Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

192results about How to "Improve memory utilization" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Distributed file system metadata management method facing to high-performance calculation

ActiveCN103150394AInhibit migrationLoad balancingTransmissionSpecial data processing applicationsDistributed File SystemMetadata management

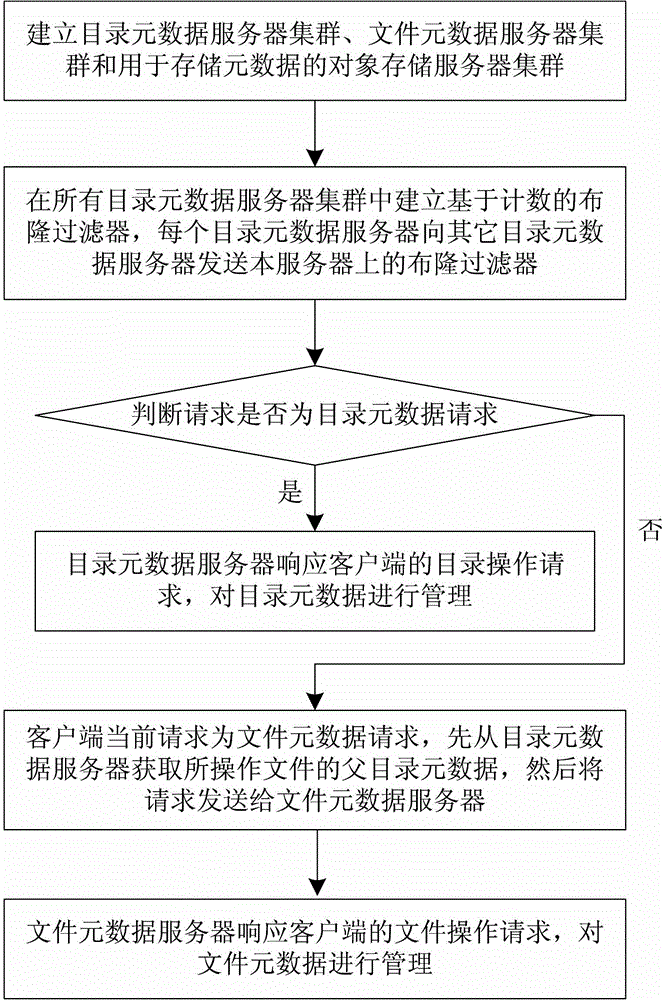

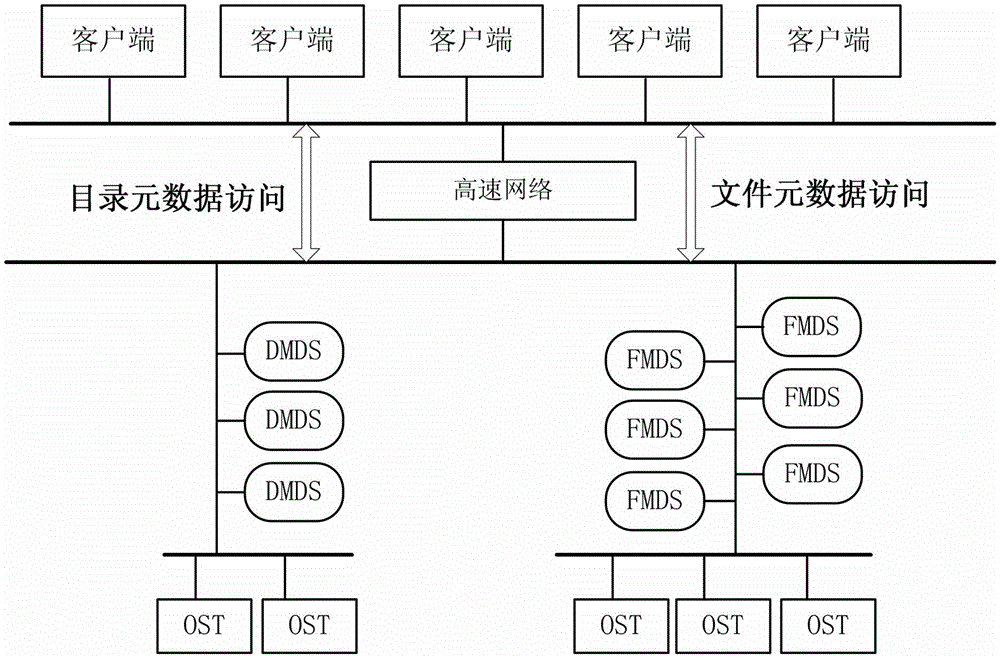

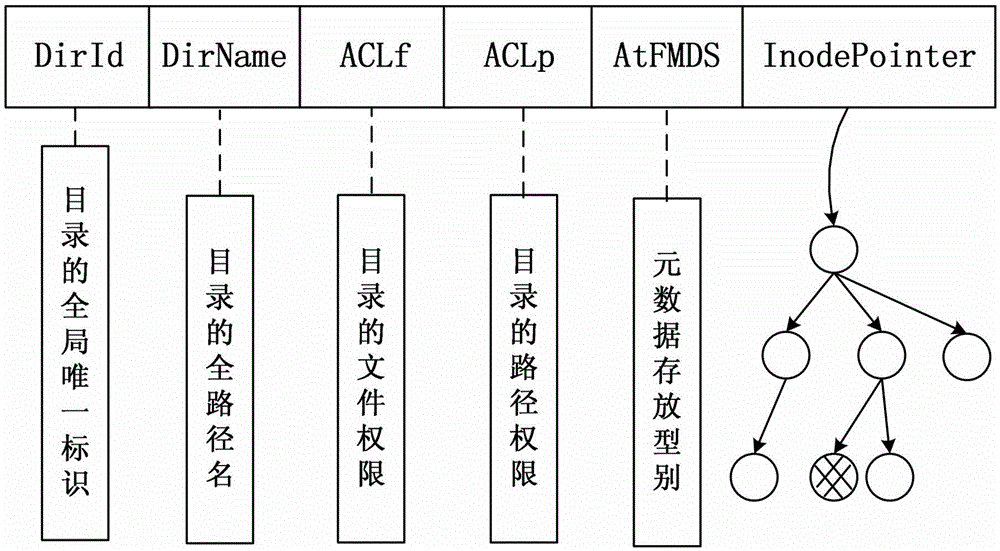

The invention discloses a distributed file system metadata management method facing to high-performance calculation. The method comprises the following steps of: 1) establishing a catalogue metadata server cluster, a file metadata server cluster and an object storage server cluster; 2) establishing a global counting-based bloom filter in the catalogue metadata server cluster; 3) when the operation request of a client side arrives, skipping to execute step 4) or 5); 4) enabling the catalogue metadata server cluster to respond to the catalogue operation request of the client side to manage the catalogue metadata; and 5) enabling the file metadata server cluster to respond to the file operation request of the client side to manage the file metadata data. According to the distributed file system metadata management method disclosed by the invention, the metadata transferring problem brought by catalogue renaming can be effectively solved, and the distributed file system metadata management method has the advantages of high storage performance, small maintenance expenditure, high load, no bottleneck, good expansibility and balanced load.

Owner:NAT UNIV OF DEFENSE TECH

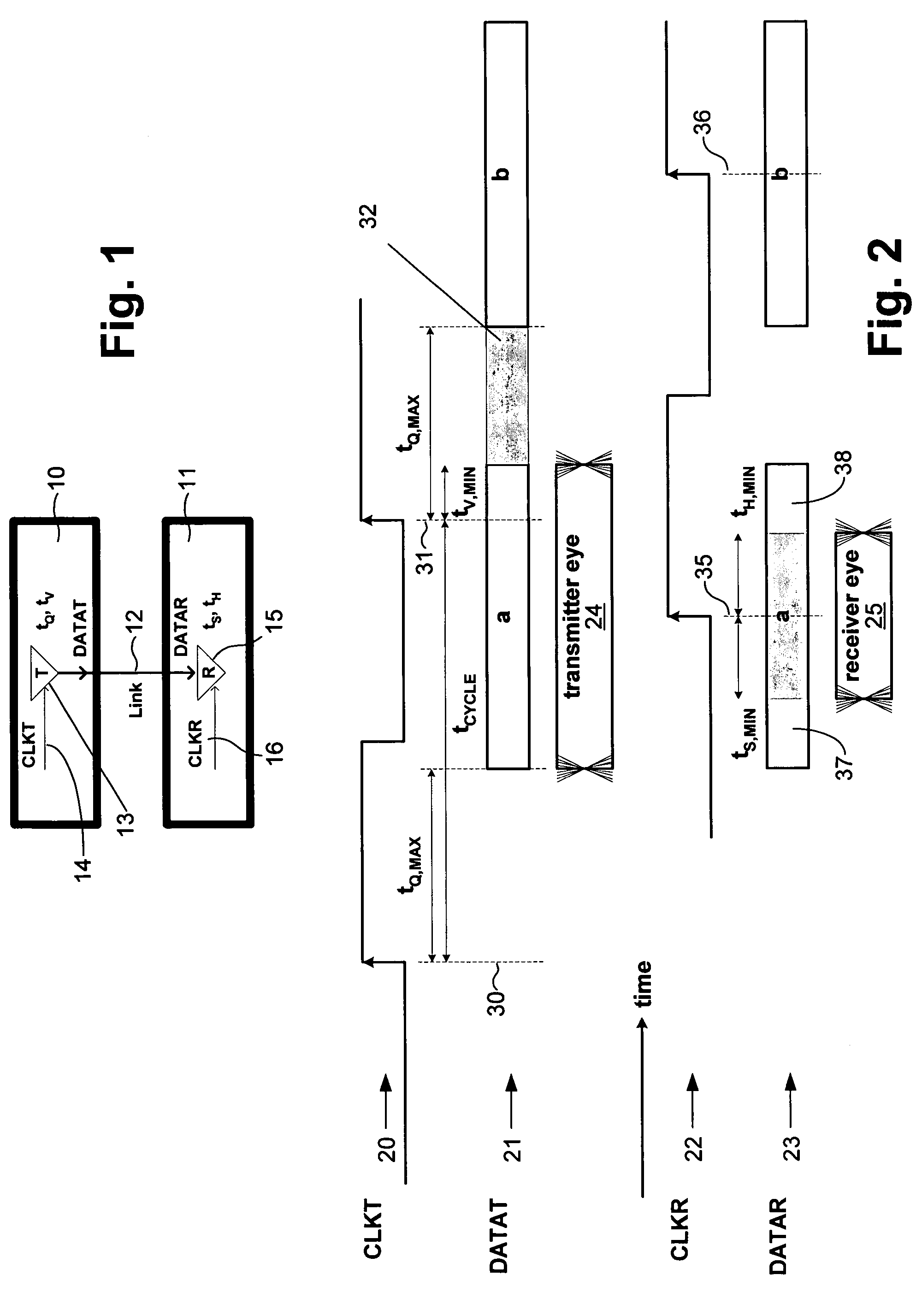

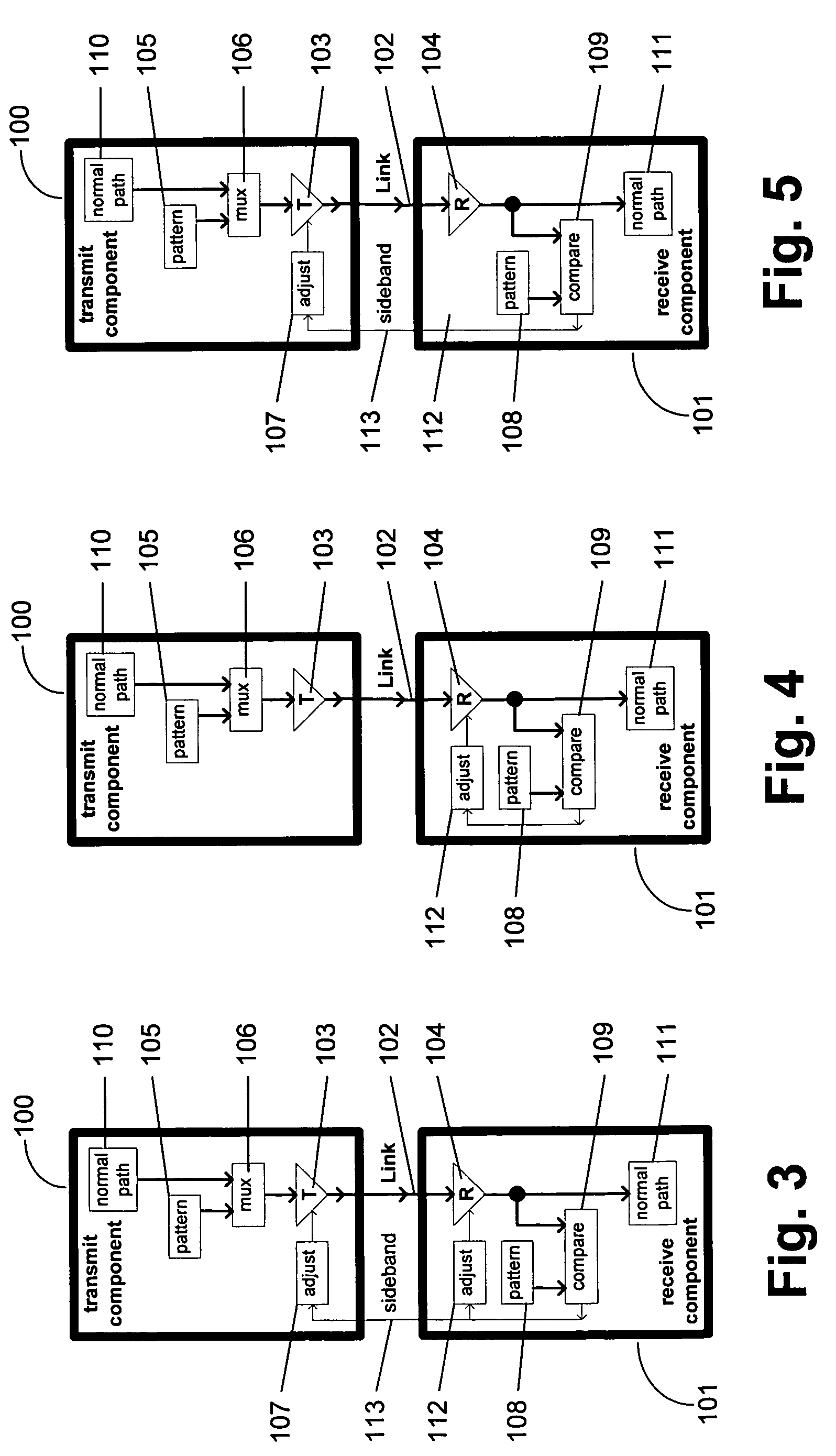

Communication channel calibration for drift conditions

ActiveUS7095789B2Improve memory utilizationIncrease profitLine impedence variation compensationCorrect operation testingTelecommunications linkData source

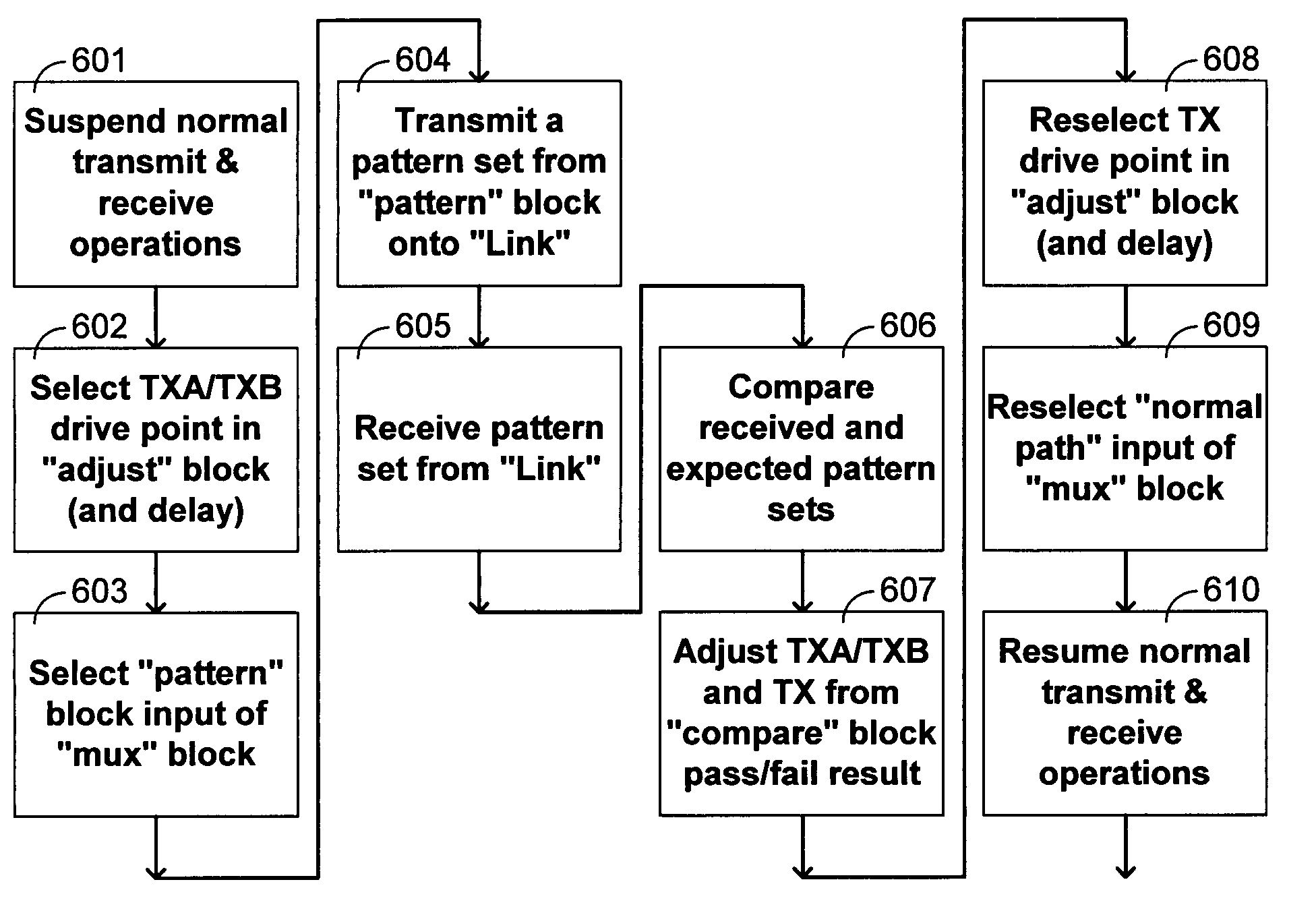

A method and system provides for execution of calibration cycles from time to time during normal operation of the communication channel. A calibration cycle includes de-coupling the normal data source from the transmitter and supplying a calibration pattern in its place. The calibration pattern is received from the communication link using the receiver on the second component. A calibrated value of a parameter of the communication channel is determined in response to the received calibration pattern. The steps involved in calibration cycles can be reordered to account for utilization patterns of the communication channel. For bidirectional links, calibration cycles are executed which include the step of storing received calibration patterns on the second component, and retransmitting such calibration patterns back to the first component for use in adjusting parameters of the channel at first component.

Owner:K MIZRA LLC

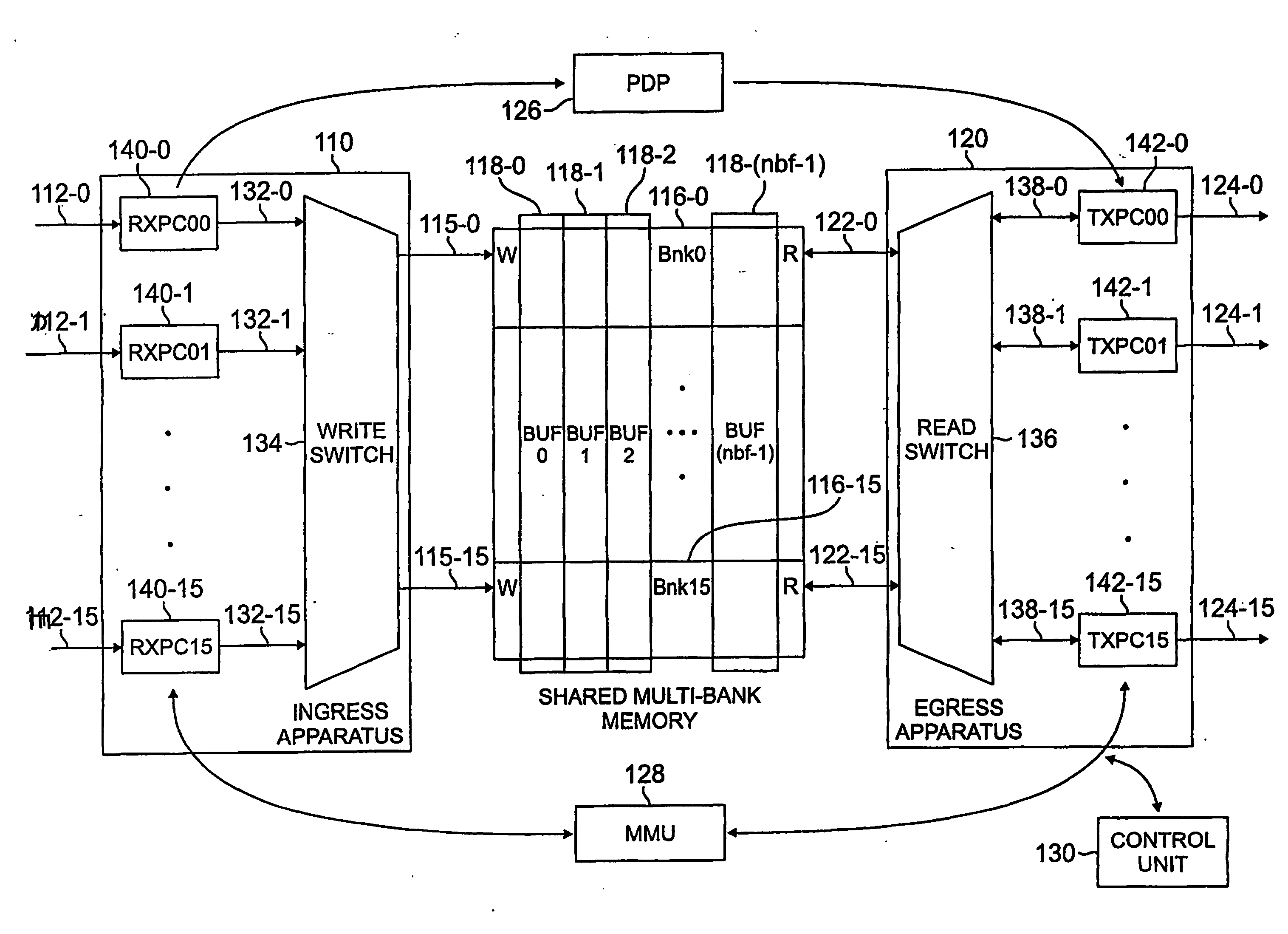

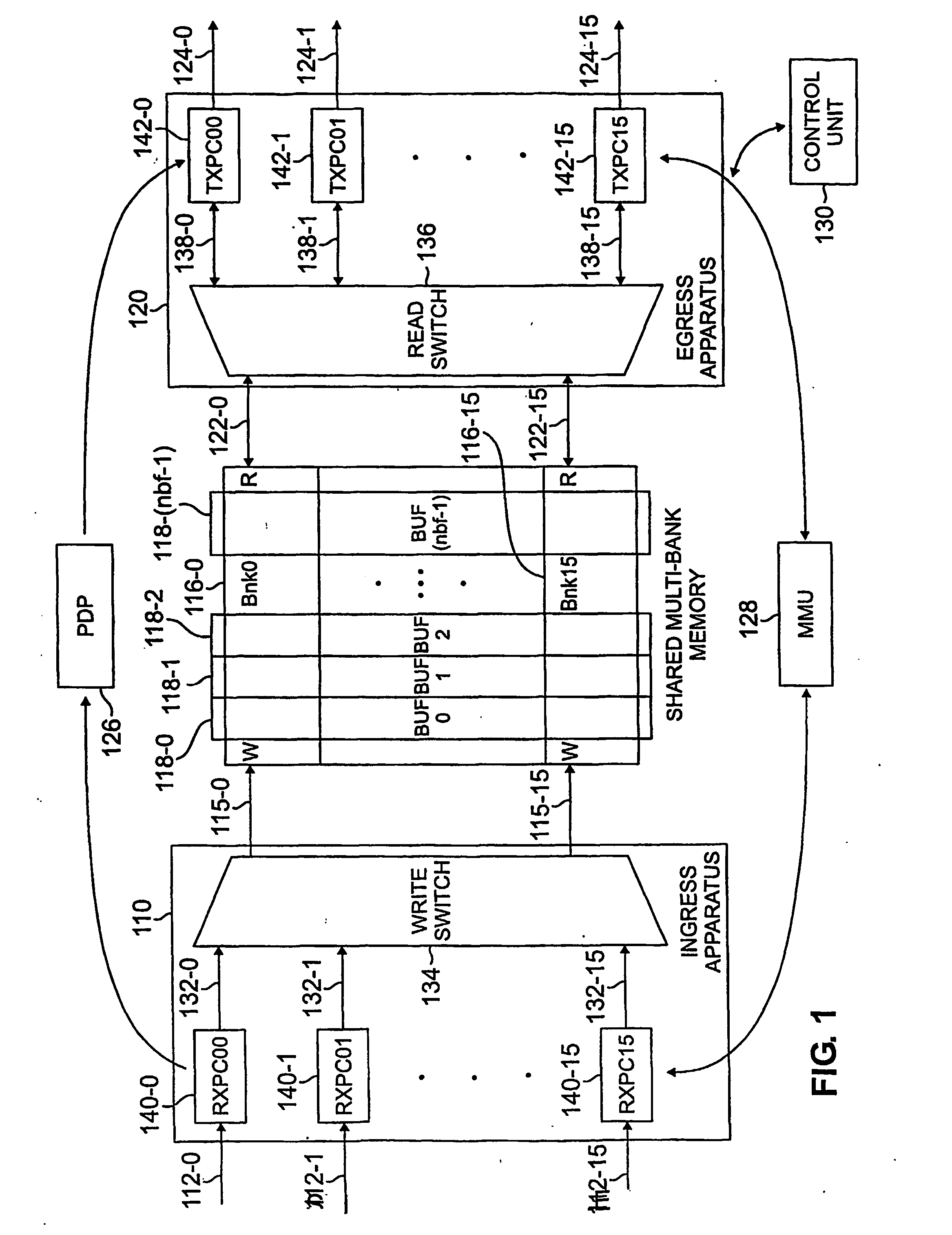

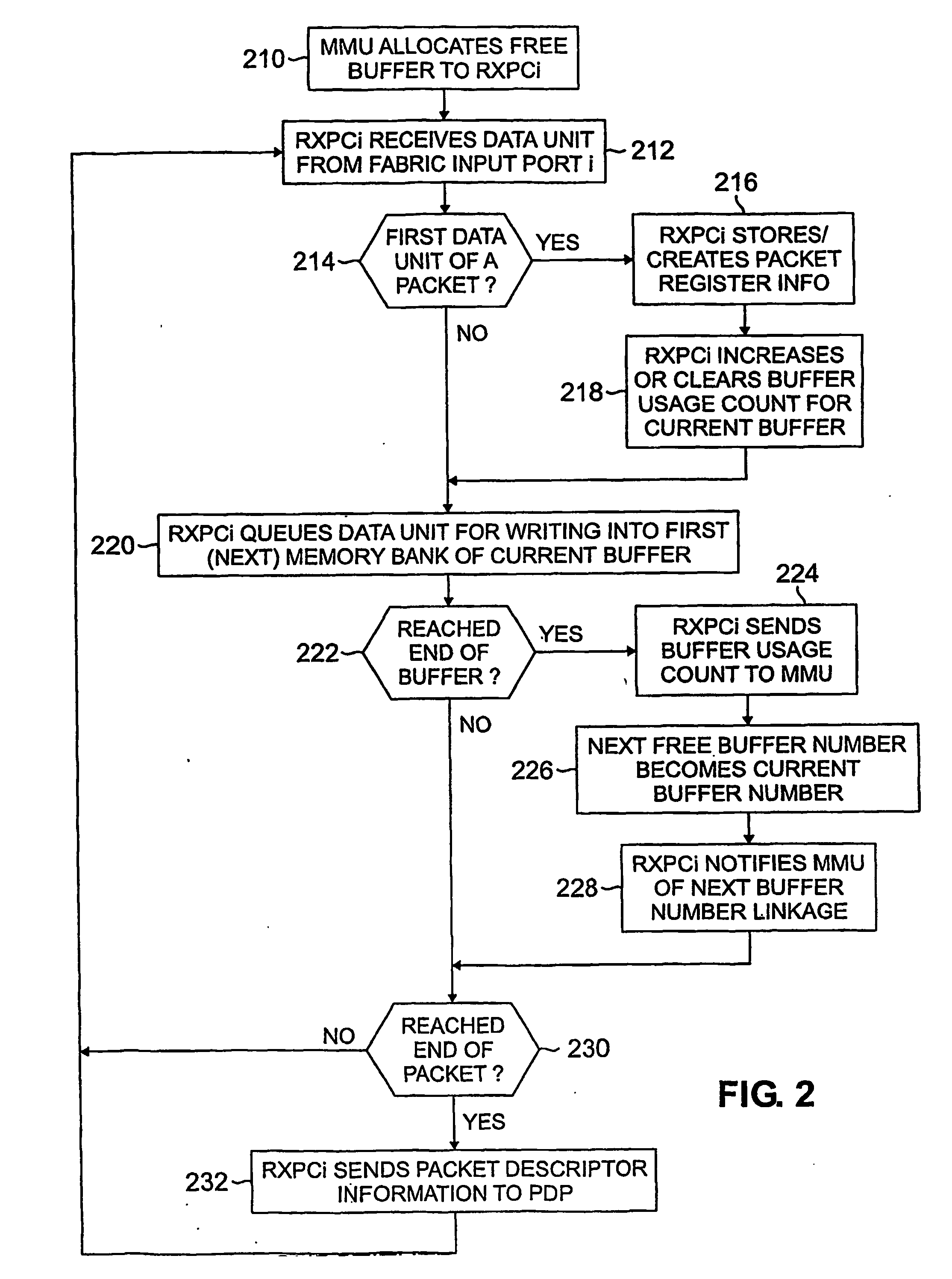

Method and apparatus for shared multi-bank memory in a packet switching system

ActiveUS20060221945A1Improve memory utilizationMultiplex system selection arrangementsData switching by path configurationSequential dataFast packet switching

A method and apparatus are disclosed that store sequential data units of a data packet received at an input port in contiguous banks of a buffer in a shared memory. Buffer memory utilization can be improved by storing multiple packets in a single buffer. For each buffer, a buffer usage count is stored that indicates the sum (over all packets represented in the buffer) of the number of output ports toward which each of the packets is destined. The buffer usage count provides a mechanism for determining when a buffer is free. The buffer usage count can also indicate a number of destination ports for a packet to perform a multicasting operation. Buffers can comprise one or more groups and each of the groups can comprise a plurality of banks.

Owner:AVAGO TECH INT SALES PTE LTD

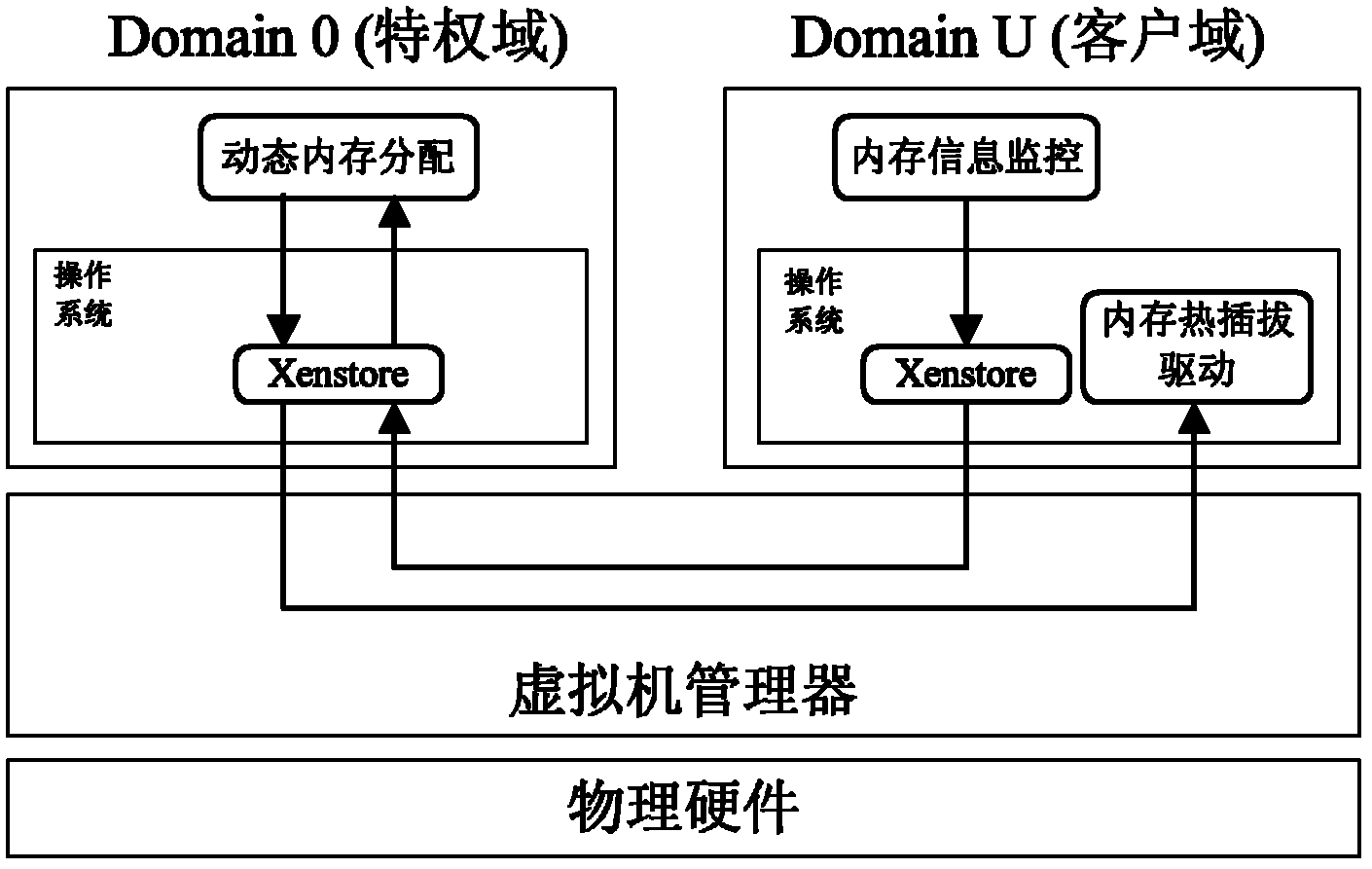

Dynamic memory management system based on memory hot plug for virtual machine

InactiveCN102222014AMemory Pressure PredictionMemory pressure balanceMemory adressing/allocation/relocationSoftware simulation/interpretation/emulationOut of memoryGNU/Linux

The invention discloses a dynamic memory management system based on memory hot plug for a virtual machine, comprising a memory monitoring module, a memory distributing module and a memory hot plug module. The memory hot plug module adopts Linux memory hot plug mechanism to realize memory hot plug on a semi-virtual Linux virtual machine, thereby breaking through the initial memory toplimit of the virtual machine and efficiently improving the memory expandability of the virtual machine by increasing and reducing the memory randomly. On one hand, the memory distributing module dynamically predicts the memory requirement of the virtual machine and balances the memory pressure of each virtual machine, thereby being capable of satisfying the memory requirement of the virtual machine and also improving the memory utilization ratio of a physical machine; on the other hand, the memory distributing module can establish a new virtual machine by reasonably reducing the memory of the existing virtual machine when the memory of the physical machine is not enough, thereby realizing memory overuse and improving the memory utilization ratio of the physical machine.

Owner:HUAZHONG UNIV OF SCI & TECH

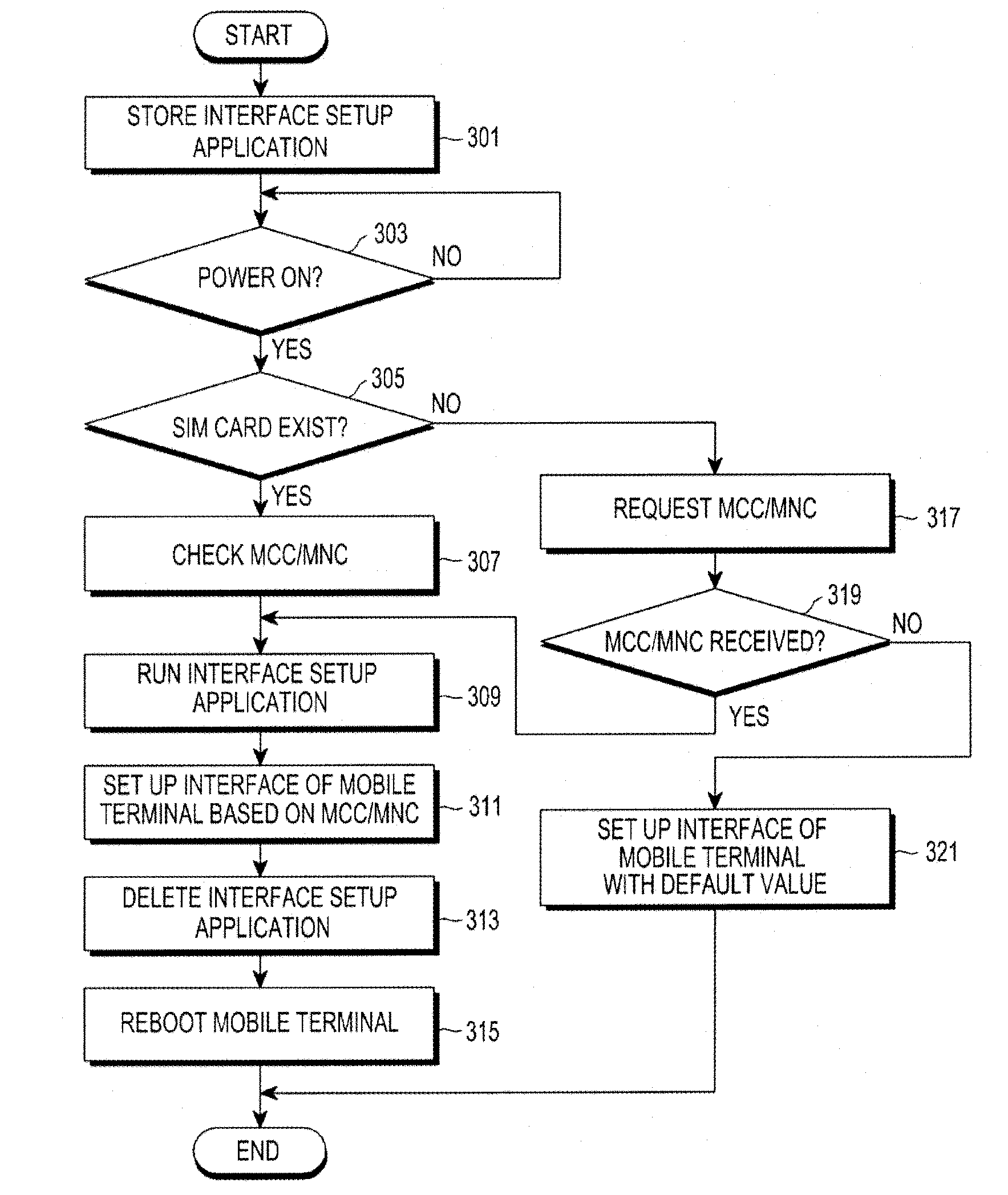

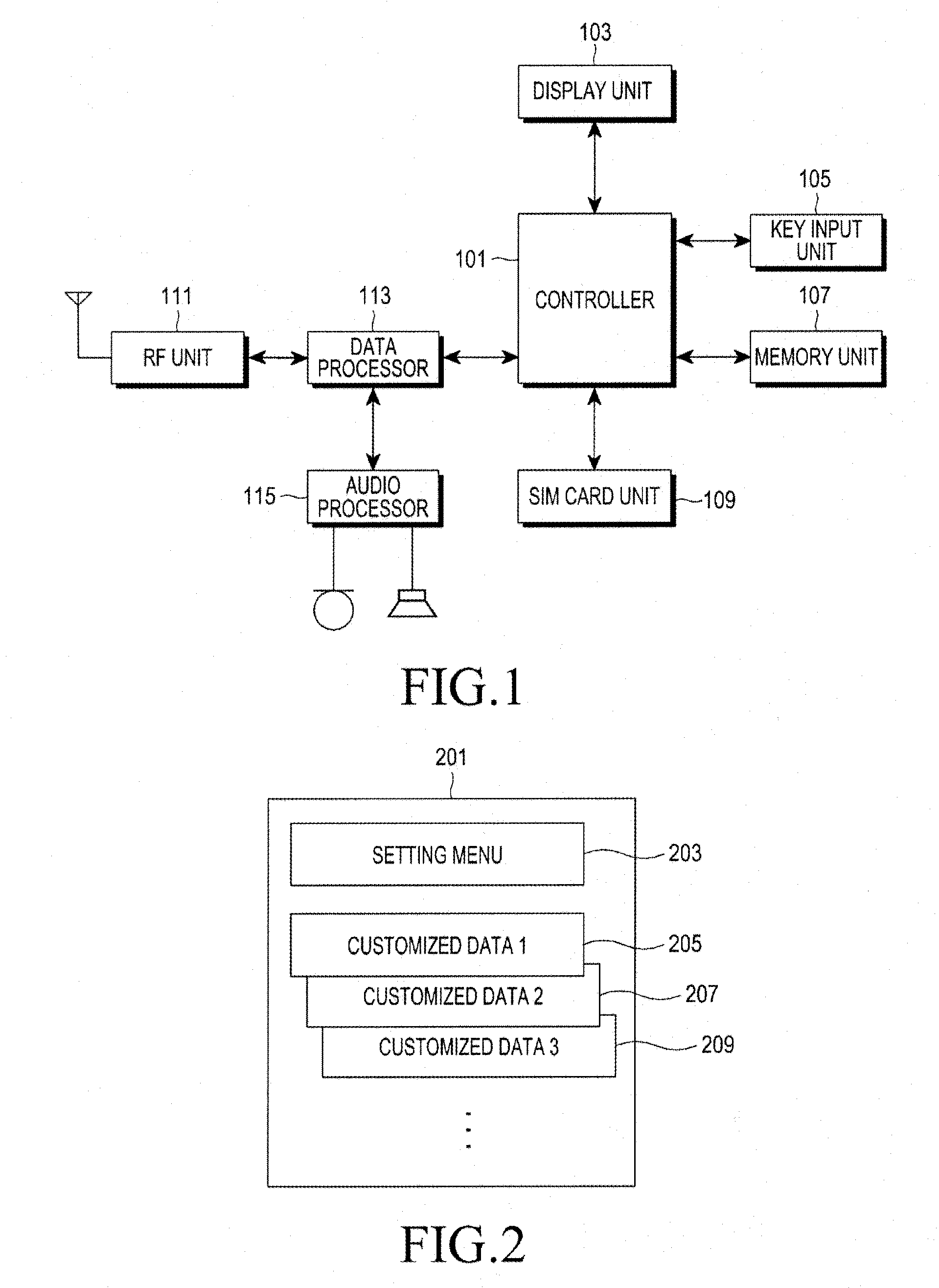

Apparatus and method for setting up an interface in a mobile terminal

InactiveUS20130184029A1Improve memory utilizationSubstation equipmentLocation information based serviceMobile network codeSubscriber identity module

An apparatus and method set up an interface in a mobile terminal. The method includes: storing an interface setup application including customized data unique to countries and operators; determining whether a Subscriber Identity Module (SIM) card is inserted into the mobile terminal when the mobile terminal is powered on; detecting a Mobile Country Code (MCC) and a Mobile Network Code (MNC) included in the SIM card, if the SIM card is inserted into the mobile terminal; executing the interface setup application and searching for customized data corresponding to the detected MCC and MNC from among the customized data unique to countries and operators; and installing an interface of the mobile terminal based on the searched customized data.

Owner:SAMSUNG ELECTRONICS CO LTD

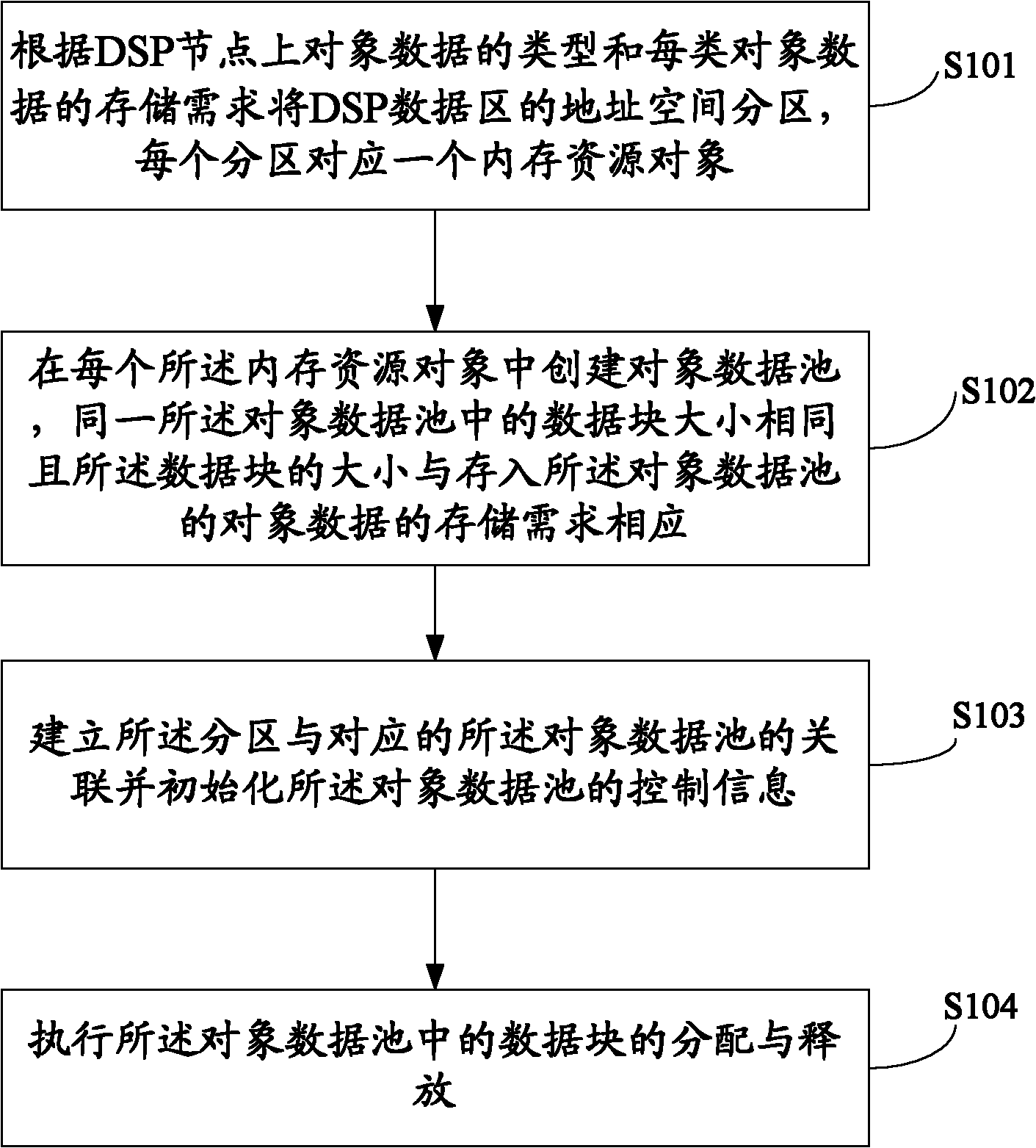

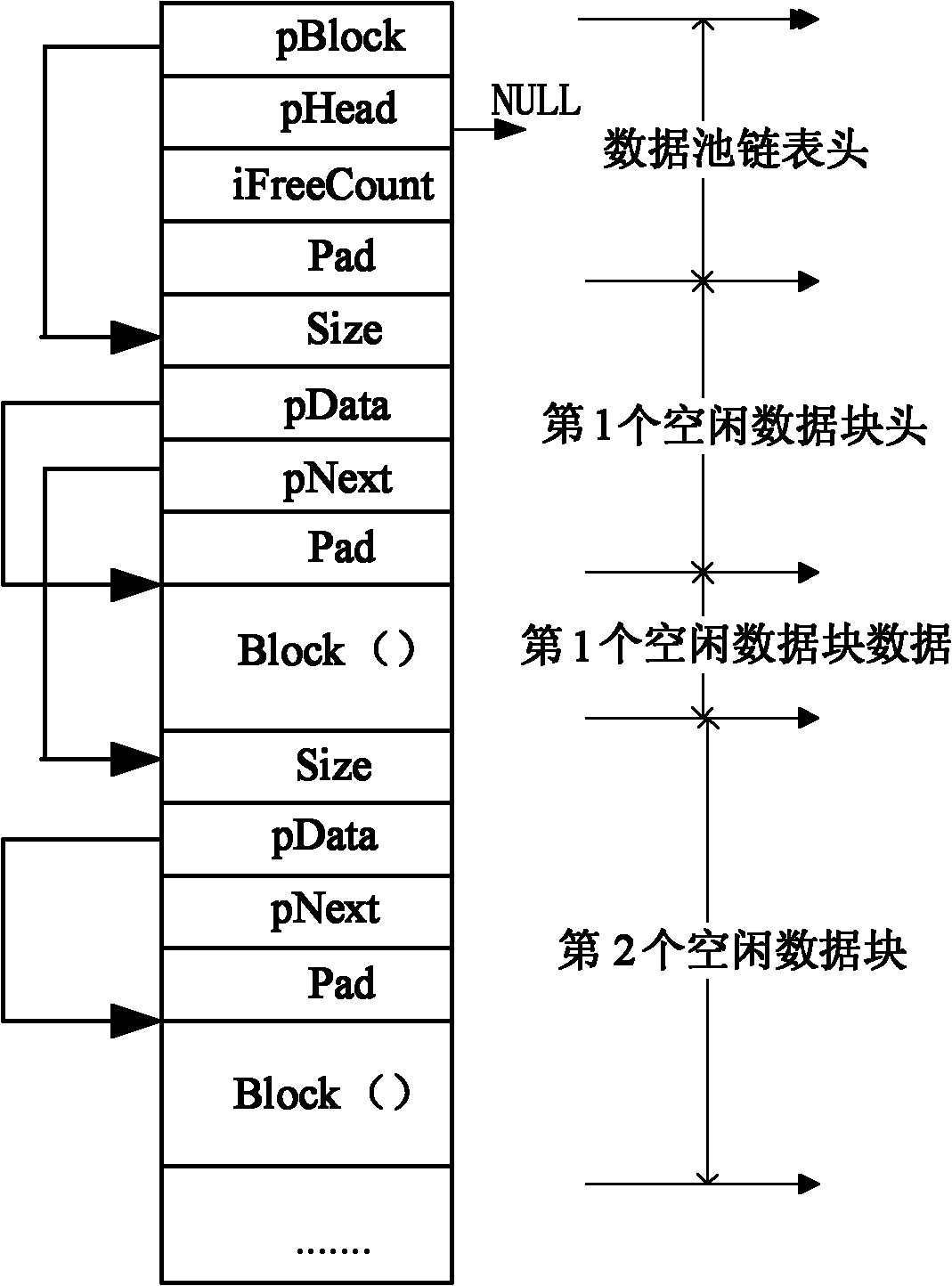

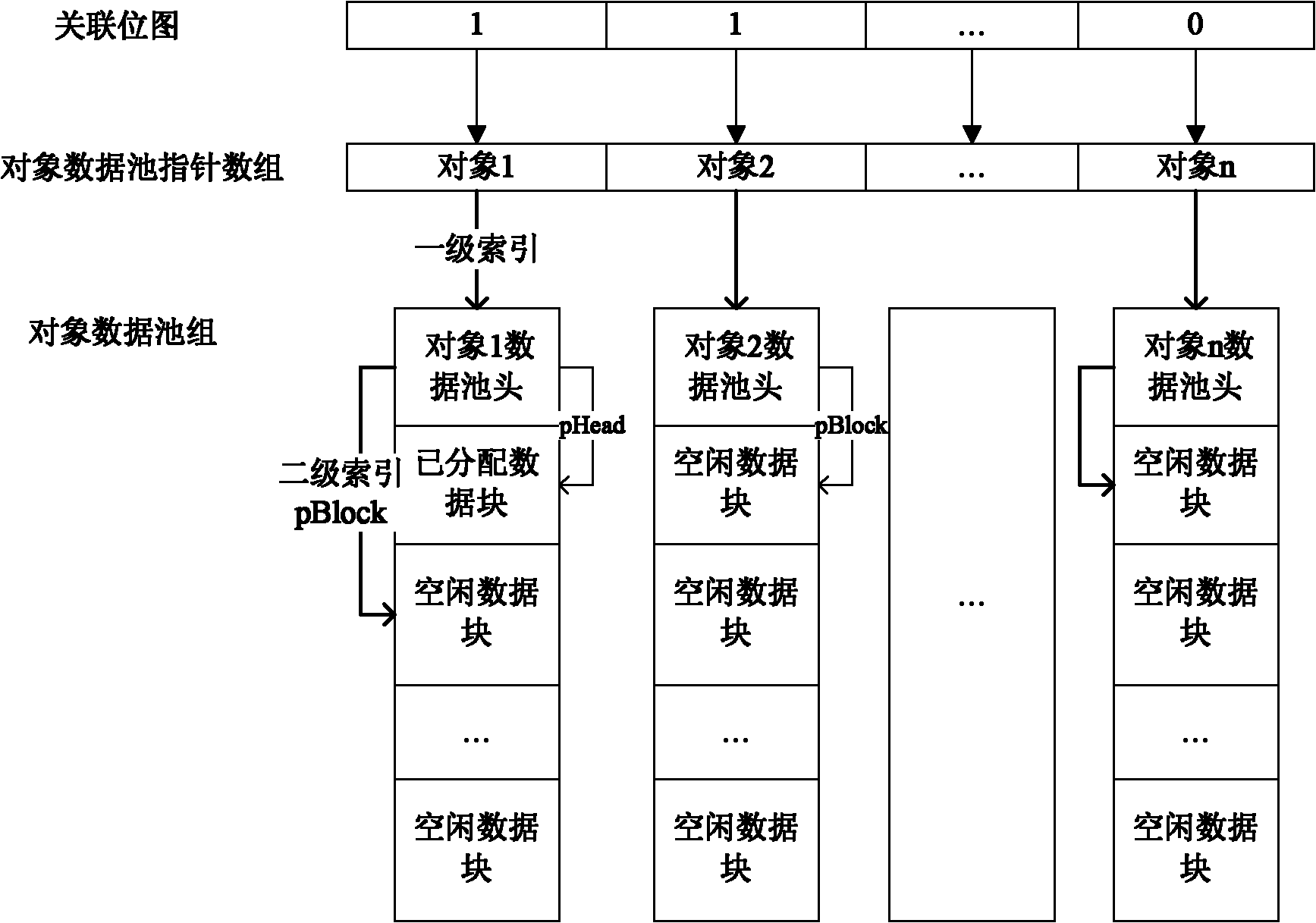

Dynamic management method of DSP data area

ActiveCN101950273AImprove memory utilizationEasy to createMemory adressing/allocation/relocationDynamic managementData storing

The invention discloses a dynamic management method of a DSP data area, comprising the following steps: according the type of object data on a DSP node and the storage requirement of each object data, partitioning the address space of the DSP data area, wherein each partitioned zone corresponds to one memory resource object; building an object data pool in each memory resource object, wherein the size of the data block in the same object data pool is same, and the size of each data block corresponds to the storage requirement of the object data stored in the object data pool; building the relevance of the partitioned zone and the object data pool, and initializing the control information of the object data pool; and performing the distribution and the release of the data block in the object data pool. The method can dynamically manage the memory data area of the DSP according to practical application, is efficient and does not have memory fragments.

Owner:NAVAL UNIV OF ENG PLA

Multi-core DSP starting method

ActiveCN107656773AIncrease flexibilityImprove memory utilizationProgram loading/initiatingRegister allocationProcessor register

The invention discloses a multi-core DSP starting method. The method comprises the steps of S1, arranging an ROM region in a multi-core DSP chip, loading a pre-established start loading program in theROM region, and configuring a peripheral needed to be adopted during chip starting and a starting mode needed to be adopted by the peripheral through a starting mode register, wherein the start loading program comprises multiple starting modes, used for starting the multi-core DSP chip, of multiple peripherals; and S2, after the chip is reset, executing the start loading program by a main core, reading the starting mode register, obtaining the target peripheral needed to be adopted currently and the target starting mode needed to be adopted, loading a user program, and after loading of the user program is finished, enabling other cores to jump to a specified address for starting executing respective user programs in an interrupt mode. The multi-peripheral and multi-mode multi-core DSP starting can be realized; the memory utilization rate and efficiency are high; the application range is wide; and the flexibility is good.

Owner:NAT UNIV OF DEFENSE TECH

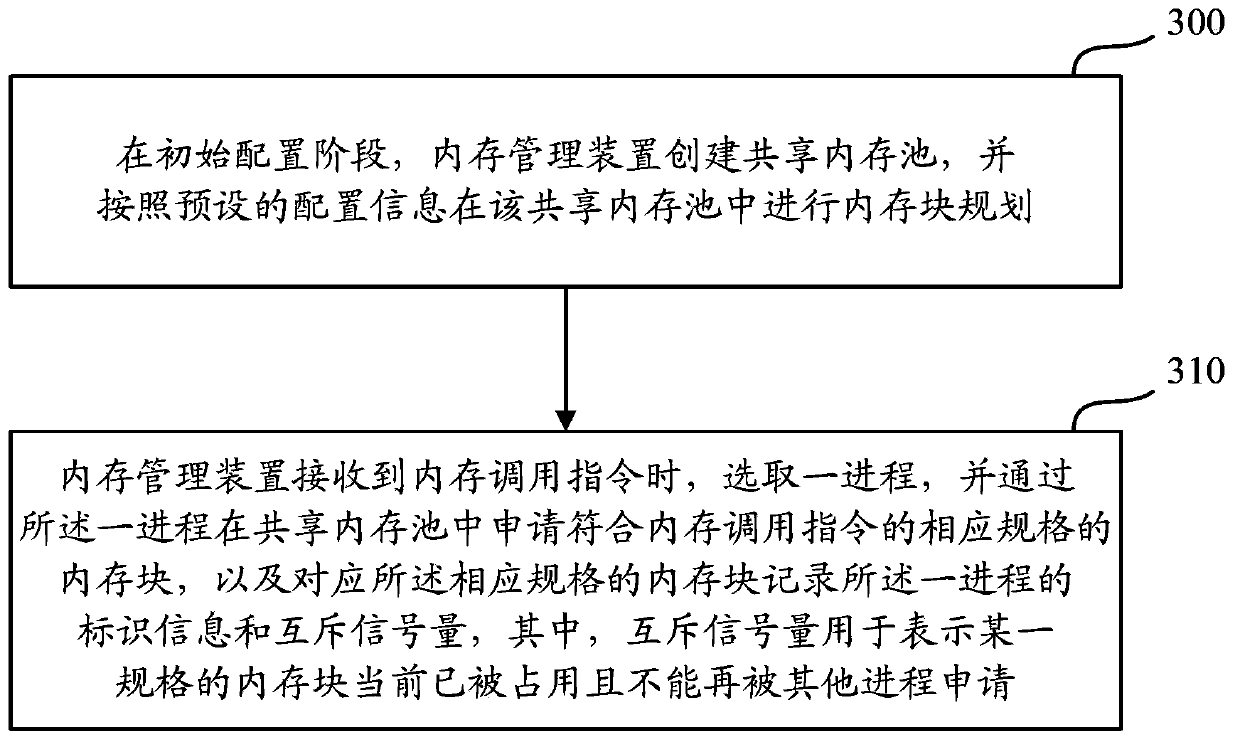

Memory management method and device for multiprocess system

ActiveCN103425592AEasy to manageAvoid it happening againMemory adressing/allocation/relocationSpecific program execution arrangementsOperational systemOut of memory

The invention discloses a memory management method and device for a multiprocess system. The memory management method and device for the multiprocess system are used for optimizing a memory management mode of the system and improving the memory utilization rate and the success rate of memory distribution. The memory management method for the multiprocess system comprises the steps that integrated planning of memory blocks is carried out by the memory management device by means of a shared memory pool, application and release of the memory blocks in the memory pool by multiple processes are distinguished according to identification information and mutually exclusive semaphore of the processes, the multiple processes can have access to memory blocks with the same specification without mutual interference, therefore, optimized memory management can be achieved, resource consumption caused by repeated application to an operation system due to the fact that the memory is not sufficient is avoided, a large amount of memory fragmentation is prevented from being generated in the operation system, and the memory utilization rate and the success rate of memory distribution of the system are effectively improved.

Owner:DATANG MOBILE COMM EQUIP CO LTD

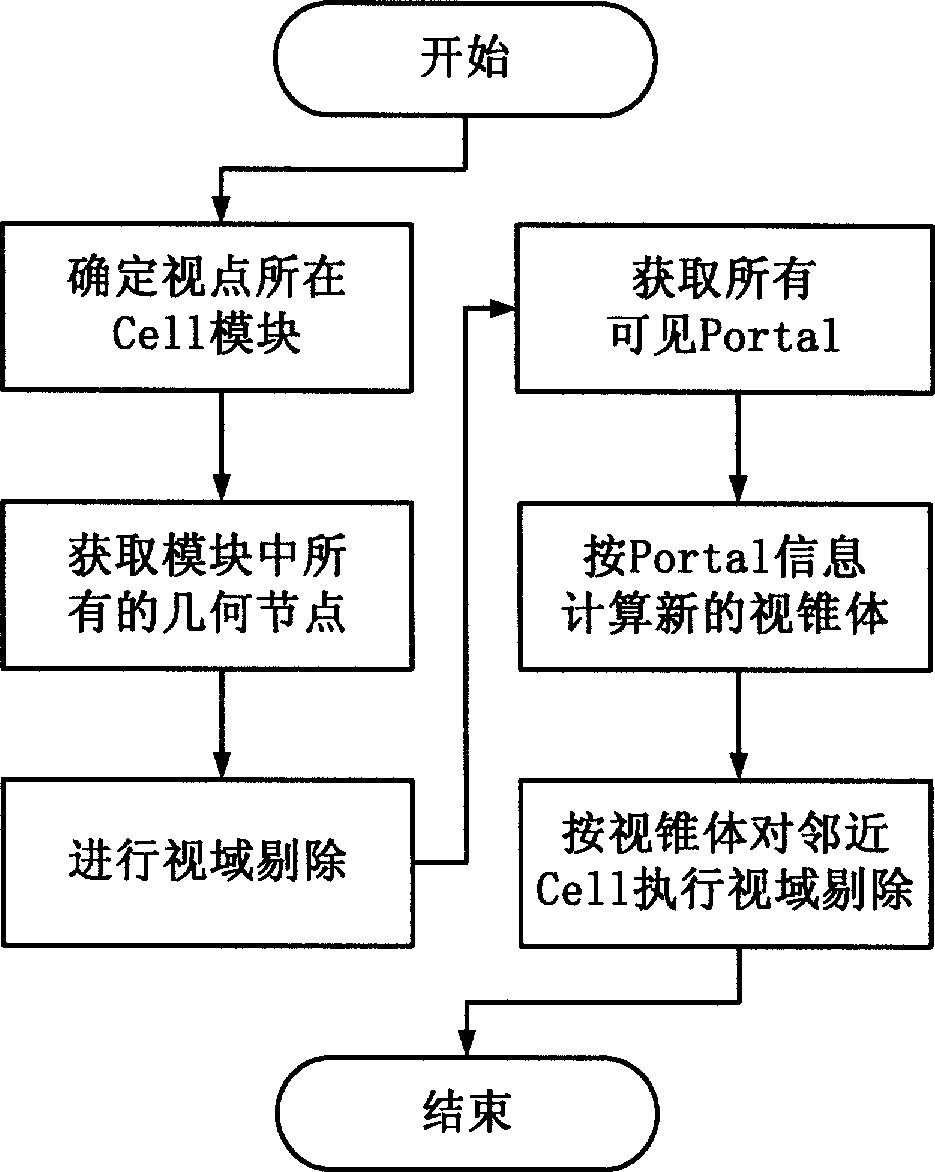

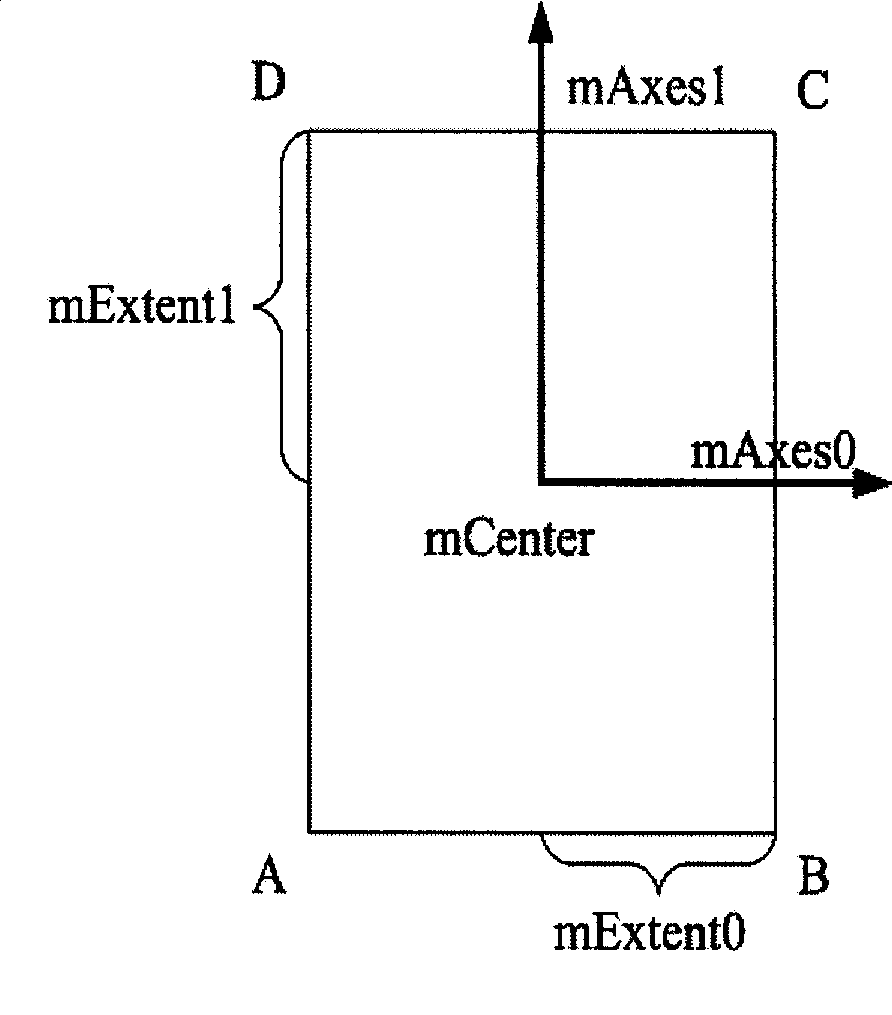

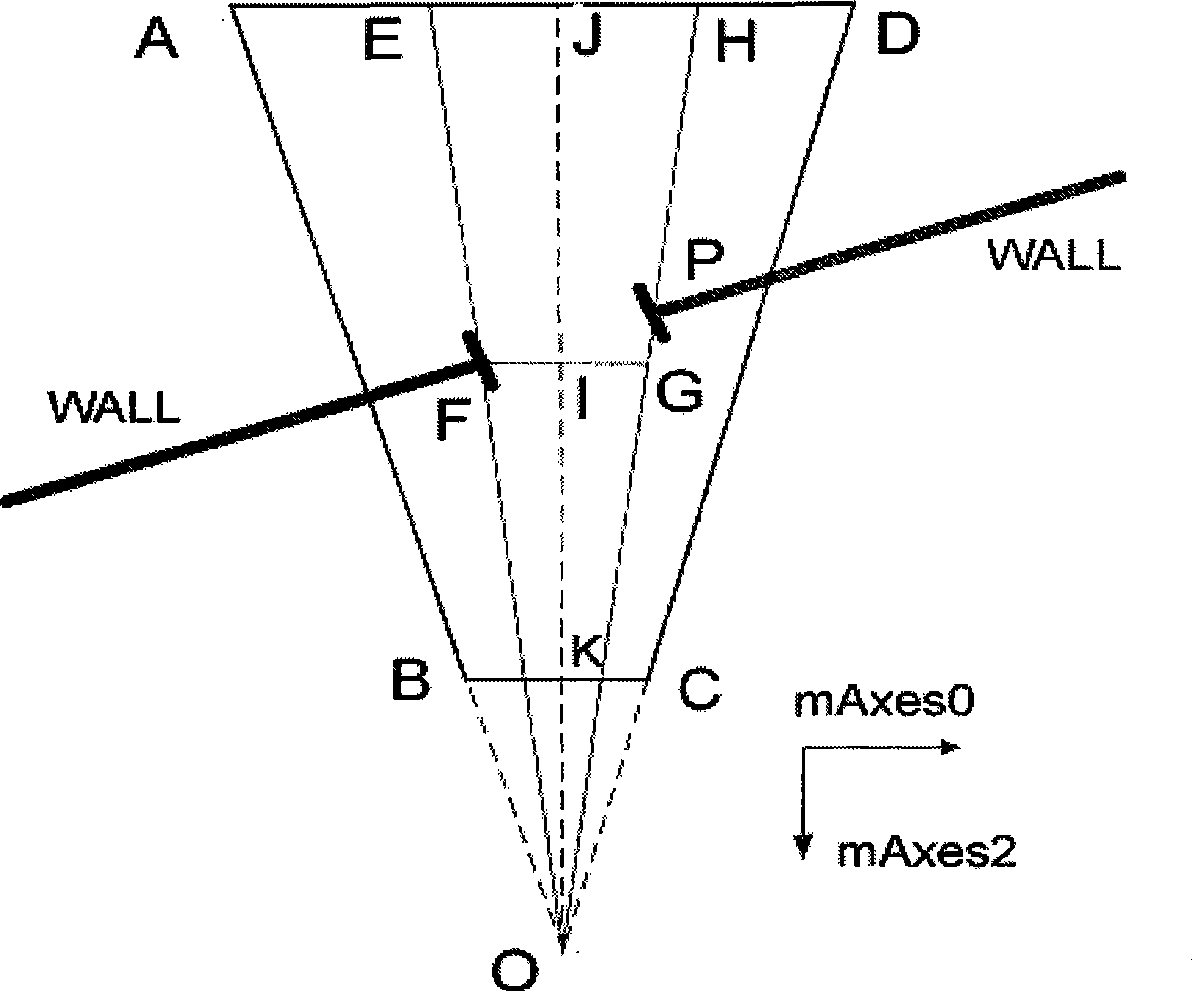

Complex indoor scene rapid drafting method based on view rejection

InactiveCN101419721AImprove efficiencyImprove memory utilization3D-image renderingComputer visionViewing frustum

The invention relates to a rapid rendering method for a complex indoor scene based on view frustum culling. The method comprises the following steps: firstly, dividing the whole scene into n areas, connecting the adjacent areas by portals, and then determining the position of a view point, performing intersection detection on all geometric modules in an area module and a viewing pyramid, completing the view frustum culling work, and finally computing number of the Portals within a viewable scope, computing a new viewing pyramid, performing the new view frustum culling, and recursing the steps to obtain a node that needs rendering. Experimental results prove that the rendering efficiency can be greatly improved by the indoor scene management method provided by the invention when the indoor shielding rate is high.

Owner:SHANGHAI UNIV

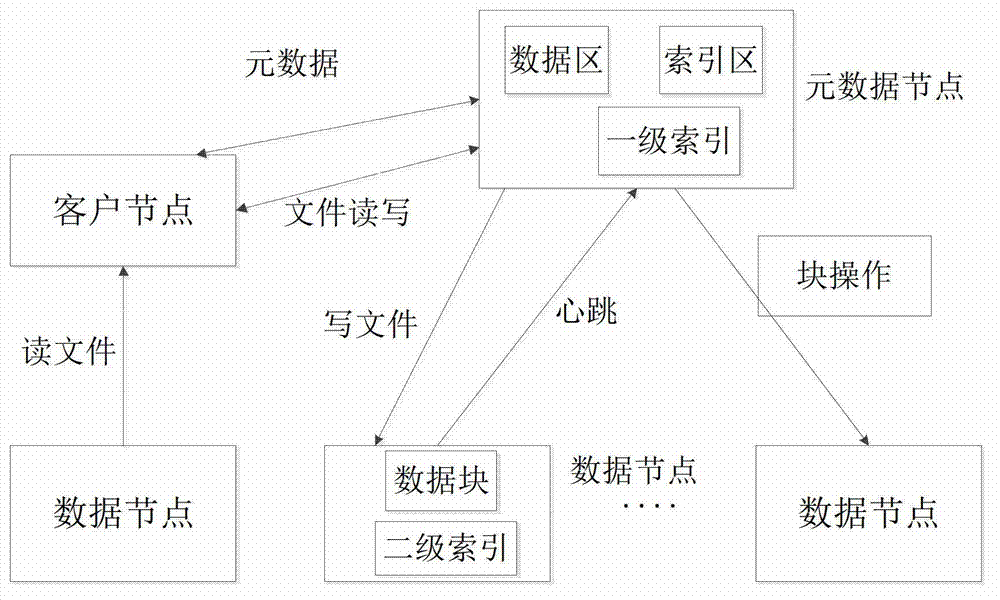

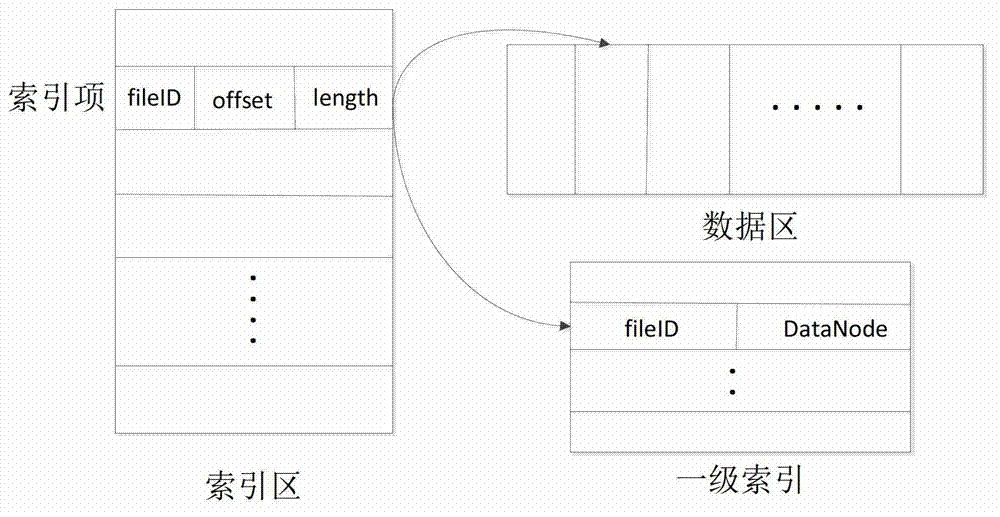

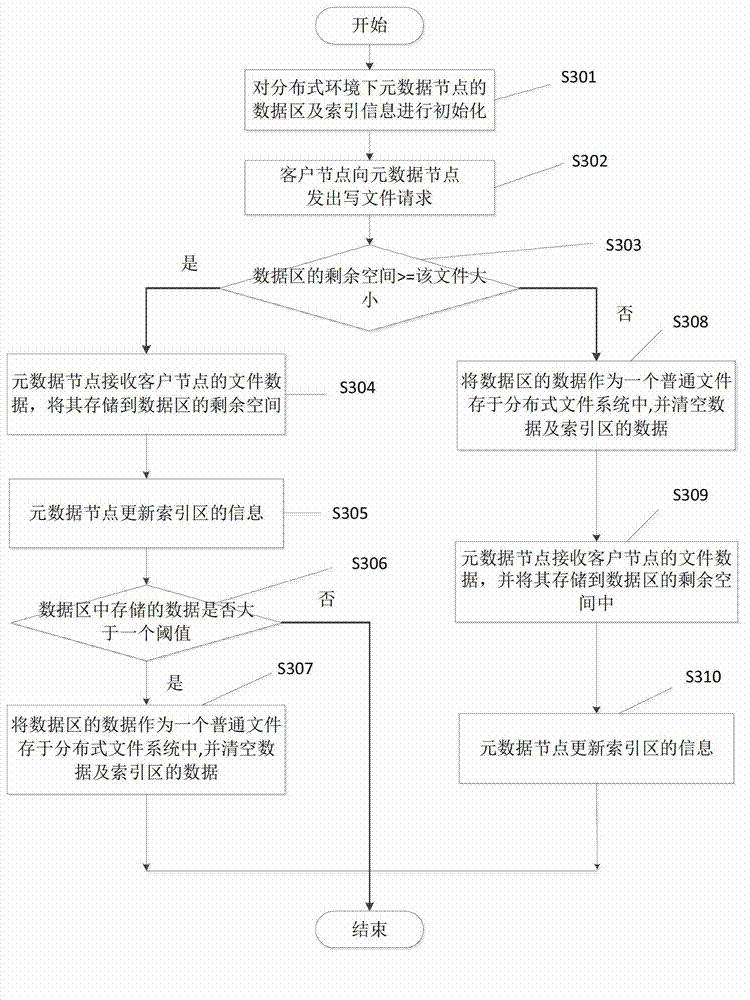

File quick reading and writing method under distributed environment

InactiveCN103092927ASave memoryReduce memory usageSpecial data processing applicationsDistributed File SystemData node

The invention discloses a file quick reading and writing method under a distributed environment. The method comprises that a client node sends a read file request to a metadata node, the client node judges whether the client node is kept to be connected with a data node which is connected with a last read file of the client node in a distributed file system, if not, the metadata node inquires whether the file exists in a data region of the file according to information in an index region, if not, the metadata node inquires a data node which stores the file according to primary index information, connection between the client node and the data node is built, the data node inquires a data block where the file is located according to secondary index information, the file is obtained through the secondary index information, the file is transmitted to the client node, and the client node receives the data and keeps connection with the data node. The method can solve the problems that in the prior art, an internal storage occupied by the metadata node is large, and writing efficiency of large amount of files is low.

Owner:HUAZHONG UNIV OF SCI & TECH

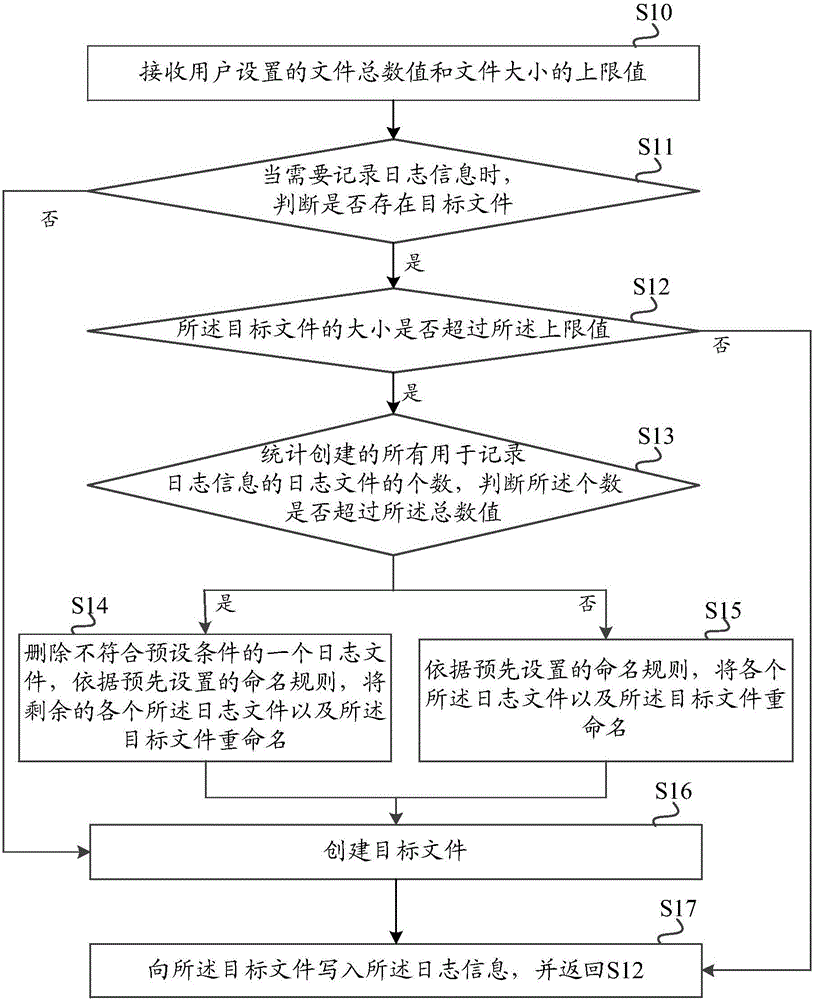

Embedded system log recording method and device

InactiveCN107526674ALong recording timeImprove memory utilizationResource allocationHardware monitoringRecording durationFile size

The embodiment of the invention discloses an embedded system log recording method and device. According to the method, a total number of files and an upper limit of a file size set by a user are received; generated log information is stored into a target file, and if the target file does not exist, the target file is established first; and when the memory size of the target file exceeds the upper limit, the target file is renamed, and a target file with the original file name is reestablished and used for recording newly generated log information. When the number of all established log files exceeds the total number, one log file not conforming to a preset condition is deleted, all the remaining log files and the target file are renamed according to preset naming rules, and a target file is created again. Logs are recorded in a rolling mode, that is, when the log recording size reaches the upper limit, newly recorded logs will cover oldest logs, and therefore the purposes of increasing the memory utilization rate and prolonging log recording time are achieved.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

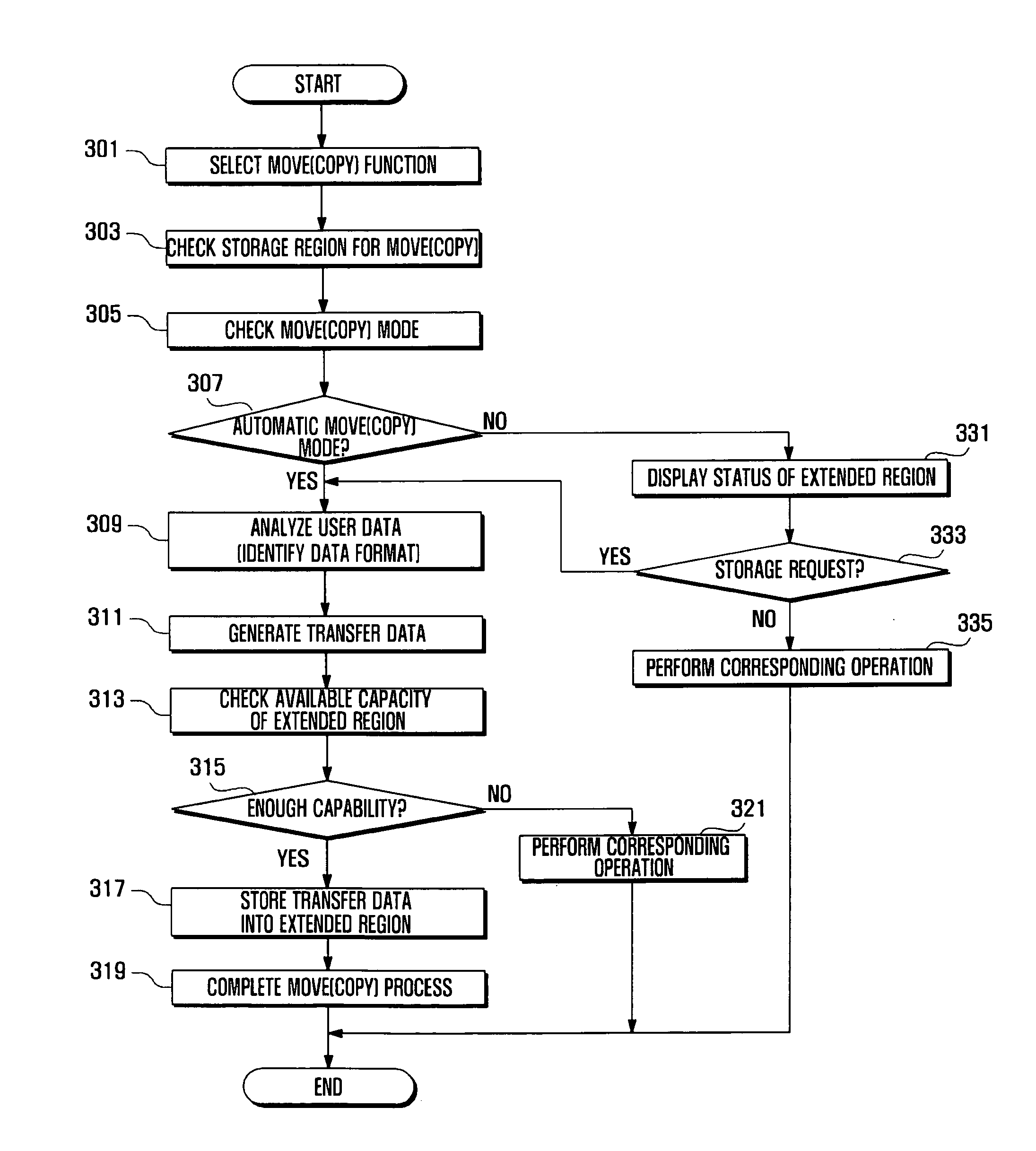

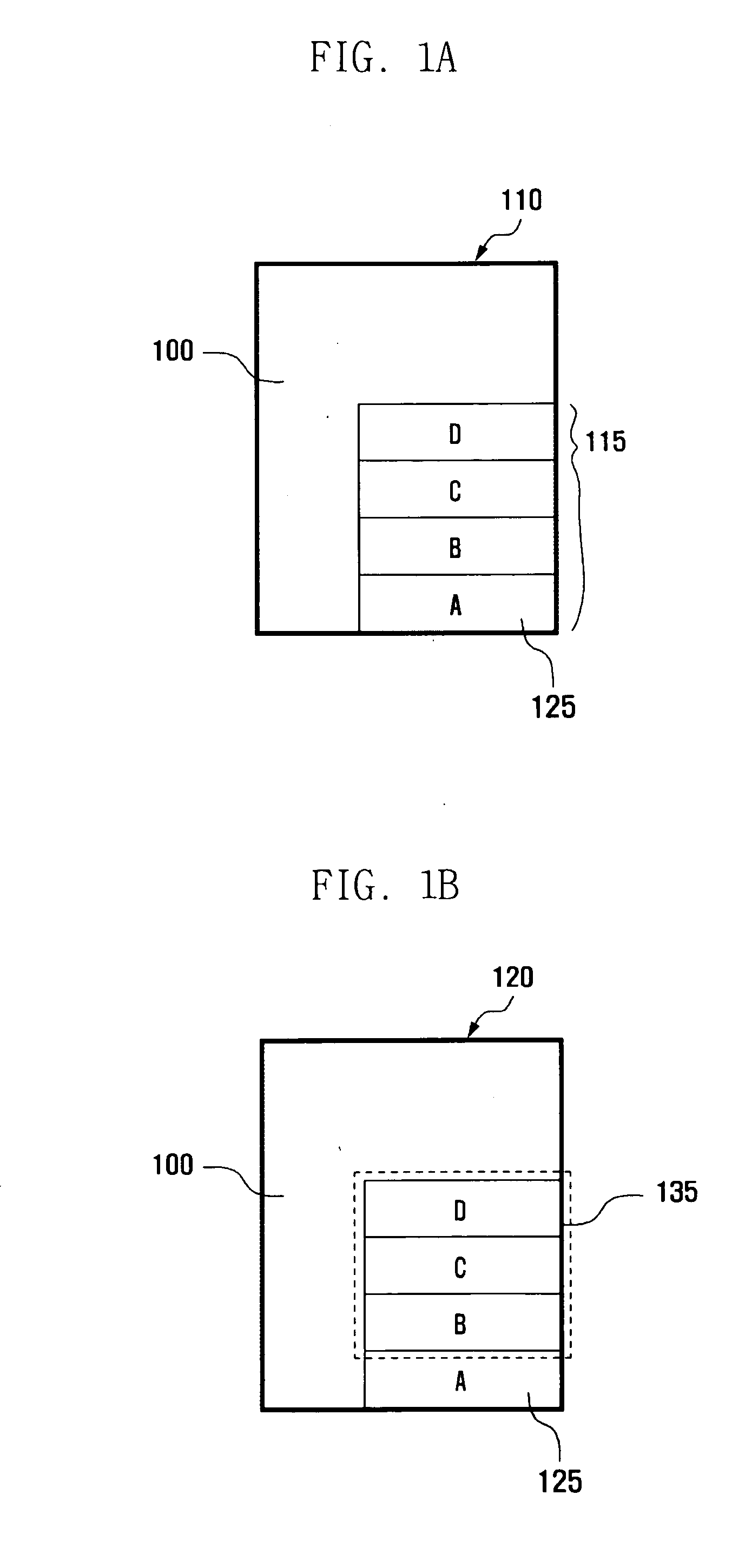

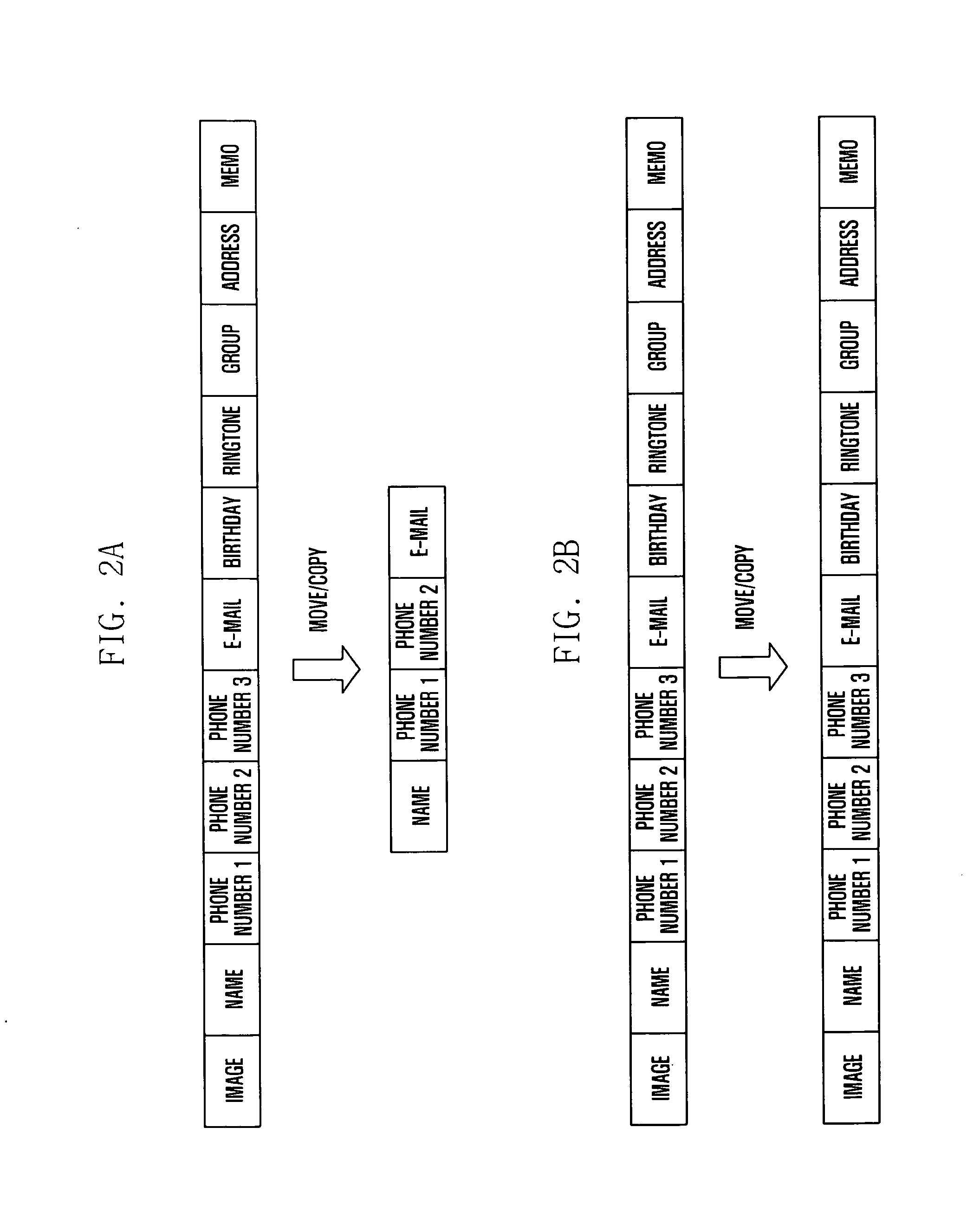

Data management method and apparatus of portable terminal

ActiveUS20110022568A1Improvement of memory utilization efficiencyImprove memory utilizationDigital data information retrievalMemory loss protectionComputer terminalData management

A data management method and apparatus of a portable terminal improves memory utilization efficiency using a data move / copy function which allows moving or copying the user data preserved in the non-volatile memory region of the memory of a portable terminal. A data management method for a portable terminal includes selecting at least one user data item in response to a selection request. An extended region for backup of the user data item is checked in response to a backup request. Transfer data corresponding to the user data item is created. The transfer data is stored in the extended region as a backup of the user data item.

Owner:SAMSUNG ELECTRONICS CO LTD

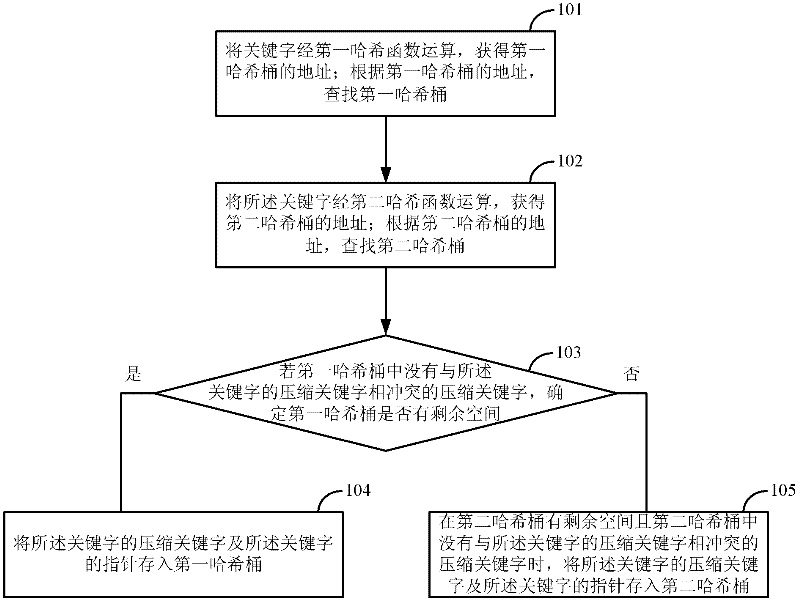

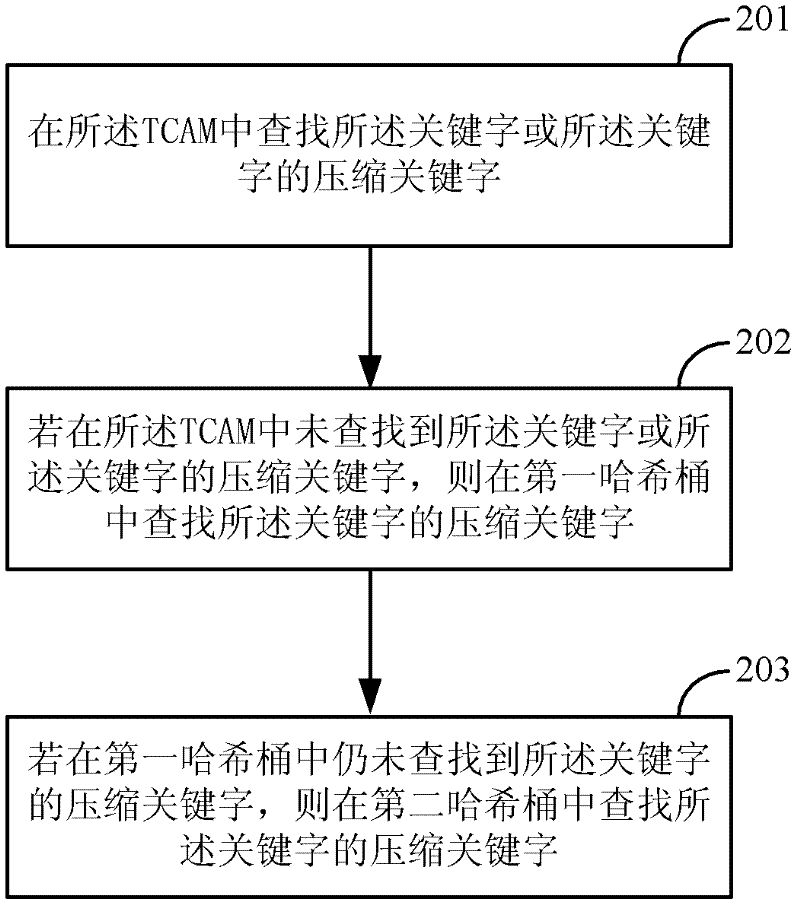

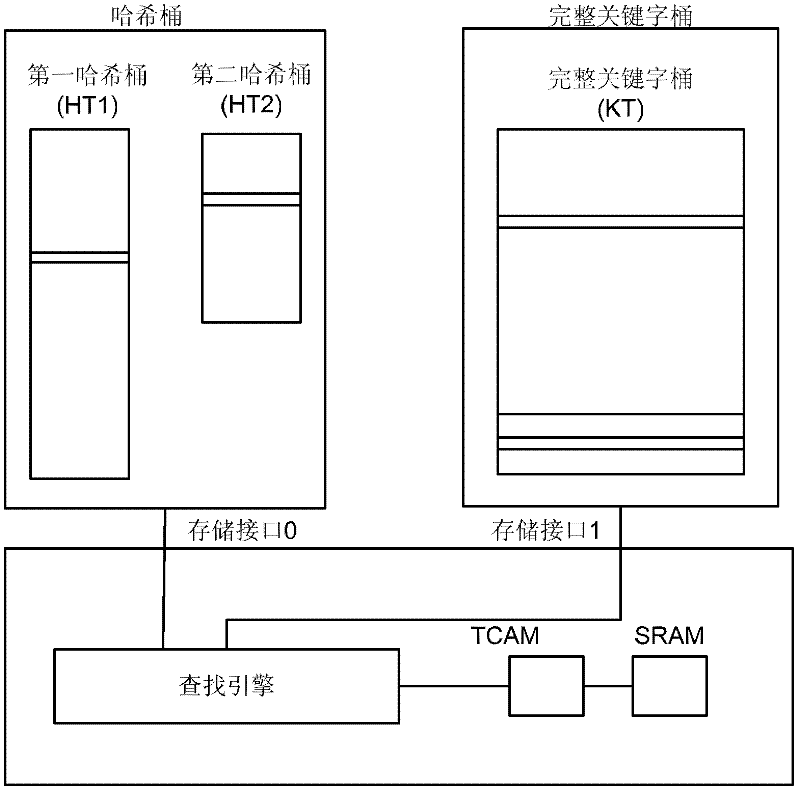

Method and device for storing and searching keyword

ActiveCN102232219AAvoid conflict situationsImprove memory utilizationDigital data information retrievalTransmissionHash functionTheoretical computer science

A method for storing keyword is disclosed, which includes: a keyword is operated respectively on the first and the second hash function in order to obtain the first and the second hash bucket addresses; the first and the second hash buckets are searched according to the first and the second hash bucket addresses; if no compressed keyword which conflicts with the compressed keyword of the keyword exists in the first hash bucket, the compressed keyword of the keyword and the pointer of the keyword are stored into the first hash bucket when the first hash bucket has remaining space; or the compressed keyword of the keyword and the pointer of the keyword are stored into the second hash bucket, when the first hash bucket has no remaining space and the second hash bucket has remaining space, and no compressed keyword which conflicts with the compressed keyword of the keyword exists in the second hash bucket. A method for searching keyword, a device for storing keyword and a device for searching keyword are also disclosed. The present invention enables increasing memory utilization ratio greatly and saving memory space and bandwidth.

Owner:HUAWEI TECH CO LTD

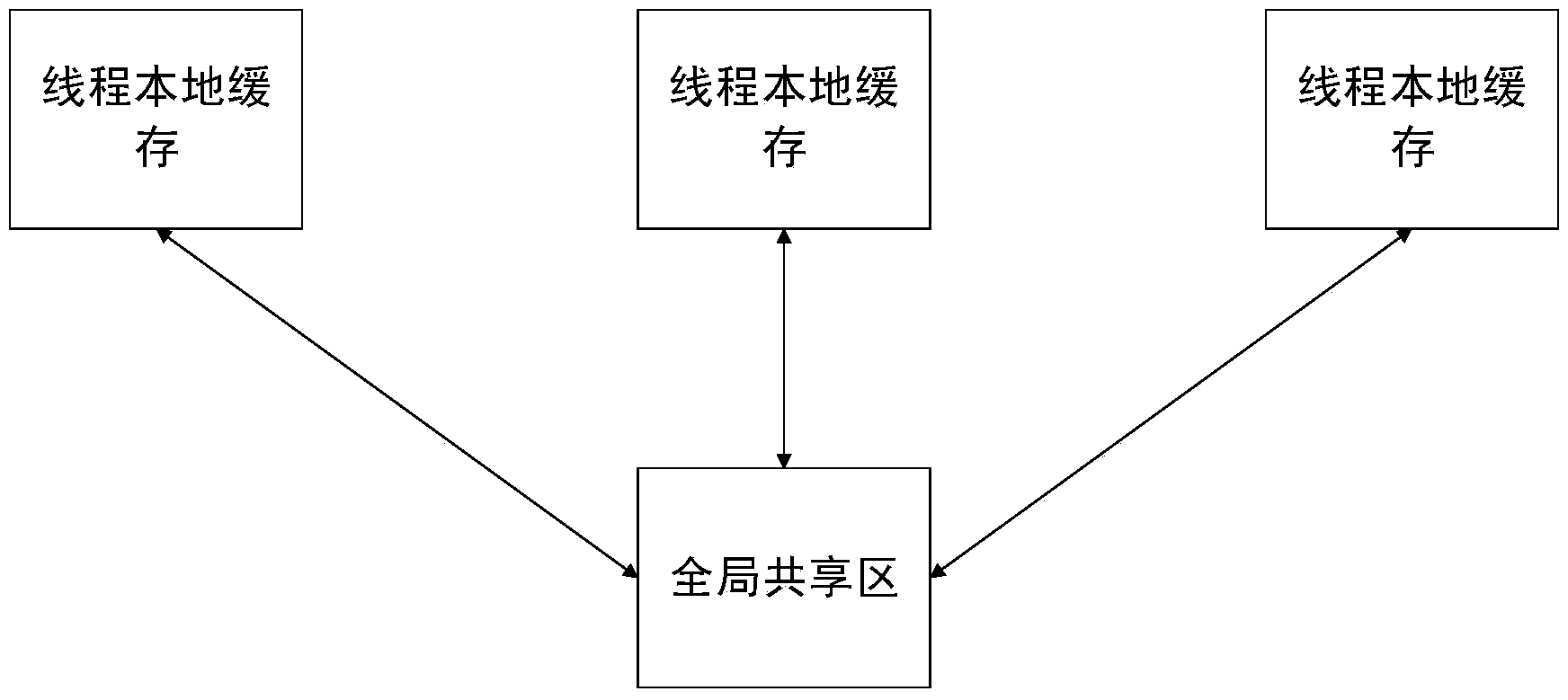

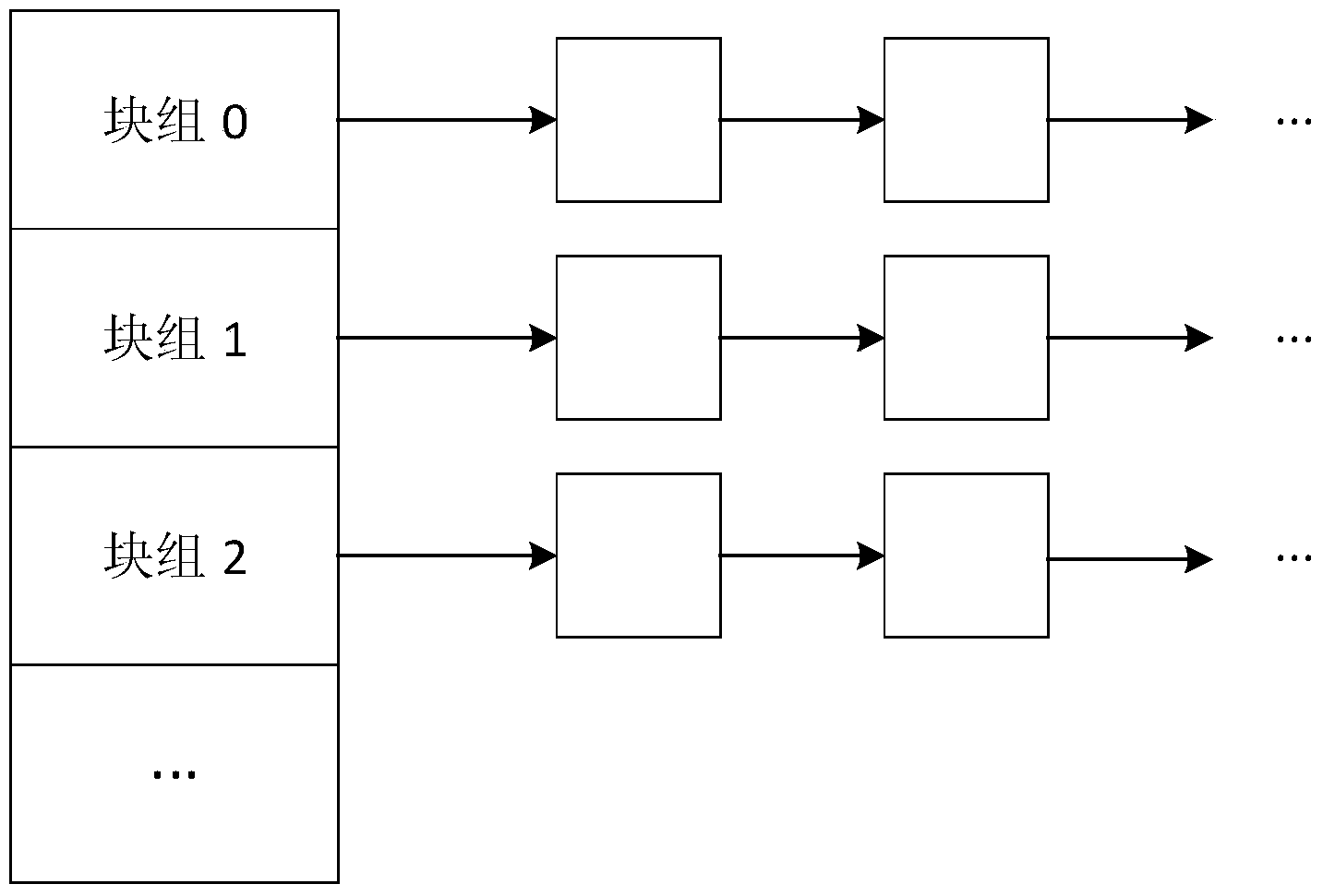

Cluster fine-grained memory management method

ActiveCN103914265AImprove memory utilizationInput/output to record carriersResource allocationPage cacheMemory management unit

The invention provides a cluster fine-grained memory management method. The cluster fine-grained memory management method includes: step 1, dividing internal storage into two broad category regions, wherein the first category is a global shared region and responsible for allocating and recovering large objects larger than or equal to a threshold value in size, the second category comprises independent thread local caches for every thread and is used for allocating small objects smaller than the threshold value in size, the thread local cache is composed of multiple block groups, each block group is a free chain table of an internal storage object, blocks in one block group are identical in size, the blocks in different groups are in equal difference in size, and the global shared region comprises a small object distribution region and a global page cache; step 2, performing memory allocation for memory applied by an application program, and starting corresponding allocation modes after the large objects and the small objects are distinguished; step 3, releasing the memory. The cluster fine-grained memory management method is capable of allocating and releasing the large objects and the small objects, particularly suitable for multi-thread applications and high in memory utilization rate.

Owner:JIANGSU CAS JUNSHINE TECH

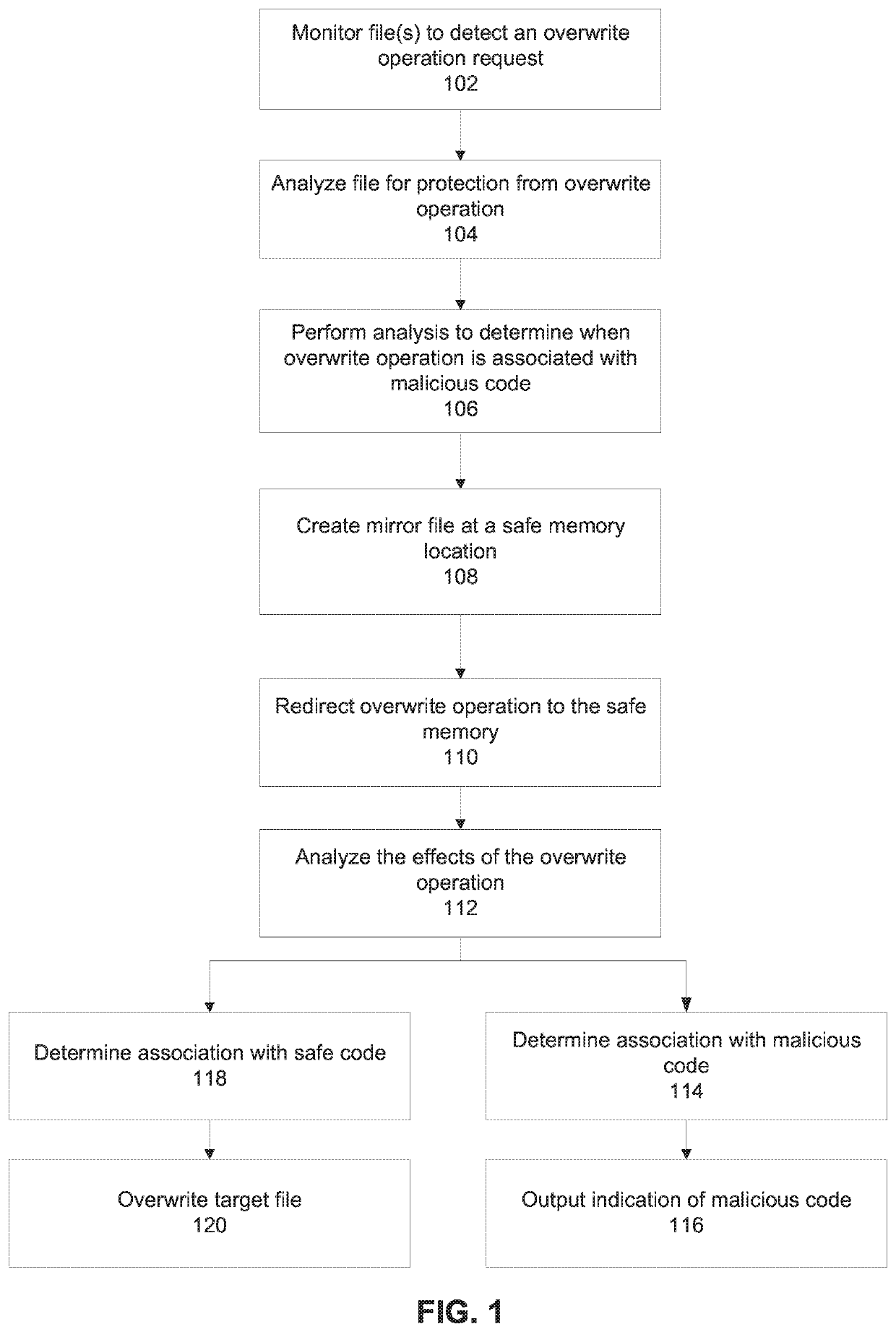

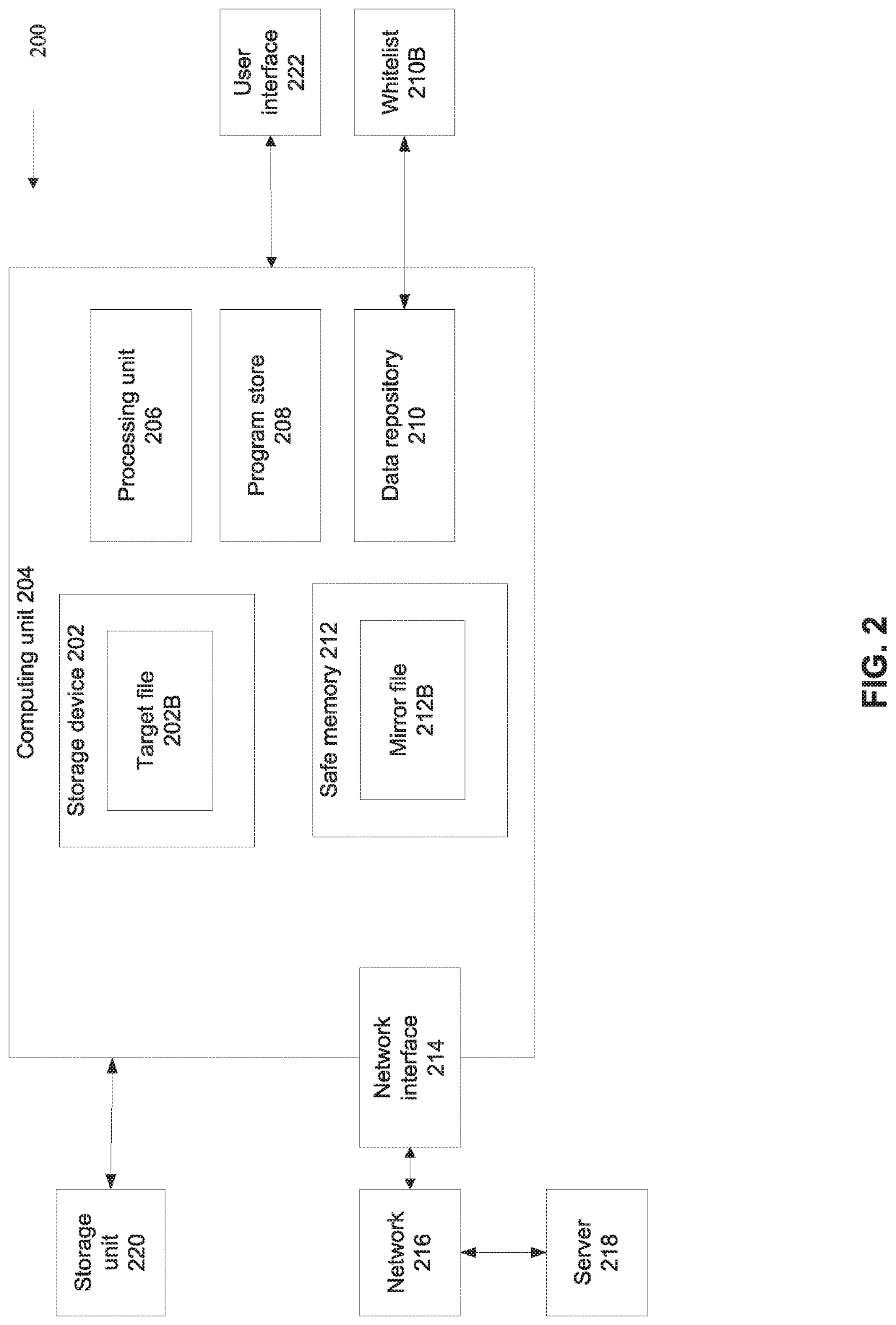

System and methods for detection of cryptoware

ActiveUS20190347415A1Improve computing powerImprove memory utilizationPlatform integrity maintainanceData storingData store

Owner:FORTINET

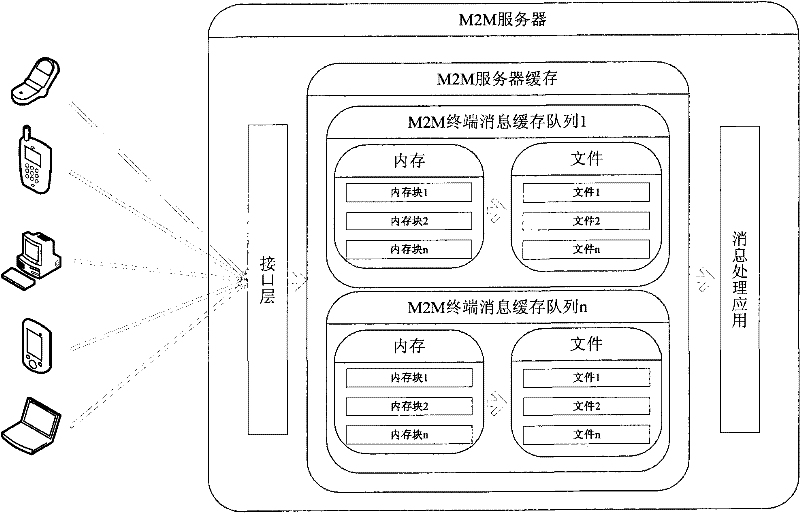

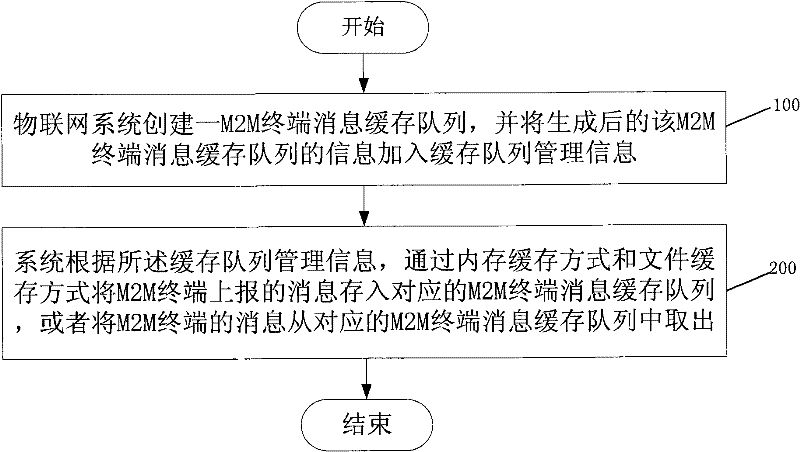

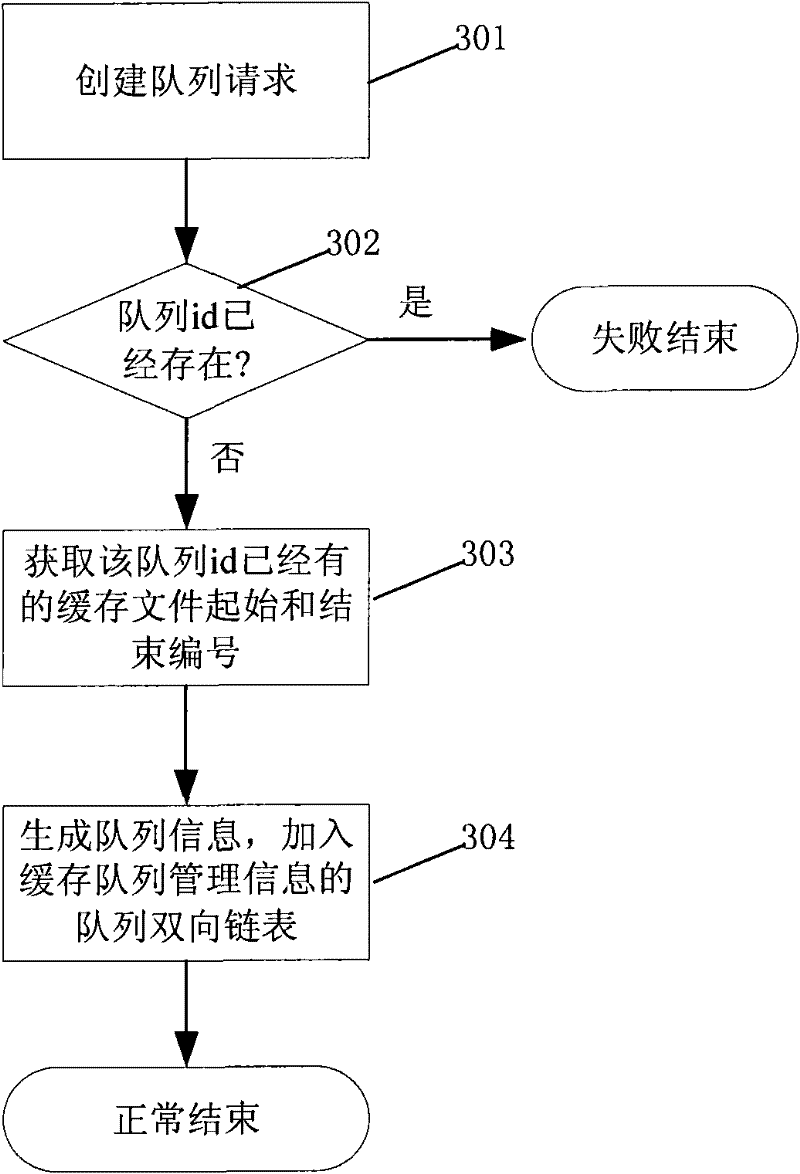

M2M system and cache control method therein

InactiveCN102223681ANo wasteAvoid consumptionNetwork traffic/resource managementNetwork topologiesOperating systemUtilization rate

The invention discloses a cache control method in an M2M system. The method comprises the following steps: the system creates an M2M (machine to machine) terminal message cache queue, and adds the generated M2M terminal message cache queue information into cache queue management information; according to the cache queue management information, the system stores a message reported by the M2M terminal into a corresponding M2M terminal message cache queue in a memory cache way and a file cache way, or extracts an M2M terminal message from a corresponding M2M terminal message cache queue. By applying the invention, when providing high efficient and unlimited cache space, a utilization rate of memory also can be raised simultaneously.

Owner:广州鸥特鸥供应链科技有限公司

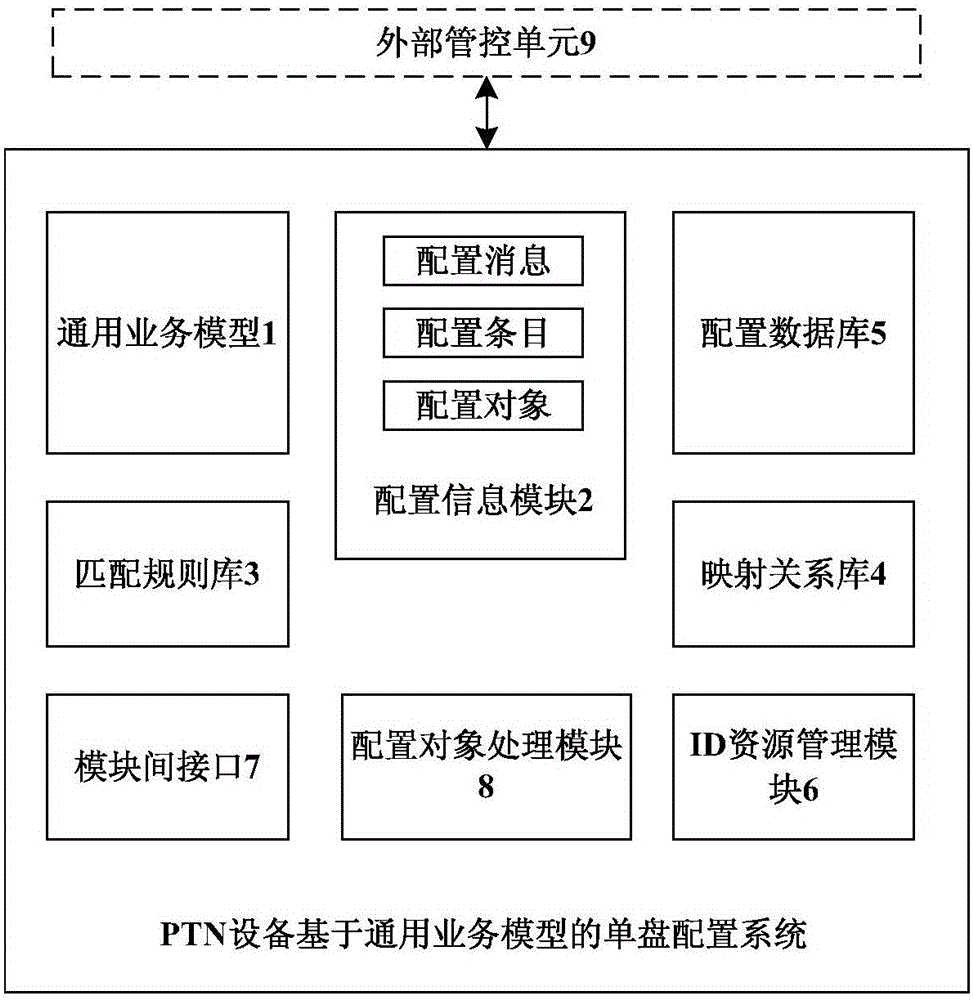

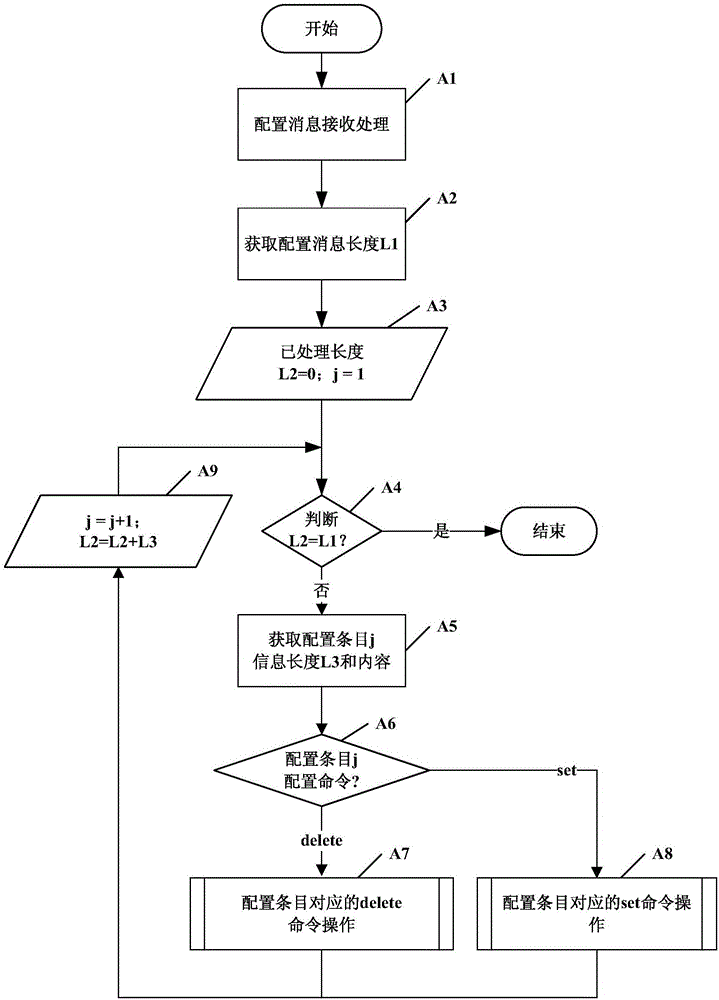

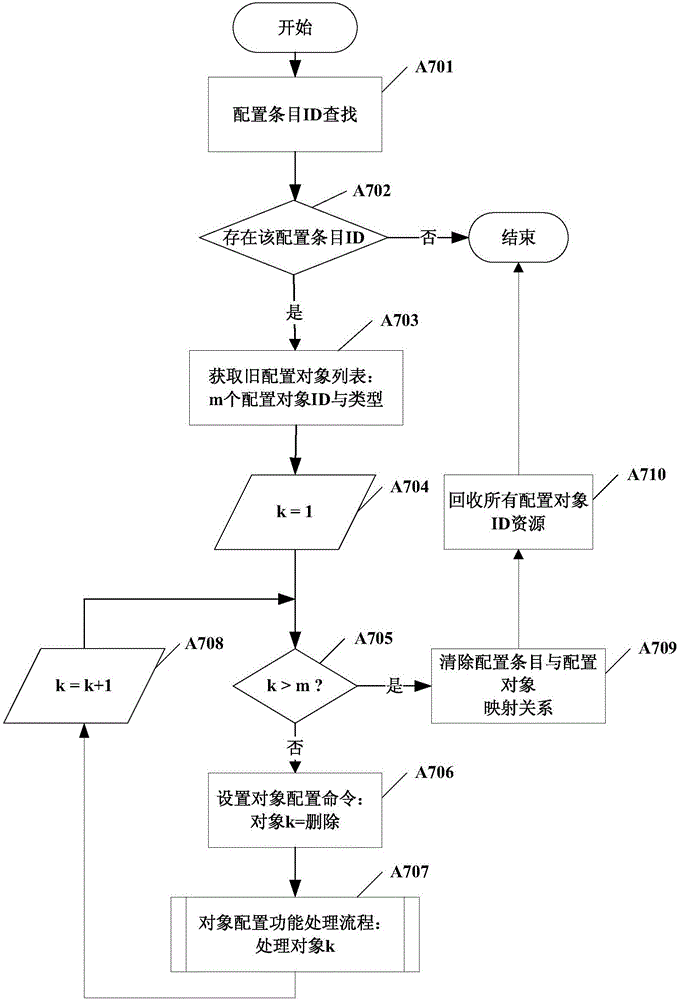

PTN equipment single-disk configuration system and method based on common business model

ActiveCN105959135AReduce redundant backupsSave memoryData switching networksInternal memoryProcessor register

The invention relates to a PTN equipment single-disk configuration system and a method based on a common business model, and relates to the field of communication equipment, and the method comprises the steps: a common business model, a matching rule base and a configuration database are initialized; a single disk receives configuration information and splits the configuration information into a plurality of configuration entries, each configuration entry is made to map a plurality of configuration objects; the configuration information of the configuration objects is divided into configuration data and configuration commands, the configuration data is saved in the configuration database, the configuration commands are sent to adjacent lower layer function modules, each layer function module performs adaptation processing on configuration objects and finishes the configuration processing of corresponding configuration commands till the bottom layer, the configuration information is adapted to bit-level data which can be directly written in a chip internal memory or an internal hard table, and the information configured to a single-disk hardware is finished. According to the invention, the redundant backup of the configuration data can be reduced, the CPU memory is saved, and the expansion need of the PTN equipment is met.

Owner:FENGHUO COMM SCI & TECH CO LTD

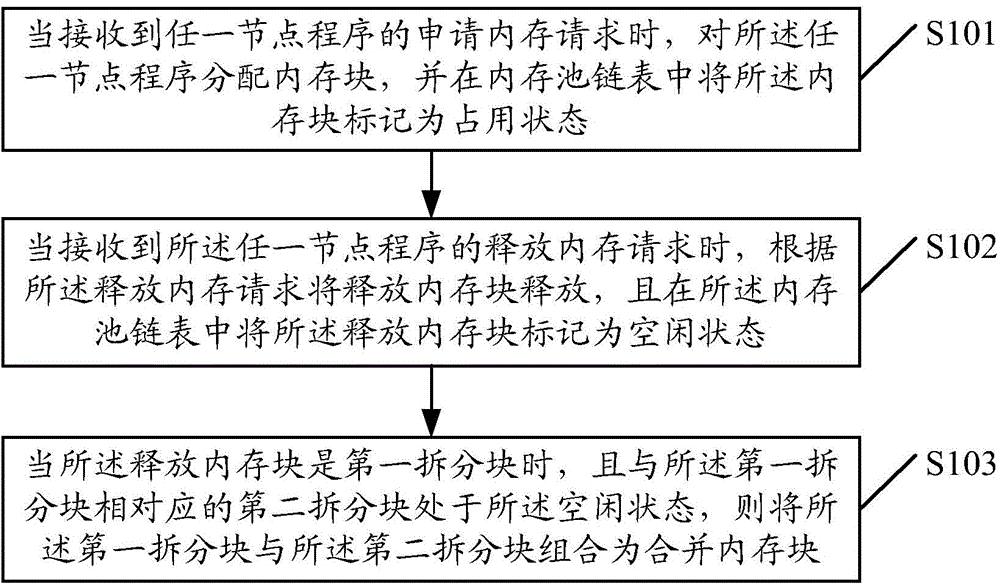

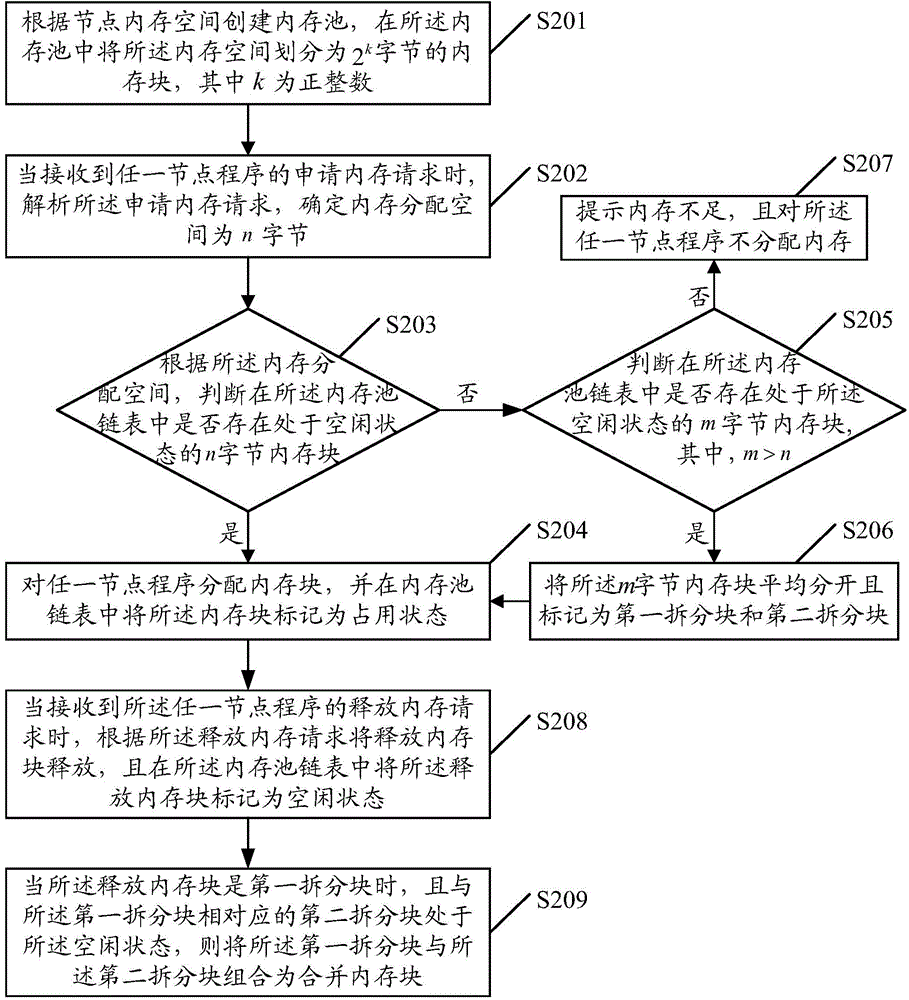

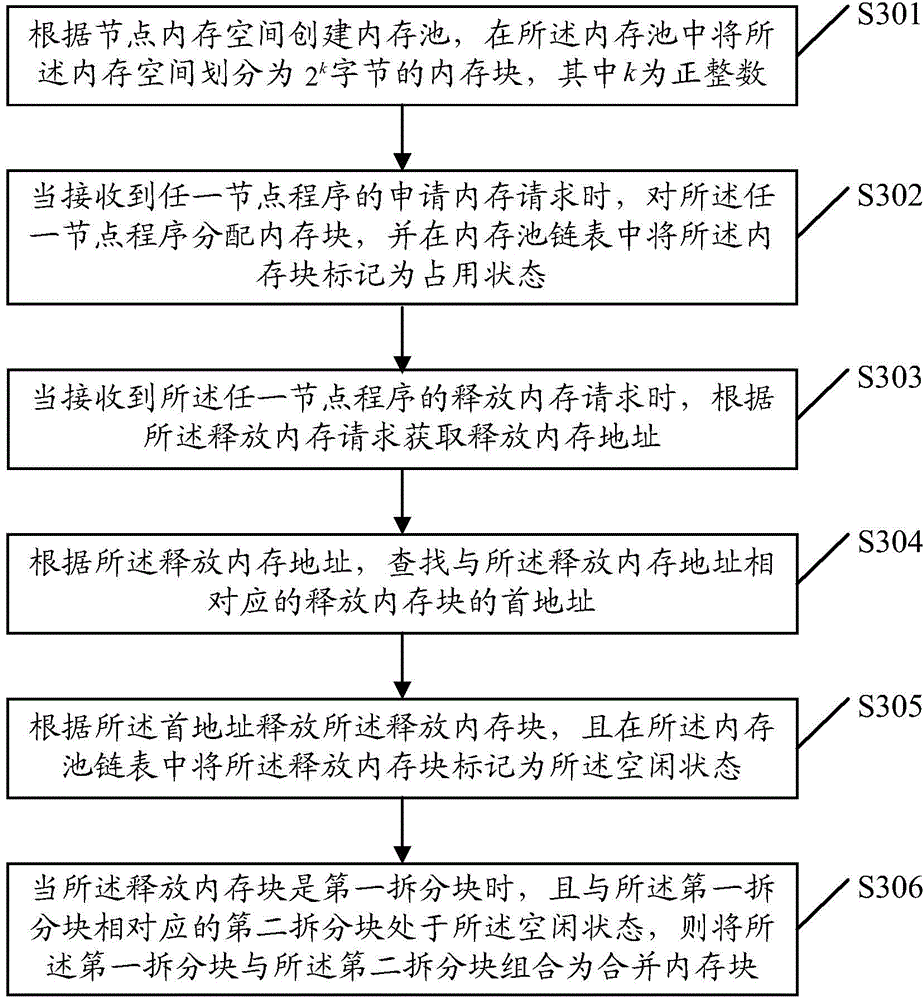

Memory management method and device

InactiveCN105589809AAvoid wastingImprove memory utilizationMemory adressing/allocation/relocationMemory management unitMemory block

The embodiment of the invention discloses a memory management method and device. The method comprises the following steps: when receiving a memory application request of any node program, distributing a memory block to any node program, and marking the memory block into an occupancy state in a memory pool linked list; when receiving a memory release request of any node program, releasing a memory release block according to the memory release request, and marking the memory release block in the memory pool linked list into an idle state; and when the memory release block is a first split block and a second split block corresponding to the first split block is in the idle state, combining the first split block and the second split block into a combined memory block. By releasing the memory block corresponding to the memory release request of any node program, memory fragments generated by an uncertain memory application size are reduced, so that waste of resources is avoided. The node memory can be reasonably utilized by splitting and combining the memory block, so that the memory utilization rate is improved.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

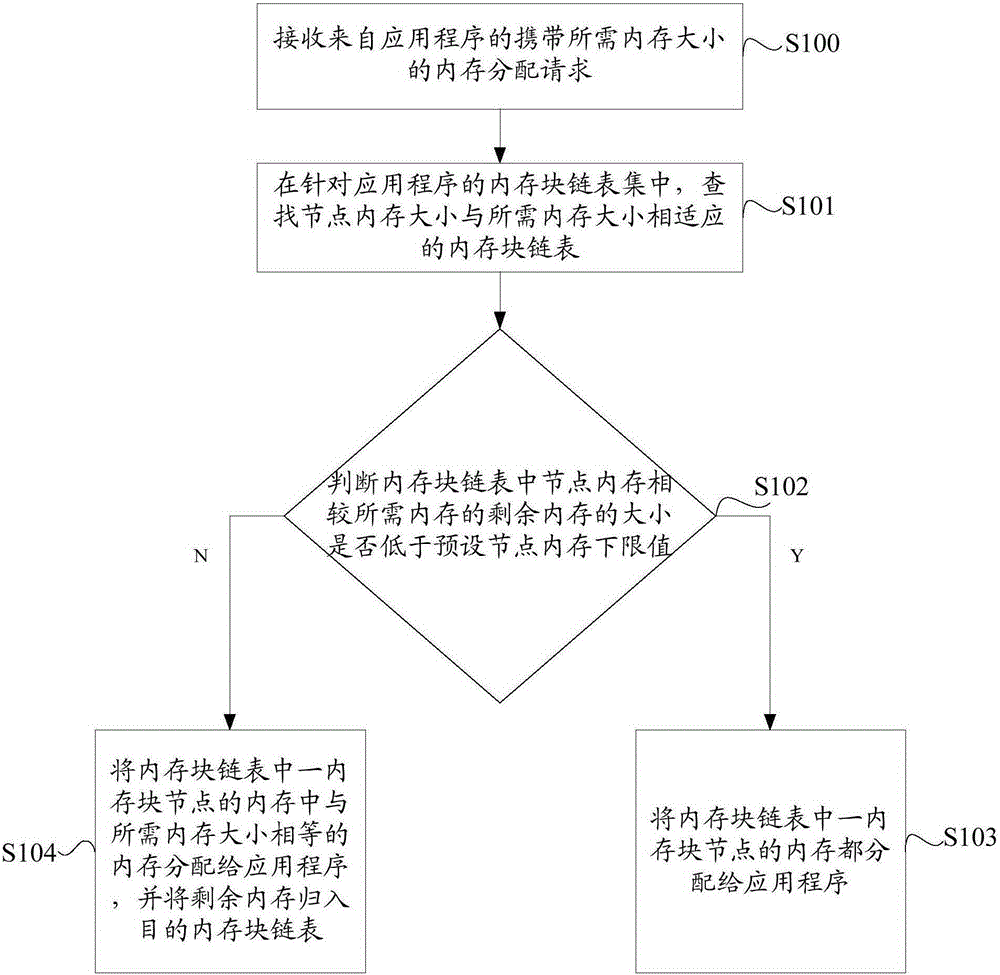

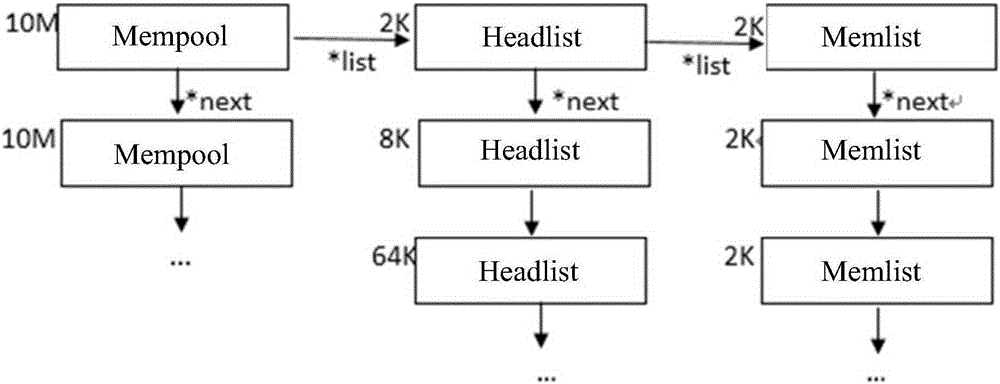

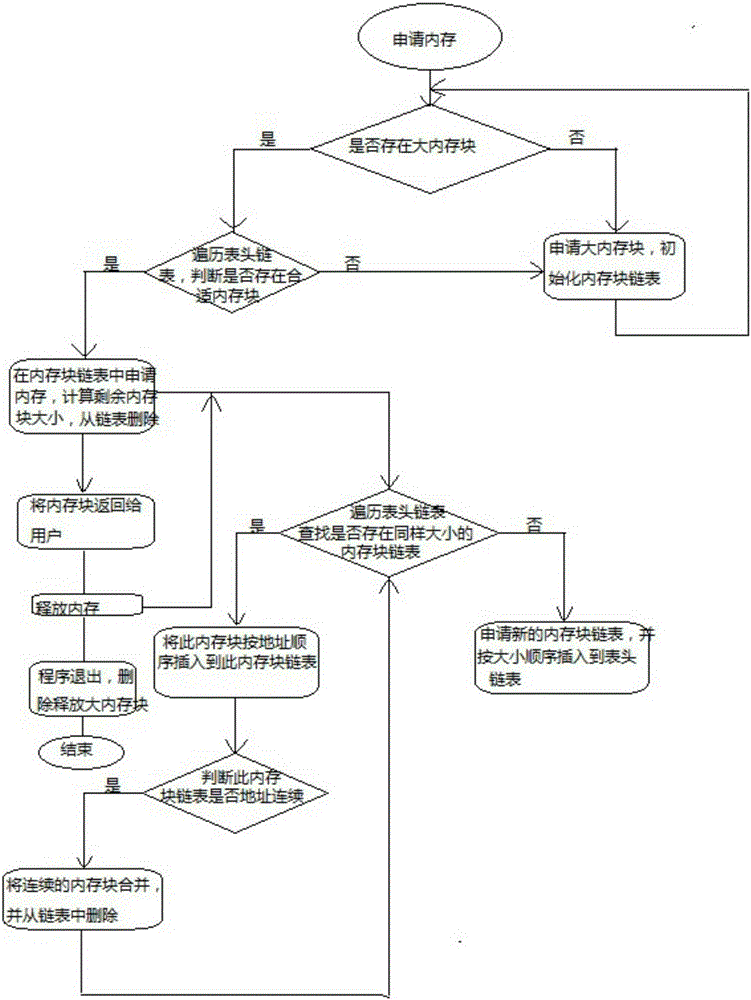

Memory allocation management method and memory allocation management system

InactiveCN105302737AImprove memory utilizationFrequently fall into the operationMemory adressing/allocation/relocationParallel computingManagement system

The invention discloses a memory allocation management method and a memory allocation management system. The method comprises the following steps: receiving the memory allocation request carried with memory size from an application program; seeking a memory block chain table with node memory size adaptive to needed memory size in a memory block chain table set aiming at the application program; in the sought memory block chain table, when the residual memory size of the node memory relative to the needed memory size is not lower than the preset lower limiting value of the node memory, allocating the memory equal to the needed memory in the node memory of one memory block to the application program, and classifying the residual memory into the target memory block chain table. According to the invention, the residual memory is classified into the target memory block chain table in which the node memory size is equal to the residual memory size, so that the memory fragments generated in the memory allocation process can be reasonably managed, and the memory utilization rate can be effectively increased.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

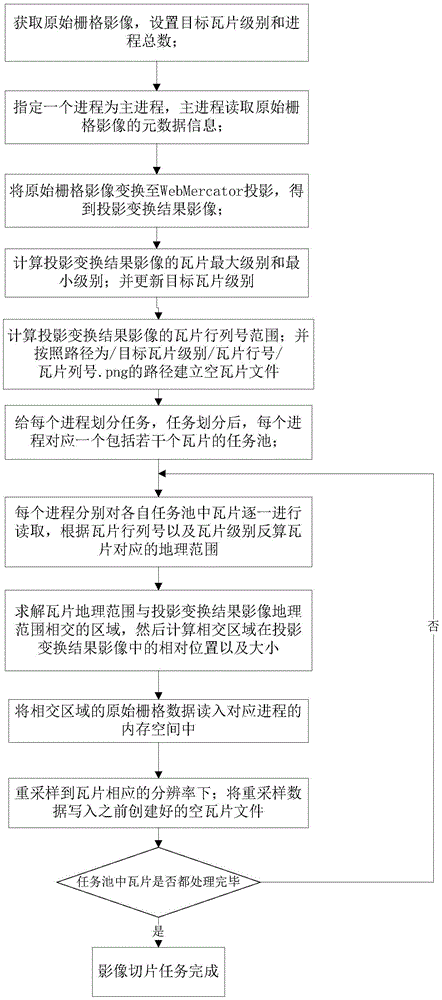

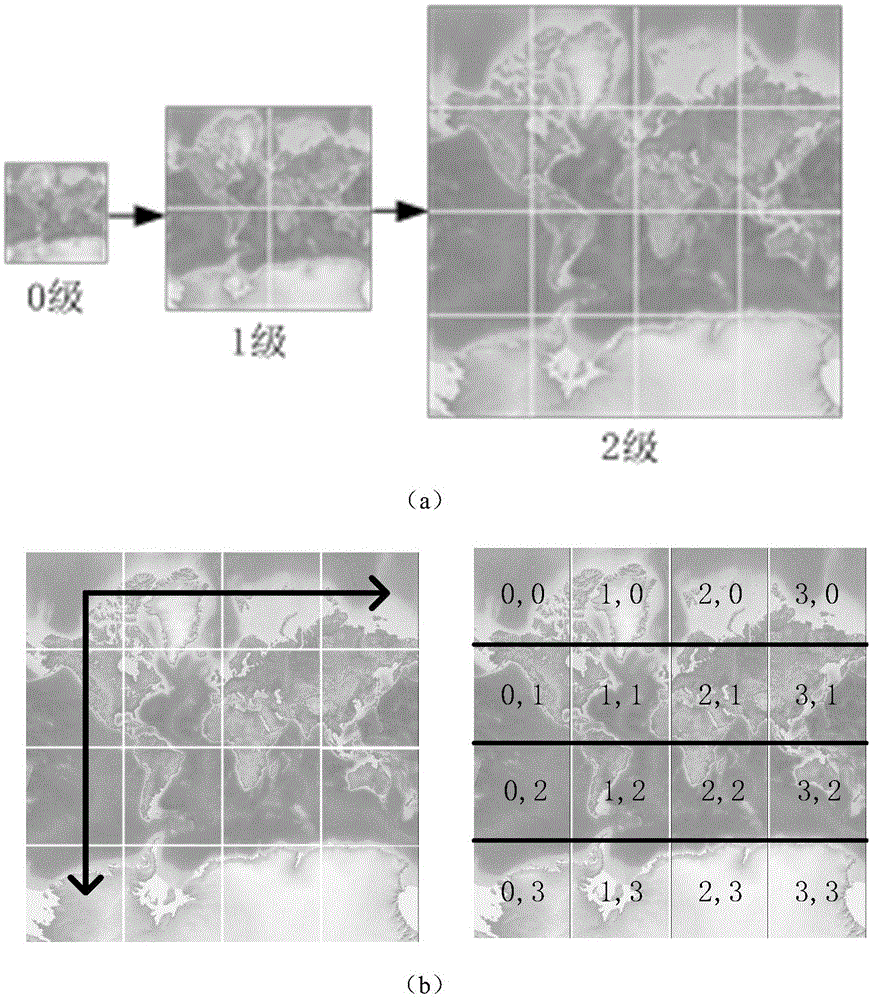

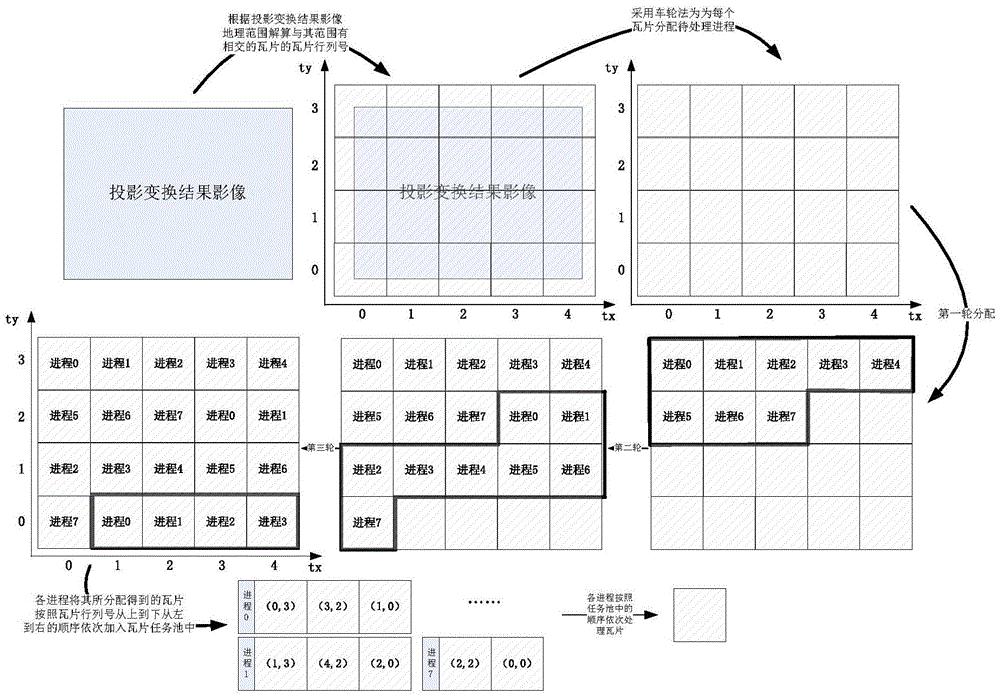

Parallel mode grid image slicing method

ActiveCN105550977ASimple configurationFast integrationImage enhancementImage analysisInformation processingAlgorithm

The present invention belongs to the field of the geographic space information processing technology, and relates to a parallel mode grid image slicing method. The method provided by the invention comprises the following steps: the step 1: obtaining an original grid image, and setting a target tile grade and the total number of processes; the step 2: reading meta-data information of the original grid image; the step 3: converting the original grid image to WebMercator projection; the step 4: calculating the max grade and the mix grade of the tiles of the projection conversion result image; the step 5: calculating the range of the tile rank numbers of the projection conversion result image, and establishing an empty tile file; and the step 6: dividing tasks for each process through adoption of a wheel method; the step 7: reading tiles in each task pool one by one through each process, inversely calculating the geographical range with respect to the tiles, calculating the relative position and the size of an intersecting region in the projection conversion result image, and reading the original grid data of the intersecting region into a memory space; and the step 8: writing resampling data in built empty tile file after resampling the original grid data of the intersecting region.

Owner:NAT UNIV OF DEFENSE TECH

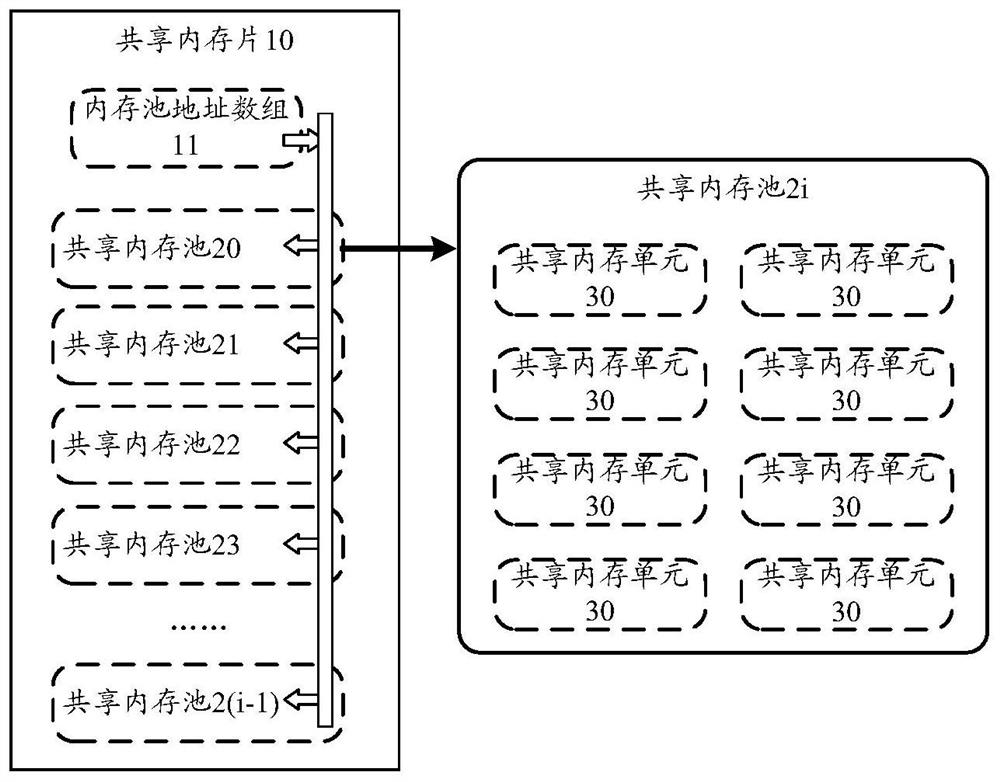

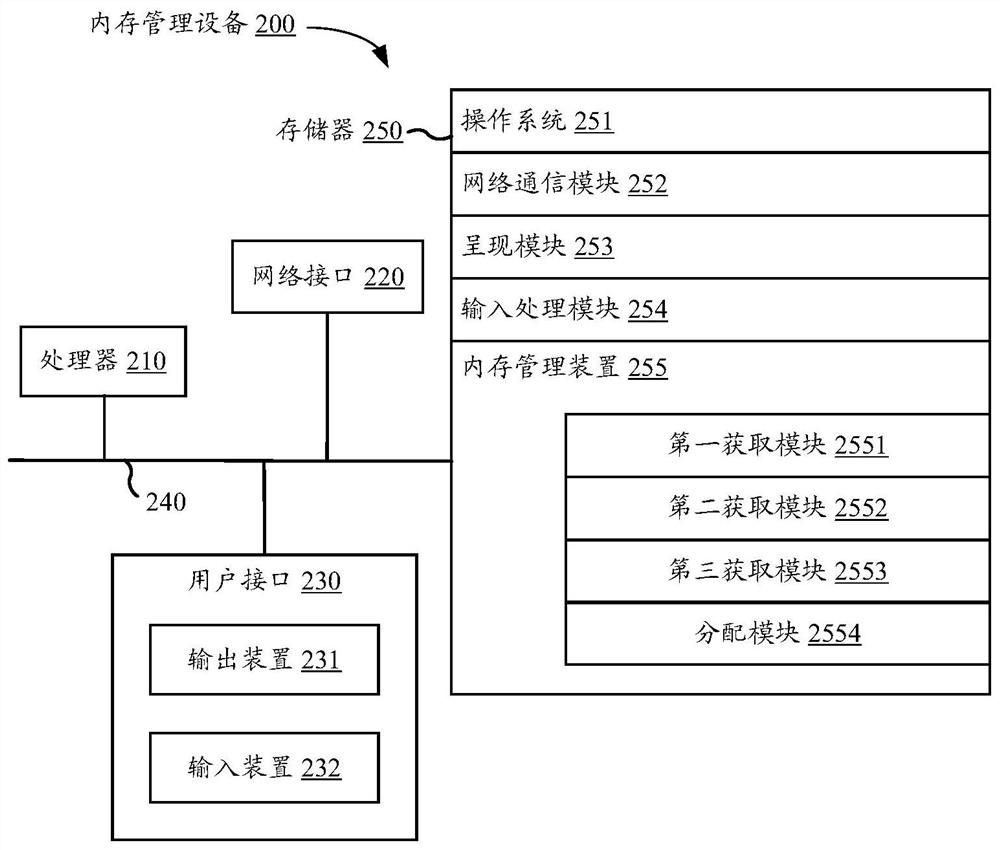

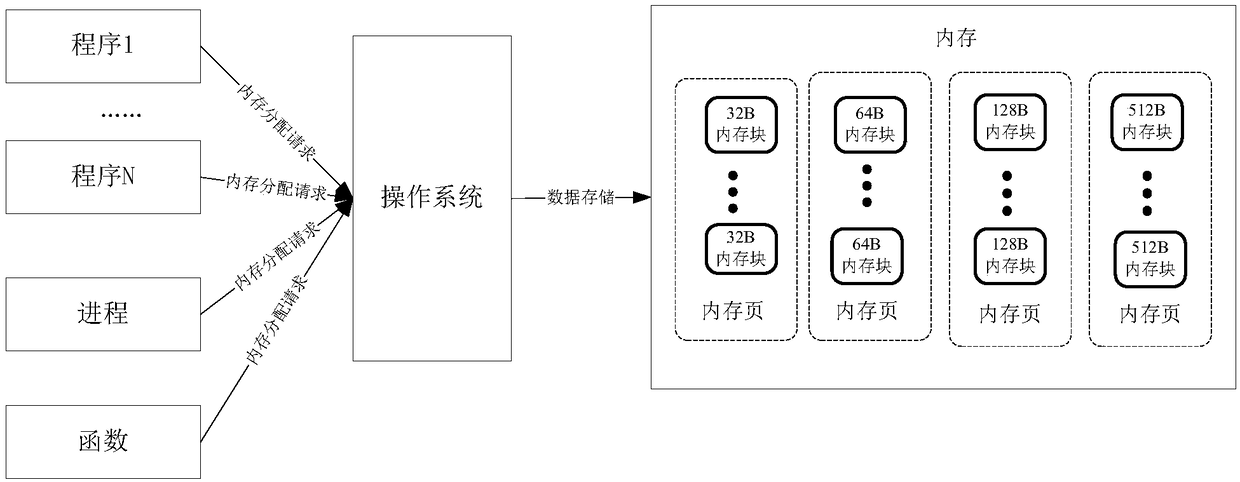

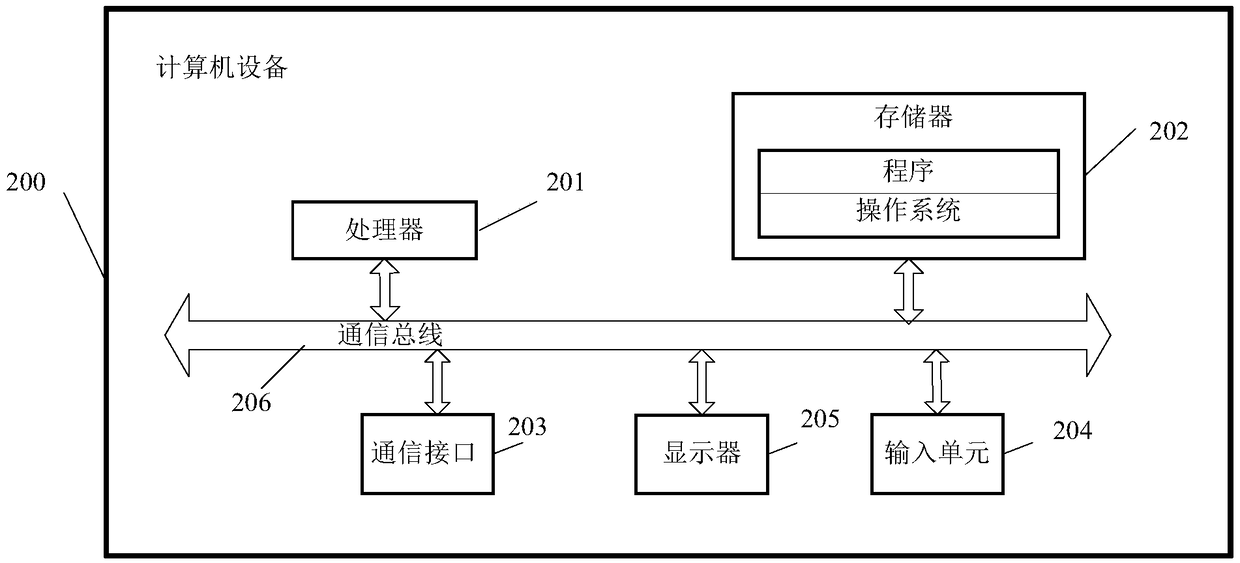

Memory management method, device and equipment and computer readable storage medium

PendingCN112214329AMeet memory usage requirementsImprove memory utilizationResource allocationEngineeringTerm memory

The invention provides a memory management method, device and equipment and a computer readable storage medium. The method comprises the steps of obtaining a to-be-allocated shared memory type of a process request; acquiring a management area address of a shared memory pool corresponding to the to-be-allocated shared memory type from a shared memory chip pre-created in a memory; acquiring memory page configuration information of the shared memory pool from the shared memory chip according to the management area address; and dynamically allocating a shared memory unit for the process from the memory according to the memory page configuration information. According to the invention, the size of the shared memory pool can be zoomed according to actual requirements, so that the memory utilization rate can be improved, and the memory use requirements in actual services can be better met.

Owner:TENCENT TECH SHANGHAI

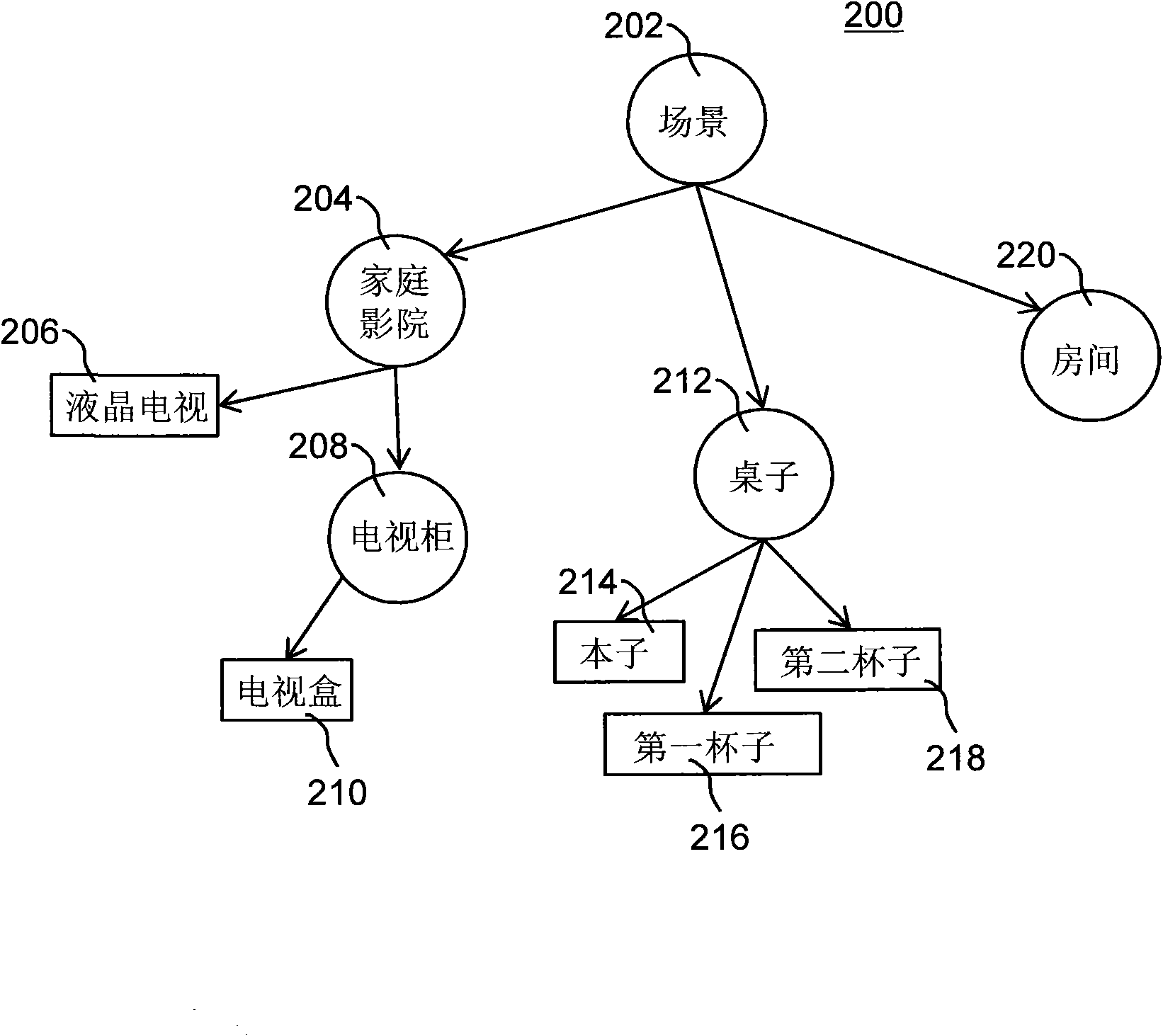

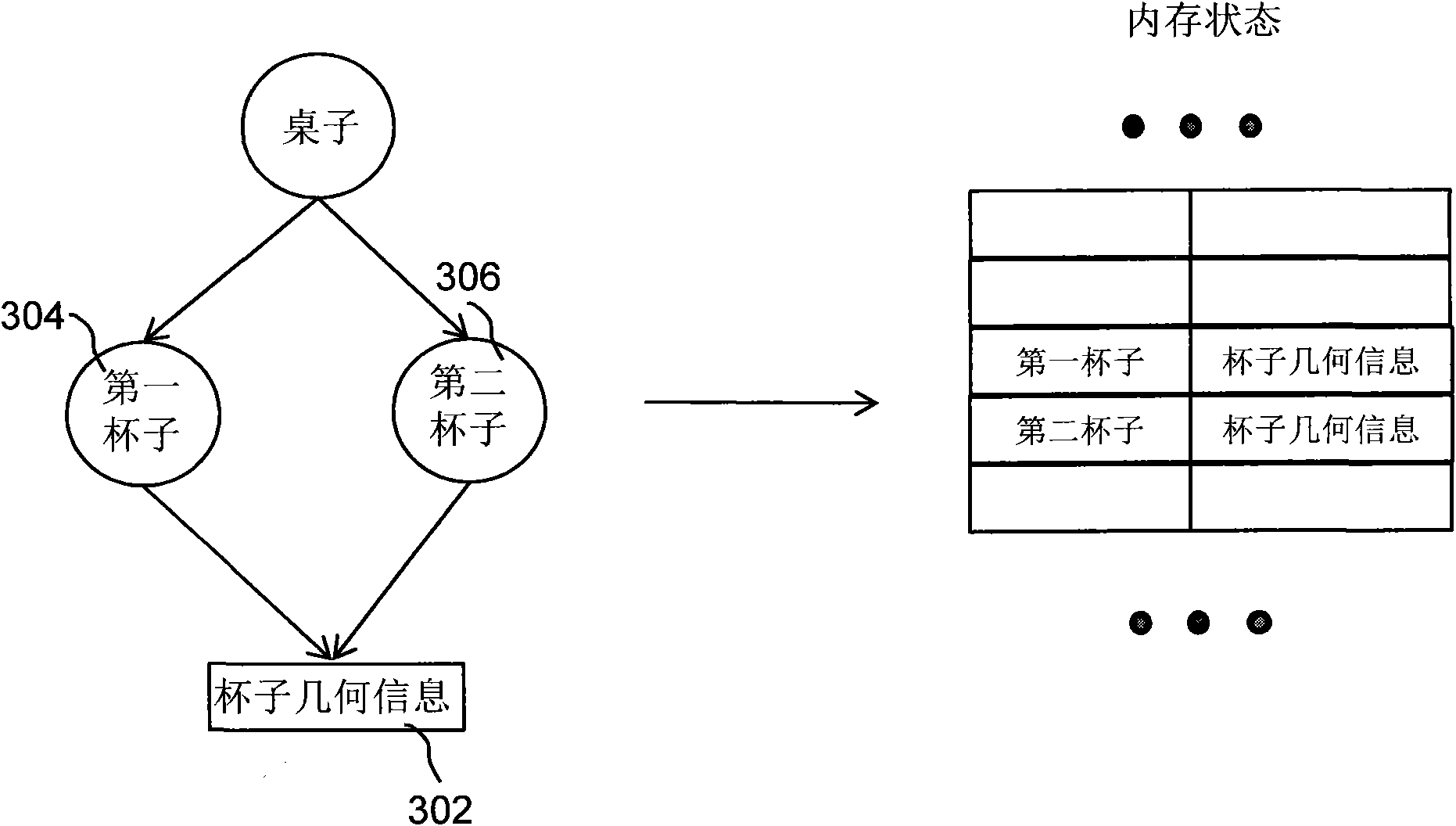

Game scene management method

InactiveCN102214263AImprove memory utilizationGood effectSpecial data processing applicationsComputer scienceGame engine

The invention discloses a game scene management method for managing a scene graph in a game engine. The scene graph comprises a plurality of group nodes and leaf nodes which have parent-child relations, wherein parts of leaf nodes comprise reference counts. The method comprises the following steps that: when the game engine traverses the scene graph to reference one of the leaf nodes which comprise the reference counts for the first time, the game engine loads the leaf node to a memory space, obtains an address pointer and initializes the reference count of the leaf node; when the game engine references the leaf node once again, the game engine references the leaf node by the address pointer and increases the reference count of the leaf node; when the game engine finishes one-time reference of the leaf node and reduces the reference count of the leaf node; and when the reference count of the leaf node is smaller than an initial value of the reference count, the game engine releases the memory space occupied by the leaf node.

Owner:无锡广新影视动画技术有限公司

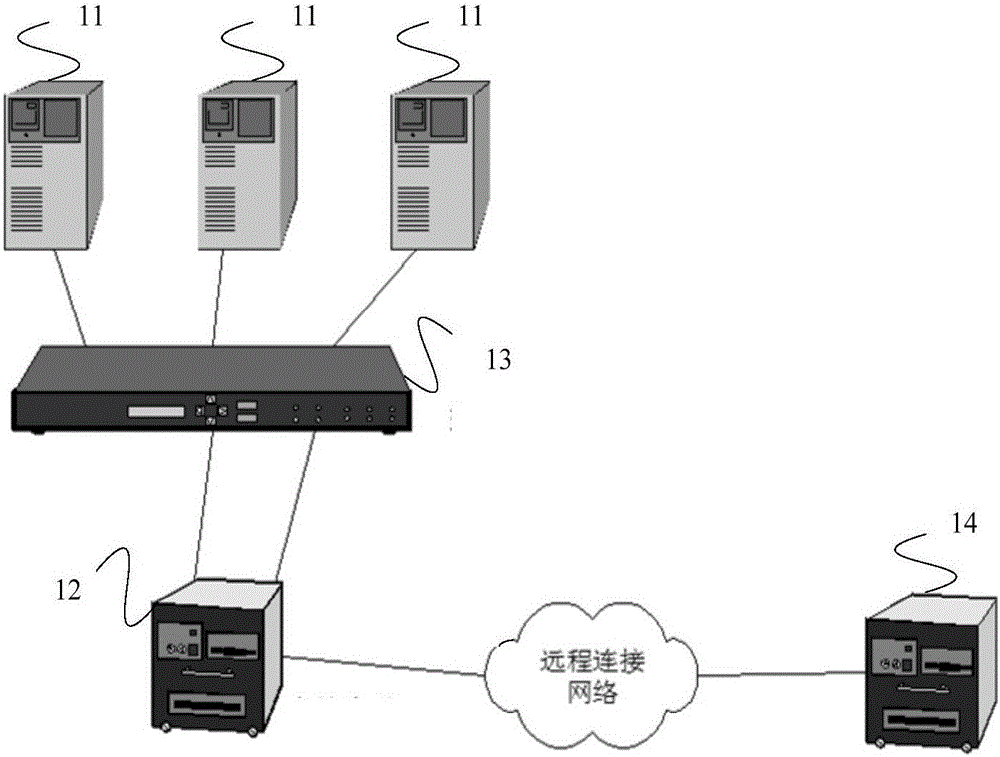

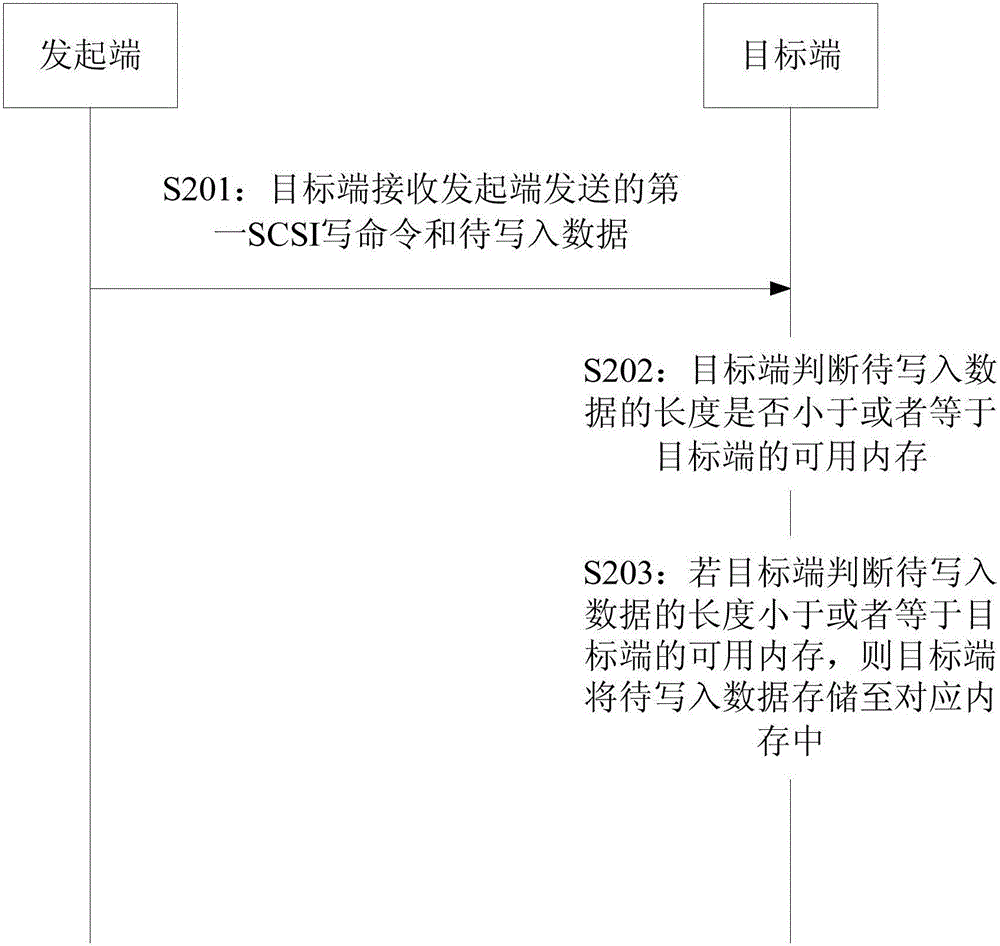

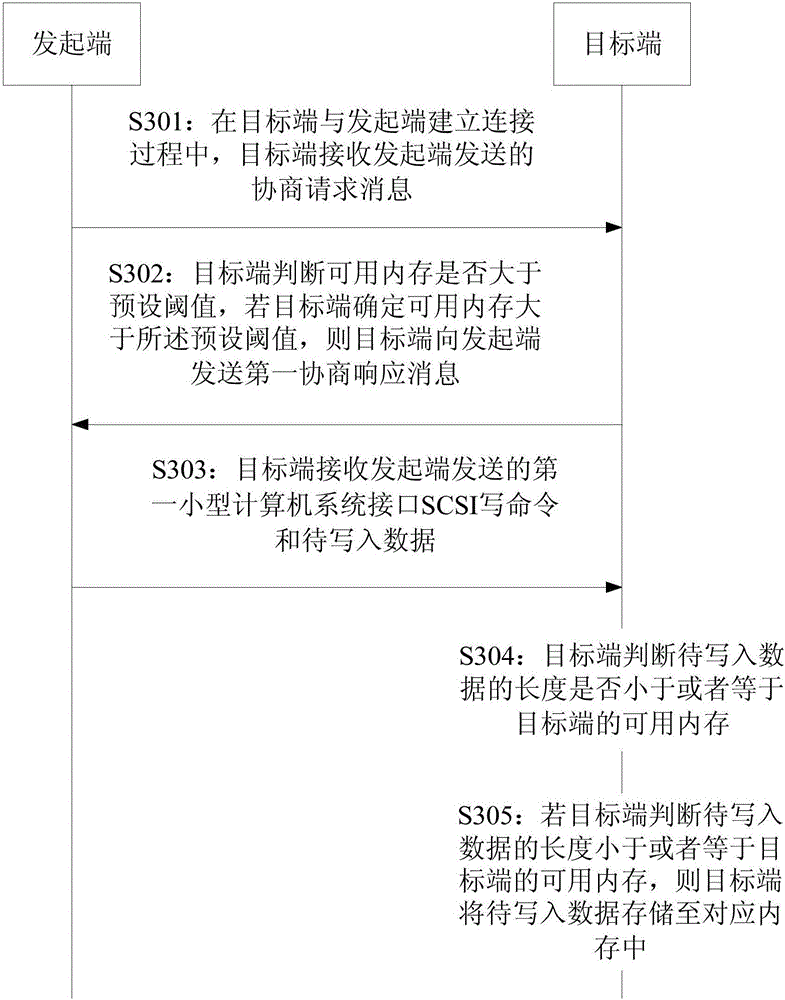

Data storage method and device

ActiveCN106095694AImprove memory utilizationMemory adressing/allocation/relocationSCSIComputer science

The embodiment of the invention provides a data storage method and device. The method comprises the steps that a target side receives a first SCSI write command sent by an initiator and to-be-written data; the target side judges whether the length of the to-be-written data is smaller than or equal to an available memory of the target side or not; if the target side judges that the length of the to-be-written data is smaller than or equal to the available memory of the target side, the target side stores the to-be-written data in the corresponding memory, wherein the memory of the target side is shared by multiple initiators. In the above-mentioned process, multiple initiators can share the memory, the target side does not need to reserve the memory for all the initiators, and therefore the memory use ratio is increased.

Owner:HUAWEI TECH CO LTD

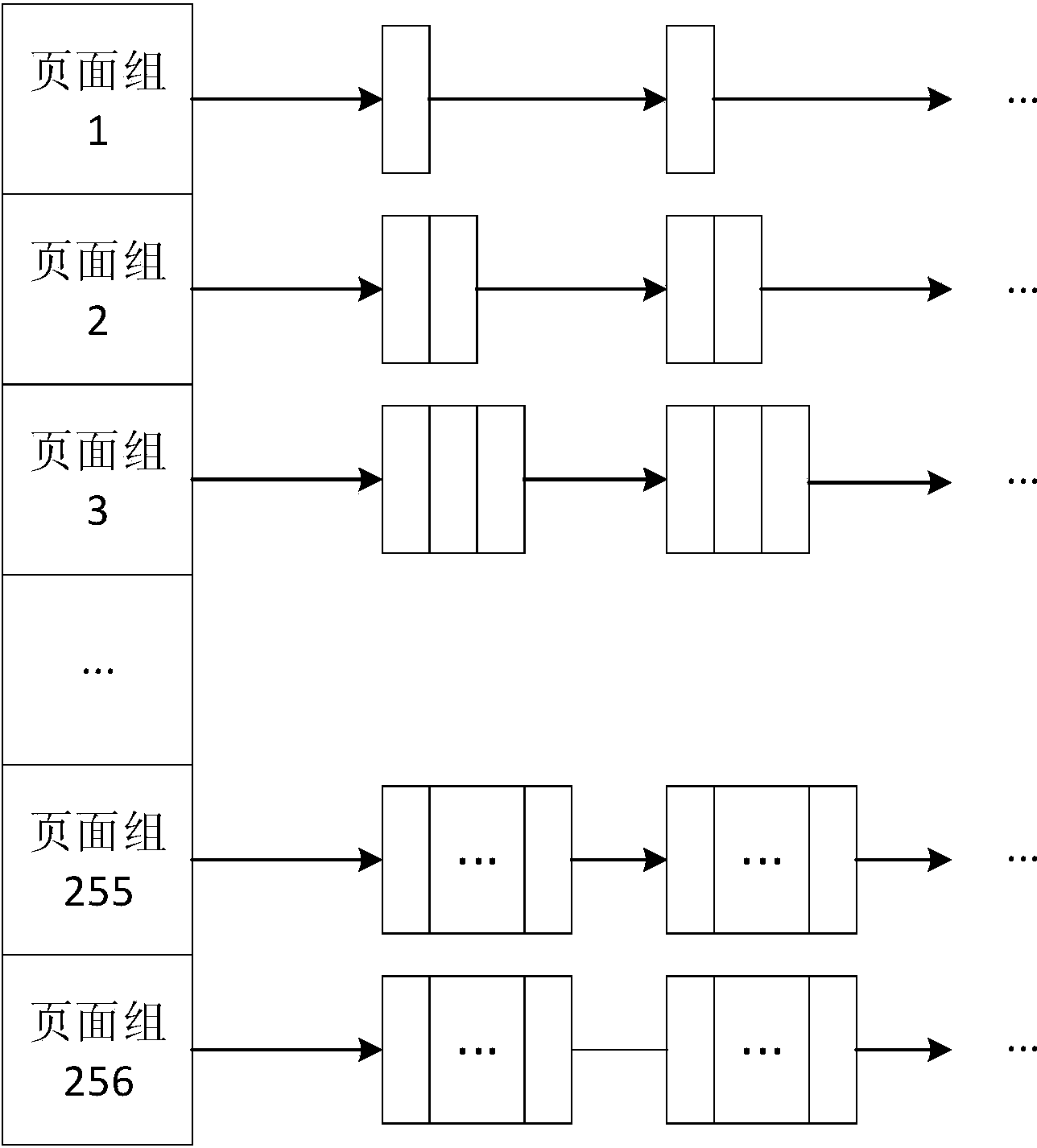

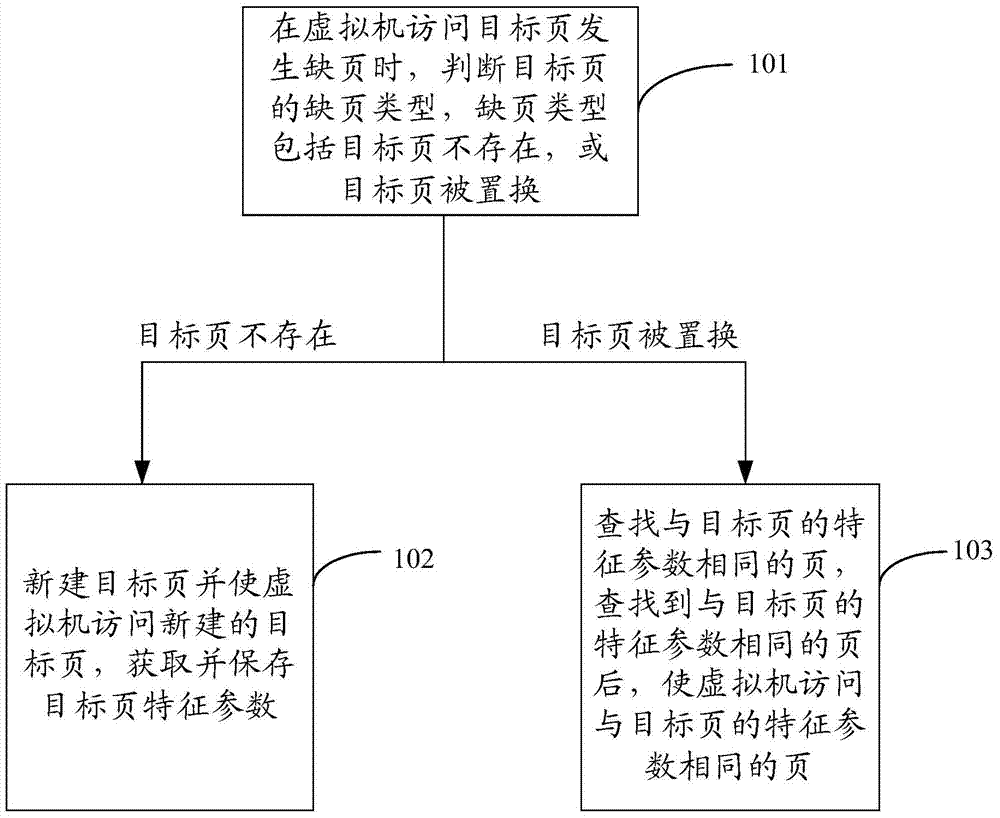

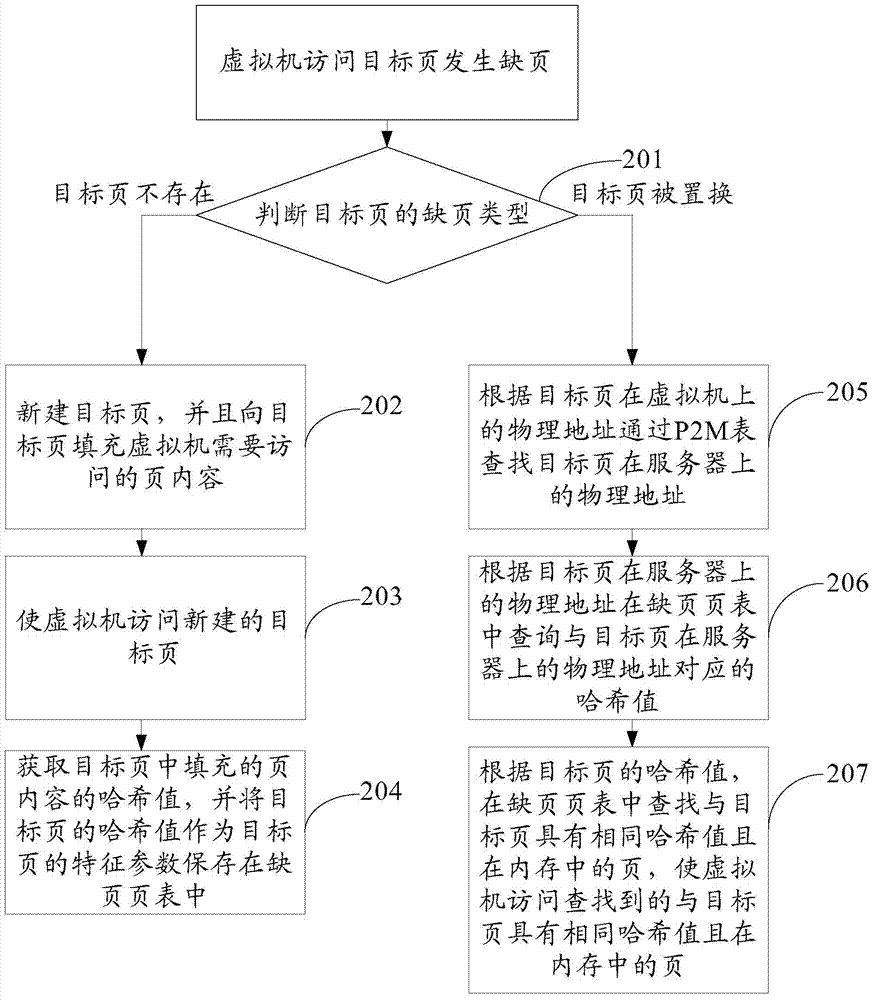

Page access method, page access device and server

InactiveCN103488523AReduce overheadImprove memory utilizationMemory architecture accessing/allocationMemory adressing/allocation/relocationAccess methodUtilization rate

An embodiment of the invention provides a page access method, a page access device and a server, and relates to the field of computers. The expenditure of a hard disk is reduced while memory utilization rate is increased. The specific scheme includes that the missing page type of a destination page is judged when the destination page accessed by a virtual machine is missed, the missing page type includes inexistence of the destination page or replacement of the destination page, the destination page is newly built if the destination page does not exist, the virtual machine can access the newly built destination page and acquire and store characteristic parameters of the destination page, pages with characteristic parameters as same as those of the destination page are searched if the destination page is replaced, and the virtual machine can access the pages with the characteristic parameters as same as those of the destination page after the pages are searched. The page access method, the page access device and the server are used for accessing pages by the virtual machine.

Owner:HUAWEI TECH CO LTD

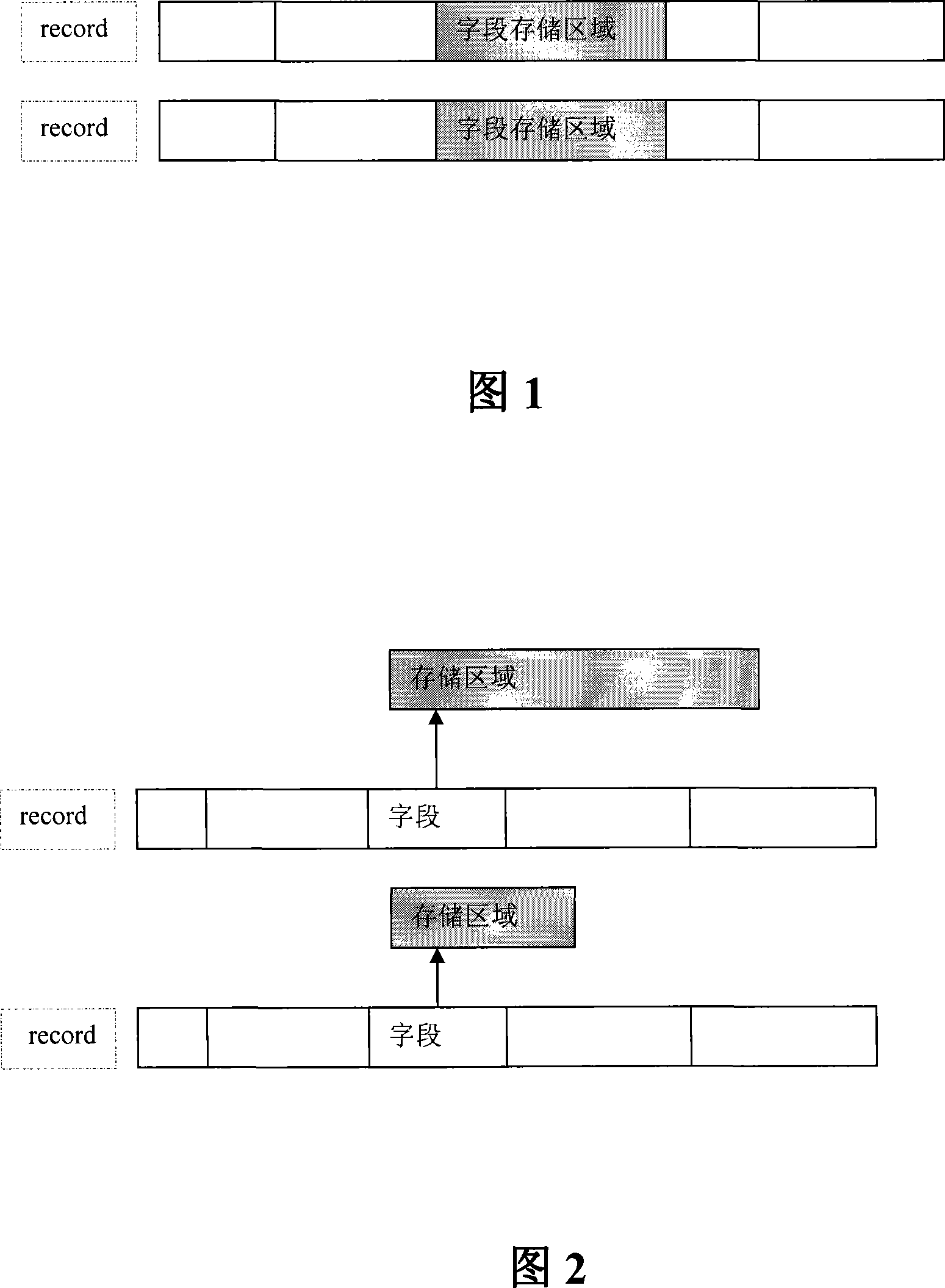

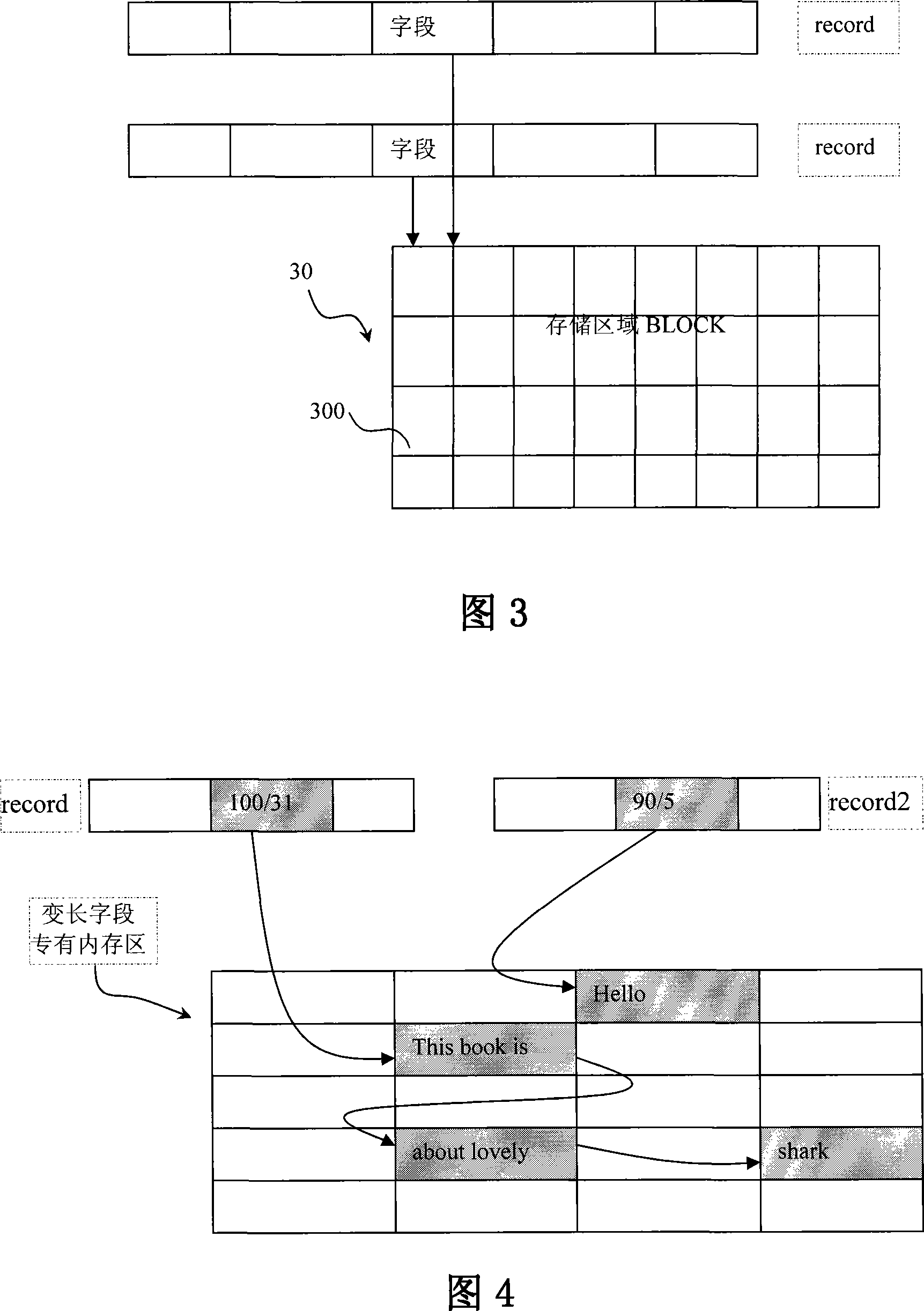

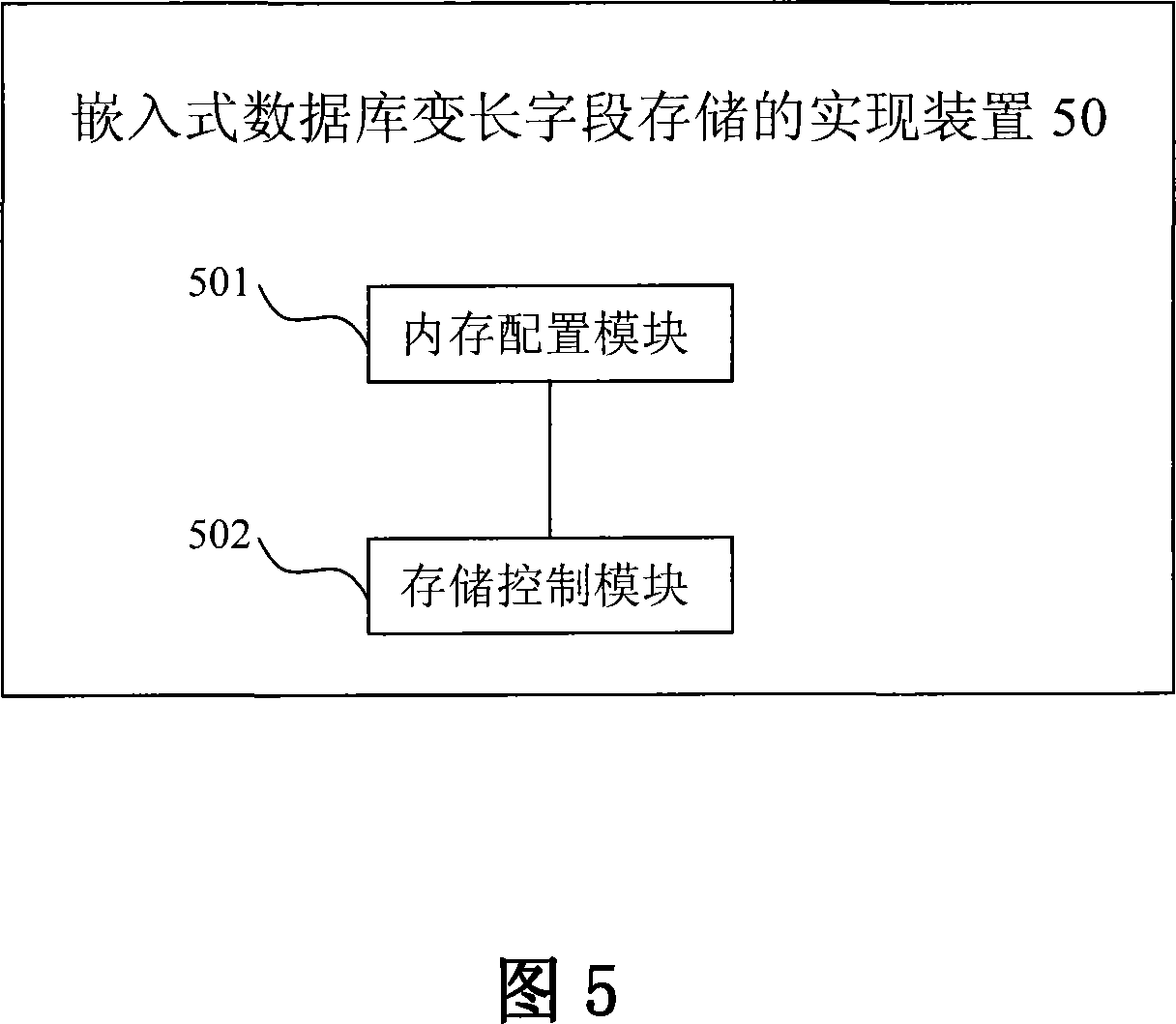

Method and device for storing length-various field of embedded database

InactiveCN101226553AAvoid memory fragmentationImprove memory utilizationSpecial data processing applicationsPre and postDatasheet

The invention discloses a realization method and a device of length-varying field memory of an imbedded database, which comprises: allocating a large memory block as an exclusive memory area of a length-varying field for each datasheet of a database, dividing the memory block into a plurality of BLOCKS with fixed sizes which are connected pre-and post through an index to form a BLOCK chain. When real length of a length-varying field is less than or equals to one BLOCK length, one BLOCK is allocated to store the length-varying field; when real length of a length-varying field is more than one BLOCK length, a plurality of BLOCKS are allocated to form a BLOCK chain to store the length-varying field. The invention can effectively solve the memory fragment problem and improves memory utility rate.

Owner:ZTE CORP

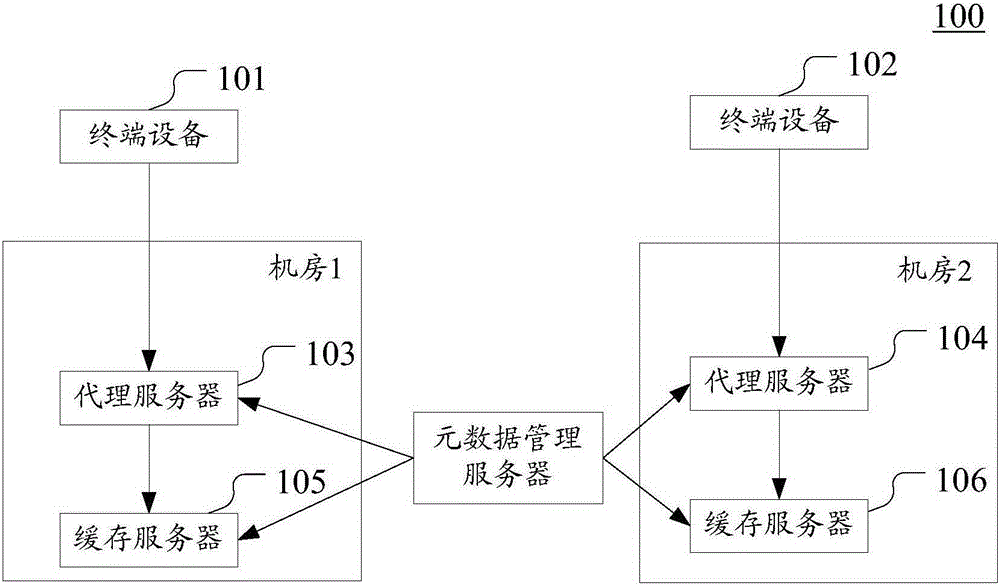

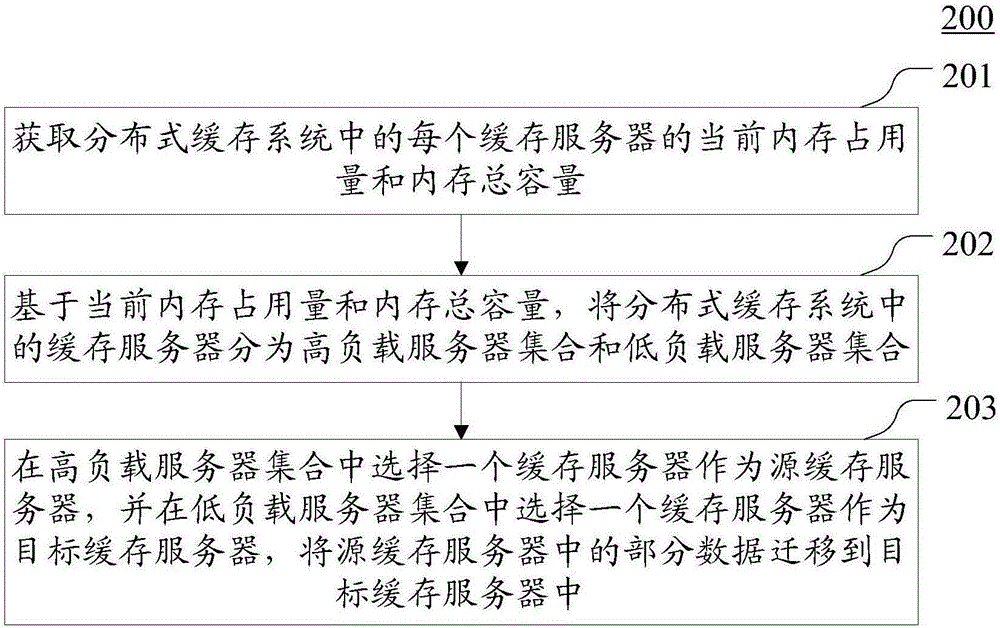

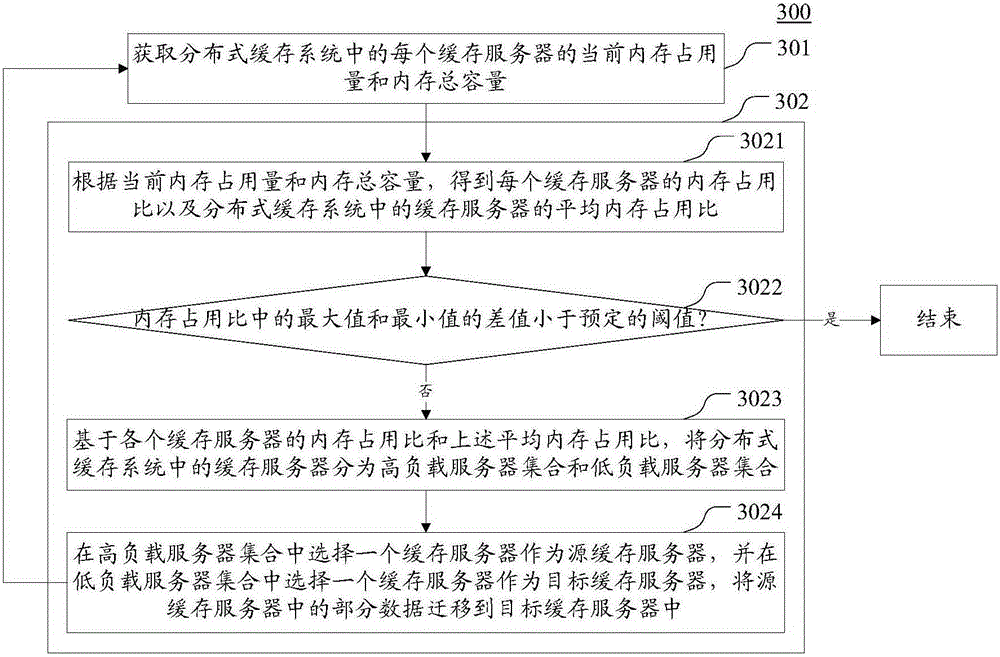

Data processing method and device used for distributed cache system

ActiveCN105183670AImprove memory utilizationData balanceMemory adressing/allocation/relocationCache serverLow load

The invention discloses a data processing method and device used for a distributed cache system. The data processing method concretely includes the steps that the current occupied memory capacity and total memory capacity of each cache sever in the distributed cache system are obtained; according to the current occupied memory capacity and total memory capacity, data balance processing is performed on all the cache servers and includes the steps that based on the current occupied memory capacity and total memory capacity, the cache servers in the distributed cache system are divided into a high-load server set and a low-load server set; one cache server in the high-load server set is selected as a source cache server, one cache server in the low-load server set is selected as a target cache server, and partial data in the source cache server are transferred into the target cache server. In implementation, the memory utilization rate of the distributed cache system is increased.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

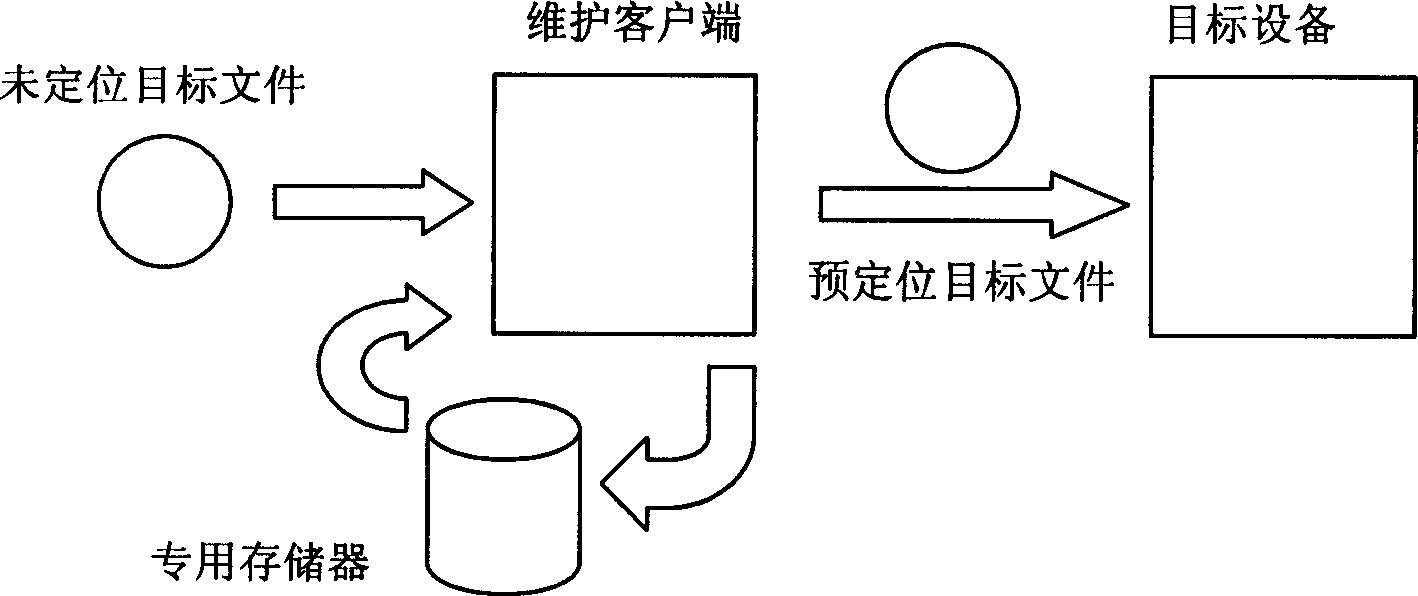

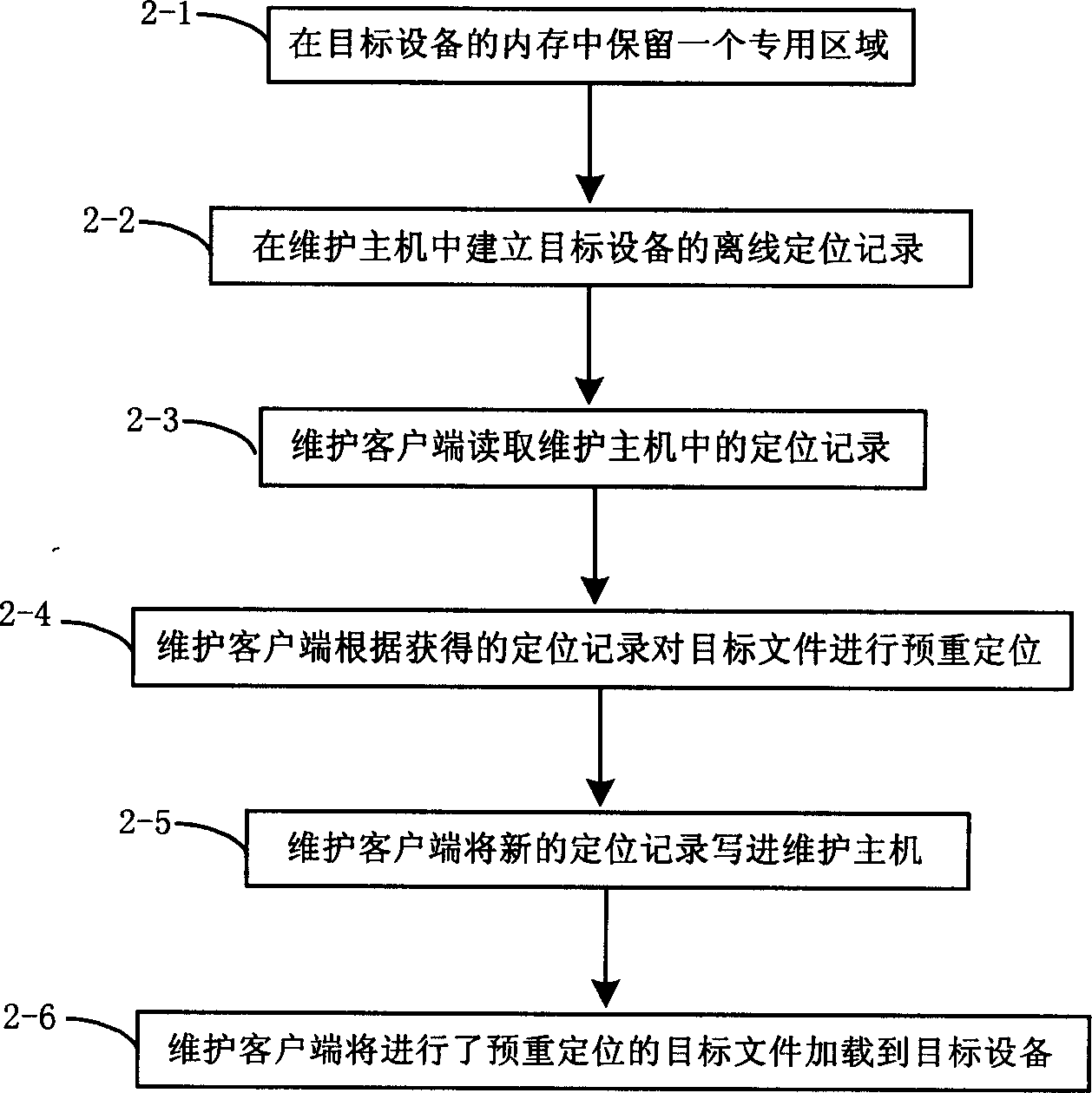

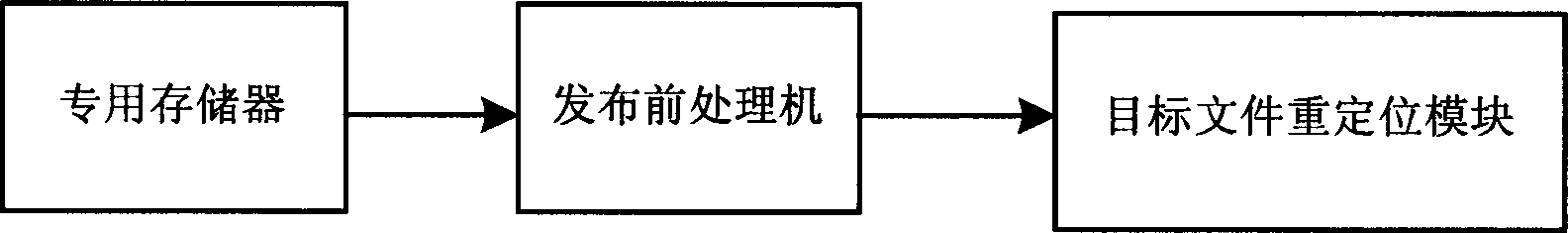

Method and apparatus for positioning target file

InactiveCN1841329AImprove memory utilizationReduce complexityProgram controlMemory systemsPre processorComputer science

The provided locating method for target file comprises: before loading, relocating the absolute address of target file; after loading, relocating its relative address, and completing the whole process. Wherein, the related devices comprise: a specific memory, a distribution pre-processor, and a relocating module for target file. This invention can improve memory utilance greatly, and reduces complexity for relocation and maintenance.

Owner:HUAWEI TECH CO LTD

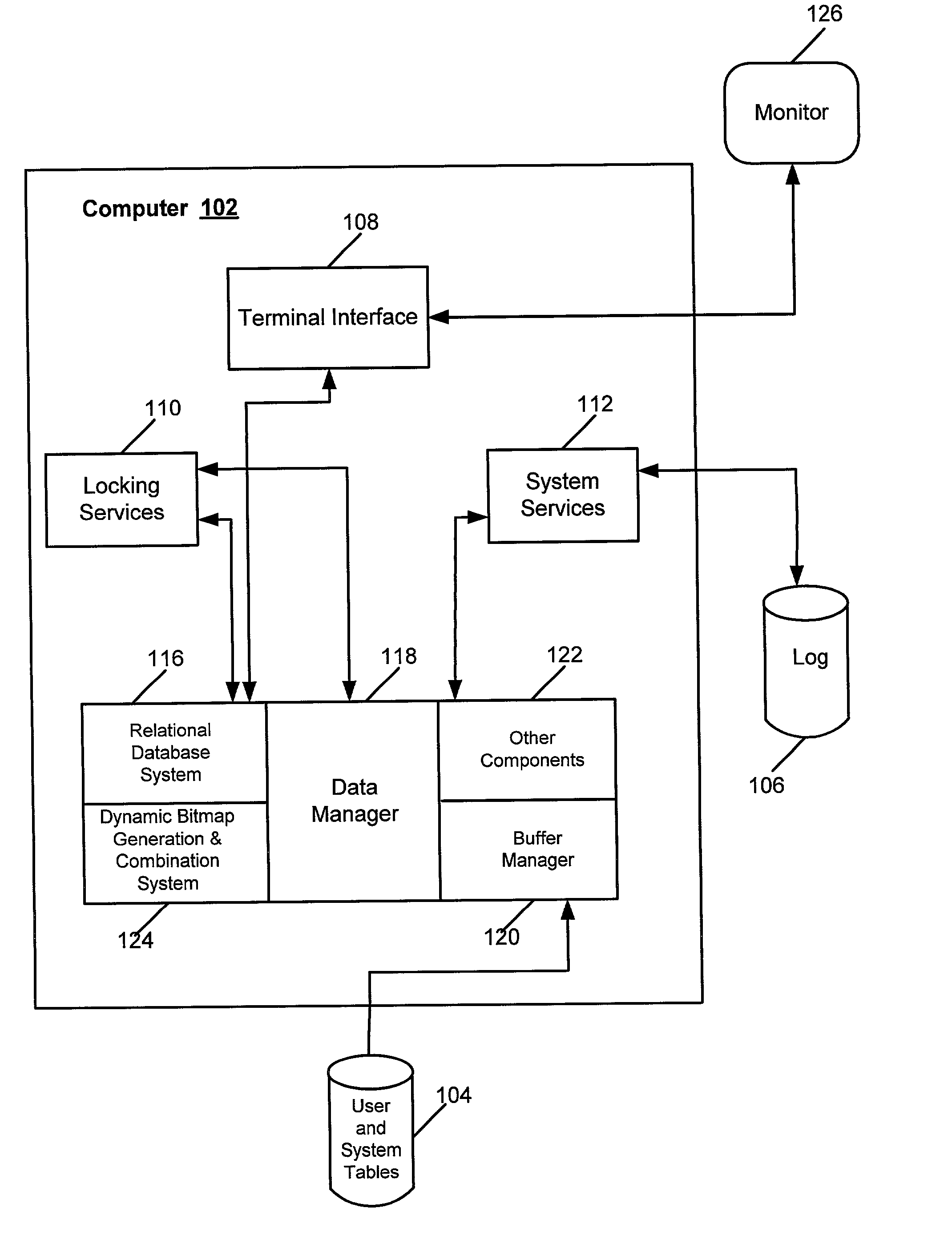

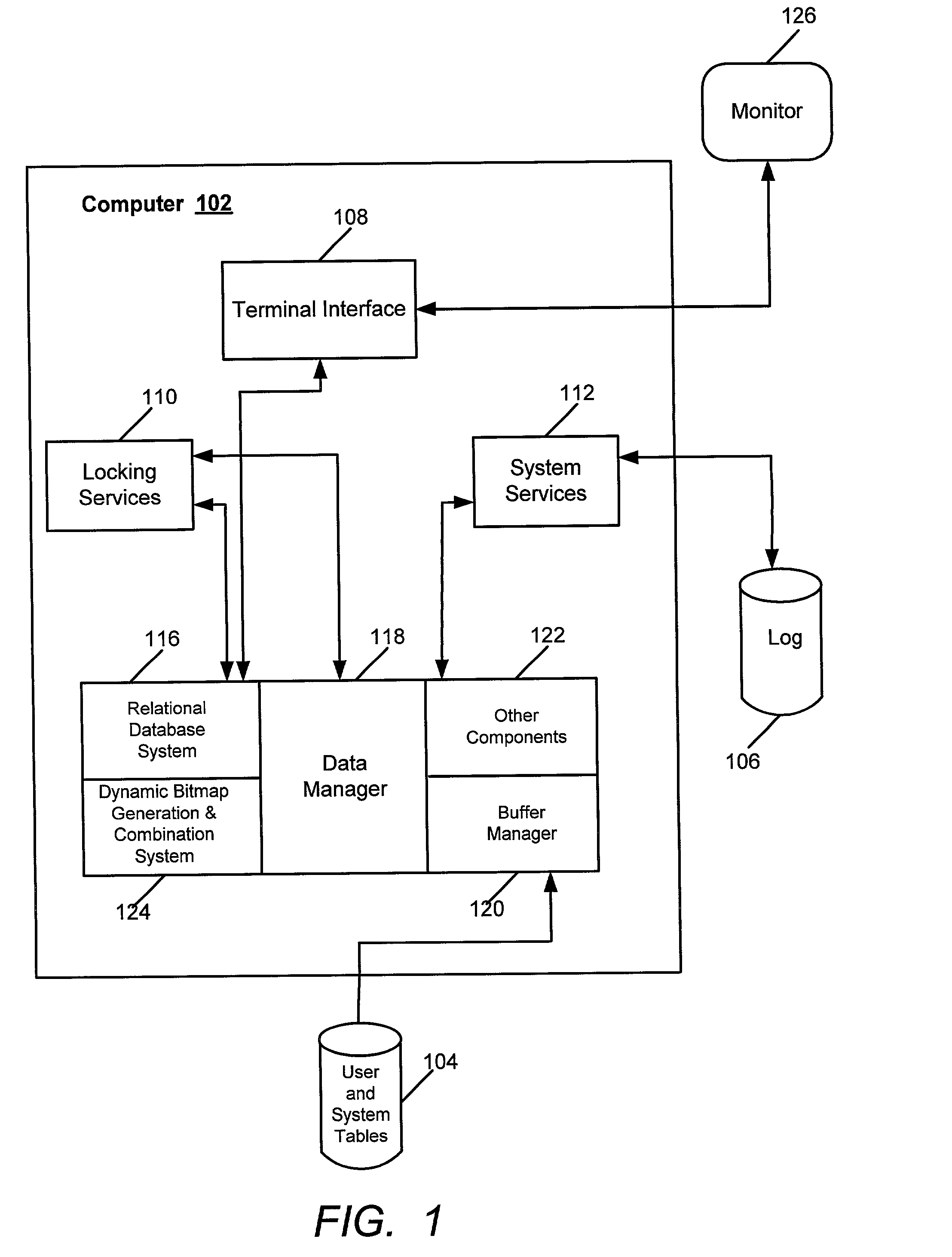

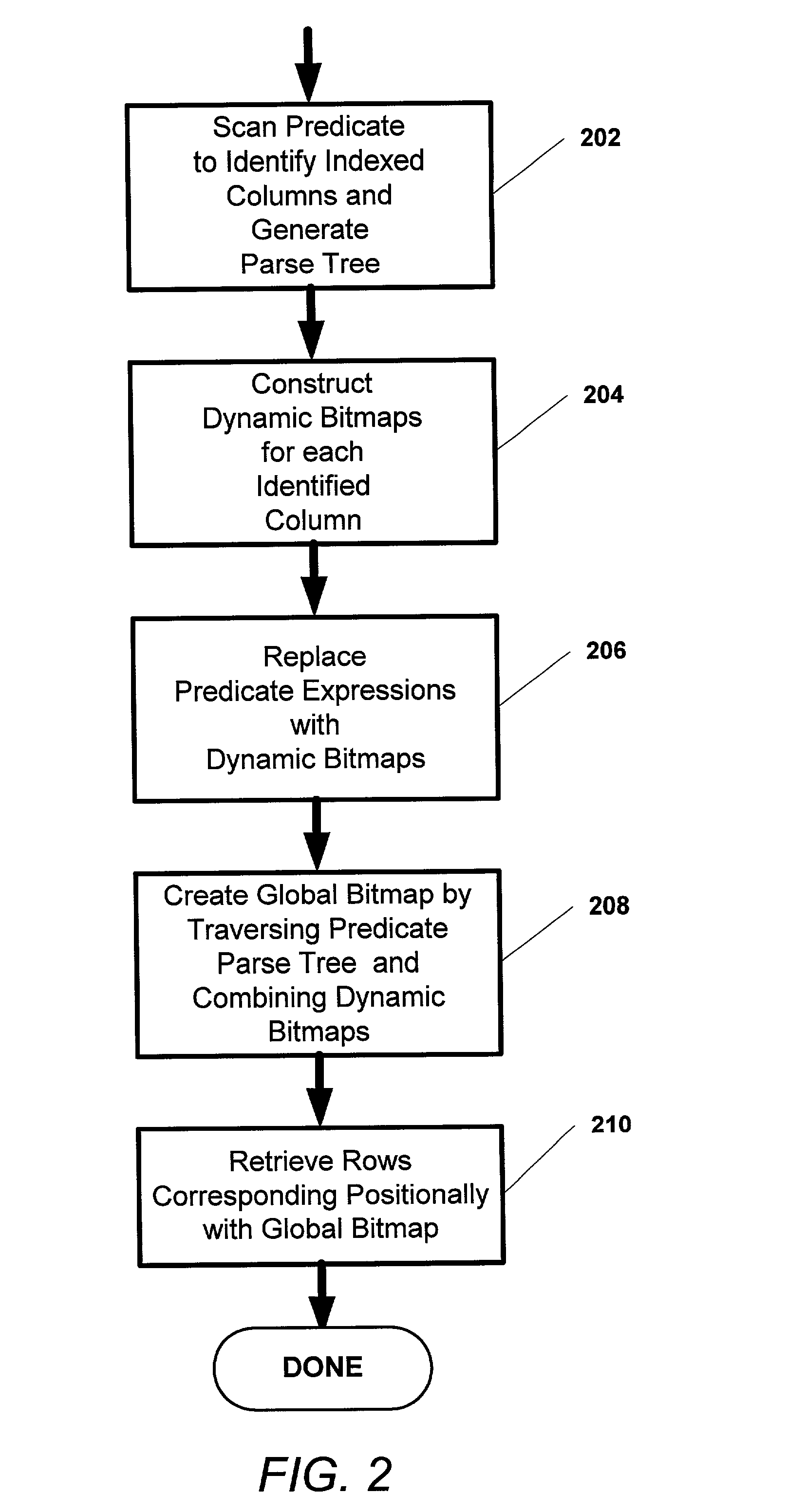

Method and apparatus to index a historical database for efficient multiattribute SQL queries

InactiveUS20020138464A1Improve memory utilizationDigital data information retrievalDigital data processing detailsAs DirectedData storing

A method, apparatus, and article of manufacture for a multiple index combination system. A query is executed to access data stored on a data storage device connected to a computer. In particular, while accessing one or more single column indexes to retrieve partition invariant designators, multiple bit maps are generated and populated. As directed by the query predicate, the bit maps are combined into a single, global bit map. The global bit map directs the retrieval process to the required rows.

Owner:CALASCIBETTA DAVID M

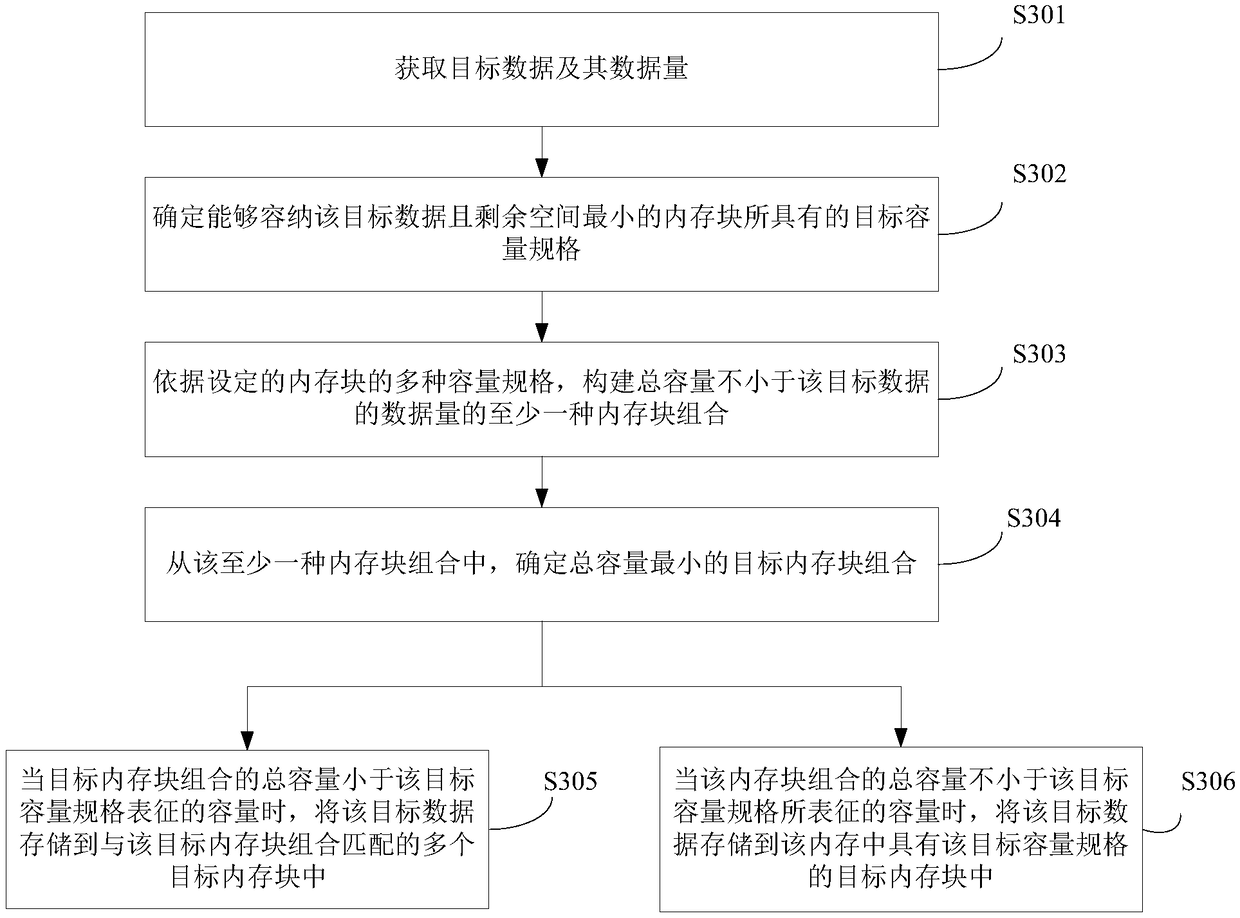

DATA STORAGE METHOD, APPARATUS AND device

ActiveCN109117273AReduce generationReduce wasteResource allocationMemory adressing/allocation/relocationComputer scienceUtilization rate

The invention provides a method for data storage, an apparatus and a device. The method comprises: after obtaining the target data to be stored in the memory and the data amount of the target data, according to the data amount, the target capacity specification of the memory block which can contain the target data and has the smallest remaining space is determined from a plurality of capacity specifications of the set memory block. At least one memory block combination with a total capacity not less than a data amount is constructed according to the plurality of capacity specification, the memory block combination comprises at least one memory block with a capacity specification and a number of memory blocks with each capacity specification, and the total numb of memory blocks in the memory block combination is a plurality of; determining a combination of target memory blocks with the smallest total capacity; when the total capacity of the target memory block combination is less than the capacity characterized by the target capacity specification, the target data is stored in a plurality of target memory blocks corresponding to the target memory block combination. The scheme of theinvention can reduce the memory fragmentation in the memory and improve the memory utilization rate.

Owner:TENCENT TECH (SHENZHEN) CO LTD

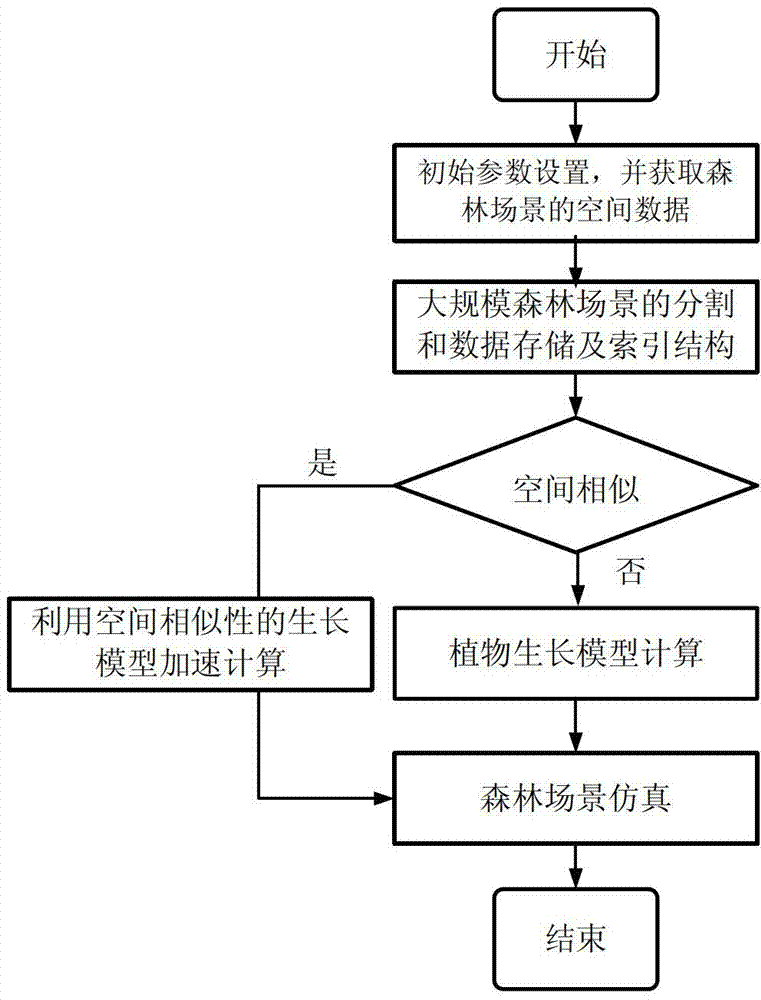

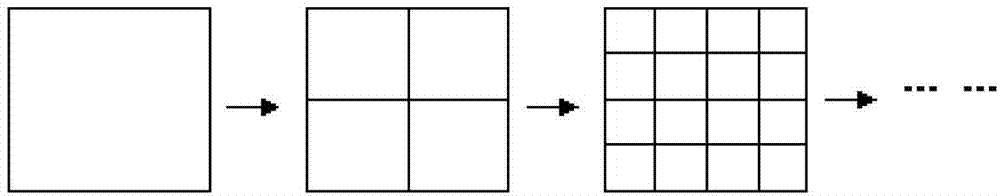

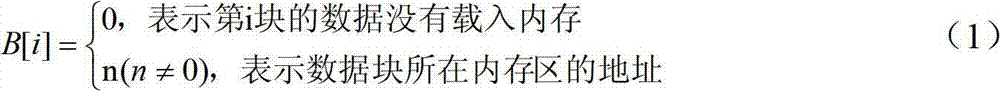

Large-scale forest scene quick generation method based on space similarity

The invention discloses a large-scale forest scene quick generation method based on space similarity. The method comprises the steps of 1) setting initialization parameters of a large-scale forest simulation scene, and acquiring scene distribution information of visible data and forest space of the whole scene; 2) partitioning the space data of the large-scale forest scene based on a quadtree, and dividing the scene into blocks with the same size; 3) calculating the space similarity of the forest scene; 4) judging the similarity between the scene blocks to quickly obtain biological amounts of plants; and 5) if the similarity between the scene blocks does not reach a certain proportion, calculating a growth model of the scene blocks, determining basic plants according to initial parameter data transmitted by a user, calculating an influence range and the biological amounts of the basic plants, then introducing a three-dimensional tree model into simulation for realizing the forest scene, and displaying a visible result to the user. The method is high in speed and high in precision.

Owner:ZHEJIANG UNIV OF TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com