Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

358 results about "Dynamic load balancing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Dynamic load balancing is a popular recent technique that protects ISP networks from sudden congestion caused by load spikes or link failures. Dynamic load balancing pro- tocols, however, require schemes for splitting trac across multiple paths at a ne granularity.

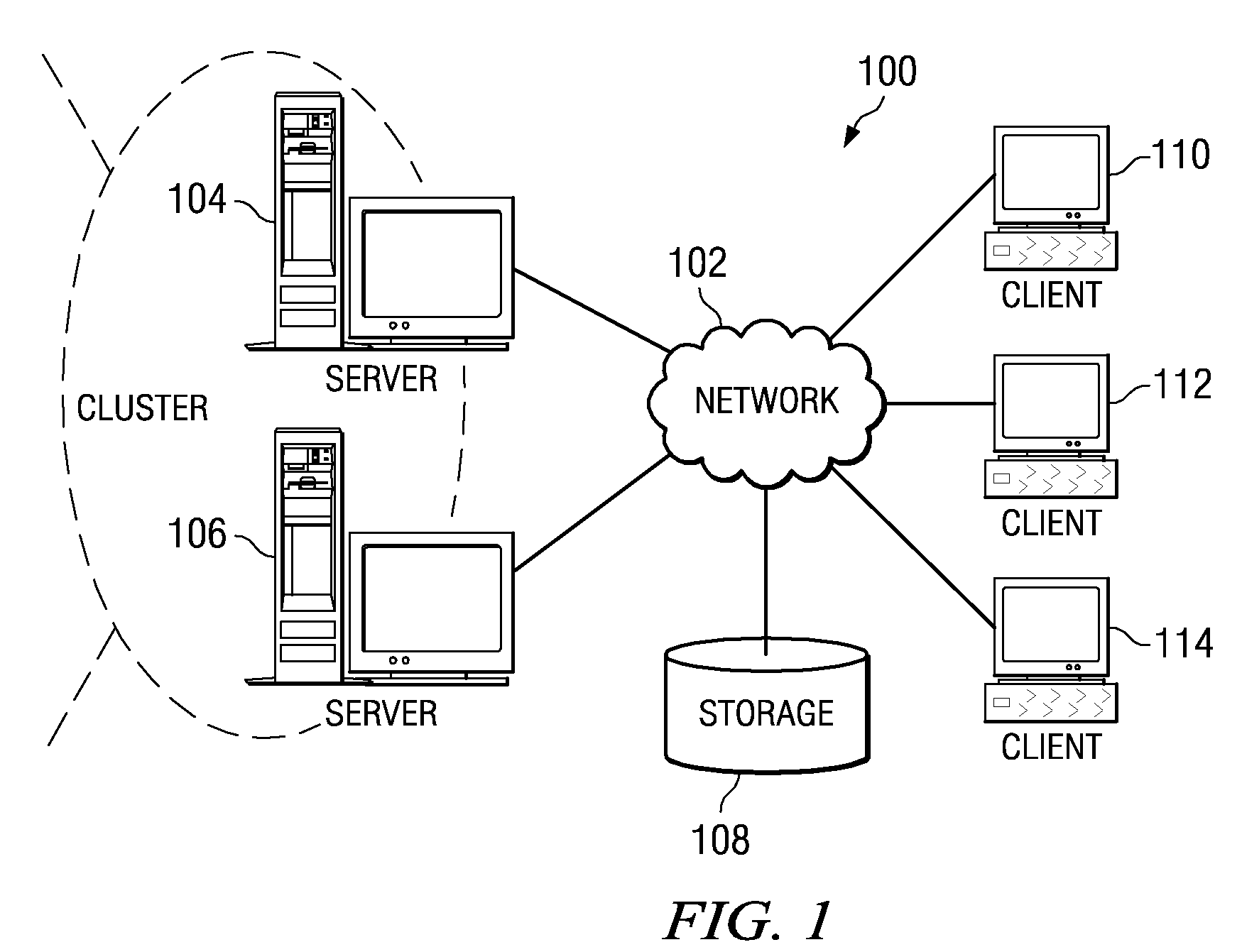

Dynamic load balancing of a network of client and server computer

InactiveUS20030126200A1Program synchronisationMultiple digital computer combinationsDynamic load balancingClient-side

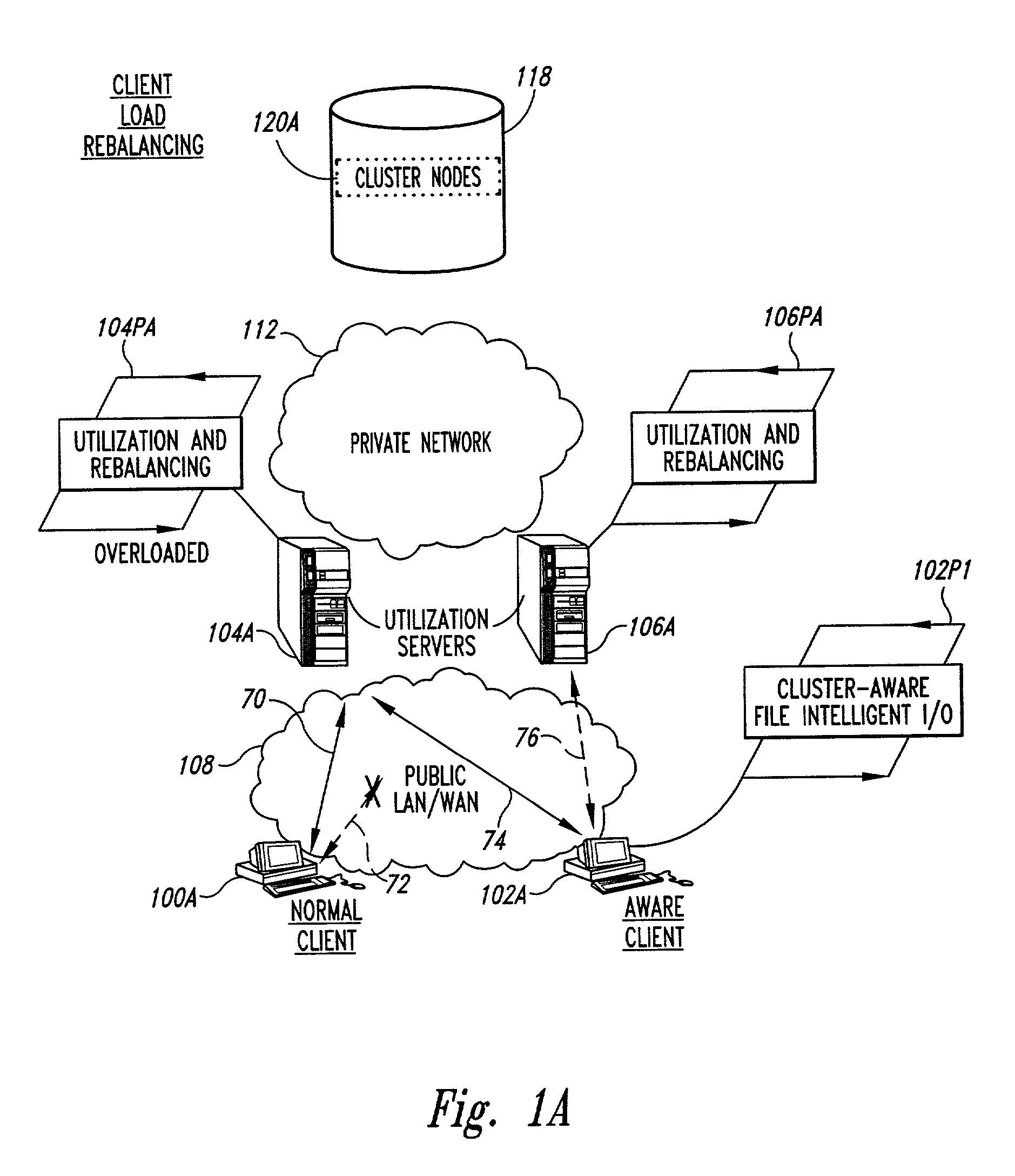

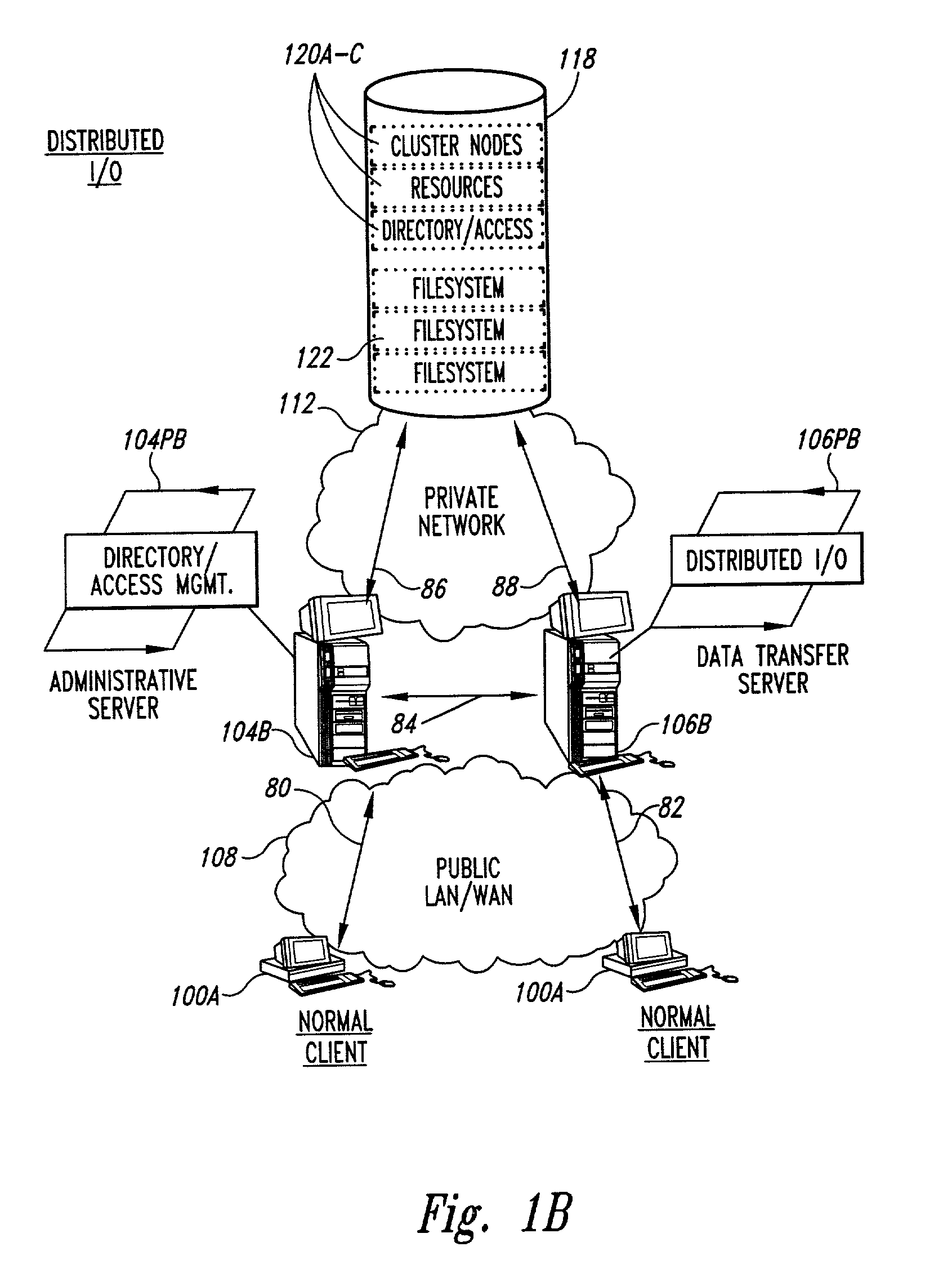

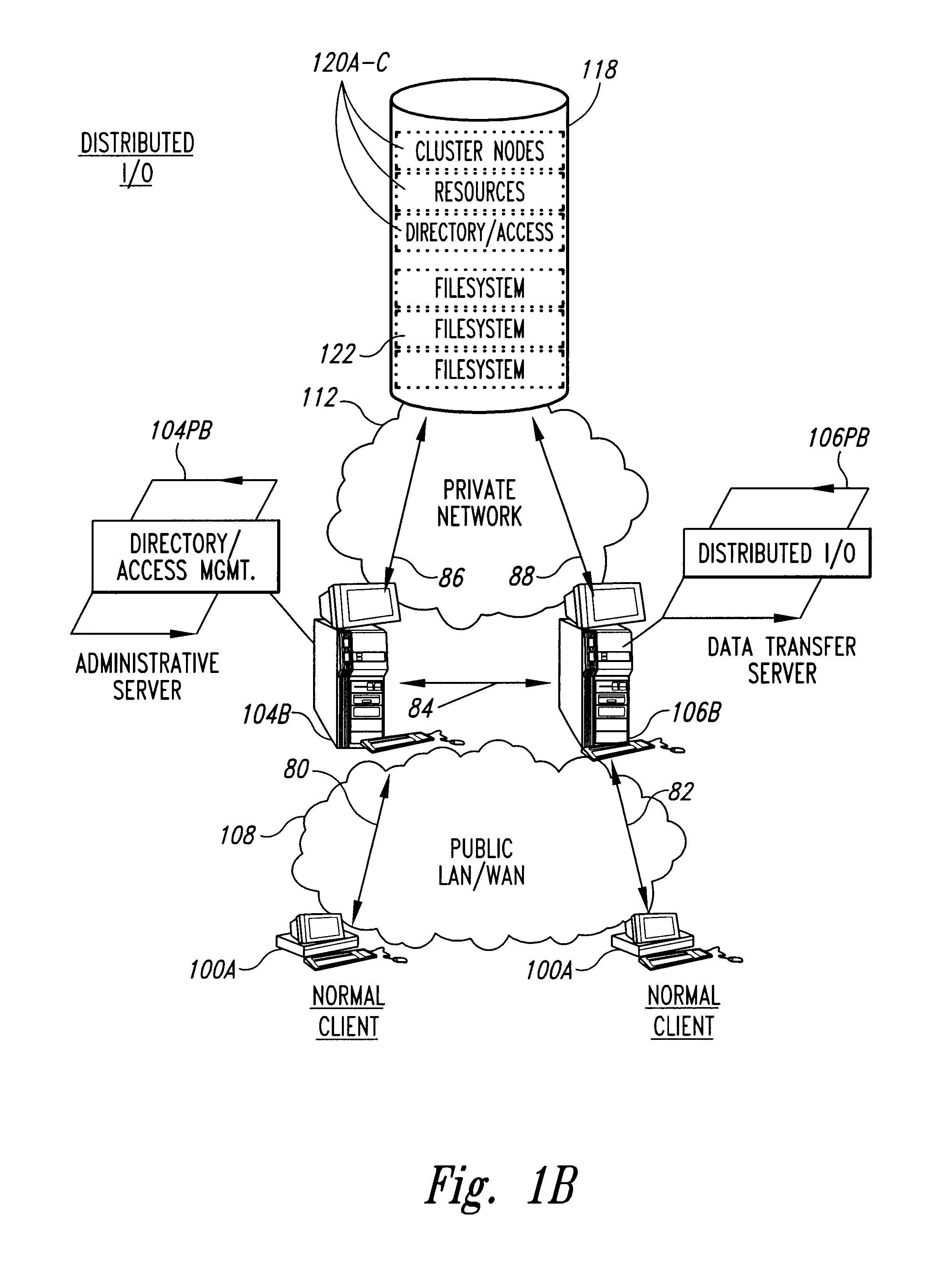

Methods for load rebalancing by clients in a network are disclosed. Client load rebalancing allows the clients to optimize throughput between themselves and the resources accessed by the nodes. A network which implements this embodiment of the invention can dynamically rebalance itself to optimize throughput by migrating client I / O requests from overutilized pathways to underutilized pathways. Client load rebalancing refers to the ability of a client enabled with processes in accordance with the current invention to remap a path through a plurality of nodes to a resource. The remapping may take place in response to a redirection command emanating from an overloaded node, e.g. server. These embodiments disclosed allow more efficient, robust communication between a plurality of clients and a plurality of resources via a plurality of nodes. In an embodiment of the invention a method for load balancing on a network is disclosed. The network includes at least one client node coupled to a plurality of server nodes, and at least one resource coupled to at least a first and a second server node of the plurality of server nodes. The method comprises the acts of: receiving at a first server node among the plurality of server nodes a request for the at least one resource; determining a utilization condition of the first server node; and re-directing subsequent requests for the at least one resource to a second server node among the plurality of server nodes in response to the determining act. In another embodiment of the invention the method comprises the acts of: sending an I / O request from the at least one client to the first server node for the at least one resource; determining an I / O failure of the first server node; and re-directing subsequent requests from the at least one client for the at least one resource to an other among the plurality of server nodes in response to the determining act.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

Dynamic load balancing of a network of client and server computer

InactiveUS6886035B2Improve throughputProgram synchronisationMultiple digital computer combinationsDynamic load balancingClient-side

Methods for load rebalancing by clients in a network are disclosed. Client load rebalancing allows the clients to optimize throughput between themselves and the resources accessed by the nodes. A network, which implements this embodiment of the invention, can dynamically rebalance itself to optimize throughput by migrating client I / O requests from over_utilized pathways to under_utilized pathways. Client load rebalancing allows a client to re-map a path through a plurality of nodes to a resource. The re-mapping may take place in response to a redirection command from an overloaded node.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

Dynamic load balancing for enterprise IP traffic

ActiveUS7308499B2Less network trafficImprove computing efficiencyMultiple digital computer combinationsData switching networksTraffic capacityDynamic load balancing

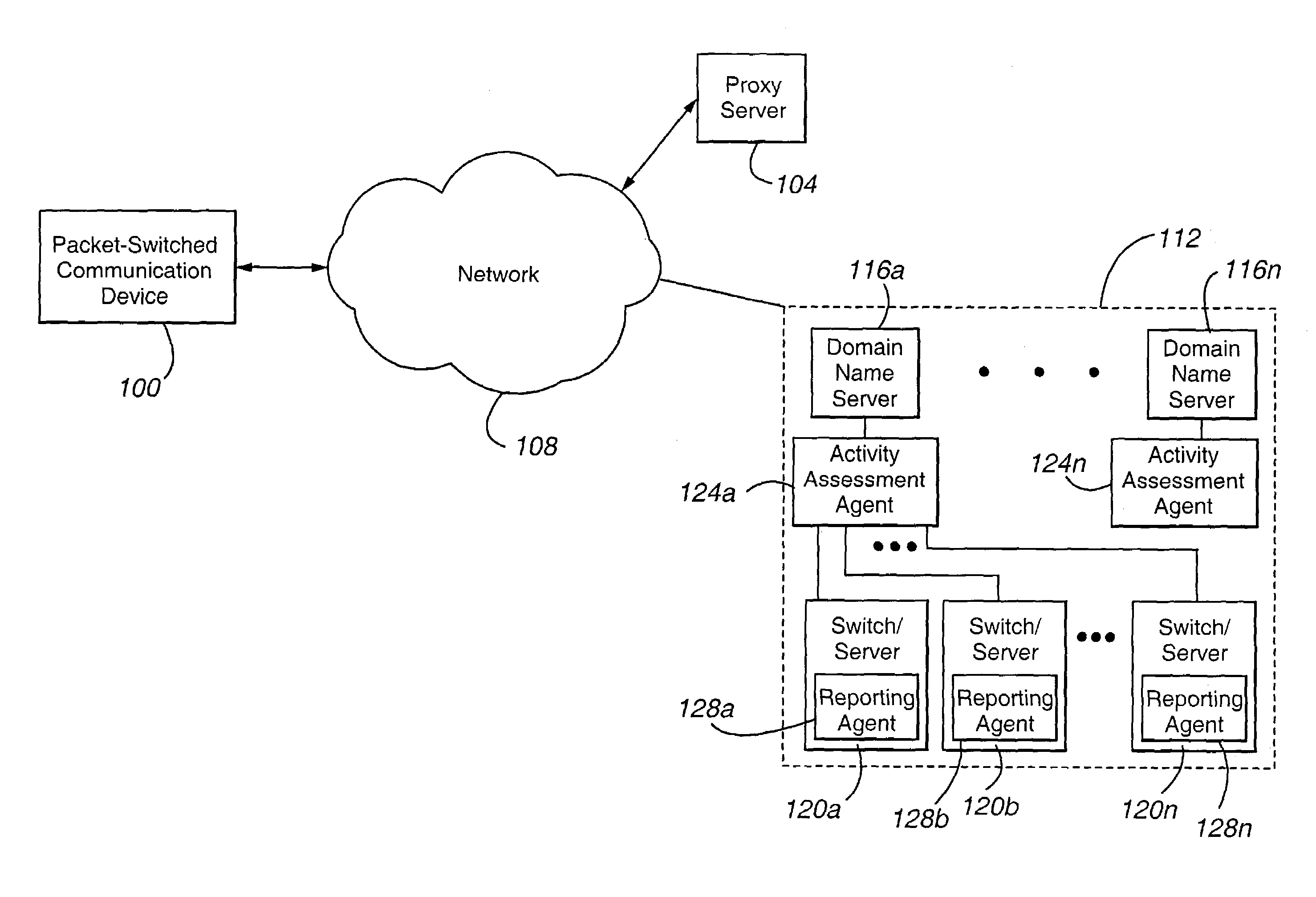

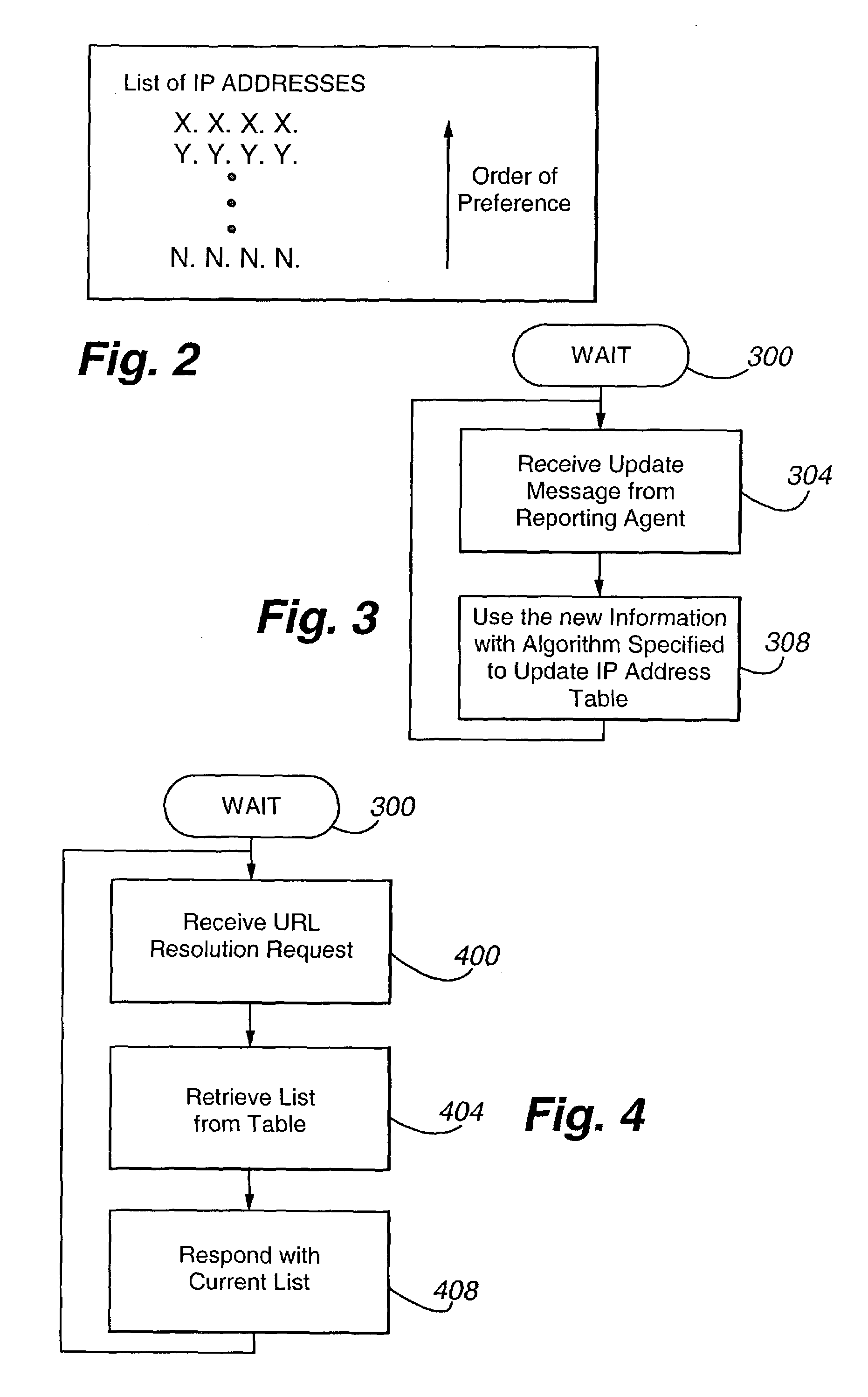

A method for effecting load balancing in a packet-switched network is provided. In one embodiment, the method includes the steps of:(a) providing a set of Internet Protocol (IP) addresses corresponding to a Universal Resource Locator (URL), wherein the ordering of the IP addresses in the set of IP addresses is indicative of a corresponding desirability of contacting each of the IP addresses and wherein the set of IP addresses are in a first order;(b) receiving activity-related information associated with at least one of the IP addresses; and(c) reordering the set of IP addresses to be in a second order different from the first order.

Owner:AVAYA INC

Transparent, look-up-free packet forwarding method for optimizing global network throughput based on real-time route status

ActiveUS20040032856A1Fast protection re-routingEfficient multicastingData switching by path configurationPrivate networkOSI model

A packet forwarding method for optimizing packet traffic flow across communications networks and simplifying network management. The invention provides look-up-free and packet-layer-protocol transparent forwarding of multi-protocol packet traffic among Layer-N (N=2 or upper in the ISO OSI model) nodes. The invention enables flexible and efficient packet multicast and anycast capabilities along with real-time dynamic load balancing and fast packet-level traffic protection rerouting. Applications include fast and efficient packet traffic forwarding across administrative domains of Internet, such as an ISP's backbone or an enterprise virtual private network, as well as passing packet traffic over a neutral Internet exchange facility between different administrative domains.

Owner:XENOGENIC DEV LLC

Distributed traffic controller for network data

InactiveUS7299294B1Improve availabilityEnsure availabilityComputer security arrangementsMultiple digital computer combinationsNetwork availabilityBack end server

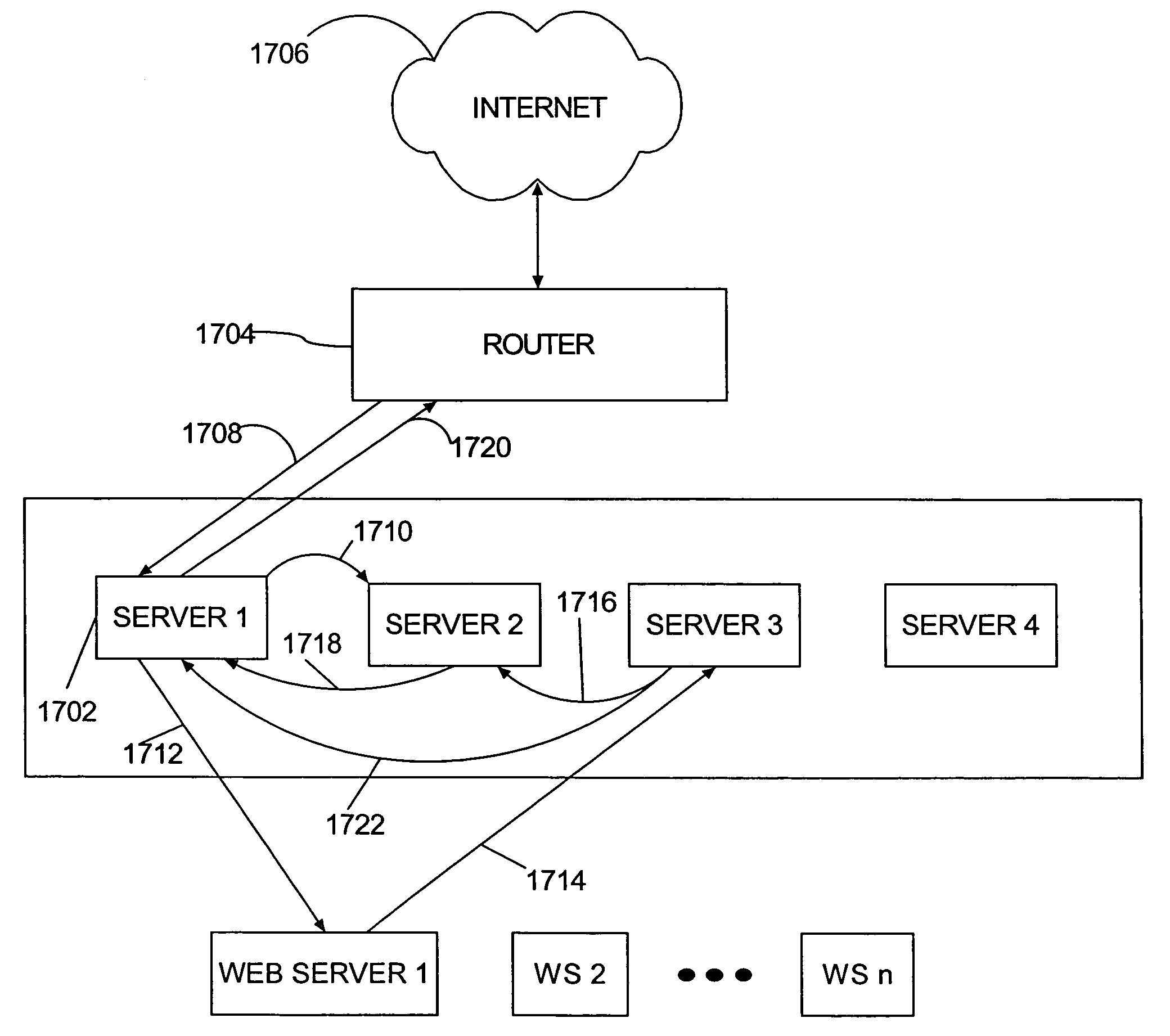

A distributed gateway for controlling computer network data traffic dynamically reconfigures traffic assignments among multiple gateway machines for increased network availability. If one of the distributed gateway machines becomes unavailable, traffic assignments are moved among the multiple machines such that network availability is substantially unchanged. The machines of the distributed gateway form a cluster and communicate with each other using a Group Membership protocol word such that automatic, dynamic traffic assignment reconfiguration occurs in response to machines being added and deleted from the cluster, with no loss in functionality for the gateway overall, in a process that is transparent to network users, thereby providing a distributed gateway functionality that is scalable. Operation of the distributed gateway remains consistent as machines are added and deleted from the cluster. A scalable, distributed, highly available, load balancing network gateway is thereby provided, having multiple machines that function as a front server layer between the network and a back-end server layer having multiple machines functioning as Web file servers, FTP servers, or other application servers. The front layer machines comprise a server cluster that performs fail-over and dynamic load balancing for both server layers.

Owner:EMC IP HLDG CO LLC

Network server having dynamic load balancing of messages in both inbound and outbound directions

InactiveUS6243360B1Improve performancePromote recoveryError preventionTransmission systemsDynamic load balancingNetwork packet

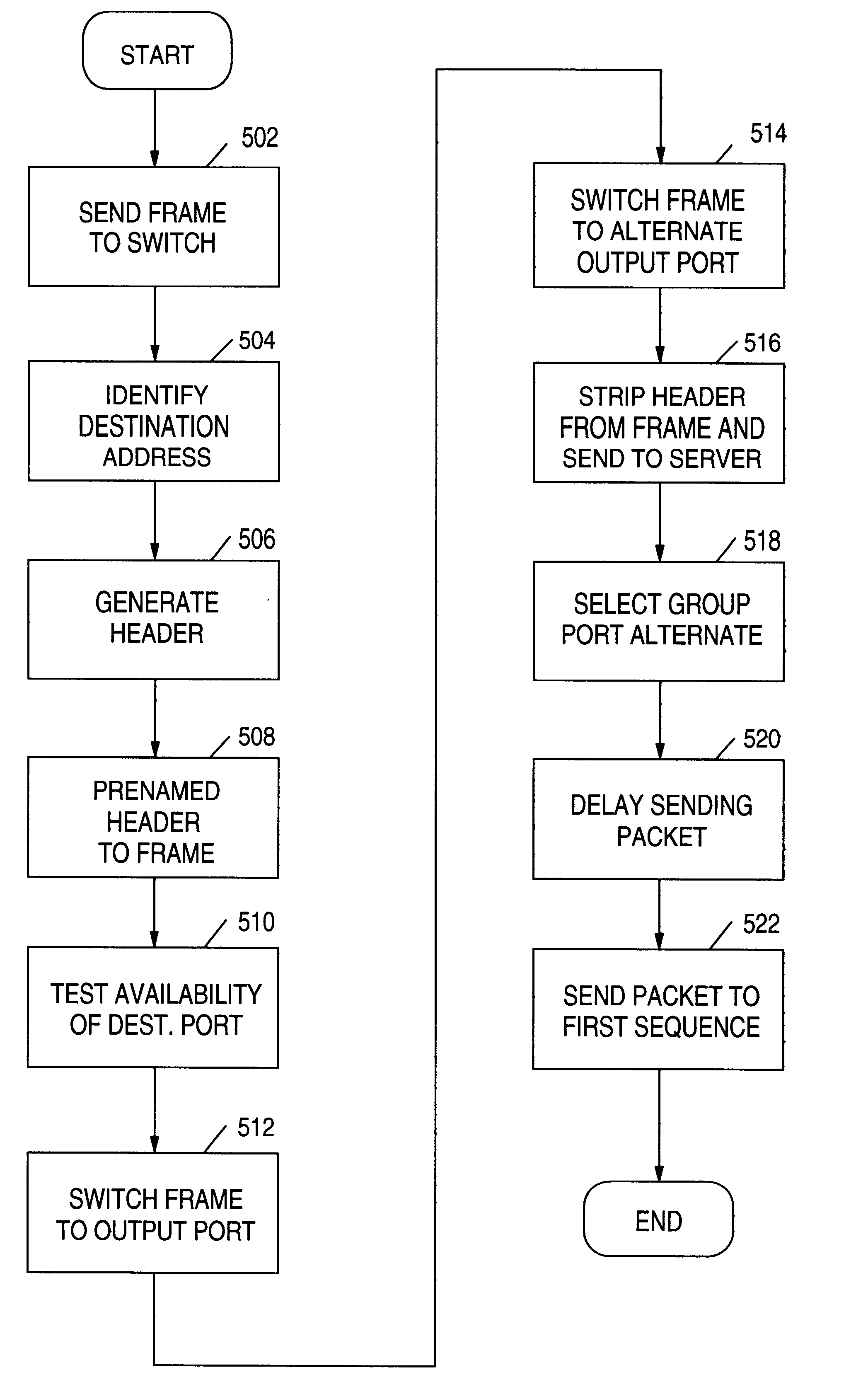

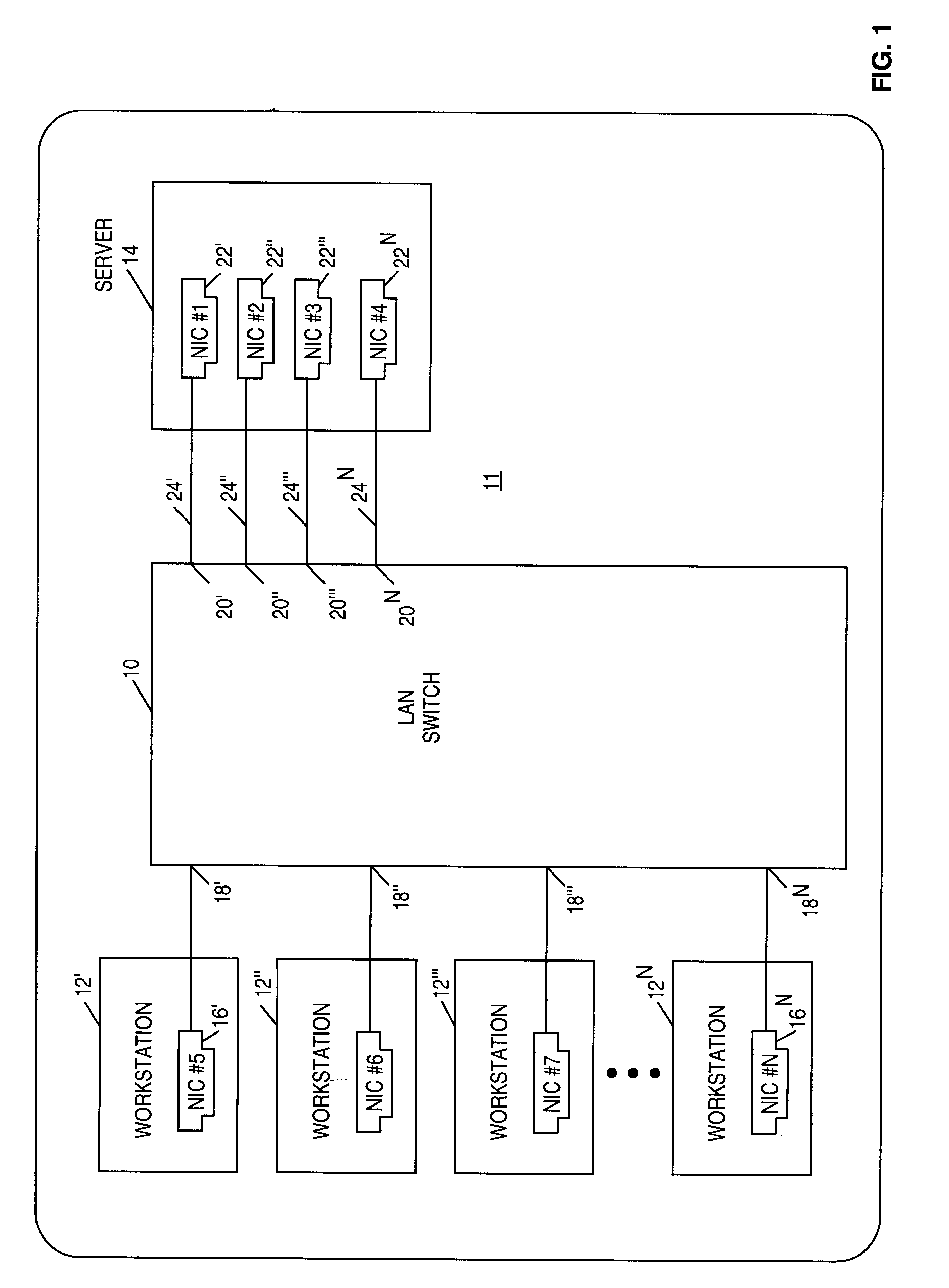

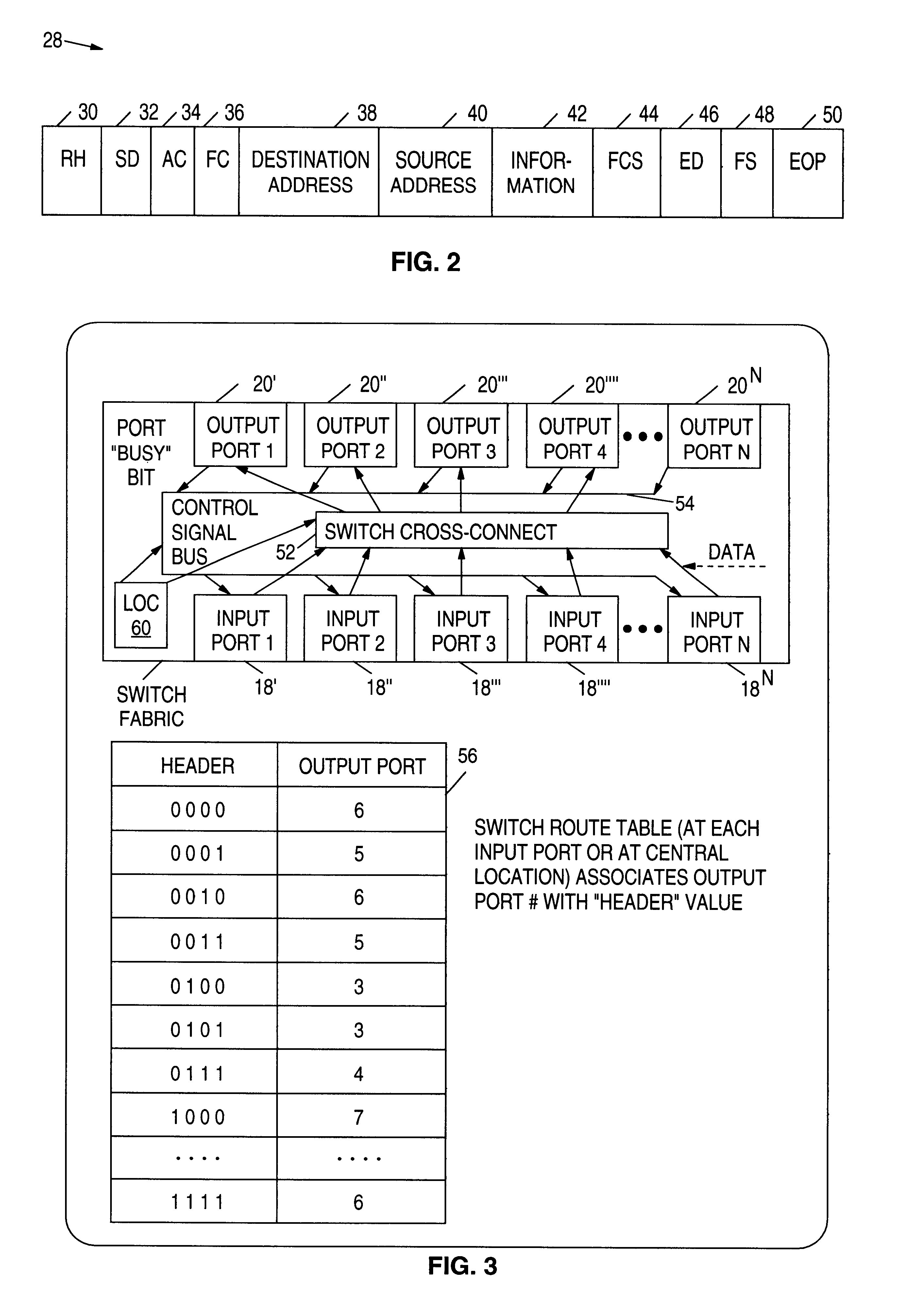

A communications network including a server having multiple entry ports and a plurality of workstations dynamically balances inbound and outbound to / from the server thereby reducing network latency and increasing network throughput. Workstations send data packets including network destination addresses to a network switch. A header is prepended to the data packet, the header identifying a switch output or destination port for transmitting the data packet to the network destination address. The network switch transfers the data packet from the switch input port to the switch destination output port whereby whenever the switch receives a data packet with the server address, the data packet is routed to any available output port of the switch that is connected to a Network Interface Card in the server. The switch includes circuitry for removing the routing header prior to the data packet exiting the switch. The server includes circuitry for returning to the workstation the address of the first entry port into the server or other network device that has more than one Network Interface Card installed therein.

Owner:IBM CORP

Dynamic load balancing resource allocation

InactiveUS20050055694A1Program initiation/switchingResource allocationDynamic load balancingResource allocation

A method, a system, and a computer readable medium embodying a computer program with code for dynamic load balancing resource allocation. A desired allocation of resources is received for servicing a plurality of consumer group requests and determining an actual allocation of the resources for a present operational period. A temporary allocation of the resources for a next operational period relative to the desired allocation and the actual allocation is determined and tile resources allocated to the consumer group requests in the next operational period according to the temporary allocation. Consumer group requests to be serviced by the resources are selected based upon availability of the consumer groups requests and the amount of consumer groups requests being presently serviced.

Owner:HEWLETT PACKARD DEV CO LP

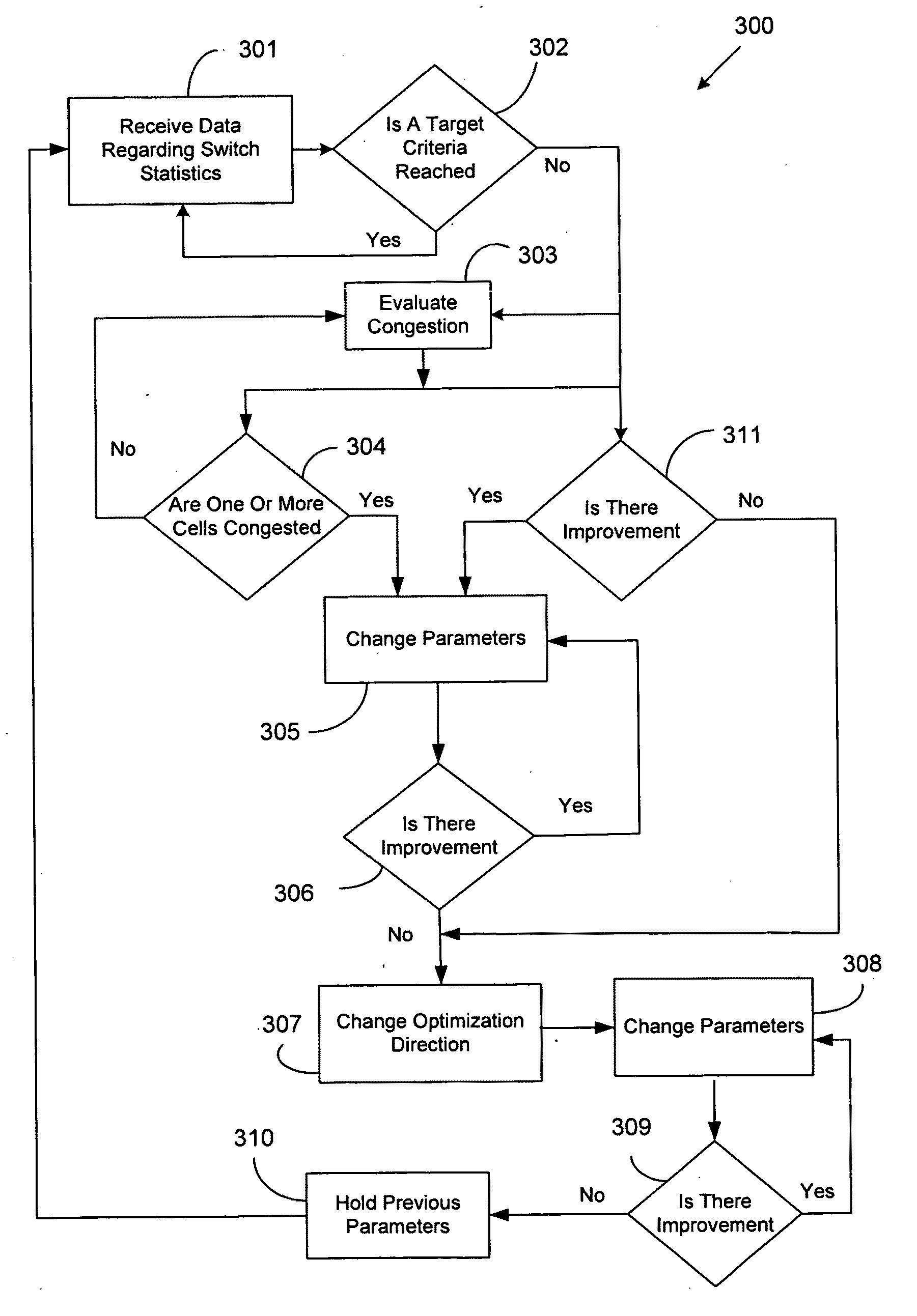

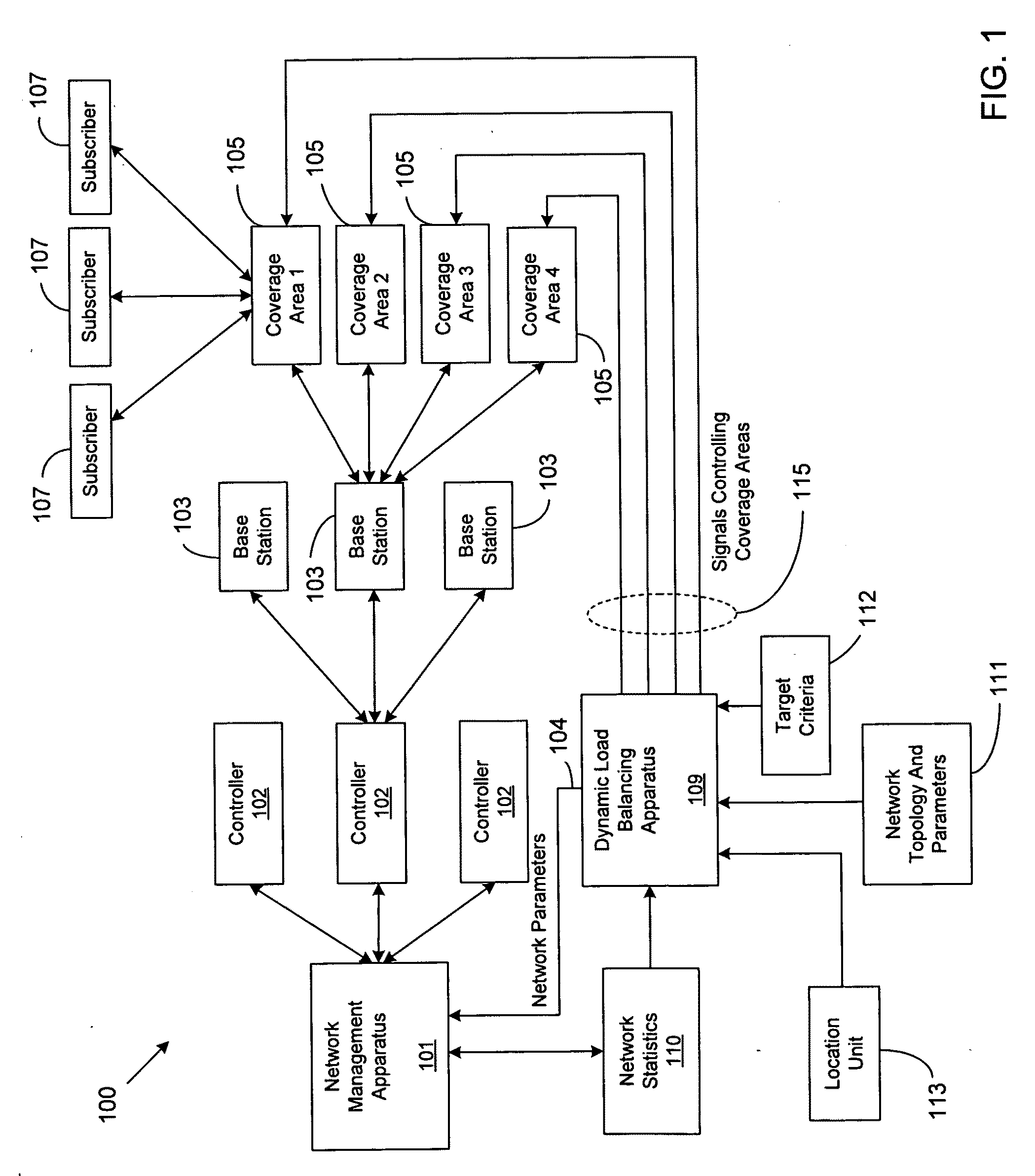

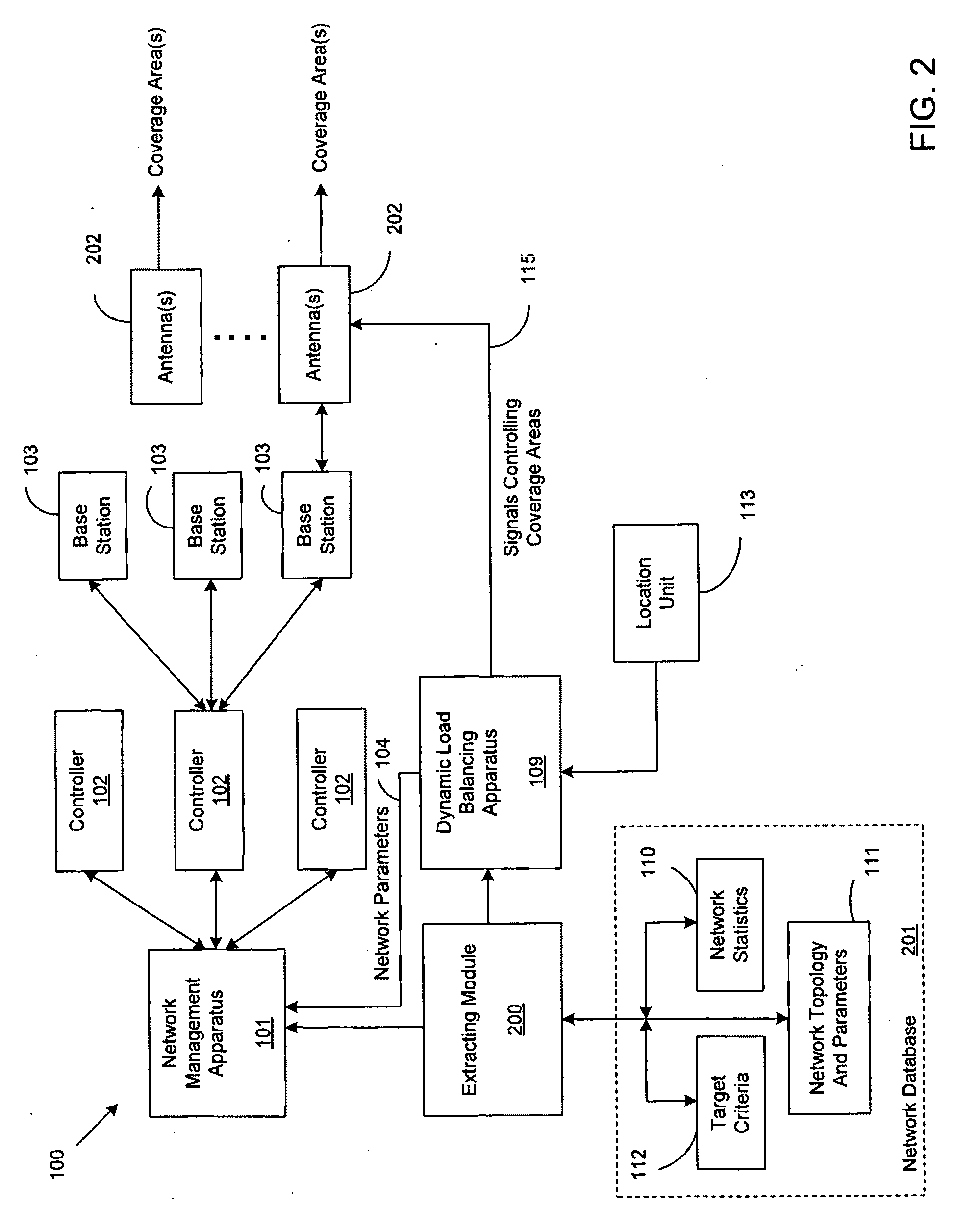

Dynamic load balancing

ActiveUS20090323530A1Increase rangeReduce in quantityError preventionTransmission systemsQuality of serviceDynamic load balancing

A method, program, system and apparatus perform dynamic load balancing of coverage areas in a wireless communication network. The dynamic load balancing is performed by evaluating cell congestion based on location information of subscribers in the wireless communication network, collecting network parameters related to the wireless communication network and altering network parameters based on the evaluated cell congestion. After the network parameter is altered, the coverage areas are narrowed. Improvements in cell congestion and quality of server are then determined based on the narrowing of the coverage areas. Altering of the plurality of network parameters and evaluating of the cell congestion are performed continuously until a target quality of service is achieved.

Owner:VIAVI SOLUTIONS INC

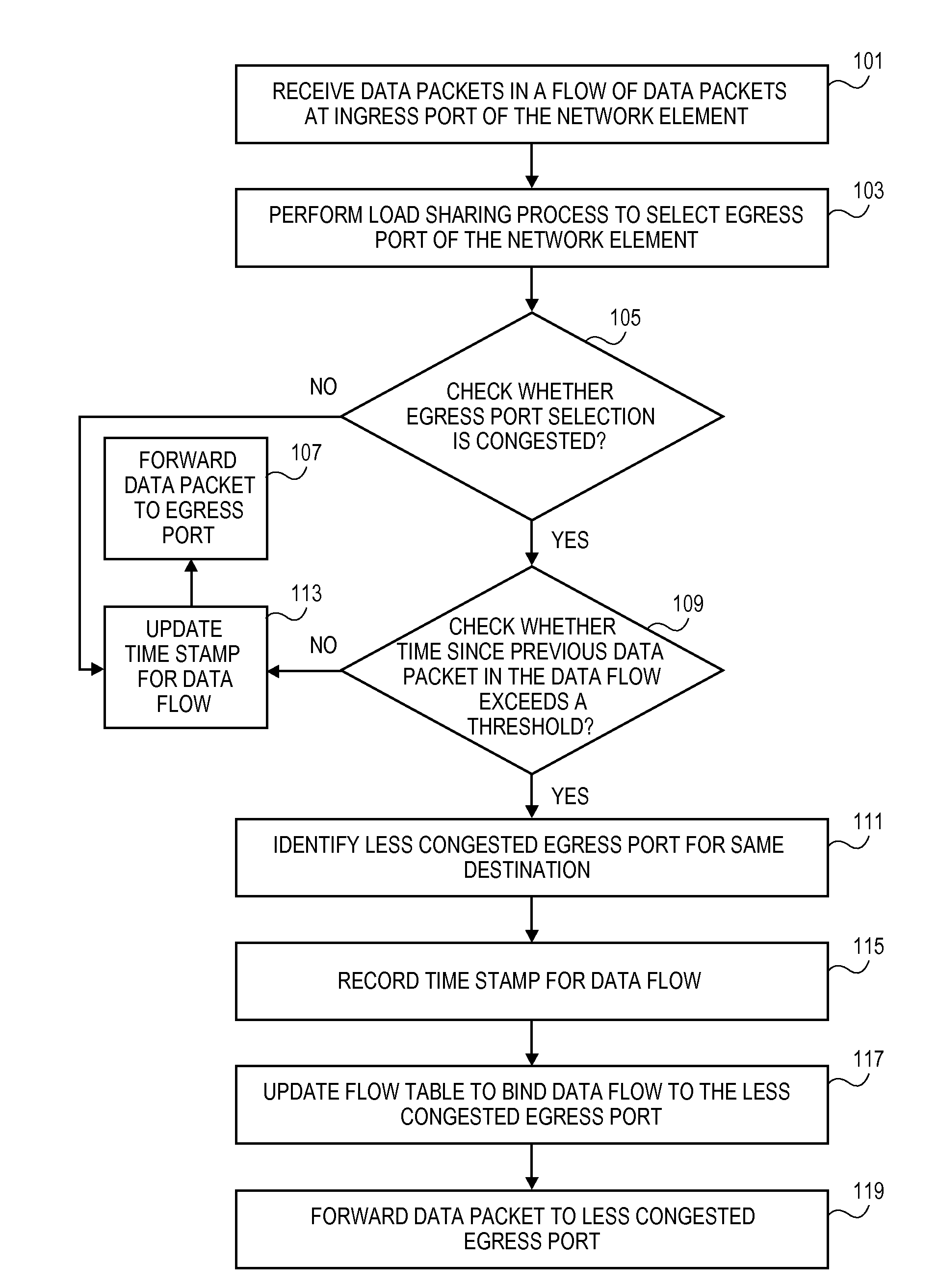

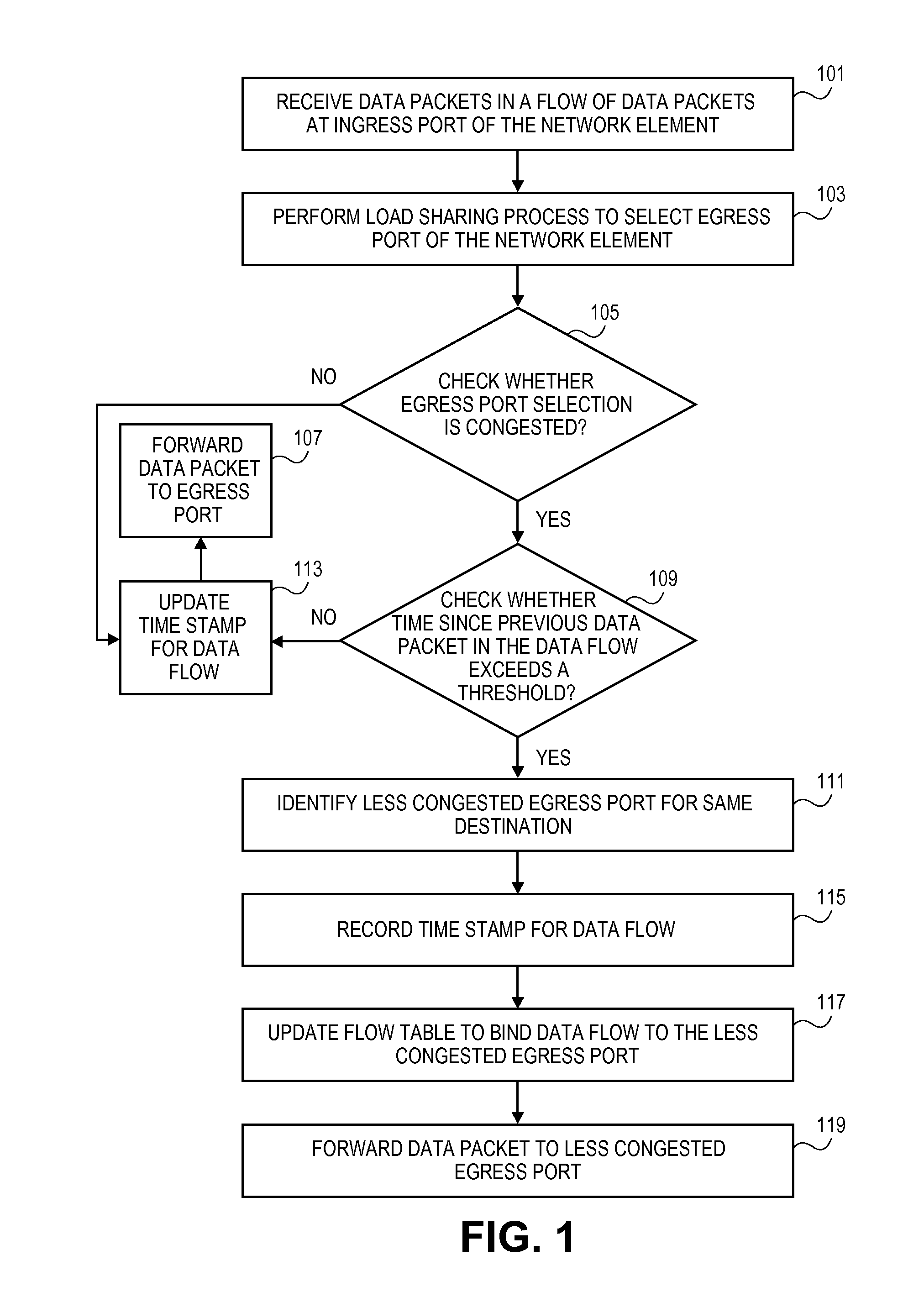

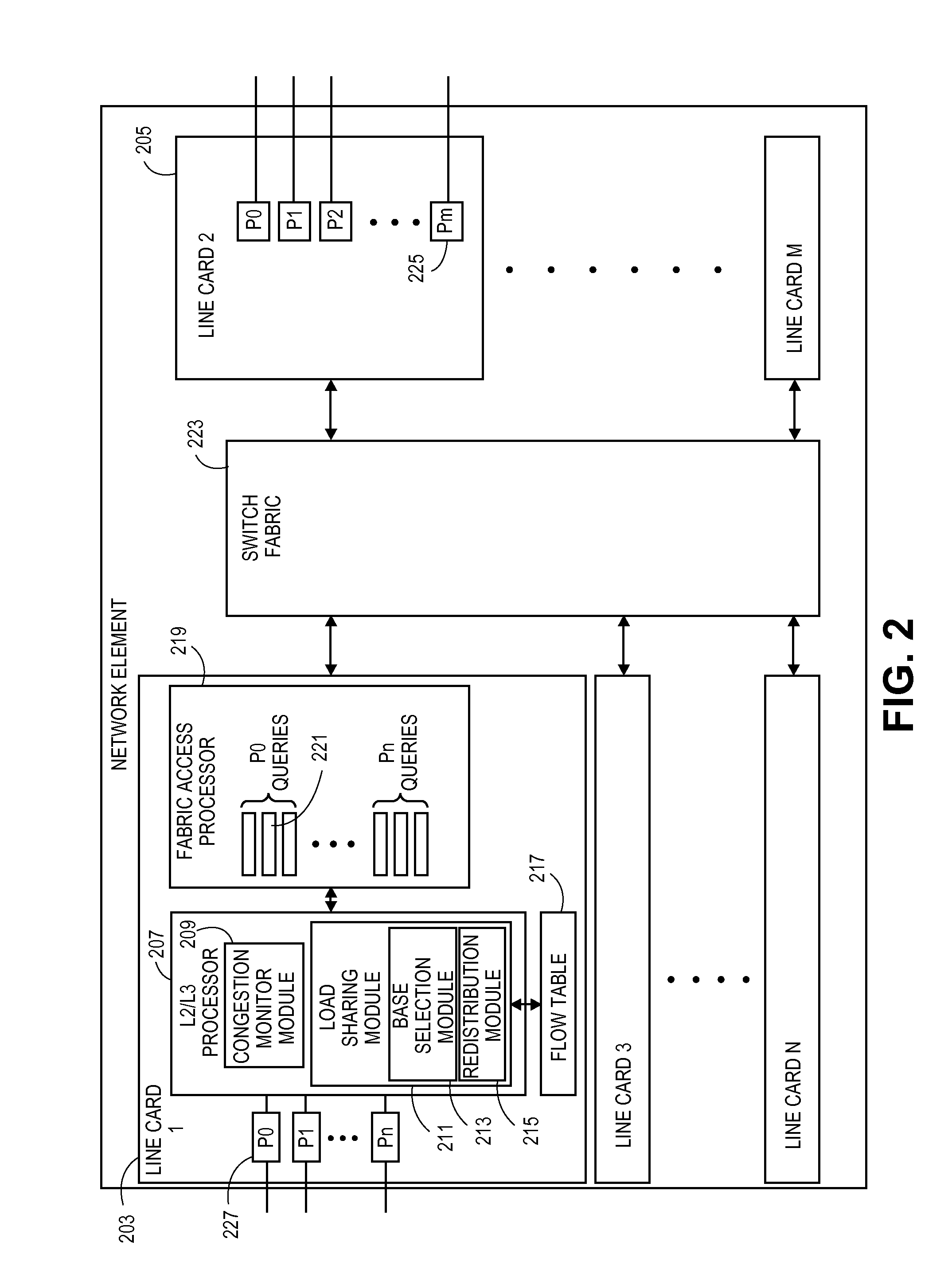

Method for dynamic load balancing of network flows on lag interfaces

ActiveUS20140119193A1Optimize load distributionError preventionTransmission systemsDynamic load balancingData stream

A method is implemented by a network element to improve load sharing for a link aggregation group by redistributing data flows to less congested ports in a set of ports associated with the link aggregation group. The network element receives a data packet in a data flow at an ingress port of the network element. A load sharing process is performed to select an egress port of the network element. A check is whether the selected egress port is congested. A check is made whether a time since a previous data packet in the data flow was received exceeds a threshold value. A less congested egress port is identified in the set of ports. A flow table is updated to bind the data flow to the less congested egress port and the data packet is forwarded to the less congested egress port.

Owner:TELEFON AB LM ERICSSON (PUBL)

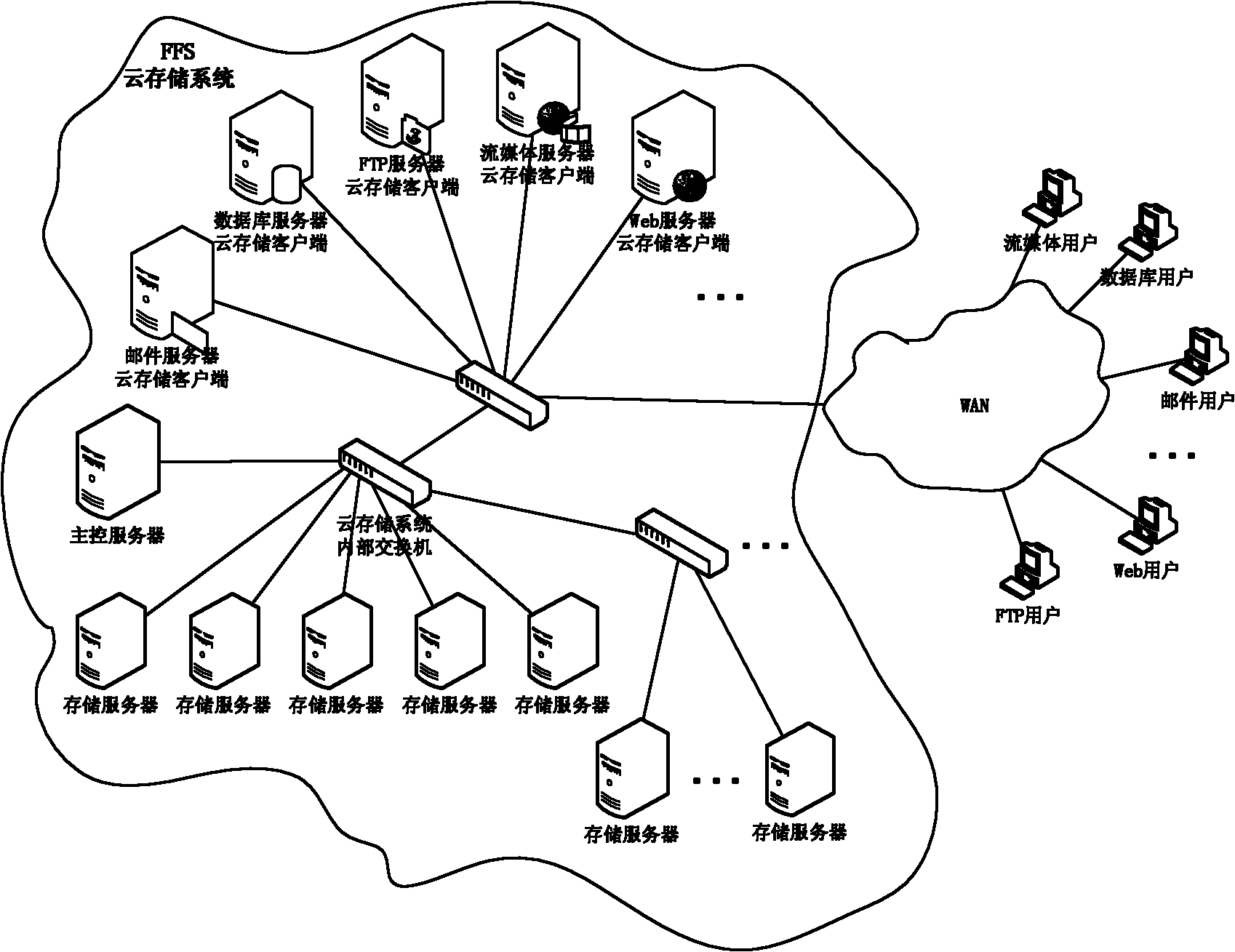

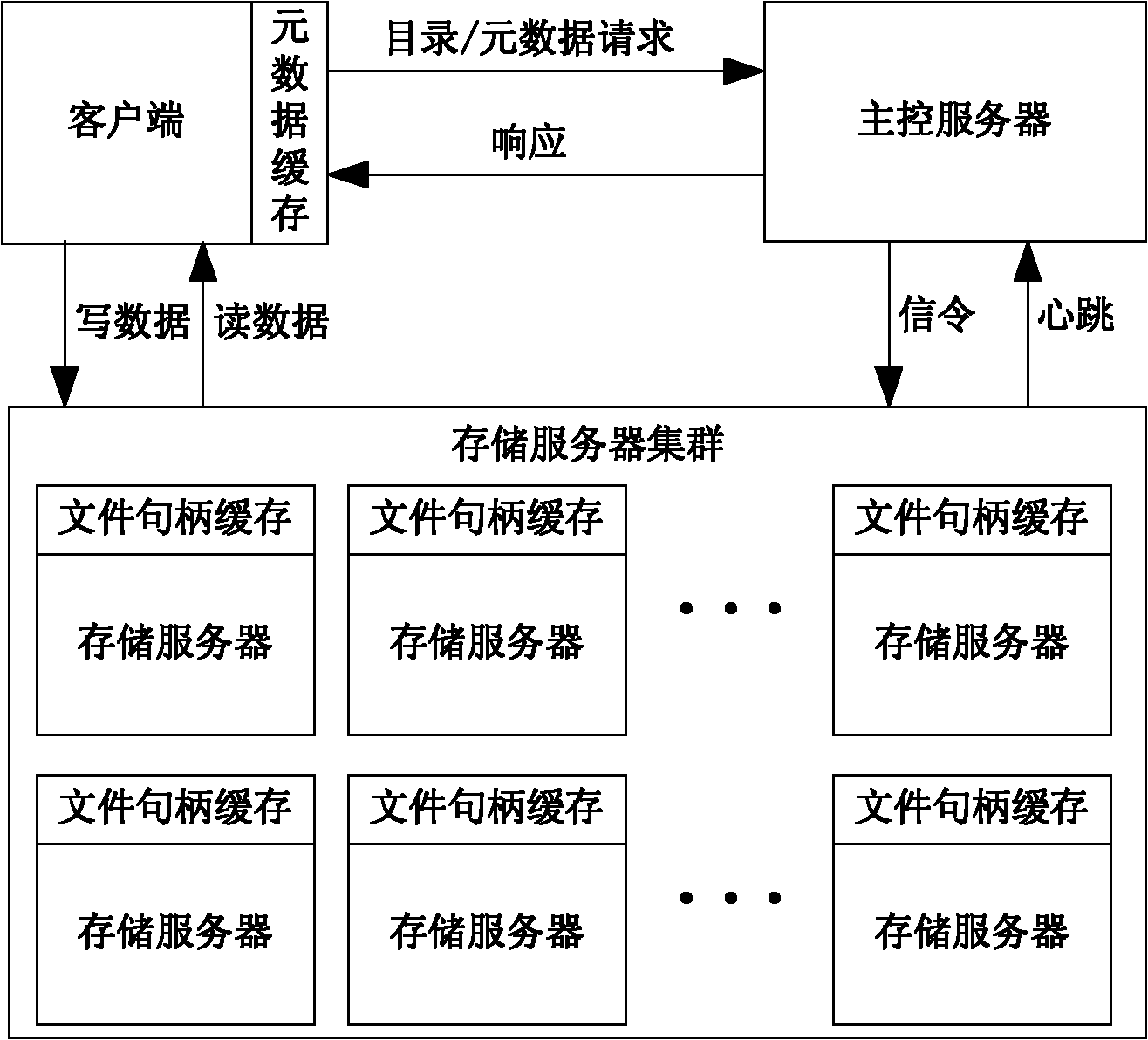

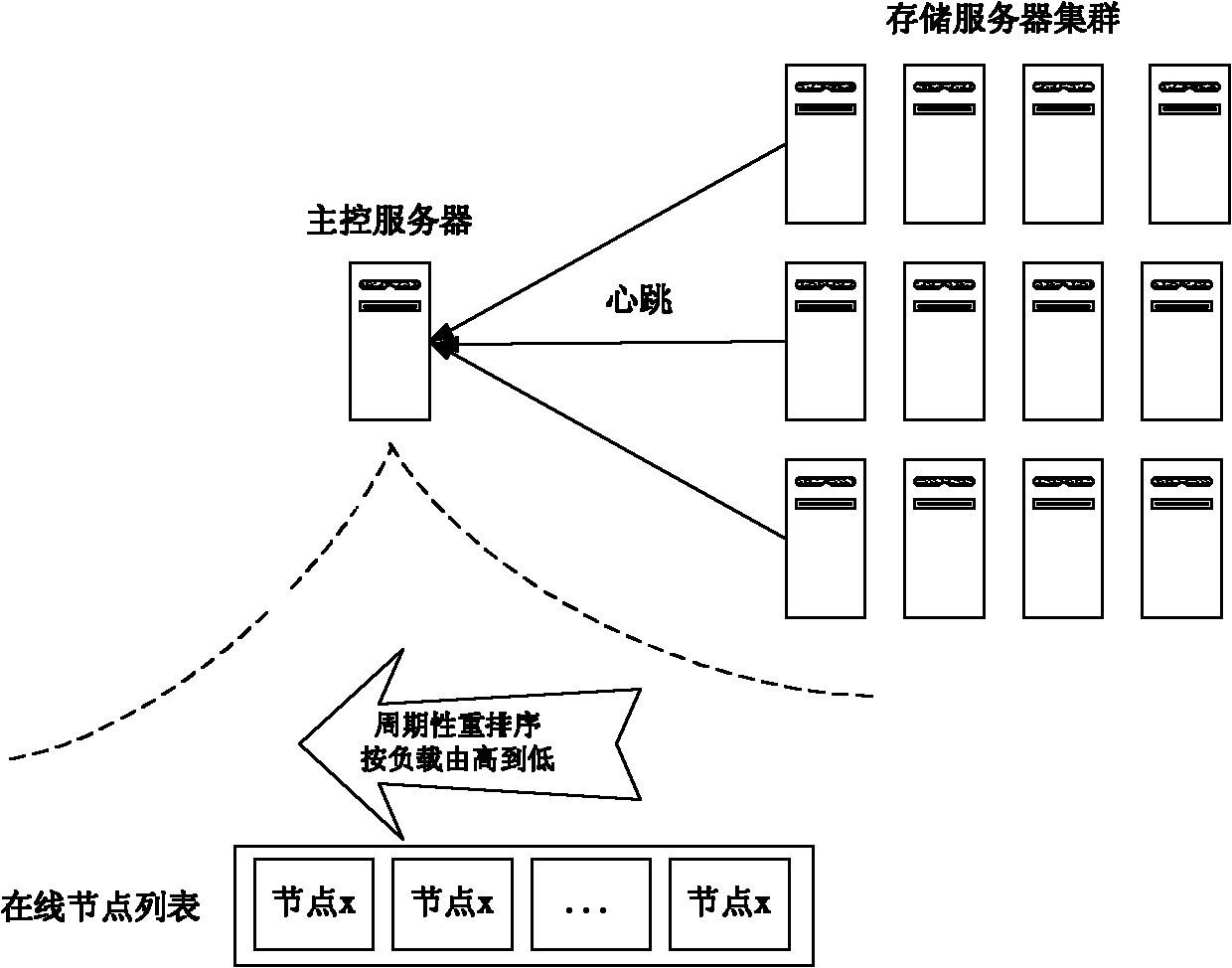

Network-based PB level cloud storage system and processing method thereof

InactiveCN102143215ASolve the problem of unbalanced load distributionSolve the problem of difficult expansionData switching networksGeneral purposeDynamic load balancing

The invention relates to a network-based PB level cloud storage system and a processing method thereof. The network-based PB level cloud storage system comprises a main control server, a storage server cluster and a client side which are operated through an internal exchange, wherein the main control server is used for providing the directory information and the meta data information for a cloud storage client side and monitoring the storage server cluster; the storage server cluster comprises a plurality of storage servers used for data storage; the client side is used for providing the virtual disc service for a cloud storage client machine, submitting an operation request of the cloud storage client machine to a virtual disc to the main control server and reading / writing the file data from the storage server, and a client-side module is arranged on the cloud storage client machine. The invention is easy to expanded and is convenient to manage, solves the problem of storage island, can construct a cloud storage file system provided with the dynamic load balancing capability and the online backup and automatic recovery functions and capable of being expanded on demand on a general-purpose low-performance PC (personal computer) cluster.

Owner:PLA UNIV OF SCI & TECH

System and method for dynamic load balancing

InactiveUS20020178262A1Resource allocationDigital computer detailsDynamic load balancingDistributed computing

A method, system, and medium for dynamic load balancing of a multi-domain server are provided. A first computer system includes a plurality of domains and a plurality of system processor boards. A management console is coupled to the first computer system and is configurable to monitor the plurality of domains. An agent is configurable to gather a first set of information relating to the domains. The agent includes one or more computer programs that are configured to be executed on the first computer system. The agent is configurable to automatically migrate one or more of the plurality of system processor boards among the plurality of domains in response to the first set of gathered information relating to the domains.

Owner:BMC SOFTWARE

Load balancing method and system based on estimated elongation rates

InactiveUS6986139B1Little changeReduce overheadResource allocationDigital computer detailsLoad SheddingDynamic load balancing

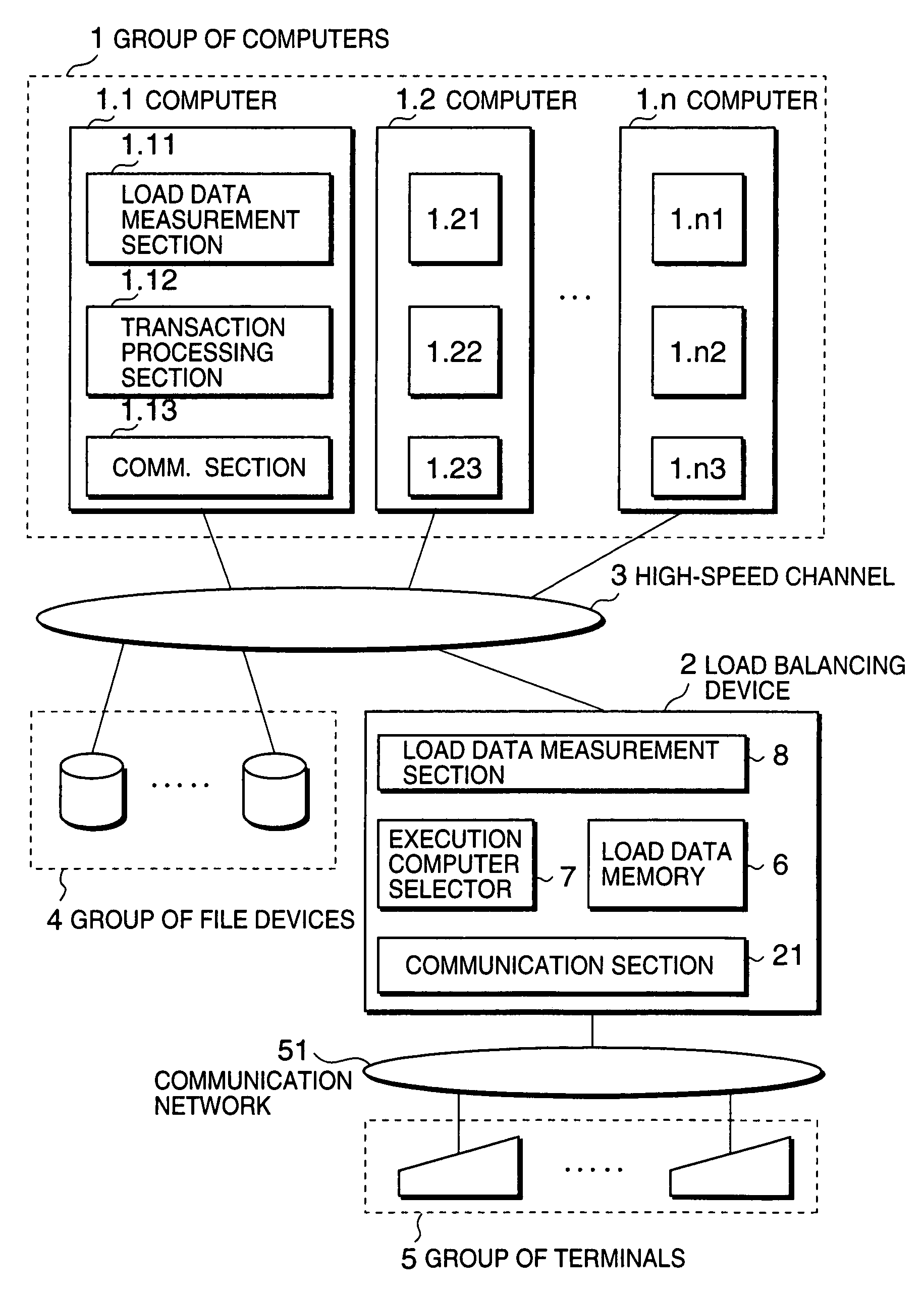

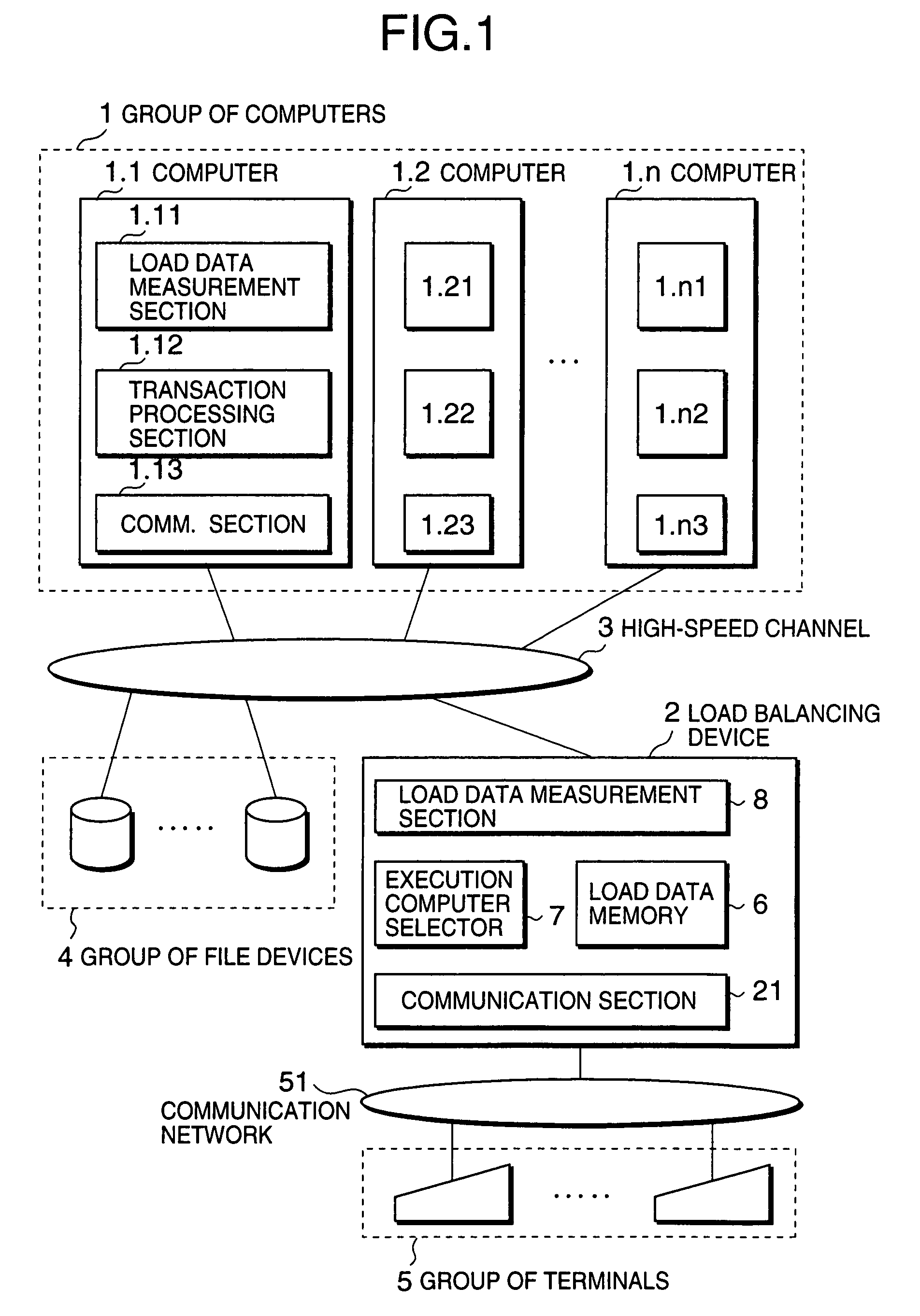

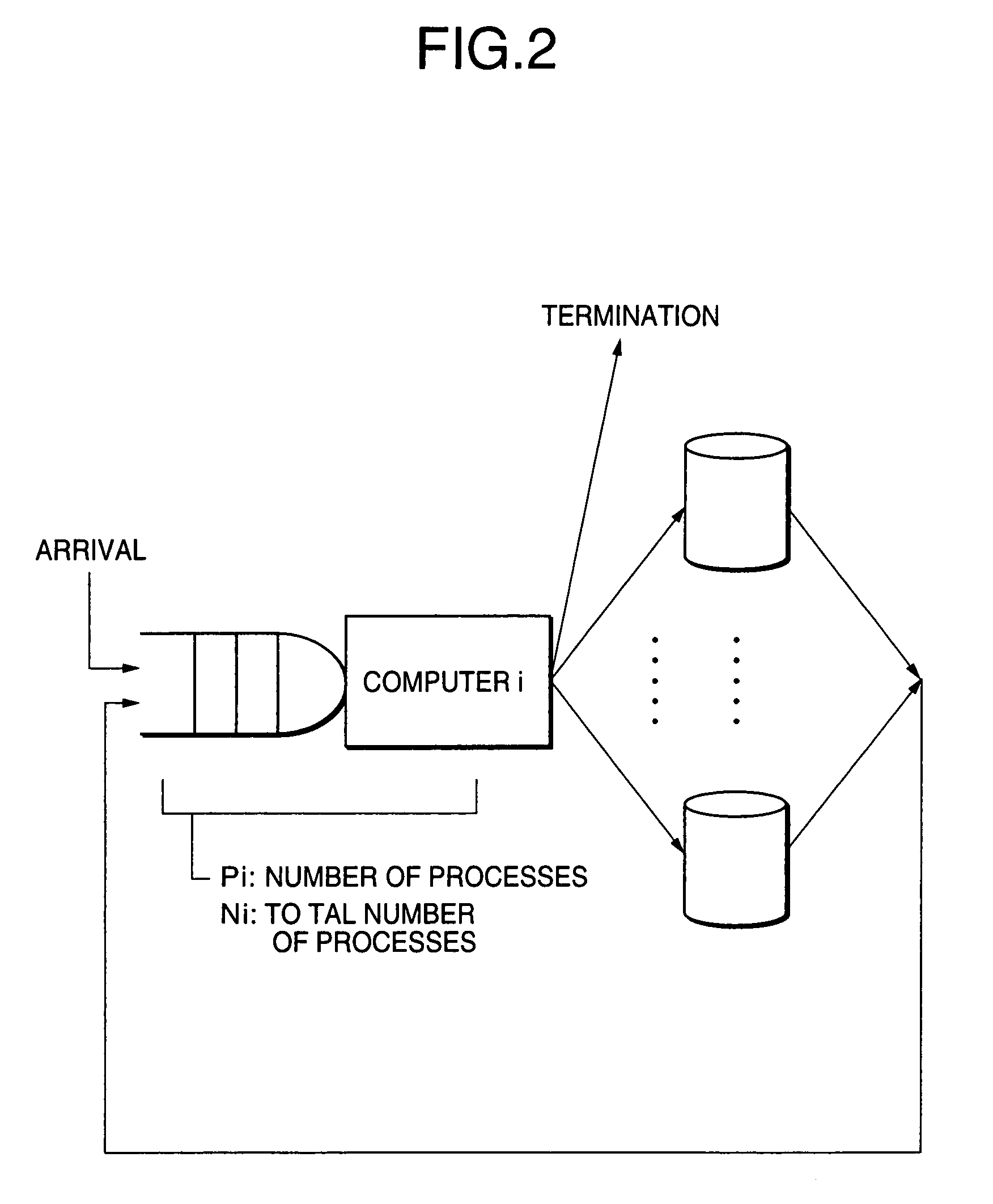

A dynamic load balancing method allowing a transaction processing load is balanced among computers by using estimated elongation rates is disclosed. In a system composed of a plurality of computers for processing transaction processing requests originating from a plurality of terminals, elongation rates of processing time for respective ones of the computers are estimated, where an elongation rate is a ratio of a processing time required for processing a transaction to a net processing time which is a sum of CPU time and an input / output time for processing the transaction. After calculating load indexes of respective ones of the computers based on the estimated elongation rates, a destination computer is selected from the computers based on the load indexes, wherein the destination computer having a minimum one among the load indexes.

Owner:NEC CORP

Apparatus and method for building distributed fault-tolerant/high-availability computed applications

InactiveUS6865591B1Multiple failureOptimal hardware utilizationMultiprogramming arrangementsTransmissionOperational systemSystem maintenance

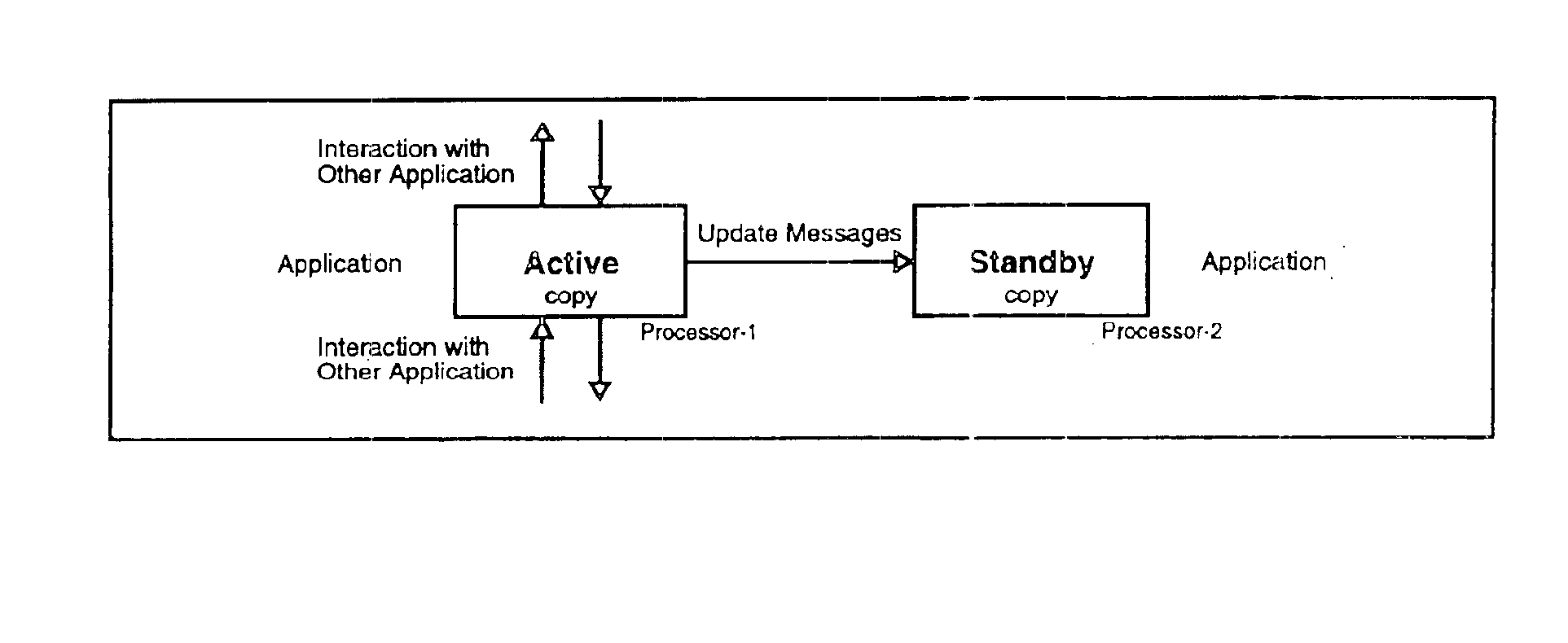

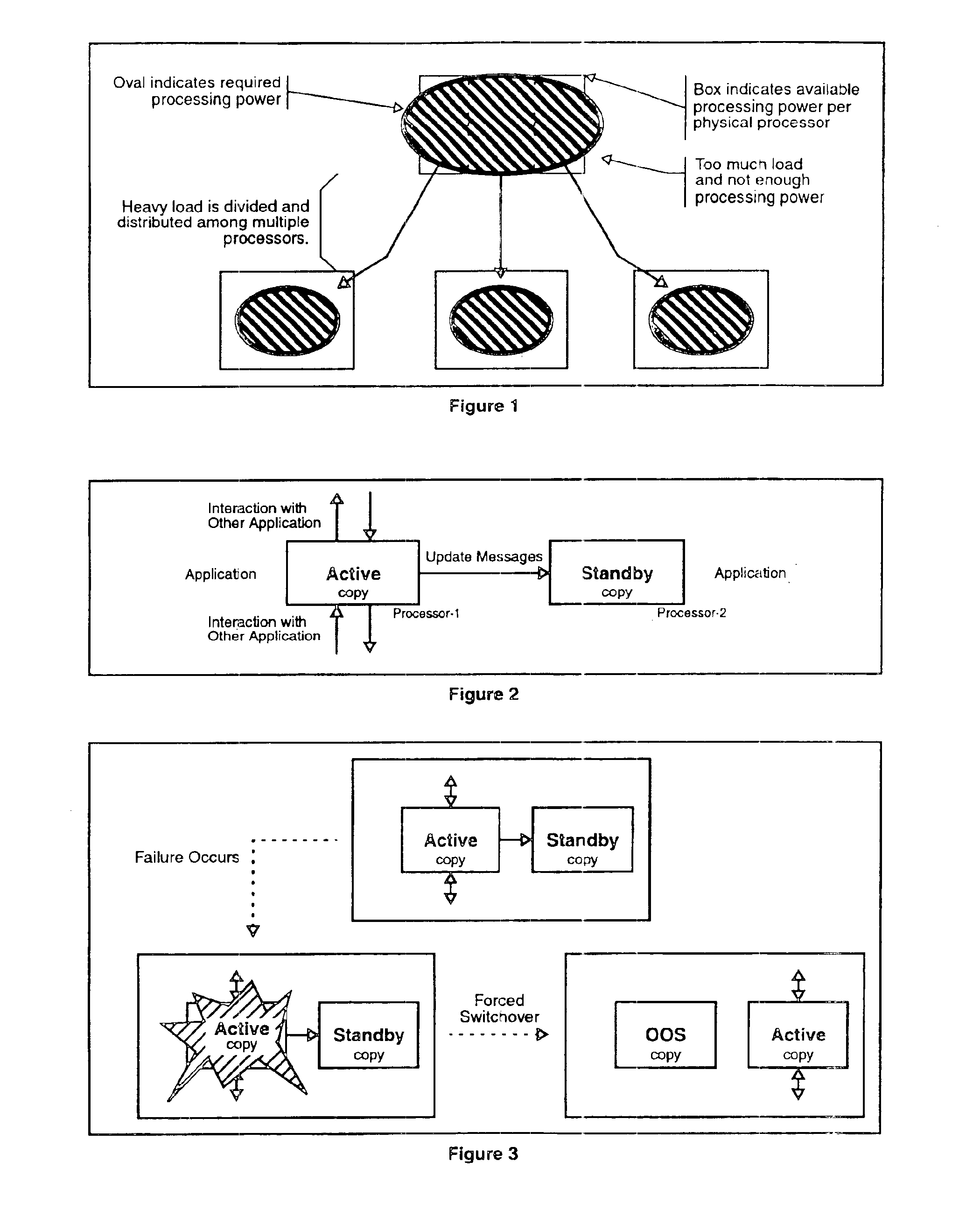

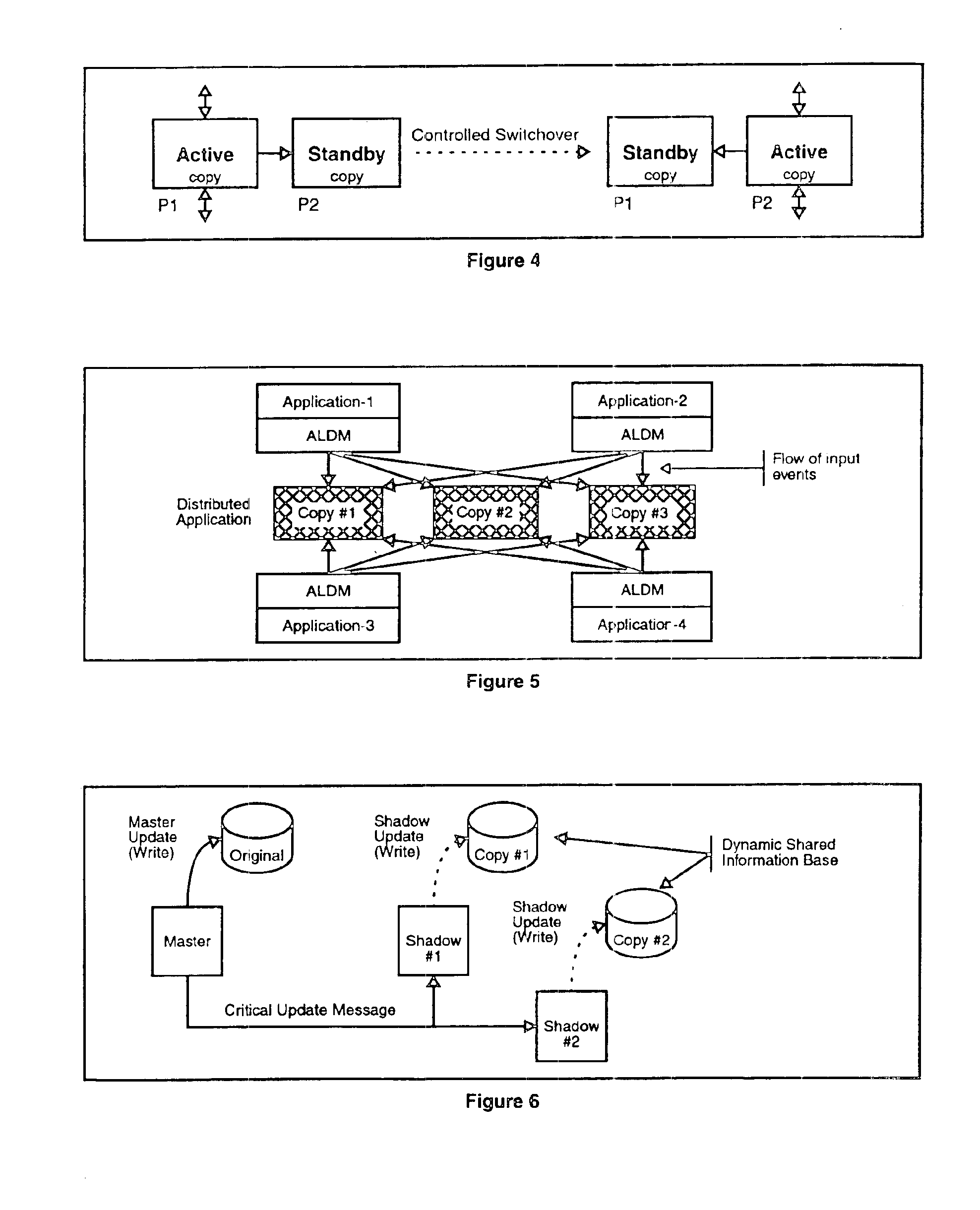

Software architecture for developing distributed fault-tolerant systems independent of the underlying hardware architecture and operating system. Systems built using architecture components are scalable and allow a set of computer applications to operate in fault-tolerant / high-availability mode, distributed processing mode, or many possible combinations of distributed and fault-tolerant modes in the same system without any modification to the architecture components. The software architecture defines system components that are modular and address problems in present systems. The architecture uses a System Controller, which controls system activation, initial load distribution, fault recovery, load redistribution, and system topology, and implements system maintenance procedures. An Application Distributed Fault-Tolerant / High-Availability Support Module (ADSM) enables an applications( ) to operate in various distributed fault-tolerant modes. The System Controller uses ADSM's well-defined API to control the state of the application in these modes. The Router architecture component provides transparent communication between applications during fault recovery and topology changes. An Application Load Distribution Module (ALDM) component distributes incoming external events towards the distributed application. The architecture allows for a Load Manager, which monitors load on various copies of the application and maximizes the hardware usage by providing dynamic load balancing. The architecture also allows for a Fault Manager, which performs fault detection, fault location, and fault isolation, and uses the System Controller's API to initiate fault recovery. These architecture components can be used to achieve a variety of distributed processing high-availability system configurations, which results in a reduction of cost and development time.

Owner:TRILLIUM DIGITAL SYST

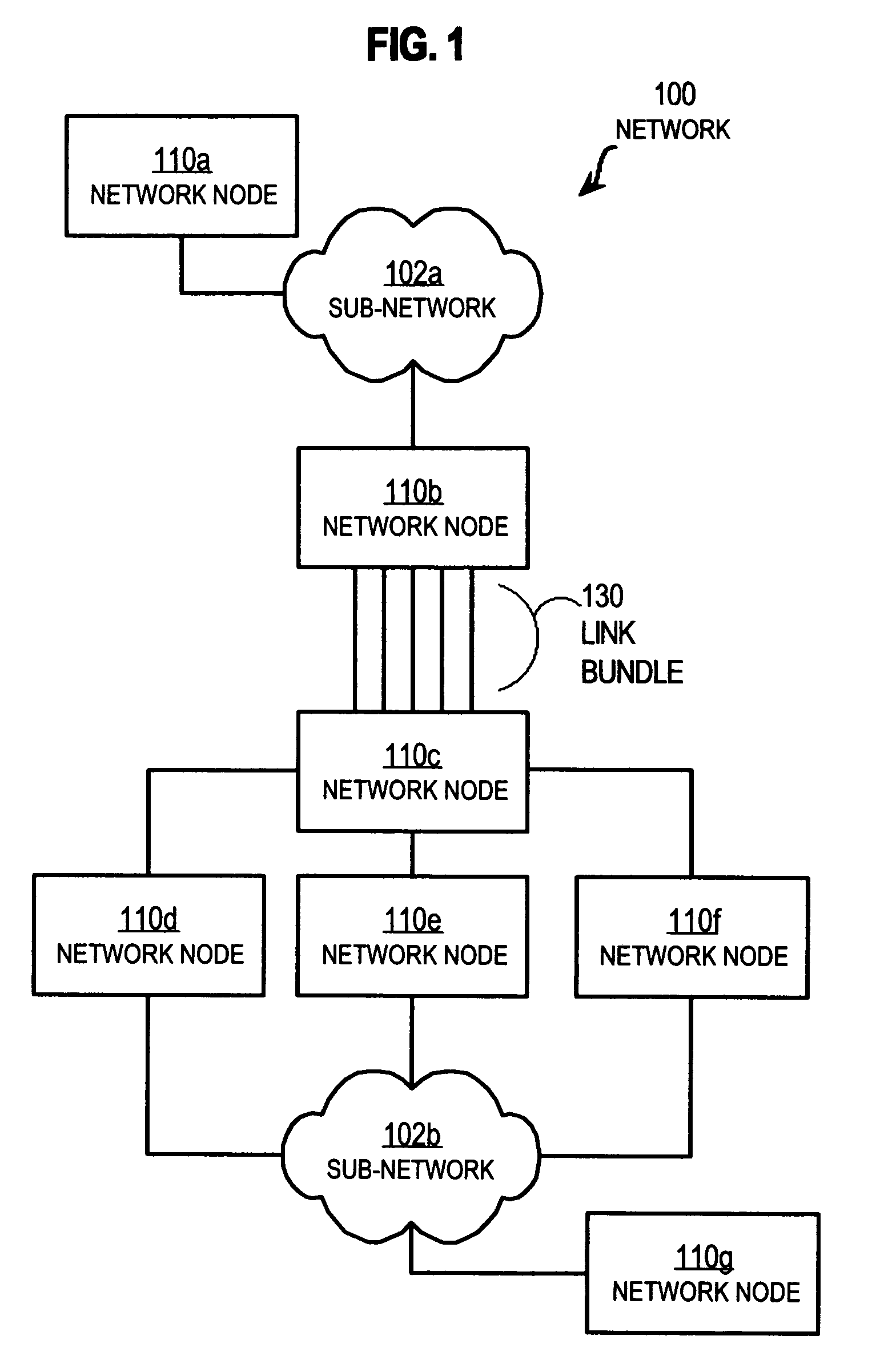

Method and apparatus for dynamic load balancing over a network link bundle

Techniques for distributing data packets over a network link bundle include storing an output data packet in a data flow queue based on a flow identification associated with the output data packet. The flow identification indicates a set of one or more data packets, including the output data packet, which are to be sent in the same sequence as received. State data is also received. The state data indicates a physical status of a first port of multiple active egress ports that are connected to a corresponding bundle of communication links with one particular network device. A particular data flow queue is determined based at least in part on the state data. A next data packet is directed from the particular data flow queue to a second port of the active egress ports. These techniques allow a more efficient use of a network link bundle.

Owner:CISCO TECH INC

Method and apparatus for dynamic load balancing over a network link bundle

InactiveUS7623455B2Error preventionFrequency-division multiplex detailsData streamTelecommunications link

Techniques for distributing data packets over a network link bundle include storing an output data packet in a data flow queue based on a flow identification associated with the output data packet. The flow identification indicates a set of one or more data packets, including the output data packet, which are to be sent in the same sequence as received. State data is also received. The state data indicates a physical status of a first port of multiple active egress ports that are connected to a corresponding bundle of communication links with one particular network device. A particular data flow queue is determined based at least in part on the state data. A next data packet is directed from the particular data flow queue to a second port of the active egress ports. These techniques allow a more efficient use of a network link bundle.

Owner:CISCO TECH INC

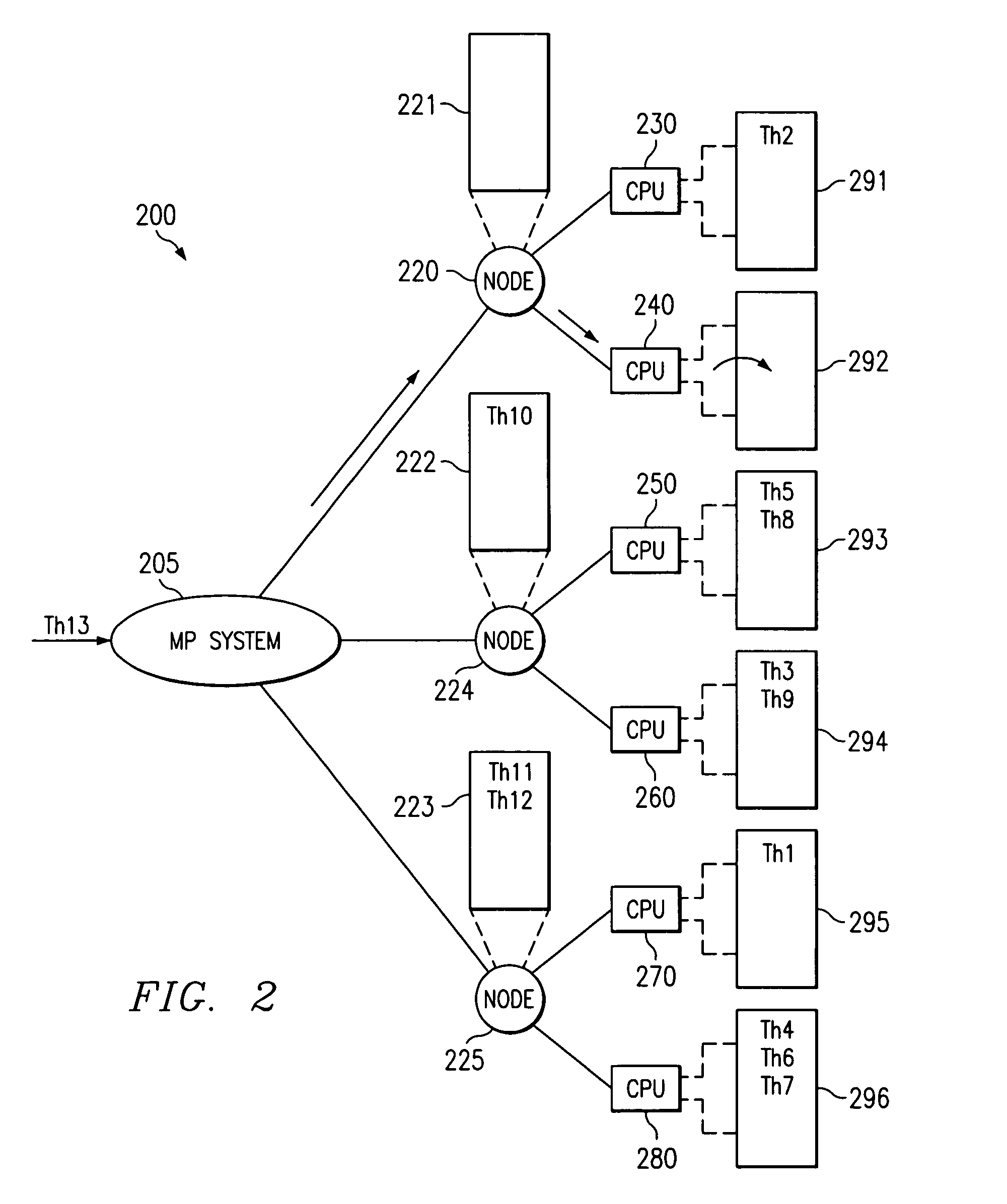

Two-Tiered Dynamic Load Balancing Using Sets of Distributed Thread Pools

By employing a two-tier load balancing scheme, embodiments of the present invention may reduce the overhead of shared resource management, while increasing the potential aggregate throughput of a thread pool. As a result, the techniques presented herein may lead to increased performance in many computing environments, such as graphics intensive gaming.

Owner:IBM CORP

Dynamic Load Balancing for Layer-2 Link Aggregation

ActiveUS20080291826A1Increase capacityEnergy efficient ICTError preventionDynamic load balancingLink aggregation

Load balancing for layer-2 link aggregation involves initial assignment of link aggregation keys (LAGKs) and reassignment of LAGKs when a load imbalance condition that merits action is discovered. Load conditions change dynamically and for this reason load balancing tends to also be dynamic. Load balancing is preferably performed when it is necessary. Thus an imbalance condition that triggers load balancing is preferably limited to conditions such as when there is frame drop, loss of synchronization or physical link capacity exceeded.

Owner:HARRIS STRATEX NETWORKS OPERATING

Systems and methods for dynamic load balancing in a wireless network

ActiveUS8451735B2Minimize disruptionError preventionTransmission systemsWireless mesh networkTelecommunications

The present disclosure relates to systems and methods for dynamic load balancing in a wireless network, such as a wireless local area network (WLAN) and the like. Specifically, the present invention periodically provides dynamic load balancing of mobile devices associated with a plurality of wireless access devices. This may include determining an optimum load and instructing wireless access devices that are overloaded to disassociate some mobile devices based upon predefined criteria. This disassociation is performed in a manner to minimize disruption by disassociating mobile devices with low usage, with close proximity to underutilized wireless access devices, and mobile devices not currently operating critical applications, such as voice.

Owner:EXTREME NETWORKS INC

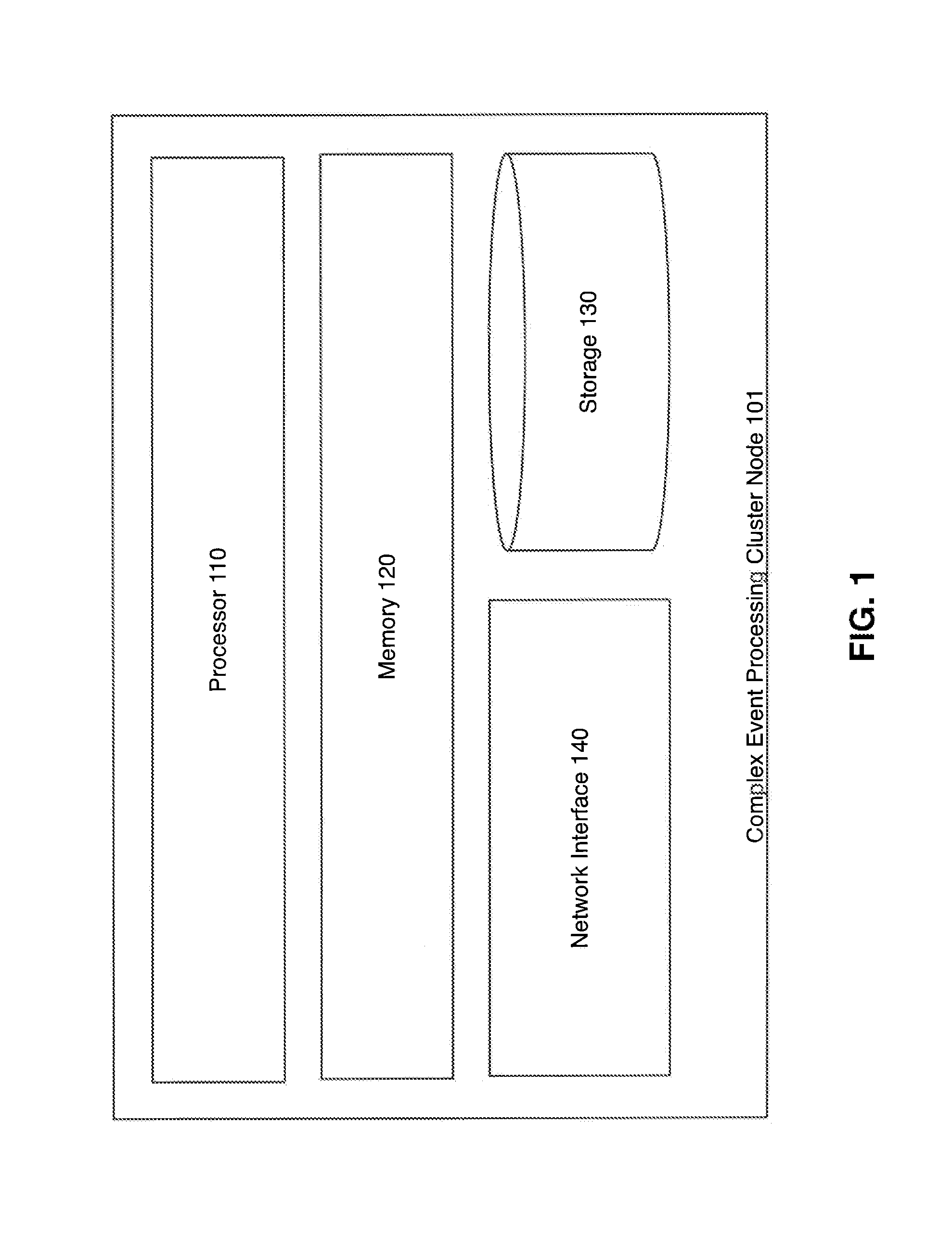

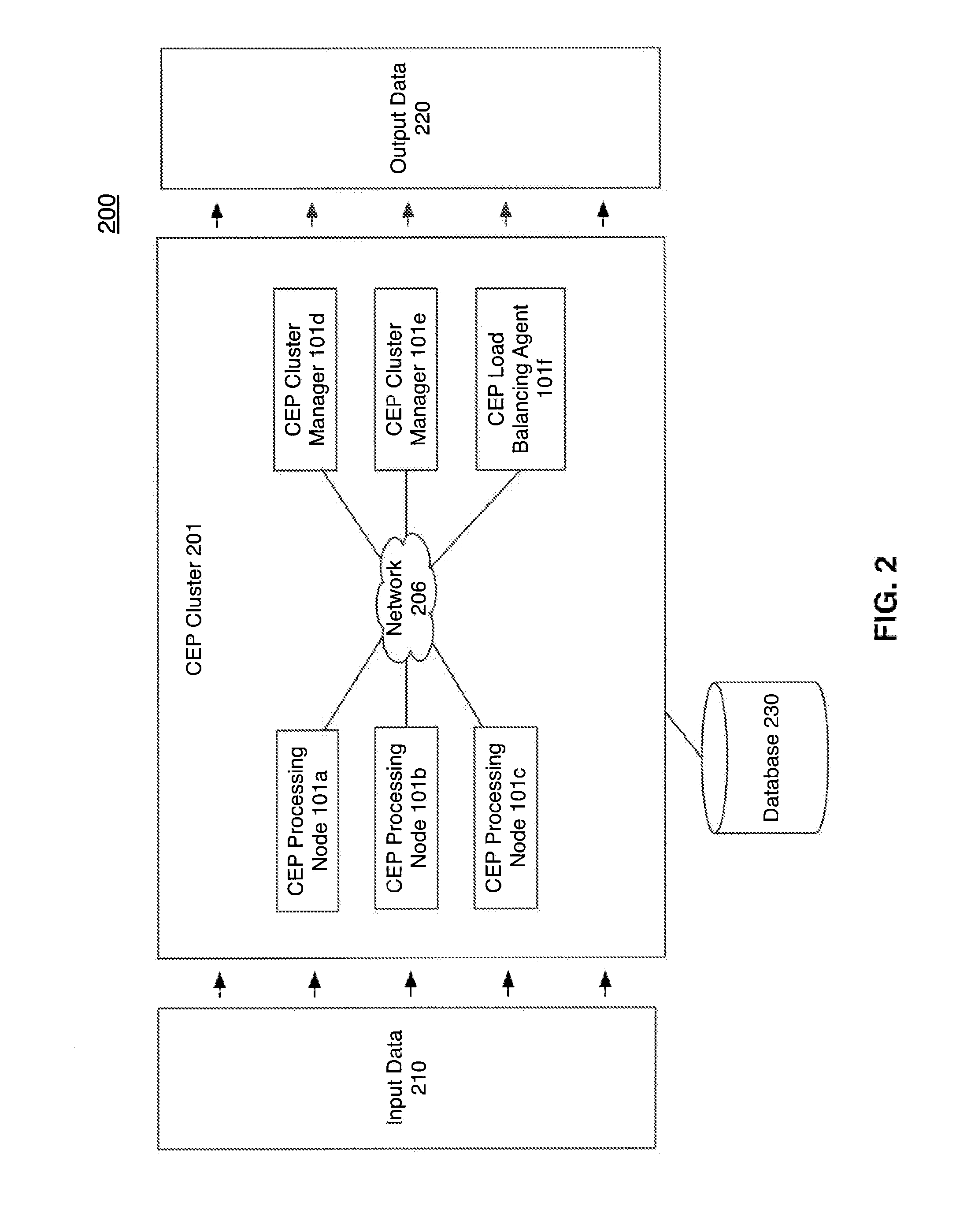

Dynamic Load Balancing for Complex Event Processing

InactiveUS20130160024A1Multiprogramming arrangementsMemory systemsDynamic load balancingComplex event processing

Disclosed herein are methods, systems, and computer readable storage media for performing load balancing actions in a complex event processing system. Static statistics of a complex event processing node, dynamic statistics of the complex event processing node, and project statistics for projects executing on the complex event processing node are aggregated. A determination is made as to whether the aggregated statistics satisfy a condition. A load balancing action may be performed, based on the determination.

Owner:SYBASE INC

Network switch providing dynamic load balancing

InactiveUS6208644B1Data switching by path configurationTime-division multiplexing selectionDynamic load balancingNetwork addressing

A network switch routes data transmissions between network stations, each data transmission including network addresses of the source and destination network stations. The network switch includes a set of input / output (I / O) ports each for receiving data transmissions from and transmitting data transmissions to a subset of the network stations. Each I / O port is identified by a "physical" port ID and a "logical" port ID. While each I / O port's physical port ID is unique, all I / O ports that can route data to the same subset of network stations share the same logical port ID. Each I / O port receiving a data transmission from a network station sends its logical port ID and the network addresses included in the data transmission to an address translation system. The address translation system uses data in the translation request to maintain a lookup table relating each subset of network addresses to a logical port ID identifying all I / O ports that communicate with network stations identified by that subset of network address. The address translation system responds to an address translation request by returning the logical port ID of all I / O ports that can send data transmissions to a destination station identified by the destination address included in the data transmission. In response to the returned logical port ID, the network switch establishes a data path for the data transmission from the I / O port receiving the data transmission and any idle I / O port having that logical port ID.

Owner:RPX CORP

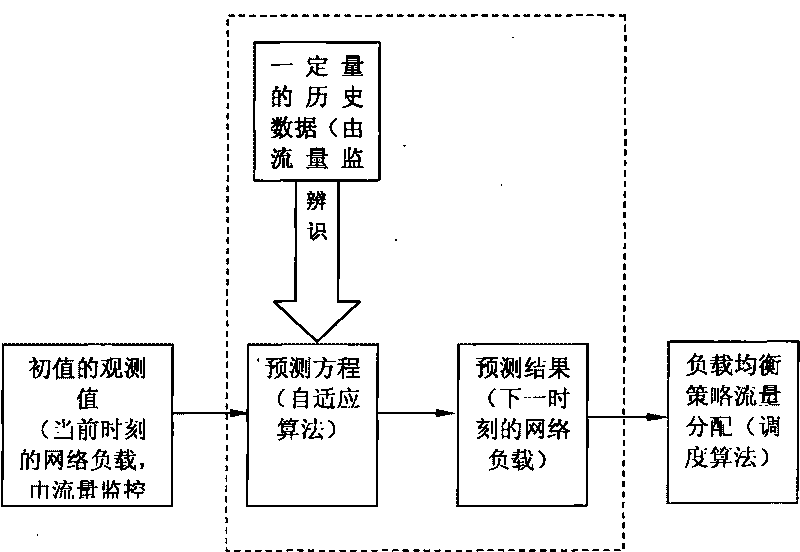

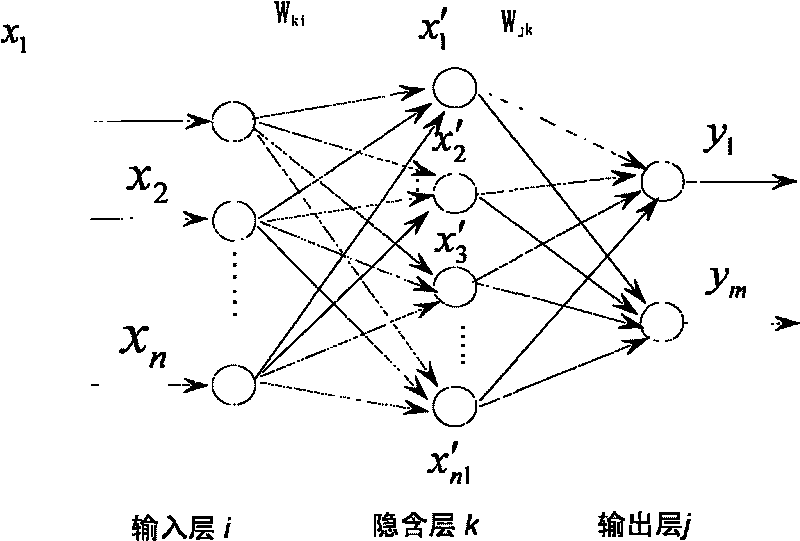

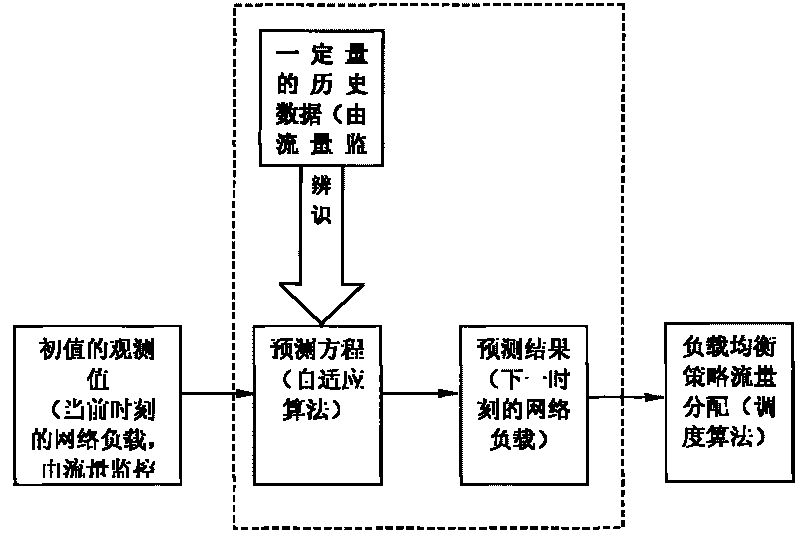

Dynamic load balancing method based on self-adapting prediction of network flow

InactiveCN101695050AImprove accuracyStrong self-learning abilityData switching networksBack end serverSoftware

The invention provides a dynamic load balancing method based on self-adapting prediction of network flow. The scheme includes: observing historical data regularity of network load flowing into a load balancing switch or a switching software in a certain time cycle; obtaining a parameter value of self-adapting algorithm in a prediction program to form a computing formula; then substituting load observation value at present moment into the formula to predict load value at the next moment; and distributing flow for a rear-end server at real time according to prediction value, thereby enabling the network load to be regulated in advance, avoiding lag effect, constantly keeping the network load in a comparatively balancing state, greatly strengthening self-adapting self-regulating capability of network to load, and being adaptable to networks with a certain time cycle regularity at occasions such as regular-period network backup and the like.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

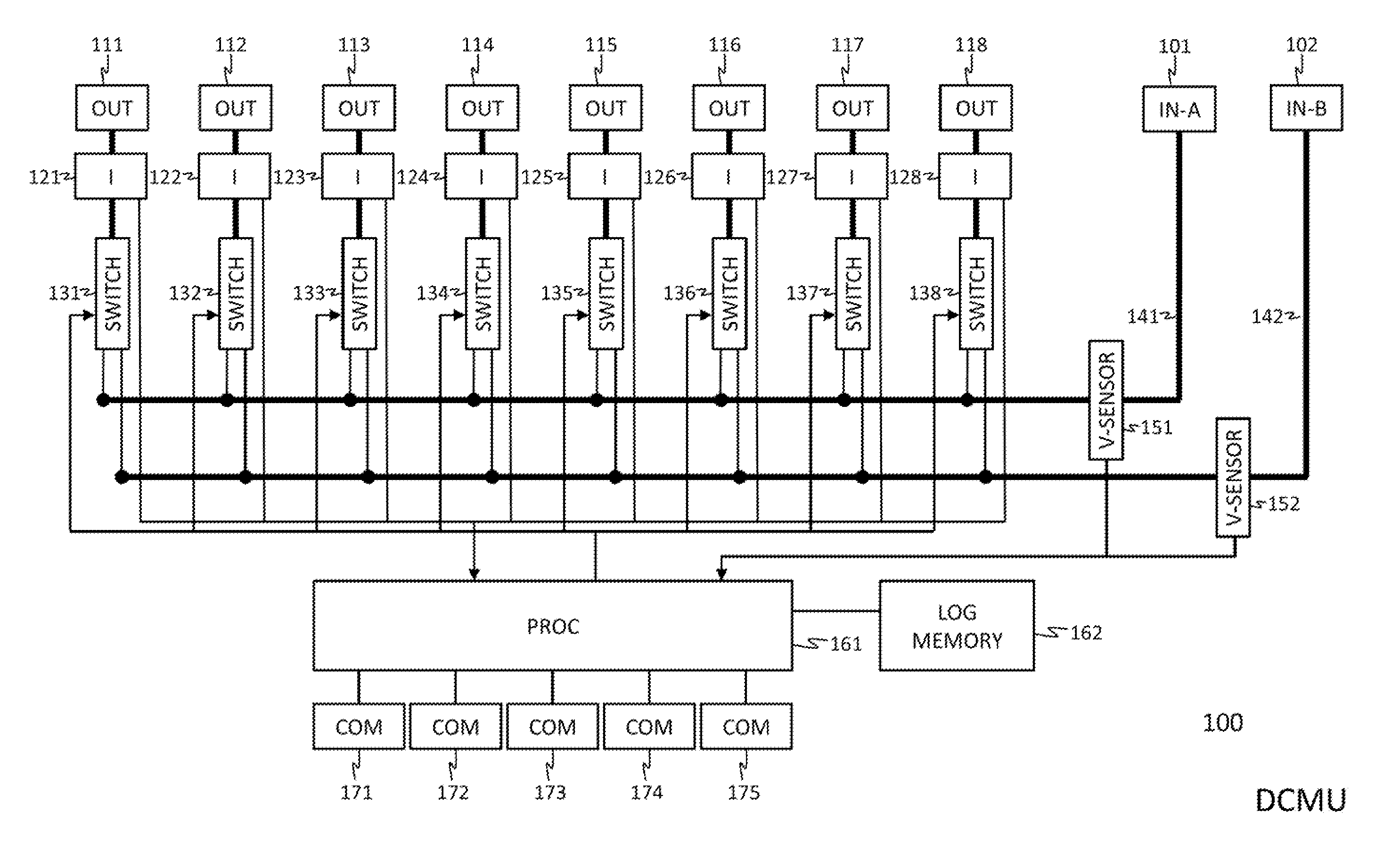

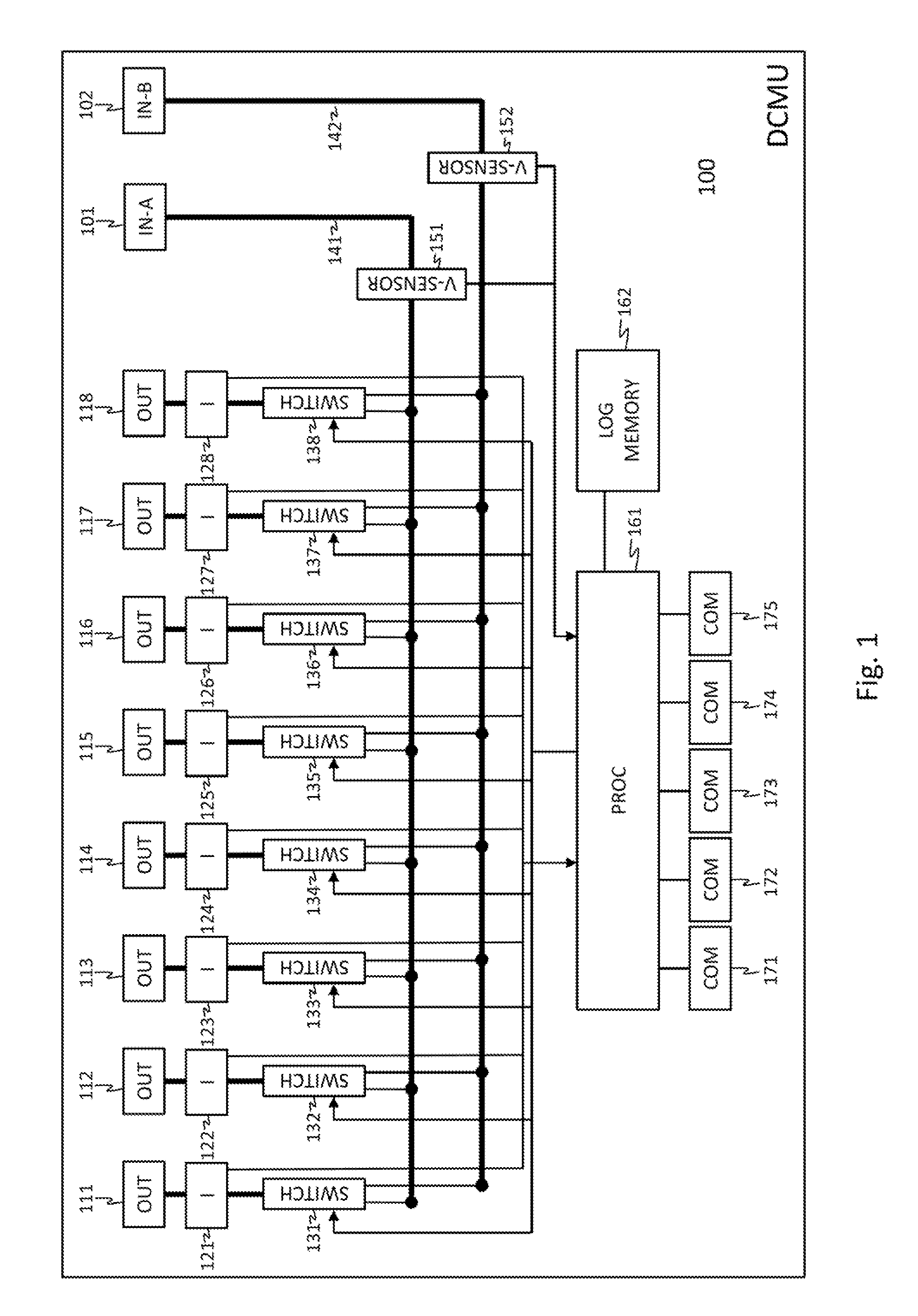

Data center management unit with dynamic load balancing

InactiveUS20110245988A1Keep the load balancedReduce stepsMechanical power/torque controlLevel controlControl powerManagement unit

A data center management unit (100) for managing and controlling power distribution to computers in a data center, comprising:a first power inlet (101) for connectivity to a first power feed;a second power inlet (102) for connectivity to a second power feed;a plurality of power outlets (111, 112, 113, 114, 115, 116, 117, 118) for providing power to the computers;a plurality of power switches (131, 132, 133, 134, 135, 136, 137, 138) each having a first input coupled to the first power inlet, a second input coupled to the second power inlet, and an output coupled to a respective power outlet; anda processor (161) adapted to control the power switches for dynamically switching individual power outlets between the first power inlet and the second power inlet or vice versa, and for dynamically switching off individual power outlets.

Owner:RACKTIVITY

Method for determining idle processor load balancing in a multiple processors system

InactiveUS6986140B2Great likelihoodResource allocationMemory systemsDynamic load balancingMulti processor

An apparatus and methods for periodic load balancing in a multiple run queue system are provided. The apparatus includes a controller, memory, initial load balancing device, idle load balancing device, periodic load balancing device, and starvation load balancing device. The apparatus performs initial load balancing, idle load balancing, periodic load balancing and starvation load balancing to ensure that the workloads for the processors of the system are optimally balanced.

Owner:IBM CORP

Cloud computing platform server load balancing system and method

ActiveCN103516807ANo added complexityImprove equalization performanceTransmissionTime conditionDynamic load balancing

The invention discloses a cloud computing platform server load balancing system and method, so as to overcome the defect that the current static load balancing technology cannot carry out dynamic adjustment according to the real-time condition of a load and the defect that a dynamic load balancing technology needs to additionally occupy system resources. According to the method, when a service request is received, a cloud computing platform selects a target server according to the number of requests which are processed and are being processed by each server in a cluster in recent preset unit time and the number of generated logs, and forwards the service request to the target server for processing. According to the embodiment of the invention, the load balancing can be effectively improved.

Owner:CHINA UNITED NETWORK COMM GRP CO LTD

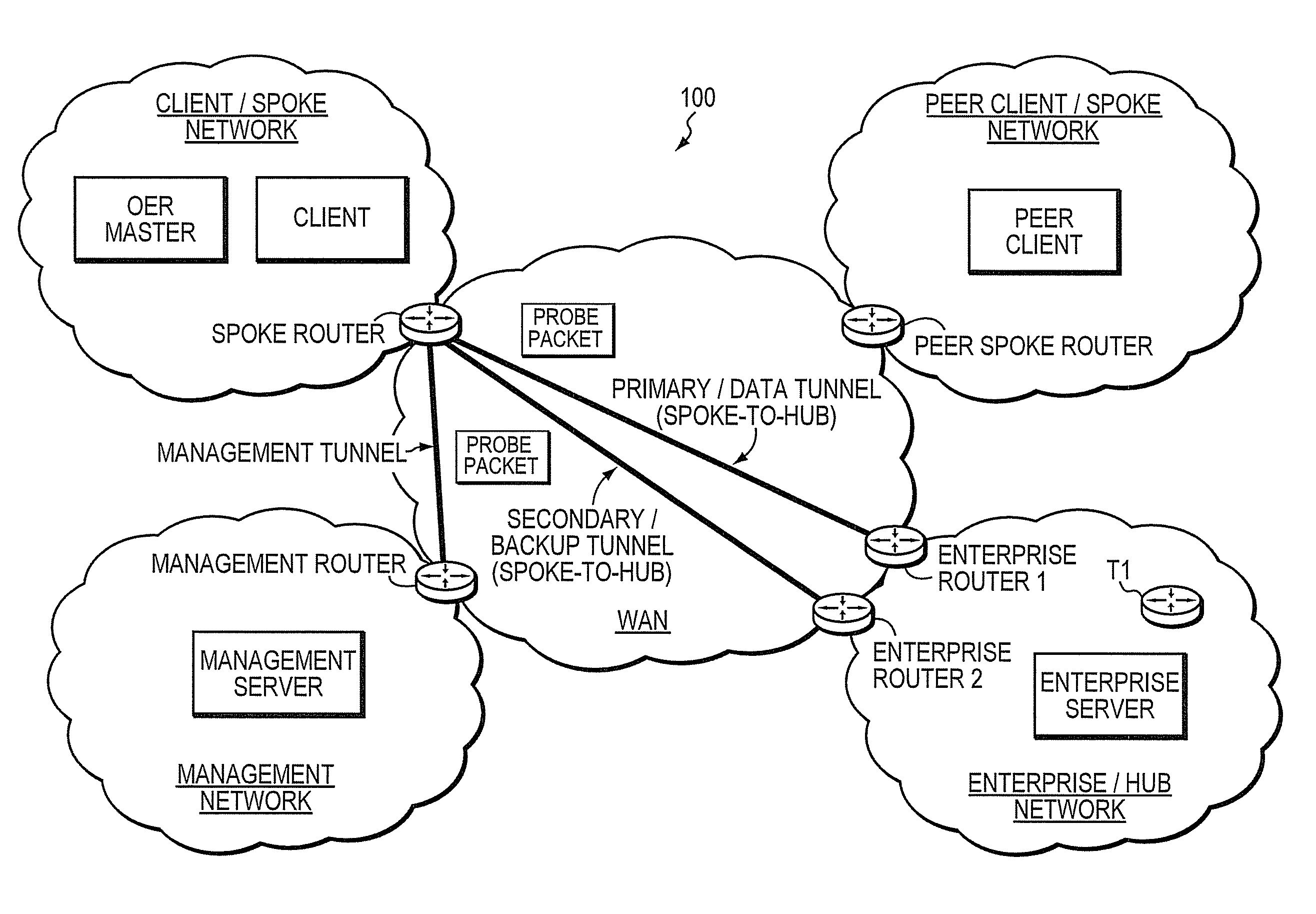

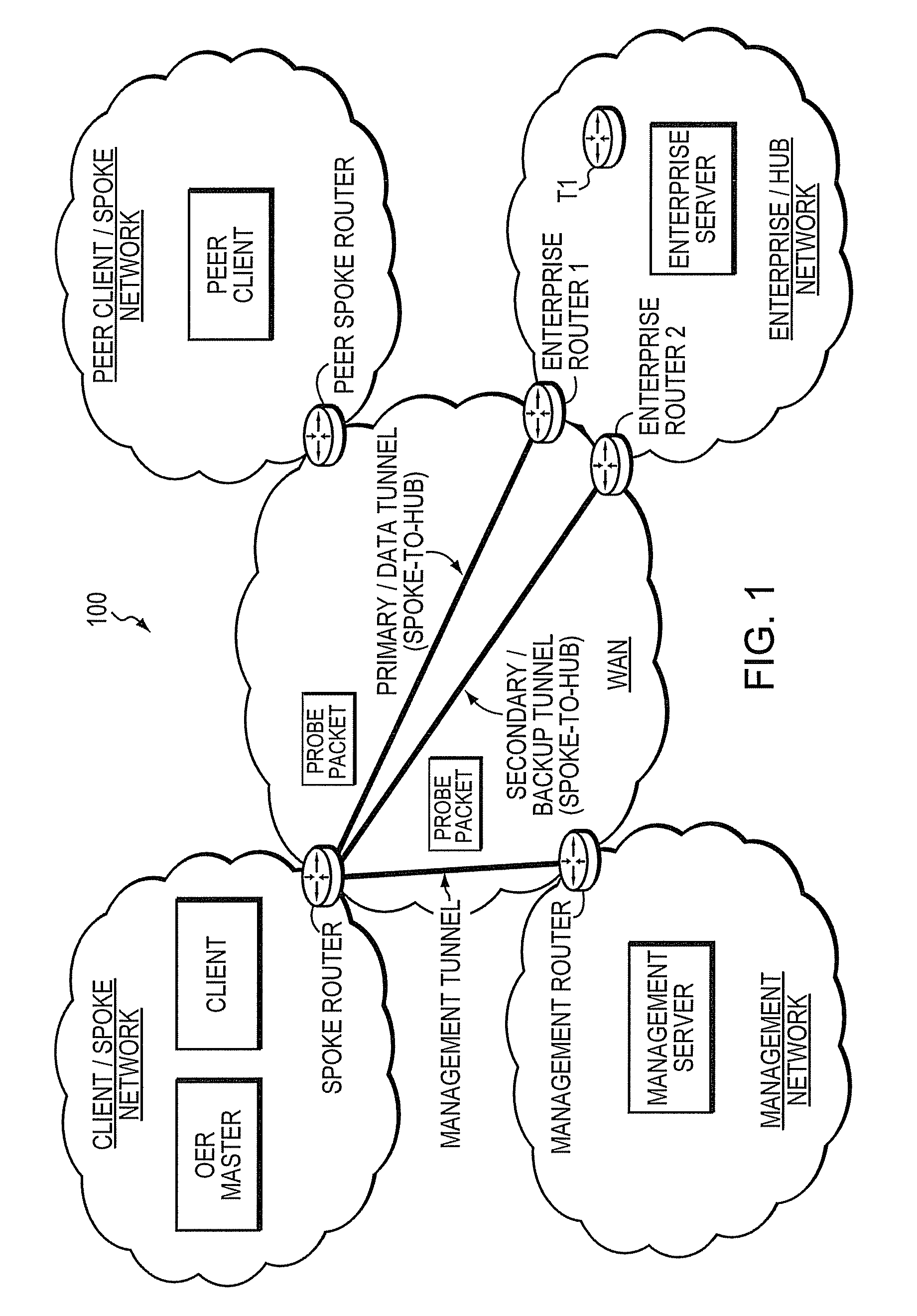

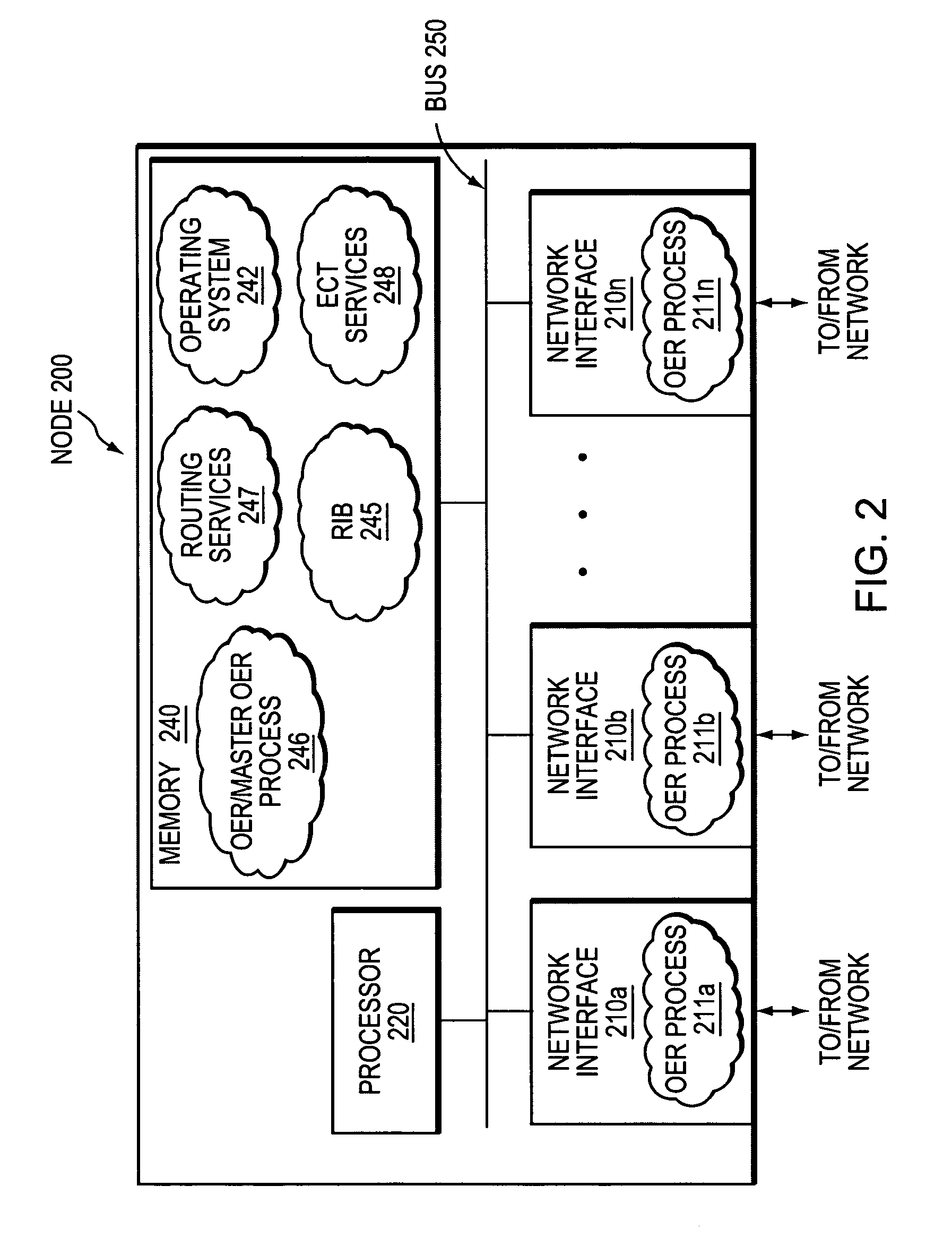

Technique for using OER with an ECT solution for multi-homed sites

ActiveUS8260922B1Extended run timeImprove usabilityDigital computer detailsTransmissionTraffic capacityReachability

A technique dynamically utilizes a plurality of multi-homed Virtual Private Network (VPN) tunnels from a client node to one or more enterprise networks in a computer network. According to the technique, a VPN client node, e.g., a “spoke,” creates a plurality of multi-homed VPN tunnels with one or more servers / enterprise networks, e.g., “hubs.” The spoke designates (e.g., for a prefix) one of the tunnels as a primary tunnel and the other tunnels as secondary (backup) tunnels, and monitors the quality (e.g., loss, delay, reachability, etc.) of all of the tunnels, such as, e.g., by an Optimized Edge Routing (OER) process. The spoke may then dynamically re-designate any one of the secondary tunnels as the primary tunnel for a prefix based on the quality of the tunnels to the enterprise. Notably, the spoke may also dynamically load balance traffic to the enterprise among the primary and secondary tunnels based on the quality of those tunnels.

Owner:CISCO TECH INC

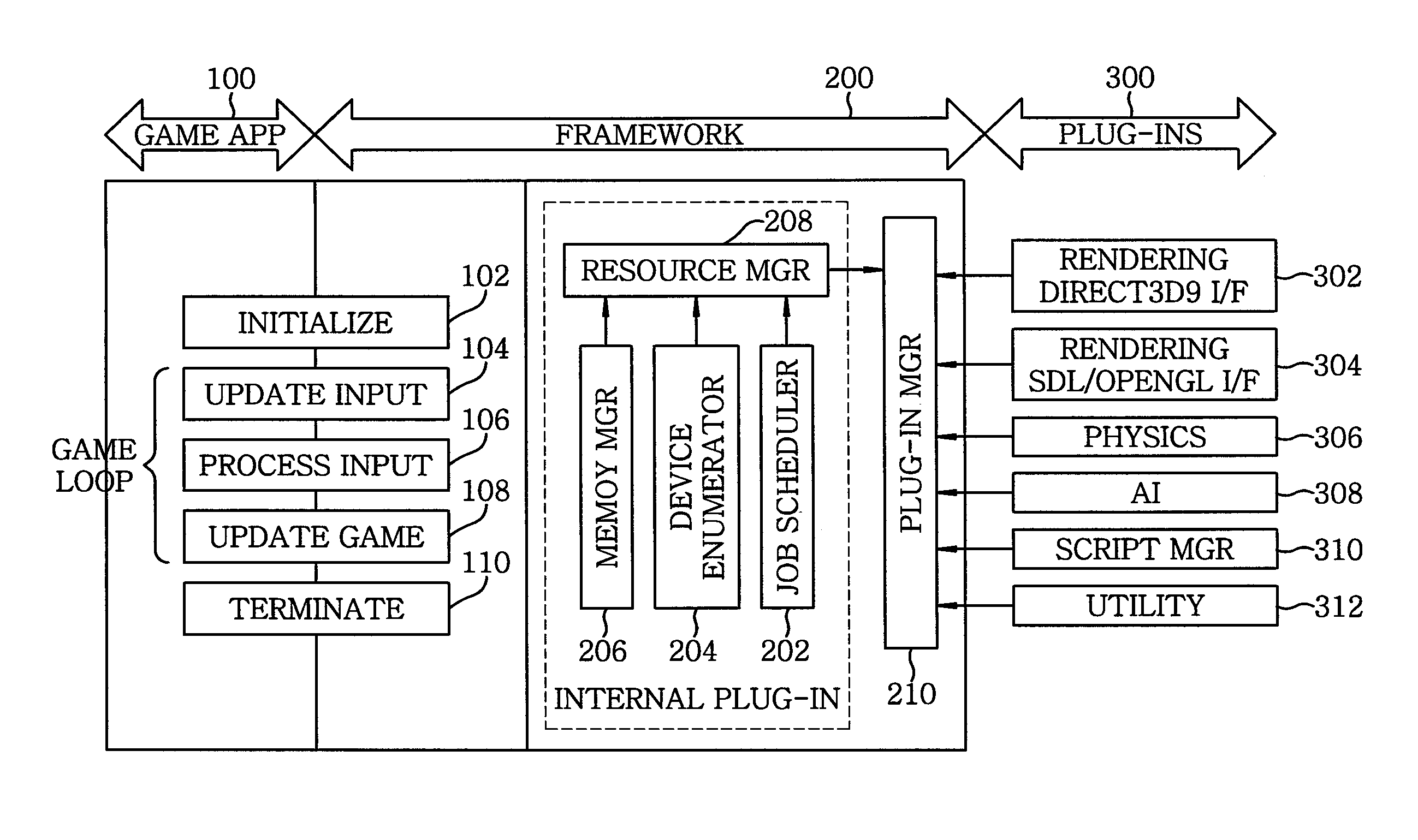

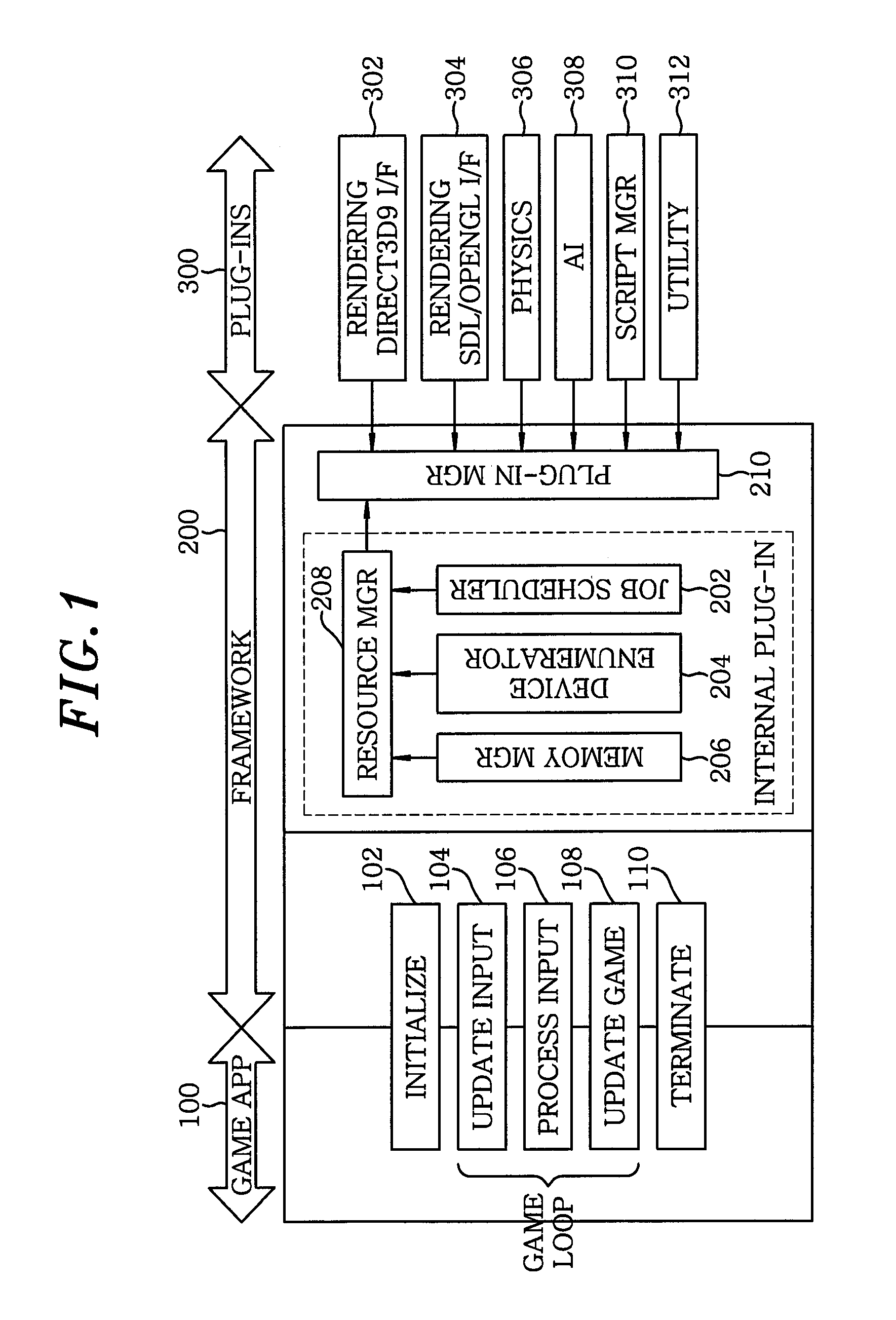

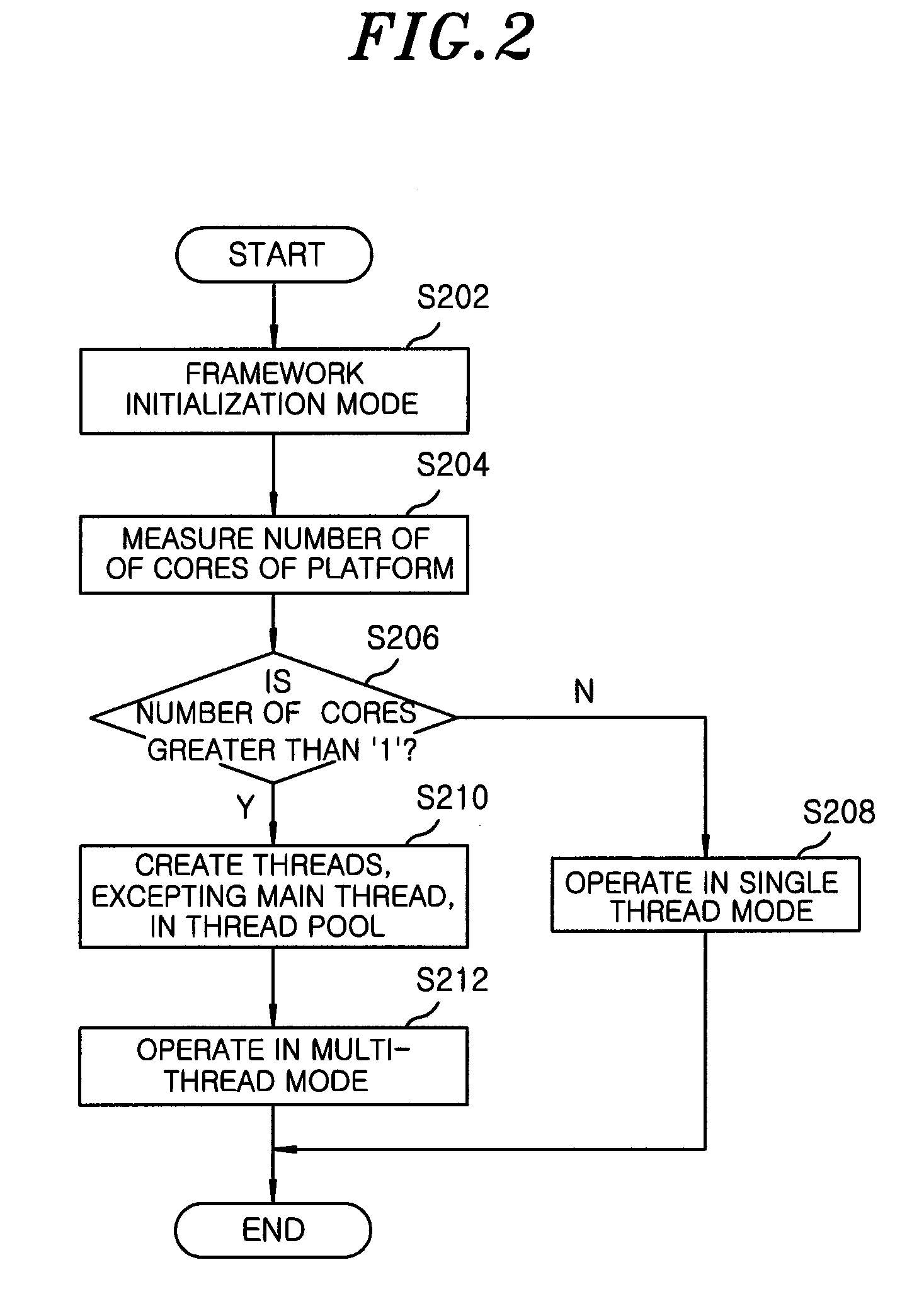

Multithreading framework supporting dynamic load balancing and multithread processing method using the same

InactiveUS20090150898A1Improve performanceMultiprogramming arrangementsMemory systemsDynamic load balancingApplication software

A multithreading framework supporting dynamic load balancing, the multithreading framework being used to perform multi-thread programming, the multithreading framework includes a job scheduler for performing parallel processing by redefining a processing order of one or more unit jobs, transmitted from a predetermined application, based on unit job information included in the respective unit jobs, and transmitting the unit jobs to a thread pool based on the redefined processing order, a device enumerator for detecting a device in which the predetermined application is executed and defining resources used inside the application, a resource manager for managing the resources related to the predetermined application executed using the job scheduler or the device enumerator, and a plug-in manager for managing a plurality of modules which performs various types of functions related to the predetermined application in a plug-in manner, and providing such plug-in modules to the job scheduler.

Owner:ELECTRONICS & TELECOMM RES INST

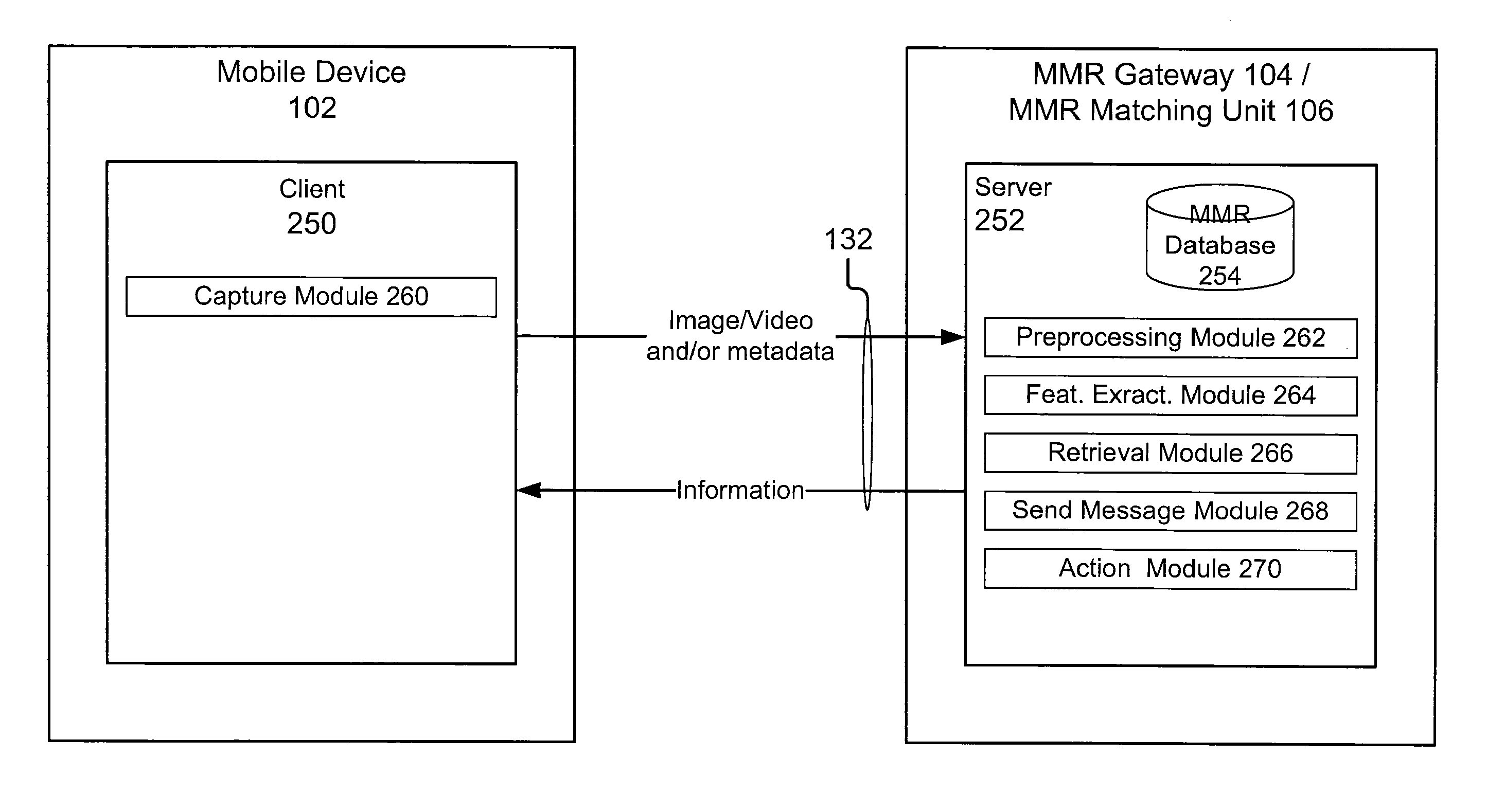

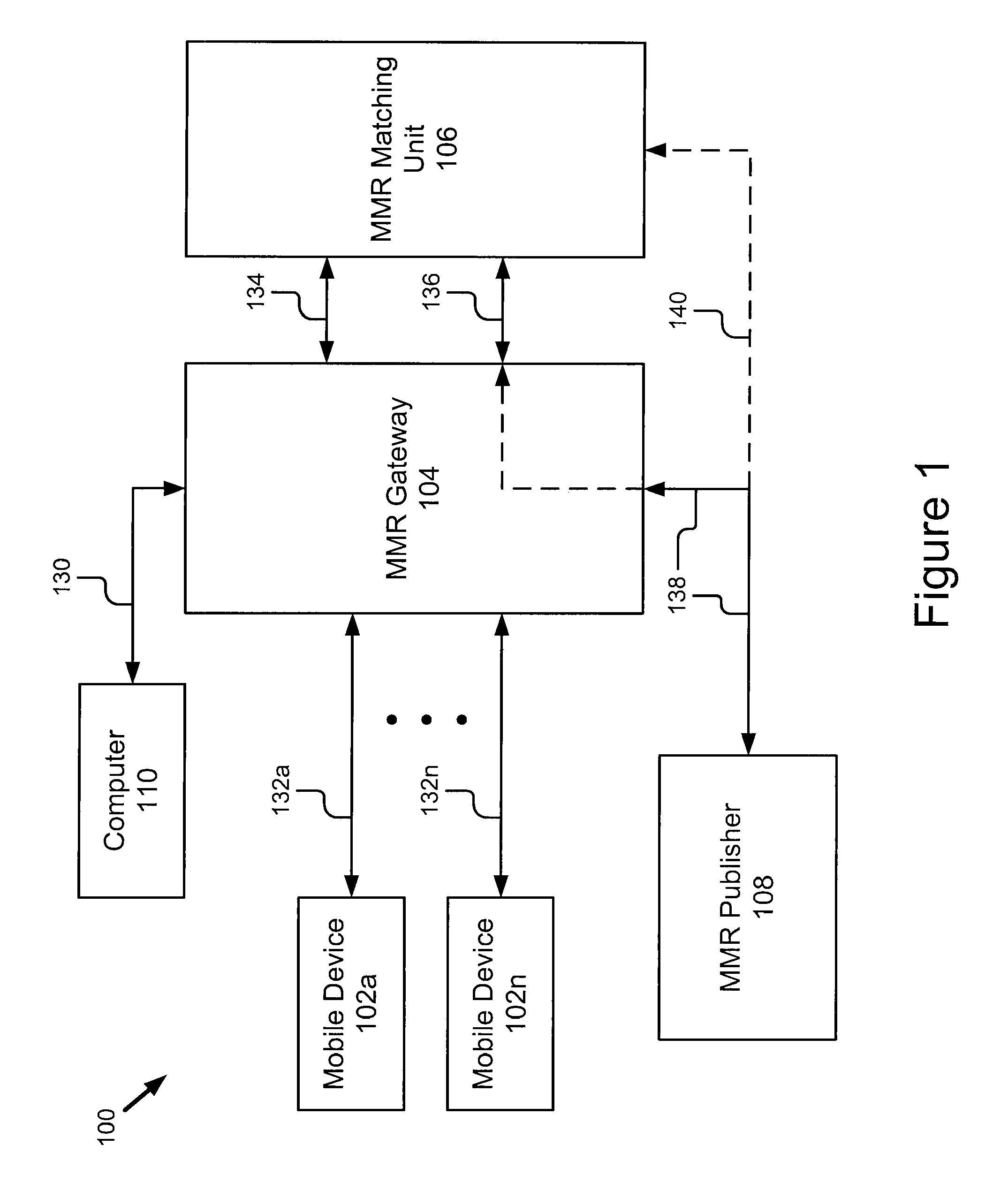

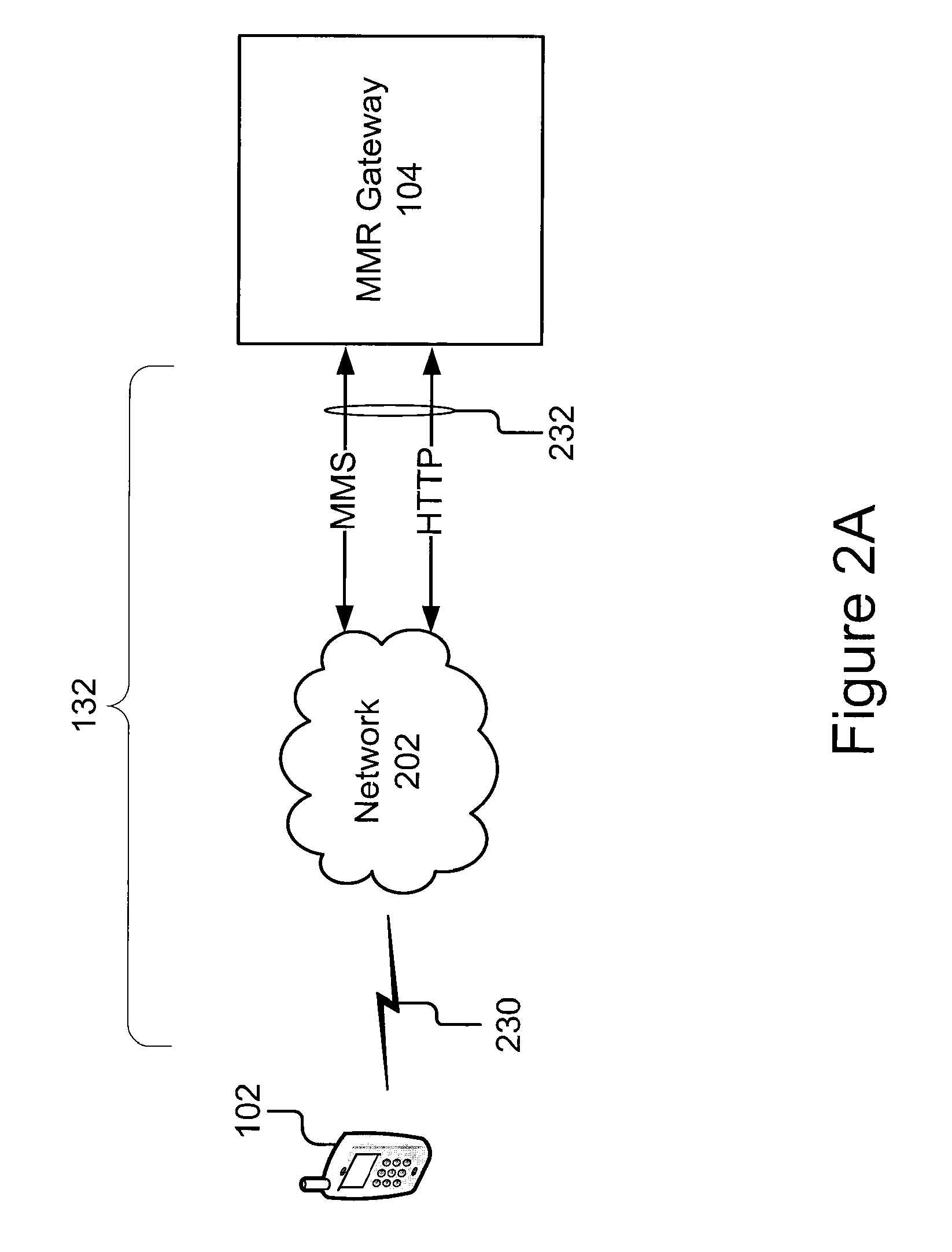

Architecture for mixed media reality retrieval of locations and registration of images

ActiveUS20090070415A1Digital data processing detailsCharacter and pattern recognitionDynamic load balancingImage query

A MMR system for publishing comprises a plurality of mobile devices, an MMR gateway, an MMR matching unit and an MMR publisher. The mobile devices send retrieval requests including image queries and other contextual information. The MMR gateway processes the retrieval request from the mobile devices and then generates an image query that is passed on to the MMR matching unit. The MMR matching unit receives an image query from the MMR gateway and sends it to one or more of the recognition units to identify a result including a document, the page and the location on the page. The MMR matching unit includes an image registration unit that receives new content from the MMR publisher and updates the index table of the MMR matching unit. A method for automatically registering images and other data with the MMR matching unit, a method for dynamic load balancing and a method for image-feature-based queue ordering are also included.

Owner:RICOH KK

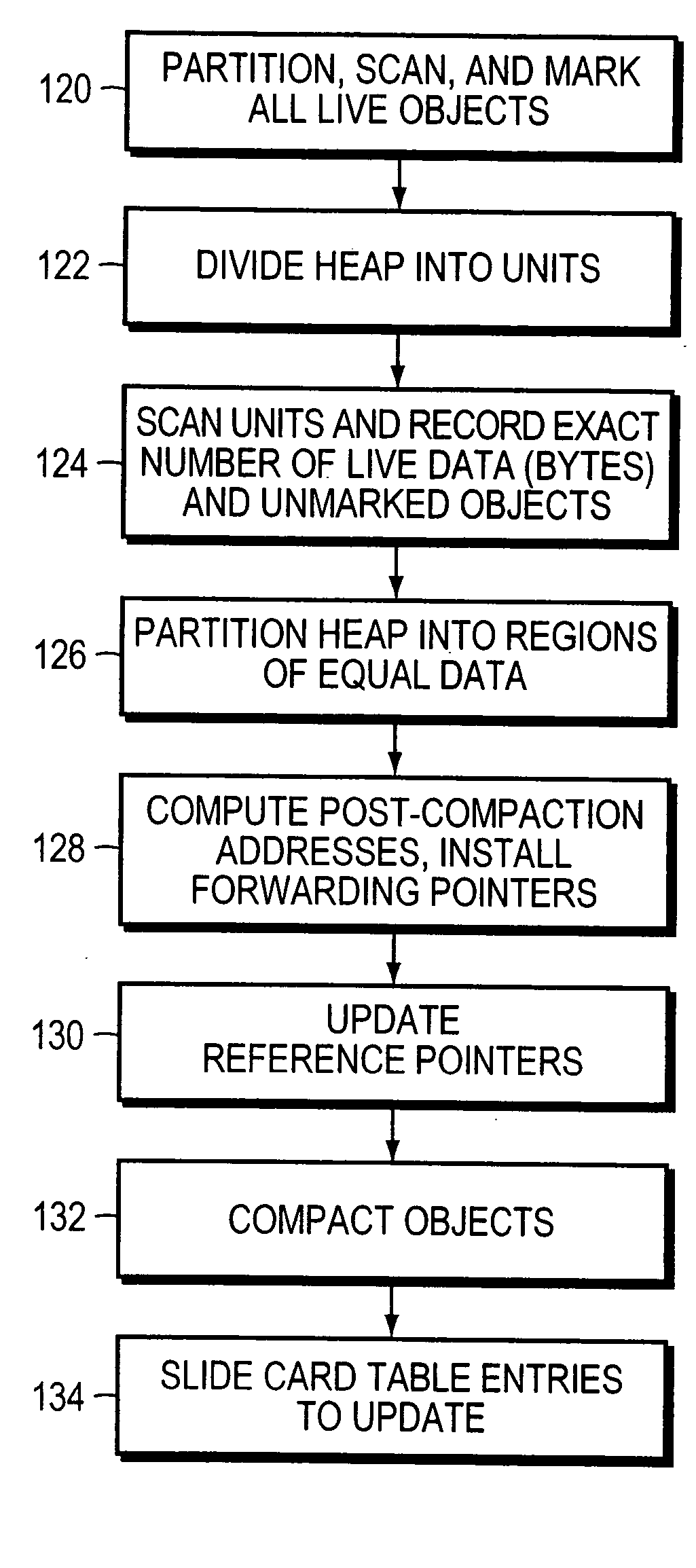

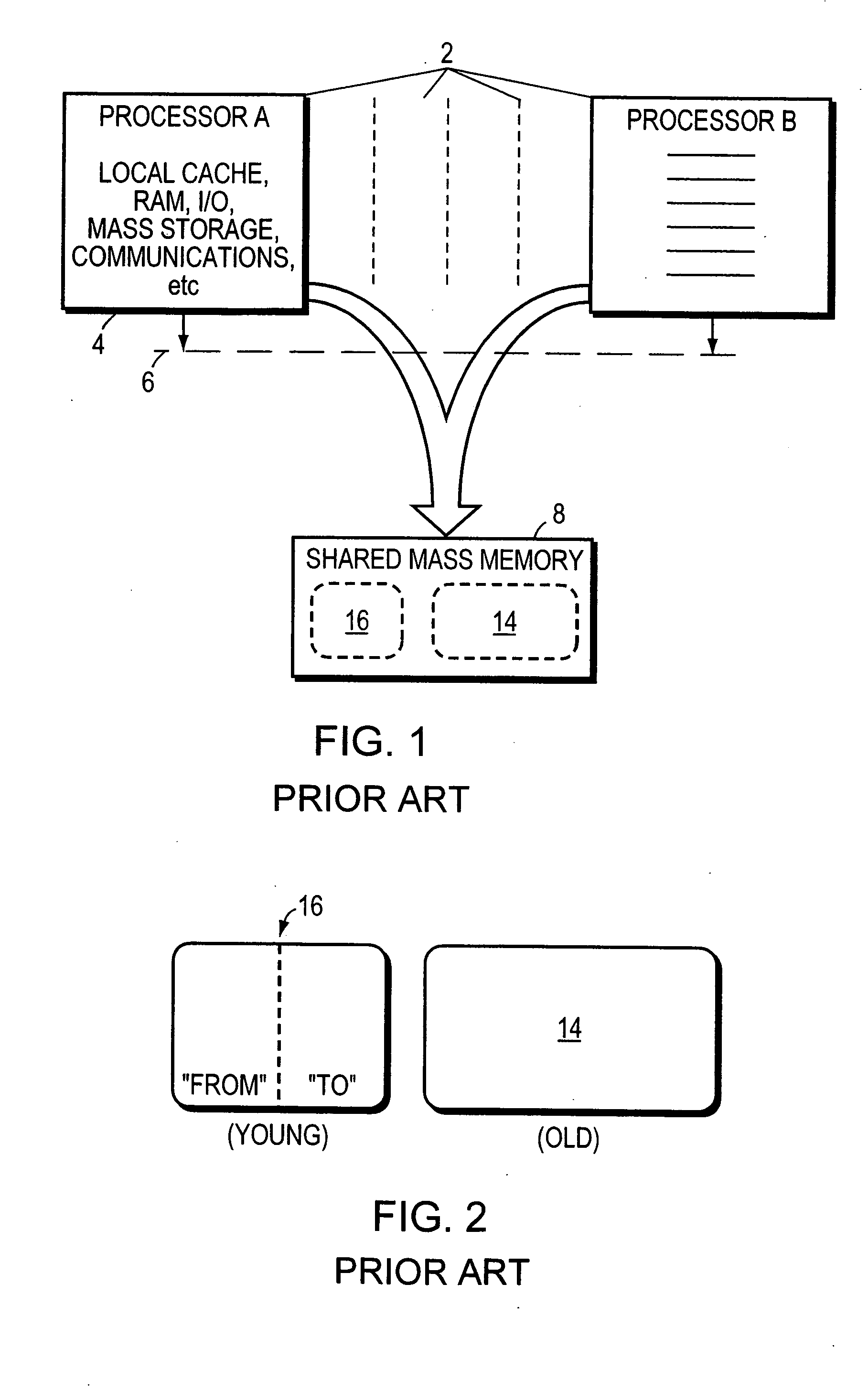

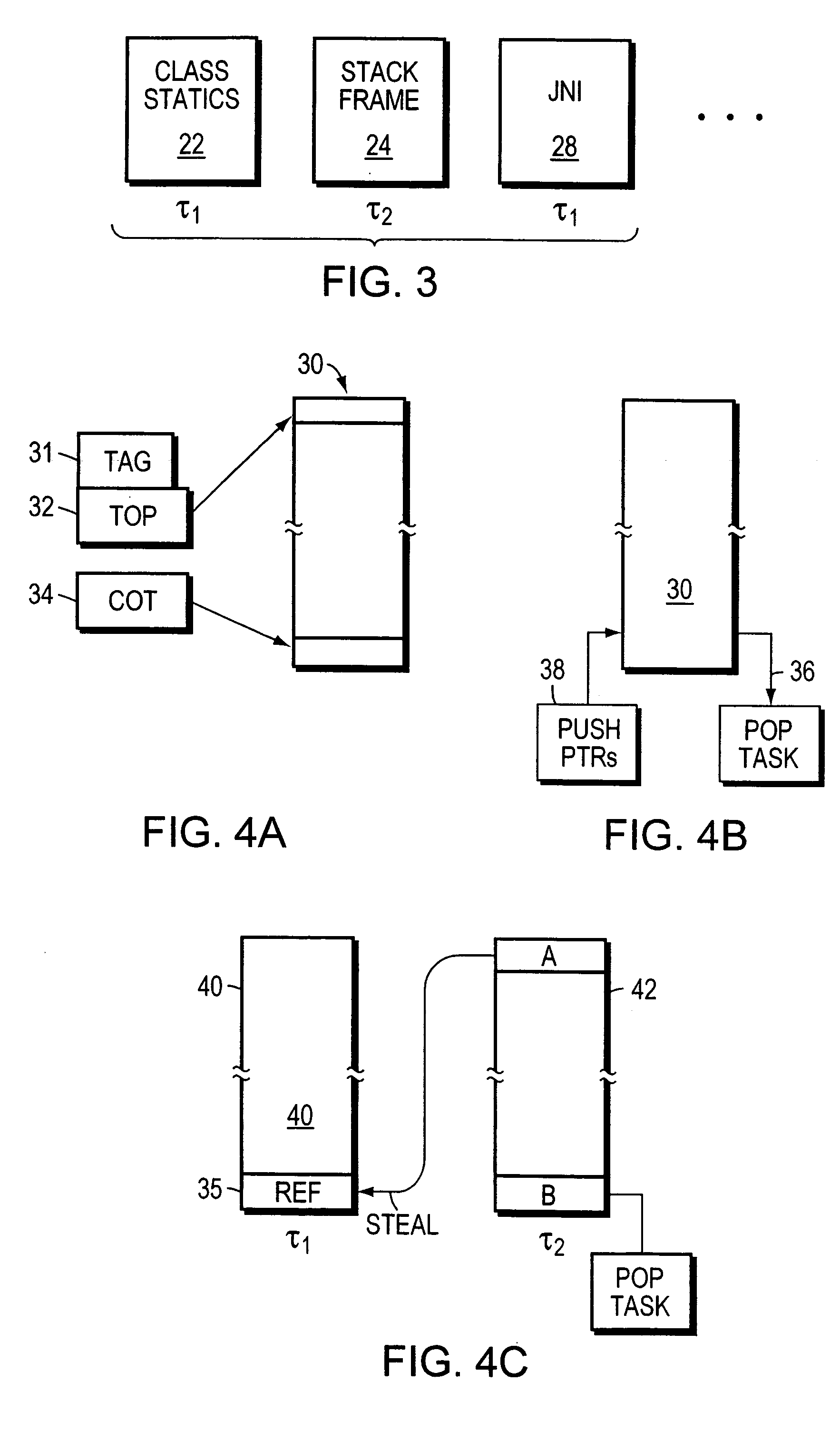

Work stealing queues for parallel garbage collection

InactiveUS20050132374A1Minimizes interruptingMinimizes blockingData processing applicationsMemory adressing/allocation/relocationDynamic load balancingRefuse collection

A multiprocessor, multi-program, stop-the-world garbage collection program is described. The system initially over partitions the root sources, and then iteratively employs static and dynamic work balancing. Garbage collection threads compete dynamically for the initial partitions. Work stealing double-ended queues, where contention is reduced, are described to provide dynamic load balancing among the threads. Contention is resolved by using atomic instructions. The heap is broken into a young and an old generation where parallel semi-space copying is used to collect the young generation and parallel mark-compacting the old generation. Speed and efficiency of collection is enhanced by use of card tables and linking objects, and overflow conditions are efficiently handled by linking using class pointers. A garbage collection termination employs a global status word.

Owner:ORACLE INT CORP

System for preventing periodic load balancing if processor associated with lightest local run queue has benefited from idle processor load balancing within a determined time period

An apparatus and methods for periodic load balancing in a multiple run queue system are provided. The apparatus includes a controller, memory, initial load balancing device, idle load balancing device, periodic load balancing device, and starvation load balancing device. The apparatus performs initial load balancing, idle load balancing, periodic load balancing and starvation load balancing to ensure that the workloads for the processors of the system are optimally balanced.

Owner:INT BUSINESS MASCH CORP

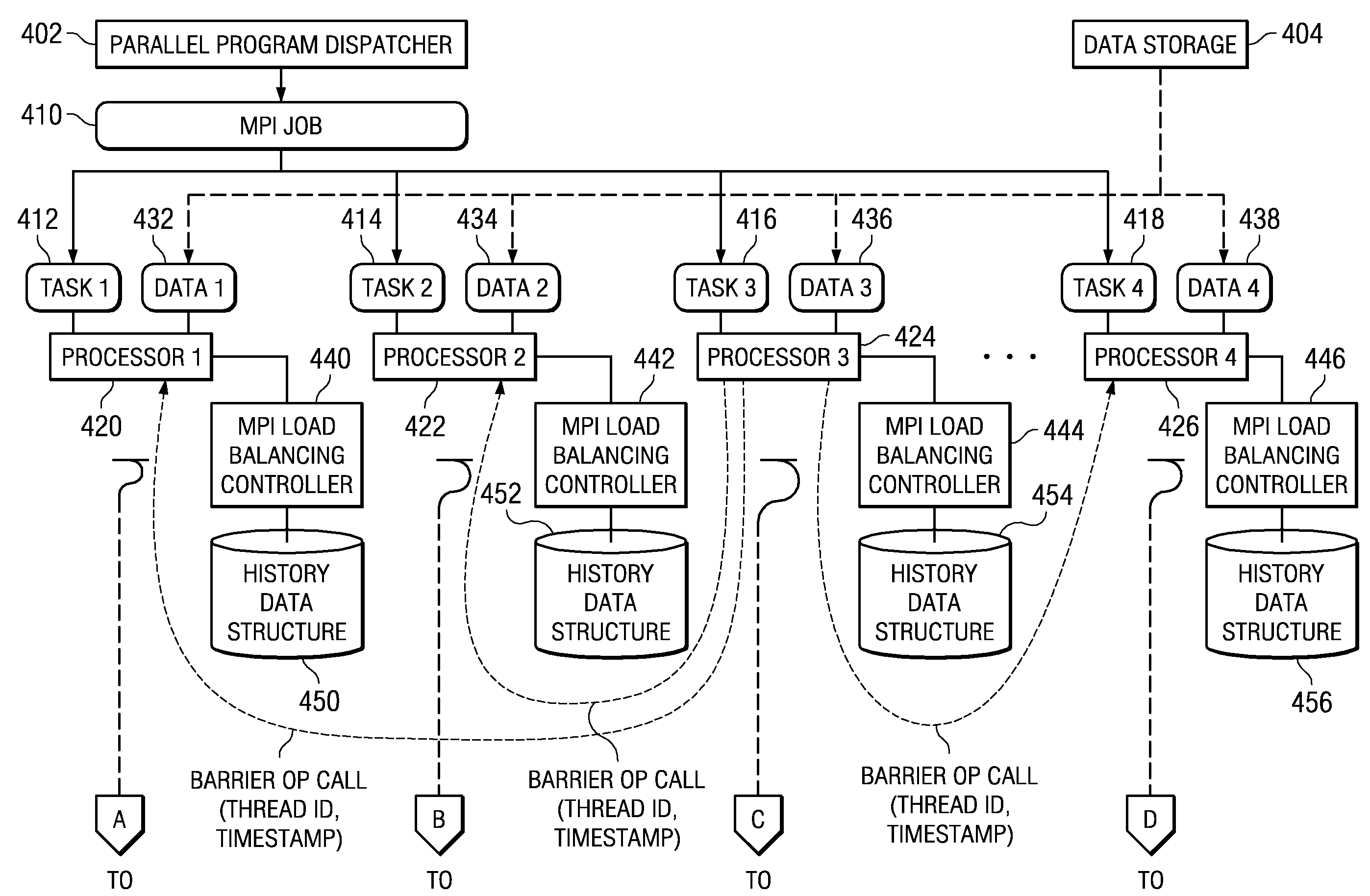

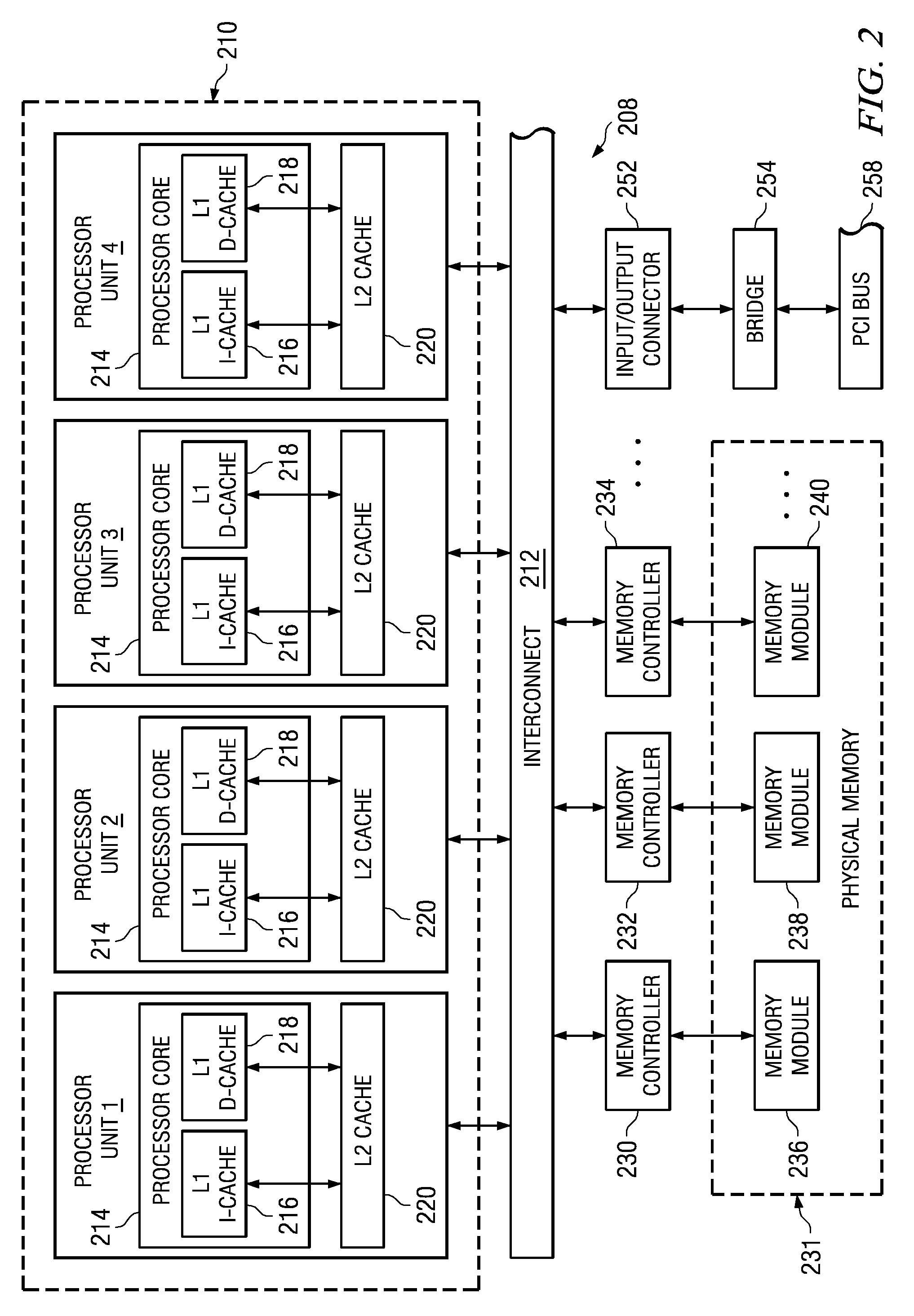

System and Method for Hardware Based Dynamic Load Balancing of Message Passing Interface Tasks By Modifying Tasks

InactiveUS20090064168A1Minimize periodWasted processor cyclesMultiprogramming arrangementsMemory systemsDynamic load balancingMessage Passing Interface

A system and method are provided for providing hardware based dynamic load balancing of message passing interface (MPI) tasks by modifying tasks. Mechanisms for adjusting the balance of processing workloads of the processors executing tasks of an MPI job are provided so as to minimize wait periods for waiting for all of the processors to call a synchronization operation. Each processor has an associated hardware implemented MPI load balancing controller. The MPI load balancing controller maintains a history that provides a profile of the tasks with regard to their calls to synchronization operations. From this information, it can be determined which processors should have their processing loads lightened and which processors are able to handle additional processing loads without significantly negatively affecting the overall operation of the parallel execution system. Thus, operations may be performed to shift workloads from the slowest processor to one or more of the faster processors.

Owner:IBM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com