Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

148 results about "Message Passing Interface" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Message Passing Interface (MPI) is a standardized and portable message-passing standard designed by a group of researchers from academia and industry to function on a wide variety of parallel computing architectures. The standard defines the syntax and semantics of a core of library routines useful to a wide range of users writing portable message-passing programs in C, C++, and Fortran. There are several well-tested and efficient implementations of MPI, many of which are open-source or in the public domain. These fostered the development of a parallel software industry, and encouraged development of portable and scalable large-scale parallel applications.

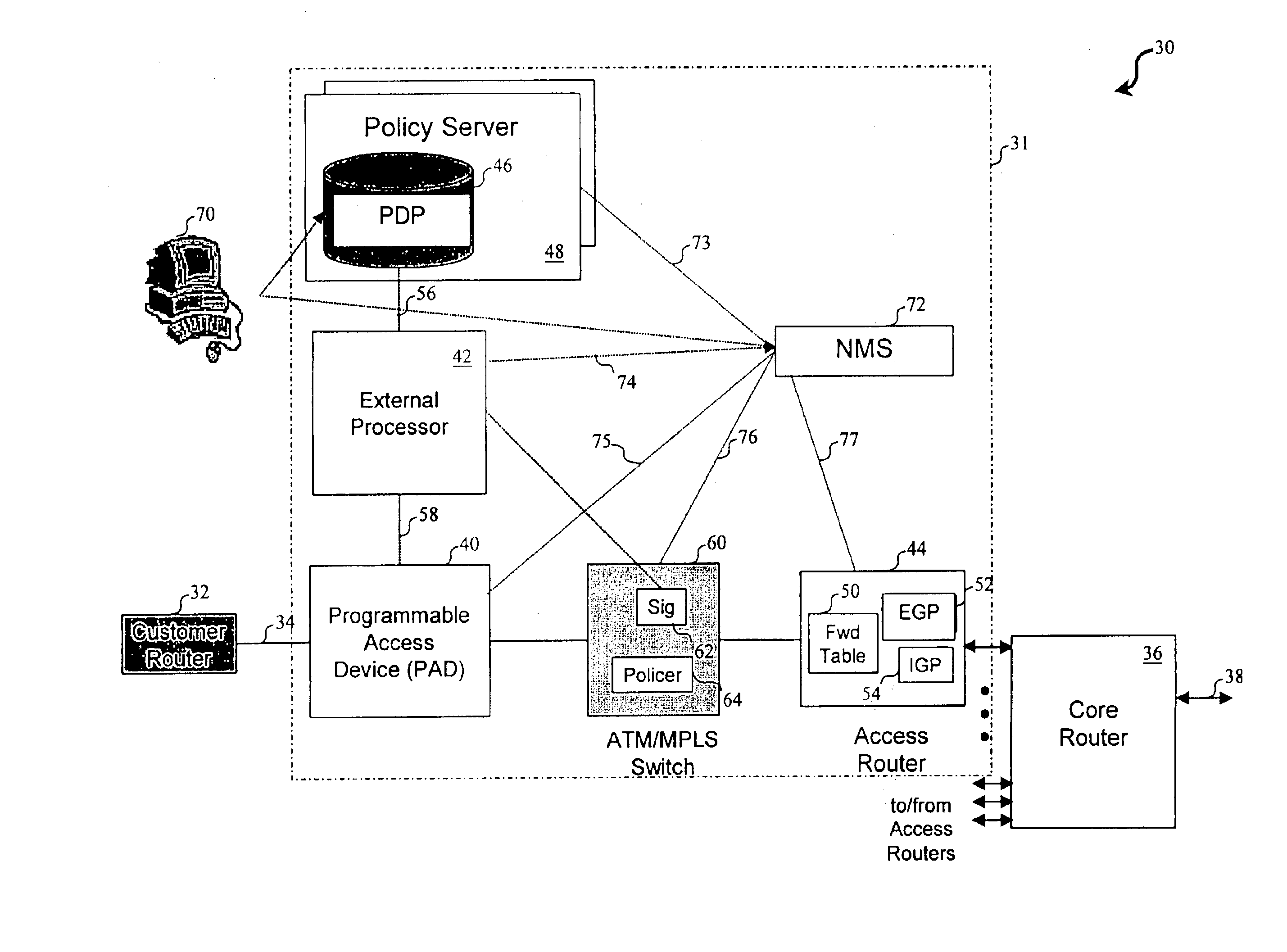

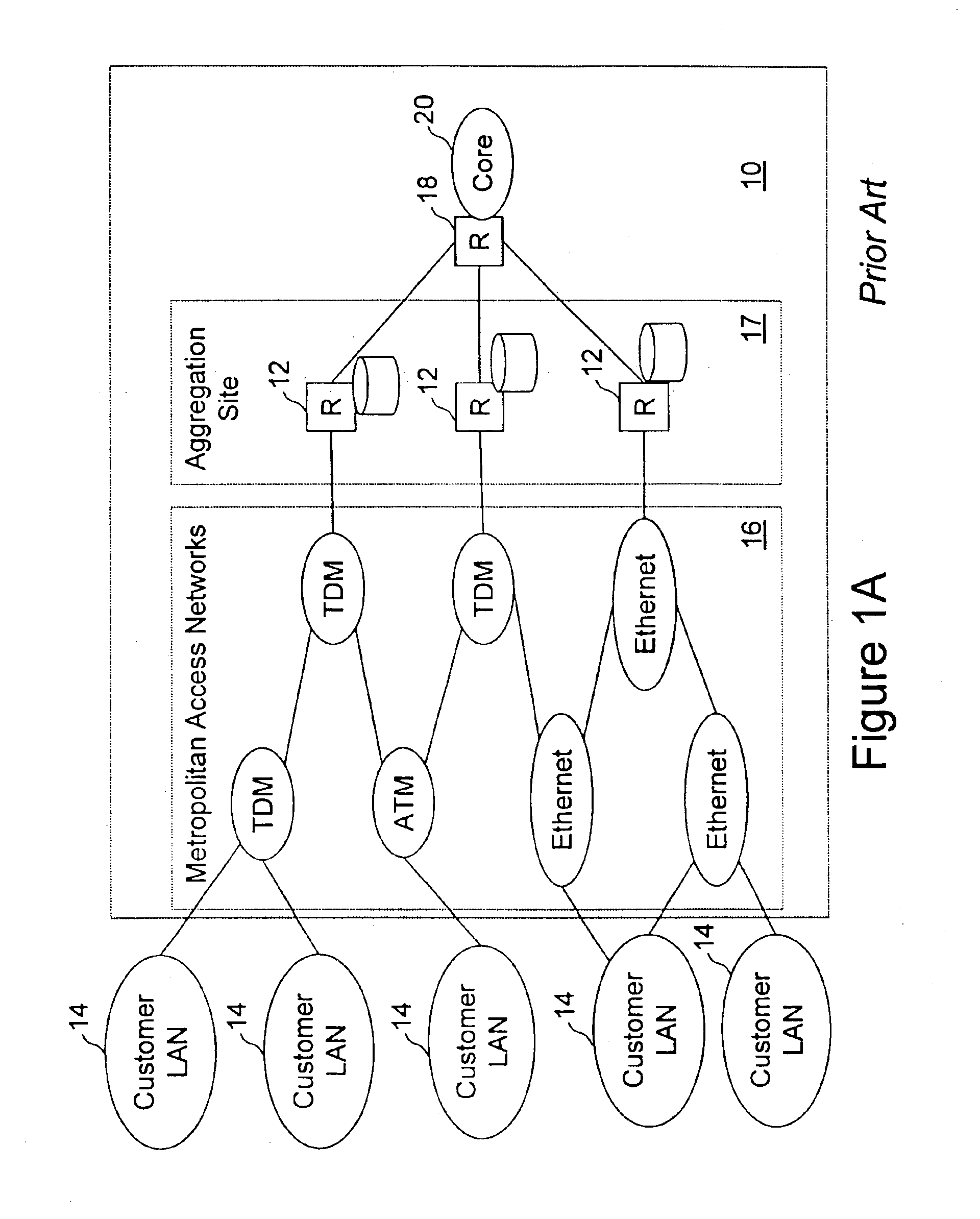

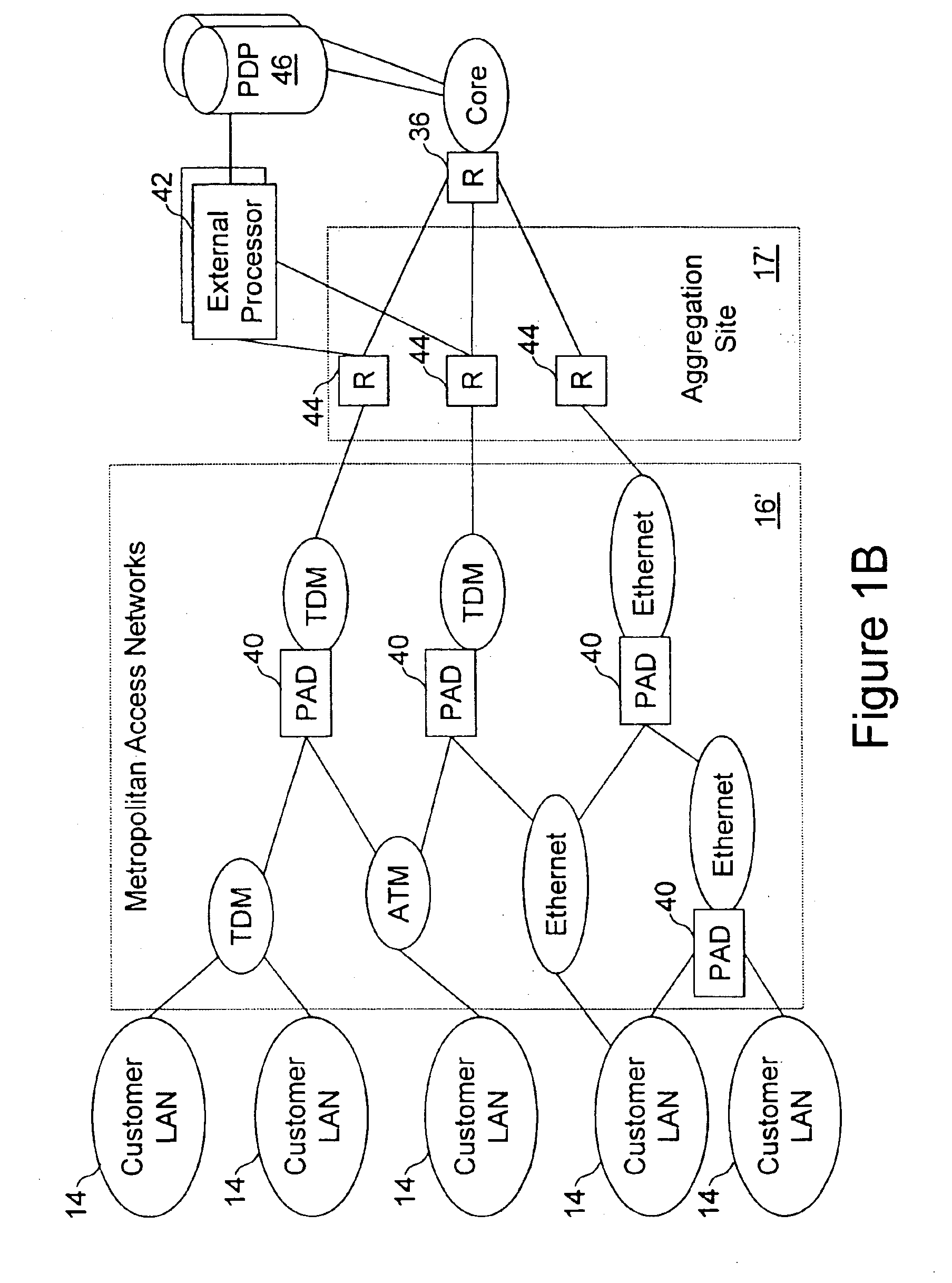

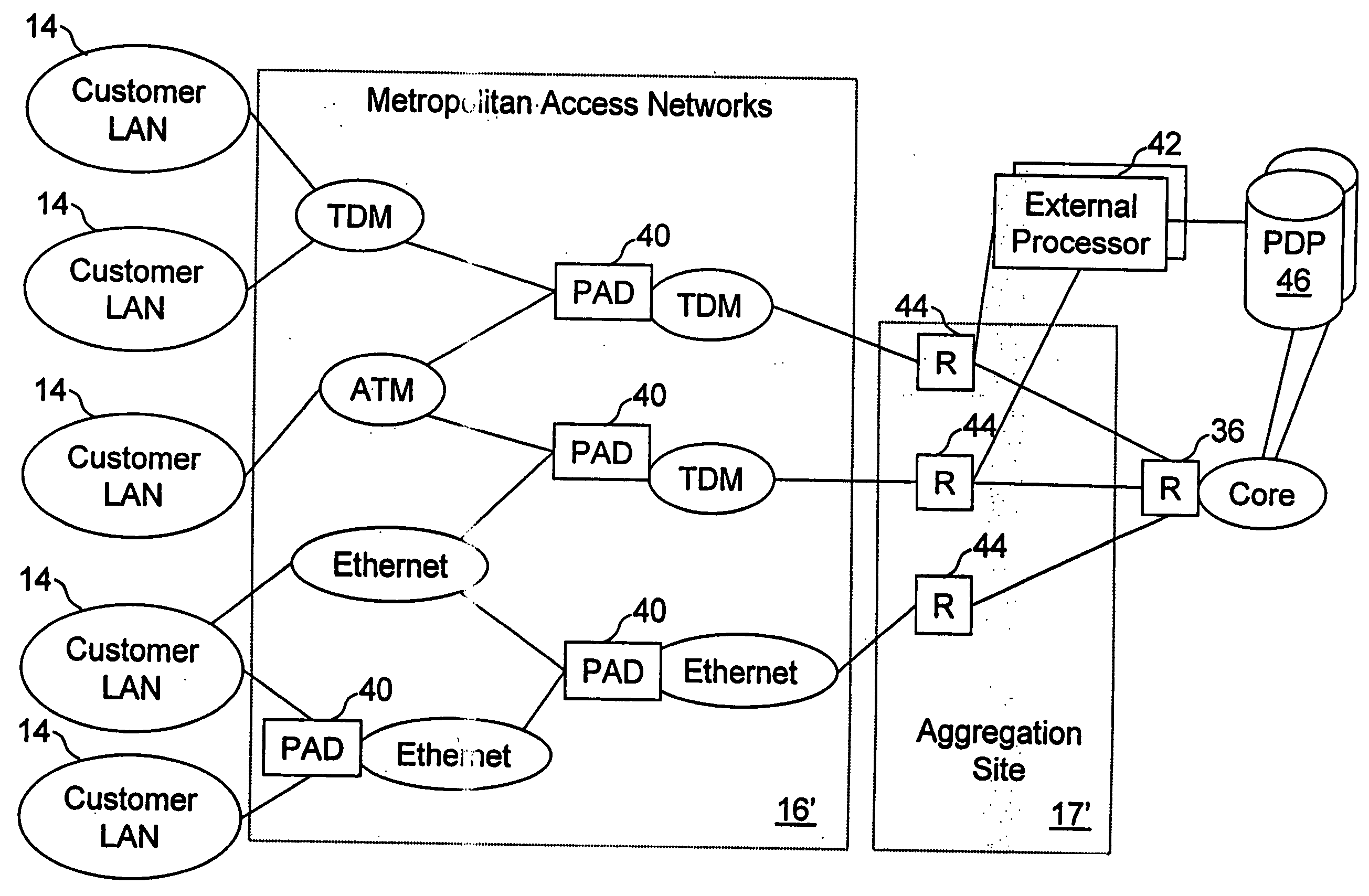

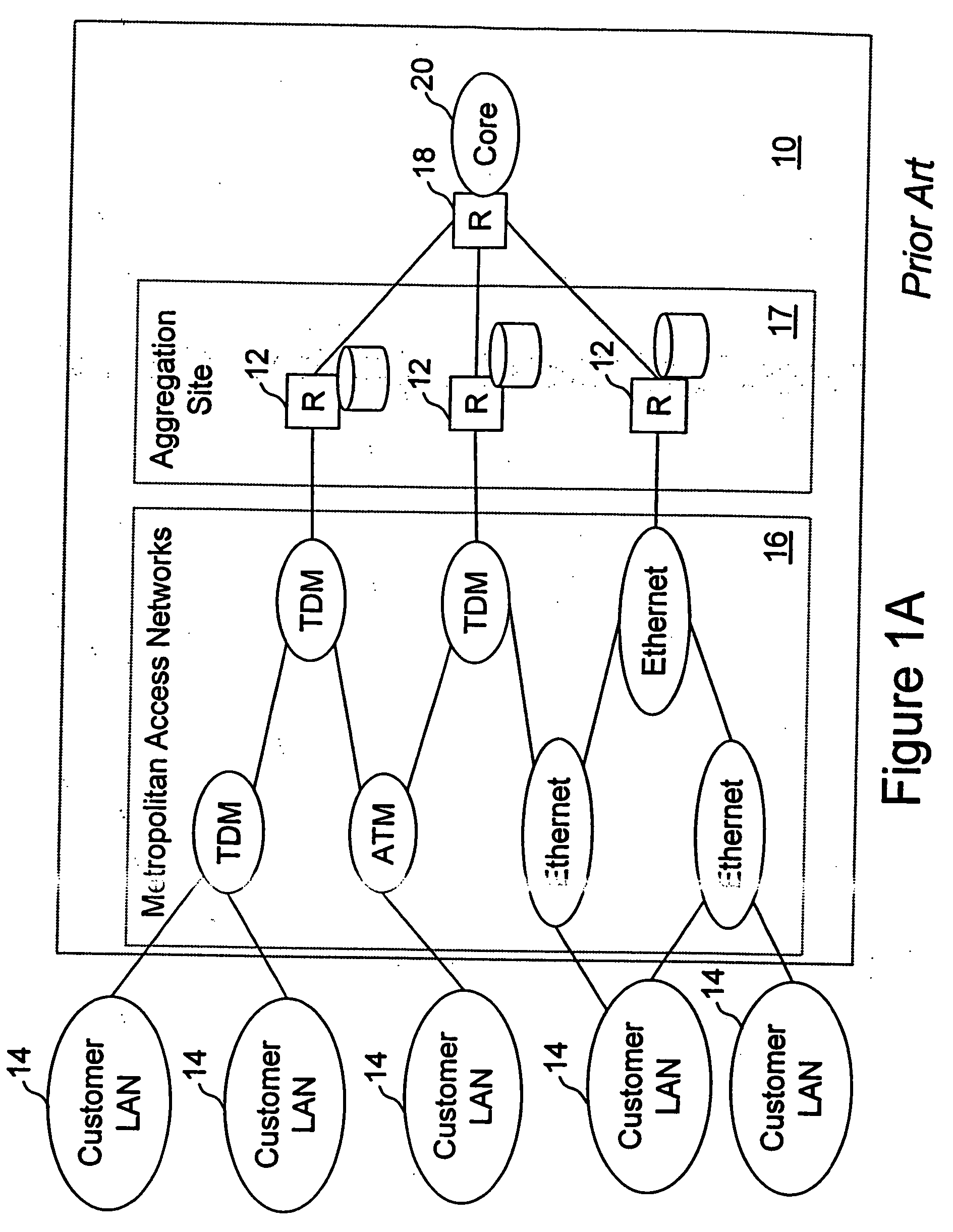

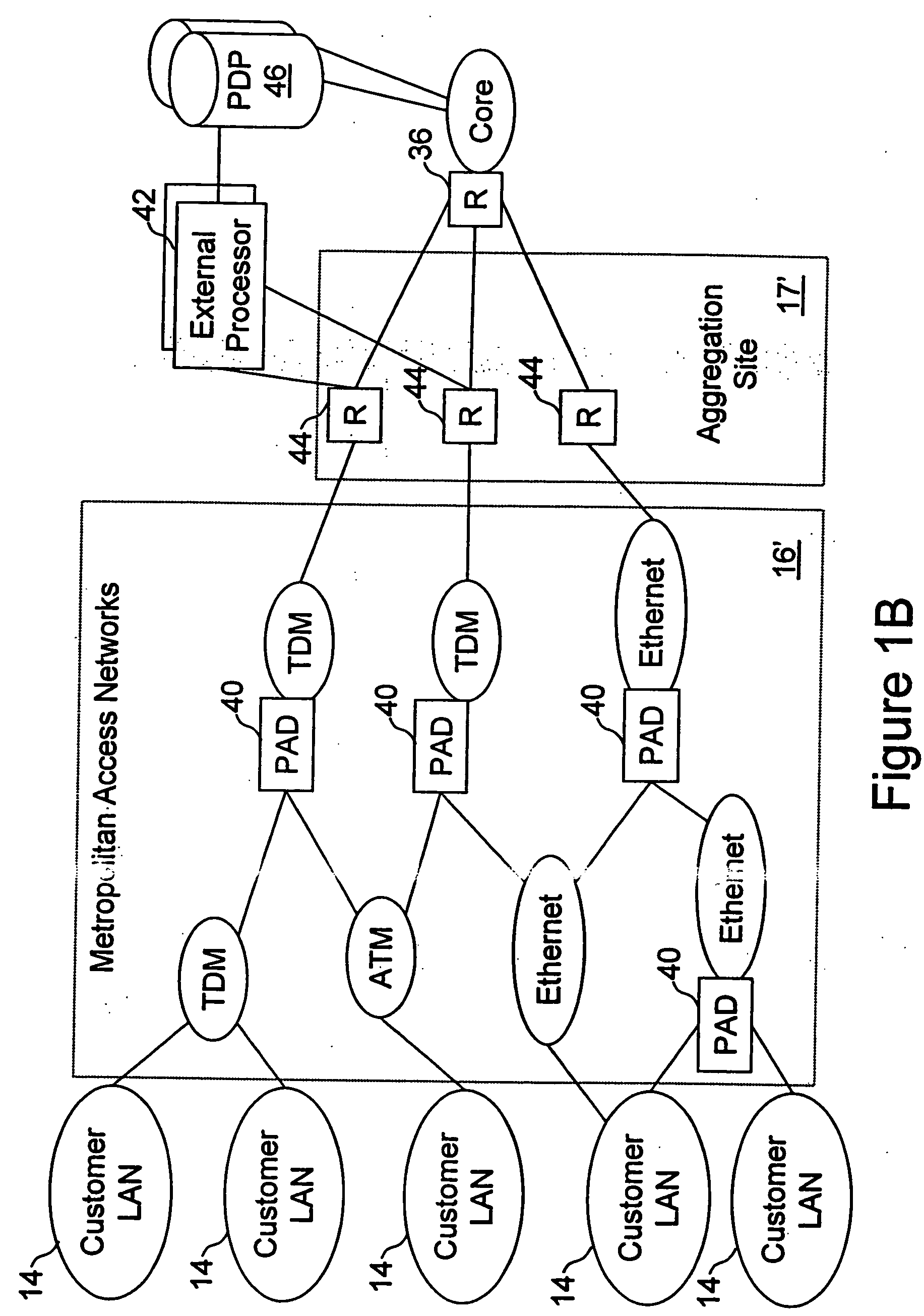

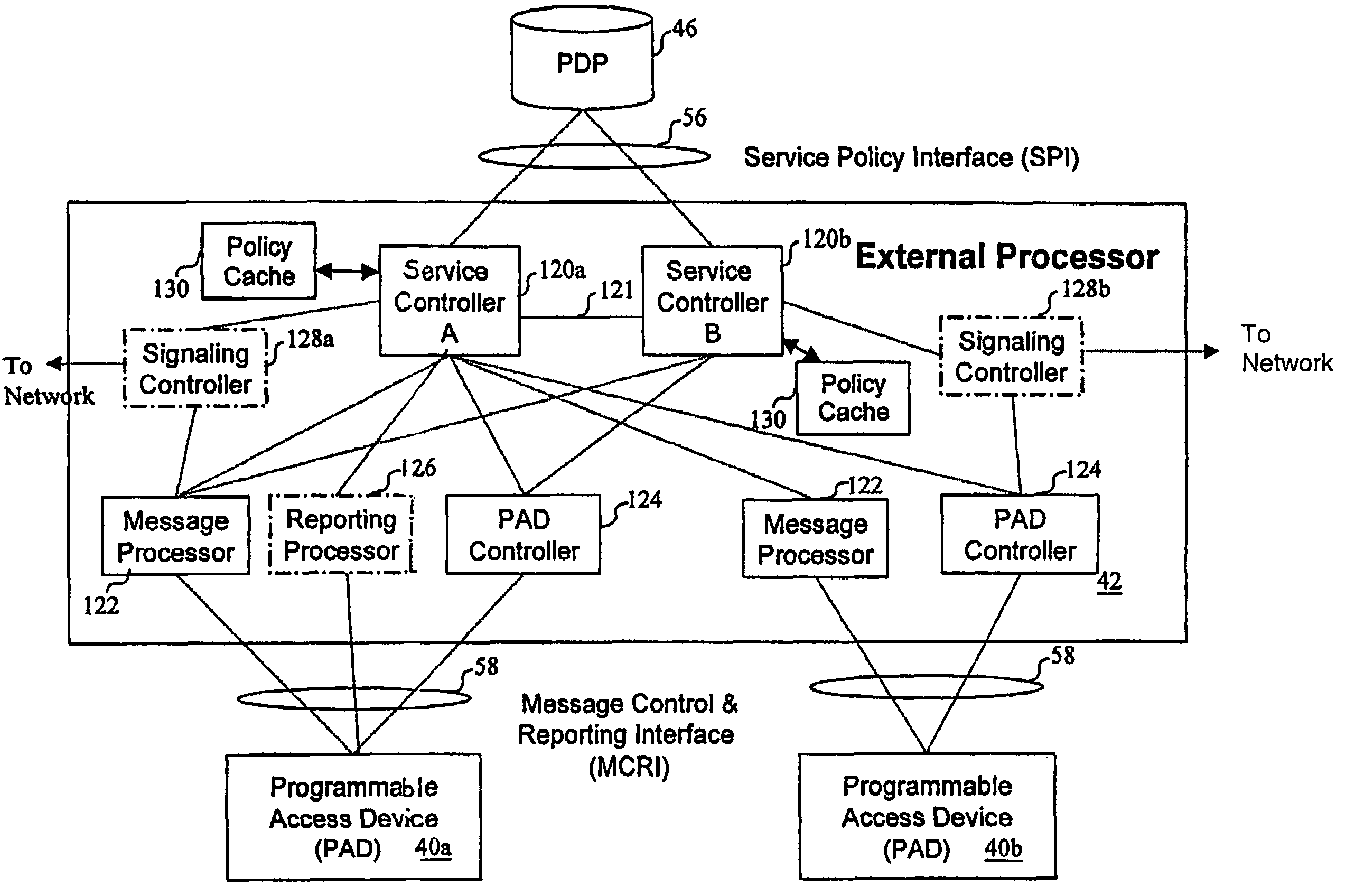

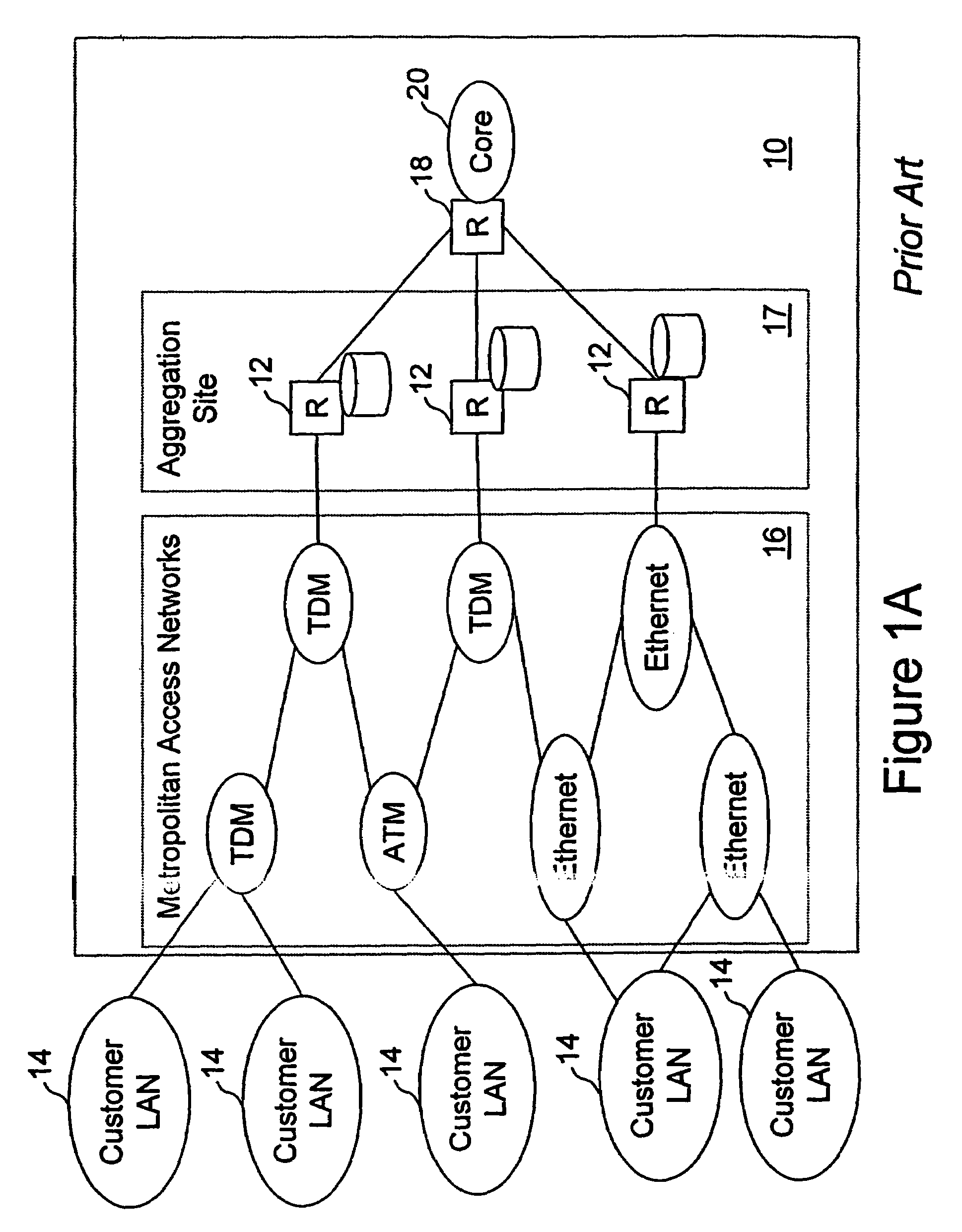

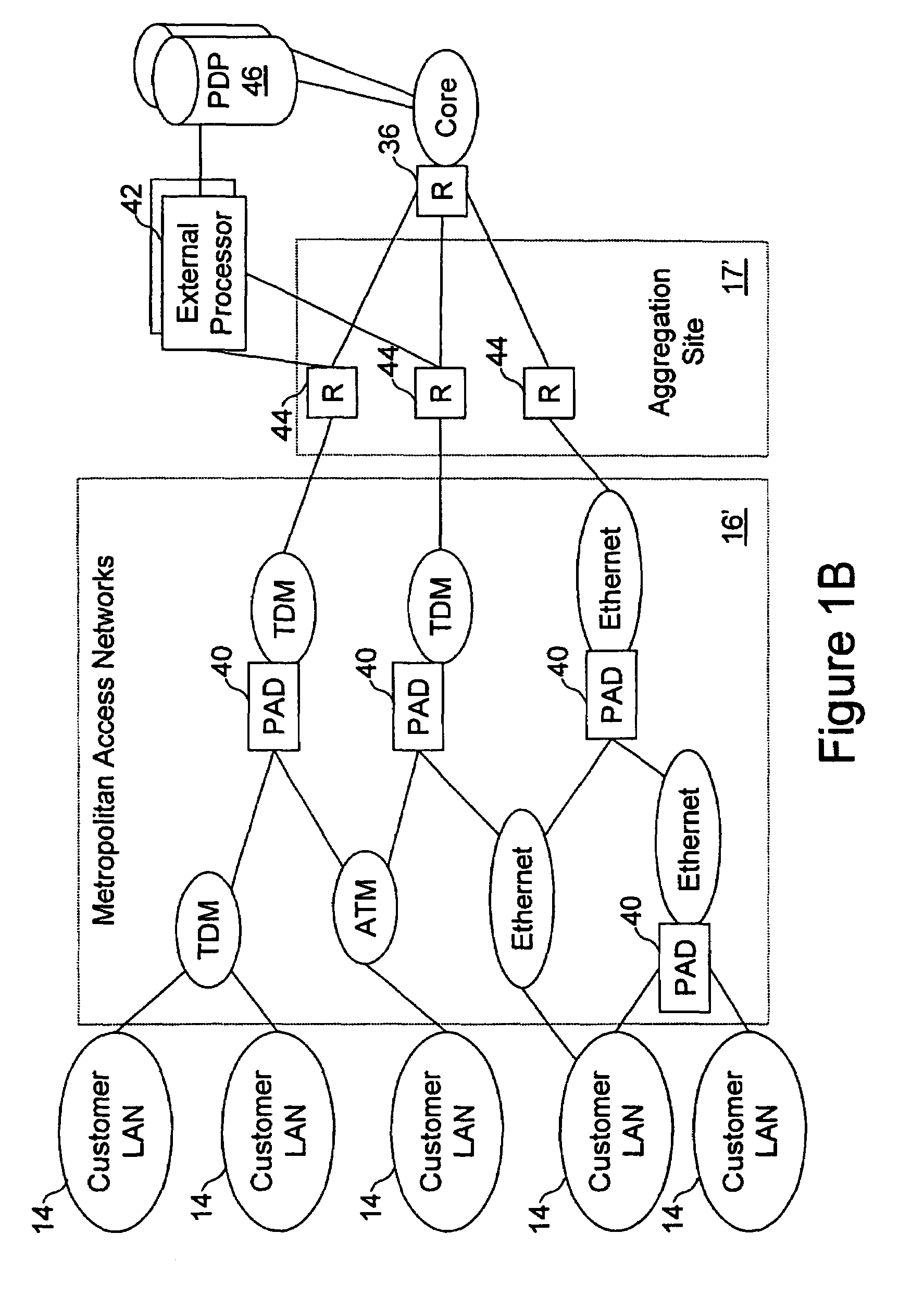

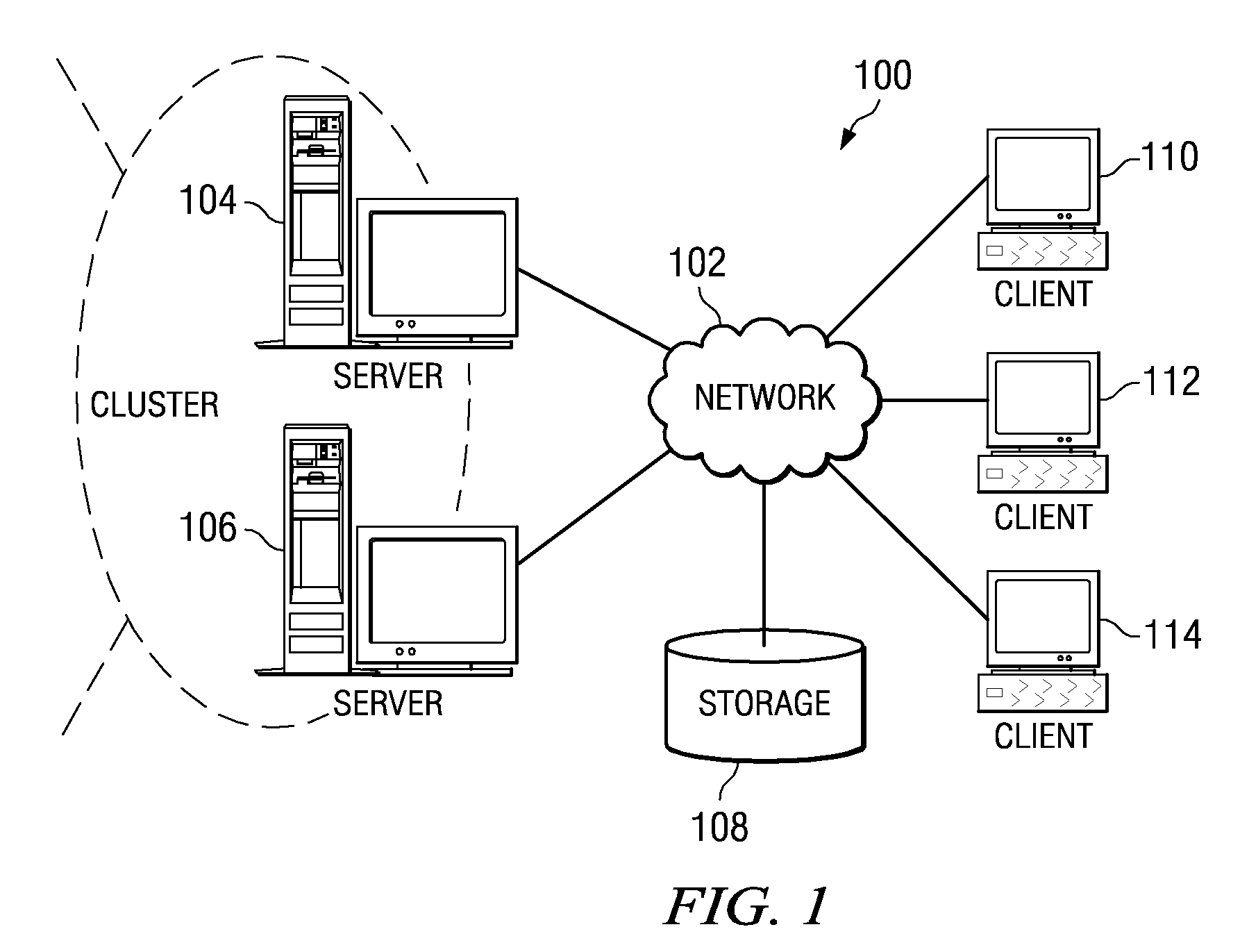

Network access system including a programmable access device having distributed service control

InactiveUS7046680B1Good extensibilityIncrease flexibilityData switching by path configurationMultiple digital computer combinationsProgrammable logic deviceMessage Passing Interface

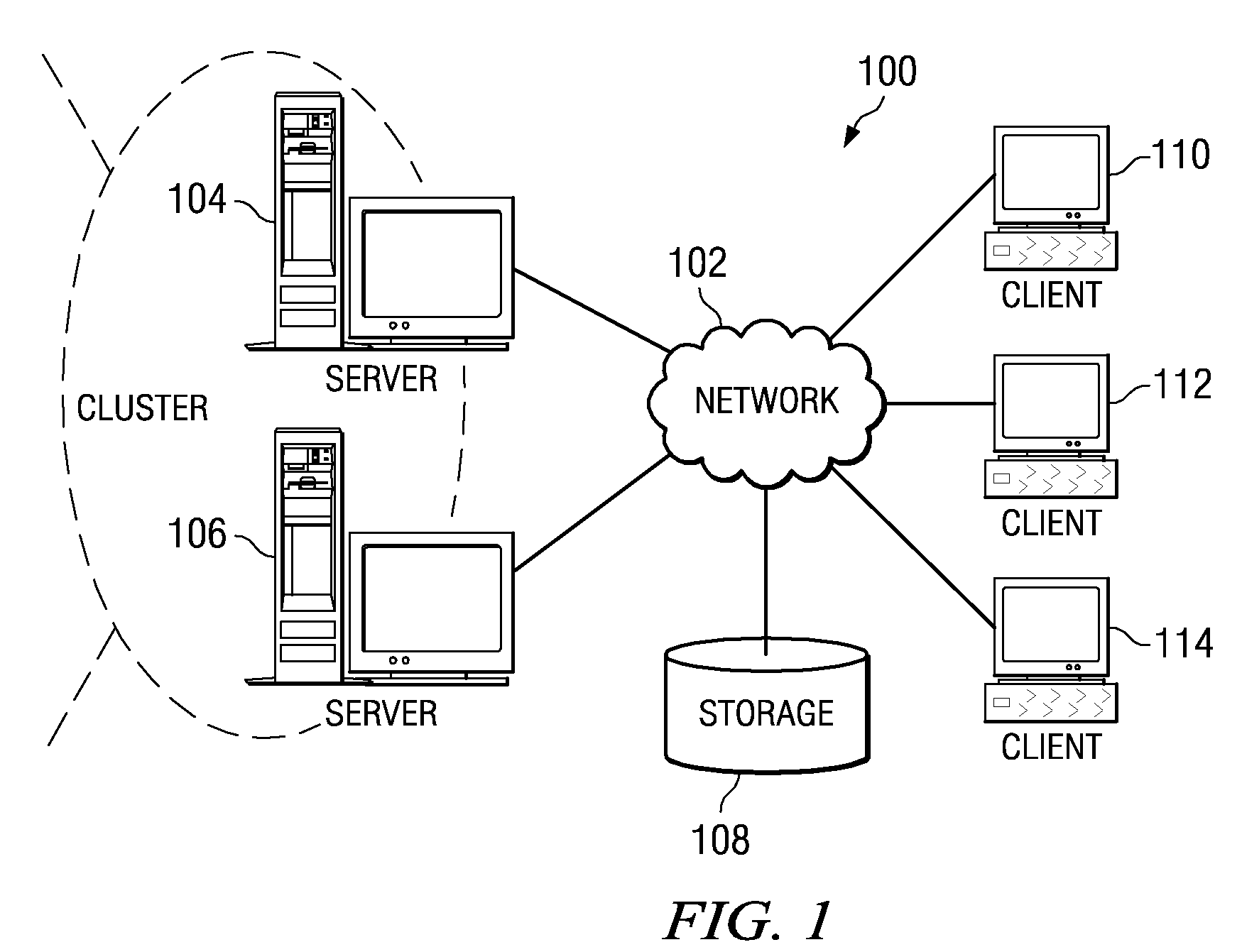

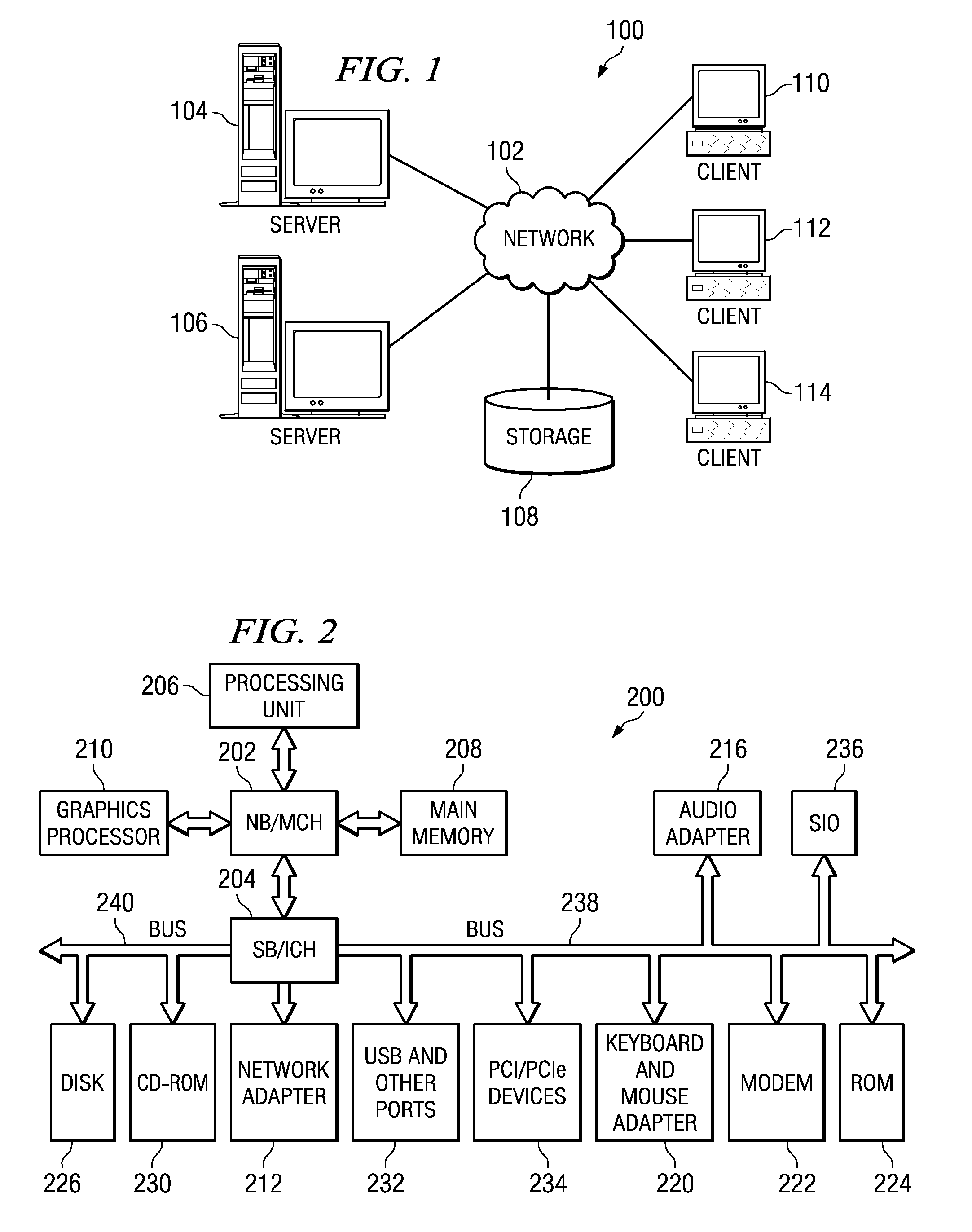

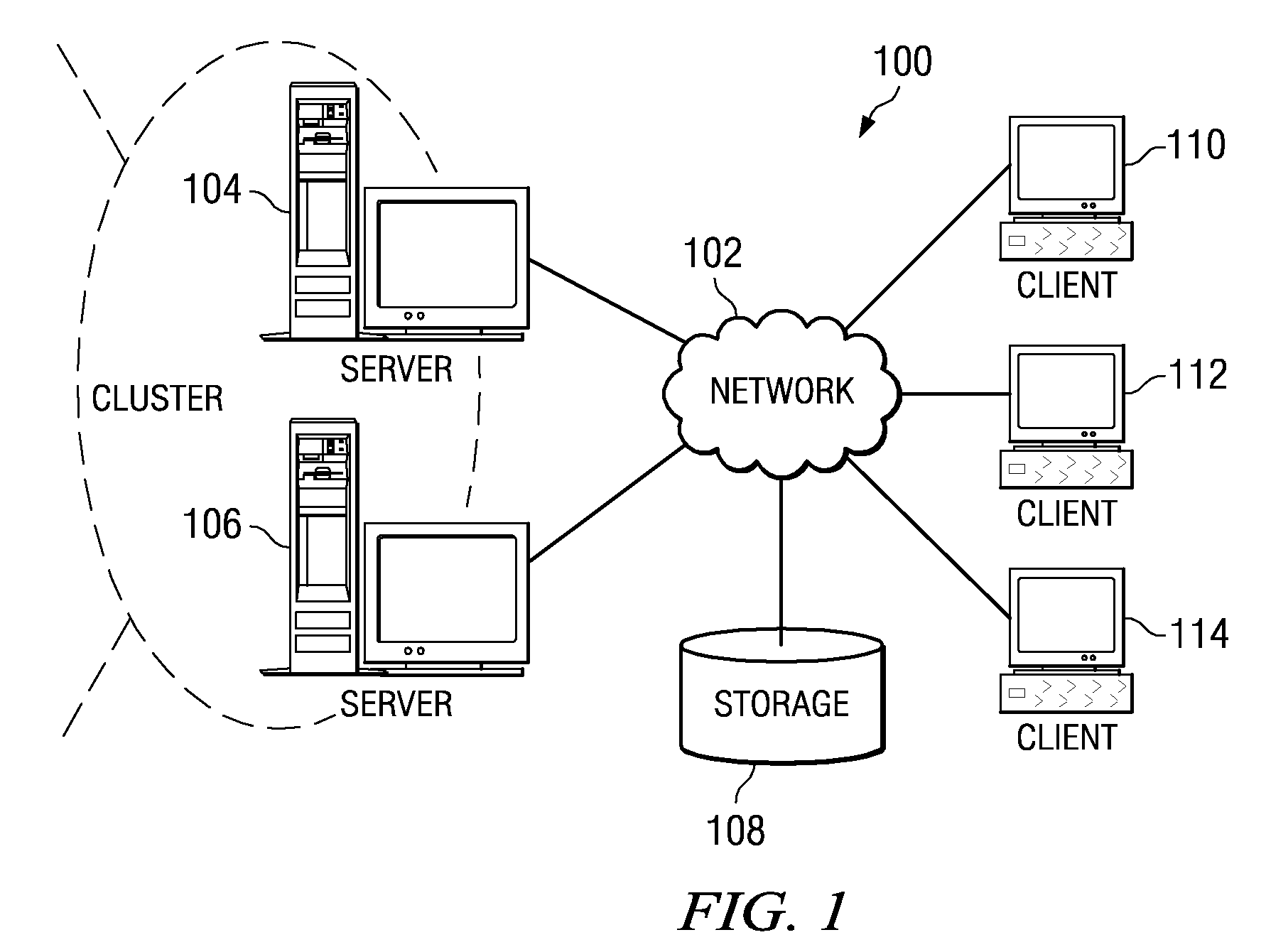

A distributed network access system in accordance with the present invention includes at least an external processor and a programmable access device. The programmable access device has a message interface coupled to the external processor and first and second network interfaces through which packets are communicated with a network. The programmable access device includes a packet header filter and a forwarding table that is utilized to route packets communicated between the first and second network interfaces. In response to receipt of a series of packets, the packet header filter in the programmable access device identifies messages in the series of messages upon which policy-based services are to be implemented and passes identified messages via the message interface to the external processor for processing. In response to receipt of a message, the external processor invokes service control on the message and may also invoke policy control on the message.

Owner:VERIZON PATENT & LICENSING INC

Network access system including a programmable access device having distributed service control

InactiveUS20050117576A1Good extensibilityIncrease flexibilityData switching by path configurationPacket communicationService control

A distributed network access system in accordance with the present invention includes at least an external processor and a programmable access device. The programmable access device has a message interface coupled to the external processor and first and second network interfaces through which packets are communicated with a network The programmable access device includes a packet header filter and a forwarding table that is utilized to route packets communication between the first and second network interfaces. In response to receipt of a series of packets, the packet header filter in the programmable access device identifies messages in the series of messages upon which policy-based services are to be implemented and passes identified messages via the message interface to the external processor for processing. In response to receipt of a message, the external processor invokes service control on the message and may also invoke policy control on the message.

Owner:VERIZON PATENT & LICENSING INC

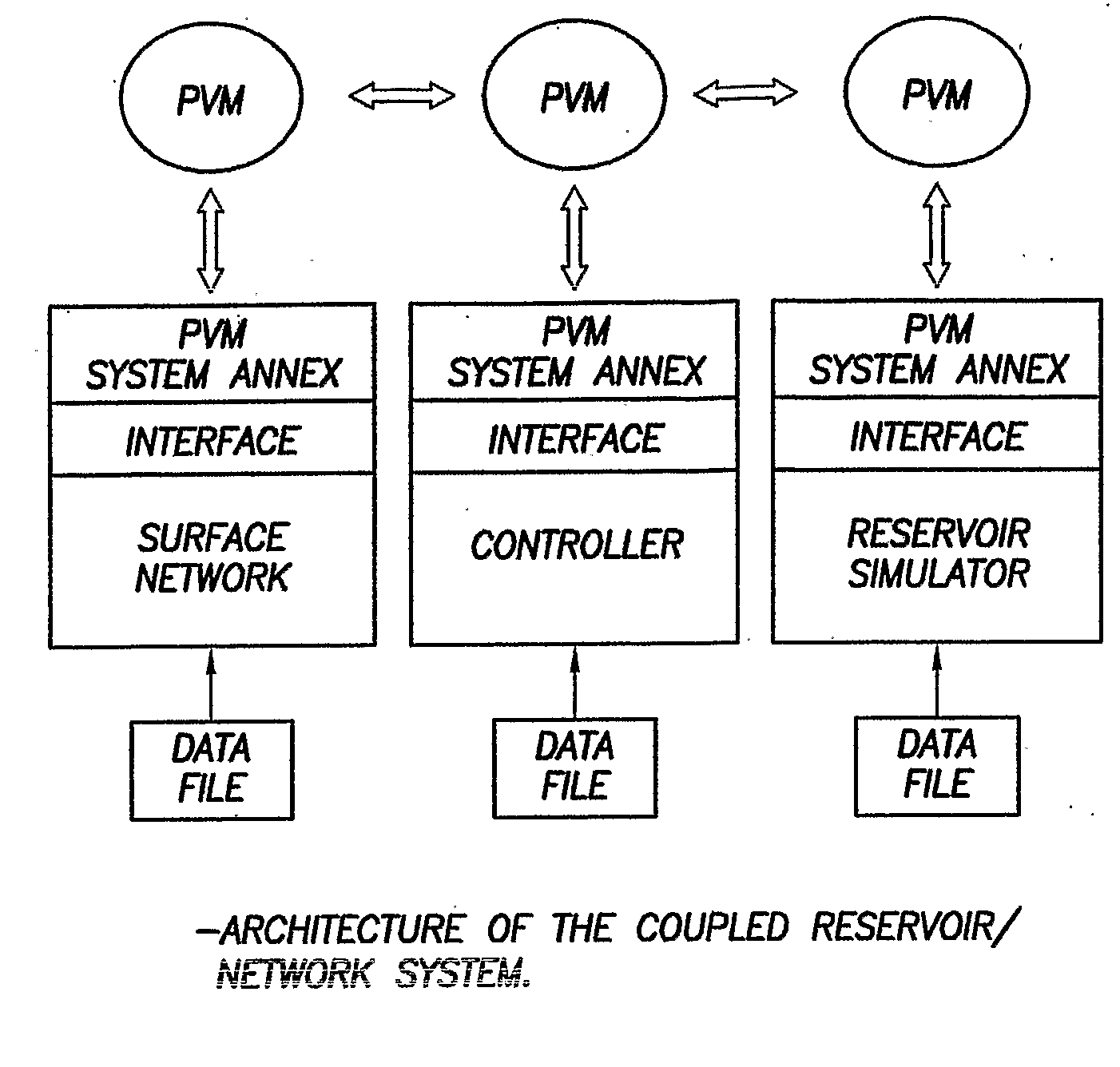

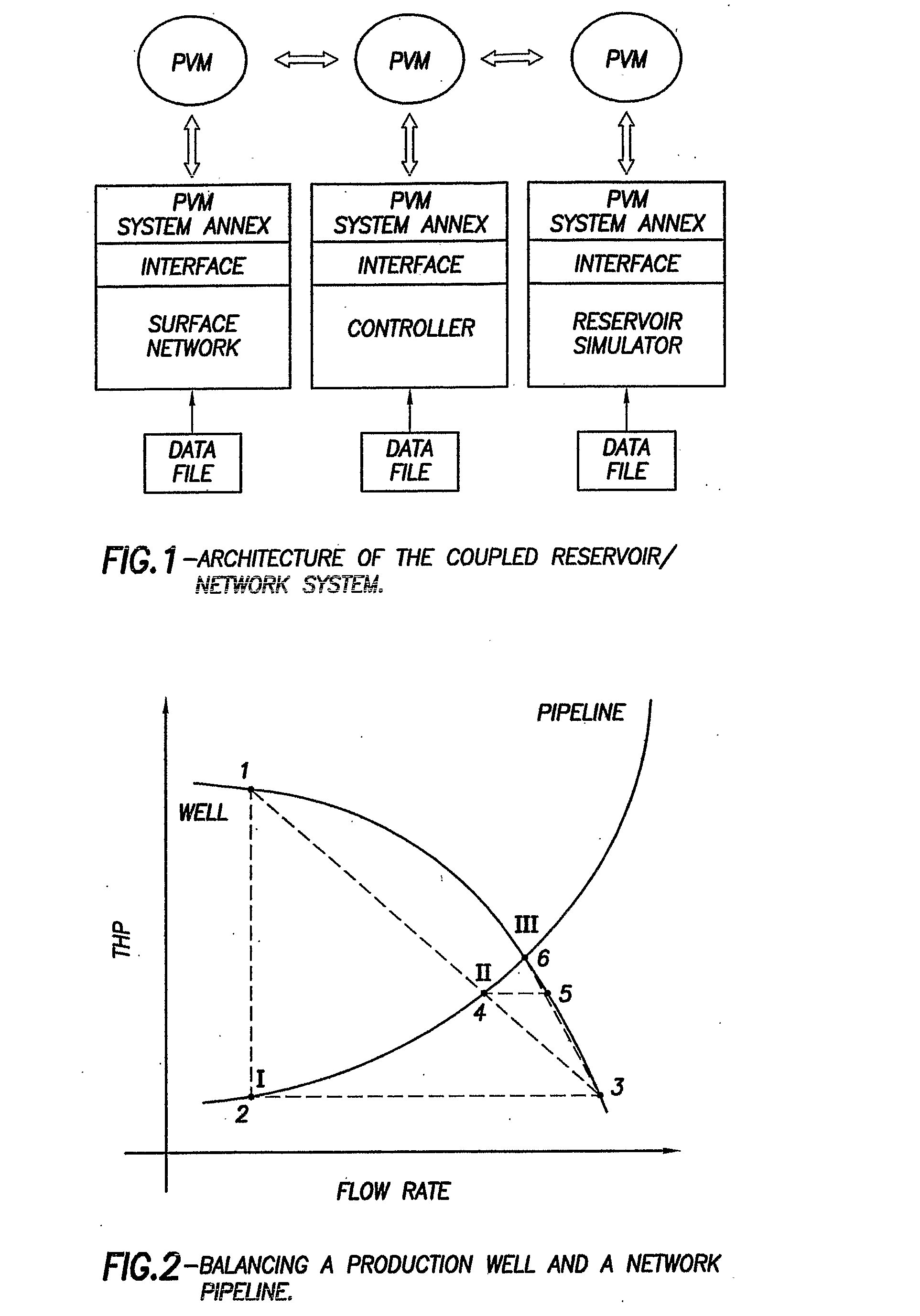

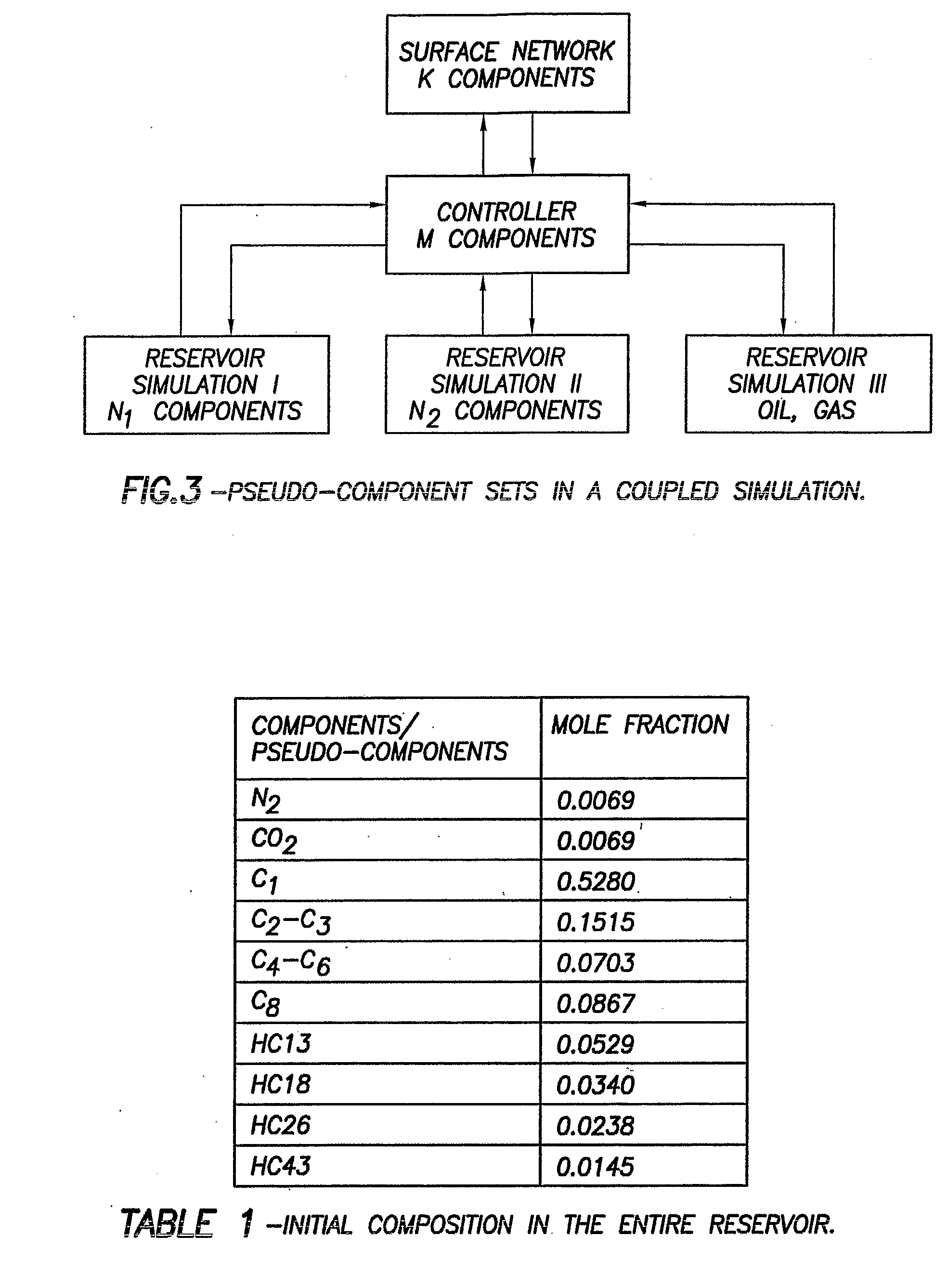

Method and system for integrated reservoir and surface facility networks simulations

InactiveUS20070112547A1Design optimisation/simulationConstraint-based CADMulti platformNetwork model

Integrated surface-subsurface modeling has been shown to have a critical impact on field development and optimization. Integrated models are often necessary to analyze properly the pressure interaction between a reservoir and a constrained surface facility network, or to predict the behavior of several fields, which may have different fluid compositions, sharing a common surface facility. The latter is gaining a tremendous significance in recent deepwater field development. These applications require an integrated solution with the following capabilities: * to balance a surface network model with a reservoir simulation model in a robust and efficient manner. * To couple multiple reservoir models, production and injection networks, synchronising their advancement through time. * To allow the reservoir and surface network models to use their own independent fluid descriptions (black oil or compositional descriptions with differing sets of pseudo-components). * To apply global production and injection constraints to the coupled system (including the transfer of re-injection fluids between reservoirs). In this paper we describe a general-purpose multi-platform reservoir and network coupling controller having all the above features. The controller communicates with a selection of reservoir simulators and surface network simulators via an open message-passing interface. It manages the balancing of the reservoirs and surface networks, and synchronizes their advancement through time. The controller also applies the global production and injection constraints, and converts the hydrocarbon fluid streams between the different sets of pseudo-components used in the simulation models. The controller's coupling and synchronization algorithms are described, and example applications are provided. The flexibility of the controller's open interface makes it possible to plug in further modules (to perform optimization, for example) and additional simulators.

Owner:SCHLUMBERGER TECH CORP

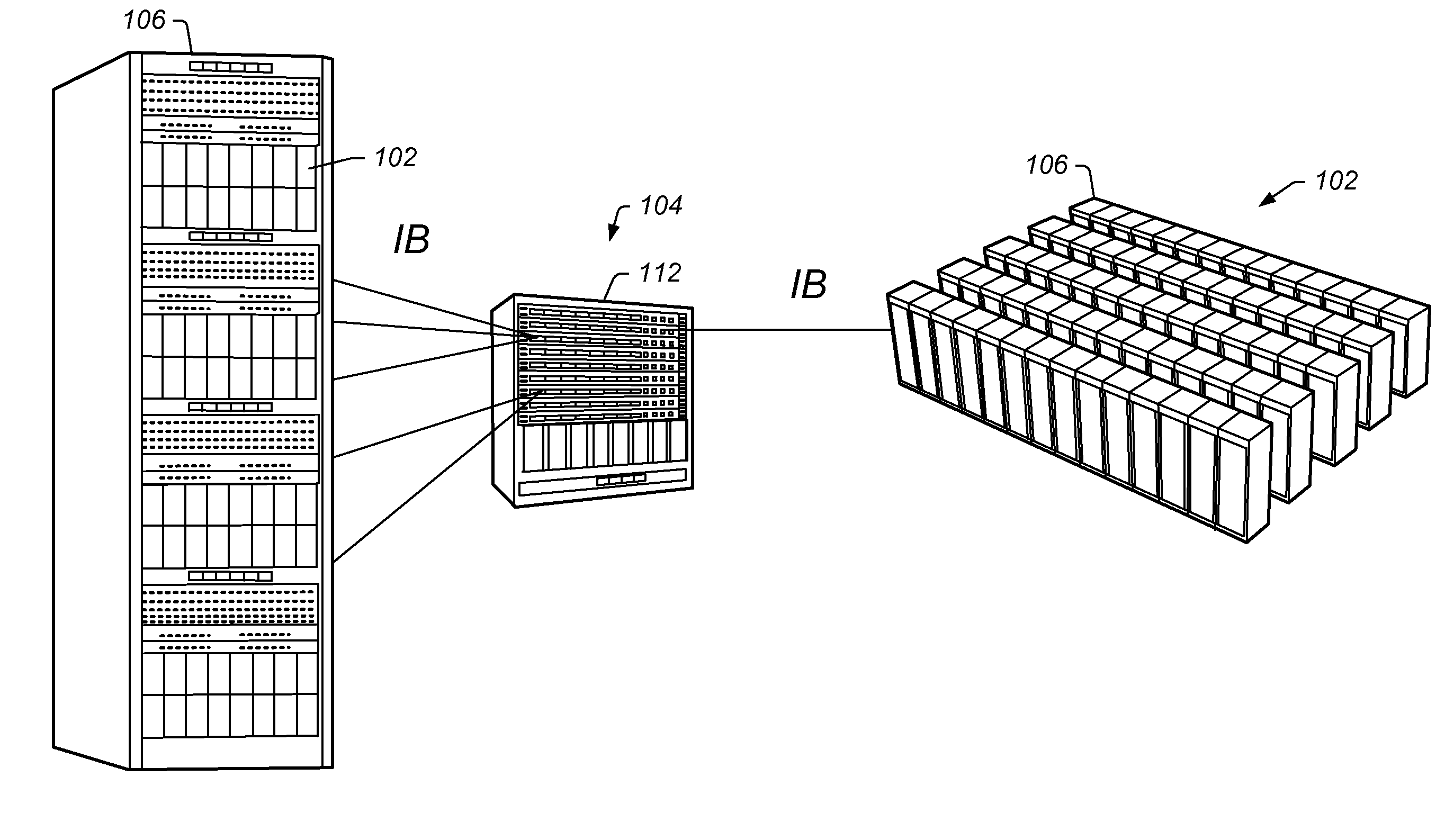

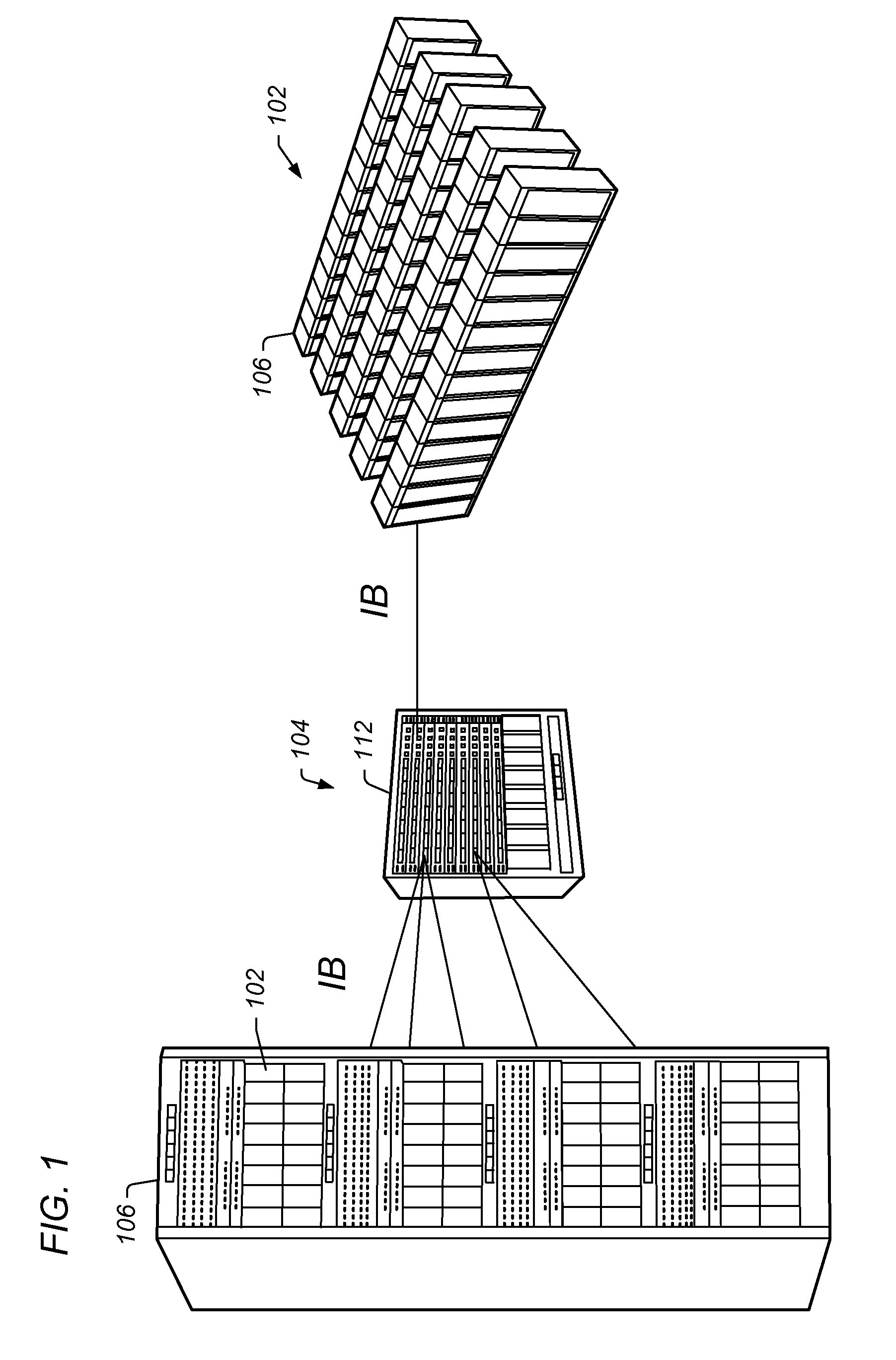

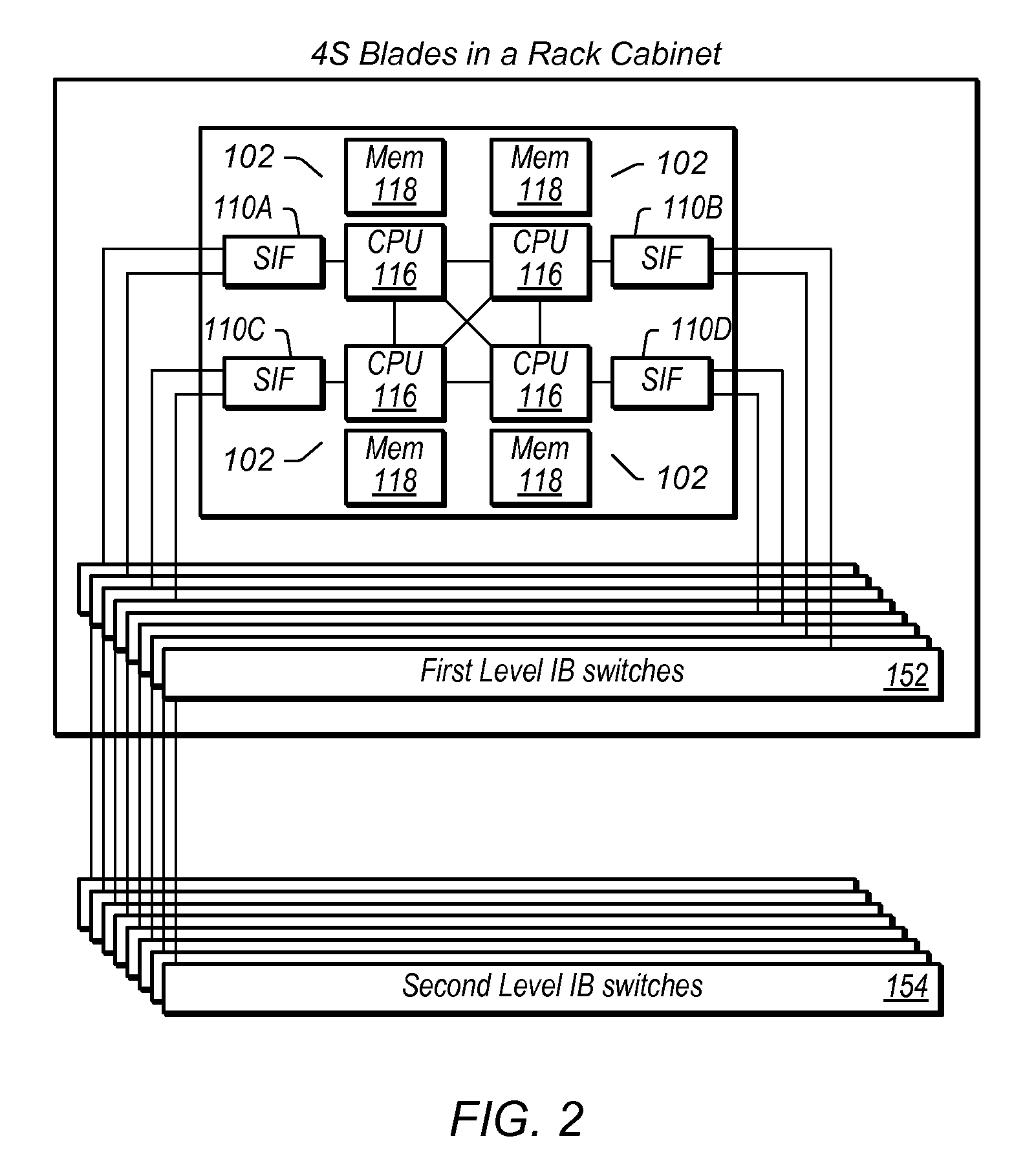

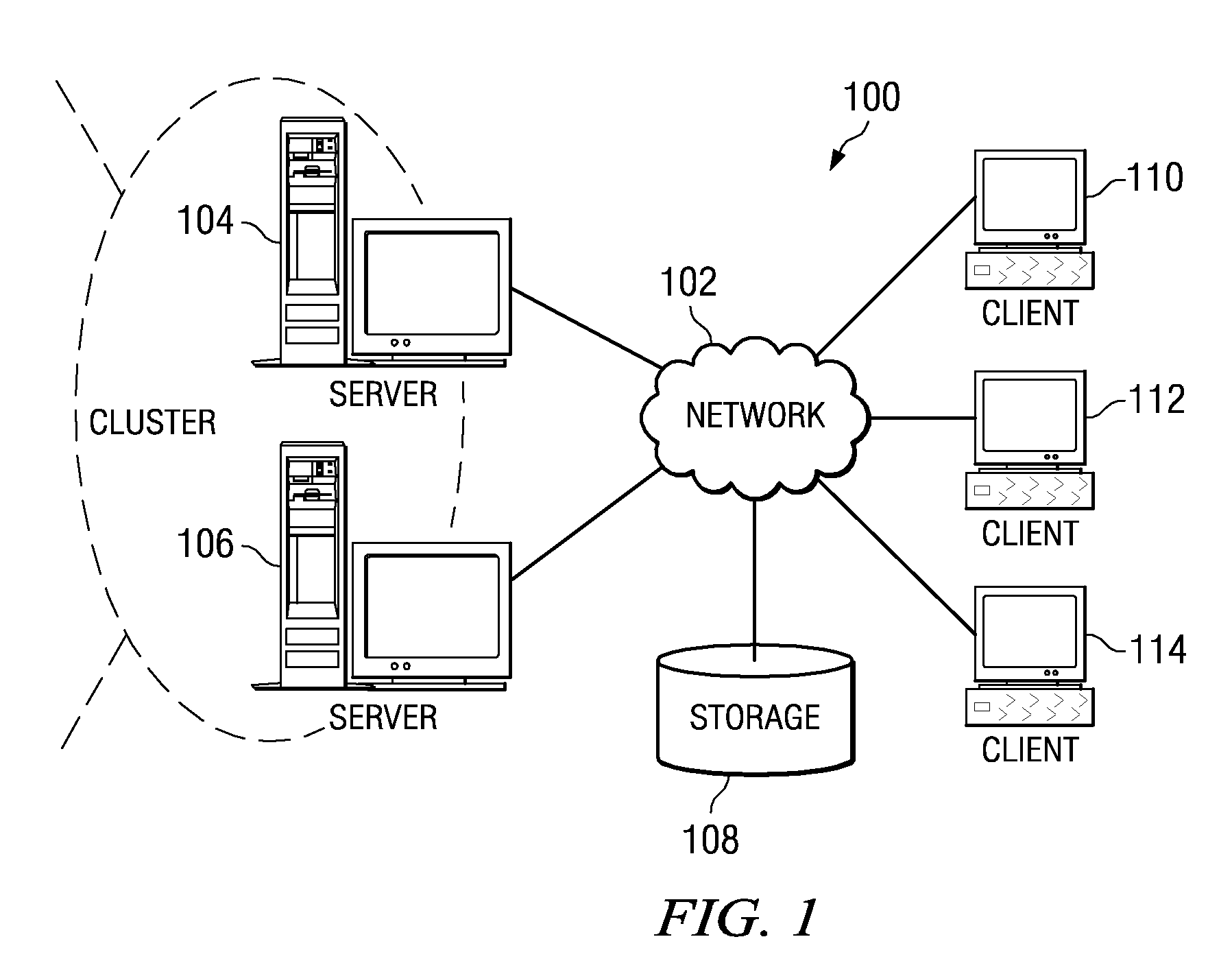

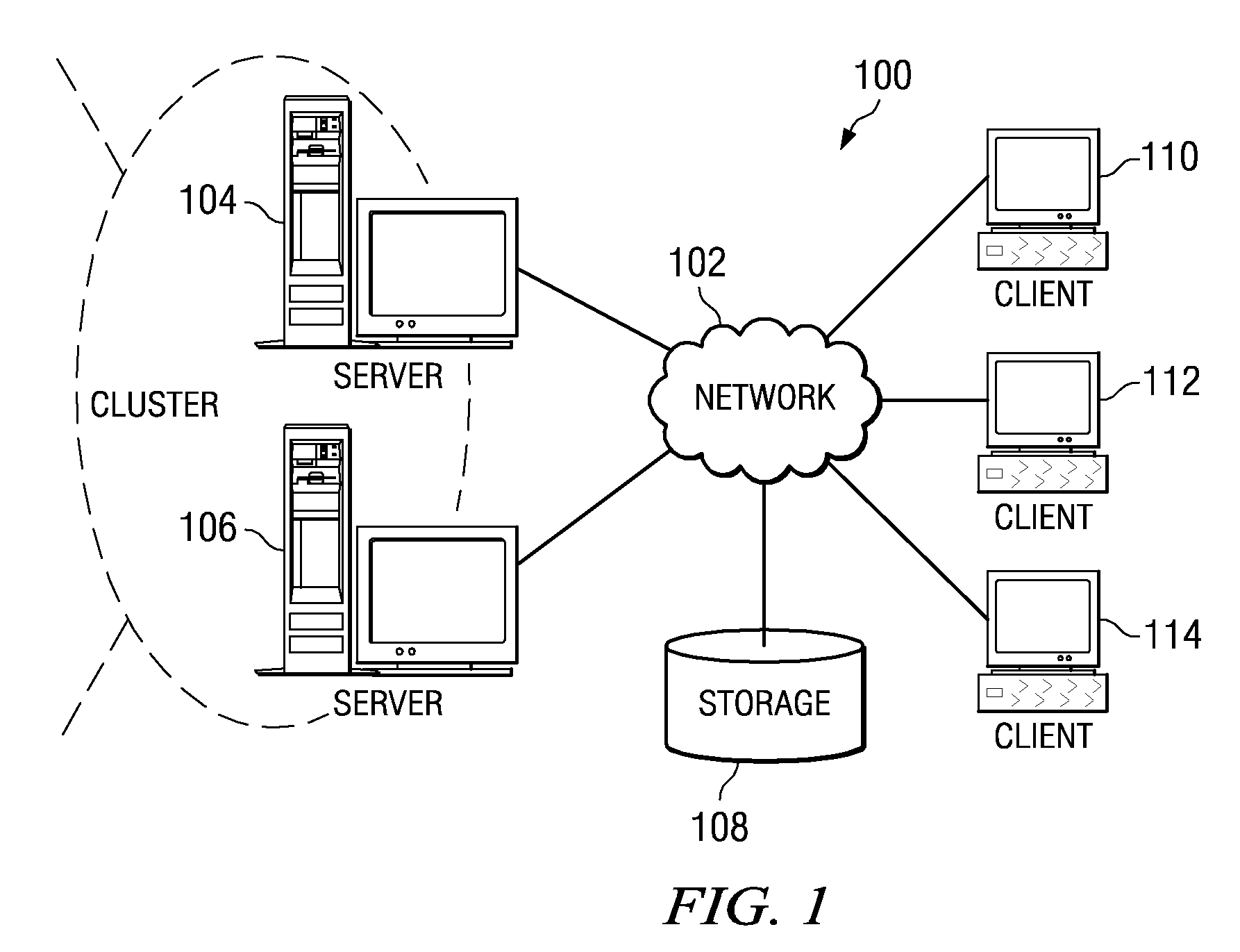

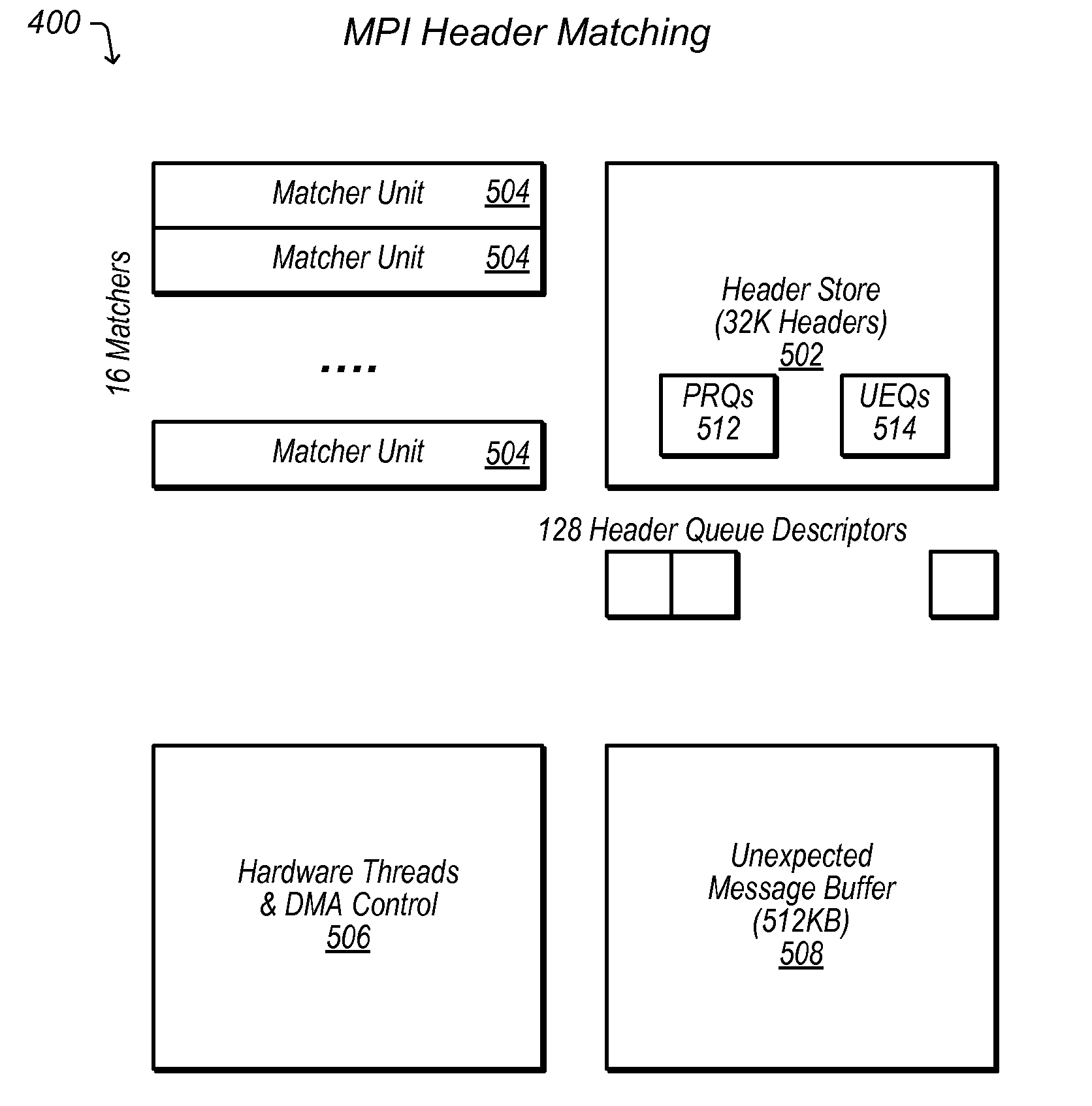

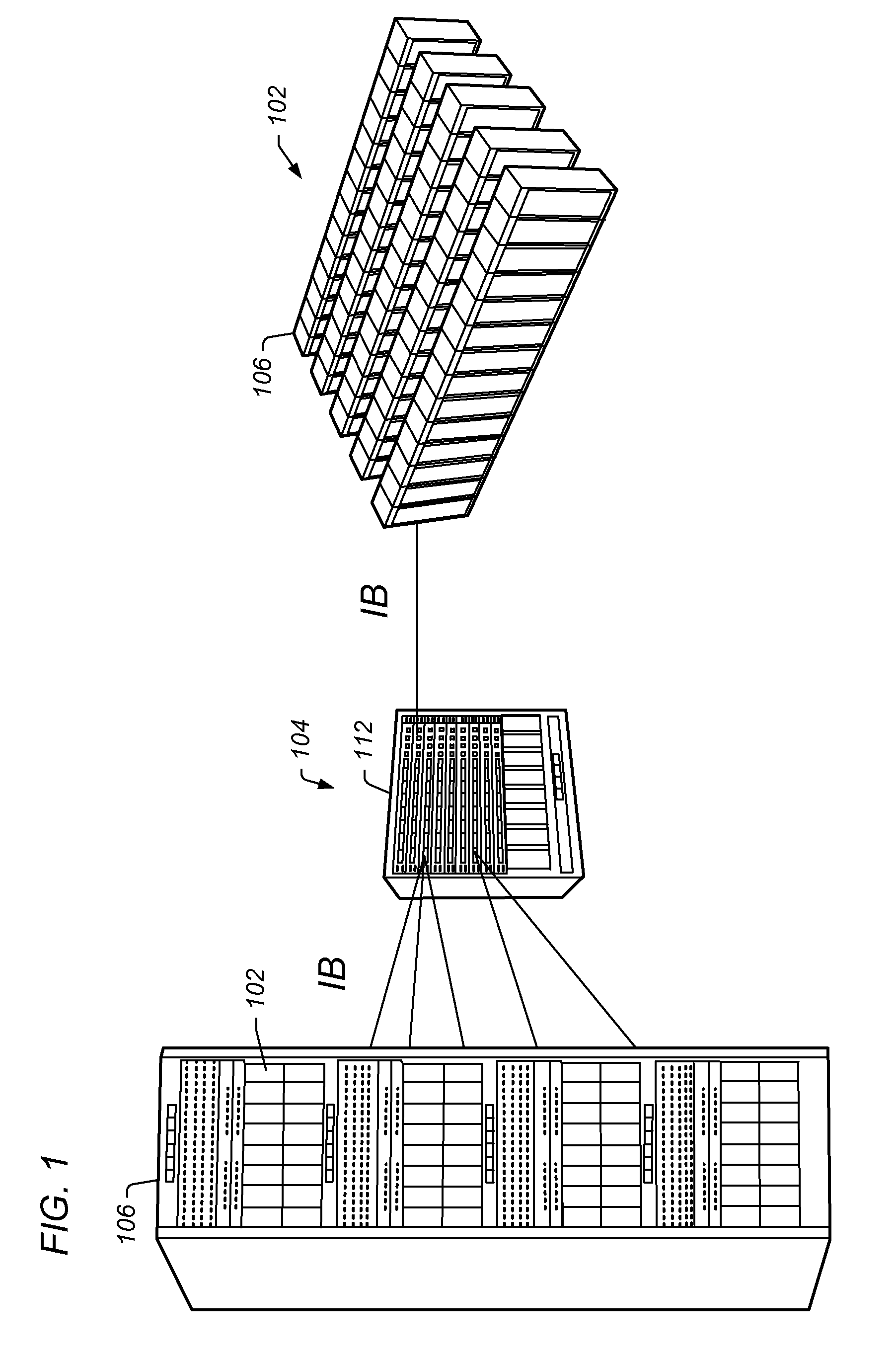

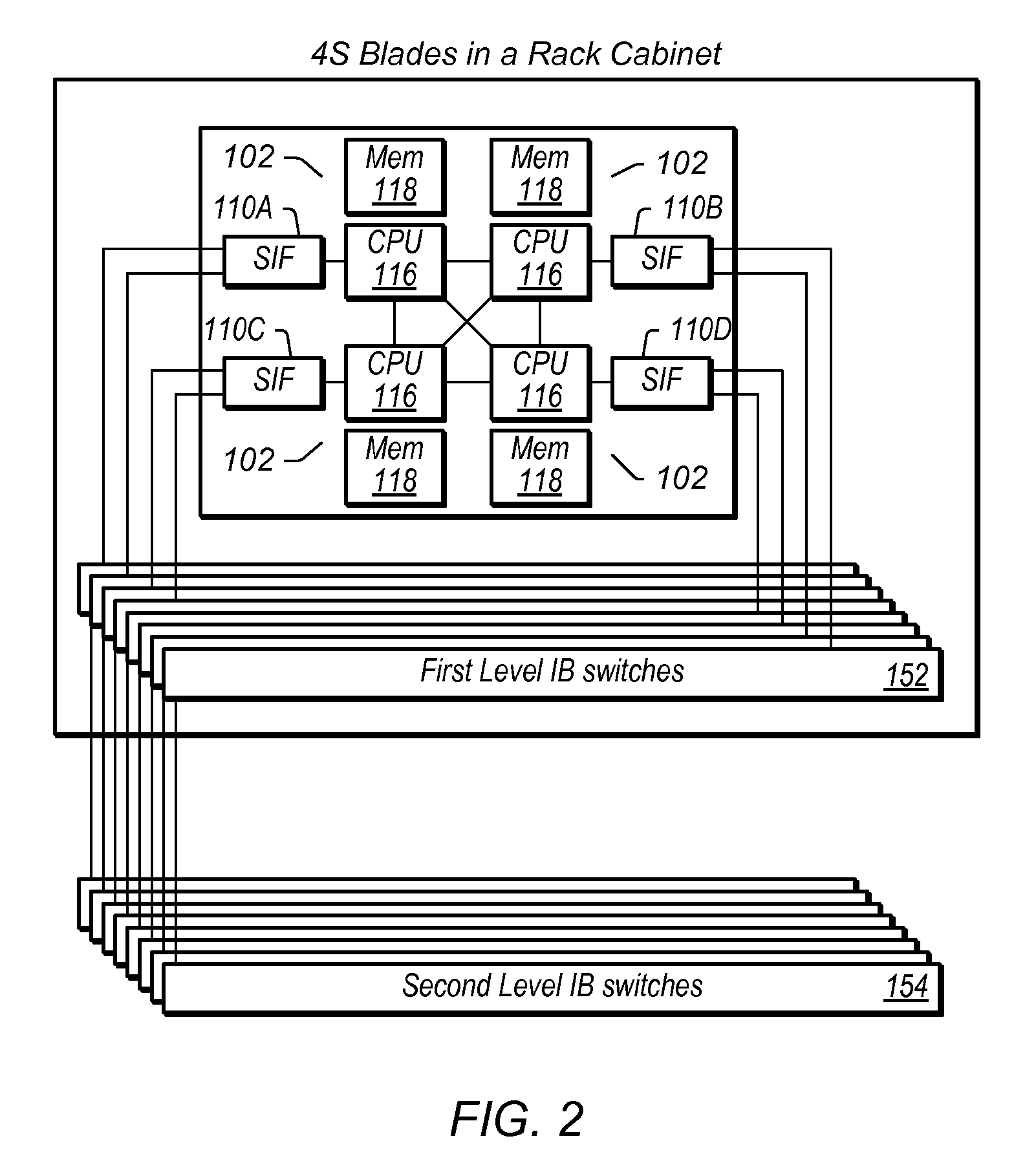

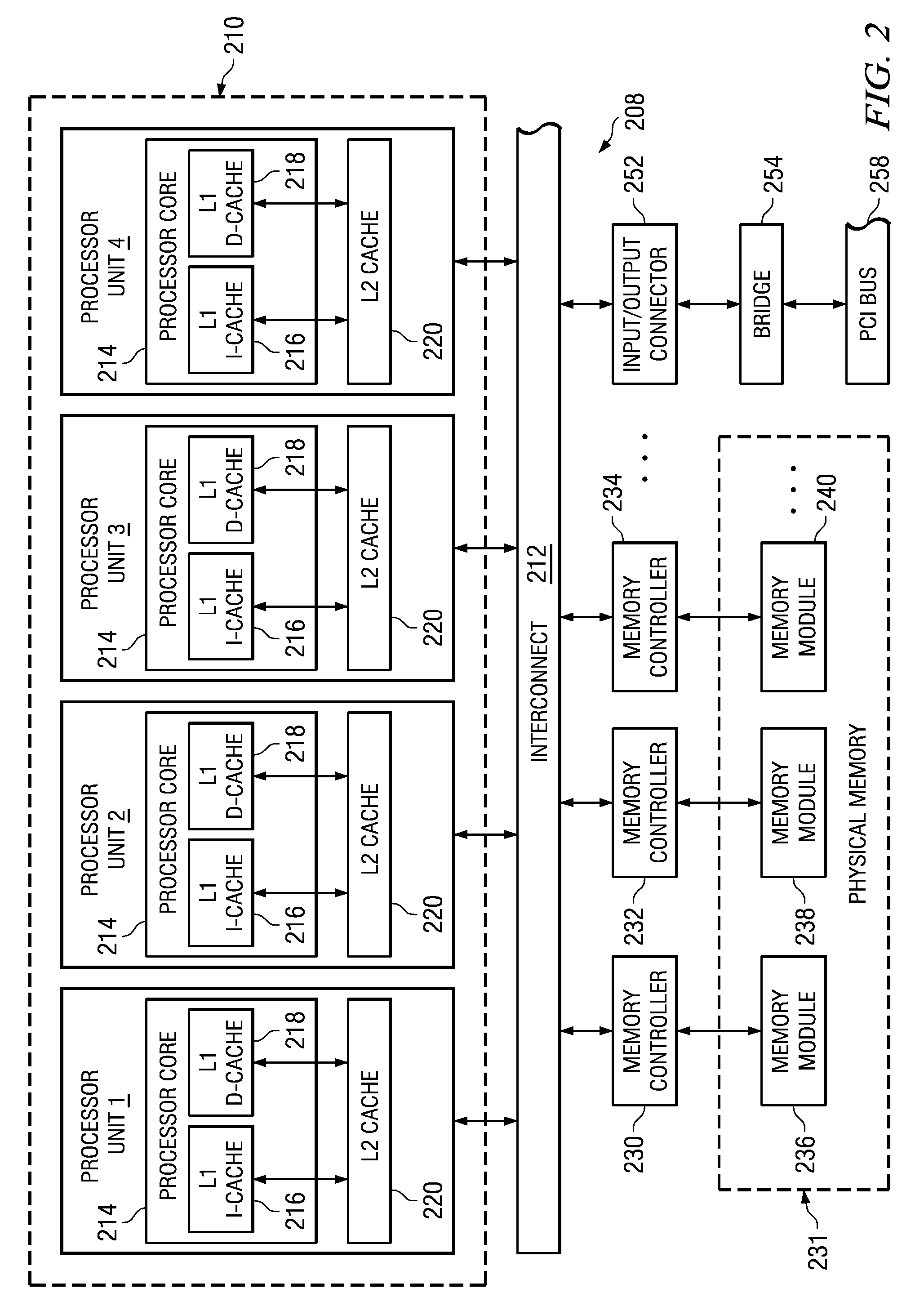

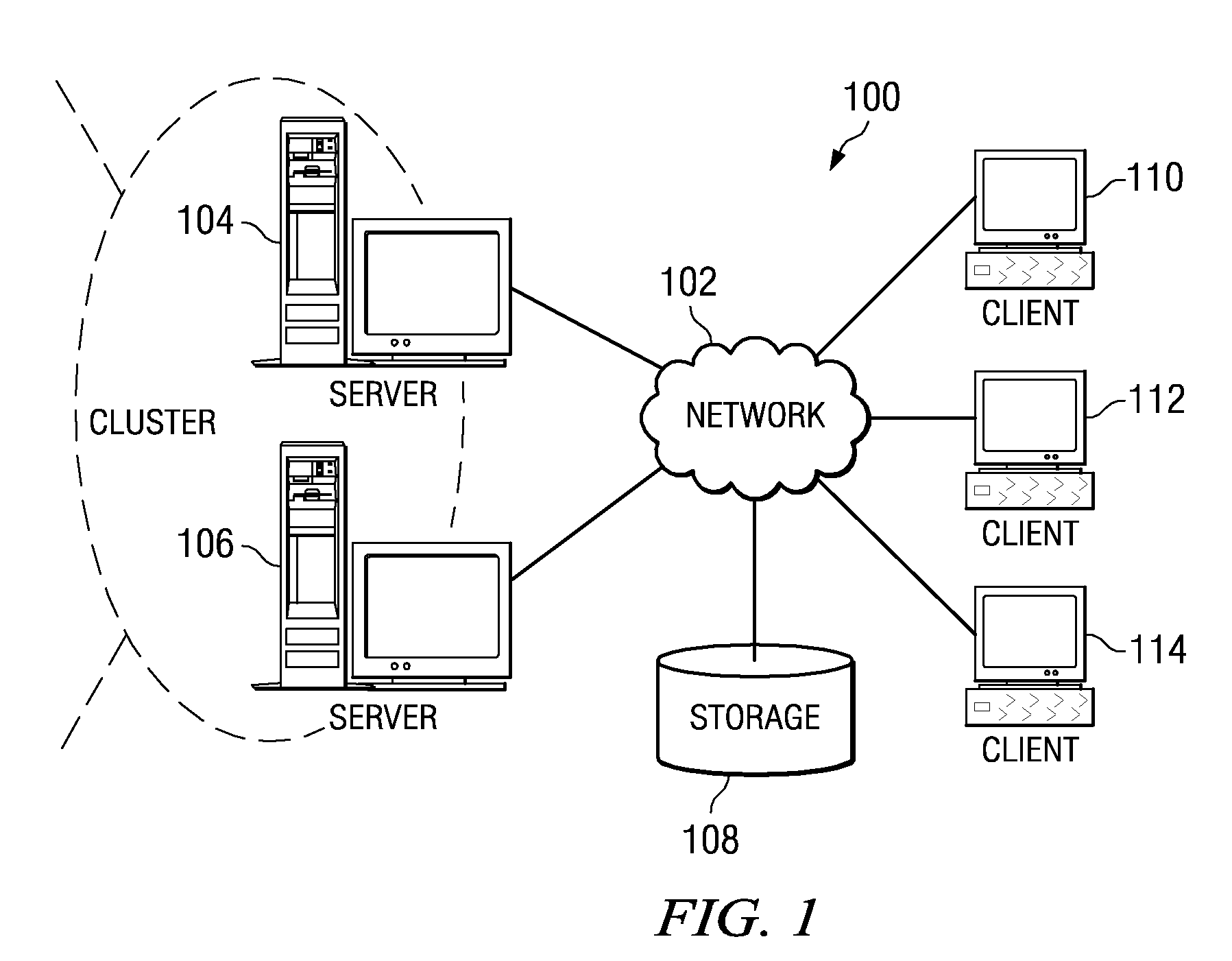

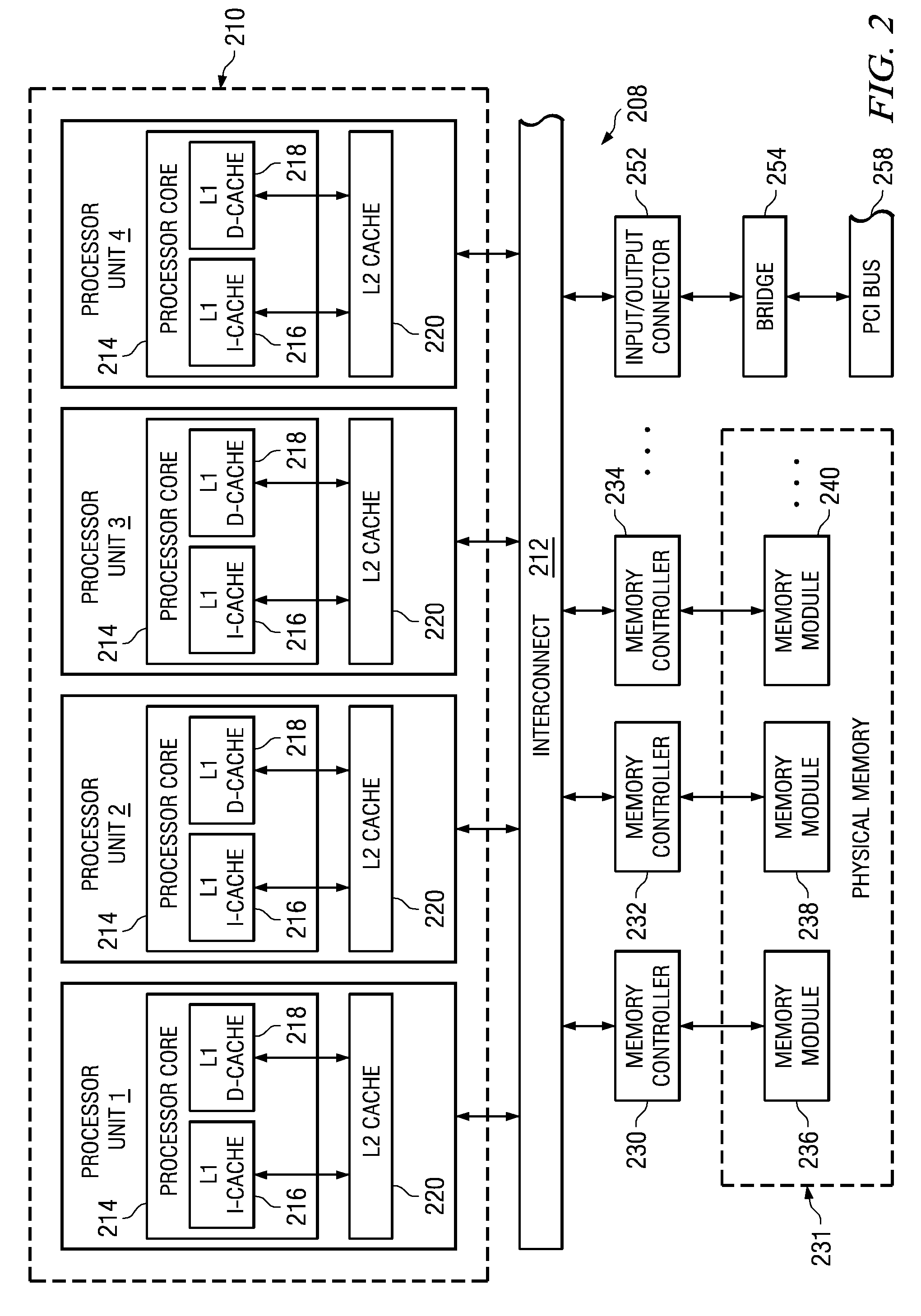

Scalable Interface for Connecting Multiple Computer Systems Which Performs Parallel MPI Header Matching

ActiveUS20100232448A1Improve clustering performanceImprove performanceDigital computer detailsData switching by path configurationComputer clusterMessage Passing Interface

An interface device for a compute node in a computer cluster which performs Message Passing Interface (MPI) header matching using parallel matching units. The interface device comprises a memory that stores posted receive queues and unexpected queues. The posted receive queues store receive requests from a process executing on the compute node. The unexpected queues store headers of send requests (e.g., from other compute nodes) that do not have a matching receive request in the posted receive queues. The interface device also comprises a plurality of hardware pipelined matcher units. The matcher units perform header matching to determine if a header in the send request matches any headers in any of the plurality of posted receive queues. Matcher units perform the header matching in parallel. In other words, the plural matching units are configured to search the memory concurrently to perform header matching.

Owner:ORACLE INT CORP

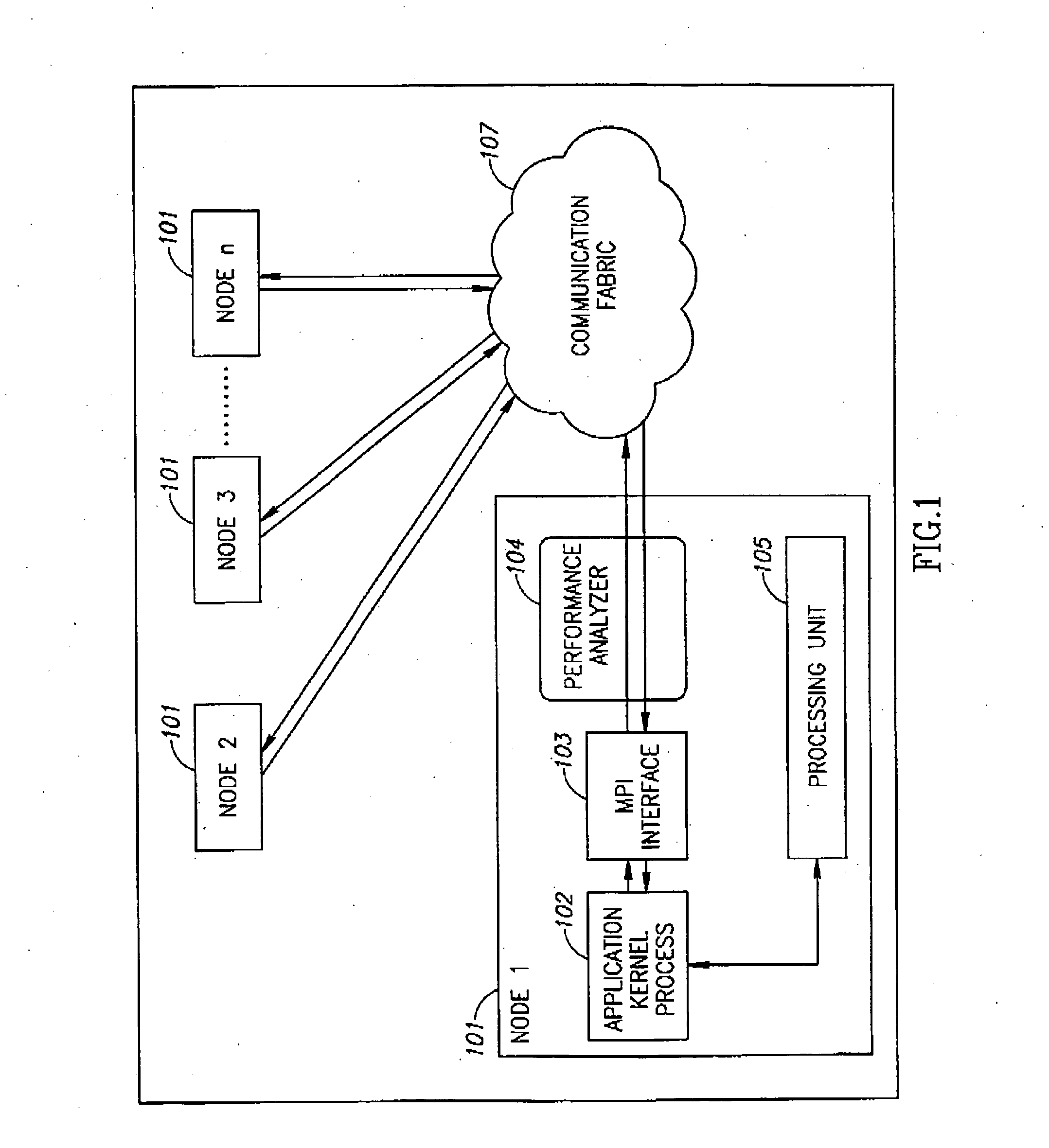

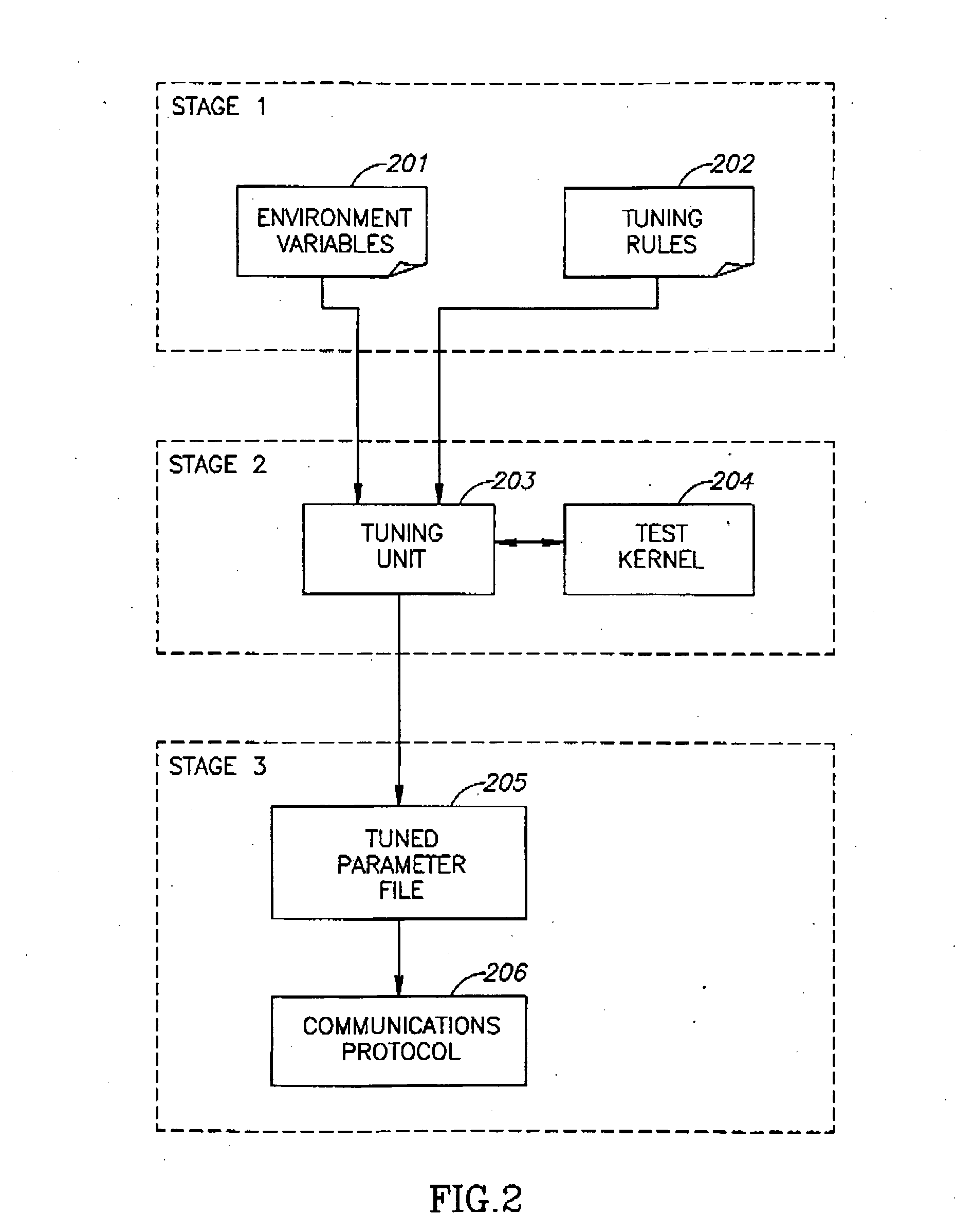

Automatic tuning of communication protocol performance

InactiveUS20090129277A1Error preventionFrequency-division multiplex detailsMessage Passing InterfaceApplication software

A method or device may optimize applications on a parallel computing system using protocols such as Message Passing Interface (MPI). Environment variables data may be used as well as a test kernel of an application to optimize communication protocol performance according to a set of predefined tuning rules. The tuning rules may specify the output parameters to be optimized, and may include a ranking or hierarchy of such output parameters. Optimization may be achieved through use of a tuning unit, which may execute the test kernel on the parallel computing system, and may monitor the output parameters for a series of input parameters. The input parameters may be varied over a range of values and combinations. Input parameters corresponding to optimized output parameters may stored for future use. This information may be used to adjust the application's communication protocol performance “on the fly” by changing the input parameters for a given usage scenario.

Owner:INTEL CORP

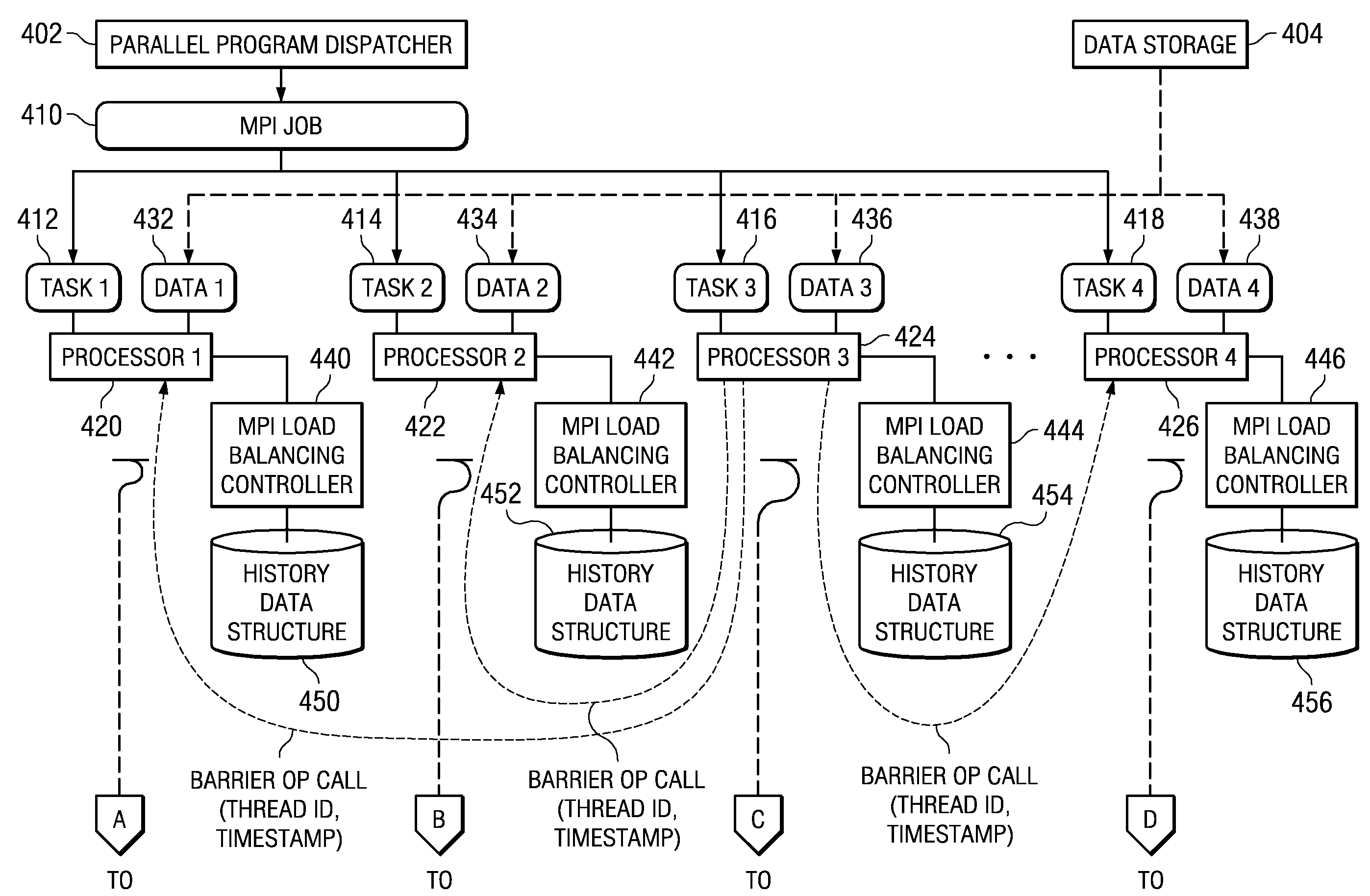

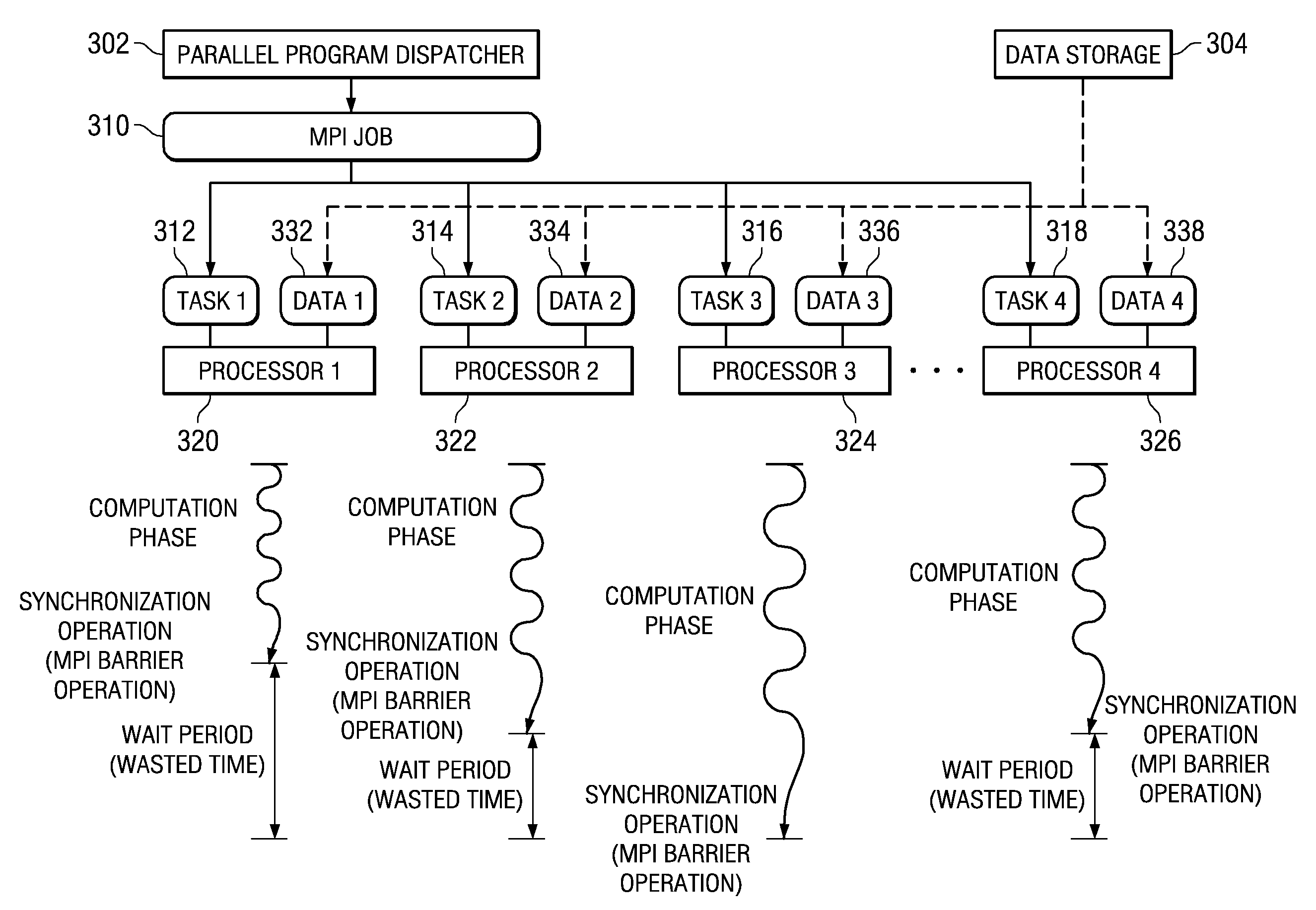

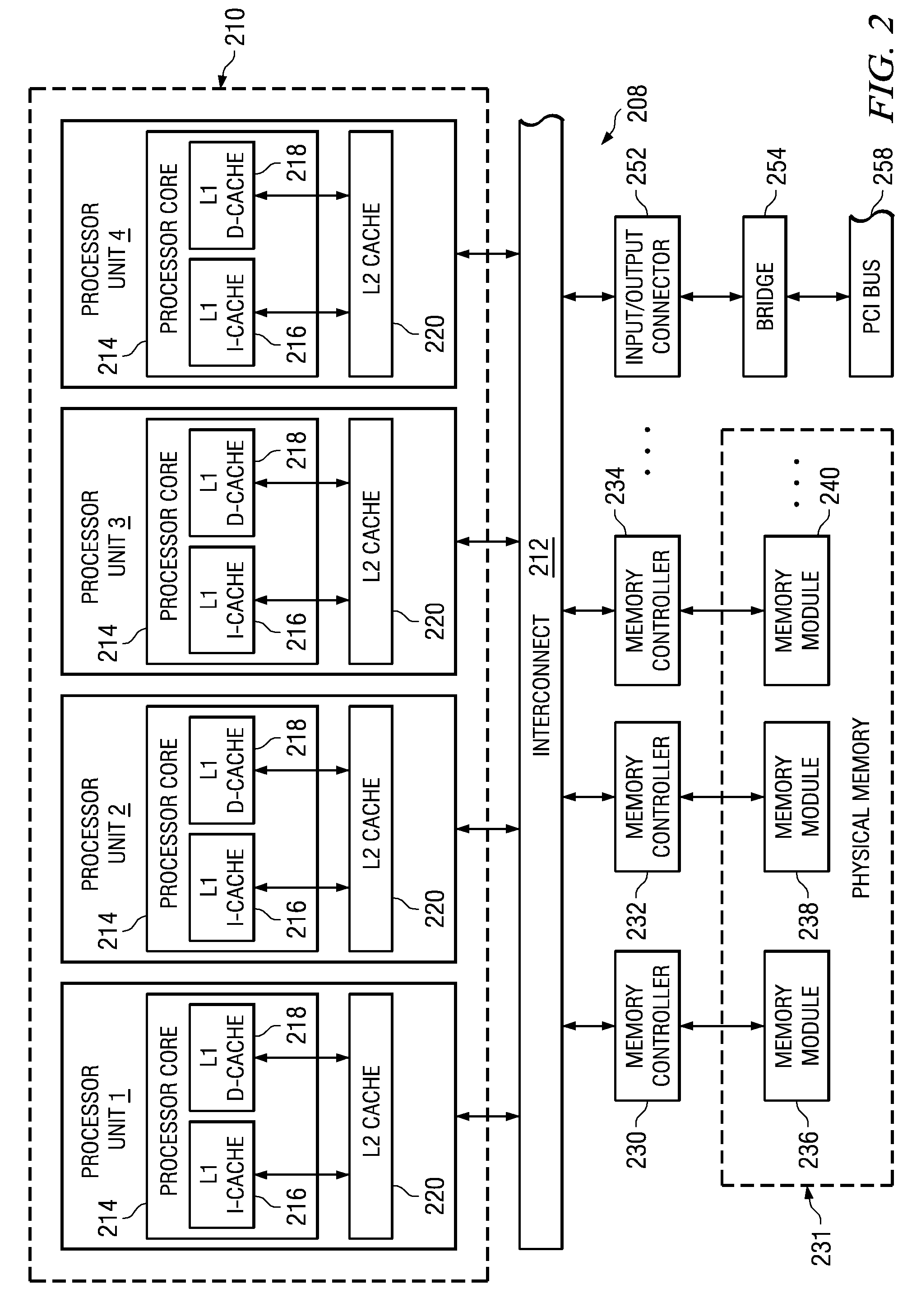

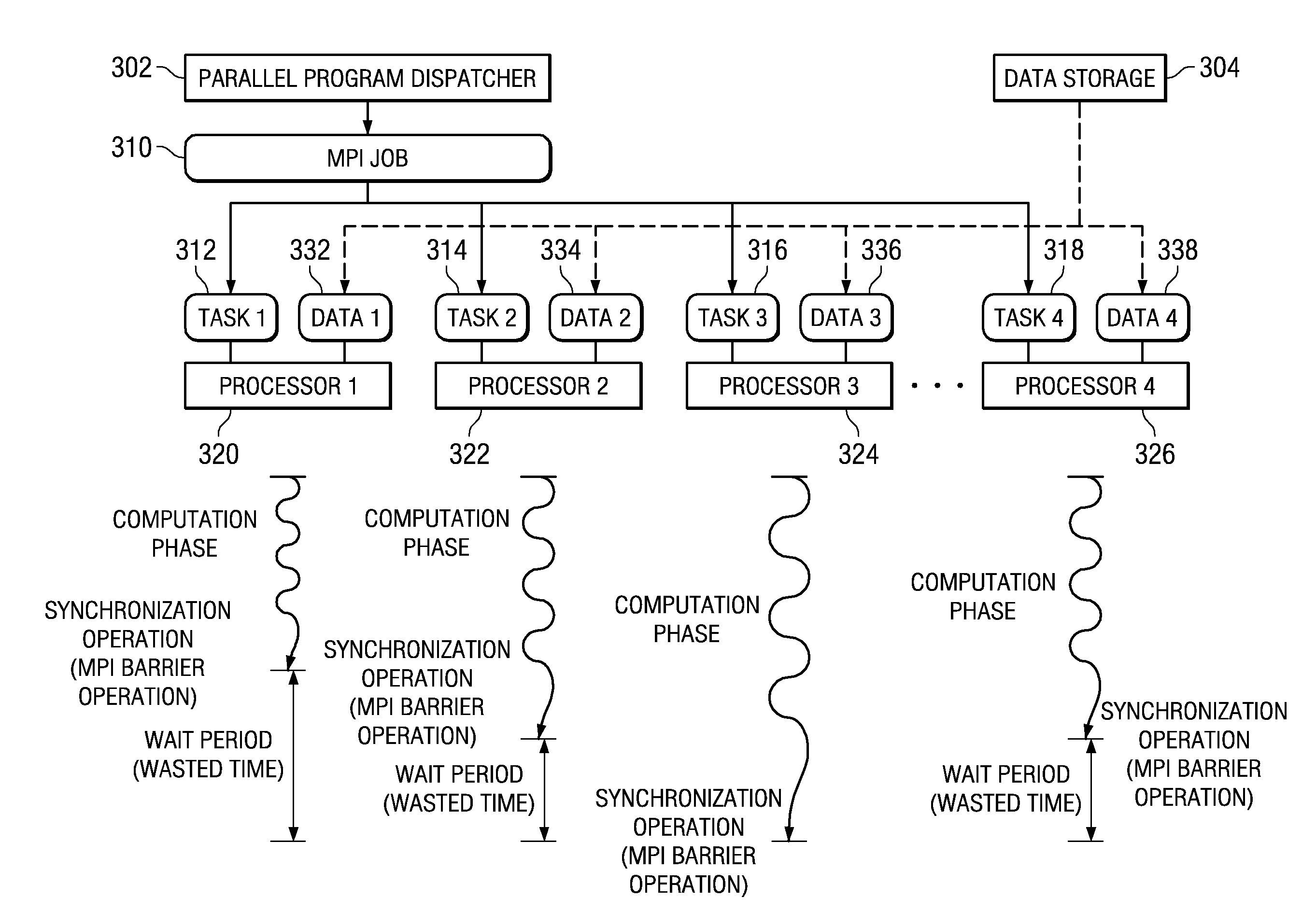

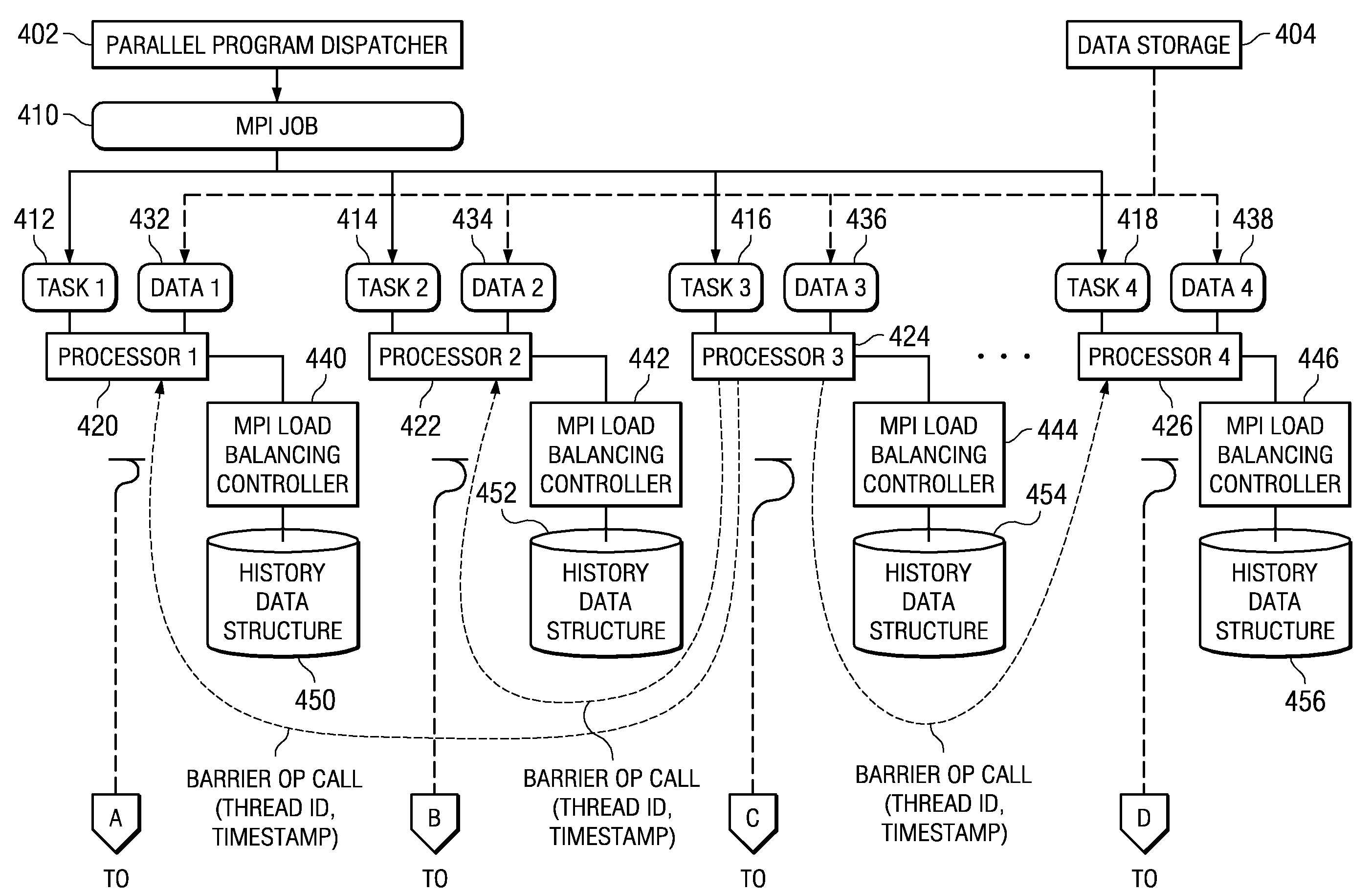

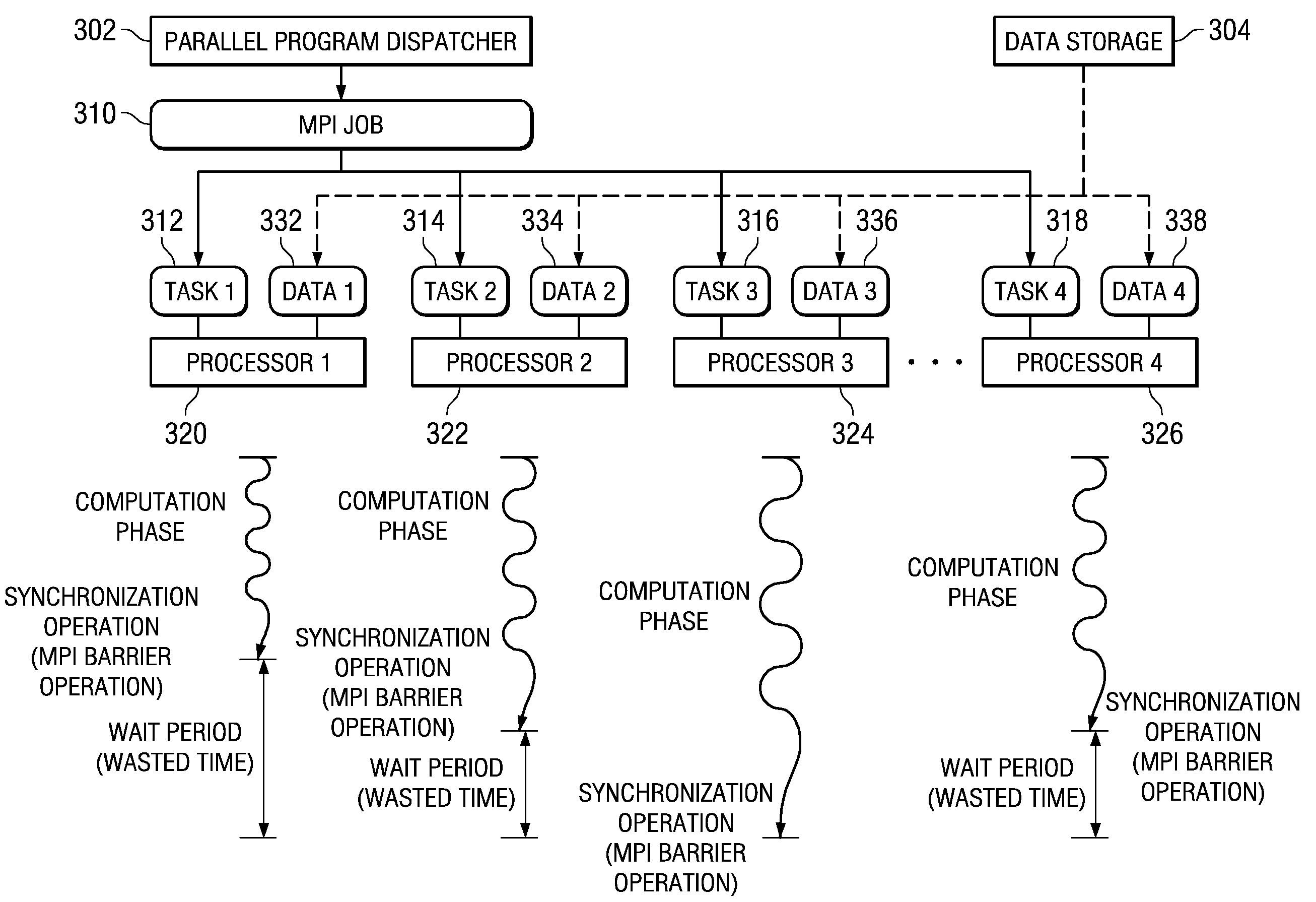

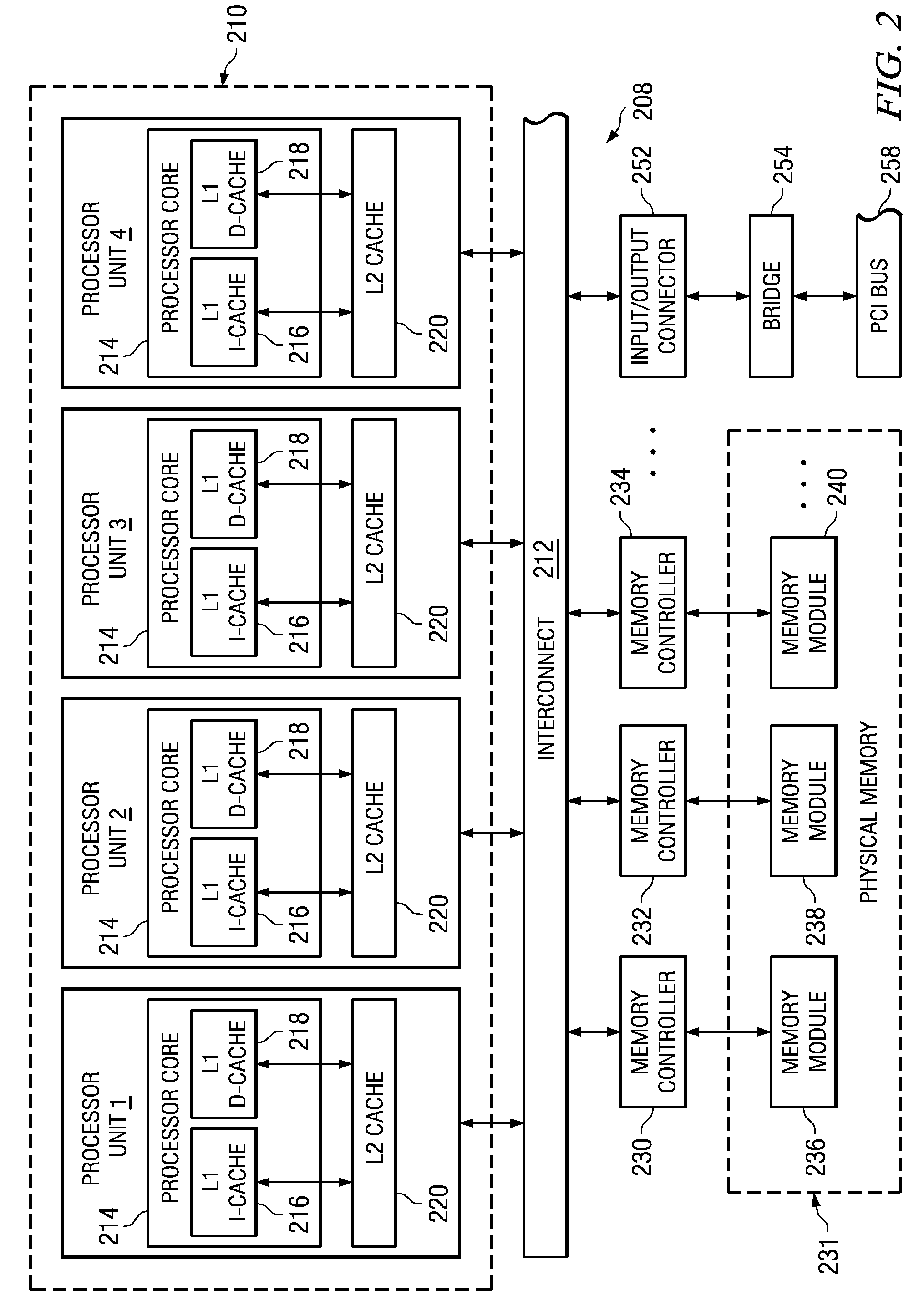

System and Method for Hardware Based Dynamic Load Balancing of Message Passing Interface Tasks By Modifying Tasks

InactiveUS20090064168A1Minimize periodWasted processor cyclesMultiprogramming arrangementsMemory systemsDynamic load balancingMessage Passing Interface

A system and method are provided for providing hardware based dynamic load balancing of message passing interface (MPI) tasks by modifying tasks. Mechanisms for adjusting the balance of processing workloads of the processors executing tasks of an MPI job are provided so as to minimize wait periods for waiting for all of the processors to call a synchronization operation. Each processor has an associated hardware implemented MPI load balancing controller. The MPI load balancing controller maintains a history that provides a profile of the tasks with regard to their calls to synchronization operations. From this information, it can be determined which processors should have their processing loads lightened and which processors are able to handle additional processing loads without significantly negatively affecting the overall operation of the parallel execution system. Thus, operations may be performed to shift workloads from the slowest processor to one or more of the faster processors.

Owner:IBM CORP

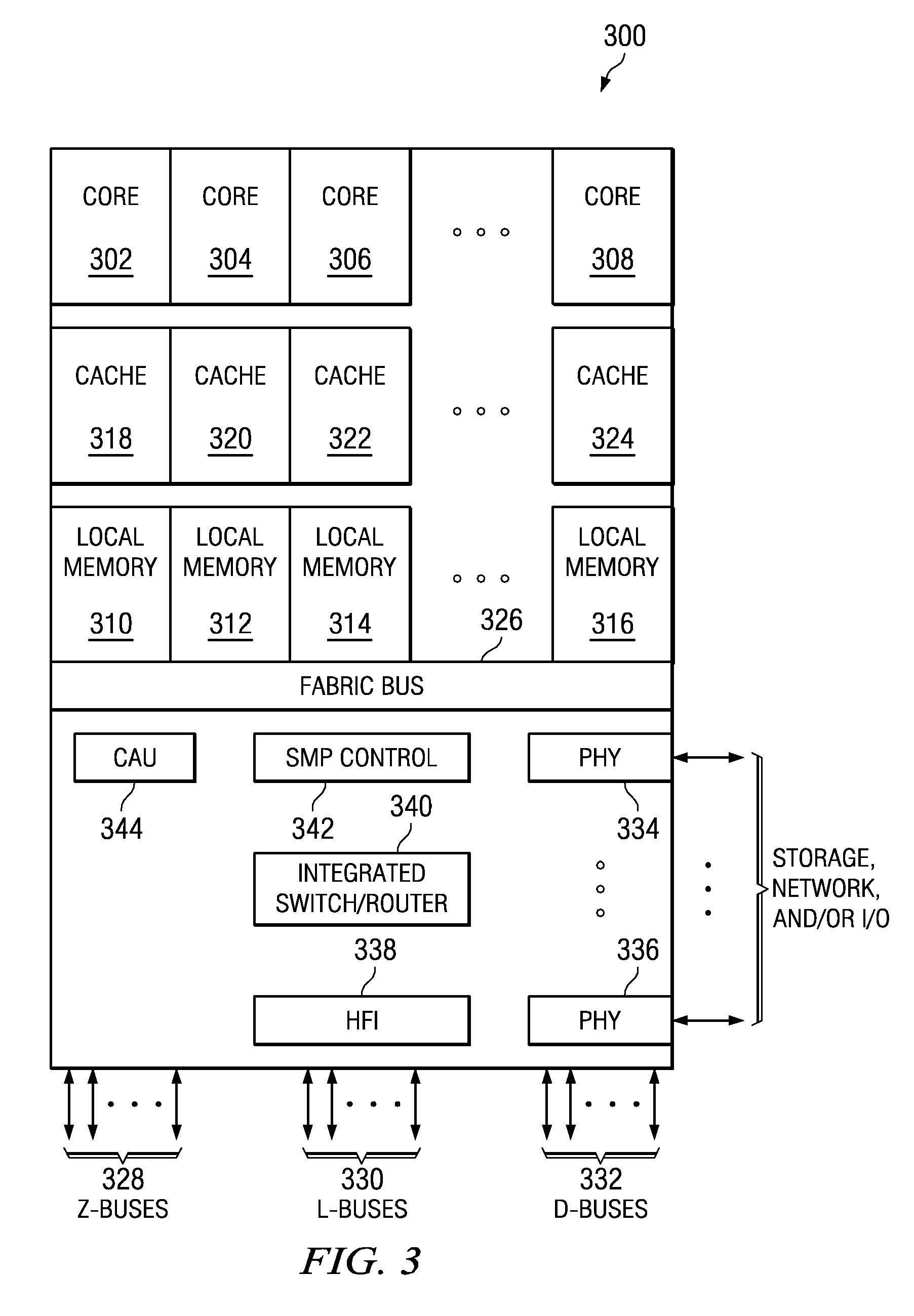

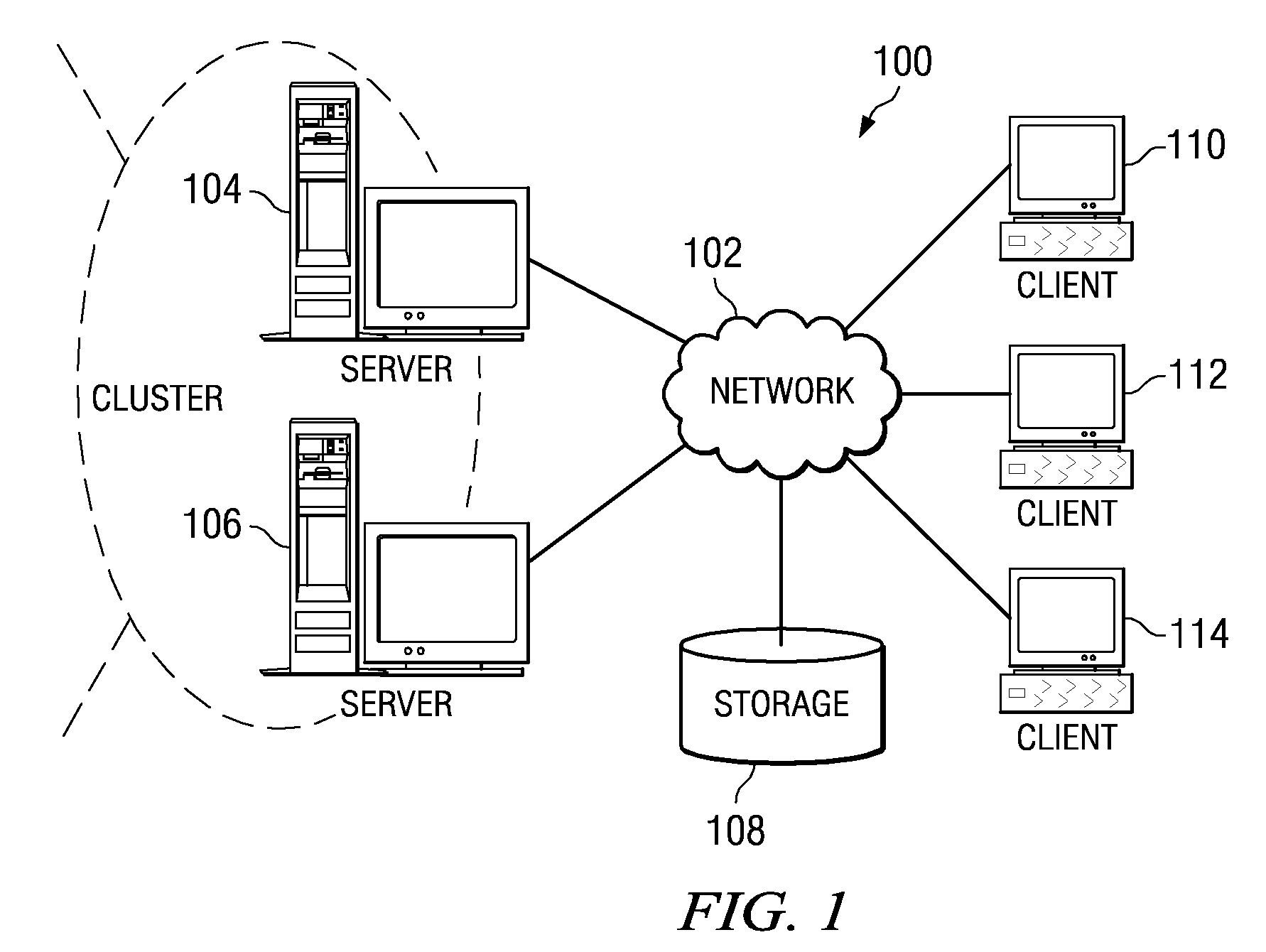

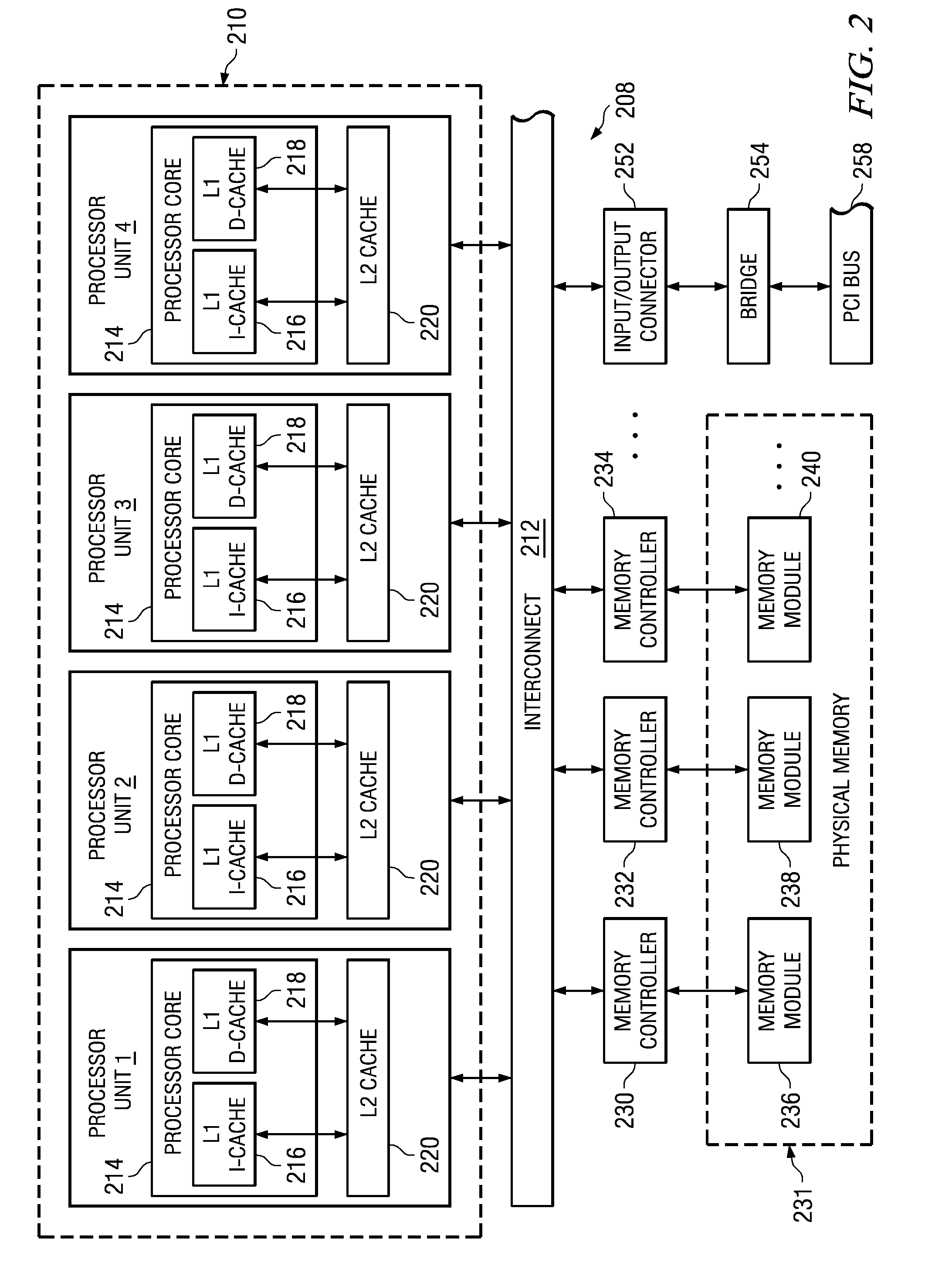

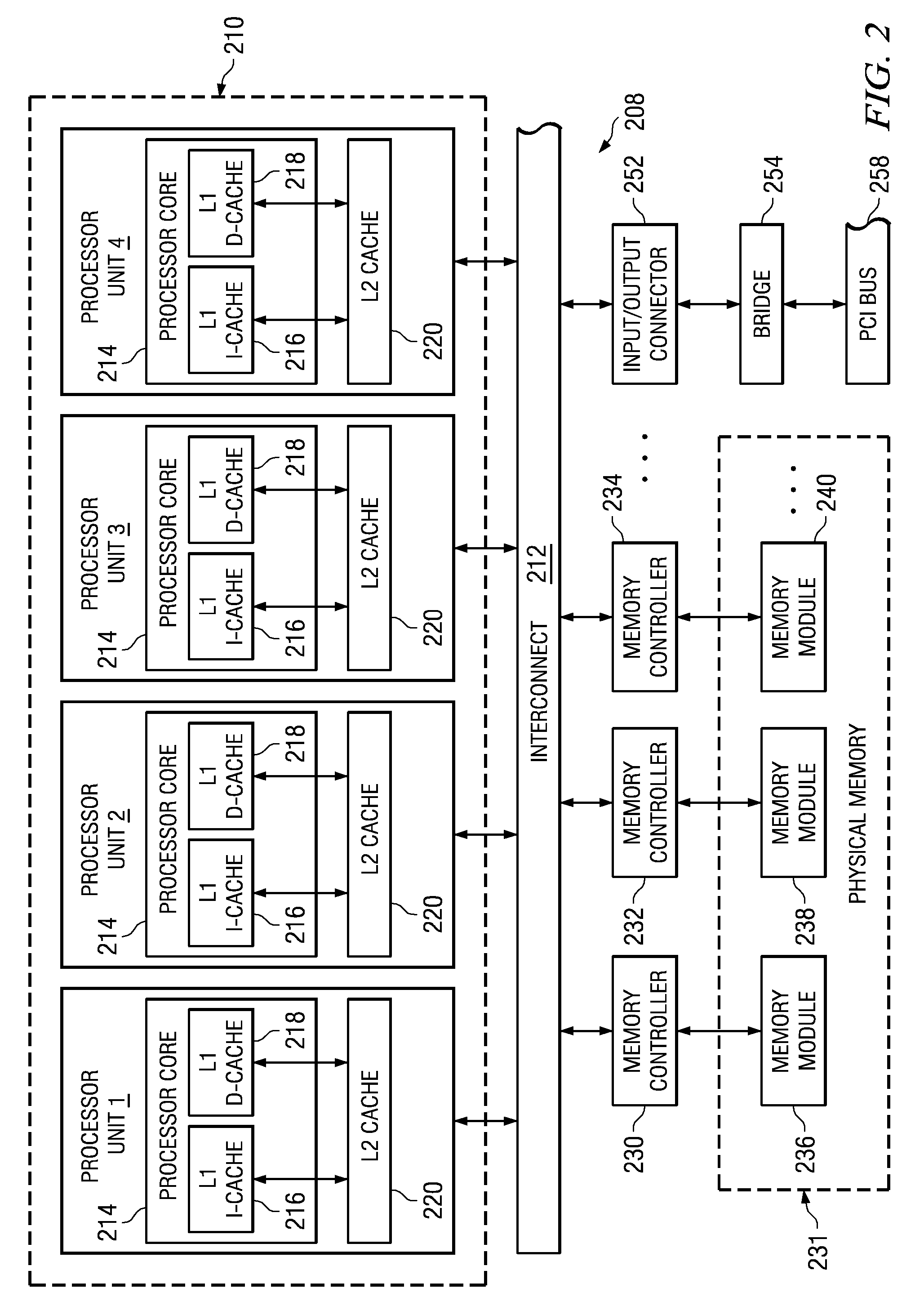

System and Method for Providing a High-Speed Message Passing Interface for Barrier Operations in a Multi-Tiered Full-Graph Interconnect Architecture

InactiveUS20090063880A1Improve communication performanceImprove productivityVolume/mass flow measurementMultiprogramming arrangementsData processing systemMessage Passing Interface

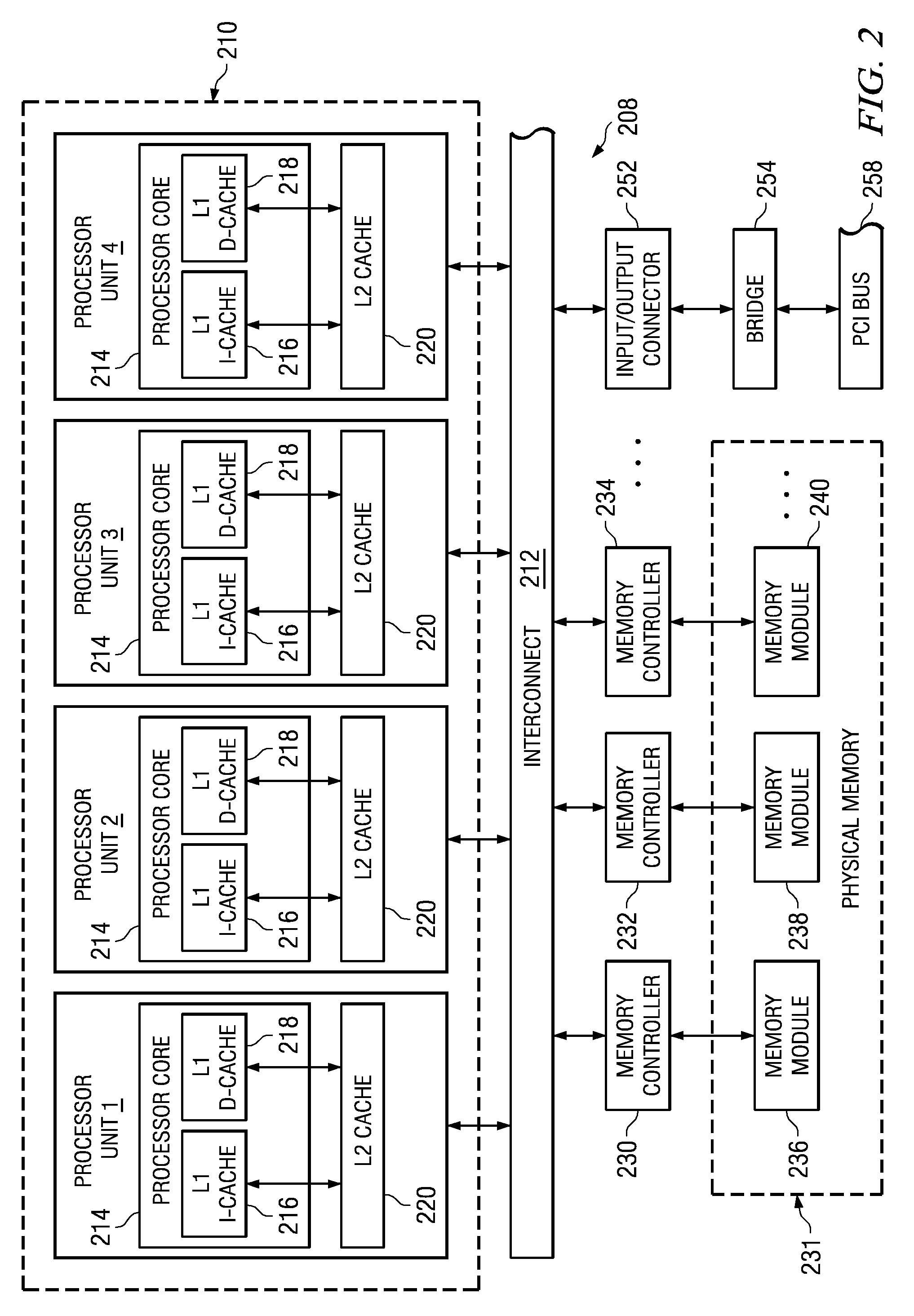

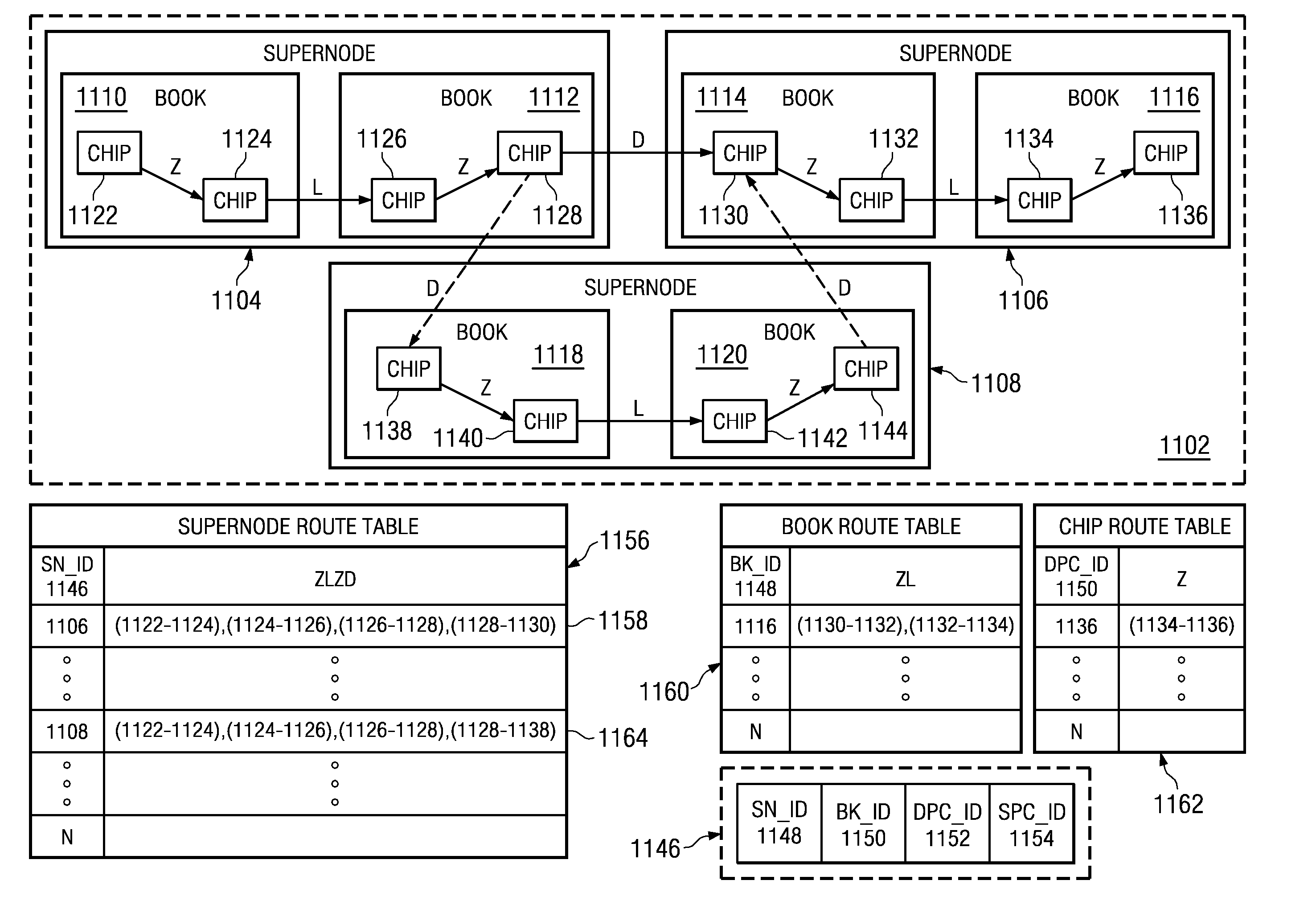

A method, computer program product, and system are provided performing a Message Passing Interface (MPI) job. A first processor chip receives a set of arrival signals from a set of processor chips executing tasks of the MPI job in the data processing system. The arrival signals identify when a processor chip executes a synchronization operation for synchronizing the tasks for the MPI job. Responsive to receiving the set of arrival signals from the set of processor chips, the first processor chip identifies a fastest processor chip of the set of processor chips whose arrival signal arrived first. An operation of the fastest processor chip is modified based on the identification of the fastest processor chip. The set of processor chips comprises processor chips that are in one of a same processor book or a different processor book of the data processing system.

Owner:IBM CORP

System and Method for Performing Setup Operations for Receiving Different Amounts of Data While Processors are Performing Message Passing Interface Tasks

InactiveUS20090064167A1Minimize periodWasted processor cyclesDigital computer detailsMultiprogramming arrangementsMessage Passing InterfaceWaiting period

A system and method are provided for performing setup operations for receiving a different amount of data while processors are performing message passing interface (MPI) tasks. Mechanisms for adjusting the balance of processing workloads of the processors are provided so as to minimize wait periods for waiting for all of the processors to call a synchronization operation. An MPI load balancing controller maintains a history that provides a profile of the tasks with regard to their calls to synchronization operations. From this information, it can be determined which processors should have their processing loads lightened and which processors are able to handle additional processing loads without significantly negatively affecting the overall operation of the parallel execution system. As a result, setup operations may be performed while processors are performing MPI tasks to prepare for receiving different sized portions of data in a subsequent computation cycle based on the history.

Owner:IBM CORP

Network access system including a programmable access device having distributed service control

InactiveUS7499458B2Good extensibilityIncrease flexibilityData switching by path configurationProgrammable logic deviceMessage Passing Interface

A distributed network access system in accordance with the present invention includes at least an external processor and a programmable access device. The programmable access device has a message interface coupled to the external processor and first and second network interfaces through which packets are communicated with a network. The programmable access device includes a packet header filter and a forwarding table that is utilized to route packets communicated between the first and second network interfaces. In response to receipt of a series of packets, the packet header filter in the programmable access device identifies messages in the series of messages upon which policy-based services are to be implemented and passes identified messages via the message interface to the external processor for processing. In response to receipt of a message, the external processor invokes service control on the message and may also invoke policy control on the message.

Owner:VERIZON PATENT & LICENSING INC

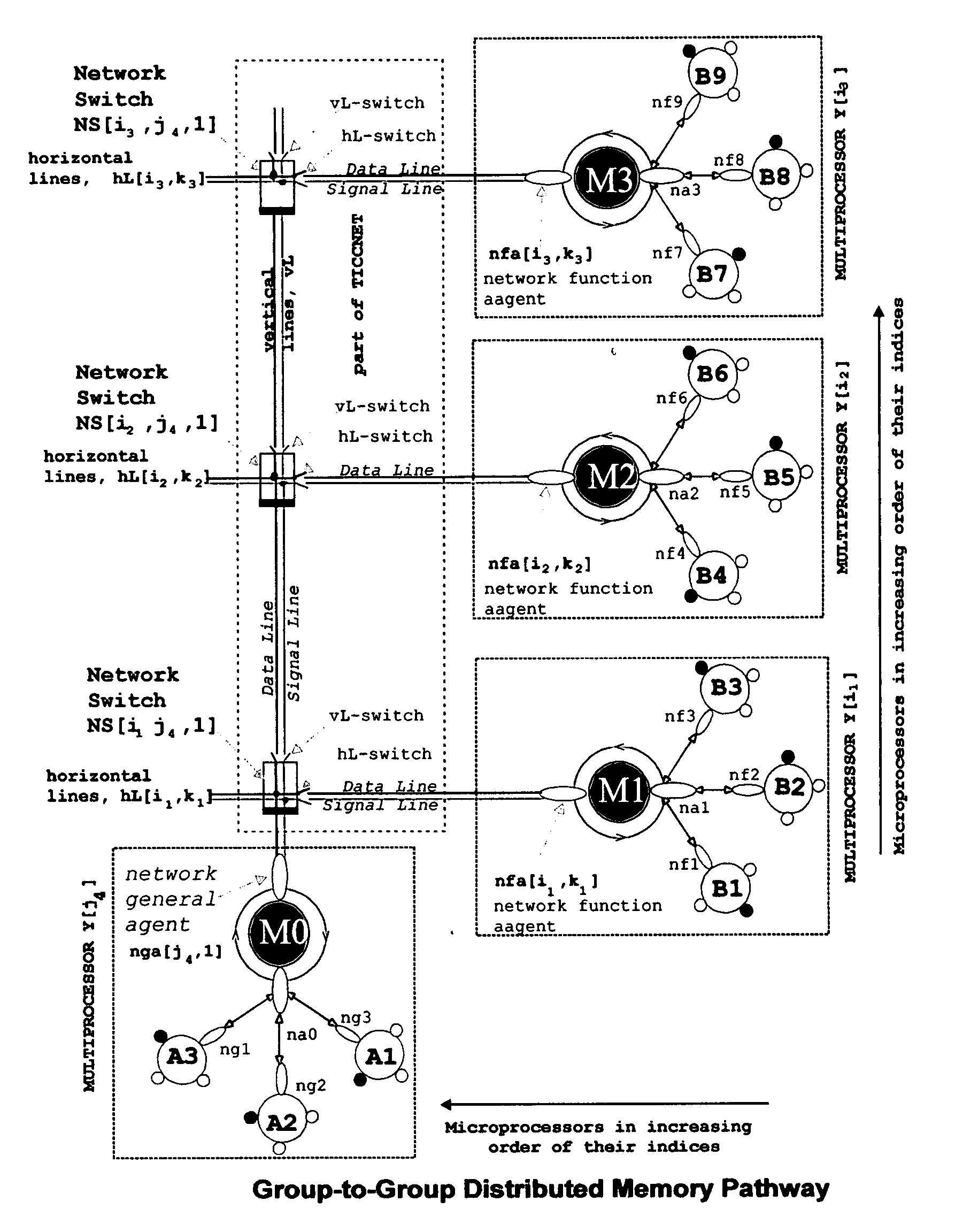

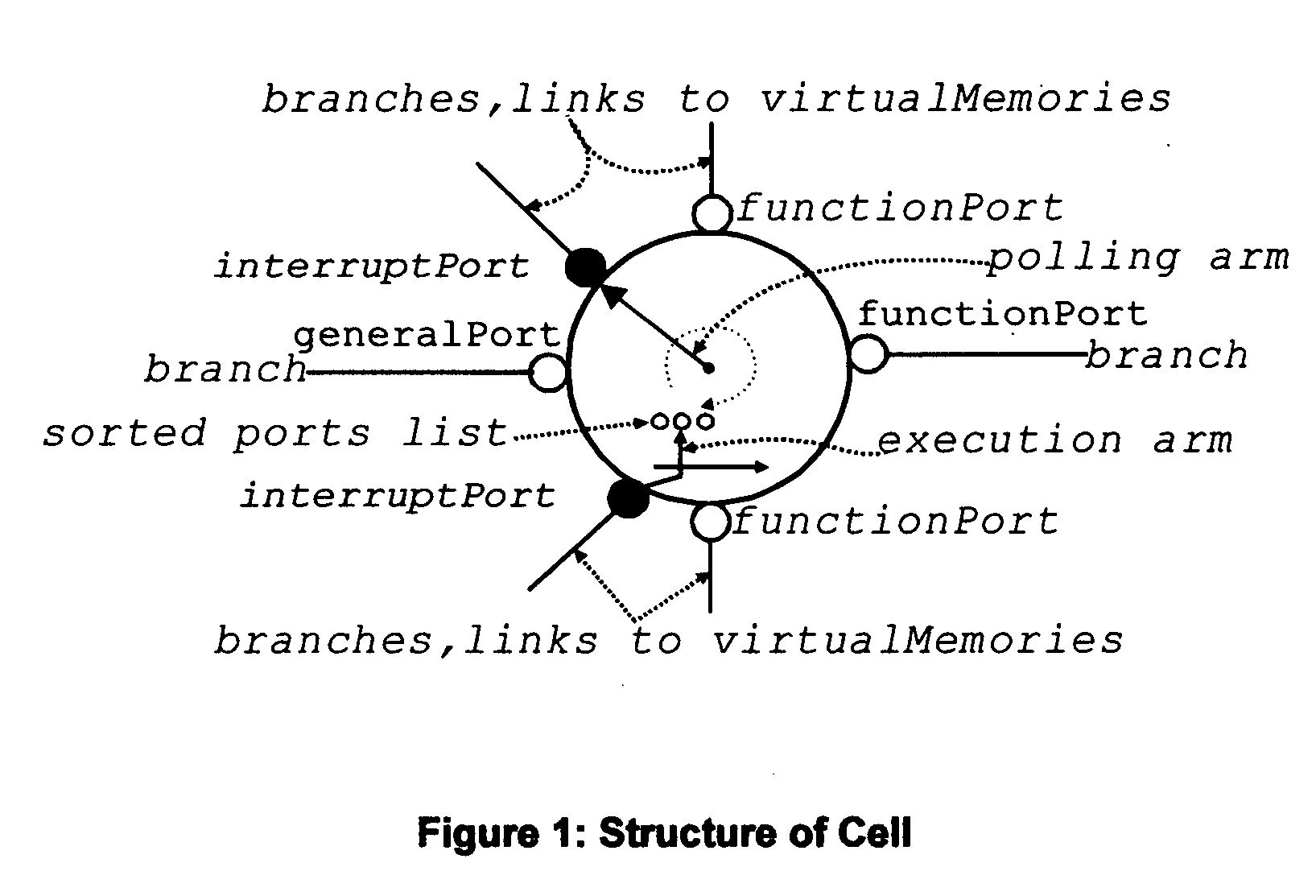

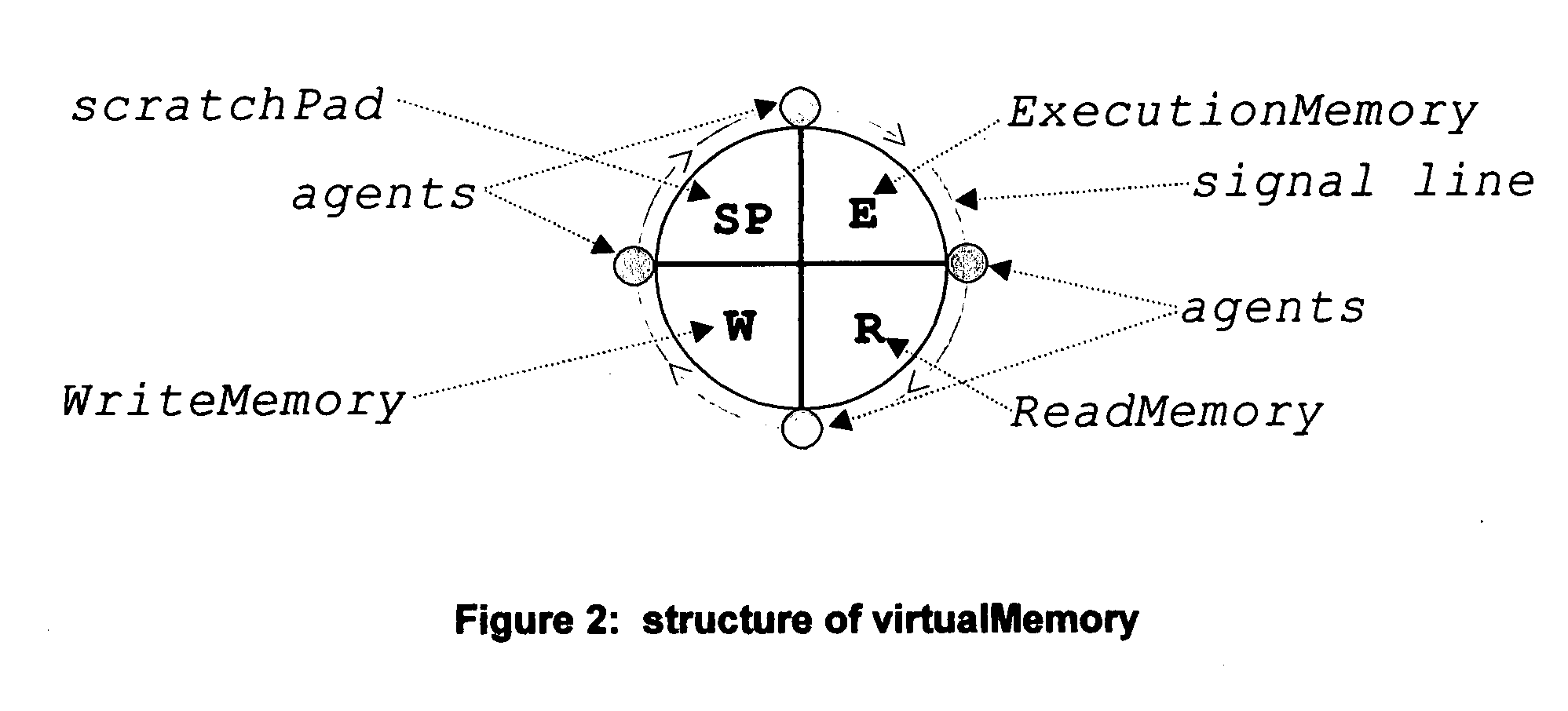

Self-scheduled real time software using real time asynchronous messaging

TICC™ (Technology for Integrated Computation and Communication), a patented technology [1], provides a high-speed message-passing interface for parallel processes. TICC™ does high-speed asynchronous message passing with latencies in the nanoseconds scale in shared-memory multiprocessors and latencies in microseconds scale over distributed-memory local area TICCNET™ (Patent Pending, [2]. Ticc-Ppde (Ticc-based Parallel Program Development and Execution platform, Patent Pending, [3]) provides a component based. parallel program development environment, and provides the infrastructure for dynamic debugging and updating of Ticc-based parallel programs, self-monitoring, self-diagnosis and self-repair. Ticc-Rtas (Ticc-based Real Time Application System) provides the system architecture for developing self-scheduled real time distributed parallel processing software with real-time asynchronous messaging, using Ticc-Ppde. Implementation of a Ticc-Rtas real time application using Ticc-Ppde will automatically generate the self-monitoring system for the Rtas. This self-monitoring. system may be used to monitor the Rtas during its operation, in parallel with its operation, to recognize and report a priori specified observable events that may occur in the application or recognize and report system malfunctions, without interfering with the timing requirements of the Ticc-Rtas. The structure, innovations underlying their operations, details on developing Rtas using Ticc-Ppde and TICCNET™ are presented here together with three illustrative examples: one on sensor fusion, the other on image fusion and the third on. power transmission control in a fuel cell powered automobile.

Owner:EDSS INC

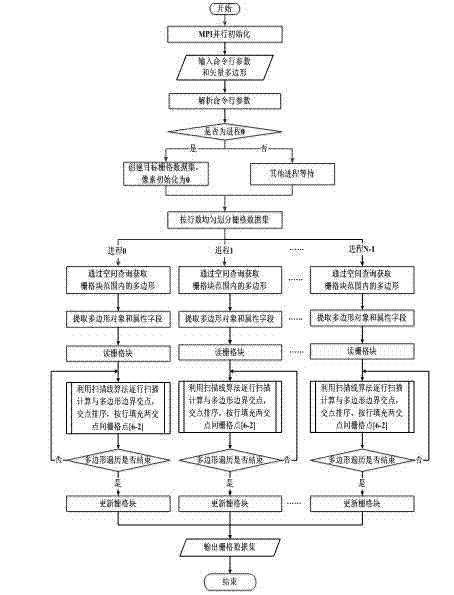

Polygonal rasterisation parallel conversion method based on scanning line method

InactiveCN102542035AImplement parallel processingTroubleshoot rasterization issuesSpecial data processing applicationsComputational scienceAlgorithm

The invention discloses a polygonal rasterisation parallel conversion method based on a scanning line method, belonging to the field of a geographic information system. The polygonal rasterisation parallel conversion method comprises inputting a command line parameter; carrying out MPI (Message Passing Interface) parallel initialization so as to obtain a total progress number and a current progress number; adopting an equal parallel mode, analyzing the command line parameter in each progress respectively, collecting parameter values behind a leading indicator respectively, reading a vector data source by using an OGROpen method, and judging whether the progress is the No.0 progress; adopting a data parallel strategy, dividing a raster data set vector polygon to be distributed to all progresses, and carrying out rasterisation of a polygon in each progress at the same time; writing the raster data, updating a raster block in each progress and outputting the converted raster data. The method is utilized to perform polygonal rasterisation of large amount of data to achieve relatively high efficiency and a satisfying conversion result, the conversion processing speed of a multi-core / multiprocessor of a high performance server to polygonal rasterisation is improved sufficiently, and the conversion time of polygonal rasterisation is shortened greatly.

Owner:NANJING UNIV

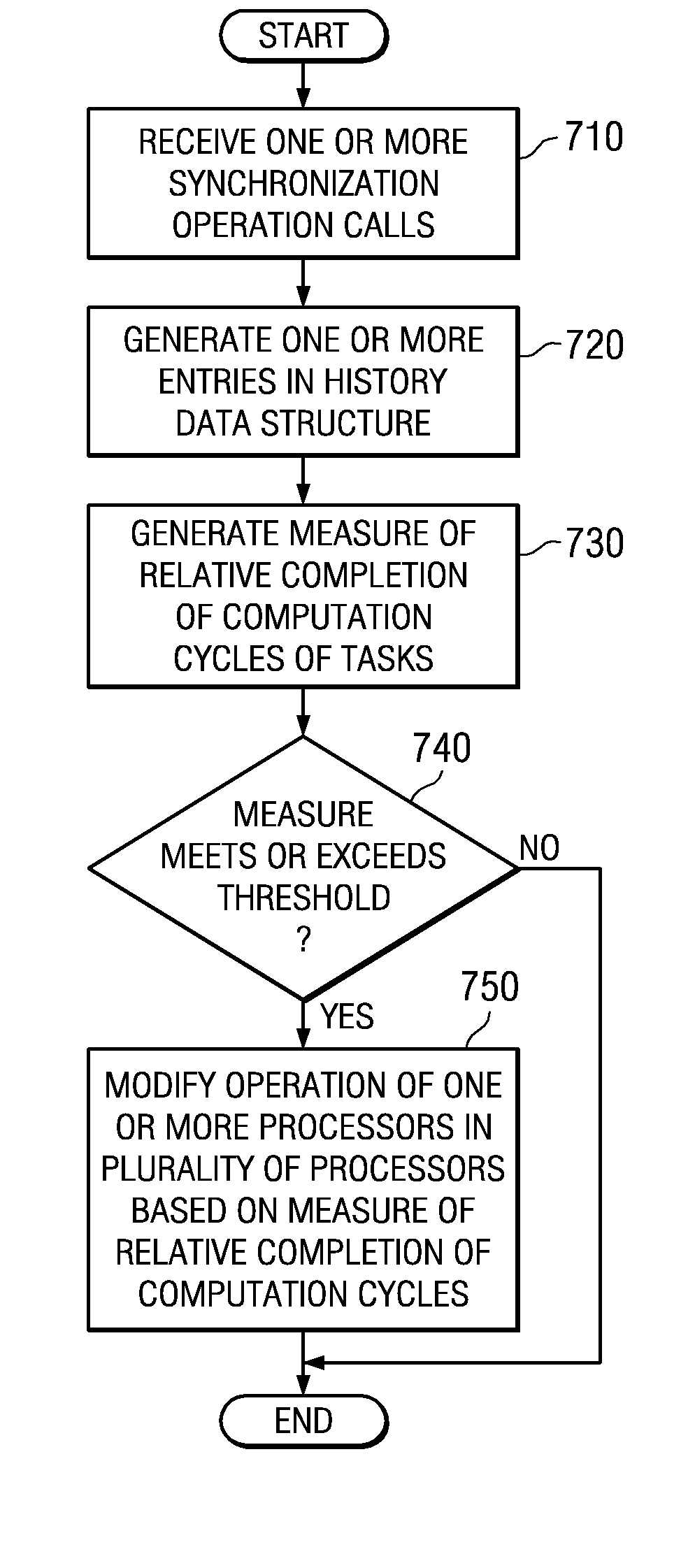

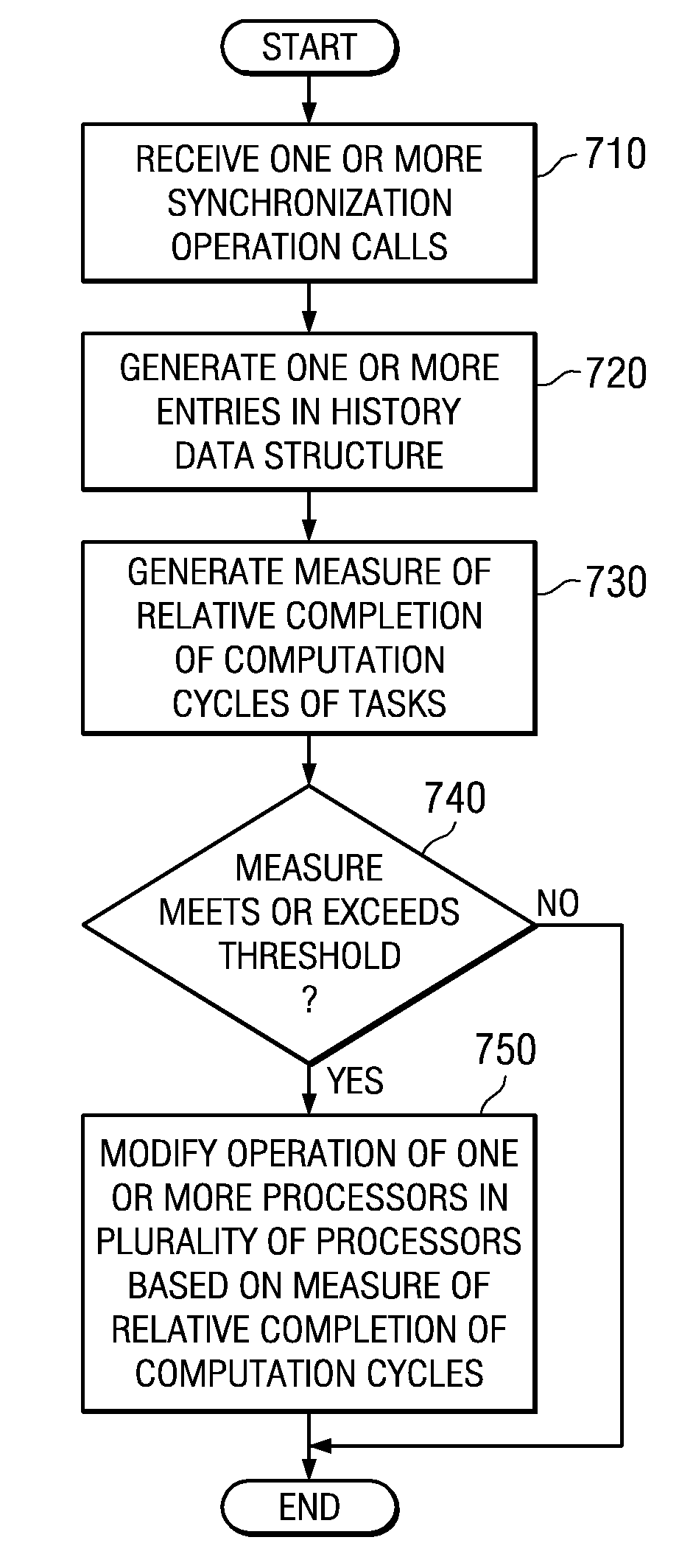

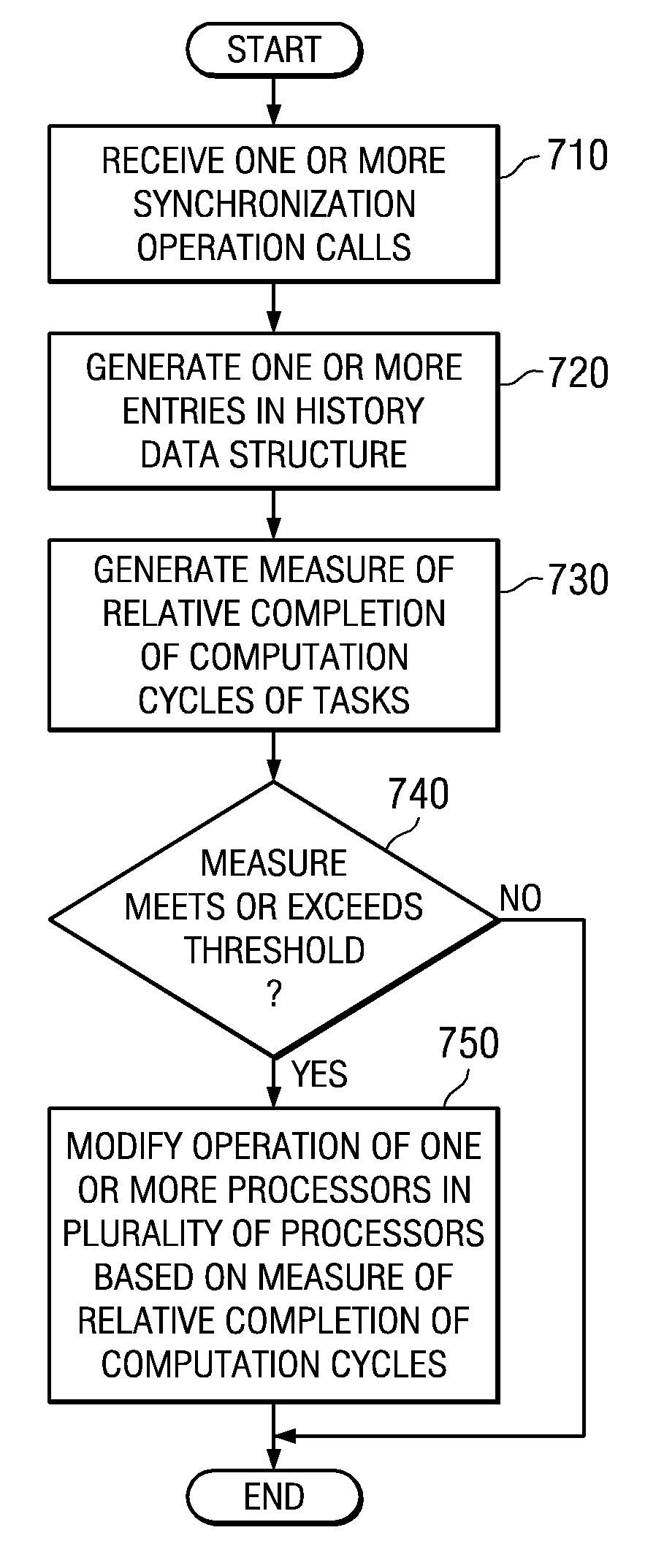

System and Computer Program Product for Modifying an Operation of One or More Processors Executing Message Passing Interface Tasks

InactiveUS20090063885A1Minimize periodWasted processor cyclesProgram synchronisationMultiple digital computer combinationsMessage Passing InterfaceWaiting period

A system and computer program product for modifying an operation of one or more processors executing message passing interface (MPI) tasks are provided. Mechanisms for adjusting the balance of processing workloads of the processors are provided so as to minimize wait periods for waiting for all of the processors to call a synchronization operation. Each processor has an associated hardware implemented MPI load balancing controller. The MPI load balancing controller maintains a history that provides a profile of the tasks with regard to their calls to synchronization operations. From this information, it can be determined which processors should have their processing loads lightened and which processors are able to handle additional processing loads without significantly negatively affecting the overall operation of the parallel execution system. As a result, operations may be performed to shift workloads from the slowest processor to one or more of the faster processors.

Owner:IBM CORP

Method for Hardware Based Dynamic Load Balancing of Message Passing Interface Tasks

InactiveUS20090064165A1Minimize periodWasted processor cyclesDigital computer detailsMultiprogramming arrangementsDynamic load balancingMessage Passing Interface

A method for providing hardware based dynamic load balancing of message passing interface (MPI) tasks are provided. Mechanisms for adjusting the balance of processing workloads of the processors executing tasks of an MPI job are provided so as to minimize wait periods for waiting for all of the processors to call a synchronization operation. Each processor has an associated hardware implemented MPI load balancing controller. The MPI load balancing controller maintains a history that provides a profile of the tasks with regard to their calls to synchronization operations. From this information, it can be determined which processors should have their processing loads lightened and which processors are able to handle additional processing loads without significantly negatively affecting the overall operation of the parallel execution system. As a result, operations may be performed to shift workloads from the slowest processor to one or more of the faster processors.

Owner:IBM CORP

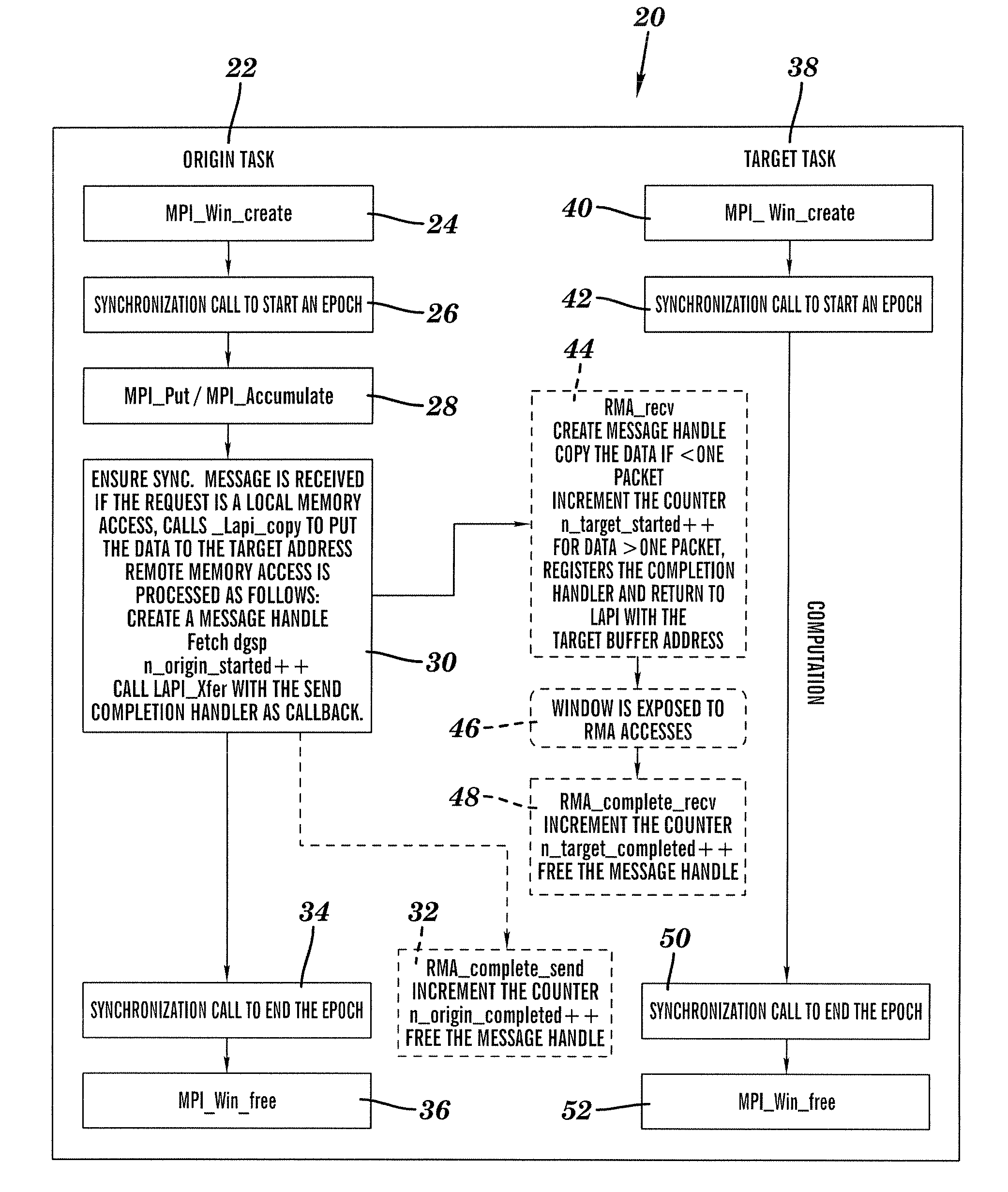

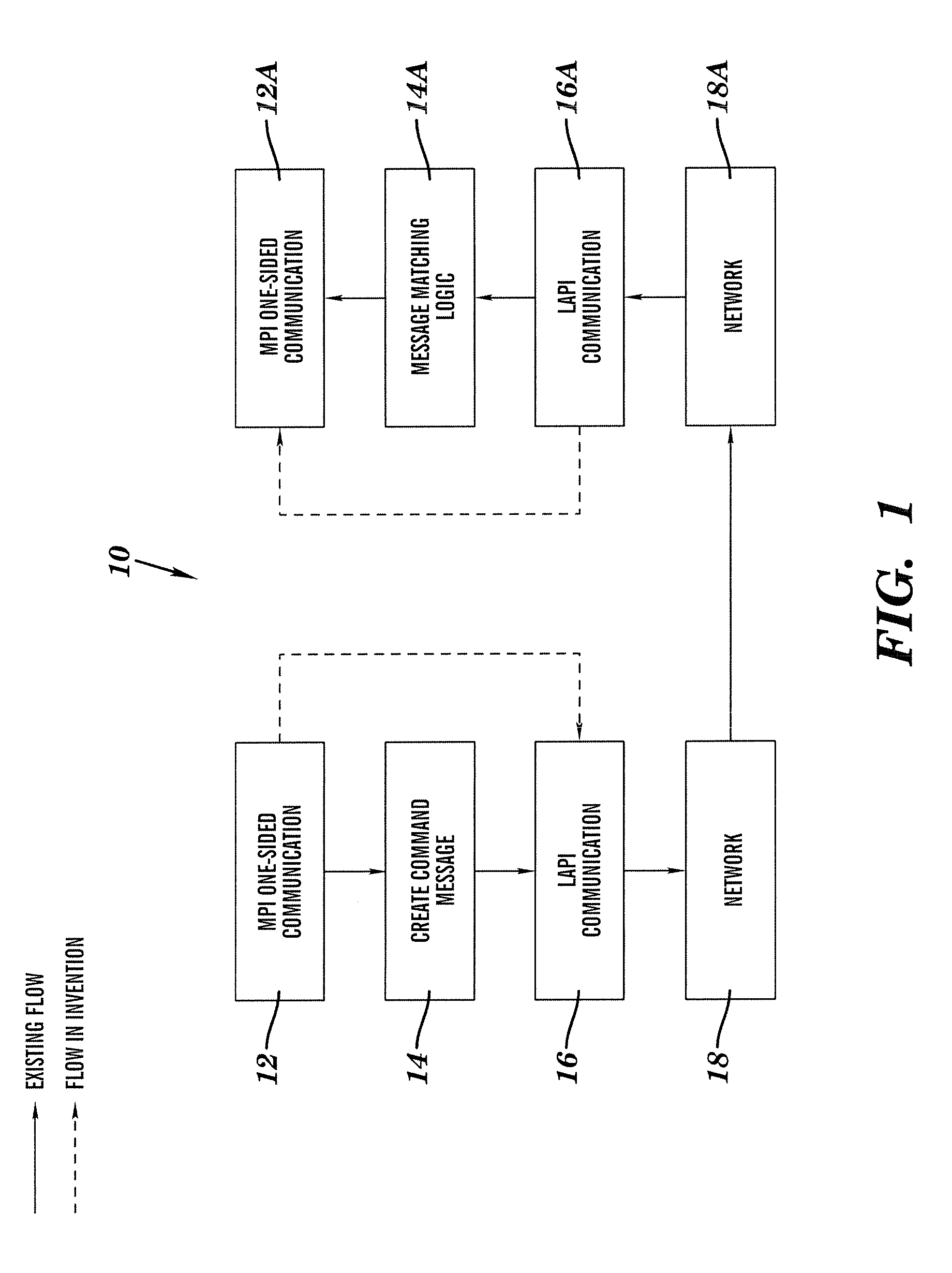

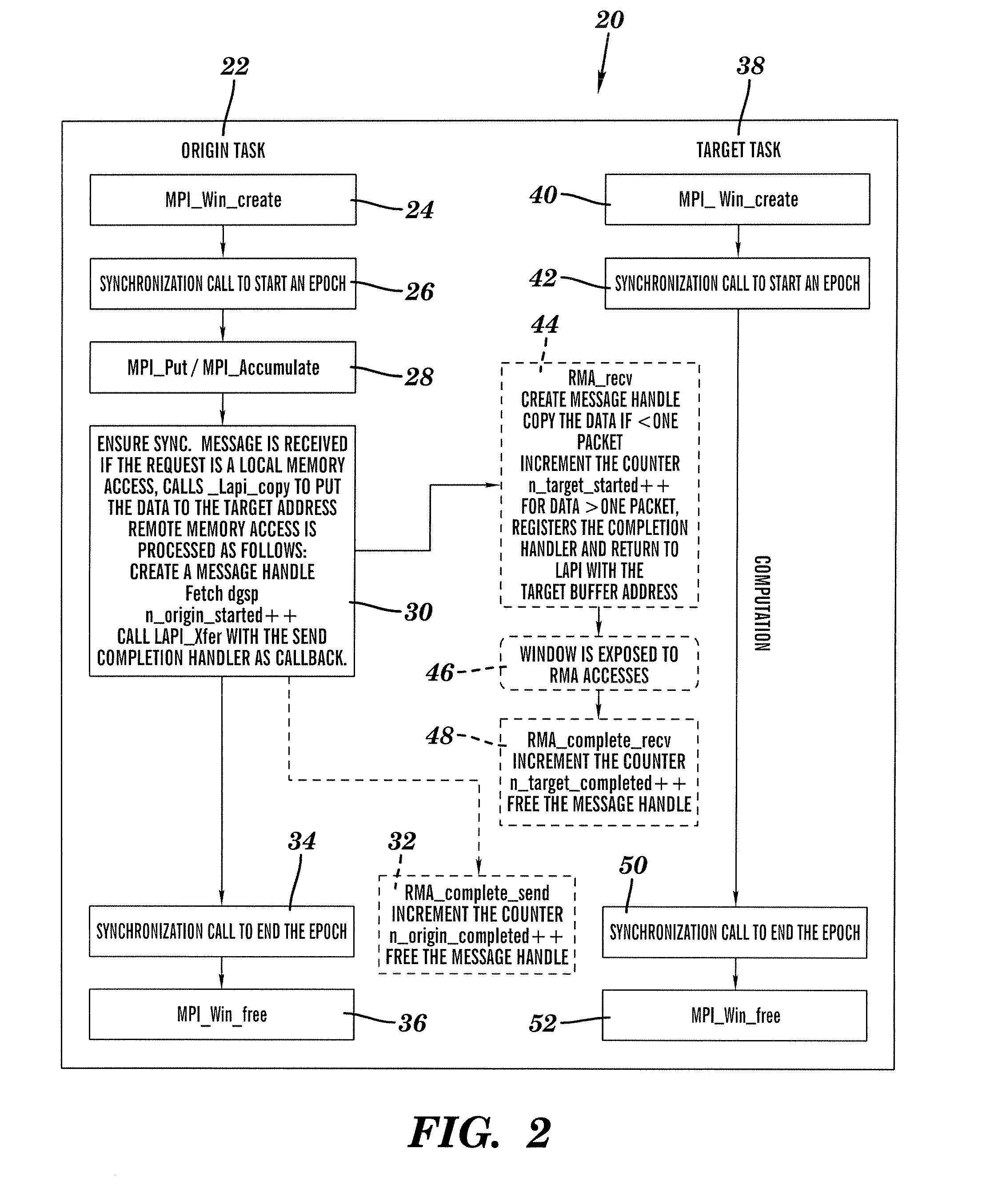

Method for implementing mpi-2 one sided communication

A method for implementing Message Passing Interface (MPI-2) one-sided communication by using Low-level Applications Programming Interface (LAPI) active messaging capabilities, including providing at least three data transfer types, one of which is used to send a message with a message header greater than one packet where Data Gather and Scatter Programs (DGSP) are placed as part of the message header; allowing a multi-packet header by using a LAPI data transfer type; sending the DGSP and data as one message; reading the DSGP with a header handler; registering the DSGP with the LAPI to allow the LAPI to scatter the data to one or more memory locations; defining two sets of counters, one counter set for keeping track of a state of a prospective communication partner, and another counter set for recording activities of local and Remote Memory Access (RMA) operations; comparing local and remote counts of completed RMA operations to complete synchronization mechanisms; and creating a mpci_wait_loop function.

Owner:TWITTER INC

Scalable interface for connecting multiple computer systems which performs parallel MPI header matching

ActiveUS8249072B2Improve performanceDigital computer detailsData switching by path configurationComputer clusterMessage Passing Interface

An interface device for a compute node in a computer cluster which performs Message Passing Interface (MPI) header matching using parallel matching units. The interface device comprises a memory that stores posted receive queues and unexpected queues. The posted receive queues store receive requests from a process executing on the compute node. The unexpected queues store headers of send requests (e.g., from other compute nodes) that do not have a matching receive request in the posted receive queues. The interface device also comprises a plurality of hardware pipelined matcher units. The matcher units perform header matching to determine if a header in the send request matches any headers in any of the plurality of posted receive queues. Matcher units perform the header matching in parallel. In other words, the plural matching units are configured to search the memory concurrently to perform header matching.

Owner:ORACLE INT CORP

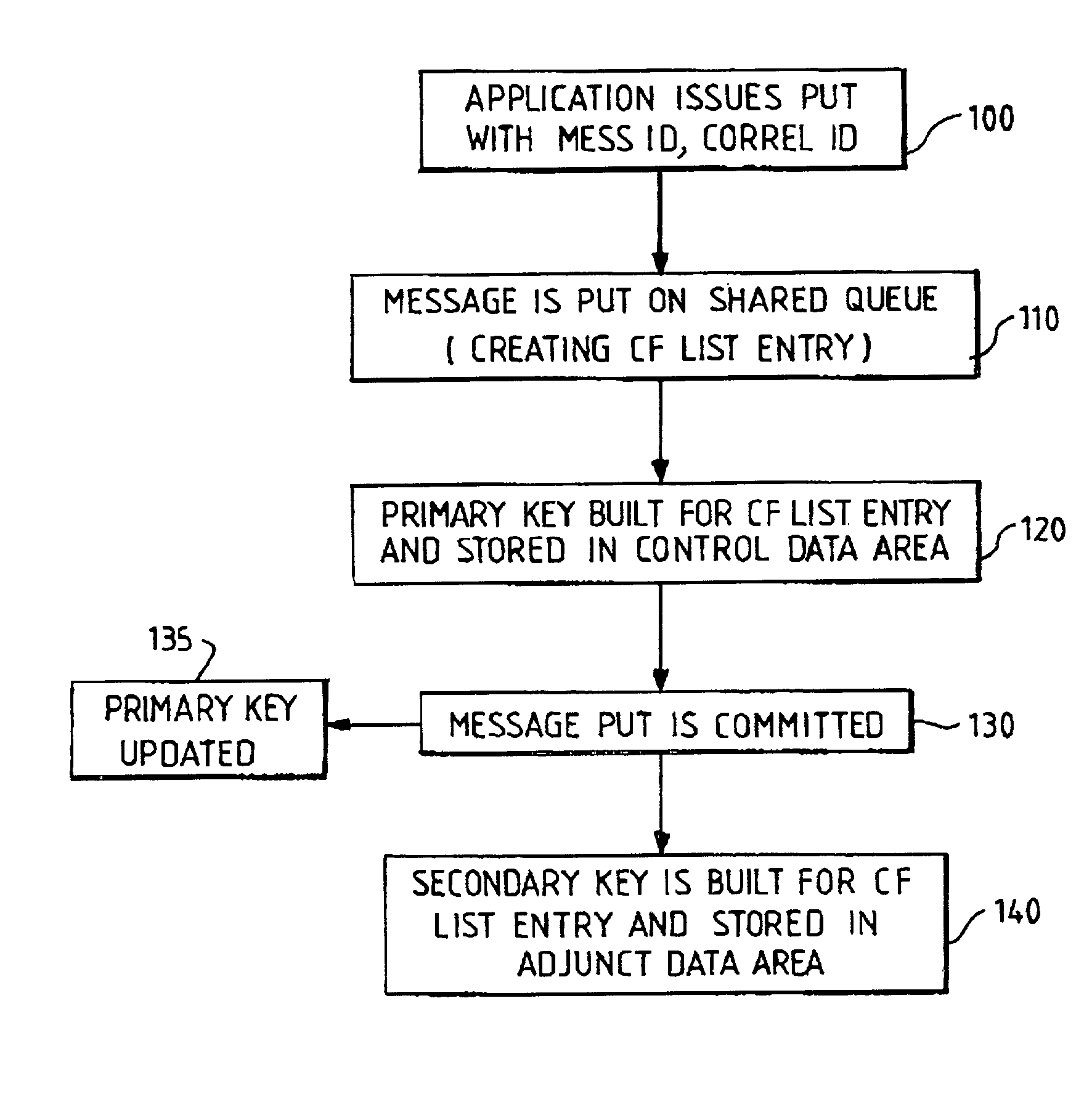

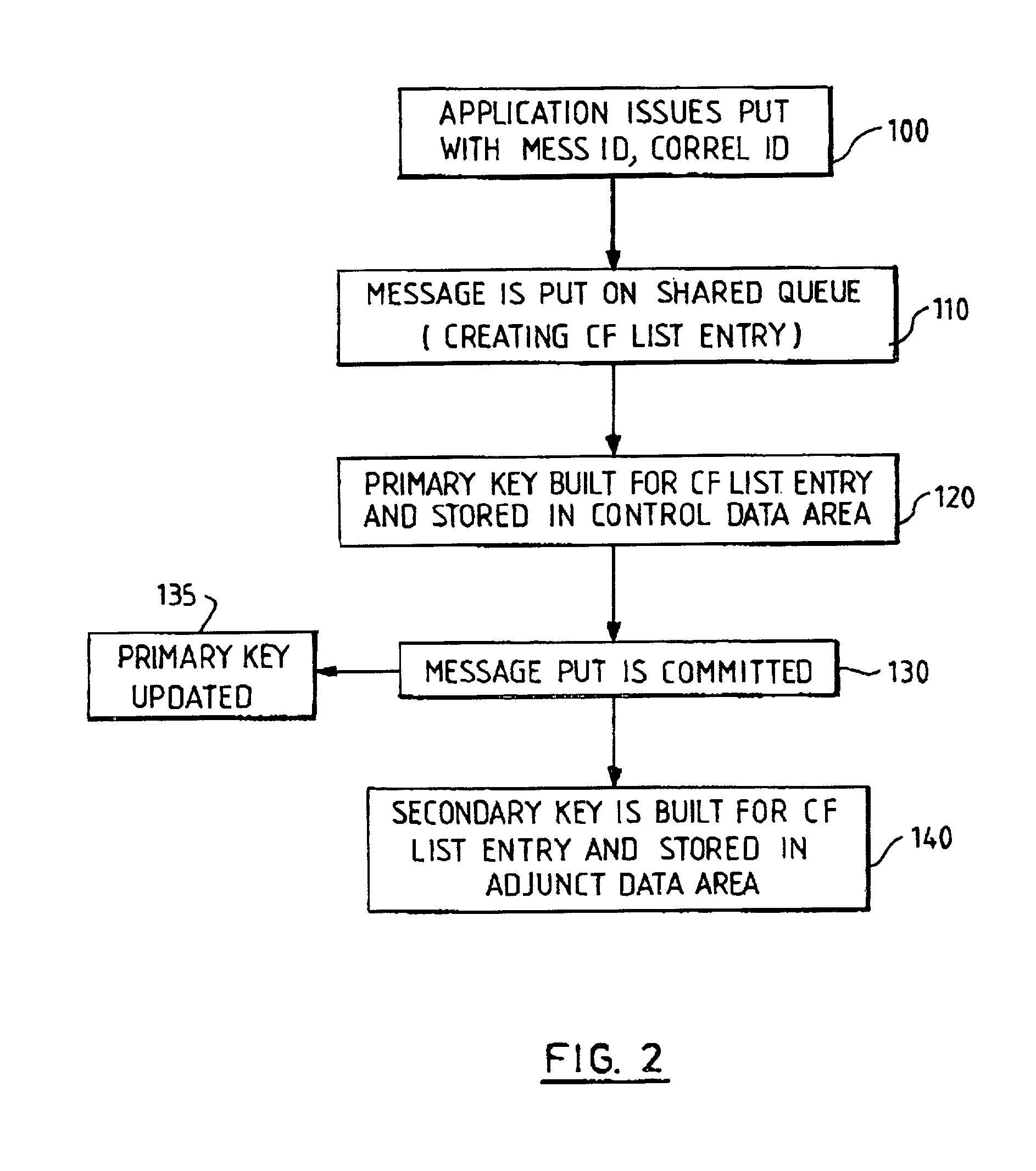

Implementing a message queuing interface (MQI) indexed queue support that adds a key to the index on put commit

InactiveUS7035852B2Efficient processingEasy accessData processing applicationsDigital data processing detailsMessage queueMessage Passing Interface

Provided are a method, computer program product and system for providing indexed queue support for efficient retrieval of messages from a queue. An index key for expediting message retrieval is assigned to a message when the operation of placing the message on a queue is committed. The index key assigned at commit time comprises an attribute (such as a message ID or correlation ID) specified by the sending application program, which placed the message on the queue. This deferred assignment of an index key until commit time means that the index key can be used to search for messages having the particular attribute without any possibility of identifying messages for retrieval before the messages have been committed. This maintains transactional requirements of a transaction-oriented messaging system which requires a message to only be made available for retrieval by receiver application programs after the sender application's put operation has committed.

Owner:IBM CORP

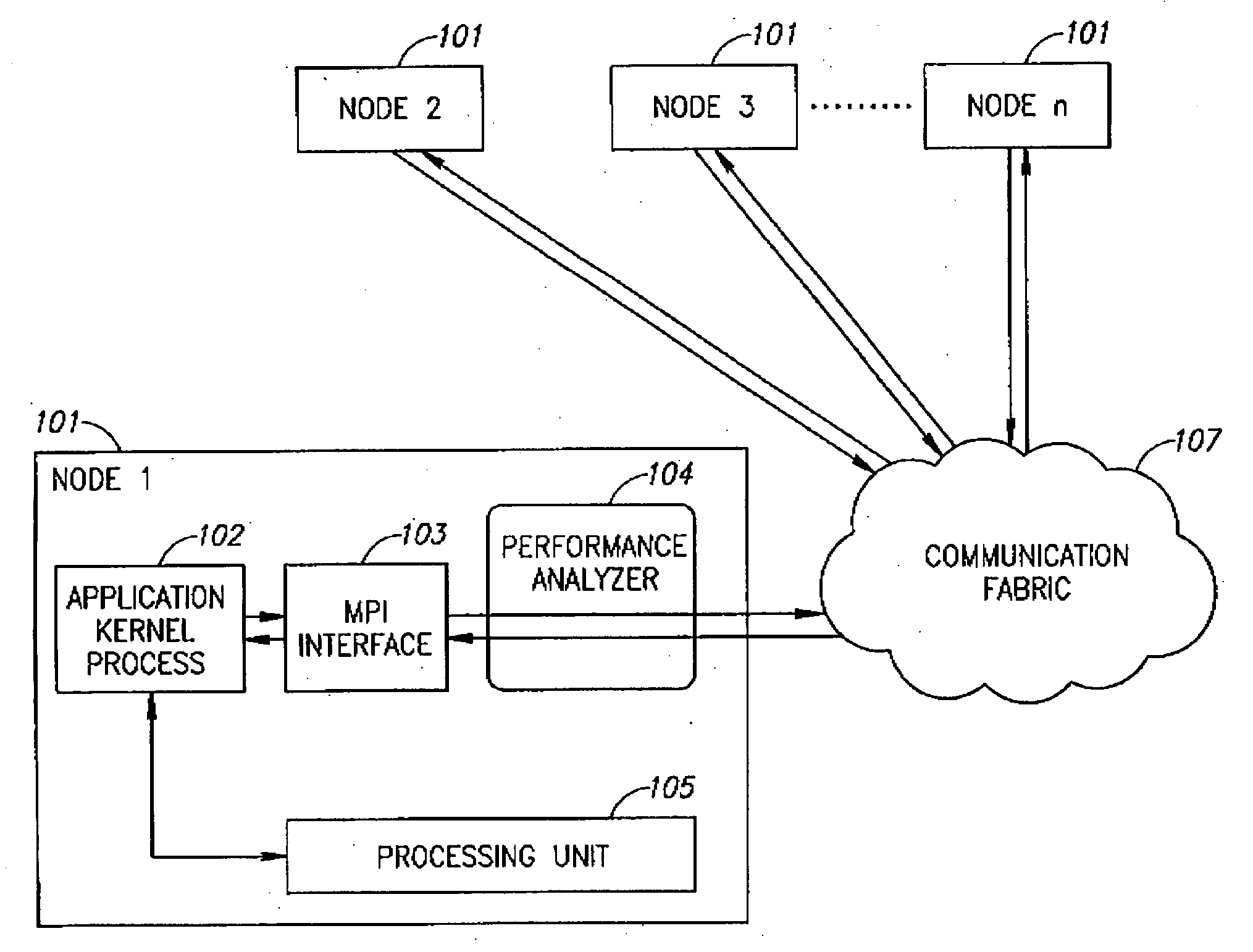

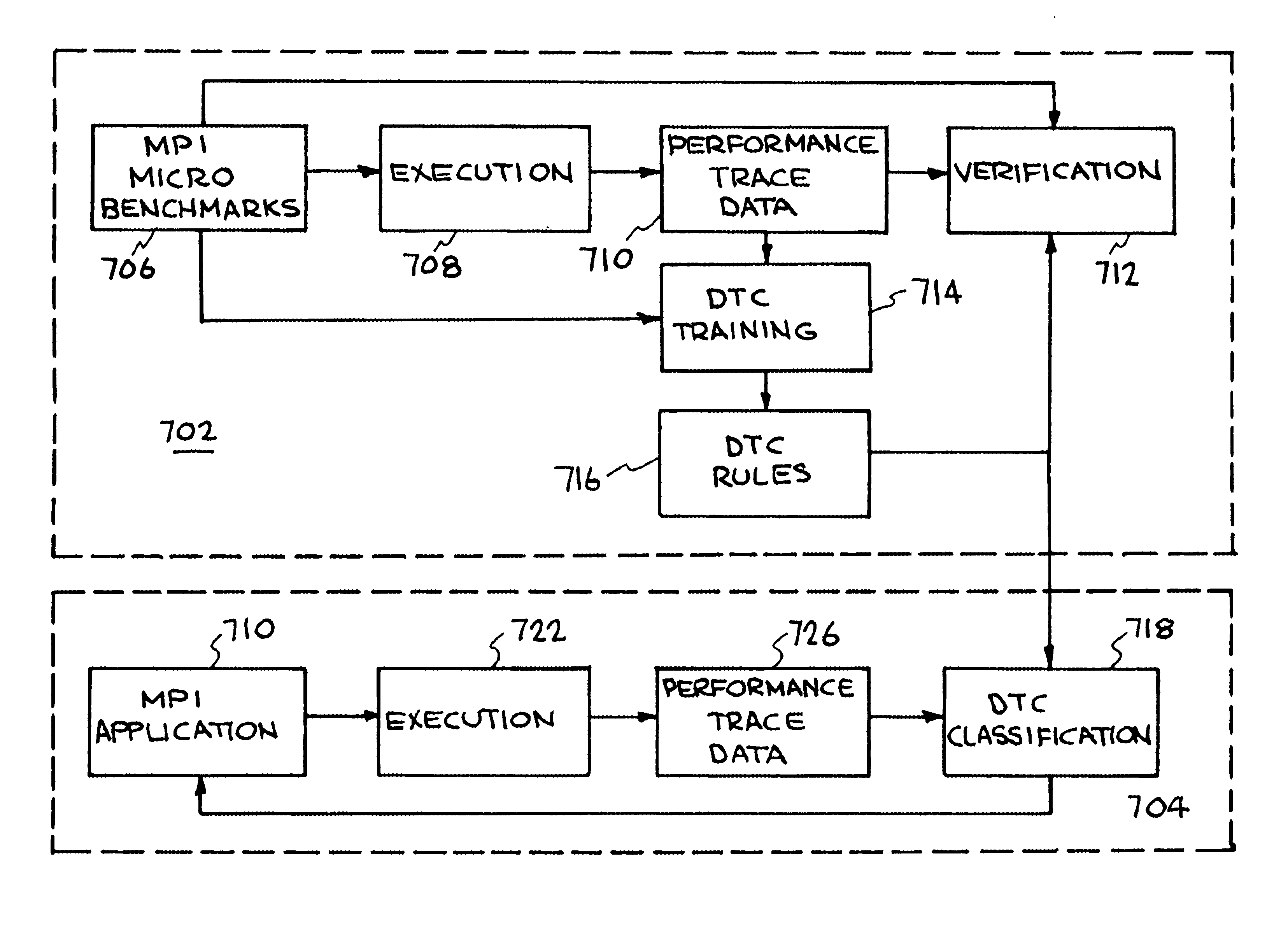

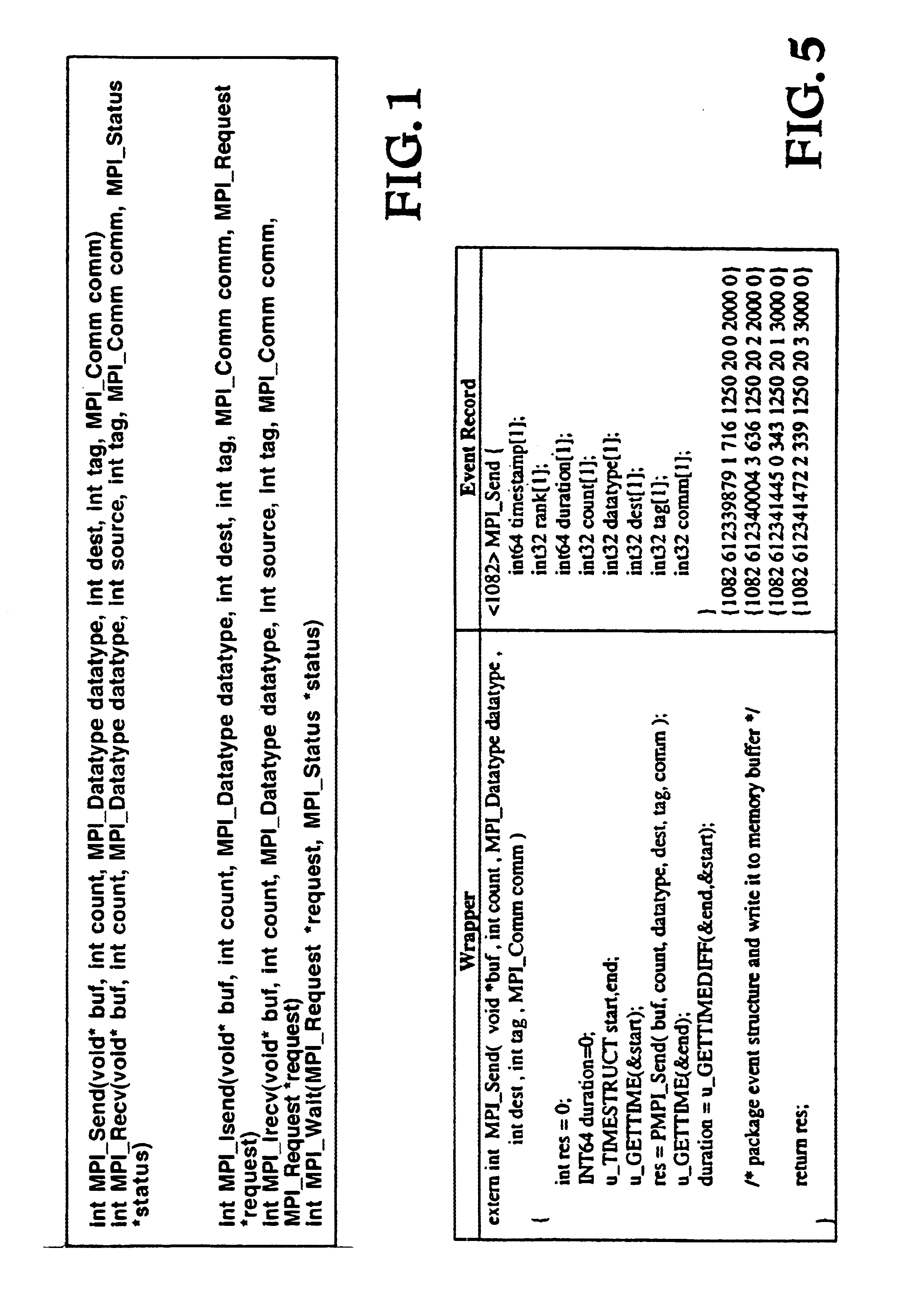

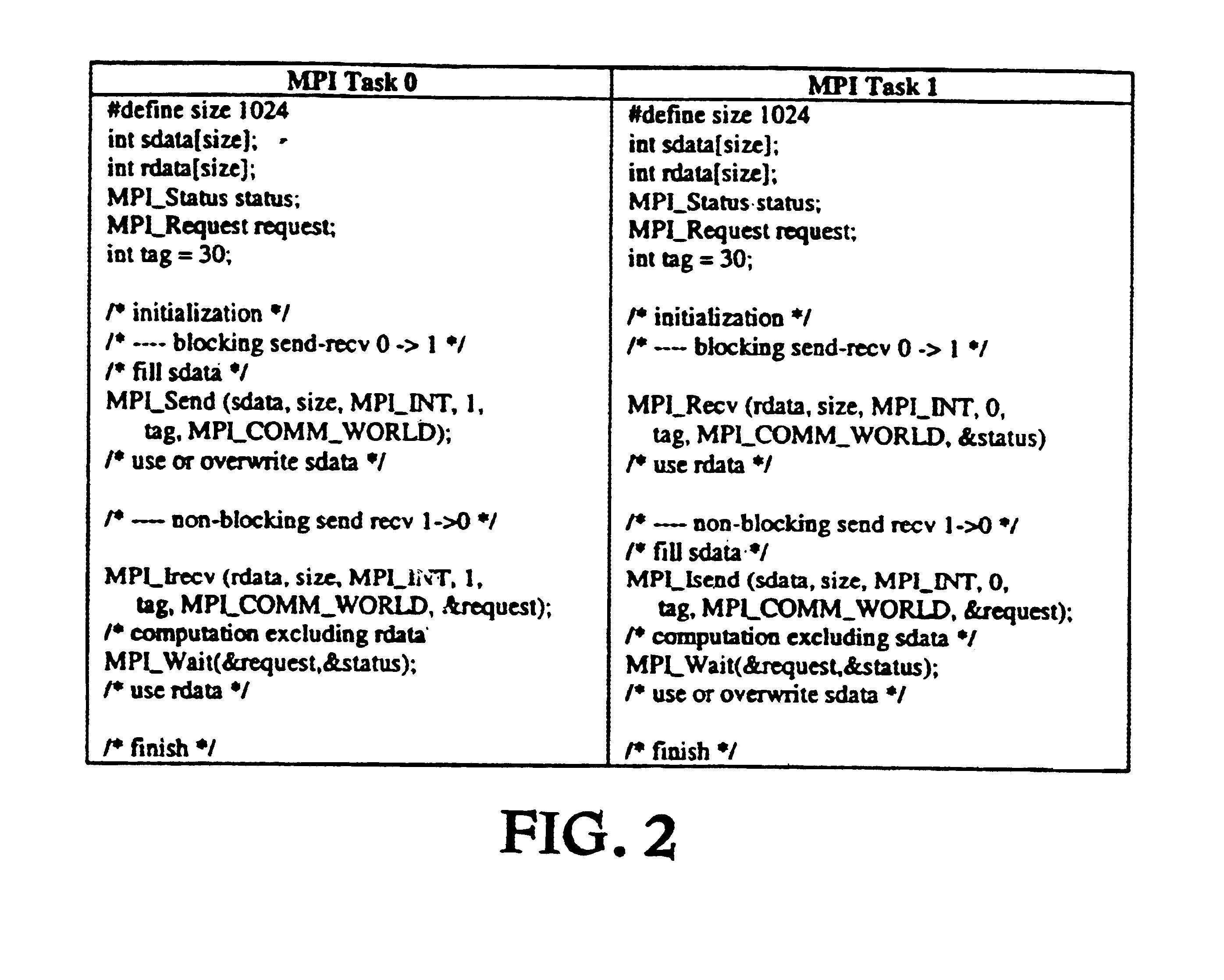

Performance analysis of distributed applications using automatic classification of communication inefficiencies

InactiveUS6850920B2Error detection/correctionDigital computer detailsMessage Passing InterfaceGoal system

The method and system described herein presents a technique for performance analysis that helps users understand the communication behavior of their message passing applications. The method and system described herein may automatically classifies individual communication operations and reveal the cause of communication inefficiencies in the application. This classification allows the developer to quickly focus on the culprits of truly inefficient behavior, rather than manually foraging through massive amounts of performance data. Specifically, the method and system described herein trace the message operations of Message Passing Interface (MPI) applications and then classify each individual communication event using a supervised learning technique: decision tree classification. The decision tree may be trained using microbenchmarks that demonstrate both efficient and inefficient communication. Since the method and system described herein adapt to the target system's configuration through these microbenchmarks, they simultaneously automate the performance analysis process and improve classification accuracy. The method and system described herein may improve the accuracy of performance analysis and dramatically reduce the amount of data that users must encounter.

Owner:LAWRENCE LIVERMORE NAT SECURITY LLC

Hardware based dynamic load balancing of message passing interface tasks

InactiveUS8127300B2Minimize periodWasted processor cyclesDigital computer detailsMultiprogramming arrangementsDynamic load balancingMessage Passing Interface

Mechanisms for providing hardware based dynamic load balancing of message passing interface (MPI) tasks are provided. Mechanisms for adjusting the balance of processing workloads of the processors executing tasks of an MPI job are provided so as to minimize wait periods for waiting for all of the processors to call a synchronization operation. Each processor has an associated hardware implemented MPI load balancing controller. The MPI load balancing controller maintains a history that provides a profile of the tasks with regard to their calls to synchronization operations. From this information, it can be determined which processors should have their processing loads lightened and which processors are able to handle additional processing loads without significantly negatively affecting the overall operation of the parallel execution system. As a result, operations may be performed to shift workloads from the slowest processor to one or more of the faster processors.

Owner:INT BUSINESS MASCH CORP

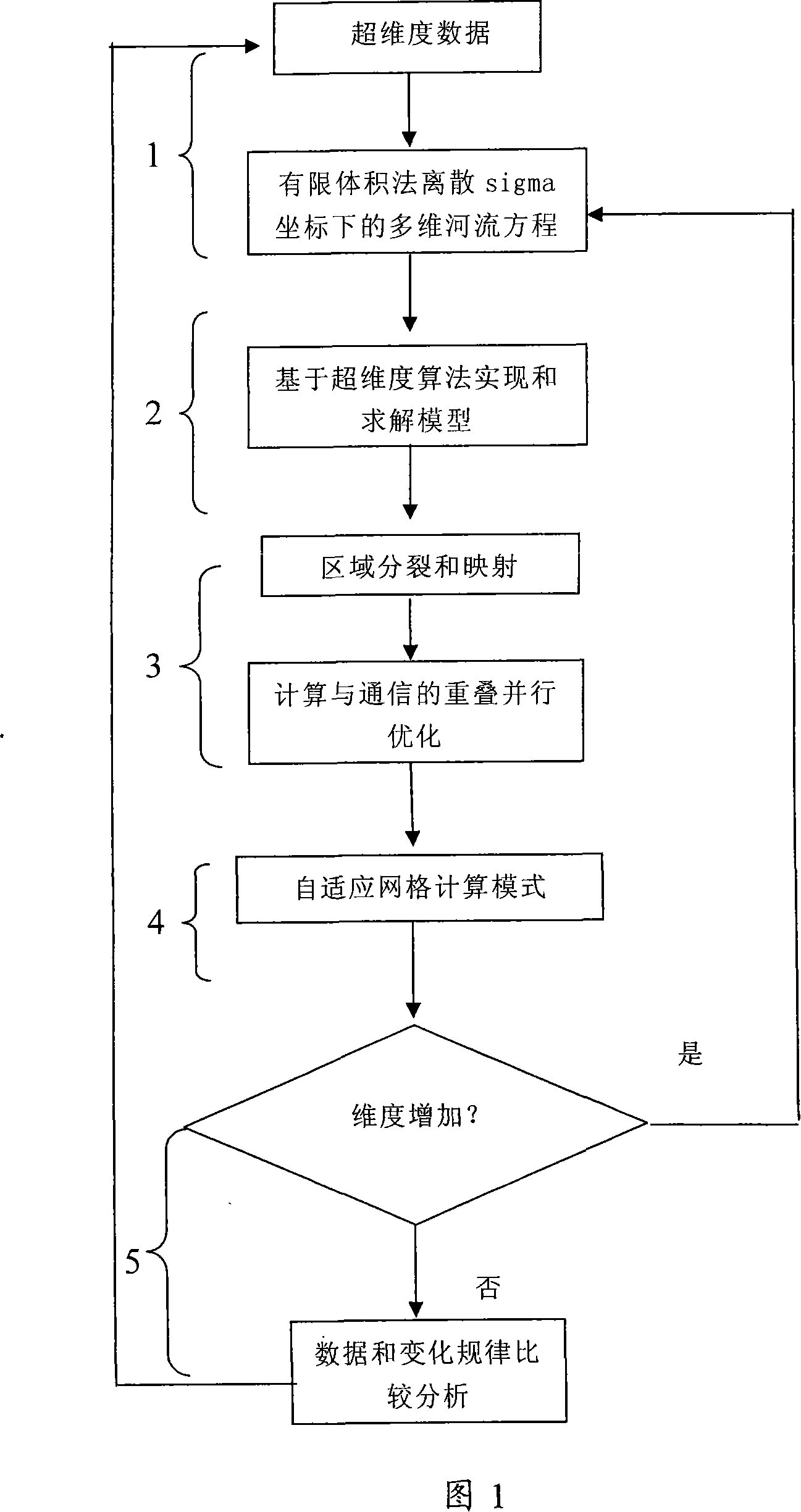

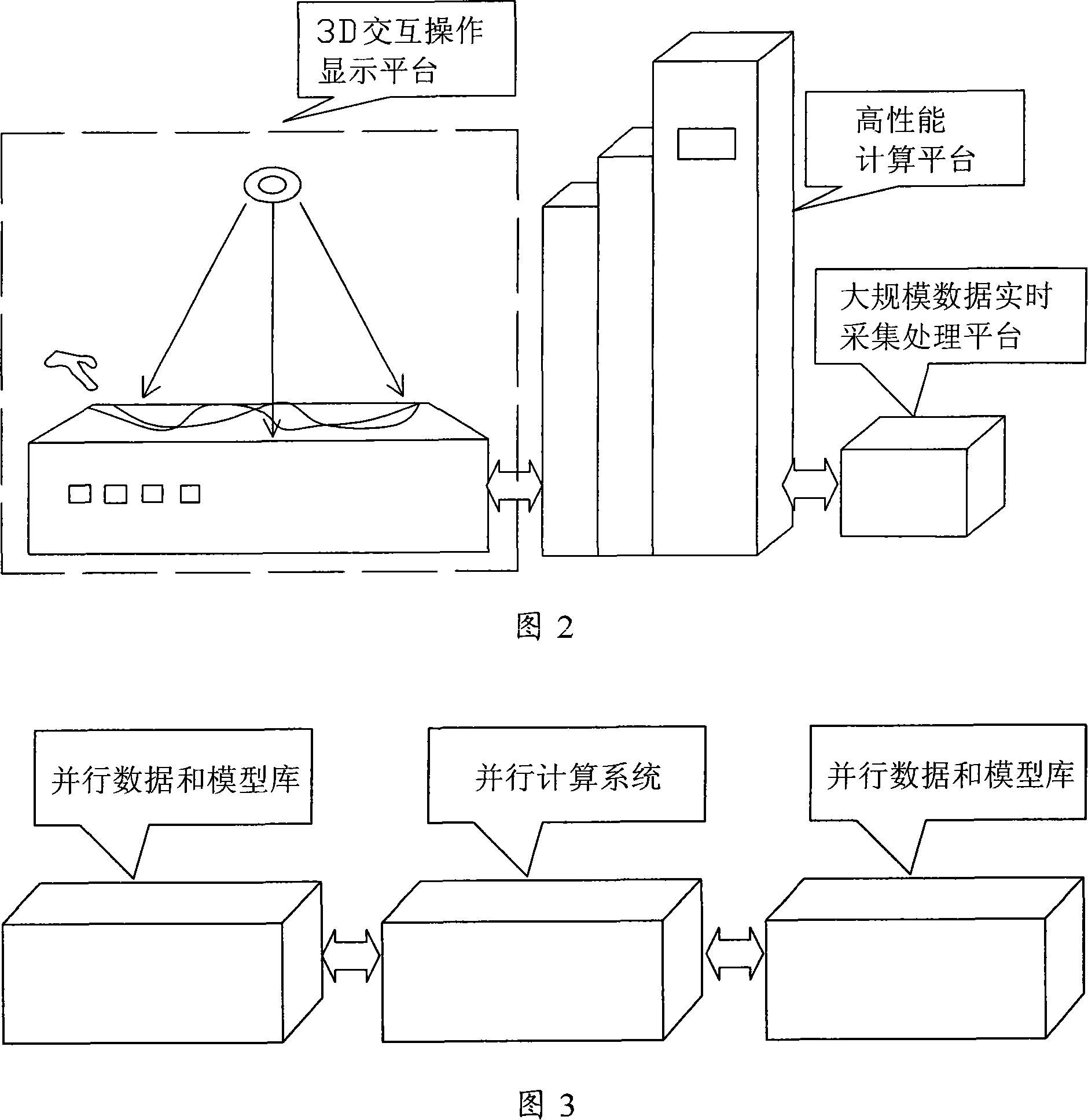

Ultra-dimension fluvial dynamics self-adapting parallel monitoring method

ActiveCN101158985AEasy to monitorEffectively reflect the changing law of the riverHydrodynamic testingMultiple digital computer combinationsCharacteristic typeParallel algorithm

The invention discloses a method of super-dimensional river dynamics self-adaptive parallel monitoring, which includes the steps as following: input super-dimensional data into a system and classify according to the different dimension where the data are; create a super-dimensional unstructured grid river dynamics model based on a characteristic-type high-resolution numerical algorithm; in terms of an efficient parallel algorithm in a super-dimensional fluid splitting scheme, perform intra-dimensional and inter-dimensional calculations; the calculation region is divided into a plurality of sub-regions, each sub-region is mapped on a calculation node on the parallel system structure, the communication between the nodes uses a standard message passing interface, the overlapped parallel optimization technique of calculation and communication in the self-adaptive grid, and the calculation of variables associated with the space is independent. The method in the invention puts the super-dimensional river dynamics into the adaptive grid to execute the efficient parallel calculation of splitting scheme, and simultaneously processes the change of dimension; the method realizes the monitoring of river conveniently, timely and high accurately.

Owner:SHENZHEN INST OF ADVANCED TECH

System and Method for Hardware Based Dynamic Load Balancing of Message Passing Interface Tasks

InactiveUS20090064166A1Minimize periodWasted processor cyclesMultiprogramming arrangementsMemory systemsDynamic load balancingMessage Passing Interface

A system and method for providing hardware based dynamic load balancing of message passing interface (MPI) tasks are provided. Mechanisms for adjusting the balance of processing workloads of the processors executing tasks of an MPI job are provided so as to minimize wait periods for waiting for all of the processors to call a synchronization operation. Each processor has an associated hardware implemented MPI load balancing controller. The MPI load balancing controller maintains a history that provides a profile of the tasks with regard to their calls to synchronization operations. From this information, it can be determined which processors should have their processing loads lightened and which processors are able to handle additional processing loads without significantly negatively affecting the overall operation of the parallel execution system. As a result, operations may be performed to shift workloads from the slowest processor to one or more of the faster processors.

Owner:IBM CORP

Complicated function maximum and minimum solving method by means of parallel artificial bee colony algorithm based on computer cluster

InactiveCN102982008ALow costCalculation speedConcurrent instruction executionComplex mathematical operationsOperational systemComputer cluster

The invention discloses a complicated function maximum and minimum solving method by means of a parallel artificial bee colony algorithm based on a computer cluster and belongs to the field of computer parallel computing technique. According to the method, the purpose of complicated function maximum and minimum solving by means of the parallel artificial bee colony algorithm on a plurality of computers is achieved by combination of the method and a message passing interface (MPI) software package in the Linux system. Experimental results show that in a complicated function maximum and minimum solving process, the parallel artificial bee colony algorithm is higher in accuracy and higher in speed than an ordinary serial algorithm, and efficiency of computing function maxima and minima is improved greatly.

Owner:SHANDONG UNIV

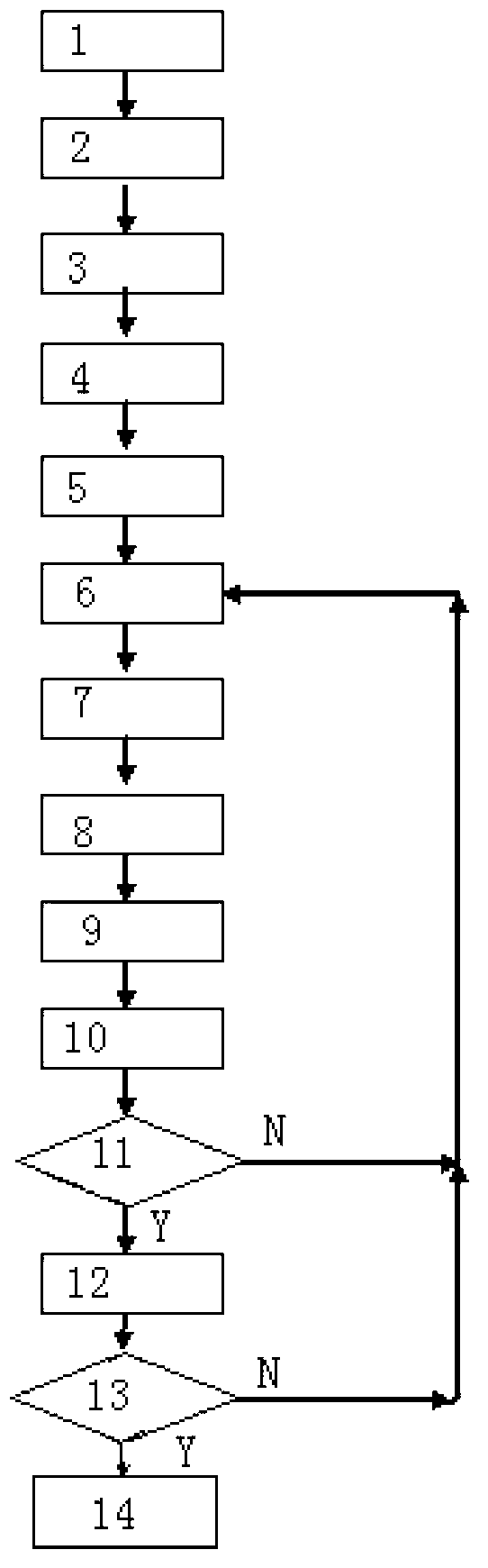

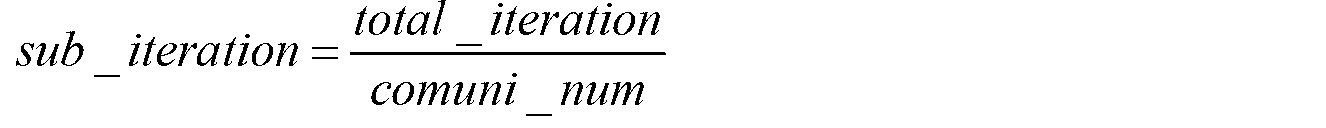

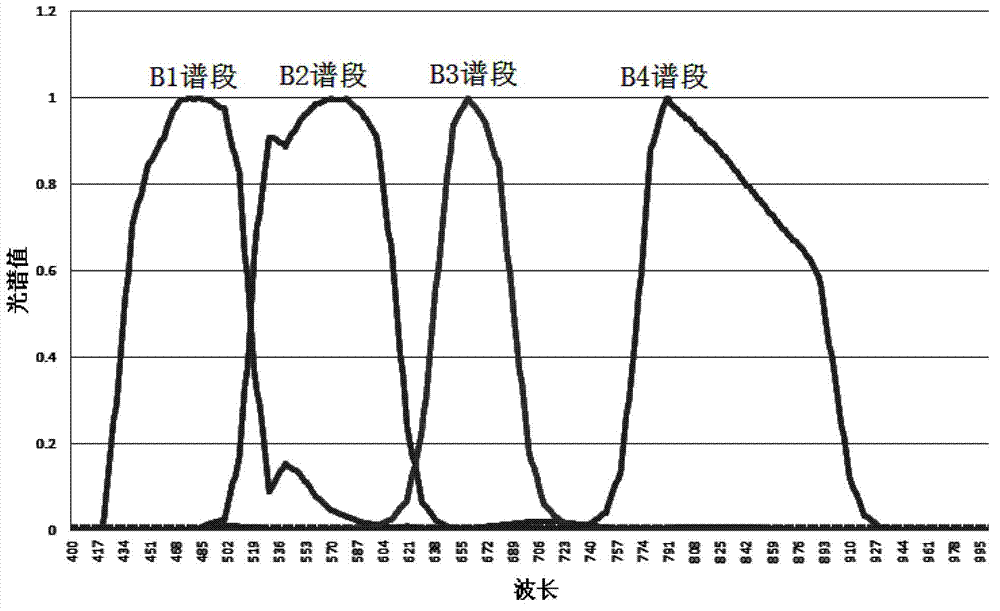

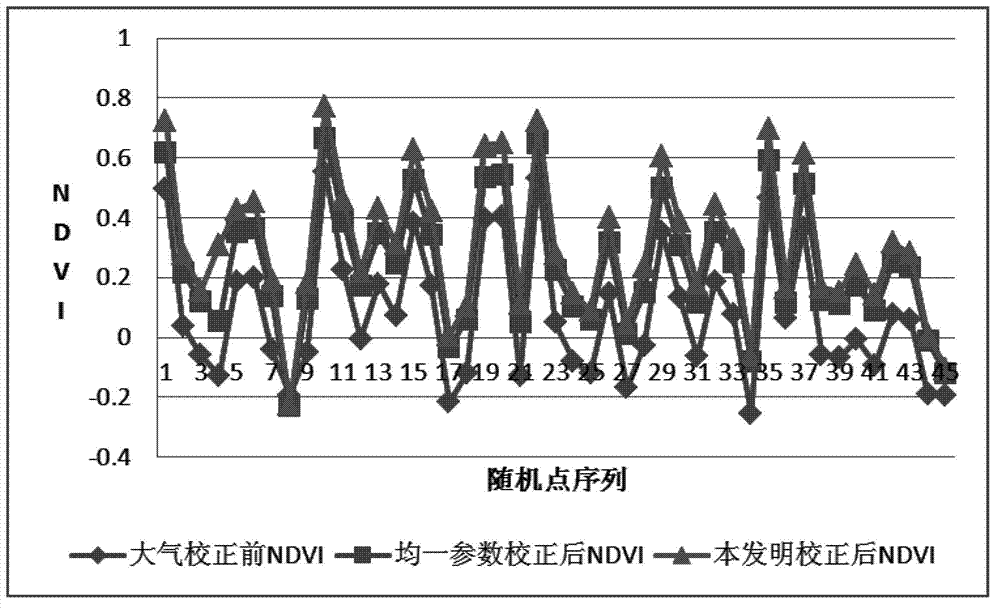

Atmospheric correction method and atmospheric correction module for satellite remote-sensing image

InactiveCN102778675AHigh precisionImprove practicalityWave based measurement systemsMessage Passing InterfaceComputer module

The invention discloses an atmospheric correction method and an atmospheric correction module for a satellite remote-sensing image. The atmospheric correction method comprises the following steps of: establishing an atmospheric correction parameter look up table (LUT) by virtue of a 6S (second simulation of a satellite signal in the solar spectrum) radiation transfer model, designing parallel atmospheric correction models driven by a data block and a functional chain by virtue of the master-slave parallel program design mode of a message passing programming model MPI (message passing interface), and then realizing parallel atmospheric correction based on the 6S radiation transfer look up table, thus effectively solving the problems of the time and the accuracy of atmospheric correction for the satellite remote-sensing image. The atmospheric correction method and the atmospheric correction module disclosed by the invention are suitable for operational atmospheric correction for various satellite remote-sensing images.

Owner:CHINESE ACAD OF SURVEYING & MAPPING

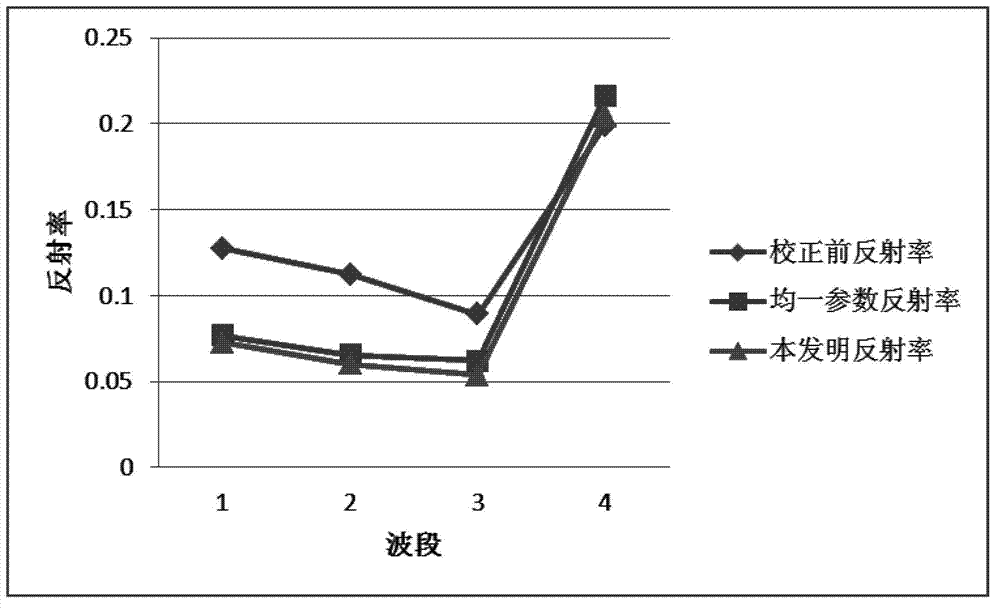

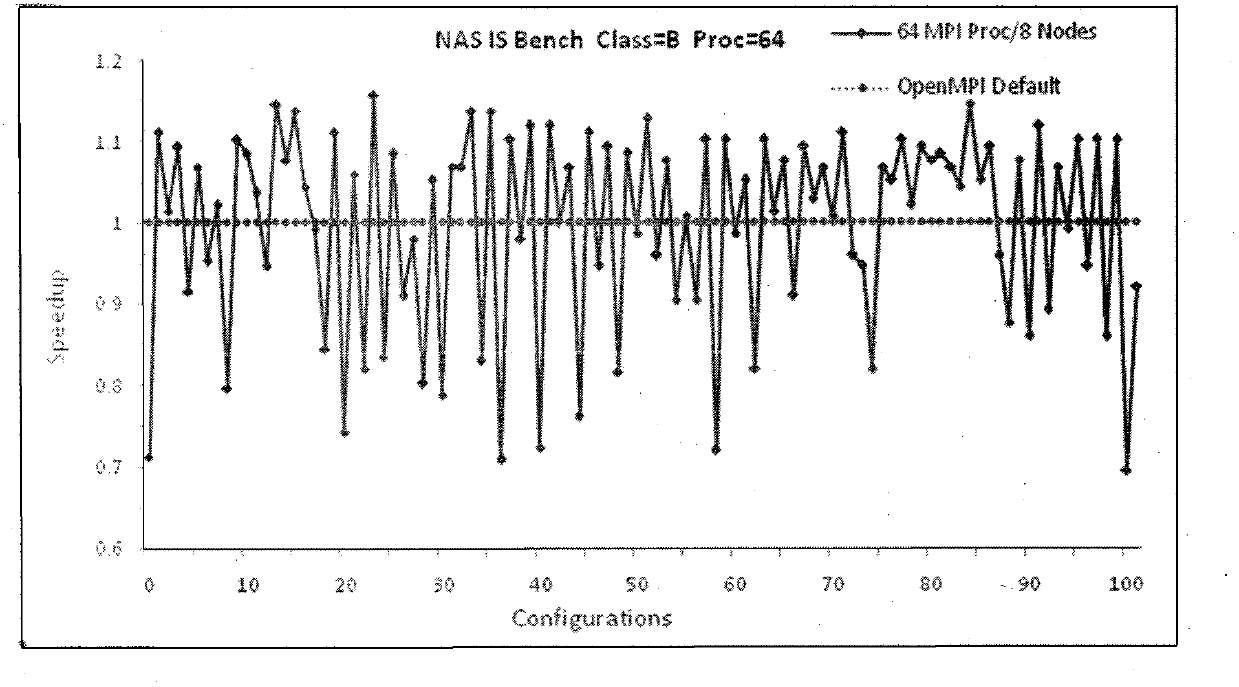

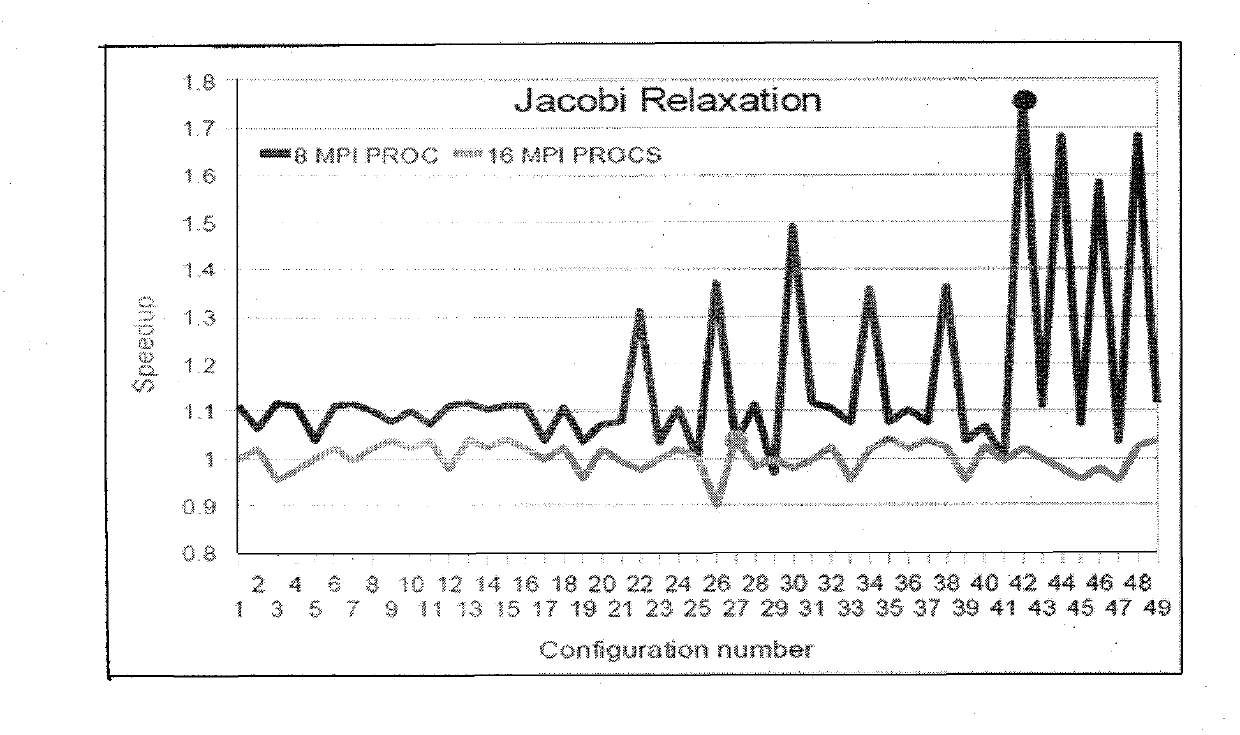

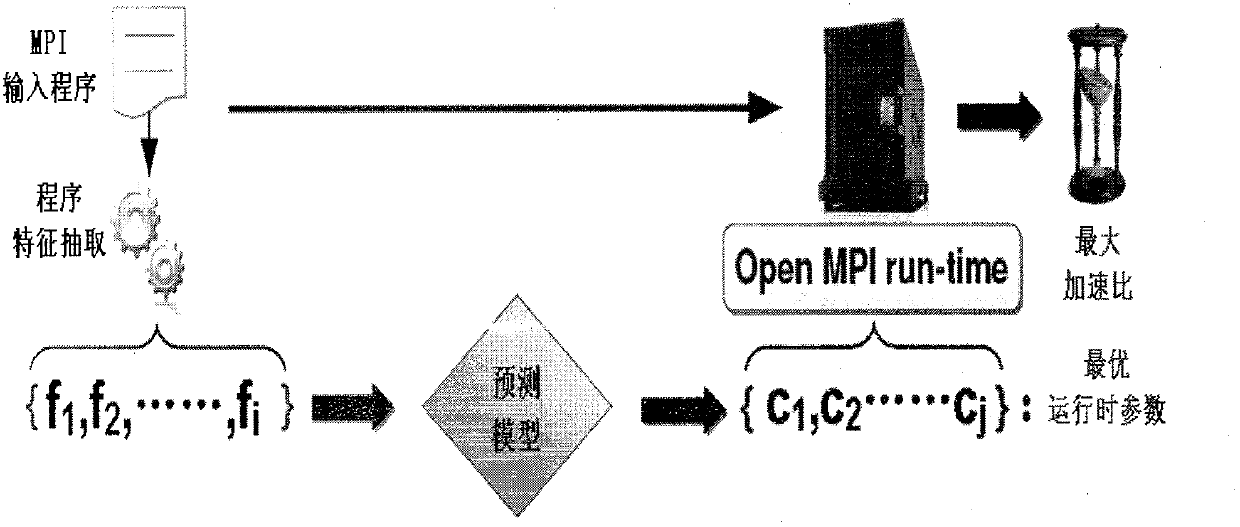

Machine learning based method for predicating parameters during MPI (message passing interface) optimal operation in multi-core environments

InactiveCN102708404AImprove noise immunityNeural learning methodsCollective communicationLearning based

The invention provides a novel method for optimizing an MPI (message passing interface) application in multi-core environments, and particularly relates to a machine learning based method for predicating parameters during MPI application optimal operation under multi-core clusters. According to the method, training benchmarks with different ratios of point-to-point communication data to collective communication data are designed to generate training data under the specific multi-core clusters, parameter optimized models during operation are constructed by a decision tree REPTree capable of quickly outputting results and an ANN (artificial neural network) capable of generating multiple output and good in noise immunity, the optimized models are trained by the training data generated by the training benchmarks, and the trained models are used for predicating the unknown parameters inputted to the MPI application during optimal operation. Experiments show that speed-up ratios generated by the parameters obtained by the REPTree-based predication model and the ANN-based predication model during optimal operation are averagely higher than 90% of a practical maximum speed-up ratio.

Owner:BEIJING COMPUTING CENT

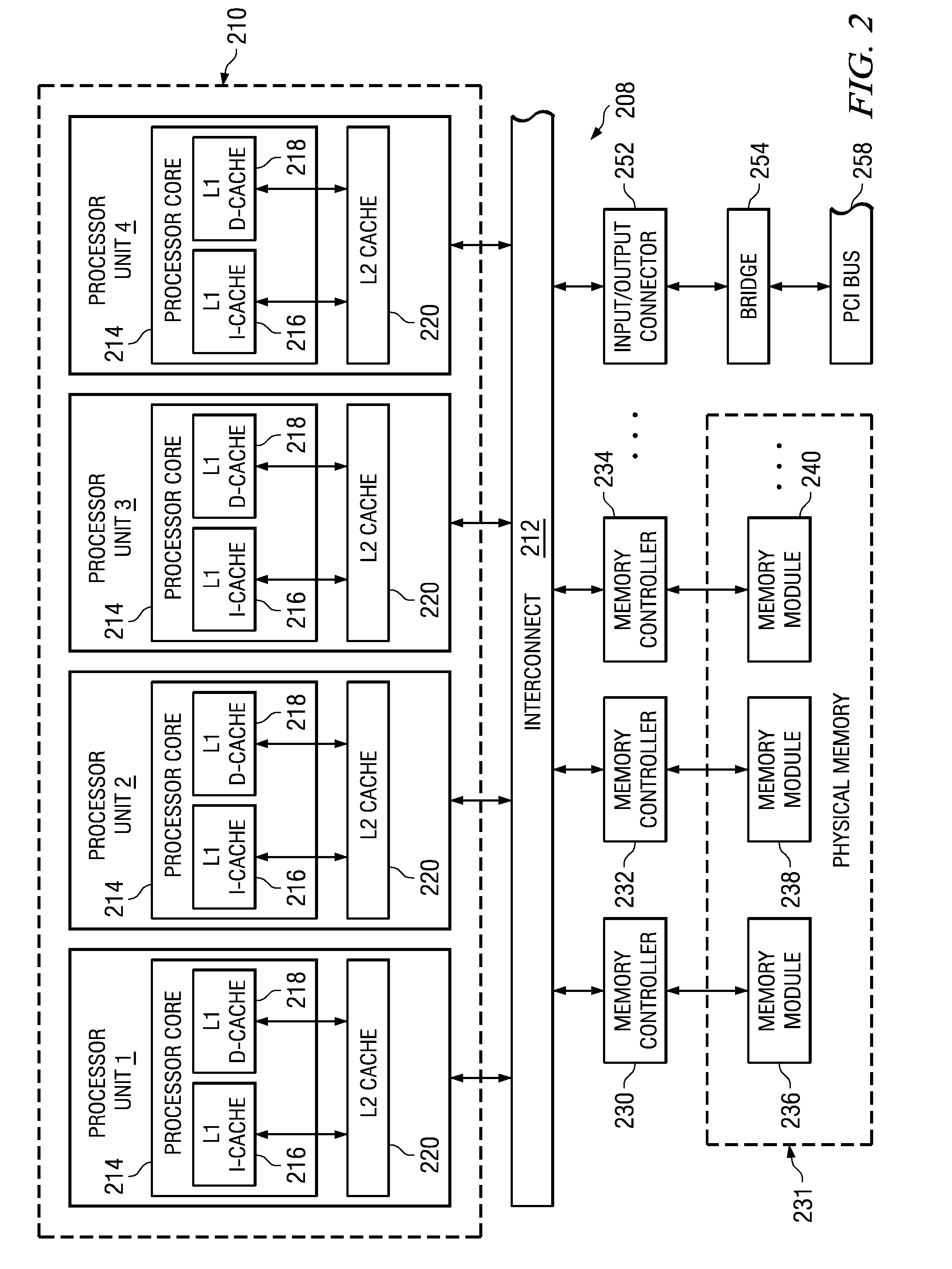

Apparatus for enhancing performance of a parallel processing environment, and associated methods

InactiveUS8499025B2Improve parallel processing capabilitiesFacilitate communicationResource allocationMultiple digital computer combinationsMessage Passing InterfaceParallel processing

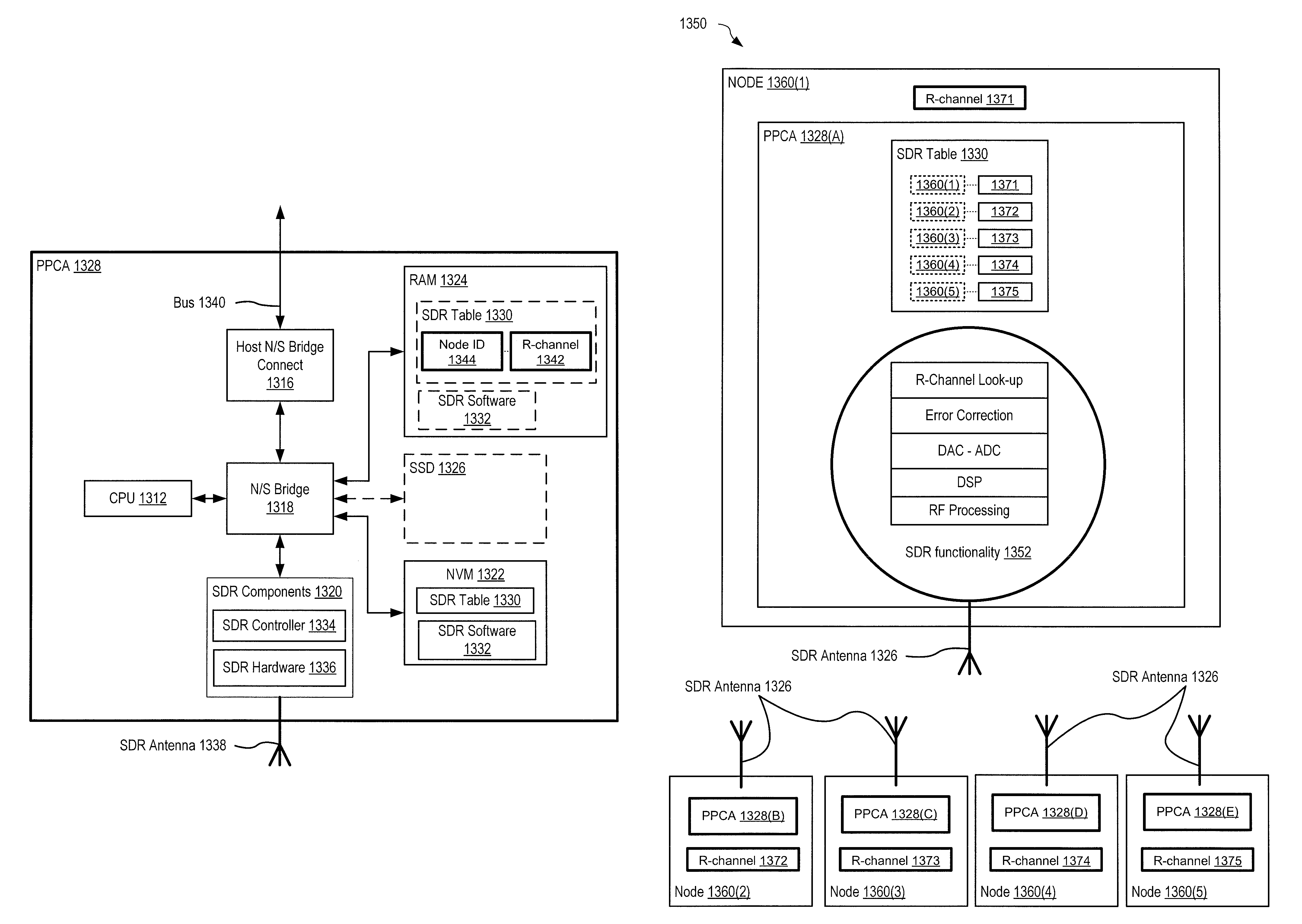

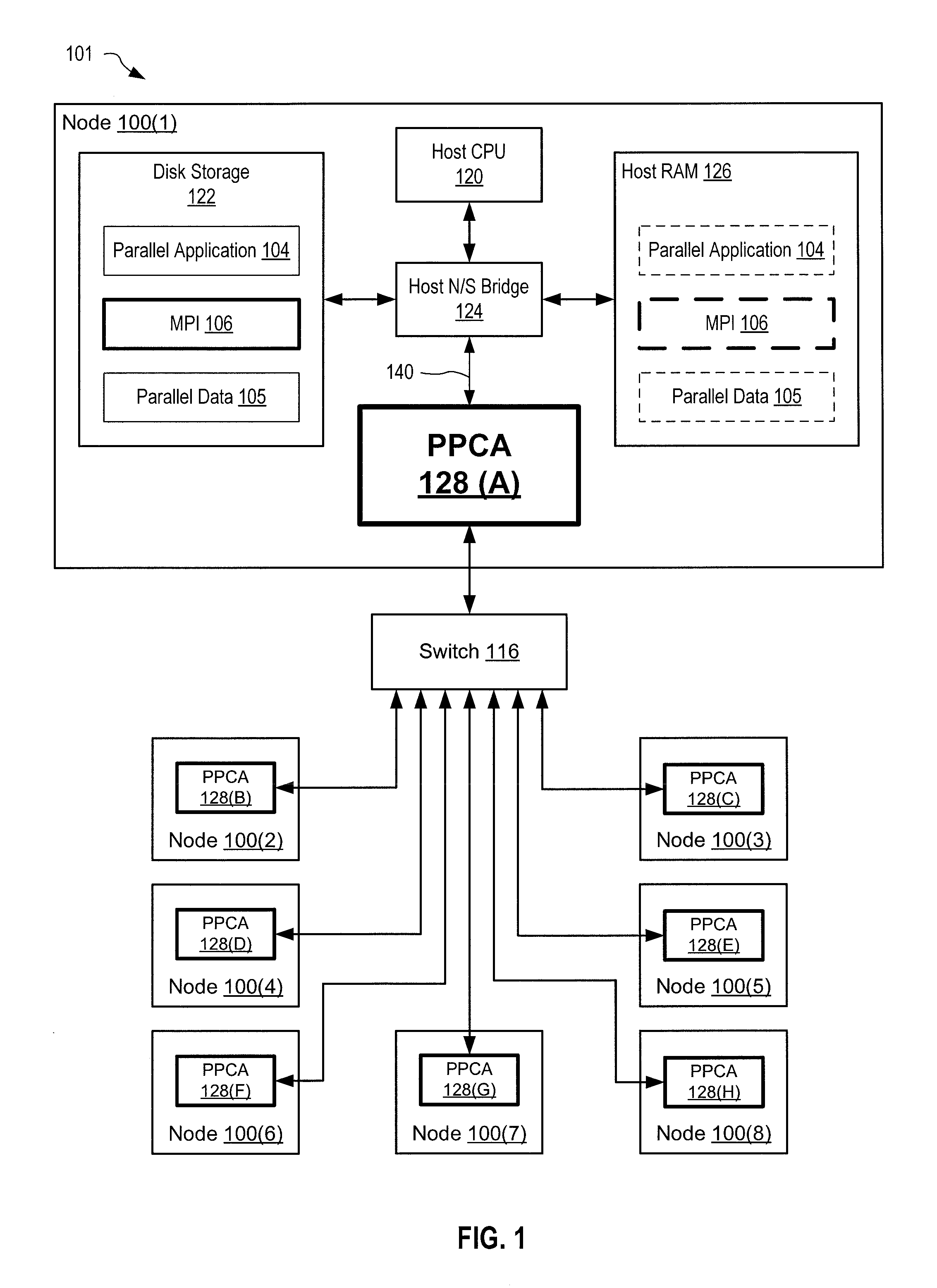

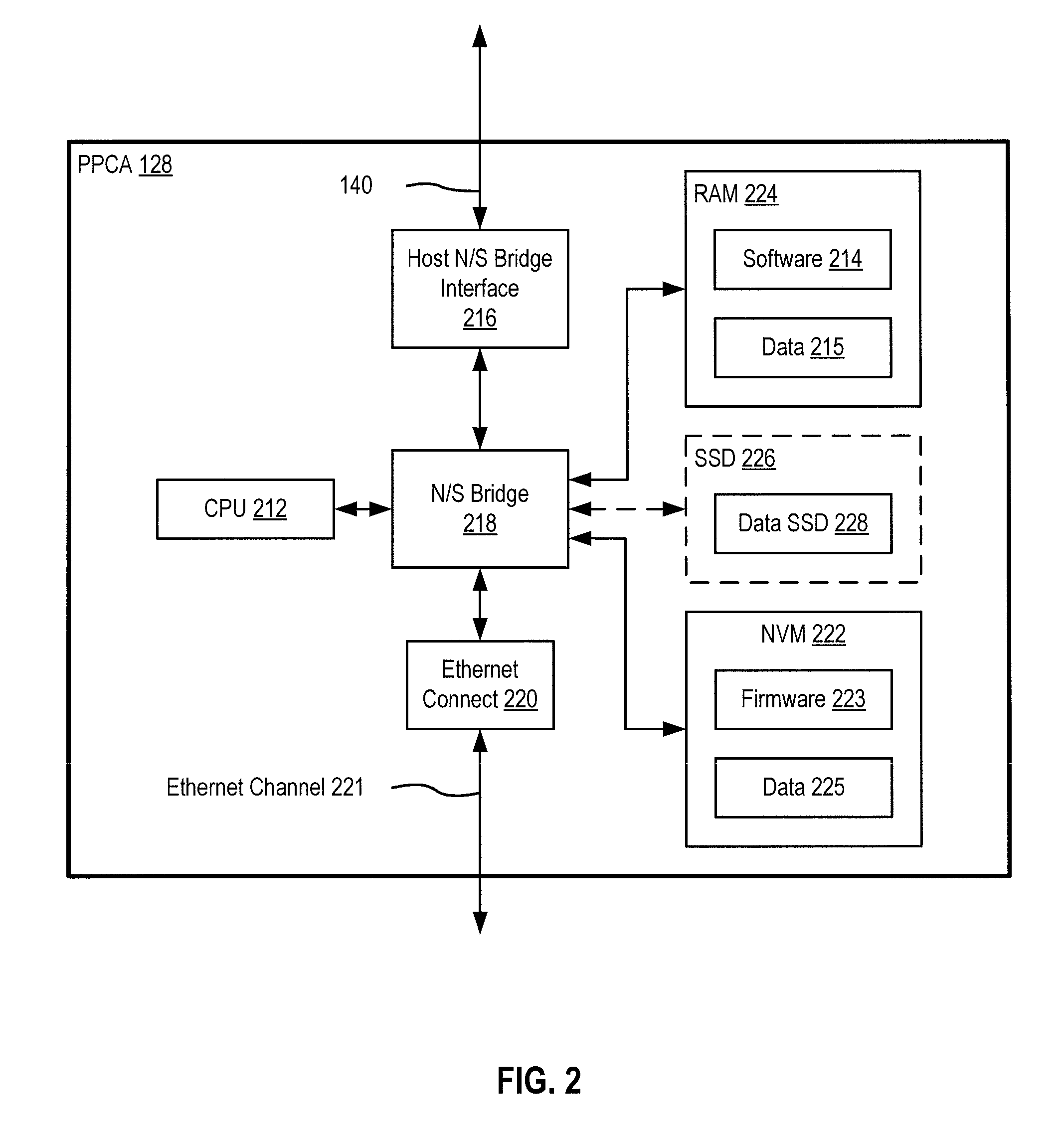

Parallel Processing Communication Accelerator (PPCA) systems and methods for enhancing performance of a Parallel Processing Environment (PPE). In an embodiment, a Message Passing Interface (MPI) devolver enabled PPCA is in communication with the PPE and a host node. The host node executes at least a parallel processing application and an MPI process. The MPI devolver communicates with the MPI process and the PPE to improve the performance of the PPE by offloading MPI process functionality to the PPCA. Offloading MPI processing to the PPCA frees the host node for other processing tasks, for example, executing the parallel processing application, thereby improving the performance of the PPE.

Owner:MASSIVELY PARALLEL TECH

Modifying an operation of one or more processors executing message passing interface tasks

InactiveUS8108876B2Minimize periodWasted processor cyclesProgram synchronisationMultiple digital computer combinationsMessage Passing InterfaceWaiting period

Mechanisms for modifying an operation of one or more processors executing message passing interface (MPI) tasks are provided. Mechanisms for adjusting the balance of processing work loads of the processors are provided so as to minimize wait periods for waiting for all of the processors to call a synchronization operation. Each processor has an associated hardware implemented MPI load balancing controller. The MPI Load balancing controller maintains a history that provides a profile of the tasks with regard to their calls to synchronization operations. From this information, it can be determined which processors should have their processing loads lightened and which processors are able to handle additional processing loads without significantly negatively affecting the overall operation of the parallel execution system. As a result, operations may be performed to shift workloads from the slowest processor to one or more of the faster processors.

Owner:INT BUSINESS MASCH CORP

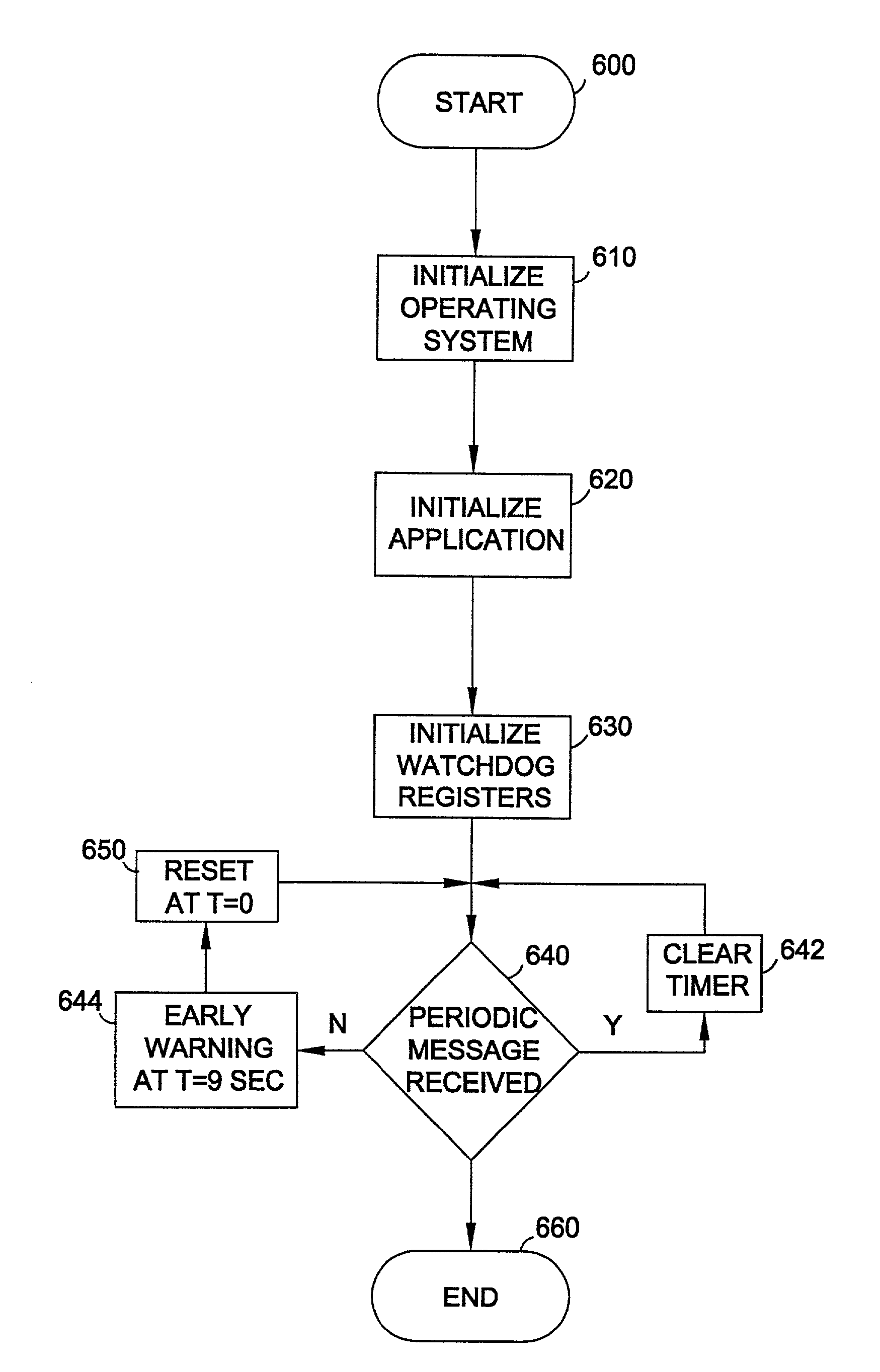

Hardware implementation of an application-level watchdog timer

InactiveUS7003775B2Error detection/correctionInterprogram communicationOperational systemSystems management

An application watchdog, comprising a dedicated watchdog counter in the hardware layer and a watchdog driver operating in the kernel mode layer of the computer operating system. The driver comprises a system thread configured to monitor a plurality of designated user applications operating in the user mode of the operating system and a message passing interface for receiving periodic signals from each of the user applications. The driver also uses an interface for transmitting timer reset commands to the dedicated watchdog counter. If the system thread receives a message from each of the designated user applications within an allotted period of time, the watchdog driver sends a timer reset command to the dedicated watchdog counter. Otherwise, the dedicated watchdog counter fails to receive the reset command and subsequently issues a system reset command. Early warning signals may be issued prior to system reset to alert system management.

Owner:COMPAQ INFORMATION TECH GRP LP A TEXAS LLP

Performing setup operations for receiving different amounts of data while processors are performing message passing interface tasks

InactiveUS8234652B2Minimize periodWasted processor cyclesDigital computer detailsMultiprogramming arrangementsMessage Passing InterfaceWaiting period

Mechanisms are provided for performing setup operations for receiving a different amount of data while processors are performing message passing interface (MPI) tasks. Mechanisms for adjusting the balance of processing workloads of the processors are provided so us to minimize wait periods for waiting for all of the processors to call a synchronization operation. An MPI load balancing controller maintains a history that provides a profile of the tasks with regard to their calls to synchronization operations. From this information, it can be determined which processors should have their processing loads lightened and which processors are able to handle additional processing loads without significantly negatively affecting the overall operation of the parallel execution system. As a result, setup operations may be performed while processors are performing MPI tasks to prepare for receiving different sized portions of data in a subsequent computation cycle based on the history.

Owner:INT BUSINESS MASCH CORP

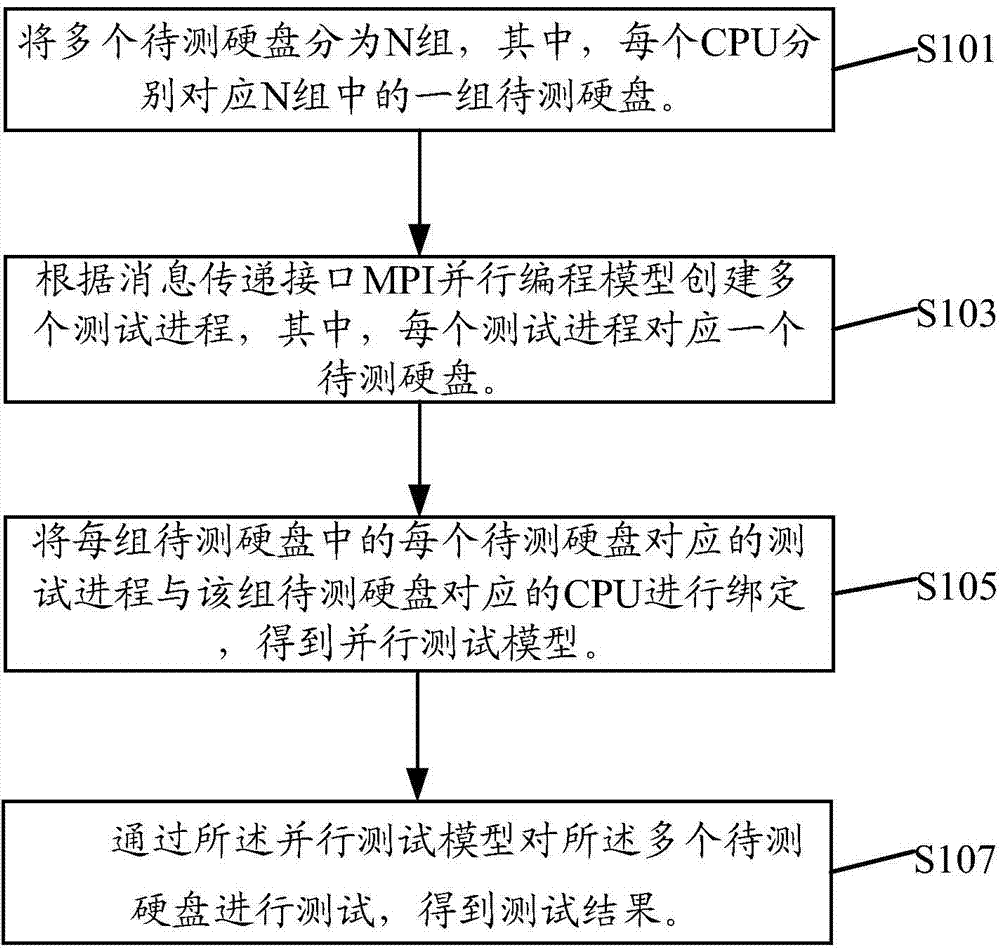

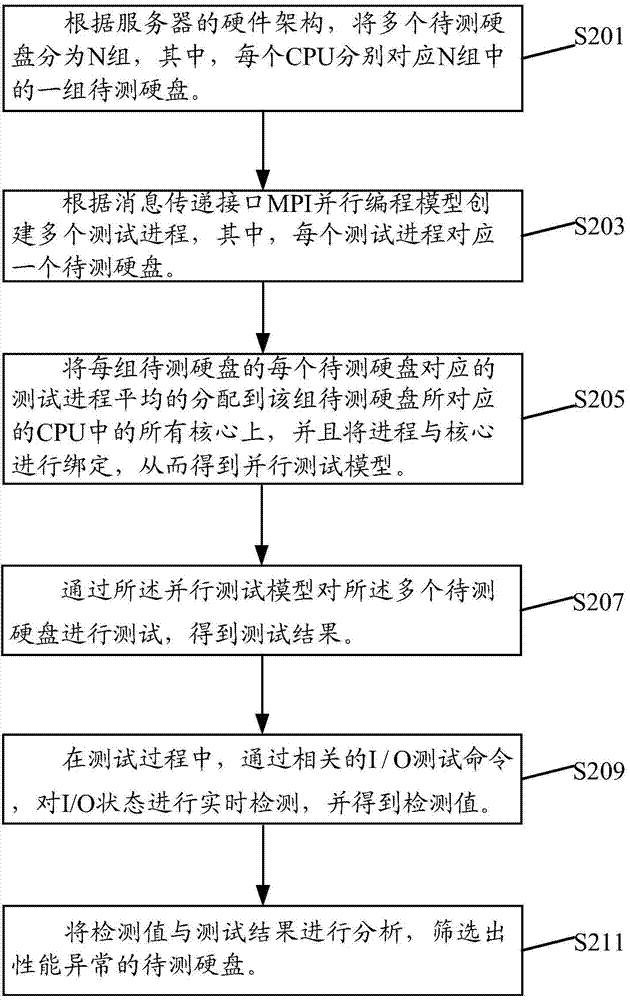

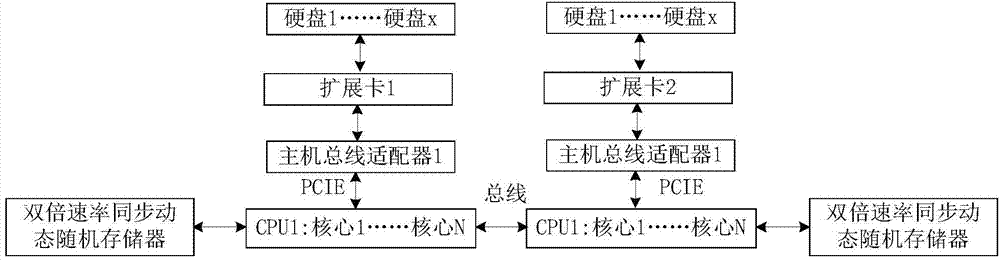

Method and device for testing performance of hard disk of high-density storage server

ActiveCN104850480AReal-time detectabilityReal time monitoringFunctional testingHigh densityMessage Passing Interface

The present invention discloses a method and device for testing performance of a hard disk of a high-density storage server. The server applied in the method for testing the performance of the hard disk of the high-density storage server comprises N CPUs, wherein N is greater than or equal to 1. The method comprises: dividing a plurality of hard disks to be tested into N sets, wherein each CPU respectively corresponds to one of the N sets of the hard disks to be tested; creating a plurality of test processes according to a parallel programming model of a message passing interface MPI, wherein each test process corresponds to one hard disk to be tested; binding the test progress corresponding to each hard disk to be tested in each set of the hard disks to be tested and the CPUs corresponding to the set of the hard disks to be tested so as to obtain a parallel test model; and testing the plurality of the hard disks to be tested through the parallel test model to obtain a test result. The test method provided by the present invention can ensure the equity of testing each hard disk to be tested, so that the accuracy and the stability of testing the high-density hard disk are improved, and besides the parallel degree during testing is increased.

Owner:DAWNING INFORMATION IND BEIJING +1

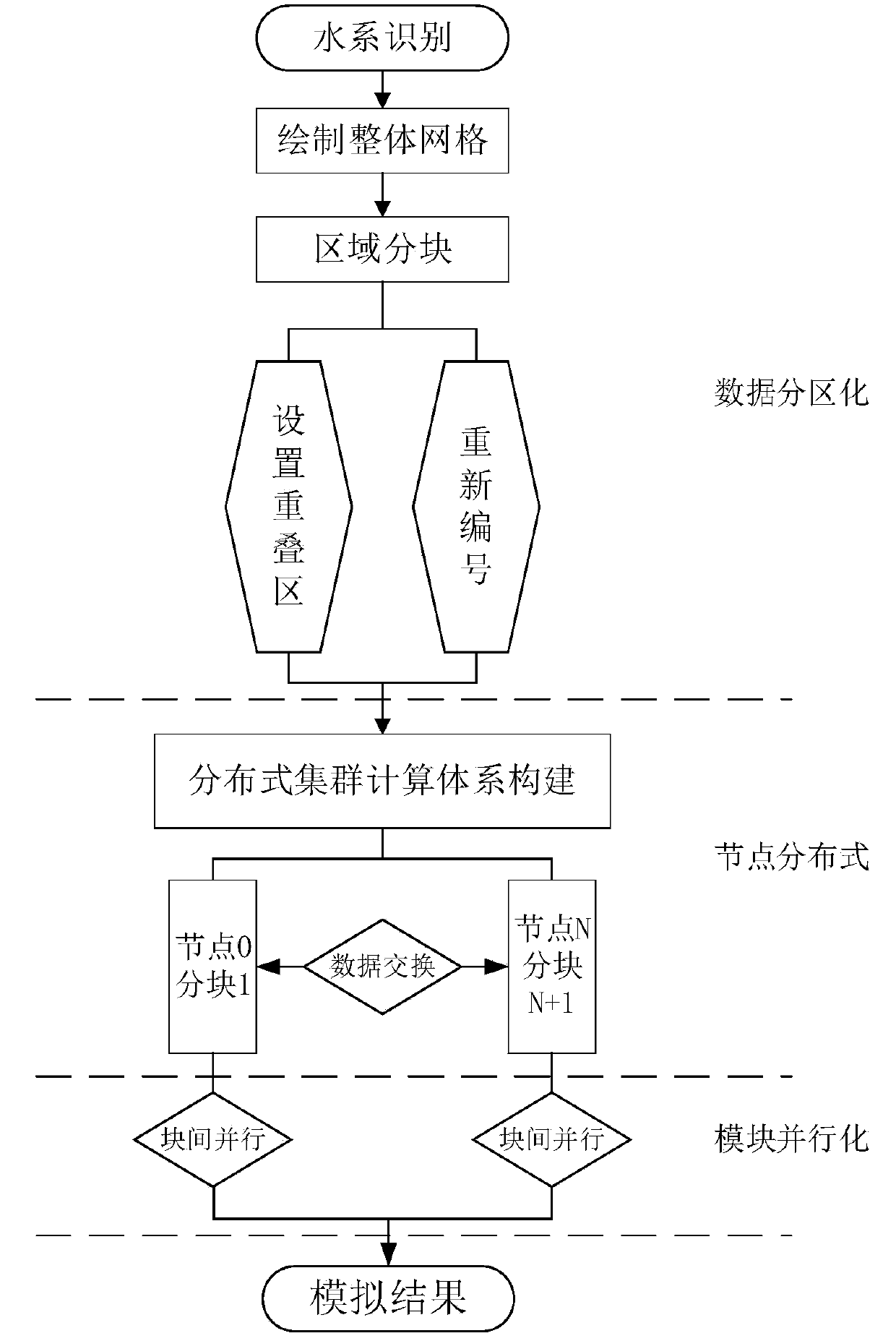

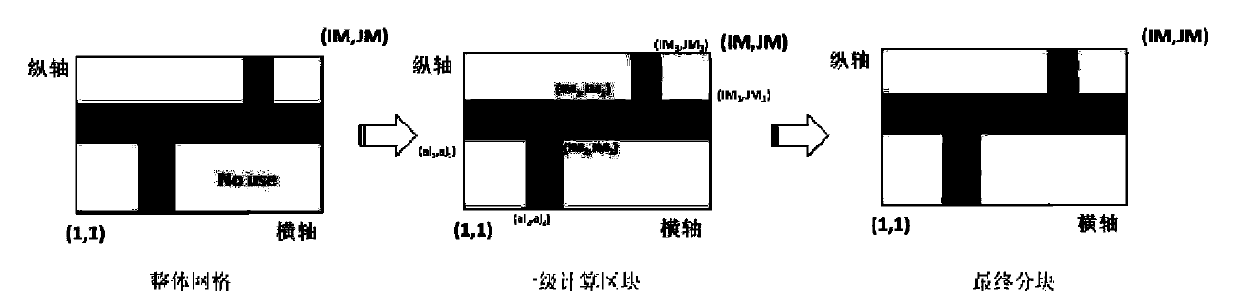

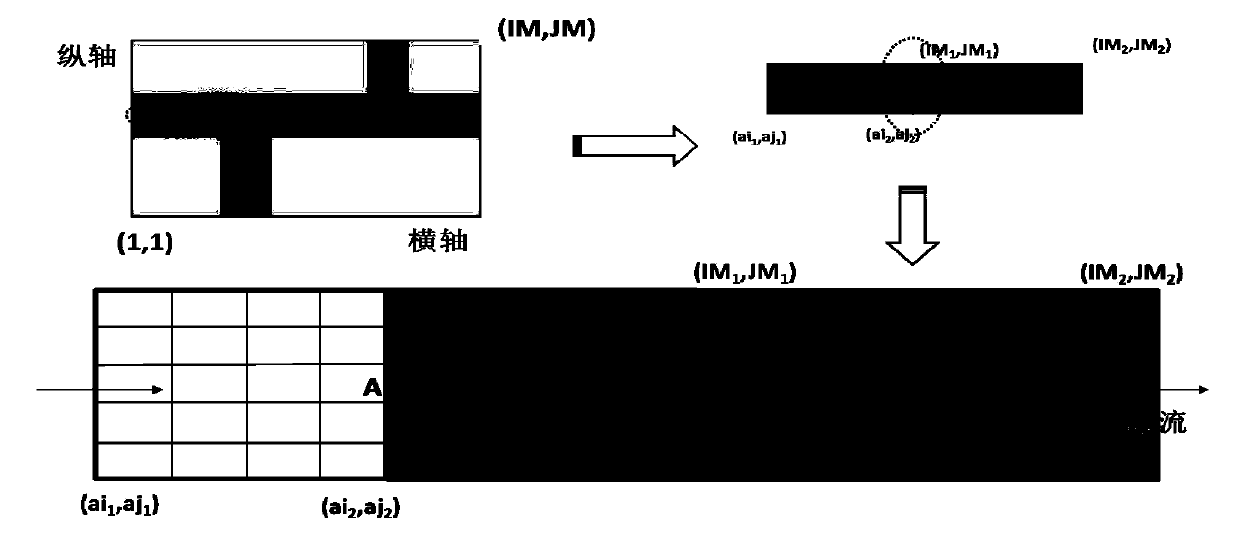

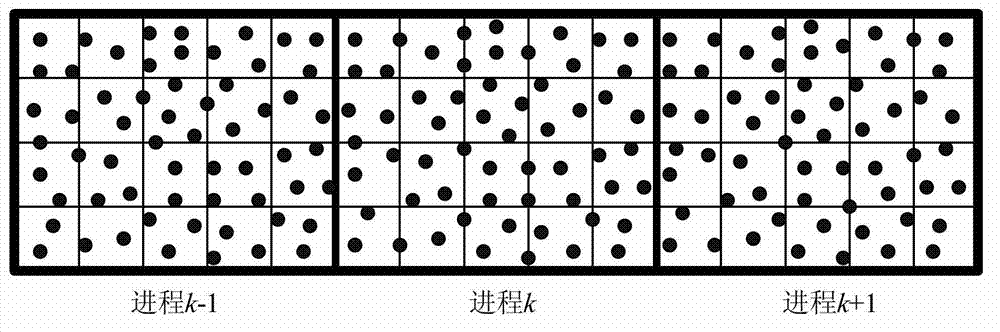

Parallel computing method for distributed hydrodynamic model of large-scale watershed system

ActiveCN104200045ASolve the problem of 2G memory array limitReduce mistakesConcurrent instruction executionSpecial data processing applicationsArray data structureParallel algorithm

The invention discloses a parallel computing method for a distributed hydrodynamic model of a large-scale watershed system. The parallel computing method includes performing a parallel algorithm to a distributed numerical model of the large-scale watershed, with regular nets as basic computing elements and partitioned zones as compute nodes. The problem of limited 2G memory array of a win32 program in a large-scale watershed simulation is solved by diving the watershed zone and performing a distributed cluster computing, and the large-scale watershed simulation is fine; based on a distributed message passing model, data are exchanged by establishing adjacent partitioned overlap zones, thus error correction of boundary data of the partitioned node is achieved, and consistency and accuracy of the whole watershed simulation are realized; according to a parallel general protocol integrating OPENMP and MPI (message passing interface), computing parallelization of and in the partitioned nodes is achieved, and efficient fine simulation of the large-scale water shed is realized. By the use of the parallel computing method, the numerical simulation of the hydrodynamic force of the large-scale watershed system is available with low cost and high efficiency and speed.

Owner:珞珈浩景数字科技(湖北)有限公司

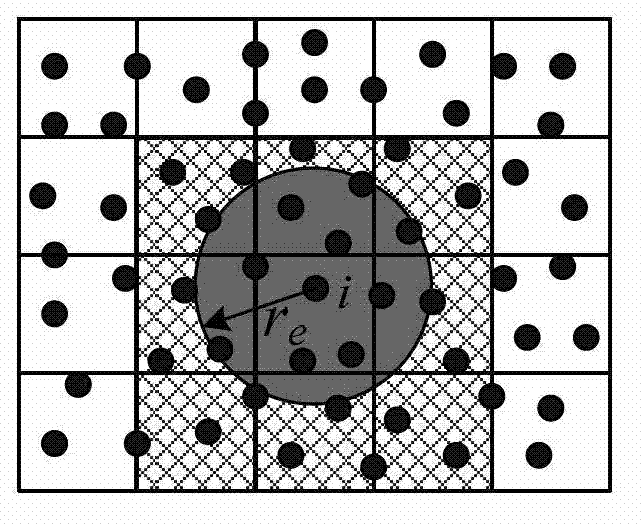

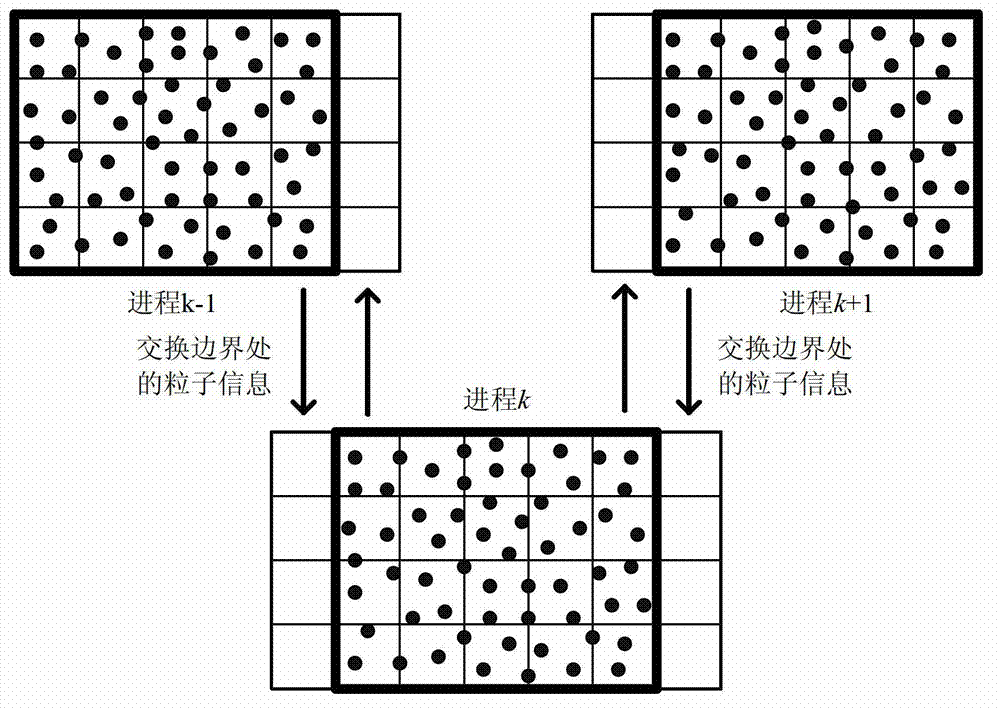

Large-scale parallel processing method of moving particle semi-implicit method

InactiveCN102902514ARealize simulationConcurrent instruction executionSpecial data processing applicationsMessage Passing InterfaceParticle method

The invention provides a large-scale parallel processing method of a moving particle semi-implicit method. The method comprises the following steps of: (1) adopting an efficient and easily-paralleled symmetric Lanczos algorithm SLA to solve a pressure poisson equation in the semi-implicit particle method; and (2) adopting parallel models (such as OpenMP, OpenCL) sharing an inner memory and / or a parallel model (MPI (Message Passing Interface)) for transmitting a message, and a mixed model of the parallel models to carry out parallel acceleration algorithm. The moving particle semi-implicit method of the pressure poisson equation is solved by large-scale parallel acceleration requirements and the parallel acceleration effect is obvious.

Owner:XI AN JIAOTONG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com