Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1498 results about "Back end server" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

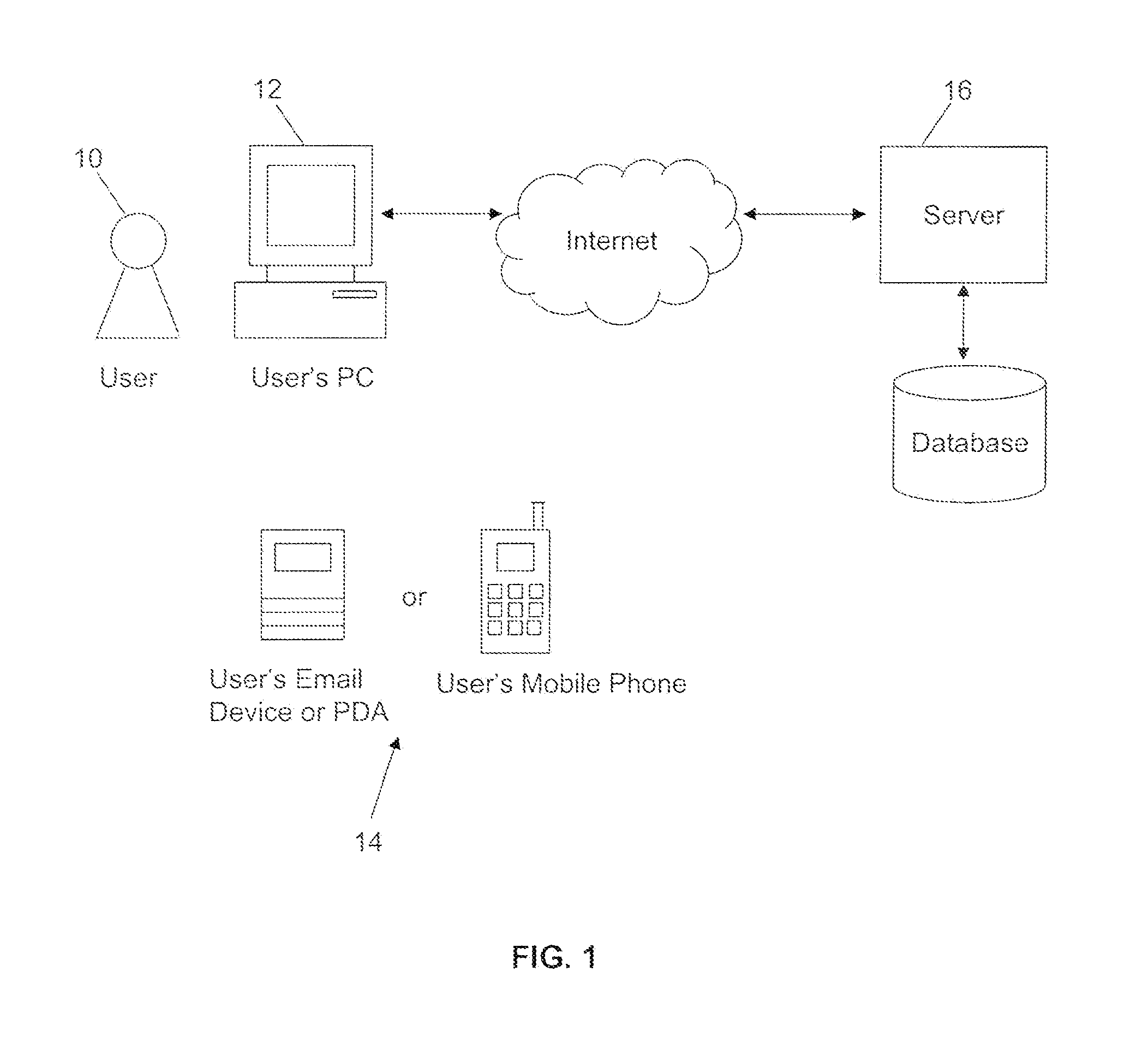

The back end is a combination of a database and a software written in a server-side language, which are run on web servers, cloud-based servers, or a hybrid combination of both. A network’s server set-up can vary, with the server-side workload divided up between various machines (e.g., a server dedicated to housing the database).

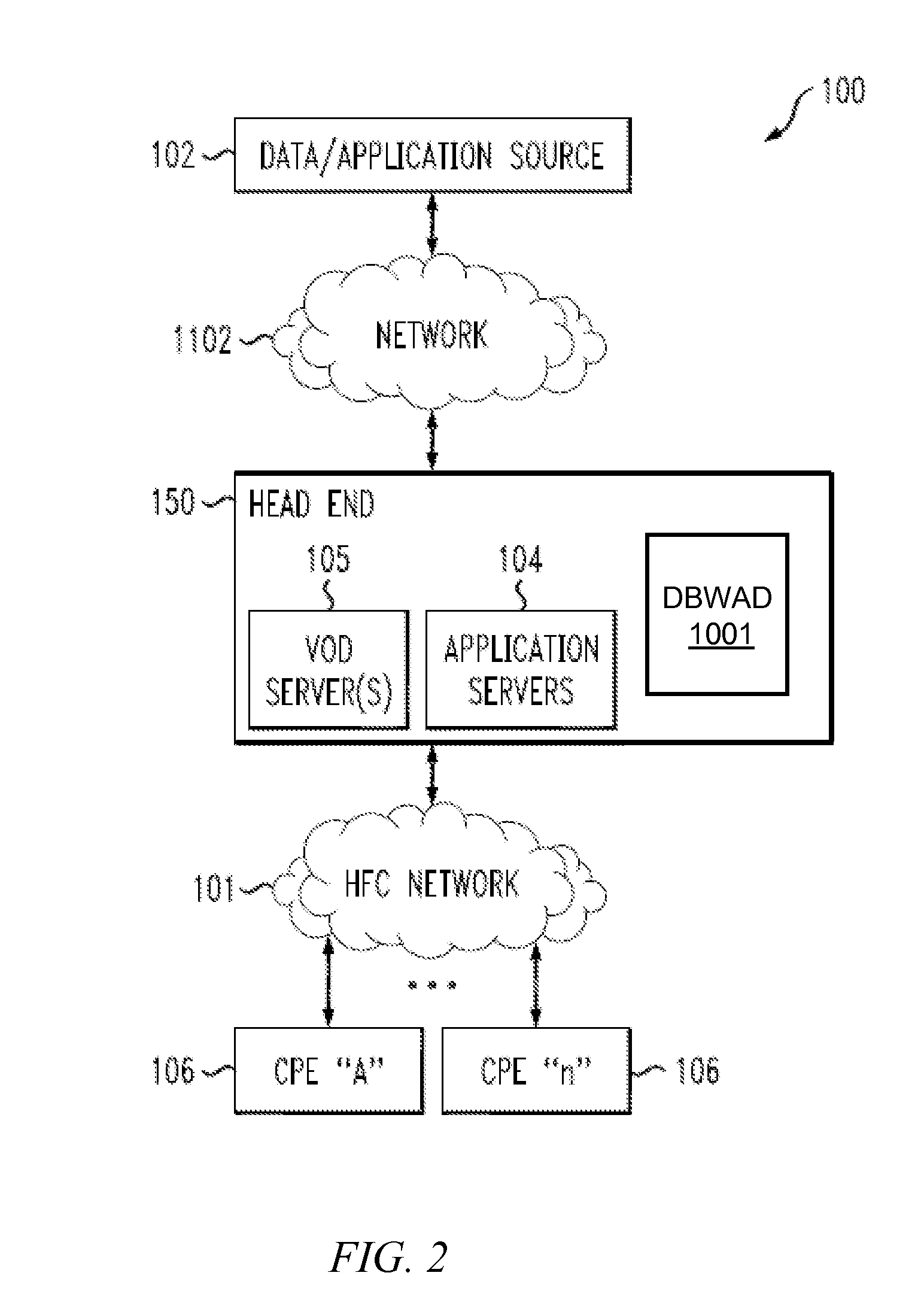

Wide-area content-based routing architecture

InactiveUS20030099237A1Easy to manageMaximum connectivityData switching by path configurationWide areaVirtualization

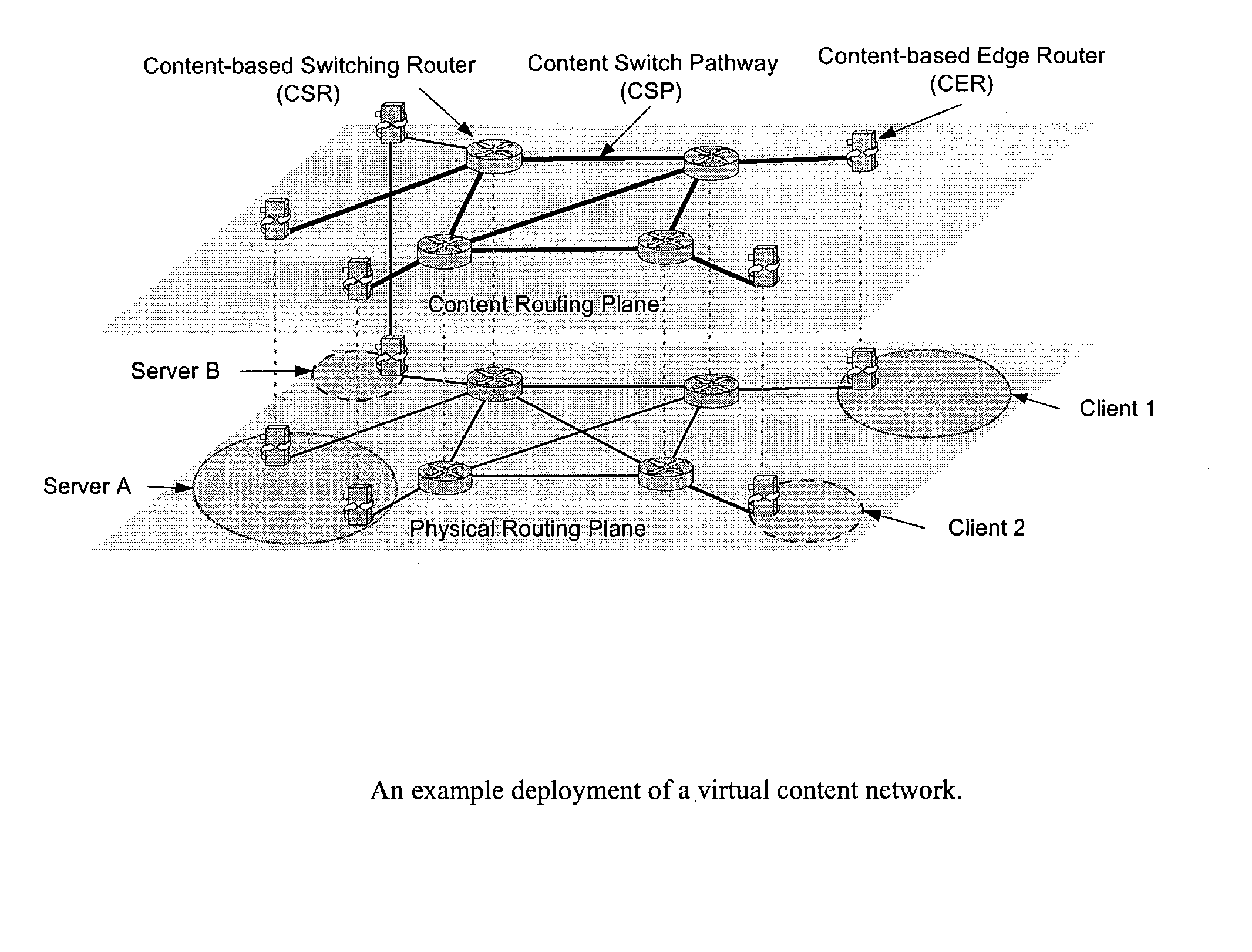

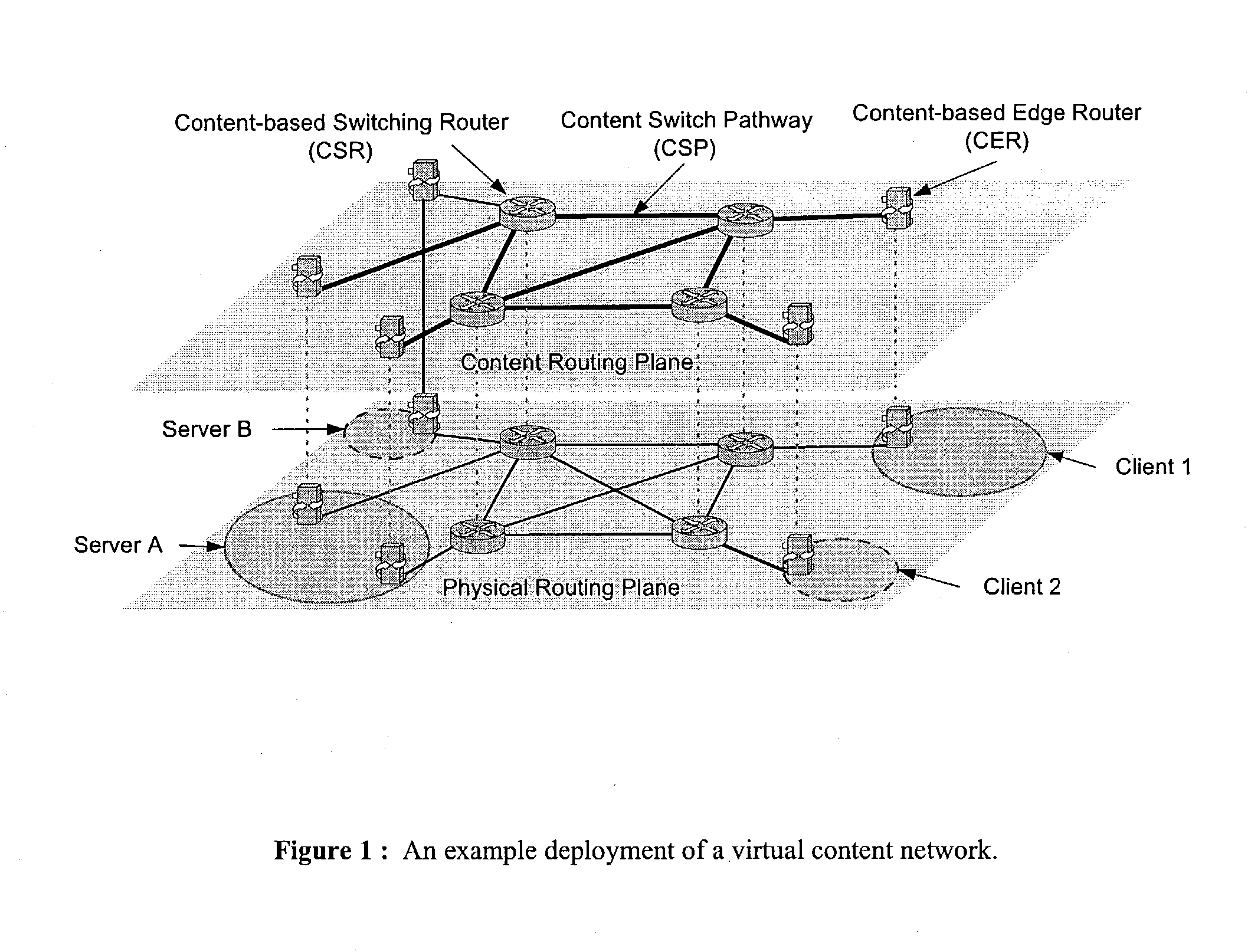

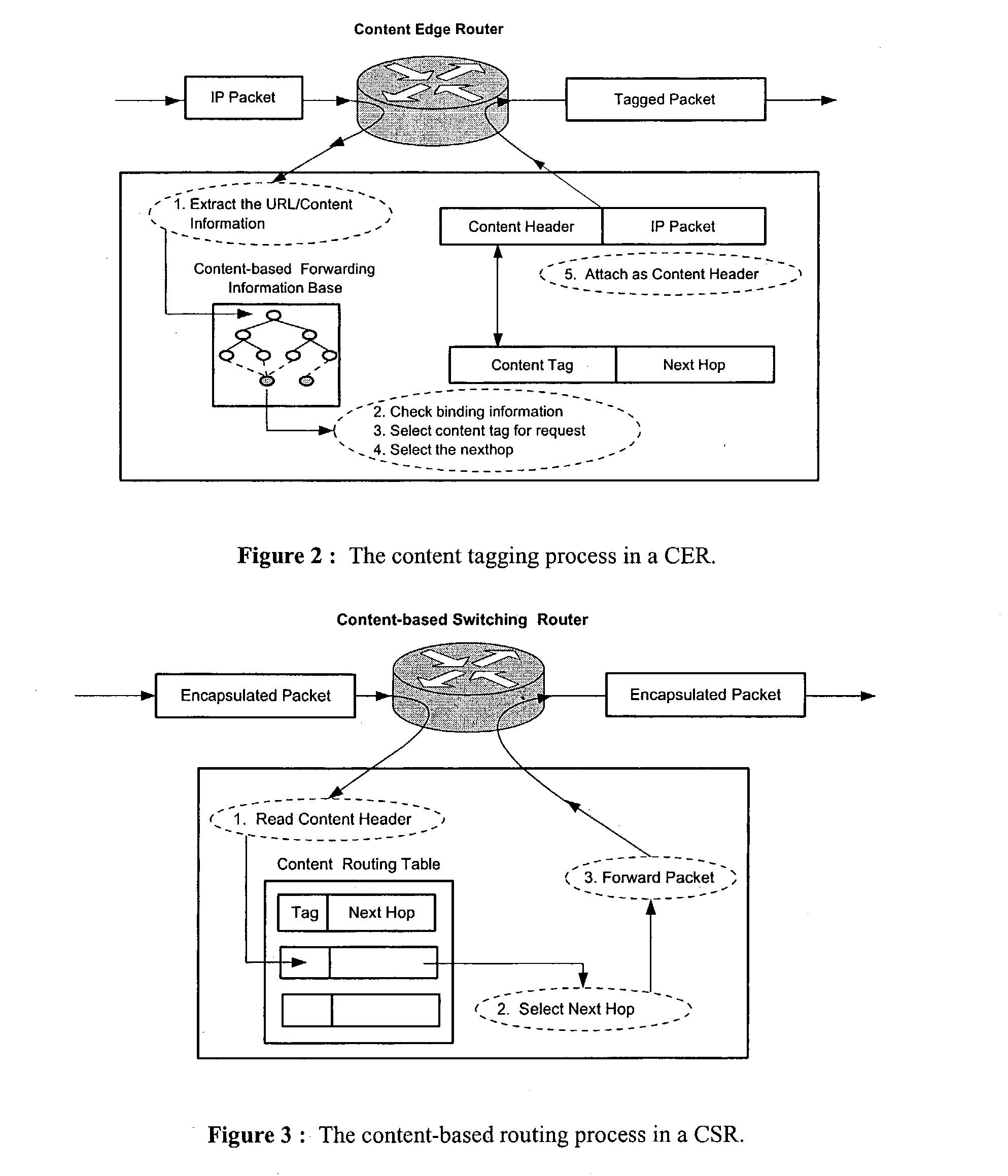

Content networking is an emerging technology, where the requests for content accesses are steered by "content routers" that examine not only the destinations but also content descriptors such as URLs and cookies. In the current deployments of content networking, "content routing" is mostly confined to selecting the most appropriate back-end server in virtualized web server clusters. This invention presents a novel content-based routing architecture that is suitable for global content networking. In this content-based routing architecture, a virtual overlay network called the "virtual content network" is superimposed over the physical network. The content network contains content routers as the nodes and "pathways" as links. The content-based routers at the edge of the content network may be either a gateway to the client domain or a gateway to the server domain whereas the interior ones correspond to the content switches dedicated for steering content requests and replies. The pathways are virtual paths along the physical network that connect the corresponding content routers. The invention is based on tagging content requests at the ingress points. The tags are designed to incorporate several different attributes of the content in the routing process. The path chosen for routing the request is the optimal path and is chosen from multiple paths leading to the replicas of the content.

Owner:TELECOMM RES LAB

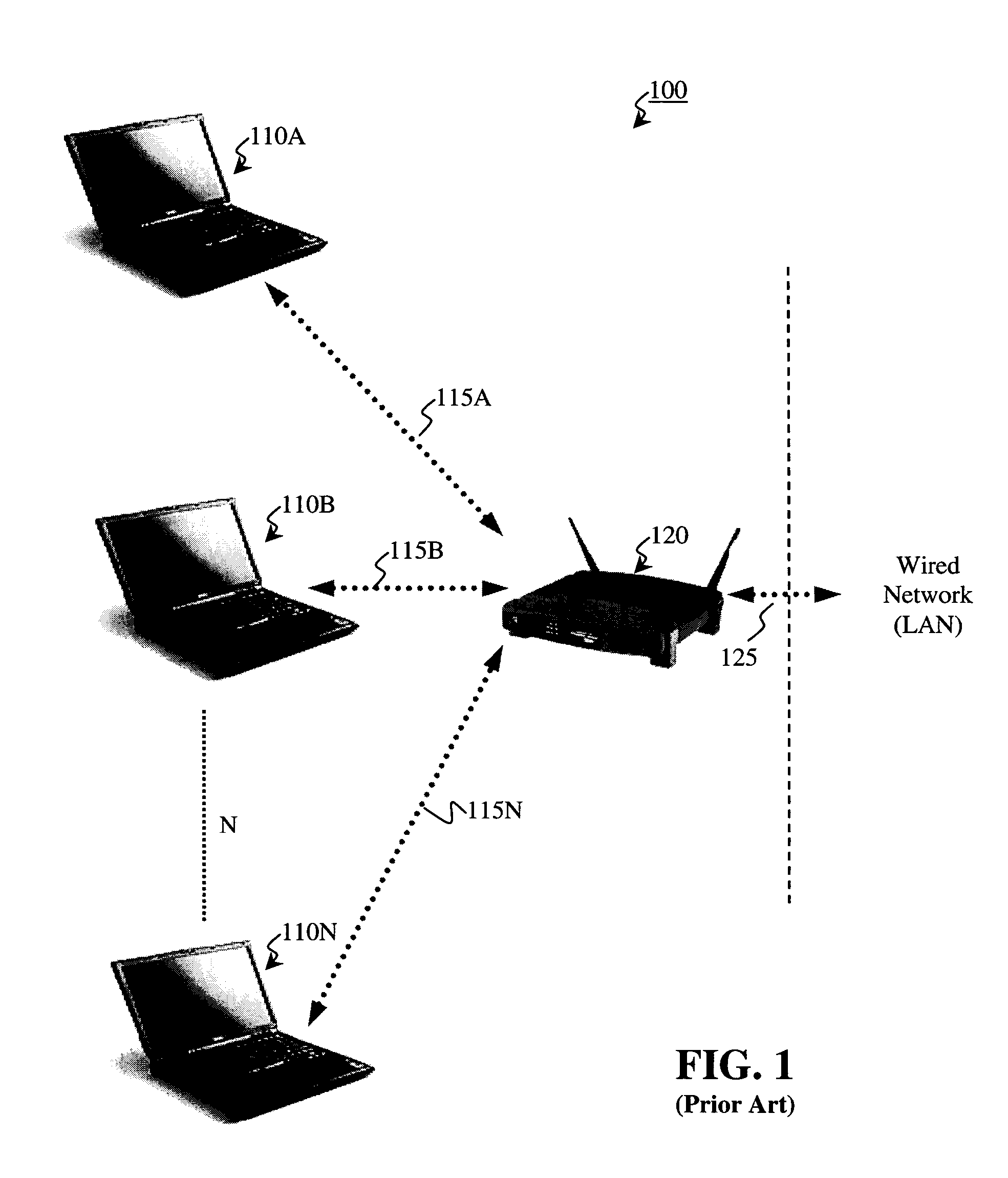

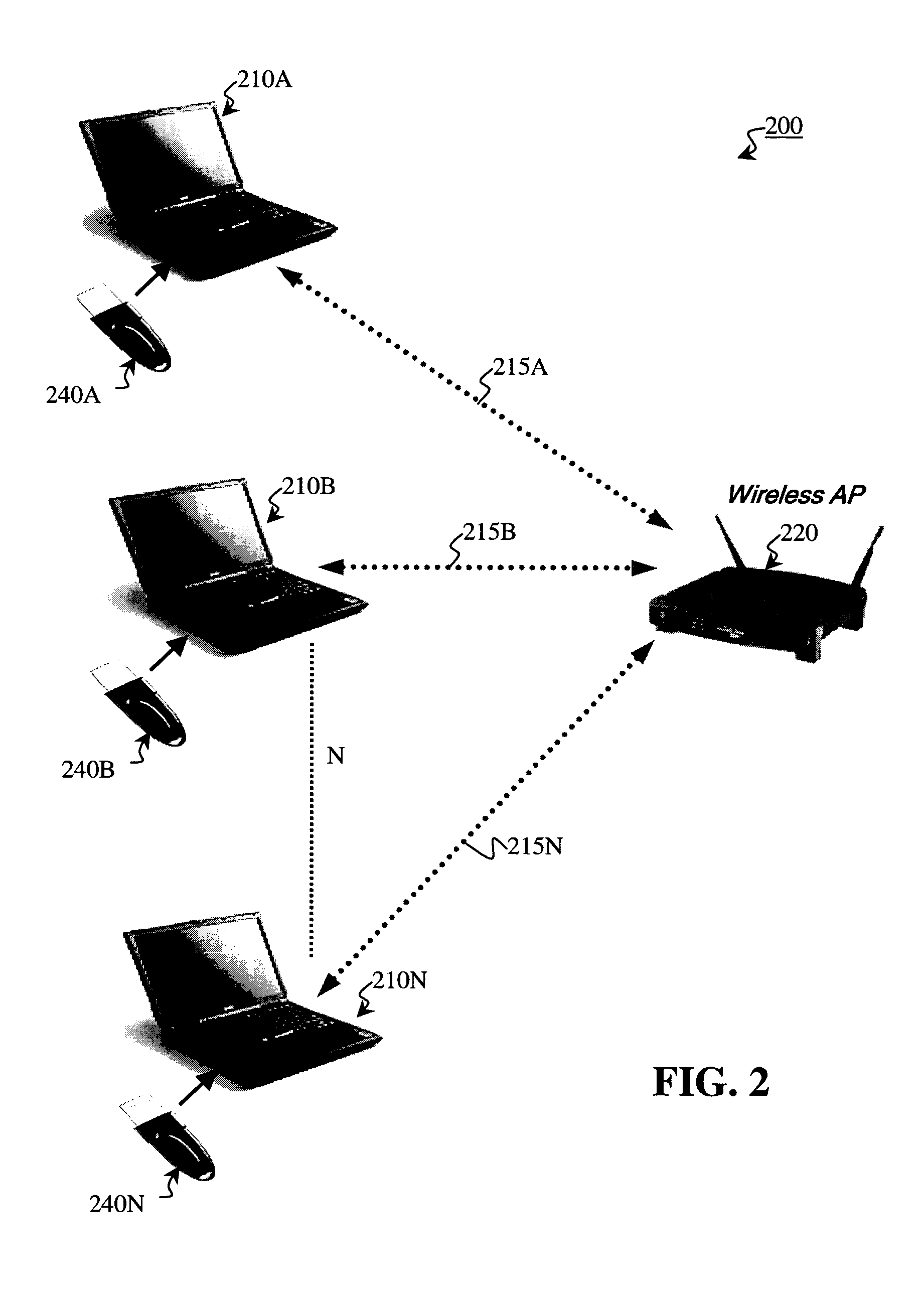

Self-managed network access using localized access management

ActiveUS7574731B2Provide usageAvoid modificationRandom number generatorsUser identity/authority verificationProgram planningBack end server

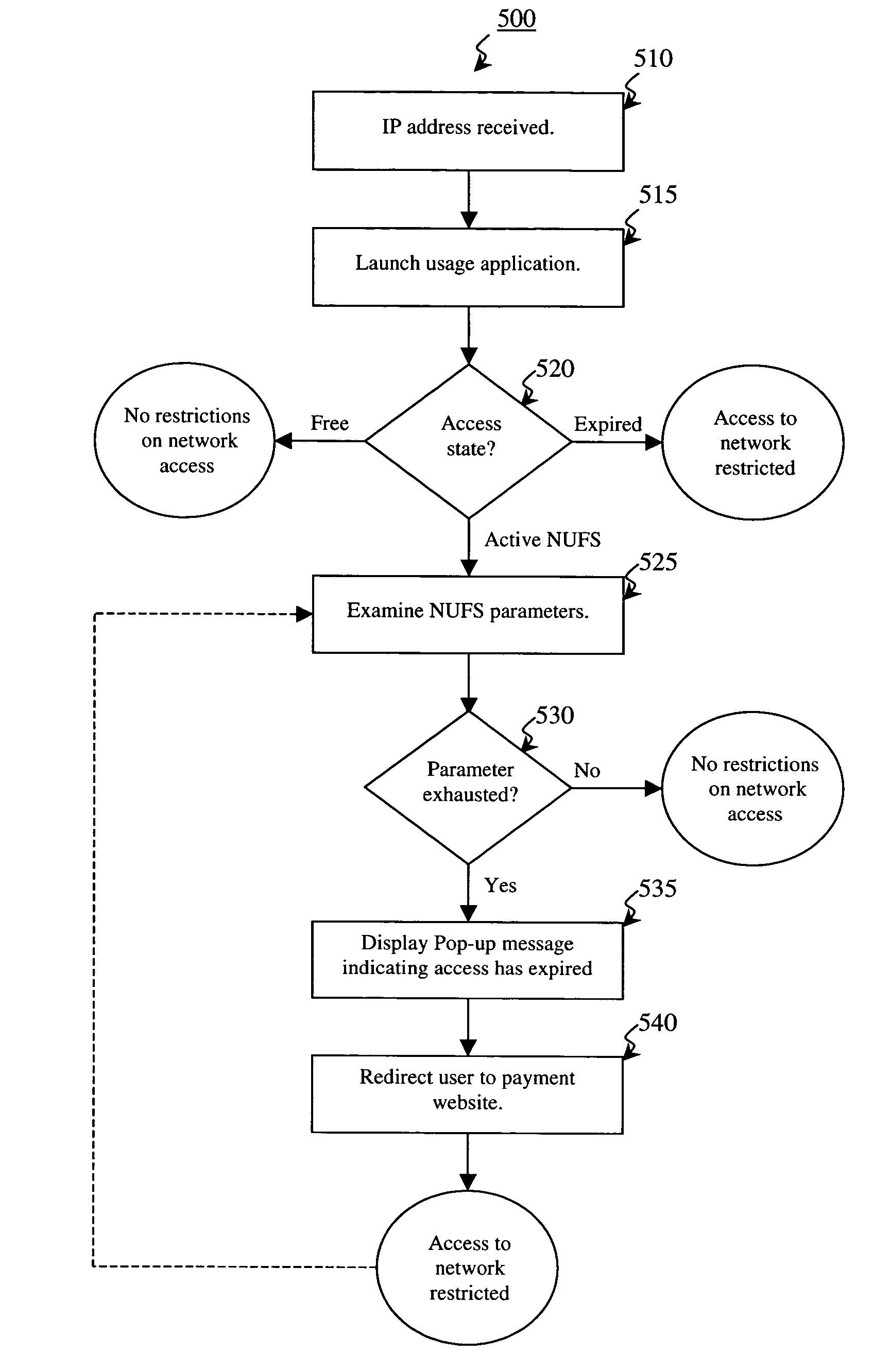

The invention provides a method and system for locally tracking network usage and enforcing usage plans at a client device. In an embodiment of the invention, a unique physical key, or token, is installed at a client device of one or more networks. The key comprises a usage application and one or more access parameters designated the conditions and / or limits of a particular network usage plan. Upon initial connection to the network, the usage application grants or denies access to the network based on an analysis of the current values of the access parameters. Therefore, network usage tracking and enforcement is made simple and automatic without requiring any back-end servers on the network while still providing ultimate flexibility in changing billing plans for any number of users at any time.

Owner:KOOLSPAN

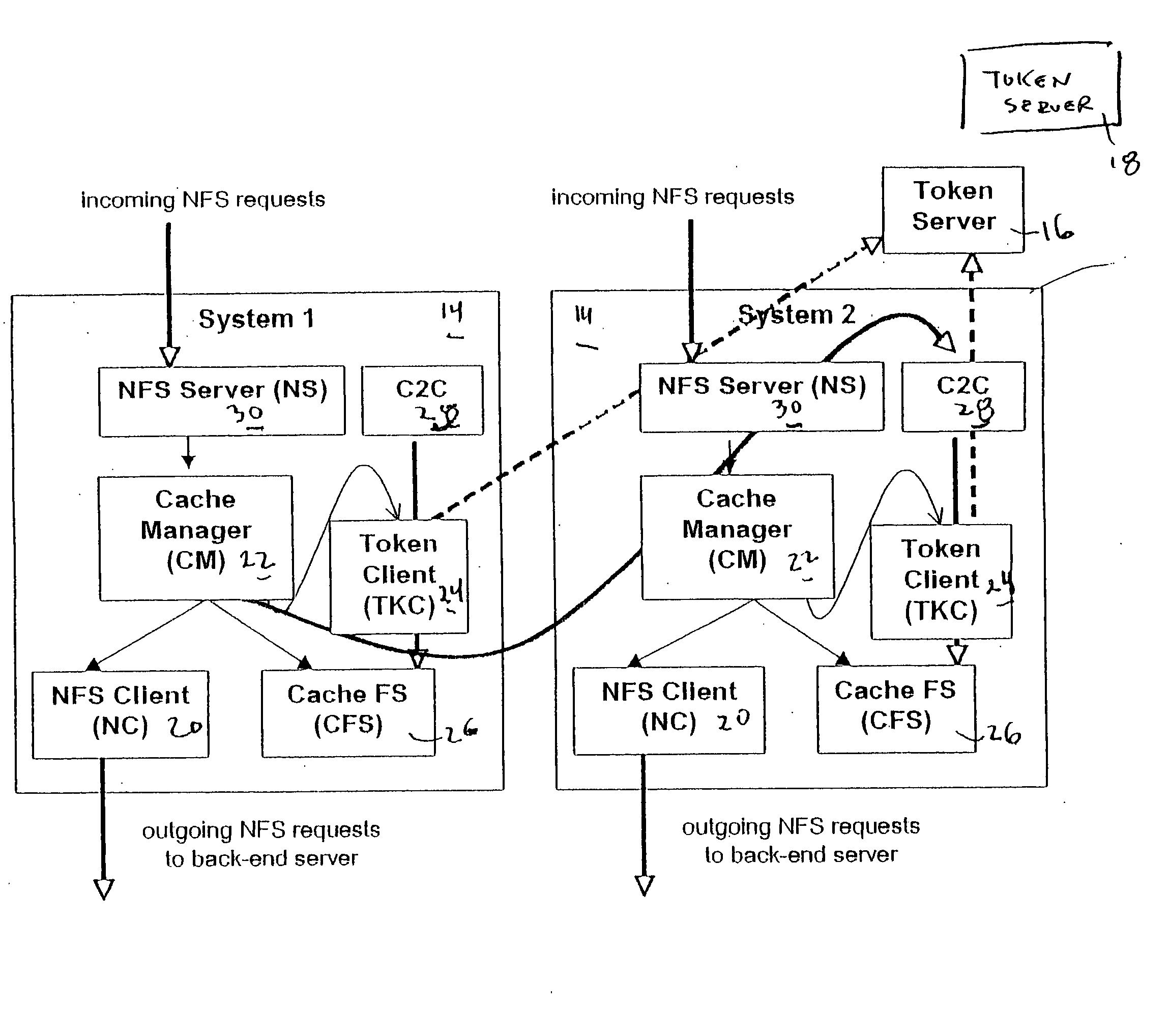

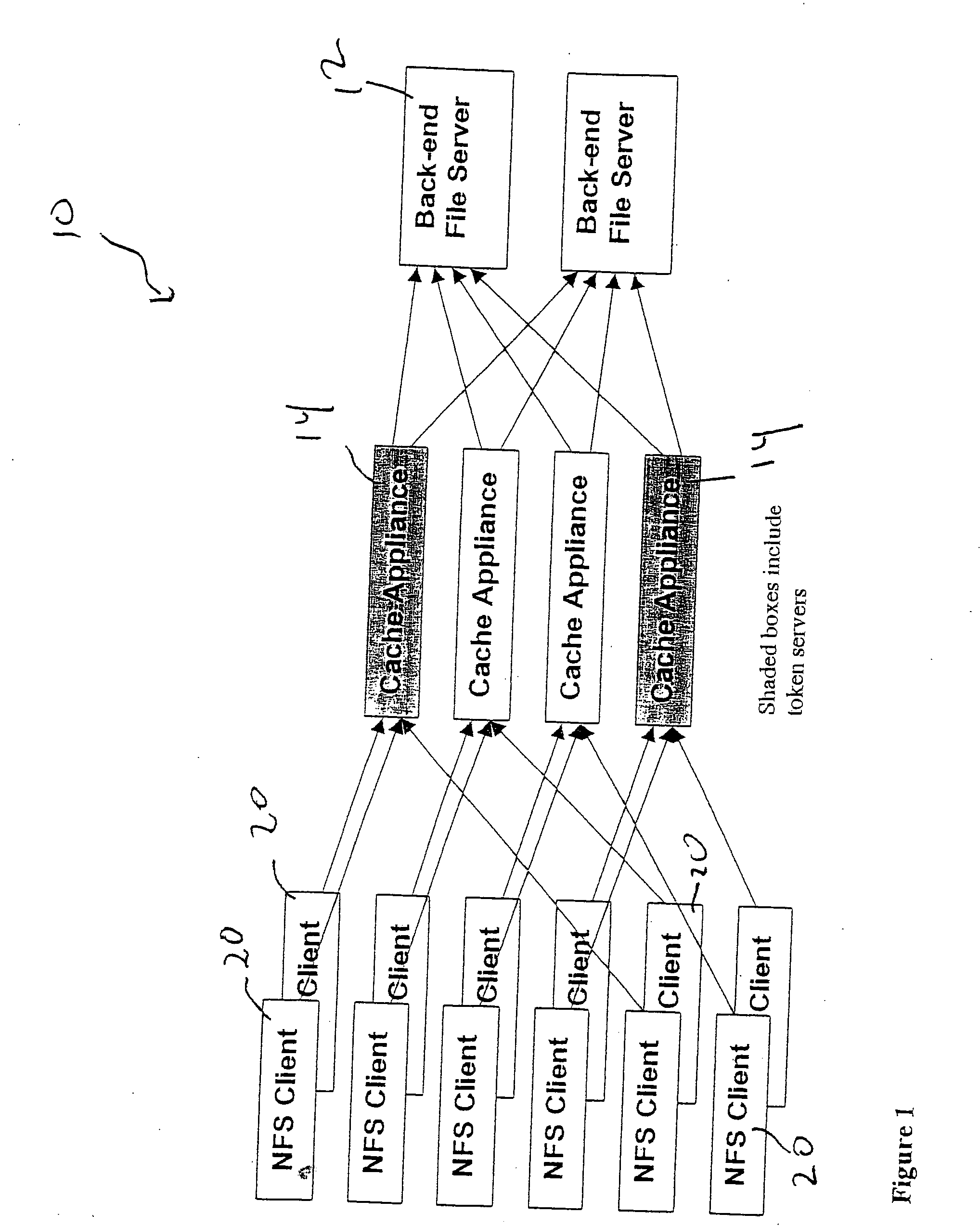

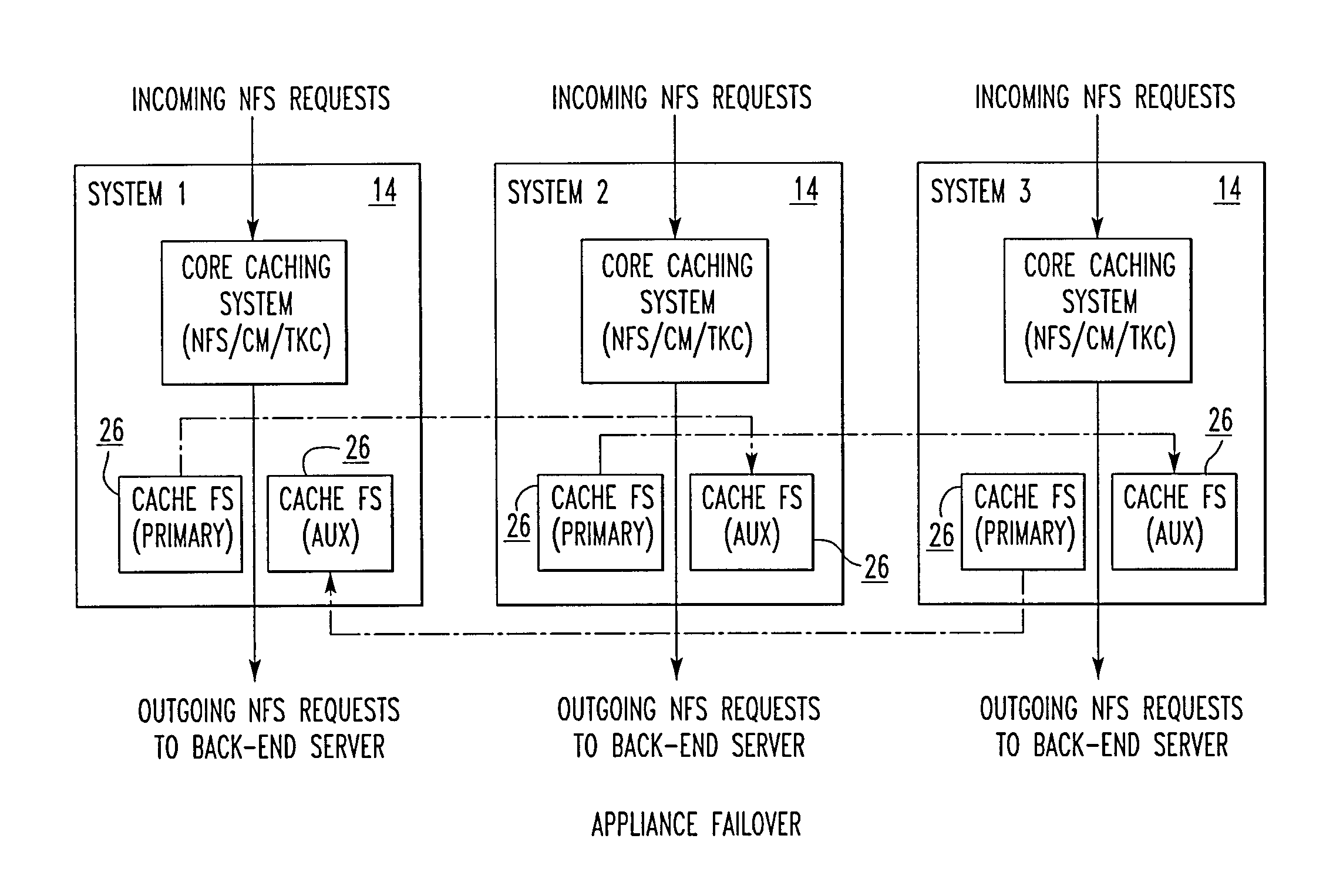

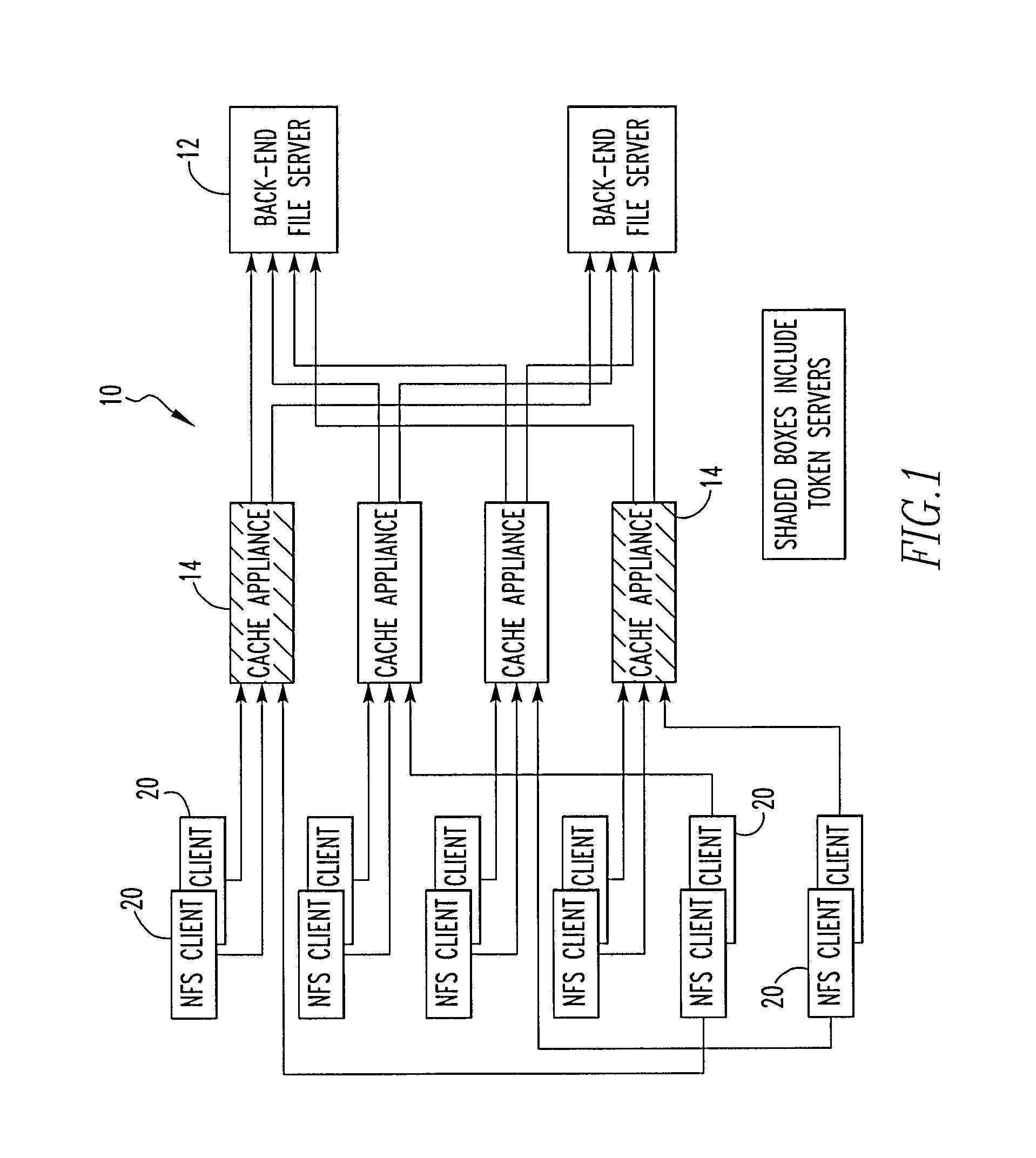

File storage system, cache appliance, and method

ActiveUS20100094806A1Increase profitLow costMemory architecture accessing/allocationDigital data processing detailsFile systemBack end server

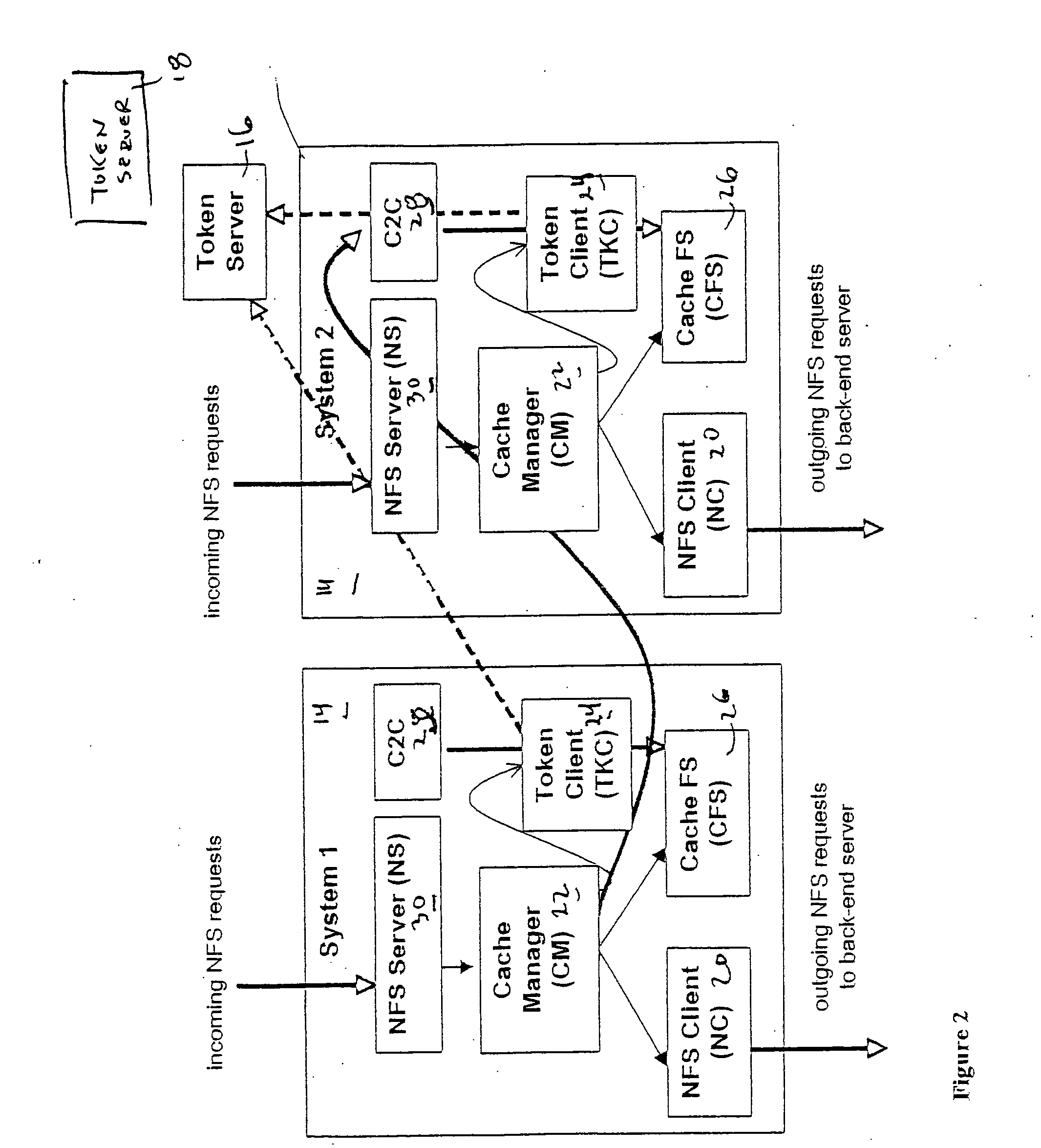

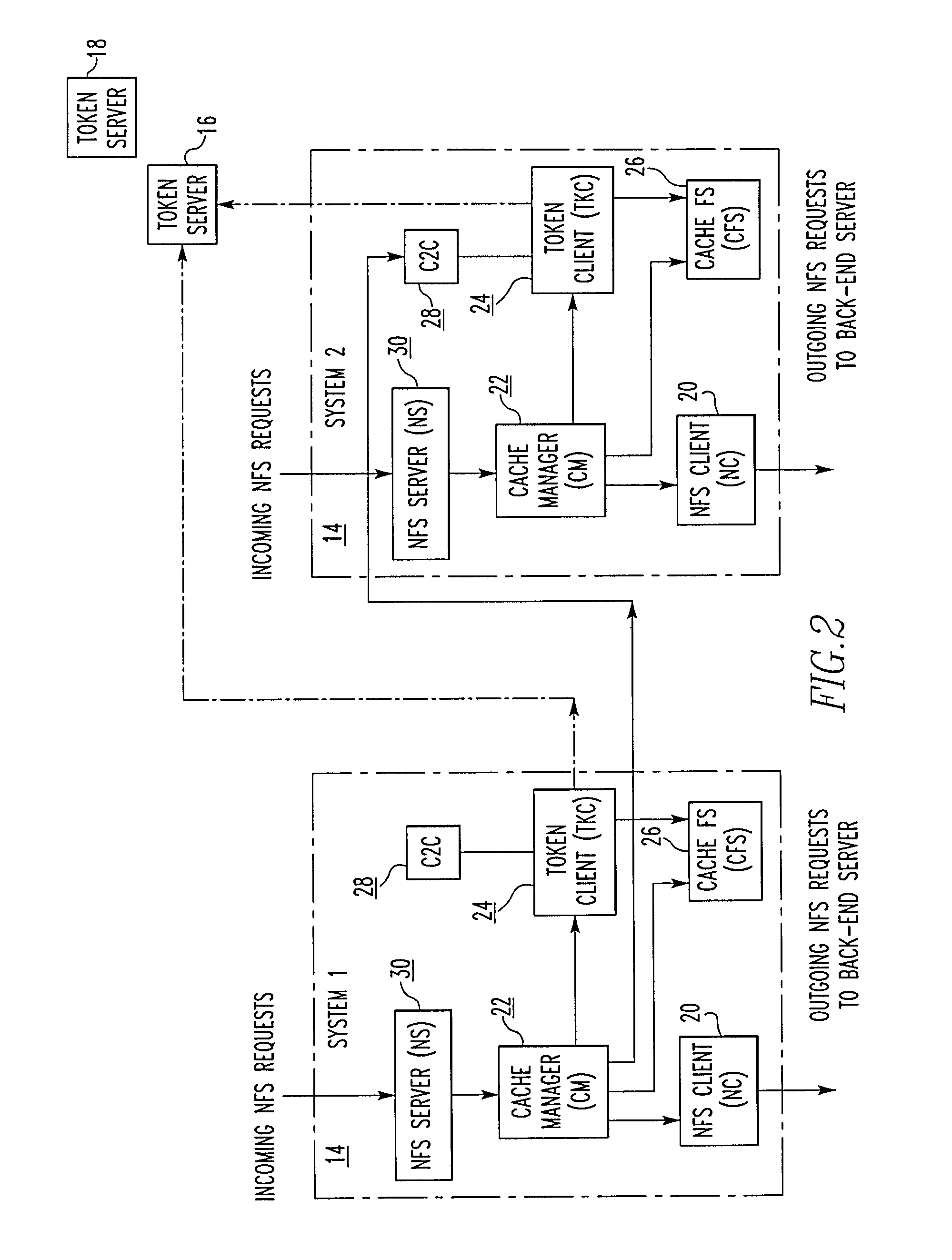

A file storage system for storing data of a file received from a client includes a back-end file server in which the data is stored. The system includes a cache appliance in communication with the file server, such that the appliance stores portions of the data or attributes of the file, and uses the stored data or attributes to process file system requests received from the client, and which reads and writes data and attributes to the back-end file server independently. A system for responding to a file system protocol request in regard to a back-end server includes a token server. The system includes a plurality of cache appliances in communication with the token server, each of which receives tokens from the token server to synchronize access to data and attributes caches of the cache appliances, and reading and writing data and attributes to the back-end servers when tokens are revoked, the cache appliance having persistent storage in which data are stored, and the token server having persistent storage in which tokens are stored. A storage system includes a plurality of backend servers. The system includes a token server which grants permission to read and write file attributes and data system, and includes a plurality of cache appliances in communication with at least one of the backend servers and the token server for processing an incoming NFS request to the one backend server. Each cache appliance comprises an NFS server which converts incoming NFS requests into cache manager operations; a token client module in communication with the token server having a cache of tokens obtained from the token server; a cache manager that caches data and attributes and uses tokens from the token client module to ensure that the cached data or attributes are the most recent data or attributes, and an NFS client which sends outgoing NFS requests to the back-end file server. Methods for storing data of a file received from a client.

Owner:MICROSOFT TECH LICENSING LLC

System and method for managing communication for component applications

ActiveUS20060165105A1Time-division multiplexData switching by path configurationBack end serverMessage passing

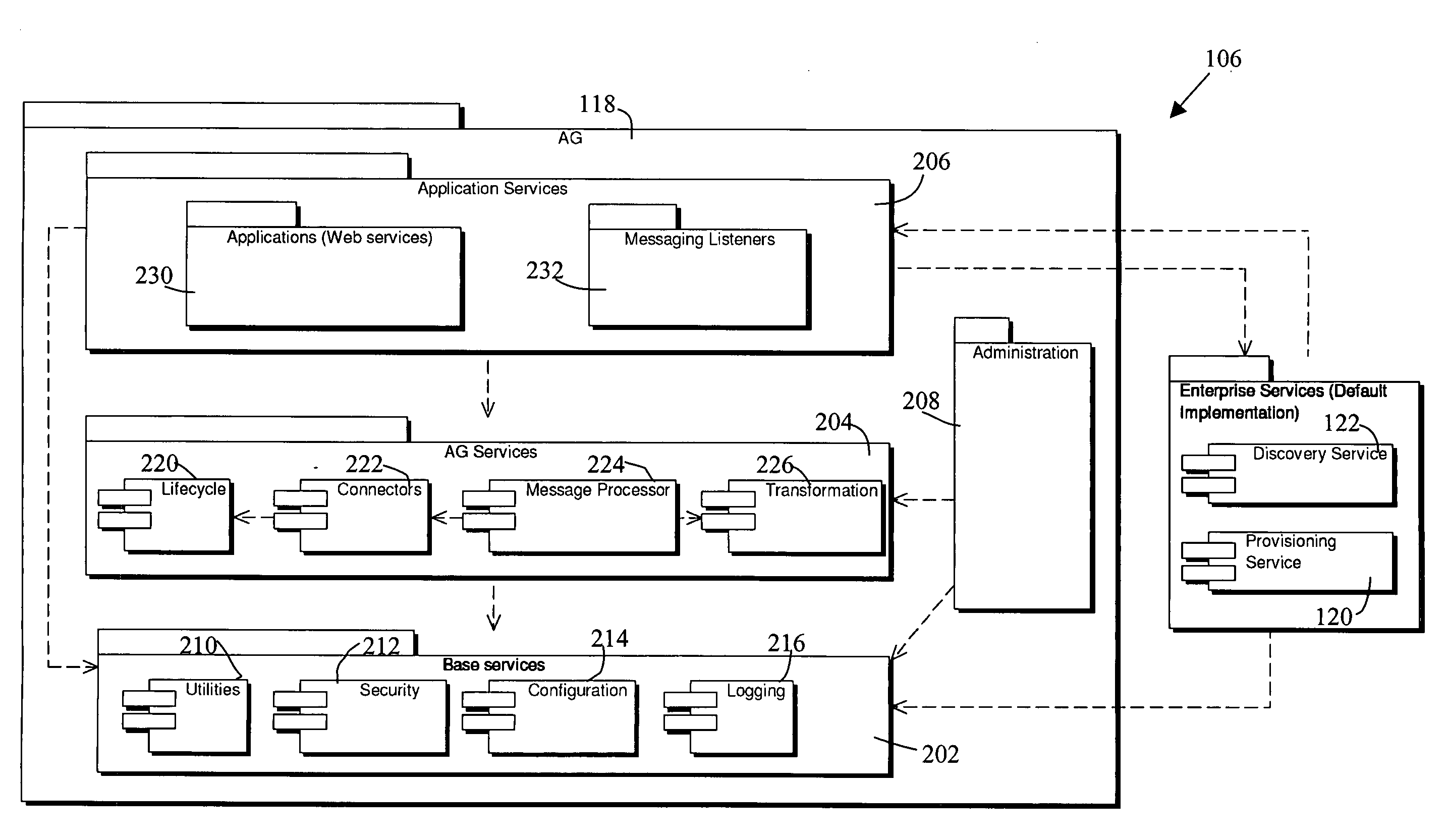

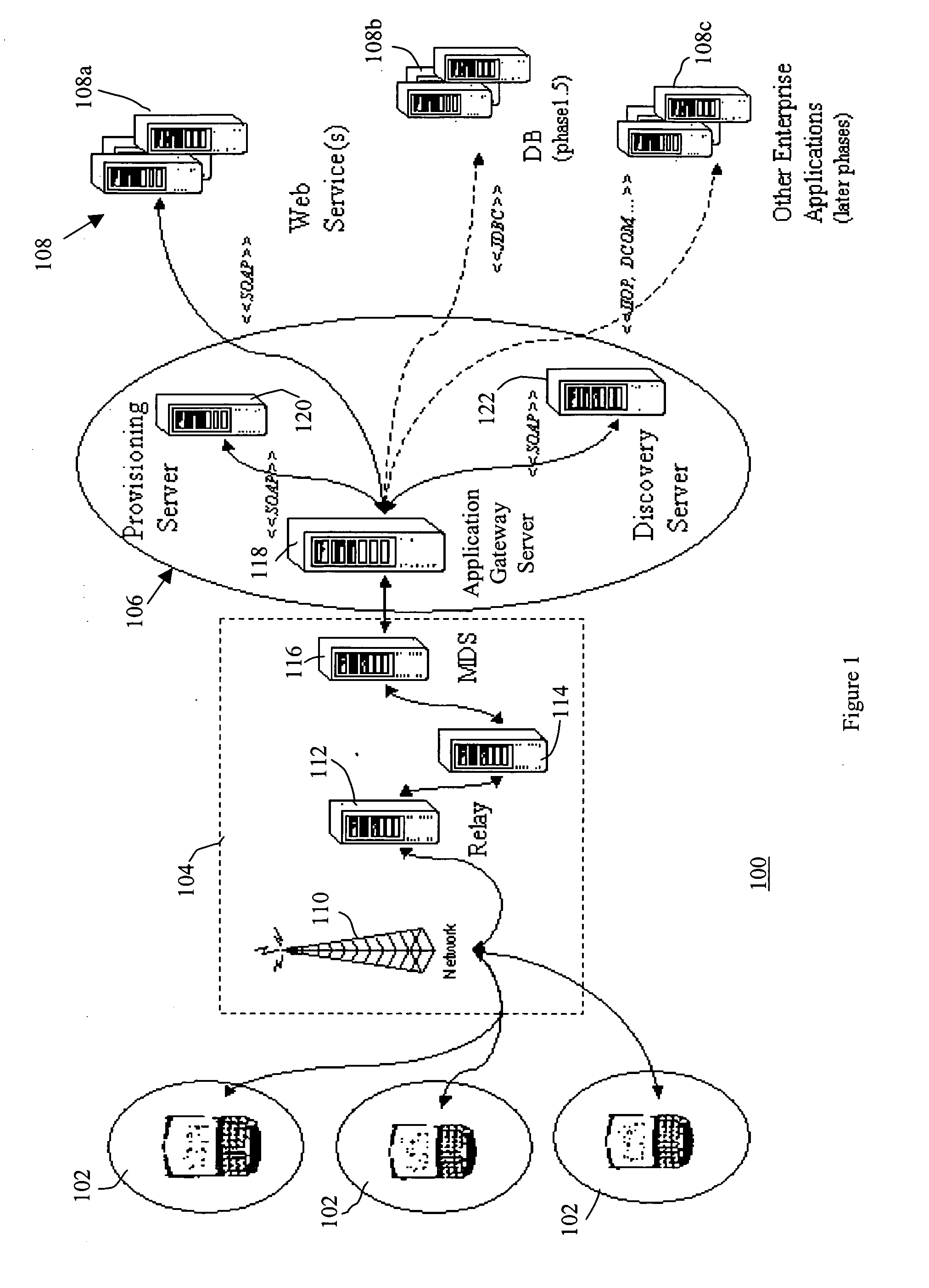

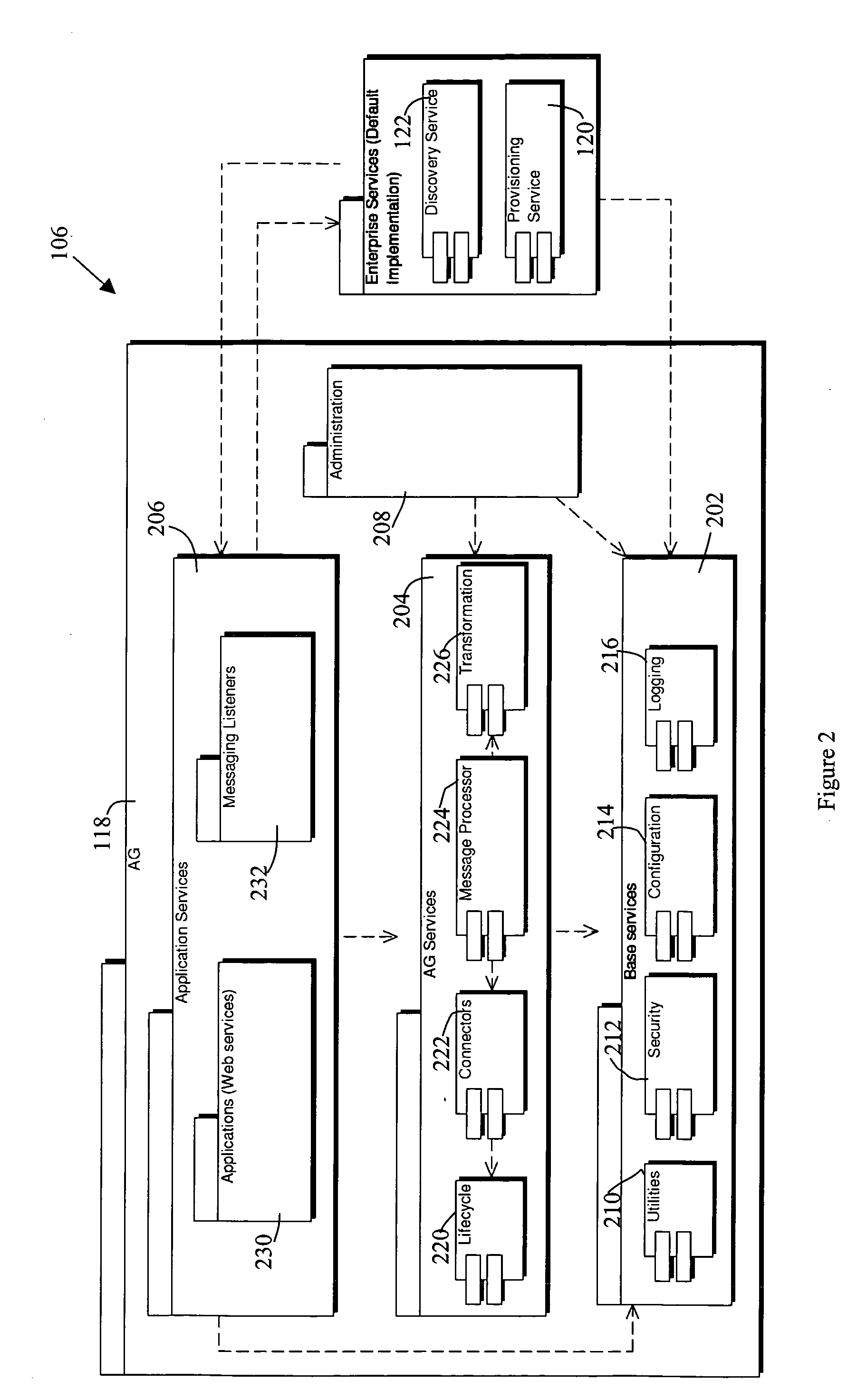

An application gateway server is provided for managing communication between an application executing in a runtime environment on a device and at least one backend server. The application gateway server comprises a message listener, a connector subsystem, and a messaging subsystem. The message listener receives messages from the component applications. The connector subsystem comprises a plurality of connectors, each of the plurality of connectors for communicating with one or more associated backend servers. The messaging subsystem comprises a message broker for processing messages received from the message listener and transmitting them to an associated one of the plurality of connectors and a communication mapping for identifying which of the plurality of connectors is to be used for each message in accordance with an origin of the message.

Owner:BLACKBERRY LTD

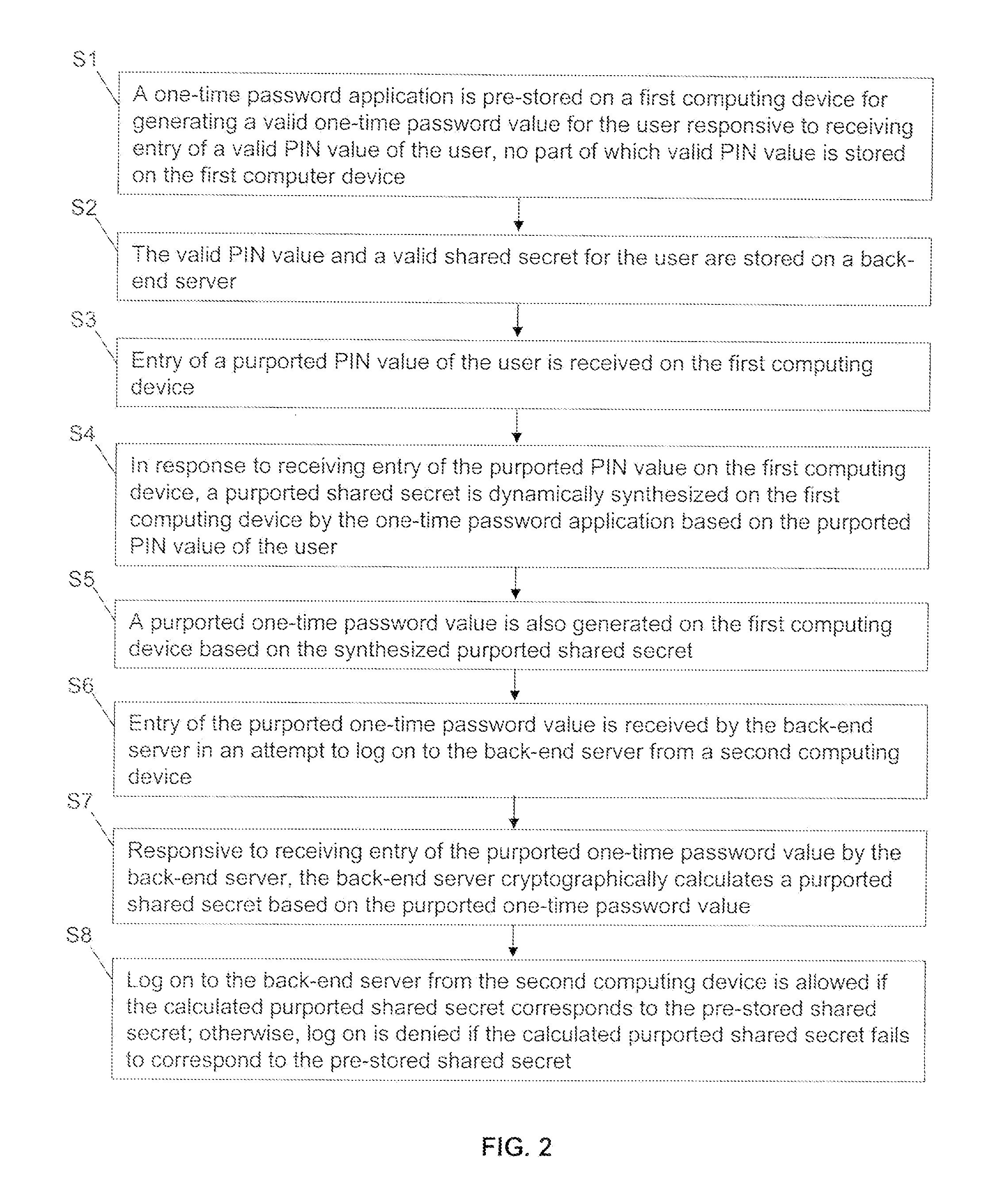

Methods and systems for secure user authentication

ActiveUS20110197266A1Reduce in quantityDigital data processing detailsUser identity/authority verificationUser authenticationBack end server

Methods and systems for secure user authentication using a OTP involve, for example, pre-storing a OTP application on a first computing device for generating a valid OTP value for the user responsive to receiving entry of a valid PIN value of the user, no part of the valid PIN value is stored on the first computing device and pre-storing on a back-end server the valid PIN value and a valid shared secret for the user. Upon receiving entry of a purported PIN value of the user, a purported shared secret is dynamically synthesized on the first computing device by the OTP application based on the purported PIN value of the user and a purported OTP value is generated on the first computing device. When entry of the purported OTP value is received by the back-end server in an attempt to log on the back-end server from a second computing device, the back-end server cryptographically calculates a window of OTP values, and log on to the back-end server from the second computing device is allowed if the calculated window of OTP values corresponds to the received OTP value.

Owner:CITICORP CREDIT SERVICES INC (USA)

File storage system, cache appliance, and method

ActiveUS9323681B2Avoid the needMemory architecture accessing/allocationDigital data processing detailsFile systemBack end server

A file storage system for storing data of a file received from a client includes a back-end file server in which the data is stored. The system includes a cache appliance in communication with the file server, such that the appliance stores portions of the data or attributes of the file, and uses the stored data or attributes to process file system requests received from the client, and which reads and writes data and attributes to the back-end file server independently. A system for responding to a file system protocol request in regard to a back-end server includes a token server. The system includes a plurality of cache appliances in communication with the token server, each of which receives tokens from the token server to synchronize access to data and attributes caches of the cache appliances, and reading and writing data and attributes to the back-end servers when tokens are revoked, the cache appliance having persistent storage in which data are stored, and the token server having persistent storage in which tokens are stored. A storage system includes a plurality of backend servers. The system includes a token server which grants permission to read and write file attributes and data system, and includes a plurality of cache appliances in communication with at least one of the backend servers and the token server for processing an incoming NFS request to the one backend server. Each cache appliance comprises an NFS server which converts incoming NFS requests into cache manager operations; a token client module in communication with the token server having a cache of tokens obtained from the token server; a cache manager that caches data and attributes and uses tokens from the token client module to ensure that the cached data or attributes are the most recent data or attributes, and an NFS client which sends outgoing NFS requests to the back-end file server. Methods for storing data of a file received from a client.

Owner:MICROSOFT TECH LICENSING LLC

Distributed traffic controller for network data

InactiveUS7299294B1Improve availabilityEnsure availabilityComputer security arrangementsMultiple digital computer combinationsNetwork availabilityBack end server

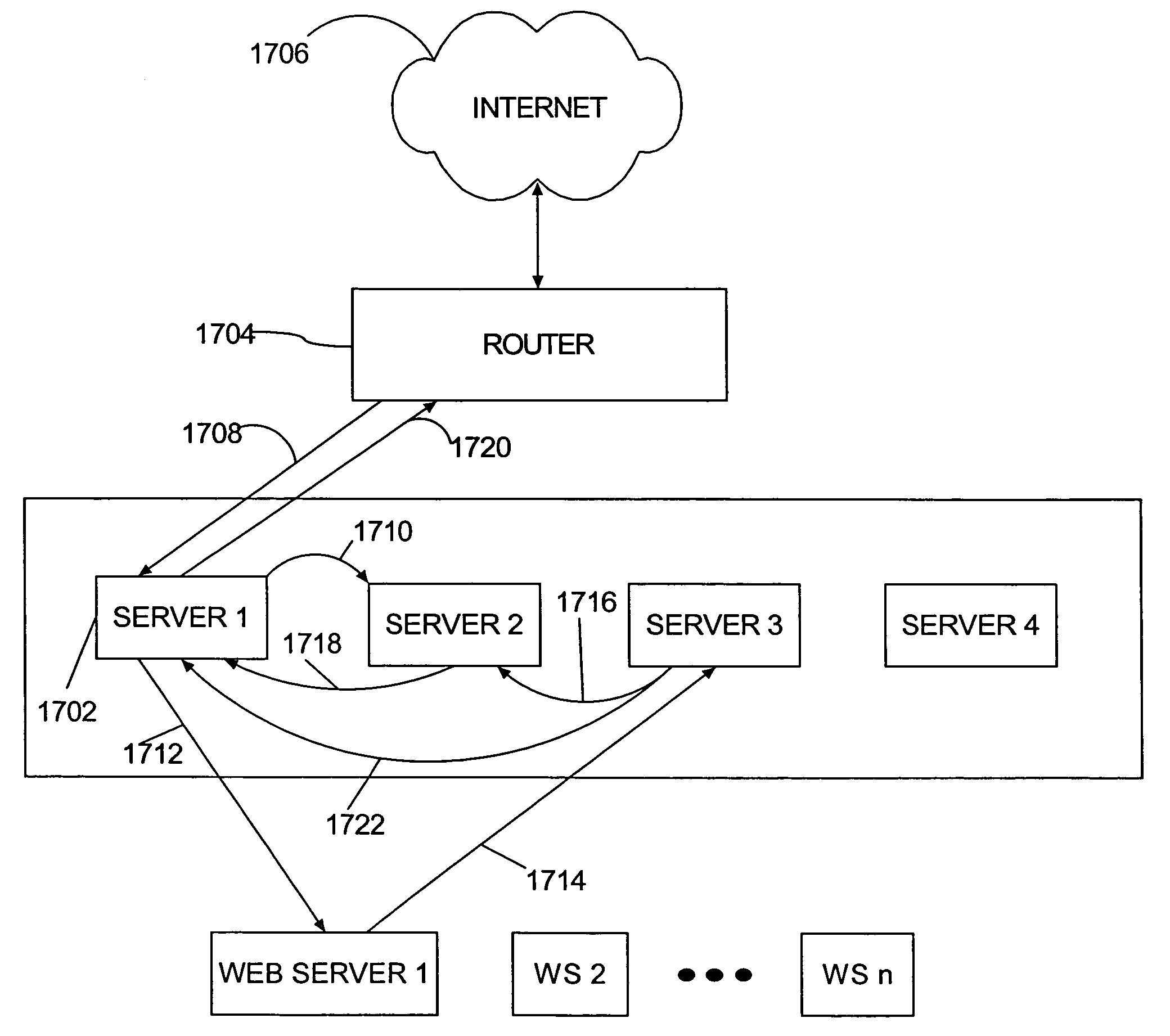

A distributed gateway for controlling computer network data traffic dynamically reconfigures traffic assignments among multiple gateway machines for increased network availability. If one of the distributed gateway machines becomes unavailable, traffic assignments are moved among the multiple machines such that network availability is substantially unchanged. The machines of the distributed gateway form a cluster and communicate with each other using a Group Membership protocol word such that automatic, dynamic traffic assignment reconfiguration occurs in response to machines being added and deleted from the cluster, with no loss in functionality for the gateway overall, in a process that is transparent to network users, thereby providing a distributed gateway functionality that is scalable. Operation of the distributed gateway remains consistent as machines are added and deleted from the cluster. A scalable, distributed, highly available, load balancing network gateway is thereby provided, having multiple machines that function as a front server layer between the network and a back-end server layer having multiple machines functioning as Web file servers, FTP servers, or other application servers. The front layer machines comprise a server cluster that performs fail-over and dynamic load balancing for both server layers.

Owner:EMC IP HLDG CO LLC

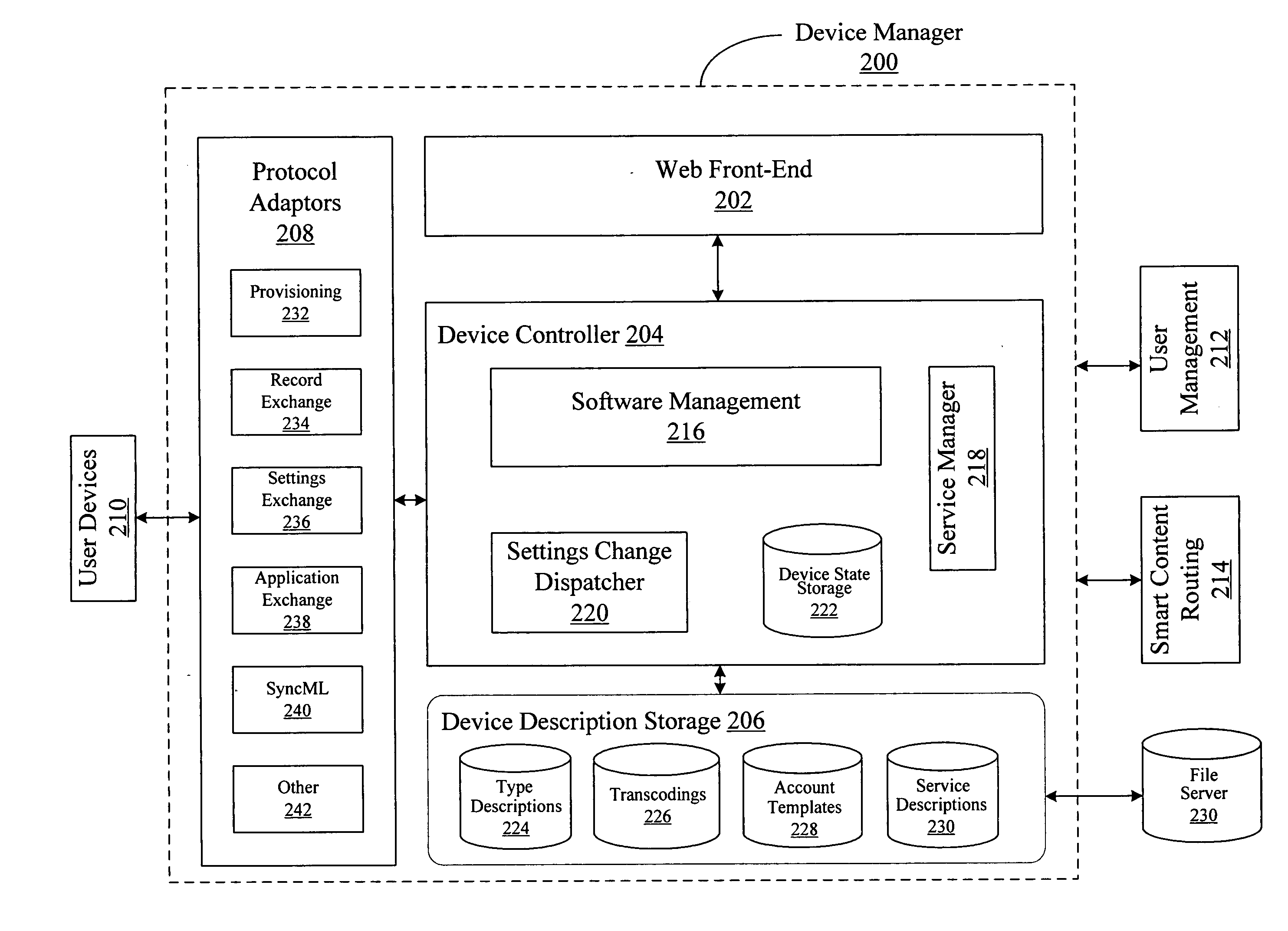

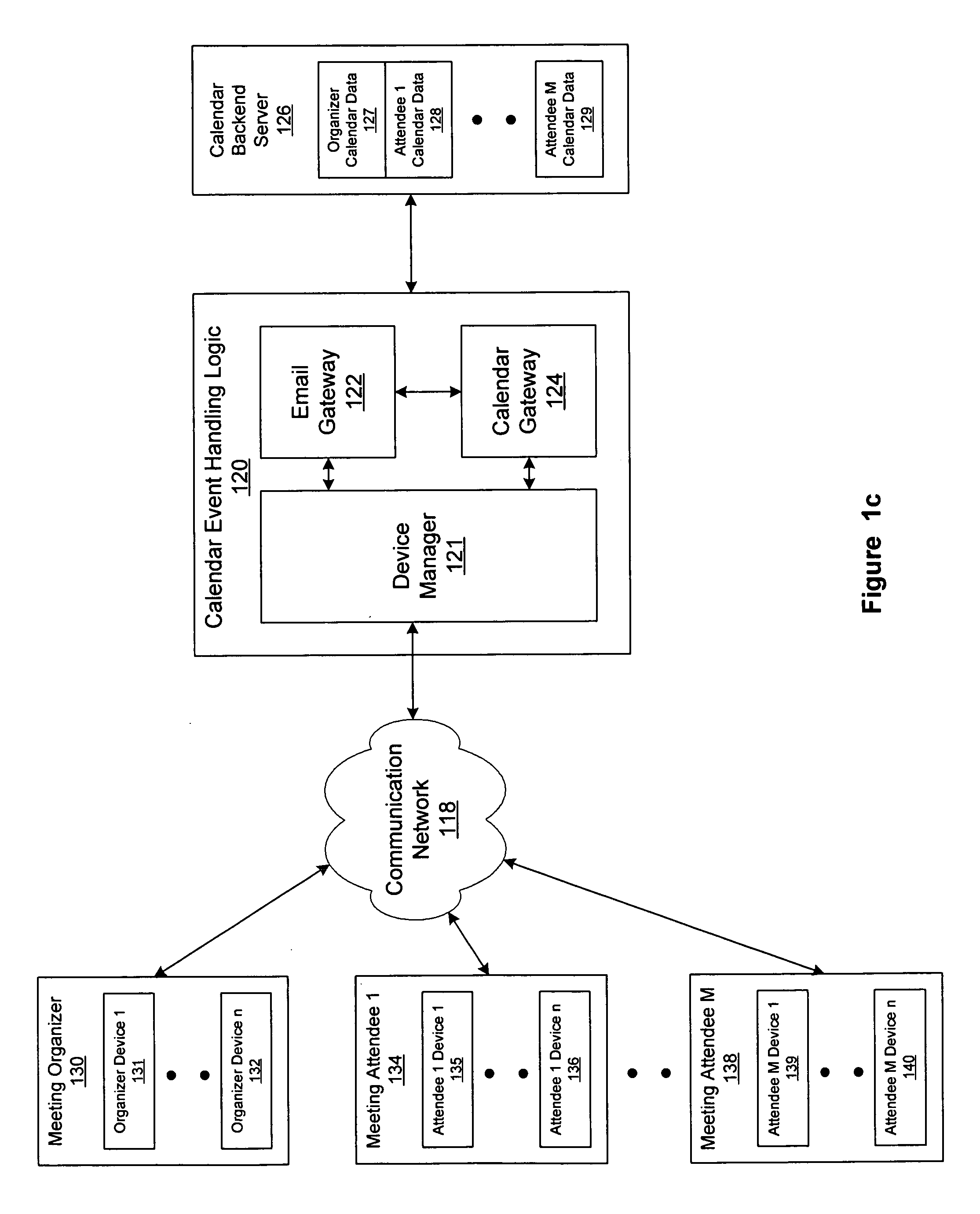

Universal calendar event handling

ActiveUS20070016646A1Multiple digital computer combinationsInput/output processes for data processingUser deviceData set

System and method for handling calendar events among a plurality of user devices in a communication network are disclosed. The system includes a device manager for interfacing with the plurality of user devices, where the plurality of user devices use different calendar applications and have calendar events in different data formats, and the plurality of user devices include at least a meeting organizer device and one or more attendee devices. The system further includes a calendar gateway for processing the calendar events to form a corresponding connected calendar-data-set for each connected user, where the connected calendar-data-set is stored in a calendar backend server and is shared among one or more devices of each connected user, and an email gateway for communicating the calendar events between the calendar gateway and the plurality of user devices.

Owner:SLACK TECH LLC

Selecting a server to service client requests

InactiveUS6898633B1Effective maintenanceResource allocationMultiple digital computer combinationsBack end serverApplication software

Systems and methods for directing client requests and for selecting a back end server to service client requests. A front end server receives client requests and based on the URI of the requests, directs the request to a back end server. The client request can be for a private or a public folder and each back end server typically stores both private and public folders. If the request is for a private folder, then the front end server determines which server stores that user's private folder and directs the client request to that folder. If the request is for a home public folder, the front end server directs the client request to the server that is associated with the private folders of the users. If the request is for an application public folder, then the front end server selects one of the back end servers to service the client request. Advantageously, the front end server always directs the client request to the same server. If the selected server is unavailable, then the front end server is capable of redirecting the client request to an available server.

Owner:MICROSOFT TECH LICENSING LLC

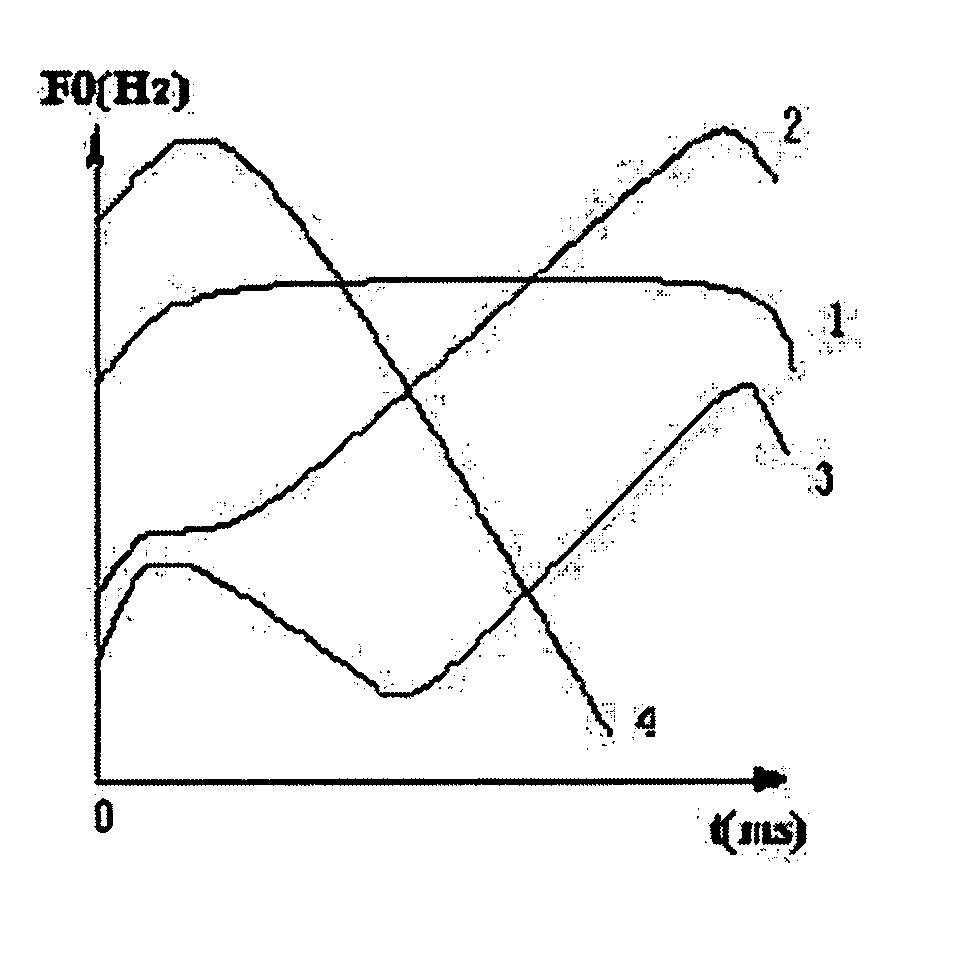

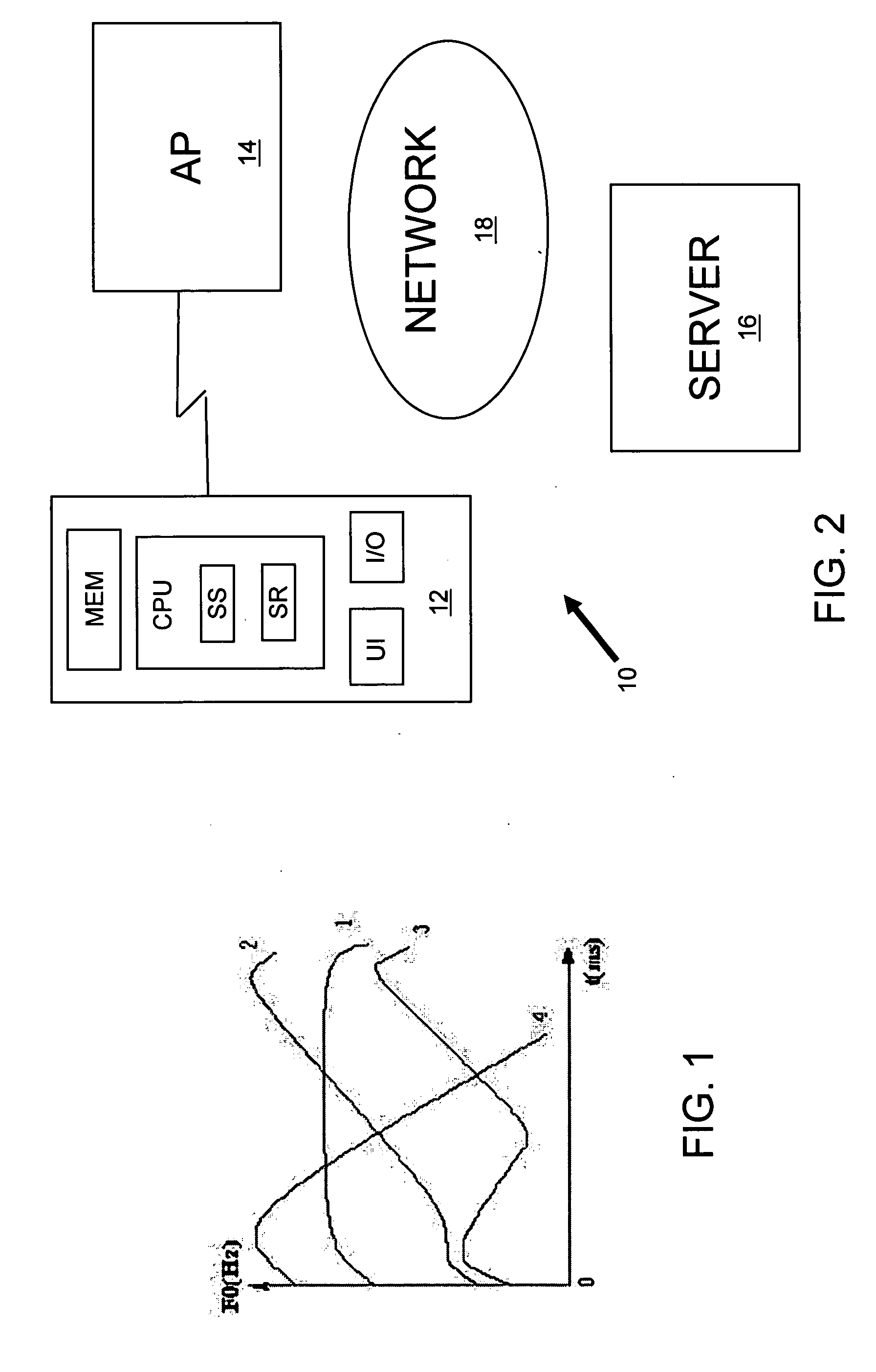

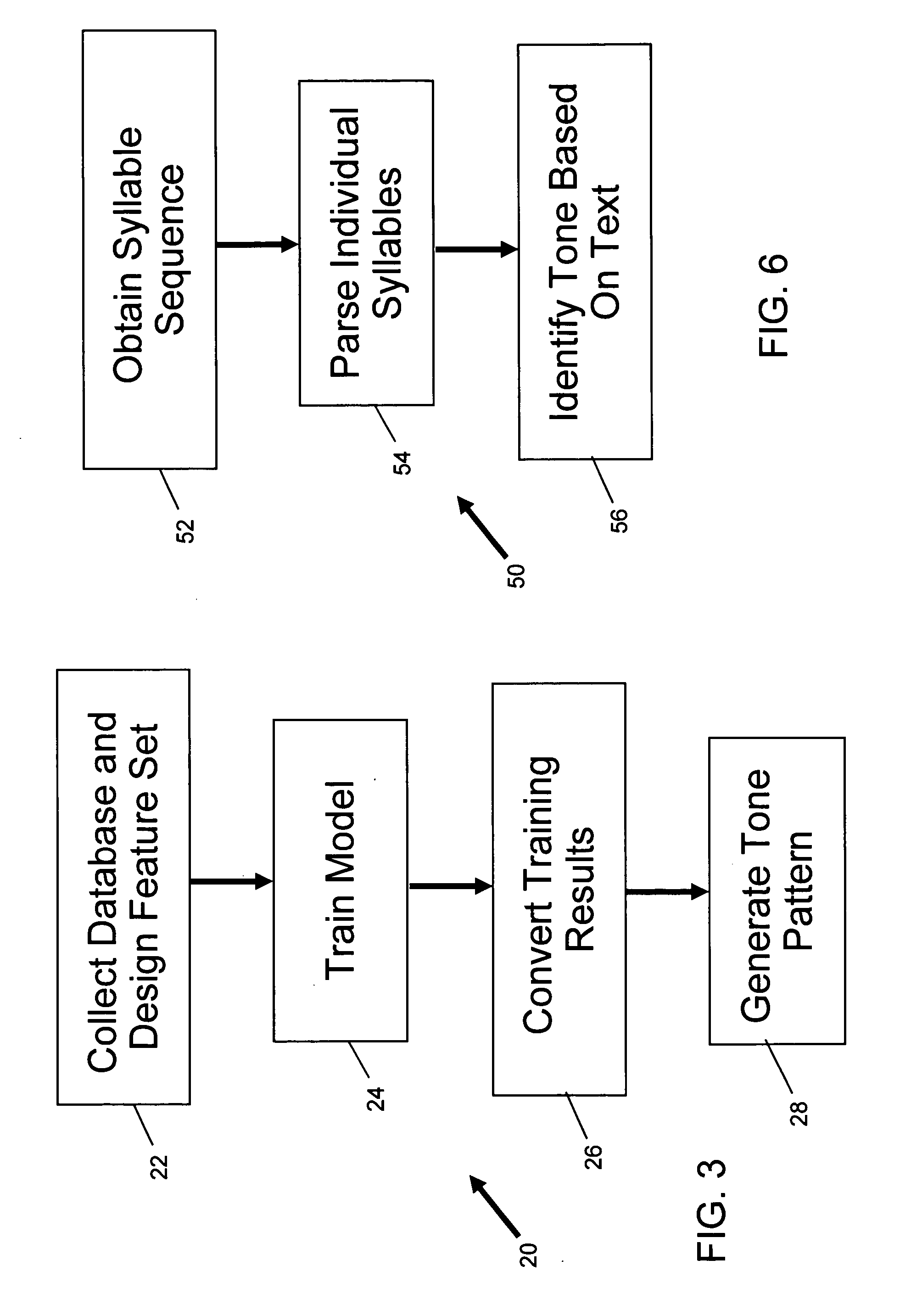

Predicting tone pattern information for textual information used in telecommunication systems

The techniques described include generating tonal information from a textual entry and, further, applying this tonal information to PINYIN sequences using decision trees. For example, a method of predicting tone pattern information for textual information used in telecommunication systems includes parsing a textual entry into segments and identifying tonal information for the textual entry using the parsed segments. The tonal information can be generated with a decision tree. The method can also be implemented in a distributed system where the conversion is done at a back-end server and the information is sent to a communication device after a request.

Owner:WSOU INVESTMENTS LLC

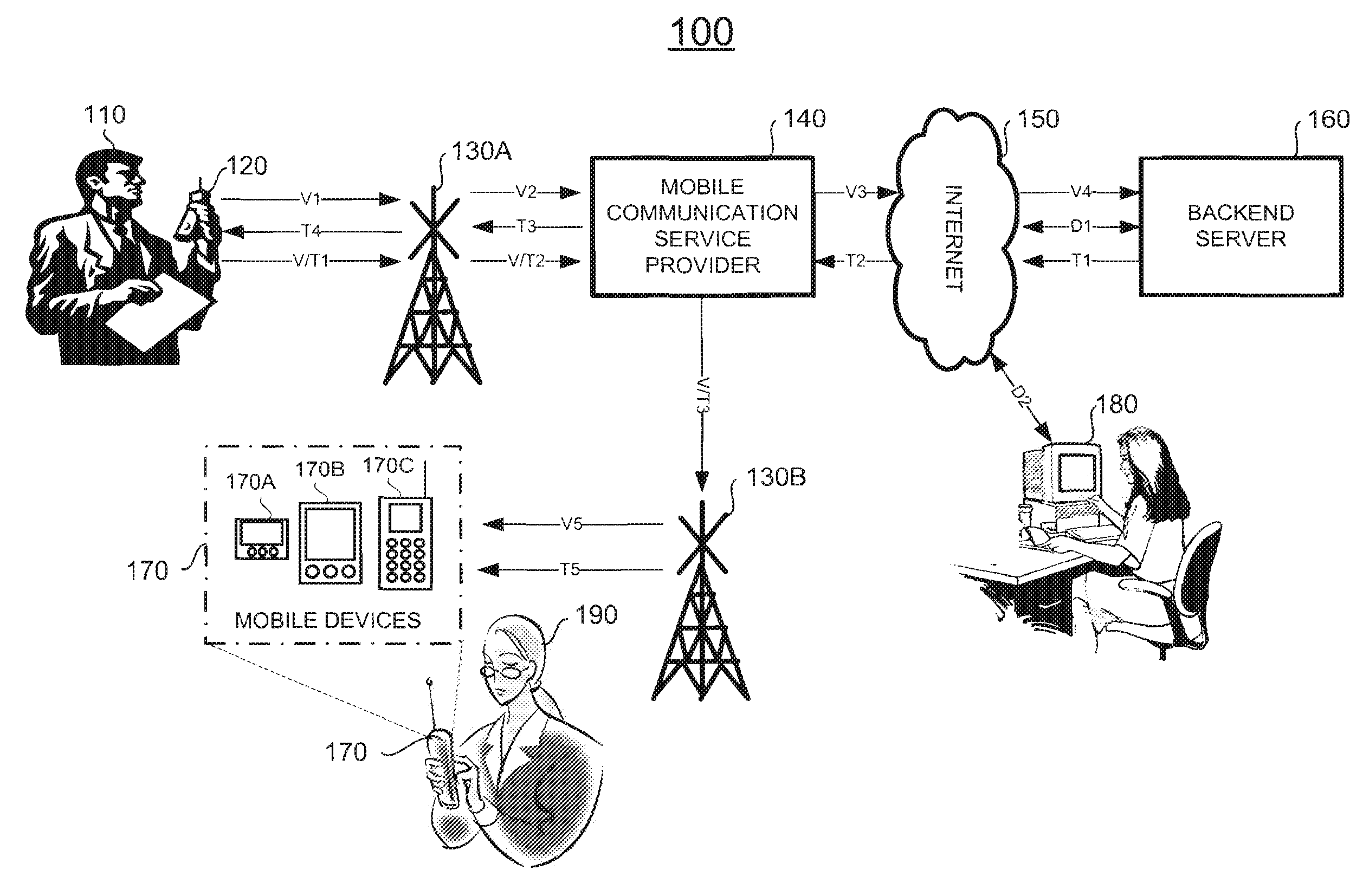

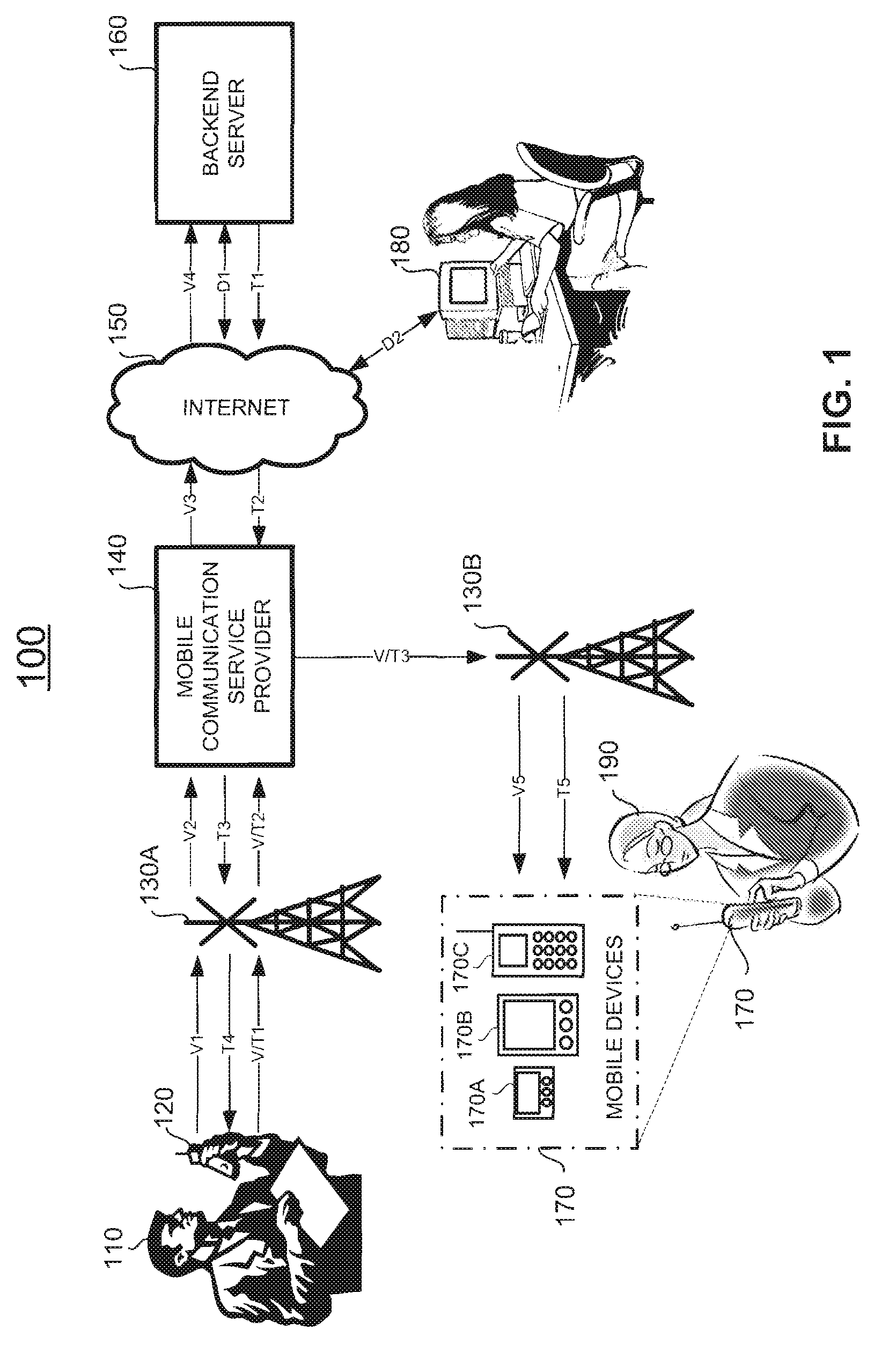

Hosted voice recognition system for wireless devices

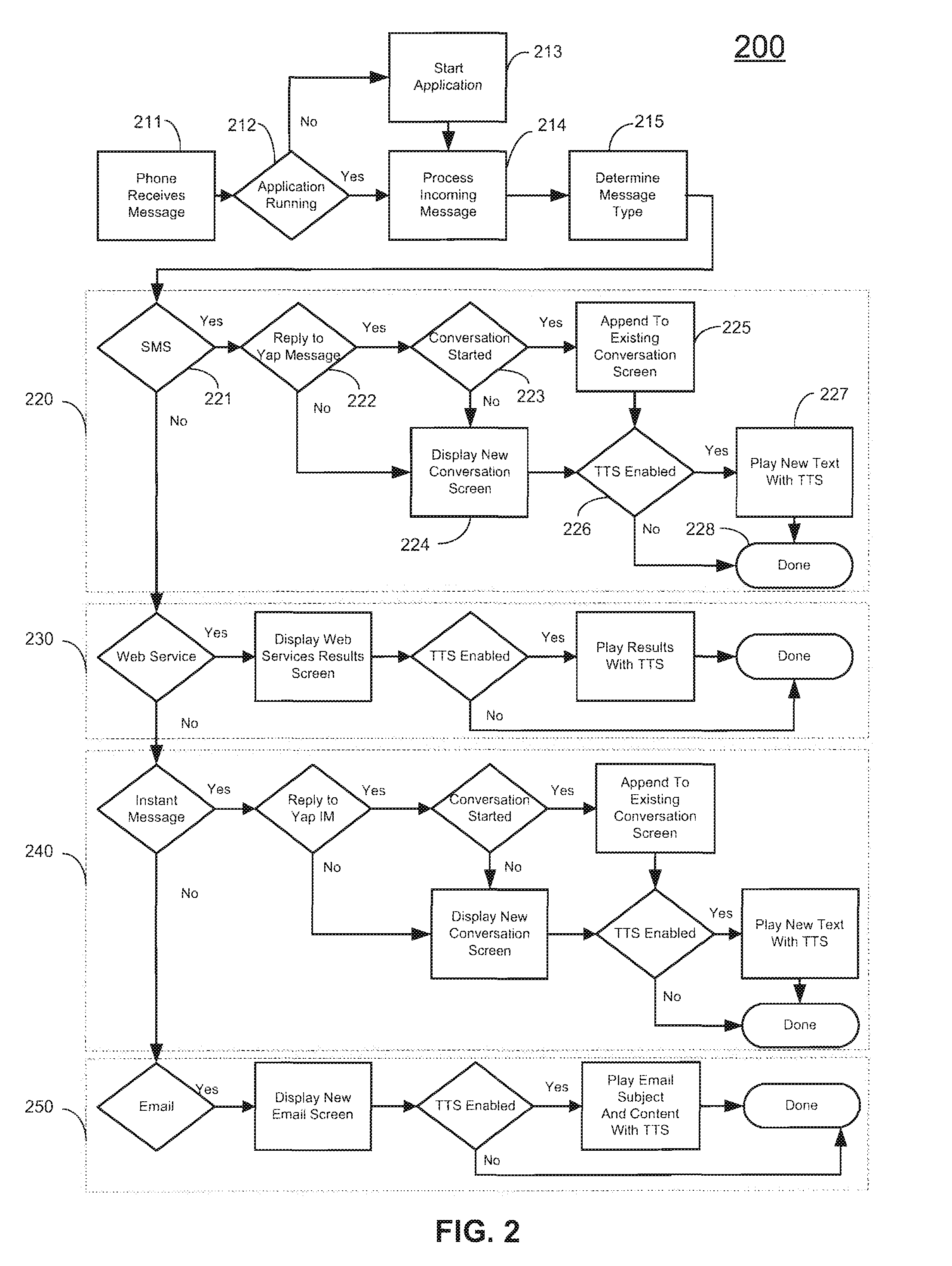

InactiveUS20070239837A1Multiple digital computer combinationsSubstation equipmentHand heldNetwork Communication Protocols

Methods, systems, and software for converting the audio input of a user of a hand-held client device or mobile phone into a textual representation by means of a backend server accessed by the device through a communications network. The text is then inserted into or used by an application of the client device to send a text message, instant message, email, or to insert a request into a web-based application or service. In one embodiment, the method includes the steps of initializing or launching the application on the device; recording and transmitting the recorded audio message from the client device to the backend server through a client-server communication protocol; converting the transmitted audio message into the textual representation in the backend server; and sending the converted text message back to the client device or forwarding it on to an alternate destination directly from the server.

Owner:AMAZON TECH INC +1

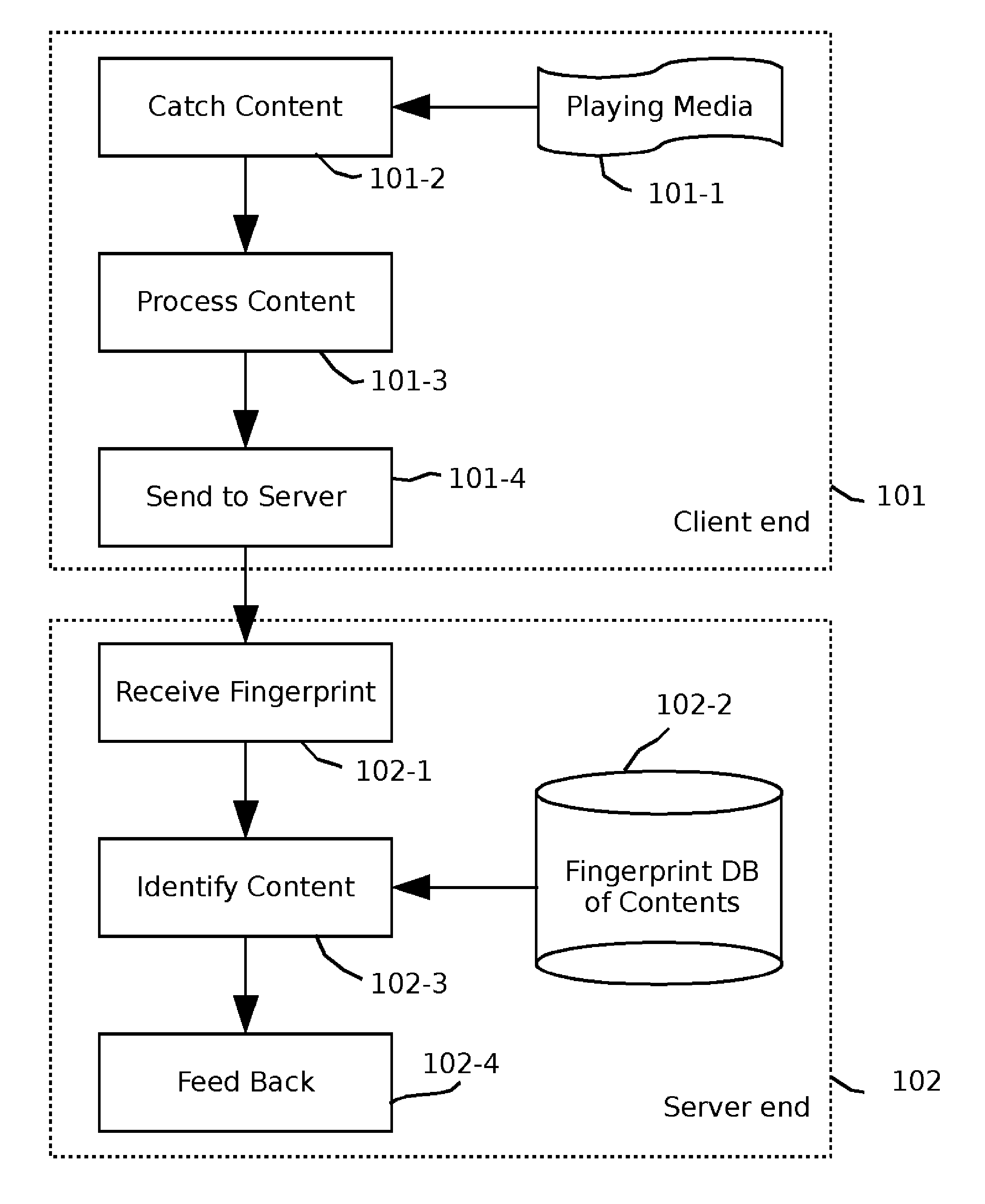

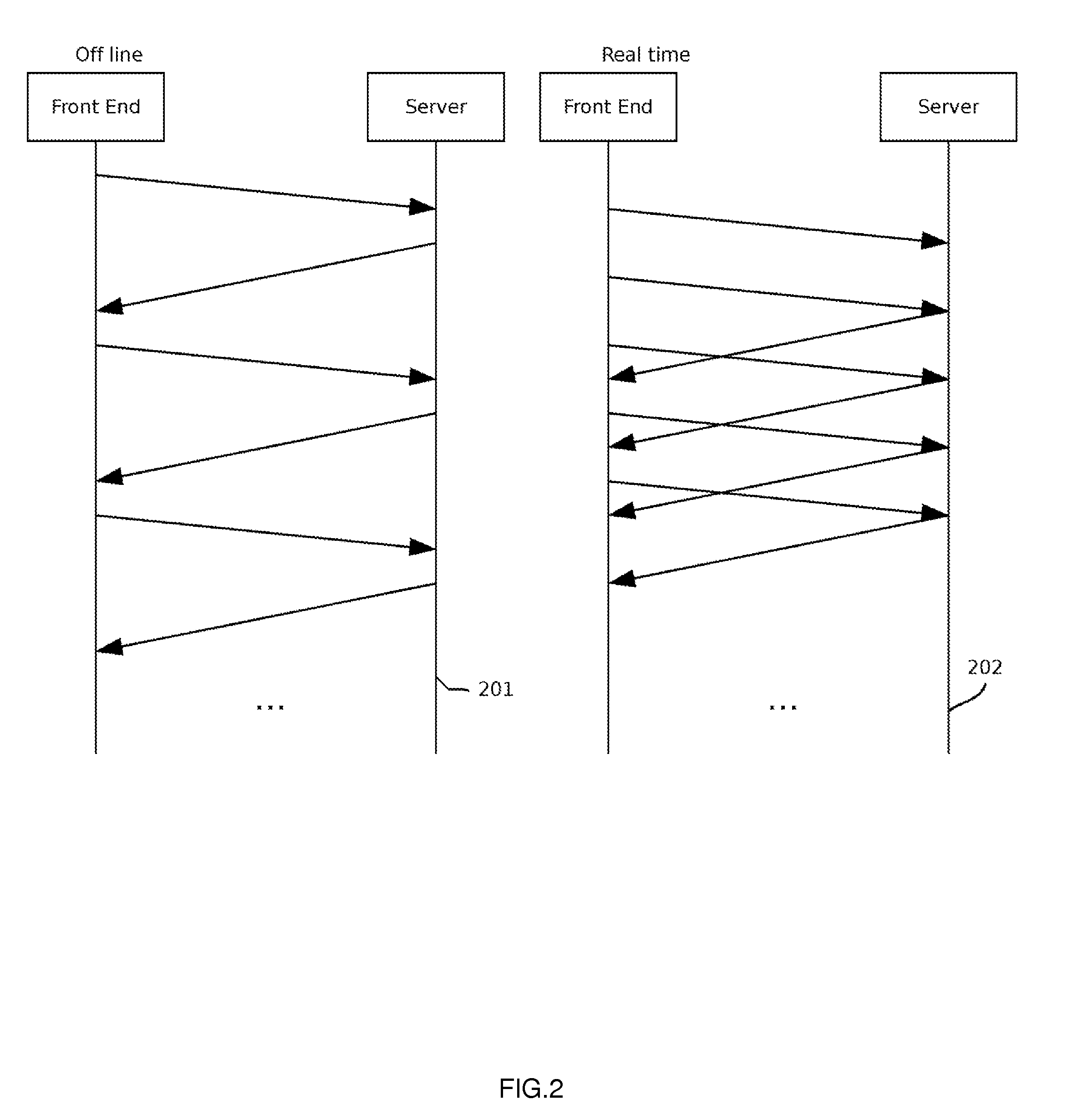

System and method for auto content recognition

ActiveUS20110289114A1Digital data information retrievalDigital data processing detailsThe InternetBack end server

System and method for automatically recognizing media contents comprise steps of capturing media content from the Internet and / or devices, extracting fingerprints from captured contents and transferring to the backend servers for identification, and backend servers processing the fingerprints and replying with identified result.

Owner:VOBILE INC

A method for maintaining transaction integrity across multiple remote access servers

ActiveUS20060047836A1Avoid resource conflictsMultiple digital computer combinationsTransmissionFailoverBack end server

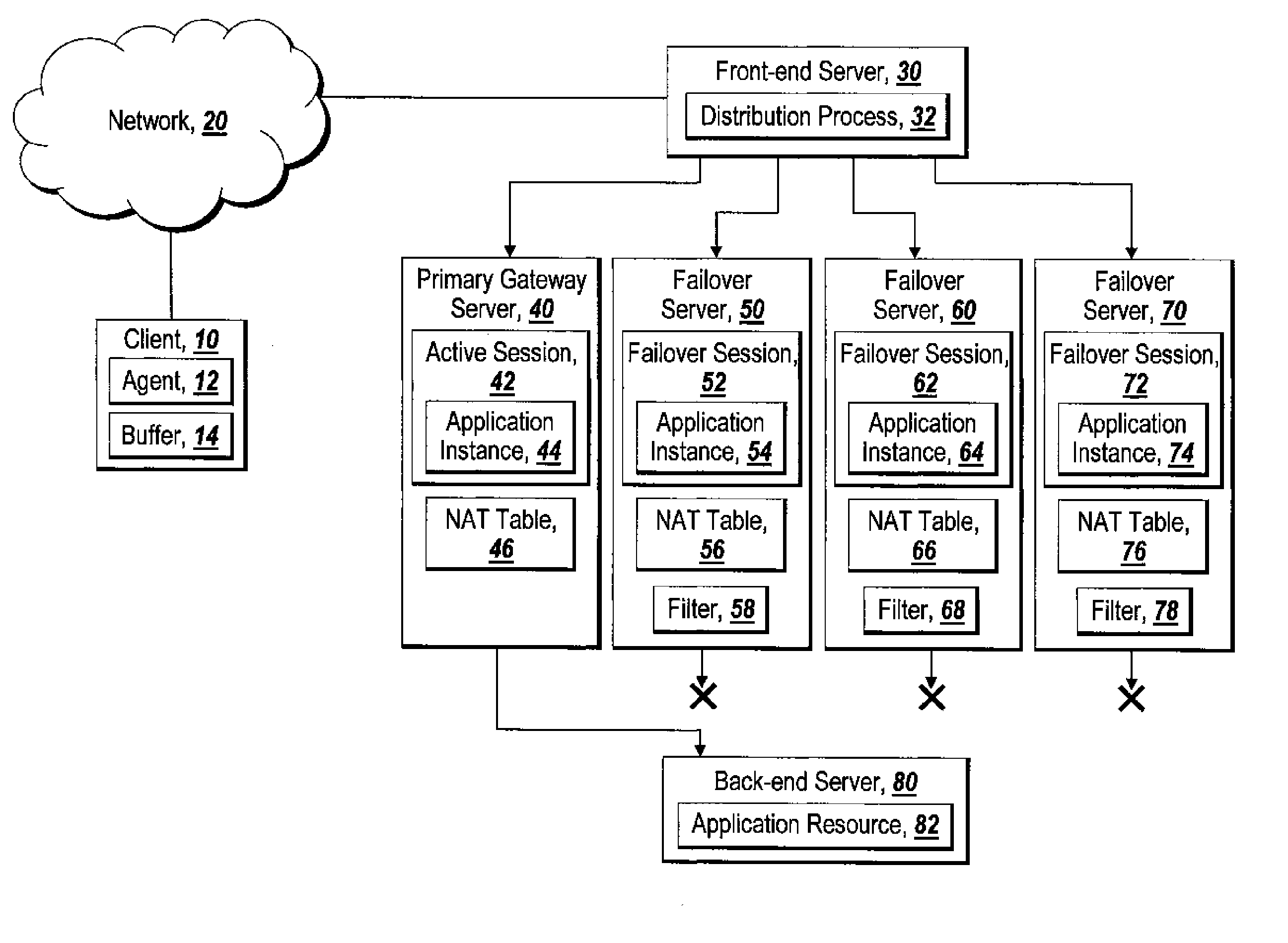

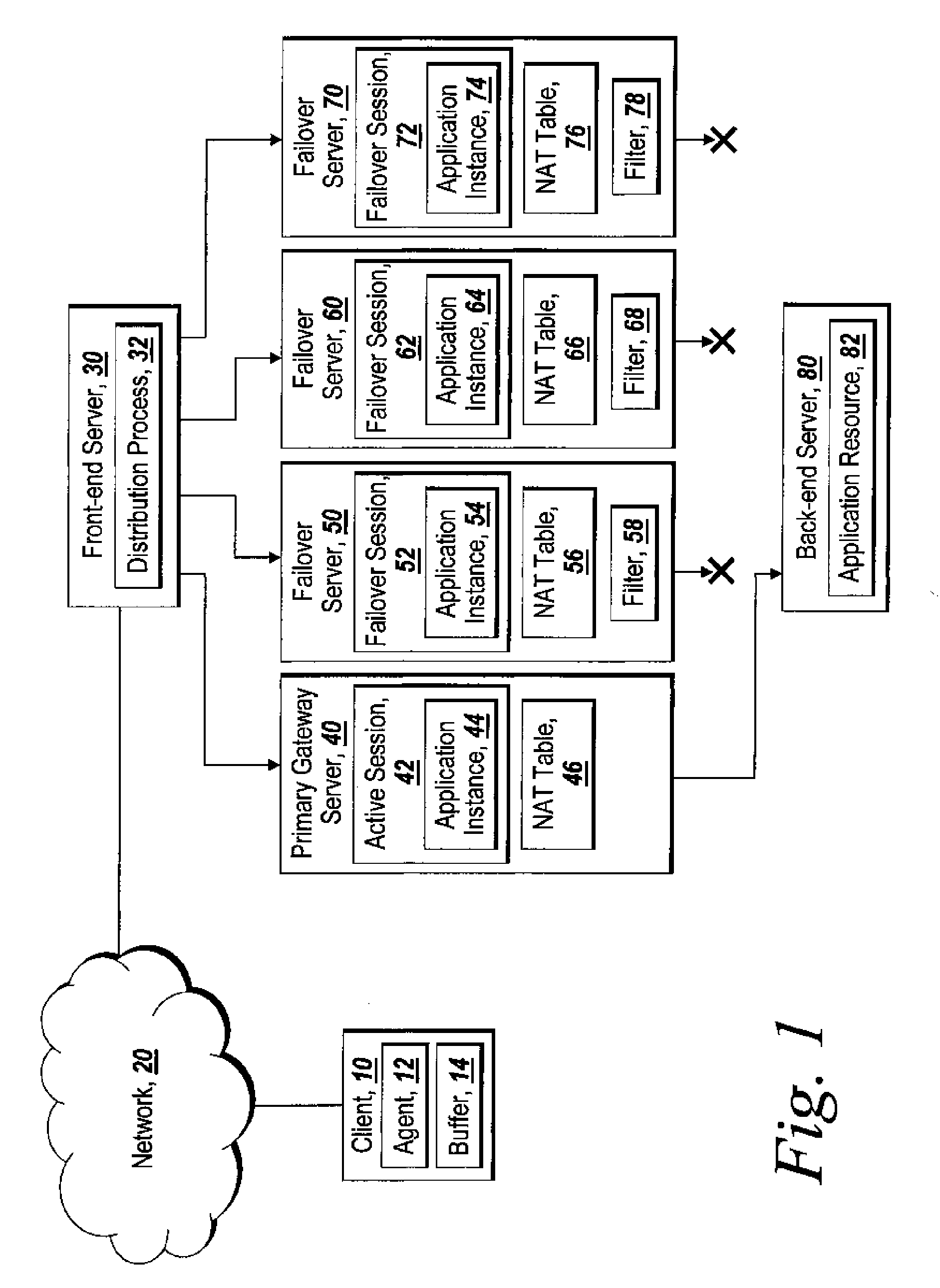

A system for providing failover redundancy in a remote access solution that includes at least one application resource on a back-end server is discussed. The system further includes multiple gateway servers. One of the multiple gateway servers is designated as a primary gateway server while the other servers are designated as failover gateway servers. Each of the multiple gateway servers hosts a session with at least one executing application instance for the same application with each of the sessions on the failover gateway servers being maintained in the same state as the session on the primary gateway server. The primary gateway server is the only one of the gateway servers that is allowed to communicate with the application resource(s). The system further includes a client device that is in communication over a VPN with the primary gateway server. The client device receives output of the application instance executing in the session on the primary gateway server over the VPN. The client device also sends input to the primary gateway server over the VPN. The received output is displayed on a viewer by the client device.

Owner:CITRIX SYST INC

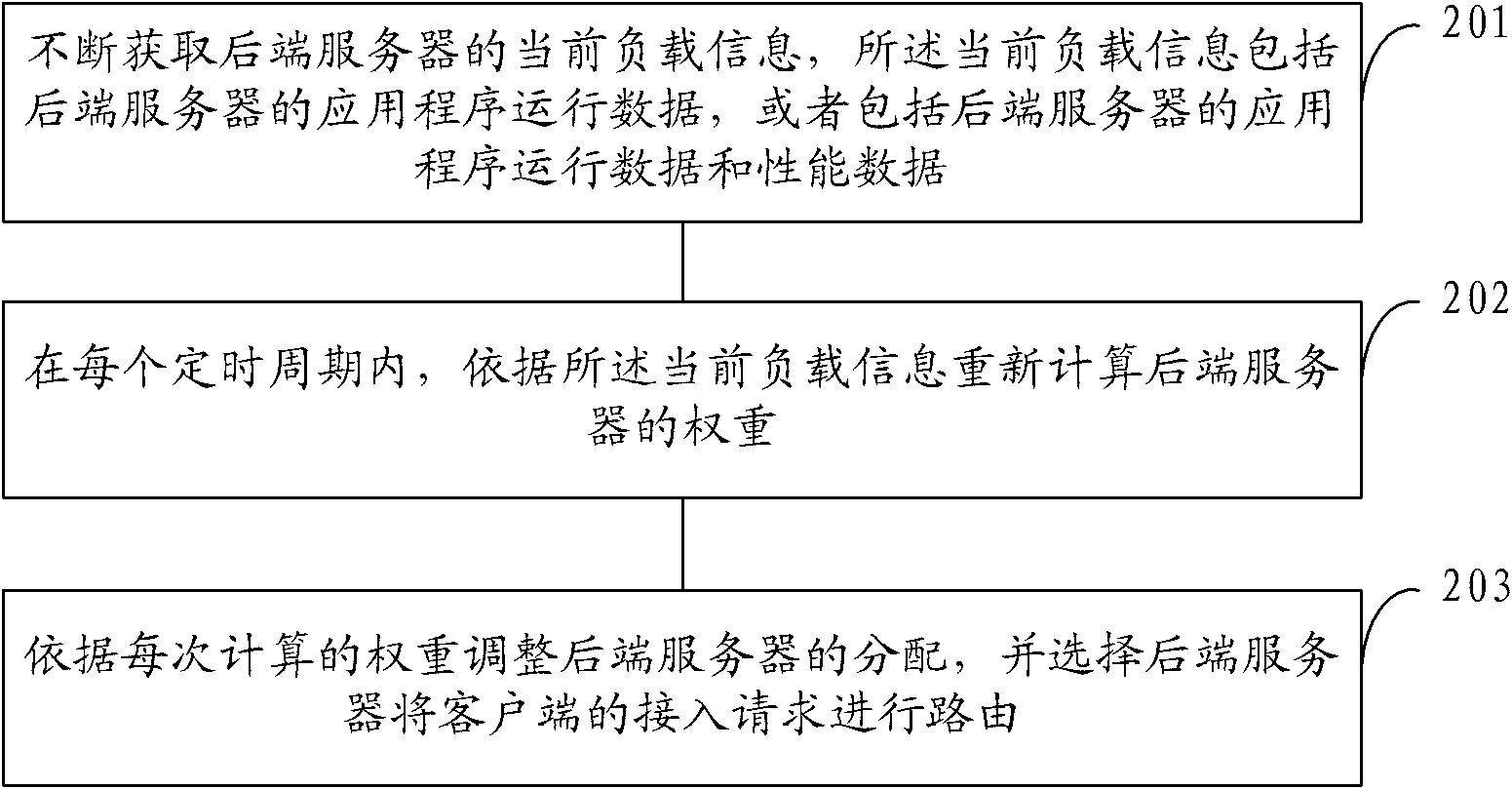

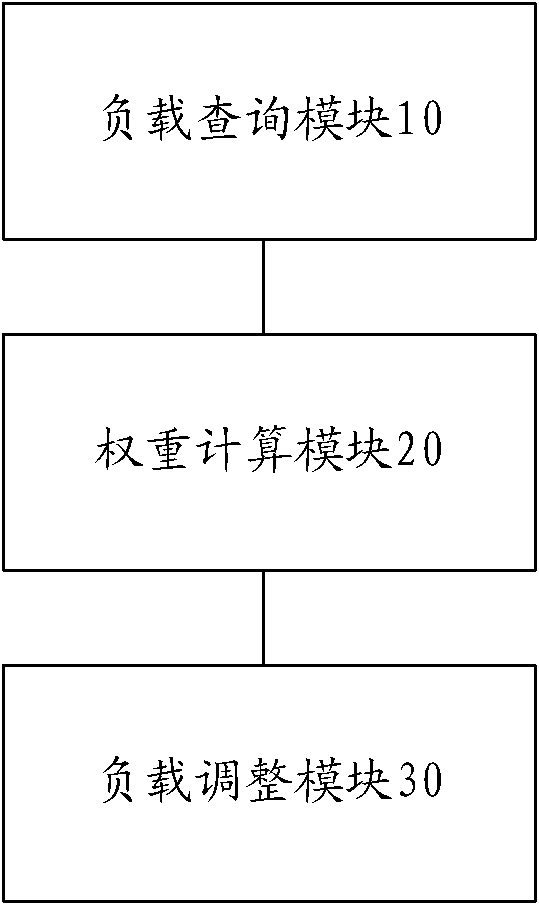

Load balancing method and system of application services

InactiveCN102611735ARealize a reasonable distributionAvoid allocation problemsTransmissionCurrent loadBack end server

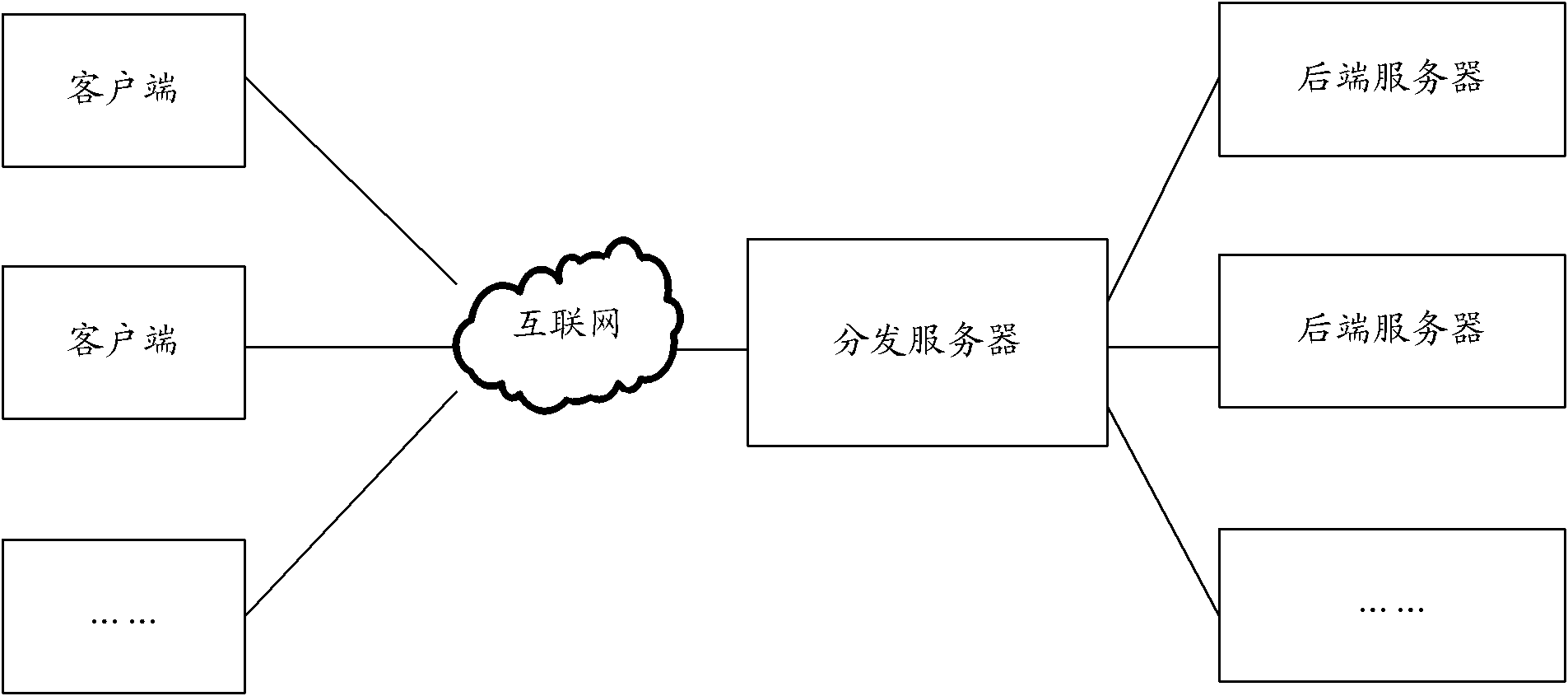

The invention provides a load balancing method and system and of application services, which are used for solving the load balancing problem in the existing network service. The method comprises the following steps of: acquiring the current load information of a rear-end server continuously, wherein the current load information comprises application program operational data of the rear-end server, or comprises the application program operational data of the rear-end server and performance data; in each acquisition period, recalculating the weight of the rear-end server according to the current load information; and adjusting the distribution of the rear-end server according to the weight of each calculation, and selecting the rear-end server to route an access request of a client side. Compared with the traditional load balancing method of the application service, the load balancing method has the advantage that reasonable distribution of traffic is realized among multiple network equipment which can finish the same functions, so that the condition that one equipment is too busy, other equipment can not perform the functions fully is avoided.

Owner:BEIJING QIHOO TECH CO LTD +1

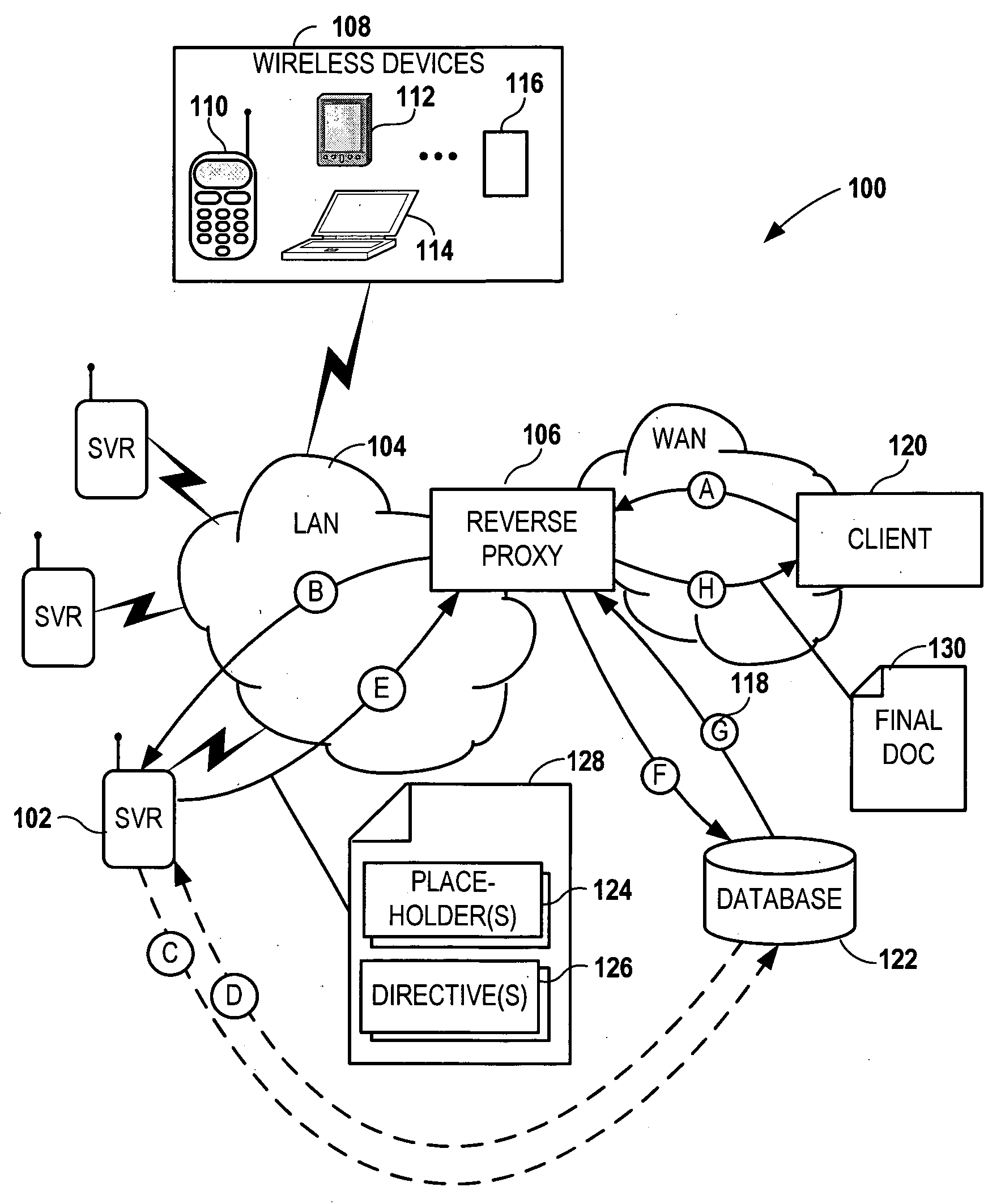

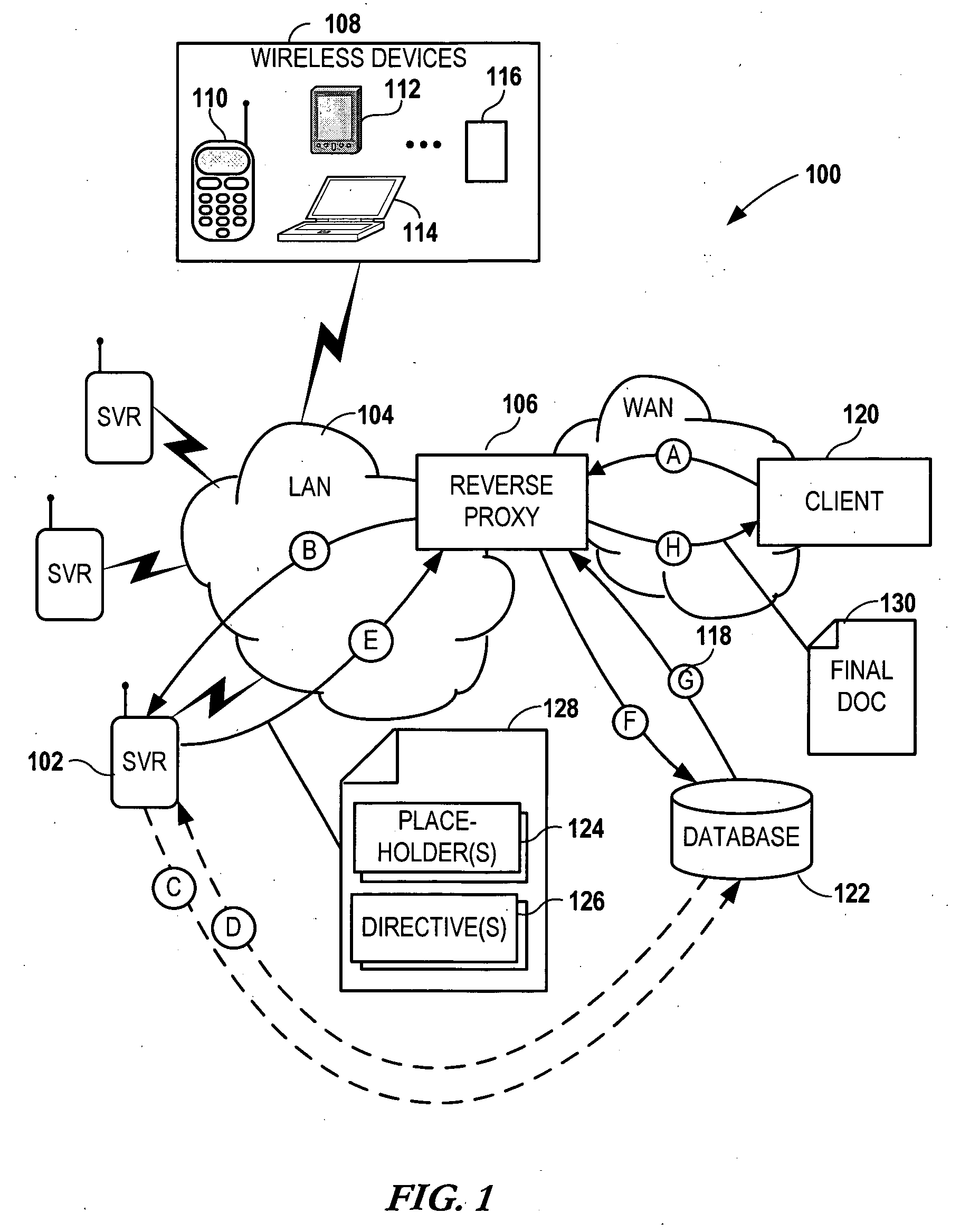

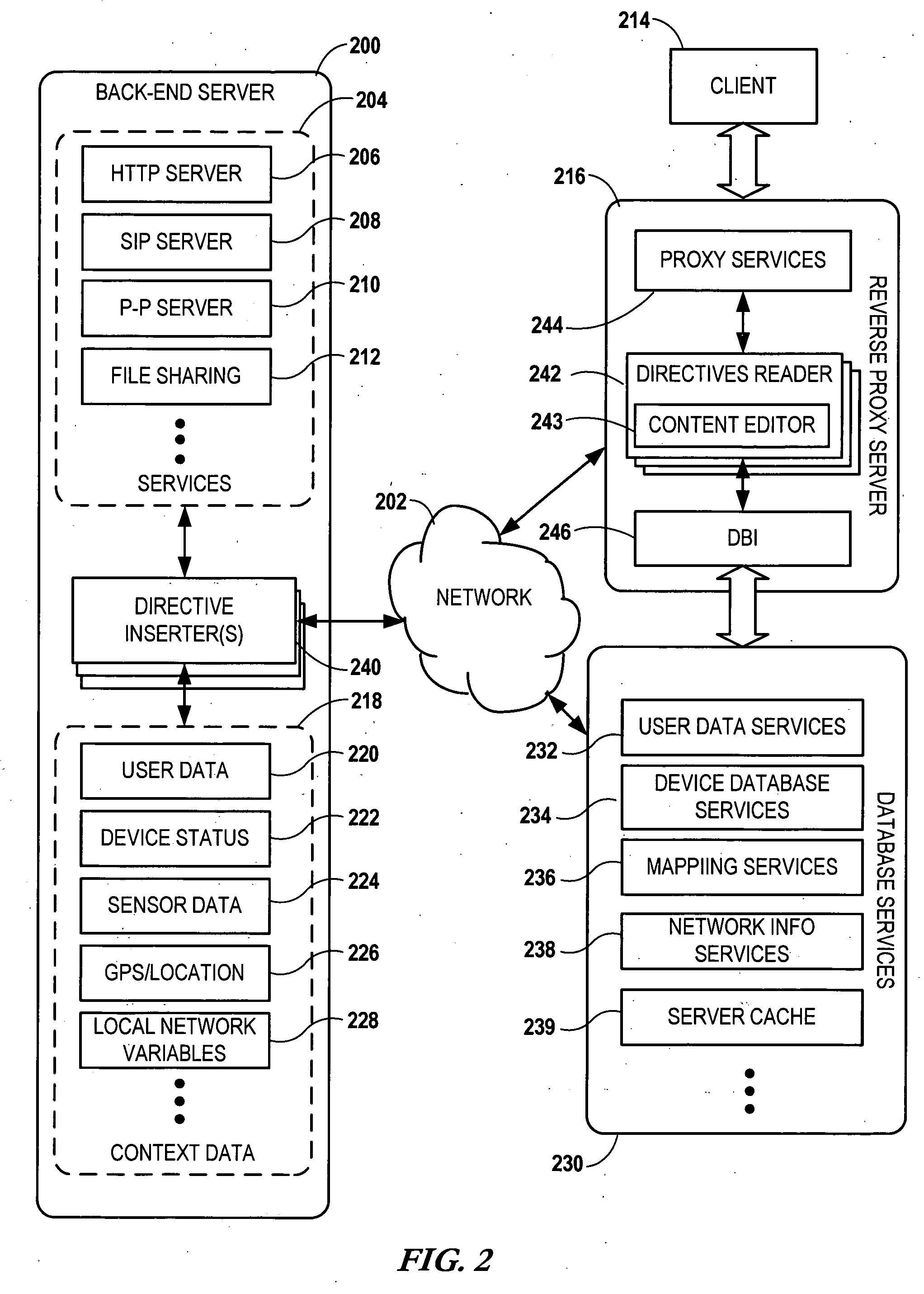

Modifying back-end web server documents at an intermediary server using directives

InactiveUS20060200503A1Digital data information retrievalSpecial data processing applicationsReverse proxyPaper document

Network services are provided by a network coupled back-end server that is accessed by an intermediary server such as a reverse proxy server. A service request is received at the back-end server via the intermediary server. The back-end server forms a first document in response to the service request. The first document contains a data generation directive targeted for the intermediary server. The first document is sent to the intermediary server. Additional data is generated at the intermediary server using the data generation directive and a second document is formed using the first document and the additional data. The second document is then sent from the intermediary server to an originator of the service request.

Owner:NOKIA CORP

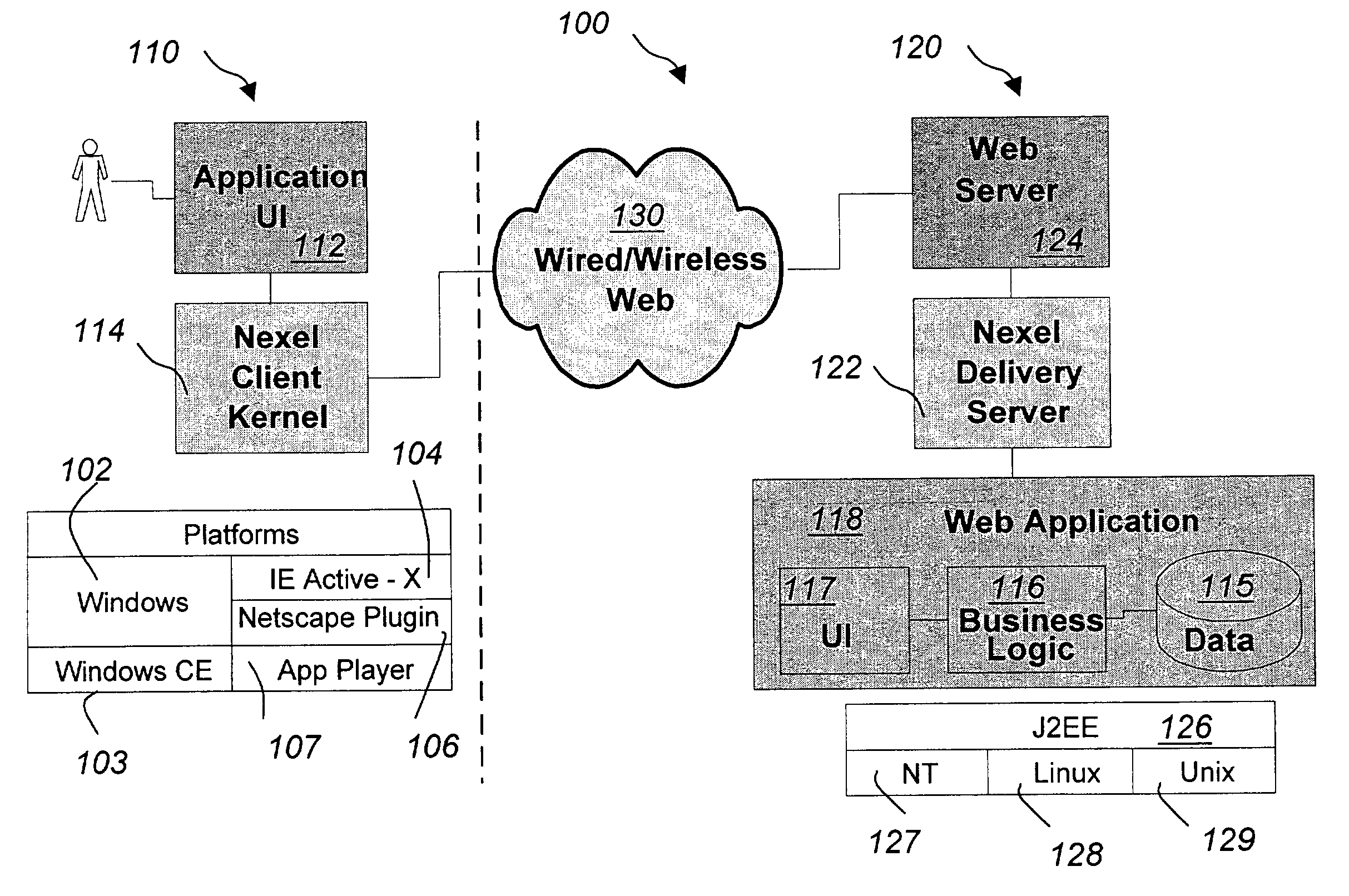

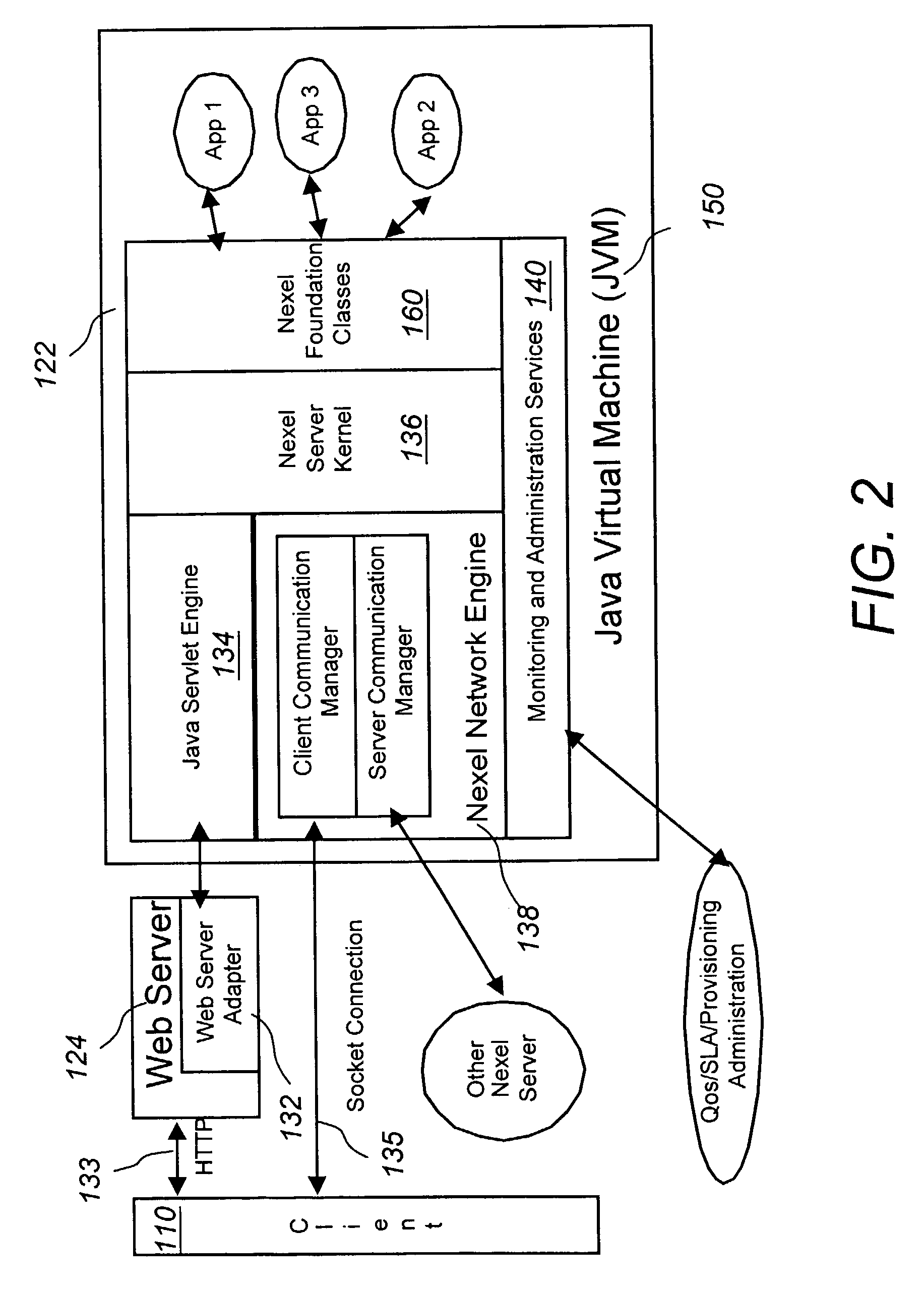

Methods and techniques for delivering rich Java applications over thin-wire connections with high performance and scalability

ActiveUS7596791B2Interprogram communicationMultiple digital computer combinationsNetwork connectionApplication software

A method for delivering applications over a network where the application's logic runs on the backend server and the application's user interface is rendered on a client-device, according to its display capabilities, thought a network connection with the backend server. The application's GUI API and event processing API are implemented to be network-aware, transmitting application's presentation layer information, event processing registries, and other related information a between client and server. The transmission is a high, object level format, which minimizes network traffic. Client-side events are transmitted to the server for processing via a predetermined protocol, the server treating such events and inputs as if locally generated. The appropriate response to the input is generated and transmitted to the client device using the format to refresh the GUI on the client.

Owner:EMC IP HLDG CO LLC

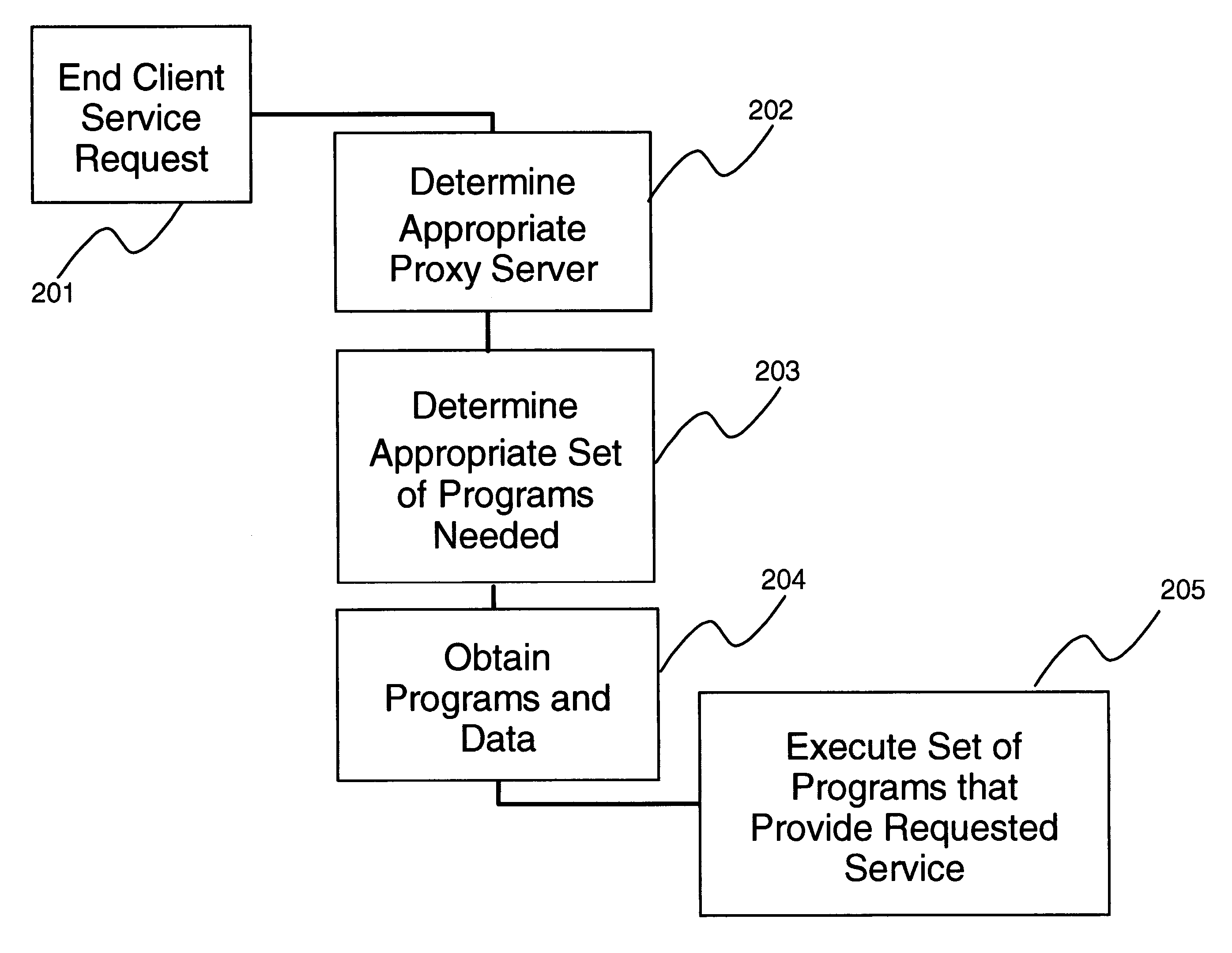

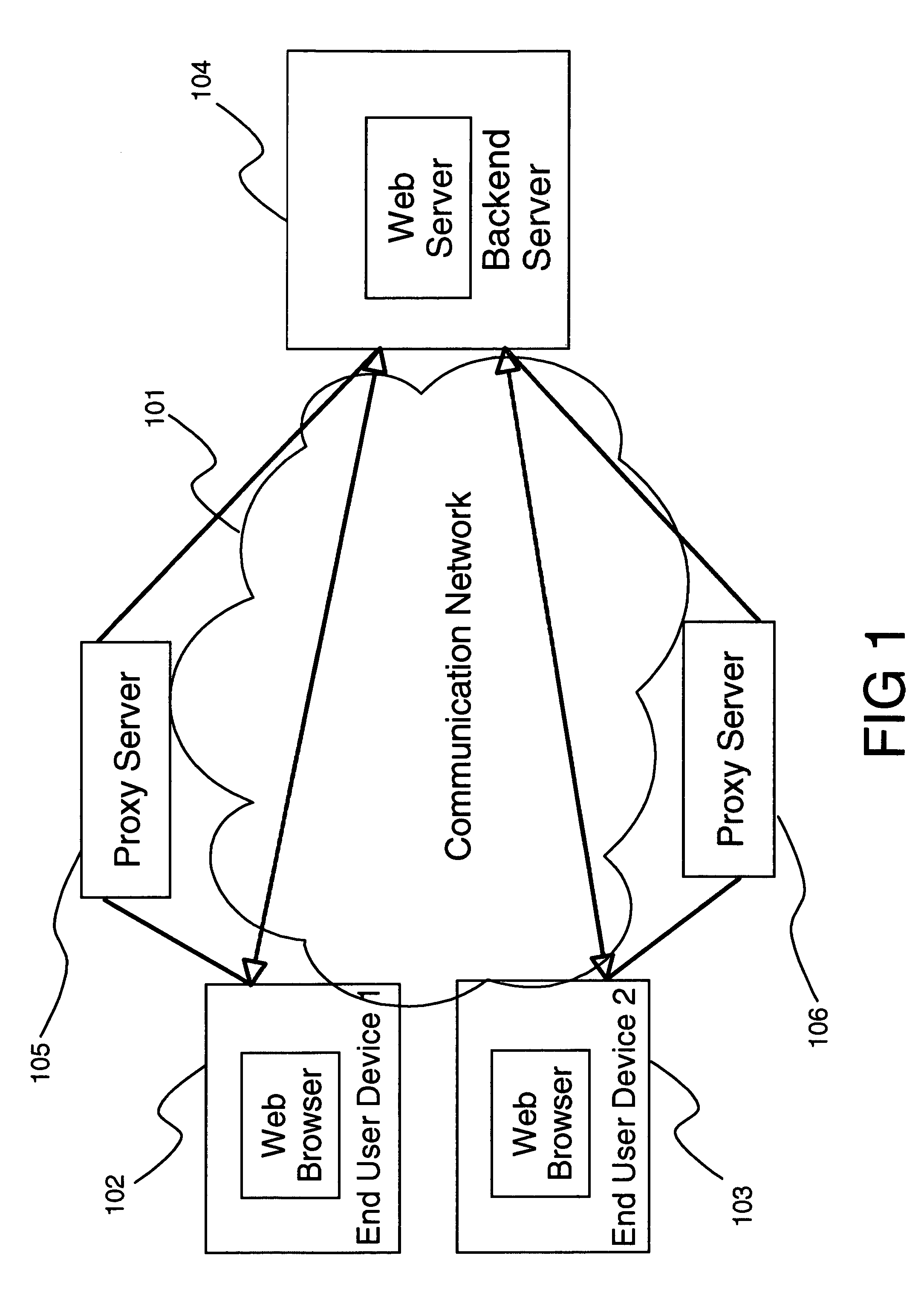

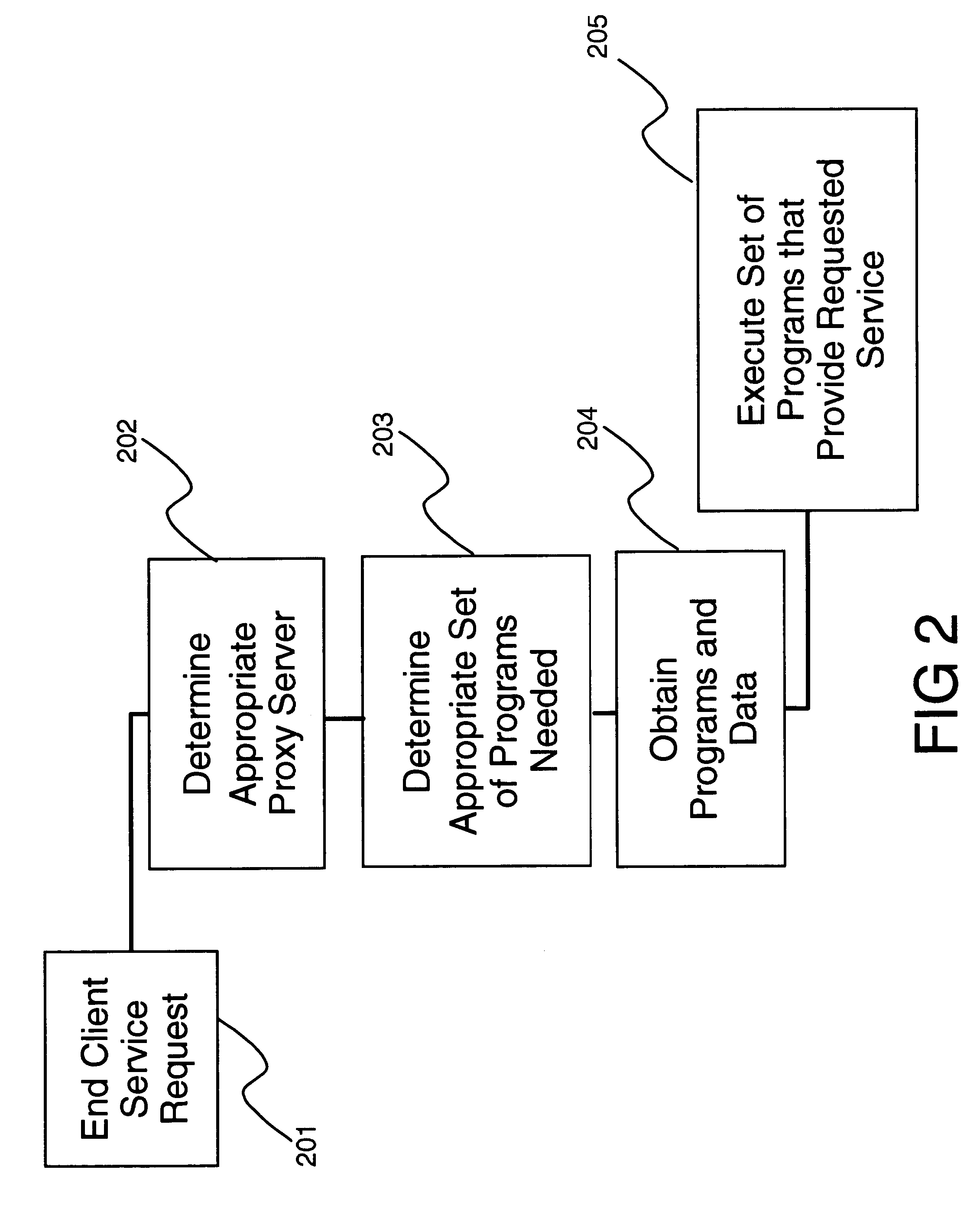

Method and apparatus for distributed application acceleration

The present invention presents methods and apparatus supporting acceleration of networked applications by means of dynamic distributed execution and maintenance. It also enables management and administration of the distributed components of the networked applications from a responsible point of origination. The method and apparatus deploys a plurality of proxy servers within the network. Clients are directed to one of the proxy servers using wide area load balancing techniques. The proxy servers download programs from backend servers and cache them in a local store. These programs, in conjunction with data stored at cached servers, are used to execute applications at the proxy server, eliminating the need for a client to communicate to a backend server to execute a networked application.

Owner:IBM CORP

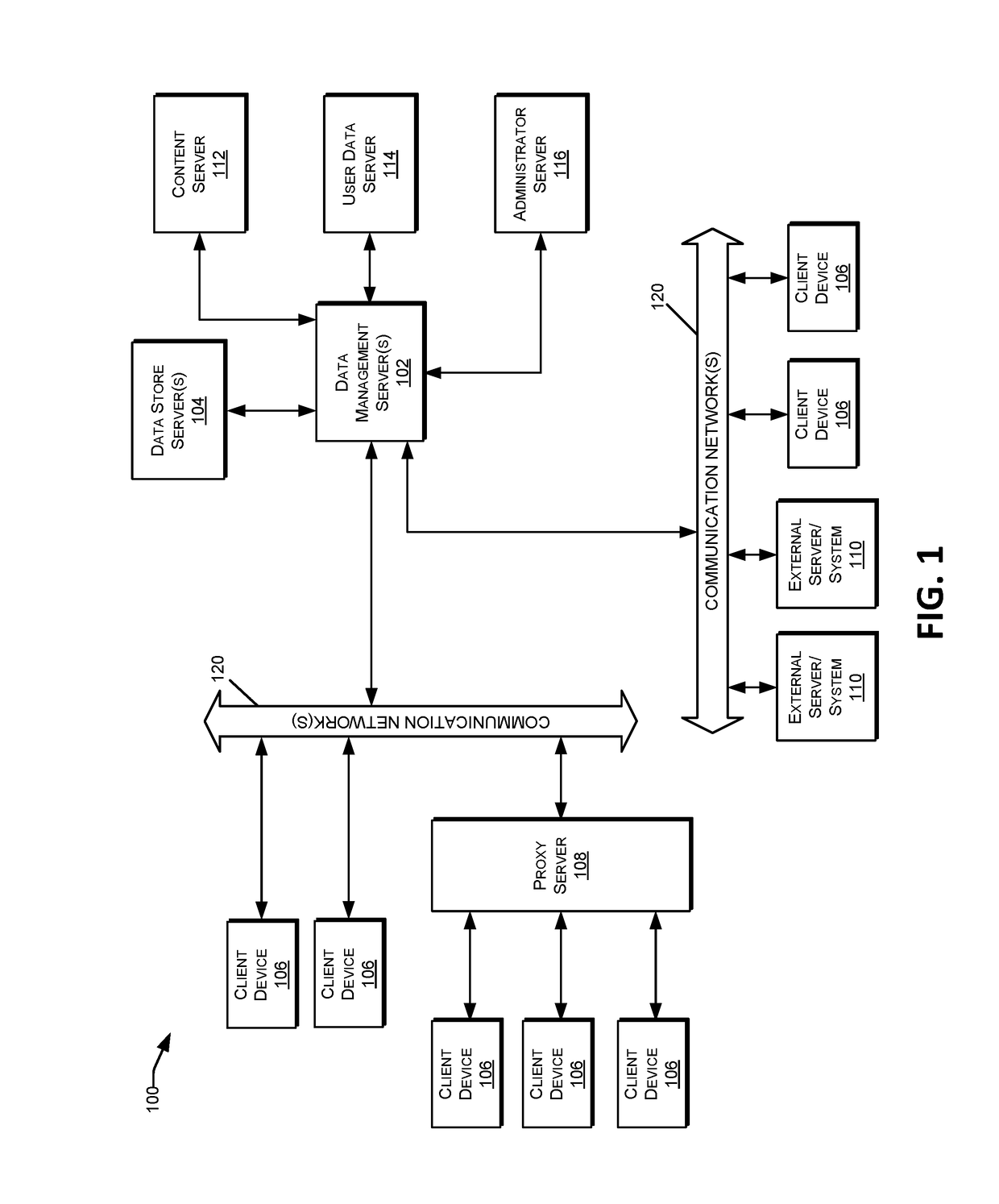

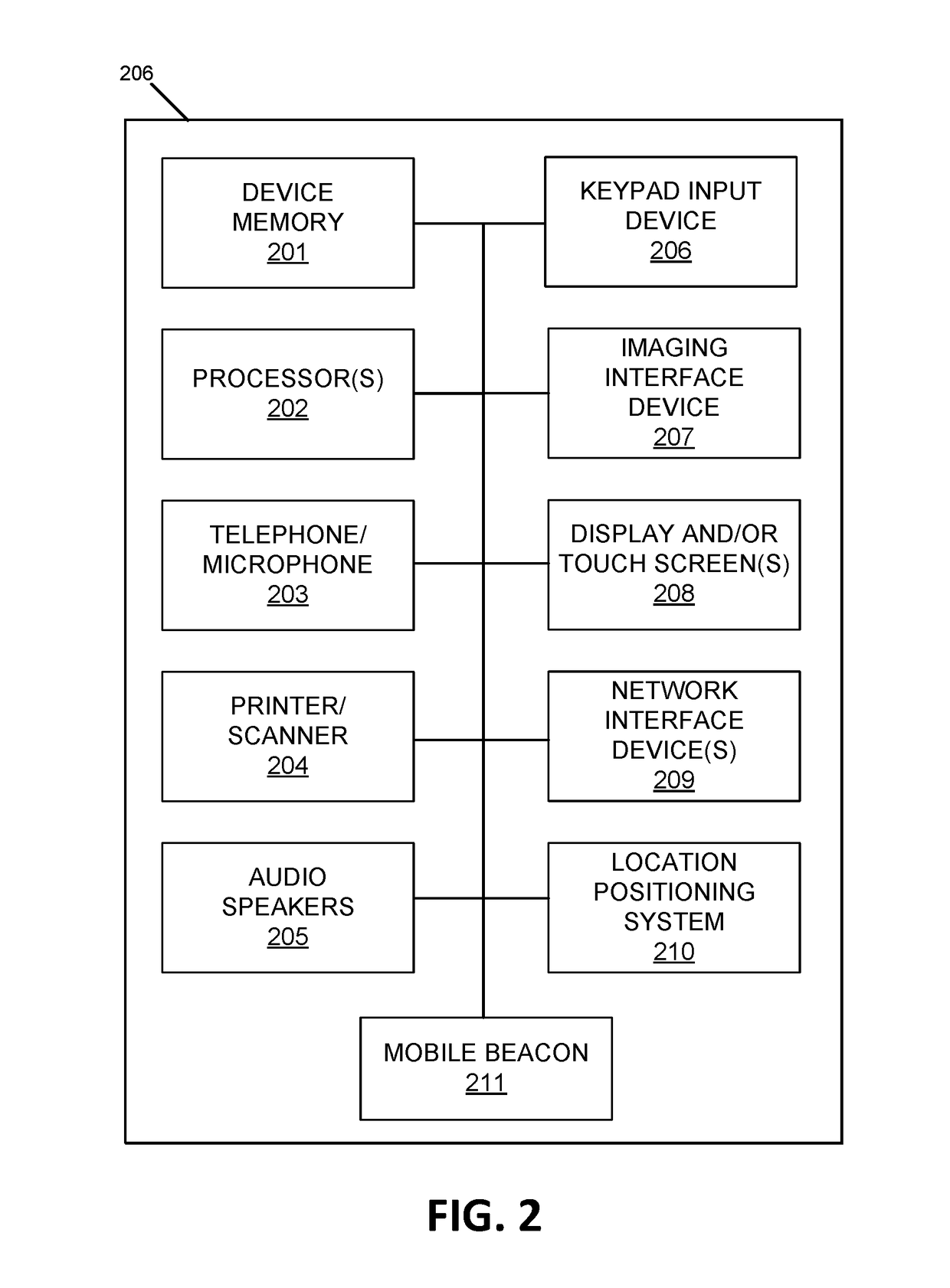

Multi-network transaction analysis

Techniques described herein relate to analyzing location-based transaction systems, based on data from multiple client devices detected and received via multiple communication networks, and providing customized data to client devices associated with particular locations and / or users of the transaction system. The characteristics of a transaction system and / or specific client locations of the transaction system may be determined, based on data received from multiple client devices. A back-end system may receive data from one or more digital kiosk systems associated with particular locations in a transaction system network, various mobile computing devices of users, and client computers within the transaction system. One or more back-end servers may analyze the data to determine various characteristics of the transaction system and / or characteristics of one or more particular locations in the transaction system network.

Owner:THE WESTERN UNION CO

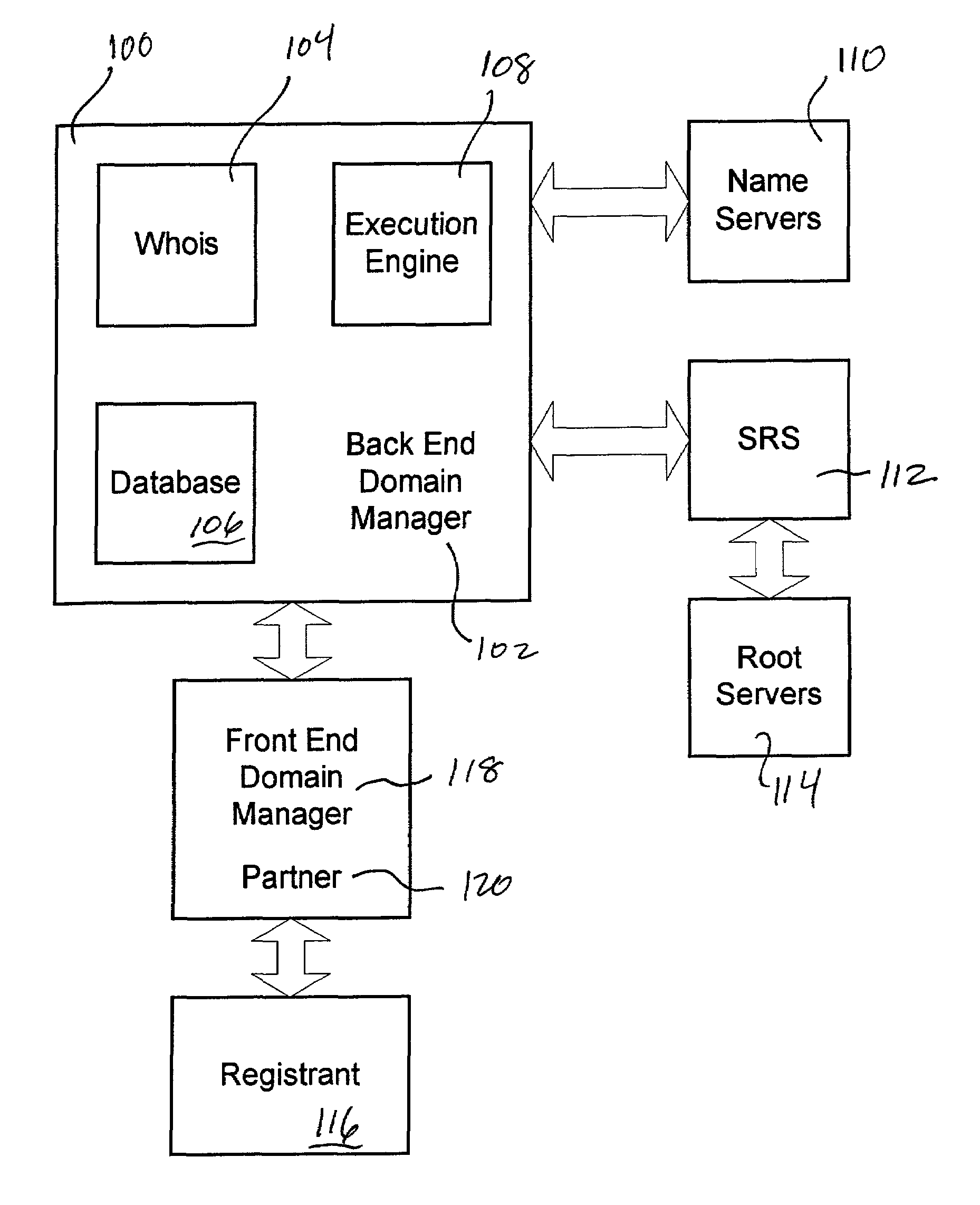

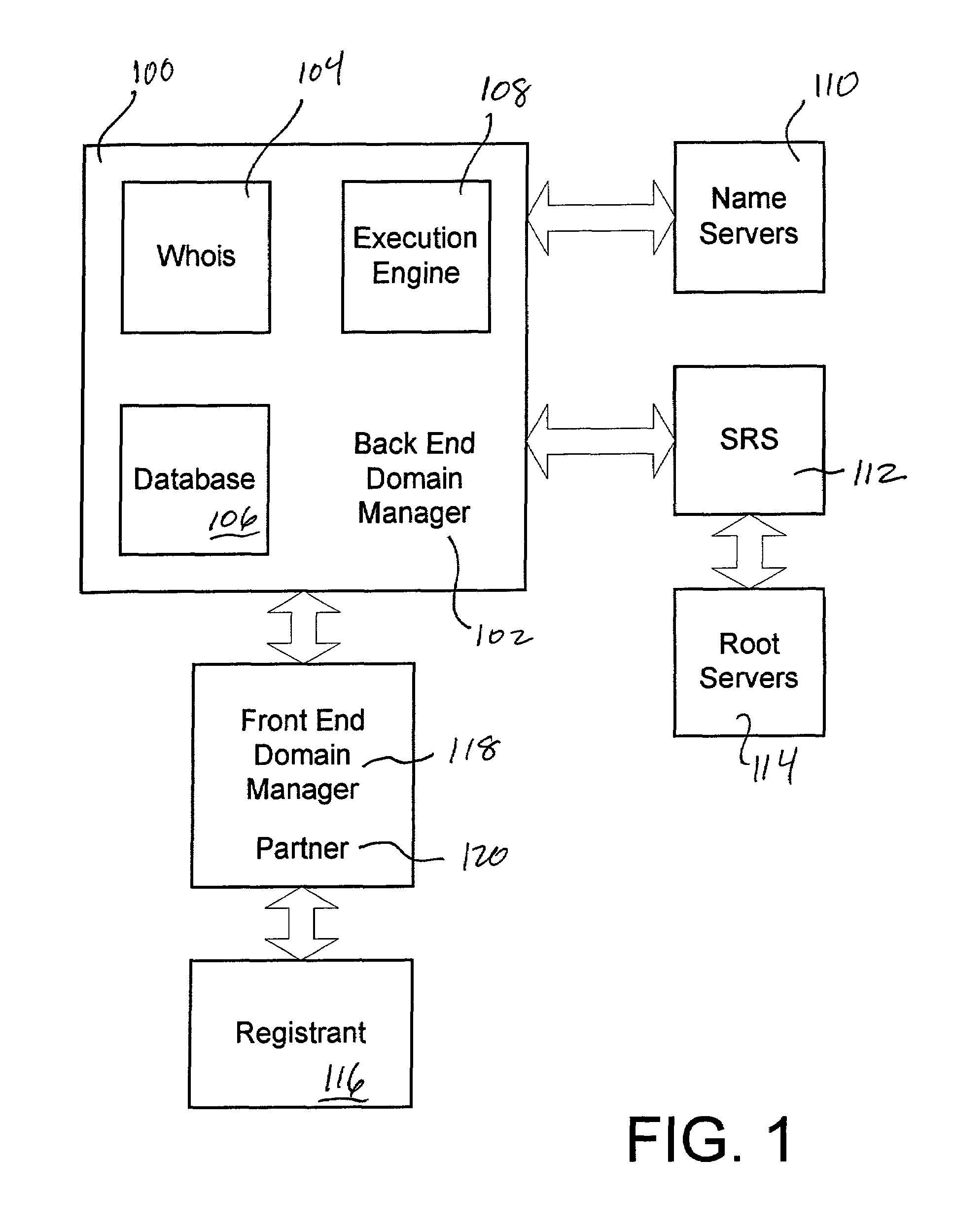

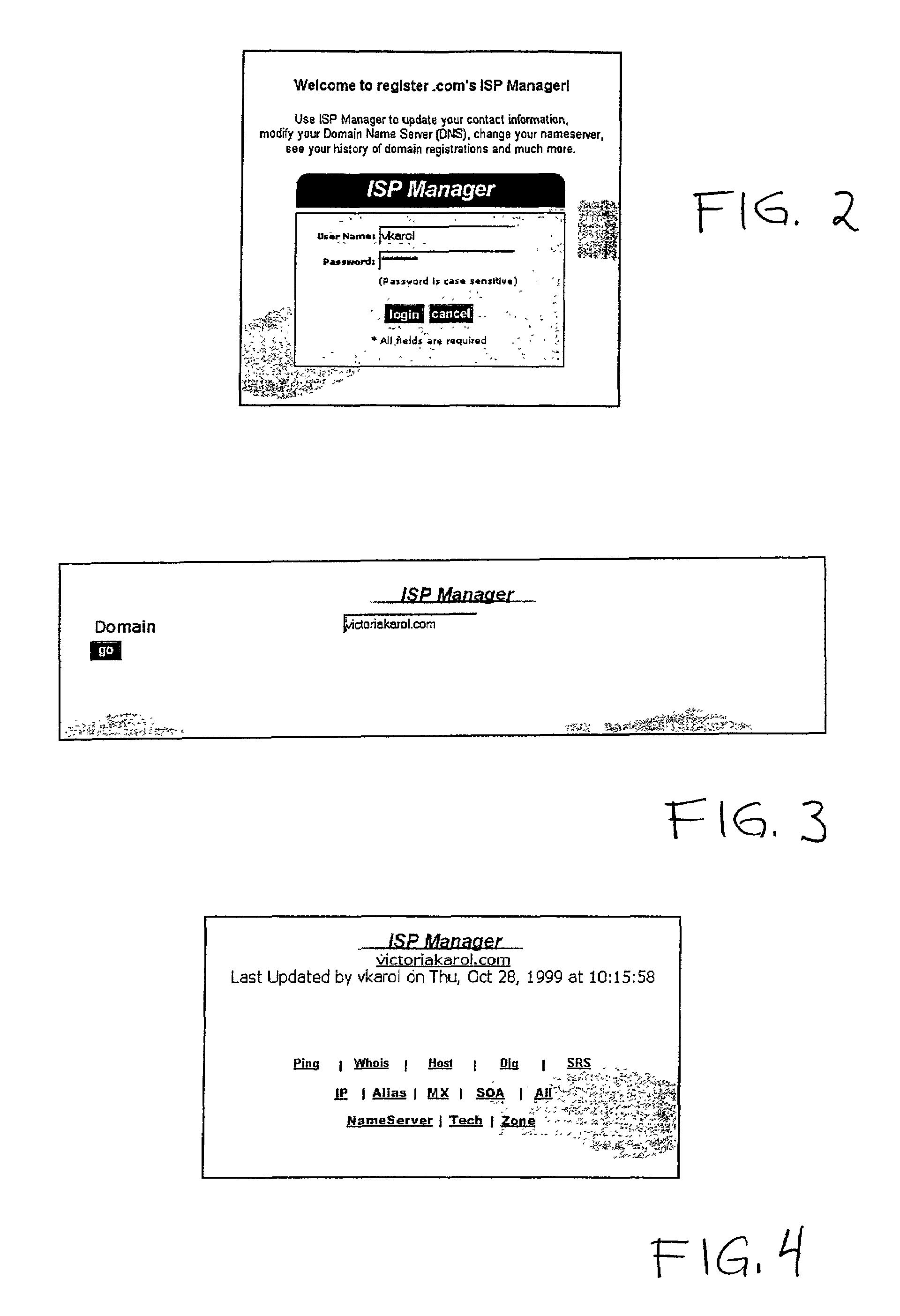

Method and apparatus providing distributed domain management capabilities

ActiveUS7076541B1Data processing applicationsMultiple digital computer combinationsGraphicsGraphical user interface

A system and method facilitates communication of domain management functions and information between a front-end server, utilized for example by an unaccredited registrar or other type of partner site, and a back-end server. Typically the back-end server has direct access to the shared registry system (SRS) and is of the type such as might be found within an accredited registrar. Communication between the front-end server and the back-end server is through a lightweight protocol. The front-end server generates a graphical user interface on an operator's terminal. An operator enters information to the front-end server through the graphical user interface and generates a message from the operator terminal to the front-end server. The front-end server parses the message, extracts information appropriate to the requested function, and generates a compact command message to send to the back-end server. Preferably, the back-end server receives the command through a command-line or similar interpreter and performs a function or extracts information as requested by the front-end server. A variety of DNS or zone file information can be altered using simple graphical user interfaces into which an operator enters change information. The front-end server parses the change information from the operator and passes data representative of that change information to the back-end domain manager server. The requested information or a completion message is sent from the back-end server using a similar lightweight protocol.

Owner:WEB COM GRP

Correlation and parallelism aware materialized view recommendation for heterogeneous, distributed database systems

InactiveUS20090177697A1Digital data information retrievalSpecial data processing applicationsCost–benefit analysisTheoretical computer science

A method is provided for generating a materialized view recommendation for at least one back-end server that is connected to a front-end server in a heterogeneous, distributed database system that comprises parsing a workload of federated queries to generate a plurality of query fragments; invoking a materialized view advisor on each back-end server with the plurality of query fragments to generate a set of candidate materialized views for each of the plurality of query fragments; identifying a first set of subsets corresponding to all nonempty subsets of the set of candidate materialized views for each of the plurality of query fragments; identifying a second set of subsets corresponding to all subsets of the first set of subsets that are sorted according to a dominance relationship based upon a resource time for the at least one back-end server to provide results to the front-end server for each of the first set of subsets; and performing a cost-benefit analysis of each of the second set of subsets to determine a recommended subset of materialized views that minimizes a total resource time for running the workload against the at least one back-end server.

Owner:IBM CORP

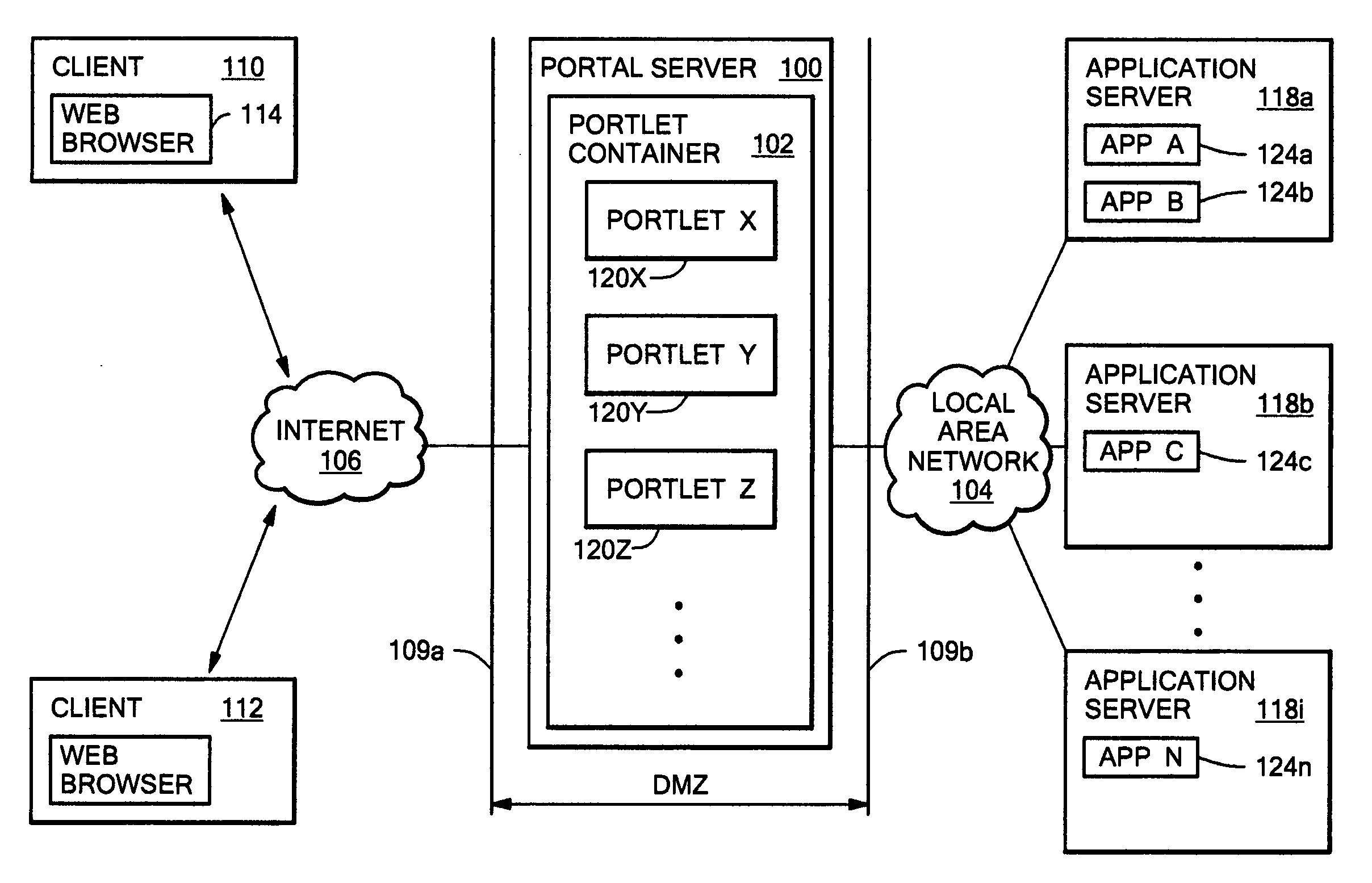

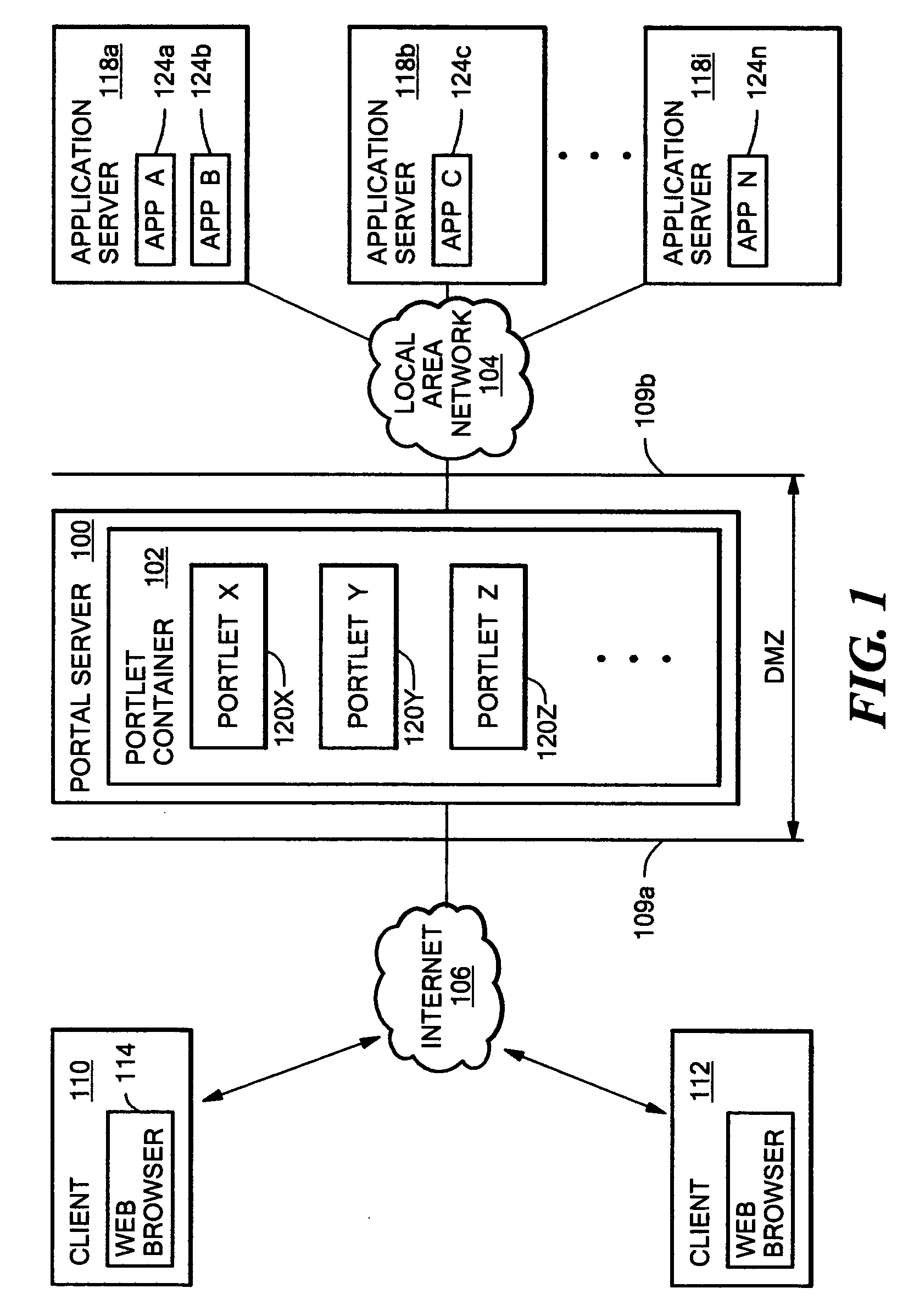

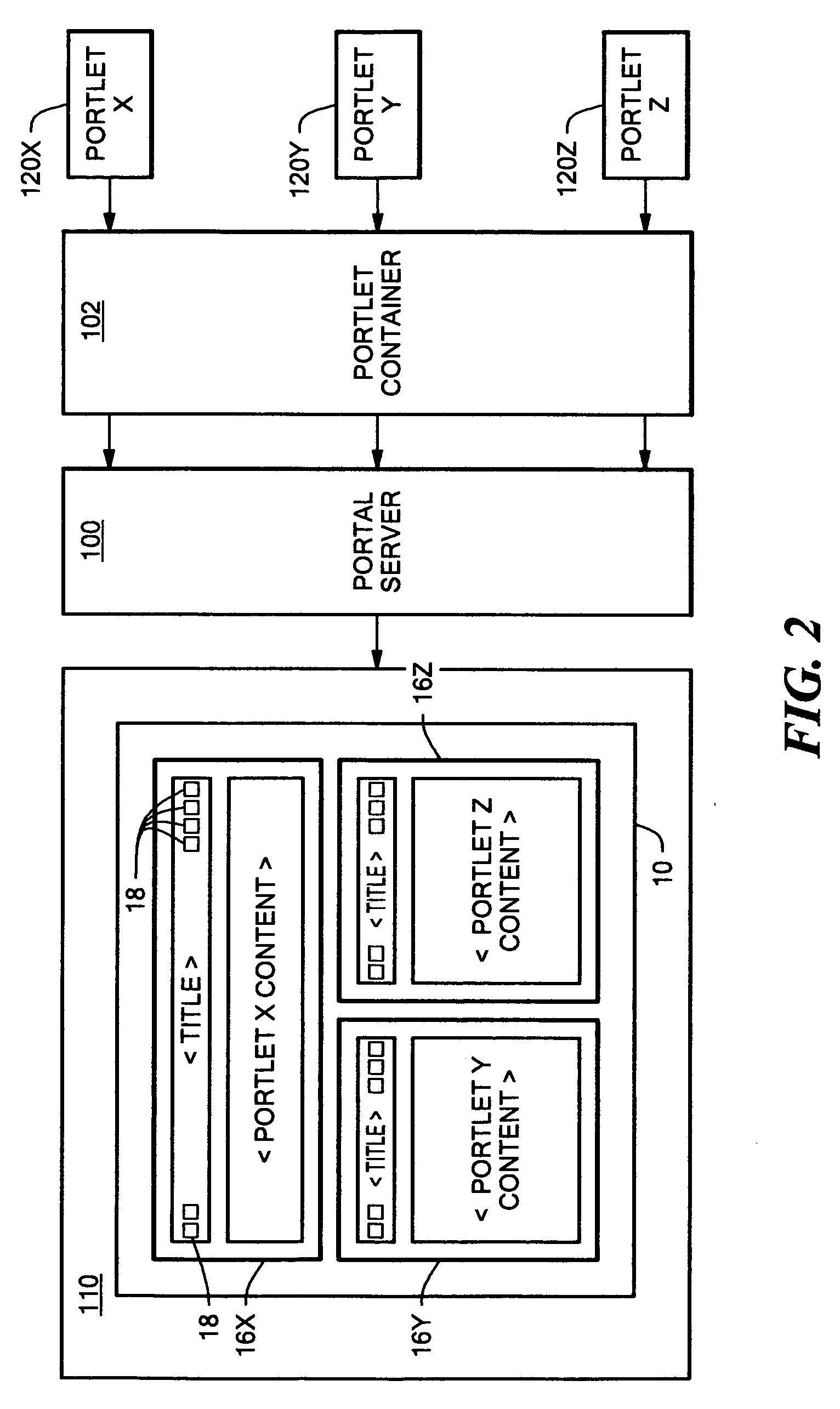

Reverse proxy portlet with rule-based, instance level configuration

InactiveUS20060041637A1Reduce overheadGood flexibilityMultiple digital computer combinationsProgram controlWeb sitePortlet

A portal server having a reverse proxy mechanism for proxying one or more Web applications on a backend server in response to a request for Web content from a user. The reverse proxy mechanism includes a portlet, a set of configuration rules, and a rewriting mechanism. The rewriting mechanism is configured to forward a user request for Web content to a Web application on the backend server, receive a response from the Web application, and rewrite the received response in accordance with the configuration rules. The portlet is configured to produce a content fragment for a portal page from the rewritten response. The configuration rules include rules for rewriting any resource addresses, such as URLs, appearing in the received response from the Web application to point to the portal server rather than to the backend server. The disclosed system allows the portal server to appear to the client as the real content server. The portal server is arranged such that an external Web application, which is running on a separate backend server, is displayed to the user within the boundary of the portlet window on a portal page. The backend server remains behind a firewall and the reverse proxy function of the portlet allows a user to access the Web application on the portal server, without needing (or allowing) the user to have direct access to the backend server and backend application which provide the actual content.

Owner:LINKEDIN

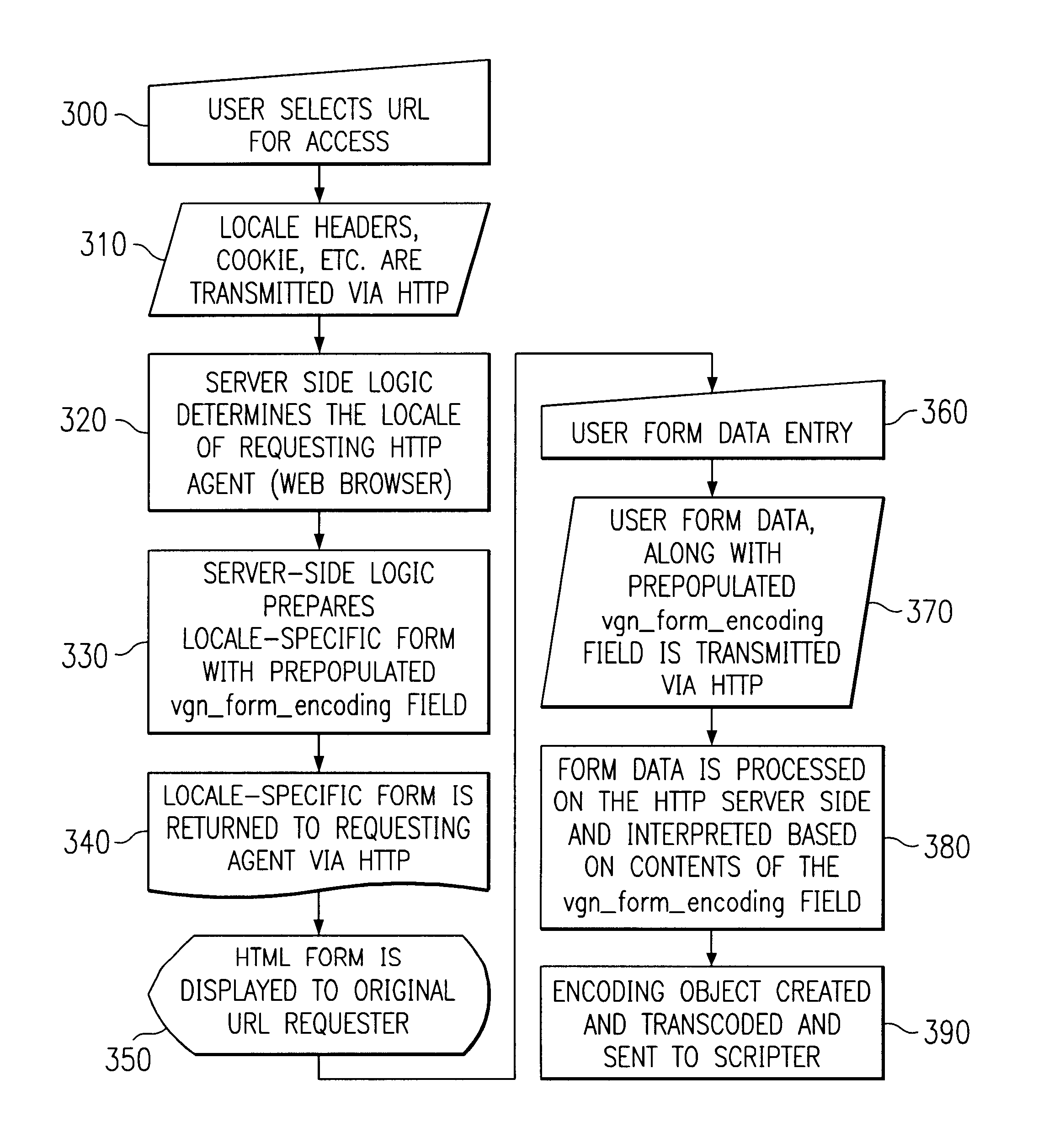

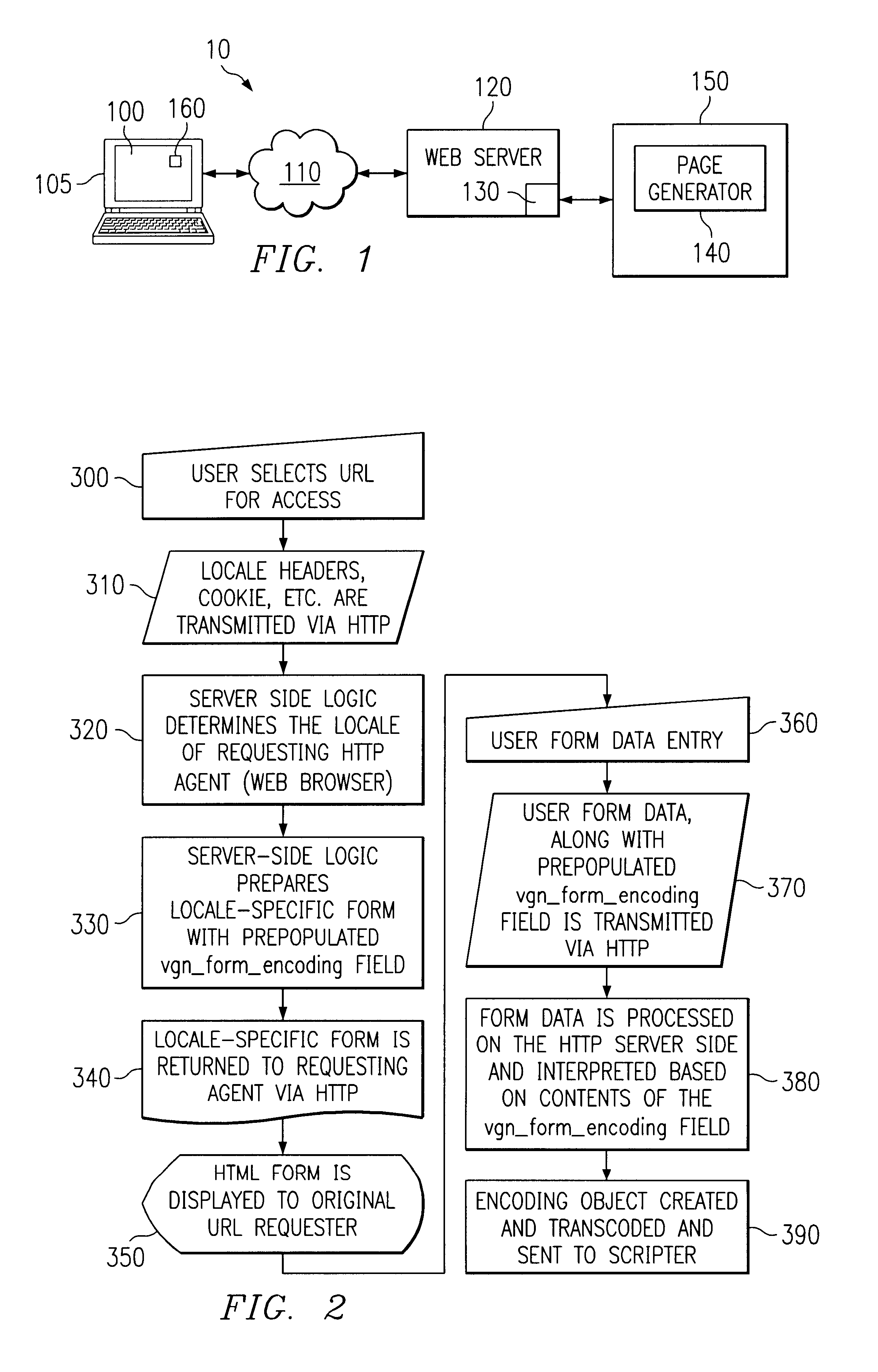

Method and system for native-byte form handling

InactiveUS6850941B1Data processing applicationsMultiple digital computer combinationsWeb siteWeb browser

A method and system are provided for native-byte form handling, one embodiment comprising a method for encoding user-submitted native-byte form data in a client-server computer network. A user, at a client computer, can select a Uniform Resource Locator (“URL”) with his or her web browser for access to a content provider's website. When accessing the content provider's web server, the client computer can transmit client computer specific information to the web server that can be used by the web server to determine the user's locale. The web server can determine the user's (client computer's) locale using, for example, an automatic locale detection algorithm, and forward the user's locale information to a back-end server. The content provider's back-end server can generate a locale-specific form having a pre-populated, uniquely-named field, using, for example, a dynamic page generation program. The locale-specific form can be served to the user's web browser for display to the user. The user can enter, on the locale-specific form, information that he or she wishes to submit to the content provider (e.g., a request for content). The user can transmit the form-entered data, including the pre-populated, uniquely-named field, to the content provider's web server. The web server can process the user form data, and an encoding object can be created, based on the value of the pre-populated field. The user form data can then be transcoded from its original encoding to a new encoding (e.g., the content provider's scripting environment encoding) and forwarded to the content provider's scripting environment.

Owner:OPEN TEXT SA ULC

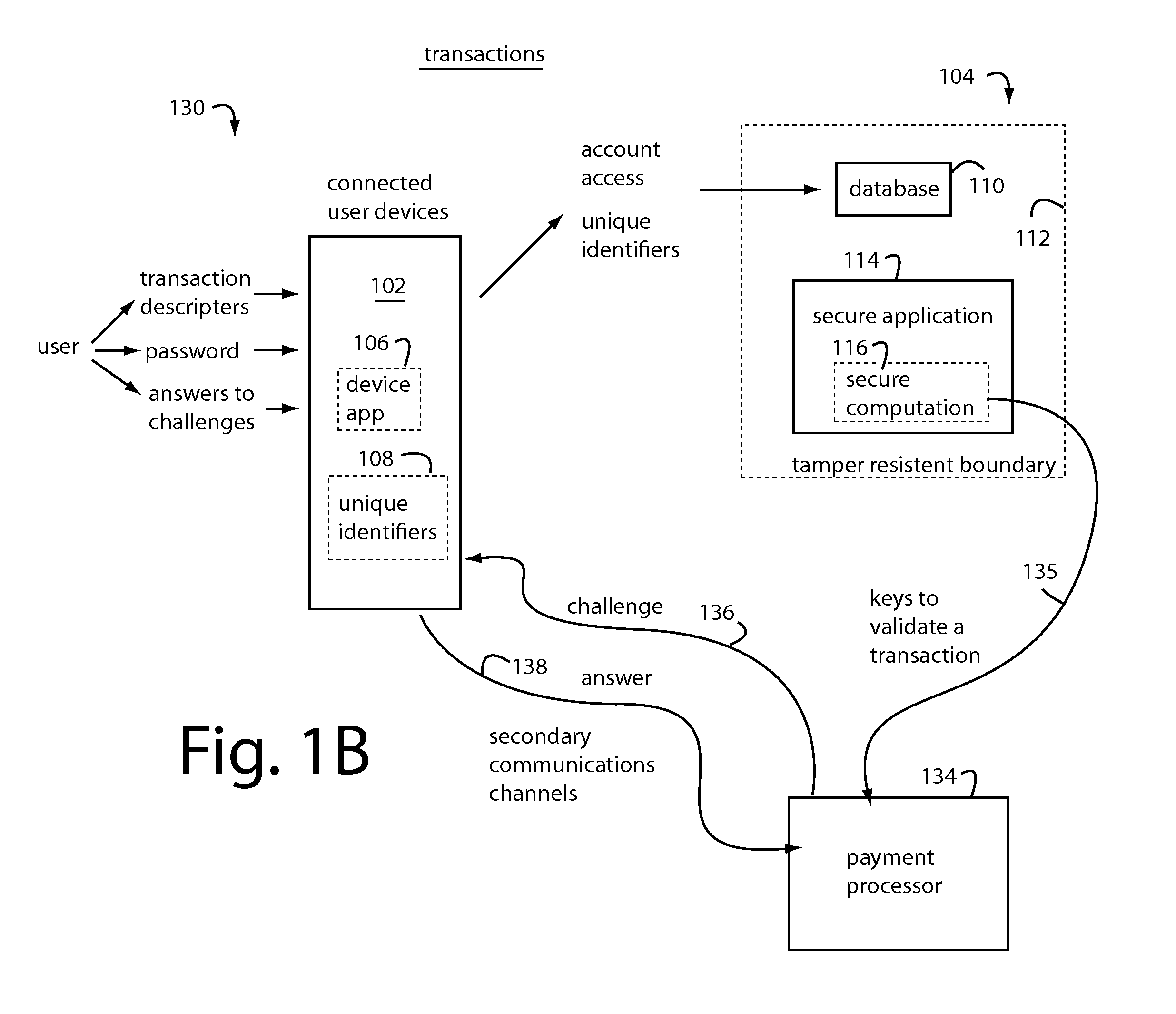

Cloud proxy secured mobile payments

InactiveUS20130226812A1Increase in numberIncrease the number ofProtocol authorisationPaymentAuthentication

A secure payment system provisions a payment transaction proxy with virtual EMV-type chipcards on secure backend servers. Users authorize the proxy in each transaction to make payments in the Cloud for them. The proxy carries out the job without exposing the cryptographic keys to risk. User, message, and / or device authentication in multifactor configurations are erected in realtime to validate each user's intent to permit the proxy to sign for a particular transaction on the user's behalf. Users are led through a series of steps by the proxy to validate their authenticity and intent, sometimes incrementally involving additional user devices and communications channels that were pre-registered. Authentication risk can be scored by the proxy, and high risk transactions that are identified are tasked by further incrementally linking in more user devices, communications channels, and user challenges to increase the number of security factors required to authenticate.

Owner:CRYPTOMATHIC LTD

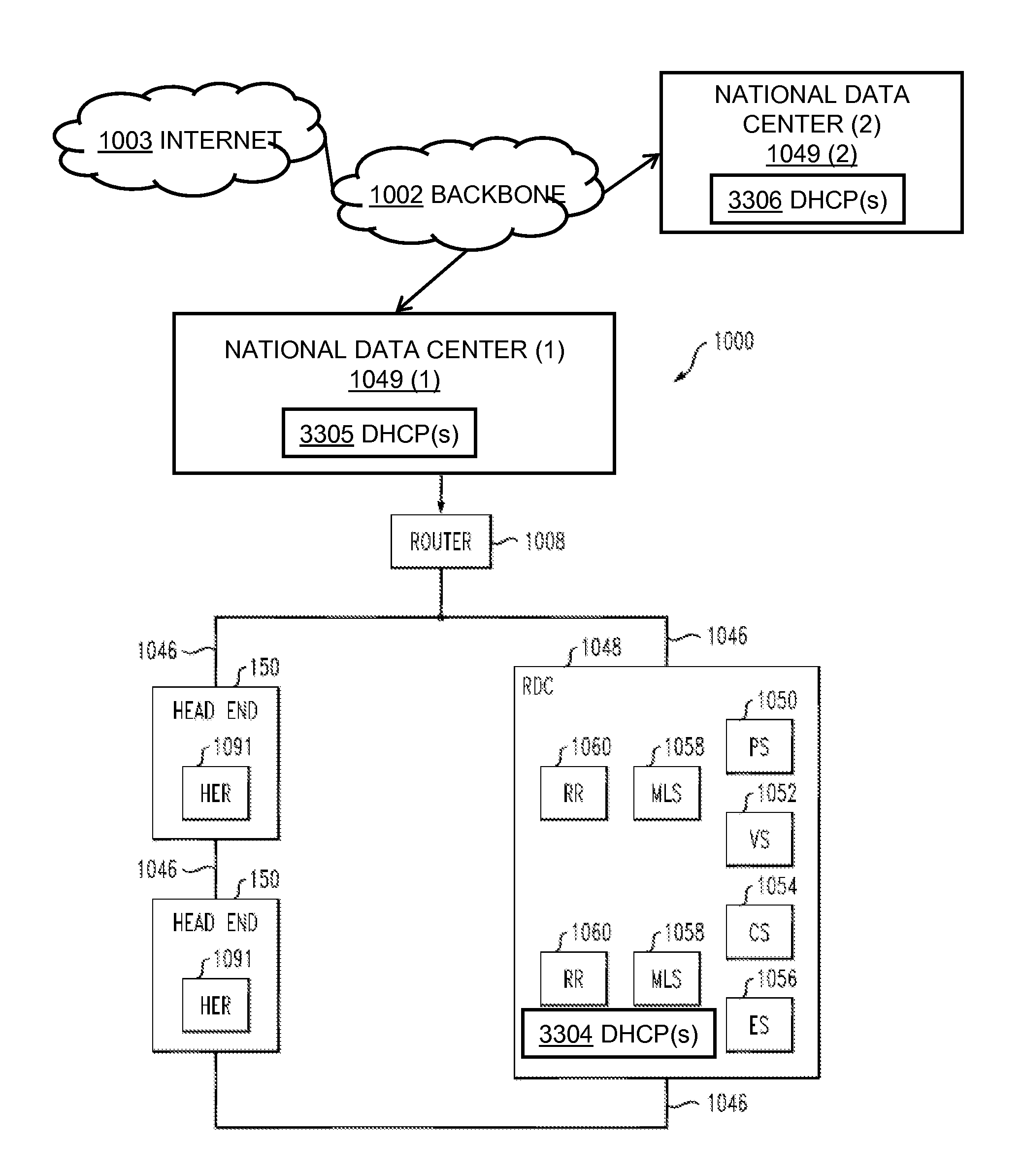

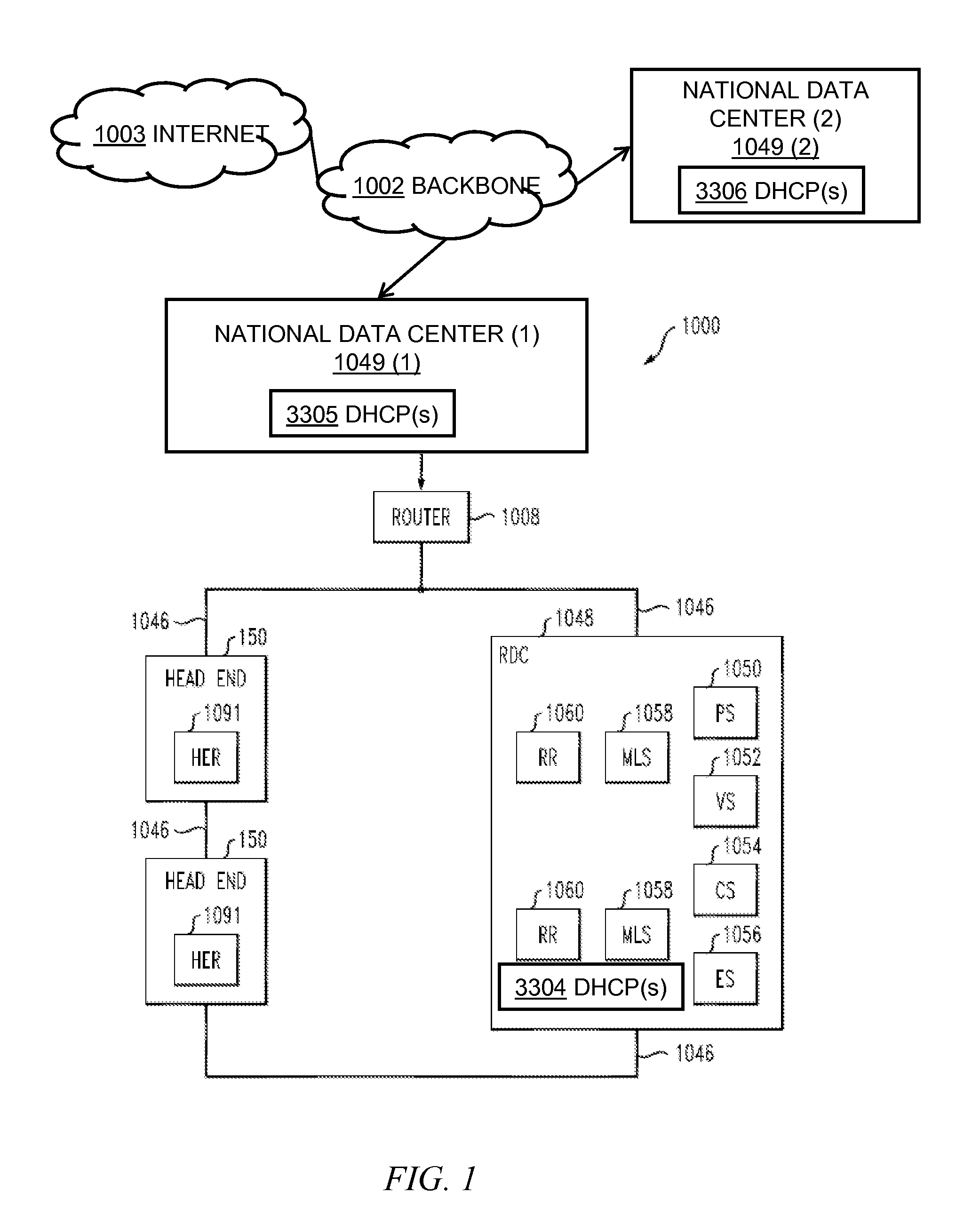

System and method for automatic routing of dynamic host configuration protocol (DHCP) traffic

ActiveUS20140281029A1Simple processEase in collecting metrics and/or statisticsMultiple digital computer combinationsData switching networksTraffic capacityAutomatic routing

At an intermediary dynamic host configuration protocol relay device, over a network, a dynamic host configuration protocol message is obtained from one of a plurality of remote dynamic host configuration protocol relay devices in communication with the intermediary dynamic host configuration protocol relay device over the network. The intermediary dynamic host configuration protocol relay device accesses data pertaining to a plurality of dynamic host configuration protocol back-end servers logically fronted by the intermediary dynamic host configuration protocol relay device. Based on information in the dynamic host configuration protocol message and the data pertaining to the plurality of dynamic host configuration protocol back-end servers, the dynamic host configuration protocol message is routed to an appropriate one of the plurality of back-end dynamic host configuration protocol servers.

Owner:TIME WARNER CABLE ENTERPRISES LLC

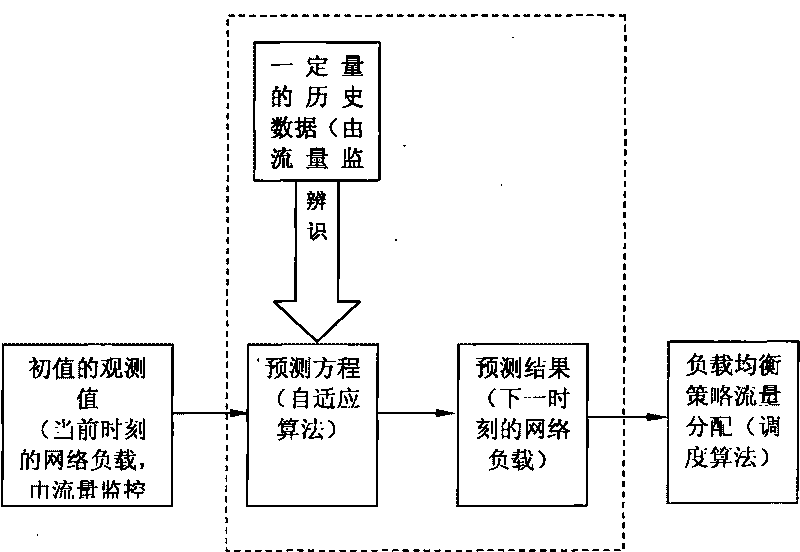

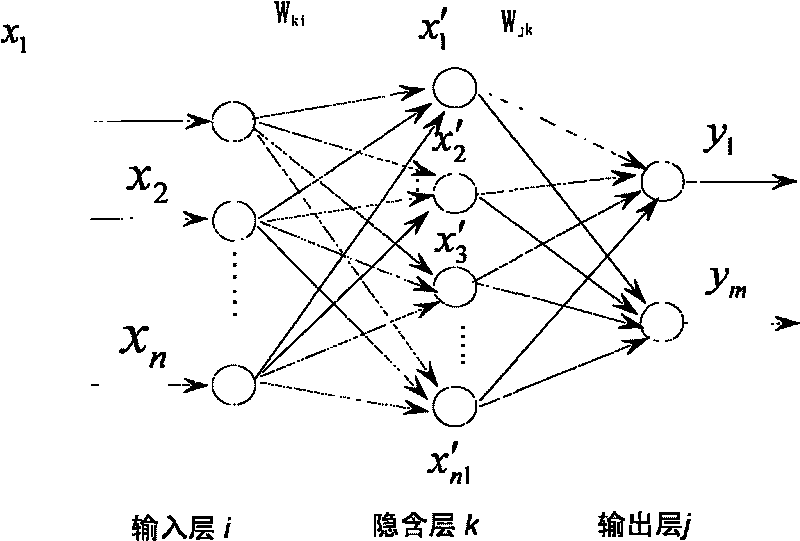

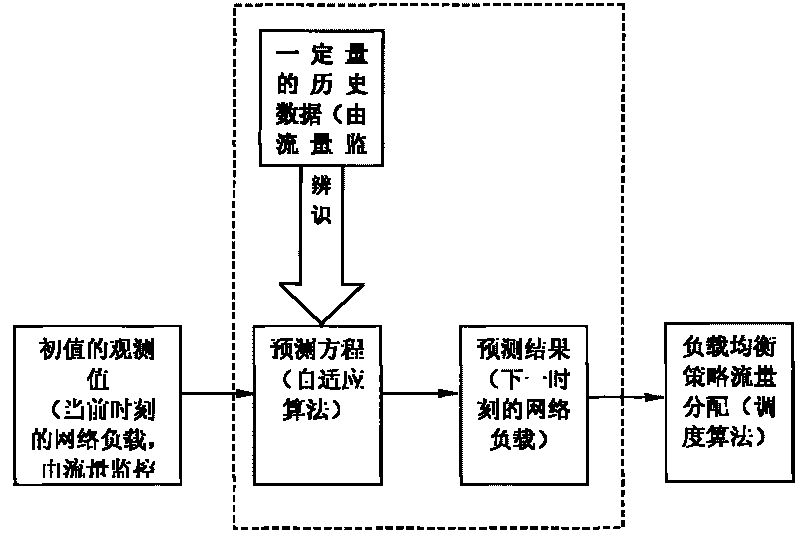

Dynamic load balancing method based on self-adapting prediction of network flow

InactiveCN101695050AImprove accuracyStrong self-learning abilityData switching networksBack end serverSoftware

The invention provides a dynamic load balancing method based on self-adapting prediction of network flow. The scheme includes: observing historical data regularity of network load flowing into a load balancing switch or a switching software in a certain time cycle; obtaining a parameter value of self-adapting algorithm in a prediction program to form a computing formula; then substituting load observation value at present moment into the formula to predict load value at the next moment; and distributing flow for a rear-end server at real time according to prediction value, thereby enabling the network load to be regulated in advance, avoiding lag effect, constantly keeping the network load in a comparatively balancing state, greatly strengthening self-adapting self-regulating capability of network to load, and being adaptable to networks with a certain time cycle regularity at occasions such as regular-period network backup and the like.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

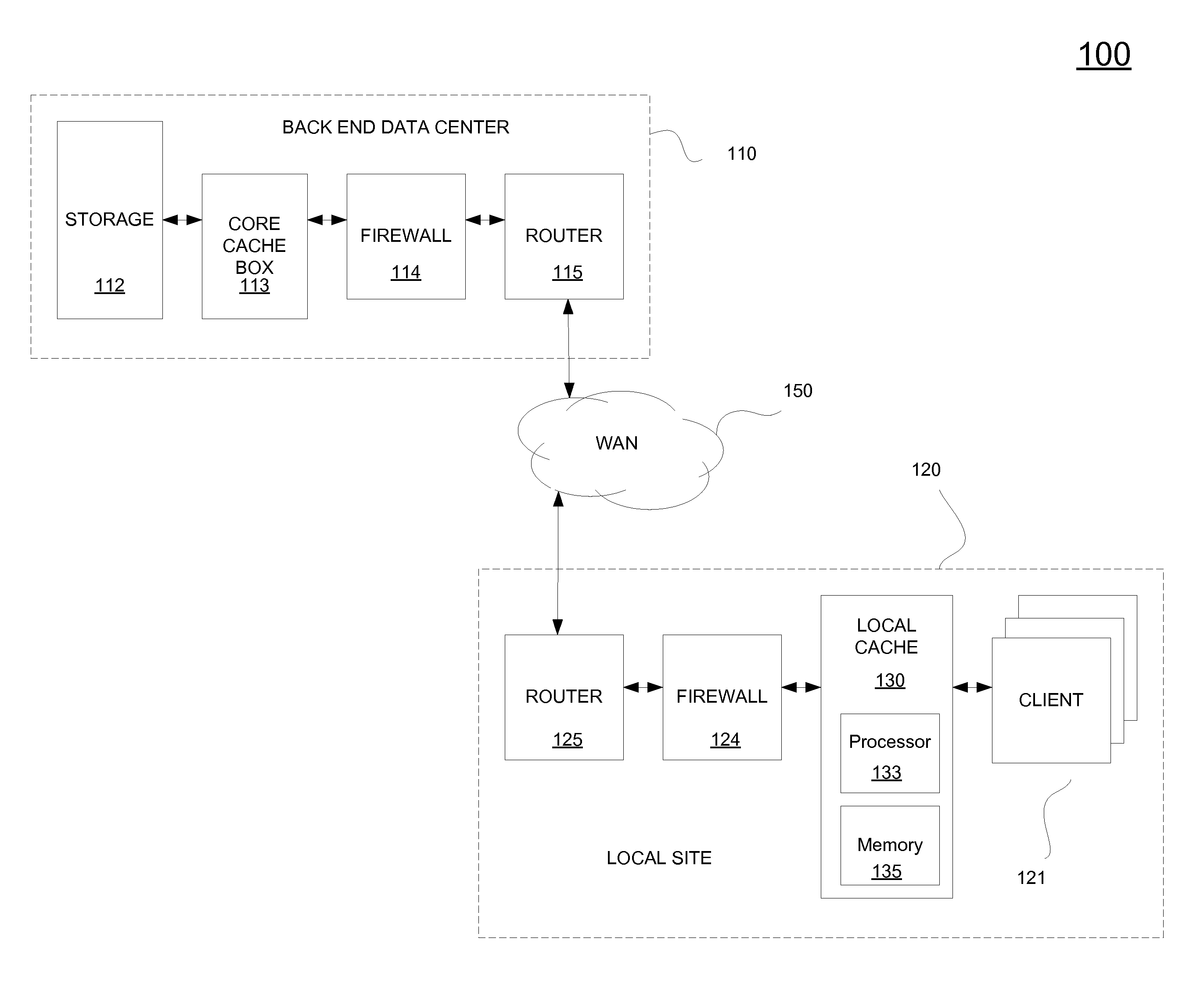

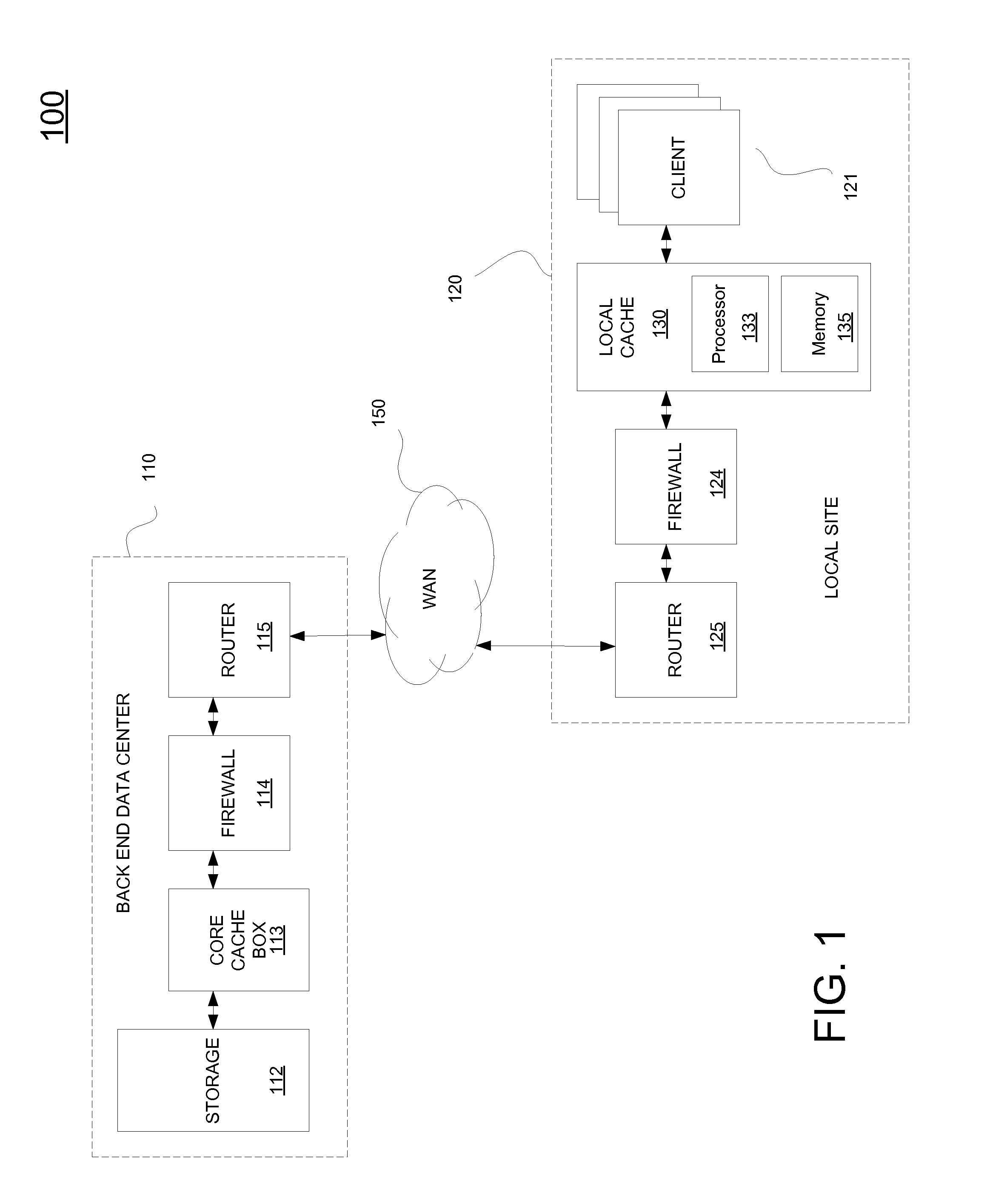

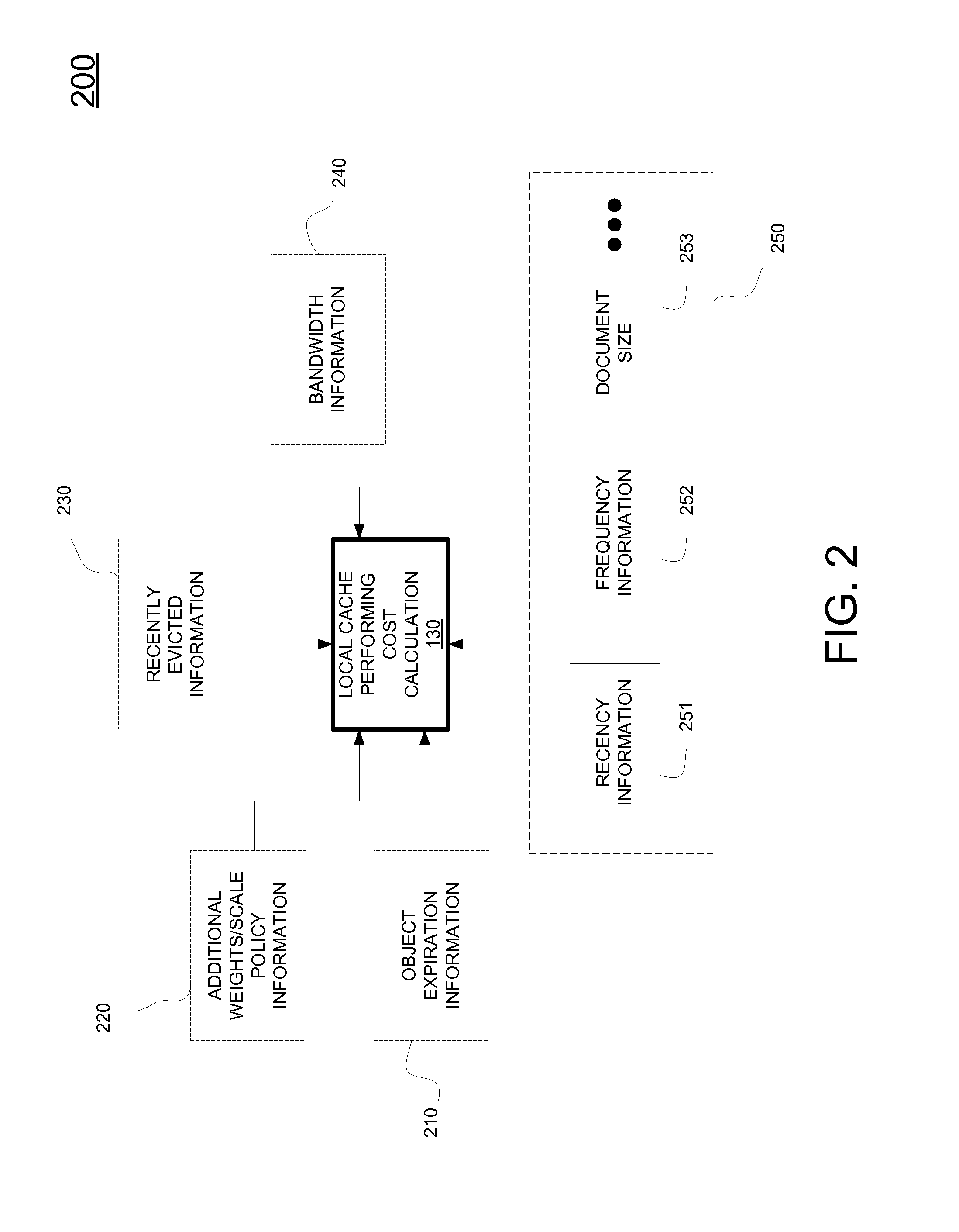

Method and system for smart object eviction for proxy cache

InactiveUS20160255169A1Improve overall utilizationTransmissionMemory systemsSmart objectsBack end server

A method for managing a local cache. The method includes receiving an object from a back end server at a local cache for access by one or more users at a local site. The method includes attaching an eviction policy parameter value to the object. The method includes storing the object in the local cache. The method includes evaluating at the local cache the eviction policy parameter value. The method includes evicting the object from the local cache based on the eviction policy parameter.

Owner:FUTUREWEI TECH INC

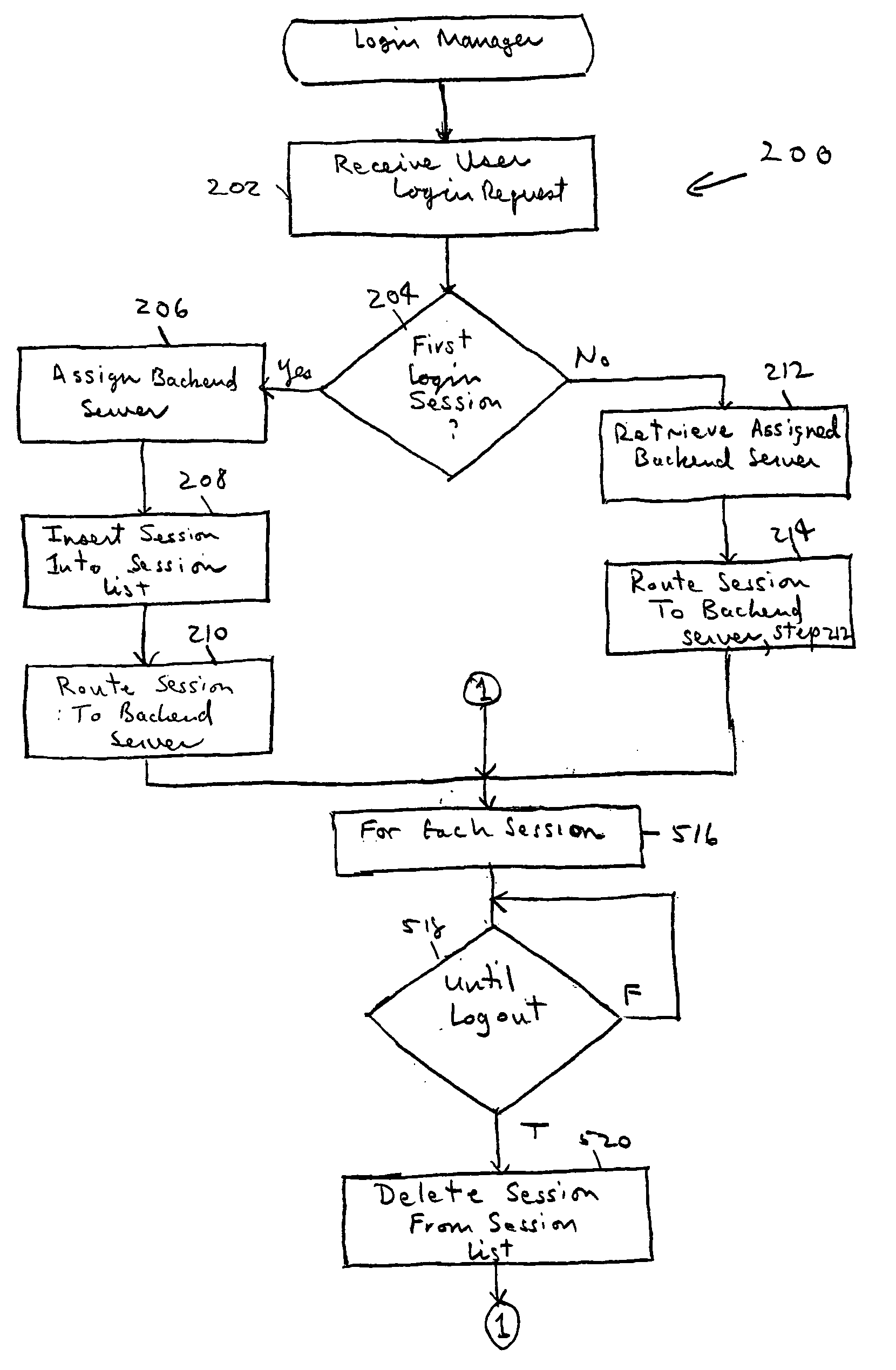

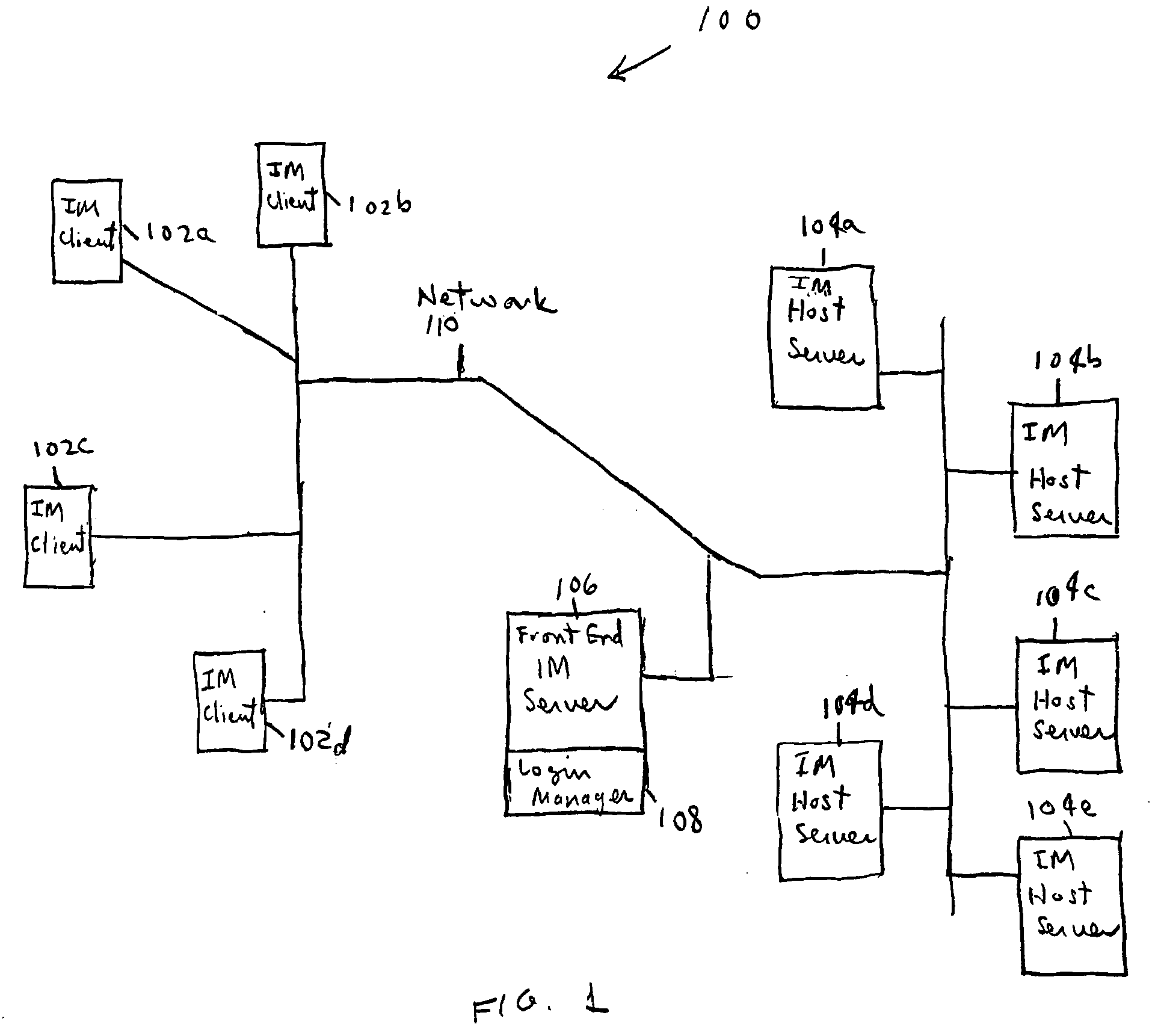

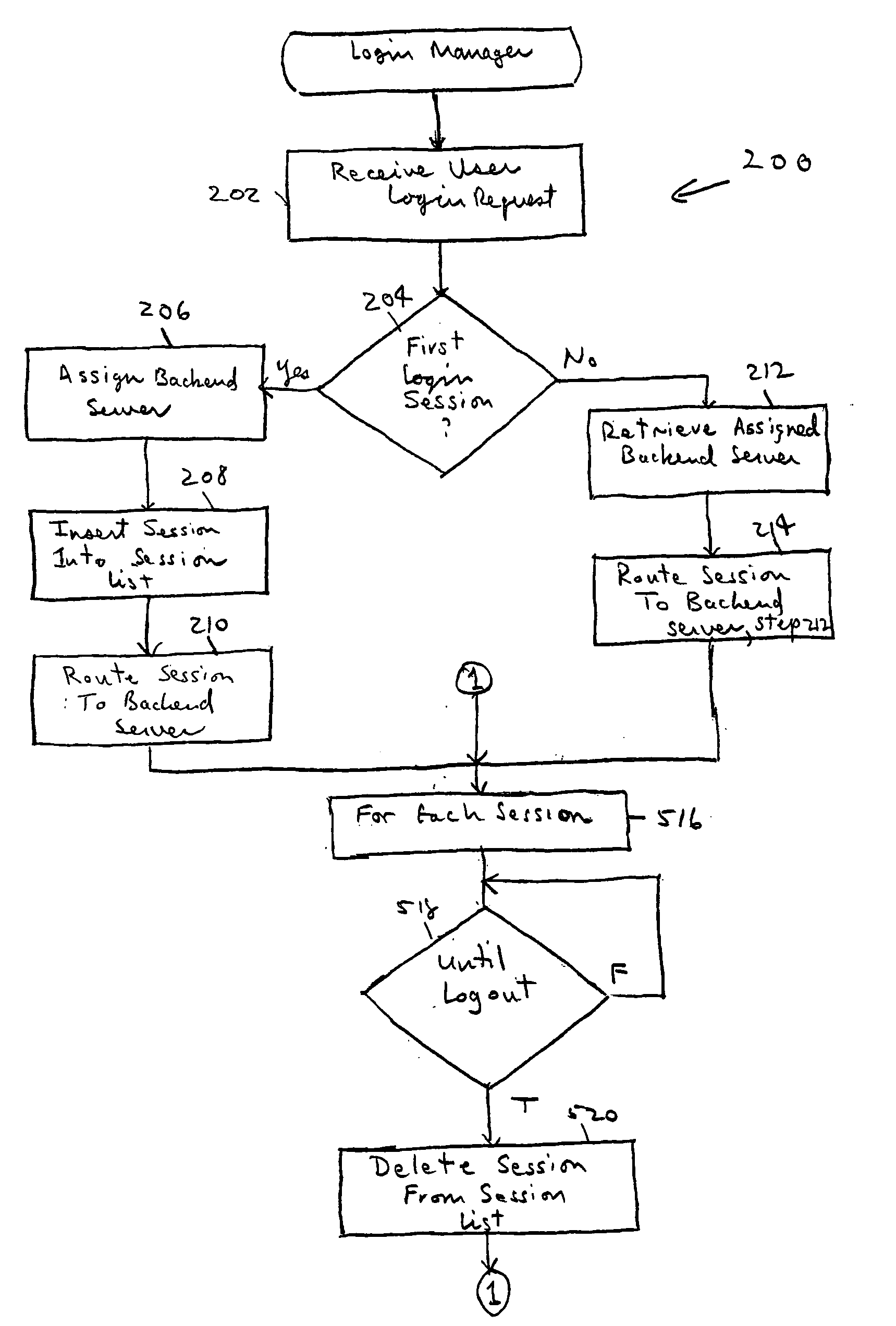

Method and system for multiple instant messaging login sessions

ActiveUS20050102365A1Special service provision for substationMultiple digital computer combinationsBack end serverLogin session

A mechanism for multiple instant messaging (IM) sessions associated with a single user name is provided. A frontend server receives user login requests and routes the instant messaging system to a back-end server. Each login associated with a particular user name is routed to the same backend server (or IM host). Messages targeted to a recipient having a plurality of active IM sessions are broadcast to all the active sessions. Messages targeted to recipients having a single IM session are unicast.

Owner:SINOEAST CONCEPT

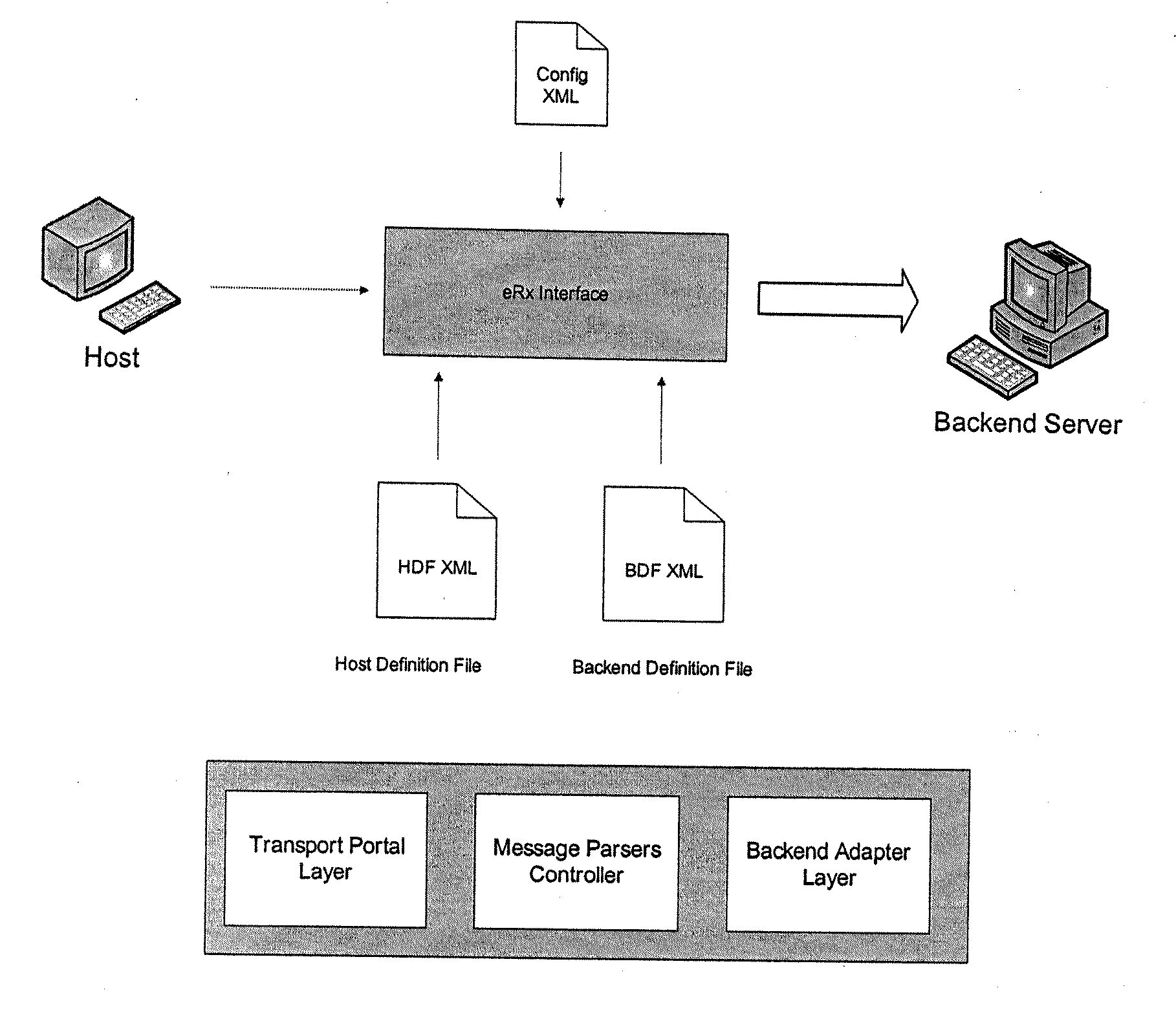

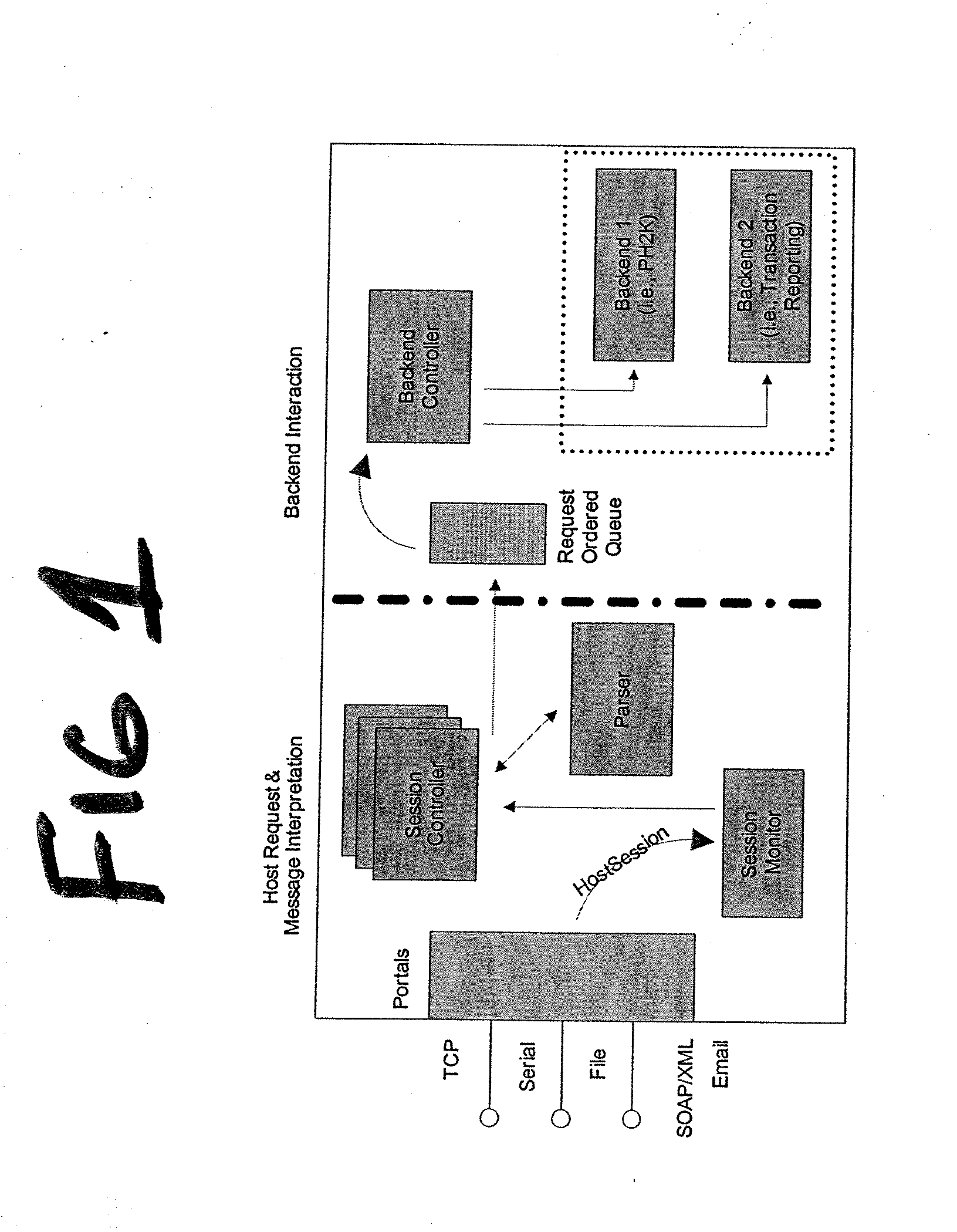

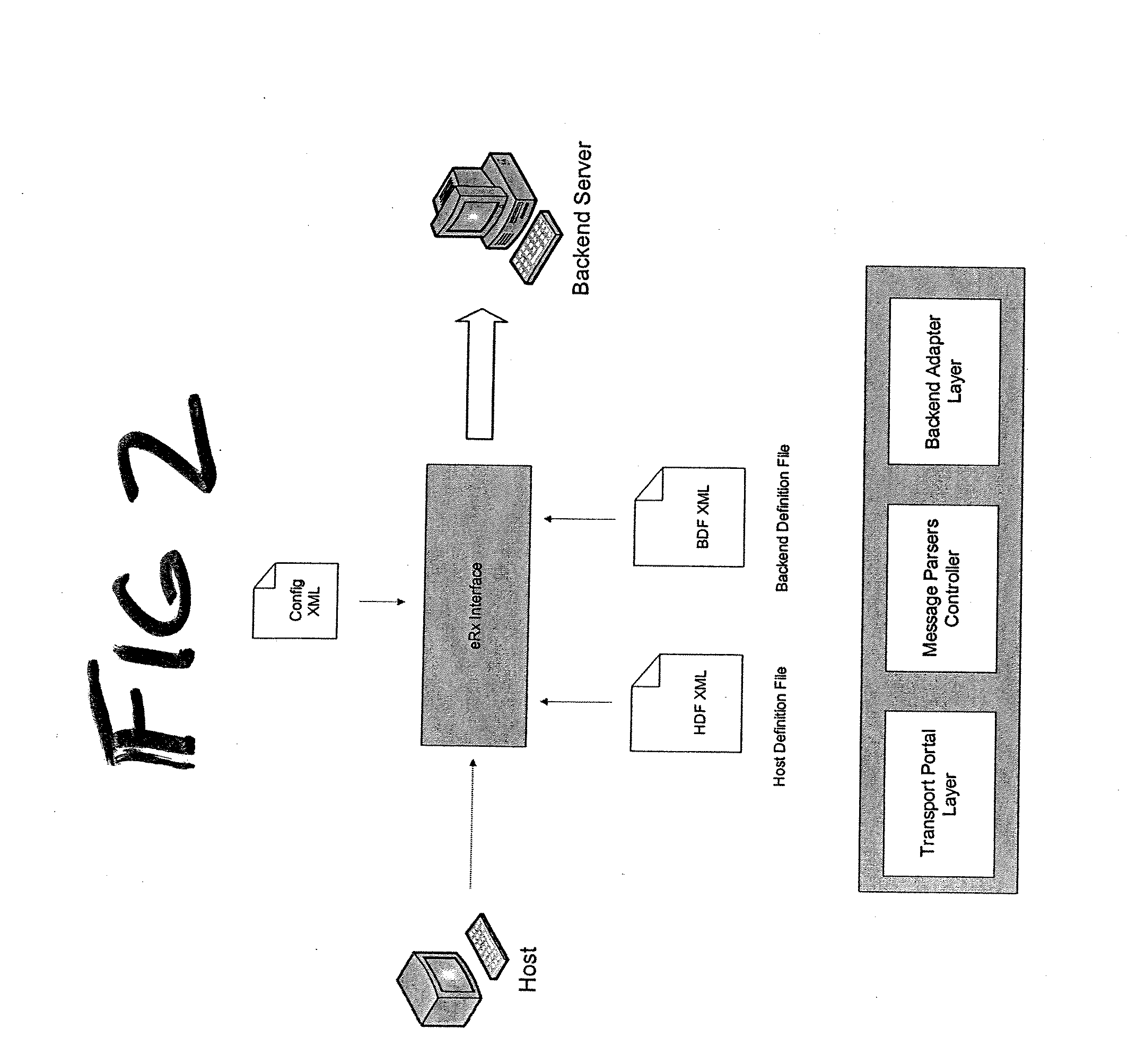

Adaptive interface for product dispensing systems

ActiveUS20070208454A1Excellent diagnosticsExcellent performance feedbackDigital data processing detailsCoin-freed apparatus detailsExtensibilityBack end server

An adaptive interface is provided that is capable of brokering requests from a diverse set of customer host systems to a diverse set of backend servers (or backend device or backend automation system) controlling product dispensing devices and / or systems. The interface may be fully configurable and extensible (i.e., there is a lot of control over the behavior, and the interface can support future features without requiring code changes). Two areas of extensibility of the interface may be adapting to new message formats from the same or new host systems, and supporting new backend services.

Owner:MCKESSON AUTOMATION SYST

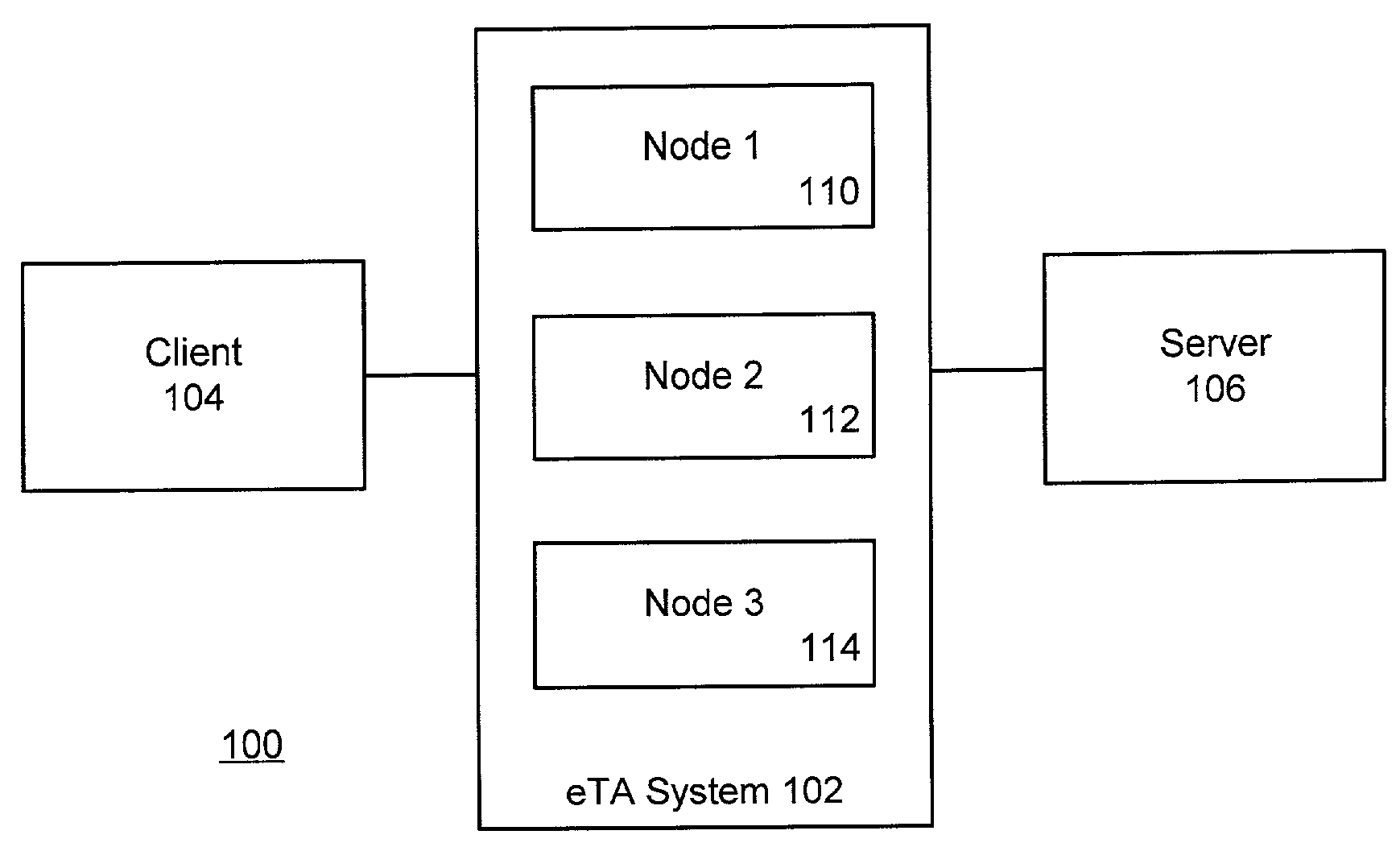

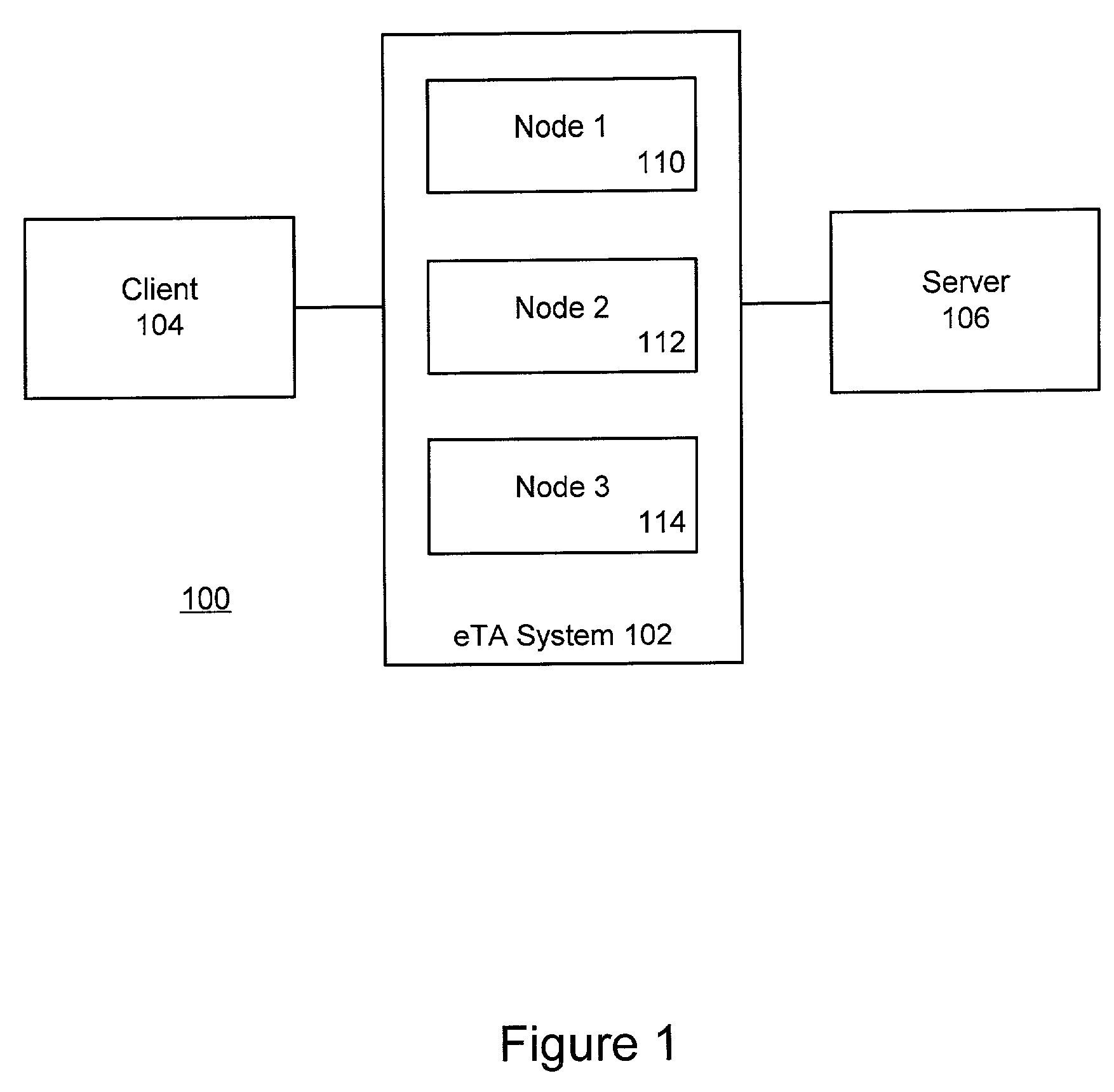

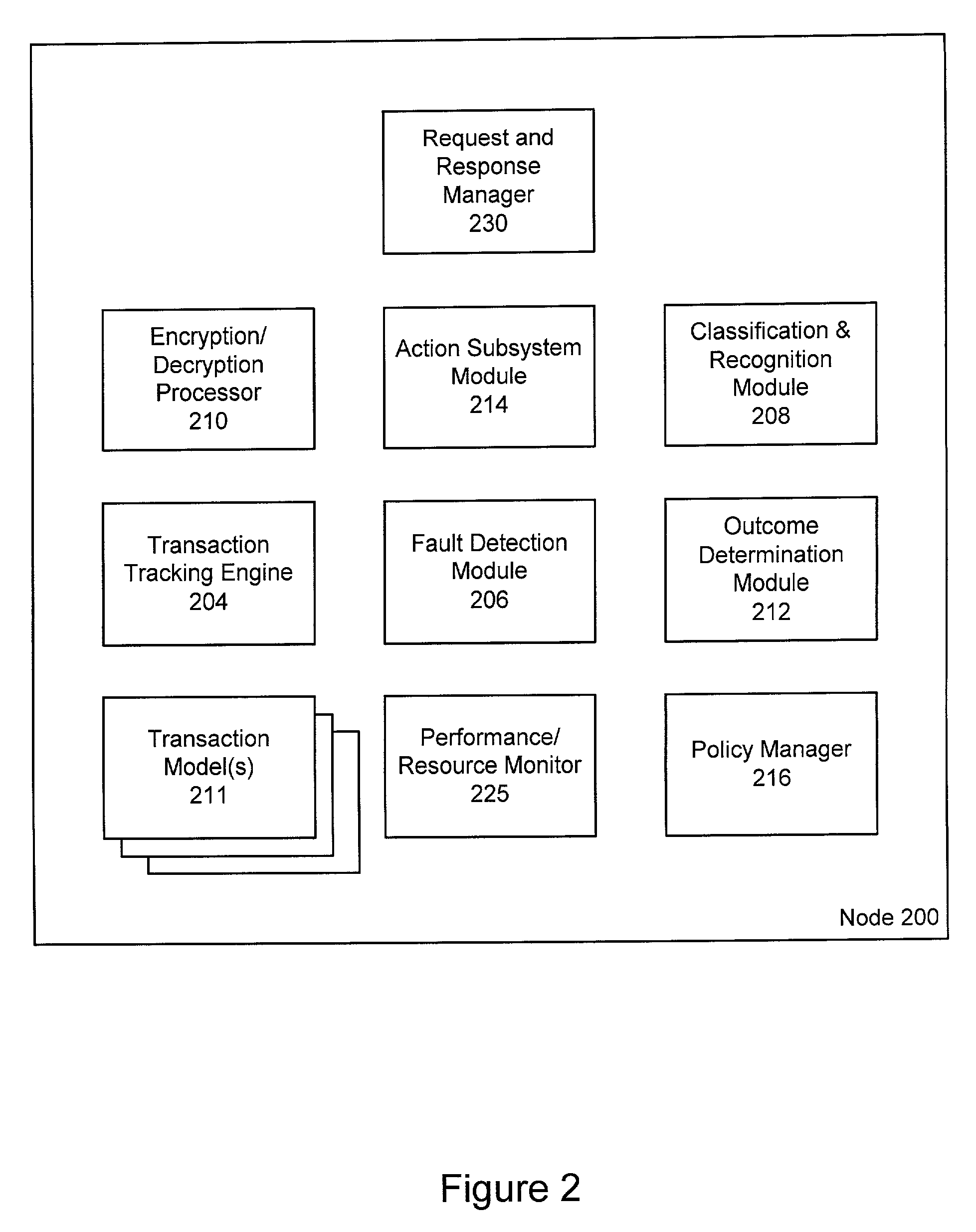

Highly available transaction failure detection and recovery for electronic commerce transactions

ActiveUS7539746B2Effective trackingMultiple digital computer combinationsTransmissionNetwork connectionTransaction model

Electronic commerce transaction messages are processed over a network between a client and a server in a highly reliable fashion by establishing a secure or un-secure communications connection between the network client and the network server at an electronic transaction assurance (eTA) system, which is located in a communication path between the network client and the network server. The transaction type is identified in the message and the progress of the transaction is tracked using transaction models. Any failure in the back-end server system or in the network connections is detected and the failure is recovered from using an outcome determination technique. The failure of a node within the eTA system is masked from the network client by formulating an appropriate response and sending it back to the client such that the network client and network server that were using the selected node do not see any interruption in their communications.

Owner:EMC IP HLDG CO LLC

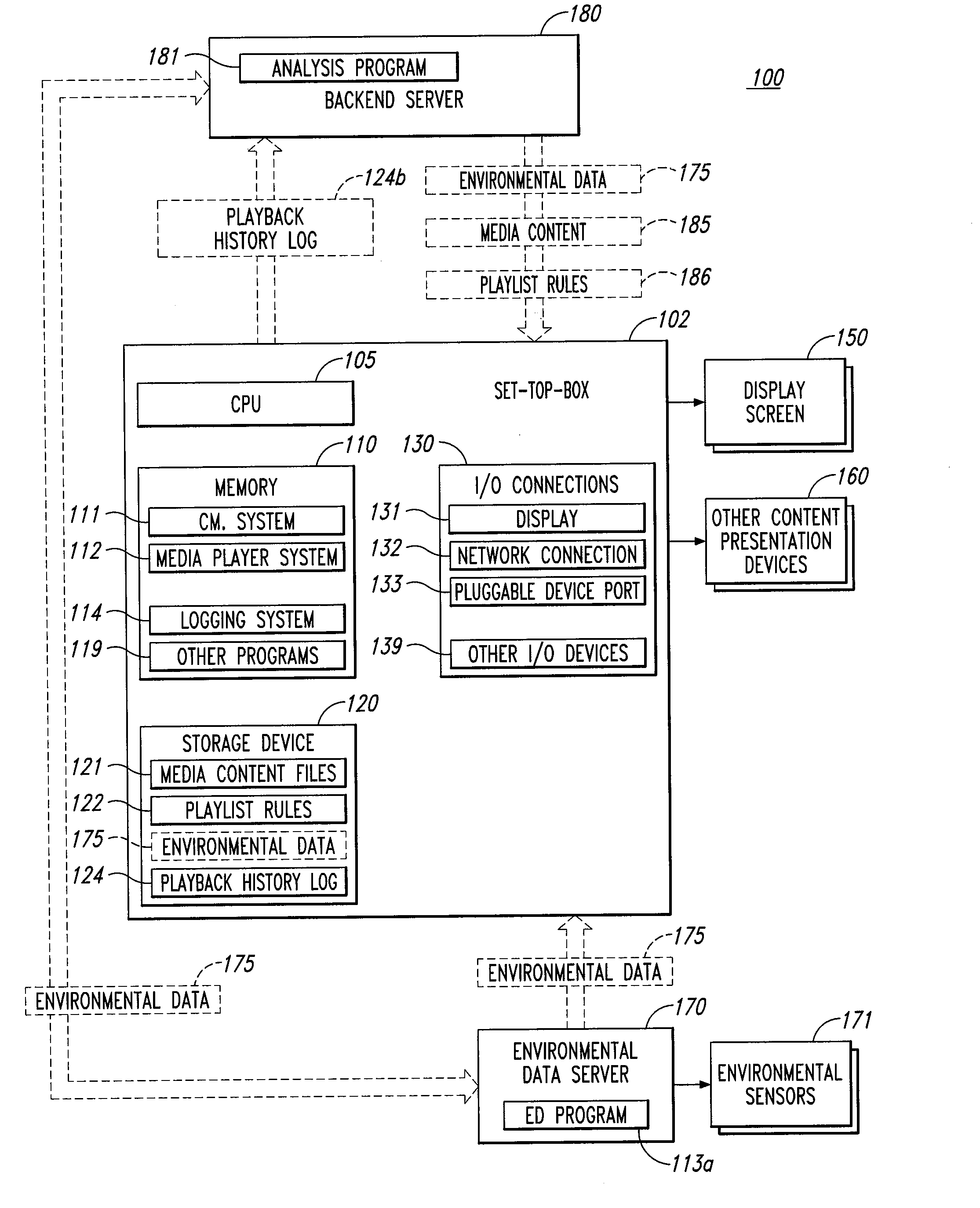

Media delivery system

A media delivery system that may be used for tracking the number and type of human impressions of media content rendered by the system during the time the media was rendered is provided. The media delivery system includes a rendering device for rendering media content, an environmental sensor for sensing impressions and other environmental variables and a computing device configured to gather data related to the external states detected by the environmental sensor. The data may be provided to a backend server for correlating the data to the rendered media content. The system may include rules that interpret that data and may cause the system to custom select, tailor or control future playback of media on the system.

Owner:VULCAN IP HLDG

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com