Dynamic scheduling method and system for collaborative computing between CPU and GPU based on two-level scheduling

A dynamic scheduling and global scheduling technology, applied in the field of distributed computing, can solve problems such as the shortest task completion time, failure to fully utilize the cluster computing capacity, and inconsistent end times of computing nodes, so as to shorten the task processing time and realize pipeline processing. , Guaranteed not to wait for the effect of each other

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

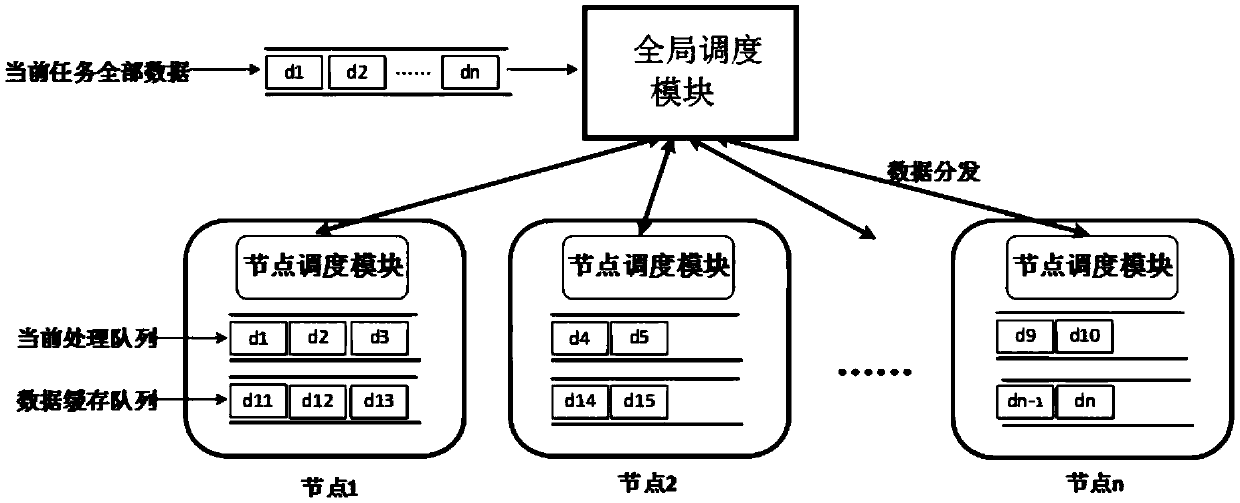

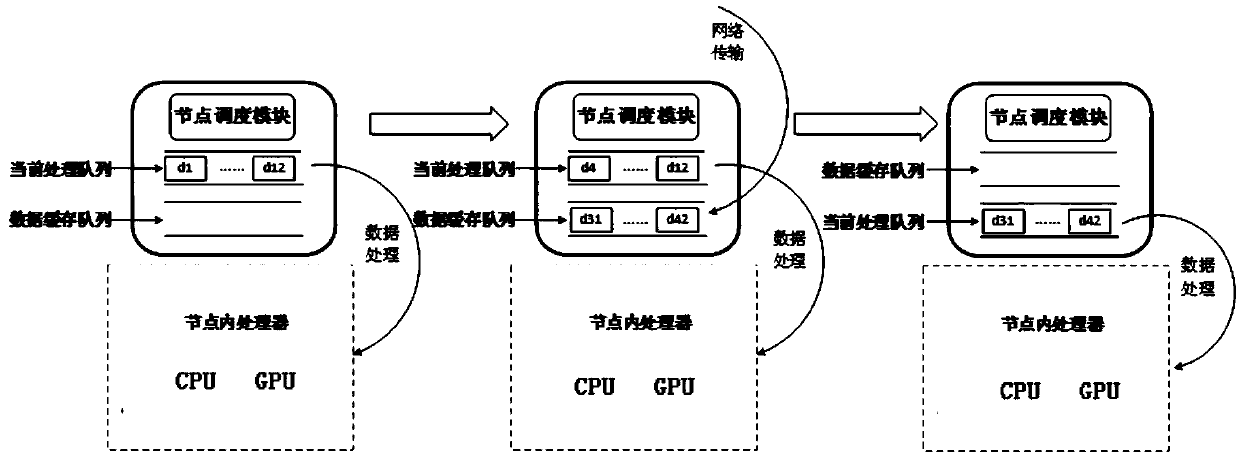

[0059] Such as figure 1 Shown is the software frame diagram of the dynamic scheduling method of the present invention, figure 1 The global scheduling module in the system can be deployed on any node and adopts active and standby redundancy to ensure reliability; the node scheduling module works on each node; the global scheduling module is responsible for distributing data blocks in the system according to the computing power of each node. Each node has two data storage queues, namely the current processing queue and the data cache queue; the current processing queue stores the data blocks being processed by the current CPU and GPU; the data cache queue stores network transmission To the local pending data block.

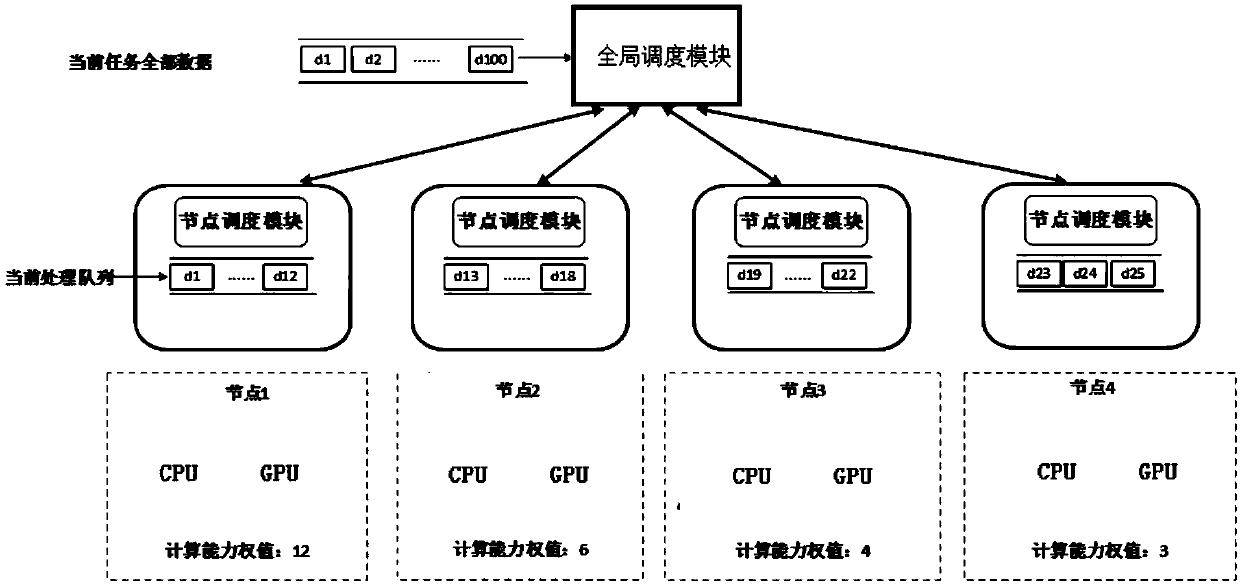

[0060] Such as figure 2 Shown is a schematic diagram of data distribution of the global scheduling module. figure 2 The middle global scheduling module firstly determines the computing power weight of each node according to parameters such as processor (includi...

Embodiment 2

[0066] Based on the same inventive concept as in Embodiment 1, the embodiment of the present invention provides a dynamic scheduling system based on two-level scheduling for CPU and GPU collaborative computing, including:

[0067] A system-level resource real-time monitoring module; the real-time resource monitoring module monitors the relevant parameters of the CPU and GPU in each node in real time; the relevant parameters include parameters such as the model of the CPU, the main frequency, the number of cores, and the average idle rate, And parameters such as the model of the GPU and the number of stream processors;

[0068] Global scheduling module; the global scheduling module receives the information sent by the resource real-time monitoring module, and estimates the processing capacity of each node in the system, and according to the request of the node scheduling module in each node, according to the estimated processing of each node in batches Ability to dynamically di...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com