Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

59 results about "Parallel computing architecture" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

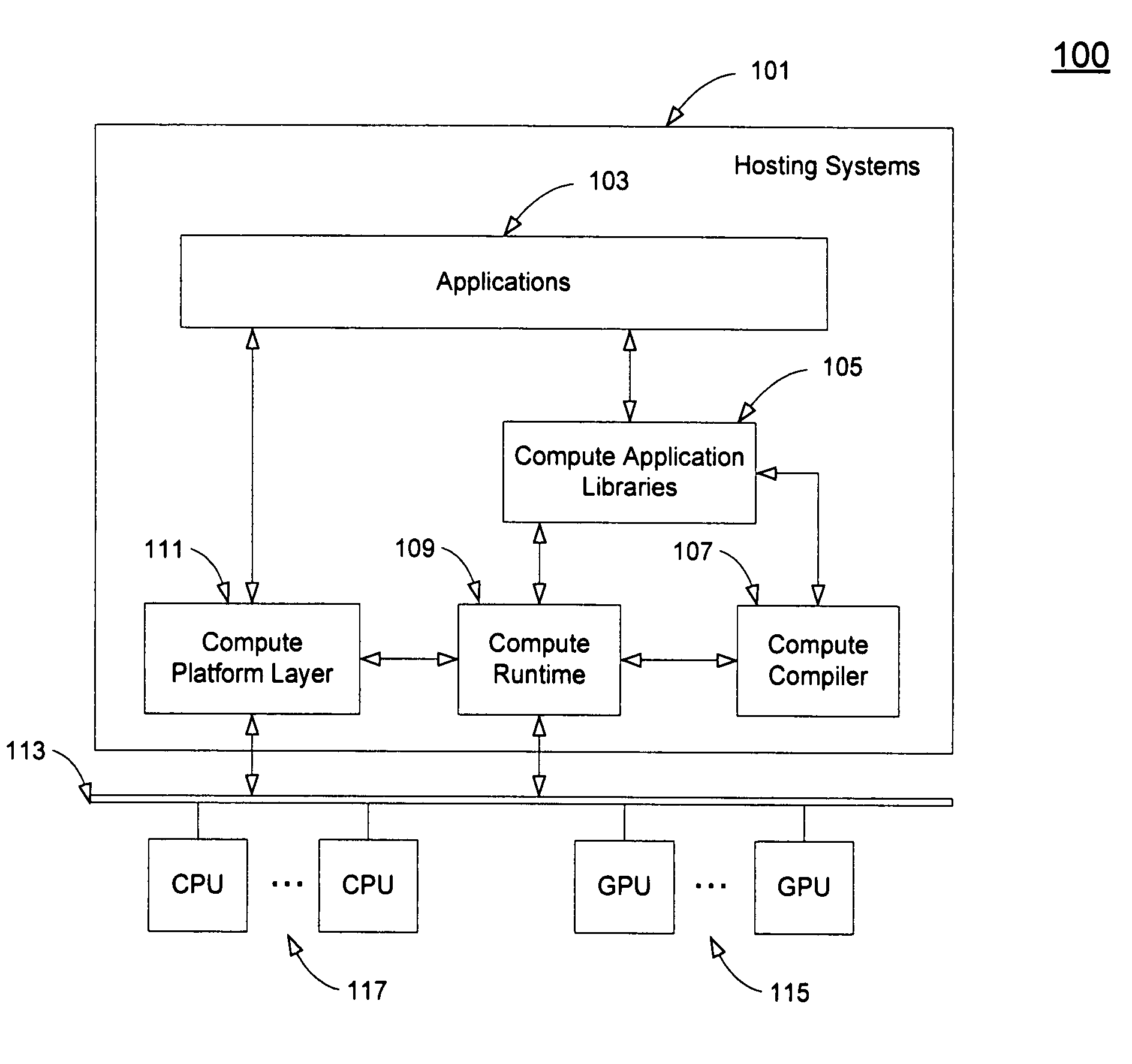

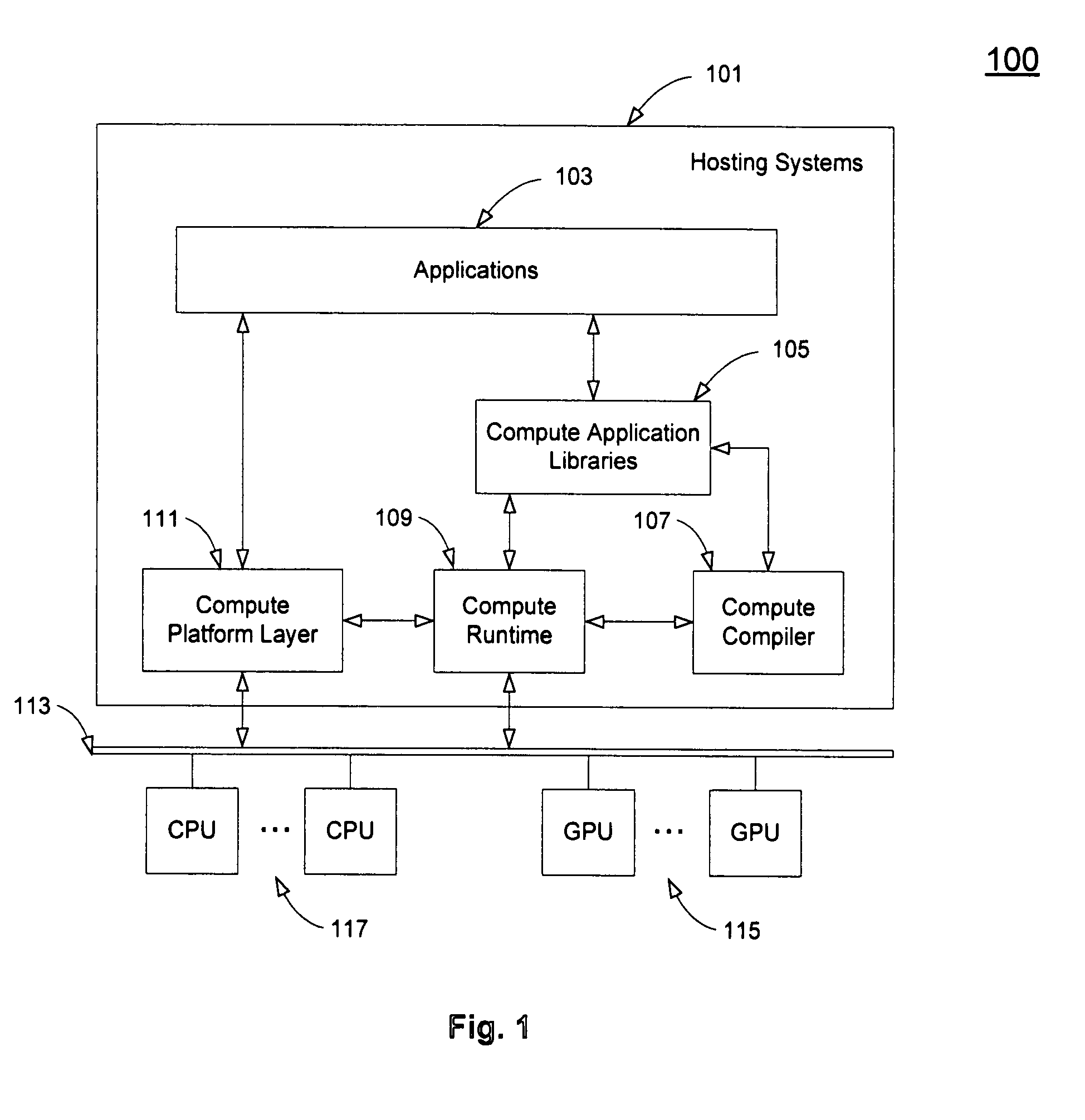

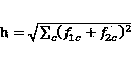

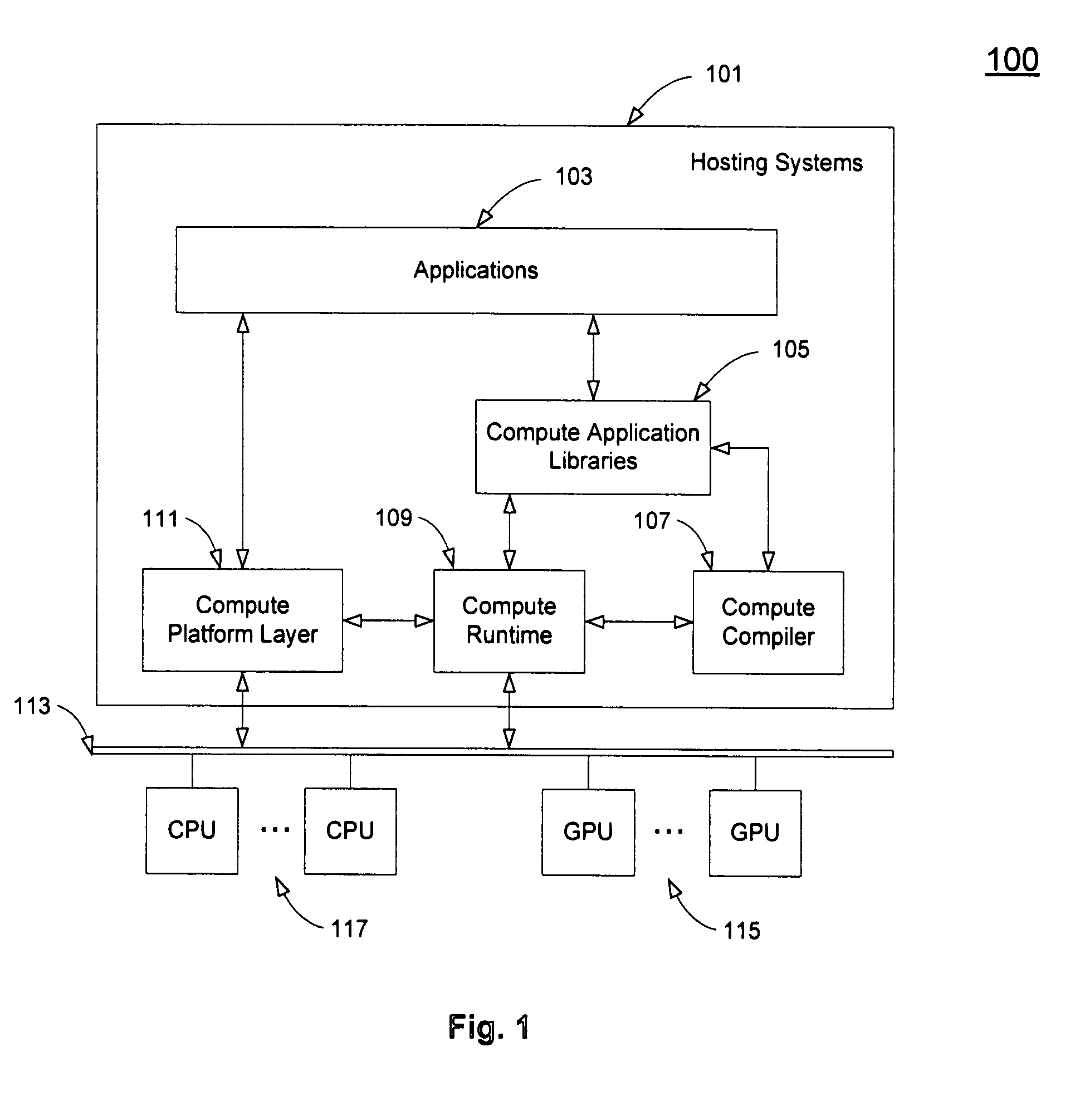

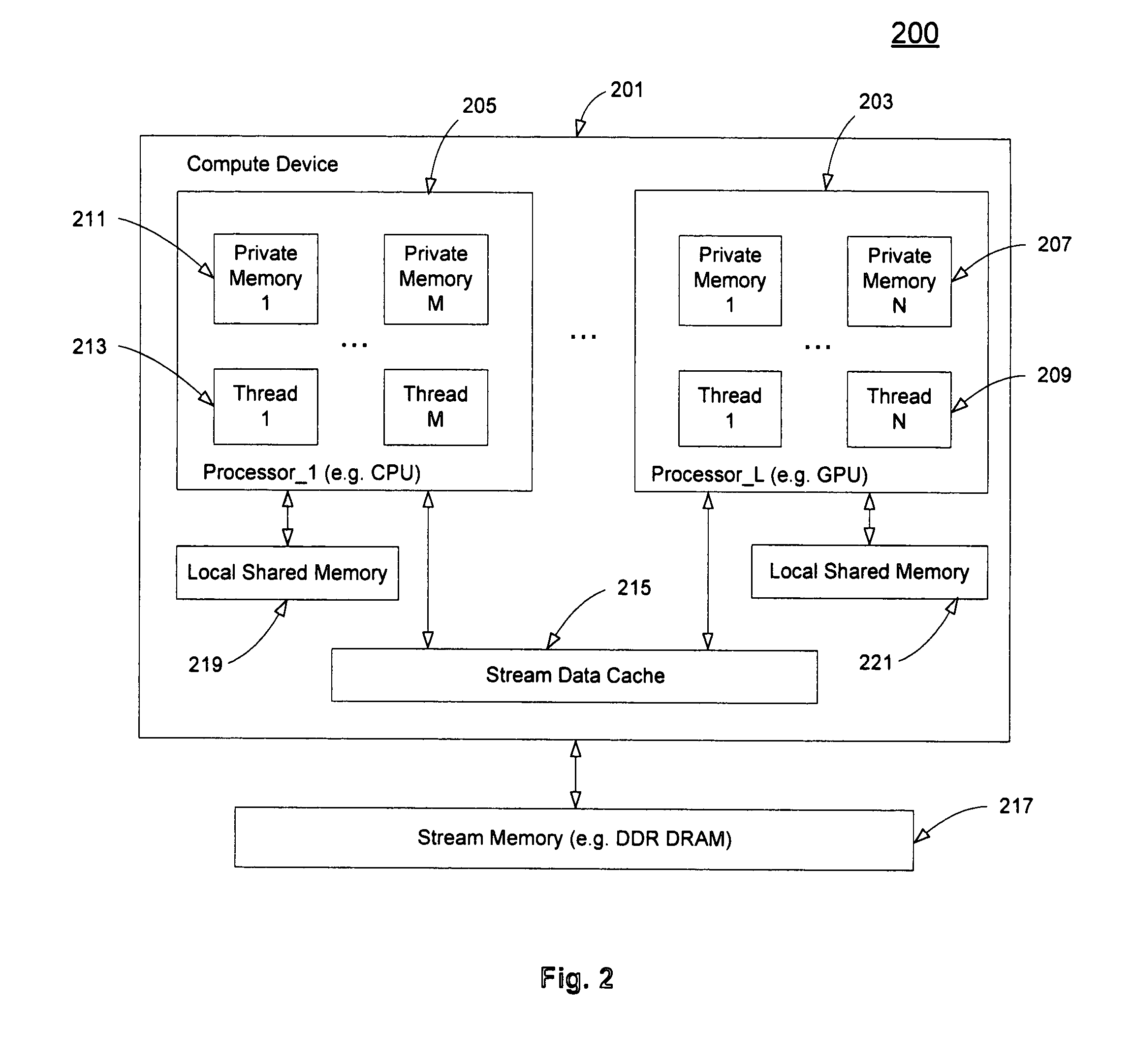

Application interface on multiple processors

ActiveUS20080276220A1Processor architectures/configurationSpecific program execution arrangementsGraphicsMulti processor

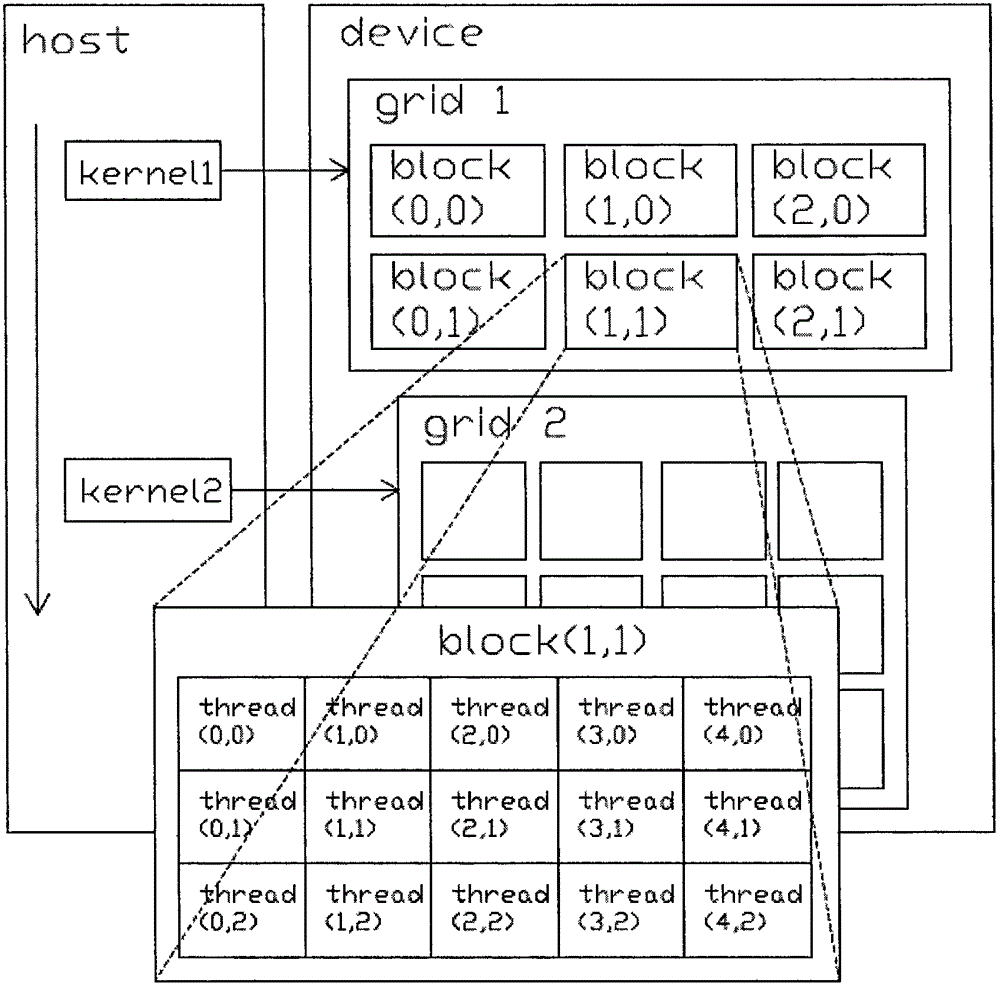

A method and an apparatus that execute a parallel computing program in a programming language for a parallel computing architecture are described. The parallel computing program is stored in memory in a system with parallel processors. The system includes a host processor, a graphics processing unit (GPU) coupled to the host processor and a memory coupled to at least one of the host processor and the GPU. The parallel computing program is stored in the memory to allocate threads between the host processor and the GPU. The programming language includes an API to allow an application to make calls using the API to allocate execution of the threads between the host processor and the GPU. The programming language includes host function data tokens for host functions performed in the host processor and kernel function data tokens for compute kernel functions performed in one or more compute processors, e.g. GPUs or CPUs, separate from the host processor. Standard data tokens in the programming language schedule a plurality of threads for execution on a plurality of processors, such as CPUs or GPUs in parallel. Extended data tokens in the programming language implement executables for the plurality of threads according to the schedules from the standard data tokens.

Owner:APPLE INC

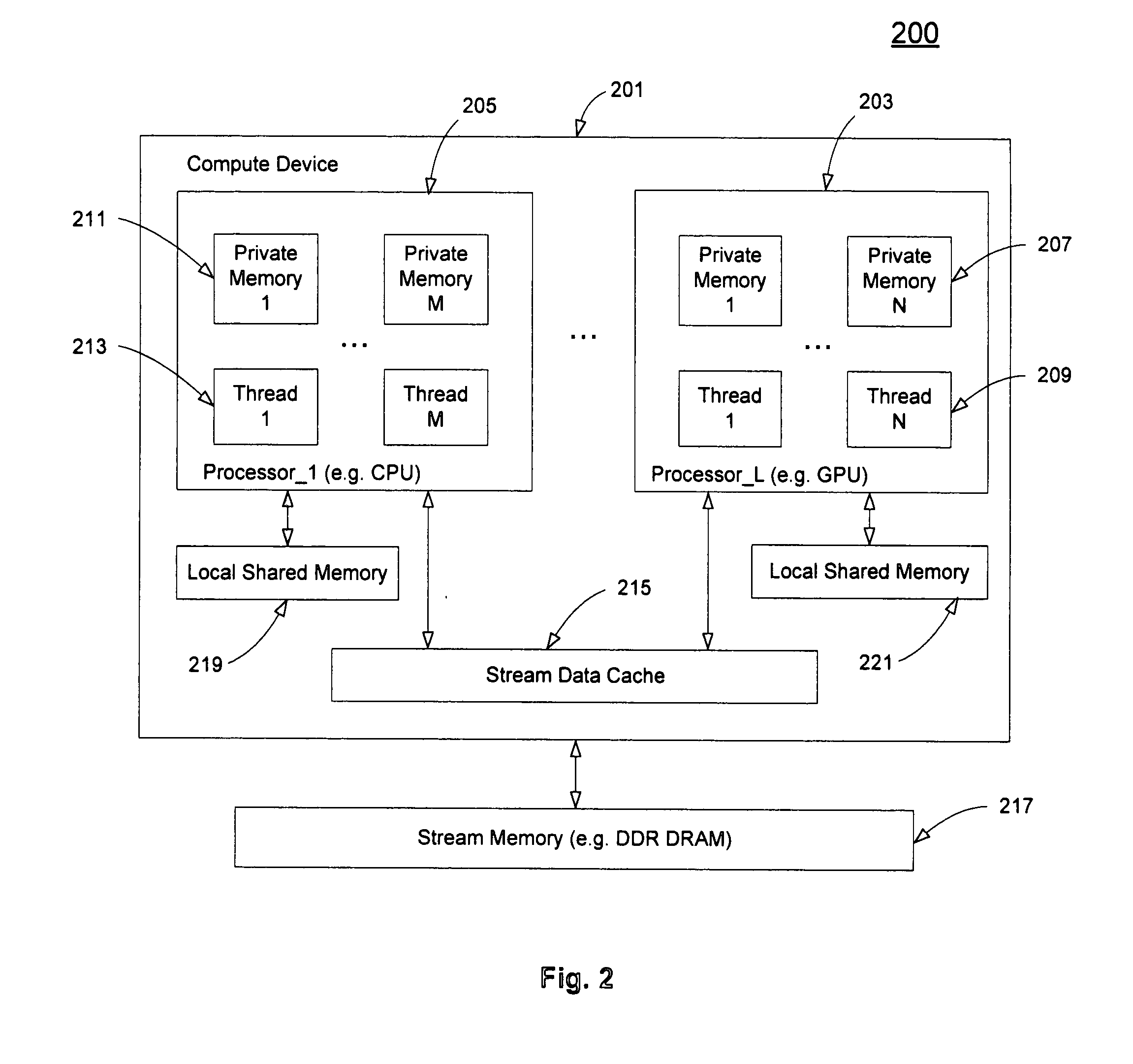

Lens boundary detection method

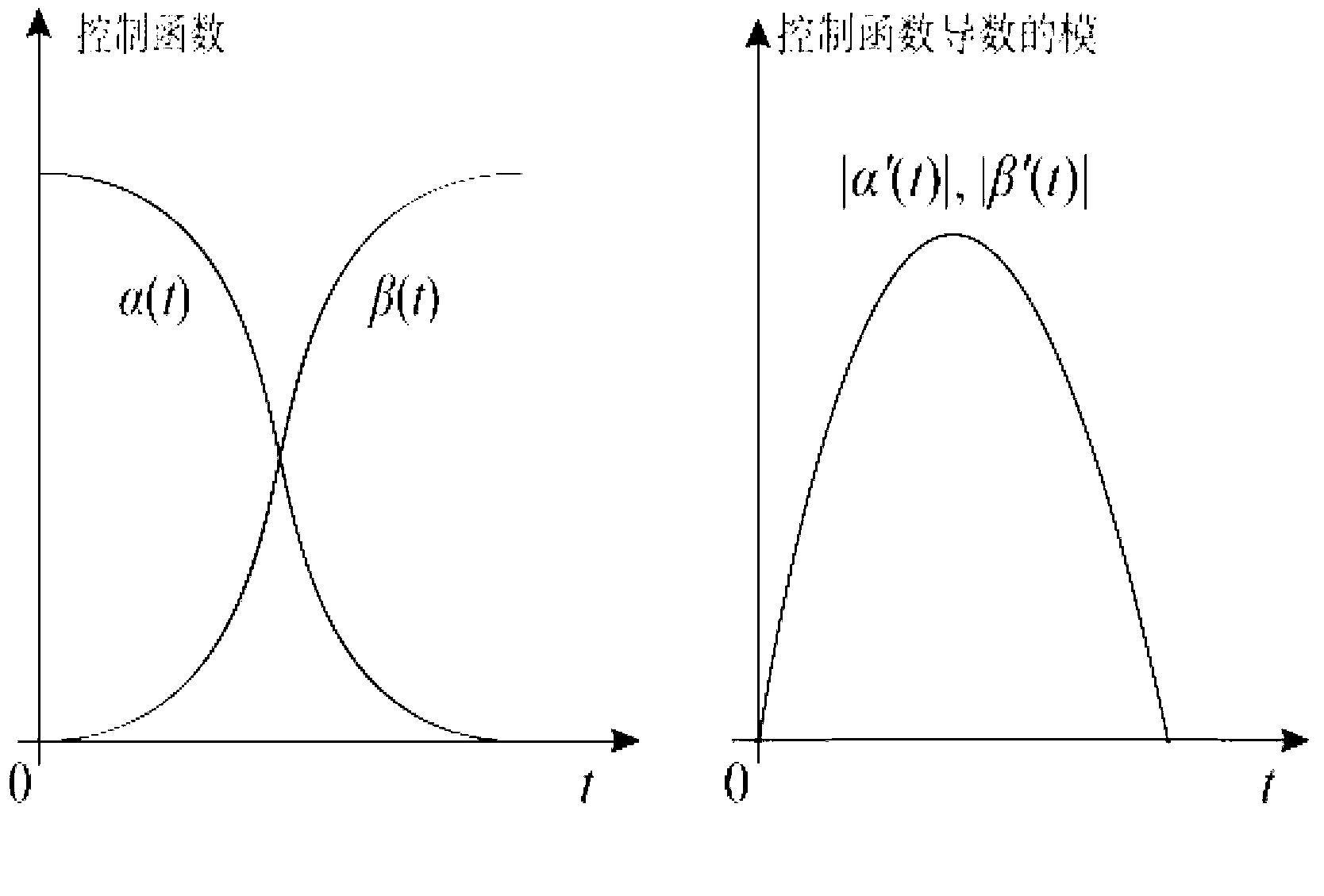

The invention discloses a lens boundary detection method which comprises the following steps of: 1) video frame character representation: calculating a non-uniform block histogram of each frame in the video in an HSV color space based on a general parallel calculation architecture as the characteristic representation of the video frame; 2) obtaining of similarity sequence, wherein the similarity of adjacent video frames is obtained by calculating the weight sum of the histogram distances of corresponding blocks, and the similarity sequence is a sequence consisting of the similarities of all adjacent video frames in the video; and 3) identification of lens boundary: for the detection of lens shear boundary, calculating the threshold by use of an adaptive threshold algorithm based on the similarity sequence, wherein the place with a similarity bigger than the threshold is the lens shear place. The detection of lens gradient boundary comprises the following steps of: finding the candidate gradient boundary by use of the inversion pair counting algorithm, and forming unified expression by use of Fourier function fitting; and comparing the candidate boundary with the standard gradient model to determine the gradient boundary and identity the gradient type thereof.

Owner:南京来坞信息科技有限公司

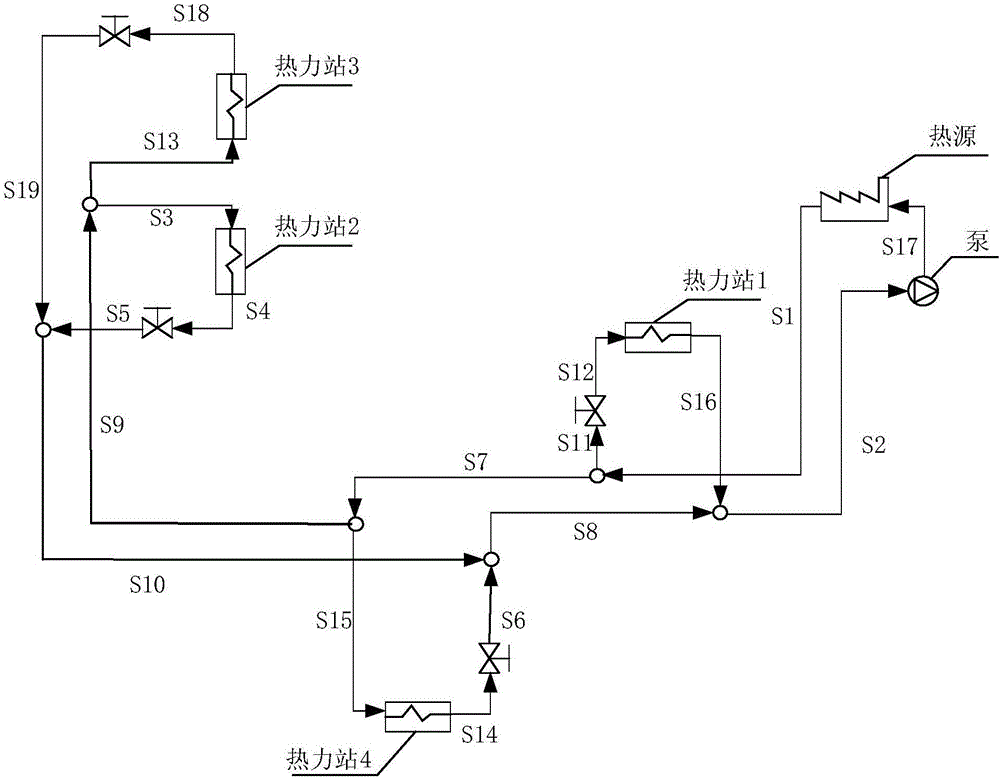

Heating pipe network hydraulic simulation model identification correction method and system, method of operation

InactiveCN106682369AHigh precisionImprove computing efficiencyBiological modelsDesign optimisation/simulationPhysical systemEngineering

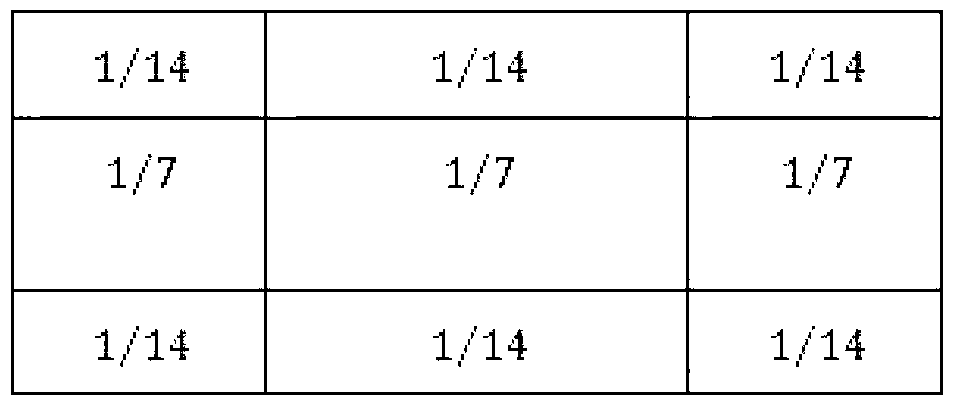

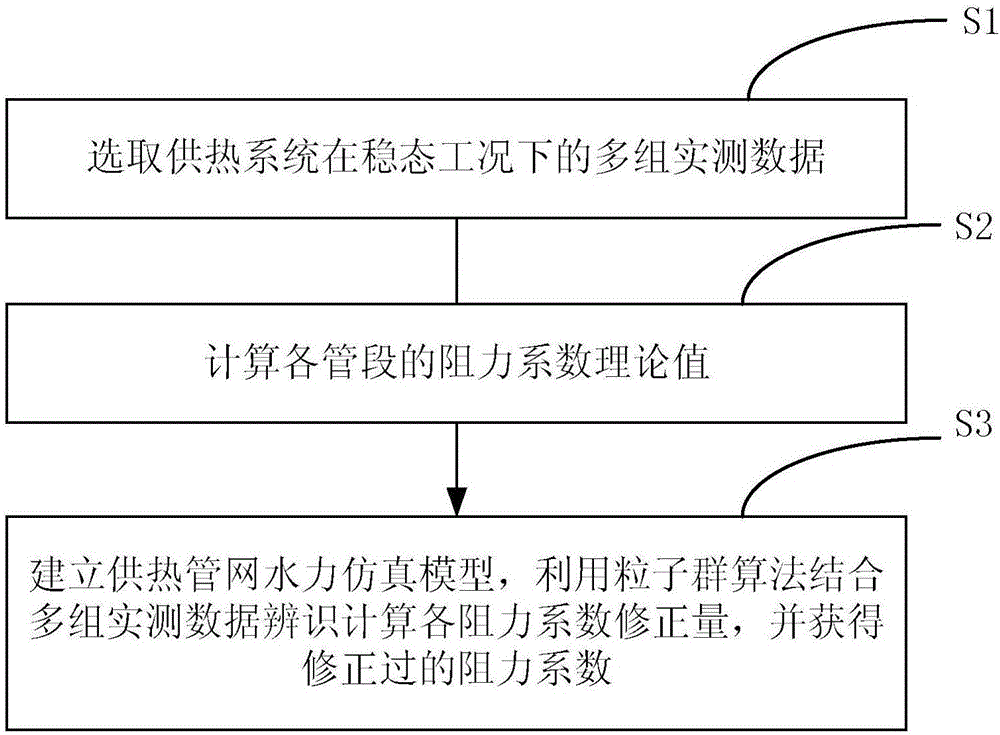

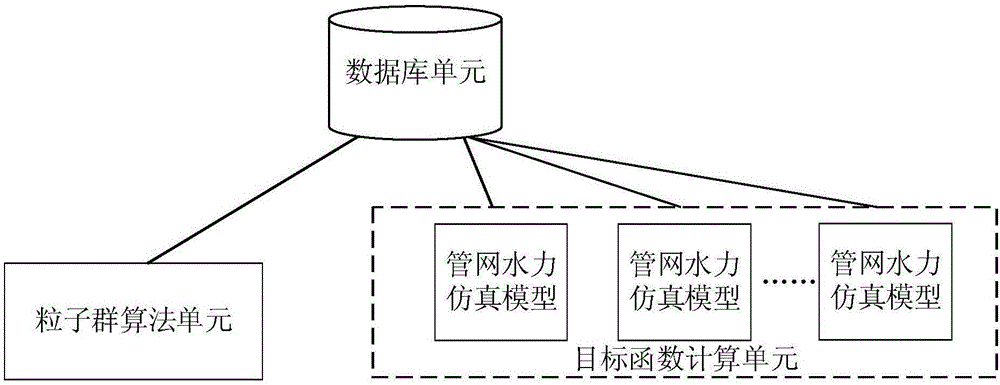

The invention relates to a heating pipe network hydraulic simulation model identification correction method and a system. The heating pipe network hydraulic simulation model identification correction method comprises the steps of S1, selecting multiple groups of actually measured data of a heating system under steady work conditions, S2, calculating theoretical values of the drag coefficient of all pipe section, and S3, establishing a hydraulic simulation model of heating pipe network, using particle swarm optimization method accompanied by multiple groups of actually measured data, identifying and calculating various resistance coefficient correction amount vectors, and through the various resistance coefficient correction amount vectors in the heating pipe network hydraulic simulation model, making the comparison between the flow conditions and the actual measured data under multiple working conditions, identifying the optimal resistance coefficient correction amount. The corrected model can better describe the actual physical system, and increase the calculation precision of the hydraulic calculation of heating pipe network. The application of a parallel computing architecture for the implementation for the identification of the particle swarm algorithm drag coefficient correction with high identification efficiency.

Owner:CHANGZHOU ENGIPOWER TECH

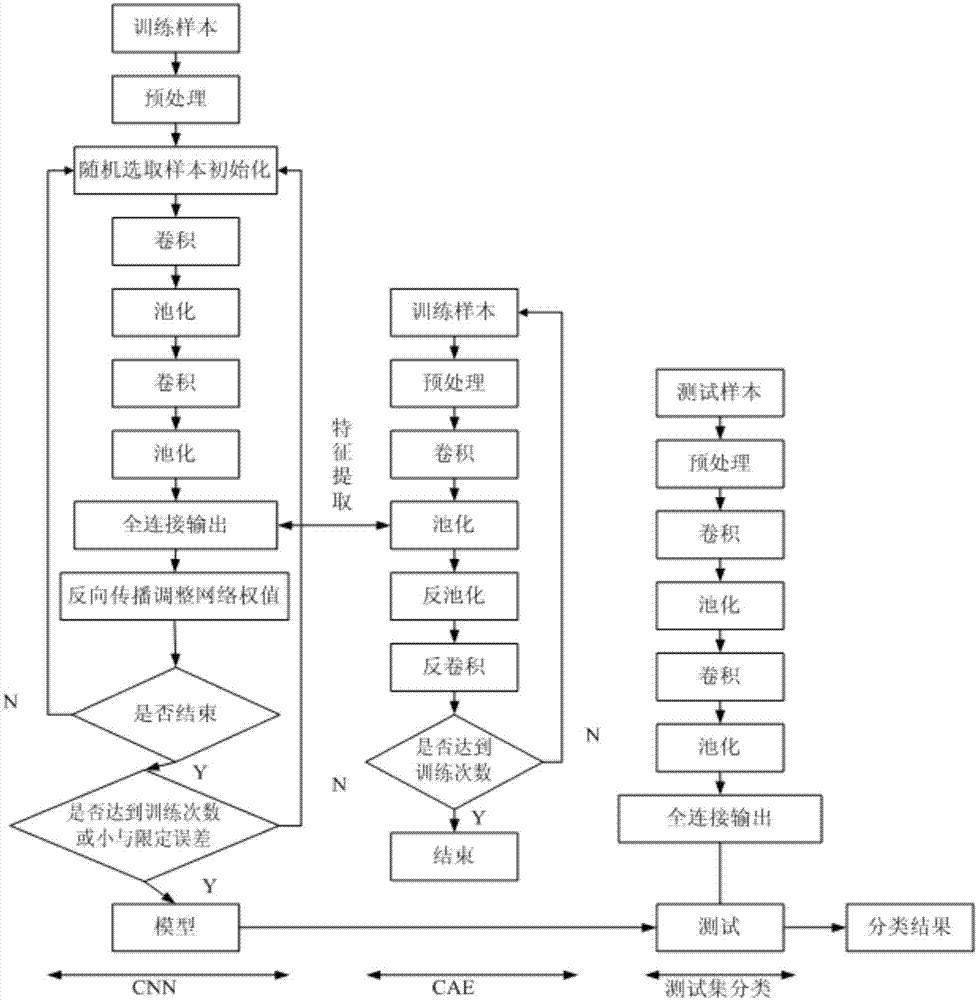

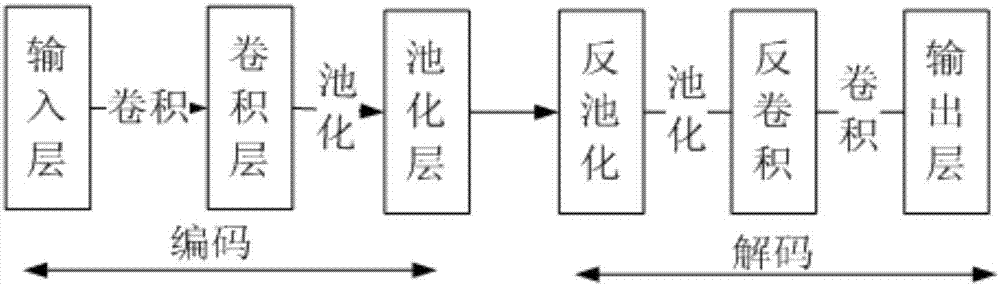

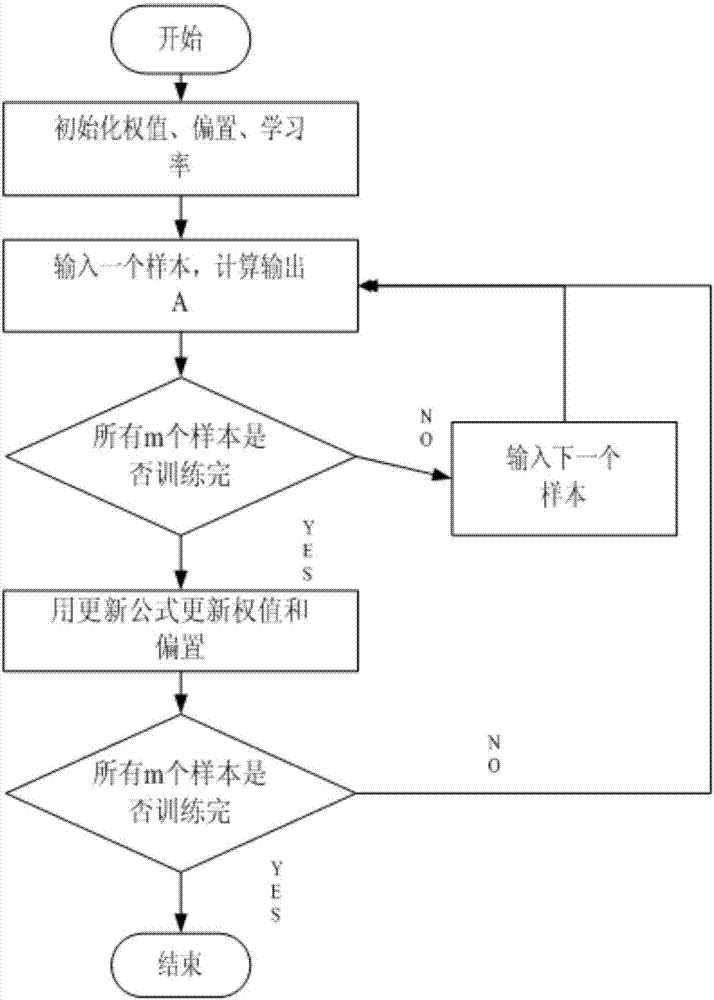

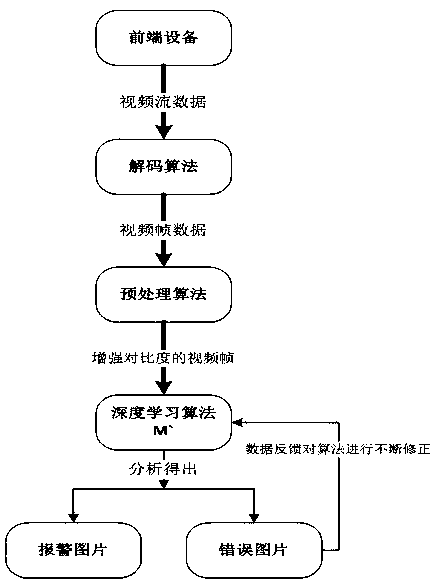

Video monitoring equipment fault automatic detection method

InactiveCN107506695AAutomatic detectionCharacter and pattern recognitionClosed circuit television systemsVideo monitoringNetwork model

The invention discloses a video monitoring equipment fault automatic detection method which includes the following steps: a convolutional auto-encoder uses different numbers of network layers and different numbers of hidden nodes to extract the features of a monitoring image, image feature extraction is carried out in a pooling layer, and the convolutional auto-encoder is iterated until the accuracy of a classification model converges; a convolutional neural network uses the features of the monitoring image extracted by the convolutional auto-encoder as the basis of image classification to realize supervised learning; and the convolutional neural network uses a parallel computing architecture to train a network model, and after training of the network model, the model is applied to the classification process of a test image, and the damage condition of video monitoring equipment is judged according to the classification result. According to the video monitoring equipment fault automatic detection method of the invention, a corresponding improved algorithm is put forward for video monitoring equipment fault detection, and an automatic and fast fault detection method is put forward.

Owner:WUHAN UNIV OF TECH

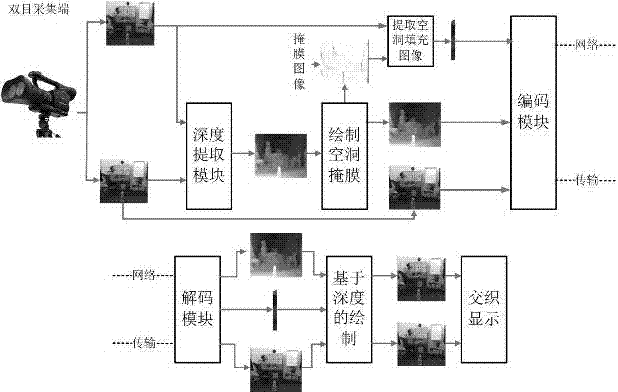

Quick generating method for integral imaging micro image array based on GPU

The invention provides a quick generating method for an integral imaging micro image array based on a GPU. The method comprises the process of establishing a virtual camera array to obtain a disparity image array in a Direct3D program and the process of achieving the operation that the disparity image array is quickly converted into a micro image array in a CUDA program. A simplified virtual camera array structure is established based on the computer graphics library Direct3D to carry out rendering on a virtual scene, and the efficiency of generating all the information of the micro image array is improved; the quick generation of the micro image array is completed based on the characteristics of a CUDA parallel computing architecture, data transmission in a CPU and a GPU is barely needed to be carried out through a computer system bus, and therefore the efficiency of generating the micro image array is further improved. Through the method, the speed of generating the integral imaging micro image array is increased by tens of times and even hundreds of times compared with the generation based on the CPU, and the requirements of real-time dynamic integral imaging 3D display are met.

Owner:SICHUAN UNIV

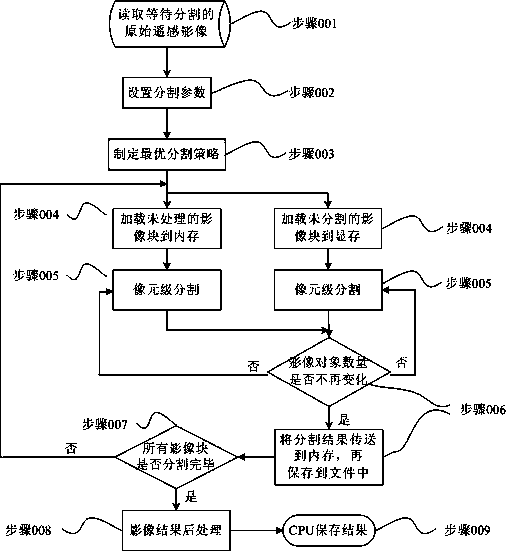

Central processing unit (CPU) and ground power unit (GPU)-based remote-sensing image multi-scale heterogeneous parallel segmentation method

InactiveCN103617626AFully utilize the maximum computing powerSplit supportImage analysisProcessor architectures/configurationImage segmentationComputer vision

Owner:WUHAN SHITU SPATIAL INFORMATION TECH

Application interface on multiple processors

ActiveUS8341611B2Processor architectures/configurationDetails involving image processing hardwareApplication softwareHuman language

Owner:APPLE INC

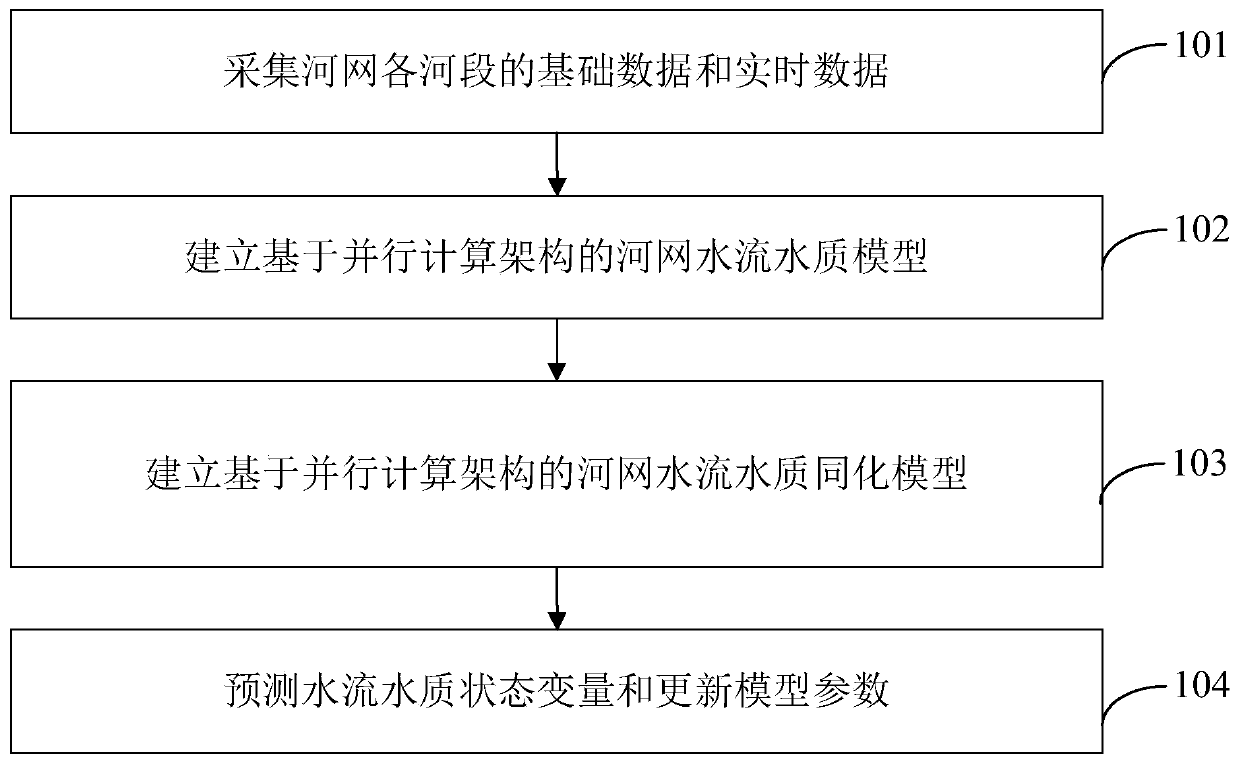

River network water flow quality real-time prediction method and device based on data assimilation

InactiveCN110222372AImprove forecast accuracyImprove computing efficiencyGeneral water supply conservationDesign optimisation/simulationRiver networkWater quality

The invention provides a river network water flow quality real-time prediction method and device based on data assimilation. According to the technical scheme, on the basis of real-time monitoring data such as the water level, the flow and the water quality of the river network, real-time monitoring data are assimilated into the river network water flow and quality model through the ensemble Kalman filter or the improved algorithm of the ensemble Kalman filter, the river network water flow and quality data assimilation model is constructed, and the computing efficiency of the data assimilationmodel is improved through a parallel computing architecture. Due to the data assimilation technology, water flow and quality model structure errors, inlet and outlet boundary errors and observation value errors are comprehensively considered; real-time observation data are fused to dynamically correct water level, flow and water quality concentration state variables and roughness and water quality parameters of the water quality model, so that the water level, flow and water quality concentration of a complex river network system can be dynamically predicted by adopting a river network waterquality data assimilation model and a parallel computing architecture, and the model prediction precision can be improved.

Owner:CHINA INST OF WATER RESOURCES & HYDROPOWER RES

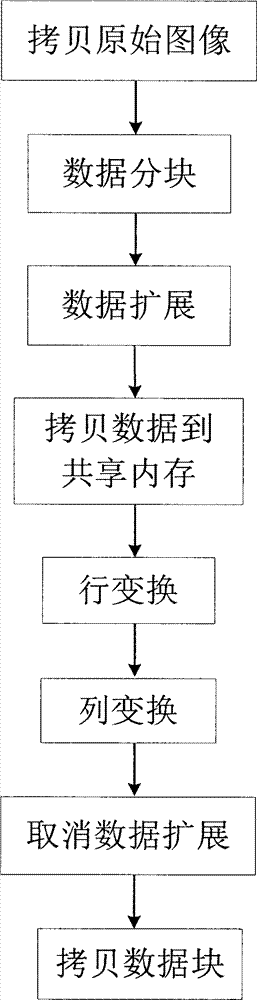

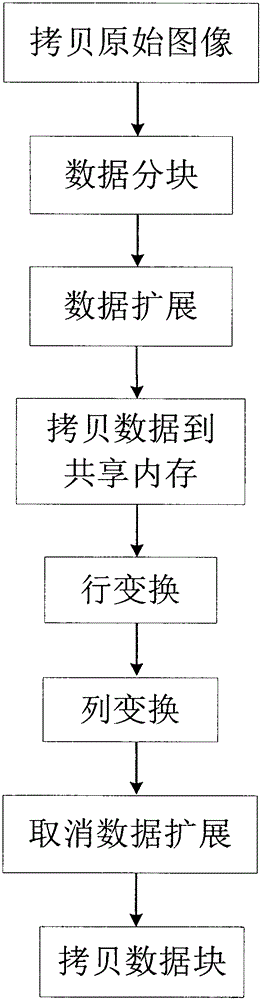

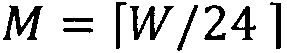

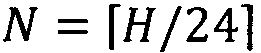

Method utilizing graphic processing unit (GPU) for achieving rapid wavelet transformation through segmentation

ActiveCN103198451AGuaranteed accuracyOvercoming imprecise resultsProcessor architectures/configurationComputational scienceGraphics

The invention discloses a method utilizing a graphic processing unit (GPU) for achieving rapid wavelet transformation through segmentation. The method utilizing the GPU for achieving rapid wavelet transformation through segmentation mainly solves the problem that the speed of wavelet transformation in the prior art is low. Directing at the features of a parallel computing framework of the GPU, the method which segments data and conducts parallel processing to the data is designed. The method includes the following steps: copying an original image, segmenting the data, expanding the data, copying the data to a shared memory, transforming rows, transforming lines, canceling data expansion and copying the transformed data to a host machine memory. According to the method utilizing the GPU for achieving rapid wavelet transformation through segmentation, accuracy of a wavelet transformation result is guaranteed through the expansion of the segmented data, the visiting speed of the data is improved through the transformation in the shared memory, interaction between the data and a global memory is avoided, and the processing speed of the whole image is improved through the paralleling of image blocks and the paralleling among pixel points of image block branches.

Owner:XIDIAN UNIV

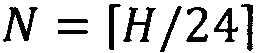

Real-time rendering method based on GPU (Graphics Processing Unit) in binocular system

ActiveCN102930593AReal-time rendering implementationObjective quality is good3D-image renderingGraphicsObjective quality

The invention relates to a real-time rendering method based on a GPU in a binocular system. The real-time rendering method comprises the following steps: rendering out a cavity mask image at the sending end of the binocular system and extracting texture information of a large hole; filling the large hole by virtue of the extracted texture information at the receiving end of the system, and using an interpolation algorithm to fill a small hole. According to the invention, the GPU is used to quicken the rendering of a virtual viewpoint image; firstly, a CUDA (Compute Unified Device Architecture) technology is used to project each pixel to a three-dimensional space; and then an OpenGL (Open Graphics Library) technology is used to project the pixel in the three-dimensional space to a two-dimensional plane to finish rendering the virtual viewpoint image. The real-time rendering method greatly improves the rendering speed, and the synthesized novel viewpoint image achieves a good effect on subjective and objective quality, so that real-time rendering of the binocular system can be achieved.

Owner:SHANGHAI UNIV

A method to implement SHA-1 algorithm using GPU

InactiveCN102298522AReduce loadEasy to handleResource allocationSpecific program execution arrangementsConcurrent computationCpu load

The invention discloses a method for implementing the SHA-1 algorithm by using a GPU, which includes the following steps: (1) implementing the SHA-1 algorithm on a general-purpose CPU; (2) transplanting the program to the GPU, and performing preprocessing according to the following method Processing: a. Create an empty function body; B. Copy the parameters from the main memory to the global matching storage space of the GPU through the parameter pointer, and copy the result from the global matching storage space of the GPU back to the main memory; C. Add device configuration modifier; D. Synchronize. The present invention provides a method for implementing the SHA-1 algorithm by using the GPU, utilizing the rapidly developing graphics hardware technology, adopting the CUDA parallel computing architecture of NVIDIA Corporation for parallel implementation, and using the GPU as a parallel computing device for program release and management operations, with flexible It has good performance, reduces CPU load, improves the processing performance of information security equipment, improves computing efficiency, and has the advantages of high security in the computing process.

Owner:四川卫士通信息安全平台技术有限公司

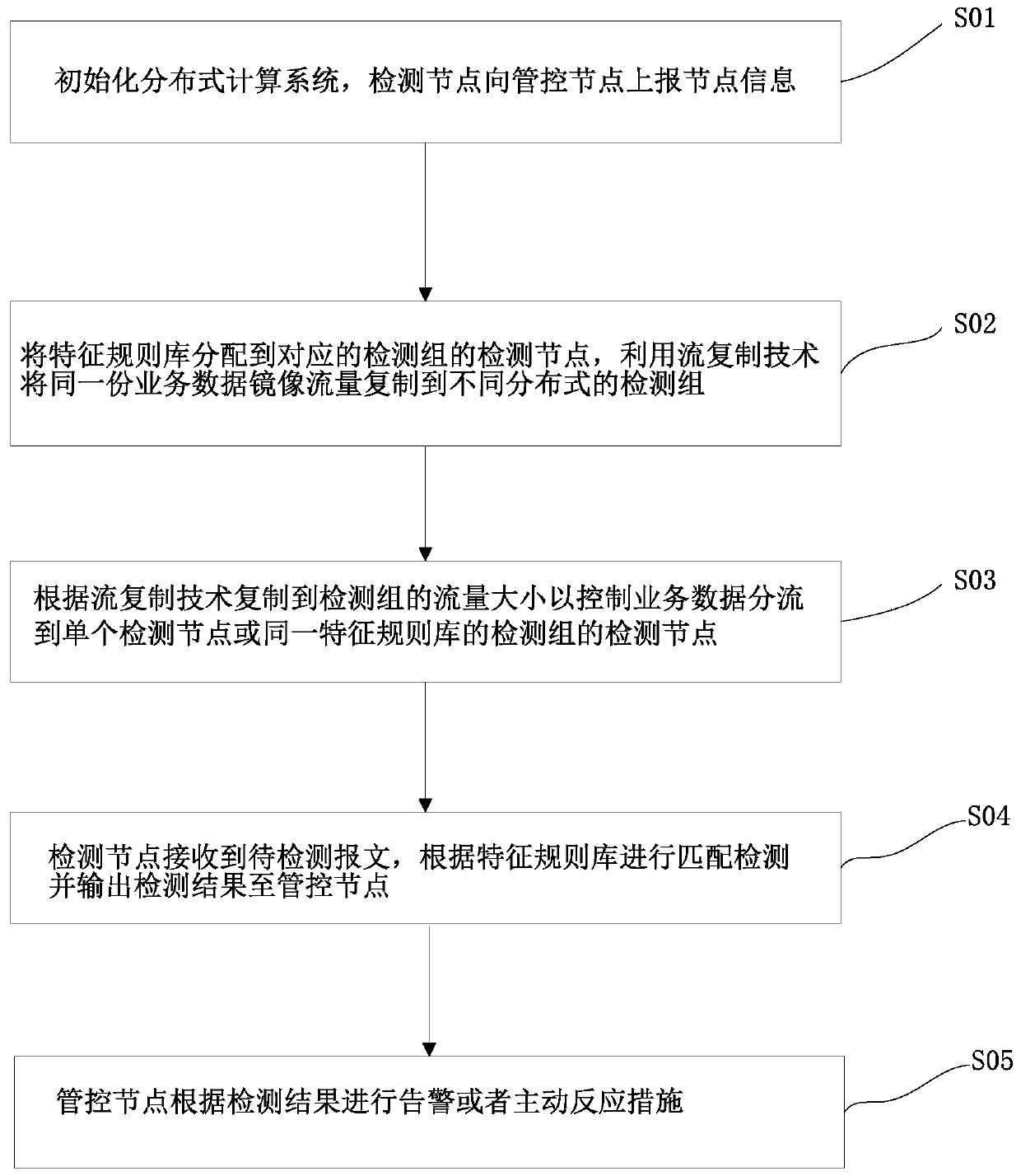

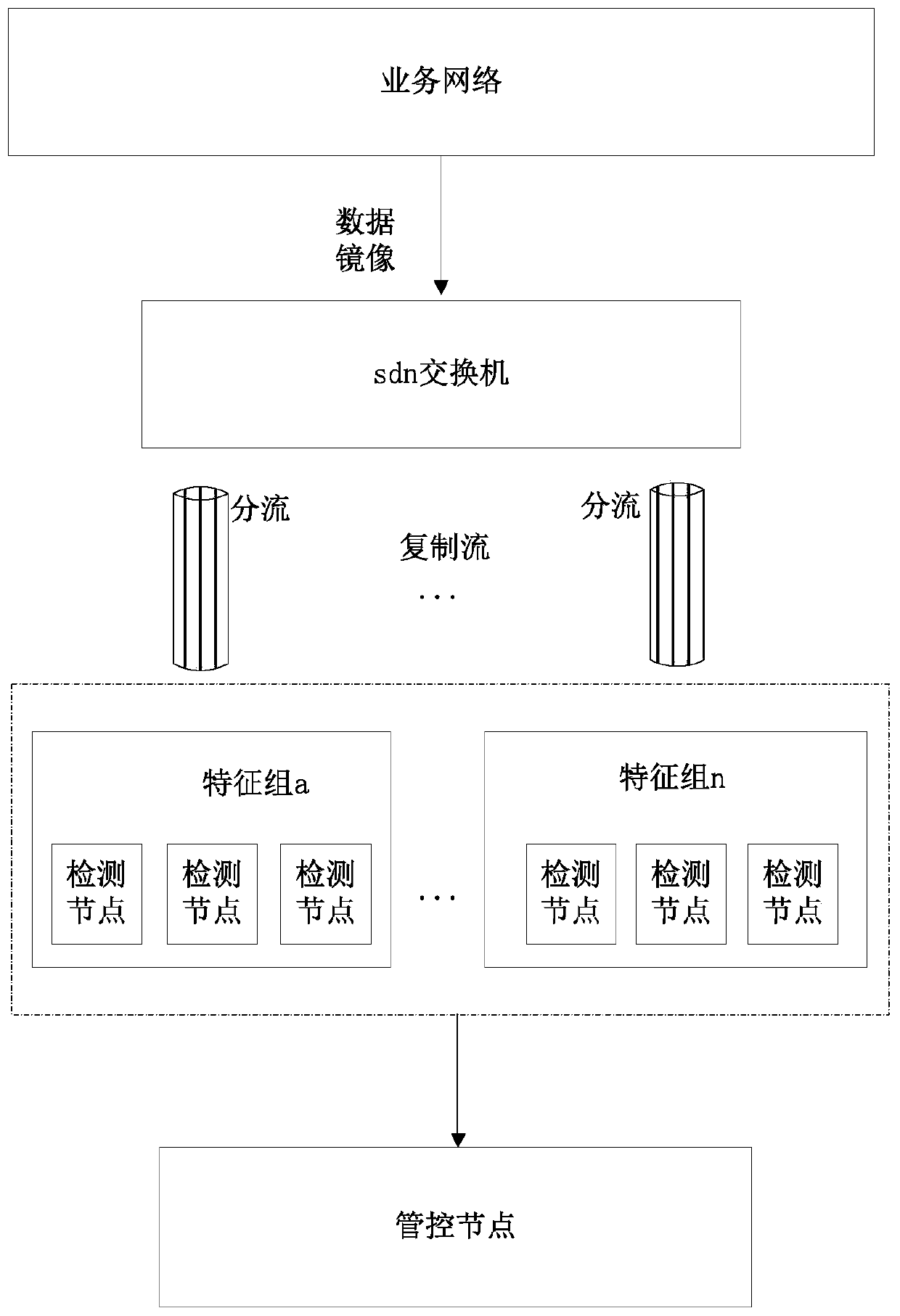

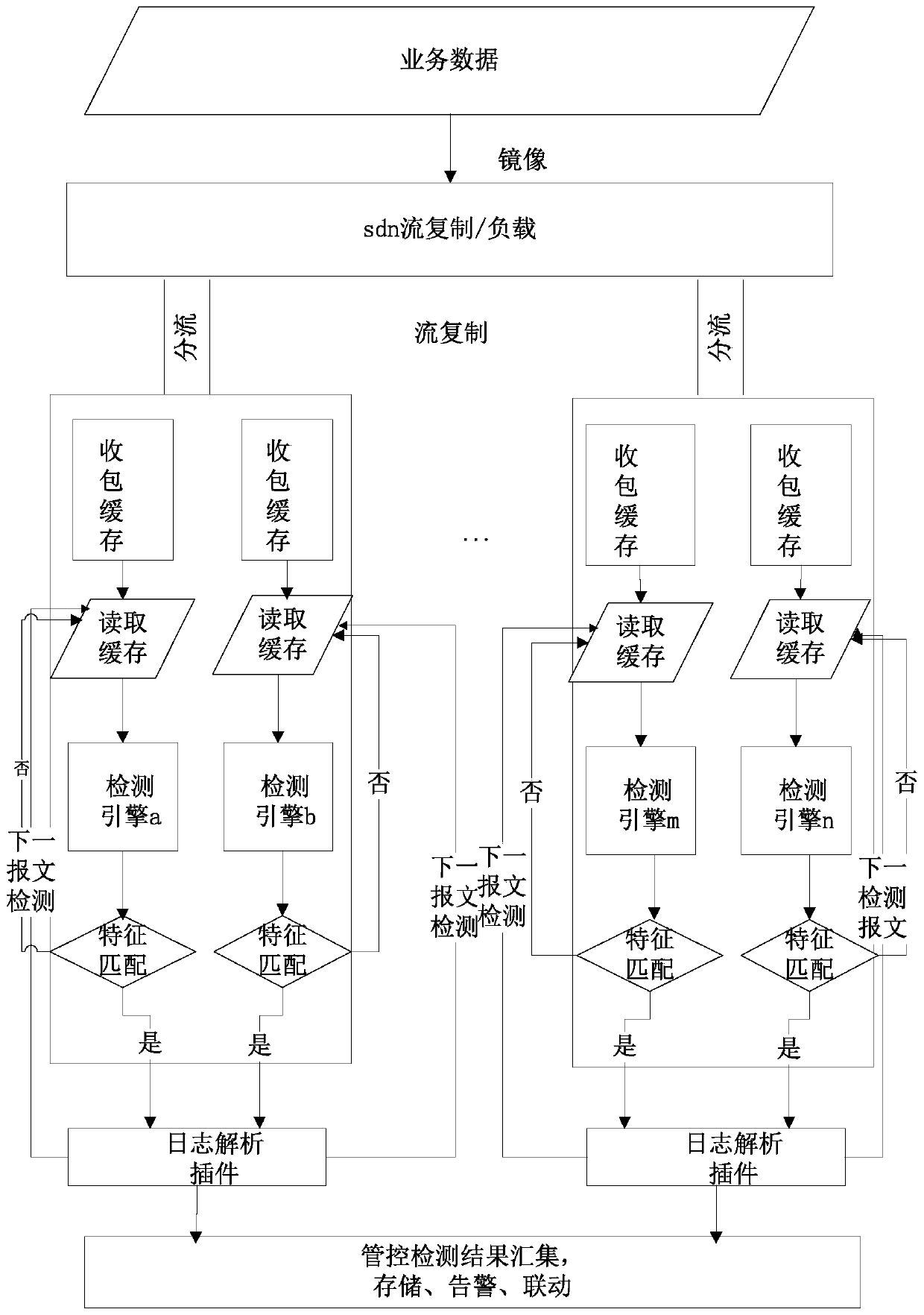

Network intrusion detection method, device and system and storage medium

PendingCN111049849AImprove securityOvercome technical issues that reduce detection efficiencyTransmissionData streamConcurrent computation

The invention discloses a network intrusion detection method, device and system and a storage medium, and the method comprises the steps: initializing a distributed computing system, and enabling a detection node to report node information to a management and control node; distributing a feature rule base to detection nodes of corresponding detection groups, and copying the same service data mirror image traffic to different distributed detection groups by using a stream copying technology; according to the flow copied to the detection group by the flow copying technology, controlling the service data to be shunted to a single detection node or a detection node of the detection group of the same feature rule base; and enabling the detection node to receive the to-be-detected message, perform matching detection according to the feature rule base and output a detection result to the management and control node. Through a technical means of adopting a distributed and parallel computing architecture and shunting a network data stream to a plurality of detection nodes by utilizing distributed and sdn switch stream replication, the technical problem of low detection efficiency during large-flow data in the prior art is solved, and the effect of improving the system detection efficiency is achieved.

Owner:SHENZHEN Y& D ELECTRONICS CO LTD

Rainfall sensor network node layout optimization method and device

ActiveCN110232471AMake up for uncertaintyHigh precisionForecastingArtificial lifeDiscretizationParticle swarm algorithm

The invention provides a rainfall sensor network node layout optimization method and device. The rainfall sensor network node layout optimization method and device can obtain high-precision rainfall space distribution through simulation by taking satellite remote sensing rainfall data as a background field and combining the ground monitoring data, so as to convert discretized ground monitoring data into high-precision and continuous ground data, and furthermore, can discretize the ground area through the Thiessen polygon, to reduce the number of traversing units, and improve the calculation efficiency. According to rainfall spatial distribution, the rainfall sensor network node layout optimization method and device can establish a multi-objective function and constraint conditions of nodelayout optimization to obtain a rainfall station layout optimization model, and can solve the model to obtain an optimal rainfall sensor network node layout by adopting a multi-objective microscopic neighborhood particle swarm algorithm, so as to provide a theoretical basis for reasonable layout of a ground monitoring station. In the solving process, the rainfall sensor network node layout optimization method and device transform the multi-target microscopic neighborhood particle swarm algorithm to be suitable for a parallel computing architecture, so as to accelerate the solving efficiency.

Owner:CHINA INST OF WATER RESOURCES & HYDROPOWER RES

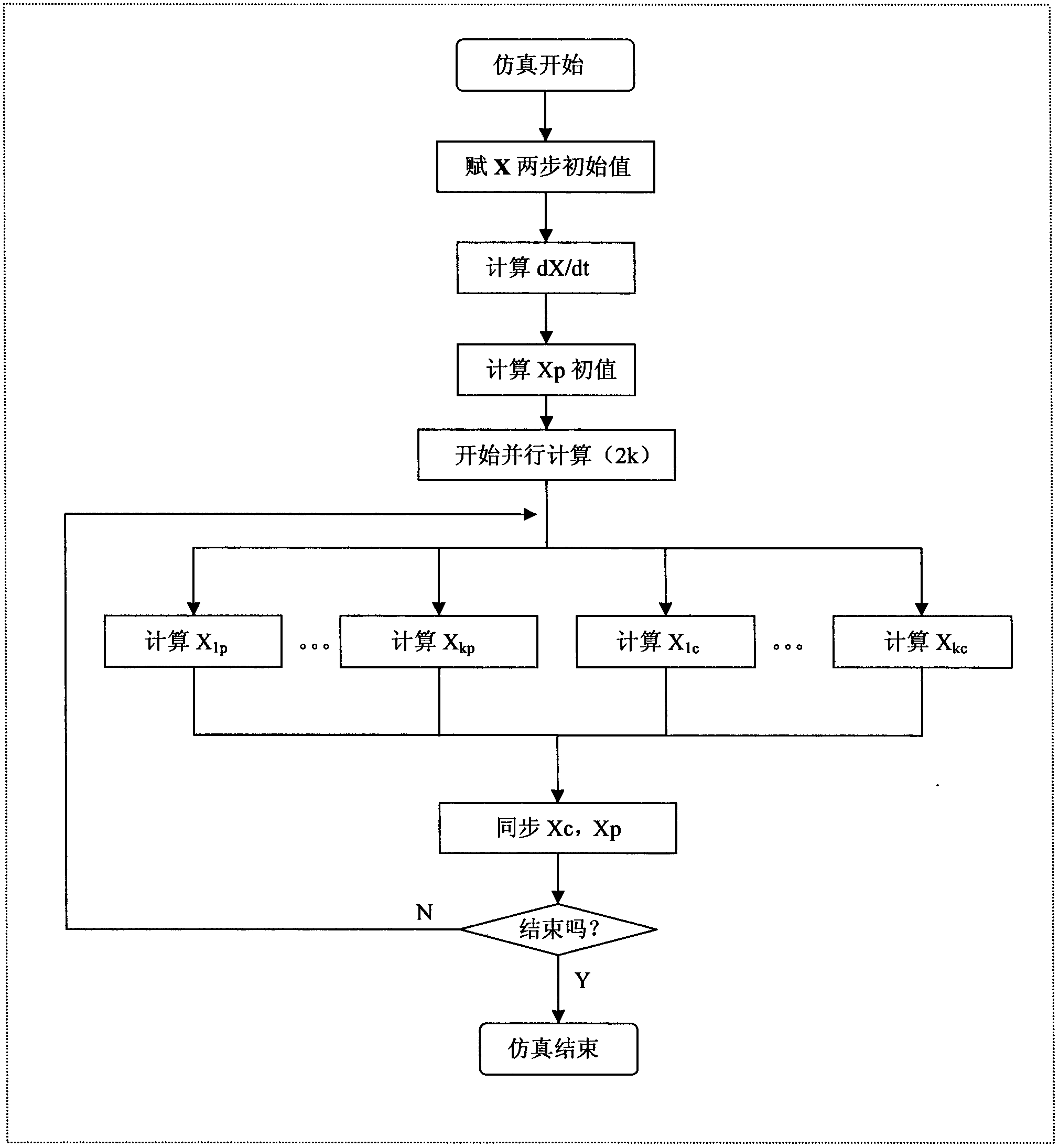

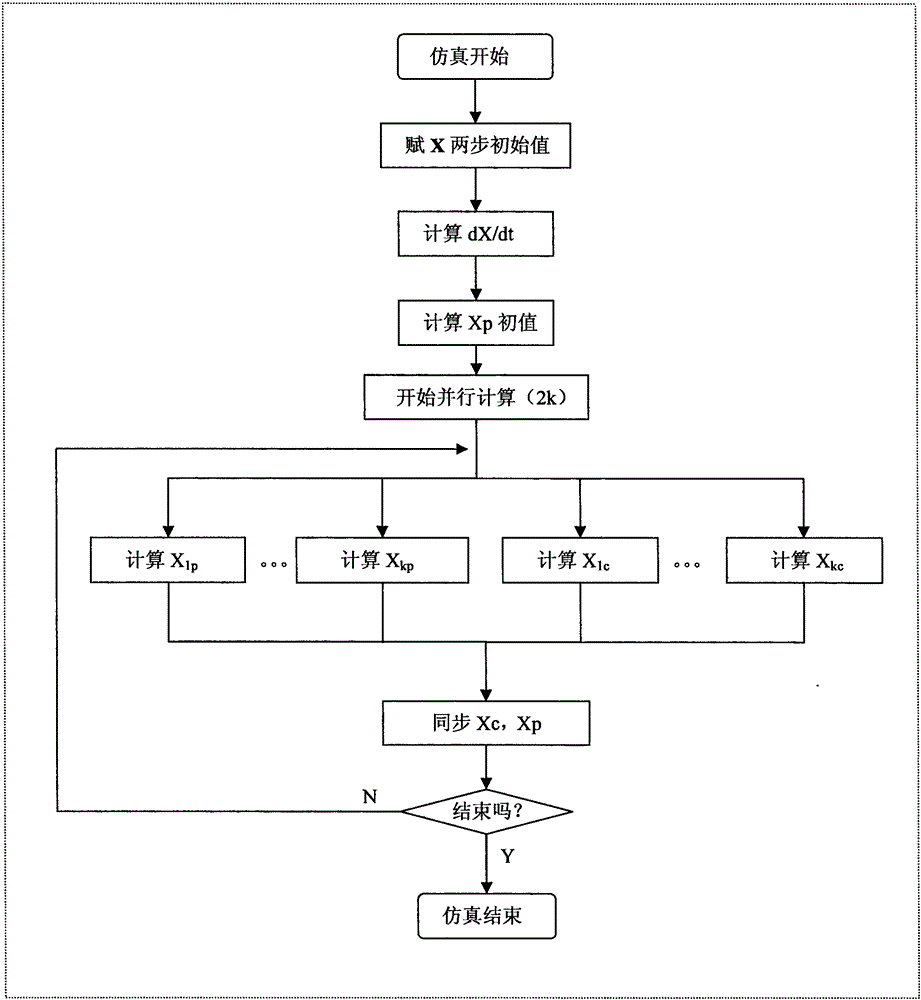

Test method for achieving electromagnetic transient real-time simulation of electrical power system based on CUDA (compute unified device architecture) parallel computing

InactiveCN103186366ASolve processing problemsTake advantage of parallel processing capabilitiesConcurrent instruction executionElectric power systemPower unit

The invention discloses a test method for achieving electromagnetic transient real-time simulation of an electrical power system based on CUDA parallel computing. An electromagnetic transient simulation test host computer adopts a CPU (central processing unit) and GPU (ground power unit) mode; the GPU uses a CUDA platform parallel computing framework; the simulation test host computer is connected with collection devices of all channels of signals through the gigabit Ethernet; a model can be greatly simplified mainly based on of the digital substation simulation instead of the whole-network or whole system simulation, the traditional simulation speed can be increased by dozens of times or hundreds of times, and requirements of transformer substation electromagnetic transient real-time simulation computing are met; and compared with the cost of a large parallel computer or a professional parallel computer, the cost of the CUDA parallel computing platform is greatly reduced. According to the method, in the aspect of electromagnetic transient real-time simulation of the electrical power system, the performance is greatly improved, the cost is substantially reduced, and the method has a wide popularization value.

Owner:SHENZHEN KANGBIDA CONTROL TECH

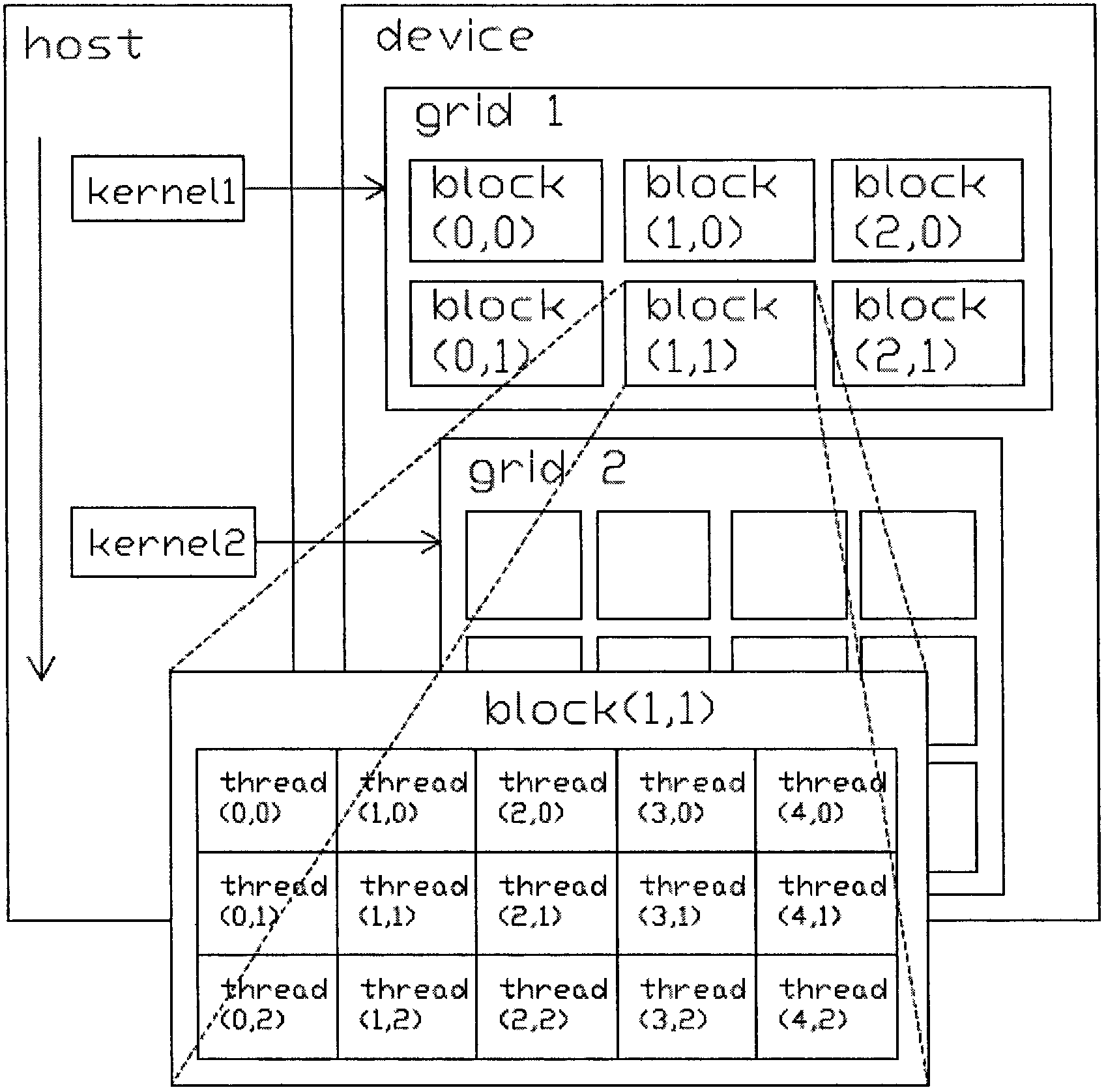

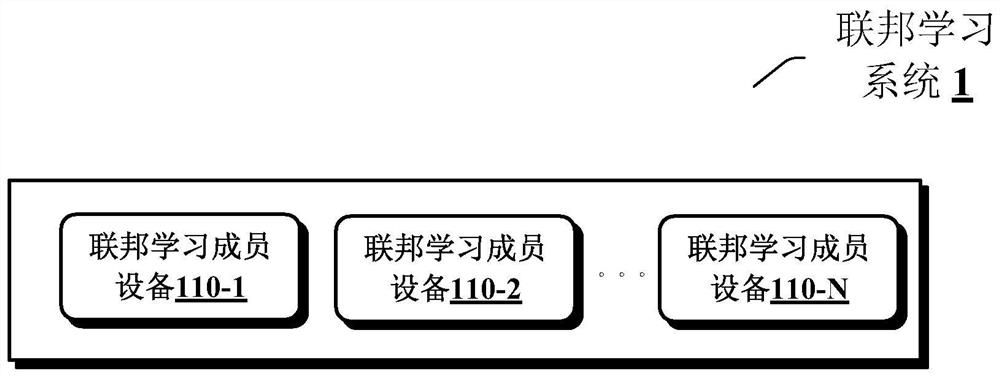

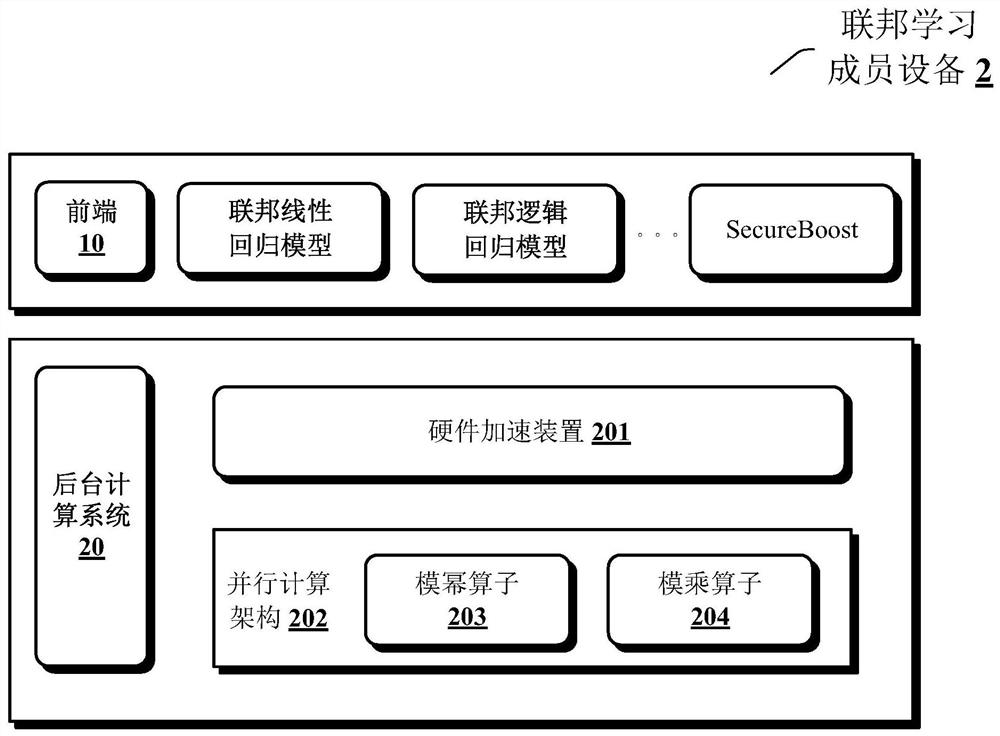

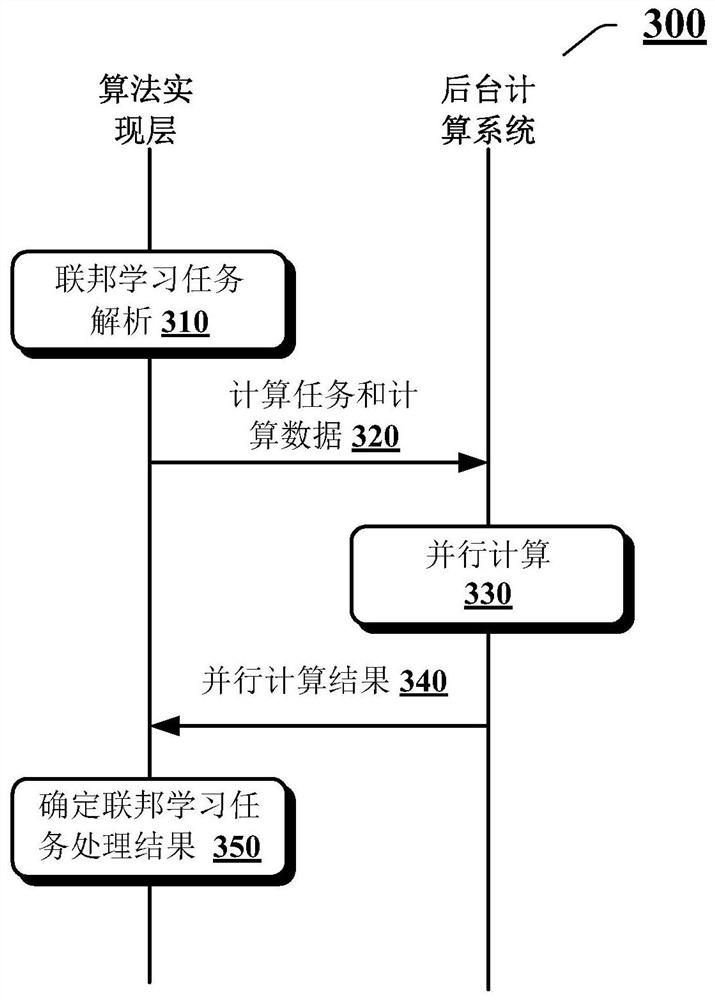

Computing task acceleration processing method, device and equipment for federated learning

ActiveCN112148437AResource allocationDigital data protectionComputer architectureConcurrent computation

The embodiment of the invention provides a method and device for achieving accelerated processing of a computing task. The method comprises the steps: receiving a calculation task and calculation datafrom an external device; determining a parallel computing scheme of the computing task according to the operation type of the computing task and the data size of the computing data, wherein the parallel computing scheme comprises the number of parallel computing blocks and a block thread resource allocation scheme of each parallel computing block, realizing the parallel computing in each parallelcomputing block by utilizing the plurality of distributed threads. calling a parallel computing architecture to execute parallel computing according to the partitioning thread resource allocation scheme to obtain a computing result; and providing the calculation result to the external device. By means of the method, a double-layer parallel processing scheme based on block parallelism and thread parallelism can be used for efficiently achieving computing task parallel processing.

Owner:CLUSTAR TECH LO LTD +1

Accurate analysis method and system for enterprise industry segmentation based on big data

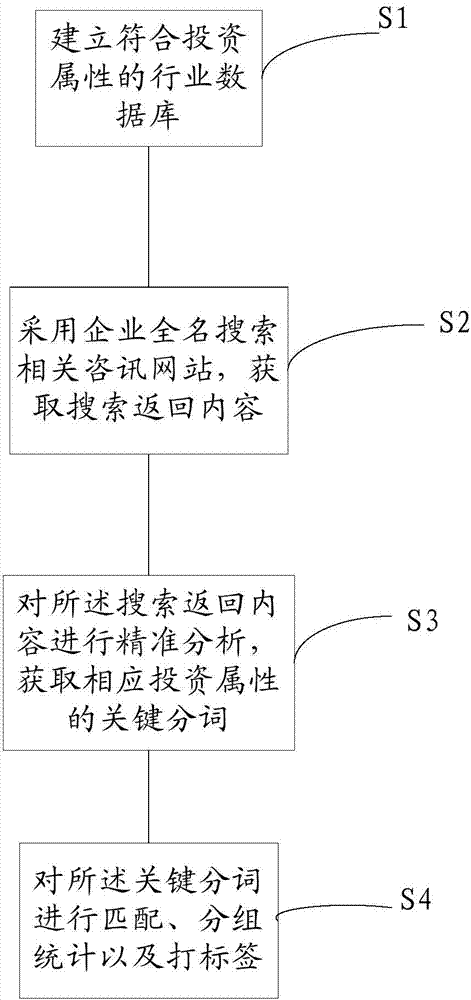

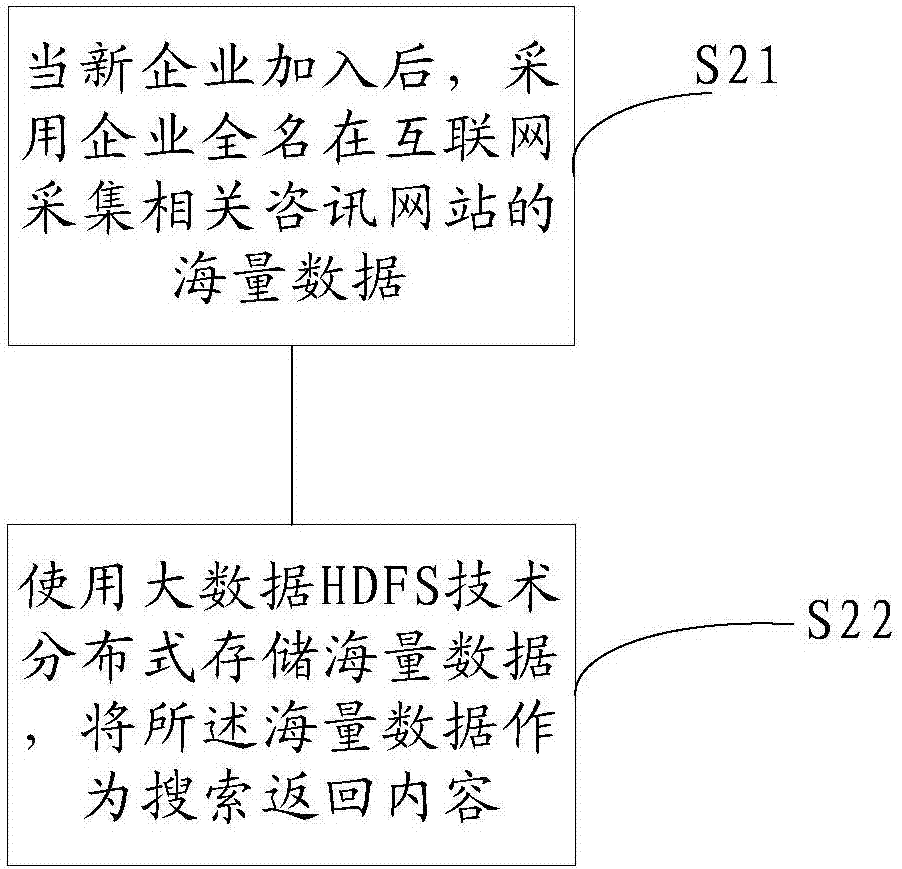

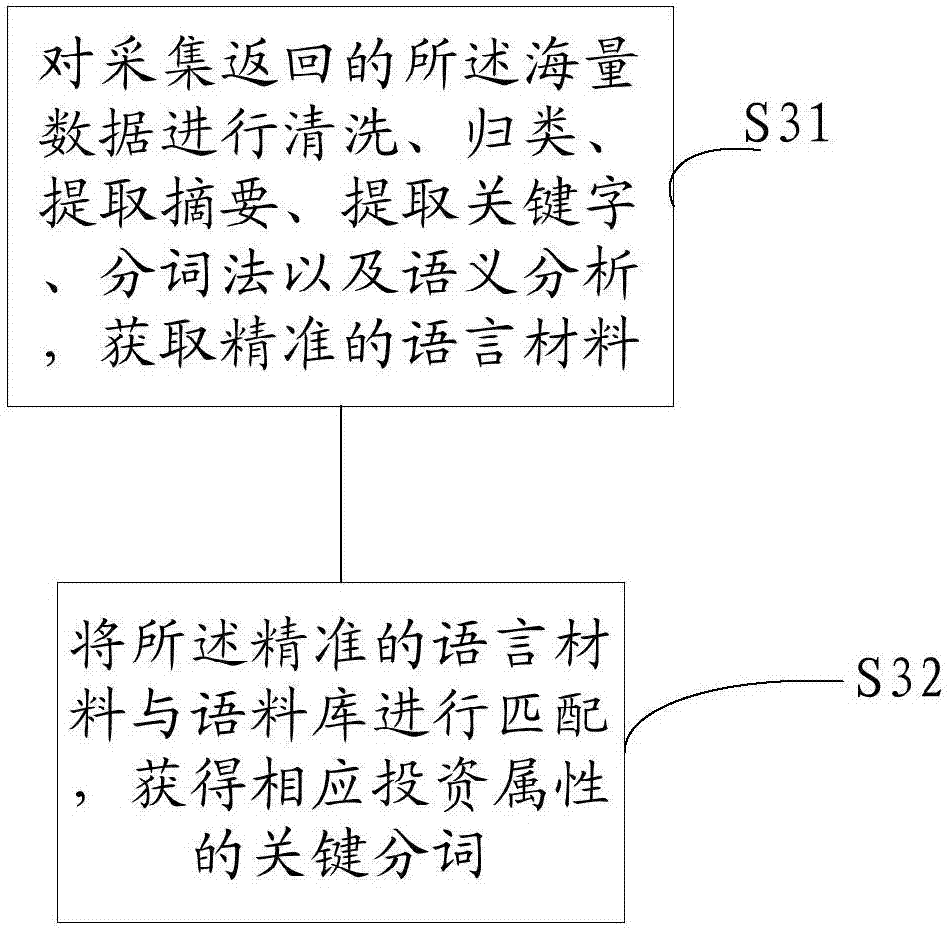

InactiveCN106934054AHelps with batch filteringImprove the efficiency of obtaining investment targetsSpecial data processing applicationsRelevant informationAnalysis method

The present invention relates to an accurate analysis method and system for enterprise industry segmentation based on big data. The method comprises: establishing an industry database in line with investment attributes; searching relevant information websites by using the full name of the enterprise, and obtaining return content by searching; and carrying out accurate analysis on the return content by searching, and obtaining key words of the corresponding investment attributes; and matching, collecting statistics in group of, and labeling the key words. According to the method and system disclosed by the present invention, by establishing the industry database in line with the investment attributes, and by using the a big data distributed parallel computing architecture, accurate extraction, matching and grouping of key words are carried out on mass data; and corresponding enterprises are screened by using the statistical method, so that batch screening of enterprises by the equity investment institution is facilitated, efficiency for the equity investment institution to obtain investment targets is improved, and the cost is low.

Owner:前海梧桐(深圳)数据有限公司

Water surface garbage detection method based on a deep learning algorithm

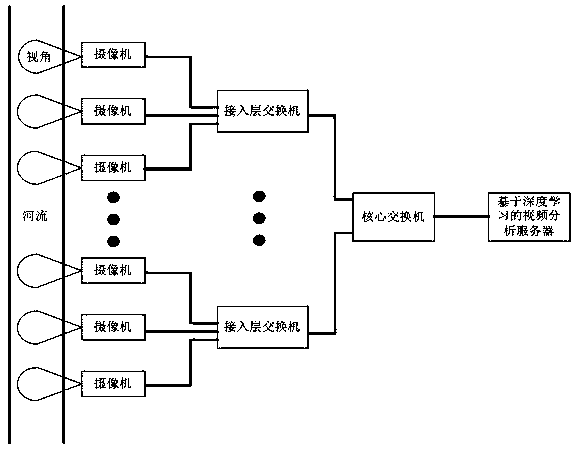

InactiveCN109614924AImprove computing powerAbility to guarantee real-time performanceCharacter and pattern recognitionAlgorithmMulti gpu

A water surface garbage detection method based on a deep learning algorithm comprises the steps that hardware equipment is arranged and installed, front-end cameras are arranged in a plurality of important sections of water areas such as an inland river and a lake to be detected, and the front-end cameras are finally connected to a core switch through an access layer switch; a deep learning algorithm and a feedback mechanism are arranged in the video analysis server based on the deep learning algorithm, and the feedback mechanism performs secondary training on pictures which are misreported ormissed in deep learning training, so that the model characteristics of the continuously trained deep learning algorithm are more comprehensively covered, and the false report rate and the missed report rate of the pictures are reduced; the video analysis server adopts a multi-GPU parallel computing architecture, hardware server resources are utilized to the maximum extent, and the investment costis saved; a CAFFE-based SSD deep learning algorithm is outstanding in calculation speed, and the detection real-time performance is effectively ensured.

Owner:JIANGXI HONGDU AVIATION IND GRP

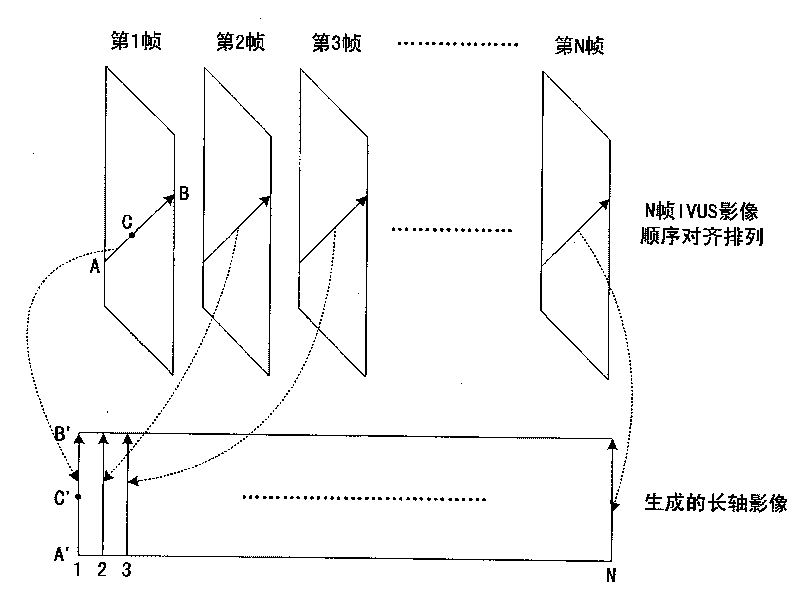

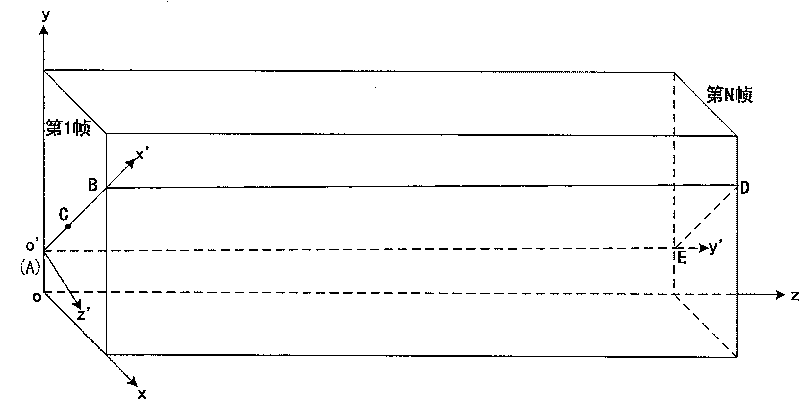

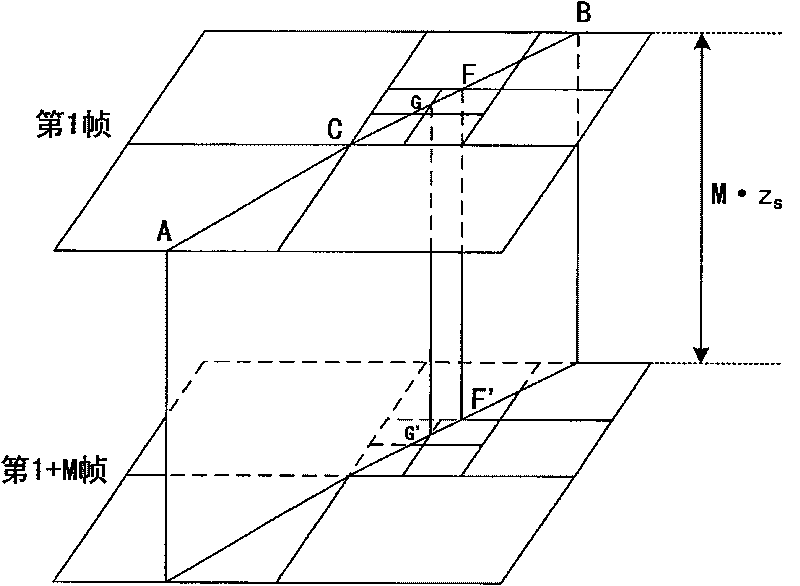

Method for structuring ultrasound long axis image quickly with high fidelity

InactiveCN101739661AImprove utilization efficiencyQuality improvementSurgeryCatheterVideo memoryComputational science

The invention discloses a method for structuring ultrasound long axis image quickly with high fidelity. The method is implemented by using a parallel computing architecture based on a graphics processor to perform efficient management to three-dimensional IVUS data with large data volume by quadtree data structure, performing recursion traverse to the quadtree and computing the intersecting point coordinate of the plane on which the long axis image is located and the quadtree data node, decomposing the long axis image into a series of small planes, then sending to the graphics processor for mapping and interpolation computing. The invention solves the problem that the data volume is too large to be loaded display memory of the graphics processor, improves utilization efficiency of texture cache of the graphics processor, and enhances computation speed and image quality by computing the three-dimensional spline interpolation by using single instruction multidata vector dot product of the graphics processor. The invention can achieve above 30 frame per second in structuring long axis image with high fidelity on common middle / high-grade display card for large scale IVUS image data of 512*512*4000*16bit.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

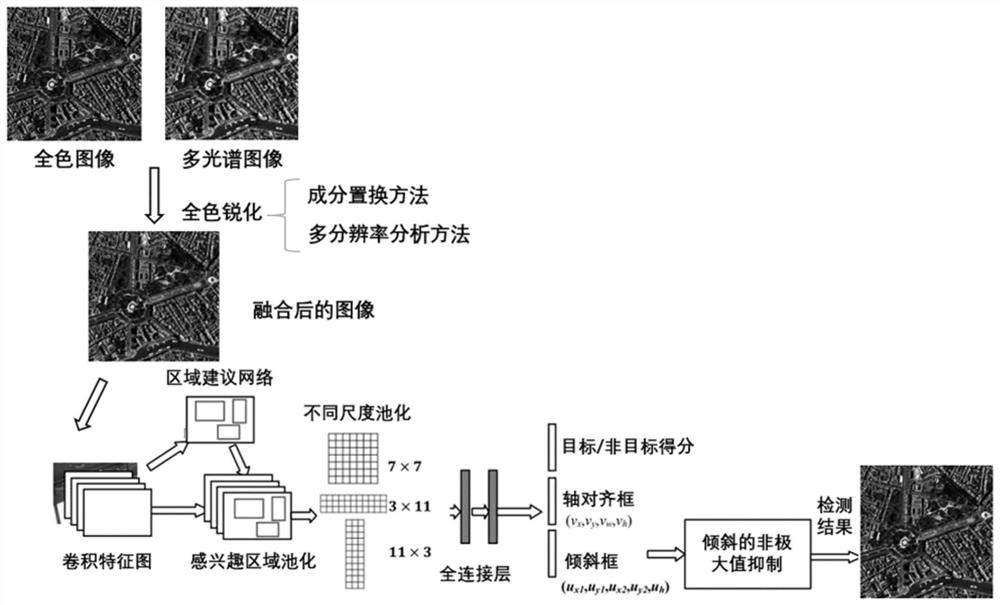

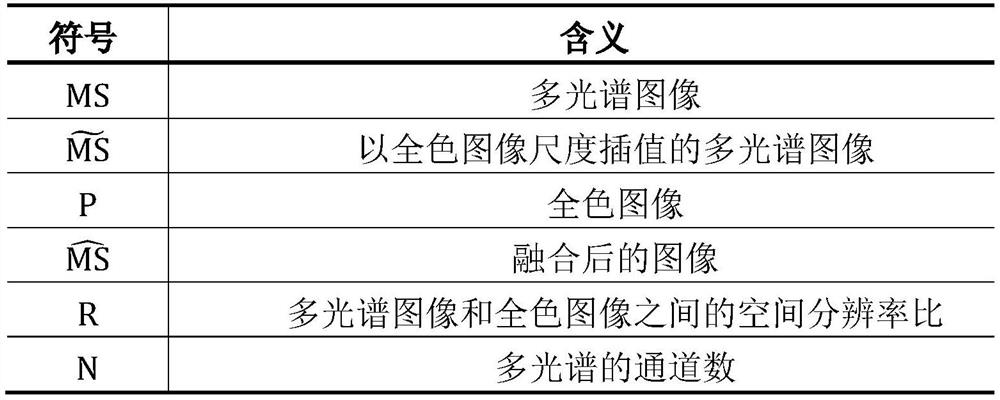

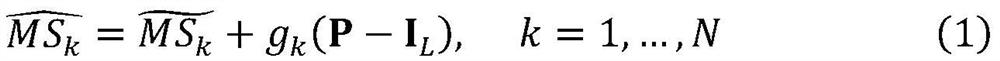

Satellite-borne target fusion detection method based on rotating region convolutional neural network

PendingCN111898534AAchieving small target detectionHigh resolutionScene recognitionNeural architecturesConcurrent computationAlgorithm

The invention discloses a satellite-borne target fusion detection method based on a rotating region convolutional neural network, and relates to the field of satellite-borne target detection. The invention is oriented to intelligent computing platforms such as a universal parallel computing architecture processor, a field programmable gate array, a system-on-chip (SOC) and the like. The method comprises the following steps: firstly, fusing a panchromatic (PAN) image and a multispectral (MS) image by adopting a panchromatic sharpening method, and then detecting a target in the fused spaceborneimage by adopting a target detection framework based on a rotating region convolutional neural network, wherein the target detection framework is based on a Faster R-CNN structure. According to the invention, the detection accuracy of the small target in the multi-source satellite-borne image is improved, the target detection framework has good generalization ability, and the method can be widelyapplied to the fields of target detection, safety monitoring and the like.

Owner:SHANGHAI JIAO TONG UNIV

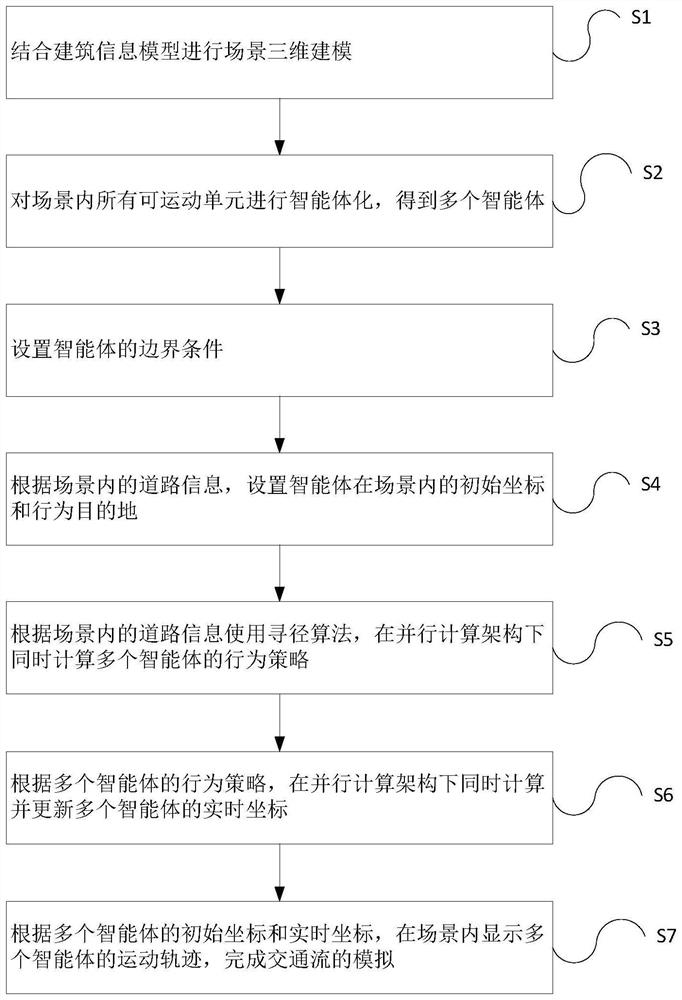

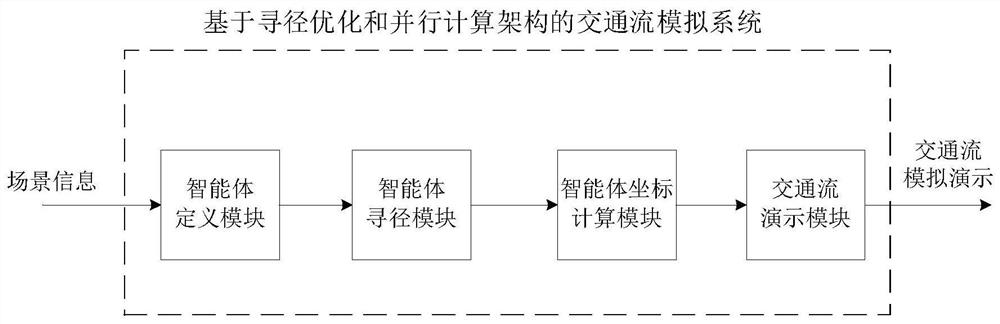

Traffic flow simulation method and system based on routing optimization and parallel computing architecture

ActiveCN111651828ASimulate the realHigh simulationGeometric CADInternal combustion piston enginesConcurrent computationDimensional modeling

The invention provides a traffic flow simulation method based on routing optimization and parallel computing architecture. The traffic flow simulation method comprises the following steps: performingscene three-dimensional modeling in combination with a building information model; performing intelligent agent processing on all the movable units in the scene to obtain a plurality of intelligent agents; setting boundary conditions of the intelligent agent; setting initial coordinates of the intelligent agent in the scene according to the road information in the scene; using a routing algorithmaccording to the road information in the scene, and calculating behavior strategies of a plurality of intelligent agents at the same time under a parallel computing architecture; updating real-time coordinates of the plurality of agents; displaying the motion trails of the plurality of intelligent agents in the scene, and completing the simulation of the traffic flow; the invention further provides a traffic flow simulation system based on the routing optimization and parallel computing architecture. According to the invention, the technical problems that the traditional offline simulation mode through the following flow model cannot be linked with the traffic scheme change in real time, and the difference between the calculated traffic flow simulation result and the real traffic conditionof the road is large can be solved.

Owner:CHINA MERCHANTS CHONGQING COMM RES & DESIGN INST

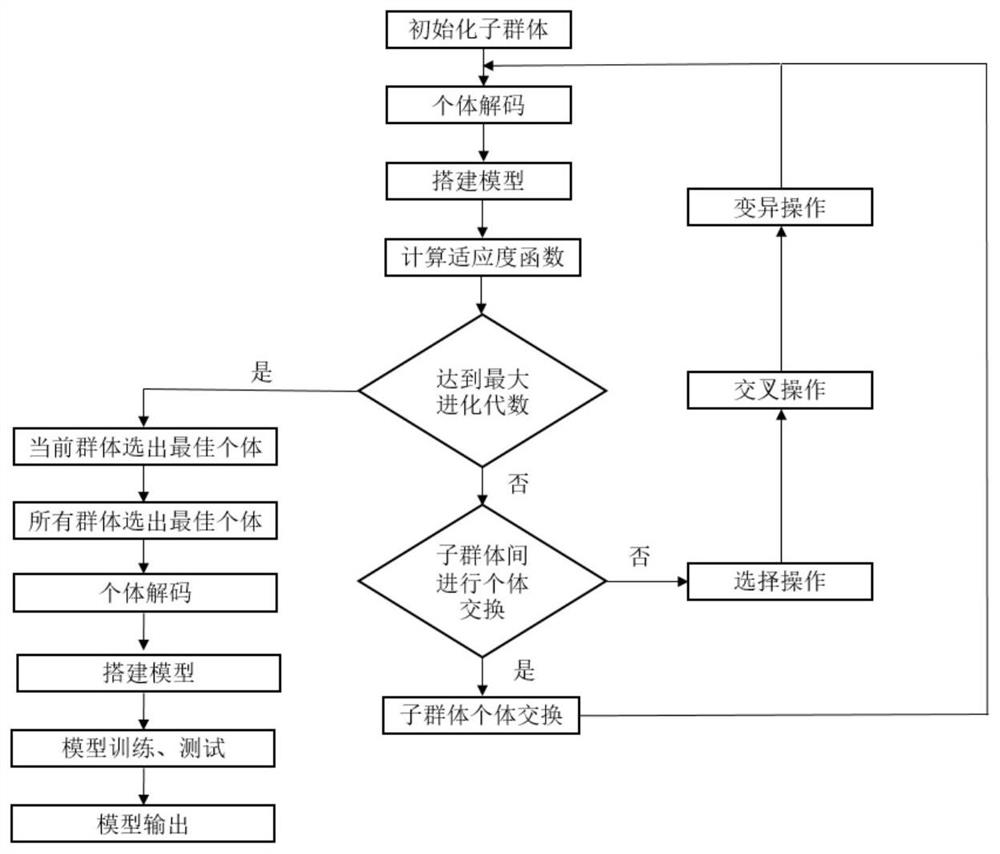

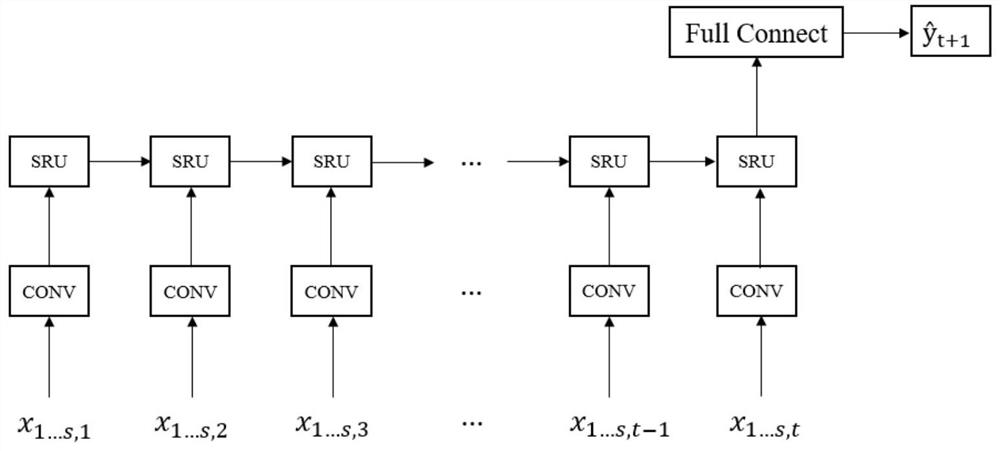

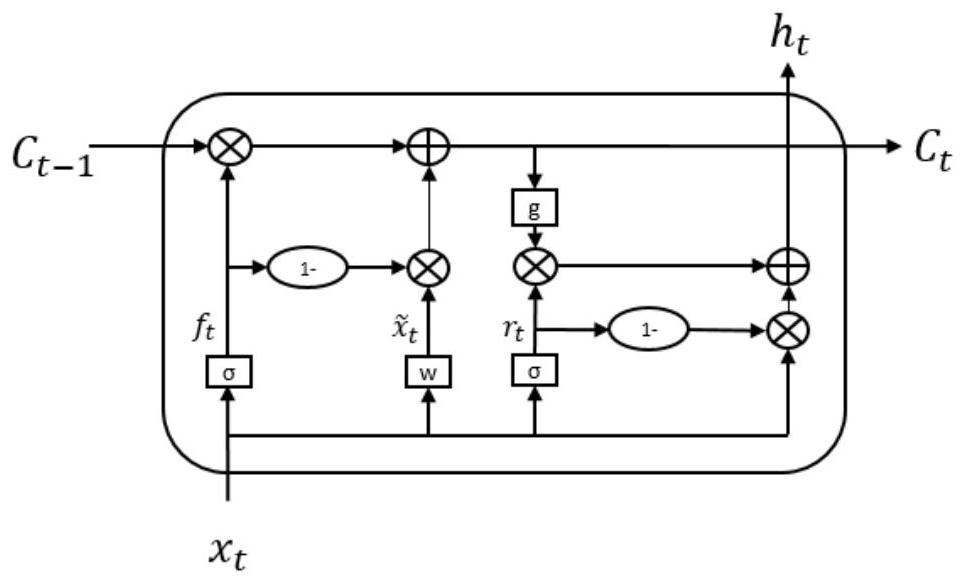

Deep learning parallel computing architecture method and hyper-parameter automatic configuration optimization thereof

PendingCN111709519APredictableShorten the timeNeural architecturesNeural learning methodsConcurrent computationConfiguration optimization

The invention discloses a deep learning parallel computing architecture method and hyper-parameter automatic configuration optimization thereof, and particularly relates to the field of deep learning.Firstly, a CNN is used for capturing spatial features of all places, and then an SRU is used for capturing sequential features of spatiotemporal data on the basis of the spatial features and used forregression prediction of the spatiotemporal data and hyper-parameter automatic configuration optimization of the spatiotemporal data; the invention has the beneficial effects that 1, regression prediction of spatio-temporal data is realized based on the SRU, so that parallel acceleration of the model to a certain extent is realized, and the time consumed by training and reasoning is reduced; 2, automatic configuration of the hyper-parameters of the model is realized based on a parallel genetic algorithm, so that manpower, energy and time consumed by hyper-parameter configuration are reduced,the hyper-parameters are more reasonable, and the prediction performance of the model is better.

Owner:HUNAN UNIV

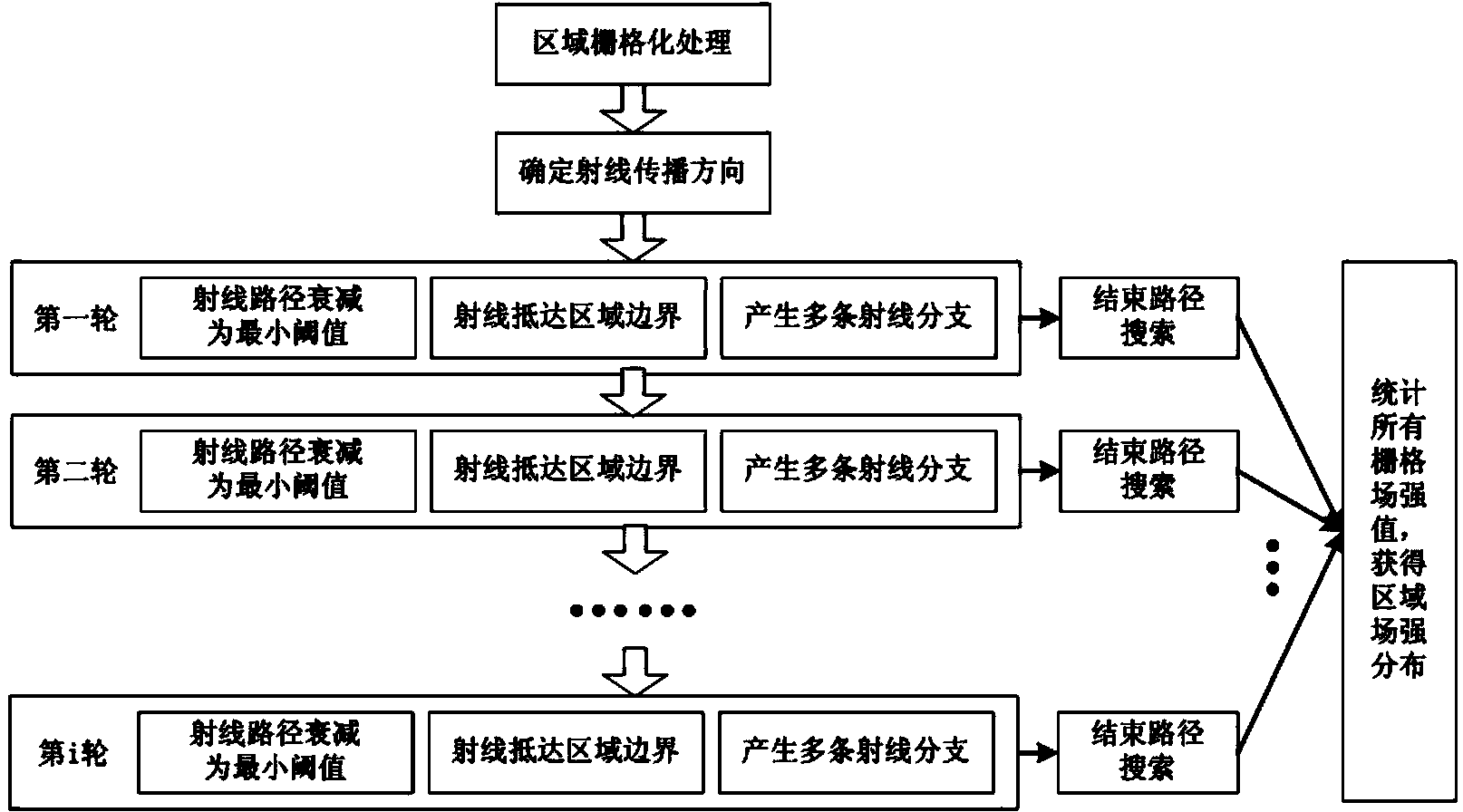

Regional electromagnetic environment ray propagation path parallel search method

ActiveCN104280621AImprove computing efficiencyReduce the amount of calculationElectromagentic field characteristicsSize increaseComputational physics

The invention discloses a regional electromagnetic environment ray propagation path parallel search method. The method comprises the steps that an electromagnetic environment region to be calculated is rasterized, parallel search is conducted on all ray propagation paths starting from a radiation source, statistics is conducted on all paths reaching grids, and the field intensity distribution of the whole region is obtained. The regional electromagnetic environment ray propagation path parallel search method has the advantages that as field intensity calculation of a small grid point in the region is completed, calculation of the field intensity distribution of the whole region is completed, and therefore the calculation quantity is greatly reduced; all the ray paths in the region can be searched for concurrently, and parallel computing architectures including cuda, OpenCL, open MP, Mpi, MapReduce and the like are quite suitable for accelerating the search process; the calculation quantity of the field intensity distribution of the whole region is reduced into the calculation quantity of the small grid point in the region, the additional calculation quantity caused by the size increase of the region is greatly reduced, and the bottleneck limiting the regional electromagnetic calculation is broken.

Owner:SOUTHWEST CHINA RES INST OF ELECTRONICS EQUIP

Method for improving operating efficiency of parallel architecture

InactiveCN104199638AImprove operational efficiencyOvercome the disadvantage of equal statusResource allocationConcurrent instruction executionTraffic capacityData stream

The invention discloses a method for improving operating efficiency of a parallel architecture. A data flow based flow equalizing algorithm is provided in the parallel computing architecture. The method comprises the steps of firstly determining channel priority levels according to processing capacities of channels, and determining the processing capacities required by data messages according to feature fields in data heads after receiving data; sending the data needing good processing capacities to high-priority-level channels to queue up; sequentially sending out the data according to data bulks in buffer areas of the channels different in priority level from large to small; firstly sending out the data in the high-priority-level channels under the same conditions. The requirement of the multichannel input and multichannel output computing architecture having priority level relation existing among the output channels can be met, and the processing efficiency of the parallel computing architecture is remarkably improved.

Owner:INSPUR GROUP CO LTD

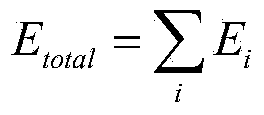

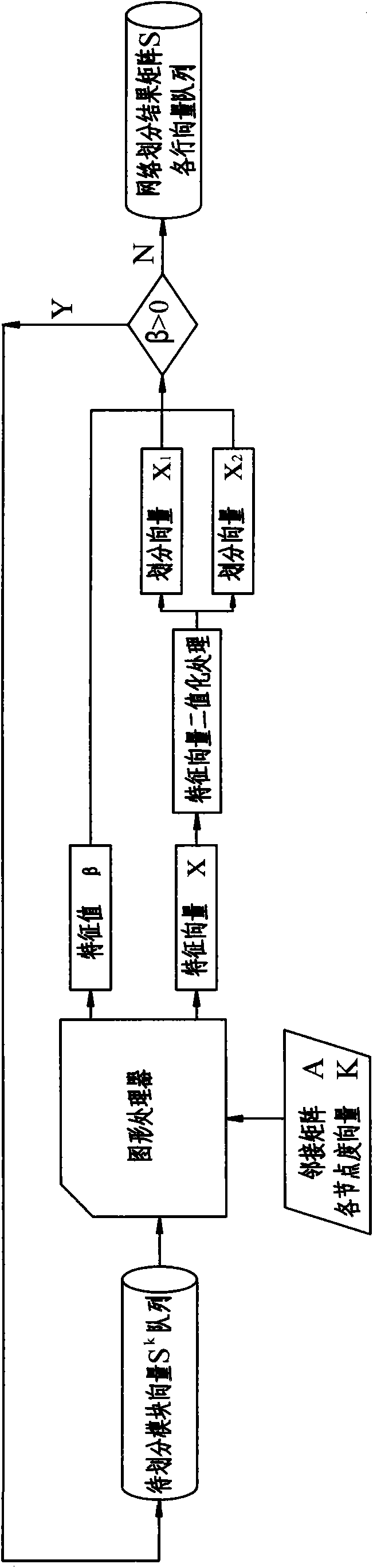

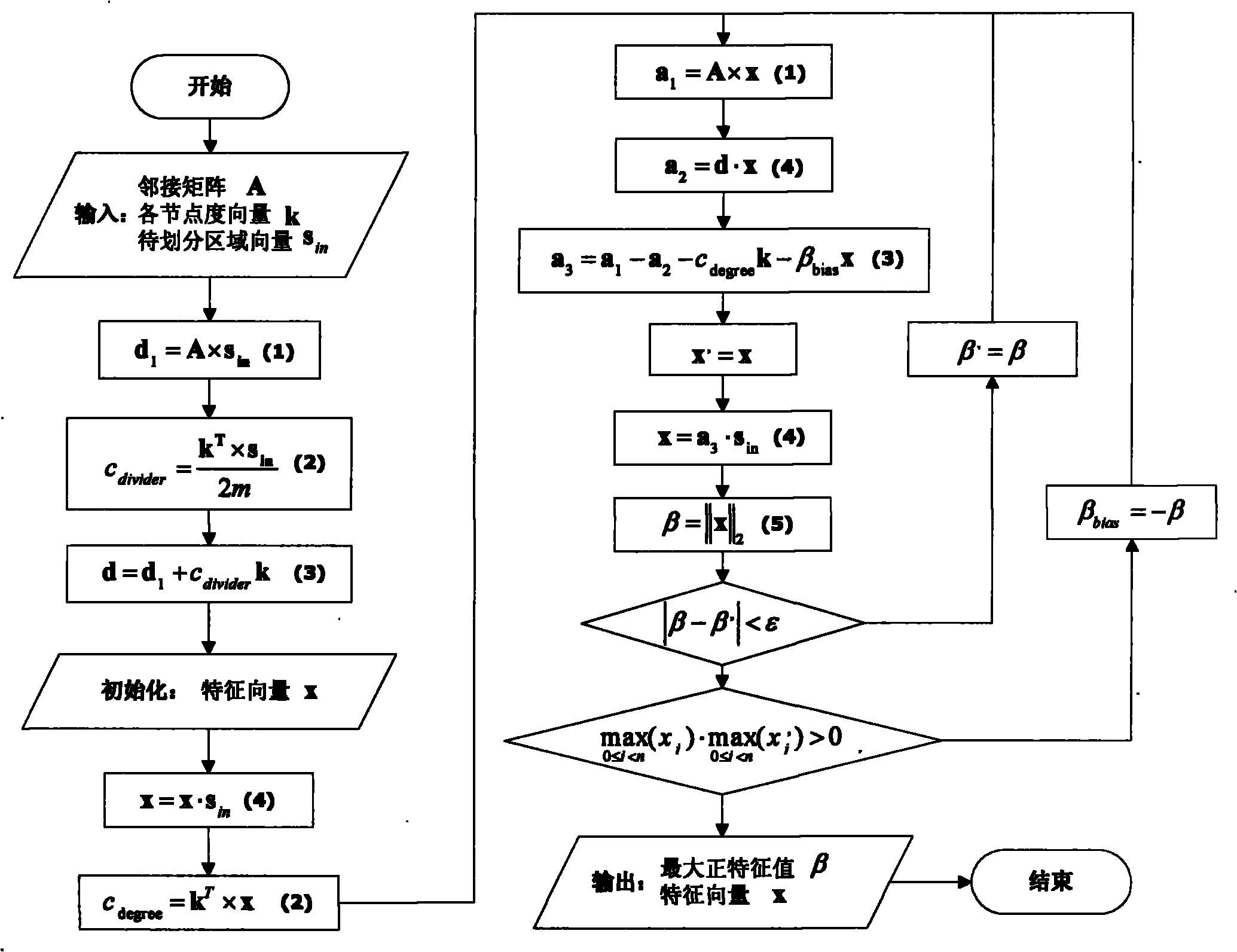

Method for partitioning large-scale static network based on graphics processor

ActiveCN101977120AGuaranteed continuityParallel Computing Performance ImprovementsData switching networksFeature vectorGraphics

The invention discloses a method for partitioning a large-scale static network based on a graphics processor, which mainly aims to solve the problem that the existing network partitioning method is not suitable for partitioning a large-scale network and low in efficiency. The method comprises the following steps: by defining a result matrix S of network partitioning, transforming the operation ofrespectively calculating the characteristic vector and characteristic value of each sub-module in network partitioning into the operation of calculating the characteristic vector and characteristic value of the whole network; and according to the parallel computing architecture of the graphics processor, decomposing the calculation of the characteristic vector and the characteristic value in network partitioning into a plurality of basic calculations, so that the original huge dense matrix calculation is simplified to the computations between sparse matrixes and vectors. In the invention, through reasonably combining the basic calculations, memory space is saved, the consumption of data transmission is decreased, and the efficiency of network partitioning is effectively improved, so that the partitioning of an extra-large scale static network which is difficult to implement becomes possible.

Owner:TSINGHUA UNIV

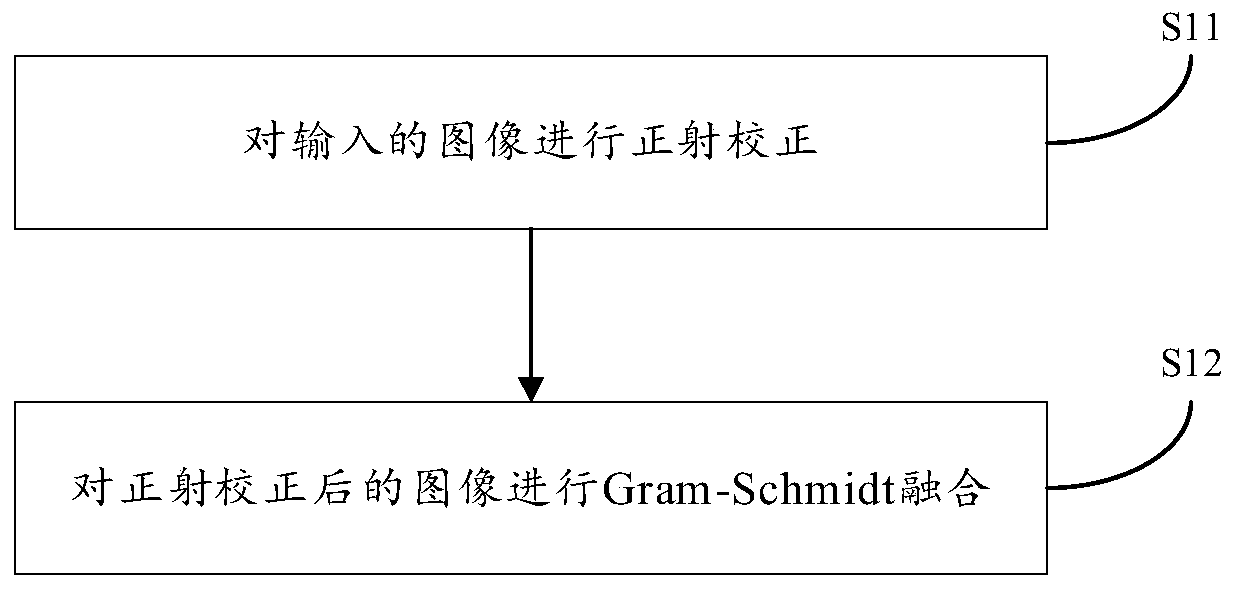

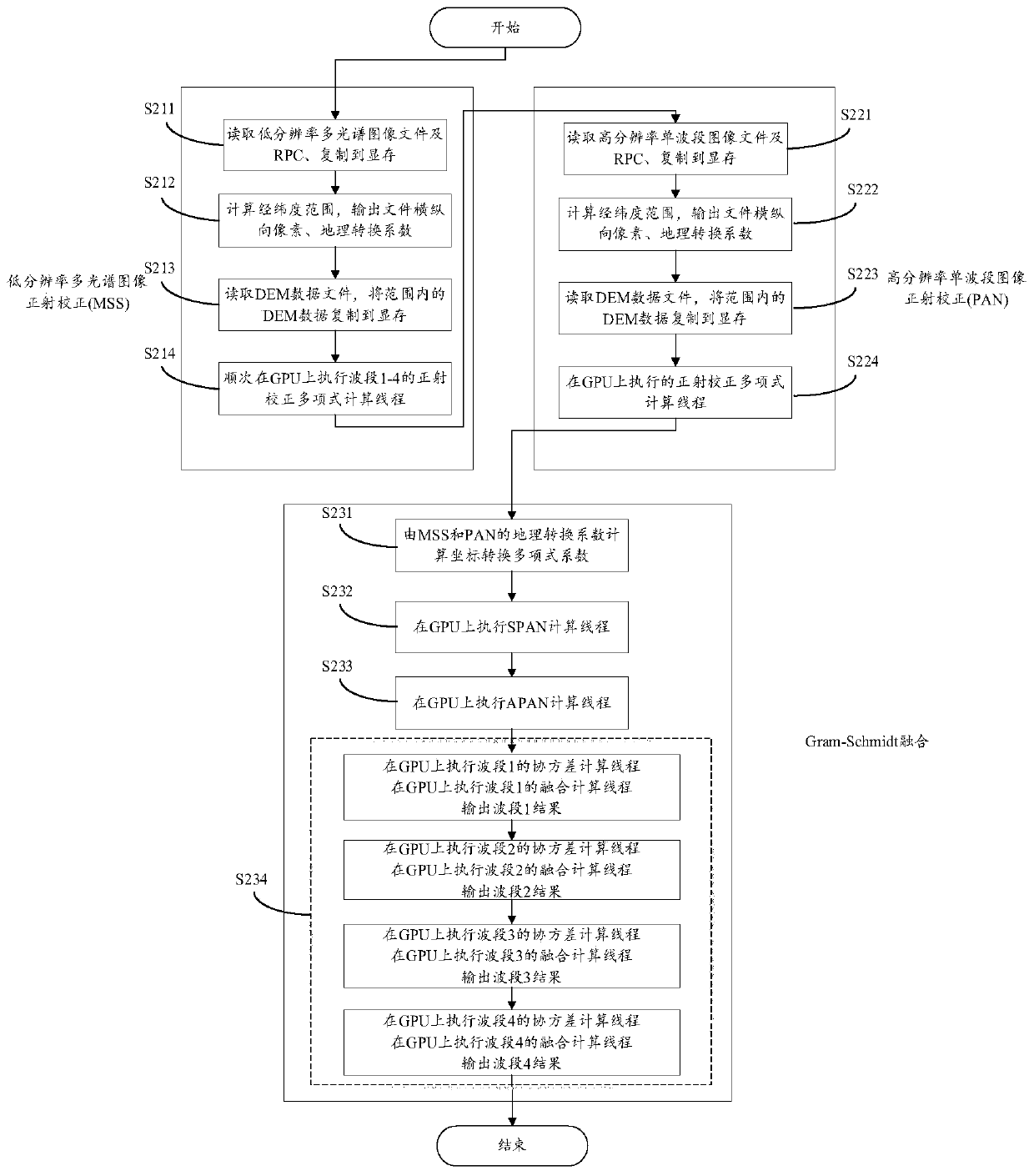

Remote sensing image preprocessing method

PendingCN111383158AAvoid multiple swapsReduce time consumptionImage enhancementImage analysisConcurrent computationComputer graphics (images)

The invention provides a remote sensing image preprocessing method. The method comprises a step of carrying out orthographic correction on input remote sensing images and a step of carrying out Gram-Schmidt fusion on orthographically corrected images. For each waveband of each image, a plurality of computing threads are executed in parallel on a GPU; and the images comprise single-scene low-resolution multispectral images MSS and high-resolution single-waveband images PAN. The method is based on a CUDA (Computed Unified Device Architecture) universal parallel computing architecture. Accordingto the method, the remote sensing images are preprocessed by utilizing the parallel processing capability of the GPU; and the orthographic correction and the Gram-Schmidt fusion algorithms in processing are improved; and therefore, the parallel processing of the remote sensing images on thousands of threads on the GPU is realized, and processing speed is greatly increased.

Owner:GEOVIS CO LTD

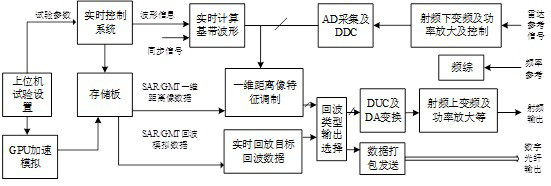

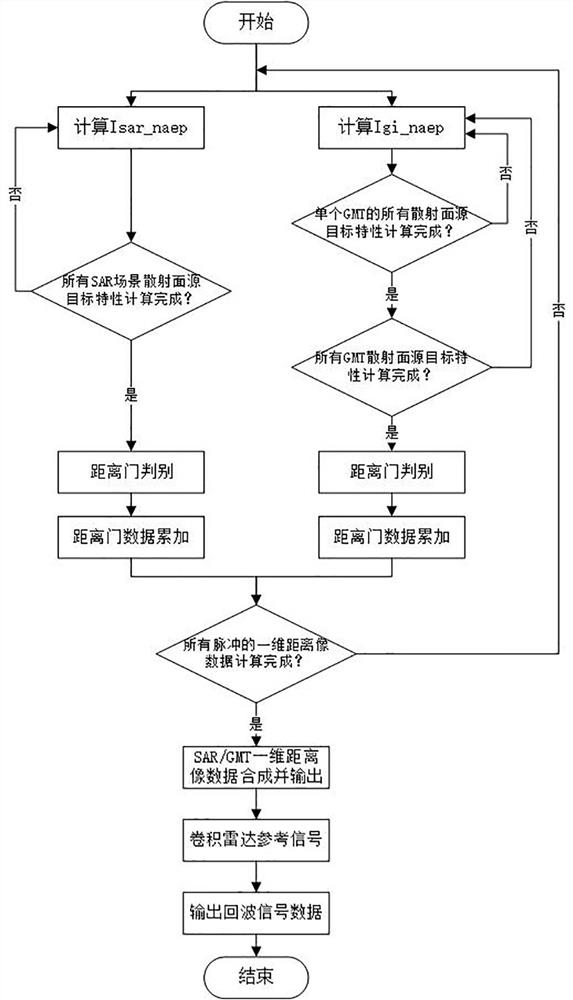

Simultaneous SAR/GMT echo simulation method and system

ActiveCN114252855AConducive to controlling the number of moving targetsWave based measurement systemsReal-time Control SystemConcurrent computation

The invention discloses a simultaneous SAR / GMT echo simulation method and system. The method comprises the following steps: setting test parameters in an upper computer; importing test parameters, running an SAR / GMT distributed scattering surface source parallel algorithm under a GPU architecture to obtain independent and merged SAR / GMT one-dimensional range profile data and echo data, and sending the data and echo data to a storage board; the upper computer outputs test parameters including echo types to the real-time control system; the signal processing board card selects and calls data in the storage board according to the echo type and then outputs the data through an optical fiber network; and according to the echo type of the test parameter, the real-time control system controls the signal processing board card to process the one-dimensional range profile data or the echo data, then DUC and DA conversion is carried out, and the data is output after being processed by the radio frequency upper variation system. According to the method, a GPU parallel computing architecture is adopted, and SAR radar echo signals synthesized by SAR / GMT at the same time can be simulated in a single channel.

Owner:南京雷电信息技术有限公司

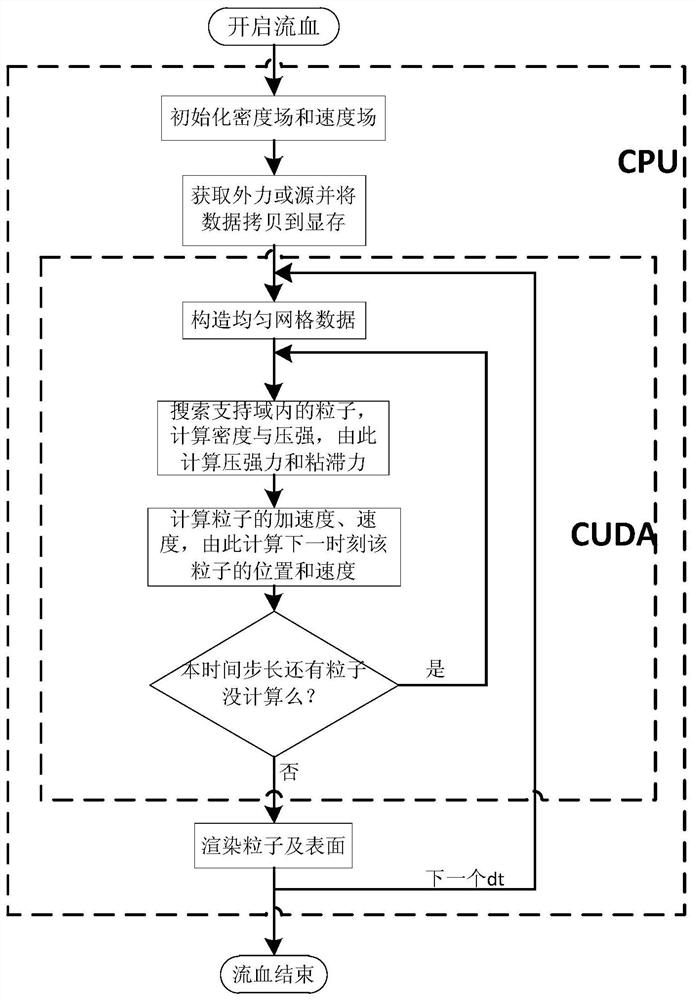

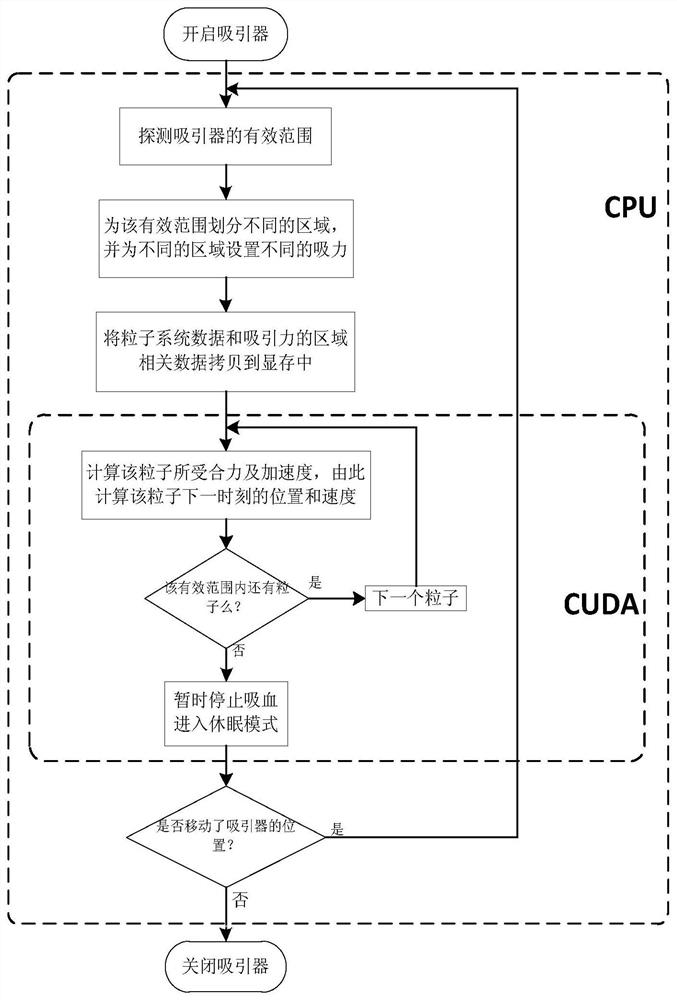

A Method for Simulating Bleeding and Handling Acceleration in Smooth Particle Hydrodynamics

ActiveCN107633123BReduce computationFast operationDesign optimisation/simulationProcessor architectures/configurationComputational scienceConcurrent computation

A method for smooth particle fluid dynamics simulation of bleeding and processing acceleration, using a simulation method based on GPU acceleration to simulate bleeding in virtual surgery and the effect of using the siphon principle to suck out blood; at the same time, using the grid method to divide the problem in real time Area, create a space grid with the support domain as the side length, search the nearest neighbor particles through the adjacent grid, and complete the solution of the particle control equation and the calculation of the interaction between blood and solids through the parallel computing architecture (CUDA) multi-threaded parallel acceleration technology , greatly improving the computing efficiency, thereby improving the real-time performance of surgical training. In addition; an improved moving cube algorithm (marching cube) is used for the rendering of fluid surfaces, which greatly improves the realism of surgical training.

Owner:ZHEJIANG UNIV OF TECH

Real-time simulation test method of power system electromagnetic transient state based on cuda parallel computing

InactiveCN103186366BRealize the requirements of electromagnetic transient real-time simulation calculationImprove performanceConcurrent instruction executionTransient stateReal-time simulation

The invention discloses a test method for achieving electromagnetic transient real-time simulation of an electrical power system based on CUDA parallel computing. An electromagnetic transient simulation test host computer adopts a CPU (central processing unit) and GPU (ground power unit) mode; the GPU uses a CUDA platform parallel computing framework; the simulation test host computer is connected with collection devices of all channels of signals through the gigabit Ethernet; a model can be greatly simplified mainly based on of the digital substation simulation instead of the whole-network or whole system simulation, the traditional simulation speed can be increased by dozens of times or hundreds of times, and requirements of transformer substation electromagnetic transient real-time simulation computing are met; and compared with the cost of a large parallel computer or a professional parallel computer, the cost of the CUDA parallel computing platform is greatly reduced. According to the method, in the aspect of electromagnetic transient real-time simulation of the electrical power system, the performance is greatly improved, the cost is substantially reduced, and the method has a wide popularization value.

Owner:SHENZHEN KANGBIDA CONTROL TECH

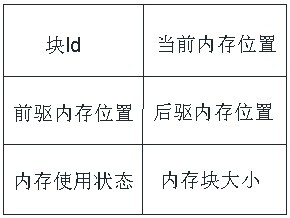

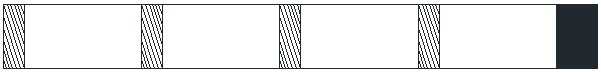

Dynamic memory allocation method and device based on GPU (Graphics Processing Unit) and memory linked list

ActiveCN113296961ASmall footprintResource allocationMemory adressing/allocation/relocationComputer architectureConcurrent computation

The invention discloses a dynamic memory allocation method based on a GPU (Graphics Processing Unit), which comprises the following steps of: recording the size of a memory block and the position of a first available memory in a system through a set memory linked list management array so as to search a corresponding memory block which can be used for allocation; and then, according to the size of the memory needing to be allocated and the information of the memory blocks which can be used for allocation, setting parameters of the data structure of the memory blocks needing to be allocated so as to apply for the memory from the system and realize dynamic allocation of the memory. The new memory linked list structure provided by the invention has the characteristics of small occupied memory and the like, and meanwhile, the dynamic memory allocation method disclosed by the invention can be applied to internal allocation in a parallel computing architecture OpenGL of a GPU processor so as to adapt to parallel computing. The invention further discloses a dynamic memory allocation device based on the GPU and a storage medium.

Owner:ZWCAD SOFTWARE CO LTD

A Method to Implement Fast Wavelet Transform by Blocking Using GPU

ActiveCN103198451BGuaranteed accuracyOvercoming imprecise resultsProcessor architectures/configurationComputational scienceGraphics

The invention discloses a method utilizing a graphic processing unit (GPU) for achieving rapid wavelet transformation through segmentation. The method utilizing the GPU for achieving rapid wavelet transformation through segmentation mainly solves the problem that the speed of wavelet transformation in the prior art is low. Directing at the features of a parallel computing framework of the GPU, the method which segments data and conducts parallel processing to the data is designed. The method includes the following steps: copying an original image, segmenting the data, expanding the data, copying the data to a shared memory, transforming rows, transforming lines, canceling data expansion and copying the transformed data to a host machine memory. According to the method utilizing the GPU for achieving rapid wavelet transformation through segmentation, accuracy of a wavelet transformation result is guaranteed through the expansion of the segmented data, the visiting speed of the data is improved through the transformation in the shared memory, interaction between the data and a global memory is avoided, and the processing speed of the whole image is improved through the paralleling of image blocks and the paralleling among pixel points of image block branches.

Owner:XIDIAN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com