Patents

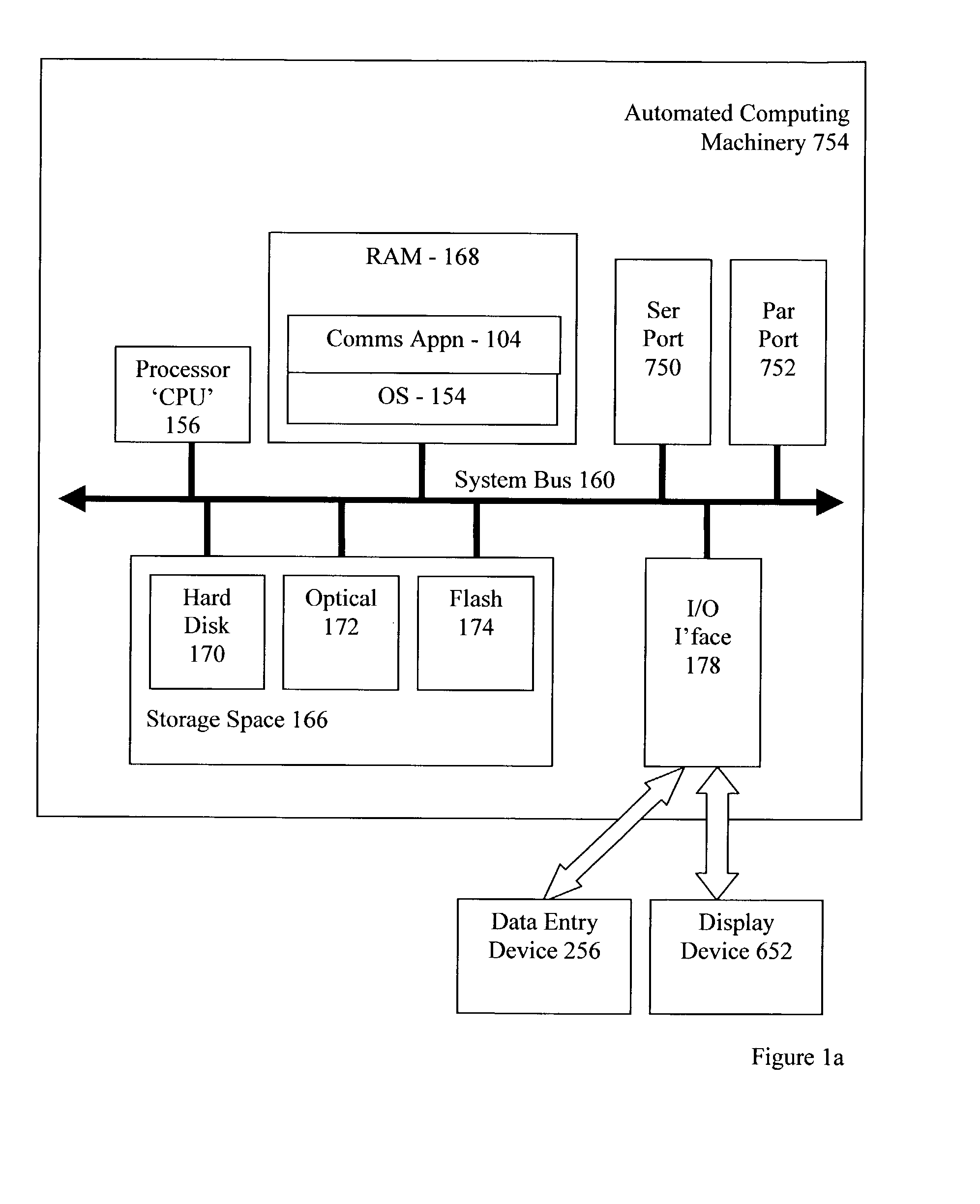

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1411 results about "Virtual camera" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

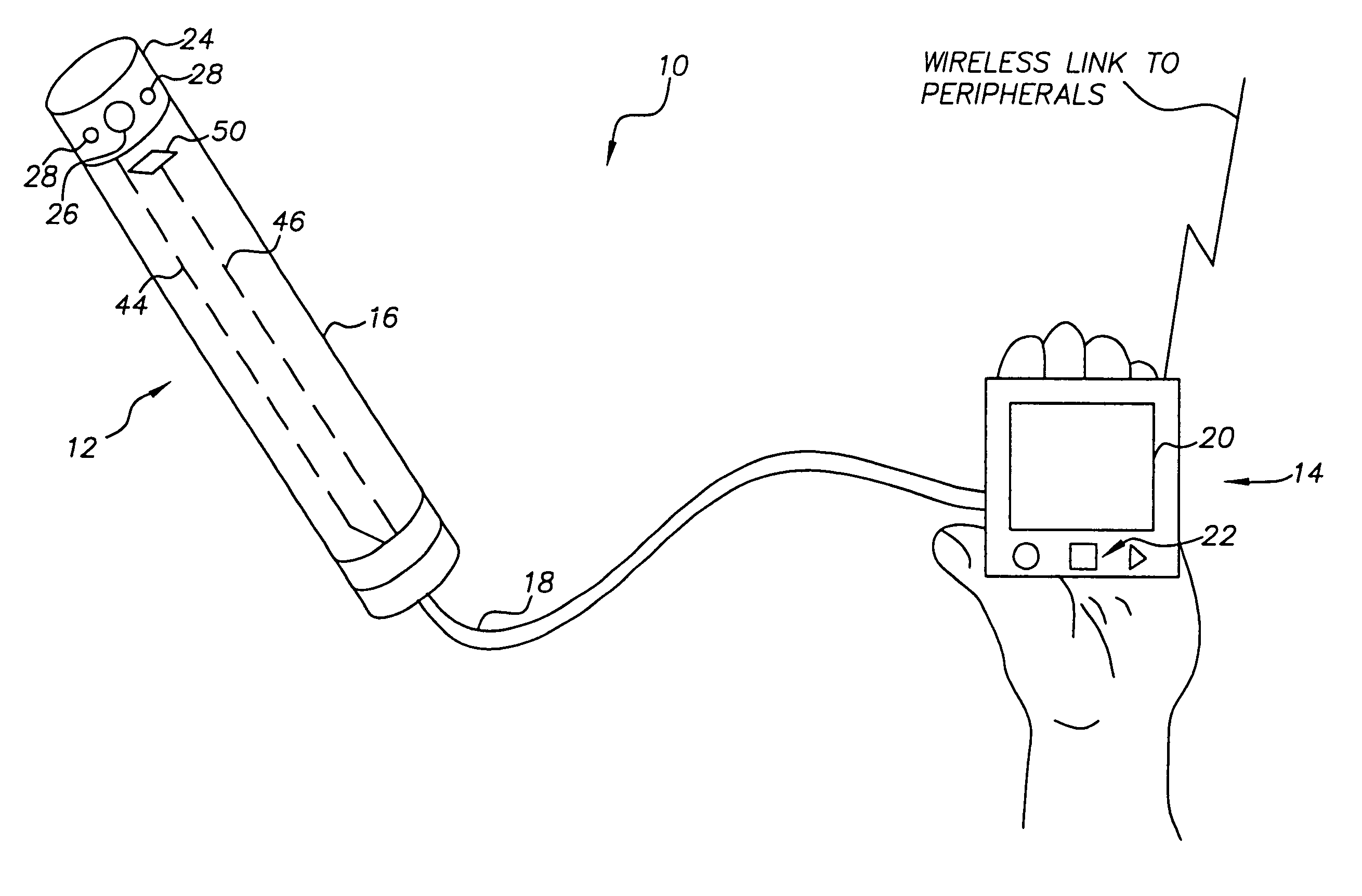

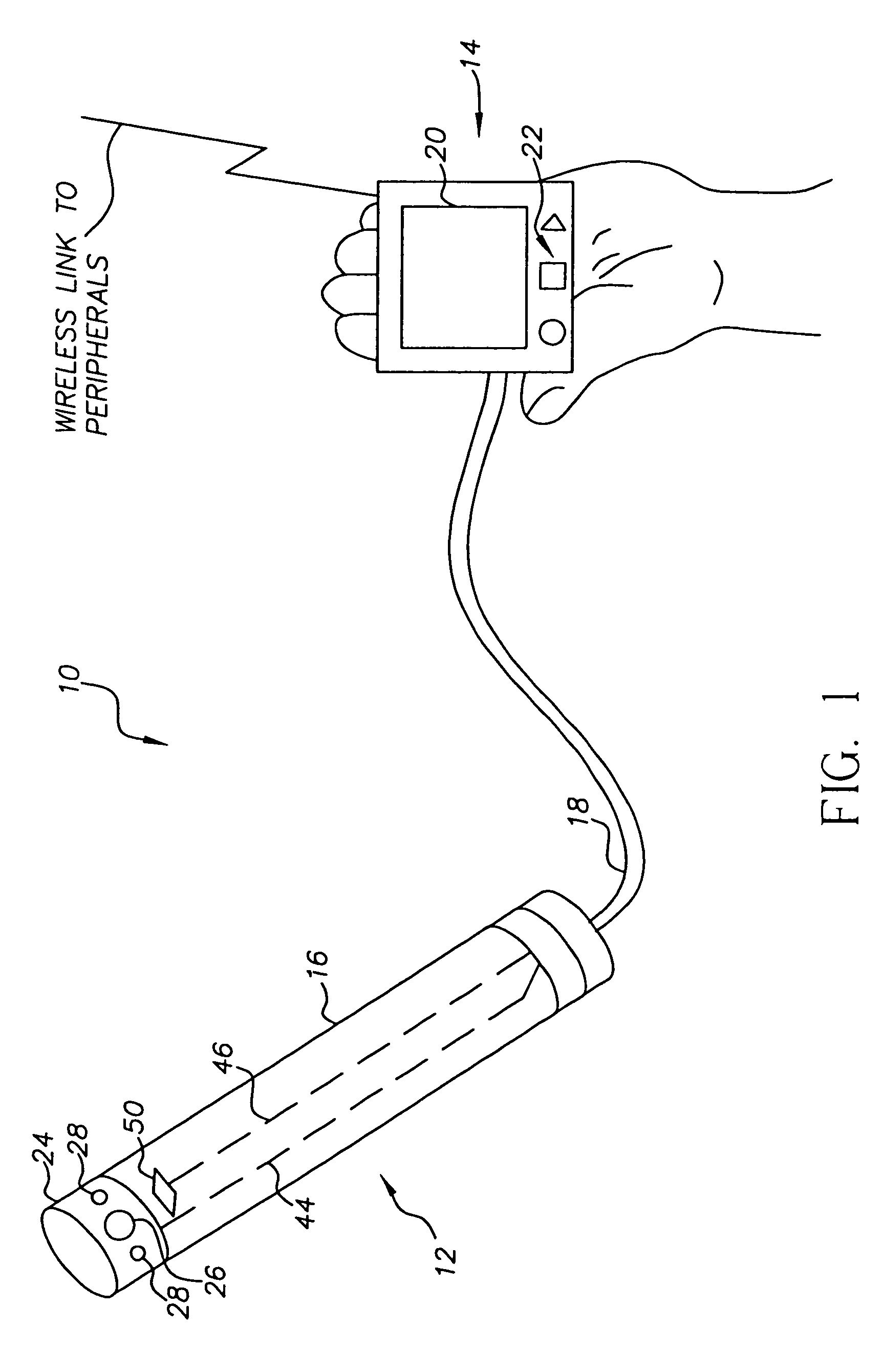

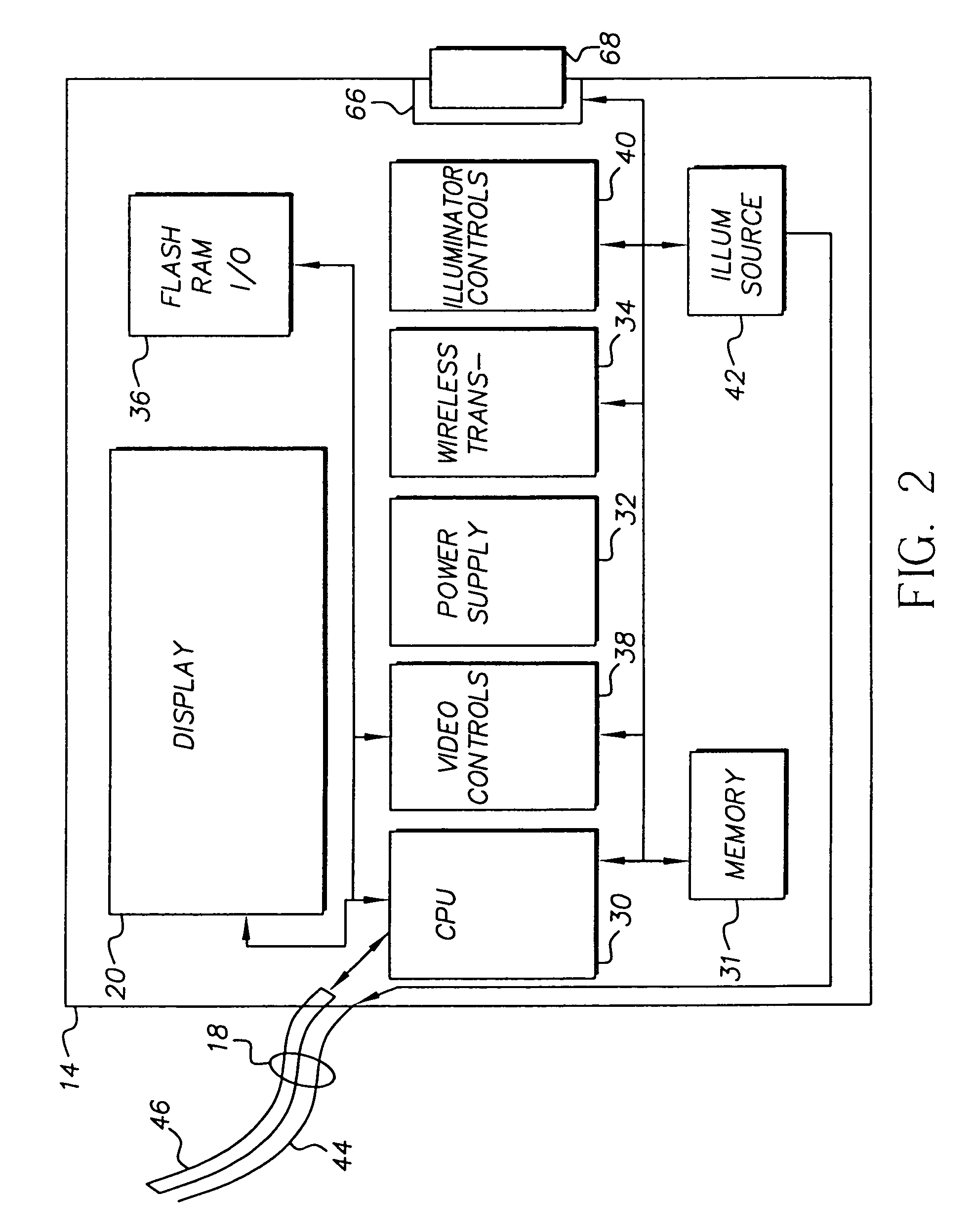

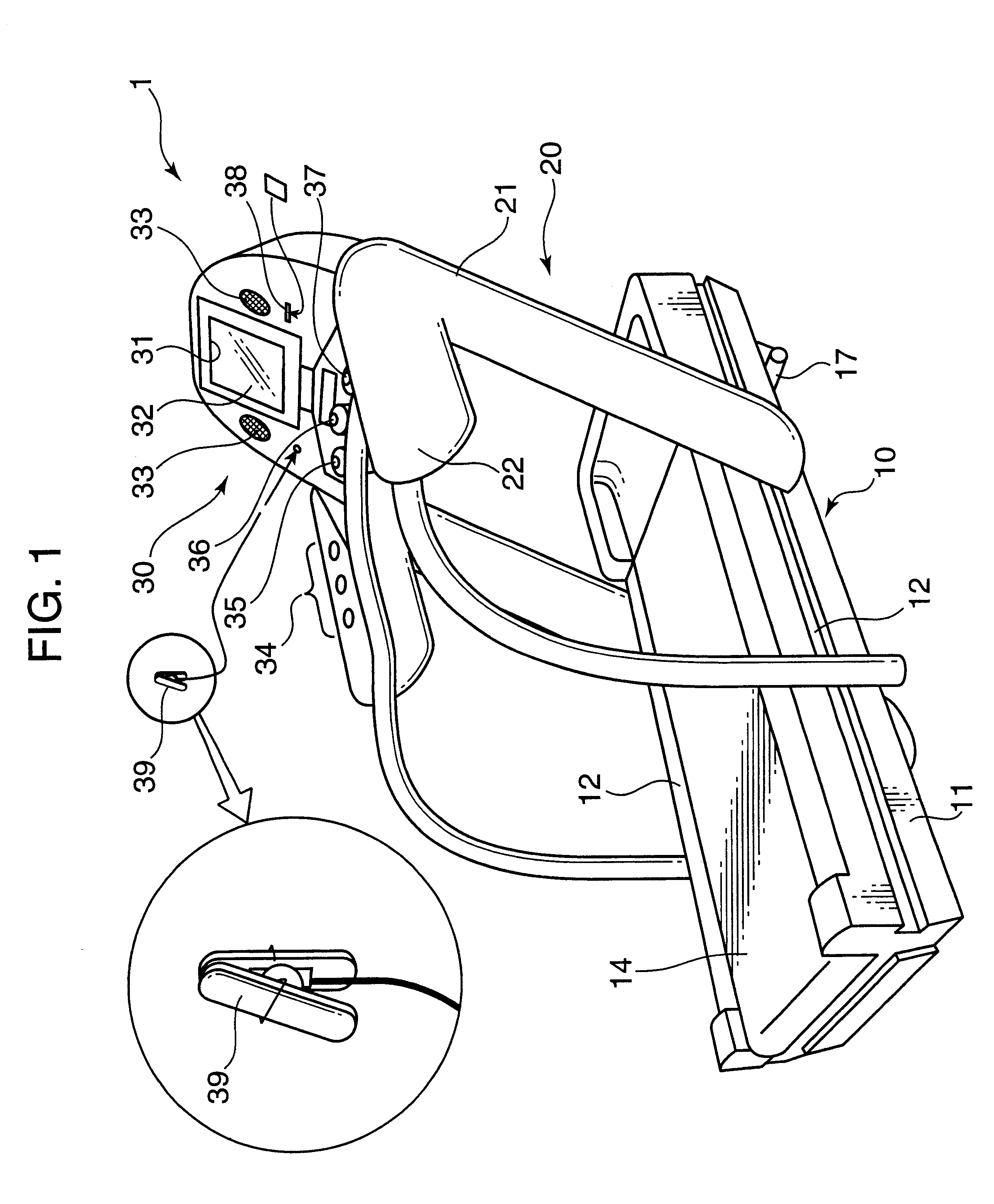

Intra-oral camera system with chair-mounted display

A portable intra-oral capture and display system, designed for use by a dental practitioner in connection with a patient seated in a dental chair, includes: a handpiece elongated for insertion into an oral cavity of the patient, where the handpiece includes a light emitter on a distal end thereof for illuminating an object in the cavity and an image sensor for capturing an image of the object and generating an image signal therefrom; a monitor interconnected with the handpiece, where the monitor contains electronics for processing the image for display and a display element for displaying the image, where the interconnection between the monitor and the handpiece includes an electrical connection for communicating the image signal from the image sensor in the camera to the electronics in the monitor; and a receptacle on the dental chair for receiving the monitor, wherein the receptacle conforms to the monitor such that the monitor may be withdrawn from the receptacle in order to allow the display element to be seen by the dental practitioner or the patient.

Owner:CARESTREAM HEALTH INC

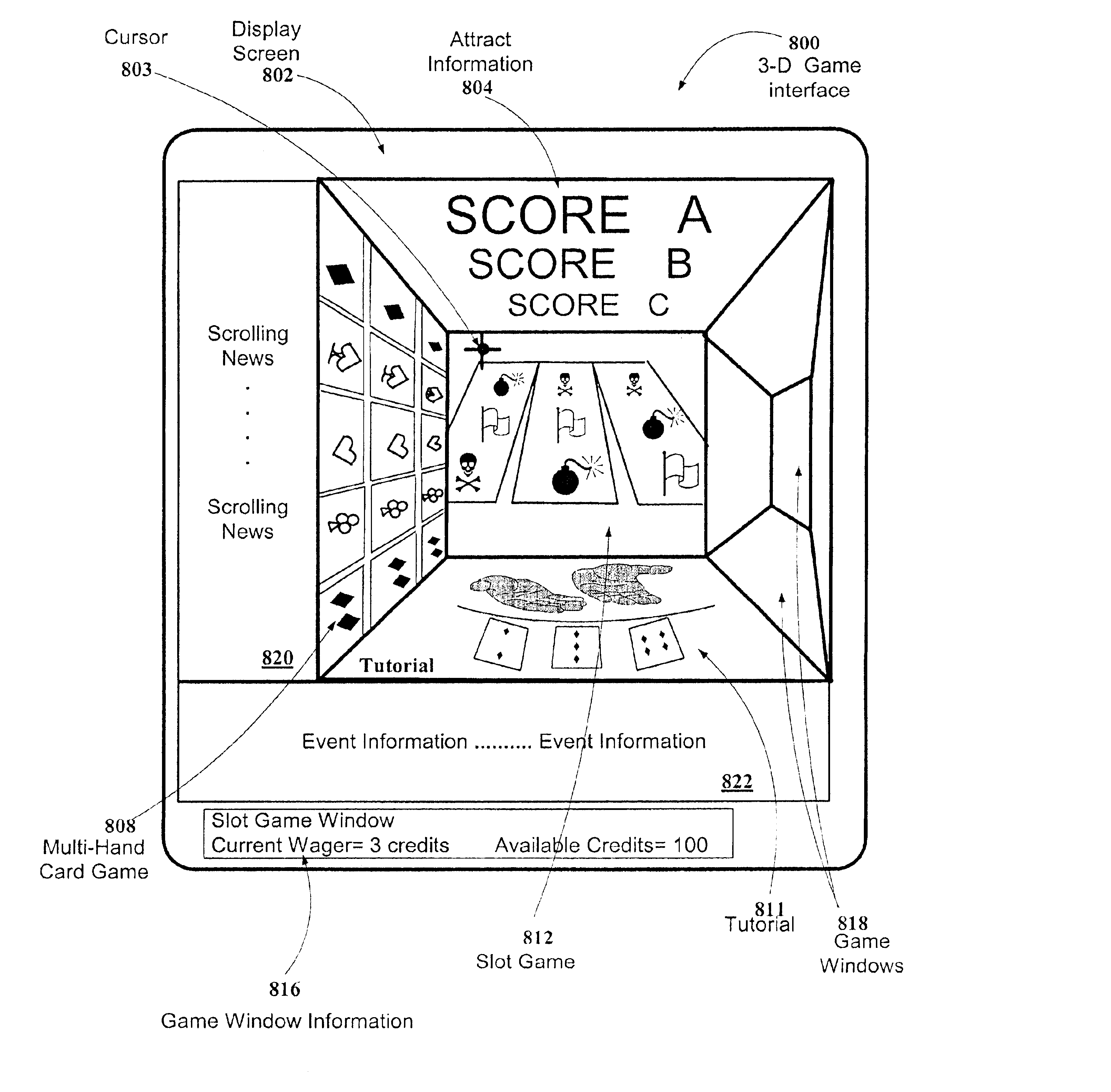

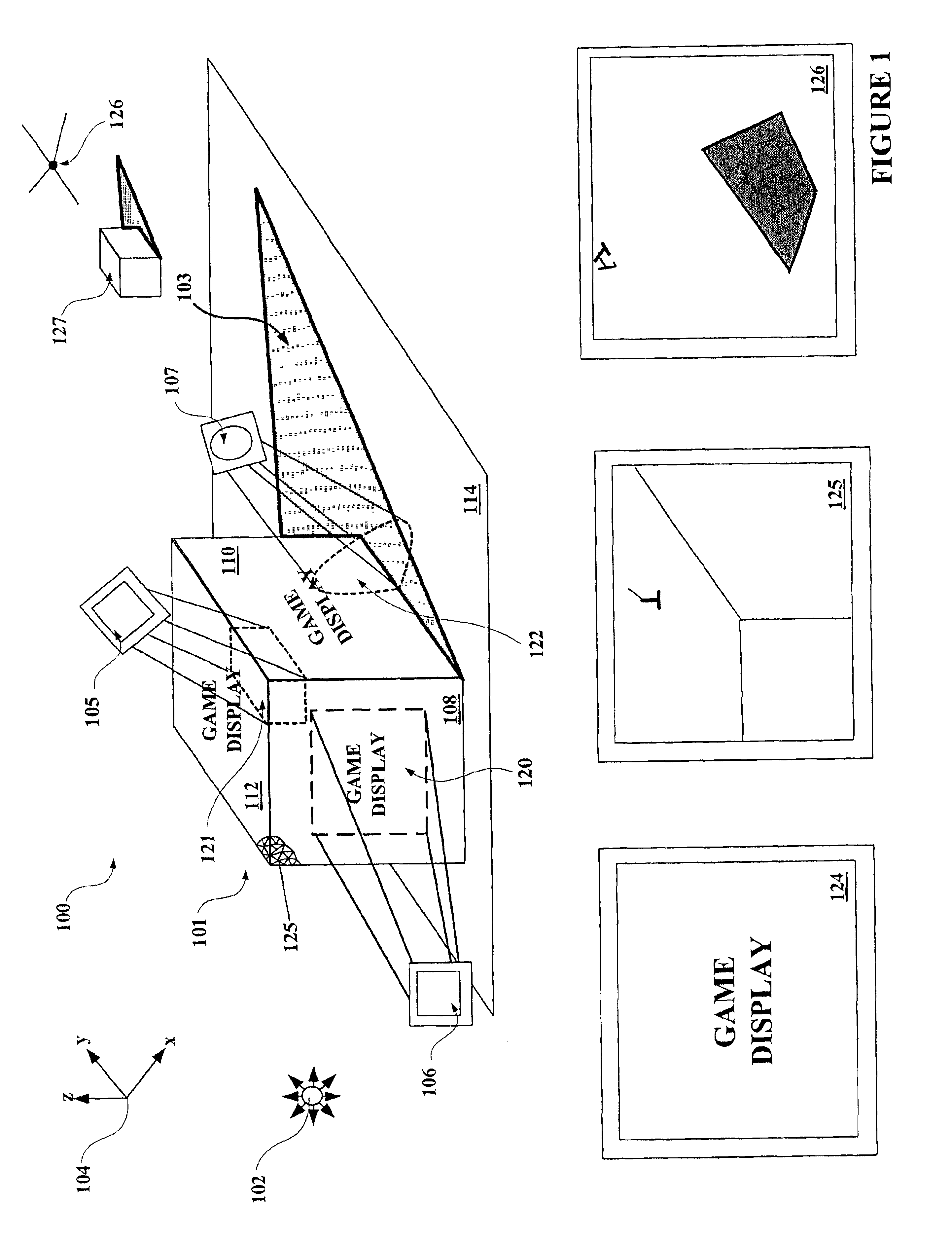

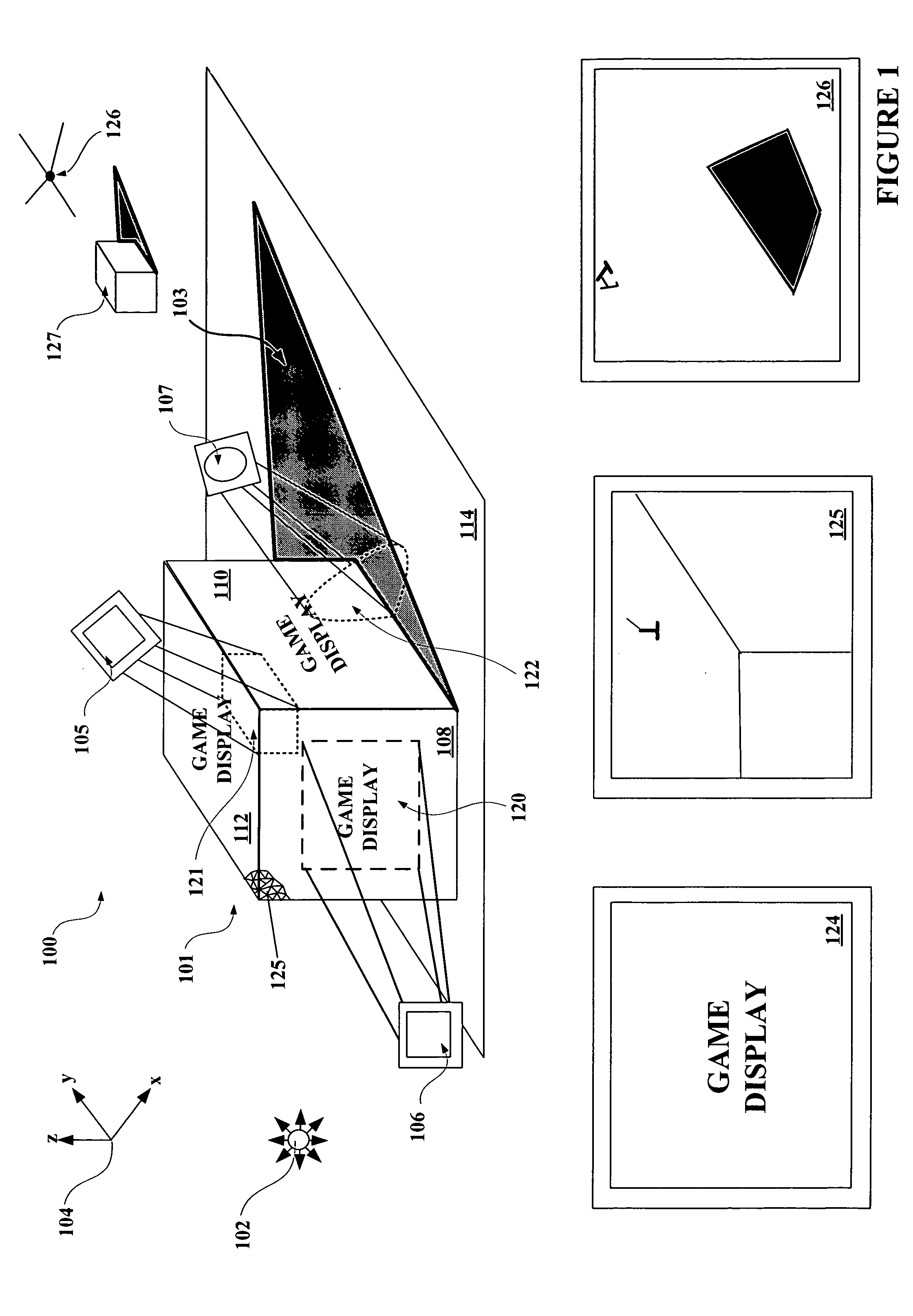

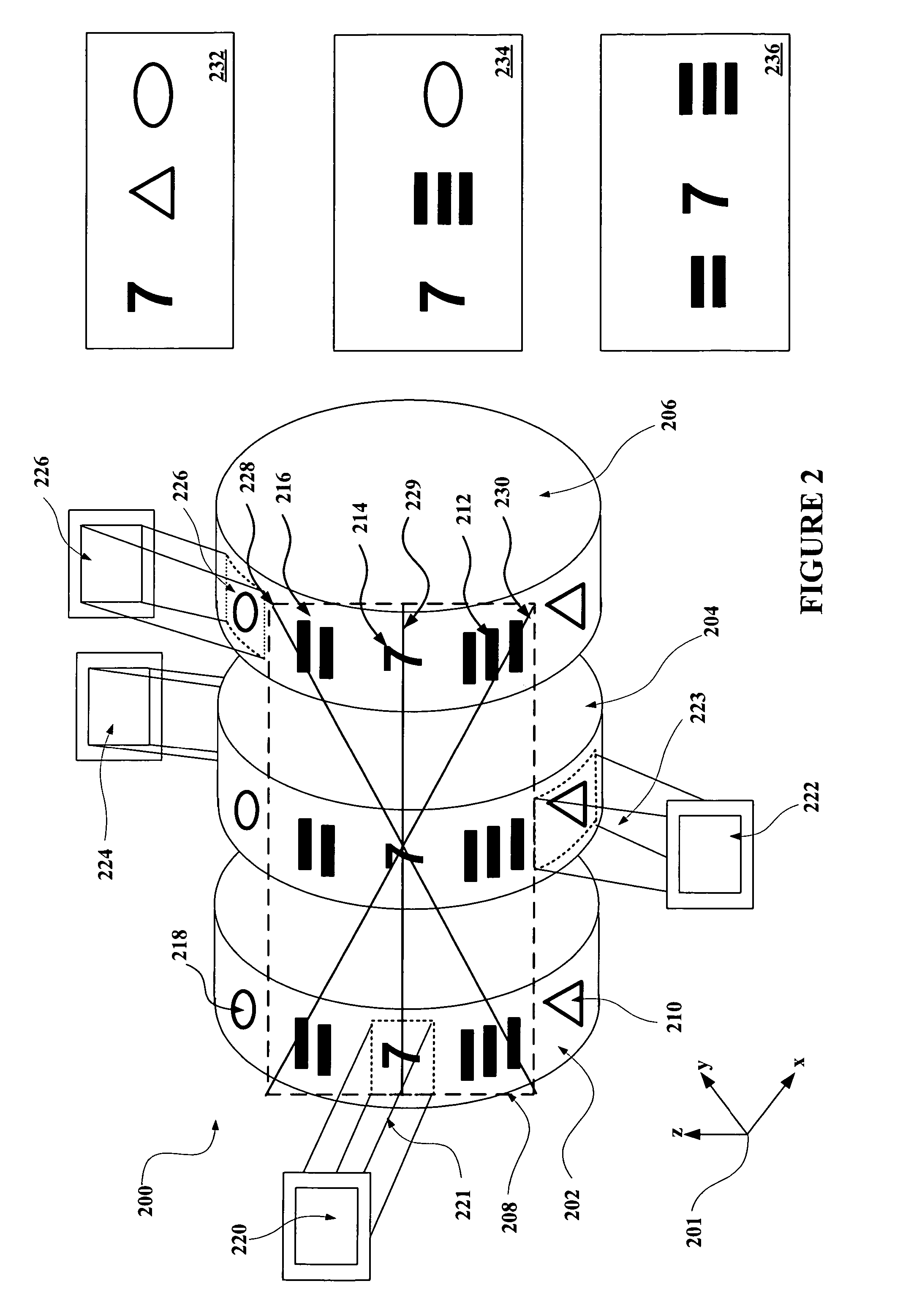

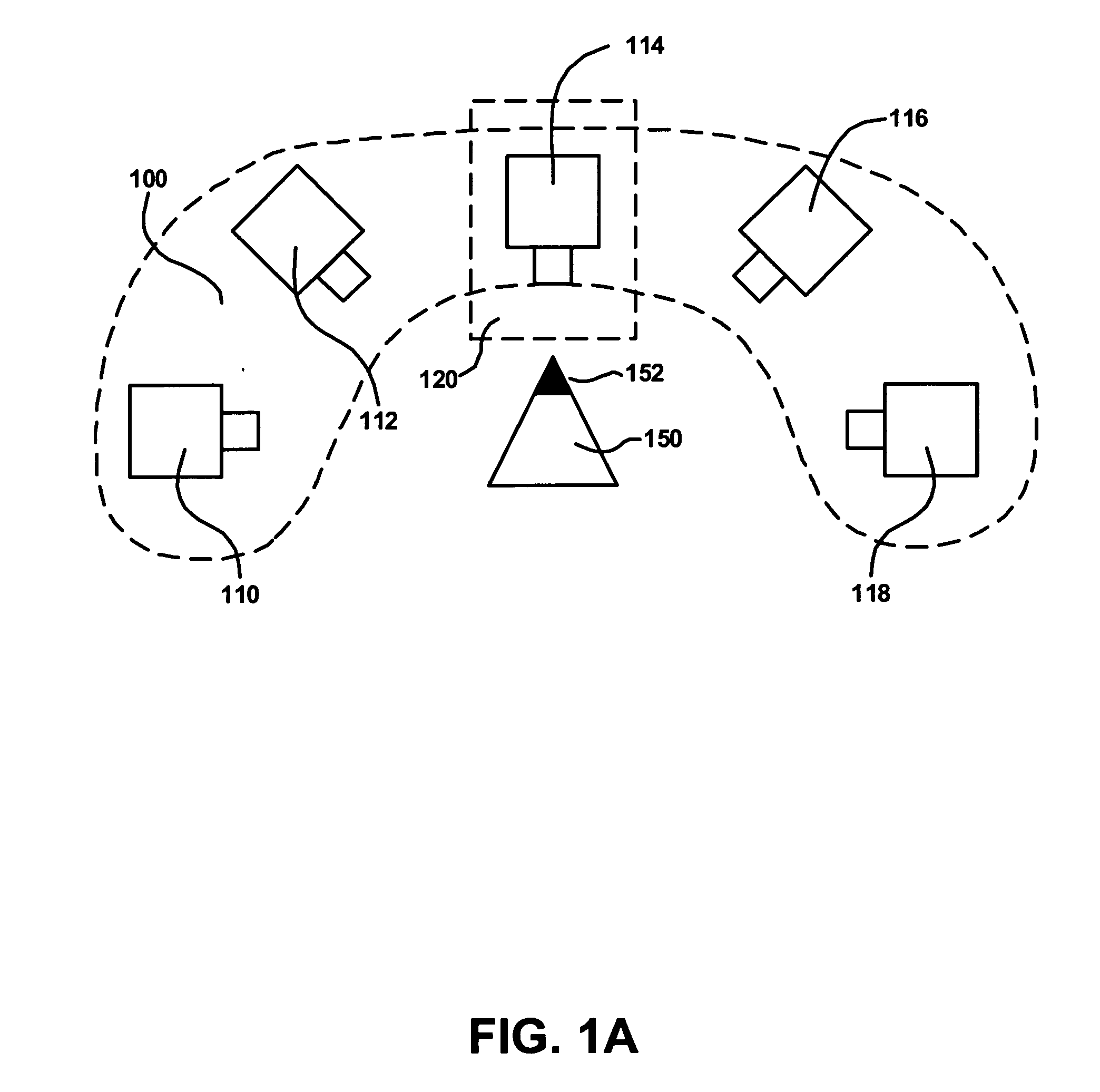

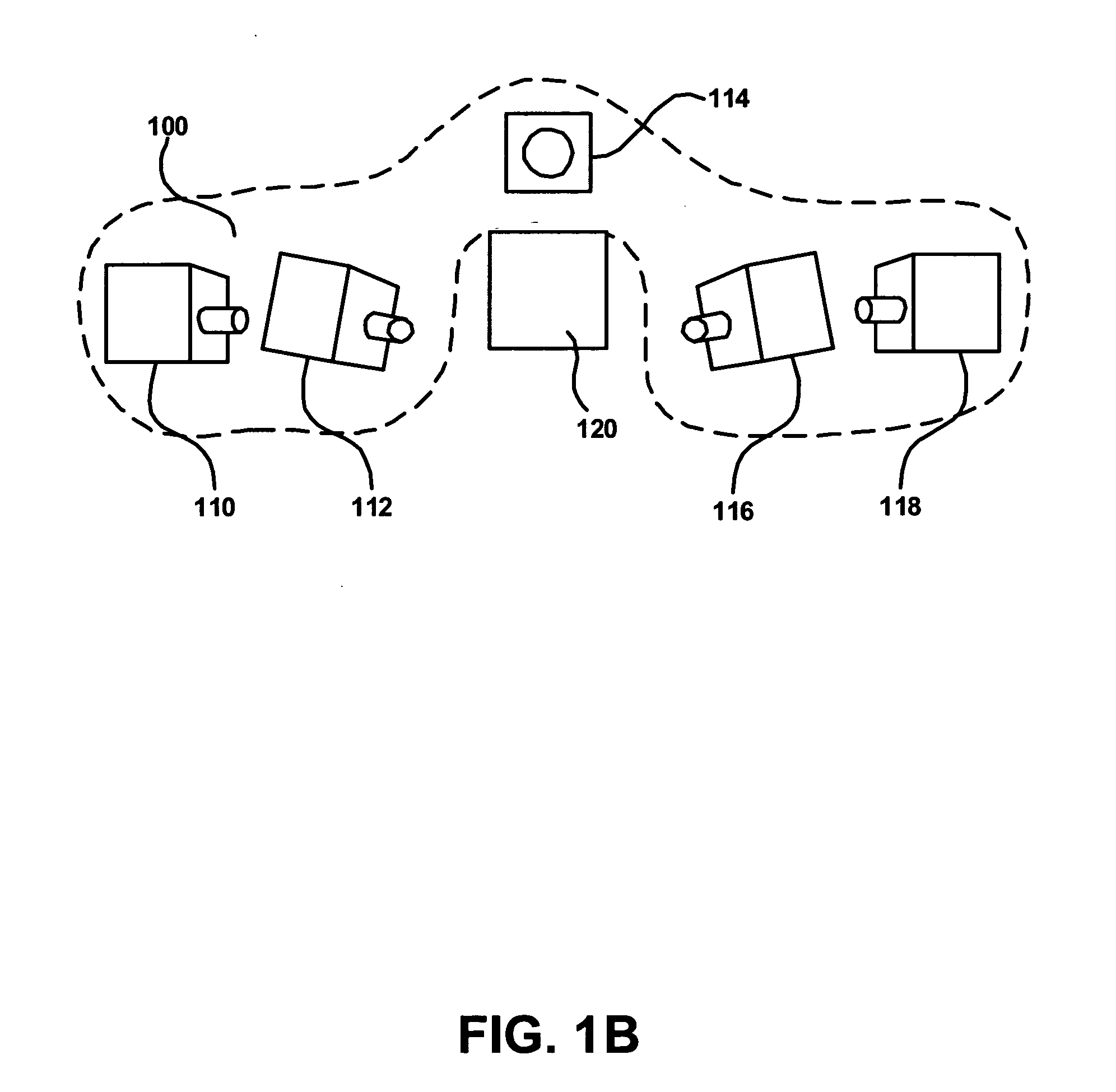

Virtual cameras and 3-D gaming environments in a gaming machine

InactiveUS6887157B2Increase excitementRoulette gamesApparatus for meter-controlled dispensingGame playerVirtual camera

A disclosed gaming machine provides method and apparatus for presenting a plurality of game outcome presentations derived from one or more virtual 3-D gaming environments stored on the gaming machine. While a game of chance is being played on the gaming machine, two-dimensional images derived from a three-dimensional object in the 3-D gaming environment may be rendered to a display screen on the gaming machine in real-time as part of the game outcome presentation. To add excitement to the game, a 3-D position of the 3-D object and other features of the 3-D gaming environment may be controlled by a game player. Nearly an unlimited variety of virtual objects, such as slot reels, gaming machines and casinos, may be modeled in the 3-D gaming environment.

Owner:IGT

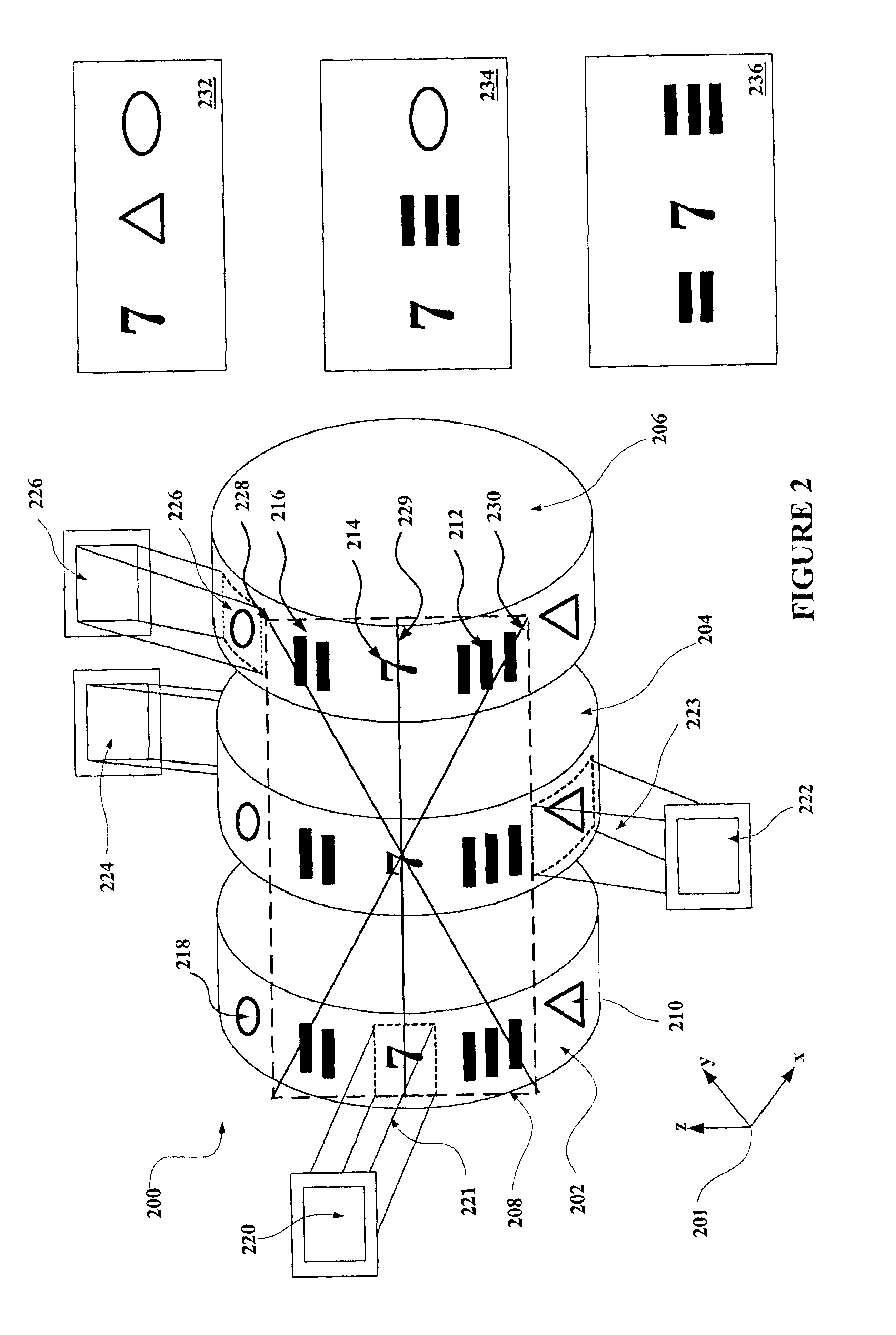

Method and apparatus for using a common pointing input to control 3D viewpoint and object targeting

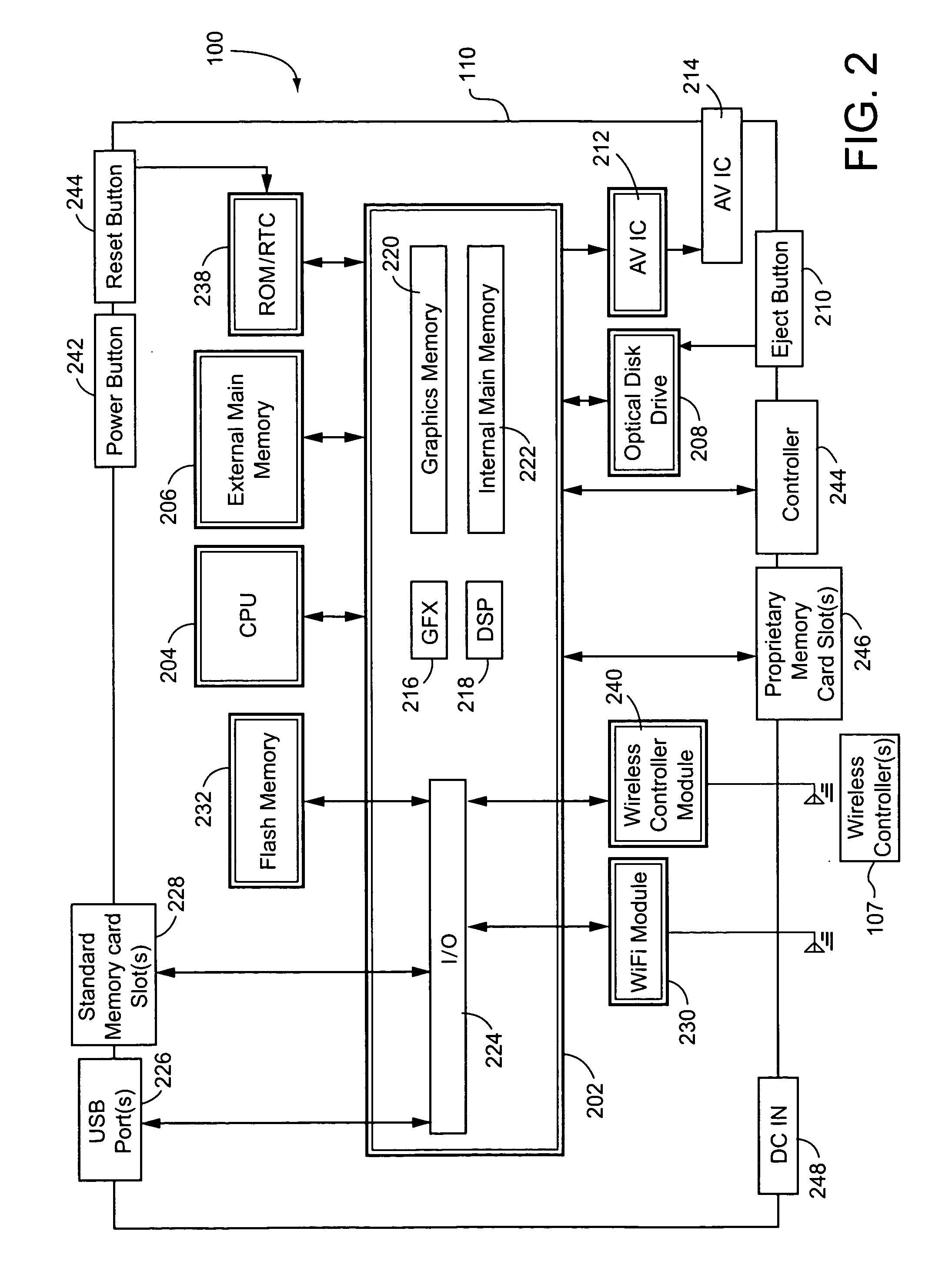

A computer graphics display system such as a video game system provides virtual camera 3-D viewpoint panning control based on a pointer. When the pointer is displayed within a virtual camera panning control region, the system automatically pans the virtual camera toward the pointer. When the pointer is displayed within a different region, panning is deactivated and the user can freely move the cursor (e.g., to control the direction a weapon is pointed) without panning the virtual camera viewpoint. Flexible viewpoint control and other animated features are provided based on a pointing device such as a handheld video game optical pointing control. Useful applications include but are not limited to first person shooter type video games.

Owner:NINTENDO CO LTD

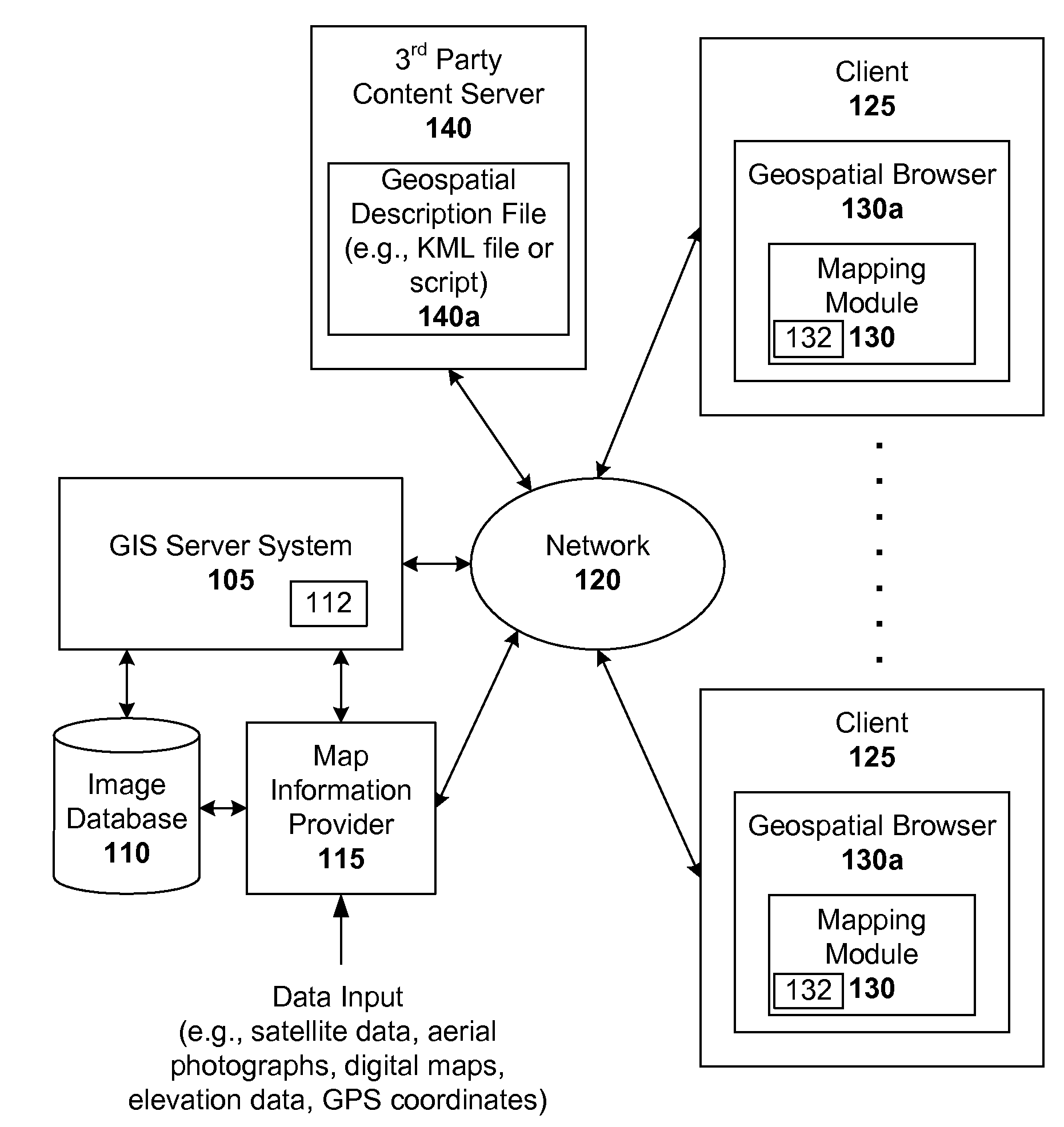

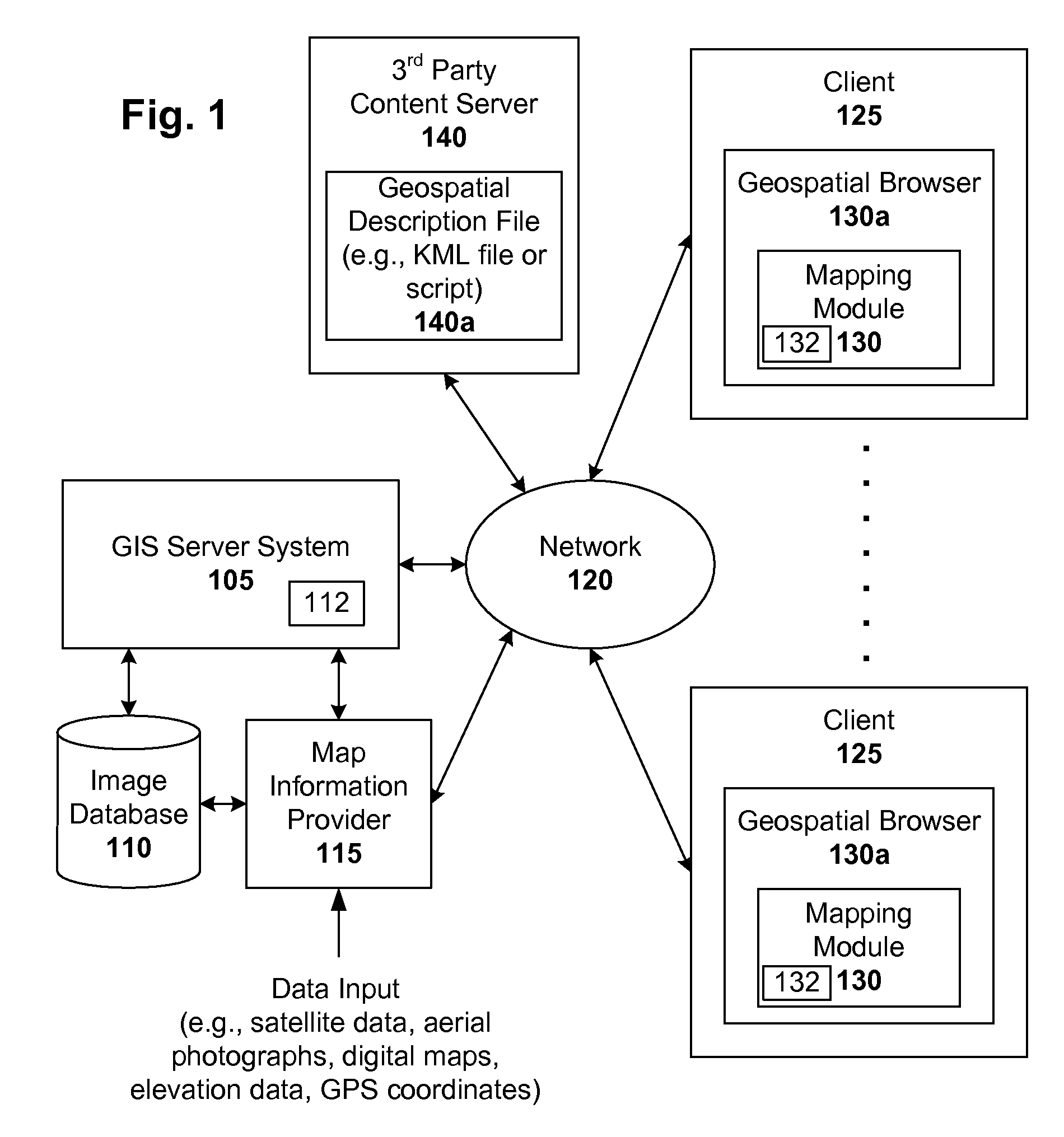

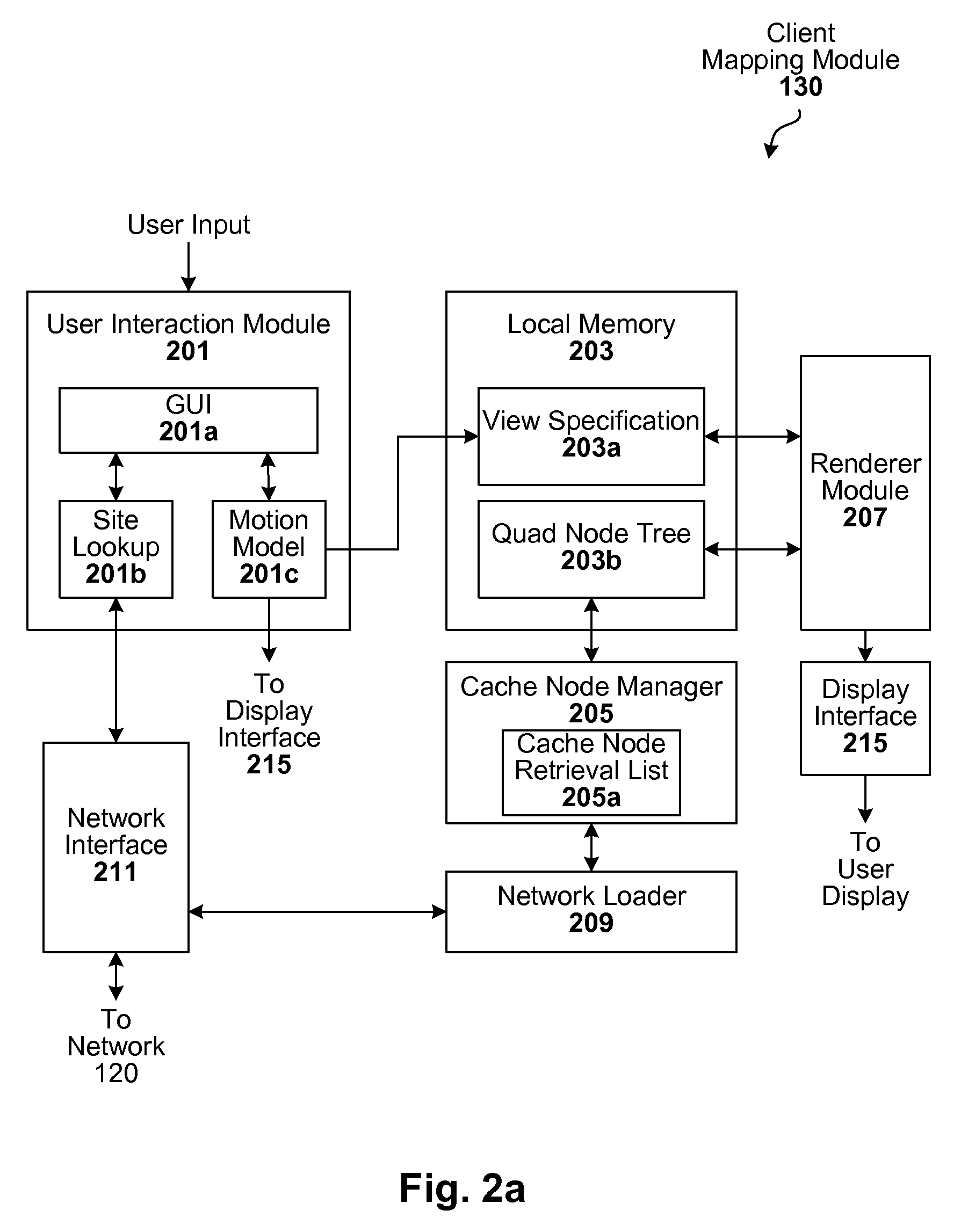

Markup language for an interactive geographic information system

ActiveUS7353114B1Natural language data processingNavigation instrumentsGeographic featureNetwork link

Interactive geographic information systems (GIS) and techniques are disclosed that provide users with a greater degree of flexibility, utility, and information. A markup language is provided that facilitates communication between servers and clients of the interactive GIS, which enables a number of GIS features, such as network links (time-based and view-dependent dynamic data layers), ground overlays, screen overlays, placemarks, 3D models, and stylized GIS elements, such as geometry, icons, description balloons, polygons, and labels in the viewer by which the user sees the target area. The markup language is used to describe a virtual camera view of a geographic feature. A compressed file format holds multiple files utilized to display a geographic feature in a single file.

Owner:GOOGLE LLC

Systems and methods for 2D image and spatial data capture for 3D stereo imaging

InactiveUS20110222757A1Facilitate post-productionLarge separationImage enhancementImage analysisVirtual cameraMovie camera

Systems and methods for 2D image and spatial data capture for 3D stereo imaging are disclosed. The system utilizes a cinematography camera and at least one reference or “witness” camera spaced apart from the cinematography camera at a distance much greater that the interocular separation to capture 2D images over an overlapping volume associated with a scene having one or more objects. The captured image date is post-processed to create a depth map, and a point cloud is created form the depth map. The robustness of the depth map and the point cloud allows for dual virtual cameras to be placed substantially arbitrarily in the resulting virtual 3D space, which greatly simplifies the addition of computer-generated graphics, animation and other special effects in cinemagraphic post-processing.

Owner:SHAPEQUEST

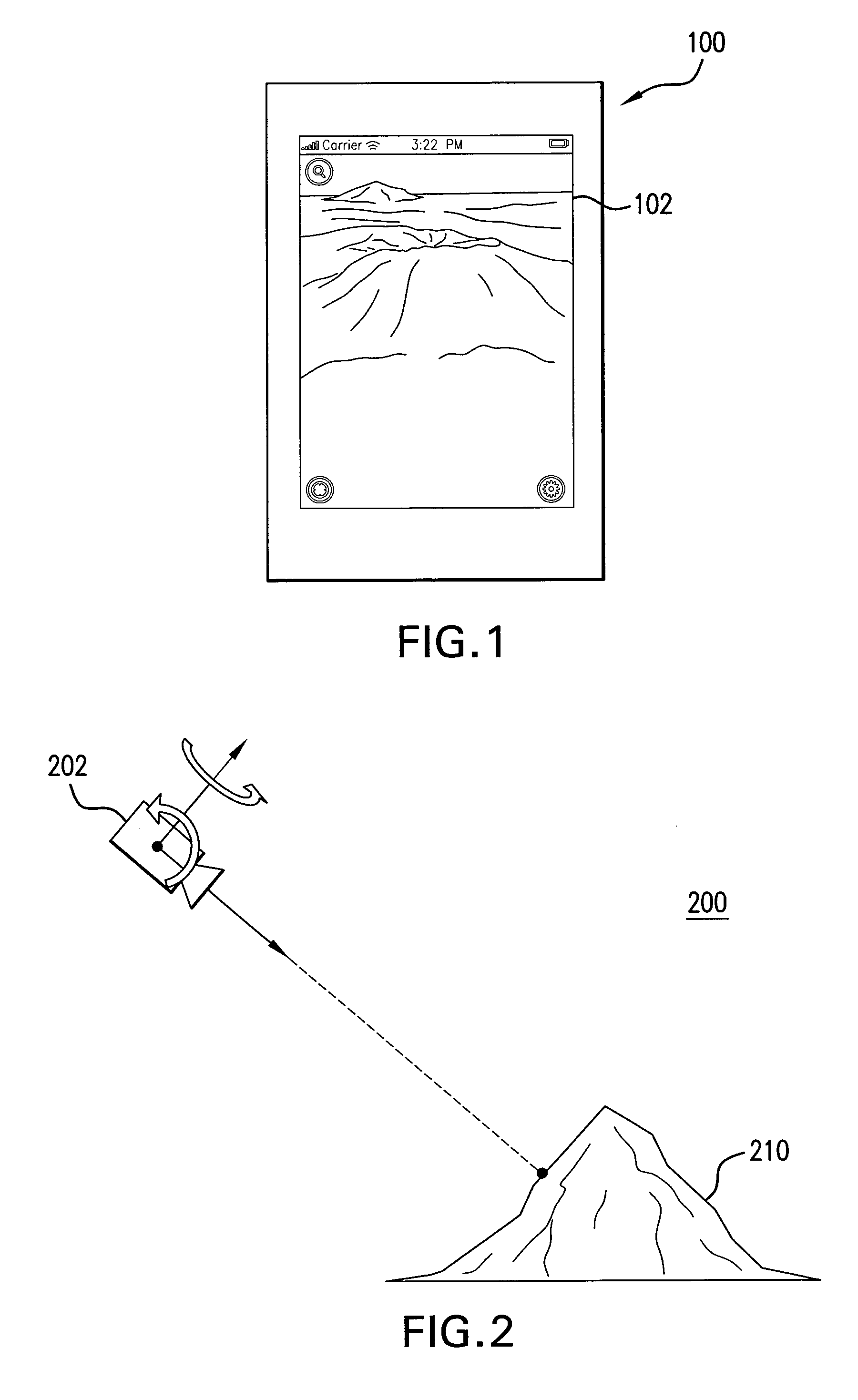

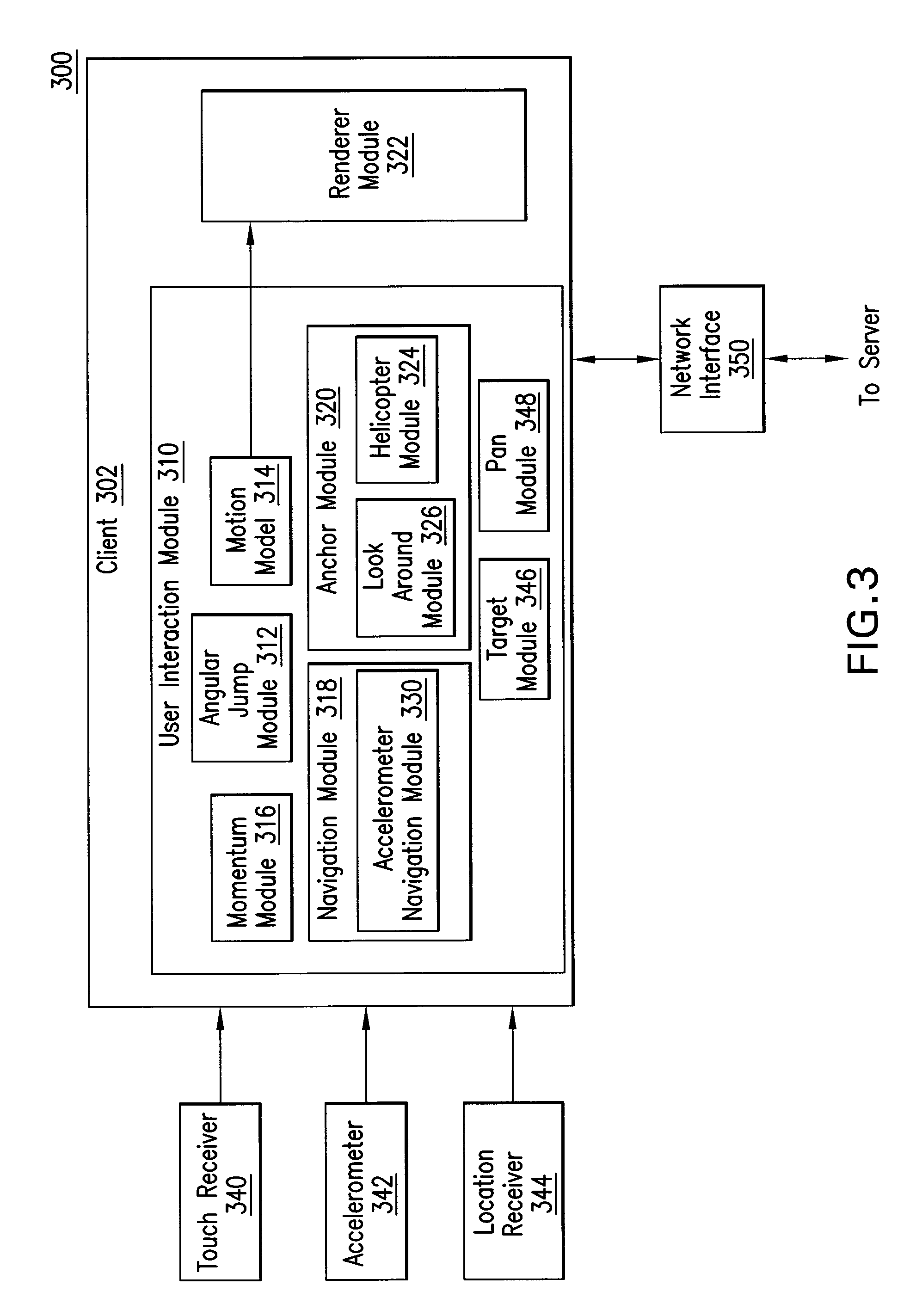

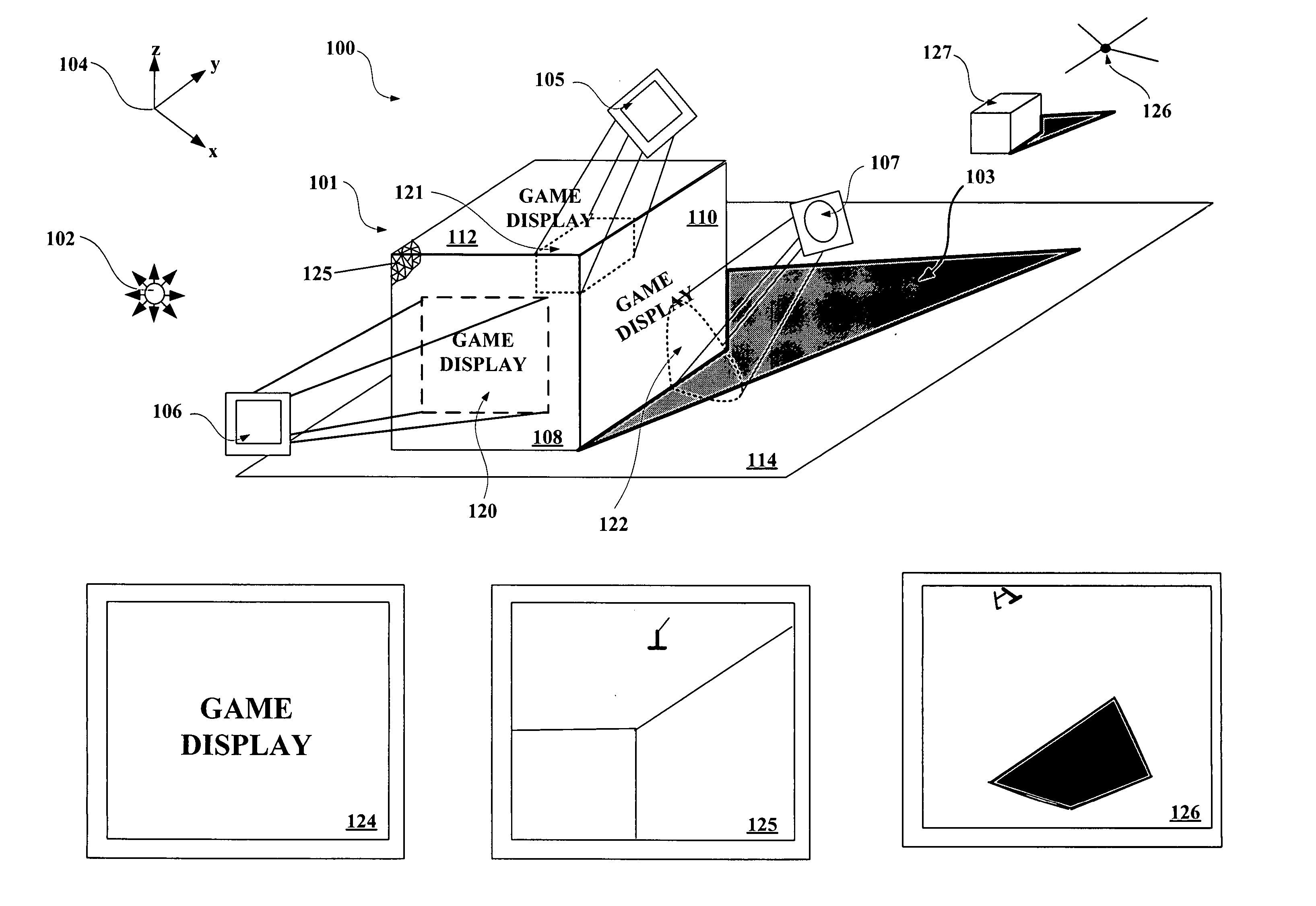

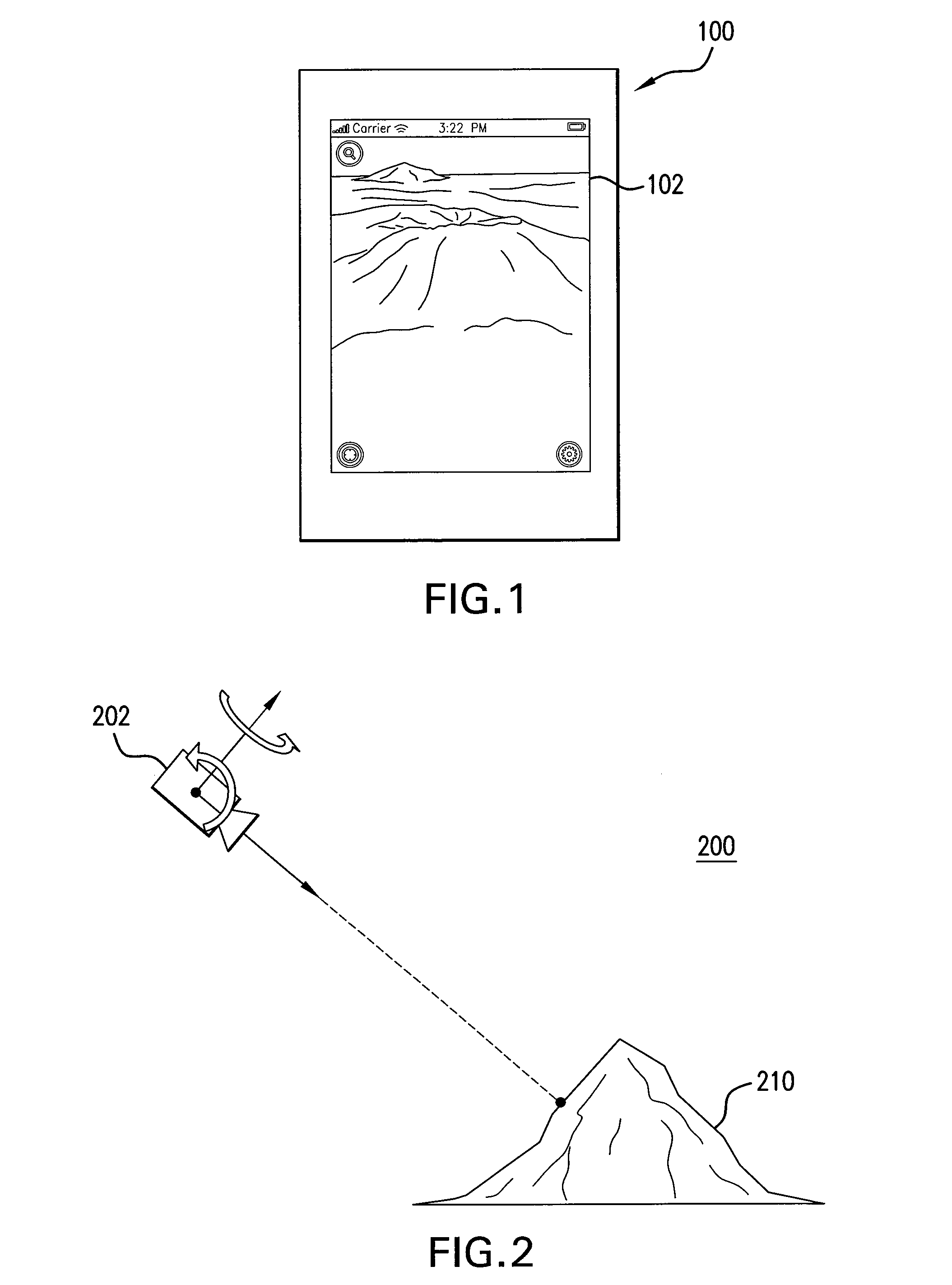

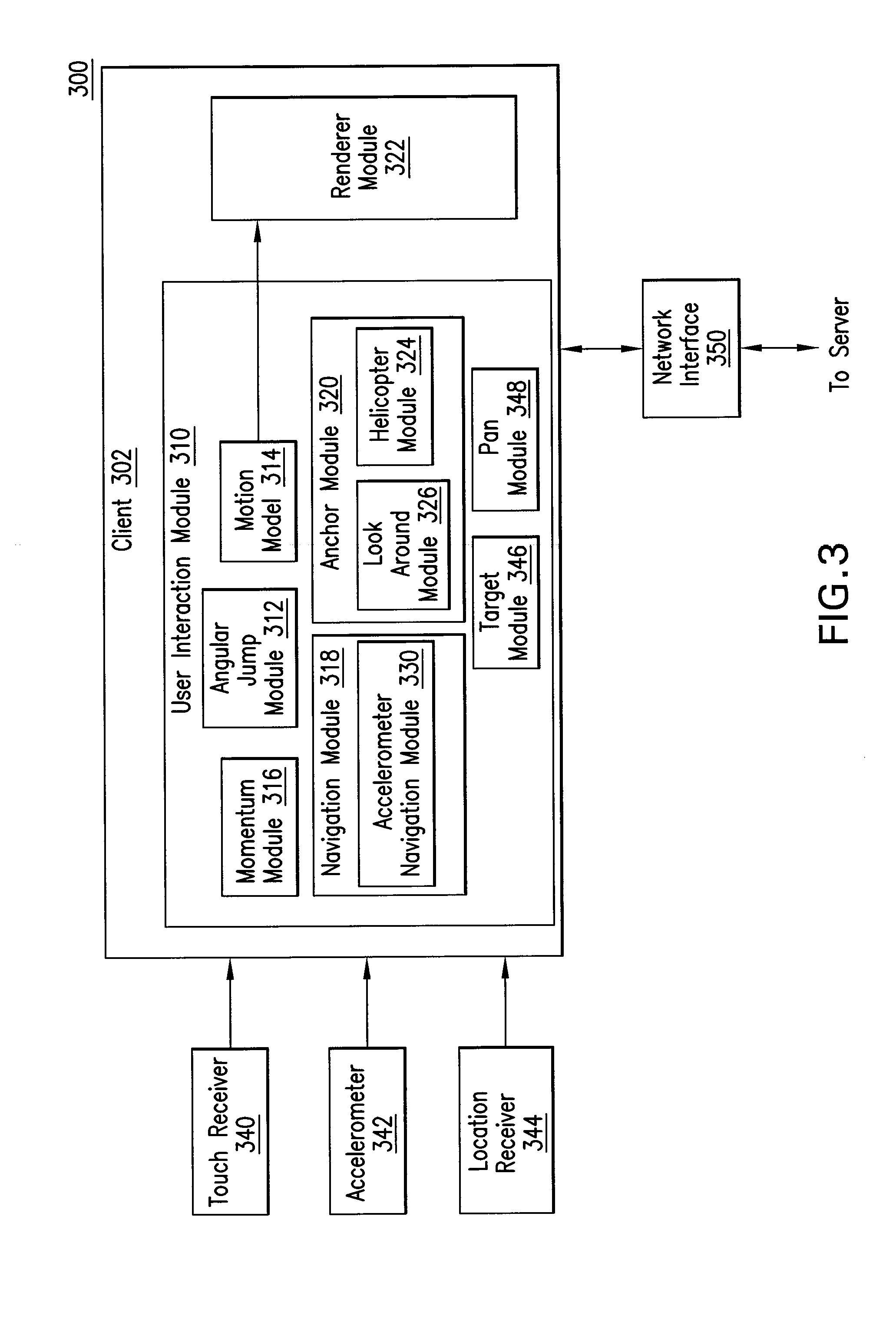

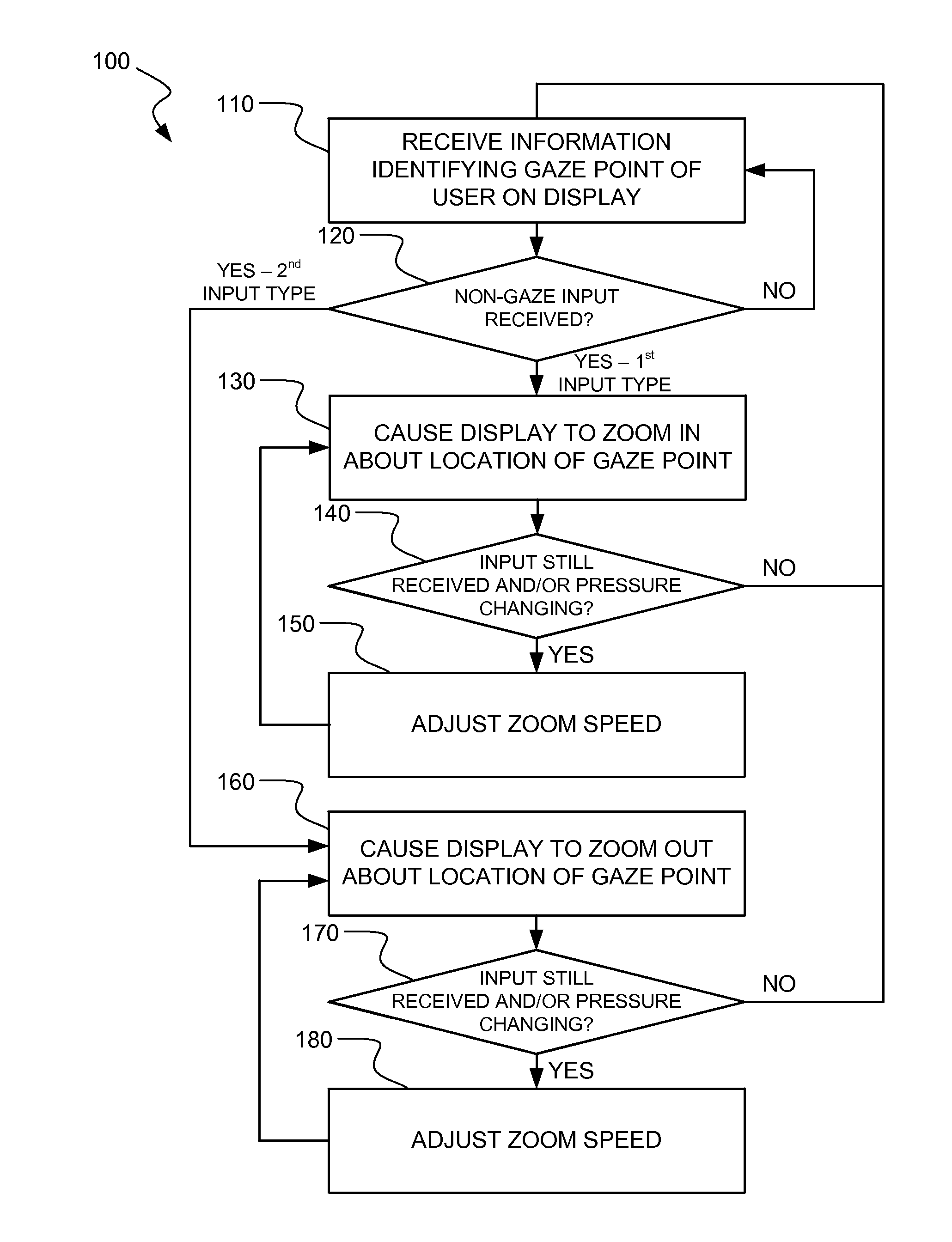

Anchored Navigation In A Three Dimensional Environment On A Mobile Device

InactiveUS20100045666A1Digital data processing detailsInput/output processes for data processingUser inputVirtual camera

This invention relates to anchored navigation in a three dimensional environment on a mobile device. In an embodiment, a computer-implemented method navigates a virtual camera in a three dimensional environment on a mobile device having a touch screen. A first user input is received indicating that a first object is approximately stationary on a touch screen of the mobile device. A second user input is received indicating that a second object has moved on the touch screen. An orientation of the virtual camera of the virtual camera is changed according to the second user input.

Owner:GOOGLE LLC

Virtual cameras and 3-D gaming environments in a gaming machine

A disclosed gaming machine provides method and apparatus for presenting a plurality of game outcome presentations derived from one or more virtual 3-D gaming environments stored on the gaming machine. While a game of chance is being played on the gaming machine, two-dimensional images derived from a three-dimensional object in the 3-D gaming environment may be rendered to a display screen on the gaming machine in real-time as part of the game outcome presentation. To add excitement to the game, a 3-D position of the 3-D object and other features of the 3-D gaming environment may be controlled by a game player. Nearly an unlimited variety of virtual objects, such as slot reels, gaming machines and casinos, may be modeled in the 3-D gaming environment.

Owner:IGT

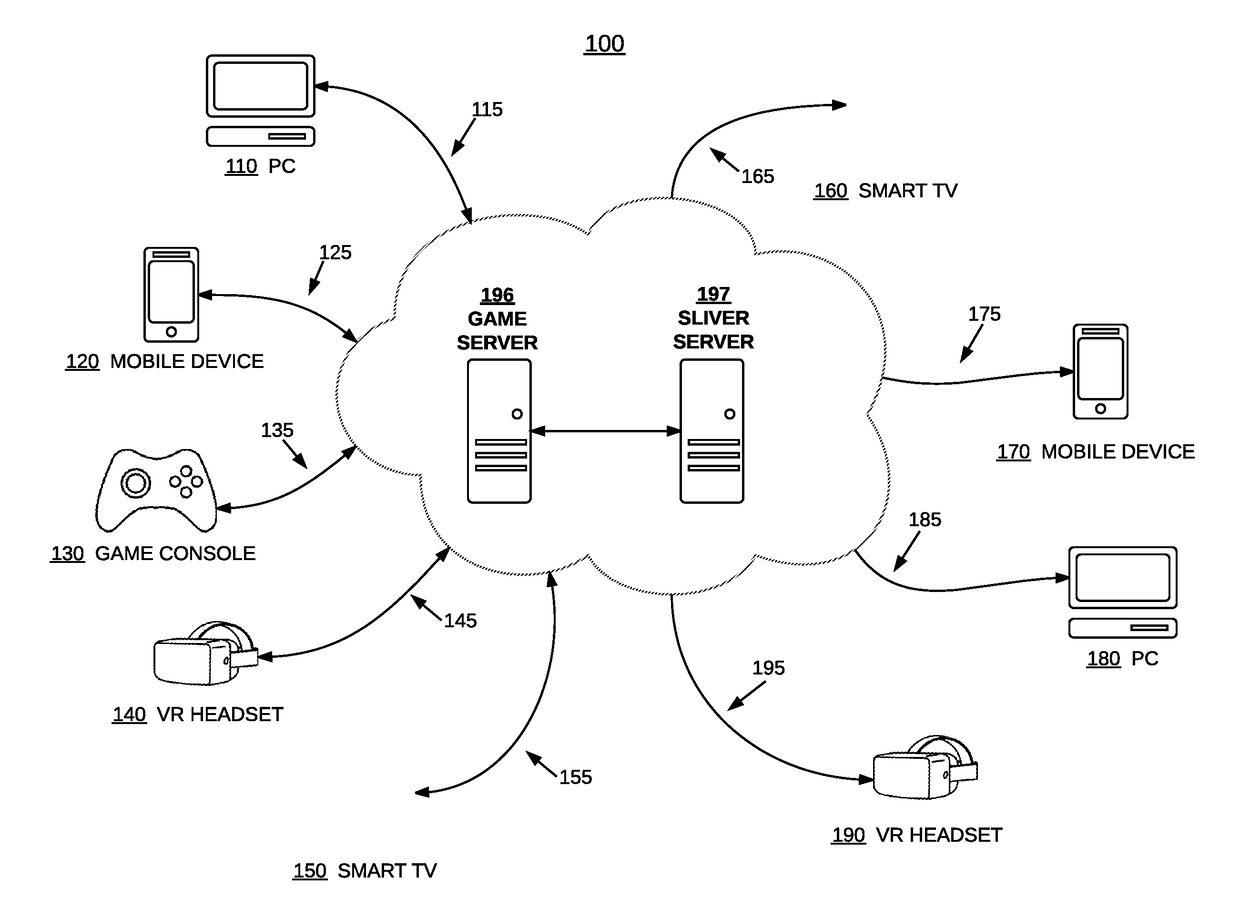

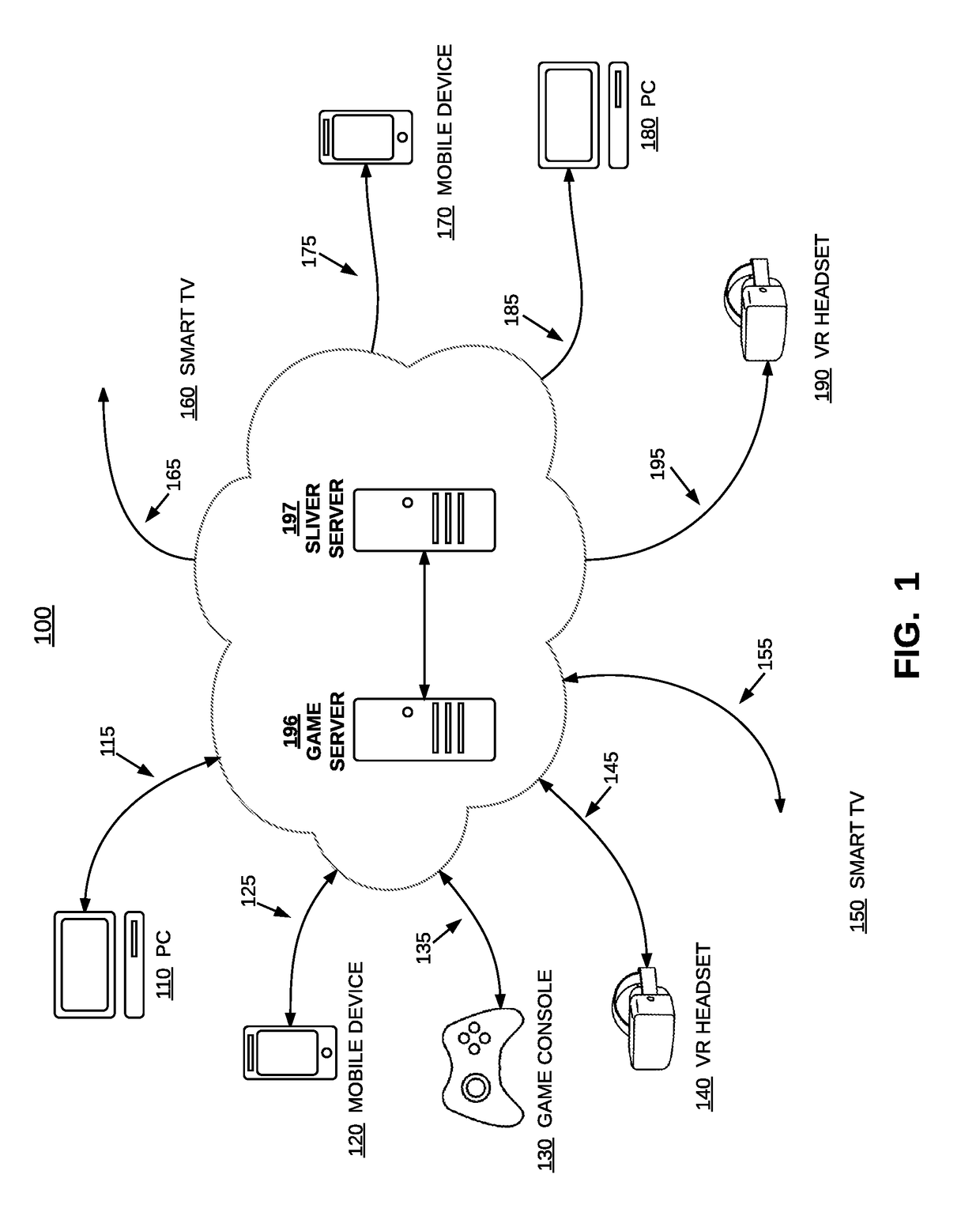

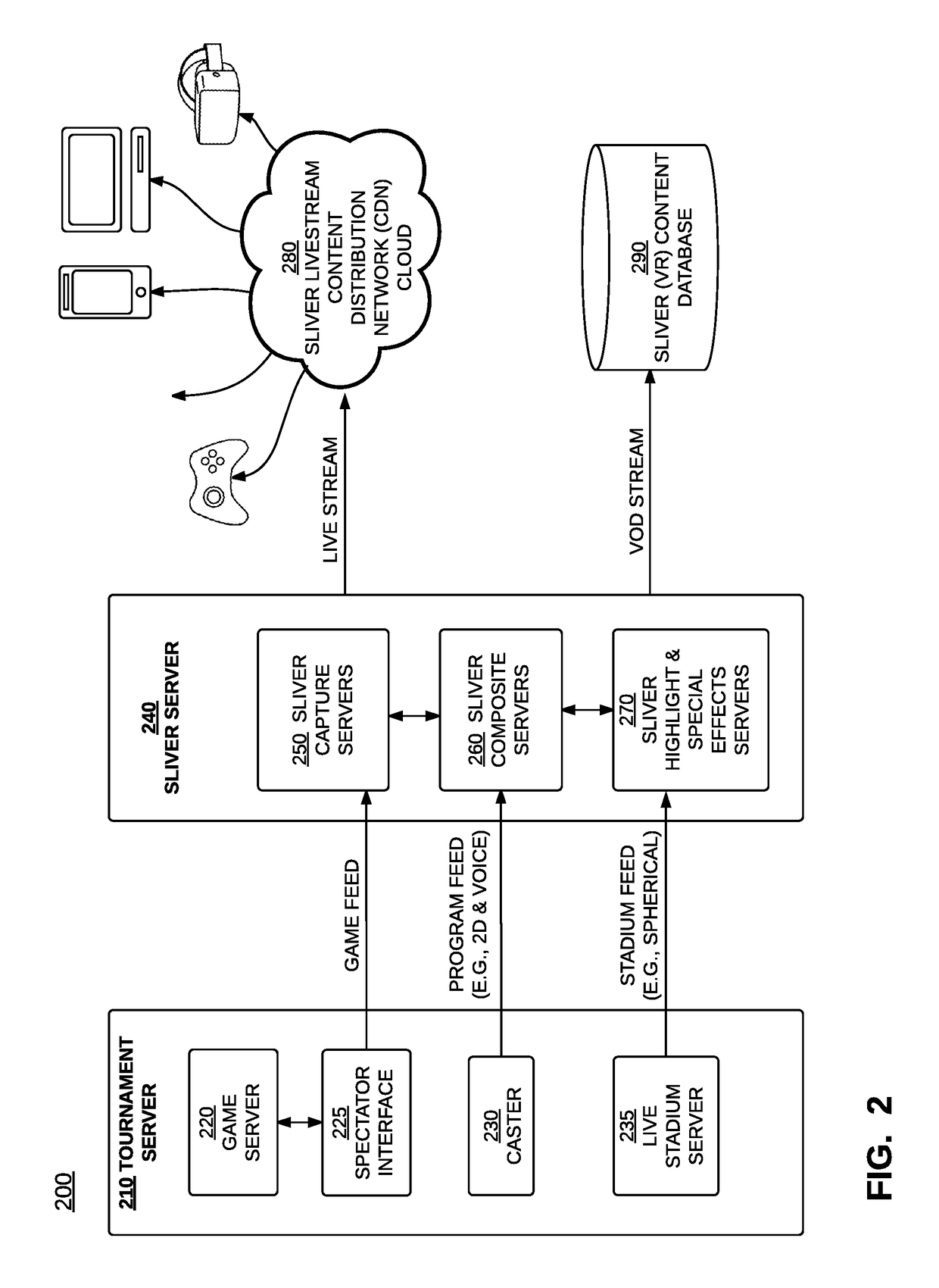

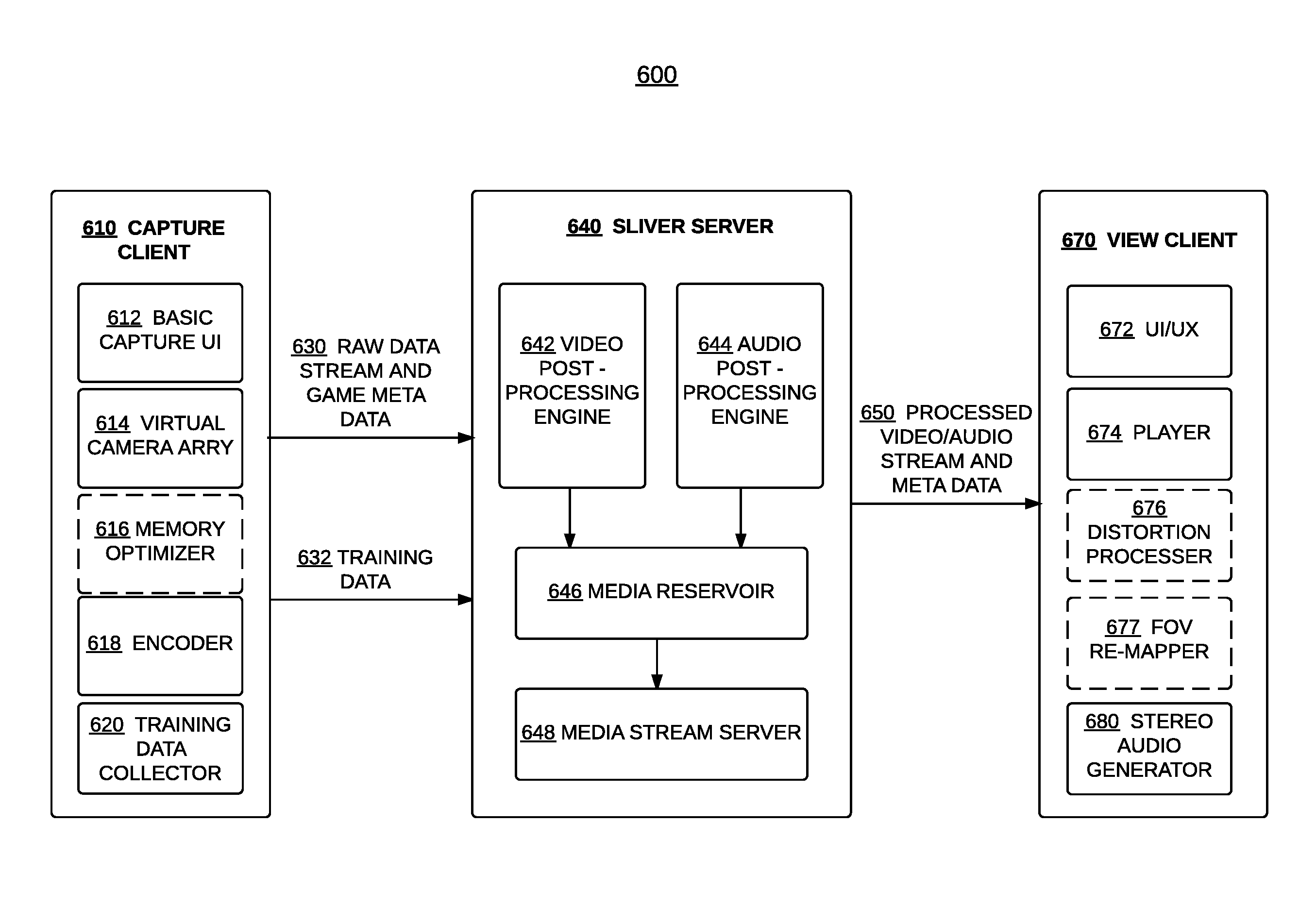

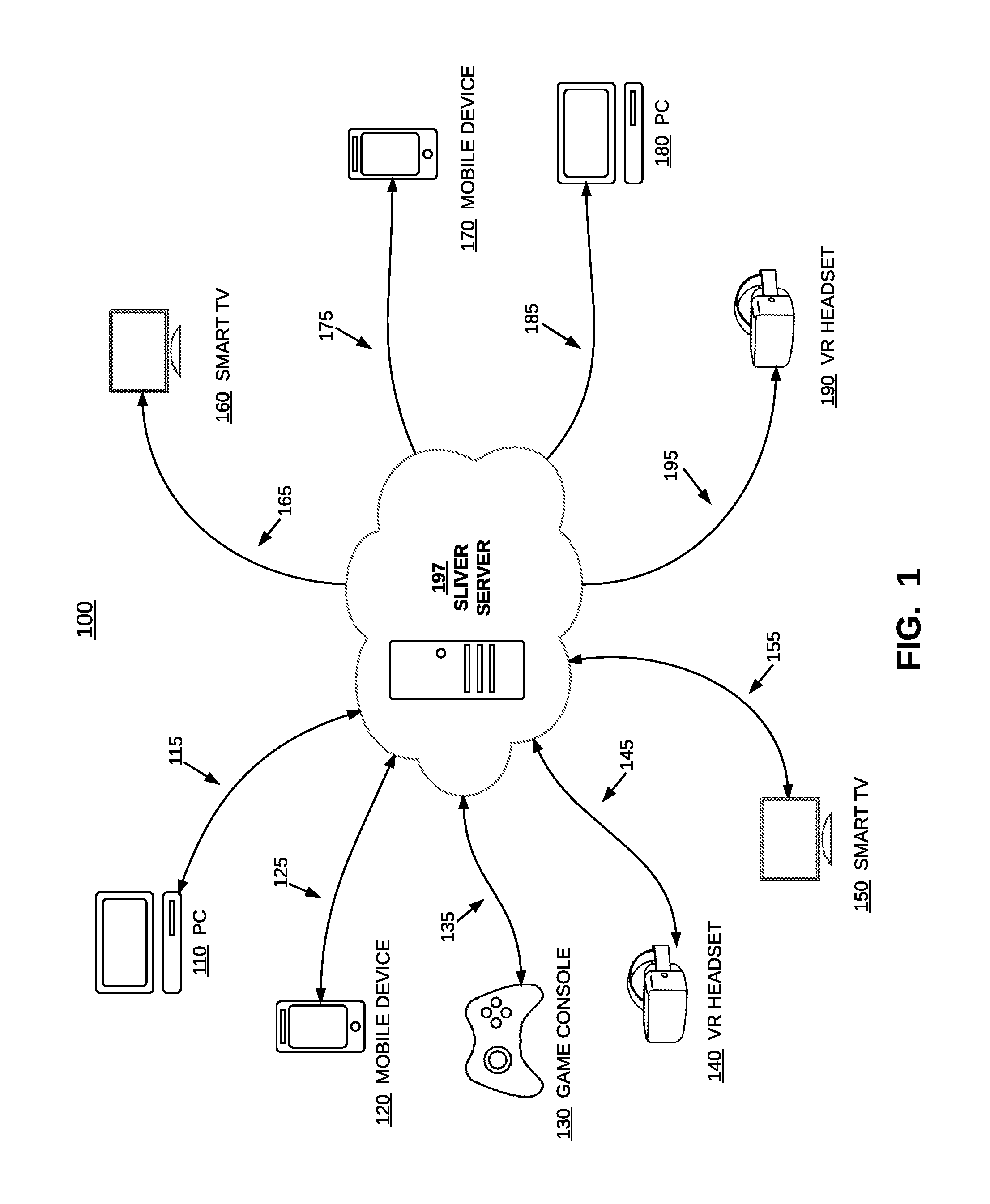

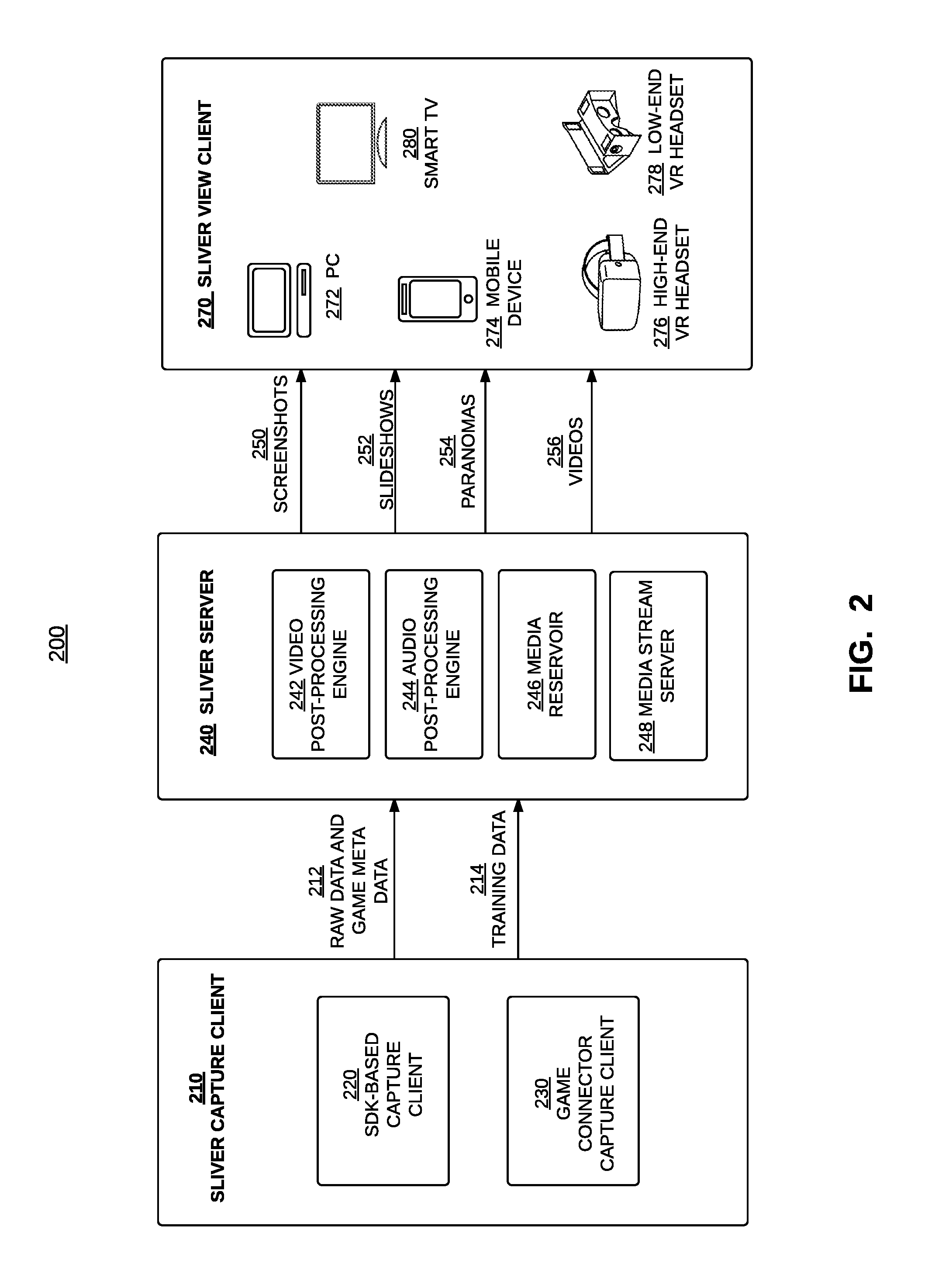

Methods and systems for computer video game streaming, highlight, and replay

ActiveUS20170157512A1Electronic editing digitised analogue information signalsVideo gamesTraffic capacityVirtual camera

Methods and systems for generating a highlight video of a critical gaming moment from a source computer game running on a game server are disclosed. The method, when executed by a processor accessible by a processing server, comprises receiving video recordings recorded using at least two game virtual cameras positioned at predetermined locations during a source game play, wherein at least one of the game virtual cameras was inserted into the source computer game using a SDK or a game connector module. Next, analyzing the received recordings to extract visual, audio, and / or metadata cues; generating highlight metadata; and detecting the critical gaming moment based on the highlight metadata. Finally, generating a highlight video of the critical gaming moment from the received video recordings based on the highlight metadata. In some embodiments, the highlight videos can be used to drive additional traffic to the game tournament and / or streaming platform operator.

Owner:SLIVER VR TECH INC

Methods and systems for game video recording and virtual reality replay

ActiveUS9473758B1Reduce rateReduce resolutionTelevision system detailsElectronic editing digitised analogue information signalsVideo sharingImage resolution

Methods and systems for processing computer game videos for virtual reality replay are disclosed. The method, when executed by a processor, comprises first receiving a video recorded using a virtual camera array during a game play of a source computer game. Next, upscaling the received video to a higher resolution, and interpolating neighboring video frames of the upscaled video for insertion into the upscaled video at a server. Finally, generating a spherical video from the interpolated video for replay in a virtual reality environment. The virtual camera array includes multiple virtual cameras each facing a different direction, and the video is recorded at a frame rate and a resolution lower than those of the source computer game. The spherical videos are provided on a video sharing platform. The present invention solves the chicken-and-egg problem of mass adoption of virtual reality technology by easily generating VR content from existing computer games.

Owner:SLIVER VR TECH INC

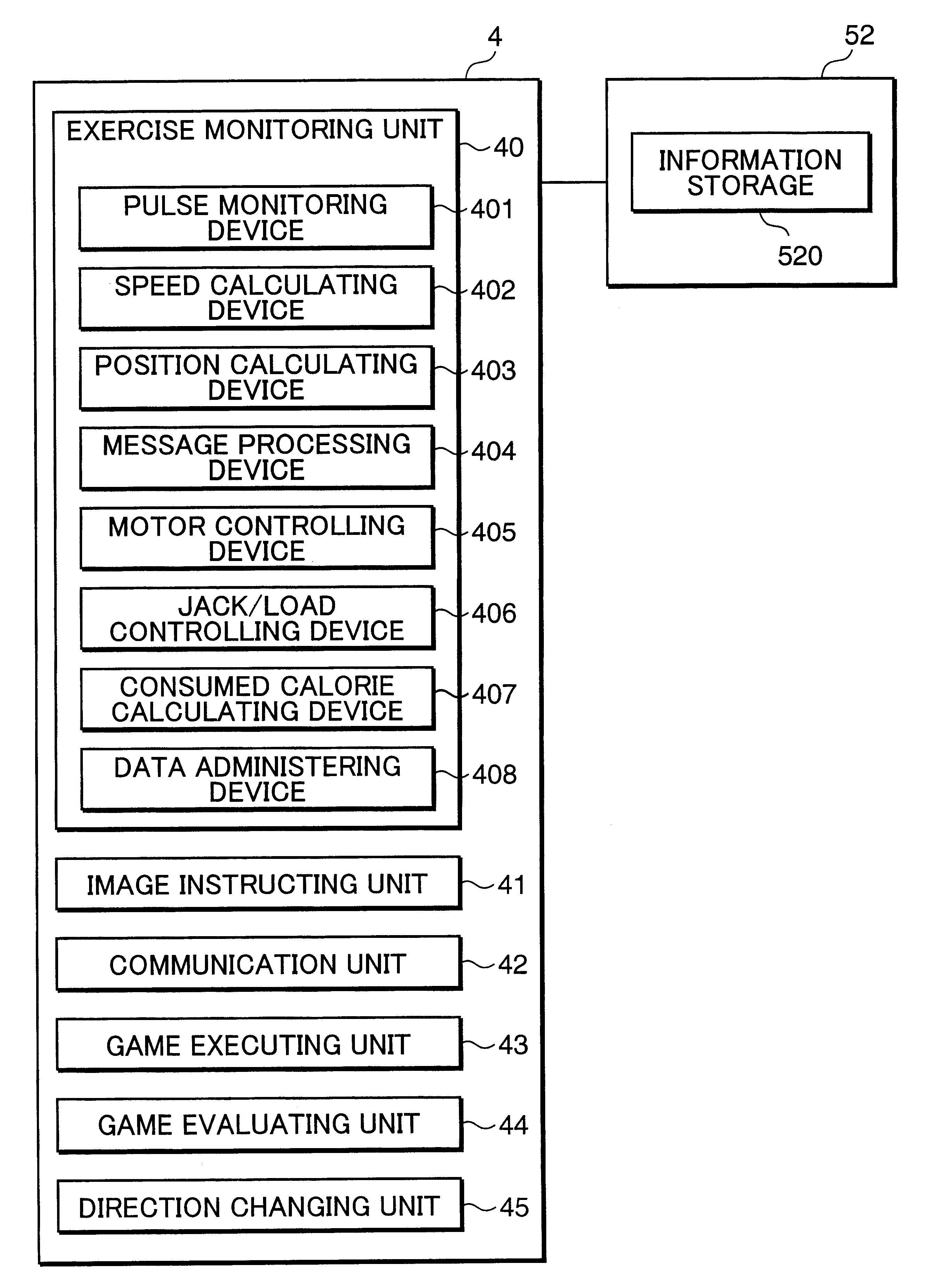

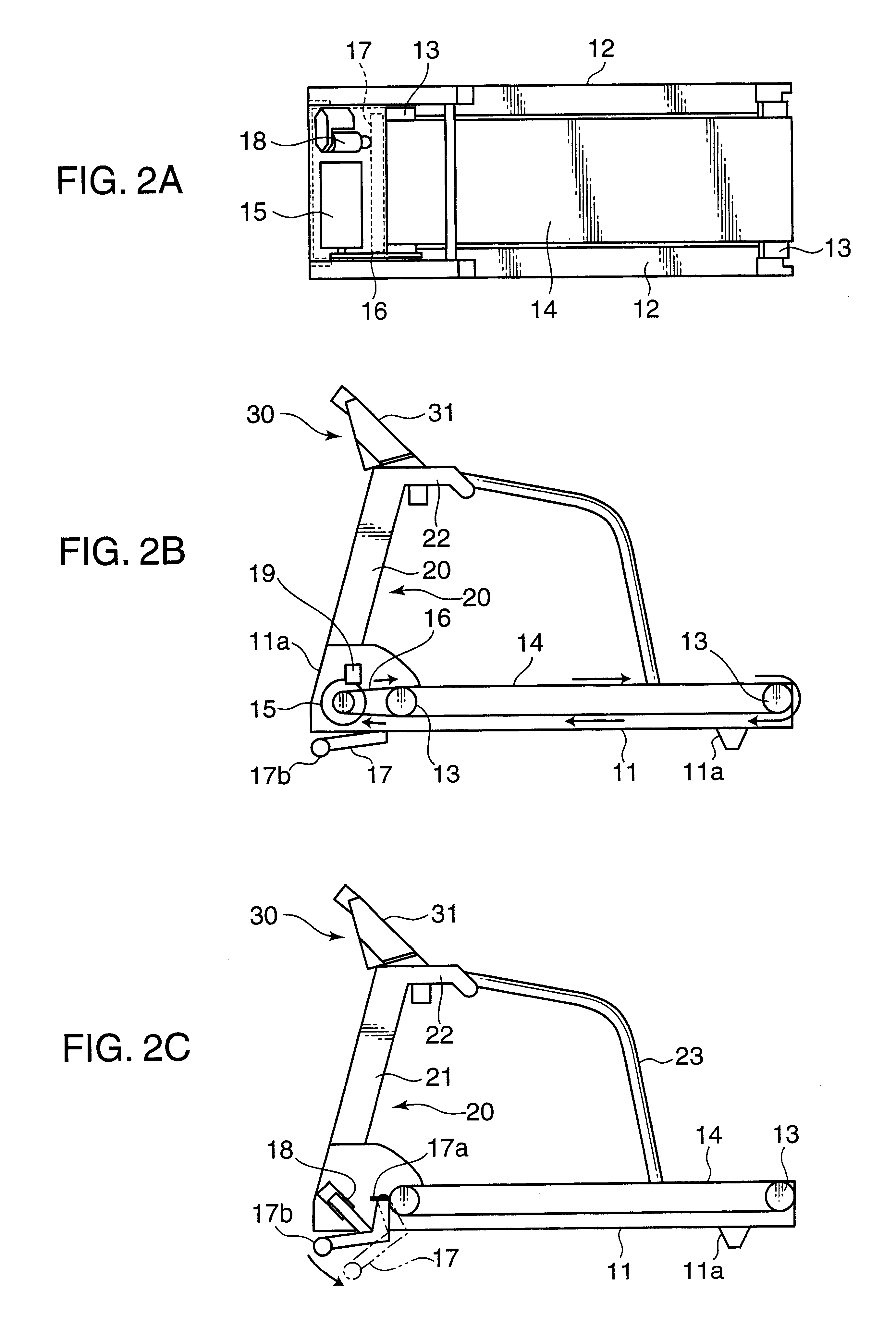

Exercise assistance controlling method and exercise assisting apparatus

InactiveUS6796927B2Improve physical functionFunction increaseClubsTherapy exerciseEngineeringVirtual camera

A running machine 1 is provided with an exercise monitoring unit 40 for turning a running belt 14 on which a user runs by means of a motor 15 and monitoring a running state of the user based on a driven state of the running belt 14. The running machine a includes a monitor 31 for displaying images at its front side. The running machine 1 is also provided with an image instructing unit 41 for displaying an image of a virtual image including a running road on the monitor 31 with the position of a virtual camera, which is changed based on the driven state of the running belt, as a viewpoint, a game executing unit 43 for displaying obstacle characters to appear on the running road in accordance with a specified rule, a direction changing unit 45 for receiving an instruction to change the running direction on the running road in response to an operation made by the user doing the running exercise, and a game evaluating unit 44 for evaluating an other athletic ability of the user based on how properly he instructed to change the running direction to avoid virtual collisions with the obstacle characters.

Owner:KONAMI SPORTS & LIFE

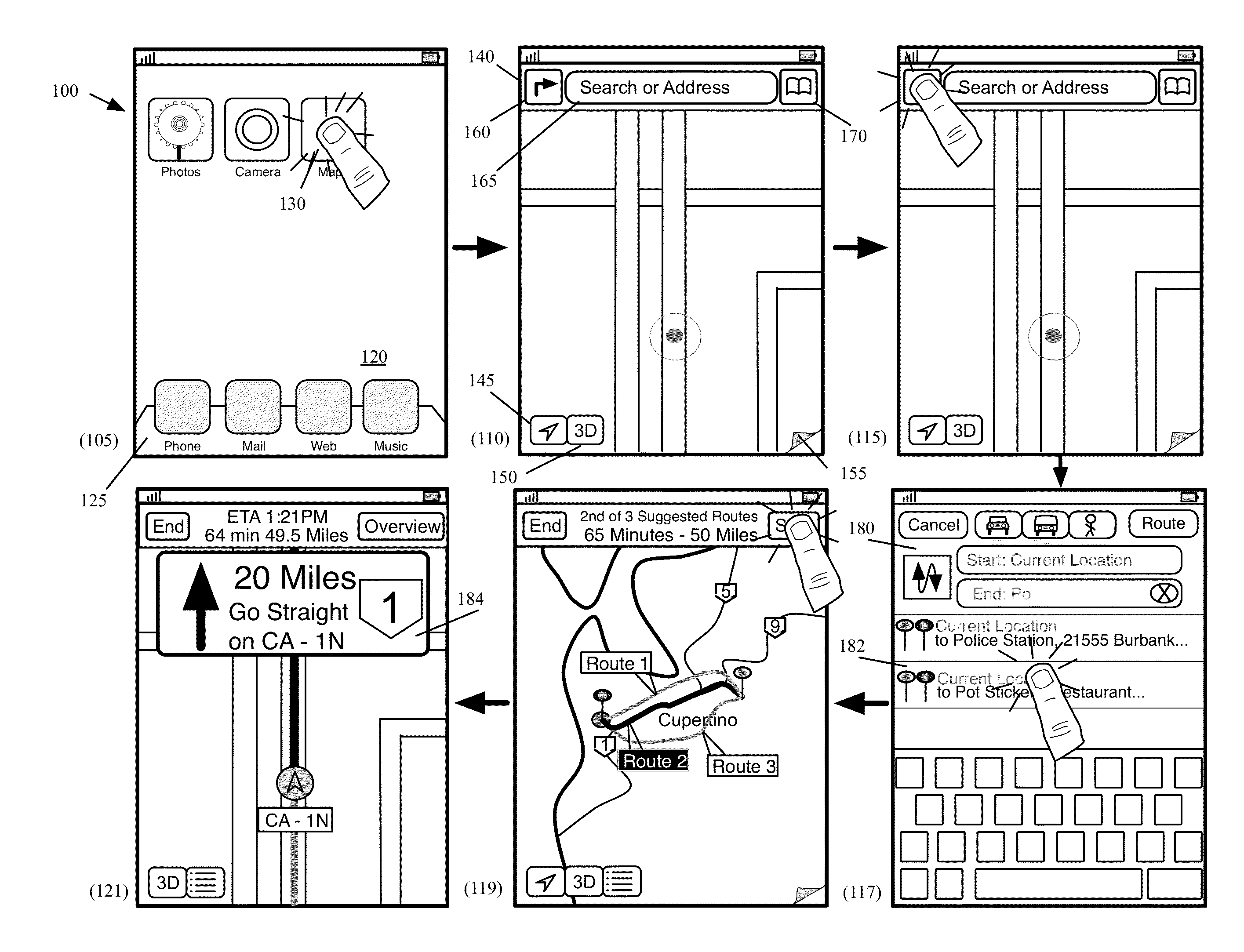

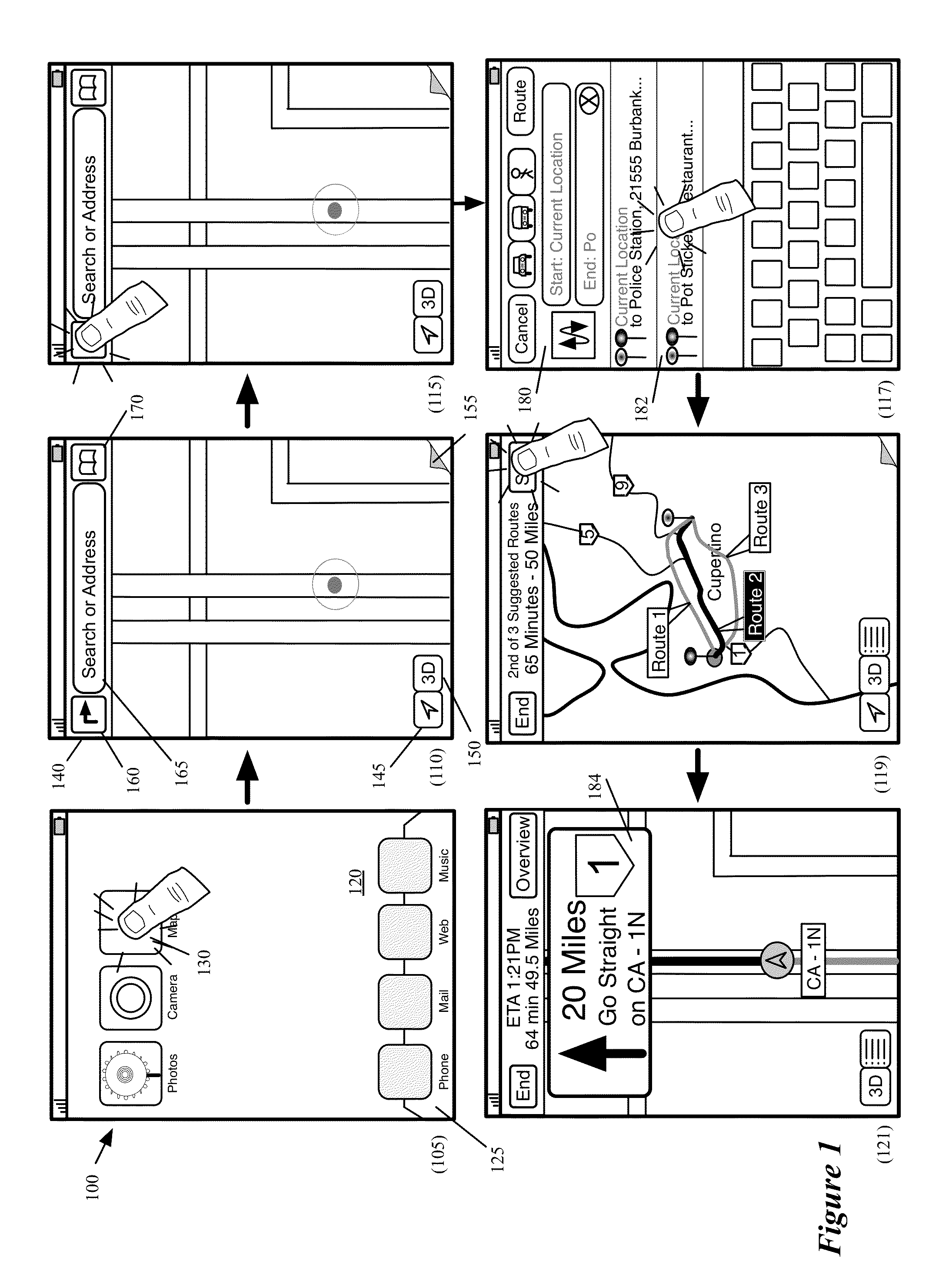

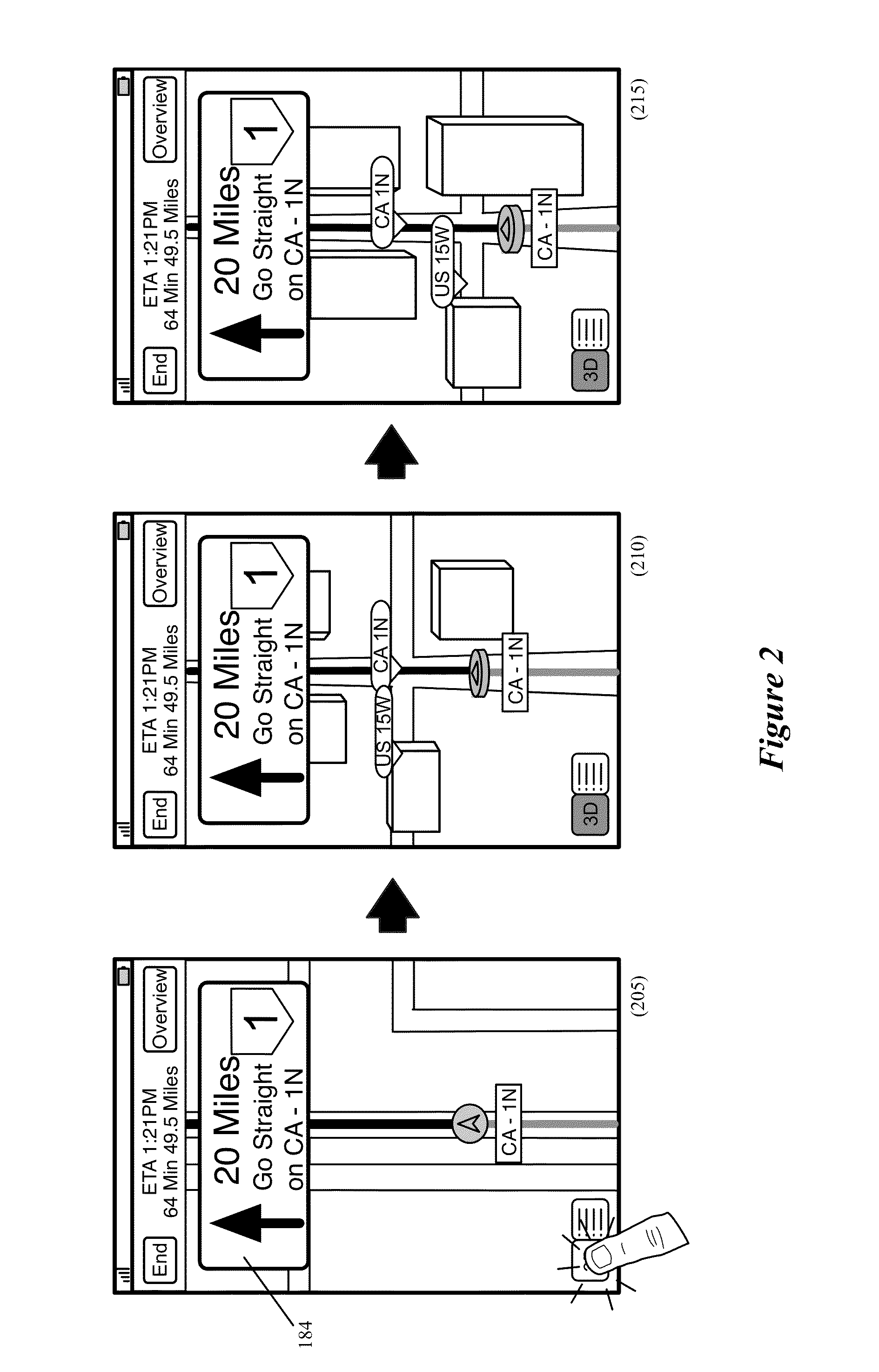

Virtual Camera for 3D Maps

ActiveUS20130321401A1Smooth transitSmooth transitionMaps/plans/chartsNavigation instrumentsAnimationVirtual camera

Some embodiments provide a non-transitory machine-readable medium that stores a mapping application which when executed on a device by at least one processing unit provides automated animation of a three-dimensional (3D) map along a navigation route. The mapping application identifies a first set of attributes for determining a first position of a virtual camera in the 3D map at a first instance in time. Based on the identified first set of attributes, the mapping application determines the position of the virtual camera in the 3D map at the first instance in time. The mapping application identifies a second set of attributes for determining a second position of the virtual camera in the 3D map at a second instance in time. Based on the identified second set of attributes, the mapping application determines the position of the virtual camera in the 3D map at the second instance in time. The mapping application renders an animated 3D map view of the 3D map from the first instance in time to the second instance in time based on the first and second positions of the virtual camera in the 3D map.

Owner:APPLE INC

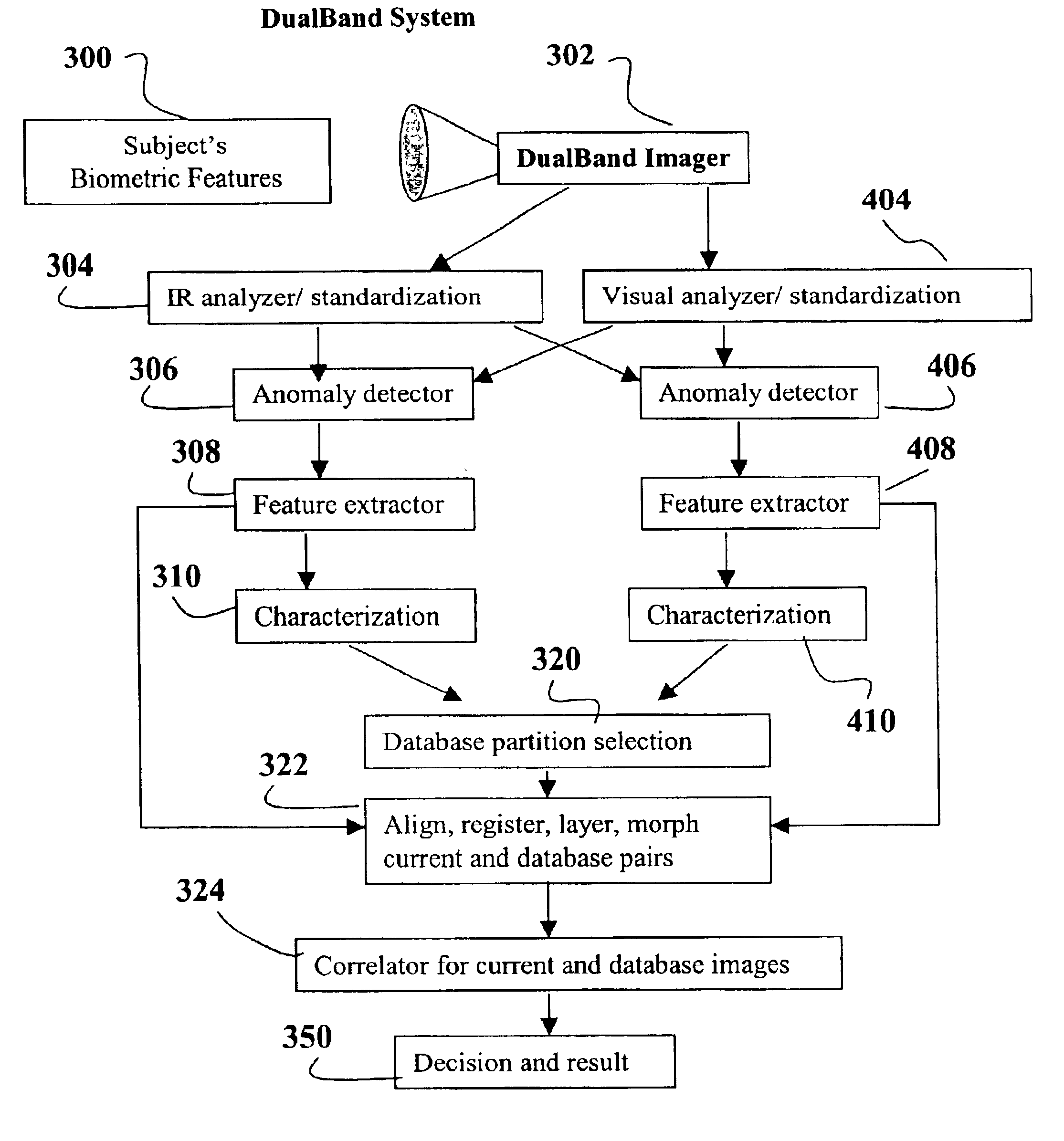

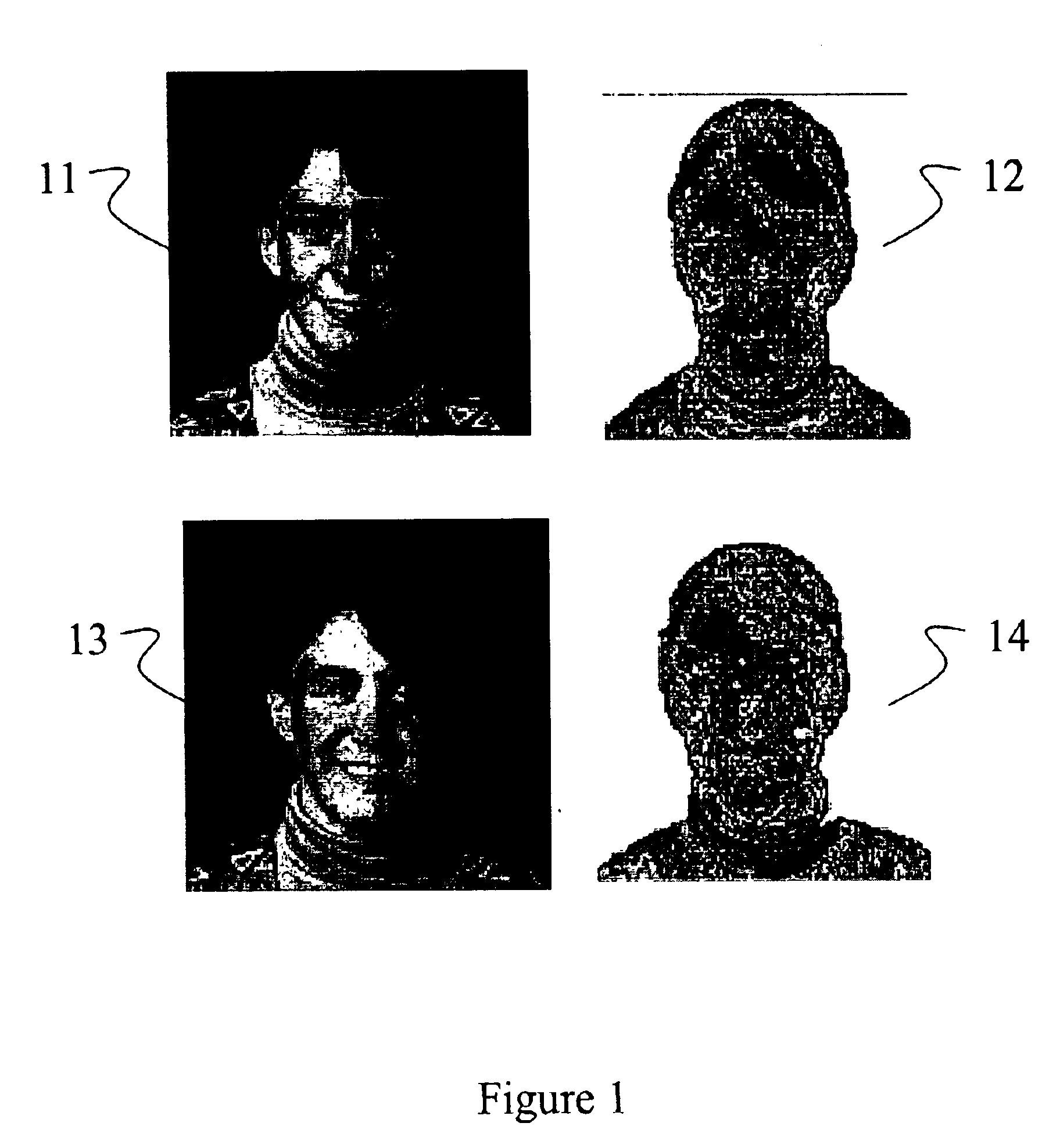

Dual band biometric identification system

InactiveUS6920236B2Improve accuracyRaise the possibilitySpoof detectionSubcutaneous biometric featuresVirtual cameraVisual perception

Owner:MIKOS

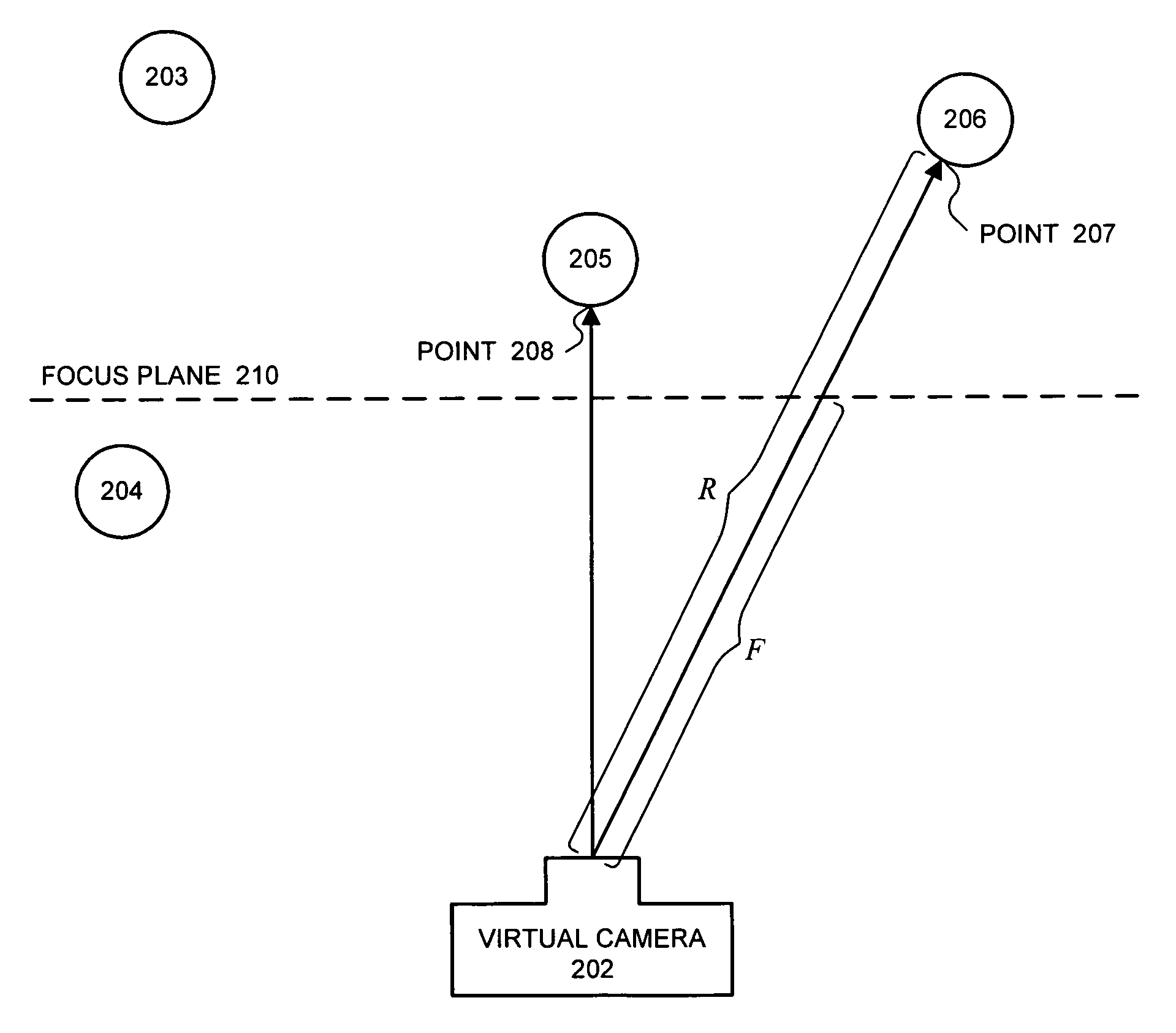

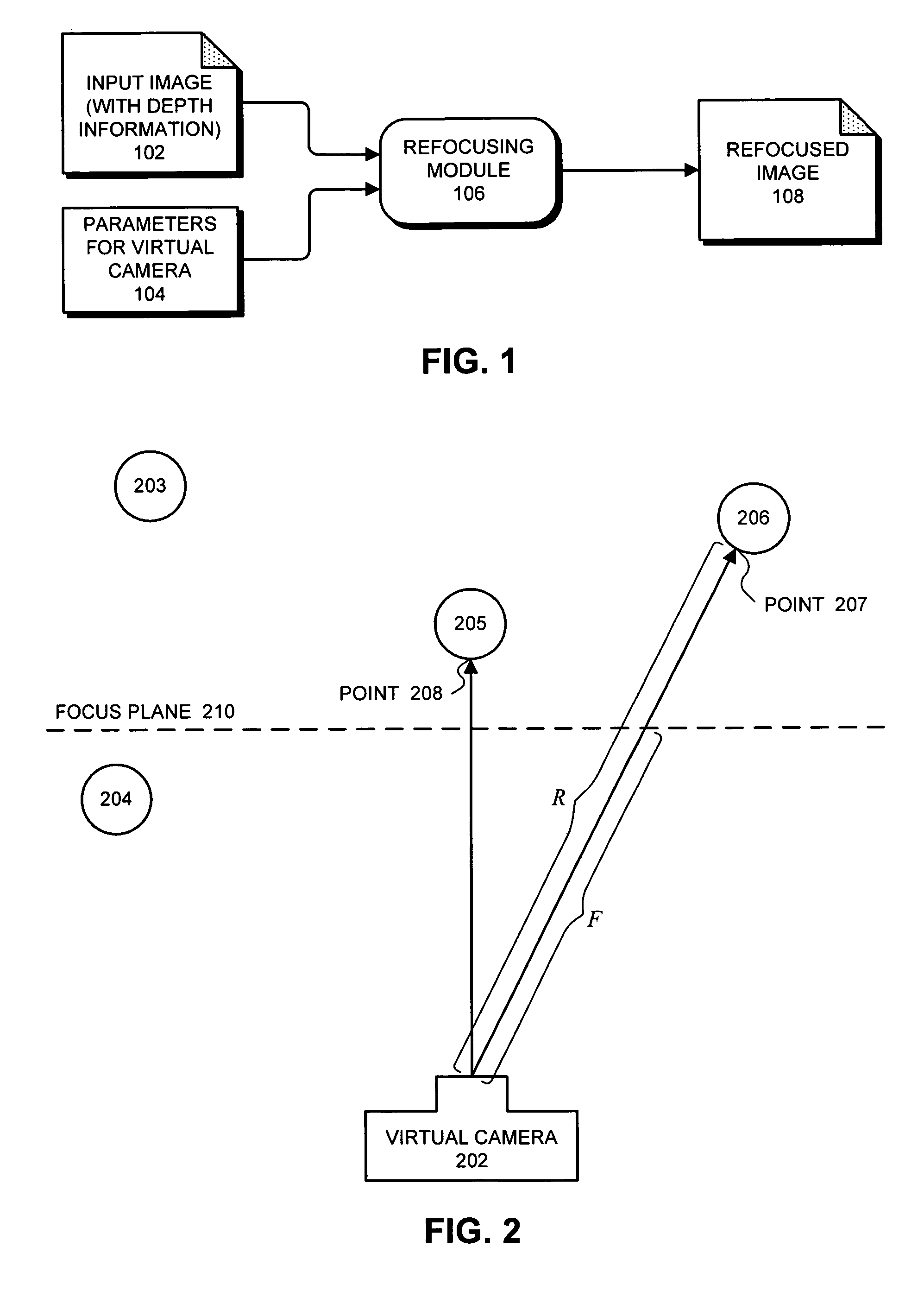

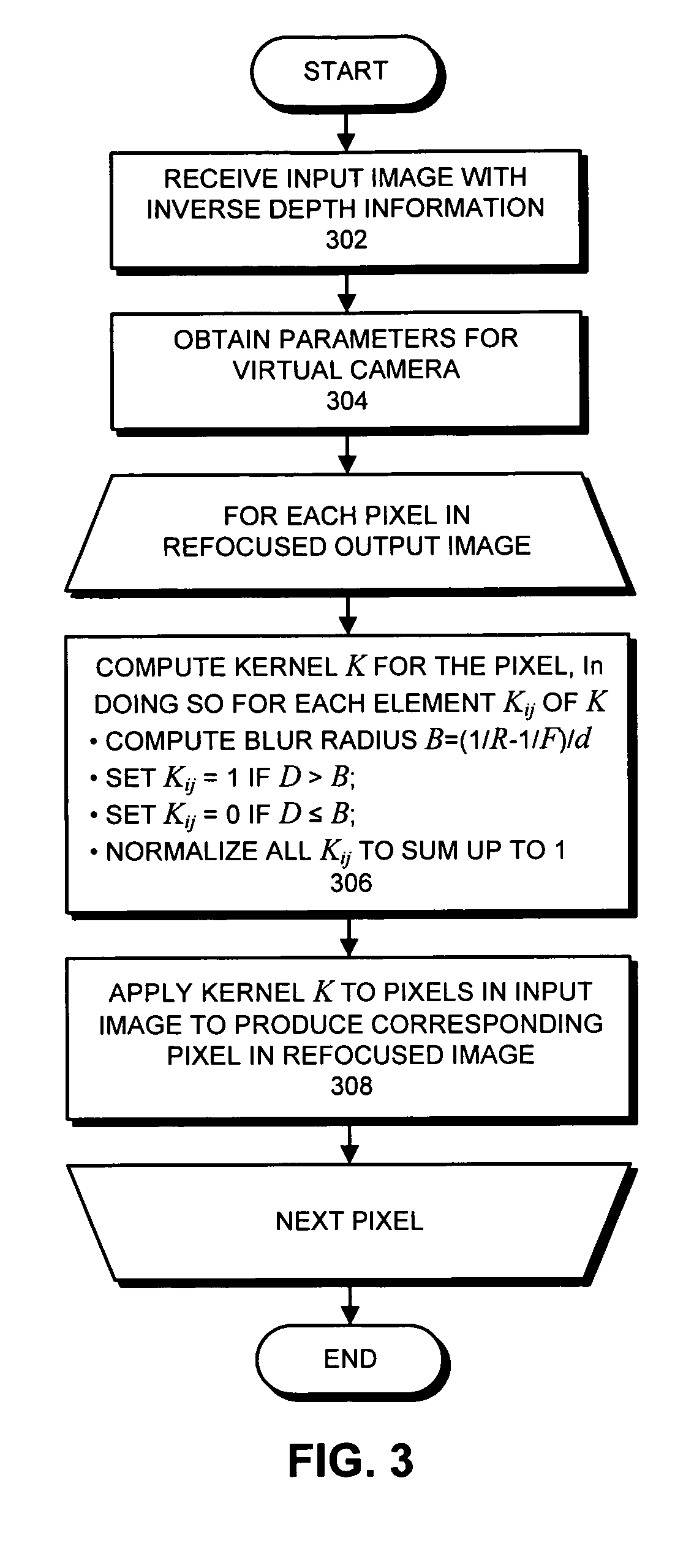

Method and apparatus for using a virtual camera to dynamically refocus a digital image

One embodiment of the present invention provides a system that dynamically refocuses an image to simulate a focus plane and a depth-of-field of a virtual camera. During operation, the system receives an input image, wherein the input image includes depth information for pixels in the input image. The system also obtains parameters that specify the depth-of-field d and the location of the focus plane for the virtual camera. Next, the system uses the depth information and the parameters for the virtual camera to refocus the image. During this process, for each pixel in the input image, the system uses the depth information and the parameters for the virtual camera to determine a blur radius B for the pixel. The system then uses the blur radius B for the pixel to determine whether the pixel contributes to neighboring pixels in the refocused image.

Owner:ADOBE INC

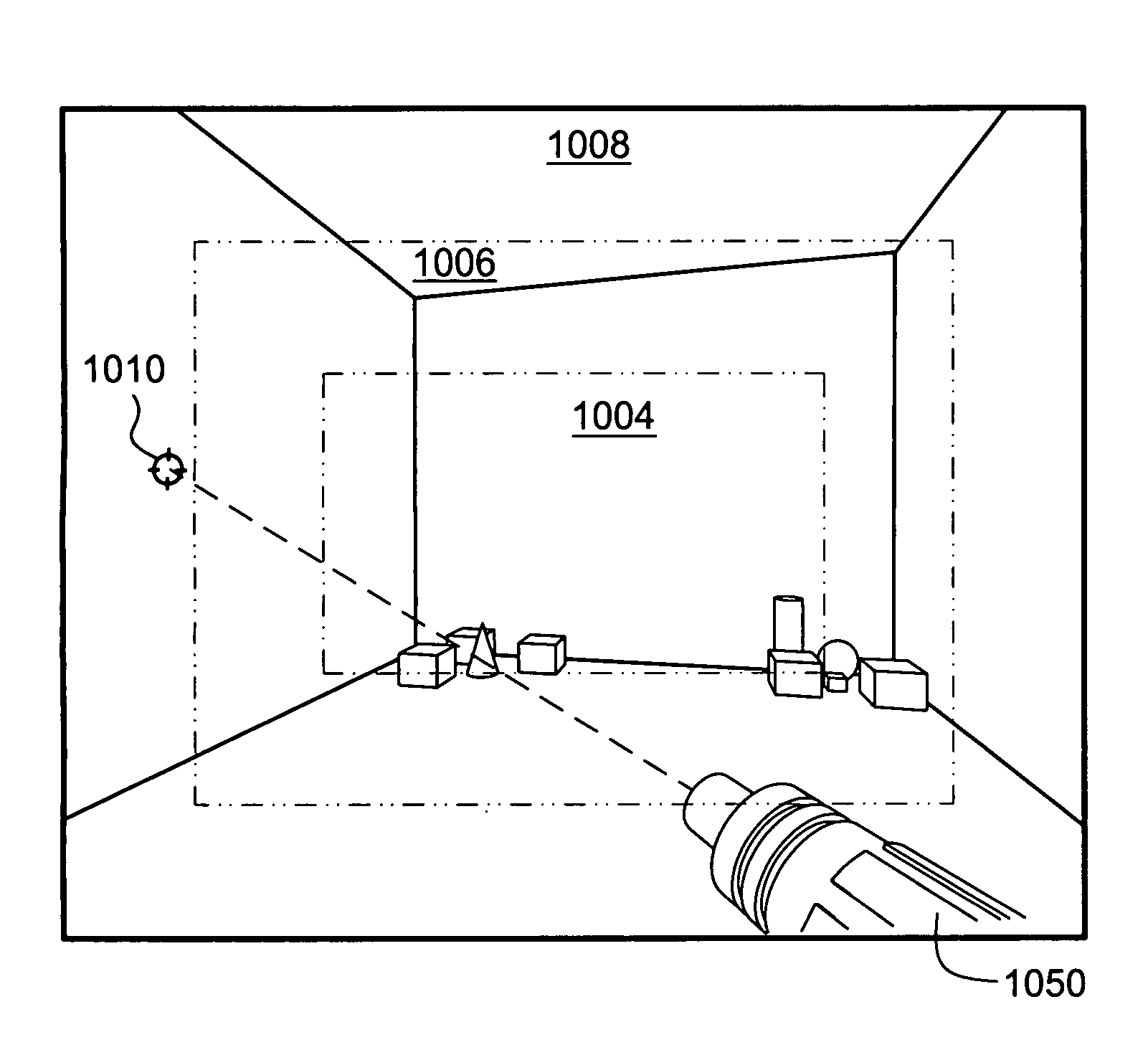

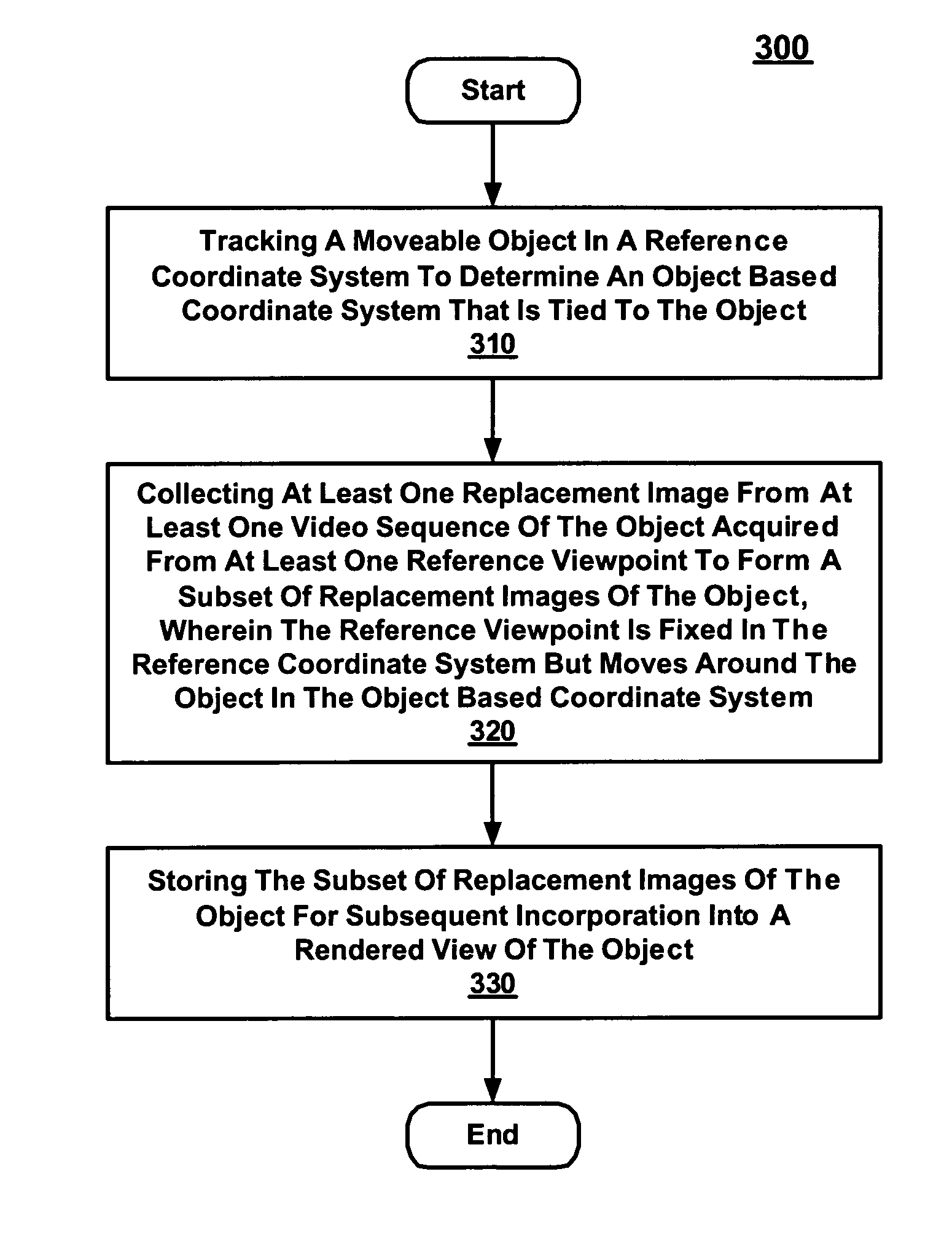

Method and system for providing extensive coverage of an object using virtual cameras

A system and method for generating texture information. Specifically, a method provides for extensive coverage of an object using virtual cameras. The method begins by tracking a moveable object in a reference coordinate system. By tracking the moveable object, an object based coordinate system that is tied to the object can be determined. The method continues by collecting at least one replacement image from at least one video sequence of the object to form a subset of replacement images of the object. The video sequence of the object is acquired from at least one reference viewpoint, wherein the reference viewpoint is fixed in the reference coordinate system but moves around the object in the object based coordinate system. The subset of replacement images is stored for subsequent incorporation into a rendered view of the object.

Owner:GENESIS COIN INC +1

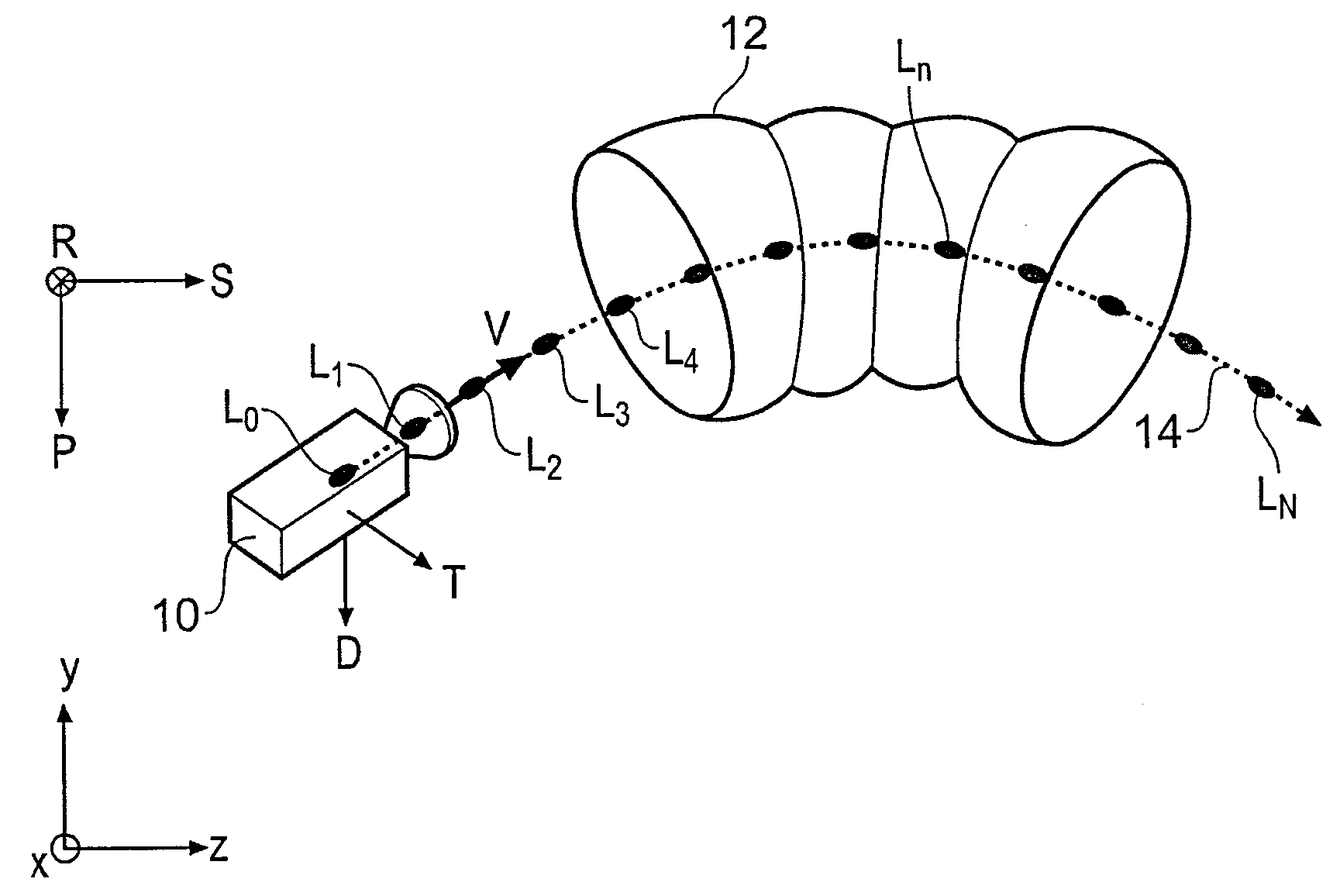

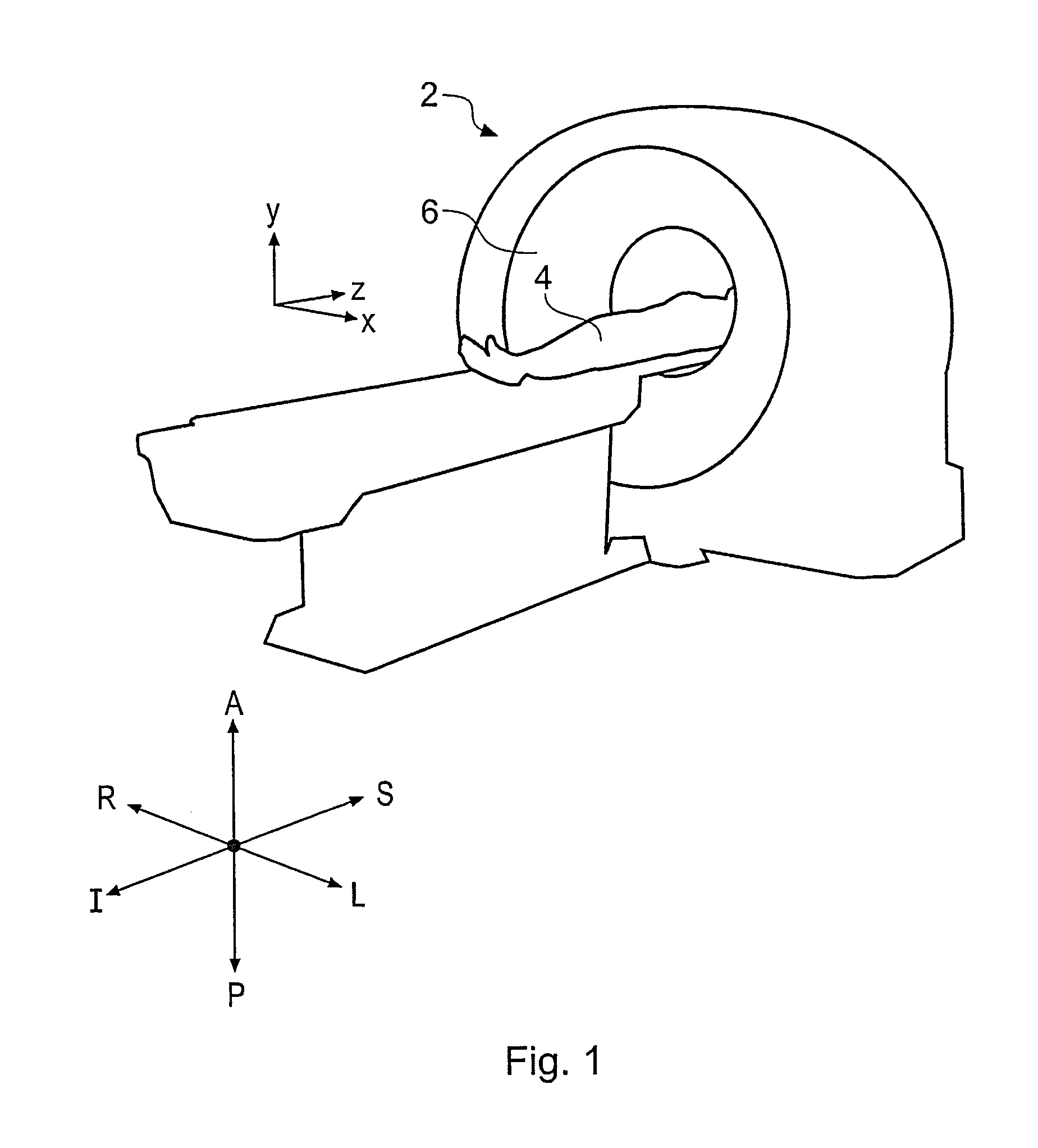

Virtual endoscopy

InactiveUS20080118117A1Full effectImproved angular separationSurgeryEndoscopesData setVirtual camera

A method of orienting a virtual camera for rendering a virtual endoscopy image of a lumen in a biological structure represented by a medical image data set, e.g., a colon. The method comprises selecting a location from which to render an image, determining an initial orientation for the virtual camera relative to the data set for the selected location based on the geometry of the lumen, determining an offset angle between the initial orientation and a bias direction; and orienting the virtual camera in accordance with a rotation from the initial orientation towards the bias direction by a fractional amount of the offset angle which varies according to the initial orientation. The fractional amount may vary according to the offset angle and / or a separation between a predetermined direction in the data set and a view direction of the virtual camera for the initial orientation. Thus the camera orientation can be configured to tend towards a preferred direction in the data set, while maintaining a good view of the lumen and avoiding barrel rolling effects.

Owner:TOSHIBA MEDICAL VISUALIZATION SYST EURO

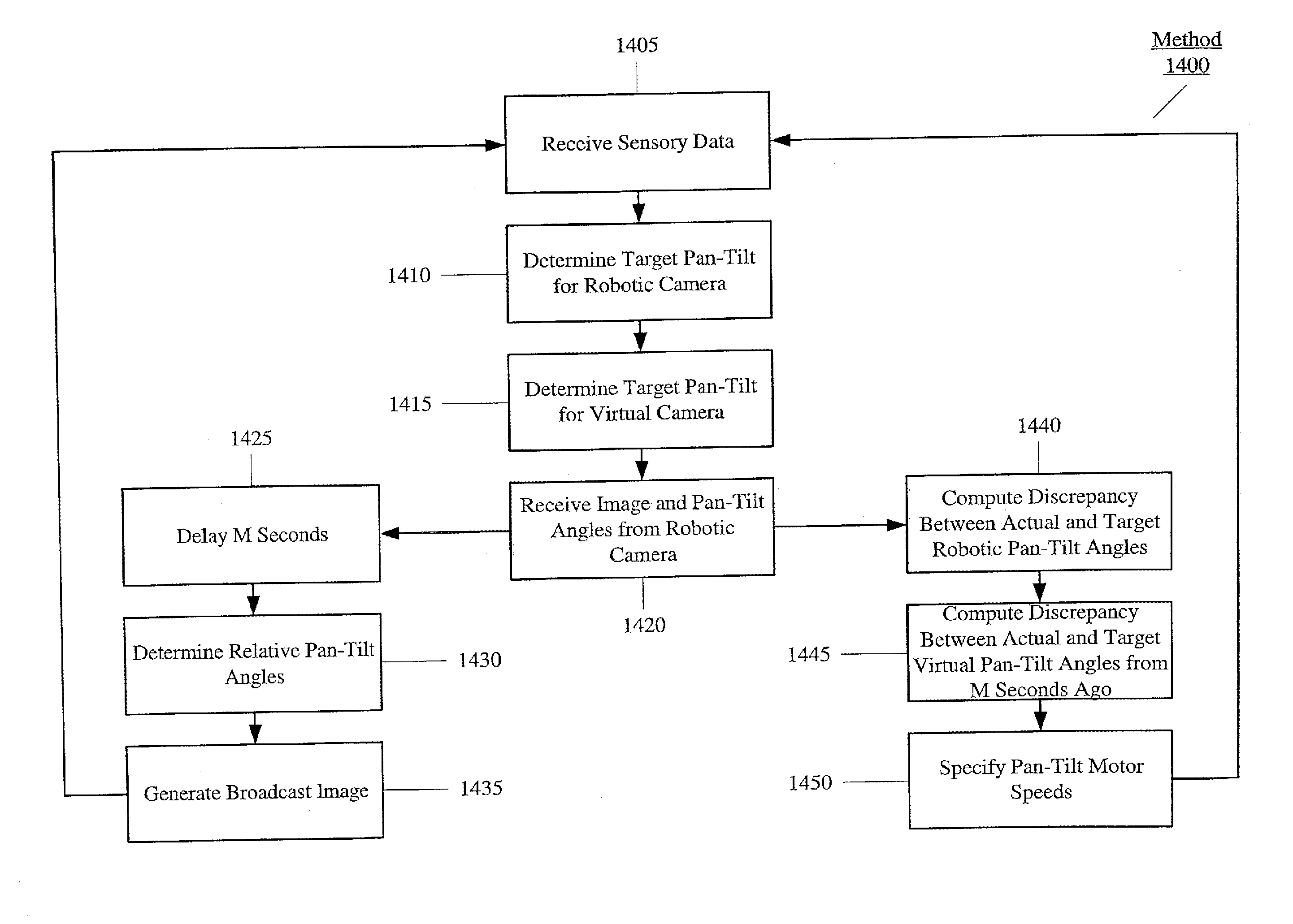

Method and device for spherical resampling for video generation

ActiveUS20140232818A1Television system detailsColor television detailsPattern recognitionVirtual camera

A system and method generates a broadcast image. The method includes receiving a first image captured by a first camera having a first configuration incorporating a first center of projection. The method includes determining a first mapping from a first image plane of the first camera onto a sphere. The method includes determining a second mapping from the sphere to a second image plane of a second virtual camera having a second configuration incorporating the first center of projection of the first configuration. The method includes generating a second image based upon the first image and a concatenation of the first and second mappings.

Owner:DISNEY ENTERPRISES INC

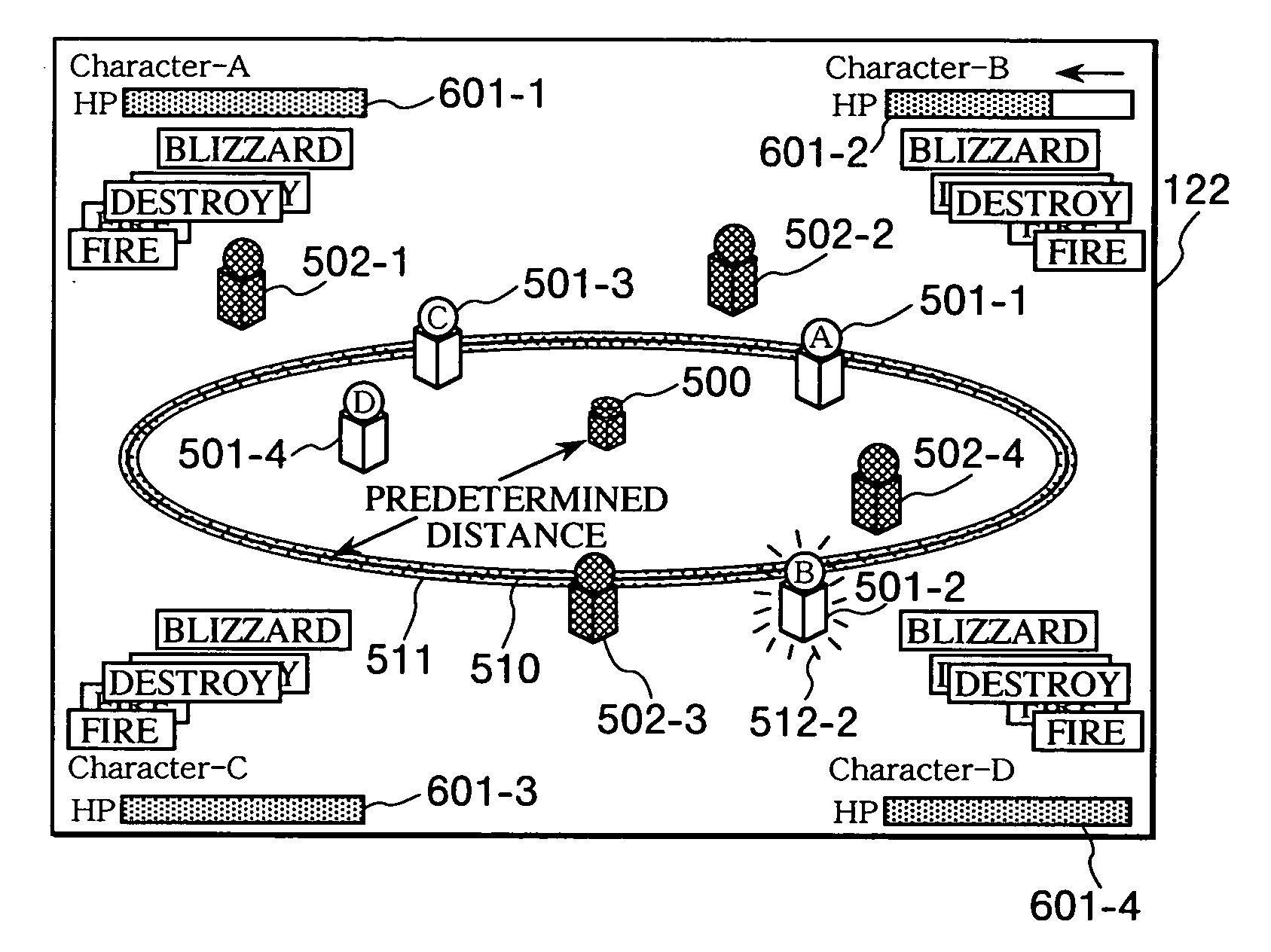

Video game that displays player characters of multiple players in the same screen

InactiveUS20040157662A1Degree of advantage of the game progressAdvance the game advantageouslyVideo gamesSpecial data processing applicationsThree-dimensional spaceSafety zone

In a virtual three-dimensional space, at least multiple player characters and a movable special object exist. The multiple player characters move in the virtual three-dimensional space according to operations of each player. The special object can be moved in the virtual three-dimensional space according to operations of each player character. An image, which is perspective-transformed in a state that a visual axis of a virtual camera is directed to the direction of the special object, is displayed as a game screen. An area, which is within a predetermined distance from the special object, is fixed as a safety zone. Hit points of the player characters, which are within the safety zone, do not decrease. However, hit points of the player characters, which are outside the safety zone, decrease.

Owner:SQUARE ENIX HLDG CO LTD

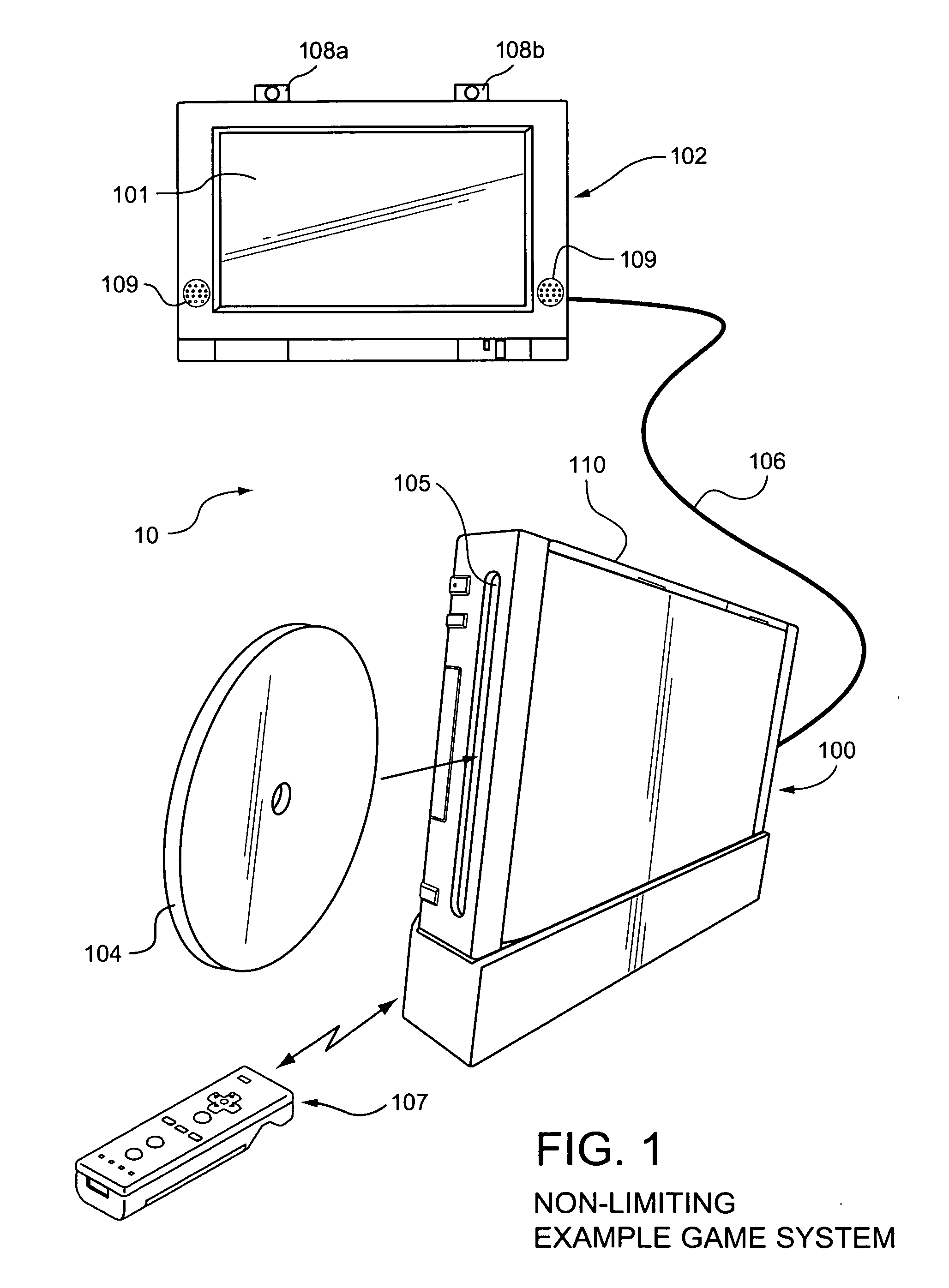

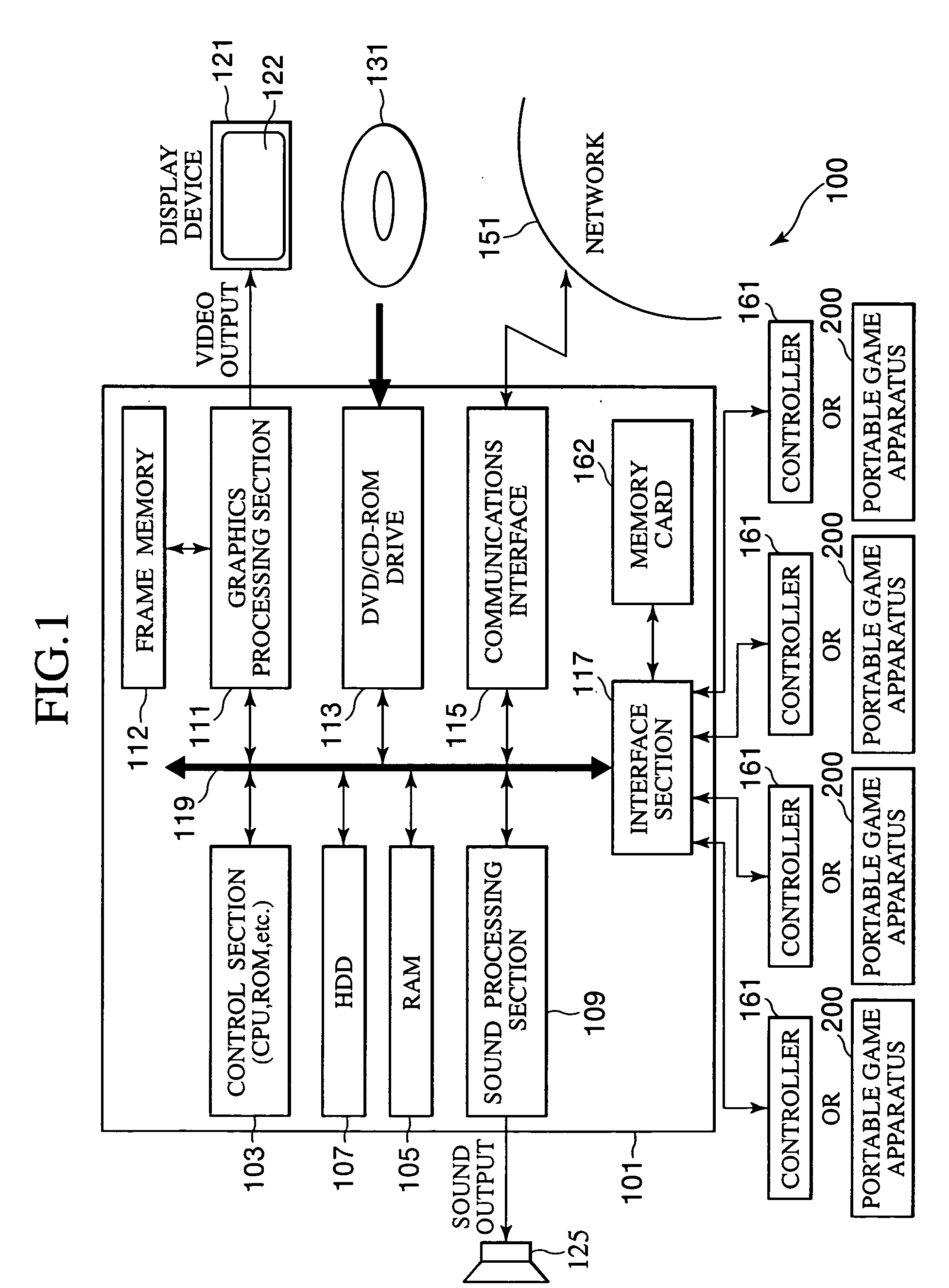

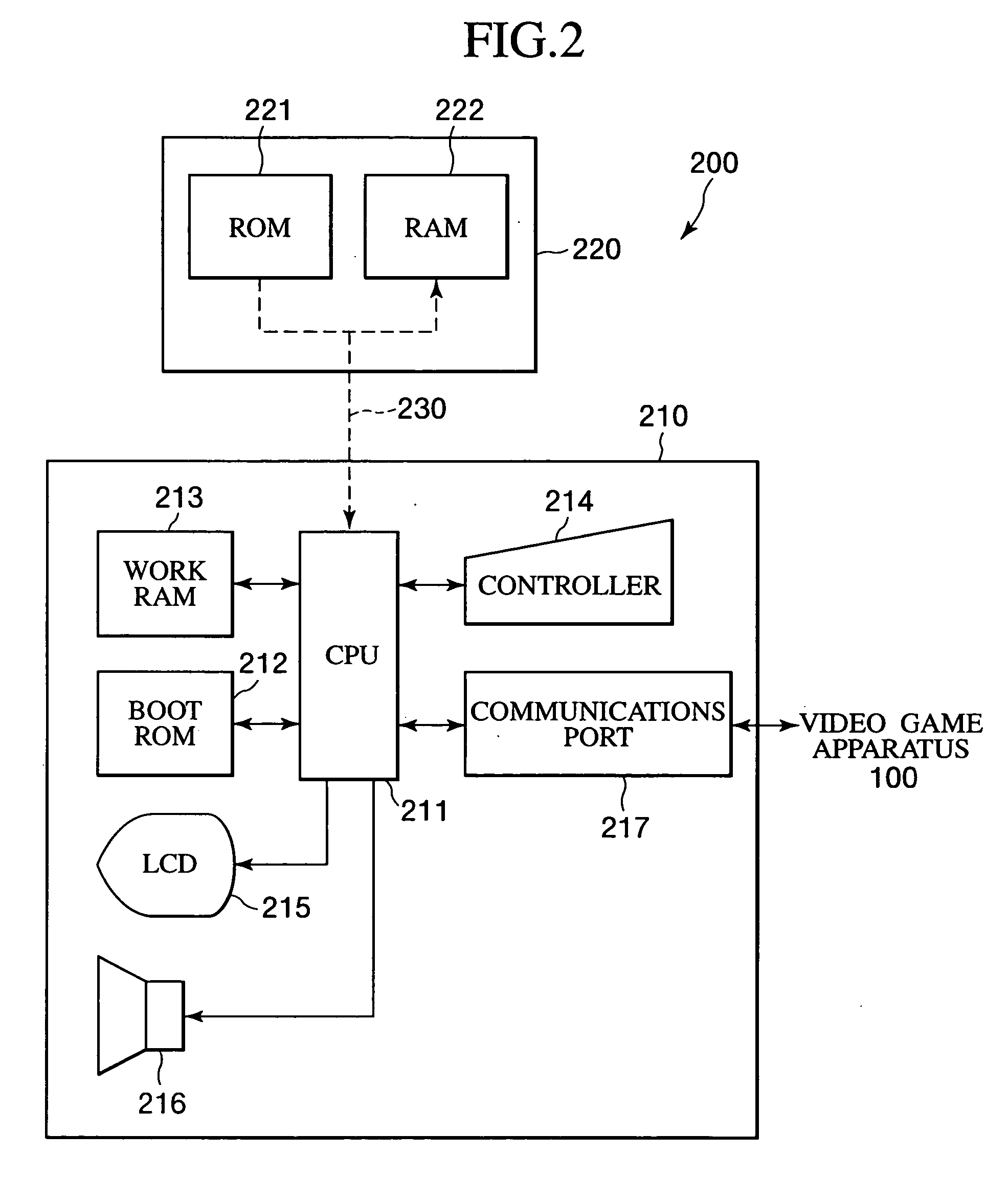

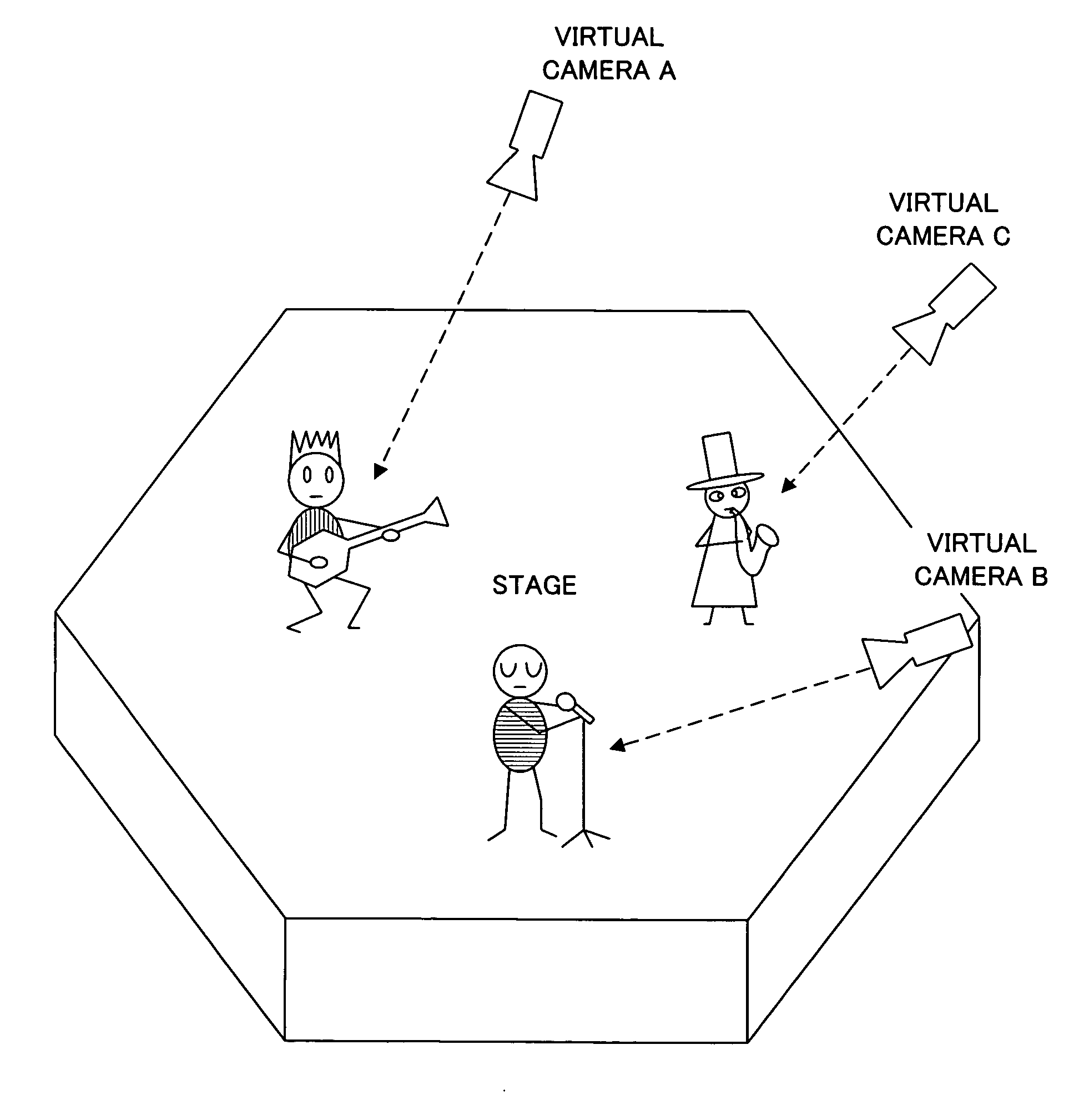

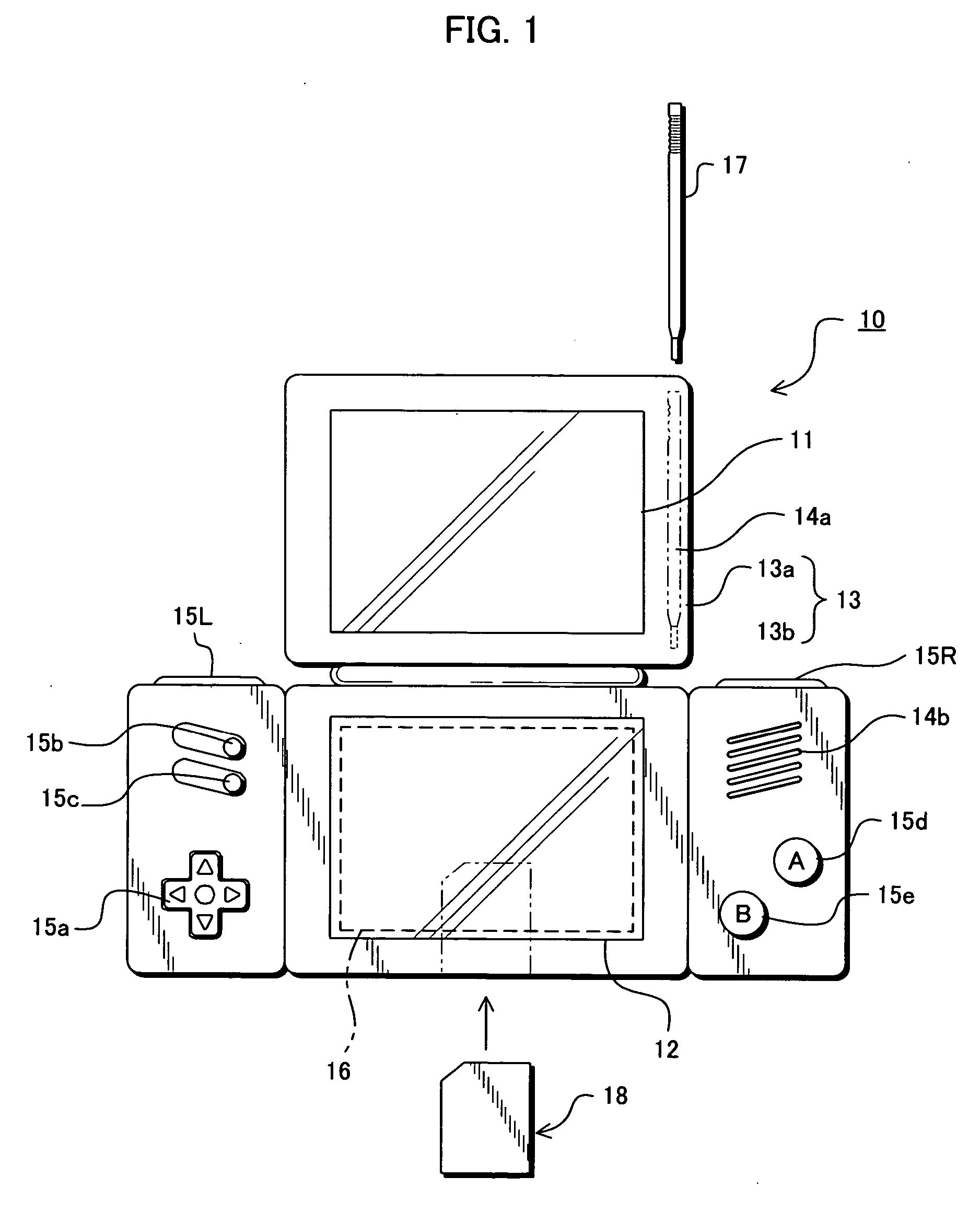

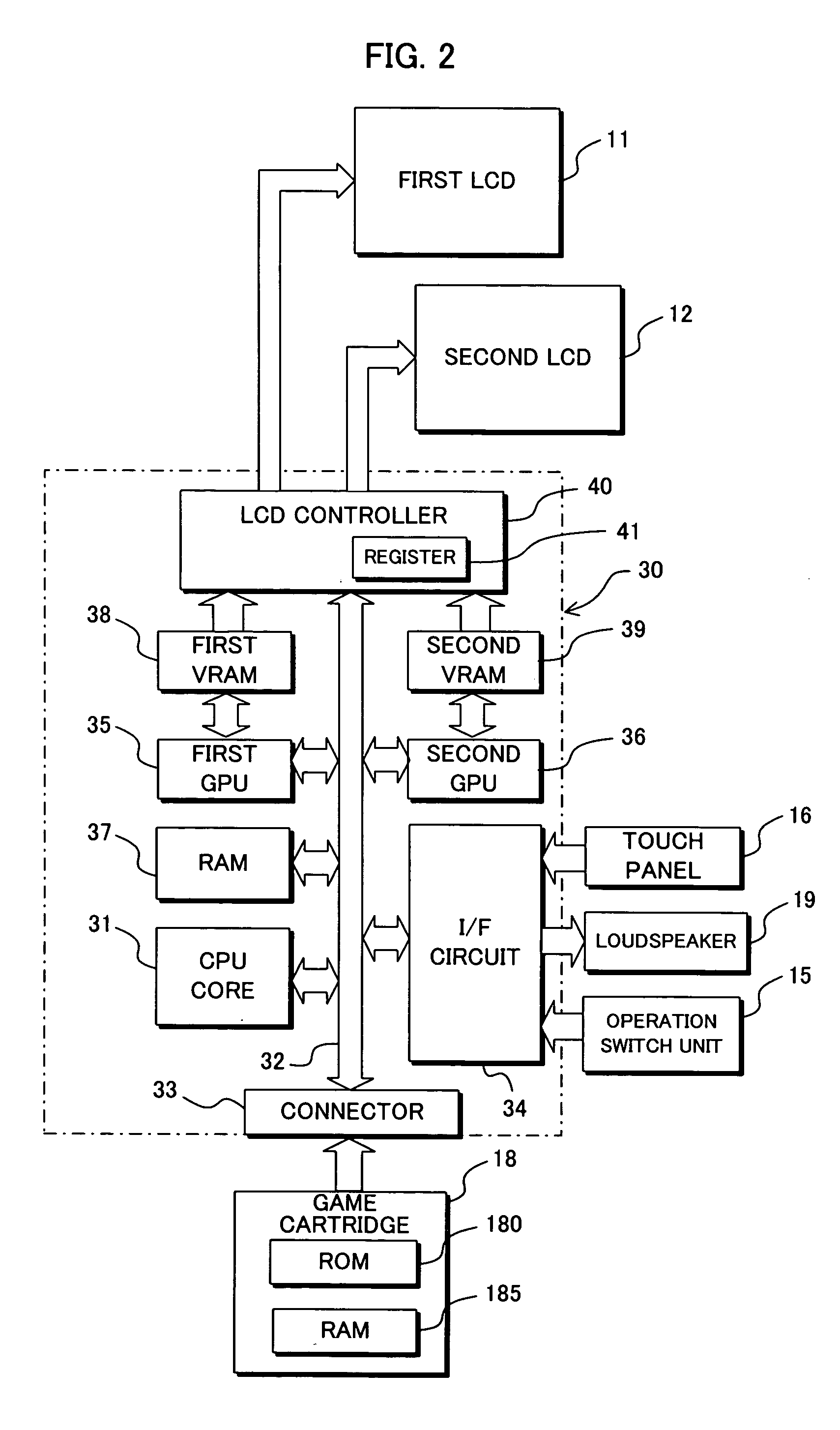

Game machine and data storage medium having stored therein game program

ActiveUS20050187015A1Current changeSure easyVideo gamesSpecial data processing applicationsVirtual cameraVirtual game

A game machine is provided with two display units, that is, a first LCD 11 and a second LCD 12. On the second LCD 12, feature information unique to each object located in a virtual game space is displayed. When a player selects, based on information displayed on the second LCD 12, one of the objects provided in the virtual game space, a focus point of a virtual camera provided in the virtual game space is set so that the virtual camera is oriented to a direction of the selected object, and the state of the virtual game space captured by the virtual camera is displayed on the first LCD 11. With this, the virtual game space can be displayed without impairing its worldview. Also, the player can be allowed to easily specify a desired focus target in the virtual game space.

Owner:NINTENDO CO LTD

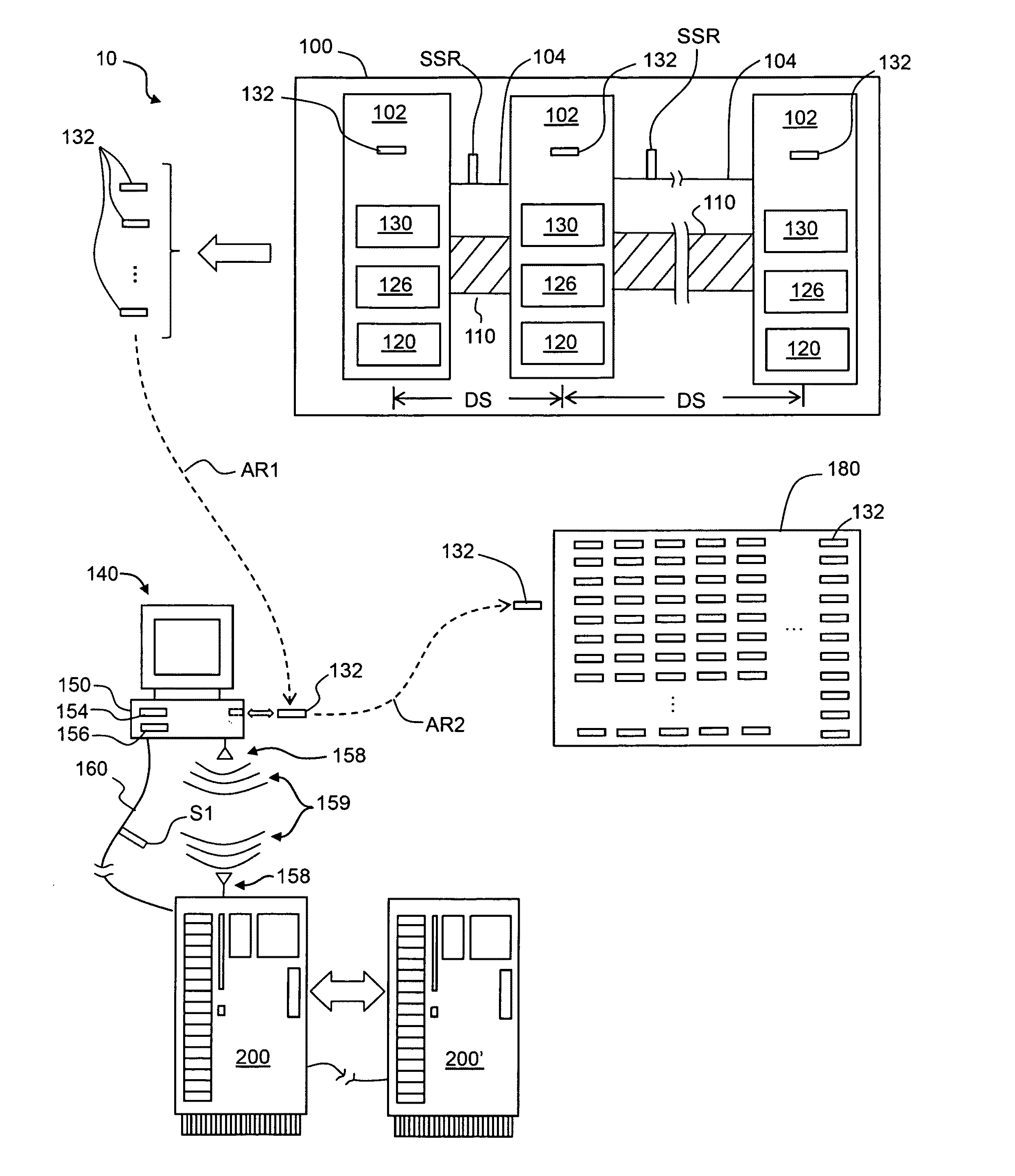

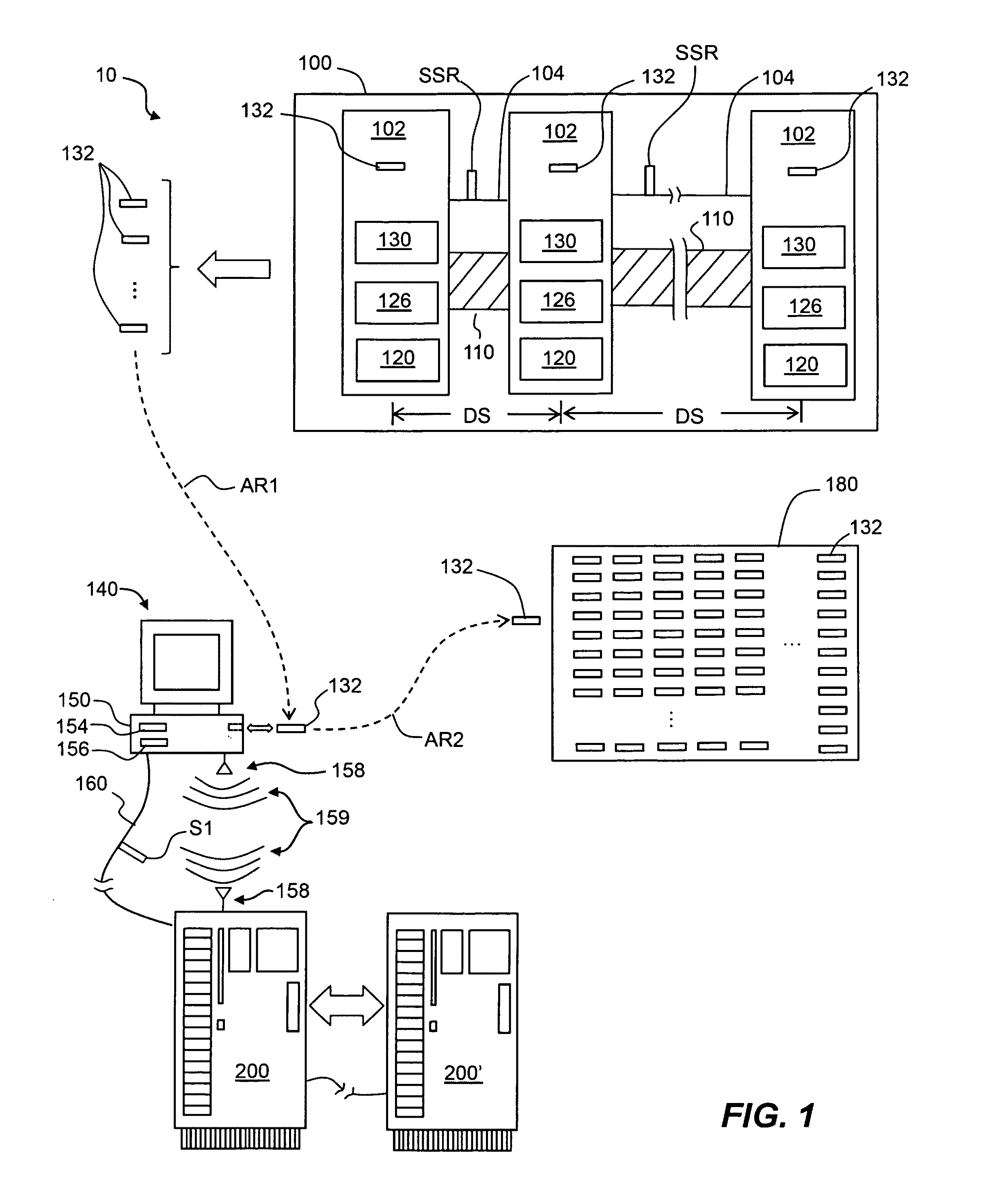

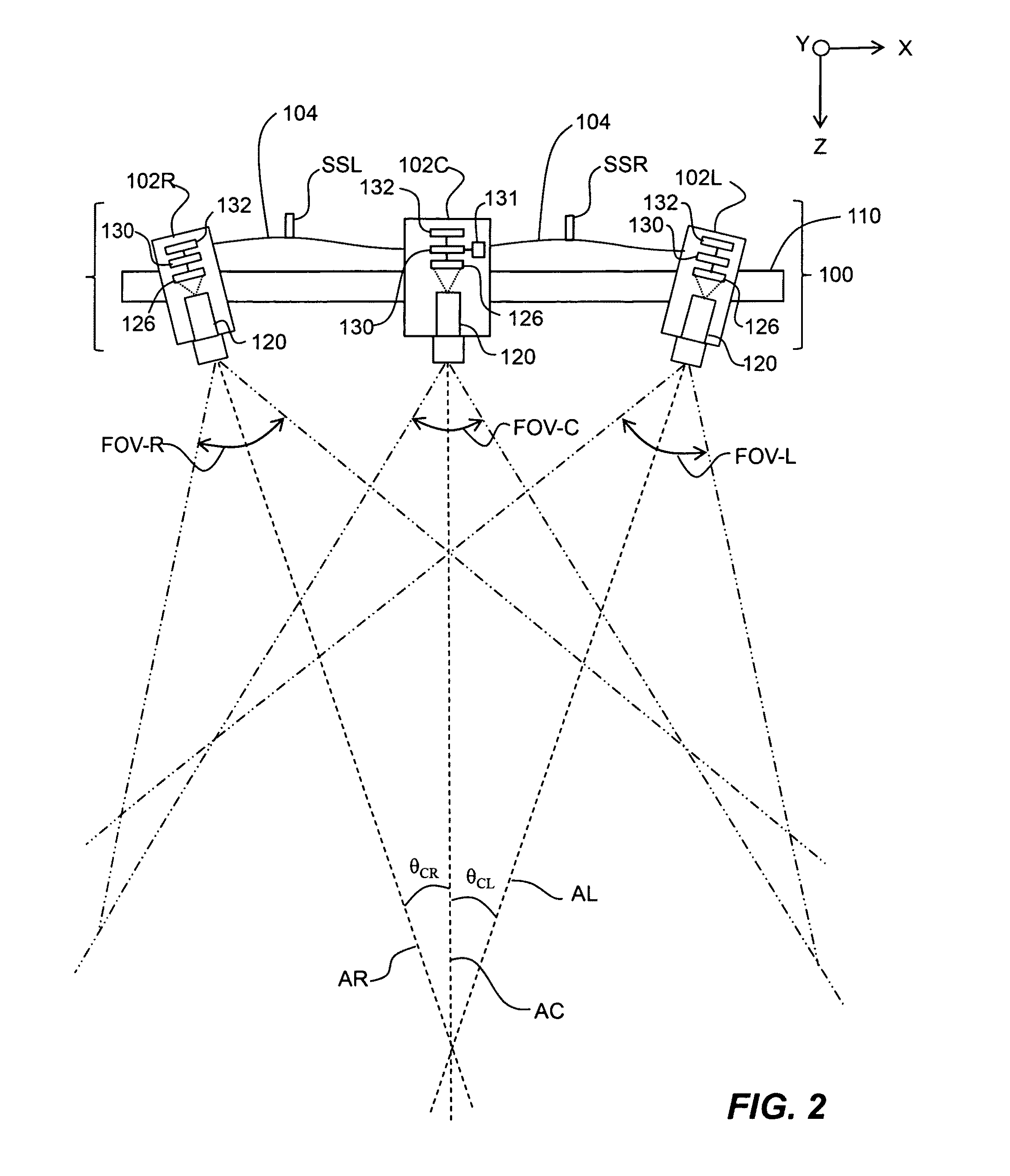

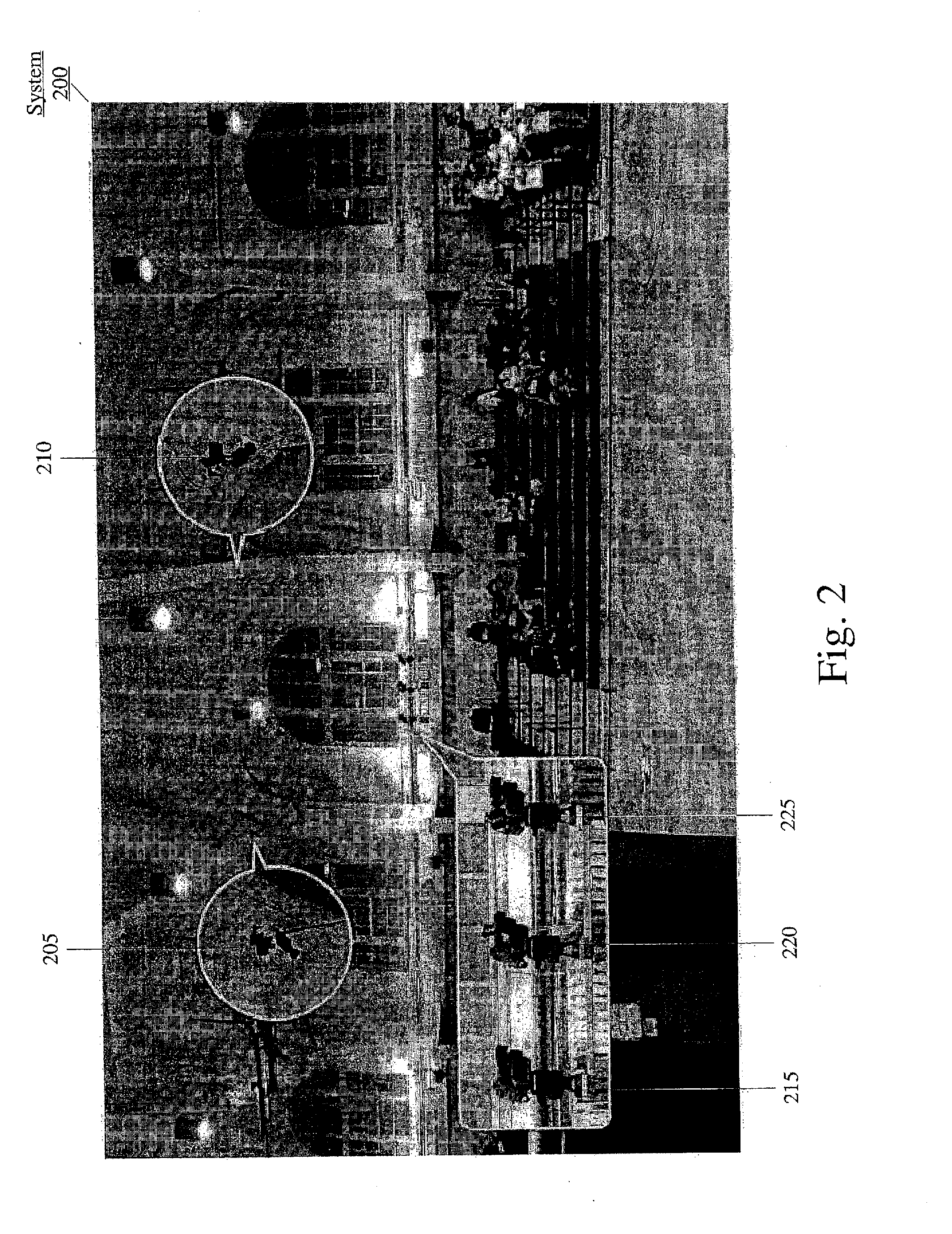

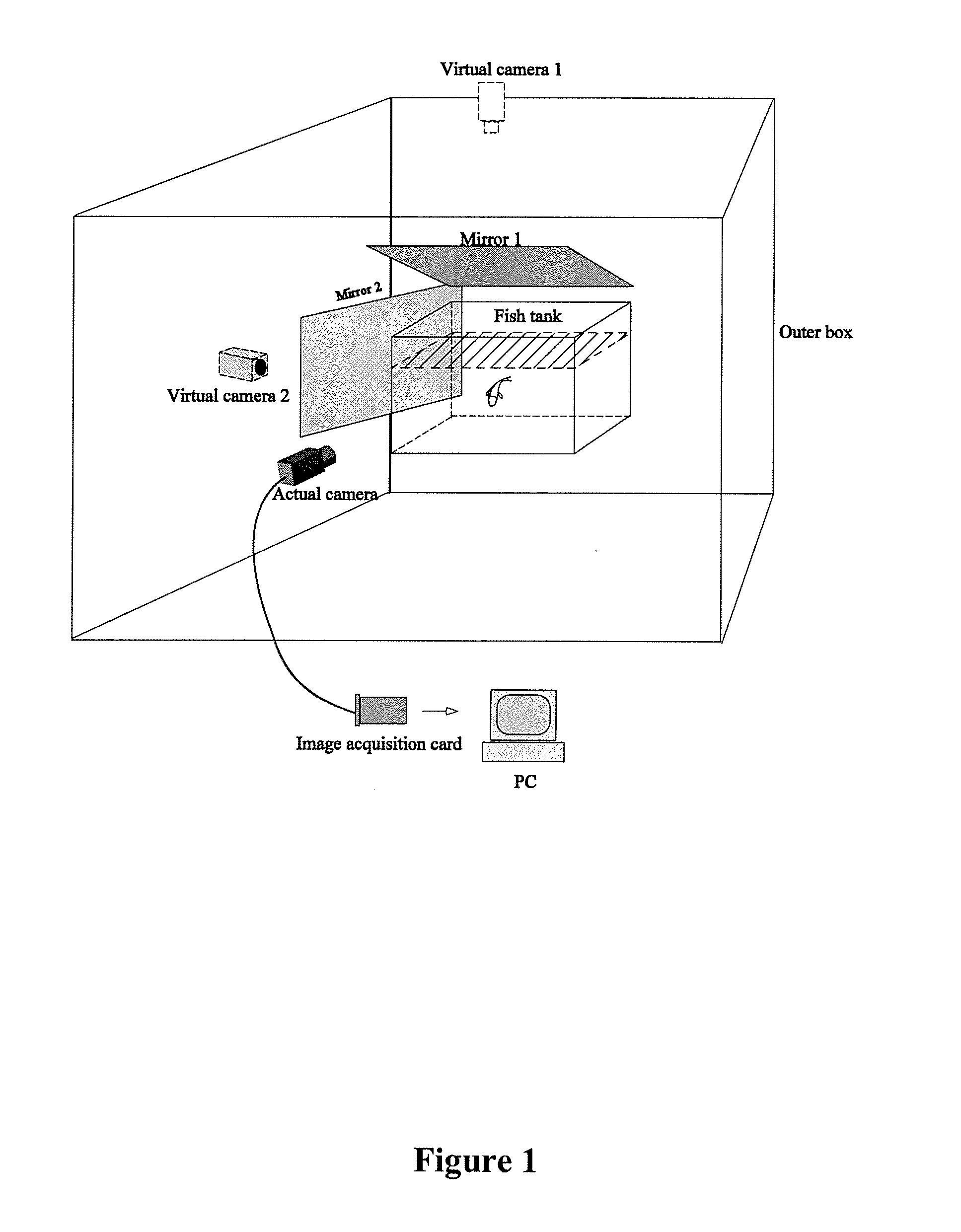

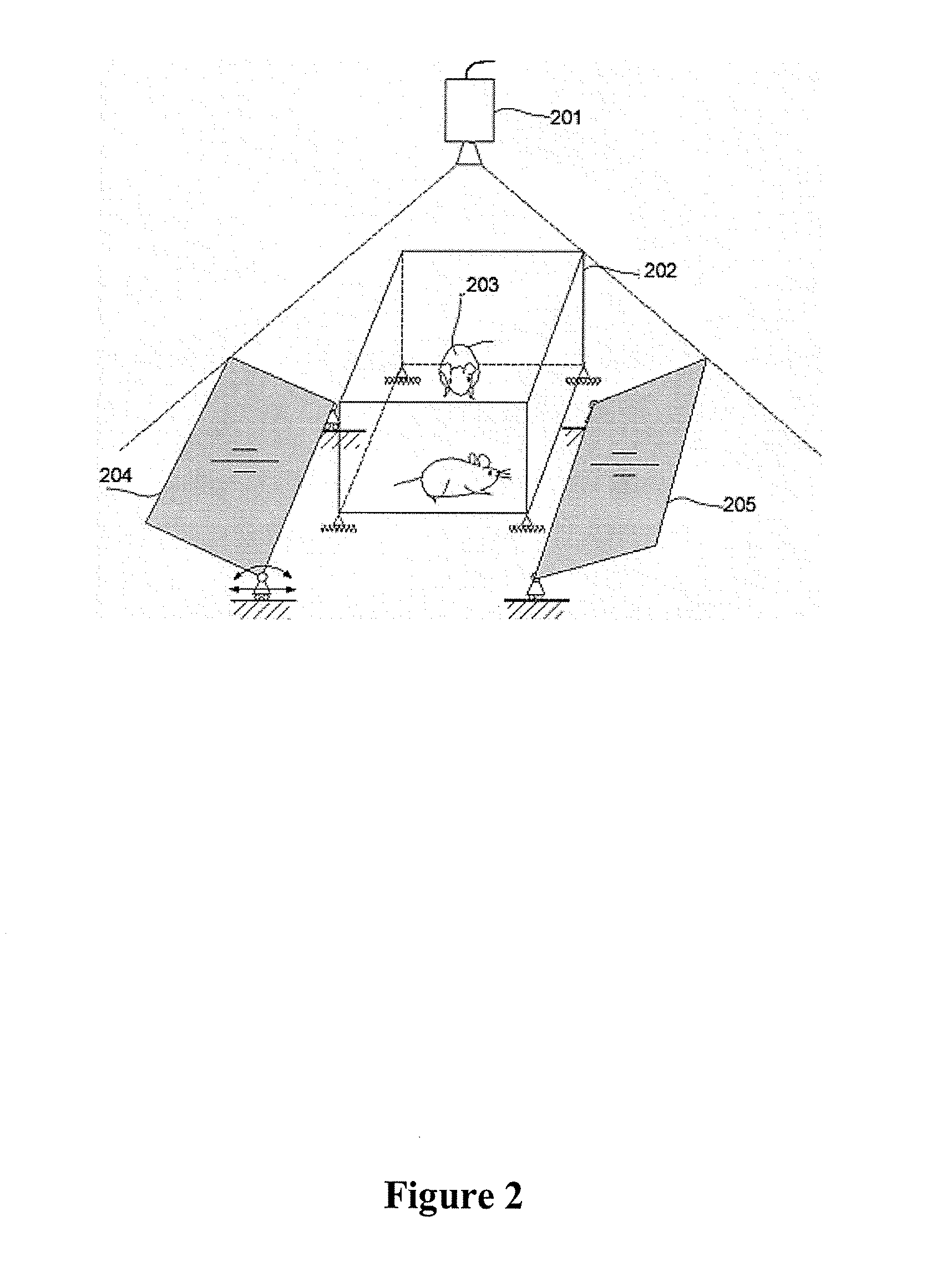

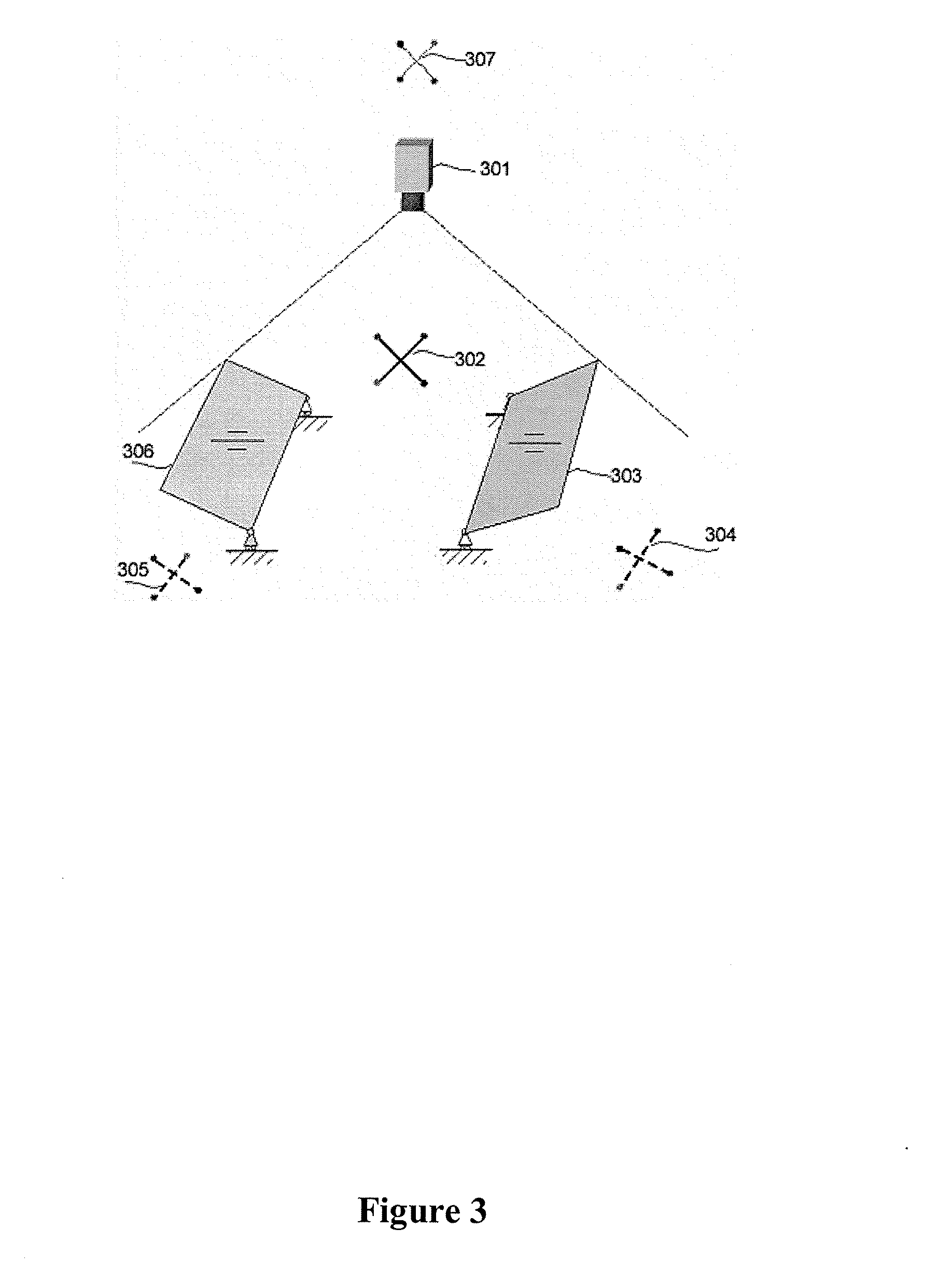

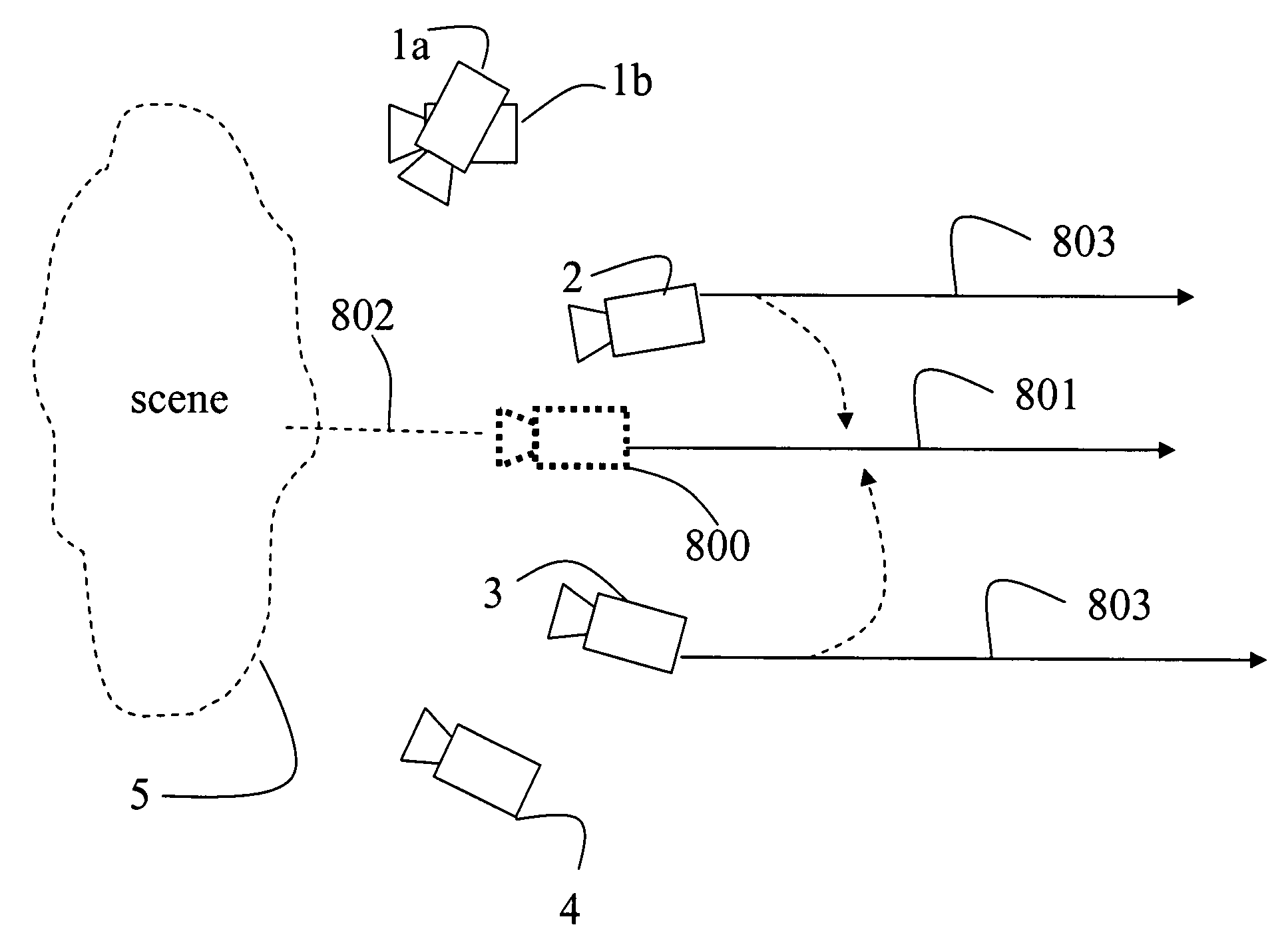

System For 3D Monitoring And Analysis Of Motion Behavior Of Targets

The present invention relates to a system for the 3-D monitoring and analysis of motion-related behavior of test subjects. The system comprises an actual camera, at least one virtual camera, a computer connected to the actual camera and the computer is preferably installed with software capable of capturing the stereo images associated with the 3-D motion-related behavior of test subjects as well as processing these acquired image frames for the 3-D motion parameters of the subjects. The system of the invention comprises hardware components as well as software components. The hardware components preferably comprise a hardware setup or configuration, a hardware-based noise elimination component, an automatic calibration device component, and a lab animal container component. The software components preferably comprise a software-based noise elimination component, a basic calibration component, an extended calibration component, a linear epipolar structure derivation component, a non-linear epipolar structure derivation component, an image segmentation component, an image correspondence detection component, a 3-D motion tracking component, a software-based target identification and tagging component, a 3-D reconstruction component, and a data post-processing component In a particularly preferred embodiment, the actual camera is a digital video camera, the virtual camera is the reflection of the actual camera in a planar reflective mirror. Therefore, the preferred system is a catadioptric stereo computer vision system.

Owner:INGENIOUS TARGETING LAB

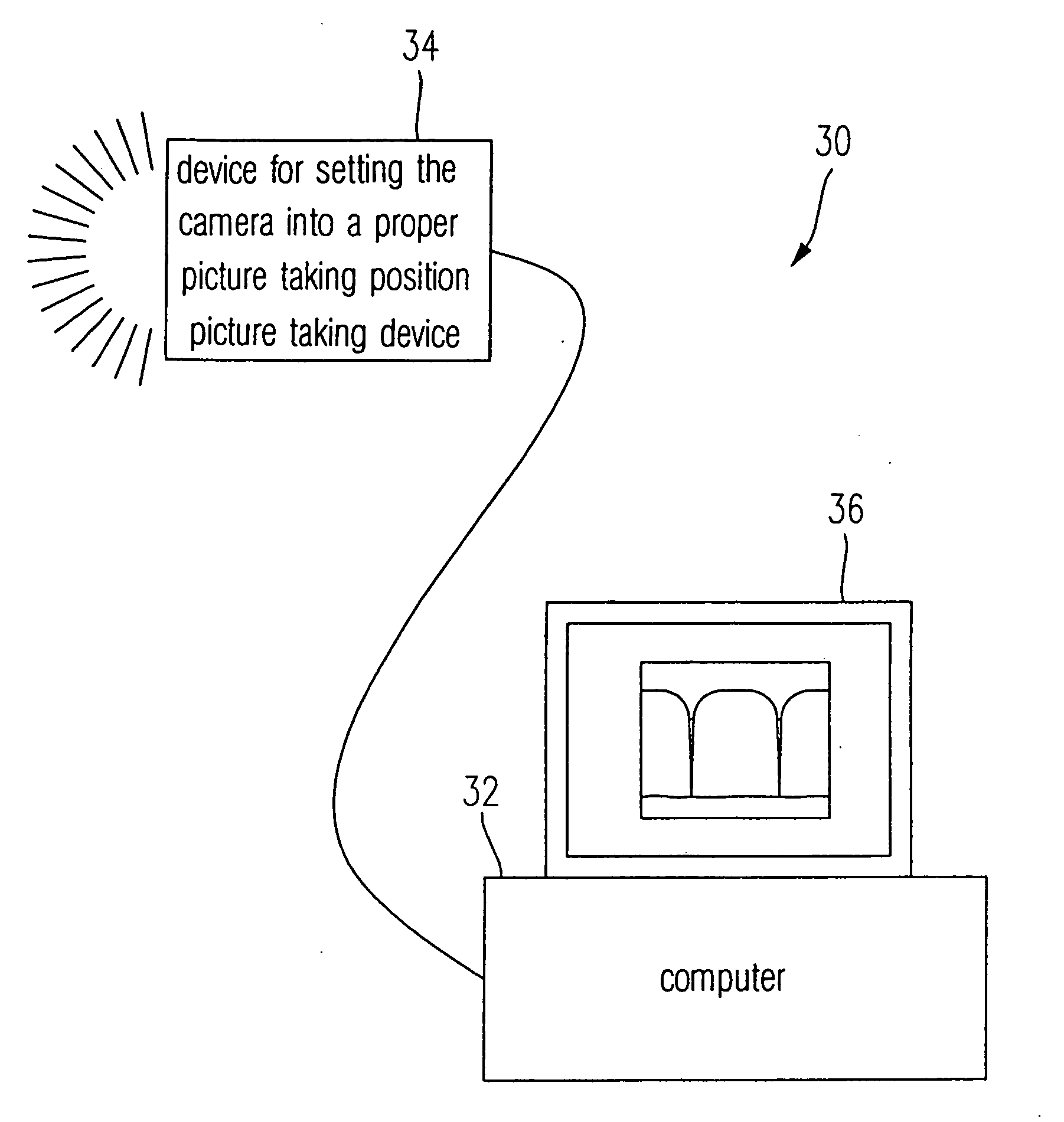

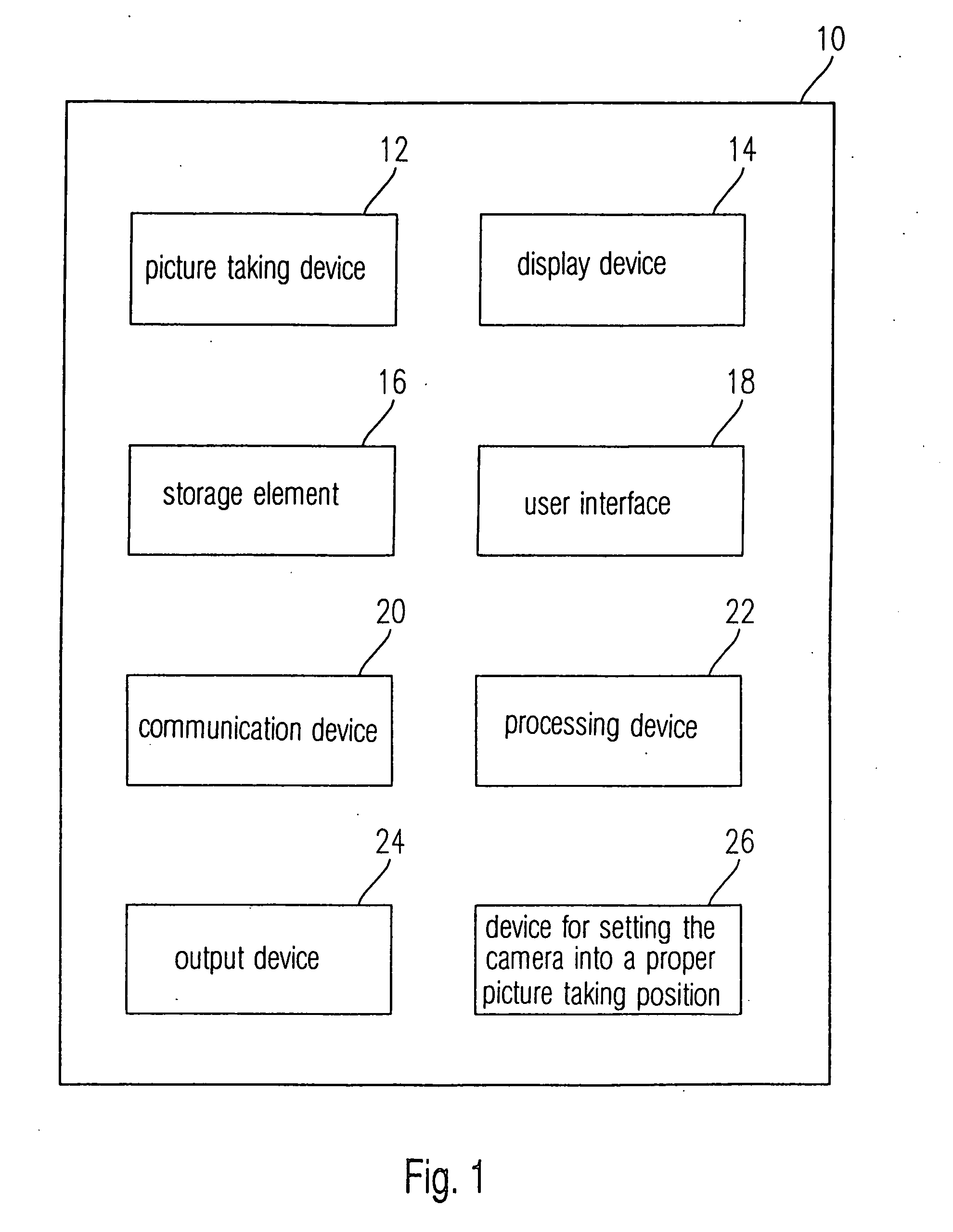

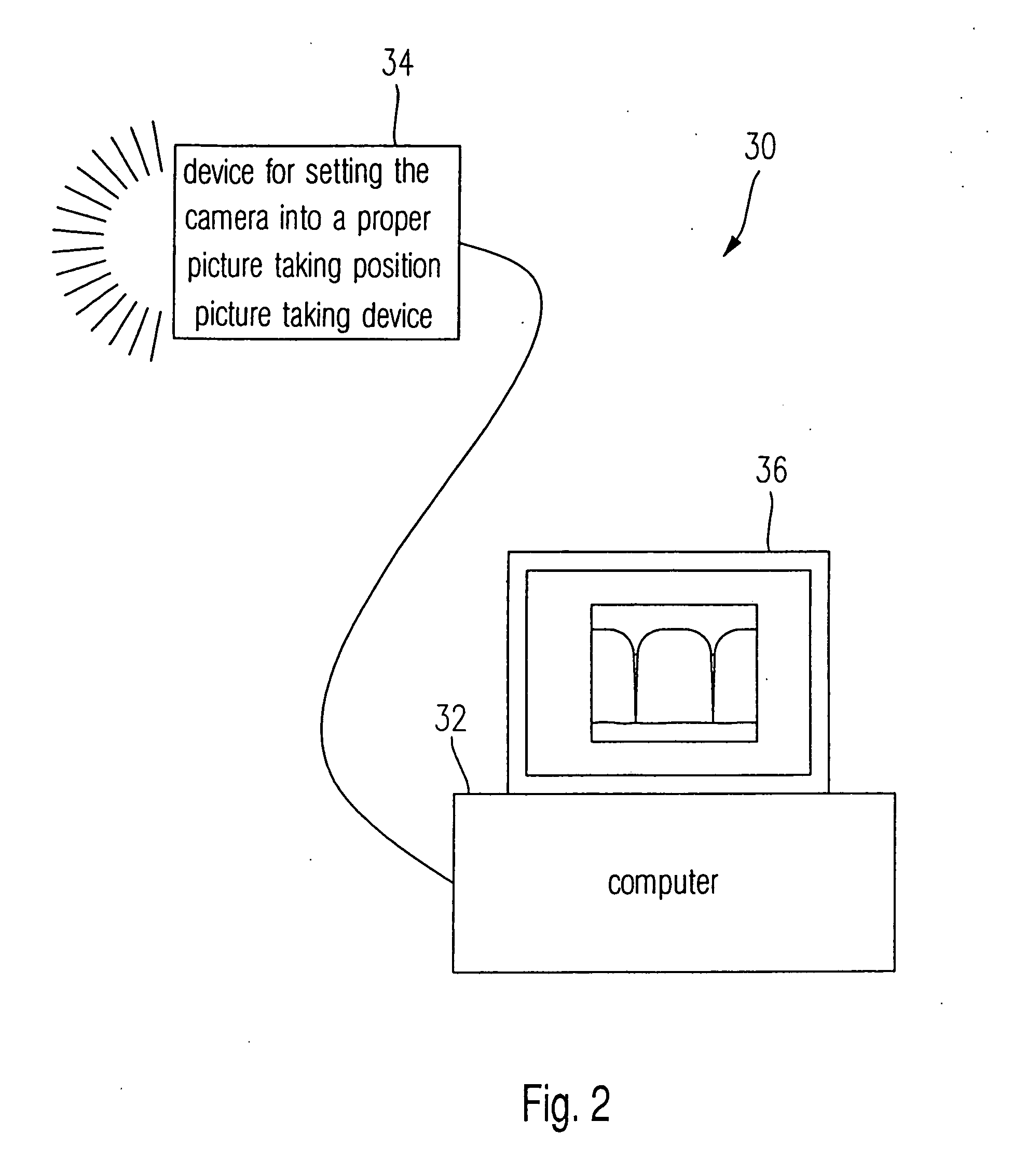

Intra-oral camera and a method for using same

InactiveUS20050100333A1Quickly takenBurdensome usage requirement is avoidedPrintersSurgeryVirtual cameraEndoscope

Owner:IVOCLAR VIVADENT AG

Method and system for synthesizing multiview videos

A system and method synthesizes multiview videos. Multiview videos are acquired of a scene with corresponding cameras arranged at a poses such that there is view overlap between any pair of cameras. A synthesized multiview video is generated from the acquired multiview videos for a virtual camera. A reference picture list is maintained for each current frame of each of the multiview videos and the synthesized video. The reference picture list indexes temporal reference pictures and spatial reference pictures of the acquired multiview videos and the synthesized reference pictures of the synthesized multiview video. Then, each current frame of the multiview videos is predicted according to reference pictures indexed by the associated reference picture list during encoding and decoding.

Owner:MITSUBHISHI ELECTRONICS RES LAB +1

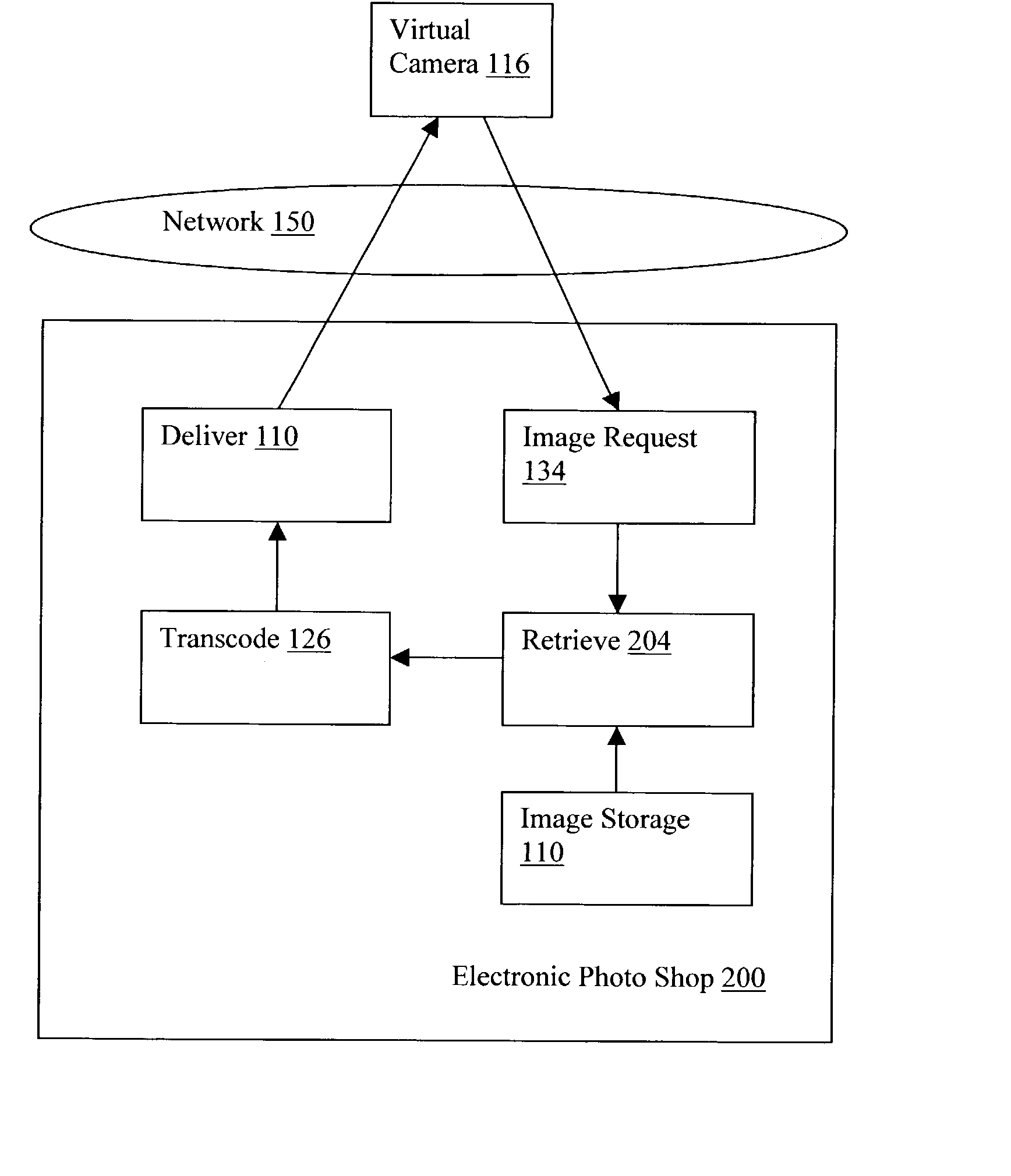

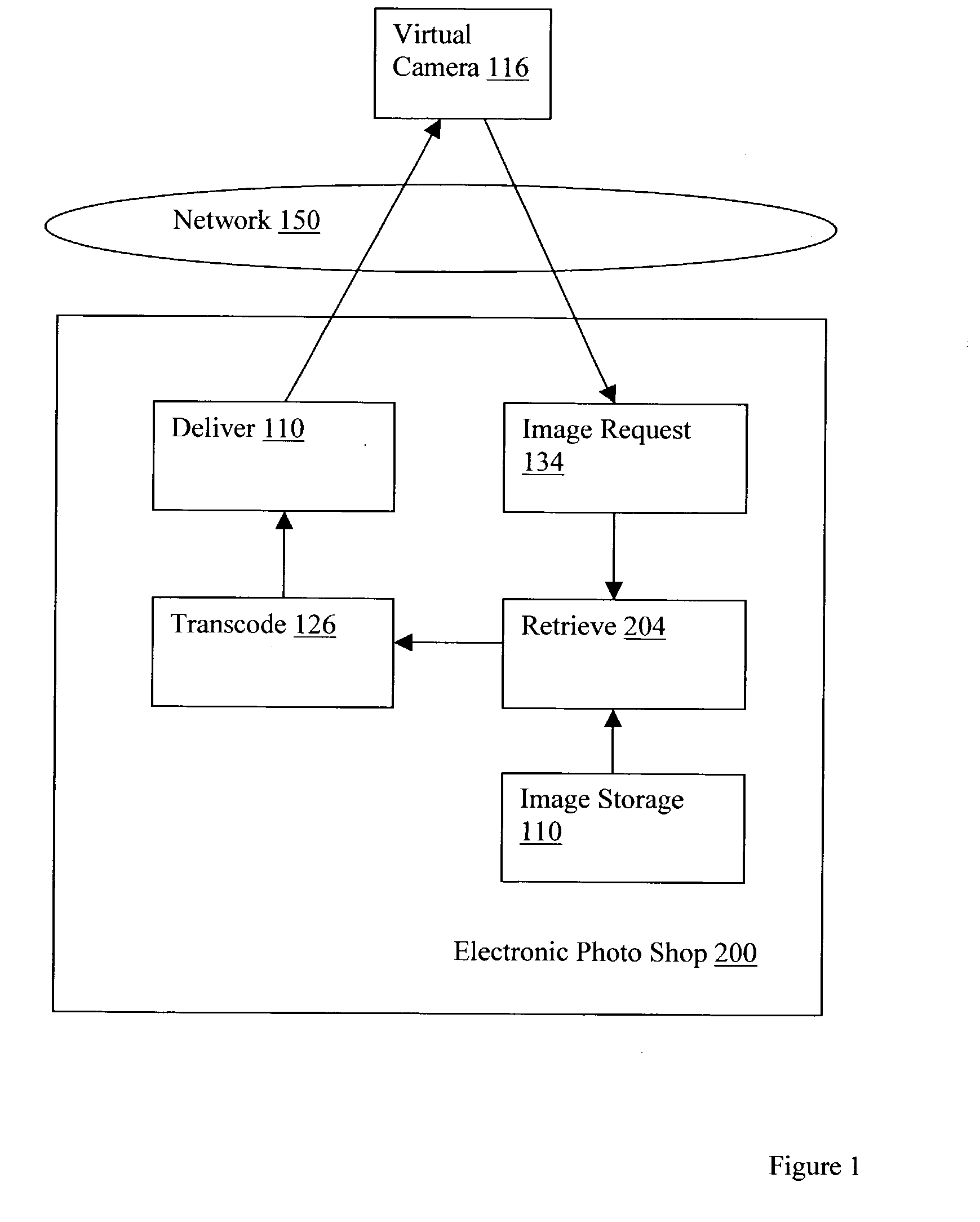

Editing and browsing images for virtual cameras

Digital imaging including creating, in a virtual camera, an unedited image request for an unedited digital image; editing the unedited image request, producing an edited image request for an edited image; communicating the edited image request to a web site for imaging for virtual cameras; receiving in the virtual camera, the edited digital image from the web site; and displaying the edited digital image on a display device of a user interface of the virtual camera. Digital imaging including identifying a browsing image request data element from among image request data elements of an image request data structure; and creating, in dependence upon the identified browsing image request data element, a multiplicity of image requests for digital images, wherein values of the browsing image request data elements vary among the image requests.

Owner:IBM CORP

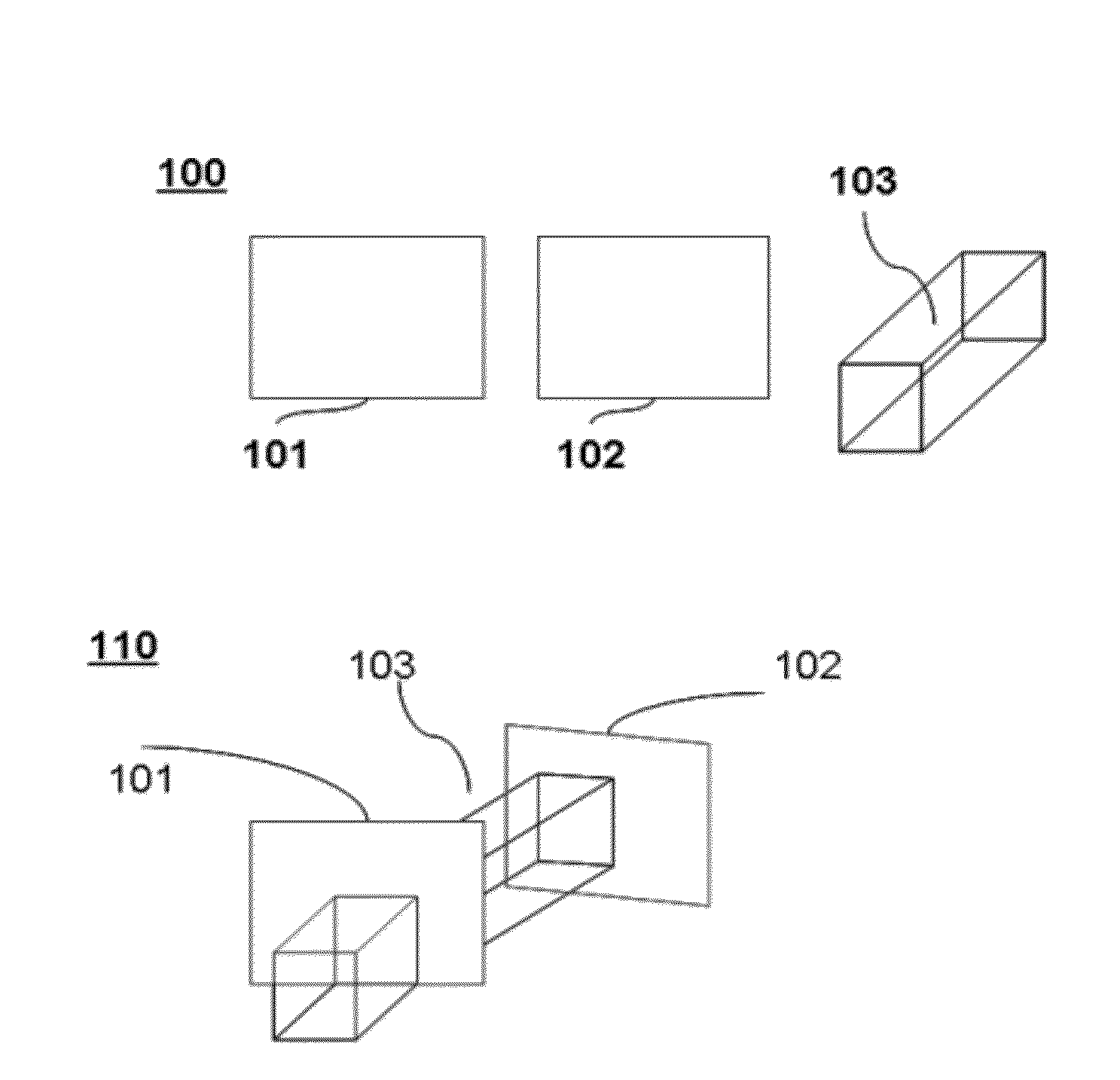

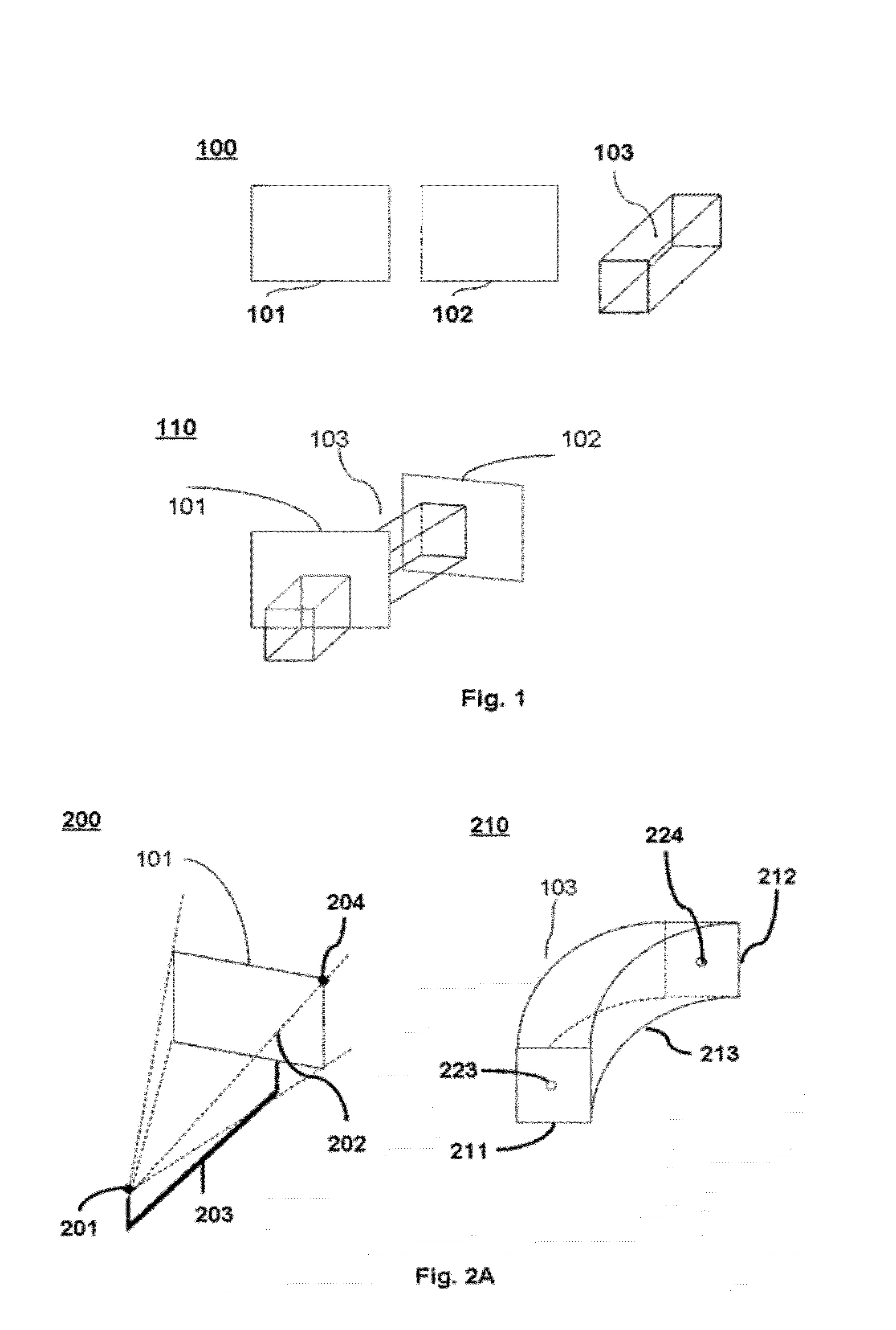

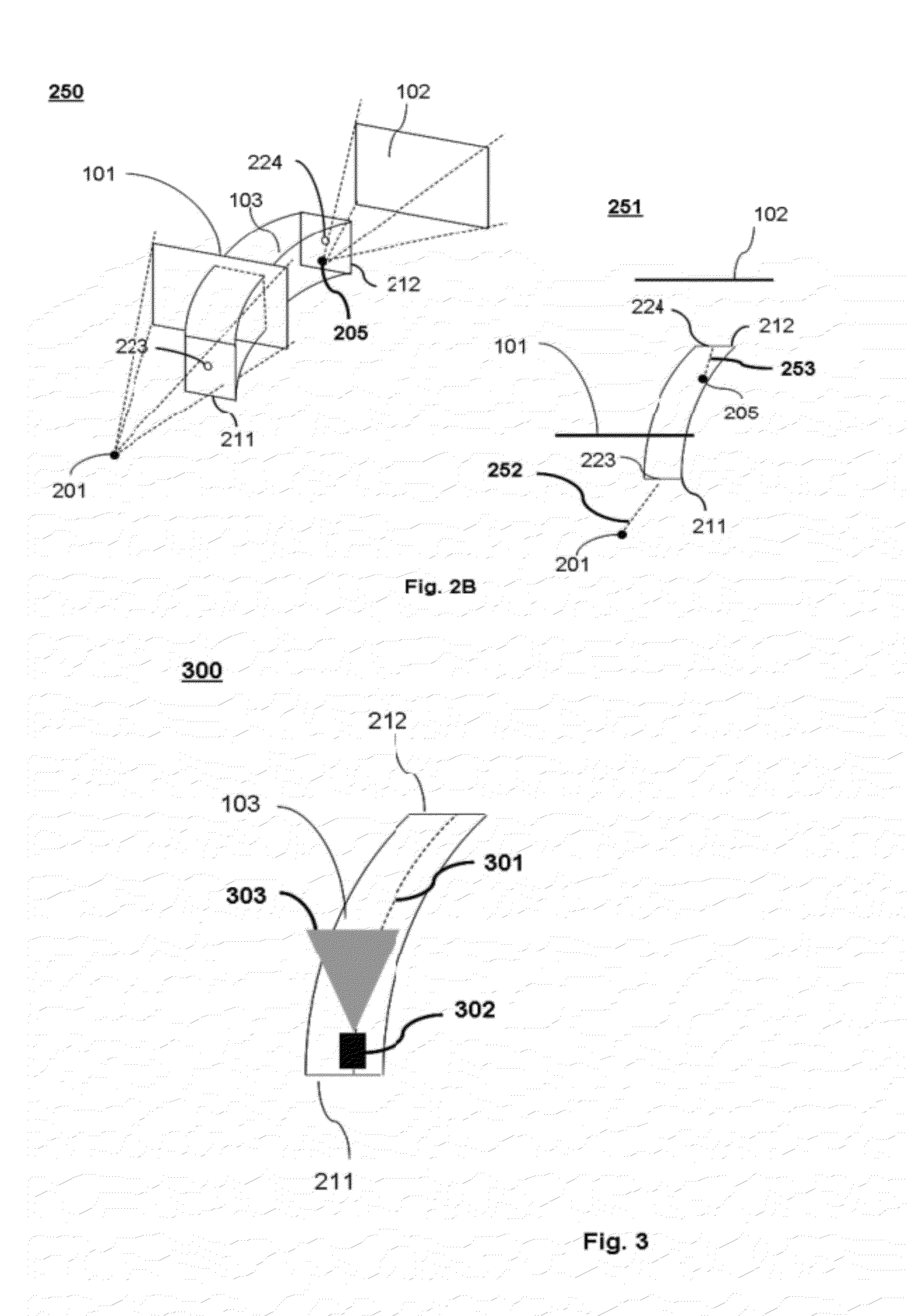

Generating Three-Dimensional Virtual Tours From Two-Dimensional Images

ActiveUS20120099804A1Increase impressionGood effectCharacter and pattern recognitionWeb data navigationUser inputVirtual camera

Interactive three-dimensional (3D) virtual tours are generated from ordinary two-dimensional (2D) still images such as photographs. Two or more 2D images are combined to form a 3D scene, which defines a relationship among the 2D images in 3D space. 3D pipes connect the 2D images with one another according to defined spatial relationships and for guiding virtual camera movement from one image to the next. A user can then take a 3D virtual tour by traversing images within the 3D scene, for example by moving from one image to another, either in response to user input or automatically. In various embodiments, some or all of the 2D images can be selectively distorted to enhance the 3D effect, and thereby reinforce the impression that the user is moving within a 3D space. Transitions from one image to the next can take place automatically without requiring explicit user interaction.

Owner:3DITIZE SL

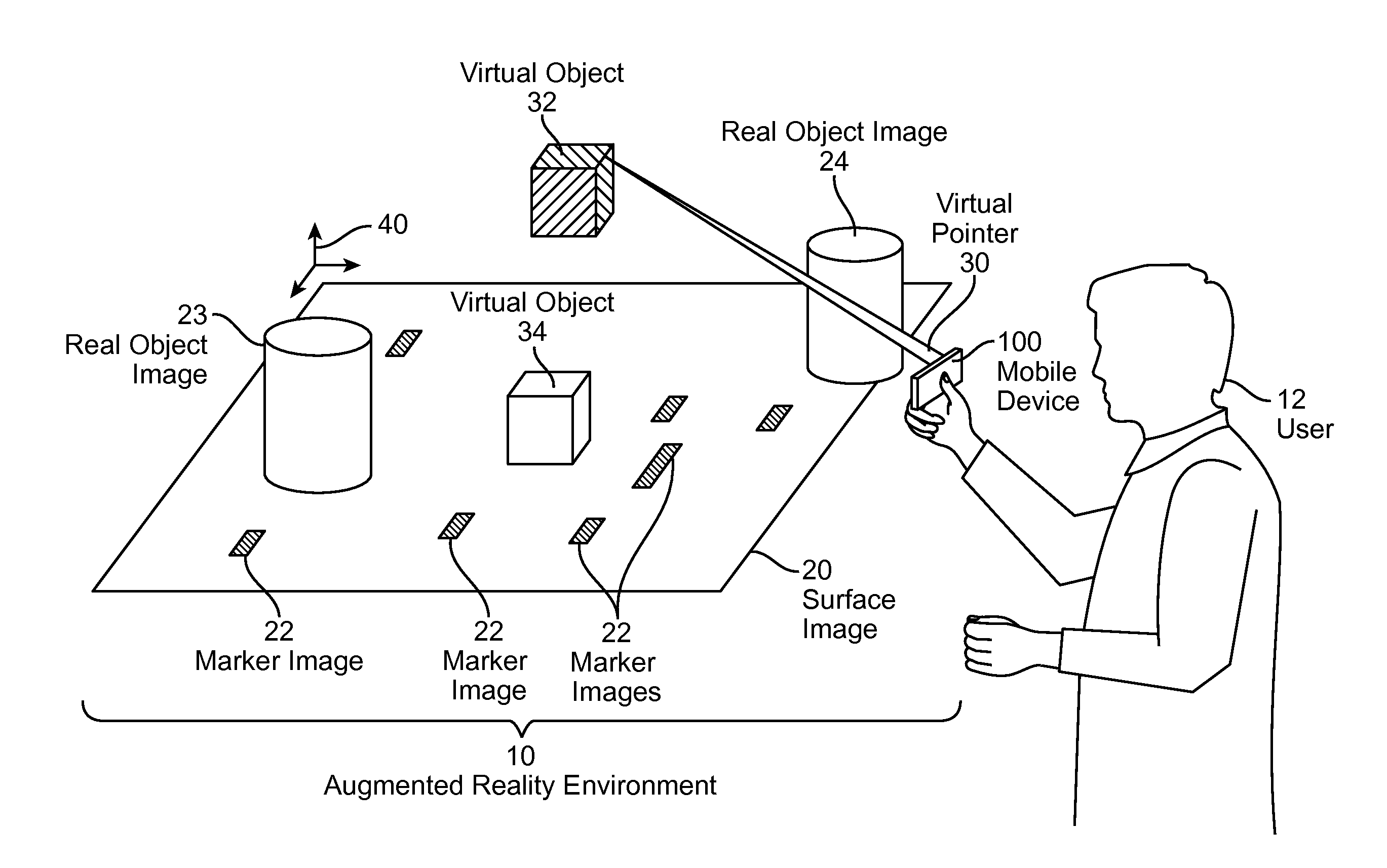

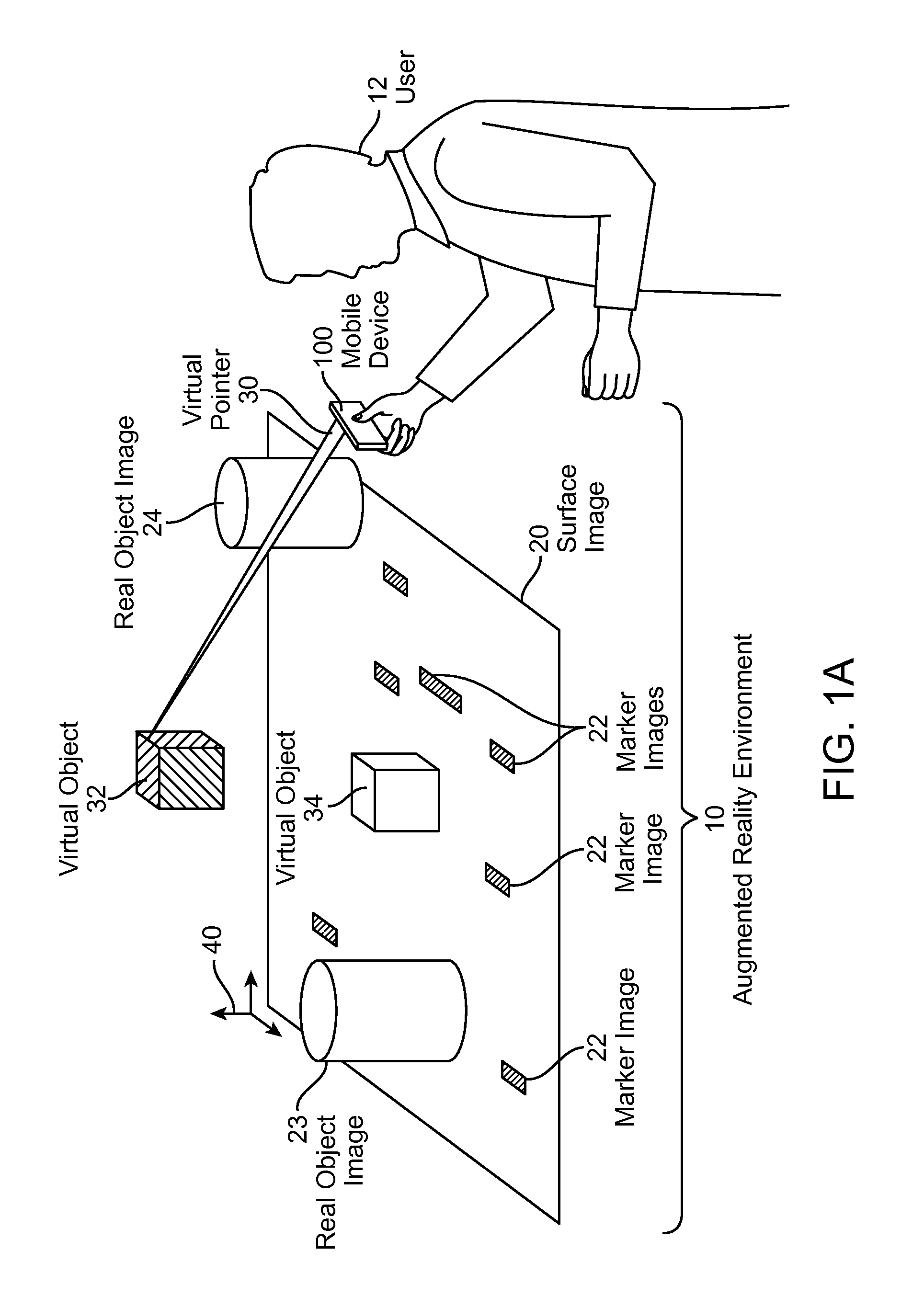

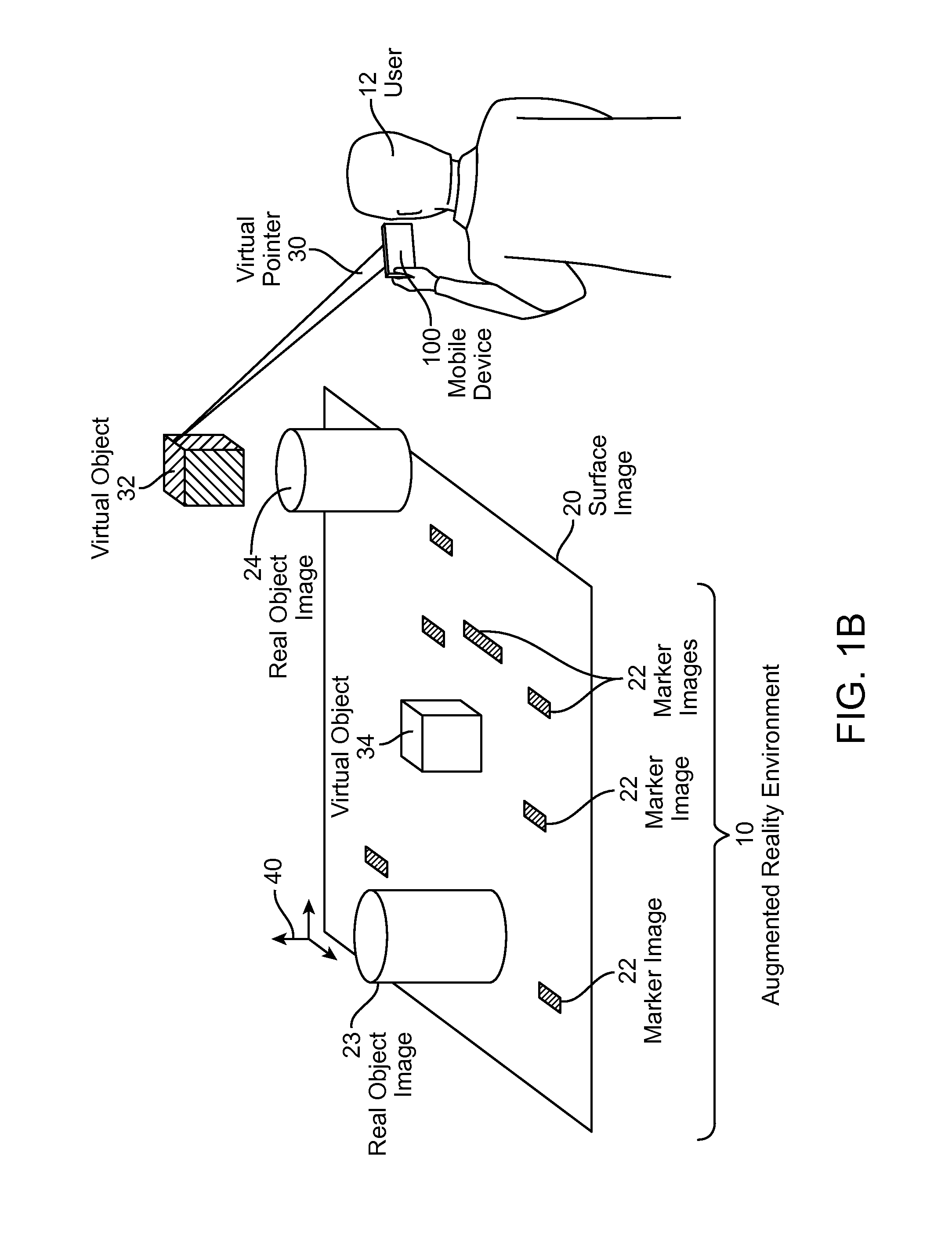

Virtual lasers for interacting with augmented reality environments

ActiveUS20160196692A1Digital data processing detailsCharacter and pattern recognitionDrag and dropVirtual control

Systems and methods enabling users to interact with an augmented reality environment are disclosed. Real-world objects may have unique markers which are recognized by mobile devices. A mobile device recognizes the markers and generates a set of virtual objects associated with the markers in the augmented reality environment. Mobile devices employ virtual pointers and virtual control buttons to enable users to interact with the virtual objects. Users may aim the virtual pointer to a virtual object, select the virtual object, and then drag-and-drop the virtual object to a new location. Embodiments enable users to select, move, transform, create and delete virtual objects with the virtual pointer. The mobile device provides users with a means of drawing lines and geometrically-shaped virtual objects.

Owner:EON REALITY

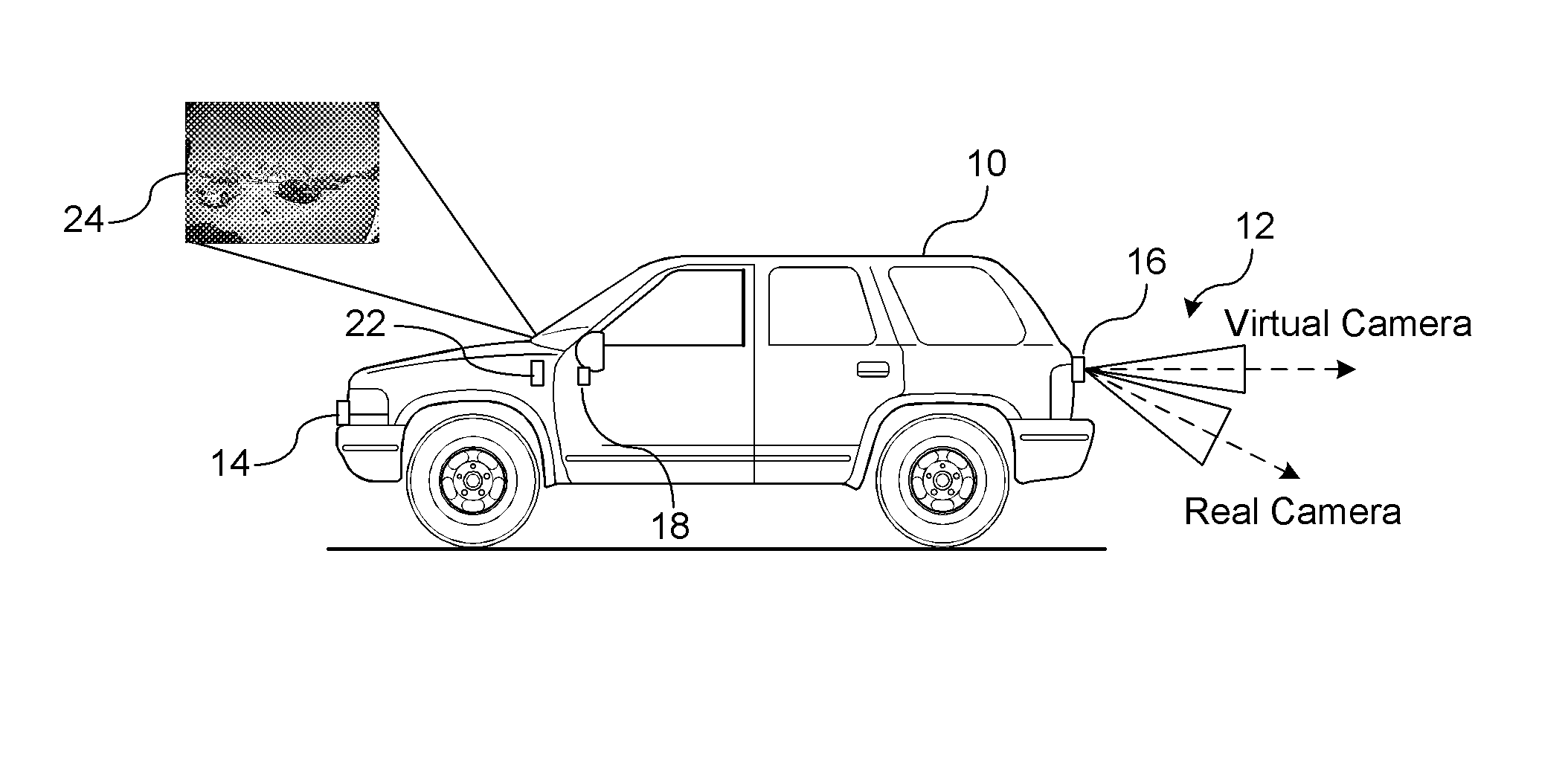

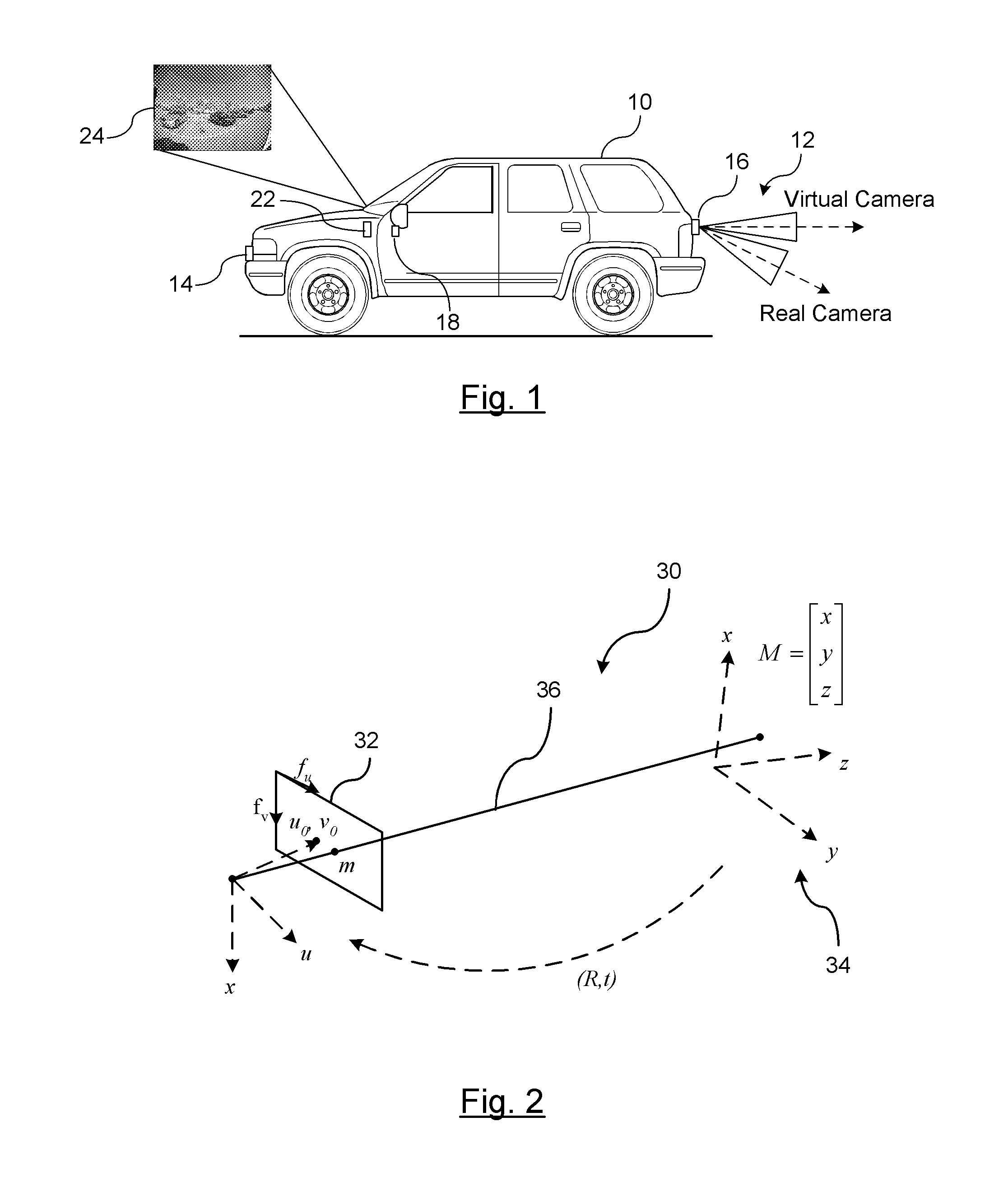

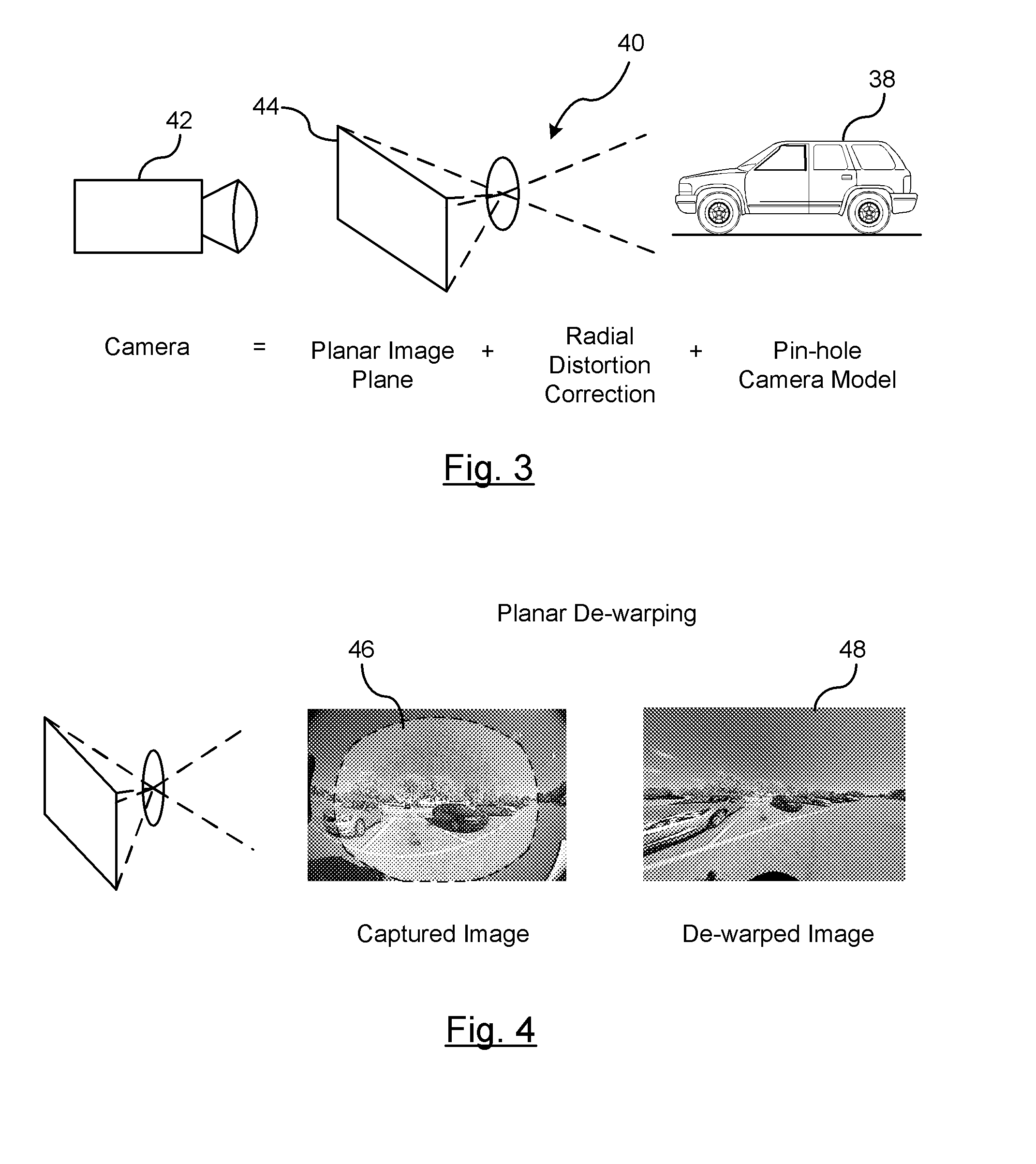

Imaging surface modeling for camera modeling and virtual view synthesis

ActiveUS20140104424A1Enlarge regionEnhances regionImage enhancementImage analysisView synthesisDisplay device

A method for displaying a captured image on a display device. A real image is captured by a vision-based imaging device. A virtual image is generated from the captured real image based on a mapping by a processor. The mapping utilizes a virtual camera model with a non-planar imaging surface. Projecting the virtual image formed on the non-planar image surface of the virtual camera model to the display device.

Owner:GM GLOBAL TECH OPERATIONS LLC

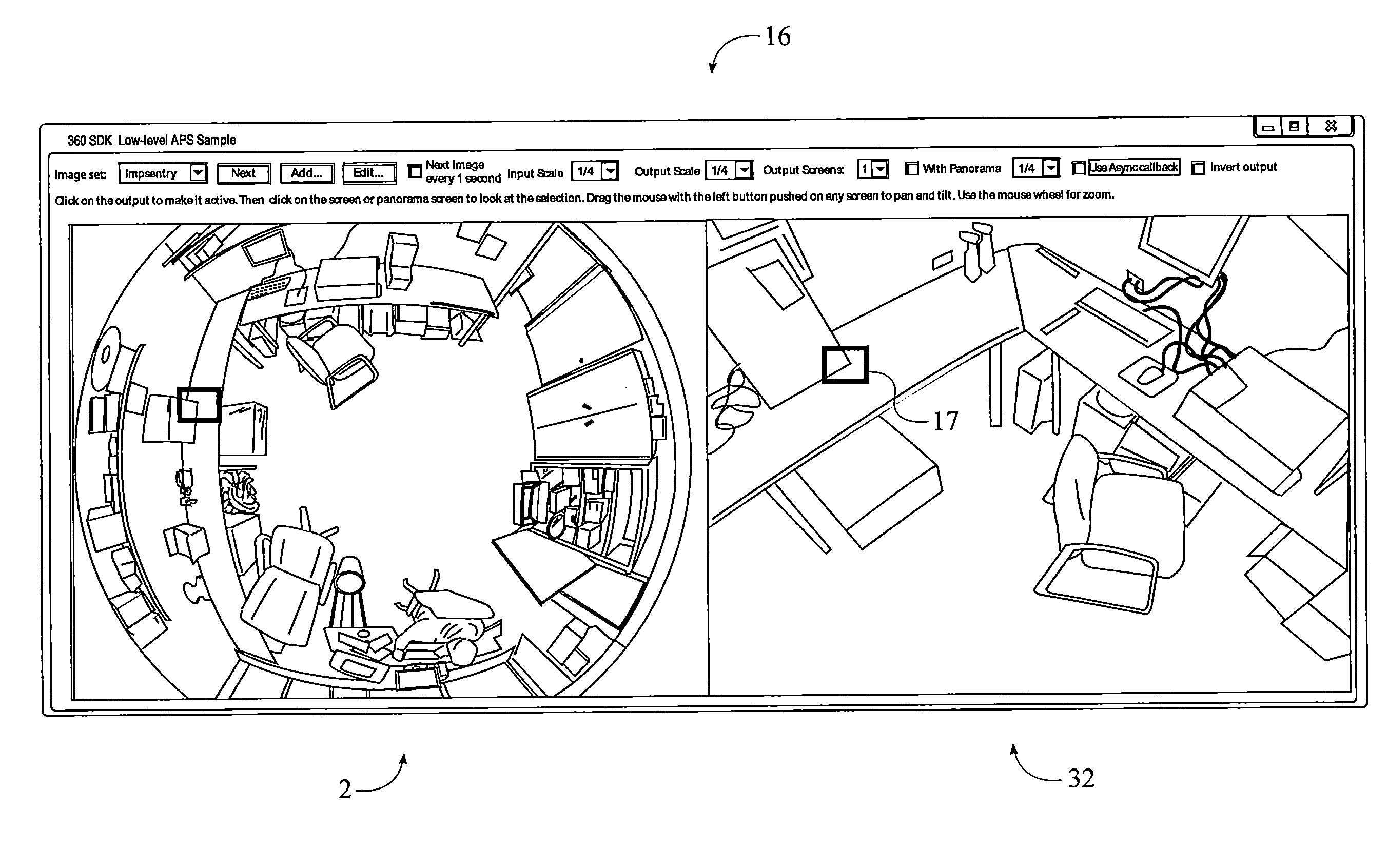

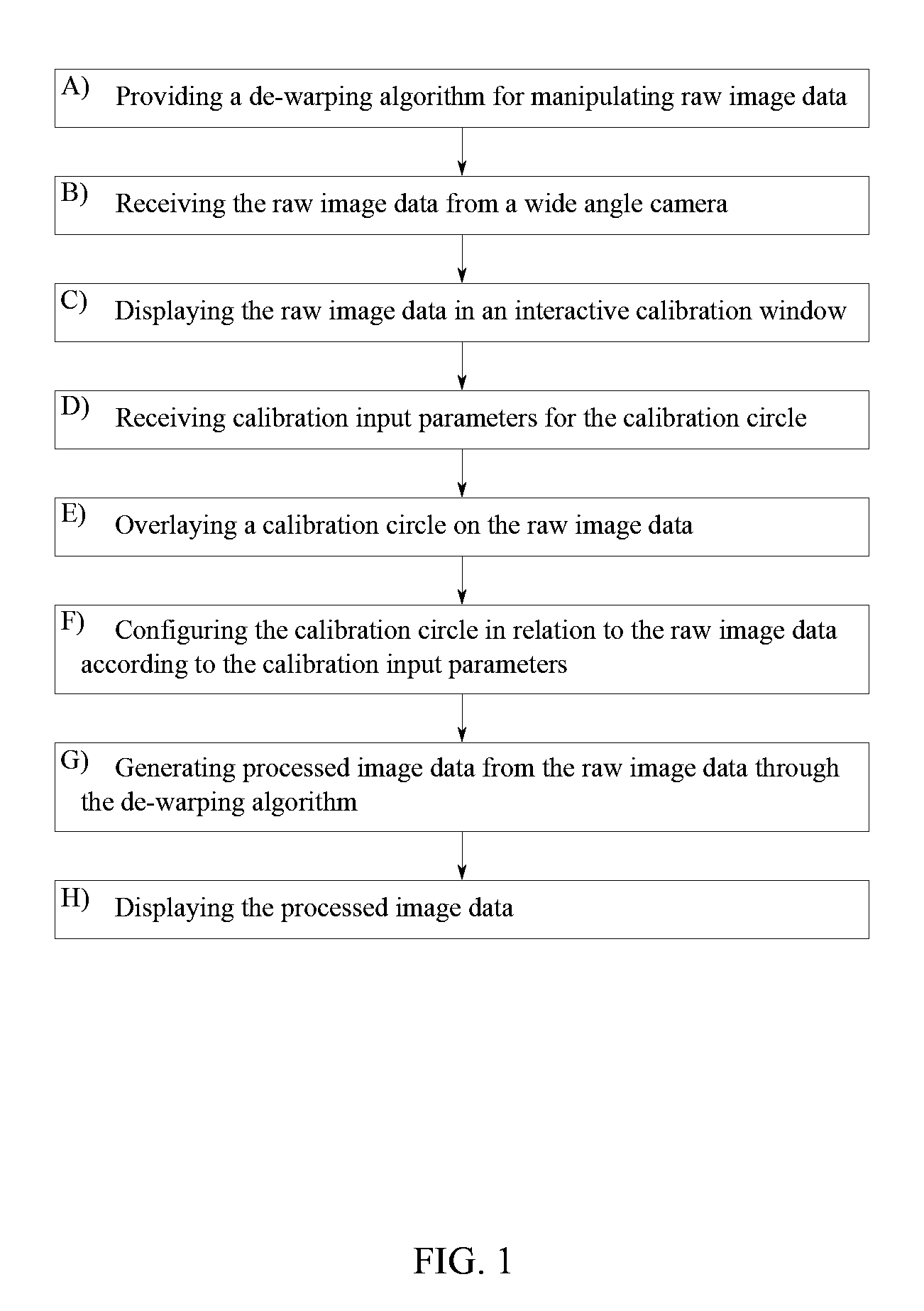

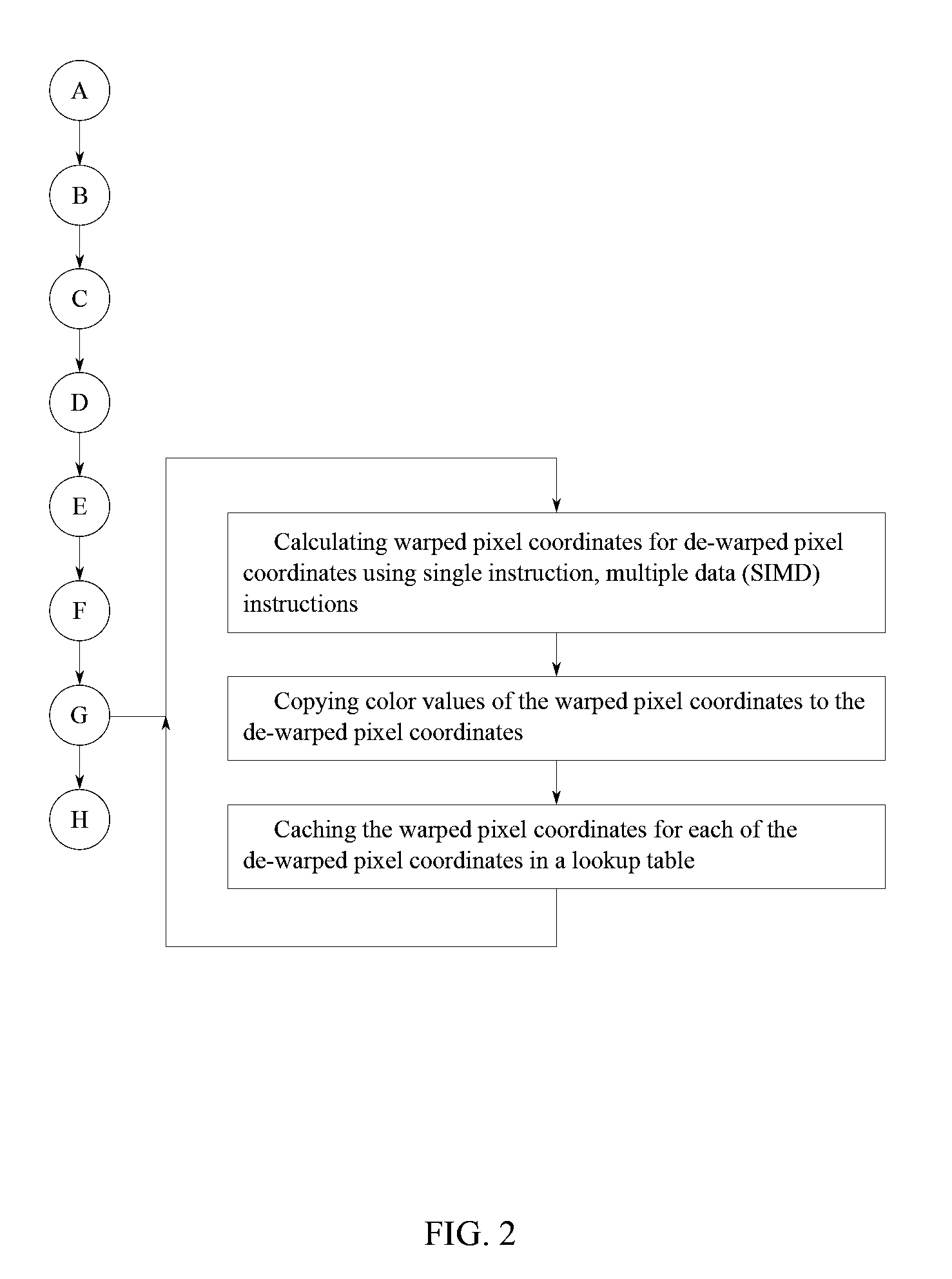

Optimized 360 Degree De-Warping with Virtual Cameras

InactiveUS20160119551A1Television system detailsGeometric image transformationPanoramaVirtual camera

A software suite for optimizing the de-warping of wide angle lens images includes a calibration process utilizing a calibration circle to prepare raw image data. The calibration circle is used to map the raw image data about a warped image space, which is then used to map a de-warped image space for processed image data. The processed image data is generated from the raw image data by copying color values from warped pixel coordinates of the warped image space to de-warped pixel coordinates of the de-warped image space. The processed image data is displayed as a single perspective image and a panoramic image in a click-to-position virtual mapping interface alongside the raw image data. A user can make an area of interest selection by clicking the raw image data, the single perspective image, or the panoramic image in order to change the point of focus within the single perspective image.

Owner:SENTRY360

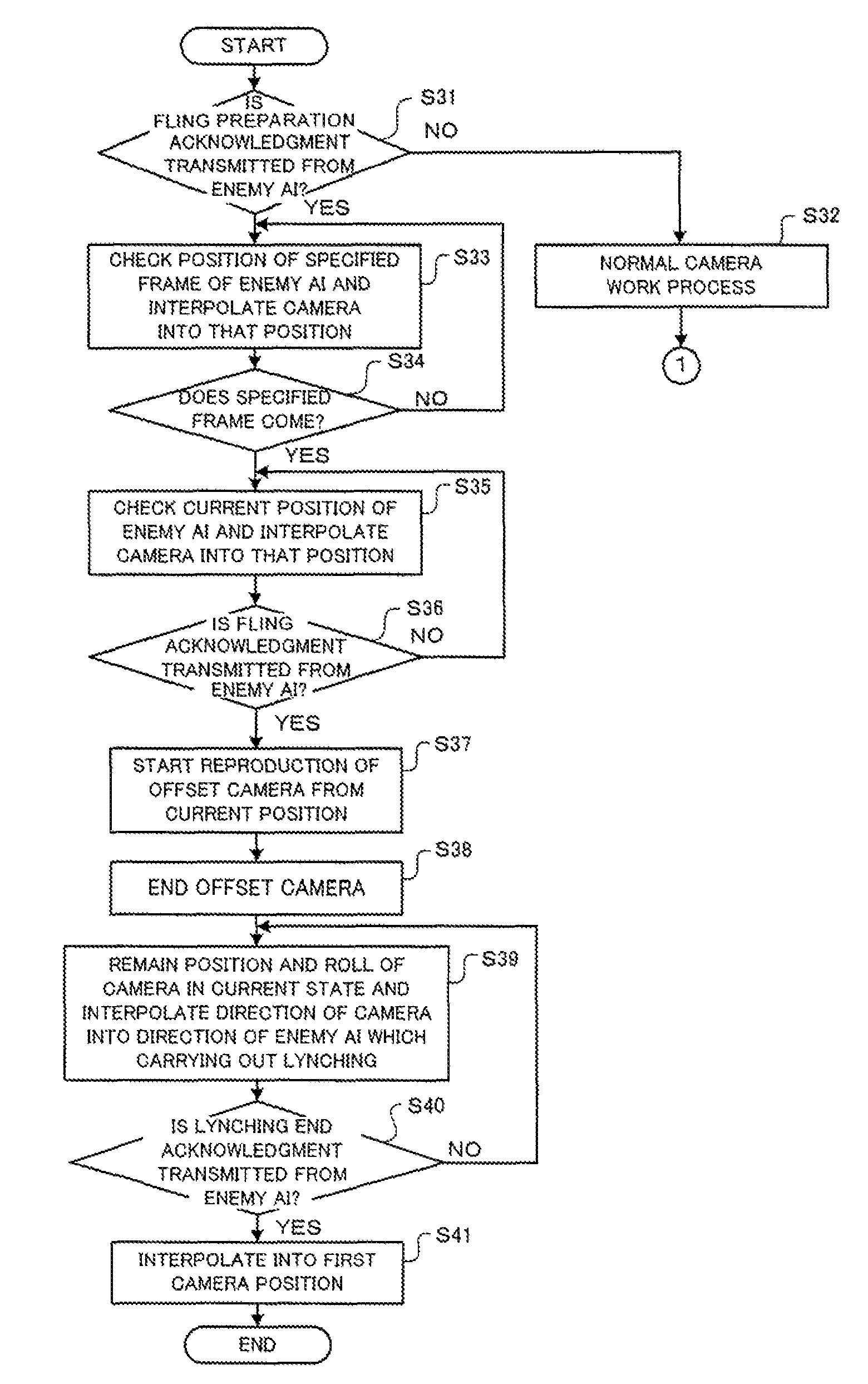

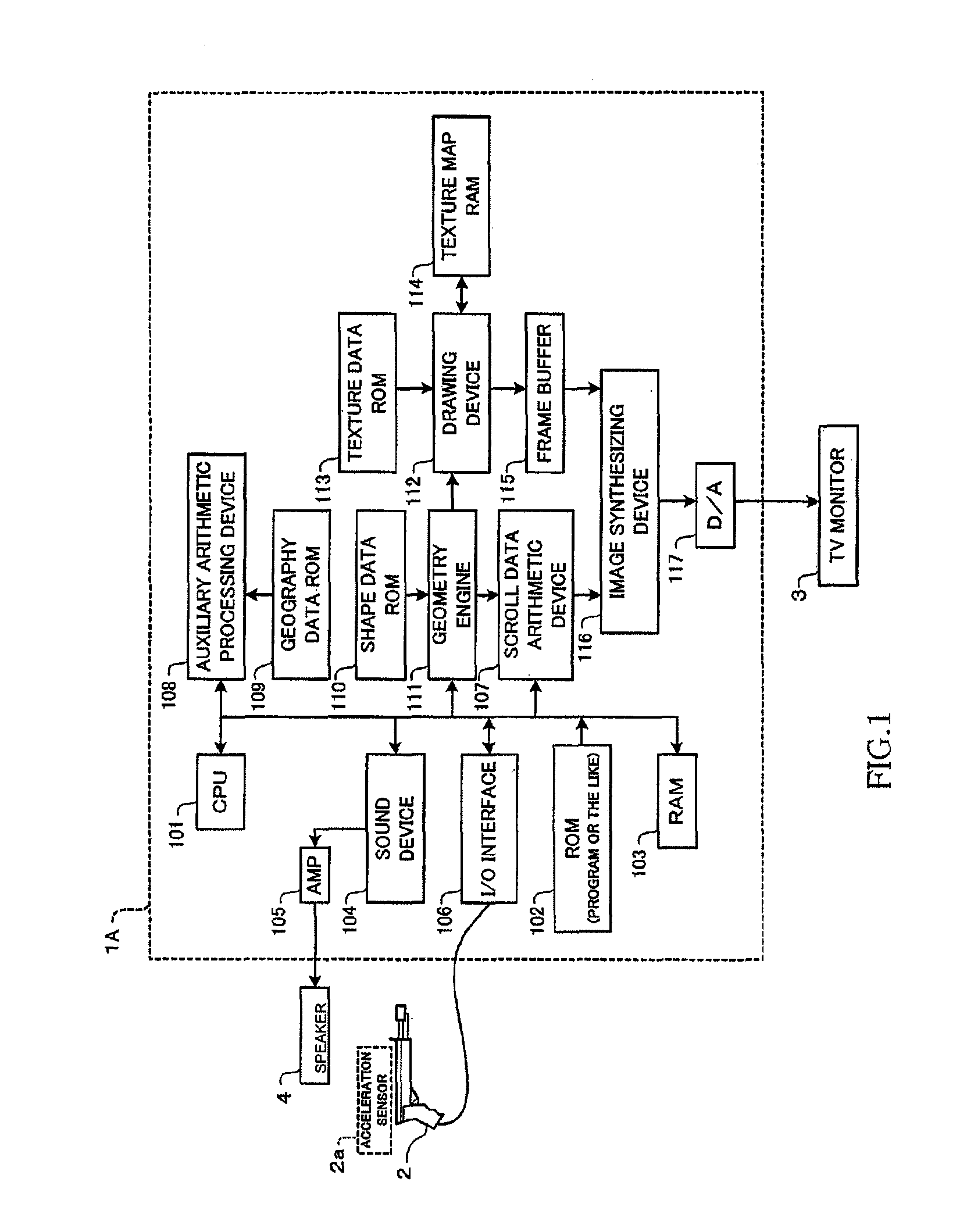

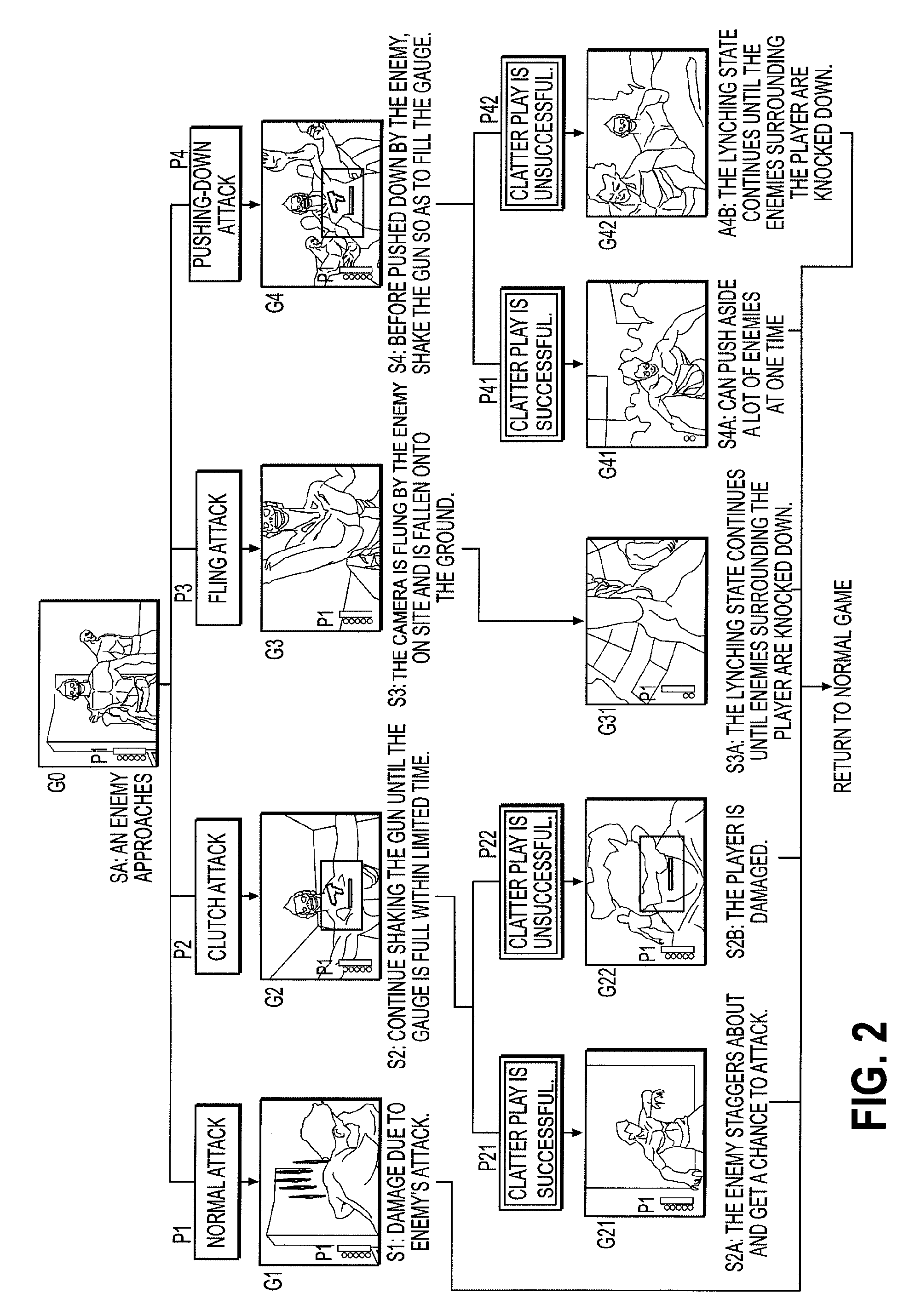

Game apparatus for changing a visual point position of a virtual camera in conjunction with an attack by and enemy character

ActiveUS7775882B2Successfully expressingVideo gamesSpecial data processing applicationsVirtual cameraVisual perception

A game apparatus which can realize a totally new expression of a fear on a game image is provided. The game apparatus includes an image generating means that uses a visual point position of a player character as a visual point position of a virtual camera and generates an image within a visual range captured by the virtual camera, an enemy character control means that allows the enemy character to mount an attack such that the visual point position of the player character is changed and moves an enemy character within a specified range viewed from the visual point position changed due to the attack to the visual range of the player character viewed from the visual point position, and a camera work control means that changes a visual point position in a three-dimensional coordinate system of the virtual camera in conjunction with the attack motions.

Owner:SEGA CORP

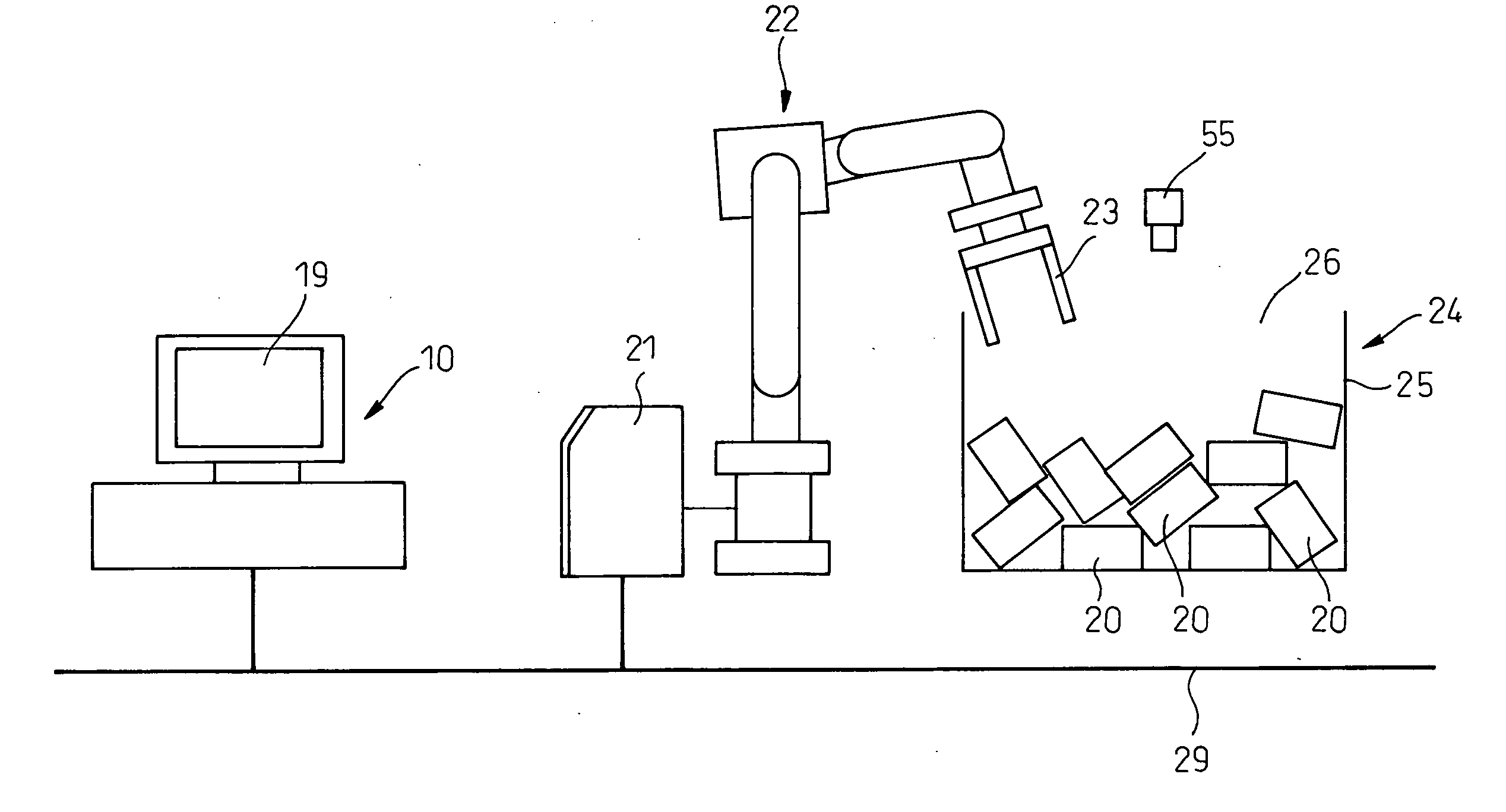

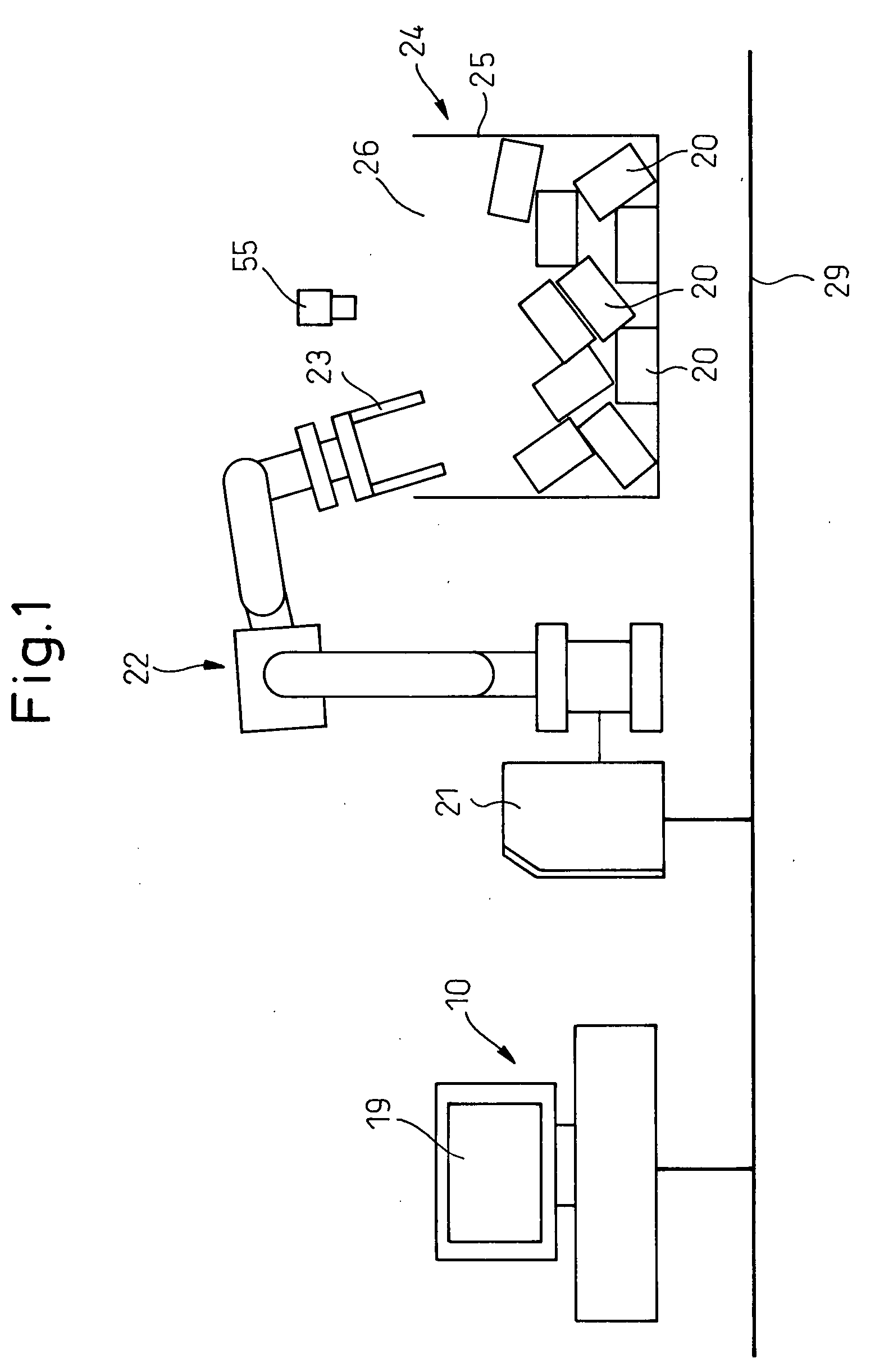

Robot simulation apparatus

ActiveUS20070282485A1Simulation is accurateAccurately determinedProgramme controlProgramme-controlled manipulatorVisual field lossVirtual space

A robot simulation apparatus (10) capable of creating and executing a robot program includes a virtual space creating unit (31) for creating a virtual space (60), a workpiece model layout unit (32) for automatically arranging at least one workpiece model (40) in an appropriate posture at an appropriate position in a workpiece accommodation unit model (24) defined in the virtual space, a virtual camera unit (33) for acquiring a virtual image (52) of workpiece models existing in the range of a designated visual field as viewed from a designated place in the virtual space, a correcting unit (34) for correcting the teaching points in the robot program based on the virtual image, and a simulation unit (35) for simulating the operation of the robot handling the workpieces, and as a result, interference between the robot and the workpieces can be predicted while at the same time accurately determining the required workpiece handling time.

Owner:FANUC LTD

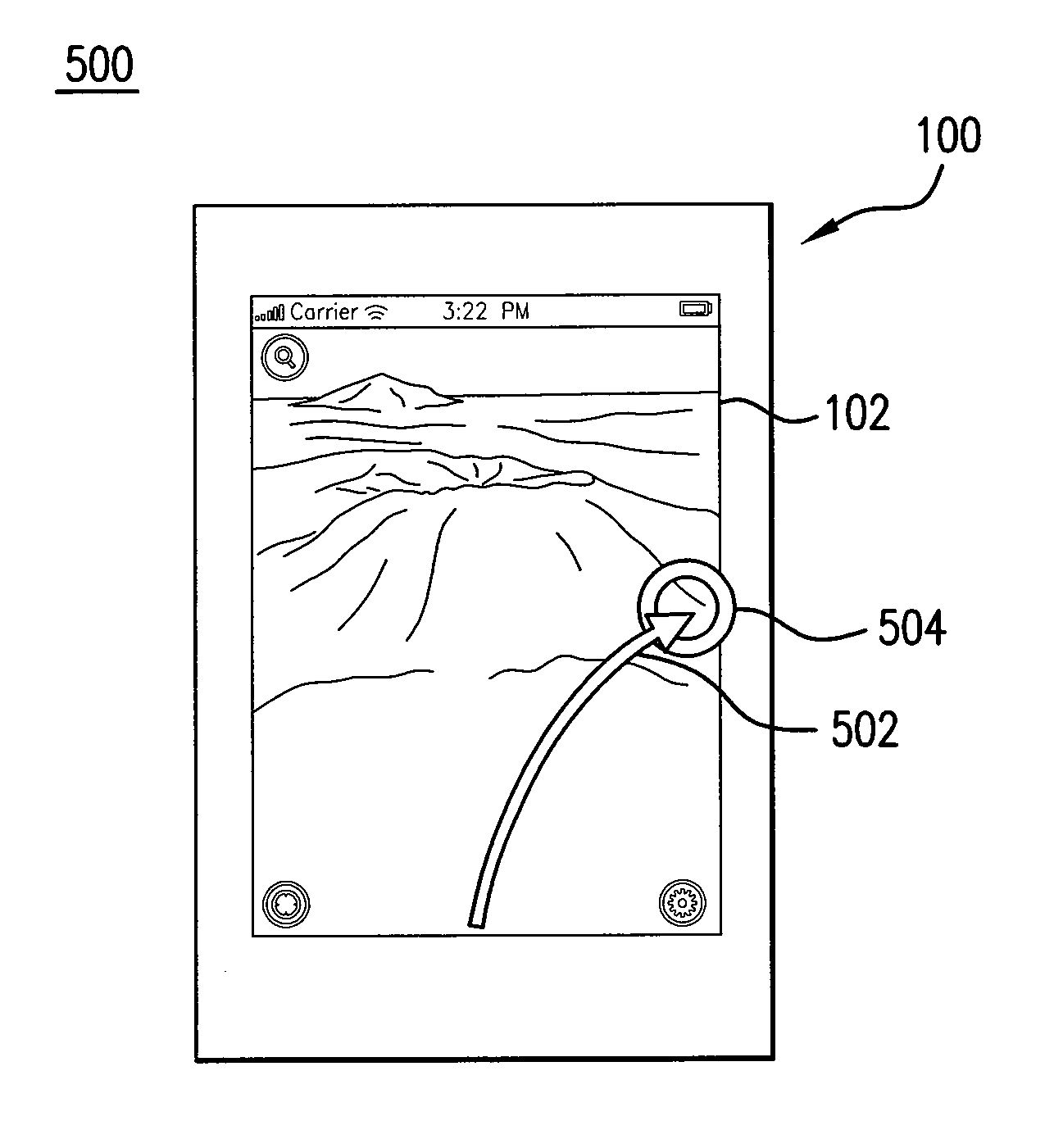

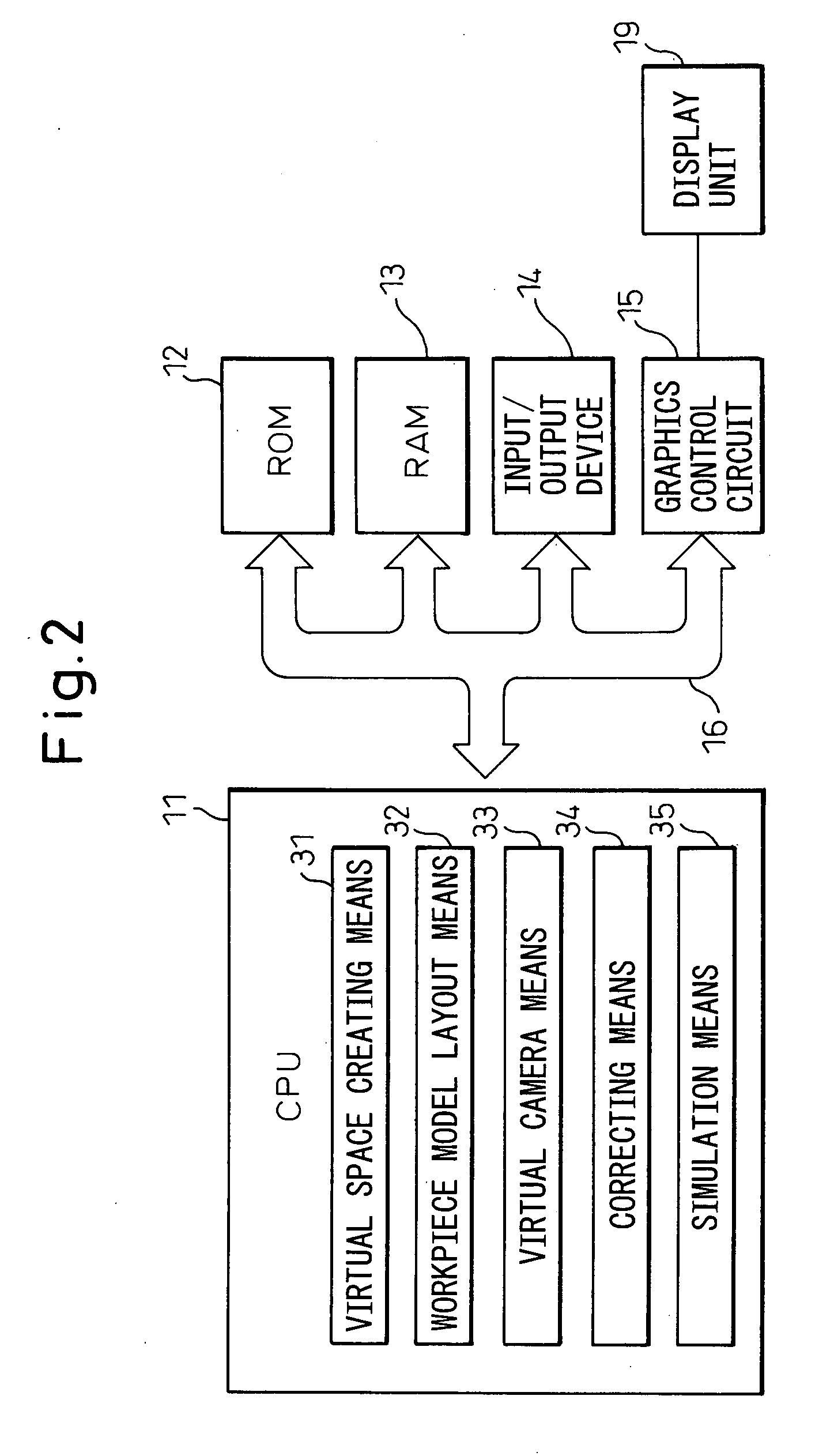

User Interface Gestures For Moving a Virtual Camera On A Mobile Device

InactiveUS20100045703A1Digital data processing detailsCathode-ray tube indicatorsObject basedUser input

This invention relates to user interface gestures for moving a virtual camera on a mobile device. In an embodiment, a computer-implemented method navigates a virtual camera in a three dimensional environment on a mobile device having a touch screen. A user input is received indicating that two objects have touched a view of the mobile device and the two objects have moved relative to each other. A speed of the objects is determined based on the user input. A speed of the virtual camera is determined based on the speed of the objects. The virtual camera is moved relative to the three dimensional environment according to the speed of the virtual camera.

Owner:GOOGLE LLC

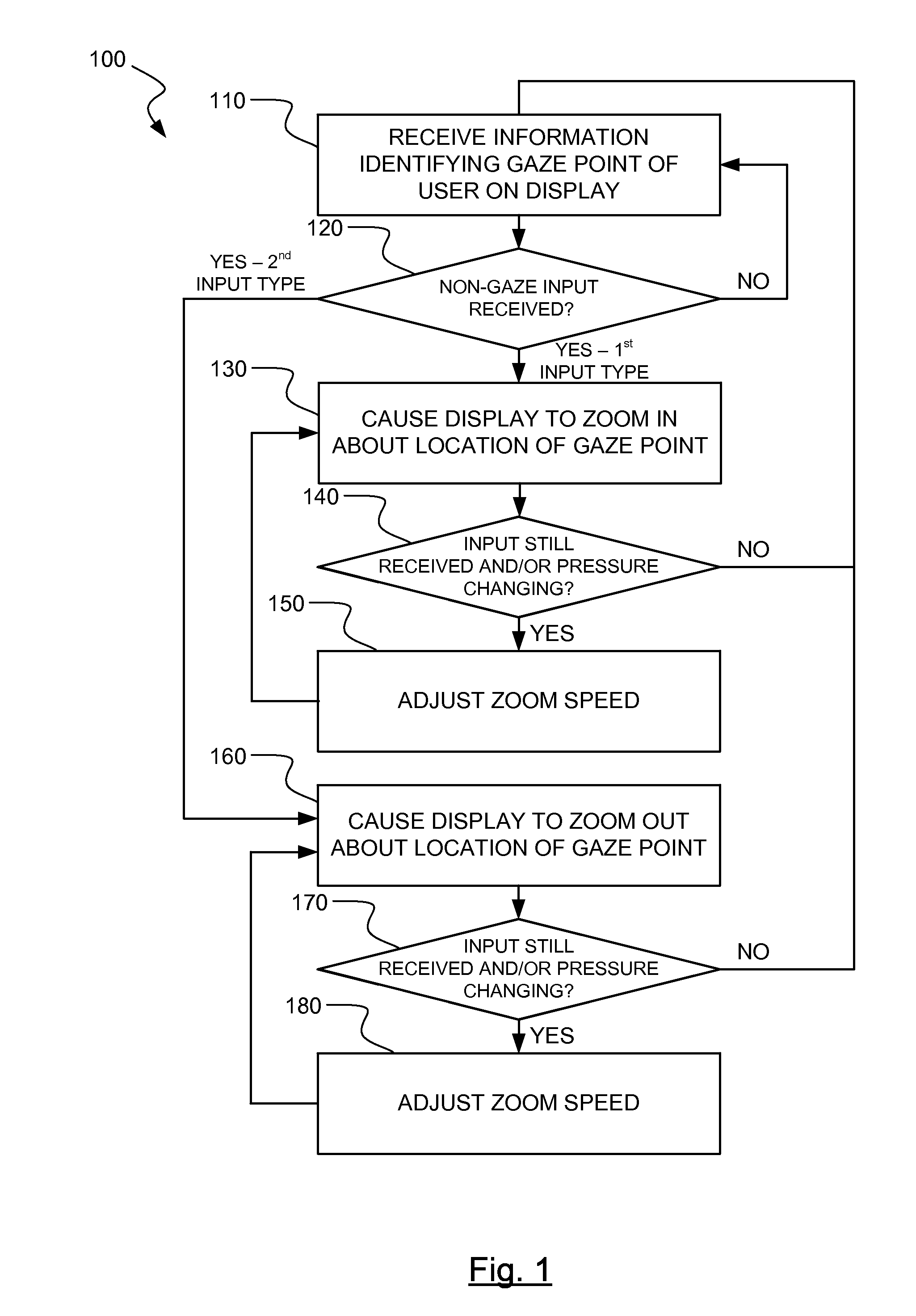

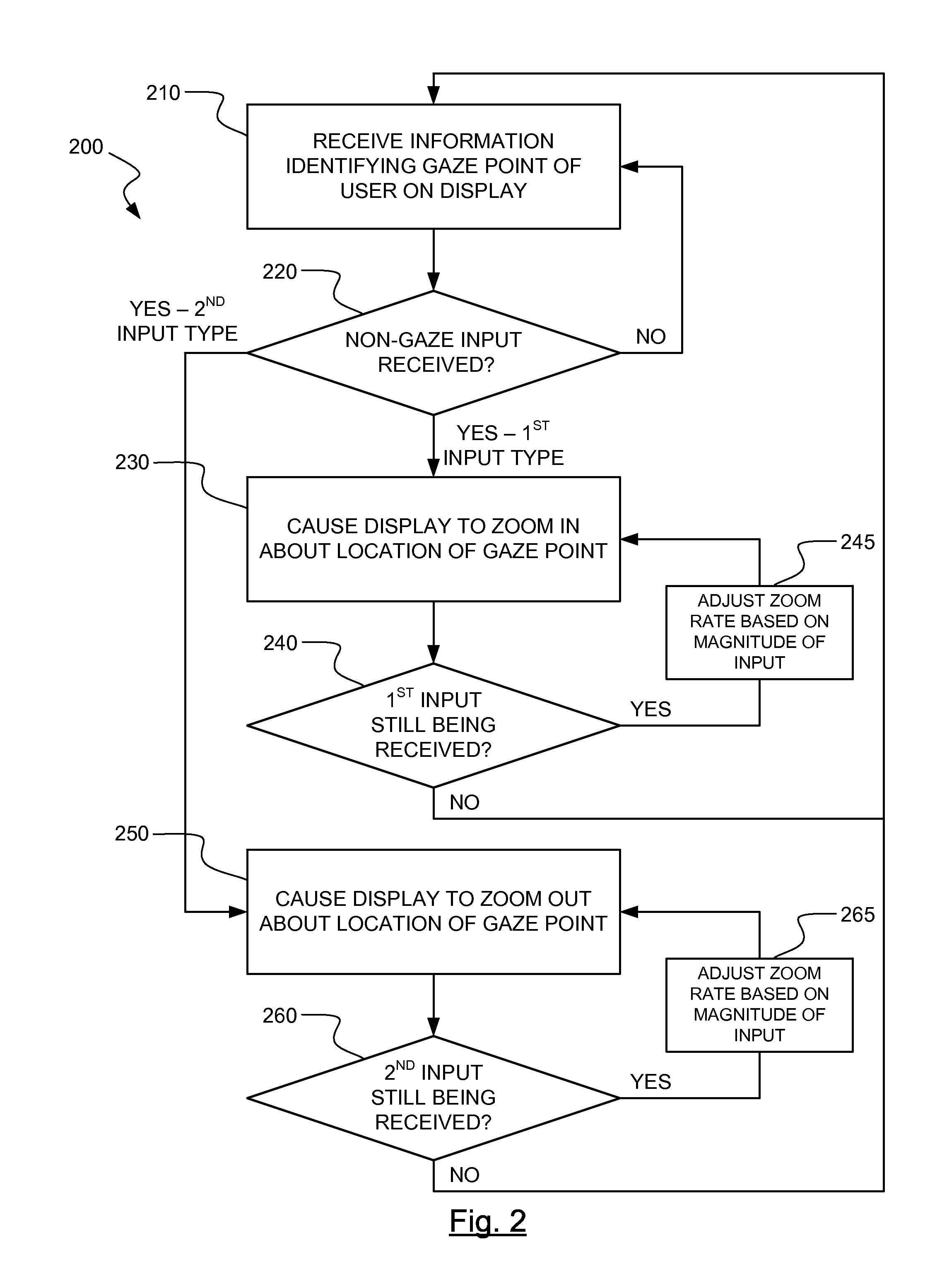

Component determination and gaze provoked interaction

ActiveUS20150138079A1Input/output for user-computer interactionCathode-ray tube indicatorsDisplay deviceVirtual camera

According to the invention, a method for changing a display based at least in part on a gaze point of a user on the display is disclosed. The method may include receiving information identifying a location of the gaze point of the user on the display. The method may also include, based at least in part on the location of the gaze point, causing a virtual camera perspective to change, thereby causing content on the display associated with the virtual camera to change.

Owner:TOBII TECH AB

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com