Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

50results about How to "Reduce reading and writing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Message processing method and device, and electronic device

PendingCN108415759AImprove stacking capacityReduce delay errorProgram initiation/switchingData fileElectronic equipment

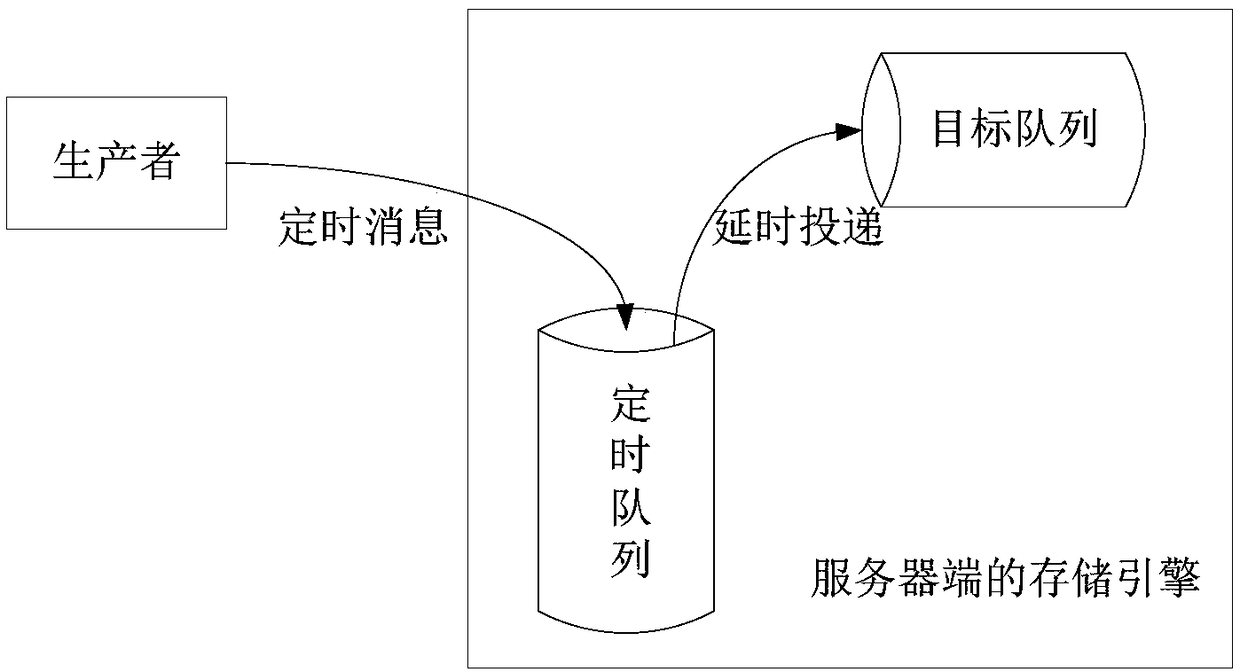

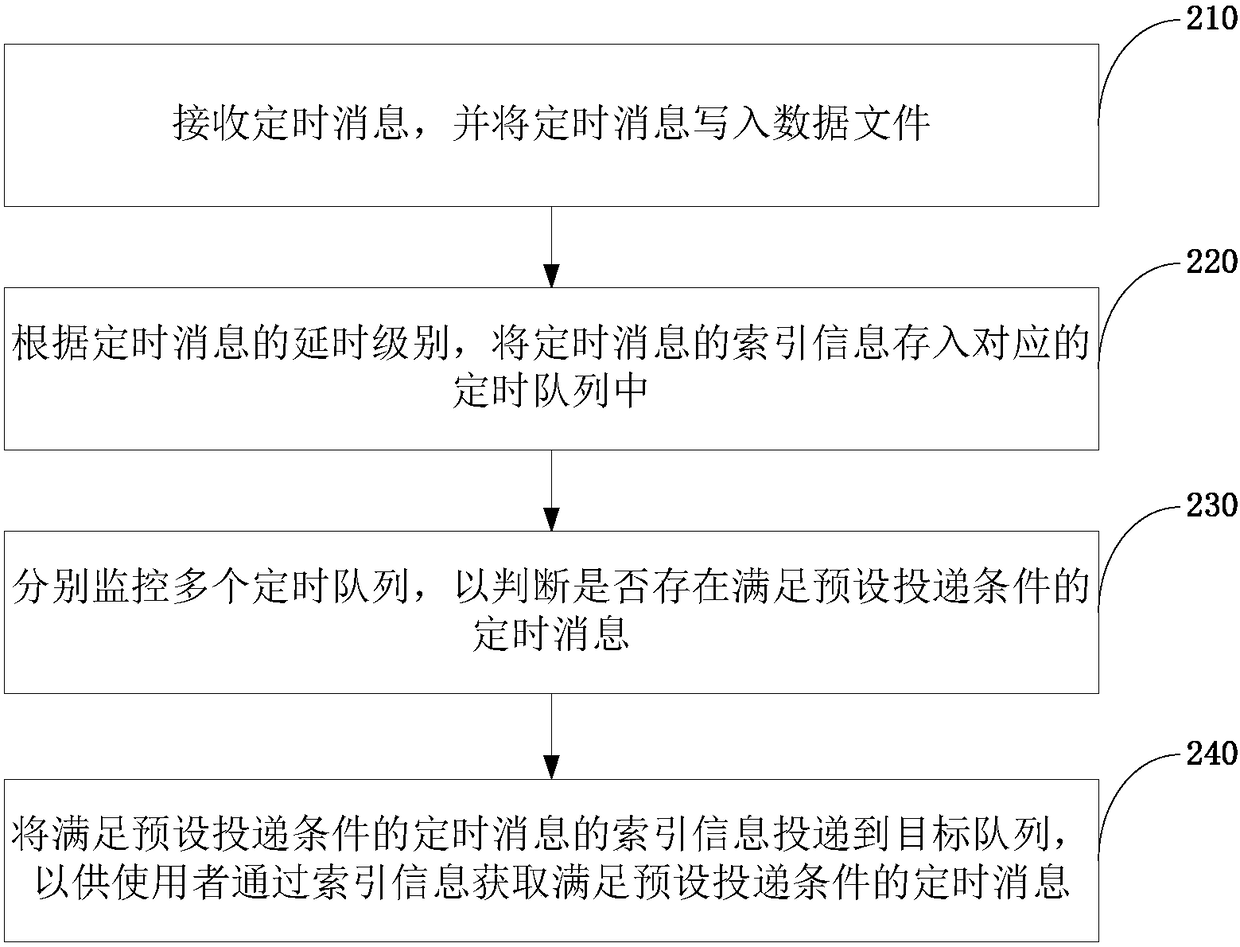

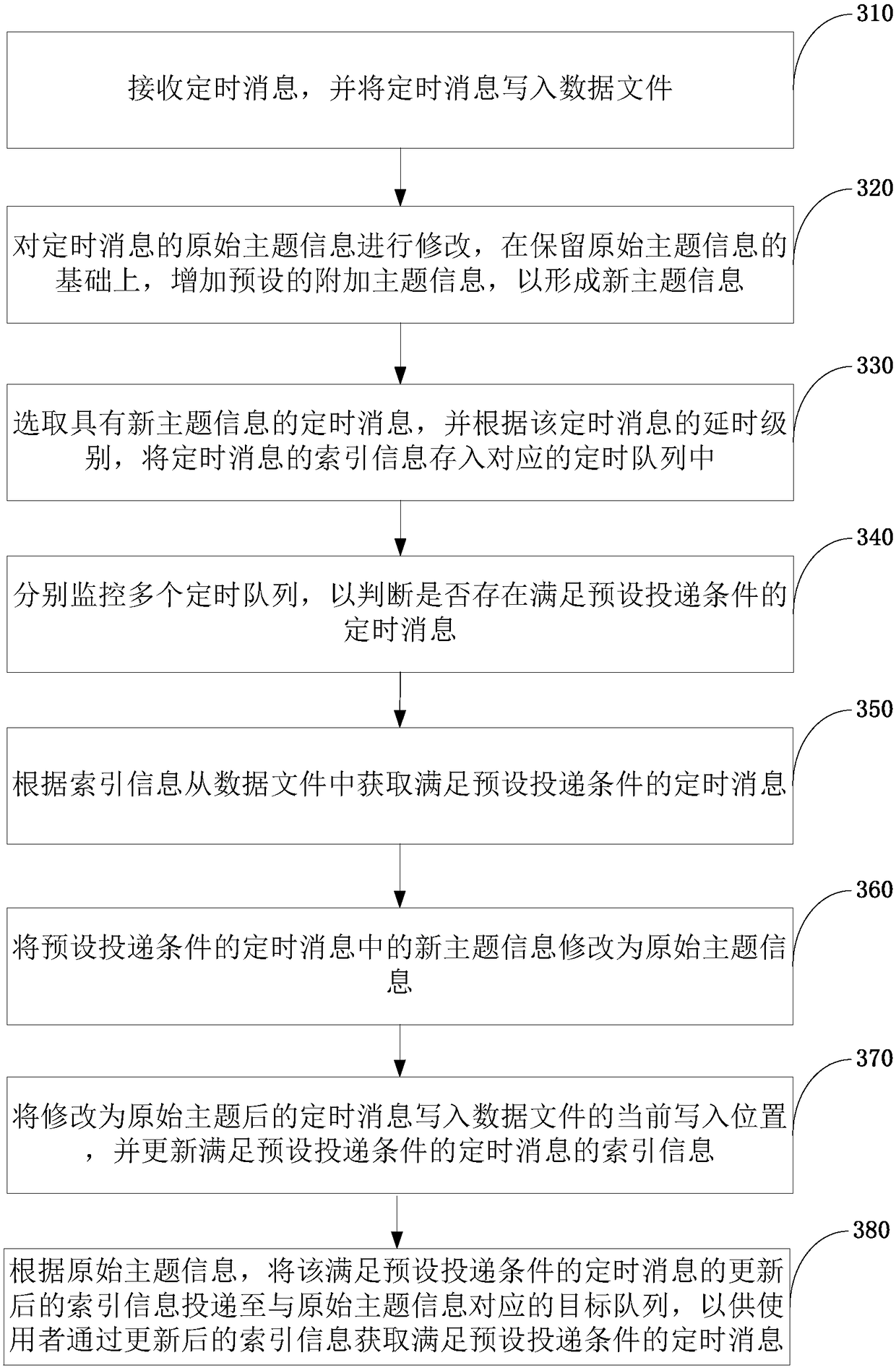

An embodiment of the present invention provides a message processing method and device, and an electronic device. A plurality of timing queues are provided, and the plurality of timing queues correspond to a plurality of preset delay levels respectively. The message processing method includes: receiving a timing message, and writing the timing message into a data file; storing index information ofthe timing message in the corresponding timing queue according to the delay level of the timing message; separately monitoring the plurality of timing queues to determine whether there is a timing message that satisfies a preset delivery condition; delivering the index information of the timing message that satisfies the preset delivery condition to a target queue if there is a timing message that satisfies the preset delivery condition so as to allow the user to acquire the timing message that satisfies the preset delivery condition through the index information. The invention improves the timing message stacking capability, and when the number of timing messages is large, the timing message delay errors can be reduced, and the timing messages are guaranteed to be delivered on time to agreater extent.

Owner:ALIBABA GRP HLDG LTD

Method for storing configuration parameter in NOR FLASH based on Hash arithmetic

InactiveCN101419571ASpeed up queryReduce reading and writingMemory adressing/allocation/relocationSpecial data processing applicationsHash tableOperating system

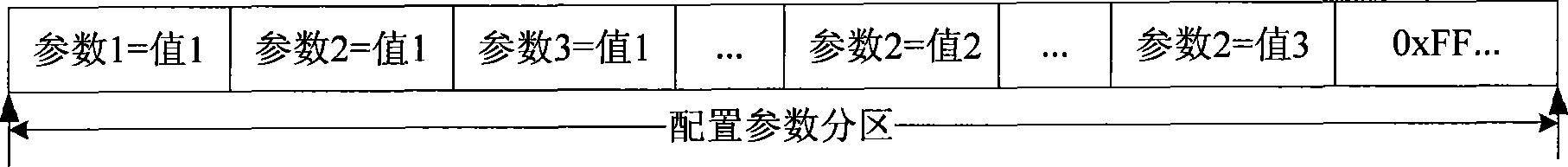

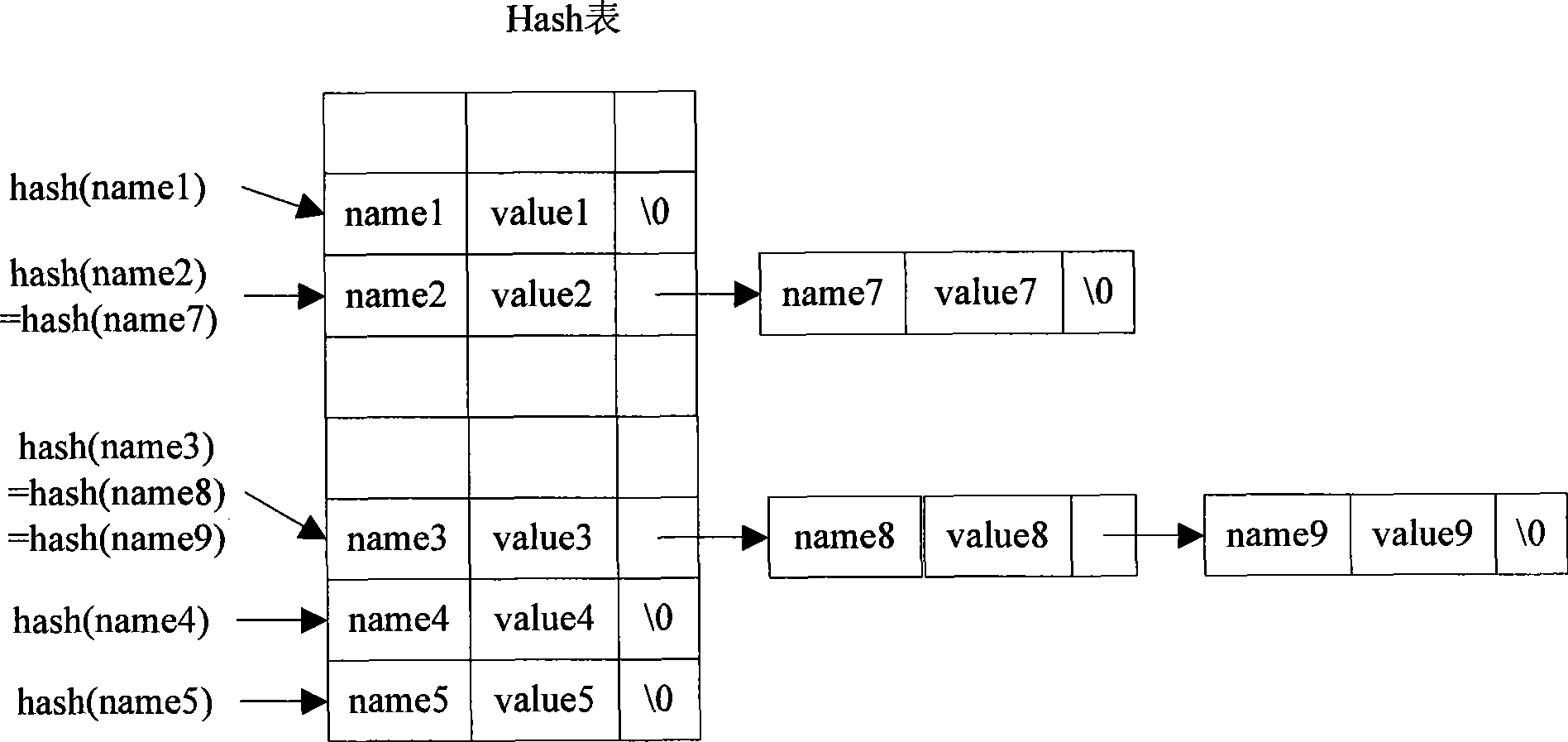

The method relates to a method for storing configuration parameters in a NOR FLASH based on a Hash algorithm. The method comprises the following steps: configuration parameter items are sequentially written in a blank area of a configuration parameters partition without adding tags in a format 'name=value', effective configuration parameters are obtained by a positional relation of the blank area in FLASH after a Hash table is established for the configuration parameters, the Hash table is used for representing mapping between effective configuration information of the partition in a configuration parameters space and memory omission, when certain parameter needs modification, similarly, the Hash algorithm is firstly carried out, then corresponding nodes in the Hash table are compared, and then a decision is made on whether the configuration parameter needs modification and whether the configuration parameter need to be written in the FLASH. The method can effectively lower the reading and writing of the FLASH, and especially the erasing times of the FLASH, and improves the inquiry speed and the modification speed of the configuration parameters.

Owner:SHANGHAI UNIV

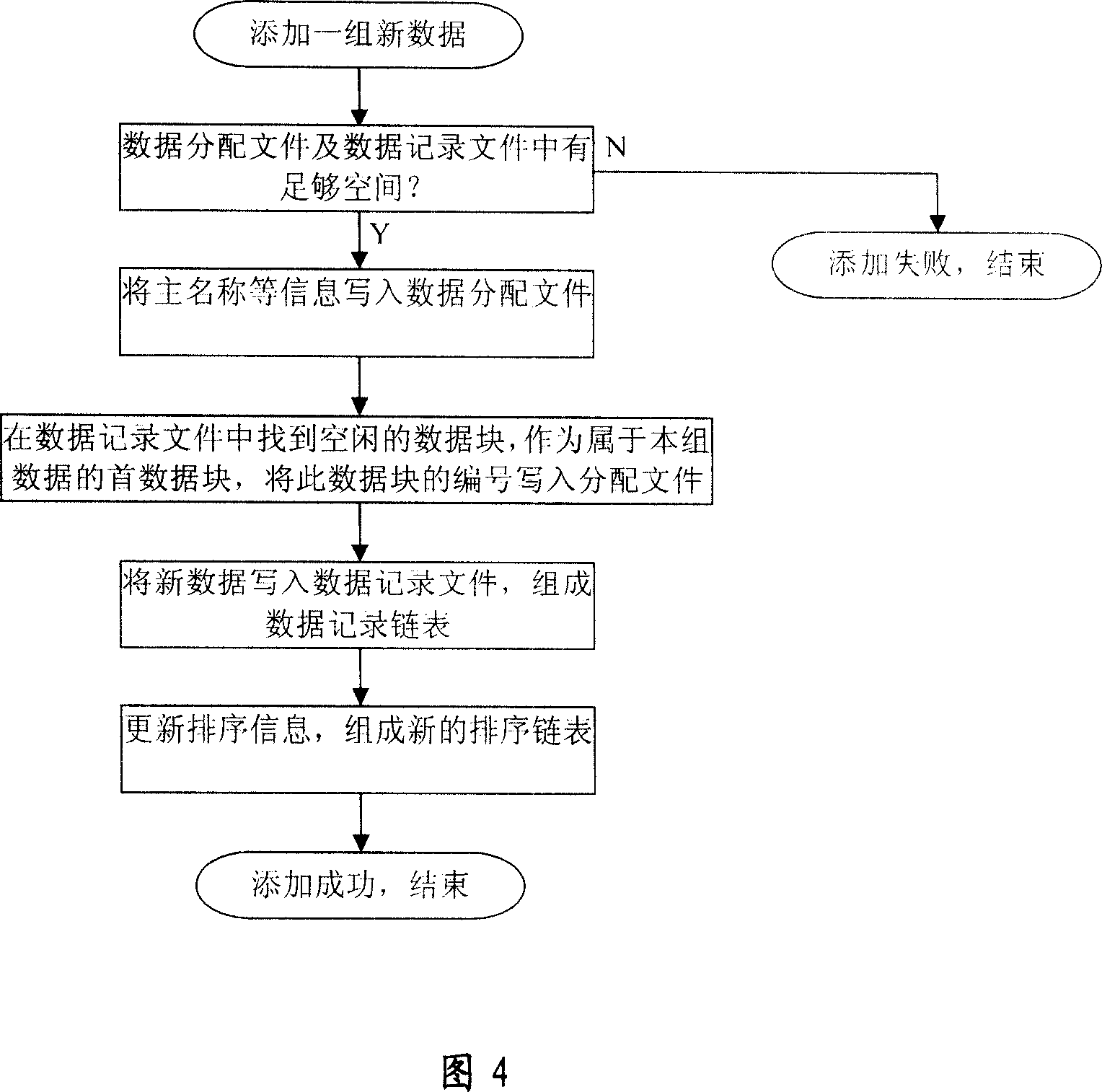

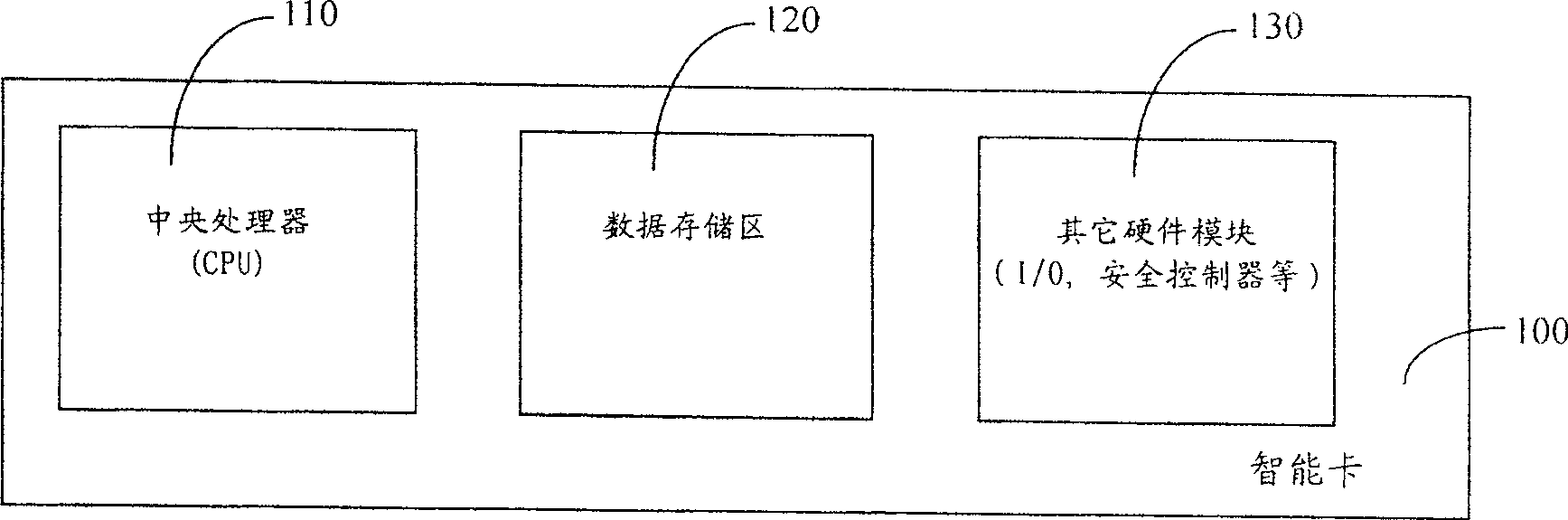

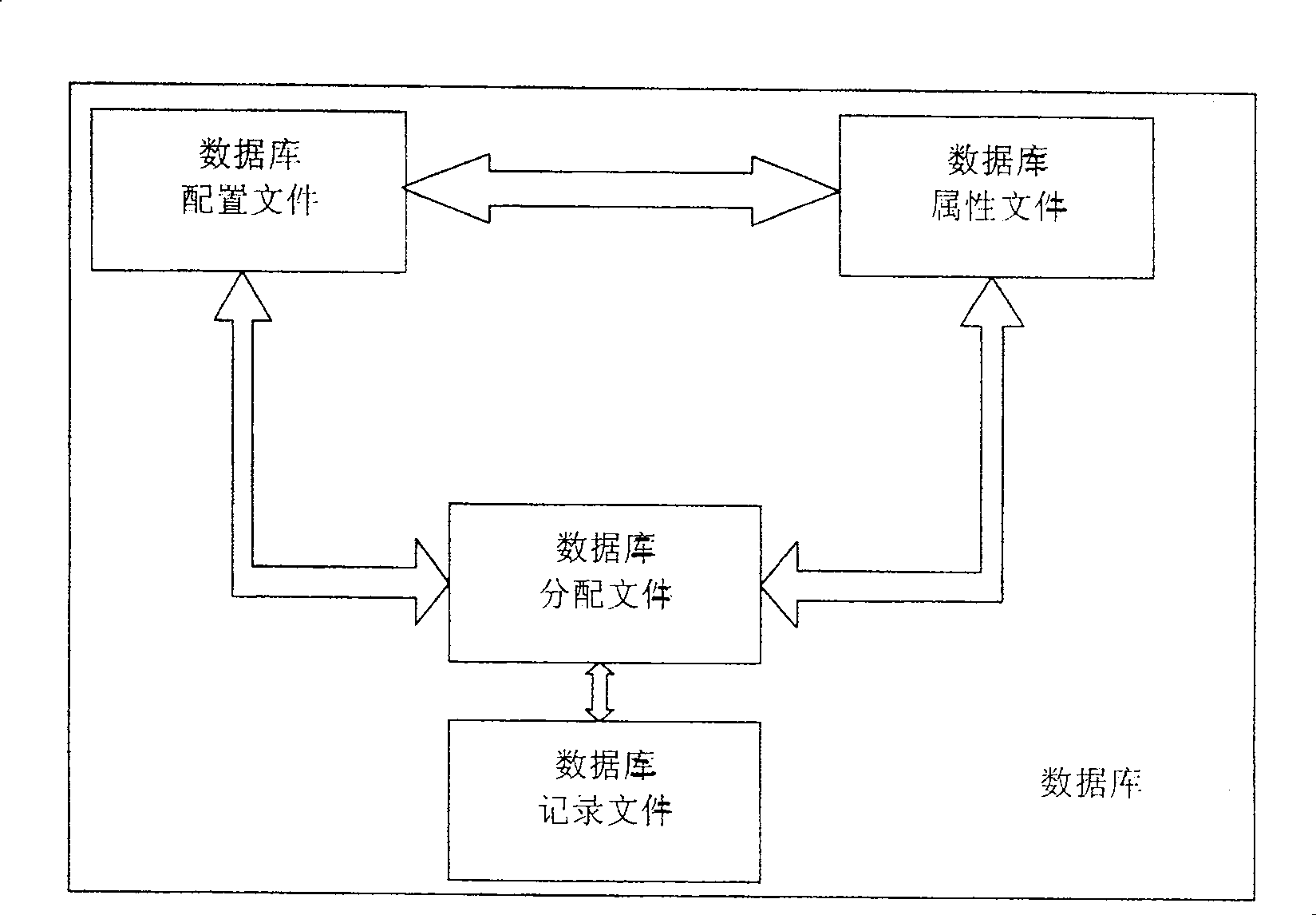

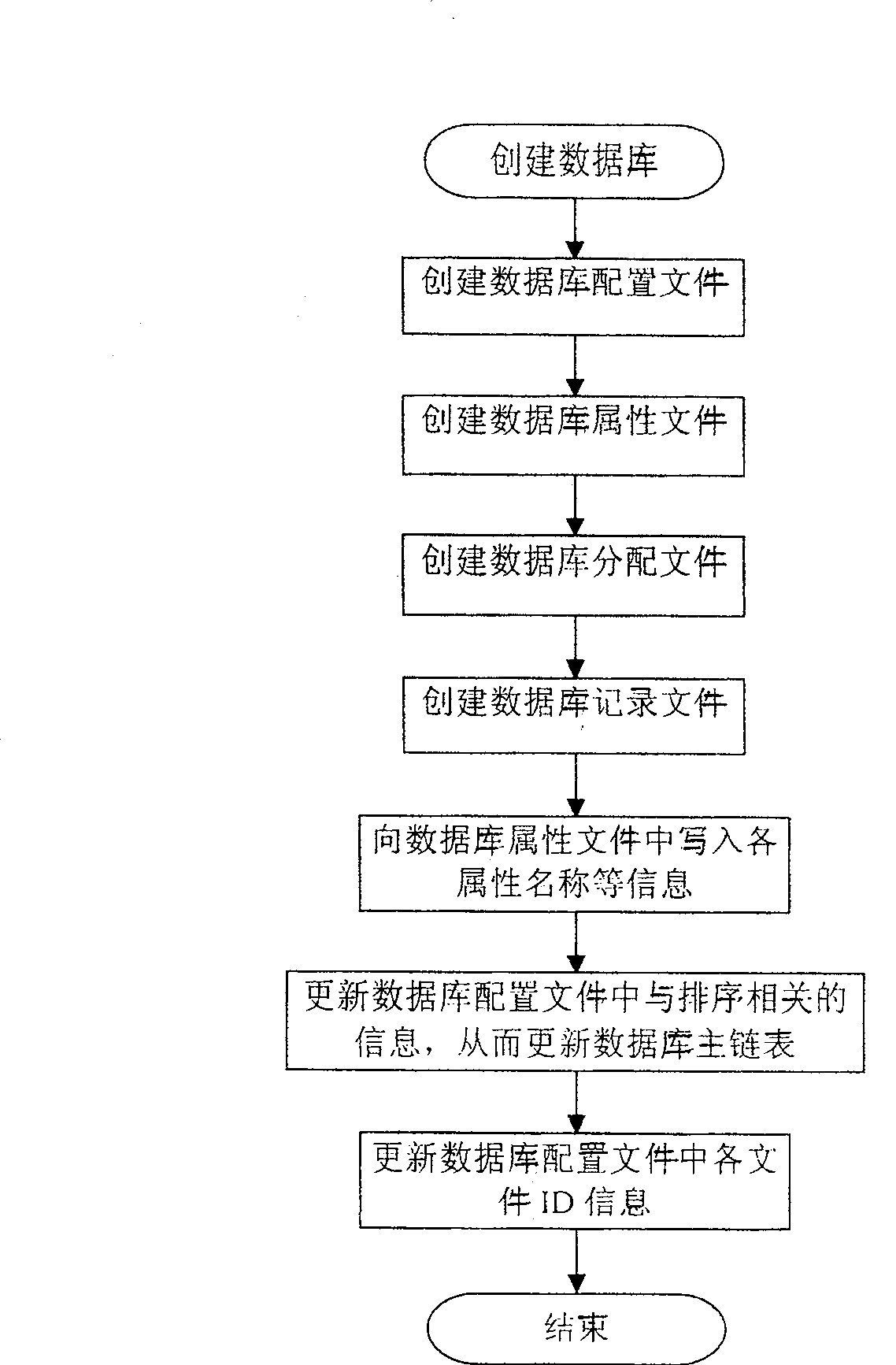

Mass storing and managing method of smart card

ActiveCN101000635AReduce reading and writingRun fastRecord carriers used with machinesSpecial data processing applicationsHome positionLinked list

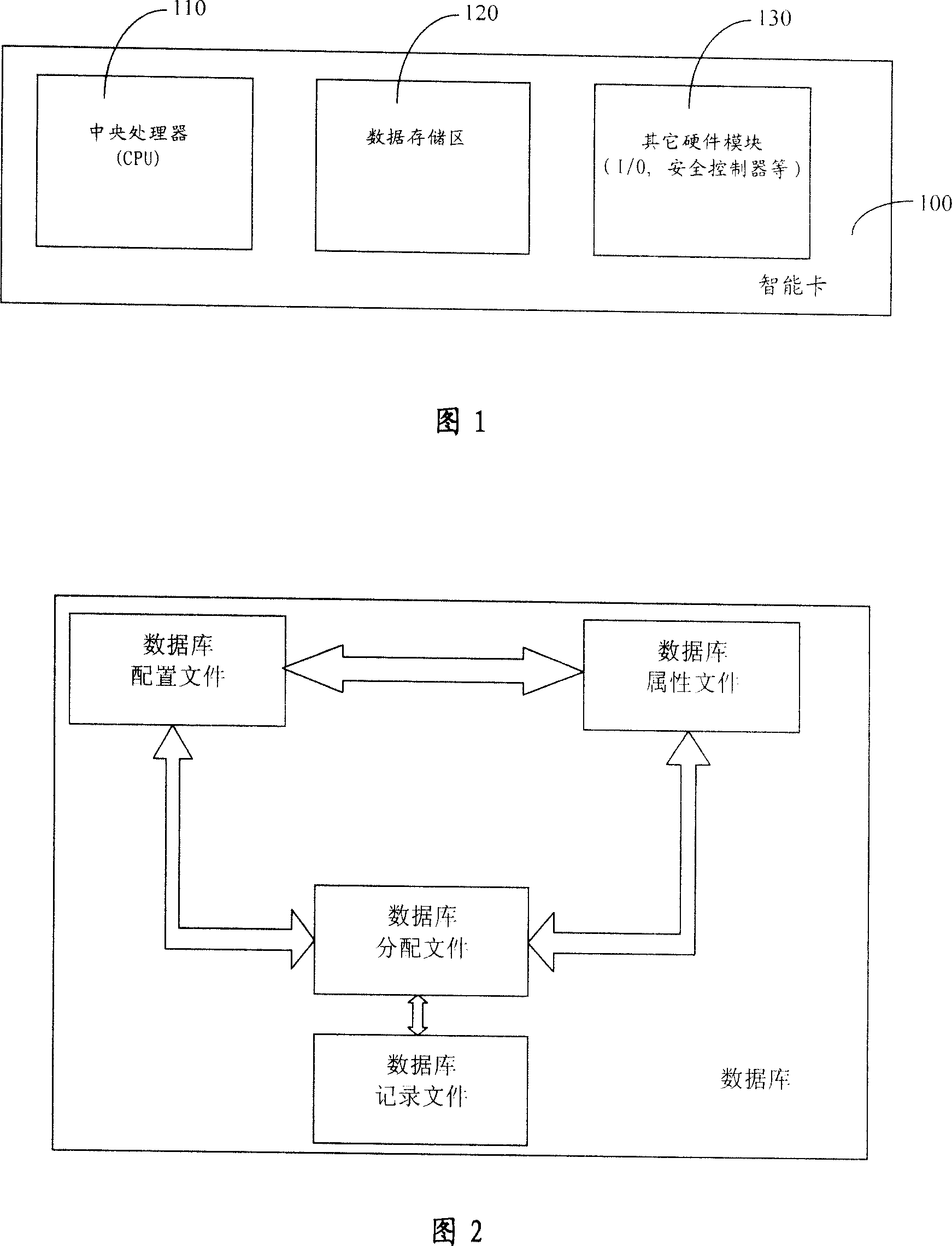

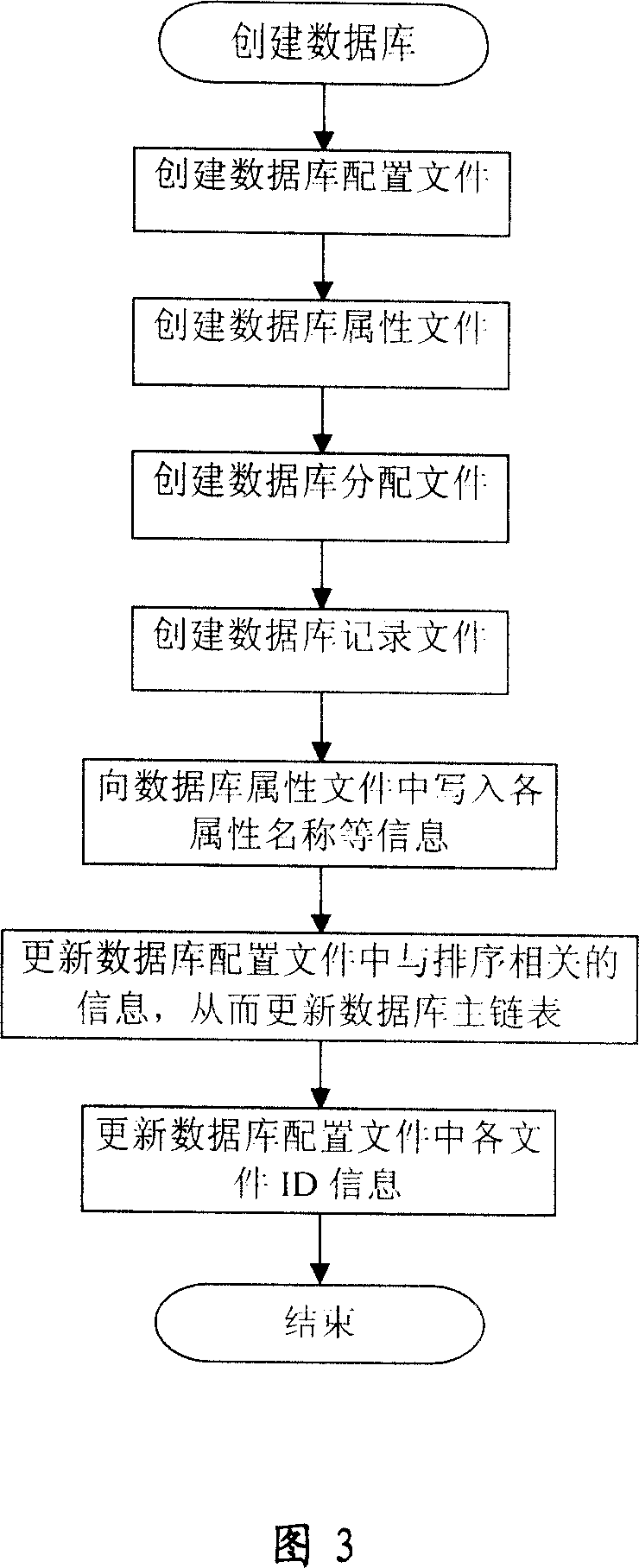

A method for storing and managing mass data of intelligent card includes setting up a databank with functions of basic storage, query, comparison and sequencing on said intelligent card, forming basic structure of said databank by two sets of chain lists, using each node in master chain list to store basic information of each record and initial position of sub-chain list, and using sub-chain list to store different attributes of the same record in structure of identification-length-value.

Owner:RDA MICROELECTRONICS SHANGHAICO LTD

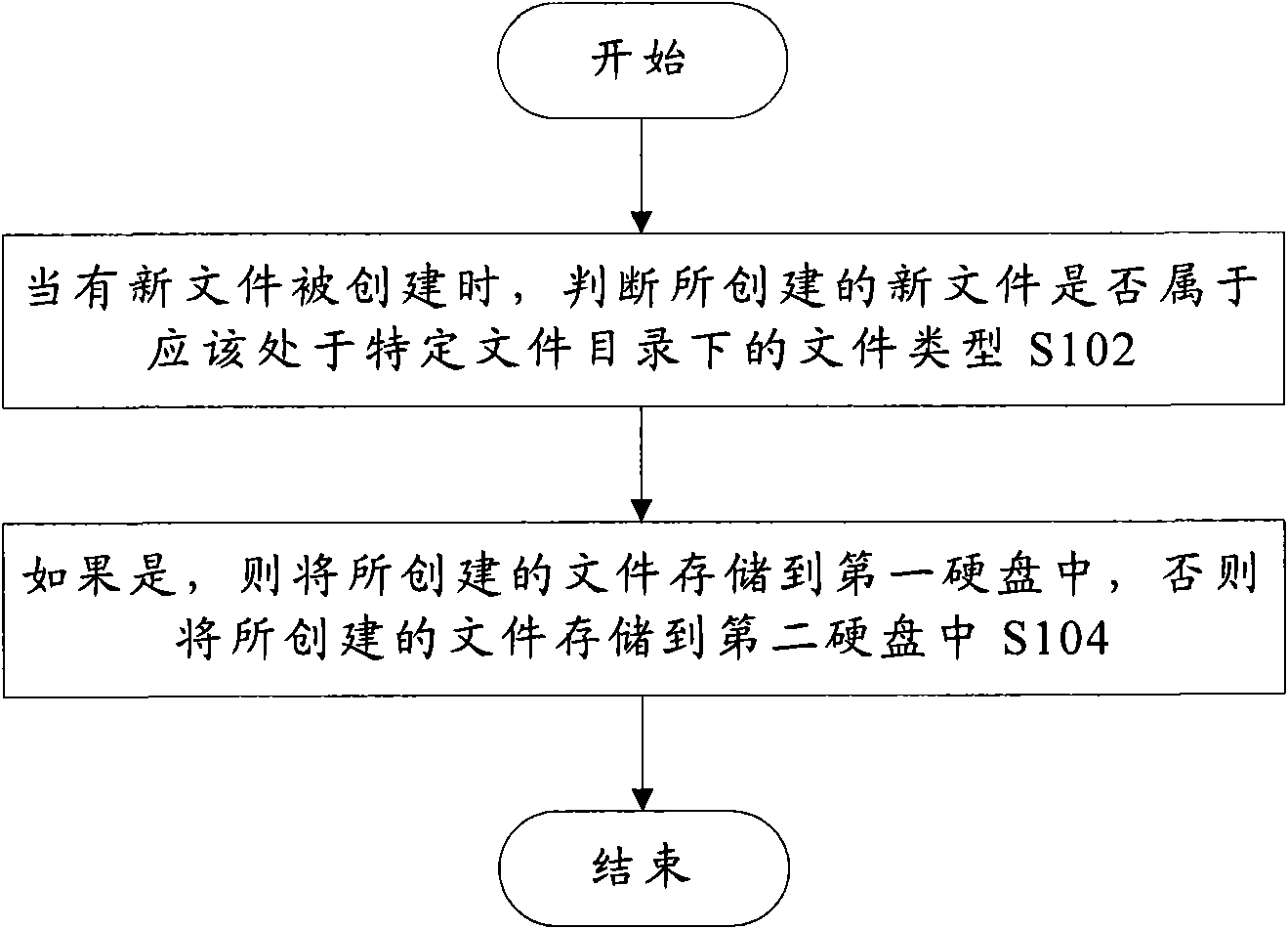

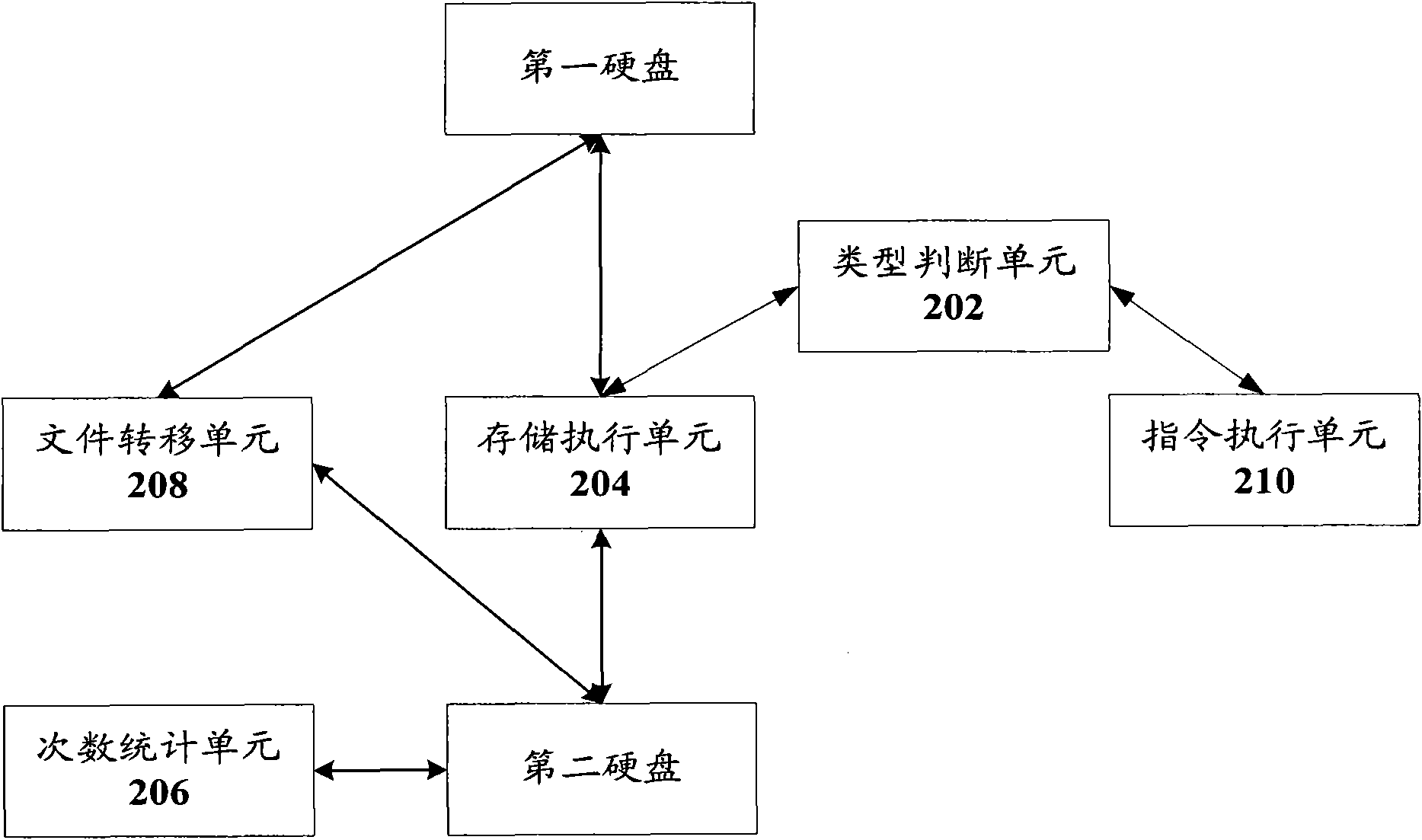

Computer and file processing method used in same

InactiveCN101551804AReduce reading and writingExtend your lifeSpecial data processing applicationsDocument handlingComputerized system

The invention discloses a computer and a file processing method used in the same, wherein the computer comprises a first hard disk and a second hard disk, both of which are mutually independent based on physics; the computer also comprises a type judging unit and a memory executing unit; if a new file is created, the type judging unit is used for judging whether the file style of the new file belongs to the preset file style under a preset file directory or not and generating a first judging result; and the memory executing unit is used for saving the new file to the first hard disk under the condition that the first judging result is yes and saving the new file to the second hard disk under the condition that the first judging result is no. The computer saves all files which are easy to produce fragments to the new hard disk of a system hard disk different from a computer system, thereby reducing the fragment problem of the system hard disk and improving the system performance.

Owner:LENOVO (BEIJING) CO LTD

Large file selective encryption method for reducing write

ActiveCN107070649AReduce reading and writingAddresses a flaw that does not apply to large file encryptionKey distribution for secure communicationFile access structuresPlaintextComputer hardware

The invention belongs to the field of information security, relating to a large file encryption method. The method considers the phenomenon that a large file has huge data and is not suitable for full encryption, a large number of data will choose not to be encrypted, and in order to reduce the read and write of the data, especially the write, the method disclosed by the invention adopts the following steps: storing an original plaintext, covering part of the plaintext that is encrypted, and separately storing an encrypted ciphertext. As different encryption methods have different advantages and disadvantages, fully homomorphic encryption also cannot solve all delegated calculations, and a high cost of encryption is needed, an encryption mode with a misleading function is required for some data, and only a general symmetric encryption mode is required for other data, thereby, the appropriate encryption methods are segmentally selected as required, only part of the data needs to be encrypted according to rules or selections; and meanwhile, an optimum scheme for reducing key management and guaranteeing security is proposed, and thus the encryption and decryption of the files can be achieved by only using fewer keys.

Owner:桂林傅里叶电子科技有限责任公司

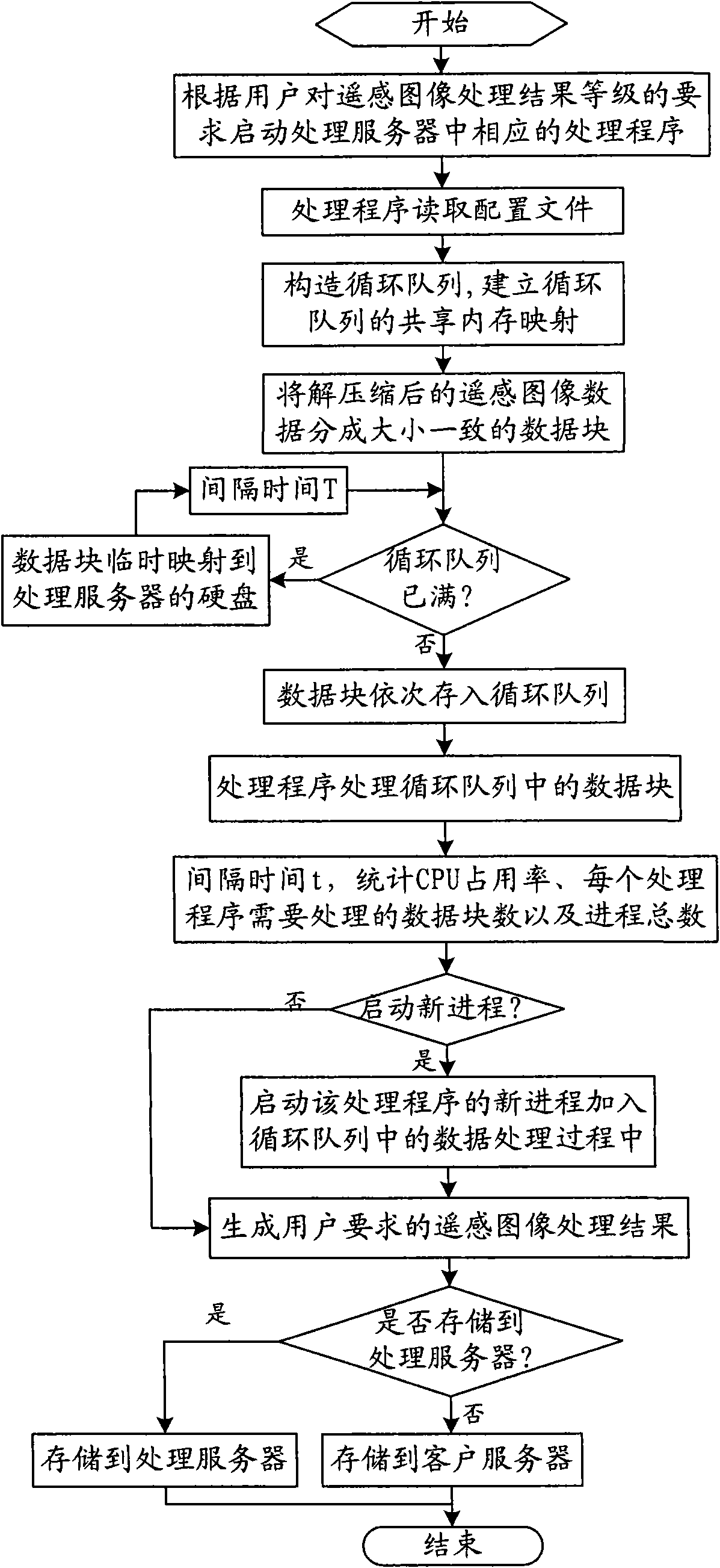

Adaptive real-time processing method for remote sensing images of ground system

ActiveCN101968876AIncrease productivityEasy to handleImage data processing detailsSensing dataProblem of time

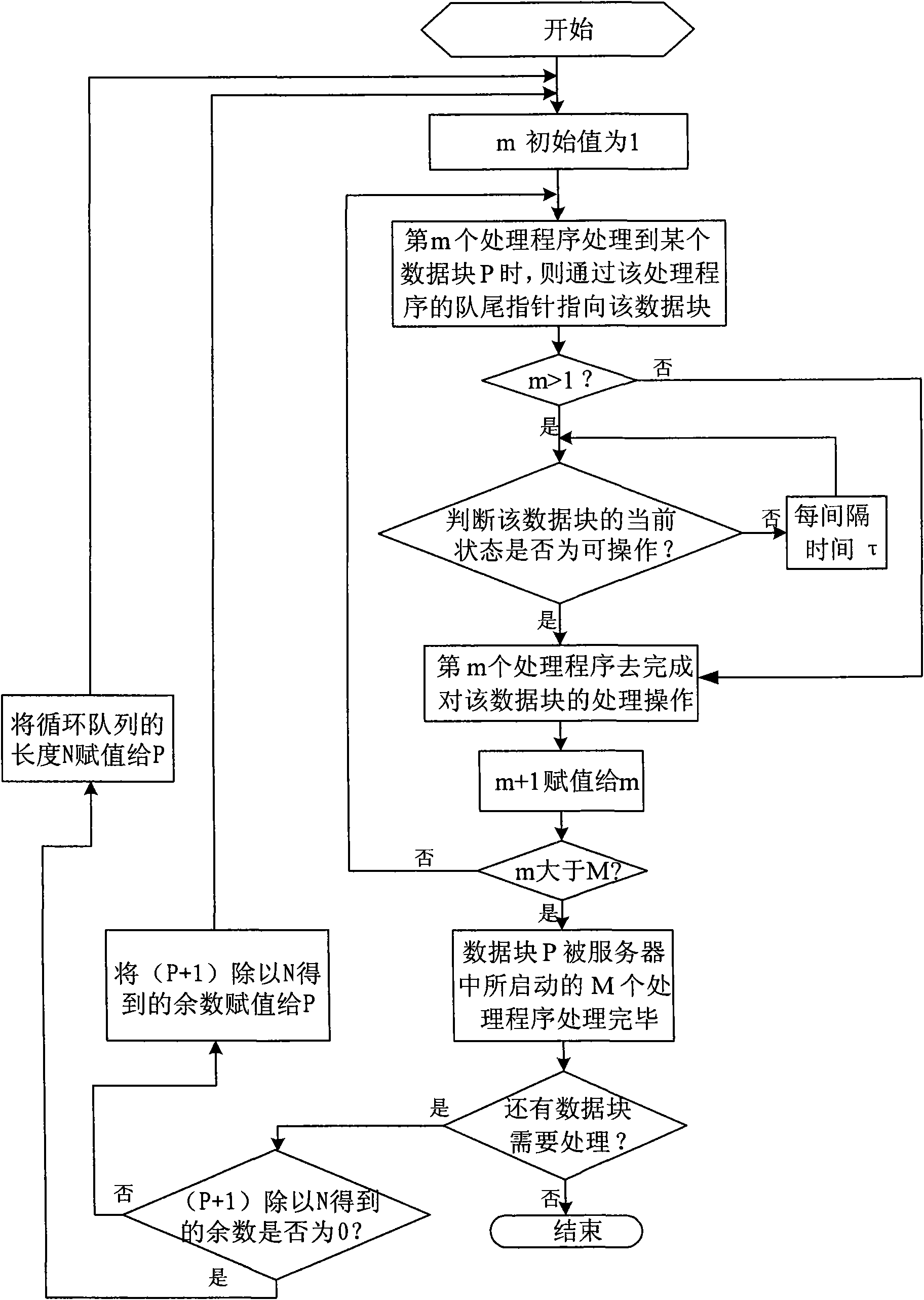

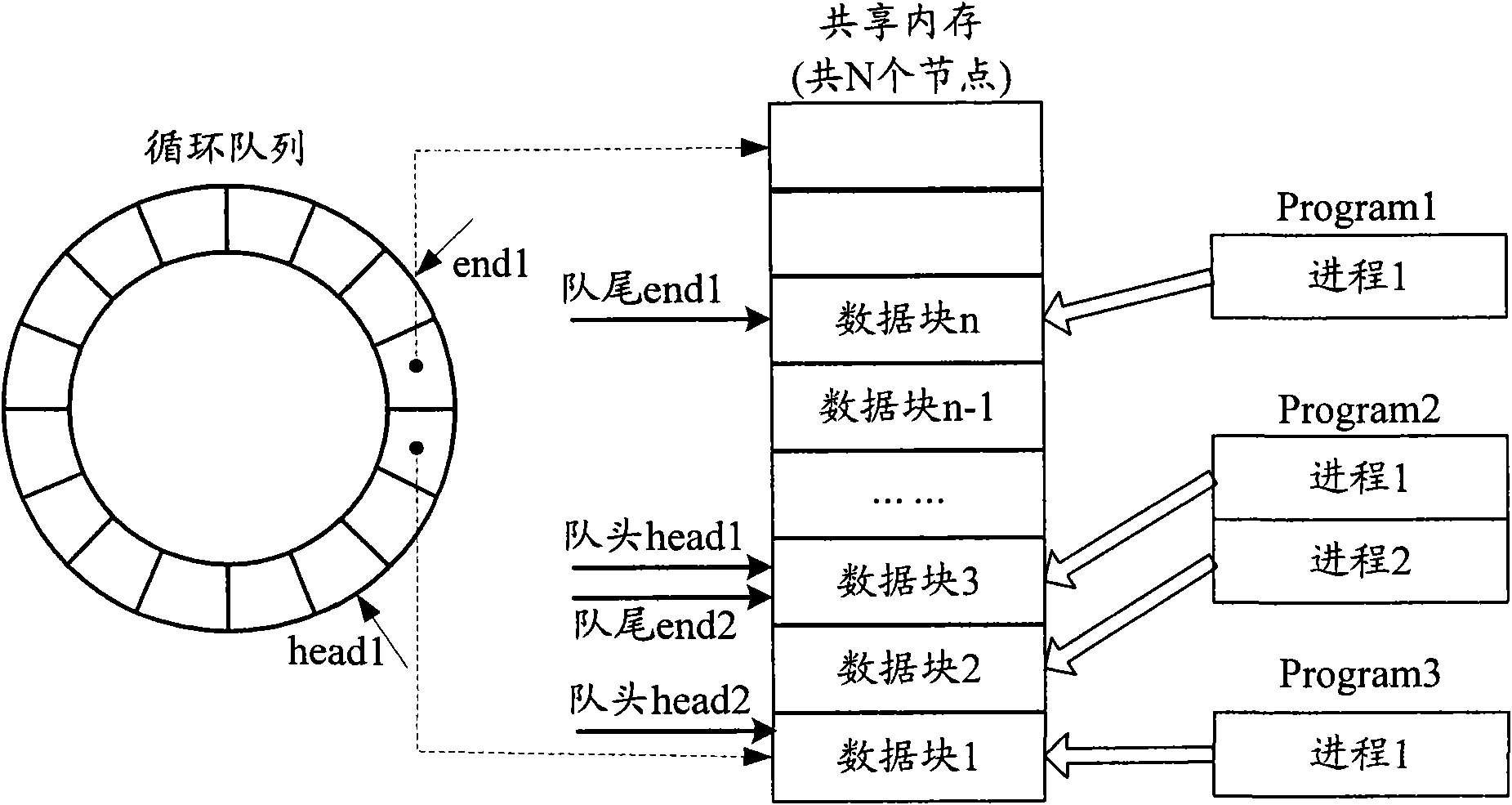

The invention relates to an adaptive real-time processing method for remote sensing images of a ground system, which comprises the following steps: starting a corresponding processing program in a processing server according to the requirement of a user for the processed result level of the remote sensing images; constructing a circular queue and establishing shared memory mapping, then decompressing the received remote sensing image data, dividing the decompressed remote sensing image data into data blocks of the same size, and sequentially storing into the circular queue; sequentially carrying out real-time processing on the data blocks in the circular queue by each processing program, and generating the processed results of the remote sensing images required by the user; and storing the processed results of the remote sensing images in the processing server or storing in a customer server. The invention solves the problem of time delay among the processing links of the current ground processing system, and greatly improves the operating efficiency of the system; and with the increasing amount of remote sensing data, the requirement of processing a large amount of remote sensing information in real time can be basically met by processing the remote sensing data by the processing mode based on the invention.

Owner:SPACE STAR TECH CO LTD

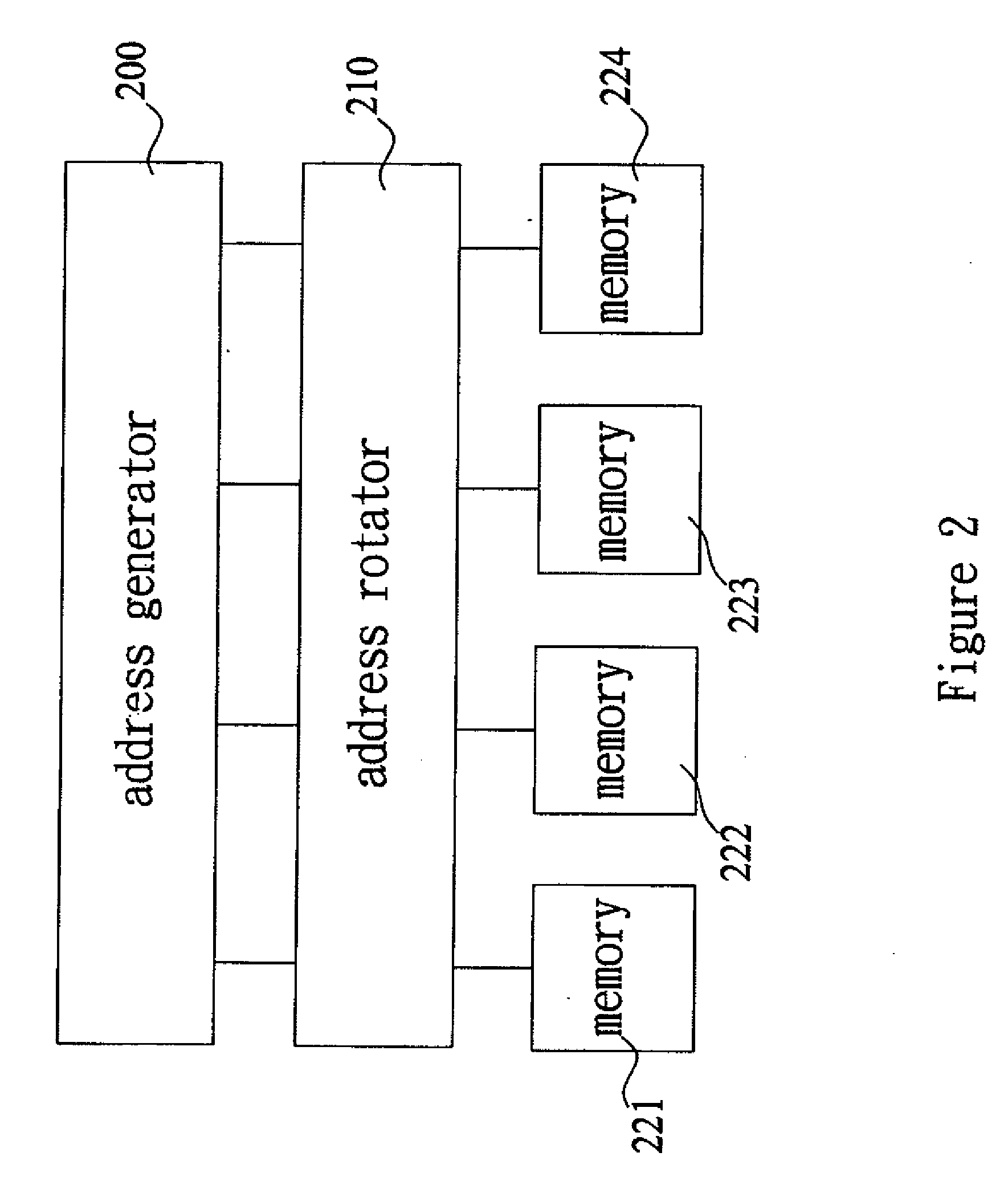

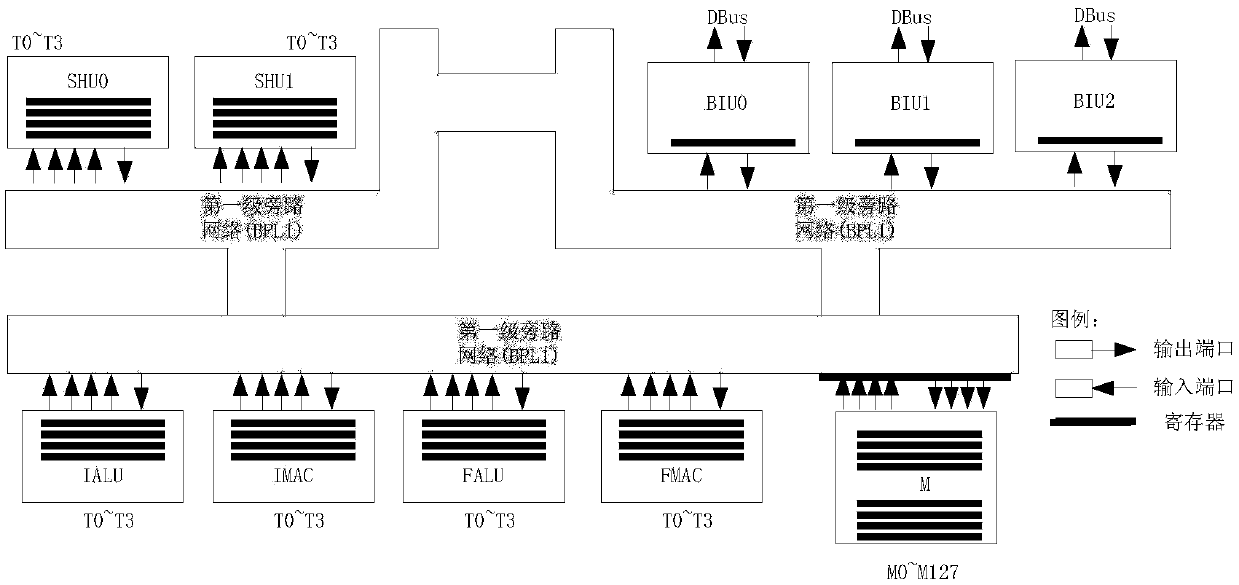

Digital signal processor structure for performing length-scalable fast fourier transformation

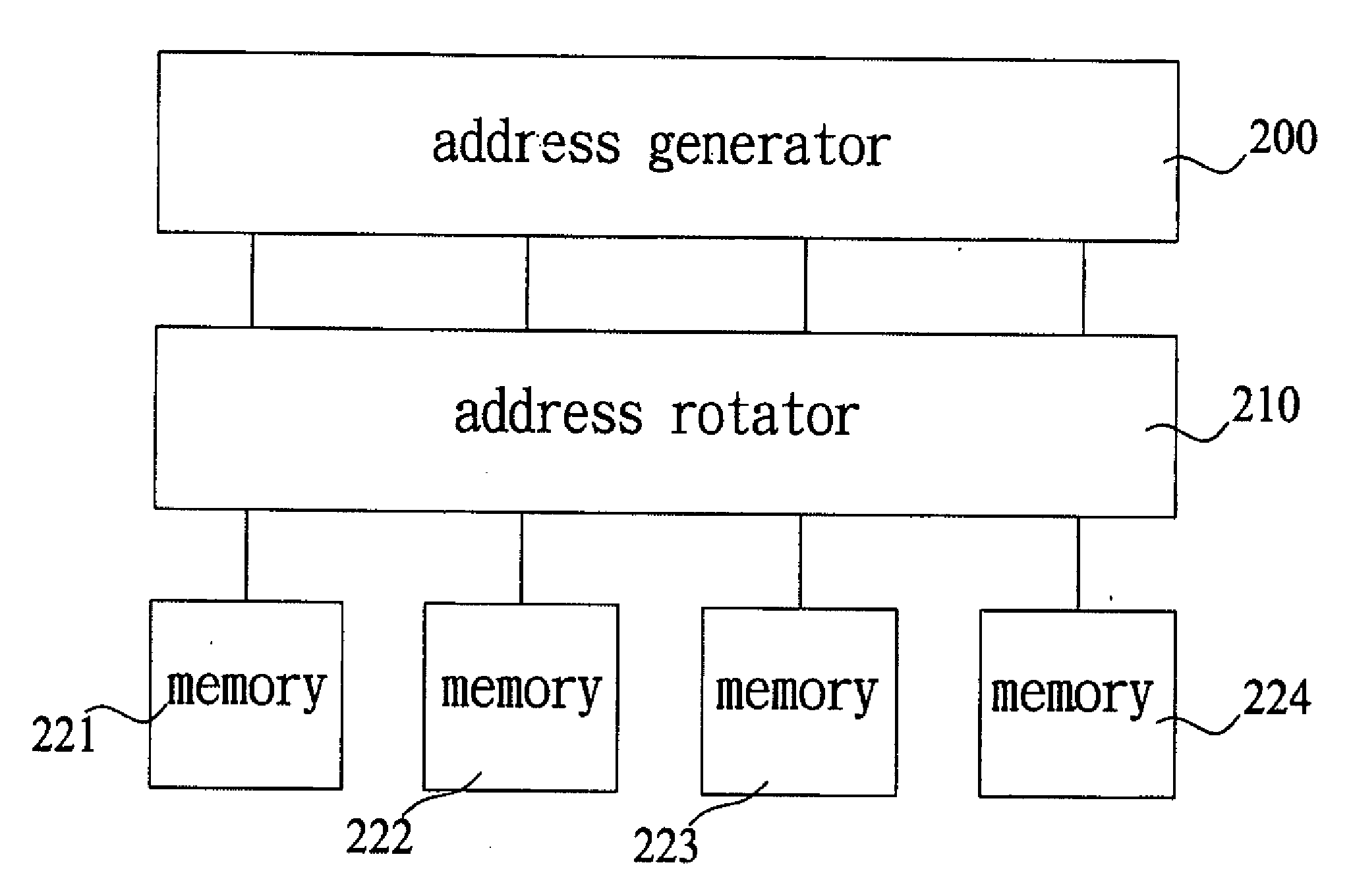

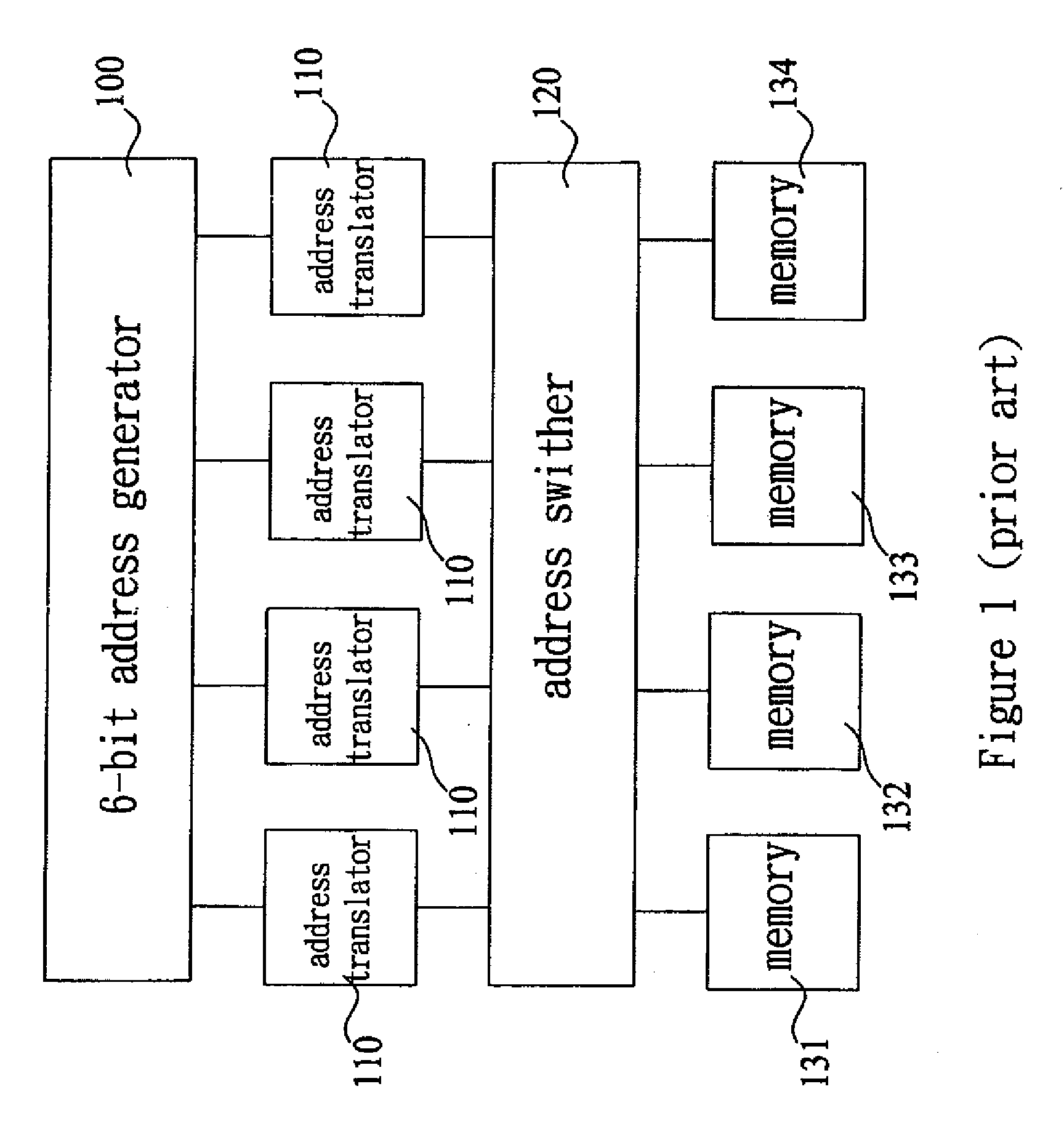

InactiveUS20080208944A1Improve performanceReduce power consumptionDigital computer detailsComplex mathematical operationsAddress generatorFft fast fourier transform

A digital signal processor structure by performing length-scalable Fast Fourier Transformation (FFT) discloses a single processor element (single PE), and a simple and effective address generator are used to achieve length-scalable, high performance, and low power consumption in split-radix-2 / 4 FFT or IFFT module. In order to meet different communication standards, the digital signal processor structure has run-time configuration to perform for different length requirements. Moreover, its execution time can fit the standards of Fast Fourier Transformation (FFT) or Inverse Fast Fourier Transformation (IFFT).

Owner:SUNG CHENG HAN +4

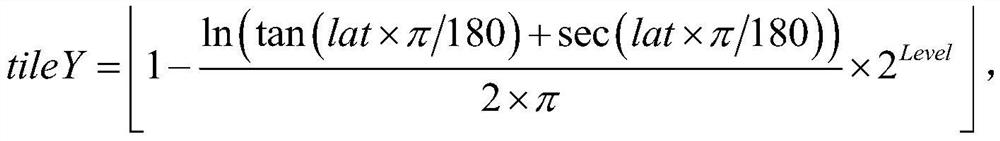

Vector tile real-time slicing and updating method based on multi-layer cache

ActiveCN111930767AImprove query efficiencyAvoid reslicingDatabase updatingGeographical information databasesIn-memory databaseMinimum bounding rectangle

The invention discloses a vector tile real-time slicing and updating method based on multi-layer cache, which comprises the following steps of: retrieving in a spatial database according to an input map range, judging whether data updating is involved or not, and returning a complete geometrical shape of updated data to a minimum bounding rectangle to generate a corresponding vector tile; establishing a retrieval identifier according to the request range for generating the vector tiles, retrieving the memory database through the retrieval identifier, returning a retrieval result if the retrieval identifier exists, and retrieving the non-relational database if the retrieval identifier does not exist; if the search result exists in the non-relational database, loading the search result intoa cache and returning the search result; and if not, calling a vector tile slicing tool to generate vector tiles in real time, storing the generated vector tiles into a memory database or a non-relational database, establishing a file index, and returning the vector tiles. The publishing efficiency of the vector tile data is effectively improved, and the requirements of a user for instant generation and updating of mass data are met.

Owner:CHONGQING GEOMATICS & REMOTE SENSING CENT

Method for writing caches of storage servers in hybrid modes

InactiveCN104268102AReduce reading and writingReduce the refresh rateMemory adressing/allocation/relocationOperating systemDisk buffer

The invention provides a method for writing caches of storage servers in hybrid modes. The method has the advantages that the hybrid modes of a write-back process and a write-through process are adopted, and the write-back process is adopted when sufficient spaces are available in the caches of disks, so that the read-write performance can be improved; the write-through process is adopted when the spaces available in the caches of the disk are reduced and reach a certain threshold value, so that read-write and refresh frequencies of the caches of the disks can be reduced, and the read-write speeds of the caches of the disks can be increased to a certain degree.

Owner:INSPUR GROUP CO LTD

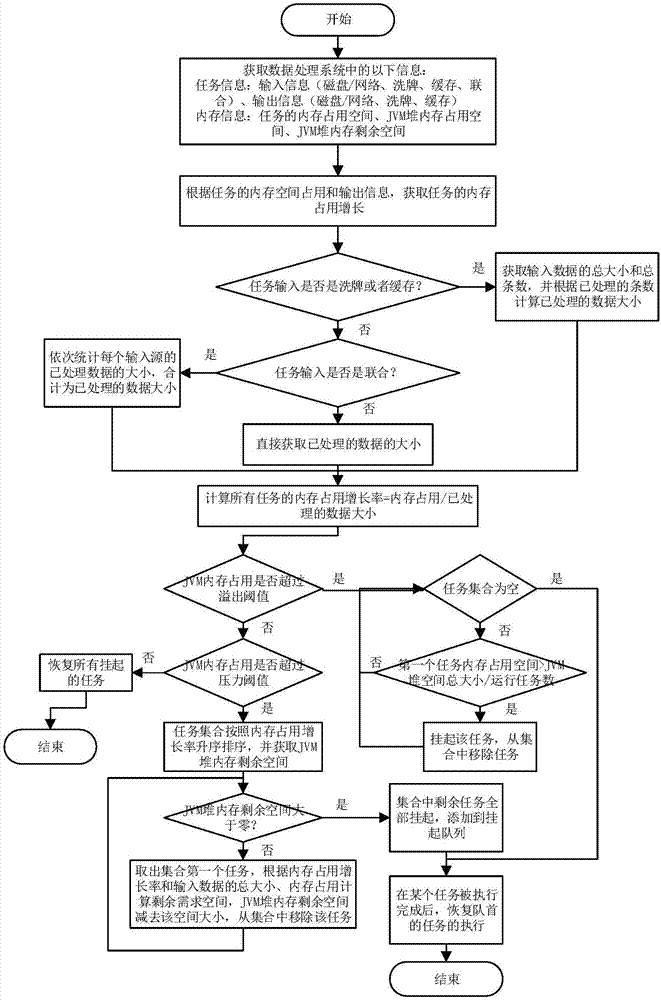

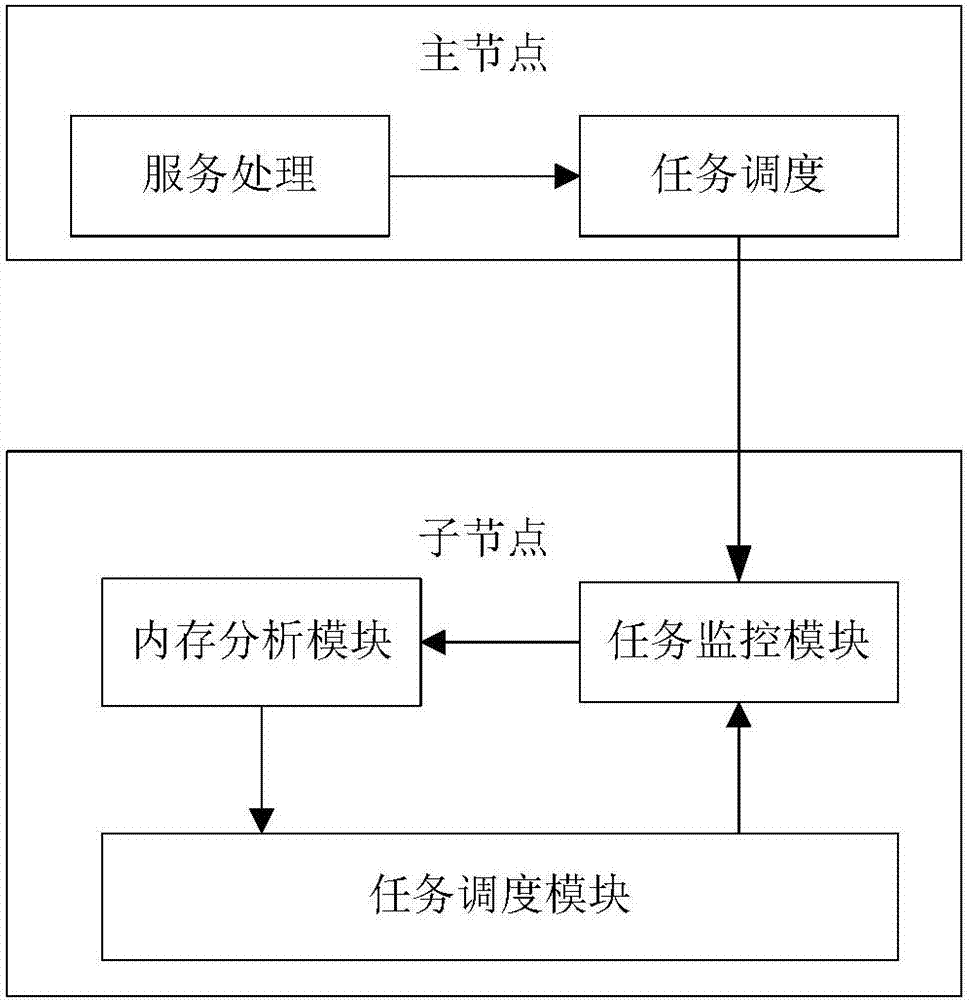

Scheduling method and system for relieving memory pressure in distributed data processing systems

InactiveCN107066316AReduce memory pressureAvoid waitingResource allocationDistributed object oriented systemsService systemDistributive data processing

The invention discloses a scheduling method for relieving memory pressure in distributed data processing systems. The scheduling method comprises the following steps of: analyzing a memory using law according to characteristics of an operation carried out on a key value pair by a user programming interface, and establishing a memory using model of the user programming interface in a data processing system; speculating memory using models of tasks according to a sequence of calling the programming interface by the tasks; distinguishing different models by utilizing a memory occupation growth rate; and estimating the influence, on memory pressure, of each task according to the memory using model and processing data size of the currently operated task, and hanging up the tasks with high influences until the tasks with low influences are completely executed or the memory pressure is relieved. According to the method, the influences, on the memory pressure, of all the tasks during the operation are monitored and analyzed in rea time in the data processing systems, so that the expandability of service systems is improved.

Owner:HUAZHONG UNIV OF SCI & TECH

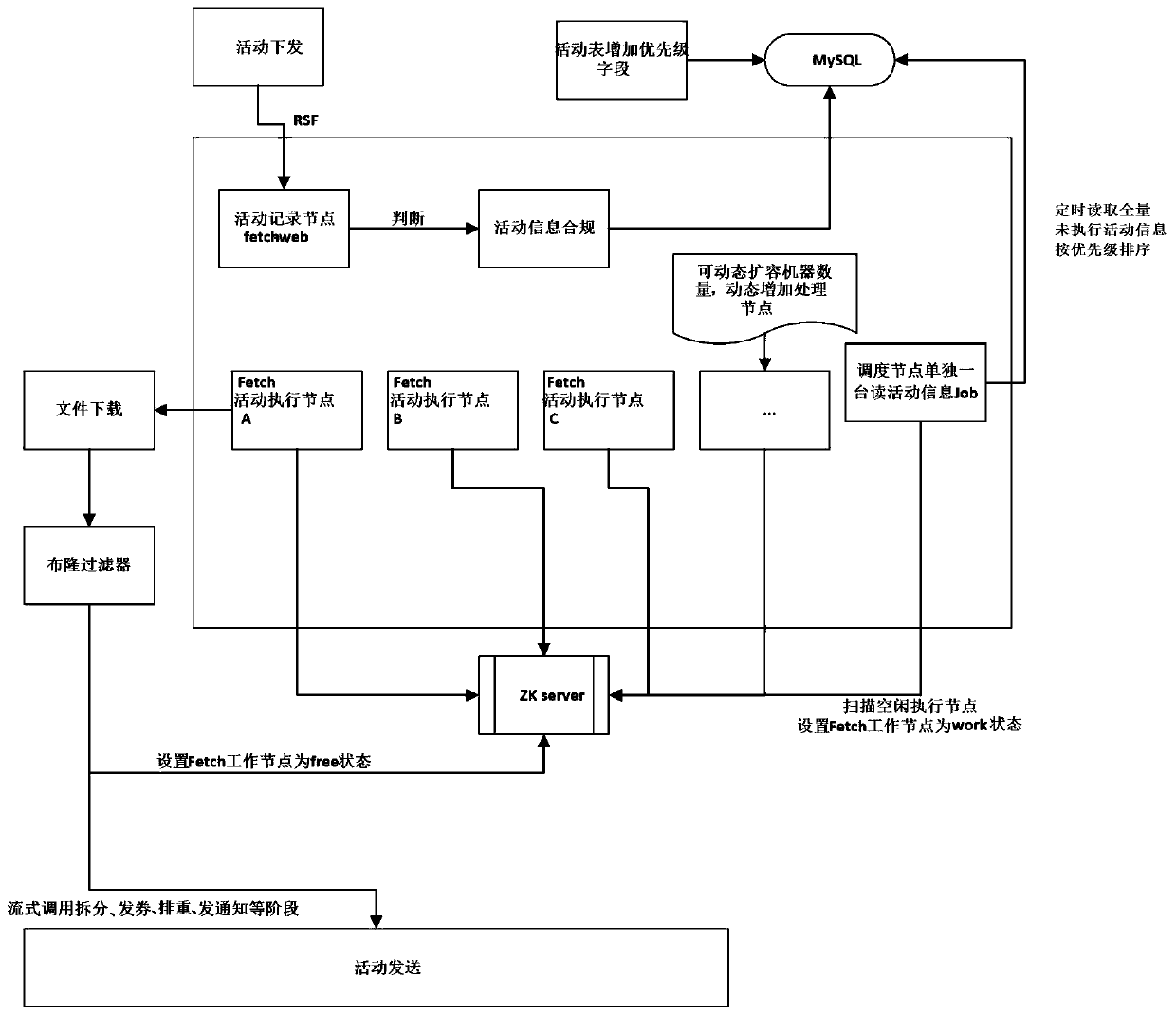

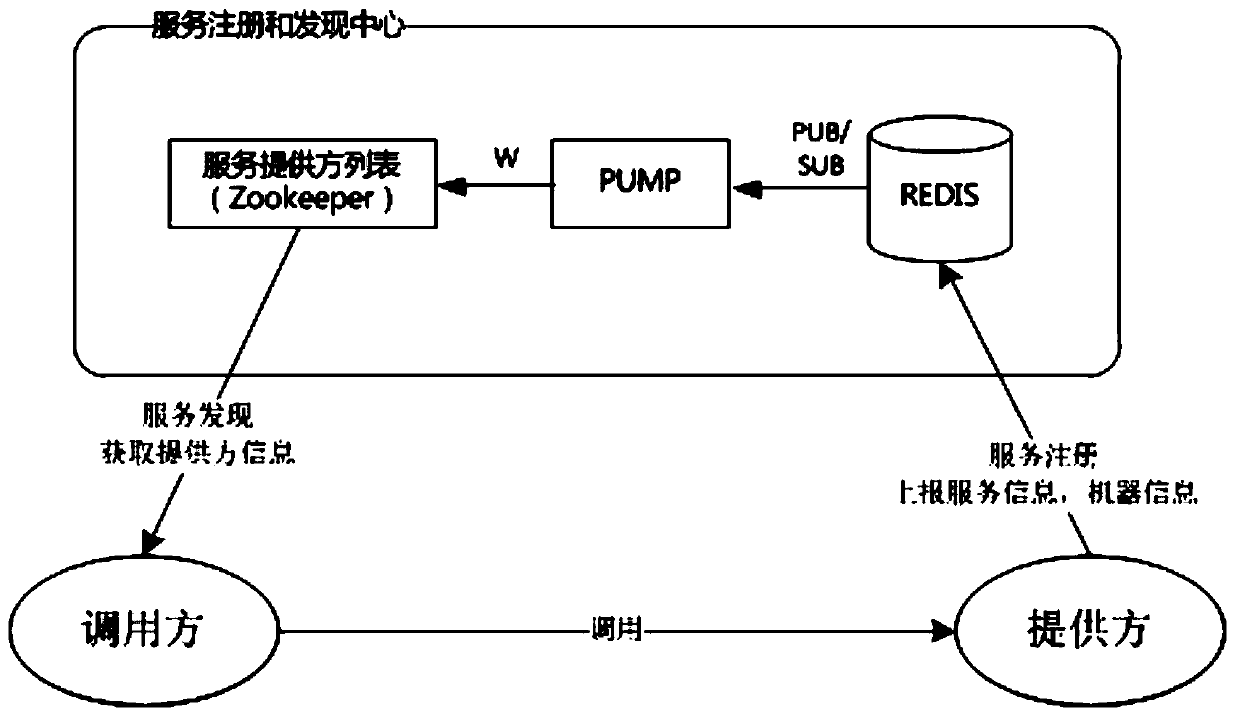

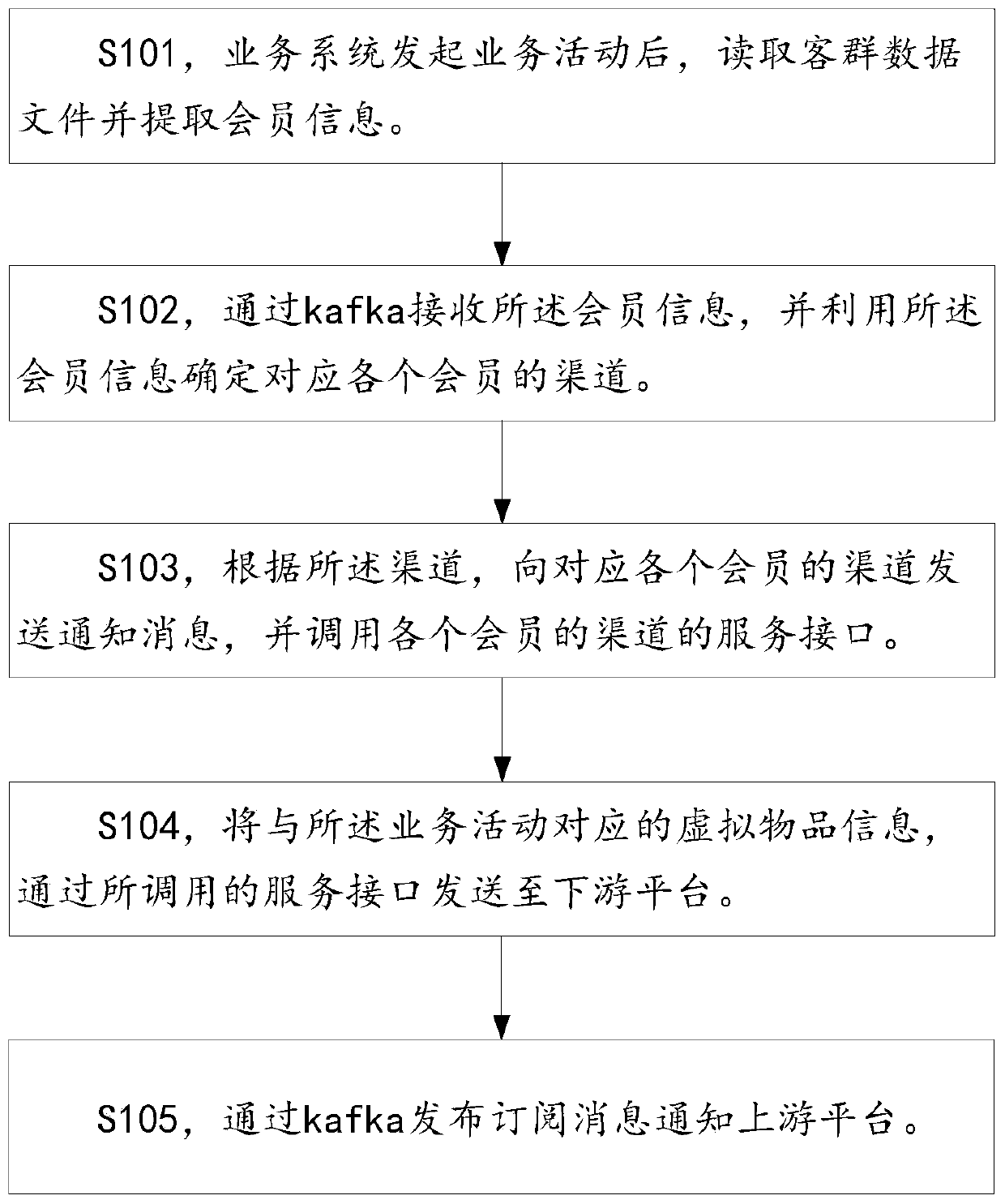

Service processing method and device for high-concurrency environment

PendingCN110955857AHigh Duplicate Data EfficiencyReduce loss rateInterprogram communicationWebsite content managementThe InternetData file

The embodiment of the invention discloses a service processing method and device for a high-concurrency environment, relates to the technical field of the Internet, and can improve the stability of asystem. The method comprises the following steps: after a service system initiates a service activity, reading a customer group data file and extracting member information; receiving the member information through kafka, and determining a channel corresponding to each member by using the member information; according to the channel corresponding to each member, sending a notification message to the channel corresponding to each member, and calling a service interface of the channel of each member; sending virtual article information corresponding to the business activity to a downstream platform through the called service interface; and publishing a subscription message through kafka to notify an upstream platform. The method is suitable for a distributed system in a high-concurrency environment.

Owner:SUNING CLOUD COMPUTING CO LTD

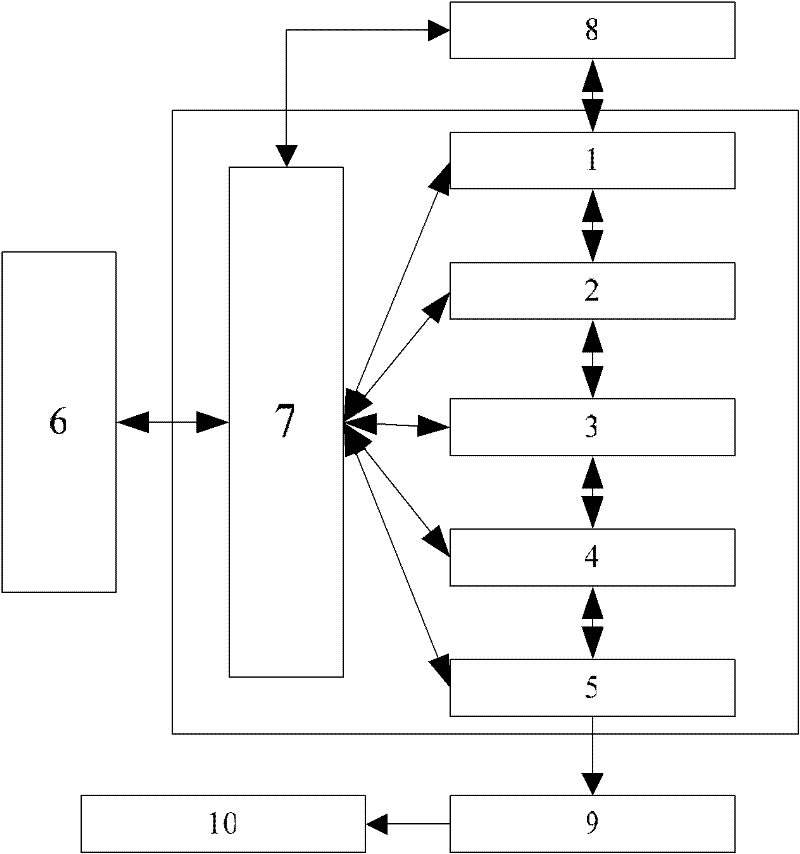

Cache management system and method for raid

ActiveCN102262511AEasy to manageReduce reading and writingInput/output to record carriersRAIDComputer module

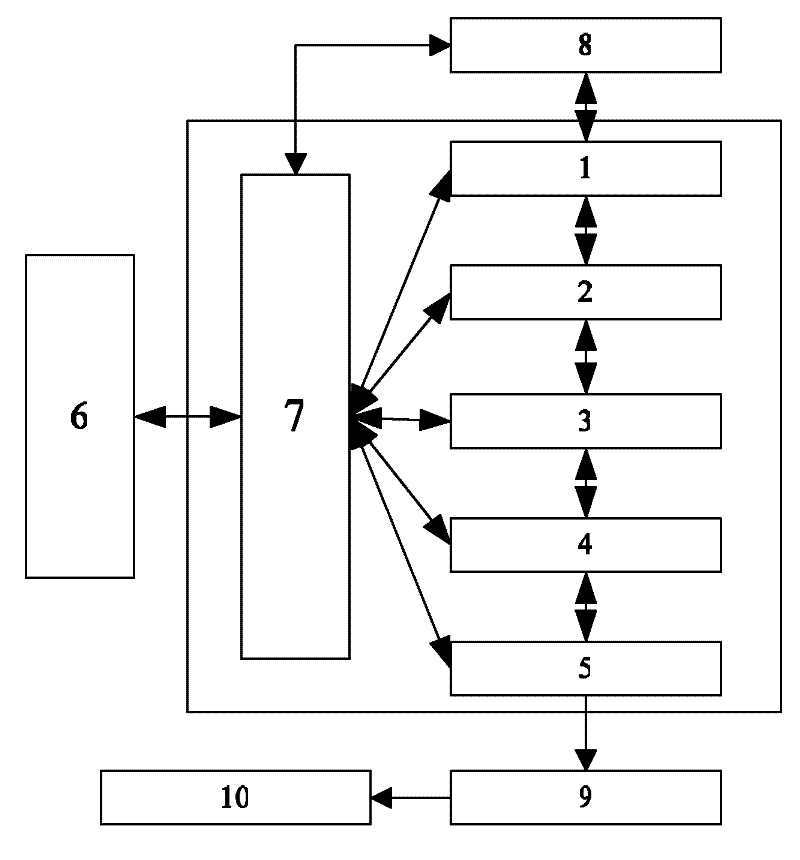

The invention relates to the technical field of computer hard disks, in particular to a cache management system and method for a RAID (Redundant Array of Independent Disks). The management system comprises a target module (1), a cache management module (2), a RAID nucleus module (3), an input / output scheduling module and a starter module which are sequentially connected. The cache management system not only can conveniently manage storage blocks in a cache, but also facilitates data communication with a next stage, and meanwhile has a good mechanism which can reduce read-write of magnetic discs, thus the efficiency and the performance of a whole system are improved.

Owner:SUZHOU ETRON TECH CO LTD

Magnetic random access memory with error correction and compression circuit

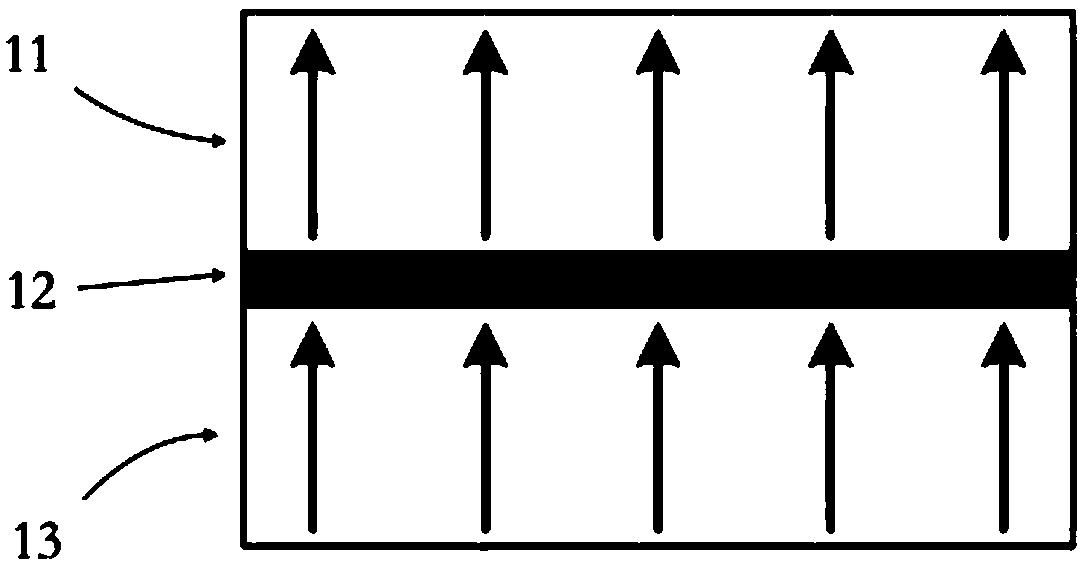

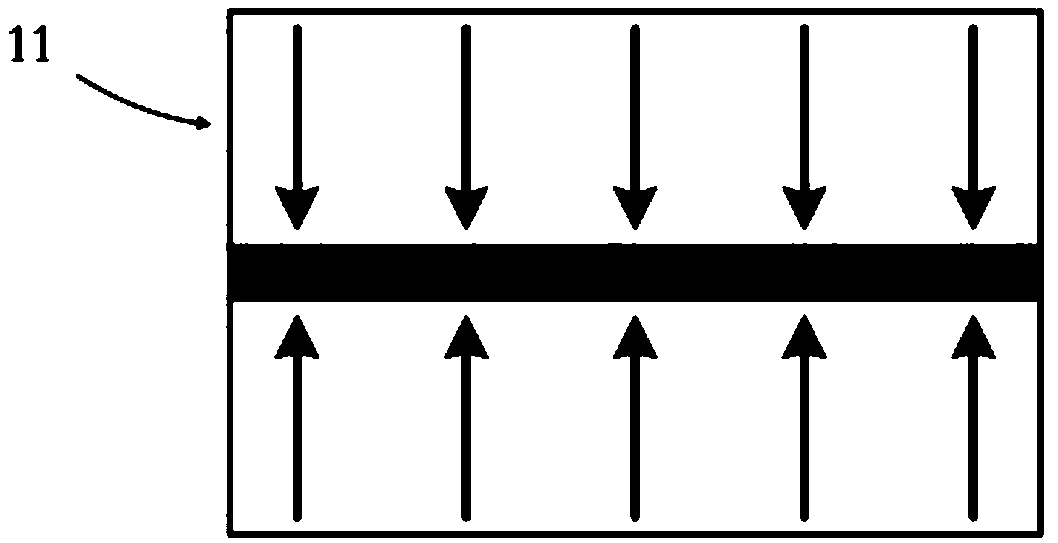

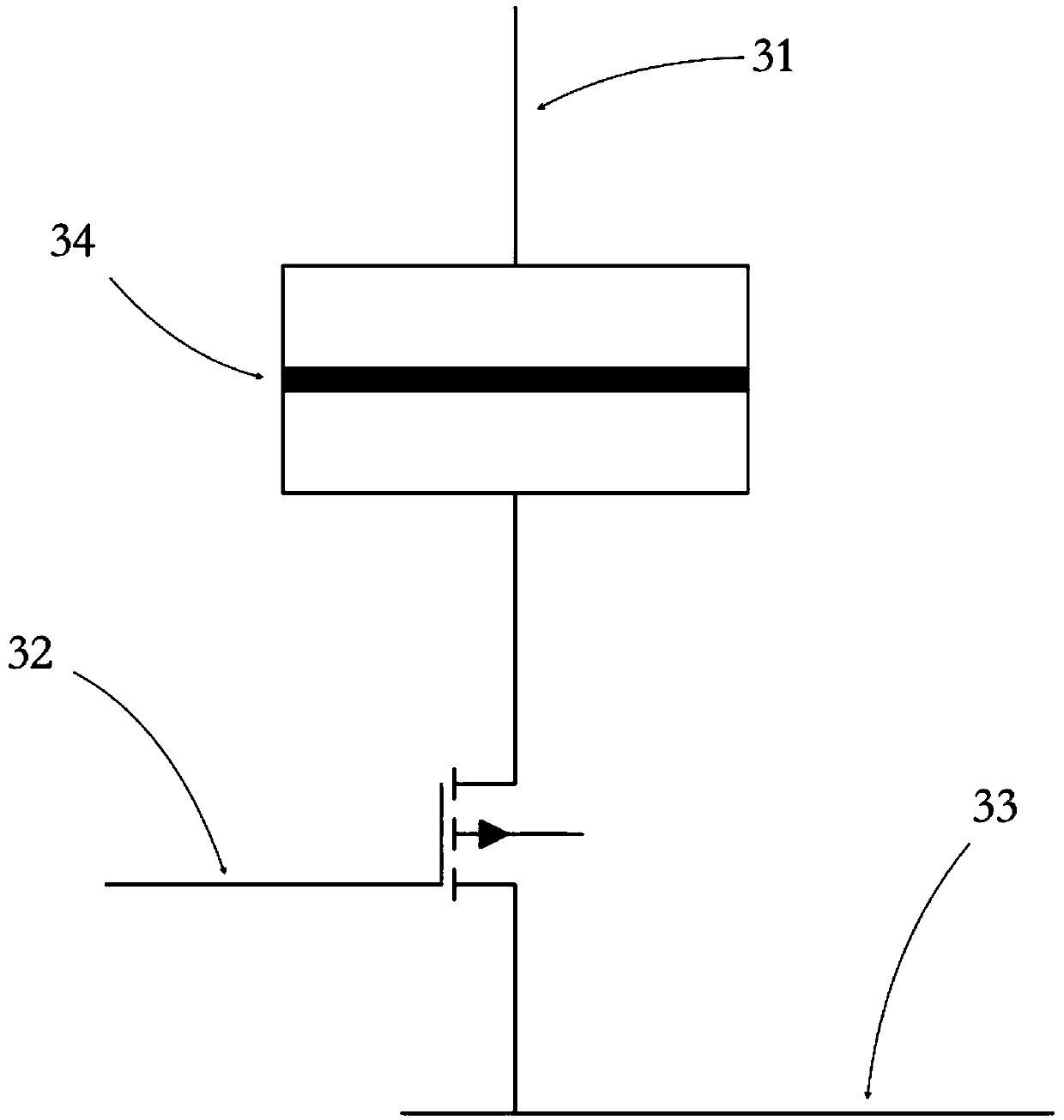

ActiveCN110660421AReduce read and write operationsTroubleshoot data errorsDigital storageMemory cellRandom access memory

The invention provides a magnetic random access memory with an error correction and compression circuit. The magnetic random access memory comprises a memory cell array, an error detection and correction circuit, a compression and decompression circuit, a control circuit, a status bit cache and a data cache, the reading operation steps of the chip are as follows: obtaining first compressed data inthe memory cell array, processing the first compressed data through the error detection and correction circuit, decompressing the processed first compressed data through the compression and decompression circuit to obtain first data, and outputting the first data; and the chip performs the following writing operation steps: compressing second data to be written through the compression and decompression circuit to obtain second compressed data, processing the second compressed data through the error detection and correction circuit, and writing the processed second compressed data into the memory cell array.

Owner:SHANGHAI CIYU INFORMATION TECH

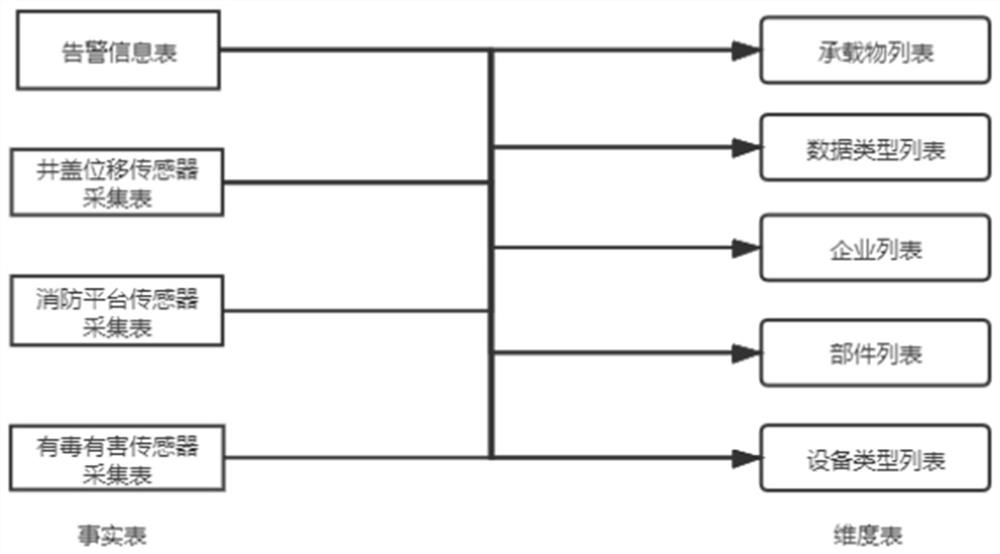

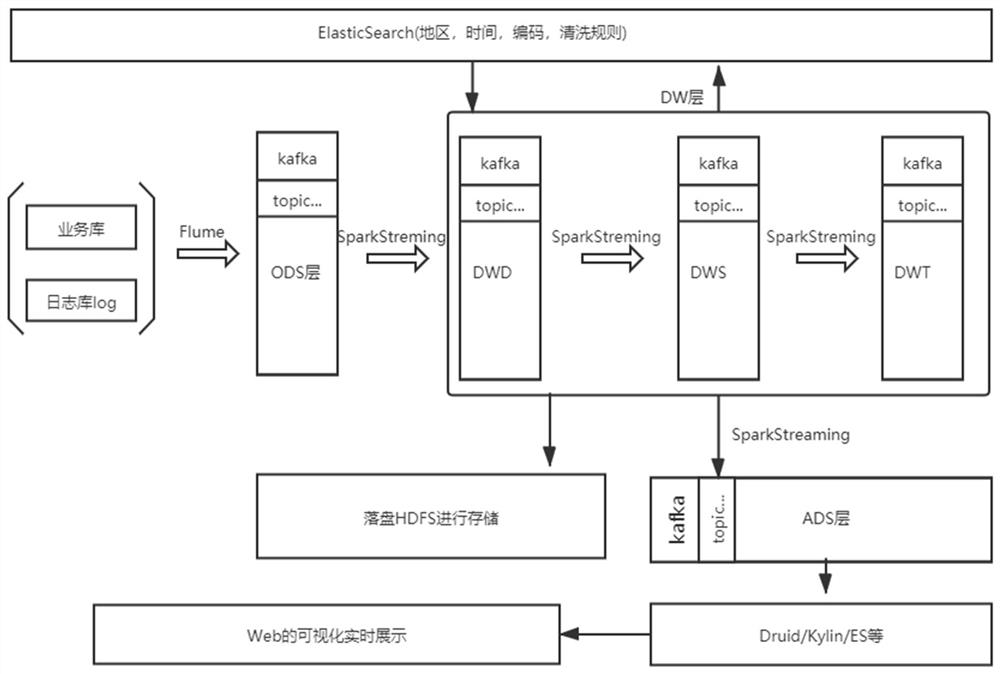

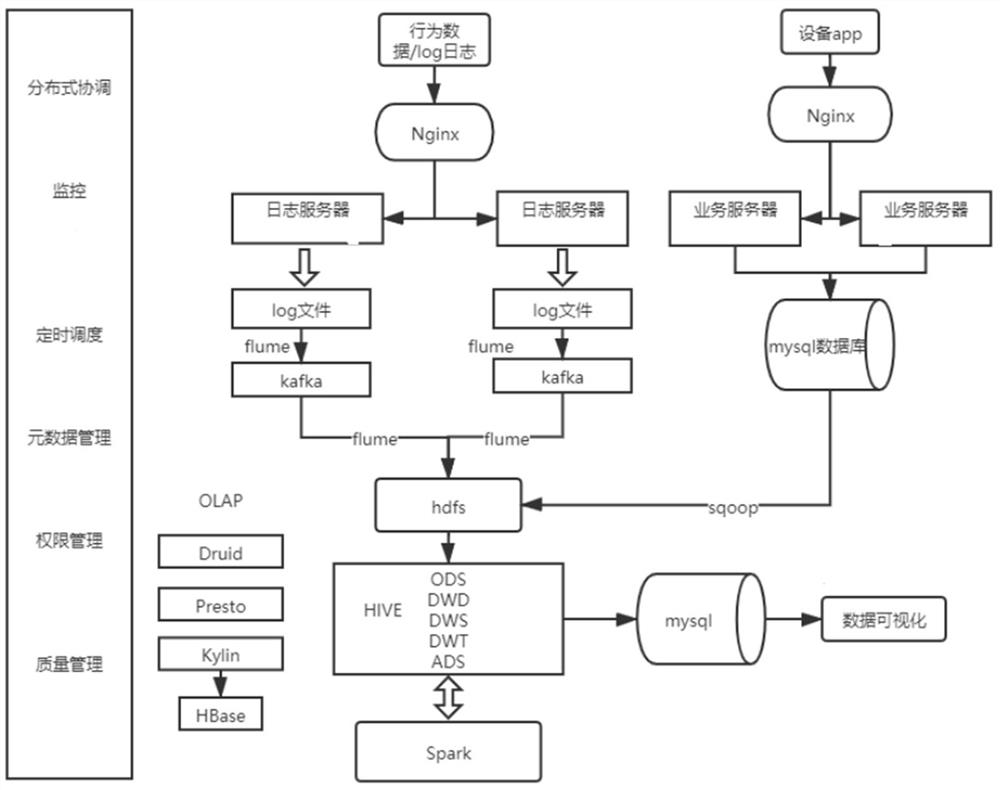

Data warehouse system based on urban brain

InactiveCN112527886AReduce file read and write ioImprove efficiencyDatabase management systemsMulti-dimensional databasesDistributed File SystemOnline analysis

The embodiment of the invention provides a data warehouse system based on an urban brain. The data warehouse system based on the urban brain comprises a distributed file system based on Hadoop, a dataETL, a five-layer data warehouse, online analysis processing, a distributed computing engine based on Hadoop and metadata. According to the method, a Hadoop-based distributed file system and a computing engine are adopted to construct a distributed data warehouse system, and storage, unified processing and analysis are performed on multivariate heterogeneous data; the data warehouse is reasonablylayered, the reuse rate of the data is improved, it is basically guaranteed that the data of the layer in the data warehouse depends on the data of the upper layer to be obtained, and repeated workloads caused by new requirements every time are avoided.

Owner:BEIJING ZHONGHAIJIYUAN DIGITAL TECH DEV CO LTD

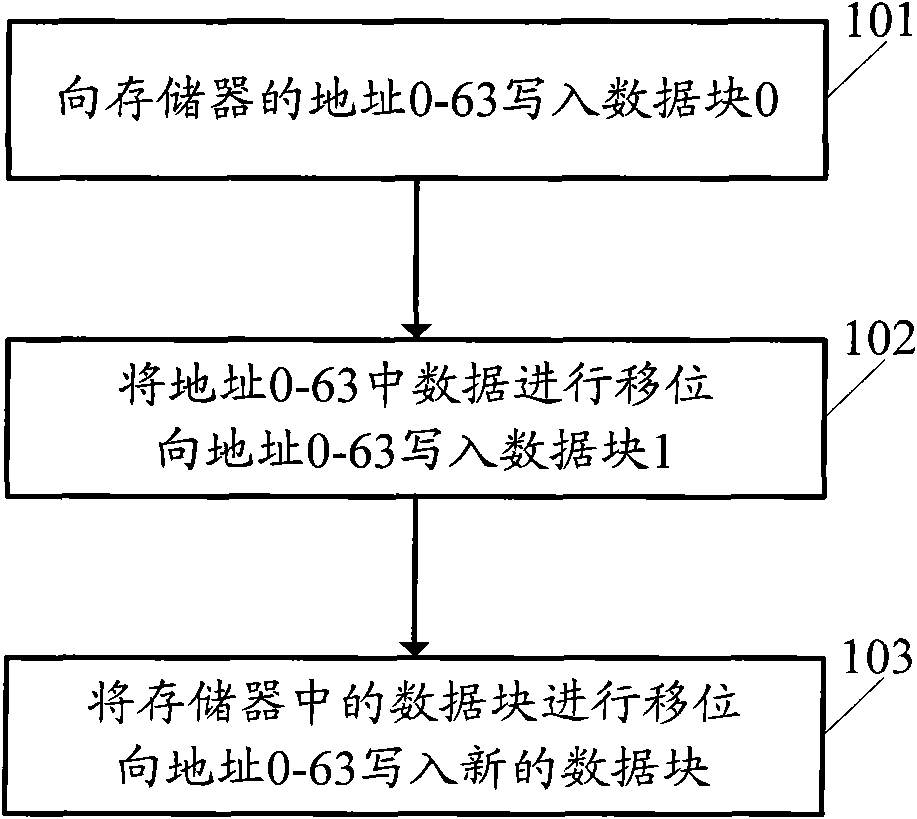

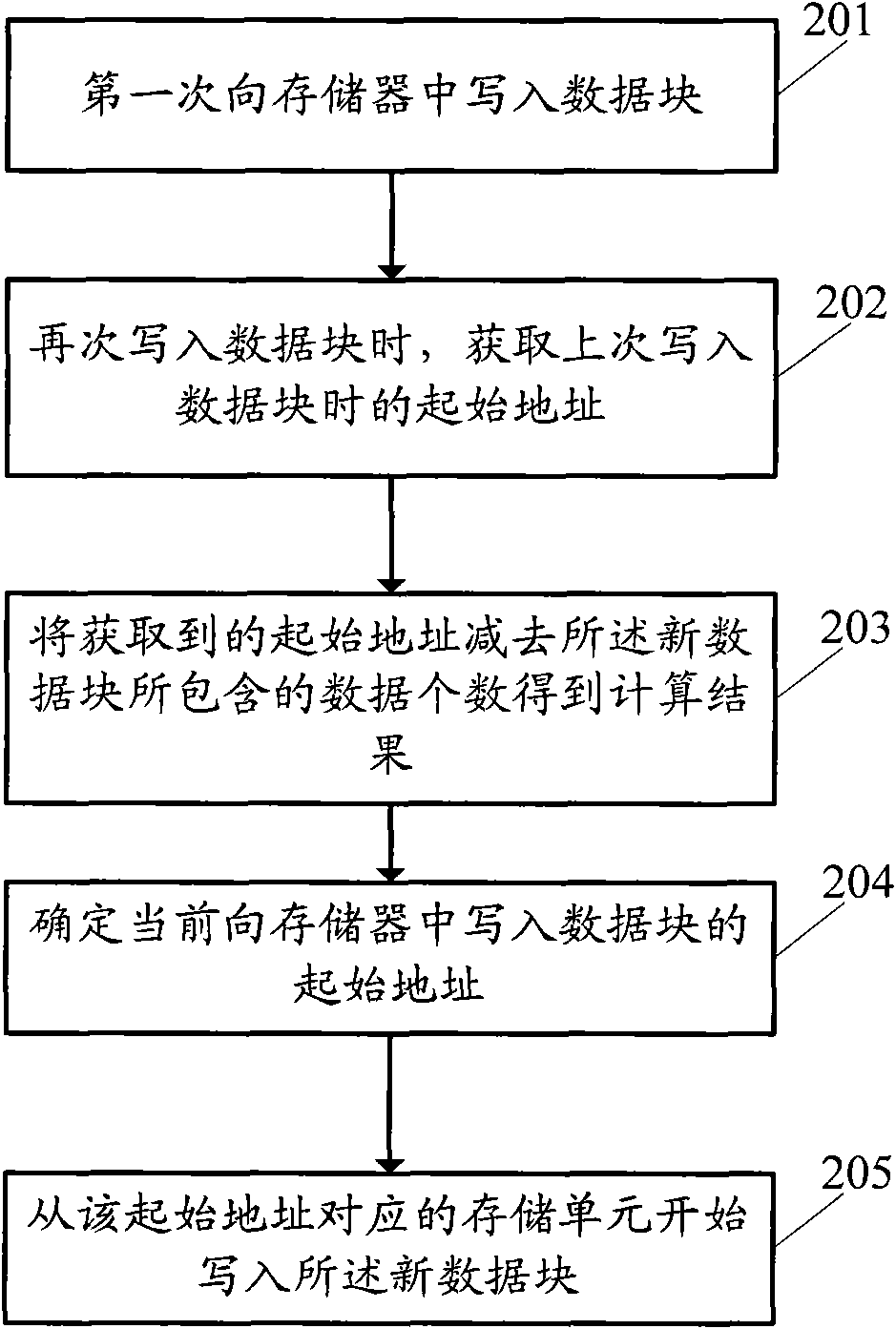

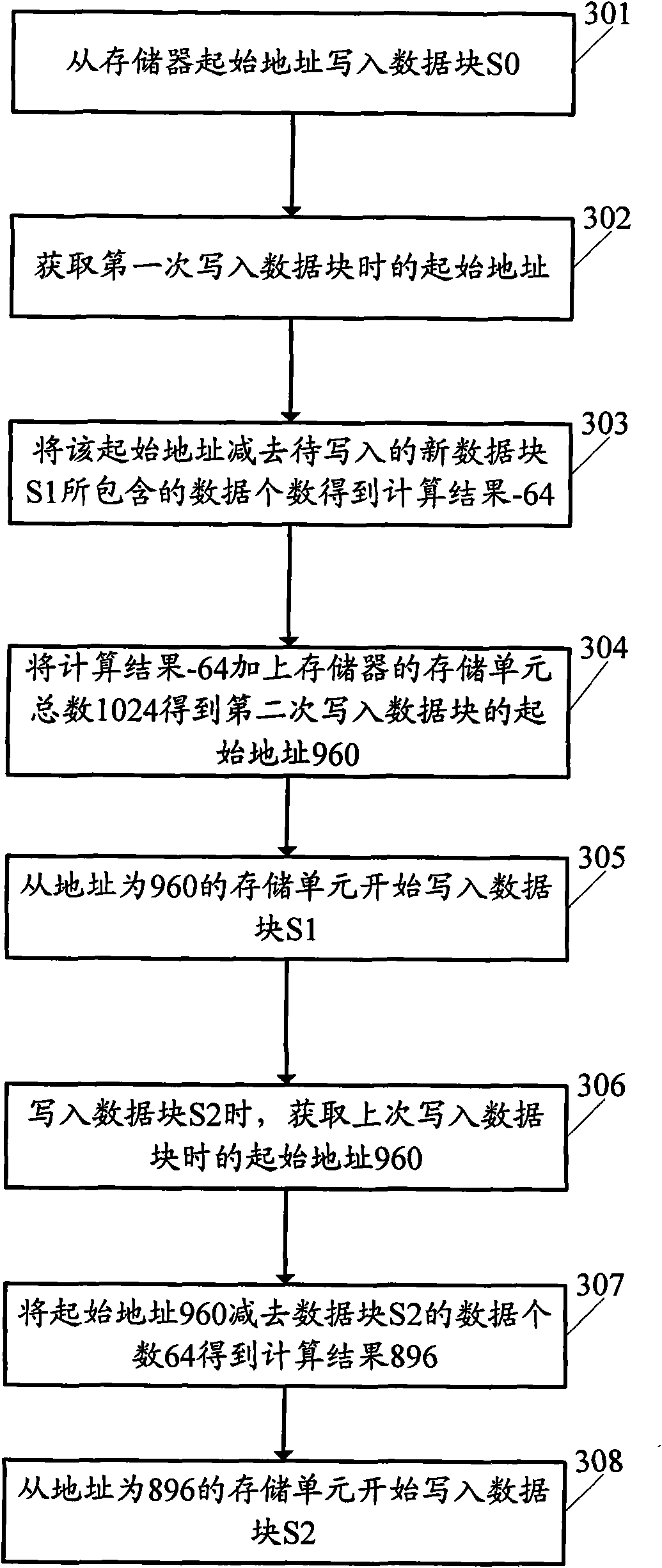

Data storage method and device

InactiveCN101556556AReduce reading and writingShorten operation timeEnergy efficient ICTMemory adressing/allocation/relocationData storeOperating system

The invention discloses a data storage method and a device. The method comprises the following steps of: obtaining an initial storage address of a previous data block of the current data block to be written; subtracting the size of the current data block to be written from the initial storage address and obtaining an intermediate result; if the intermediate result is less than 0, mapping the intermediate result into an effective address of a memory and taking the mapped result as the initial storage address of the current data block to be written; if the intermediate result is more than or equal to 0, directly taking the intermediate result as the initial storage address of the current data block to be written; and starting to write in the current data block to be written from a storage unit corresponding to the initial storage address of the current data block to be written. The method provided by the embodiment of the invention can reduce read-write times to the memory, thus reducing the power consumption when writing data in the memory.

Owner:ACTIONS ZHUHAI TECH CO

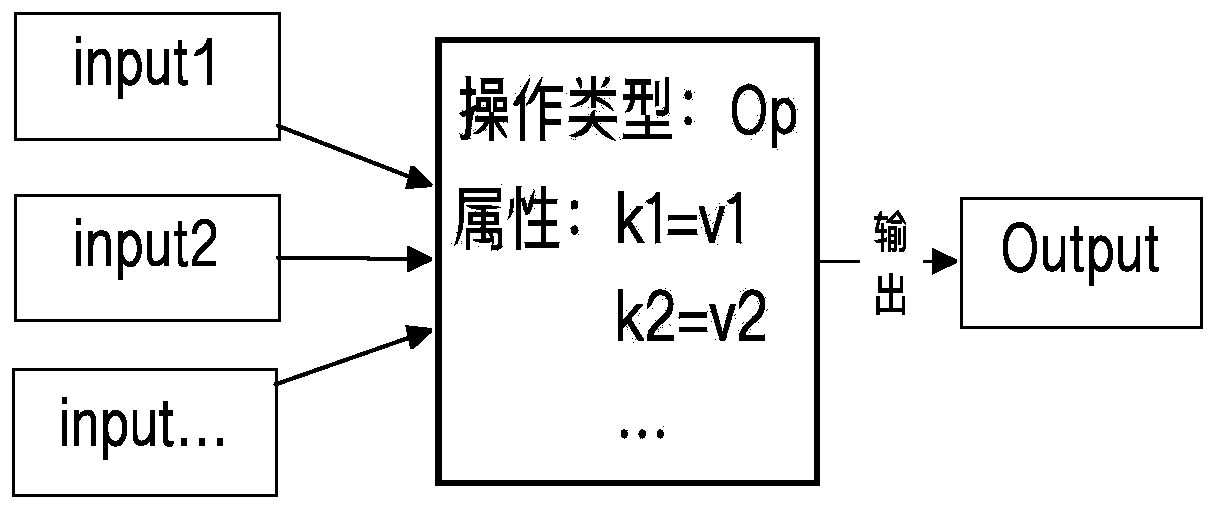

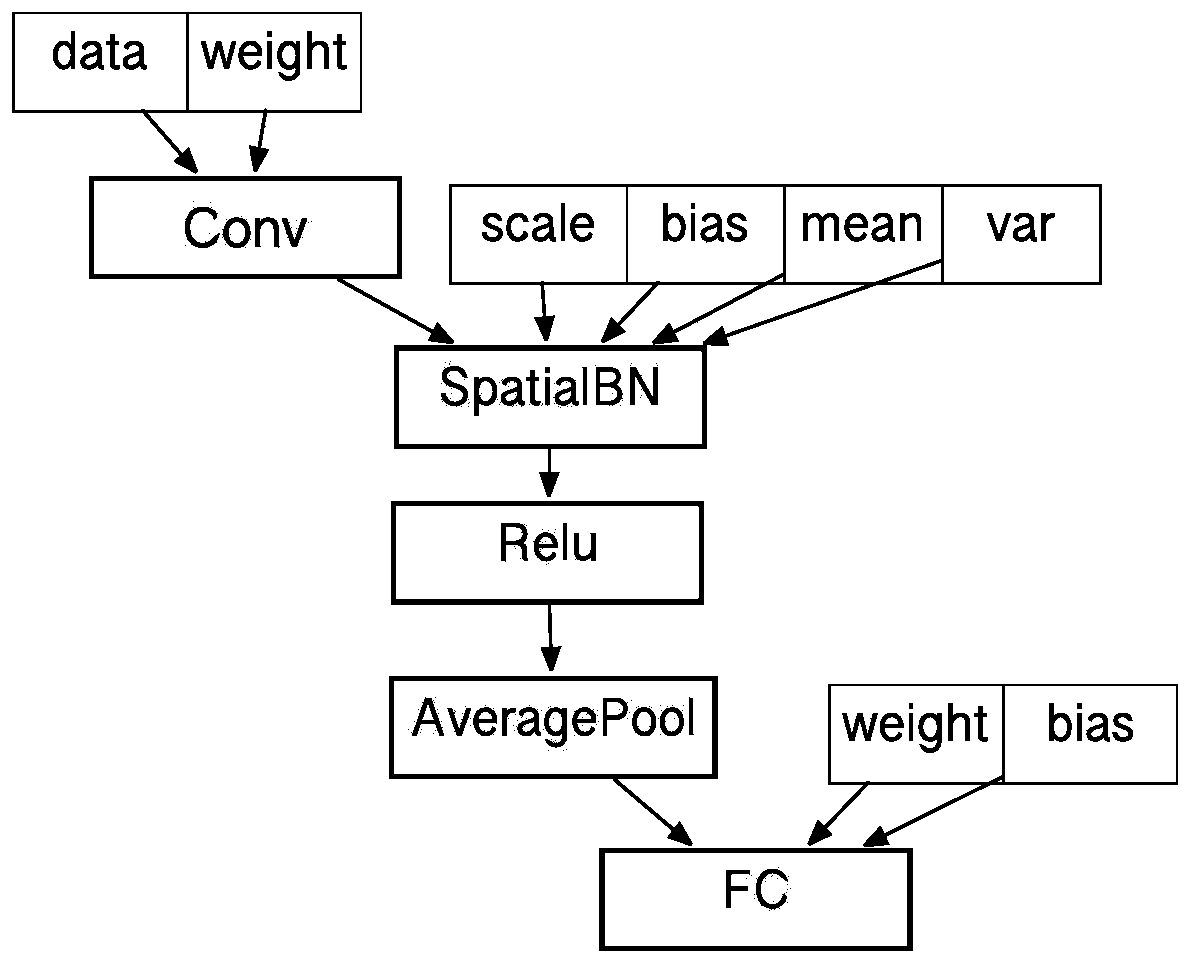

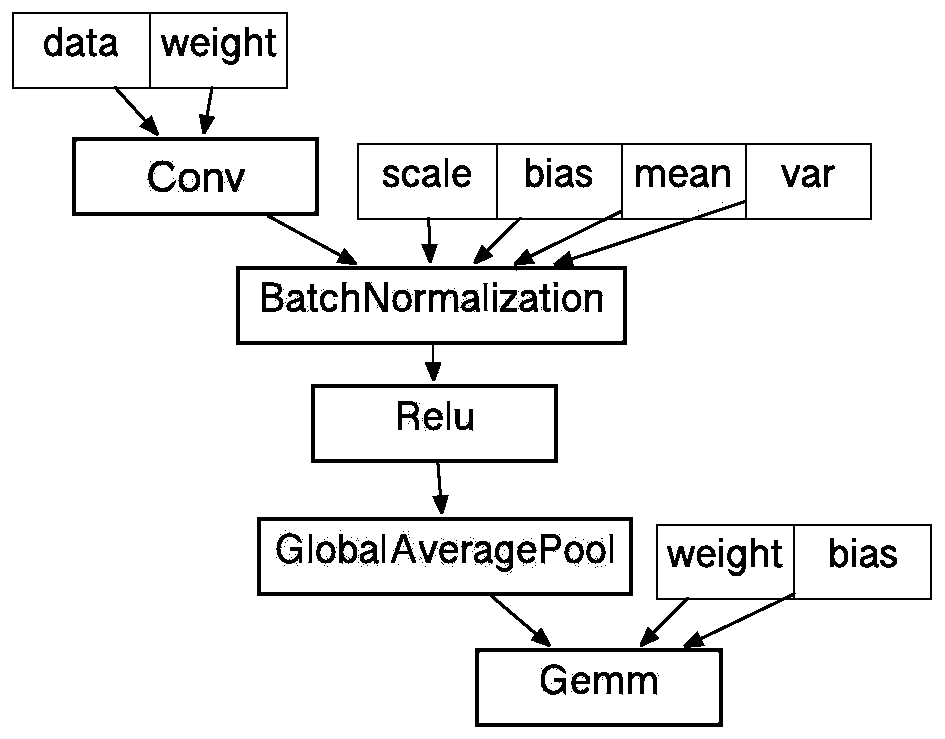

Model conversion method and system between deep learning frameworks based on minimum execution cost

ActiveCN110532291AReduce reading and writingLower execution costDigital data information retrievalMachine learningOperational transformationTheoretical computer science

The invention provides a model conversion method and system between deep learning frameworks based on minimum execution cost, and the method comprises the steps: adding an operation conversion cost value on the basis of the original technology, considering the fusion condition of a plurality of independent operations, and supplementing fusion mapping. The specific implementation of the model is embodied in the operation conversion of forming the model, and in the stage, a converted model structure with the lowest execution cost is obtained through a dynamic programming algorithm according to amodel conversion mapping table. According to the method, the read-write process of the intermediate results of a plurality of operation rooms can be reduced through operation fusion, so that the calculation performance and the storage space are optimized, and the execution cost of the converted model is reduced. Meanwhile, when multiple fusion options exist, a model conversion method with the minimum execution cost is obtained through a dynamic programming algorithm.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

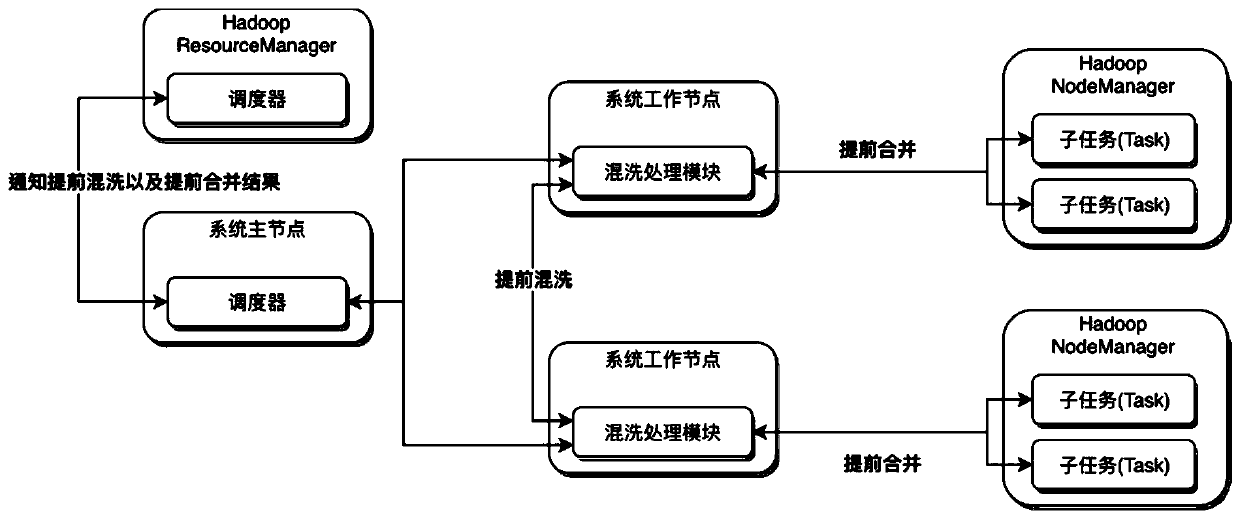

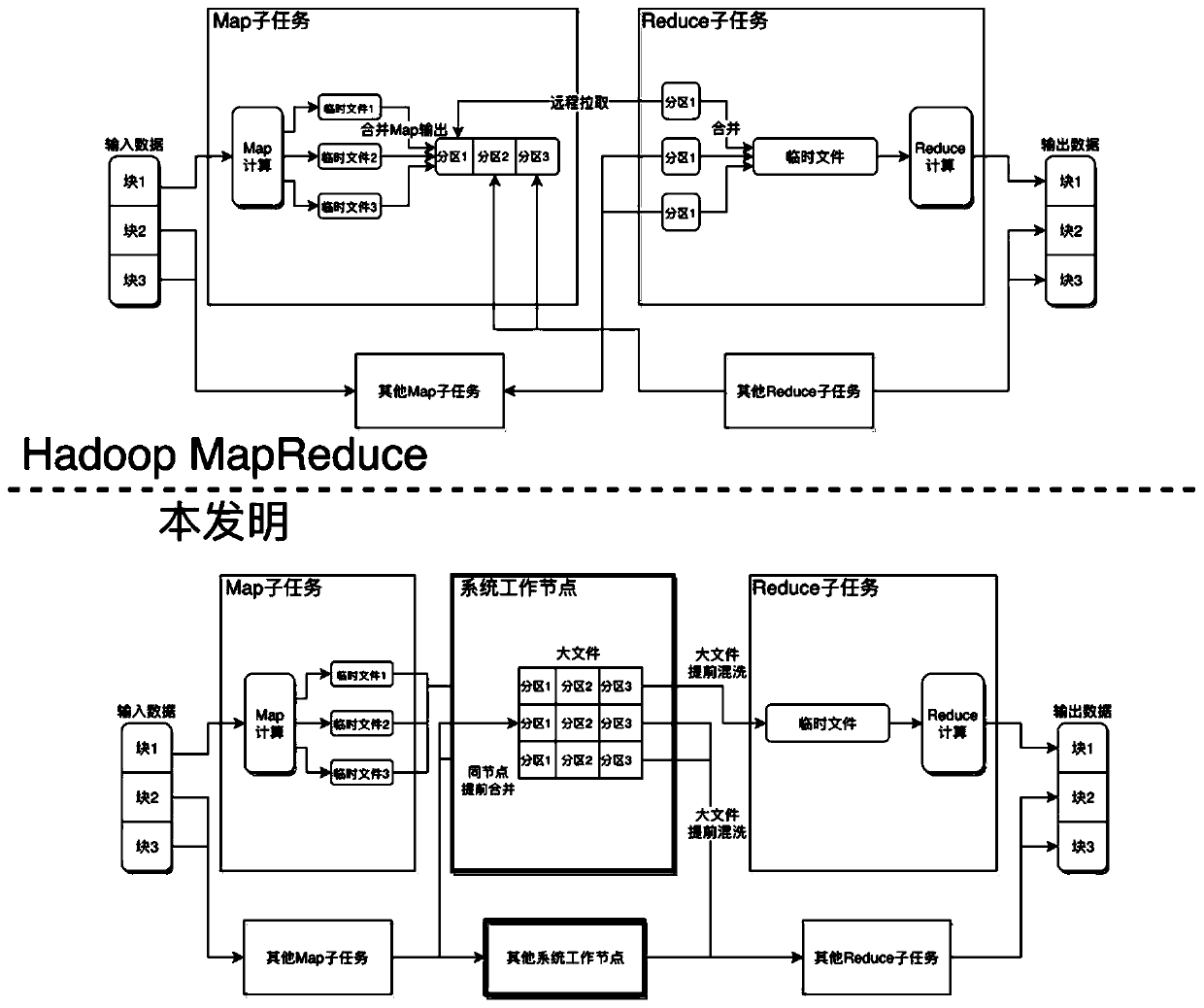

Optimization system and method for shuffling stage in Hadoop MapReduce

ActiveCN110502337AReduce the number of reads and writesOptimizing Tail LatencyResource allocationEnergy efficient computingTask completionInter-process communication

The invention provides an optimization system for a shuffling stage in Hadoop MapReduce. The optimization system runs in a working node and a main node of the Hadoop MapReduce in a daemon process mode, and communicates with the Hadoop MapReduce in an inter-process communication and remote process calling mode. Meanwhile, the invention provides an optimization method based on the optimization system. After the optimization system provided by the invention is operated, all intermediate data in Hadoop MapReduce task operation is taken over; and by means of pre-merging and pre-shuffling, on one hand, the idle network bandwidth in the Map stage is reasonably utilized, and on the other hand, small file reading and writing are effectively reduced after intermediate data in the same node is merged, so that the MapReduce task completion time is optimized.

Owner:SHANGHAI JIAO TONG UNIV

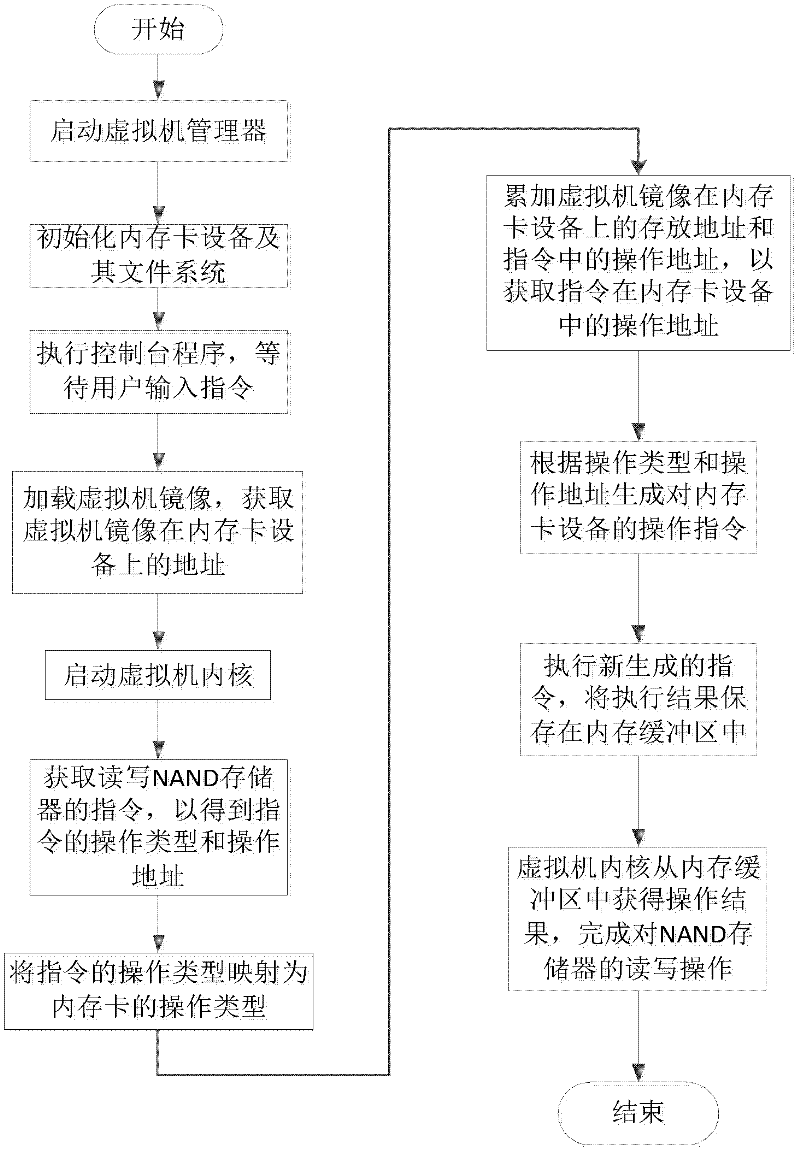

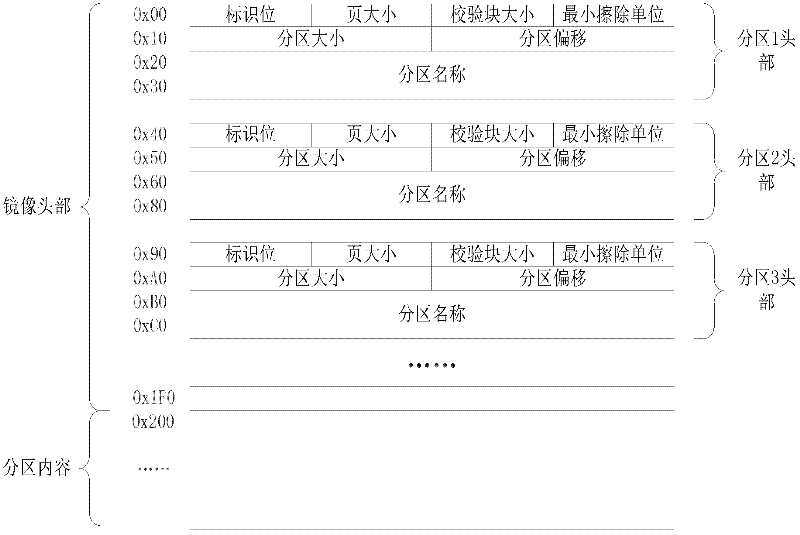

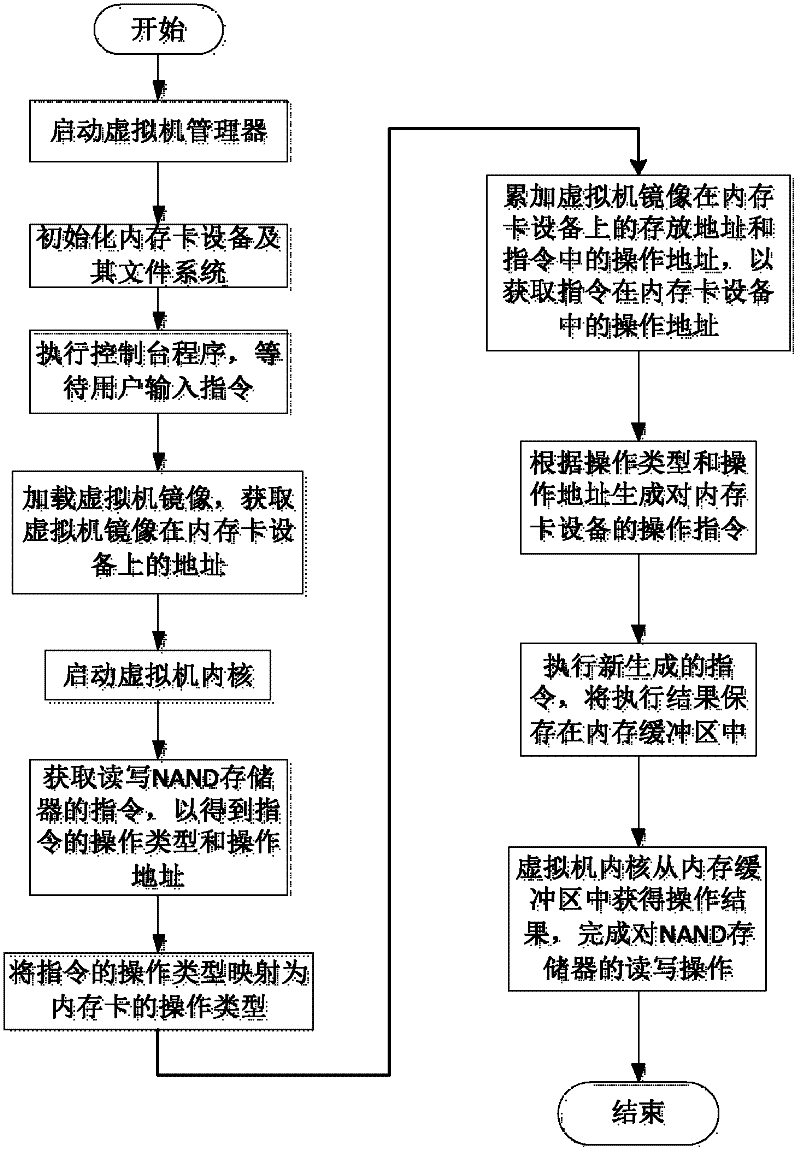

Method for replacing NAND memorizer by using virtual machine mirror image in embedded system

InactiveCN102521013ASave storage spaceSave spaceSoftware simulation/interpretation/emulationFile systemUser input

The invention discloses a method for replacing an NAND memorizer by using a virtual machine mirror image in an embedded system, which comprises steps of starting a virtual machine supervisor, initializing memory card equipment and file systems of the equipment of an embedded virtual machine, executing control console procedures of the embedded virtual machine, waiting users for inputting loading orders of the virtual machine, loading the virtual machine mirror image according to the loading orders of the virtual machine, obtaining storage address of the virtual machine mirror image on the memory card equipment according to offset address in the head of the virtual machine mirror image, starting inner core of the virtual machine, obtaining orders for reading and writing the NAND memorizer in NAND memorizer drive of the inner core of the virtual machine so as to obtain operation types and operation address of the orders, enabling the operation types of the orders to be mapped to be the operation types of a memory card, accumulating the storage address of the virtual machine mirror image on the memory card equipment and the operation address of the orders so as to obtain the operation address of the orders in the memory card equipment, and generating operation orders of the memory card according to the operation types of the memory card and the operation address of the orders in the memory card equipment.

Owner:HUAZHONG UNIV OF SCI & TECH

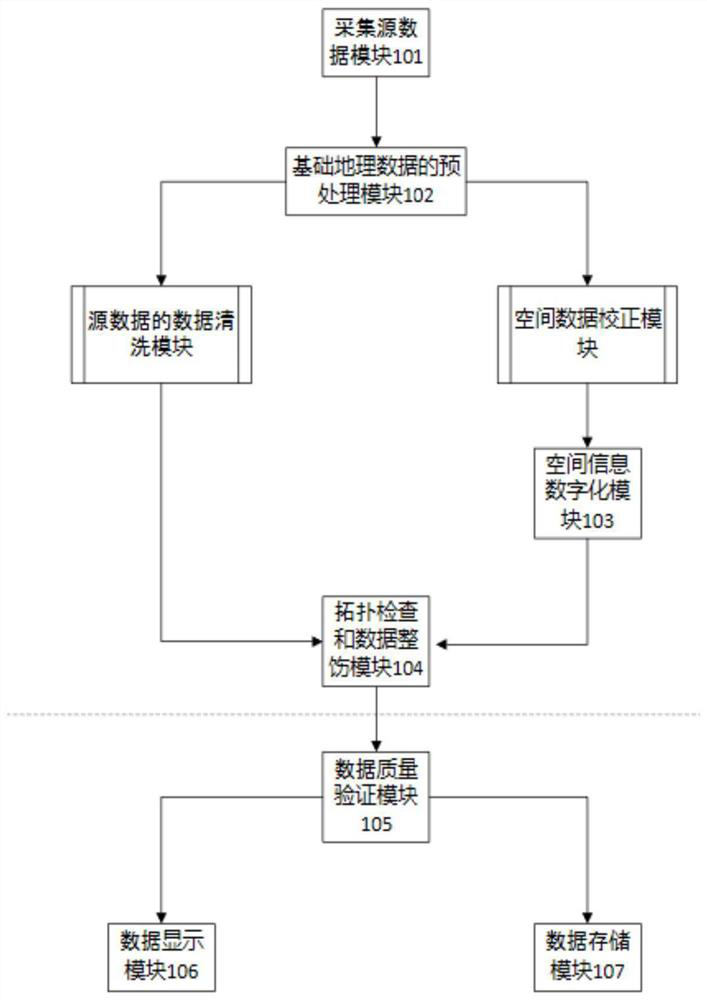

Standardized basic geographic data making method and system

PendingCN111797083AAvoid ambiguityImprove positioning reliabilityGeographical information databasesSpecial data processing applicationsData displayData acquisition

The invention relates to the technical field of vector data editing of maps. The invention discloses a standardized basic geographic data making method and system. The normalized basic geographic datamaking system comprises a source data acquisition module, a basic geographic data preprocessing module, a spatial information digitization module, a topology check and data rectification module, a data quality verification module, a data display module and a data storage module. The basic geographic data made by the method can provide rich space analysis support for mobile signaling data analysis, avoids cutting of space entities and ambiguity of semantics, and can be used for improving base station cell positioning reliability, semantic enhancement analysis and the like. According to the method, standardized basic geographic data making requirements are provided, multi-source data can be integrated, so that a consistent API can be used during mobile signaling data analysis, and the problems of reading, writing and adaptation of data from different sources are reduced.

Owner:成都方未科技有限公司

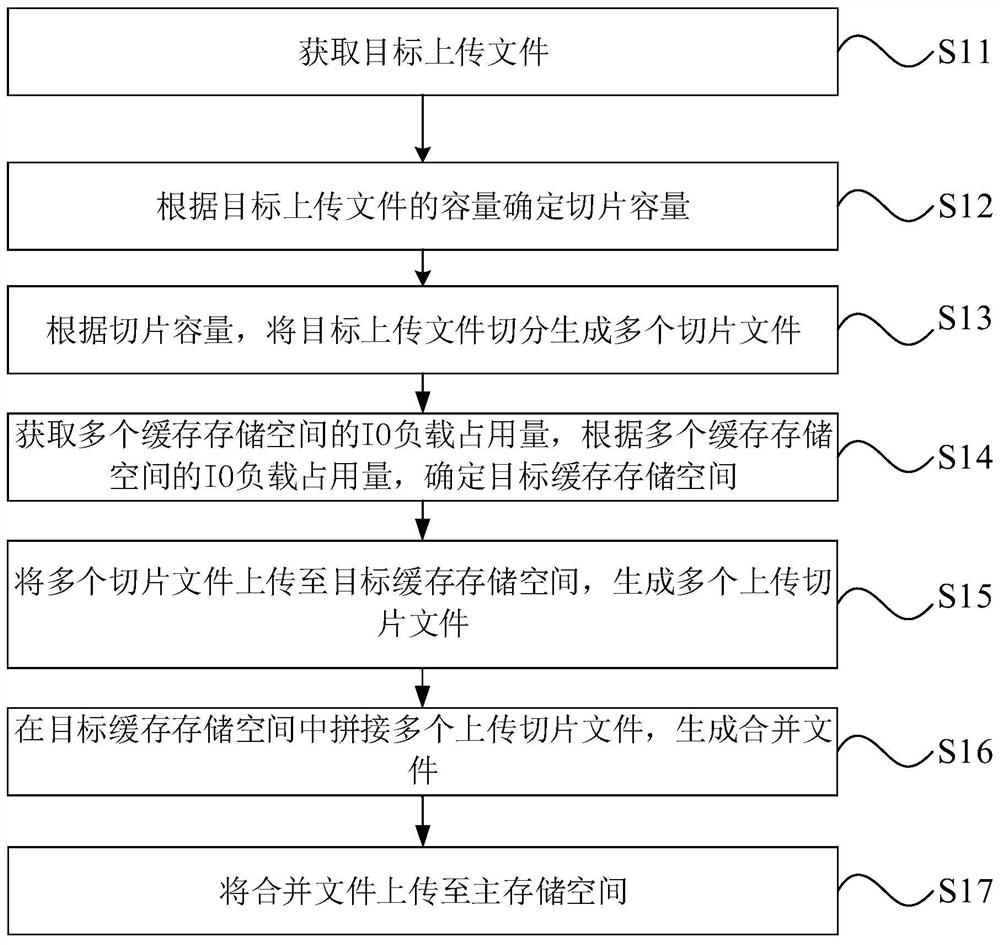

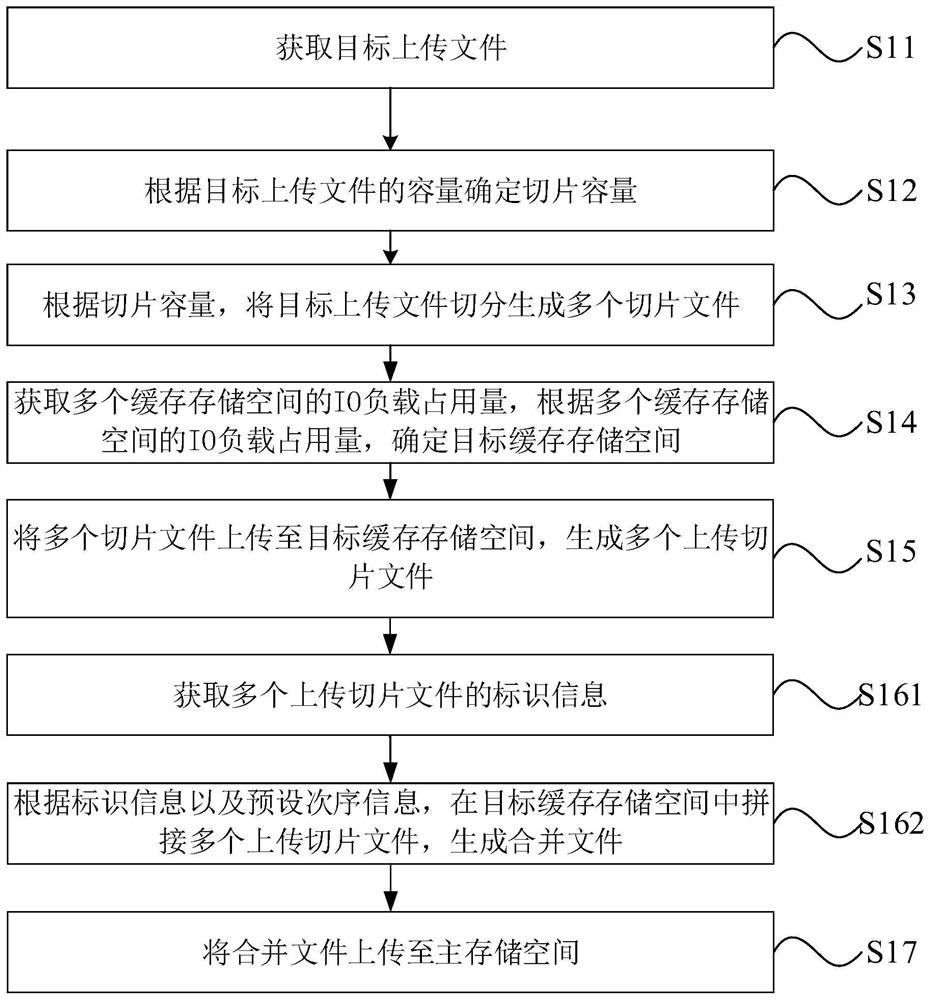

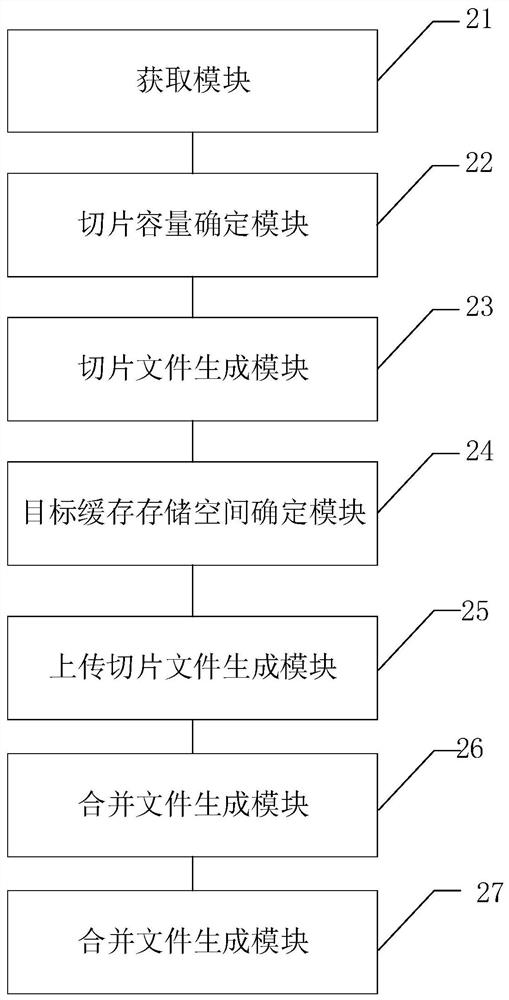

File uploading method and device and computer equipment

PendingCN112199342AIncrease upload speedImprove reliabilityDigital data information retrievalSpecial data processing applicationsComputer hardwareData transport

The invention discloses a file uploading method and device and computer equipment, and the method comprises the steps: determining the slice capacity through combining with the capacity of a target uploaded file; segmenting the target uploaded file into a plurality of slice files according to the slice capacity; acquiring IO load occupancy of the plurality of cache storage spaces, and determininga target cache storage space according to the IO load occupancy; uploading the plurality of slice files to a target cache storage space to generate a plurality of uploaded slice files; splicing a plurality of uploaded slice files in the target cache storage space to generate a merged file; and uploading the merged file to a main storage space. Uploading speed and reliability of the target uploadedfile are improved, the possibility of overtime data transmission is reduced, the slice files are stored in the target cache storage space during uploading, and is written into the main storage spaceafter being merged, so that unnecessary reading and writing of the main storage space are reduced.

Owner:TSD ELECTRONICS TECH

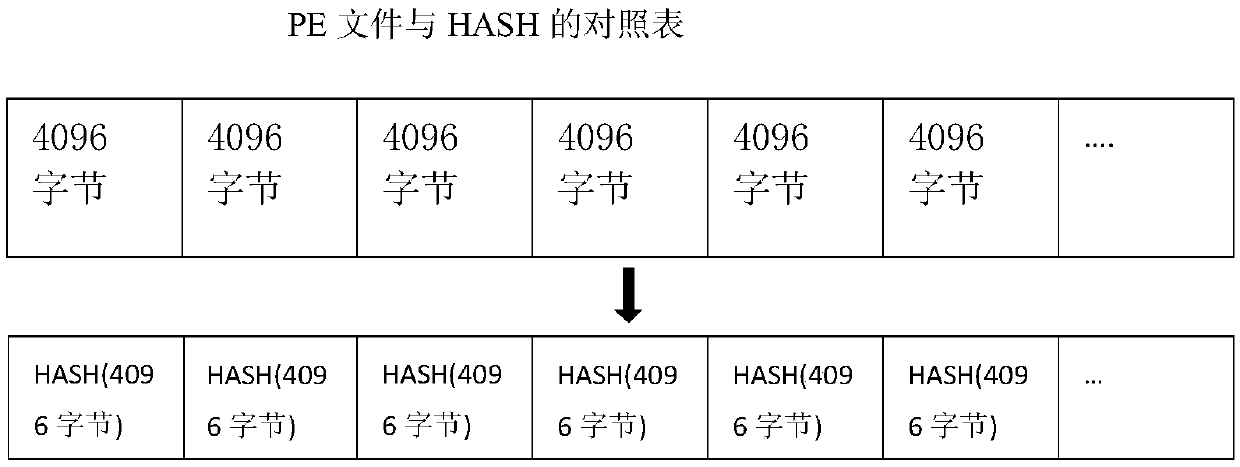

A method for quickly realizing file identification under a host white list mechanism

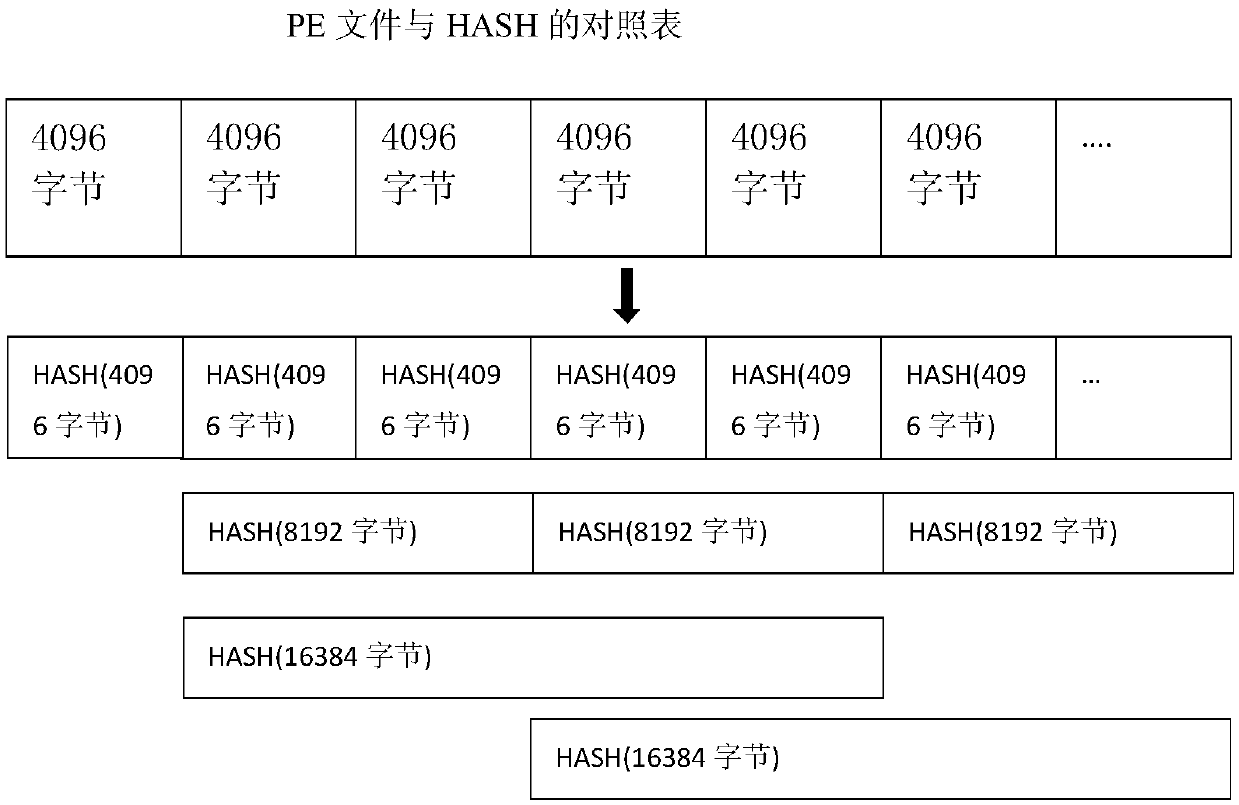

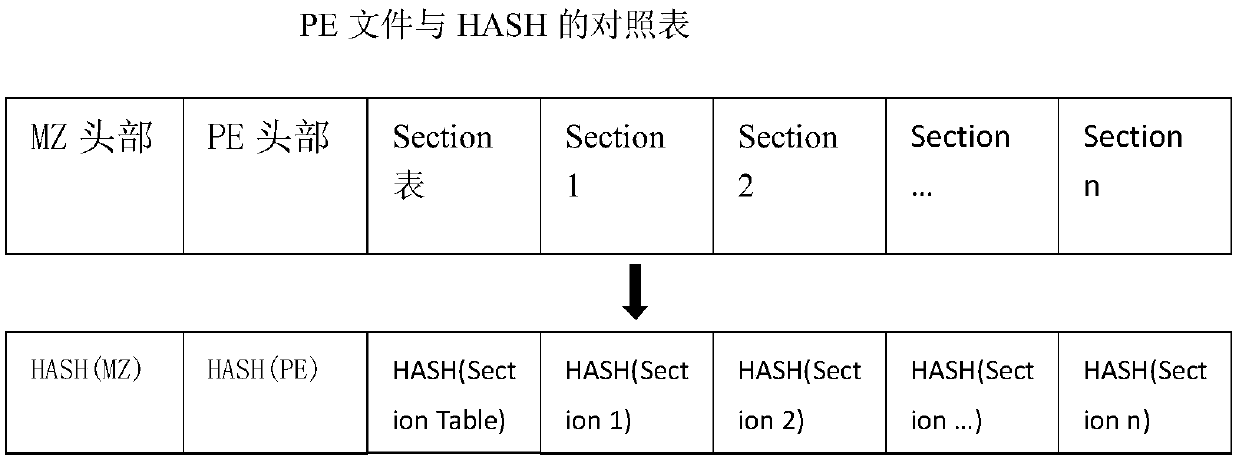

ActiveCN109558752AEasy to identifySolve process loadingDigital data protectionComputer hardwarePaging

The invention discloses a method for quickly realizing file identification under a host white list mechanism. The method comprises the following steps of step 1 a first fragmentation algorithm; step 2a fragmentation algorithm 2 characterized by fragmenting according to a fixed size, combining a plurality of adjacent fragmentations, calculating a HASH value, and recording the HASH value in a database; step 3 a fragmentation algorithm III characterized byperforming fragmentation according to the Section of the PE, combining the attribute field of the Section with the Section data body part, calculating a HASH value, and recording and verifying the HASH value; and step 4 carrying out the fragmentation according to the Section of the PE, and combining the attribute field of the Section with the Section data body part. The beneficial effects of the invention are as follows the problem that the process loading or running speed becomes slow due to the white list software is solved, the system jamming problem caused by existing white list software is solved, the memory paging control over executable files is achieved through kernel programming of Windows, and the HASH value of paging is quickly verified during paging exchange.

Owner:北京威努特技术有限公司 +1

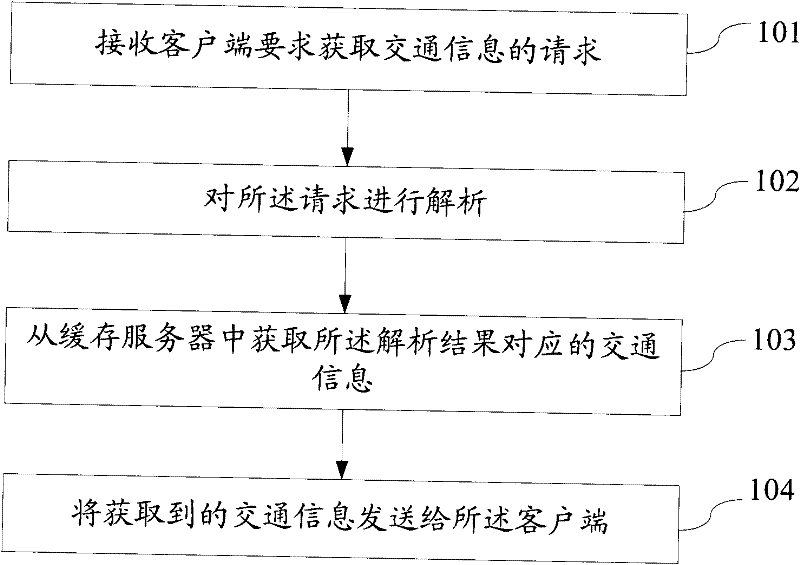

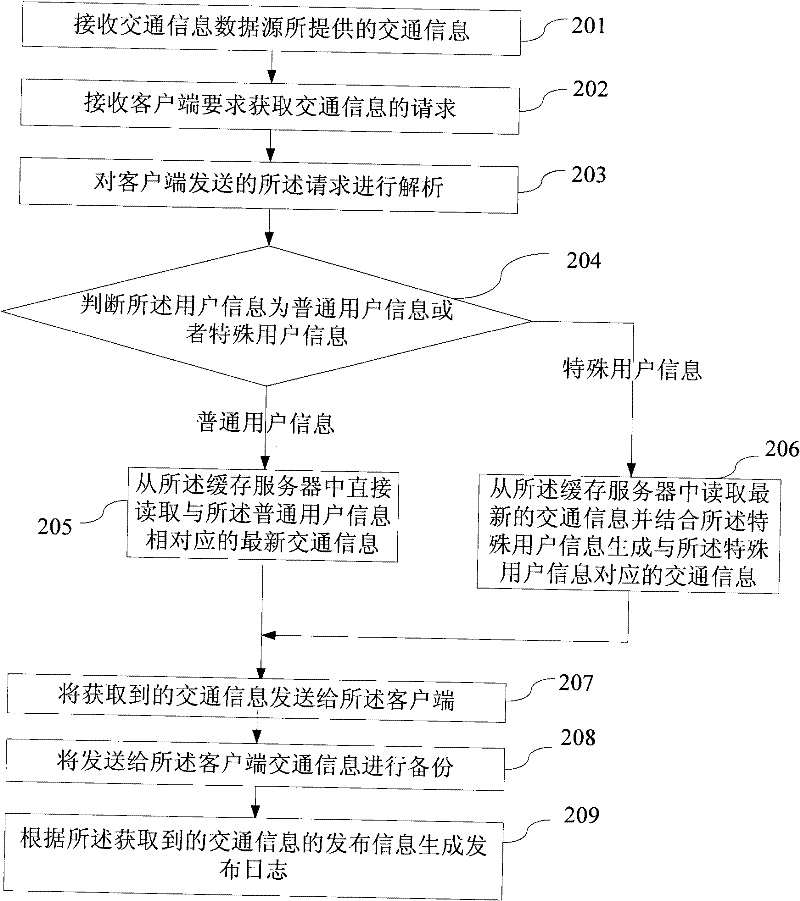

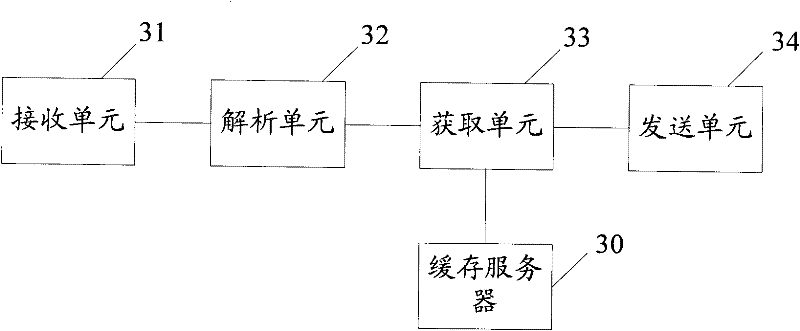

Method and system for releasing traffic information

ActiveCN101887640BShorten the release cycleImprove publishing efficiencyArrangements for variable traffic instructionsBroadcast specific applicationsCache serverDistributed computing

The embodiment of the invention discloses a method and system for releasing traffic information and relates to the communication technical field. The method and system can be used to reduce the release cycle of road information and increase the release efficiency. The method comprises the following steps: receiving a request of a client for obtaining traffic information; analyzing the request; obtaining traffic information corresponding to the analytic result from a cache server; and sending the obtained traffic information to the client. The method and system provided by the embodiment of the invention are suitable to be used to release real-time traffic information to the client.

Owner:CENNAVI TECH

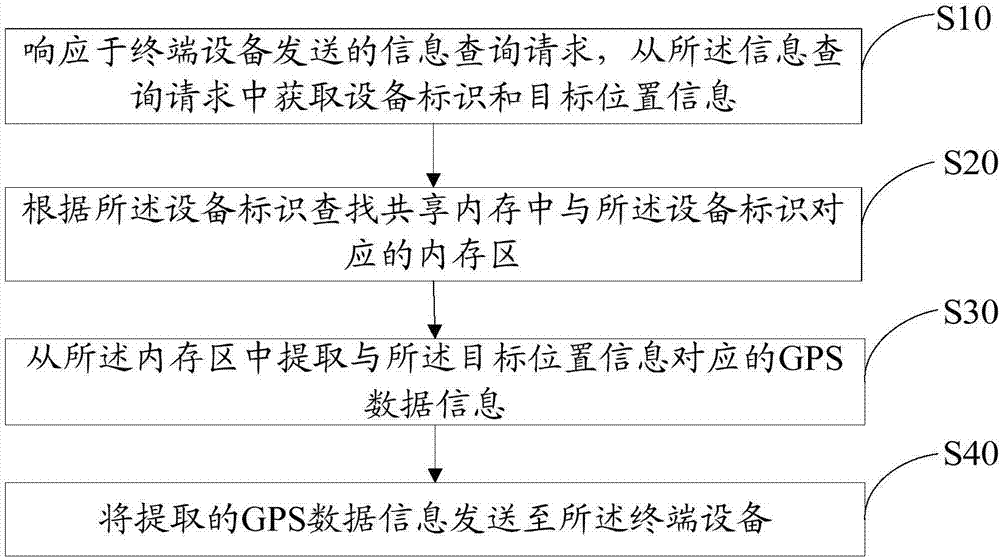

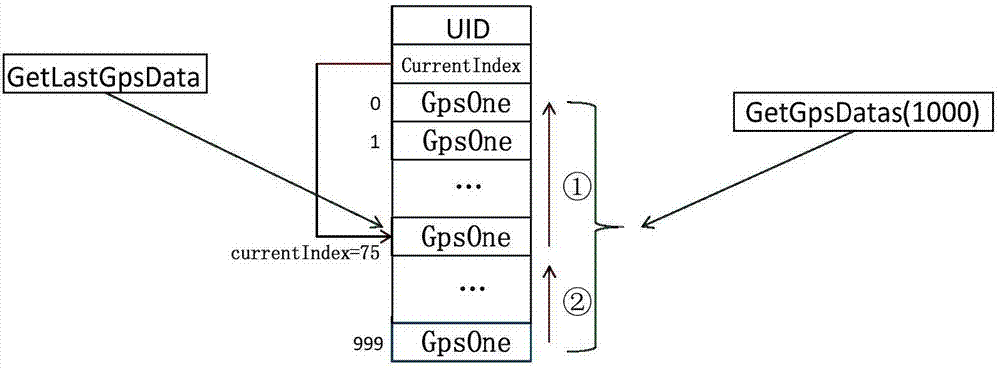

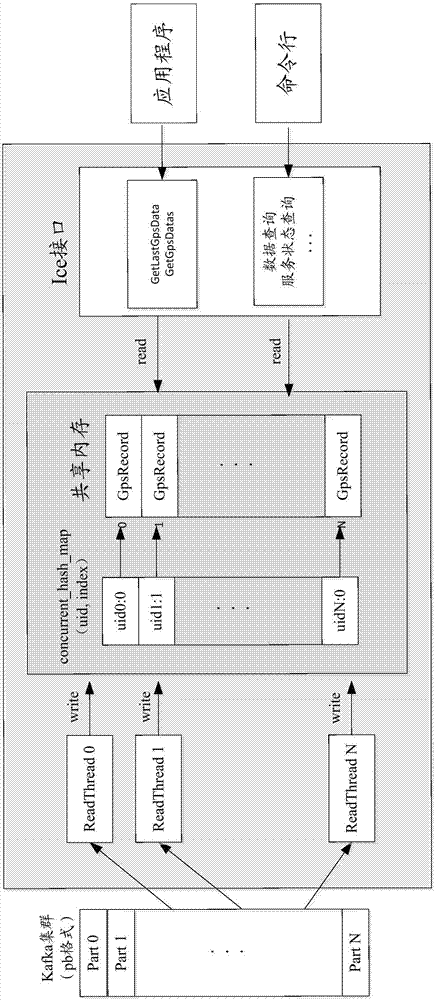

Shared memory-based GPS data information query method and system

ActiveCN106970964AReduce reading and writingImprove service capabilitiesGeographical information databasesSpecial data processing applicationsData informationGps data

Owner:SHENZHEN AUTONET CO LTD

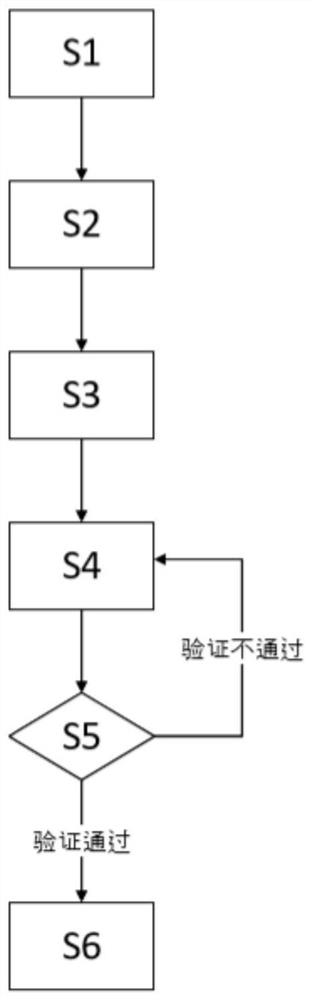

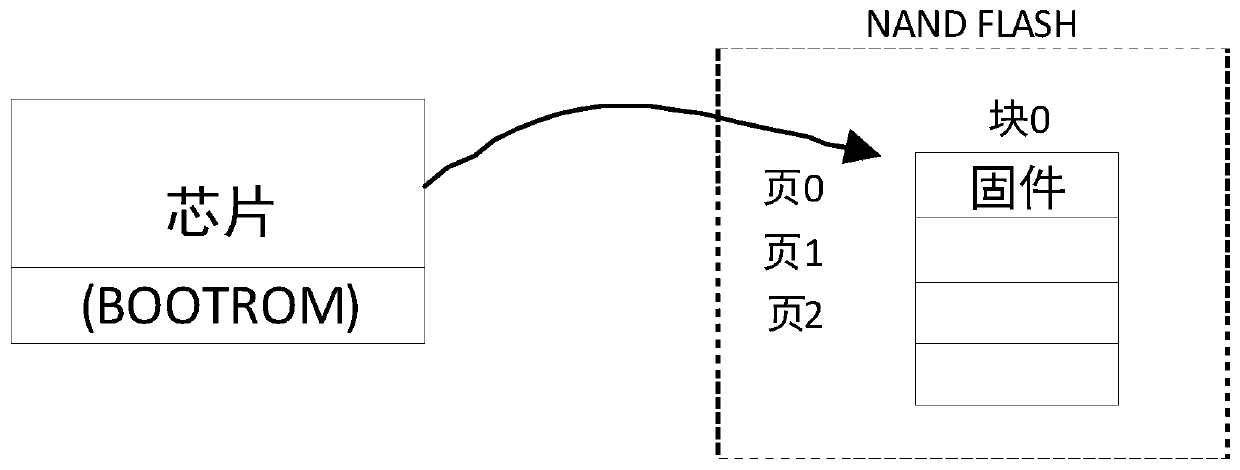

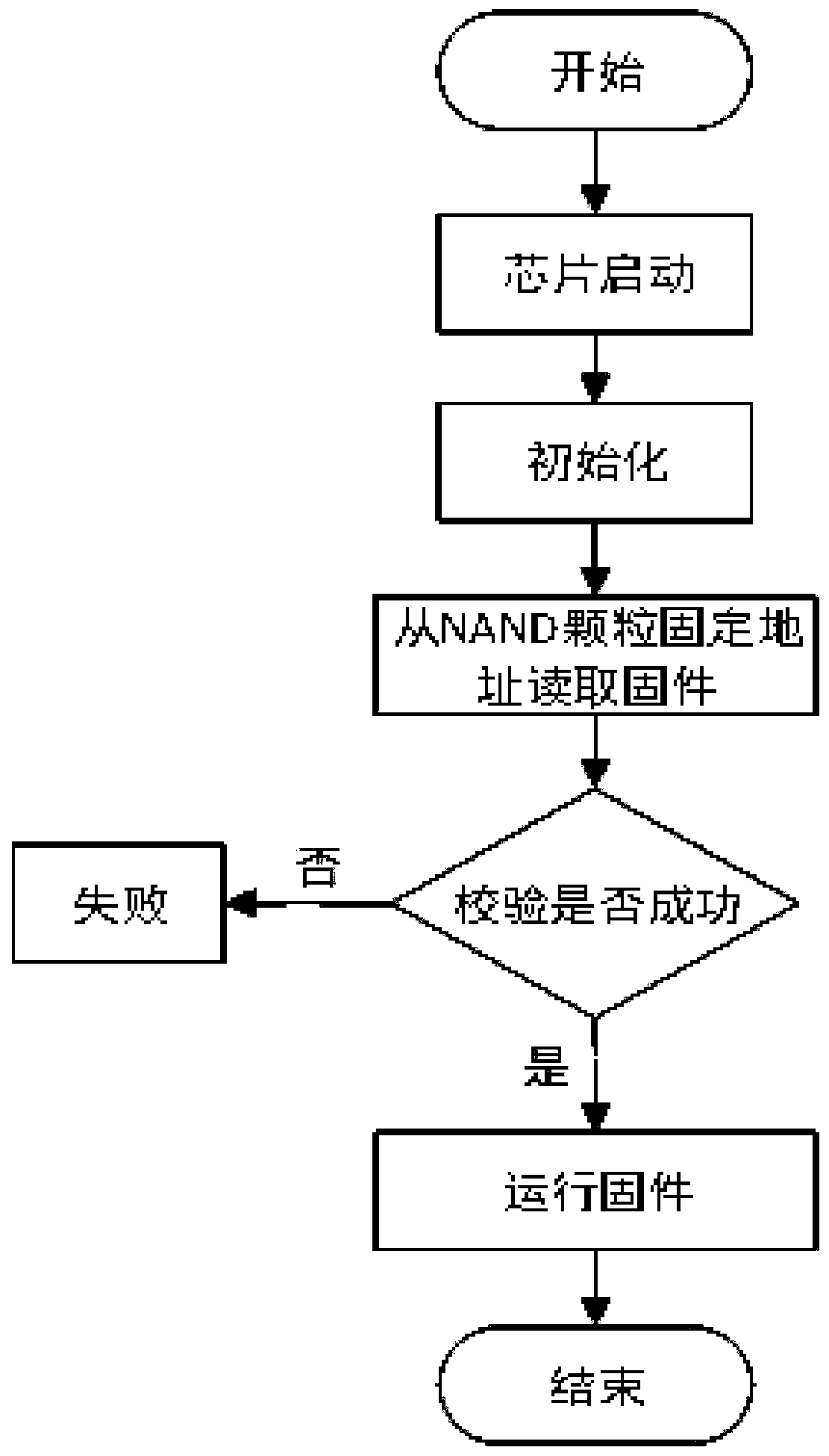

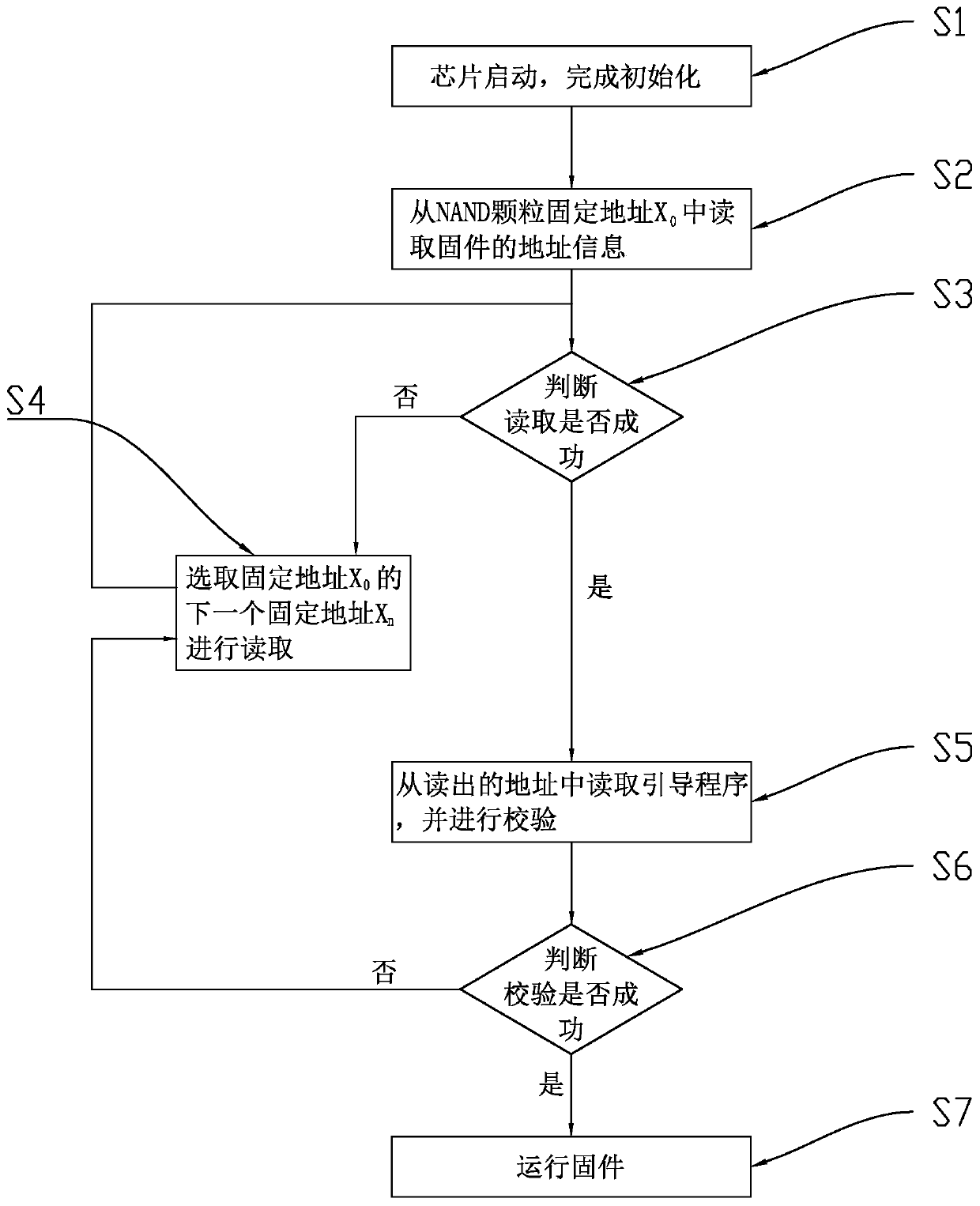

Method and system for effectively prolonging service life of NAND startup

PendingCN110297604AReduce reading and writingAvoid risk of errorInput/output to record carriersSolid-state driveBooting

The invention relates to a method and a system for effectively prolonging the service life of NAND startup. The method comprises the steps that S1, starting a chip, and completing initialization; s2,reading address information of the firmware from the NAND particle fixed address X0; s3, judging whether reading is successful or not; if yes, entering S5; if not, entering S4; s4, selecting the nextfixed address Xn of the fixed address X0 for reading, and returning to the step S3; s5, reading the bootstrap program from the read address, and verifying the bootstrap program; s6, judging whether the verification is successful or not; if yes, entering S7; if not, returning to S4; s7, running firmware. Through two-stage mapping, the read fixed address is subjected to multiple backups, and firmware is separated from the storage address, so that repeated erasing and writing of the same address by NAND particles are reduced, the service life of chip NAND starting is prolonged, and the service time of the solid state disk is prolonged.

Owner:SHENZHEN YILIAN INFORMATION SYST CO LTD

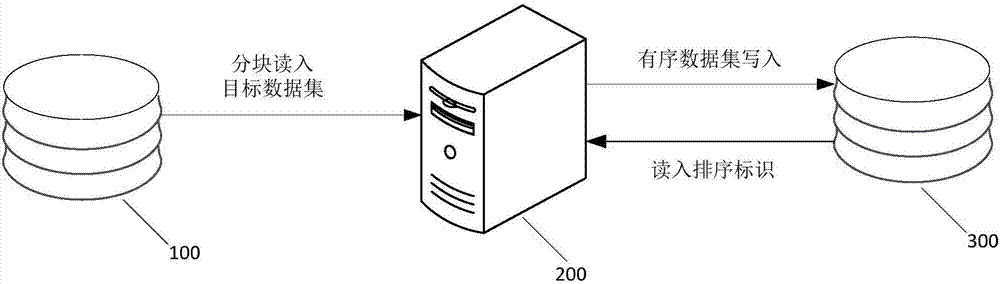

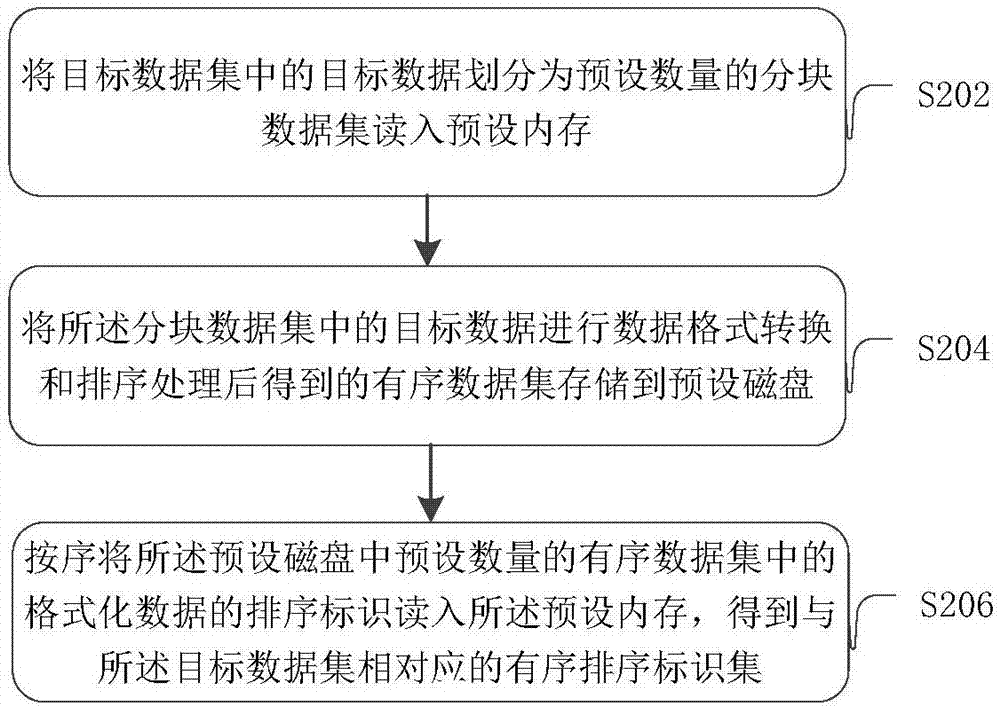

Data processing method and device and server

ActiveCN107544753AImprove processing efficiencyReduce reading and writingInput/output to record carriersData setData mining

The embodiment of the invention provides a data processing method and device and a server. The method comprises the steps that target data in a target dataset is divided into block datasets in a preset quantity, and the block datasets are read into a preset memory; orderly datasets obtained after data format conversion and ranking processing are performed on the target data in the block datasets are stored into a preset disk; and ranking identifiers of the formatted data in the orderly datasets in the preset quantity in the preset disk are read into the preset memory in sequence, and an orderly ranking identifier set corresponding to the target dataset is obtained.

Owner:ADVANCED NEW TECH CO LTD

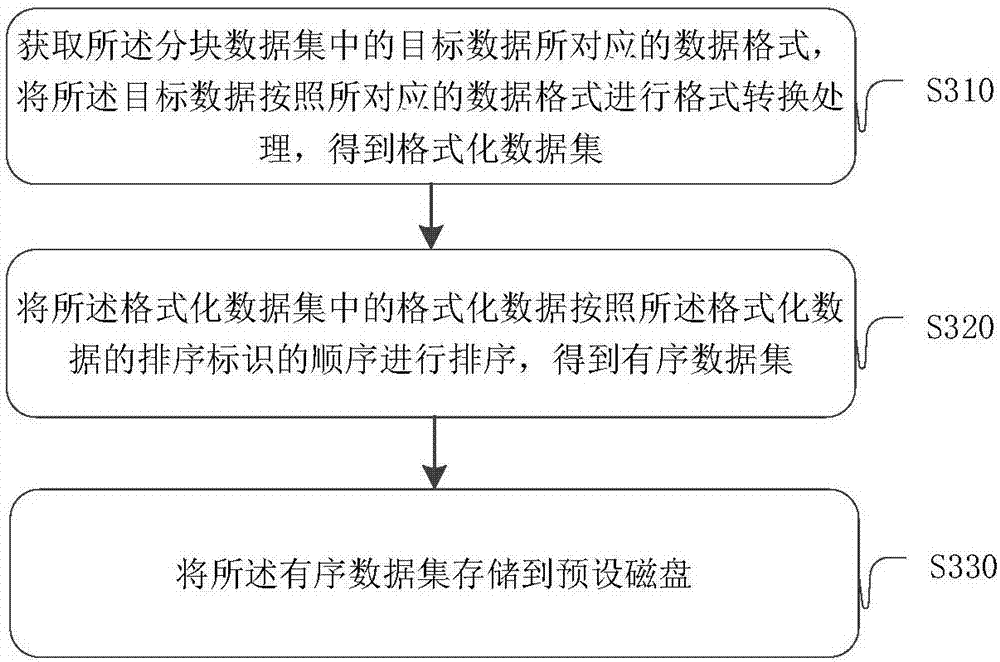

TCP protocol-based license authentication method of device function permission and authentication platform

InactiveCN106487706AReduce reading and writingDesign scienceData switching networksNetworked Transport of RTCM via Internet ProtocolData transmission

The invention discloses a TCP protocol-based license authentication method of device function permission and an authentication platform. When a network device actively makes requests to the authentication platform during starting, the method comprises following steps of: S1, receiving a service function permission request report which is made by the network device and transmitted through the network, and carrying out decoding, wherein the service function permission request report is in a TLV format; S2, distributing the decoded service function permission request report to different authentication request processors for processing through a load balance processor; S3, acquiring a device sn code corresponding to the decoded service function permission request report from a KV database through the authentication request processors, comparing service deadlines and service module enabling identifiers, and judging whether the network device making the authenticating request is legal or not. According to the invention, by storing authentication information into the KV database, data transmission efficiency in a communication process is improved; and the TLV format is used as the basic storage format, so a delay problem in a real-time authentication process can be reduced.

Owner:苏州迈科网络安全技术股份有限公司

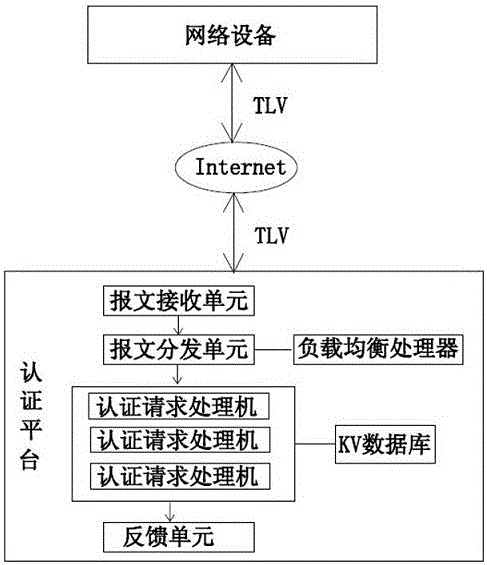

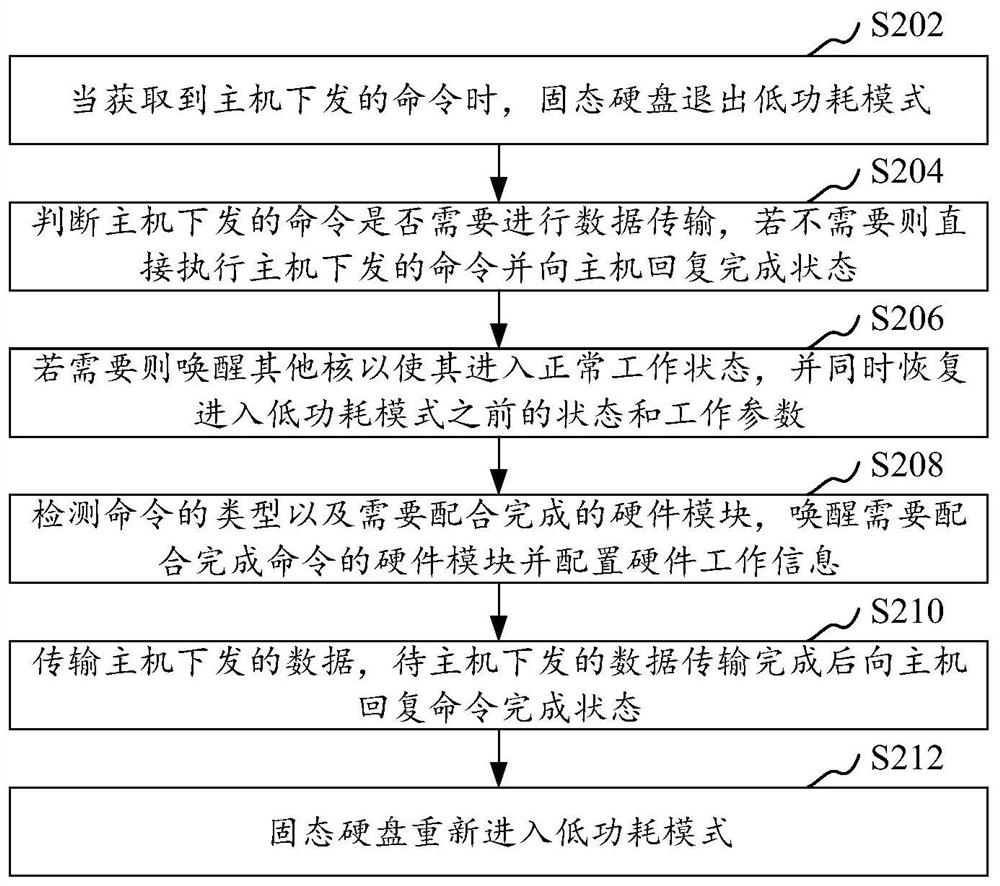

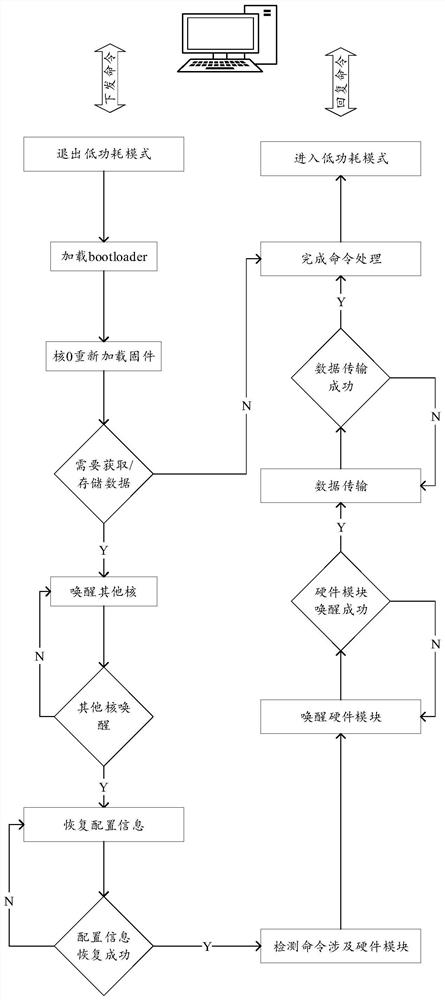

Low-power-consumption mode wakeup recovery method and device for solid state disk and computer equipment

ActiveCN111625284AReduce power consumptionImprove performanceDigital data processing detailsBootstrappingComputer hardwareData transport

The invention relates to a low-power-consumption mode wakeup recovery method and device for a solid state disk, computer equipment and a storage medium. The method comprises the steps: when a commandissued by a host is obtained, enabling the solid state disk to quit a low-power-consumption mode; judging whether the command issued by the host needs data transmission or not, and if not, directly executing the command issued by the host and replying a completion state to the host; if so, awakening other cores to enter a normal working state, and recovering the state and the working parameters before entering the low-power-consumption mode at the same time; detecting the type of the command and the hardware modules needing to be matched, awakening the hardware modules needing to be matched with the command, and configuring hardware working information; transmitting data issued by the host, and replying a command completion state to the host after the data issued by the host is completelytransmitted; and enabling the solid state disk to enter the low-power-consumption mode again. The performance of the solid state disk is improved, the power consumption is reduced, and the effect of improving the stability of the solid state disk is achieved.

Owner:SHENZHEN YILIAN INFORMATION SYST CO LTD

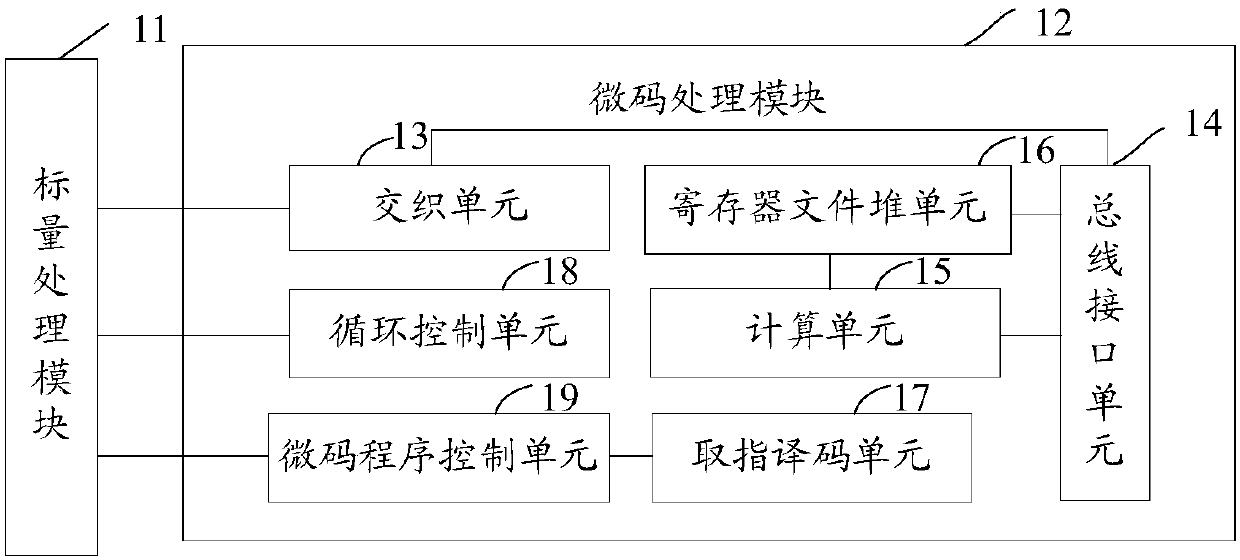

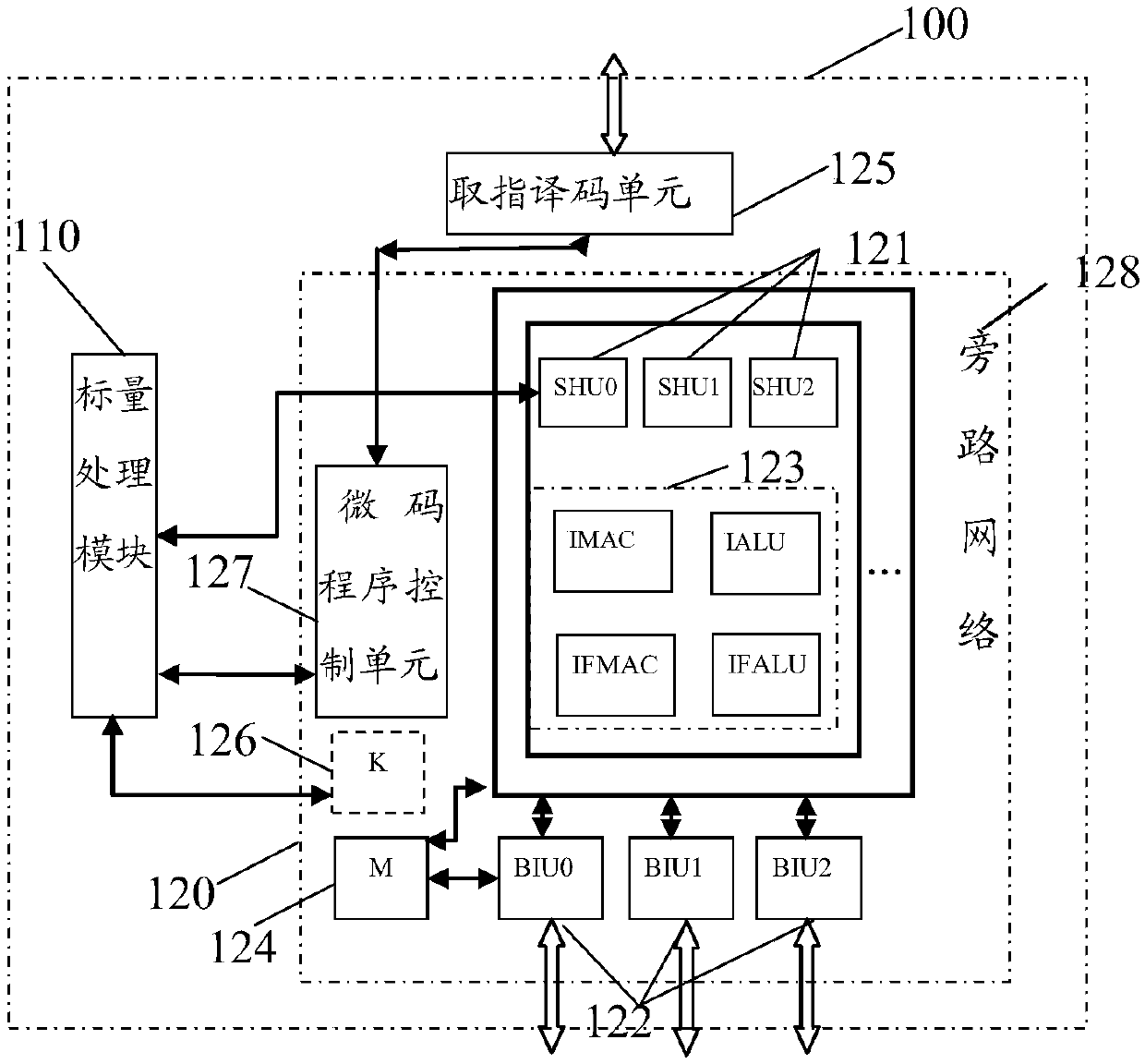

Communication processor

ActiveCN110096307AImprove computing powerFlexible accessMicrocontrol arrangementsProcessor registerData access

The invention provides a communication processor. The communication processor comprises a scalar processing module and a microcode processing module connected with the scalar processing module, and the microcode processing module comprises an interleaving unit which comprehensively supports data interleaving, a bus interface unit, a computing unit, a register file stack unit, an instruction fetchdecoding unit, a circulation control unit and a microcode program control unit. At least one internal register is arranged in each unit included in the microcode processing module, and the microcode processing module further comprises an interleaving bus and a bypass network, and an internal register of each unit of the microcode processing module performs data interaction through the interleavingbus. According to the invention, the independent internal register is arranged in each unit to reduce the time delay when each unit accesses the associated data and improve the data access speed of each unit, and the interleaving unit comprehensively supports the data interleaving, so that the data access and use process is more flexible.

Owner:BEIJING SMART LOGIC TECH CO LTD

Mass storing and managing method of smart card

ActiveCN100524312CNo need to change the operation functionReduce reading and writingRecord carriers used with machinesSpecial data processing applicationsSmart cardStorage management

The invention relates to the design and application technology of smart cards in the field of microelectronics, in particular to a mass data storage and management method of smart cards. This method establishes a database with basic storage, query, comparison and sorting functions on the smart card. The basic structure of the database is two sets of linked lists. The first set only includes a main linked list. Form this linked list, and use each node in the linked list to save the basic information of each record and the starting position of the sub-linked list; the second set of linked lists—the number of sub-linked lists is the same as the number of records contained in the database, and all on these linked lists Each node is a "data block" with a fixed size, and the different attributes belonging to a record are stored in the sub-linked list according to the structure of "identity-length-value". The method can greatly expand the functions of the existing smart card by cooperating with the corresponding upgrading of the hardware, making it a new generation of data storage and management platform.

Owner:RDA MICROELECTRONICS SHANGHAICO LTD

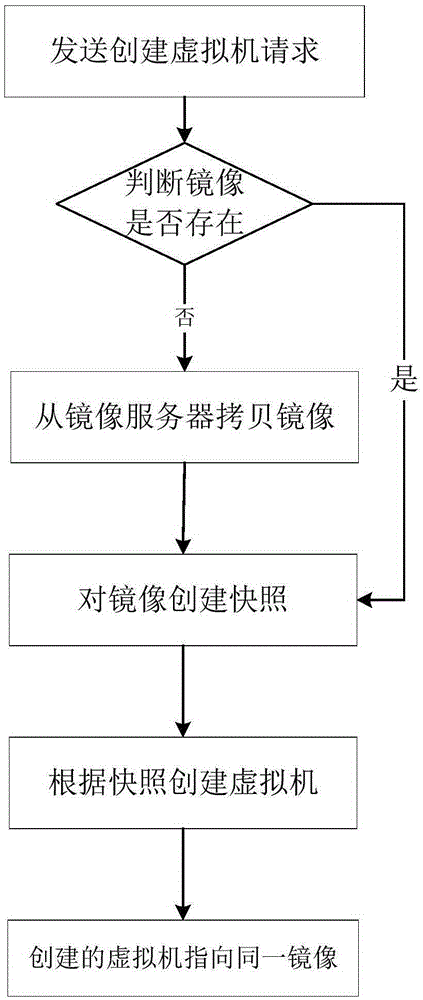

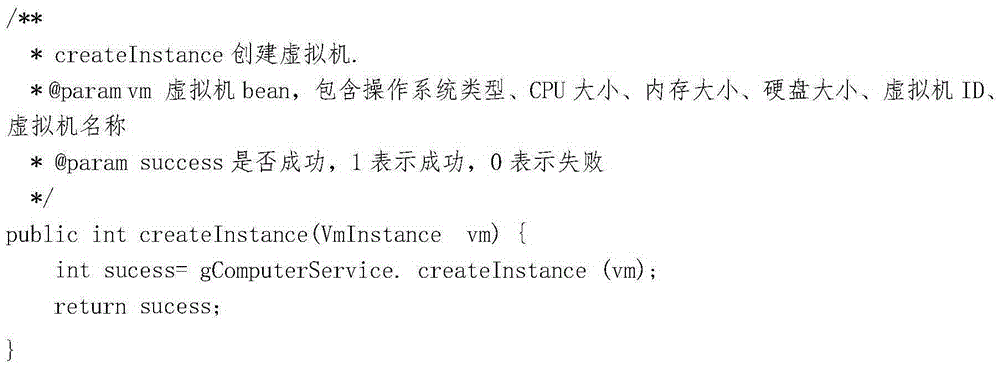

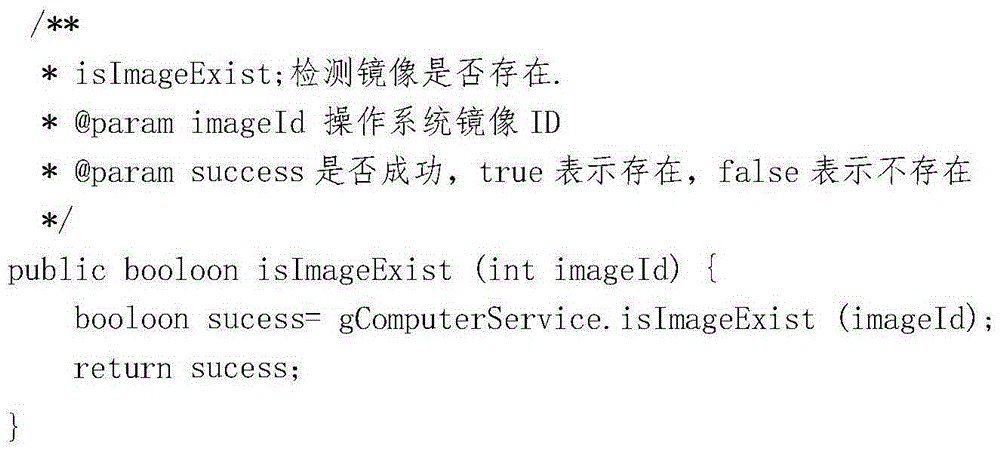

Method of reducing physical disk I/O reading and writing

InactiveCN105260231AReduce reading and writingSave storage spaceInput/output to record carriersSoftware simulation/interpretation/emulationOperational systemMirror image

The invention relates to the technical field of cloud computing, in particular to a method of reducing physical disk I / O reading and writing. The method comprises the steps of sending a virtual machine creating request; then detecting whether an operating system mirror image required by a virtual machine to be created exists or not at a physical node, and if the operating system image exists, creating a corresponding snap shot for the existing mirror image and copying the corresponding image from a mirror image server to the physical node; finally creating an virtual machine according to the snap shot. The method reduces the physical disk I / O reading and writing by the virtual machine, improves a storage space use ratio, and can be used for solving the problem of a physical disk I / O reading and writing choke point.

Owner:G CLOUD TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com