Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

132 results about "Disk buffer" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

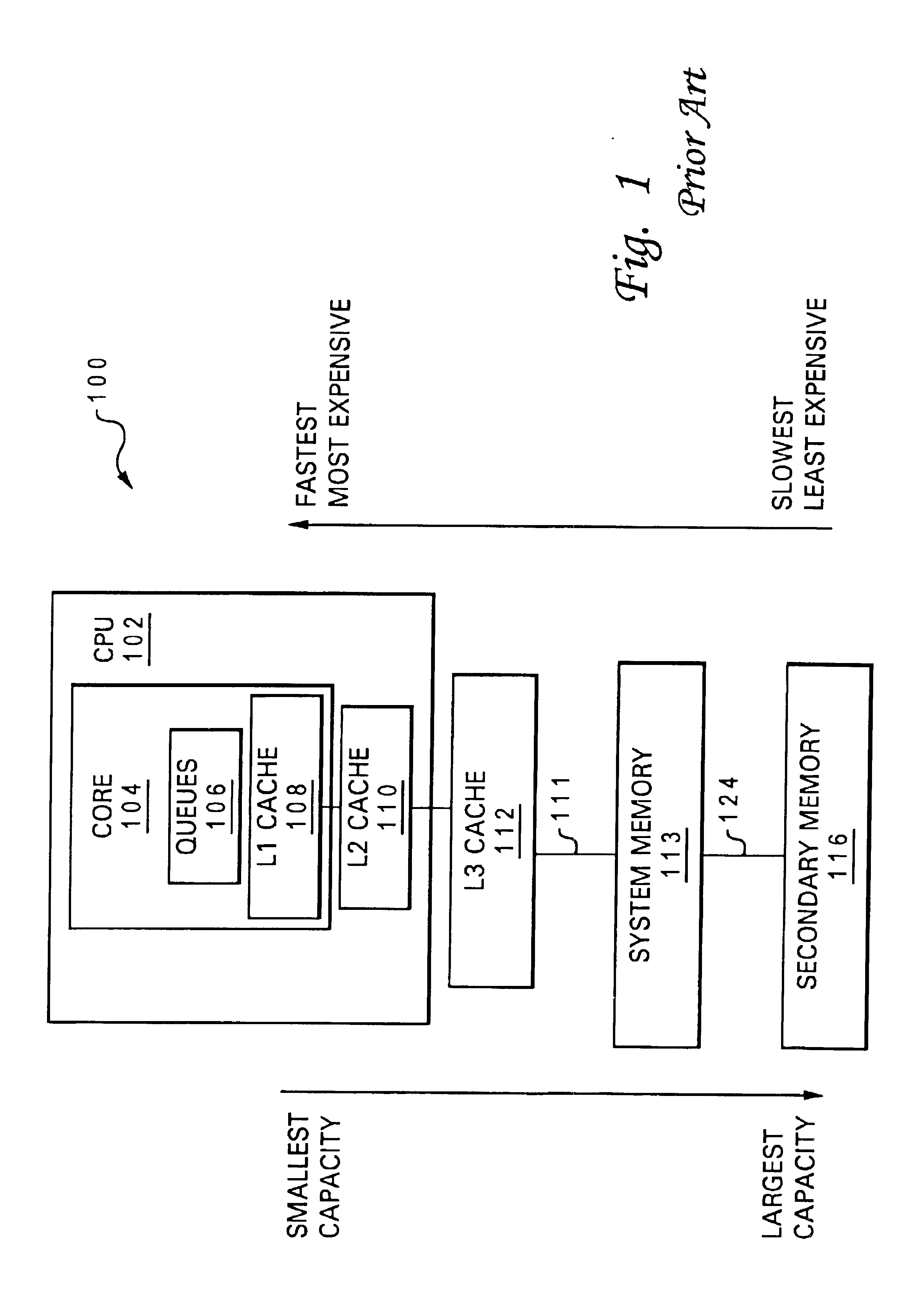

In computer storage, disk buffer (often ambiguously called disk cache or cache buffer) is the embedded memory in a hard disk drive (HDD) acting as a buffer between the rest of the computer and the physical hard disk platter that is used for storage. Modern hard disk drives come with 8 to 256 MiB of such memory, and solid-state drives come with up to 4 GB of cache memory.

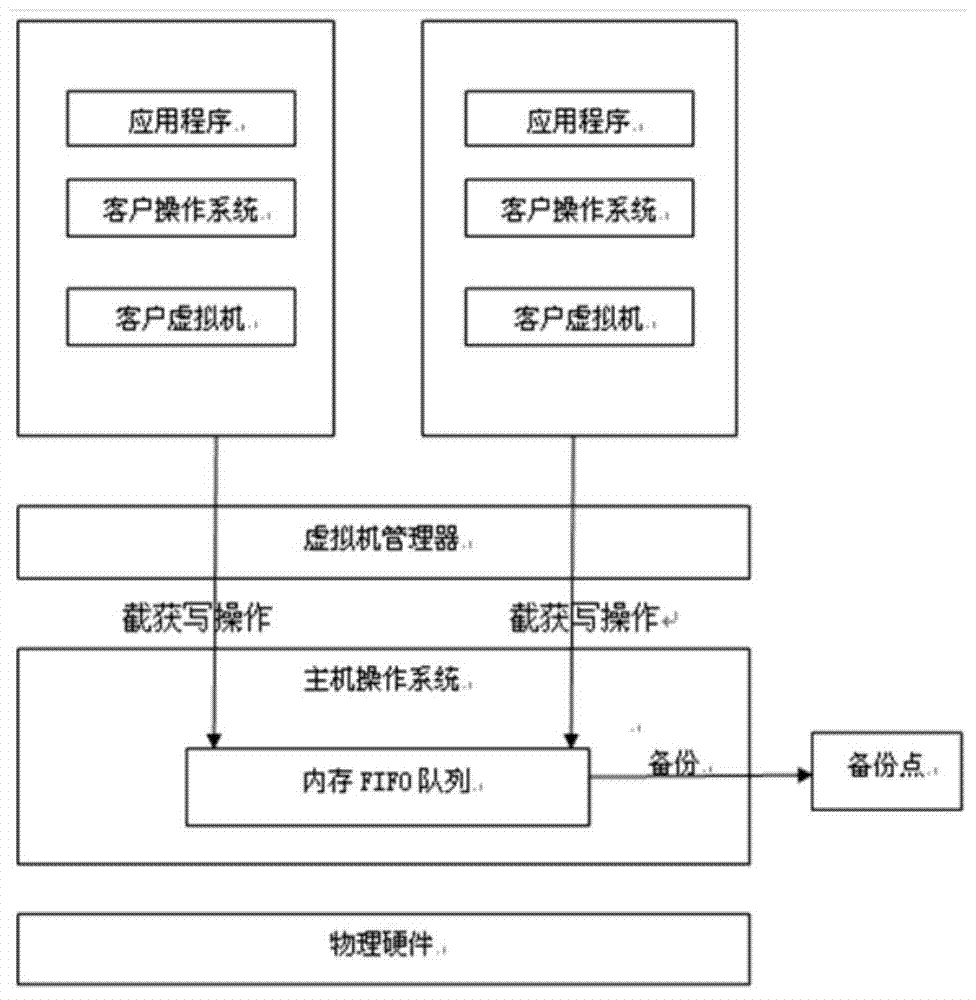

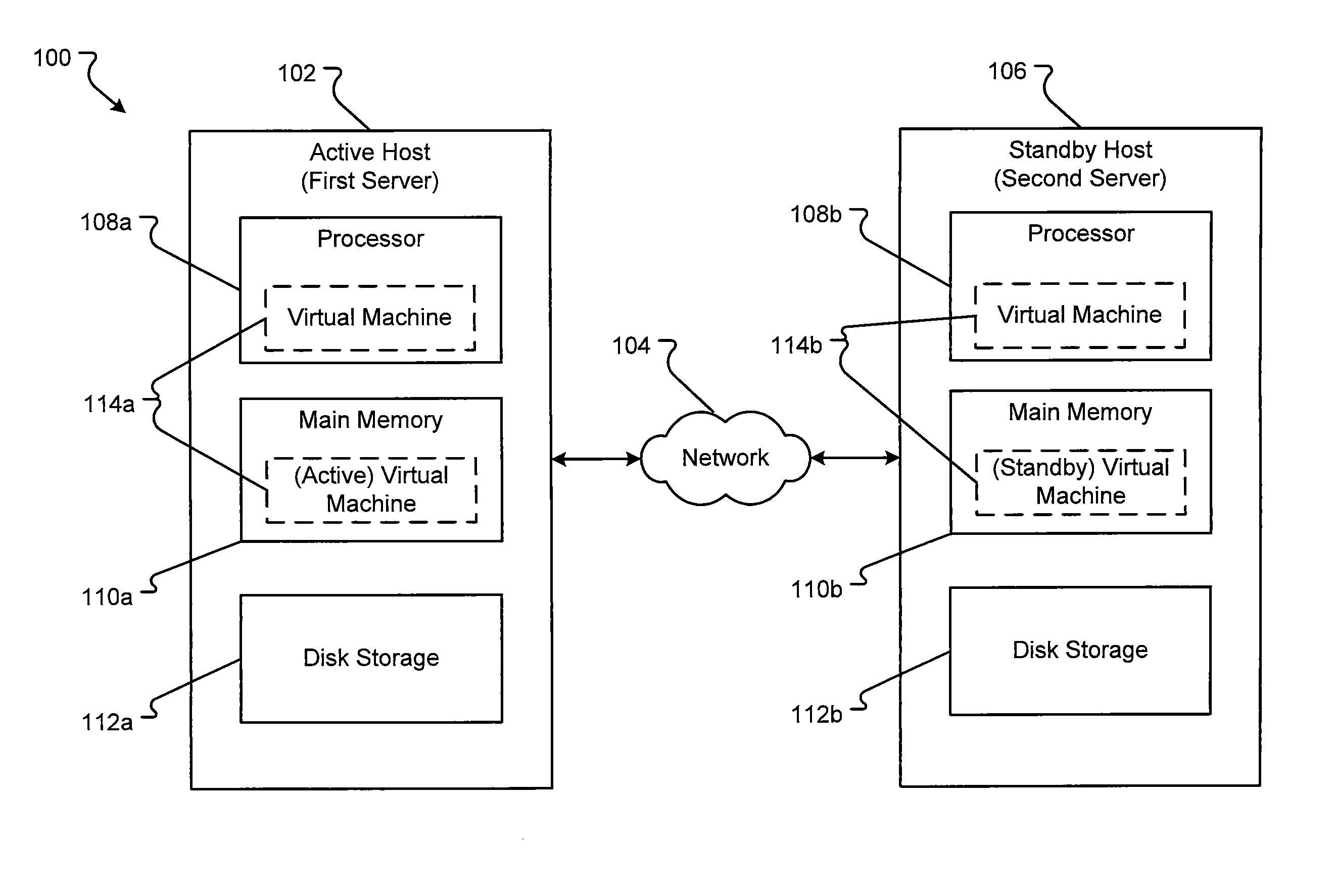

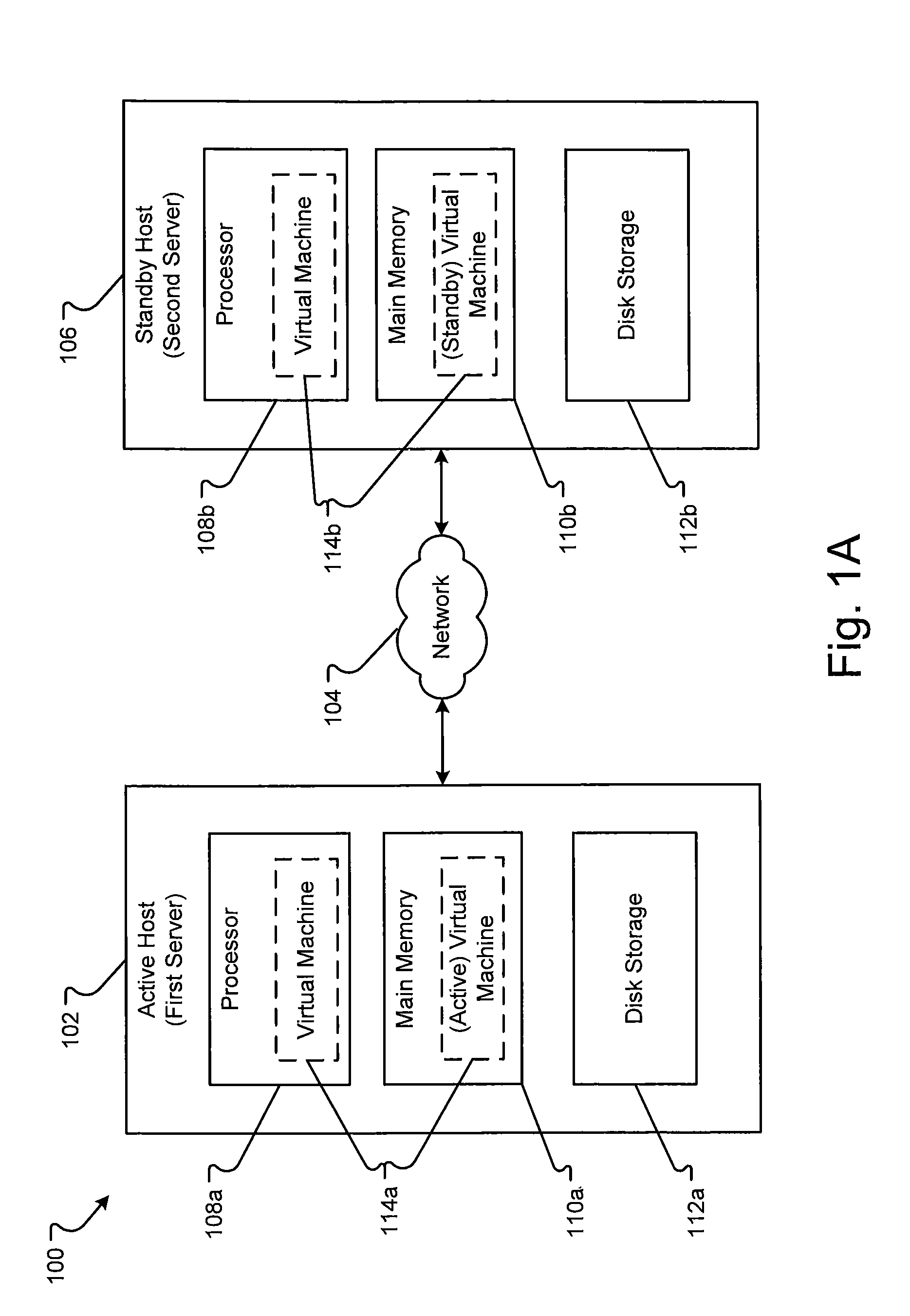

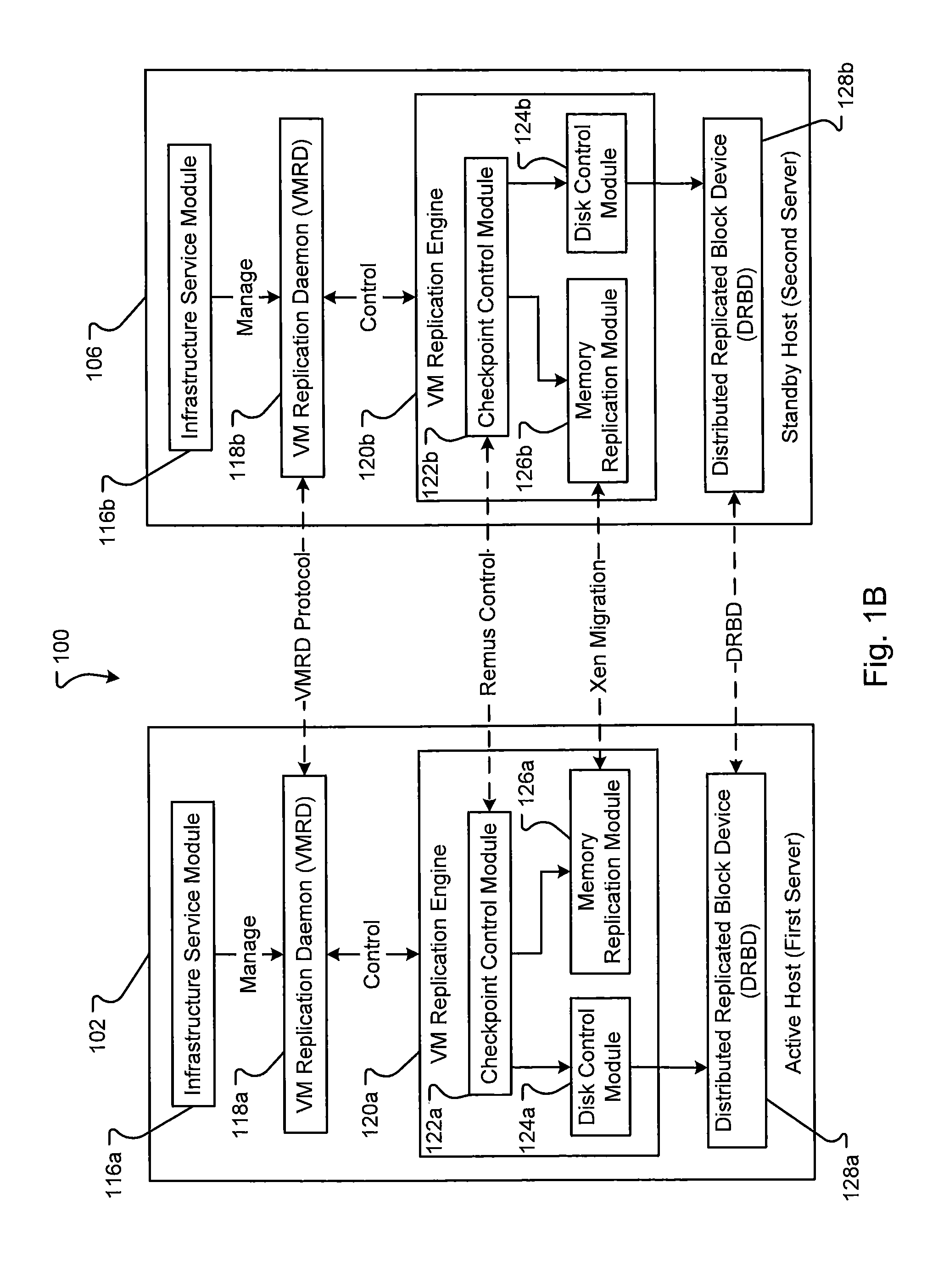

Method and apparatus for high availability (HA) protection of a running virtual machine (VM)

ActiveUS20110208908A1Minimal downtimeMemory loss protectionError detection/correctionHigh availabilityDisk buffer

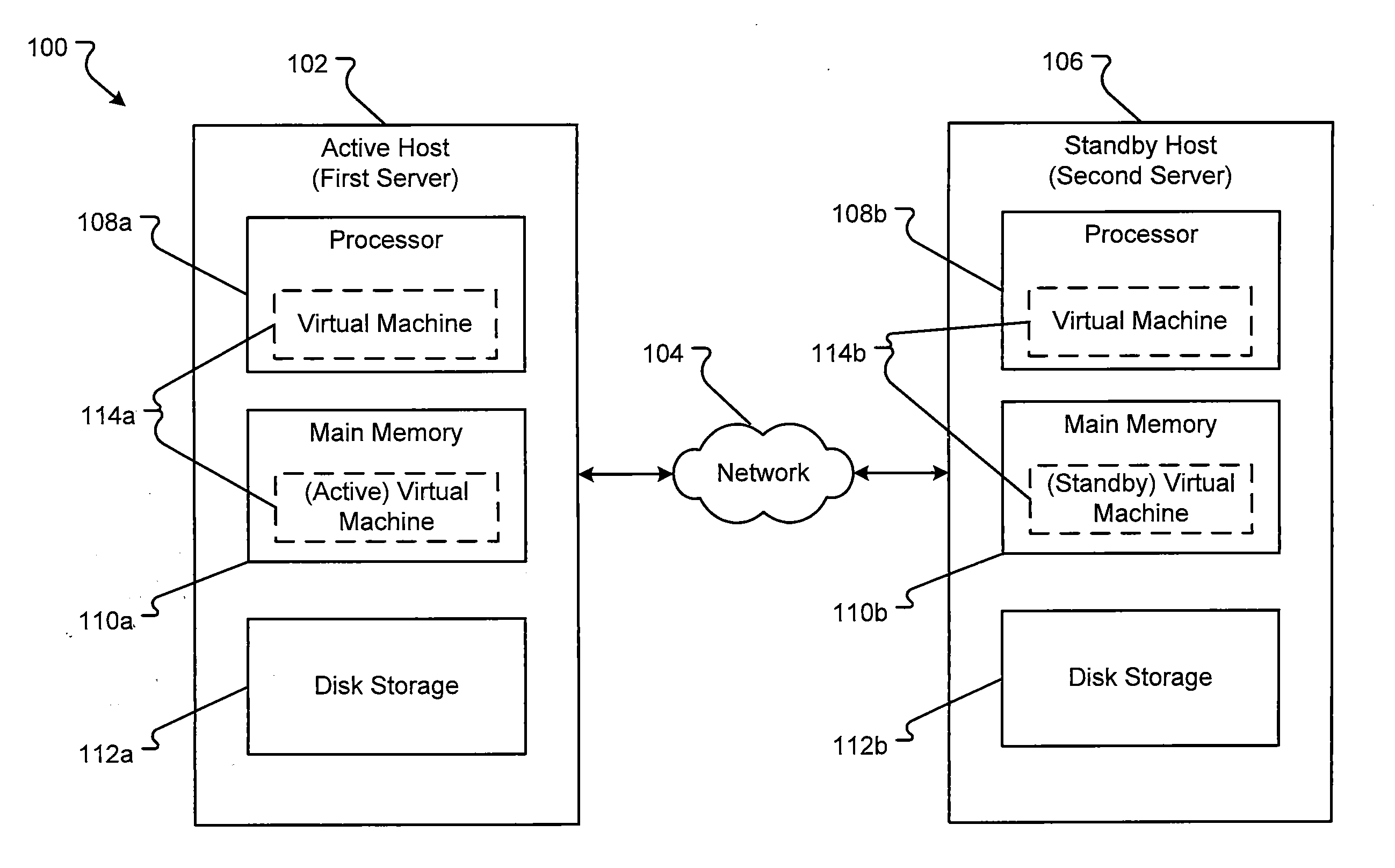

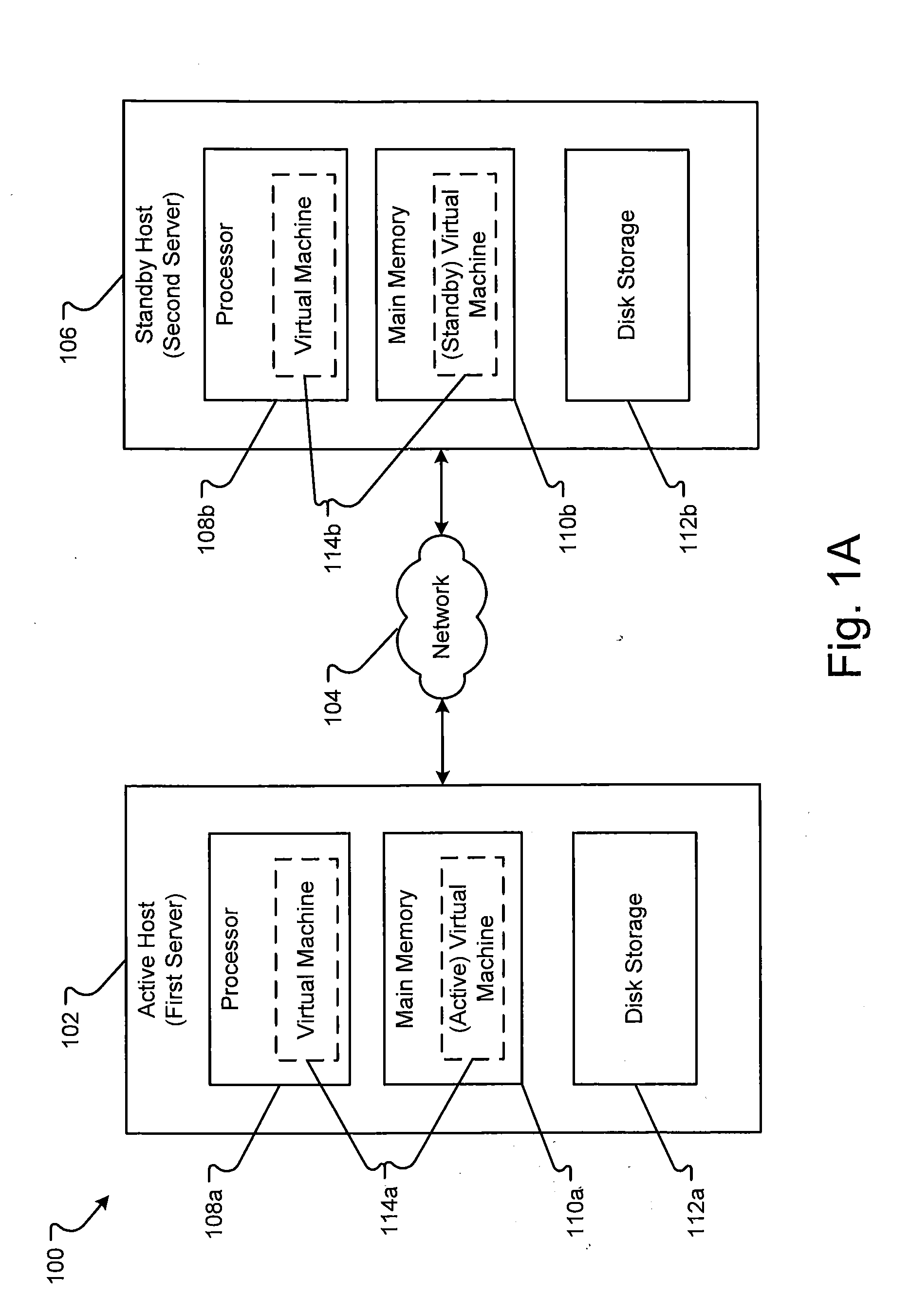

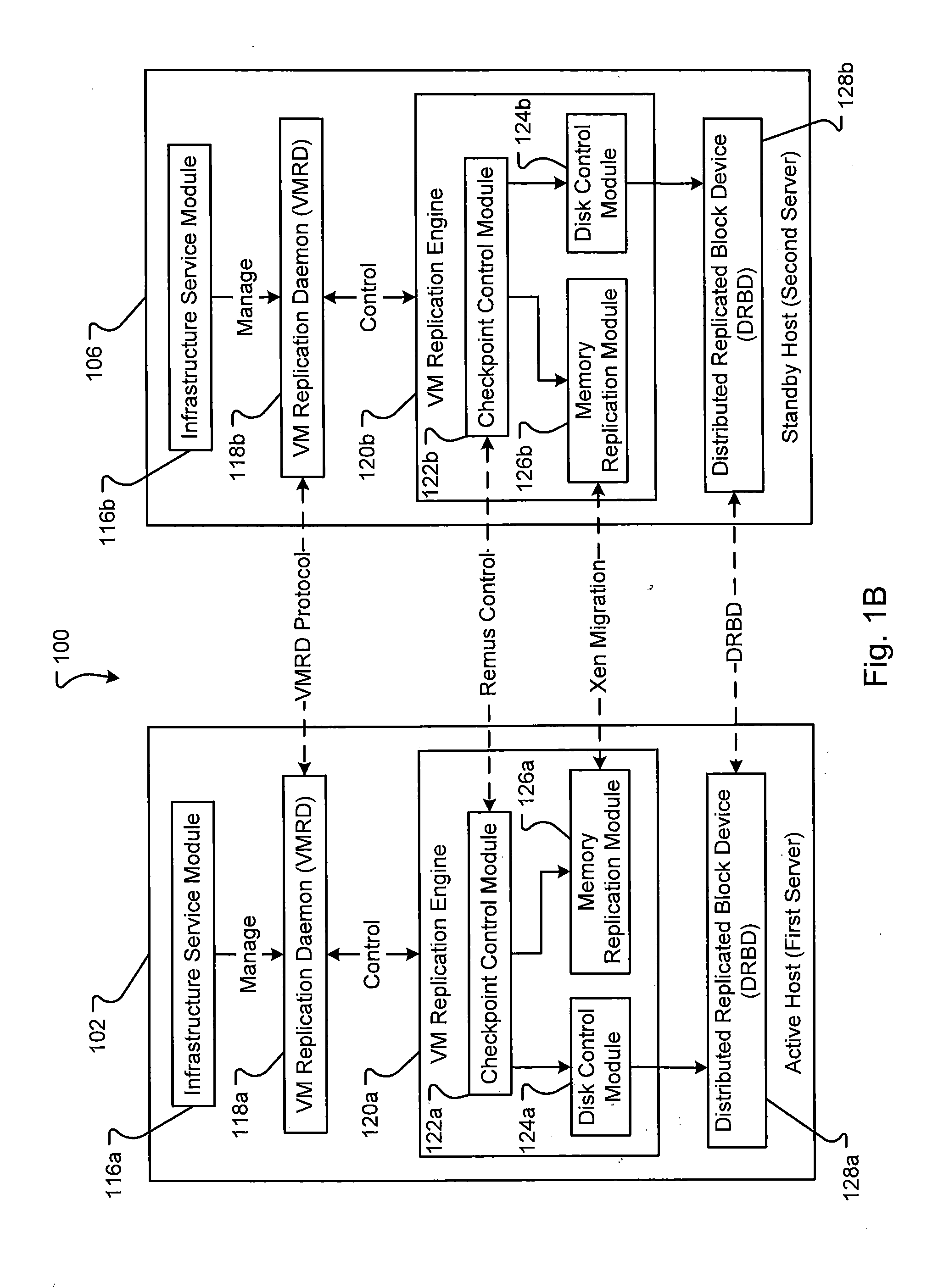

High availability (HA) protection is provided for an executing virtual machine. A standby server provides a disk buffer that stores disk writes associated with a virtual machine executing on an active server. At a checkpoint in the HA process, the active server suspends the virtual machine; the standby server creates a checkpoint barrier at the last disk write received in the disk buffer; and the active server copies dirty memory pages to a buffer. After the completion of these steps, the active server resumes execution of the virtual machine; the buffered dirty memory pages are sent to and stored by the standby server. Then, the standby server flushes the disk writes up to the checkpoint barrier into disk storage and writes newly received disk writes into the disk buffer after the checkpoint barrier.

Owner:AVAYA INC

File system having predictable real-time performance

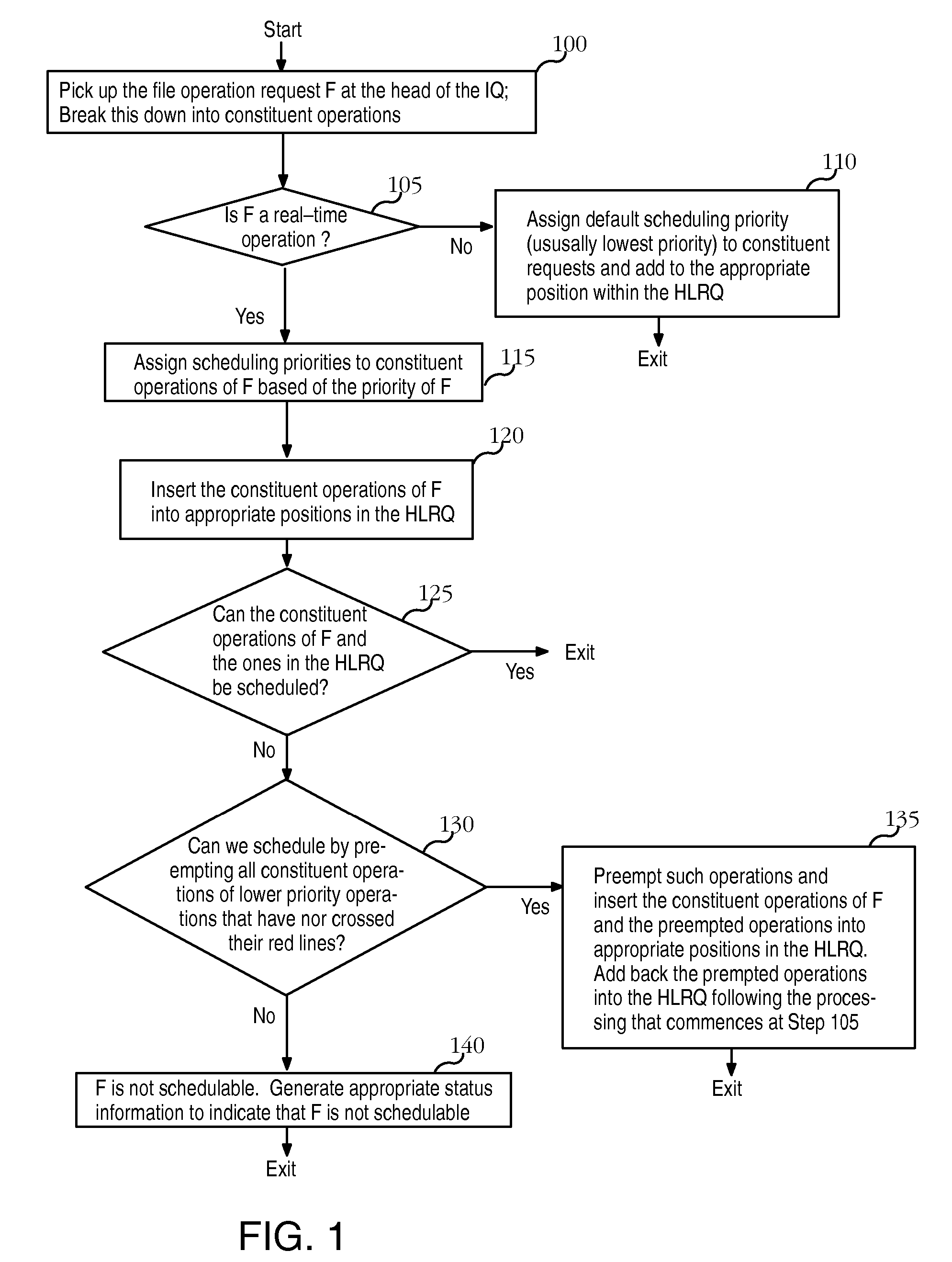

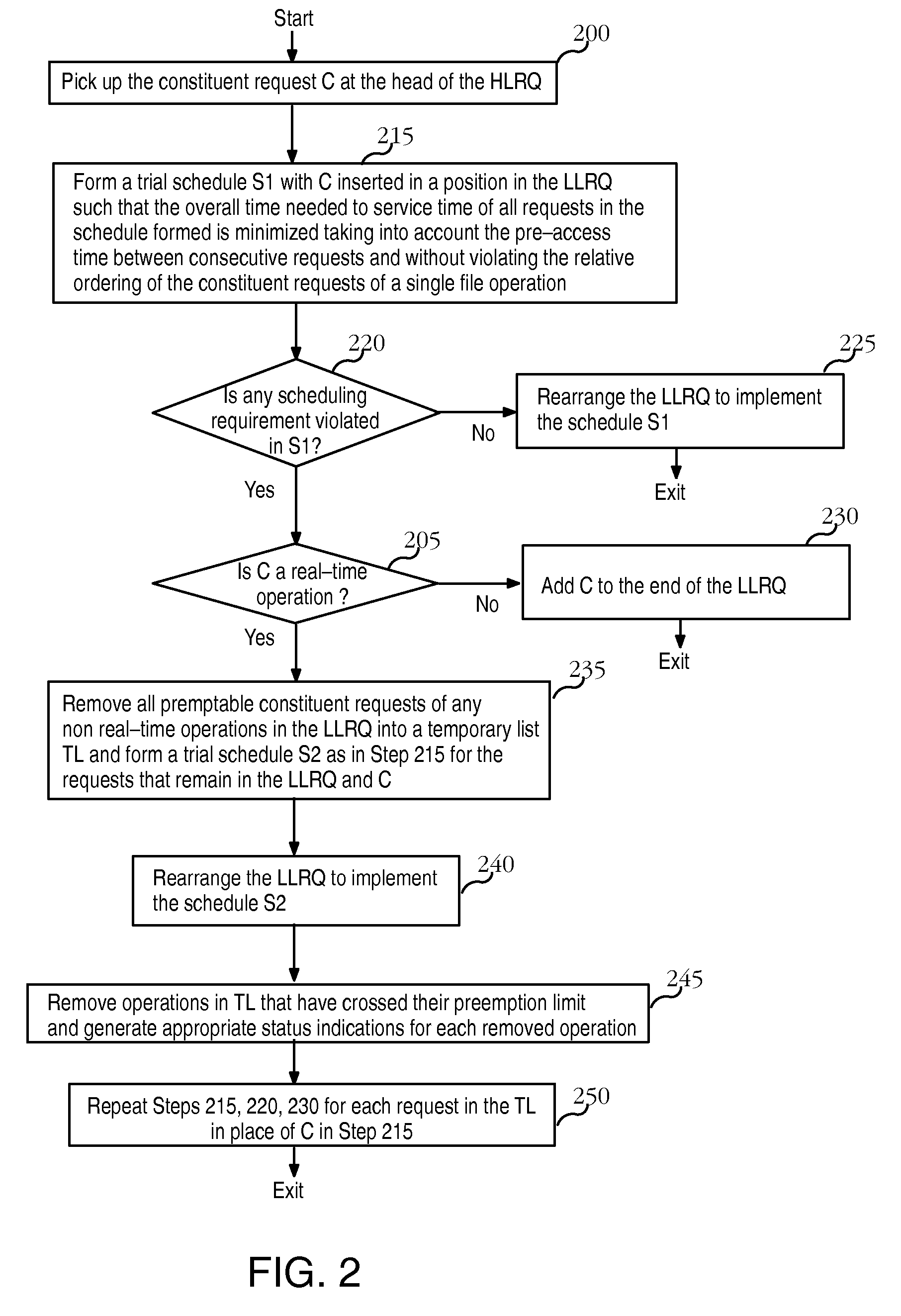

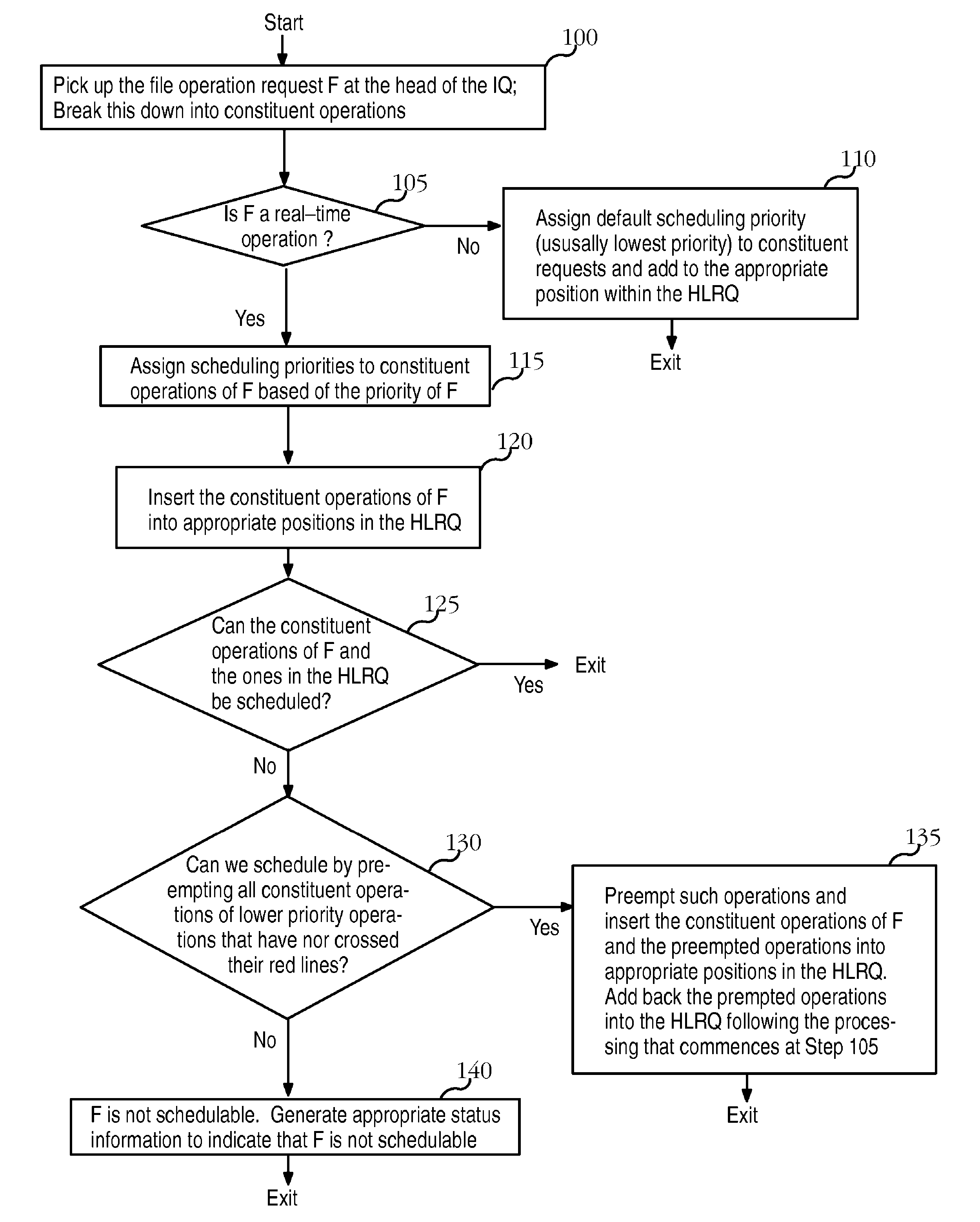

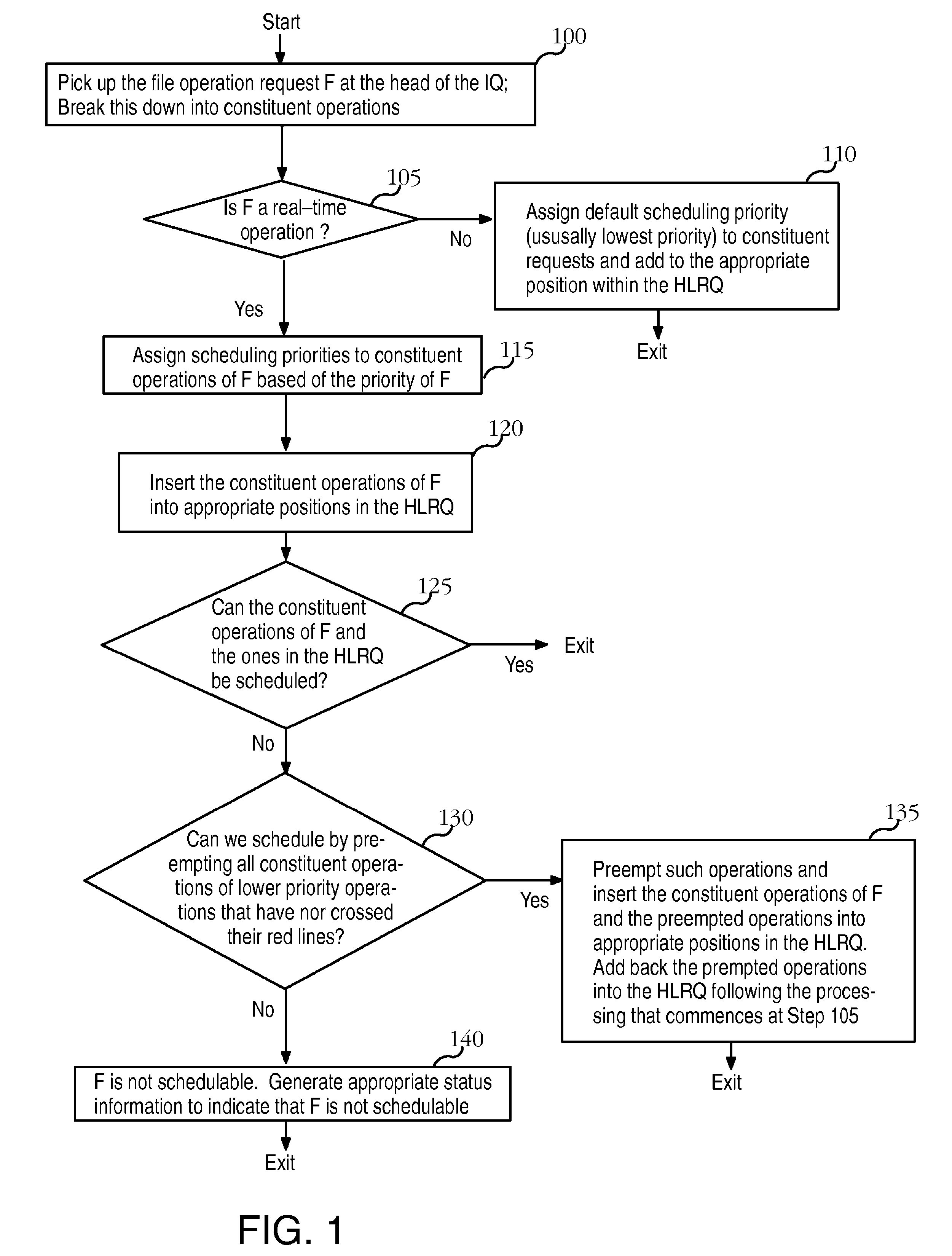

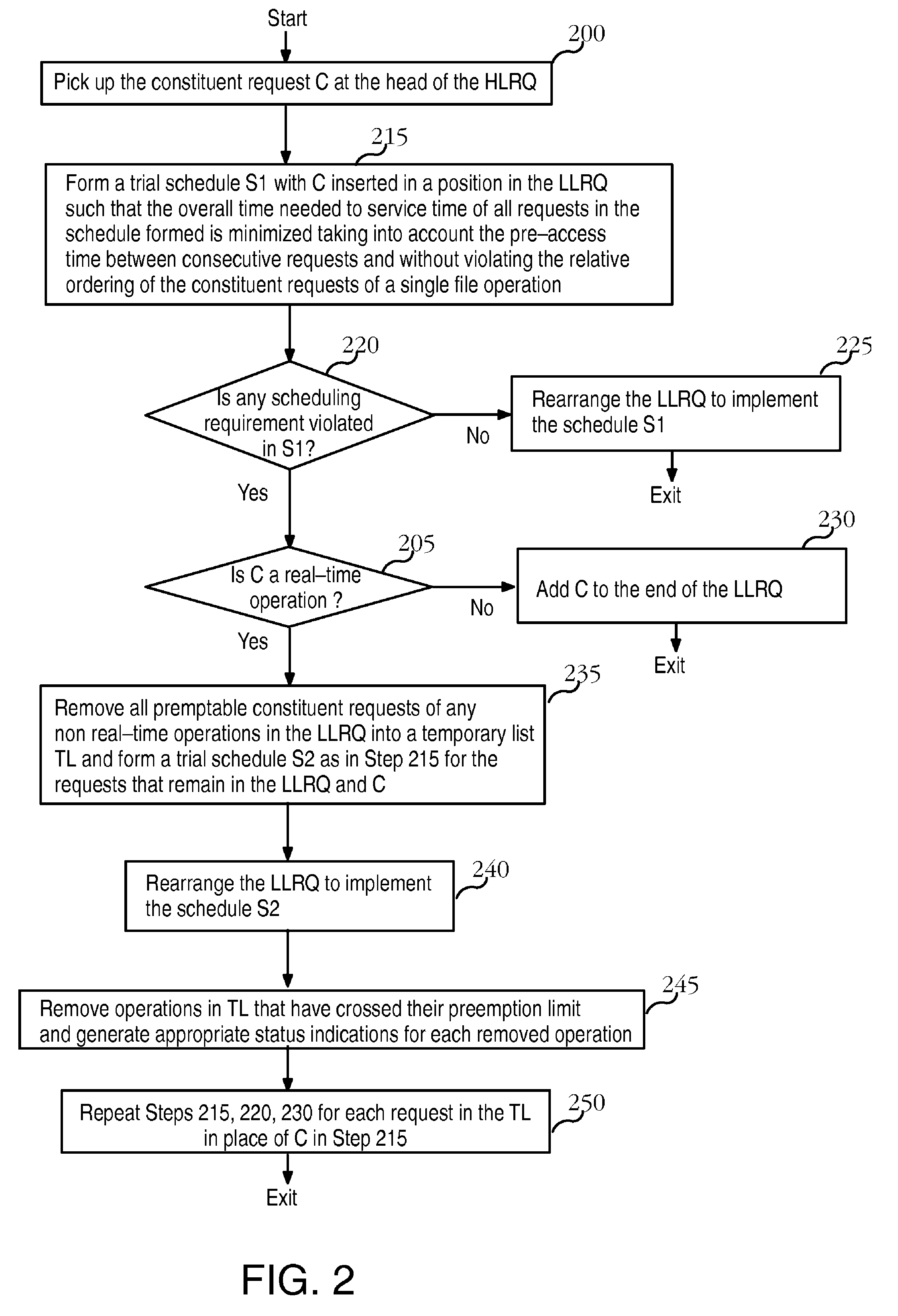

InactiveUS20070067595A1Improve abilitiesTime requiredDigital data information retrievalSpecial data processing applicationsNon real timeQuality of service

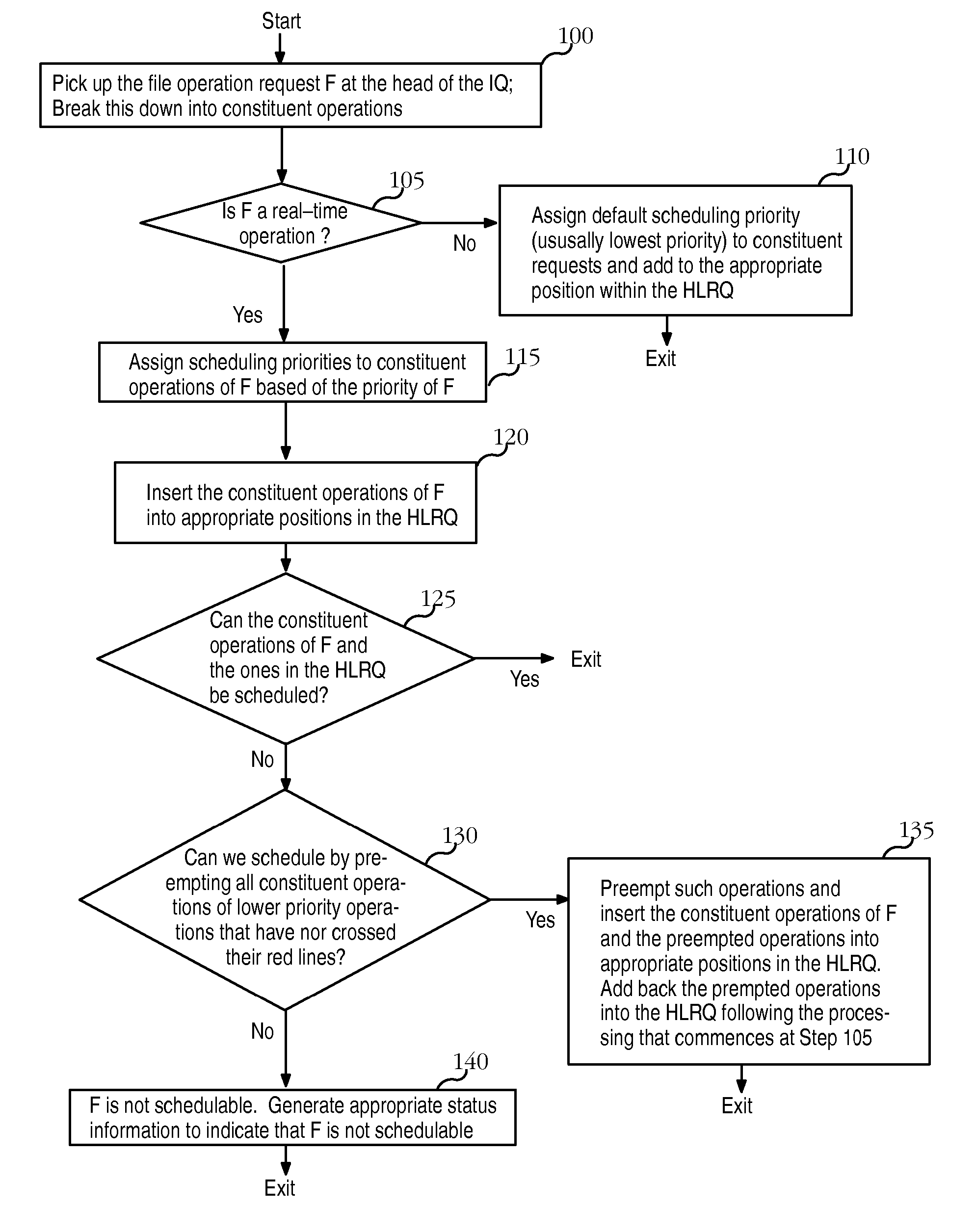

A file system that permits predictable accesses to file data stored on devices that may have a variable access latency dependent on the physical location of the file on the physical storage device. A variety of features that guarantee timely, real-time response to I / O file system requests that specify deadlines or other alternative required quality-of-service parameters. The file system addresses needs to accommodate the file systems of storage devices such as disks that have an access time dependant on the physical location of the data within the storage device. A two-phase, deadline-driven scheduler considers the impact of disk seek time on overall response times. Non real-time file operations may be preempted. Files may be preallocated to help avoid access delay caused by non-contiguity. Disk buffers may also be preallocated to improve real-time file system performance.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

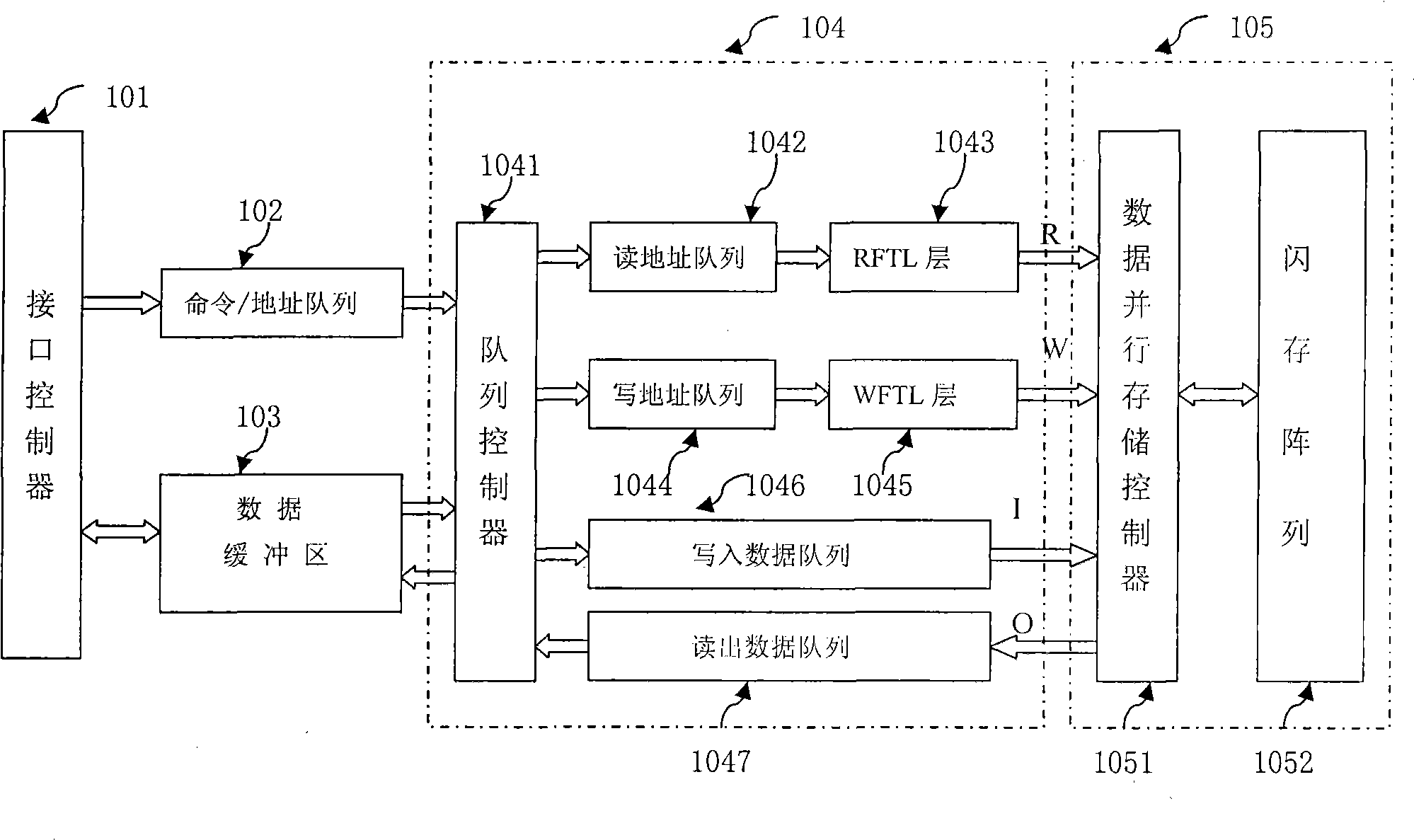

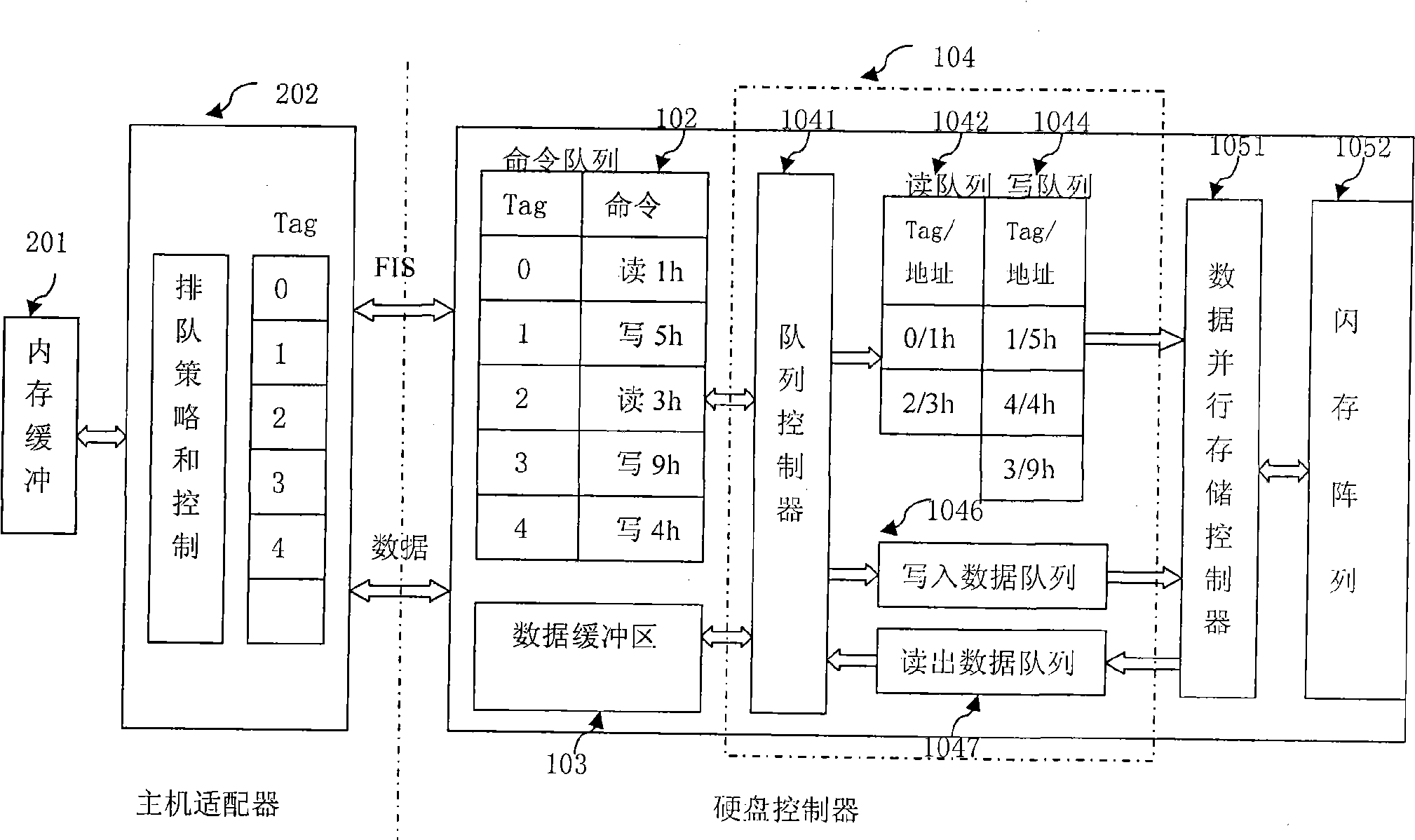

Solid state disk controller

InactiveCN101498994AImprove reading and writing efficiencyImplement parallel transferInput/output to record carriersMemory adressing/allocation/relocationDisk controllerSolid-state drive

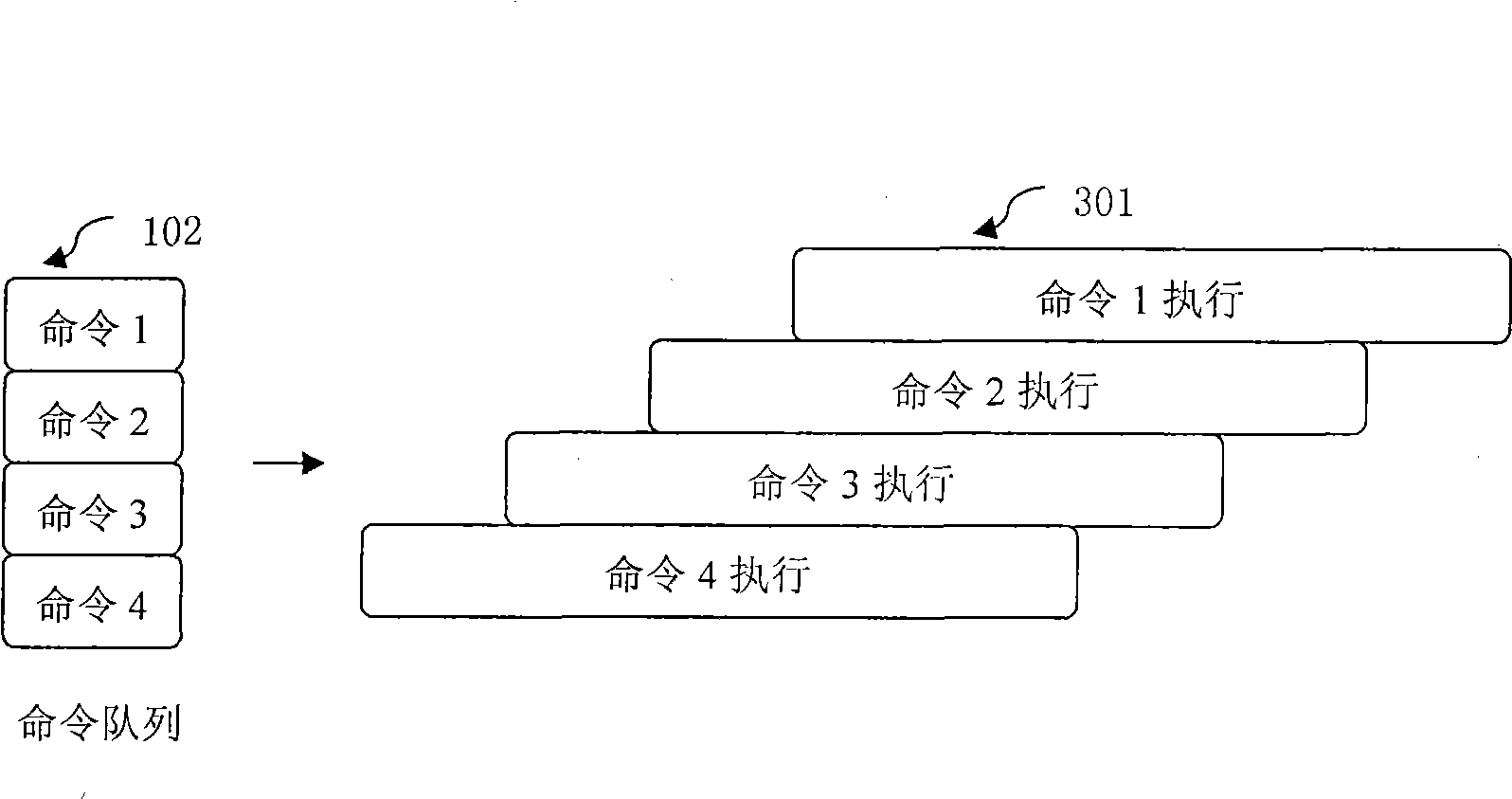

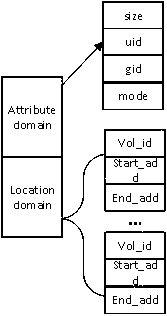

A solid hard disk controller belongs to the technical field of the hard disk storage. The hard disk controller comprises a command queue module and a flash memory parallel storage module. The command queue optimizing module acquires a command address queue and divides into a write address queue and a read address queue, extracts data from a hard disk buffer based on the address queue, and writes in the flash memory parallel storage module, reads data from the flash memory parallel storage module based on the read address queue and outputs to the hard disk buffer. The flash memory parallel storage module comprises more than one flash memory passages. A flash memory chip in the flash memory passage adopts the bite extending method. This invention separately processes the write operation and the read operation, and realizes the data parallel transmission via the flash memory parallel structure, thereby effectively improving the write / read efficiency of the solid hard disk.

Owner:HUAZHONG UNIV OF SCI & TECH

Method for speculative streaming data from a disk drive

InactiveUS6891740B2Input/output to record carriersMemory adressing/allocation/relocationStreaming dataData stream

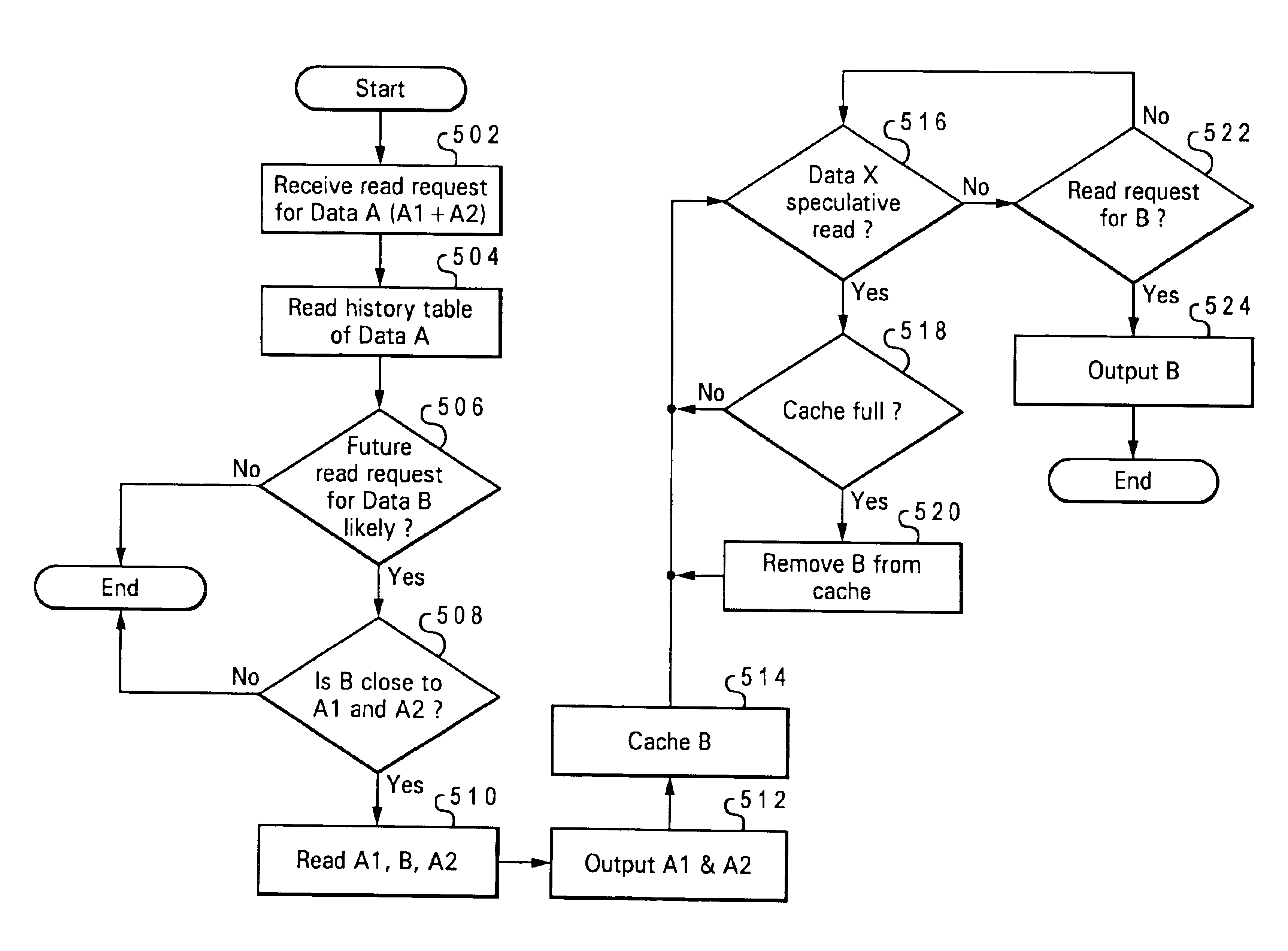

A method and program product supporting speculative data transfers in a disk drive. Requested first data are read from a disk. Before the first data are read, a determination is made as to whether there are un-requested second data that are likely to be requested at a later time as part of a data stream. If so, then a determination is made as to whether the second data and the first data are stored in locations that are physically / logically proximate on the disk. If the second data are close to the first data, then the second data are speculatively read and stored in a local disk cache. If a subsequent request comes to the disk drive for the second data, then the second data are quickly produced from the disk cache rather than being slowly read off the disk.

Owner:WESTERN DIGITAL TECH INC

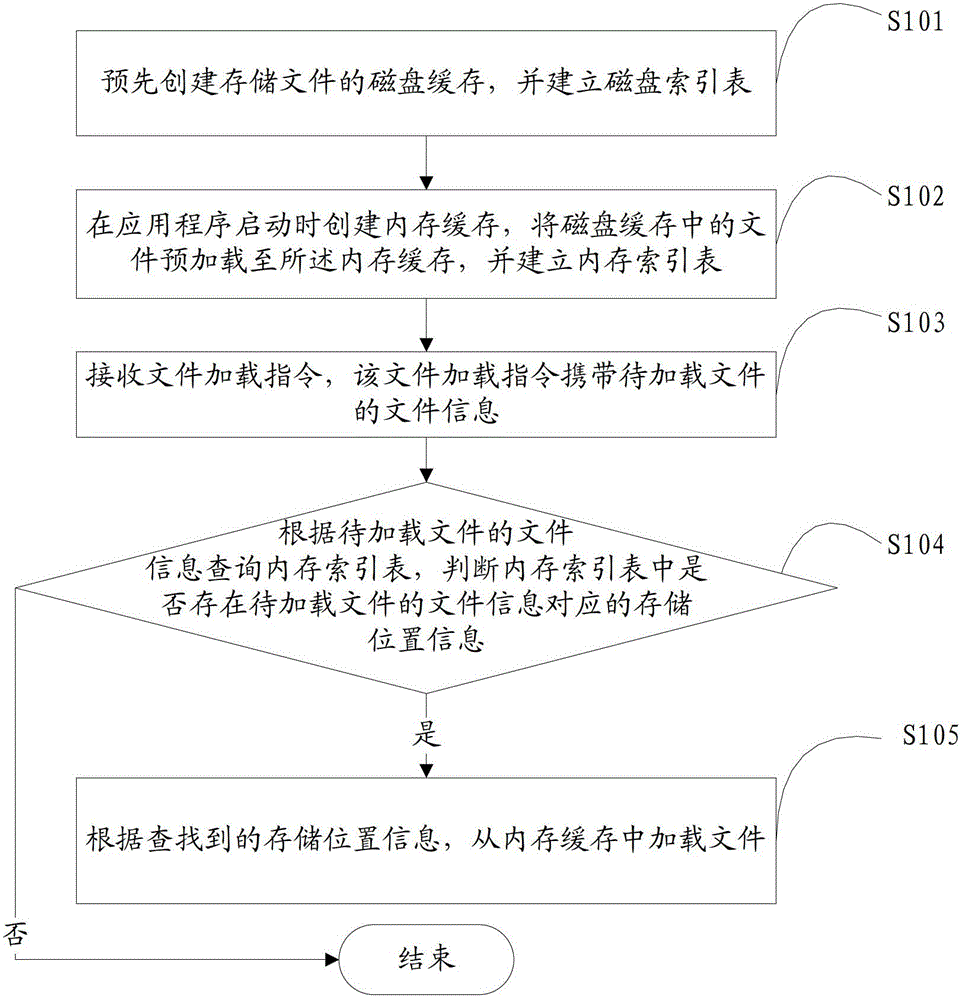

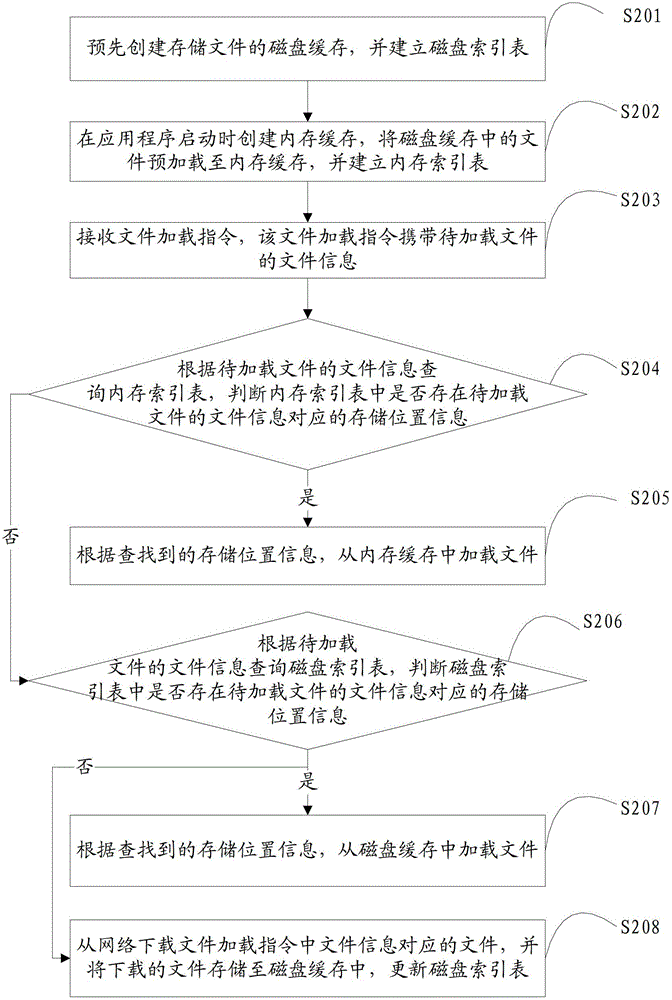

Method and device for loading file

InactiveCN102750174AImprove loading efficiencyHigh speedProgram loading/initiatingAccess frequencyComputer terminal

The invention is suitable for the field of multimedia applications and provides a method and a device for loading a file. The method comprises the following steps of: receiving a file loading instruction which carries file information of a to-be-loaded file; inquiring a memory index table according to the file information of the to-be-loaded file, wherein the memory index table contains storage location information and access frequency of a file preloaded from a disk cache to a memory cache; and if searching the storage location information corresponding to the file information in the memory index table, loading the file from the memory cache according to the searched storage location information and updating the access frequency of the file in the memory index table. The file downloaded from the network is stored at a terminal by a two-level file caching mode, and since the access delay of a memory is much less than that of the dick cache, compared with the file stored in the memory cache, a display file can be rapidly loaded, the file loading efficiency is high, and the file loading speed is quick.

Owner:TCL CORPORATION

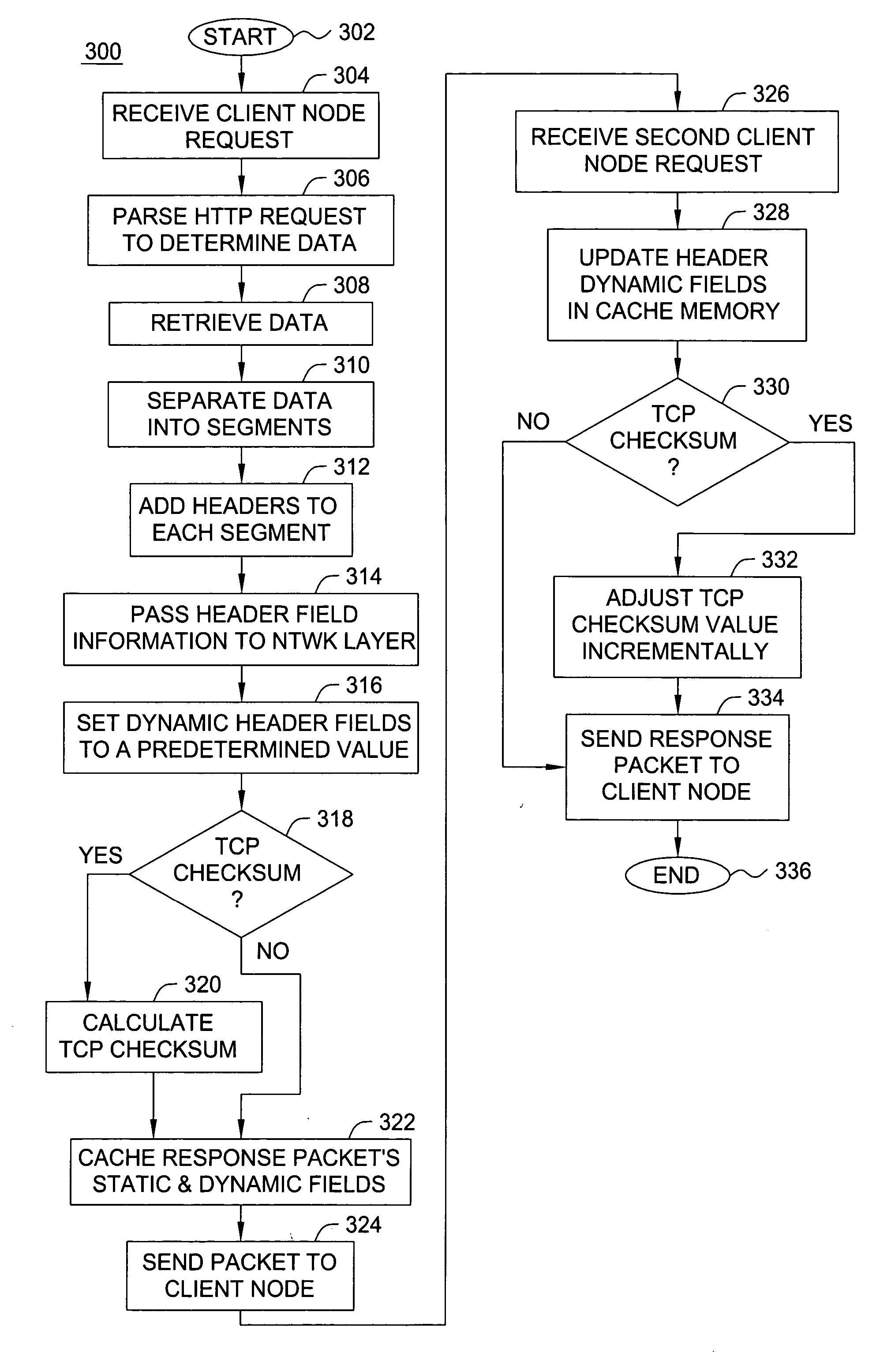

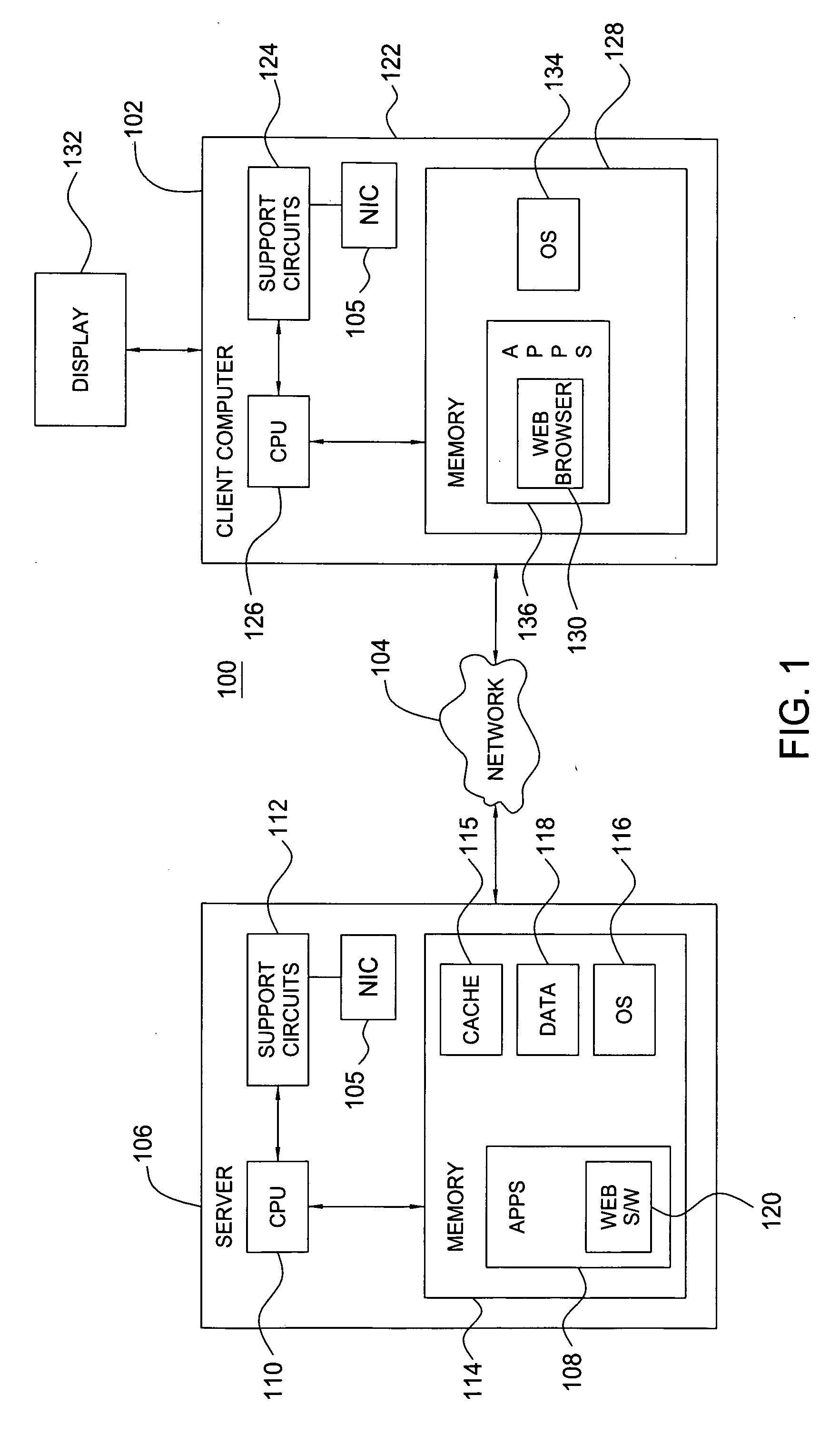

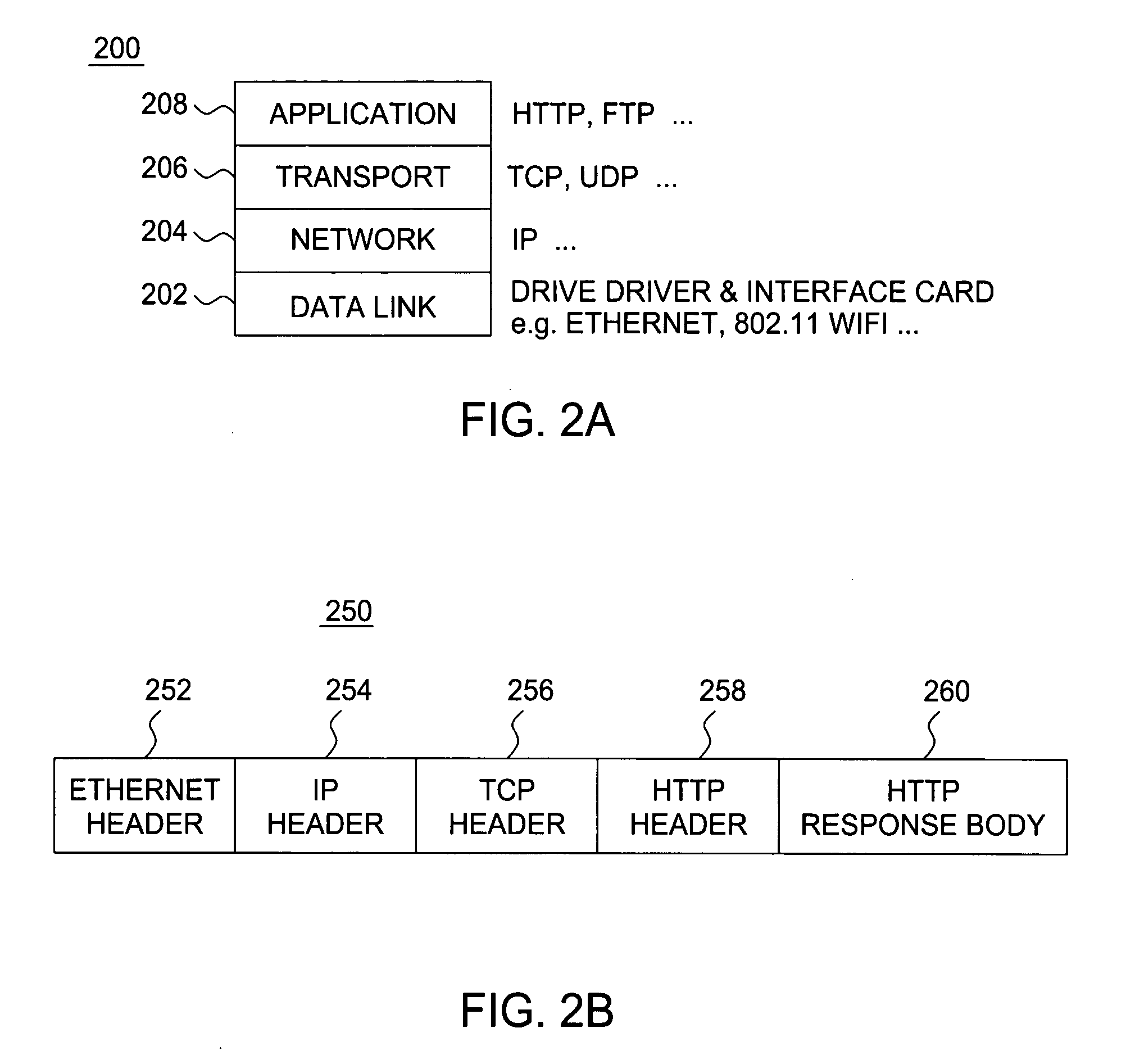

Method and apparatus for pre-packetized caching for network servers

InactiveUS20060112185A1Short response timeIncreasing network server hardwareDigital computer detailsTransmissionData packCache server

A method and apparatus for caching client node requested data stored on a network server is disclosed. The method and apparatus comprise caching server responses at the pre-packetized level thereby decreasing response time by minimizing packet processing overhead, reducing disk file reading and reducing copying of memory from disk buffers to network interfaces.

Owner:ALCATEL-LUCENT USA INC +1

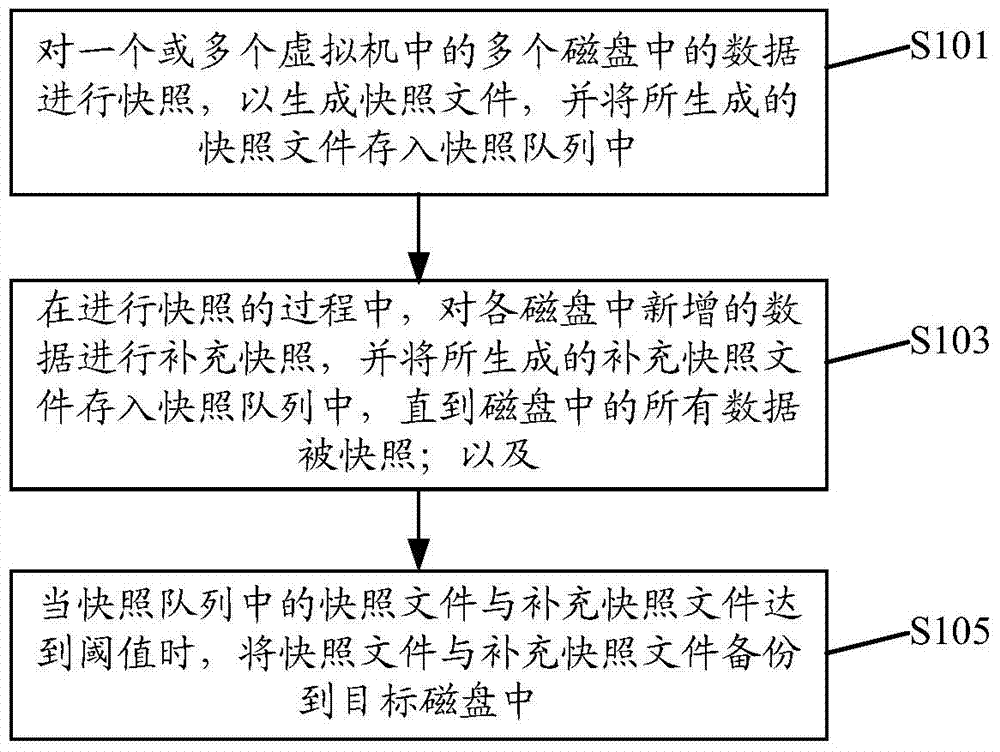

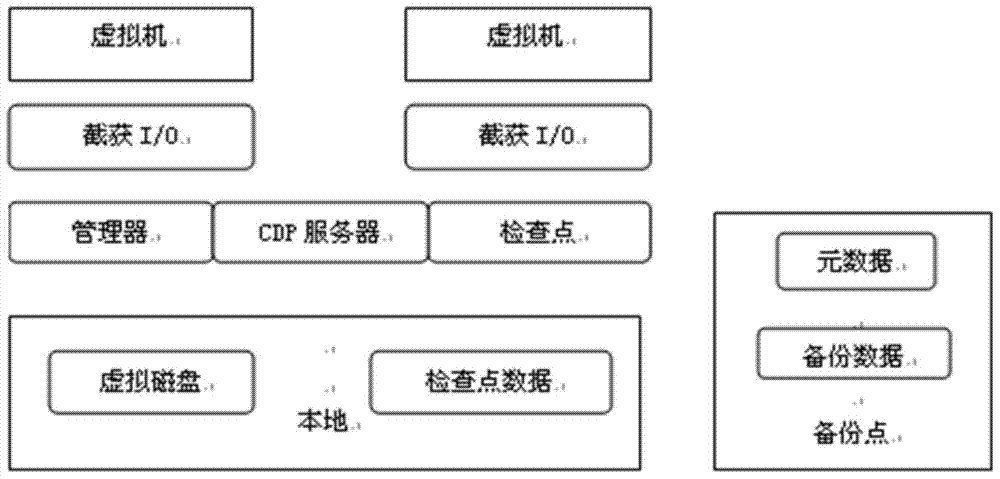

Data backup method for virtual machines

ActiveCN103678045AShorten the timeLittle impact on performanceInput/output to record carriersRedundant operation error correctionDisk bufferVirtual machine

The invention discloses a data backup method for virtual machines. The method comprises the following steps of snapshooting data in a plurality of magnetic disks in one or more virtual machines to generate a snapshot file, and storing the generated snapshot file in a snapshot queue; complementarily snapshooting newly added data in each magnetic disk in a snapshooting process, and storing a generated complementary snapshot file in the snapshot queue until all the data in the magnetic disks is snapshot; when the snapshot file and the complementary snapshot file in the snapshot queue reach a threshold value, backing up the snapshot file and the complementary snapshot file in a target magnetic disk. According to the data backup method, the data is snapshot and complementarily snapshot, and the snapshot file and the complementary snapshot file are transferred when reaching the threshold value, so that the backup time of the virtual machines is shortened; moreover, the virtual machines continuously run in backup processes, so that influence on a physical server in the backup processes of the virtual machines is reduced, and the consistency of memory data of the virtual machines and the cache data of the magnetic disks is improved.

Owner:DAWNING CLOUD COMPUTING TECH CO LTD

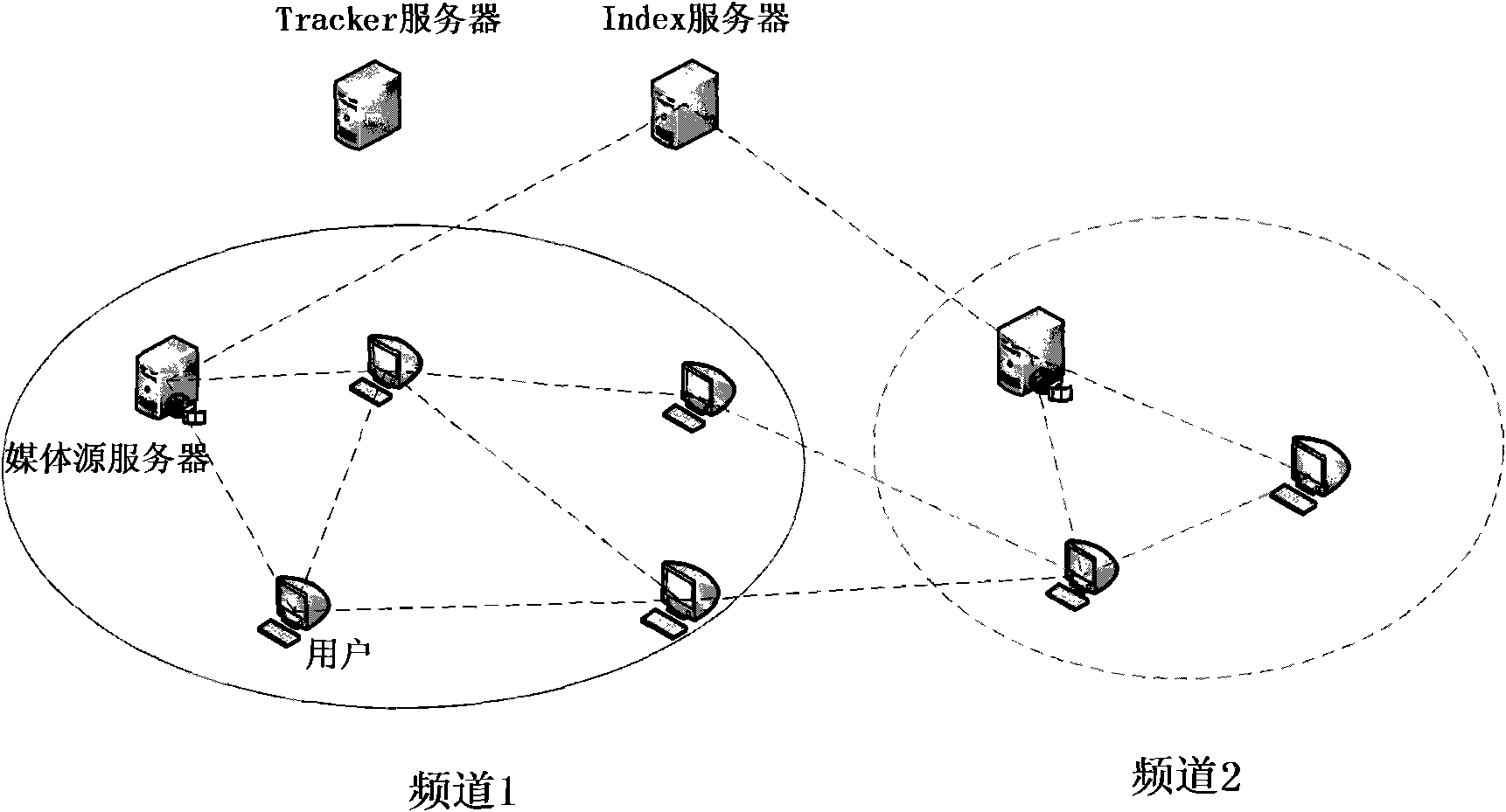

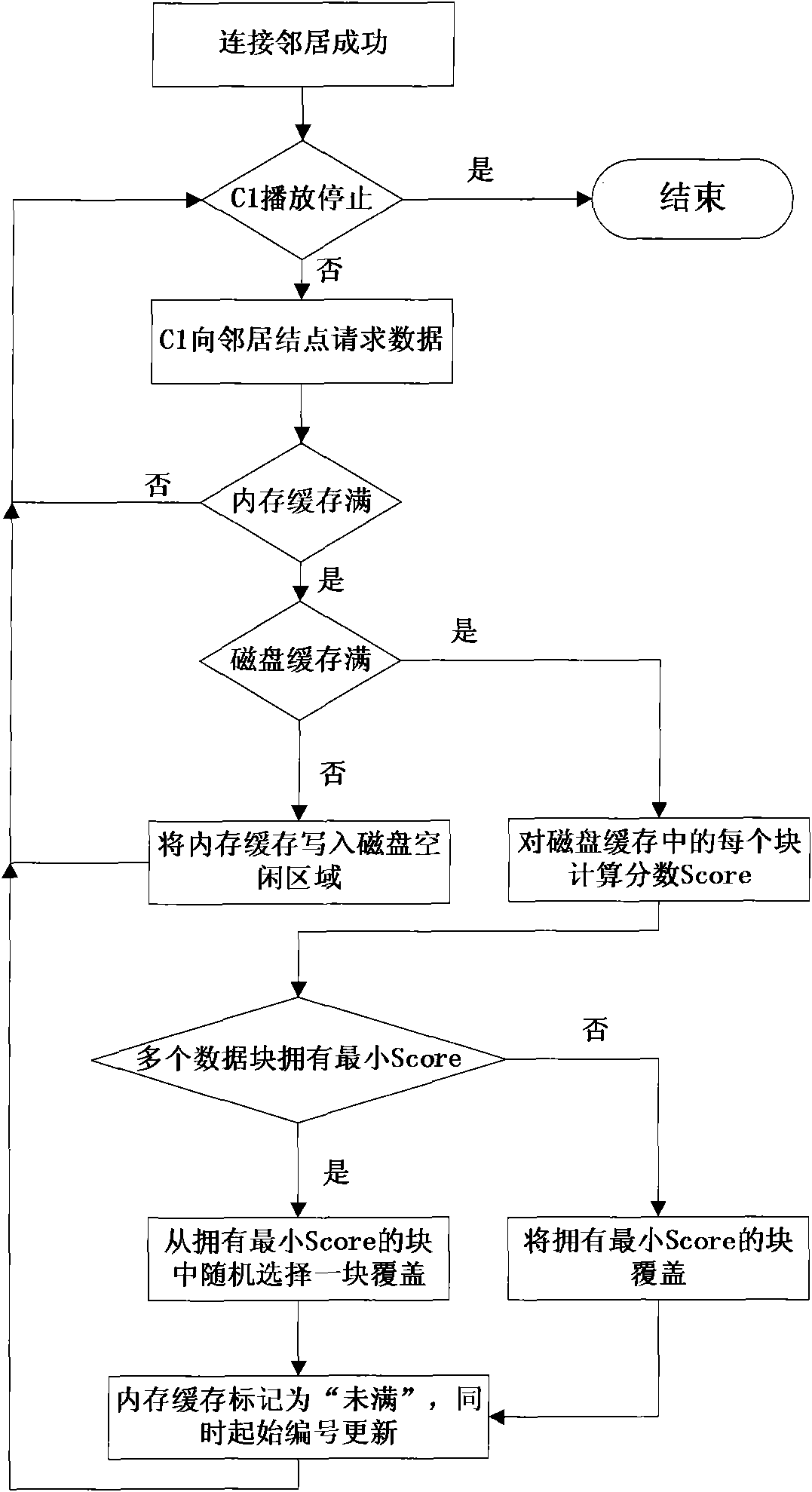

Disk buffering method in use for video on demand system of peer-to-peer network

InactiveCN1874490AIncrease cache contentWidely distributedMemory adressing/allocation/relocationTwo-way working systemsComputer architectureMedia server

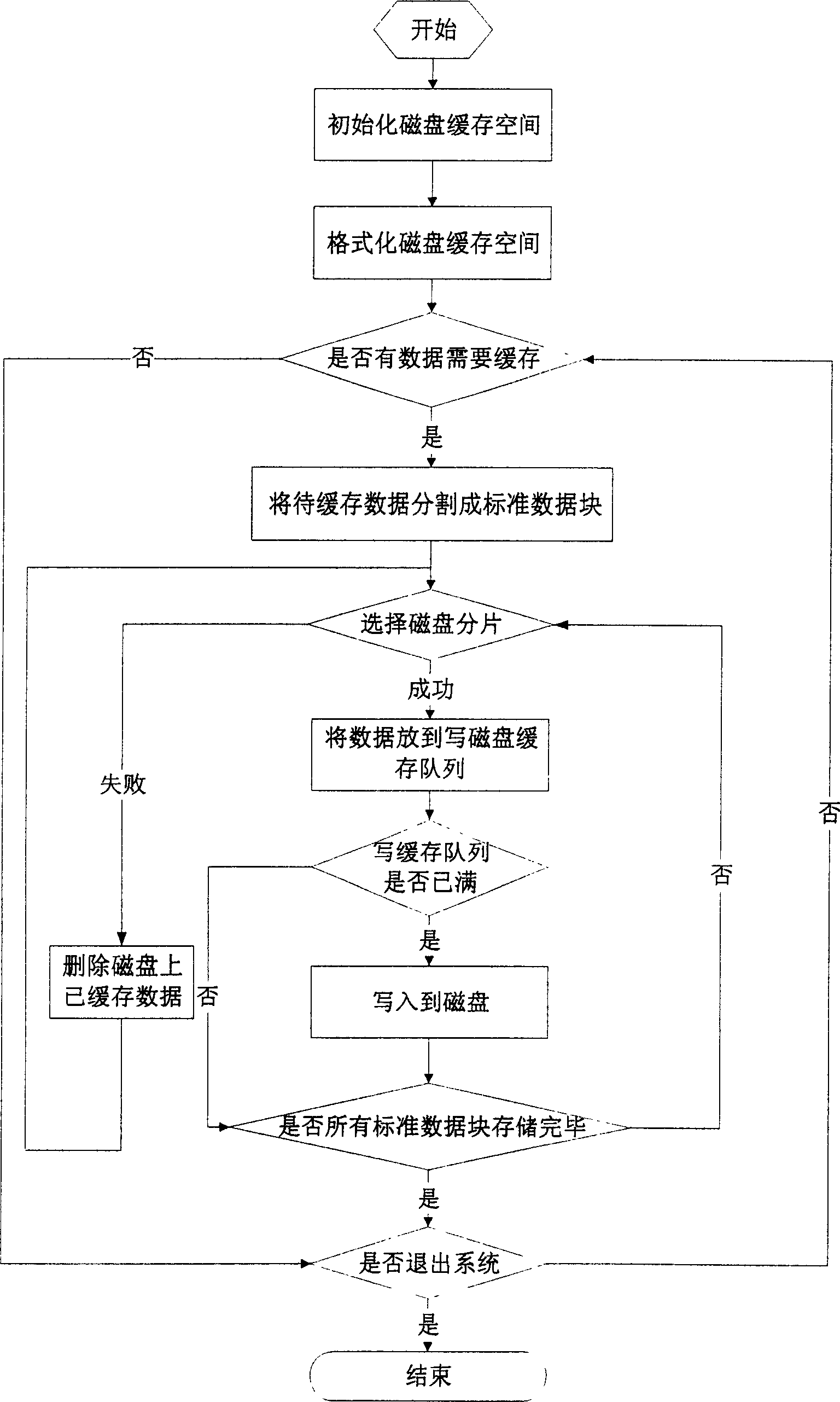

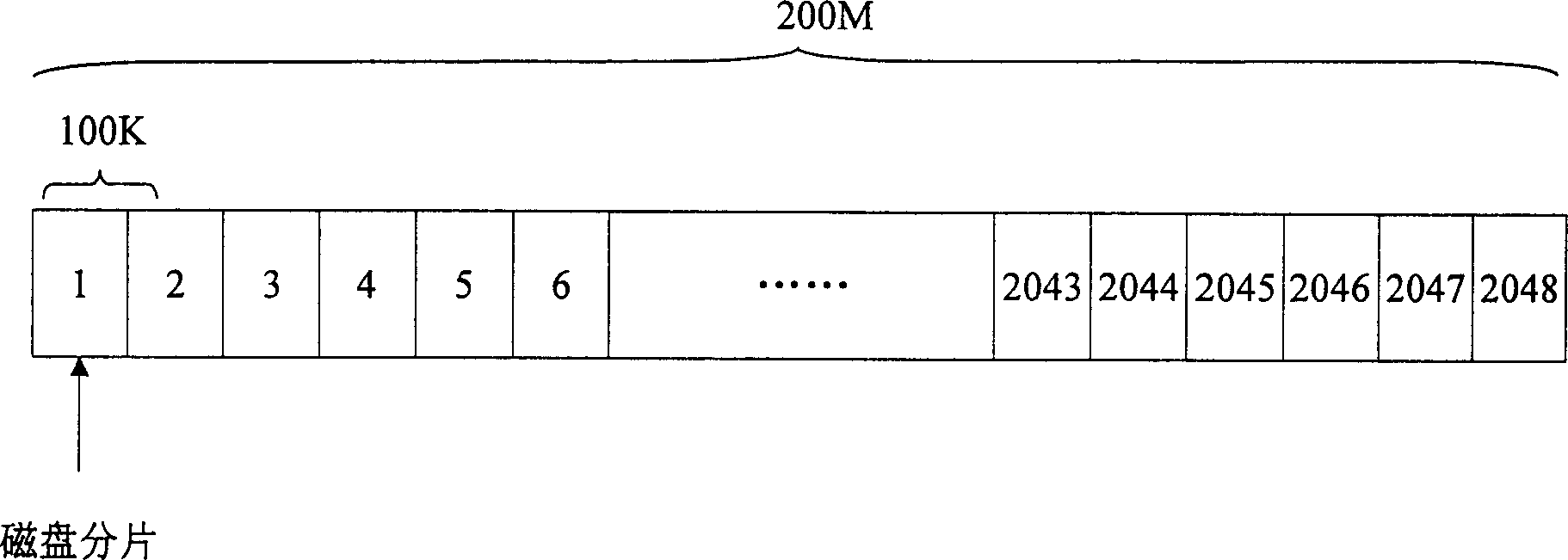

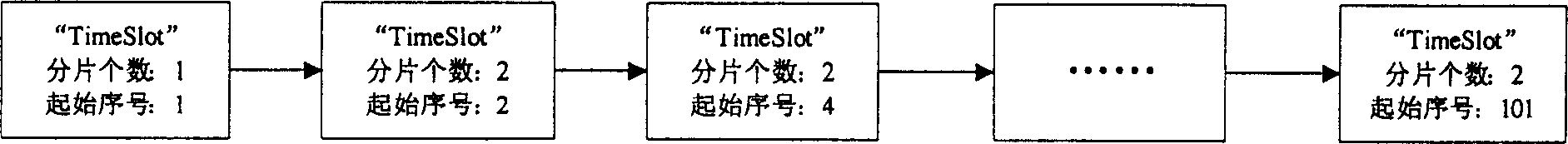

In the invention, each node executes the following steps: 1) initializing the disc space; 2) formatting the disc space; 3) making check to decide if there is data to be buffered; 4) dividing the data to be buffered into normal data blocks; 5) selecting a proper sub-disc; 6) inserting the data to be buffered into the disc buffer queue; 7) deleting the buffer data already existing in the disc; 8) checking the buffer queue for writing into the disc to decide it is full; 11) deciding if the user quits the system.

Owner:HUAZHONG UNIV OF SCI & TECH

Object caching method based on disk

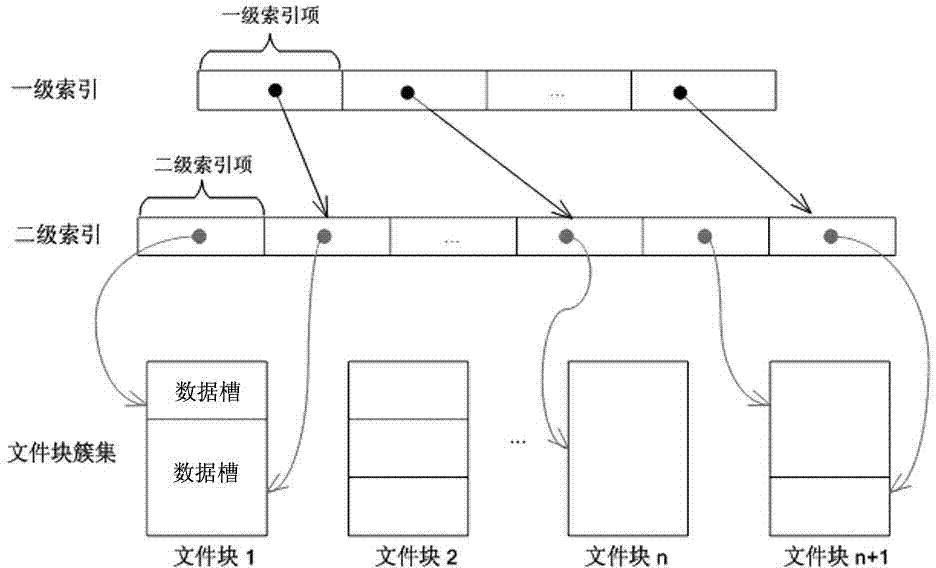

ActiveCN103678638ATake advantage ofImprove reading and writing efficiencyMemory adressing/allocation/relocationSpecial data processing applicationsCache optimizationFailure mechanism

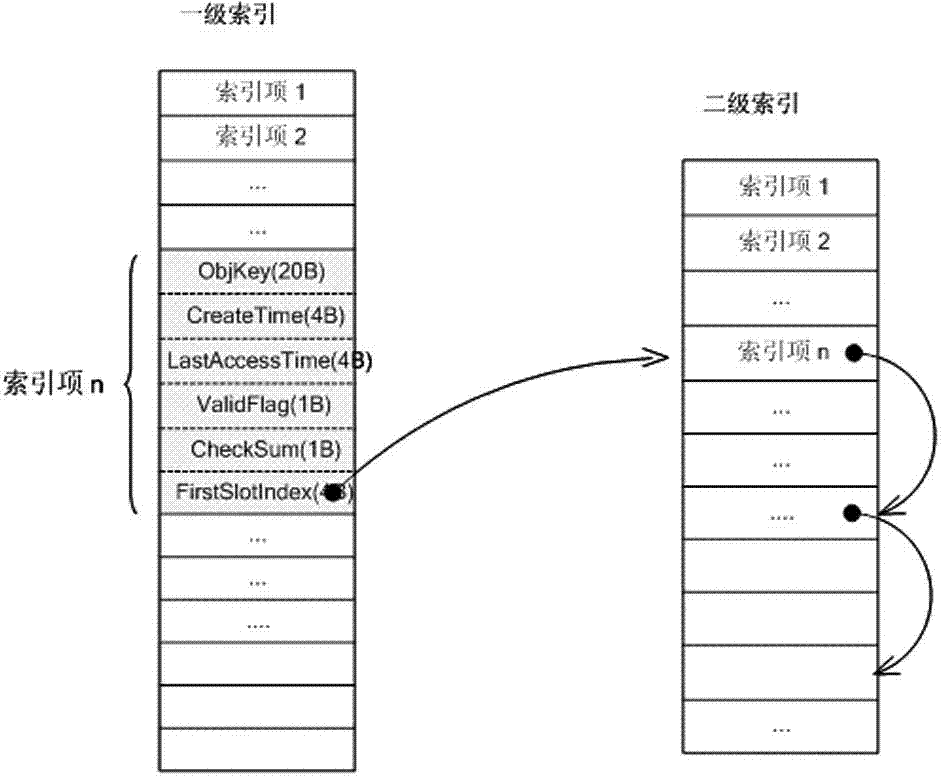

The invention relates to the technical field of disk caching, in particularly to an object caching method based on a disk. The object caching method includes the steps that file storage space is divided to construct a file storage structure of secondary indexes, and the processes of adding objects to a disk cache and acquiring and deleting cached objects from the disk cache, a cache failure mechanism and the cache optimization arrangement process are achieved according to the file storage structure of the secondary indexes. Data of the objects are stored through chunks with the fixed sizes, the multiple small objects are merged and stored in one chunk, large objects are divided into a plurality of object blocks, the object blocks are stored in the multiple chunks respectively, the objects of any size and any type can be cached, and caching efficiency is higher.

Owner:XIAMEN YAXON NETWORKS CO LTD

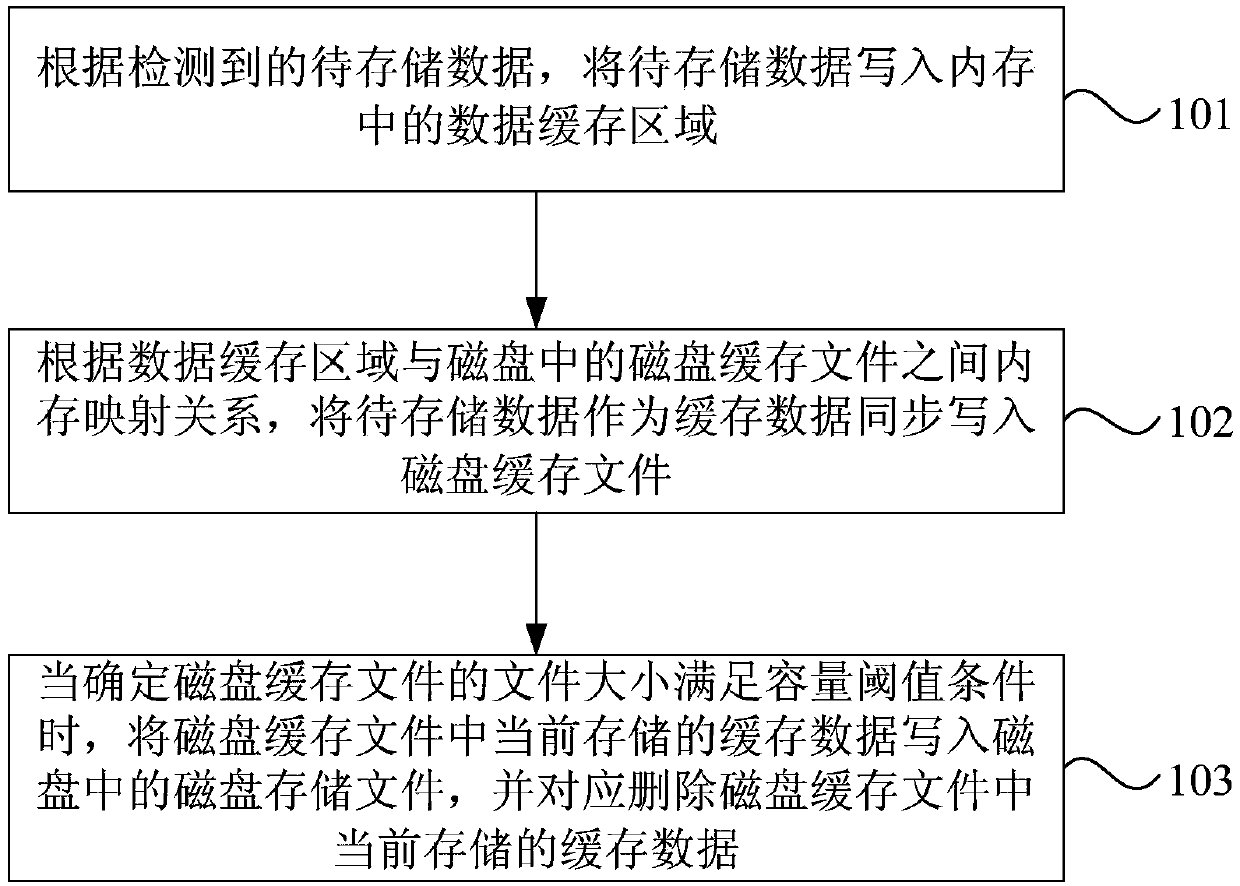

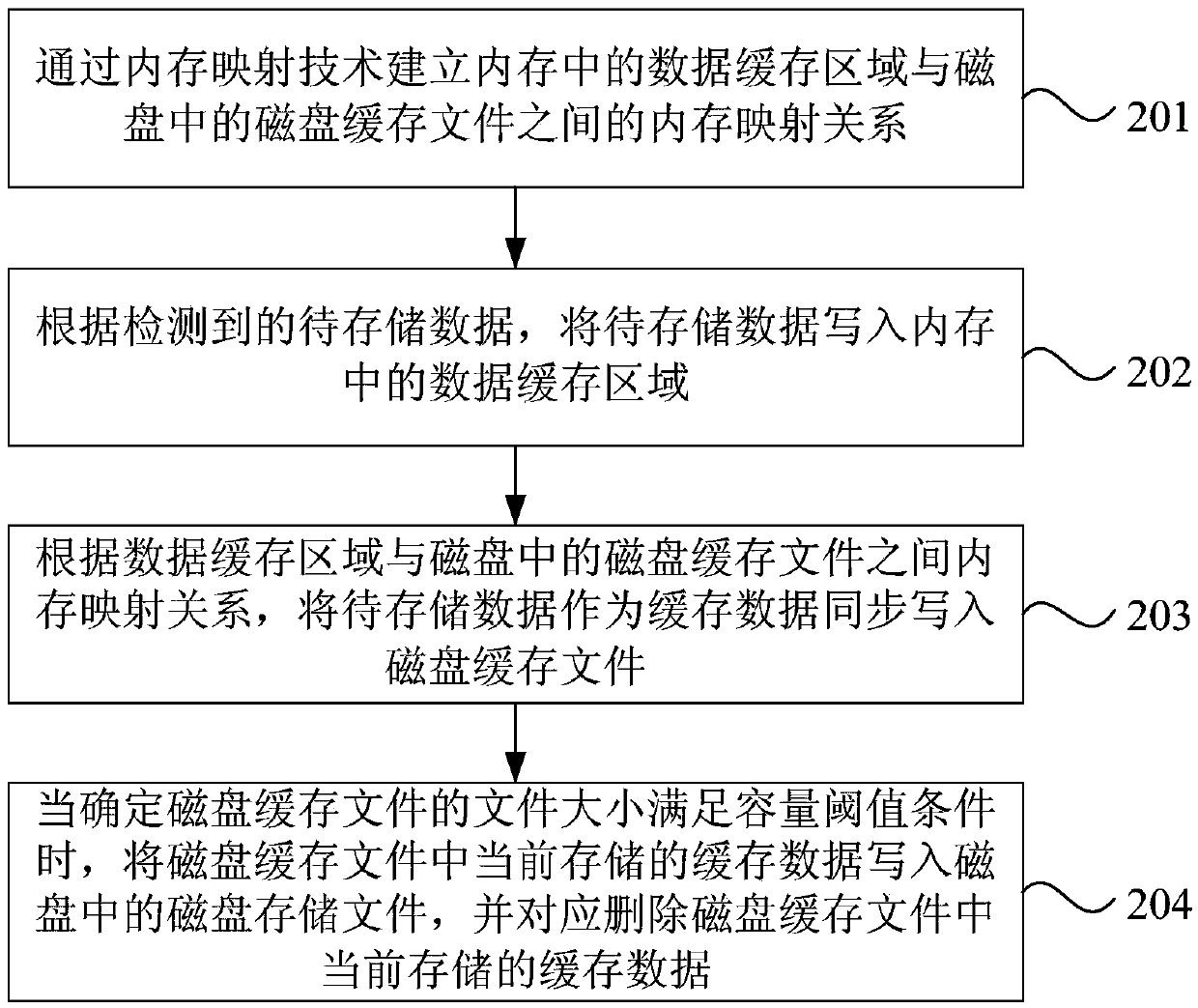

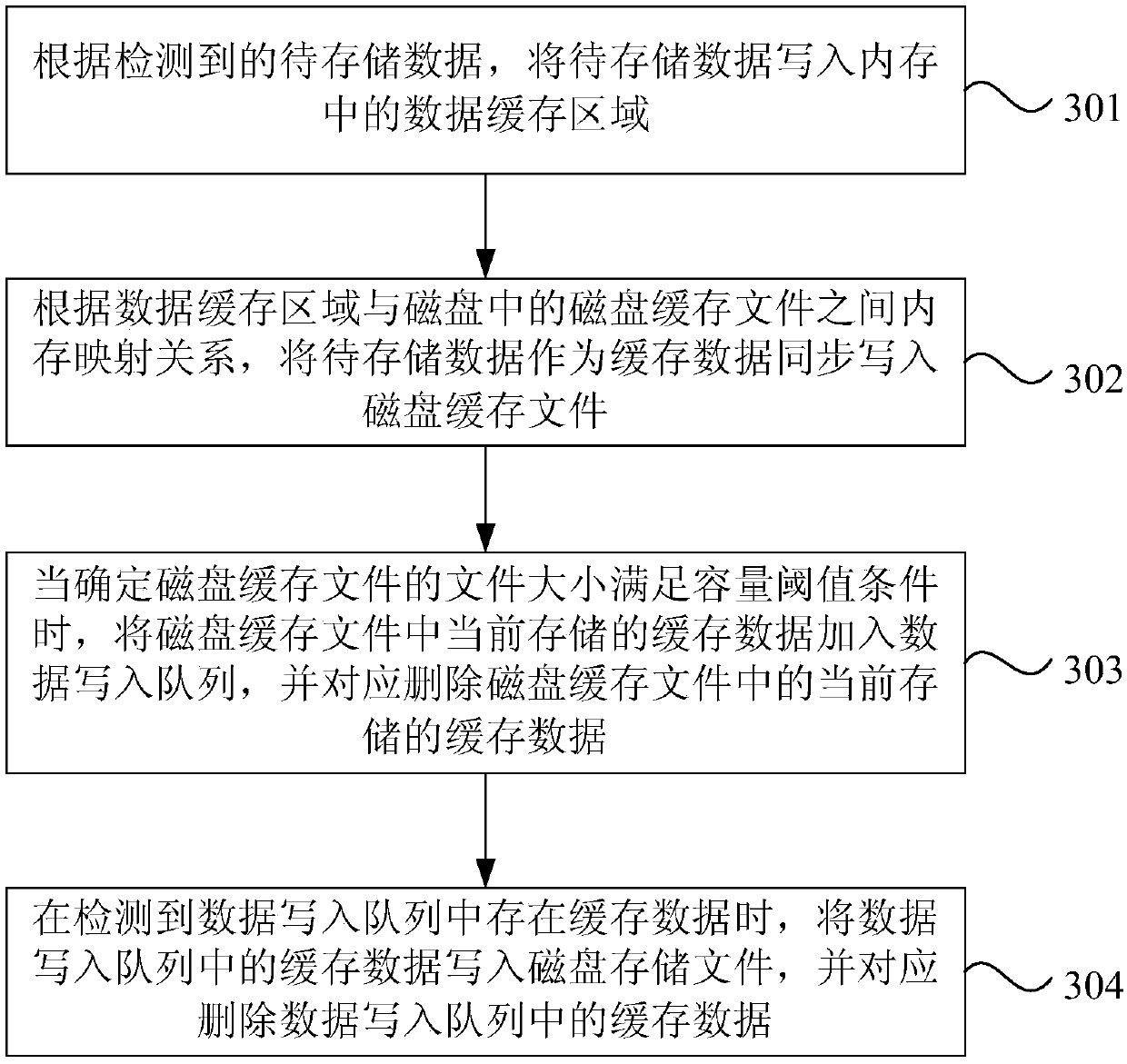

Data storage method and device, terminal equipment and storage medium

ActiveCN109597568APrevent lossIntegrity guaranteedInput/output to record carriersData synchronizationTerminal equipment

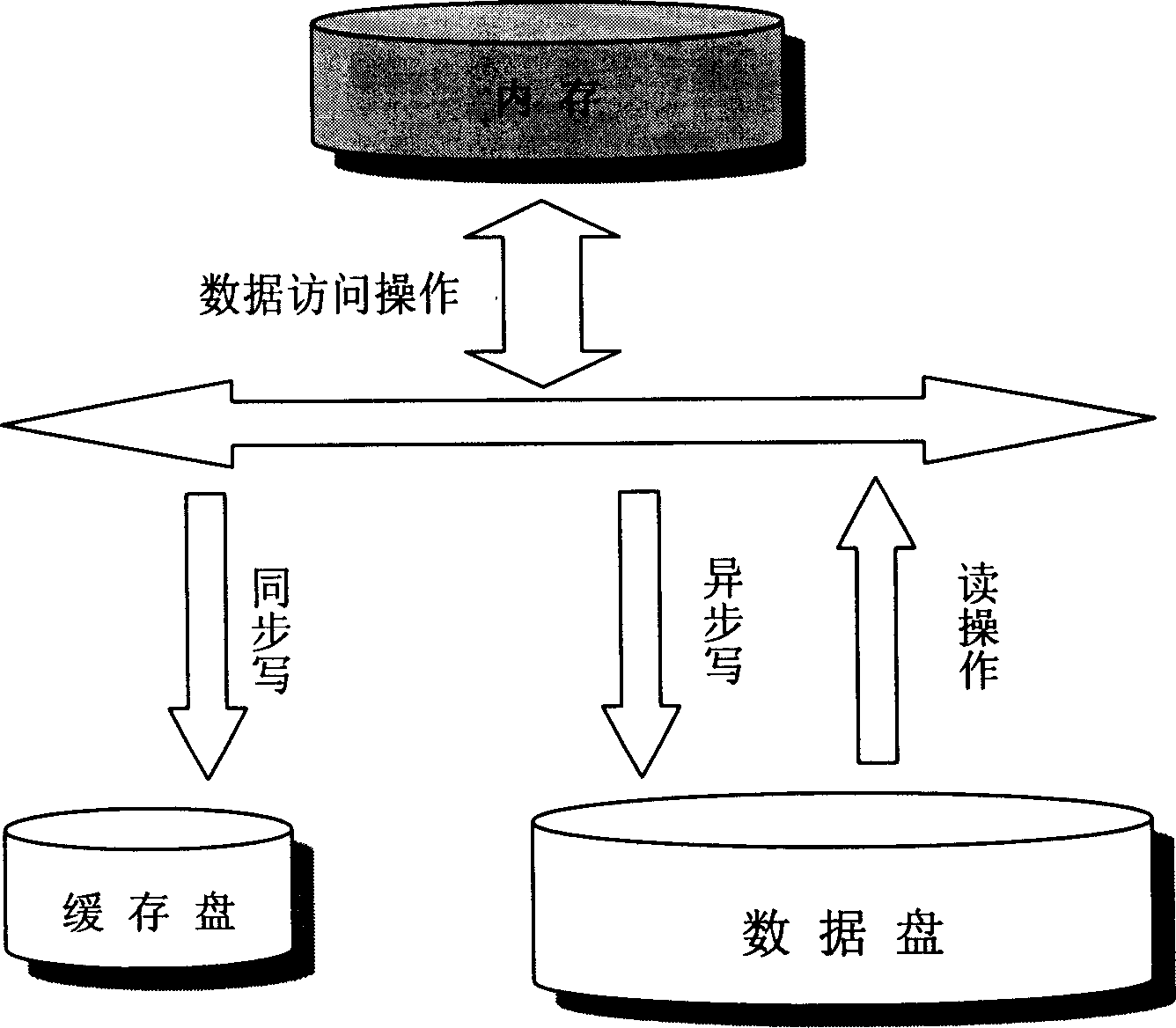

The invention discloses a data storage method and device, terminal equipment and a storage medium. The method comprises the steps that according to detected to-be-stored data, writting the to-be-stored data into a data caching area in a memory; Synchronously writing the to-be-stored data as cache data into a disk cache file according to a memory mapping relation between the data cache area and thedisk cache file in the disk; And when it is determined that the data size of the disk cache file meets the capacity threshold condition, writing the cache data currently stored in the disk cache fileinto a disk storage file in a disk, and correspondingly deleting the cache data currently stored in the disk cache file. The embodiment of the invention can avoid the condition of data loss caused byusing a memory caching technology in the prior art, and can effectively reduce the frequency of input / output operation, reduce the system power consumption and improve the system performance while ensuring the integrity of the data.

Owner:脸萌技术(深圳)有限公司

Method and apparatus for high availability (HA) protection of a running virtual machine (VM)

ActiveUS8417885B2Minimal downtimeError detection/correctionSoftware simulation/interpretation/emulationHigh availabilityVirtual machine

High availability (HA) protection is provided for an executing virtual machine. A standby server provides a disk buffer that stores disk writes associated with a virtual machine executing on an active server. At a checkpoint in the HA process, the active server suspends the virtual machine; the standby server creates a checkpoint barrier at the last disk write received in the disk buffer; and the active server copies dirty memory pages to a buffer. After the completion of these steps, the active server resumes execution of the virtual machine; the buffered dirty memory pages are sent to and stored by the standby server. Then, the standby server flushes the disk writes up to the checkpoint barrier into disk storage and writes newly received disk writes into the disk buffer after the checkpoint barrier.

Owner:AVAYA INC

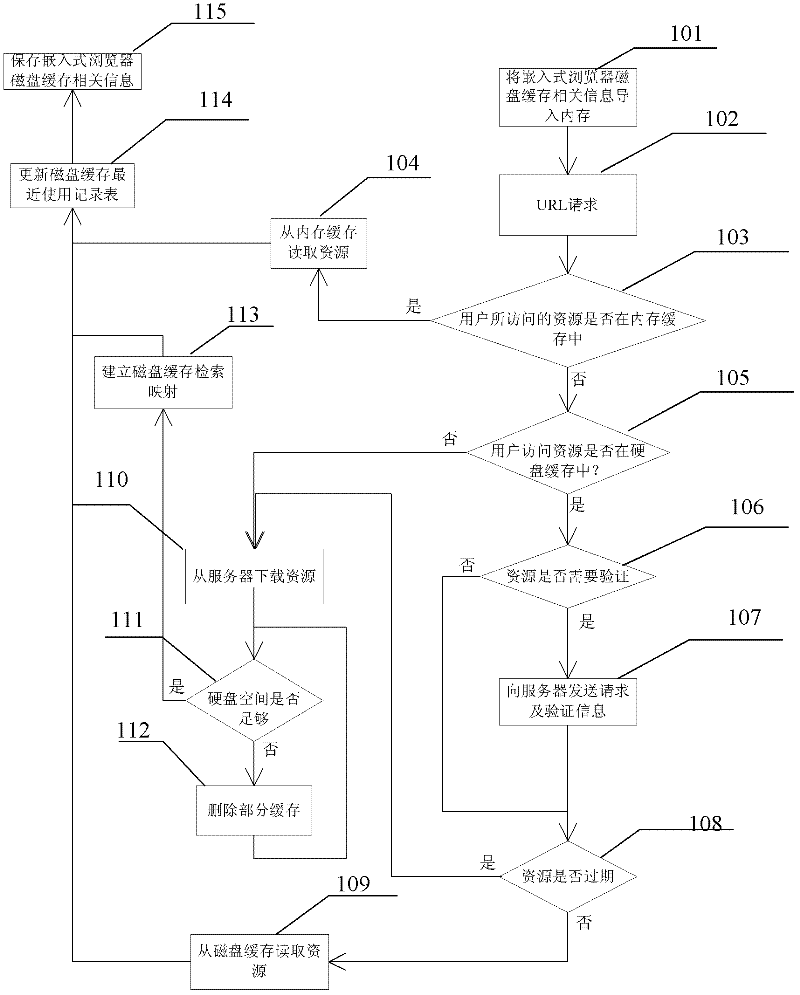

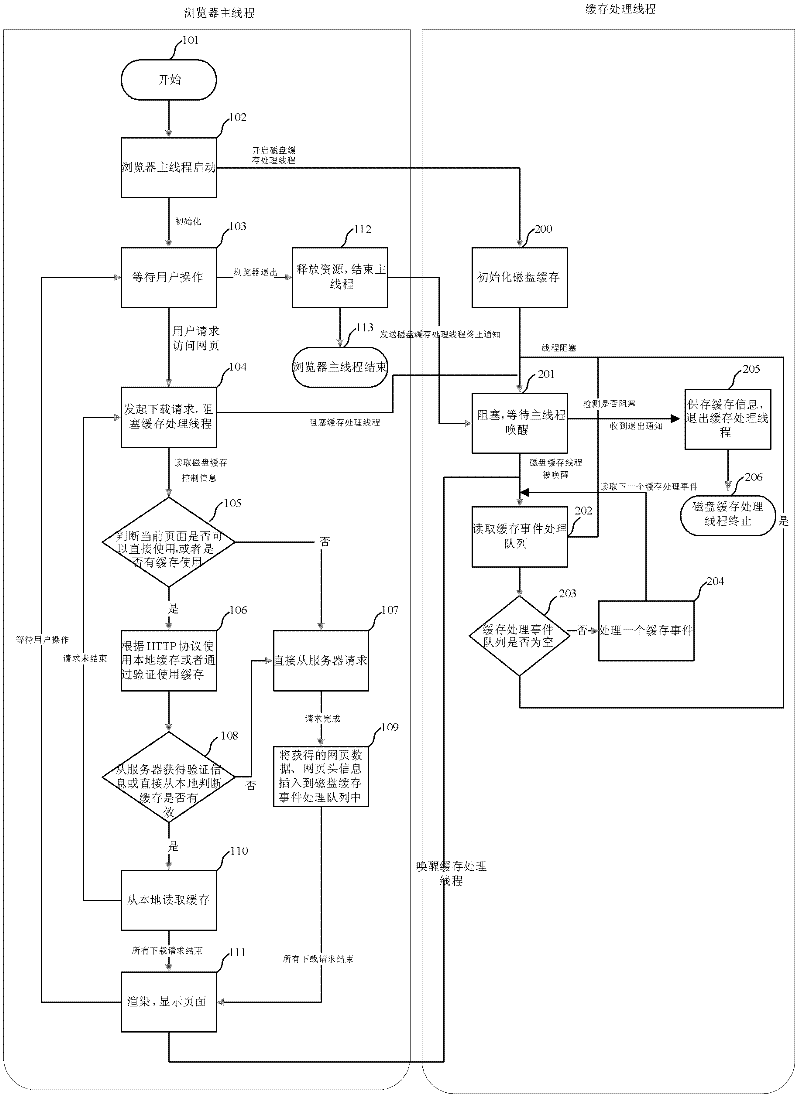

Disk caching method for embedded browser

InactiveCN102479250AAvoid distinctionEasy to readSpecial data processing applicationsUniform resource locatorWeb page

The invention provides a disk caching method for an embedded browser. In the method, the embedded browser takes a caching medium as a disk. The method comprises the following steps of: storing webpage resources accessed by a user onto a disk medium; establishing a mapping relationship between a resource URL (uniform resource locator) and disk caching data, and recording and maintaining the mapping relationship between the resource URL and the disk caching data on the disk medium; accessing the webpage resources according to the recorded mapping relationship, and querying from the mapping relationship when the resource URL is accessed; if a corresponding mapping value is found, determining that resources are stored on the disk, and reading the webpage resource data from the disk medium; and otherwise, downloading required webpage resource data from a server. The step of establishing the mapping relationship comprises the following substeps of: obtaining a Hash value of the resource URL by adopting a Hash algorithm; and writing a key value pair formed by the resource URL and the Hash value into a disk caching retrieval table to establish the mapping relationship between the resource URL and the disk caching data.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI

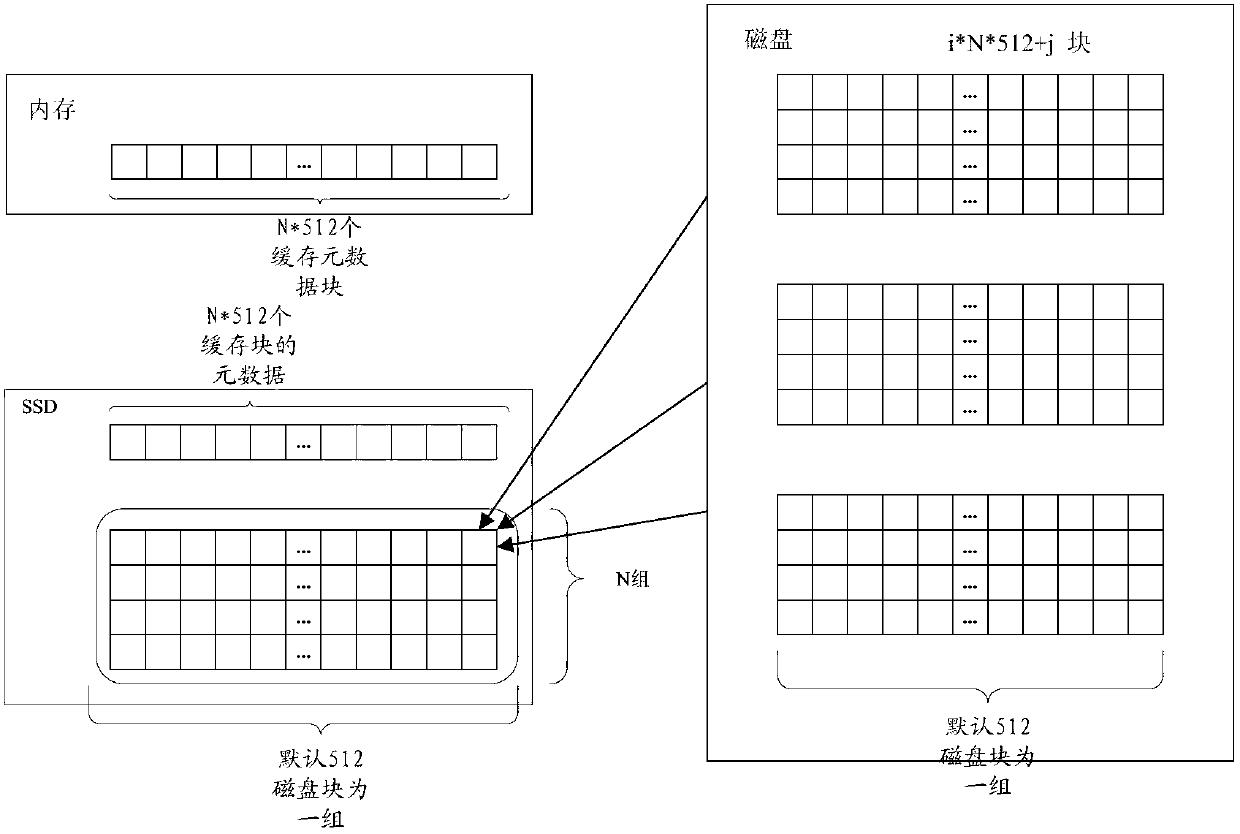

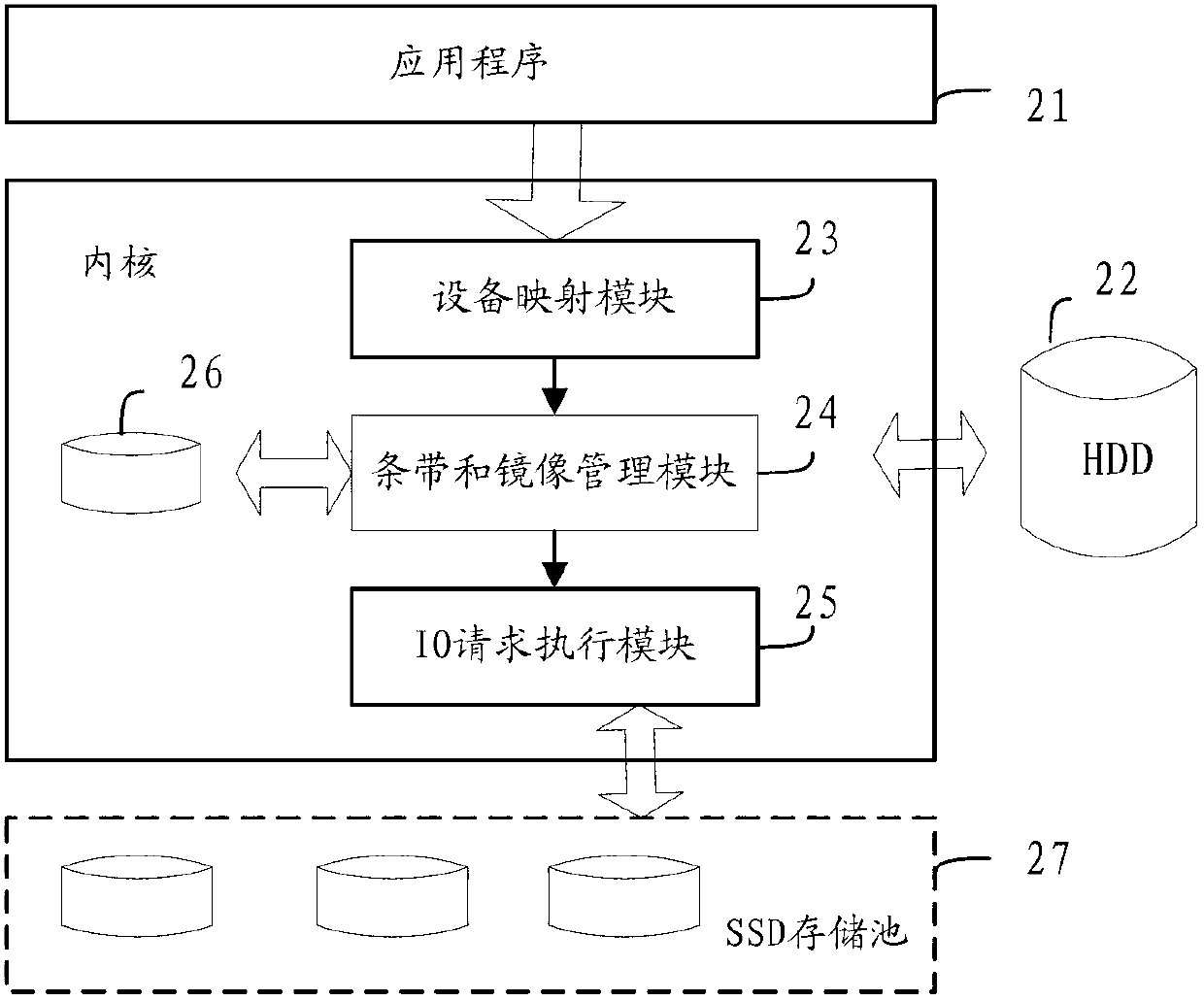

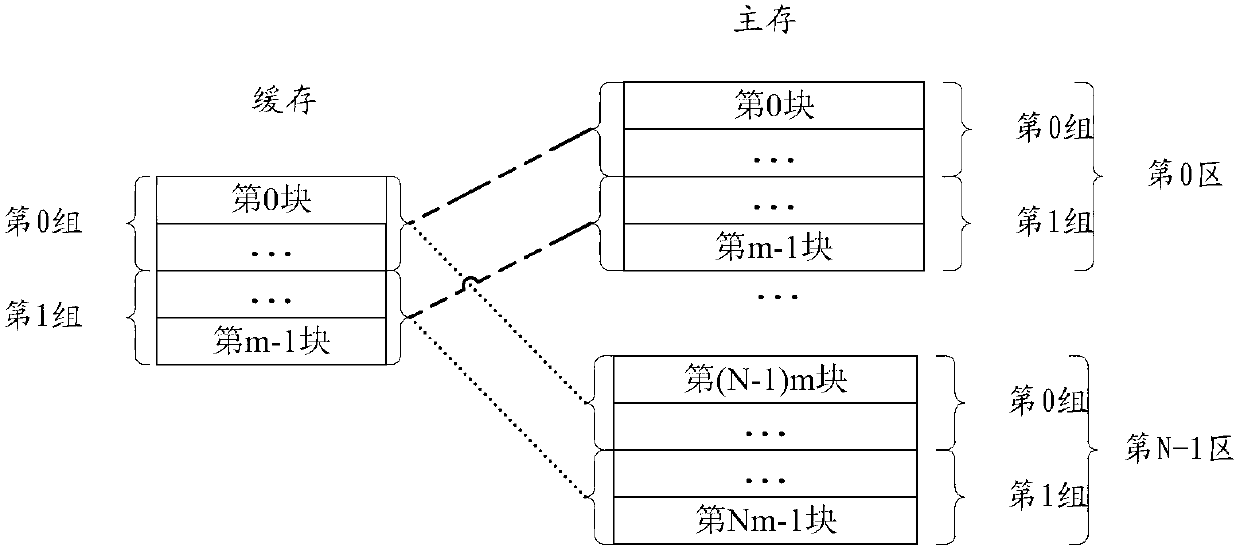

Multi-device mirror images and stripe function-providing disk cache method, device, and system

ActiveCN102713828AImprove read performanceImprove reliabilityInput/output to record carriersMemory adressing/allocation/relocationParallel processingCache management

The invention provides a method, a device, and a system for proceeding IO input and output operation on multiple cache devices. The method comprises the steps that a cache management module reads cache block information which comprises at least two addresses, wherein the at least two addresses point to least two different SSD cache devices; and the cache management module initiates data-reading operation on the at least two SSD cache devices according to the at least two addresses in the cache block information. The invention embodiments realize multiple-device parallel processing required by IO by configuring buffer block stripes in cache devices, and reading performance of cache blocks is improved; and data reliability is improved by adding mirror image copies to a dirty cache block.

Owner:HUAWEI TECH CO LTD

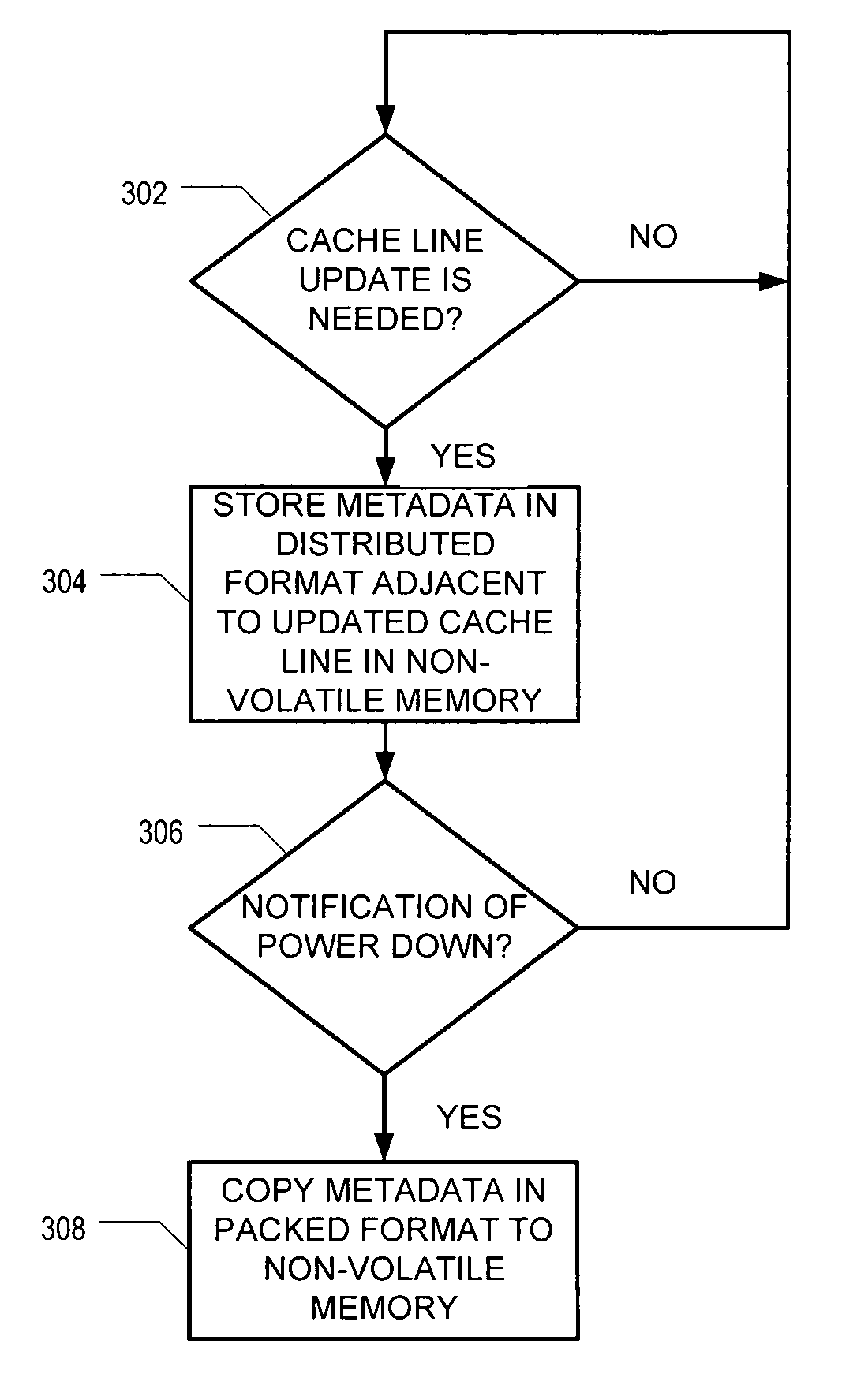

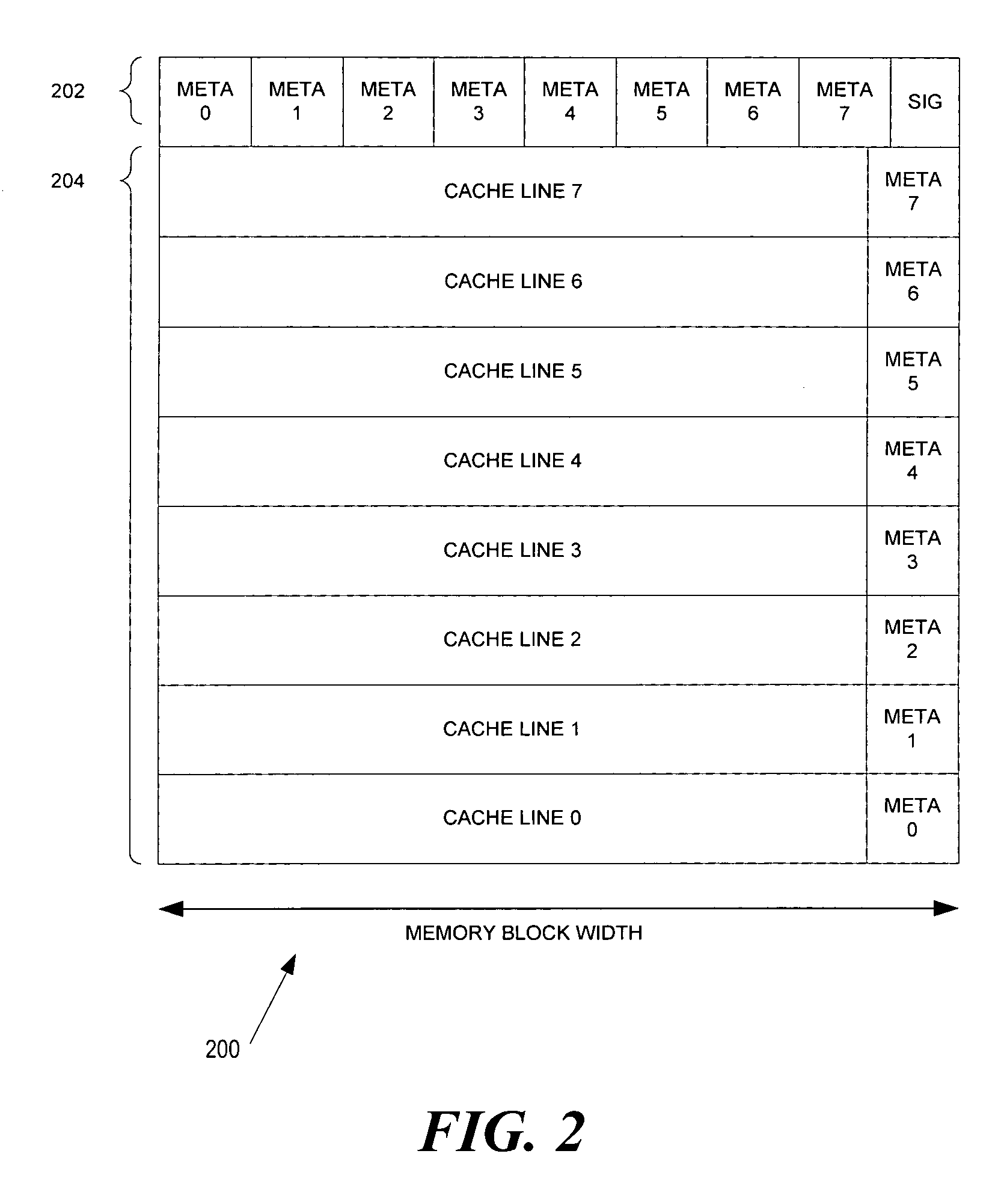

Distributed and packed metadata structure for disk cache

Owner:INTEL NDTM US LLC

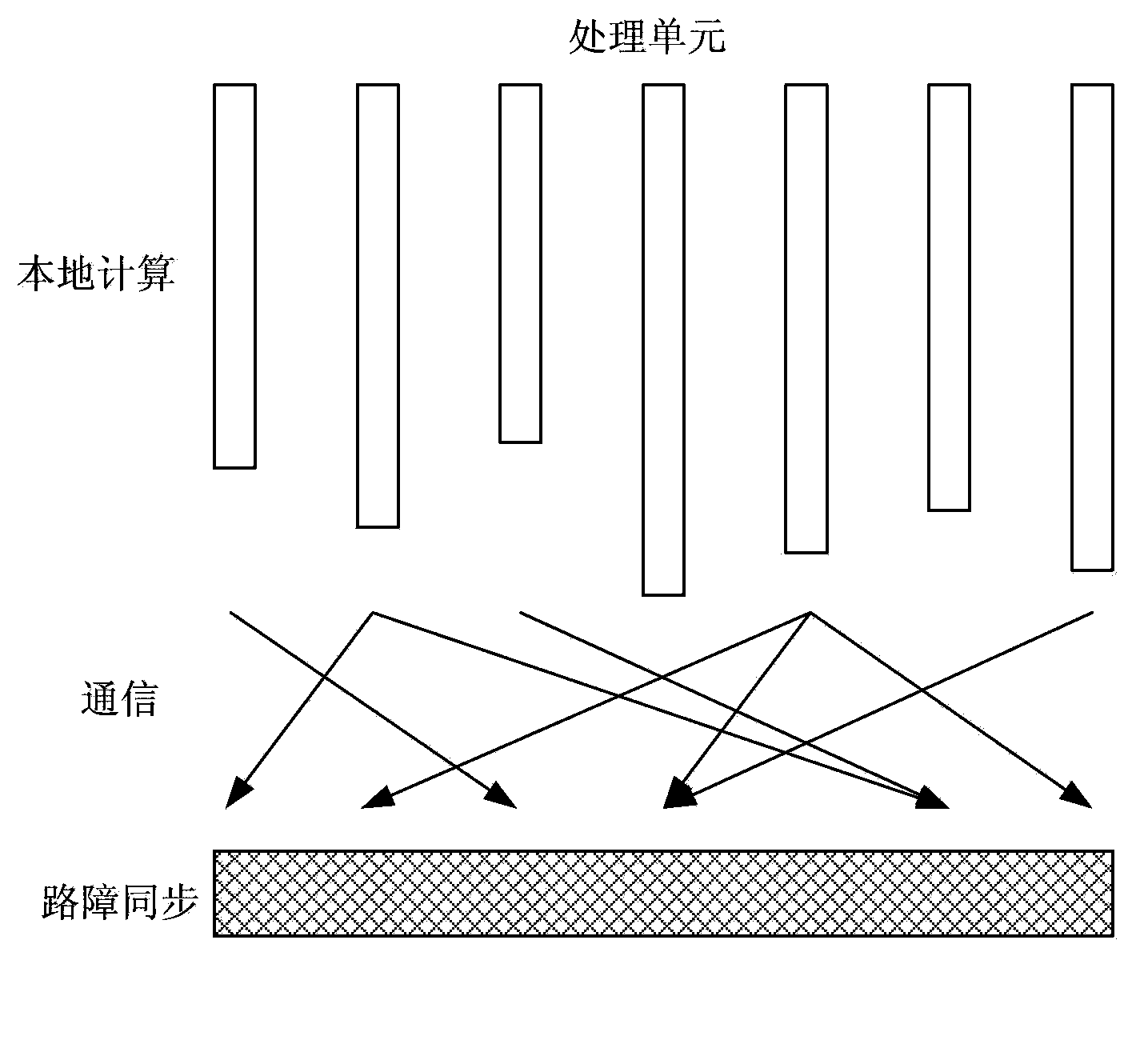

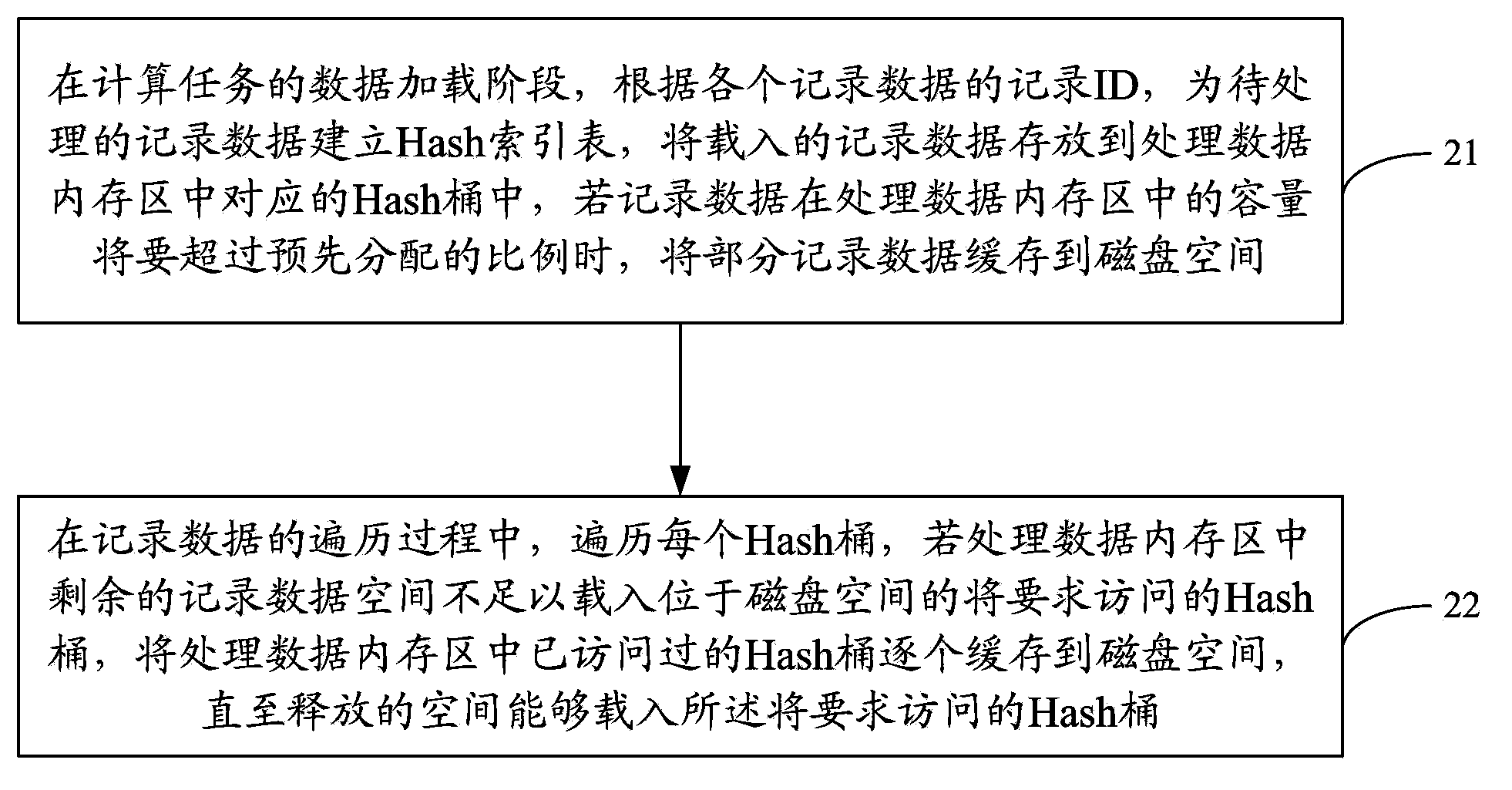

Disk cache method and device in parallel computer system

ActiveCN103914399AAvoid problems with throwing exceptions or even job failuresGuaranteed uptimeMemory adressing/allocation/relocationParallel computingInformation data

The invention provides a disk cache method and device in parallel computer system. The method comprises pre-assigning the respective occupied rate of record data and information data in a data processing memory area; caching part of the record data in disk space with a Hash bucket as the unit when the capacity of the record data in the data processing memory area is about to exceed the pre-assigned rate during data loading; during calculating of task traversal access of the recorded data, caching the accessed Hash buckets in the data processing memory area in the disk space one by one till the released space can load the Hash buckets required to be accessed if the Hash buckets to be accessed is in the disk space and the surplus record data space in the data processing memory area is insufficient to load the Hash buckets to be accessed. By means of the method and the device, automatic caching of data to the disk can be achieved in a BSP model based parallel iterative computation system.

Owner:CHINA MOBILE COMM GRP CO LTD

P2P technique based video on-demand program caching method

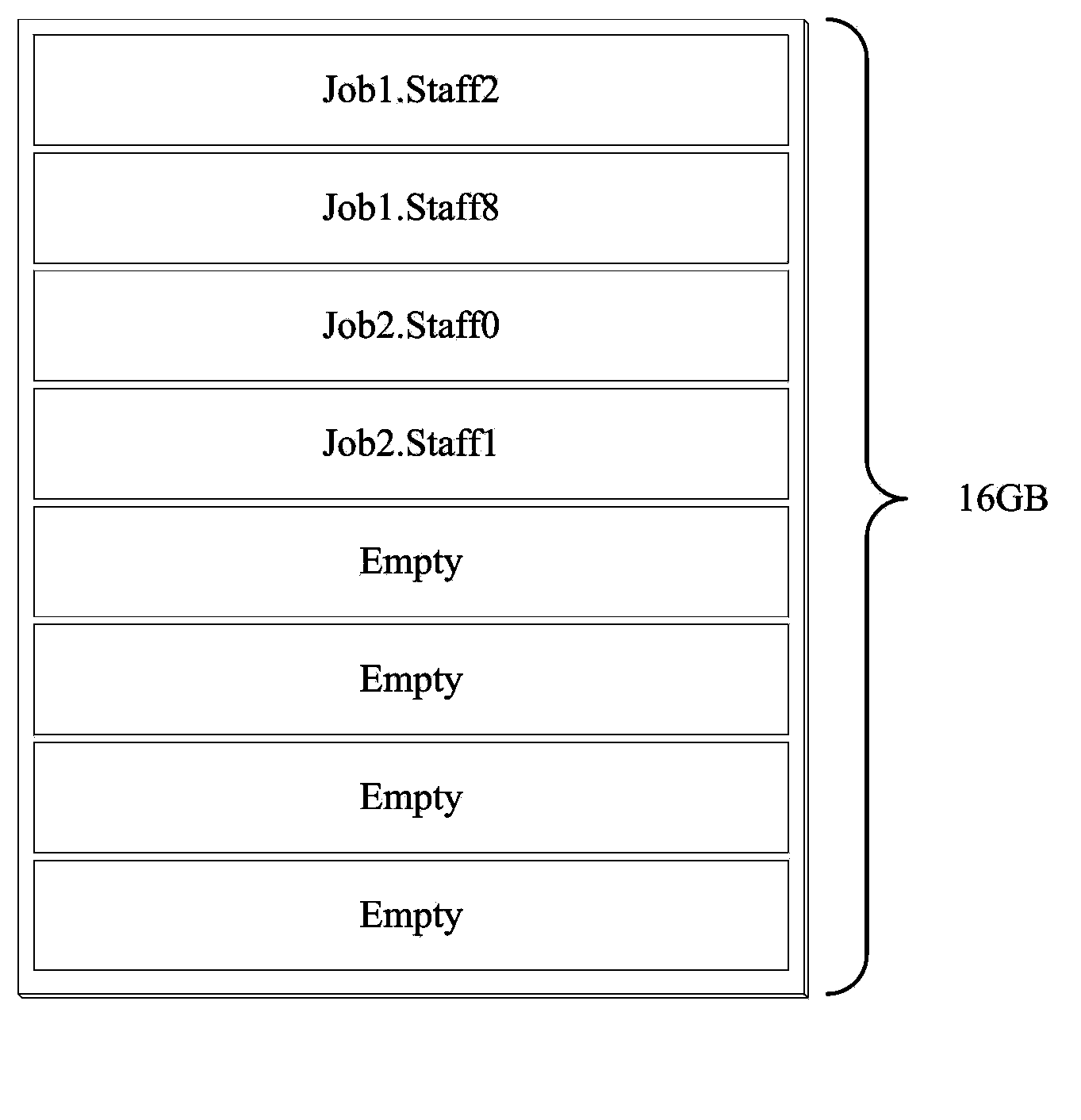

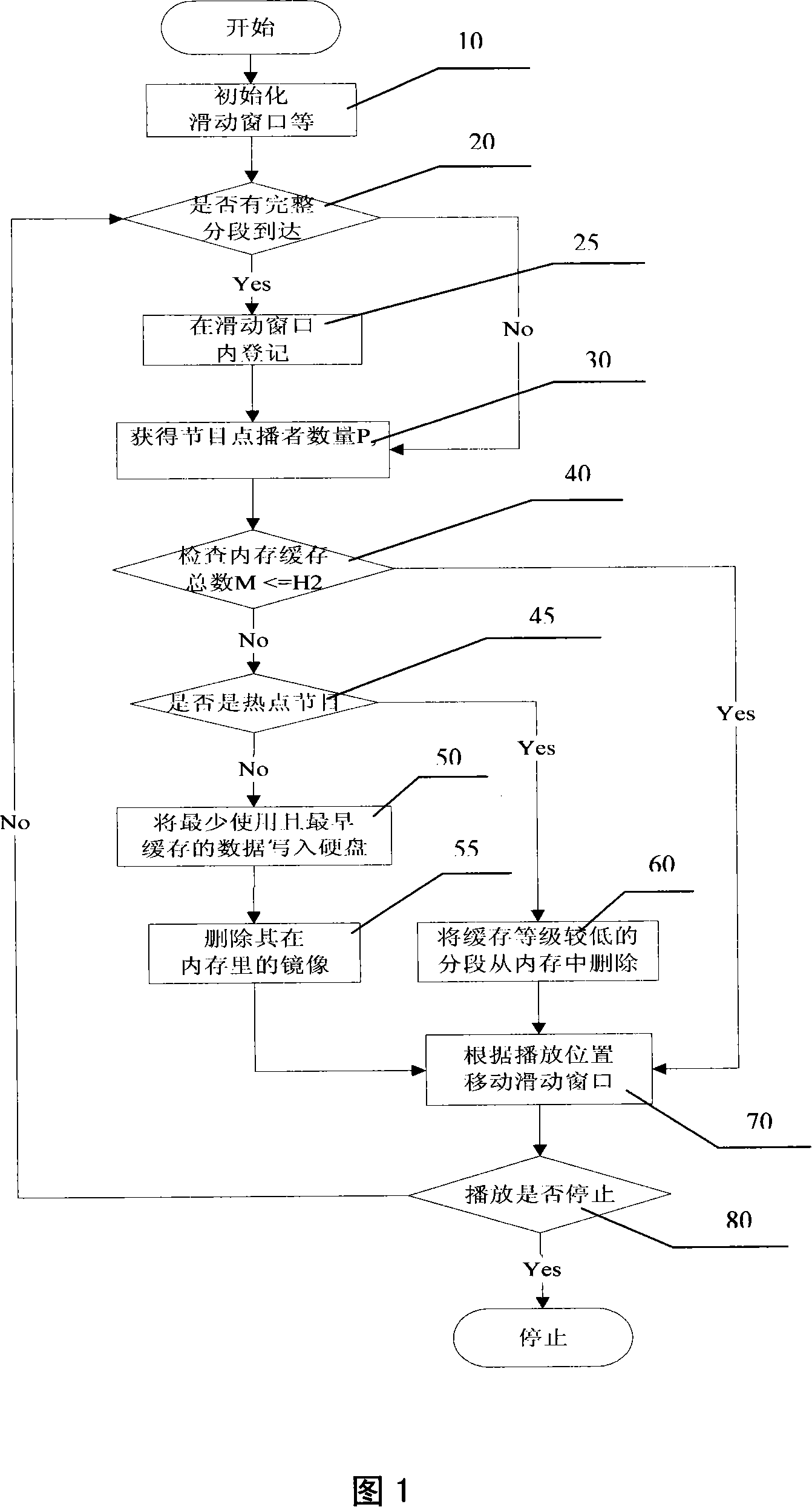

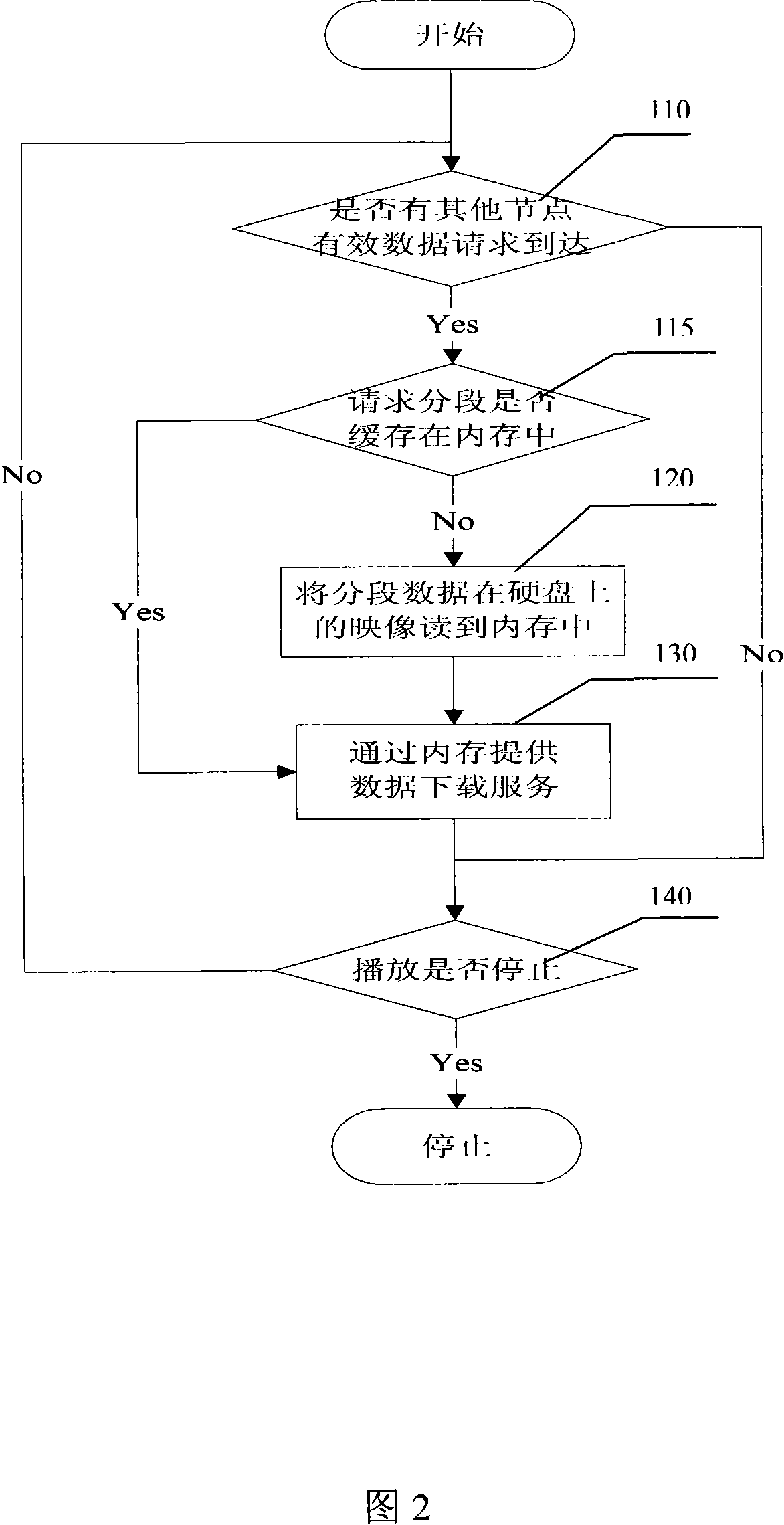

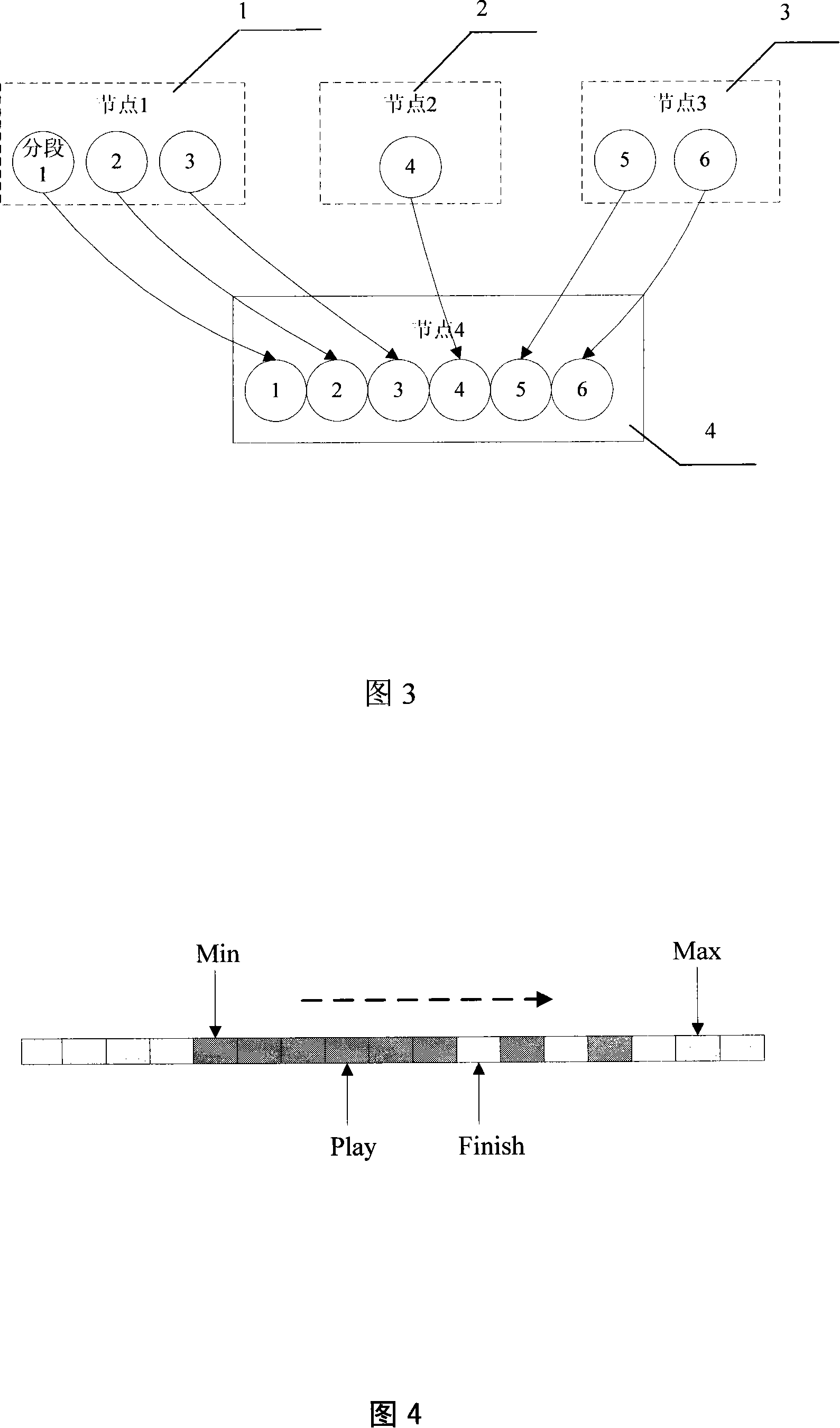

InactiveCN101141623AReduce the number of reads and writesDo not need to read frequentlyTwo-way working systemsInternal memoryVideo on demand

The present invention is aimed at providing a buffer method for video-on-demand program. The method is characterized in that the method comprises a step of obtaining the quantity of the demander demanding the program, wherein, the step is used for obtaining the quantity of the nodes on demand of the program on demand; a step of judging whether the program on demand is a hot program, wherein, the step is used for judging whether the program on demand is a hot program according to the quantity of the demanders demanding the program; a step of writing non hot programs into a hard disk, wherein, the step is used for writing data used least and buffered earliest into the hard disk. Because the present invention adopts the proposal of combining the internal memory and the hard disk buffer memory of non hot programs, the read write frequency of the hard disk can be greatly reduced, and better user satisfaction degree can be obtained; for hot programs, the present invention directly adopts the internal memory to buffer the rare resources in the network, therefore the buffer memory efficiency is enhanced on one hand, the hard disk buffer memory does not exist on the other hand, and thus the hard disk is not required to be read frequently.

Owner:HUAZHONG UNIV OF SCI & TECH

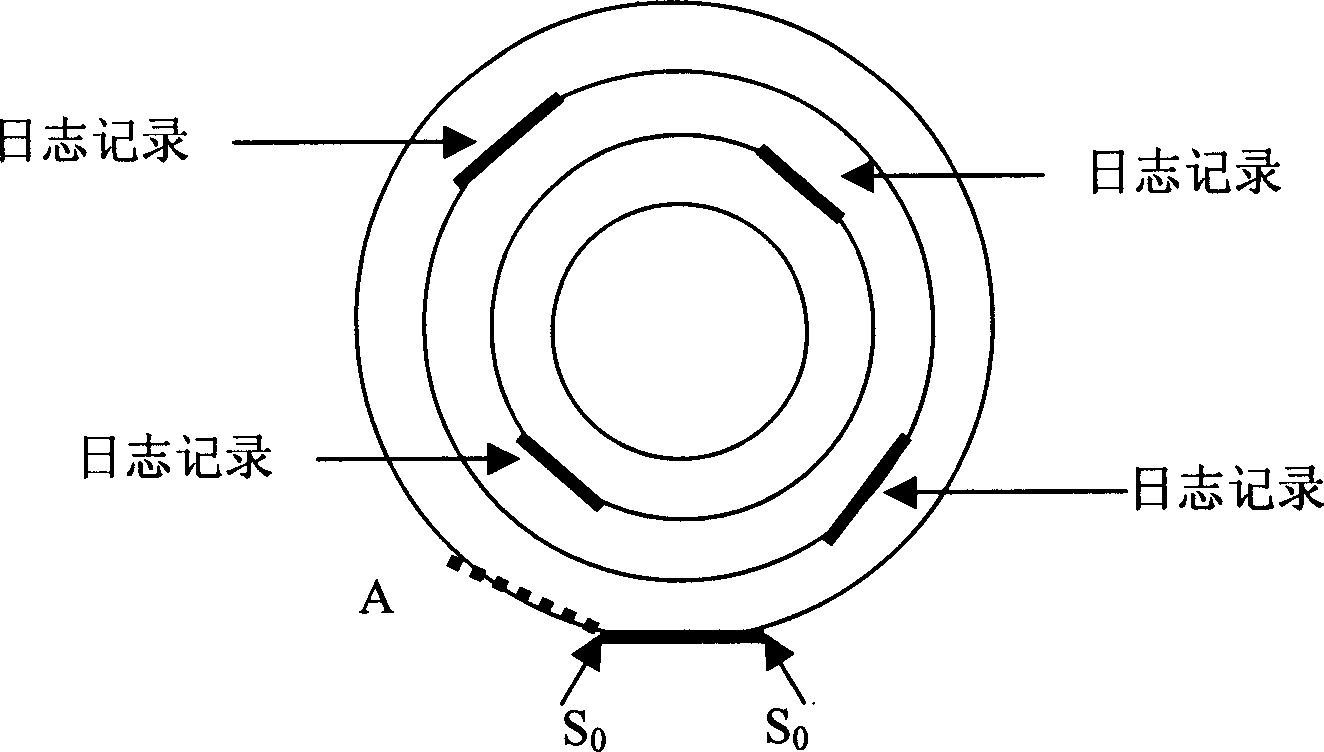

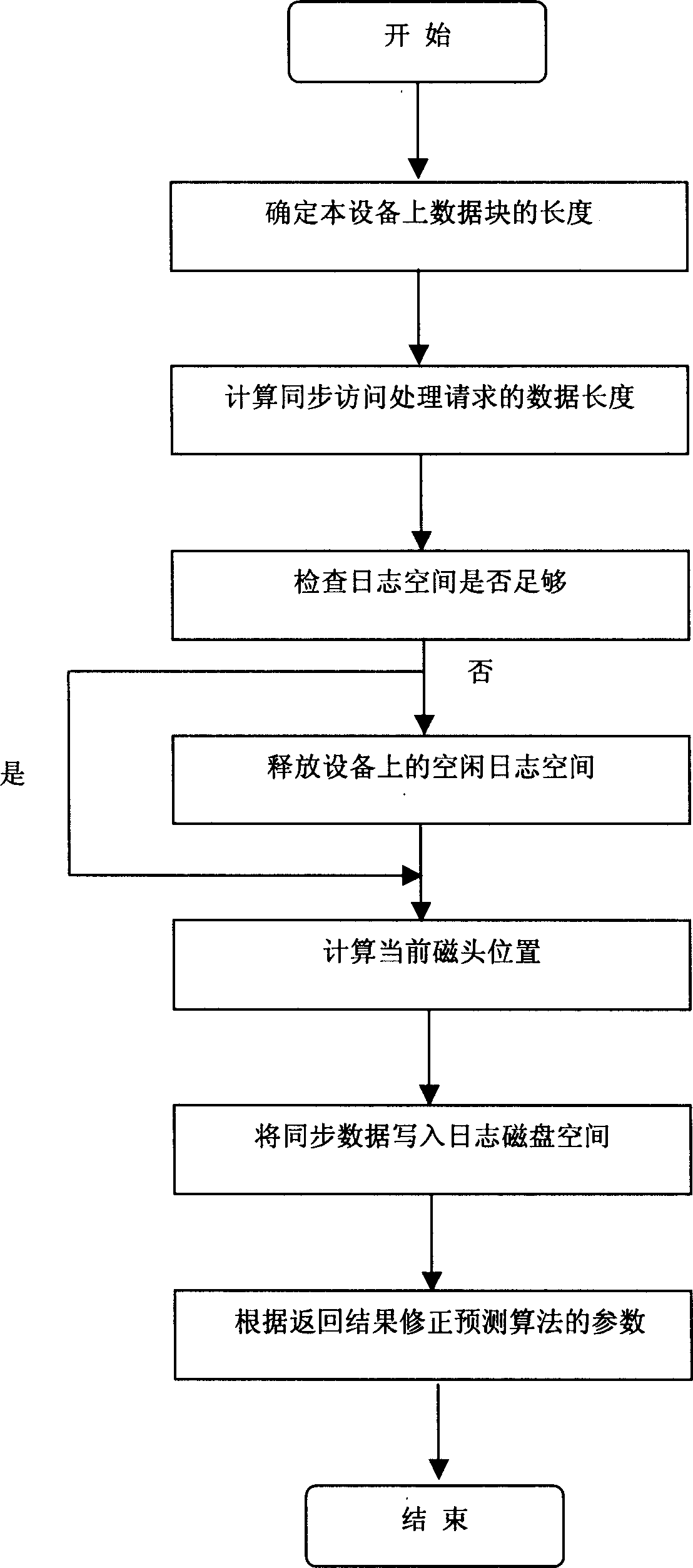

Fast synchronous and high performance journal device and synchronous writing operation method

InactiveCN1564138AImprove performanceLow costMemory adressing/allocation/relocationSCSIComputer architecture

Using high performance journal magnetic disk in small capacity as disk buffer raises disk synchronous writing operation in three-layered storage structure-memory, journal disk and data disk. When operating system executes synchronous writing operation, the method converts it to synchronous writing operation of journal disk and asynchronous writing operation of system buffer. Correspondingly, synchronous writing operation is raised. Features are: overhead of rotational delay is eliminated basically by predicting position of magnetic head so that command of writing operation is executed near place right ahead moving magnetic head. The method parks magnetic head at free magnetic track in advance; thus, in most cases, overhead of seeking track is also eliminated. Journal disk is through SCSI so as to improve performance of writing operation.

Owner:TSINGHUA UNIV

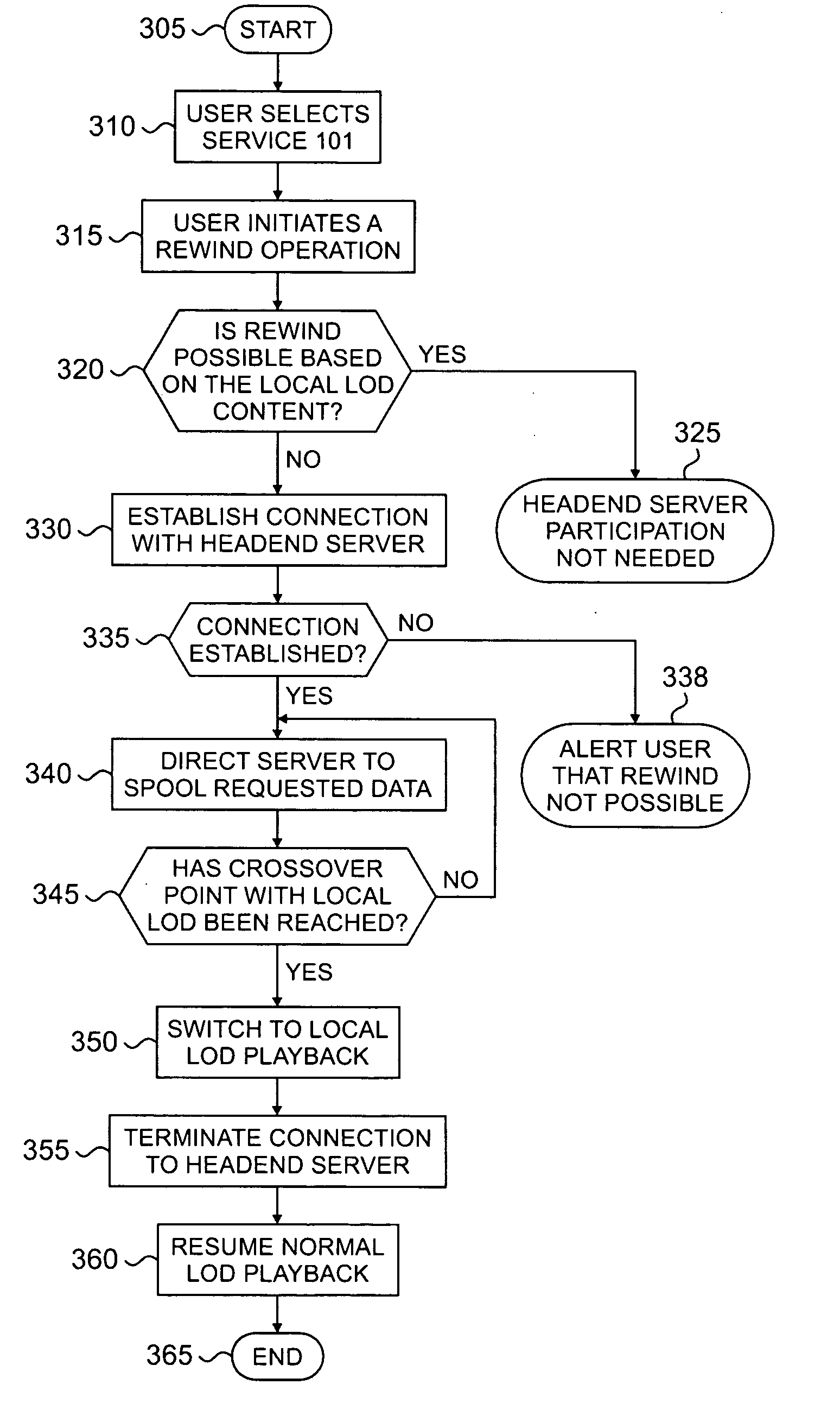

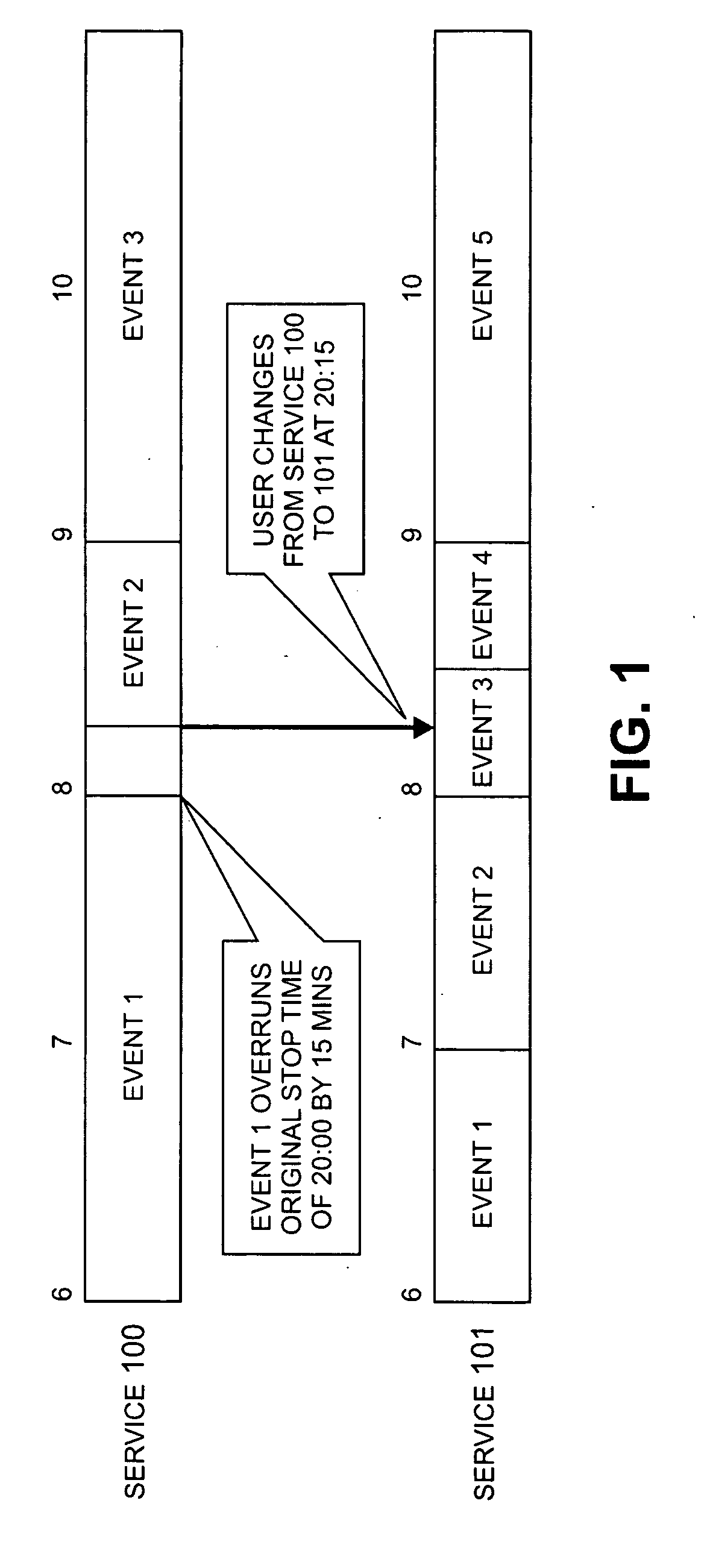

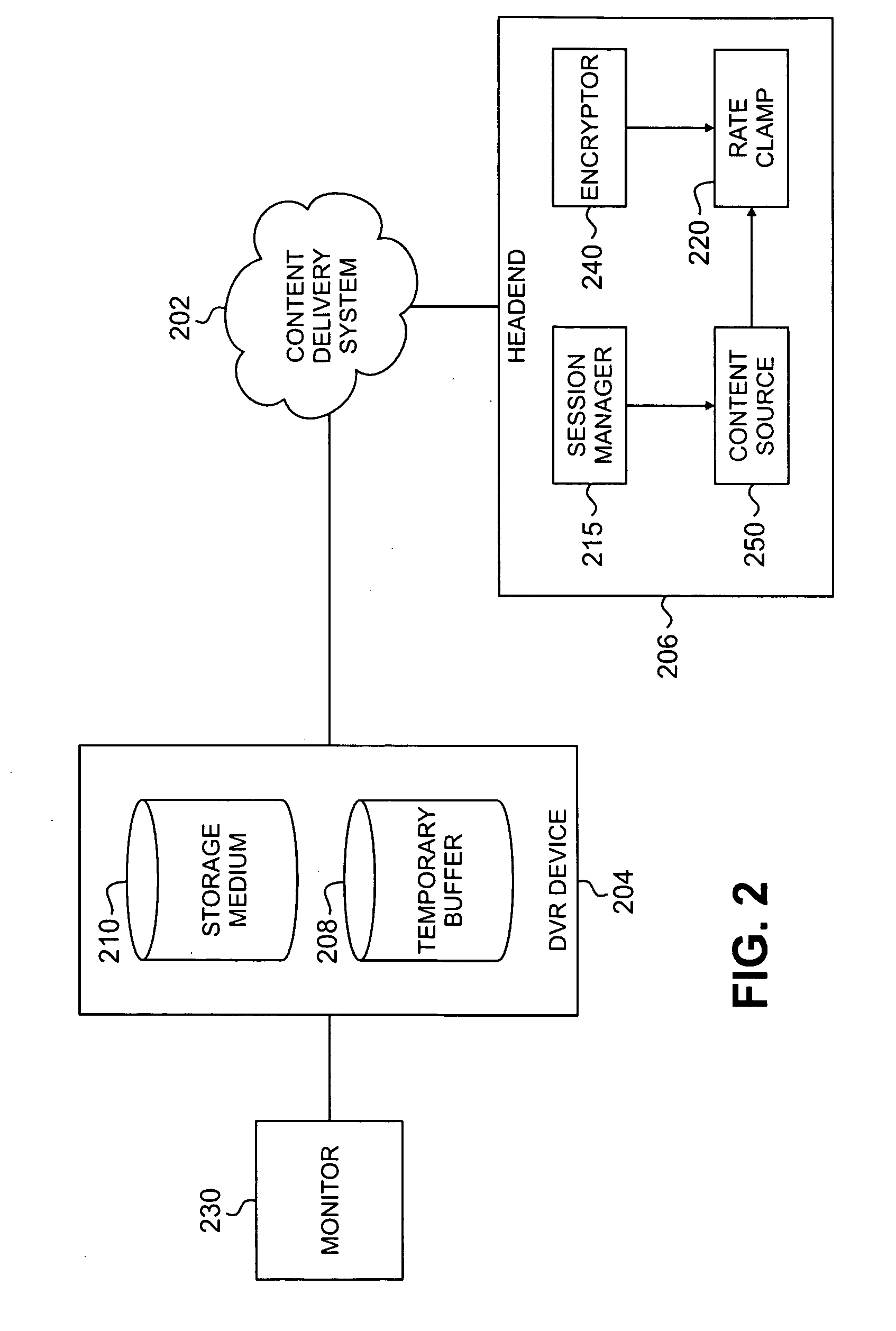

Digital video recorder having live-off-disk buffer for receiving missing portions of buffered events

ActiveUS20100115574A1Analogue secracy/subscription systemsTwo-way working systemsDigital videoWorld Wide Web

In accordance with one aspect of the invention, a method of receiving content over a content delivery system is provided. The method includes receiving a user request to initiate a rewind operation on content locally available in a live-off-disk (LOD) buffer. The content is associated with an event received over a content delivery system. If all of the event is not available in the LOD buffer, a message is communicated over the content delivery system to a headend requesting remaining content associated with an unreceived portion of the event. In response to the message, the remaining content is received from the headend over the content delivery system.

Owner:ARRIS ENTERPRISES INC

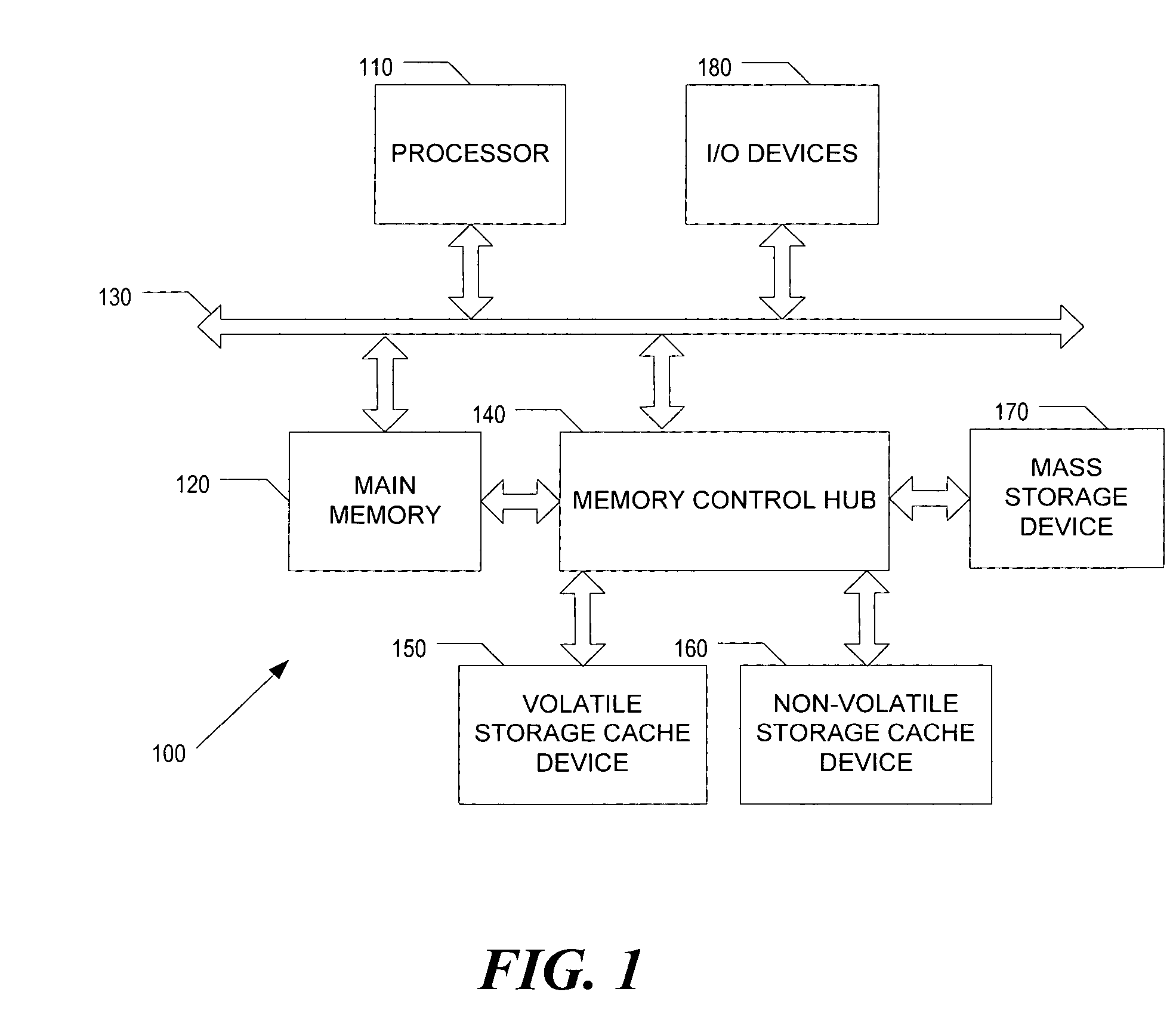

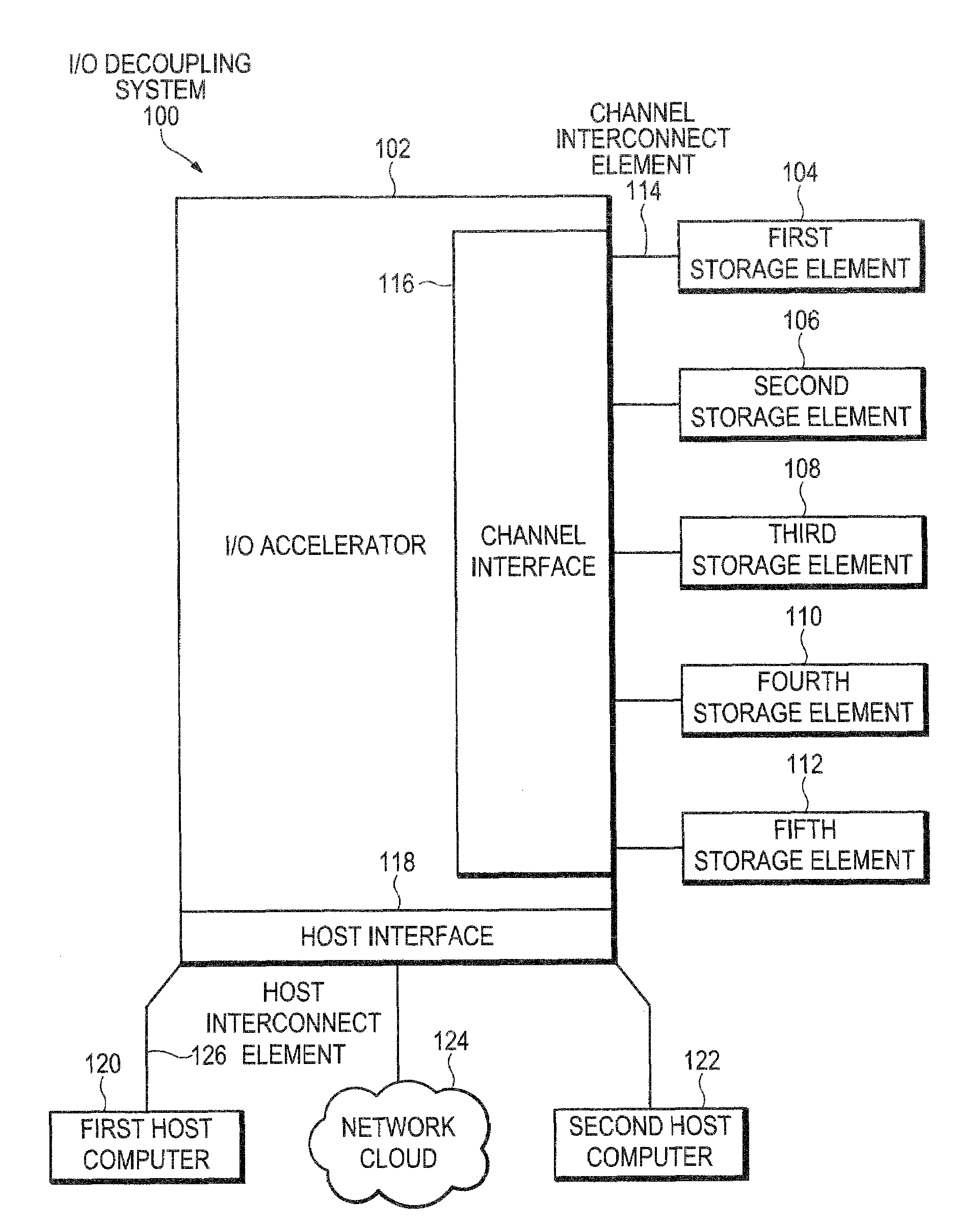

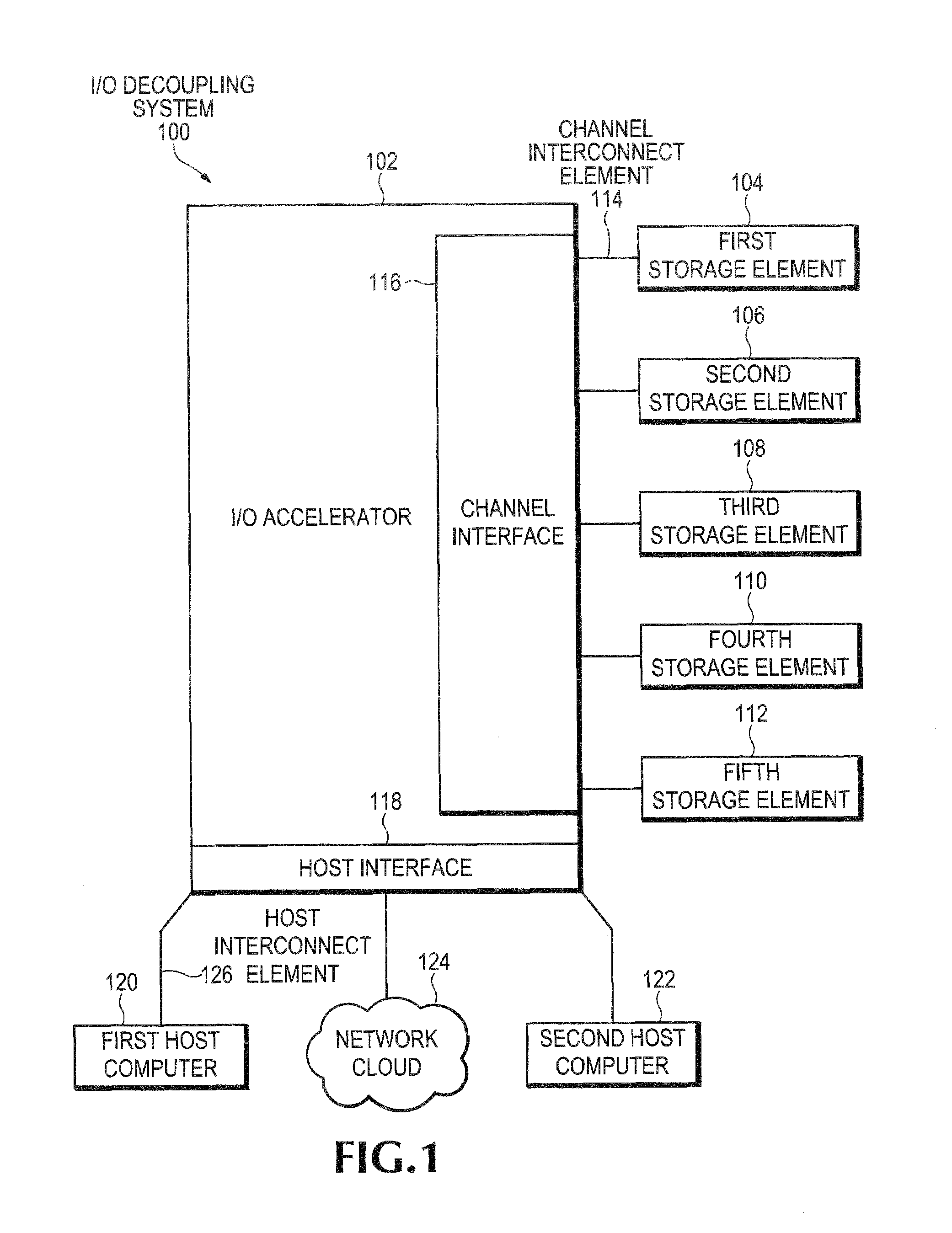

Input/output decoupling system method having a cache for exchanging data between non-volatile storage and plurality of clients having asynchronous transfers

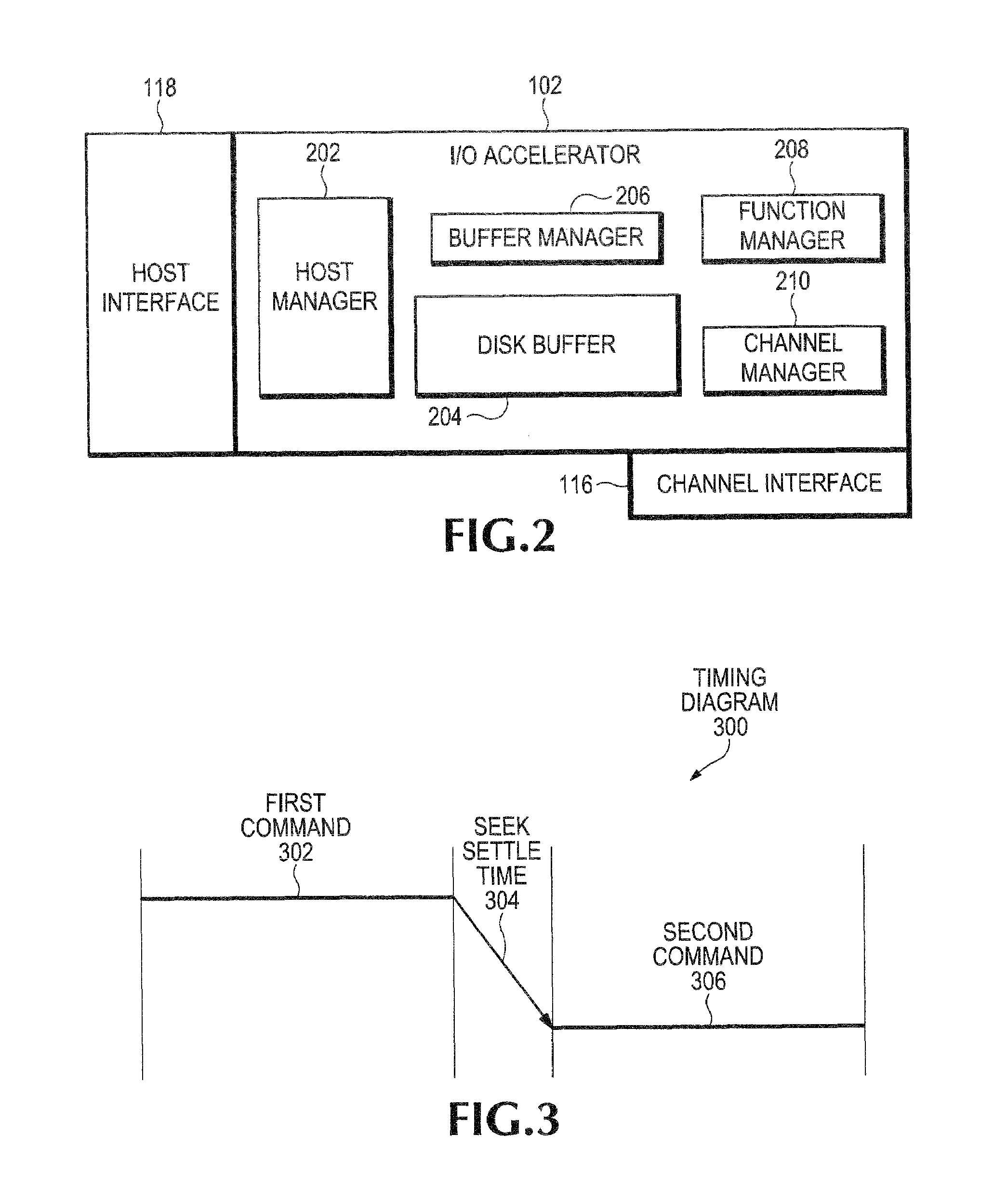

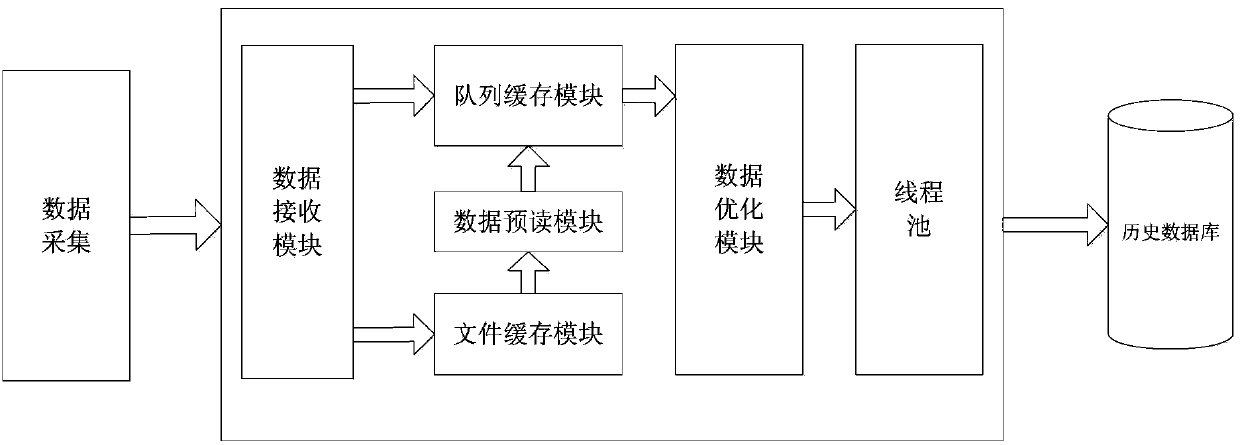

An I / O decoupling system comprising an I / O accelerator coupled between a host interface and a channel interface, wherein the I / O accelerator comprises a host manager, a buffer manager a function manager, and a disk buffer. The host manager is coupled to the host interface to receive a request from a connected host computer. The function manager in response to receiving the request allocates the disk buffer and determines a threshold offset for the buffer while coordinating the movement of data to the disk buffer through the channel interface coupled to the disk buffer.

Owner:SILICON VALLEY BANK +1

Explosive type data caching and processing system for SCADA system and method thereof

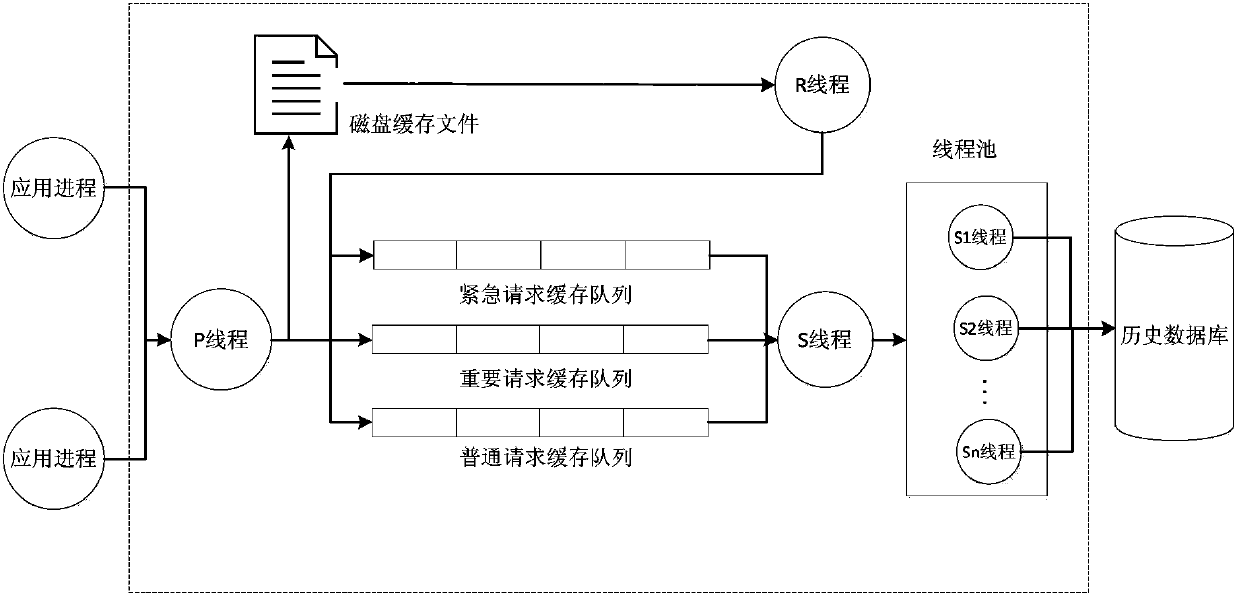

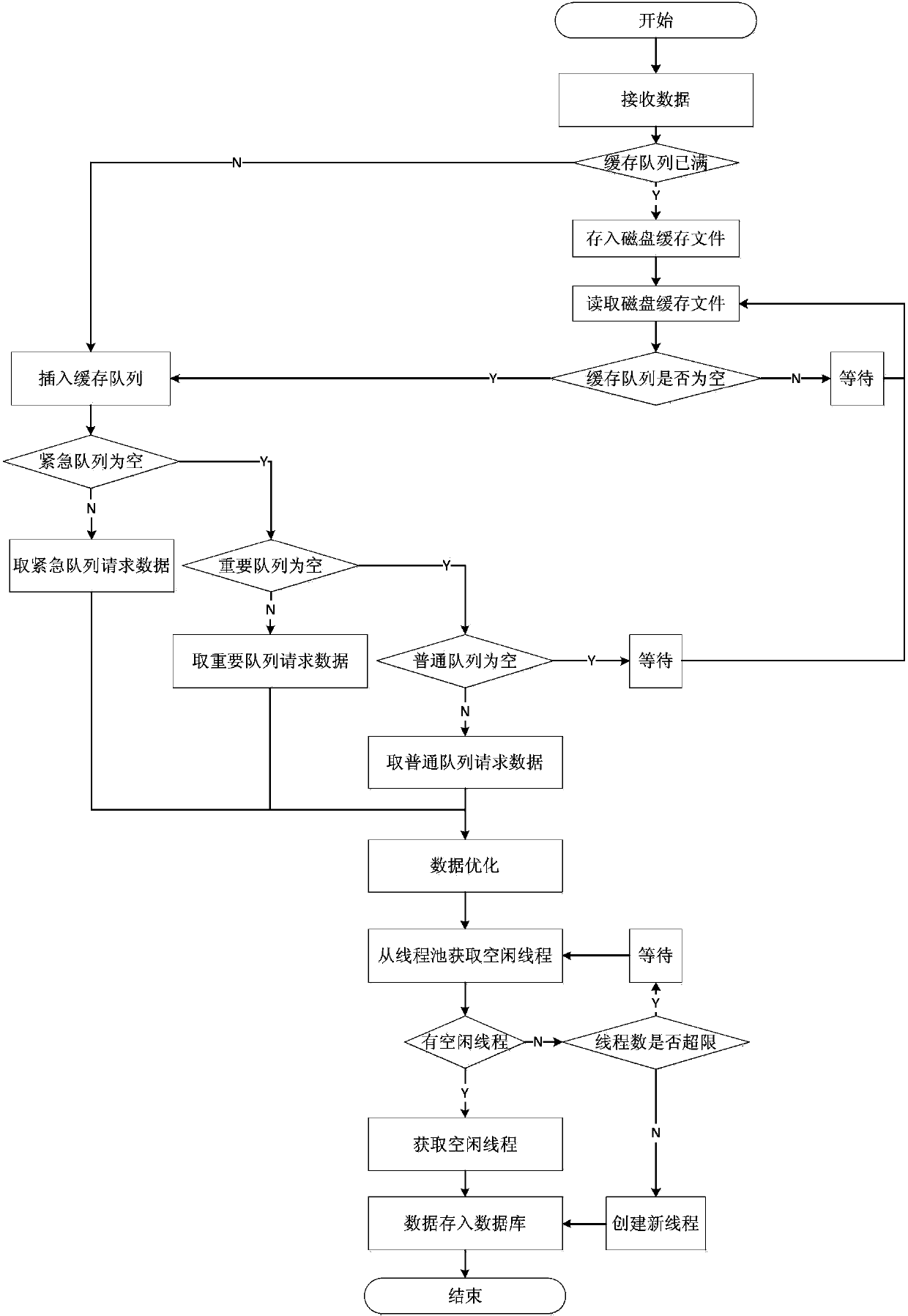

InactiveCN103399856AFix security issuesEnsure safetySpecial data processing applicationsSCADAApplication software

The invention discloses an explosive type data caching and processing system for a SCADA system and a method of the explosive type data caching and processing system for the SCADA system. The system comprises a data receiving module, a file caching module, a data pre-reading module, an queue caching module, a data optimization module and a tread pool module, wherein the data receiving module receives a data storage request message sent by an application program and enables the data storage request message to be placed into a disk caching file or a caching queue of the file caching module according to a caching queue state, the data pre-reading module inserts a data storage request stored in the file caching module into the caching queue, the data optimization module reads the data storage request, optimizes the data storage request and then calls an idle thread in a thread pool to execute the data storage operation. The system and method can well solve the safety problem of data and the SCADA system when the massive data storage requests are submitted.

Owner:BEIJING KEDONG ELECTRIC POWER CONTROL SYST

File system having predictable real-time performance

InactiveUS8046558B2Improve abilitiesTime requiredDigital data information retrievalSpecial data processing applicationsQuality of serviceNon real time

A file system that permits predictable accesses to file data stored on devices that may have a variable access latency dependent on the physical location of the file on the physical storage device. A variety of features that guarantee timely, real-time response to I / O file system requests that specify deadlines or other alternative required quality-of-service parameters. The file system addresses needs to accommodate the file systems of storage devices such as disks that have an access time dependant on the physical location of the data within the storage device. A two-phase, deadline-driven scheduler considers the impact of disk seek time on overall response times. Non real-time file operations may be preempted. Files may be preallocated to help avoid access delay caused by non-contiguity. Disk buffers may also be preallocated to improve real-time file system performance.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

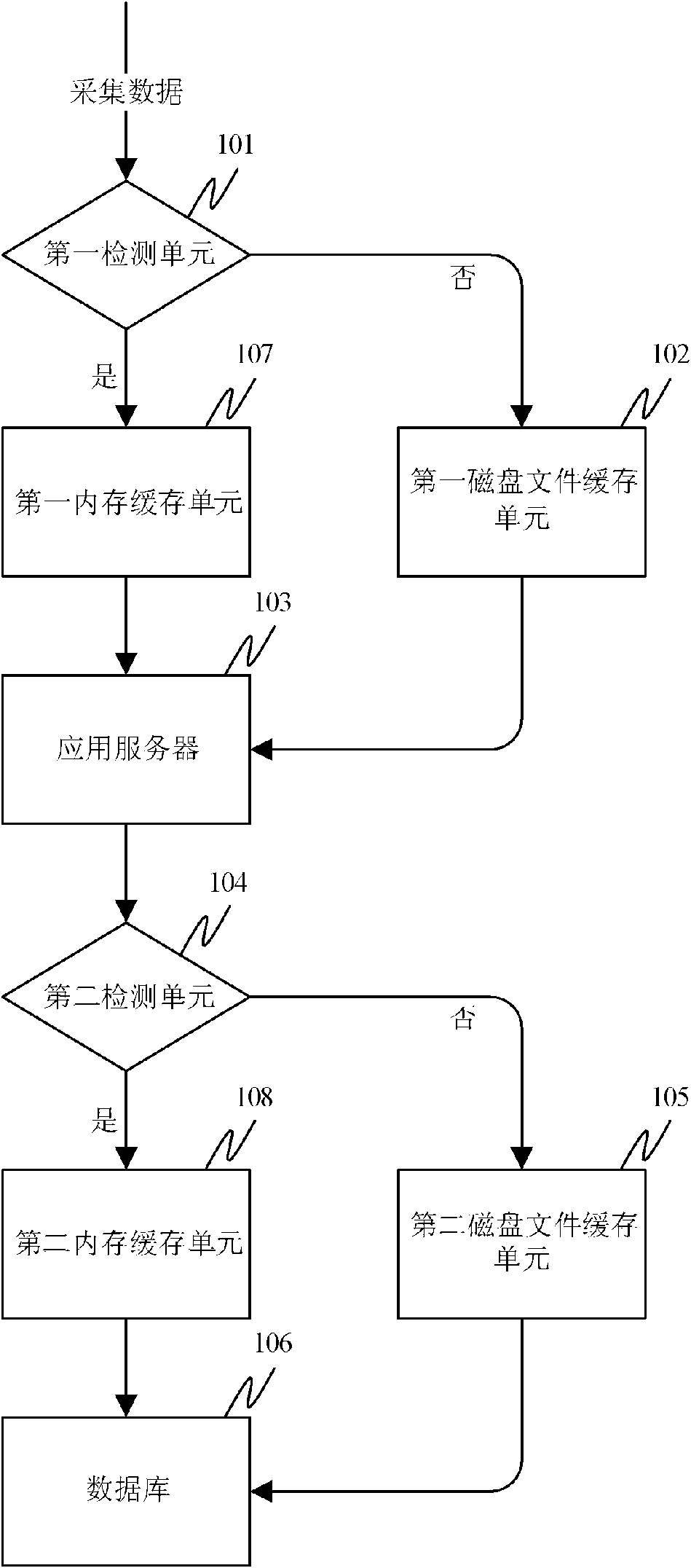

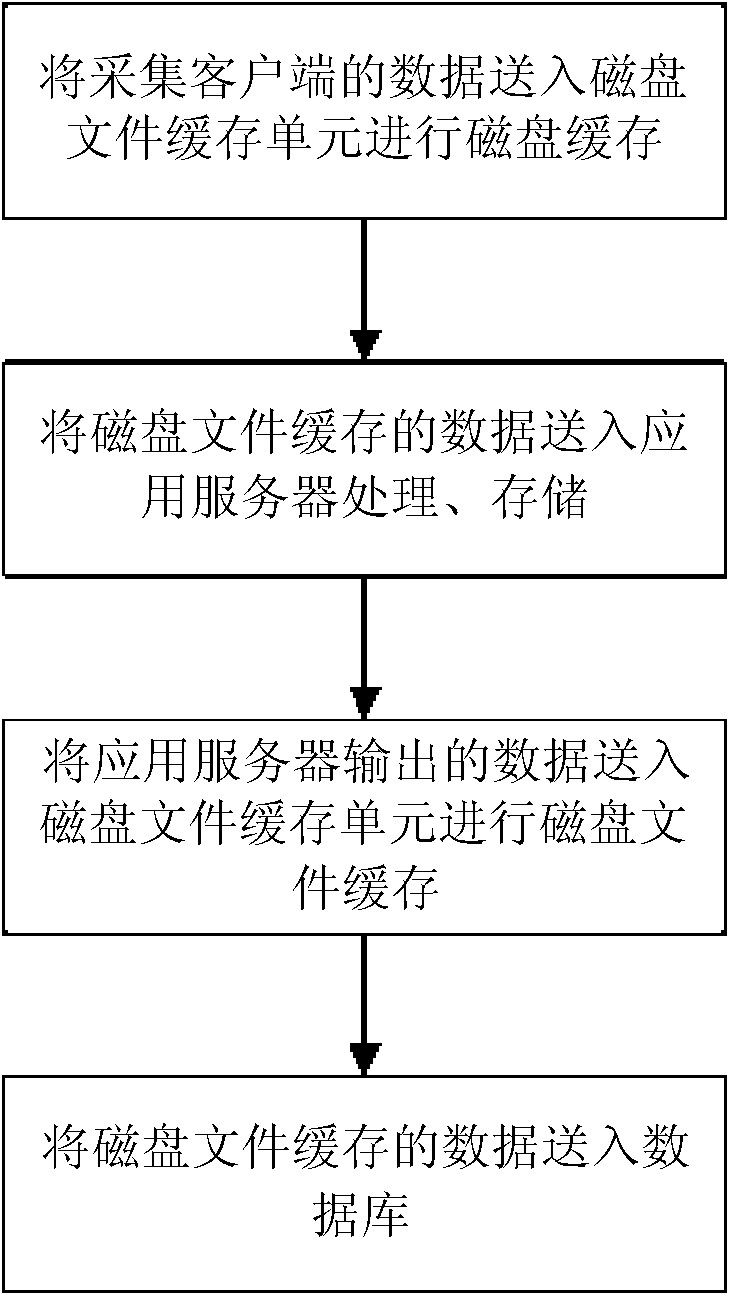

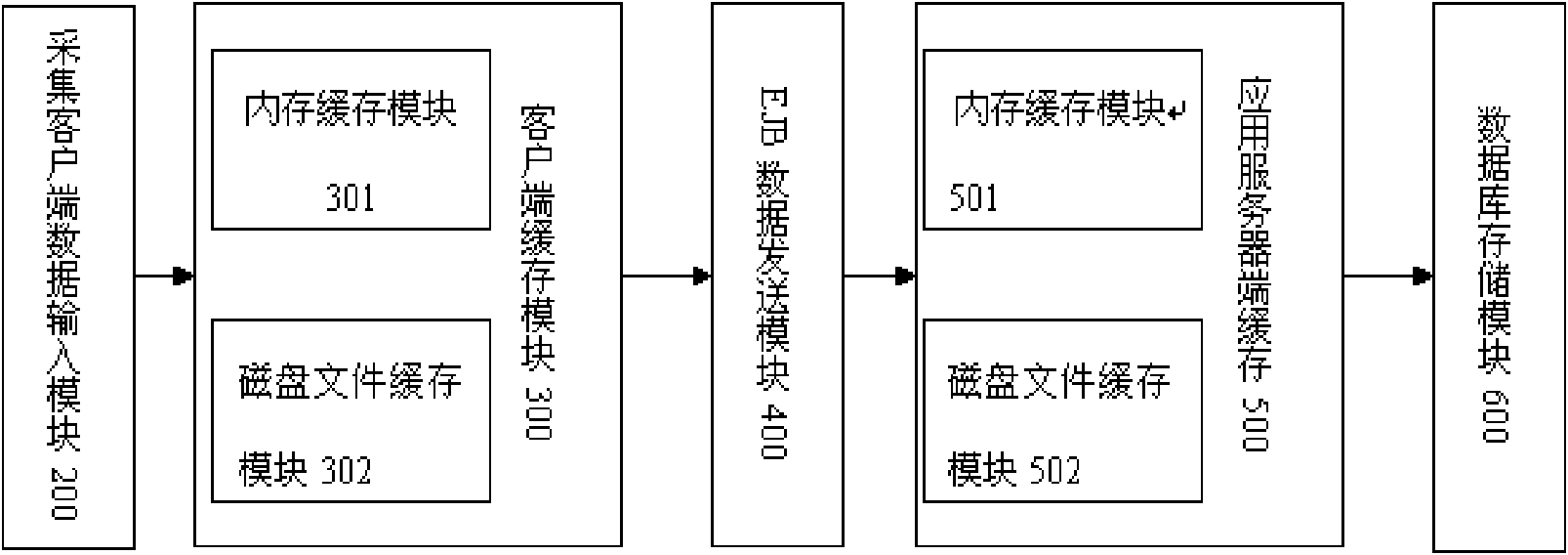

Data storing system and method based on cache

InactiveCN101576855AIntegrity guaranteedAvoid the problem of duplicate data collectionInput/output to record carriersMemory adressing/allocation/relocationApplication serverComputer module

The invention relates to a data storing system and a method based on cache. The system comprises: a first detecting unit for detecting the abnormality of a network connected with an application server or the application server; a first disk file caching unit for the disk cache of the data collected from a client; and an application server for storing or processing data. The first detecting unit is respectively connected with the first disk file caching unit and the application server. The first disk file caching unit is connected with the application server. The data storing method based on cache comprises the following steps: step 1, transmitting the data collected from the client to the disk file caching unit for disk cache; and step 2, transmitting the cached data in the disk file to the application server to be processed and stored. The system and the method in the invention cannot affect the storage of the collected data in the condition that the network service is abnormal or a certain module of the program is abnormal, thereby ensuring the integrity of the collected data and improving the efficiency.

Owner:SHENZHEN CLOU ELECTRONICS

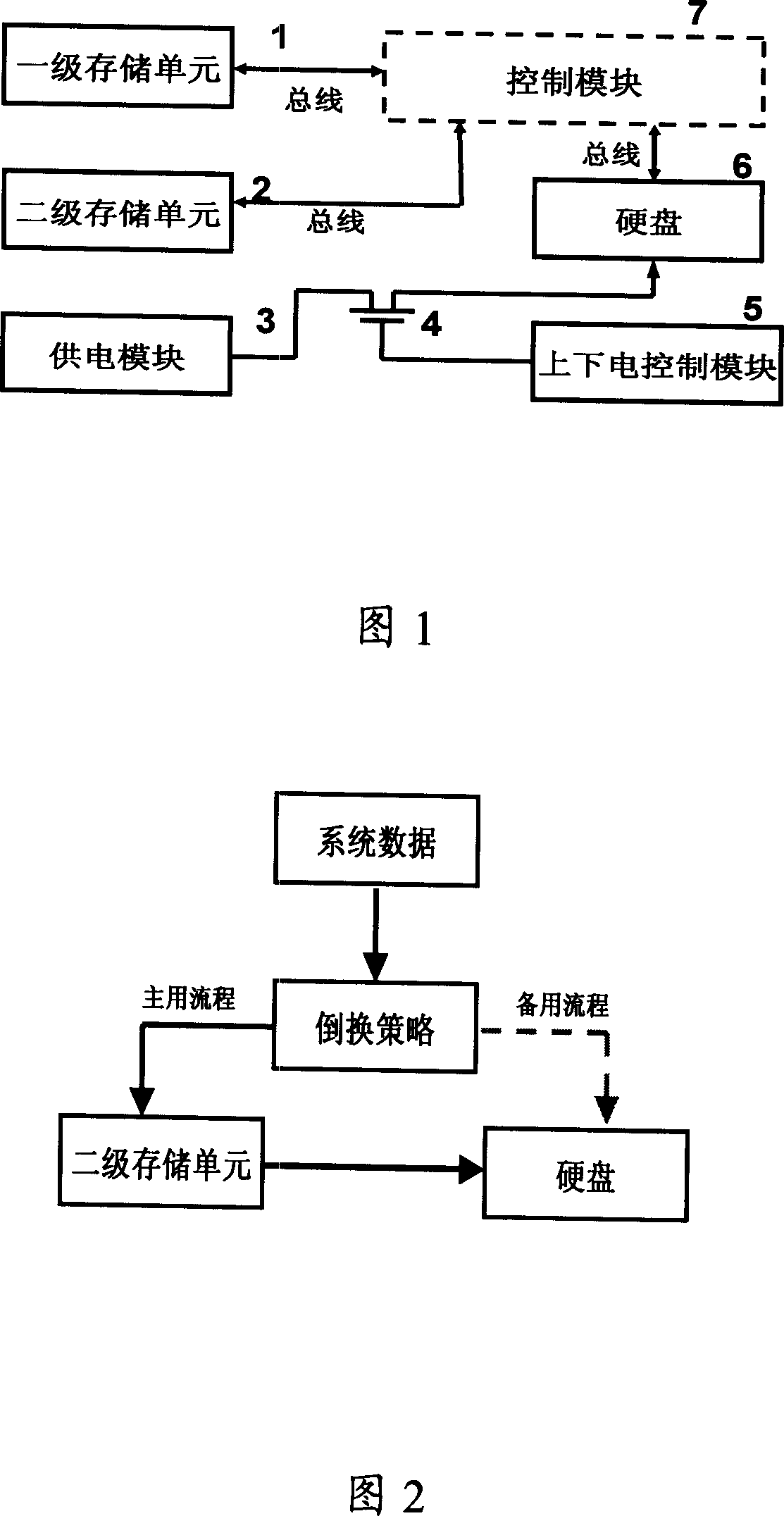

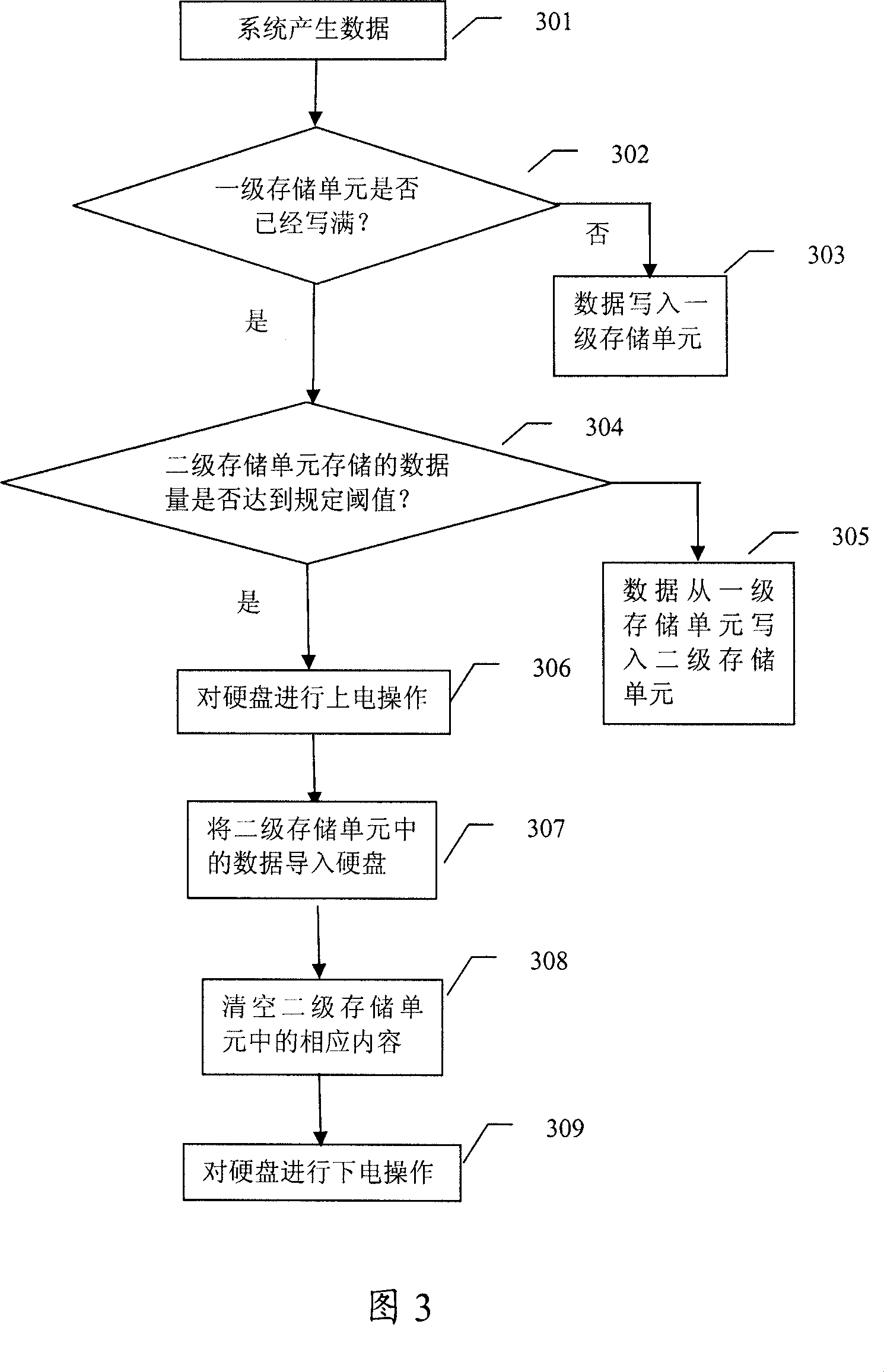

Multi-level buffering type memory system and method therefor

InactiveCN1996266ASave time at workExtended service lifeEnergy efficient ICTDigital data processing detailsControl dataEmbedded system

The invention relates to the storage technique in the filed of data communication, using multi level buffer storage system. The said system comprises a first storage unit, hard disk, control module, with the said first level storage unit being disk buffer area for writing and reading of data temporarily with the said control module used for controlling the reading and writing of the data, the secondary level storage unit writing the temporarily stored data of the first level storage unit based on the instruction of the control module, introducing the self stored data to the hard disk before the stored overall data arriving the preset valve value. It can increase the protection for hard disk, extending its usage, improving the reliability and stability of the storage system.

Owner:HUAWEI TECH CO LTD

Method and apparatus for buffering data

InactiveCN101277211AImprove performanceMultiple shared resourcesSpecial service provision for substationMemory adressing/allocation/relocationShared resourceDatabase

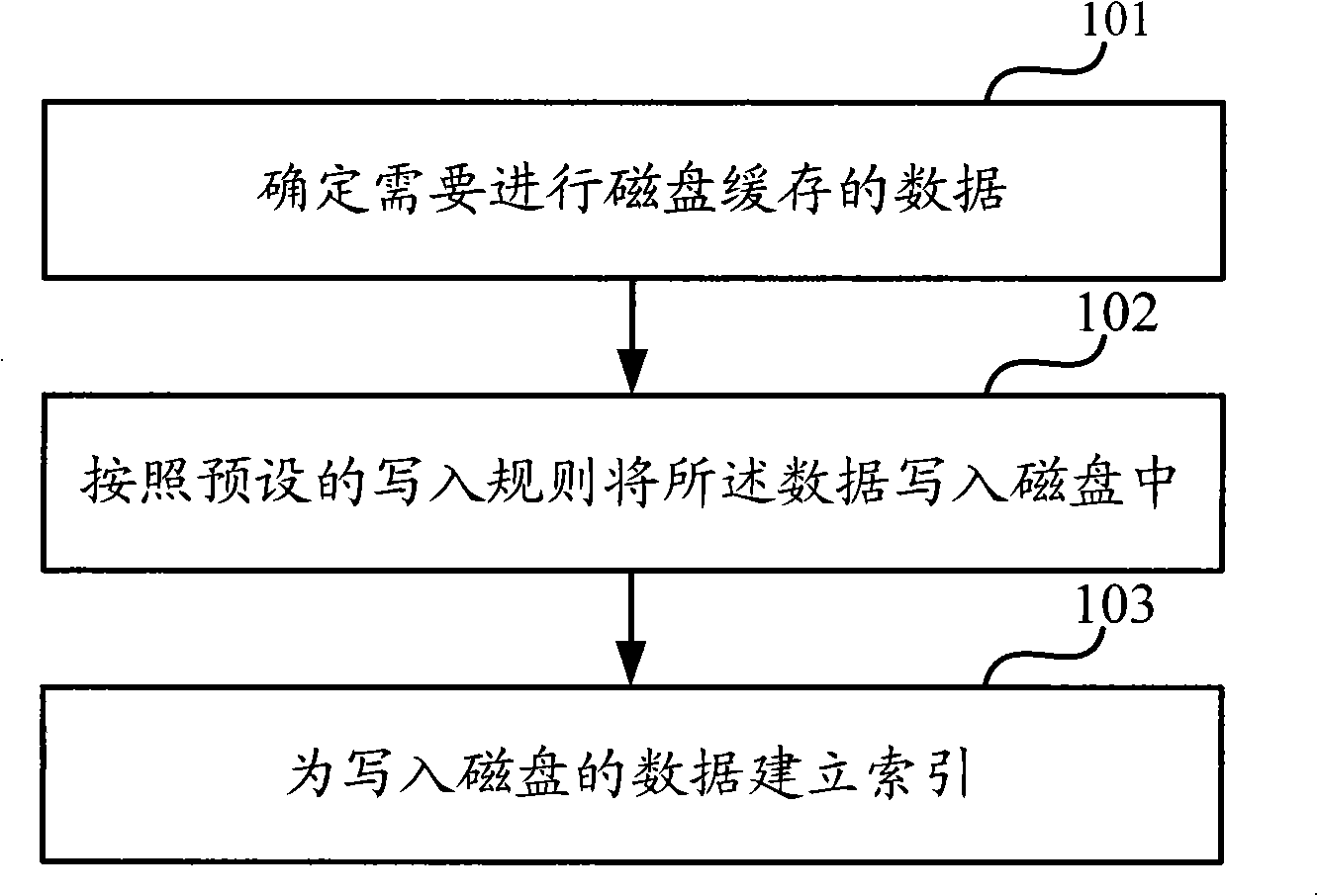

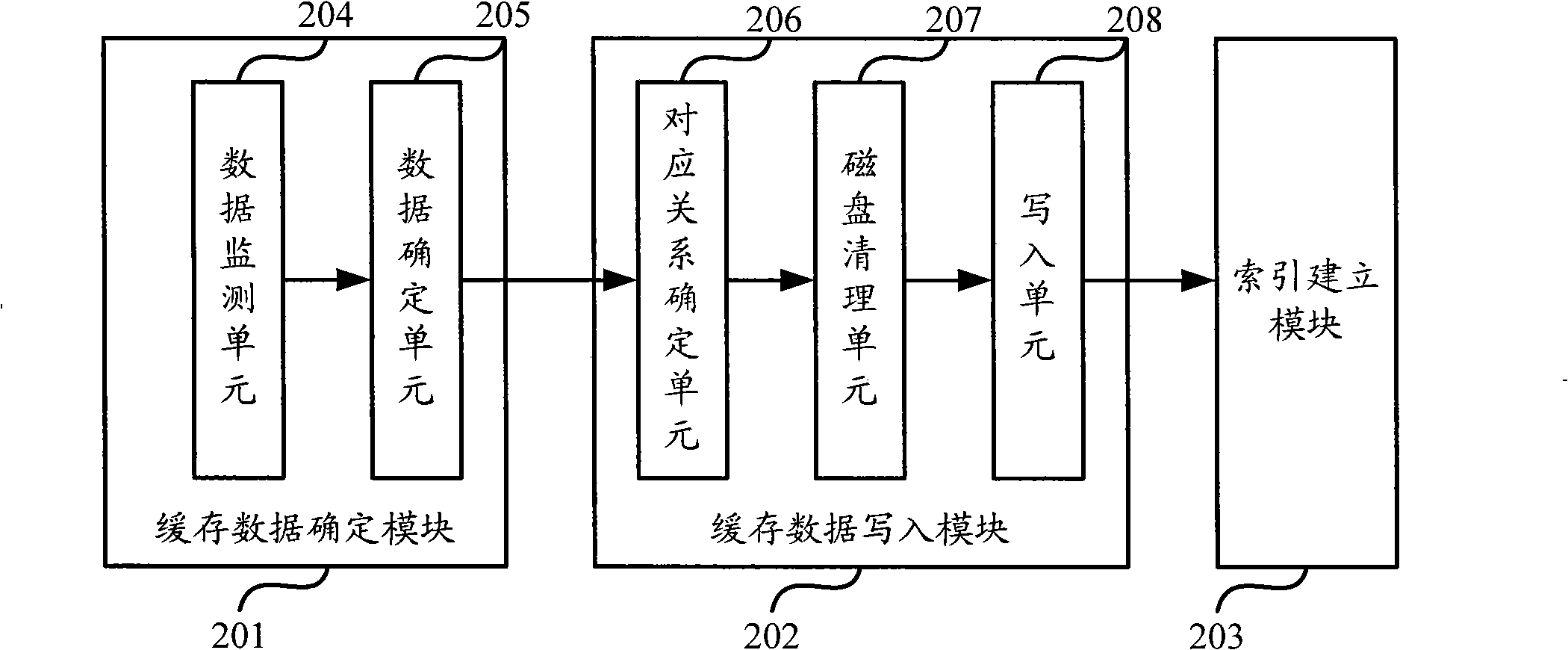

The invention discloses a data caching method and a date caching device. The method includes steps: ensuring data required disc caching; writing the data into disc according with pre-set writing rule; establishing index for data wrote into disc, and realizing data caching by using disc. Because disc has characteristics of large capacity, nonvolatility also likes, node in P2P on-demand system can provide more sharing resource, is not limited to media resource being on-demand, consequently, whole property of P2P on-demand system is increased.

Owner:TENCENT TECH (SHENZHEN) CO LTD

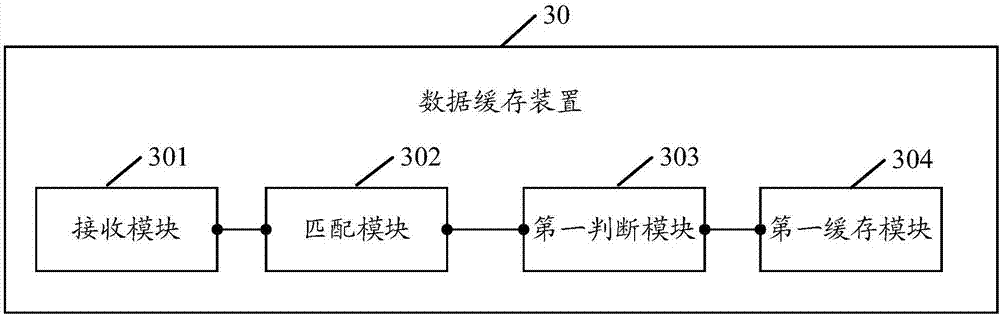

Data caching method and terminal device

InactiveCN107992432ACater to diverse needsImprove the efficiency of reading dataMemory systemsTerminal equipmentDatabase

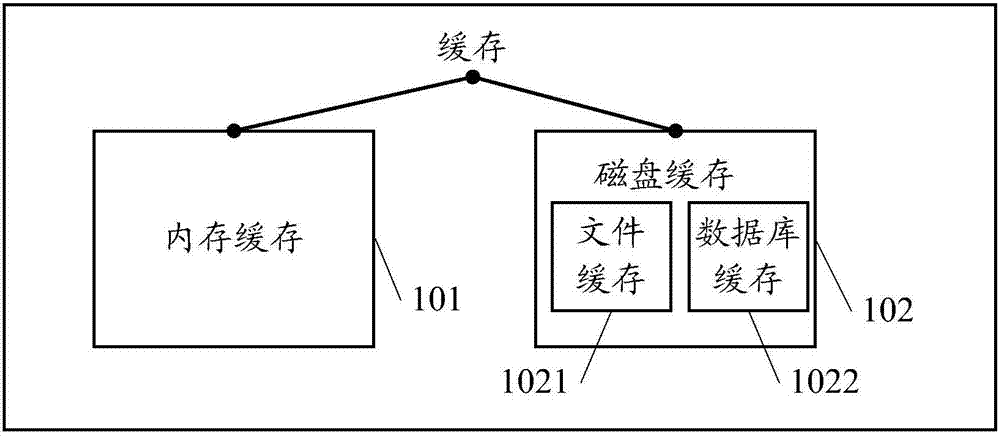

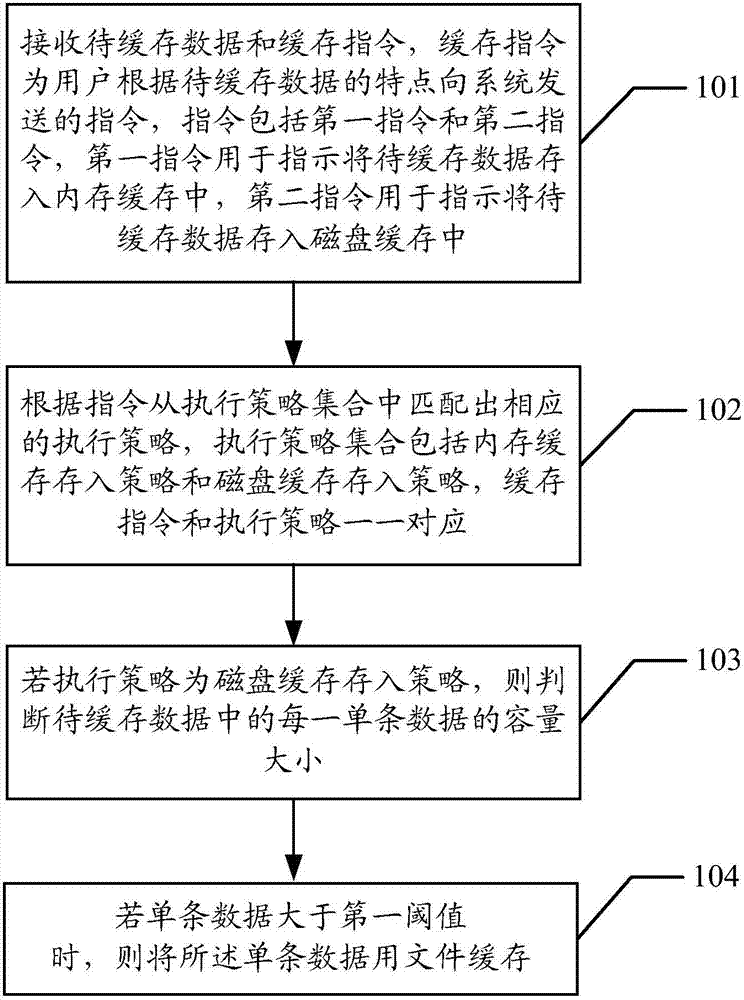

The embodiment of the invention discloses a data caching method. The data caching method includes the steps that to-be-cached data and caching instructions are received, wherein the caching instructions are instructions sent by users to a system according to characteristics of the to-be-cached data, the instructions comprise first instructions or second instructions, the first instructions are used for that the to-be-cached data is indicated to be stored into a memory cache, and the second instructions are used for that the to-be-cached data is indicated to be stored into a magnetic disk cache; according to the caching instructions, corresponding executive strategies are matched from an executive strategy set, the executive strategy set comprises a memory-cache storage strategy and a magnetic-disk-cache storage strategy, and the caching instructions correspond to the executive strategies in a one-by-one mode; if the executive strategy is the magnetic-disk-cache storage strategy, the capacity of each single data in the to-be-cached data is judged; if the single data is larger than a first threshold value, the single data is cached with files. According to the data caching method, the advantages of all caching modes can be achieved according to different characteristics of caching data.

Owner:福建中金在线信息科技有限公司

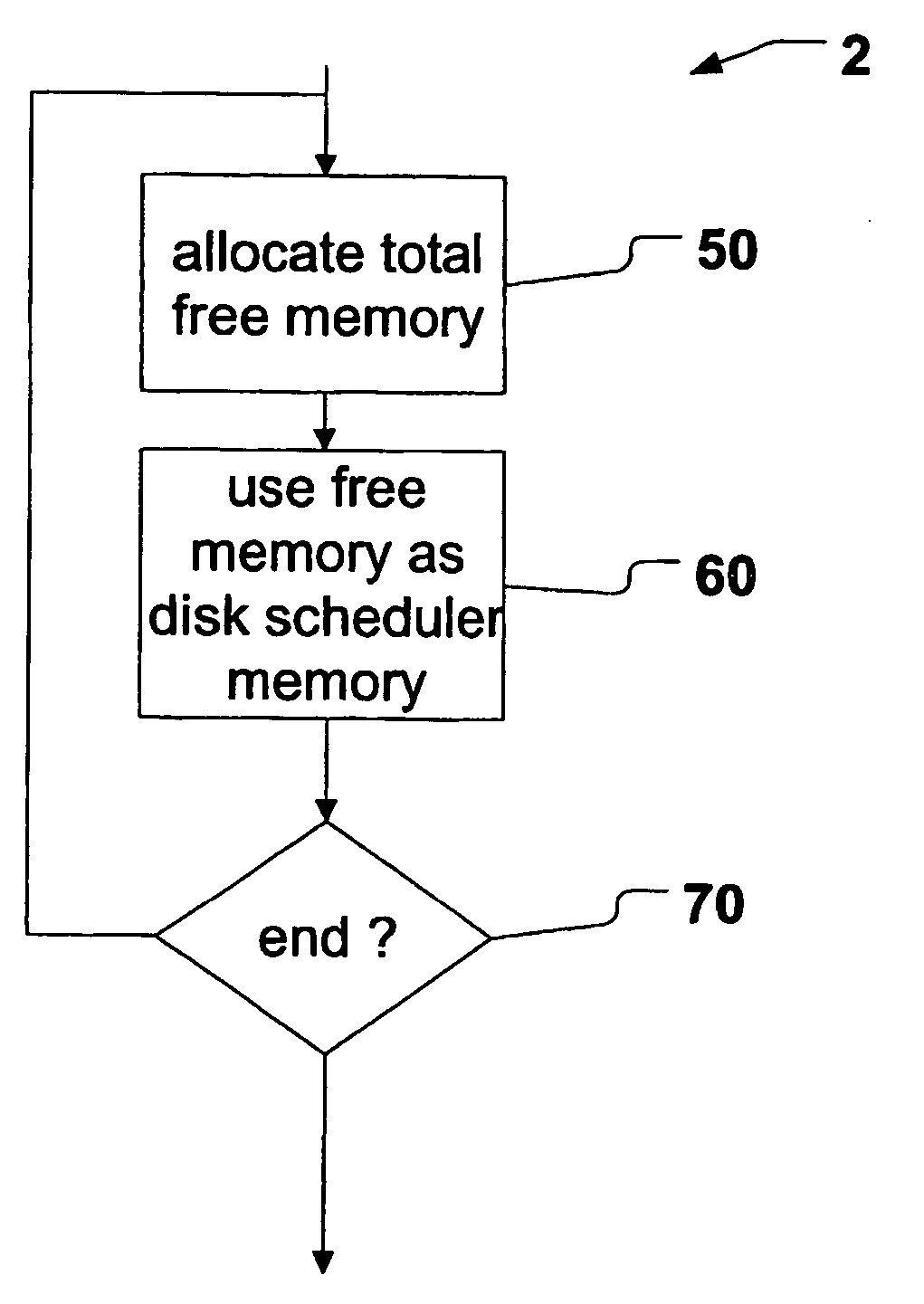

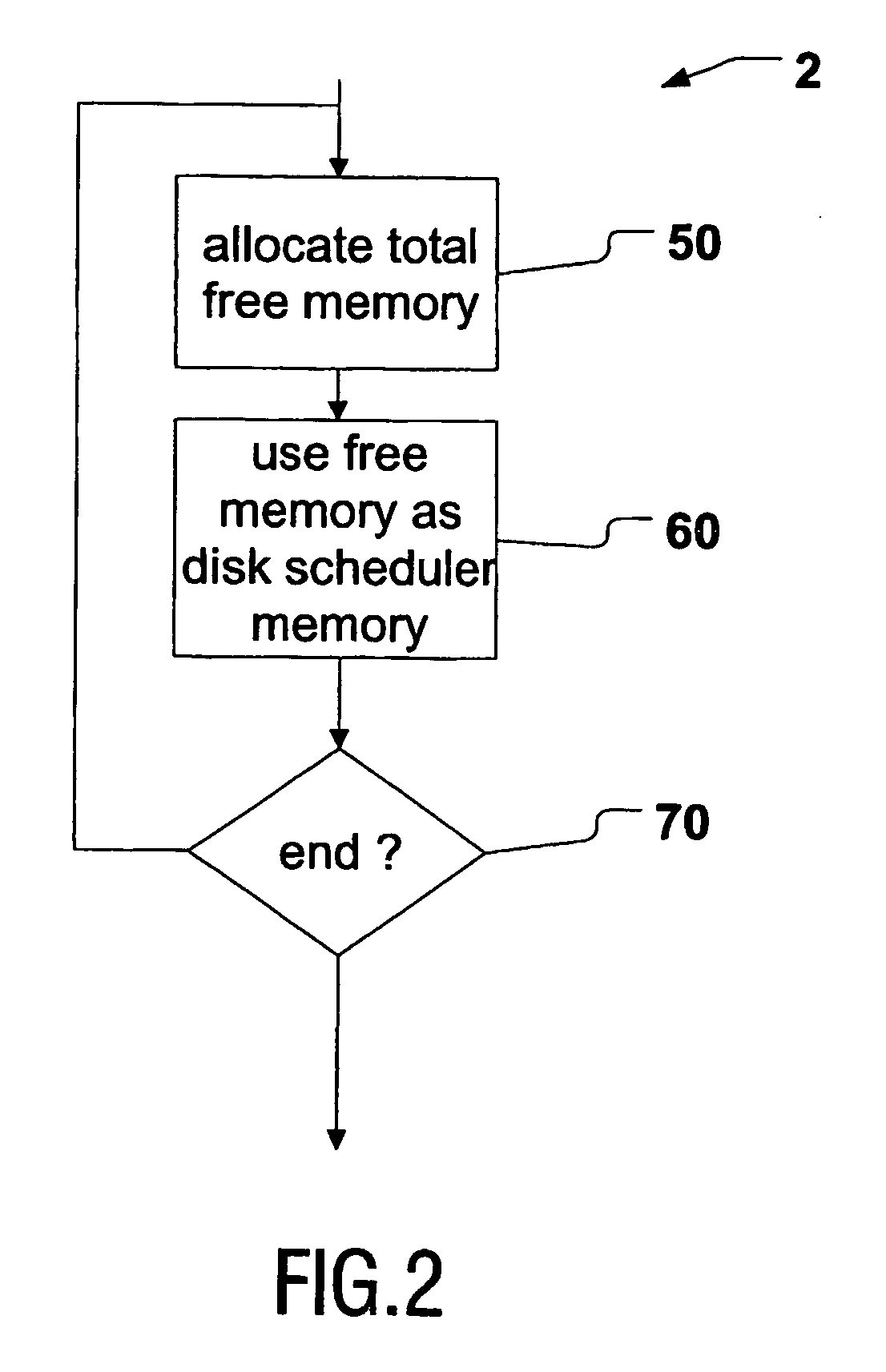

Power saving method for portable streaming devices

InactiveUS20060143420A1Extend the life cycleQuantity minimizationTelevision system detailsEnergy efficient ICTStart stopProcessing element

A method (2) of controlling memory usage in a portable streaming device (100), a portable streaming device (100) and a computer readable medium (110). The portable streaming device (100) comprises at least one memory (102), at least one processing unit (101), and at least one storage device (103) being operatively connected with said memory (102) under control of said processing unit (101). The size of a disk scheduler buffer memory within said memory in said portable streaming device is adaptively maximised by said method (2) at all times. Free memory available within the portable streaming device is continuously allocated (50) and at least a portion of said allocated free memory is designated as disk scheduler buffer memory (60). Thus results improved solid state memory utilisation of the portable streaming device, and due to larger available disk buffer memory size, less start-stop-cycles of the storage device are initiated, which leads to a longer life-cycle of said portable streaming device.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

Disk cache method of embedded browser

InactiveCN102411631AImprove Internet experienceImprove access speedSpecial data processing applicationsResource informationEvent queue

The invention relates to a disk cache method of an embedded browser. The browser operates a first thread and a second thread, the first thread is used for initiating a network downloading request, and the second thread is used for storing and processing a disk cache. The method comprises the following steps of: operating the first thread, starting the second thread by the third thread, setting the second thread in a blockage state; obtaining network resource information by the first thread according to the network downloading request and then generating disk cache events and inserting into a disk cache event queue; and when the first thread is downloaded completely, setting the second thread in an operation state by the first thread, starting processing the disk cache events in the disk cache event queue by the second thread, and storing the network resource information to a disk. According to the invention, access speed of resources can be increased, user net play experiences are enhanced, and performance of the embedded browser is further improved.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI +1

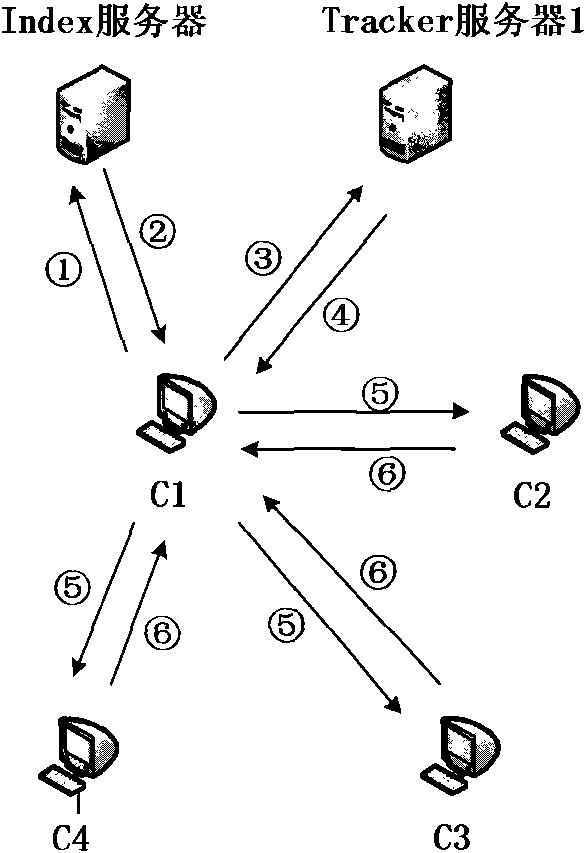

Method of magnetic disc cache replacement in P2P video on demand system

ActiveCN101551781AImprove collaborationRelieve pressureMemory adressing/allocation/relocationTwo-way working systemsParallel computingClient-side

The present invention provides a method of magnetic disc cache replacement in P2P video on demand system, includes following steps: 1) each client node sends the latest data cache information of the current node to neighbor nodes of the client node; 2) for each data block in magnetic disc cache of client nodes that needs replacing magnetic disc cache data, according to each neighbor node of the client node provided data cache information to obtain number of neighbor nodes needing the data block urgently and number of neighbor nodes needing the data block commonly; 3) according to number of neighbor nodes needing the data block urgently and number of neighbor nodes needing the data block commonly to obtain priority value of each the data block, further according to the priority value to obtain data blocks will be replaced; using data in the memory cache of the client nodes to cover the data blocks to be replaced. The invention can improve collaboration of nodes in system network and reduce pressure of media source server.

Owner:ALIBABA (CHINA) CO LTD

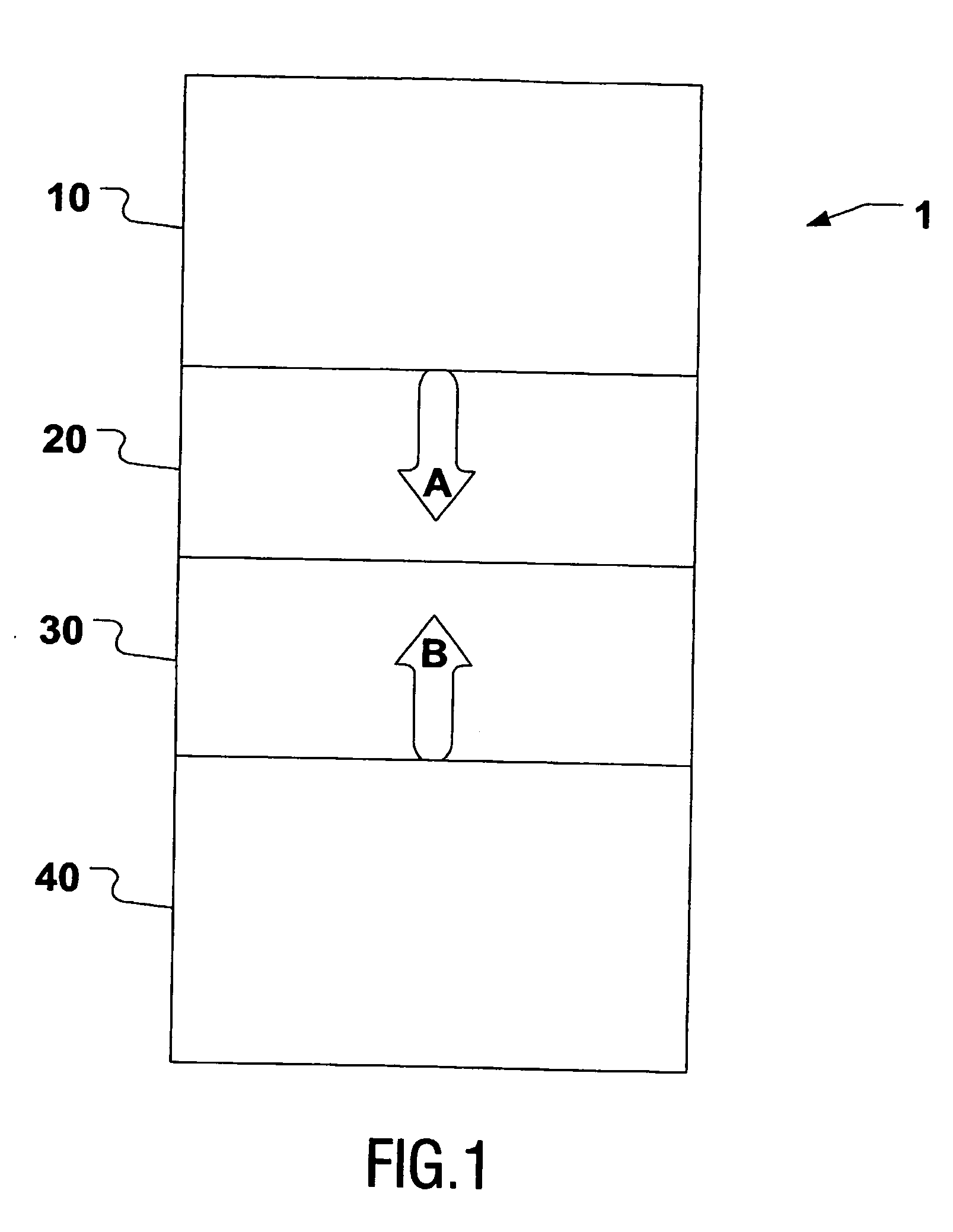

Information processing system and recording medium recording a program to cause a computer to execute steps

InactiveUS20020174294A1Input/output to record carriersMemory systemsInformation processingData storing

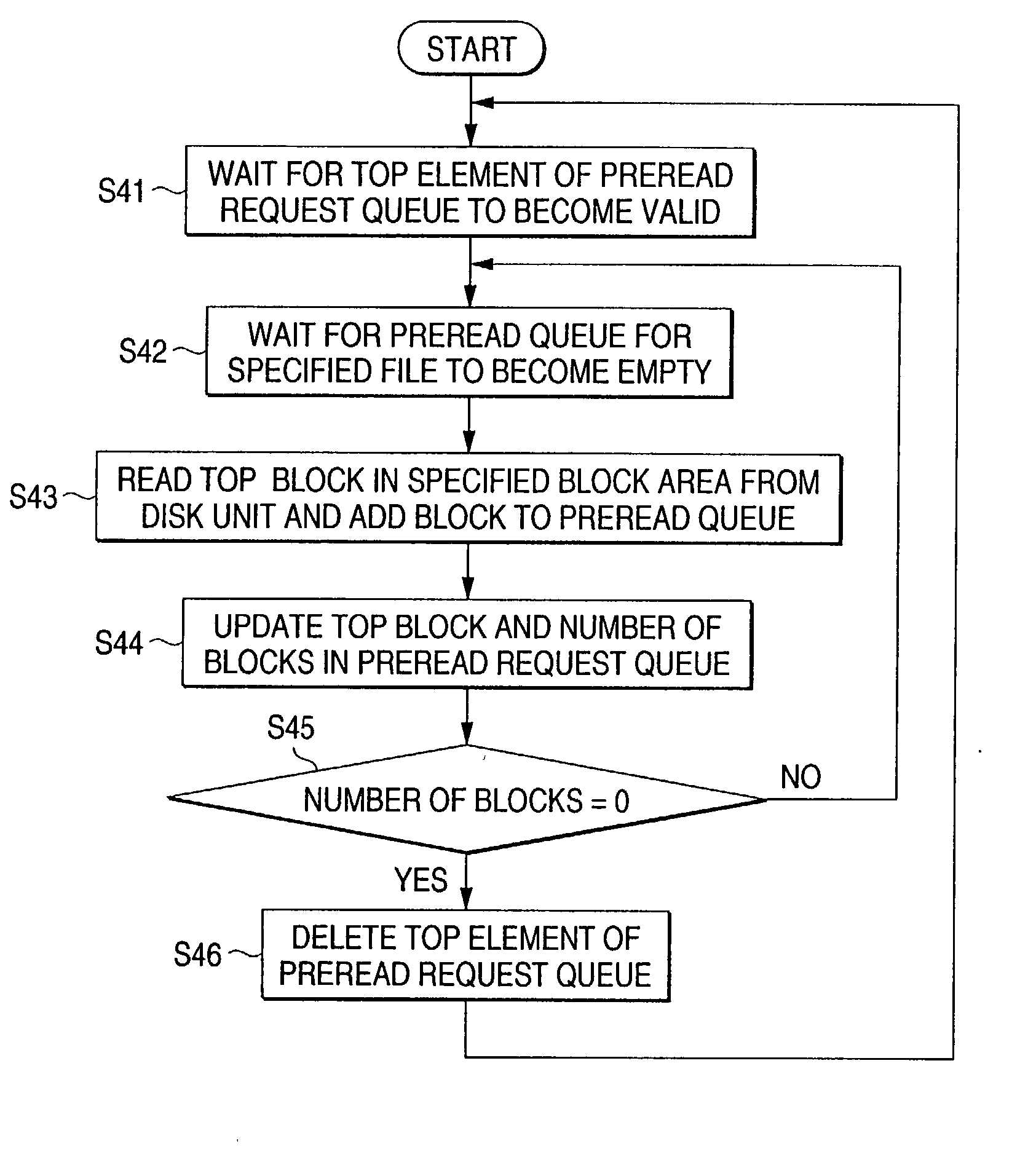

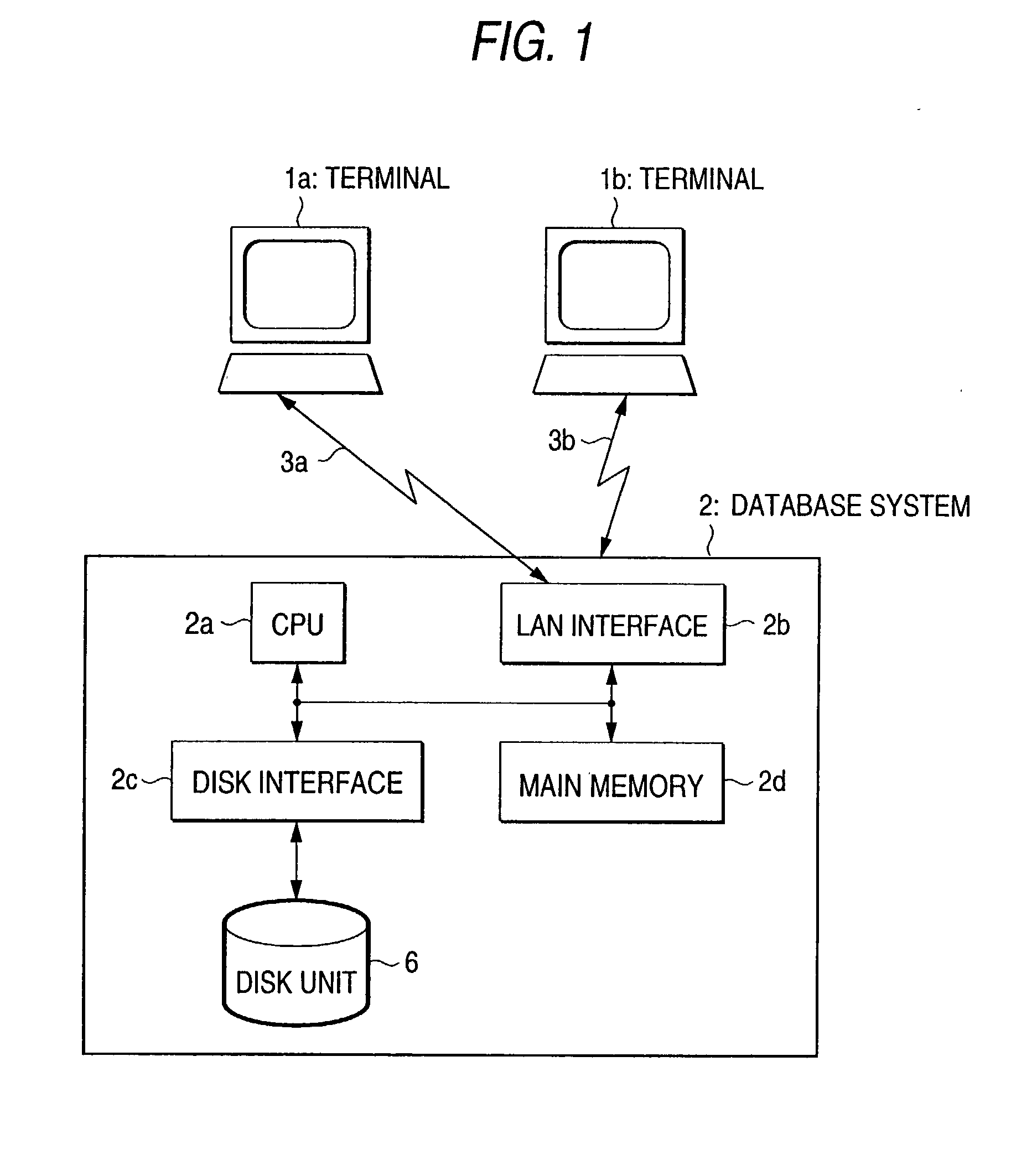

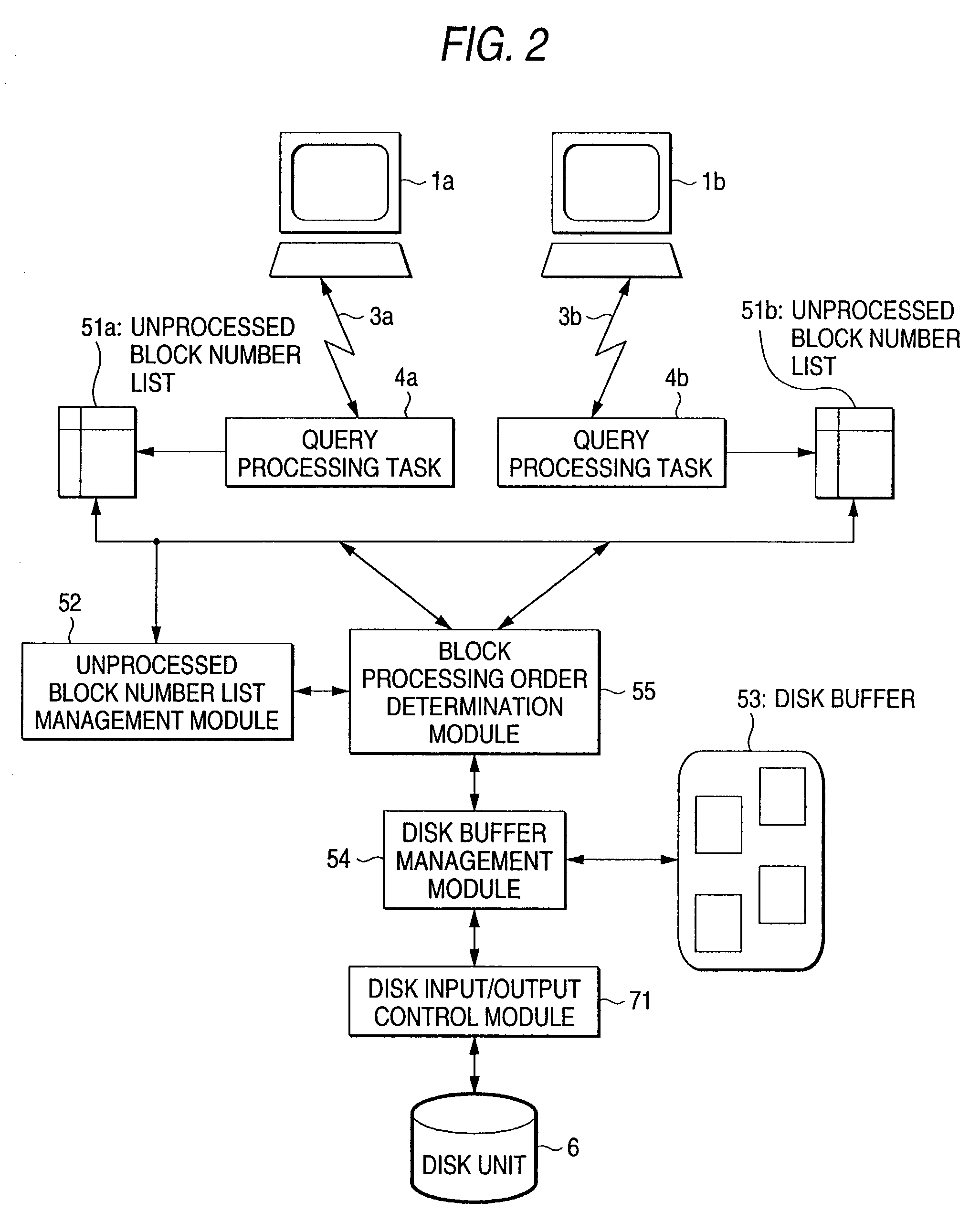

An information processing system which has a disk buffer 53 for temporarily storing a plurality of data pieces read from a disk unit 6, a block processing order determination module 55 for detecting from a processing request for requesting a plurality of data pieces stored on the disk unit 6, the data piece matching the data stored in the disk buffer 53 among the data pieces requested in the processing request and determining the read order of the match data piece and the remaining data requested in the processing request so as to read the match data piece preceding the remaining data, and read means for reading the match data piece from the disk buffer 53 before reading the remaining data into the disk buffer 53 from the disk unit 6 in accordance with the read order determined by the block processing order determination module 55.

Owner:MITSUBISHI ELECTRIC CORP

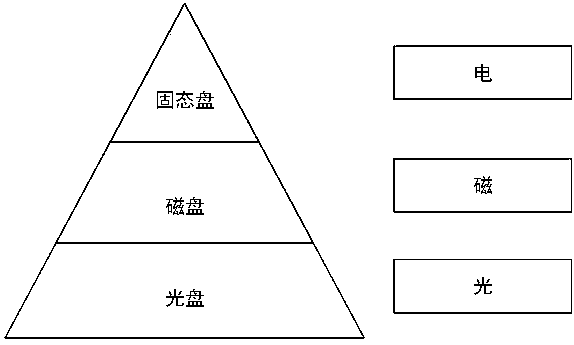

Magnetic-optical-electric hybrid optical-disk library and management method and management system thereof

ActiveCN107704211AImprove reliabilityImprove access speedInput/output to record carriersDisk controllerData access

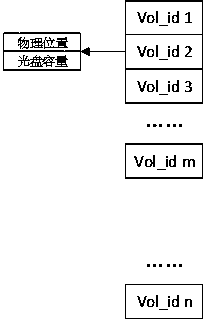

The invention provides a magnetic-optical-electric hybrid optical-disk library and a management method and a management system thereof. The optical-disk library includes an optical-disk library controller, a solid-state disk, a magnetic disk, multiple optical-disk drivers and multiple optical disks. The solid-state disk is used as a metadata area. The magnetic disk is used as a data cache area. The optical disks are used as a long-acting storage area. The optical-disk controller is connected with all the solid-state disk, the magnetic disk and the optical-disk drivers through high-speed data channels. The optical-disk library controller is used for virtualizing the whole optical-disk library into file volumes and controlling writing and reading of data, and is also used for providing external standard network storage interfaces. According to the optical-disk library, the characteristics of fast accessing speeds of the solid-state disk and the magnetic disk and long-acting data storageof the optical disks are combined to build a hierarchical storage system, a global file view and online data accessing of a user are provided, file data written by the user are stored in a magnetic-disk cache, metadata needing to be frequently read and written are stored in the metadata area formed by the solid-state disk, and an accessing speed of the data is increased.

Owner:WUHAN OPSTOR TECH LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com