Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

202 results about "Fifo queue" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

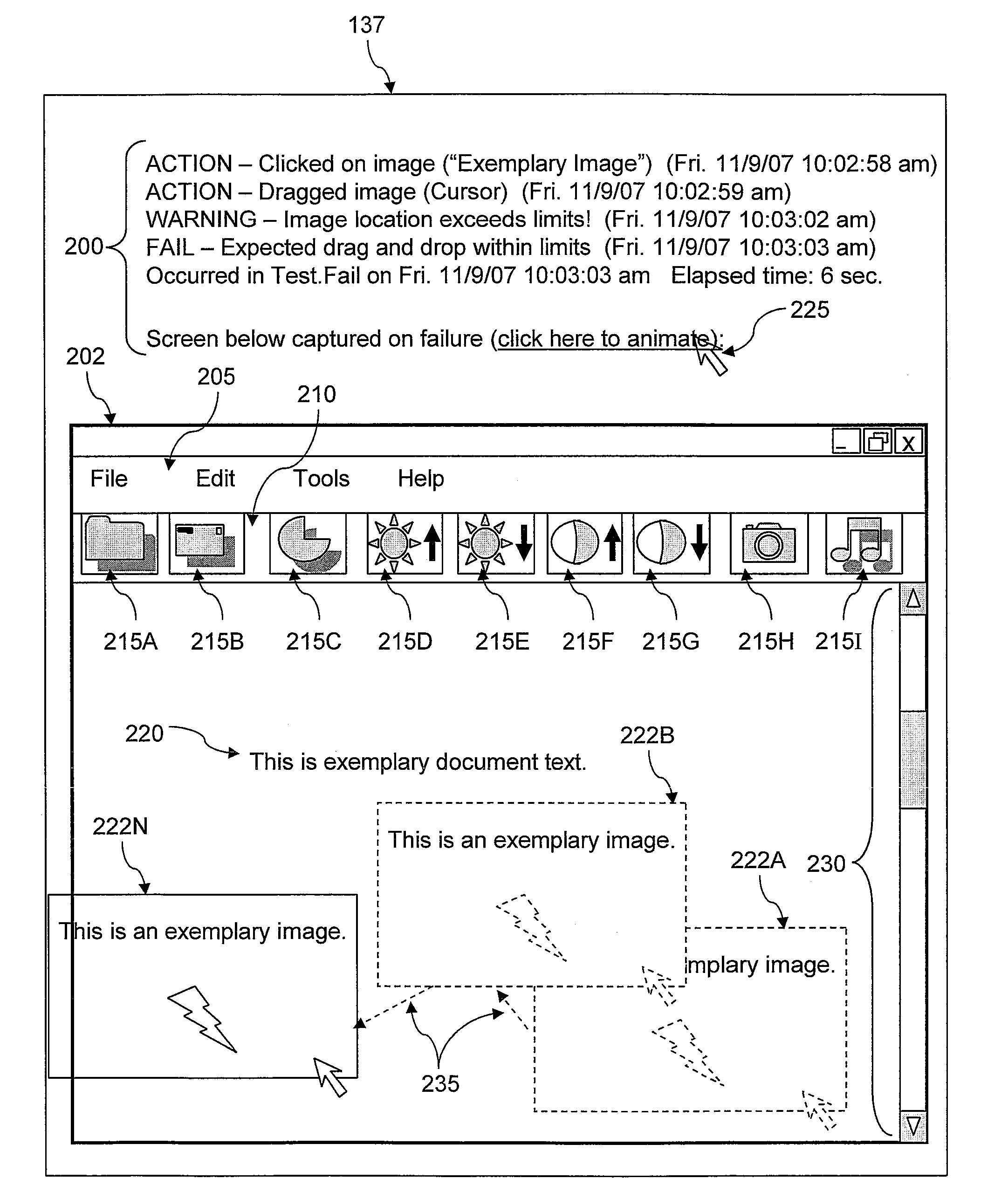

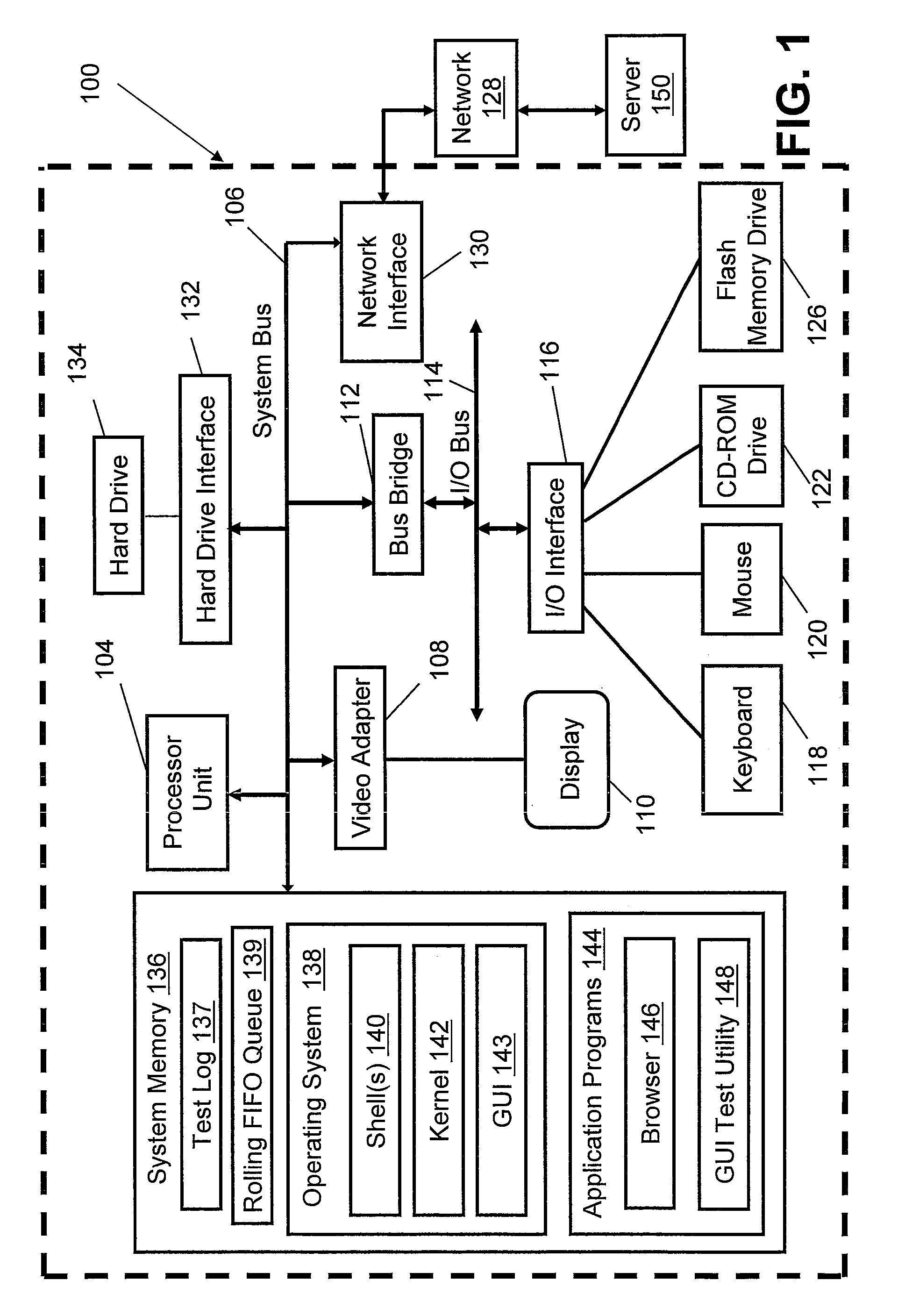

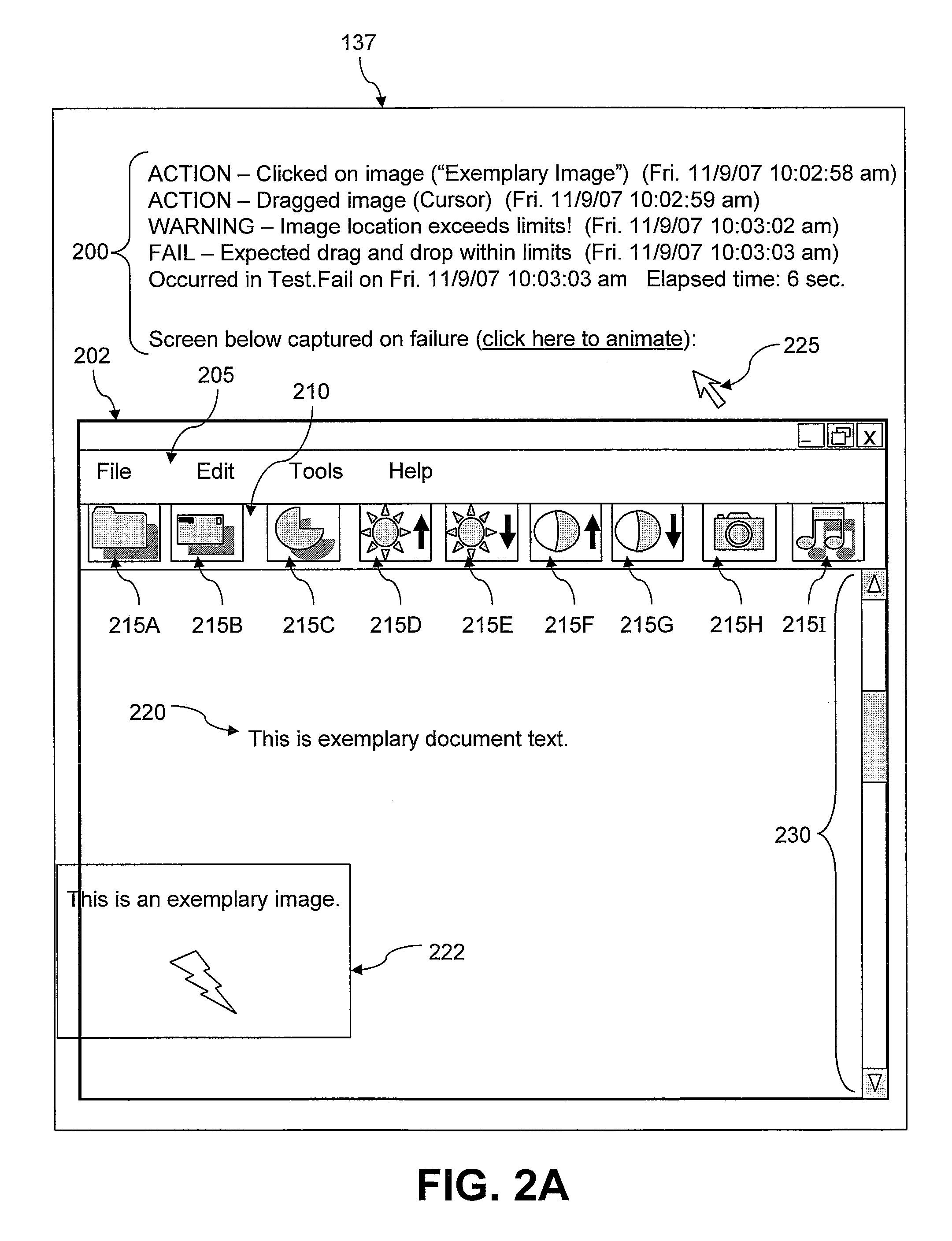

Method and System for Capturing Movie Shots at the Time of an Automated Graphical User Interface Test Failure

InactiveUS20090150868A1Error detection/correctionSpecific program execution arrangementsGraphicsComputer hardware

A method of capturing movie shots at the time of an automated Graphical User Interface (GUI) test failure. When an automated GUI test application performs an action during a test of a GUI, the GUI test application adds a text description of the action to a test log and captures a screenshot image of the GUI. The GUI test application adds the screenshot image to a rolling First-In-First-Out (FIFO) queue that includes up to a most recent N screenshot images, where N is a pre-defined configurable number. If an error occurs, the GUI test application captures a final failure point screenshot image. The GUI test application adds the final screenshot image to the rolling FIFO queue and the test log. The GUI test application assembles the screenshot images from the rolling FIFO queue into a chronologically animated movie file and attaches the movie file to the test log.

Owner:IBM CORP

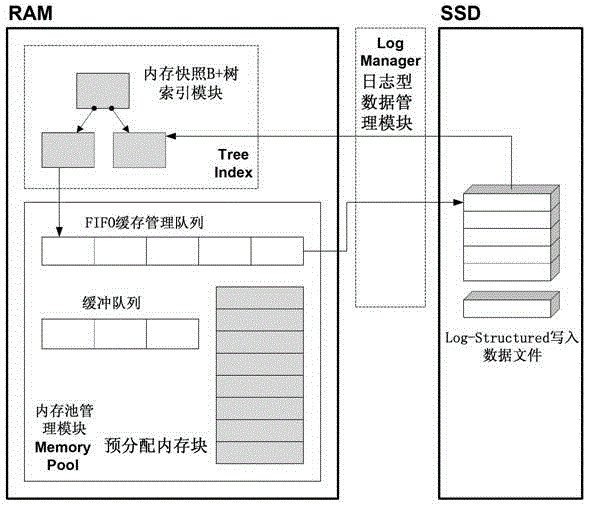

Key-Value local storage method and system based on solid state disk (SSD)

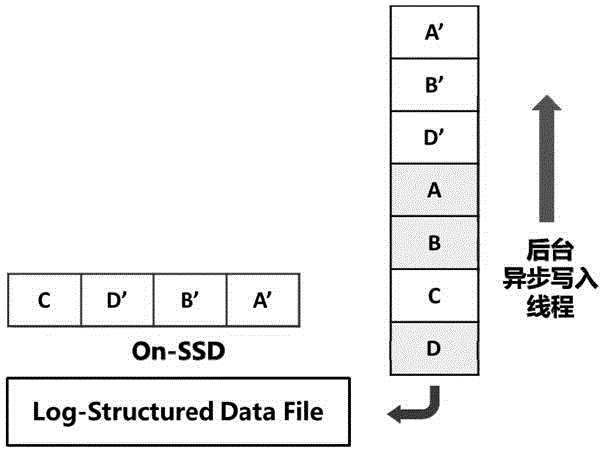

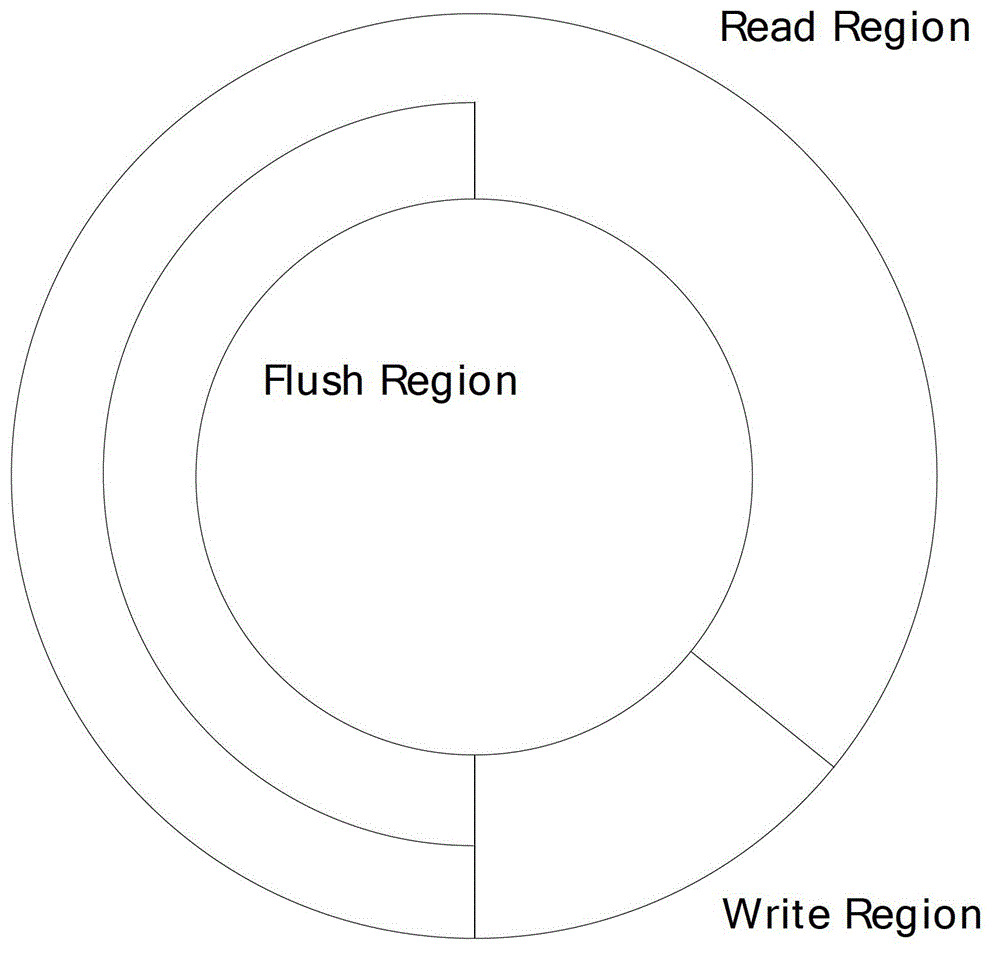

ActiveCN102722449AEasy to wearRealize read-write separationDigital data information retrievalMemory adressing/allocation/relocationSolid-state driveFifo queue

The invention discloses a Key-Value local storage method and a Key-Value local storage system based on a solid state disk (SSD). The method comprises the following steps of: 1, performing read-write separation operation of a memory on data by adopting a memory snapshot B+ tree index structure; 2, performing first in first out (FIFO) queue management and caching on the indexed data aiming at a B+ tree; and 3, performing read-write operation on the data. Mapping management of logical page number and physical position is realized through an empty file mechanism in the log type additional write-in data.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI +1

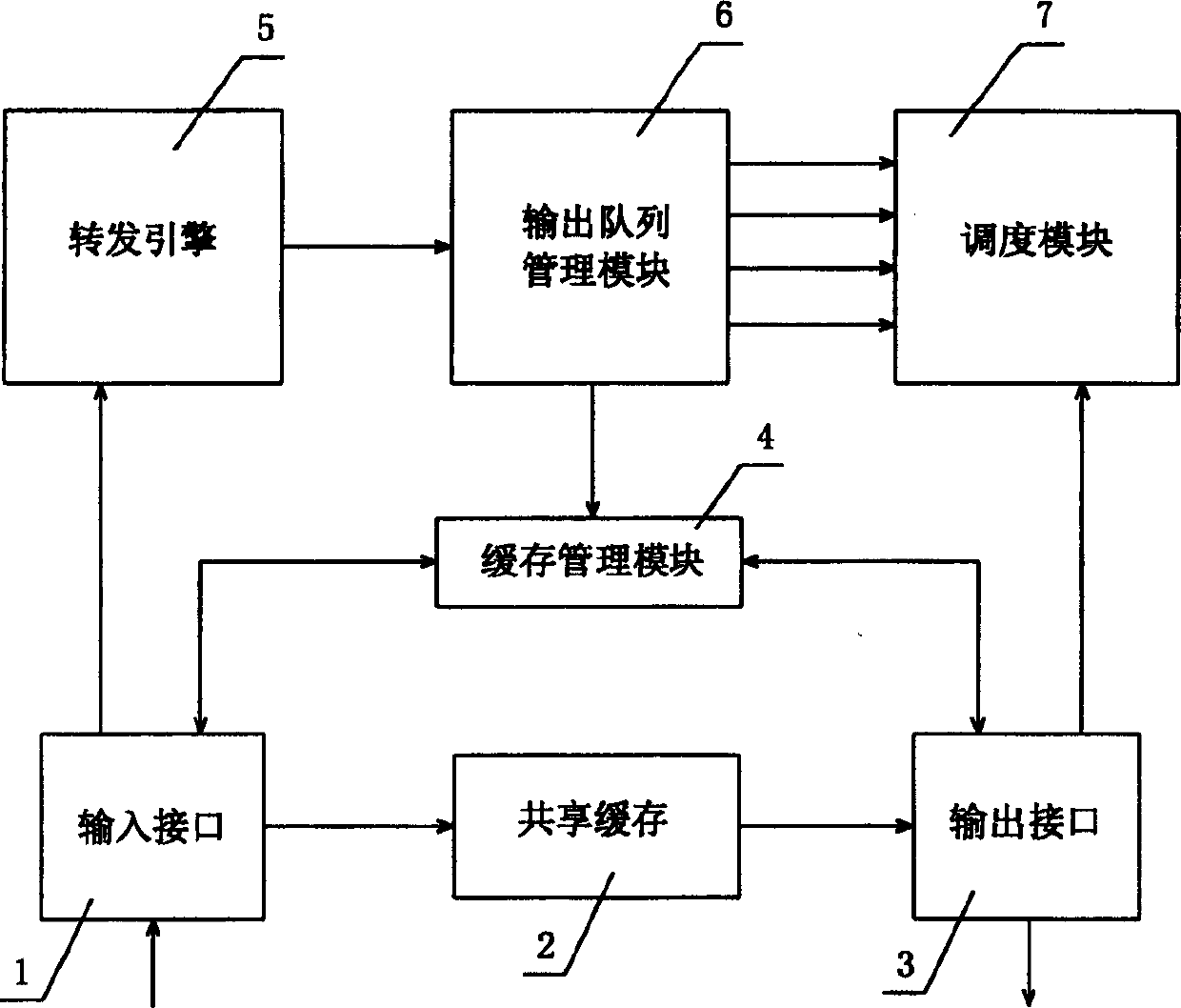

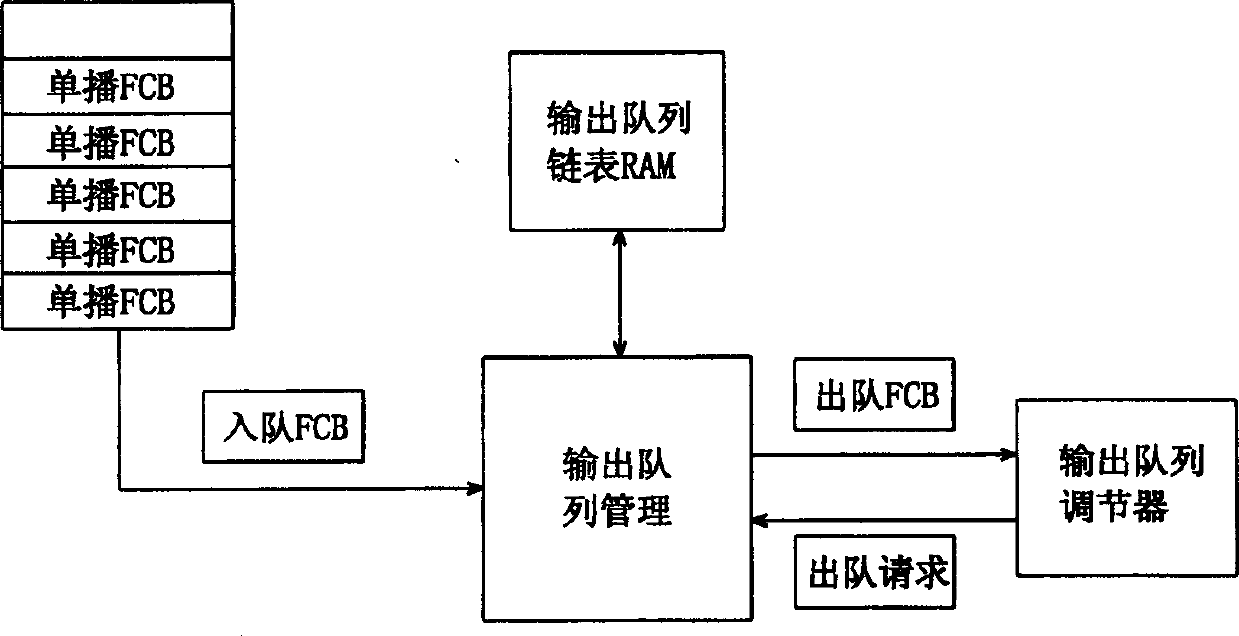

Ethernet exchange chip output queue management and dispatching method and device

InactiveCN1411211ADistribute quicklyEffective distributionData switching by path configurationMessage queueFifo queue

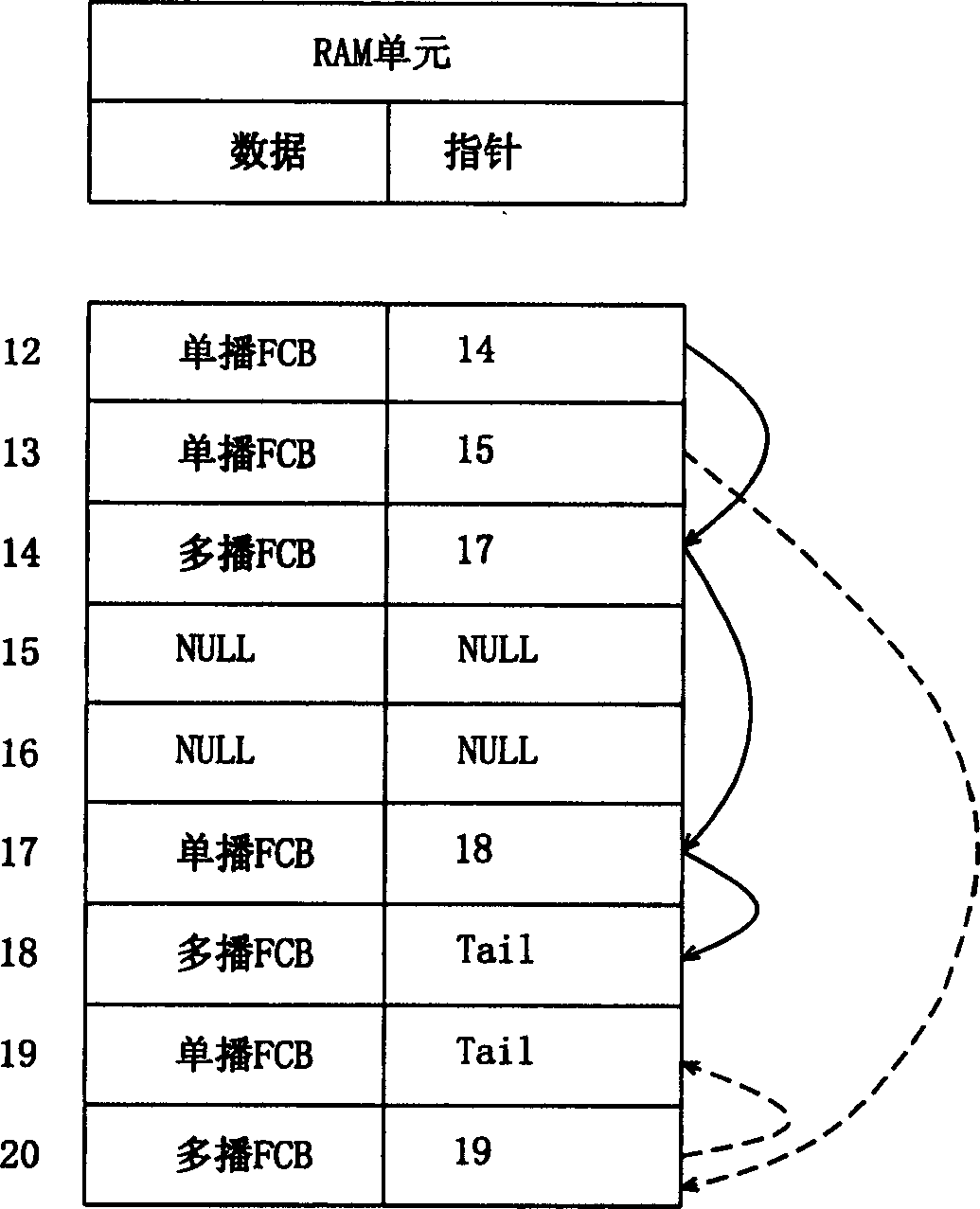

A management method and device of Ethernet chip output quene is that a single / multi message control separation module outputs queue from the frame control module based on single, multimessage separation way of each interface, the single data frame control module sets single-message queue in mode with multiple priority queue and uses congestion control algorithm to safeguard the single queue, the multiple one organizes in a way of FIFO queue to apply congestion control algorithm to directly discard queue end then carry out single / multi message priority quene matched arrangement. After interface dispatch between interfaces and priority dispatch inside interface in output quene dispatches, if single / multiple in priority.

Owner:HISILICON TECH

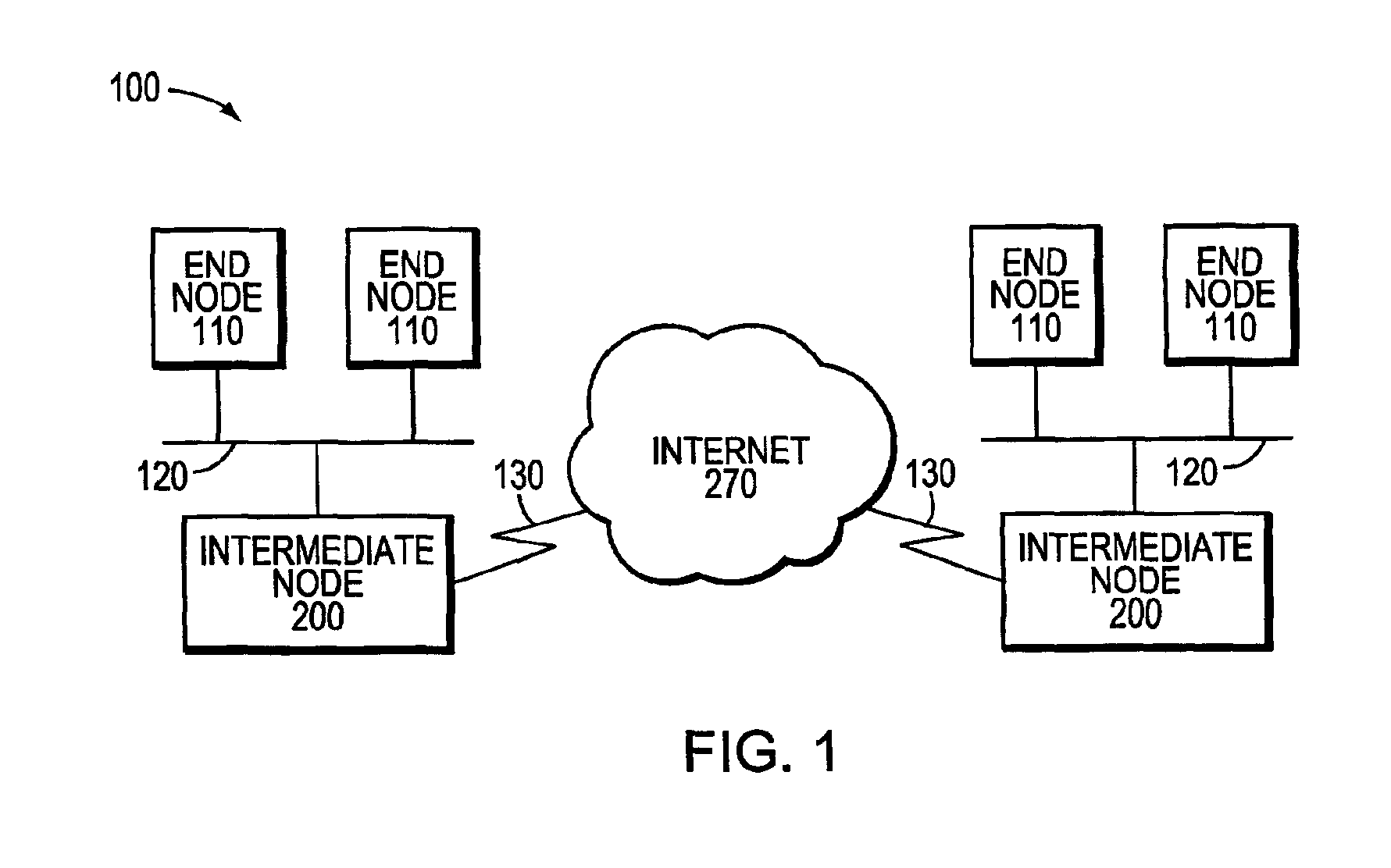

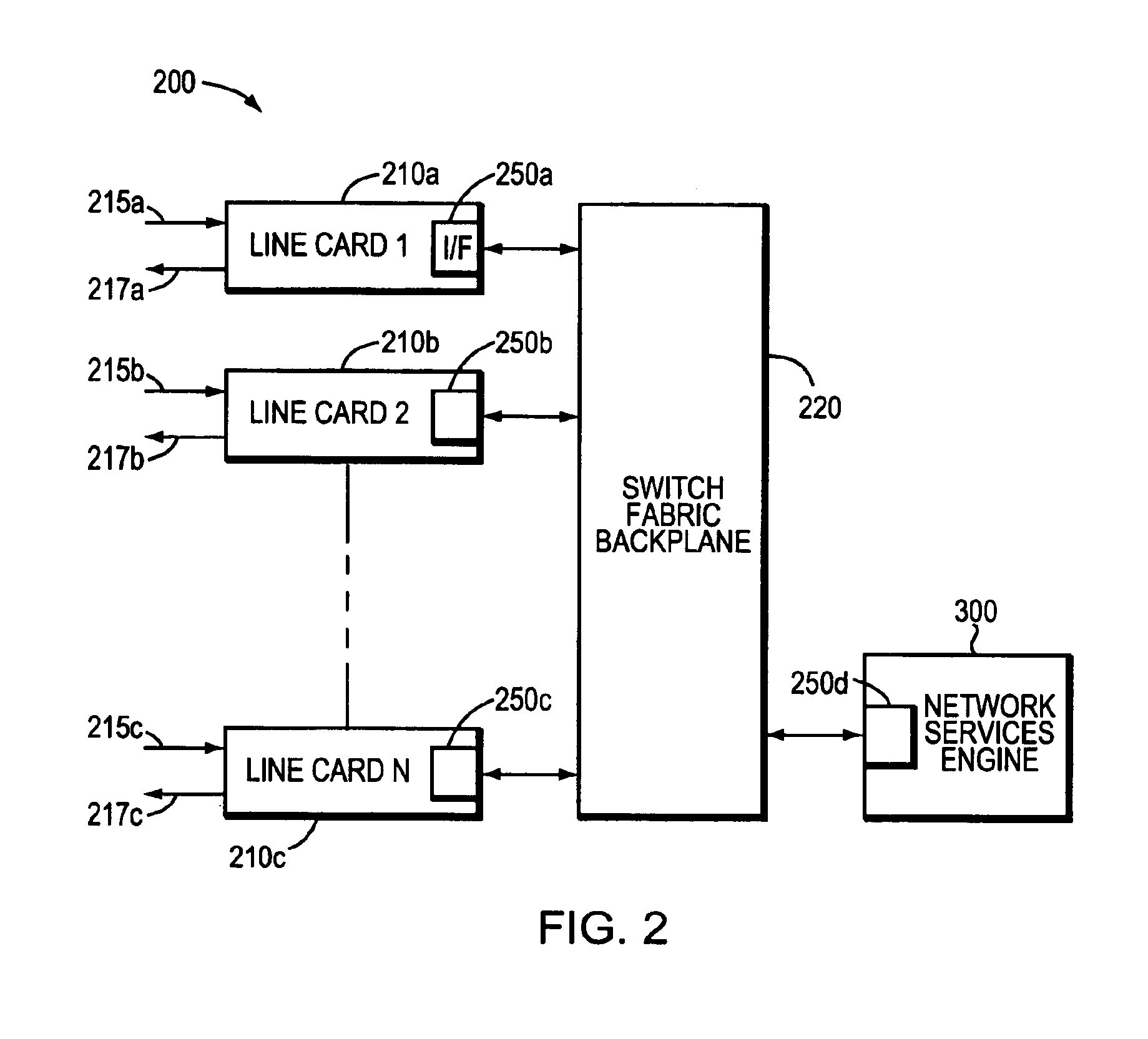

Traffic control method for network equipment

InactiveUS20060098675A1Drop in throughput can be avoidedReduce stepsMemory adressing/allocation/relocationData switching by path configurationRecord statusNetwork packet

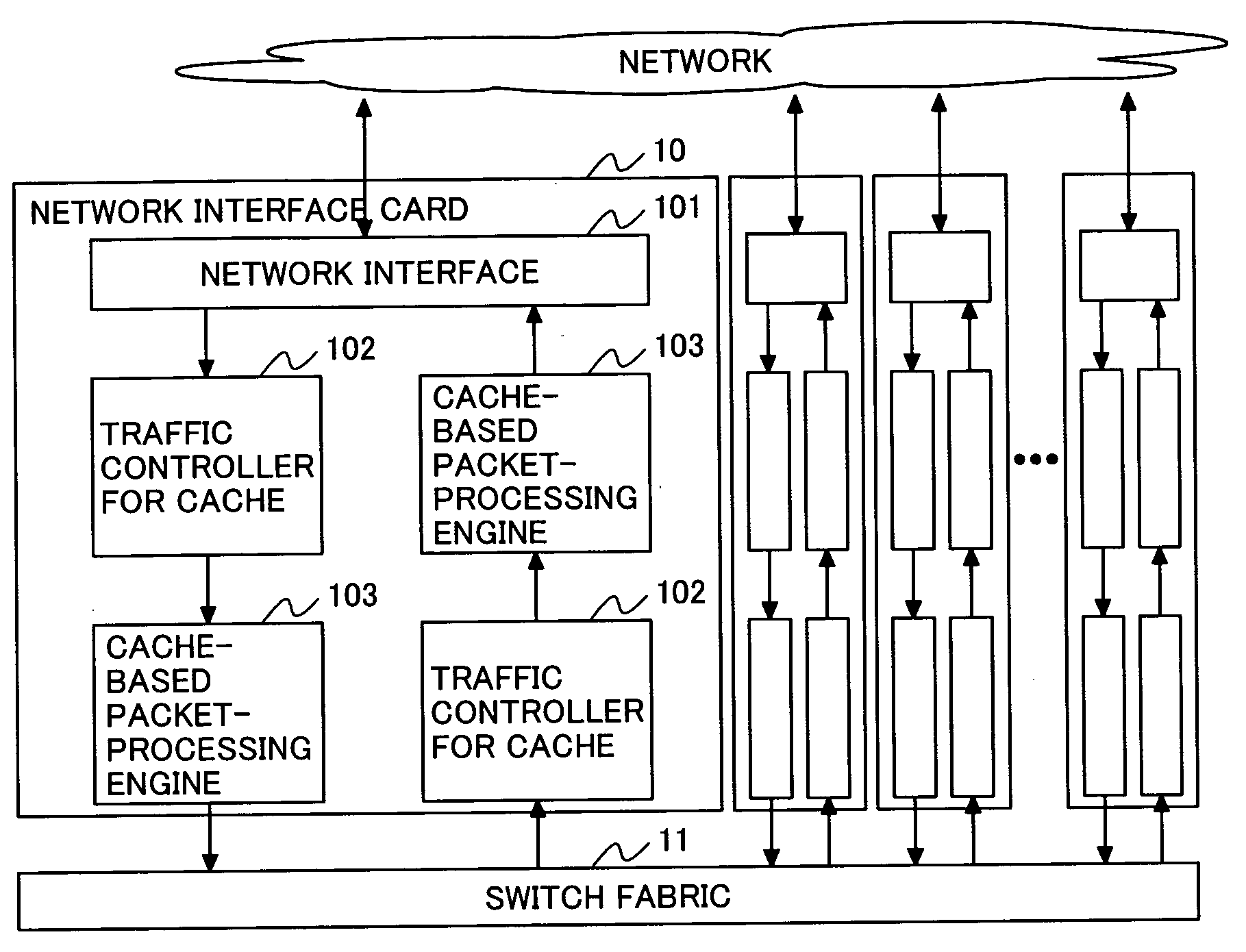

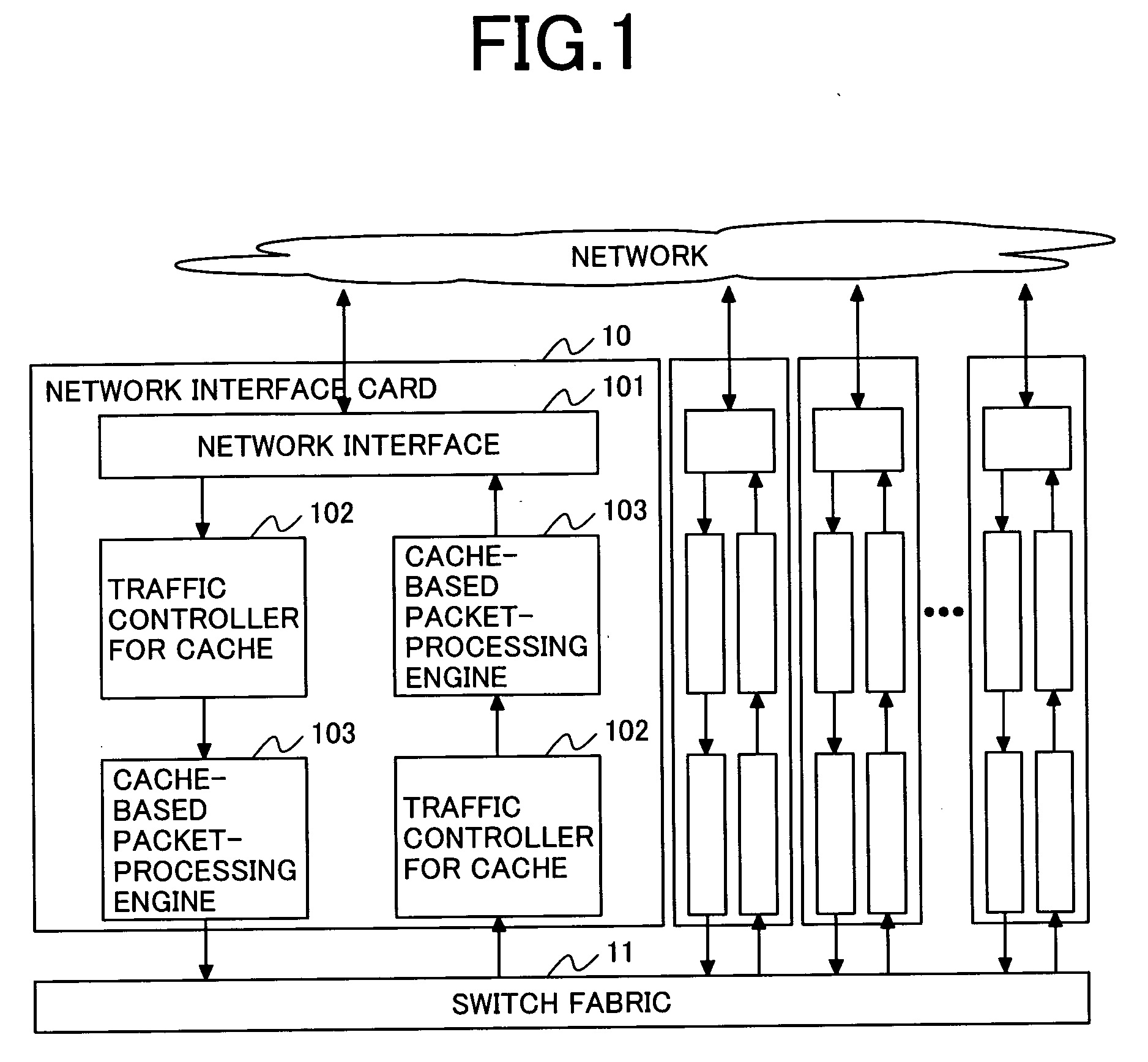

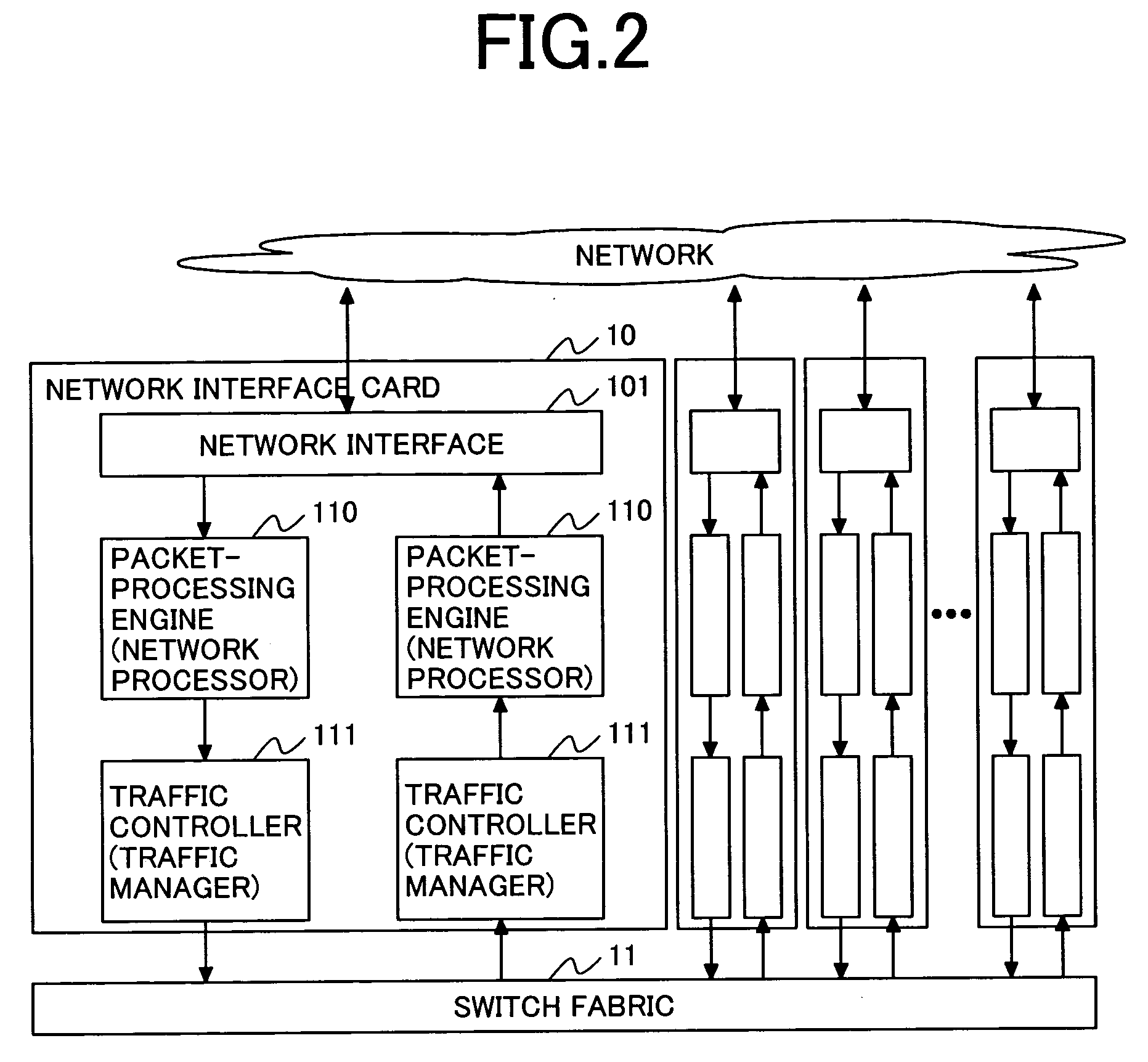

A function block containing a process-cache tag for storing process-cache tags in the pre-stage of a process cache and an FIFO queue for each tag entry are installed as a traffic controller. The traffic controller stacks packet groups, identified as being from the same flow, in the same FIFO queue. Each FIFO queue records the logged state of the corresponding process queues, and when a packet arrives at an FIFO queue entry in a non-registered state, only its first packet is conveyed to a function block for processing the process-cache misses, and then it awaits registration in a process cache. Access to the process cache from the FIFO queue is implemented at the time that registration of the second and subsequent packets in the process cache are completed. This allows packets other than the first packet in the flow to always access the process cache for a cache hit.

Owner:HITACHI LTD

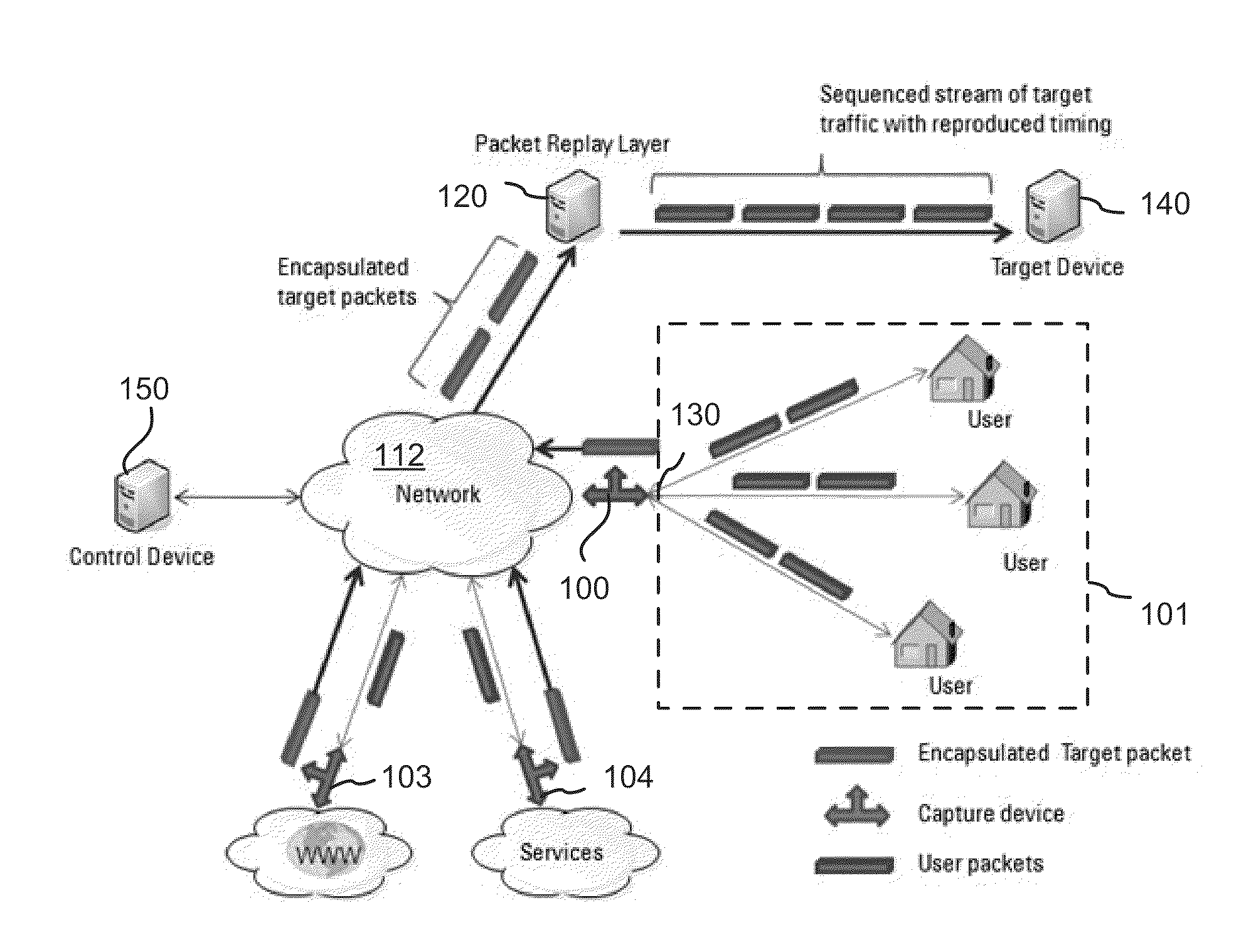

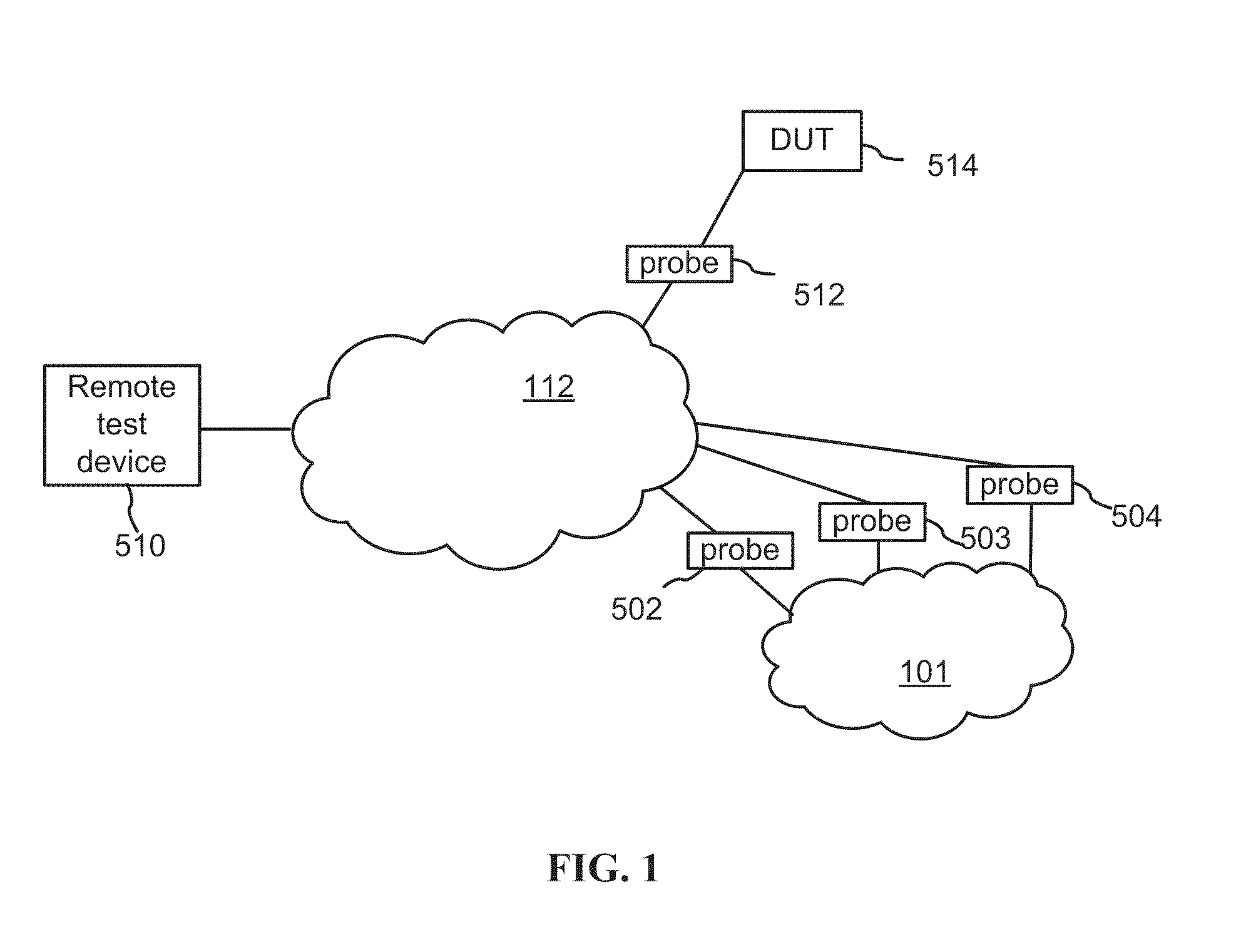

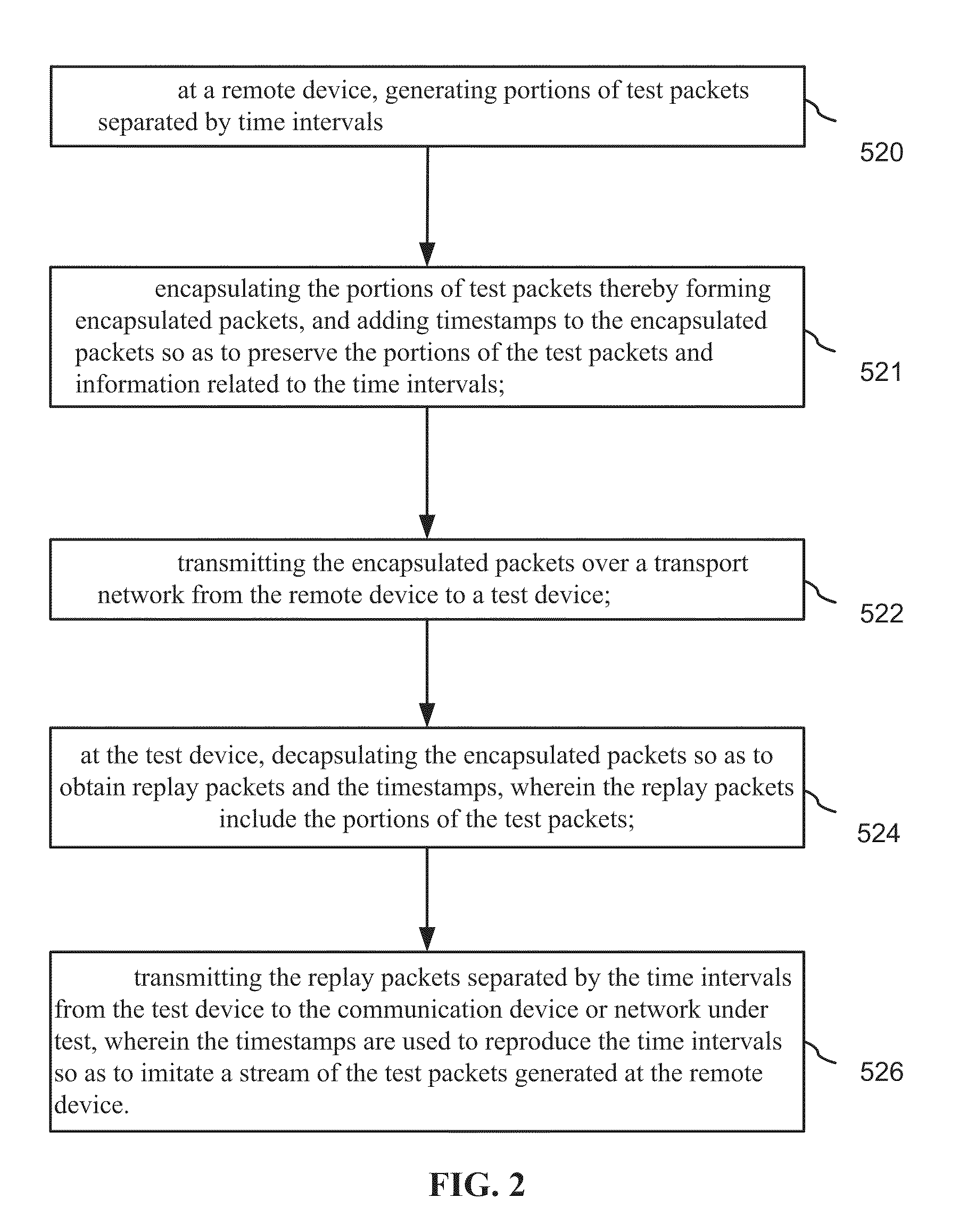

Method of remote active testing of a device or network

A test device includes a packet input receiver for receiving encapsulated packets from a network; a packet reader for extracting timing information from the encapsulated packets, and for decapsulating encapsulated packets so as to obtain test packets; a FIFO queue for storing the test packets; a packet controller for reading the test packets from the FIFO queue and writing the test packets into a de-jitter buffer in accordance with the timing information, the de-jitter buffer for storing the reordered test packets; and, a packet output generator for providing the test packets to a target device wherein time intervals between the test packets are reproduced using the timing information.

Owner:VIAVI SOLUTIONS INC

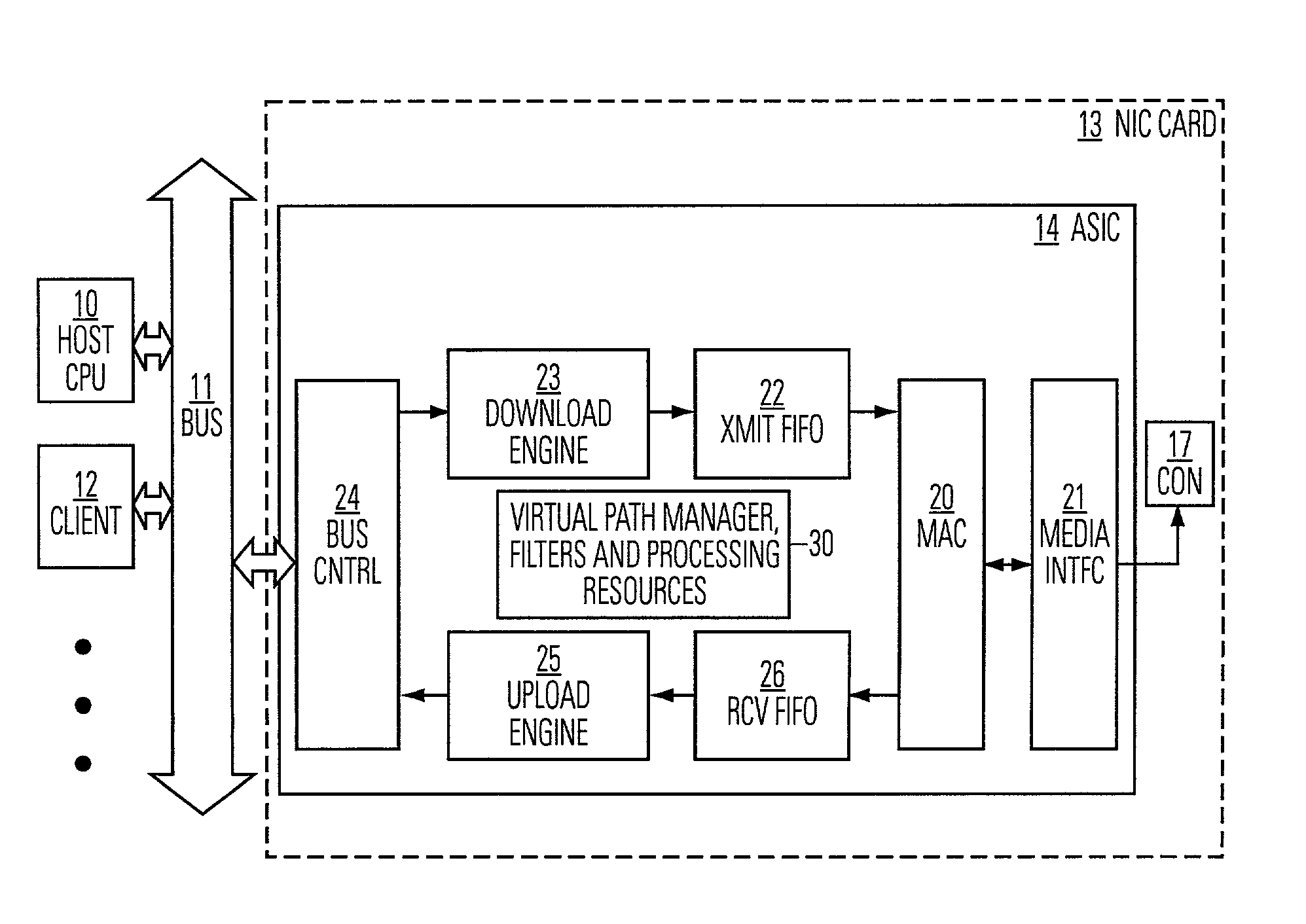

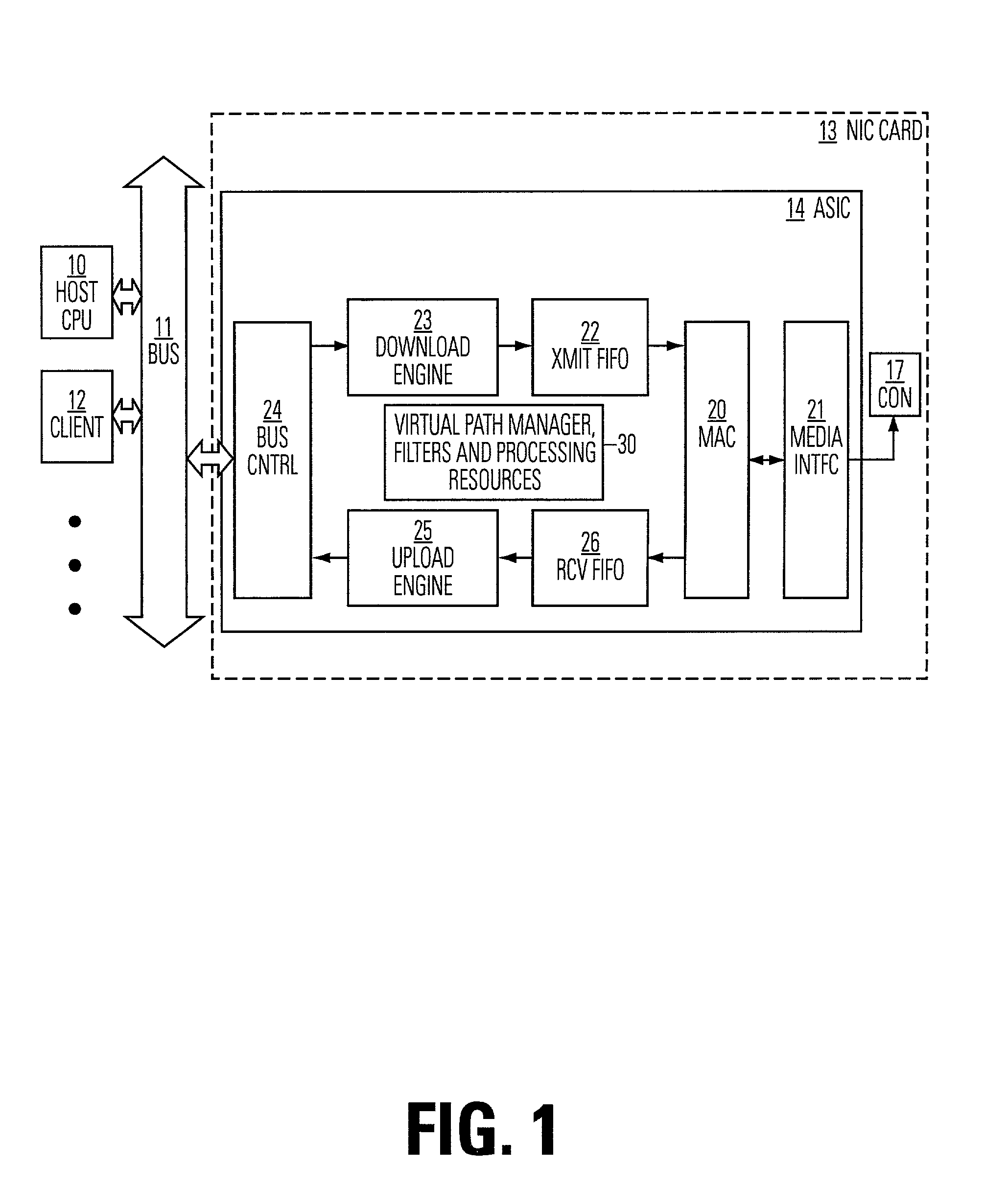

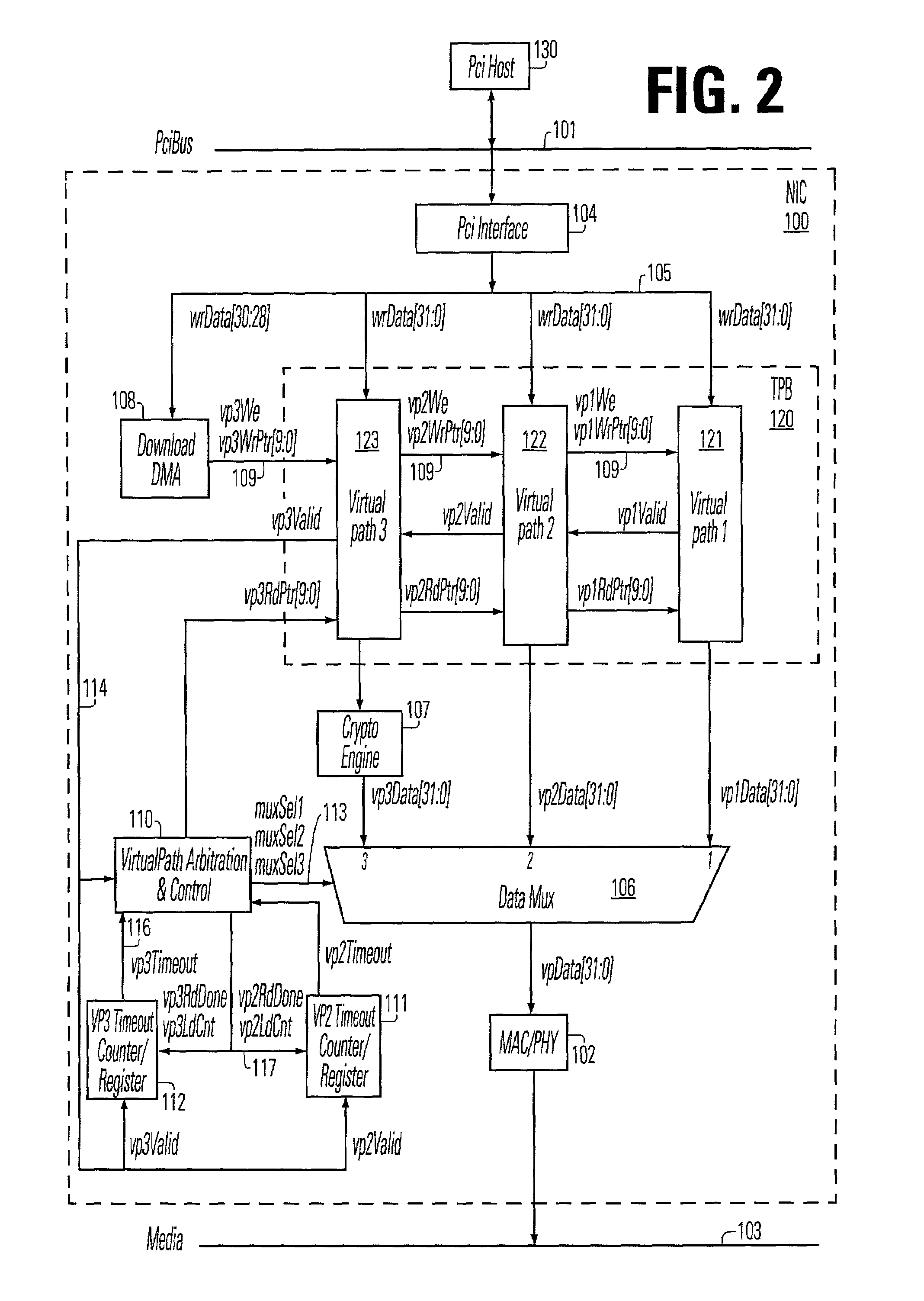

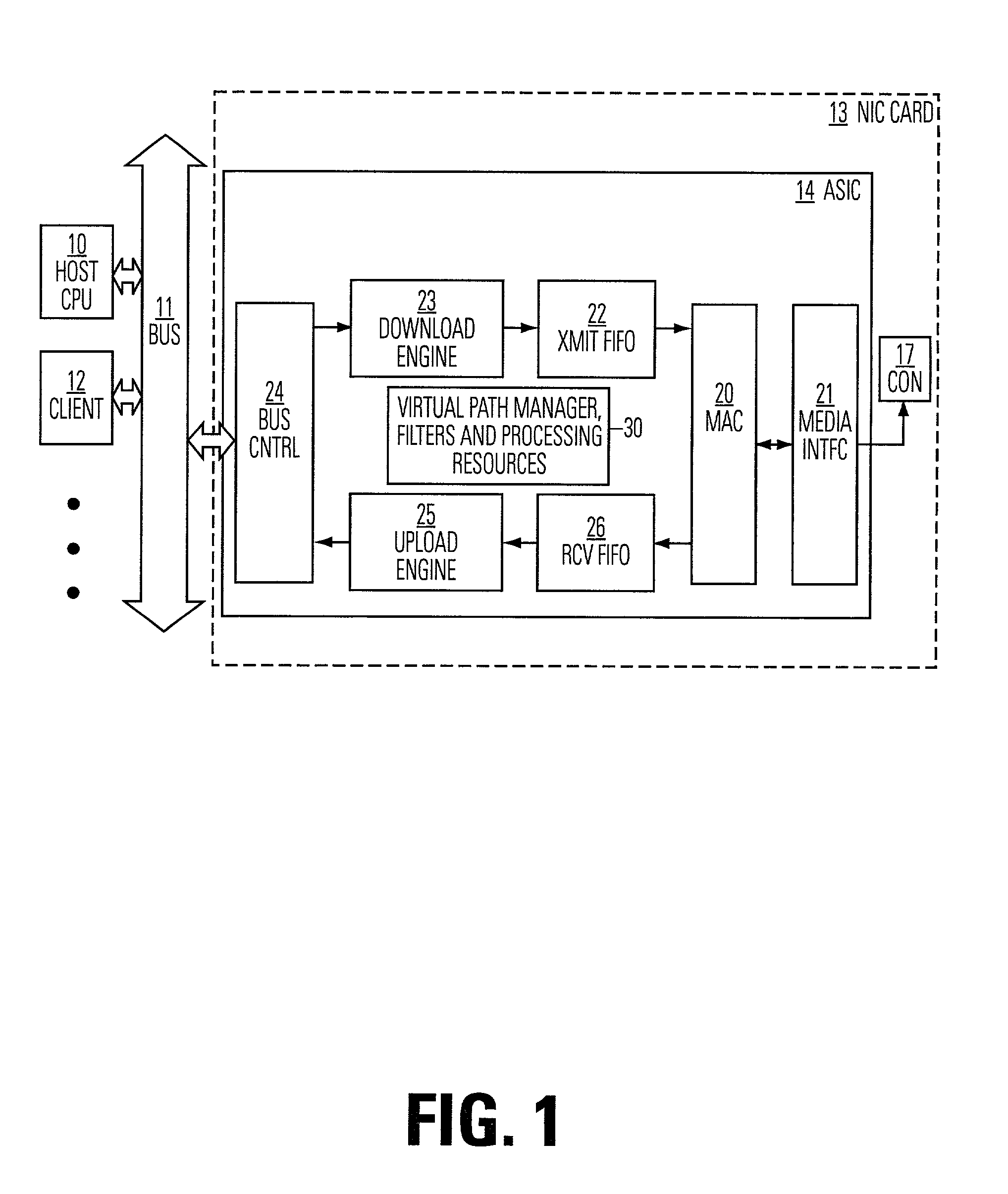

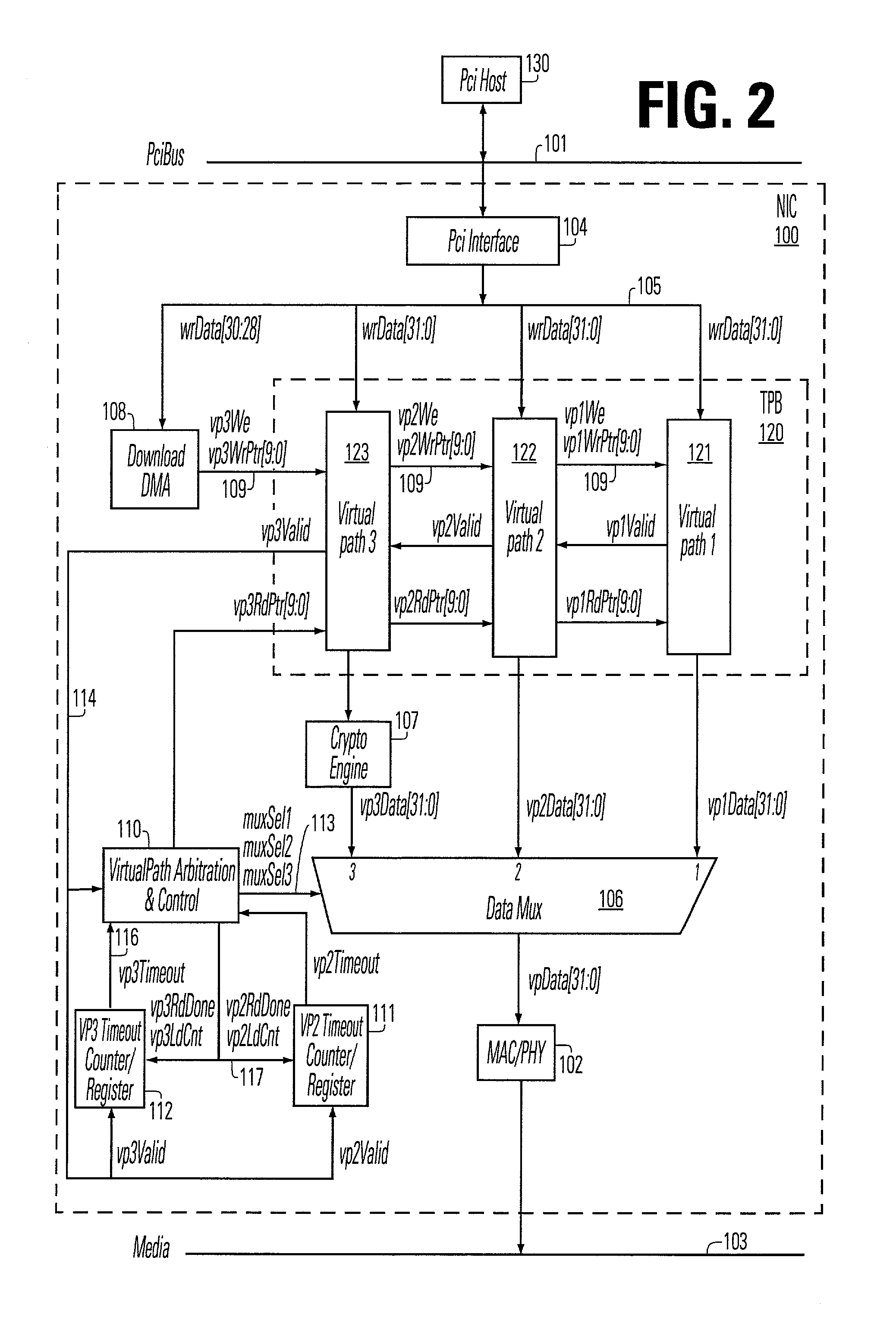

Network interface supporting virtual paths for quality of service

ActiveUS6970921B1Multiple digital computer combinationsData switching networksQuality of serviceFifo queue

A plurality of virtual paths in a network interface between a host port and a network port are managed according to respective priorities. Thus, multiple levels of quality of service are supported through a single physical network port. Variant processes are applied for handling packets which have been downloaded to a network interface, prior to transmission onto the network. The network interface also includes memory used as a transmit buffer, that stores data packets received from the host computer on the first port, and provides data to the second port for transmission on the network. A control circuit in the network interface manages the memory as a plurality of first-in-first-out FIFO queues having respective priorities. Logic places a packet received from the host processor into one of the plurality of FIFO queues according to a quality of service parameter associated with the packets. Logic transmits the packets in the plurality of FIFO queues according to respective priorities. The transmit packet buffer may be statically or dynamically allocated memory.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP +1

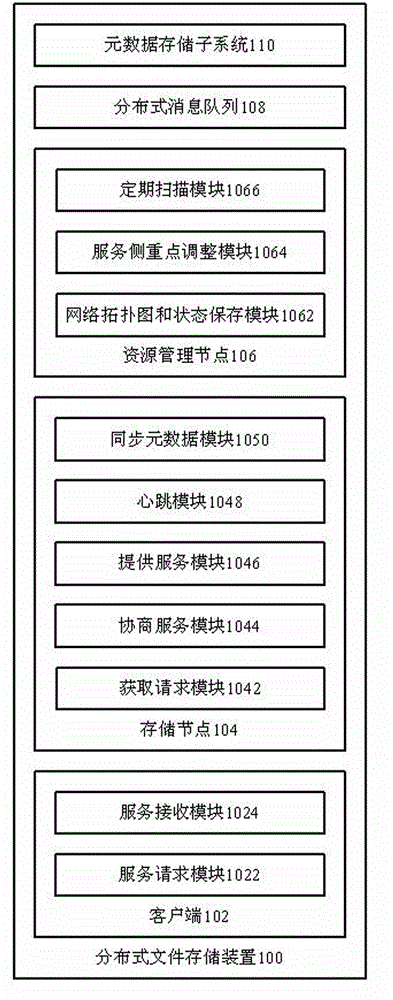

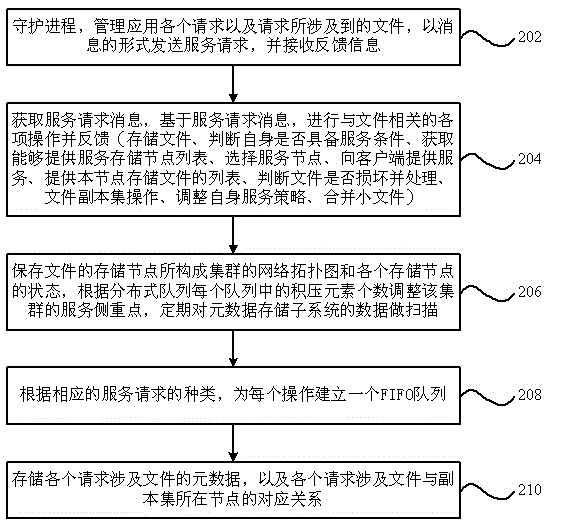

Distributed file storage device and method

InactiveCN104391930ADoes not cause a bottleneckSpecial data processing applicationsMessage queueParallel computing

The invention provides a distributed file storage device. The distributed file storage device comprises a client side for managing and applying all requests and requesting for related files, storage nodes for obtaining service request messages to perform various operations relevant to the files and making feedback, resource management nodes for saving a network topological graph of clusters and the states of the storage nodes, adjusting the service emphasis of the clusters and performing periodical scanning, distributed message queues for establishing corresponding FIFO queues according to request categories, and a metadata storage subsystem for storing metadata of the files involved with the requests and the correspondence relation of the nodes where the files and replica sets are located. The invention further provides a distributed file storage method. By means of the technical scheme, the single-object type can be fully utilized to complete storage of multiple-object type of files based on an existing file storage mode, and multiple-object type involved and distributed file oriented universal and unified storage thought is established.

Owner:YONYOU NETWORK TECH

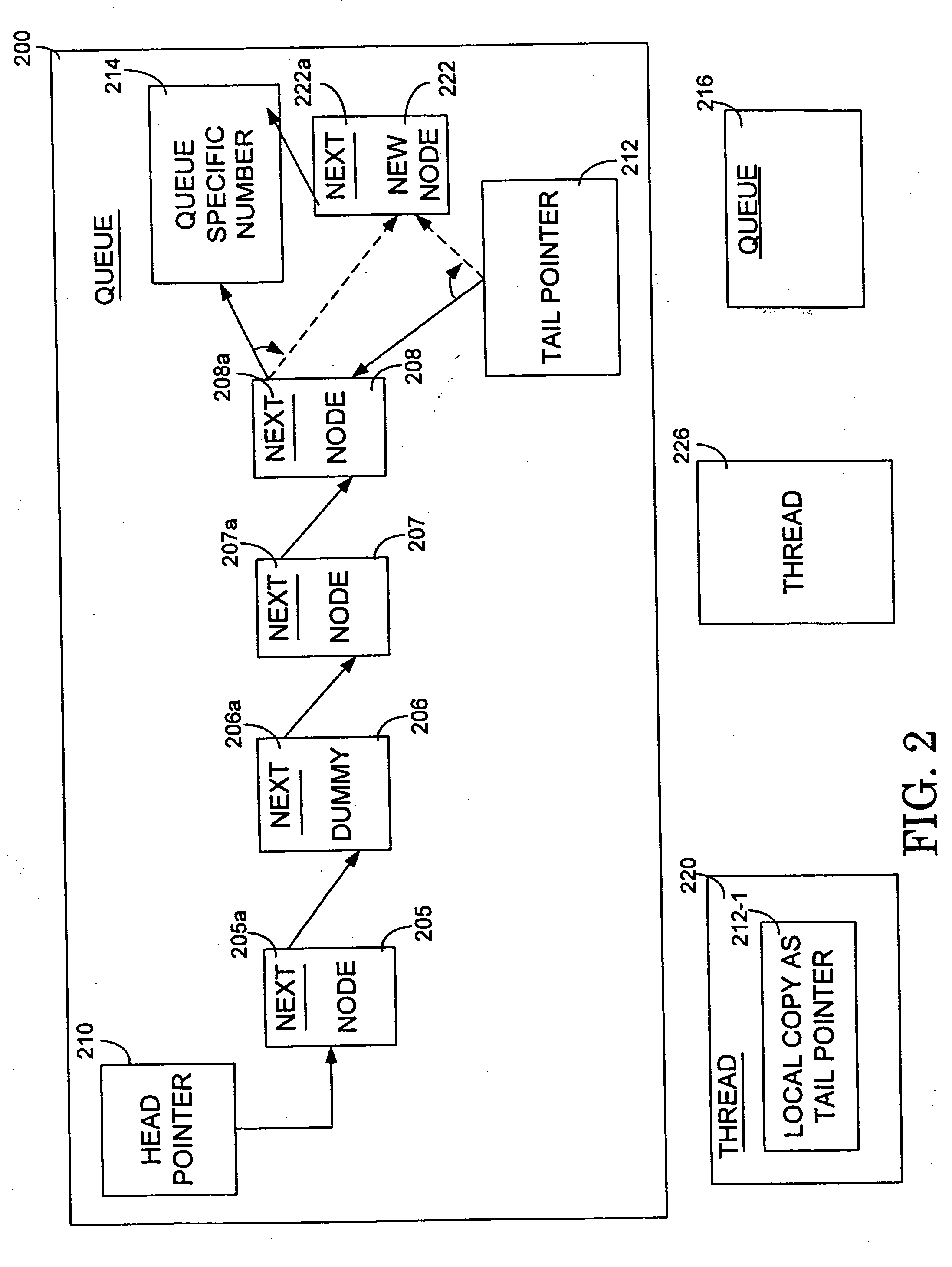

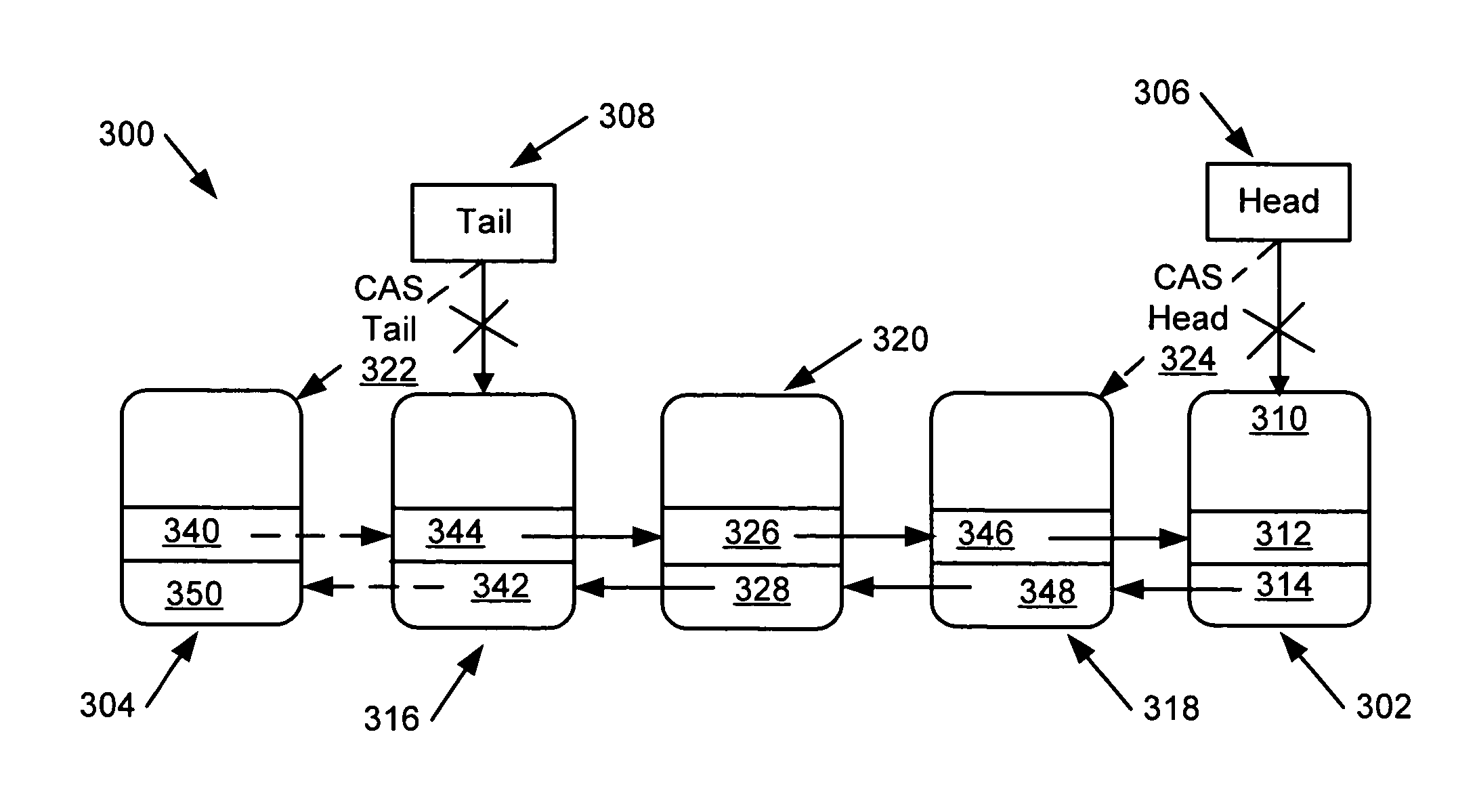

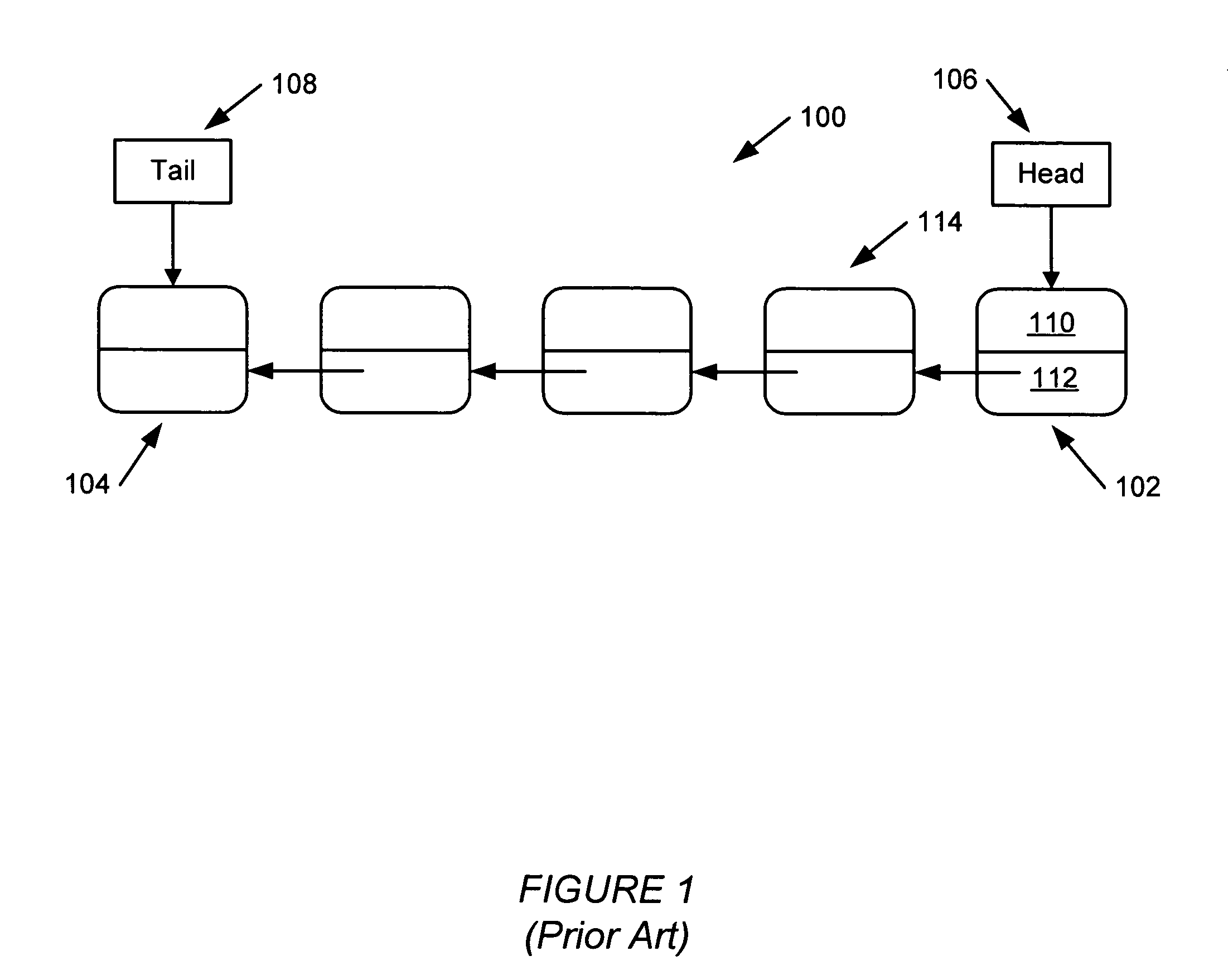

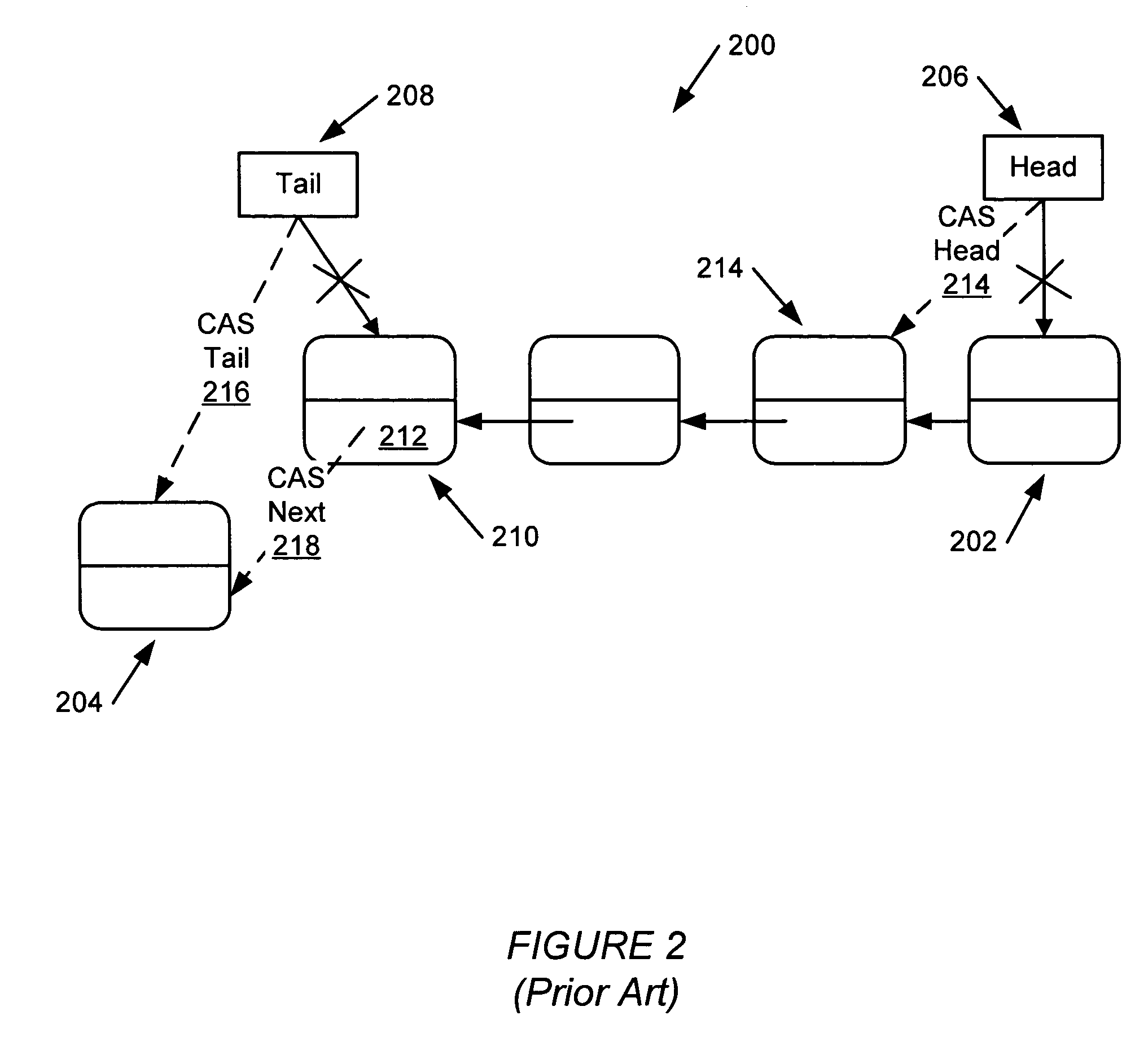

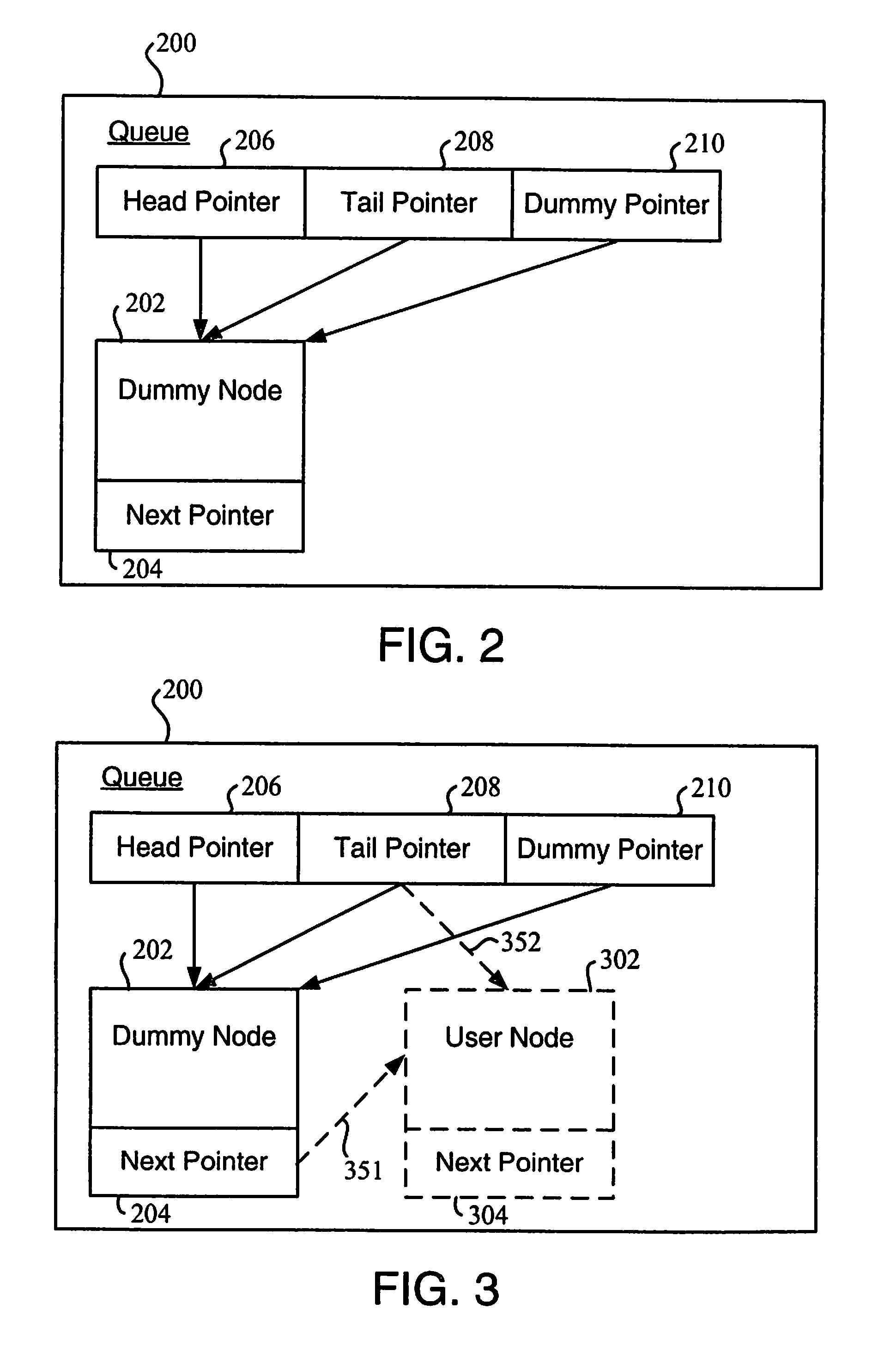

Non-blocking concurrent queues with direct node access by threads

InactiveUS20050066082A1Digital computer detailsSpecific program execution arrangementsParallel computingEngineering

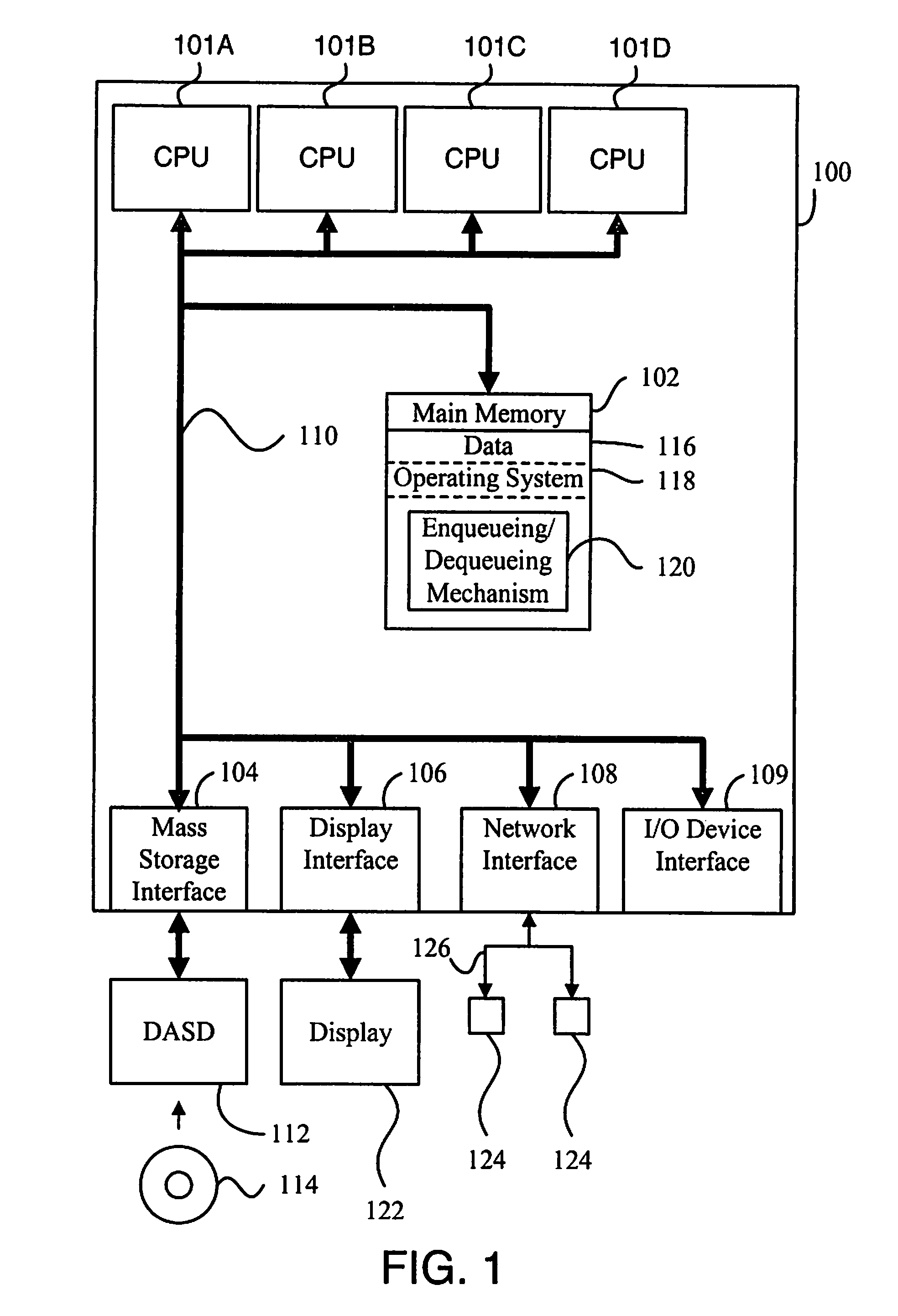

Multiple non-blocking FIFO queues are concurrently maintained using atomic compare-and-swap (CAS) operations. In accordance with the invention, each queue provides direct access to the nodes stored therein to an application or thread, so that each thread may enqueue and dequeue nodes that it may choose. The prior art merely provided access to the values stored in the node. In order to avoid anomalies, the queue is never allowed to become empty by requiring the presence of at least a dummy node in the queue. The ABA problem is solved by requiring that the next pointer of the tail node in each queue point to a “magic number” unique to the particular queue, such as the pointer to the queue head or the address of the queue head, for example. This obviates any need to maintain a separate count for each node.

Owner:MICROSOFT TECH LICENSING LLC

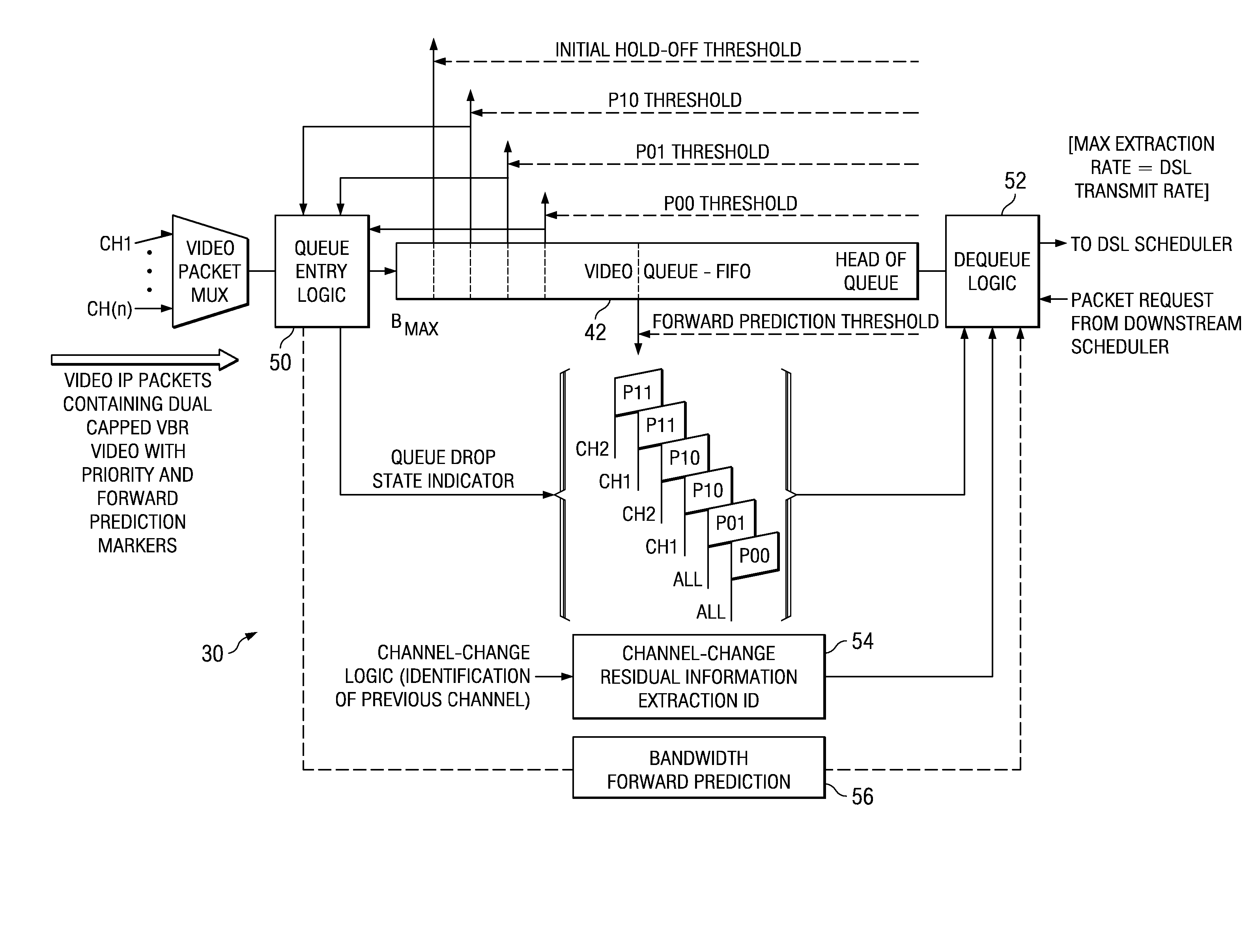

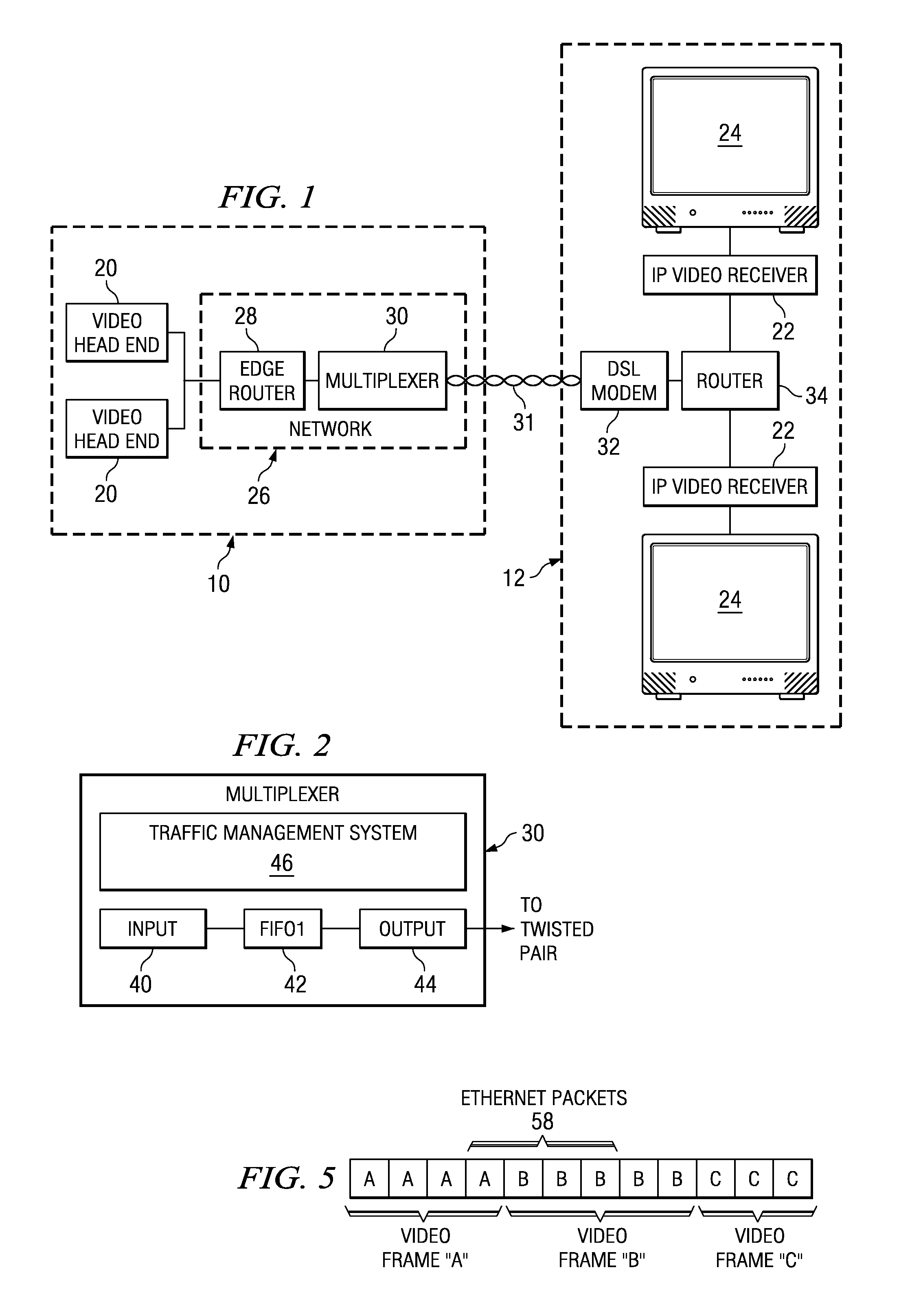

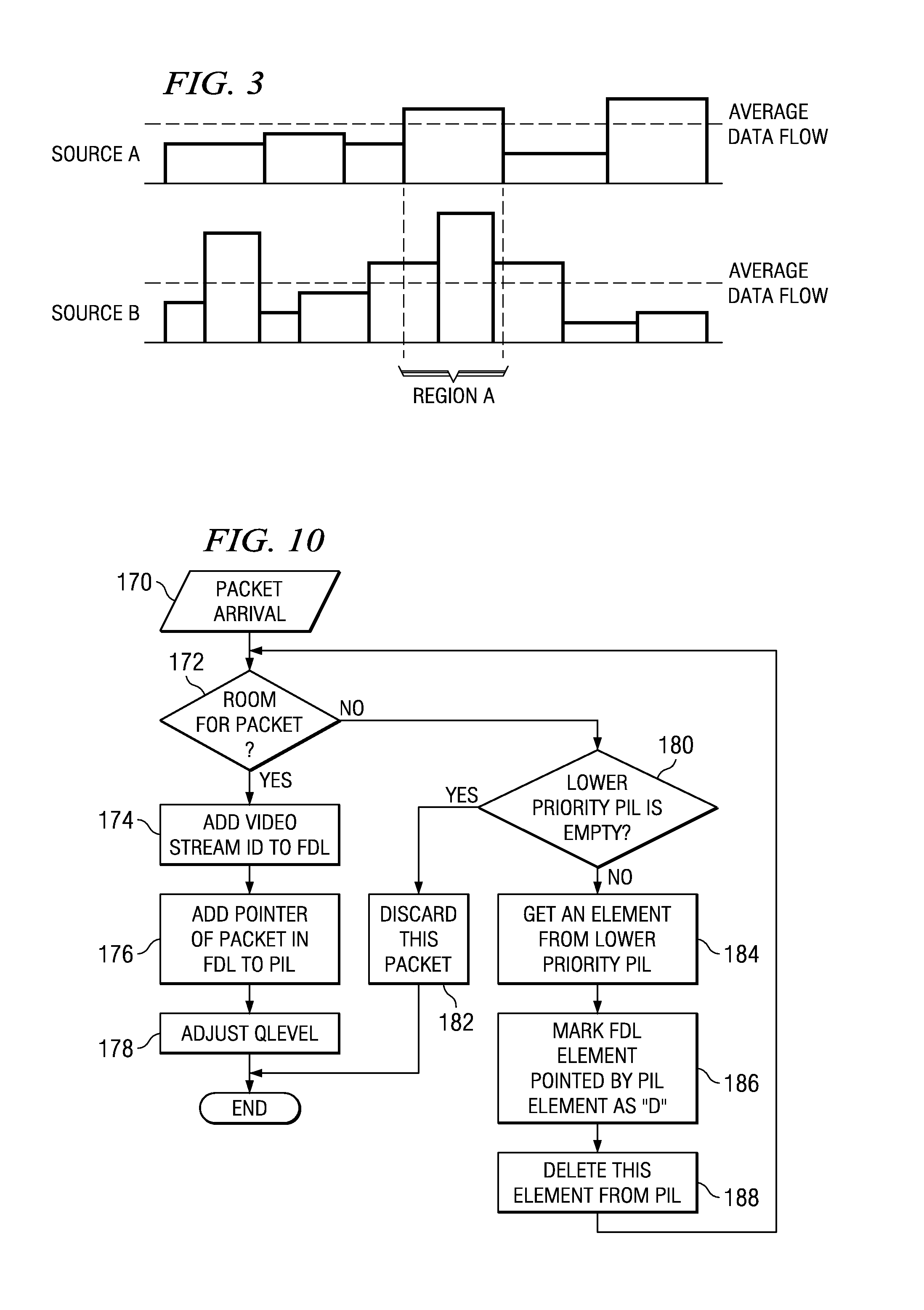

Video aware traffic management

ActiveUS20070171928A1Improve reception performanceReduce resultTime-division multiplexData switching by path configurationTraffic capacityNetwork packet

A multiplexer selectively chooses packets for discarding during periods of congestion. In a first embodiment, thresholds for fill levels of a FIFO queue are set for a plurality of priority types. As thresholds are exceeded, incoming packet below a set priority level will be prevented from entering the queue and packets at the front of the queue below the set priority will be discarded. In a second embodiment, packets within a queue may be marked for deletion. A forward / discard list assigns an index to a new packet and maintains a list of the stream associated with each packet. A priority index list maintains a list of packets for each priority type by index number. A video metadata buffer stores the metadata for each enqueued packet. A physical video buffer stores the packets. When an incoming packet cannot be enqueued, packets of lower priority are detected by reference to the index list and marked for discard.

Owner:ALCATEL-LUCENT USA INC +1

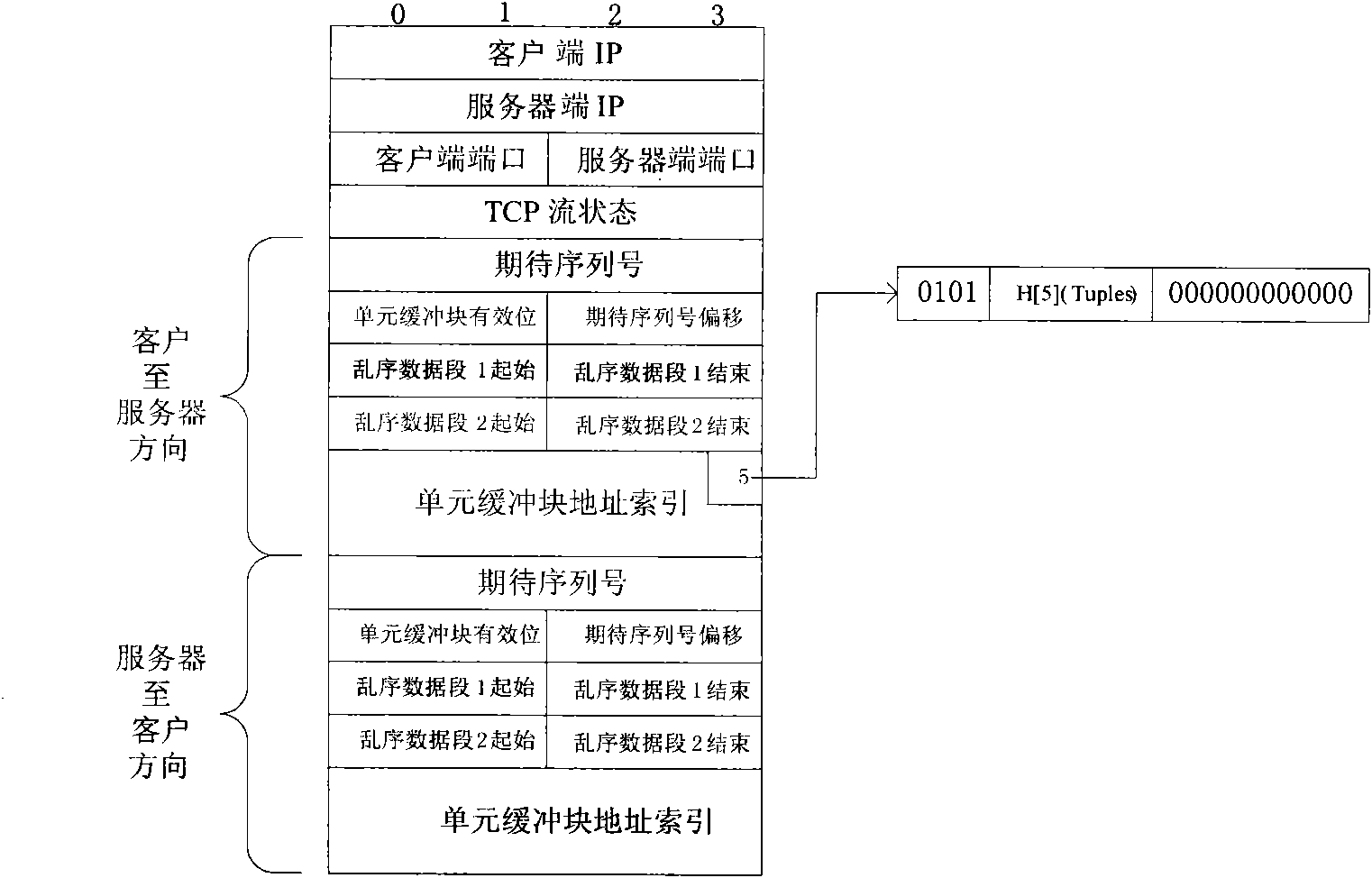

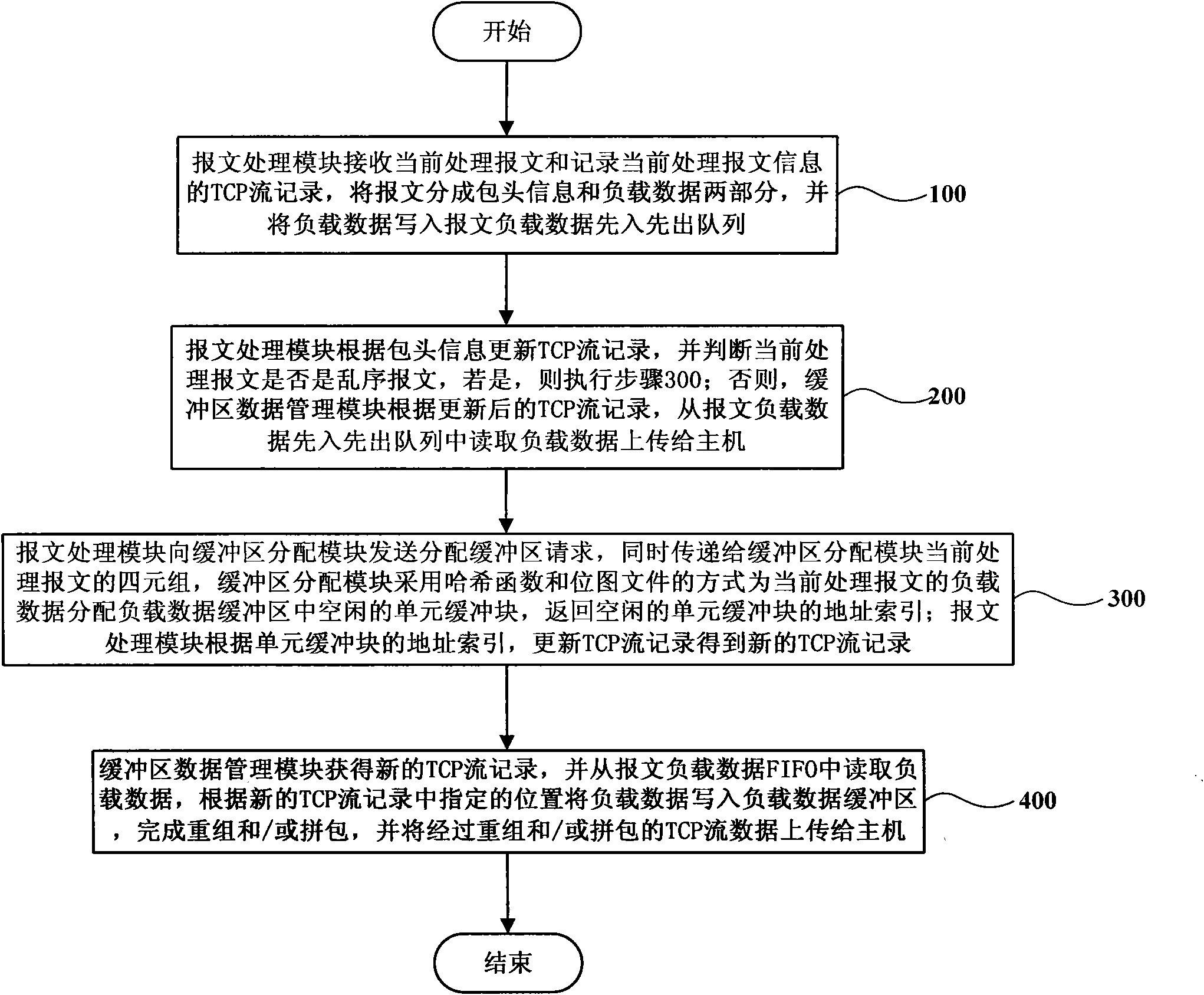

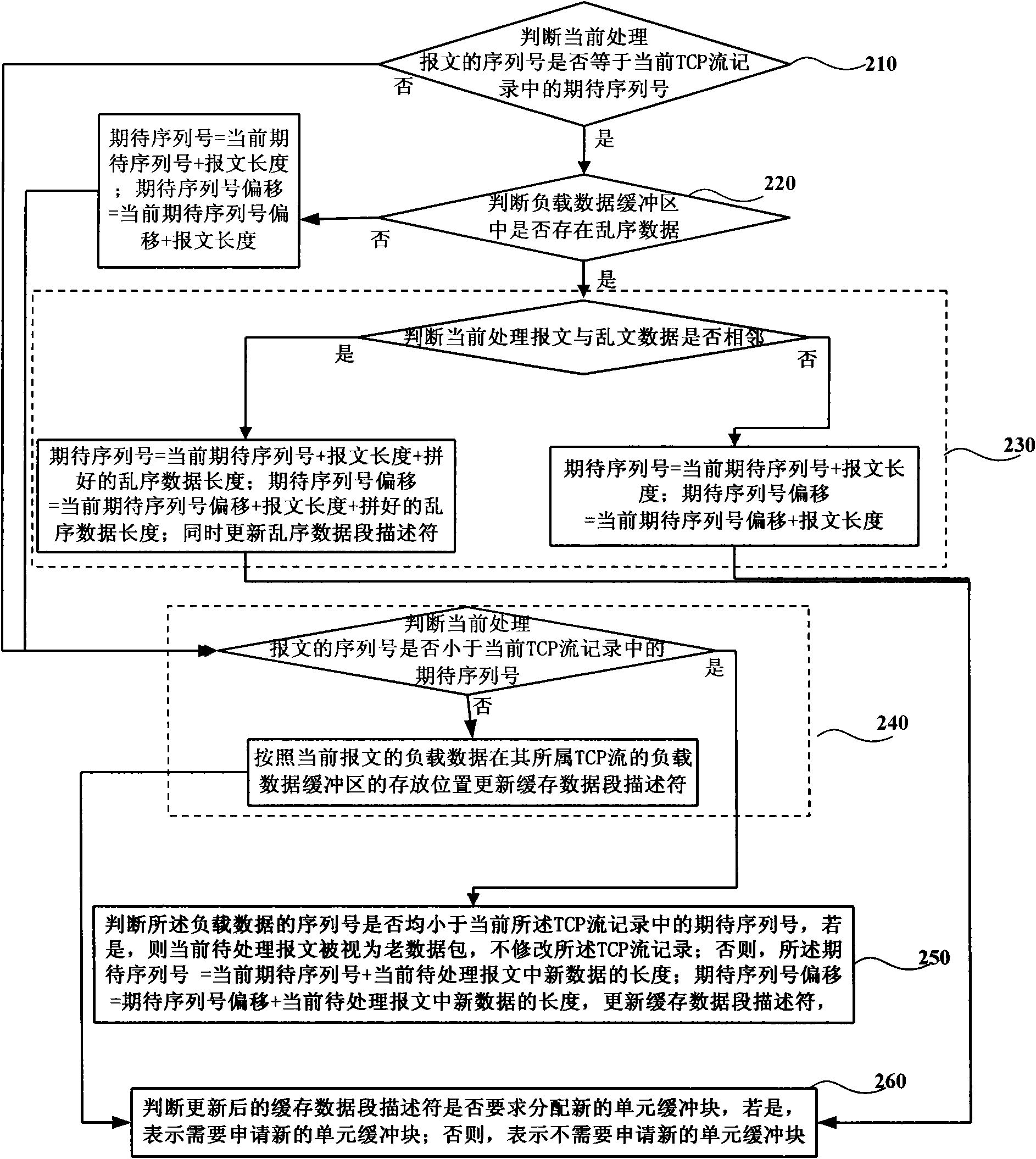

TCP stream restructuring and/or packetizing method and device

ActiveCN101841545AReduce interrupt overheadReduce overheadData switching networksFifo queueData buffer

The invention discloses a TCP stream restructuring and / or packetizing method and a device. The method comprises the following steps that: a current processing packet is received and the TCP stream records of the current processing packet information are recorded, the packet is divided into header information and load data, and the load data is written into a packet load data FIFO queue; whether the caching of the current processing packet needs to apply for a novel buffer area is judged; if yes, a request for distributing the buffer area is sent, a vacant unit buffer block in a caching space is distributed for the load data of the current processing packet, the address index of the vacant unit buffer block is returned, and the TCP stream records are updated; and otherwise, the load data is read from the packet load data FIFO queue and written into the load data buffer area according to the positions specified by the new TCP stream records so as to complete restructuring and / or packetizing.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

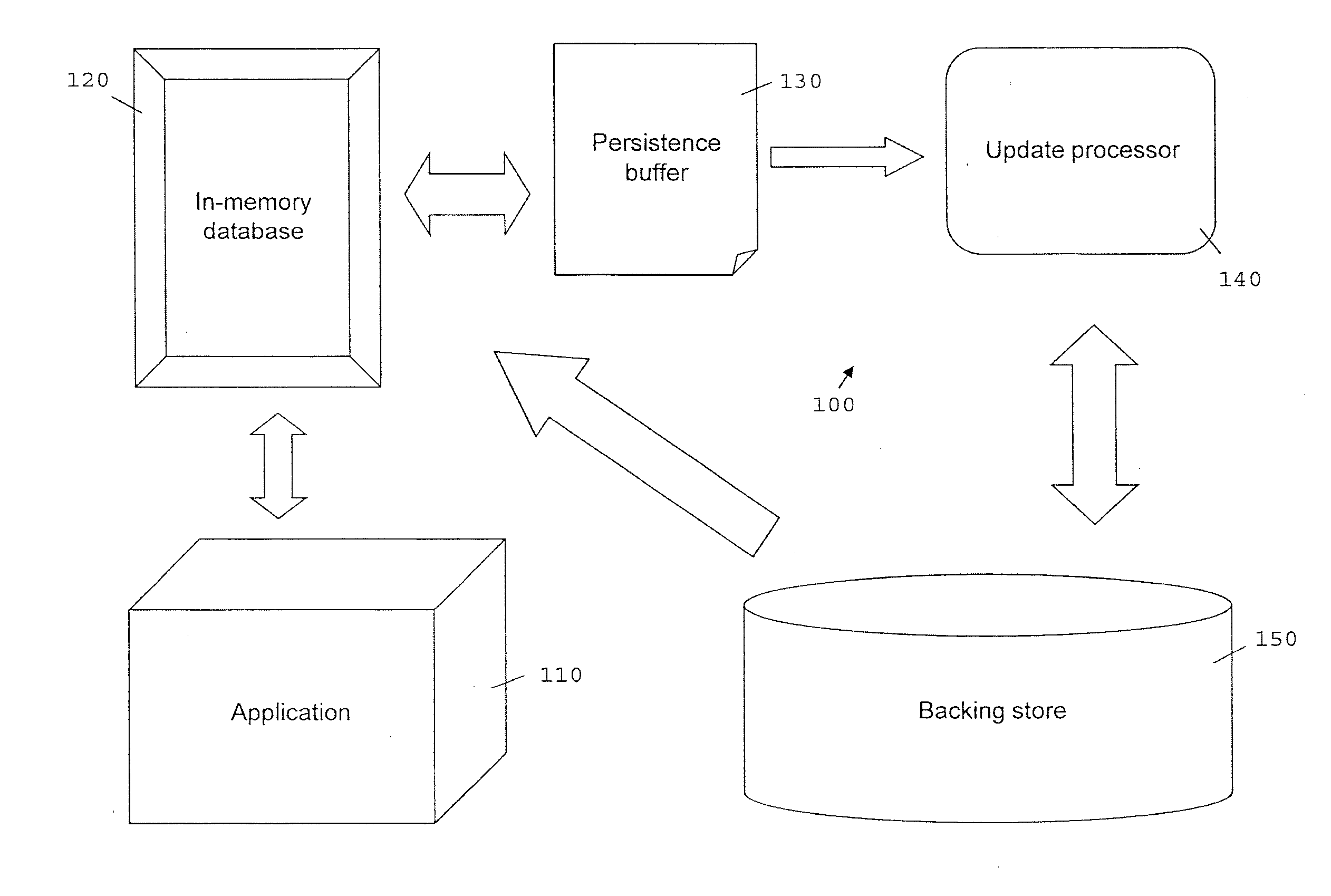

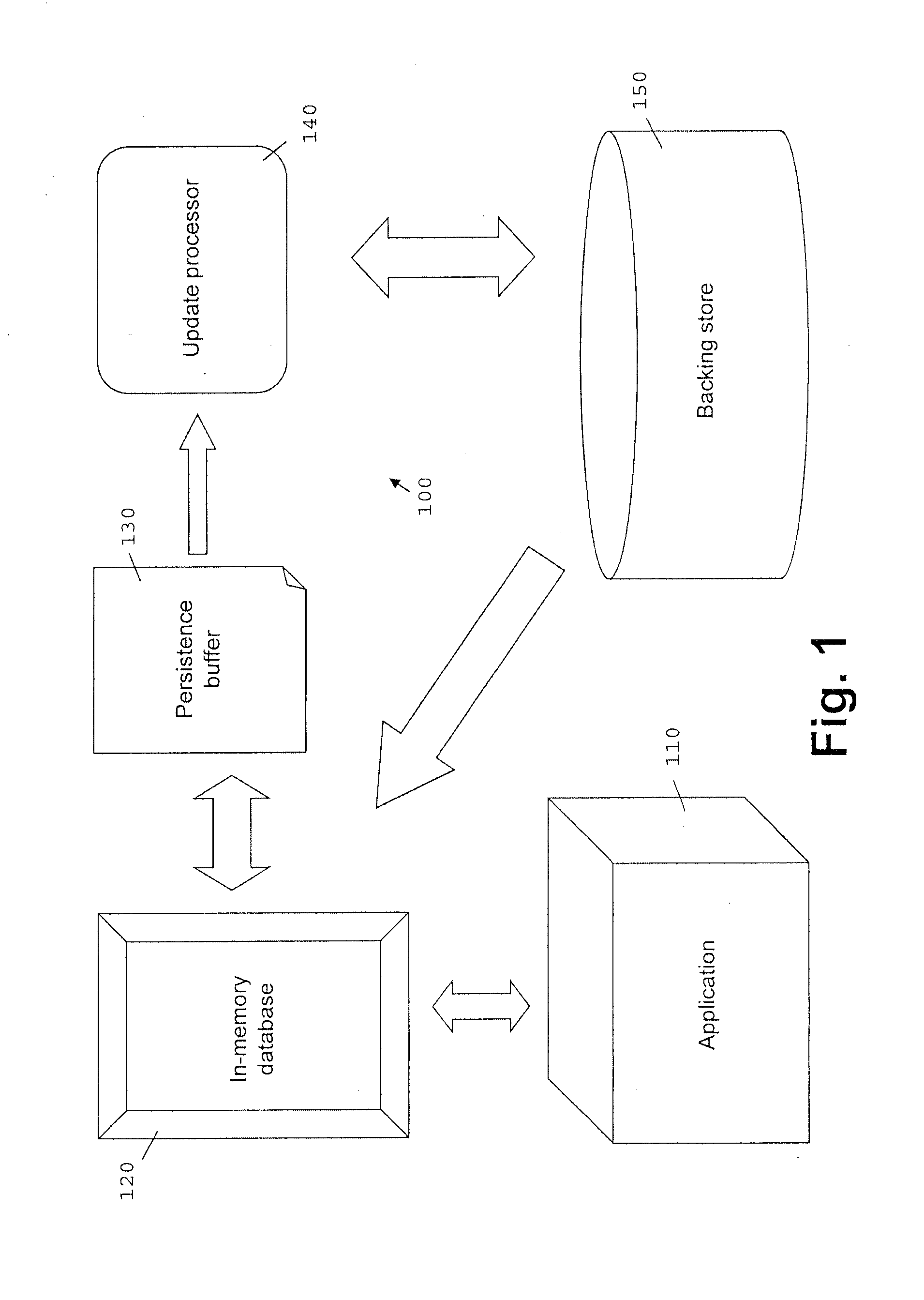

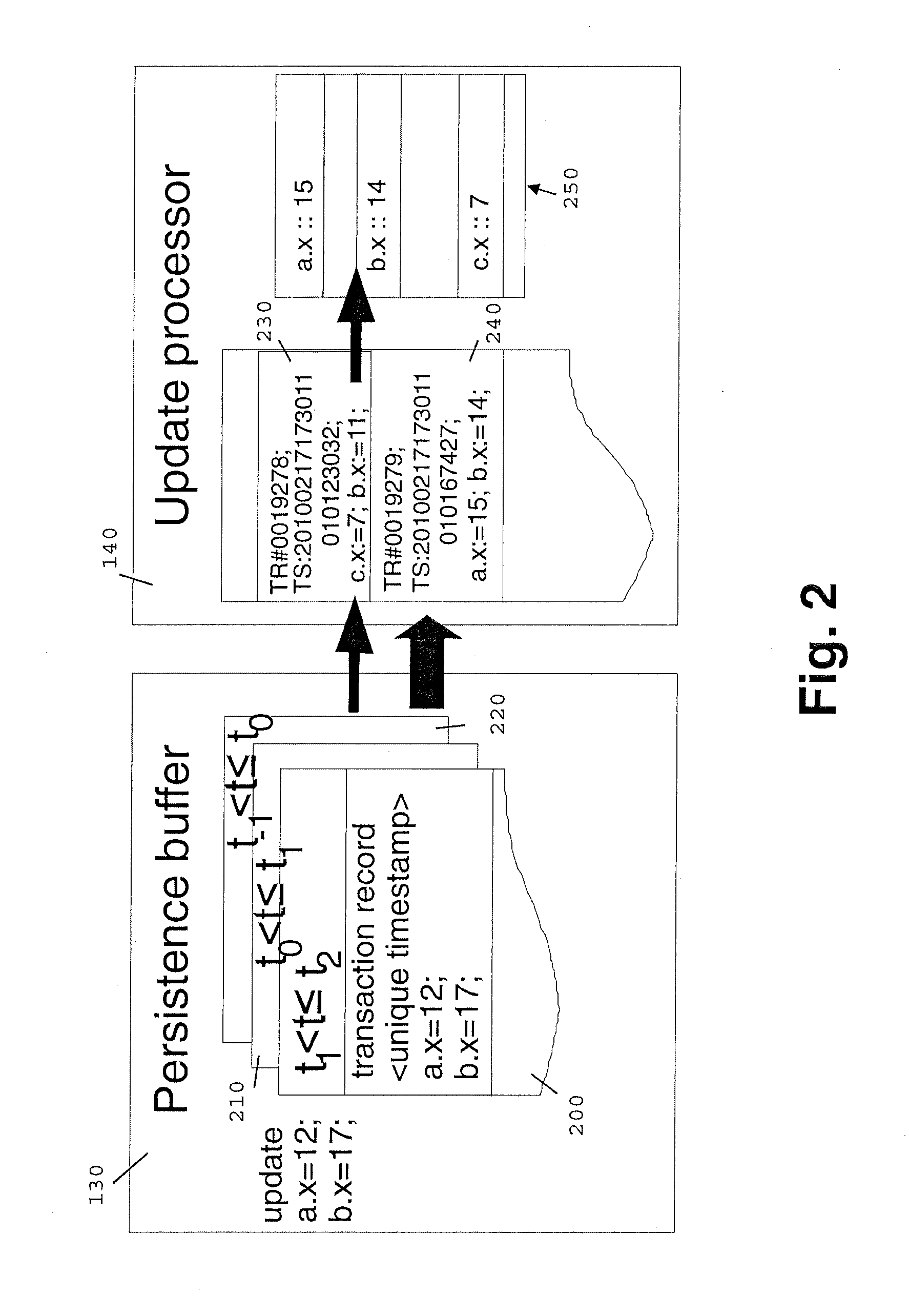

Persisting of a low latency in-memory database

ActiveUS20120265743A1Digital data information retrievalDigital data processing detailsIn-memory databaseTransaction data

Processing is provided for operating an in-memory database, wherein transaction data is stored by a persistence buffer in an FIFO queue, and update processor subsequently: waits for a trigger; extracts the last transactional data associated with a single transaction of the in-memory database from the FIFO memory queue; determines if the transaction data includes updates to data fields in the in-memory database which were already processed; and if not, then stores the extracted transaction data to a store queue, remembering the fields updated in the in-memory database, or otherwise updates the store queue with the extracted transaction data. The process continues until the extracting is complete, and the content of the store queue is periodically written into a persistent storage device.

Owner:IBM CORP

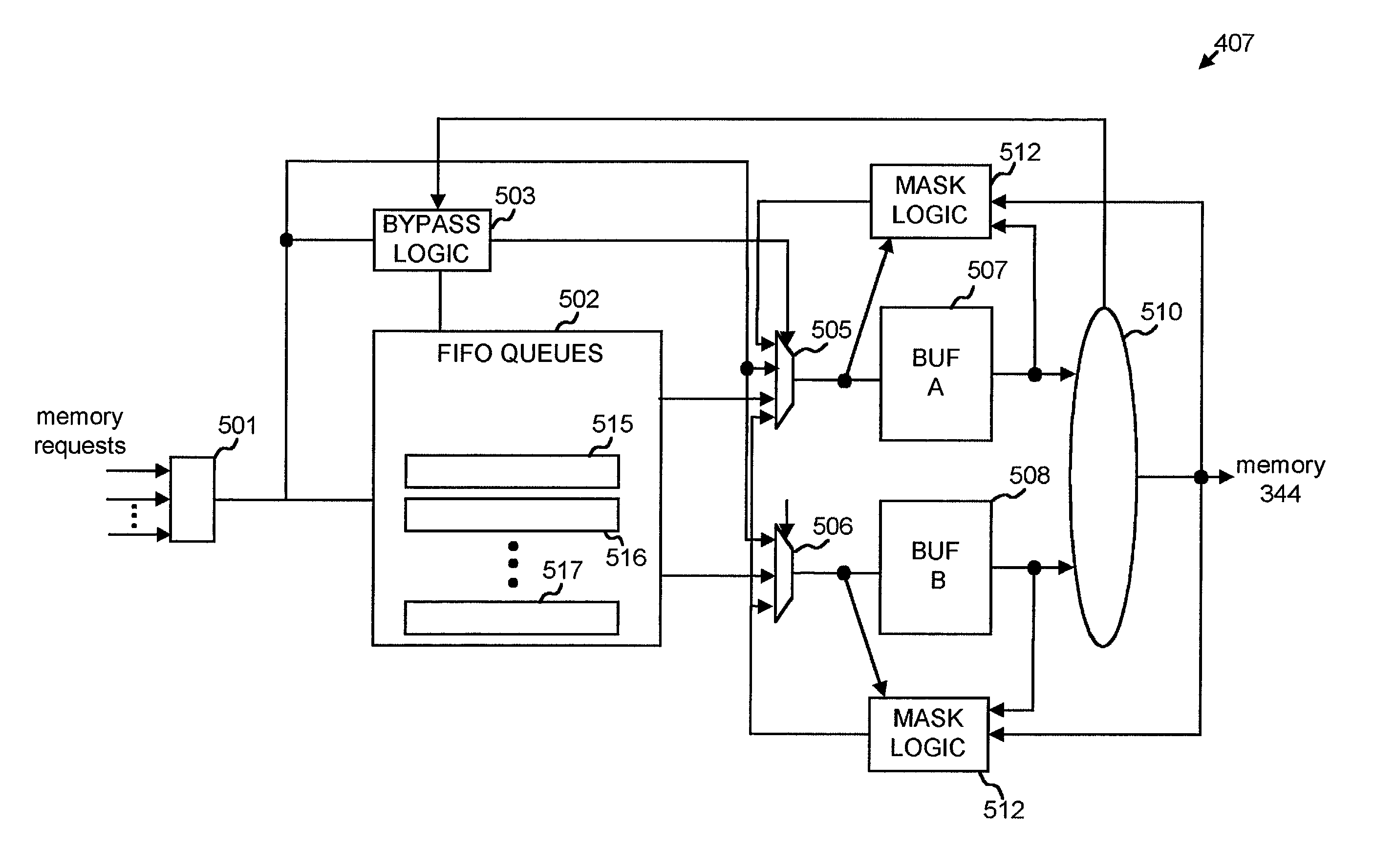

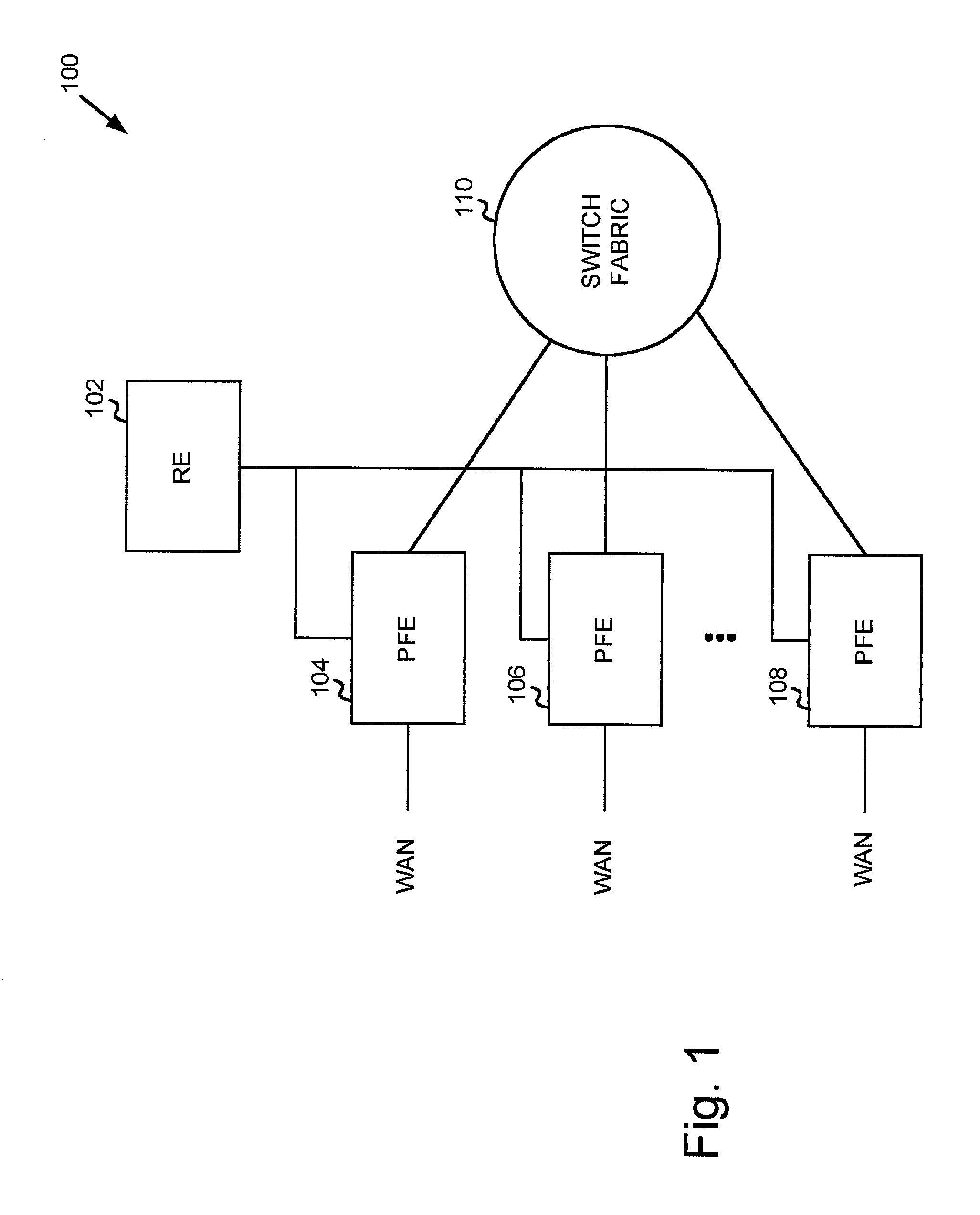

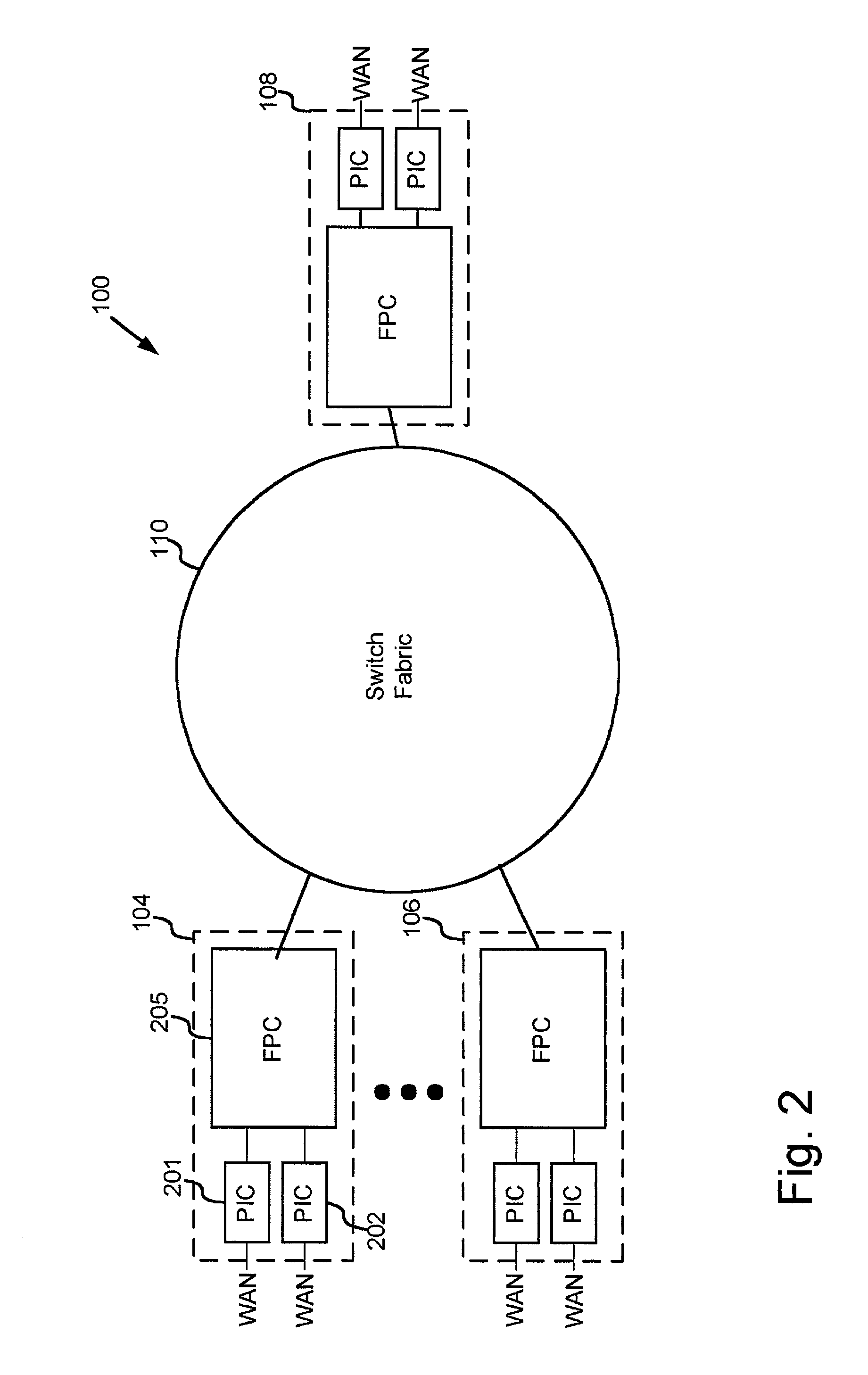

Low latency request dispatcher

InactiveUS7039770B1Delay minimizationReduce request latencyMemory systemsData conversionMultiplexerLatency (engineering)

A first-in-first-out (FIFO) queue optimized to reduce latency in dequeuing data items from the FIFO. In one implementation, a FIFO queue additionally includes buffers connected to the output of the FIFO queue and bypass logic. The buffers act as the final stages of the FIFO queue. The bypass logic causes input data items to bypass the FIFO and to go straight to the buffers when the buffers are able to receive data items and the FIFO queue is empty. In a second implementation, arbitration logic is coupled to the queue. The arbitration logic controls a multiplexer to output a predetermined number of data items from a number of final stages of the queue. In this second implementation, the arbitration logic gives higher priority to data items in later stages of the queue.

Owner:JUMIPER NETWORKS INC

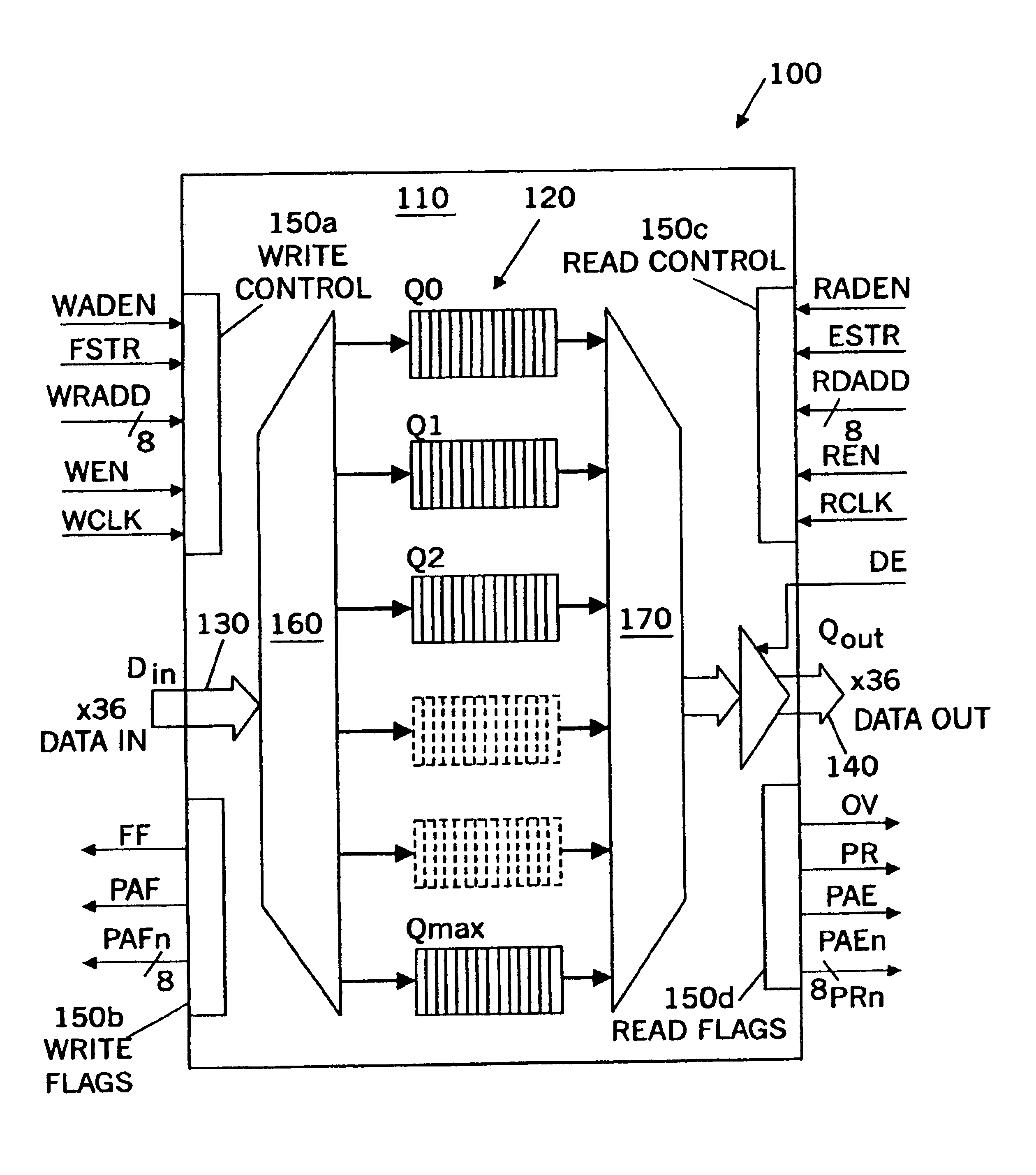

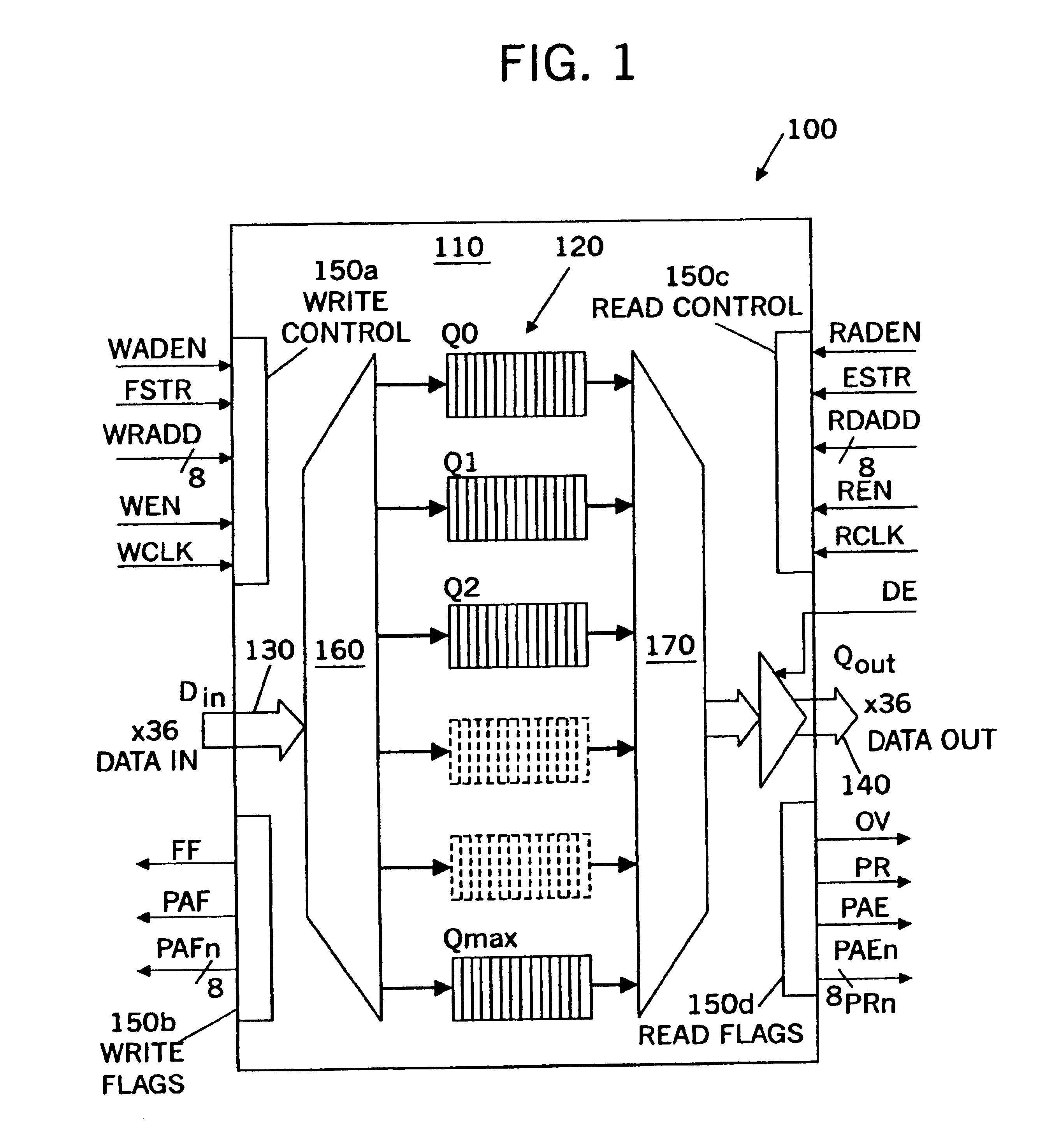

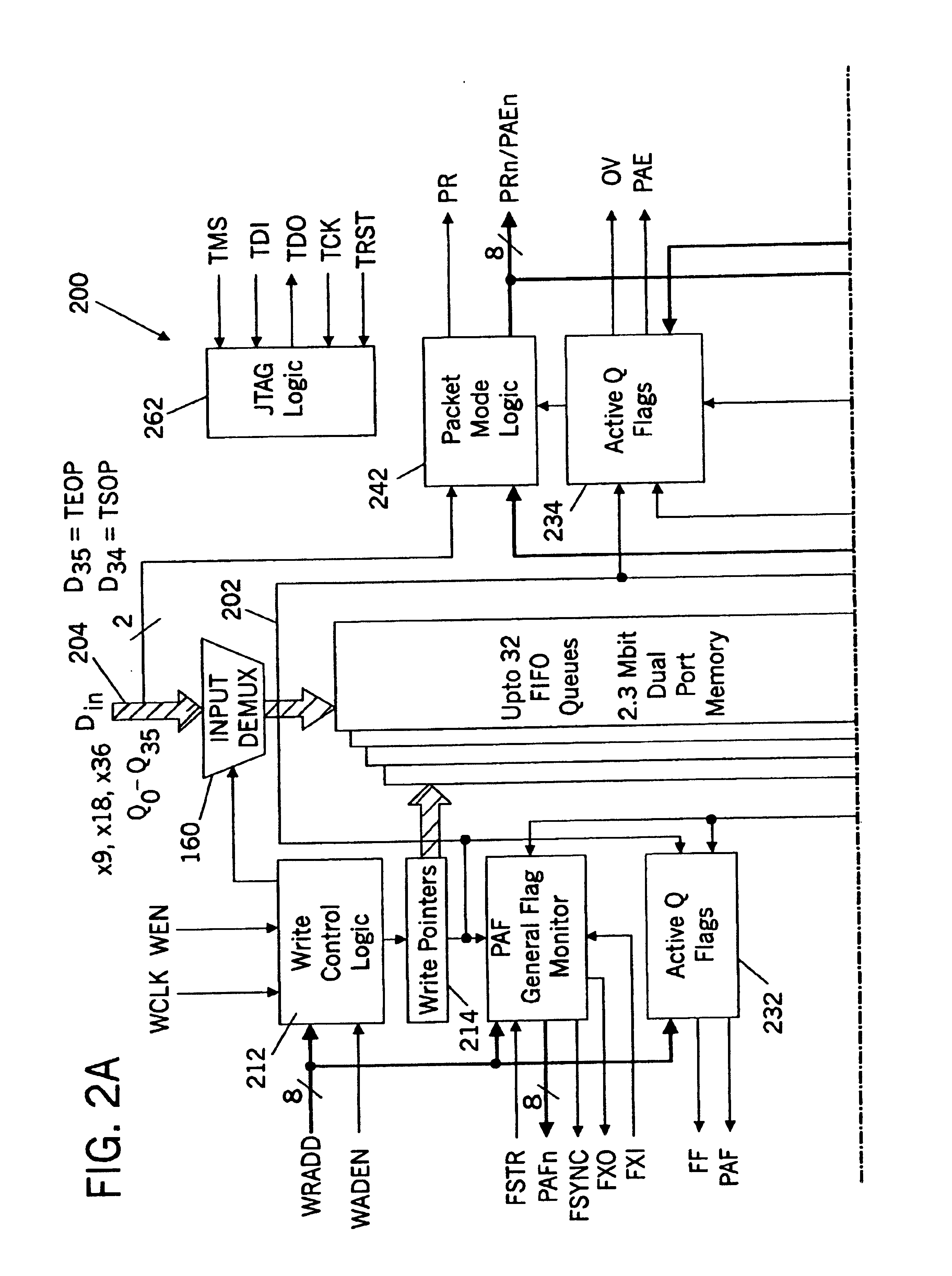

Integrated circuit FIFO memory devices that are divisible into independent FIFO queues, and systems and methods for controlling same

InactiveUS6907479B2Improve scalabilityEfficient storageMemory systemsInput/output processes for data processingProcessor registerFifo queue

Integrated circuit FIFO memory devices may be controlled using a register file, an indexer and a controller. The FIFO memory device includes a FIFO memory that is divisible into up to a predetermined number of independent FIFO queues. The register file includes the predetermined number of words. A respective word is configured to store one or more parameters for a respective one of the FIFO queues. The indexer is configured to index into the register file, to access a respective word that corresponds to a respective FIFO queue that is accessed. The controller is responsive to the respective word that is accessed, and is configured to control access to the respective FIFO queue based upon at least one of the one or more parameters that is stored in the respective word. Thus, as the number of FIFO queues expands, the number of words in the register file may need to expand, but the controller and / or indexer need not change substantially. The register file may include multiple register subfiles, and the controller may include multiple controller subblocks.

Owner:INTEGRATED DEVICE TECH INC

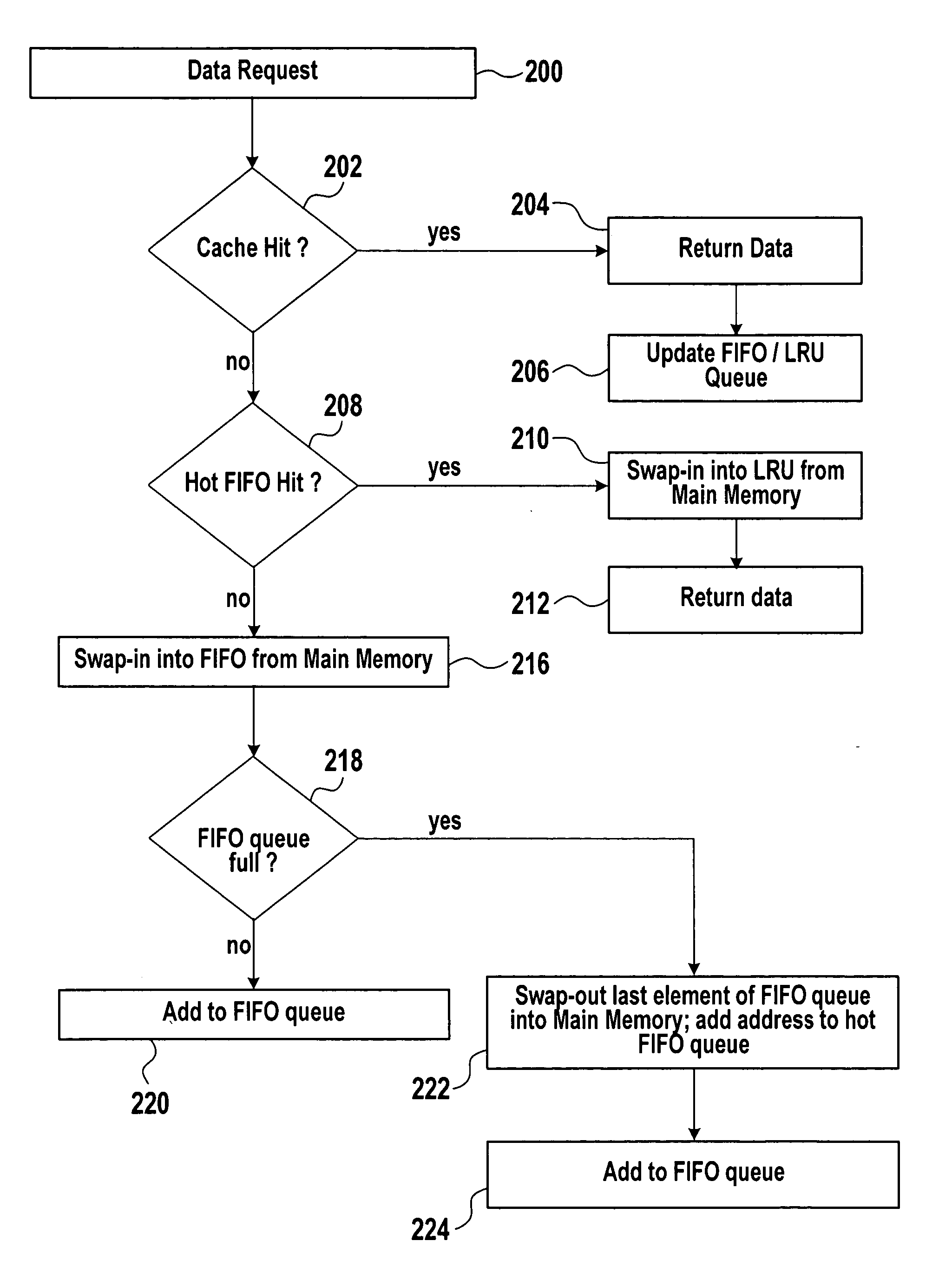

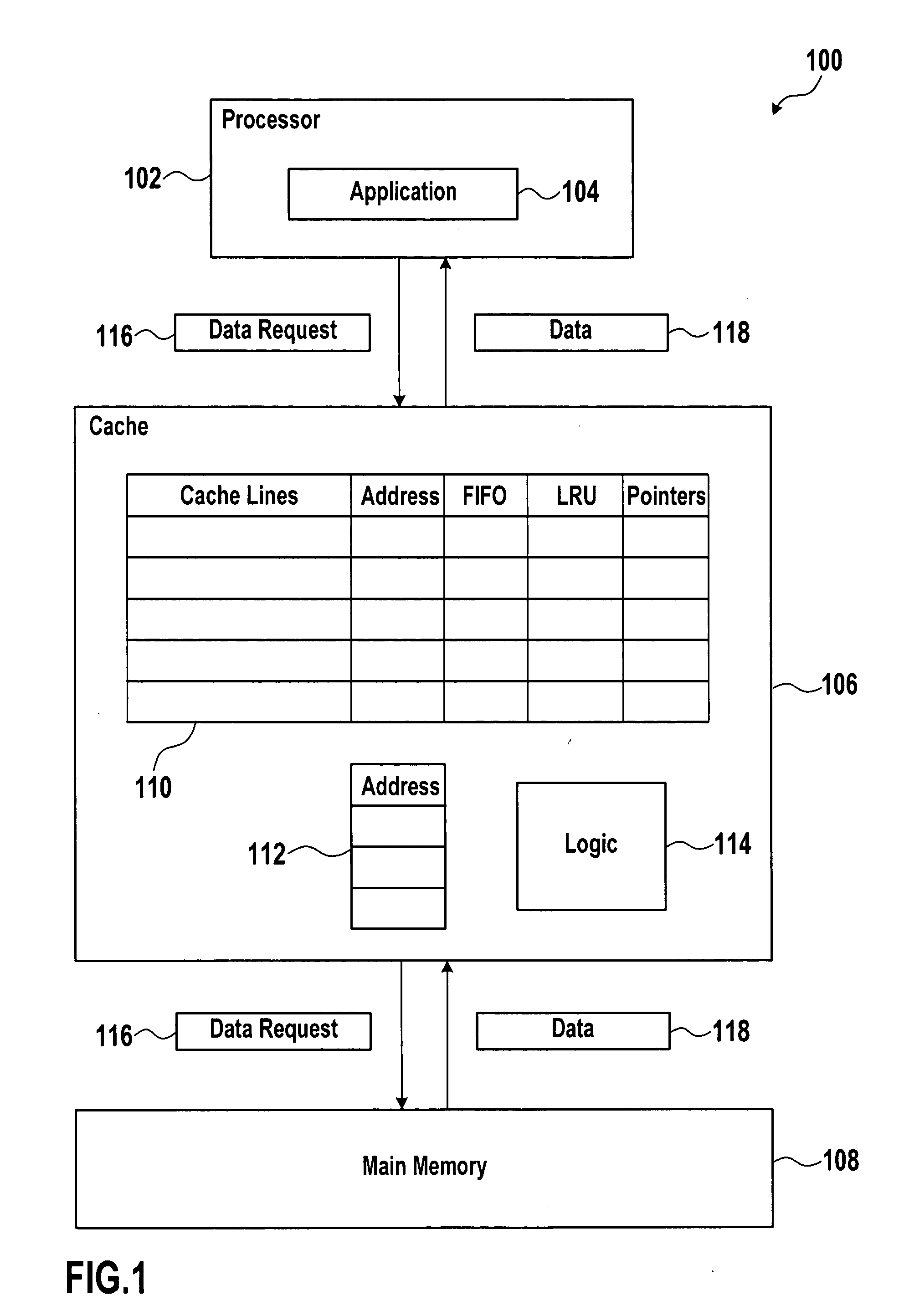

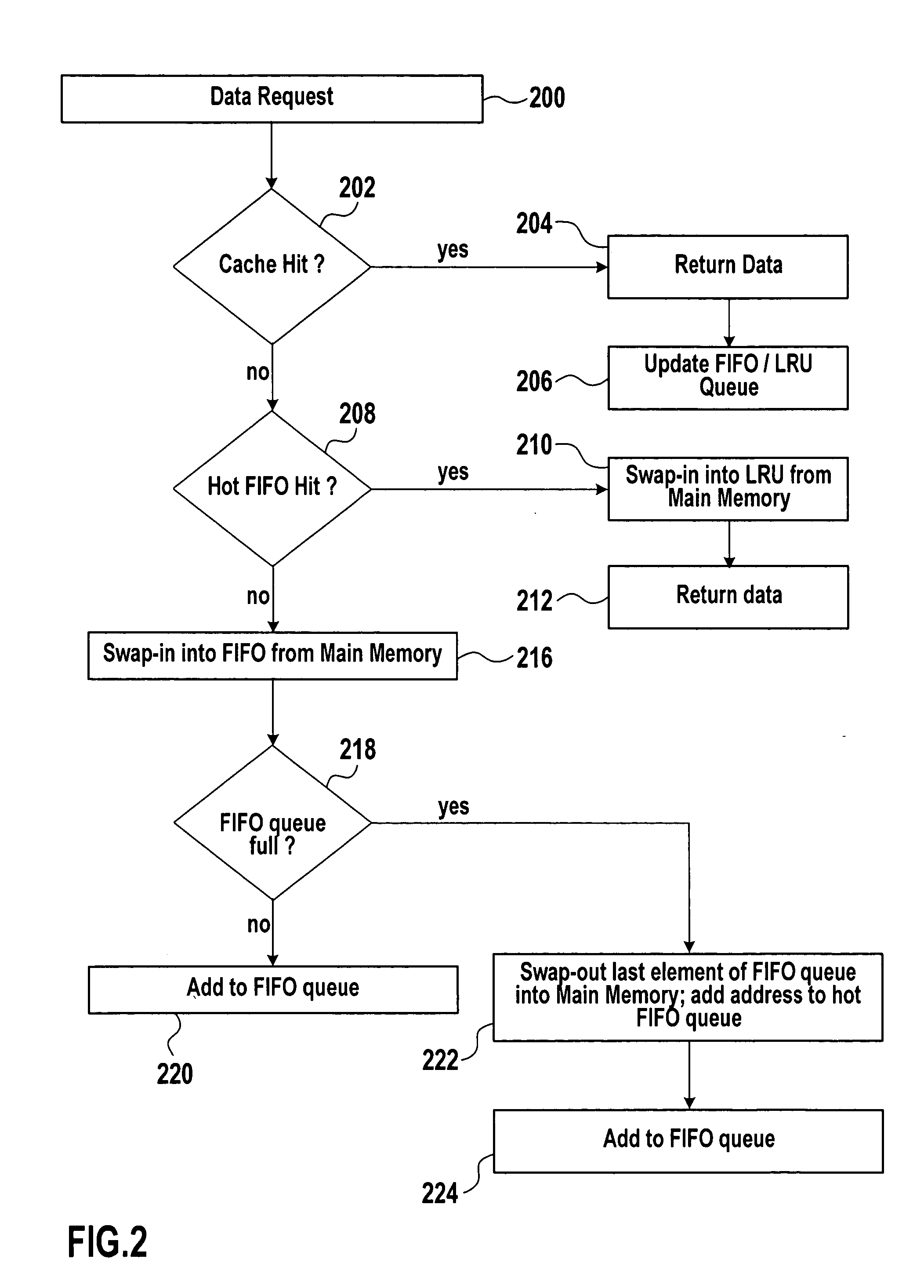

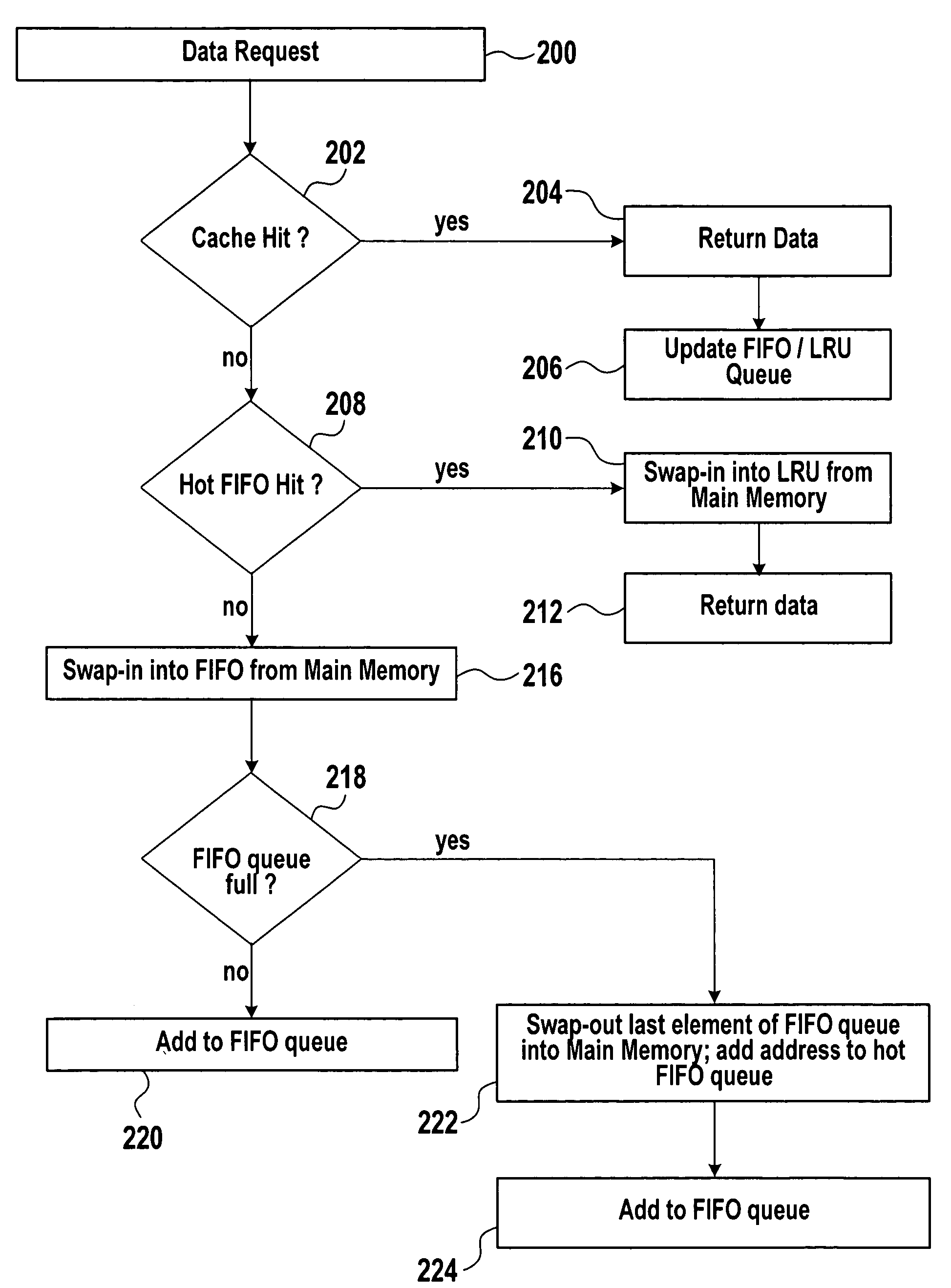

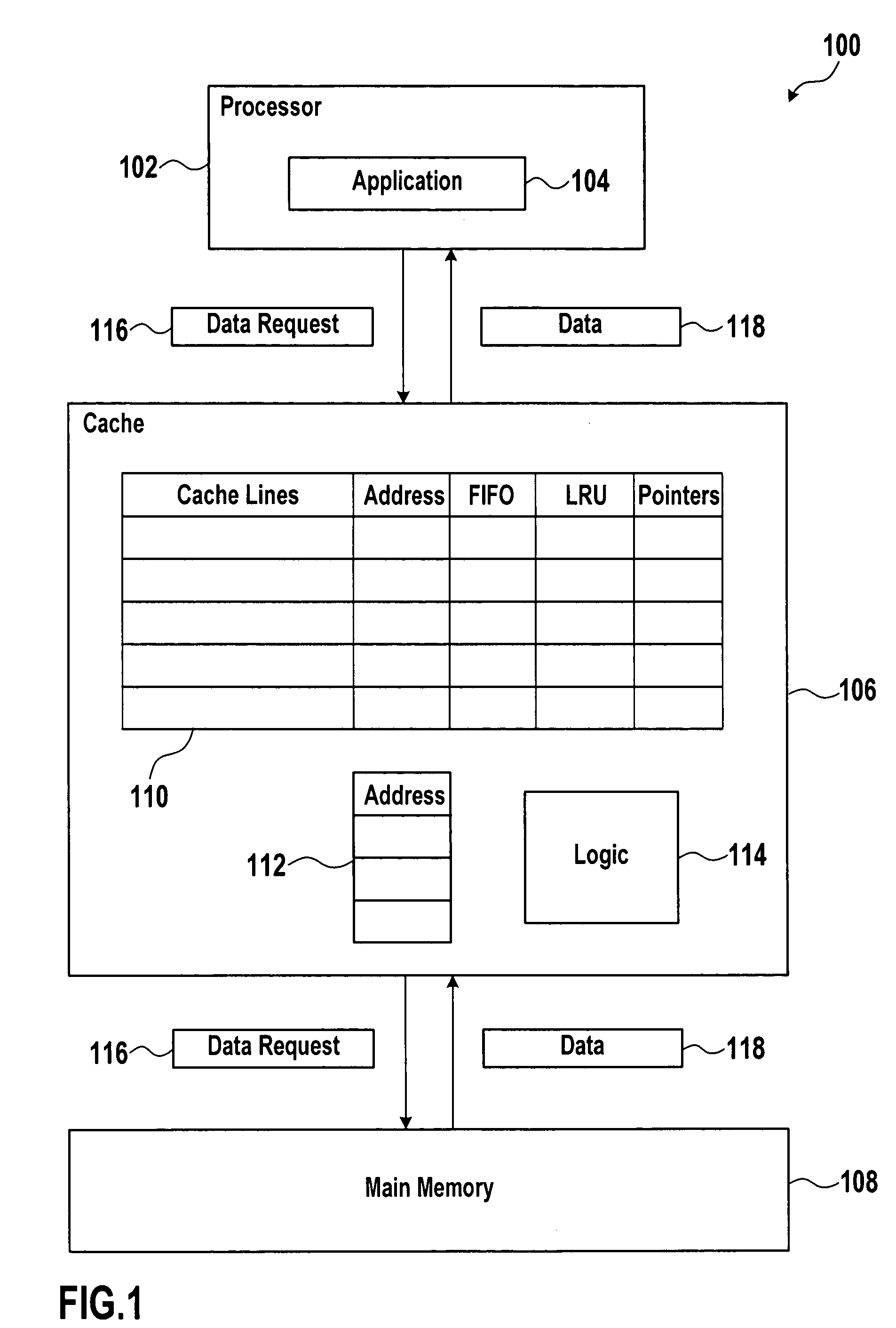

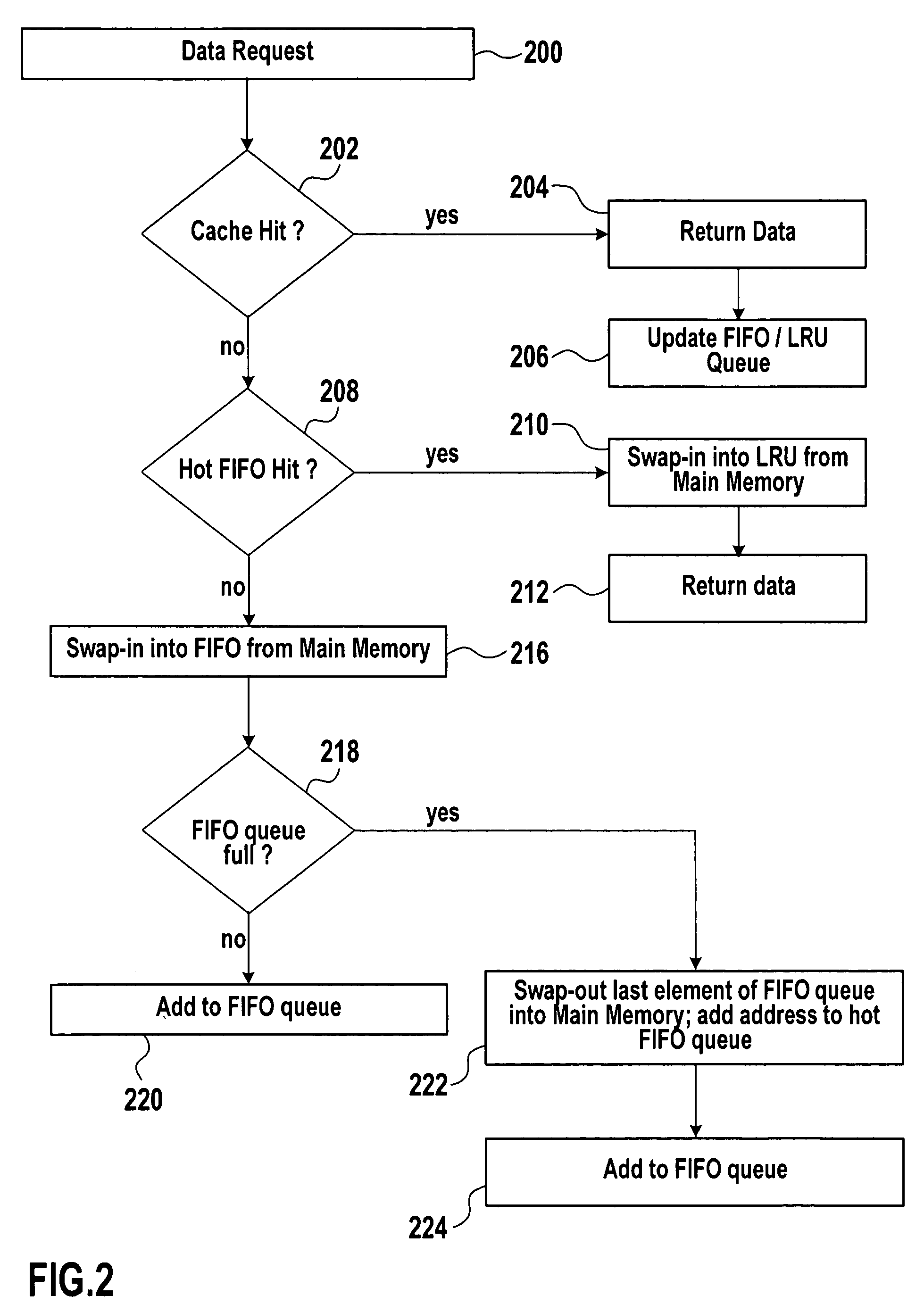

Systems and methods for data caching

ActiveUS20050055511A1Positively performanceImprove cache hit ratioMemory architecture accessing/allocationMemory adressing/allocation/relocationExternal storageParallel computing

Systems and methods are provided for data caching. An exemplary method for data caching may include establishing a FIFO queue and a LRU queue in a cache memory. The method may further include establishing an auxiliary FIFO queue for addresses of cache lines that have been swapped-out to an external memory. The method may further include determining, if there is a cache miss for the requested data, if there is a hit for requested data in the auxiliary FIFO queue and, if so, swapping-in the requested data into the LRU queue, otherwise swapping-in the requested data into the FIFO queue.

Owner:SAP AG

Implementing optimistic concurrent data structures

A concurrent FIFO queue is implemented as an “optimistic” doubly-linked list. Nodes of the optimistic doubly-linked list are allocated dynamically and links between the nodes are updated optimistically, i.e., assuming that threads concurrently accessing the FIFO queue will not interfere with each other, using a simple store operation. Concurrent, linearizable, and non-blocking enqueue and dequeue operations on the two ends of the doubly-linked list proceed independently, i.e., disjointly. These operations require only one successful single-word synchronization operation on the tail pointer and the head pointer of the doubly-linked list. If a bad ordering of operations on the optimistic FIFO queue by concurrently executing threads creates inconsistencies in the links between the nodes of the doubly-linked list, a fix-up process is invoked to correct the inconsistencies.

Owner:ORACLE INT CORP

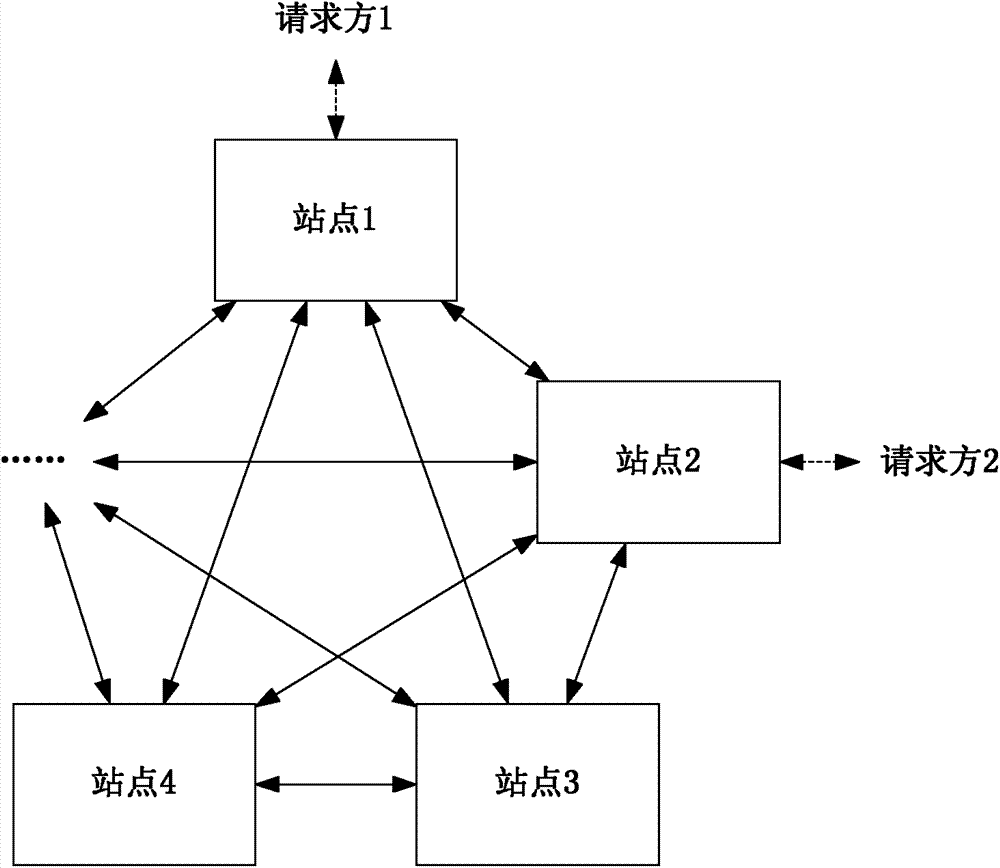

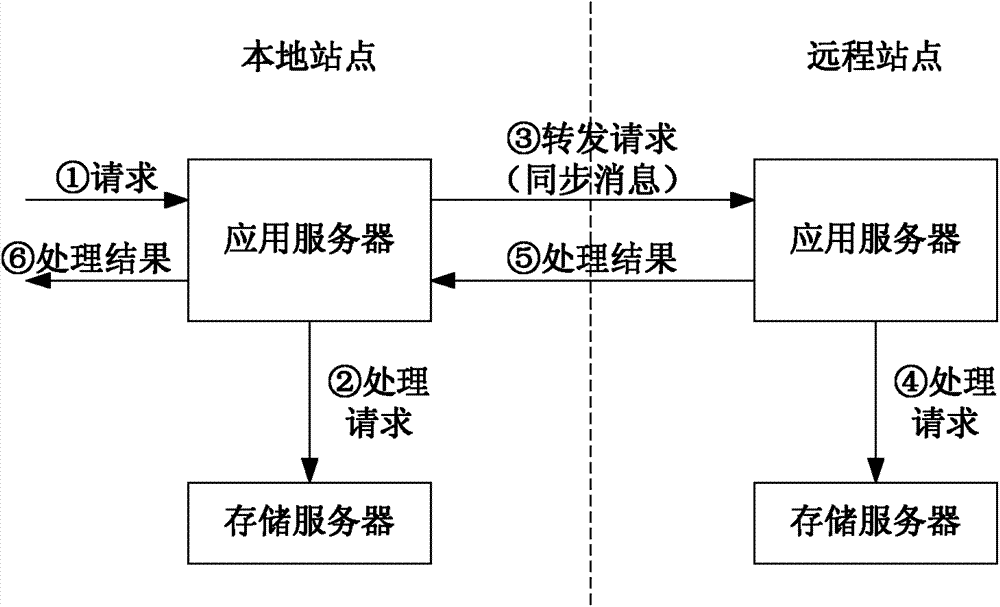

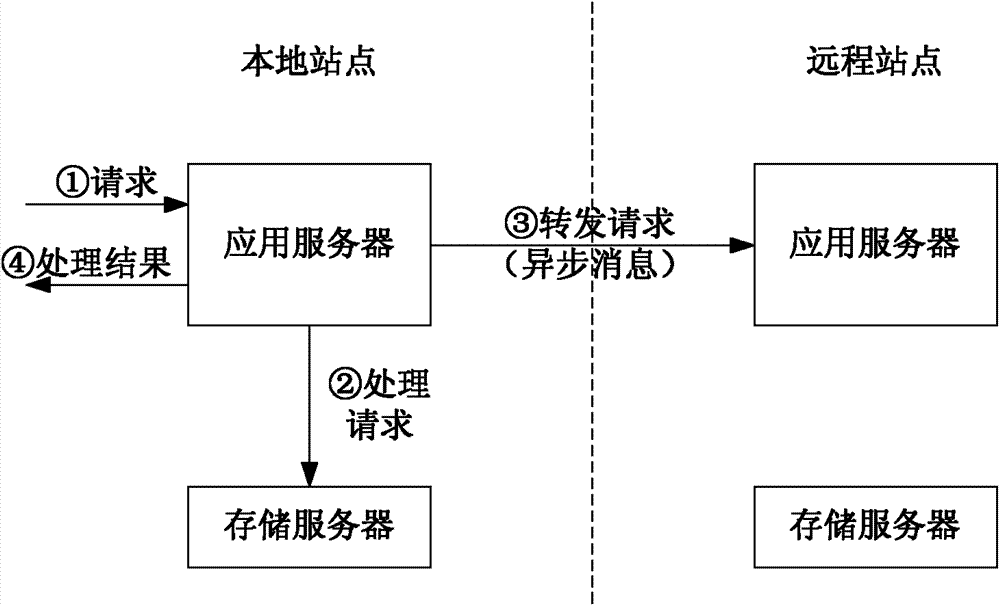

Cross-machine-room data synchronism method and system

The invention discloses a cross-machine-room data synchronism method which comprises a demand processing method of parallel processing and a long-distance synchronism method. According to the demand processing method, requests are processed by local sites, index key value used for unique location is built for changed data, and the index key value is added to a first in first out (FIFO) queue. According to the long-distance synchronism method, the corresponding changed data contents are found by the local sites according to the index key value in the FIFO queue, and synchronous instructions are sent to each long-distance site. The invention further discloses a cross-machine-room data synchronism system corresponding to the cross-machine-room data synchronism method. The cross-machine-room data synchronism method and the system have the advantages of being short in delay time and waiting time, low in energy consumption and correct in data synchronism of the long-distance sites from beginning to end.

Owner:ALIBABA GRP HLDG LTD

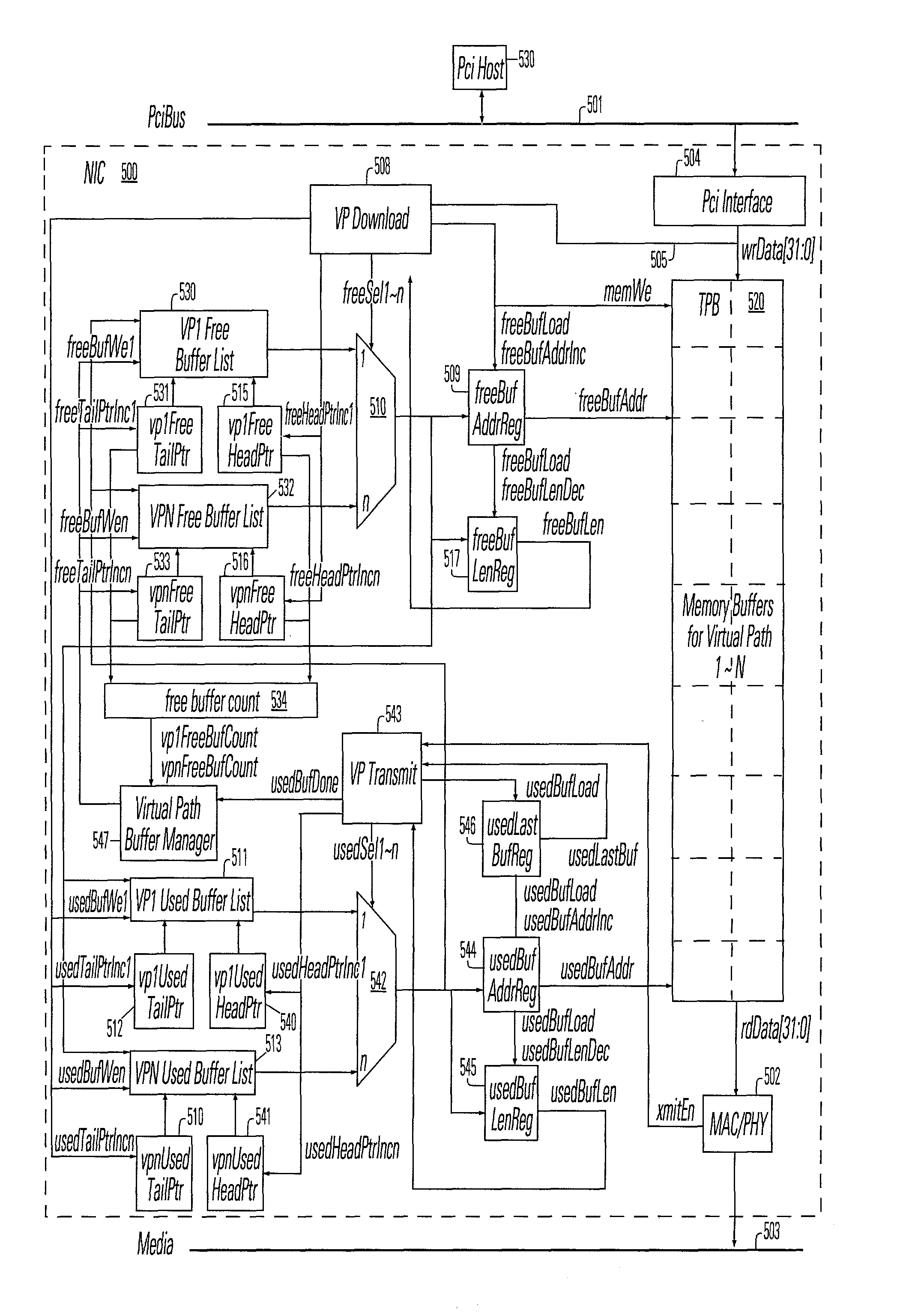

Network interface supporting of virtual paths for quality of service with dynamic buffer allocation

A plurality of virtual paths in a network interface between a host port and a network port are managed according to respective priorities using dynamic buffer allocation. Thus, multiple levels of quality of service are supported through a single physical network port. Variant processes are applied for handling packets which have been downloaded to a network interface, prior to transmission onto the network. The network interface also includes memory used as a transmit buffer, that stores data packets received from the host computer on the first port, and provides data to the second port for transmission on the network. A control circuit in the network interface manages the memory as a plurality of first-in-first-out FIFO queues having respective priorities. Logic places a packet received from the host processor into one of the plurality of FIFO queues according to a quality of service parameter associated with the packets. Logic transmits the packets in the plurality of FIFO queues according to respective priorities. Logic dynamically allocates the memory using a list of buffer descriptors for corresponding buffers in said memory. The list of buffer descriptors comprises a free buffer list and a used buffer list for each of the virtual paths served by the system. A used buffer descriptor is released from the used buffer list, after the data stored in the corresponding used buffer has been transmitted, to the free buffer list for a virtual path which has the largest amount traffic or which has the smallest number of free buffers in its free buffer list.

Owner:HEWLETT PACKARD DEV CO LP

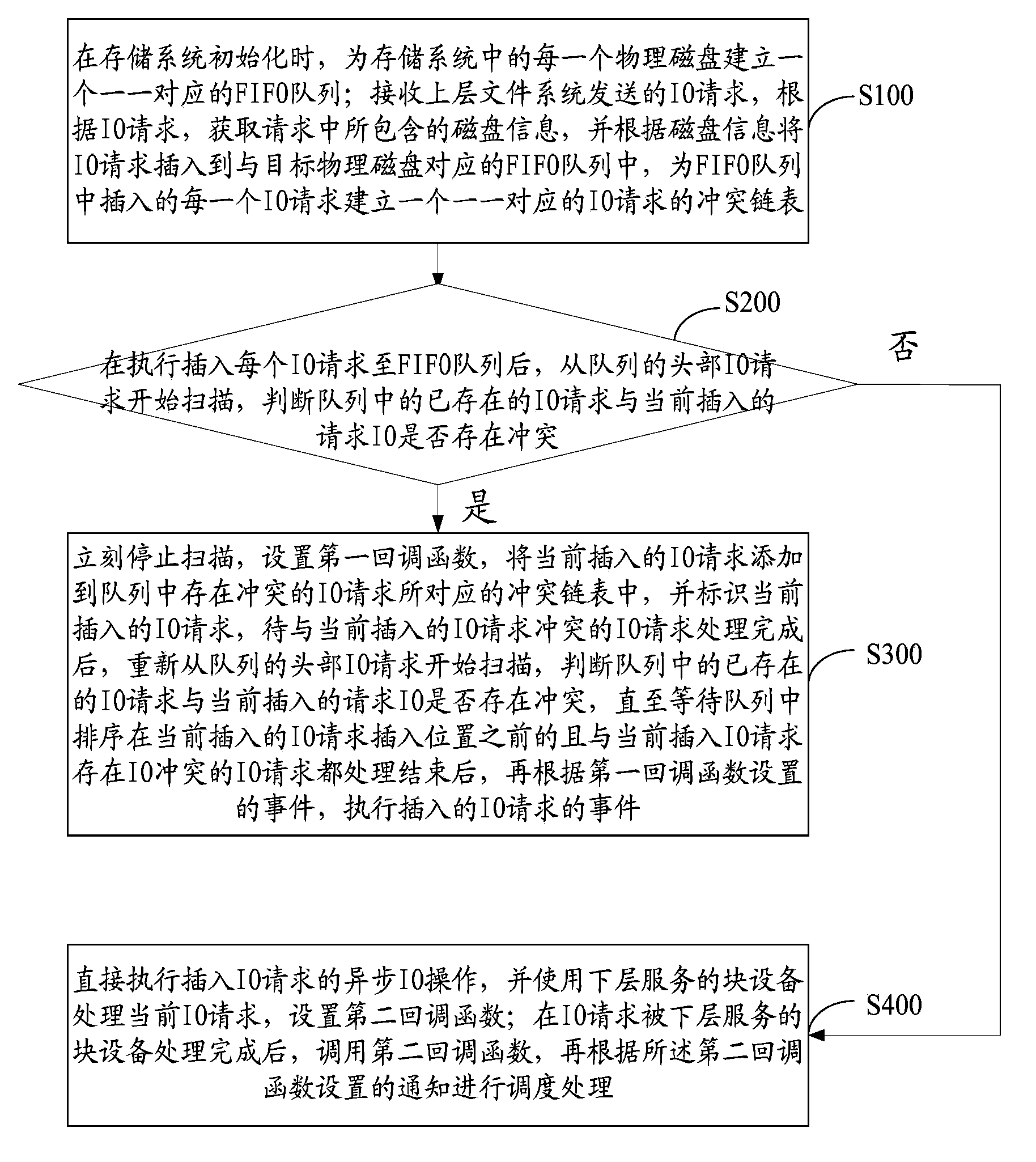

IO (input output) scheduling method and IO scheduling device

ActiveCN103823636AEasy to handleImprove read and write speedInput/output to record carriersSolid-state driveFifo queue

The invention discloses an IO (input output) scheduling method and IO scheduling device. The IO scheduling method includes steps of creating an FIFO (first input first output) queue for each physical disk in a storage system when the storage system is initialized; creating an IO request conflict link table for each IO request inserted into the corresponding FIFO queue; starting scanning head IO requests of the queues after each IO request inserted into the corresponding FIFO queue is executed, judging queue conflicts, recording the IO request conflict link tables, preparing to-be-processed events and transmitting notification by the aid of the conflict link tables. The FIFO queues are in one-to-one correspondence with the physical disks. The IO request conflict link tables are in one-to-one correspondence with the IO requests. The notification indicates that other IO request conflicts in the link tables are relieved. The IO scheduling method and the IO scheduling device have the advantages that large quantities of IO conflicts caused when solid state disks are used as caches and are processed can be prevented, and I / O reading and writing rates and transmission rates can be effectively increased.

Owner:常熟它思清源科技有限公司

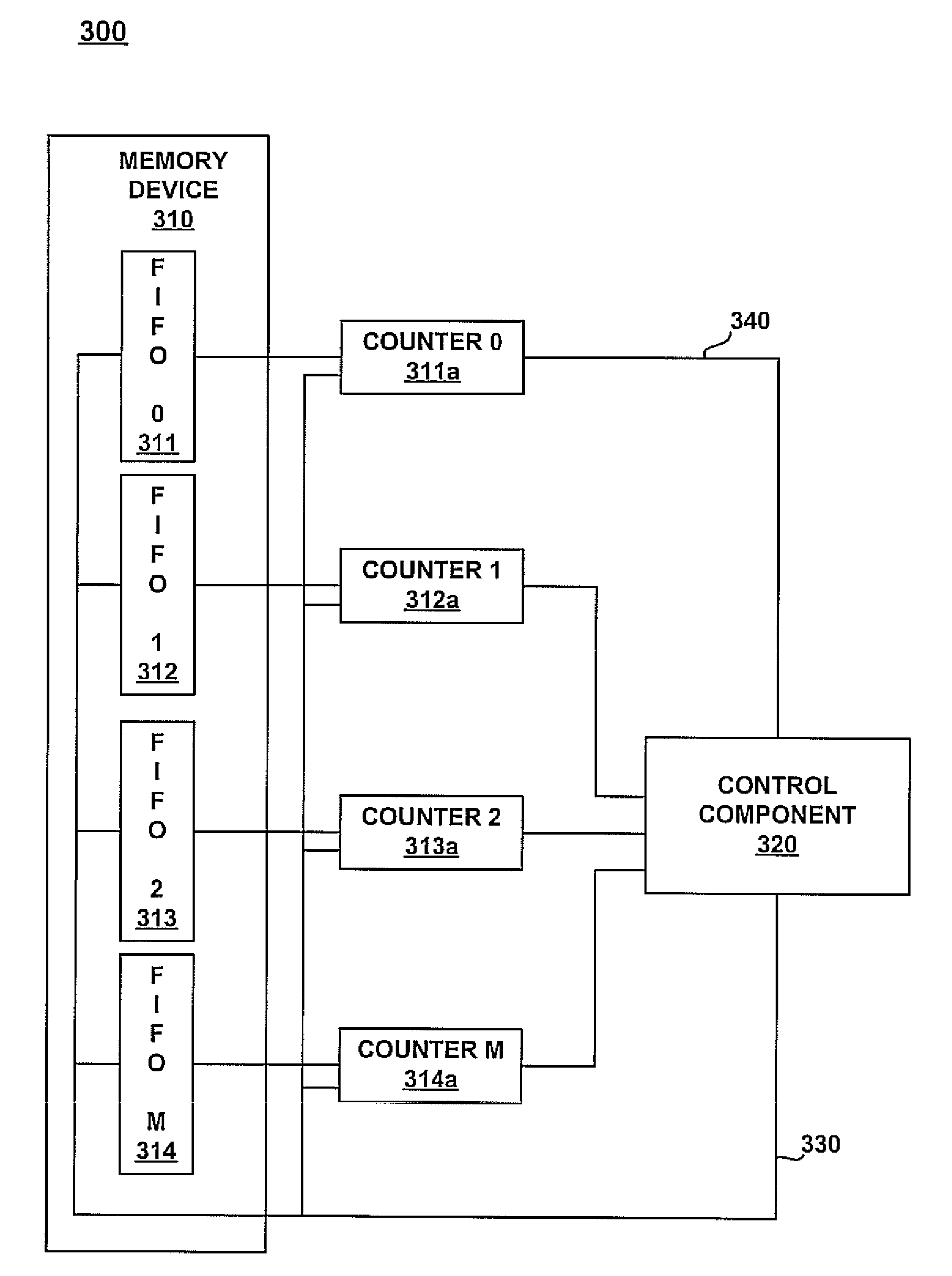

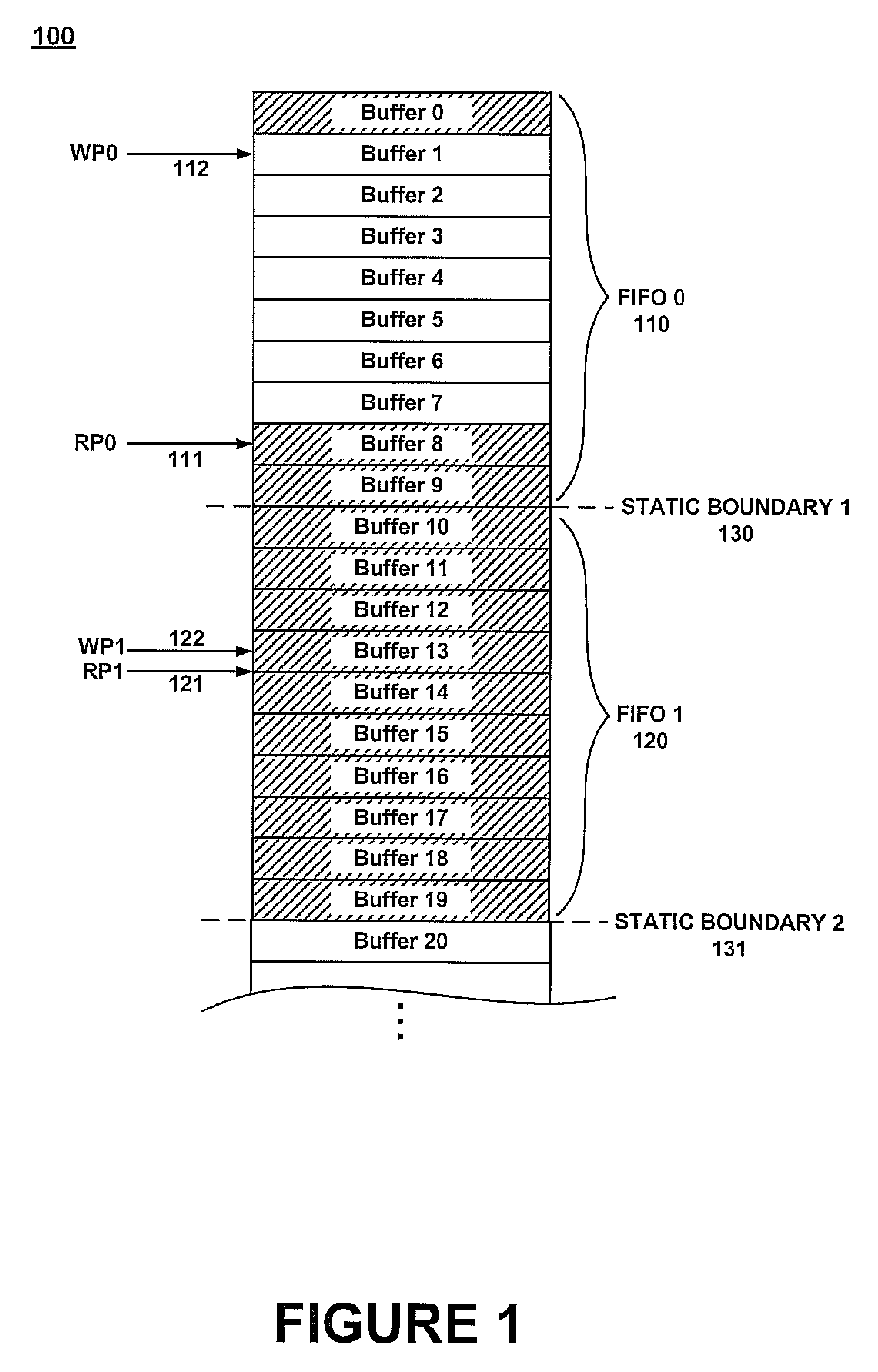

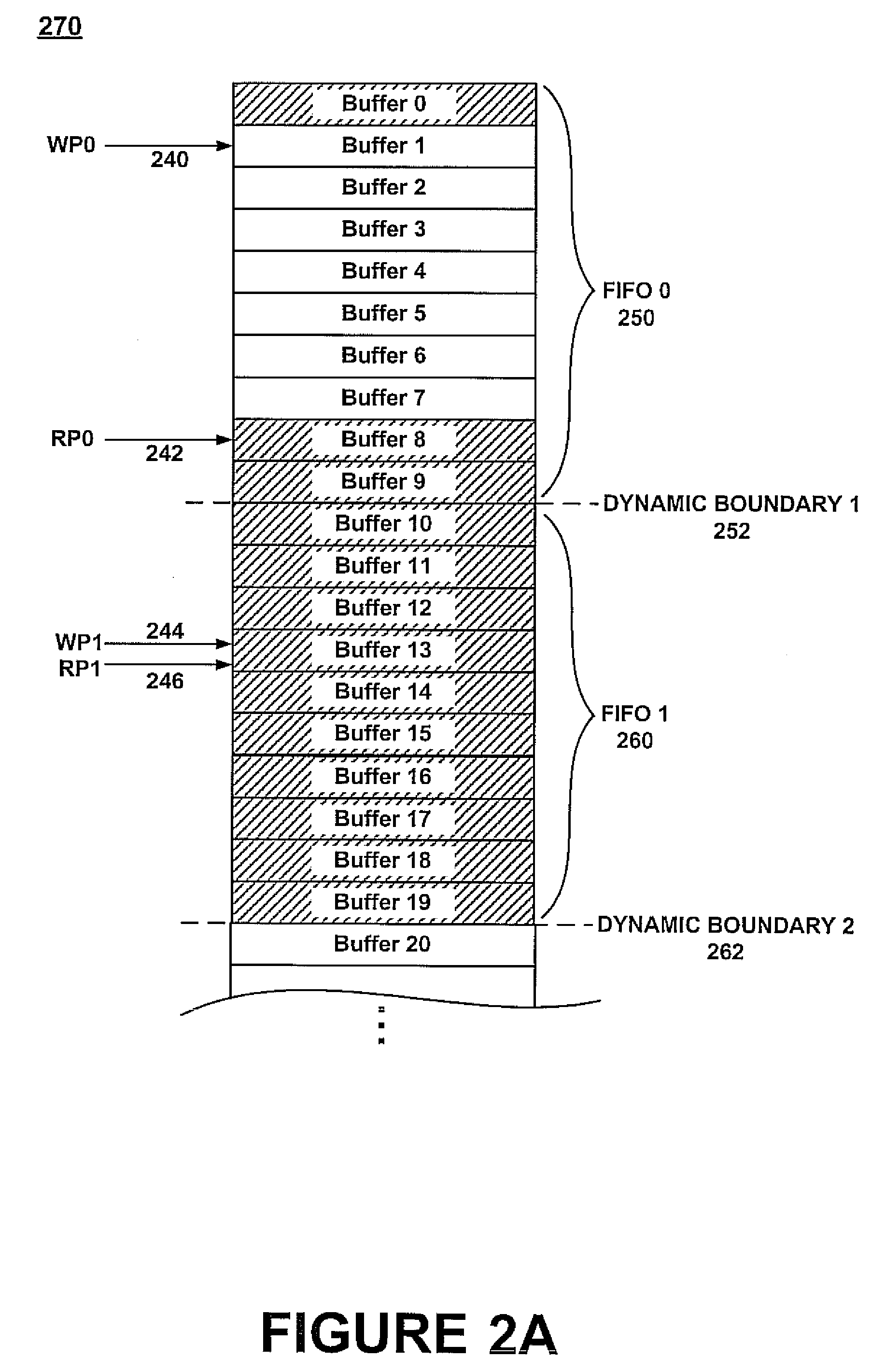

Automatic resource sharing between FIFOs

ActiveUS7444491B1Reduce in quantityExact numberMemory systemsData conversionParallel computingFifo queue

Embodiments of the present invention recite a method and system for allocating memory resources. In one embodiment, a control component coupled with a memory device determines that a data buffer adjacent to a boundary of a first FIFO queue does not contain current data. The control component also determines that a second data buffer of a second FIFO queue adjacent to the boundary does not contain current data. The control component then automatically shifts the boundary to include the second data buffer in the first FIFO queue.

Owner:NVIDIA CORP

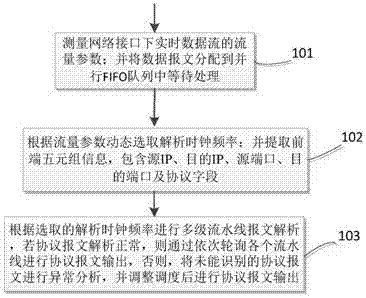

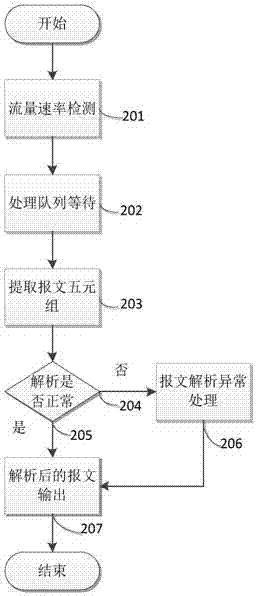

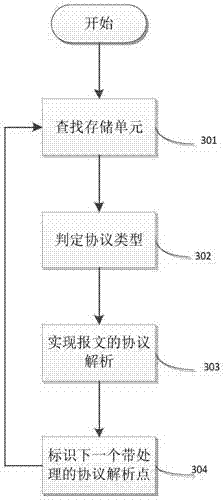

Message analysis method and device based on FPGA hardware parallel pipelines

ActiveCN106961445AEasy constructionFast transferData switching networksStructure of Management InformationOperating frequency

The invention relates to a message analysis method and device based on FPGA hardware parallel pipelines. The method comprises the steps of measuring traffic parameters of real-time data streams under network interfaces; allocating data messages to parallel FIFO queues for processing; dynamically selecting analysis clock frequencies according to the traffic parameters; extracting front end quintuple information; and carrying out multilevel pipeline message analysis according to the selected analysis clock frequencies, if the protocol message analysis is normal, polling each pipeline in sequence for protocol message output, otherwise, carrying out abnormality analysis on the unidentified protocol messages, adjusting scheduling and then carrying out protocol message output. According to the method and the device, on the basis of a high-speed parallel pipeline structure, a message analysis rate is improved; working frequencies are dynamically changed through traffic detection; the system power consumption cost is greatly reduced; a solidified and sealed mode of a traditional network is broken through; a utilization rate of link resources is improved; and the basic network construction cost is reduced.

Owner:THE PLA INFORMATION ENG UNIV

Systems and methods for data caching

ActiveUS7284096B2Positively performanceImprove cache hit ratioMemory architecture accessing/allocationMemory adressing/allocation/relocationExternal storageData exchange

Systems and methods are provided for data caching. An exemplary method for data caching may include establishing a FIFO queue and a LRU queue in a cache memory. The method may further include establishing an auxiliary FIFO queue for addresses of cache lines that have been swapped-out to an external memory. The method may further include determining, if there is a cache miss for the requested data, if there is a hit for requested data in the auxiliary FIFO queue and, if so, swapping-in the requested data into the LRU queue, otherwise swapping-in the requested data into the FIFO queue.

Owner:SAP AG

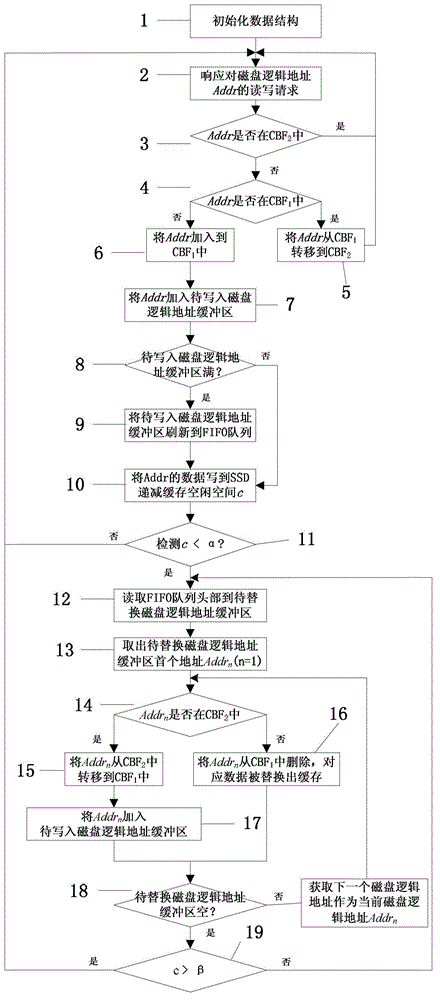

Implementation method of least recently used (LRU) policy in solid state drive (SSD)-based high-capacity cache

ActiveCN103150136AEasy to implementComplicated operationData conversionBloom filterSolid-state drive

The invention discloses an implementation method of a least recently used (LRU) policy in a solid state drive (SSD)-based high-capacity cache. According to the method, logic addresses of an upper-layer application read-write request are combined into a first in first out (FIFO) queue; two counting Bloom filters are respectively used for recording the logic addresses which are accessed for one or more times in the FIFO queue; and the FIFO queue and the two counting Bloom filters can accurately simulate the behavior of an LRU queue. The FIFO queue is stored on an SSD, and does not occupy a memory space. The two counting Bloom filters are stored in a memory, and occupy a very small memory space. Functions of the LRU queue are realized with extremely-low memory overhead. The implementation method has the advantages of simplicity in implementation, quickness in operation, small storage occupation space and low memory overhead.

Owner:NAT UNIV OF DEFENSE TECH

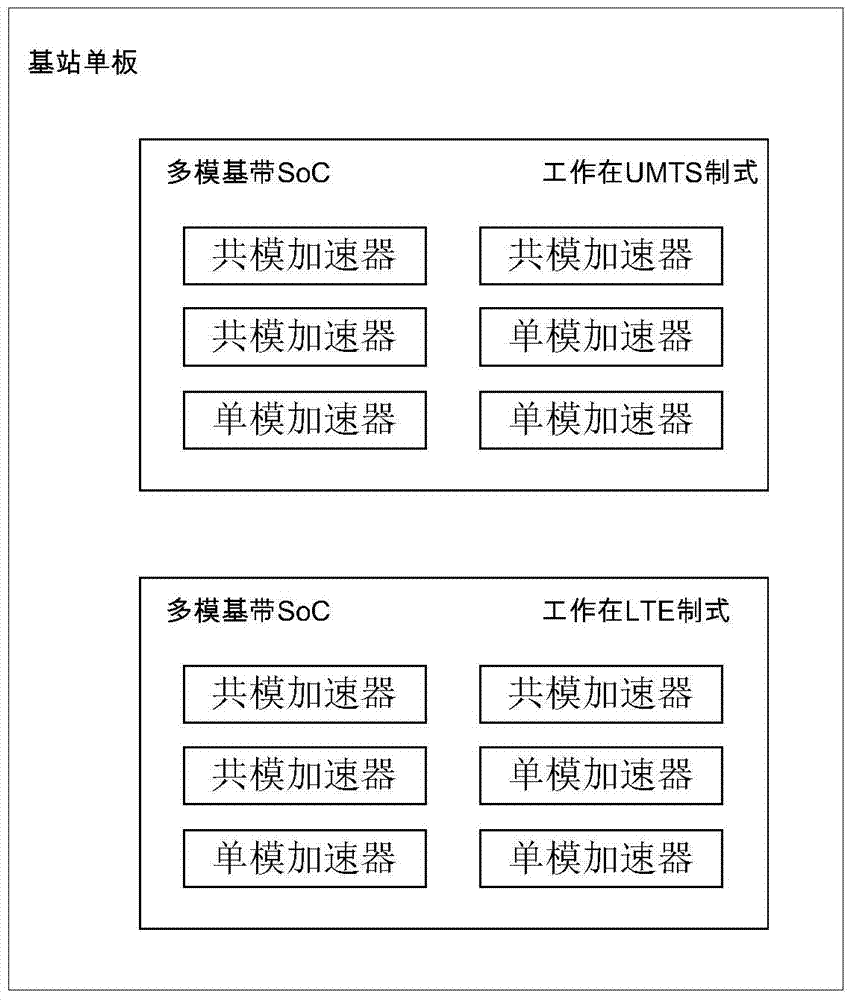

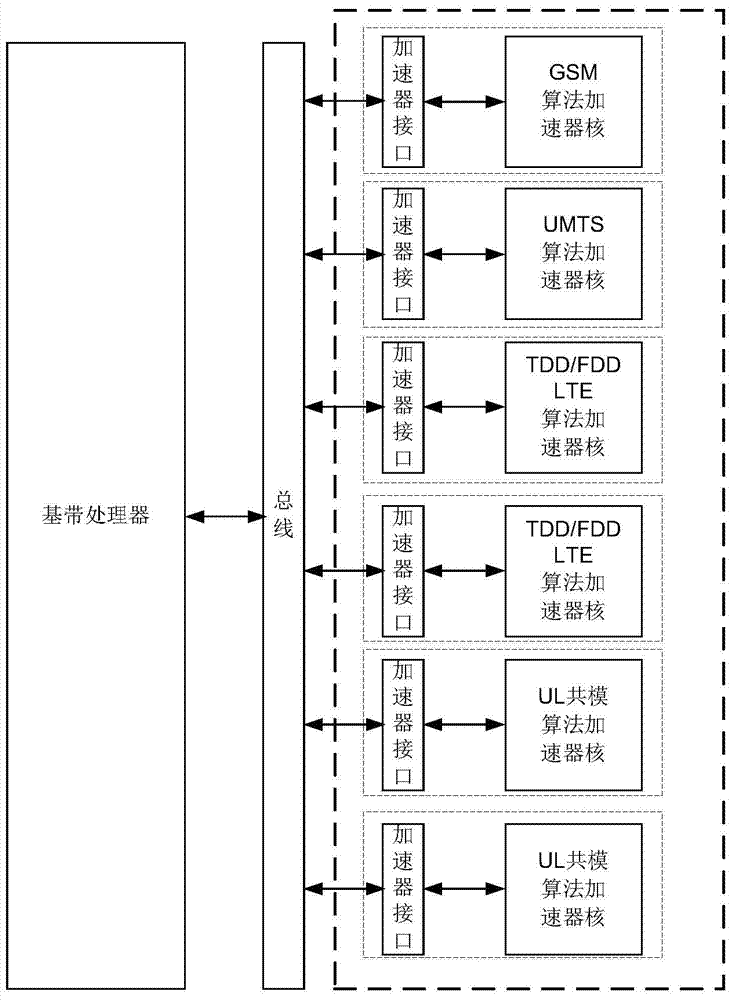

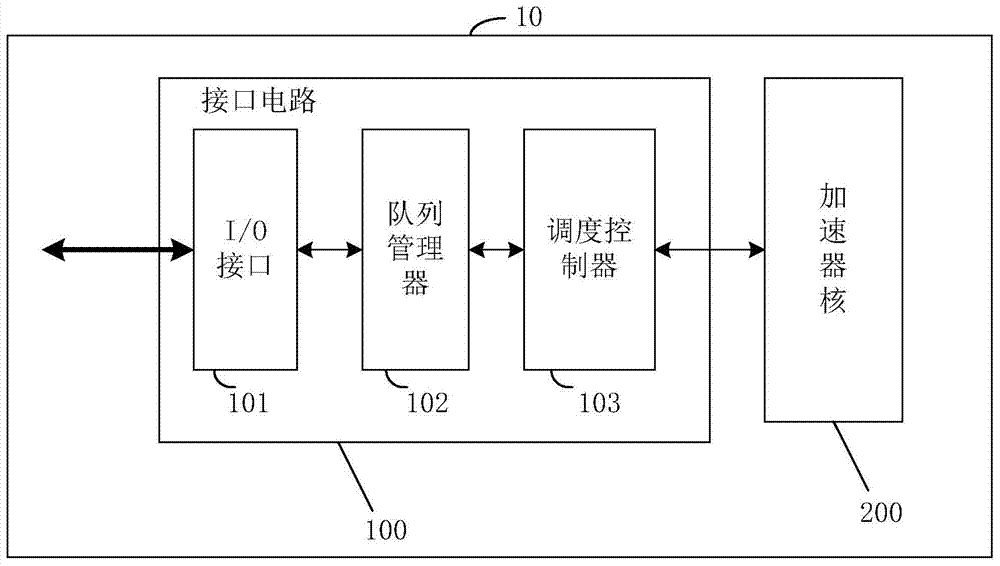

Hardware accelerator and chip

ActiveCN104503728AAvoid interactionExpected processing capacityProgram controlTransmissionFifo queueInterface circuits

The embodiment of the invention discloses a hardware accelerator and a chip. The hardware accelerator comprises an interface circuit, and an accelerator core which is coupled to the interface circuit; the interface circuit is used for receiving a first task request and decoding the first task request so as to obtain marking information, as well as configuring the first task request into an FIFO array in match with the marking information according to the marking information; a scheduling controller is used for determining a target channel set with at least one second task request to be treated in the n cycle from at least two channel sets, receiving the time parameters fed back by the accelerator core and respectively corresponding to the targeted channel sets, and scheduling the at least one second task request of the targeted channel sets according to the time parameters and the weighted round robin algorithm; the accelerator core is used for responding to the at least one second task request after scheduling. With the adoption of the hardware accelerator, the isolation in the configuration process can be effectively achieved, so that the mutual influence can be avoided.

Owner:HUAWEI TECH CO LTD

Concurrent, Non-Blocking, Lock-Free Queue and Method, Apparatus, and Computer Program Product for Implementing Same

InactiveUS20080112423A1Reduce overheadError preventionFrequency-division multiplex detailsLoad-link/store-conditionalFifo queue

A dummy node is enqueued to a concurrent, non-blocking, lock-free FIFO queue only when necessary to prevent the queue from becoming empty. The dummy node is only enqueued during a dequeue operation and only when the queue contains a single user node during the dequeue operation. This reduces overhead relative to conventional mechanisms that always keep a dummy node in the queue. User nodes are enqueued directly to the queue and can be immediately dequeued on-demand by any thread. Preferably, the enqueueing and dequeueing operations include the use of load-linked / store conditional (LL / SC) synchronization primitives. This solves the ABA problem without requiring the use a unique number, such as a queue-specific number, and contrasts with conventional mechanisms that include the use of compare-and-swap (CAS) synchronization primitives and address the ABA problem through the use of a unique number. In addition, storage ordering fences are preferably inserted to allow the algorithm to run on weakly consistent processors.

Owner:IBM CORP

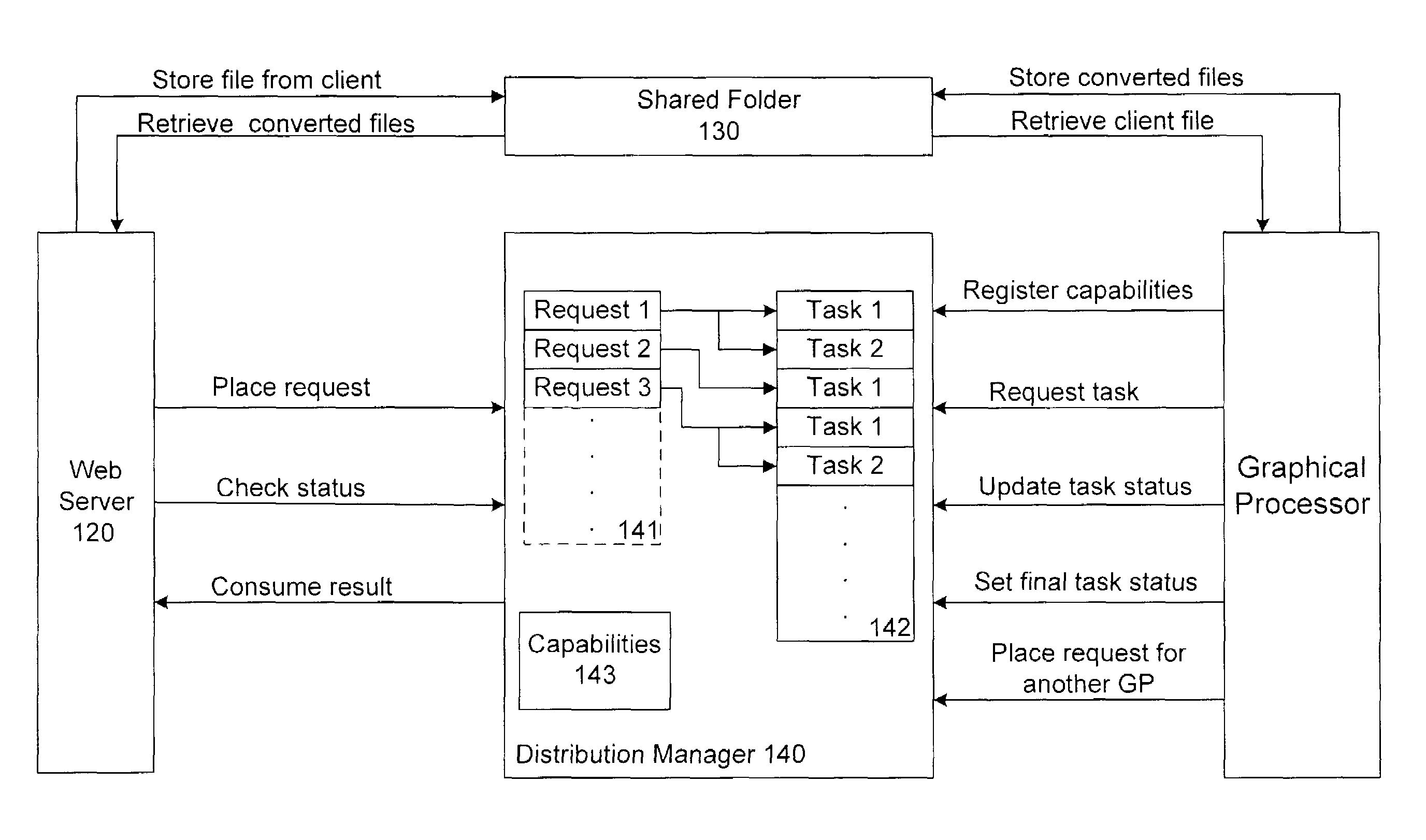

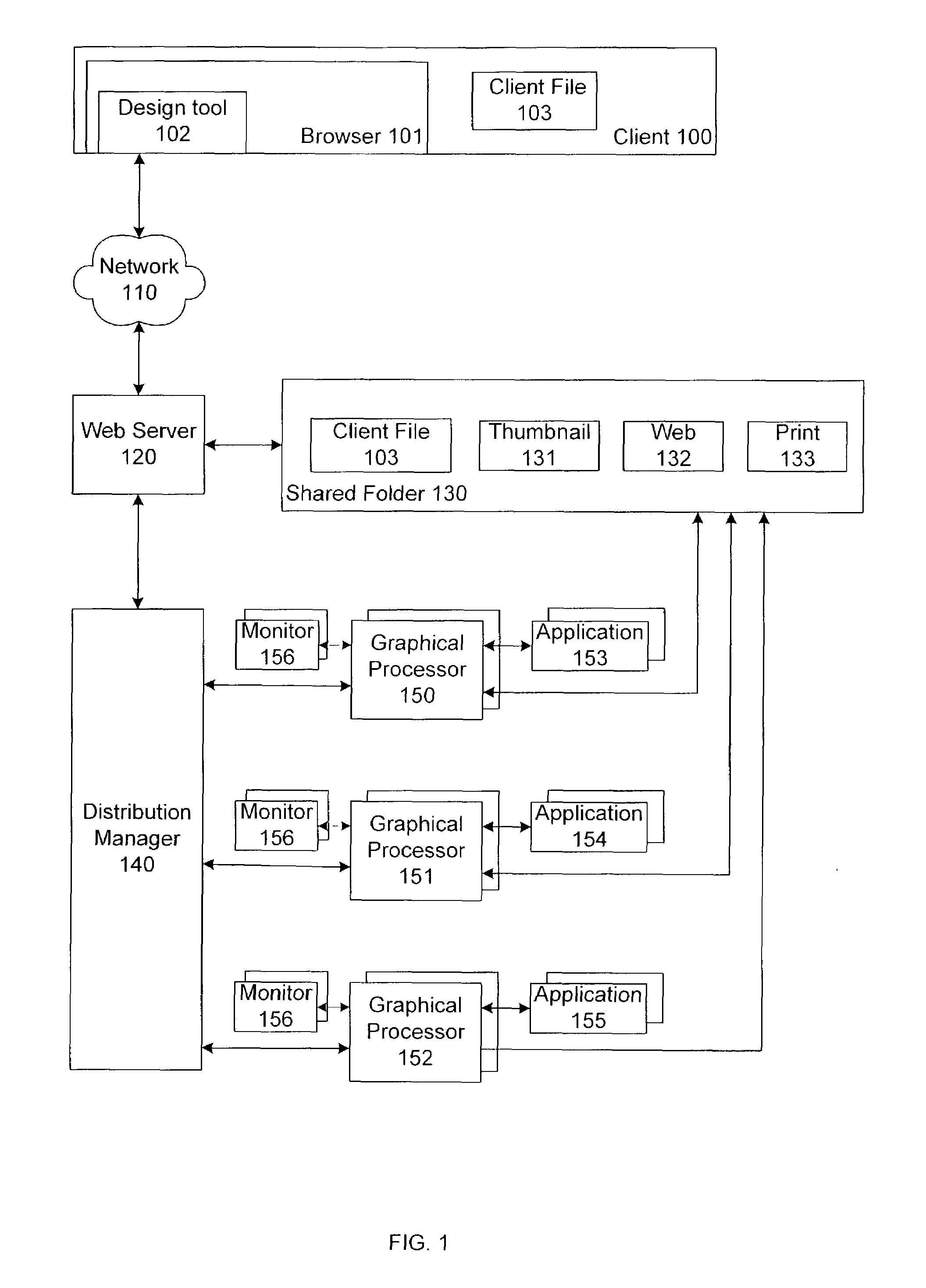

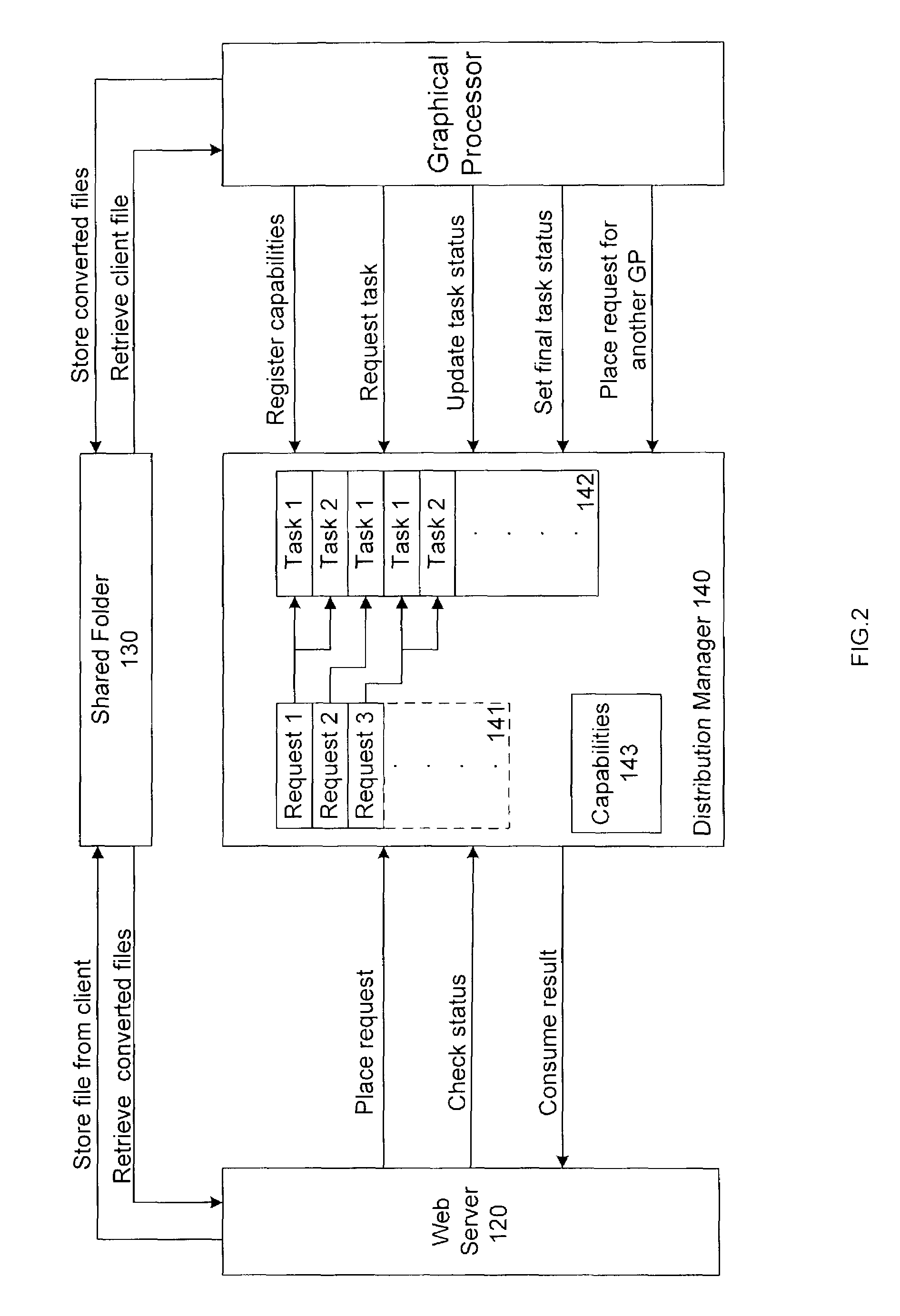

File conversion system and method

ActiveUS7681122B1Digital data information retrievalSpecial data processing applicationsGraphicsWeb service

A flexible and scalable file format conversion system is capable of supporting a number of contemporaneous conversion requests. For each file, a conversion request entry and one or more related conversion task entries are maintained in FIFO queues under the control of a distribution manager program. Conversion operations are handled by application programs under the control of associated graphical processor programs. Conversion tasks are assigned to graphical processors by the distribution manager based on the conversion capabilities of the associated application program. The uploaded files received from clients for conversion are stored in a shared folder pending access by the assigned graphical processor. The graphical processors have a uniform interface with the queues and the memory. The results of the processing are stored in the shared folder where they are subsequently accessed by the web server and transmitted back to the client.

Owner:CIMPRESS SCHWEIZ

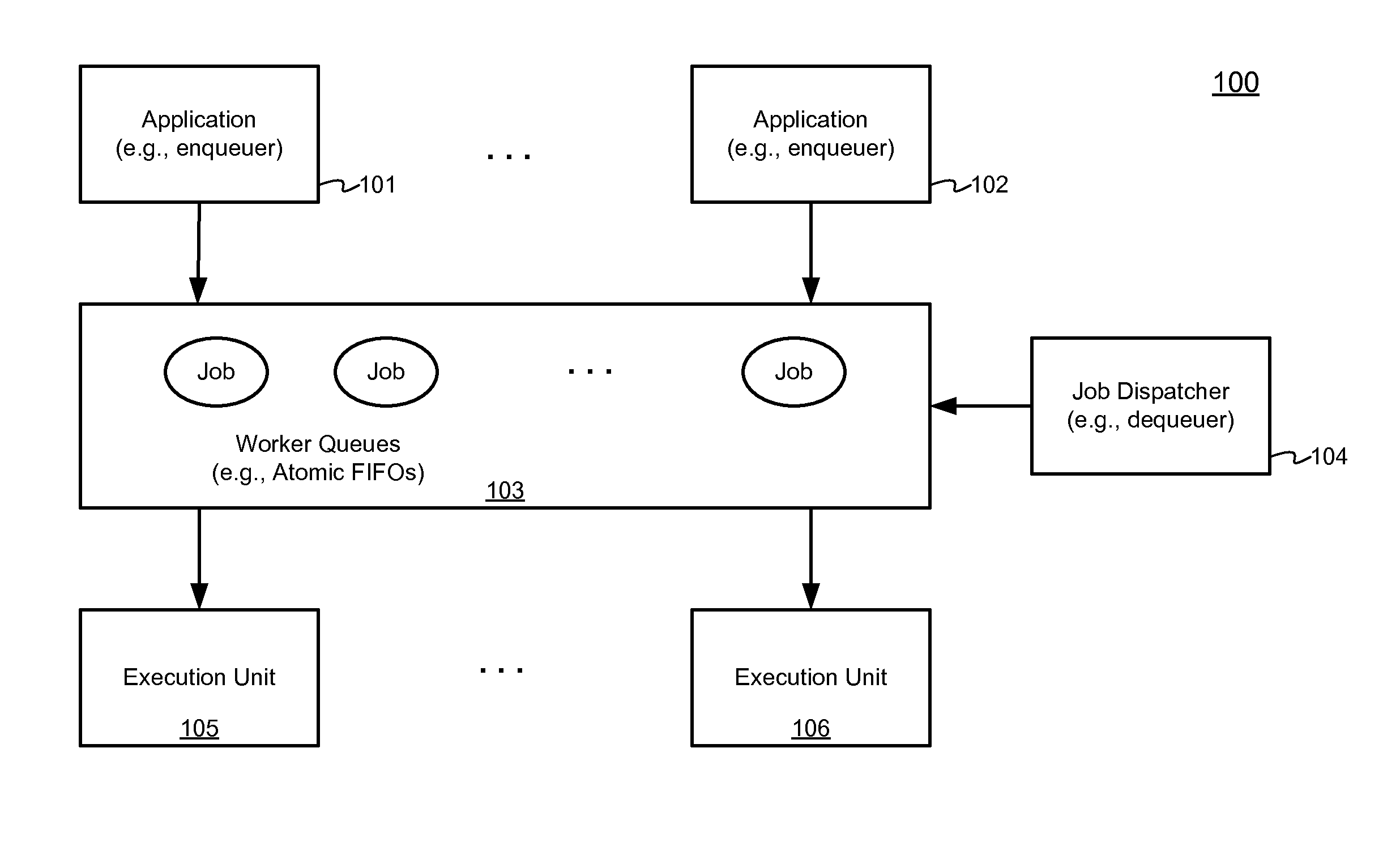

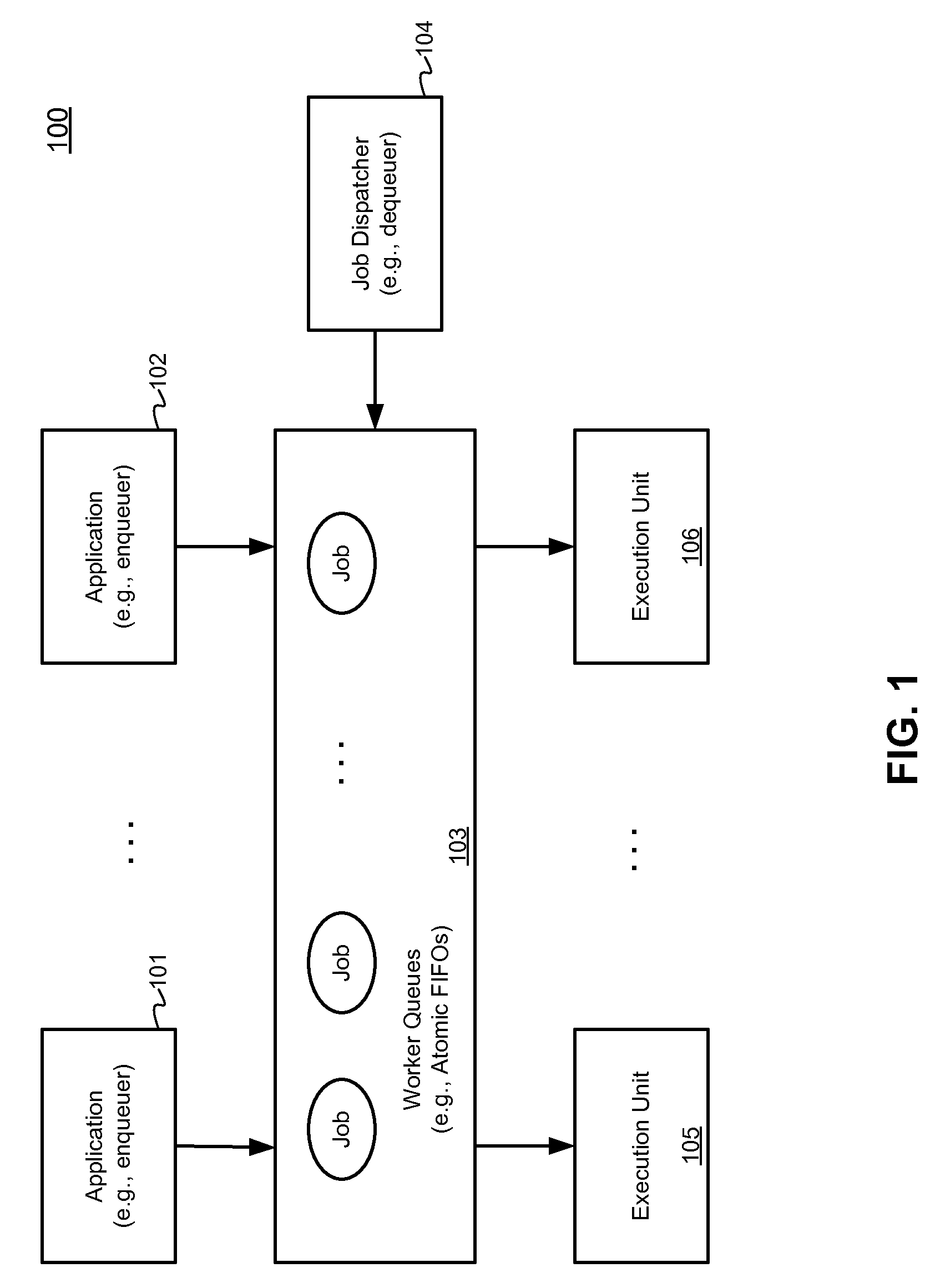

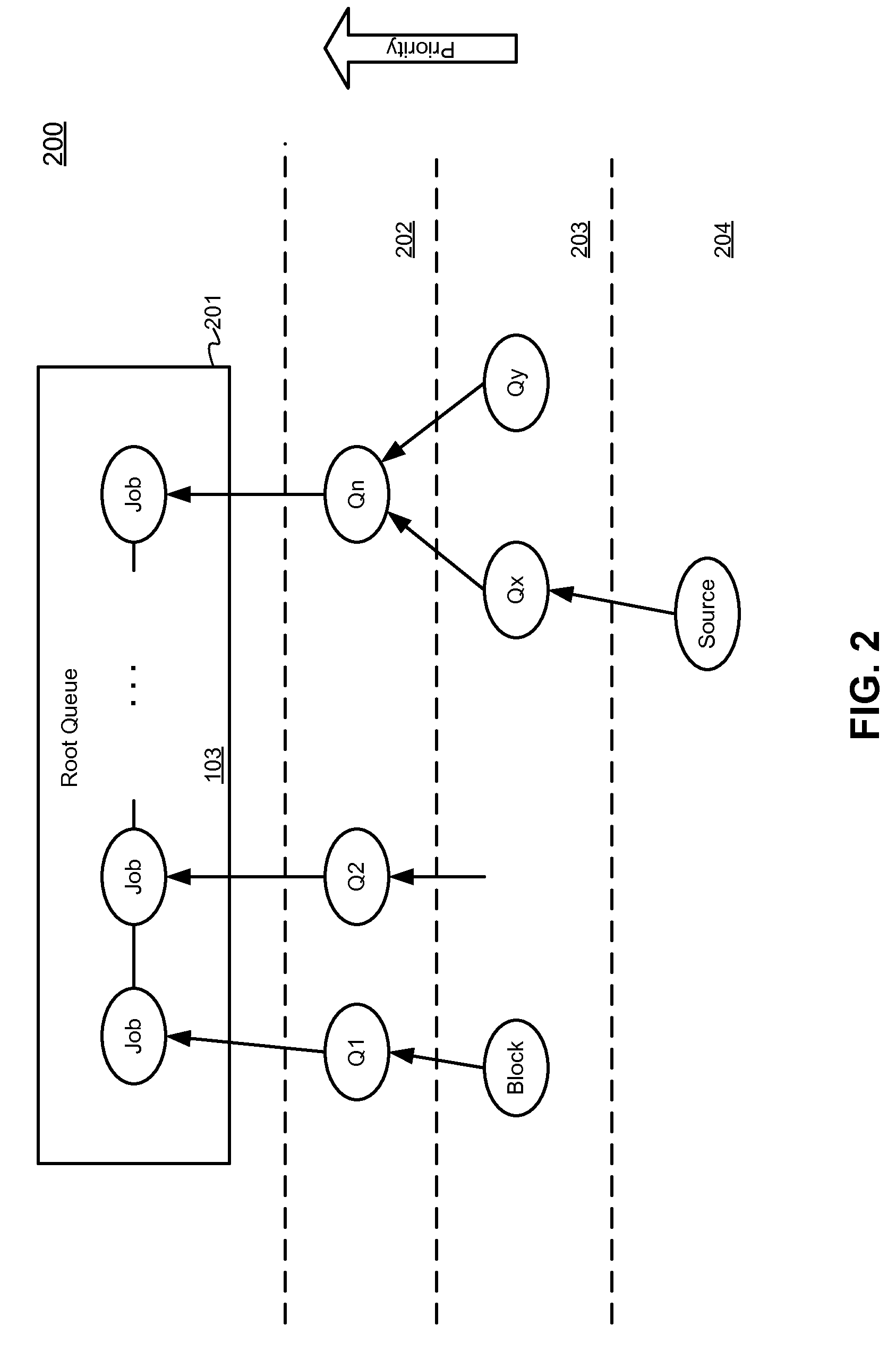

Method and apparatus for implementing atomic FIFO

ActiveUS20100313208A1Program synchronisationSpecific program execution arrangementsContinuationFifo queue

Techniques for implementing an atomic FIFO queue and system for processing queue elements are described herein. According to one embodiment, in a first thread of execution, new data is atomically merged with existing data of an object via an atomic instruction associated with hardware that executes the first thread. An attempt is made to acquire ownership of the object (exclusive access). If successful, the object is enqueued on an atomic FIFO queue as a continuation element for further processing. Otherwise, another thread of execution is safely assumed to have acquired ownership and taken responsibility to enqueue the object. A second thread of execution processes the atomic FIFO queue and assumes ownership of the continuation elements. The second thread invokes a function member of the continuation element with a data member of the continuation element, the data member including the newly merged data. Other methods and apparatuses are also described.

Owner:APPLE INC

System-on-chip communication manager

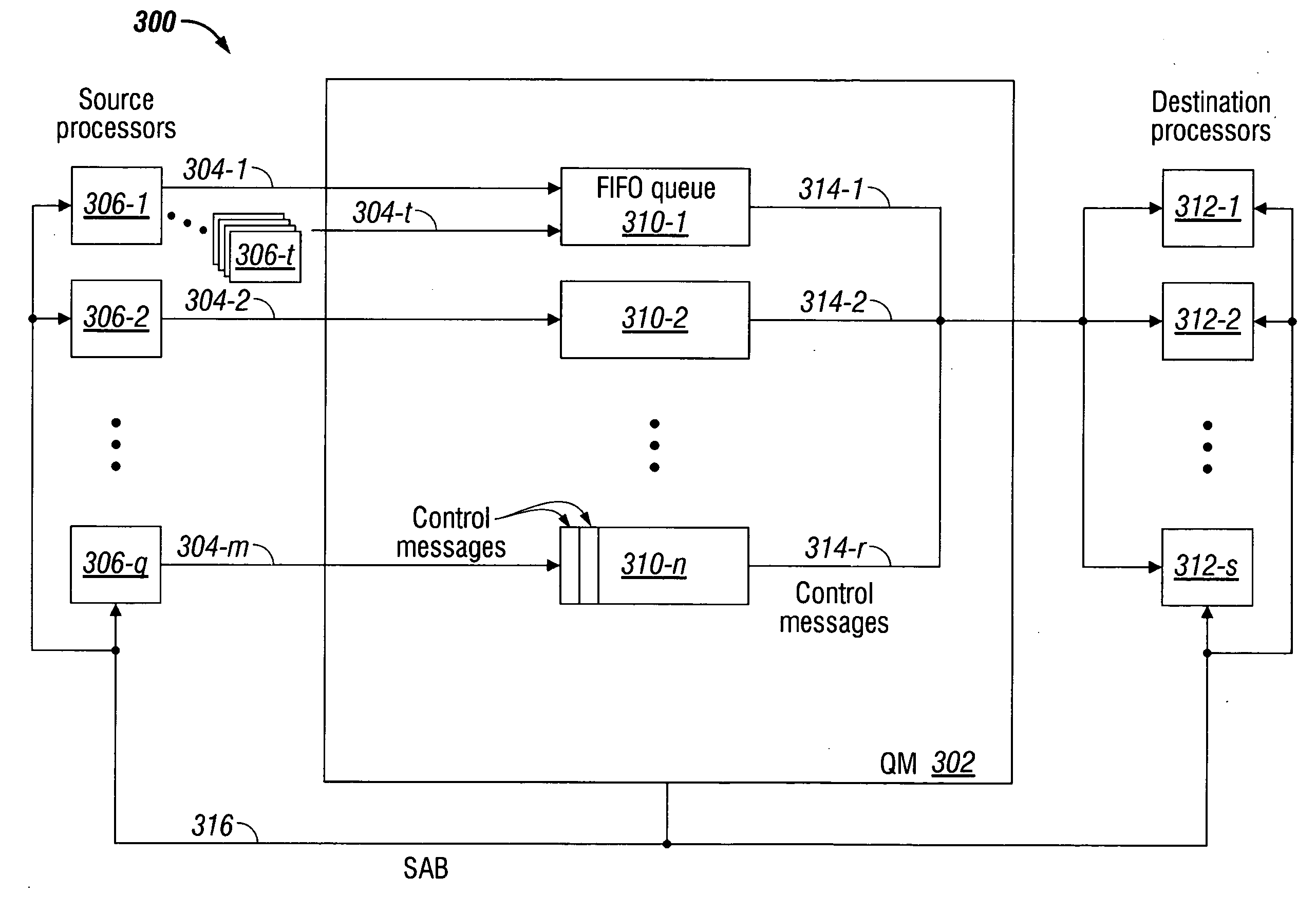

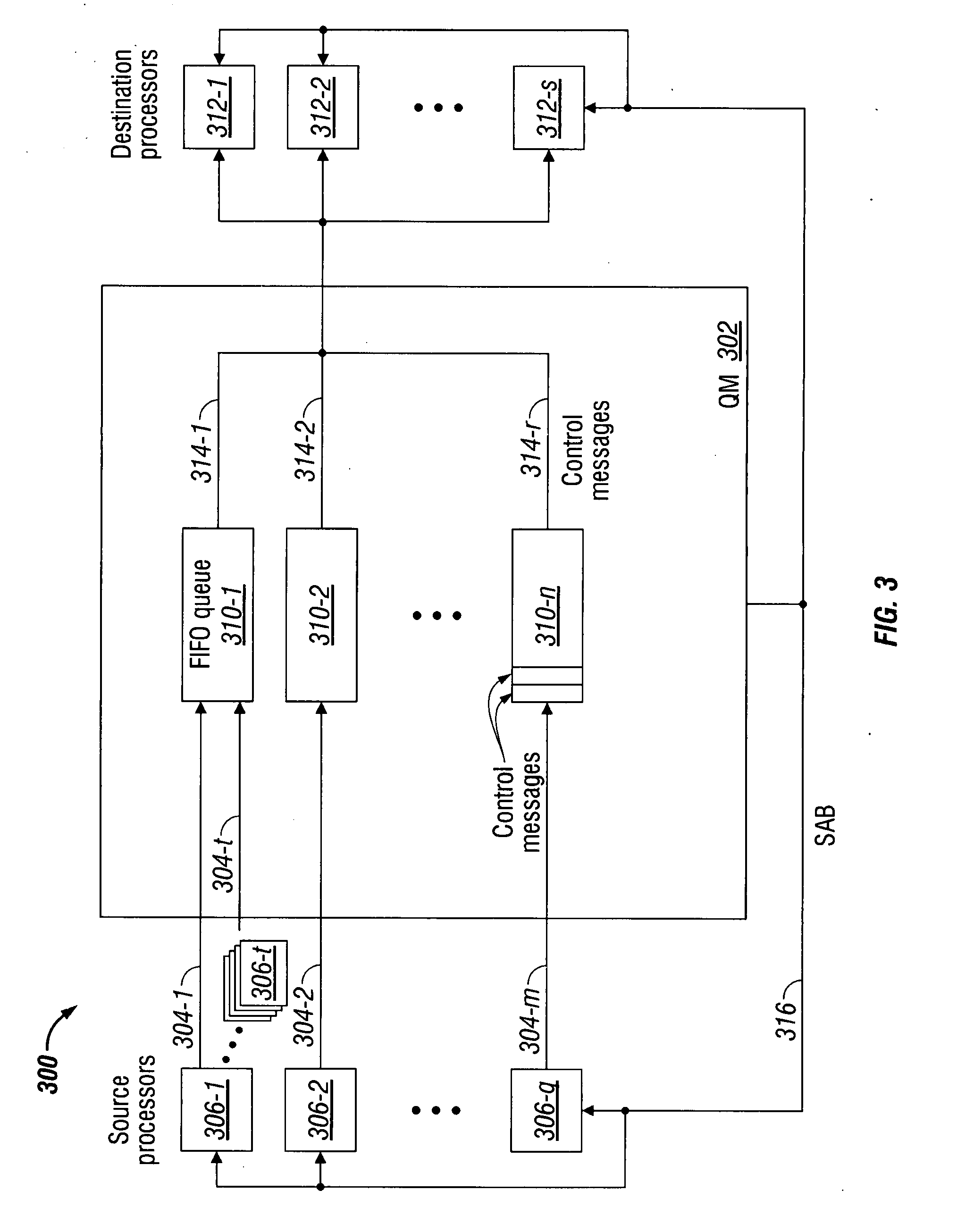

A Queue Manager (QM) system and method are provided for communicating control messages between processors. The method accepts control messages from a source processor addressed to a destination processor. The control messages are loaded in a first-in first-out (FIFO) queue associated with the destination processor. Then, the method serially supplies loaded control messages to the destination processor from the queue. The messages may be accepted from a plurality of source processors addressed to the same destination processor. The control messages are added to the queue in the order in which they are received. In one aspect, a plurality of parallel FIFO queues may be established that are associated with the same destination processor. Then, the method differentiates the control messages into the parallel FIFO queues and supplies control messages from the parallel FIFO queues in an order responsive to criteria such as queue ranking, weighting, or shaping.

Owner:MACOM CONNECTIVITY SOLUTIONS LLC

System and method for operating a packet buffer

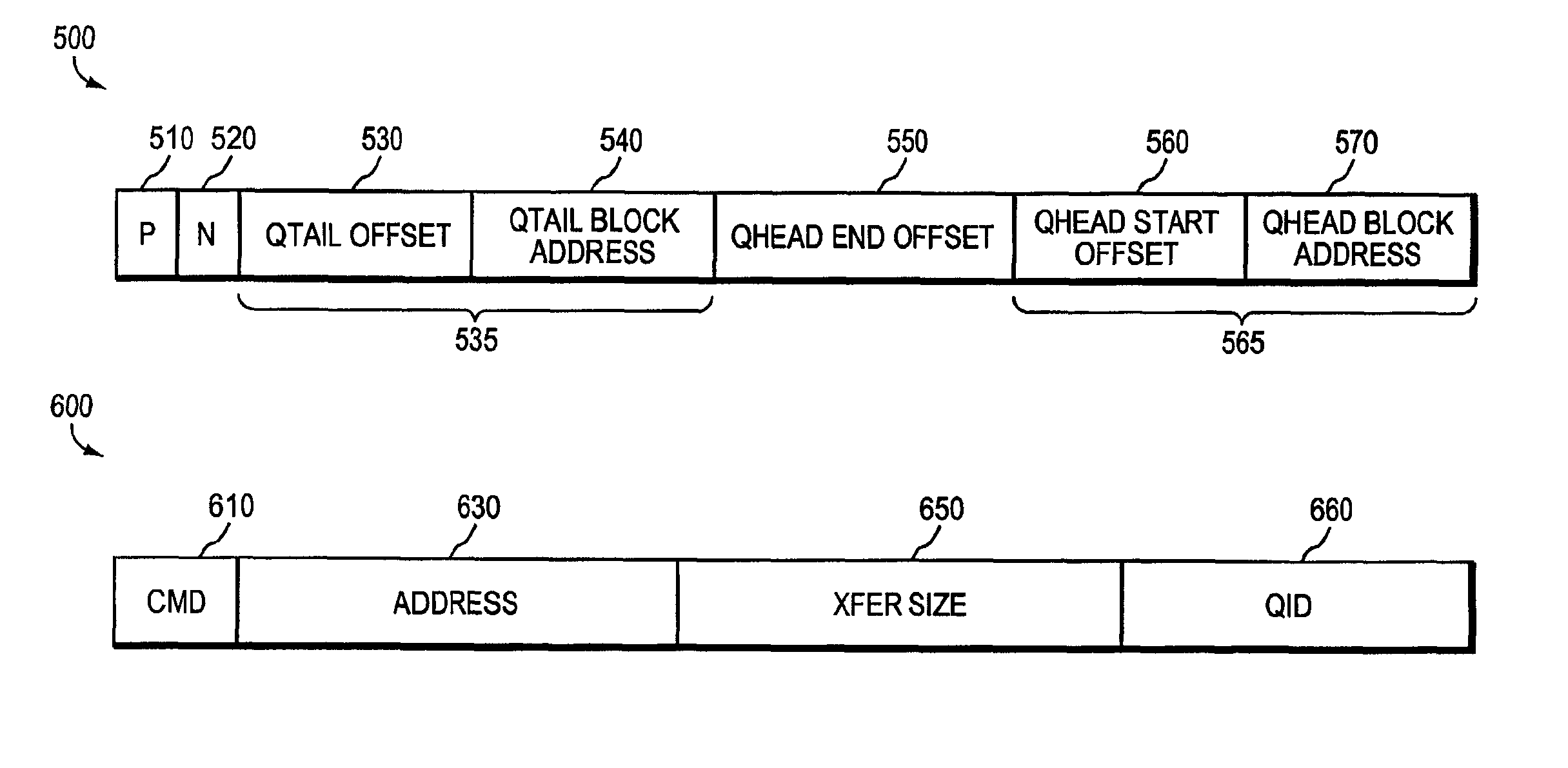

A technique for implementing a novel high-speed high-density packet buffer utilizing a combination of high-speed and low-speed memory devices. The novel packet buffer is organized as a plurality of first-in-first-out (FIFO) queues where each FIFO queue is associated with a particular input or output line. Each queue comprises a high-speed cache portion that resides in high-speed memory and a low-speed high-density portion that resides in low-speed high-density memory. Each high-speed cache portion contains FIFO data that contains head and / or tail information associated with a corresponding FIFO queue. The low-speed high-density portion contains FIFO data that is not contained in the high-speed cache portion. A queue identifier (QID) directory refills the high-speed portion of one or more queues with data from a corresponding low-speed portion. Queue head start and end offsets are used to determine whether a corresponding queue is empty.

Owner:CISCO TECH INC

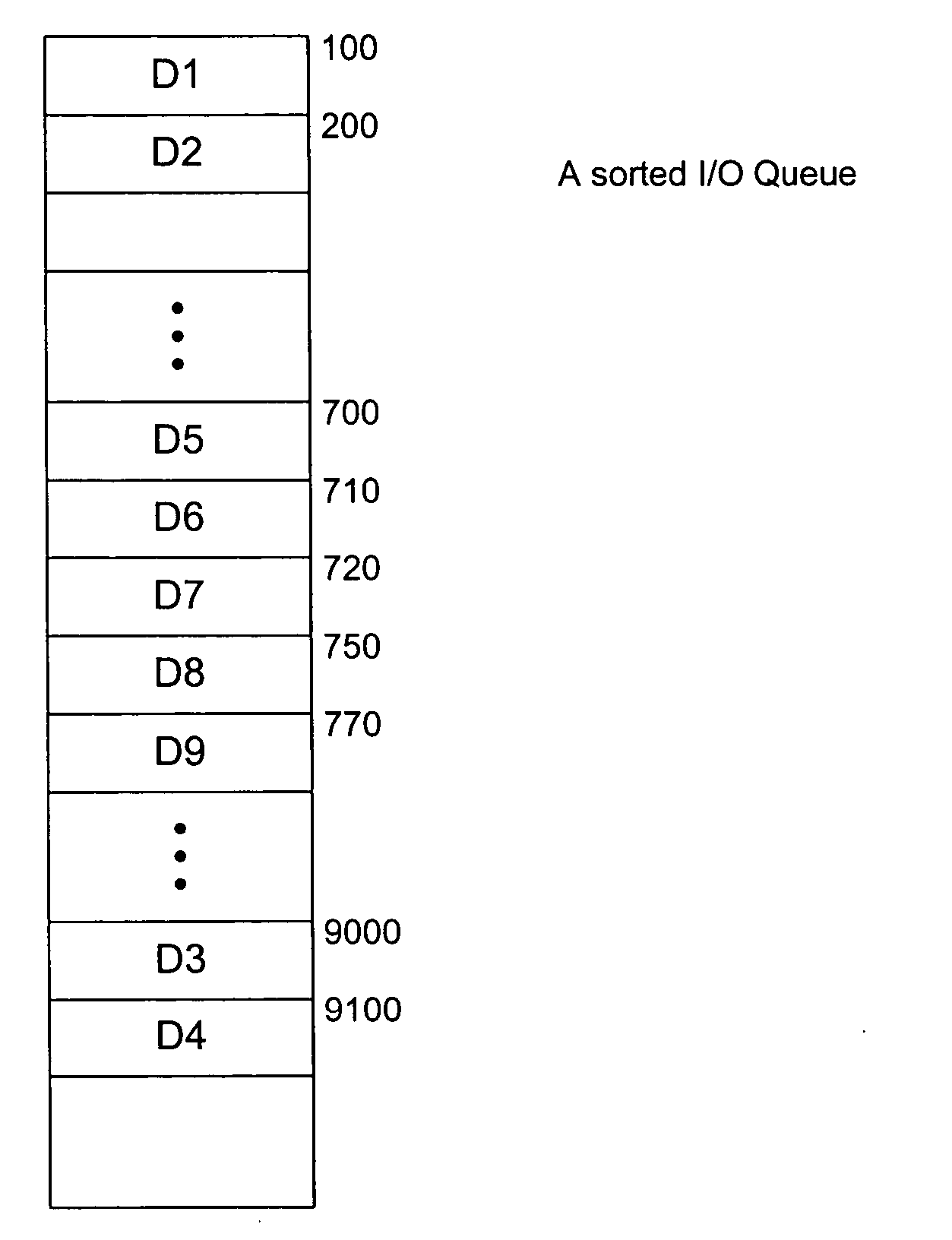

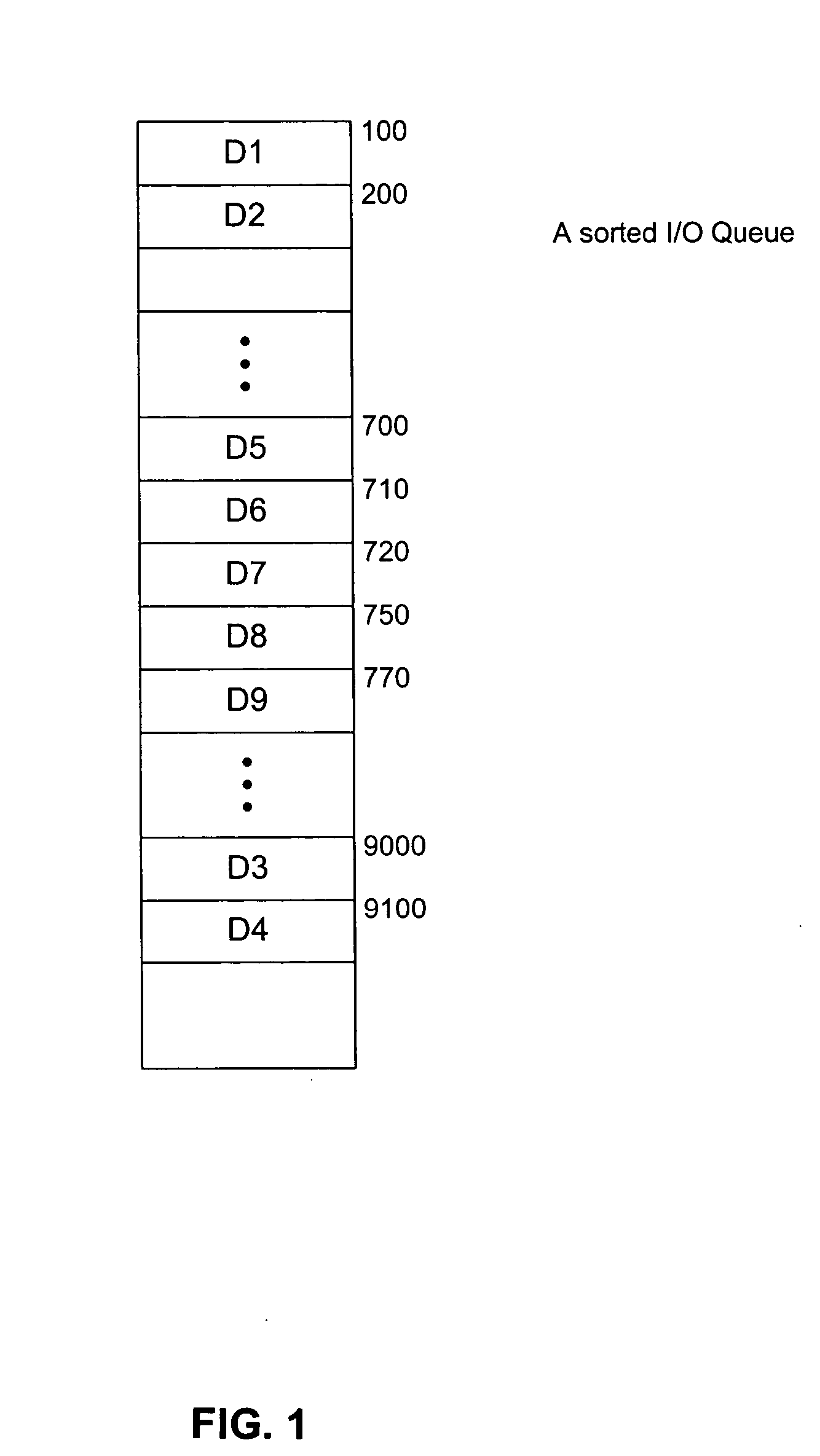

Method and computer program product to improve I/O performance and control I/O latency in a redundant array

InactiveUS20060112301A1Improve I/O performanceRedundant hardware error correctionInput/output processes for data processingFifo queueDistributed computing

A method and computer program product for improving I / O performance and controlling I / O latency for reading or writing to a disk in a redundant array, comprising determining an optimal number of I / O sort queues, their depth and a latency control number, directing incoming I / Os to a second sort queue if the queue depth or latency control number for the first queue is exceeded, directing incoming I / Os to a FIFO queue if all sort queues are saturated and issuing I / Os to a disk in the redundant array from the sort queue having the foremost I / Os.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Saving burst data by using semi-merge sorting module

InactiveUS6892199B2Improve throughputImprove efficiencyData processing applicationsRelational databasesMessage queueMerge sort

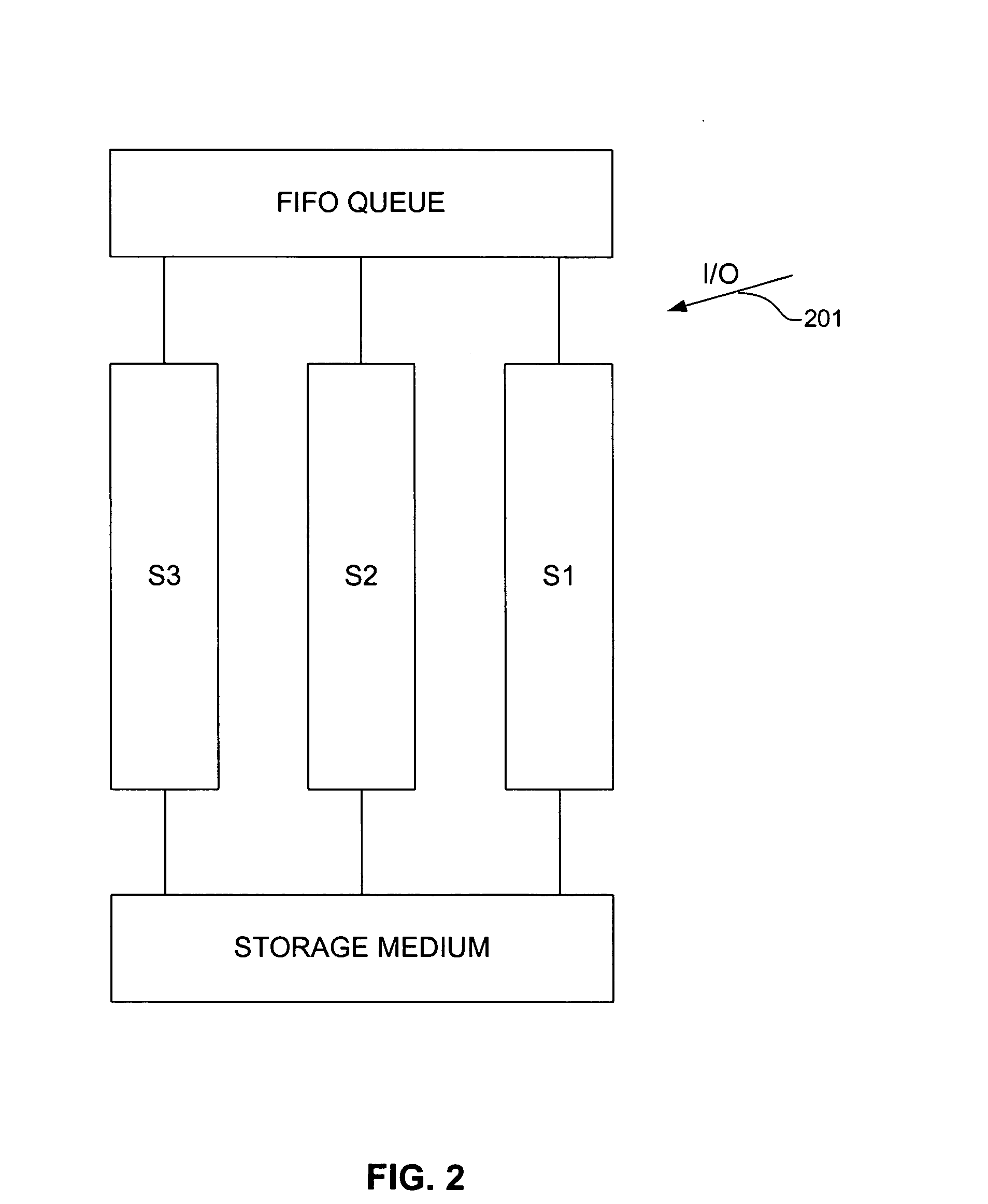

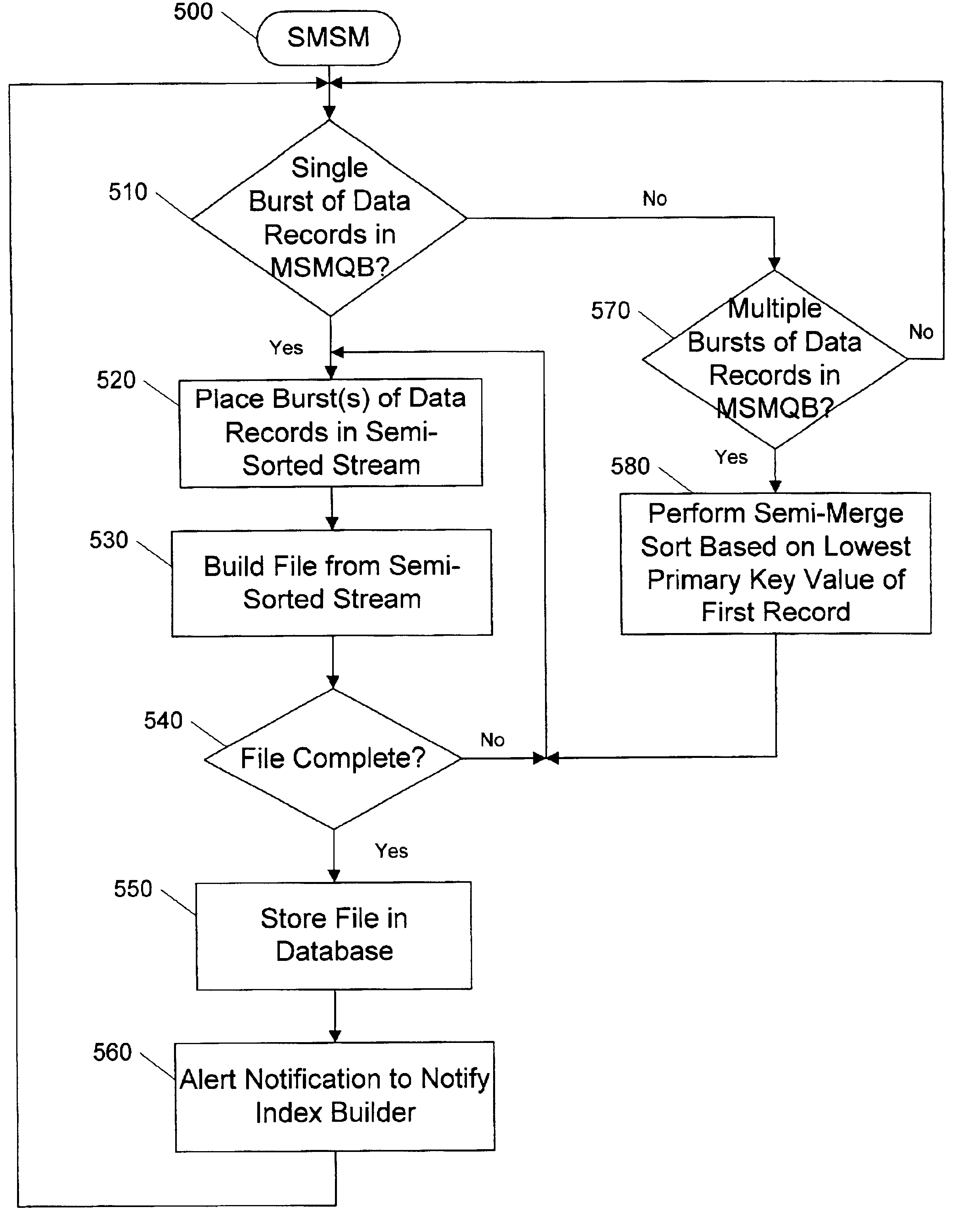

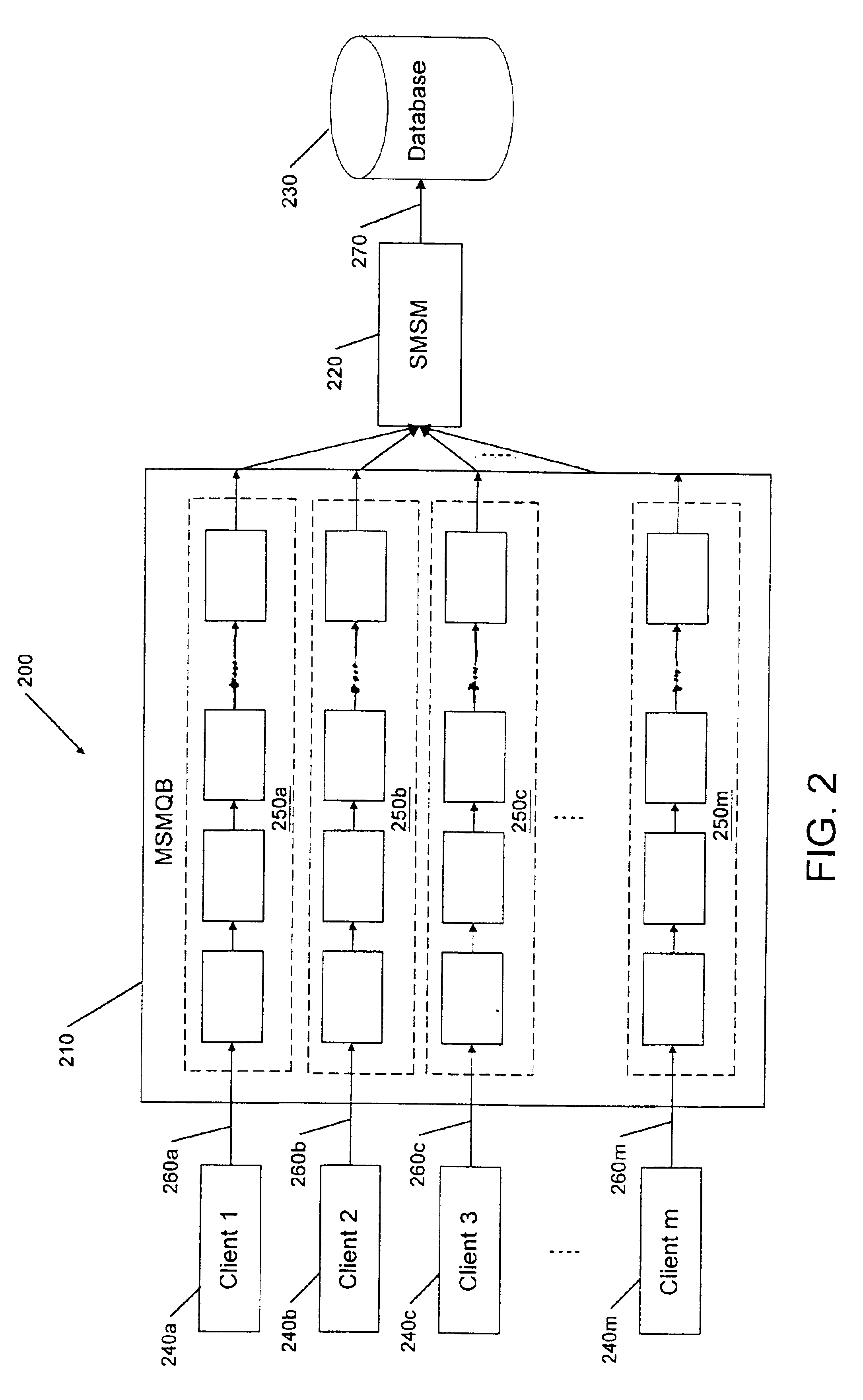

Systems, methods and computer program products for storing data from multiple clients in a database include a Multi-thread Shared Memory message Queue Buffer (MSMQB) that includes multiple First-In First-Out (FIFO) queues, a respective one of which is associated with a respective one of the clients. The MSMQB is configured to store sequential bursts of data records that are received from the clients in the associated FIFO queues. A Semi-Merge Sort Module (SMSM) is configured to sort the sequential bursts in the FIFO queues based on the primary key of at least one selected record but not every record, to produce a semi-sorted record stream for serially stored in the database.

Owner:VIAVI SOLUTIONS INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com