Implementation method of least recently used (LRU) policy in solid state drive (SSD)-based high-capacity cache

An implementation method and large-capacity technology, applied in data transformation, instrumentation, electrical digital data processing, etc., can solve problems such as inapplicability, and achieve the effect of simple and high reading and writing speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

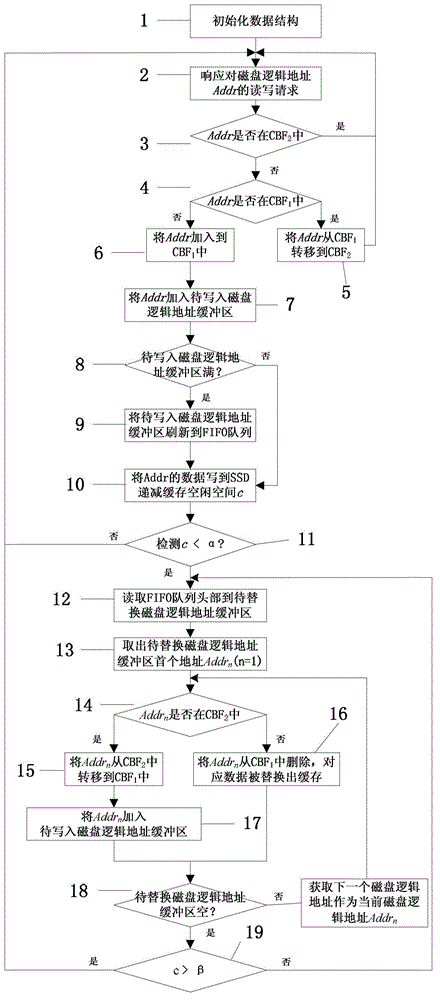

[0039] Such as figure 1 As shown, the implementation steps of the LRU policy implementation method in the SSD-based large-capacity cache in this embodiment are as follows:

[0040] 1) Allocate a continuous address space on the SSD to initialize the FIFO queue; establish the first counting Bloom selector CBF in the memory for recording the logical address of the disk that has only been accessed once 1 And the second counting Bloom selector CBF for recording the logical address of the disk that has been accessed more than twice 2 The data structure, respectively apply for two address spaces in the memory as the logical address buffer of the disk to be written and the logical address buffer of the disk to be replaced, and jump to the next step.

[0041] In this embodiment, the FIFO queue is stored in a piece of continuous address space in the SSD. This piece of continuous address space is recycled. The size of the continuous address space depends on the length of the logical add...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com