Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

219results about How to "Improve I/O performance" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Apparatus and method to provide virtual solid state disk in cache memory in a storage controller

InactiveUS6567889B1Reduce in quantityImprove I/O performanceMemory architecture accessing/allocationInput/output to record carriersRAIDControl store

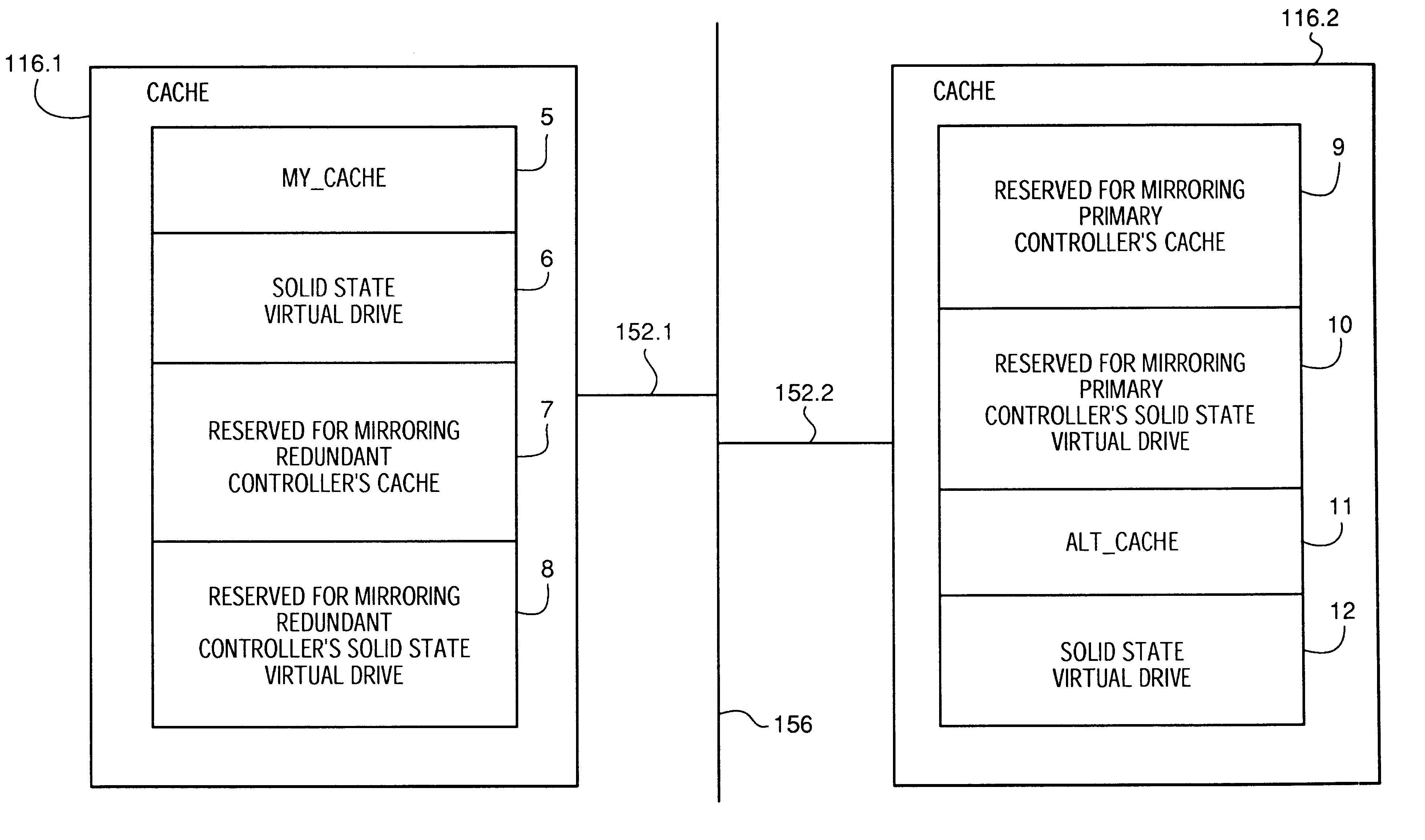

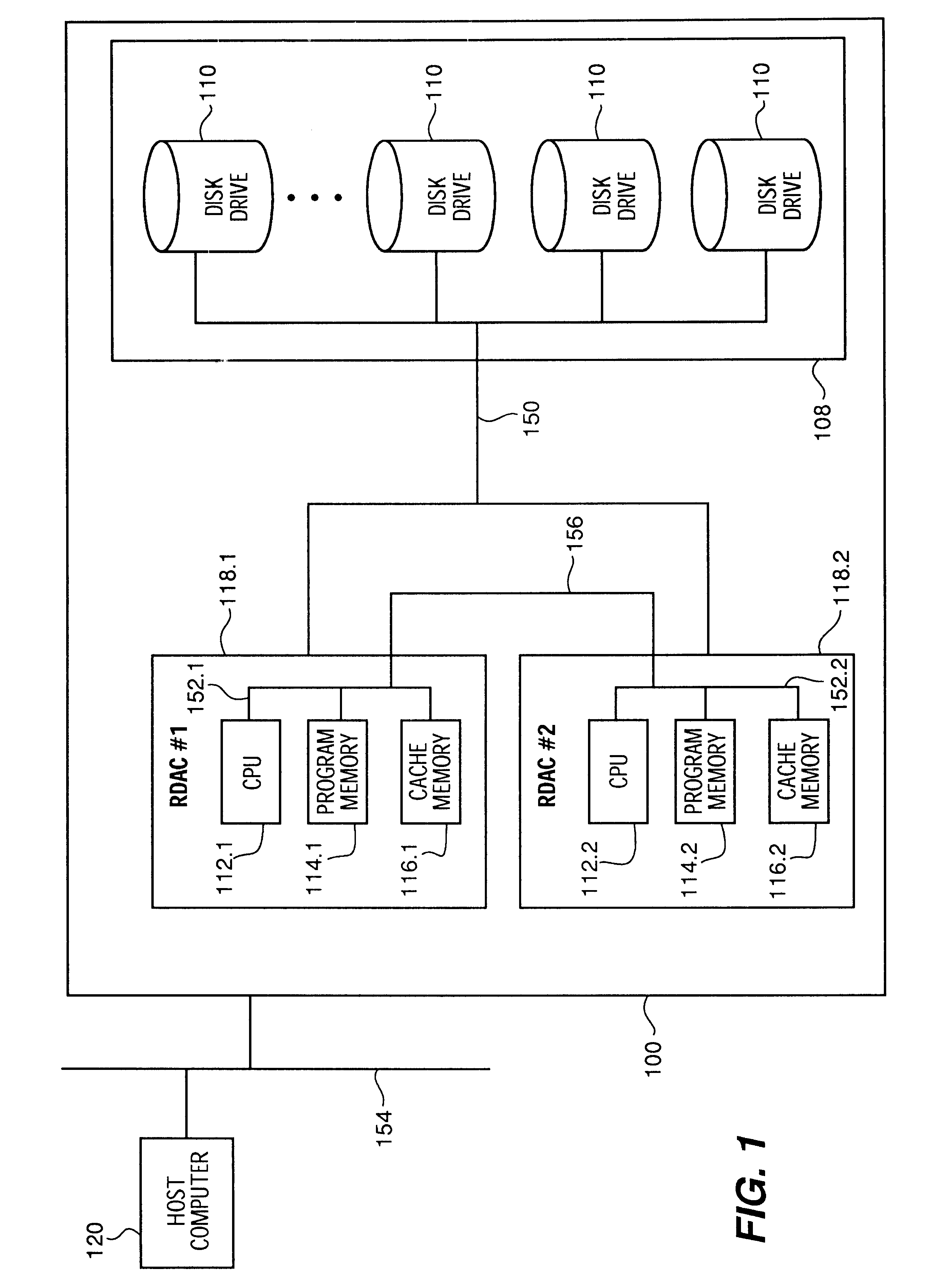

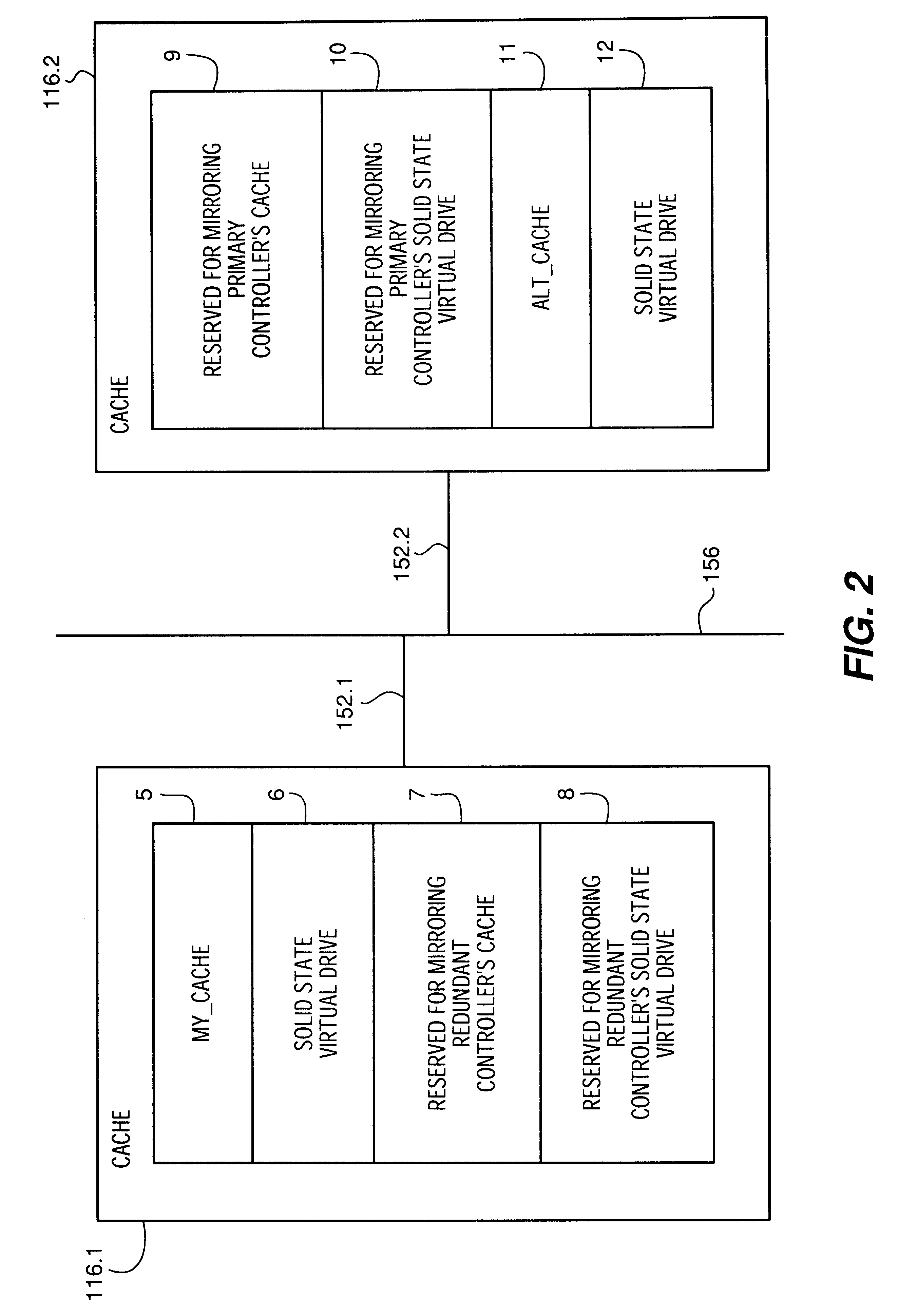

A portion of a storage controller's cache memory is used as a virtual solid state disk storage device to improve overall storage subsystem performance. In a first embodiment, the virtual solid state disk storage device is a single virtual disk drive for storing controller based information. In the first embodiment, the virtual solid state disk is reserved for use by the controller. In a second embodiment, a hybrid virtual LUN is configured as one or more virtual solid state disks in conjunction with one or more physical disks and managed using RAID levels 1-6. Since the hybrid virtual LUN is in the cache memory of the controller, data access times are reduced and throughput is increased by reduction of the RAID write penalty. The hybrid virtual LUN provides write performance that is typical of RAID 0. In a third embodiment, a high-speed virtual LUN is configured as a plurality of virtual solid state disks and managed as an entire virtual RAID LUN. Standard battery backup and redundant controller features of RAID controller technology ensure virtual solid state disk storage device non-volatility and redundancy in the event of controller failures.

Owner:NETWORK APPLIANCE INC

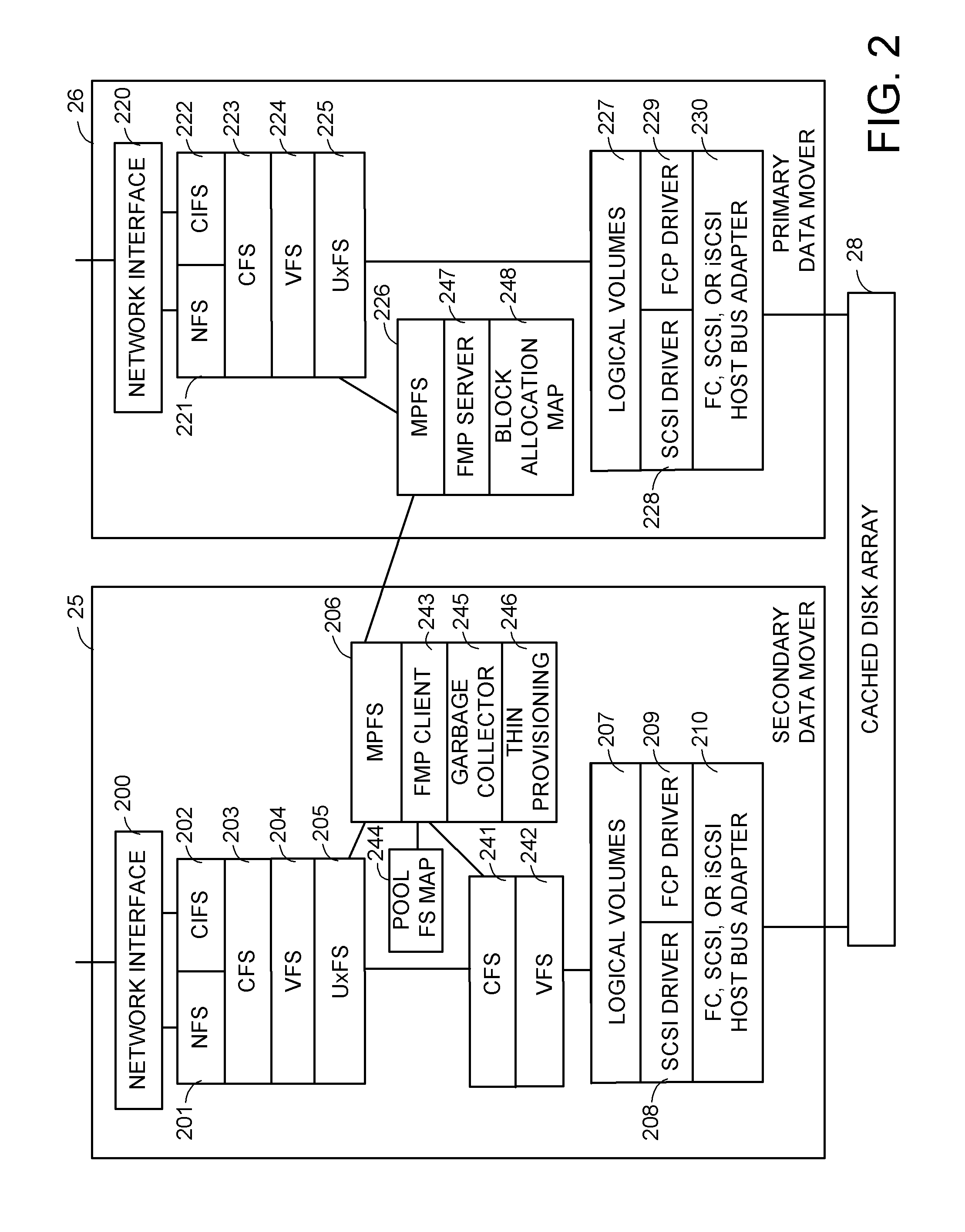

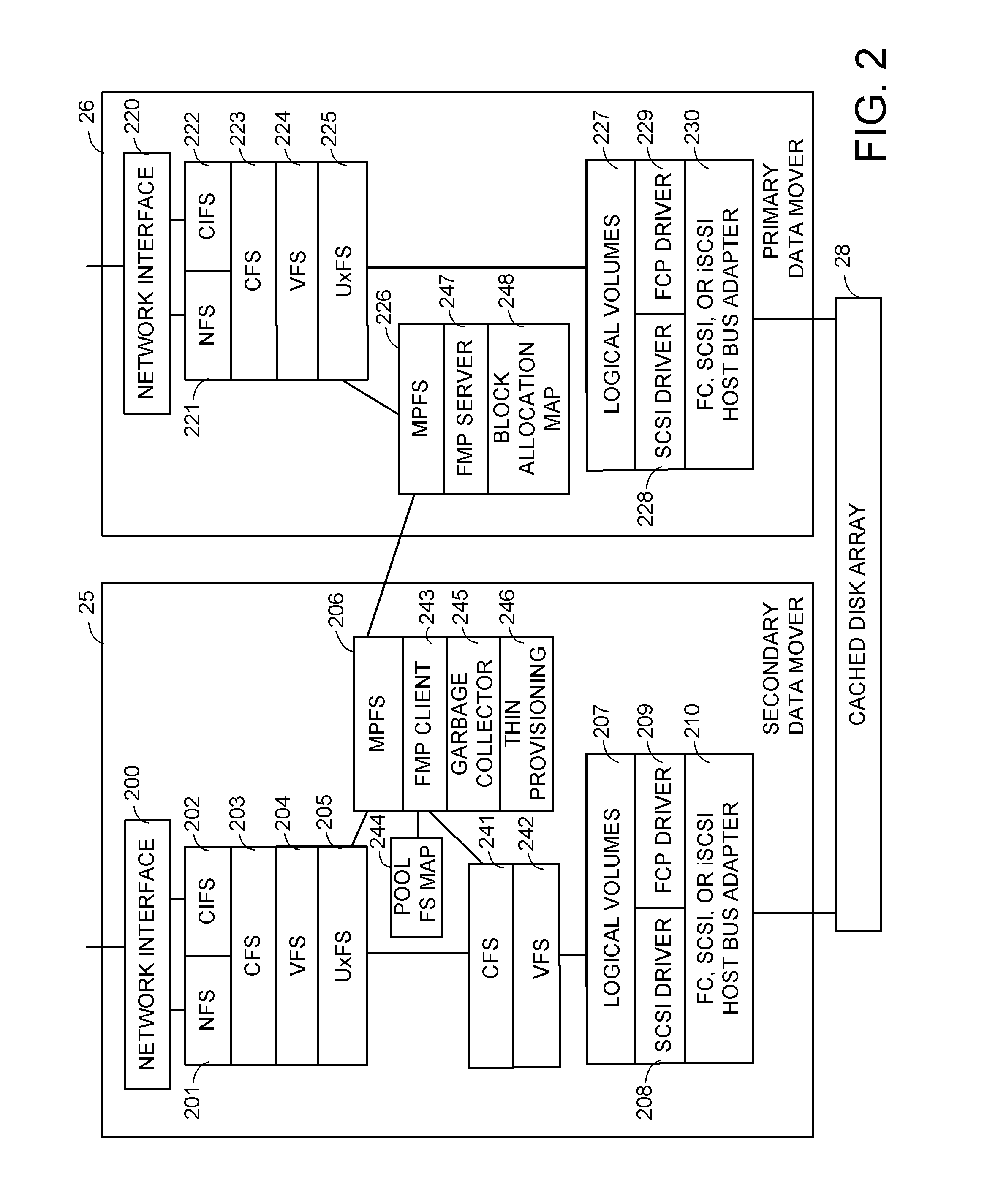

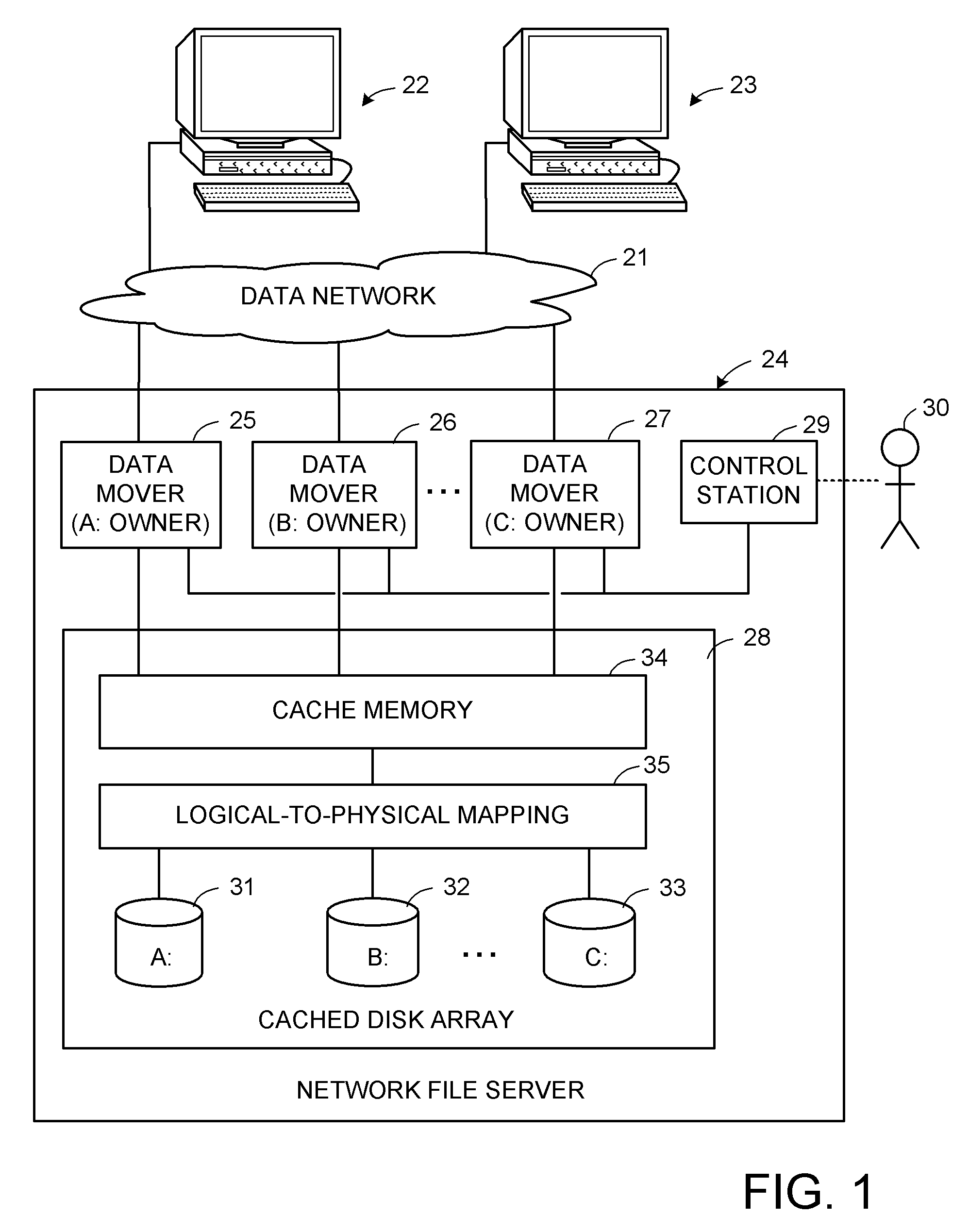

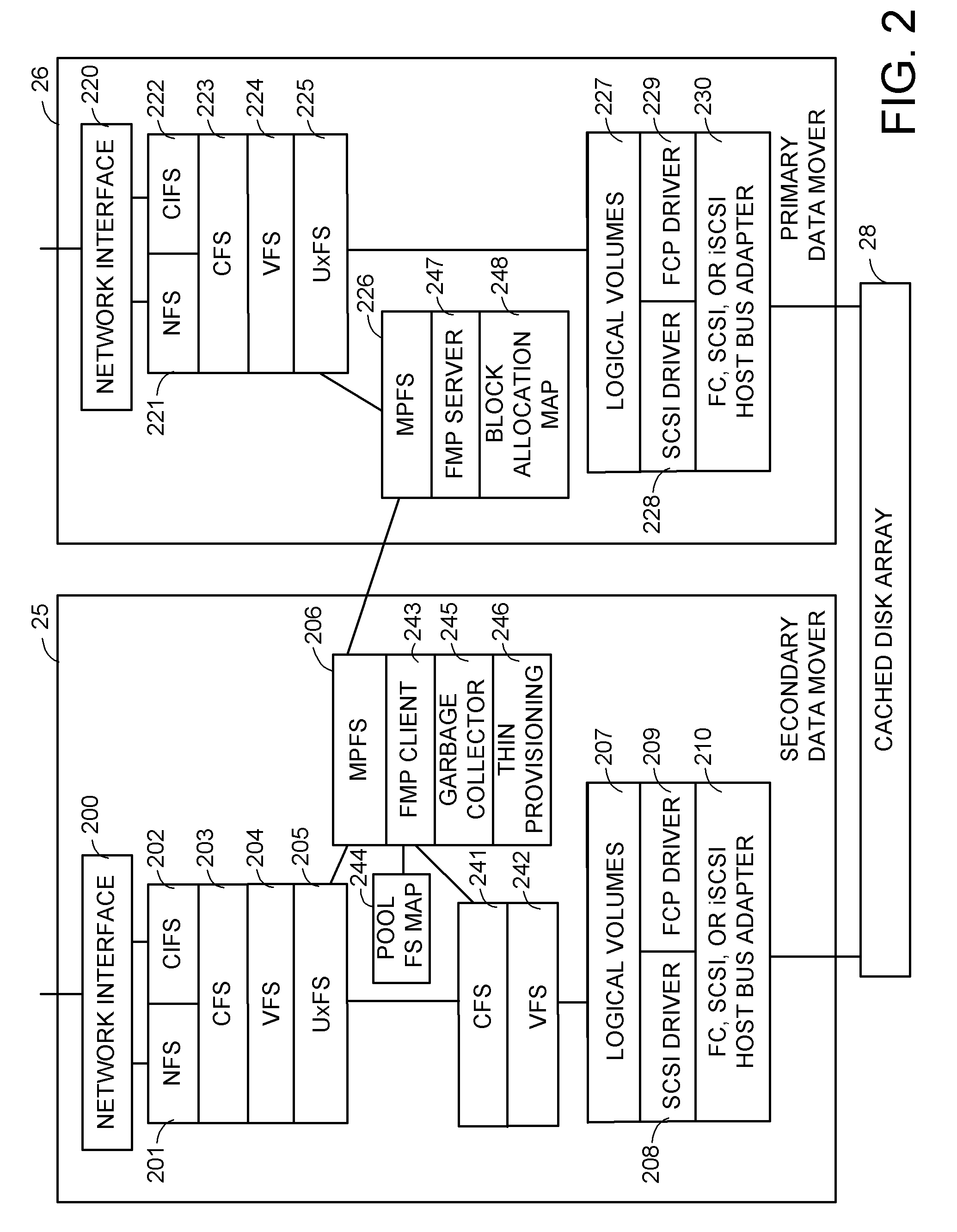

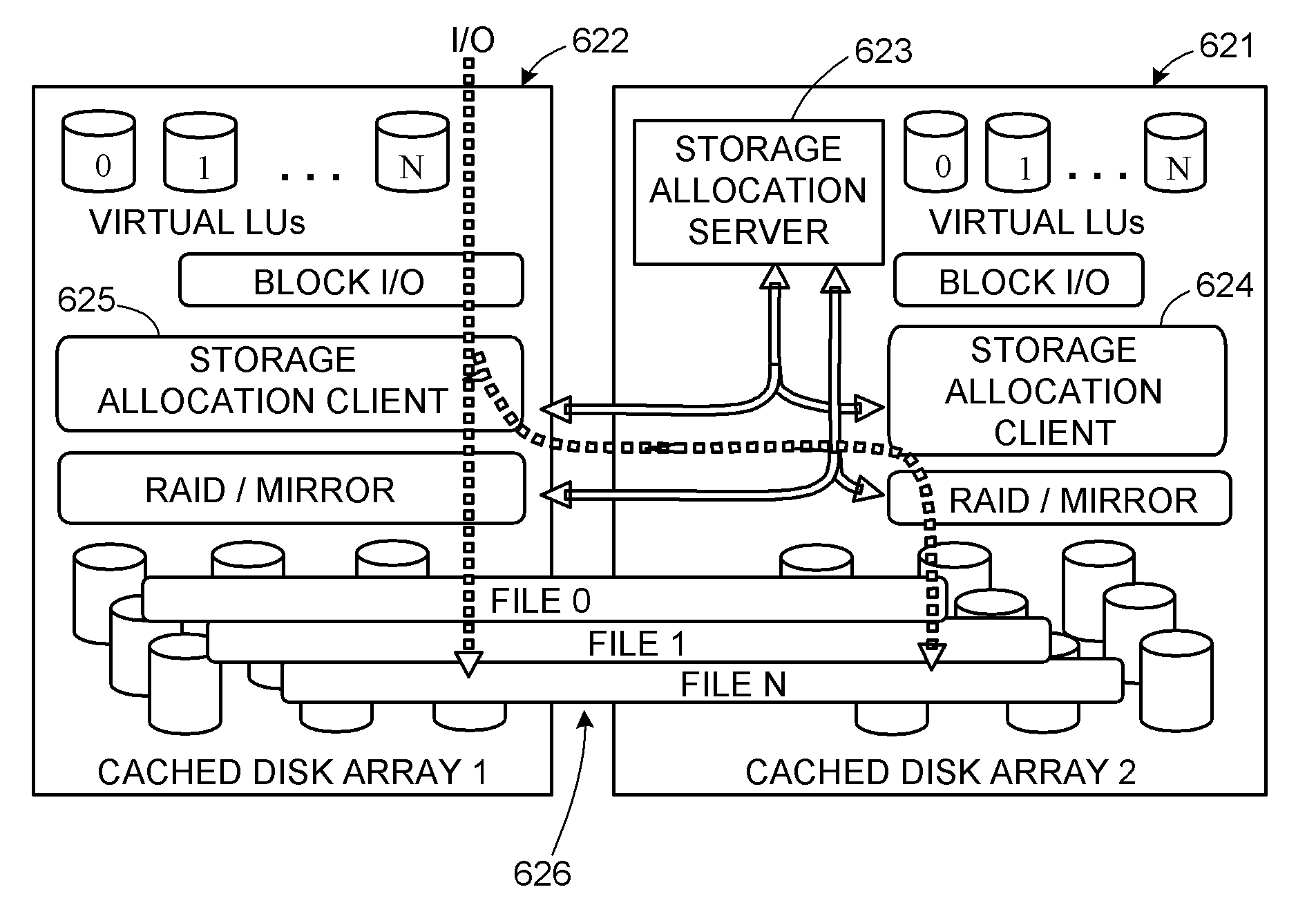

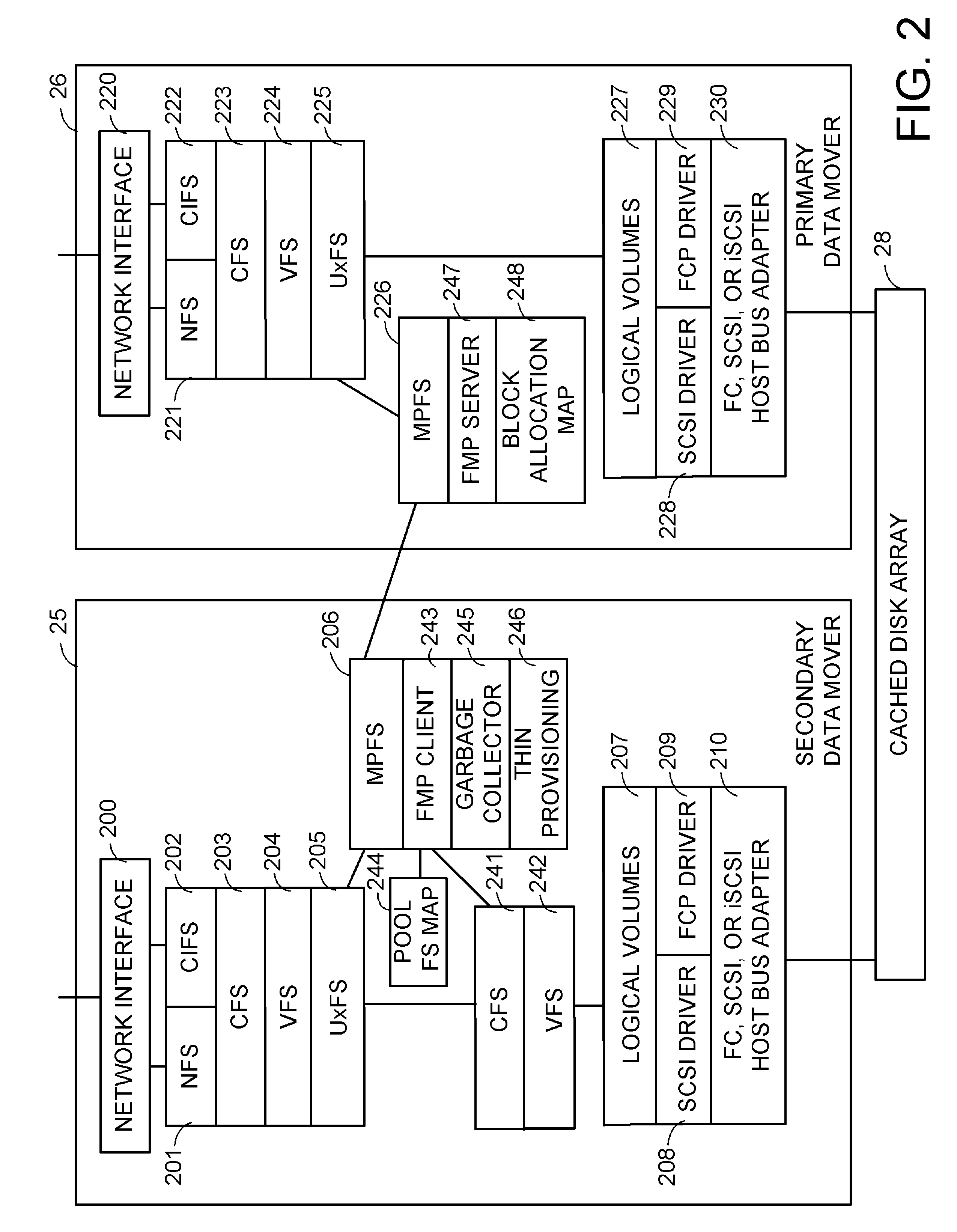

Pre-allocation and hierarchical mapping of data blocks distributed from a first processor to a second processor for use in a file system

ActiveUS20070260842A1Improve I/O performanceReduce block scatter on diskMemory systemsInput/output processes for data processingData processing systemFile system

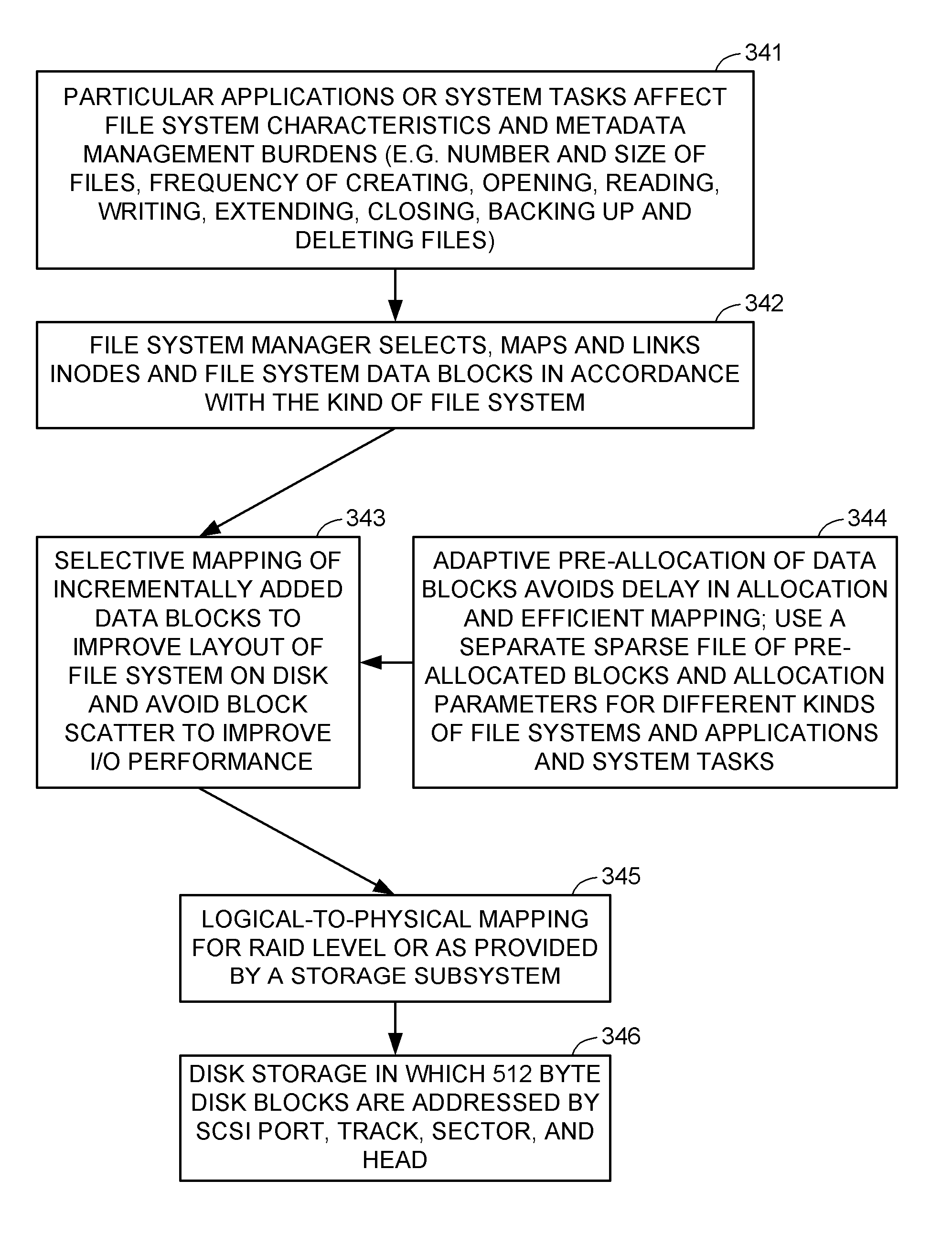

In a data processing system, a first processor pre-allocates data blocks for use in a file system at a later time when a second processor needs data blocks for extending the file system. The second processor selectively maps the logical addresses of the pre-allocated blocks so that when the pre-allocated blocks are used in the file system, the layout of the file system on disk is improved to avoid block scatter and enhance I / O performance. The selected mapping can be done at a program layer between a conventional file system manager and a conventional logical volume layer so that there is no need to modify the data block mapping mechanism of the file system manager or the logical volume layer. The data blocks can be pre-allocated adaptively in accordance with the allocation history of the file system.

Owner:EMC IP HLDG CO LLC

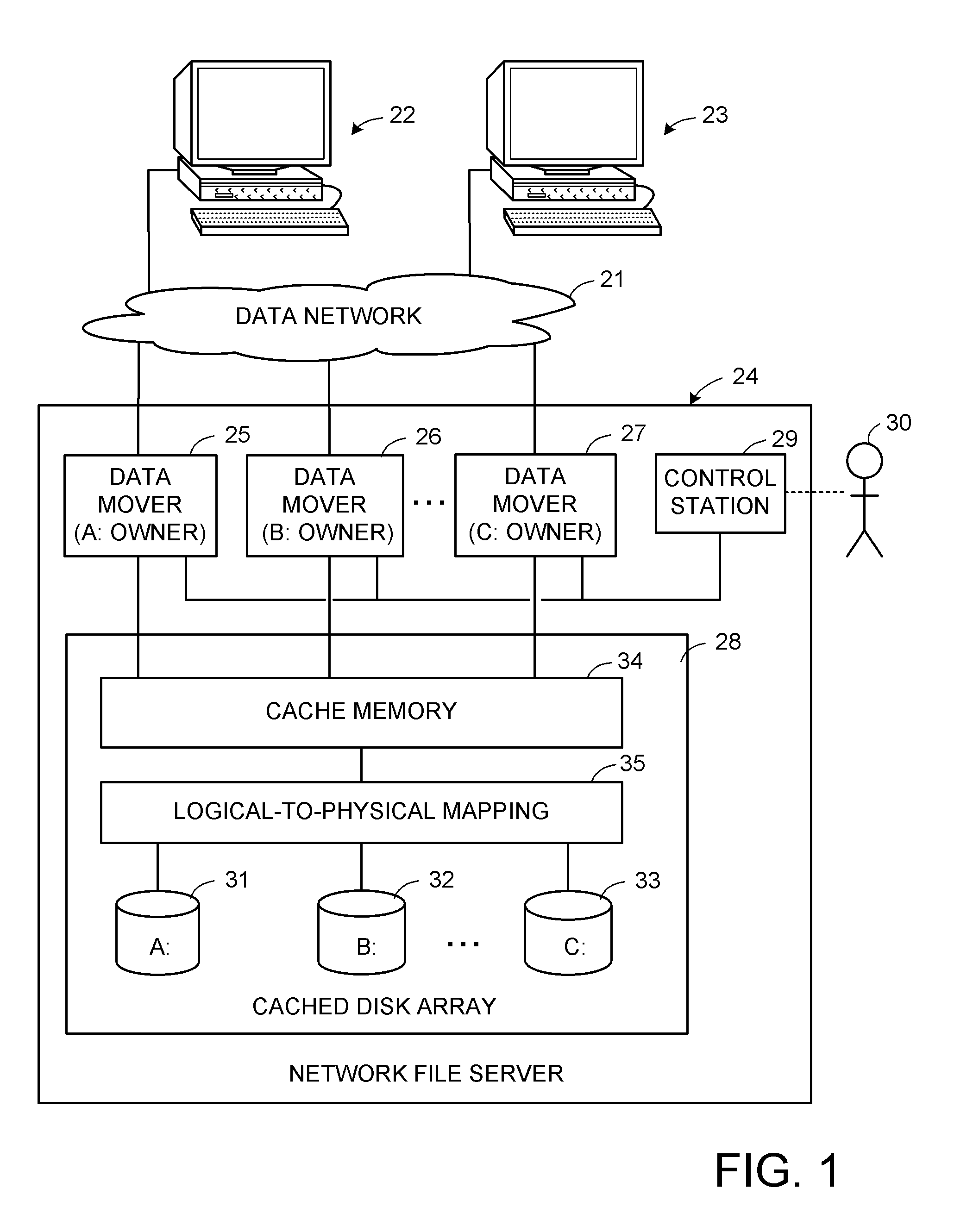

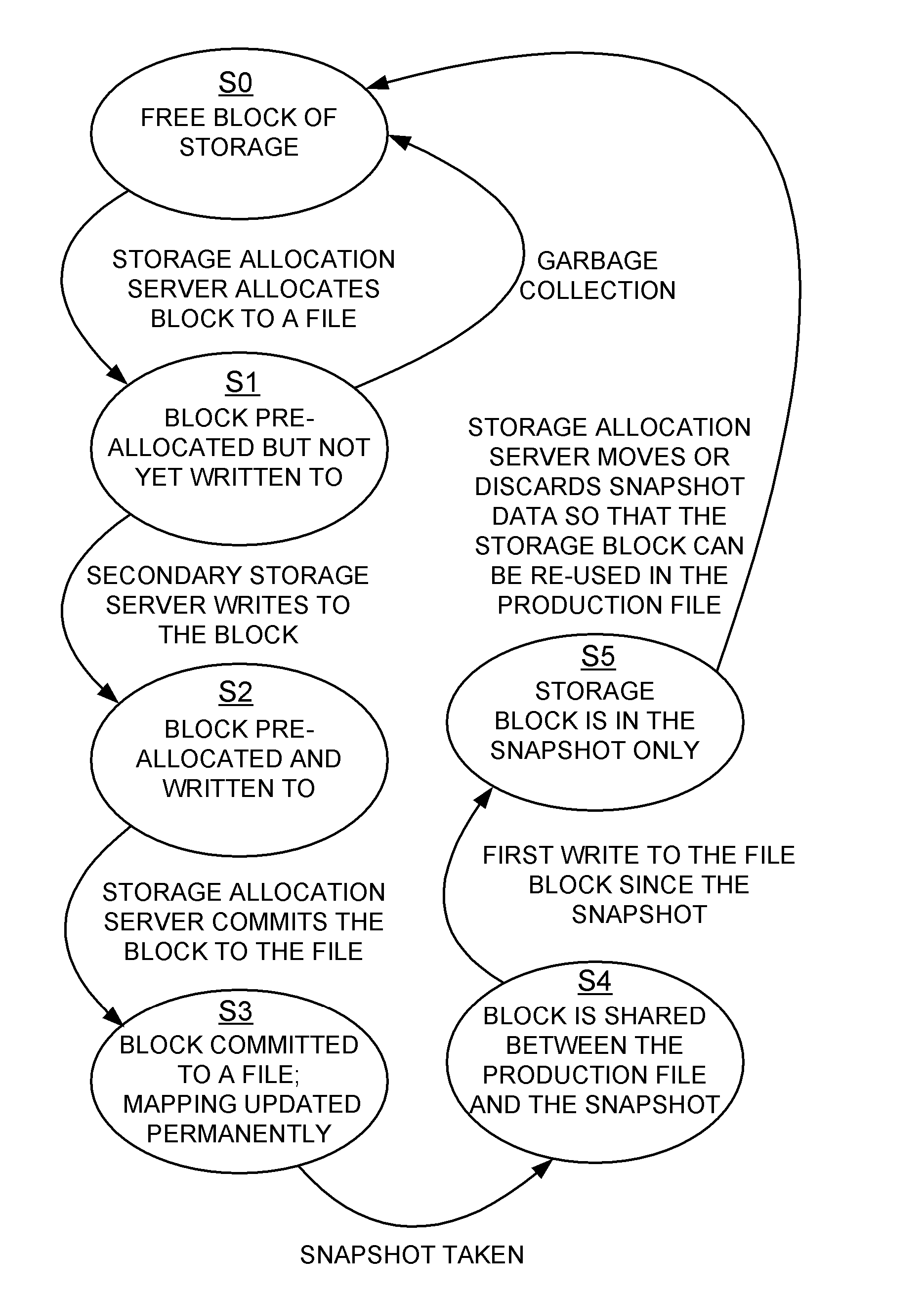

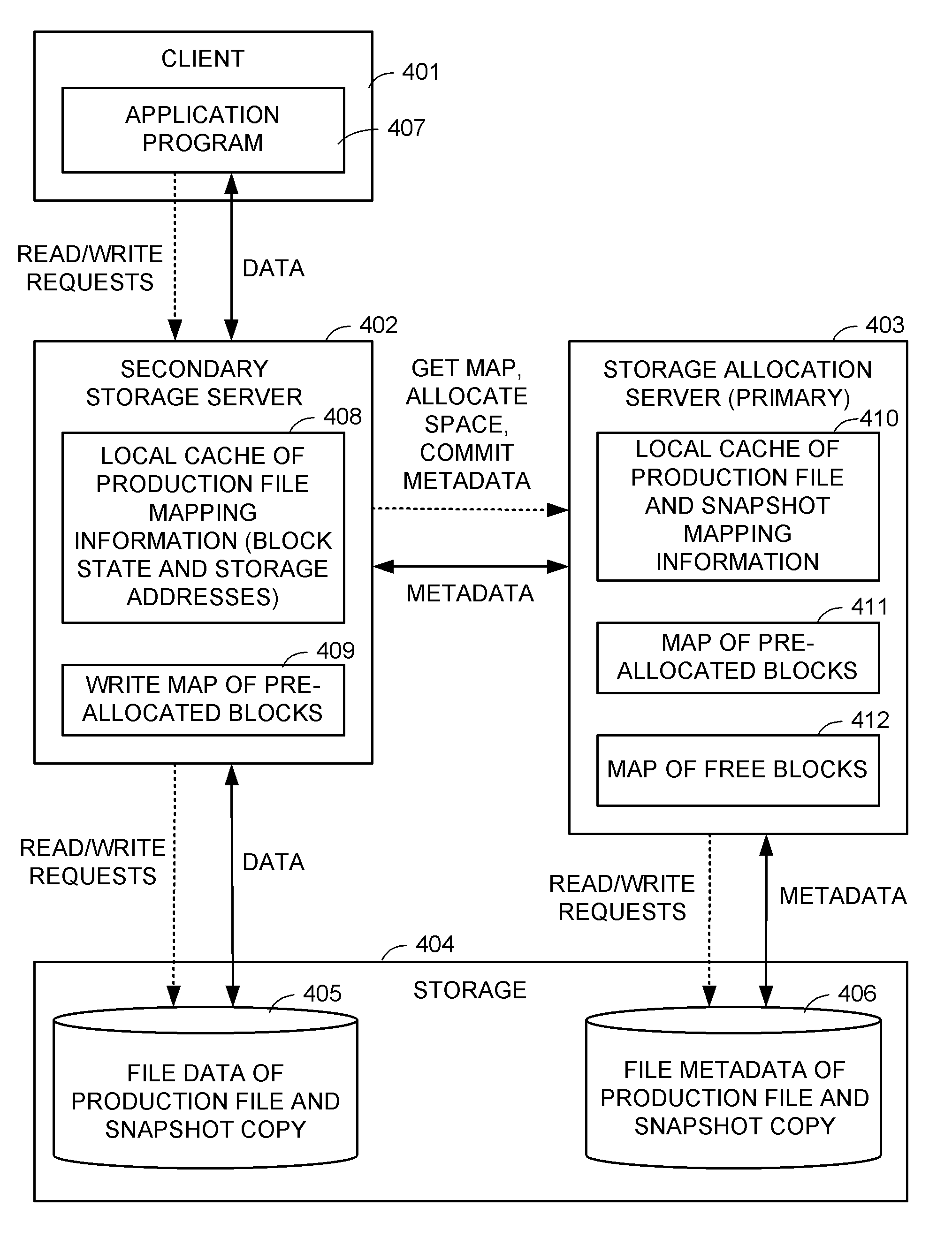

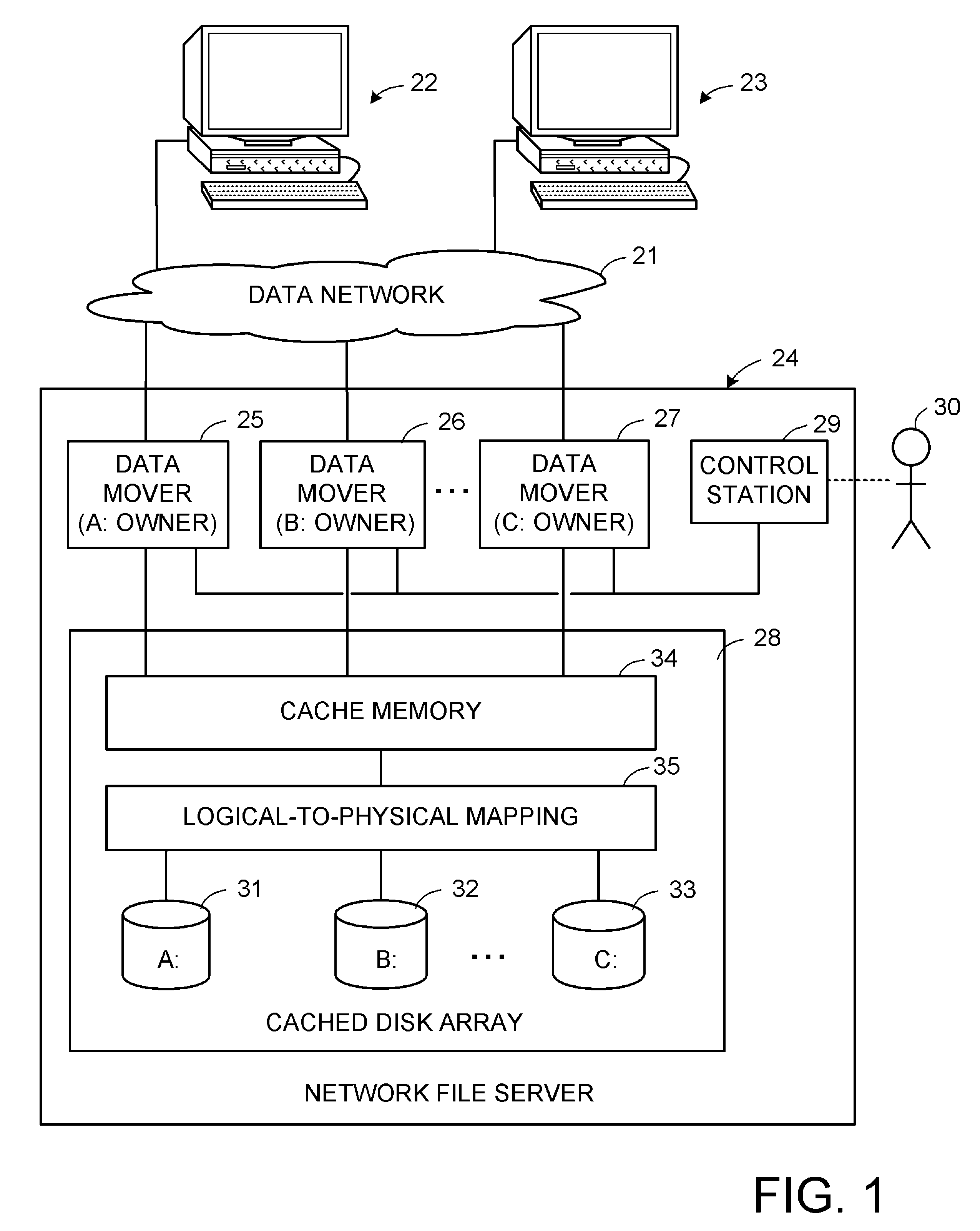

Distributed maintenance of snapshot copies by a primary processor managing metadata and a secondary processor providing read-write access to a production dataset

ActiveUS20070260830A1Degrades I/O performanceImprove I/O performanceMemory loss protectionDigital data processing detailsData setCoprocessor

A primary processor manages metadata of a production dataset and a snapshot copy, while a secondary processor provides concurrent read-write access to the primary dataset. The secondary processor determines when a first write is being made to a data block of the production dataset, and in this case sends a metadata change request to the primary data processor. The primary data processor commits the metadata change to the production dataset and maintains the snapshot copy while the secondary data processor continues to service other read-write requests. The secondary processor logs metadata changes so that the secondary processor may return a “write completed” message before the primary processor commits the metadata change. The primary data processor pre-allocates data storage blocks in such a way that the “write anywhere” method does not result in a gradual degradation in I / O performance.

Owner:EMC IP HLDG CO LLC

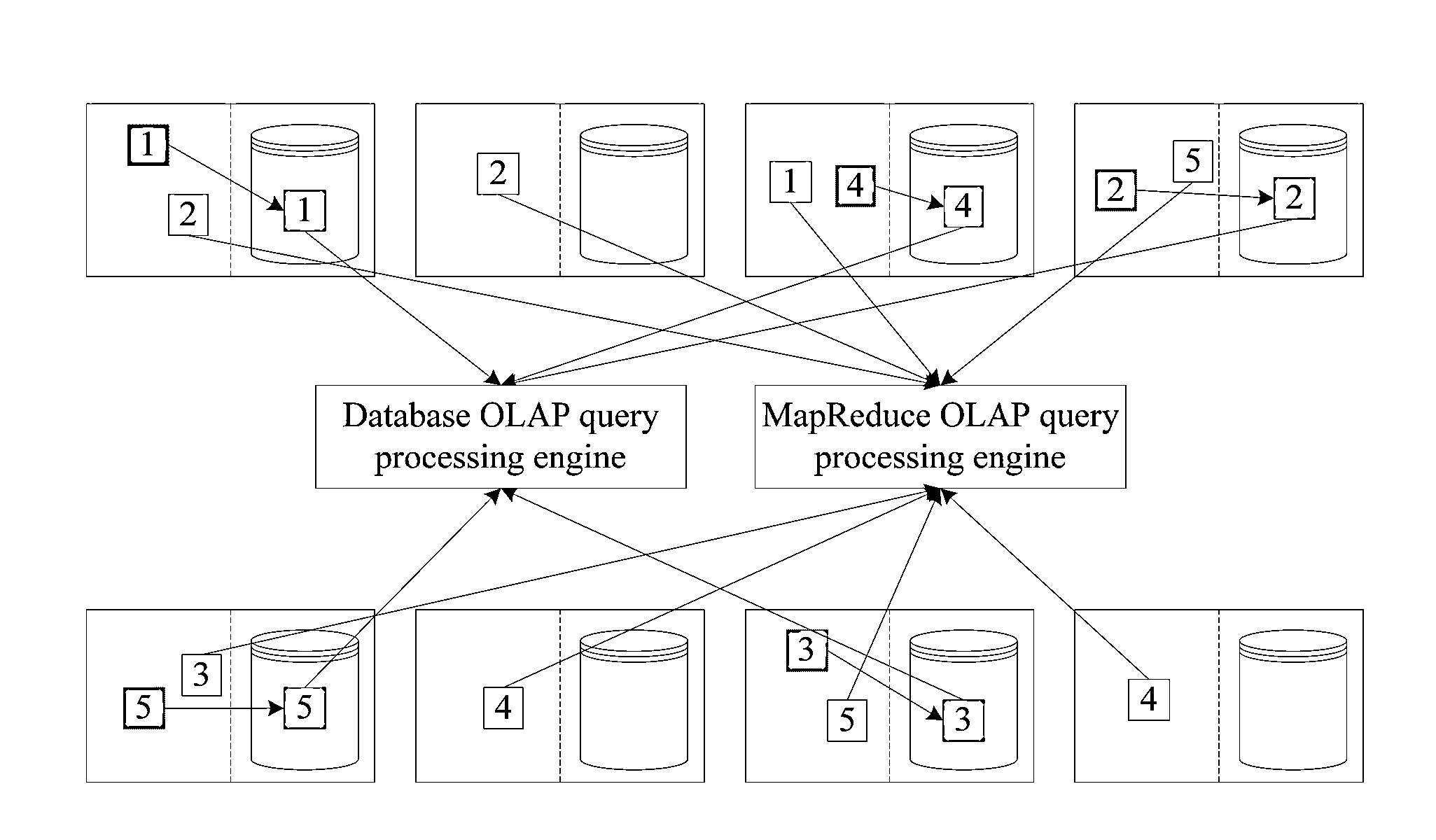

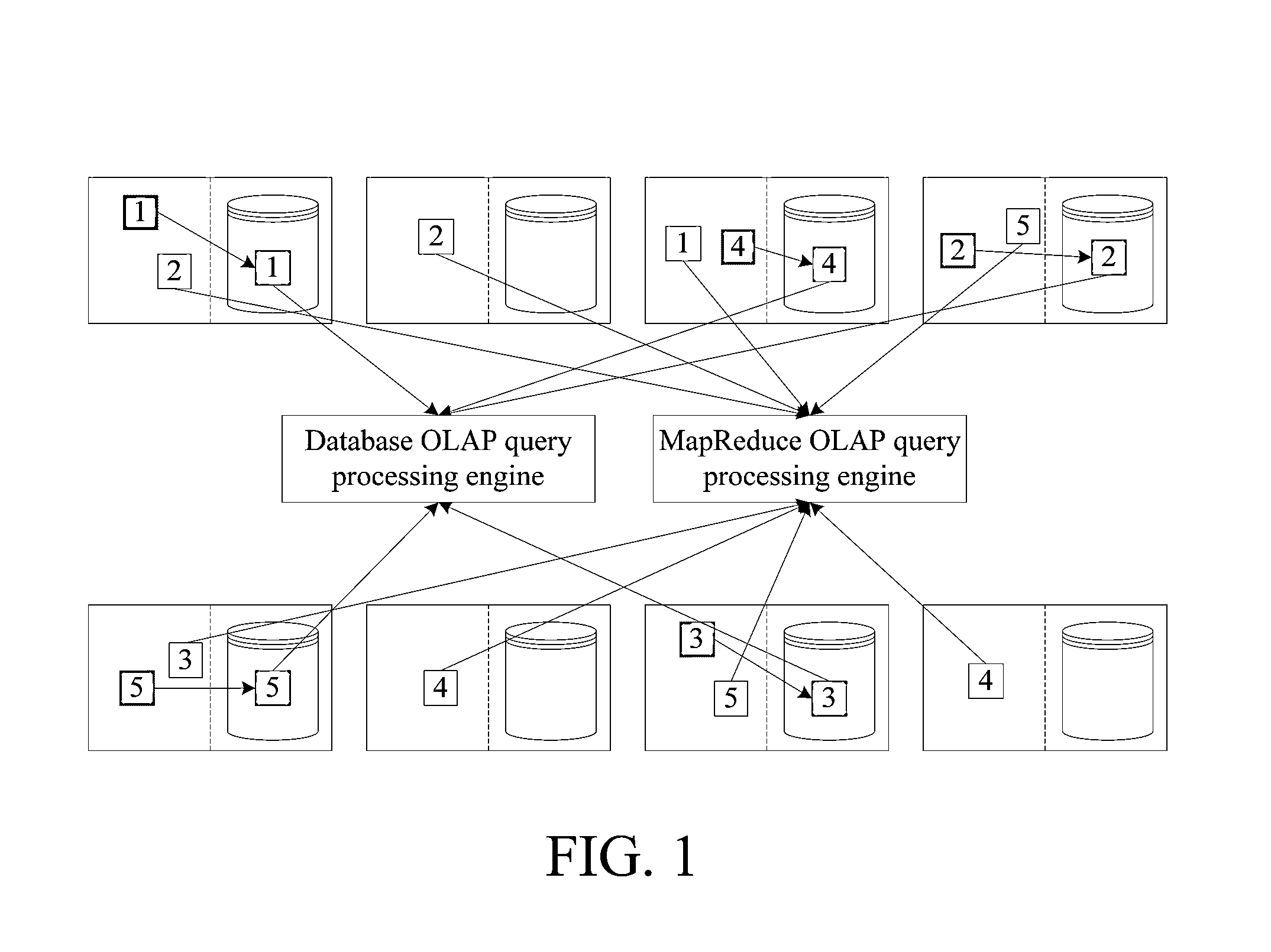

OLAP Query Processing Method Oriented to Database and HADOOP Hybrid Platform

ActiveUS20130282650A1Improve performanceImprove storage efficiencyDigital data processing detailsMulti-dimensional databasesFault toleranceHigh availability

An OLAP query processing method oriented to a database and Hadoop hybrid platform is described. When OLAP query processing is performed, the processing is executed first on a main working copy, and a query processing result is recorded in an aggregate result table of a local database; when a working node is faulty, node information of a fault-tolerant copy corresponding to the main working copy is searched for through namenode, and a MapReduce task is invoked to complete the OLAP query processing task on the fault-tolerant copy. The database technology and the Hadoop technology are combined, and the storage performance of the database and the high expandability and high availability of the Hadoop are combined; the database query processing and the MapReduce query processing are integrated in a loosely-coupled mode, thereby ensuring the high query processing performance, and ensuring the high fault-tolerance performance.

Owner:RENMIN UNIVERSITY OF CHINA

Distributed maintenance of snapshot copies by a primary processor managing metadata and a secondary processor providing read-write access to a production dataset

ActiveUS7676514B2Lower performance requirementsImprove I/O performanceDigital data processing detailsSpecial data processing applicationsData setCoprocessor

A primary processor manages metadata of a production dataset and a snapshot copy, while a secondary processor provides concurrent read-write access to the primary dataset. The secondary processor determines when a first write is being made to a data block of the production dataset, and in this case sends a metadata change request to the primary data processor. The primary data processor commits the metadata change to the production dataset and maintains the snapshot copy while the secondary data processor continues to service other read-write requests. The secondary processor logs metadata changes so that the secondary processor may return a “write completed” message before the primary processor commits the metadata change. The primary data processor pre-allocates data storage blocks in such a way that the “write anywhere” method does not result in a gradual degradation in I / O performance.

Owner:EMC IP HLDG CO LLC

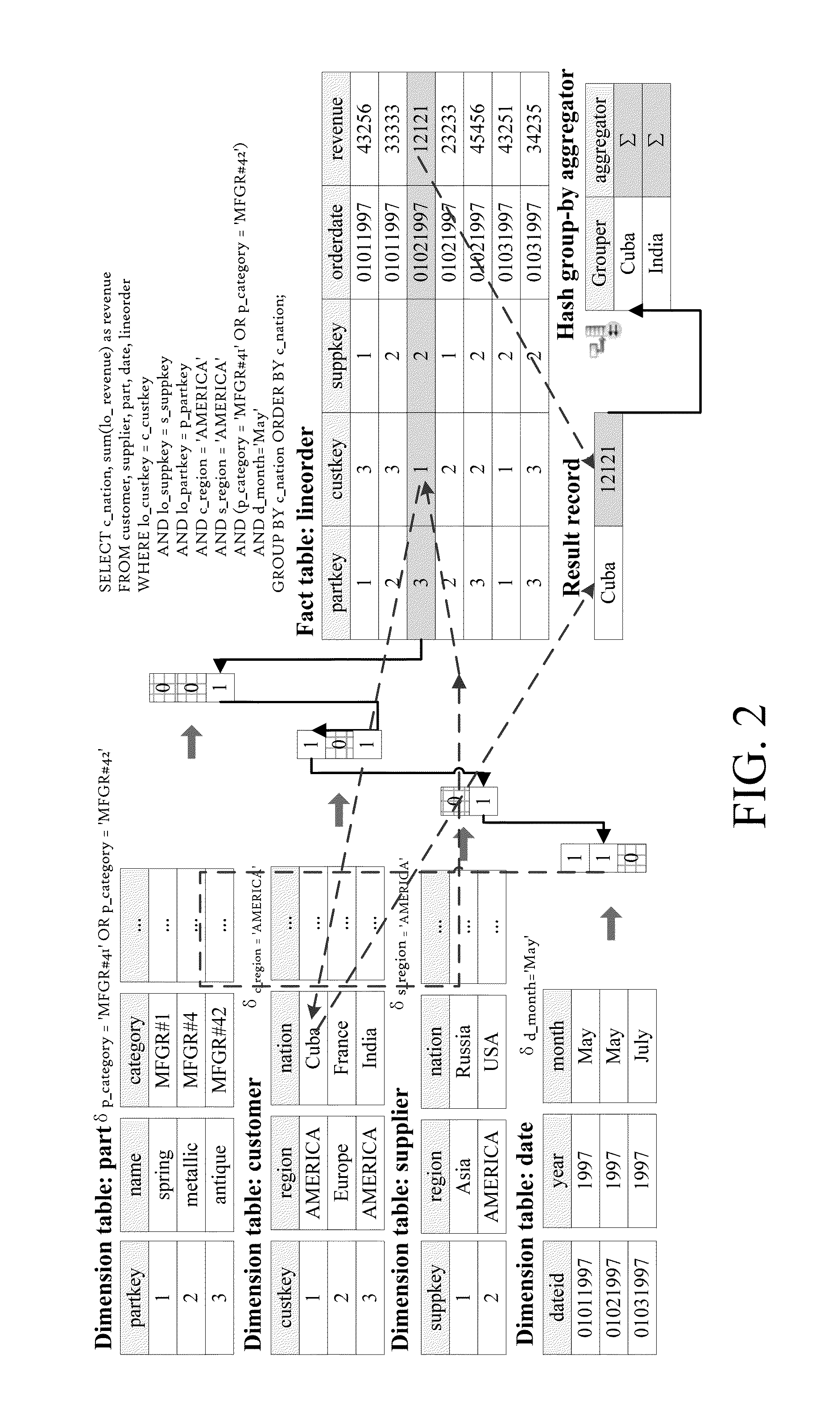

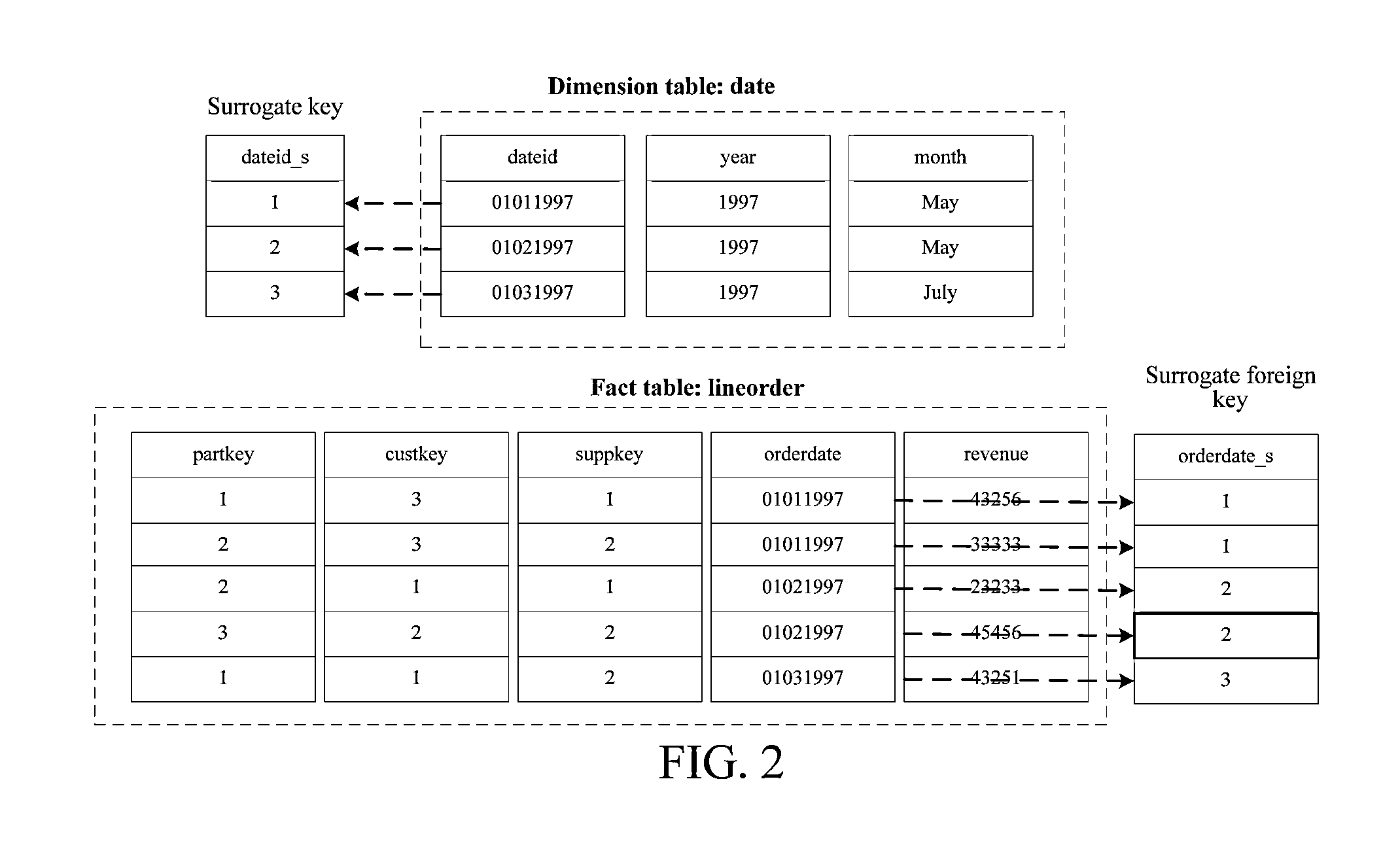

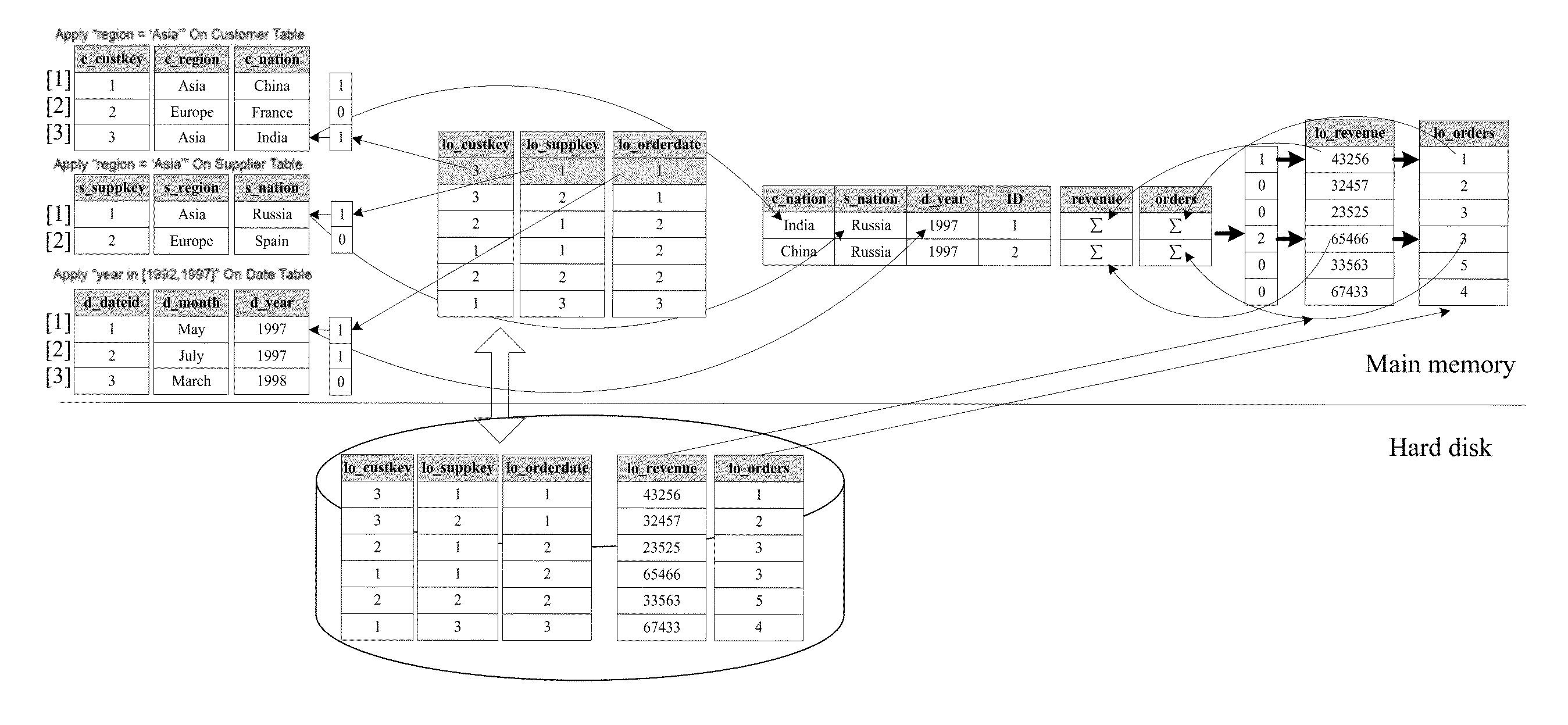

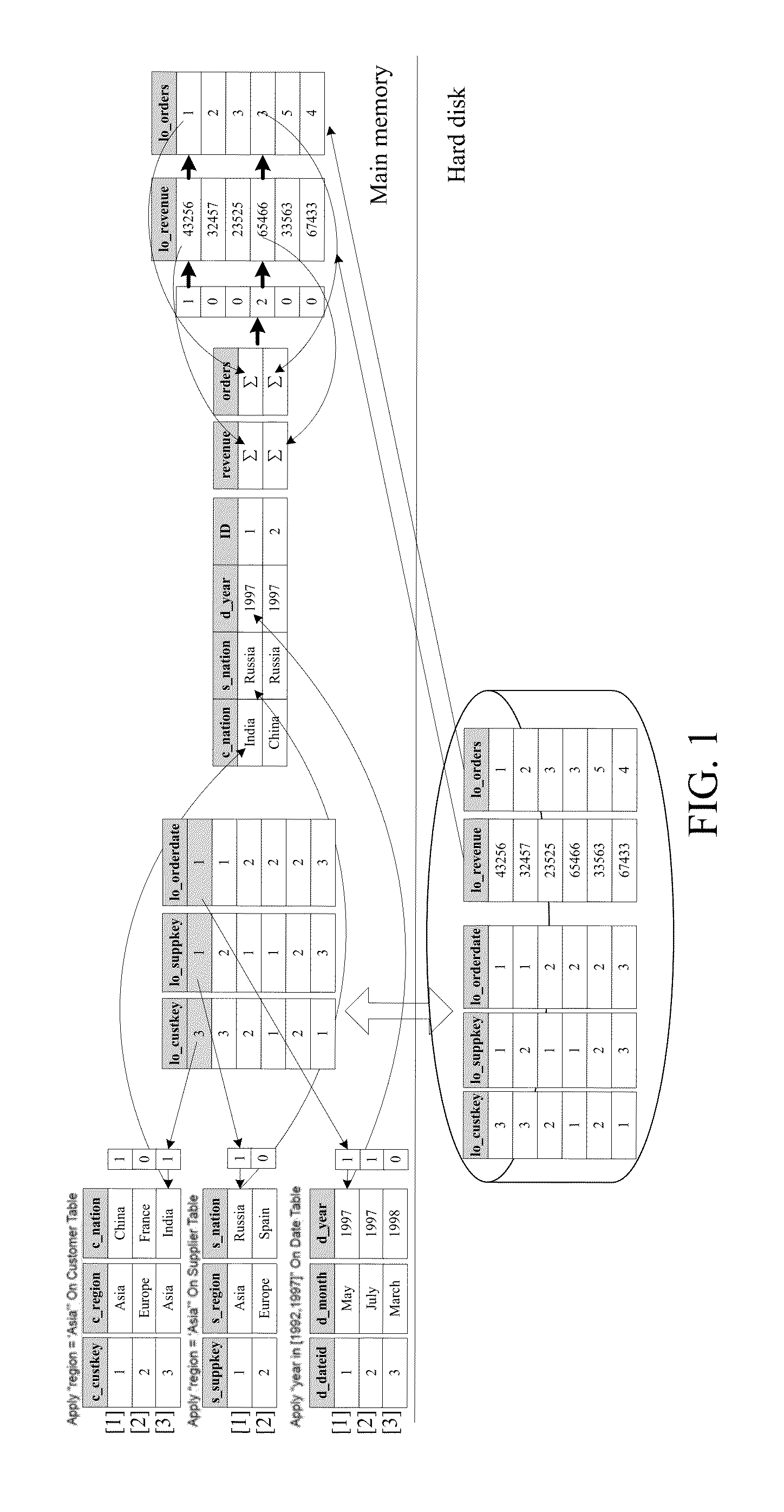

Multi-Dimensional OLAP Query Processing Method Oriented to Column Store Data Warehouse

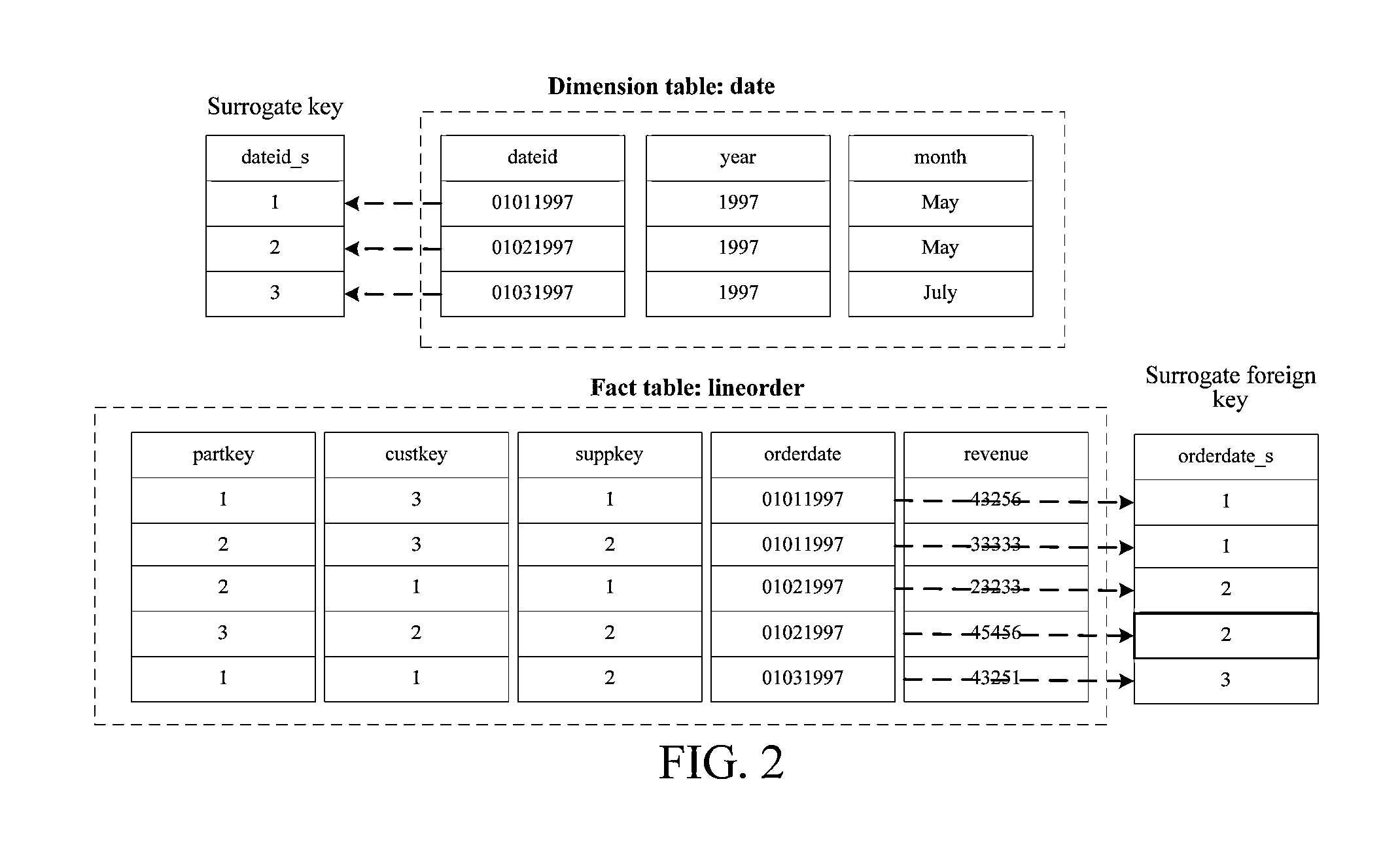

ActiveUS20130275365A1Improve I/O performanceReduce complexityDigital data processing detailsMulti-dimensional databasesOne passSurrogate key

A multi-dimensional OLAP query processing method oriented to a column store data warehouse is described. With this method, an OLAP query is divided into a bitmap filtering operation, a group-by operation and an aggregate operation. In the bitmap filtering operation, a predicate is first executed on a dimension table to generate a predicate vector bitmap, and a join operation is converted, through address mapping of a surrogate key, into a direct dimension table tuple access operation; in the group-by operation, a fact table tuple satisfying a filtering condition is pre-generated into a group-by unit according to a group-by attribute in an SQL command and is allocated with an increasing ID; and in the aggregate operation, group-by aggregate calculation is performed according to a group item of a fact table filtering group-by vector through one-pass column scan on a fact table measure attribute.

Owner:RENMIN UNIVERSITY OF CHINA

Pre-allocation and hierarchical mapping of data blocks distributed from a first processor to a second processor for use in a file system

ActiveUS7945726B2Reduce block scatter on diskImprove I/O performanceMemory systemsInput/output processes for data processingData processing systemFile system

Owner:EMC IP HLDG CO LLC

Method and system for internal cache management of solid state disk based on novel memory

ActiveCN103049397AOvercome read and write imbalanceEasy to operateMemory architecture accessing/allocationMemory adressing/allocation/relocationFlash memory controllerCache management

The invention provides a method and a system for internal cache management of a solid state disk based on a novel memory. The system for internal cache management of the solid state disk comprises an SATA (serial advanced technology attachment) interface controller, a microprocessor, a DRAM (dynamic random access memory), a local bus, a flash controller, an NAND flash and a PCRAM (phase change random access memory) cache. The PCRAM cache comprises a data block displacement area and a mapping table storage area, wherein the data block replacement area is used for storing data blocks displaced to the PCRAM cache from the DRAM, and the mapping table storage area is used for storing mapping tables among logic addresses and physical addresses of data pages. By the method for internal cache management of the SSD (solid state disk) based on the PCRAM, write cache for the solid state disk is realized to overcome read-write imbalance of the solid state disk, write performances are effectively improved, random write operation and wiping operation of the solid state disk are decreased, accordingly, the service life of the solid state disk is prolonged, and the integral I / O (input / output) performance of the solid state disk is improved.

Owner:SHANGHAI INST OF MICROSYSTEM & INFORMATION TECH CHINESE ACAD OF SCI

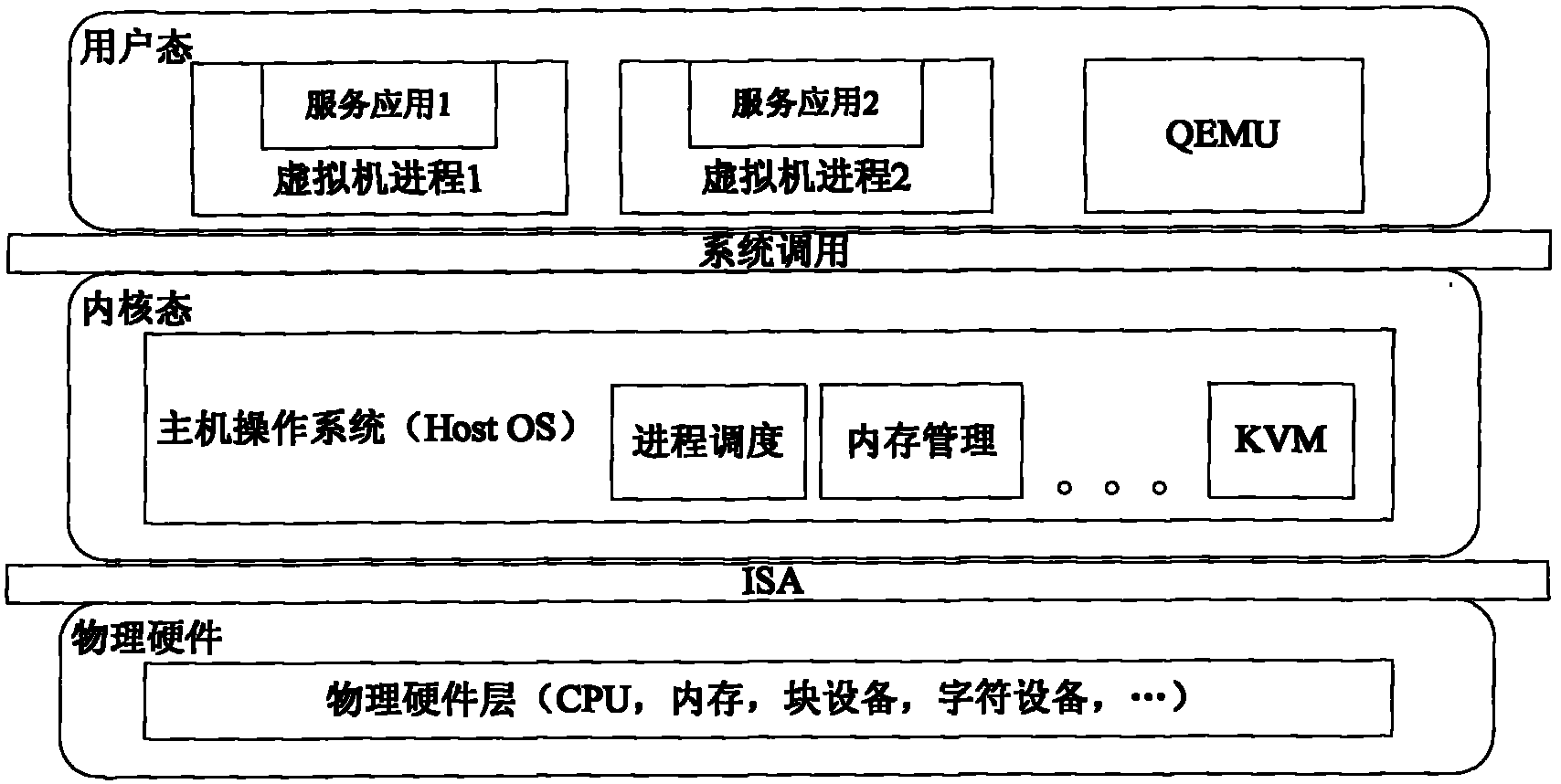

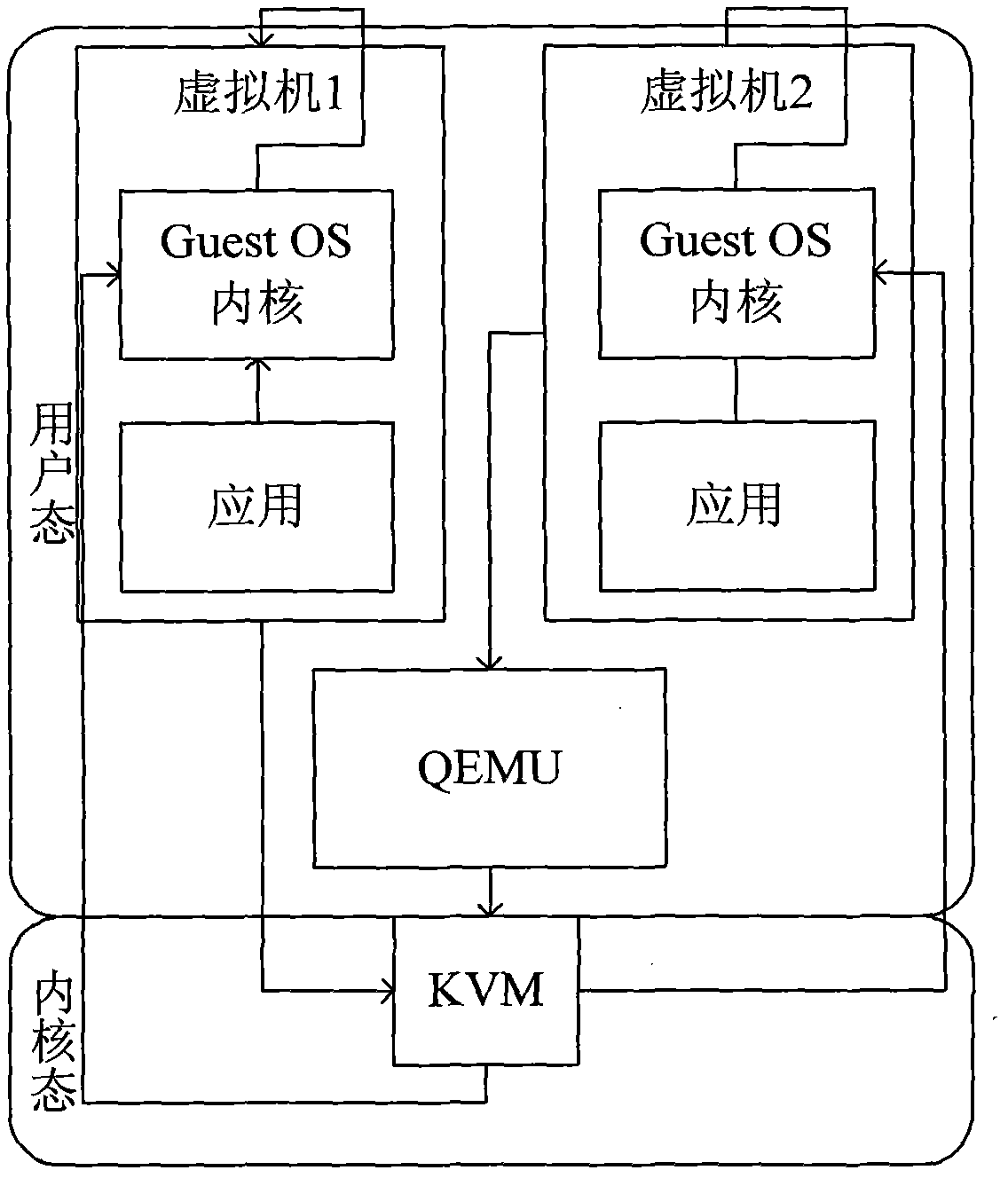

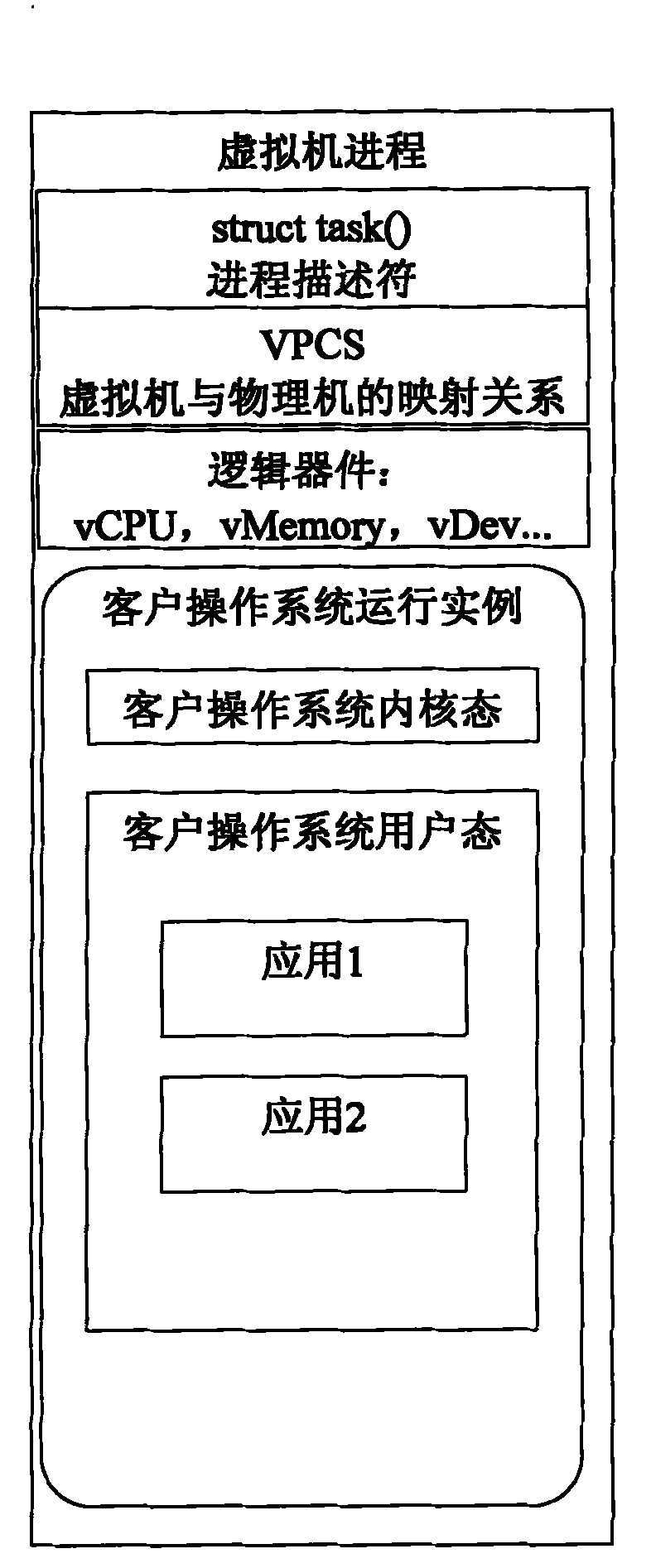

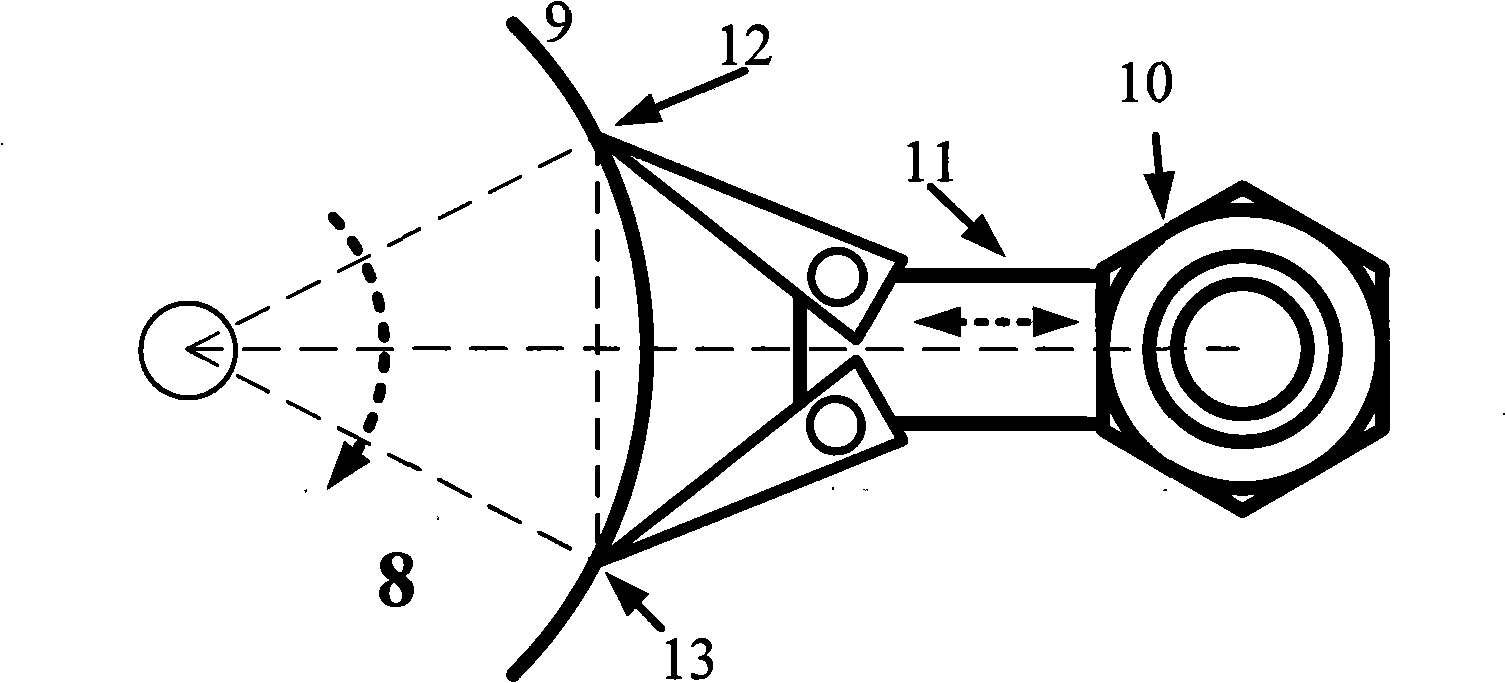

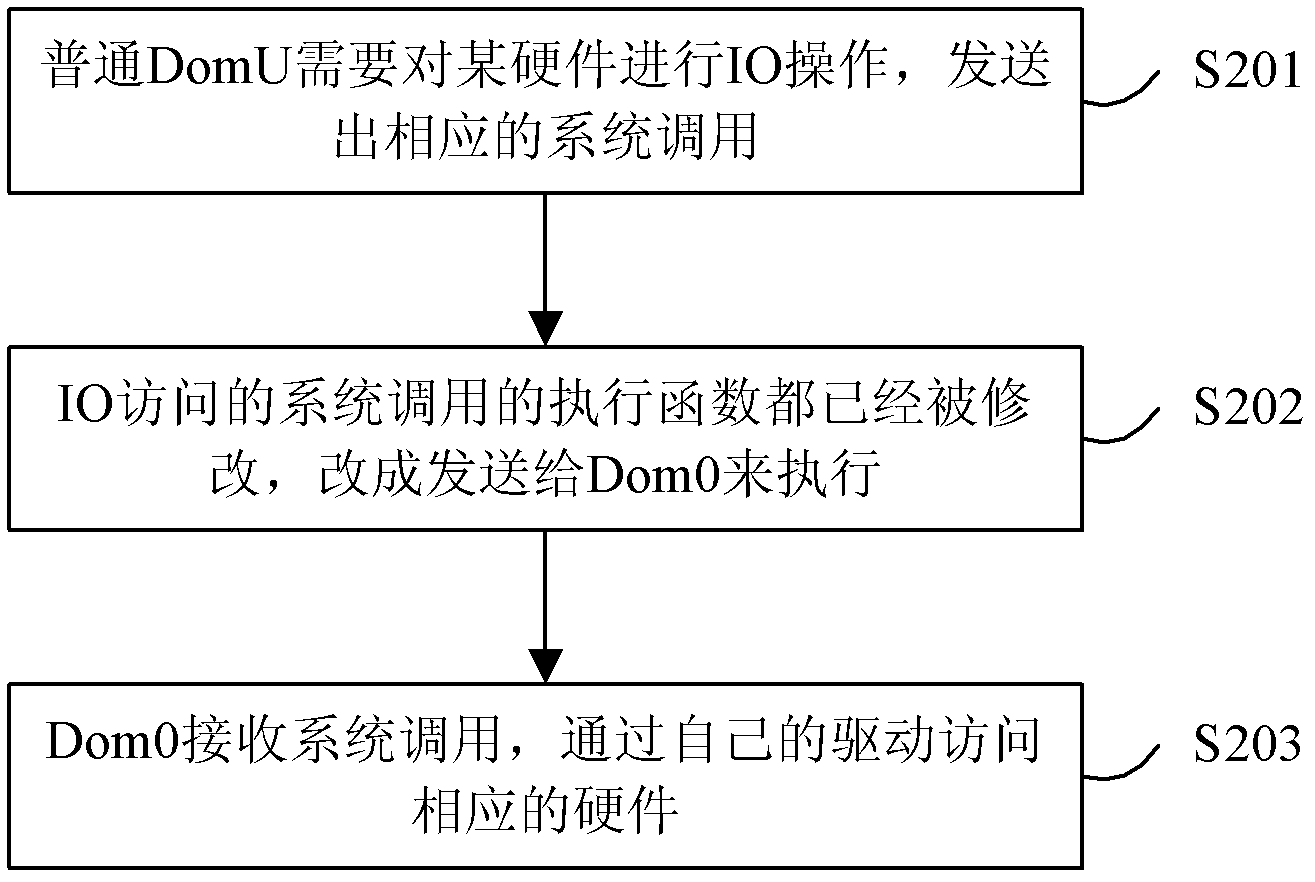

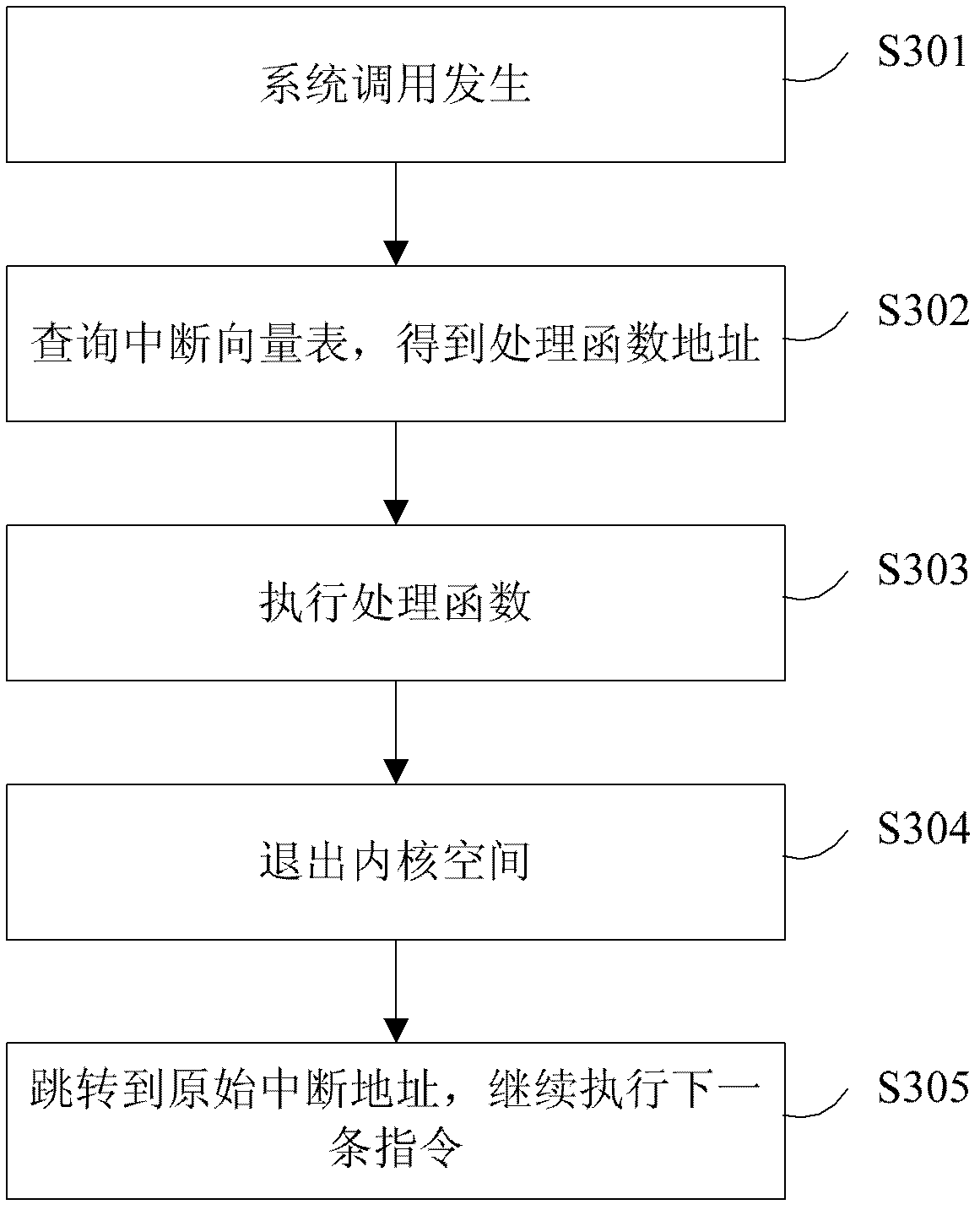

Method for implementing organizational architecture mode of kernel-based virtual machine (KVM)

InactiveCN101968746AEnhanced interactionImprove I/O performanceMultiprogramming arrangementsSoftware simulation/interpretation/emulationVirtualizationData center

The invention mainly relates to a kernel-based virtual machine (KVM) architecture and an optimization technique thereof. Based on a KVM, the software structure thereof is optimized to fulfill the purposes of reducing the host resource occupied by the virtual machine and providing more efficient virtual machine running mode. In particular, a VPCS (virtual process control structure) created for each virtual machine process provides a good interface for implementing direct mapping between a physical resource and a logic resource. Meanwhile, because the hardware virtualization technique needs the support of a hardware technique, but a big part of servers owned by current large data centers and companies do not support the hardware virtualization technique, the optimization technique enables the virtualization technique to play a greater role in the calculation resource of the part. Therefore, the invention has good application prospect.

Owner:BEIHANG UNIV

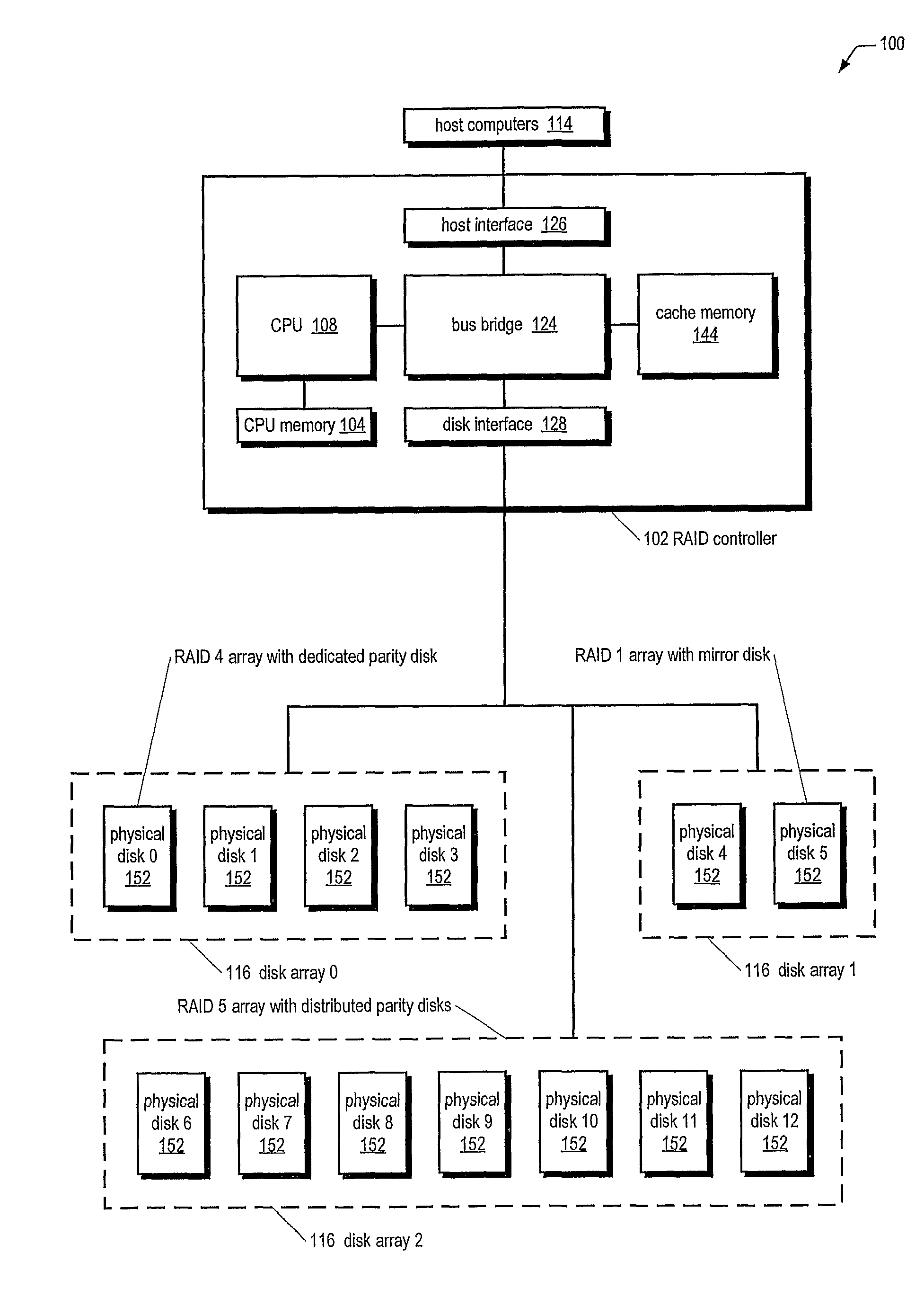

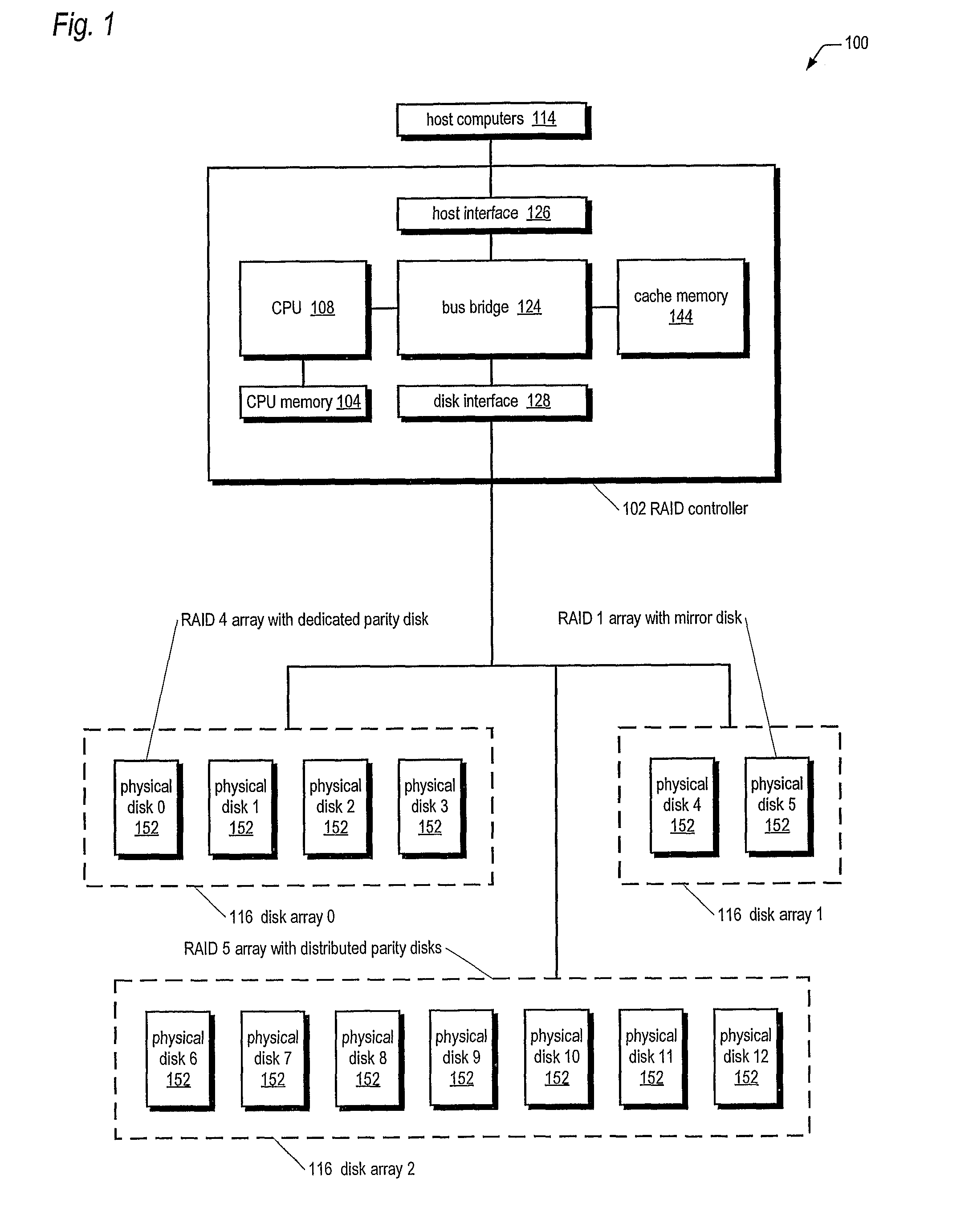

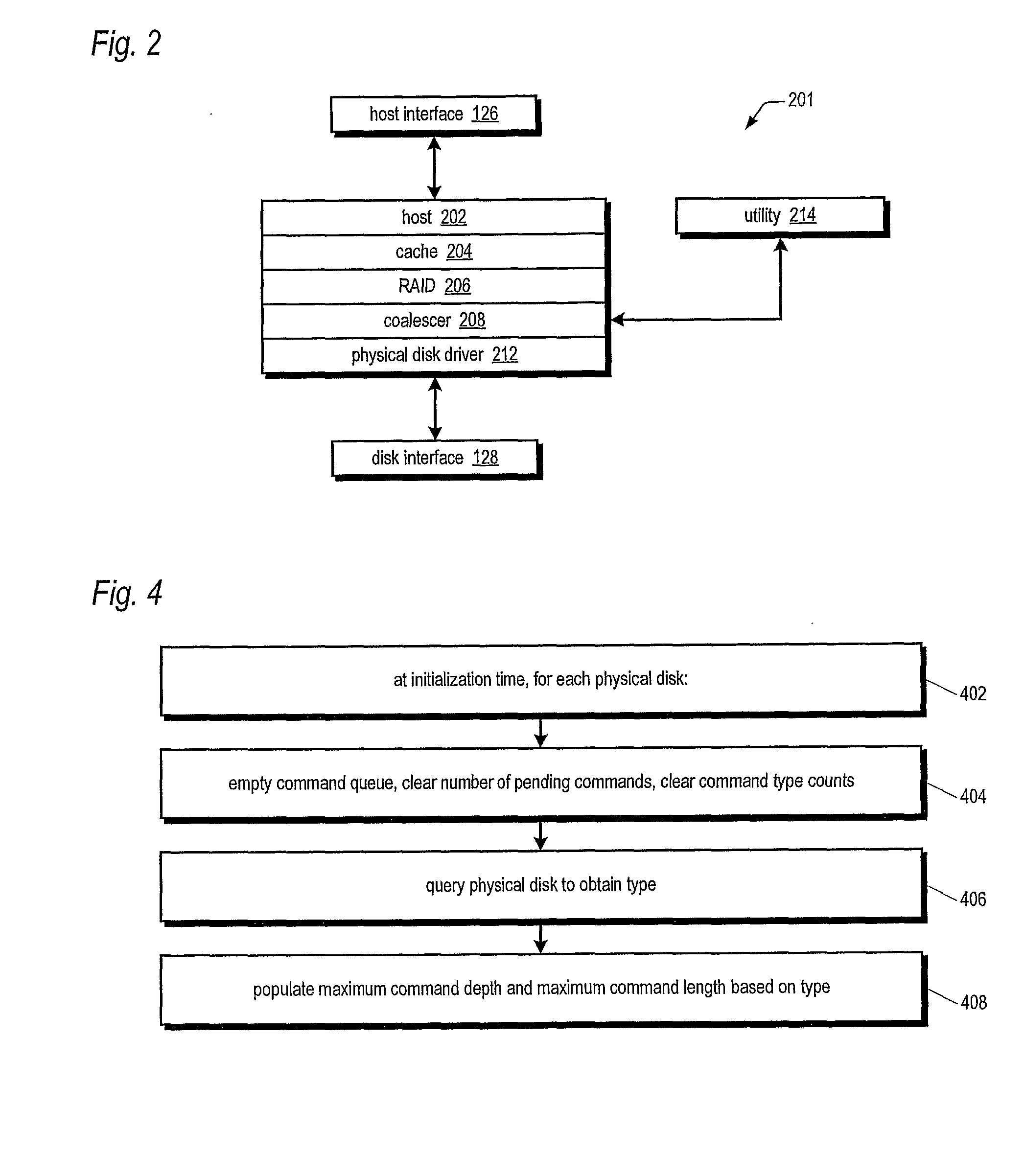

Command-Coalescing Raid Controller

ActiveUS20080120463A1Reduce in quantityHigh bandwidthMemory systemsInput/output processes for data processingRAIDComputer users

A RAID controller is disclosed. The controller controls at least one redundant array of physical disks, receives I / O requests for the array from host computers, and responsively generates disk commands for each of the disks. Some commands specify host computer user data, and others specify internally generated redundancy data. The controller executes coalescer code that maintains the commands on a queue for each disk. Whenever a disk completes a command, the coalescer determines whether there are two or more commands on the disk's queue that have the same read / write type and specify adjacent locations on the disk, and if so, coalesces them into a single command, and issues the coalesced command to the disk. The coalescer immediately issues a received command, rather than queuing it, if the number of pending commands to the disk is less than a maximum command depth, which may be different for each disk.

Owner:DOT HILL SYST

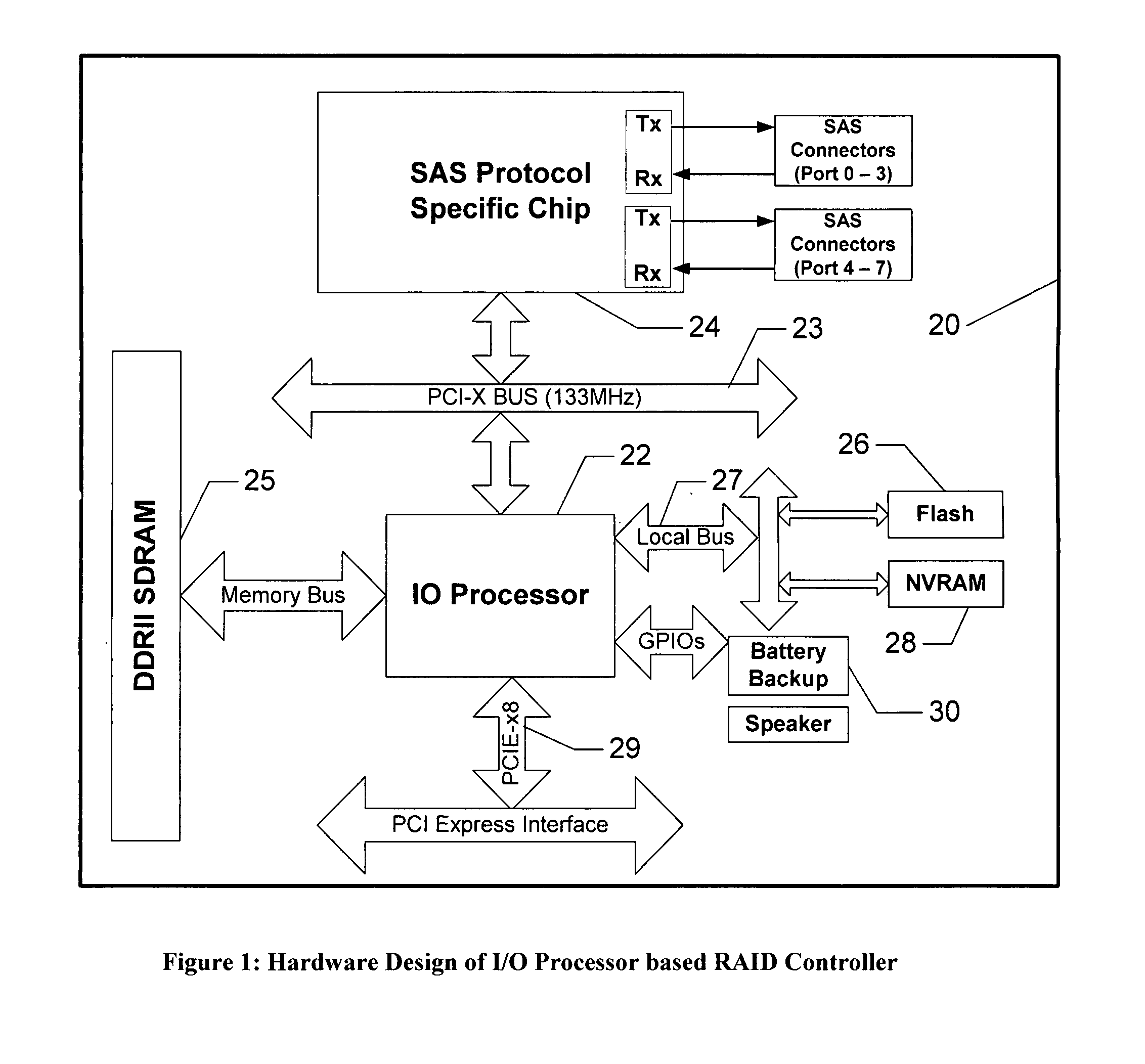

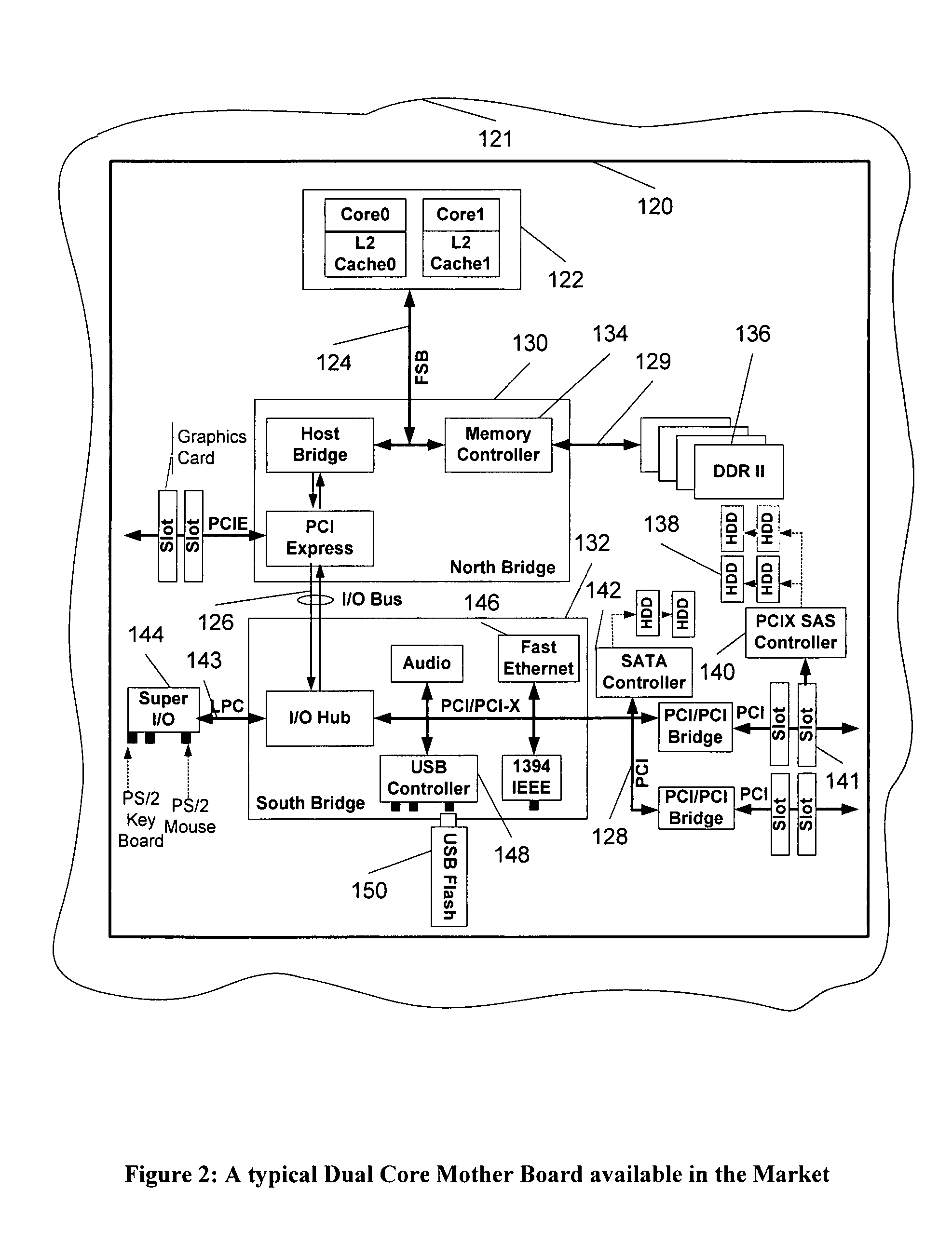

Apparatus and method for use of redundant array of independent disks on a muticore central processing unit

InactiveUS20090119449A1Simple structureEasy to processError detection/correctionMemory systemsRAIDOperational system

The invention is based on running the entire RAID stack on a dedicated core of one of the cores of the multi-core CPU. This makes it possible to eliminate the use of a conventional separate RAID controller and replace its function with a special flash memory chip that contains a program, which isolates the dedicated cores from the rest of the operating system and converts it into a powerful RAID engine. A part of the memory of the flash memory chip can also be used for storing data at power failure. This makes it possible to avoid having the battery backup module. The invention of the method of RAID on multi-core CPU may have many useful applications on an enterprise level, e.g., for increased accessibility and preserving critical data.

Owner:CHAUDHURI PUBALI RAY +2

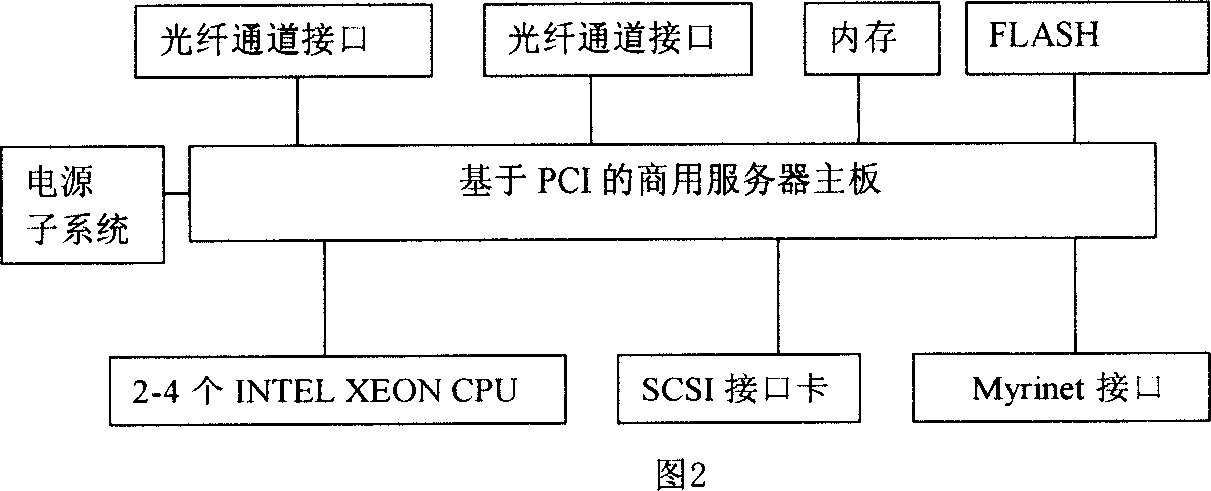

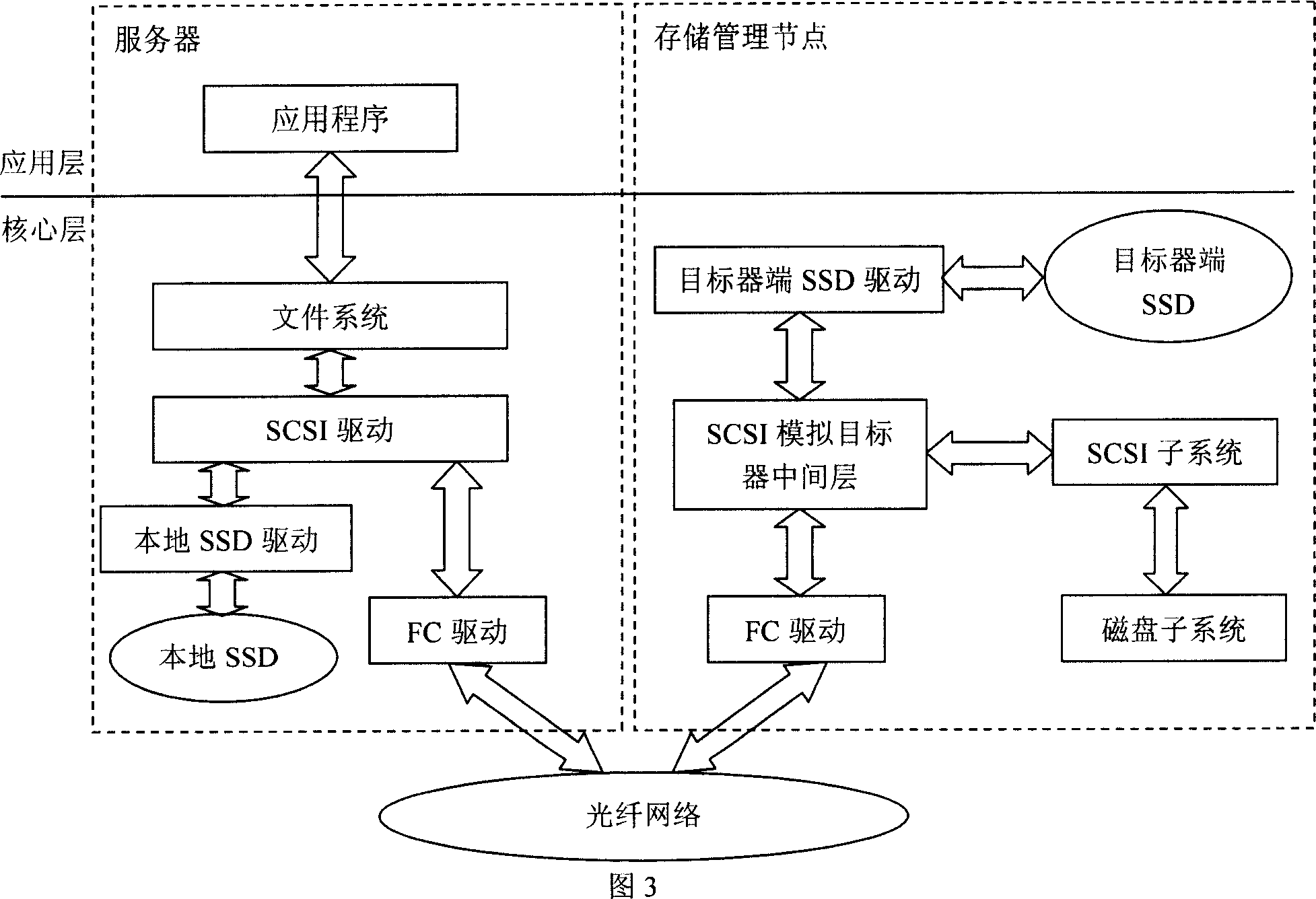

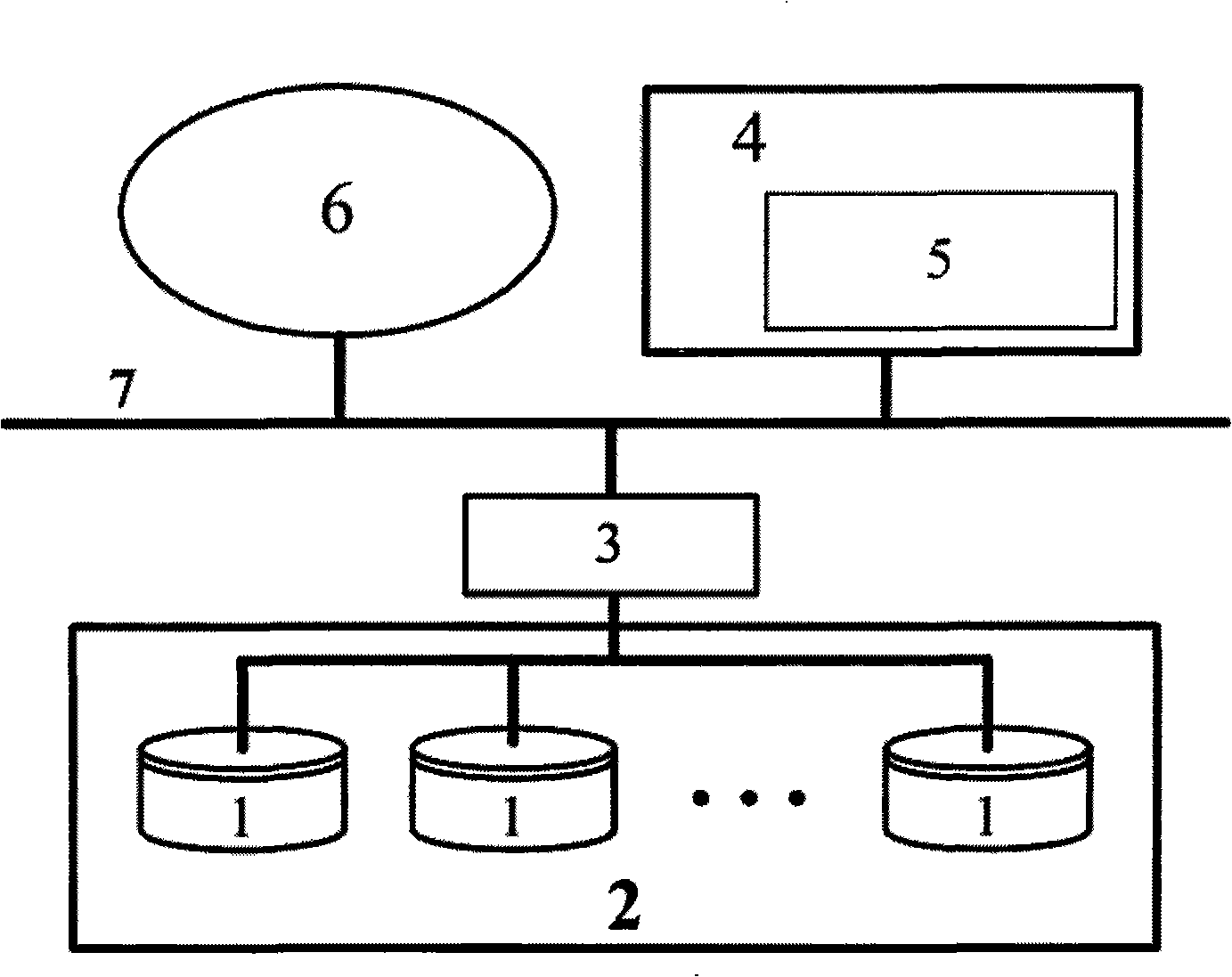

Method for realizing high speed solid storage device based on storage region network

InactiveCN1945537AImprove I/O performanceImprove performanceInput/output to record carriersMemory adressing/allocation/relocationStorage area networkVirtual hardware

This invention belongs to the SAN technology field of storage area network, the character is: it organizes the frontal server in SAN and excrescent DRAM memory resources in the object node server together to form a high speed virtual hardware TH-SSD, and gives users the same read-write connect of normal disk. This method uses virtual technology to project the primary dispersive memory resources to a continuous virtual disk address spaces, all the data requests to TH-SSD from front are the request to virtual disk. Factually, it expends the usable virtual disk spaces because of using expending memory technology of X86 platform; and it ensures date integrality in TH-SSD because of using the image strategy with double log and double copy; and it realizes the no gap supporting to FC SAN and IP SAN, and makes TH-SSD can good work not only in fiber-optic network, but also in Ethernet.

Owner:TSINGHUA UNIV

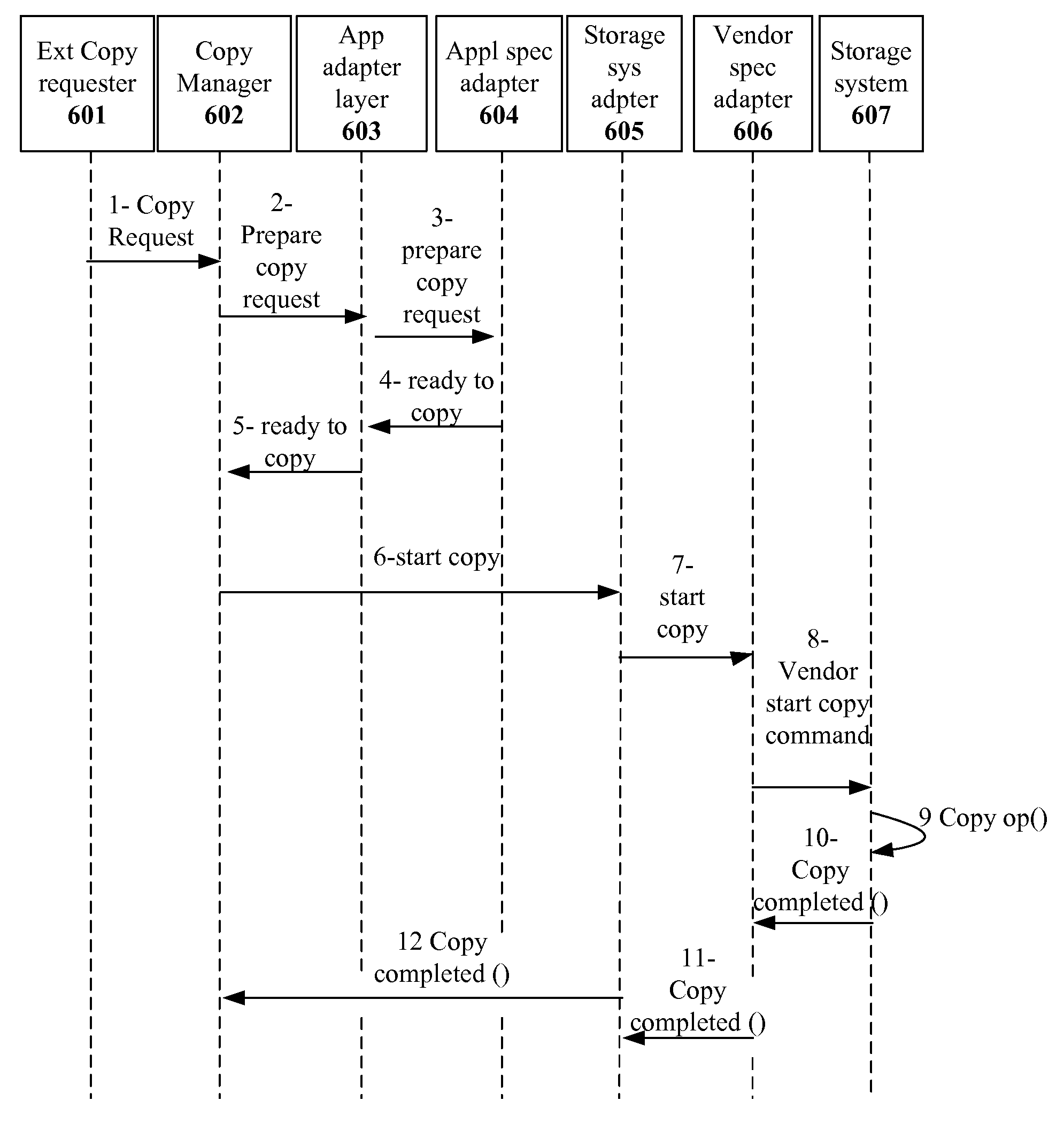

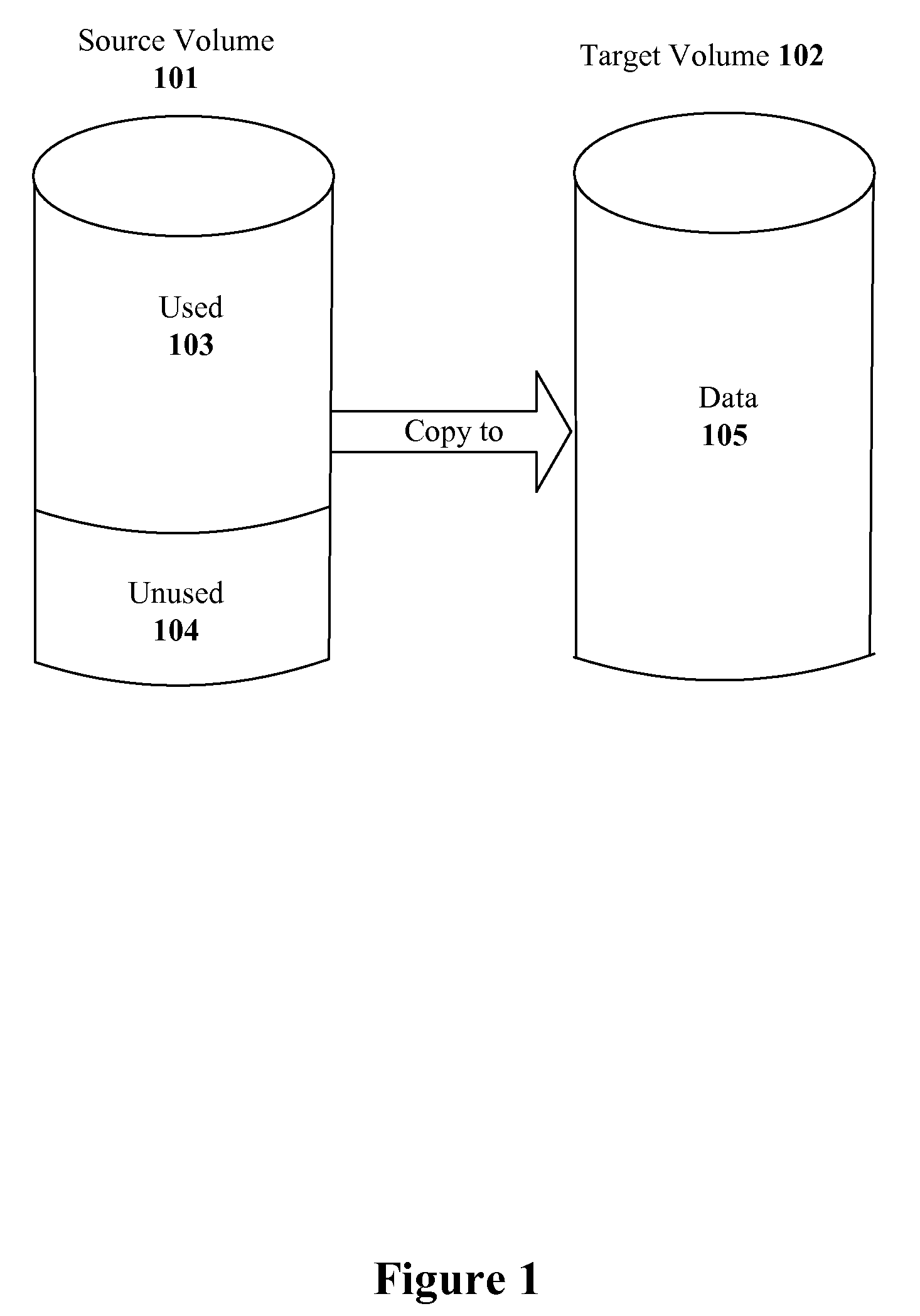

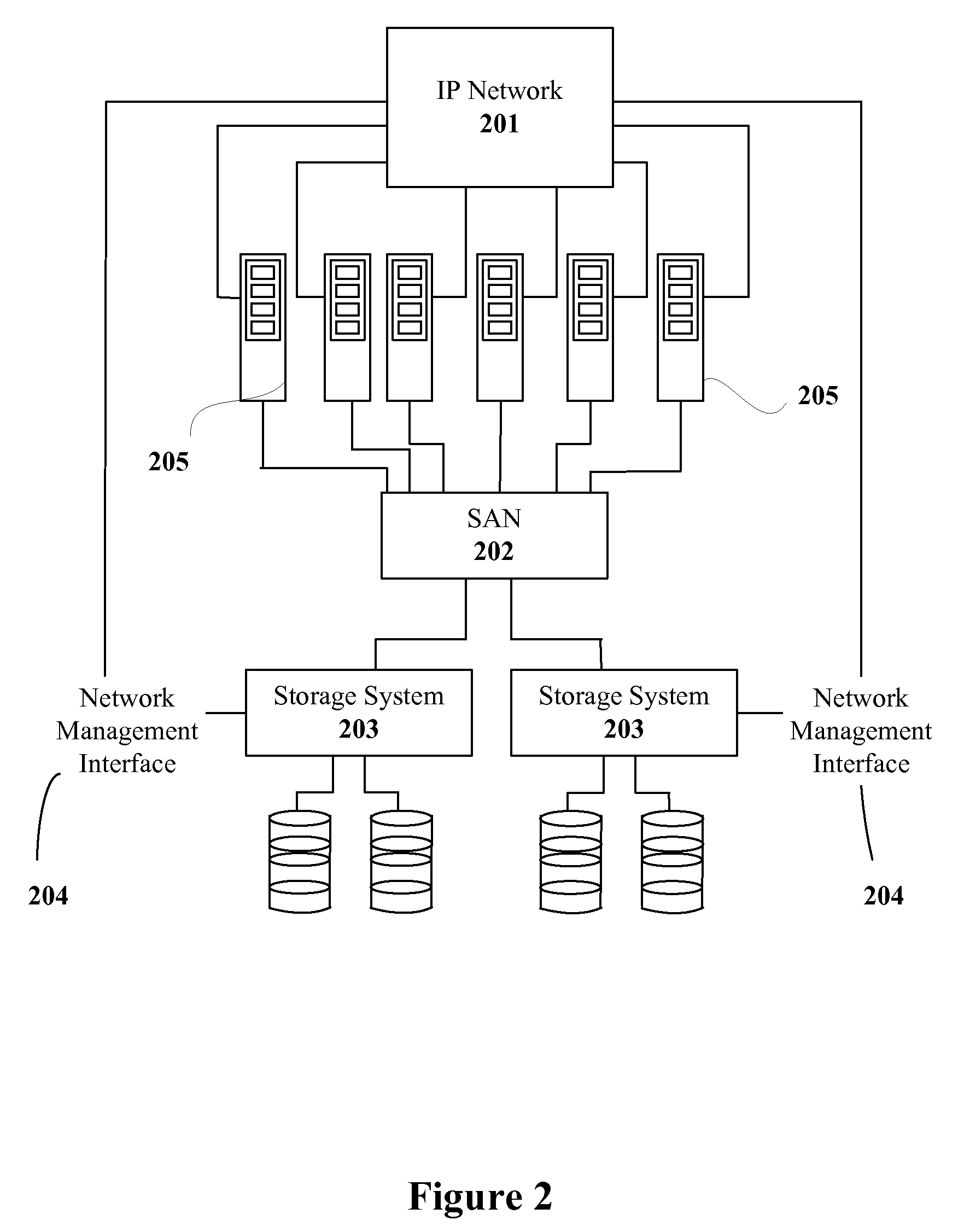

Application Integrated Storage System Volume Copy and Remote Volume Mirror

InactiveUS20080183988A1Reduce processBandwidthMemory loss protectionError detection/correctionApplication softwareApplication Adapter

Systems, devices and methods are described for copying used data block within a source volume to a target volume. A copy manager is provided on a host system for coordinating external copy requests originating from external sources. An application adapter layer is provided that provides lists of the blocks that are occupied on the source volume by the specific applications. The occupied block lists is forwarded along with the copy requests to a storage system adapter layer. The storage system copies only the data that are written in the occupied blocks of the source volume to the target volume. During the copy operation, all I / O requests are paused and resumed after the copy operation is complete.

Owner:LSI CORPORATION

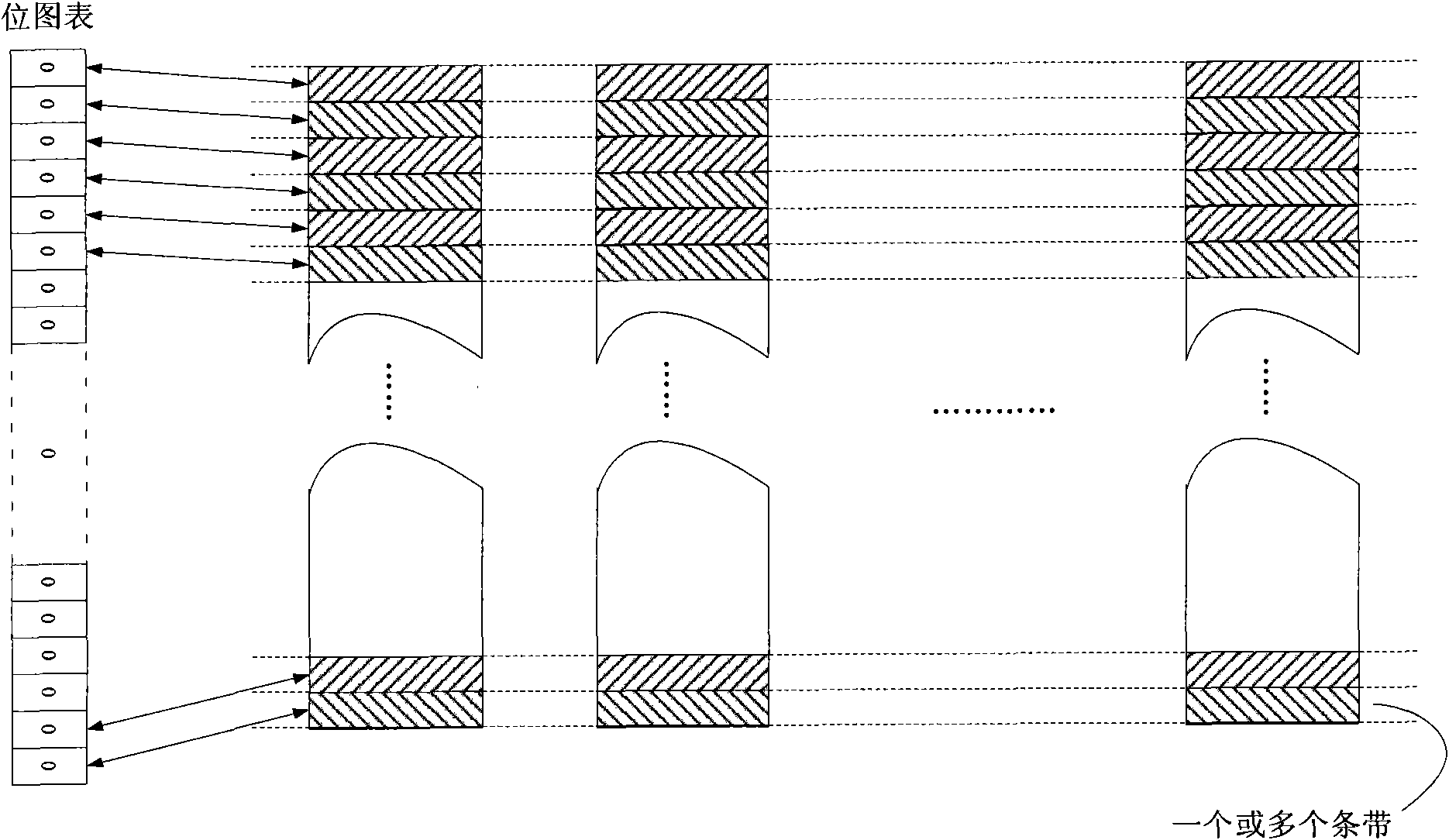

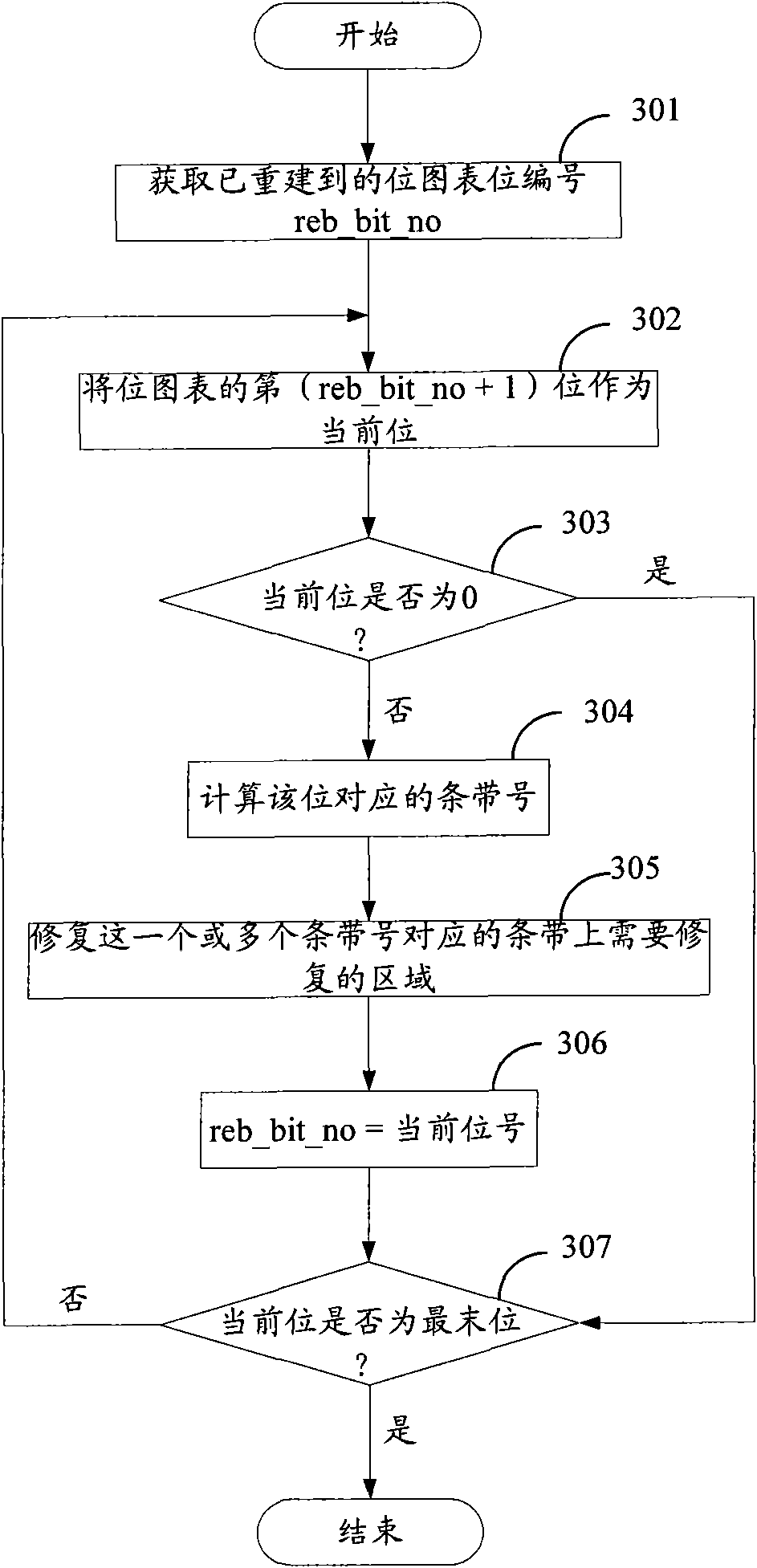

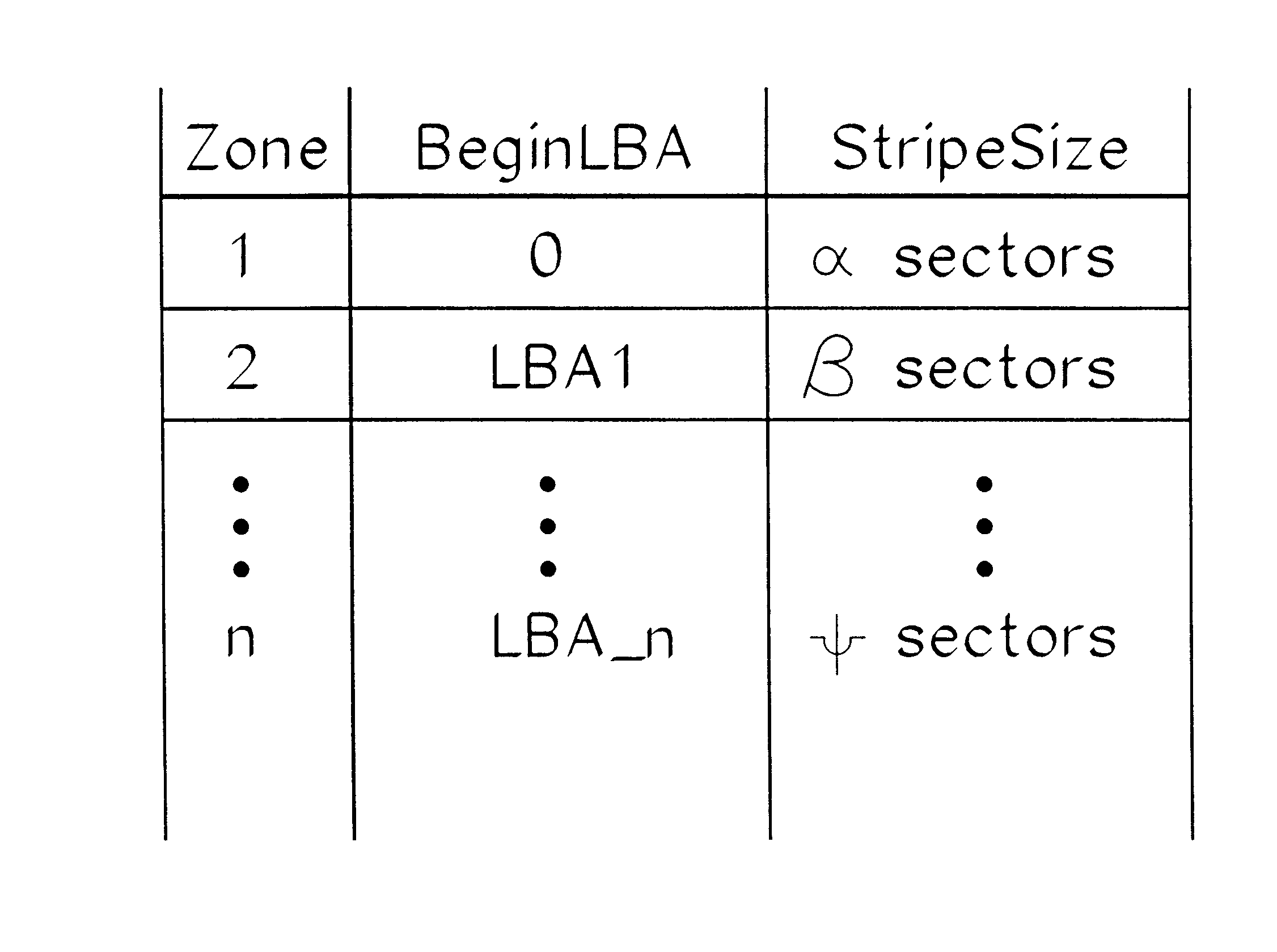

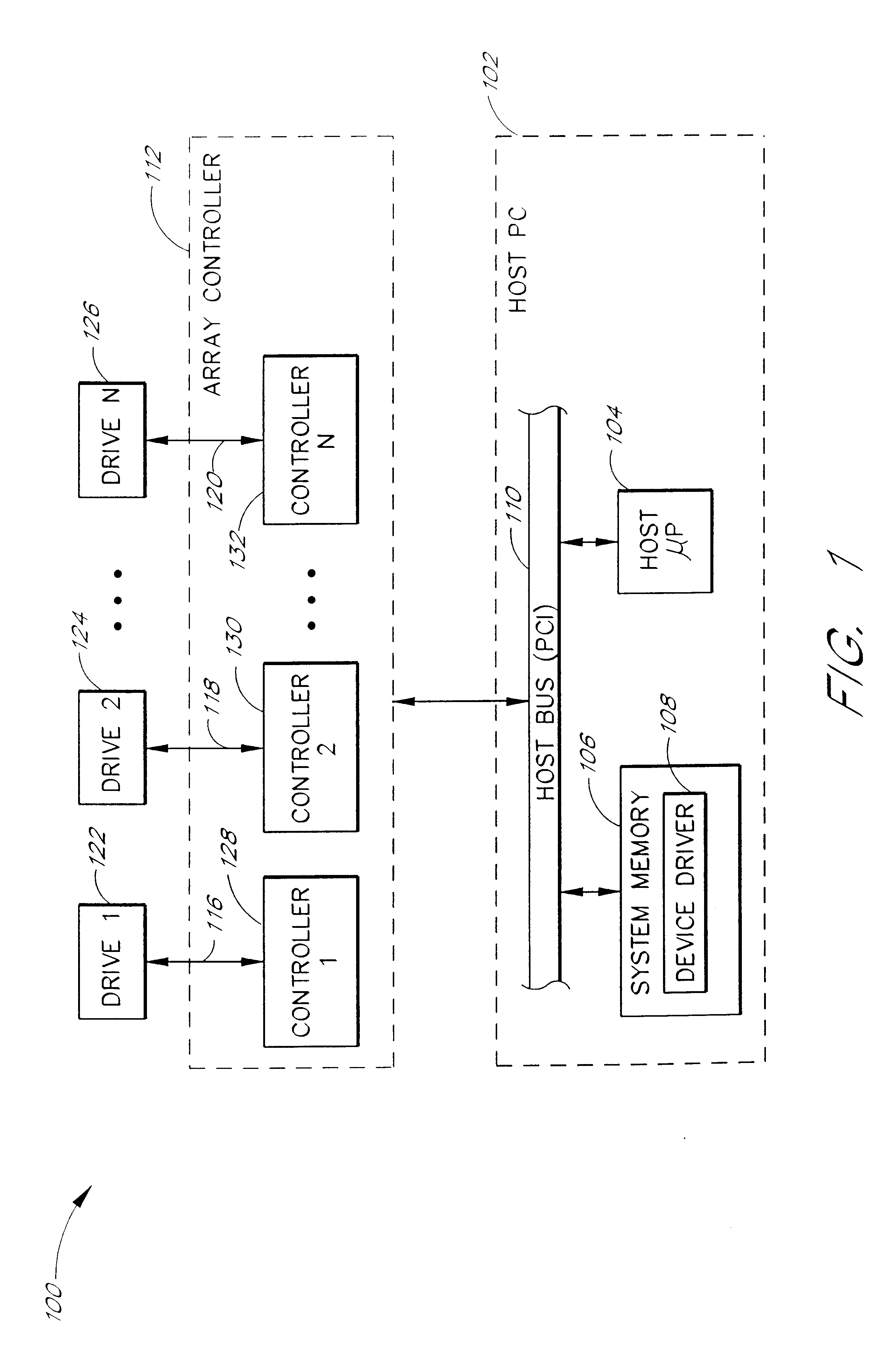

Rapid reconstruction method and device of RAID (Redundant Array of Independent Disk) system

InactiveCN101840360ARepair processing to avoidThe repair process is realized quicklyInput/output to record carriersRedundant data error correctionRAIDReconstruction method

The invention discloses a rapid reconstruction method of a RAID (Redundant Array of Independent Disk) system. The method comprises the following steps of: A, setting a bitmap, the initial value of which is invalid, wherein each bit in the bitmap corresponds to at least a strip in the RAID system; B, modifying the bit of the bitmap corresponding to the strip into valid when the writing operation is carried out on the strip; and C, finding out the strip on which the writing operation is carried out according to the bitmap, and recovering the data of the found strip. The invention also discloses a rapid reconstruction device of the RAID system. The RAID system reconstruction can be rapidly realized according to the scheme of the invention, and the IO (Input / Output) performance and the reliability of the system can be improved.

Owner:UNITED INFORMATION TECH +1

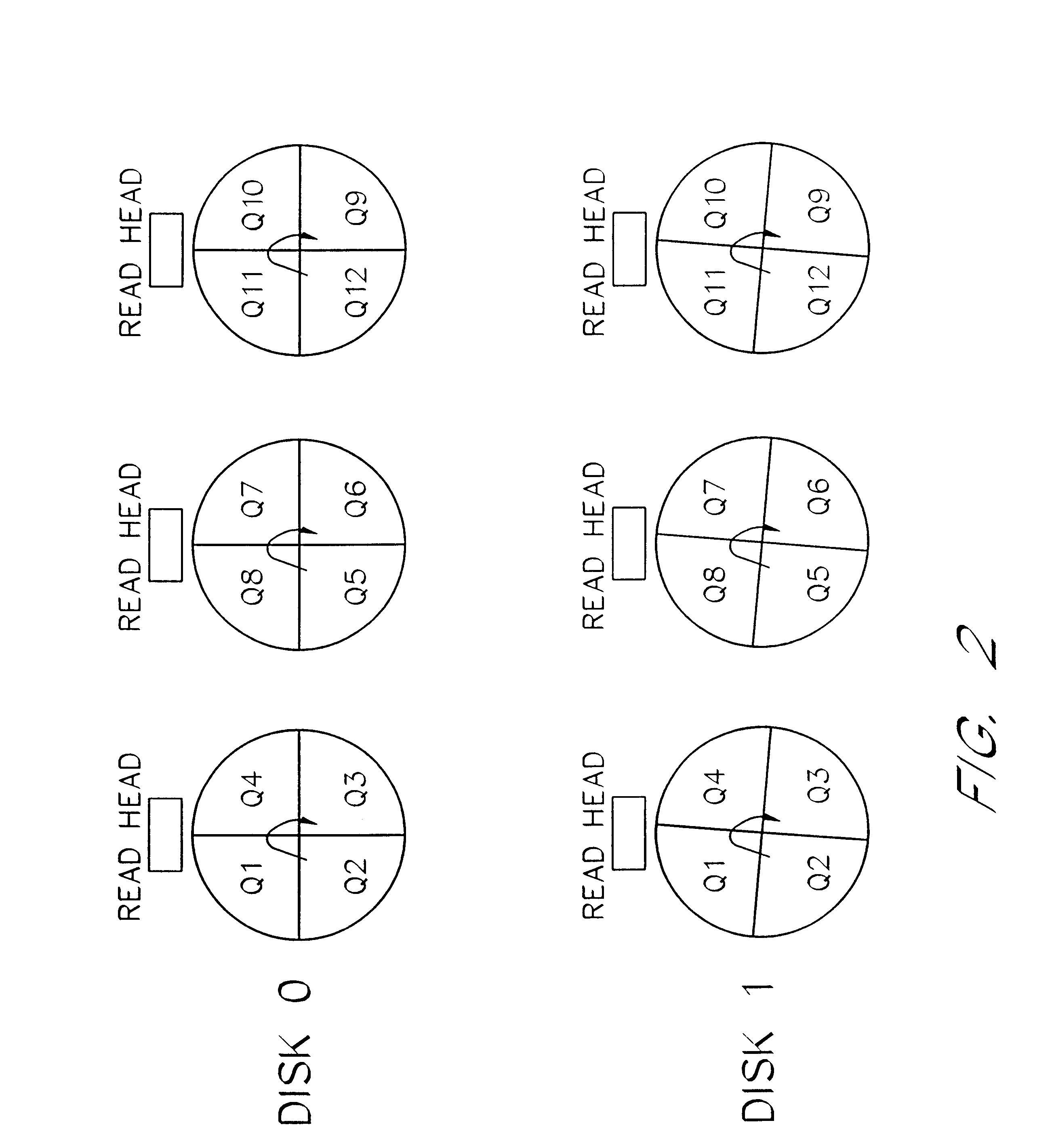

Methods and systems for mirrored disk arrays

InactiveUS6591338B1Enhance I/O operationSpeeding access timeRecording carrier detailsInput/output to record carriersData rateDisk array

Owner:SUMMIT DATA SYST

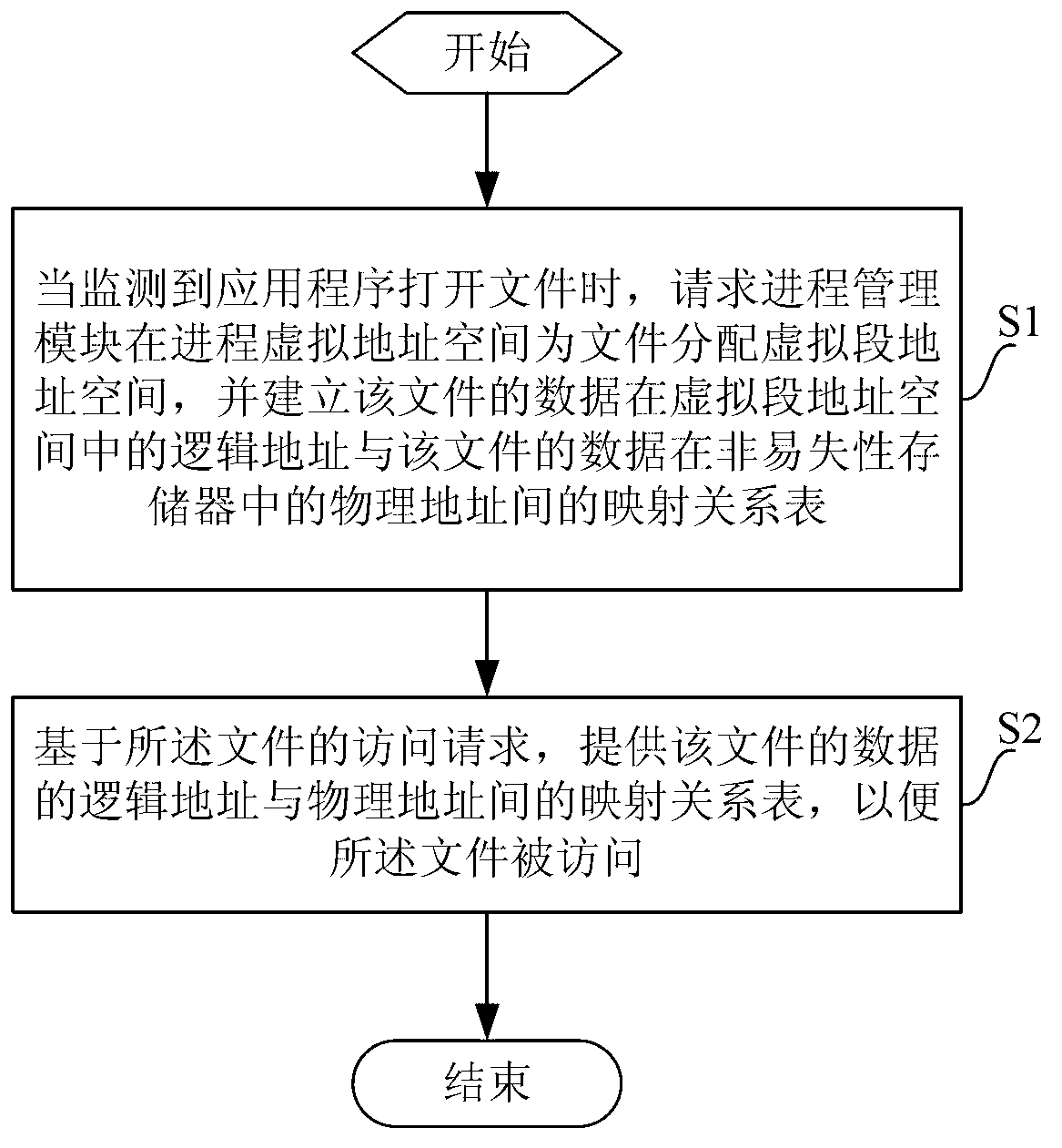

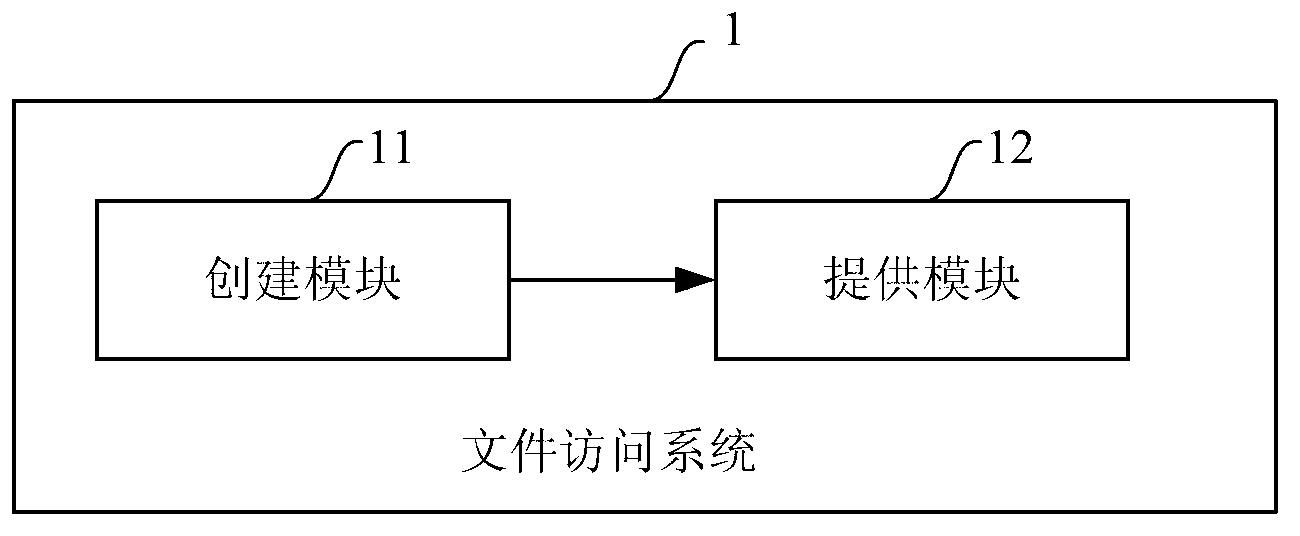

File access method and file access system

ActiveCN103218312AImprove read and write access speedSave DRAM resourcesMemory adressing/allocation/relocationLogical addressAddress space

The invention provides a file access method and a file access system. The system is applied to a memory system comprising a dynamic random access memory (DRAM) and a nonvolatile memory. The method comprises the following steps: when monitoring that an application program opens a file, firstly requesting a process management module to allocate a virtual segment address space for the file at a progress virtual address space, and establishing a mapping relation table of logic addresses of file data in the allocated virtual address space and the physical addresses of the file data in the nonvolatile memory; and then on the basis of the file access request, providing the mapping relation table of the logic addresses of the file data and the physical address, so that the file can be conveniently accessed. By utilizing the method and the system, the file data in the nonvolatile memory can be accessed in an internal memory mode, the file read-write access speed can be improved, and limited DRAM resources can be saved, so that the I / O (Input / Output) performance of the system is improved, and the application program can rapidly access the file data.

Owner:SHANGHAI INST OF MICROSYSTEM & INFORMATION TECH CHINESE ACAD OF SCI

Method for finding the longest common subsequences between files with applications to differential compression

InactiveUS7487169B2Low costEnhance memoryDigital data processing detailsCode conversionLongest common subsequence problemGranularity

A differential compression method and computer program product combines hash value techniques and suffix array techniques. The invention finds the best matches for every offset of the version file, with respect to a certain granularity and above a certain length threshold. The invention has two variations depending on block size choice. If the block size is kept fixed, the compression performance of the invention is similar to that of the greedy algorithm, without the expensive space and time requirements. If the block size is varied linearly with the reference file size, the invention can run in linear-time and constant-space. It has been shown empirically that the invention performs better than certain known differential compression algorithms in terms of compression and speed.

Owner:INT BUSINESS MASCH CORP

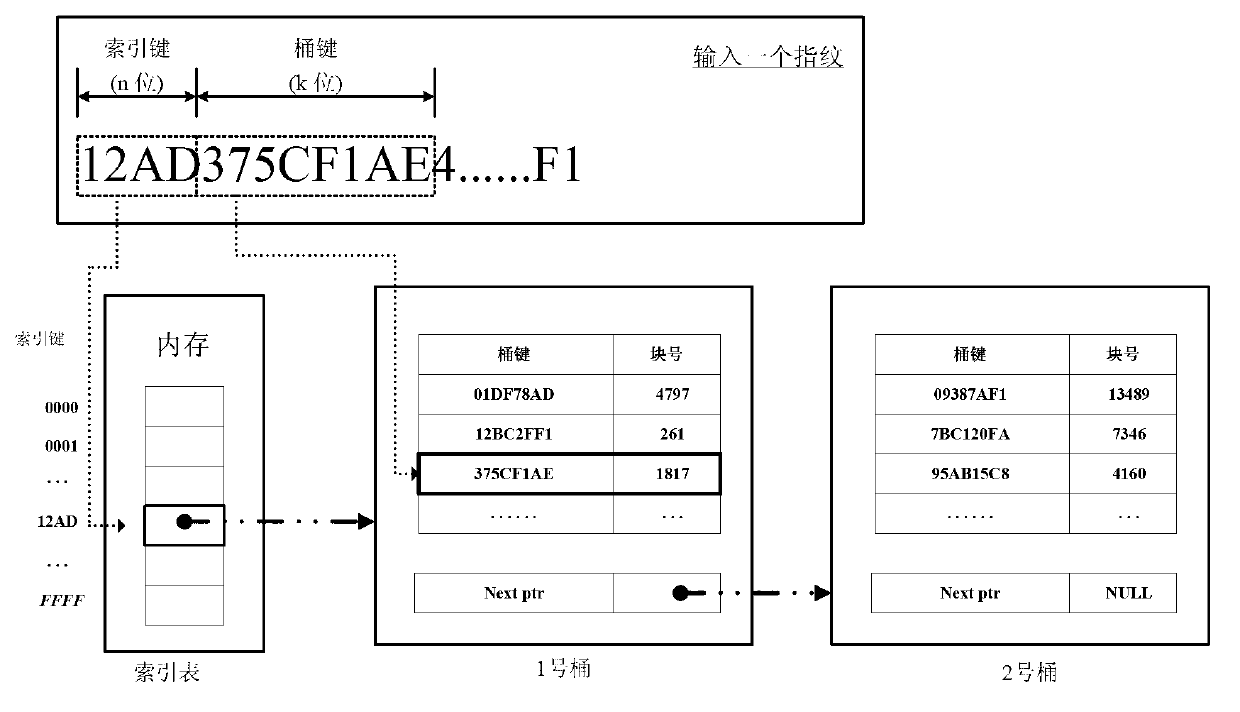

Virtual machine image management optimization method based on data de-duplication

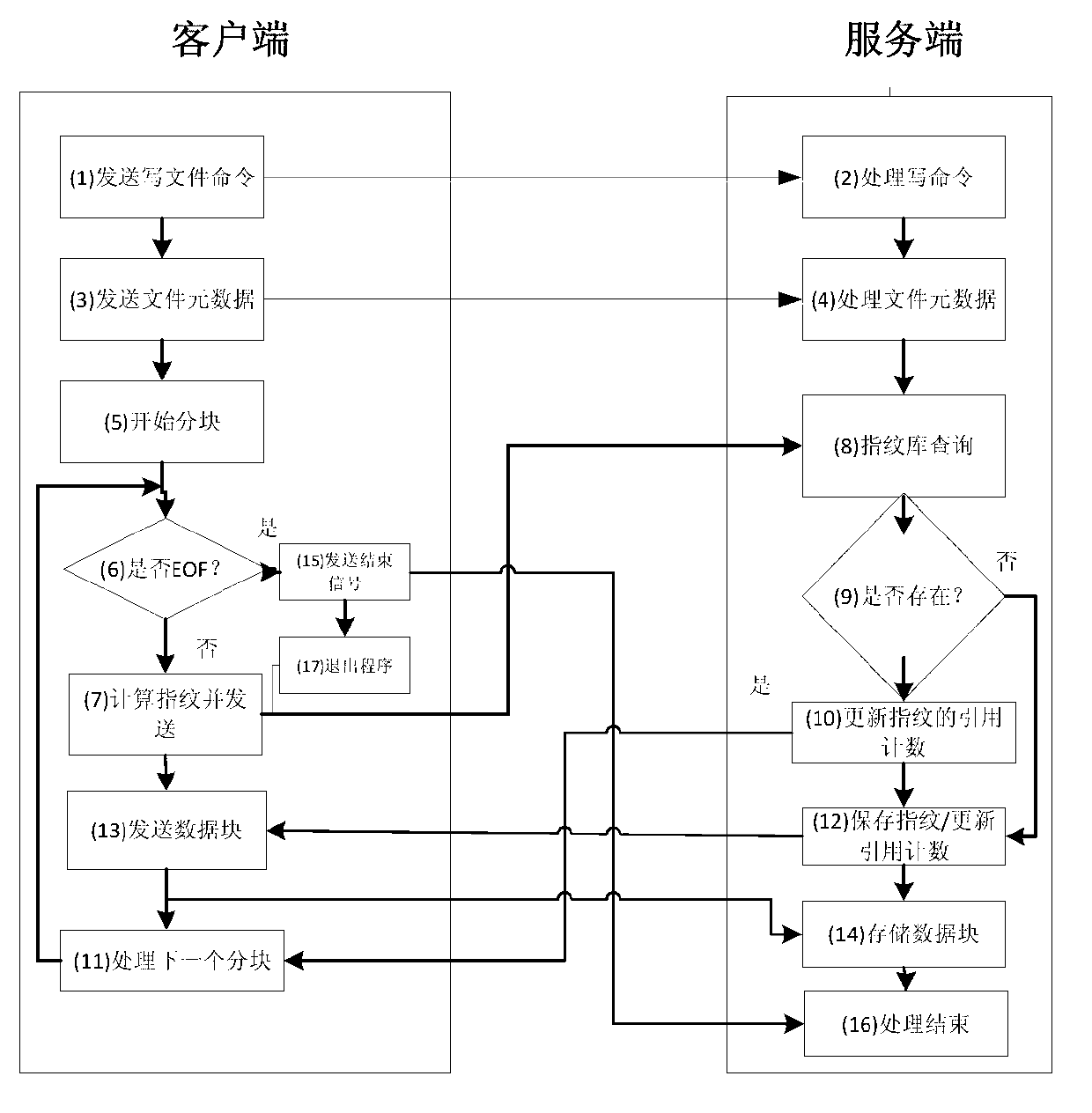

InactiveCN103139300AAvoid duplicate transmissionImprove transmission efficiencyTransmissionSoftware simulation/interpretation/emulationFile systemThe Internet

The invention discloses a virtual machine image management optimization method based on data de-duplication. The specific steps of the virtual machine image management optimization method based on the data de-duplication include that when a virtual machine image is uploaded, a fixed size partitioning method is adopted by a user end to divide an image file into a plurality of data blocks, the size of each data block is the same as the size of a cluster of a local file system, a mini disk (MD) 5 value of each data block is calculated, Socket programming technology is used, a finger print is sent to a server end, the server end searches the finger print and returns the result to the user end, whether the data blocks are sent is judged by the user end according to the result, and the network sources are saved; when the server end searches the finger print, by using a finger print filter and a finger print storage device, the memory usage amount and disk access amount are reduced; when the data are saved, the data blocks are directly stored in a complete cluster, the repetitive job of image renewing and segmentation is eliminated. The virtual machine image management optimization method based on the data de-duplication achieves a kernel mode file system of the online data de-duplication, reduces the disk storage amount and reduces internet consumption.

Owner:HANGZHOU DIANZI UNIV

Method to improve the performance of a read ahead cache process in a storage array

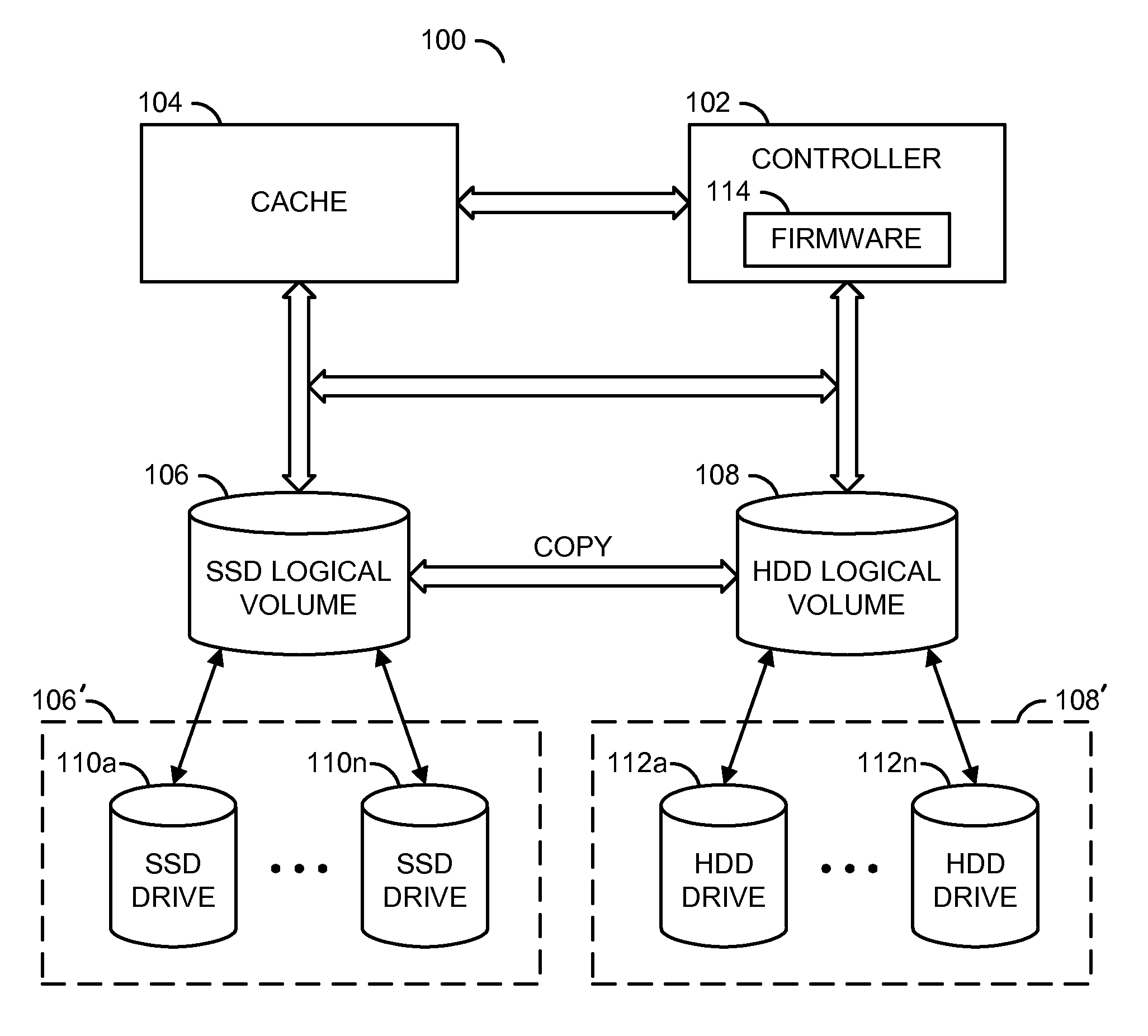

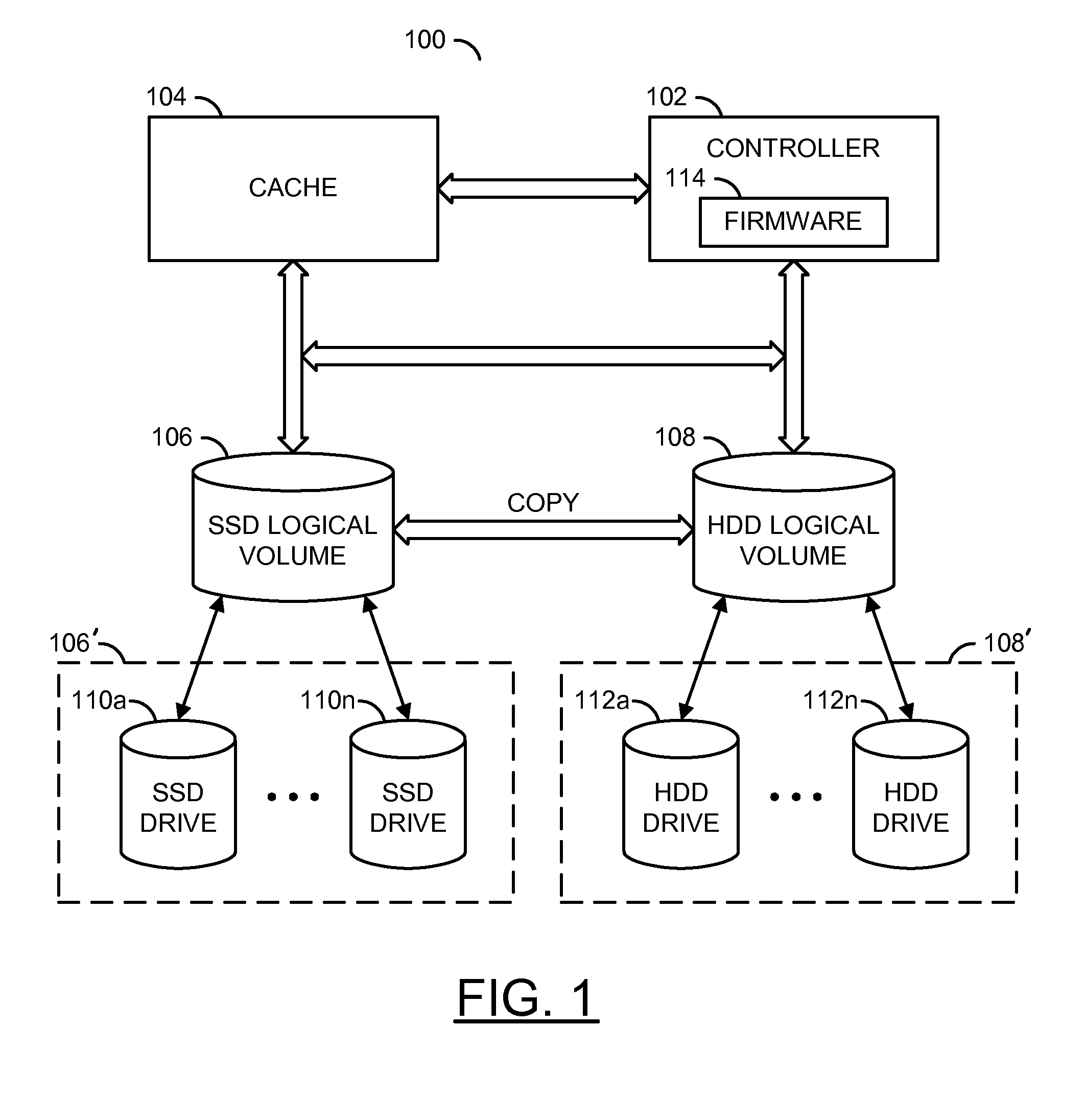

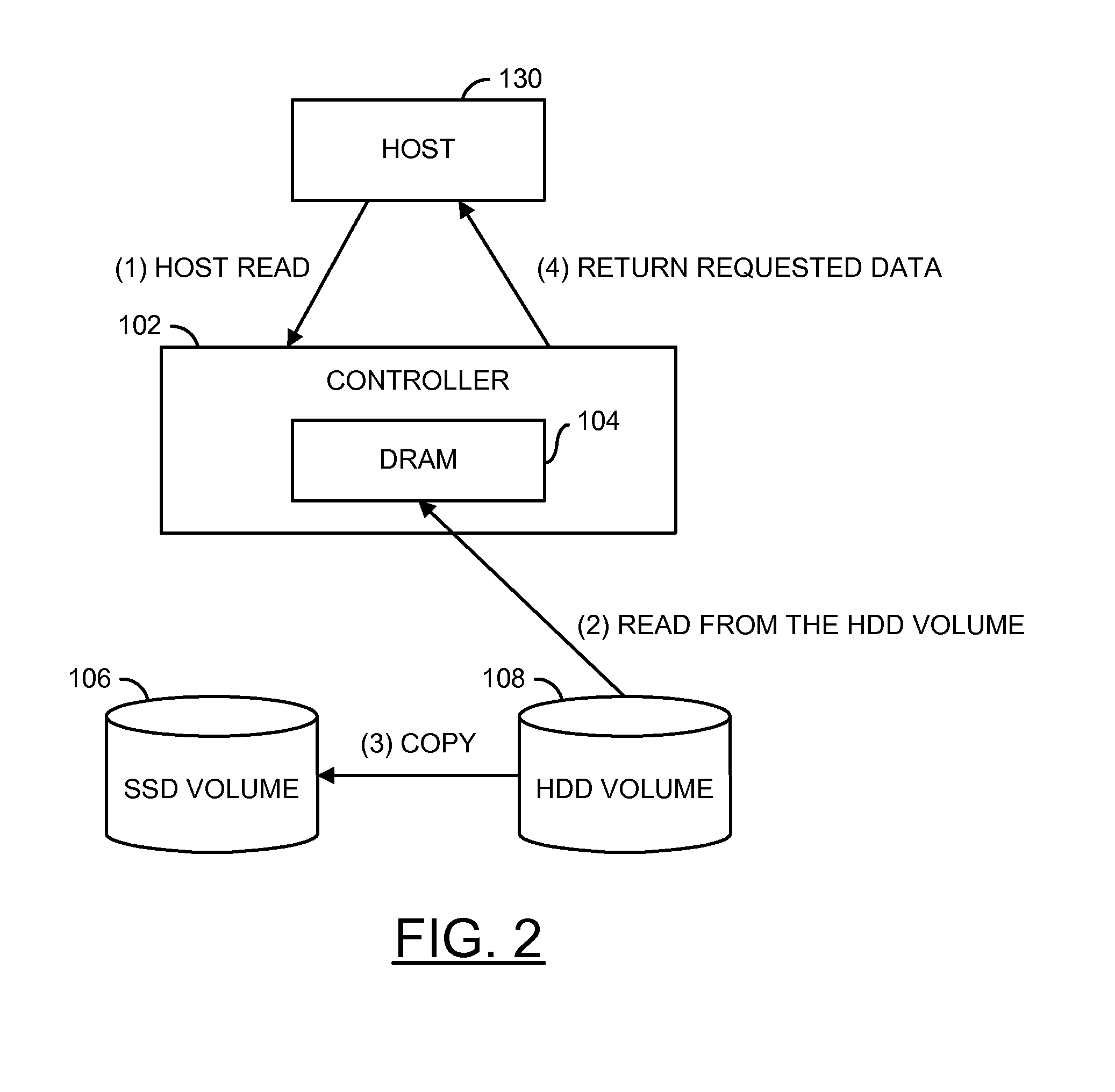

ActiveUS20120331222A1Improve read performanceImprove I/O performanceMemory architecture accessing/allocationMemory loss protectionParallel computingData storing

An apparatus comprising an array controller and a cache. The array controller may be configured to read / write data to a first array of drives of a first drive type in response to one or more input / output requests. The cache may be configured to (i) receive said input / output requests from the array controller, (ii) temporarily store the input / output requests, and (iii) read / write data to a second array of drives of a second drive type in response to the input / output requests. The first array of drives may be configured to copy the data directly to / from the second array of drives during a cache miss condition such that the array controller retrieves the data stored in the first array of drives through the second array of drives without writing the data to the cache.

Owner:AVAGO TECH INT SALES PTE LTD

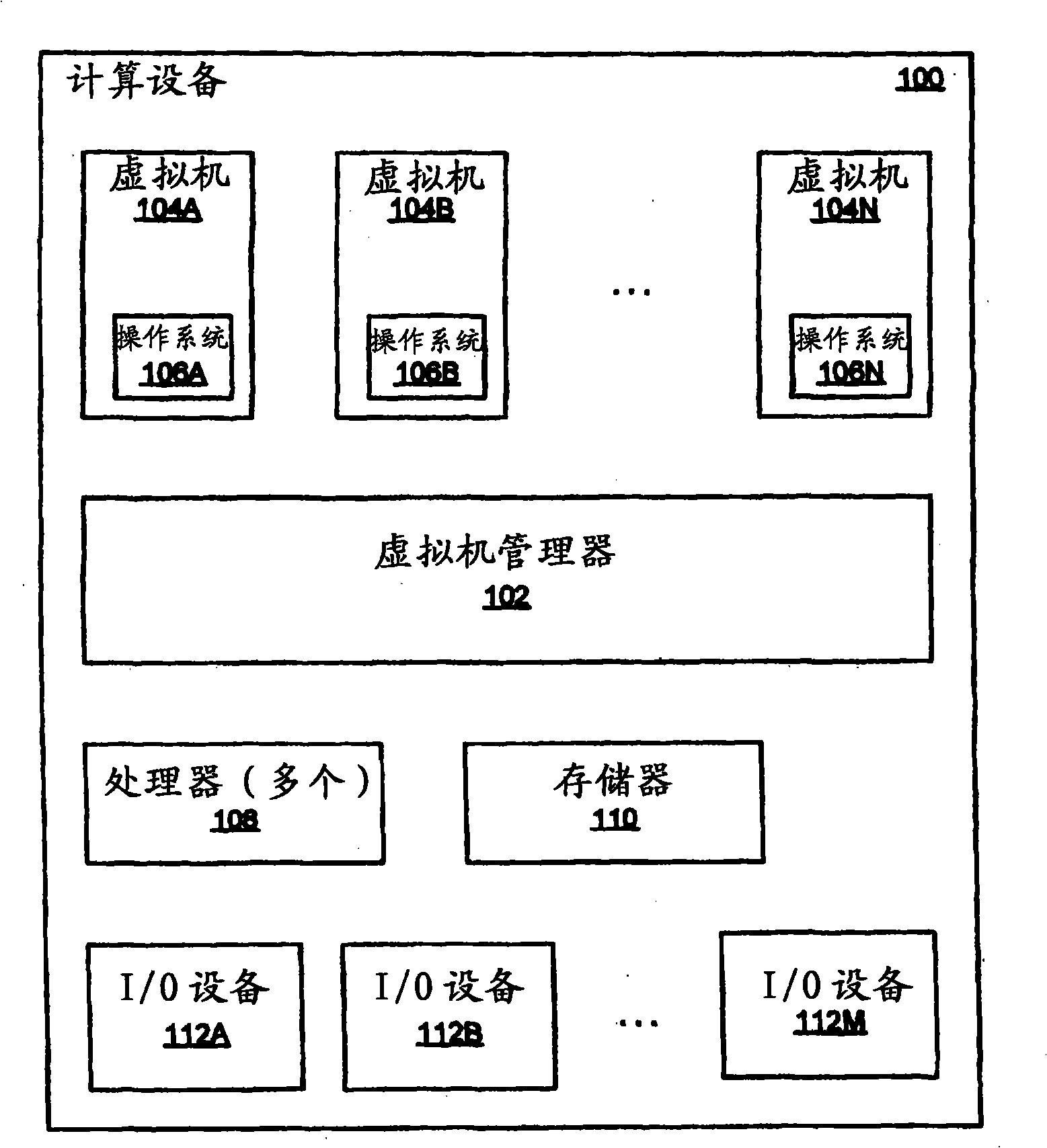

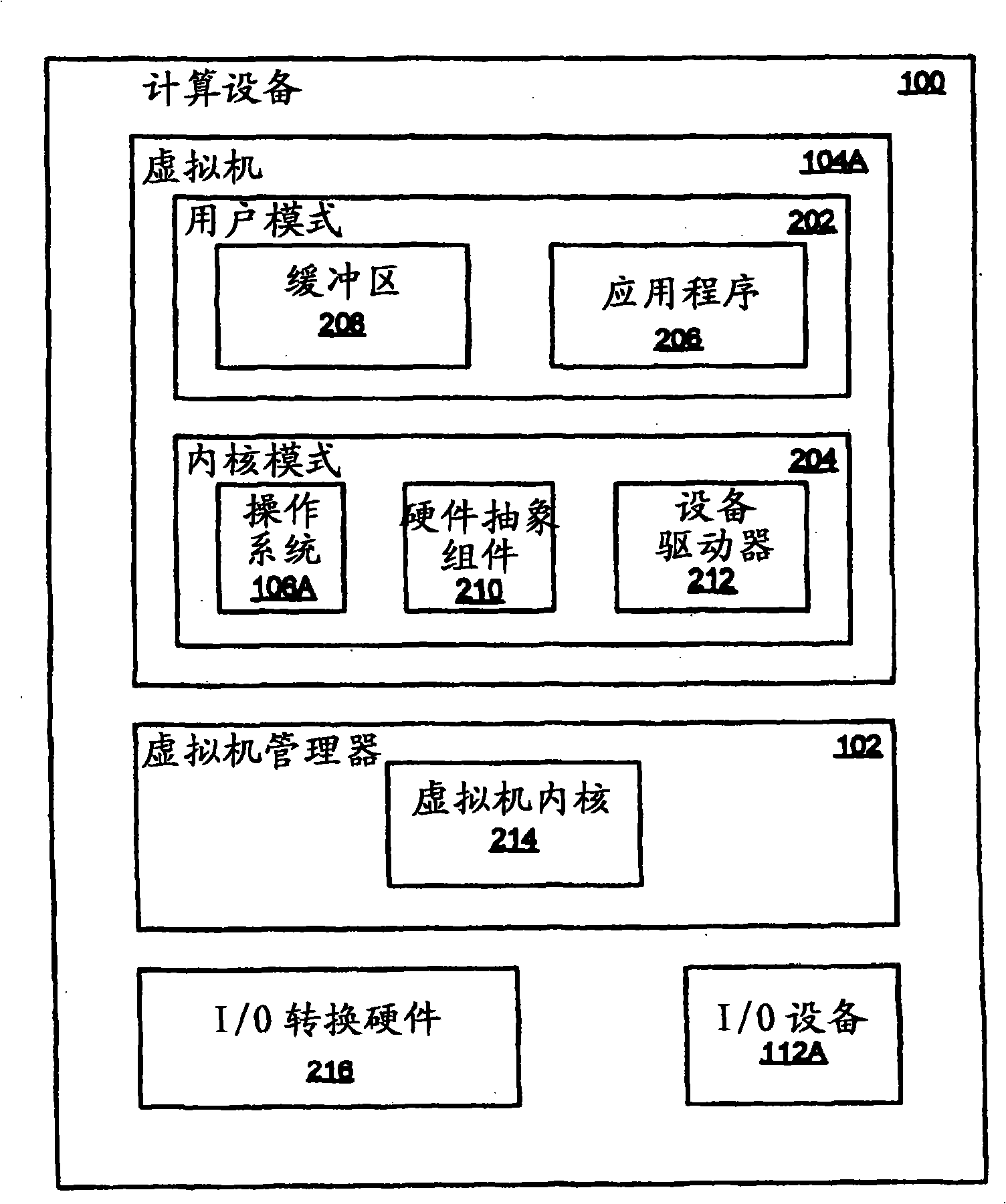

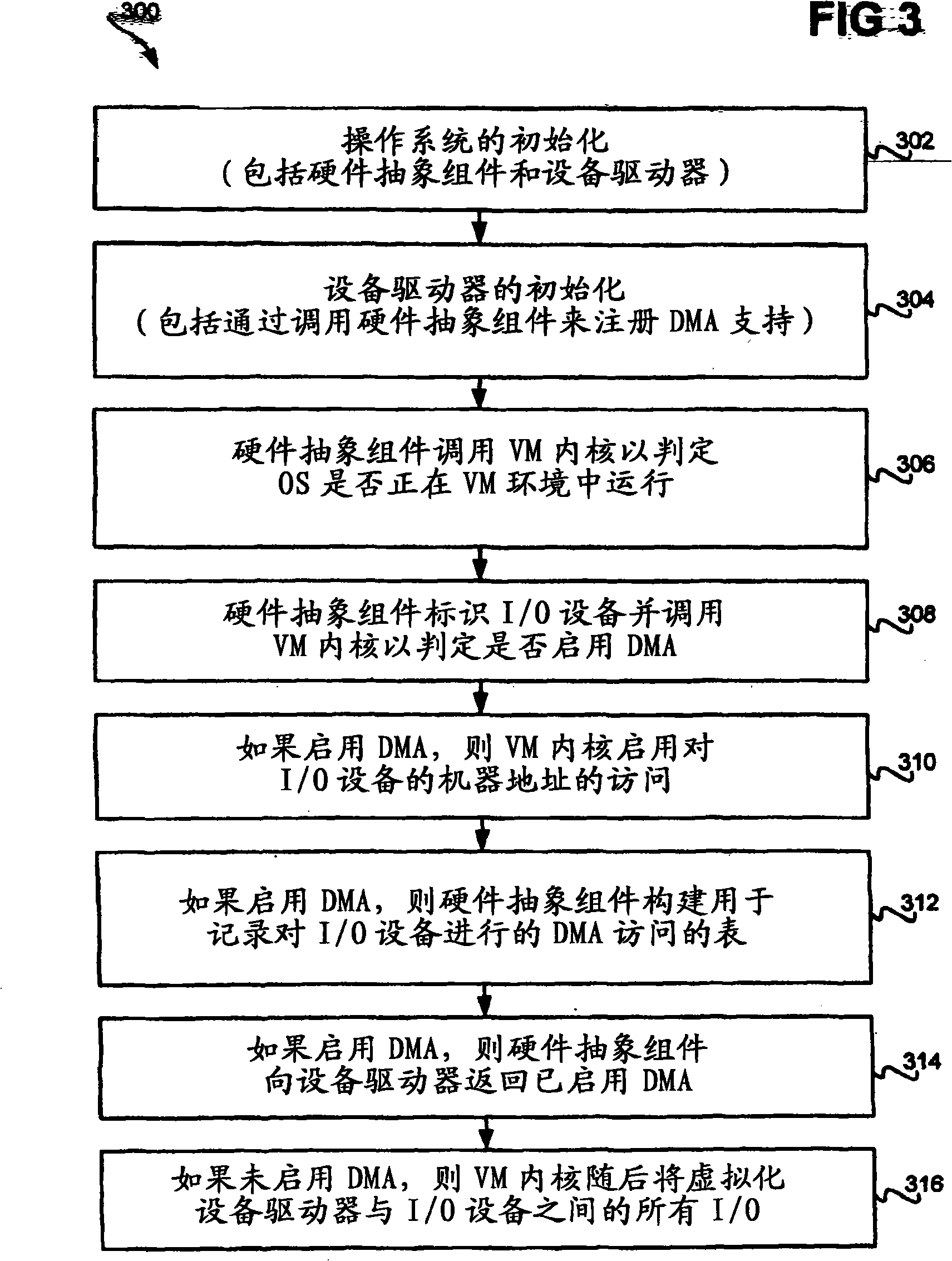

Direct-memory access between input/output device and physical memory within virtual machine environment

InactiveCN101278263AImprove I/O performanceImprove compatibilitySoftware simulation/interpretation/emulationMemory systemsDirect memory accessOperational system

Direct memory access (DMA) is provided between input / output (I / O) devices and memory within virtual machine (VM) environments. A computing device includes an I / O device, an operating system (OS) running on a VM of the computing device, a device driver for the I / O device, a VM manager (VMM), I / O translation hardware, and a hardware abstraction component for the OS. The I / O translation hardware is for translating physical addresses of the computing device assigned to the OS to machine addresses of the I / O device. The hardware abstraction component and the VMM cooperatively interact to enable the device driver to initiate DMA between the I / O device and memory via the translation hardware. The OS may be unmodified to run on the VM of the computing device, except that the hardware abstraction component is particularly capable of cooperatively interacting with the VMM to enable the device driver to receive DMA from the I / O device.

Owner:INT BUSINESS MASCH CORP

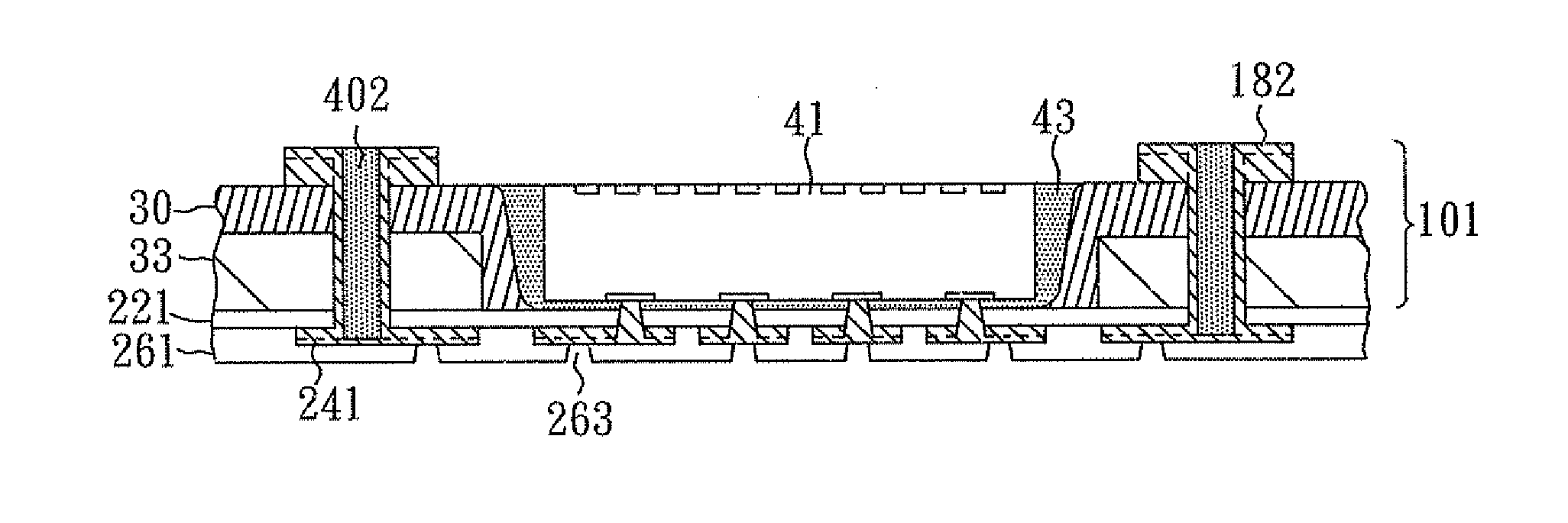

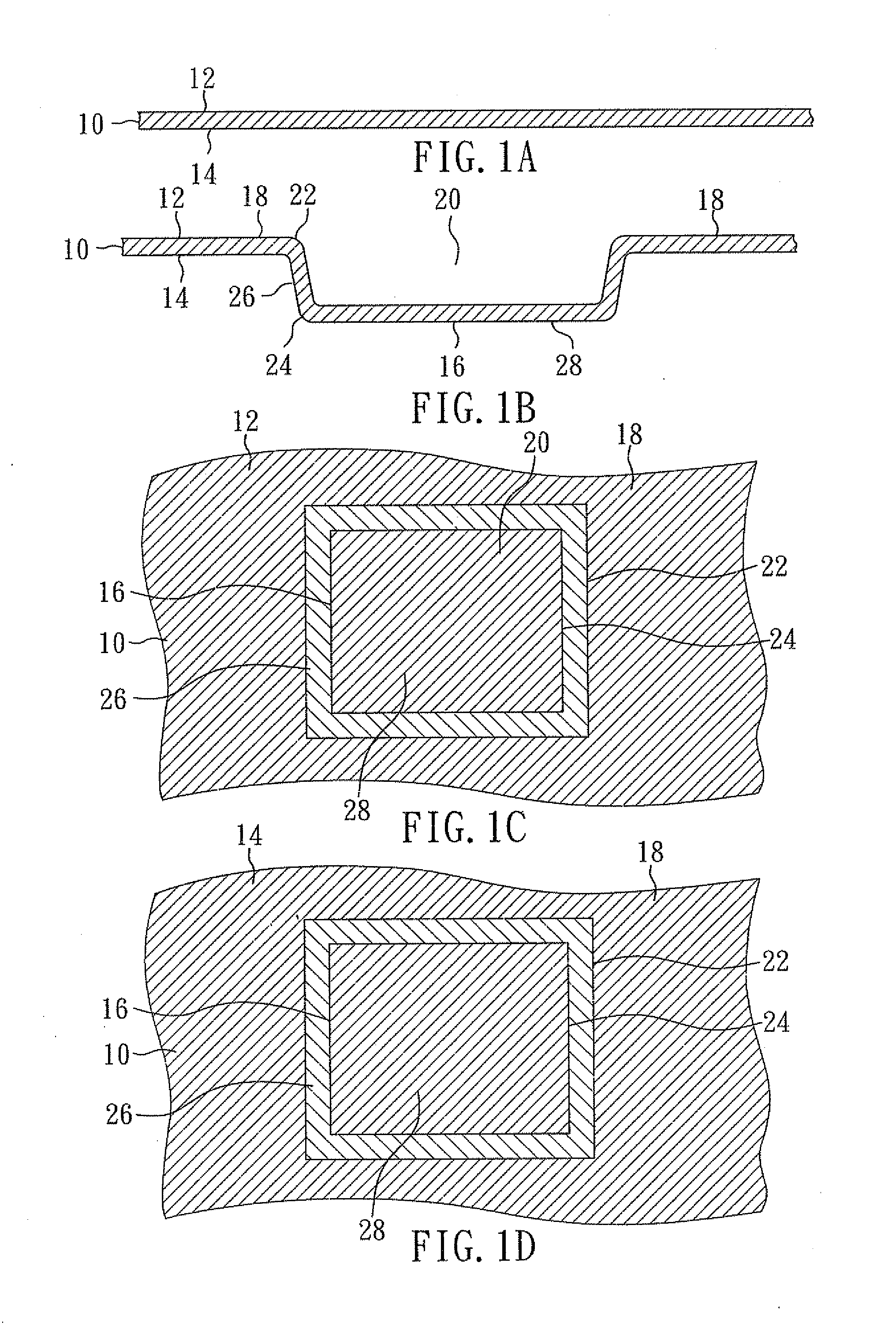

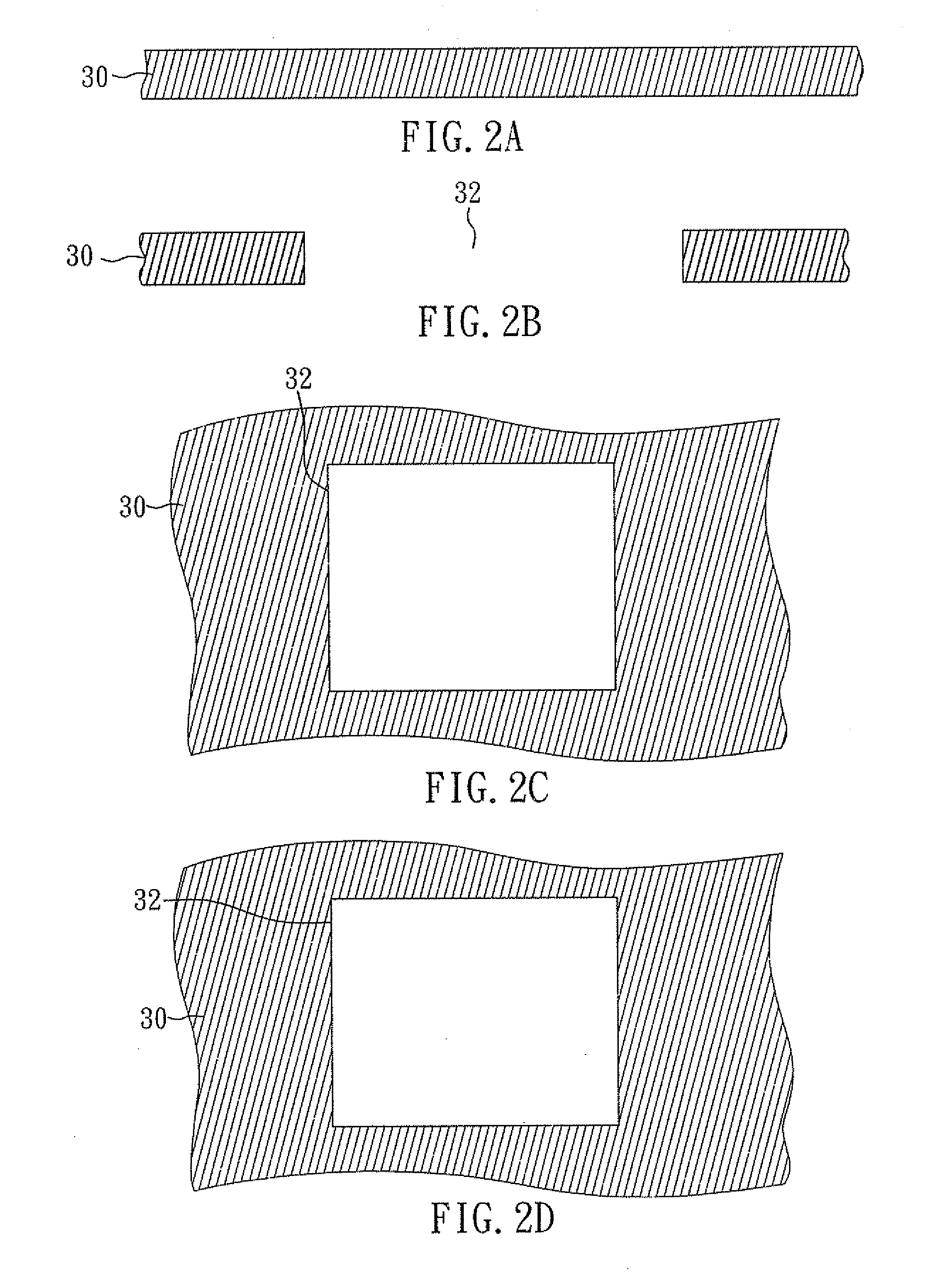

Method of making hybrid wiring board with built-in stiffener and interposer and hybrid wiring board manufactured thereby

InactiveUS20140291001A1Easy to handleImprove production yieldSemiconductor/solid-state device detailsPrinted circuit aspectsSignal routingAdhesive

The present invention relates to a method of making a hybrid wiring board. In accordance with a preferred embodiment, the method includes: preparing a dielectric layer and a supporting board including a stiffener, a bump / flange sacrificial carrier and an adhesive, wherein the adhesive bonds the stiffener to the sacrificial carrier and the dielectric layer covers the supporting board; then removing the bump and a portion of the flange to form a cavity and expose the dielectric layer; then mounting an interposer into the cavity; and then forming a build-up circuitry that includes a first conductive via in direct contact with the interposer and provides signal routing for the interposer. Accordingly, the direct electrical connection between the interposer and the build-up circuitry is advantageous to high I / O and high performance, and the stiffener can provide adequate mechanical support for the build-up circuitry and the interposer.

Owner:BRIDGE SEMICON

Method for updating double-magnetic head user data of large scale fault-tolerant magnetic disk array storage system

InactiveCN101349979AImprove I/O performanceReduce update operation time overheadInput/output to record carriersRedundant data error correctionFault toleranceOperational system

The invention relates to a method for updating double magnetic head user data of a large fault tolerance disc array storage system, which belongs to the fault tolerance disc array storage system technical field. The method is characterized by comprising: in the fault tolerance disc array storage system which is composed of a disc array, a storage adapter, a memory which stores a storage operating system and a processor and is based on an erasure code, firstly, utilizing a read operation to read an old user data block from a disc, using a new user data block to cover the old user data block on the disc, simultaneously, using xor operations to obtain the differential values of the new user data block and the old user data block, then, calculating the renewed differential value of each calibration data block according to product coefficients of each calibration data block about the user data block in the erasure code which is adopted, and finally using compound operations which are in the disc of a double magnetic head disc driver structure based on the grade assembly line technique to finish the update operation of each calibration data block. Experiments show that the invention prominently reduces the I / O operation number and shortens the I / O average response time.

Owner:TSINGHUA UNIV

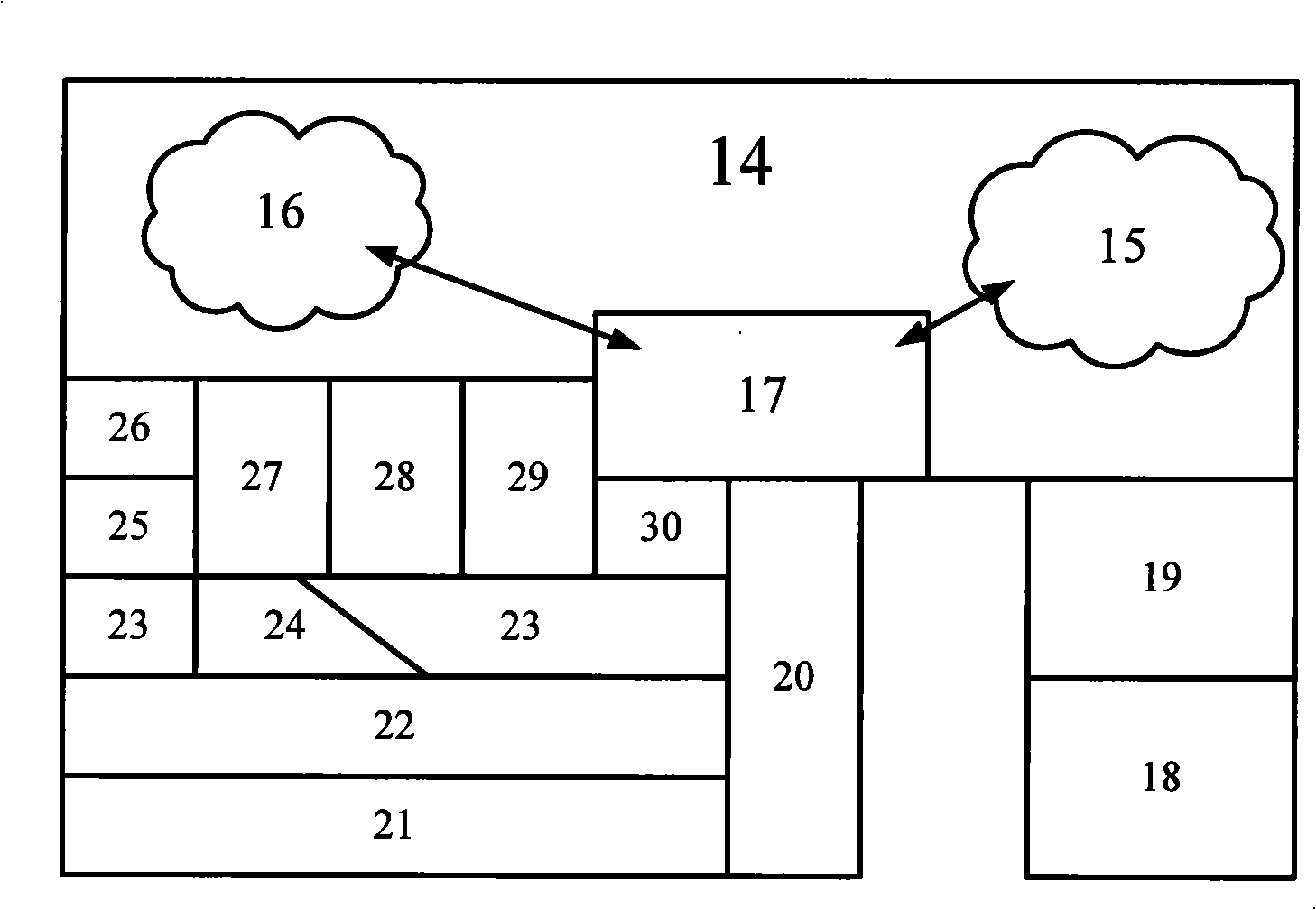

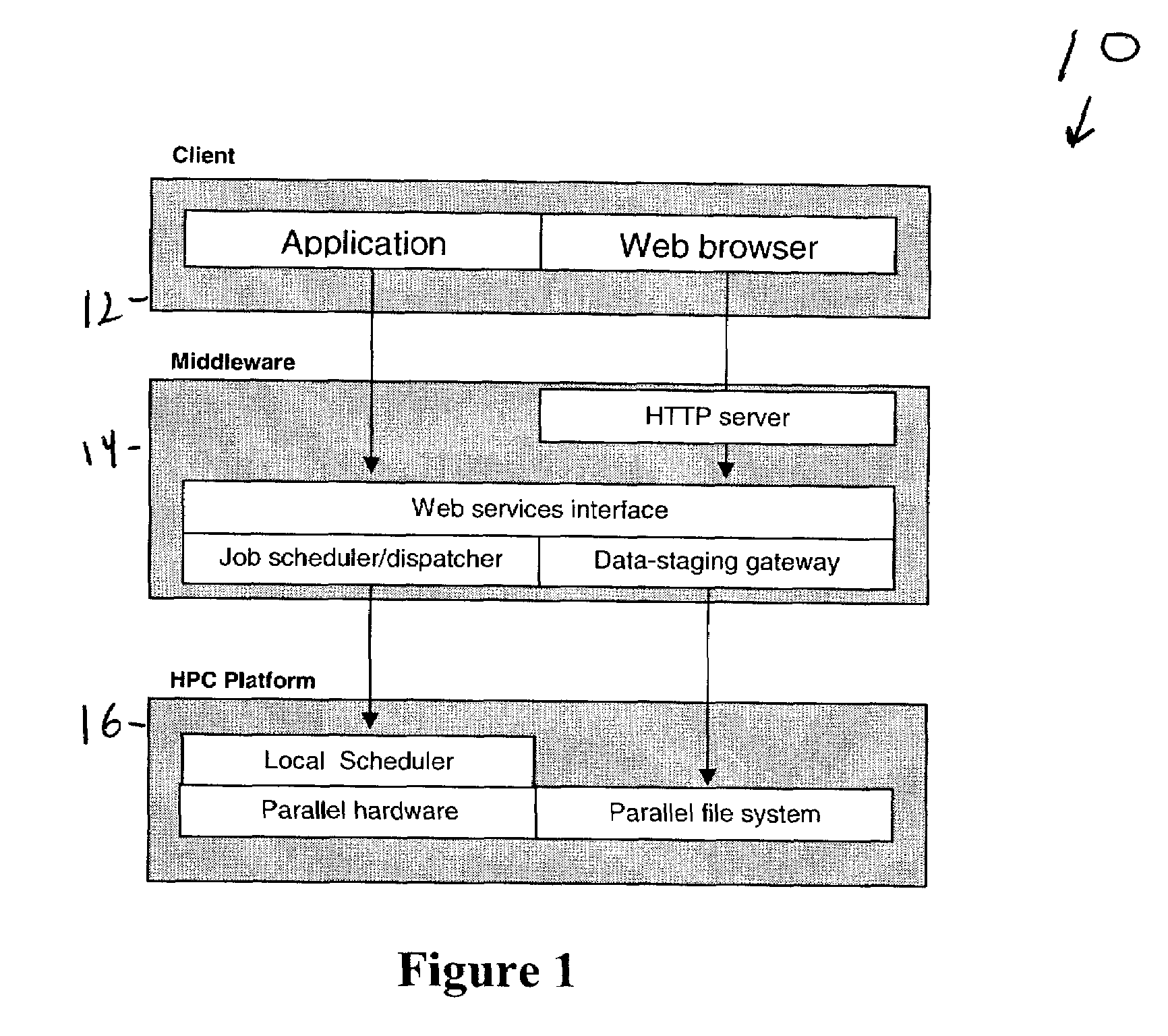

System and method for automating and scheduling remote data transfer and computation for high performance computing

InactiveUS20080178179A1Rapid responseBroaden applicationMultiprogramming arrangementsSpecific program execution arrangementsBlue geneWeb service

The invention pertains to a system and method for a set of middleware components for supporting the execution of computational applications on high-performance computing platform. A specific embodiment of this invention was used to deploy a financial risk application on Blue Gene / L parallel supercomputer. The invention is relevant to any application where the input and output data are stored in external sources, such as SQL databases, where the automatic pre-staging and post-staging of the data between the external data sources and the computational platform is desirable. This middleware provides a number of core features to support these applications including for example, an automated data extraction and staging gateway, a standardized high-level job specification schema, a well-defined web services (SOAP) API for interoperability with other applications, and a secure HTML / JSP web-based interface suitable for non-expert and non-privileged users.

Owner:IBM CORP

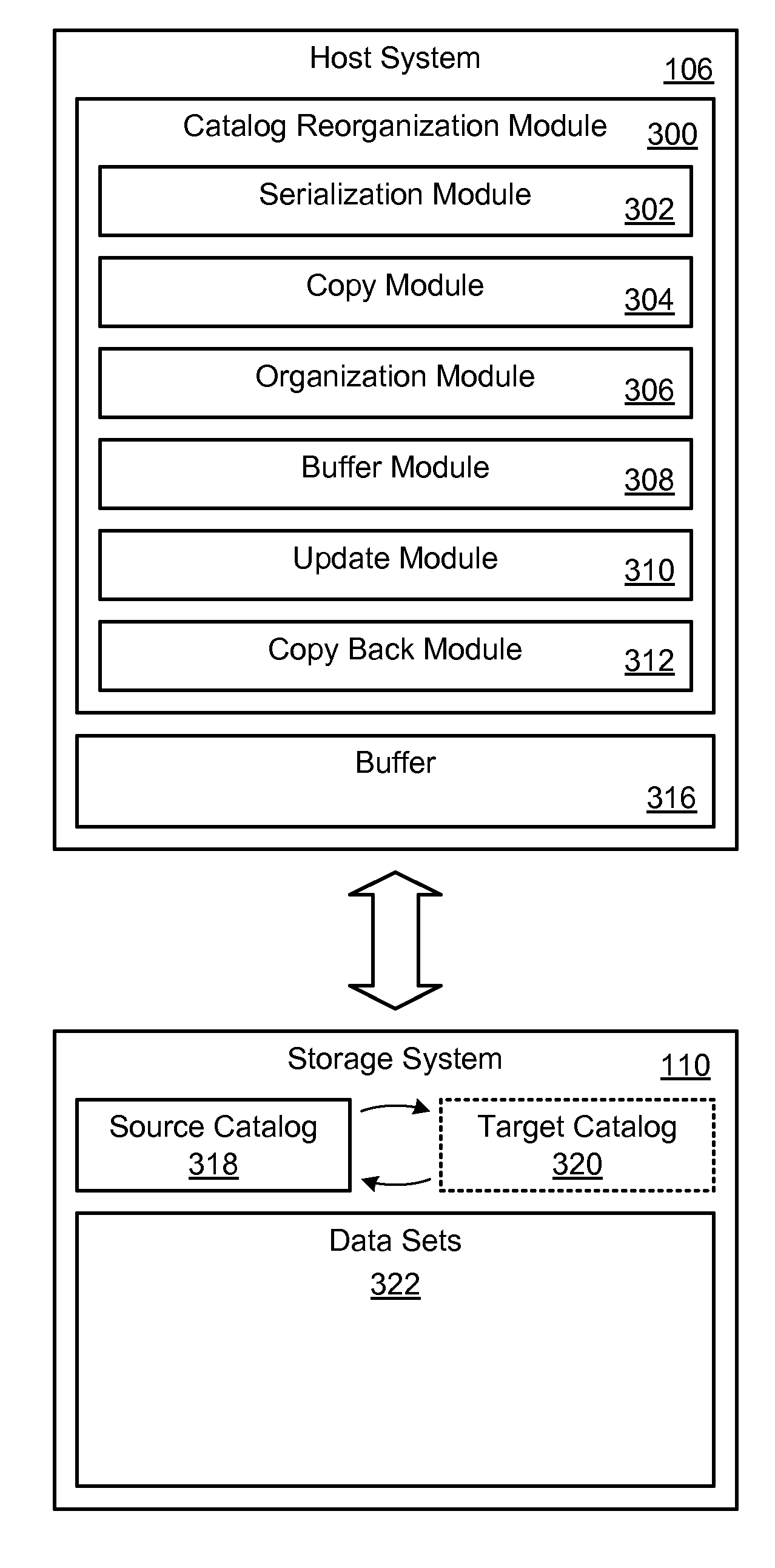

Catalog reorganization apparatus and method

InactiveUS20110173154A1Efficiently reorganizeMinimally disruptingDigital data processing detailsStructured data retrievalLibrary scienceMirror image

A method for reorganizing a catalog to improve I / O performance includes initially placing a shared lock on a source catalog. The method then makes a point-in-time copy of the source catalog to generate a target catalog. Once the target catalog is generated, the method reorganizes the contents of the target catalog. Optionally, while reorganizing the contents of the target catalog, the method temporarily releases the shared lock on the source catalog and mirrors I / O intended for the source catalog to a buffer. The buffered I / O may then be used to update the target catalog to bring it current with the source catalog. When the target catalog is reorganized and up-to-date, the method upgrades the shared lock on the source catalog to an exclusive lock, overwrites the source catalog with the target catalog, and releases the exclusive lock. A corresponding apparatus and computer program product are also disclosed and claimed herein.

Owner:IBM CORP

Multi-dimensional OLAP query processing method oriented to column store data warehouse

ActiveUS8660985B2Improve I/O performanceReduce complexityDigital data processing detailsMulti-dimensional databasesOne passSurrogate key

A multi-dimensional OLAP query processing method oriented to a column store data warehouse is described. With this method, an OLAP query is divided into a bitmap filtering operation, a group-by operation and an aggregate operation. In the bitmap filtering operation, a predicate is first executed on a dimension table to generate a predicate vector bitmap, and a join operation is converted, through address mapping of a surrogate key, into a direct dimension table tuple access operation; in the group-by operation, a fact table tuple satisfying a filtering condition is pre-generated into a group-by unit according to a group-by attribute in an SQL command and is allocated with an increasing ID; and in the aggregate operation, group-by aggregate calculation is performed according to a group item of a fact table filtering group-by vector through one-pass column scan on a fact table measure attribute.

Owner:RENMIN UNIVERSITY OF CHINA

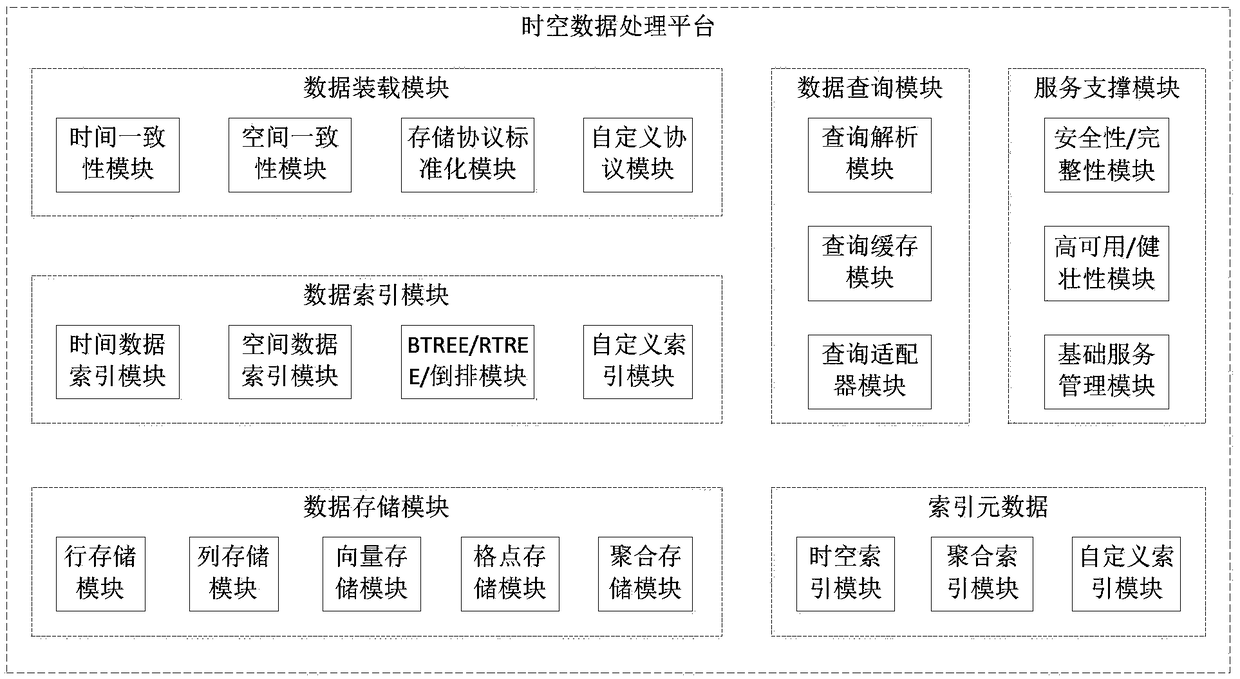

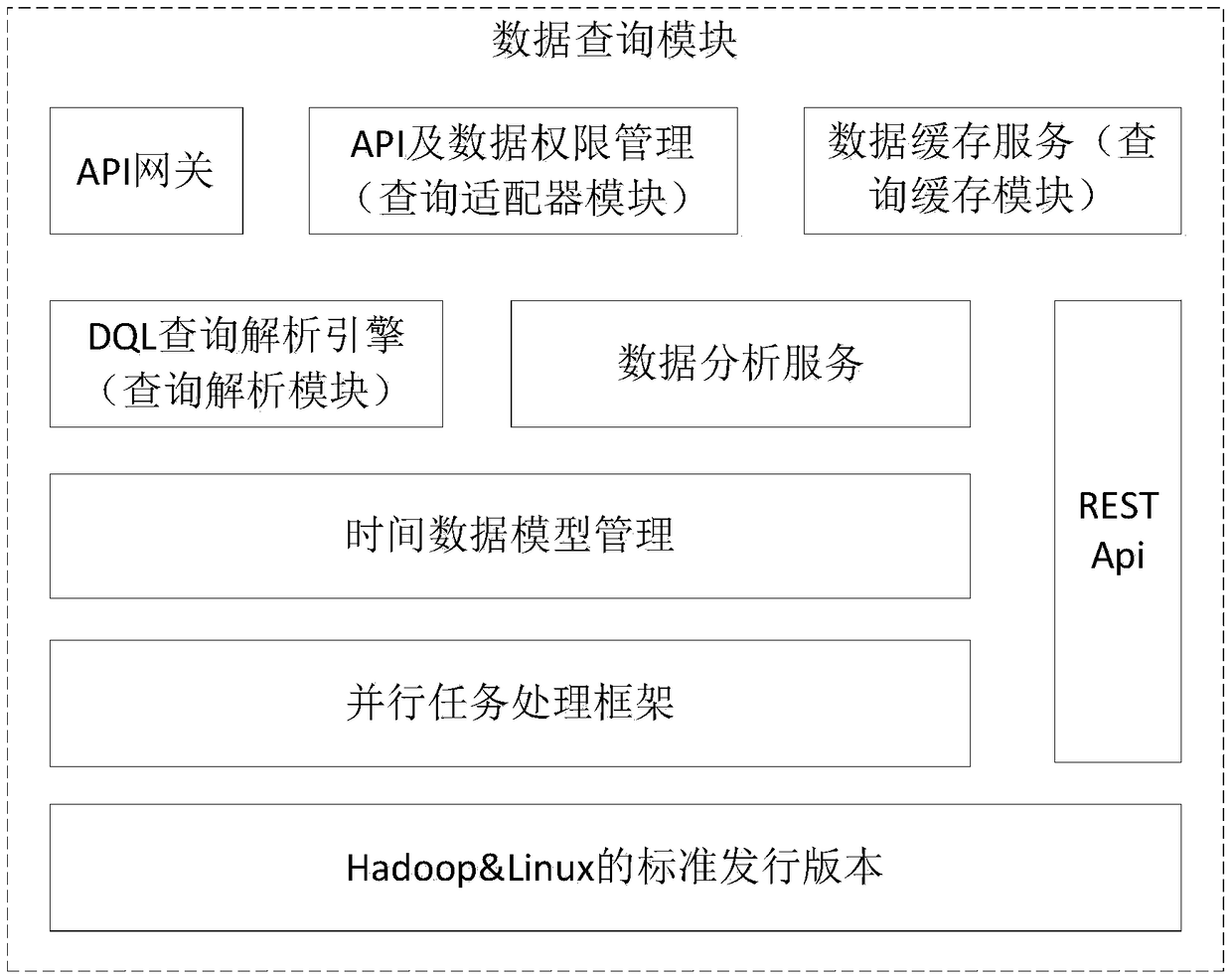

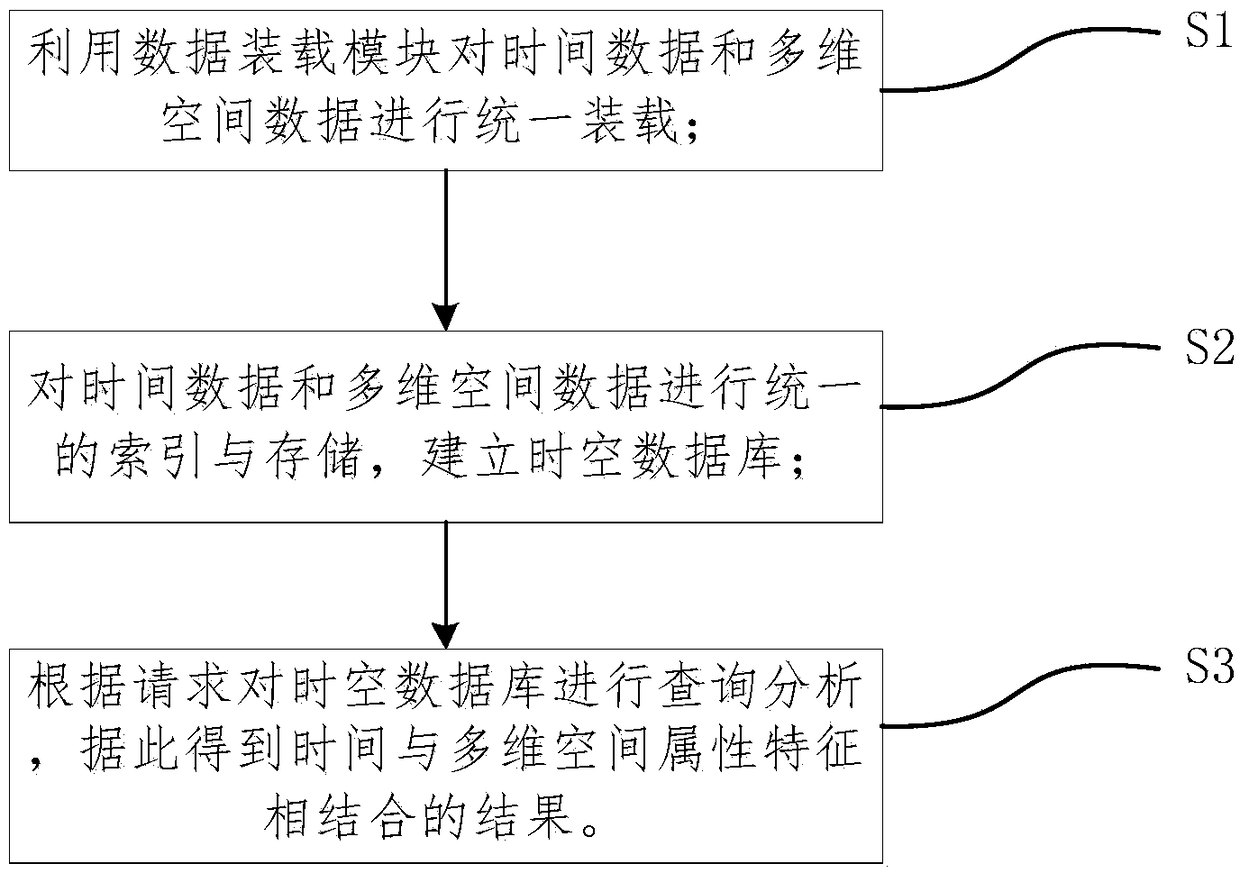

Space-time data processing platform and method based on time and space data models

PendingCN108959352APromote rapid developmentLow professional knowledge requirementSpecial data processing applicationsSpacetimeLatitude

The invention relates to a space-time data processing platform and method based on time and space data models, and belongs to the technical field of space-time big data processing and analysis, a datamodel is established through combination of time and space data, changes of space factors over time can be realized, time, space and attribute characteristics of geographical things and phenomena canbe organically combined, and the geographical things and phenomena can be enriched as an expression content, and meanwhile, the rapid analysis, writing, persistence and multi-latitude aggregate queryof the space-time data are supported.

Owner:北京天机数测数据科技有限公司

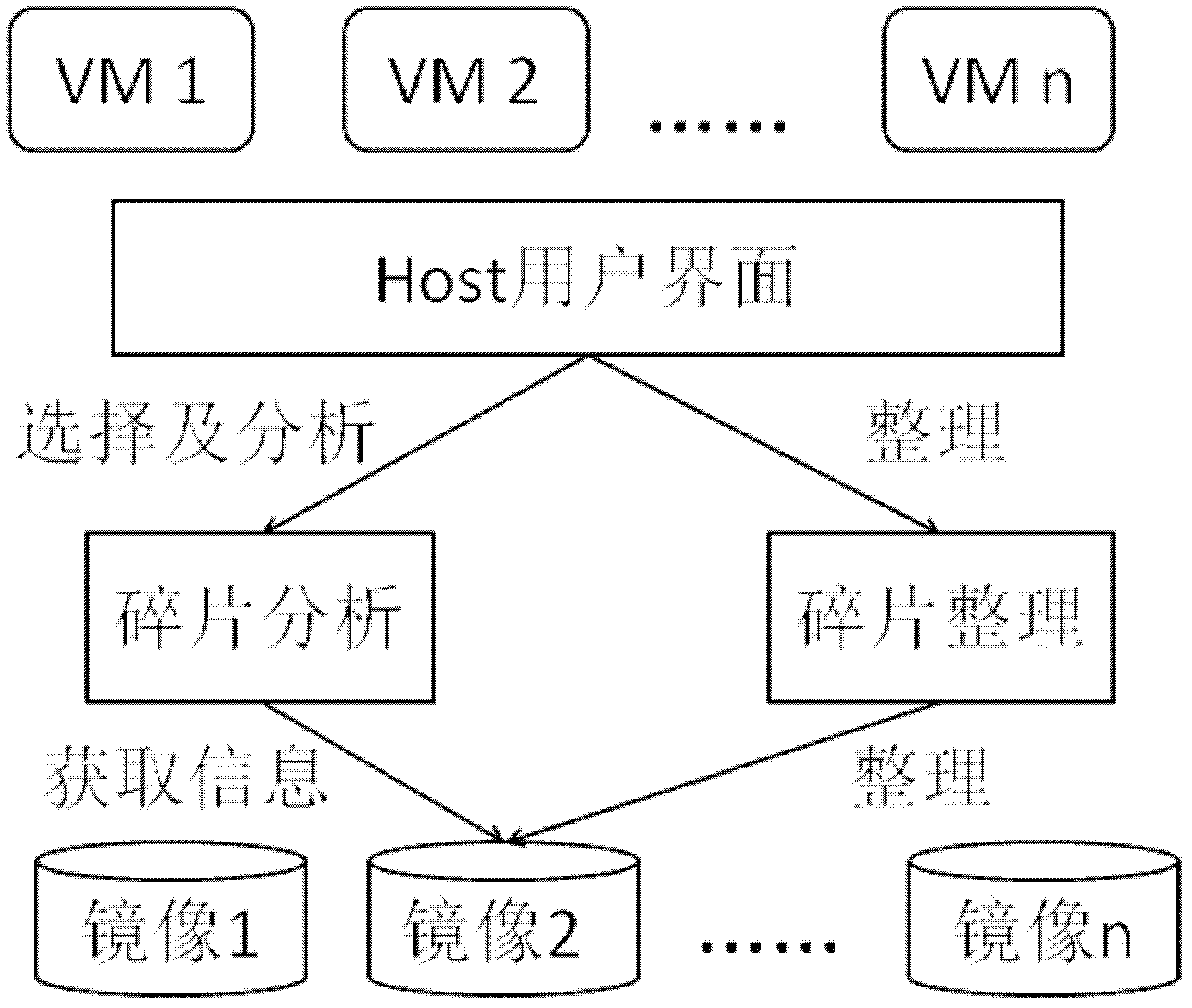

Zero-copy defragmentation method for virtual file system fragments

InactiveCN102194010AImprove reading performanceReduce overheadSpecial data processing applicationsVirtual file systemZero-copy

The invention discloses a zero-copy defragmentation method for virtual file system fragments. The defragmentation method is a zero-copy defragmentation method, that is, any data block on a physical disk is not required to be moved or copied, only the metadata mapping relation between a virtual file system and the corresponding physical file system is modified, and therefore the zero-copy defragmentation method has the characteristics of high efficiency and low cost. Compared with the traditional defragmentation method of a virtual machine, the zero-copy defragmentation method disclosed by theinvention has the characteristics of more effectiveness, higher efficiency, lower cost and flexibility in use.

Owner:HUAZHONG UNIV OF SCI & TECH

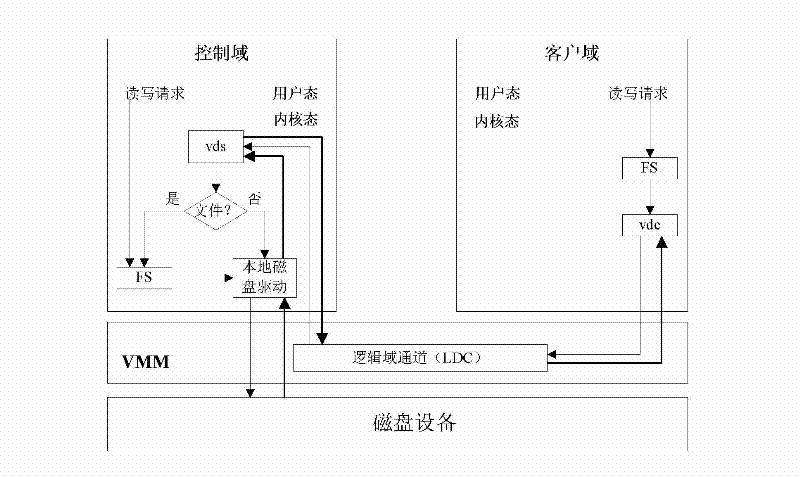

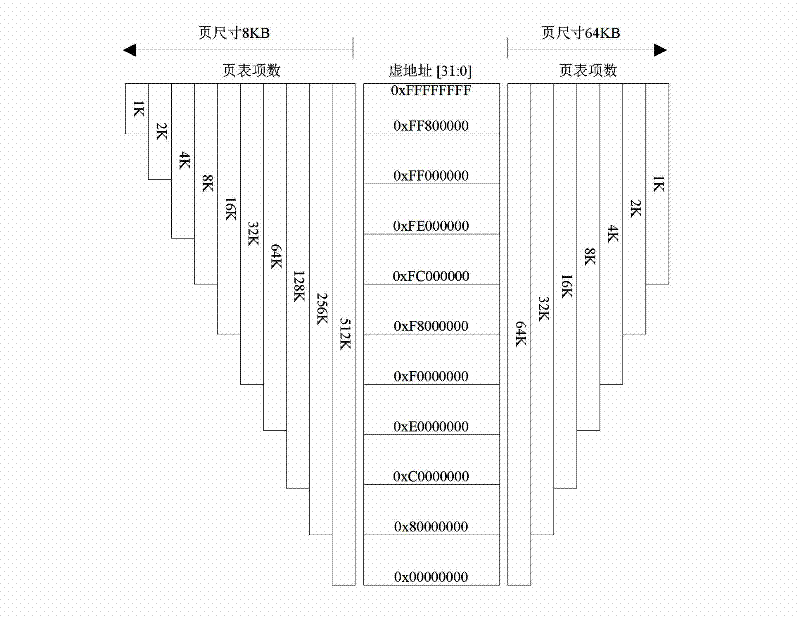

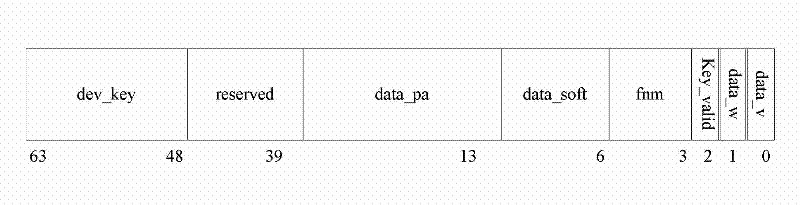

DMA (direct memory access) resource allocation method for virtual machine under sun4v architecture

ActiveCN102521054AImprove I/O performanceMinor changesResource allocationSoftware simulation/interpretation/emulationDirect memory accessManagement unit

The invention discloses a DMA (direct memory access) resource allocation method for a virtual machine under a sun4v architecture, which includes the steps: 1) enabling a control domain and a customer domain to keep overall-situation reserved virtual address spaces and to set up address translation page tables; 2) enabling the customer domain to acquire the DMA virtual addresses through consultation with the control domain, and allocating the DMA virtual addresses from the overall-situation reserved virtual address spaces to the customer domain by the control domain; 3) utilizing a virtual machine monitor to open service interfaces of an input / output memory management unit to the customer domain; and 4) utilizing the customer domain to search the address translation page tables to obtain real addresses and the table item sequence number, calling the service interfaces of the input / output memory management unit by the customer domain according to the real addresses and the table item sequence number so that the customer domain realizes operation of the DMA with physical memory, and releasing the allocated DMA virtual addresses after DMA operation is completed. The customer domain ofthe method can directly perform DMA operation without the aid of the control domain, and the method has the advantages of fine reliability, high safety, low performance loss, fine flexibility and adaptability and wide application range.

Owner:NAT UNIV OF DEFENSE TECH

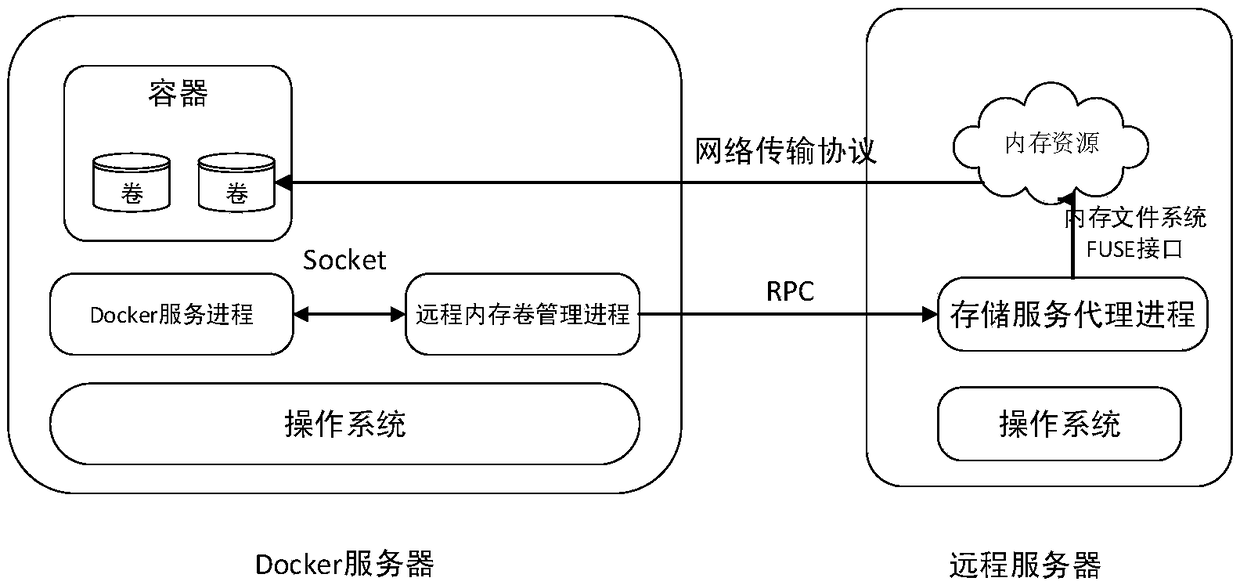

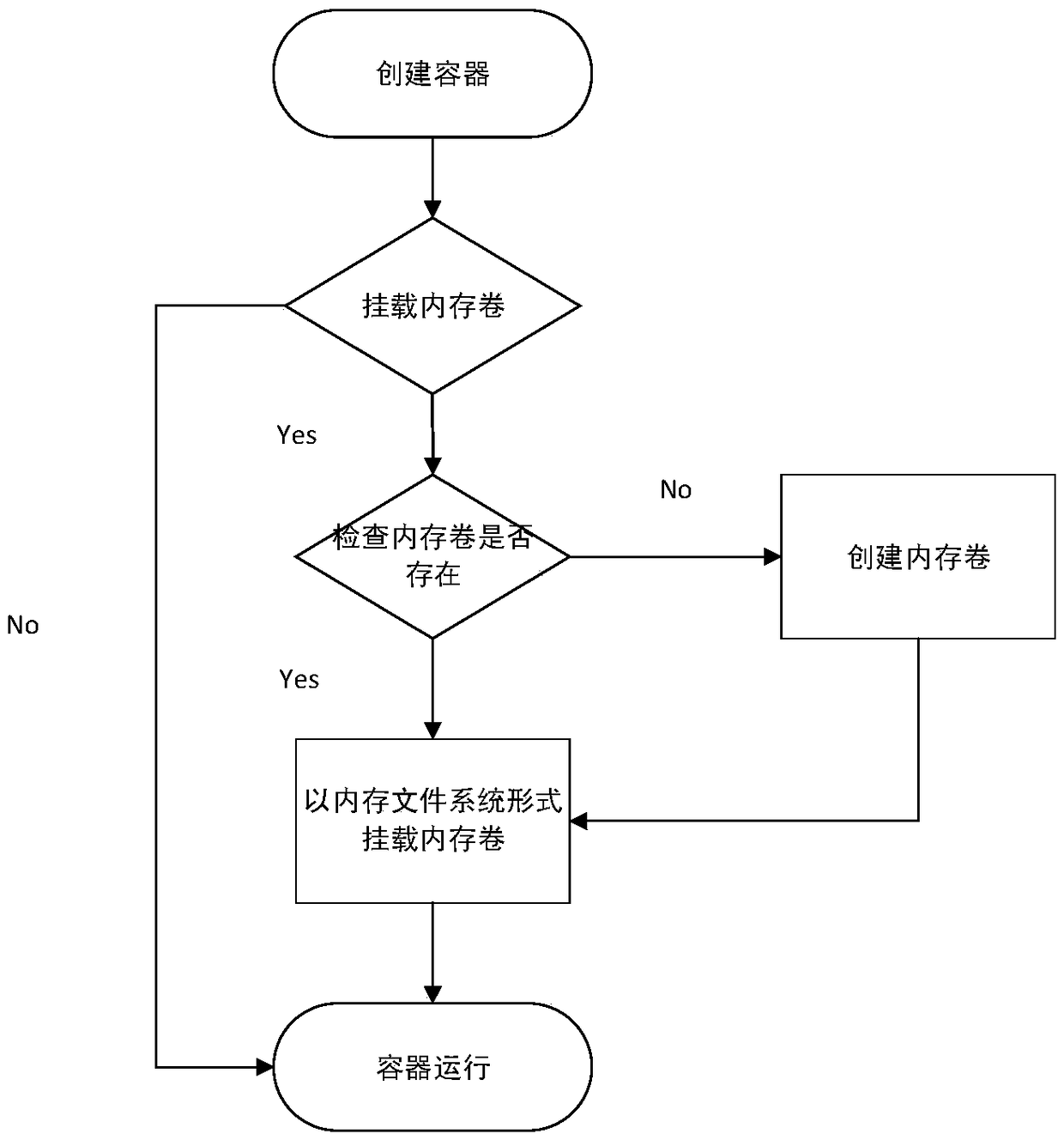

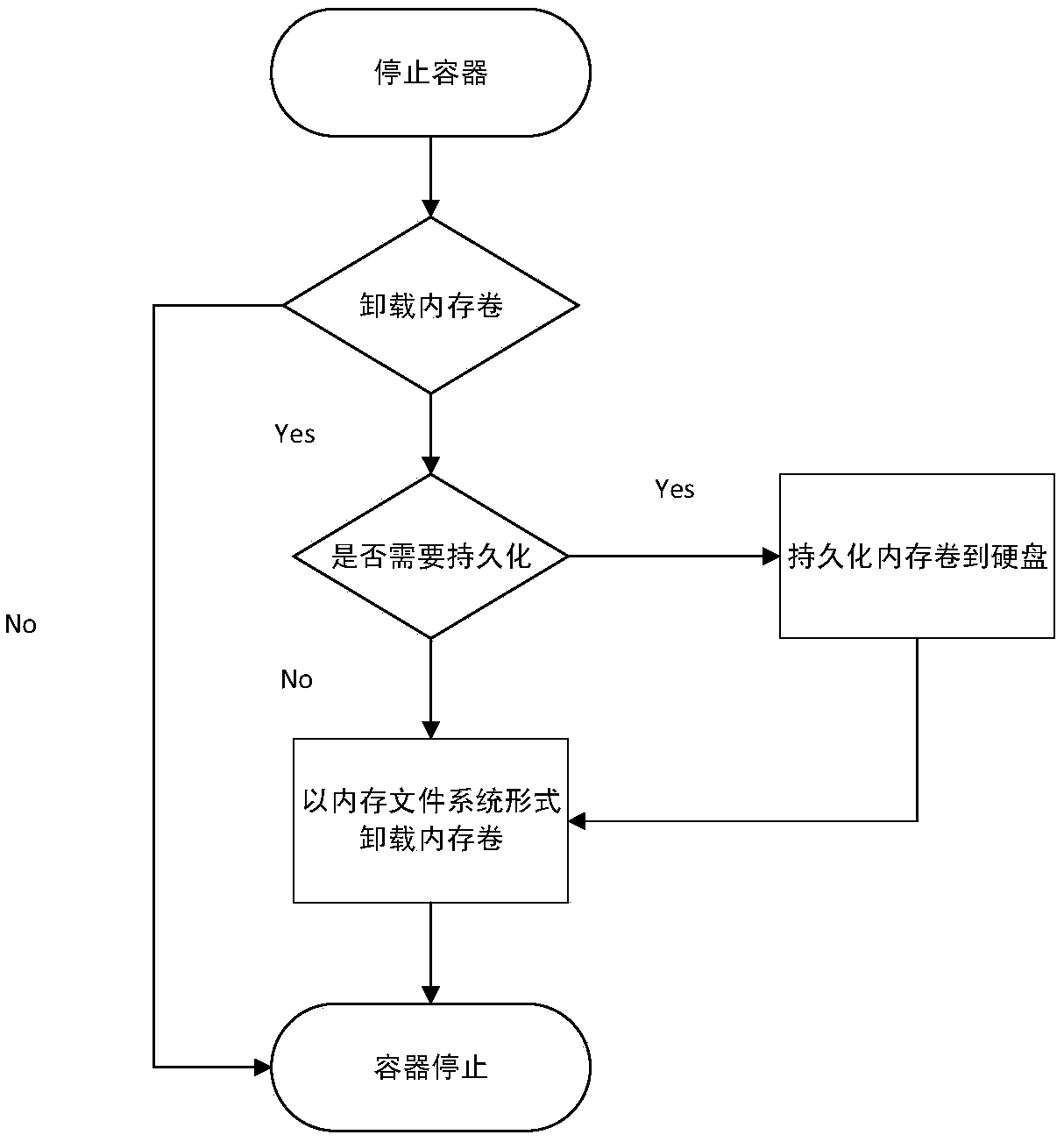

Remote memory volume management method and system of Docker container

The invention discloses a remote memory volume management method and a remote memory volume management system of a Docker container. The method comprises building, loading, unloading and destroying operations of a remote memory volume. The operation of building the remote memory volume comprises the steps of when a remote memory volume management process receives a remote memory volume building request, acquiring an IP address of a storage node from a storage server cluster, and recording a mapping relation between a remote memory volume name and the IP address of the storage node; the operation of loading the remote memory volume comprises the step of when the remote memory volume loading request is received, connecting to the storage node, wherein the agent process of the storage node loads the remote memory volume to a local node; the operation of unloading the remote memory volume comprises the step of when a remote memory volume unloading request is received, informing the agent process to unload the remote memory volume; and the operation of destroying the remote memory volume comprises the step of when a remote memory volume destroying request is received, informing the agent process to release remote memory volume resources. According to the method provided by the invention, the IO performance of the application in the Docker container on the local node is improved.

Owner:ZHEJIANG UNIV

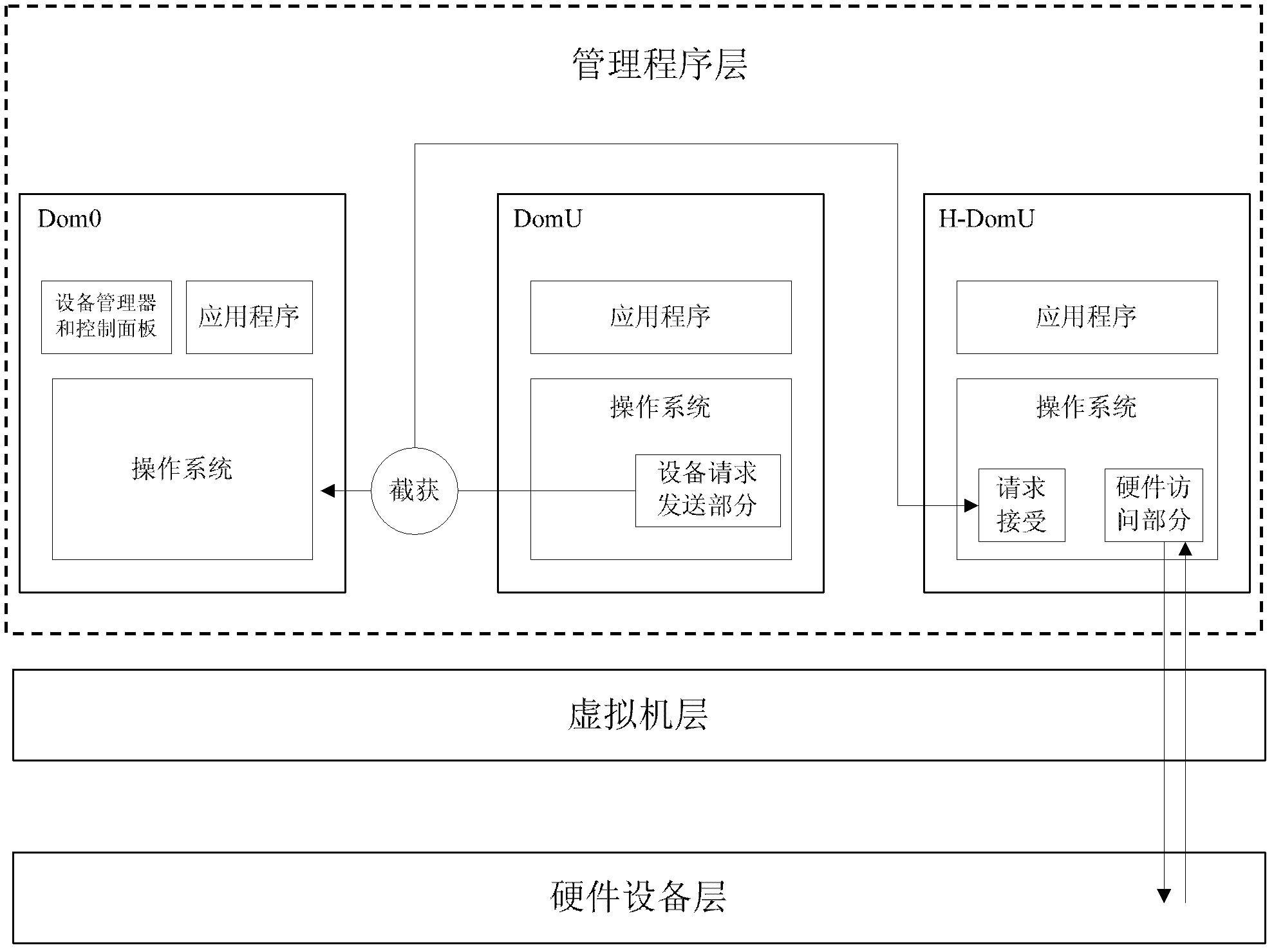

Separated access method and system for PCI (Peripheral Component Interconnect) equipment in virtualization environment

InactiveCN102426557AImprove performanceOvercoming problems that become input-output bottlenecksElectric digital data processingVirtualizationAccess method

The invention relates to a separated access method and system for PCI (Peripheral Component Interconnect) equipment in a virtualization environment. The separated access system is characterized by comprises a privileged domain and an isolation driver domain, wherein the privileged domain is used for receiving system calling sent by an unprivileged domain for accessing I / O (Input / Output) equipment, the isolation driver domain is connected with the privileged domain and used for isolating a part of permission to access the I / O equipment from the privileged domain, receiving the system calling sent to the privileged domain from the unprivileged domain, establishing an interaction channel between front-end equipment of the unprivileged domain and rear-end equipment of the isolation driver domain, acquiring permission to access the I / O equipment, accessing the I / O equipment through the rear-end equipment and returning data through the interaction channel. According to the invention, through intercepting and capturing the system calling, the burden of the privileged domain is reduced so as to better improve network I / O performance.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com