Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

241results about How to "Improve locality" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

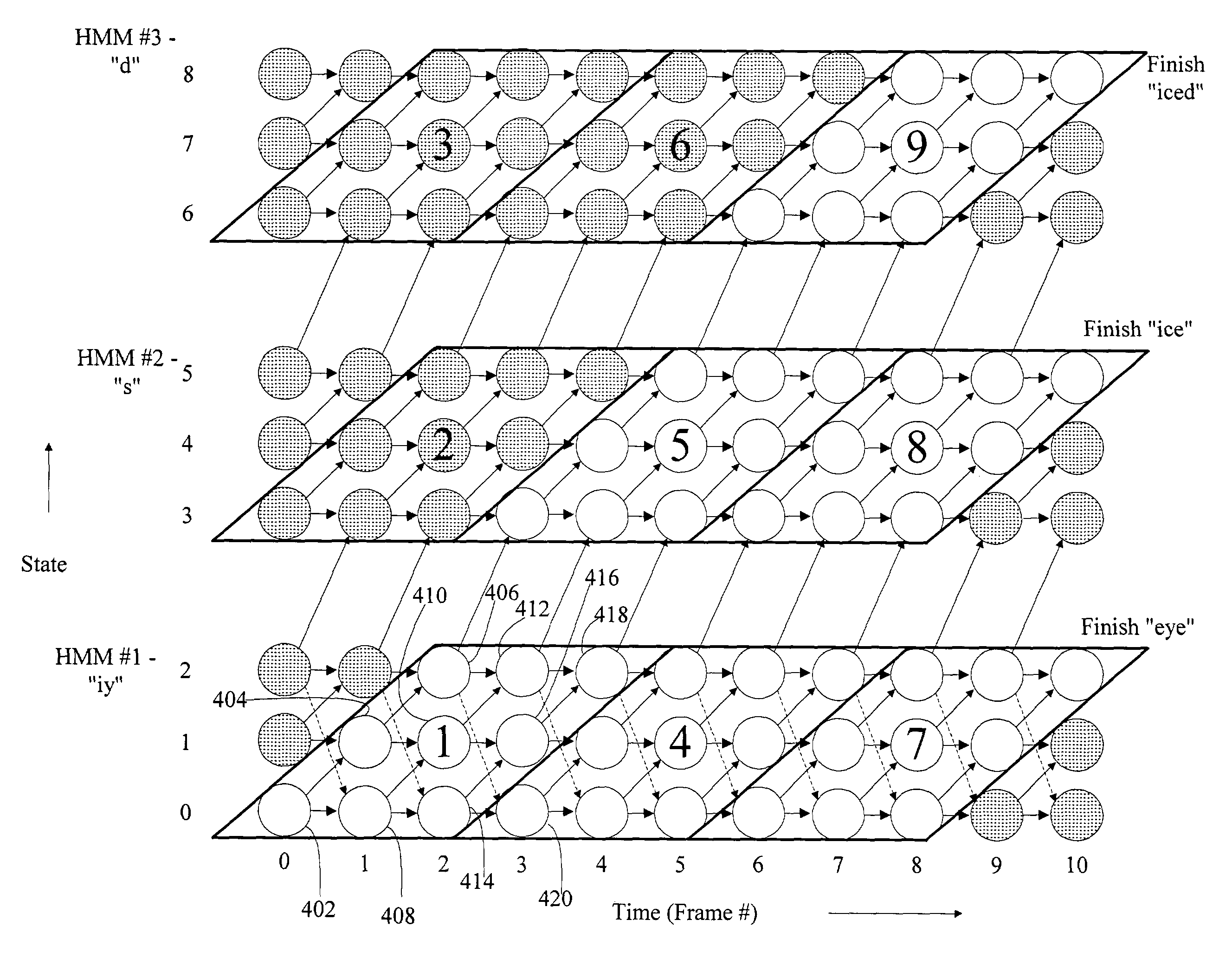

Block synchronous decoding

InactiveUS7529671B2Improved pattern recognition speedImprove cache localityKitchenware cleanersGlovesHide markov modelCache locality

A pattern recognition system and method are provided. Aspects of the invention are particularly useful in combination with multi-state Hidden Markov Models. Pattern recognition is effected by processing Hidden Markov Model Blocks. This block-processing allows the processor to perform more operations upon data while such data is in cache memory. By so increasing cache locality, aspects of the invention provide significantly improved pattern recognition speed.

Owner:MICROSOFT TECH LICENSING LLC

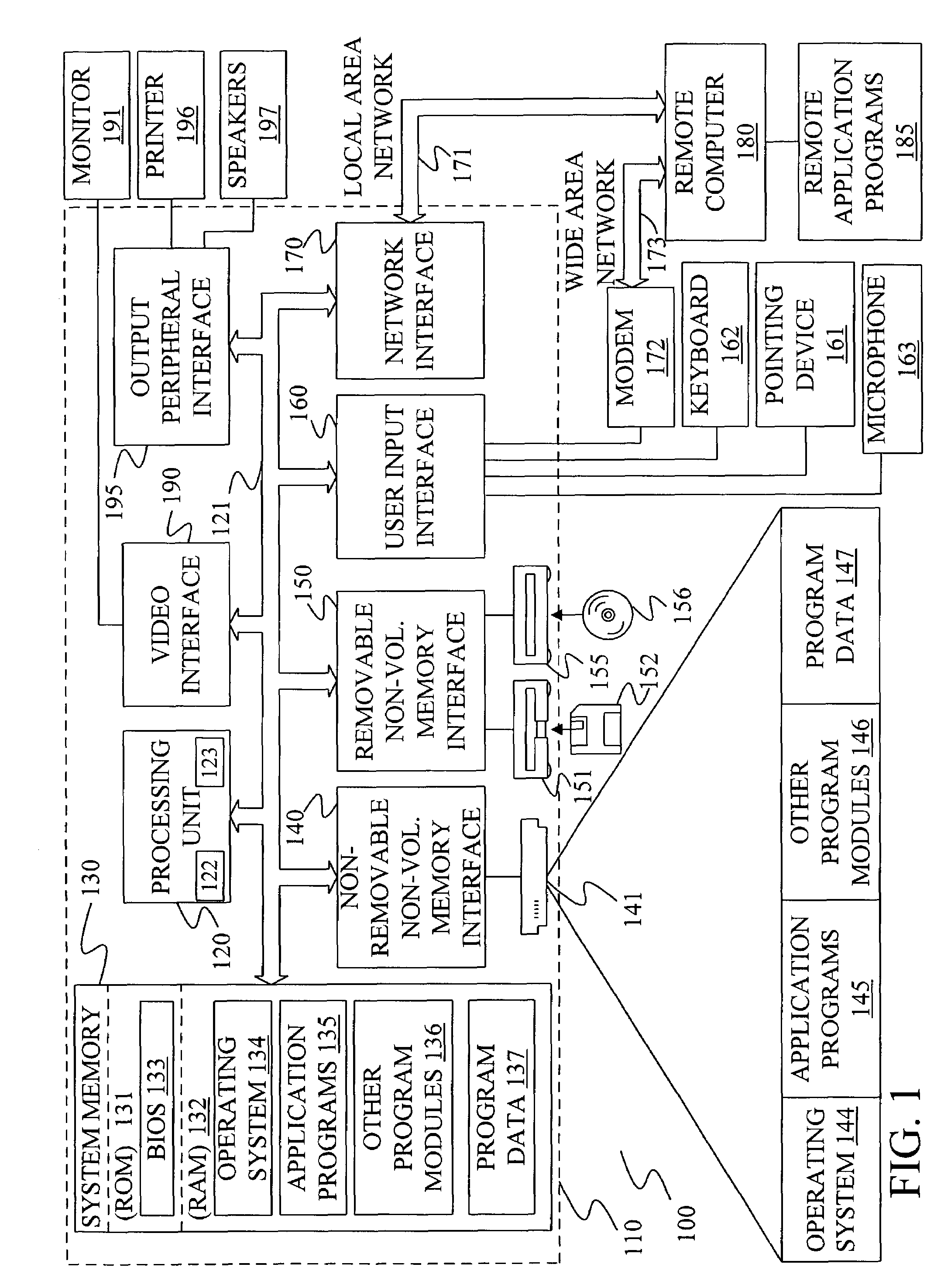

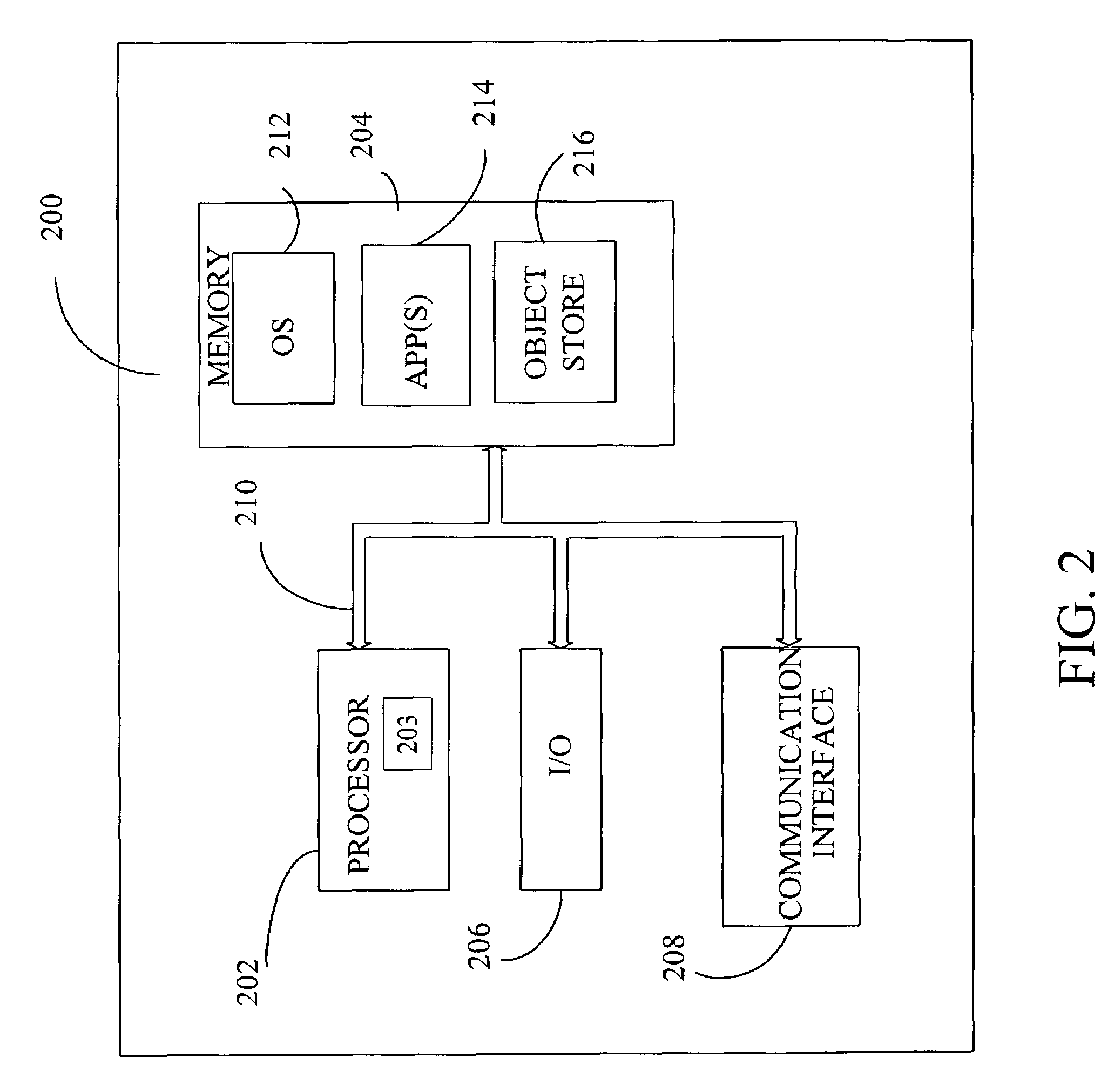

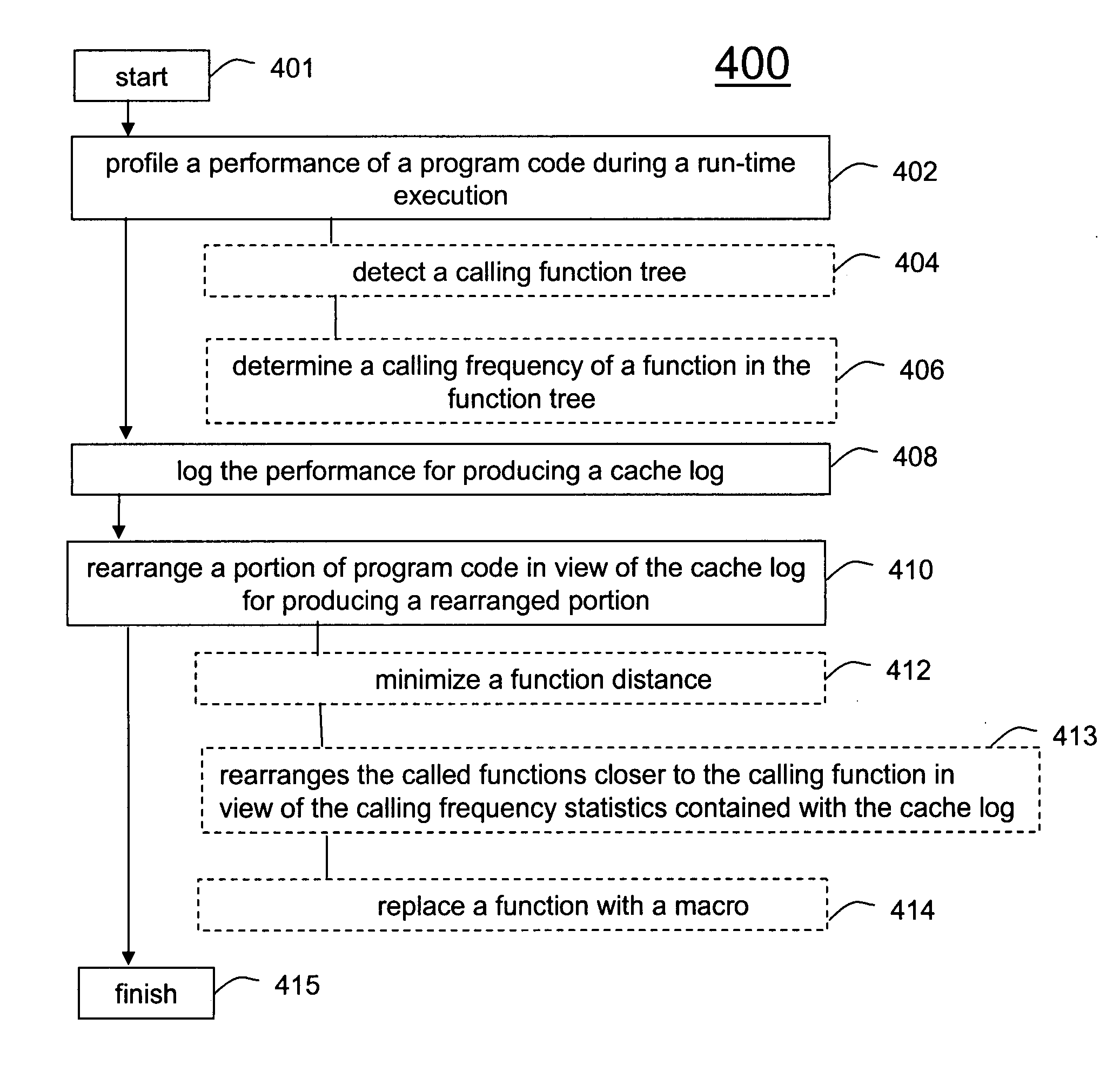

Method and system for run-time cache logging

InactiveUS20070150881A1Increase heightMaximize cache locality compile timeError detection/correctionSpecific program execution arrangementsCache optimizationParallel computing

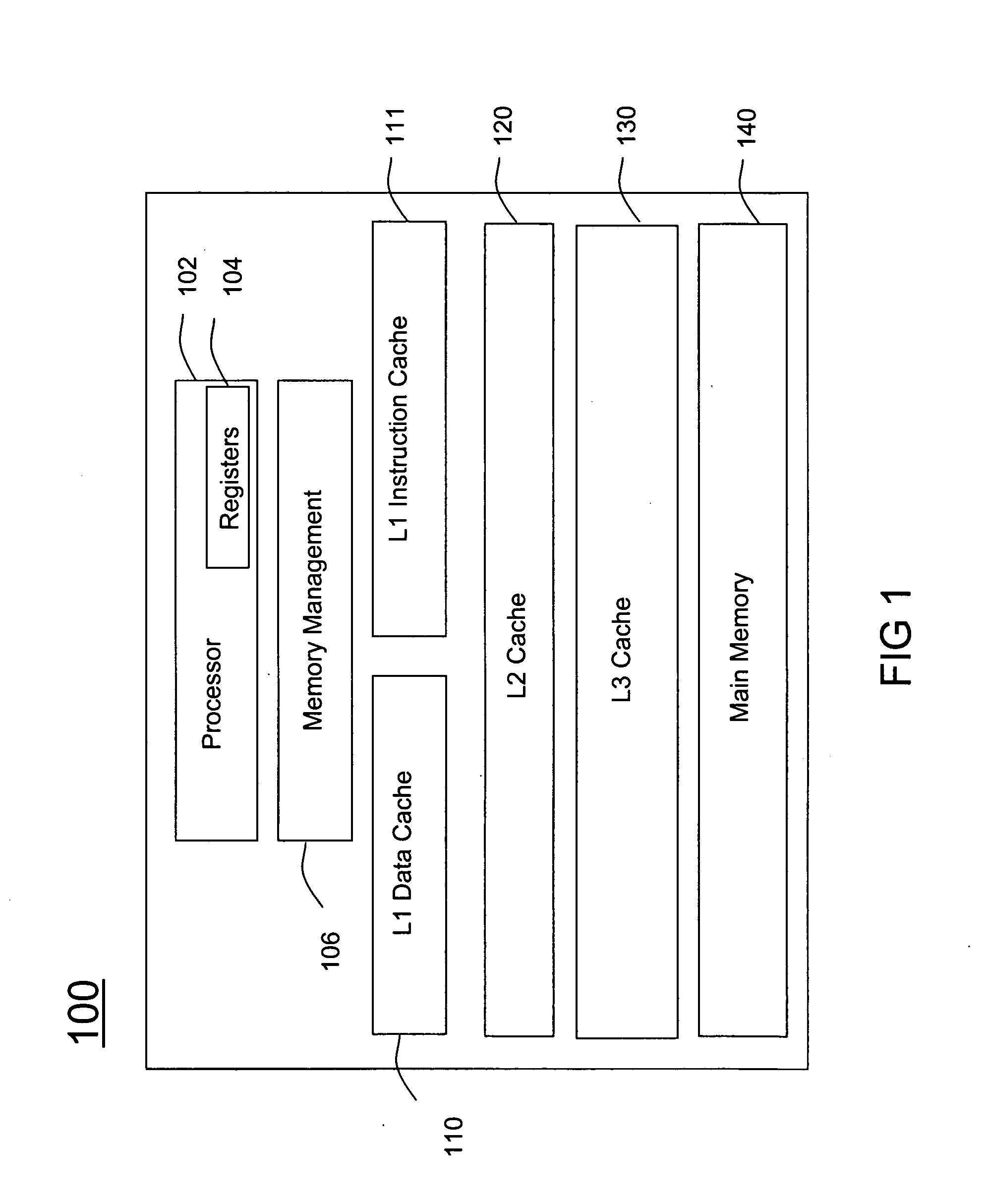

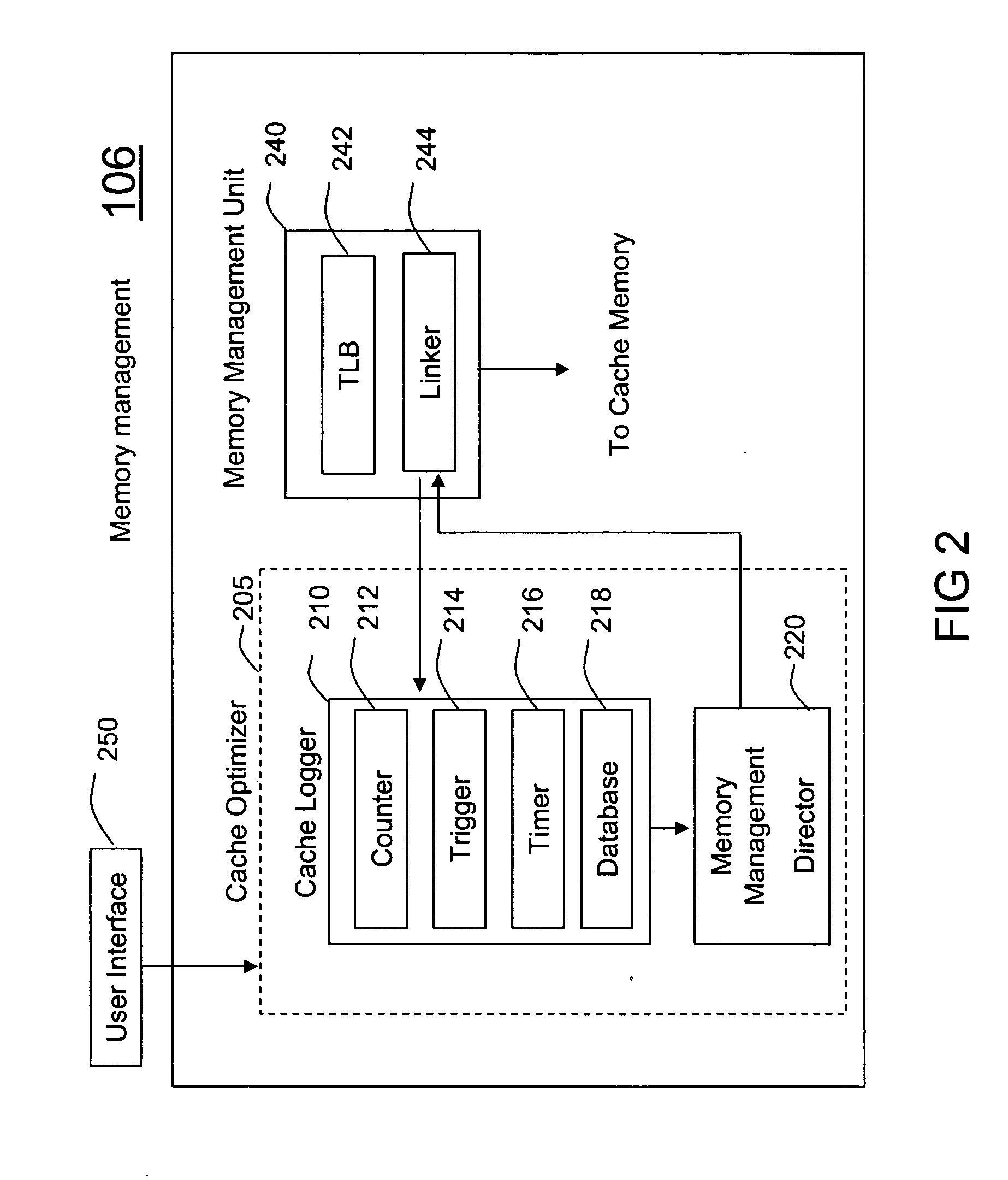

A method (400) and system (106) is provided for run-time cache optimization. The method includes profiling (402) a performance of a program code during a run-time execution, logging (408) the performance for producing a cache log, and rearranging (410) a portion of program code in view of the cache log for producing a rearranged portion. The rearranged portion is supplied to a memory management unit (240) for managing at least one cache memory (110-140). The cache log can be collected during a real-time operation of a communication device and is fed back to a linking process (244) to maximize a cache locality compile-time. The method further includes loading a saved profile corresponding with a run-time operating mode, and reprogramming a new code image associated with the saved profile.

Owner:MOTOROLA INC

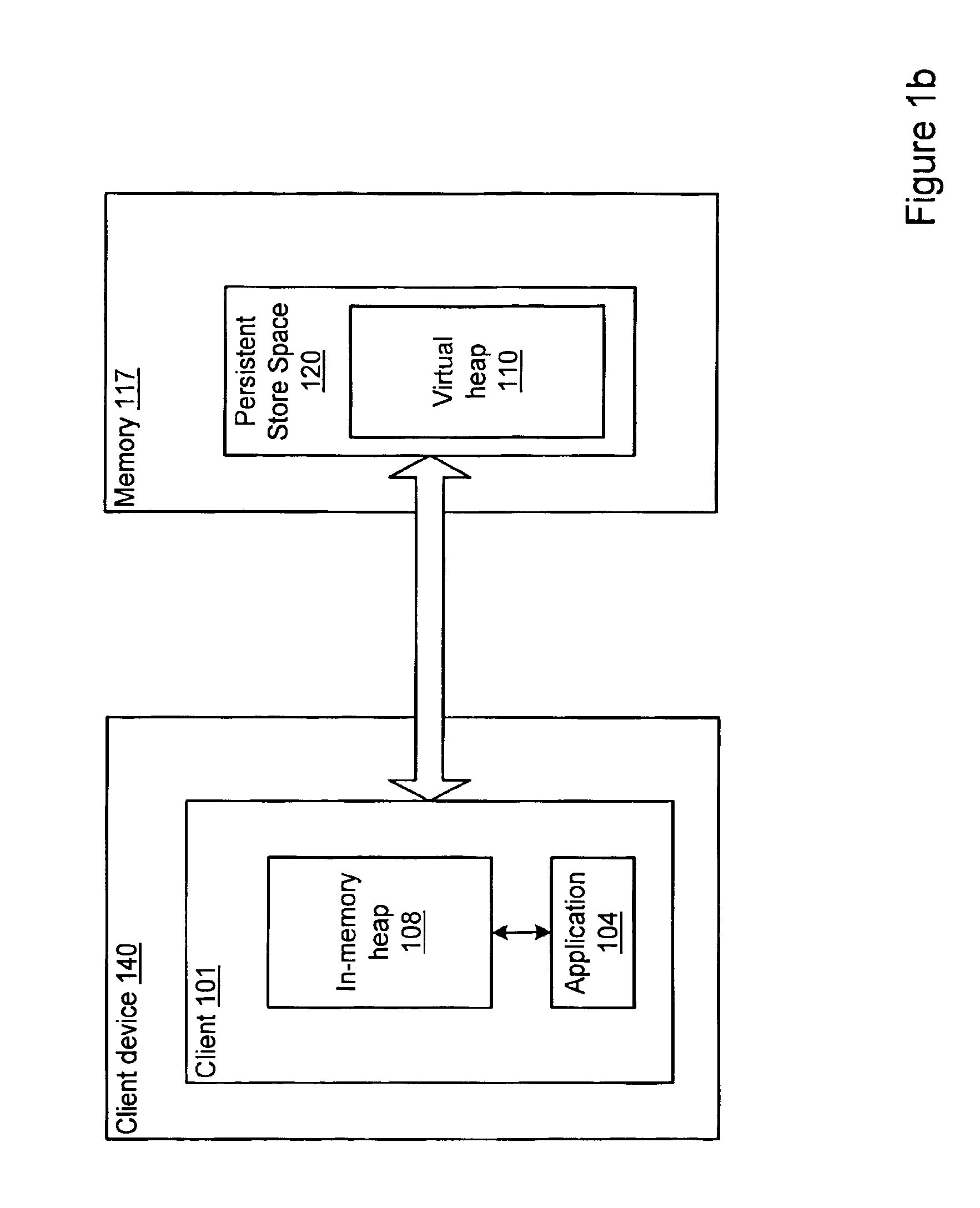

Database store for a virtual heap

InactiveUS6957237B1Meet constraintsEnsure consistencyData processing applicationsMemory adressing/allocation/relocationApplication programming interfaceApplication software

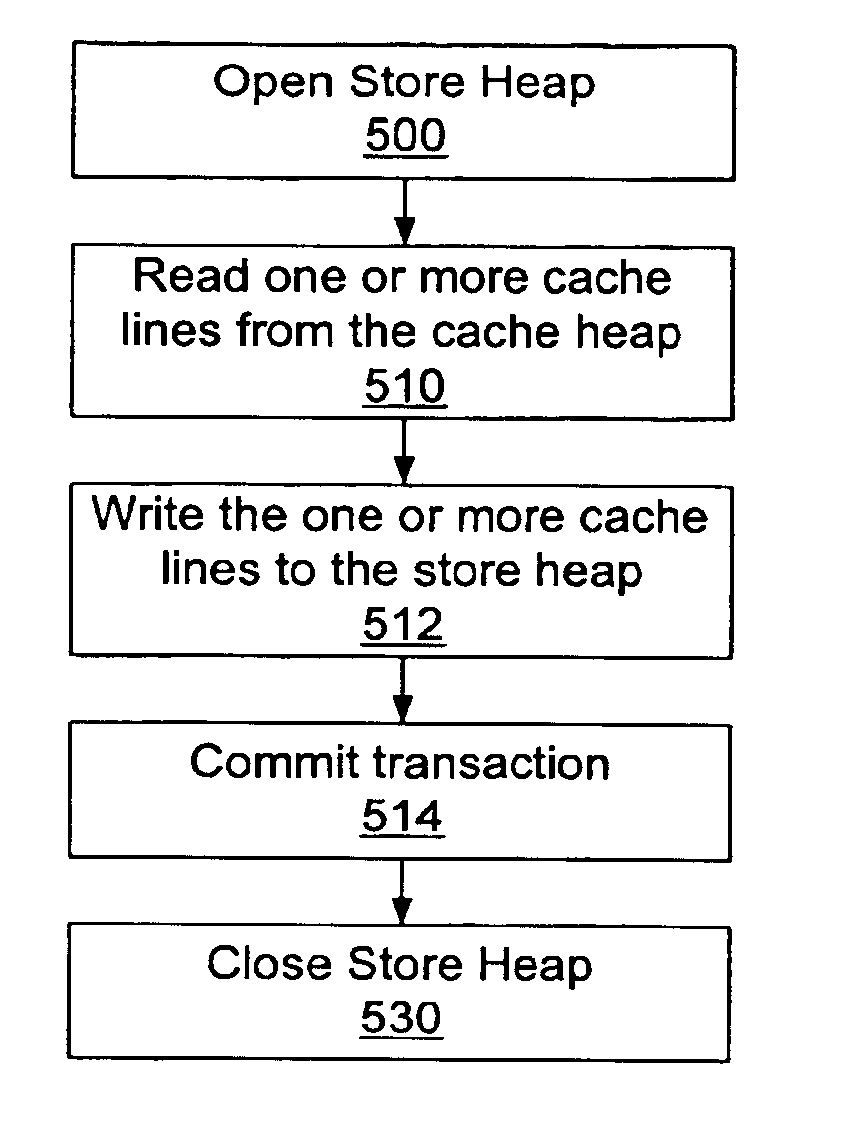

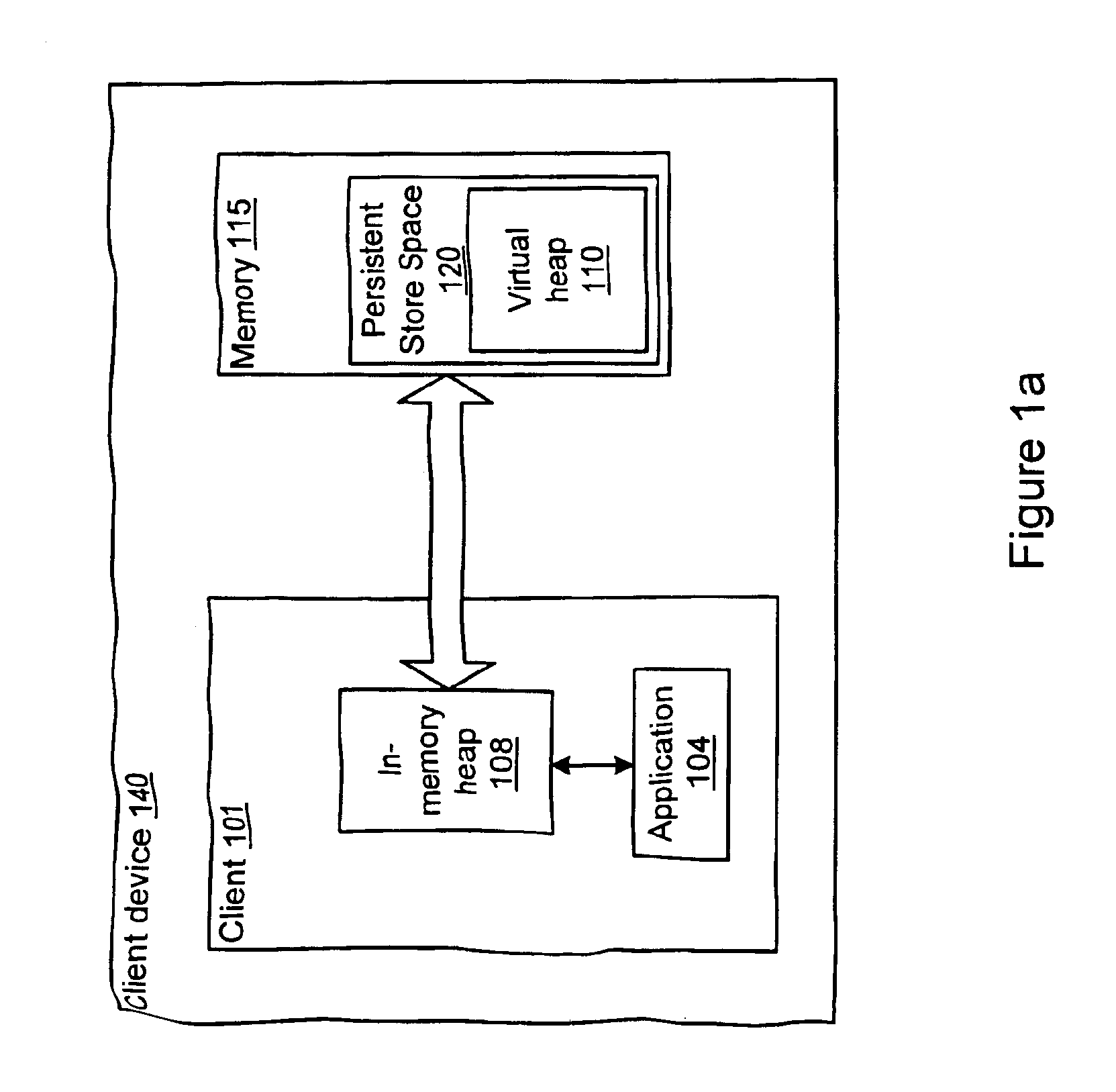

A database store method and system for a virtual persistent heap may include an Application Programming Interface (API) that provides a mechanism to cache portions of the virtual heap into an in-memory heap for use by an application. The virtual heap may be stored in a persistent store that may include one or more virtual persistent heaps, with one virtual persistent heap for each application running in the virtual machine. Each virtual persistent heap may be subdivided into cache lines. The store API may provide atomicity on the store transaction to substantially guarantee the consistency of the information stored in the database. The database store API provides several calls to manage the virtual persistent heap in the store. The calls may include, but are not limited to: opening the store, closing the store, atomic read transaction, atomic write transaction, and atomic delete transaction.

Owner:ORACLE INT CORP

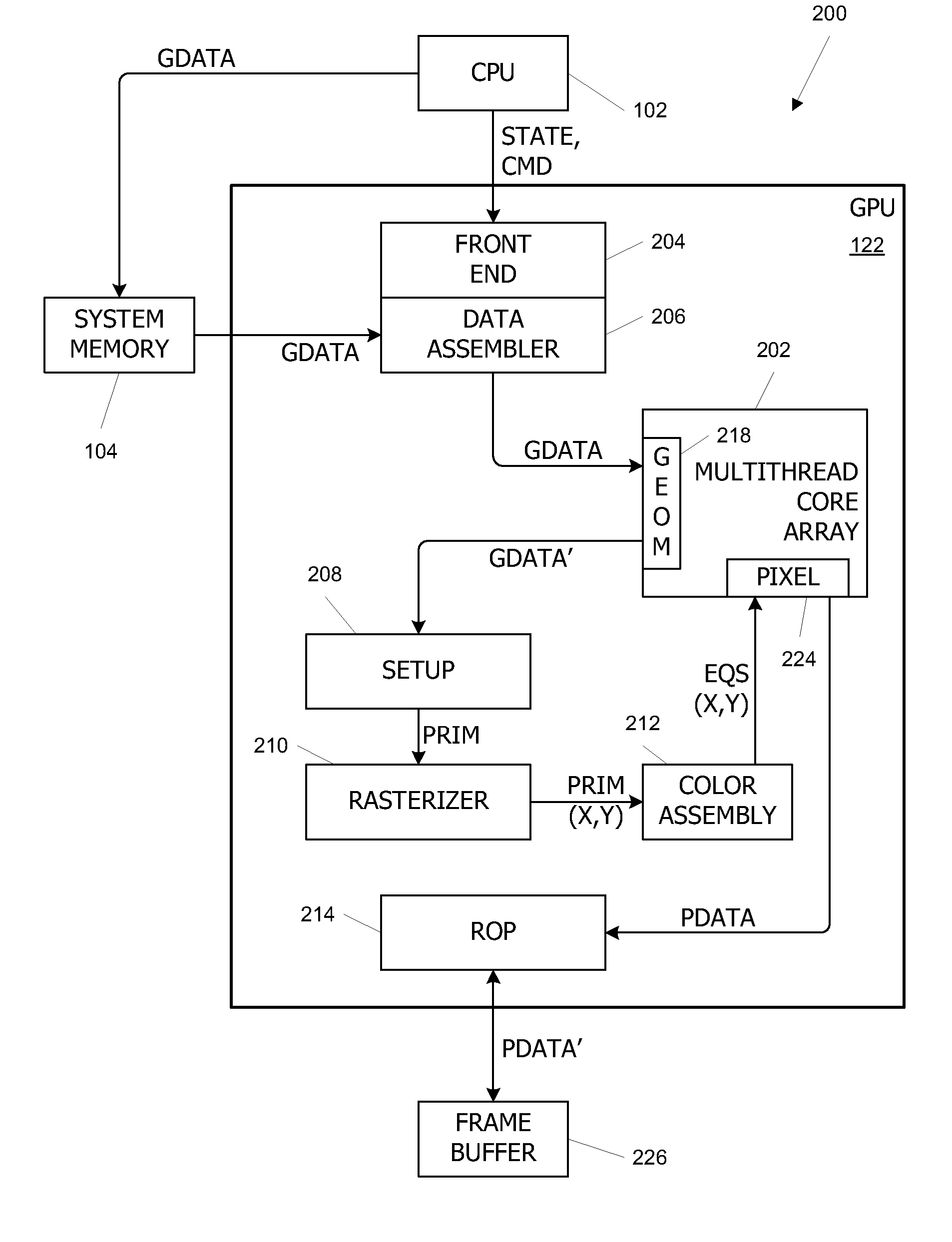

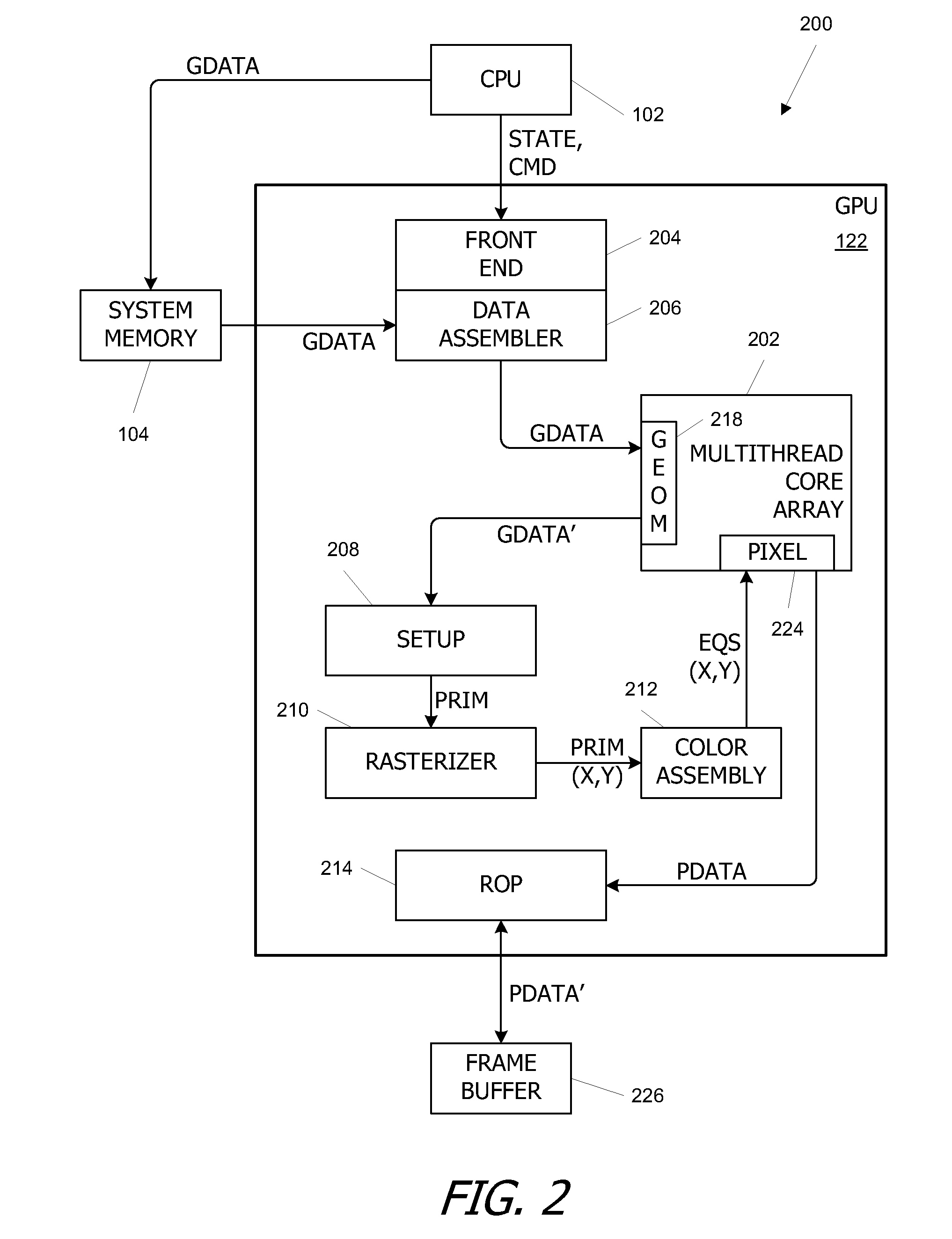

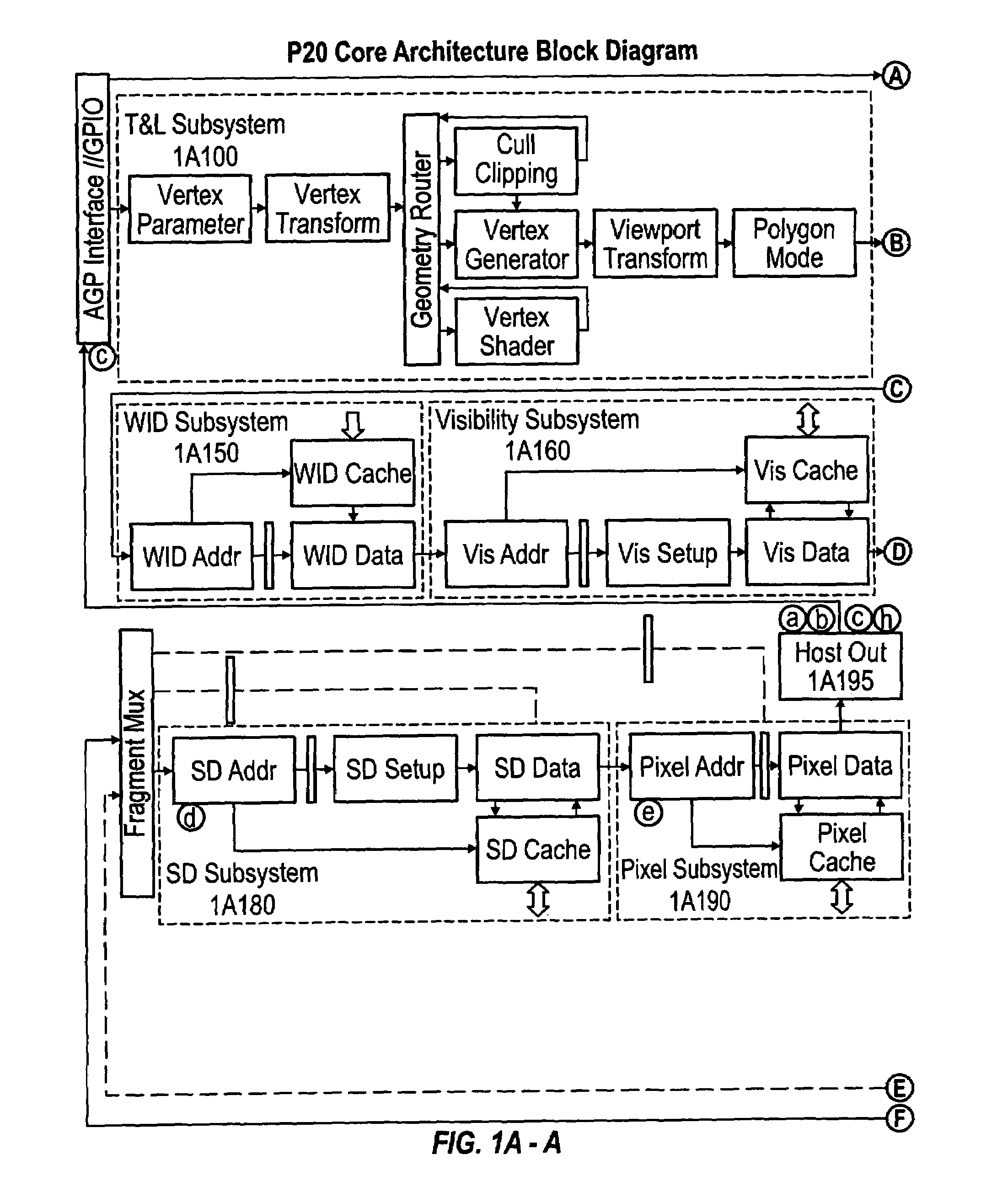

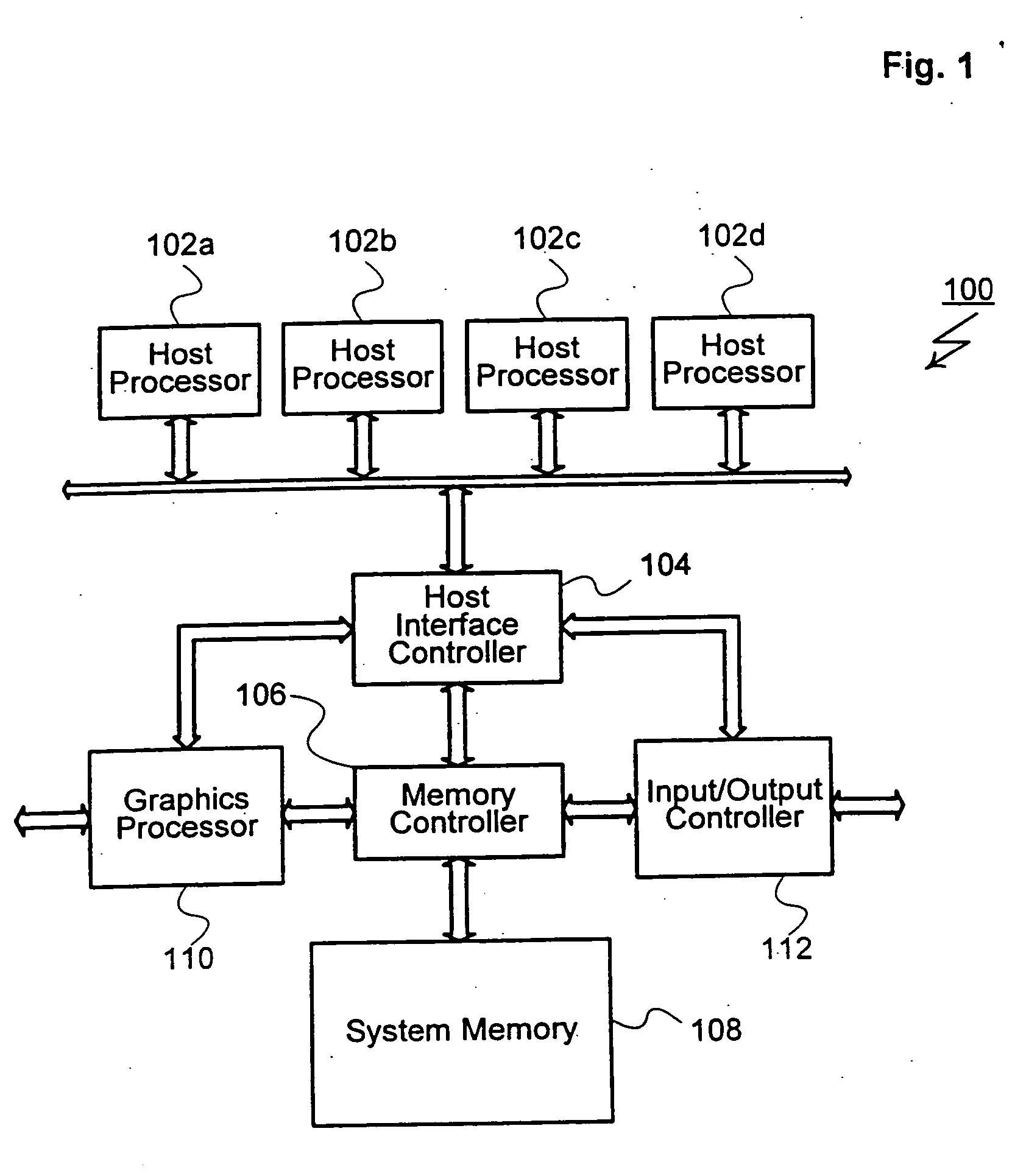

Parallel Array Architecture for a Graphics Processor

InactiveUS20070159488A1Improving memory localityImprove localitySingle instruction multiple data multiprocessorsCathode-ray tube indicatorsProcessing coreParallel computing

A parallel array architecture for a graphics processor includes a multithreaded core array including a plurality of processing clusters, each processing cluster including at least one processing core operable to execute a pixel shader program that generates pixel data from coverage data; a rasterizer configured to generate coverage data for each of a plurality of pixels; and pixel distribution logic configured to deliver the coverage data from the rasterizer to one of the processing clusters in the multithreaded core array. The pixel distribution logic selects one of the processing clusters to which the coverage data for a first pixel is delivered based at least in part on a location of the first pixel within an image area. The processing clusters can be mapped directly to the frame buffers partitions without a crossbar so that pixel data is delivered directly from the processing cluster to the appropriate frame buffer partitions. Alternatively, a crossbar coupled to each of the processing clusters is configured to deliver pixel data from the processing clusters to a frame buffer having a plurality of partitions. The crossbar is configured such that pixel data generated by any one of the processing clusters is deliverable to any one of the frame buffer partitions.

Owner:NVIDIA CORP

Order-independent 3D graphics binning architecture

ActiveUS7505036B1Increased complexityIncrease frame rateDetails involving image processing hardwareElectric digital data processingGraphicsDepth peeling

A binning architecture that allows opaque and transparent primitives to be segregated automatically into pairs of bins covering the same bin rectangle on the screen. When the frame is complete, the opaque bin will be rendered first and then the transparent bin will be rendered repeatedly using the depth peeling algorithm until all the layers have been resolved. This will happen automatically without requiring the application to isolate and store the transparent primitives until all of the opaque primitives have been rendered or submit the transparent primitives repeated until all of the transparent layers have been resolved.

Owner:XUESHAN TECH INC

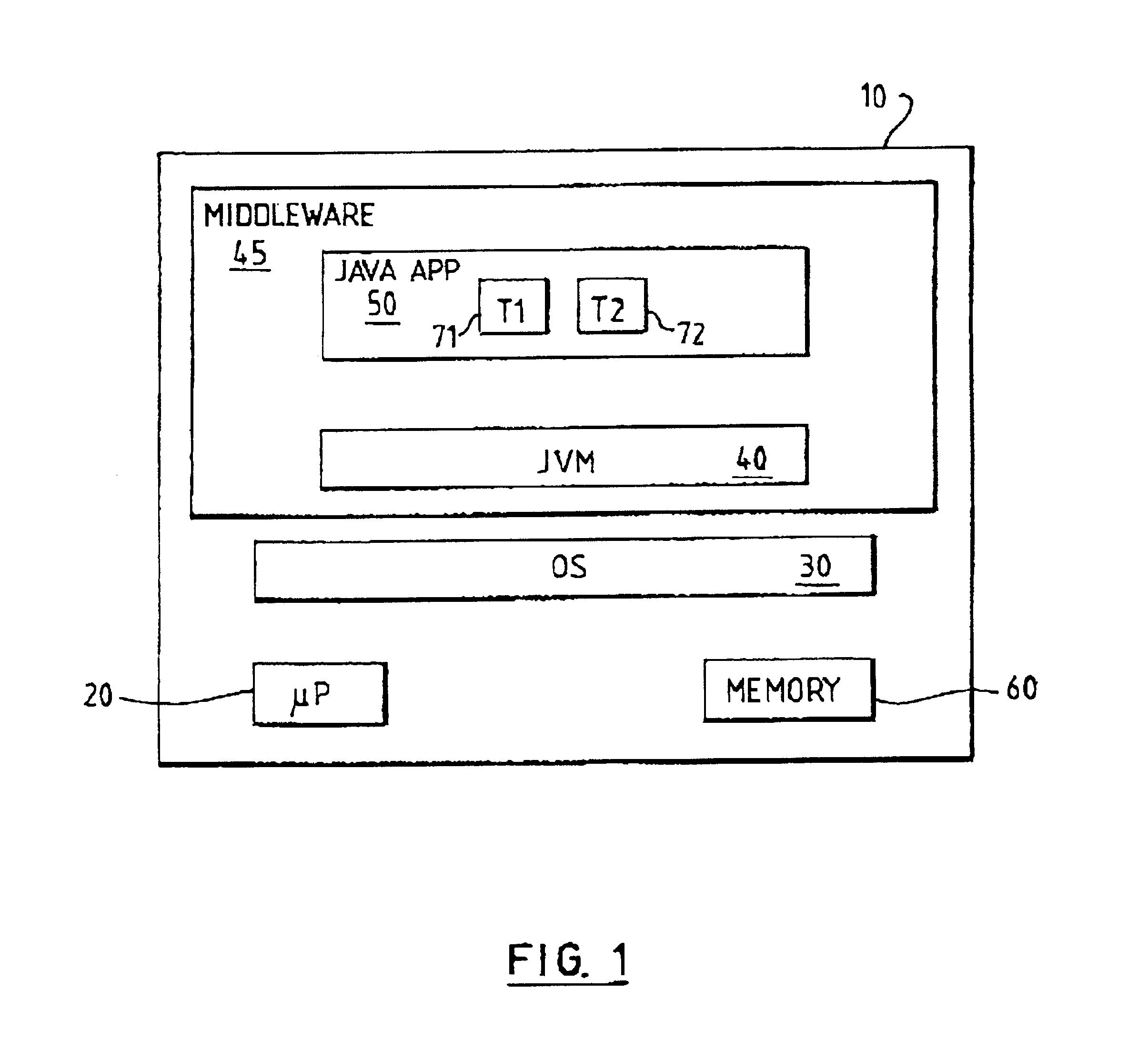

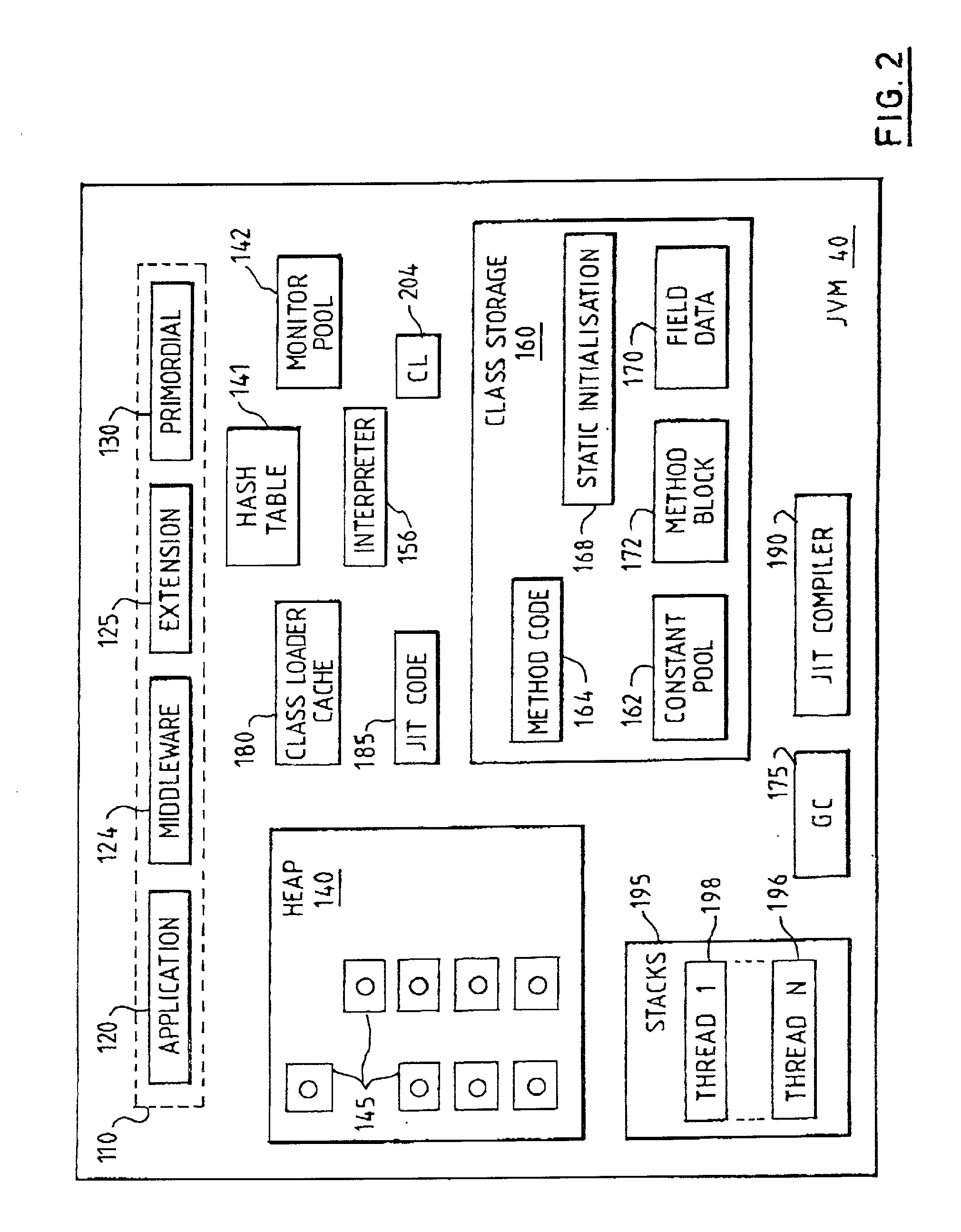

System and method for class loader constraint checking

InactiveUS6851111B2Improve scalabilityImprove efficiencyProgram loading/initiatingSoftware simulation/interpretation/emulationProgramming languageComputerized system

A computer system includes multiple class loaders for loading program class files into the system. A constraint checking mechanism is provided wherein a first class file loaded by a first class loader makes a symbolic reference to a second class file loaded by a second class loader, the symbolic reference including a descriptor of a third class file. The constraint mechanism requires that the first and second class files agree on the identity of the third class file and stores a list of constraints as a set of asymmetric relationships between class loaders. Each stored constraint, for a class loader which loaded a class file that contains a symbolic reference to another class file, includes a first parameter denoting the class loader which loaded the class file to which the symbolic references is made; and a second parameter denoting a class file which is identified by a descriptor in said symbolic reference.

Owner:INT BUSINESS MASCH CORP

System and method for class loader constraint checking

InactiveUS20020129177A1Improve efficiencyReduce numberInterprogram communicationDigital computer detailsProgramming languageComputerized system

An object-oriented computer system includes two or more class loaders for loading program class files into the system. A constraint checking mechanism is provided so that where a first class file loaded by a first class loader makes a symbolic reference to a second class file loaded by a second class loader, with said symbolic reference including a descriptor of a third class file, the constraint enforces that the first and second class files agree on the identity of the third class file. The constraint checking mechanism stores a list of constraints as a set of asymmetric relationships between class loaders. Each stored constraint, for a class loader which loaded a class file that contains a symbolic reference to another class file, includes a first parameter denoting the class loader which loaded the class file to which the symbolic references is made; and a second parameter denoting a class file which is identified by a descriptor in said symbolic reference.

Owner:IBM CORP

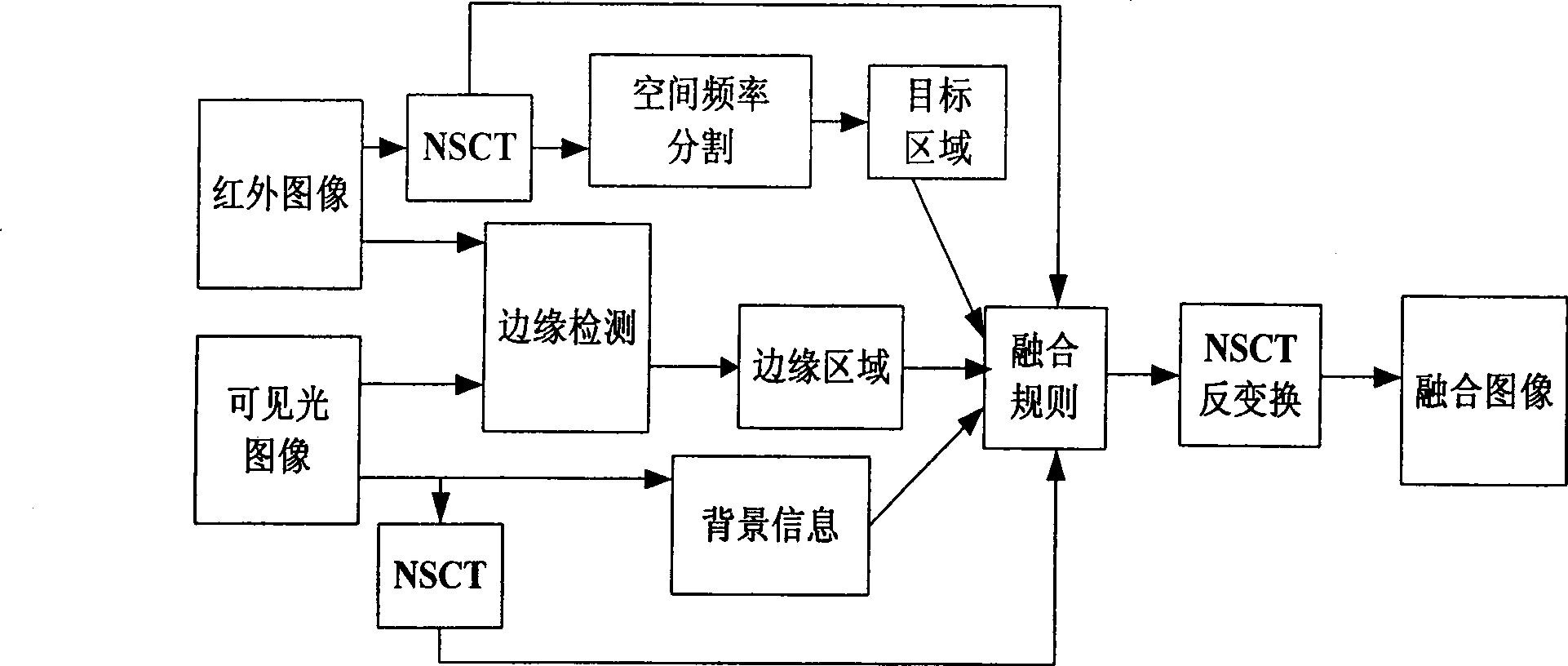

Image fusion of sequence infrared and visible light based on region segmentation

ActiveCN101546428AEfficient captureGood multi-resolutionImage enhancementImage analysisContourletImage fusion

The invention relates to an image fusion of sequence infrared and visible light based on region segmentation. The invention is characterized in that infrared images are segmented into different regions according to the interframe objective change situation and gray scale change degree thereof; the images are dissembled to different frequency domains in the different directions by utilizing non-sub-sampling Contourlet transform; different fusion rules are selected based on the characteristics of different regions in the different frequency domains; and image reformation is carried out for the processed coefficients to obtain the final fusion result. The method takes the information of the characteristics in one of the regions into consideration, so the algorithm is capable of effectively reducing the rate of fusion image error due to the noise and low matching precision, and has stronger robustness.

Owner:JIANGSU HUAYI GARMENT CO LTD +1

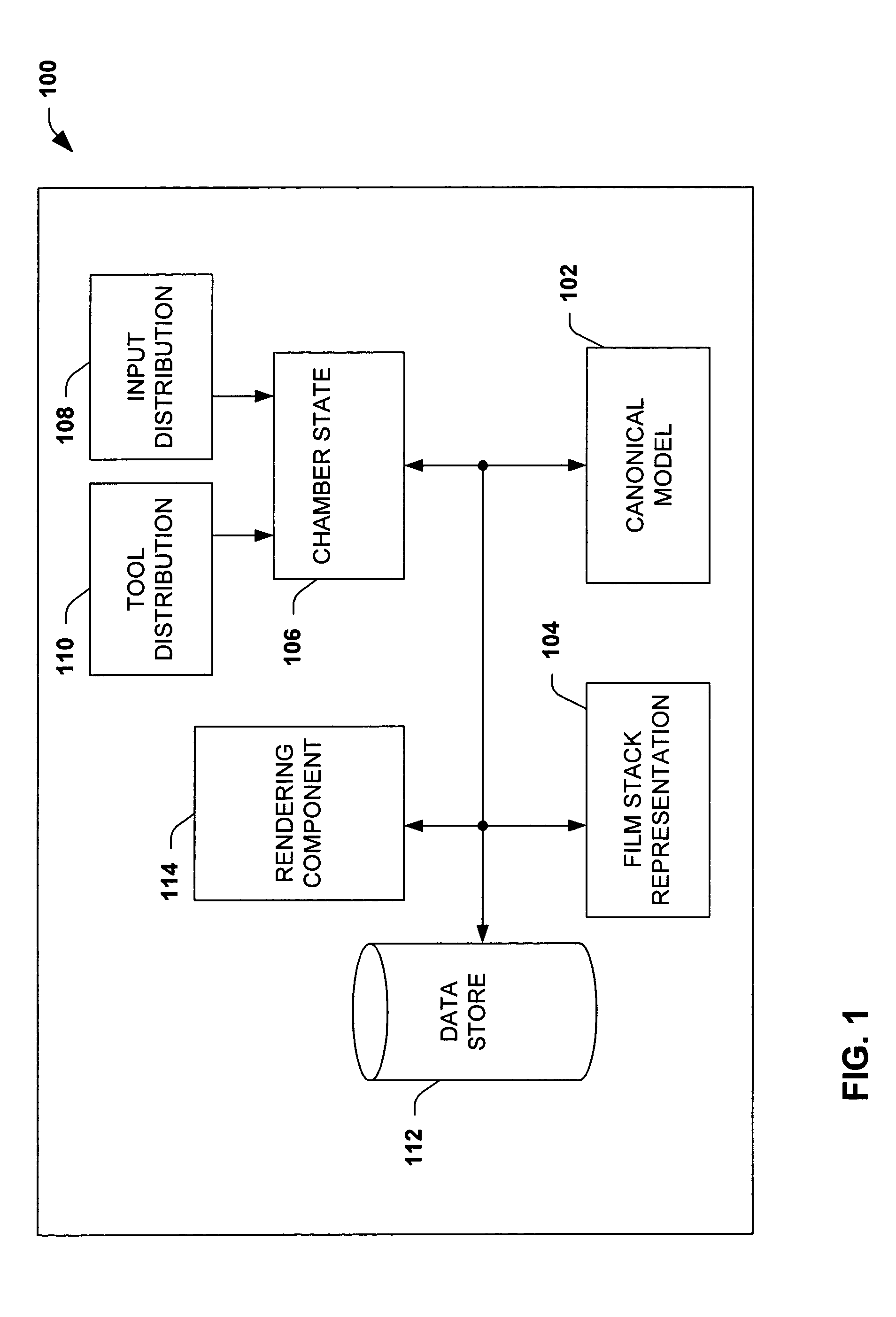

System and method for validating and visualizing APC assisted semiconductor manufacturing processes

InactiveUS20050010319A1High simulationOvercome deficienciesSemiconductor/solid-state device manufacturingSpecial data processing applicationsCanonical modelComputer science

A system and / or methodology that facilitates verifying and / or validating an APC assisted process via simulation is provided. The system comprises a film stack representation and a canonical model. The canonical model can predict process rates based at least in part upon an exposed material in the film stack representation. A solver component can also be provided to generate an updated recipe set-point according inputs and outputs of the canonical model.

Owner:BLUE CONTROL TECH

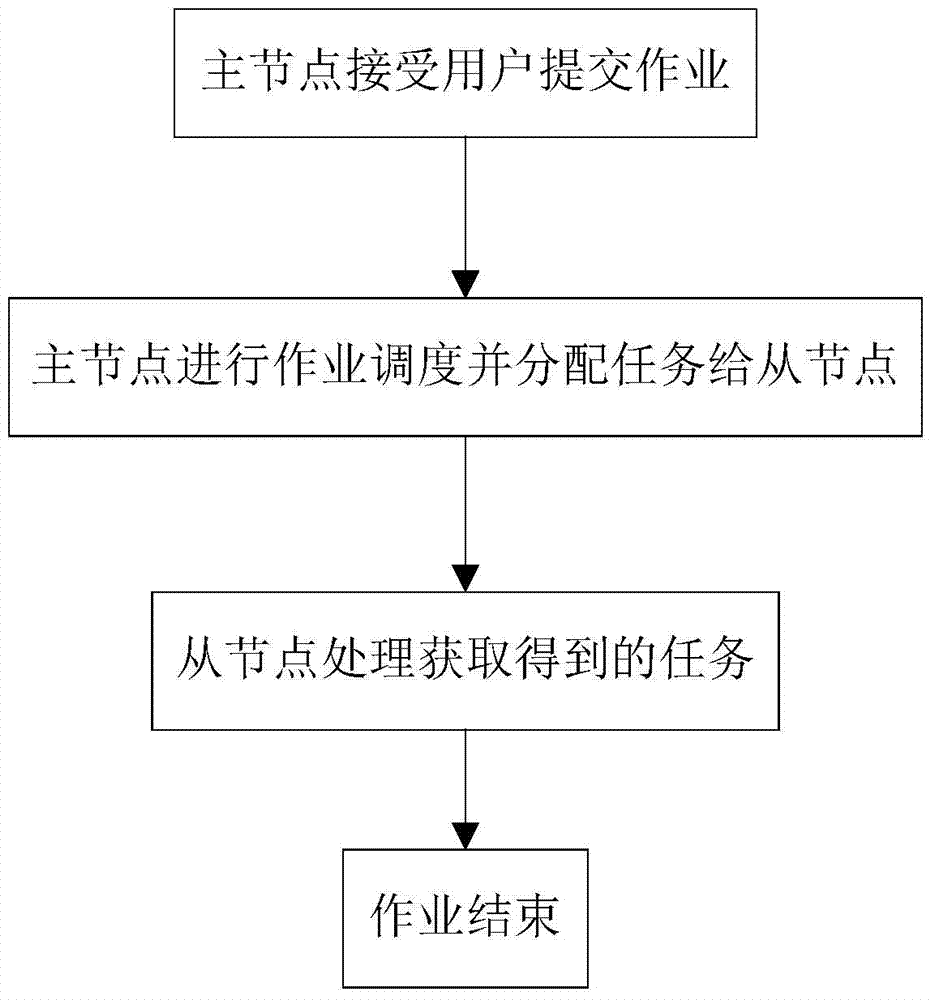

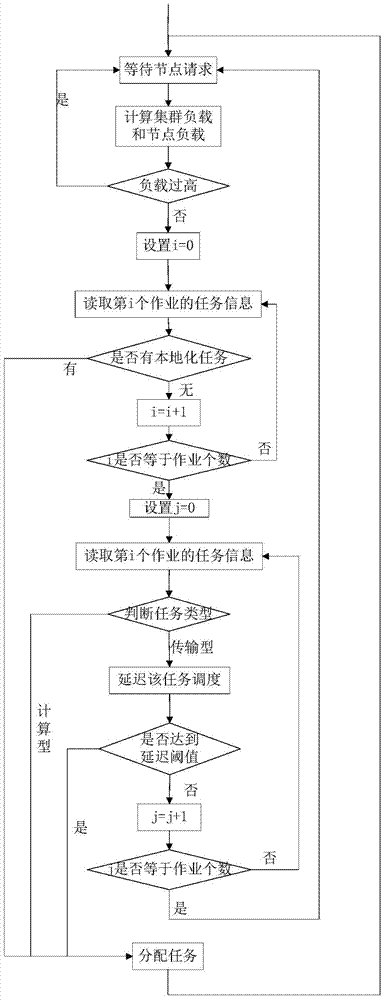

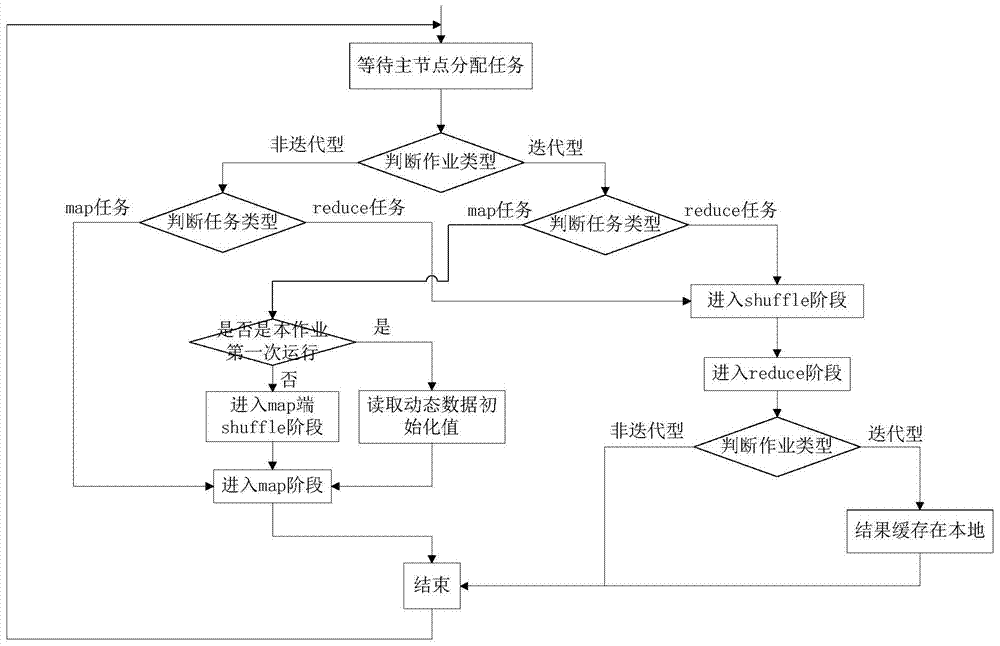

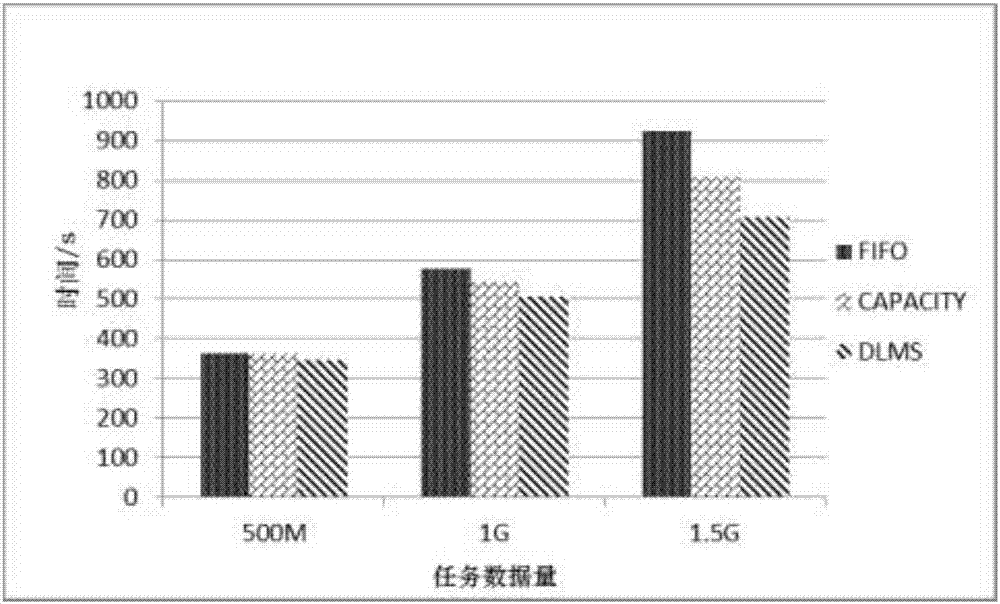

MapReduce optimizing method suitable for iterative computations

ActiveCN103617087AIncrease the localization ratioReduce latency overheadResource allocationTrunkingDynamic data

The invention discloses a MapReduce optimizing method suitable for iterative computations. The MapReduce optimizing method is applied to a Hadoop trunking system. The trunking system comprises a major node and a plurality of secondary nodes. The MapReduce optimizing method comprises the following steps that a plurality of Hadoop jobs submitted by a user are received by the major node; the jobs are placed in a job queue by a job service process of the major node and wait for being scheduled by a job scheduler of the major node; the major node waits for a task request transmitted from the secondary nodes; after the major node receives the task request, localized tasks are scheduled preferentially by the job scheduler of the major node; and if the secondary nodes which transmit the task request do not have localized tasks, prediction scheduling is performed according to task types of the Hadoop jobs. The MapReduce optimizing method can support the traditional data-intensive application, and can also support iterative computations transparently and efficiently; dynamic data and static data can be respectively researched; and data transmission quantity can be reduced.

Owner:HUAZHONG UNIV OF SCI & TECH

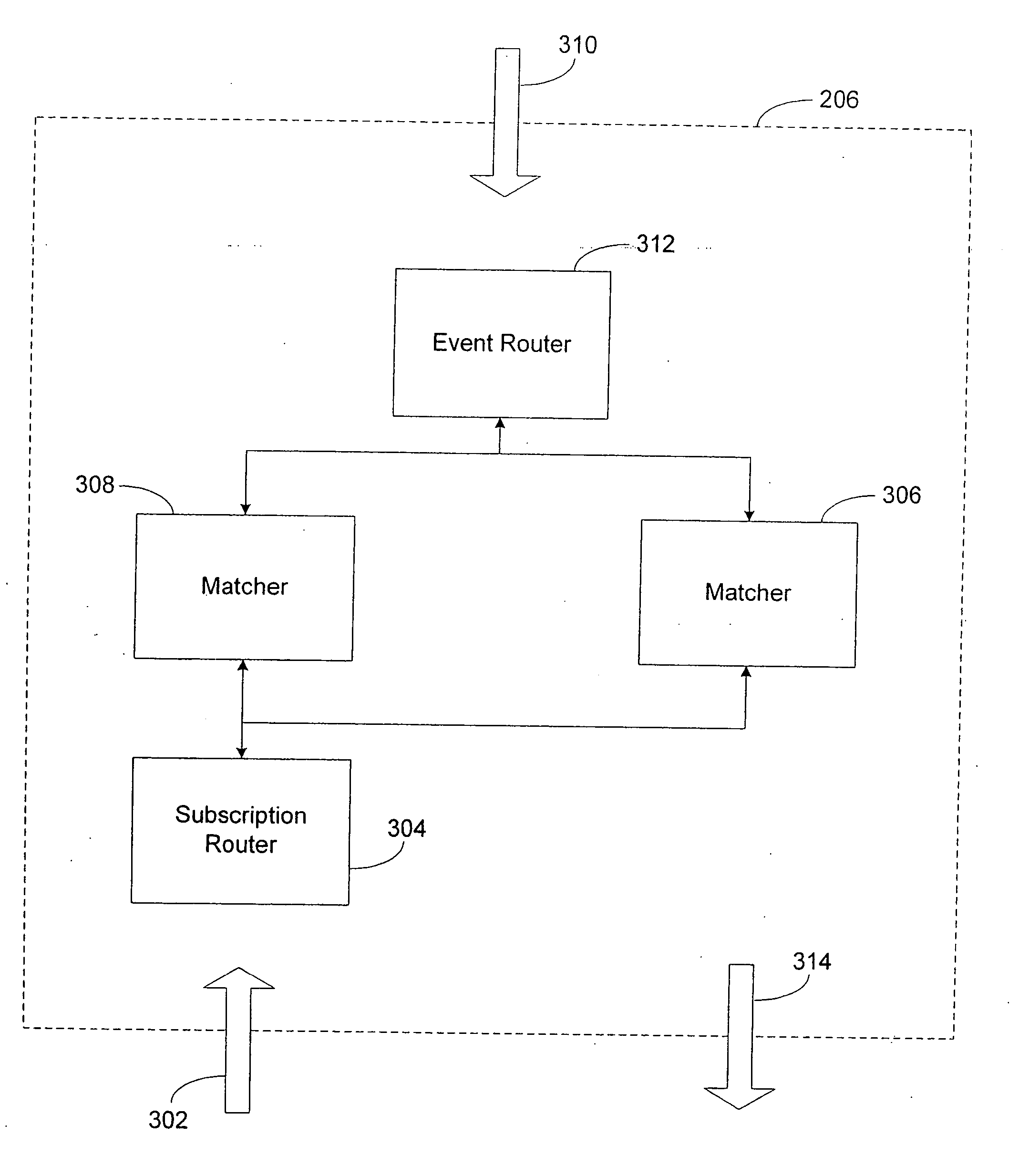

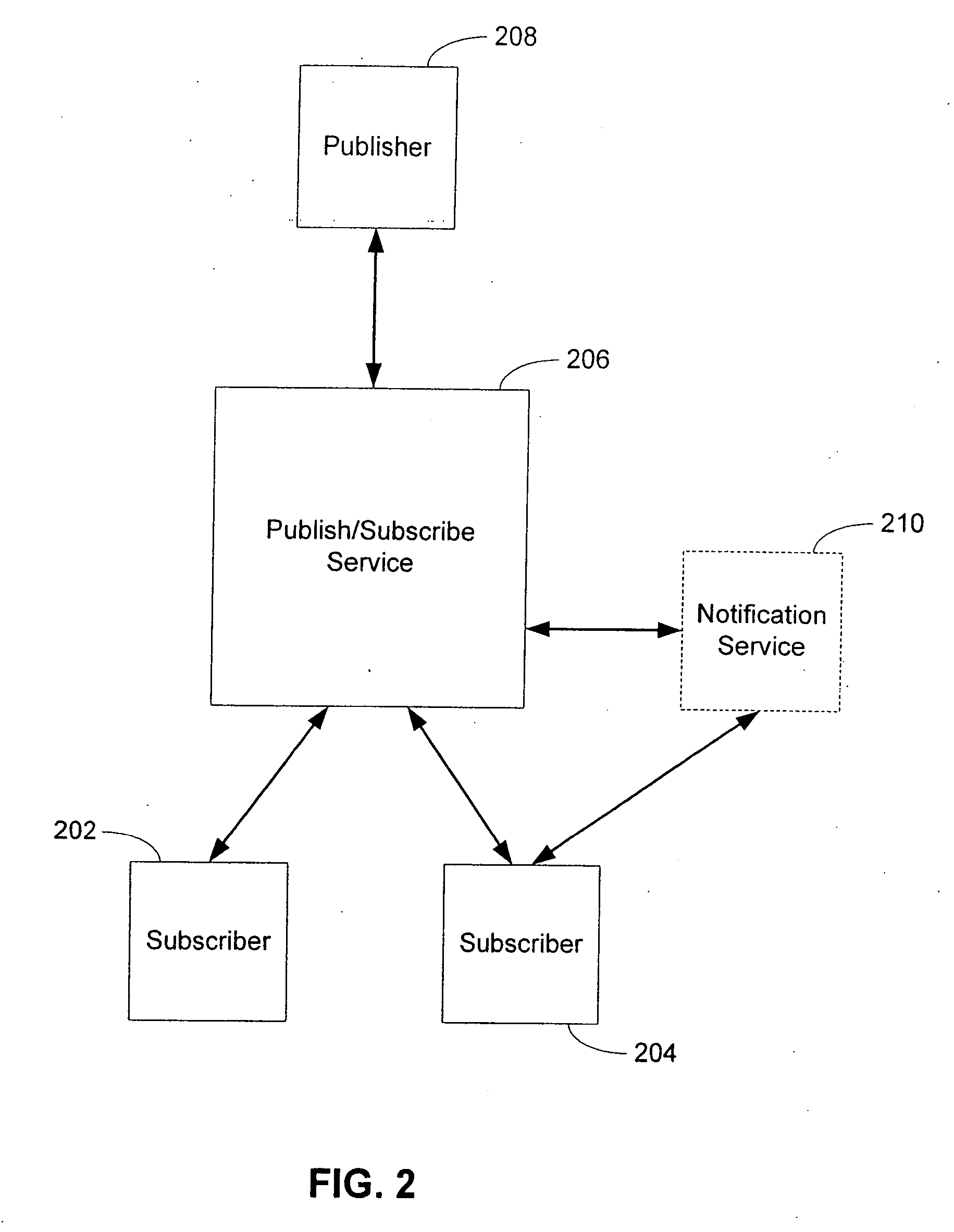

Summary-based routing for content-based event distribution networks

ActiveUS7200675B2Precise cuttingHigh precisionSpecial service provision for substationMultiple digital computer combinationsTraffic capacityPublish–subscribe pattern

A system and method for enabling highly scalable multi-node event distribution networks through the use of summary-based routing, particularly event distribution networks using a content-based publish / subscribe model to distribute information. By allowing event routers to use imprecise summaries of the subscriptions hosted by matcher nodes, an event router can eliminate itself as a bottleneck thus improving overall event distribution network throughput even though the use of imprecise summaries results in some false positive event traffic. False positive event traffic is reduced by using a filter set partitioning that provides for good subscription set locality at each matcher node, while at the same time avoiding overloading any one matcher node. Good subscription set locality is maintained by routing new subscriptions to a matcher node with a subscription summary that best covers the new subscription. Where event space partitioning is desirable, an over-partitioning scheme is described that enables load balancing without repartitioning.

Owner:MICROSOFT TECH LICENSING LLC +1

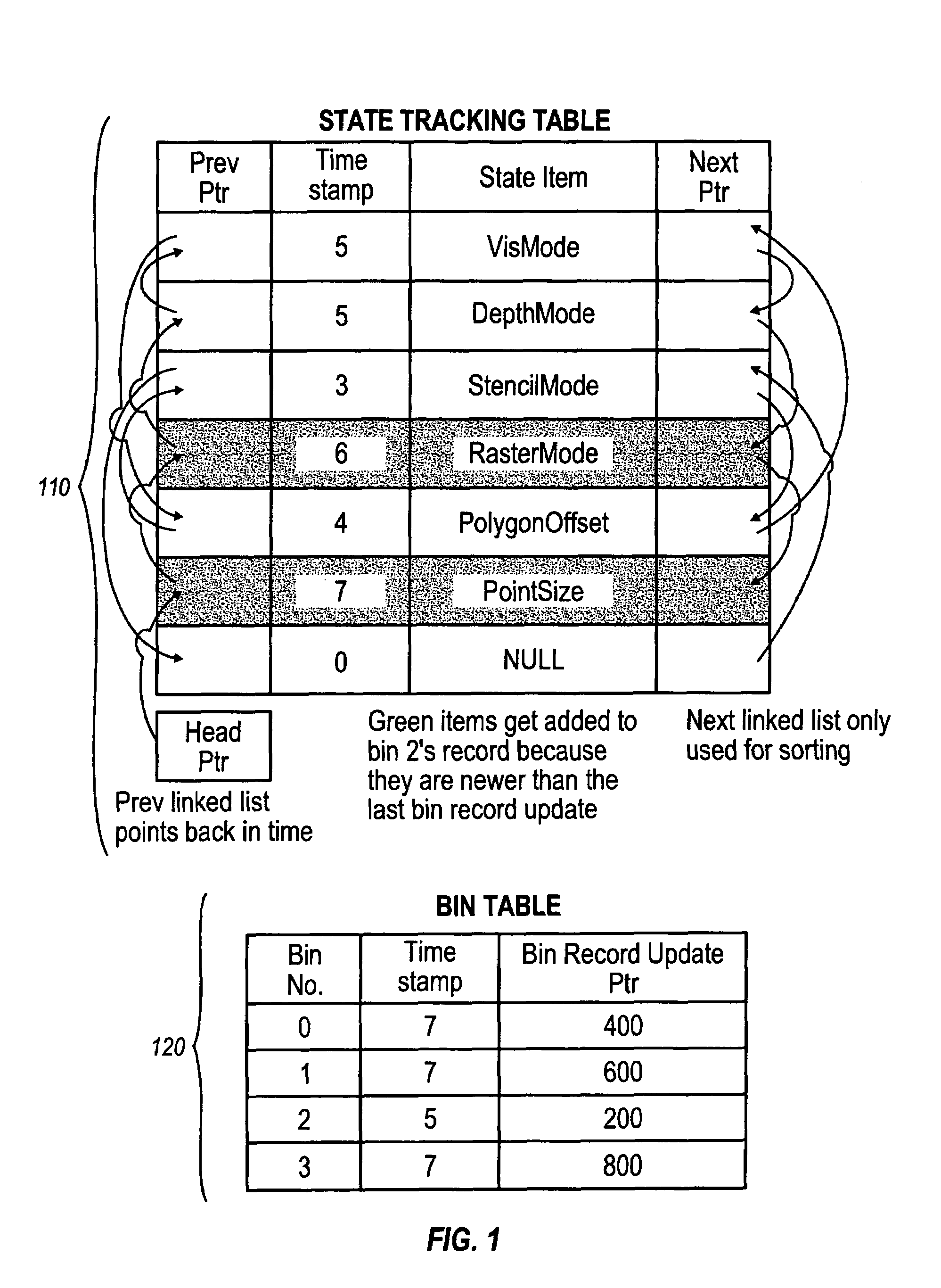

State tracking methodology

InactiveUS7385608B1Increased complexityIncrease frame rateCathode-ray tube indicatorsProcessor architectures/configurationOperating systemTime sequence

Redundant changes of tracked state issued by an application are filtered out by comparing the new state value with the old value, and if they are the same, no update is made. State changes are collected in on-chip memory and added to the bin if the state vector associated with the bin is out of date. State changes within a bin are done incrementally in temporal order, and a bin is only brought up to date prior to adding in a new primitive if the state has changed since the last primitive was added to it.

Owner:RPX CORP

Systems and methods for parallelizing and optimizing sparse tensor computations

ActiveUS20150169369A1Efficiently executeFacilitate parallel executionProgram initiation/switchingInterprogram communicationTensorScheduling system

A scheduling system can schedule several operations for parallel execution on a number of work processors. At least one of the operations is not to be executed, and the determination of which operation or operations are not to be executed and which ones are to be executed can be made only at run time. The scheduling system partitions a subset operations that excludes the one or more operation that are not to be executed into several groups based on, at least in part, an irregularity of operations resulting from the one or more operation that are not to be executed. In addition, the partitioning is based on, at least in part, locality of data elements associated with the subset of operations to be executed or loading of the several work processors.

Owner:QUALCOMM TECHNOLOGIES INC

Audio/video data access method and device based on raw device

InactiveCN101008919AWill not cause lossExtended service lifeRecording carrier detailsMemory adressing/allocation/relocationElectricityFile system

This invention discloses one audio frequency data memory method based on bare device, which comprises the following steps: aligning magnetic disc space into several blocks and designing each with certain number and storing the blocks into each disc space block for operations in writing or reading data. This invention has property for lossless data to keep data in electricity break.

Owner:ZHEJIANG UNIV

Summary-based routing for content-based event distribution networks

InactiveUS20070168550A1Precise cuttingHigh precisionSpecial service provision for substationDigital computer detailsAridSpace partitioning

A system arid method for enabling highly scalable multi-node event distribution networks through the use of summary-based routing, particularly event distribution networks using a content-based publish / subscribe model to distribute information. By allowing event routers to use imprecise summaries of the subscriptions hosted by matcher nodes, an event router can eliminate itself as a bottleneck thus improving overall event distribution network throughput even though the use of imprecise summaries results in some false positive event traffic. False positive event traffic is reduced by using a filter set partitioning that provides for good subscription set locality at each matcher node, while at the same time avoiding overloading any one matcher node. Good subscription set locality is maintained by routing new subscriptions to a matcher node with a subscription summary that best covers the new subscription. Where event space partitioning is desirable, an over-partitioning scheme is described that enables load balancing without repartitioning.

Owner:MICROSOFT TECH LICENSING LLC

Credit-Based Streaming Multiprocessor Warp Scheduling

ActiveUS20110072244A1Improves cache localityImprove system performanceDigital computer detailsConcurrent instruction executionCache accessScheduling instructions

One embodiment of the present invention sets forth a technique for ensuring cache access instructions are scheduled for execution in a multi-threaded system to improve cache locality and system performance. A credit-based technique may be used to control instruction by instruction scheduling for each warp in a group so that the group of warps is processed uniformly. A credit is computed for each warp and the credit contributes to a weight for each warp. The weight is used to select instructions for the warps that are issued for execution.

Owner:NVIDIA CORP

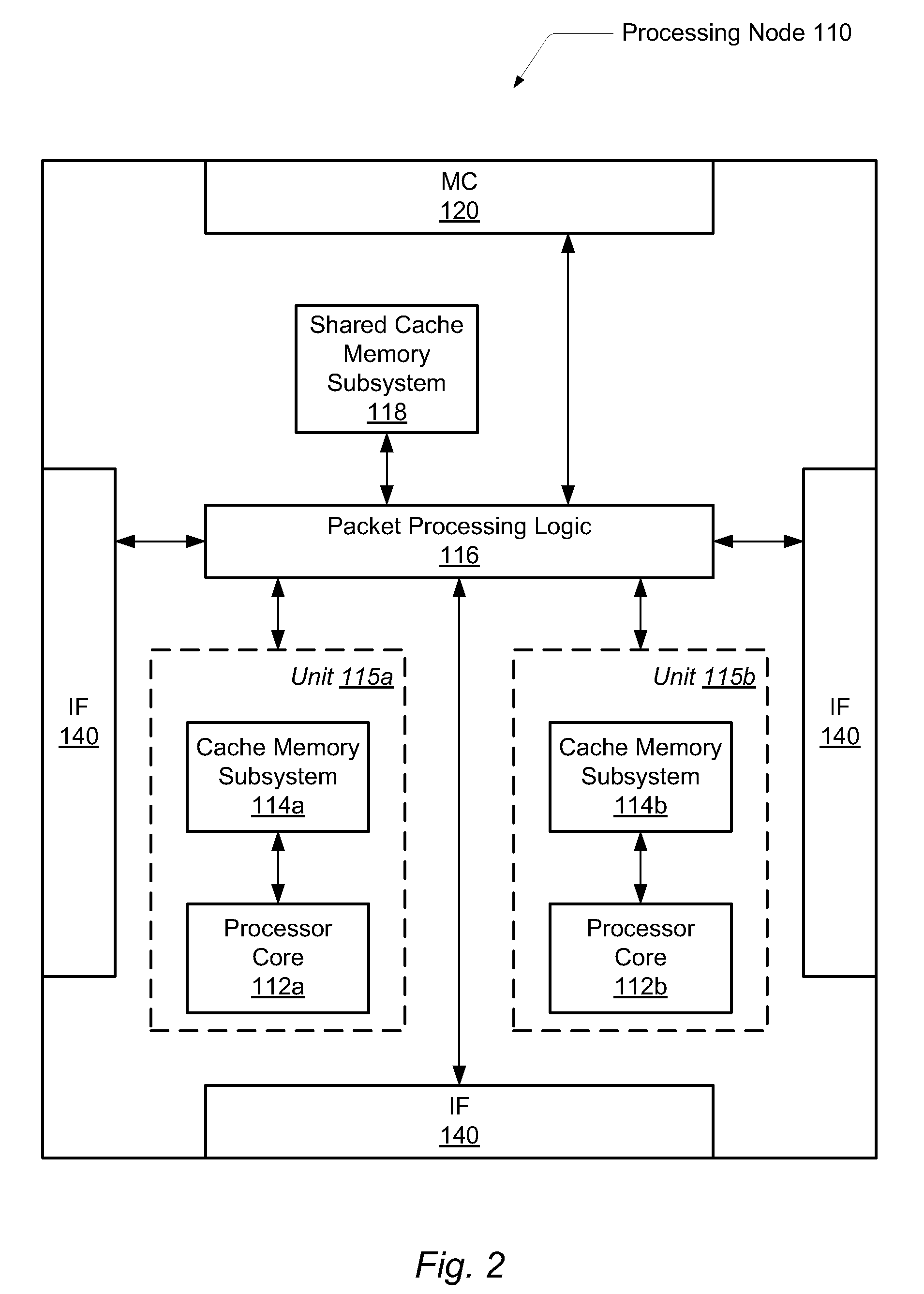

High-speed packet filtering device and method realized based on shunting network card and multi-core CPU (Central Processing Unit)

InactiveCN102497322AImprove localityImprove performanceMultiprogramming arrangementsData switching networksTraffic capacityInternal memory

The invention provides a high-speed packet filtering device and method realized based on a shunting network card and a multi-core CPU (Central Processing Unit). The shunting network card is a specially customized hardware which can get through transmission bottleneck of network data from the network card to each CPU core. The high-speed packet filtering method comprises the steps of: homologizing and lodging the network flow; distributing the network flow into a message reception buffer region corresponding to each CPU core in a balanced load manner and reading data in a message transmission buffer region of each core into the network card in a parallel manner; sending out the data; and starting one progress (or thread) on each core of the multi-core CPU. As the message buffer region in a local internal memory of the CPU core is used for receiving and sending data, internal memory access locality is improved. Compared with the prior art, the high-speed packet filtering device and method realized based on the shunting network card and the multi-core CPU has the advantage of improving performances of the packet filtering device.

Owner:DAWNING INFORMATION IND BEIJING

System and method for loop unrolling in a dynamic compiler

InactiveUS6948160B2Improve localityDecrease branching overheadSoftware engineeringProgram controlLoop unrollingComputer program

Provided is a method for performing loop-unrolling optimization during program execution. In one example, a method for loop optimization within a dynamic compiler system is disclosed. A computer program having a loop structure is executed, wherein the loop structure includes a loop exit test to be performed during each loop iteration. The loop structure is compiled during the execution of the computer program, and an unrolled loop structure is created during the compiling operation. The unrolled loop structure includes plurality of loop bodies based on the original loop structure. Further, the unrolled loop structure can include the loop exit test, which can be performed once for each iteration of the plurality of loop bodies.

Owner:ORACLE INT CORP

Method for saving virtual machine state to a checkpoint file

ActiveUS20140164722A1Reduce in quantitySpeed up checkpointing processMemory loss protectionError detection/correctionCopyingData buffer

A process for lazy checkpointing a virtual machine is enhanced to reduce the number of read / write accesses to the checkpoint file and thereby speed up the checkpointing process. The process for saving a state of a virtual machine running in a physical machine to a checkpoint file maintained in persistent storage includes the steps of copying contents of a block of memory pages, which may be compressed, into a staging buffer, determining after the copying if the buffer is full, and upon determining that the buffer is full, saving the buffer contents in a storage block of the checkpoint file.

Owner:VMWARE INC

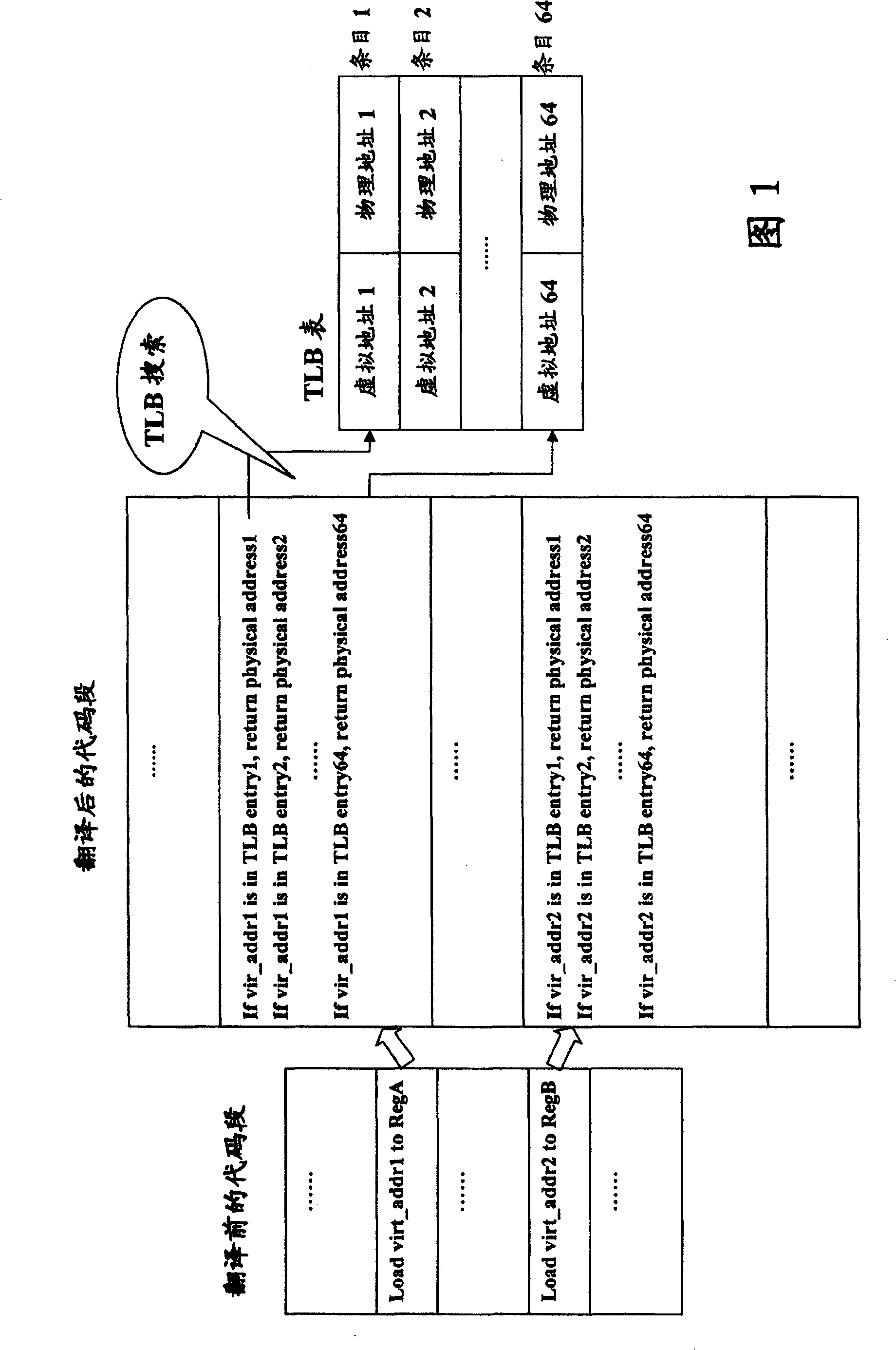

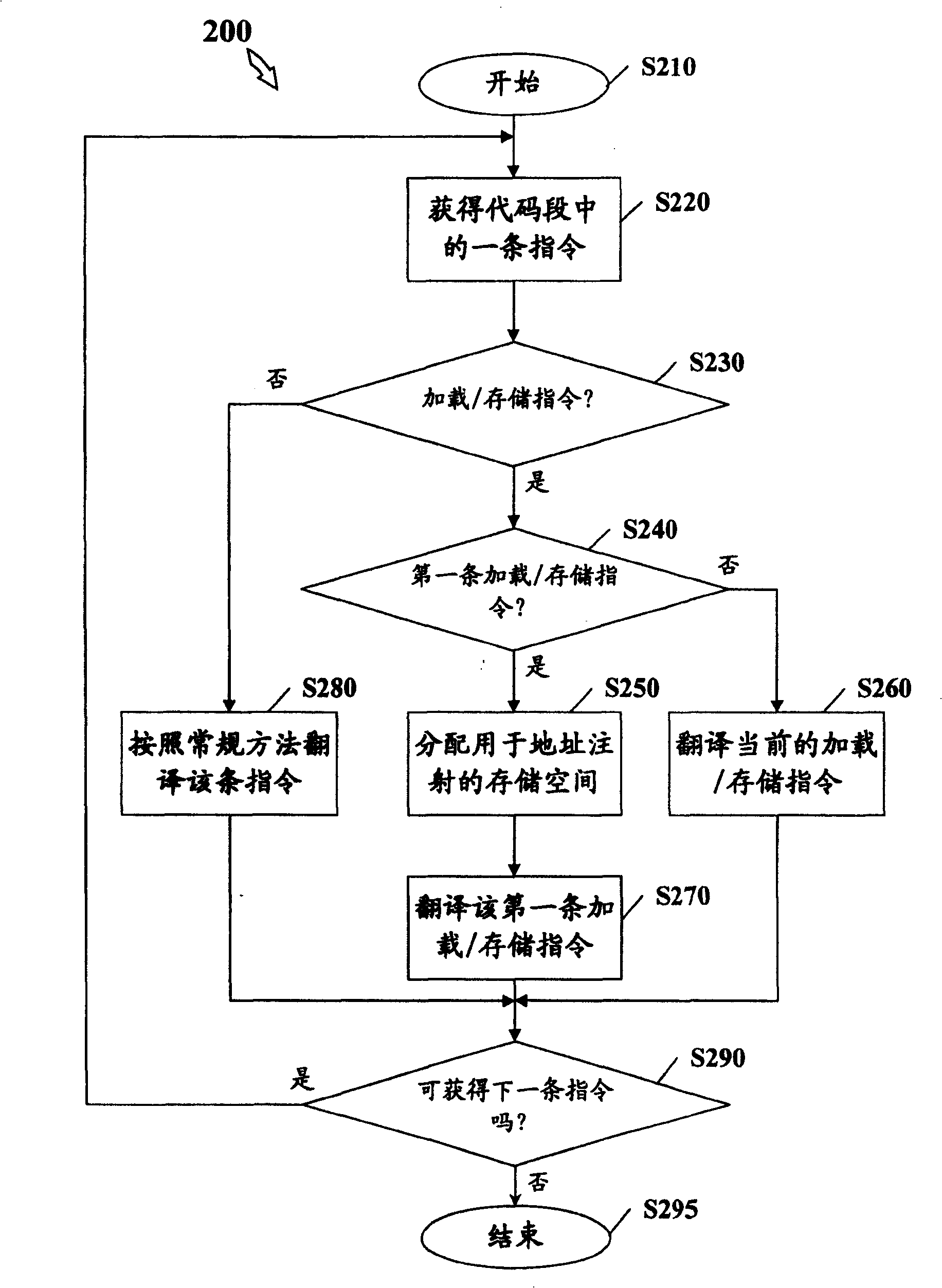

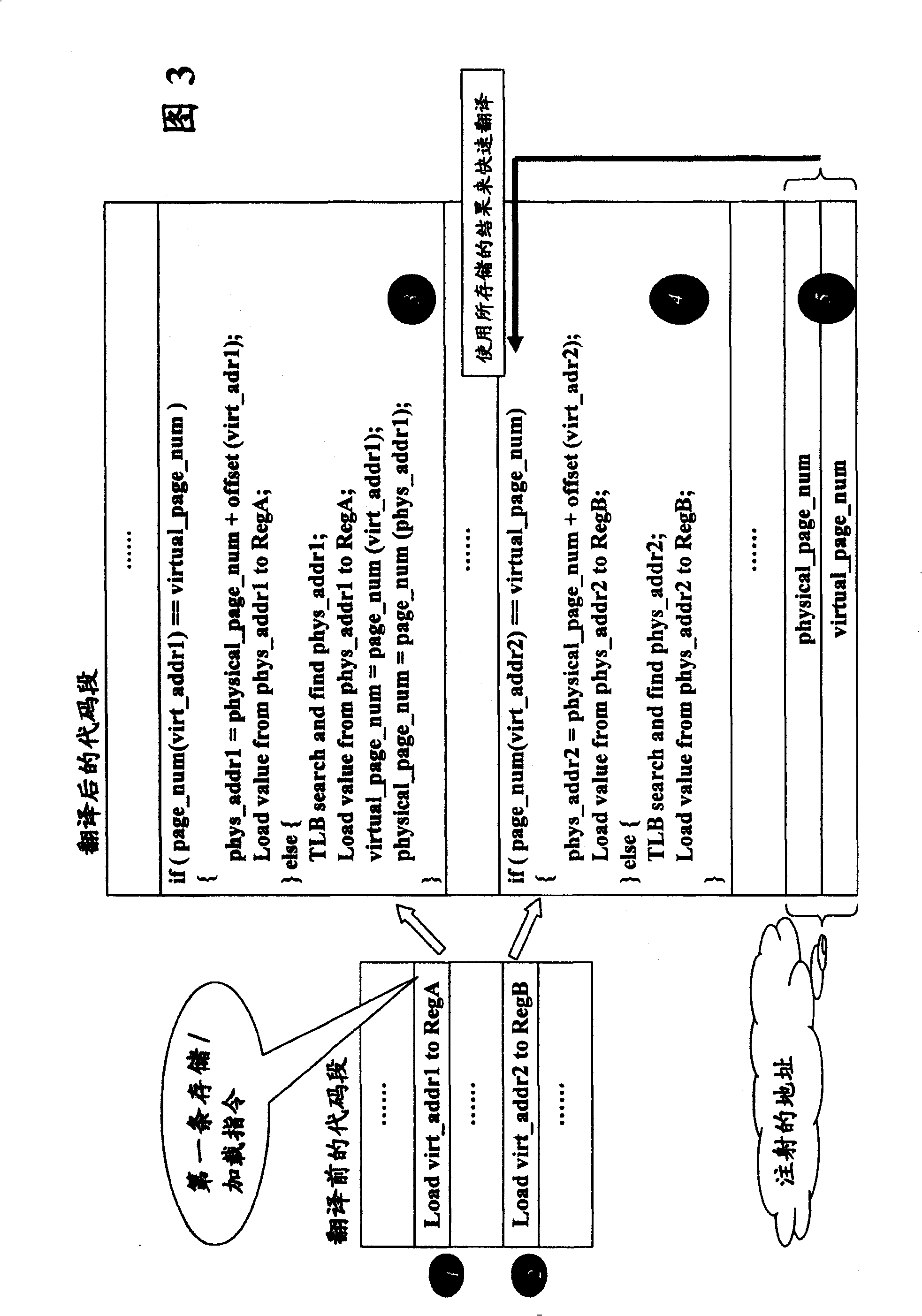

Method and apparatus for fast performing MMU analog, and total system simulator

InactiveCN101246452AGood data localityImprove localityMemory adressing/allocation/relocationProgram loading/initiatingManagement unitComputerized system

The invention provides a method for executing quick MMU simulation for computer program in computer system, wherein, a destined size address injection space in which virtual page number and corresponding physical page number are stored is allotted in the computer system. The method comprises the following steps: for the loading / storing instruction on a code sect of the computer program, comparing the virtual page number of virtual address of loading / storing instruction with the virtual page number stored in the address injection space, if the virtual page numbers being identical, obtaining corresponding physical address according to the physical page number stored in the address injection space, otherwise, executing address conversion by-pass buffer searching, that is TLB searching to obtain corresponding physical address, and reading data from the obtained corresponding physical address or writing data into the obtained corresponding physical address. The invention also discloses a device and a total system simulator for realizing the above method.

Owner:INT BUSINESS MASCH CORP

GPU assisted garbage collection

ActiveUS20100082930A1Easy to operateBurden inefficiencyMemory adressing/allocation/relocationSpecial data processing applicationsGraphicsMain processing unit

A system and method for efficient garbage collection. A general-purpose central processing unit (CPU) sends a garbage collection request and a first log to a special processing unit (SPU). The first log includes an address and a data size of each allocated data object stored in a heap in memory corresponding to the CPU. The SPU has a single instruction multiple data (SIMD) parallel architecture and may be a graphics processing unit (GPU). The SPU efficiently performs operations of a garbage collection algorithm due to its architecture on a local representation of the data objects stored in the memory. The SPU records a list of changes it performs to remove dead data objects and compact live data objects. This list is subsequently sent to the CPU, which performs the included operations.

Owner:ADVANCED MICRO DEVICES INC

Mine roof and floor water inrush monitoring and prediction system and method

ActiveCN103529488AMonitoring water inrush problemsAvoid distractionsGeological measurementsPrediction systemPrediction methods

The invention discloses a mine roof and floor water inrush monitoring and prediction system and a mine roof and floor water inrush monitoring and prediction method. The system comprises a ground control room host terminal, an underground site host, a comprehensive cable assembly and a plurality of detection terminals, wherein the underground site host is connected with the ground control room host terminal; the comprehensive cable assembly is connected with the underground site host; the detection terminals are arranged on a roadway wall, a roadway floor or a roadway roof along the mine roadway direction; each detection terminal comprises a controller, a three-dimensional vibration sensor, an electrode and a memory connected with the controller; signal output ends of the three-dimensional vibration sensor and the electrode are connected with a signal input end of the controller; a data communication end of each controller is connected with the underground site host through the comprehensive cable assembly. According to the system and the method, the accuracy and the real-time property of a mine roof and floor water inrush monitoring result can be obviously improved.

Owner:WUHAN CONOURISH COALMINE SAFETY TECH

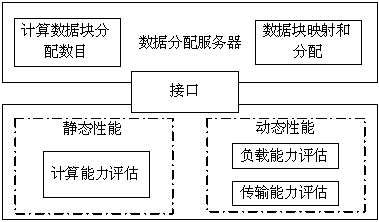

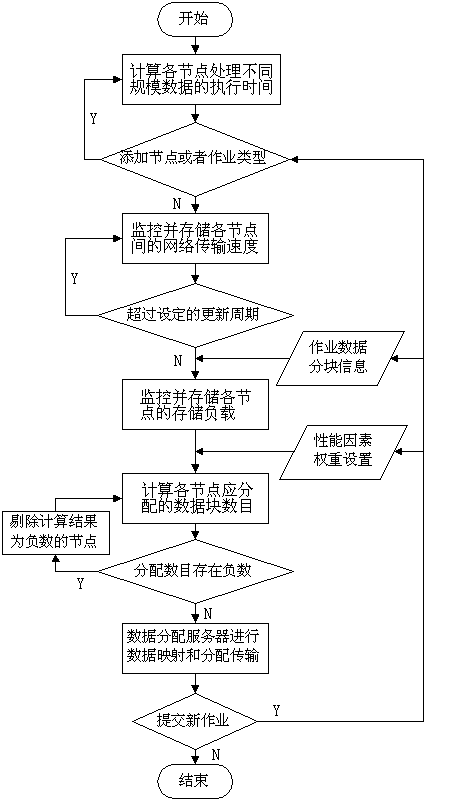

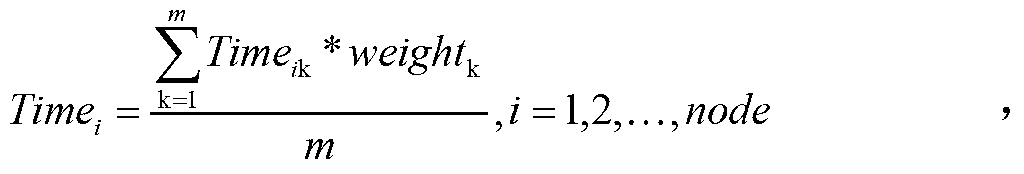

Data allocation strategy in hadoop heterogeneous cluster

ActiveCN103218233AImprove adaptabilityImprove localityResource allocationSpecific program execution arrangementsHeterogeneous clusterIndustrial engineering

The invention relates to a data allocation strategy in a hadoop heterogeneous cluster. The data allocation strategy is characterized by comprising the following steps of: step S01, testing and storing execution time for each node to treat data with different scales, and transforming the execution time into a static performance reference index; step S02, monitoring and storing storage load of each node and network transmission speed between the nodes, and transforming the storage load and the network transmission speed into dynamic performance reference indexes; and step S03, calculating the quantity of data blocks to be allocated to each node by utilizing a calculating module according to preset weight of each performance factor, and performing data block-node mapping and carrying out allocation transmission by using a data allocation server. Through flexible configuration of each performance factor of the static and dynamic performance reference indexes, the data allocation strategy disclosed by the invention enhances the adaptability, ensures the effectiveness, effectively increases the data locality, reduces the operation response time and network transmission, improves the load stability of the system, and optimizes the cluster resources.

Owner:FUZHOU UNIV

Dynamic label matching scheduling method under Hadoop Platform

ActiveCN107038069AImprove localityLower the level of localityProgram initiation/switchingResource allocationCluster NodeYarn

The present invention discloses a dynamic label matching scheduling method under a Hadoop platform, and belongs to the field of computer software. Targeting at problems of large performance difference of Hadoop cluster nodes, randomness of resource allocation and too long execution time, the present invention provides a scheduler for dynamically matching a node performance label (hereinafter referred to as a node label) and a job category label (hereinafter referred to as a job label). A node performs initial classification and assigns a label to an original node; the node detects a performance indicator of the node to generate a dynamic node label; a job performs classification according to partial operation information to generate a job label; and a resource scheduler allocates a node resource to a job corresponding to the label. As an experimental result shows, the scheduler provided by the present invention shortens job execution time compared with the scheduler carried by YARN.

Owner:BEIJING UNIV OF TECH

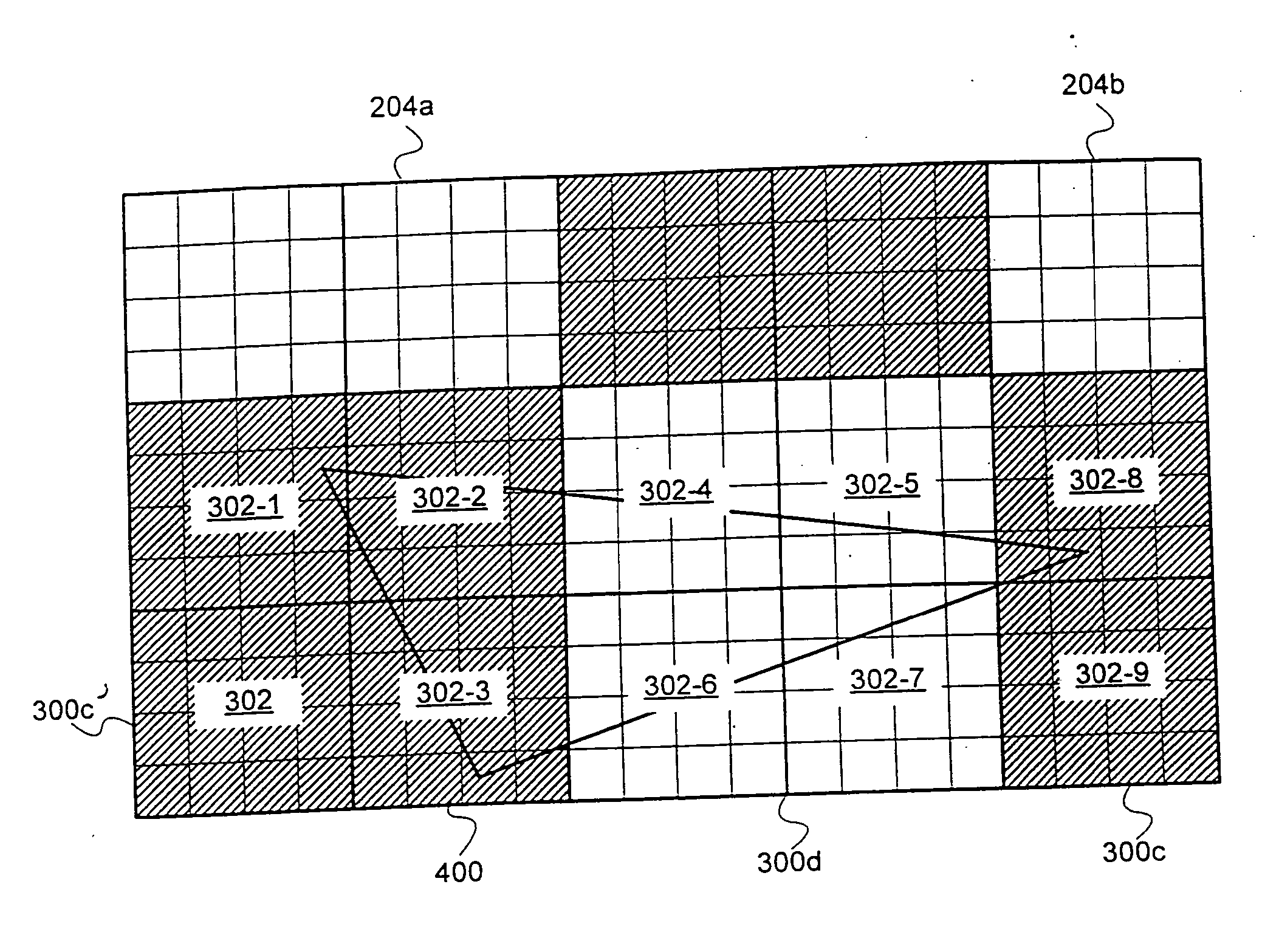

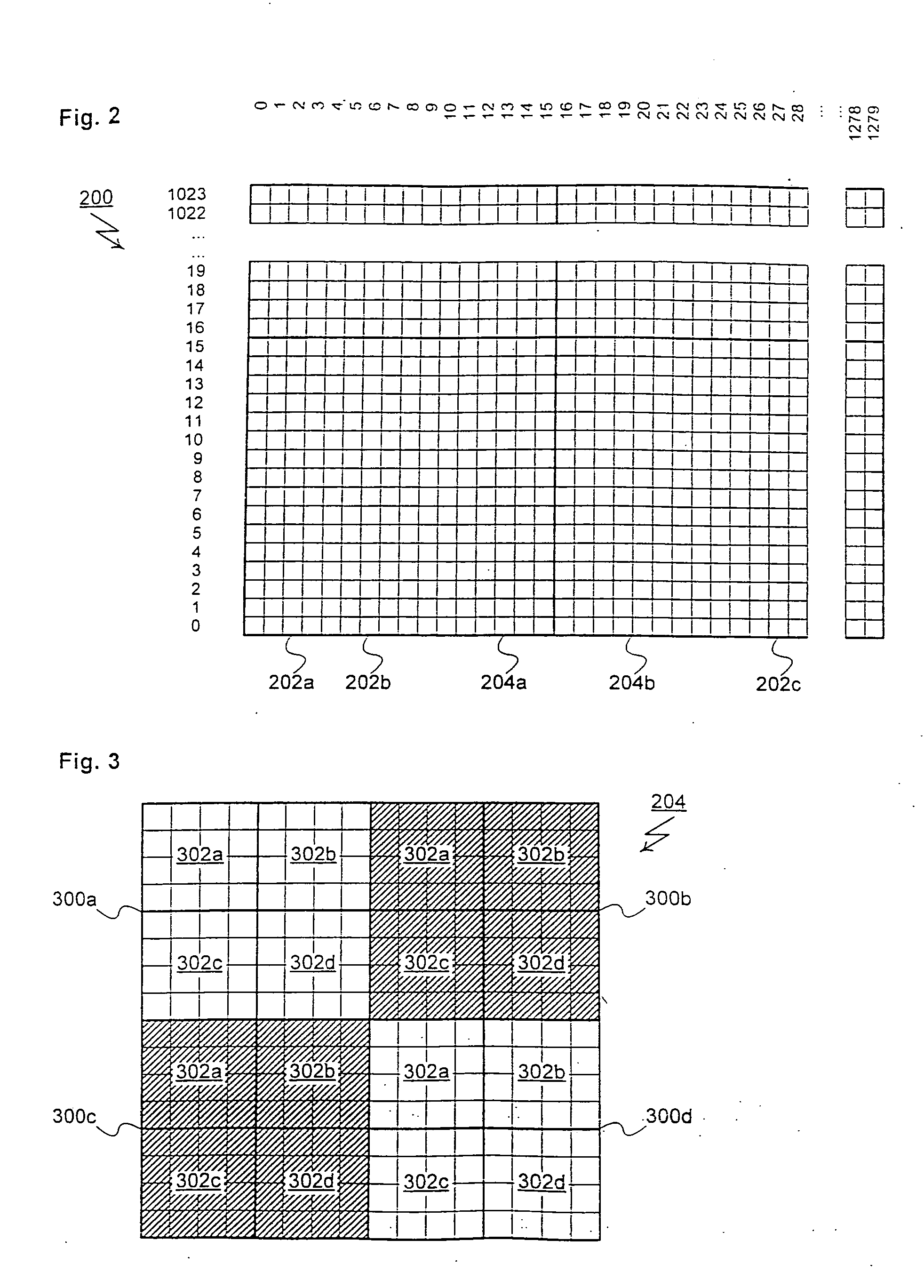

Method and apparatus for rasterizing in a hierarchical tile order

InactiveUS20050088448A1Enhance performanceIncrease temporal localityDrawing from basic elementsCathode-ray tube indicatorsBase addressGraphics

A method and apparatus for efficiently rasterizing graphics is provided. The method is intended to be used in combination with a frame buffer that provides fast tile-based addressing. Within this environment, frame buffer memory locations are organized into a tile hierarchy. For this hierarchy, smaller low-level tiles combine to form larger mid-level tiles. Mid-level tiles combine to form high-level tiles. The tile hierarchy may be expanded to include more levels, or collapsed to included fewer levels. A graphics primitive is rasterized by selecting an starting vertex. The low-level tile that includes the starting vertex is then rasterized. The remaining low-level tiles that are included in the same mid-level tile as the starting vertex are then rasterized. Rasterization continues with the mid-level tiles that are included in the same high-level tile as the starting vertex. These mid-level tiles are rasterized by rasterizing their component low-level tiles. The rasterization process proceeds bottom-up completing at each lower level before completing at higher levels. In this way, the present invention provides a method for rasterizing graphics primitives that accesses memory tiles in an orderly fashion. This reduces page misses within the frame buffer and enhances graphics performance.

Owner:MICROSOFT TECH LICENSING LLC

Image defogging method with optimal contrast ratio and minimal information loss

The invention relates to an image defogging method with optimal contrast ratio and minimal information loss. The image defogging method is characterized in that through the adoption of functions including the Gauss mixed model, the quadtree, the maximal contrast ratio and the minimal information loss, the image defogging method is realized. The image defogging method comprises the following steps: firstly, based on the Gauss mixed model and the expected value maximal algorithm, images are segmented into two categories, namely, a sky region and a non-sky region; secondly, the quadtree iteration method is adopted in the sky region of the images to estimate the atmospheric illumination intensity of an atmospheric scattering model; then, the grid partition method is adopted to partition the non-sky region of the images and the functions of the maximal contrast ratio and the minimal information loss are adopted to calculate the spreading rate of an atmospheric lighting model of each grid unit; based on the constant coefficient and the optimal spreading rate of the non-sky region, the spreading rate of the sky region is estimated; finally, the recovery images of the sky region and the non-sky region are merged and output.

Owner:HUAWEI TEHCHNOLOGIES CO LTD

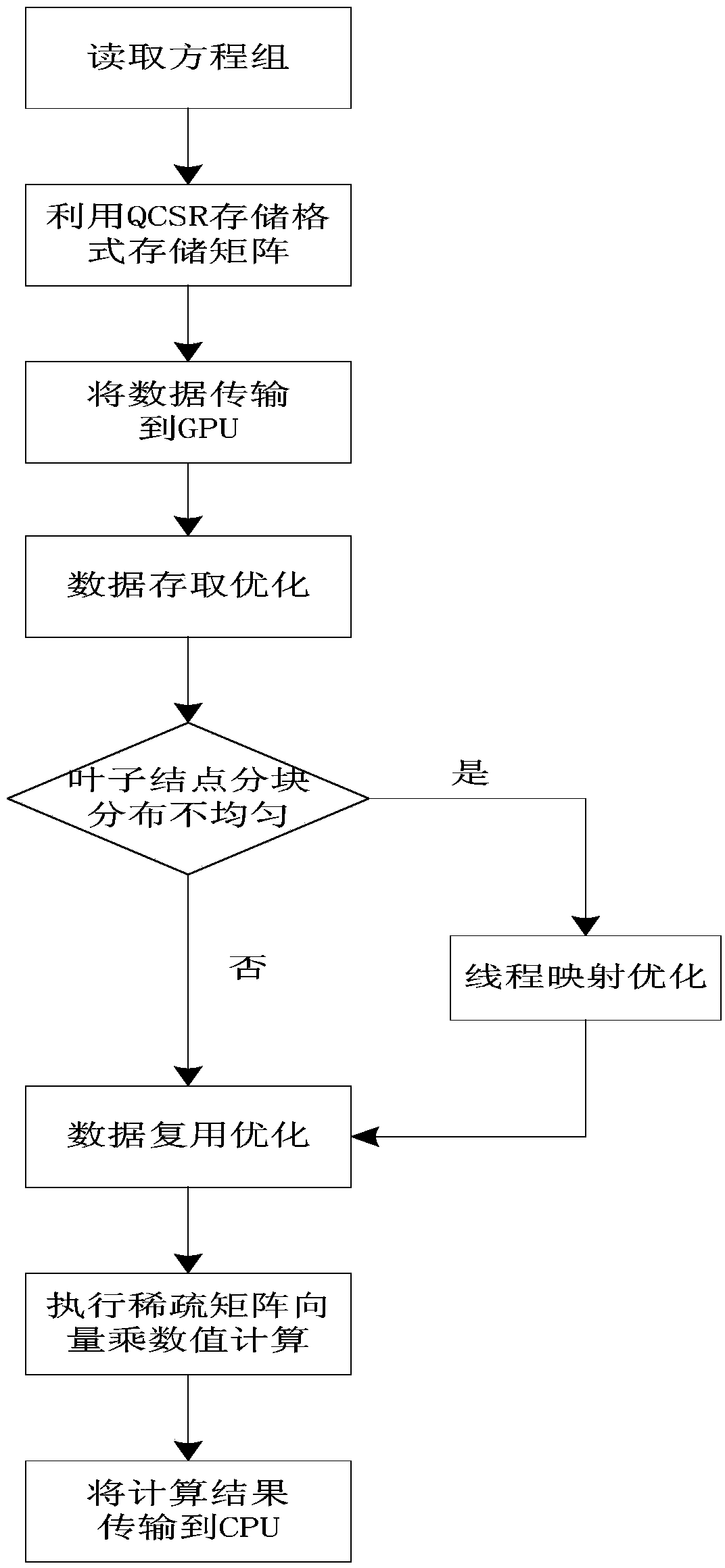

Method optimizing sparse matrix vector multiplication to improve incompressible pipe flow simulation efficiency

ActiveCN103984527AImprove data locality and cache hit ratioFew influencing factorsConcurrent instruction executionData transmissionDecomposition

The invention discloses a method optimizing sparse matrix vector multiplication to improve incompressible pipe flow simulation efficiency. The method uses a QCST storage structure to combine with the advantages of a quadtree structure and a CSR storage structure to operate recursion decomposition and rearrangement to a sparse matrix to realize the storage of the sparse matrix, so that the sparse matrix vector multiplication operating process has the universality to the matrix form, particularly is suitable for the matrix with the whole being sparse and the local part being provided with a plurality of dense sub-matrixes. The method realizes the sparse matrix vector multiplication based on the QCSR storage structure through four strategies of thread mapping optimization, data storage optimization, data transmission optimization and data reusing optimization in a CPU / GPU (central processing unit / graphics processing unit) heterogeneous parallel system. The method has the advantages that the data locality and the cache hit rate in the sparse matrix vector multiplication value calculating process are improved, and the better calculating acceleration and the whole acceleration effect are obtained, so that the incompressible pipe flow simulation efficiency is improved.

Owner:HANGZHOU DIANZI UNIV

Efficient and scalable computations with sparse tensors

ActiveUS10936569B1Improve data localityMemory storage benefitsComplex mathematical operationsDatabase indexingLocality of referenceAlgorithm

In a system for storing in memory a tensor that includes at least three modes, elements of the tensor are stored in a mode-based order for improving locality of references when the elements are accessed during an operation on the tensor. To facilitate efficient data reuse in a tensor transform that includes several iterations, on a tensor that includes at least three modes, a system performs a first iteration that includes a first operation on the tensor to obtain a first intermediate result, and the first intermediate result includes a first intermediate-tensor. The first intermediate result is stored in memory, and a second iteration is performed in which a second operation on the first intermediate result accessed from the memory is performed, so as to avoid a third operation, that would be required if the first intermediate result were not accessed from the memory.

Owner:QUALCOMM INC

Data block balancing method in operation process of HDFS (Hadoop Distributed File System)

InactiveCN102937918AImprove localityPromote balance in task executionResource allocationSpecial data processing applicationsDistributed File SystemNon local

The invention discloses a data block balancing method in an operation process of an HDFS (Hadoop Distributed File System). The method comprises the following steps of: at first, pre-processing local task lists of nodes, and dividing the local task list of each node into entirely local tasks and non-entirely local tasks, so as to provide the basis for starting data block balance judgment of the HDFS; secondly, carrying out estimation and task request prediction on an operation rate of each node; thirdly, designing and realizing an assignment process of each node after completing said steps; fourthly, selecting proper nodes to move a data block between the proper nodes, so that the distribution of the data block can be matched with a predicted node task request sequence; and finally, balancing the data block. With the adoption of the data block balancing method, non-local map task execution which is possible to occur is judged by predicting the node task request in advance, and the proper data block is moved between the corresponding nodes, so that the distribution response of the local map tasks can be obtained when the nodes send an actual task request. Therefore, the completion efficiency of a Map step can be improved.

Owner:XI AN JIAOTONG UNIV

Information recording method, information recording device, information recording system, program, and recording medium

InactiveUS20050063290A1Suppress mutationImprove reproductive characteristicsTelevision system detailsRecord information storageComputer hardwareRecording layer

An information recording method records information to a multilayer recording medium in which a number of record layers are laminated and recording of information to each record layer is possible. When an amount of the information being recorded to the medium does not exceed a maximum amount of information which can be recorded to the medium, the information being recorded to the medium is divided into data blocks by the number of the record layers. The data blocks are recorded to data areas of the respective record layers so that the recording areas of the record layers where the data blocks are recorded are overlapped each other with respect to a thickness direction of the medium.

Owner:RICOH KK

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com