Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

211 results about "Job queue" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In system software, a job queue (sometimes batch queue), is a data structure maintained by job scheduler software containing jobs to run. Users submit their programs that they want executed, "jobs", to the queue for batch processing. The scheduler software maintains the queue as the pool of jobs available for it to run.

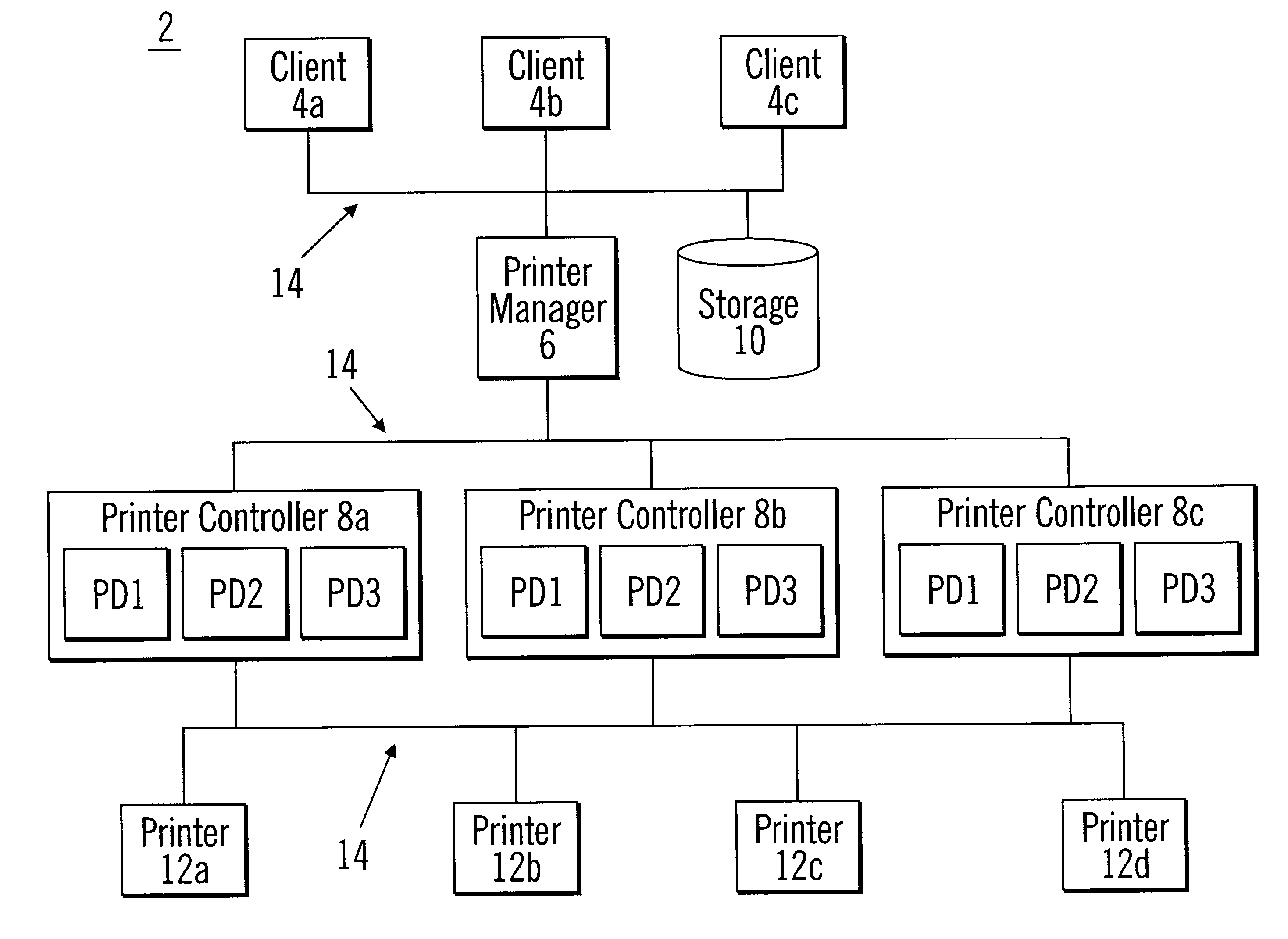

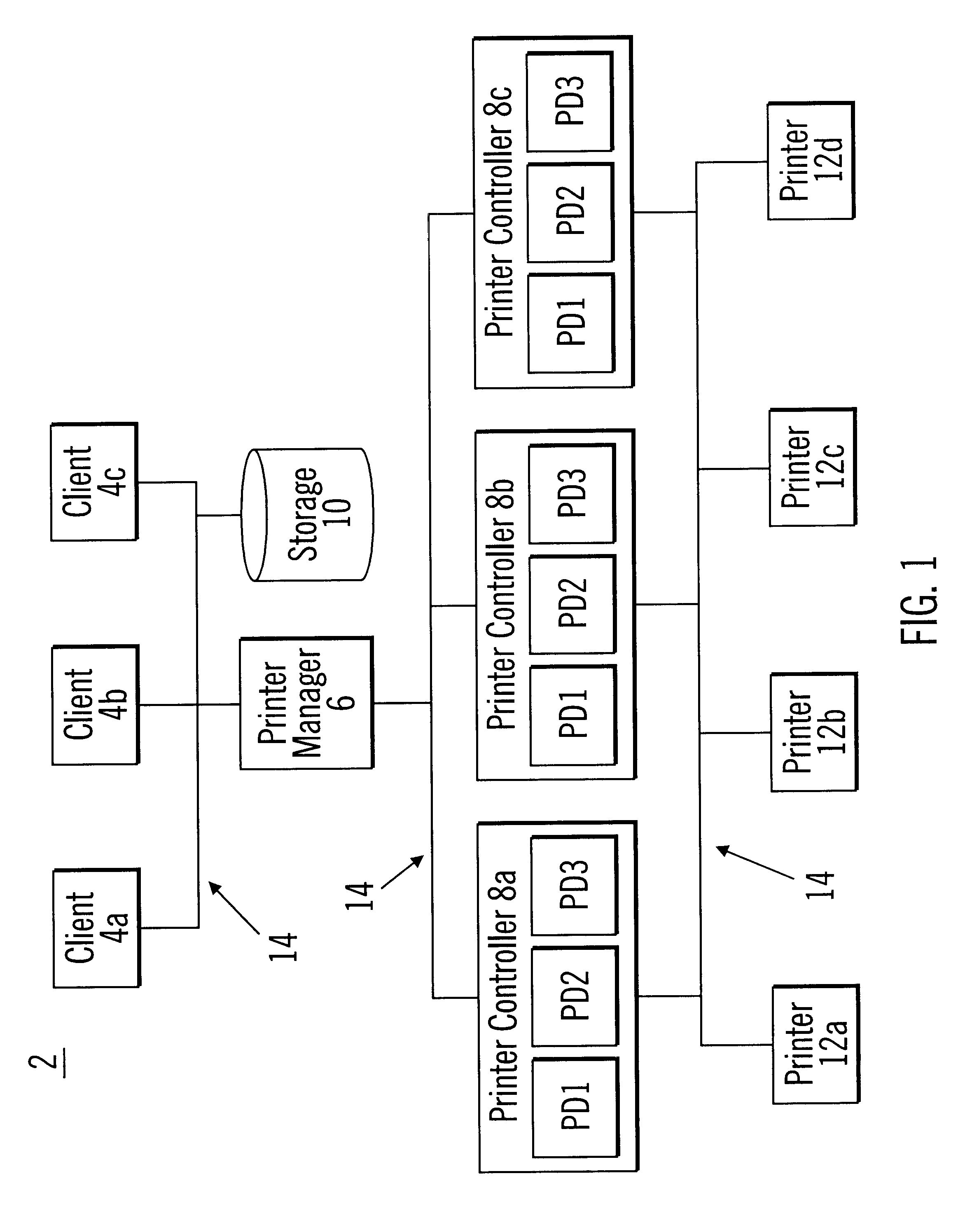

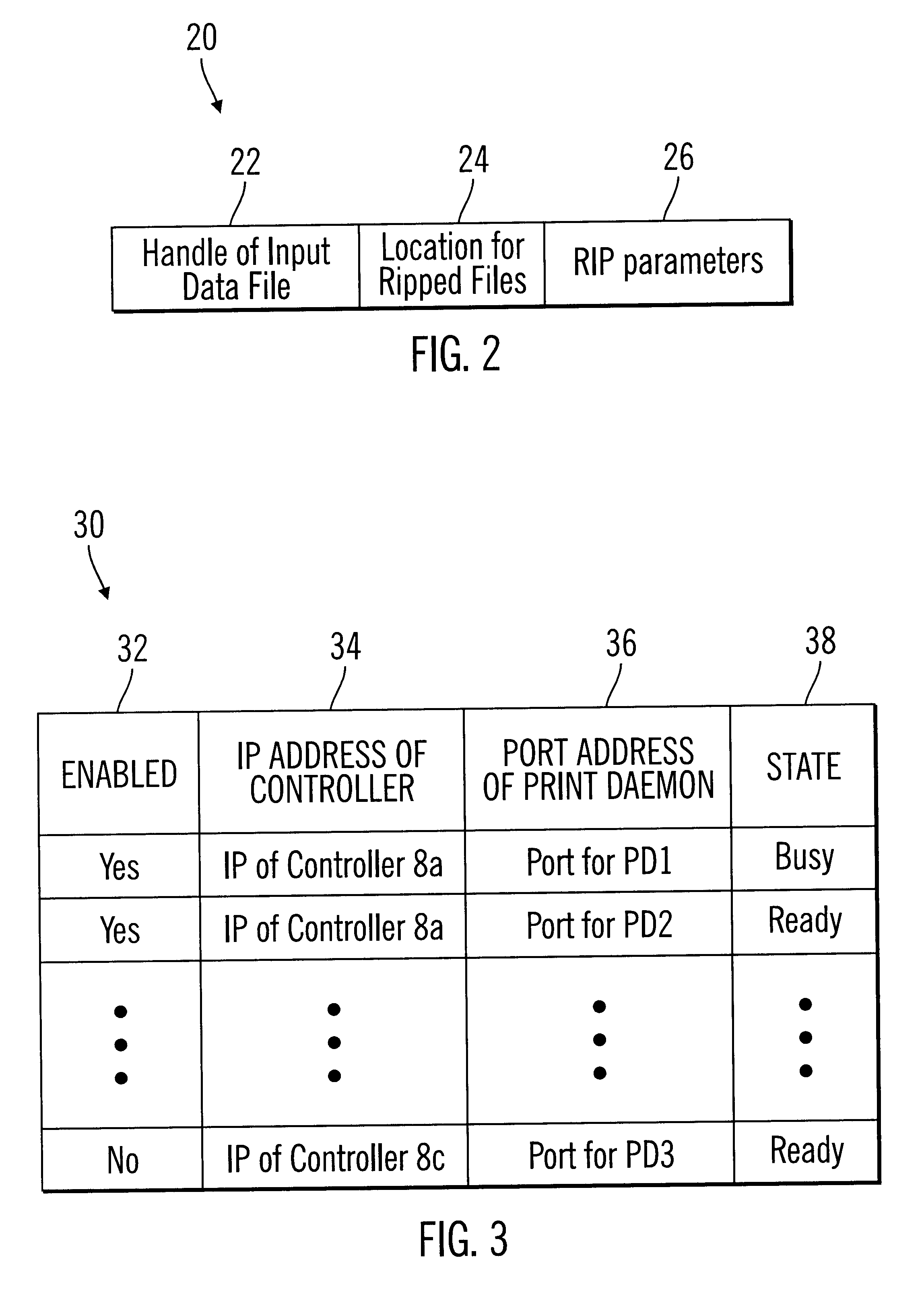

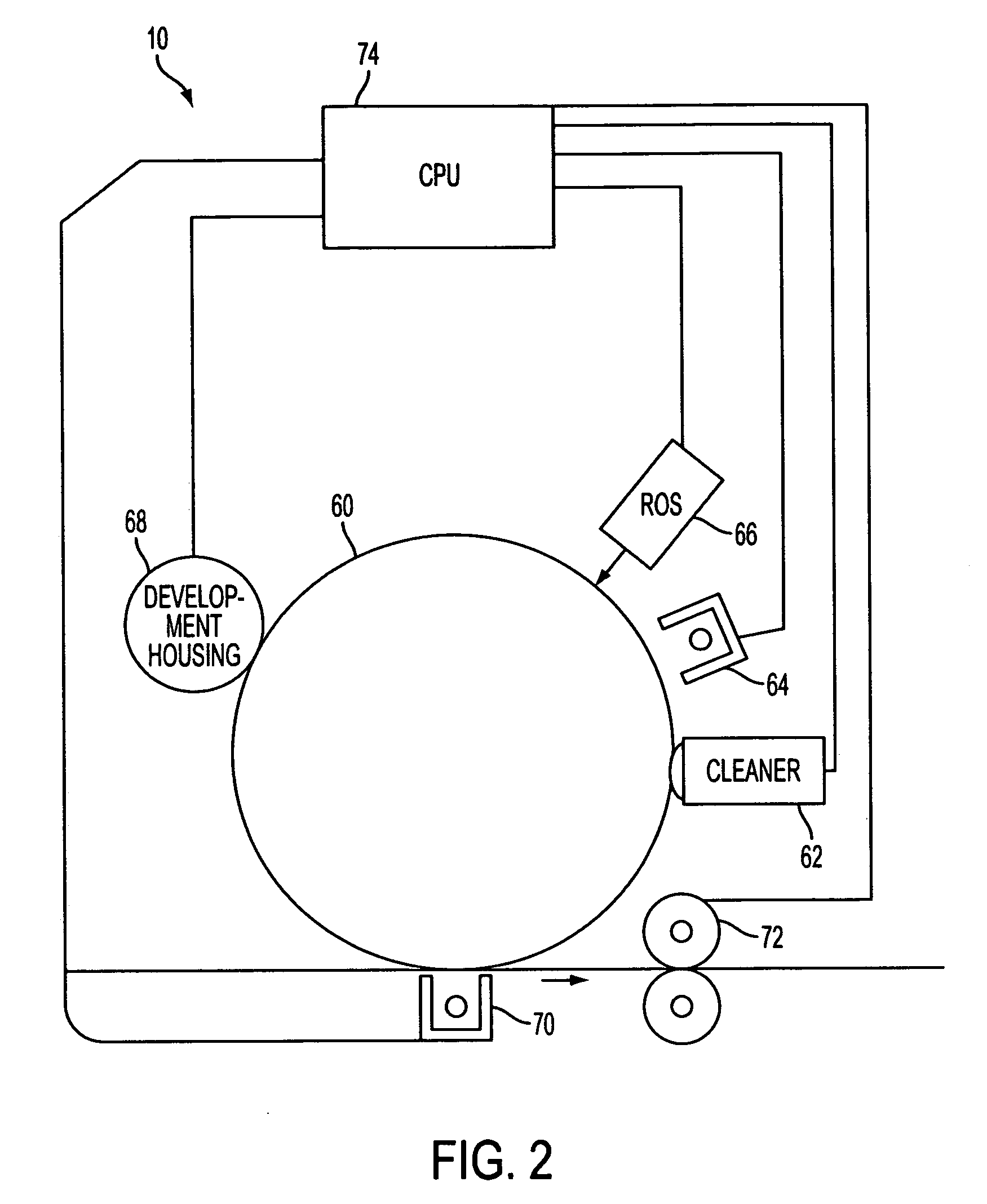

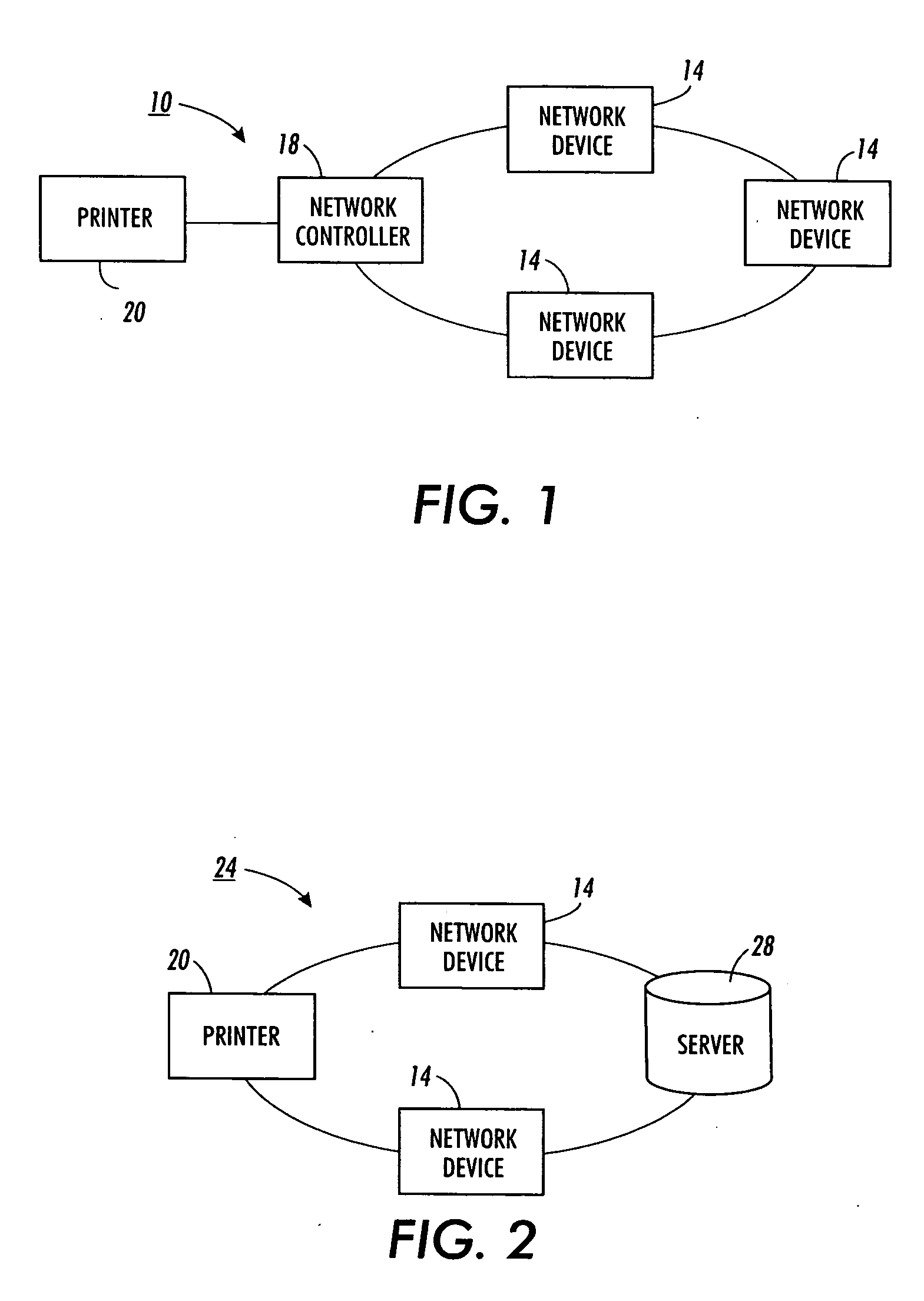

Load balancing for processing a queue of print jobs

InactiveUS6373585B1Maximize useSave consumptionDigital computer detailsVisual presentationData streamData file

A system is provided for processing a print job. A processing unit, such as a server, receives a plurality of print job files. Each print job file is associated with a data file. The print job files are maintained in a queue of print job files. The processing unit selects a print job file in the queue and processes a data structure indicating a plurality of transform processes and the availability of each indicated transform process to process a data file. The processing unit selects an available transform process, such as a RIP process, in response to processing the data structure and indicates in the data structure that the selected transform process is unavailable. The transform process processes the data file associated with the selected print job to generate a printer supported output data stream.

Owner:RICOH KK

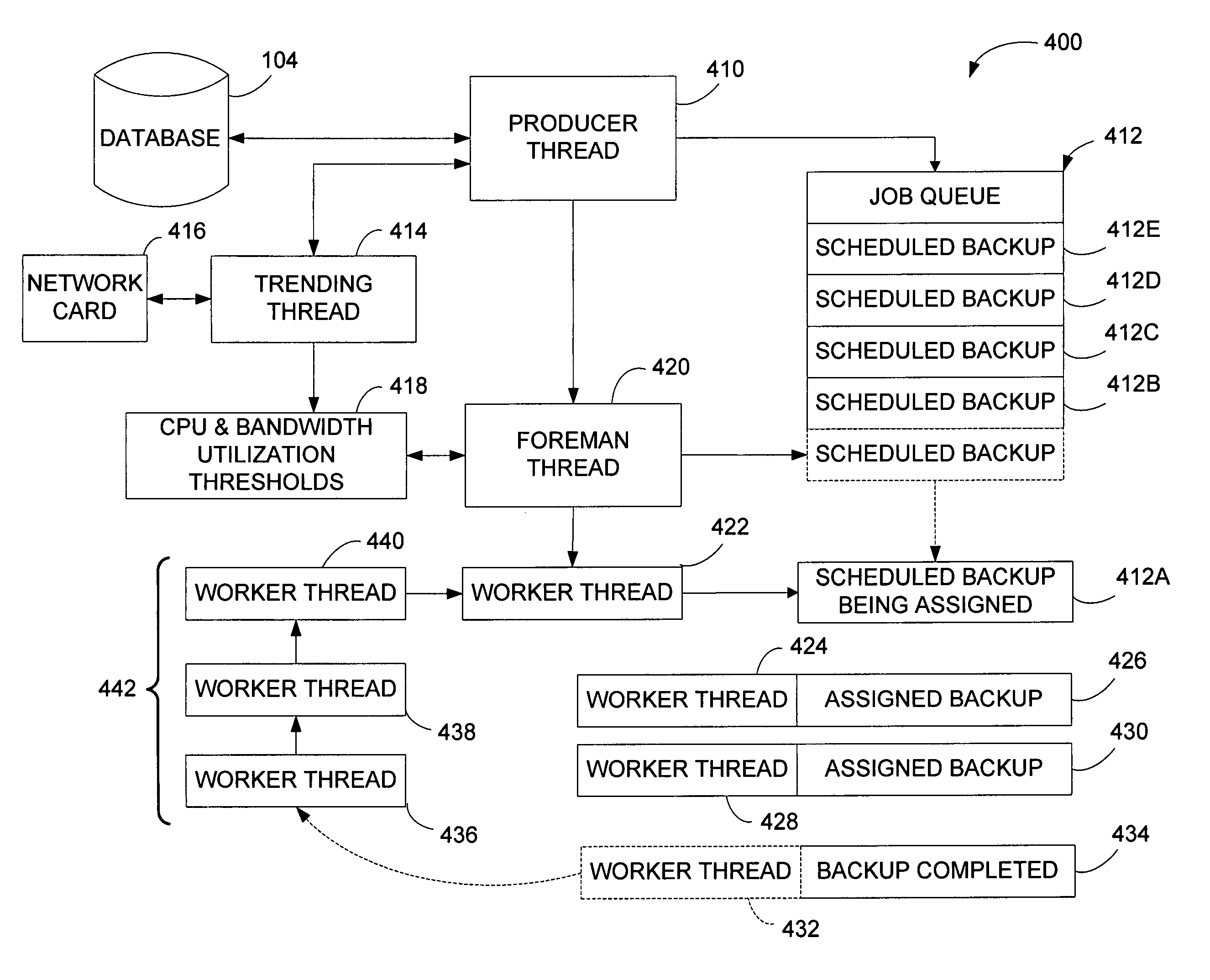

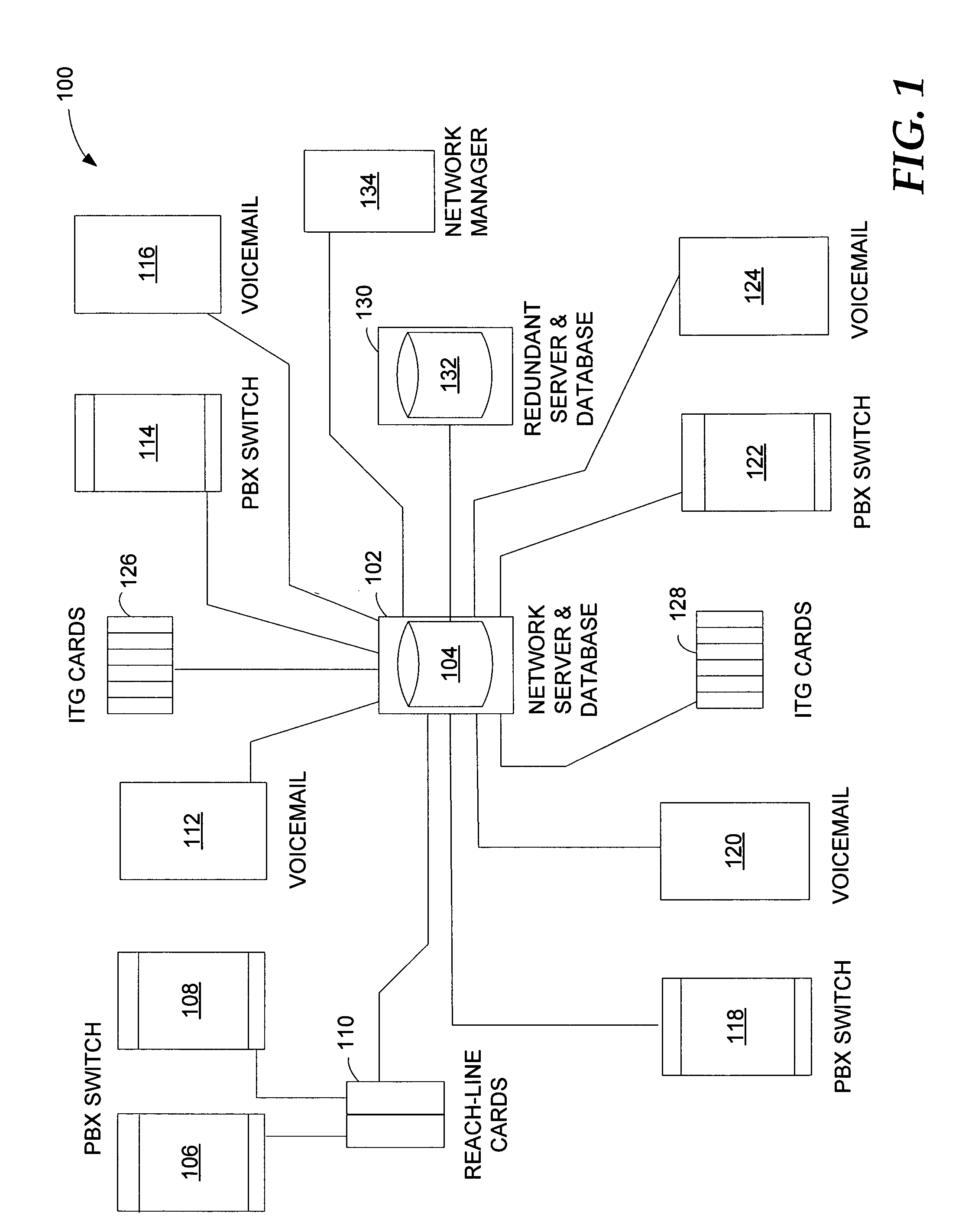

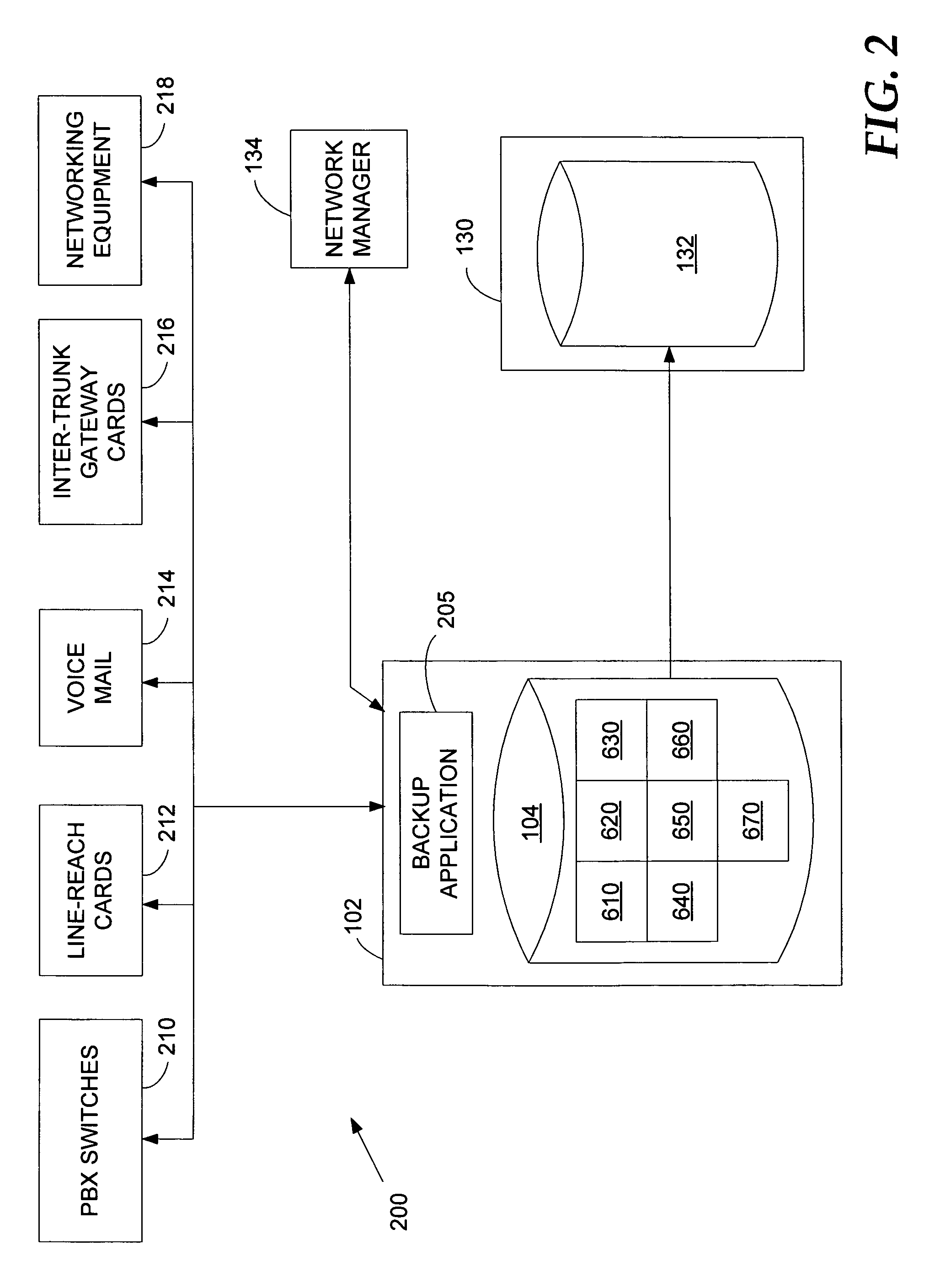

Method and system for backing up or restoring data in remote devices over a communications network

ActiveUS7467267B1Eliminate needError detection/correctionMemory systemsMonitoring systemNetwork communication

Methods and media are provided for backing up data stored in one or more network-communications devices that are located in a geographic area distinct from where the backup data is to be stored. System threads for performing interactive processing to initiate an automatic scheduling procedure and job queue using a producer thread, a trending thread for retrieving and monitoring system performance factors, a foreman thread for monitoring the system performance factors for determining when an assignment is performed, one or more worker threads capable of remotely extracting backup data from network equipment, determining and recording informational logs regarding a performed backup, and sending informational traps to a surveillance manager.

Owner:T MOBILE INNOVATIONS LLC

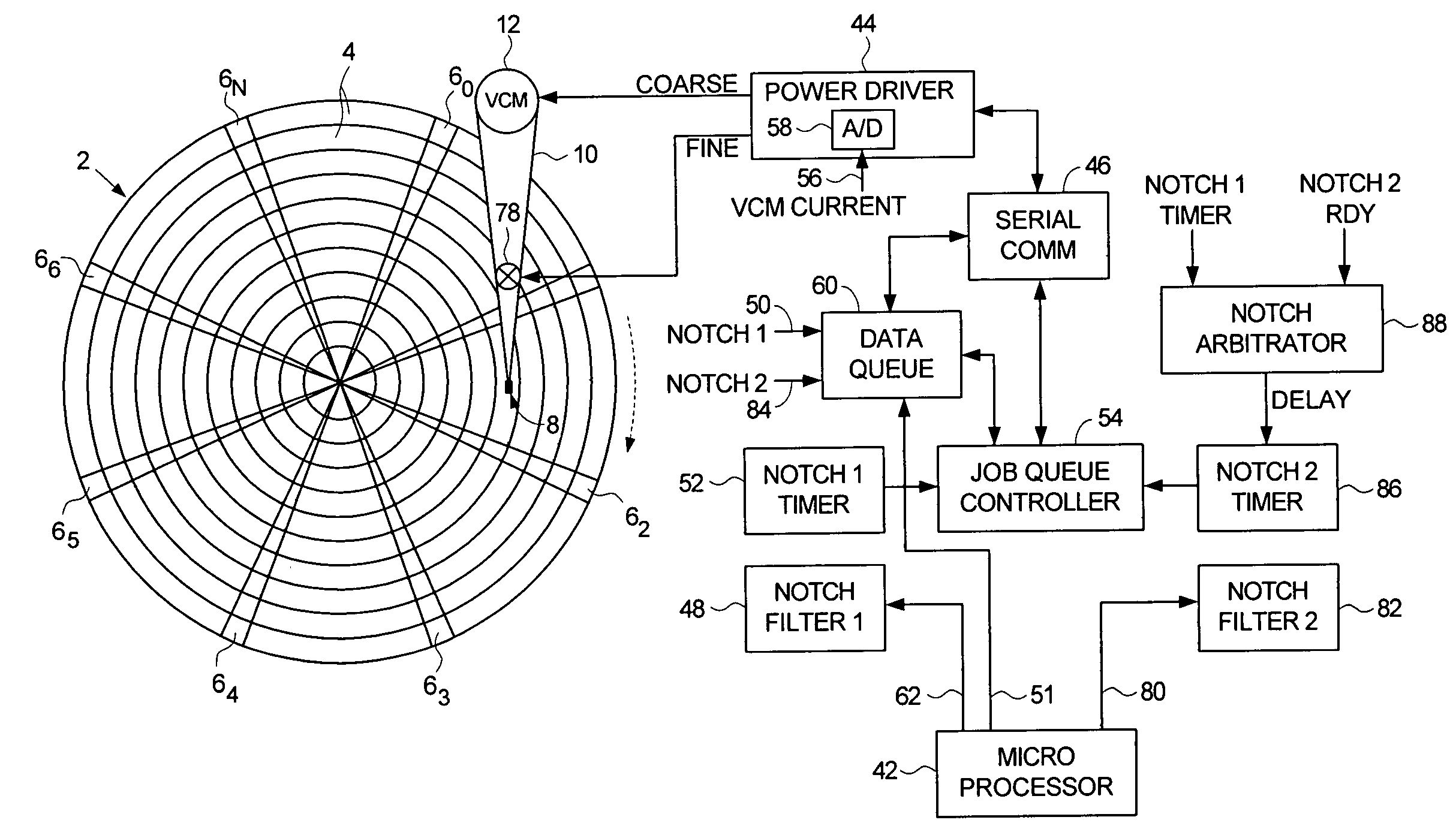

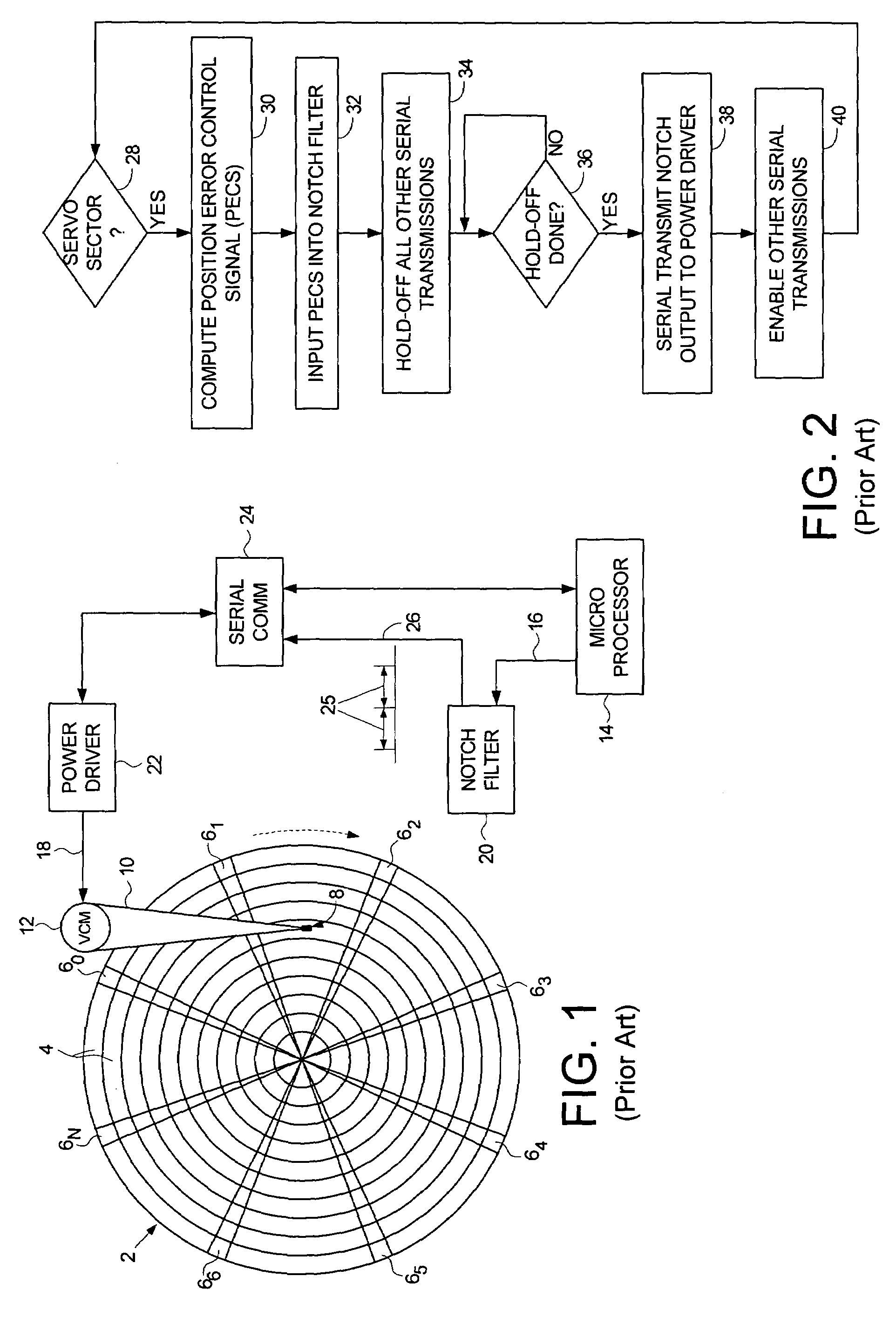

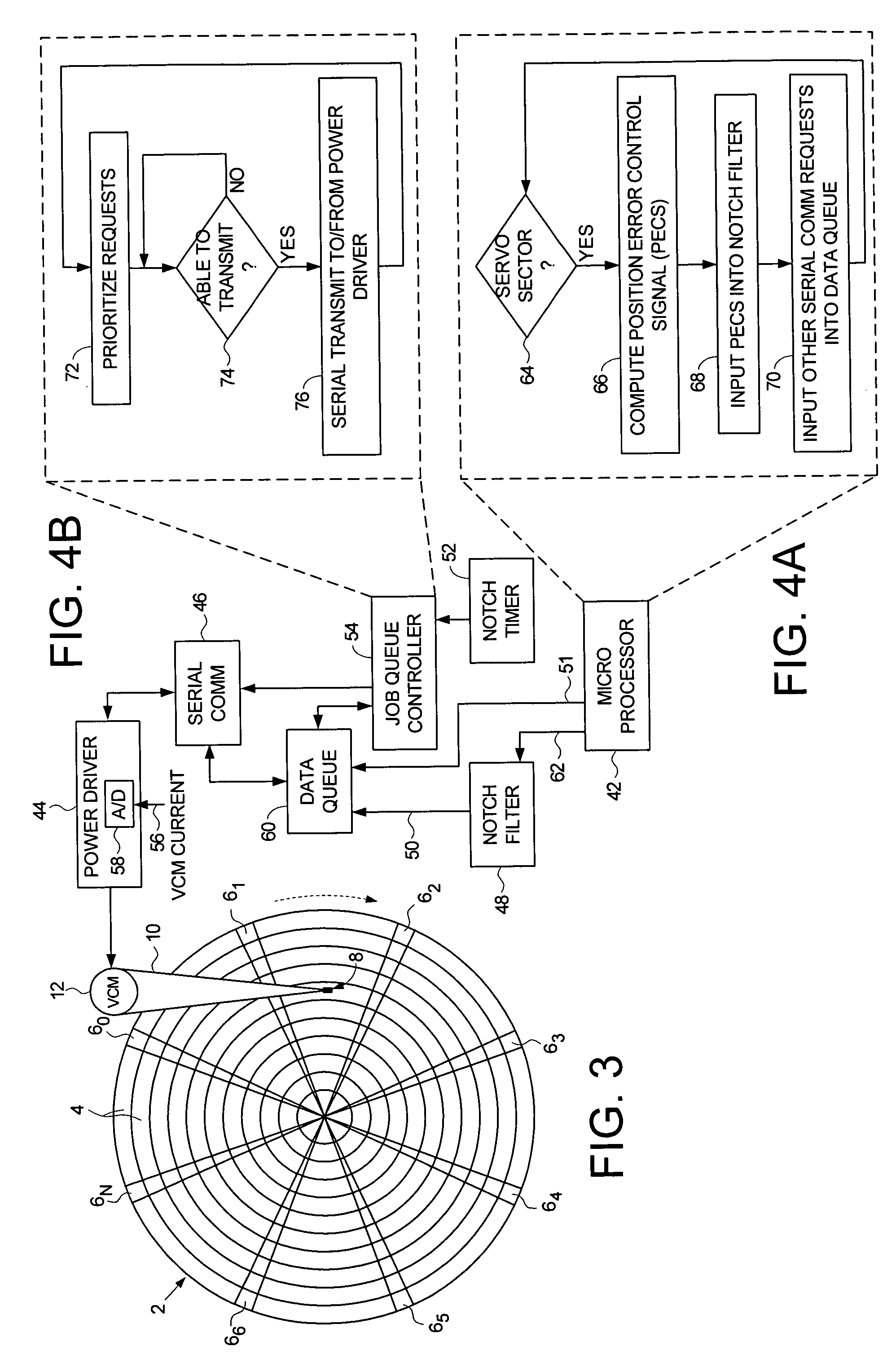

Disk drive using a timer to arbitrate periodic serial communication with a power driver

InactiveUS7106537B1Driving/moving recording headsFilamentary/web record carriersControl signalEngineering

A disk drive is disclosed comprising a microprocessor and a power driver for generating at least one actuator signal applied to the VCM actuator, wherein a serial communication circuit is used to communicate with the power driver. At least one control component periodically generates a control signal, wherein the control signal is transmitted at a substantially constant periodic interval using the serial communication circuit, and a timer times the periodic interval. A job queue controller queues and arbitrates serial communication requests to access the power driver using the serial communication circuit. The serial communication requests include a request to transmit the control signal and requests generated by the microprocessor. The job queue controller services a communication request generated by the microprocessor if the communication request can be serviced before the timer expires.

Owner:WESTERN DIGITAL TECH INC

Method, apparatus, and article of manufacture for a control system in a selective deposition modeling system

InactiveUS6490496B1Programme controlAdditive manufacturing apparatusControl systemSelective deposition

Techniques for controlling jobs in a selective deposition modeling (Selective Deposition Modeling) system. A client computer system can connect directly to a Selective Deposition Modeling system to work with a job queue located at the Selective Deposition Modeling system. Jobs in the job queue may be previewed in three-dimensional form. Moreover, the jobs in the job queue may be manipulated to move the jobs to different positions in the job queue or to delete a job. Additionally, multiple jobs may be automatically combined to generate a single build process.

Owner:3D SYST INC

Workflow control of reservations and regular jobs using a flexible job scheduler

InactiveUS20120198462A1Efficiently managing workflowGeneral purpose stored program computerMultiprogramming arrangementsWait stateActive state

A scheduler receives at least one flexible reservation request for scheduling in a computing environment comprising consumable resources. The flexible reservation request specifies a duration and at least one required resource. The consumable resources comprise at least one machine resource and at least one floating resource. The scheduler creates a flexible job for the at least one flexible reservation request and places the flexible job in a prioritized job queue for scheduling, wherein the flexible job is prioritizes relative to at least one regular job in the prioritized job queue. The scheduler adds a reservation set to a waiting state for the at least one flexible reservation request. The scheduler, responsive to detecting the flexible job positioned in the prioritized job queue for scheduling next and detecting a selection of consumable resources available to match the at least one required resource for the duration, transfers the selection of consumable resources to the reservation and sets the reservation to an active state, wherein the reservation is activated as the selection of consumable resources become available and has uninterrupted use of the selection of consumable resources for the duration by at least one job bound to the flexible reservation.

Owner:IBM CORP

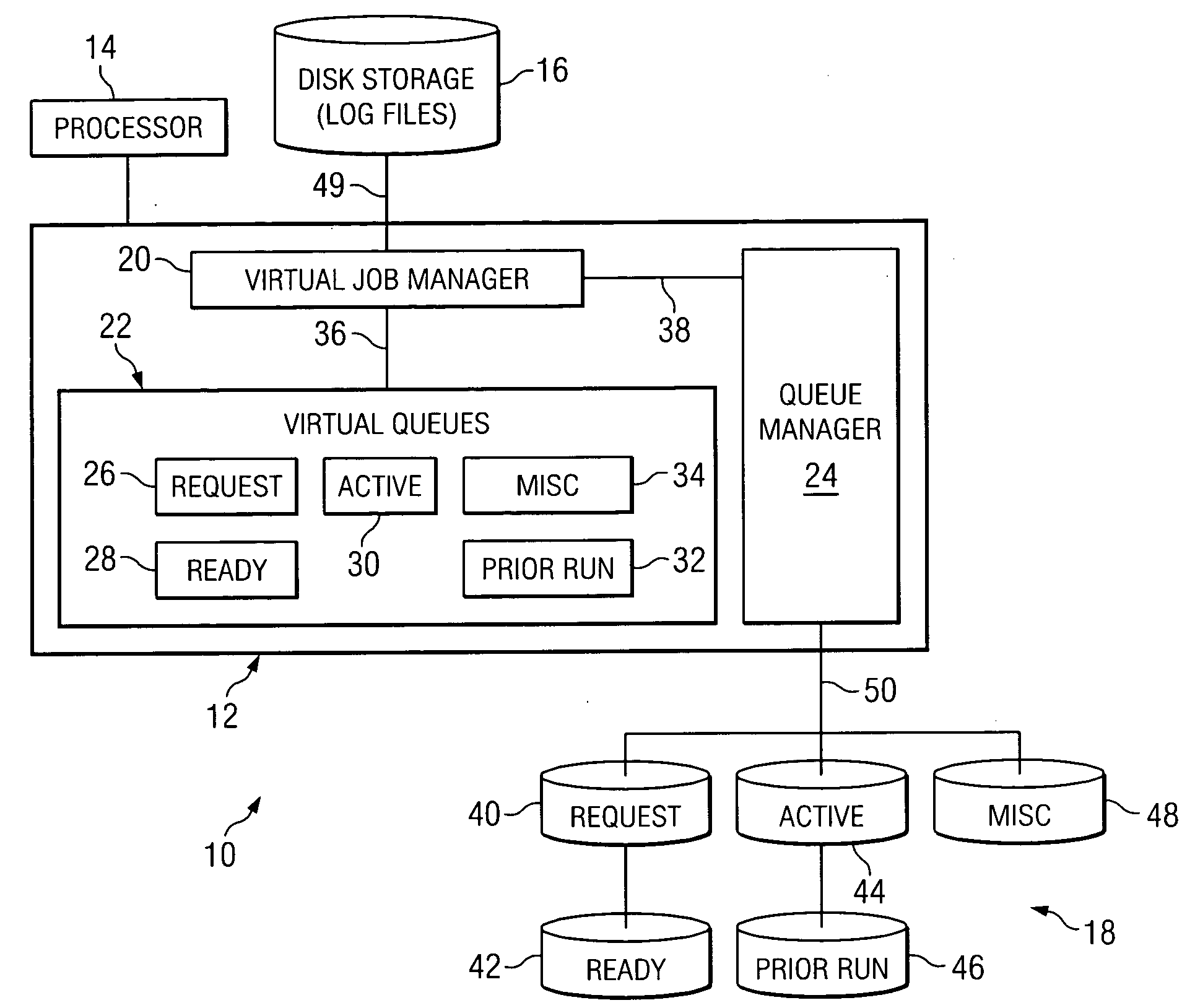

Method and system for scheduling jobs in a computer system

ActiveUS7898679B2Shorten access timeQuick dispatchMultiple digital computer combinationsProgram controlRandom access memoryComputerized system

A method for scheduling jobs in a computer system includes storing a plurality of job queue files in a random access memory, accessing at least one of the job queue files stored in random access memory, and scheduling, based in part on the at least one job queue file, execution of a job associated with the at least one job queue file. In a more particular embodiment, a method for scheduling jobs in a computer system include storing a plurality of job queue files in a random access memory. The plurality of job queue files include information associated with at least one job. The method also includes storing the information external to the random access memory and accessing at least one of the job queue files stored in the random access memory. The method also includes scheduling, based at least in part on the at least one job queue file, execution of a job associated with at least one job queue file. In response to the scheduling, the method includes updating the information stored in the job queue file and random access memory and the information stored external to the random access memory.

Owner:COMP ASSOC THINK INC

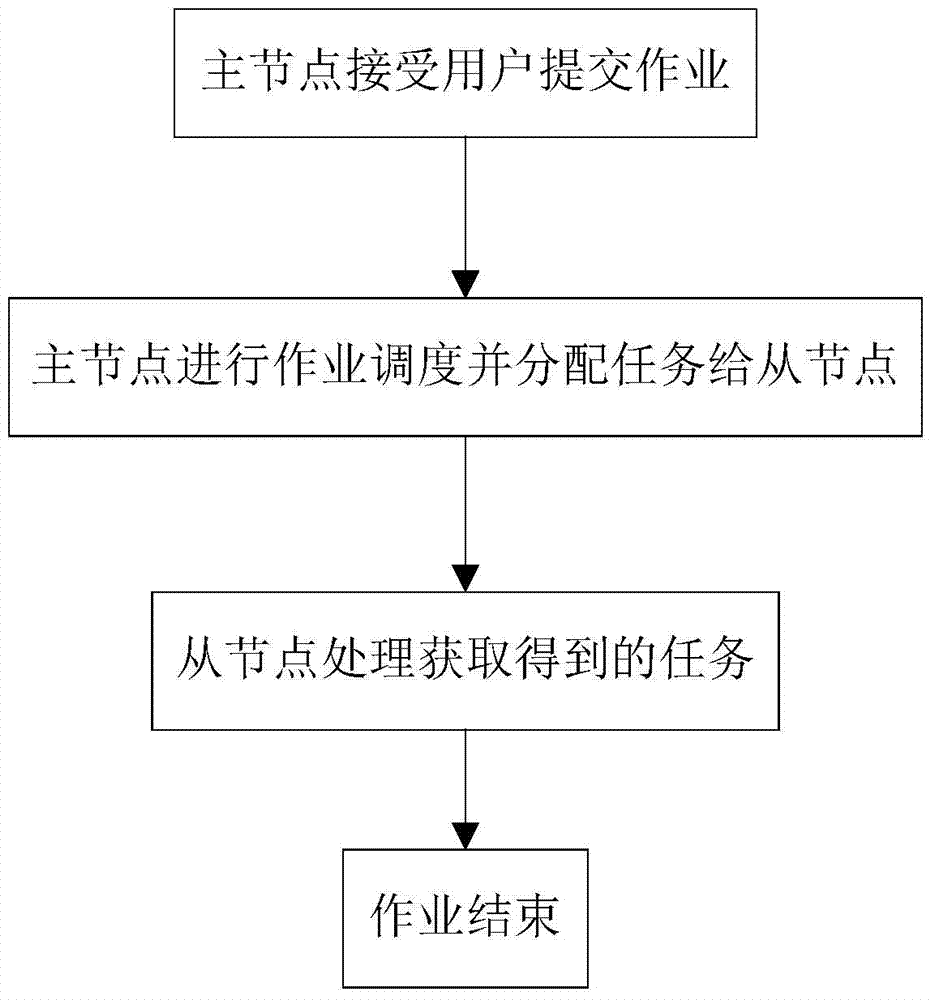

Task optimization scheduling method based on Hadoop

The invention discloses a task optimization scheduling method based on Hadoop, comprising analyzing resource demands of the operating tasks in all jobs of every node in a Hadoop cluster, predicating the resource demand conditions of unexecuted tasks; allocating tasks to job nodes according to the occupation conditions of resources; wherein resources comprise a cluster CPU, a memory and an input output bandwidth IO; allocating the tasks to the task trackers of the job nodes through job schedulers, updating the waiting task lists of the job nodes, optimizing a task executing sequence according to a rule that local tasks are prior, configuring local resources according to the sequence, carrying out the jobs; when the job queue of a node in the cluster is empty and no task is in the current job queue according to inquiry, taking three indexes: file data backup quantities, idle time prediction values of all nodes in the cluster and disk capacities as parameters, and executing other waiting tasks in the Hadoop cluster. According to the invention, the utilization efficiency of the cluster resources is optimized.

Owner:THE 28TH RES INST OF CHINA ELECTRONICS TECH GROUP CORP

Scheduling system

InactiveUS20060268317A1Minimizing printer downtimeMaximizing continuous printer run timeDigitally marking record carriersDigital computer detailsOperation schedulingComputer science

A system suited to scheduling print jobs for a printing system includes a first processing component which identifies preliminary attributes of print jobs to be printed on sheets. A job scheduler receives the preliminary attributes and assigns each of the print jobs to one of a plurality of job queues in time order for printing. Print jobs spanning the same time are scheduled for printing contemporaneously. In one mode of operation, the assignment of the print jobs to the job queues is based on their preliminary attributes and on the application of at least one constraint which affects contemporaneous printing of at least two of the plurality of print jobs. A second processing component identifies detailed attributes of the print jobs. A sheet scheduler receives information on the assignments of the print jobs and their detailed attributes and forms an itinerary for each sheet to be printed.

Owner:XEROX CORP

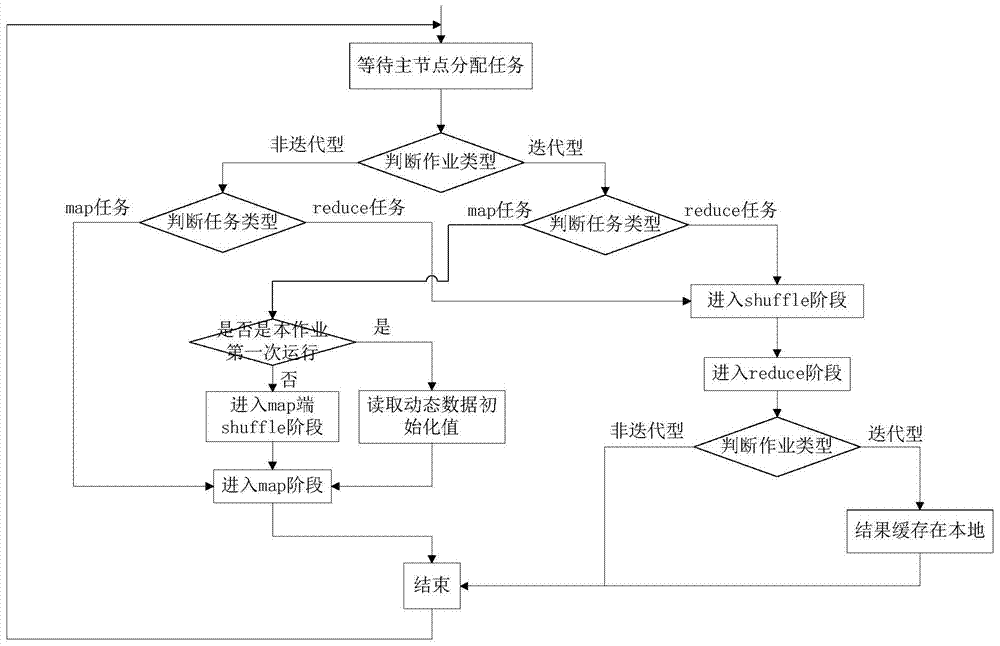

MapReduce optimizing method suitable for iterative computations

ActiveCN103617087AIncrease the localization ratioReduce latency overheadResource allocationTrunkingDynamic data

The invention discloses a MapReduce optimizing method suitable for iterative computations. The MapReduce optimizing method is applied to a Hadoop trunking system. The trunking system comprises a major node and a plurality of secondary nodes. The MapReduce optimizing method comprises the following steps that a plurality of Hadoop jobs submitted by a user are received by the major node; the jobs are placed in a job queue by a job service process of the major node and wait for being scheduled by a job scheduler of the major node; the major node waits for a task request transmitted from the secondary nodes; after the major node receives the task request, localized tasks are scheduled preferentially by the job scheduler of the major node; and if the secondary nodes which transmit the task request do not have localized tasks, prediction scheduling is performed according to task types of the Hadoop jobs. The MapReduce optimizing method can support the traditional data-intensive application, and can also support iterative computations transparently and efficiently; dynamic data and static data can be respectively researched; and data transmission quantity can be reduced.

Owner:HUAZHONG UNIV OF SCI & TECH

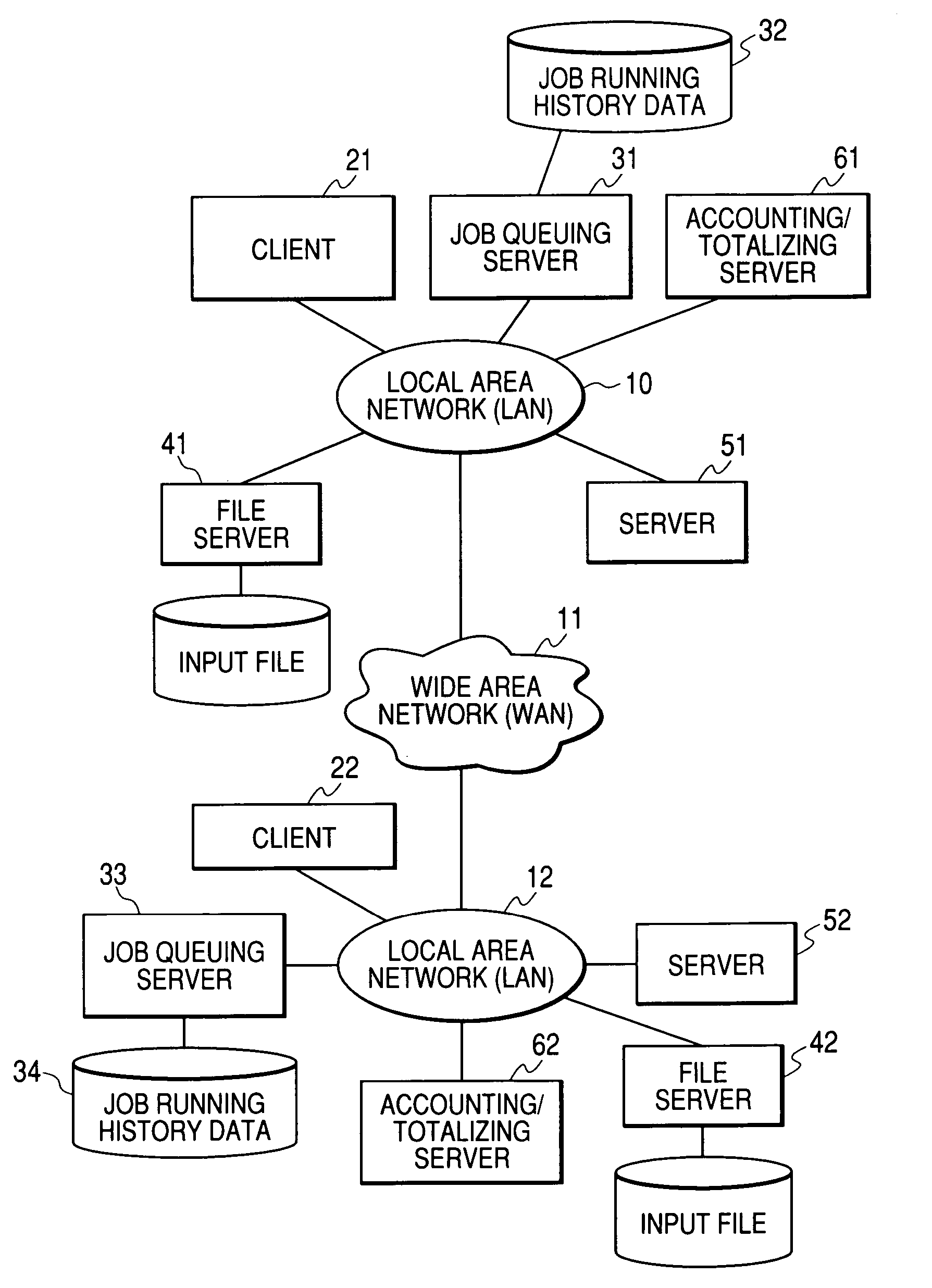

Decentralized processing system, job decentralized processing method, and program

InactiveUS20050144234A1Easy to operateEnsure correct executionProgram initiation/switchingResource allocationOperating systemJob queue

It is possible to realize job distribution in a plurality of computer systems, considering the job completion dead-lines. Jobs queuing servers (31,33) have a function to share operating information on each computer system, a function to forecast an execution completed date of a job entered by pointing an optimization of an execution priority and an execution term; and a function to forecast again the execution completed date of the job in the execution priority modified according to the forecasted result, and a function to request for a job execution to another computer system to share the operating information according to the forecasted result.

Owner:PANASONIC CORP

Grid computing system having node scheduler

InactiveUS20060167966A1Multiple digital computer combinationsProgram controlInformation repositoryResource utilization

A scheduler for a grid computing system includes a node information repository and a node scheduler. The node information repository is operative at a node of the grid computing system. Moreover, the node information repository stores node information associated with resource utilization of the node. Continuing, the node scheduler is operative at the node. The node scheduler is configured to determine whether to accept jobs assigned to the node. Further, the node scheduler includes an input job queue for accepted jobs, wherein each accepted job is launched at a time determined by the node scheduler using the node information.

Owner:HEWLETT PACKARD DEV CO LP

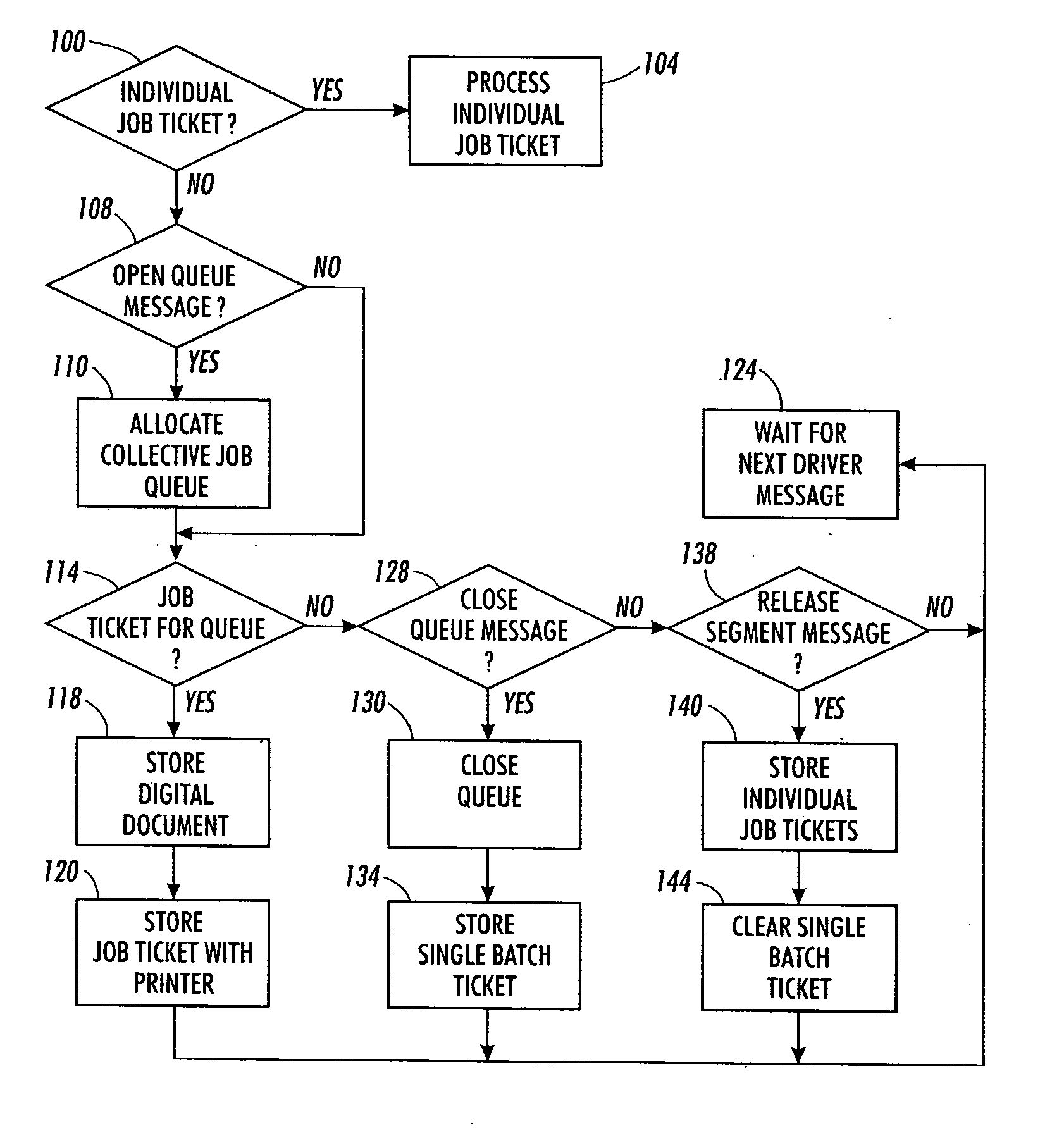

Method and system for managing print job files for a shared printer

InactiveUS20060033958A1Promote recoveryEfficient printingDigitally marking record carriersVisual presentation using printersComputer scienceQueue manager

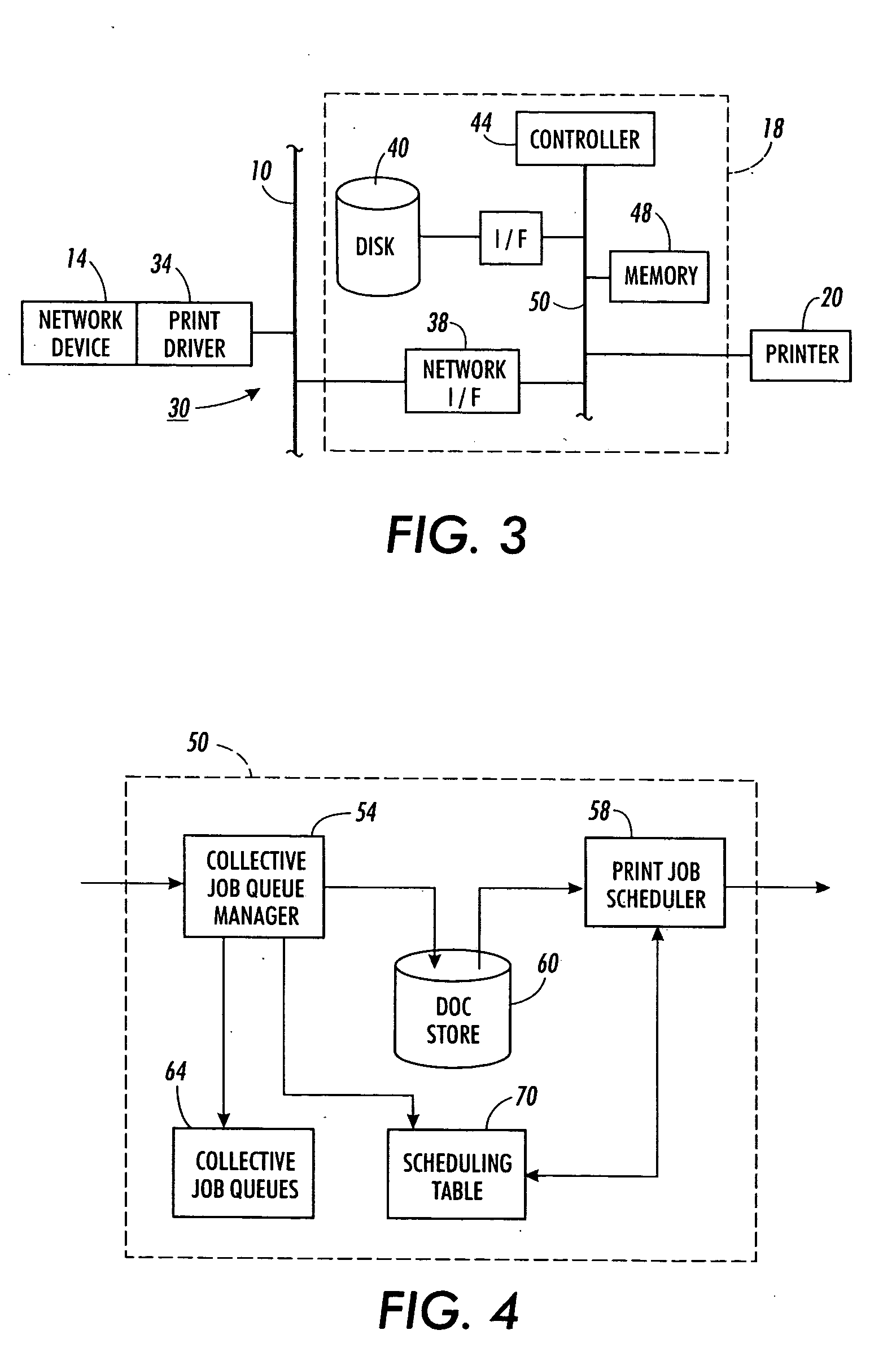

A system and method enable a user to generate a single batch job ticket for a plurality of print job tickets. The system includes a print driver, a print job manager, and a print engine. The print driver enables a user to request generation of a collective job queue and to provide a plurality of job tickets for the job queue. The print job manager includes a collective job queue manager and a print job scheduler. The collective job queue manager collects job tickets for a job queue and generates a single batch job ticket for the print job scheduling table when the job queue is closed. The print job scheduler selects single batch job tickets in accordance with various criteria and releases the job segments to a print engine for contiguous printing of the job segments.

Owner:XEROX CORP

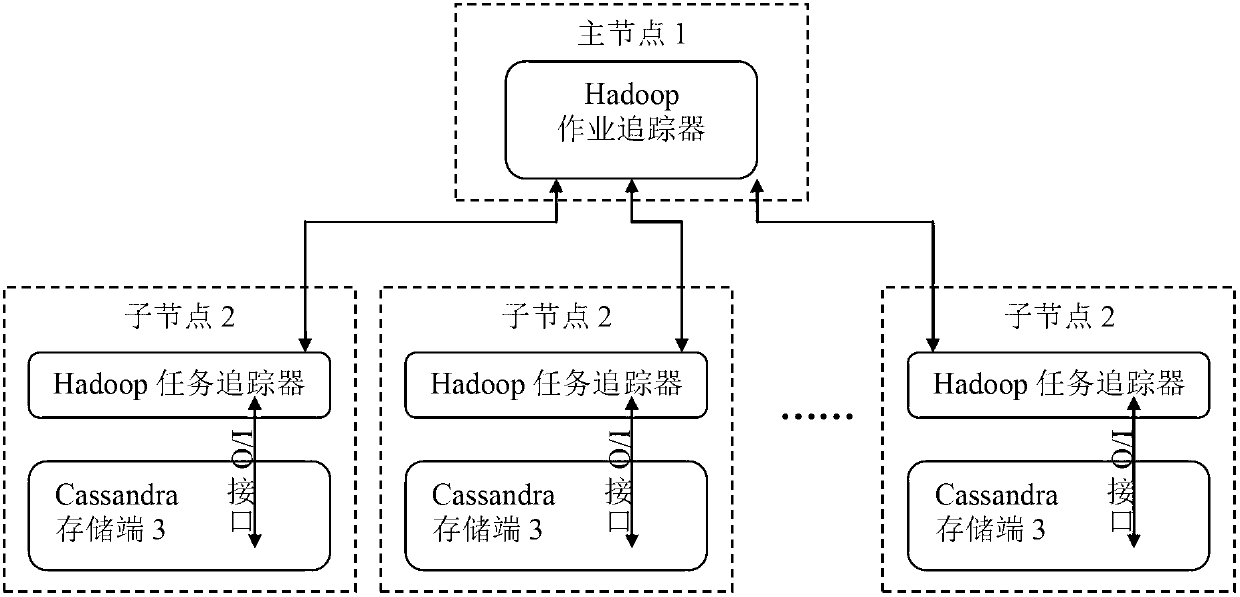

Data parallel processing system based on Cassandra

ActiveCN103106249AImprove reliabilityImprove usabilitySpecial data processing applicationsJob trackerParallel processing

The invention discloses a data parallel processing system based on Cassandra. The data parallel processing system based on the Cassandra comprises a Hadoop main node, a plurality of Hadoop auxiliary nodes and a Cassandra storage end arranged on the Hadoop auxiliary node, wherein the main node comprises a user interface module, a Cassandra inquiring module, a job scheduling module, a job queue module, and a job tracker, wherein the auxiliary node comprises a task tracker, an input module, an output module and a Mapreduce module, the user interface module is used for receiving a user request, and judging that the type of the user request is a data inquiring request, or a submitting data processing job request, or a job information inquiring request, if the type of the user request is the data inquiring request, the user interface module sends the data inquiring request to the Cassandra inquiring module, if the type of the user request is the submitting data processing job request or the job information inquiring request, and the user interface module sends the submitting data processing job request or the job information inquiring request to the job scheduling module. The data parallel processing system based on the Cassandra has the advantages of being high in reliability, good in expansibility, and high in a throughput rate. The data parallel processing system based on the Cassandra has the capacity of simply inquiring and rapidly responding to the data, and meanwhile has the complex processing capacity to mass data.

Owner:HUAZHONG UNIV OF SCI & TECH

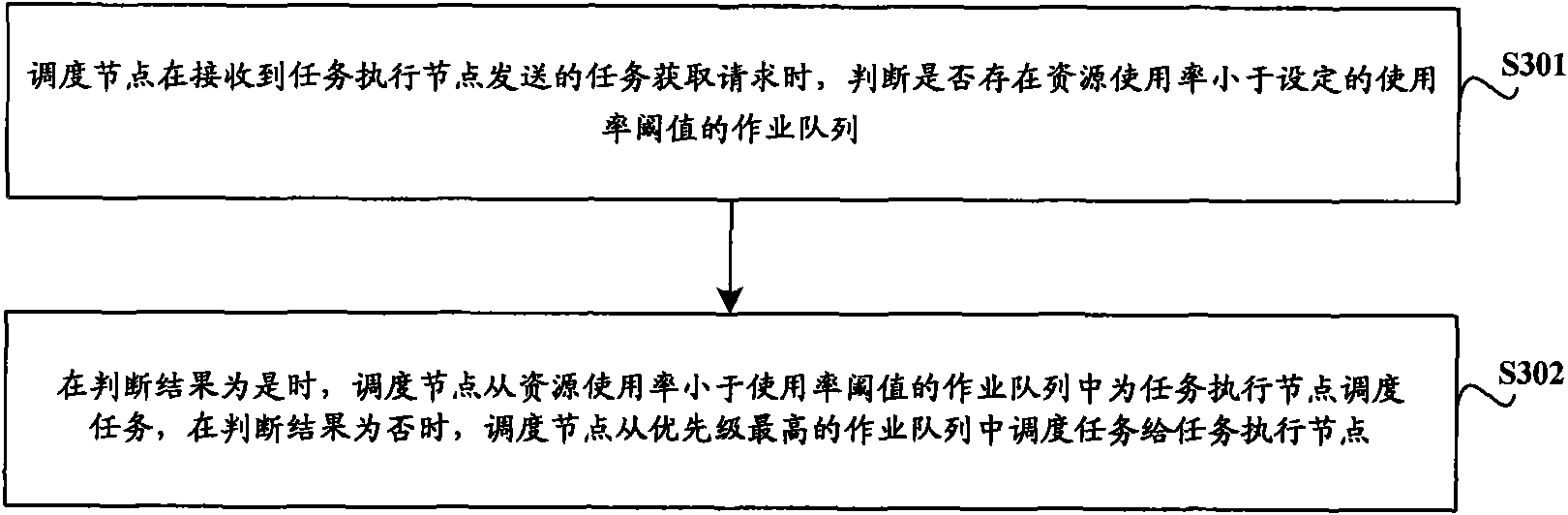

Multi-queue task scheduling method and related system and equipment

InactiveCN102096599ATimely schedule operationOptimize resource allocationResource allocationResource utilizationResource allocation

The invention discloses a multi-queue task scheduling method and related system and equipment, which are used for solving the problem that differential resource allocation cannot be performed for each job queue because the priority of the queue is not supported during the conventional multi-queue task scheduling. The multi-queue task scheduling method comprises the following steps that: a scheduling node judges whether a job queue with a resource utilization ratio less than a set utilization ratio threshold exists or not when a task acquisition request transmitted by a task execution node is received; if the job queue is available, the scheduling node schedules a task to the task execution node from the job queue with the resource utilization ratio less than the utilization ratio threshold; and if the job queue is not available, the scheduling node schedules the task to the task execution node from the job queue with the highest priority.

Owner:CHINA MOBILE COMM GRP CO LTD

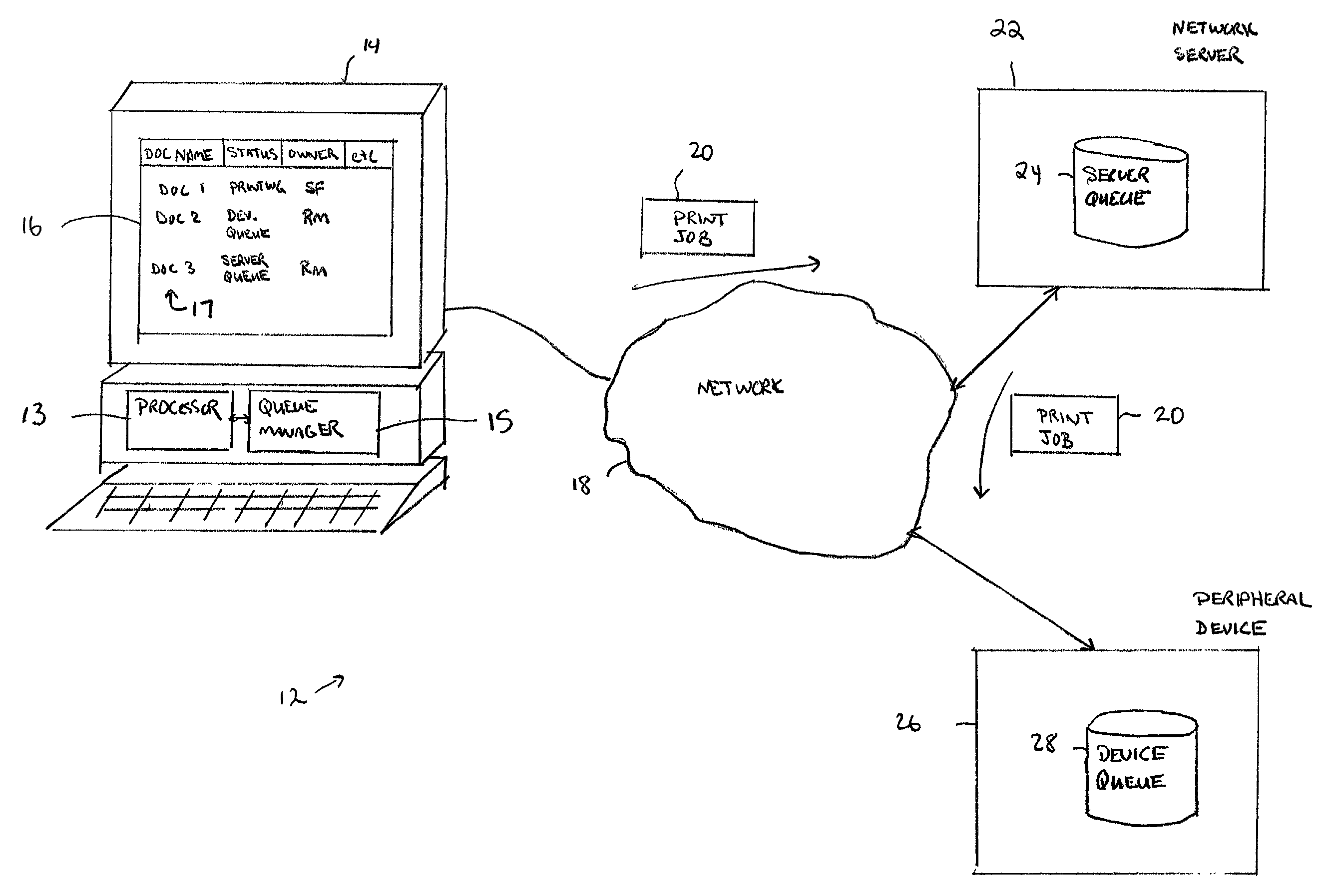

Method and apparatus for managing job queues

A queue manager monitors status of a server queue in a network server and status of a device queue in a peripheral device at the same time. A user interface displays the status of jobs in the server queue and device queue on the same display and allows a user to manipulate any of the jobs on either queue using the same user interface.

Owner:SHARP KK

Multiple independent intelligent pickers with dynamic routing in an automated data storage library

InactiveUS6438459B1Improve efficiencyAvoid interferenceFilamentary/web carriers operation controlInput/output to record carriersTelecommunications linkCommunication link

Multiple intelligent pickers for an automated data storage library, the library having a library controller which identifies the start and end locations of the received move jobs, and places the move jobs in a job queue. The picker processor receives information over a communication link from other pickers describing movement information for the current move job of each of the other pickers. Upon completion of a move job, the picker communicates with the library controller, selecting a move job which avoids interference with the movement of the other pickers. The movement for the selected move job is determined so as to avoid interference with the stored movement profiles of the other pickers. A movement profile may be communicated to the other pickers over the communication link, and the move job is conducted according to the movement profile. Alternatively, movement information comprising the current location and vector are communicated.

Owner:IBM CORP

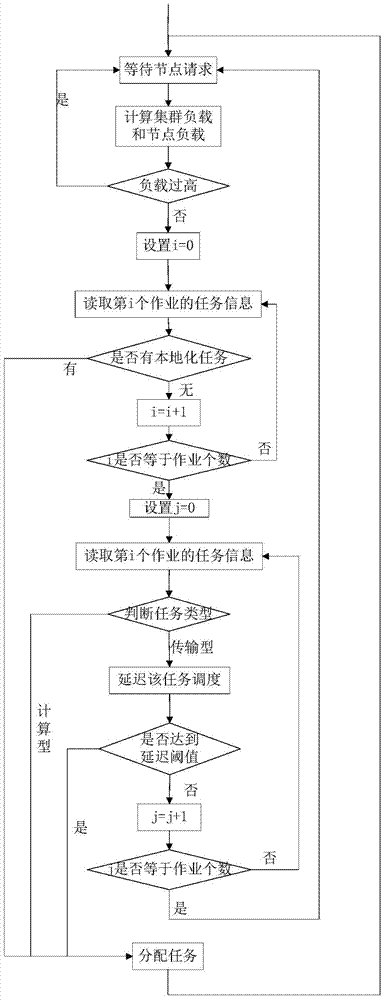

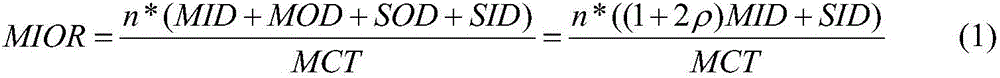

Multi-tenant resource optimization scheduling method facing different types of loads

ActiveCN106502792AAchieve localizationImprove throughputResource allocationResource managementDistributed computing

The invention relates to a multi-tenant resource optimization scheduling method facing different types of loads. The multi-tenant resource optimization scheduling method comprises the following steps: 1, submitting jobs by system tenants and adding the jobs into a job queue; 2, collecting job load information and sending the job load information to a resource manager; 3, judging different load types of the jobs according to the job load information by the resource manager, and sending type information to a job scheduler; 4, carrying out job scheduling according to the different load types by the job scheduler; if the job is a calculation intensive type job, scheduling at the node; if the job is an I / O intensive type job, delaying and waiting; and 5, collecting job scheduling decision-making information to a scheduling reconstruction decision-making device, reconstructing a target calculation node, and carrying out the job scheduling according to a final decision-making result. According to the method provided by the invention, a multi-tenant shared cluster is realized, so that the cost of establishing an independent cluster is reduced, and meanwhile, a plurality of tenants can share more big data set resources. The better data locality is realized facing optimization of the different types of loads, and the balance between the equity and efficiency in a job scheduling process can be realized very well; and calculation performances of the whole cluster, such as throughput rate and job responding time, are improved.

Owner:SOUTH CHINA UNIV OF TECH

Intelligent scheduler for multi-level exhaustive scheduling

InactiveUS20050097556A1Reduced resourceTasks to be executed more efficientlyProgram initiation/switchingMemory systemsAnalysis workingLow resource

A method and apparatus are provided for scheduling tasks within a computing device such as a communication switch. When a task is to be scheduled, other tasks in the work queue are analyzed to see if any can be executed simultaneously with the task to be scheduled. If so, the two tasks are combined to form a combined task, and the combined task is placed within the job queue. In addition, if the computing device has insufficient resources to execute the task to be scheduled, the task is placed back into the work queue for future scheduling. This is done in a way which avoids immediate reselection of the task for scheduling. Task processing efficiency is increased, since combining tasks reduces the waiting time for lower priority tasks, and tasks for which there are insufficient resources are delayed only a short while before a new scheduling attempt, rather than rejecting the task altogether.

Owner:ALCATEL LUCENT SAS

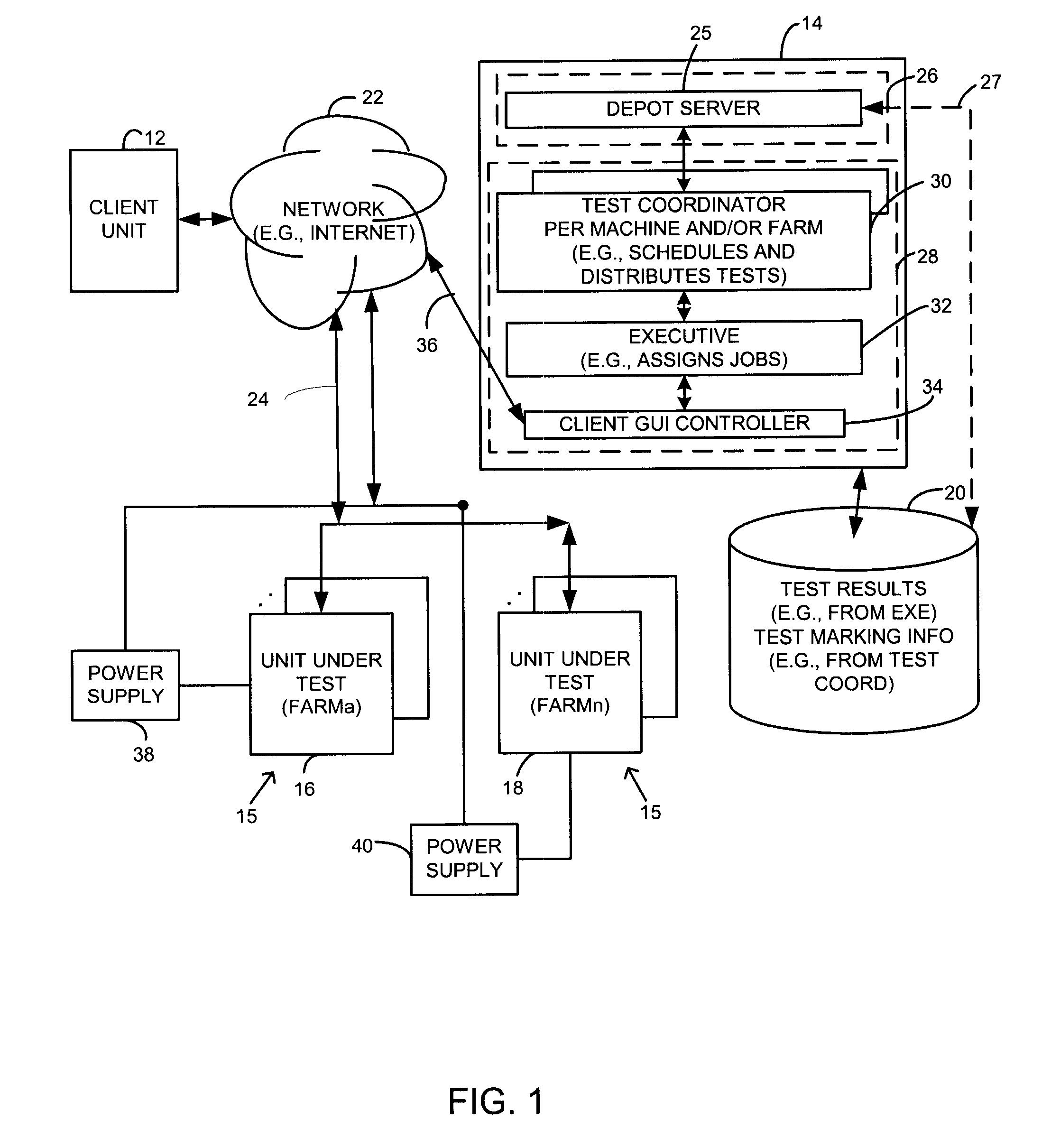

Software or hardware test apparatus and method

InactiveUS20070244663A1Resistance/reactance/impedenceNuclear monitoringParallel computingTest status

A software or hardware test system and method repeatedly obtains testing status of a plurality of test units in a group while the test units are testing hardware or software being executed on the test units. The system and method provides for display of the current testing status of the plurality of units of the group while the plurality of test units is performing software testing. In another embodiment, a test system and method compiles heuristic data for a plurality of test units that are assigned to one or more groups of test units. The heuristic data may include, for example, data representing a frequency of use on a per-test unit basis over a period of time, and other heuristic data. The test system and method evaluates job queue sizes on a per-group basis to determine whether there are under-utilized test units in the group and determines on a per-group of test unit basis whether a first group allows for dynamic reassignment of a test unit in the group based on at least the compiled heuristic data. If the unit is allowed to be dynamically reassigned, the test system and method reassigns the test unit whose heuristic data meets a specific set of criteria.

Owner:ATI TECH INC

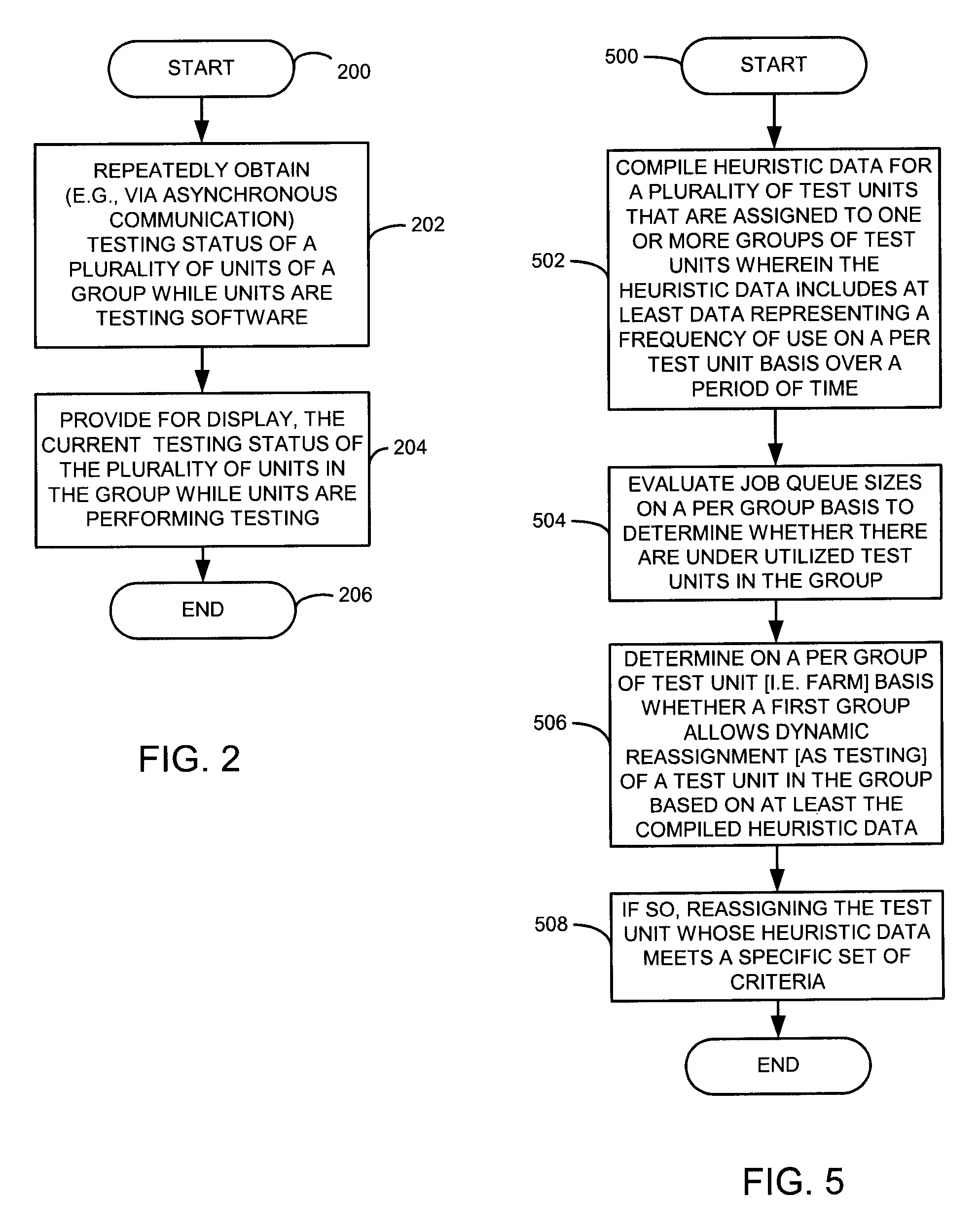

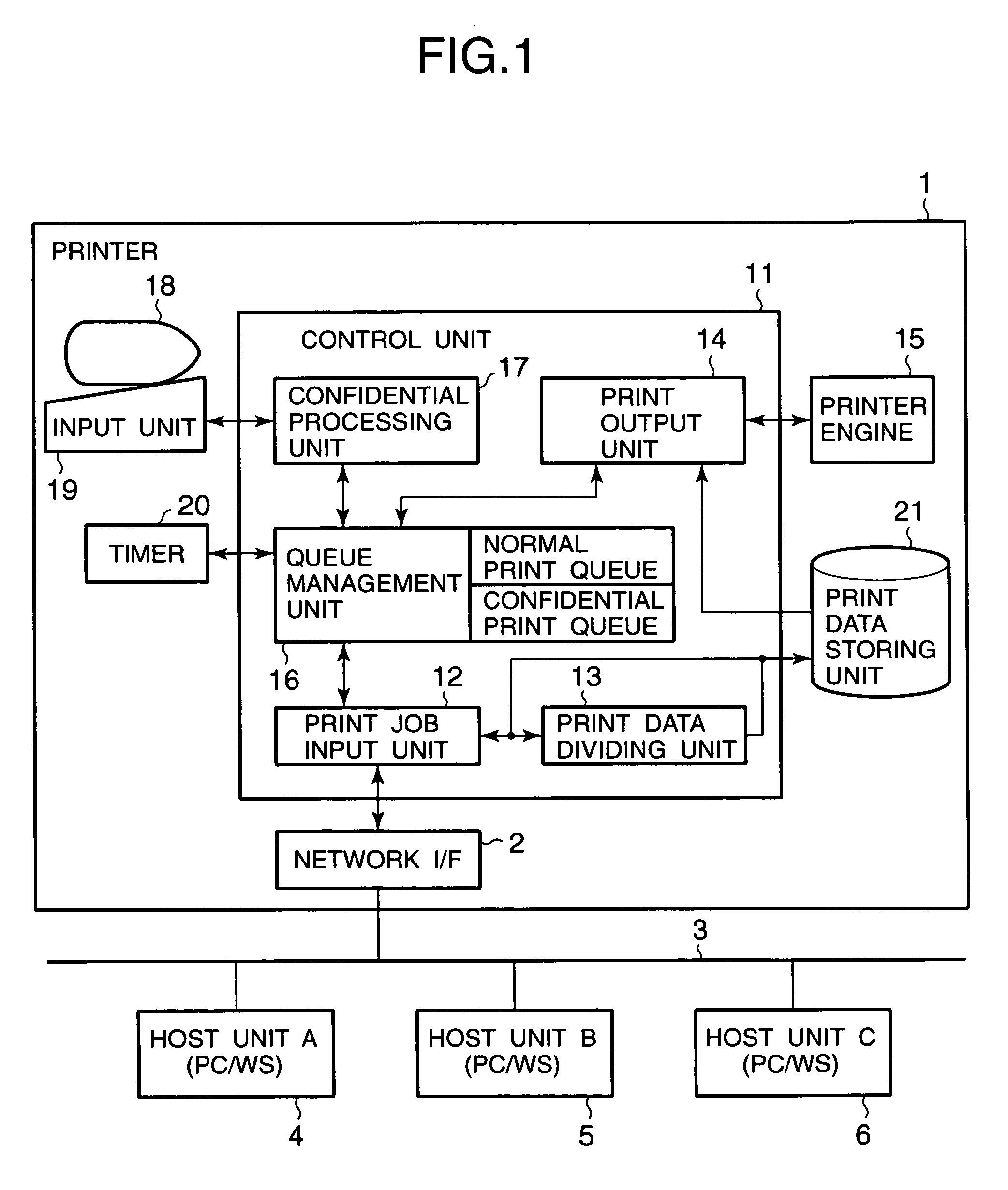

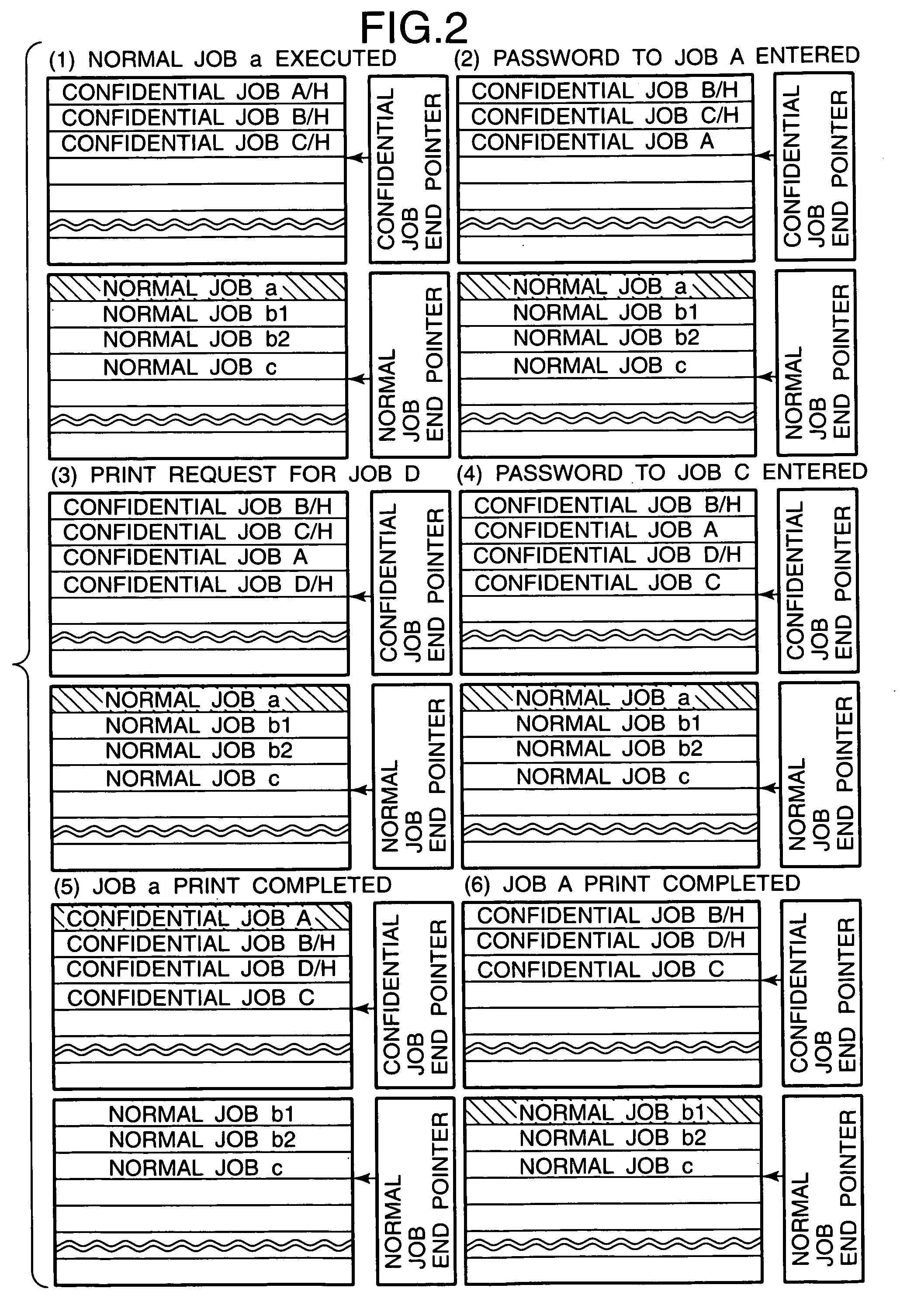

Printing apparatus, printing method, and printing system for printing password-protected and normal print jobs

InactiveUS7298505B2MixingAvoid overuseElectric signal transmission systemsDigital data processing detailsManagement unitPassword

A printing apparatus is provided in which confidential prints are kept secret from each other even when congested by passwords for a plurality of confidential prints being entered in succession. Pointers are provided at the respective ends of a confidential print job queue and a normal print job queue in a queue management unit 16. New print jobs are stored into the corresponding ends. When a user enters a valid password for a confidential print job A from an input unit 19, a control unit 11 releases the hold status of the confidential print job A. The control unit 11 also refers to the end pointer for confidential print jobs and moves the confidential print job A to the end of the confidential print jobs. When a normal print job a is completed, the control unit 11 skips held confidential print jobs B and D, and processes the confidential print job A which is the first released of the hold status. Then, a normal print job b1 is executed so that no confidential print job follows immediately.

Owner:CEDAR LANE TECH INC

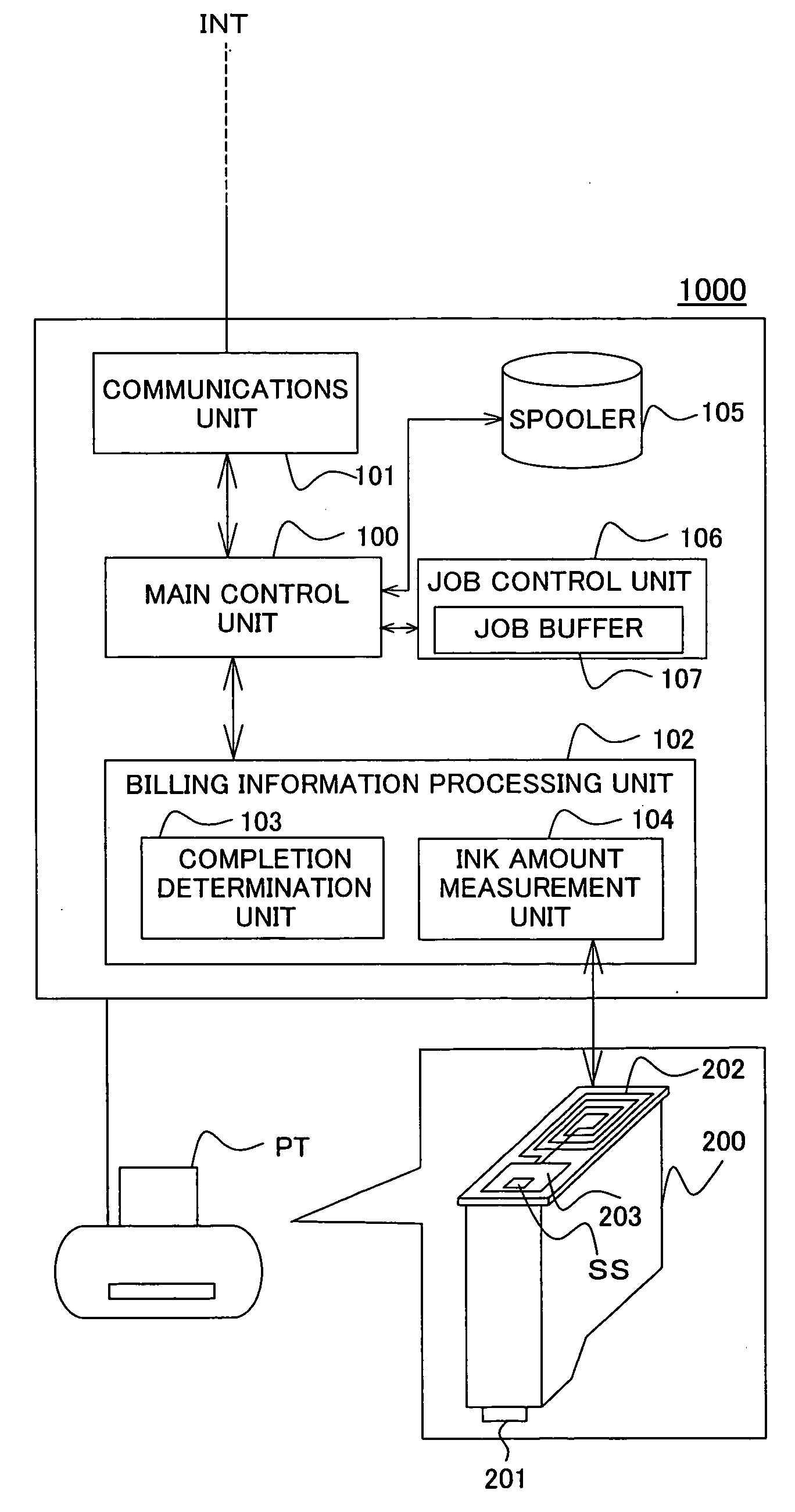

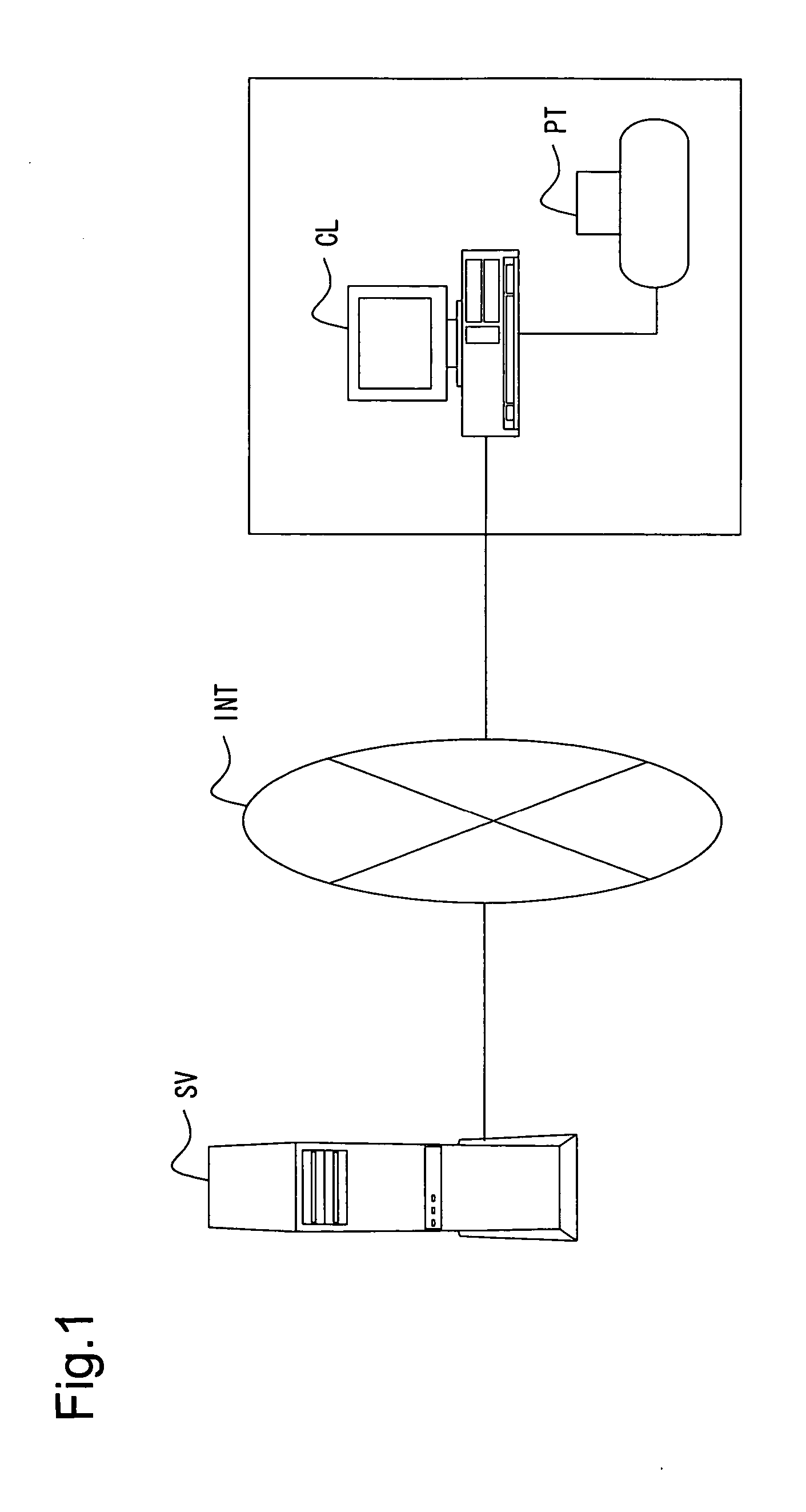

Monitoring printer via network

InactiveUS20050039091A1Quick calculationHigh measurement accuracyElectronic circuit testingResourcesComputer scienceNetwork monitoring

Amounts of expendables consumed by a printer are sensed accurately. A job control unit 106 transfers a print job queued in job buffer 107 to a spooler 105, in page units. Spooler 105 sends the print job to a printer PT, as well as providing a completion determination unit 103 with notification of start of printing. Upon receiving notification of start of printing, the completion determination unit monitors the status of the printer PT and remaining data in the spooler 105, and in the event that printer PT status is “ready to print” and there is no remaining data in the spooler 105, decides that printing has completed, and counts the number of sheets of paper. An ink amount measurement unit 104, by means of predetermined correcting means, corrects the amount of ink consumed during printing, so as to approximate the amount of ink actually used in printing. A server SV is notified of the number of sheets of paper and the corrected amount of ink consumed, whereupon the server performs a billing process.

Owner:SEIKO EPSON CORP

Method and system for scheduling jobs in a computer system

ActiveUS20060268321A1Shorten access timeEliminate bottlenecksProgram controlDigital output to print unitsRandom access memoryComputerized system

A method for scheduling jobs in a computer system includes storing a plurality of job queue files in a random access memory, accessing at least one of the job queue files stored in random access memory, and scheduling, based in part on the at least one job queue file, execution of a job associated with the at least one job queue file. In a more particular embodiment, a method for scheduling jobs in a computer system include storing a plurality of job queue files in a random access memory. The plurality of job queue files include information associated with at least one job. The method also includes storing the information external to the random access memory and accessing at least one of the job queue files stored in the random access memory. The method also includes scheduling, based at least in part on the at least one job queue file, execution of a job associated with at least one job queue file. In response to the scheduling, the method includes updating the information stored in the job queue file and random access memory and the information stored external to the random access memory.

Owner:COMP ASSOC THINK INC

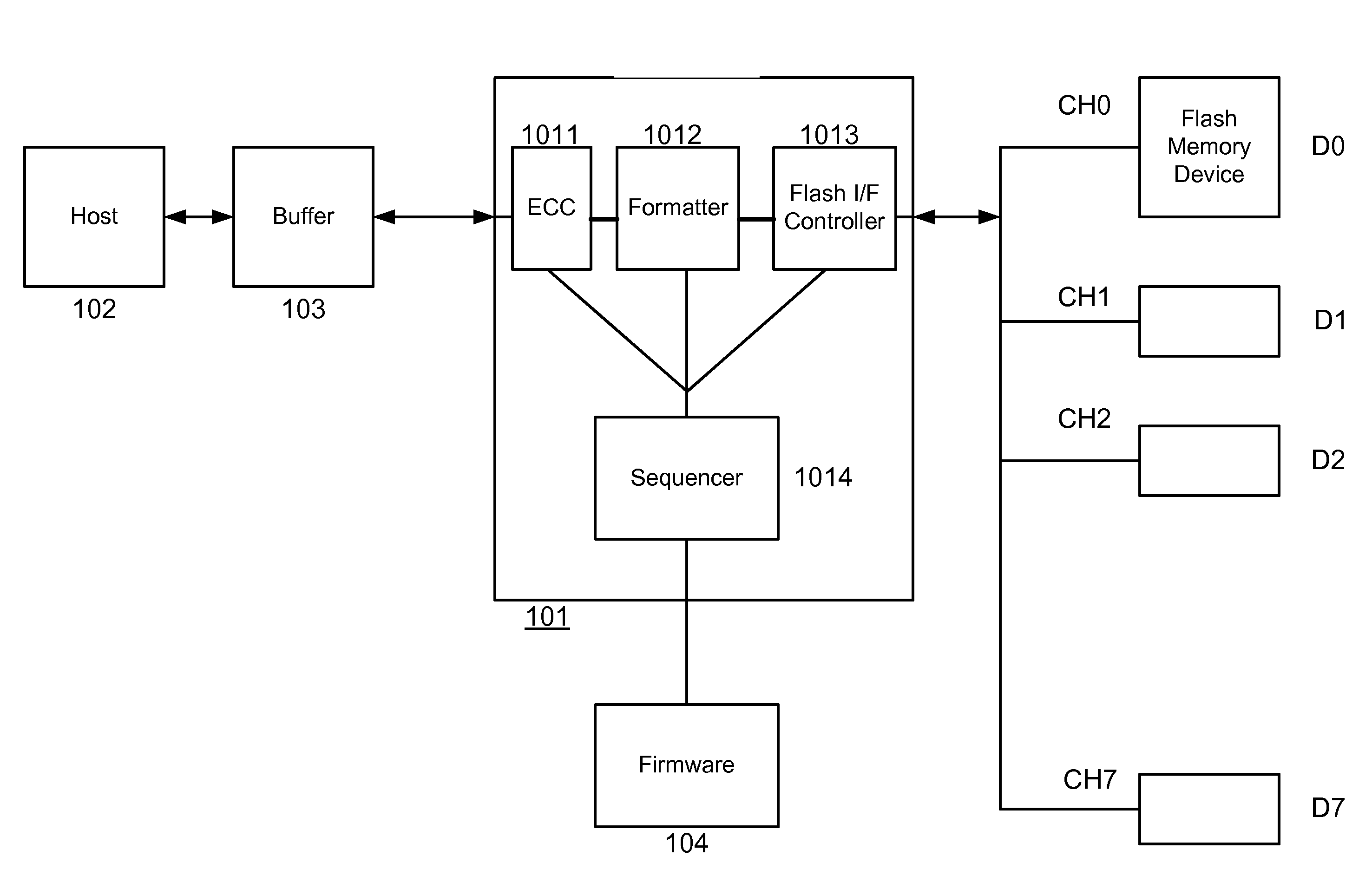

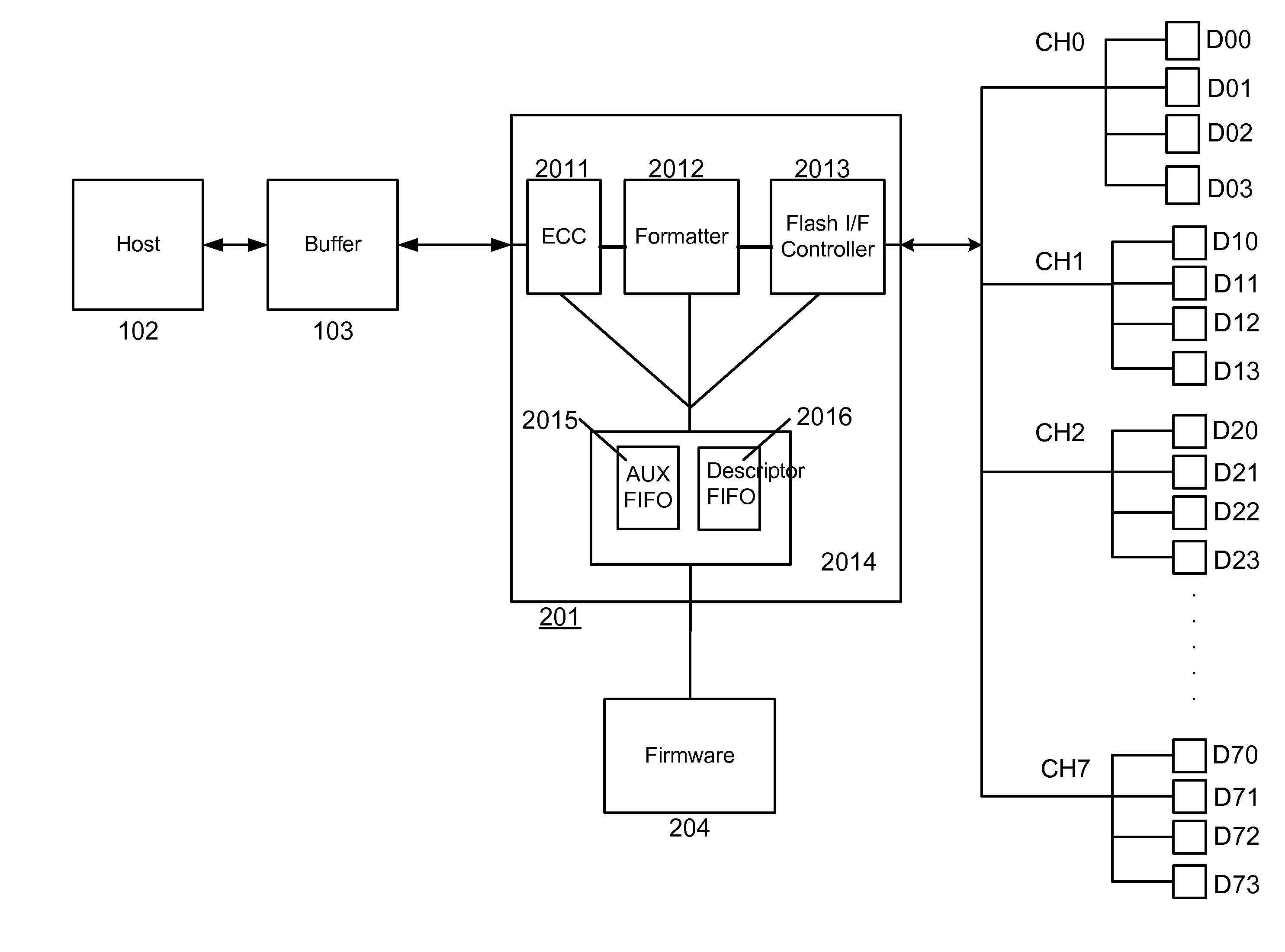

Flexible sequencer design architecture for solid state memory controller

ActiveUS8185713B2Reduce wasteMinimal interventionMultiprogramming arrangementsMemory systemsSolid state memoryHost machine

A method and apparatus for controlling access to solid state memory devices which may allow maximum parallelism on accessing solid state memory devices with minimal interventions from firmware. To reduce the waste of host time, multiple flash memory devices may be connected to each channel. A job / descriptor architecture may be used to increase parallelism by allowing each memory device to operate separately. A job may be used to represent a read, write or erase operation. When firmware wants to assign a job to a device, it may issue a descriptor, which may contain information about the target channel, the target device, the type of operation, etc. The firmware may provide descriptors without waiting for a response from a memory device, and several jobs may be issued continuously to form a job queue. After the firmware finishes programming descriptors, a sequencer may handle the remaining work so that the firmware may concentrate on other tasks.

Owner:MARVELL ASIA PTE LTD

Parallel symbolic execution on cluster of commodity hardware

InactiveUS8863096B1Multiprogramming arrangementsSoftware testing/debuggingProgramming languageJob transfer

A symbolic execution task is dynamically divided among multiple computing nodes. Each of the multiple computing nodes explores a different portion of a same symbolic execution tree independently of other computing nodes. Workload status updates are received from the multiple computing nodes. A workload status update includes a length of a job queue of a computing node. A list of the multiple computing nodes ordered based on the computing nodes' job queue lengths is generated. A determination is made regarding whether a first computing node in the list is underloaded. A determination is made regarding whether a last computing node in the list is overloaded. Responsive to the first computing node being underloaded and the last computing node being overloaded, a job transfer request is generated that instructs the last computing node to transfer a set of one or more jobs to the first computing node.

Owner:MATHE MEDICAL +1

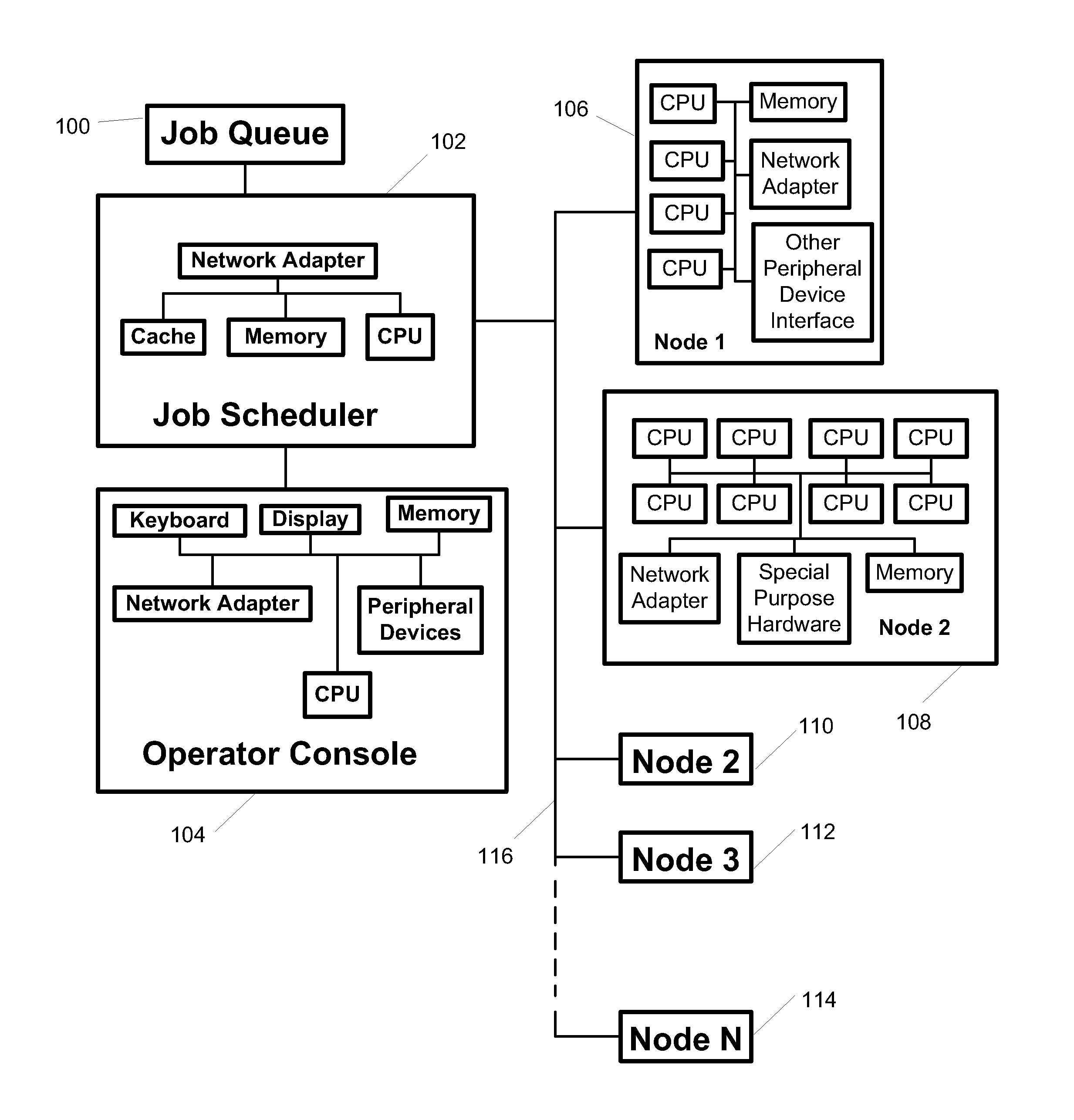

Computer job scheduler with efficient node selection

The present invention provides a method, program product, and information processing system that efficiently dispatches jobs from a job queue. The jobs are dispatched to the computational nodes in the system. First, for each job, the number of nodes required to perform the job and the required computational resources for each of these nodes are determined. Then, for each node required, a node is selected to determine whether a job scheduler has a record indicating if this node meets the required computational resource requirement. If no record exists, the job scheduler analyzes whether the node meets the computational resource requirements given that other jobs may be currently executing on that node. The result of this determination is recorded. If the node does meet the computational resource requirement, the node is assigned to the job. If the node does not meet the resource requirement, a next available node is selected. The method continues until all required nodes are assigned and the job is dispatched to the assigned nodes. Alternatively, if the number of required nodes is not available, it is indicated the job can not be run at this time.

Owner:IBM CORP

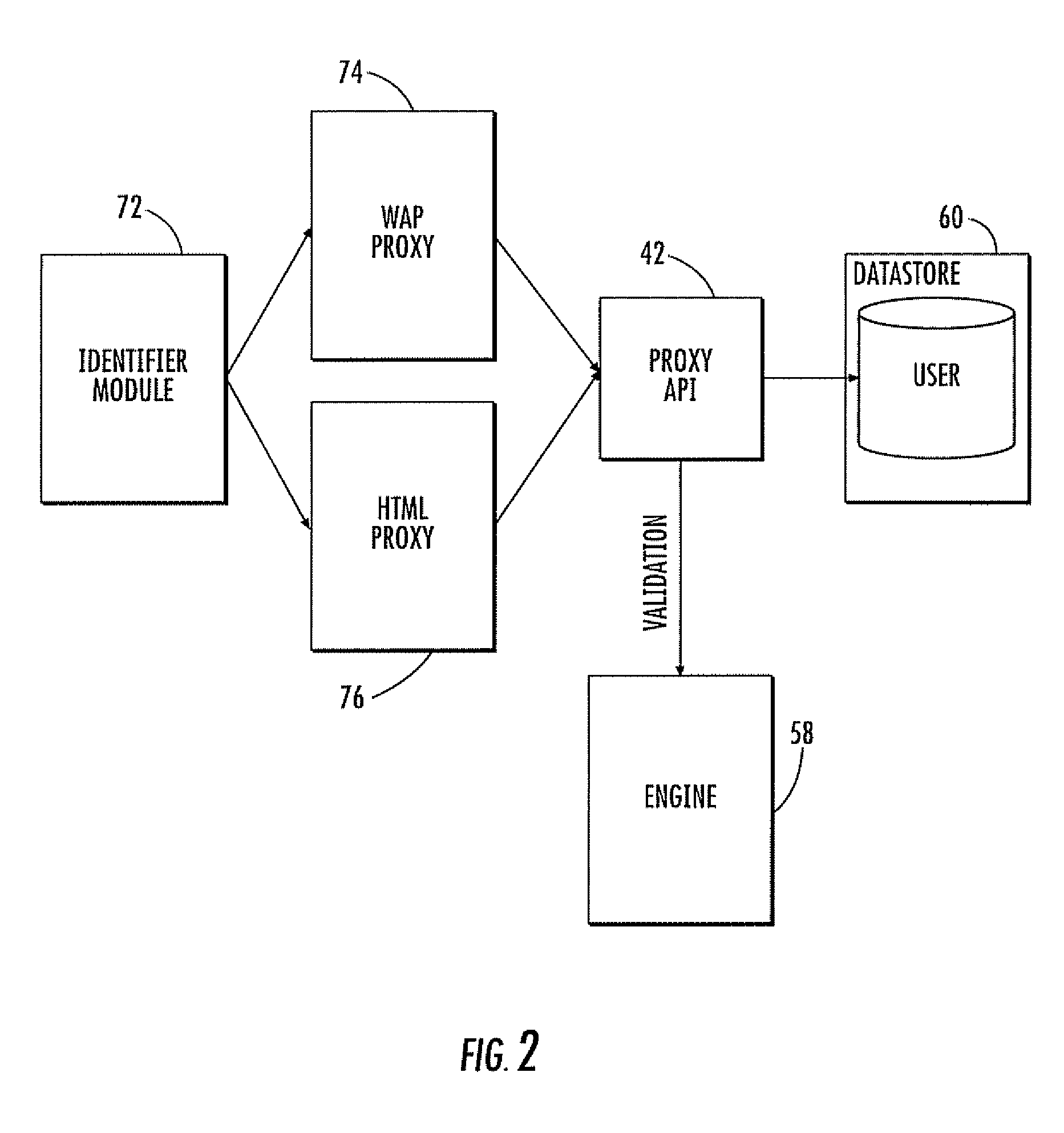

Email Server Performing Email Job Processing for a Given User and Related Methods

InactiveUS20070088791A1Multiple digital computer combinationsData switching networksWorld Wide WebQueue manager

An electronic mail (email) server may include a pending email job queue manager for storing a plurality of email jobs for a plurality of users, and a processing email job queue manager. The processing email job queue manager may be for processing a plurality of email jobs from the pending email job queue manager for a given user, if available, and otherwise processing at least one email job from the pending email job queue manager for a different user.

Owner:MALIKIE INNOVATIONS LTD

Intelligent scheduler for multi-level exhaustive scheduling

InactiveUS7559062B2Reduced resourceTasks to be executed more efficientlyProgram initiation/switchingMemory systemsAnalysis workingLow resource

A method and apparatus are provided for scheduling tasks within a computing device such as a communication switch. When a task is to be scheduled, other tasks in the work queue are analyzed to see if any can be executed simultaneously with the task to be scheduled. If so, the two tasks are combined to form a combined task, and the combined task is placed within the job queue. In addition, if the computing device has insufficient resources to execute the task to be scheduled, the task is placed back into the work queue for future scheduling. This is done in a way which avoids immediate reselection of the task for scheduling. Task processing efficiency is increased, since combining tasks reduces the waiting time for lower priority tasks, and tasks for which there are insufficient resources are delayed only a short while before a new scheduling attempt, rather than rejecting the task altogether.

Owner:ALCATEL LUCENT SAS

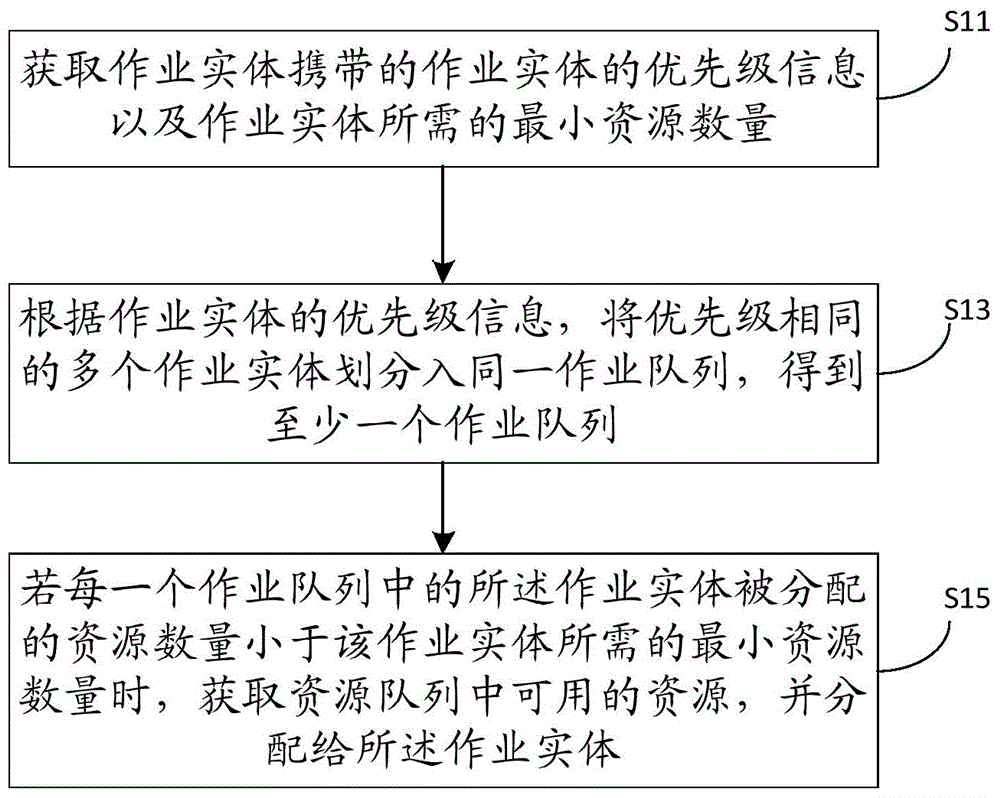

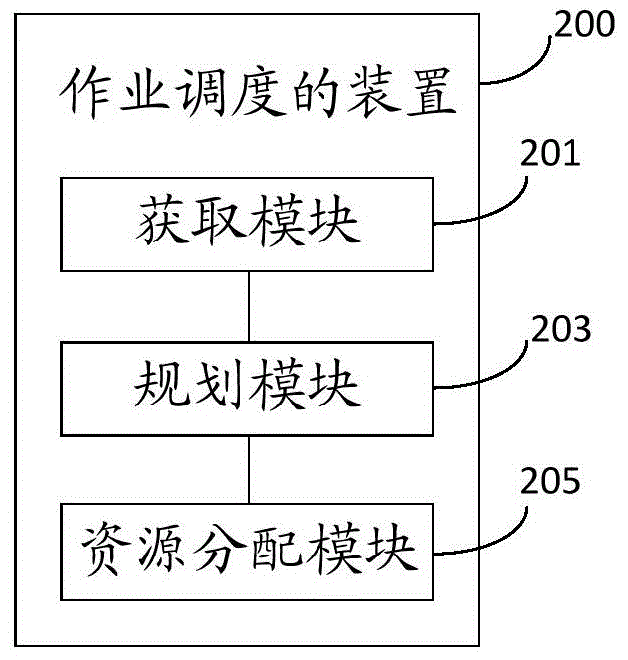

Job scheduling method and apparatus

InactiveCN105718316AAchieve balanceIncrease profitResource allocationService-level agreementComputer science

The invention provides a job scheduling method and apparatus. The job scheduling method comprises the steps of obtaining priority information of job entities carried by the job entities and the minimum resource quantity required by the job entities; dividing the multiple job entities with the same priority into the same job queue according to the priority information of the job entities to obtain at least a job queue; and if the resource quantity allocated to the job entities in each job queue is less than the minimum resource quantity required by the job entities, obtaining available resource from a resource queue, and allocating the available resource to the job entities. By adoption of the scheme of the job scheduling method and apparatus, service level agreement guarantee for different job entities when multiple job entities are under concurrent execution can be realized, including resource allocations for job entities of different priorities or the job entities of the same priority; the balance of resource sharing and well-aligned guarantee is realized; and therefore, the service level agreement for each job entity is realized, and the utilization ratio of the overall cluster resource is improved as well.

Owner:CHINA MOBILE COMM GRP CO LTD +1

Flexible Sequencer Design Architecture for Solid State Memory Controller

ActiveUS20090077305A1Reduce wasteMinimal interventionInterprogram communicationMemory adressing/allocation/relocationSolid state memoryHost machine

A method and apparatus for controlling access to solid state memory devices which may allow maximum parallelism on accessing solid state memory devices with minimal interventions from firmware. To reduce the waste of host time, multiple flash memory devices may be connected to each channel. A job / descriptor architecture may be used to increase parallelism by allowing each memory device to operate separately. A job may be used to represent a read, write or erase operation. When firmware wants to assign a job to a device, it may issue a descriptor, which may contain information about the target channel, the target device, the type of operation, etc. The firmware may provide descriptors without waiting for a response from a memory device, and several jobs may be issued continuously to form a job queue. After the firmware finishes programming descriptors, a sequencer may handle the remaining work so that the firmware may concentrate on other tasks.

Owner:MARVELL ASIA PTE LTD

Systems and methods for controlling an image forming system based on customer replaceable unit status

InactiveUS7009719B2Avoid delayStay productiveDigitally marking record carriersDigital computer detailsImage formationSystem failure

Informing the user when consumables and / or customer replaceable units will have to be resupplied, changed and / or replaced, relative to the jobs sent to the image forming system, is a beneficial way to avoid unnecessary printing delays. When a job is added to a job queue or repositioned within the job queue, a warning can be associated with that job, and displayed to the user. With this information, the user can pre-emptively add a consumable and / or to replace a customer replaceable unit to avoid delays and maintain productivity. Alternatively, if such a system fault would occur prior to an urgent job reaching the top of the job queue, the user can manipulate the order and / or presence of the various jobs in the queue to ensure the high-priority job is completed before the consumable is fully exhausted and / or the customer replaceable unit reaches the end of its useful life.

Owner:XEROX CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com