Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

76 results about "Extreme scale computing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The purpose of the Joint Laboratory for Extreme Scale Computing (JLESC) is to be an international, virtual organization whose goal is to enhance the ability of member organizations and investigators to make the bridge between Petascale and Extreme computing. The founding partners of the JLESC are INRIA and UIUC.

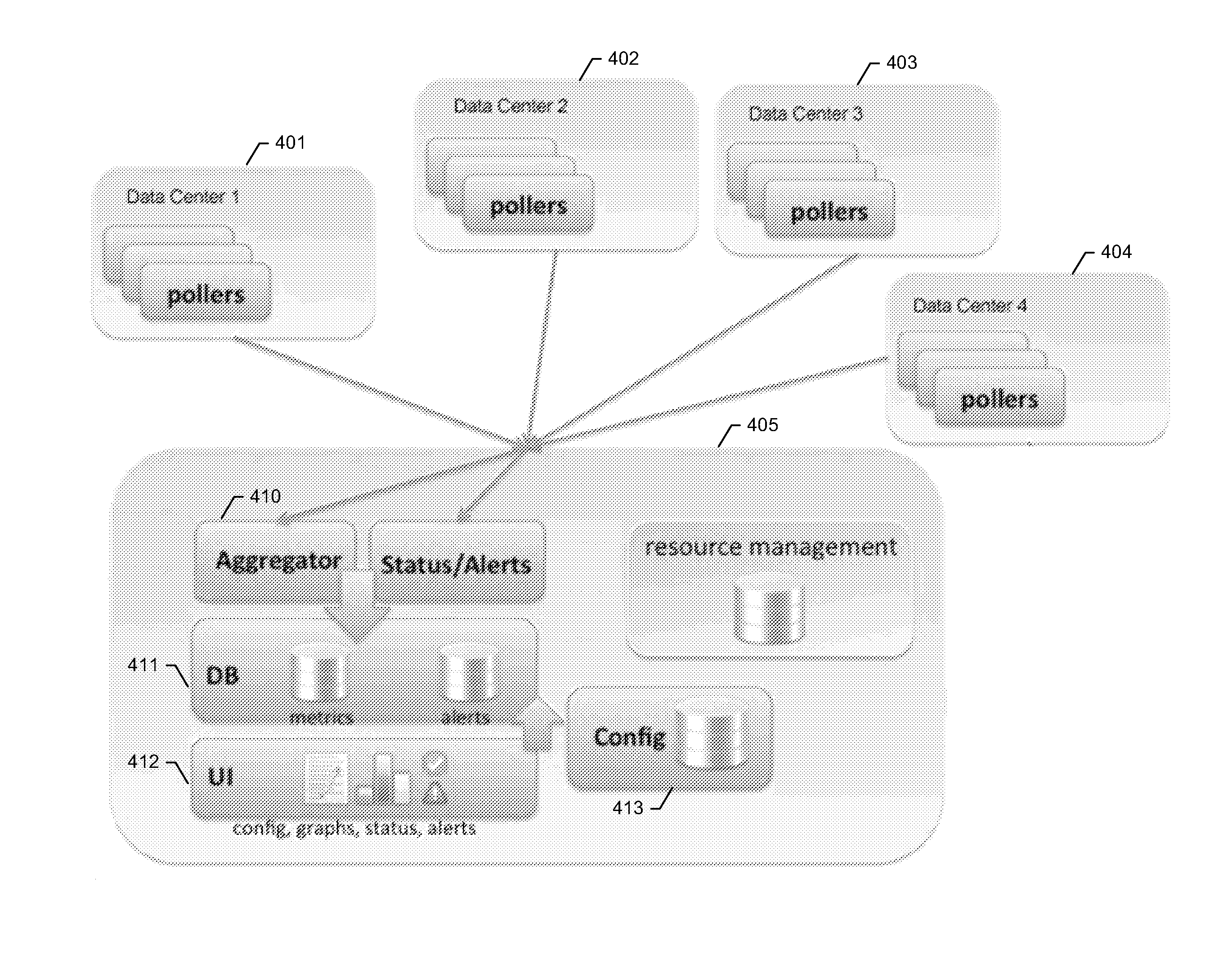

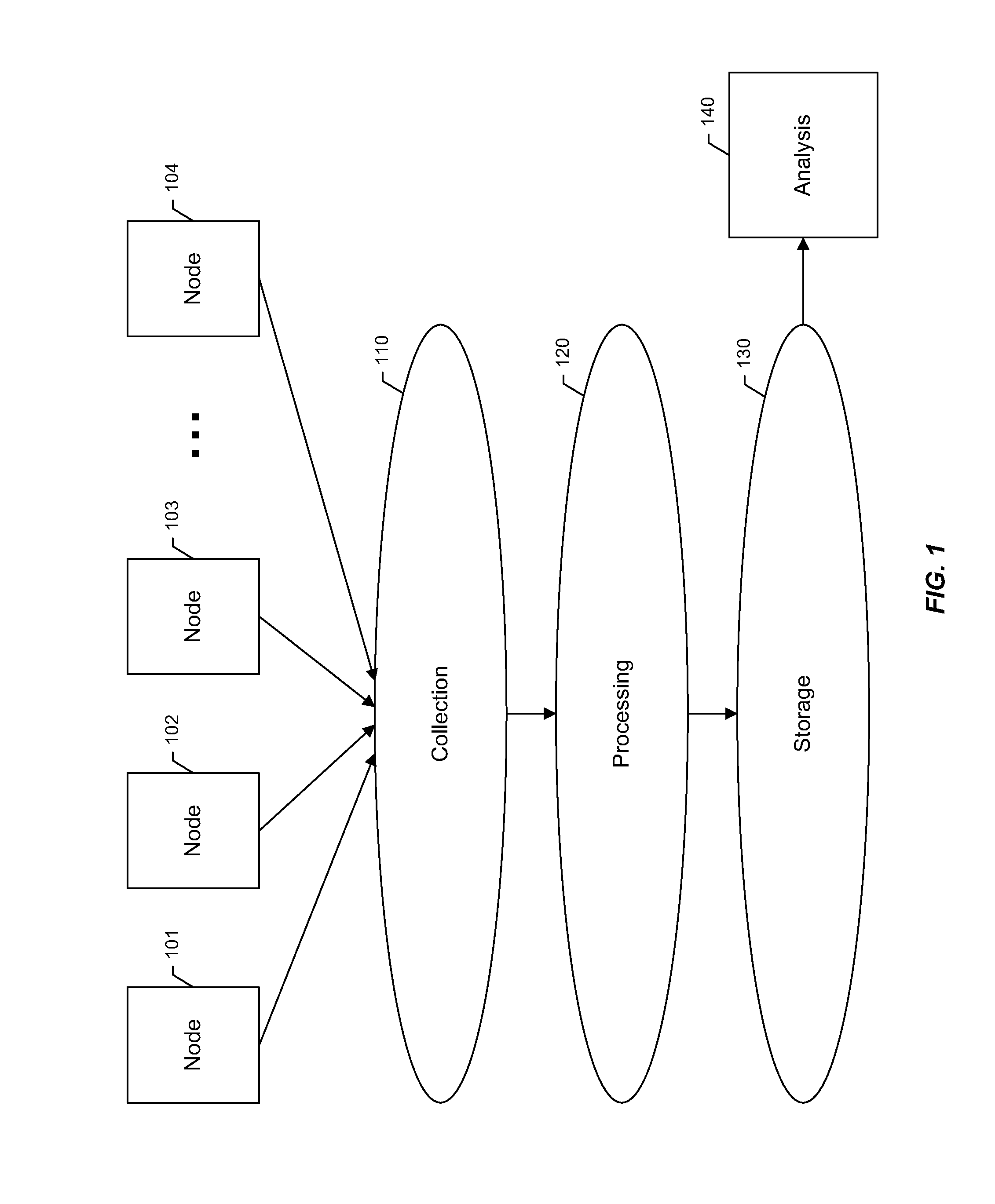

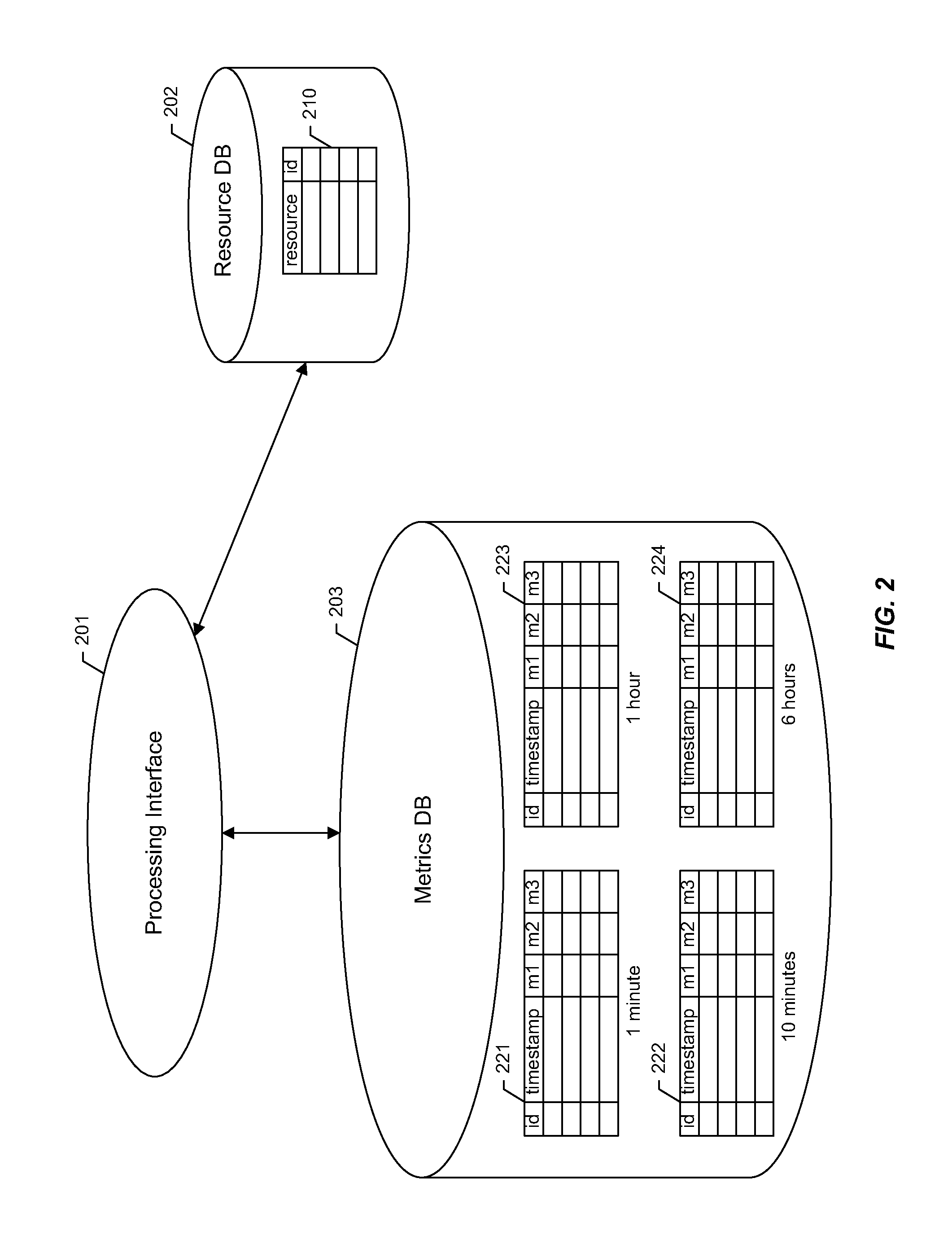

Time series storage for large-scale monitoring system

InactiveUS20110153603A1Efficient storageDigital data information retrievalDigital data processing detailsRetention periodInternet traffic

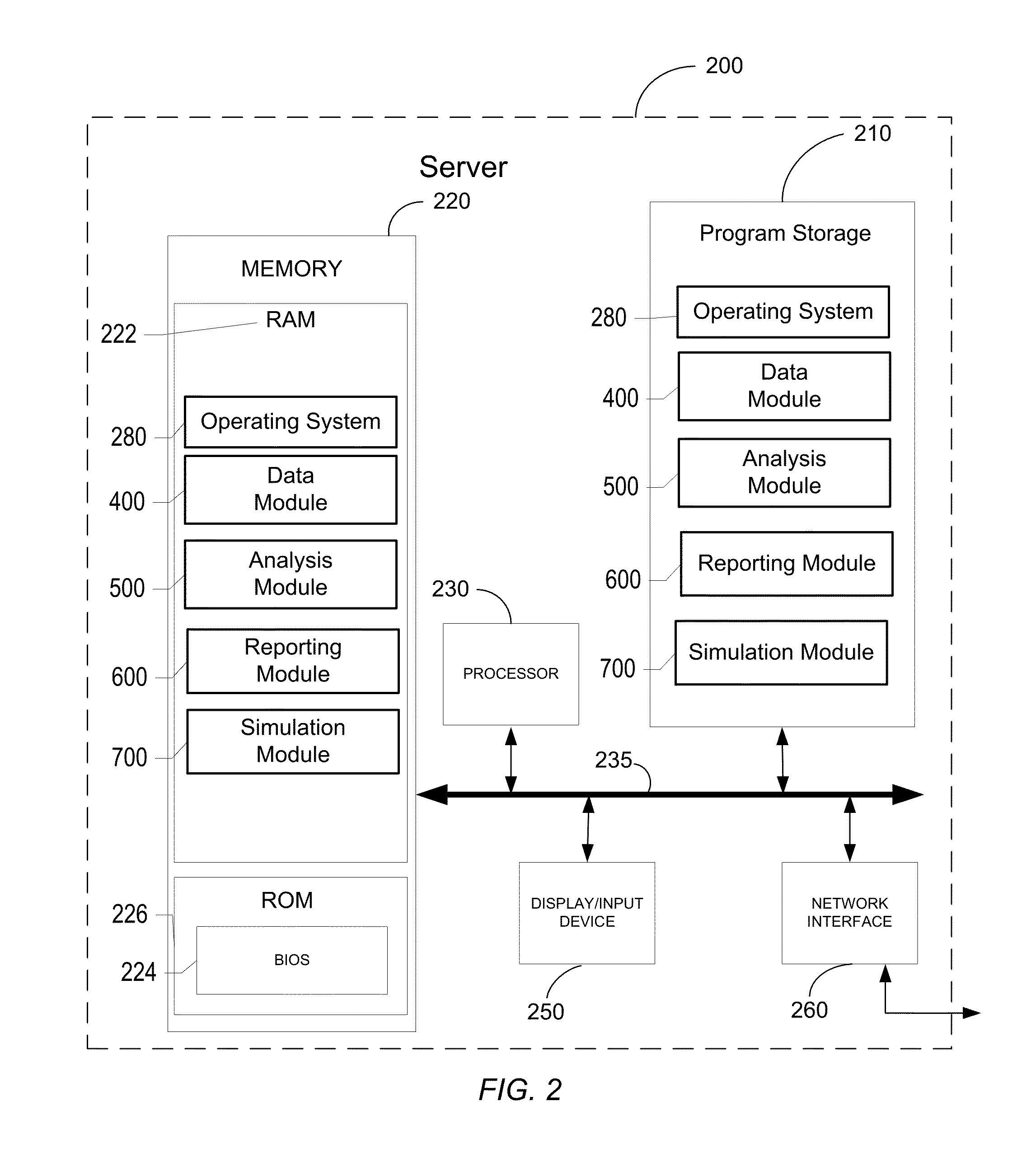

Methods and apparatus are described for collecting and storing large volumes of time series data. For example, such data may comprise metrics gathered from one or more large-scale computing clusters over time. Data are gathered from resources which define aspects of interest in the clusters, such as nodes serving web traffic. The time series data are aggregated into sampling intervals, which measure data points from a resource at successive periods of time. These data points are organized in a database according to the resource and sampling interval. Profiles may also be used to further organize data by the types of metrics gathered. Data are kept in the database during a retention period, after which they may be purged. Each sampling interval may define a different retention period, allowing operating records to stretch far back in time while respecting storage constraints.

Owner:OATH INC

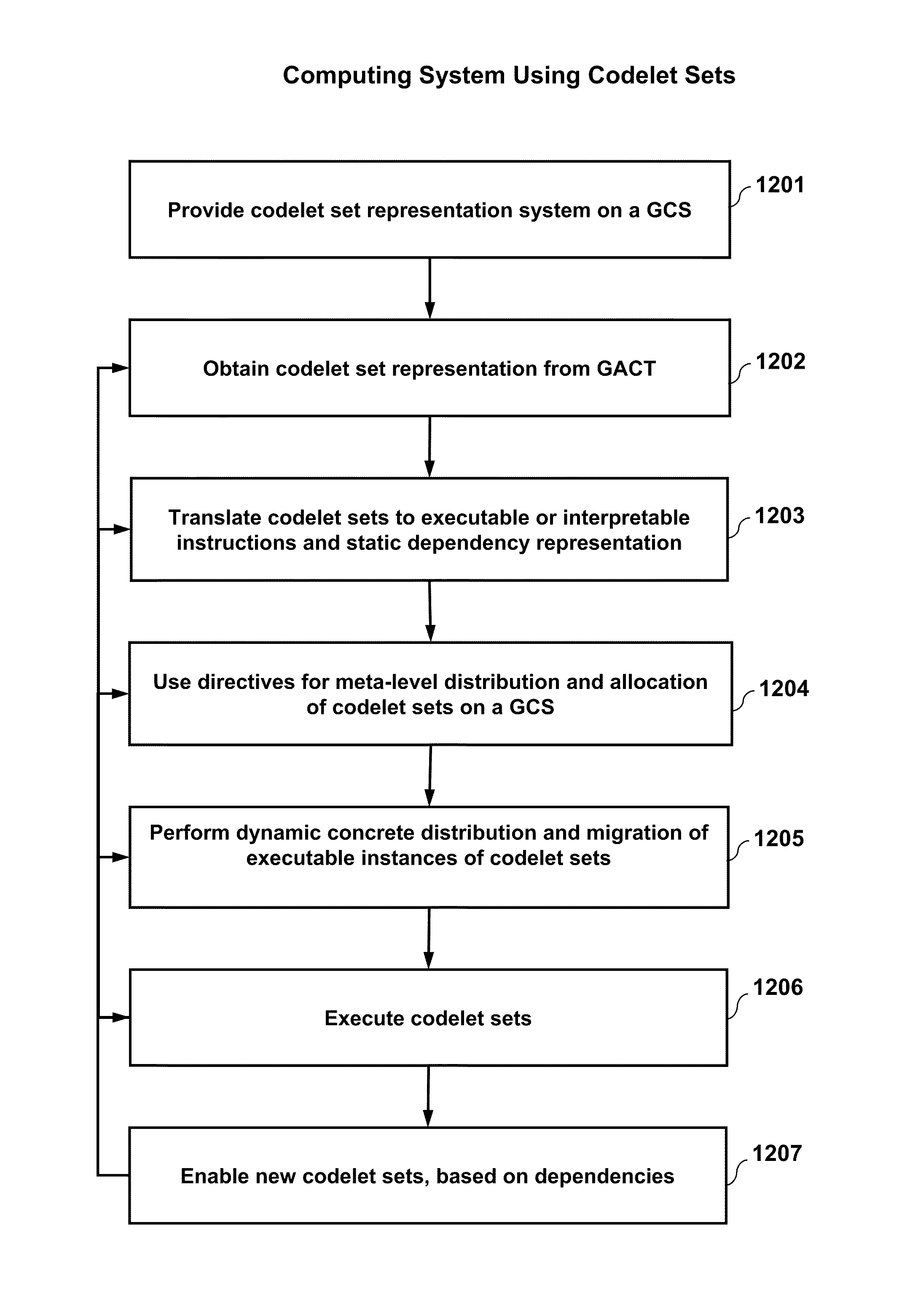

Efficient execution of parallel computer programs

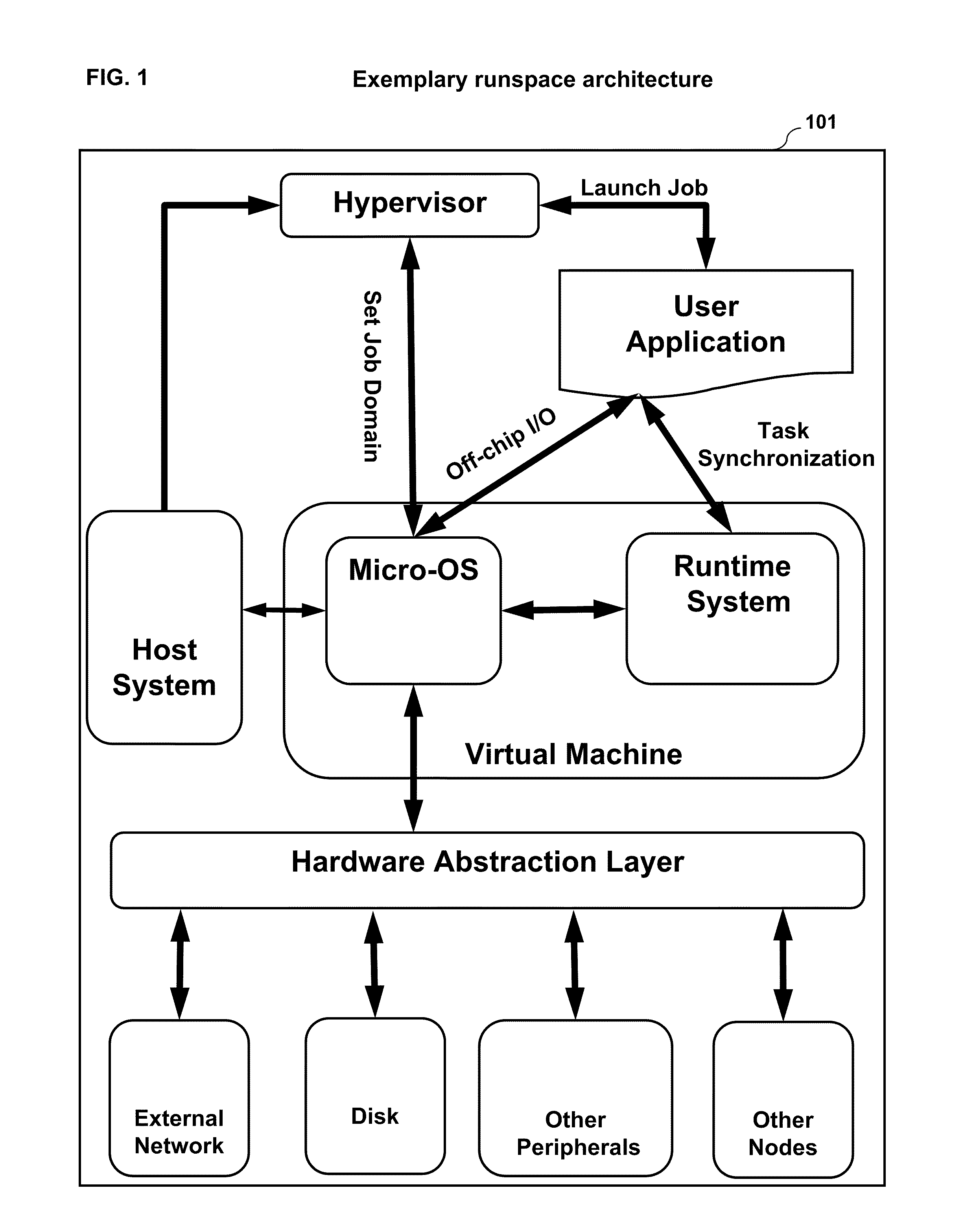

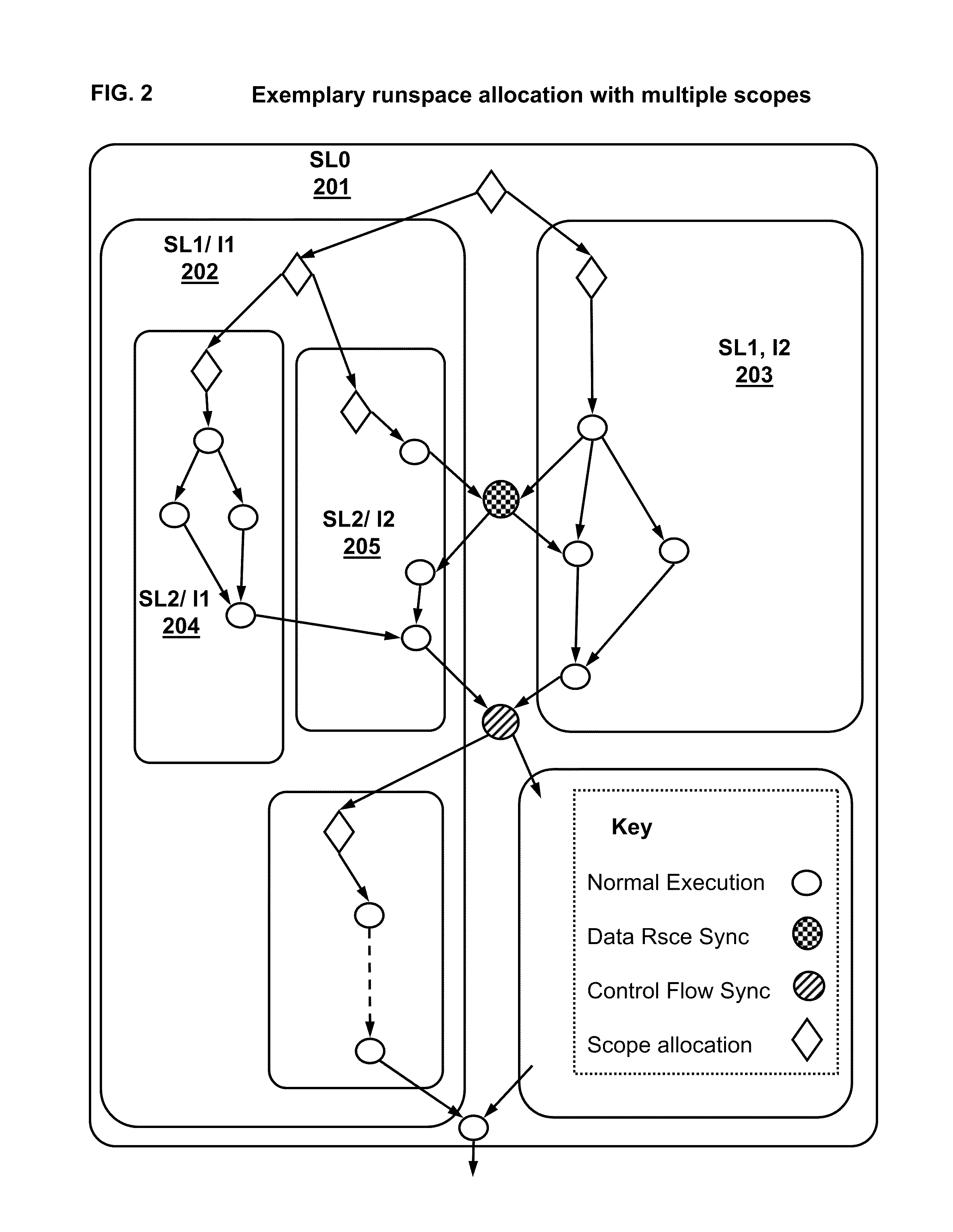

ActiveUS9542231B2Effective distributionEnergy efficient ICTResource allocationConcurrent computationSystems management

The present invention, known as runspace, relates to the field of computing system management, data processing and data communications, and specifically to synergistic methods and systems which provide resource-efficient computation, especially for decomposable many-component tasks executable on multiple processing elements, by using a metric space representation of code and data locality to direct allocation and migration of code and data, by performing analysis to mark code areas that provide opportunities for runtime improvement, and by providing a low-power, local, secure memory management system suitable for distributed invocation of compact sections of code accessing local memory. Runspace provides mechanisms supporting hierarchical allocation, optimization, monitoring and control, and supporting resilient, energy efficient large-scale computing.

Owner:ET INT

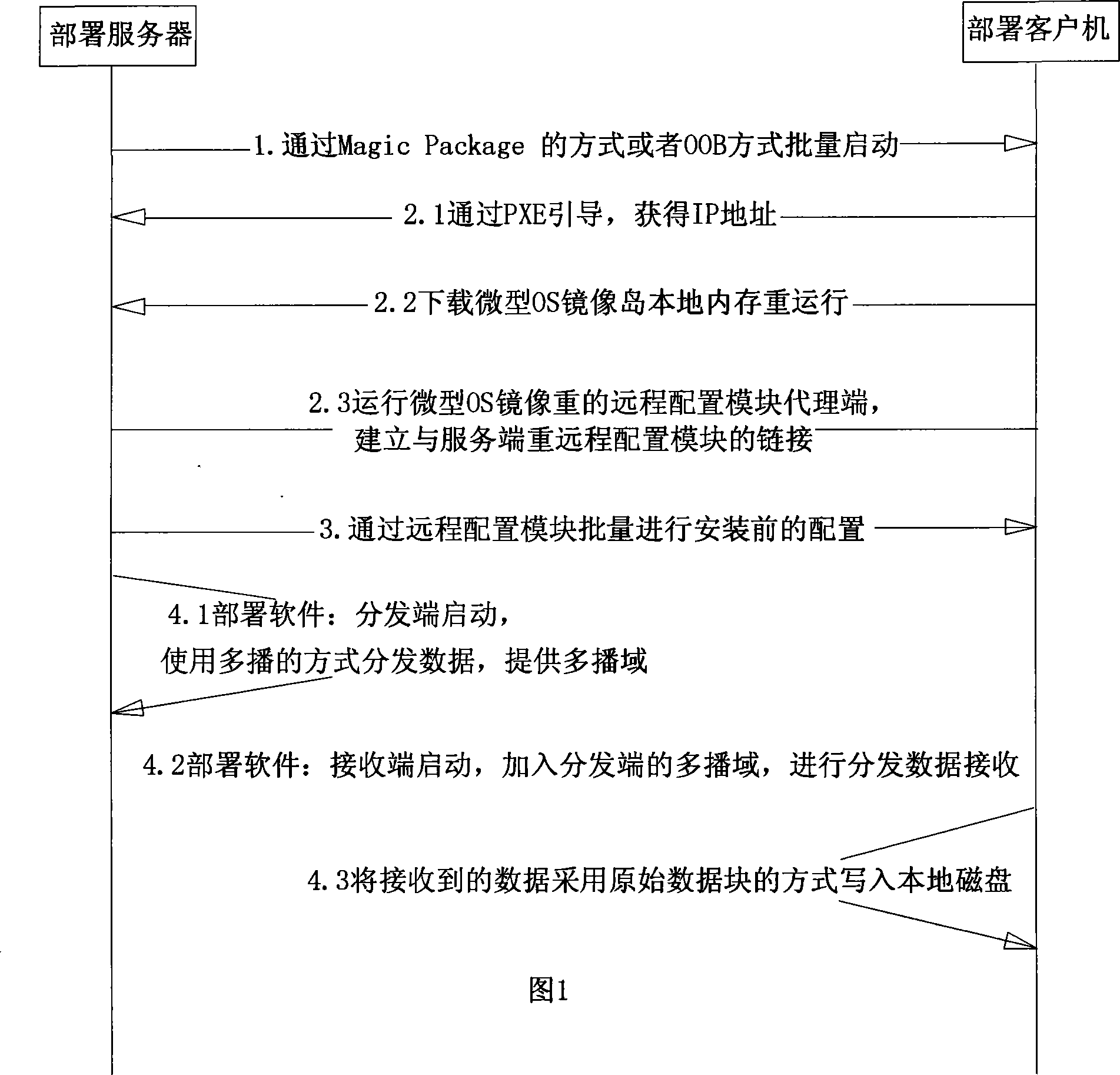

Method for allocating operating system through network guide

ActiveCN101232400AImprove transmission performanceData switching networksOperational systemIp address

The invention provides a method for deploying an operating system via a network guide. The method includes that a remote control module is adopted to remotely start a client computer by transmitting wake on Local Area Network (LAN) Magic Package or in an OOB management manner; the remote control module is used for concentratedly carrying out some necessary deployments to a multi-client terminal, and deploying starting / stopping of a software receiving terminal; a DHCP module is used for carrying out assignment of dynamic IP address to the client computer; and a micro OS mirror image operates on the client computer. The method is completely independent of a storage medium such as a local optical driver, a floppy drive, etc. and is suitable for various operating systems on an IA server. The method improves network transmission performance by using multi-cast technology, gets rid of limit of a file system by using the low-level implementation mechanism of primative data block, and can be widely applied in a large-scale computer system deploying field.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

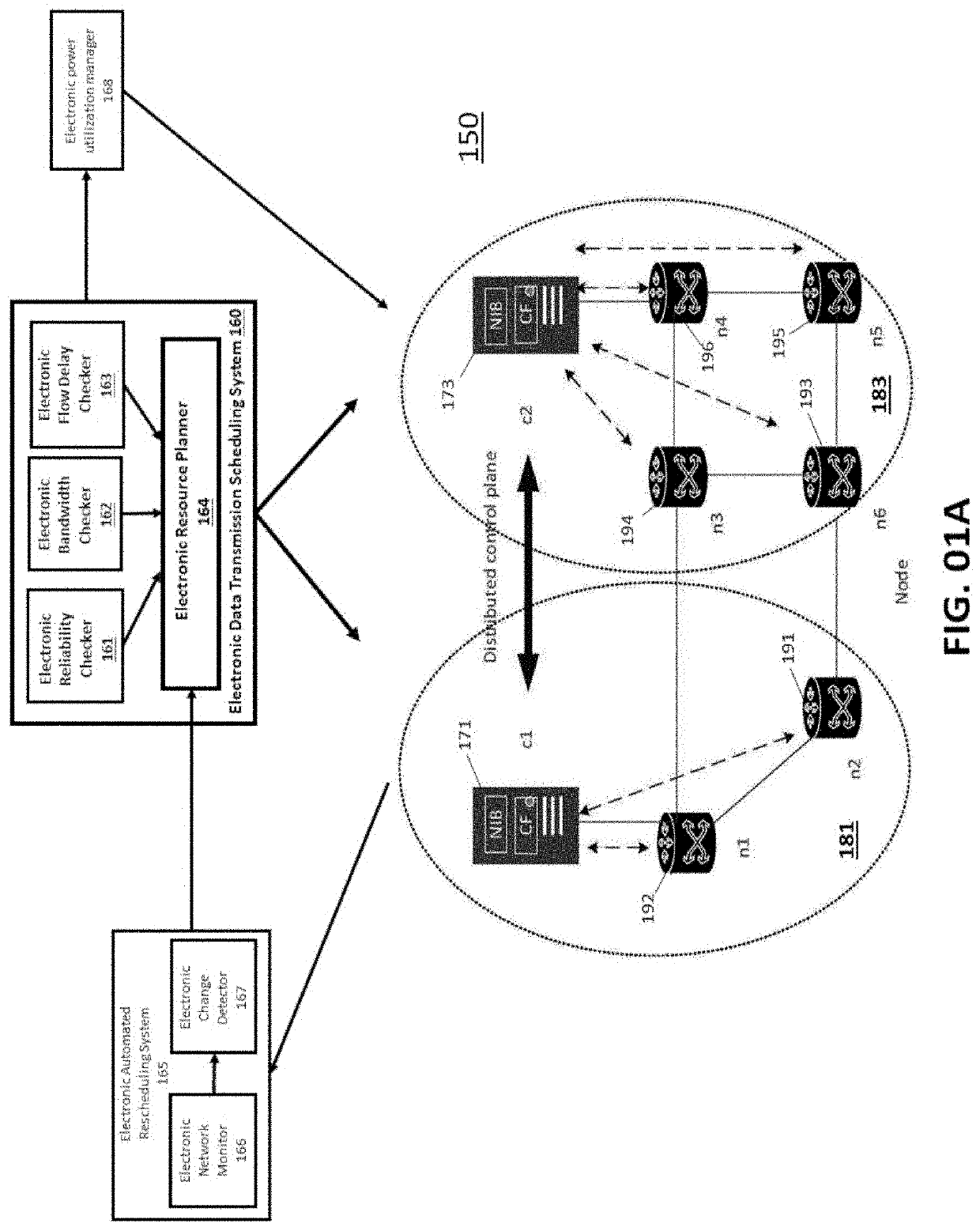

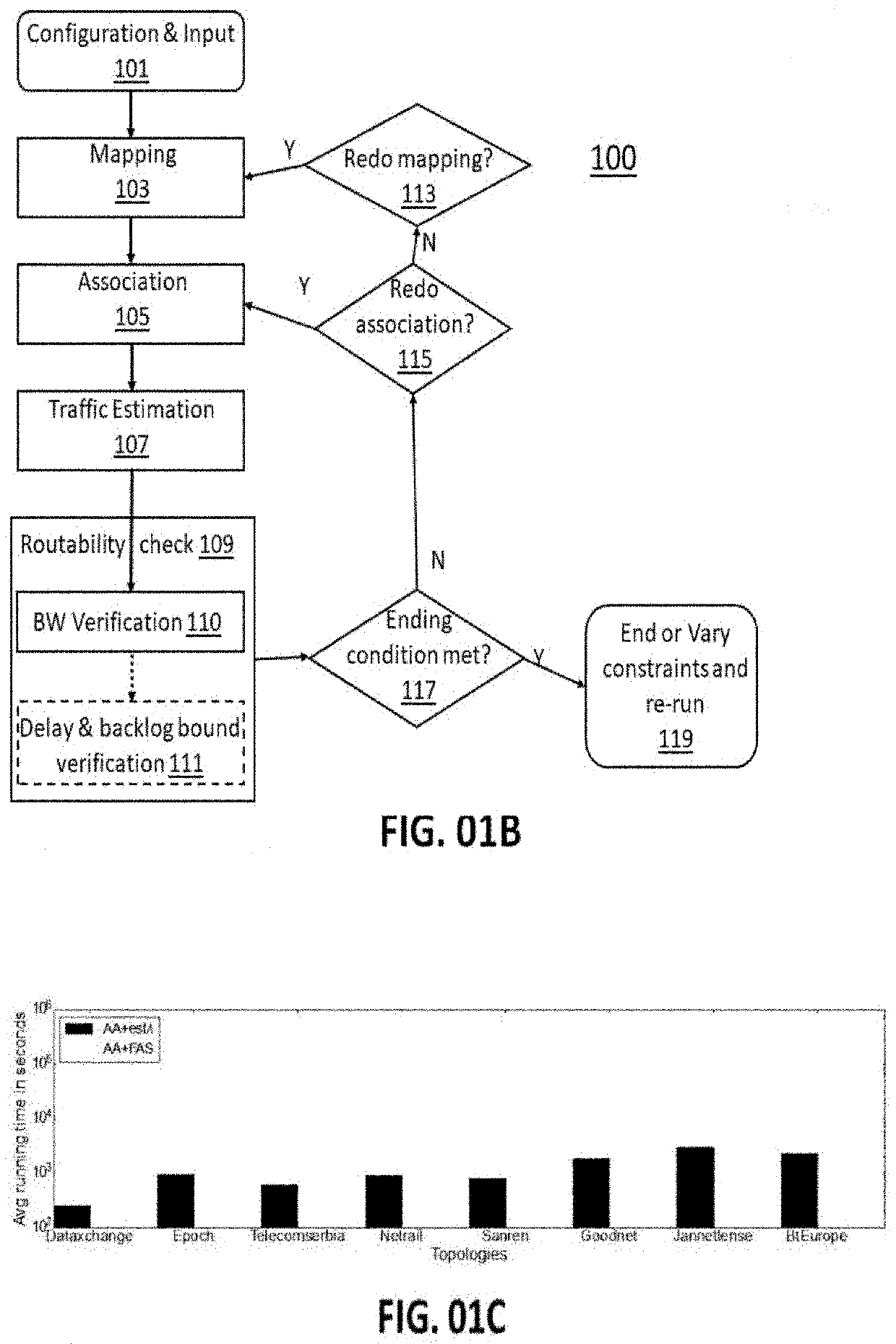

Dynamic Deployment of Network Applications Having Performance and Reliability Guarantees in Large Computing Networks

Embodiments of the invention relate to computerized systems and computerized methods configured to optimize data transmission paths in a large-scale computerized network relative to reliability and bandwidth requirements. Embodiments of the invention further relate to computerized systems and methods that direct and control the physical adjustments to data transmission paths in a large-scale network's composition of computerized data transmission nodes in order to limit data end-to-end transmission delay in a computerized network to a delay within a calculated worst-case delay bound.

Owner:RISE RES INST OF SWEDEN AB

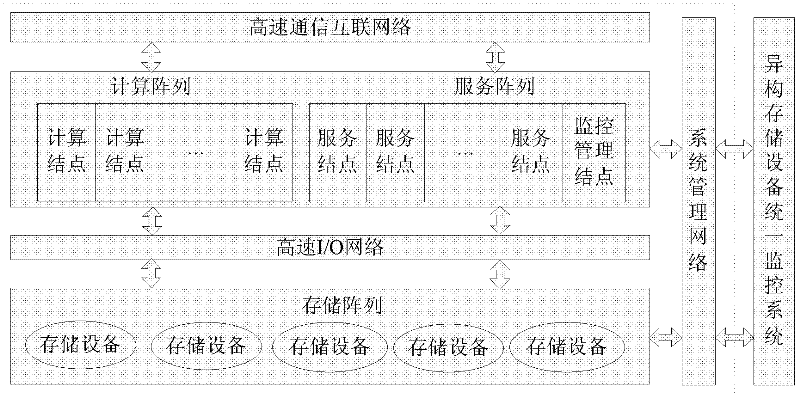

Mass storage system monitoring method integrating heterogeneous storage devices

ActiveCN102638378AGuaranteed validityGuaranteed uptimeData switching networksComputerized systemMonitoring system

The invention discloses a mass storage system monitoring method integrating heterogeneous storage devices, which aims to realize unified monitoring of numerous heterogeneous storage devices in a large-scale computer system. The technical scheme includes that the method includes: constructing a heterogeneous storage device unified monitoring system consisting of a storage device information sheet, a system configuration information sheet, a monitoring information frame, a monitoring client side, an event acquisition module, a warning information mapping module and a warning information filtering module, wherein the monitoring system is used for monitoring the heterogeneous storage devices in the mass storage system and acquiring monitoring results of all the storage devices by the aid of the event acquisition module, the warning information mapping module and the warning information filtering module are used for mapping and filtering the monitoring results respectively, and the monitoring client side is used for displaying warning event information of the heterogeneous storage devices in a unified format. Using the method can guarantee normal operation of the storage devices, reduce maintenance cost and improve efficiency of monitoring of the heterogeneous storage devices in the large-scale storage system.

Owner:NAT UNIV OF DEFENSE TECH

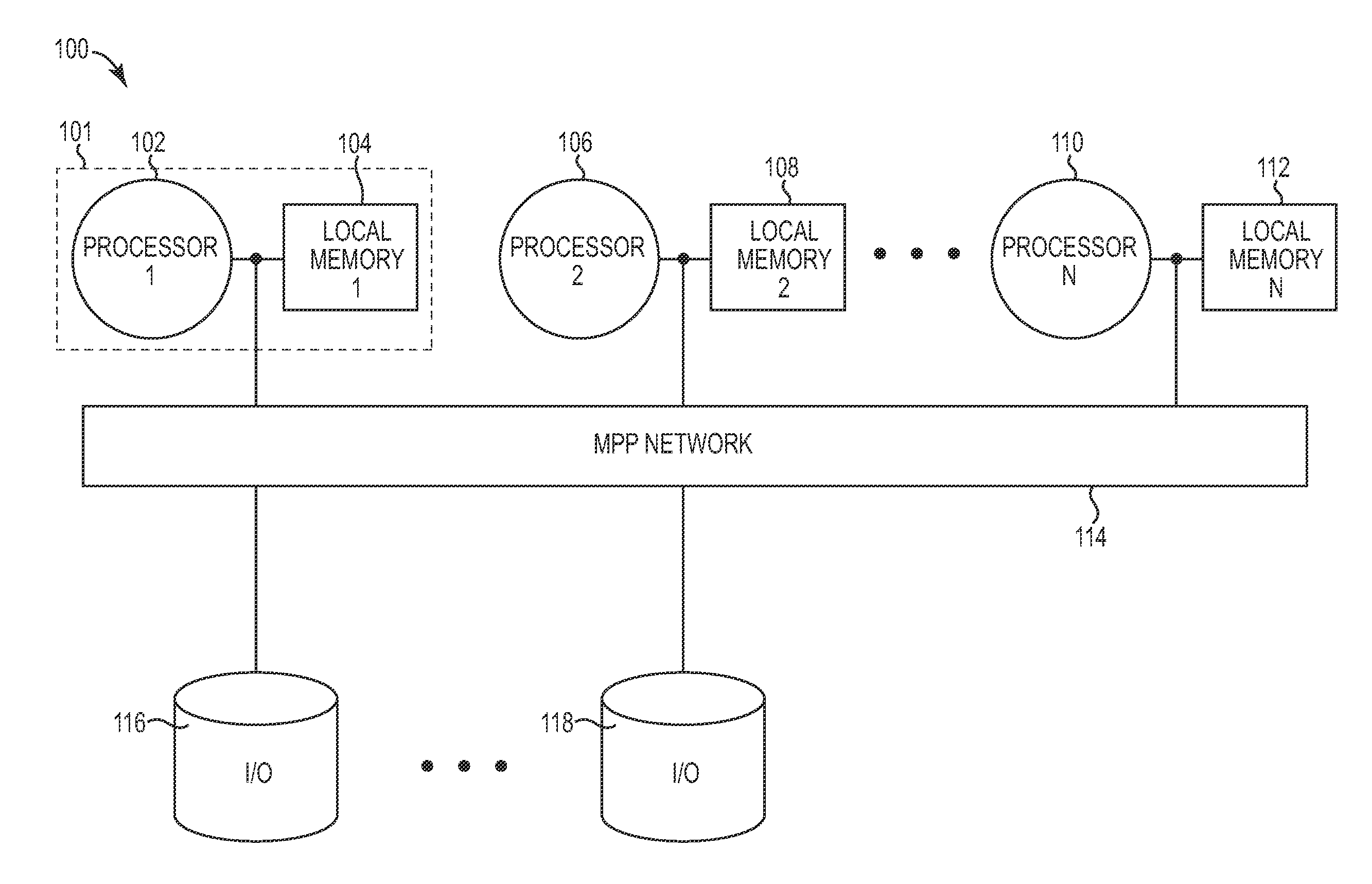

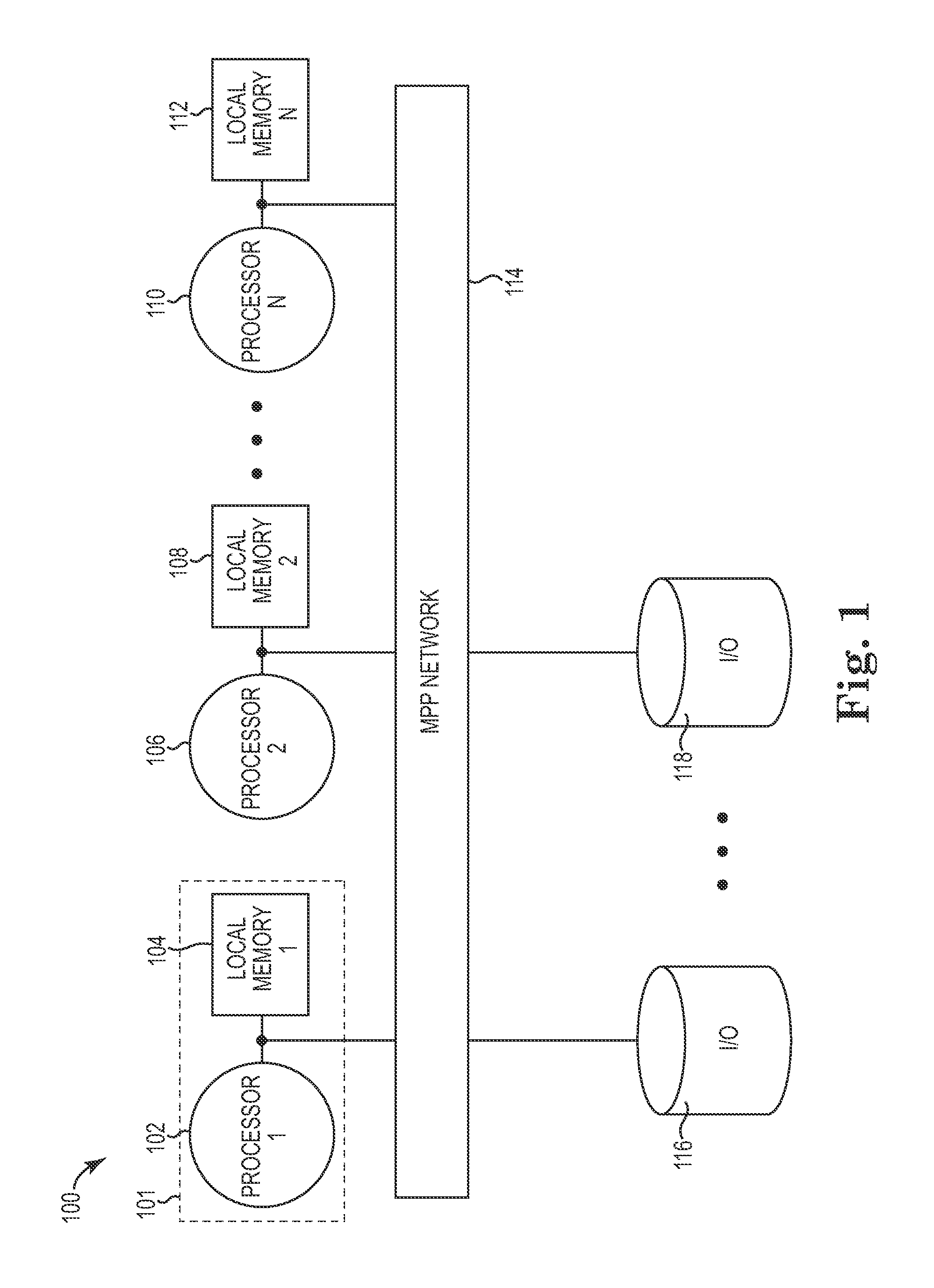

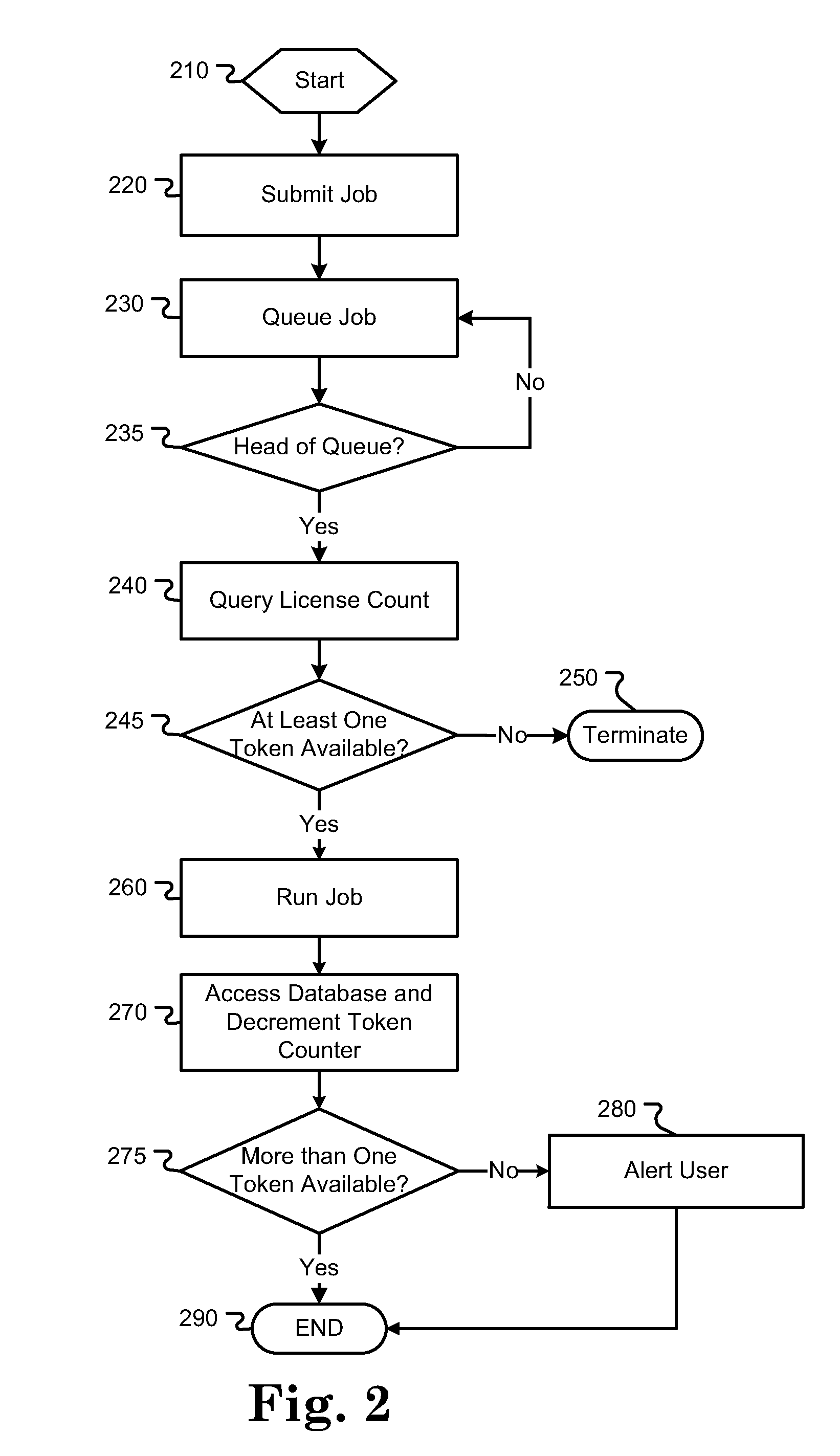

Software license serving in a massively parallel processing environment

ActiveUS20110296402A1Facilitate license usageEasy to useDigital data processing detailsAnalogue secracy/subscription systemsMassively parallelSoftware license

Techniques for implementing software licensing in a massive parallel processing environment on the basis of the actual use of licensed software instances are disclosed. In one embodiment, rather than using a license server or a node-locked license strategy, each use of a licensed software instance is monitored and correlated with a token. A store of tokens is maintained within the licensing system and a token is consumed after each instance successfully executes. Further, a disclosed embodiment also allows jobs that execute multiple software instances to complete execution, even if an adequate number of tokens does not exist for each remaining software instance. Once the license tokens are repurchased and replenished, any overage consumed from previous job executions may be reconciled. In this way, token-based licensing can be adapted to large scale computing environments that execute jobs of large and unpredictable sizes, while the cancellation of executing jobs may be avoided.

Owner:IBM CORP

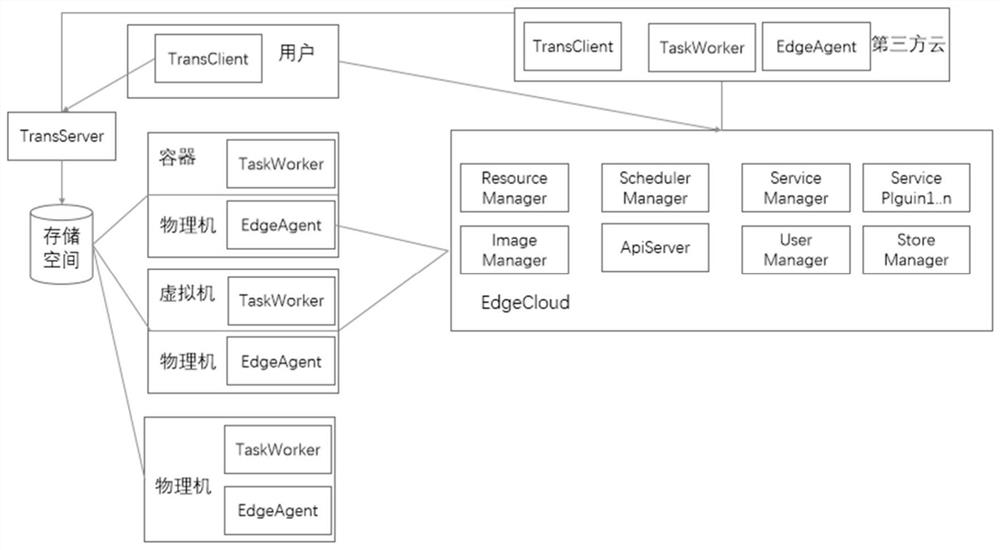

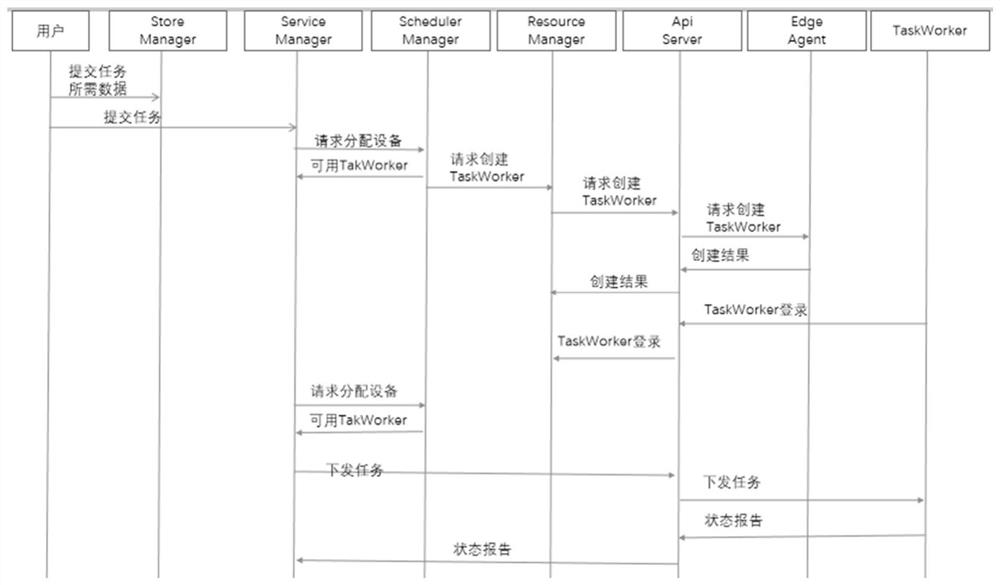

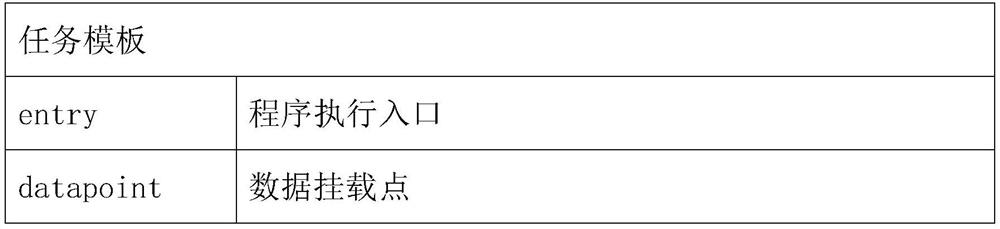

Resource scheduling system and method based on edge computing in heterogeneous environment

ActiveCN111858054AImprove execution speedRealize group schedulingResource allocationEnergy efficient computingResource poolTask analysis

The embodiment of the invention discloses a resource scheduling system and method based on edge computing in a heterogeneous environment in the technical field of edge computing. The resource scheduling system based on edge computing in a heterogeneous environment includes an EdgeCloud is used for task analysis, scheduling, resource management and arrangement, an EdgeAgent which is used for creating and destroying resources required by calculation according to an instruction of the EdgeCloud and monitoring a resource load of the EdgeAgent, and a TaskWorker which is used for executing a calculation task allocated by the EdgeCloud. According to the method, the scattered resources in each region are grouped and further added into the resource pool, and the situation of how to dynamically calculate the score of the equipment is designed to determine the scheduling priority of the equipment; through the stored namespace, the method and system can be applied to the data sharing problem during large-scale calculation, and then the data can be shared with the equipment resource group.

Owner:北京秒如科技有限公司

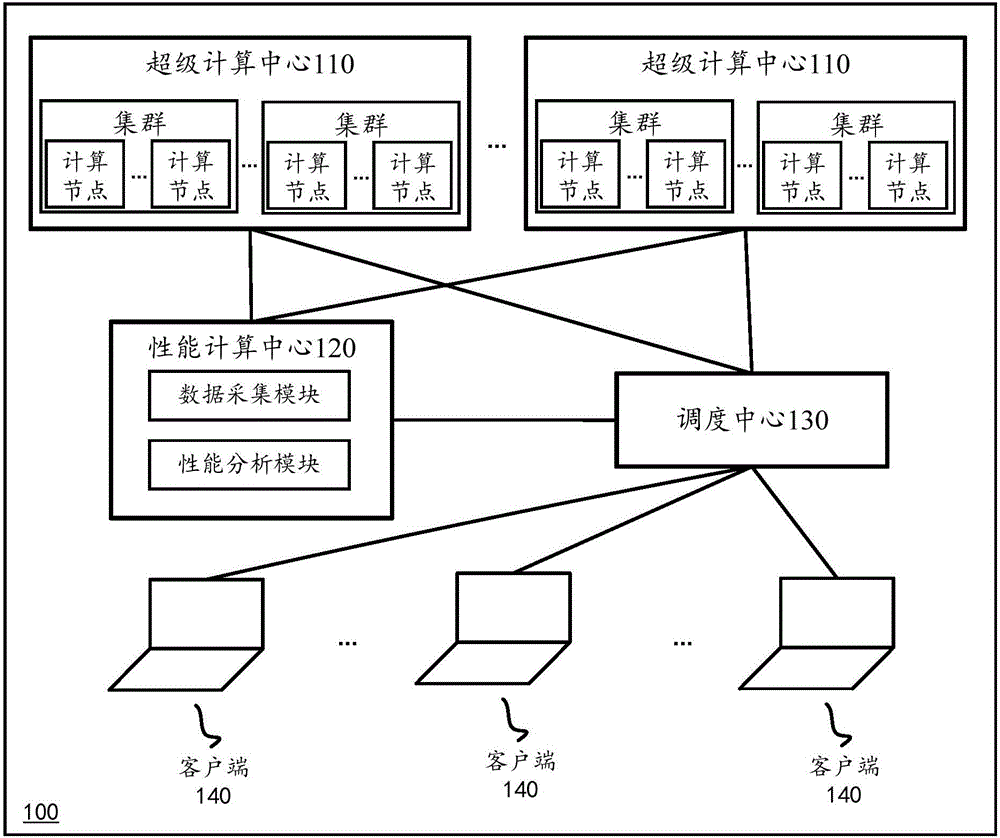

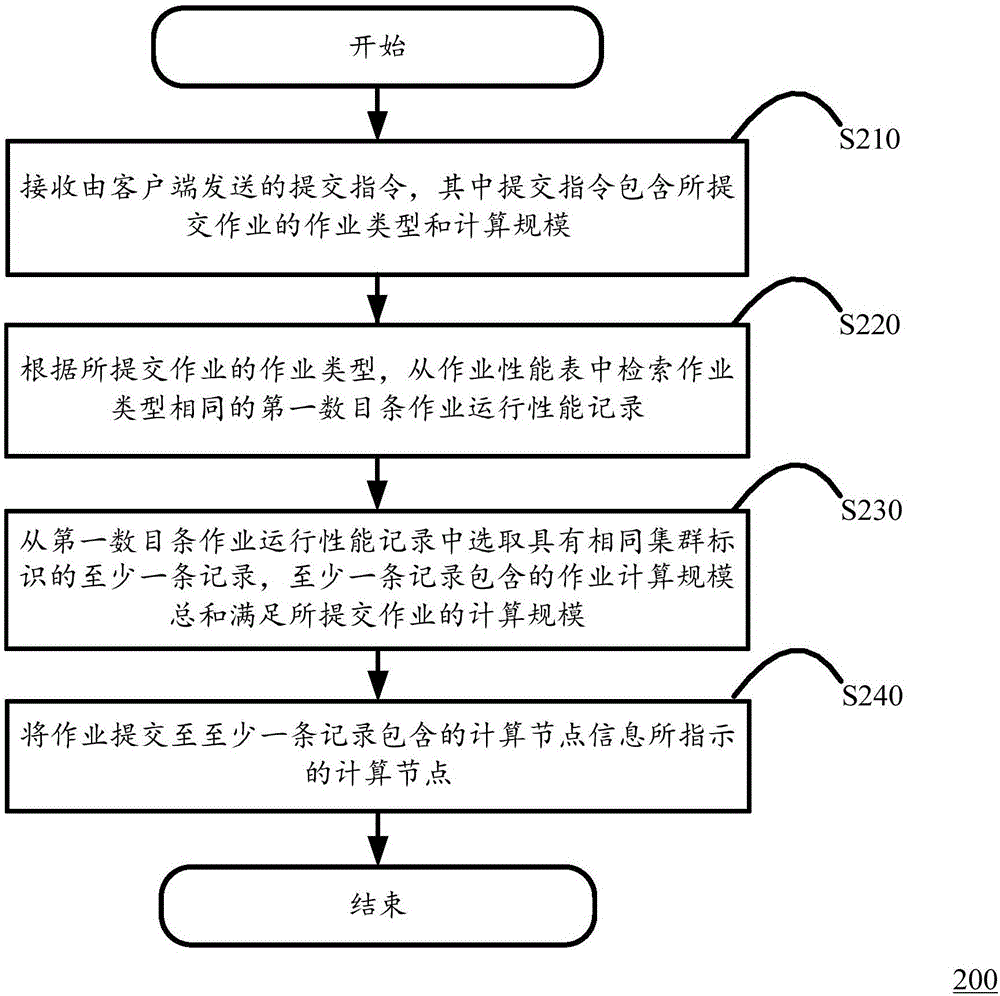

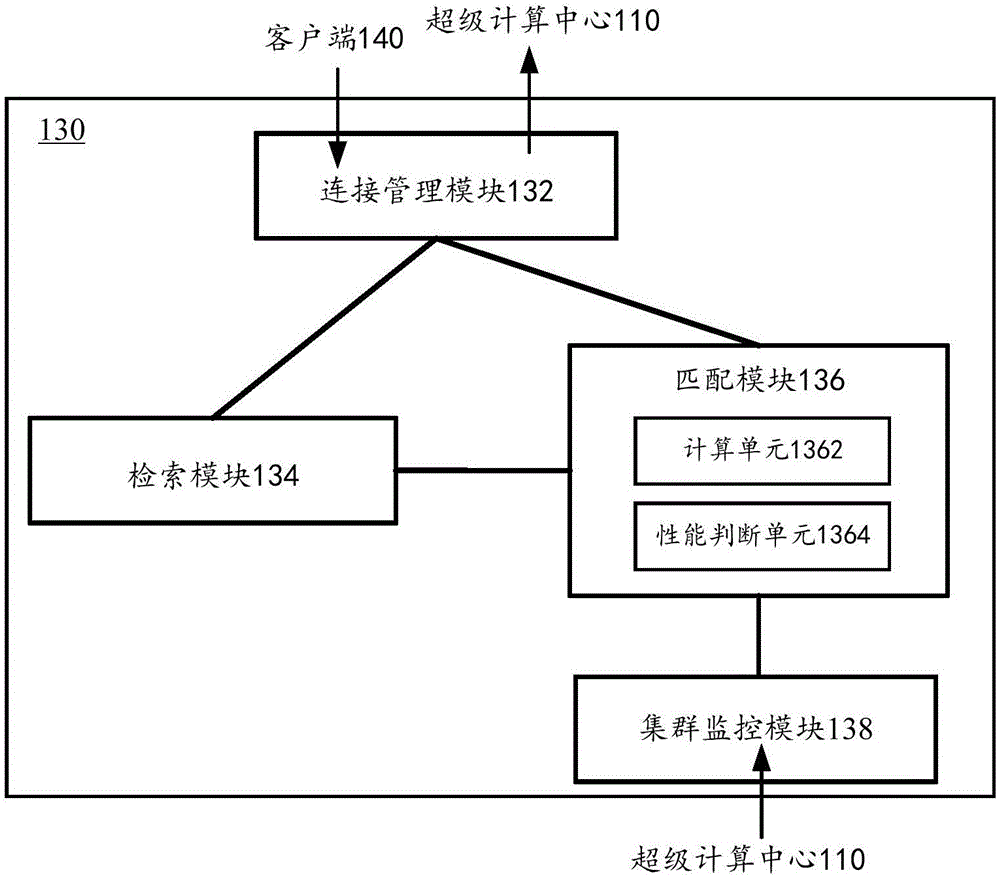

Dispatching method and system for computing resources and dispatching center

ActiveCN106790529AAvoid wastingEfficient matchingData switching networksComputing centerPerformance computing

The invention discloses a dispatching system for computing resources. The system comprises supercomputing centers which are applicable to operation of work submitted by clients; a performance computing center which is applicable to computation of work operation performance feature values according to work operation performance parameters and generation of a work performance table, wherein the work performance table is applicable to performance of associated storage on work identifiers, work types, identifiers of the supercomputing centers for performing the work, identifiers of clusters for performing the work, work computing scales, computing node information and the work operation performance feature values, as a work operation performance record; the clients which are applicable to response of requests of submitting the work by users and transmission of submission instructions to the dispatching center, wherein the submission instructions comprise the work types and the computing scales; and the dispatching center which is applicable to matching of at least one record from the work performance table according to the work types of the submitted work and submission of the work to the computing nodes contained by the at least one record. The invention also discloses the corresponding dispatching center and dispatching method.

Owner:BEIJING PARATERA TECH

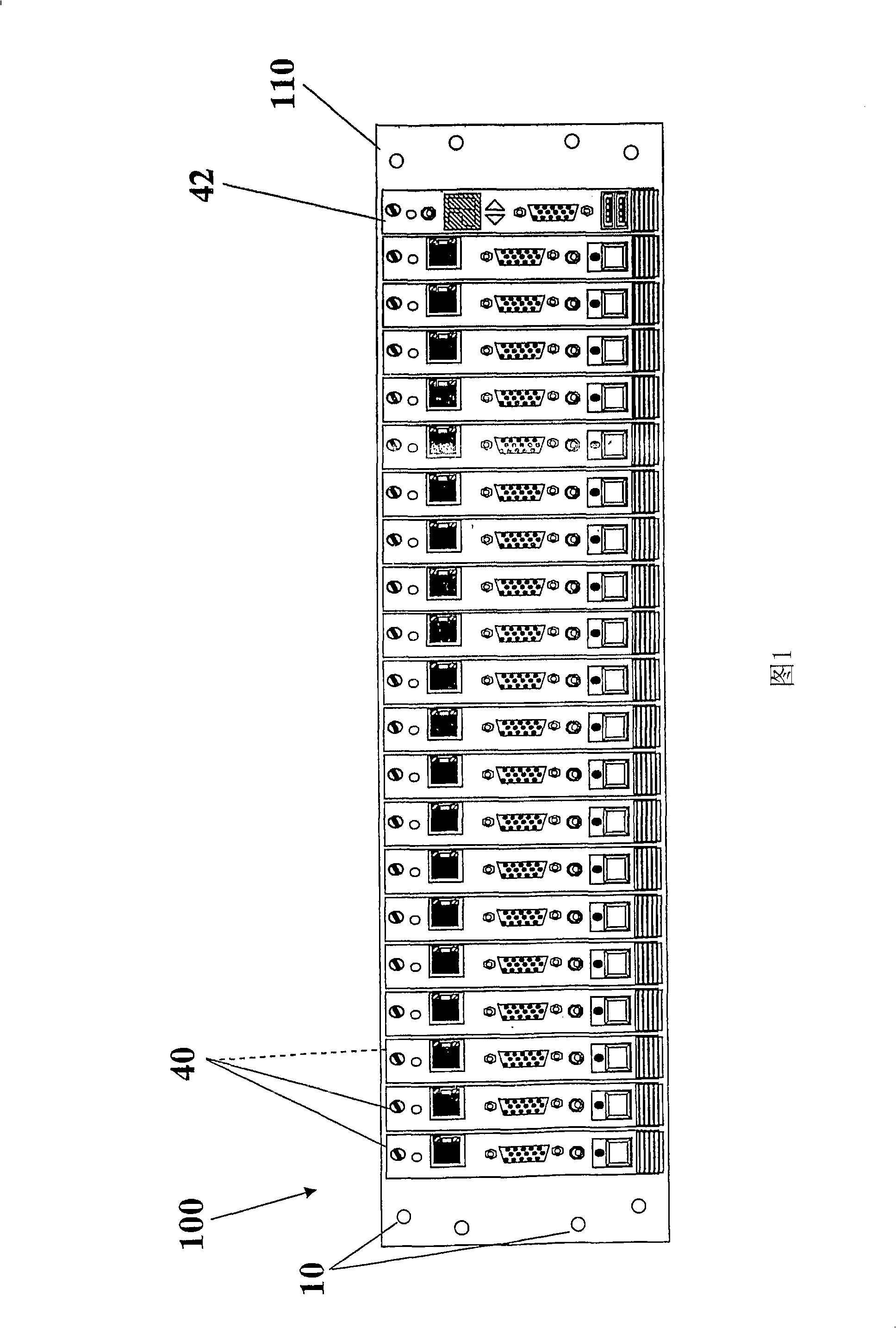

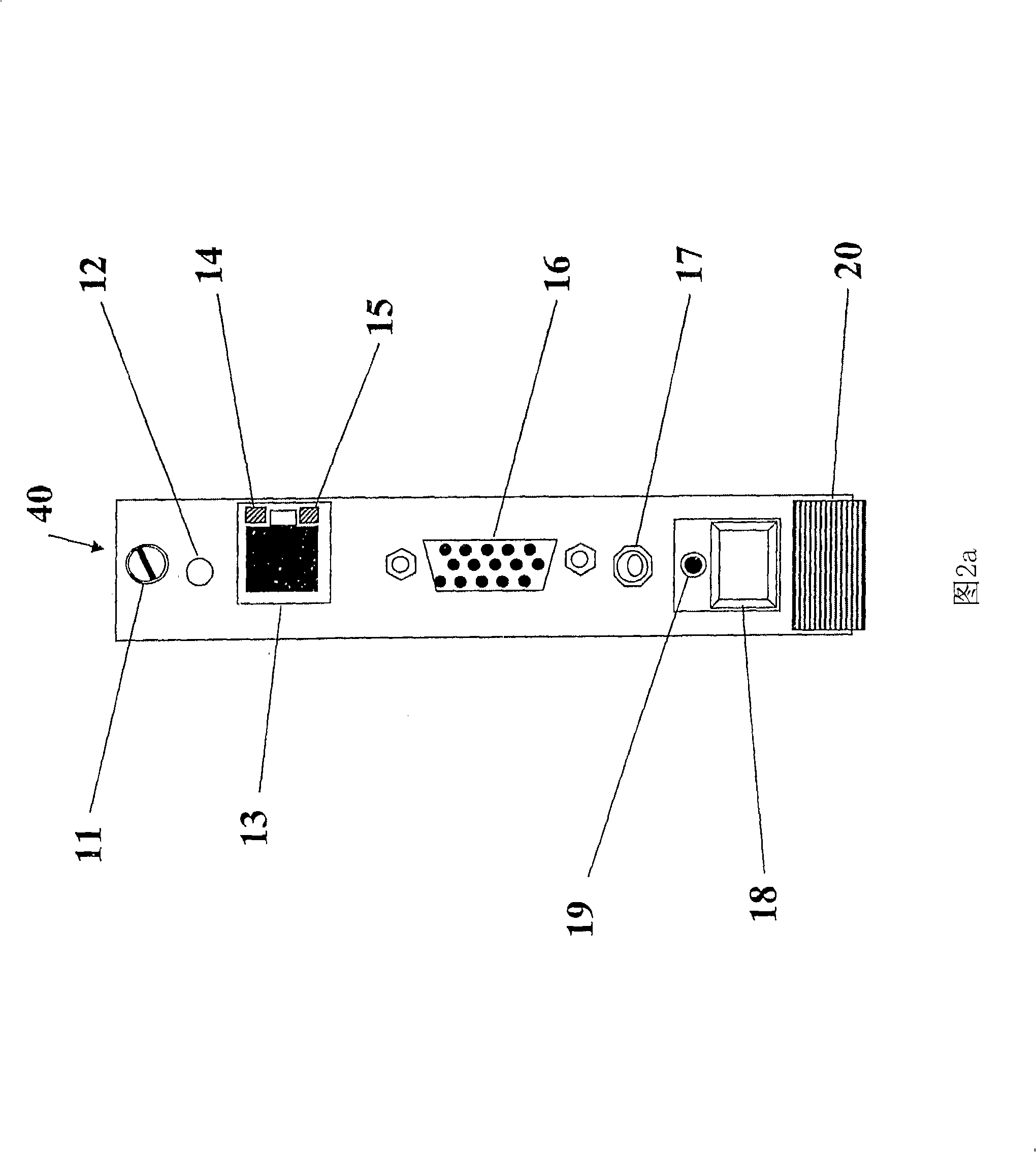

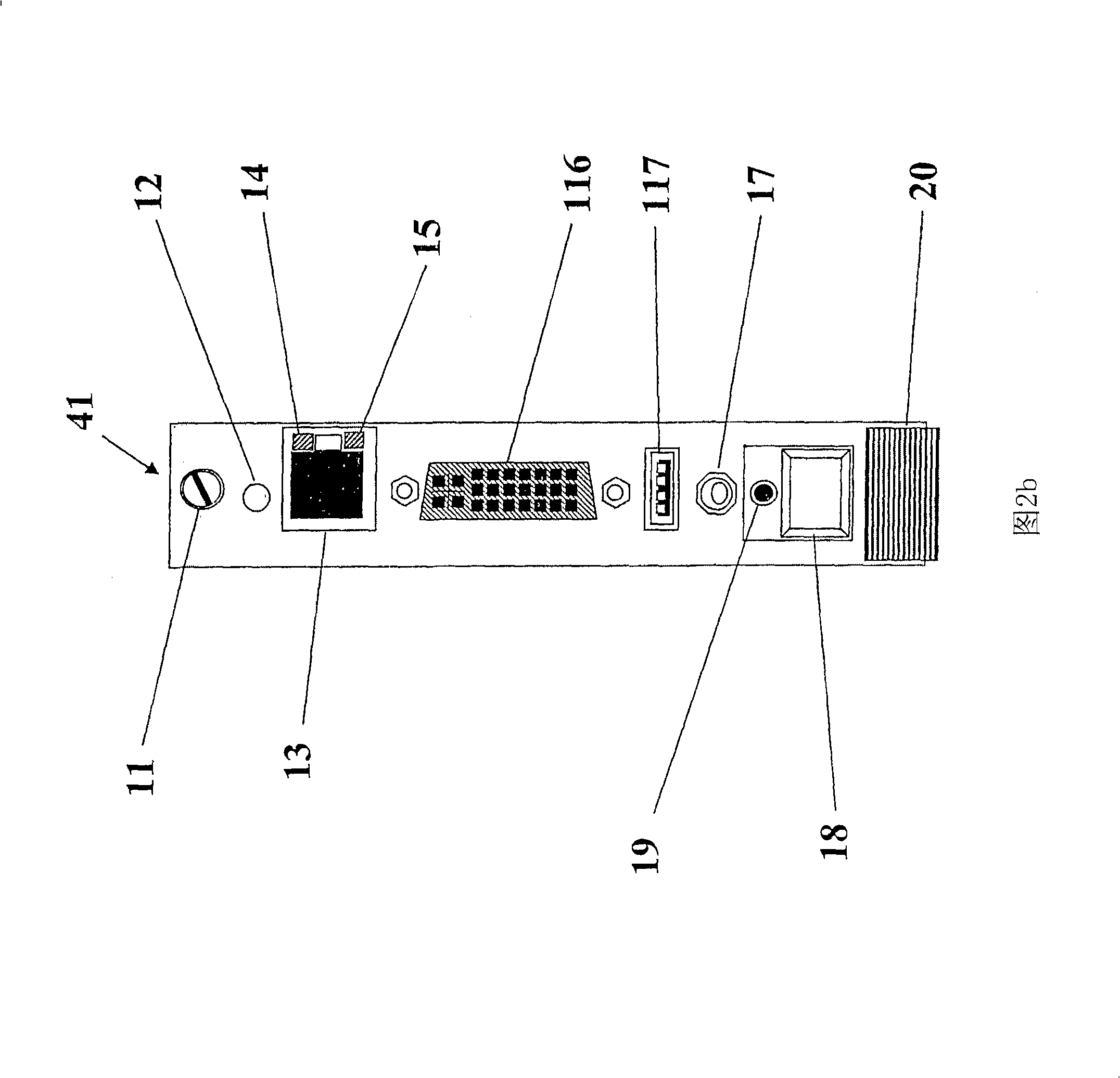

Apparatus, method and system of thin client blade modularity

InactiveCN101341810AEasy to manageServersMultiple digital computer combinationsModularityEngineering

The present invention provides a modular chassis comprising multiple thin-client blades removeably connectable to a common midplane and to one or more power supplies and one or more management modules to simulate multiple thin-client operating with one or more computer networks. The invention enables building large-scale computer laboratory environments having many thin-client devices and possibly many simulated users, easily connected and managed to simulate large computer infrastructure. Also disclosed in the present invention is a method for performing combinations of functions including testing and simulation of normal and abnormal operational scenarios in complex server-based computing environments.

Owner:CHIP PC ISRAEL LTD

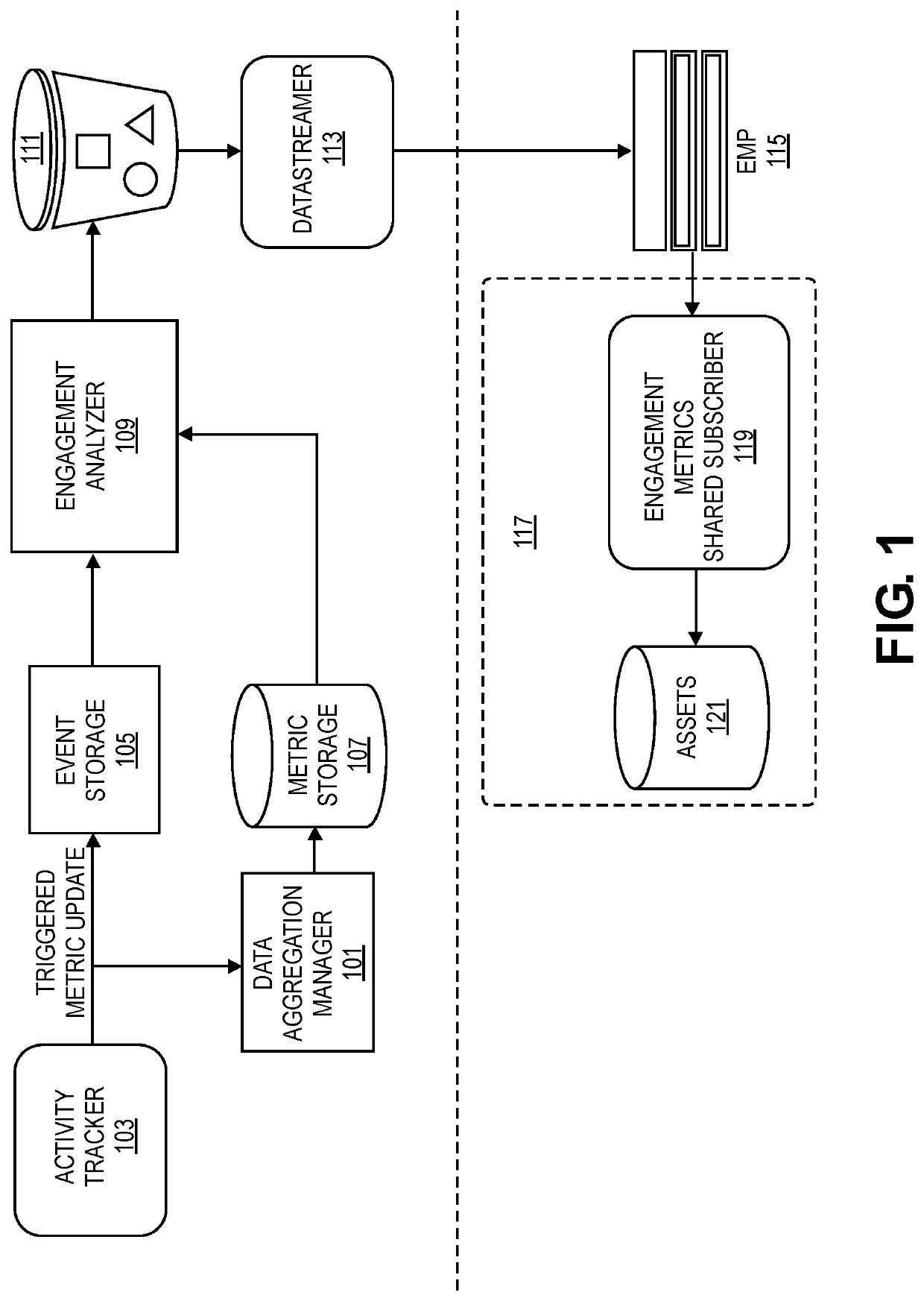

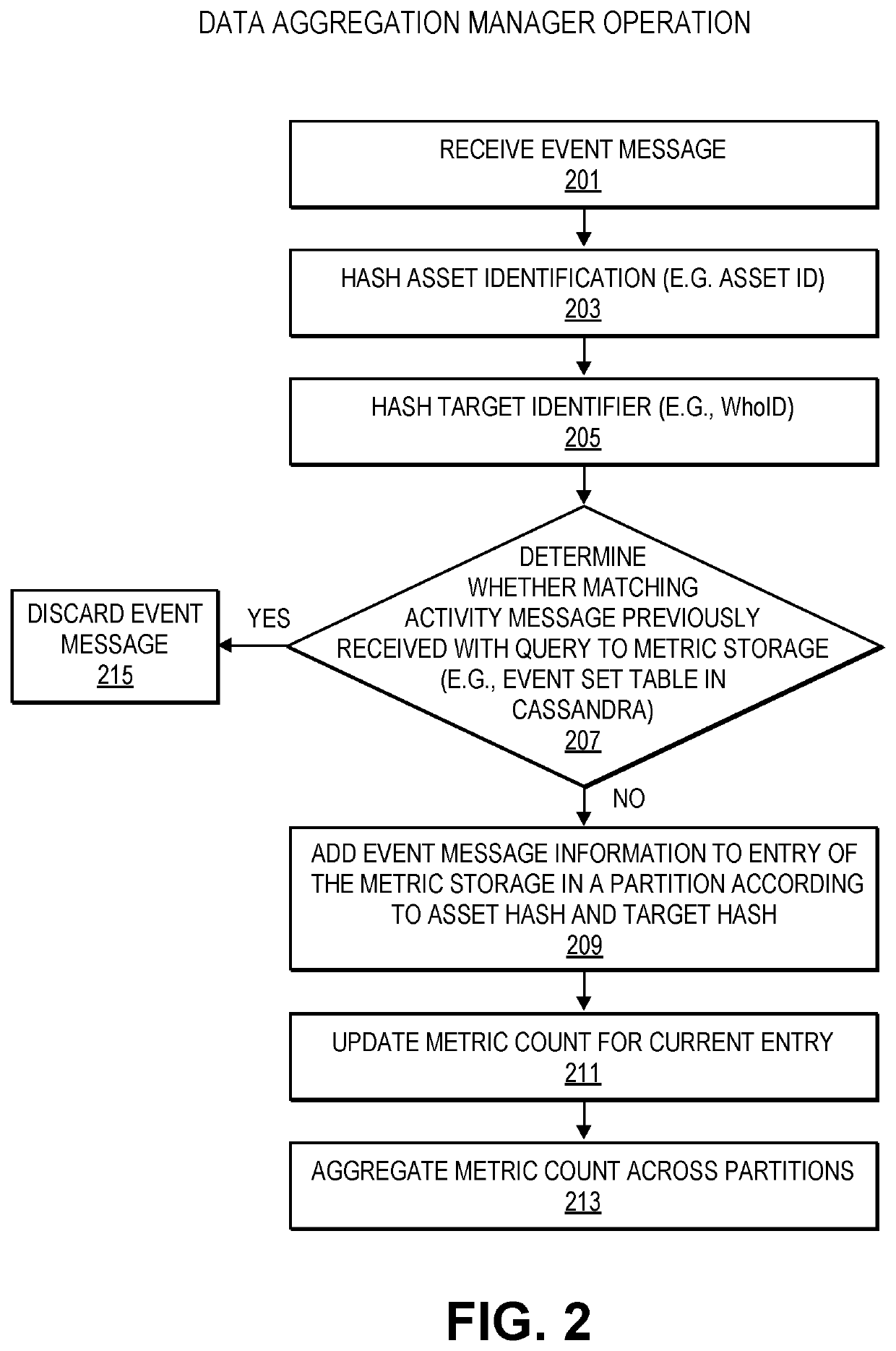

Multidimensional partition of data to calculate aggregation at scale

ActiveUS20210240678A1Database distribution/replicationMulti-dimensional databasesTheoretical computer scienceData aggregator

A method enables data aggregation in a multi-tenant system. The method includes receiving, at a data aggregation manager, an event from an activity tracking component, generating, by the data aggregation manager, a first hash value based on a first identifier in the event, generating, by the data aggregation manager, a second hash value based on a second identifier in the event, and storing event message information to an entry of a metric storage database in a partition according the first hash value and the second hash value.

Owner:SALESFORCE COM INC

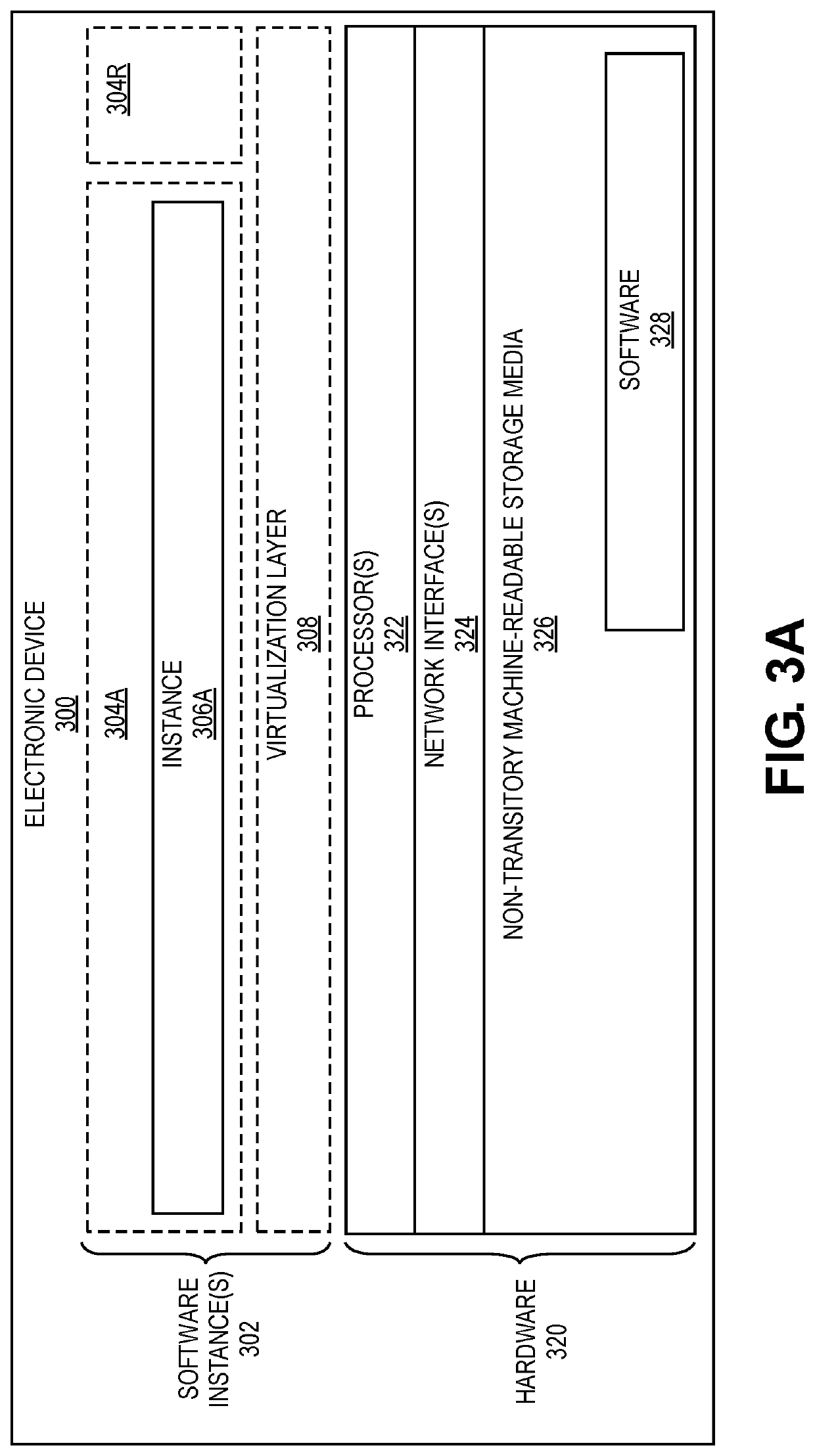

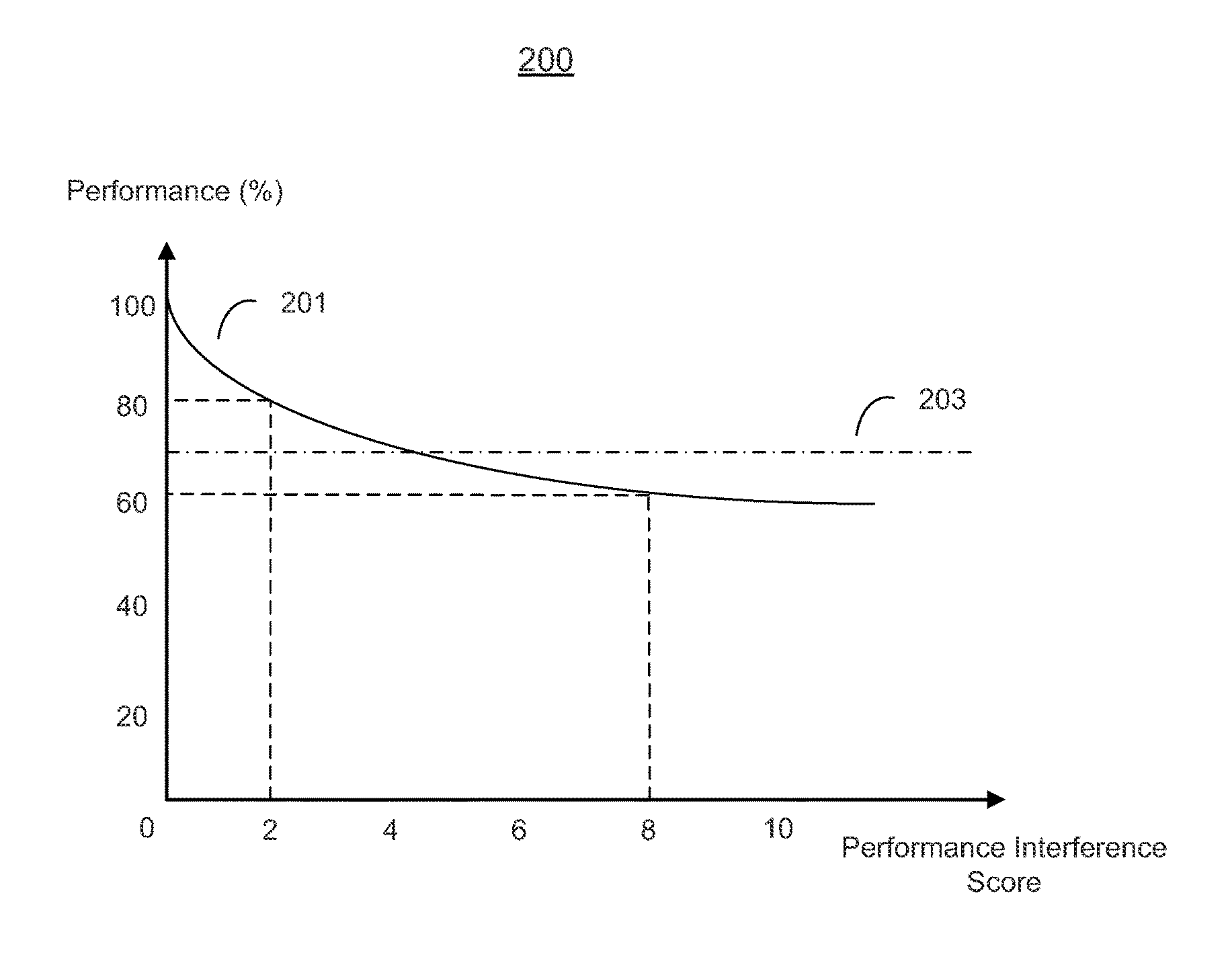

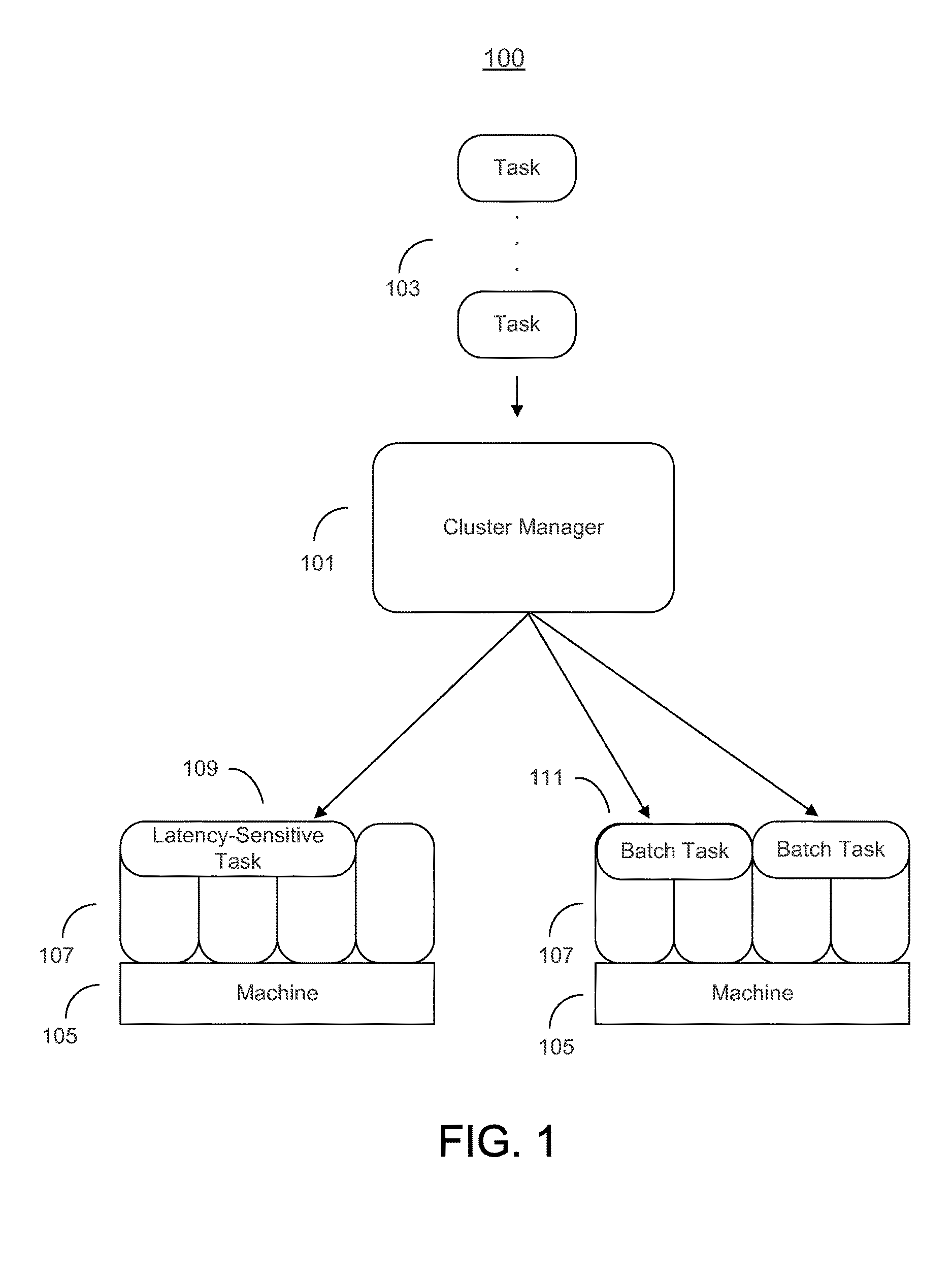

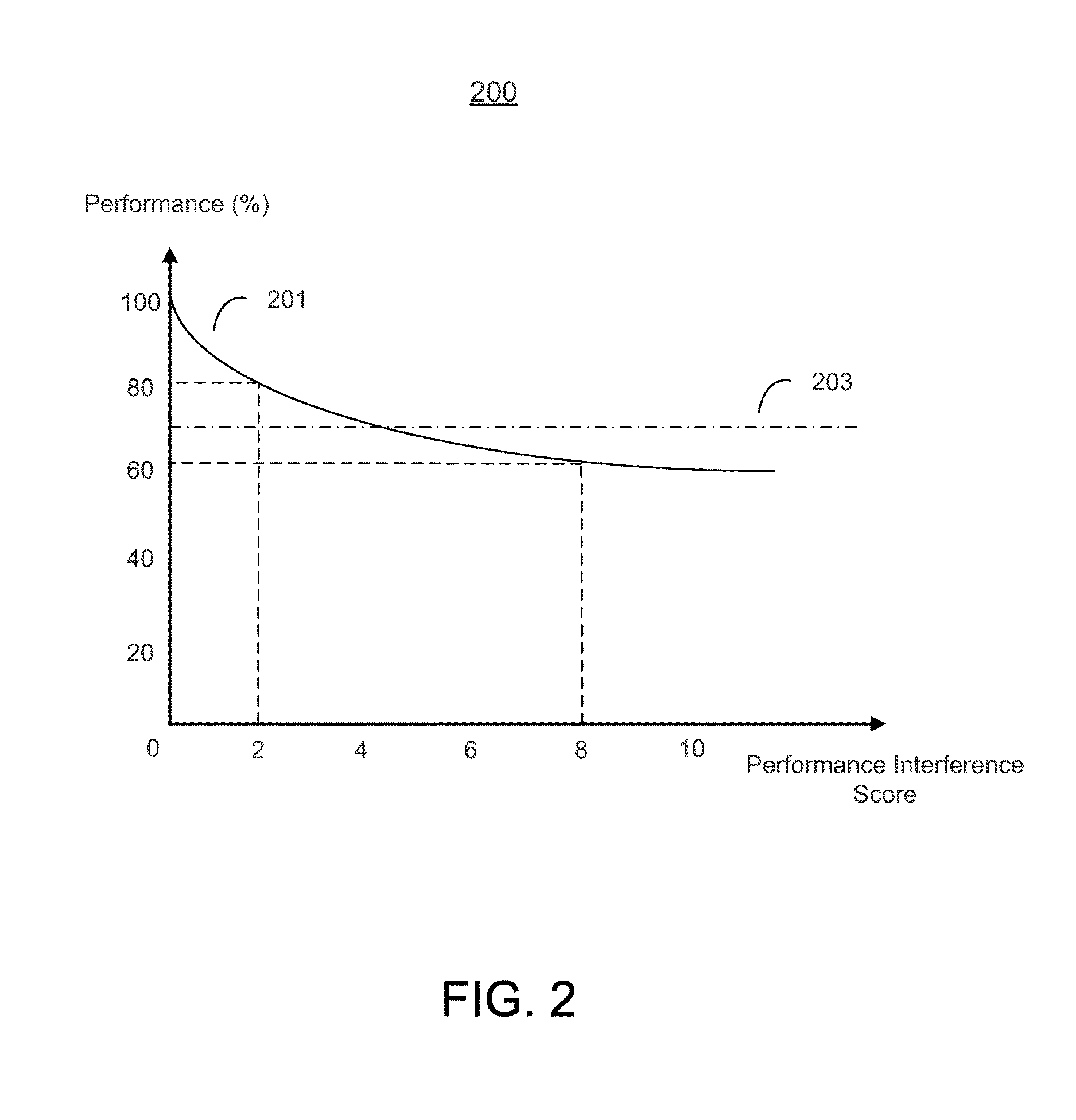

Allocation of tasks in large scale computing systems

ActiveUS9563532B1Digital computer detailsHardware monitoringParallel computingPredicting performance

Aspects of the invention may be used to allocate tasks among computing machines in large scale computing systems. In one aspect, the method includes executing a first task in the plurality of tasks on a first computing machine and determining a performance degradation threshold for the first task. The method further includes calculating a predicted performance degradation of the first task when a second task is executed on the first computing machine, wherein the predicted performance degradation is determined by comparing a performance interference score of the second task with a performance sensitivity curve of the first task. The method further includes executing the second task on the first computing machine when the predicted performance degradation of the first task is below the performance degradation threshold.

Owner:GOOGLE LLC

Energy-efficient networking method in heterogeneous sensor network

ActiveCN110121200AImprove utilization efficiencyReduce communication energy consumptionHigh level techniquesWireless communicationHeterogeneous networkJoint influence

The invention discloses an energy-efficient networking method in a heterogeneous sensor network, and belongs to the technical field of wireless sensor networks. According to the method, cluster head election is carried out based on neighbor information and residual energy Ei of each node, and the structure scale of a cluster is determined; a self-adaptive cluster scale networking mode based on energy efficiency is adopted. The physical radius of the cluster is reduced while the communication distance is met, so that the single-hop communication distance of the node is short and effective, andthe unreasonable situation that in probability election, the node capacity is not equal, but the opportunities are equal to that of selecting cluster heads is broken through by providing a cluster head election mechanism under the joint influence of energy and neighbors; a concept of adaptive value radius is introduced, and a cluster head communication distance is dynamically controlled to adjustthe structure and scale of the cluster; the communication cost between the cluster heads is calculated to serve as the basis of communication path selection, the utilization efficiency of node energyin the random heterogeneous sensor network is improved, and the effect of reducing communication energy consumption is achieved.

Owner:无锡尚合达智能科技有限公司

Mobile virtual reality language communication simulation learning calculation system and method

ActiveCN110794965ATroubleshoot sample labeling issuesFor fast changing situationsInput/output for user-computer interactionData processing applicationsSimulationExtreme scale computing

The invention discloses a mobile virtual reality language communication simulation learning calculation system and a mobile virtual reality language communication simulation learning calculation method. According to the invention, a mobile edge calculation system with an energy collection function is constructed; then, a task unloading decision of edge calculation is generated through a deep reinforcement learning method, the algorithm does not need any manually marked training data and learns from past task unloading experience, and task unloading actions generated by the DNN are improved through reinforcement learning; the convergence speed of the algorithm is increased by shrinking a local search method, and the trained DNN can realize online real-time task unloading decision; accordingto the method, energy collection is considered while task unloading calculation is considered. The problem of energy limitation of the mobile terminal can be solved. According to the method, the problems of time delay and energy consumption of large-scale calculation in the emerging fields of virtual reality and augmented reality are solved through cooperation of mobile edge calculation and cloudcalculation, and a user can achieve simulated learning of virtual reality language communication in a mobile environment.

Owner:HUNAN NORMAL UNIVERSITY

Systems, methods, and computer program products providing a yield management tool that enhances visibility of recovery rates of recovered and disposed assets

ActiveUS20150235248A1Easy to implementIncrease awarenessMarket predictionsResourcesVisibilityLogistics management

A yield management system for dynamically enhancing visibility of yield rates realized for one or more recovered assets is provided. The system is configured to retrieve disposition data associated with a plurality of activities undertaken with handling and processing of one or more recovered assets for disposal thereof and comprising at least one component of disposition cost. Based thereon the system calculates a yield rate for each of said one or more recovered assets, compares said calculated yield rate to one or more market yield rates to identify one or more discrepancies, and if discrepancies exist, generate an indication thereof. A conservation logistics system is also provided, which calculates an index value along a consolidated index scale, compares said index value to one or more pre-determined goals so as to identify one or more discrepancies, and if discrepancies exist, facilitates implementation of one or more conservation logistics initiatives.

Owner:UNITED PARCEL SERVICE OF AMERICAN INC

CPU+MIC mixed heterogeneous cluster system for achieving large-scale computing

InactiveCN103294639AImplement extensionsMeet the requirements of high-performance applicationsMultiple digital computer combinationsExtreme scale computingHeterogeneous cluster

The invention provides a CPU+MIC mixed heterogeneous cluster system for achieving large-scale computing. The mixed heterogeneous cluster system comprises a pure CPU node cluster system and an MIC node cluster system, wherein each node in the pure CPU node cluster system only comprises a CPU computing chip, and each node in the MIC node cluster system comprises a CPU chip and at least one MIC. The mixed heterogeneous cluster system can achieve larger-scale computing expanding based on an original CPU cluster system, effectively improve system performance, and meet the requirements for high-performance application of the larger-scale computing.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

Task processing method, device and equipment and storage medium

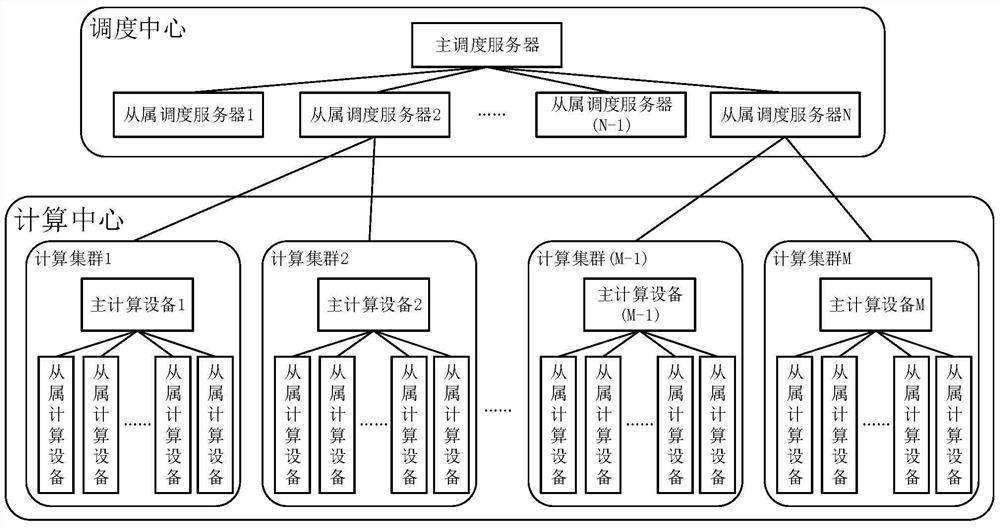

PendingCN112148439ARelieve pressureImplement schedulingProgram initiation/switchingResource allocationExtreme scale computingScheduling (computing)

The embodiment of the invention discloses a task processing method and device, equipment and a storage medium. The method comprises the steps of receiving a target computing task initiated by a user in response to a task processing request of the user; selecting a target slave scheduling server from the at least two slave scheduling servers; and sending the target computing task to the target slave scheduling server to indicate the target slave scheduling server to select a target computing cluster from the connected computing clusters, and distributing the target computing task to a slave computing device in the target computing cluster for processing through a master computing device in the target computing cluster. By adopting the scheme of the embodiment of the invention, the pressureof the scheduling server can be greatly reduced, the scheduling of large-scale computing equipment is achieved, and the received computing tasks can be sequentially distributed to proper slave computing equipment to be processed when the computing tasks are received.

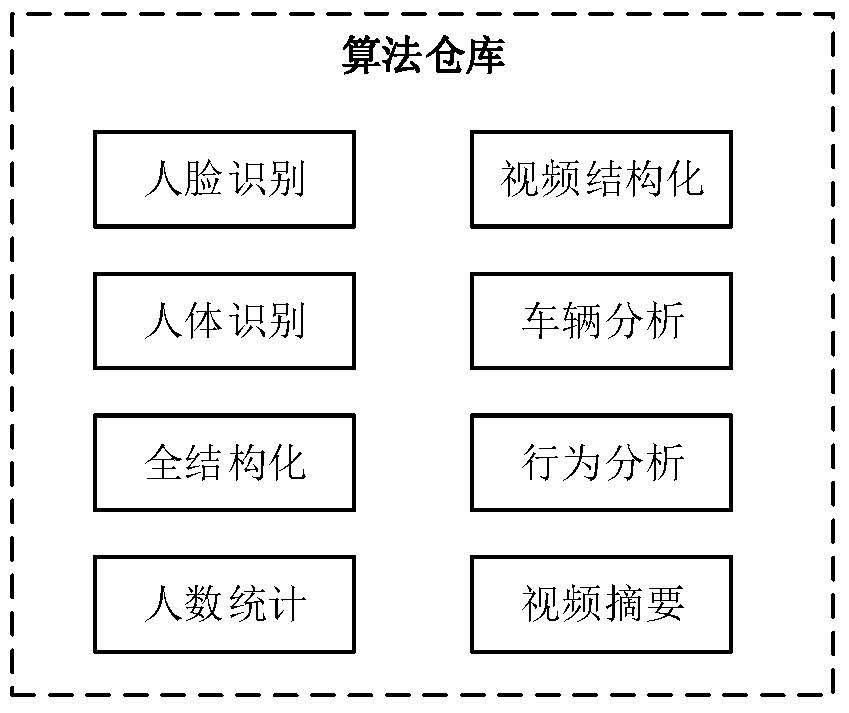

Owner:ZHEJIANG UNIVIEW TECH CO LTD

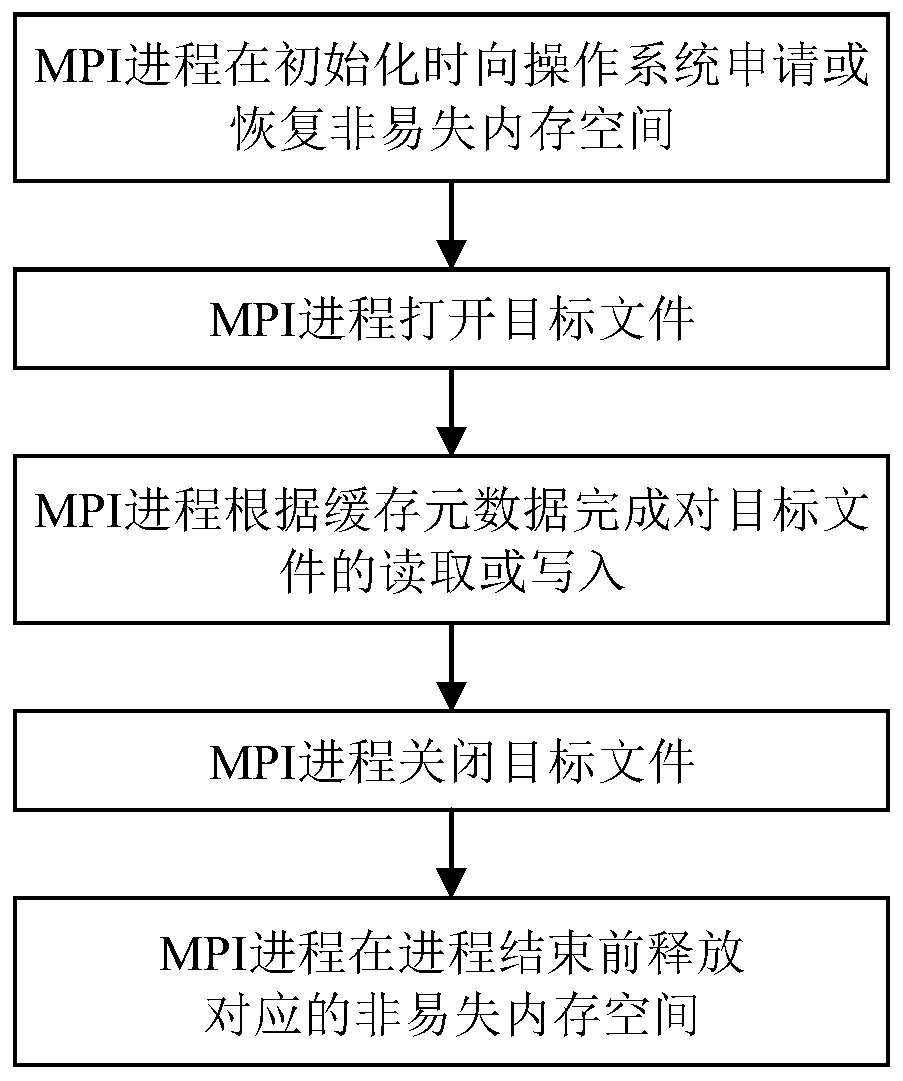

Nonvolatile memory management method and system based on MPI-IO middleware

ActiveCN111061652ARealize unified managementReduce IO overheadMemory adressing/allocation/relocationInterprogram communicationComputer architecturePerformance computing

The invention discloses a nonvolatile memory management method and system based on MPI-IO middleware. The nonvolatile memory management method comprises the steps that an MPI process applies for or recovers a nonvolatile memory space in nonvolatile memory equipment from an operating system during initialization, and the MPI process releases the corresponding nonvolatile memory space before the process is ended, IO data can be cached by utilizing the nonvolatile memory device, and the MPI process completes reading or writing of the target file according to the cached metadata cached in the nonvolatile memory space. According to the invention, a plurality of nonvolatile memory devices in a high-performance computing environment can be managed in a unified manner; IO data is cached, and IO overhead of a program is reduced; efficient temporary storage and recovery are carried out on files in large-scale calculation; non-volatile memory equipment is used in a non-exclusive mode, and hardware resources are fully utilized; the API provided for the user is not modified, and the method is simple and easy to use.

Owner:SUN YAT SEN UNIV

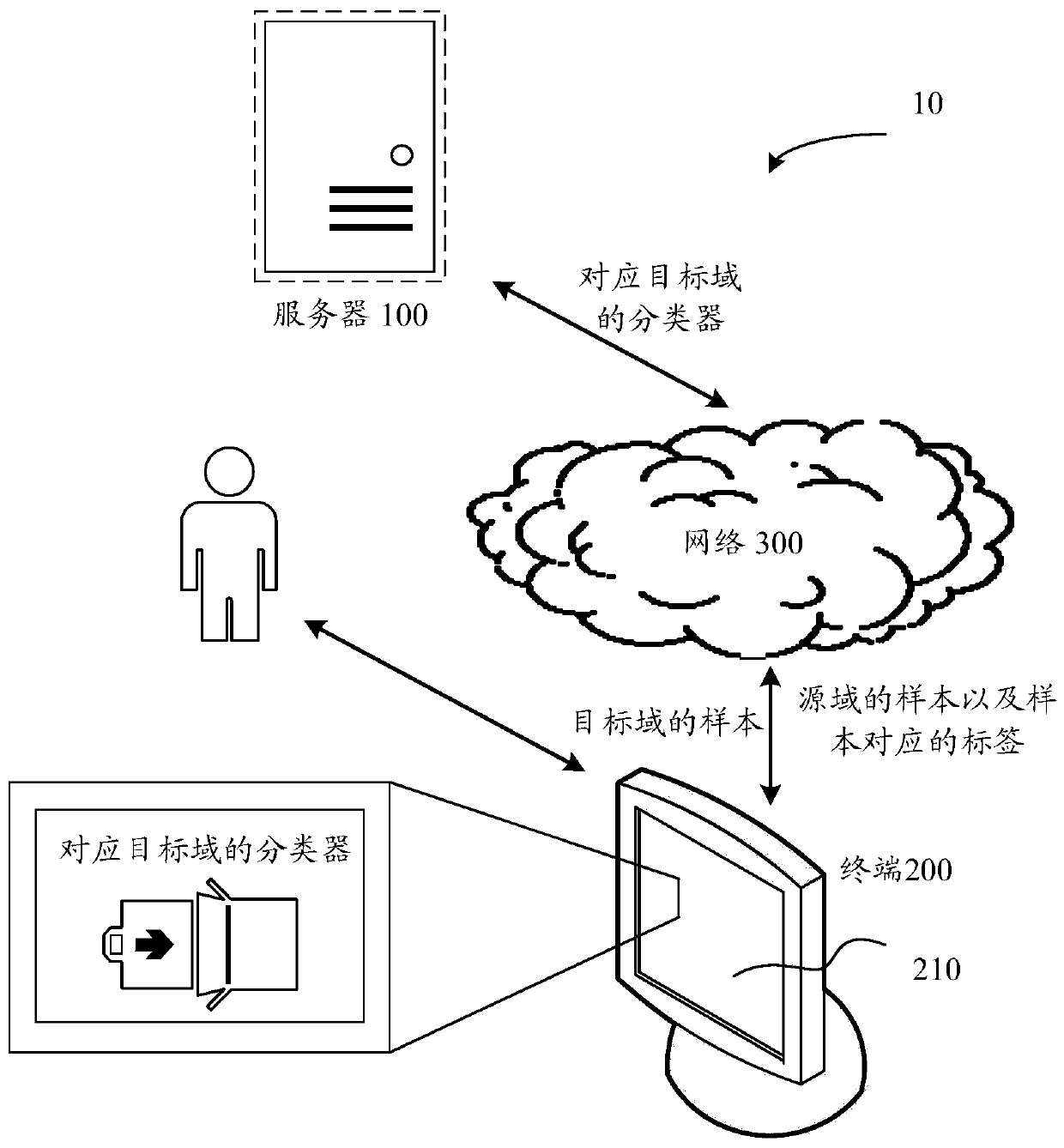

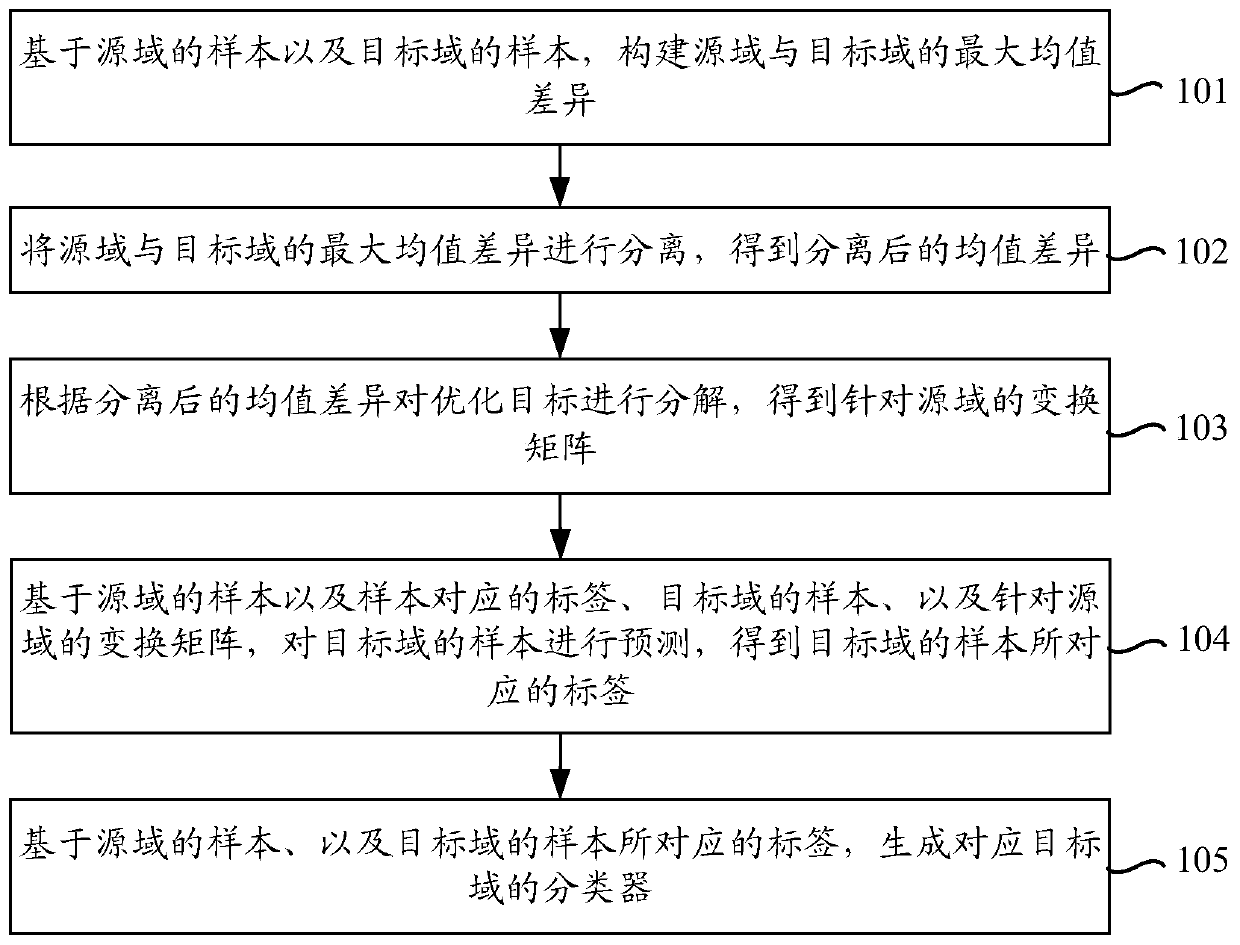

Classifier generation method, device, equipment and storage medium

PendingCN110781970ASolve the multiplication problemScale upCharacter and pattern recognitionComplex mathematical operationsComputation complexityComputational physics

The invention provides a classifier generation method and a device, electronic equipment and a storage medium. The method comprises the steps of constructing a maximum mean value difference between asource domain and a target domain based on a sample of the source domain and a sample of the target domain; separating the maximum mean value difference between the source domain and the target domainto obtain a separated mean value difference; decomposing the optimization target according to the separated mean value difference to obtain a transformation matrix for the source domain; predicting the sample of the target domain based on the sample of the source domain, the label corresponding to the sample, the sample of the target domain and the transformation matrix for the source domain to obtain the label corresponding to the sample of the target domain; and generating a classifier corresponding to the target domain based on the samples of the source domain and the labels correspondingto the samples of the target domain. According to the method and the device, the maximum mean value difference related to large-scale calculation amount in transfer learning can be separated, so thatthe calculation complexity of transfer learning is reduced.

Owner:TENCENT TECH (SHENZHEN) CO LTD

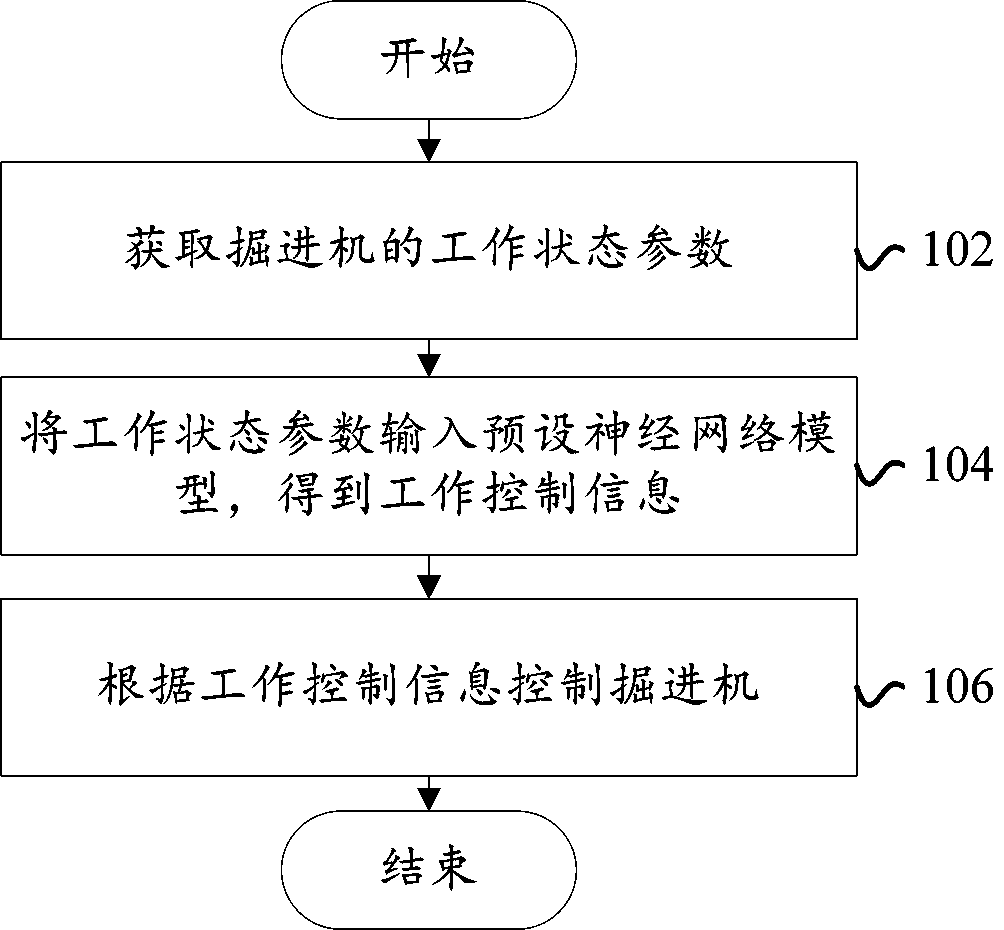

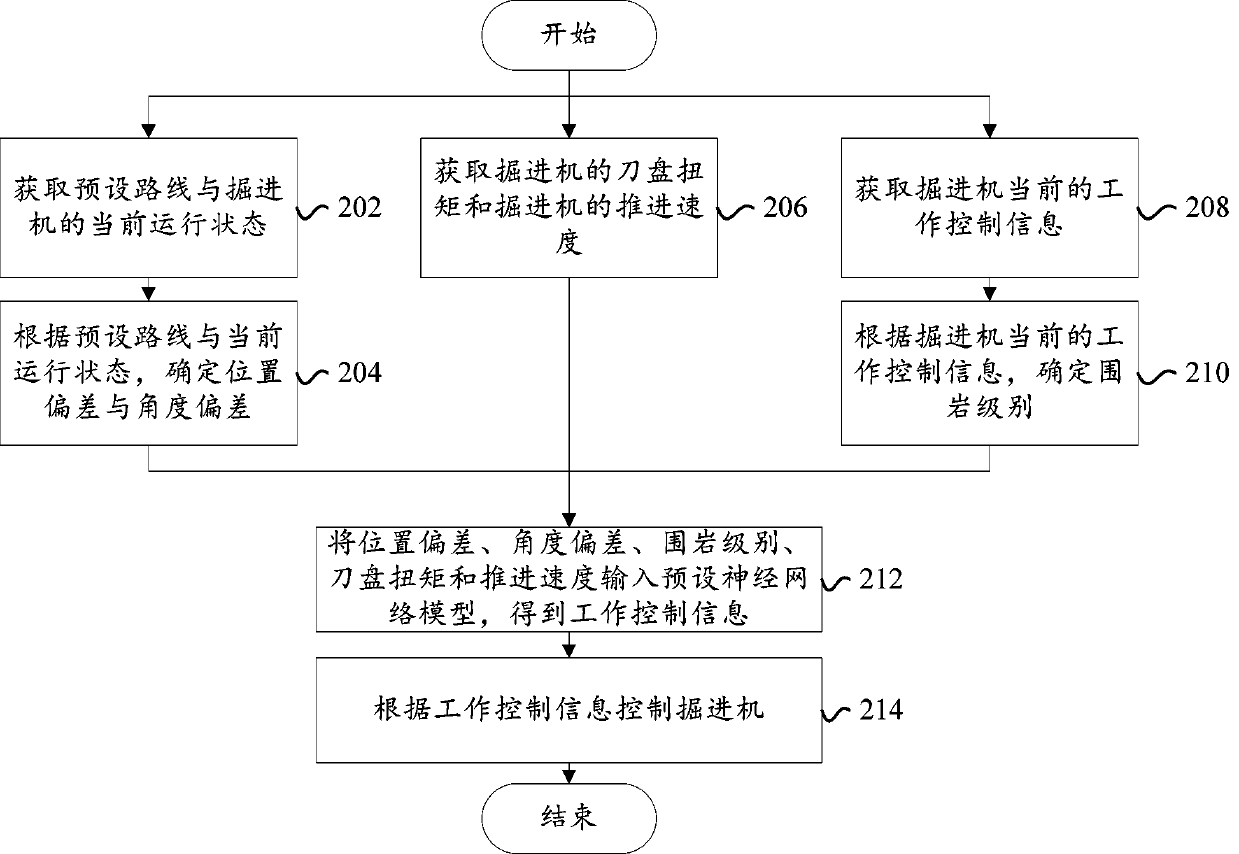

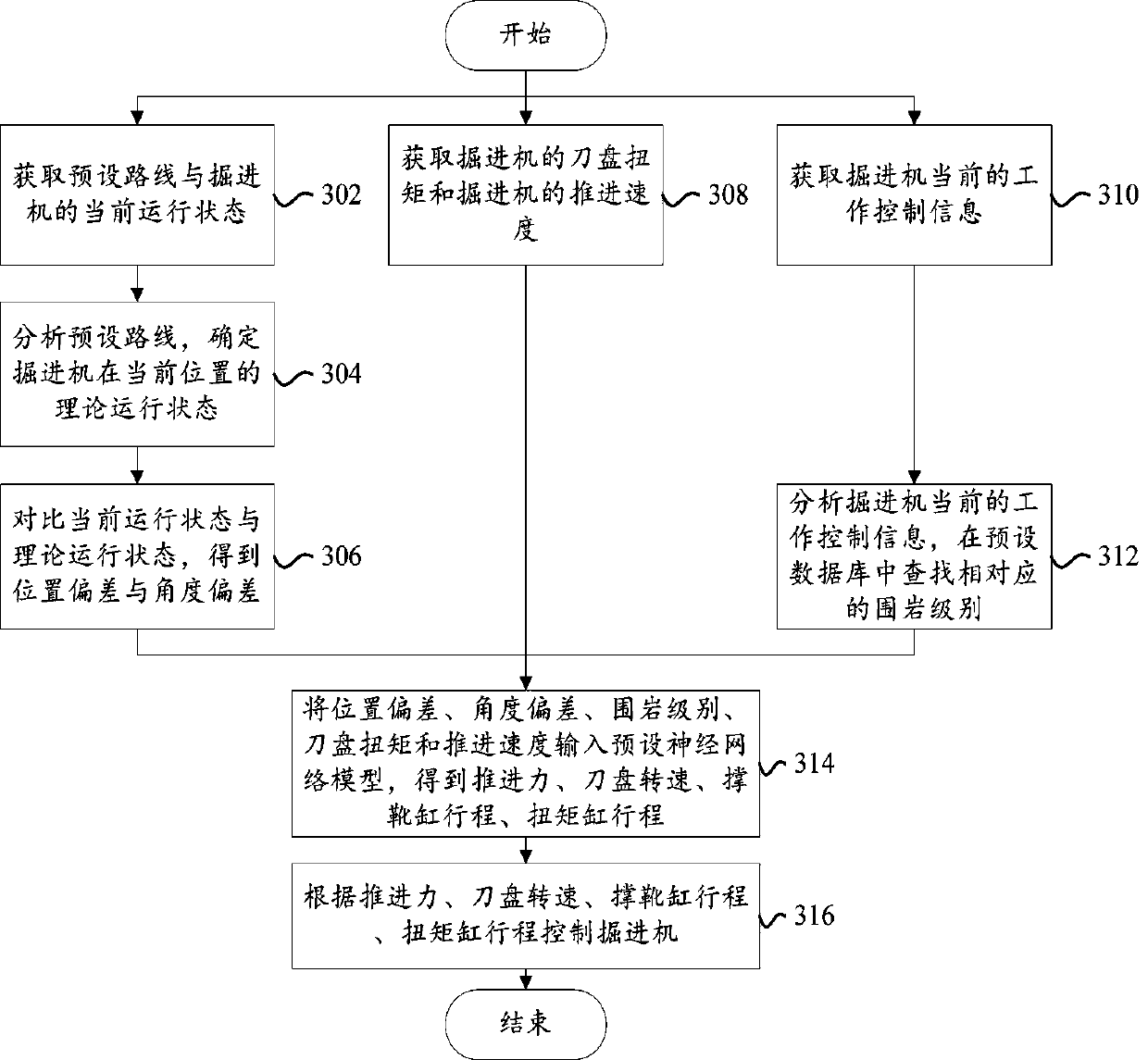

Control method of heading machine, heading machine and computer readable storage medium

ActiveCN110989366ARealize fully automatic controlPromote self-learningAdaptive controlAutomatic controlNetwork model

The invention provides a control method of a heading machine, the heading machine and a computer readable storage medium. The control method of the heading machine comprises the steps of acquiring working state parameters of the heading machine; inputting the working state parameters into a preset neural network model to obtain work control information; and controlling the heading machine according to the work control information. According to the control method of the heading machine provided by the invention, the working state parameters of the heading machine during operation are processedon the basis of the preset neural network model, then the work control information is output according to the working state parameters so as to control the heading machine to operate with proper working state parameters, so that full-automatic control of the heading machine is achieved, the uncertainty caused by manual operation is reduced, and the automation level is improved. Meanwhile, the neural network has good self-learning and parallel problem processing capacity, and the requirements for environment adaptability, real-time large-scale calculation and the like can be fully met.

Owner:CHINA RAILWAY CONSTR HEAVY IND

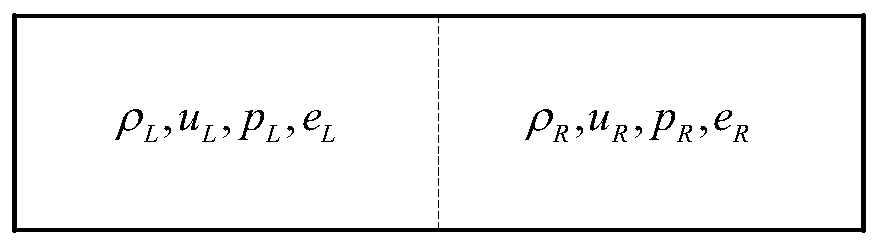

Method for determining size of computational fluid dynamics analysis grid of digital reactor

ActiveCN110728072AMeet the calculation accuracy requirementsMathematical principles are clearDesign optimisation/simulationEngineeringExtreme scale computing

The invention discloses a method for determining the size of a computational fluid mechanics analysis grid of a digital reactor, which comprises the following steps of: dividing the digital reactor into a group of computational grids in space, and determining the geometric size of each grid and the central coordinate of each grid; obtaining initial boundary condition parameters; establishing an equation set corresponding to the one-dimensional Riemann problem, and solving and obtaining an accurate solution of the grid; establishing a one-dimensional Riemann problem approximate discrete solvingmodel, and obtaining an approximate solution of the grid through calculation of the model; and comparing the approximate solution of the grid with the exact solution of the grid. The method has the characteristics of clear principle, high precision, simple calculation input and low calculation time consumption, can meet the modeling requirements of large-scale computational fluid mechanics analysis and calculation grids and quickly determine the sizes of the unit calculation grids, and is particularly suitable for evaluating a grid modeling scheme of large-scale CFD calculation.

Owner:NUCLEAR POWER INSTITUTE OF CHINA

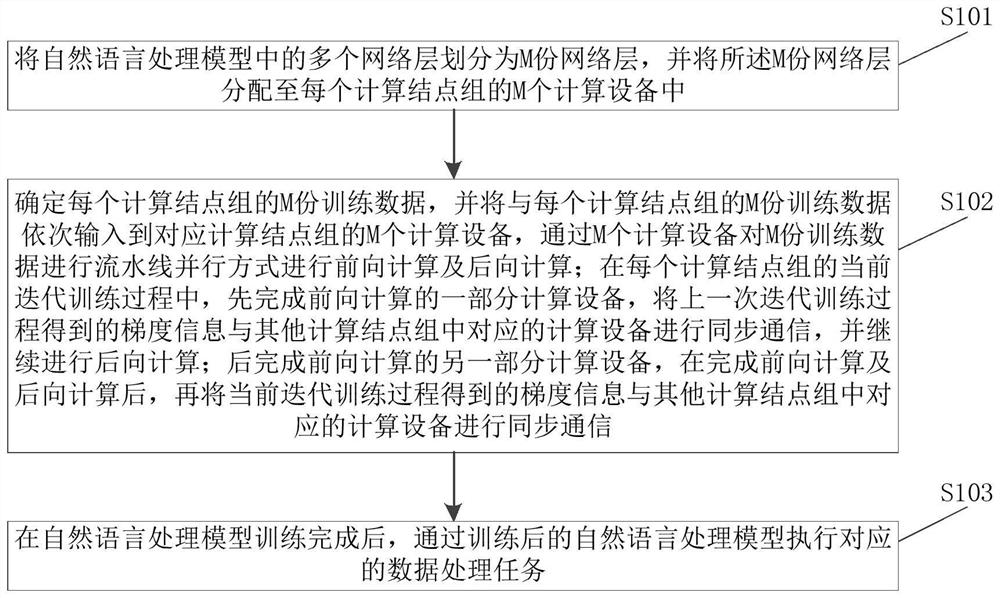

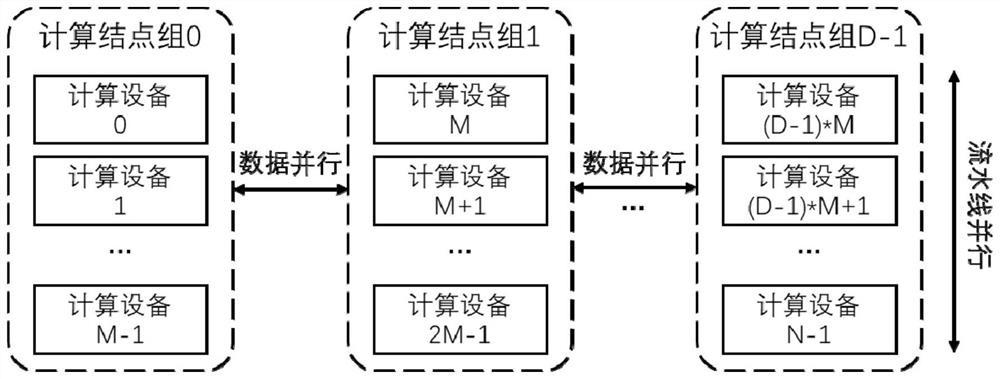

Parallel computing method and device for natural language processing model, equipment and medium

PendingCN114356578AGuaranteed treatment effectReduce computing timeResource allocationInterprogram communicationConcurrent computationTheoretical computer science

The invention discloses a parallel computing method and device for a natural language processing model, equipment and a medium. In the scheme, a plurality of computing devices in different computing node groups are trained in an assembly line parallel mode, different computing node groups are subjected to gradient sharing in a data parallel mode, the assembly lines can be controlled in a certain number of nodes in a parallel mode, and the problem that in large-scale computing node training, the number of the nodes is too large is avoided. And the method can be effectively suitable for parallel training of a large-scale network model on large-scale computing nodes. Moreover, according to the scheme, the synchronous communication between the computing node groups is hidden in the pipeline parallel computing process, so that each computing node group can enter the next iterative computation as soon as possible after the iterative computation is finished, and the processing efficiency of the natural language processing model can be improved on the basis of ensuring the processing effect of the natural language processing model in this way. The calculation time of natural language processing model training is shortened, and the efficiency of distributed training is improved.

Owner:NAT UNIV OF DEFENSE TECH

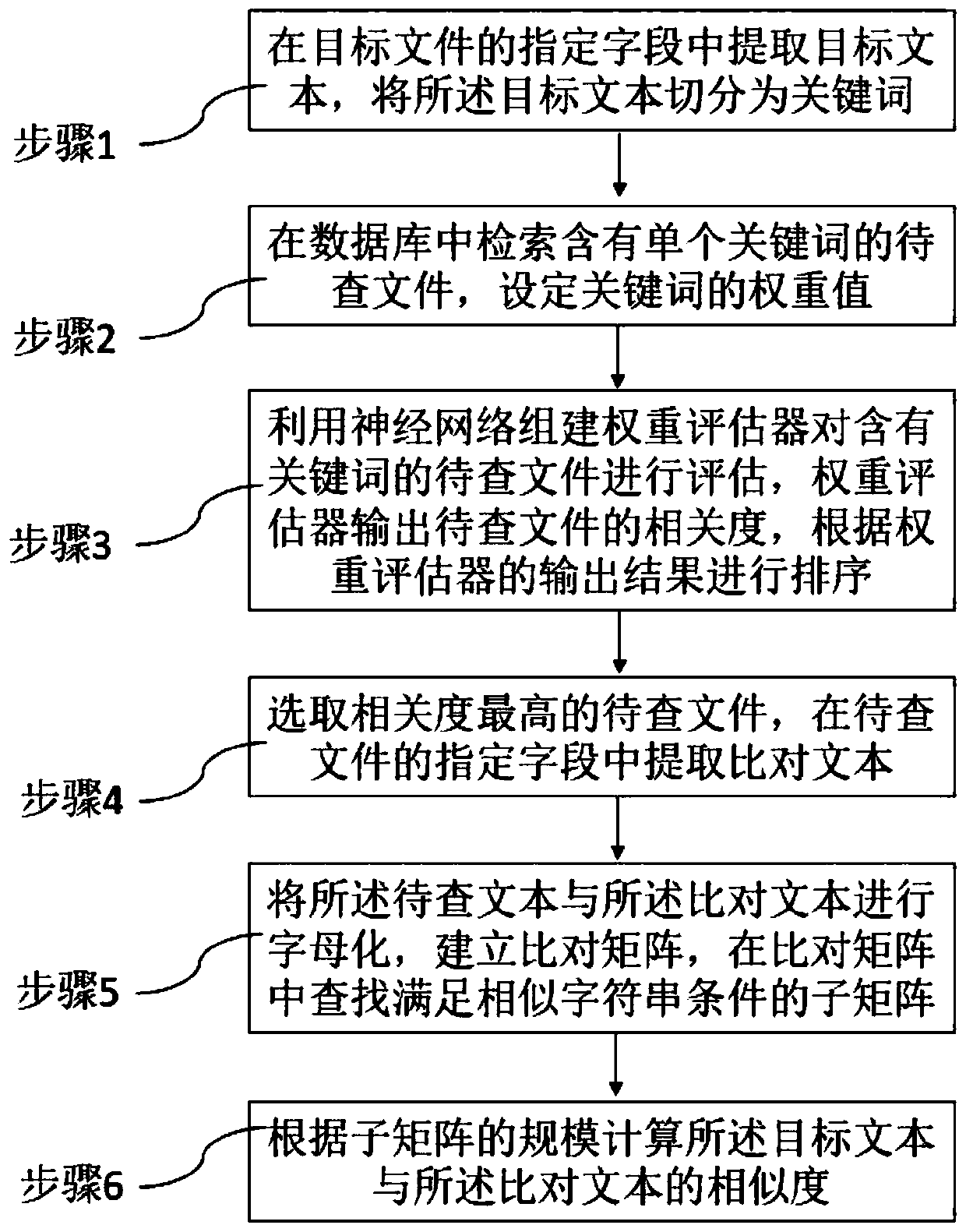

Science and technology project duplicate checking method for automatically realizing field weight allocation based on deep learning algorithm

PendingCN110941743AEfficient comparisonQuick comparisonNatural language data processingOther databases queryingEngineeringExtreme scale computing

The invention provides a science and technology project duplicate checking method for automatically realizing field weight allocation based on a deep learning algorithm, which comprises the followingsteps: extracting a target text from a specified field of a target file, and segmenting the target text into keywords; retrieving a to-be-queried file containing a single keyword in a database, and setting a weight value of the keyword; utilizing a neural network to establish a weight evaluator to evaluate and sort the to-be-checked files containing the keywords; selecting a to-be-queried file with the highest relevancy, and extracting a comparison text from a specified field of the to-be-queried file; establishing a comparison matrix, and calculating the similarity between the target text andthe comparison text according to the scale of the sub-matrix; according to the science and technology project duplicate checking method for automatically realizing field weight distribution based onthe deep learning algorithm, learning training is conducted on related samples through the neural network, and after training is completed, a file similarity comparison (duplicate checking) task can be efficiently and rapidly completed.

Owner:广西壮族自治区科学技术情报研究所

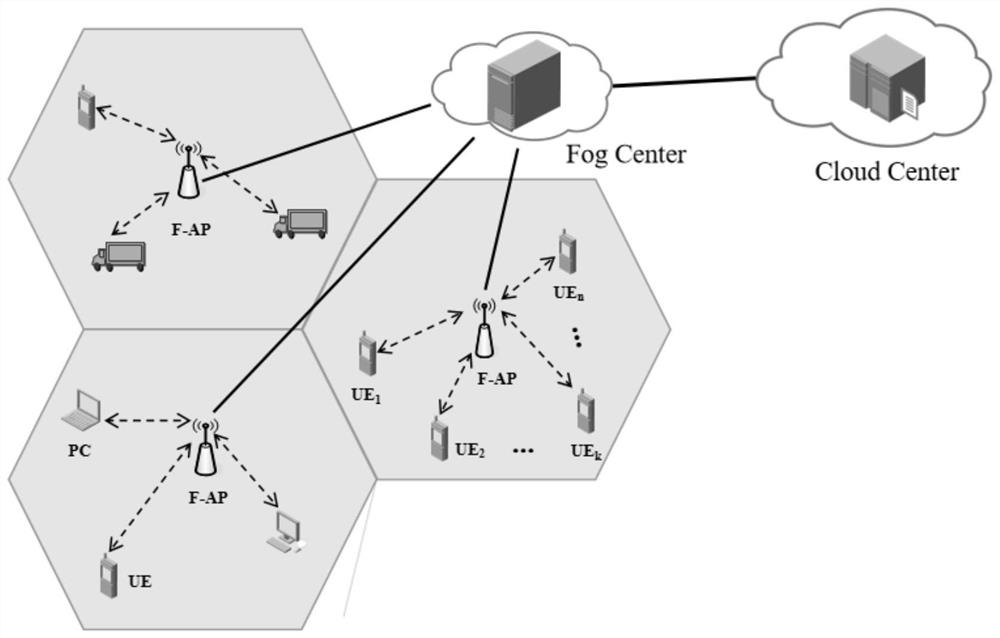

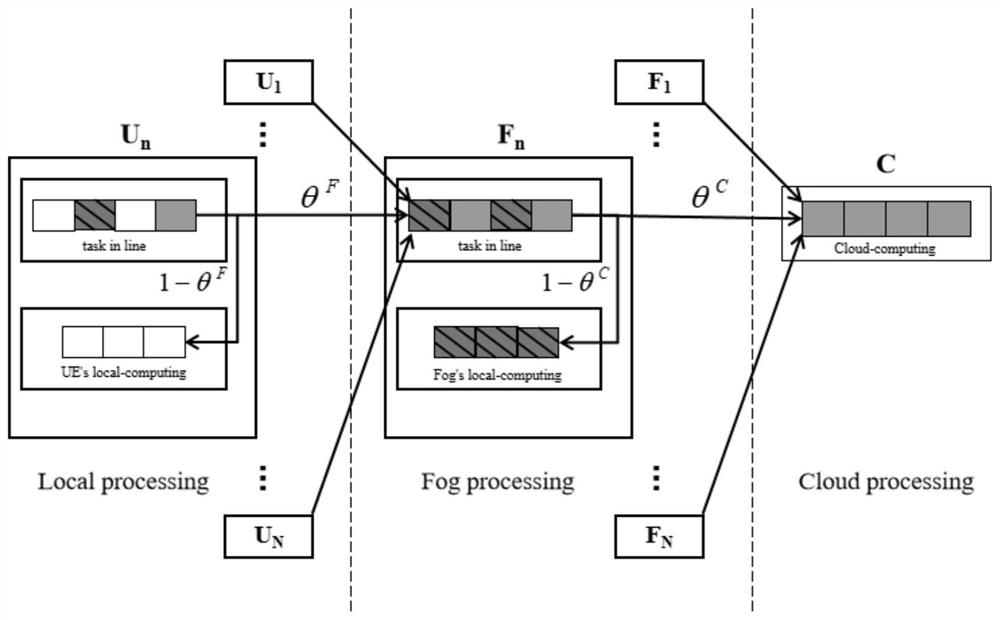

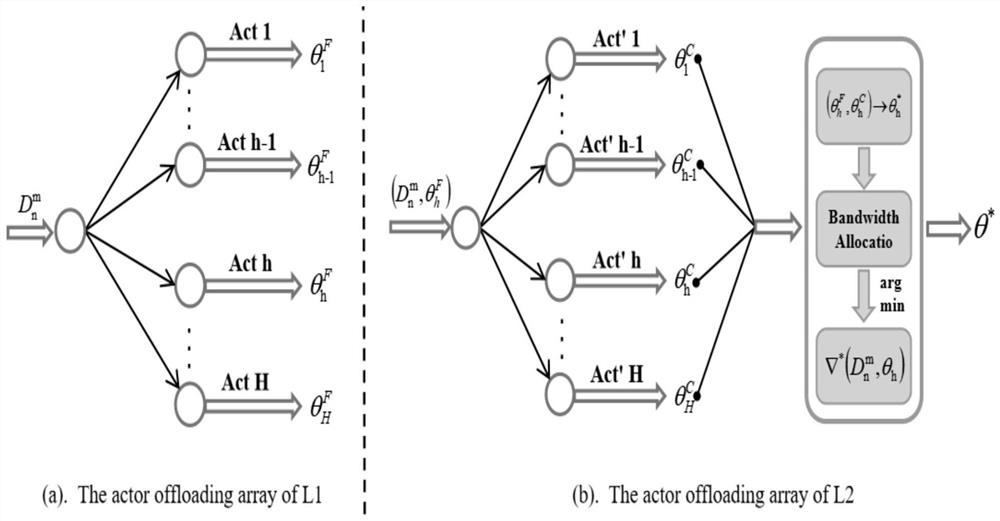

Distributed DNN-based mobile fog computing loss joint optimization system and method

ActiveCN112910716ALow differential sensitivityReached a state of convergenceEnergy efficient computingData switching networksFog computingEngineering

The invention discloses a distributed DNN-based mobile fog computing loss joint optimization system and method. The system comprises a local computing layer, a fog computing layer and a cloud computing layer. The local computing layer computes a task through a user device. And the fog computing layer is used for providing fog computing service for the unloading task so as to reduce time delay and energy consumption of computing of the user equipment. The cloud computing layer is used for processing large-scale computing and high-complexity computing. An unloading task is firstly sent to a fog receiving node away from a local layer through a wireless network, uploaded to a fog computing layer through the fog receiving node and finally uploaded to a cloud computing layer through the fog computing layer, and a user independently decides whether to unload the task to a fog server for computing or not. The fog server can decide whether to unload the task to the cloud server on the upper layer again for calculation or not. The system has the advantages that the optimal unloading decision of each unloading task is given in a short time, the average accuracy of unloading is high, each neural network model is optimized, and the convergence state can be achieved more quickly.

Owner:NORTH CHINA UNIVERSITY OF TECHNOLOGY

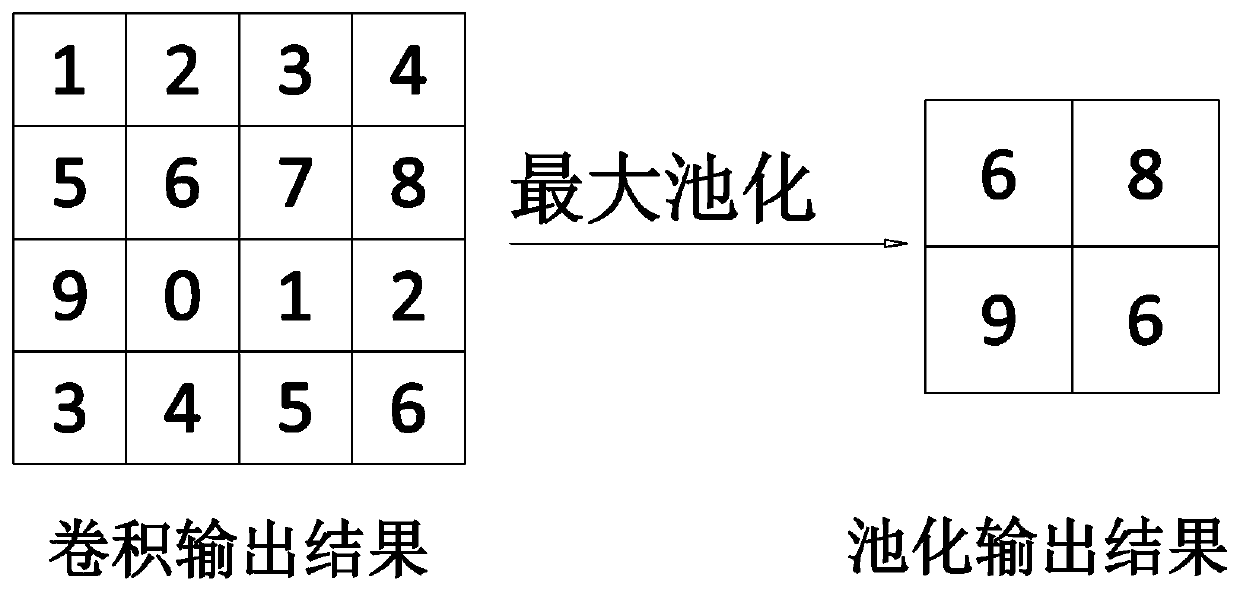

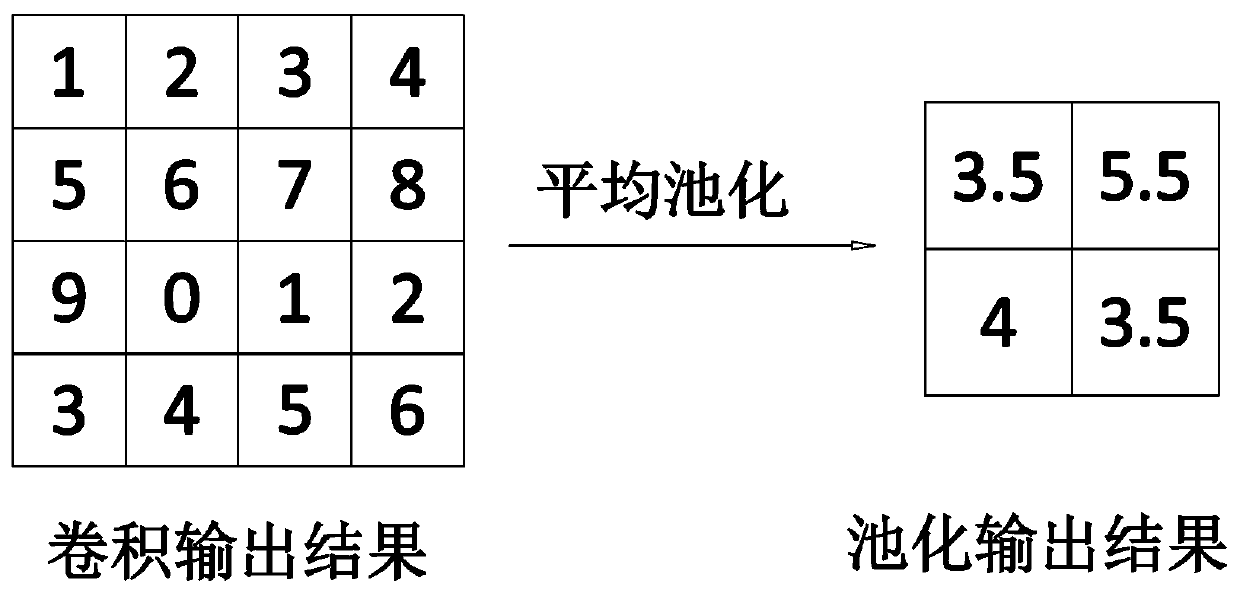

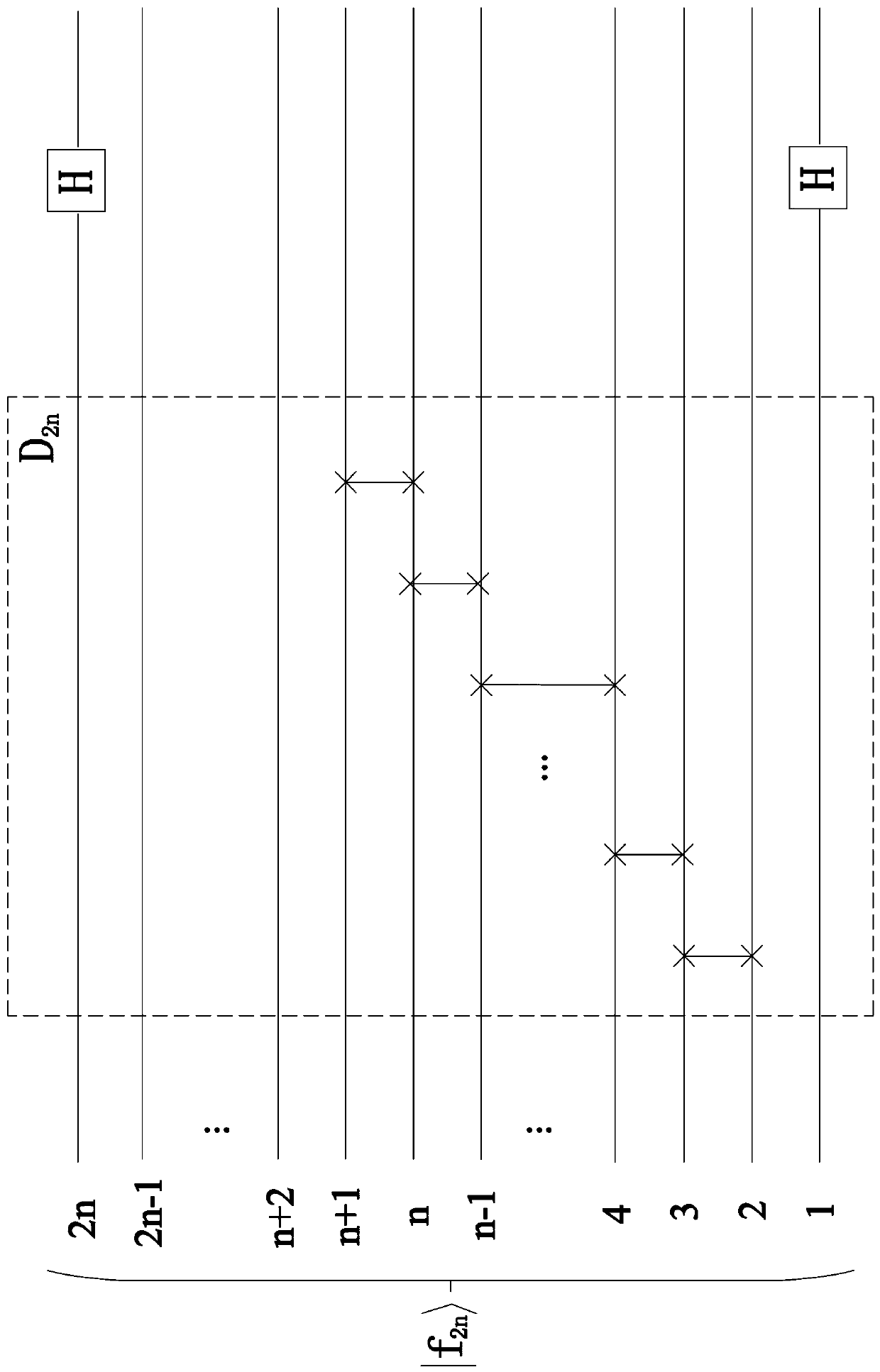

Quantum average pooling calculation method

PendingCN111340193AEasy transferEfficient storageQuantum computersNeural architecturesExtreme scale computingParticle physics

The invention relates to a quantum average pooling calculation method. The method comprises the following steps: expanding quantum convolution result information | fn > length and width n-qbit by rowto obtain | f2n > = (c0, c1, c2,..., c2n) T as input information, and inputting a quantum state | f2n > into a built quantum average pooling line model; under the action of an exchange gate D2n, keening the position of the absolute value of f2n > the quantum state ground state unchanged; performing H gate action on the first quantum bit and the 2nth quantum bit, performing unit matrix action on the rest quantum bits, and performing operating at the moment to obtain a quantum state ground state of addition, subtraction and addition, which represents a tensor product; outputting a quantum average pooling result through the acted quantum state measurement when the measurement result is an | 00 > state. The calculation method provided by the invention has a super-calculation capability which cannot be compared by a classic computer, and provides a solution for large-scale calculation problems such as artificial intelligence and deep learning convolutional neural networks.

Owner:HUBEI NORMAL UNIV

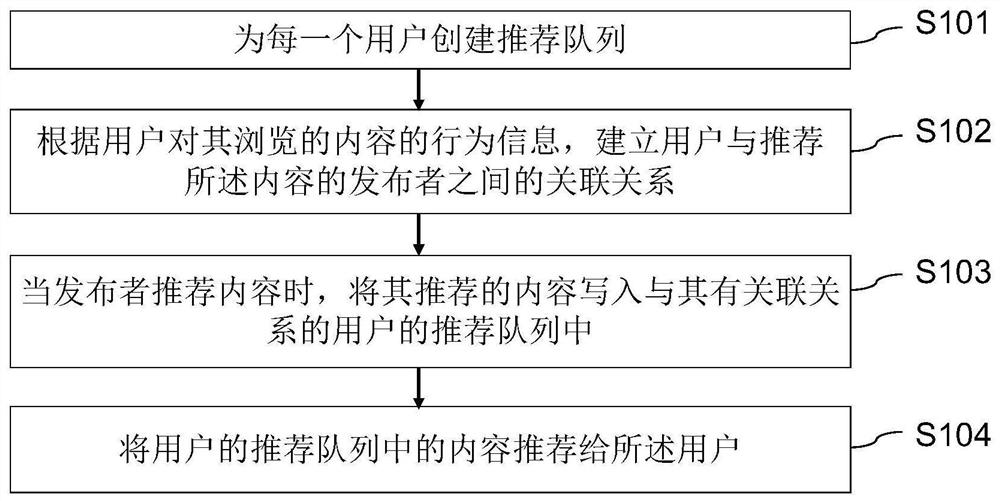

Content recommendation method and system, computer equipment and storage medium

ActiveCN112231590AAvoid consumptionGuaranteed recommendation efficiencyDigital data information retrievalEnergy efficient computingEngineeringExtreme scale computing

The invention provides a content recommendation method and system, computer equipment and a readable storage medium. The method comprises the steps of creating a recommendation queue for each user; according to the behavior information of the content browsed by the user, establishing an association relationship between the user and a publisher recommending the content; when the publisher recommends the content, writing the recommended content into a recommendation queue of the user associated with the publisher; and recommending the content in the recommendation queue of the user to the user.According to the technical scheme, one recommendation queue can be maintained for each user, the list content is updated in real time based on the behaviors of the users, the recommendation data is directly taken out from the list every time the users obtain the recommendation data, the recommendation content is adjusted in real time based on the behaviors of the users while large-scale computingresource consumption is avoided, and the recommendation efficiency is guaranteed.

Owner:CHINA UNITED NETWORK COMM GRP CO LTD

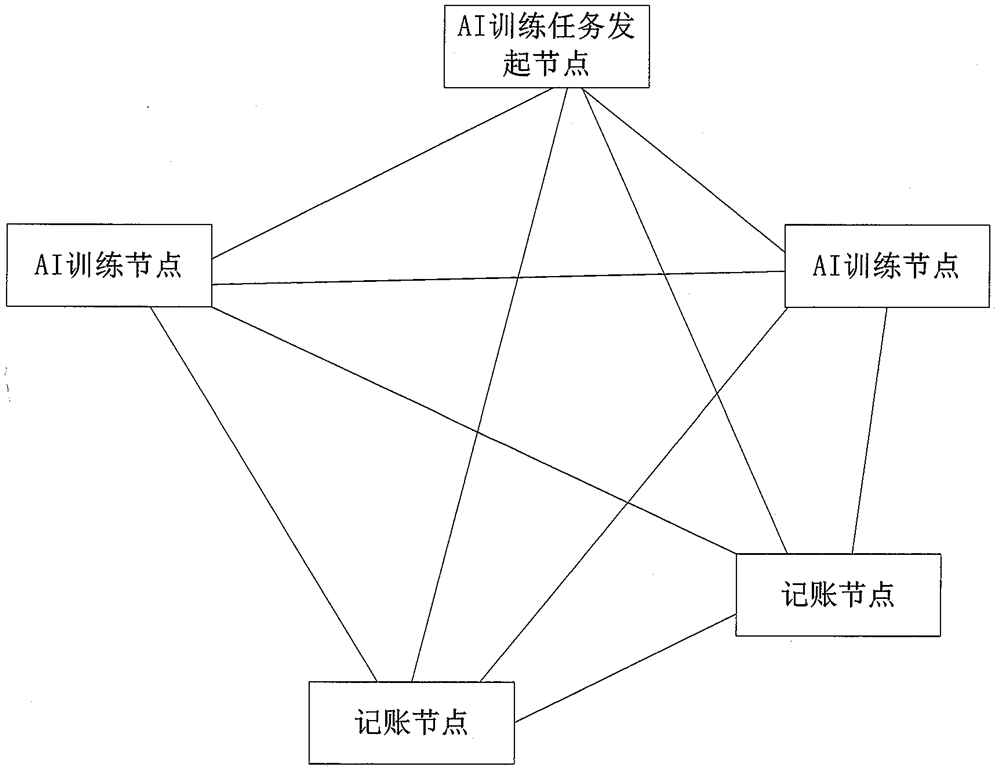

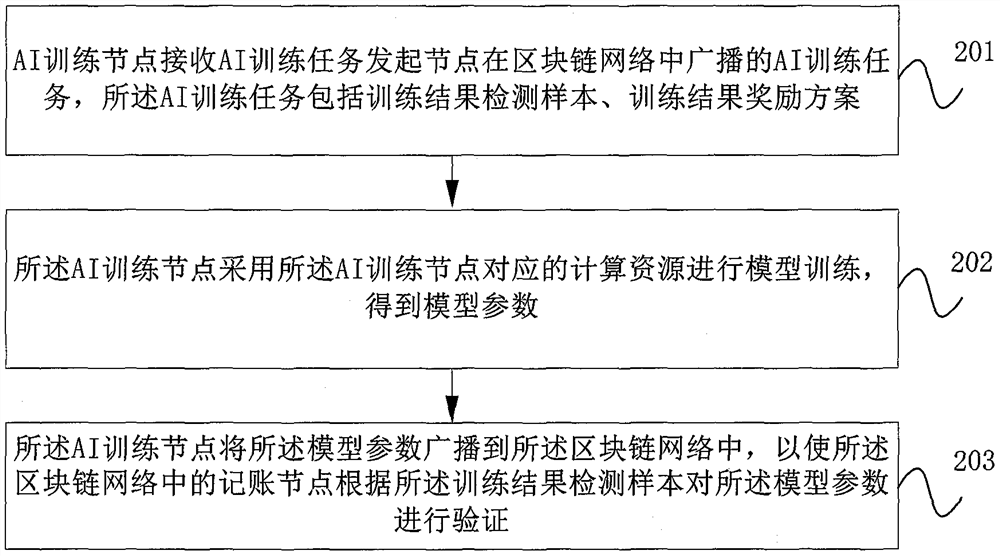

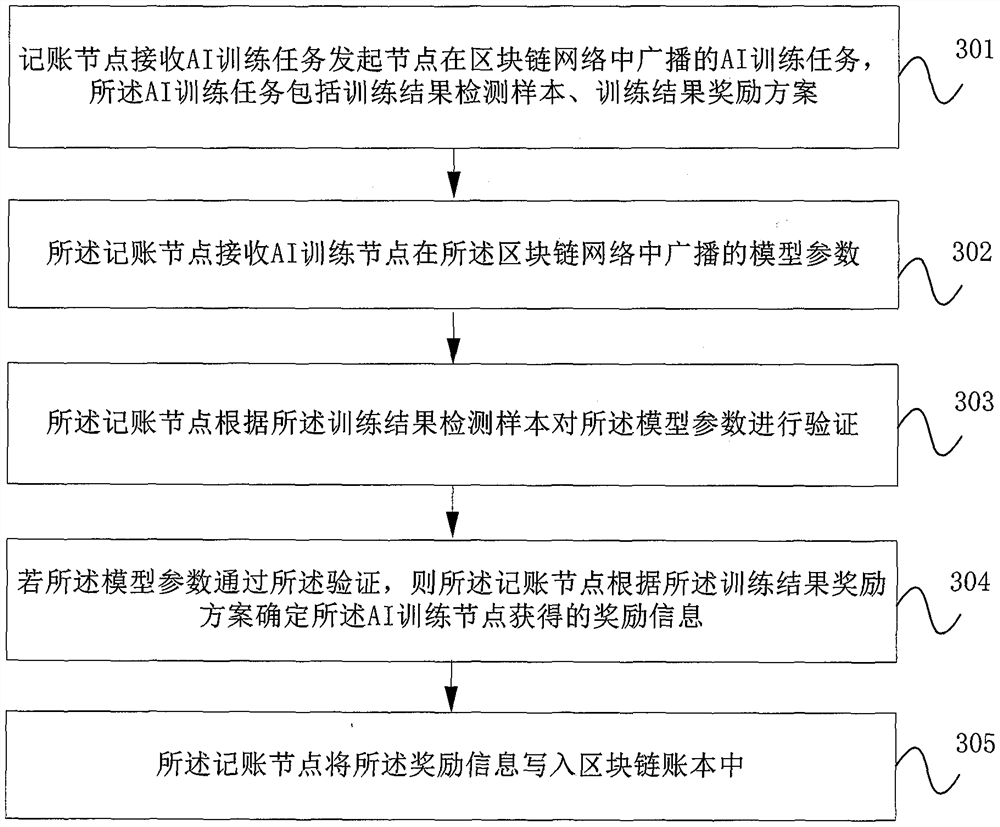

Artificial intelligence training method and device based on block chain and storage medium

PendingCN111858752AIncrease profitLow costDatabase distribution/replicationMachine learningComputer clusterEngineering

The embodiment of the invention provides an artificial intelligence training method and device based on a block chain and a storage medium. According to the embodiment of the invention, the method comprises: the AI training task initiating node broadcasting the AI training task in the blockchain network; the AI training node with the computing resources in the block chain network can perform modeltraining by adopting the own computing resources to obtain the model parameters, and furthermore, the AI training node can broadcast the model parameters to the block chain network. That is to say, it is possible to obtain a product, idle computing resources can be integrated for model training through the block chain network. For example, an AI training task is issued on a block chain network, so that nodes with computing resources or training samples in the block chain network can jointly complete the AI training task, the cost required for constructing a large-scale computer cluster is saved, and meanwhile, the utilization rate of the computing resources is increased.

Owner:全链通有限公司

Techniques for modifying a rules engine in a highly-scaled computing environment

One embodiment of the present invention sets forth a technique for modifying a rules engine implemented in a highly-scaled computing environment. The technique includes receiving rules data that include a first operation, wherein the first operation is from a set of pre-defined operations and includes at least one dimension that is from a set of pre-defined dimensions and building a list of rules based on the rules data, wherein the list of rules filters an extended list of entries based on the first operation and on a first value that corresponds to the at least one dimension. The technique further includes receiving a request to generate a filtered list of entries, wherein the request references the first value, and, in response to receiving the request, applying the list of rules to the extended list of entries based on the first value to generate the filtered list of entries.

Owner:NETFLIX

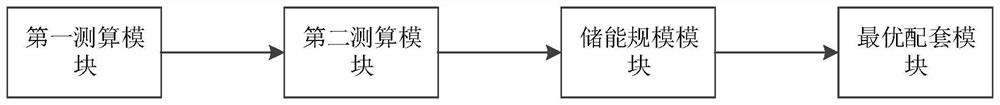

Method and system for measuring and calculating reasonable energy storage scale matched with new energy power station of power system

PendingCN114142470AProfit maximizationAccuracy Electricity CostGeneration forecast in ac networkSingle network parallel feeding arrangementsPower stationElectricity system

The invention discloses a power system new energy power station matched energy storage reasonable scale calculation method and system, and the method comprises the steps: carrying out the hour-level production simulation with the lowest operation cost as an objective function for a target power system, and calculating the total new energy annual energy output and energy storage power consumption of the target power system; carrying out production simulation on the target power system comprising the incremental new energy power station, measuring and calculating the total new energy annual energy output and the energy storage loss power consumption of the target power system, and calculating the true cost per kilowatt-hour of the incremental new energy power station; a unit energy storage scale is given, annual cost converted by total investment and operation maintenance cost is calculated, and different energy storage scale sequences are obtained through sorting from small to large; and performing production simulation on the power systems under different energy storage scales contained in the energy storage scale sequence, calculating the energy storage cost per kilowatt-hour of the increment new energy power station one by one, and comparing to obtain the optimal matched energy storage scale. The method can help the increment new energy power station to measure the reasonable scale of matched energy storage, and achieves the maximization of the income of the power station.

Owner:ECONOMIC RES INST OF STATE GRID GANSU ELECTRIC POWER +1

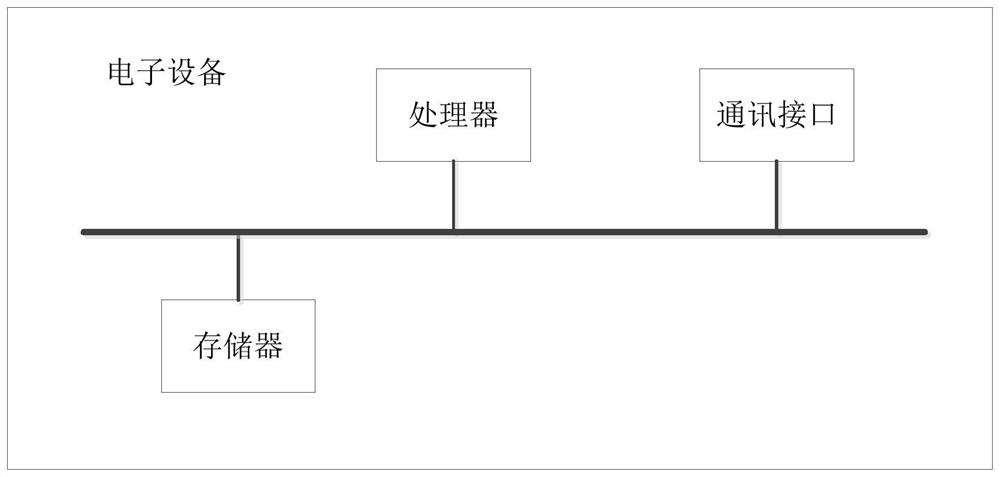

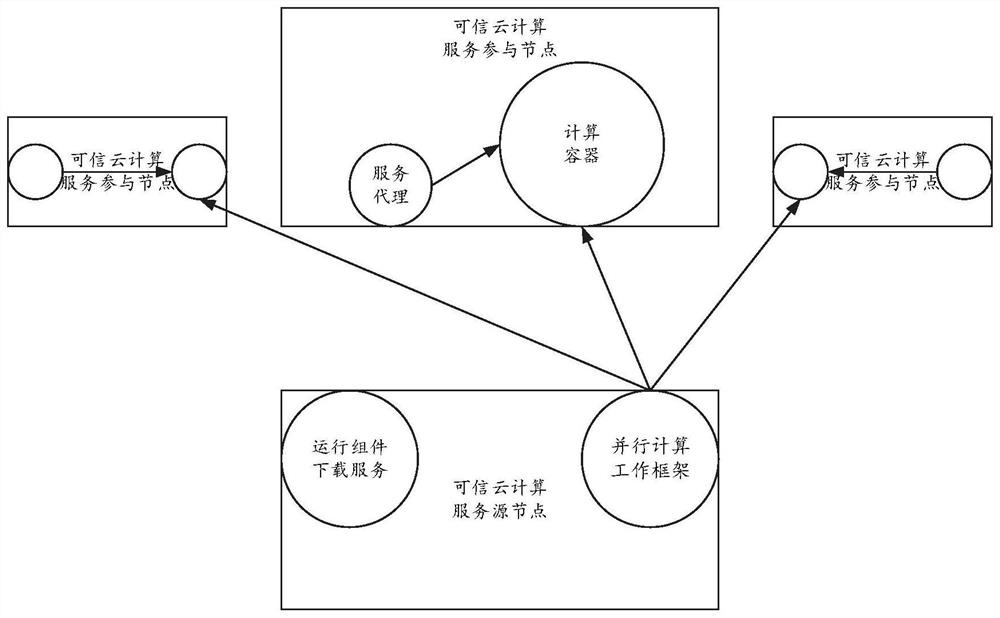

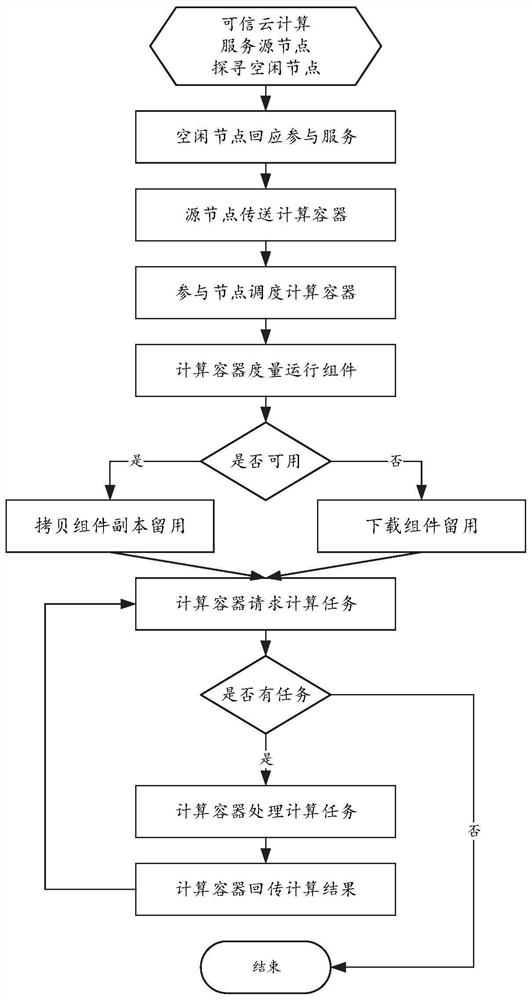

Trusted cloud-based large-scale computing service configuration method and system

ActiveCN112597502AIncrease profitImplement Trusted Computing ServicesPlatform integrity maintainanceSoftware simulation/interpretation/emulationConcurrent computationParallel computing

The invention provides a trusted cloud-based large-scale computing service configuration method and a system. The method comprises the steps: a computing service network is defined in advance, the computing service network is enabled to comprise a plurality of computing nodes, and one trusted computing node is enabled to be arranged in each computing node and serve as a source node of a computingservice; the source node queries an idle computing node and transmits a computing container to the idle computing node; after the idle computing node receives the computing container, the computing container is started through the virtual trusted root, wherein the source node and all started computing containers form a credible cloud which can be flexibly expanded; and the source node decomposes acomputing task into a plurality of sub-tasks based on a parallel computing work framework, allocates the sub-tasks to a computing container in the computing service network, and completes the computing task. By utilizing a large number of idle computing nodes which do not realize trusted computing in the network, large-scale trusted computing service is realized, and the utilization rate of computing resources in the network is effectively improved.

Owner:MASSCLOUDS +2

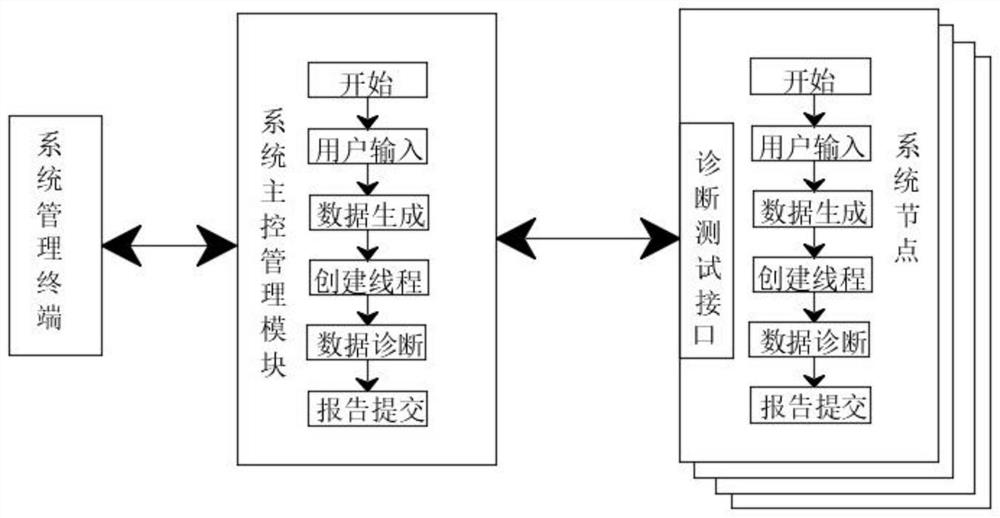

Parallel boundary scanning method based on large-scale computer system

InactiveCN113254280AImprove good performanceImprove parallelismFaulty hardware testing methodsConcurrent computationSystems management

The invention relates to the technical field of boundary scanning of computer systems, and discloses a parallel boundary scanning method based on a large-scale computer system, which comprises the following steps: 1, system-level parallel boundary scanning is performed on a system main control management module by a system management terminal, a corresponding number of threads are created according to the number of parallel connections on the system main control management module; 2, the running system master control management module performs out-of-band diagnosis test on parallel computer nodes. The diagnosis test module and the serial port terminal module are arranged in the system main control management module, so that the system main control management module can dynamically debug and manage the node controller under the condition of not influencing the normal operation of the system node, and can access the memory storage unit, the internal register and the external equipment, dynamic implicit faults and partial explicit static faults occurring in system operation can be detected.

Owner:杨婷婷

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com