Parallel computing method and device for natural language processing model, equipment and medium

A technology for natural language processing and computing equipment, applied in the field of devices, parallel computing methods of natural language processing models, equipment and media, can solve the problems of general acceleration effect and low applicability, and achieve the effect of reducing computing time and improving efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

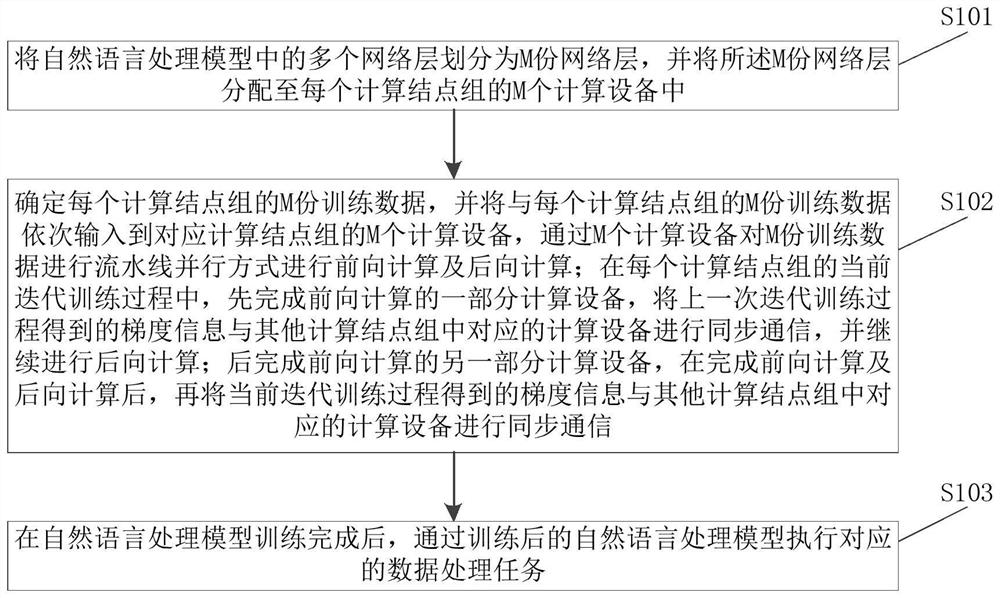

Method used

Image

Examples

Embodiment Construction

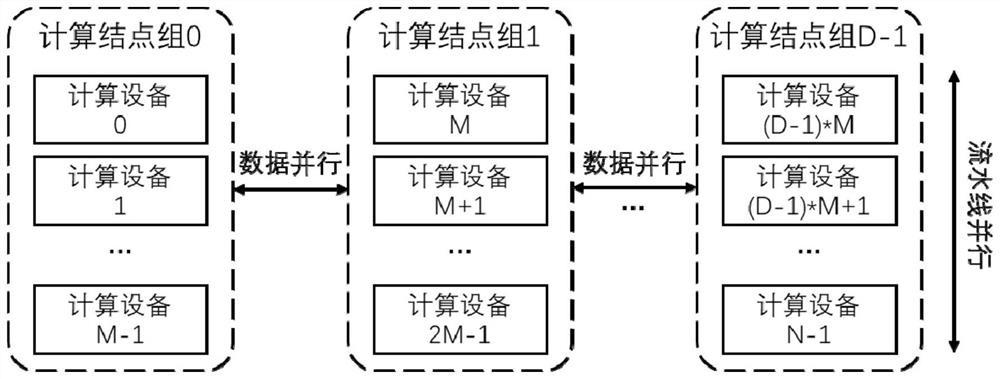

[0040] It should be noted that in the deep neural network model in the field of natural language processing, the common acceleration methods for distributed training include: data parallelism, model parallelism, and pipeline parallelism:

[0041] Data parallelism means that each device stores a copy of the model parameters, divides the training data into several parts and inputs them into the model for training, and regularly synchronizes the training results of each device to achieve the purpose of collaborative training. The disadvantage of this method is: the amount of data that needs to be communicated during synchronization is large, and the overhead of synchronization will slow down the training speed, which is more obvious in the distributed training of large-scale nodes. In addition, the storage space of the device needs to be larger than the size of the model, which requires high hardware conditions.

[0042]Model parallelism is a parallel method for large-scale neura...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com