Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

104 results about "Task mapping" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

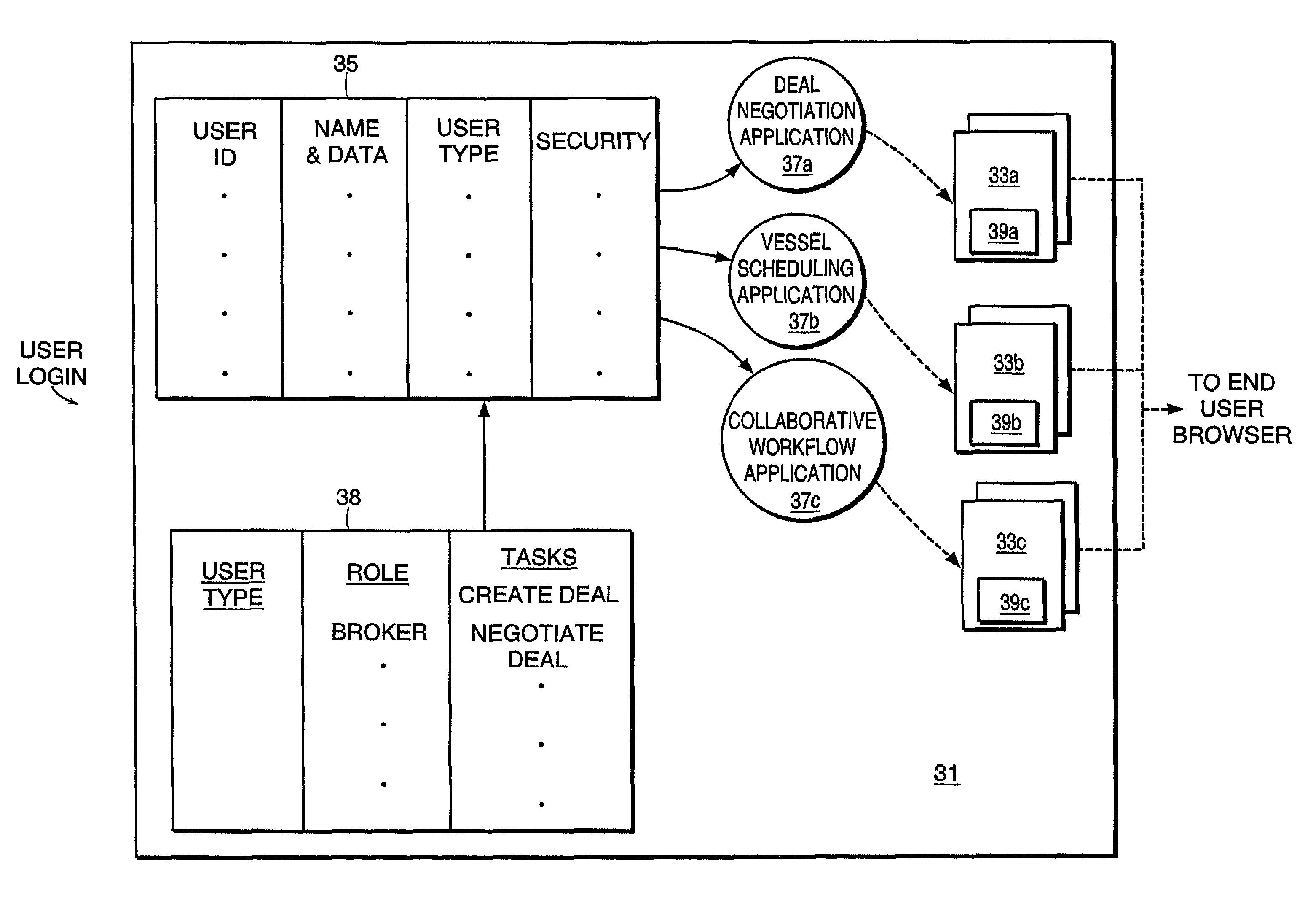

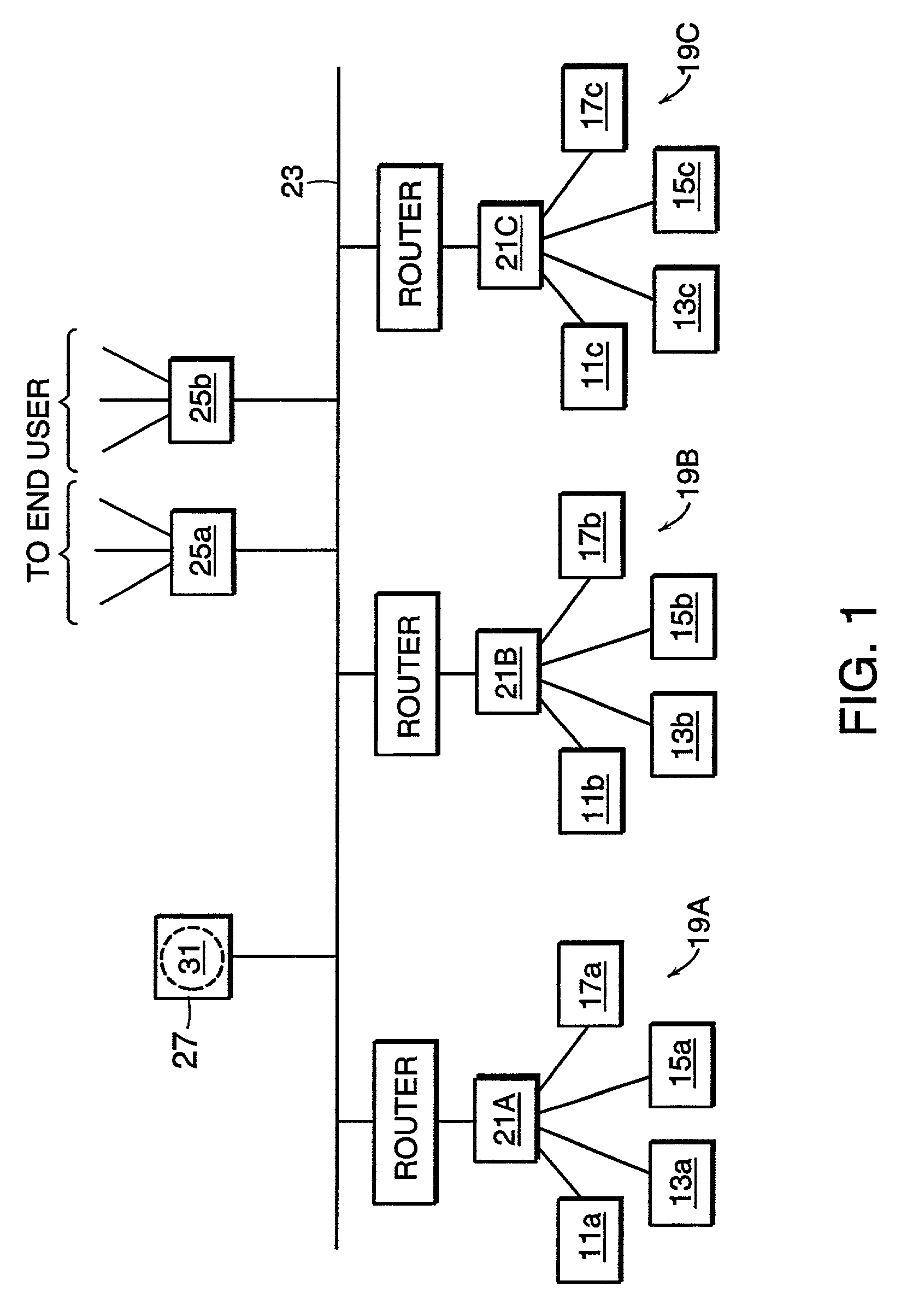

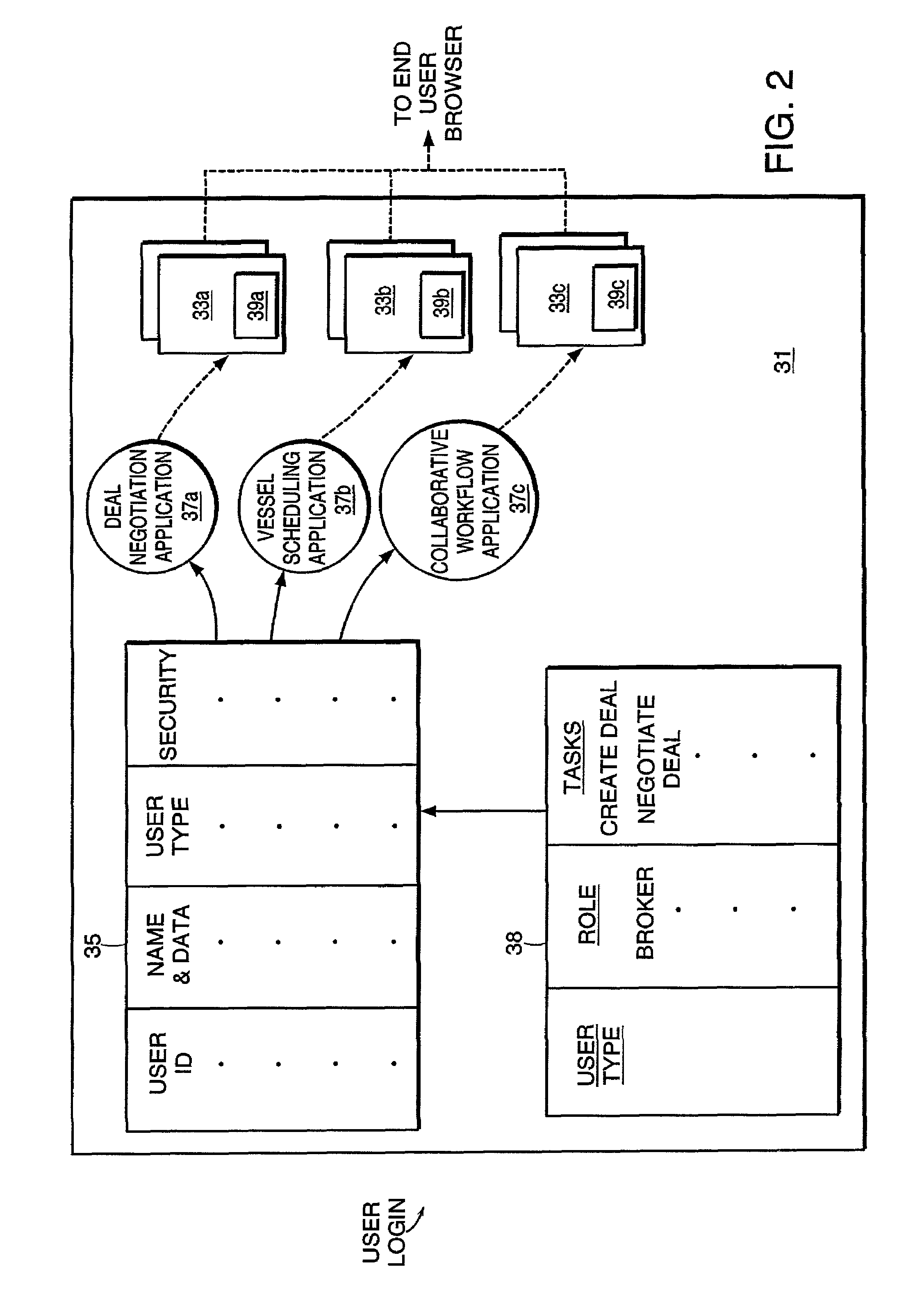

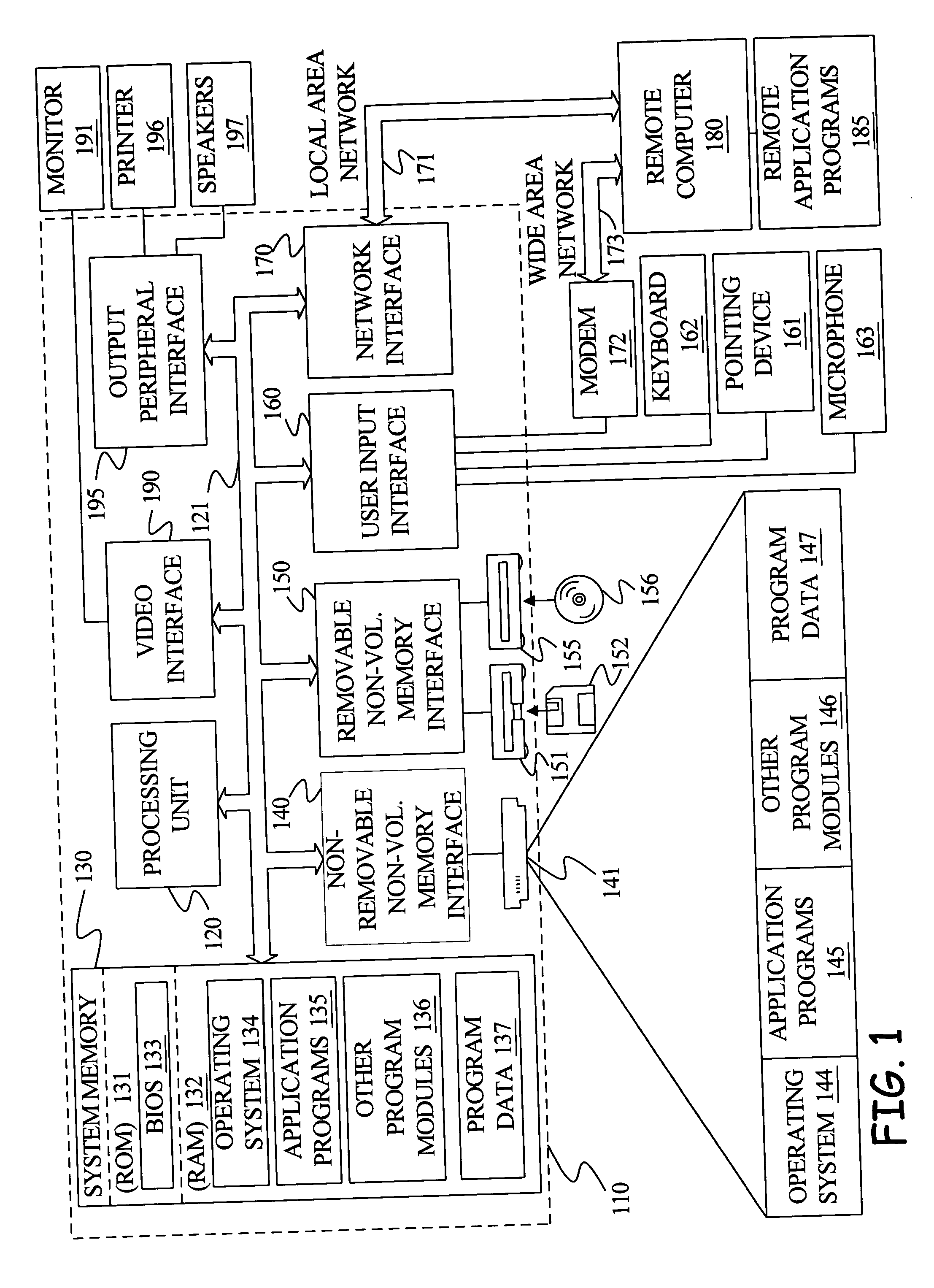

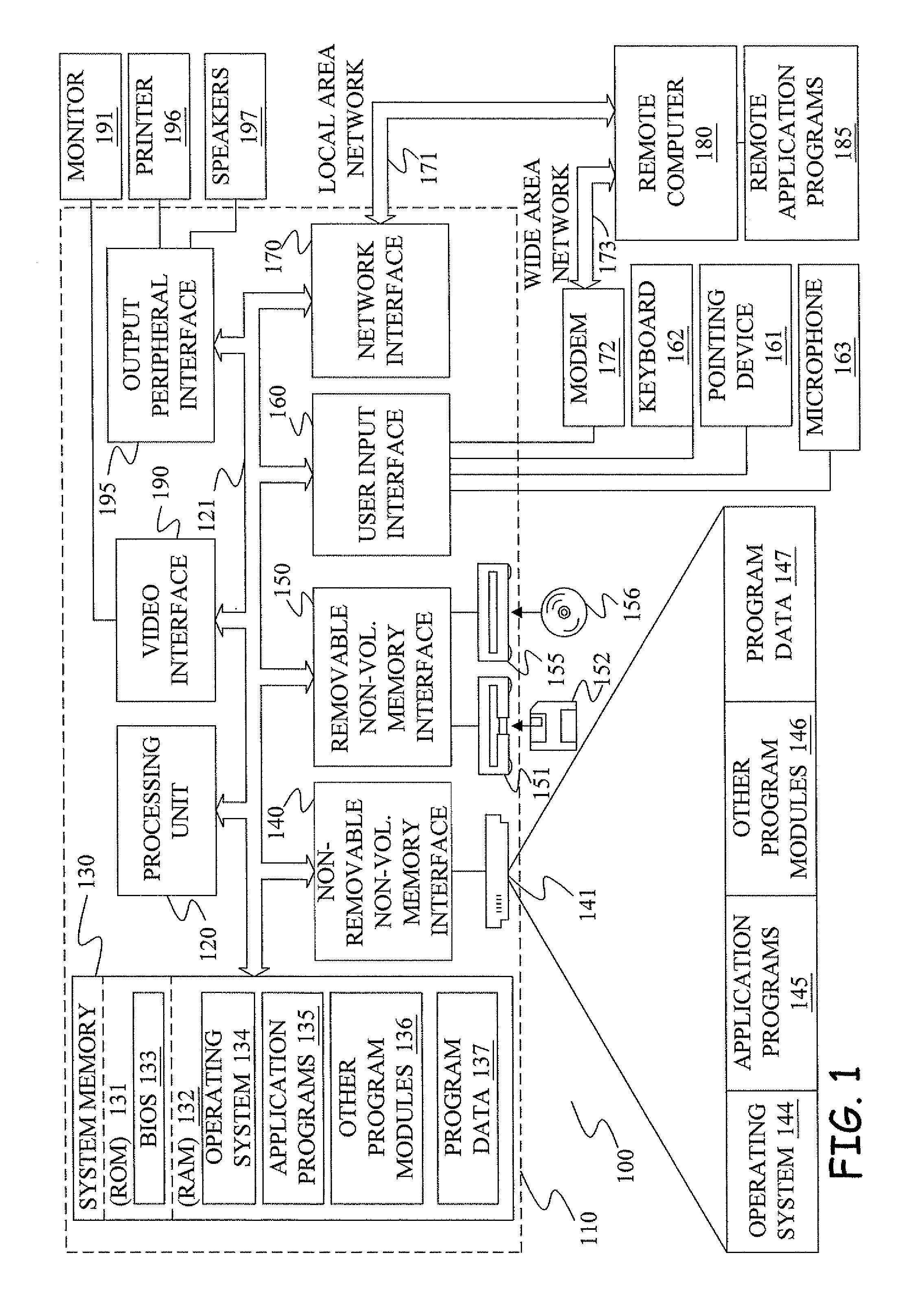

Computer system for providing a collaborative workflow environment

InactiveUS7448046B2Easy to useAccurate dataFinanceMultiprogramming arrangementsLogistics managementTask mapping

Currently lacking are effective and accurate tools to help petroleum traders and logistics personnel to make better decisions, collaborate in real-time and negotiate deals in a private and secure environment. The present invention addresses this and other needs in the industry. In particular, the present invention provides automated workflow management for a series of workflow tasks by mapping the workflow tasks to a collaborative workflow process comprising: roles, users, business processes and computer executable activities. A workflow object is received that supplies information used to set particular attributes of the roles, the users, the business activities and the computer executable activities of the collaborative workflow process. Information and data objects are shared electronically among the users performing certain of the roles. At least one of the activities is automatically executed, such that the workflow is automatically managed.

Owner:ASPENTECH CORP

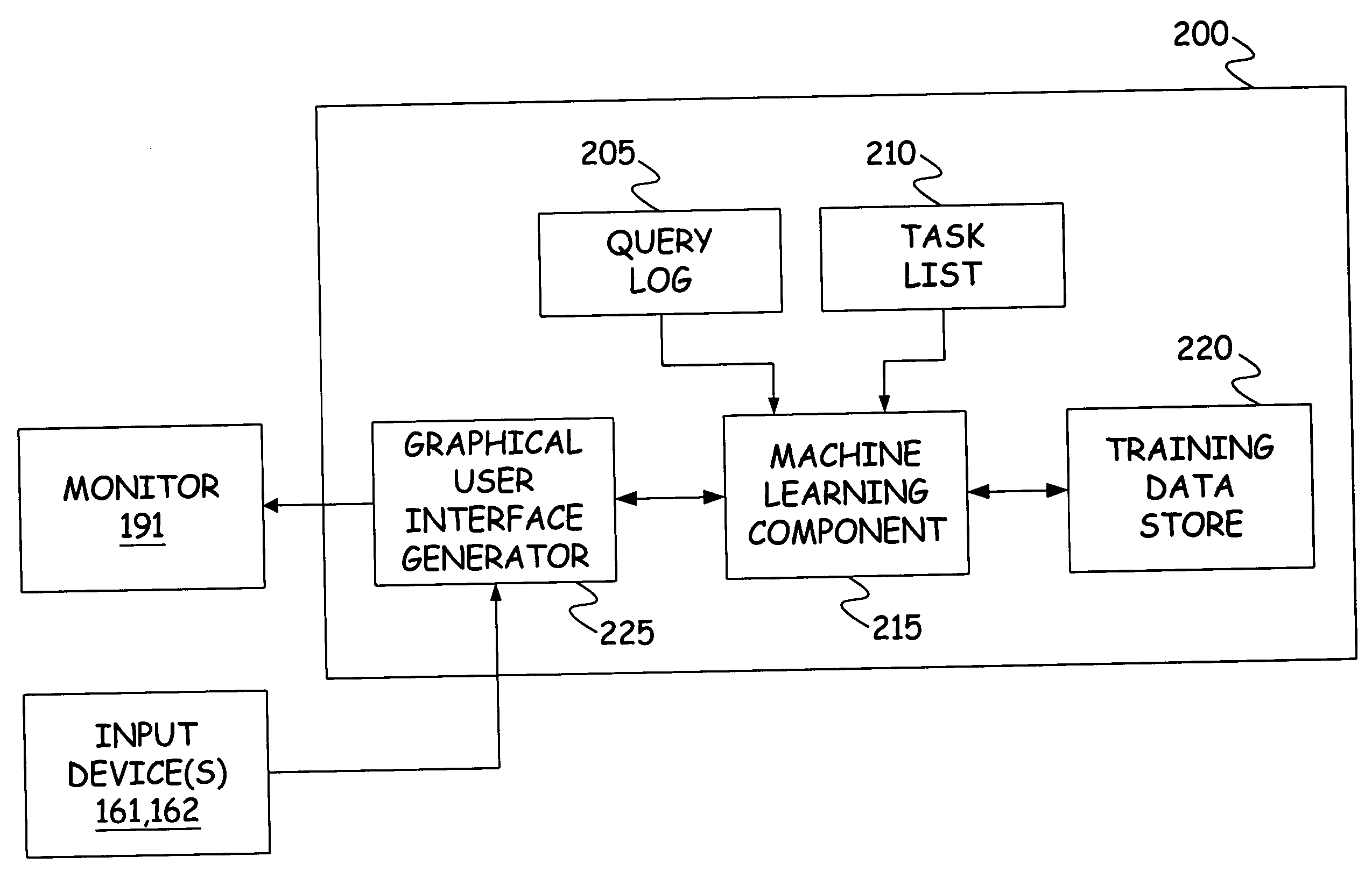

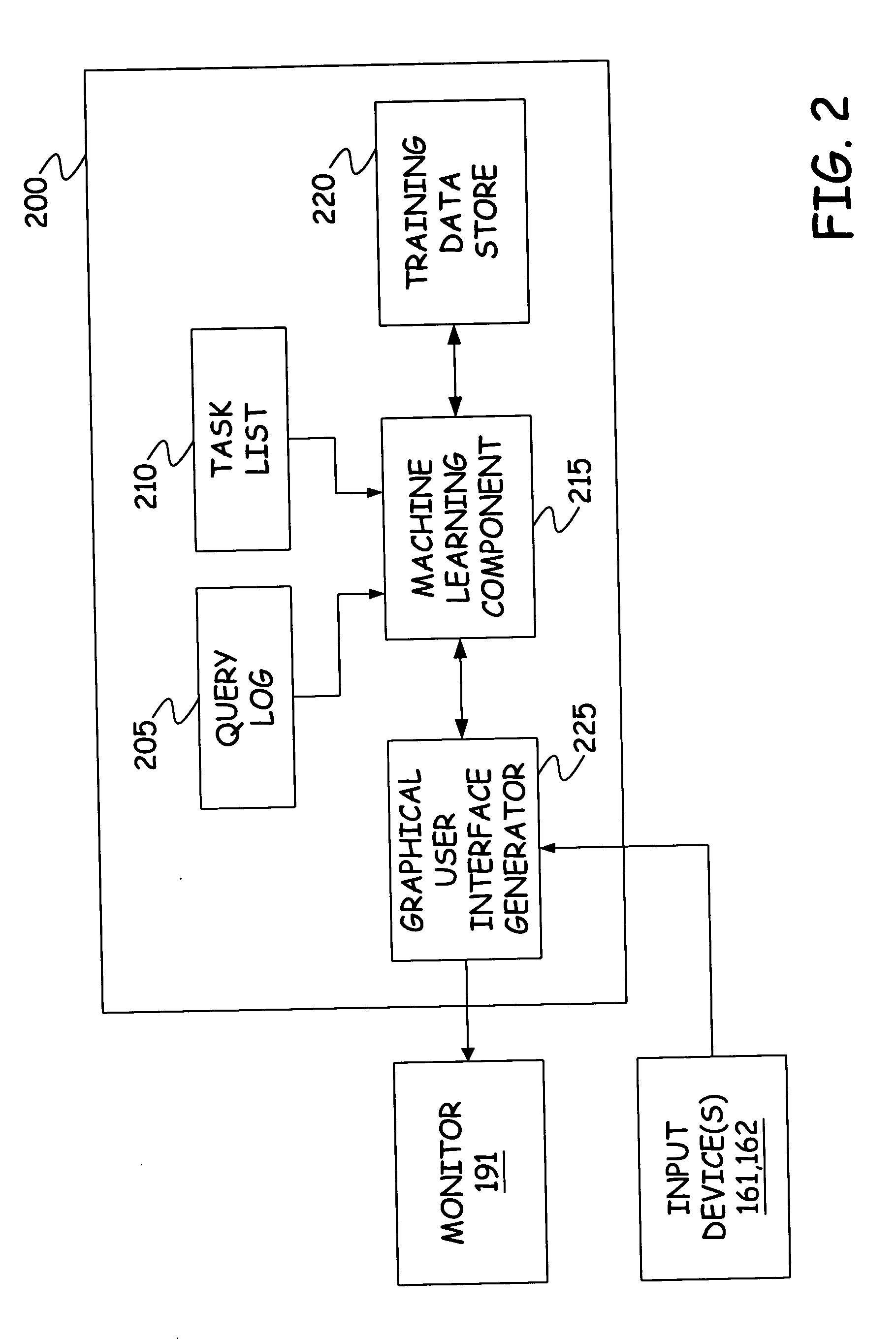

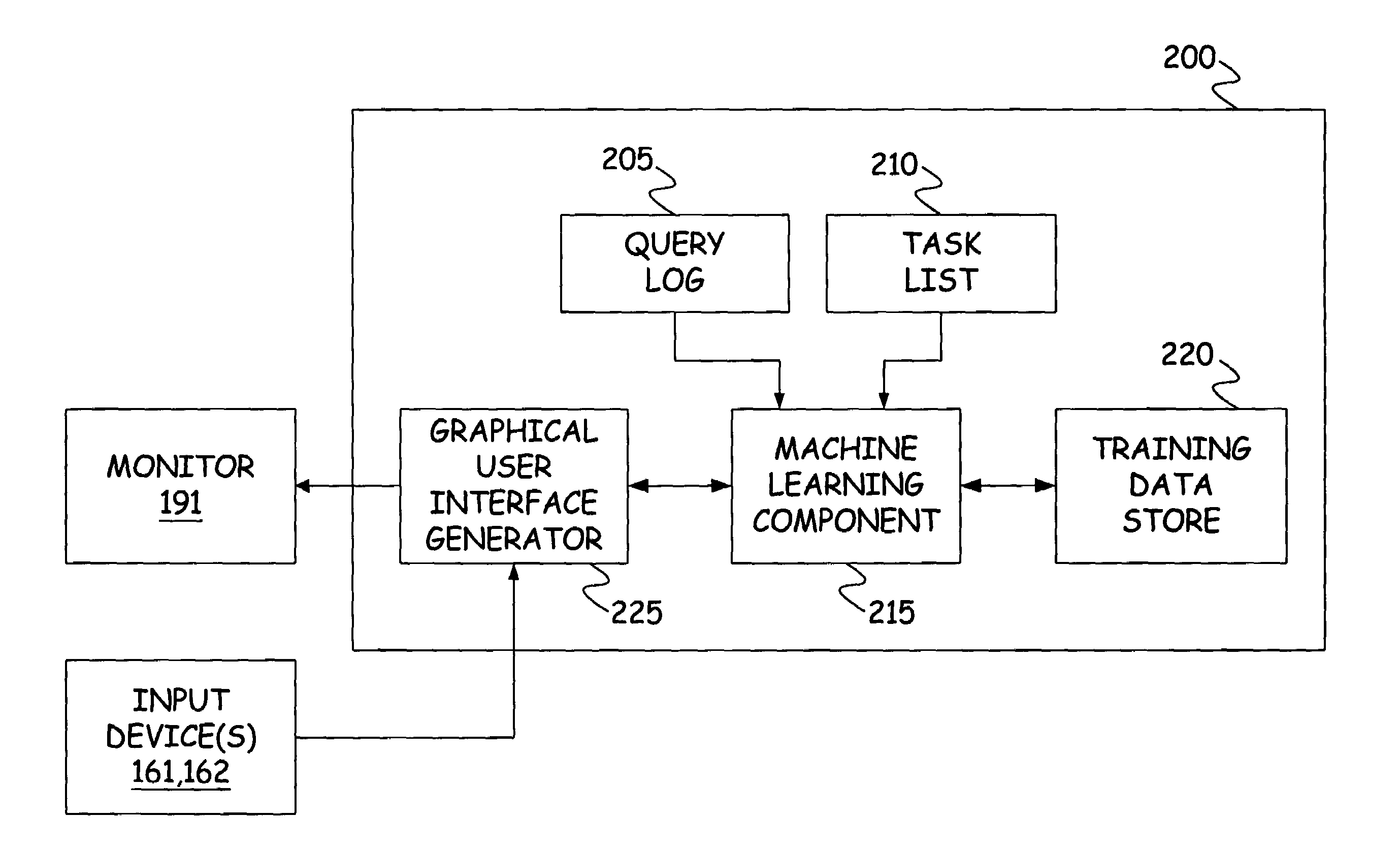

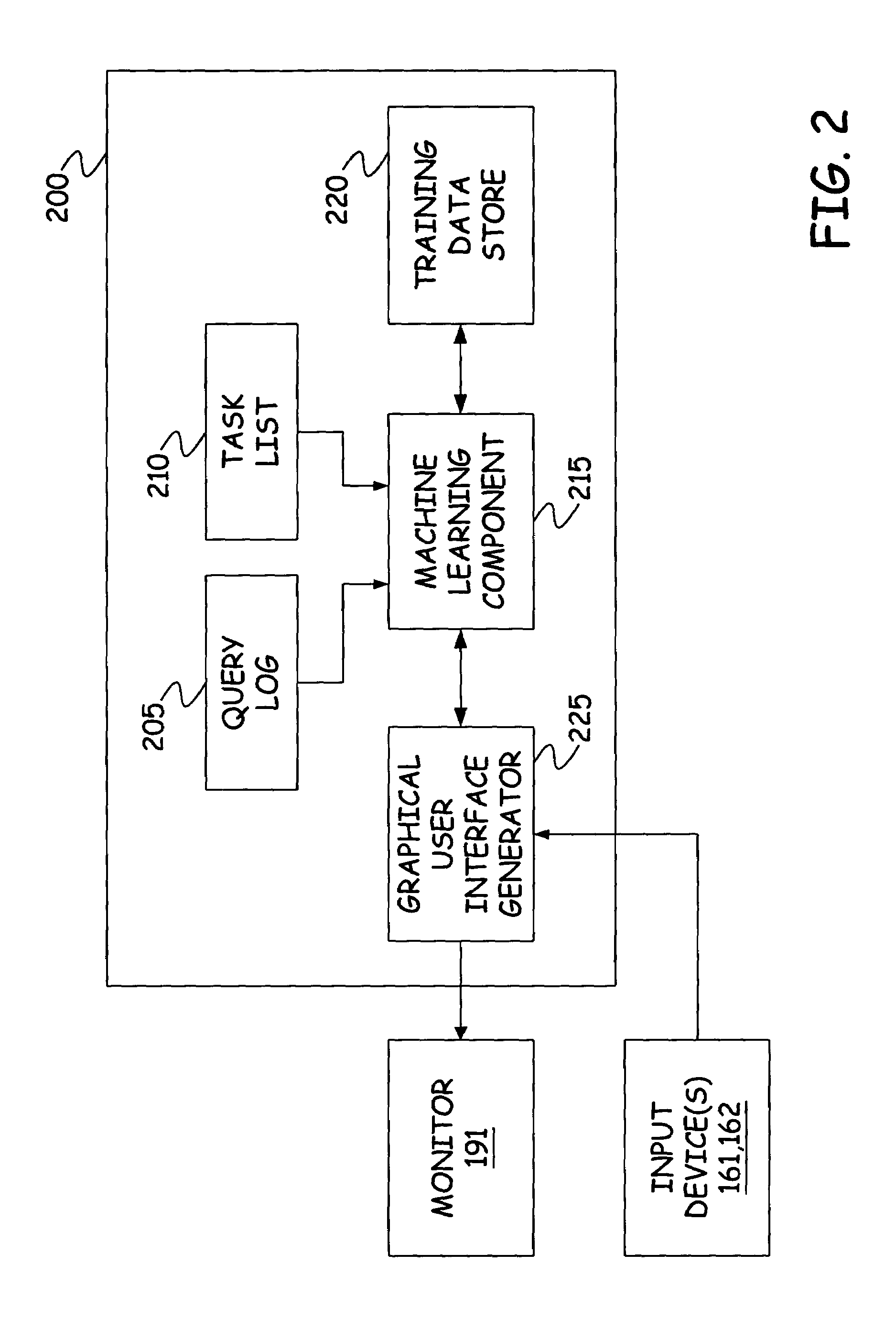

Computer aided query to task mapping

InactiveUS20050080782A1Huge taskMetadata text retrievalDigital data processing detailsGraphicsGraphical user interface

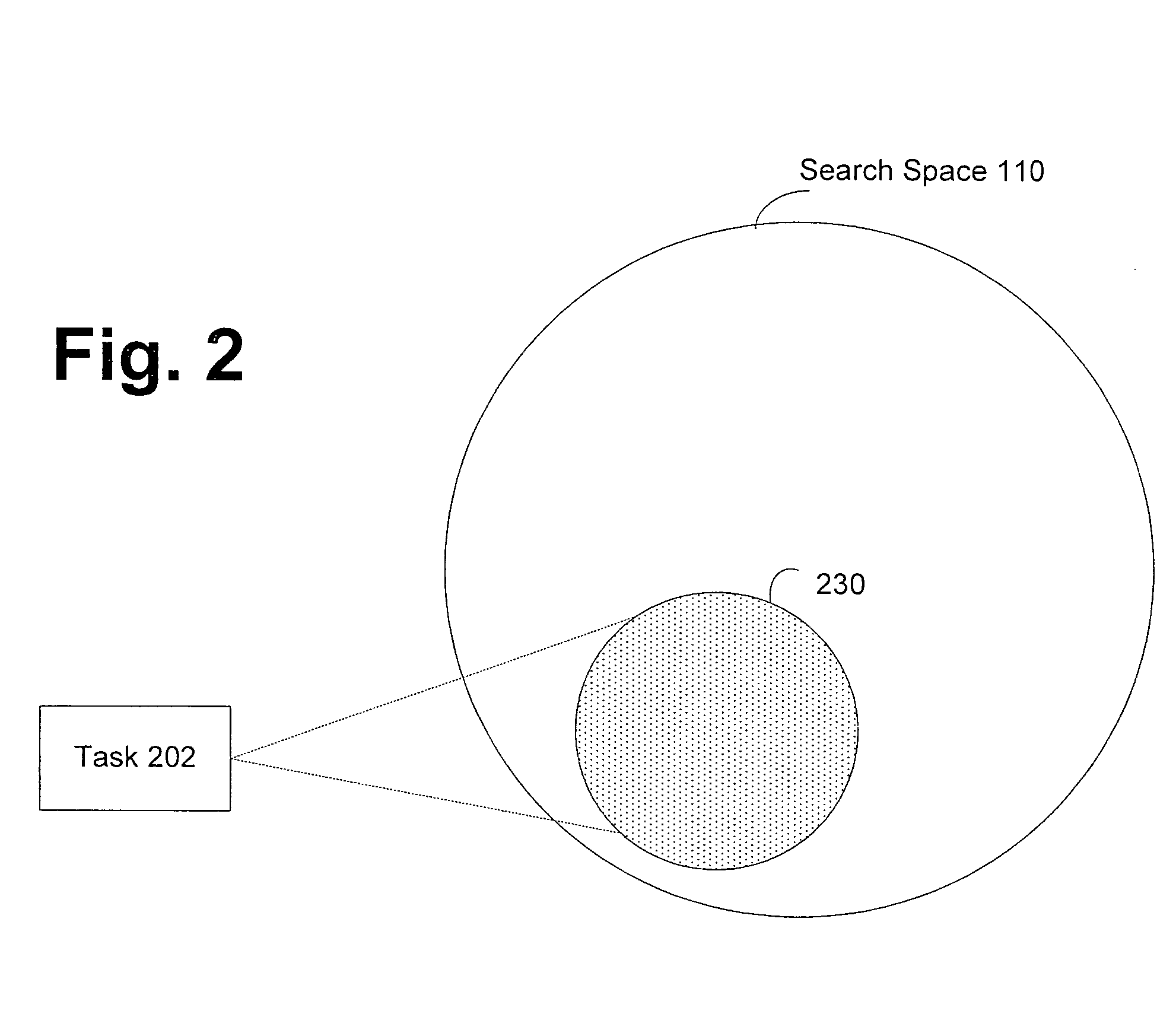

An annotating system aids a user in mapping a large number of queries to tasks to obtain training data for training a search component. The annotating system includes a query log containing a large quantity of queries which have previously been submitted to a search engine. A task list containing a plurality of possible tasks is stored. A machine learning component processes the query log data and the task list data. For each of a plurality of query entries corresponding to the query log, the machine learning component suggests a best guess task for potential query-to-task mapping as a function of the training data. A graphical user interface generating component is configured to display the plurality of query entries in the query log in a manner which associates each of the displayed plurality of query entries with its corresponding suggested best guess task.

Owner:MICROSOFT TECH LICENSING LLC

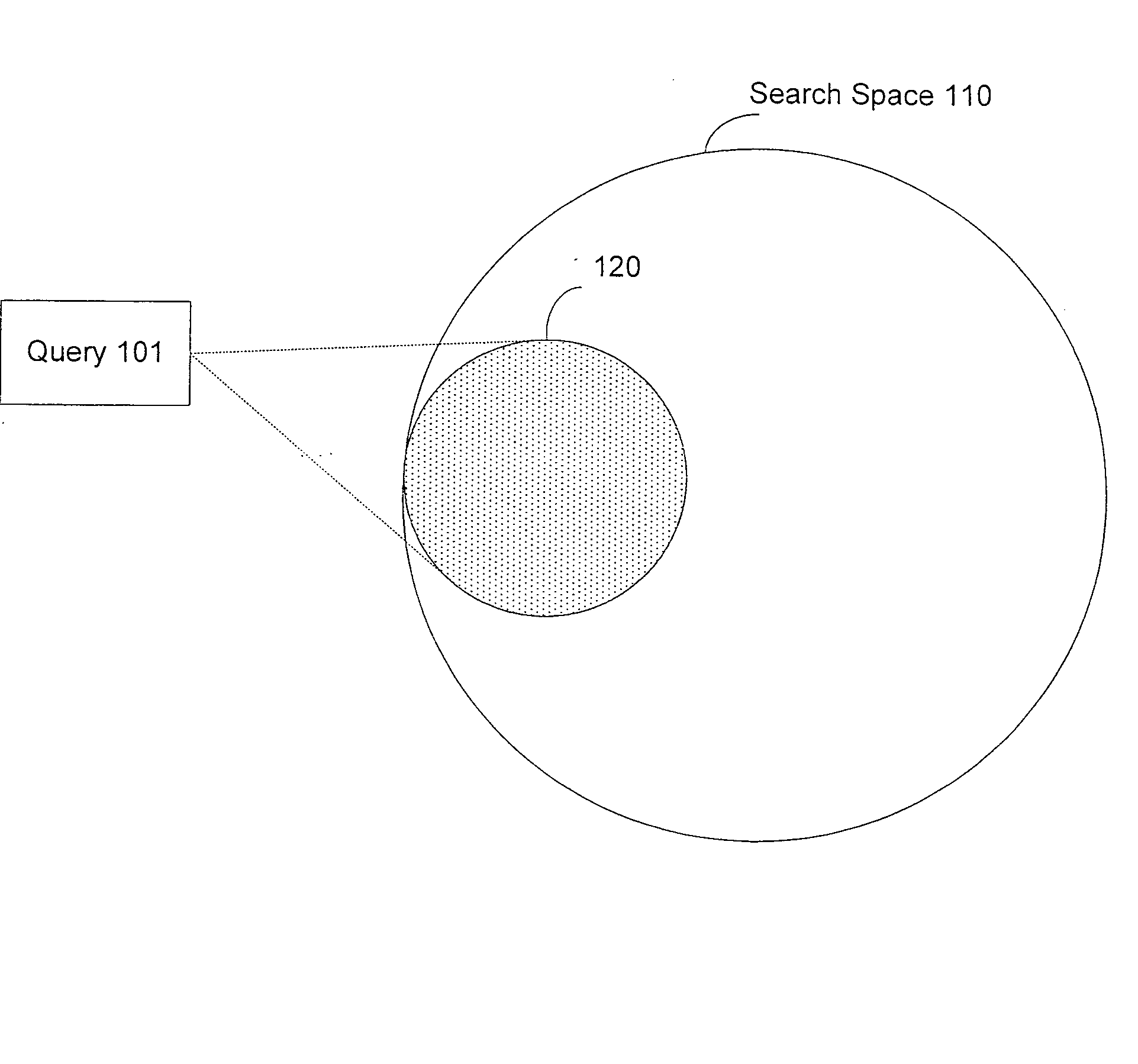

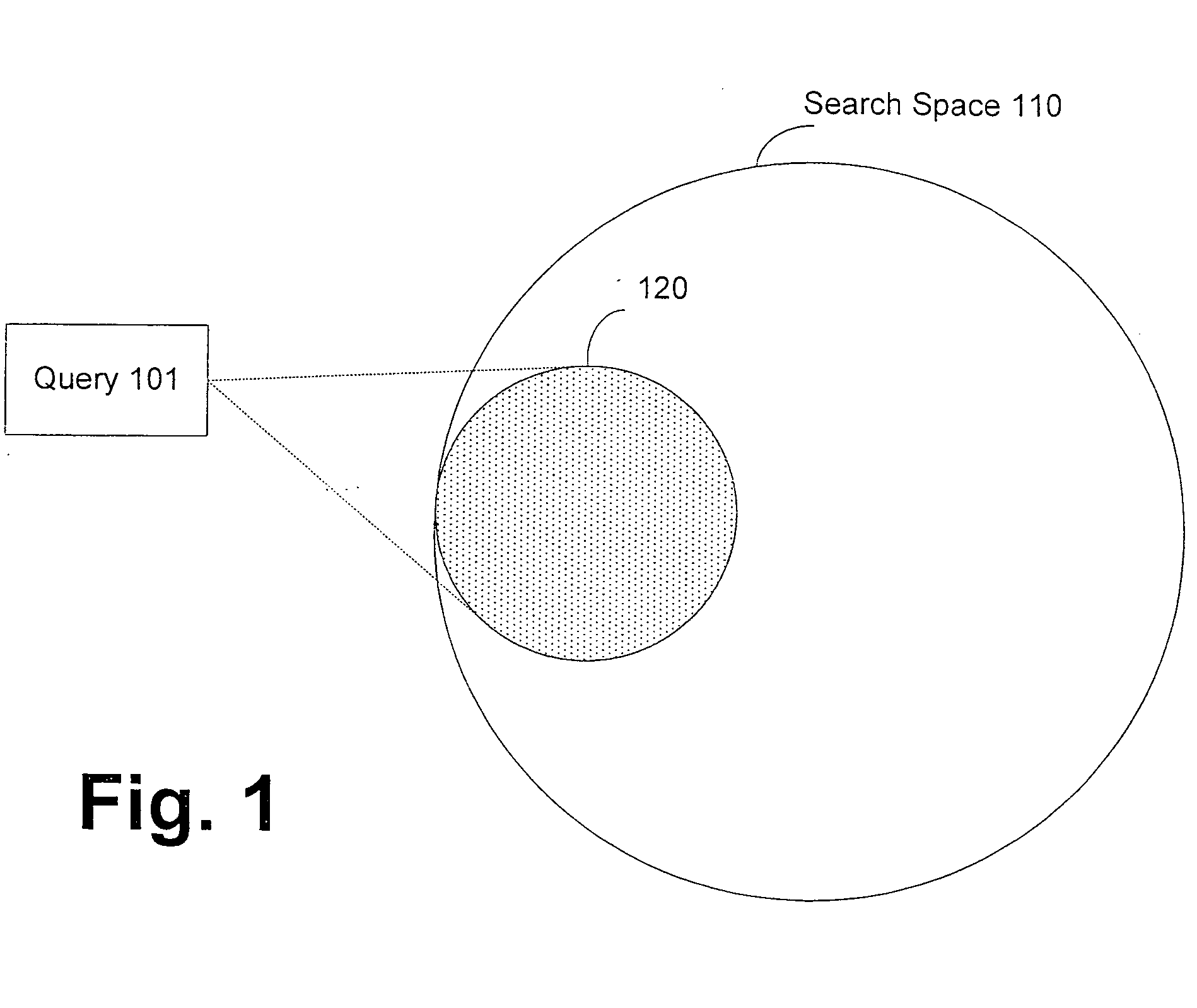

Query to task mapping

InactiveUS20050262058A1Great mapping qualityQuality improvementMetadata text retrievalDigital data processing detailsShort stringTask mapping

Owner:MICROSOFT TECH LICENSING LLC

Comprehensive scheduling and load balancing method for intelligent massive video analysis system

InactiveCN104581423AShort analysis timeIncrease usageSelective content distributionTask mappingData file

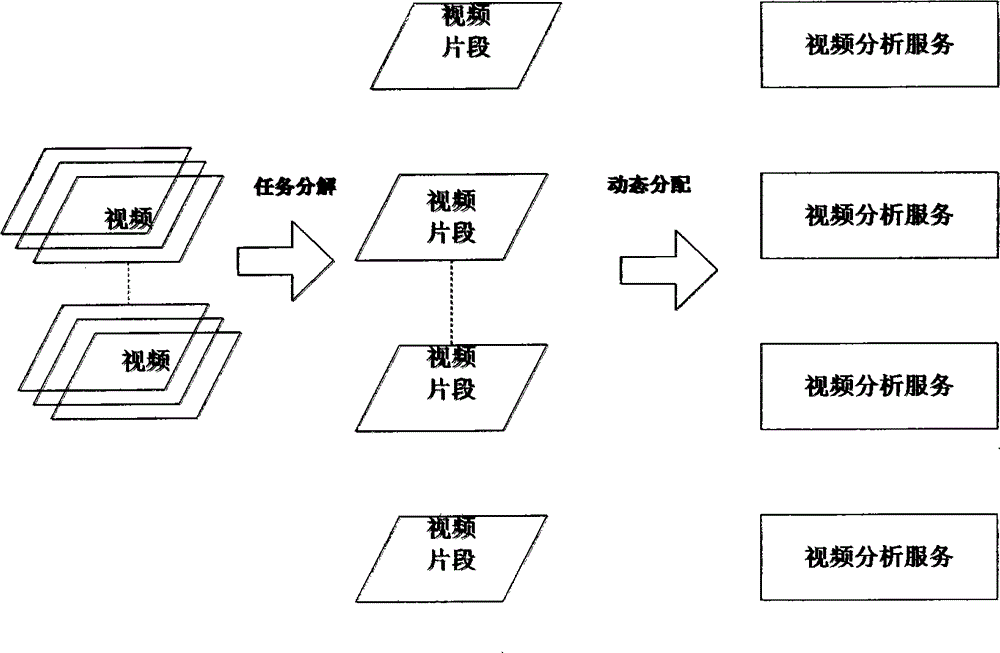

The invention relates to a comprehensive scheduling and load balancing method for an intelligent massive video analysis system. A leading end is a client side, and a background comprises a comprehensive scheduling server, multiple video analysis servers and a file transfer server, wherein the comprehensive scheduling server is responsible for receiving analysis tasks of the client side, decomposing big data files according to file size, quantity and length of time and building a task mapping model; the video analysis servers report the operation states to the comprehensive scheduling server regularly; the comprehensive scheduling server allocates the tasks to the proper video analysis servers according to the priority of the analysis tasks and the states of the video analysis servers, and meanwhile, dynamically adjusts allocation results of the tasks according to the states of the video analysis servers; the file transfer server receives analysis results of the video analysis servers and synthesizes and transfers the analysis results to the client side.

Owner:CHINA CHANGFENG SCI TECH IND GROUPCORP

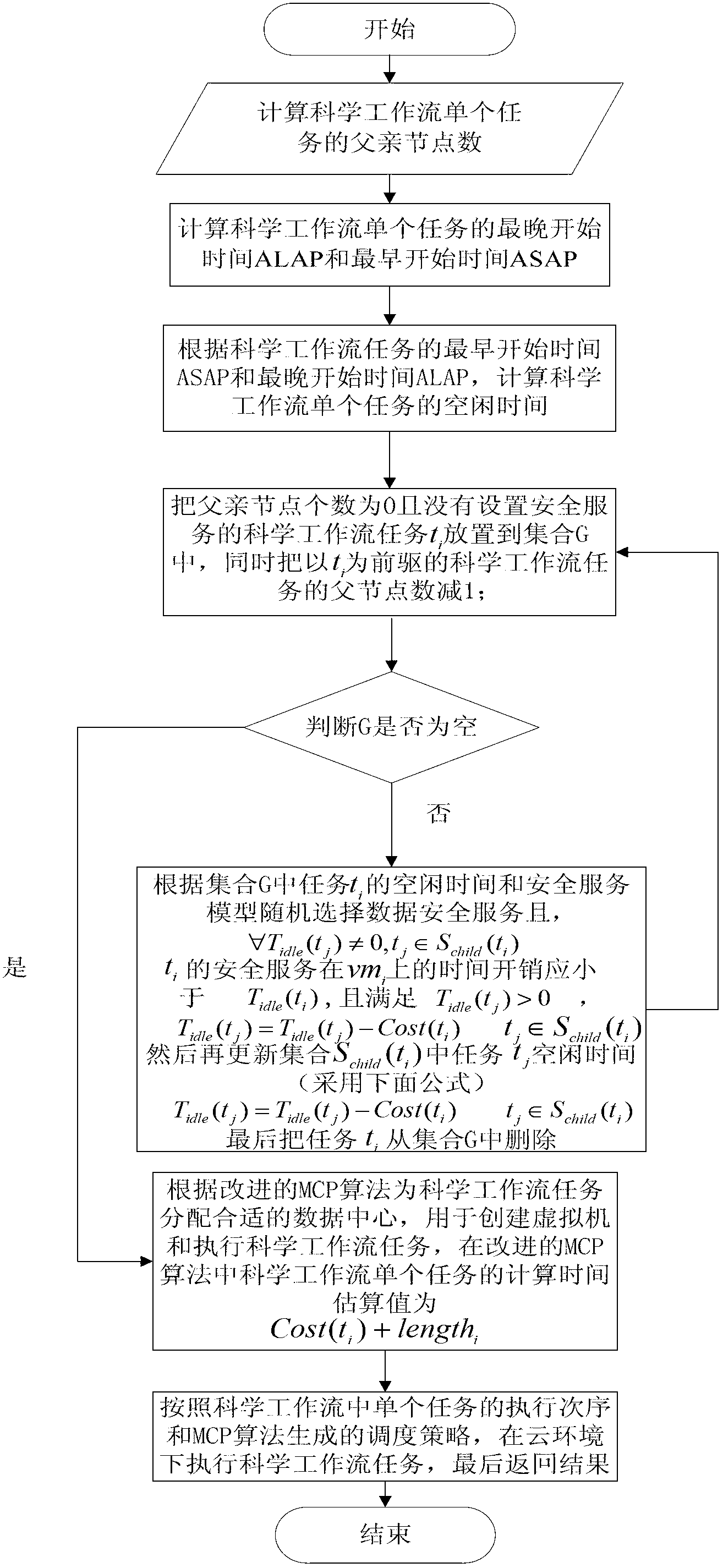

Scientific work flow scheduling method with safe perception under cloud calculation environment

InactiveCN102799957ADoes not affect scheduling performanceSecurity service is reliableResourcesService modelData center

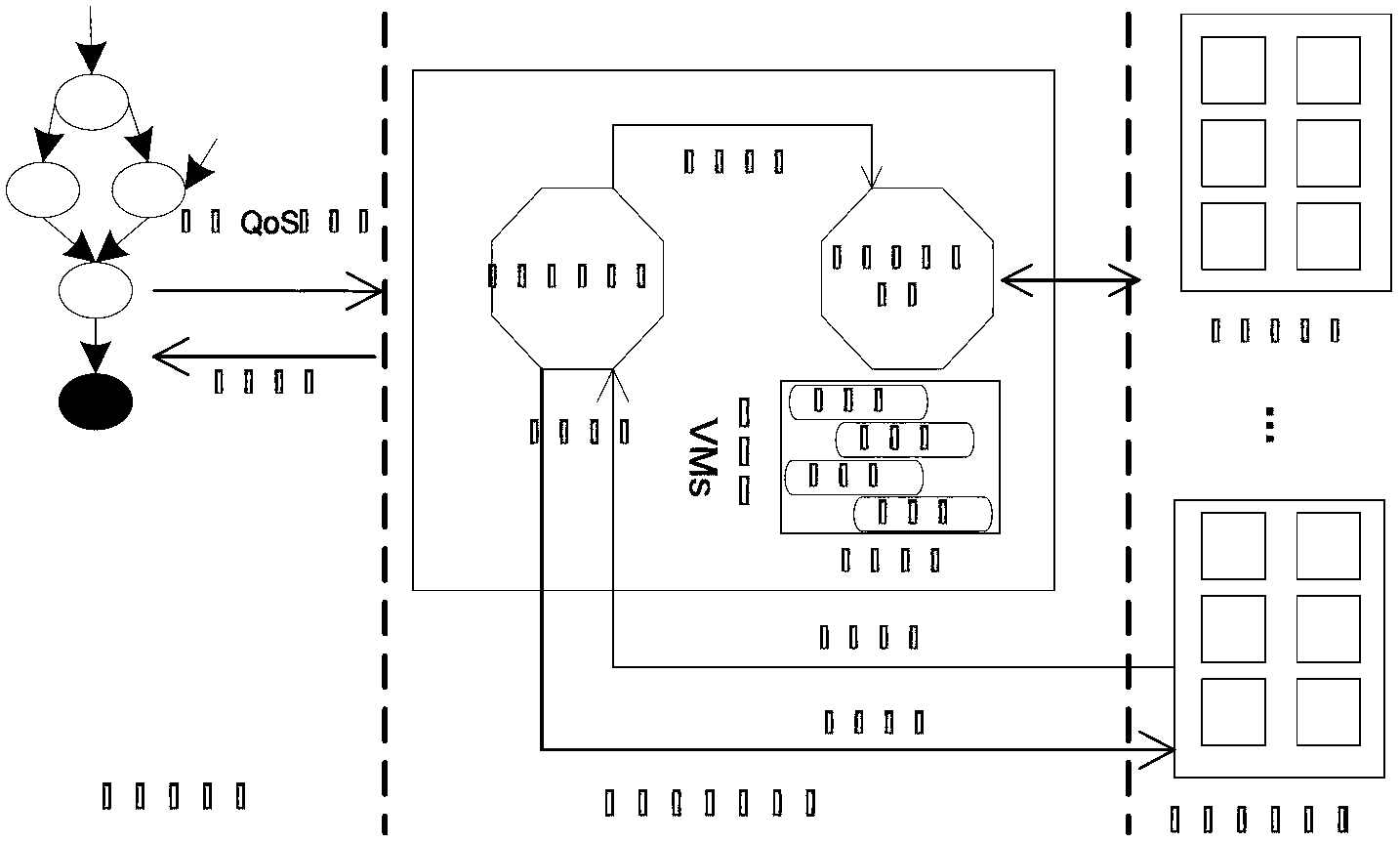

The invention relates to a scientific work flow scheduling method with safe perception under a cloud calculation environment. The method comprises the following steps of: firstly, calculating idle time of a single task in a scientific work flow task according to calculating time of the single task in a scientific work flow and data transmission time among the tasks; randomly setting an allowable safety service of the idle time for the single task in the scientific work flow according to a dependence relation among a safety service model, the idle time and the task; adding an expenditure brought about by the single task safety service in the scientific work flow into predication of task executing time, and adding an improvement on the calculation of the task expenditure into an MCP (Mixed Complementary Problem) algorithm; and finally, mapping a resource for the single task in the scientific work flow according to a resource condition in a data center of the cloud calculation environment and utilizing the resource to establish a virtual machine and execute the task. According to the scientific work flow scheduling method disclosed by the invention, the safety service grade of a whole body is improved under the precondition of not influencing the scheduling performance of the scientific work flow, and the safety risk of deploying under the cloud calculation environment is reduced.

Owner:WUHAN UNIV OF TECH

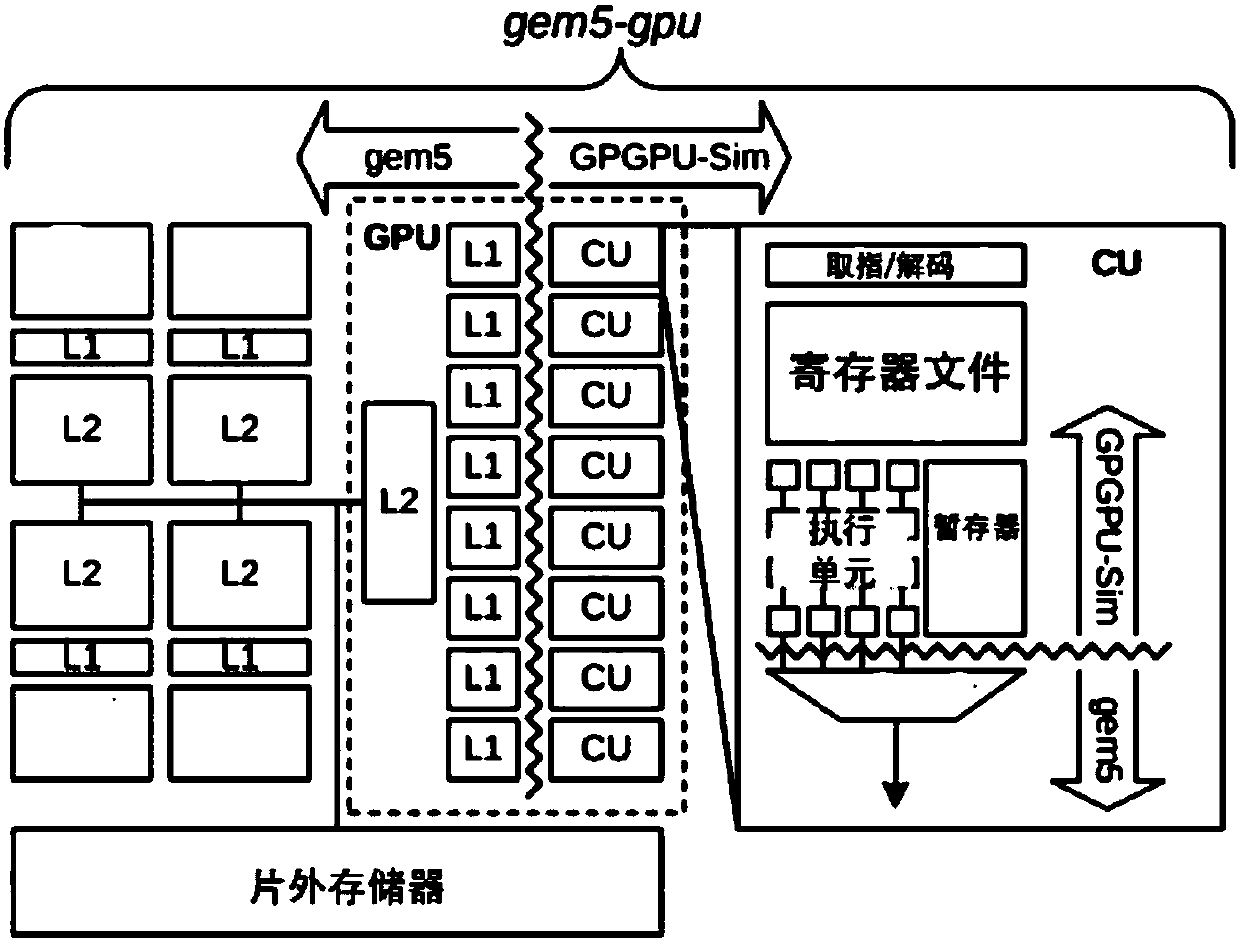

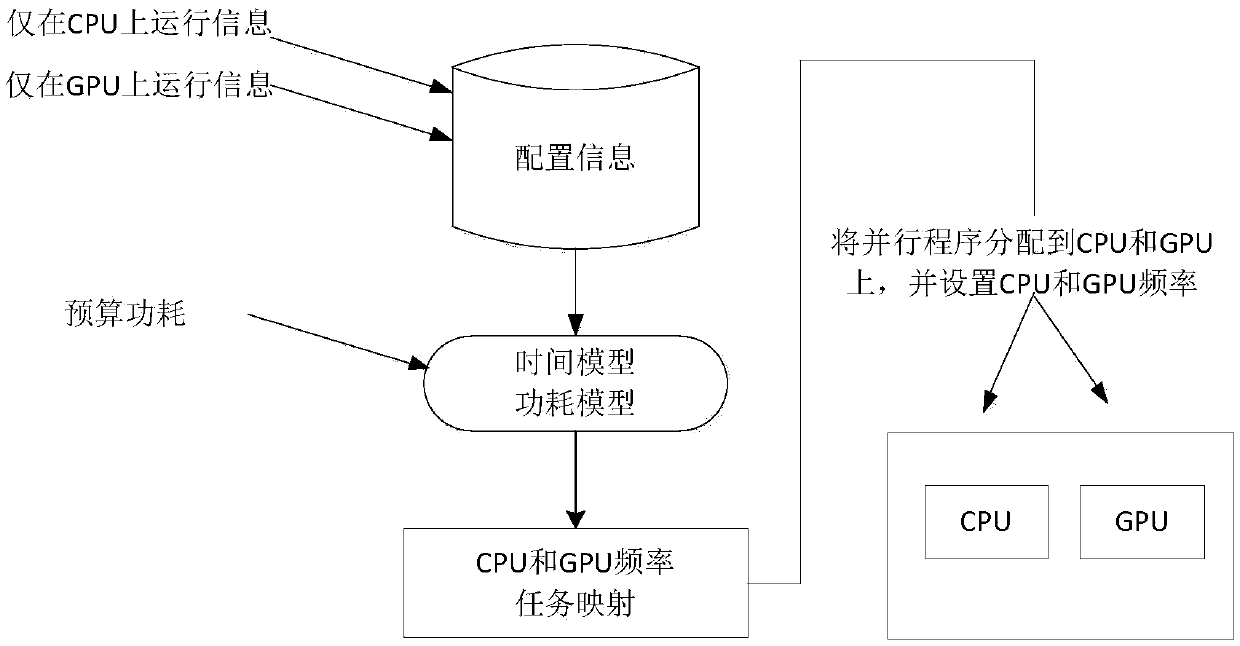

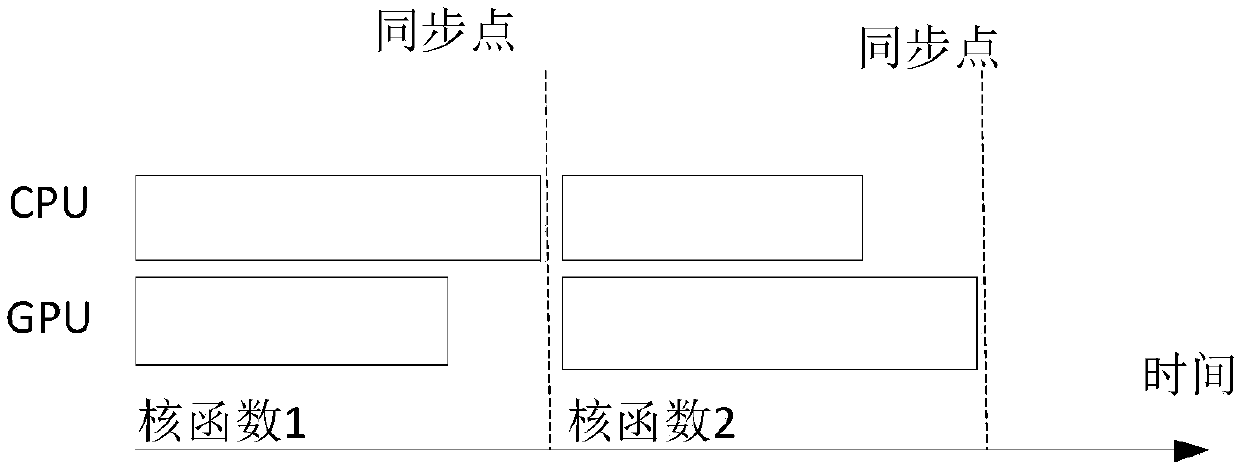

Heterogeneous multi-kernel power capping method through coordination of DVFS and task mapping

InactiveCN107861606AGuaranteed performanceTake advantage of computing powerPower supply for data processingGreedy algorithmParallel computing

The invention discloses a heterogeneous multi-kernel power capping method through coordination of DVFS and task mapping. The method comprises the steps that firstly, computational node power consumption, CPU power consumption and GPU power consumption scripts can be measured after program execution is completed for a heterogeneous system, then, selected parallel test benchmark programs are modified for obtaining the execution time of different kernel functions; different frequencies are set for a CPU and a GPU, application programs are operated only on the CPU and the GPU, detailed operation information is obtained and comprises the total execution time, the execution time of each kernel function, computational node power consumption, CPU power consumption and GPU power consumption; on thebasis of the operation information, a predicted model is designed and includes a predicted execution time model and a power consumption model; finally, on the basis of the predicted model, system power consumption and execution time under different CPU frequencies, GPU frequencies and task distribution schemes are obtained to be filled in a configuration table, and according to an improved greedyalgorithm, the best configuration scheme is found. By adopting the heterogeneous multi-kernel power capping method, the system power consumption budget is limited while the system performance can beimproved.

Owner:BEIJING UNIV OF TECH

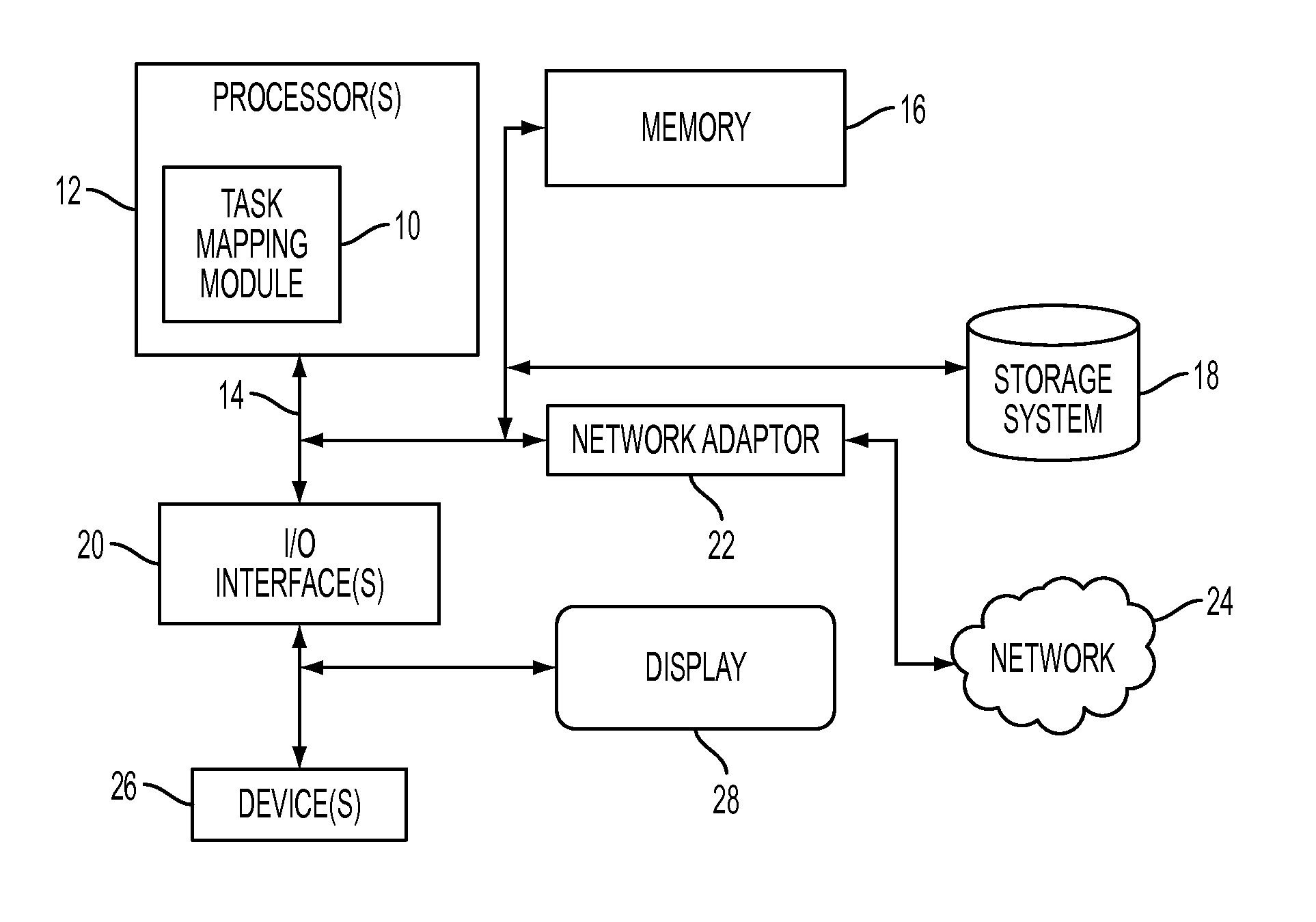

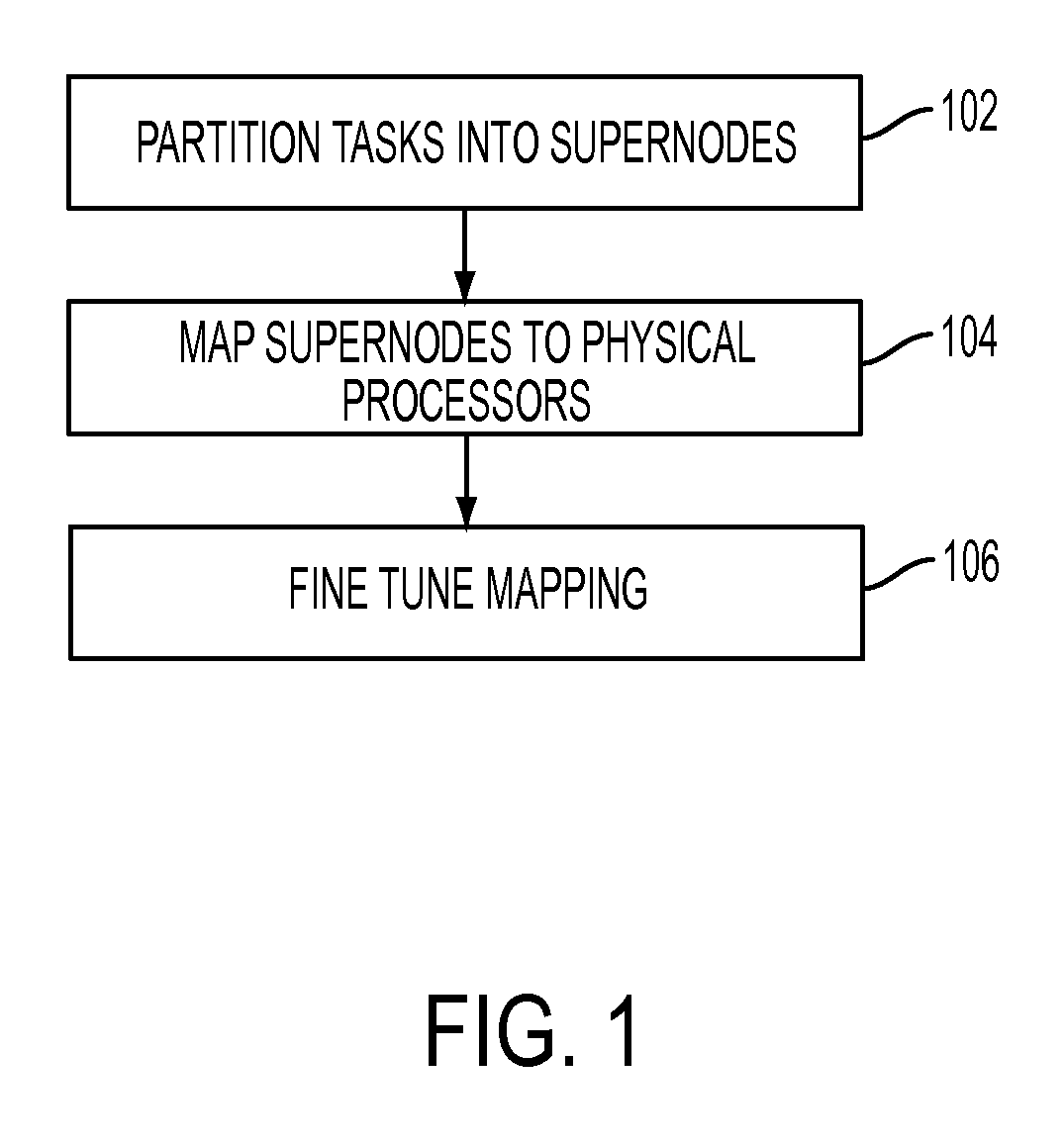

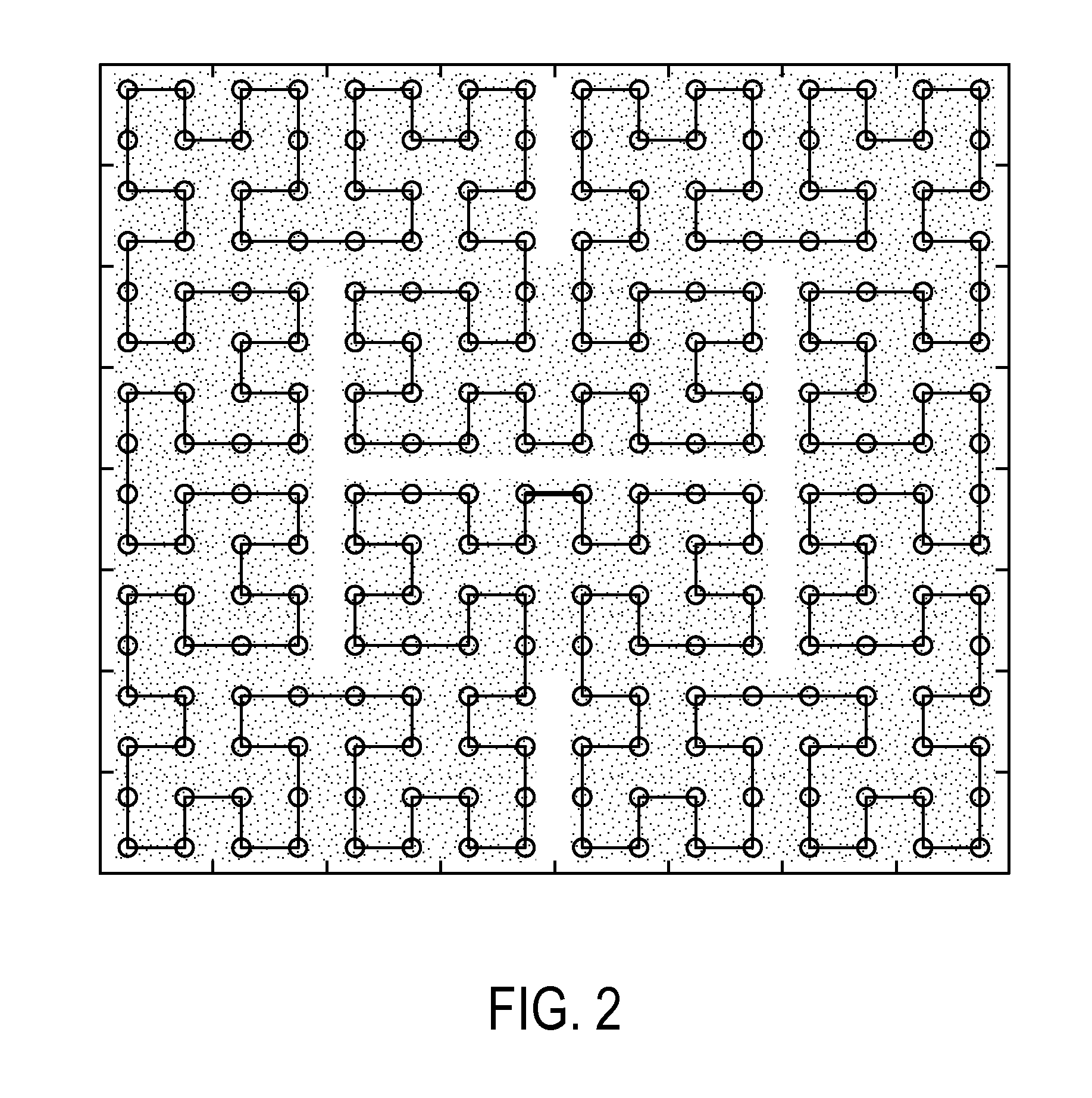

Hierarchical task mapping

Mapping tasks to physical processors in parallel computing system may include partitioning tasks in the parallel computing system into groups of tasks, the tasks being grouped according to their communication characteristics (e.g., pattern and frequency); mapping, by a processor, the groups of tasks to groups of physical processors, respectively; and fine tuning, by the processor, the mapping within each of the groups.

Owner:IBM CORP

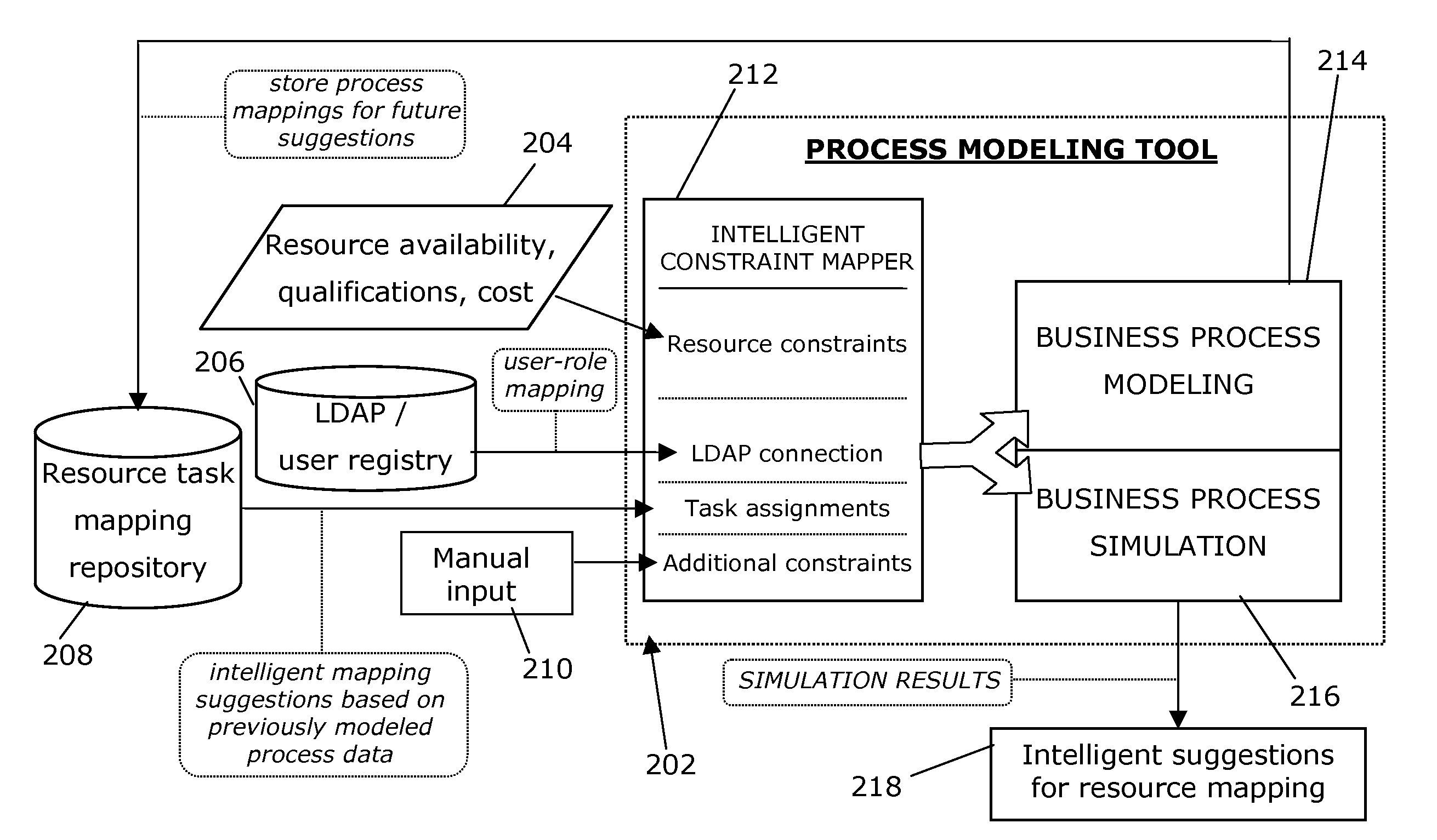

System and Method for Dynamic Optimal Resource Constraint Mapping in Business Process Models

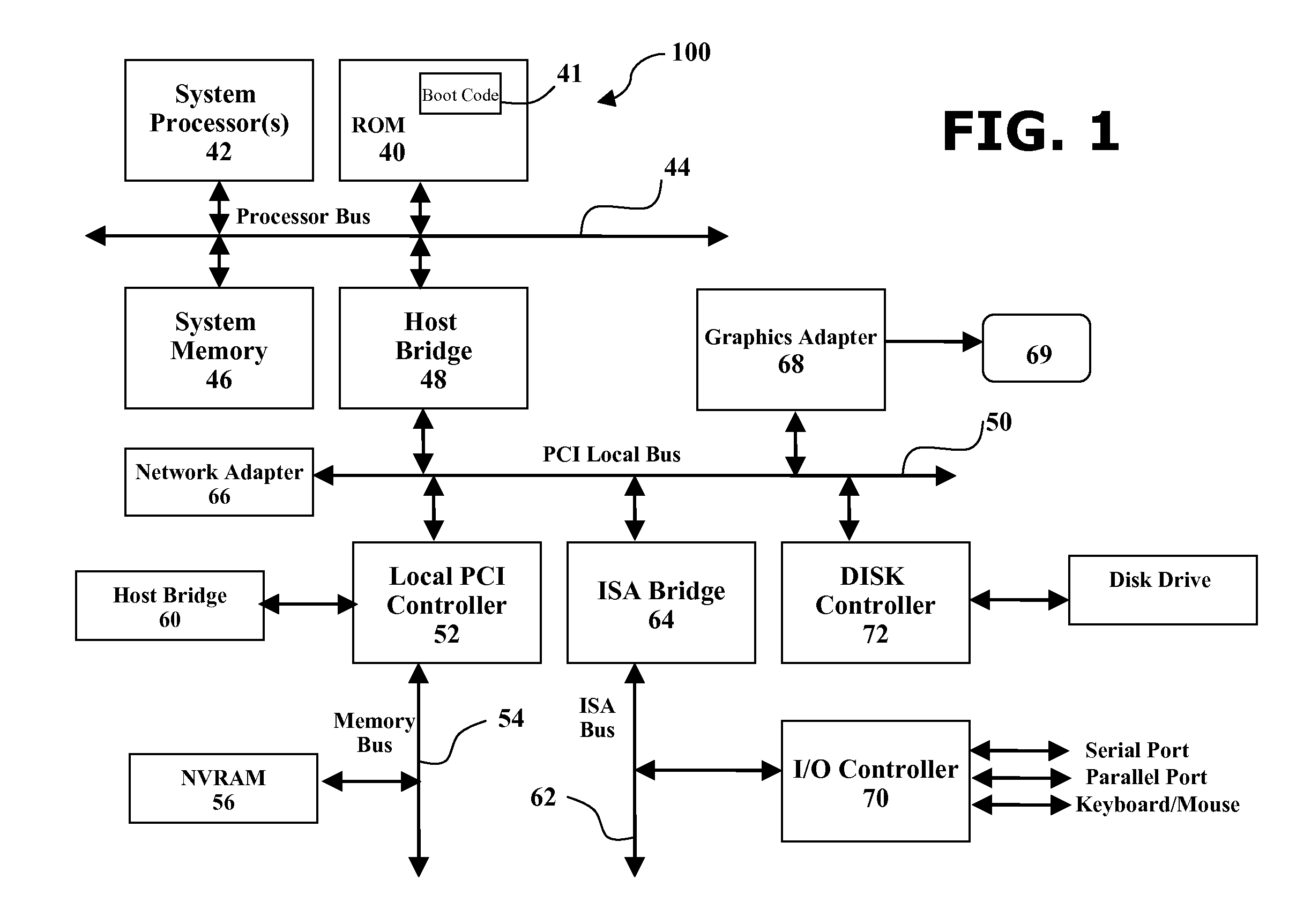

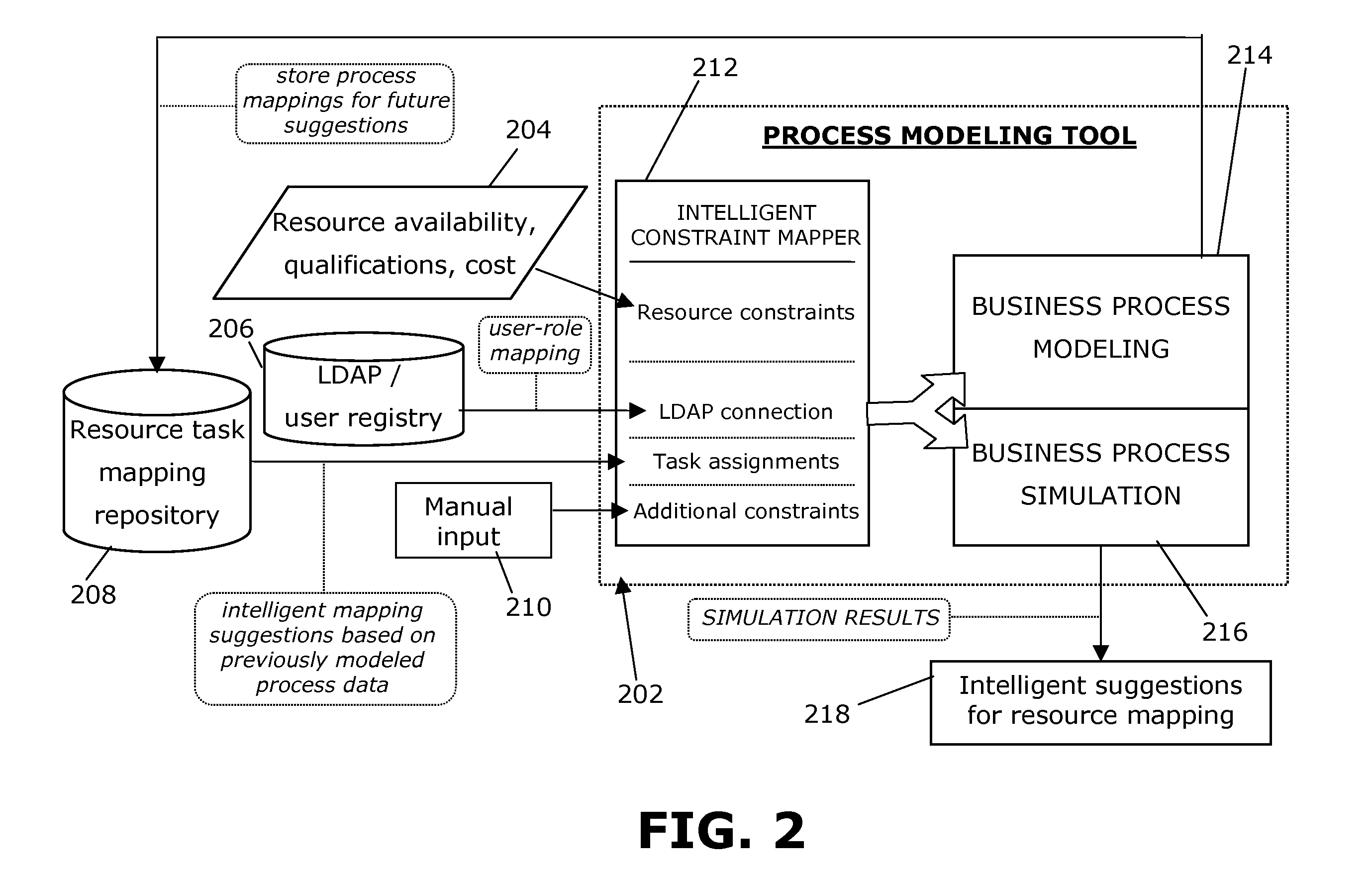

Electronic communication has made it increasingly easier for large companies, such as those with thousands, even tens of thousands, of workers and / or consultants, to maintain steady streams of workflow across scattered offices and locations. In such a context, there is broadly contemplated herein business process modelling comprising the receipt of multiple business process models and resource constraints for each of the models. The models and constraints are then consolidated to more fully optimize business process modelling. Additional resource constraints governing goals of the business unit or organization are also received in embodiments of the invention, as well as resource registry information, (e.g., LDAP information) in addition to resource information such as resource availability and cost, etc. In embodiments of the invention, historical resource-to-task mapping is also assimilated as well as performance characteristics of resources. In other embodiments, dynamic changes to resource information are also considered for resource assignment.

Owner:IBM CORP

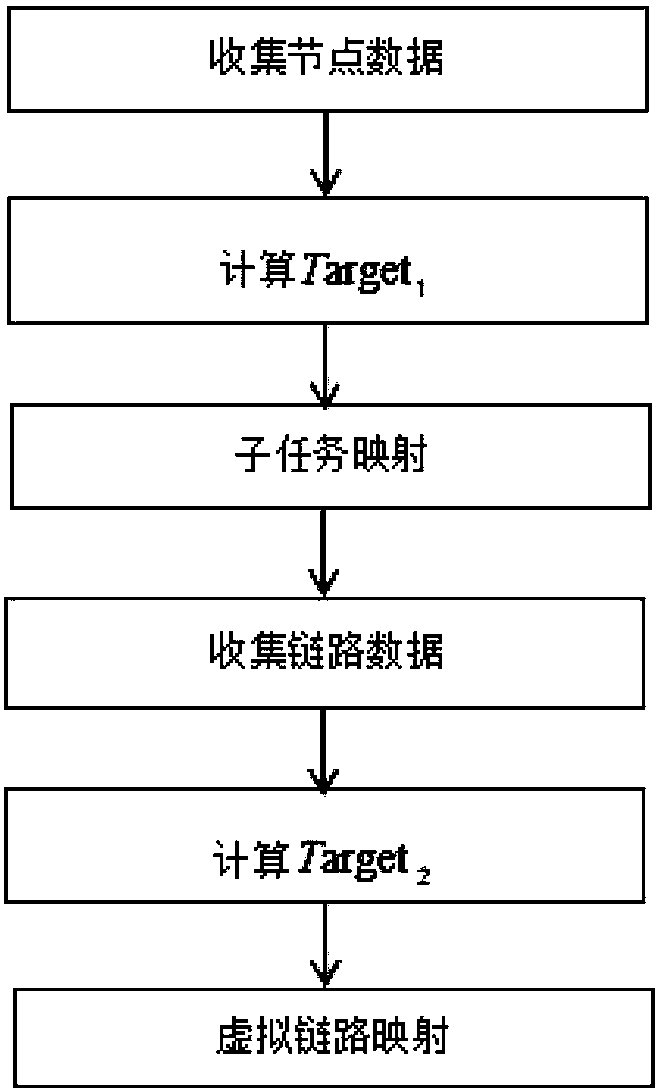

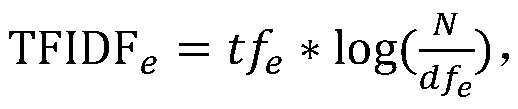

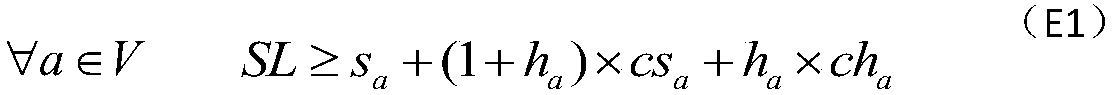

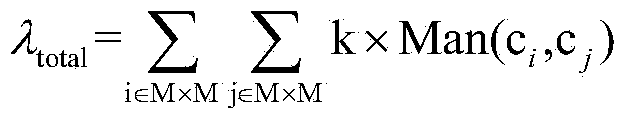

Dynamic resource allocation algorithm of SDN/NFV network based on load balance

ActiveCN107770096AImprove QoSLoad balancingProgram controlData switching networksLocal optimumProblem description

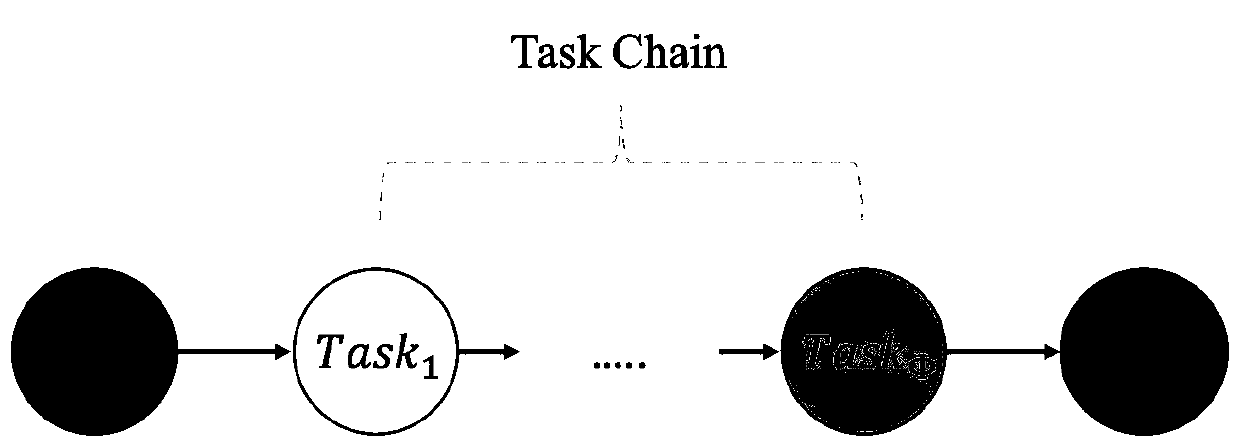

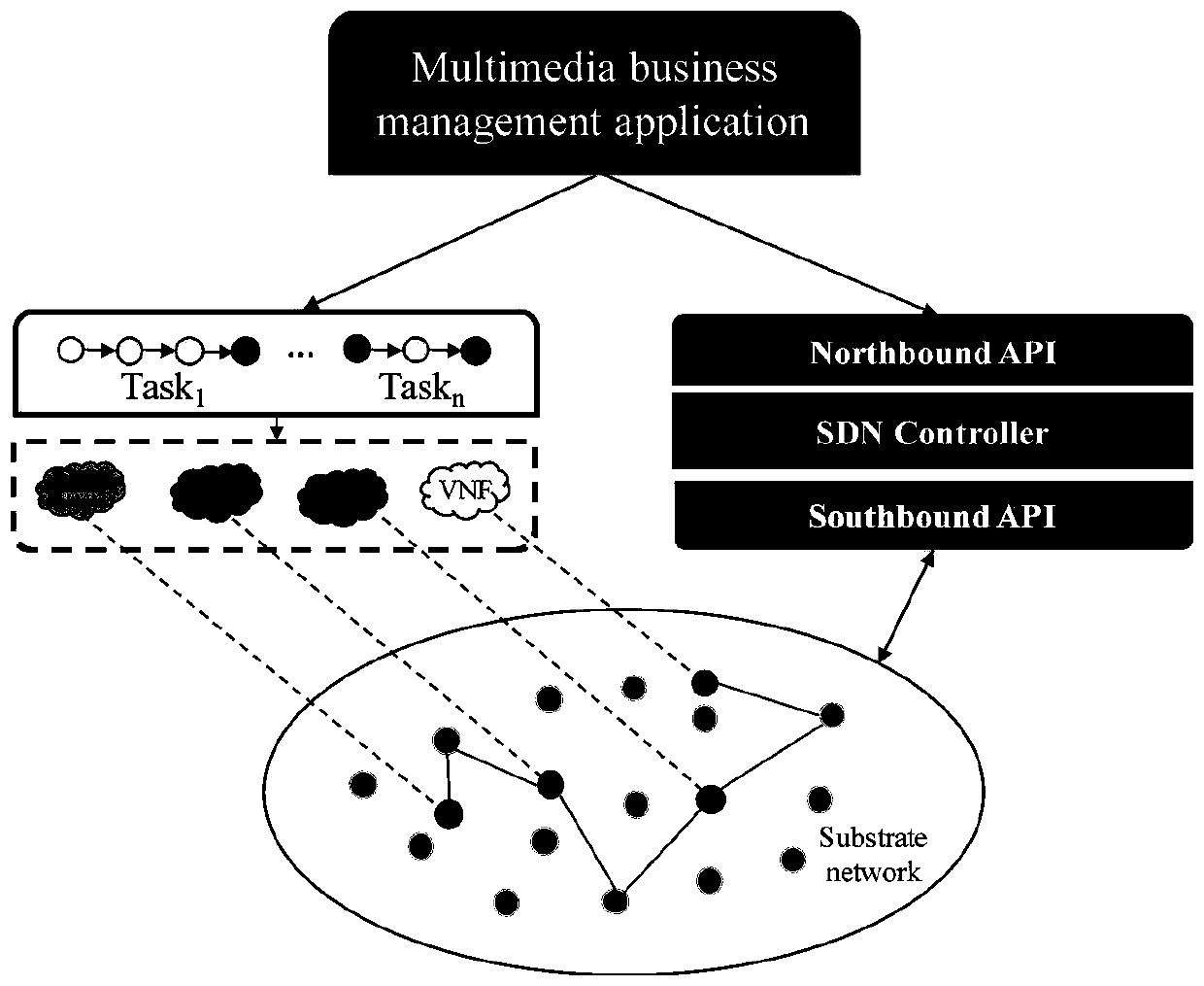

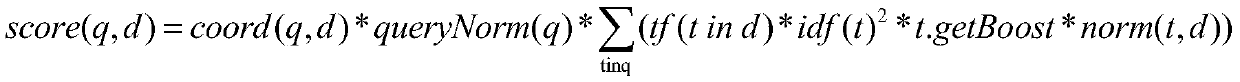

The invention provides a dynamic resource allocation algorithm of an SDN / NFV network based on load balance. In view of multimedia services of different needs, virtual link mapping objectives, constraints and physical link load states are associated, sub-tasks are adaptively mapped to network nodes according to the physical link load states, and used resources and remaining resources of physical nodes and links are effectively distinguished so as to balance the load, improve the utilization rate of network resources and avoid the occurrence of local optimum or current optimum. The scheme is asfollows: performing sub-task mapping to find a server node satisfying constraints for each sub-task in a service request, wherein a mapping model of the sub-task is described as (the formula is described in the specification); and then, performing virtual link mapping to find a physical path satisfying capacity constraints of each virtual link for each virtual link in the service request, whereina dynamic virtual mapping problem is described as (the formula is described in the specification).

Owner:STATE GRID HENAN INFORMATION & TELECOMM CO +1

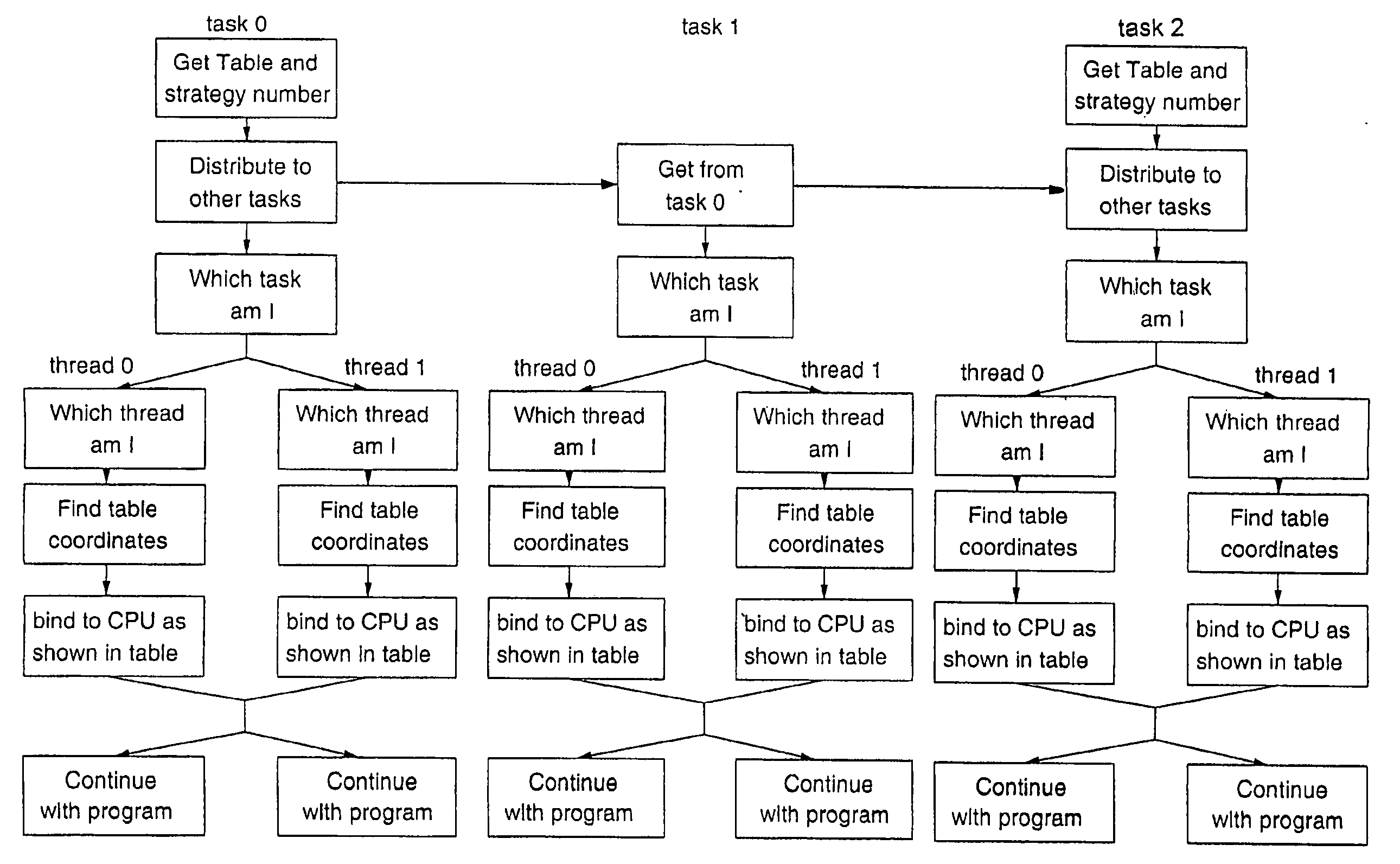

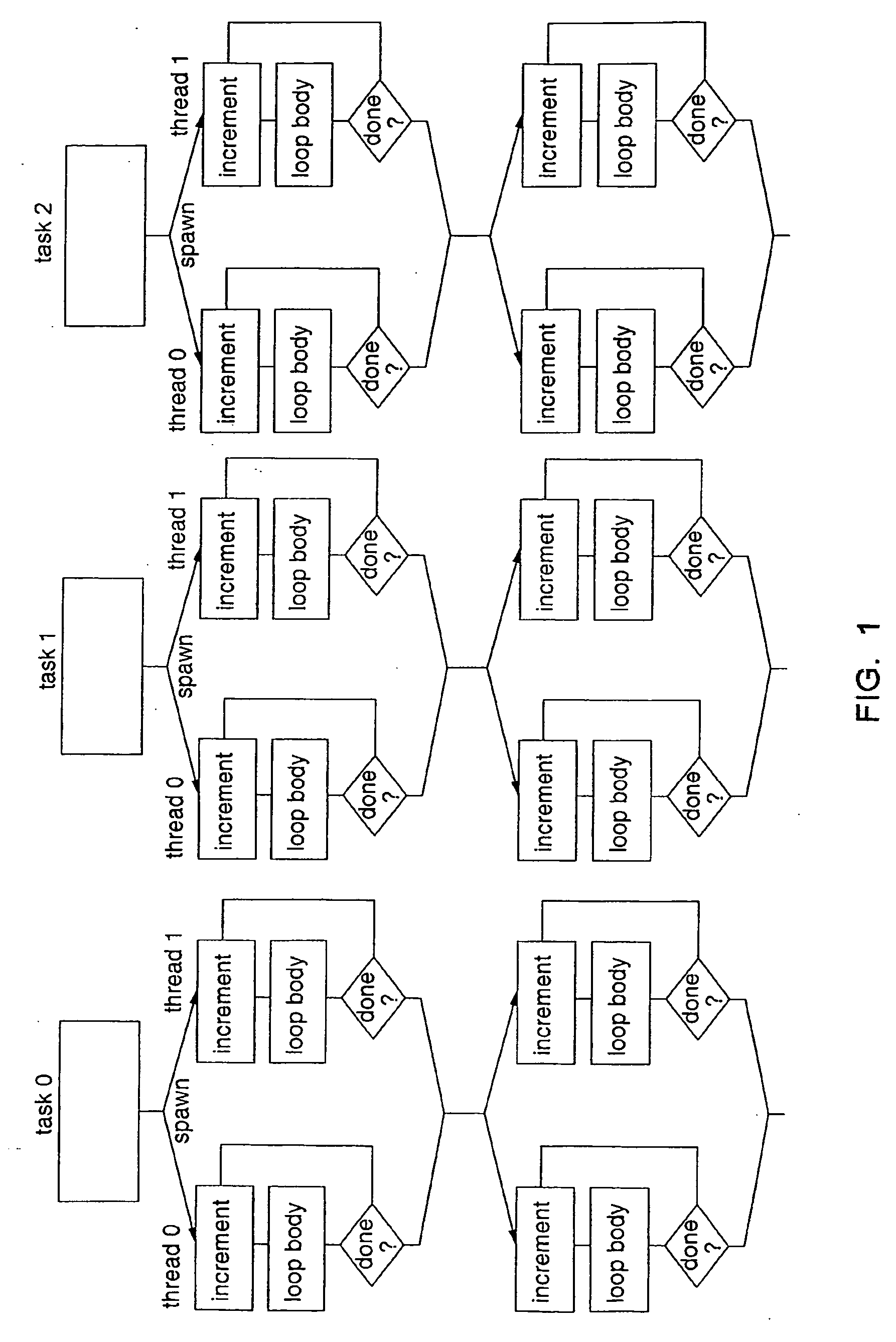

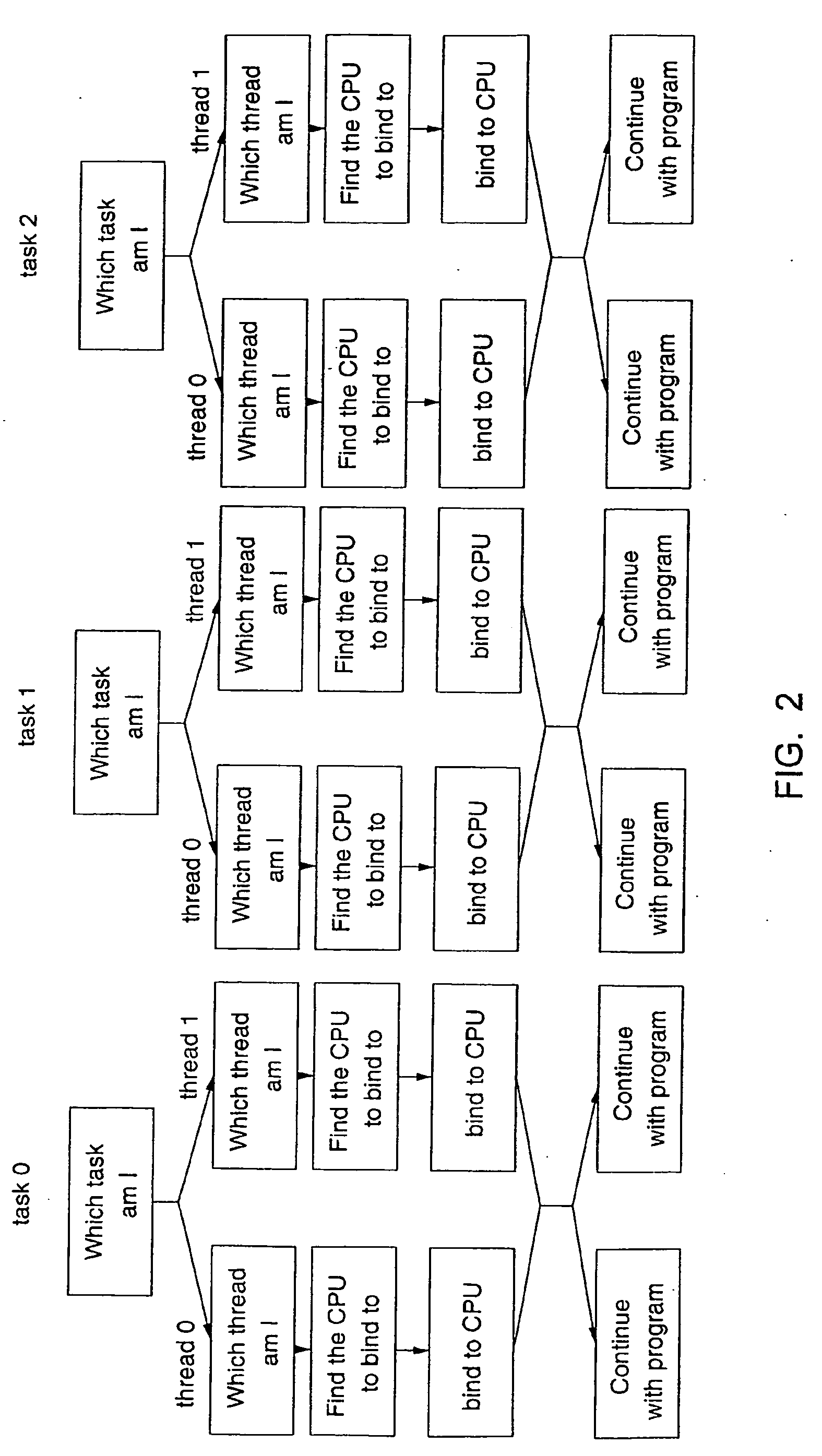

Method and system for mapping threads or tasks to CPUs in a parallel computer

InactiveUS20060080663A1Multiprogramming arrangementsMemory systemsConcurrent computationParallel computing

The present invention provides a new method and system to provide a flexible and easily reconfigurable way to map threads or tasks of a parallel program to CPUs of a parallel computer. The inventive method replaces the necessity of coding the mapping of threads or tasks to CPUs in the parallel computer by looking up in a mapping description which is preferably presented in form of least one table that is provided at runtime. The mapping description specifies various mapping strategies of tasks or threads to CPUs. Selecting of a certain mapping strategy or switching from a specified mapping strategy to a new one can be done manually or automatically at runtime without any decompilation of the parallel program.

Owner:IBM CORP

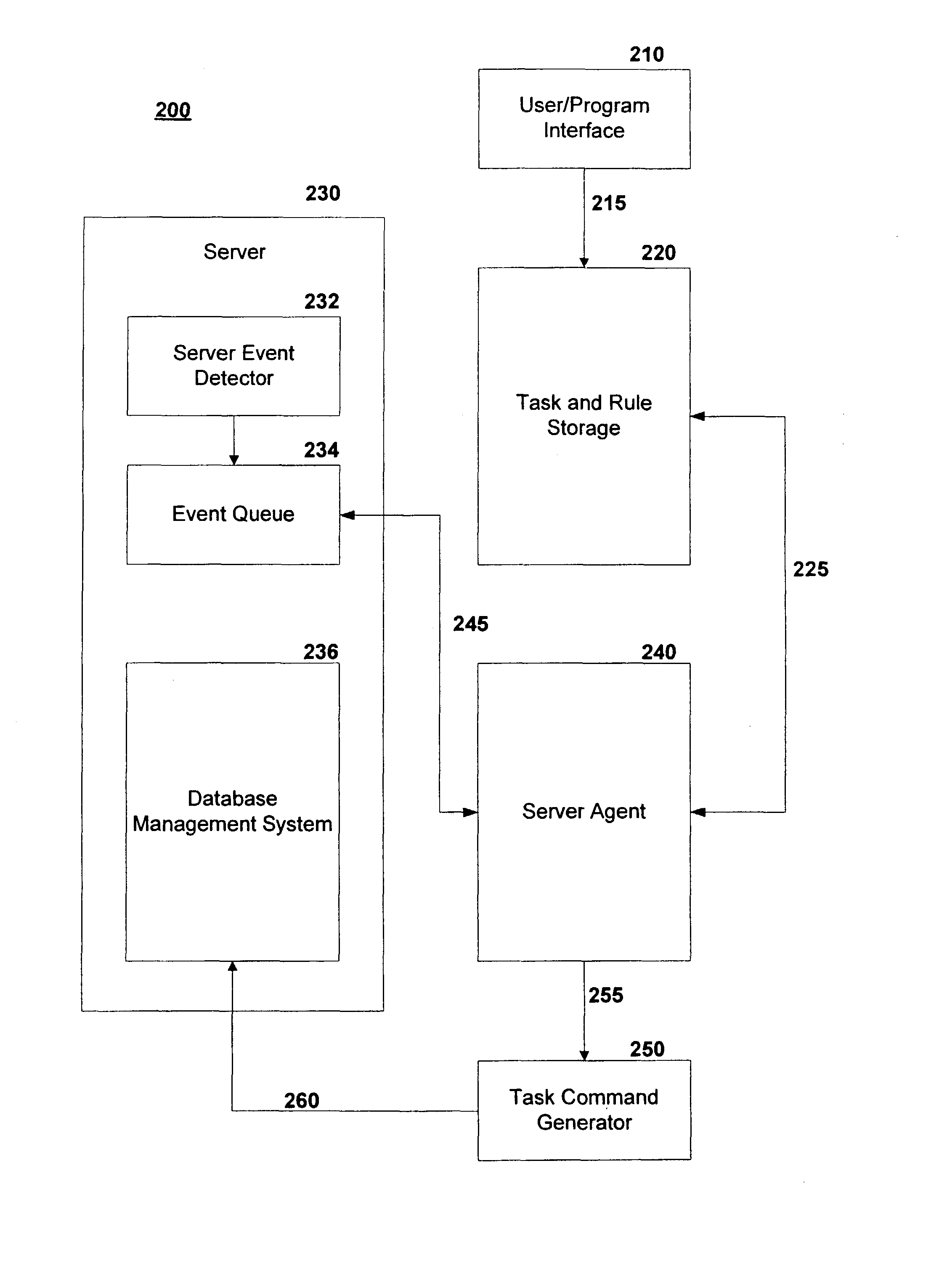

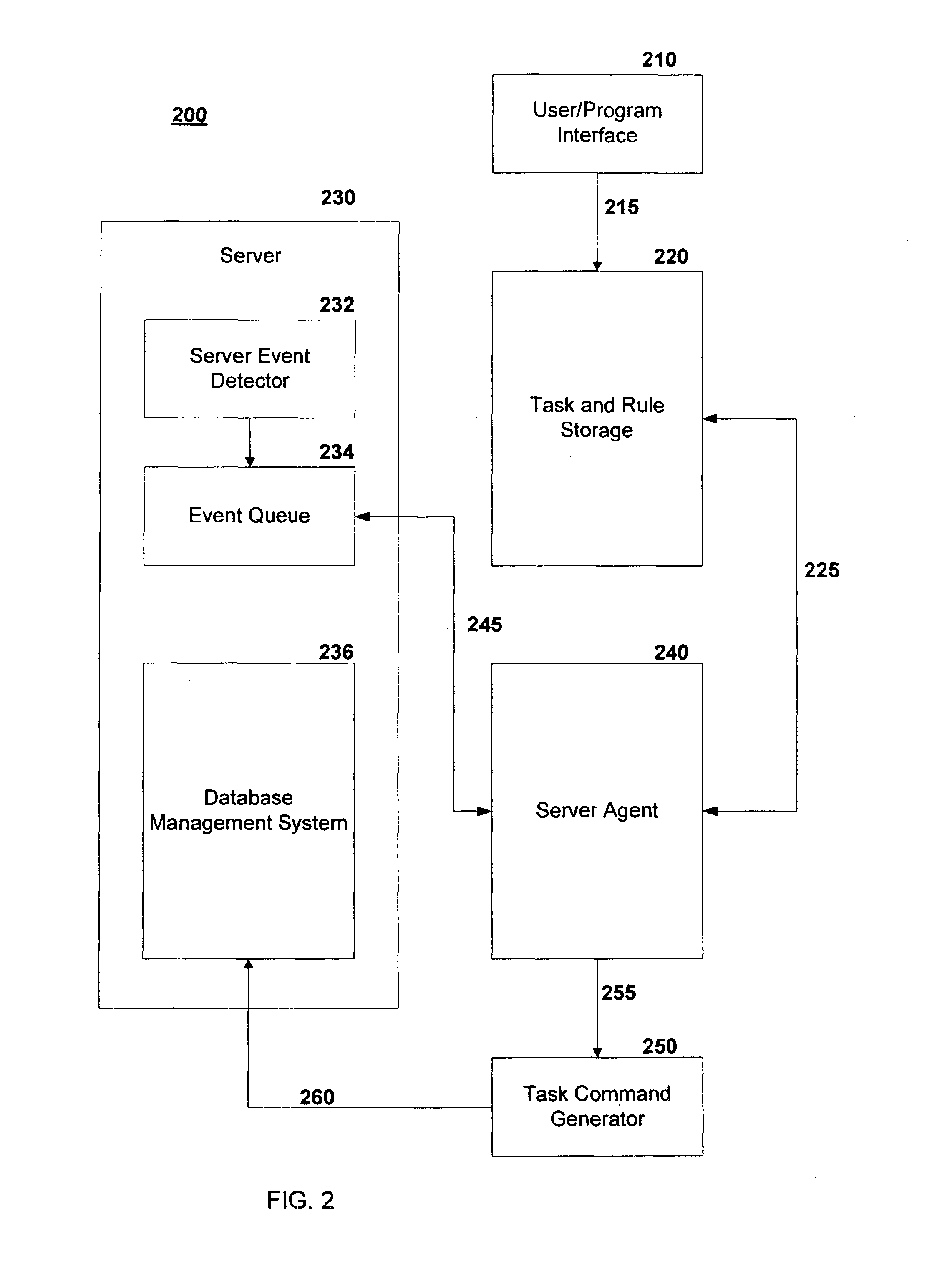

Automatic task generator method and system

ActiveUS7912820B2Digital data information retrievalDigital data processing detailsTask mappingComputerized system

An automatic task generation mechanism provides generation of tasks such as maintenance tasks for a computer system. A set of tasks is set up along with rules for performing the tasks. The rules may be associated with metadata that allow the tasks to be mapped to the tasks. Events may be detected that are related to database operations such as a create, modify, delete or add command. Upon event detection by the system, the system may store the event and associated metadata. Another process may query the event and metadata storage and compare those items to the rules previously set up. If there is a match between the rules and the event, one or more tasks may be established which correspond to an action that is desired to be taken. The task may then be inserted into a computer system for subsequent execution.

Owner:SERVICENOW INC

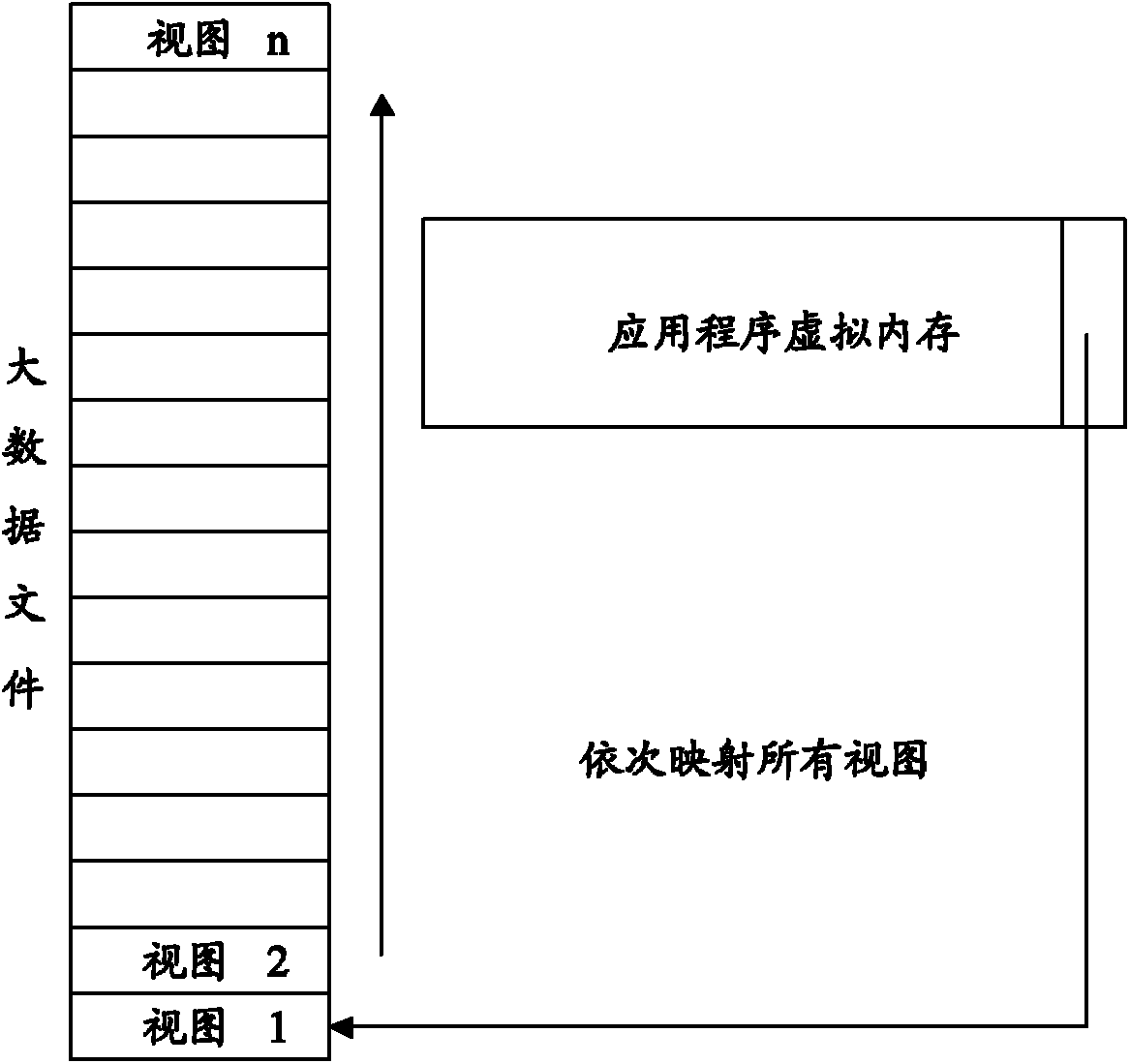

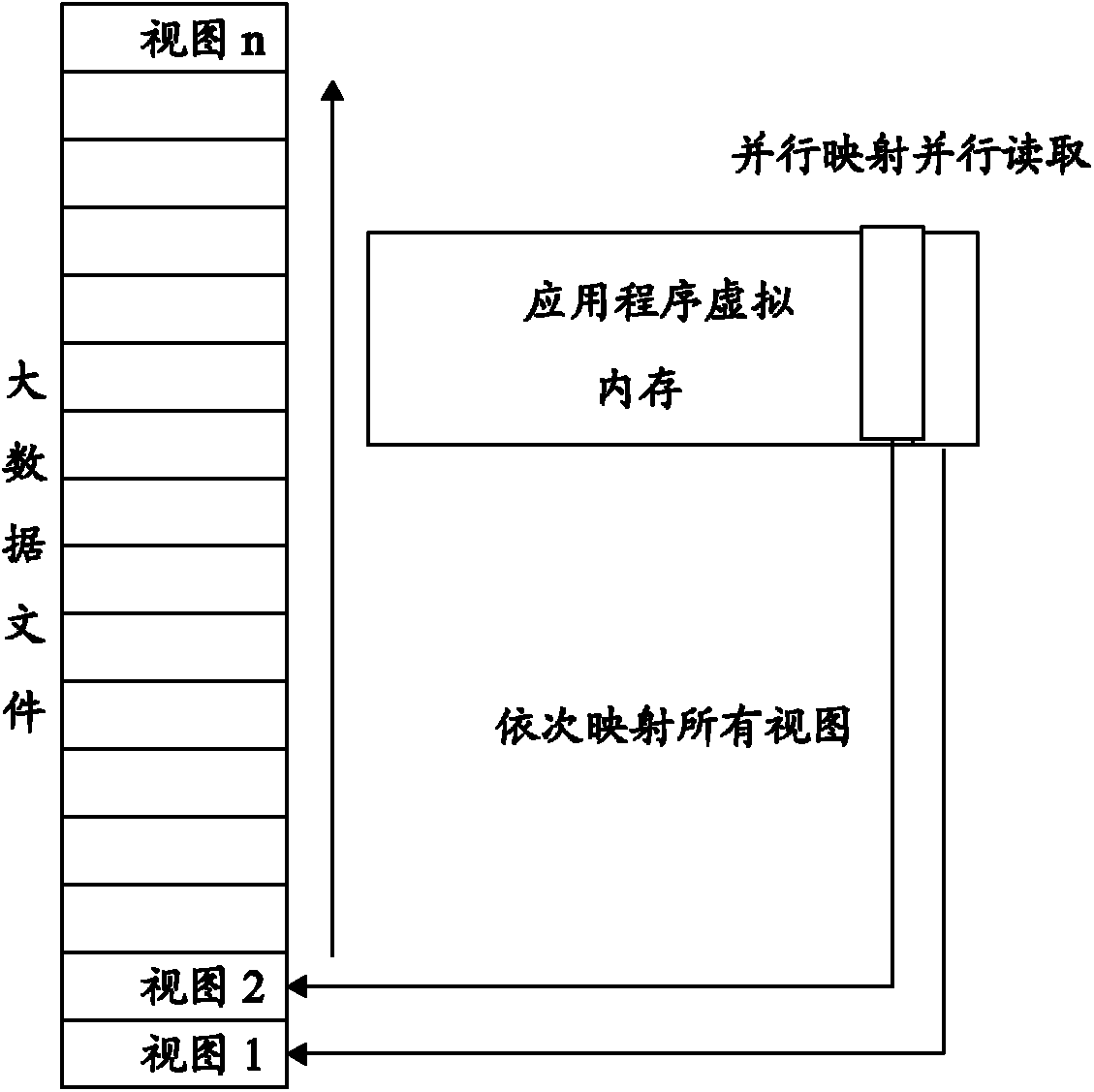

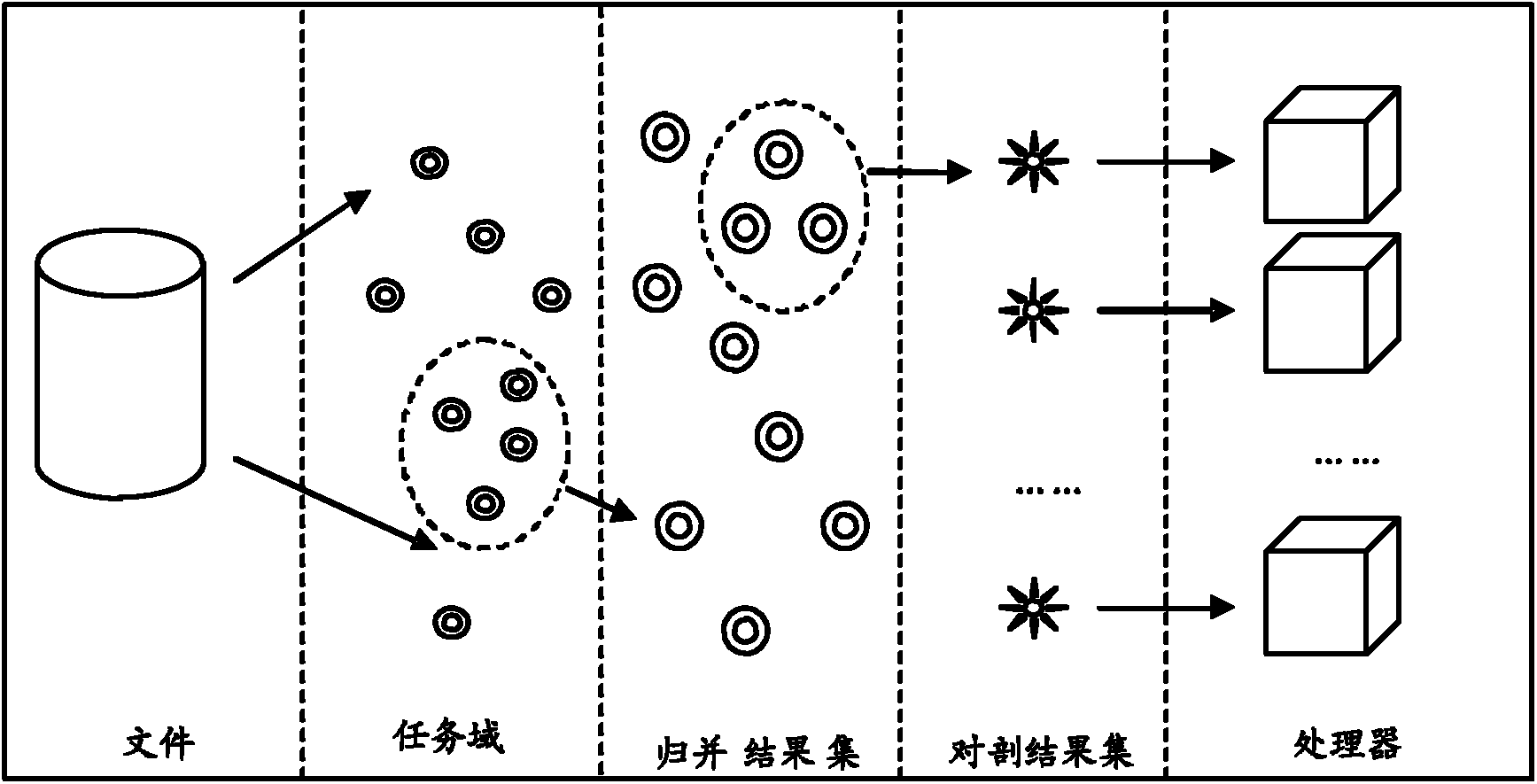

Method for rapidly extracting massive data files in parallel based on memory mapping

InactiveCN102231121AImprove efficiencyBreak through the bottleneck of processing speedMultiprogramming arrangementsSpecial data processing applicationsInternal memoryTask mapping

The invention discloses a method for rapidly extracting massive data files in parallel based on memory mapping. The method comprises the following steps of: generating a task domain: forming the task domain by task blocks, wherein the task blocks are elements in the task domain; generating a task pool: performing sub-task domain merger of the elements in the task domain according to a rule of lowcommunication cost, taking a set of the elements in the task domain as the task pool for scheduling tasks, and extracting tasks to be executed by a processor according to the scheduling selection; scheduling the tasks: according to the remaining quantity of the tasks, determining the scheduling particle size of the tasks, extracting the tasks according with requirements from the task pool, and preparing for mapping; and mapping a processor: mapping the extracted tasks to be executed by a currently idle processor. According to the method disclosed by the invention, the multi-nuclear advantagescan be played; the efficiency for an internal memory to map files is increased; the method can be applicable for reading a single file from a massive file, the capacity of which is below 4GB; the reading speed of this kind of files can be effectively increased; and the I / O (Input / Output) throughput rate of a disk file can be increased.

Owner:NORTH CHINA UNIVERSITY OF TECHNOLOGY

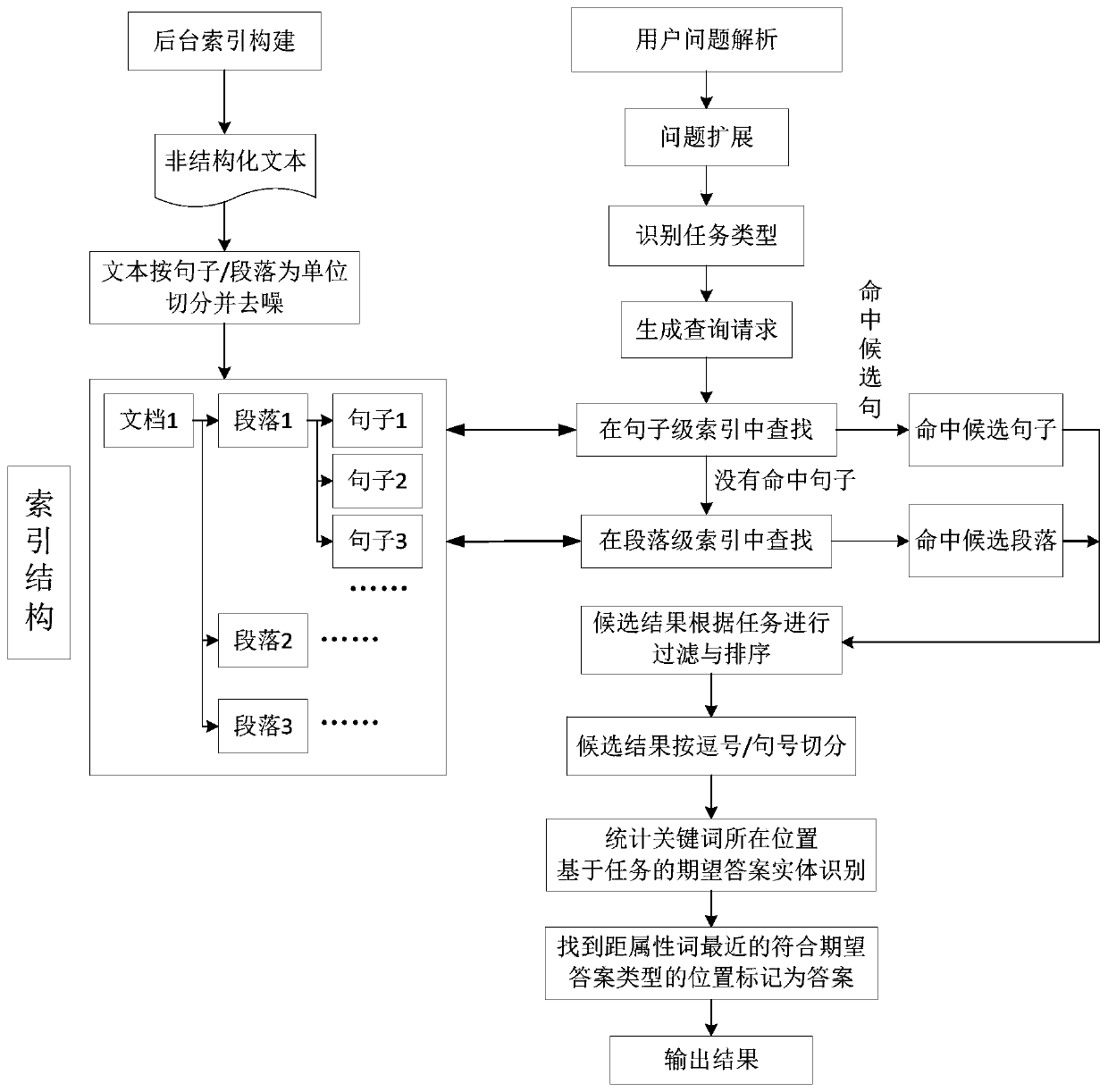

Task-oriented unstructured information intelligent question and answer system construction method

ActiveCN109800284AWork lessReturn search results exactText database indexingSpecial data processing applicationsNamed-entity recognitionTask mapping

The invention discloses a task-oriented unstructured information intelligent question and answer system construction method. According to the method, a user request is subjected to natural language processing, key words and sentence patterns of a request statement are identified, task types to which the user request belongs are matched, a query request expression is generated, and different searchconditions and sorting conditions are formulated according to different task types. Three expected answers which are mapped by the task is defined and comprise a weather type, a time type and a digital type, wherein the three types of questions can be directly and accurately answered to the questions of the user. And the system queries candidate results meeting conditions in sentences and paragraph indexes. The candidate sentences or paragraphs screen words conforming to task expectation answer types according to named entity recognition results, screen out results containing target type phrases, mark the results as target answers and highlight and display the target answers; Wherein the results which do not contain the target type phrases are ranked backwards. Finally, the answer is output.

Owner:THE 28TH RES INST OF CHINA ELECTRONICS TECH GROUP CORP

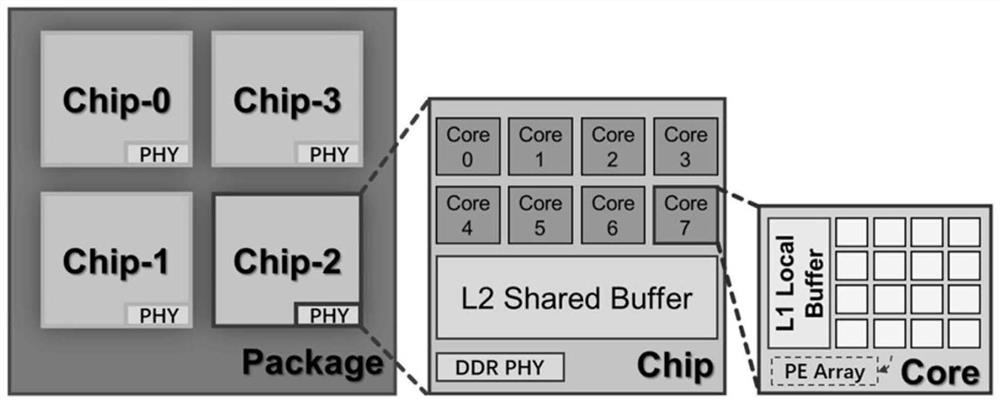

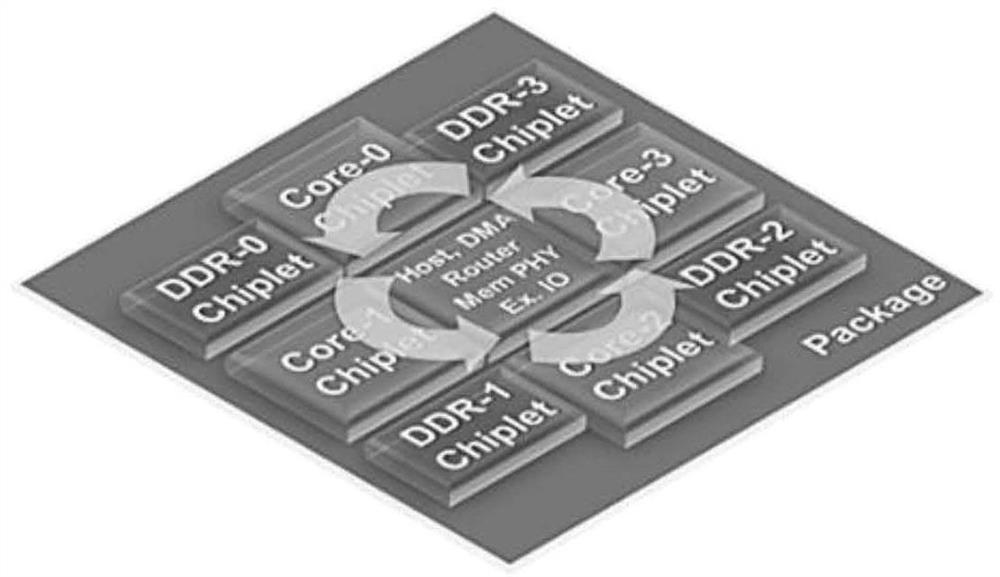

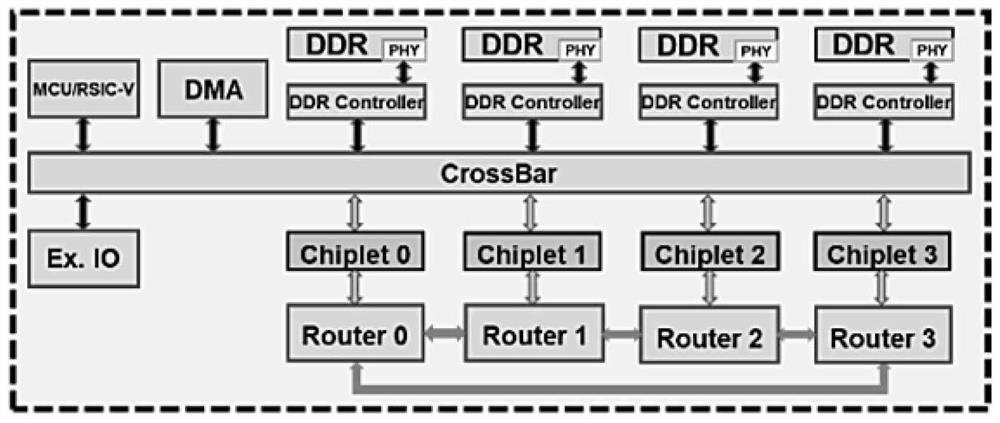

Multi-core package-level system based on core particle framework and task mapping method thereof for core particles

PendingCN112149369AReduce occupancyImprove processing efficiencyDataflow computersCAD circuit designComputational scienceDram memory

The invention discloses a multi-core package-level system based on a core particle framework and a task mapping method for core particles. The system comprises a core unit, a core particle unit and apackaging unit; the core unit comprises a plurality of parallel processing units and an L1 local buffer unit shared by the plurality of processing units; the L1 local buffer unit is only used for storing weight data; the core particle unit comprises a plurality of parallel core units and an L2 shared buffer unit shared by the plurality of core units; the L2 shared buffer unit is only used for storing activation data; the package unit includes a plurality of parallel and interconnected core grain units, and a DRAM memory shared by the plurality of core grain units. According to the method, scheme search is carried out through core particle Chiplet calculation mapping, calculation mapping between core particle Chiplets, a data distribution template of PE array calculation mapping in the coreparticle Chiplets and scale distribution of calculation of each layer, so that less inter-chip communication, less on-chip storage and less DRAM access are achieved.

Owner:INST FOR INTERDISCIPLINARY INFORMATION CORE TECH XIAN CO LTD

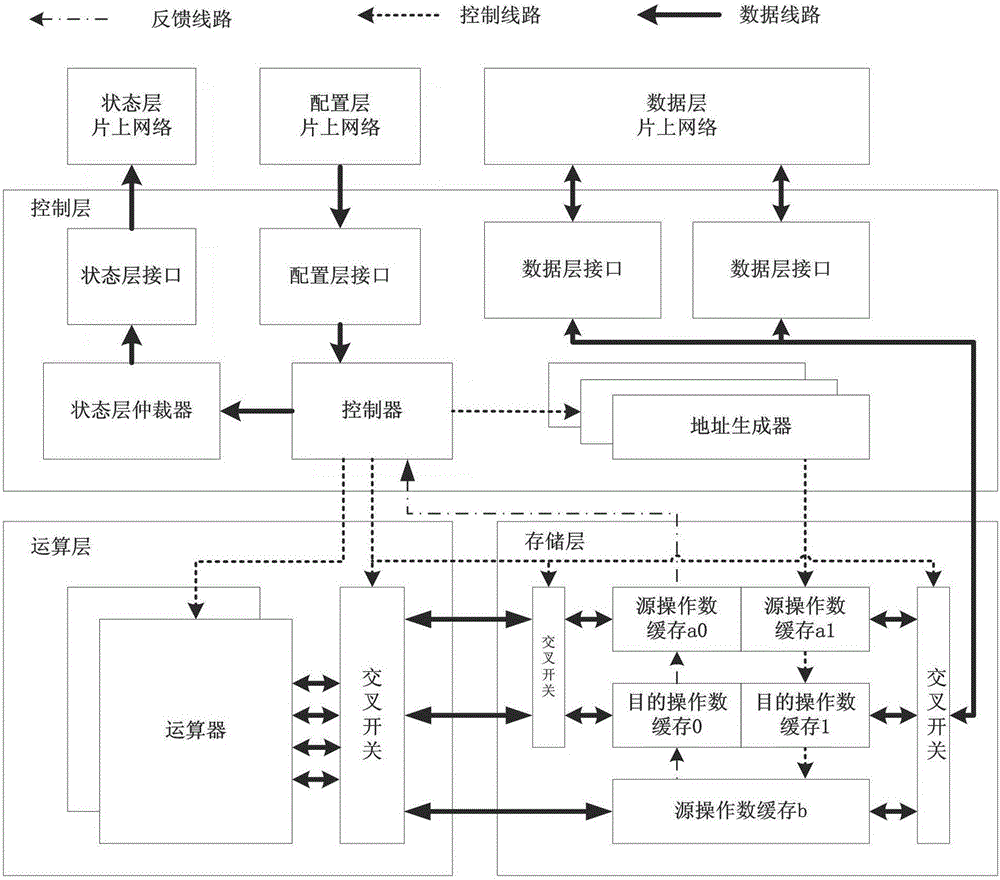

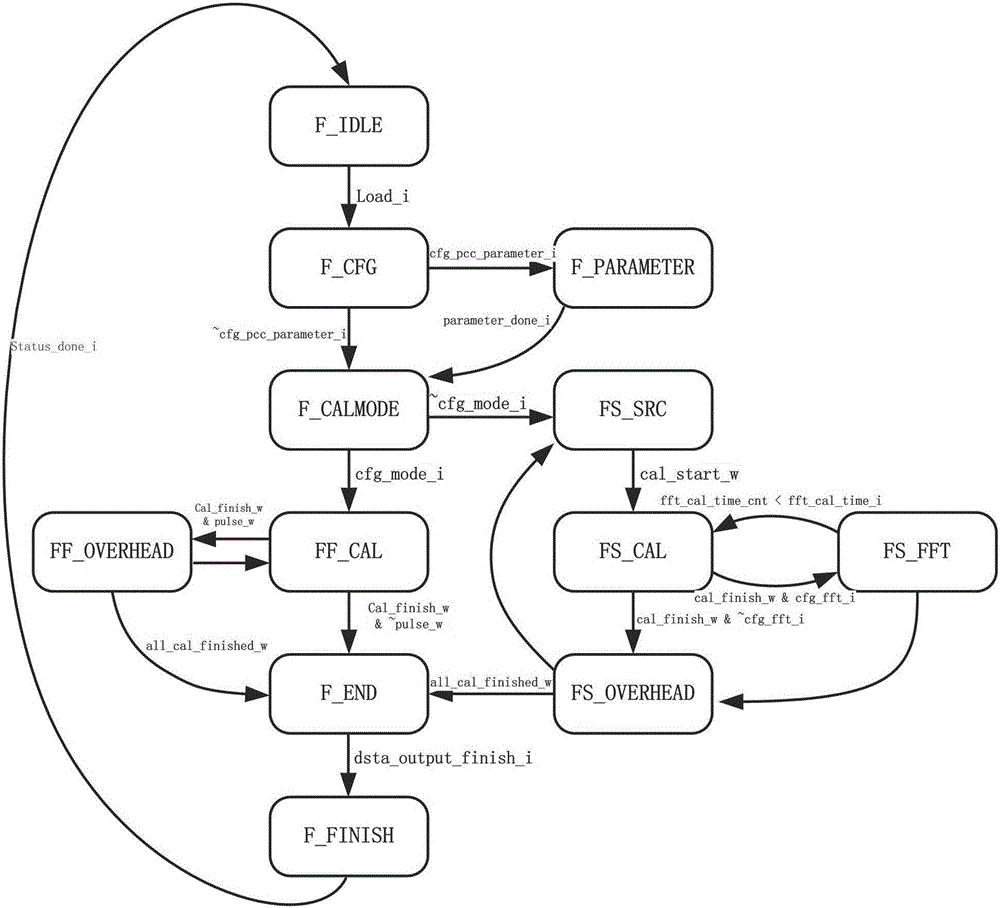

Reconfigurable arithmetic unit supporting multiple working modes and working modes thereof

ActiveCN106155814AImprove work efficiencyEasy to integrateResource allocationDigital computer detailsControl layerLayer interface

The invention discloses a reconfigurable unit supporting multiple working modes and the working modes thereof. The reconfigurable unit is characterized by comprising a control layer, an arithmetic layer and a storage layer; the control layer comprises a state layer interface, a configuration layer interface, a data layer interface, an address generator and a controller; the arithmetic layer comprises an arithmetic device; the storage layer comprises a source operand cache unit and a destination operand cache unit. The working modes of the reconfigurable arithmetic unit comprise the storage arithmetic mode, the pulse arithmetic mode and the stream arithmetic mode, and higher flexibility is provided for algorithm mapping of a computing system. When task mapping is carried out in the computing system, the specific working modes of the reconfigurable arithmetic unit can be selected according to specific features and the bottleneck of the algorithm to be mapped and in combination with specific conditions of network communication and storage bandwidth in the computing system, therefore, the arithmetic throughput capacity and network communication and storage access pressure are considered, and the working efficiency of the whole system is improved.

Owner:HEFEI UNIV OF TECH

Computer aided query to task mapping

InactiveUS7231375B2Huge taskMetadata text retrievalDigital data processing detailsGraphicsGraphical user interface

An annotating system aids a user in mapping a large number of queries to tasks to obtain training data for training a search component. The annotating system includes a query log containing a large quantity of queries which have previously been submitted to a search engine. A task list containing a plurality of possible tasks is stored. A machine learning component processes the query log data and the task list data. For each of a plurality of query entries corresponding to the query log, the machine learning component suggests a best guess task for potential query-to-task mapping as a function of the training data. A graphical user interface generating component is configured to display the plurality of query entries in the query log in a manner which associates each of the displayed plurality of query entries with its corresponding suggested best guess task.

Owner:MICROSOFT TECH LICENSING LLC

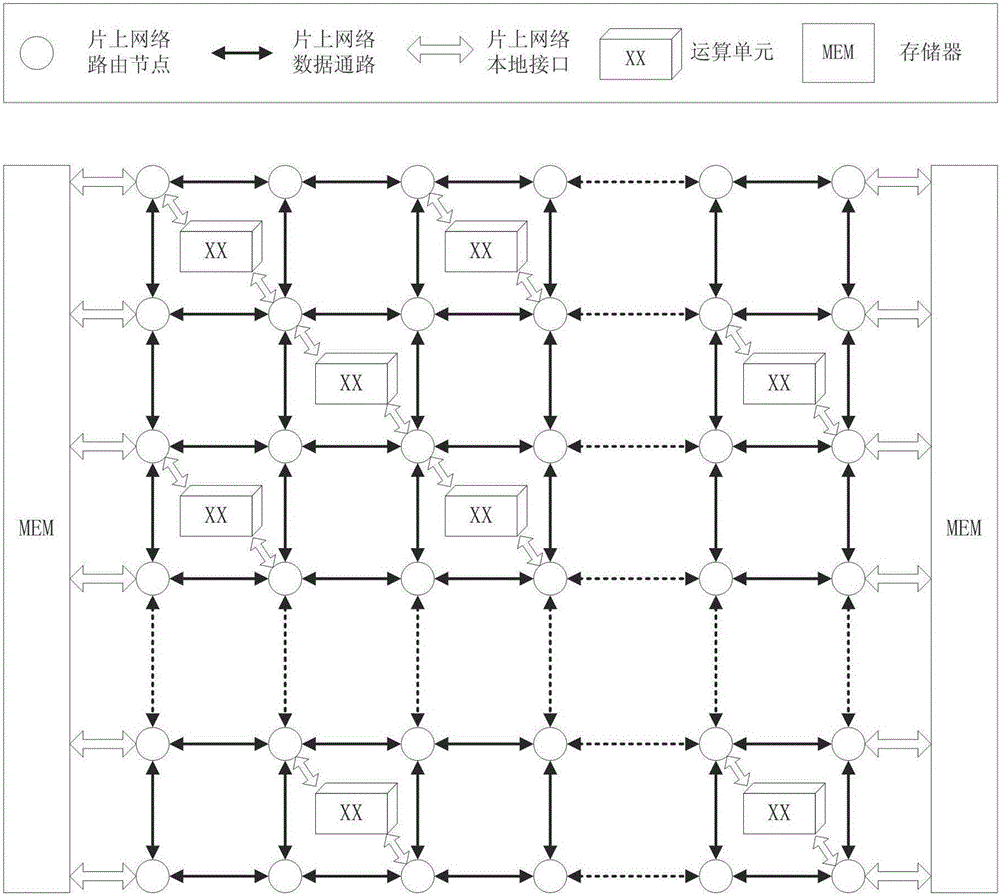

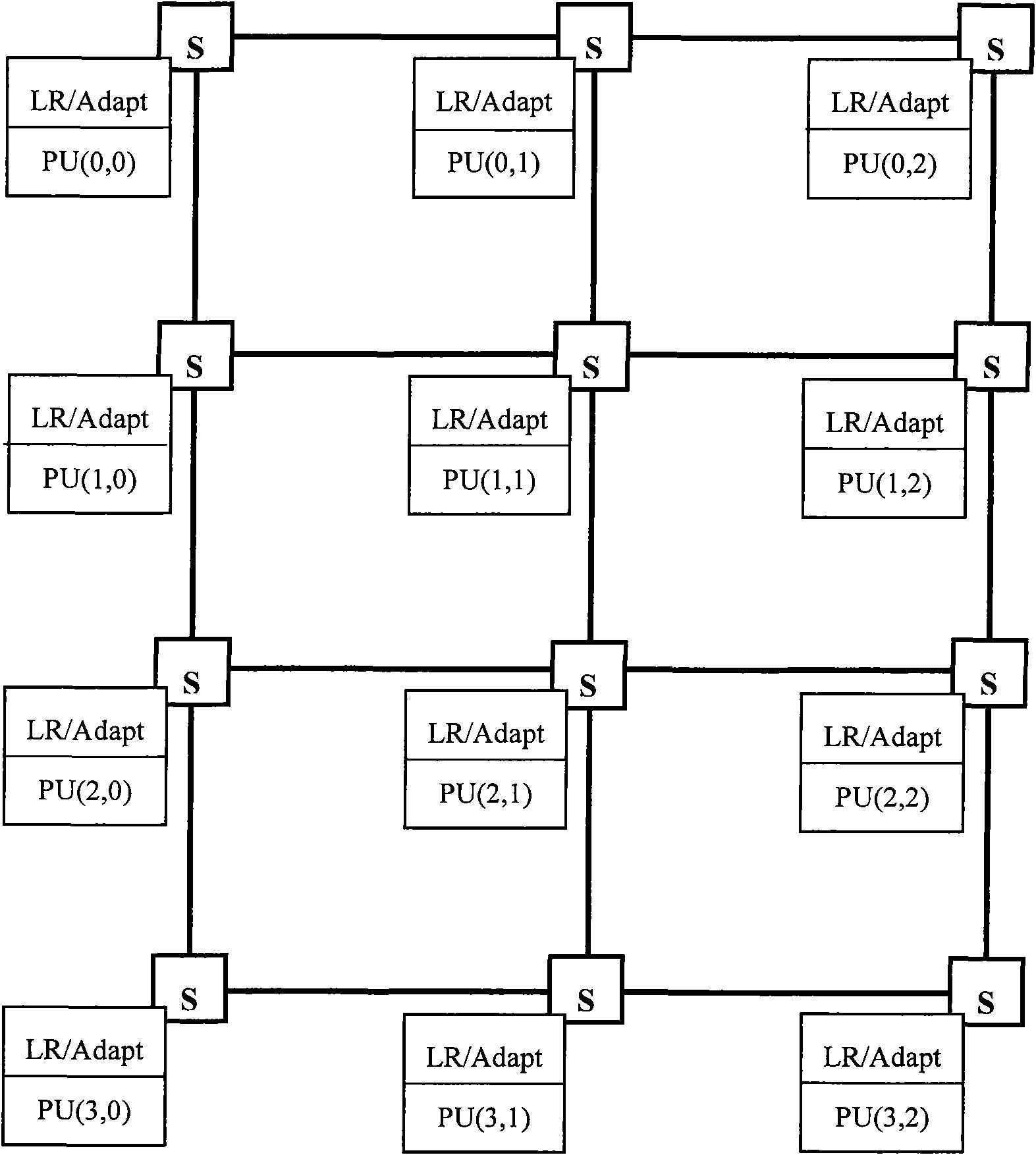

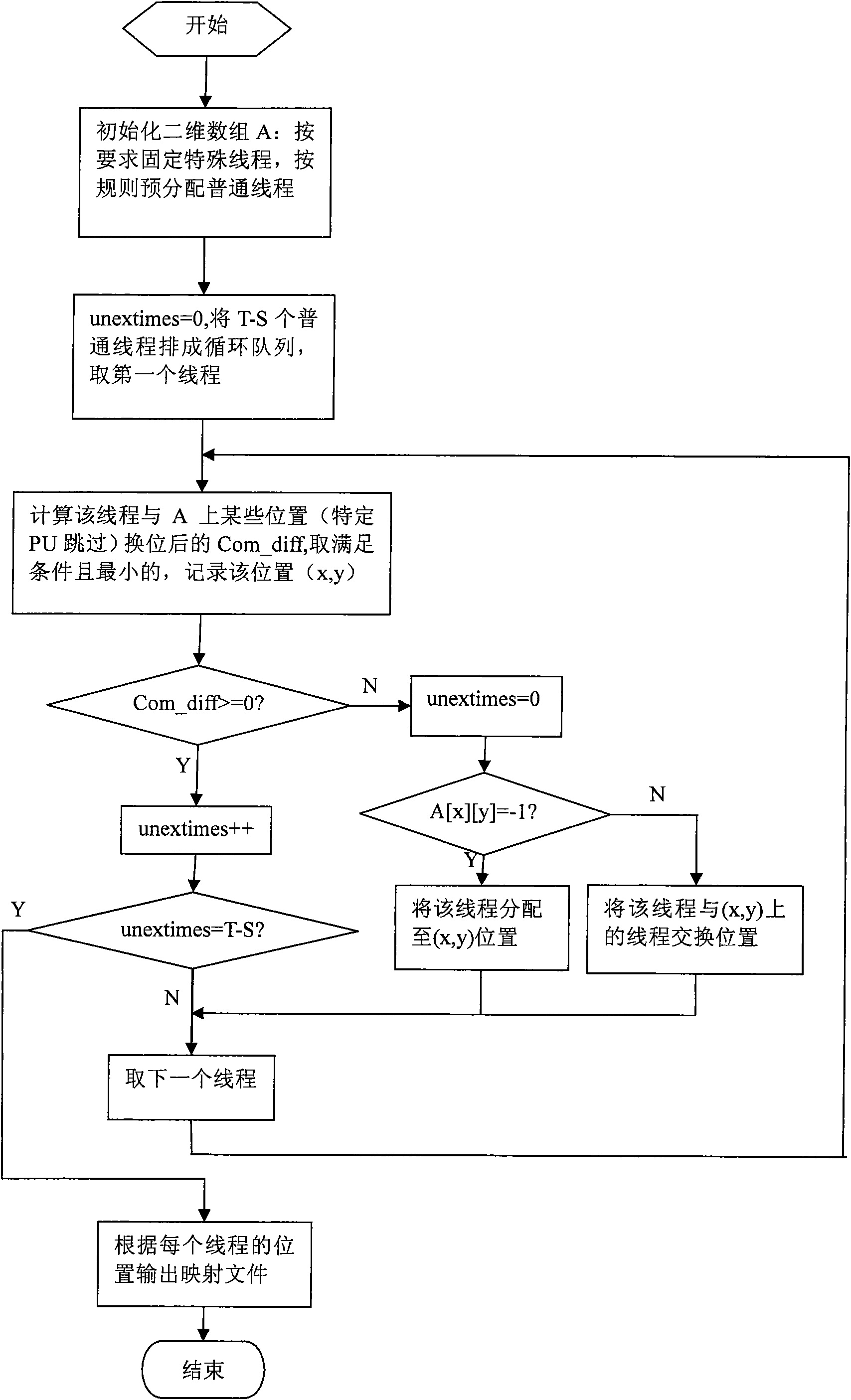

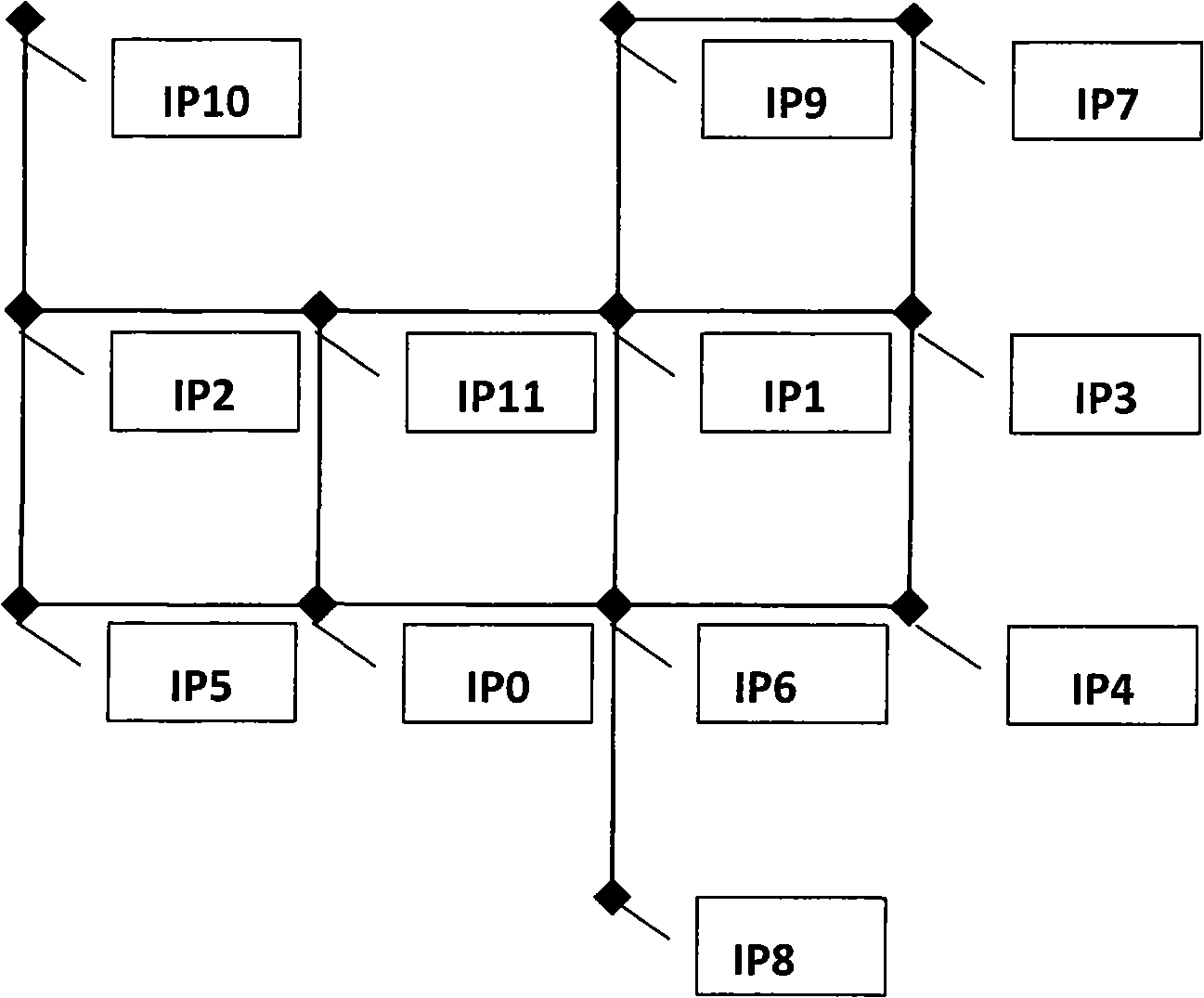

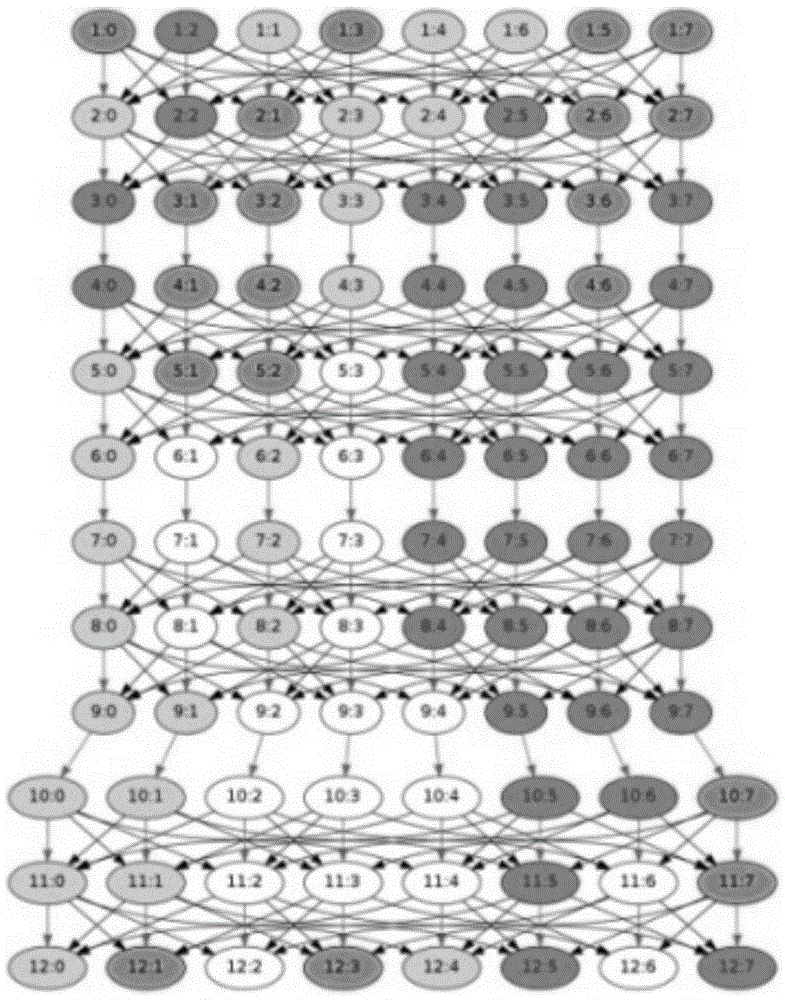

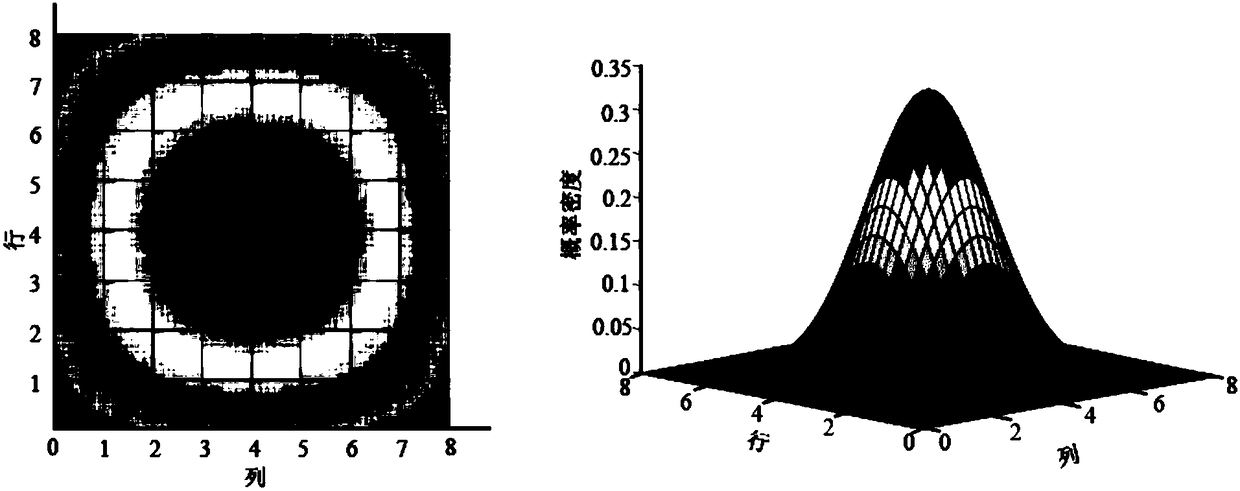

Method for mapping task of network on two-dimensional grid chip

ActiveCN101625673AImprove convenienceReduce time complexityDigital computer detailsMultiprogramming arrangementsNetwork onTask mapping

The invention discloses a method for mapping a task of a network on a two-dimensional grid chip. The method comprises the following steps: 1) pre-distributing expected positions of all threads on a two-dimensional grid, wherein the threads comprise common threads which can be mapped to any position; 2) calculating variation Com_diff of a general communication power consumption factor after each common thread is exchanged with a close-by common thread on the expected position of the common thread or an idle position, wherein the common thread executes exchange with the common thread or the idle position which minimizes the Com_diff, until exchanges of all the common threads and the close-by threads on the expected positions of the common threads or the idle positions lead the Com_diff to be more than or equal to zero; and 3) outputting a mapping file according to the positions of all the threads. The method has high optimization degree, and solves part of the mapping problem because users can regulate parameters by oneself to control time complexity.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

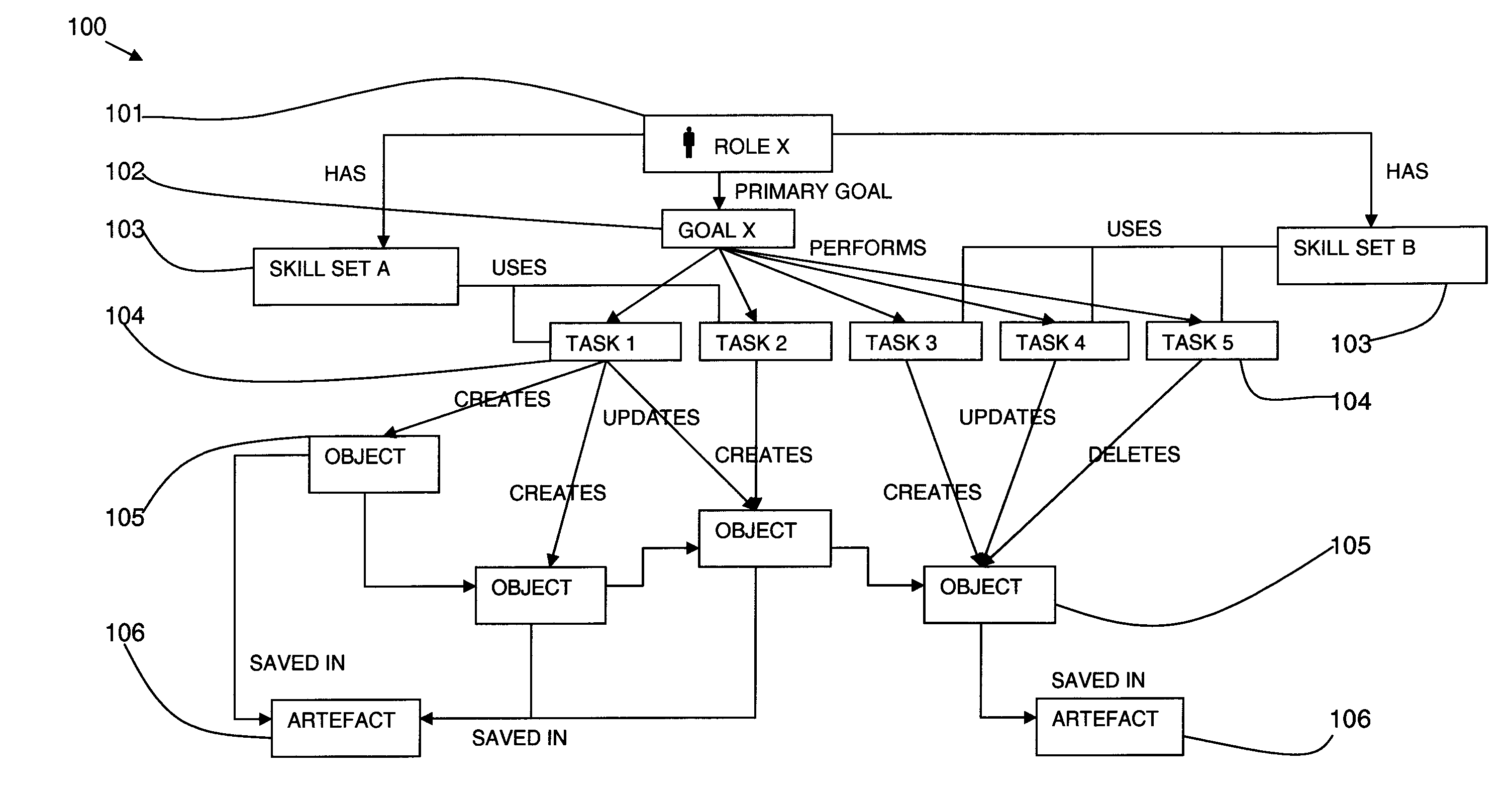

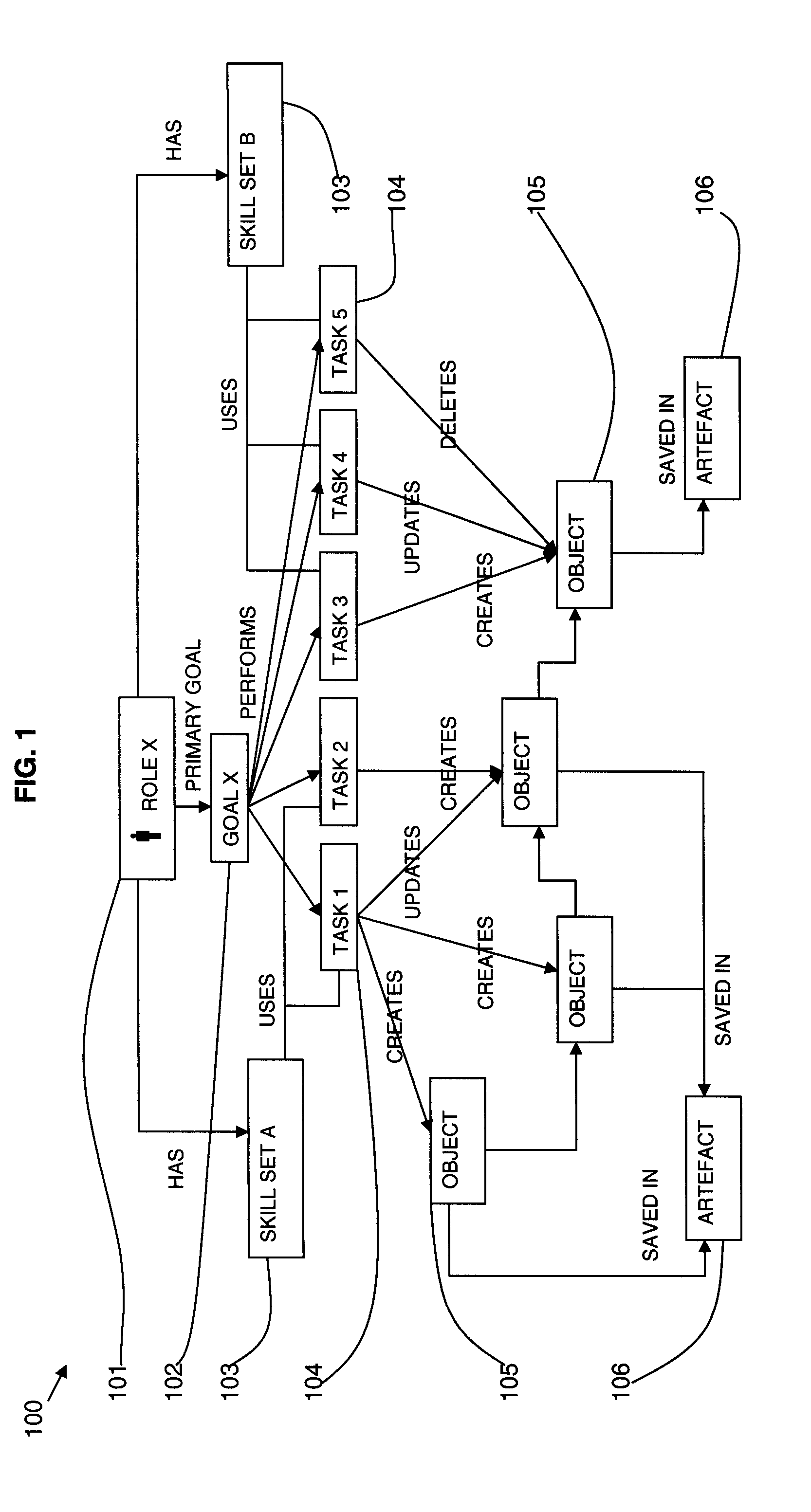

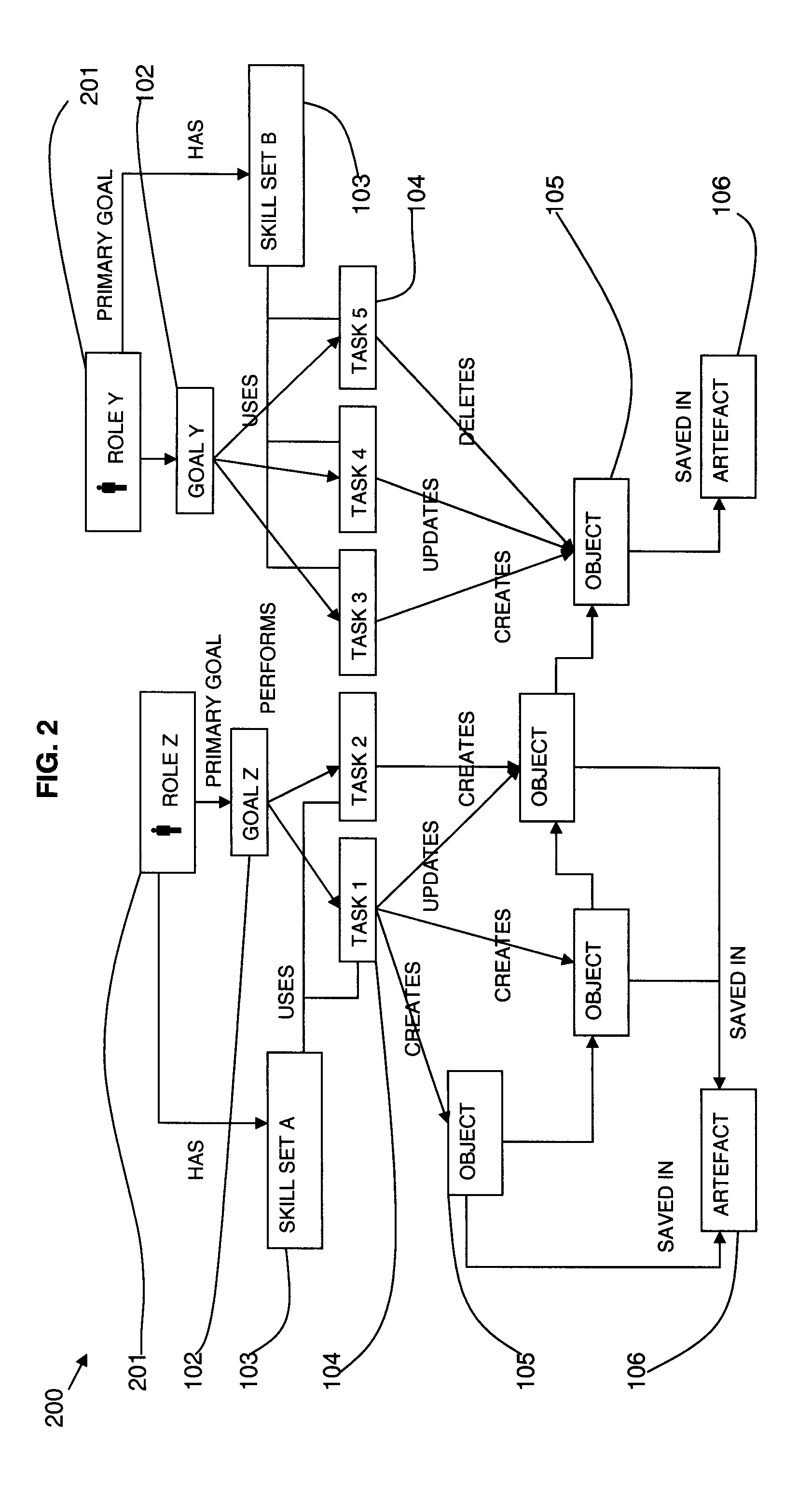

Method and system for configuring a user interface

InactiveUS20090024979A1Meet needsExecution for user interfacesMemory systemsTask mappingHuman–computer interaction

A method and system are provided for configuring a user interface to a user role. A user model defines one or more user roles and one or more tasks, each user role being linked to: one or more user tasks via one or more user goals, and a set of skills a user performing the role must have, wherein each task is also linked to one or more skills. A software product defines one or more software functions, and means are provided for linking each user task to a sequence of software function calls. The system includes an organization modeling tool including means for customizing the user model dynamically to alter the user role to task mapping to meet the current needs of an organization including validating the goals and sets of skills of the user model. The system also includes a display structure model including means for configuring a user interface to a user role, the display structure model being a runtime component for dynamically building control menus for groups of tasks depending on the user role of the logged on user.

Owner:IBM CORP

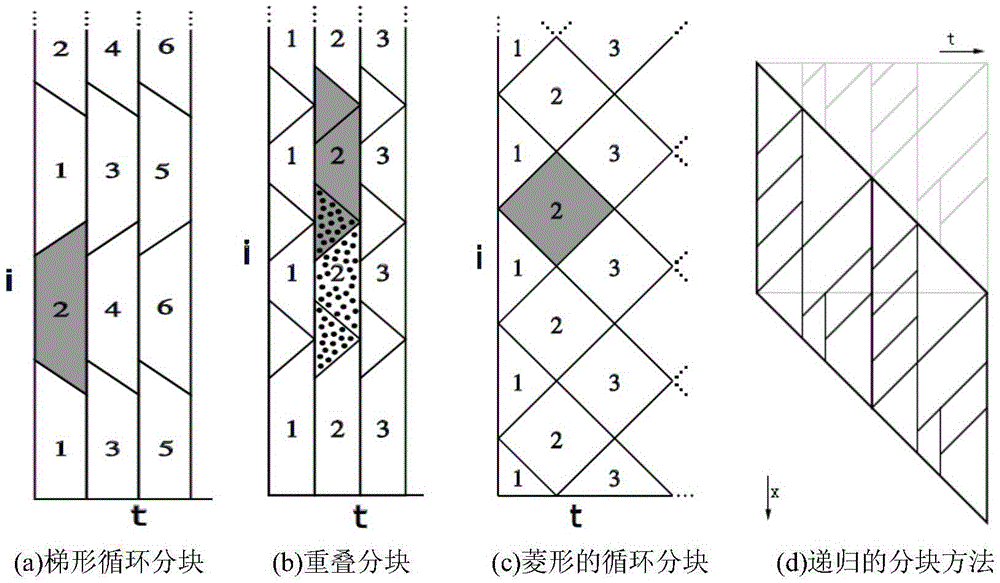

A priority packet scheduling method and system utilizing data topological information

ActiveCN105528243AAchieve reuseOptimizing Cache ReuseProgram initiation/switchingFunction pointerTask mapping

The invention provides a priority packet scheduling method and system utilizing data topological information. The method comprises the steps of obtaining an original grid space of data topological information, setting the size and the floating point precision of a grid sheet of the original grid space and generating a new grid space; according to the stencil format of the new grid space and a parallel zone, establishing a condensed task graph (four-dimensional time-space domain) and calculating a priority packet serial number of each task therein; obtaining a data piece visited by the current task, determining whether neighbor data dependence is involved through format abstract or function pointer and actual parameters , generating a corresponding mark, and identifying a cycle involving the neighbor data dependence according to the mark, wherein the cycle is effective time steps; mapping the task on the cycle to some task of the condensed task graph according to the current effective time steps, calculating the priority serial number of the current task according to the priority serial number of the latter. The priority packet scheduling of tasks is supported.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

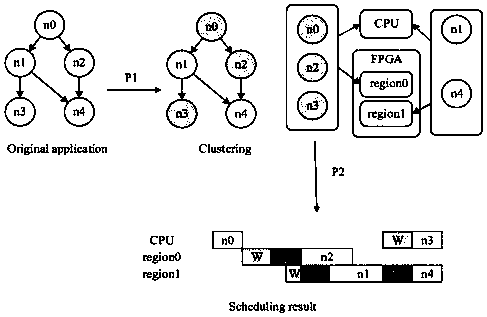

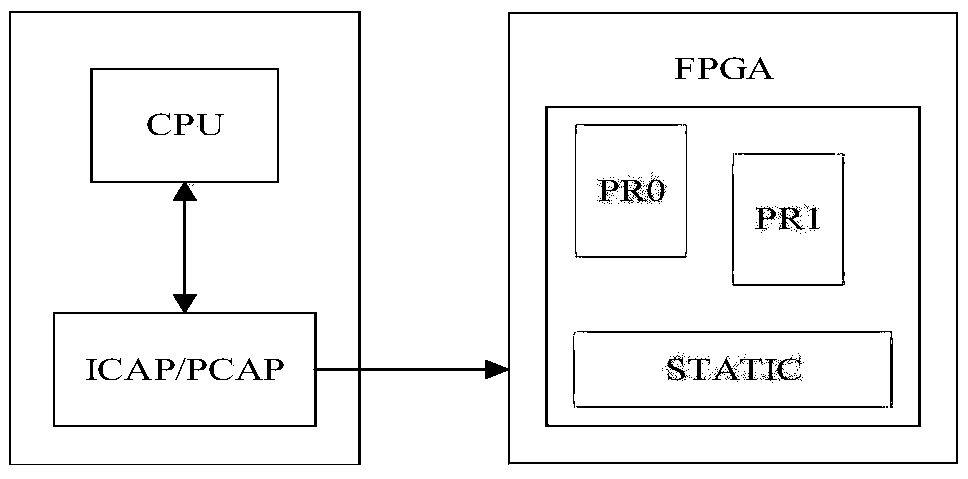

Software and hardware partitioning method for a dynamic part reconfigurable system-on-chip

ActiveCN109656872AFast solutionReduce complexityArchitecture with single central processing unitElectric digital data processingComputer hardwareComputer architecture

The invention relates to a software and hardware partitioning method for a dynamic part reconfigurable system-on-chip. The problems of task mapping, task sequencing, task scheduling, task reconstruction sequencing, inter-task communication and the like in the software and hardware partitioning problem can be solved. According to the method, a software and hardware division problem is described asa refined mathematical model, so that an optimal solution can be obtained by adopting a solver with less time complexity. Meanwhile, according to the method disclosed by the invention, the reconstruction time of all tasks allocated to the reconfigurable area of the FPGA dynamic part is taken into consideration, so that the coincidence degree of the result and the actual application is improved, and the method has higher application value.

Owner:NAT UNIV OF DEFENSE TECH

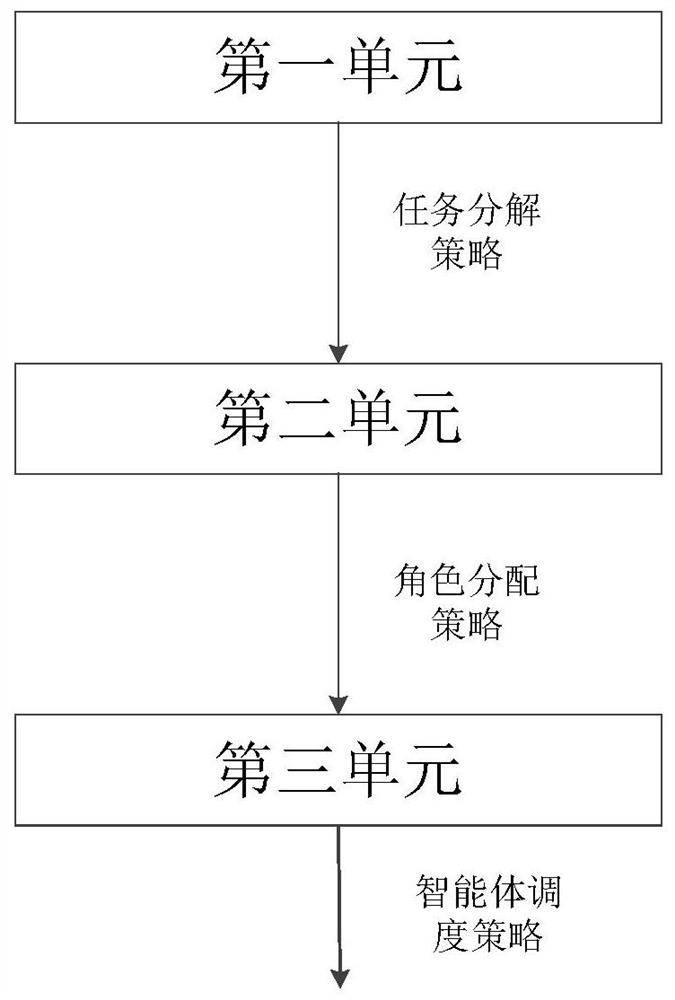

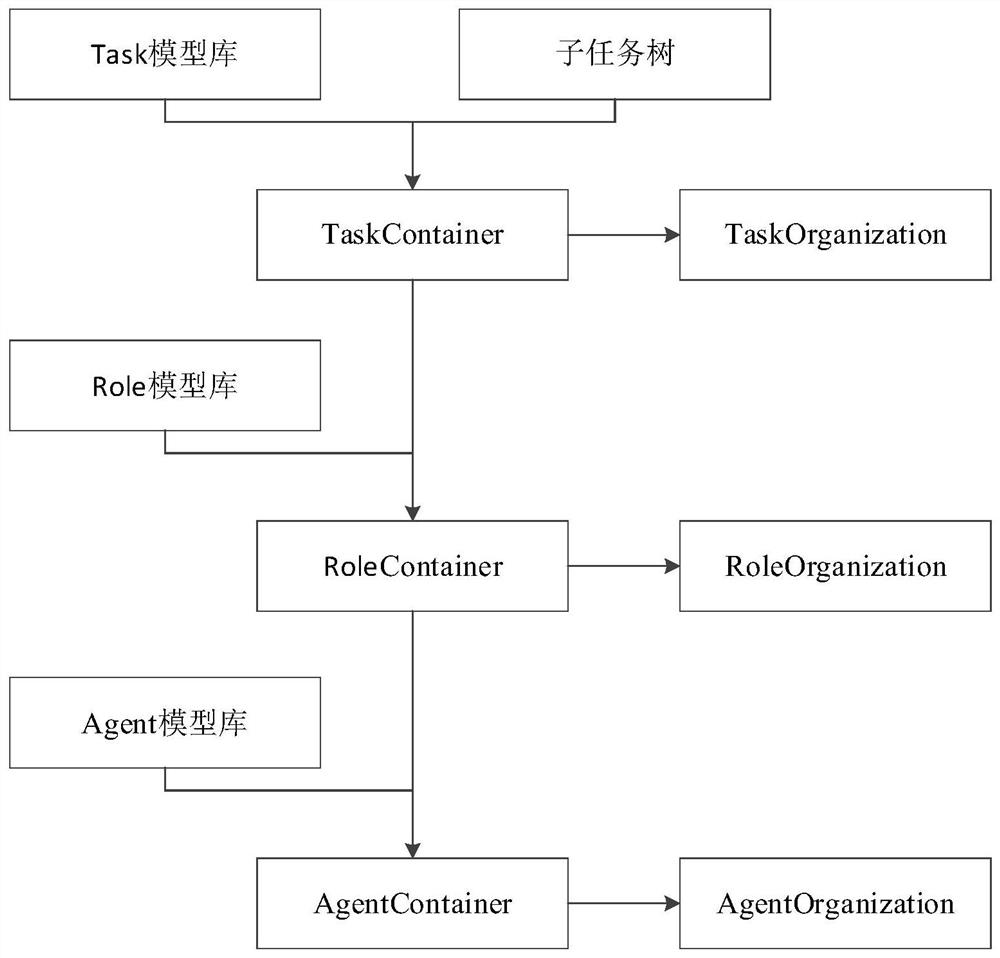

Role-based multi-agent task coordination system

The invention belongs to the field of multi-agent scheduling, particularly relates to a role-based multi-agent task cooperation system, and aims to solve the problem of low robustness of an existing multi-agent task cooperation system. The system comprises a first unit, a second unit and a third unit, the first unit is configured to split the input task into a group of sub-tasks, establish an organization relationship among the sub-tasks based on the behavior tree, obtain a task decomposition strategy and construct a sub-task tree, the second unit is configured to perform role allocation of the sub-tasks according to the sub-task tree based on a preset role task mapping relationship and an allocation algorithm to obtain a role allocation strategy, and the third unit is configured to configure the role after task configuration to the agent based on a preset agent role relationship and a distribution algorithm, and output an agent scheduling strategy. According to the invention, task modeling and role allocation in a complex environment are realized, and the robustness of the multi-agent task cooperation system is improved.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI +1

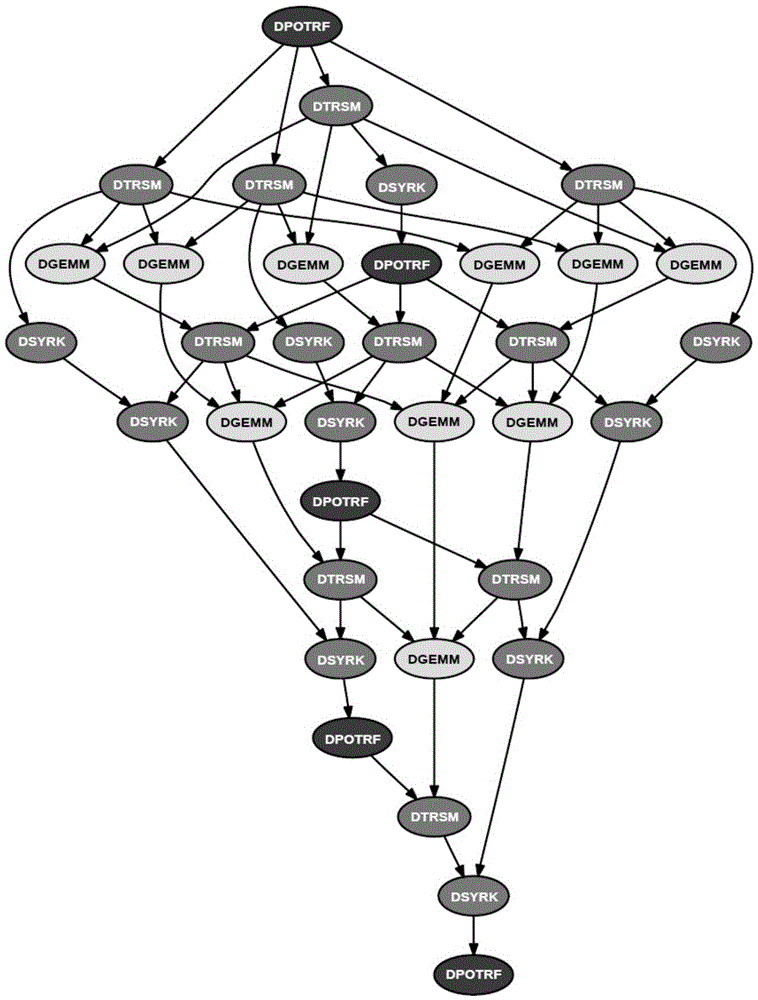

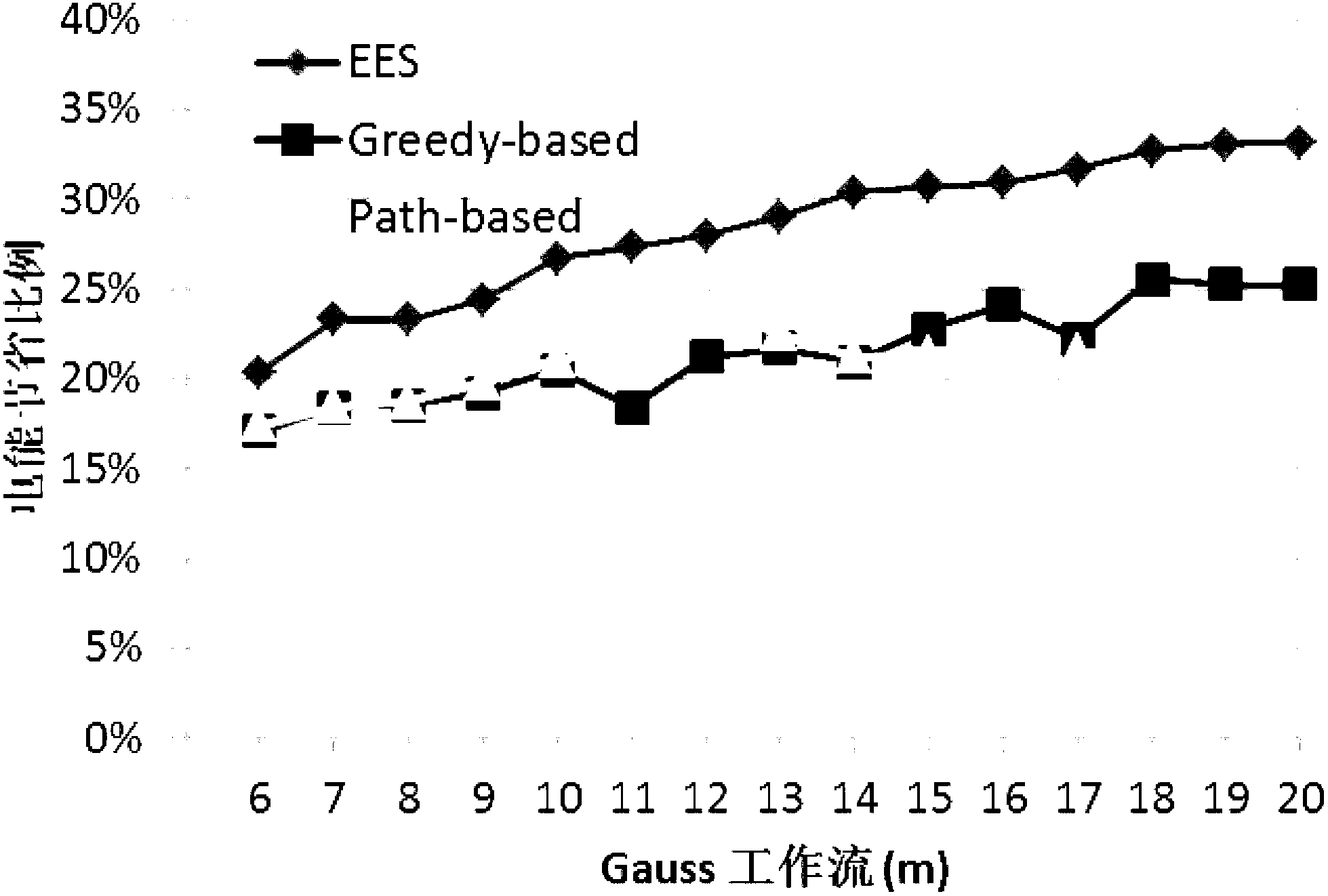

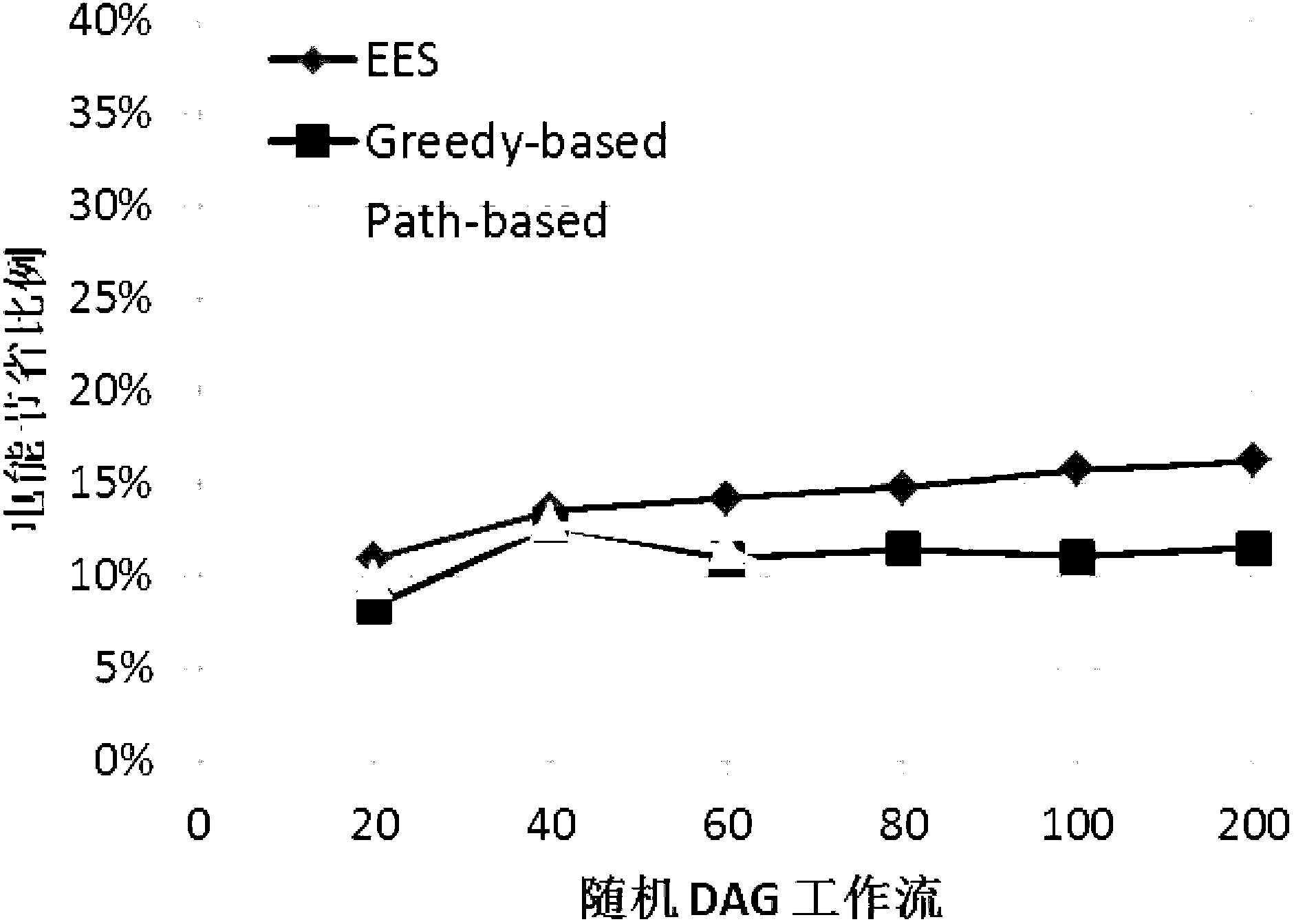

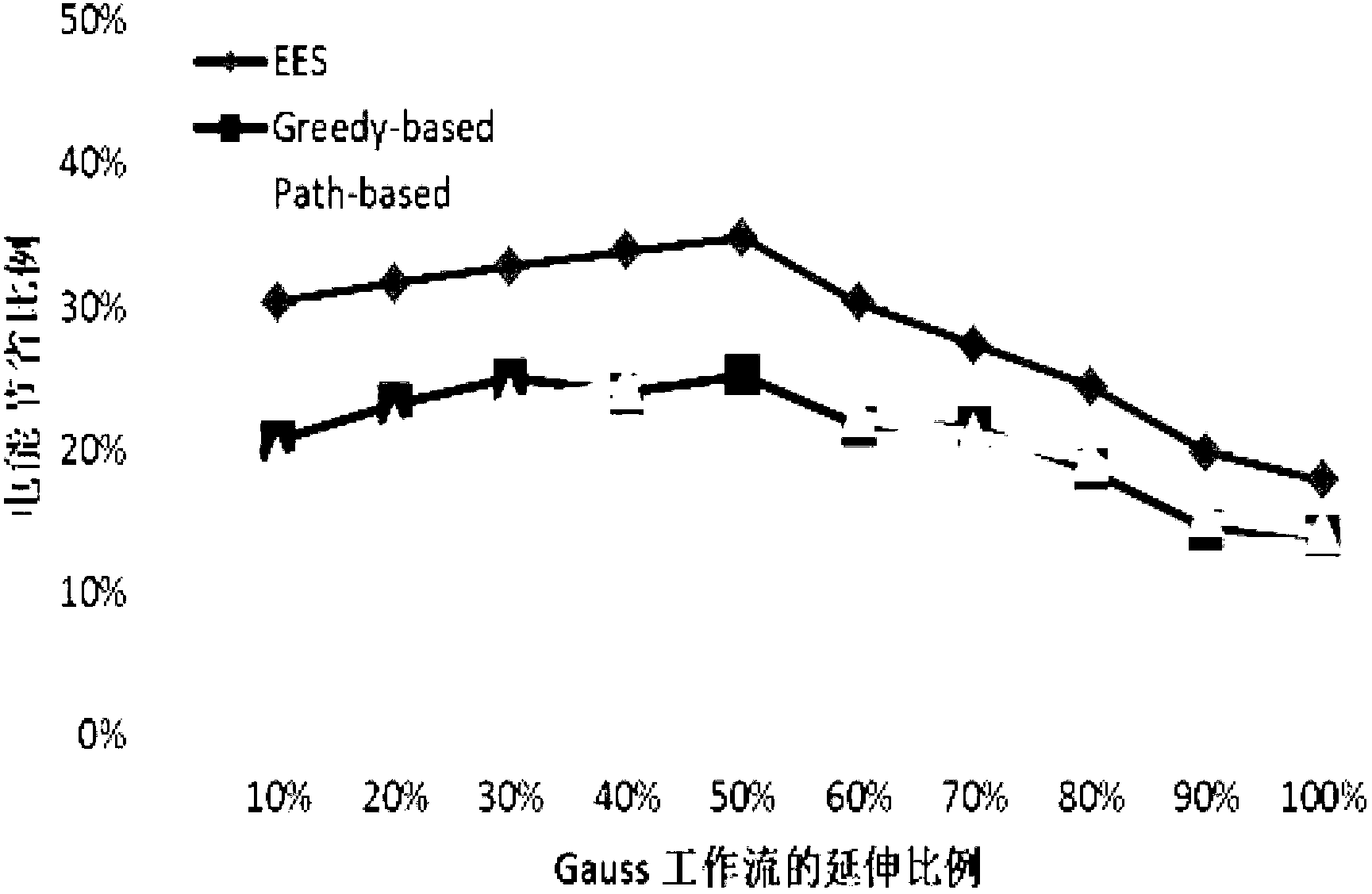

DVFS-based energy-saving dispatching method for large-scale parallel tasks

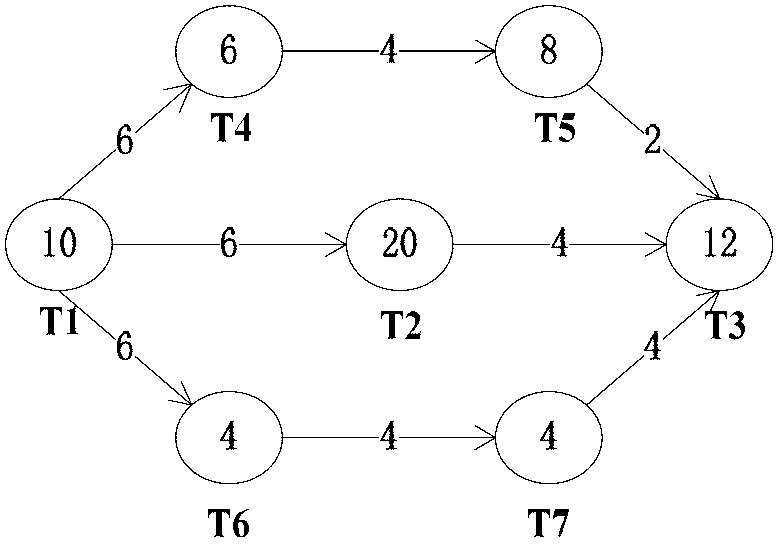

InactiveCN103235640ADoes not affect execution timeReduce energy costsEnergy efficient ICTResource allocationTask mappingParallel computing

The invention provides a DVFS (Dynamic Voltage and Frequency Scaling)-based energy-saving dispatching method for large-scale parallel tasks, and belongs to the field of distributed computation. The method comprises the following steps: firstly, task mapping stage: setting the original states of all the processors as the states running at the maximal voltage and the maximal frequency, then obtaining the overall execution time of the dispatching result of the directed acyclic graph for the task mapping stage through computation MHEFT; and secondly, task expansion stage: performing expansion optimization of the execution voltage and frequency for the tasks, and lowering energy consumption and cost without affecting the overall performance. The method provided by the invention can remarkably reduce the energy consumption and cost caused by parallel tasks, without affecting the overall execution time of the large-scale parallel tasks.

Owner:BEIJING UNIV OF POSTS & TELECOMM

Method for generating and assigning tasks and obtaining task completion assessment through Internet of Things

InactiveCN108711011ARealize intelligent applicationSolve task executionResourcesTask completionClosed loop

The present invention discloses a method for generating and assigning tasks and obtaining task completion assessment through the Internet of Things. The method specifically comprises: establishing a rule set of an information mapping task, establishing a rule set of a corresponding organization or person to the task mapping, regularly collecting the Internet of Things data, and according to the rule set of the information mapping task, generating a task set; and according to the rule set of the corresponding organization or person to the task mapping, assigning the task to the organization orthe person, receiving and starting the corresponding task, and forming a transaction block after the related task is received and confirmed. According to the method disclosed by the present invention,the task completion, including remuneration, responsibility, and credit information can be comprehensively assessed; the intelligent application of the integrated information of the Internet of Things can be really realized through the closed loop rolling management from the information, task generation, task assignment to task completion assessment; the problems of task execution and informationsecurity in the intelligent application process of the Internet of Things are solved; and an Internet of Things task performer can obtain reasonable ecological assessment.

Owner:HANGZHOU TRACESOFT

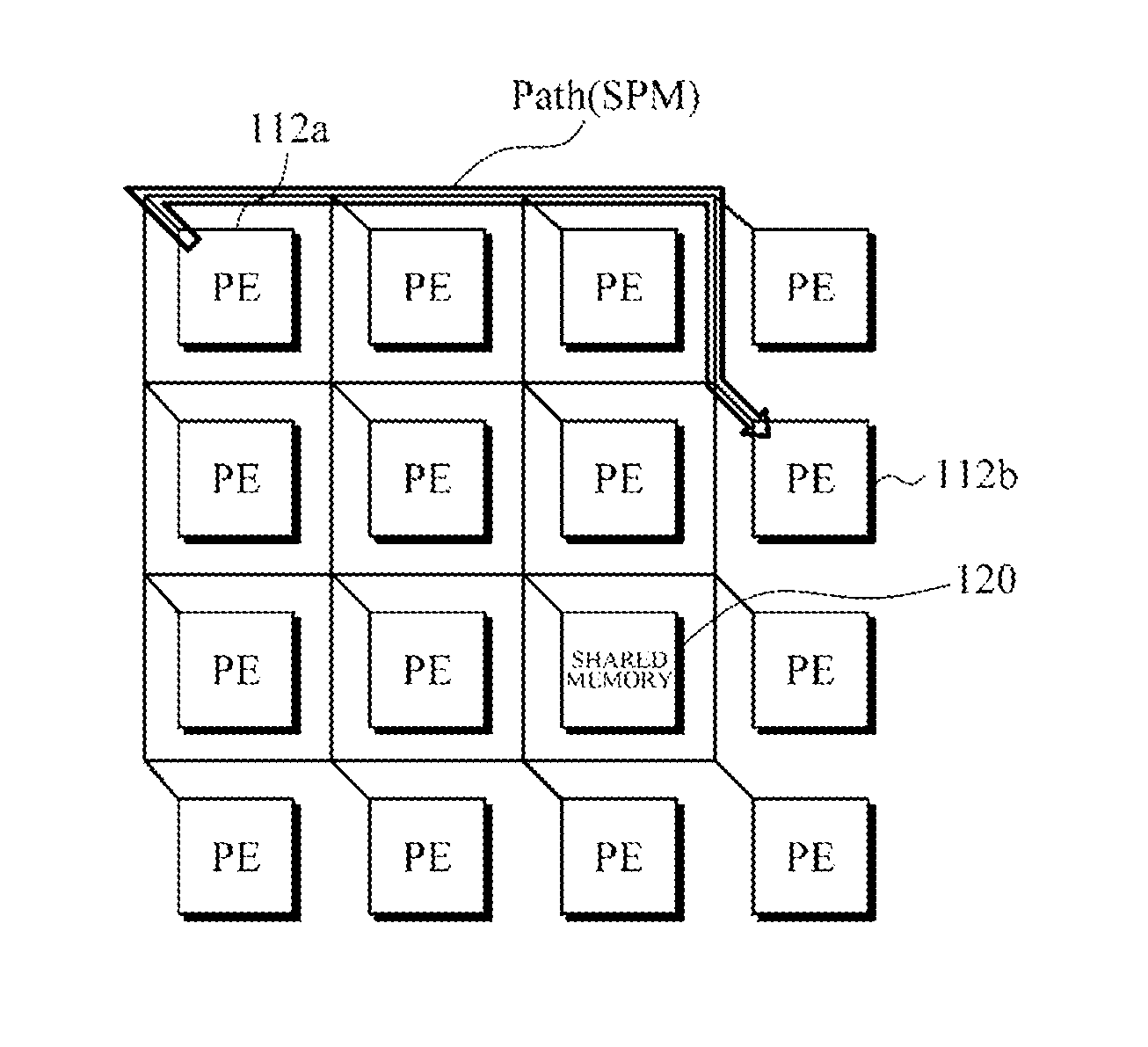

Method of compiling program to be executed on multi-core processor, and task mapping method and task scheduling method of reconfigurable processor

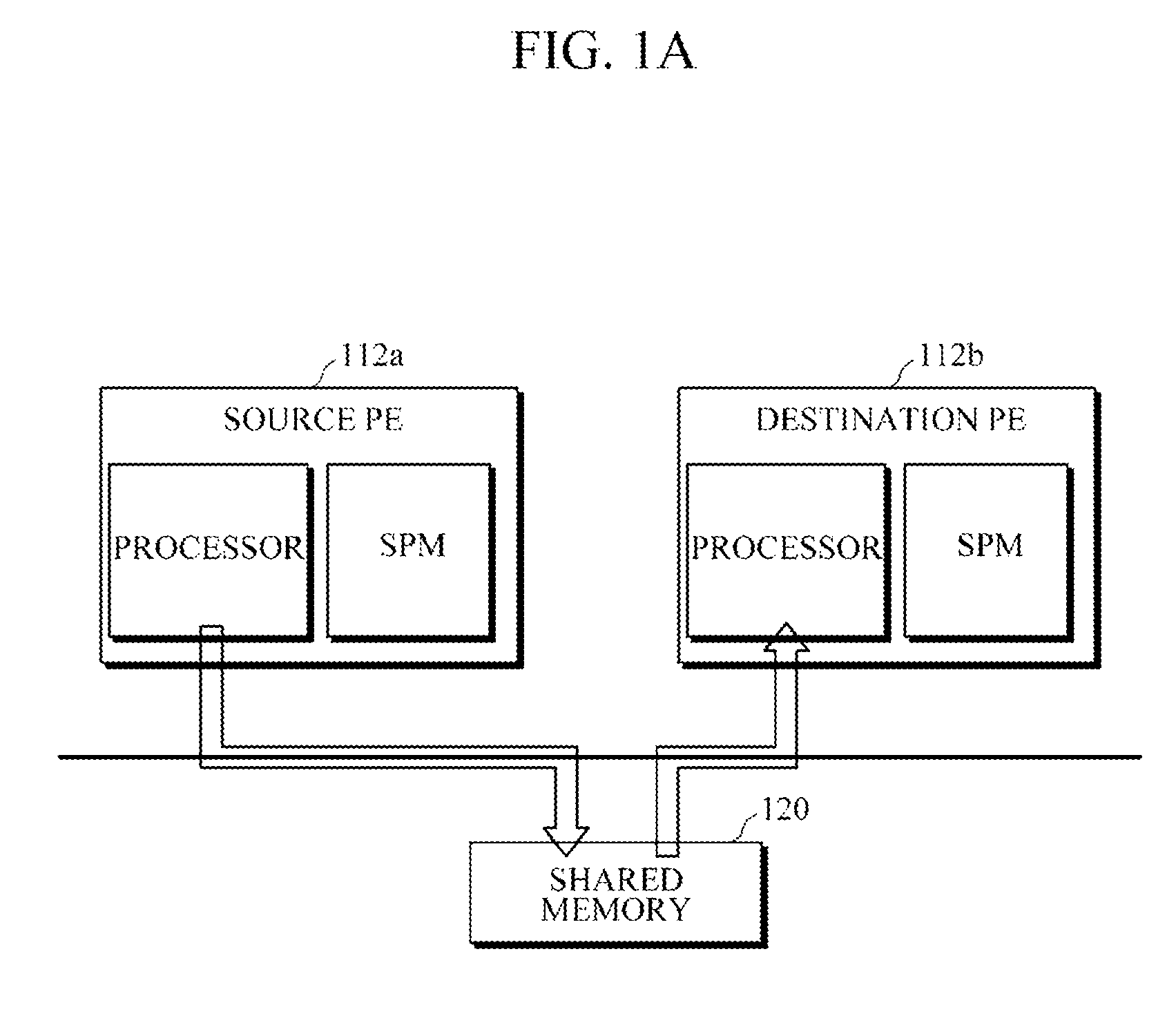

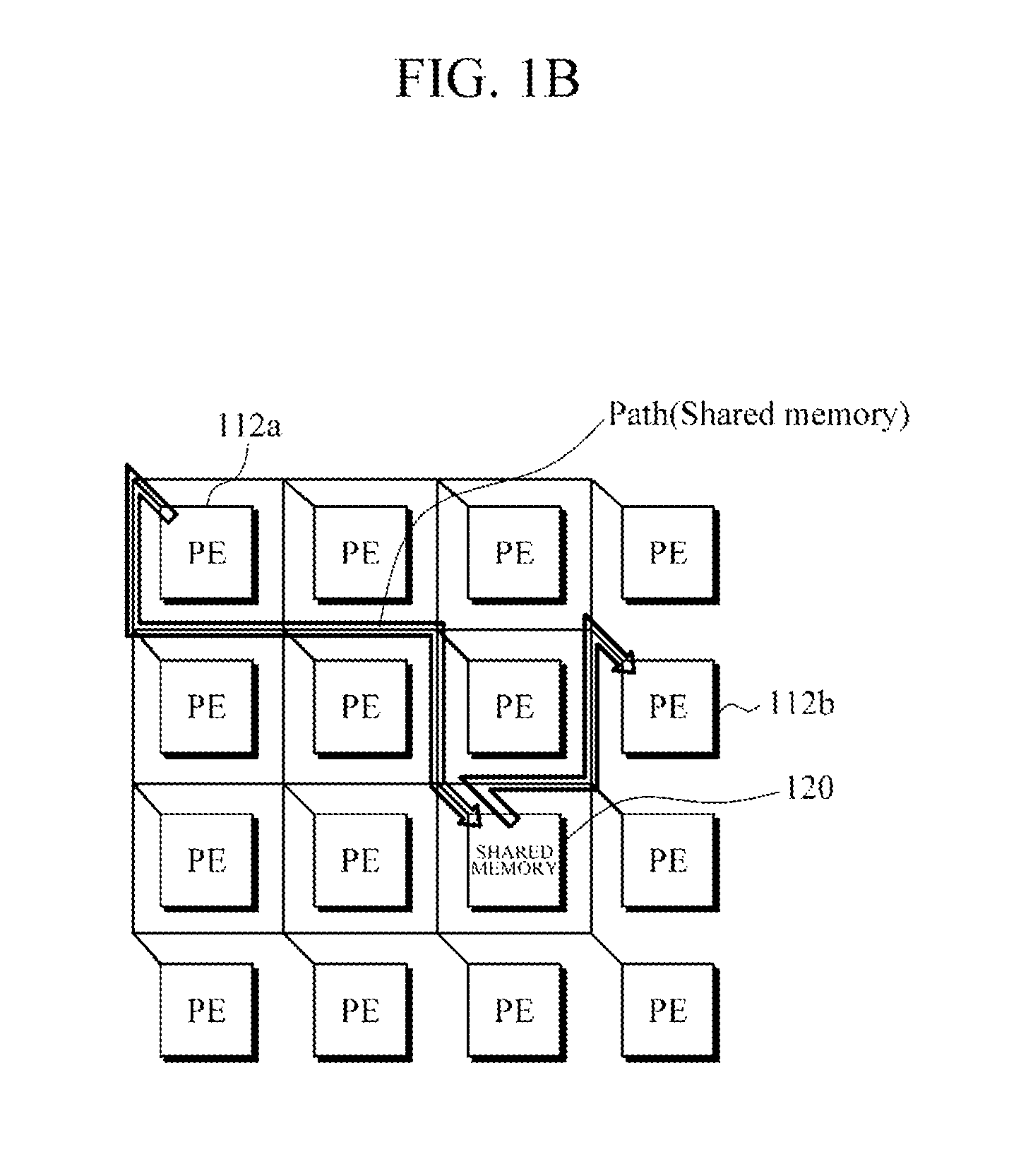

A method of compiling a program to be executed on a multicore processor is provided. The method may include generating an initial solution by mapping a task to a source processing element (PE) and a destination PE, and selecting a communication scheme for transmission of the task from the source PE to the destination PE, approximately optimizing the mapping and communication scheme included in the initial solution, and scheduling the task, wherein the communication scheme is designated in a compiling process.

Owner:SAMSUNG ELECTRONICS CO LTD +1

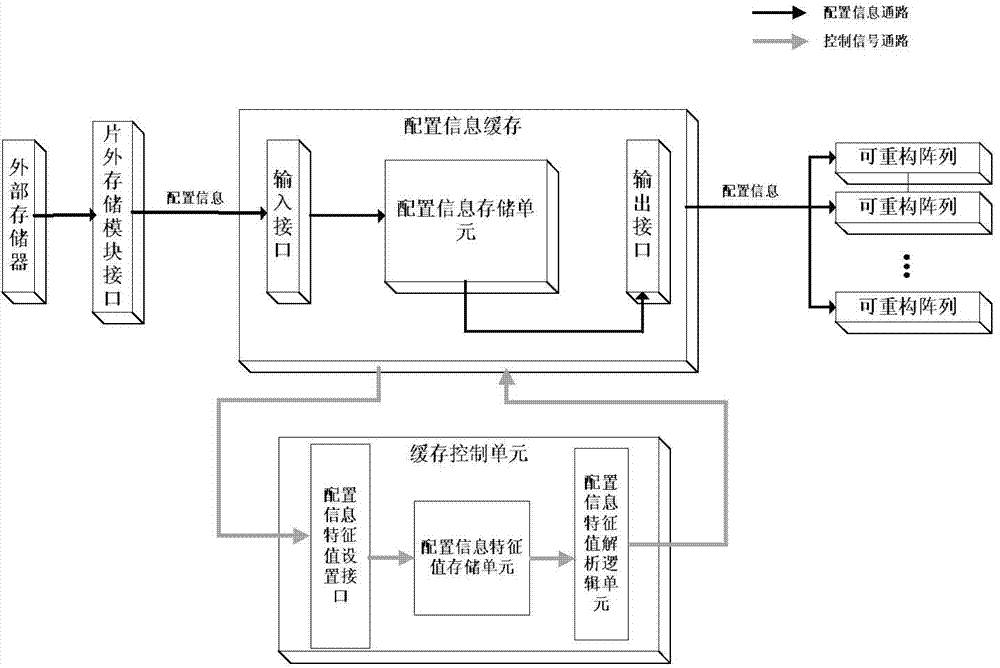

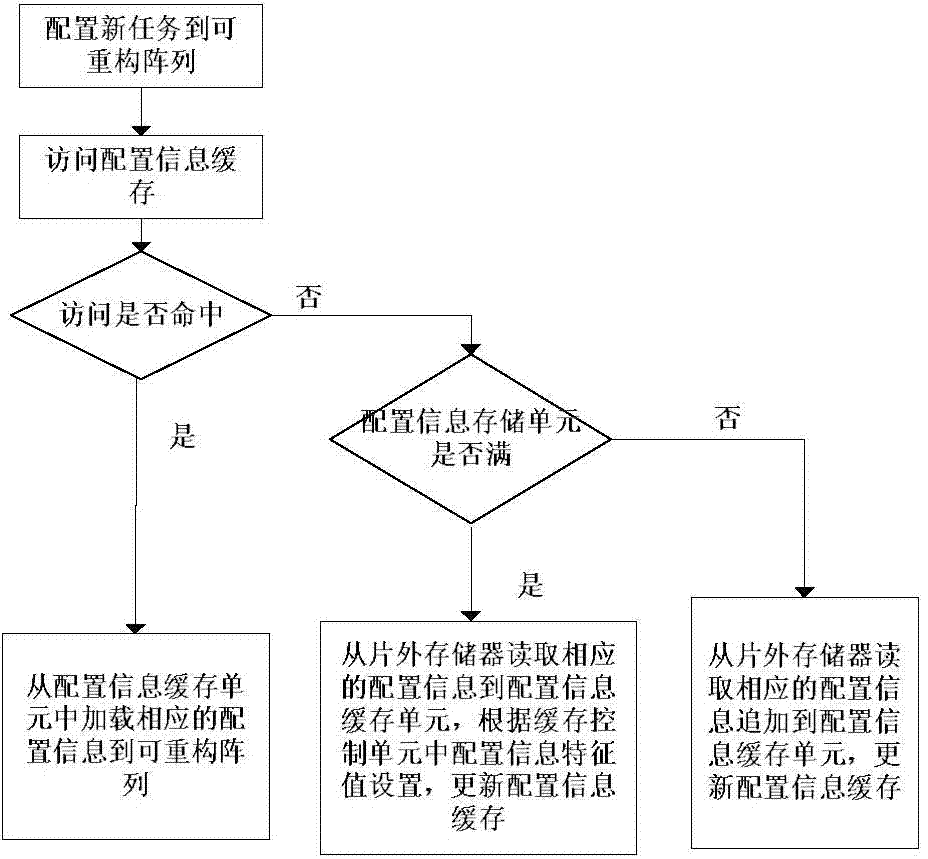

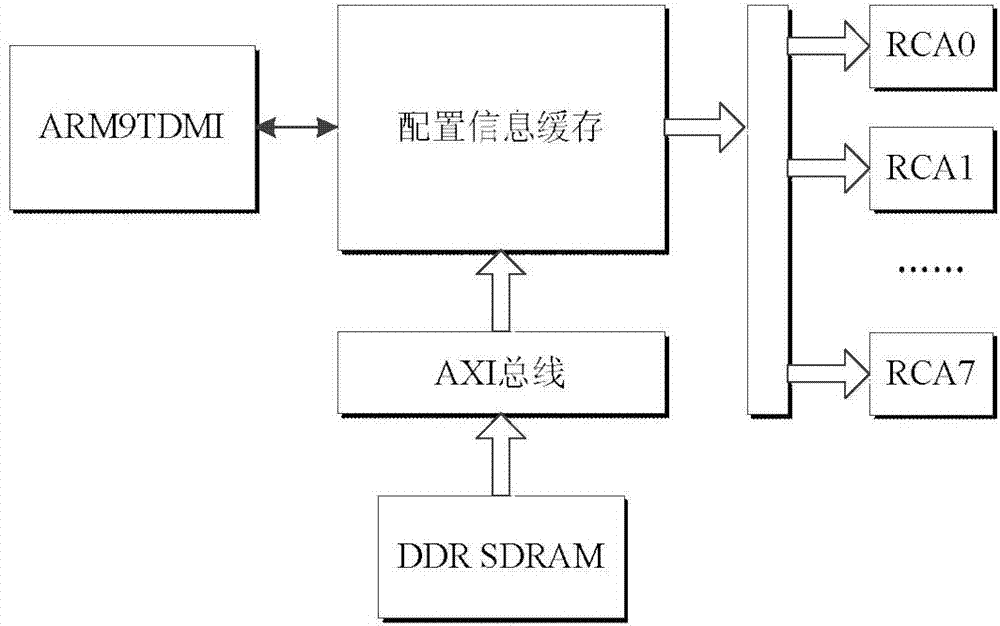

Controller for realizing configuration information cache update in reconfigurable system

ActiveCN103488585AImprove dynamic refactoring efficiencyIncrease profitMemory adressing/allocation/relocationProgram loading/initiatingExternal storageMemory interface

The invention discloses a controller for realizing configuration information cache update in a reconfigurable system. The controller comprises a configuration information cache unit, an off-chip memory interface module and a cache control unit, wherein the configuration information cache unit is used for caching configuration information which can be used by a certain reconfigurable array or some reconfigurable arrays within a period of time, the off-chip memory interface module is used for reading the configuration information from an external memory and transmitting the configuration information to the configuration information cache unit, and the cache control unit is used for controlling the reconfiguration process of the reconfigurable arrays. The reconfiguration process includes: mapping subtasks in an algorithm application to a certain reconfigurable array; setting a priority strategy of the configuration information cache unit; replacing the configuration information in the configuration information cache unit according to an LRU_FRQ replacement strategy. The invention further provides a method for realizing configuration information cache update in the reconfigurable system. Cache is updated by the aid of the LRU_FRQ replacement strategy, a traditional mode of updating the configuration information cache is changed, and the dynamic reconfiguration efficiency of the reconfigurable system is improved.

Owner:SOUTHEAST UNIV

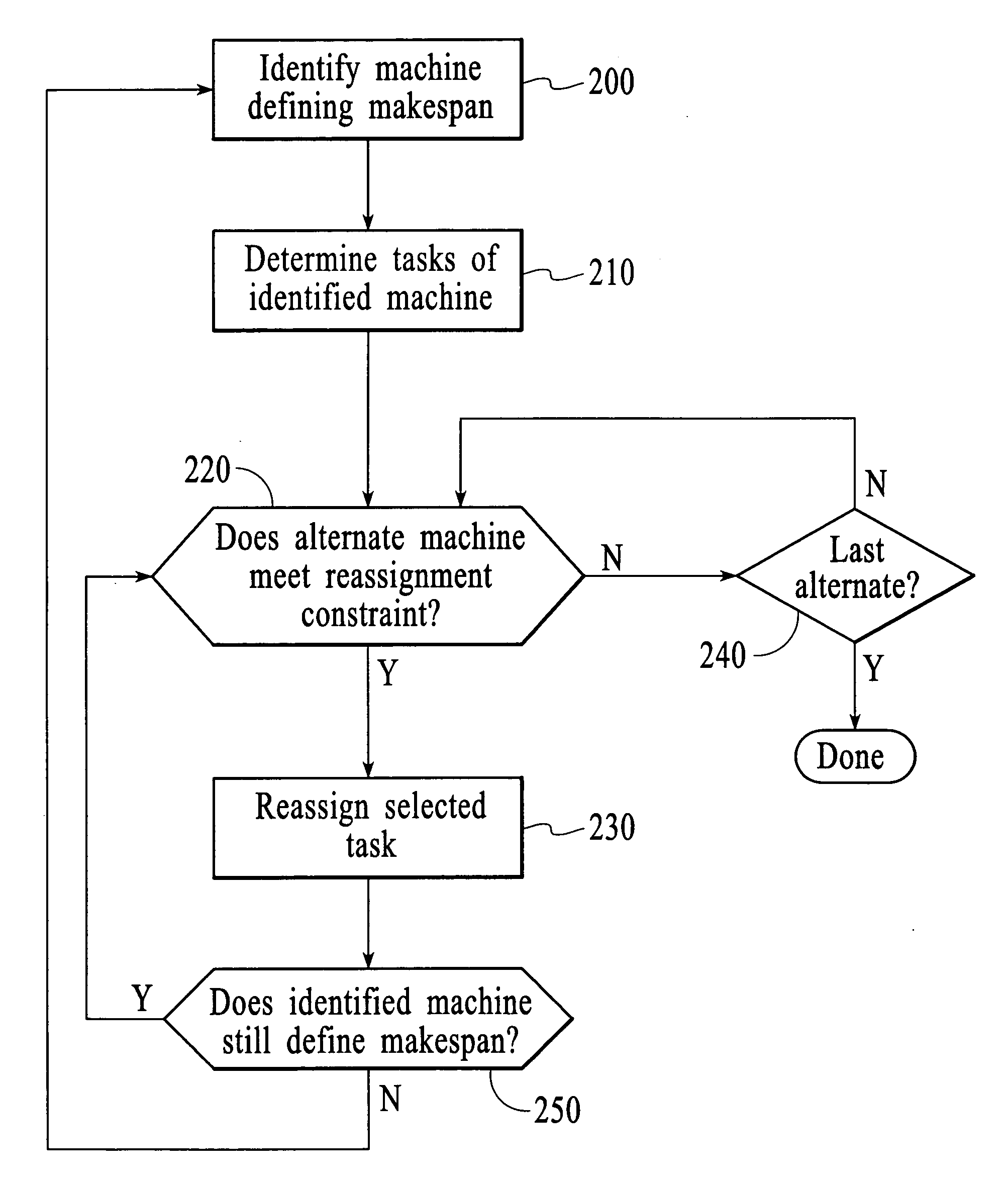

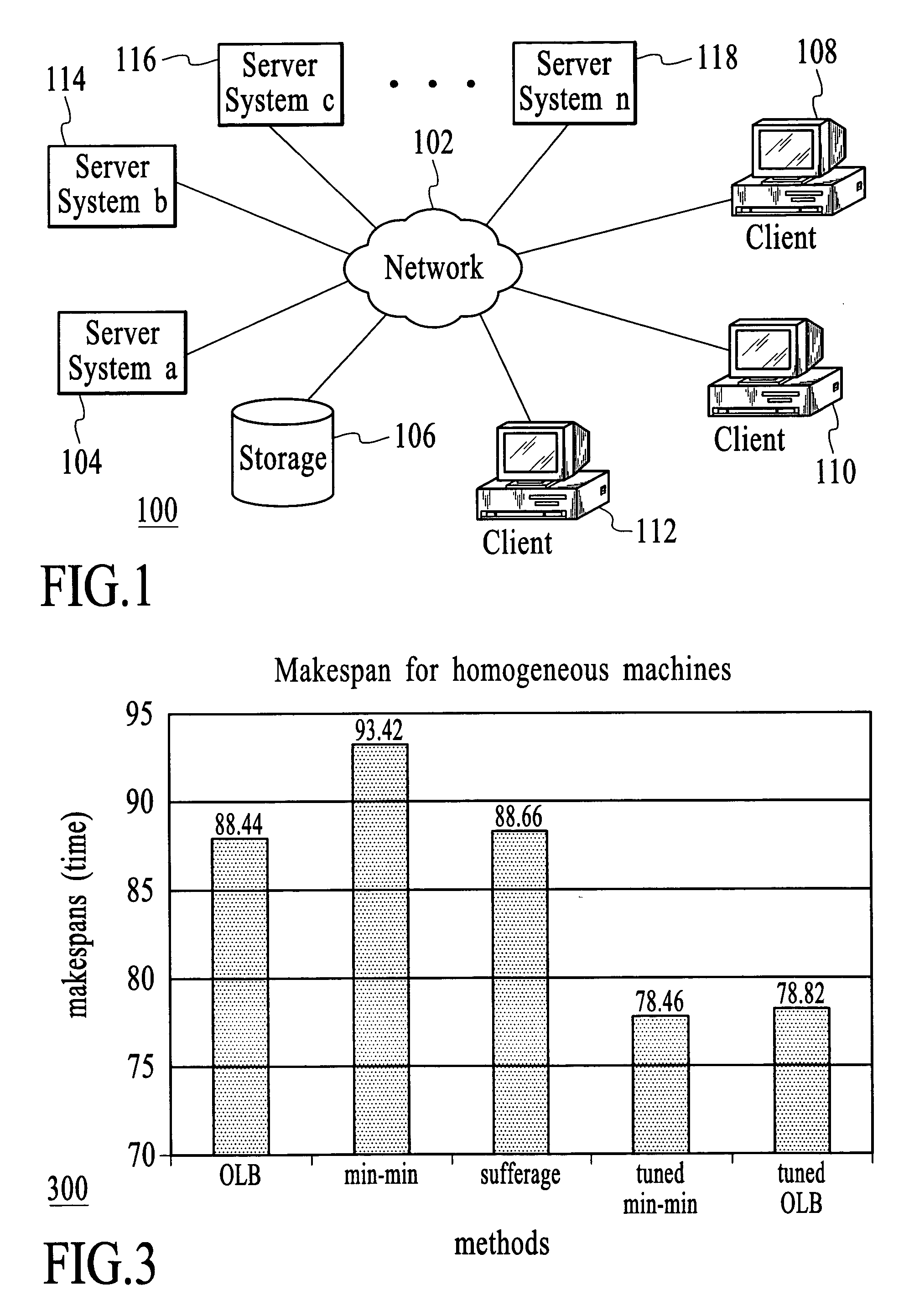

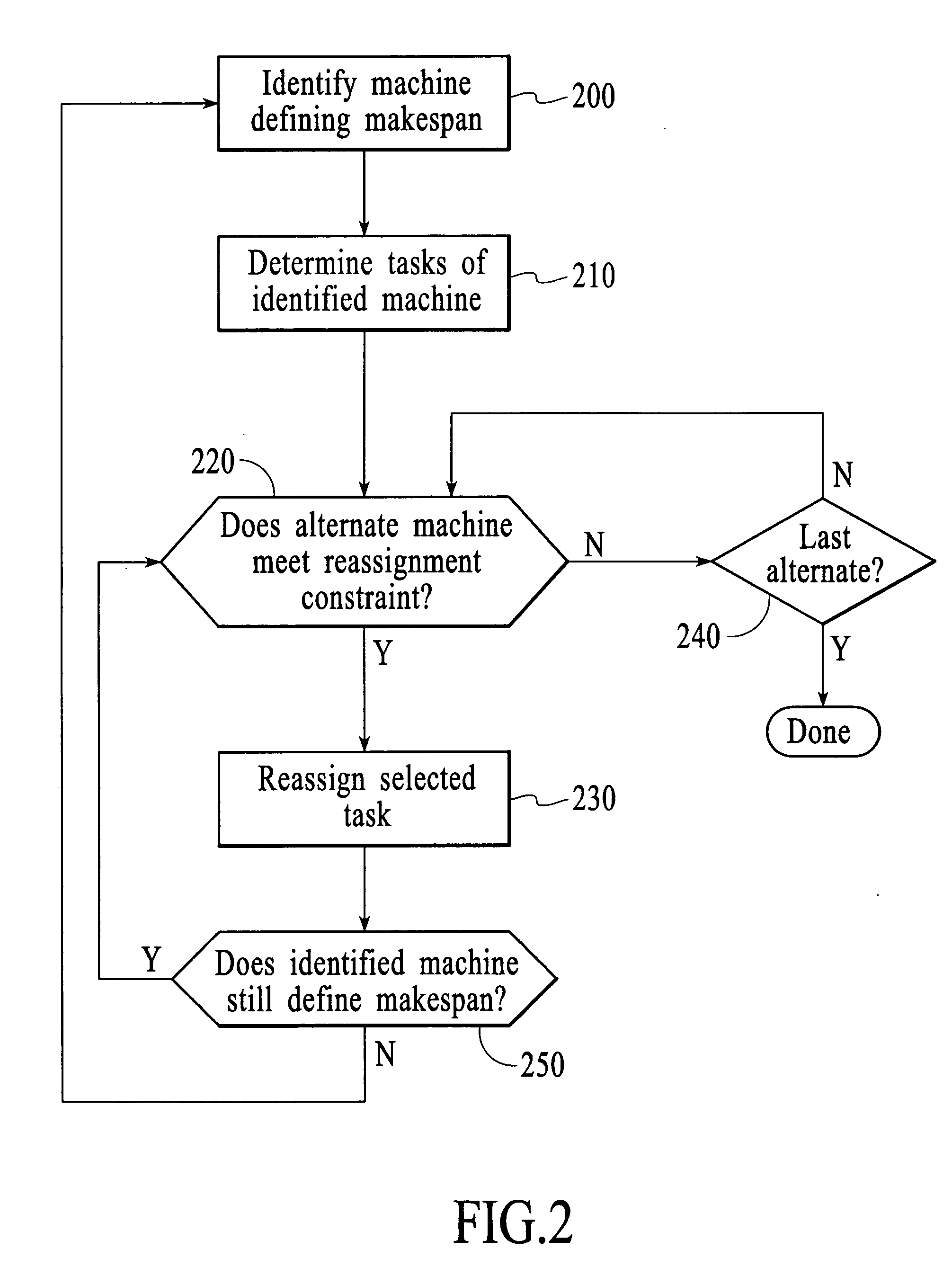

Method and system for task mapping to iteratively improve task assignment in a heterogeneous computing system

InactiveUS20060212875A1Improve task assignmentMultiprogramming arrangementsMemory systemsTask mappingSymmetric multiprocessor system

Method and system aspects for mapping tasks to iteratively improve task assignment in a heterogeneous computing (HC) system include identifying a current machine that defines a makespan in the HC system. Further included is the reassigning of at least one task from the current machine to at least one alternate machine in the HC system according to a predefined reassignment constraint. Reassigning also includes reassigning the at least one task when the at least one alternate machine can perform the at least one task in addition to previously assigned work while finishing in less time than the time of the makespan reduced by time required for the task being reassigned.

Owner:RICOH KK

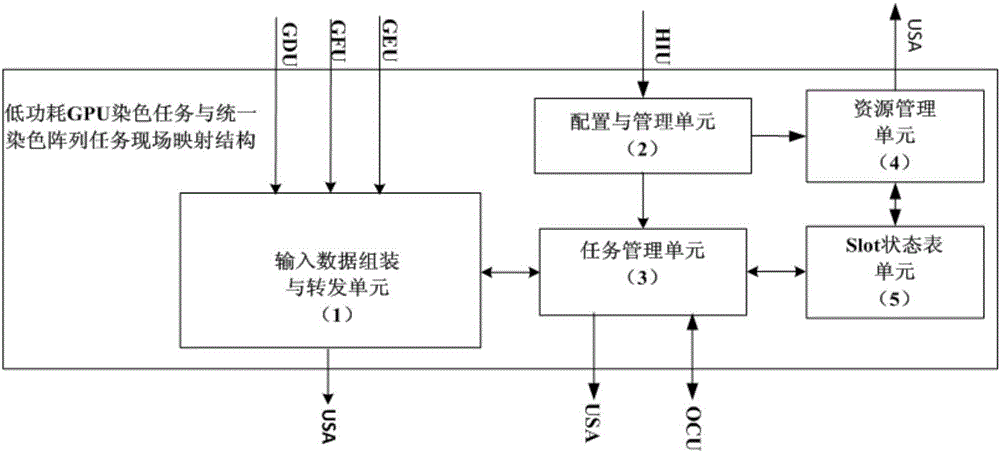

Low power consumption GPU (Graphic Process Unit) staining task and uniform staining array task field mapping structure

ActiveCN106651744AMapping implementationSet configuration parameters in real timeResource allocationProcessor architectures/configurationStainingTask mapping

The invention belongs to the field of GPU (Graphic Process Unit) design, and discloses a low power consumption GPU (Graphic Process Unit) staining task and uniform staining array task field mapping structure. The structure comprises an input data assembling and forwarding unit (1), a configuration and management unit (2), a task management unit (3), a resource management unit (4) and a slot state table unit (5), wherein the input data assembling and forwarding unit (1) receives vertex attribute data and Pixel attribute data input by an external module, assembles the vertex attribute data and the Pixel attribute data into Vertex warp and Pixel warp and forwards the Vertex warp and the Pixel warp a USA (Uniform Staining Array) task field; the configuration and management unit (2) receives a configuration parameter input by an external HIU (Host Interface Unit), and sets and records the value of the configuration parameter; the task management unit (3) executes idle slot query and task mapping, task output and task submission on the basis of a depth-first algorithm; the resource management unit (4) updates the resource management method of the slot according to the configuration parameter; and the slot state table unit (5) records the states and the task types of m pieces of slots (task fields) in the USA.

Owner:XIAN AVIATION COMPUTING TECH RES INST OF AVIATION IND CORP OF CHINA

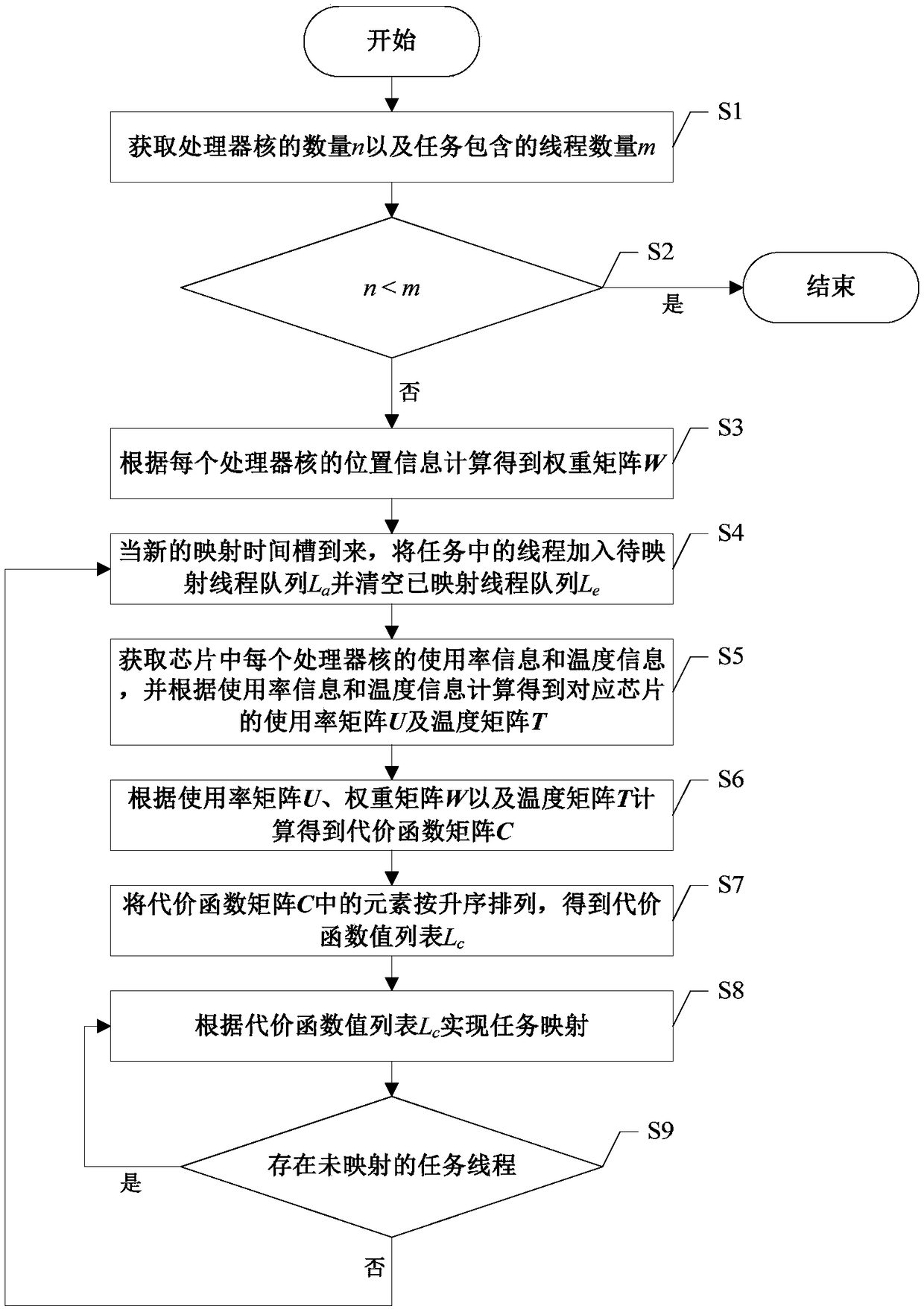

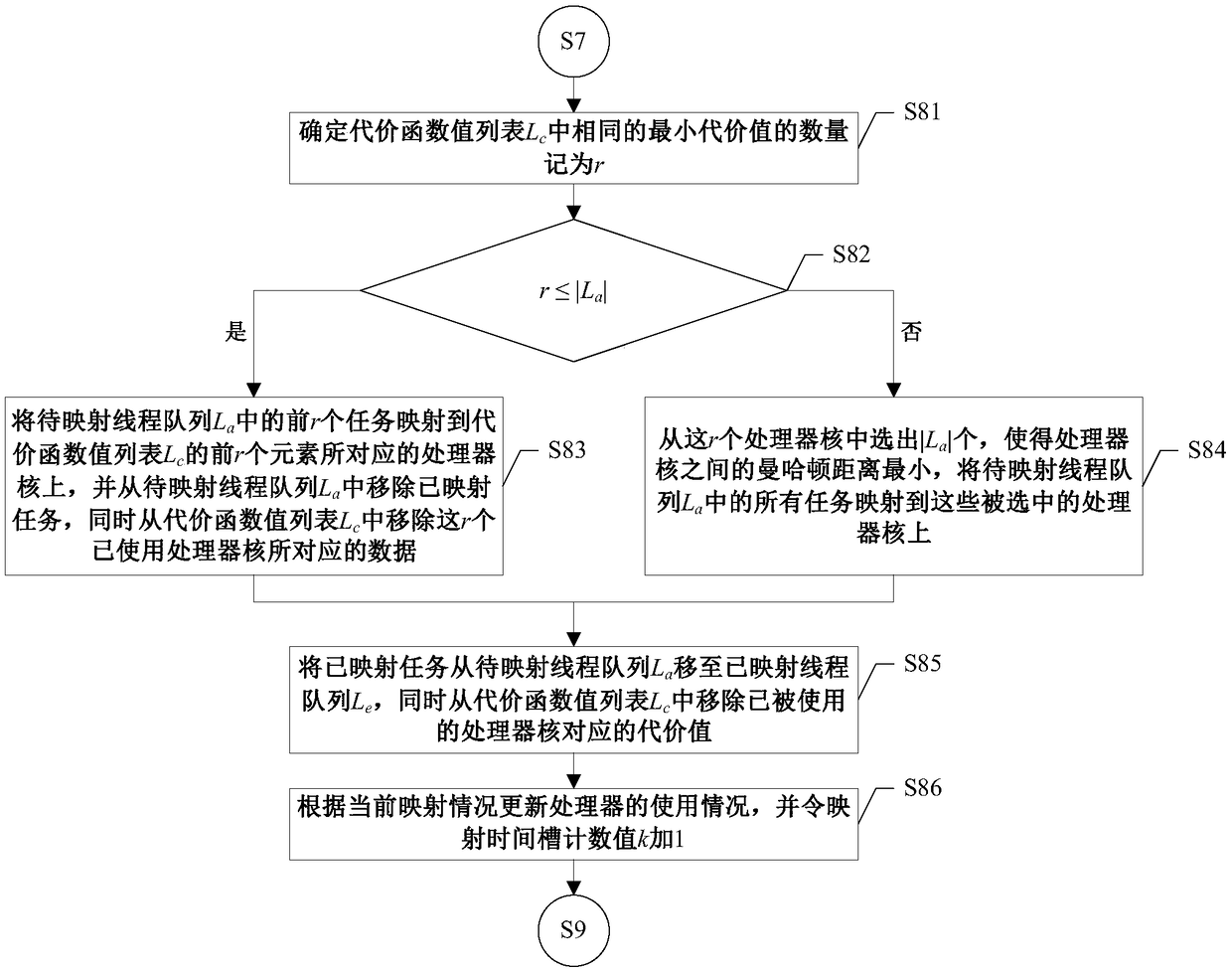

Task mapping method for information security of multi-core processor

ActiveCN108563949AReduce correlationLower success ratePlatform integrity maintainanceComputer architectureSide information

The invention discloses a task mapping method for the information security of a multi-core processor, and belongs to the technical field of on-chip multi-processor task mapping algorithm and hardwaresecurity. For the hot side information channel leakage problem of the multi-core processor, the dynamic security task mapping method is provided, a task thread is mapped to the optimum combination selected from a processor core set with the same cost function value, the correlation between the instruction information of the processor in the executing process and the transient temperature and spacetemperature is reduced, and therefore the success rate of stealing information on a chip through a hot side information channel by an attacker is reduced.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

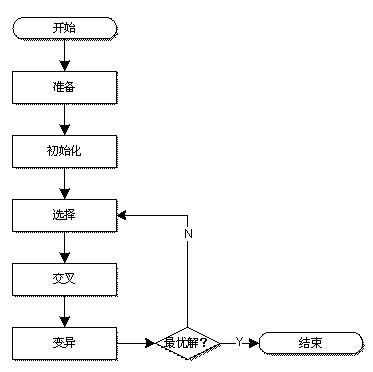

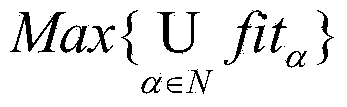

Task mapping method for optimizing whole of on-chip network with acceleration nodes

InactiveCN103885842AReasonableMapping method optimizationInterprogram communicationDigital computer detailsTask mappingTheoretical computer science

The invention discloses a task mapping method for optimizing the whole of an on-chip network with acceleration nodes. The task mapping method includes the steps of firstly determining a subtask feasible solution range and a fitness function, selecting feasible solution regions equal to subtasks in number from the subtask feasible solution range, carrying out random selection in the feasible solution regions to generate mapping layout sets, with the assigned number, of the initial subtasks, then carrying out selection and intersecting on the mapping layout sets of the initial subtasks, and exchanging the mapping positions of any two tasks in a mapping layout after intersected exchanging is carried out. According to the task mapping method, the inter-core communication cost and the task immigration cost after mapping are reduced, and the system performance is improved.

Owner:ZHEJIANG UNIV

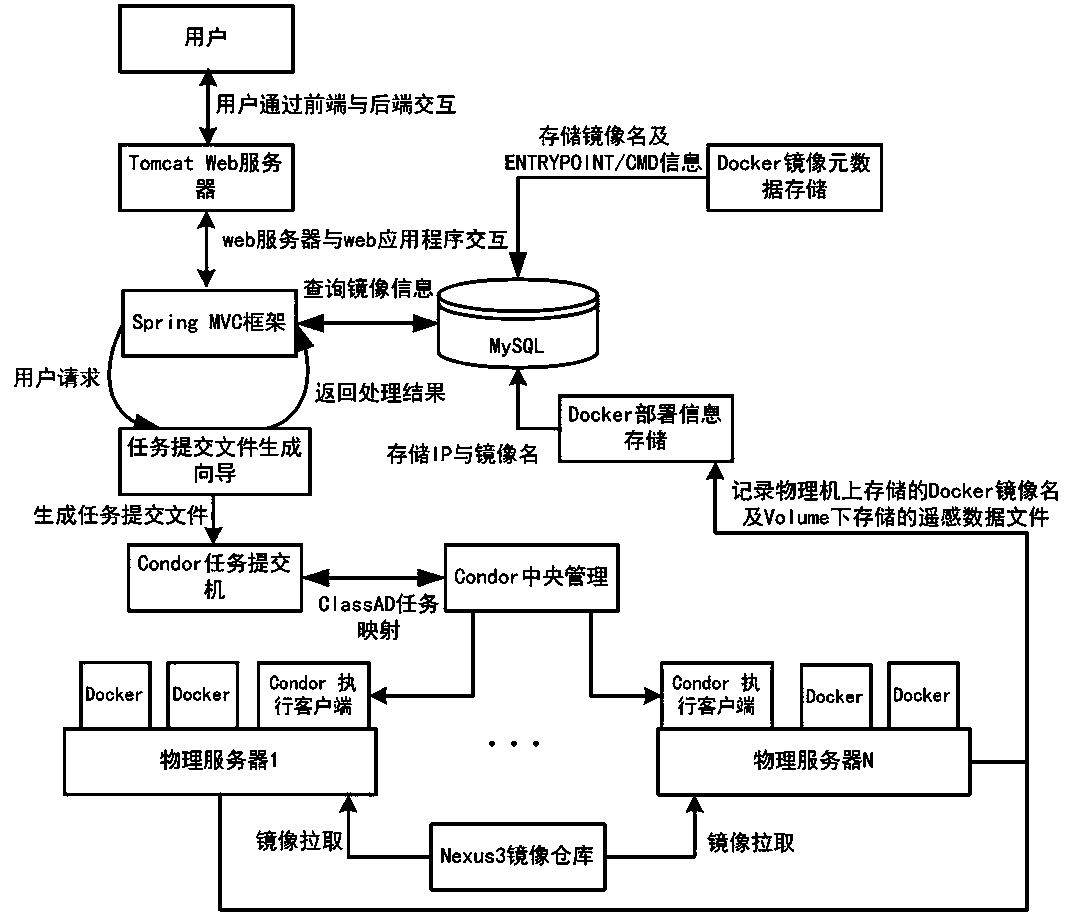

A coandor task mapping method of a remote sensing image processing Docker cluster

InactiveCN109614219AImprove resource utilizationResource allocationVisual data miningResource utilizationIp address

The invention relates to a coandor task mapping method of a remote sensing image processing Docker cluster, and belongs to the field of cloud computing. During a whole treatment process, the association between a Docker and a Conor and a task is realized by automatically generating a Docker universe mode task submitting description file meeting Class Ad resource matching through a system, a Dockercontainer is started on a contractor execution host to operate a remote sensing image processing algorithm, a contractor cluster is deployed on a physical machine, and a docker-engine is deployed ona contractor execution client, and a Connor cluster environment is constructed; metadata of a Docker mirror image is stored, and IP address information and a mirror image name of a physical machine are stored in a MySQL; according to a Conor Class AD task description method and the information recorded in the MySQL, a visual page provided by a front-end page enables a user to form a task submission file generation guide meeting the requirements of the user. The container mode is adopted, the resource utilization rate of remote sensing image processing nodes in a distributed environment is improved, and a plurality of remote sensing tasks can run on the same physical machine.

Owner:DONGGUAN UNIV OF TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com