Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

40results about How to "Guaranteed load balancing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

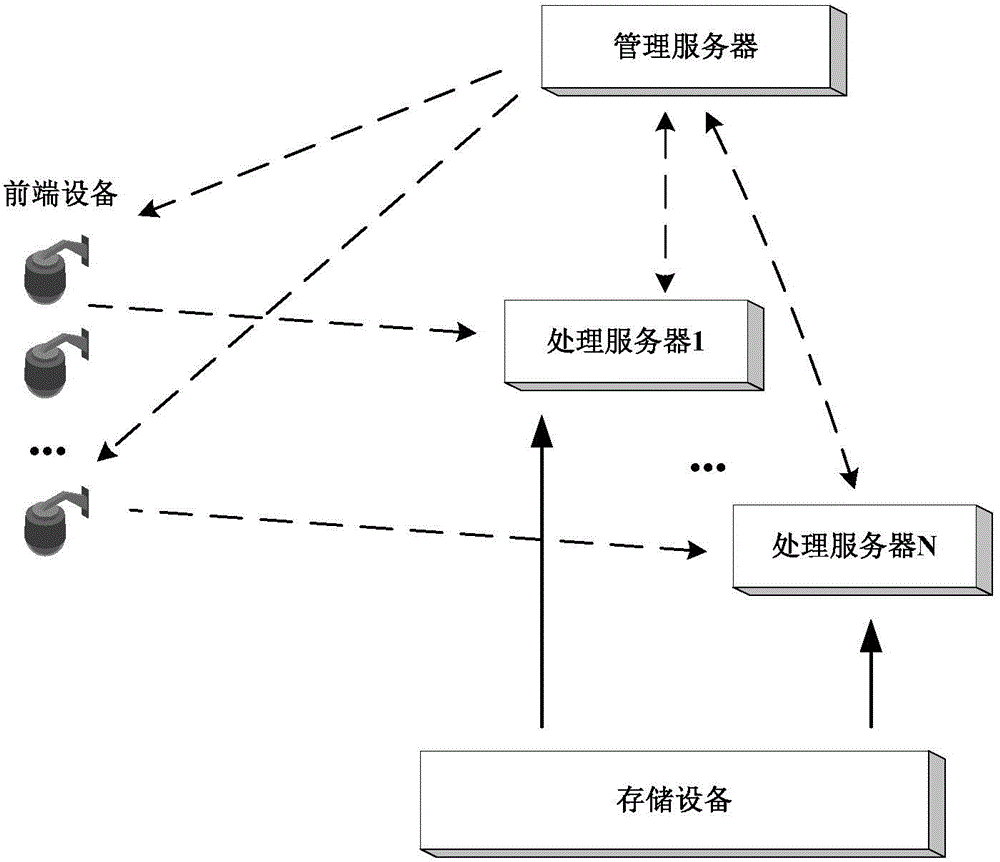

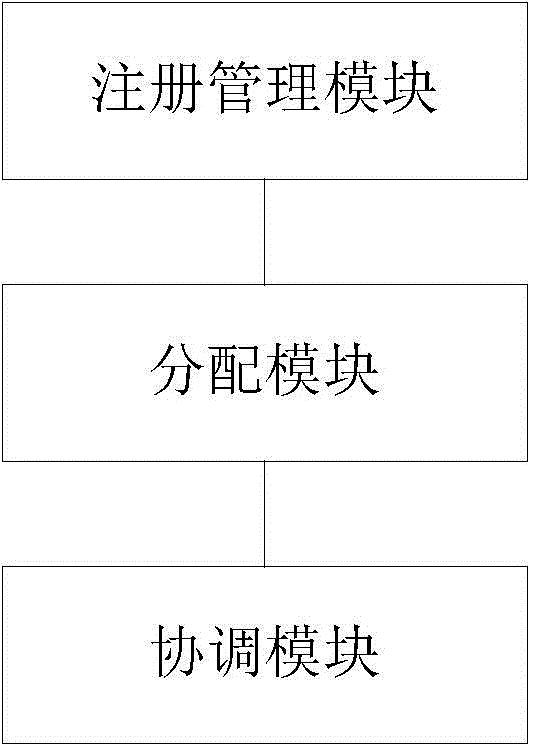

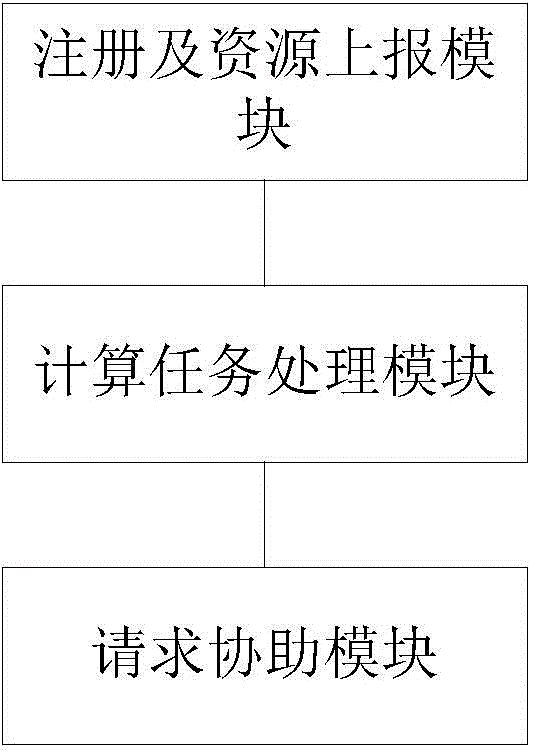

Compute task distributed dispatching device in server cluster

ActiveCN104363300AReduce work stressGuaranteed load balancingTransmissionDistributed computingResource information

The invention discloses a compute task distributed dispatching device in a server cluster. Compute tasks from front-end devices are dispatched to processing servers in the server cluster for carrying out compute processing. The compute task distributed dispatching device in the server cluster comprises a register management module, a distributing module and a coordinating module, wherein the register management module is used for receiving register of the processing servers and receiving computing resource information and load information reported by the processing servers, the distributing module is used for distributing the processing servers bound to the front-end devices and issuing the bound processing servers to the front-end devices, and the coordinating module is used for receiving assistance requests sent by the registered processing servers when the processing servers are overloaded and coordinating other processing servers with residual processing capacity to assist the corresponding processing servers to complete the compute tasks contained in the assistance requests according to the processing capacity and the load information of the other processing servers. According to the compute task distributed dispatching device in the server cluster, the work pressure of a management server is reduced, automatic adjustment of overloaded processing servers is achieved automatically, and the load balance of the server cluster is guaranteed.

Owner:ZHEJIANG UNIVIEW TECH

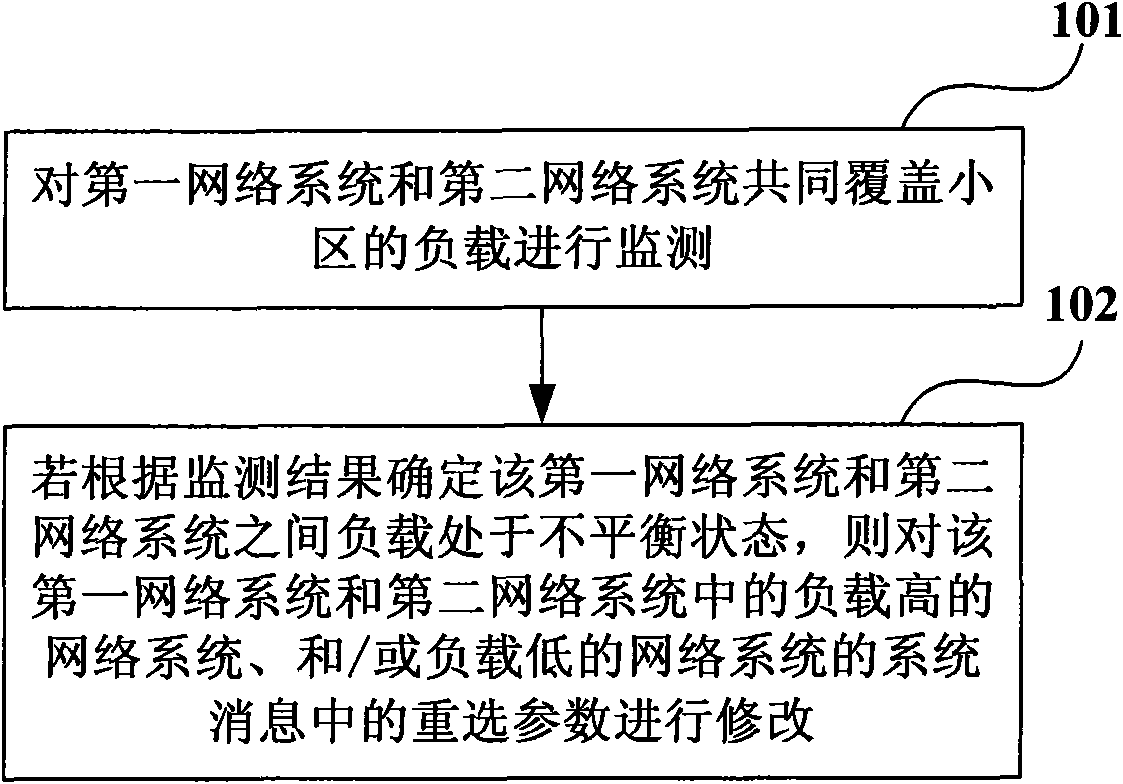

Load control method, apparatus and system

ActiveCN101626589AGuaranteed load balancingNetwork traffic/resource managementAssess restrictionLow loadNetworked system

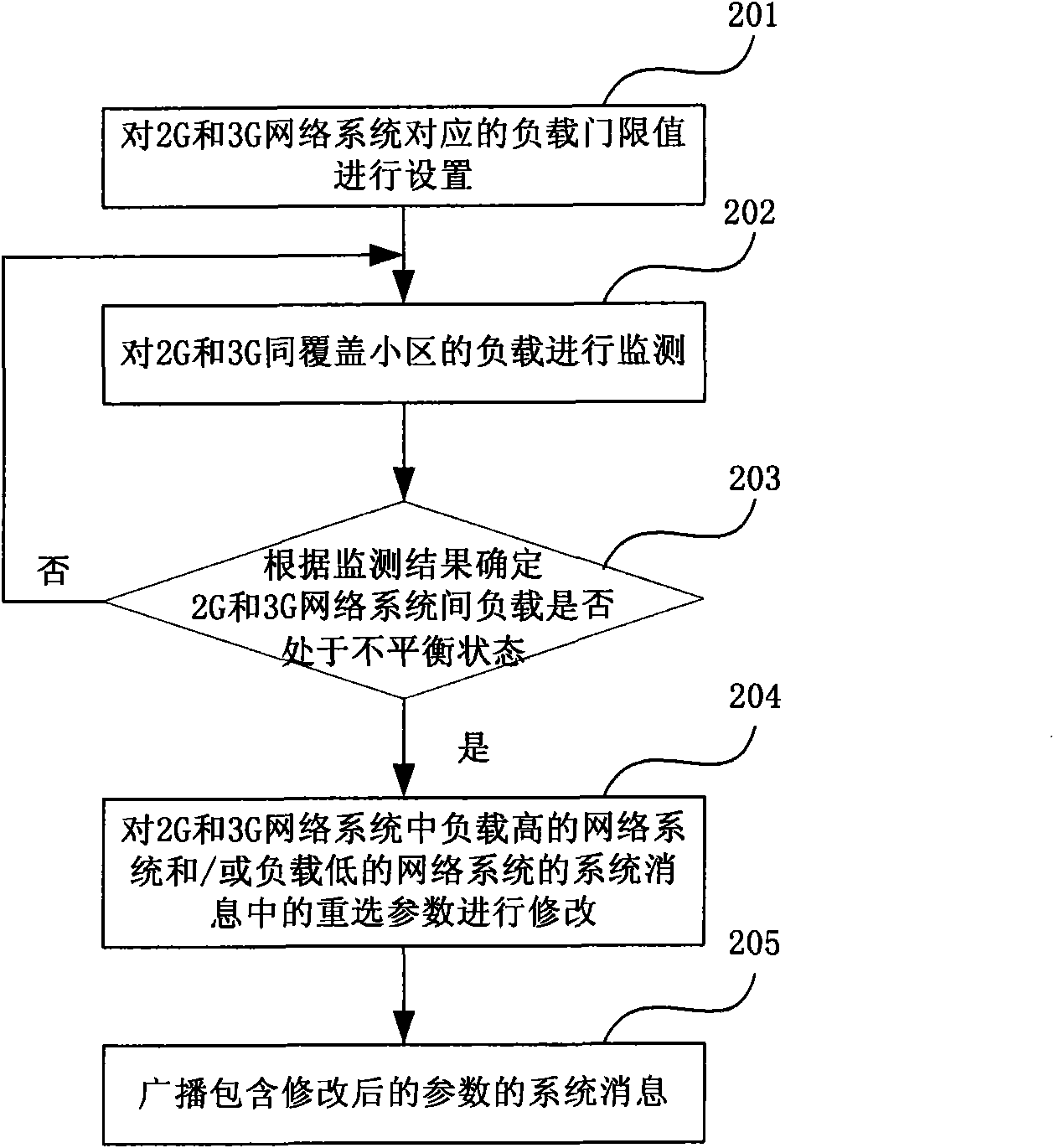

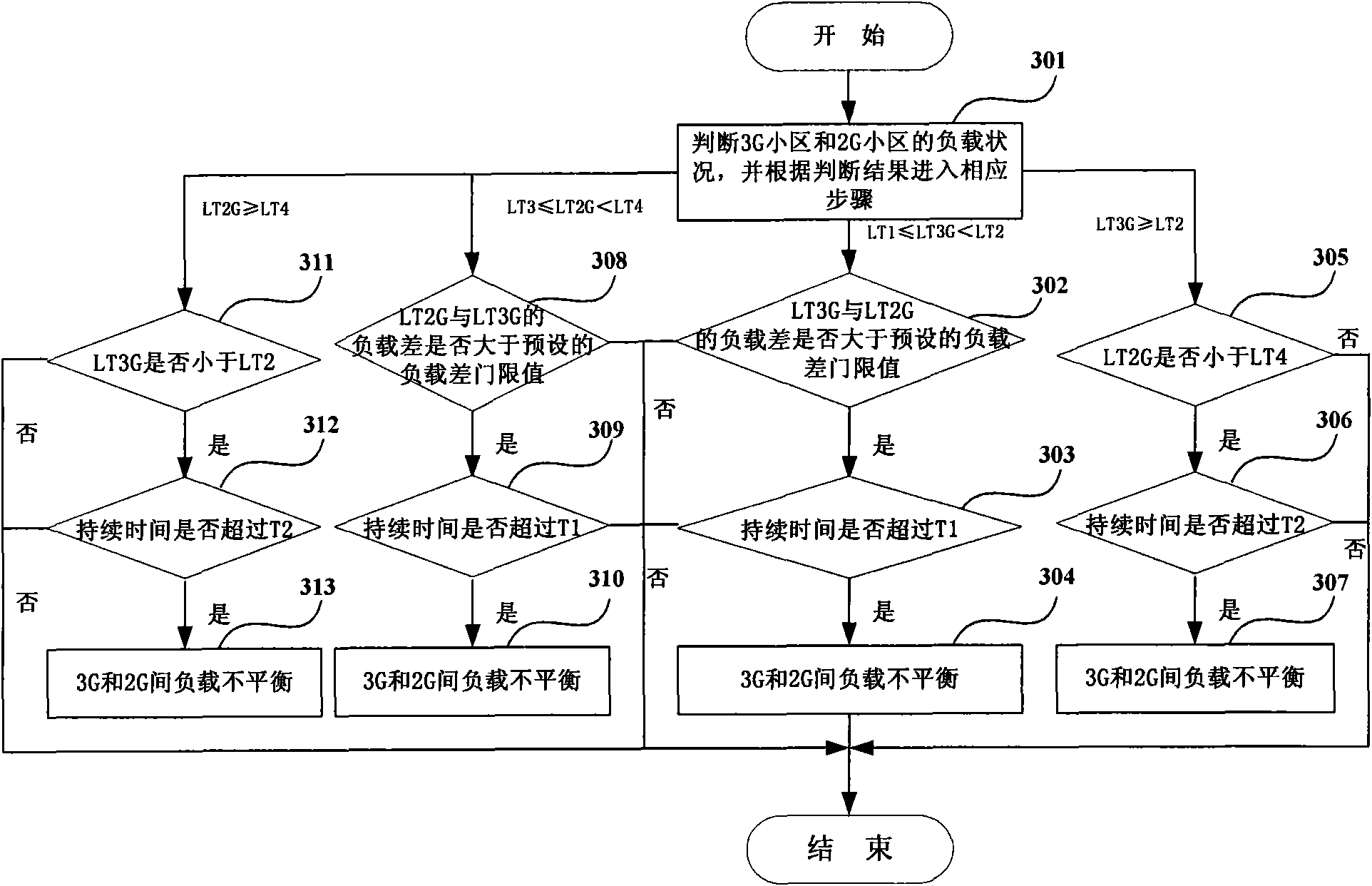

The invention provides a load control method, an apparatus and a system. The method comprises the following steps: monitoring a load in a common coverage cell of different network systems, wherein the different network systems comprise a first network system and a second network system; and modifying the reselection parameters in the system message of the network system with a higher load and / or of the network system with a lower load if the load between the first network system and the second network system is determined to be in imbalance according to a monitoring result. Whether the different network systems are in imbalance or not is determined by monitoring the load in the common coverage cell of the network systems in the invention, and in the case of imbalance, the reselection parameter is adjusted to enable the user to easily reselect a different network system, thereby reducing the load increment of the network system and guaranteeing the load balance of the different network systems.

Owner:HUAWEI TECH CO LTD

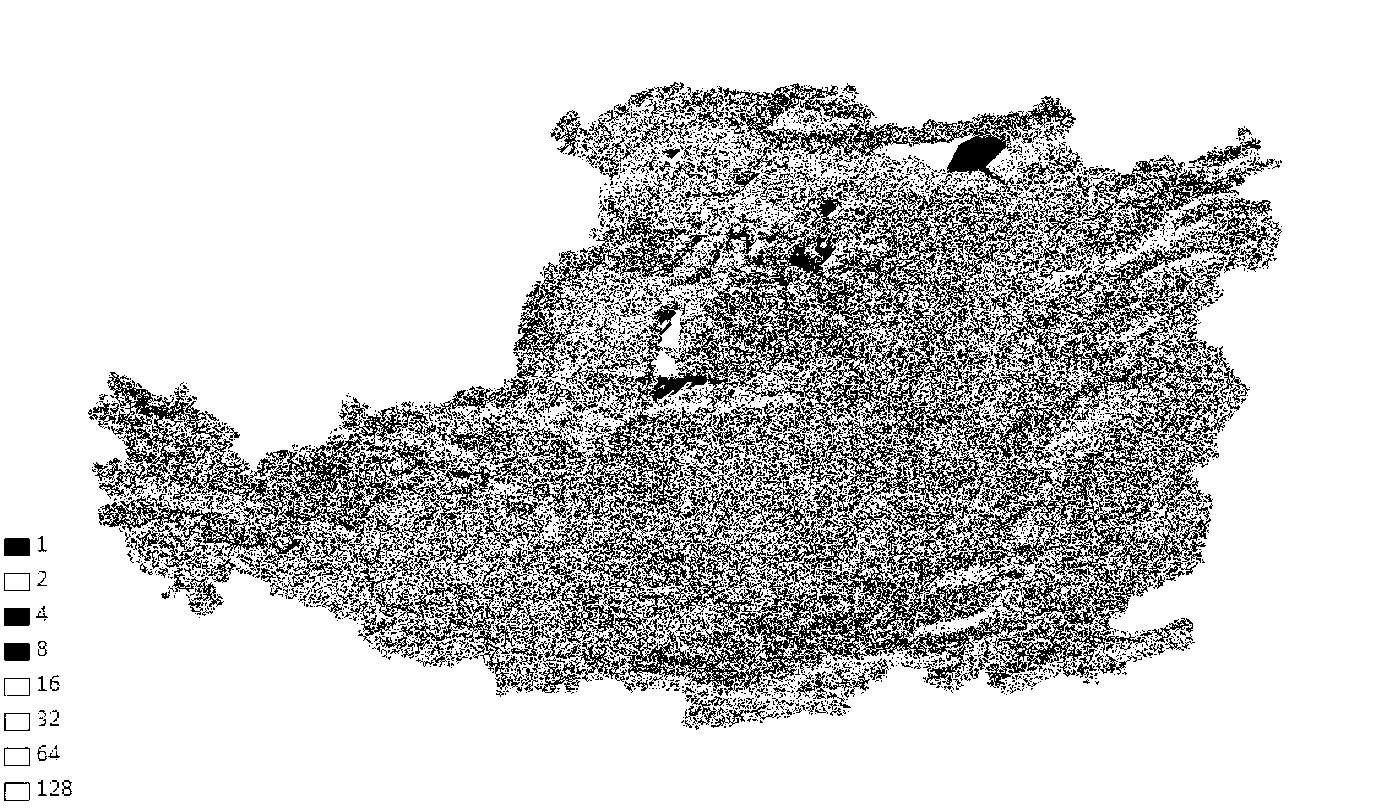

Parallel method for large-area drainage basin extraction

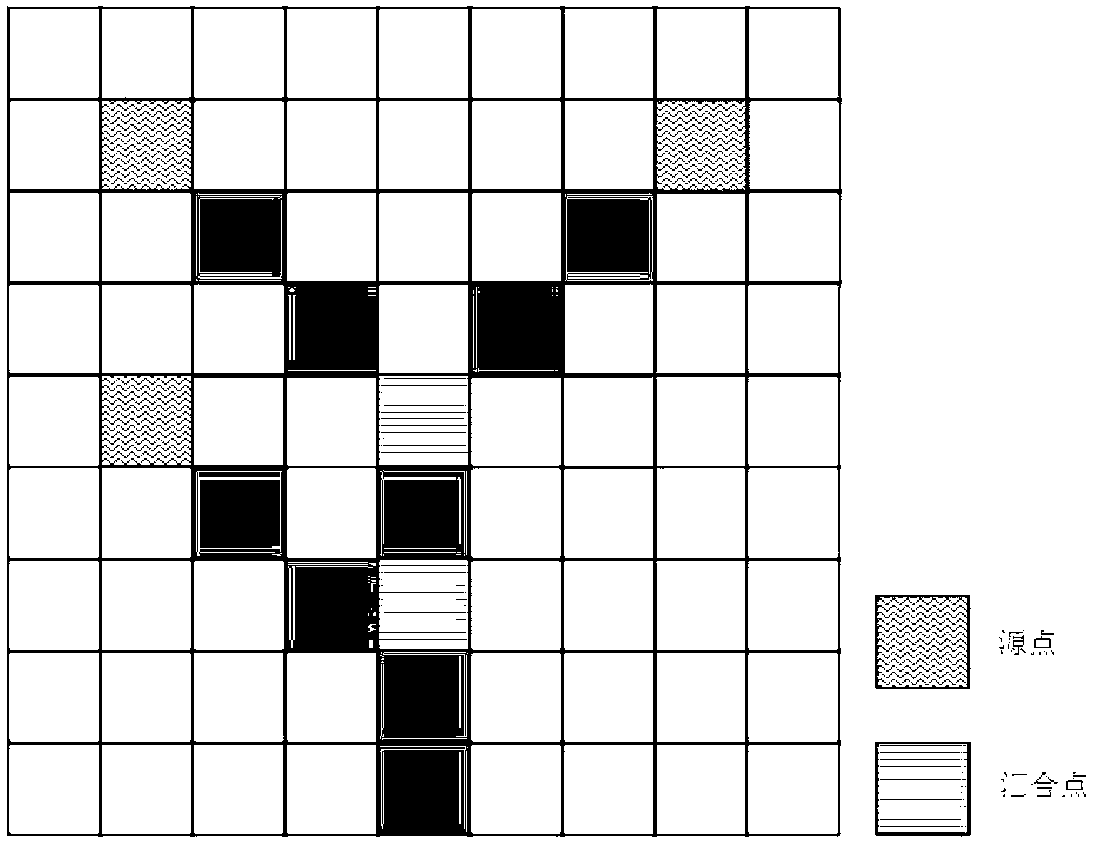

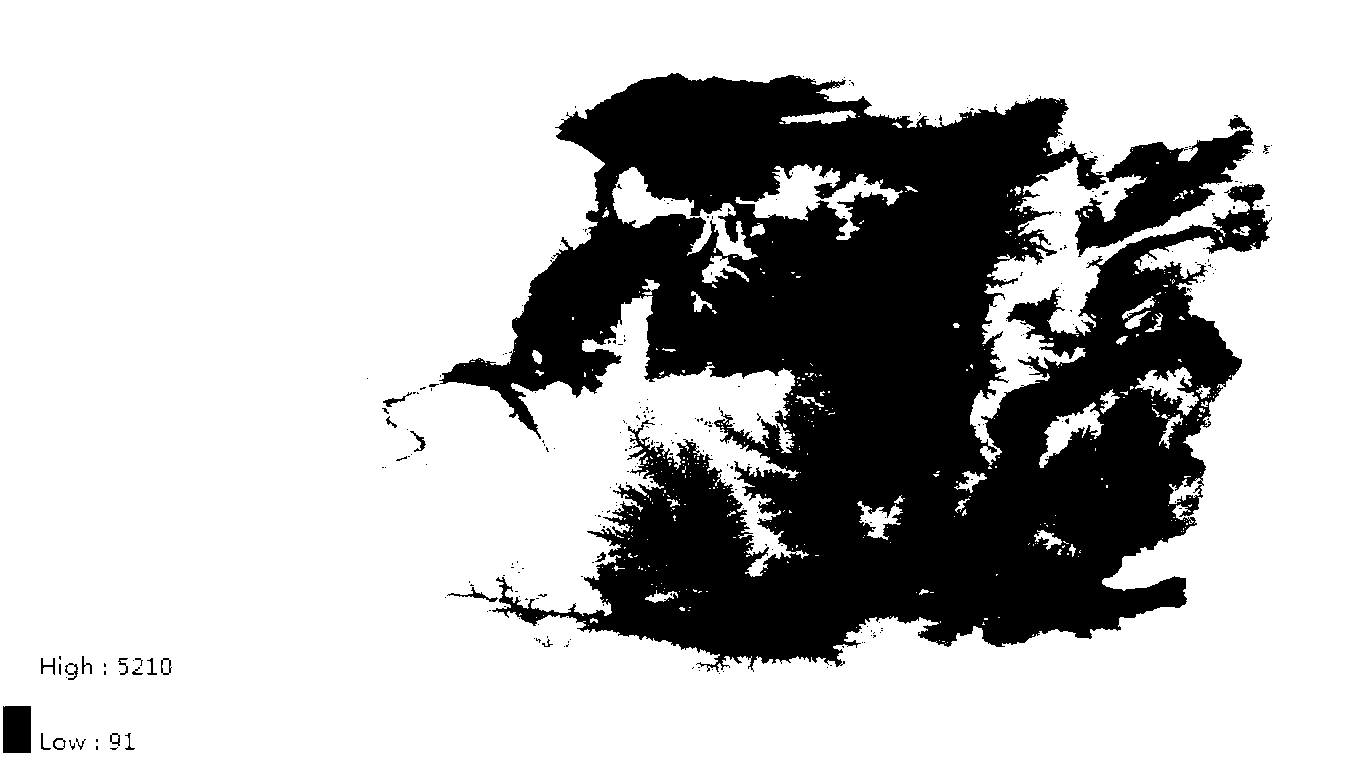

InactiveCN102915227AGuaranteed load balancingReduced Computational Efficiency ImpactResource allocationConcurrent instruction executionData partitioningRiver network

The invention discloses a parallel method for large-area drainage basin extraction of massive DEM data. The parallel method comprises the following steps of: step 1, optimally partitioning granularity evaluation; step 2, carrying out swale filling and calculating in accordance with data partitioning and fusion strategies; step 3, carrying out water flow direction parallel calculation in accordance with the data partitioning and fusion strategies on the basis of swale filling results; step 4, carrying out confluence accumulation parallel calculation in accordance with the data partitioning and fusion strategies on the basis of water flow direction data; step 5, setting confluence thresholds and carrying out river network water system parallel calculation in accordance with the data partitioning and fusion strategies on the basis of water flow direction data and confluence accumulation data; and step 6, carrying out sub-drainage basin partitioning parallel calculation in accordance with the data partitioning and fusion strategies on the basis of the river network water system and water flow direction data so as to accomplish the drainage basin extraction. With the adoption of the method, the granularity in data partitioning and an I / O (Input / Output) mechanism are sufficiently considered, and the self data characteristics on the serial algorithm parallel analysis can be considered as well.

Owner:NANJING NORMAL UNIVERSITY

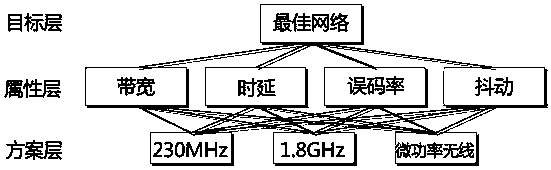

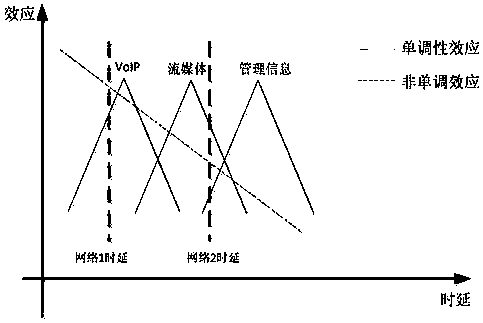

Network selection method based on grey relational analytic hierarchy

ActiveCN107734512AAdaptableSolve the network selection problemAssess restrictionNetwork planningCorrelation coefficientEntropy weight method

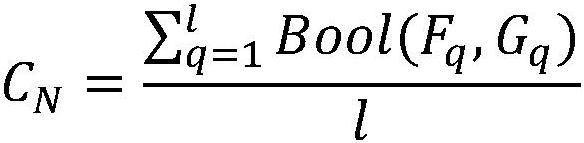

The invention discloses a network selection method based on grey relational analytic hierarchy. The network selection method comprises the following steps of (1) utilizing an analytic hierarchy, establishing a hierarchical order and constructing a judgment matrix (formula); (2) calculating a normalized weight omegaj; (3) utilizing an entropy weight method, constructing an evaluation matrix (formula) and normalizing the evaluation matrix; (4) solving an entropy Ej of a decision-making property, and calculating the entropy weight phij; (5) utilizing a grey relational method, constructing a global decision matrix (formula) and finding out a group of reference solutions (formula); and (6) calculating a grey relational grade (formula), carrying out grey multi-attribute judgment (formula) and selecting a network having a largest correlation coefficient with the reference network as the optimal network. According to the method, the best network which is most matched with the reference networkis selected according to the grey correlation method, the problem that the network decision-making property is not a network selection problem of a monotonous function can be effectively solved.

Owner:NANJING NARI GROUP CORP +1

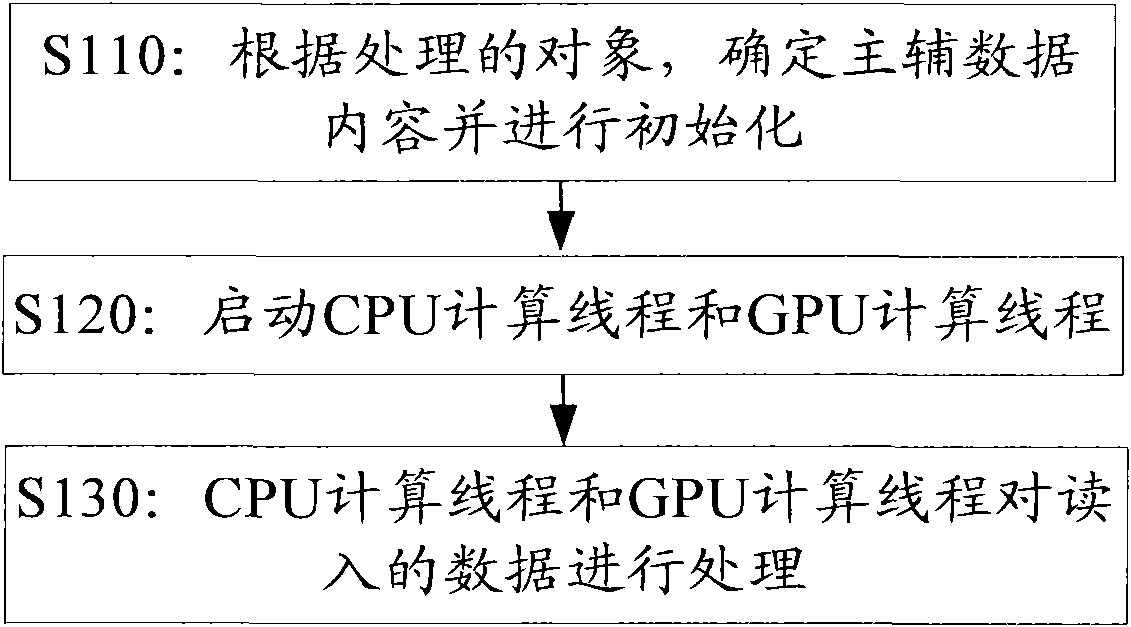

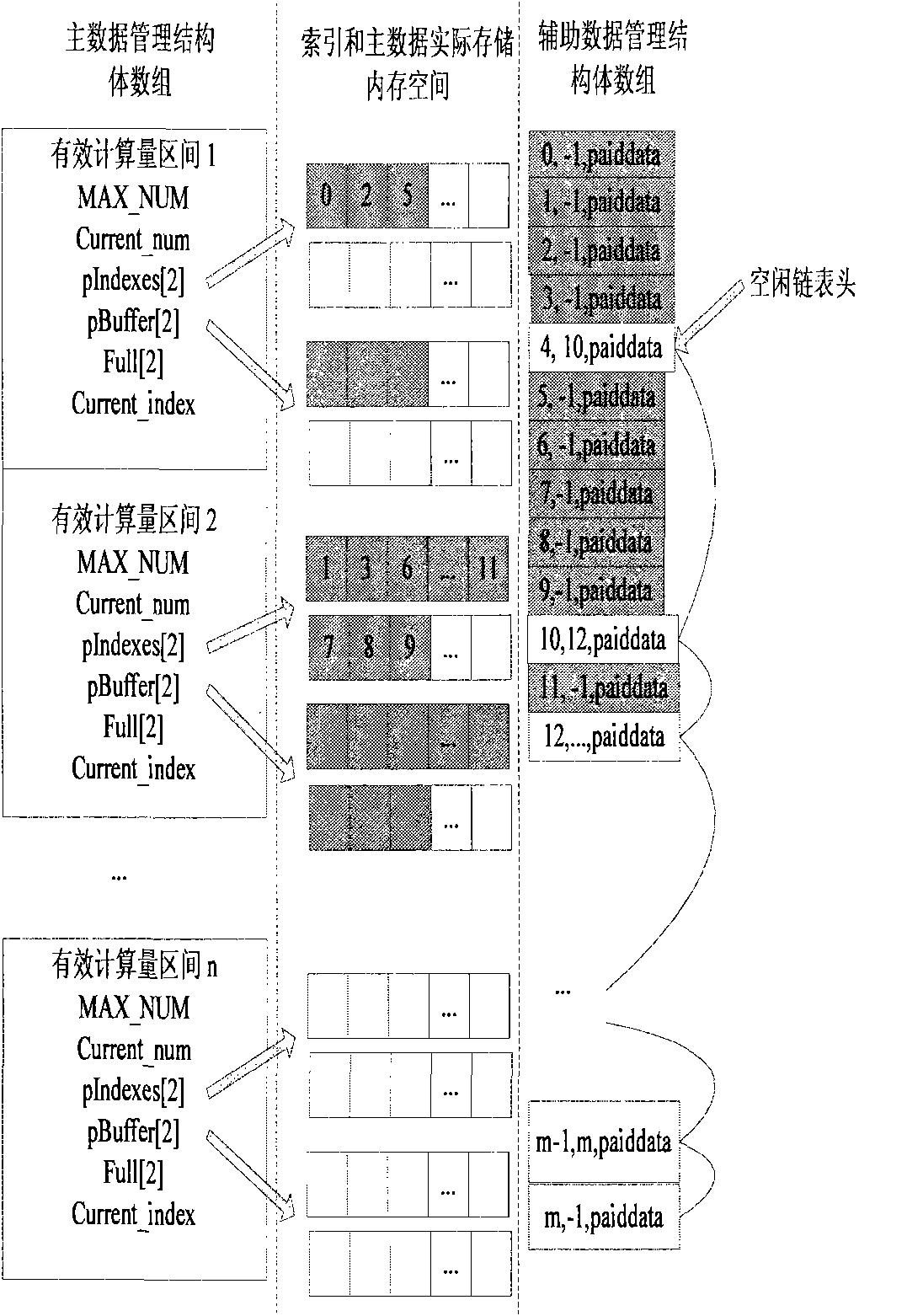

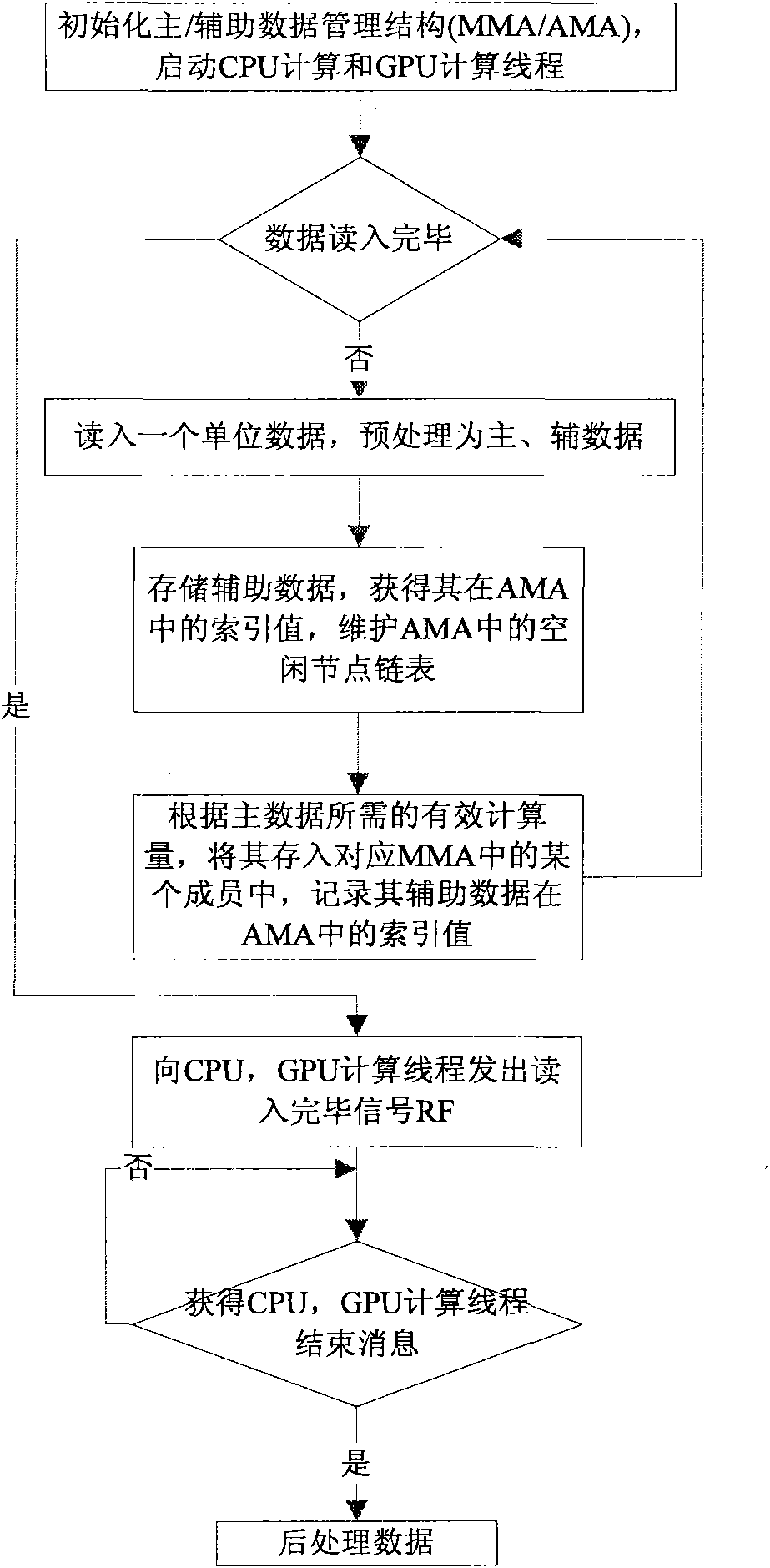

Primary and secondary data structure-based CPU-GPU cooperative computing method

InactiveCN101894051AIncrease profitGuaranteed load balancingResource allocationMultiple digital computer combinationsData contentUtilization rate

The embodiment of the invention provides a primary and secondary data structure-based CPU-GPU cooperative computing method, which comprises the following steps: according to an object to be processed, determining primary and secondary data contents and initializing the primary and secondary data contents; starting a CUP computing thread and a GPU computing thread; reading data to be processed, storing the pre-processed data in a primary and secondary data structure, and processing the data in the primary and secondary data structure by using the CUP computing thread and the GPU computing thread till no data exists. According to a scheme provided by the invention, parallel data can be managed effectively, and the balance of the loads on the threads of a GPU can be ensured when a GPGPU platform processes databases with unbalance effective computation distribution; and a CPU and the GPU can perform complete parallel calculation and keep high utilization rate by simply-designed and reusable thread dividing method.

Owner:UNIV OF SCI & TECH OF CHINA

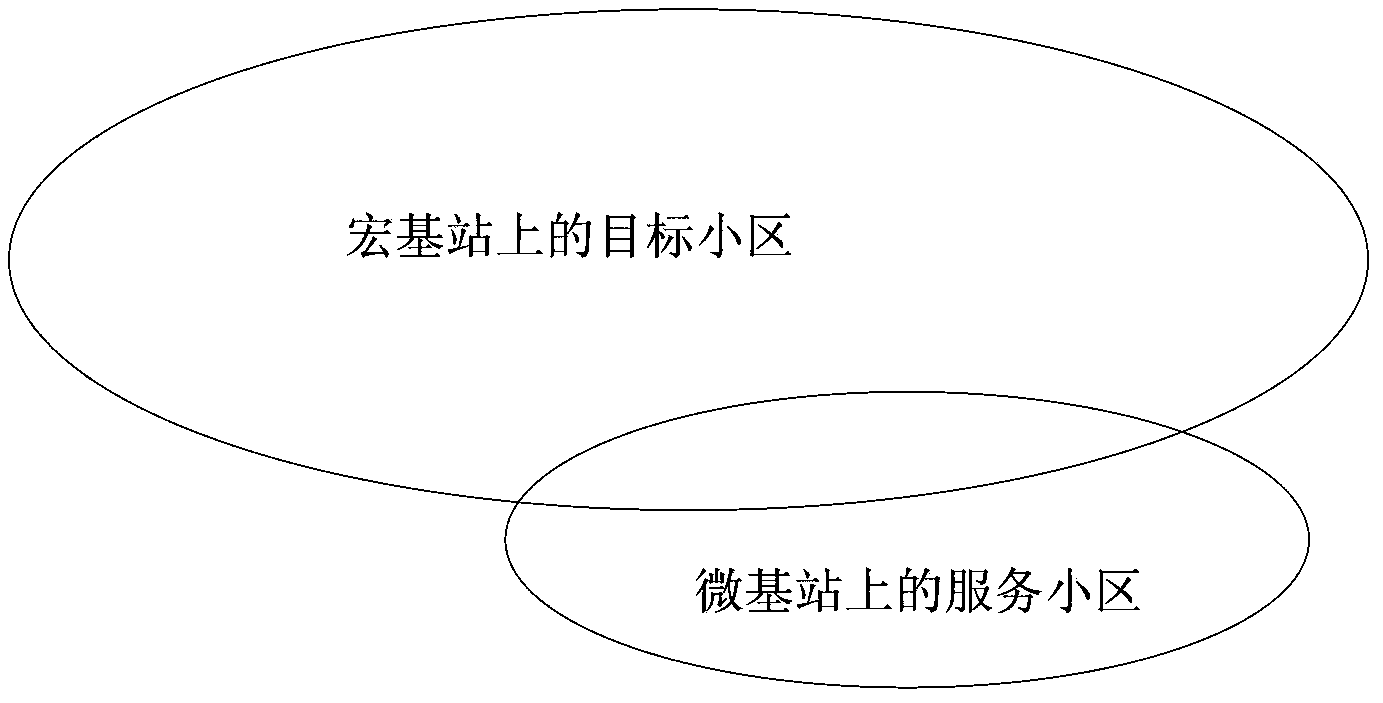

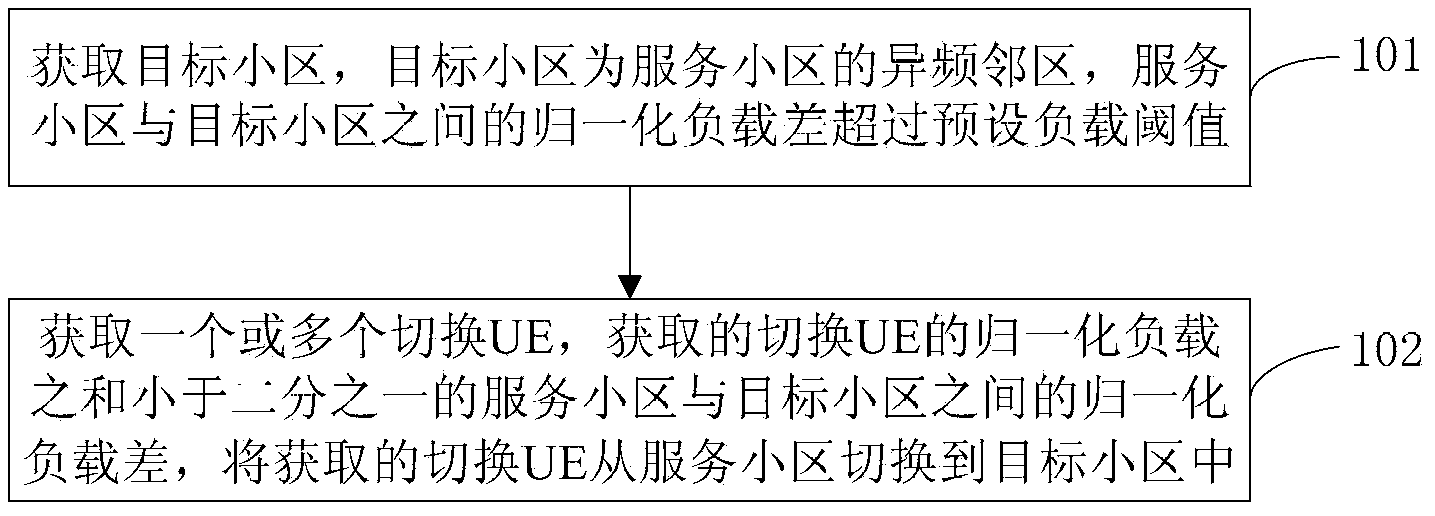

Load balancing method and apparatus

ActiveCN103416089AGuaranteed load balancingAvoid ping-pong switchingNetwork traffic/resource managementComputer moduleComputer science

The invention provides a load balancing method and an apparatus, which relate to the communication field. The method comprises: obtaining an object zone, wherein the object zone is the different frequency neighborhood zone of a service zone, the normalized load difference between the service zone and the object zone proceeds a preset load threshold; and obtaining one or more user switching devices UE, wherein the sum of the normalized load is less than 1 / 2 of the normalized load difference between the service zone and the object zone, and the obtained switching device UE is switched from the service zone to the object zone. The apparatus comprises a first obtaining module, a second obtaining module and a switching module. According to the invention, 'ping pong' switching phenomenon is prevented.

Owner:HUAWEI TECH CO LTD

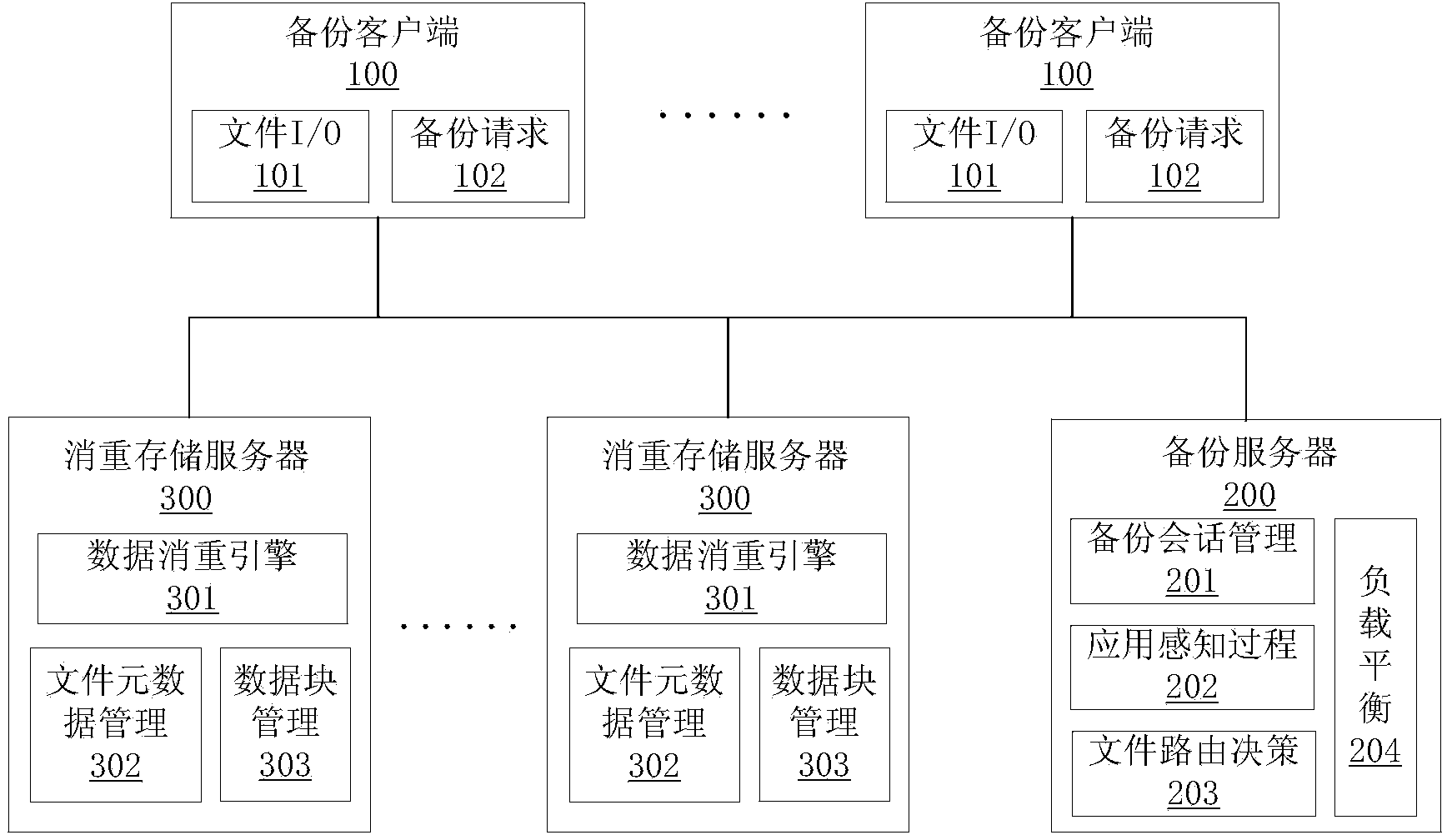

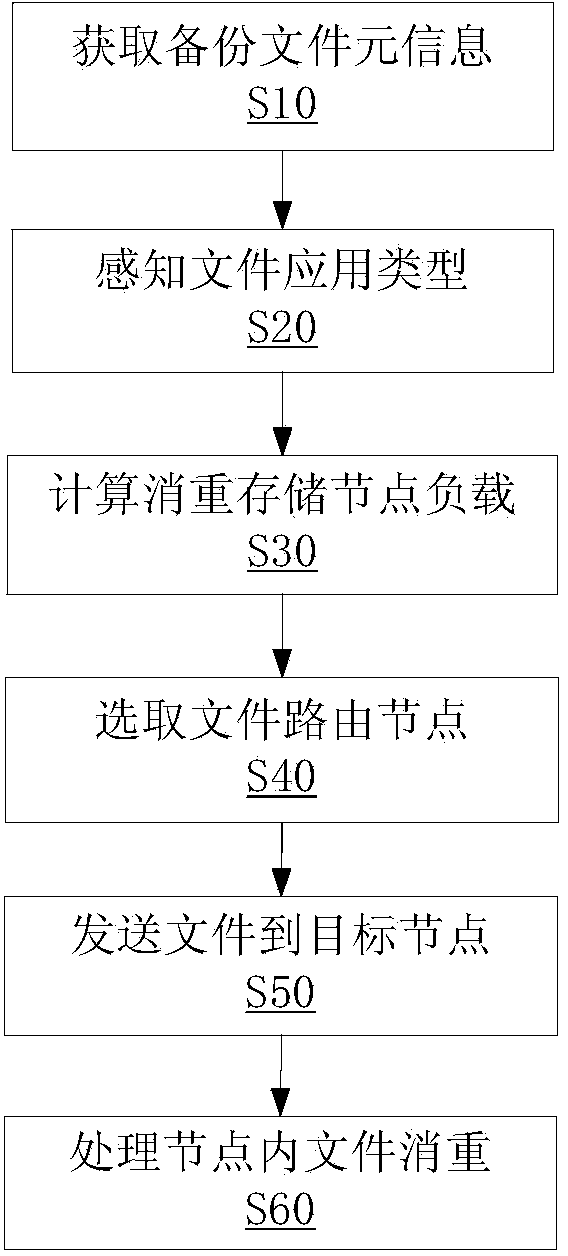

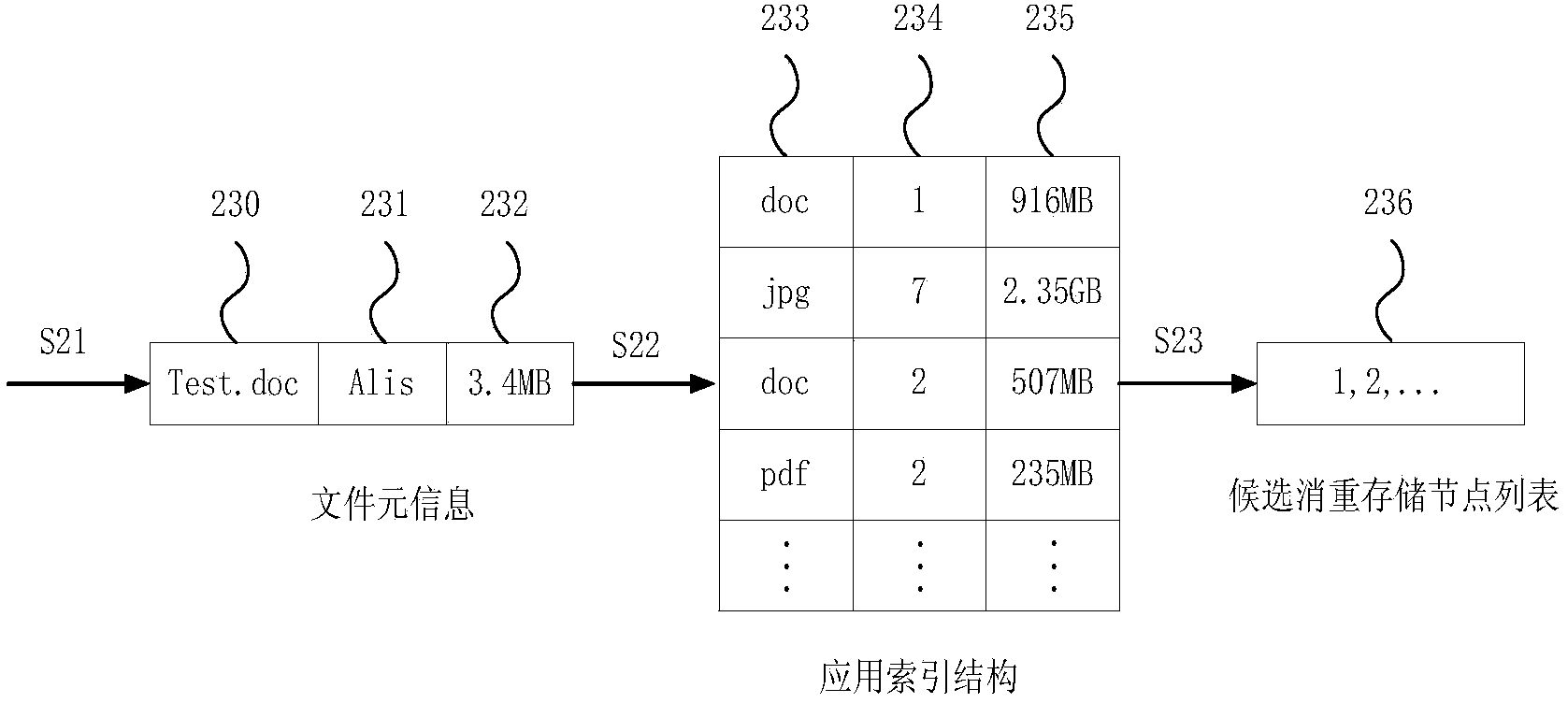

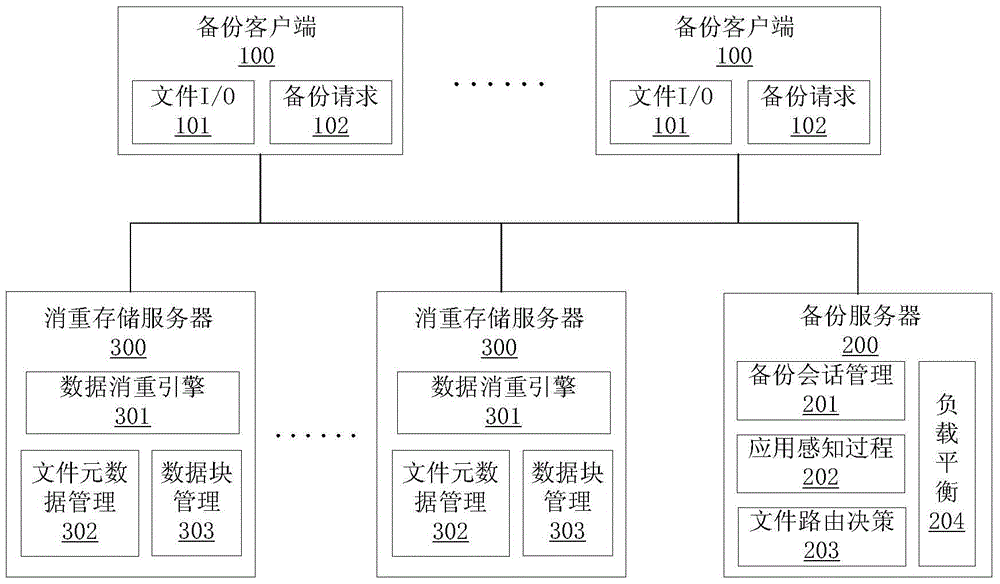

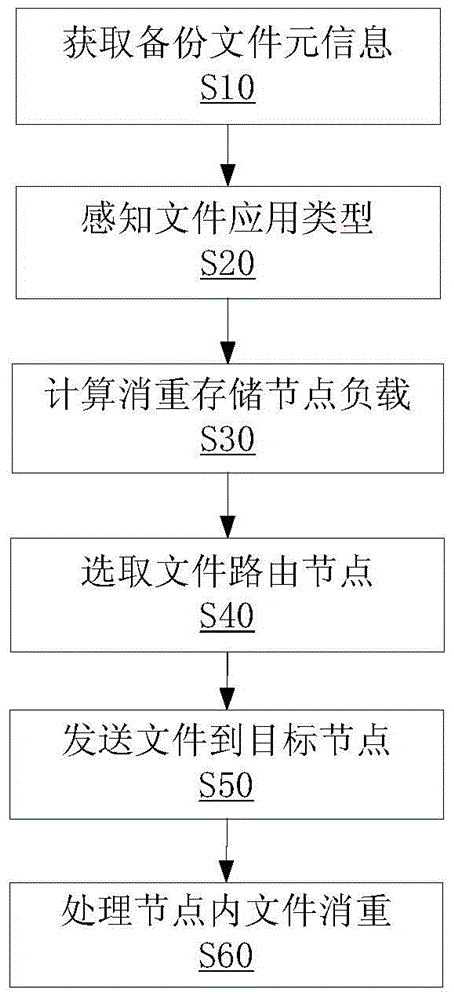

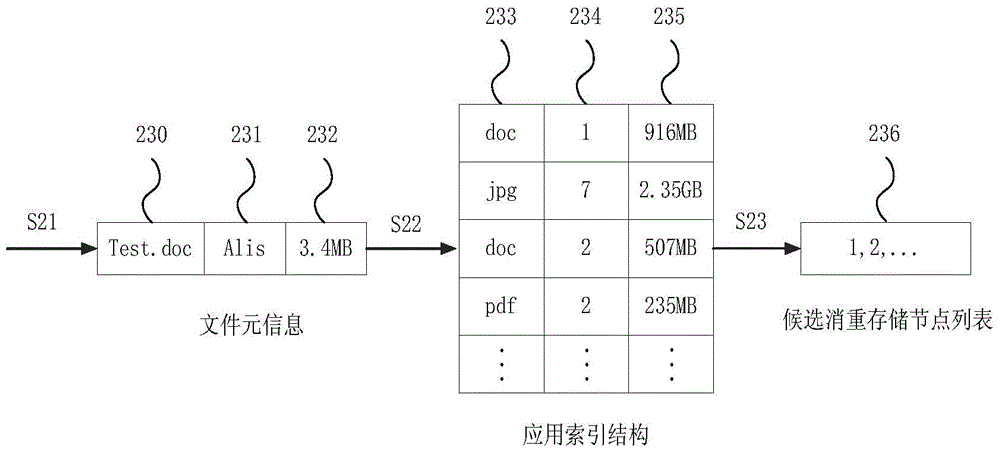

Application perception data routing method oriented to large-scale cluster deduplication and system

ActiveCN103902735AReduce data overlapData deduplication rate is highTransmissionSpecial data processing applicationsCluster systemsPattern perception

The invention discloses an application perception data routing method oriented to large-scale cluster deduplication and a large-scale backup storage cluster system. The application perception data routing method comprises the steps of (S10) obtaining backup file meta-information, (S20) sensing a file application type, (S30) calculating deduplication storage node loads, (S40) selecting file routing nodes, (S50) sending files to target nodes, (S60) conducting deduplication on the files in the nodes, and the like. The large-scale backup storage cluster system comprises a plurality of backup clients, a backup server and a plurality of deduplication storage servers. The data routing method and the system have the advantages that the data deduplication rate is high, the node throughput rate is high, system communication overheads are low, and system loads are balanced.

Owner:PLA UNIV OF SCI & TECH

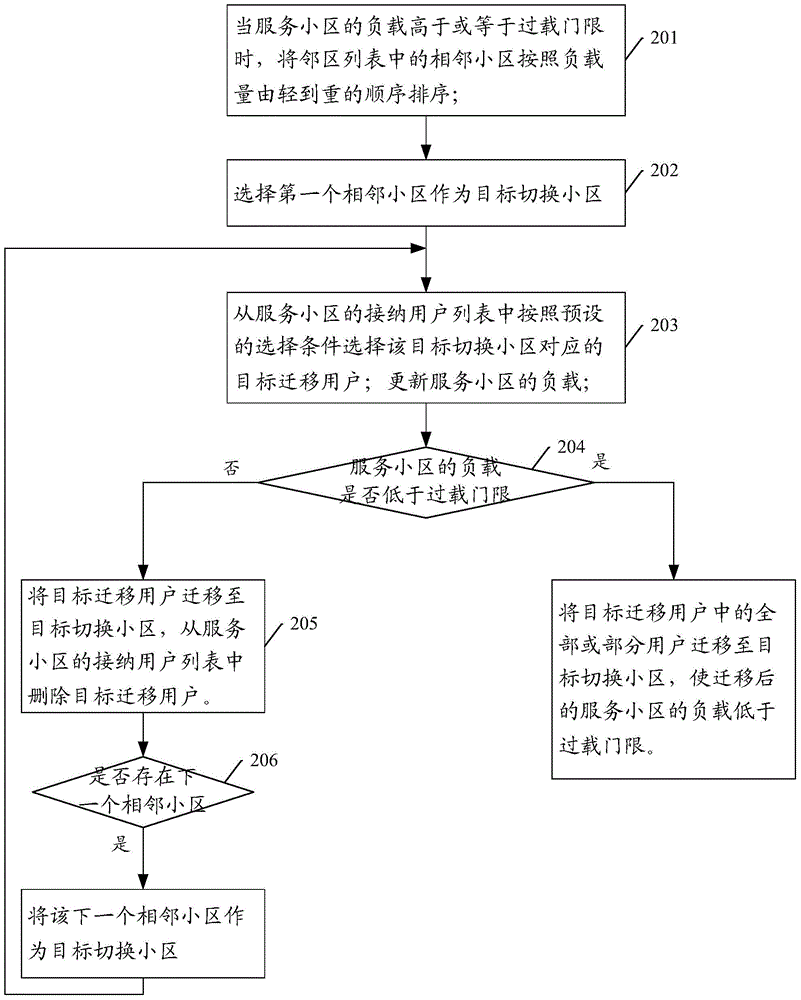

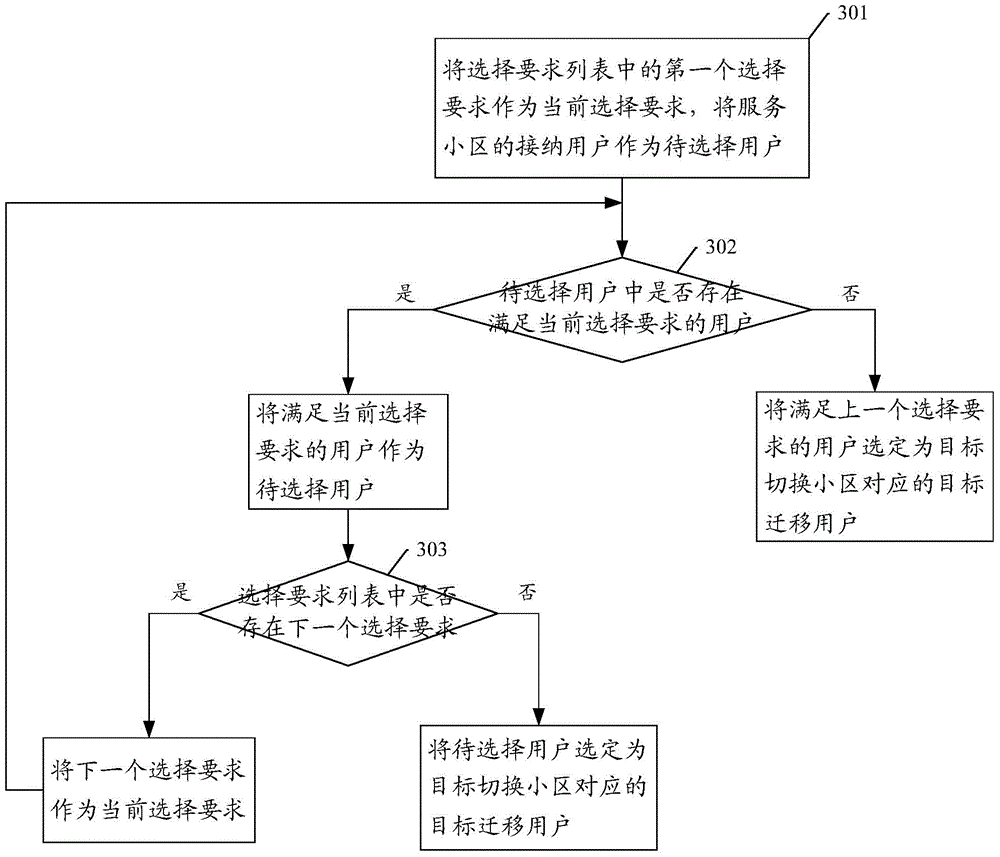

Load balancing method and base station

InactiveCN105323798AGuaranteed load balancingGuaranteed switching success rateNetwork traffic/resource managementLoad capacityBase station

The invention provides a load balancing method and base station. The method comprises that when the load of a service cell is greater than or equivalent to a preset overload threshold, neighbor cells in a neighbor cell list are ordered in the sequence form low load capacity to high load capacity; the cell of the lowest load capacity is selected as a target switching cell preferentially; after the target switching cell is selected, target migrate users corresponding to the target switching cell are selected from an admission user list according to preset selection conditions; and the target switching cells and corresponding target migration users are selected one by one till the load of the service cell is lower than the overload threshold or all the neighbor cells in the neighbor cell list are selected as the target switching cells. Thus, the load balance between the cells is effectively ensured, and the success rate of switching the users to the target switching cell is guaranteed.

Owner:POTEVIO INFORMATION TECH

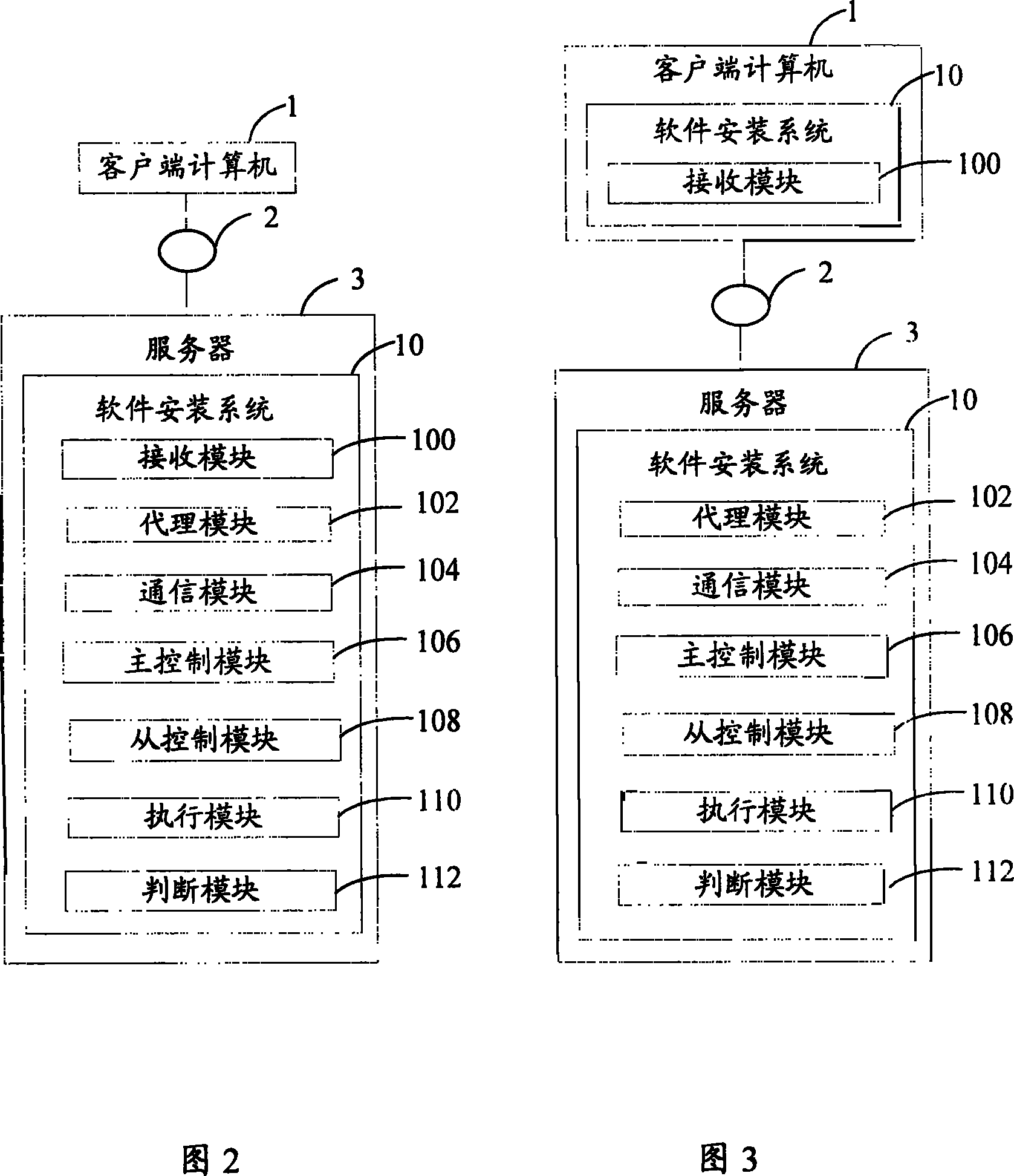

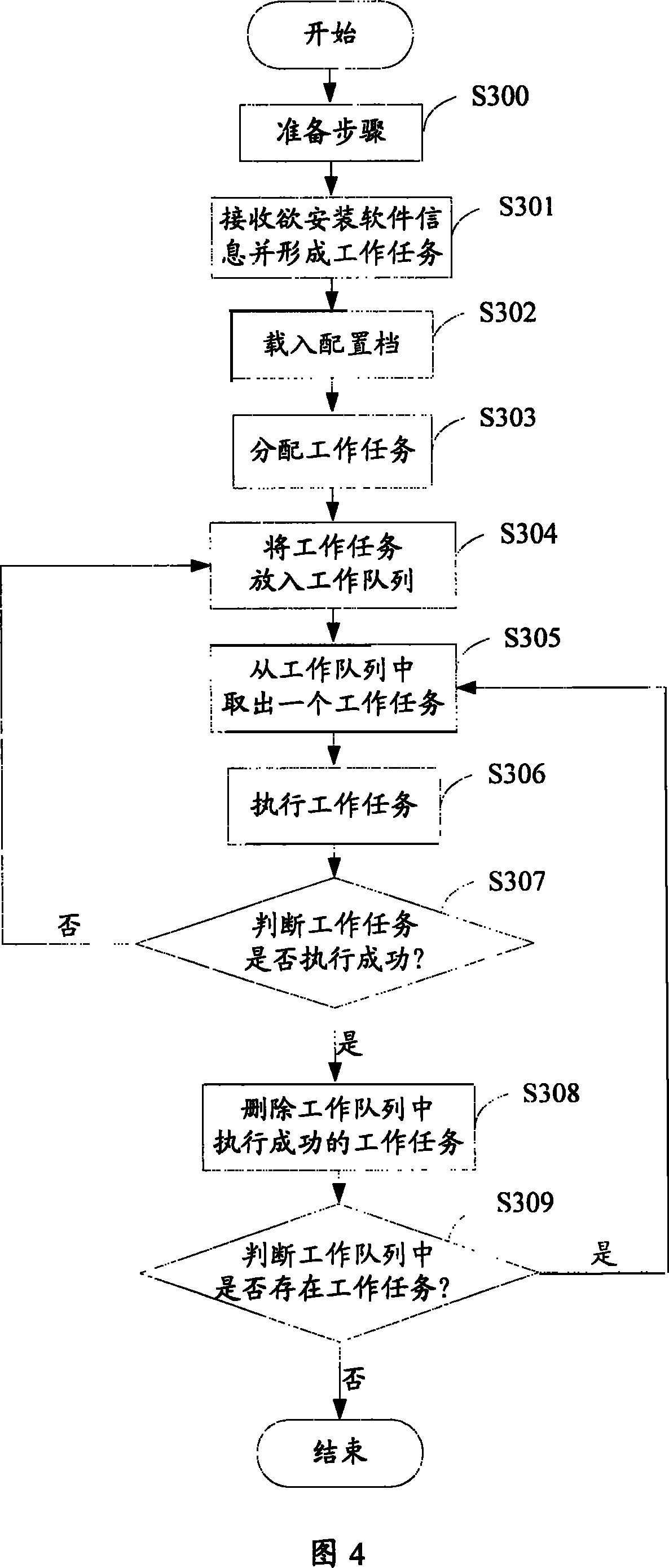

Software installation system and method

InactiveCN101018152AImprove work efficiencyImprove resource utilizationProgram loading/initiatingData switching networksWork taskSoftware engineering

Owner:HONG FU JIN PRECISION IND (SHENZHEN) CO LTD +1

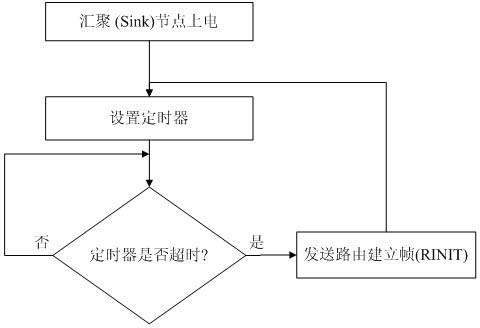

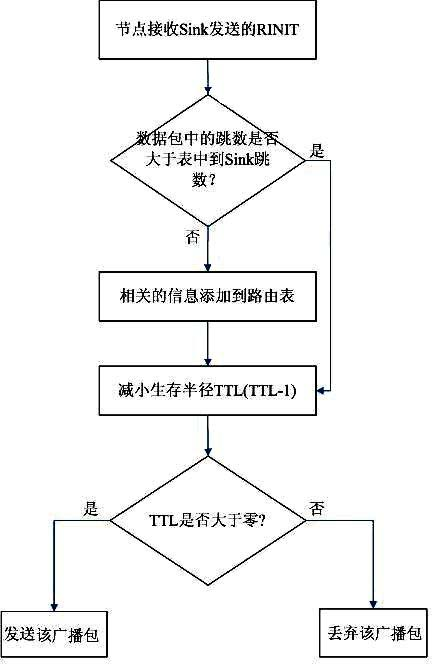

Time-delay constrained wireless sensor network routing method based on fuzzy gradient

InactiveCN102111817AGuaranteed load balancingBalanced utilizationEnergy efficient ICTNetwork traffic/resource managementWireless mesh networkFuzzy classification

The invention relates to a time-delay constrained wireless sensor network routing implementation method based on a fuzzy gradient. The method comprises a proximate Sink node selection implementation step and a gradient fuzzy classification implementation step. In the method, aiming at limited time-delay constrained energy of a wireless sensor network, each sense node transmits a data packet to anadjacent node along the Sink direction of the base station until data reaches a Sink; and a condition that certain nodes are used up prematurely and a large amount of surplus energies are left in other sensor nodes often occurs in the sensor network, thus the routing of the nodes is focused, and the energy consumption of each node is balanced, thereby effectively improving the network energy consumption efficiency and prolonging the network survival time.

Owner:SHANGHAI INST OF MICROSYSTEM & INFORMATION TECH CHINESE ACAD OF SCI +1

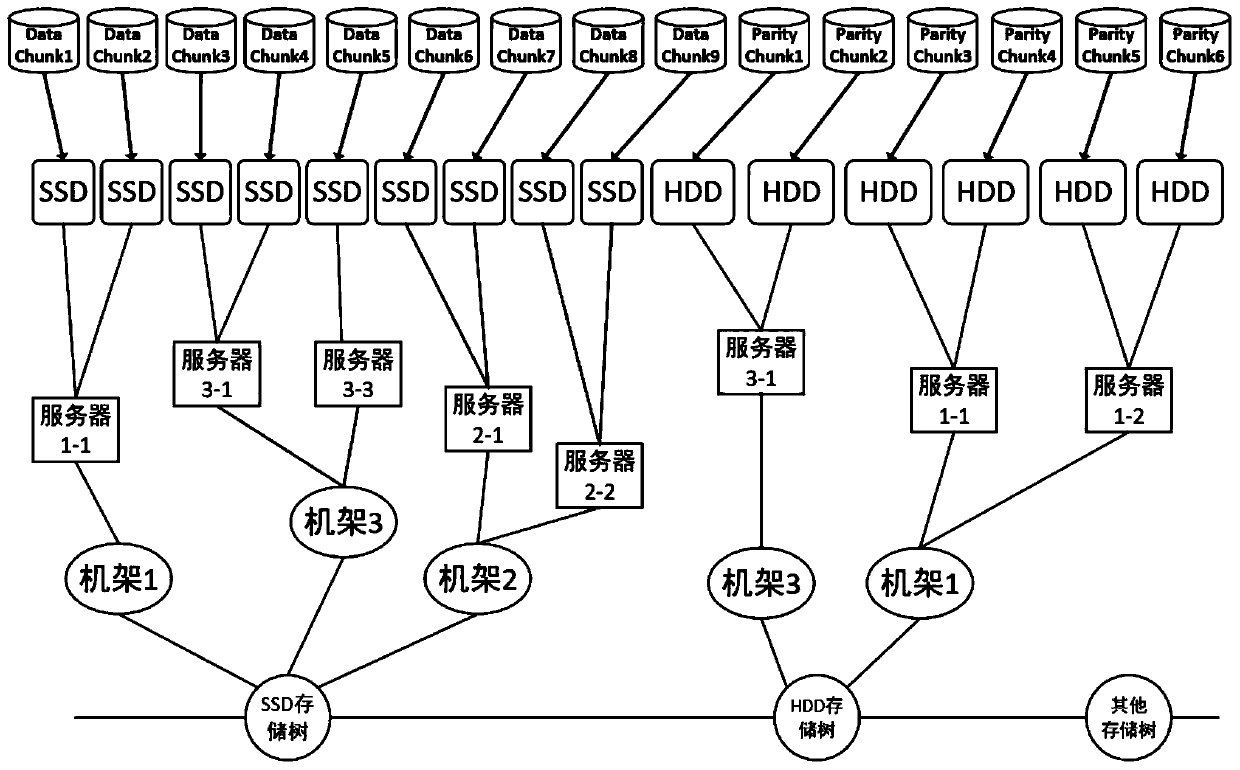

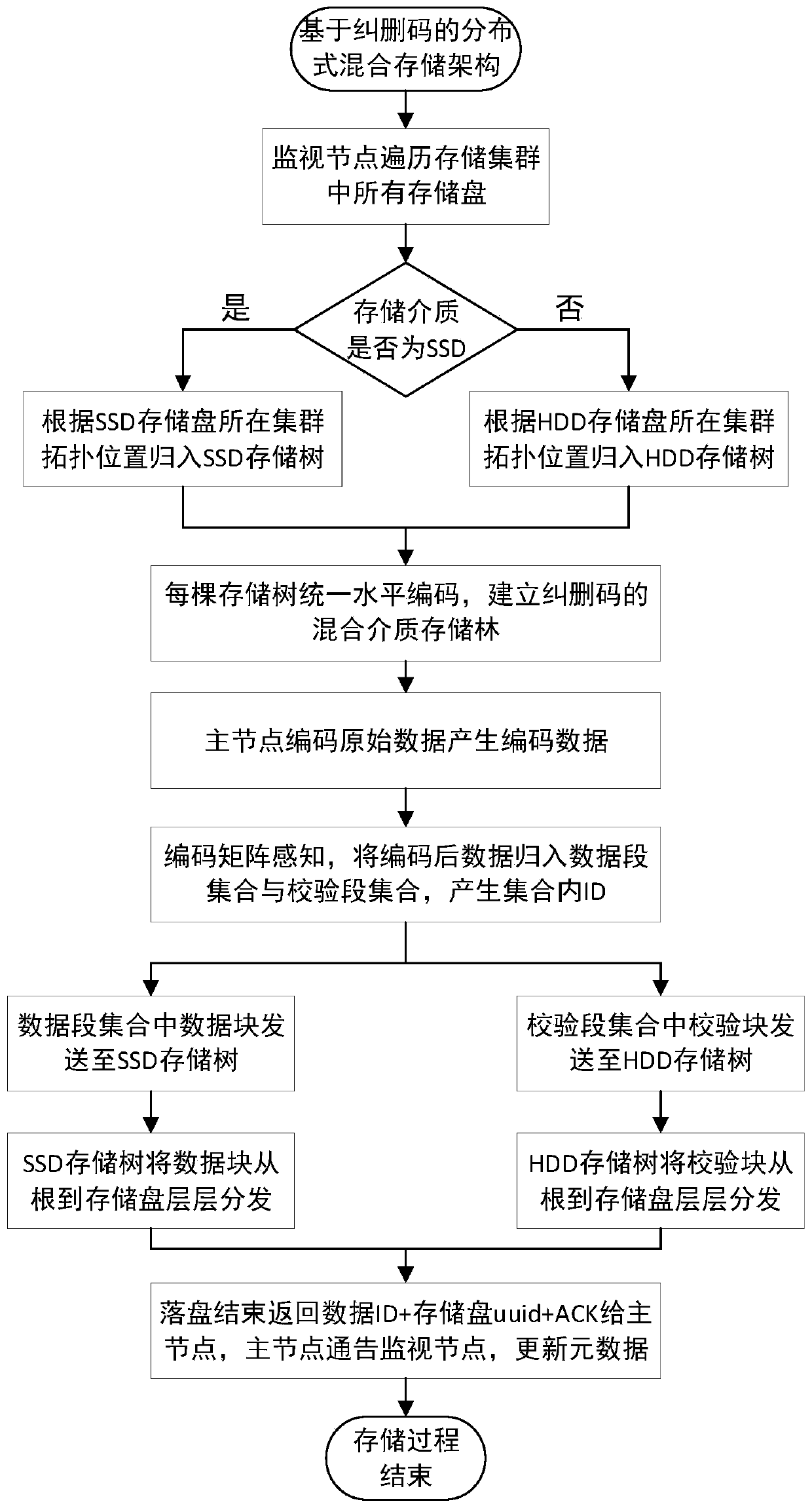

Forest type storage structure and method for distributed erasure code hybrid storage based on multiple storage media

ActiveCN110531936AGuaranteed accuracyGuaranteed randomnessInput/output to record carriersWrite amplificationSolid-state drive

The invention discloses a forest type storage structure and a method for a distributed erasure code hybrid storage based on multiple storage media, which are characterized in that data block data of erasure codes are placed in a solid state disk for storage in a distributed storage system, and data check block data of the erasure codes are placed in a mechanical hard disk for storage. The method comprises the following steps: (1) classifying data storage media in a distributed storage system, and establishing a forest type hybrid storage structure; (2) classifying erasure code data in the distributed storage system into data block data and check block data, and marking the data block data and the check block data; and (3) placing the classified erasure code data on a specific tree of a forest type storage structure for distribution and disk falling. In this way, hybrid architecture storage of erasure code data on distributed storage based on multiple storage media is achieved. According to the invention, excessive wear of SSD caused by erasure code write amplification can be solved, the system performance is improved with lower cost, the service life is prolonged, and the reliability is enhanced.

Owner:XI AN JIAOTONG UNIV

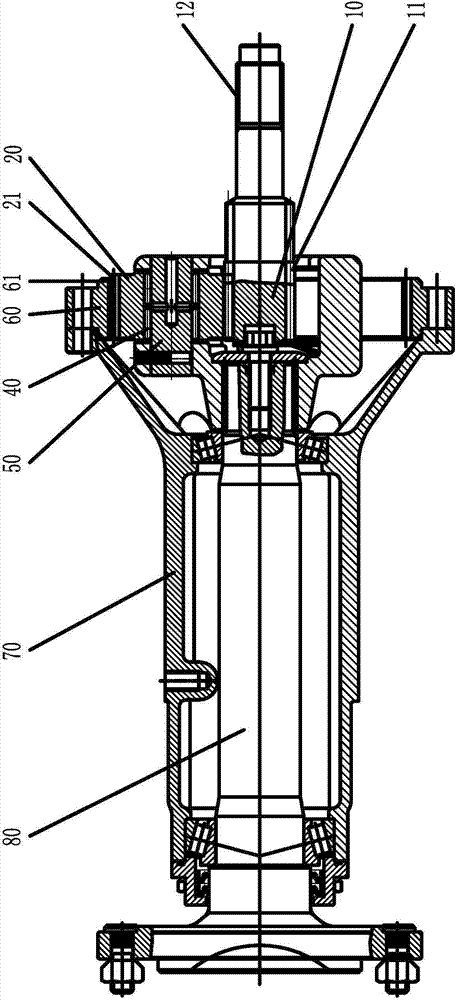

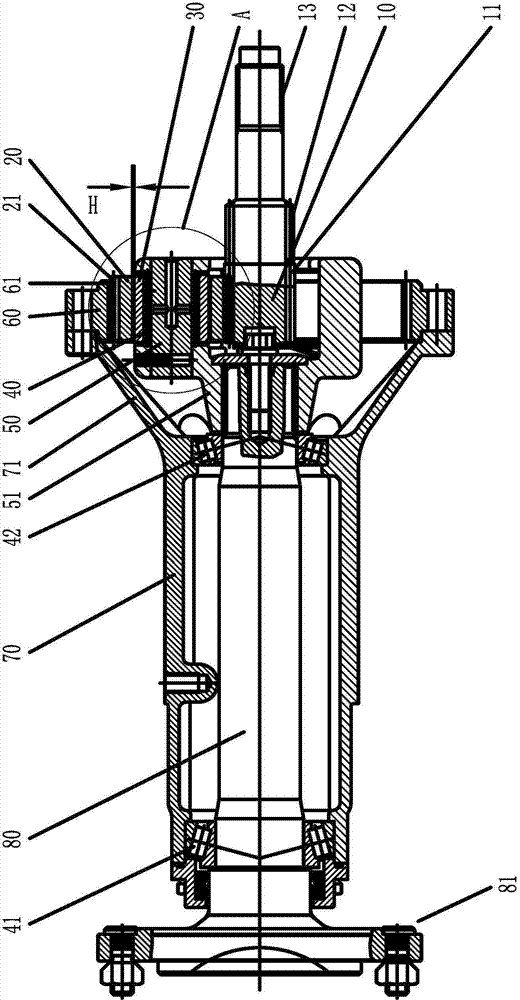

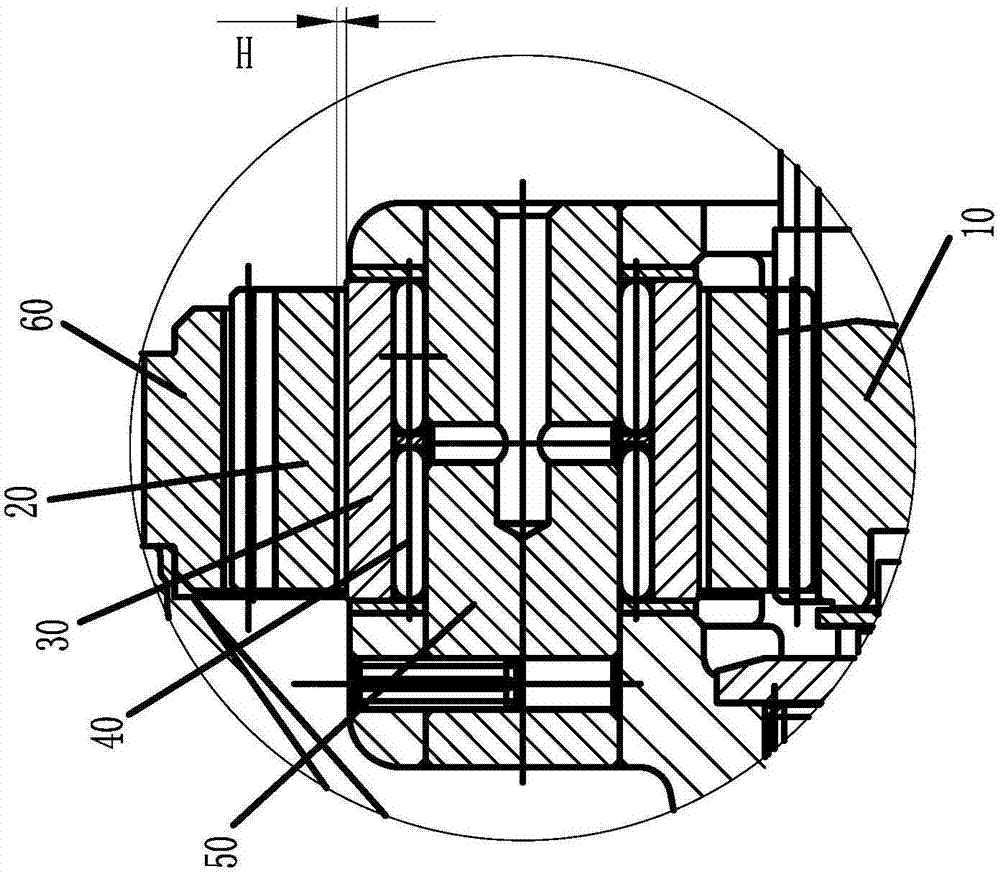

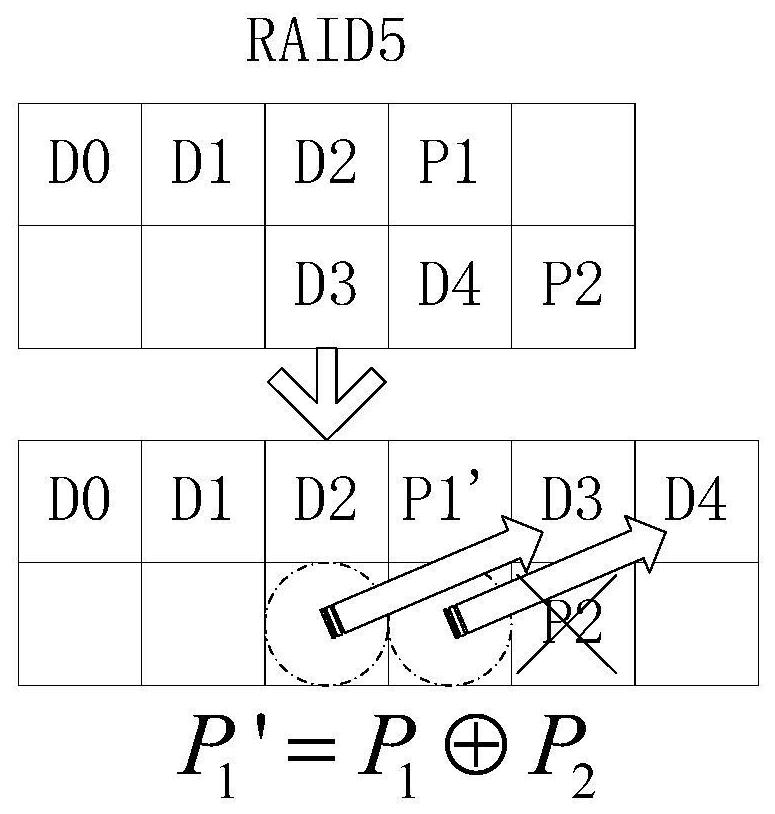

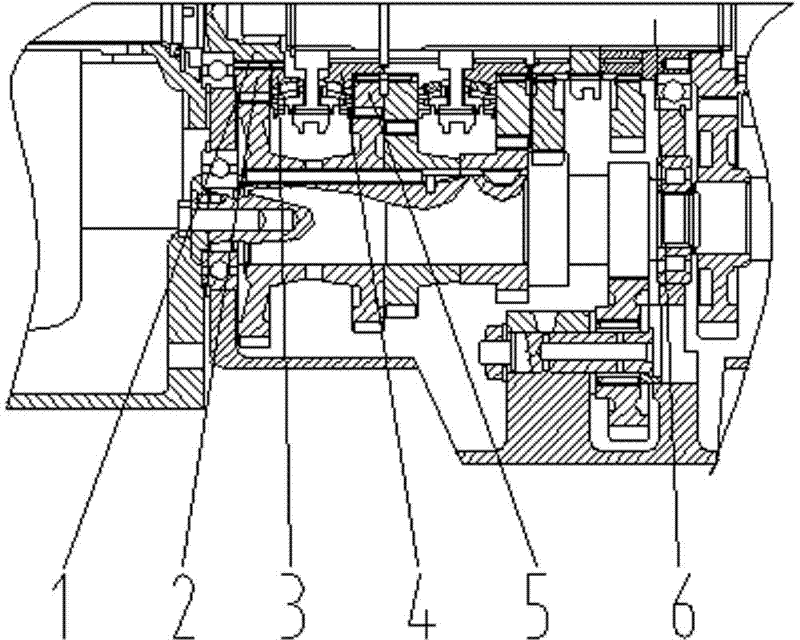

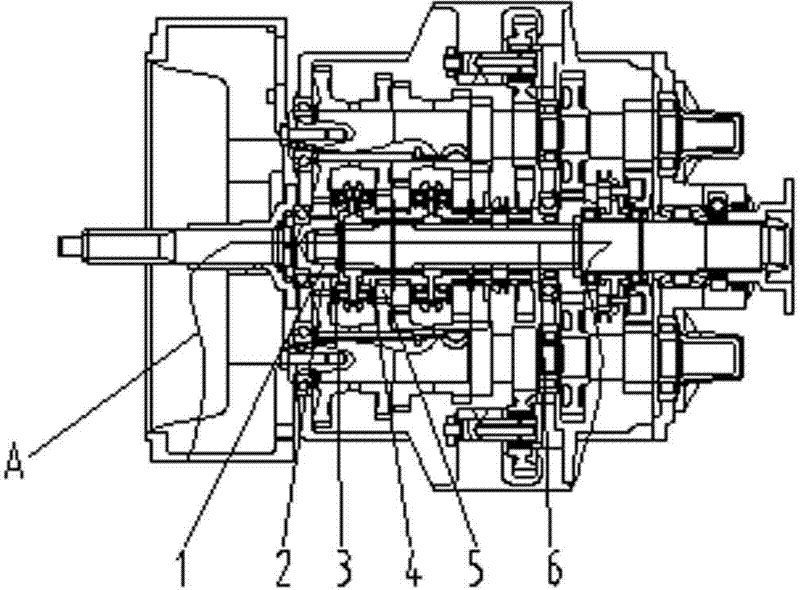

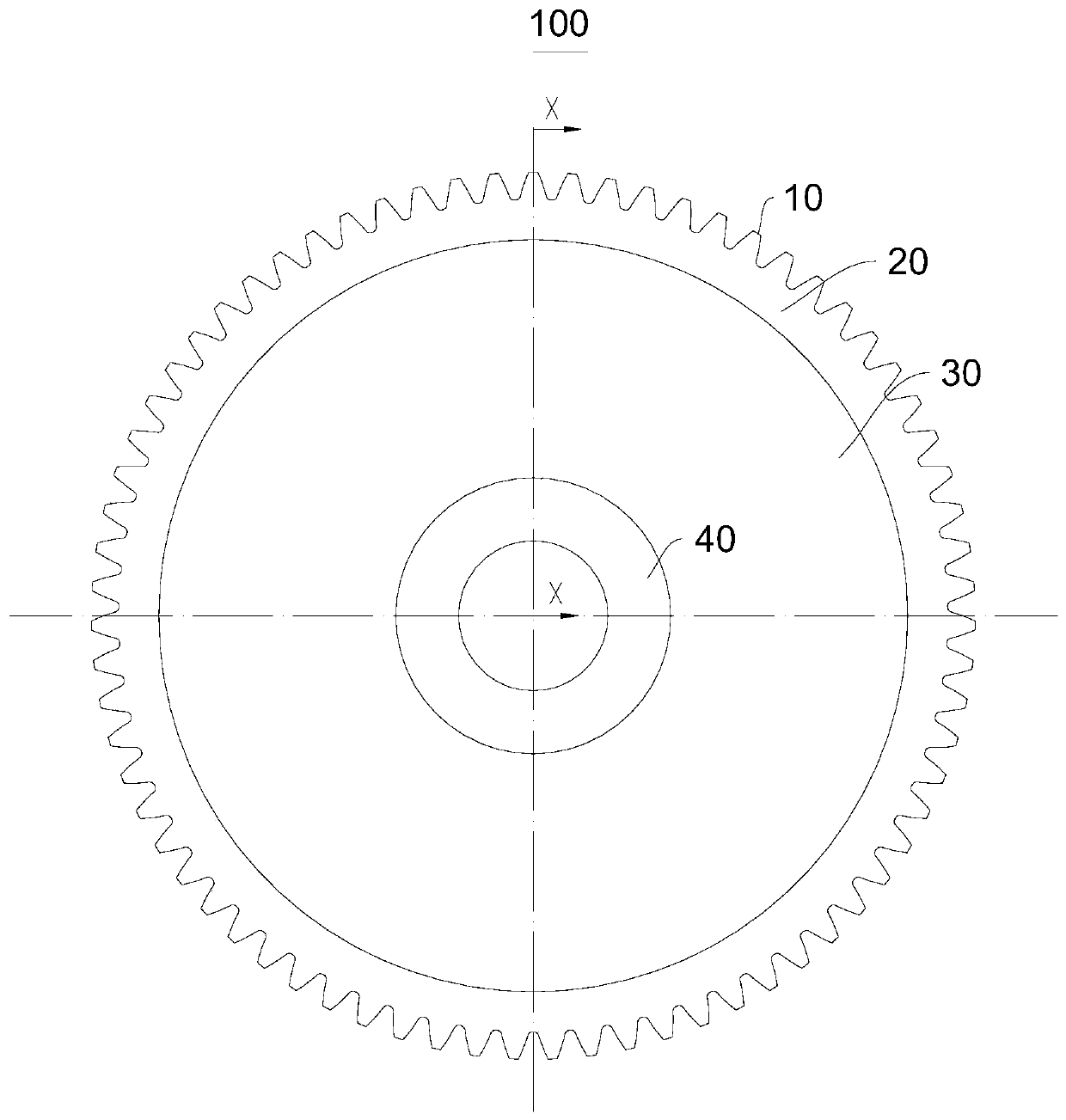

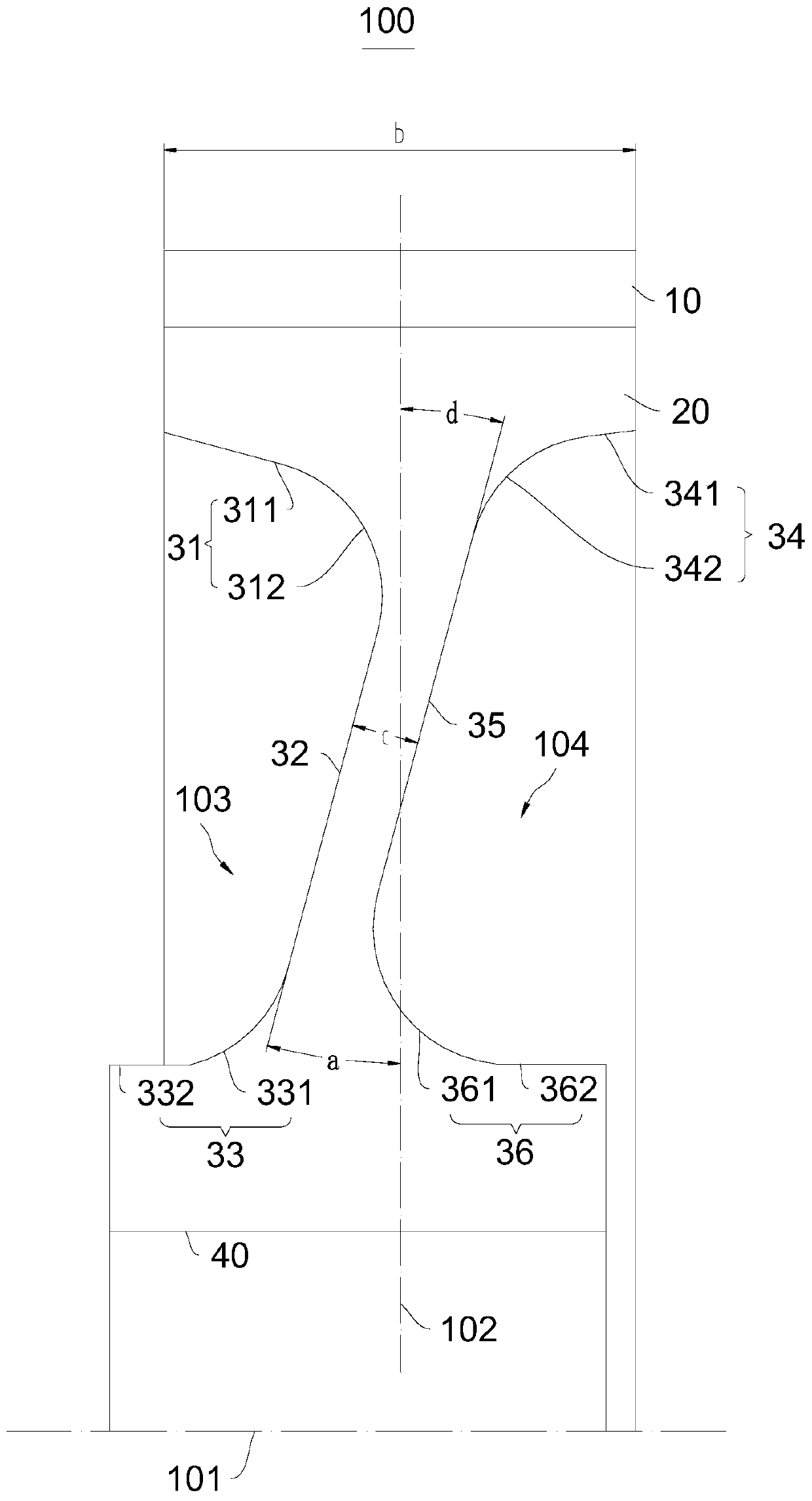

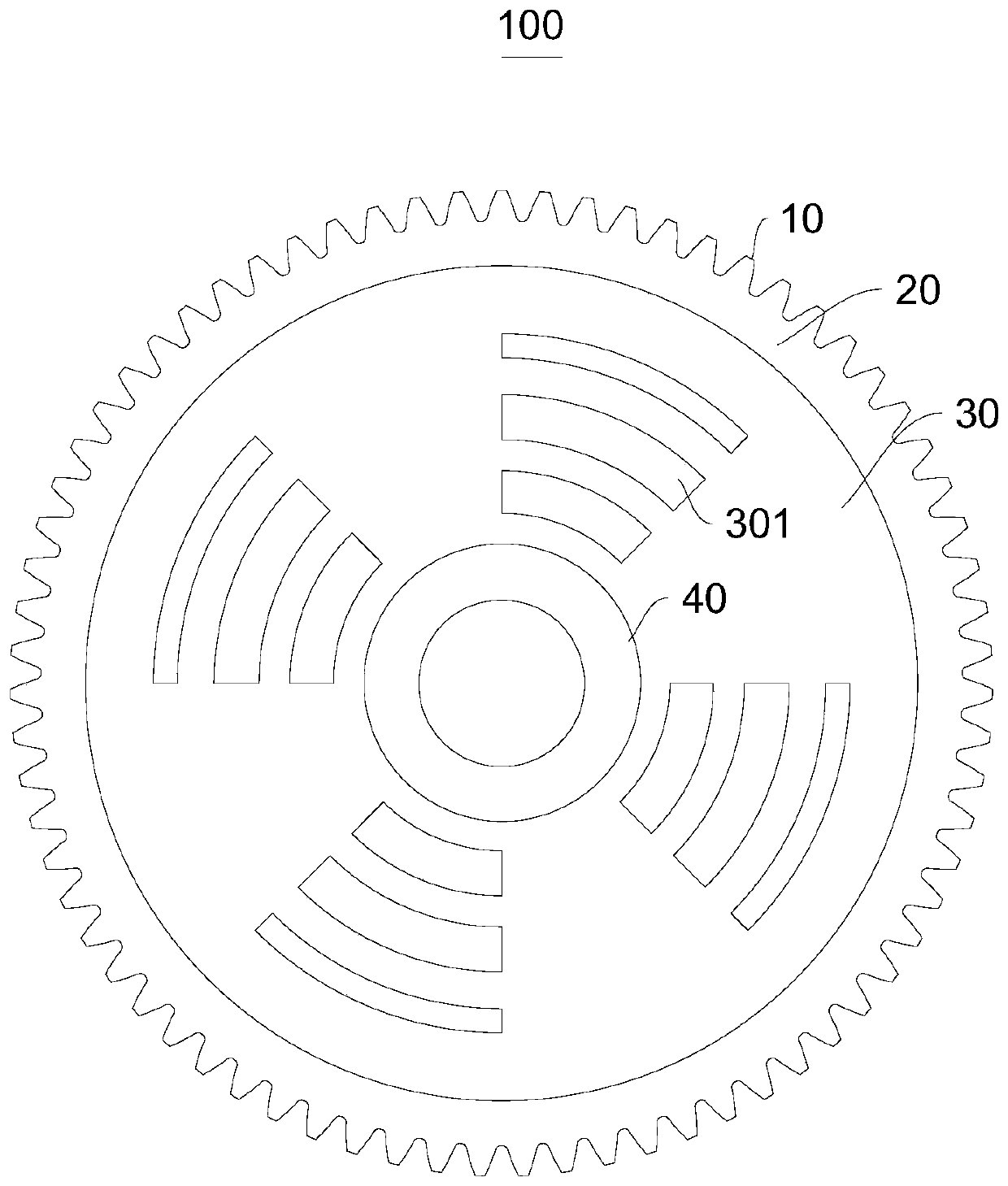

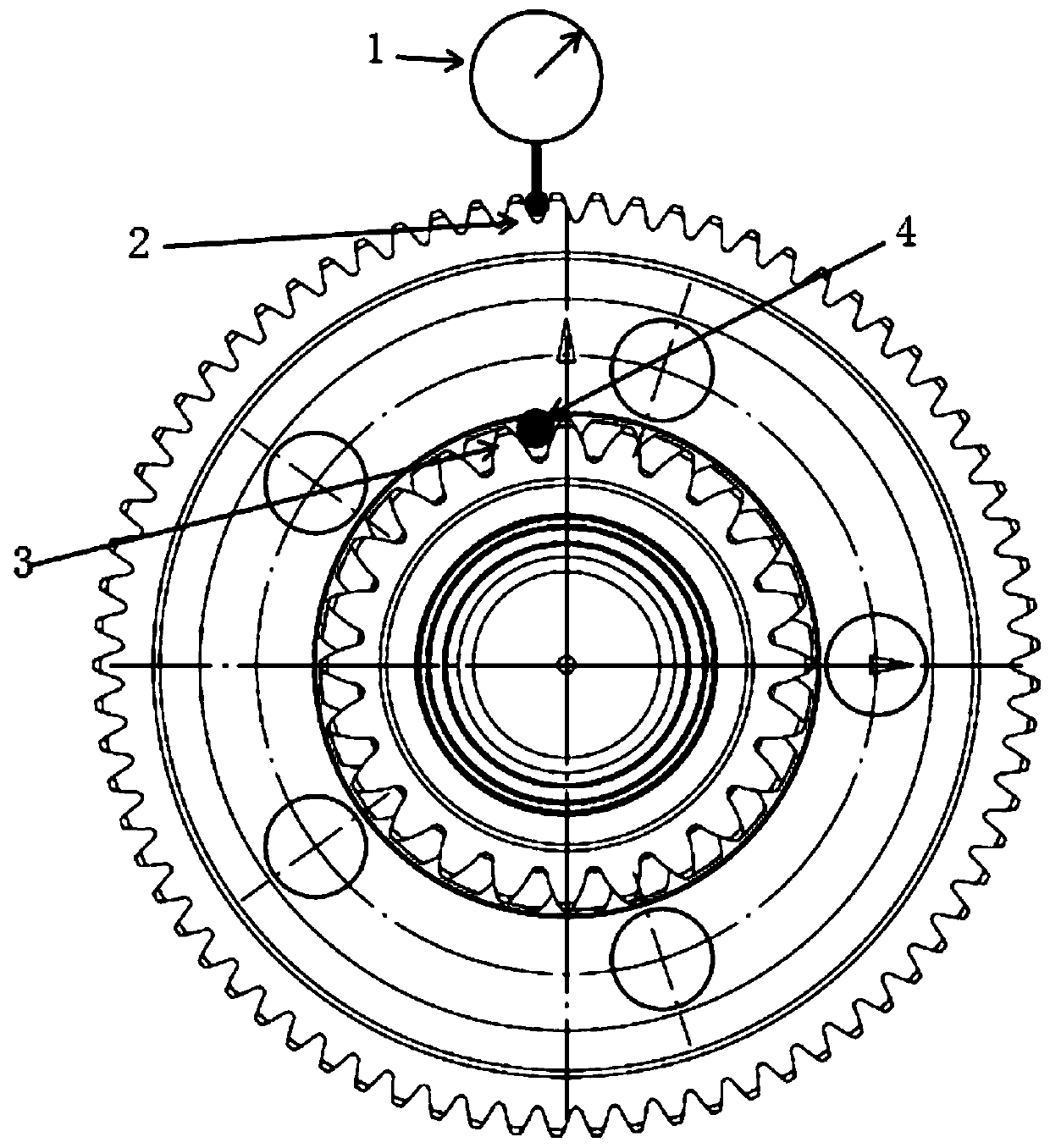

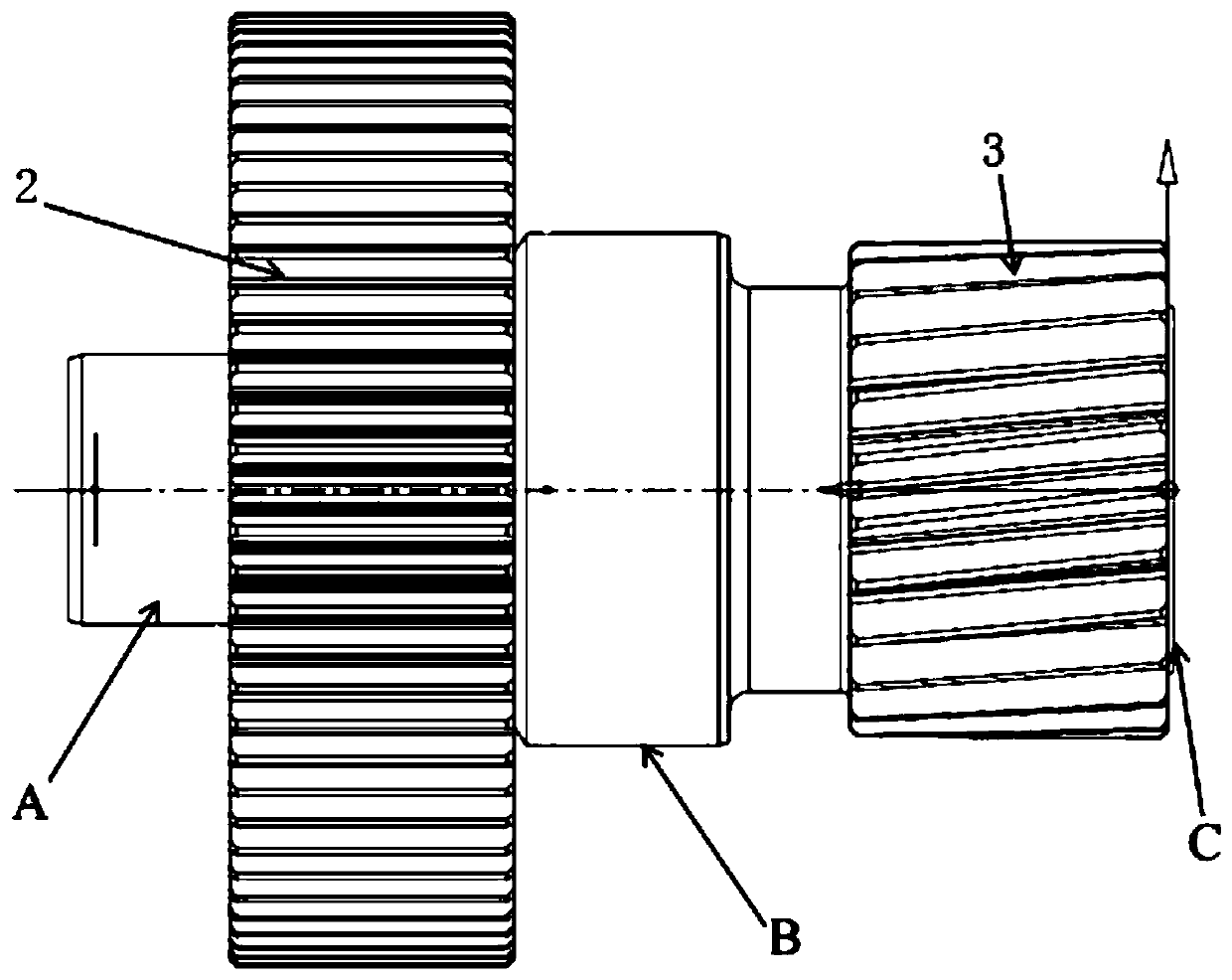

Improvement in planet final drive of tractor

ActiveCN103883681AOutstanding and Beneficial Technical EffectsGuaranteed load balancingToothed gearingsGearing detailsDrive shaftEngineering

The invention belongs to the technical field of final drive of tractors, and relates to an improvement in planet final drive of a tractor. The improvement comprises an output shaft and a driving shaft, wherein the output shaft and the driving shaft are coaxially arranged. The output shaft is sleeved with a shaft sleeve, a sun wheel is arranged at one end of the driving shaft, planet wheels meshed with the sun wheel for drive are installed on planet wheel axles installed on the output shaft, a gear ring arranged on the shaft sleeve and provided with internal teeth is meshed with the planet wheels for drive, a bearing and an intermediate ring are sequentially arranged between each planet wheel axle and the corresponding planet wheel in a sleeved mode from inside to outside, and radial floating intervals are formed between the planet wheels and the corresponding intermediate rings. The improvement has the advantages that the intermediate rings are arranged, the radial floating intervals are formed between the planet wheels and the corresponding intermediate rings to form buffer oil films, the planet wheels can be connected in a suspension mode, uniform loads of the planet wheels in the tooth length direction in the working process are guaranteed, and the service life of the planet wheels is prolonged.

Owner:ZHEJIANG HAITIAN MACHINERY

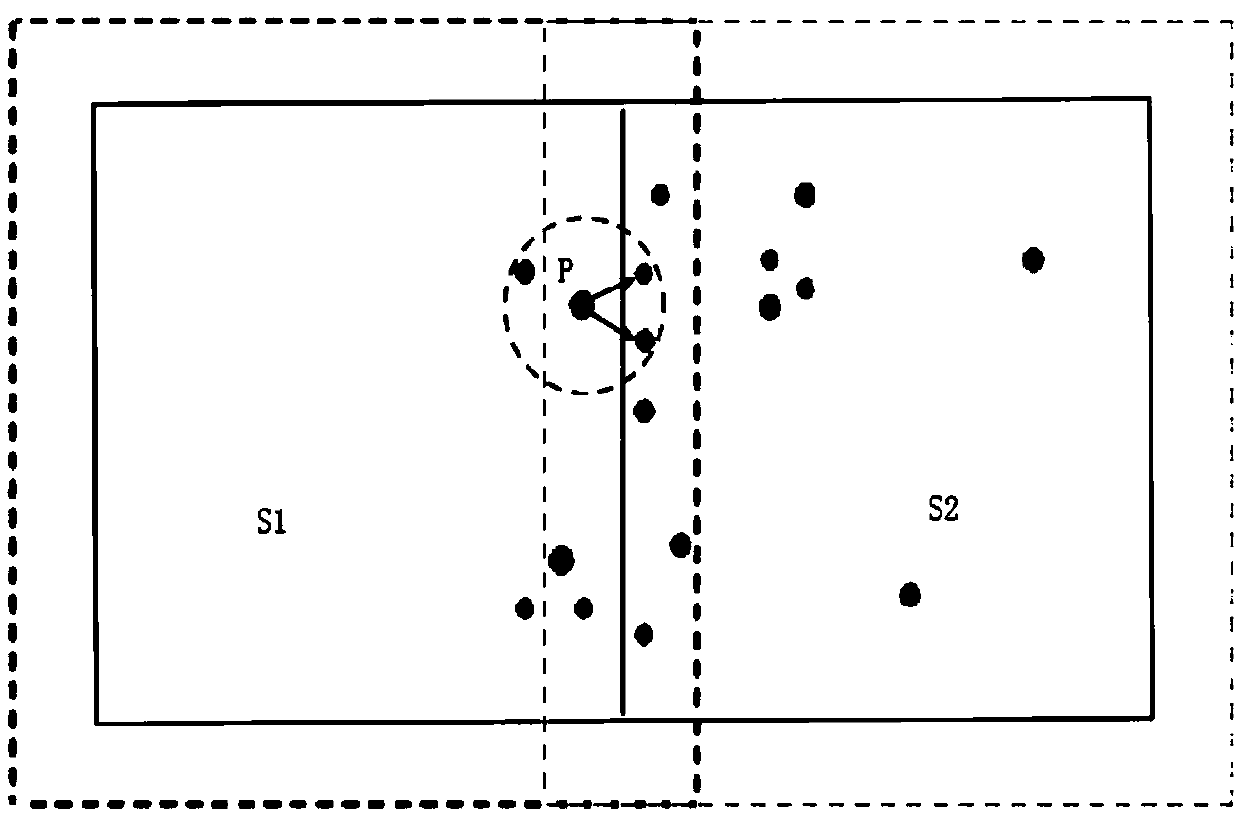

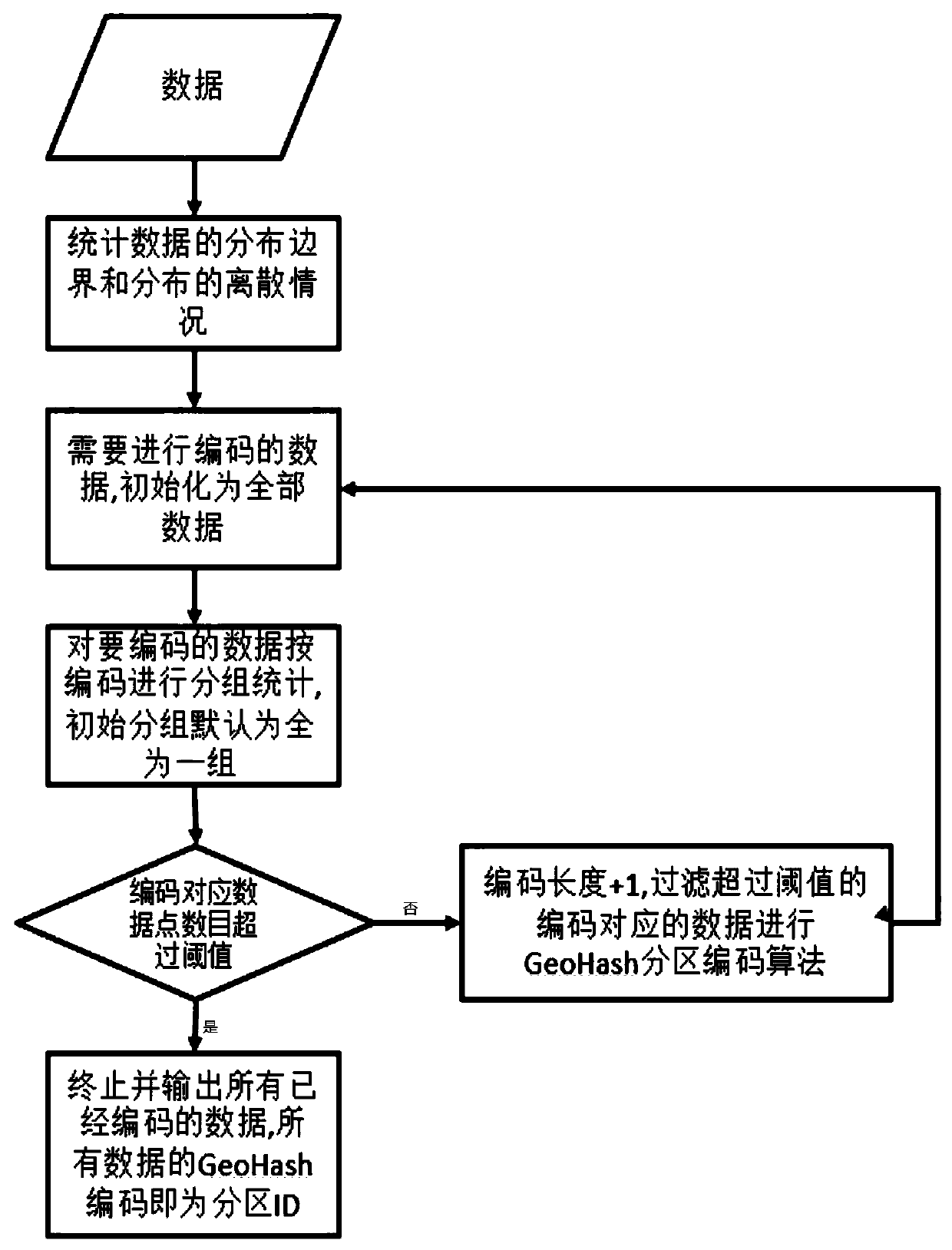

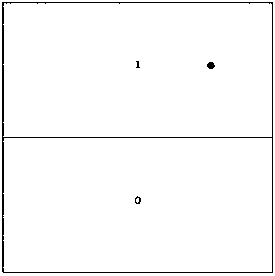

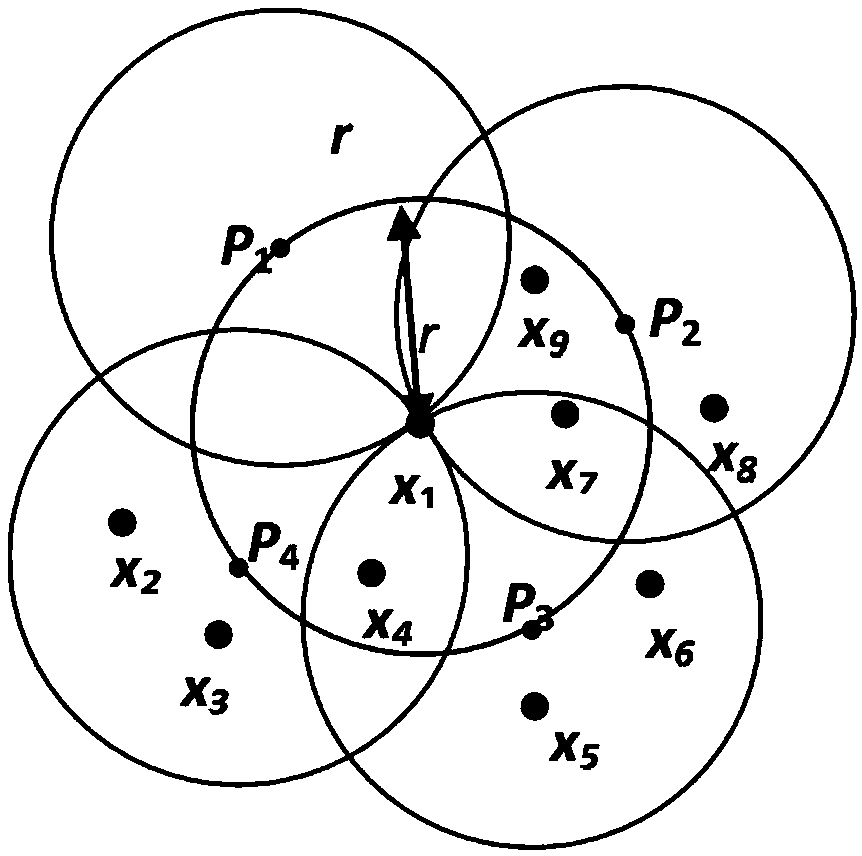

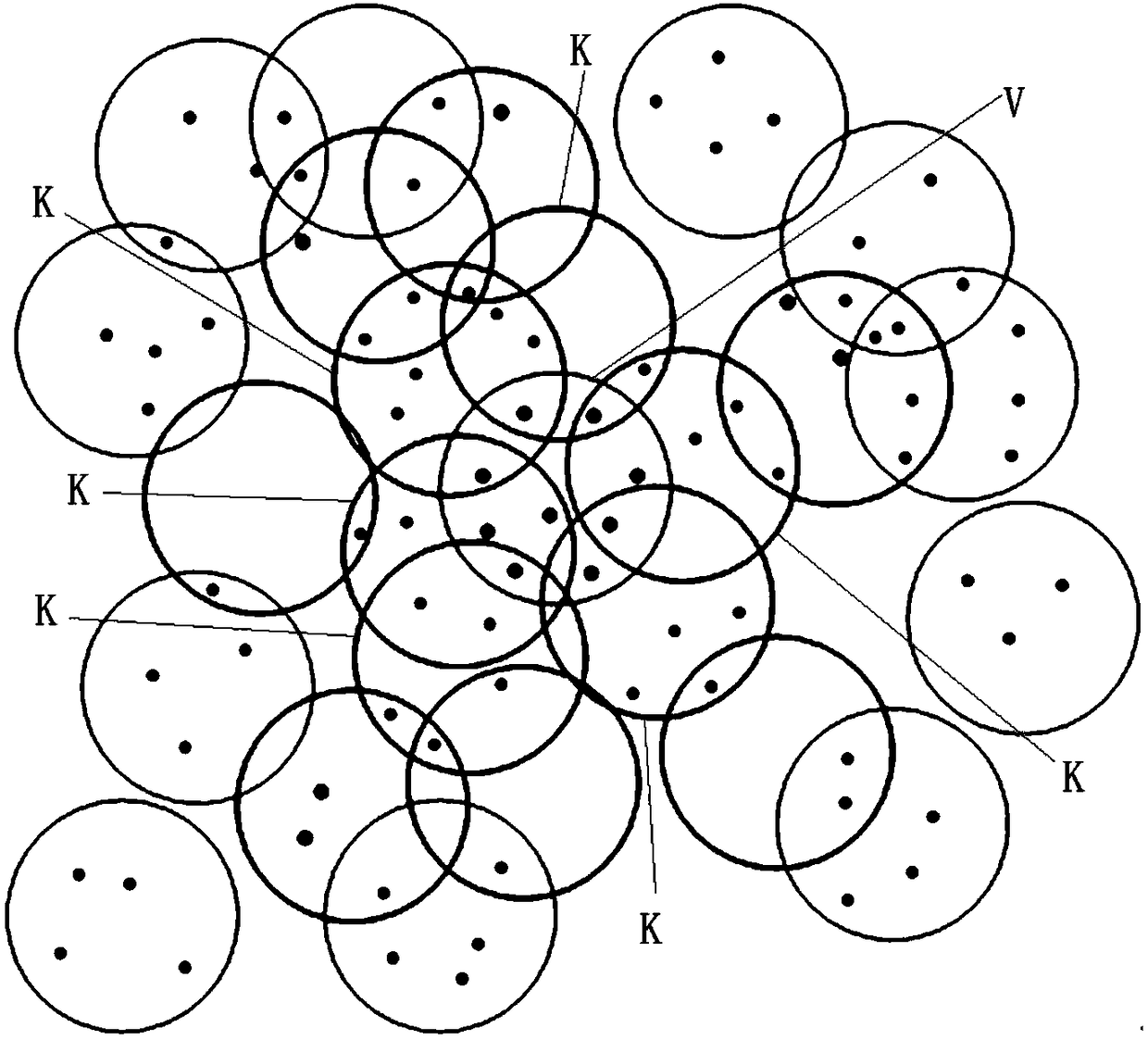

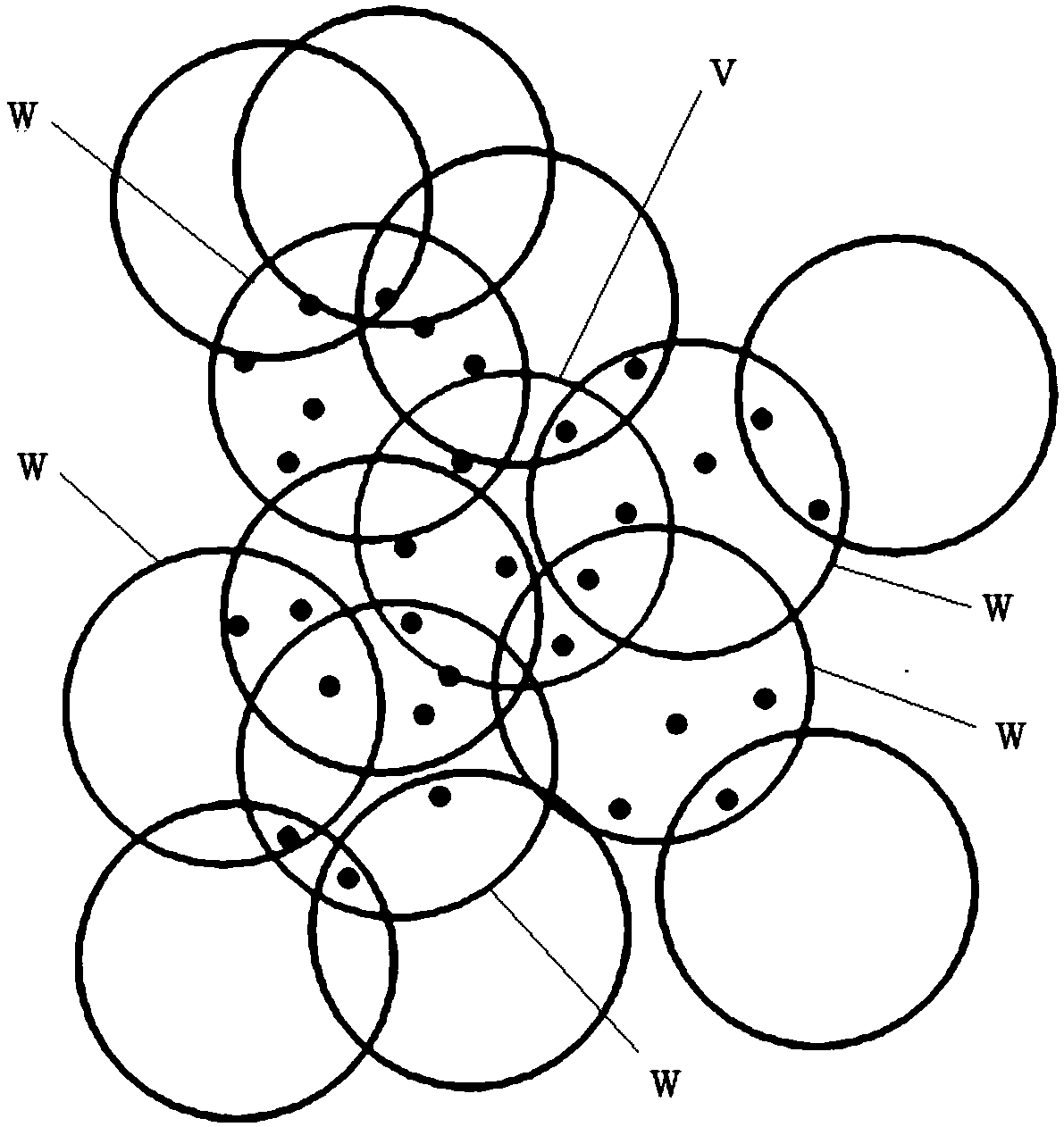

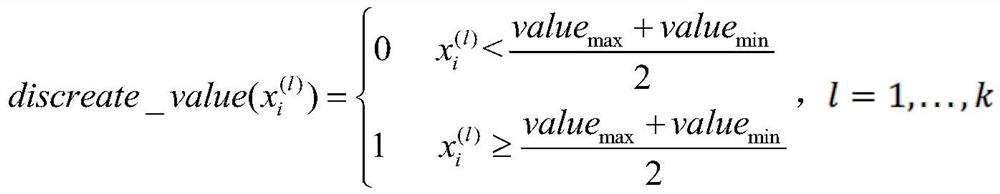

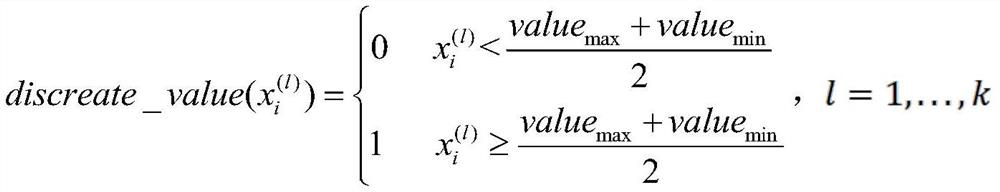

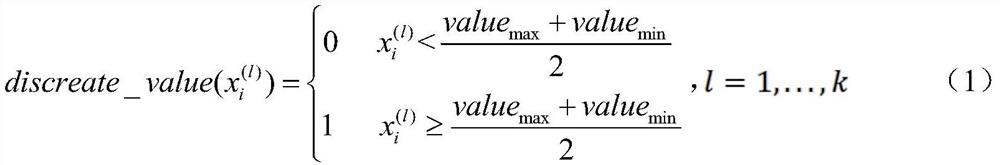

Mass data clustering analysis method and device

InactiveCN110717086AGuaranteed load balancingImprove computing efficiencyOther databases indexingOther databases clustering/classificationLoad balancing (computing)DBSCAN

The invention discloses a mass data clustering analysis method and a mass data clustering analysis device, and aims to realize a DBSCAN algorithm based on parallel computing and solve the problem thata traditional density clustering algorithm cannot perform mass data analysis. According to the invention, an efficient overlapping partitioning and class cluster merging strategy is provided; data splitting and class cluster merging can be quickly carried out; according to the method, load balancing is fully considered, efficient operation can be achieved under a distributed framework, therefore,clustering of mass data is supported, the problem that mass data analysis cannot be conducted through a traditional DBSCAN is efficiently solved, and therefore the method has high performance and practical value.

Owner:CHENGDU SEFON SOFTWARE CO LTD

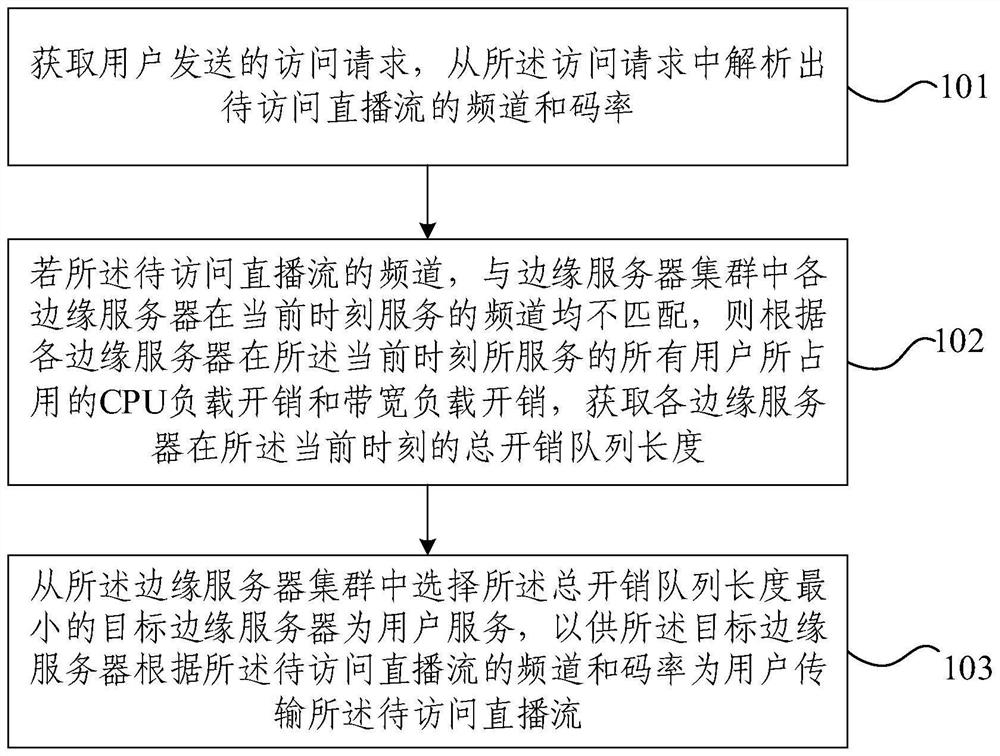

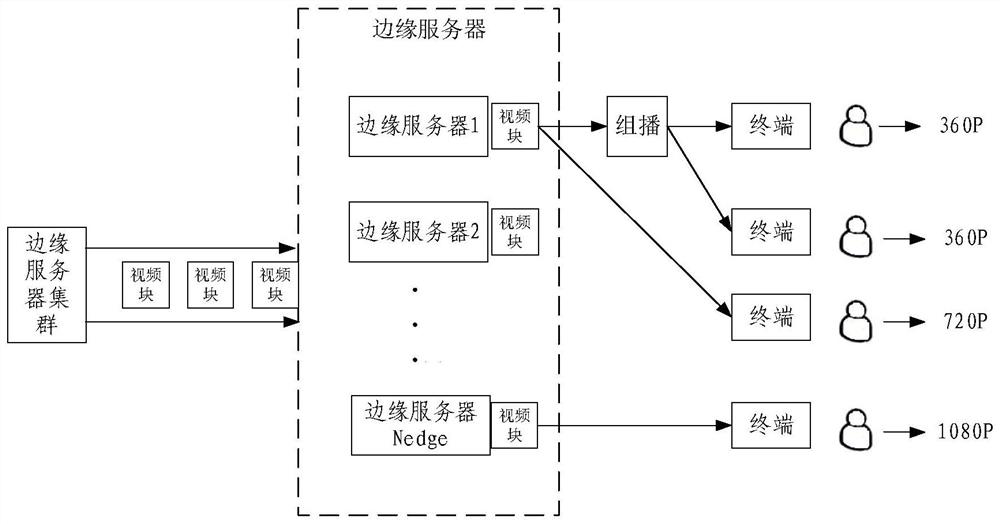

Load balancing method and device based on queue overhead under edge streaming media

PendingCN114518956AGuaranteed load balancingImprove resource utilizationResource allocationEngineeringCpu load

The invention provides a load balancing method and device based on queue overhead under edge streaming media, and the method comprises the steps: obtaining an access request sent by a user, and analyzing a channel and a code rate of a to-be-accessed live broadcast stream from the access request; if the channel of the to-be-accessed live stream is not matched with the channel served by each edge server in an edge server cluster at the current moment, determining the channel of the to-be-accessed live stream according to the CPU load overhead and bandwidth load overhead occupied by all users served by each edge server at the current moment; obtaining the total overhead queue length of each edge server at the current moment; and selecting a target edge server with the minimum total overhead queue length from the edge server cluster to serve a user, so that the target edge server transmits the to-be-accessed live broadcast stream to the user according to the channel and the code rate of the to-be-accessed live broadcast stream. According to the invention, load balancing is improved, and better service experience is provided for users.

Owner:BEIJING UNIV OF POSTS & TELECOMM

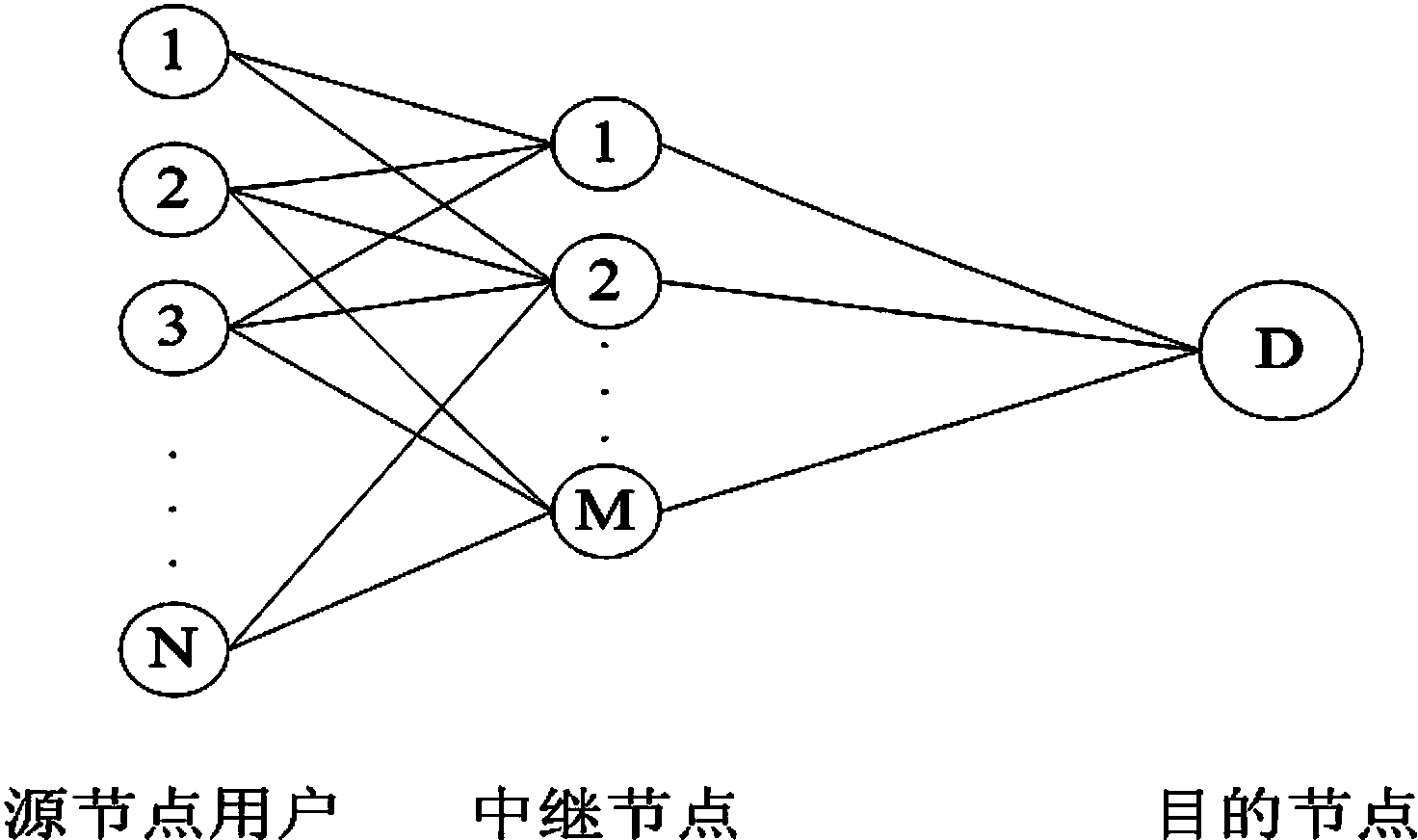

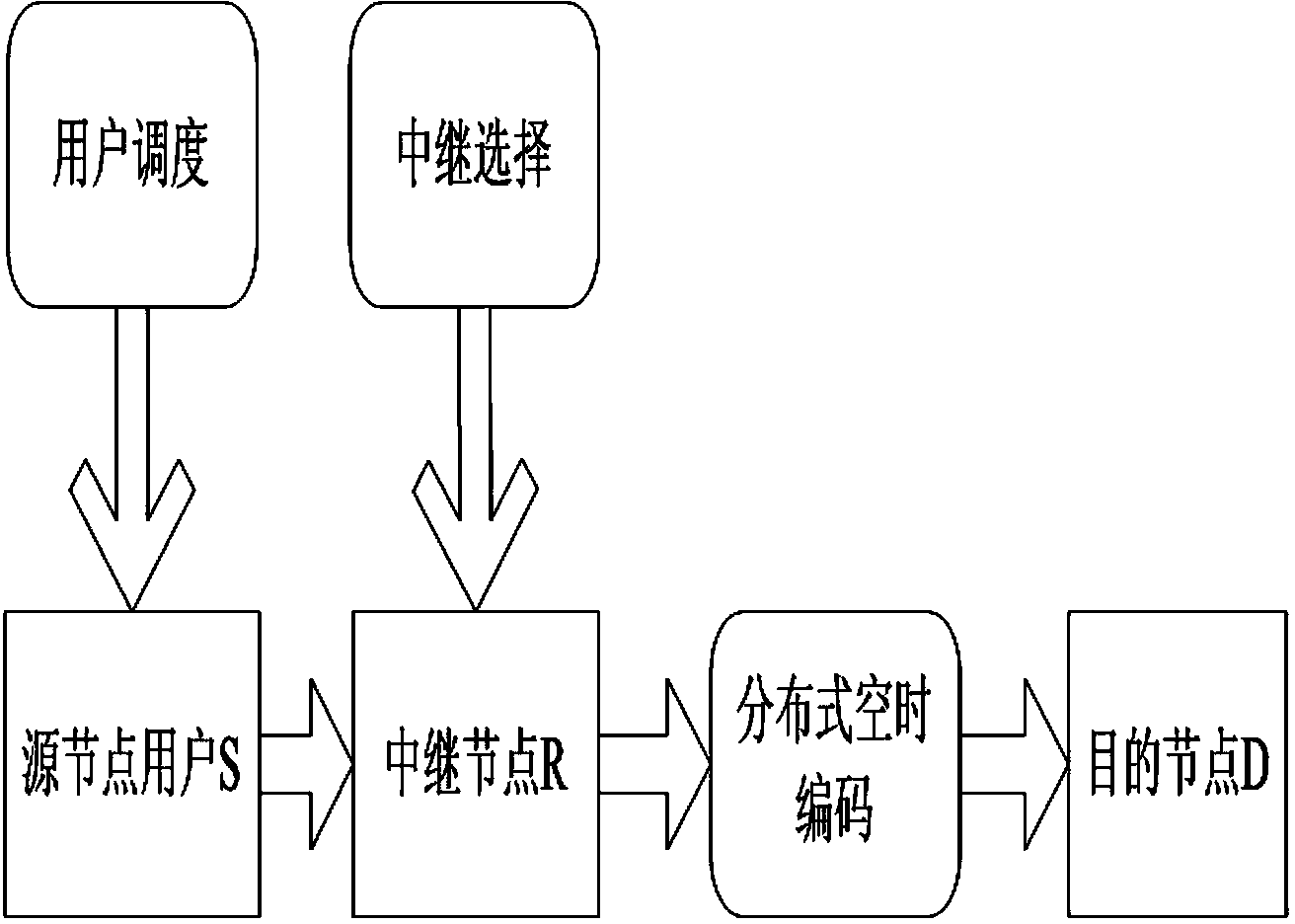

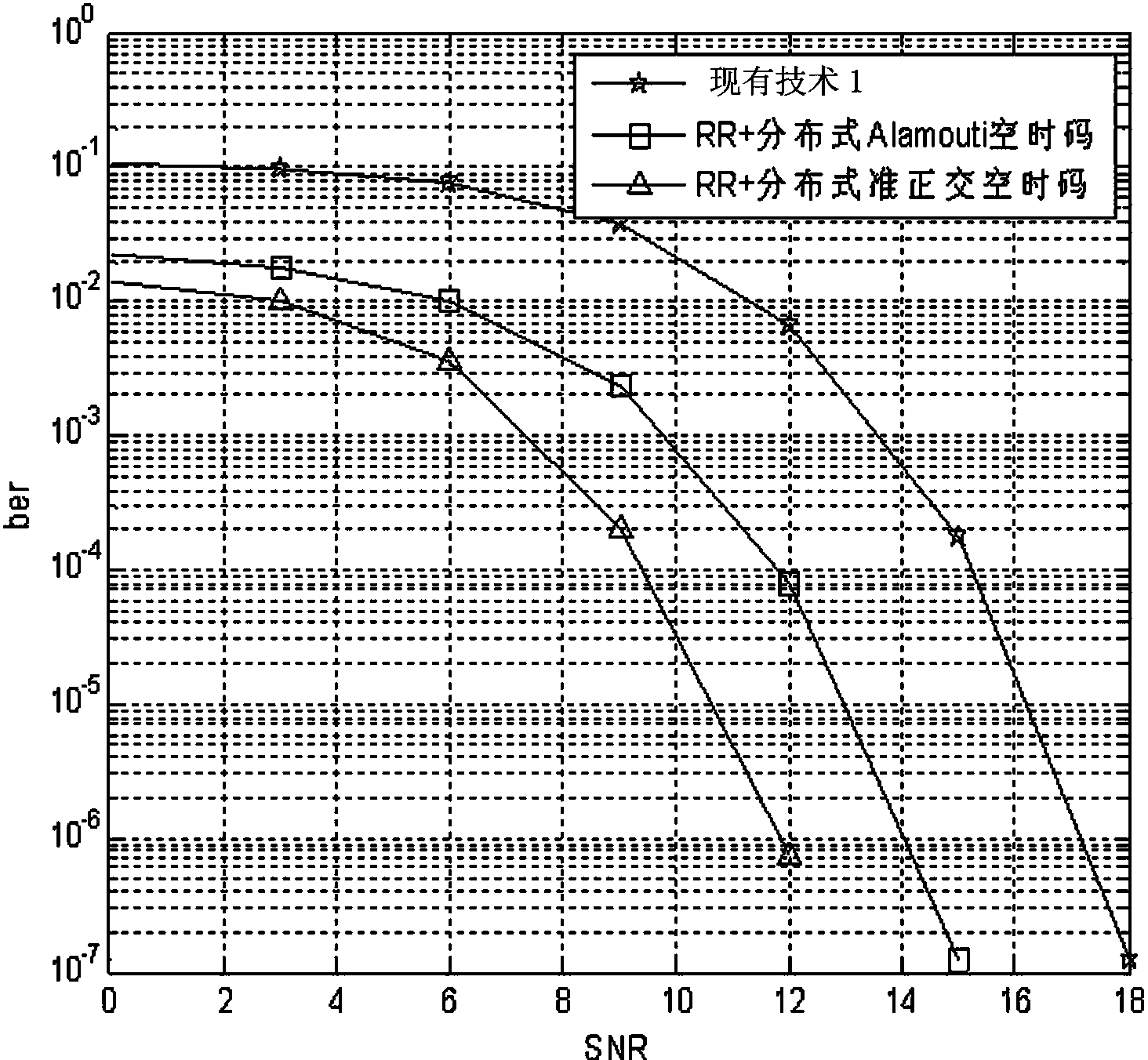

Polling grouping scheduling method in multi-source multi-relay cooperation network

InactiveCN103354651AImprove system performanceGuaranteed load balancingNetwork traffic/resource managementError prevention/detection by diversity receptionDistributed computingUtilization rate

The invention discloses a polling grouping scheduling method in a multi-source multi-relay cooperation network. The method is combined with distributed time space coding. In the multi-source multi-relay cooperation network, there are two node cluster selections. A distributed two step node selection scheme is adopted. According to a polling grouping scheme and taking K relays as one group, the relay node polling is divided into several relay subsets. And the relay node reversely schedules an optimal source node user subset. During a scheduling process, fairness of all the users is guaranteed according to a polling scheduling algorithm. Through a coupling selection, a node selection process based on load balancing and the user fairness is realized. And then, the distributed time space coding is introduced to the selected relay node. According to the invention, on an aspect of relay selection grouping, extra signaling cost is not needed so that it is simple to realize; the load balancing of the relay is guaranteed and the transmission fairness of the users can be guaranteed too; practicality is possessed; after the distributed time space coding is introduced, a frequency spectrum utilization rate is increased and performance of the system is increased too.

Owner:CHINA JILIANG UNIV

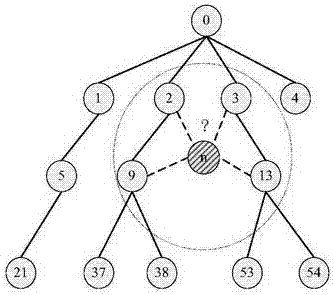

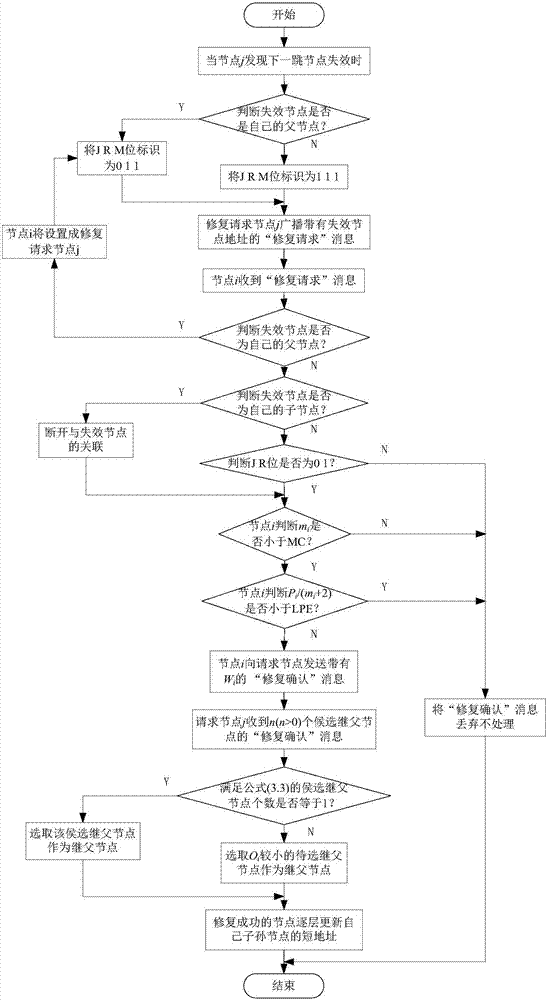

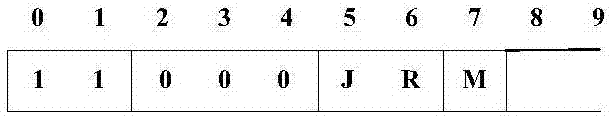

Low power consumption wireless personal area network tree-like routing method based on IPv6

InactiveCN107197480AGuaranteed load balancingImprove survival rateNetwork traffic/resource managementNetwork topologiesUplink transmissionSelf adaptive

The invention belongs to the field of wireless sensor networks, and particularly relates to a low power consumption wireless personal area network tree-like routing method based on IPv6. The method is divided into a node networking stage, a data transmission stage and a path repair stage during operation. The method uses the optimal parent node selection mechanism based on load balancing and an adaptive path prognosis repair mechanism. By reducing the redundancy of a control message and increasing the selected parameters in the parent node selection process, the problems of unreasonable selection, redundancy expenditure in the control message and the like of the existing low power consumption wireless personal area network tree-like routing method based on IPv6 are solved. During a path repair process, the relationship between a failure node and the previous hop node is determined, and then path repair is carried out in a targeted manner. Downlink path repair, the repair of the failure node and other descendant nodes in an uplink transmission process and the like of the existing low power consumption wireless personal area network tree-like routing method based on IPv6 are realized. The node survival rate is improved. The network survival time, the data transmission success rate and other performances are improved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

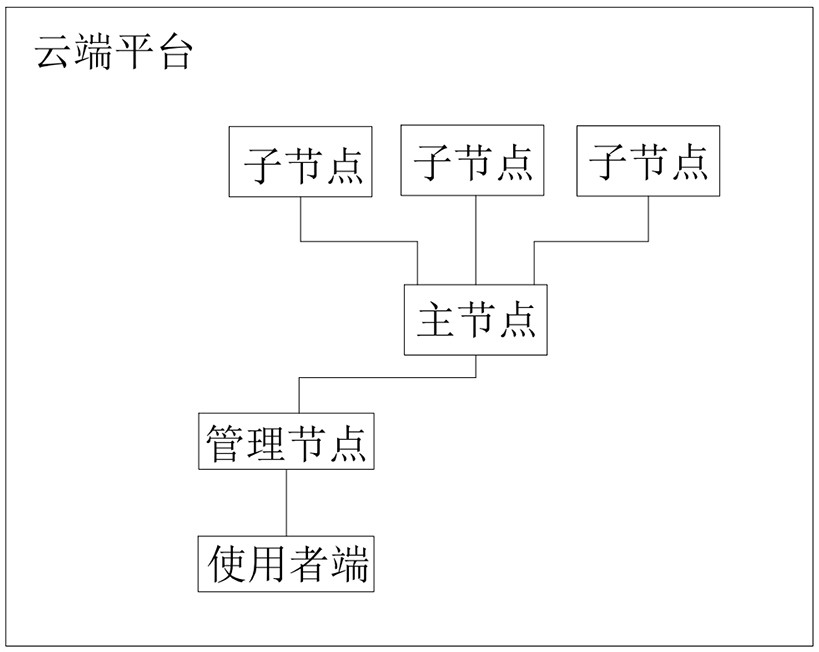

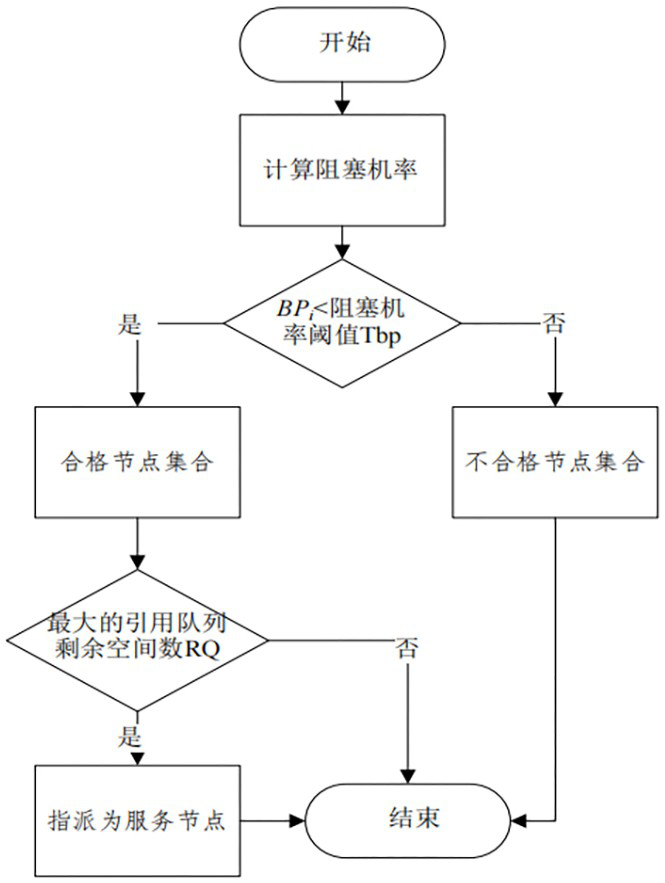

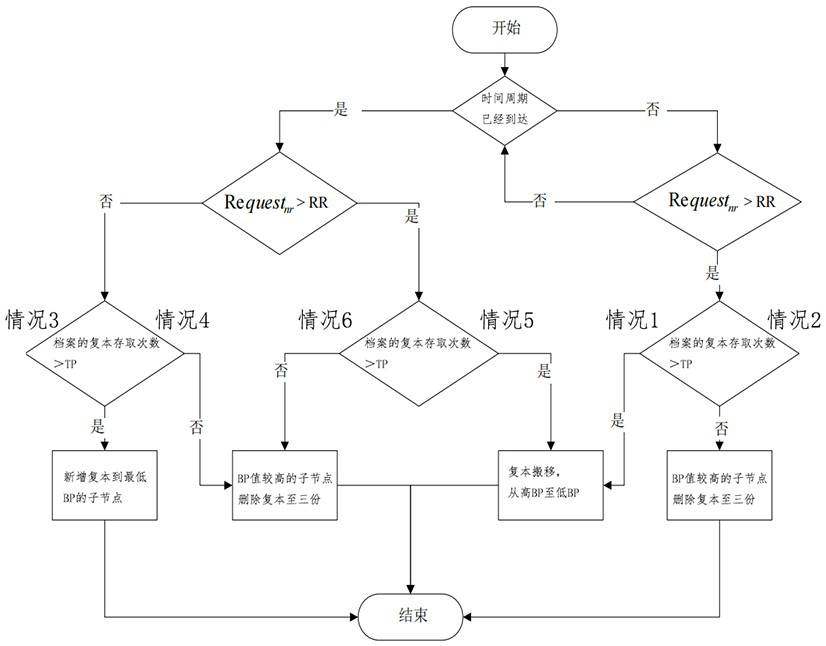

Distribution method for distributed storage of cloud data

ActiveCN114827180AGuaranteed load balancingReduce waiting timeTransmissionEngineeringArchival storage

A distribution method for distributed storage of cloud data belongs to the technical field of resource distribution and comprises the following steps: step S1, establishing a cloud platform; the cloud platform comprises a user side, a management node, a main node and a child node; the main node is used for classifying archives and setting the number of duplicates of the archives; the master node distributes the file replica to the child nodes and records the state of the file replica; step S2, a blocking pre-screening mechanism: the main node screens out child nodes of which the blocking probability BPi is smaller than a blocking probability threshold value Tbp, and a qualified node set is formed; and S3, a reference queue load balancing mechanism: in the qualified node set, the main node screens out a sub-node with the maximum reference queue residual space number RQ to serve as an optimal sub-node for file copy storage, and file copy storage is carried out. According to the scheme, three file storage configuration mechanisms with a progressive relationship are provided on the cloud platform, and dynamic copy configuration is carried out to maintain load balance between nodes.

Owner:蒲惠智造科技股份有限公司

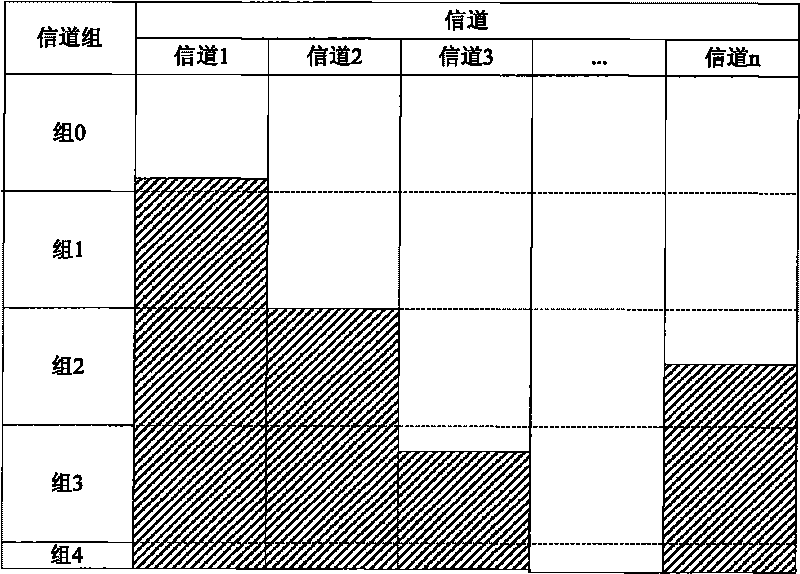

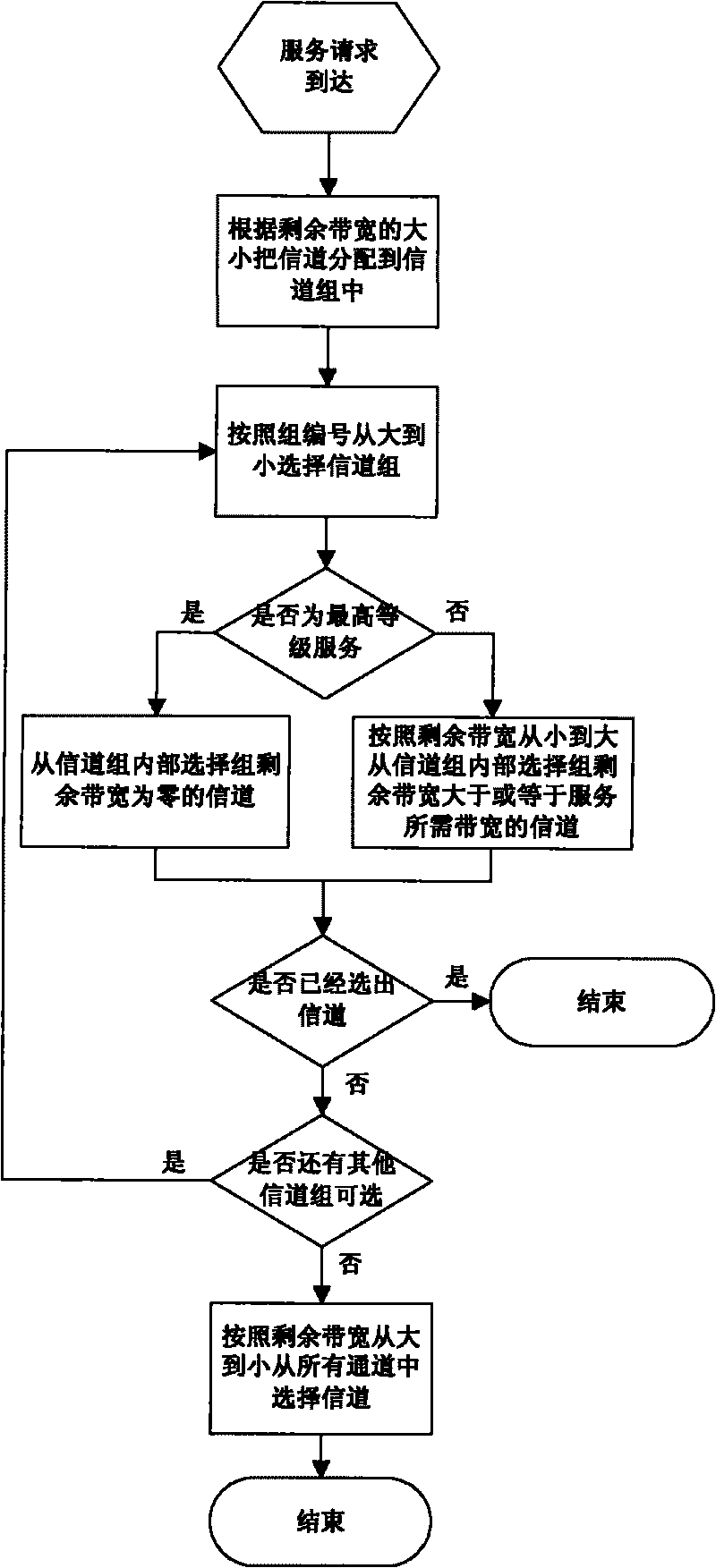

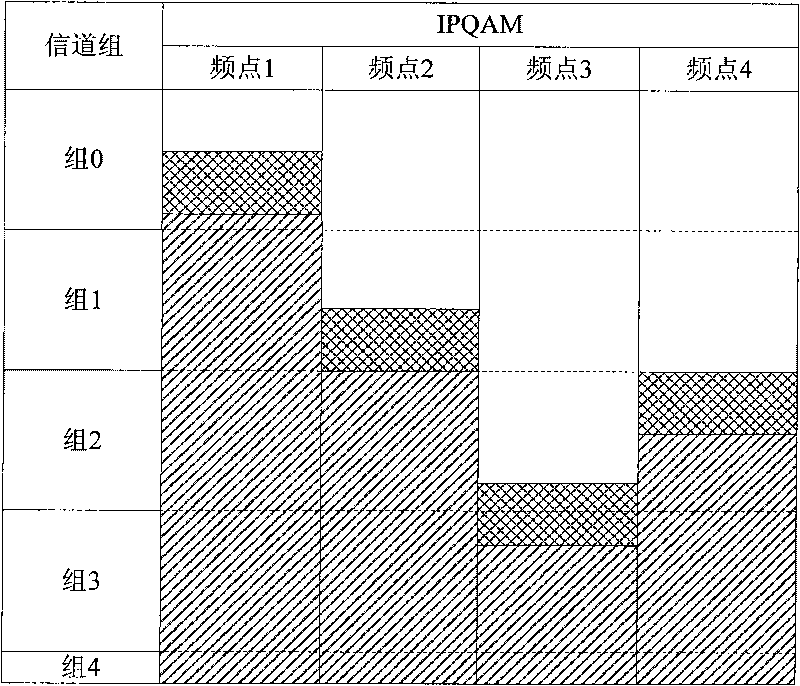

Channel bank-based bandwidth allocation method

InactiveCN101742566AAchieve effective useGuaranteed load balancingNetwork traffic/resource managementTelecommunicationsBandwidth allocation

The invention discloses a channel bank-based bandwidth allocation method, which comprises the following steps: determining the service request level; allocating all channels to a plurality of channel banks according to the size of residual bandwidth of each channel; selecting a channel bank from the plurality of channel banks, judging whether the selected channel bank has a proper channel for allocation until a proper bandwidth is allocated for the service, wherein all the channels are allocated to the channel banks according to the size of the residual bandwidth of the channels; the channel bank has a serial number; the serial number of the channel bank to which a certain channel belongs is equal to a value that the residual bandwidth of the channel divides exactly the needed bandwidth of the highest-level service. Firstly, a channel bank is selected from the larger one to the smaller one according to the serial number of the channel banks, and then, a channel is selected from the interior of the channel bank. When the channel is selected from the interior of the channel bank, the channel with the residual bandwidth of zero is selected for the high-level service, and the channel with the residual bandwidth of more than or equal to the needed bandwidth of the service is selected for the low-level service according to the order from the smaller residual bandwidth to the larger residual bandwidth.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI

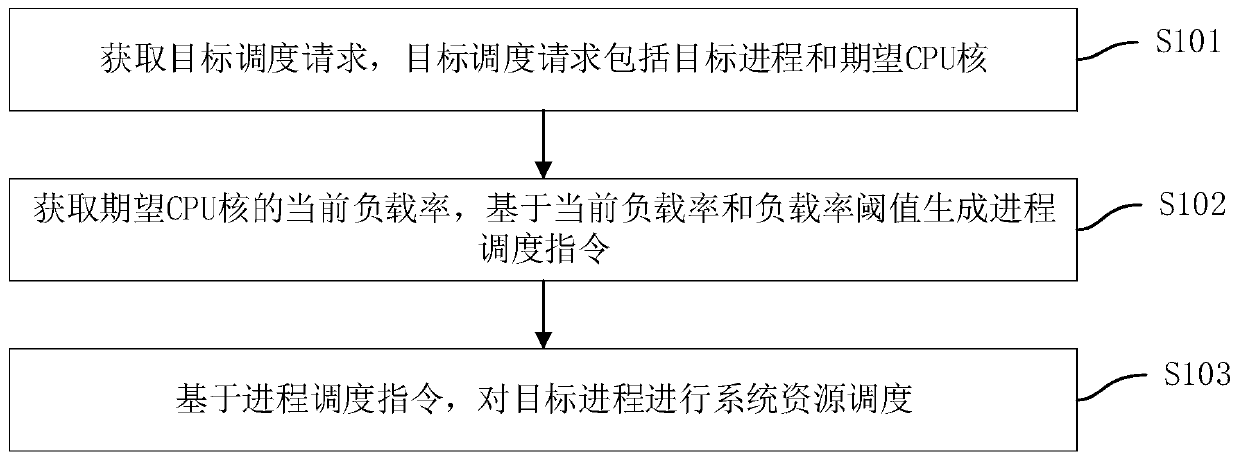

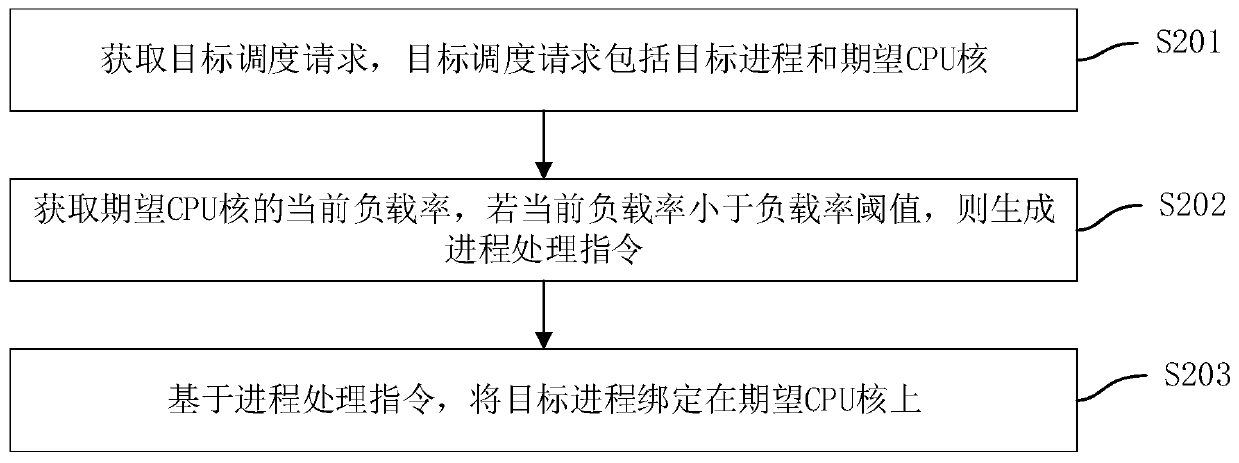

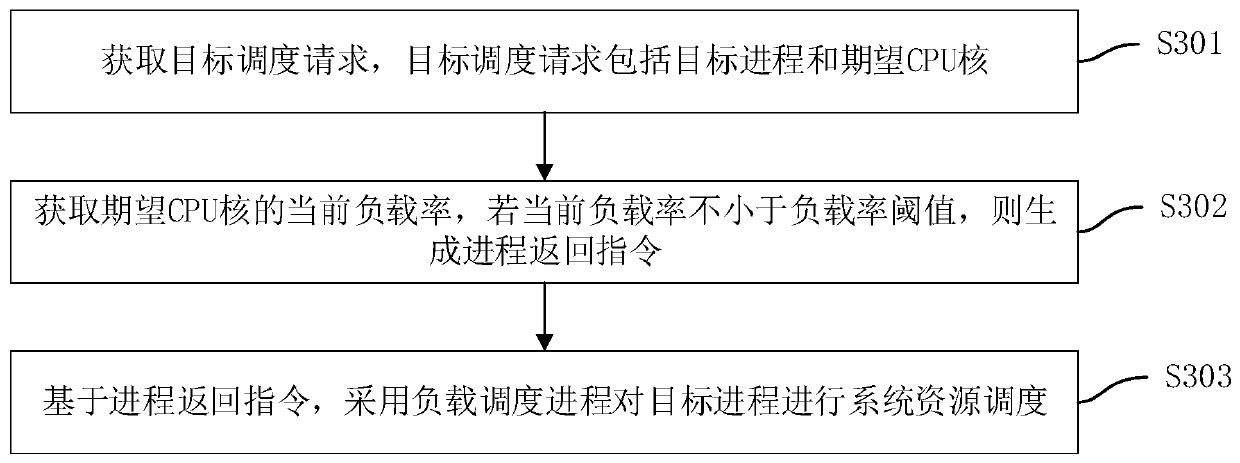

Process scheduling management method and device, computer equipment and storage medium

PendingCN111104208AGuaranteed rationalityGuaranteed load balancingProgram initiation/switchingResource allocationProcess engineeringComputer equipment

The invention discloses a process scheduling management method and device, computer equipment and a storage medium, and the method comprises the steps: obtaining a target scheduling request which comprises a target process and an expected CPU core; obtaining a current load rate of the expected CPU core, and generating a process scheduling instruction based on the current load rate and a load ratethreshold; and performing system resource scheduling on the target process based on the process scheduling instruction. The process can be reasonably scheduled, and the problem that the characteristics of a first CPU core and a second CPU core are not fully utilized in the current system resource scheduling process is solved.

Owner:UBTECH ROBOTICS CORP LTD

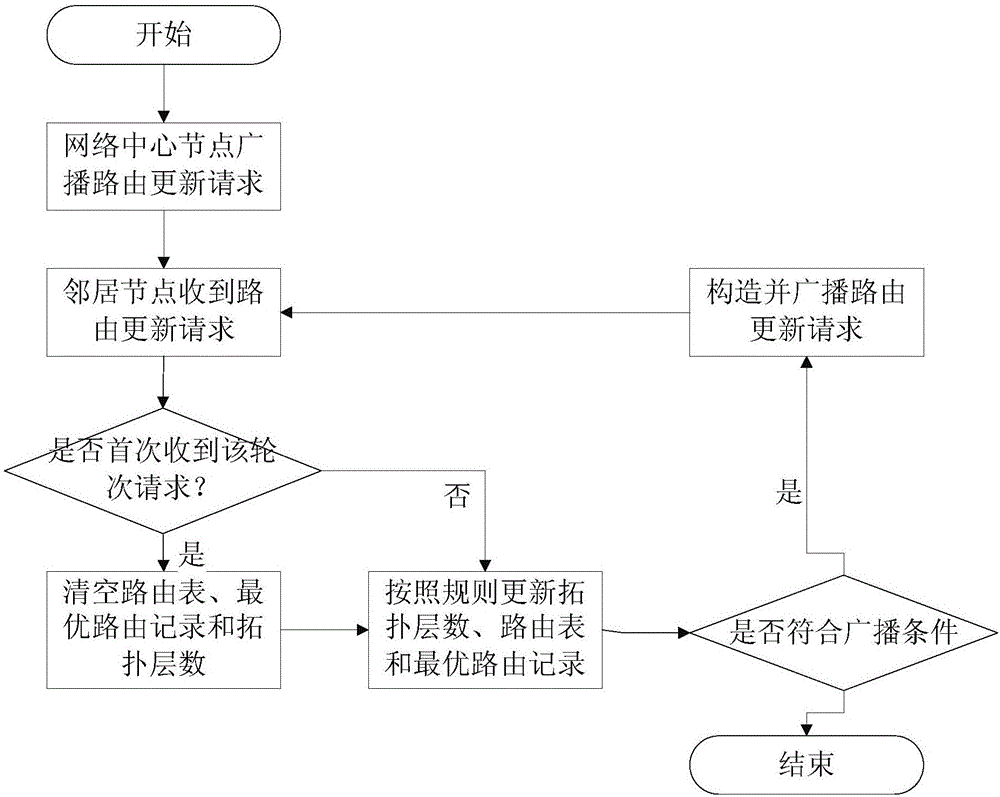

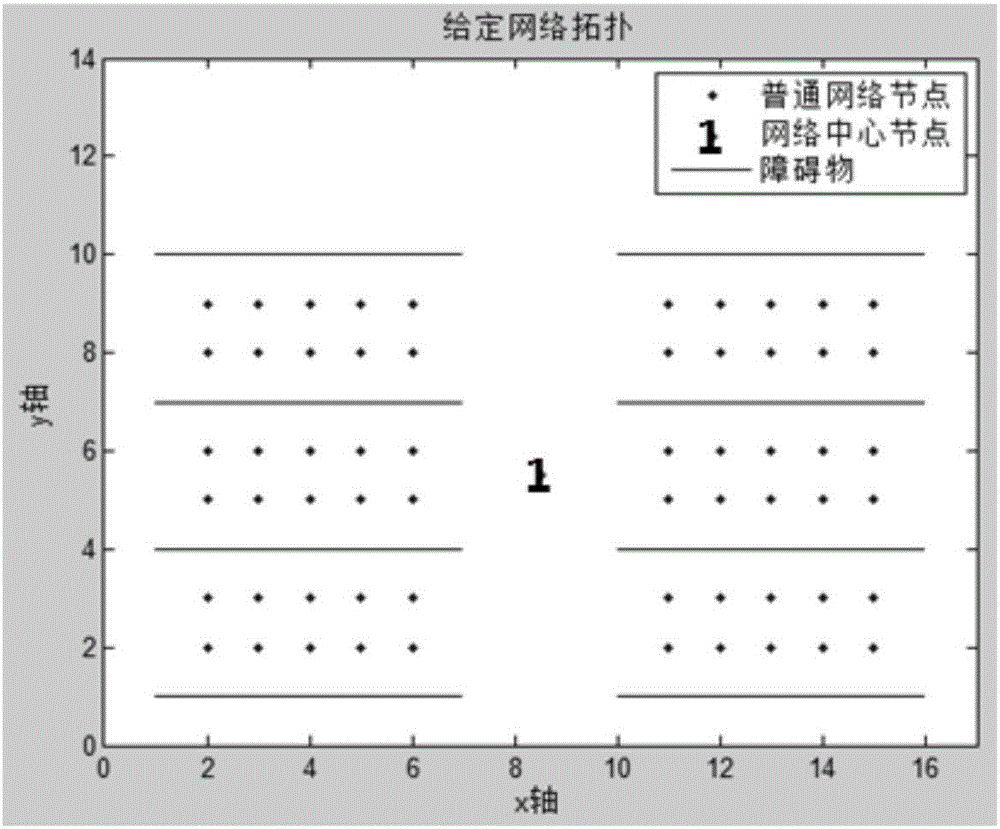

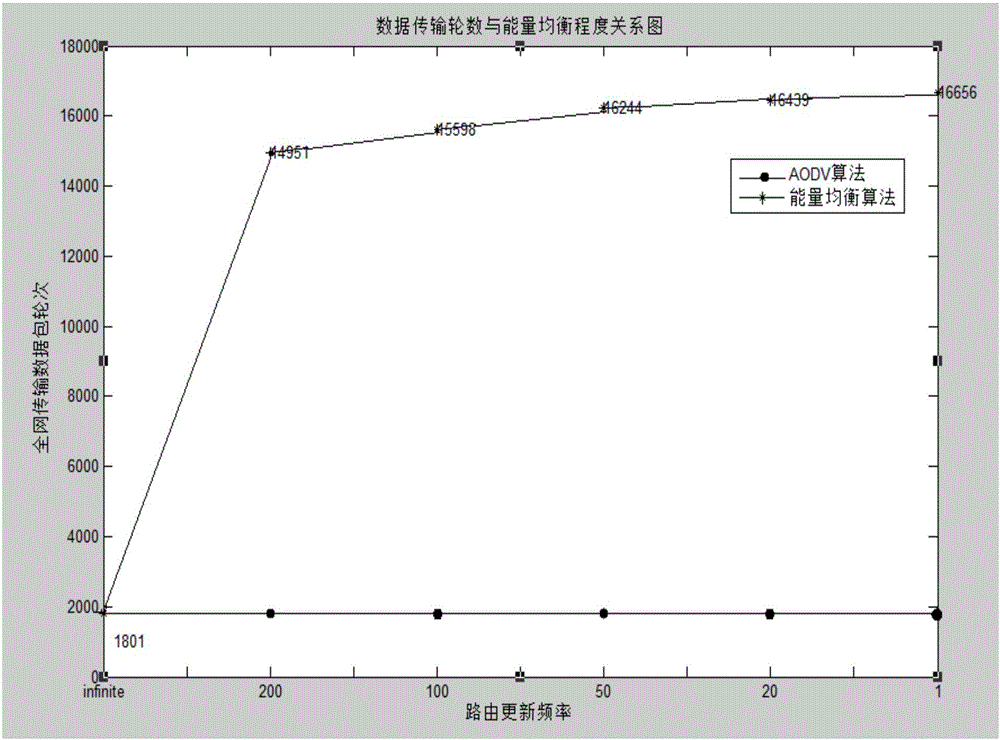

Method and device for improving route based on AODV

ActiveCN106792912AGuaranteed load balancingIncrease the number of roundsNetwork traffic/resource managementHigh level techniquesRouting algorithmElectricity

The embodiment of the invention discloses a method and device for improving route based on AODV. The method includes the steps that a current node receives a route updating request sent by a network central node, and a round is marked; the current node judges whether the route updating request is received for the first time or not; if yes, the hop count of a route with the optimal topology layer number and the minimum electricity quantity of the optimal route are set, a route table is emptied, and if not, the topology layer number is updated; if not, whether a next hop is a route item of a source node broadcasting the route updating request in the route table is judged, according to the topology layer number of the current node and the optimal route record, a route updating request of the same round is broadcast, and meanwhile returning is carried out to execute the current node repeatedly to judge whether the route updating request is received for the first time. By the application of the embodiment, the load balance of a whole network is guaranteed; by the adoption of the energy balance routing algorithm, limit, exerted on the topology depth of the network, of limit of data package length can be avoided.

Owner:STATE GRID CORP OF CHINA +1

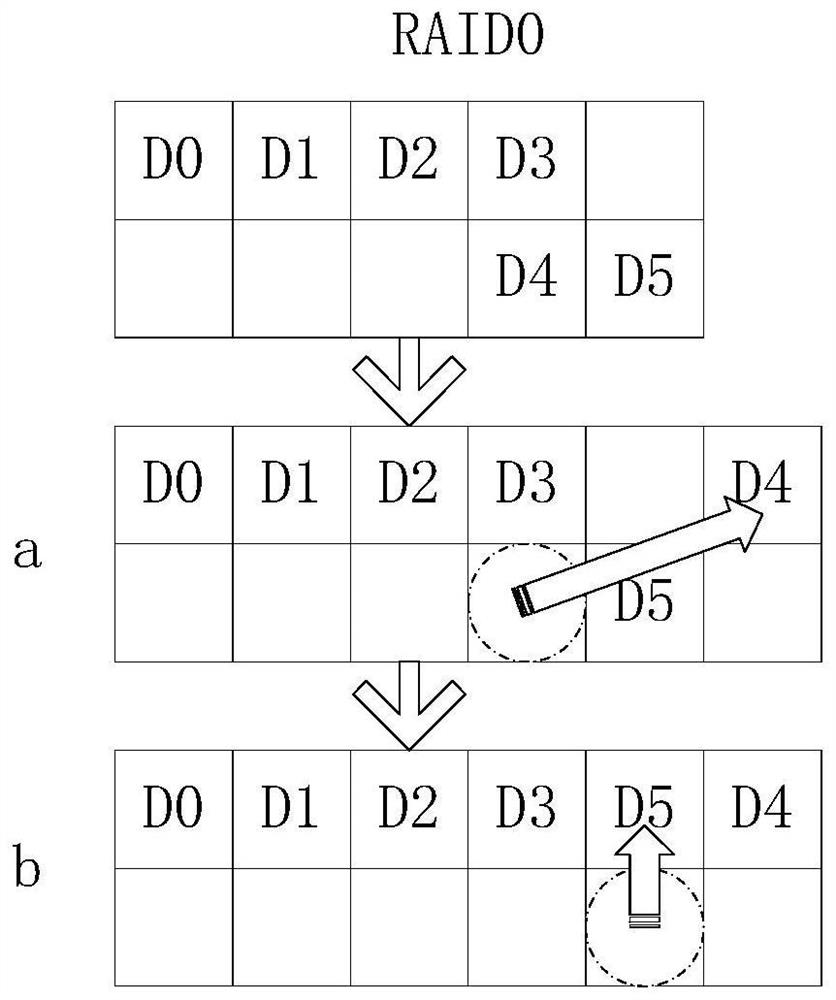

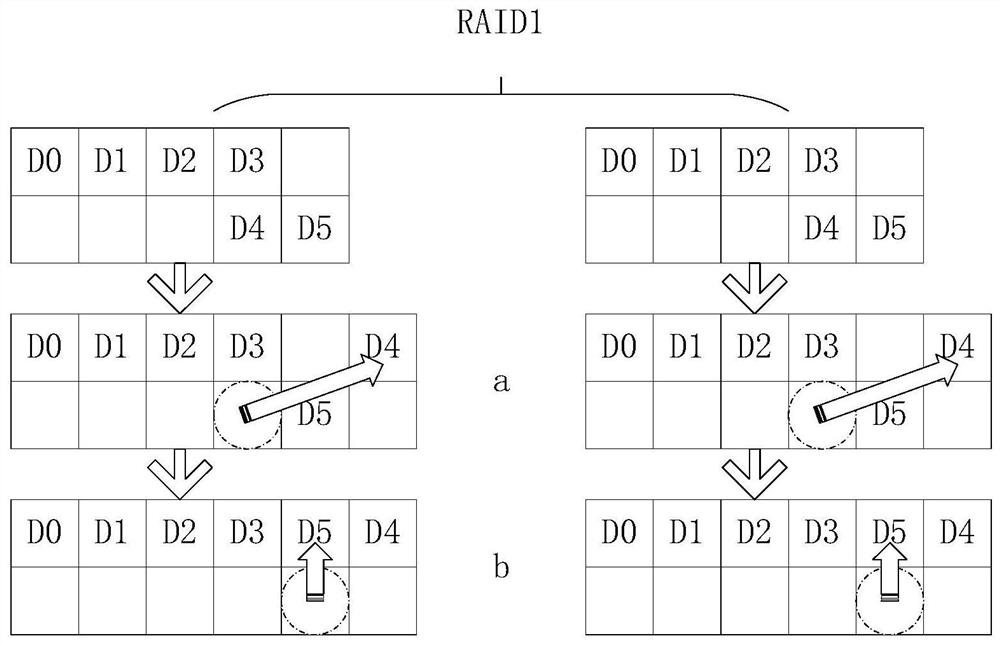

Efficient data migration method under RAID (Redundant Array of Independent Disks)

PendingCN114115729AGuaranteed load balancingReduce operationInput/output to record carriersEnergy efficient computingData migrationRAID

According to the efficient data migration method under the RAID provided by the invention, the RAID0, the RAID1, the RAID5 and the RAID6 are planned respectively, a uniform scheduling scheme is used for a data migration part, the method is simple and effective for hardware and firmware, and load balance can be ensured. After data migration, verification and updating need to be carried out in RAID5 and RAID6, at the moment, an updating algorithm provided for a scheduling scheme does not need to carry out operation on all data, the simplest data updating operation method is provided for different scenes, operation can be effectively reduced, and the data migration efficiency is improved.

Owner:山东云海国创云计算装备产业创新中心有限公司

Device for simultaneously ensuring uniform load of transmission with double intermediate shafts and left and right concentricity of synchronizer

InactiveCN102345710AGuaranteed Radial FloatGuaranteed radial positioningToothed gearingsPhysicsNeedle roller bearing

The invention relates to a device for simultaneously ensuring uniform load of a transmission with double intermediate shafts and left and right concentricity of a synchronizer. The device comprises an input shaft, a normally engaged gear, joint teeth of the normally engaged gear, joint teeth of an all-gear output shaft gear, the all-gear output shaft gear and an output shaft, wherein the all-gear output shaft gear intermittently floats on the joint teeth of the all-gear output shaft gear through a spline; the joint teeth of the all-gear output shaft gear are centered on the output shaft through a needle roller bearing so as to ensure the characteristic of coaxiality of a synchronizer cone ring and two conical surfaces of the left and right joint teeth; the normally engaged gear intermittently floats on the input shaft; and the joint teeth of the normally engaged gear are centered on the input shaft. By split structural design of the output shaft gear and the gear shifting joint teeth, radial positioning of the gear shifting joint teeth on the shaft is ensured at the same time of ensuring radial floating of the output shaft gear, and uniform load of the double intermediate shafts and left and right concentricity of the synchronizer are ensured.

Owner:罗元月

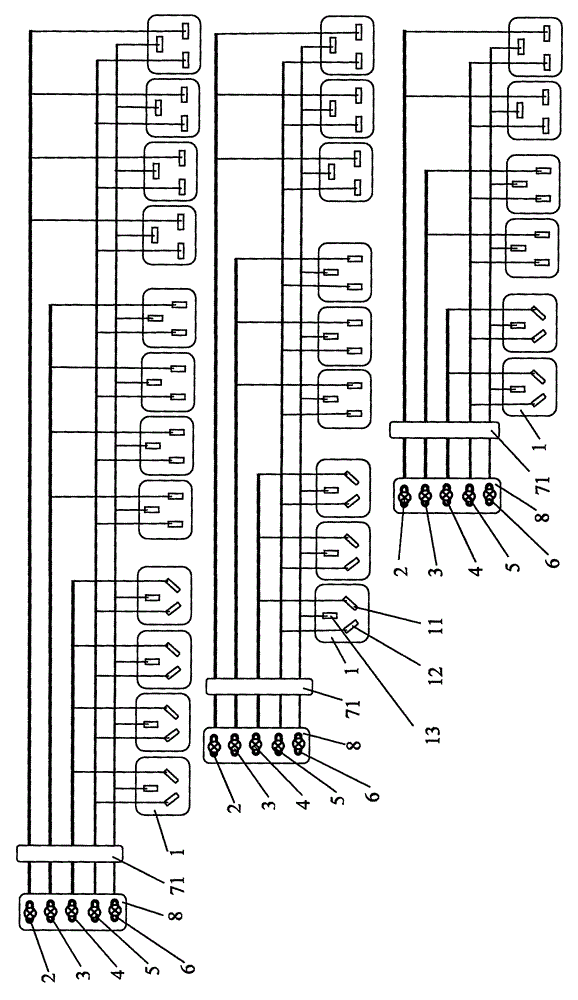

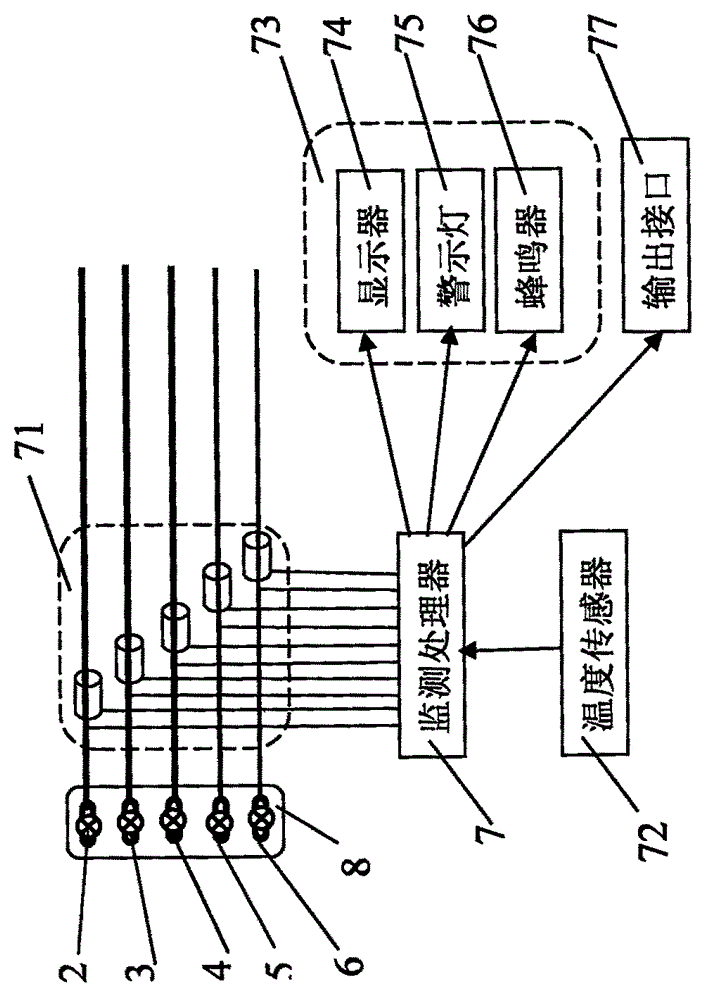

Power distribution unit

InactiveCN106099544AGuaranteed load balancingReduce wire consumptionIncorrect coupling preventionSingle phaseInterface circuits

A power distribution unit is used for power distribution of a data center and a communication machine room, wherein an input is from a three-phase five-wire system power supply and is provided with an A phase line, a B phase line, a C phase line, a neutral line and a ground line, an output is from a single-phase three-wire system power supply and is provided with a live line, a zero line and a ground line, output sockets of three phases are sequentially arranged, the load balance of the three phases in a cabinet is achieved, the line loss and the temperature rise are reduced, the loading capacity and the reliability of an uninterruptible power supply (UPS) system are guaranteed, and the hidden danger of power ripple to weak current signal interference is reduced; power sockets of the three phases are different in structure and cannot be plugged in a mixed way, a shell is provided with phase sequence marks of shapes, characters and colors, and the phase sequence is conveniently identified during installation or maintenance of a wiring line; the power distribution unit can monitor phase current and voltage, temperature rise, ground line current and three-phase load unbalance and is provided with a corresponding warning and output interface circuit, and alarm and centralized monitoring are facilitated in situations of overcurrent, overvoltage, overheating, device electric leakage and three-phase load unbalance; and with the arrangement of an input junction box, adaption delay is prevented, materials are saved, and the reliability is improved. The figure 1 is an abstract drawing.

Owner:厦门东方远景科技股份有限公司

Application-aware data routing method and system for large-scale cluster deduplication

ActiveCN103902735BReduce data overlapData deduplication rate is highTransmissionRedundant operation error correctionEngineeringData mining

The invention discloses an application perception data routing method oriented to large-scale cluster deduplication and a large-scale backup storage cluster system. The application perception data routing method comprises the steps of (S10) obtaining backup file meta-information, (S20) sensing a file application type, (S30) calculating deduplication storage node loads, (S40) selecting file routing nodes, (S50) sending files to target nodes, (S60) conducting deduplication on the files in the nodes, and the like. The large-scale backup storage cluster system comprises a plurality of backup clients, a backup server and a plurality of deduplication storage servers. The data routing method and the system have the advantages that the data deduplication rate is high, the node throughput rate is high, system communication overheads are low, and system loads are balanced.

Owner:PLA UNIV OF SCI & TECH

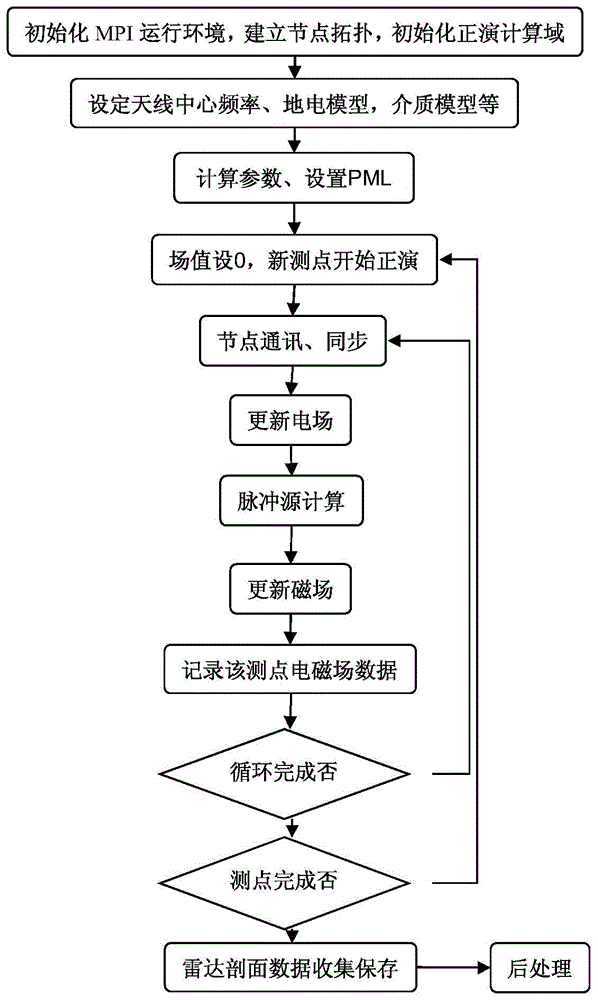

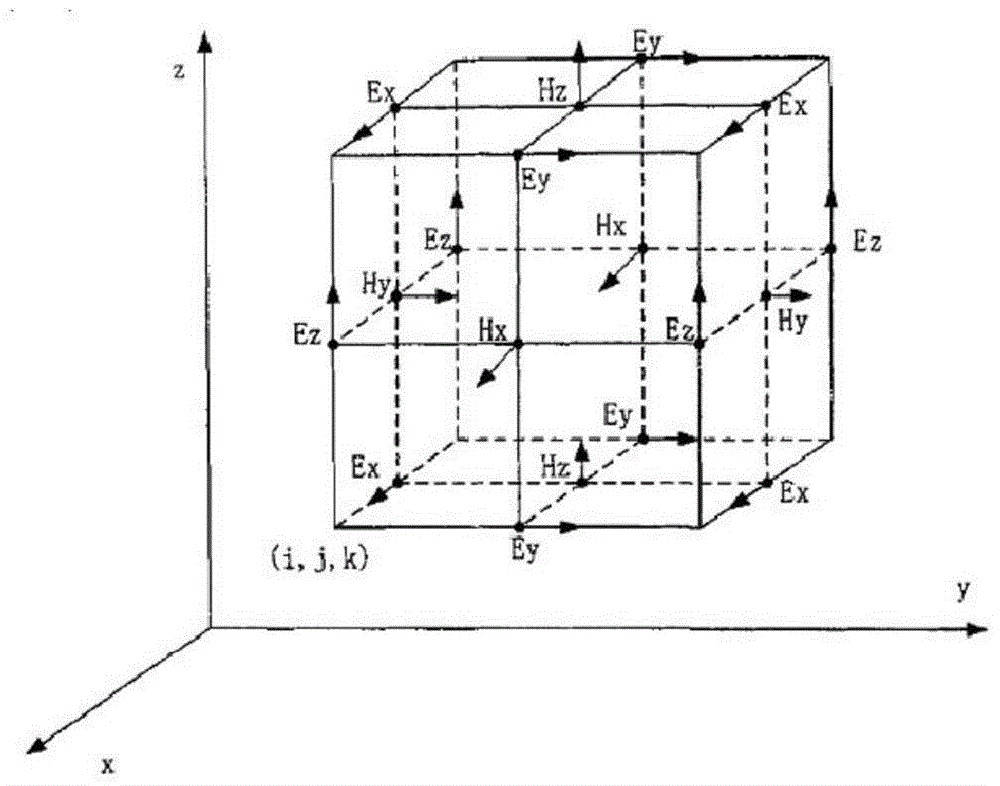

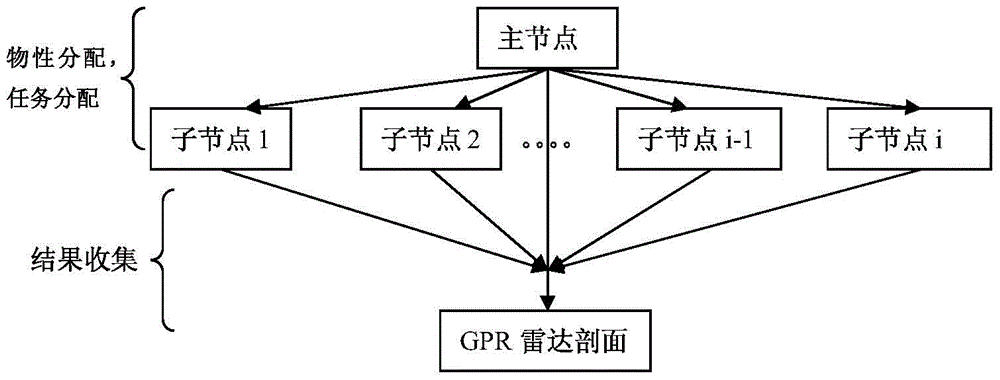

Large-Scale 3D Forward Modeling Method for Ground Penetrating Radar Based on FDTD

ActiveCN103969627BGuaranteed load balancingImprove reliabilityRadio wave reradiation/reflectionDielectricElectricity

The invention discloses a ground penetrating radar large-scale three-dimensional forward modeling method based on the FDTD. According to the method, data exchange of nodes is carried out through a network, and communication and synchronization of the nodes are achieved; a measured point in any node is selected to be subjected to forward modeling parallel calculation on the basis of the FDTD, firstly, an electric field value is calculated, magnetic field calculation is carried out by adding an excitation source after electric field calculation is finished, iteration is continuously carried out through communication, electric field calculation and magnetic field calculation, and when calculation of the set time total step length is finished, calculation is stopped, output data are stored, the data serve as a radar reflected signal of the measured point; initialization updating is carried out on all field values, a whole computational domain recovers to the state before calculation, and then a transmitting antenna and a receiving antenna are moved to a next measured point to start forward modeling calculation again; if calculation of all the measured points is finished, data of all the nodes are collected to carry out imaging and image postprocessing. The ground penetrating radar large-scale three-dimensional forward modeling method based on the FDTD is applicable to calculation of dielectrics and models with complex scales, has the advantage of being high in universality, and is especially applicable to large-scale and high-precision forward modeling.

Owner:苏州市数字城市工程研究中心有限公司

Gears and Transmission Mechanisms

ActiveCN107559405BReduce the amount of design modificationHigh strengthPortable liftingGearing detailsEngineeringGear tooth

Owner:CRRC QISHUYAN INSTITUTE CO LTD

A Robust Overlay Method for Relay Nodes in Two-layer Structured Wireless Sensor Networks

ActiveCN105704732BLower deployment costsGuaranteed load balancingPosition fixationNetwork planningRound complexityWireless sensor networking

The invention relates to a relay node robust coverage method for a double-layer structure wireless sensor network. The present invention is a relay node 2-overlay deployment algorithm based on local search, which realizes optimal deployment while ensuring robustness by degrading the global deployment problem to the local deployment problem. The method specifically includes two steps: the first 1-coverage and the second 1-coverage. The first 1‑coverage includes three steps: construction of relay node candidate deployment locations, sensor node grouping, and relay node local deployment. Sensors are grouped by a novel grouping method, which reduces the complexity of the algorithm and ensures deployment The most effective; the second 1-coverage adjustment threshold, select the sensor nodes that are only covered by one relay node for each group, and use the 1-coverage method to perform 1-coverage on these sensor nodes again, which not only ensures the robustness The stickiness saves the number of relay nodes deployed and shortens the problem solving time.

Owner:SHENYANG INST OF AUTOMATION - CHINESE ACAD OF SCI

Computing system load balancing method and device and storage medium

InactiveCN111737001AImprove efficiencyGuaranteed load balancingResource allocationKnowledge based modelsLoad balancing (computing)Computing systems

The invention discloses a computing system load balancing method, which dynamically gives weight to a GPU (Graphics Processing Unit) through a decision tree, and then dynamically allocates computing tasks by adopting a smooth polling weighting method, so that the efficiency of a cooperative computing system of a CPU (Central Processing Unit) and the GPU is effectively improved, and meanwhile, theload balancing of the system is ensured. The invention further provides a computing system load balancing device based on the method and a storage medium.

Owner:STATE GRID ELECTRIC POWER RES INST +3

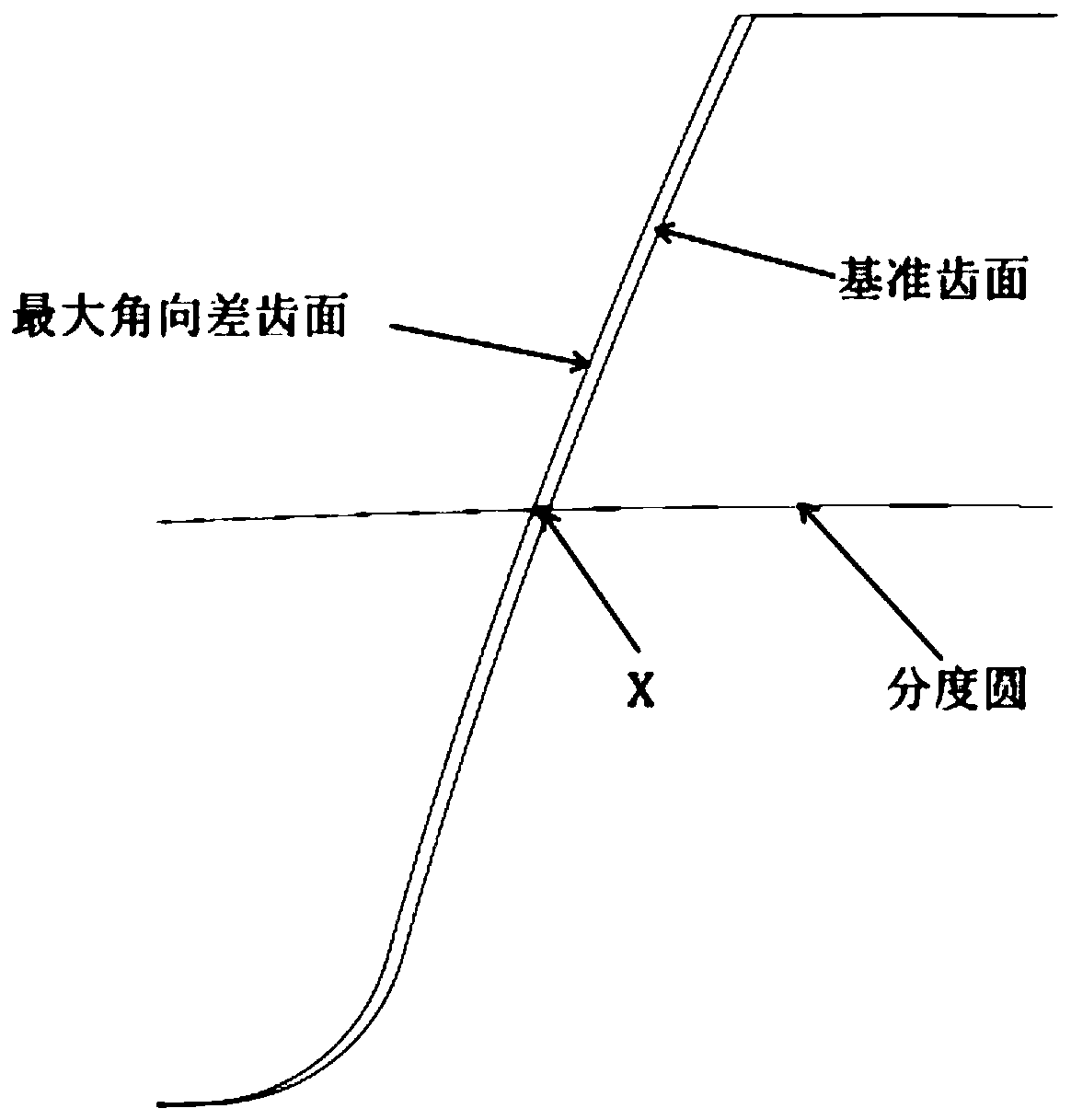

Grouping method for measurement of angular difference of common planetary gear

InactiveCN110440658AHigh measurement accuracyAccelerateMachine part testingMechanical measuring arrangementsPhysicsAngular difference

The invention belongs to the technical field of mechanical processing, and particularly relates to a grouping method for measurement of angular difference of a common planetary gear. The method provided by the invention obtains a grouping method for measurement of the angular difference based on the radial variation conversion of the measured amount of balls by comparing the principle of measurement. The method can complete the problem of grouping the measurement of the angular difference of the common planetary gear by using a simple measuring tool. The invention directly uses a reference specified by part drawings to perform the measurement without the need of reference conversion, has high measuring precision, fast speed and no additional skill requirements for the inspection and measurement personnel, and is convenient and quick. The method provided by the invention can be used to avoid the repeated matching and trial installation during the assembly of the device, ensure the uniform load of the common planetary gear and improve the operating life of the device.

Owner:HARBIN DONGAN ENGINE GRP

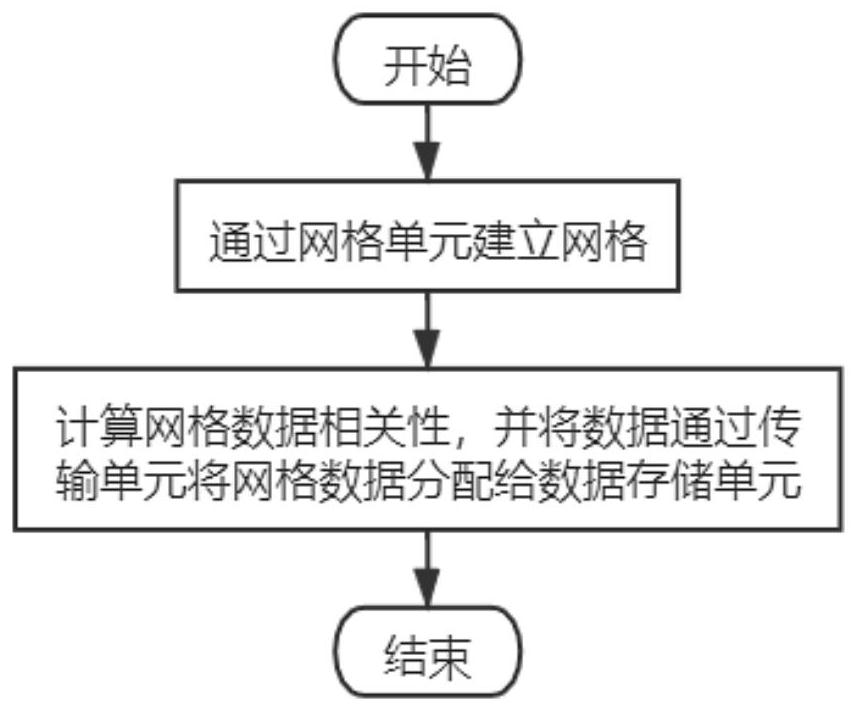

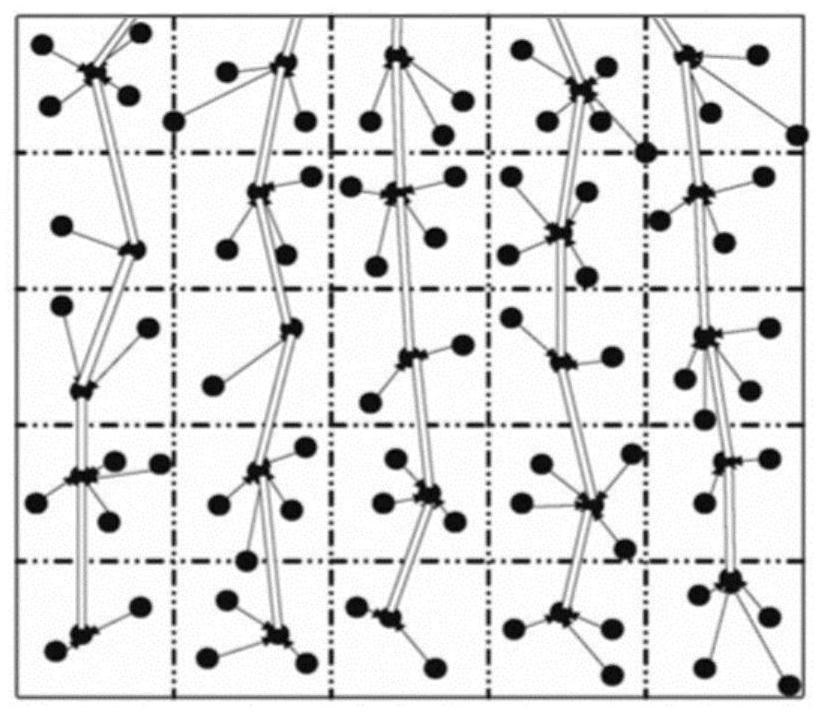

A parallel distributed big data architecture construction method and system

ActiveCN113873031BImprove query performanceGuaranteed load balancingDatabase distribution/replicationTransmissionComputational scienceParallel computing

The invention discloses a method and system for constructing a parallel distributed big data architecture, wherein a method for constructing a parallel distributed big data architecture comprises: establishing a grid through grid units, and sequentially storing data into the grid according to time stamps; The grid data correlation and the data node sampling time interval are calculated by the computing unit; the grid data is distributed to the data storage unit through the transmission unit according to the grid data correlation; Planning transmission paths and allocating data storage space ensures the load balance of data nodes and greatly improves data query capabilities.

Owner:南京翌淼信息科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com