Computing system load balancing method and device and storage medium

A technology of load balancing and computing systems, applied in the direction of knowledge-based computer systems, computing, computing models, etc., can solve problems such as system crashes, reduce overall system performance, and decrease system processing efficiency, so as to ensure load balancing and improve efficiency effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] The technical solutions of the present invention will be further described below in conjunction with the embodiments.

[0039] The computing system load balancing method described in the present invention has the following steps:

[0040] Assuming that a CPU assembly unit is composed of m CPU units of the same type, and a GPU assembly unit is composed of n GPU cards of the same type, these assembly units are numbered.

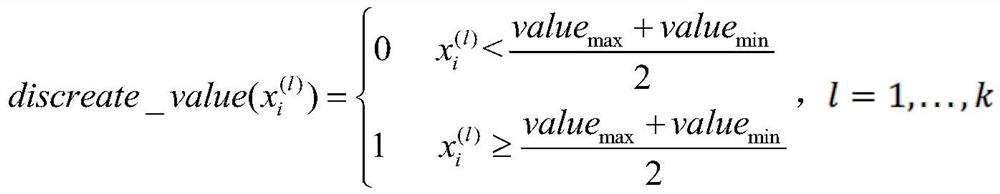

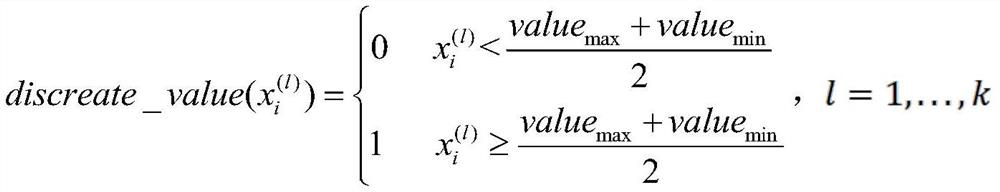

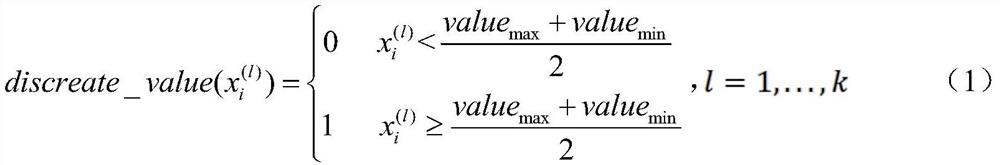

[0041] Let sample s i ≡{x i ,y i},in Represents the feature vector obtained after feature extraction of the CPU set unit or the GPU set unit, is the feature value, k is the number of features, i is the sample number; y i ∈ {1, 2, 3, 4, 5, 6, 7, 8, 9} represents 9 different levels of load. the y i The smaller the value, the lower the load level. Experiments show that the characteristics that affect the load of a processing unit include: cache size, number of threads, number of ALUs, unit current load, unit user process occupancy, unit waiting fo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com