Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

205results about How to "Reduce execution" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

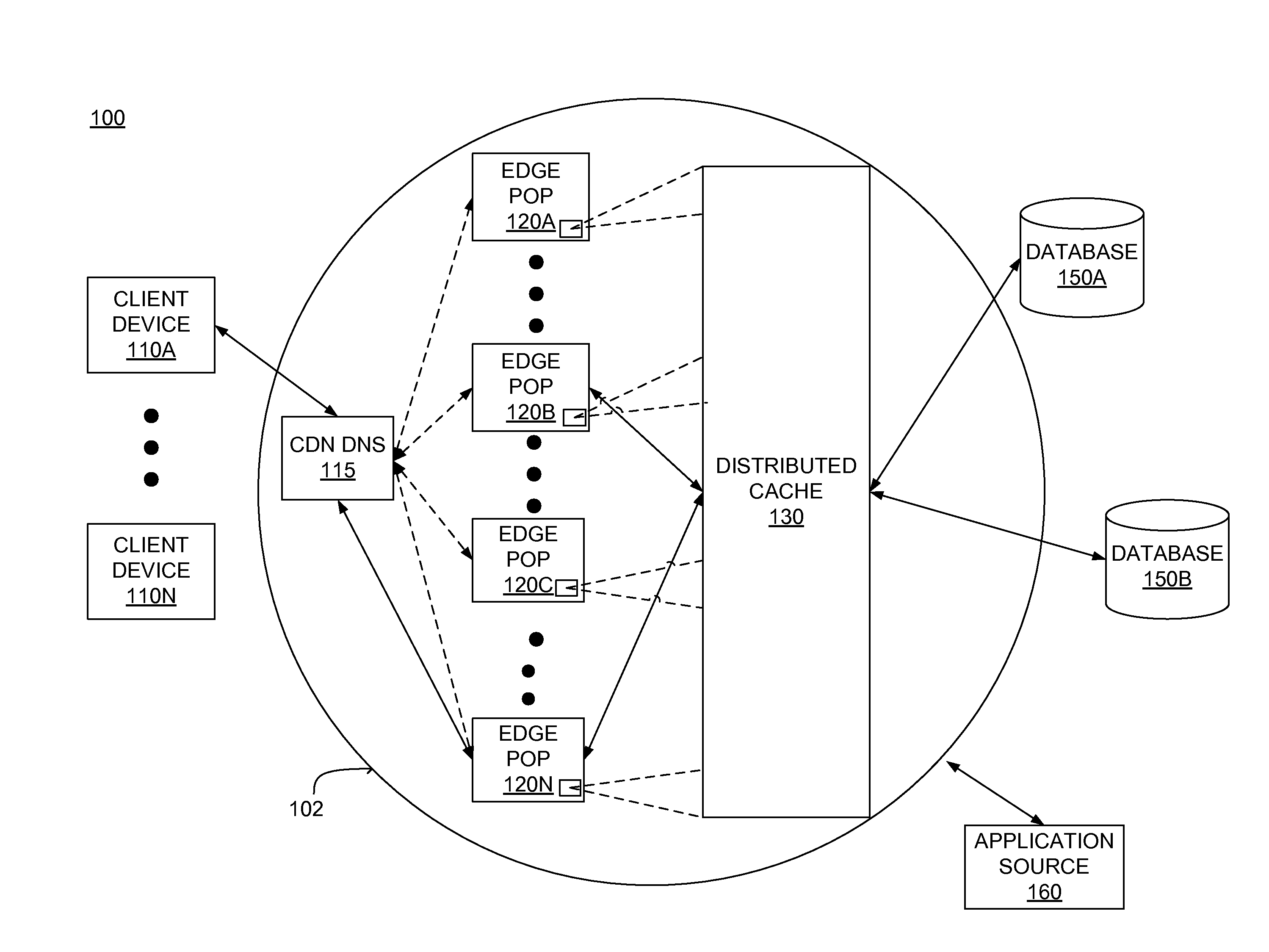

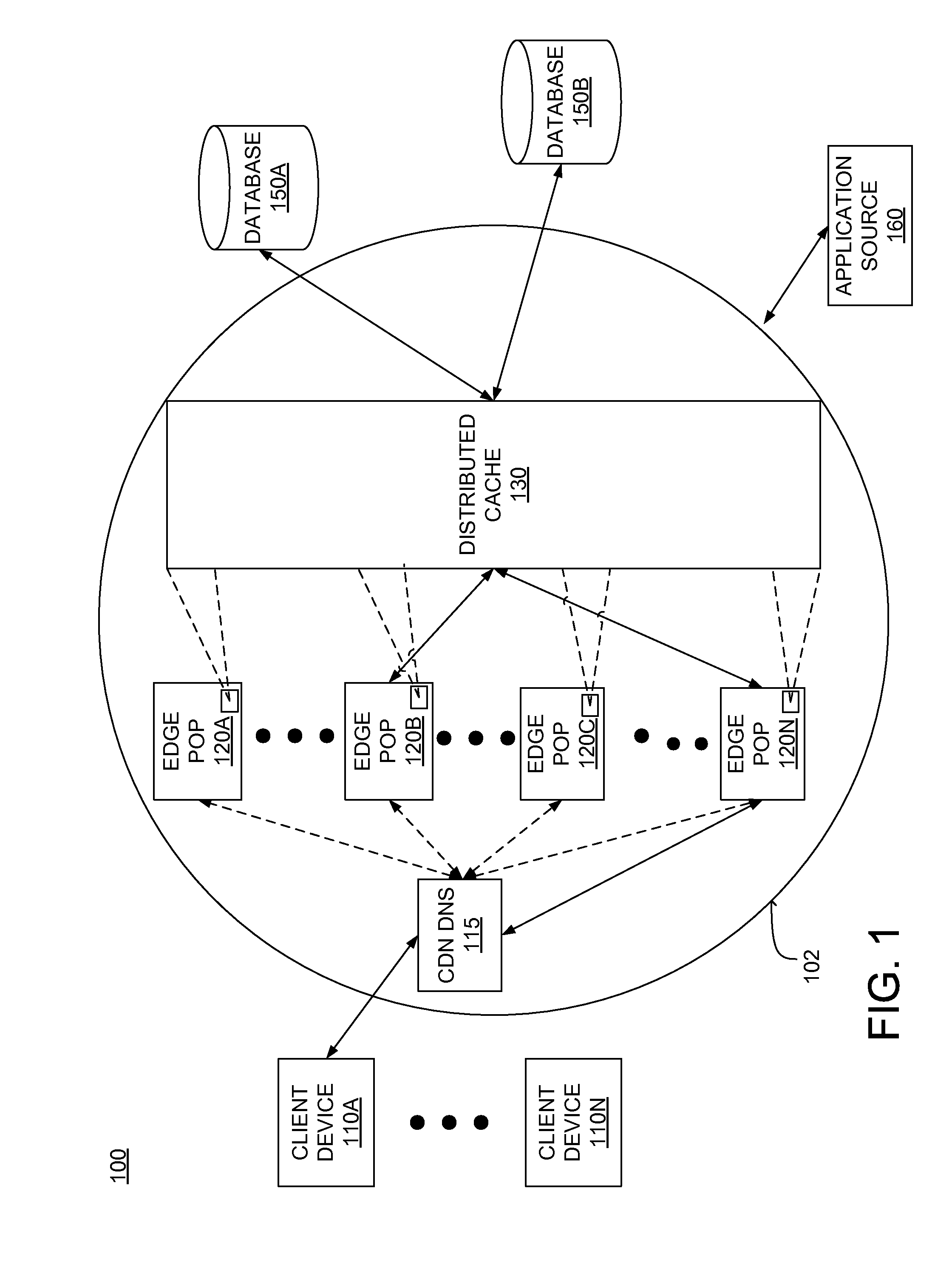

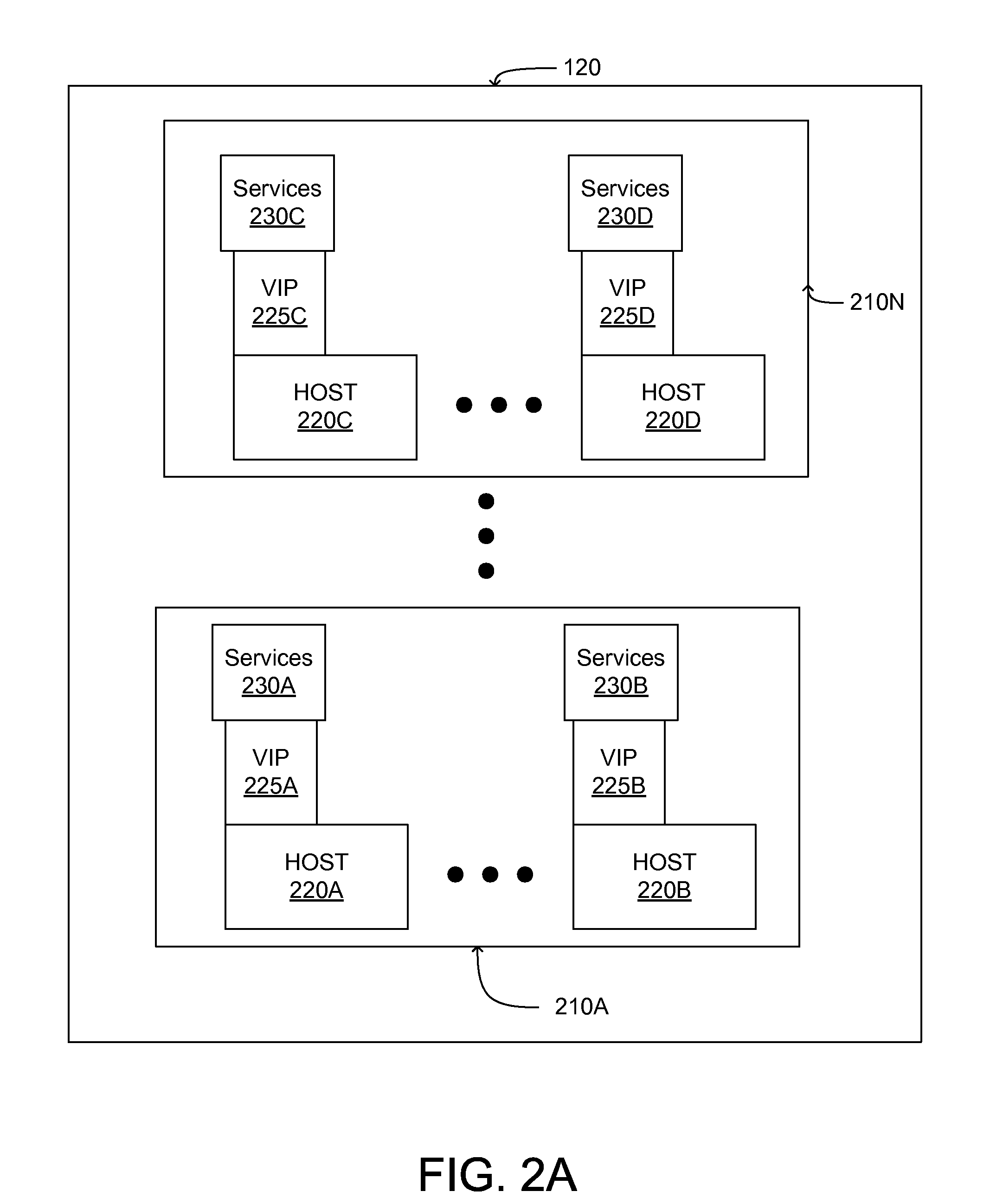

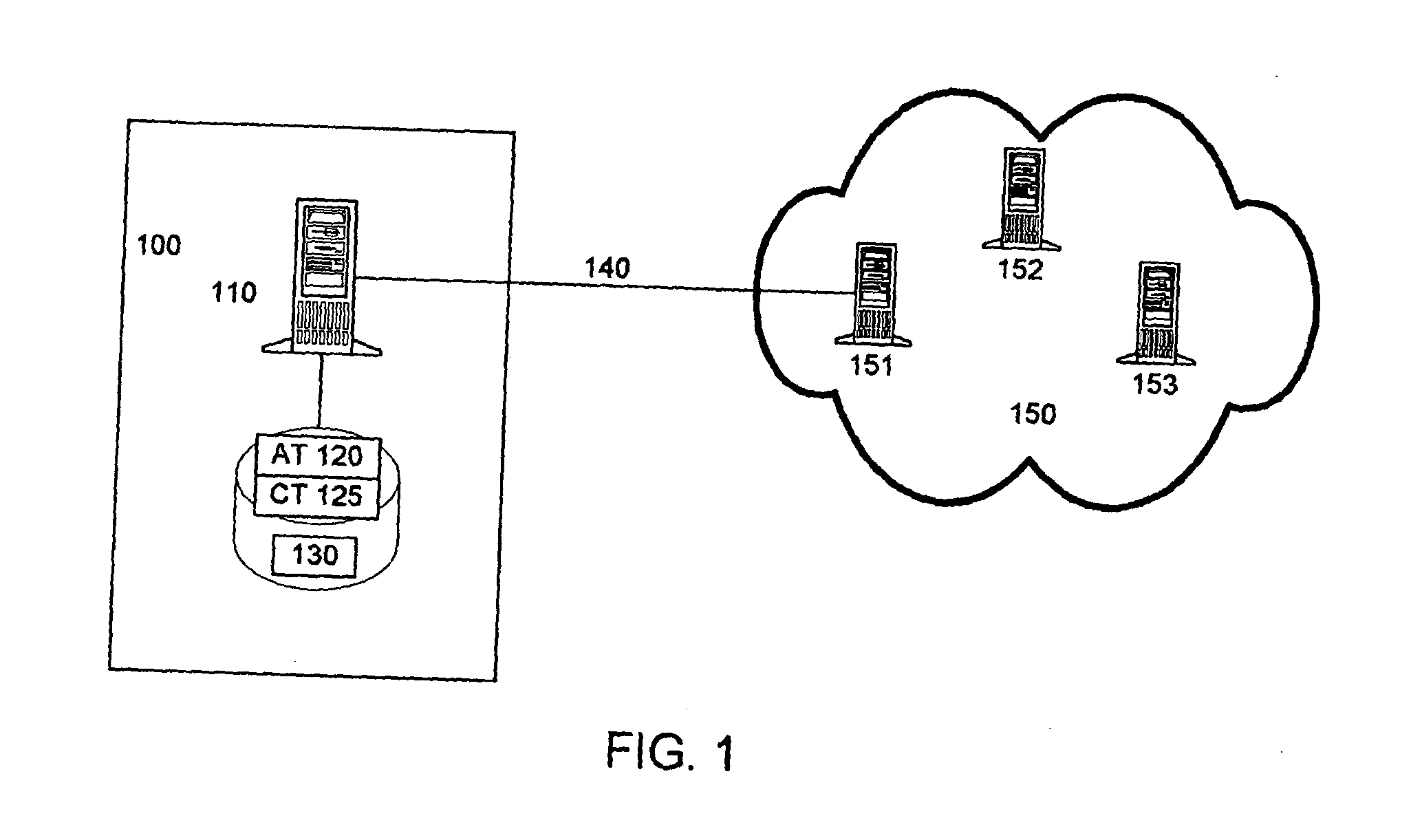

Distributed data cache for on-demand application acceleration

ActiveUS20120041970A1Decreasing application executionShorten the timeDigital data information retrievalDigital data processing detailsApplication softwareDistributed computing

A distributed data cache included in a content delivery network expedites retrieval of data for application execution by a server in a content delivery network. The distributed data cache is distributed across computer-readable storage media included in a plurality of servers in the content delivery network. When an application generates a query for data, a server in the content delivery network determines whether the distributed data cache includes data associated with the query. If data associated with the query is stored in the distributed data cache, the data is retrieved from the distributed data cache. If the distributed data cache does not include data associated with the query, the data is retrieved from a database and the query and associated data are stored in the distributed data cache to expedite subsequent retrieval of the data when the application issues the same query.

Owner:CDNETWORKS HLDG SINGAPORE PTE LTD

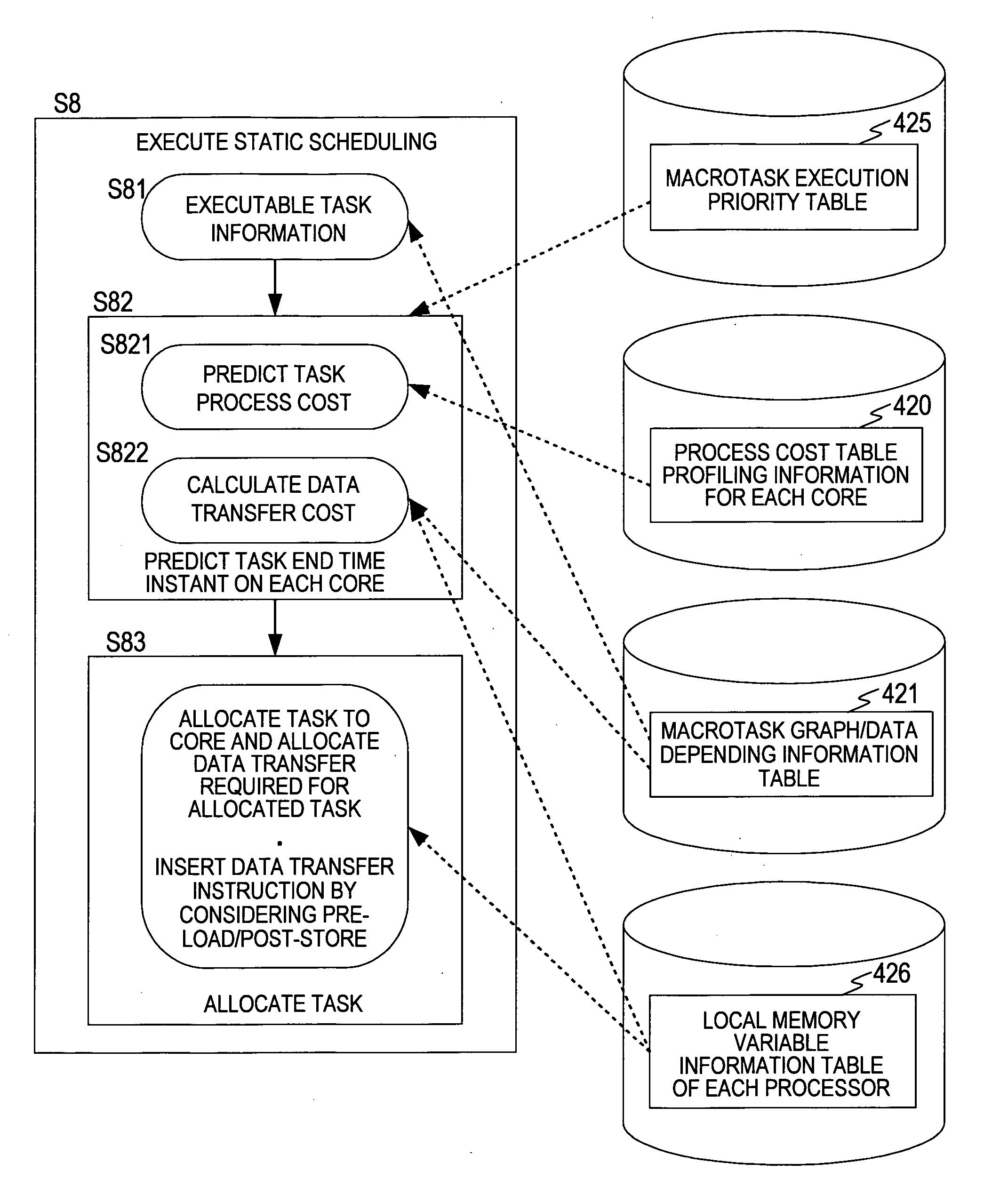

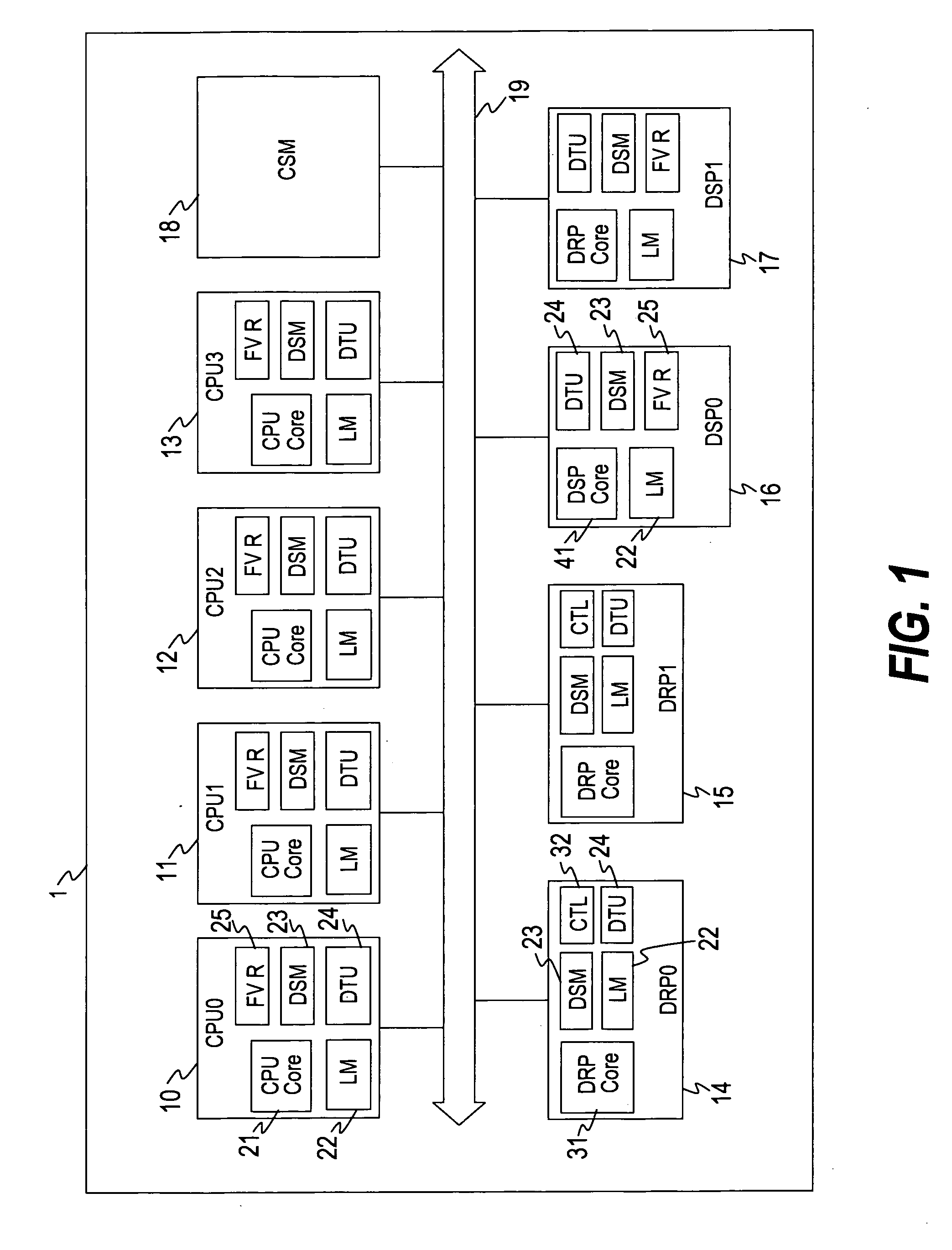

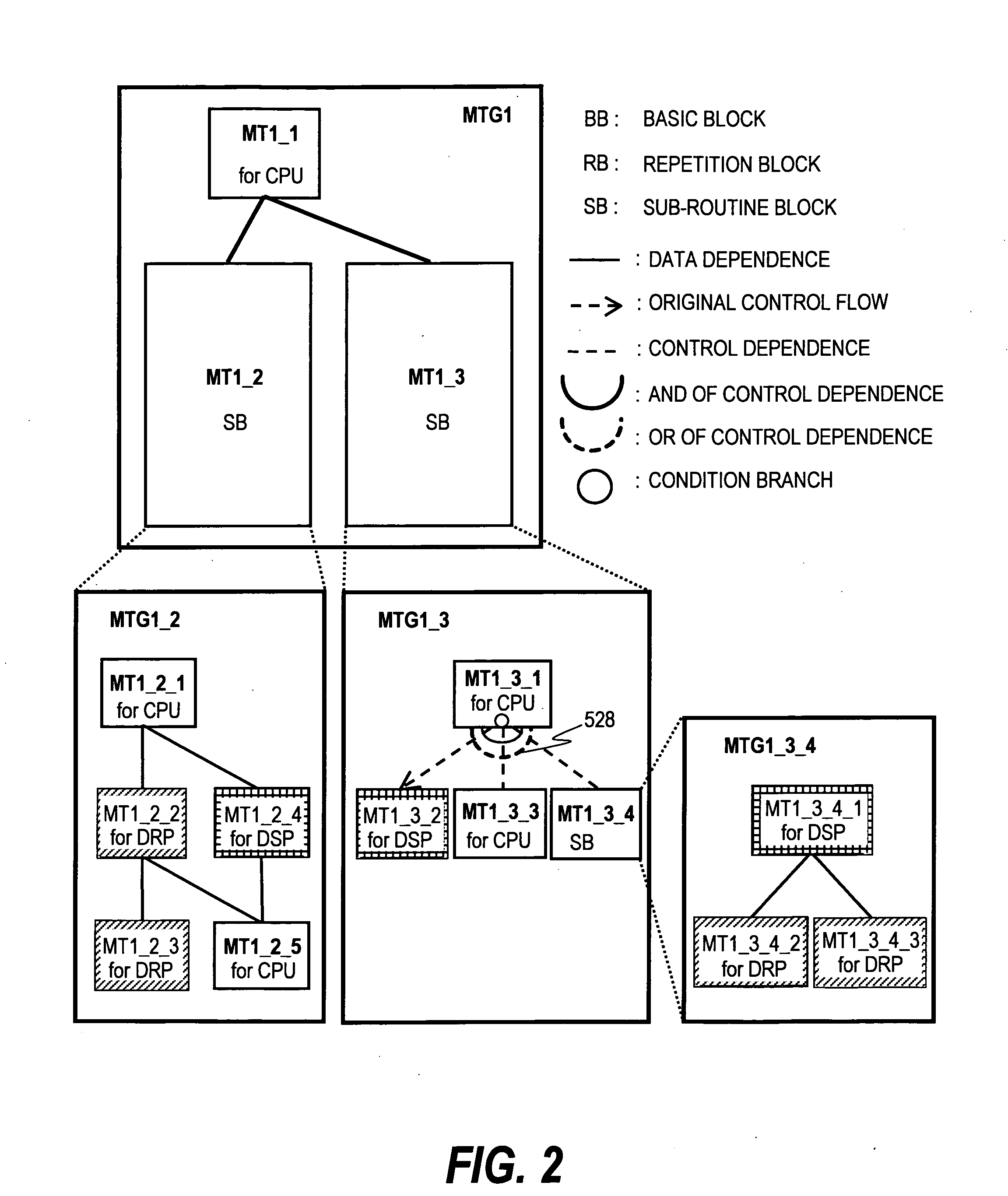

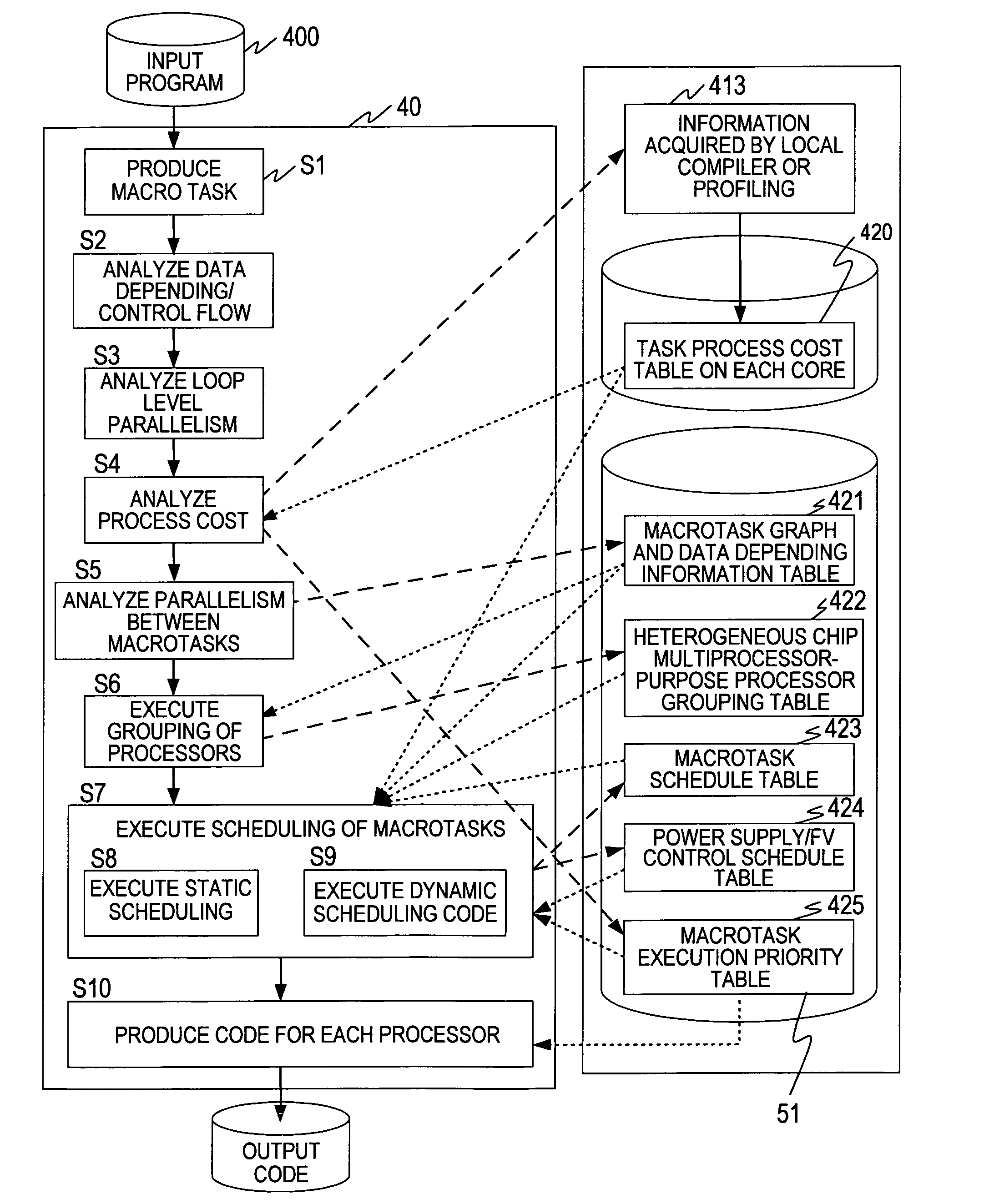

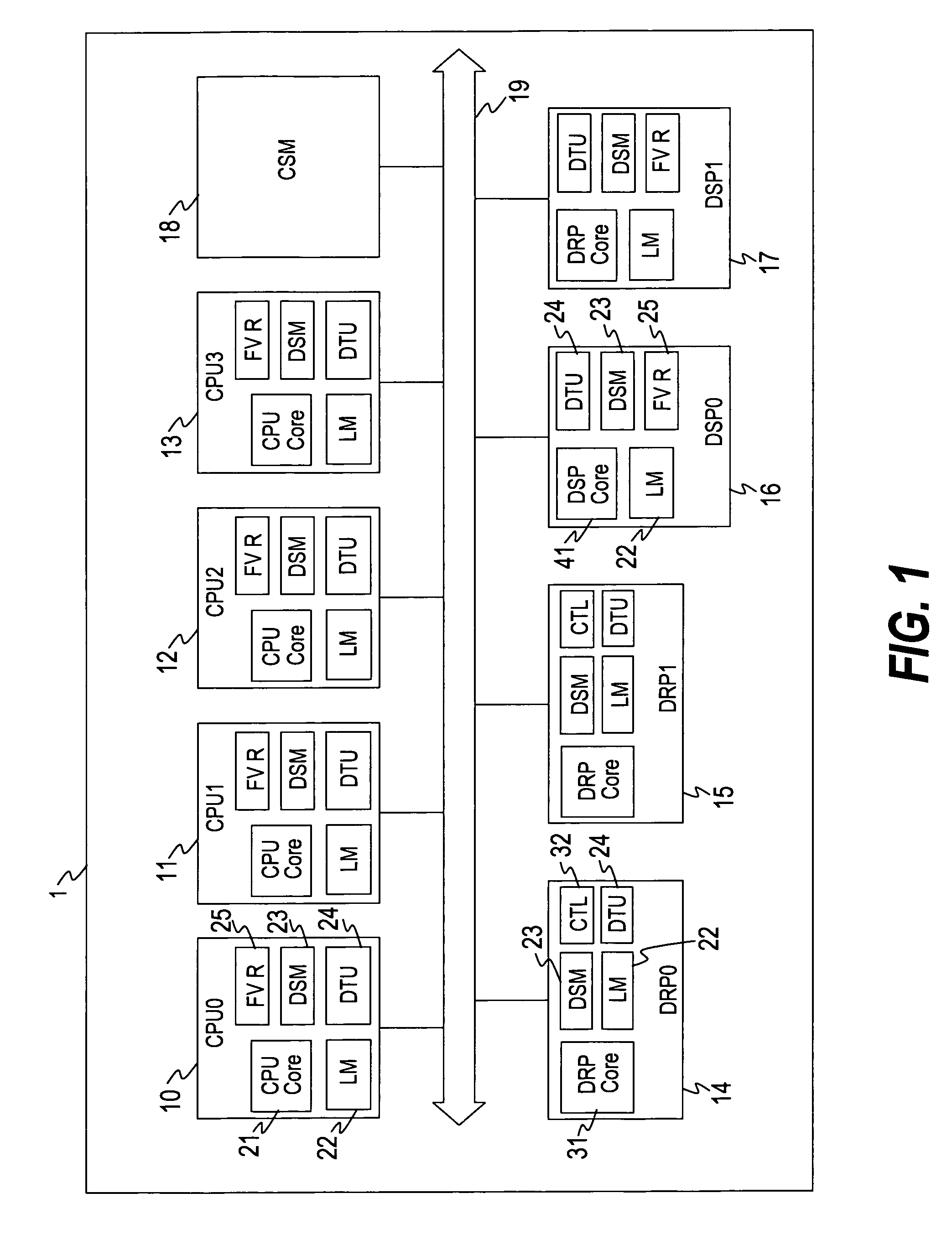

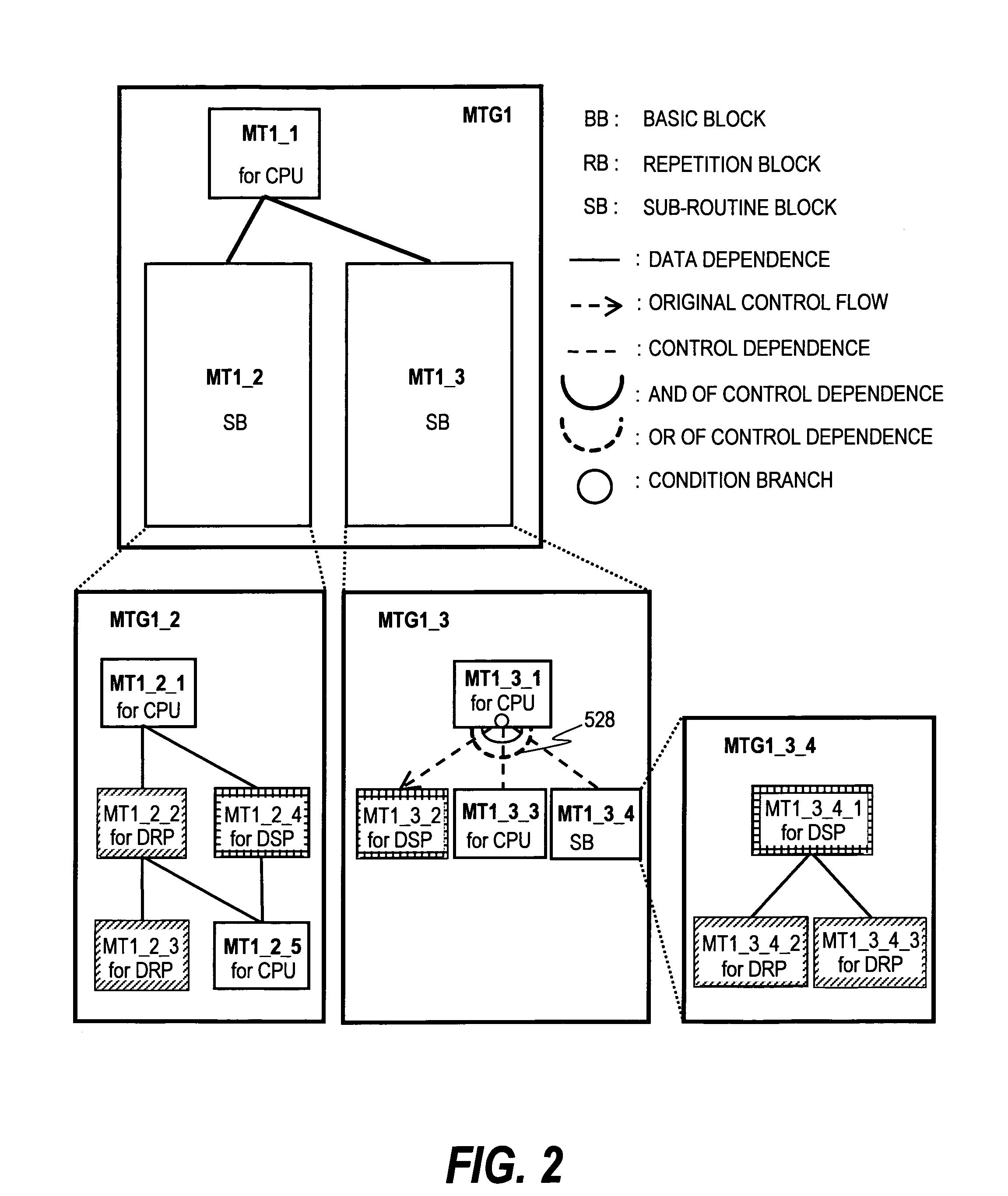

Method for controlling heterogeneous multiprocessor and multigrain parallelizing compiler

ActiveUS20070283358A1Improve efficiencyOverhead required can be suppressedEnergy efficient ICTResource allocationGeneral purposeCost comparison

A heterogeneous multiprocessor system including a plurality of processor elements having mutually different instruction sets and structures avoids a specific processor element from being short of resources to improve throughput. An executable task is extracted based on a preset depending relationship between a plurality of tasks, and the plurality of first processors are allocated to a general-purpose processor group based on a depending relationship among the extracted tasks. A second processor is allocated to an accelerator group, a task to be allocated is determined from the extracted tasks based on a priority value for each of tasks, and an execution cost of executing the determined task by the first processor is compared with an execution cost of executing the task by the second processor. The task is allocated to one of the general-purpose processor group and the accelerator group that is judged to be lower as a result of the cost comparison.

Owner:WASEDA UNIV

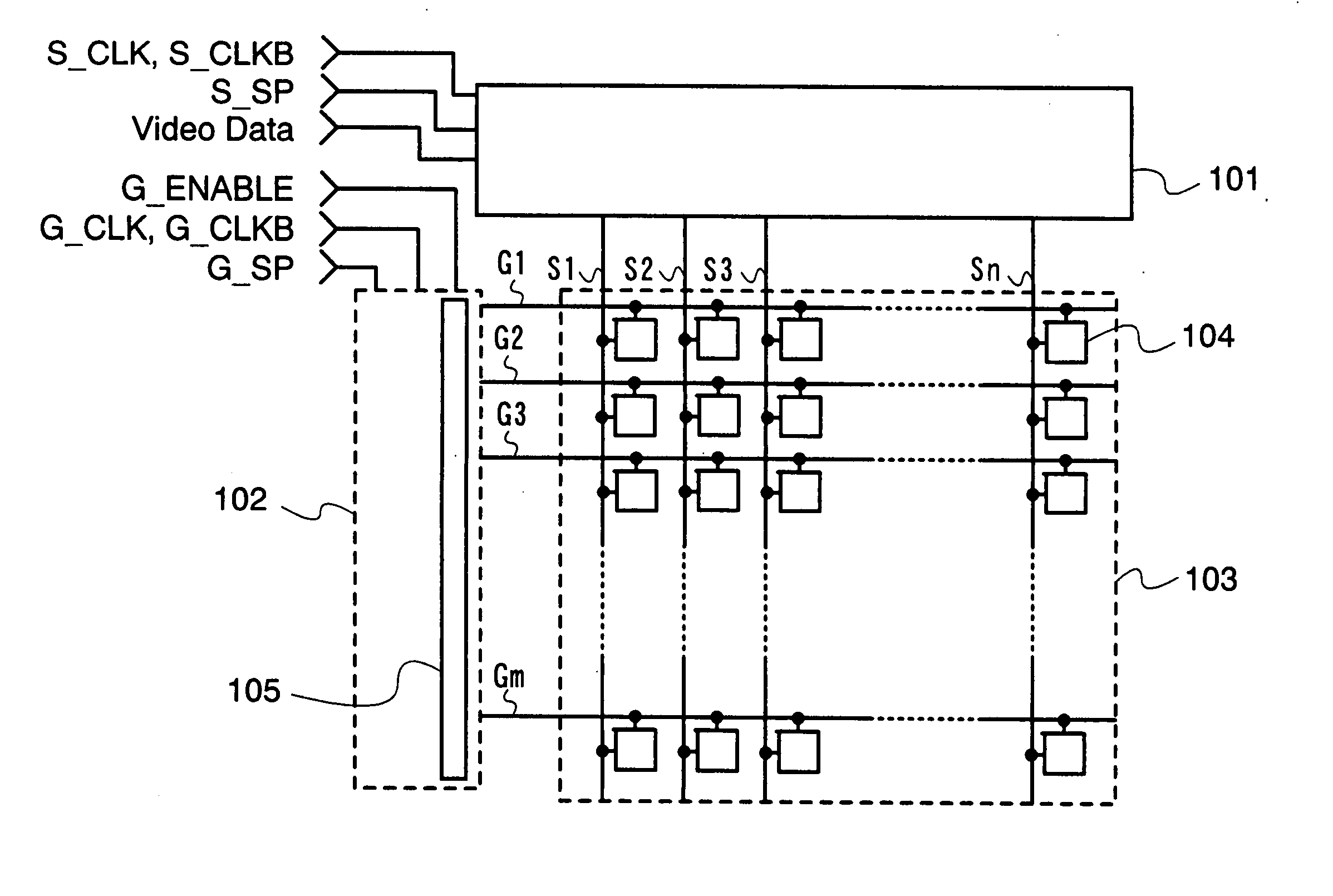

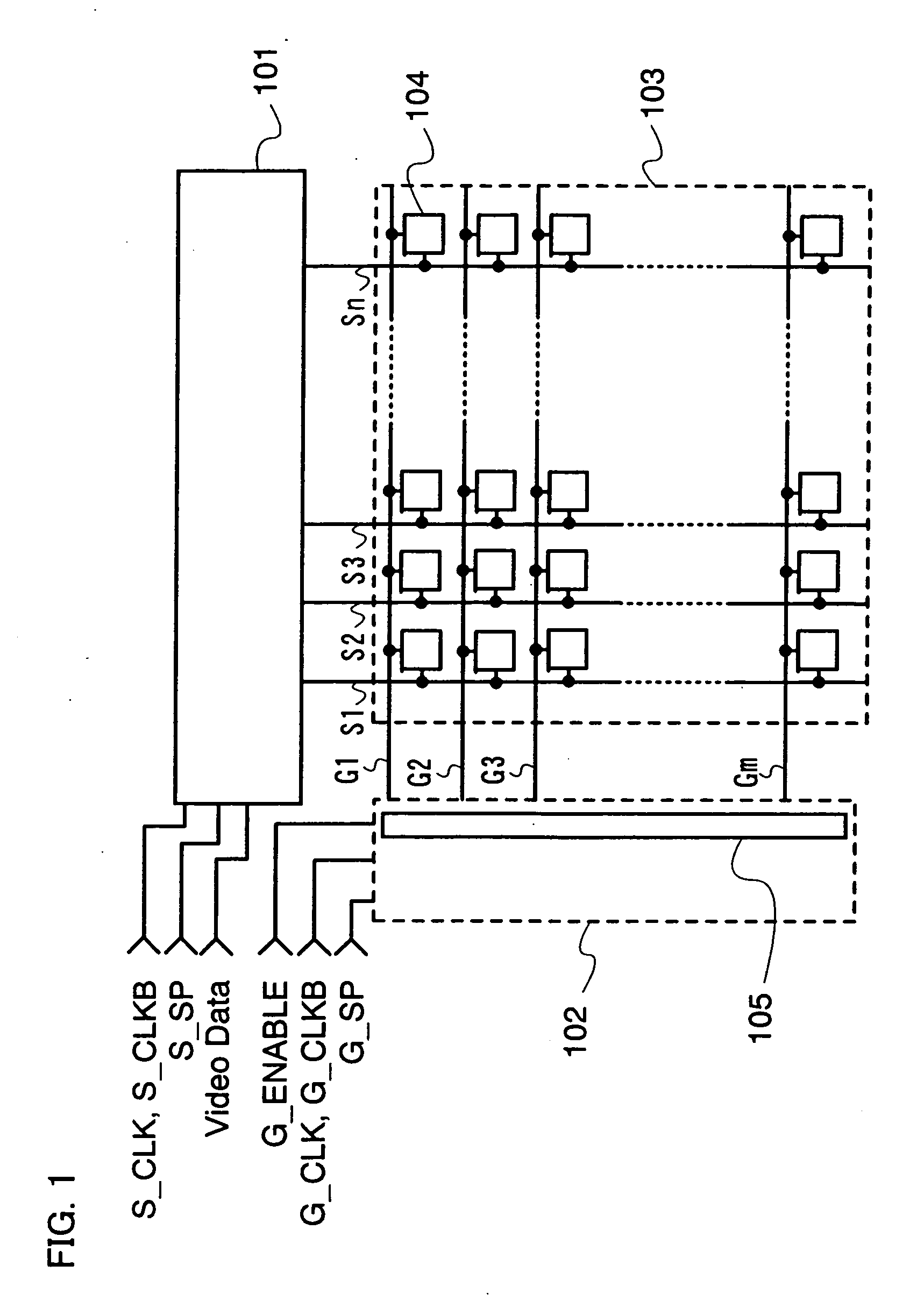

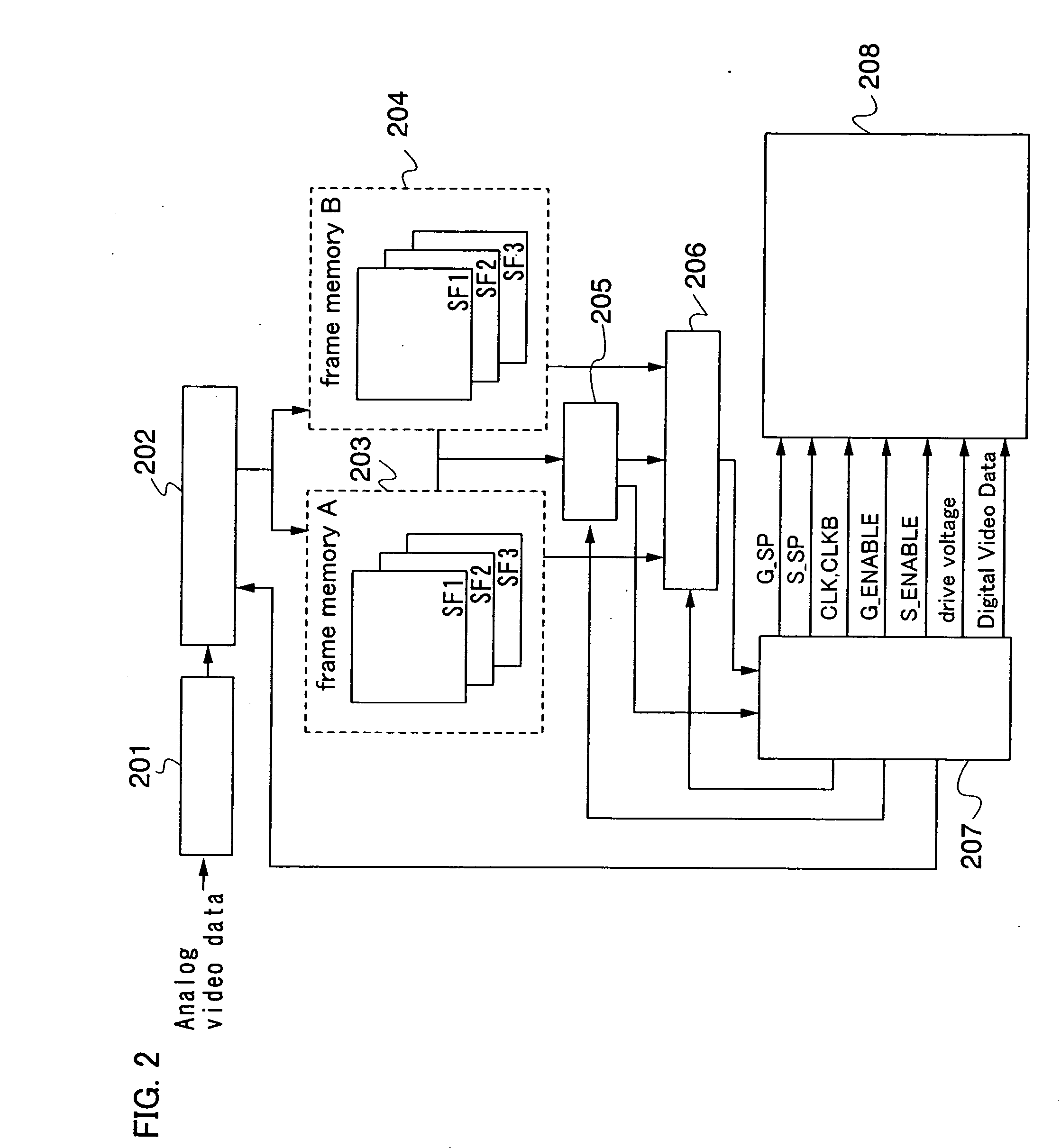

Active matrix display device, method for driving the same, and electronic device

InactiveUS20060267889A1Reduce the number of timesPower consumption can be providedElectrical apparatusElectroluminescent light sourcesDriver circuitScan line

An object of the invention is to provide a display device which can reduce the number of times signal writing to a pixel is carried out and power consumption. A display device which can reduce the number of times signal writing to a pixel is carried out and power consumption can be provided. According to an active matrix display device of the invention, in the case a signal to be written to a pixel row is identical with a signal stored in the pixel row, the scan line driver circuit does not output a selecting pulse to a scan line corresponding to the pixel row, and the signal line driver circuit makes the signal lines in a floating state or keeps without changing the state of the signal line from the previous state.

Owner:SEMICON ENERGY LAB CO LTD

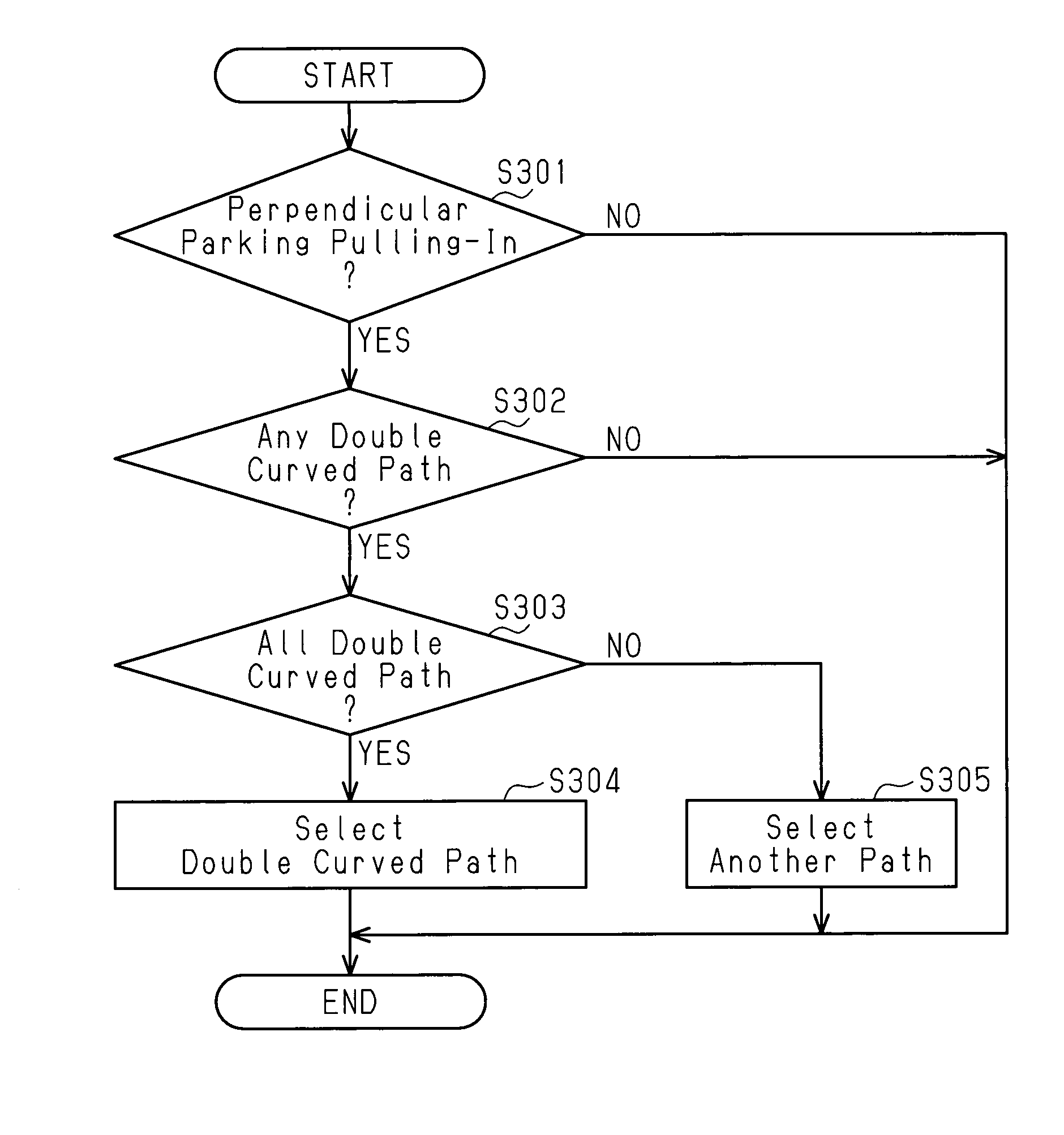

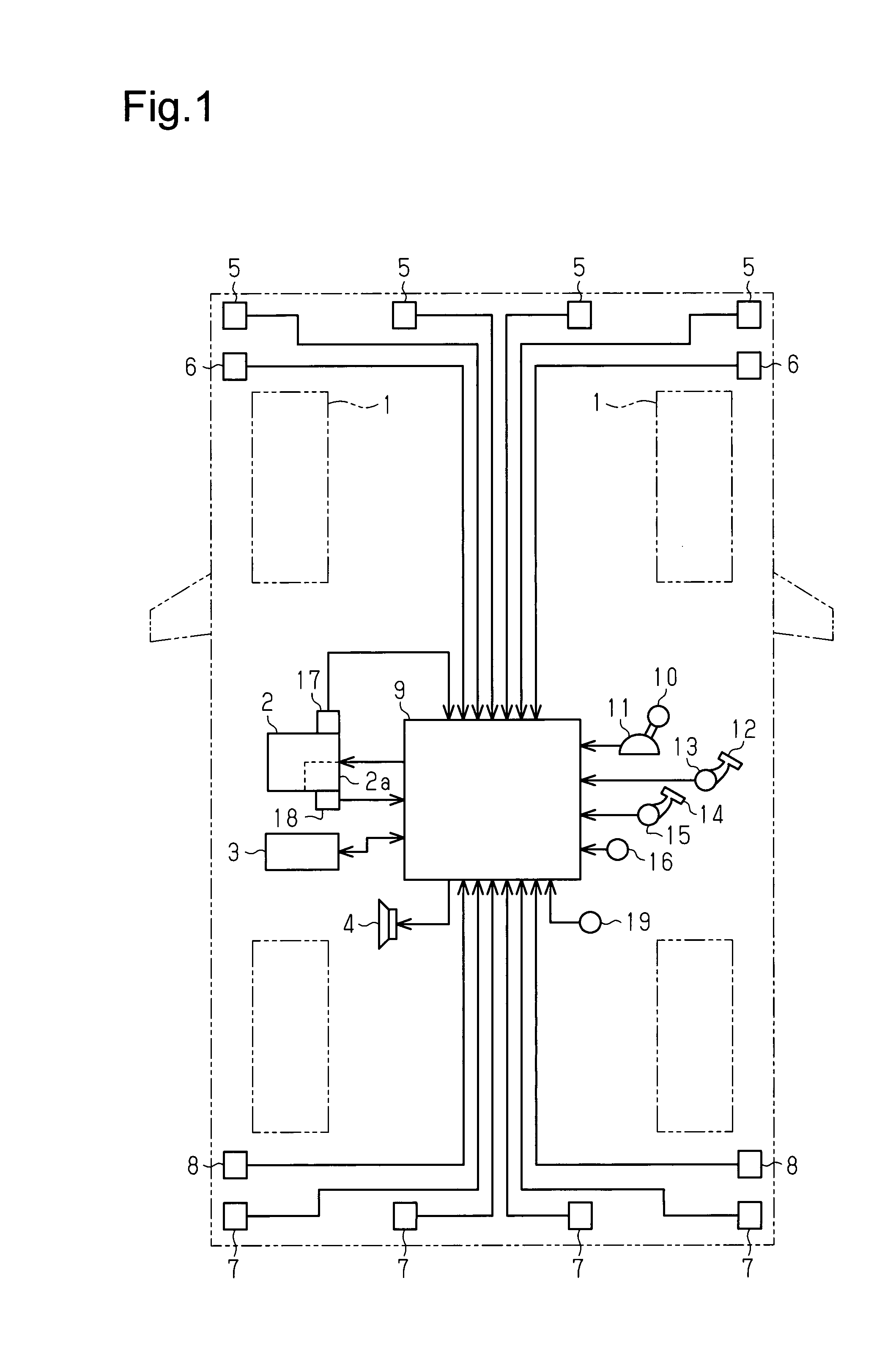

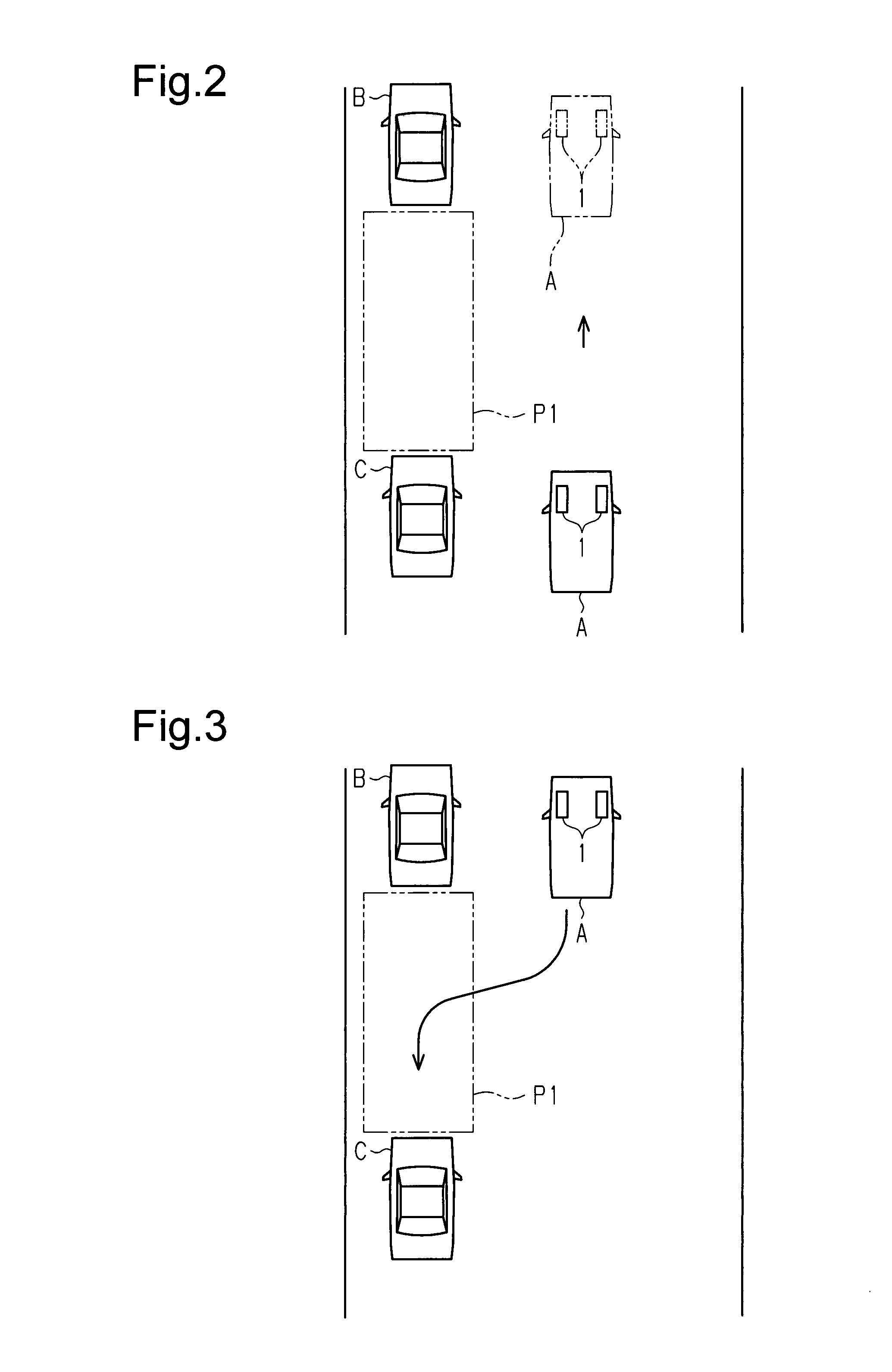

Parking Assist Device

ActiveUS20160185389A1Increase frequency of executionIncrease in numberSteering initiationsDigital data processing detailsAutomatic controlElectronic control unit

When performing automatic control of a steering device that assists a host vehicle to be pulled into or out of a parking space, an electronic control unit performs the following procedure. When there is, as a path for pulling the vehicle into or out of the parking space, a path in which a predicted temperature of the steering device for when the automatic control is performed to move the vehicle along the path would be lower than an allowable upper limit value, the electronic control unit starts the automatic control to move the vehicle along the path. In contrast, when there is no path in which the predicted temperature is lower than the allowable upper limit value, the electronic control unit prohibits the automatic control.

Owner:TOYOTA JIDOSHA KK

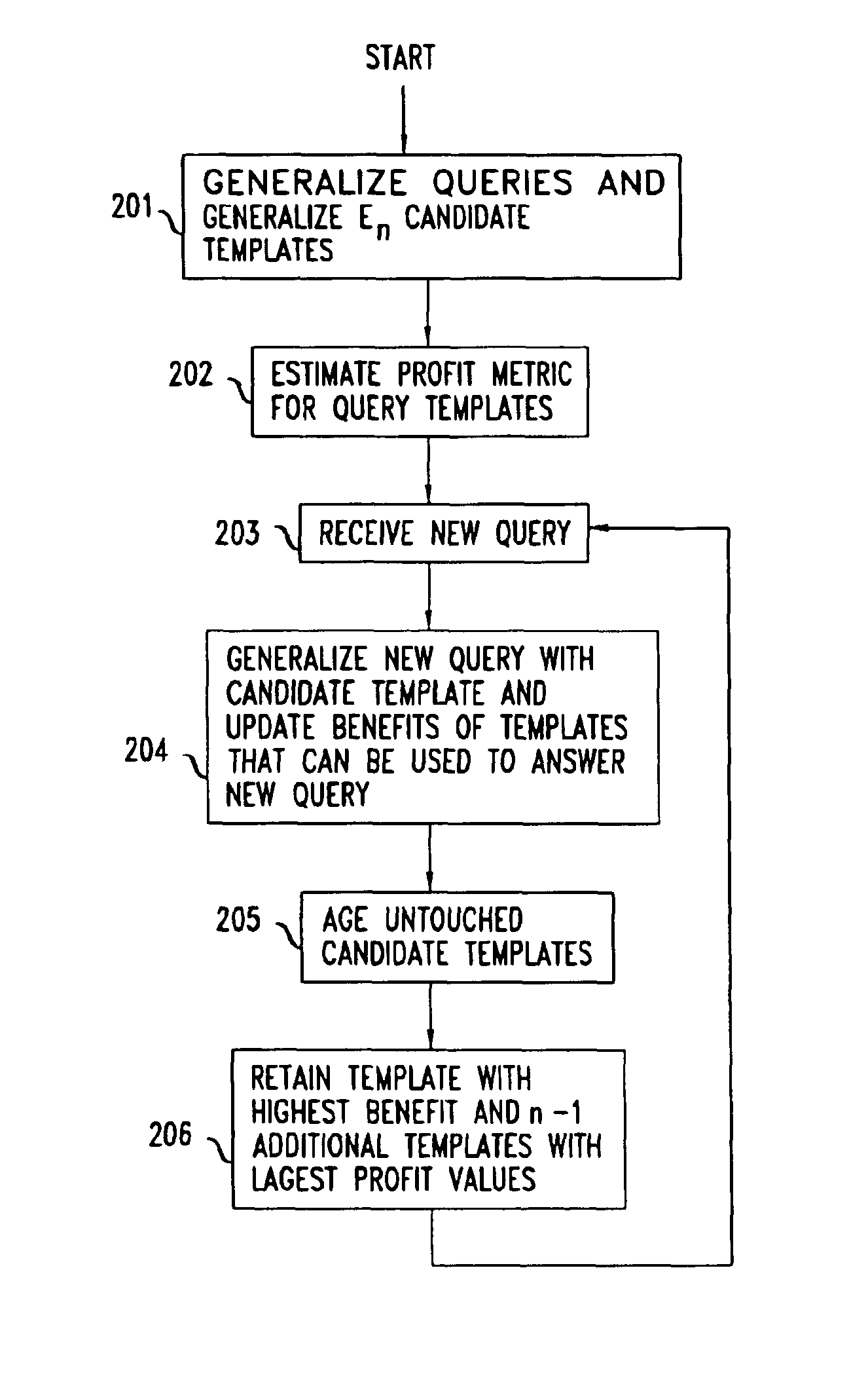

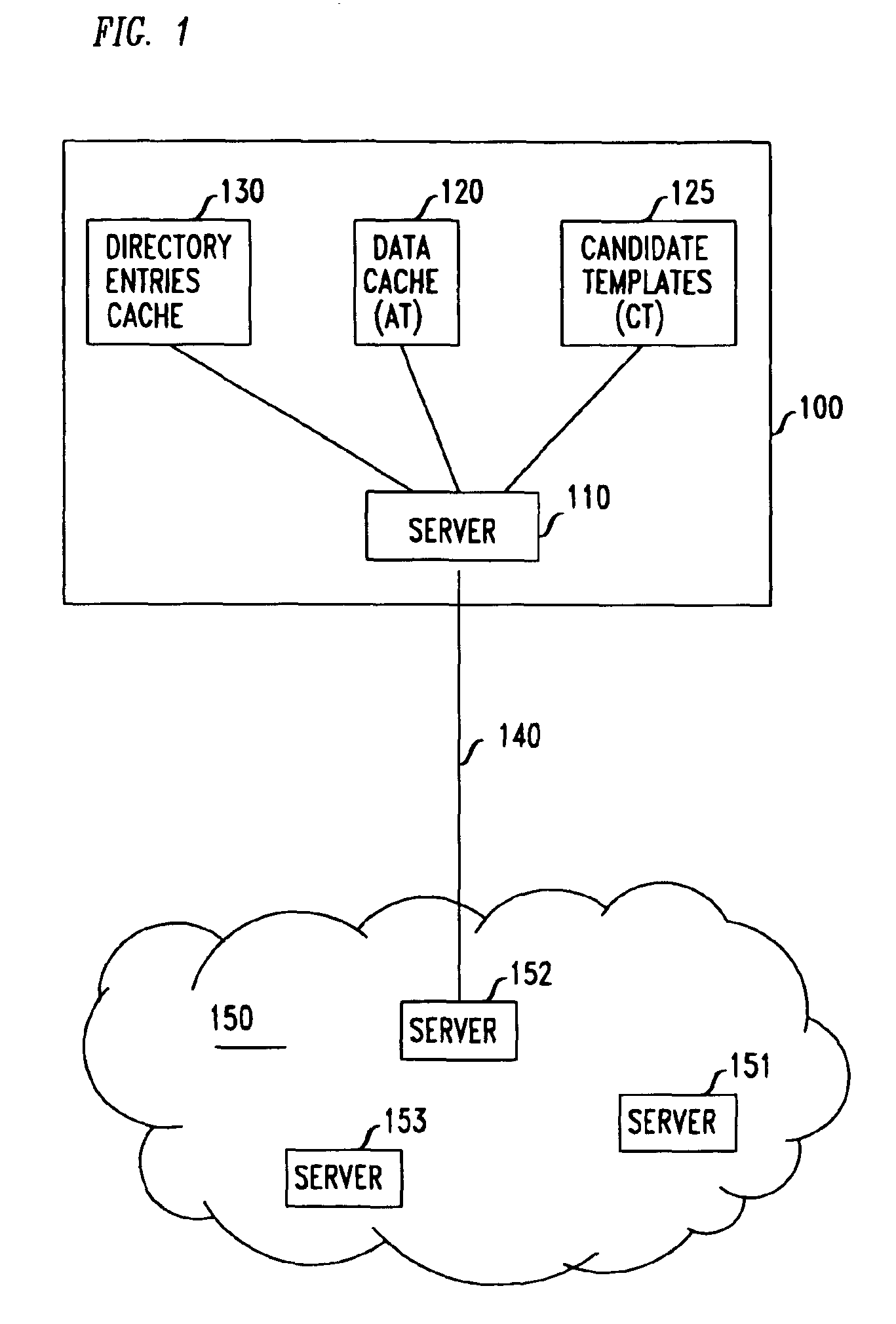

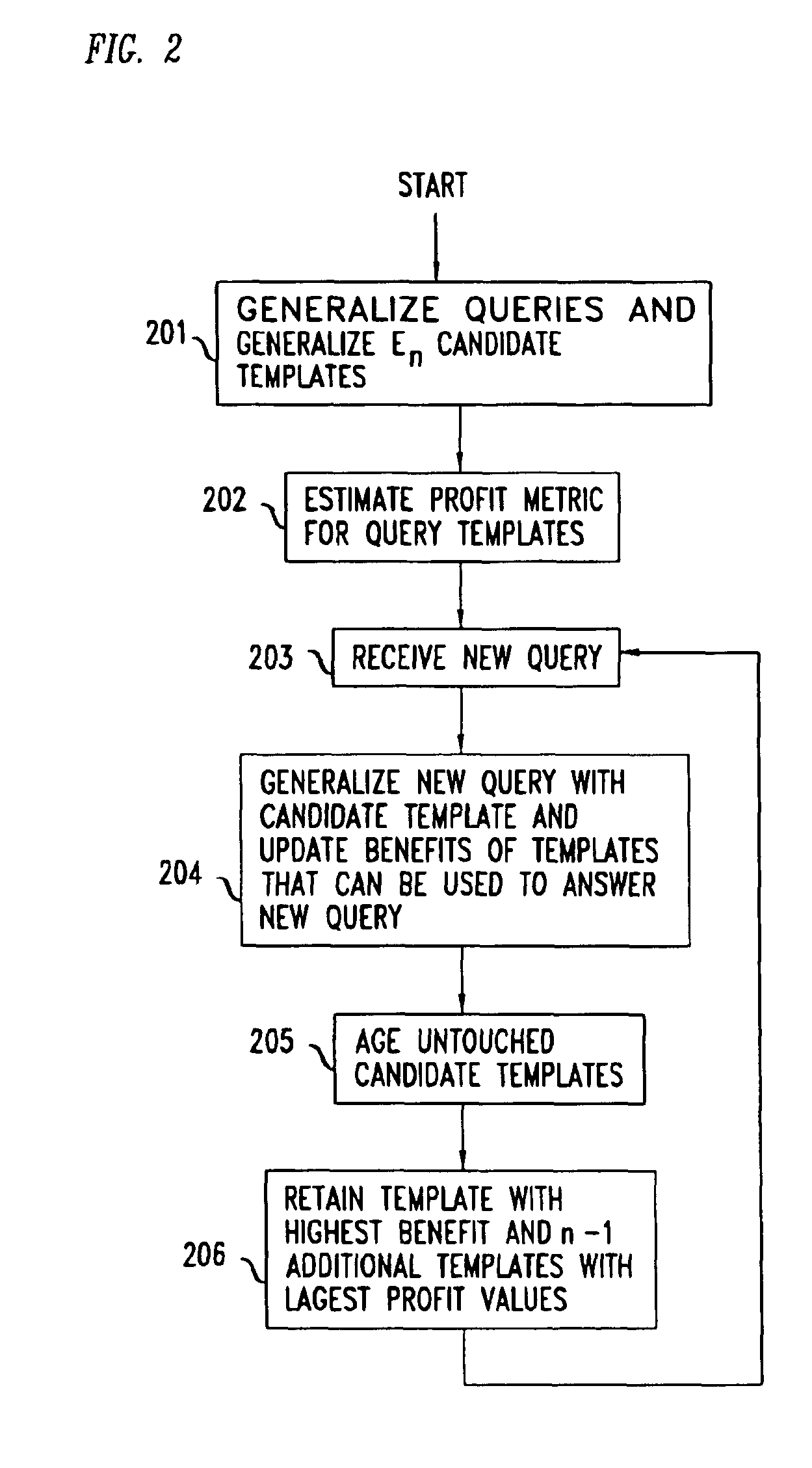

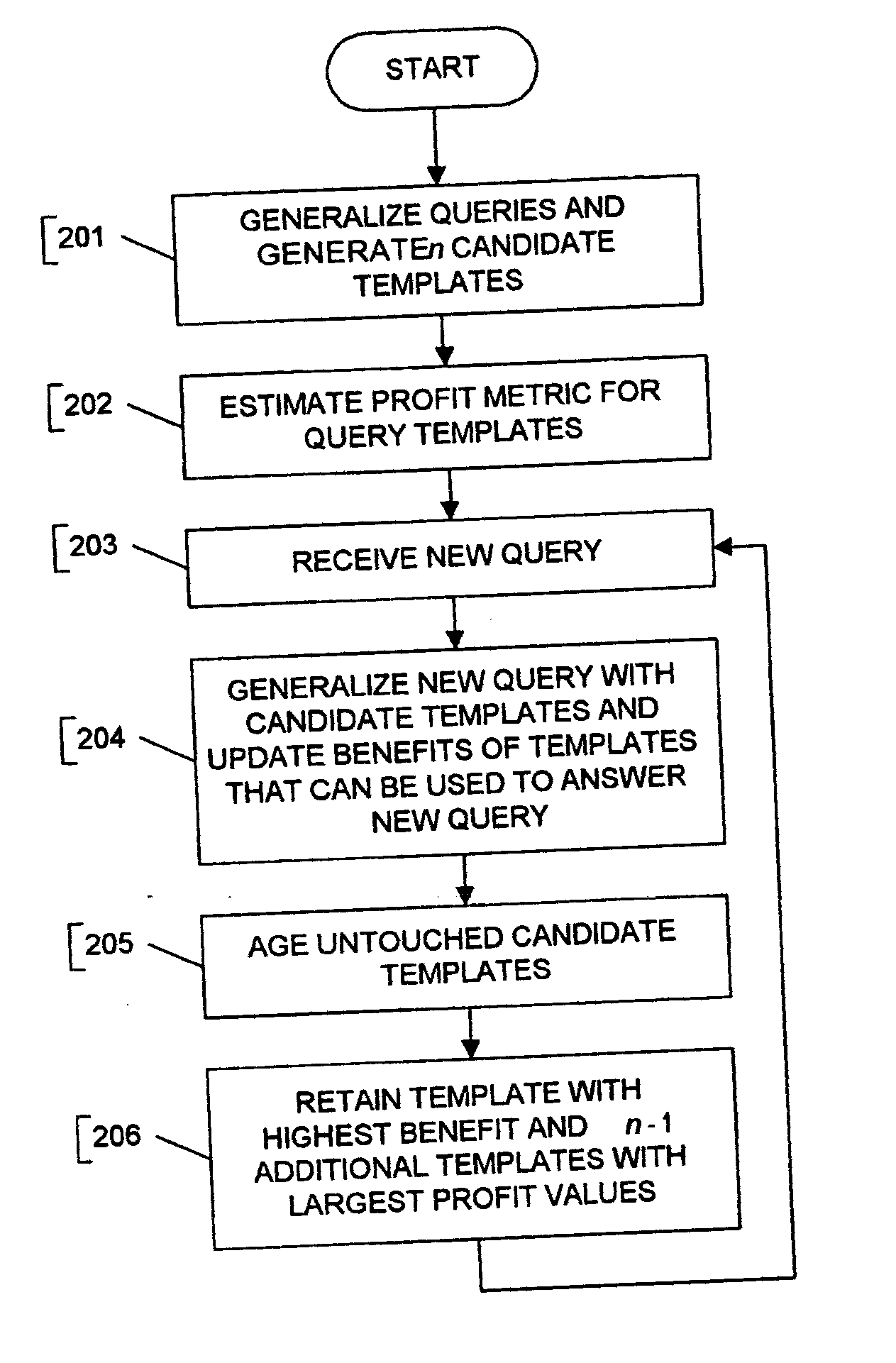

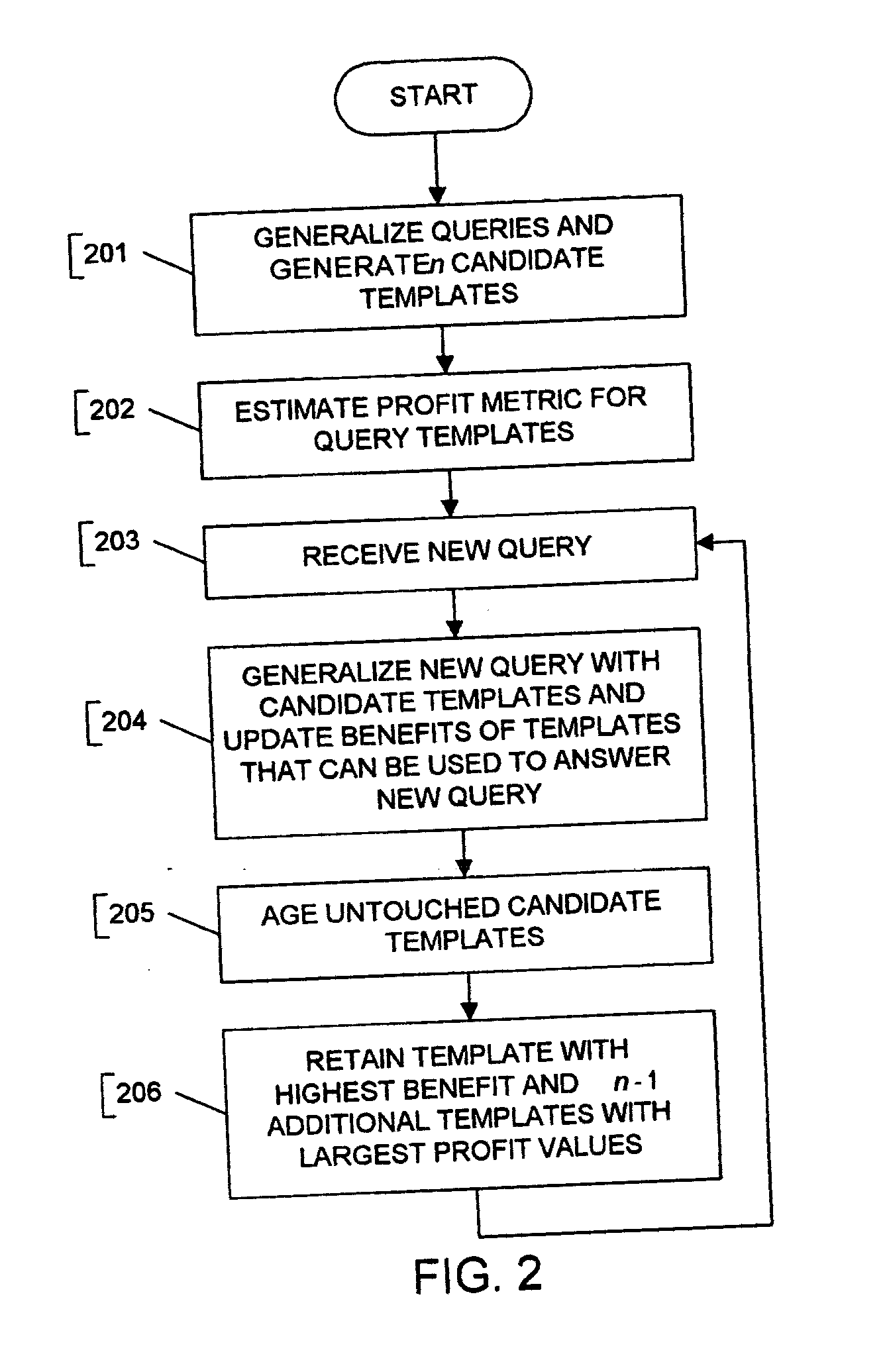

Method for using query templates in directory caches

InactiveUS6904433B2Improve effectivenessLow overheadDigital data information retrievalData processing applicationsLow overheadServer-side

The present invention discloses the use of generalized queries, referred to as query templates, obtained by generalizing individual user queries, as the semantic basis for low overhead, high benefit directory caches for handling declarative queries. Caching effectiveness can be improved by maintaining a set of generalizations of queries and admitting such generalizations into the cache when their estimated benefits are sufficiently high. In a preferred embodiment of the invention, the admission of query templates into the cache can be done in what is referred to by the inventors as a “revolutionary” fashion—followed by stable periods where cache admission and replacement can be done incrementally in an evolutionary fashion. The present invention can lead to considerably higher hit rates and lower server-side execution and communication costs than conventional caching of directory queries—while keeping the clientside computational overheads comparable to query caching.

Owner:AT&T INTPROP I L P

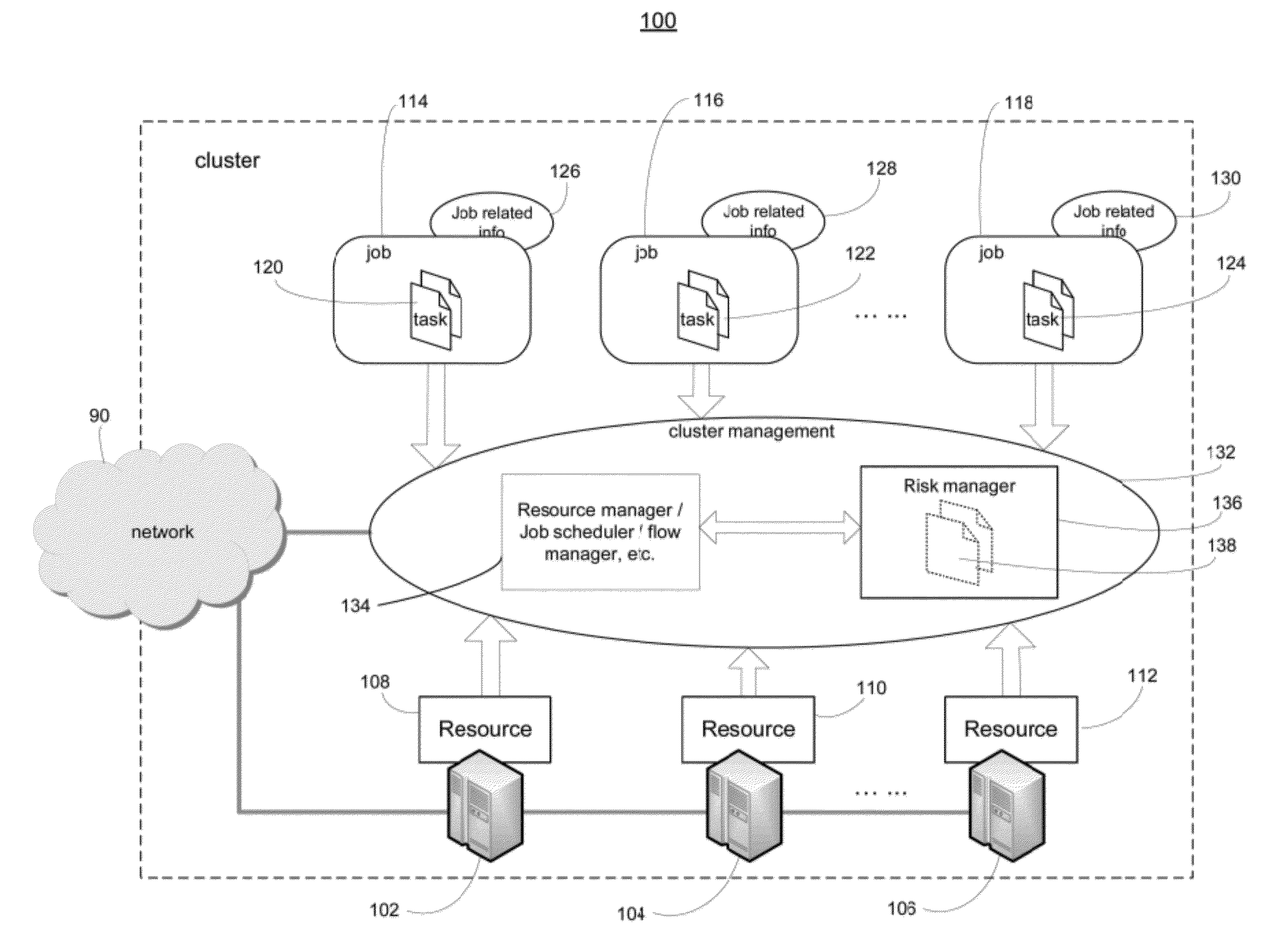

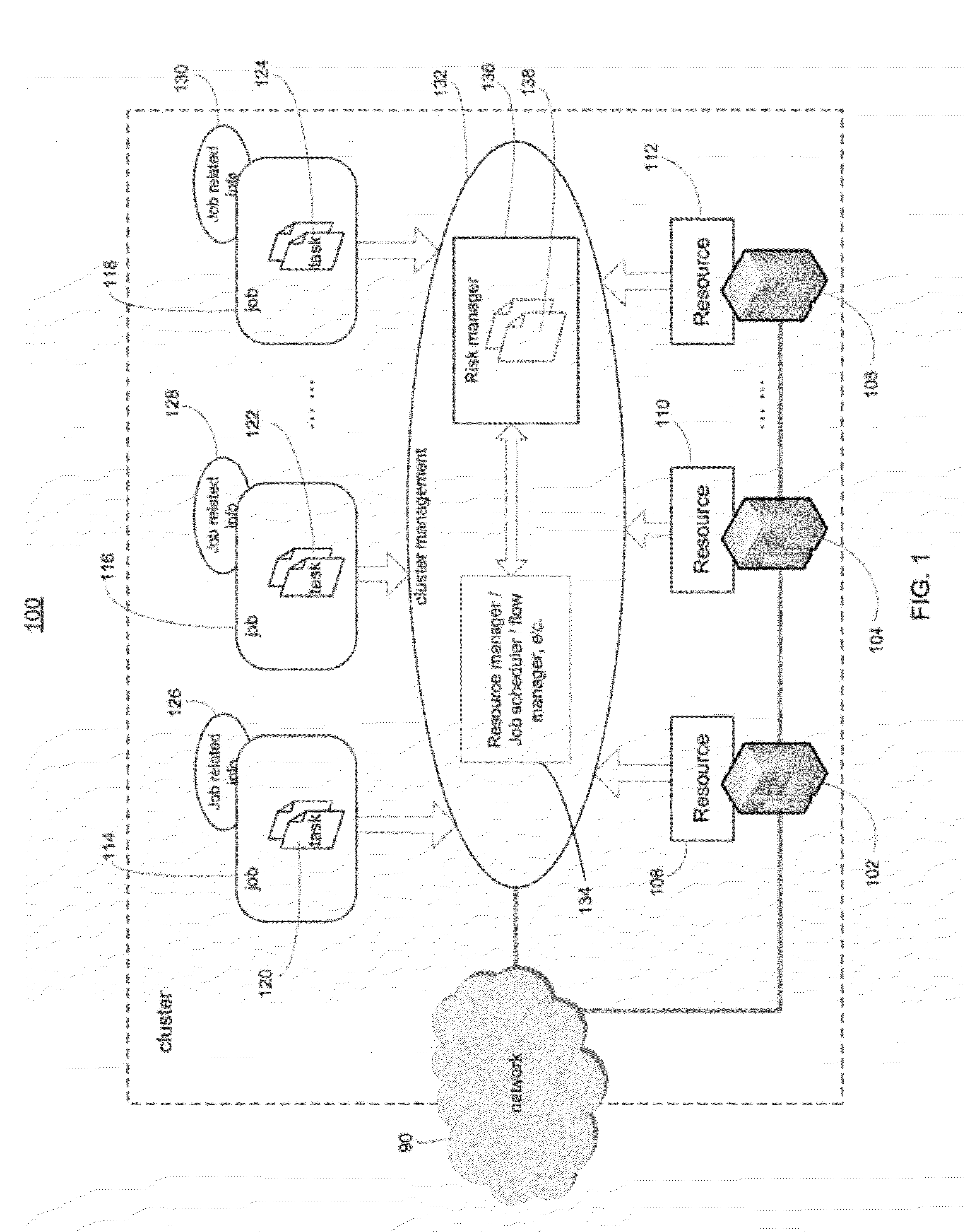

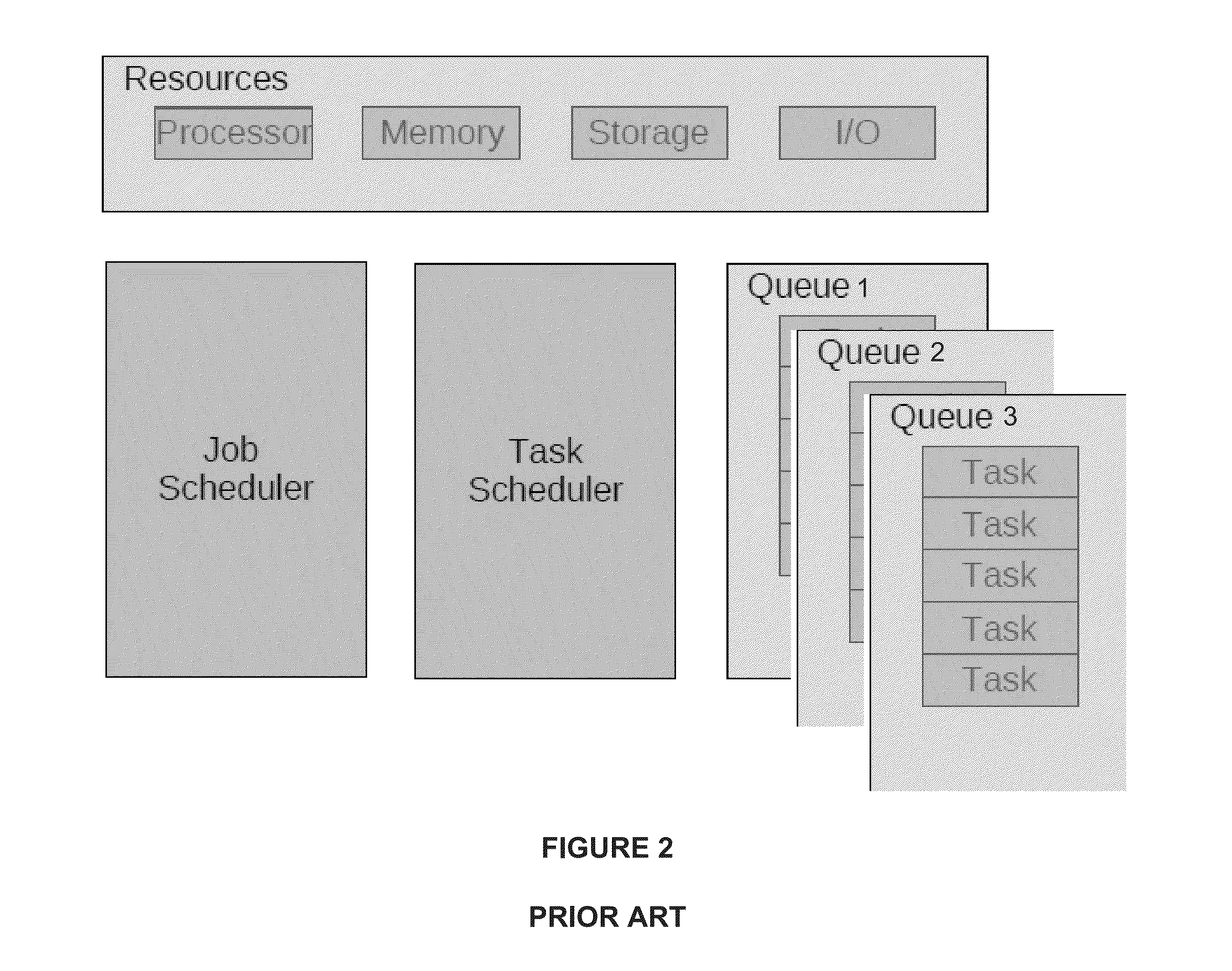

System and method of active risk management to reduce job de-scheduling probability in computer clusters

ActiveUS20120110584A1Minimize de-scheduling possibilityReduce executionMultiprogramming arrangementsReliability/availability analysisOperation schedulingComputer cluster

Systems and methods are provided for generating backup tasks for a plurality of tasks scheduled to run in a computer cluster. Each scheduled task is associated with a target probability for execution, and is executable by a first cluster element and a second cluster element. The system classifies the scheduled tasks into groups based on resource requirements of each task. The system determines the number of backup tasks to be generated. The number of backup tasks is determined in a manner necessary to guarantee that the scheduled tasks satisfy the target probability for execution. The backup tasks are desirably identical for a given group. And each backup task can replace any scheduled task in the given group.

Owner:GOOGLE LLC

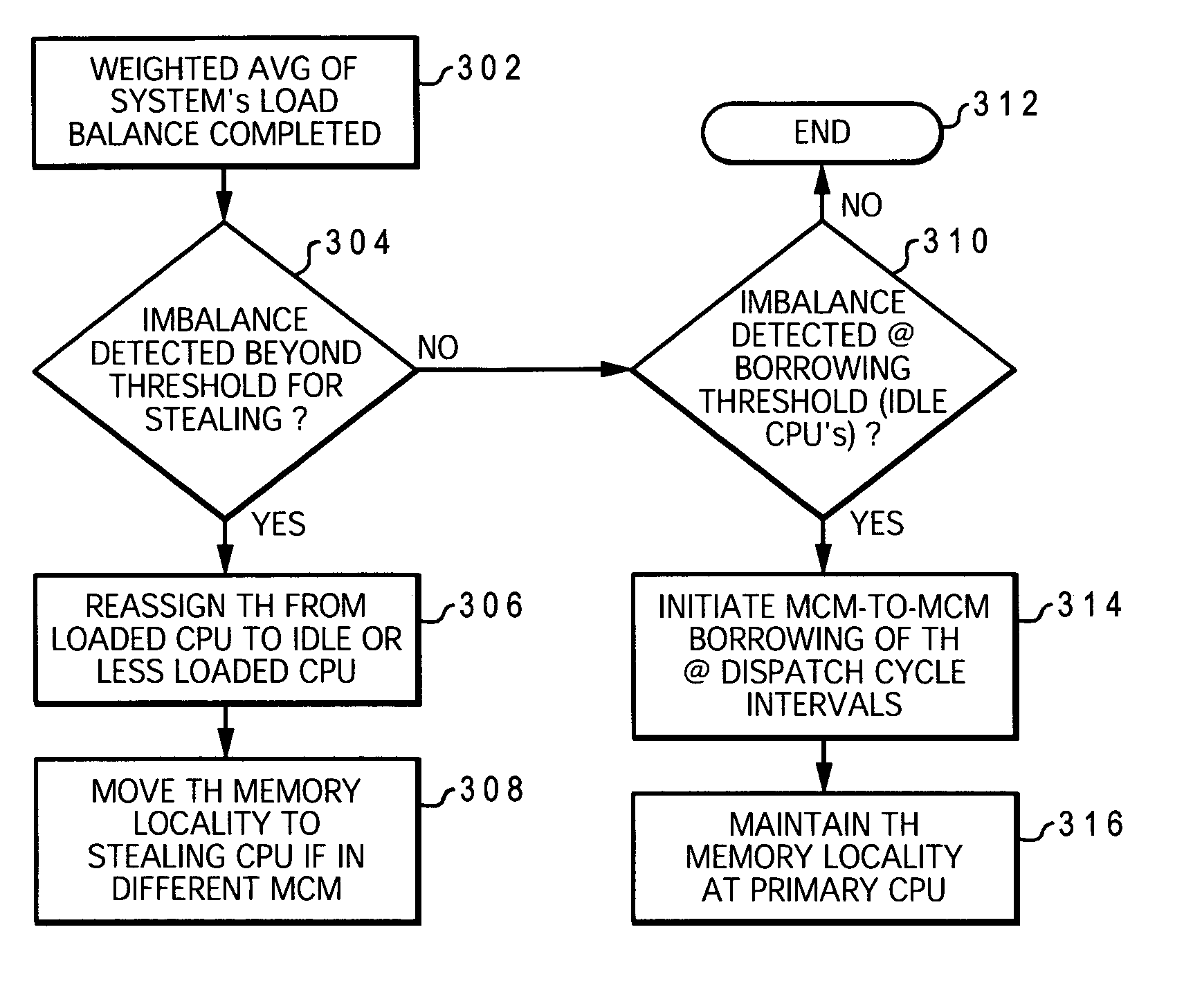

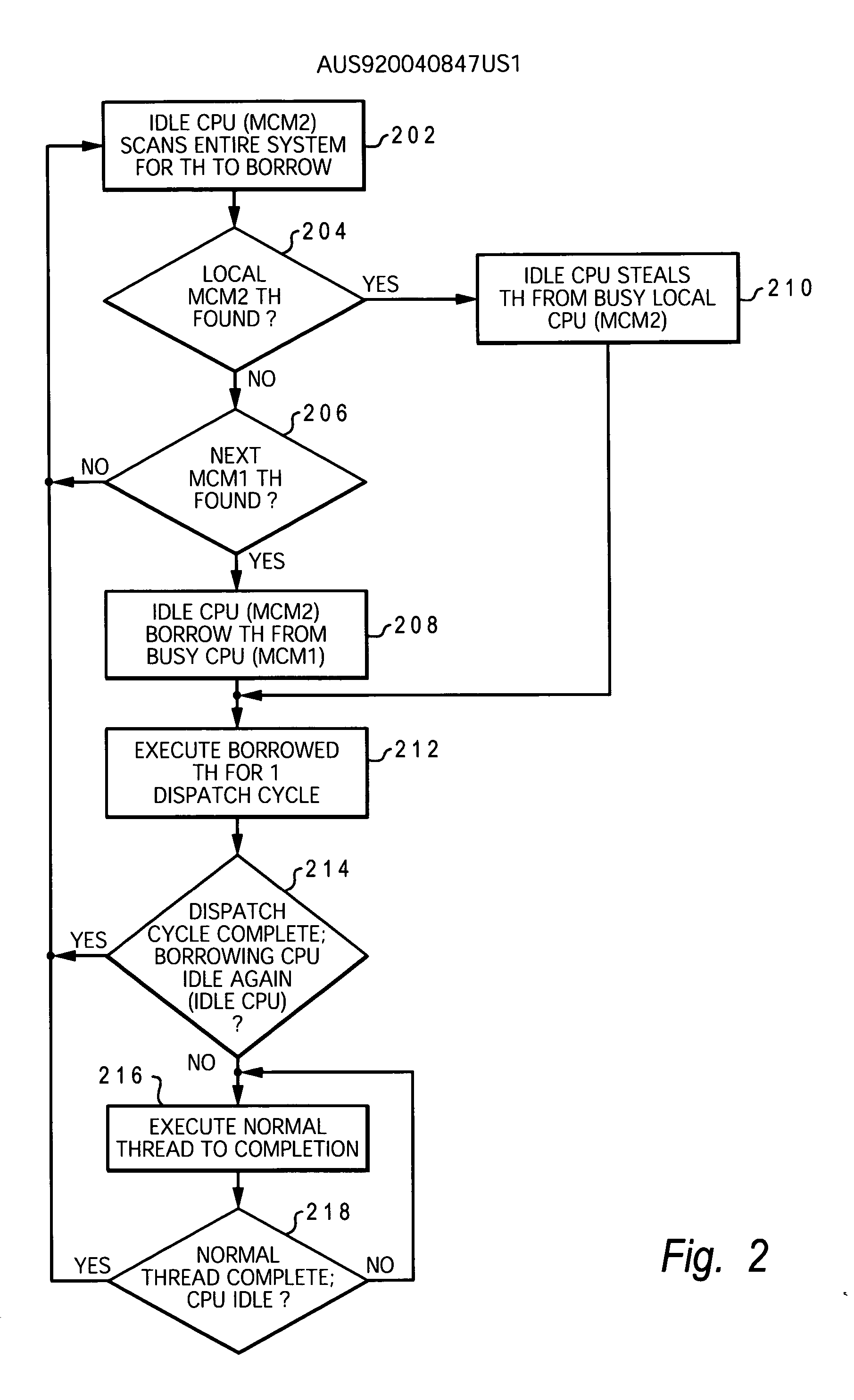

Borrowing threads as a form of load balancing in a multiprocessor data processing system

InactiveUS20060123423A1Efficient load balancingReduce executionMultiprogramming arrangementsMemory systemsLoad SheddingData processing system

A method and system in a multiprocessor data processing system (MDPS) that enable efficient load balancing between a first processor with idle processor cycles in a first MCM (multi-chip module) and a second busy processor in a second MCM, without significant degradation to the thread's execution efficiency when allocated to the idle processor cycles. A load balancing algorithm is provided that supports both stealing and borrowing of threads across MCMs. An idle processor is allowed to “borrow” a thread from a busy processor in another memory domain (i.e., across MCMs). The thread is borrowed for a single dispatch cycle at a time. When the dispatch cycle is completed, the thread is released back to its parent processor. No change in the memory allocation of the borrowed thread occurs during the dispatch cycle.

Owner:IBM CORP

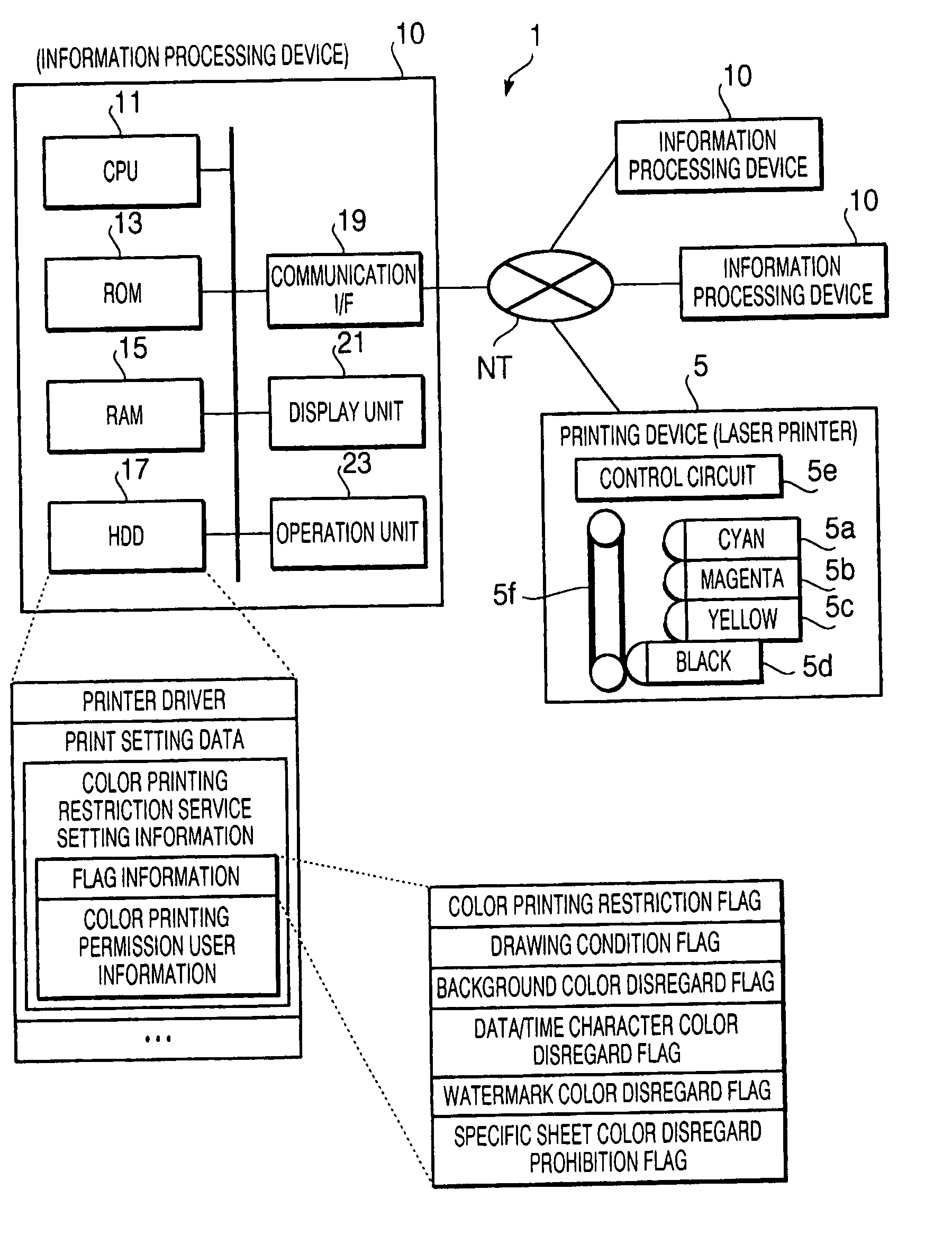

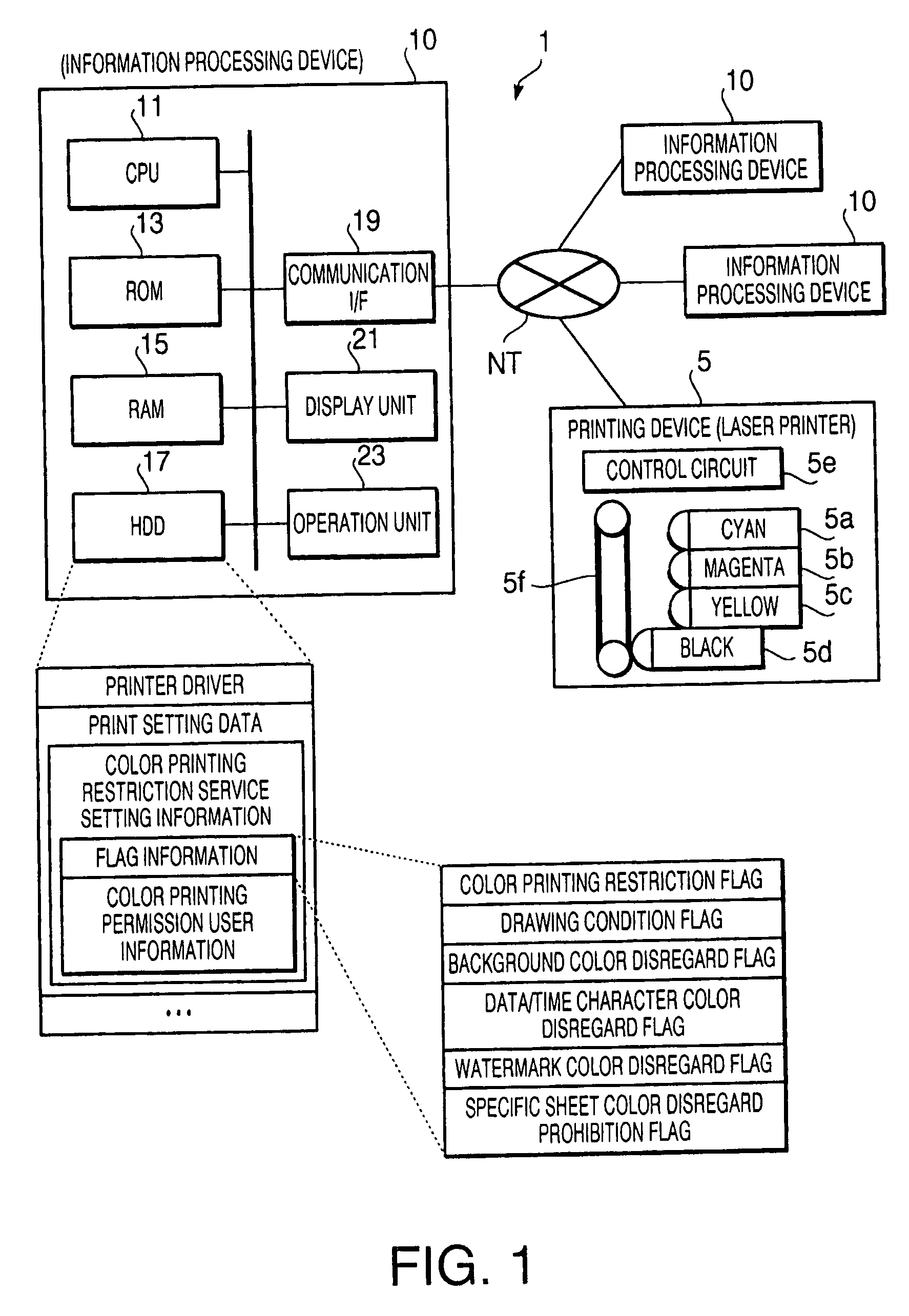

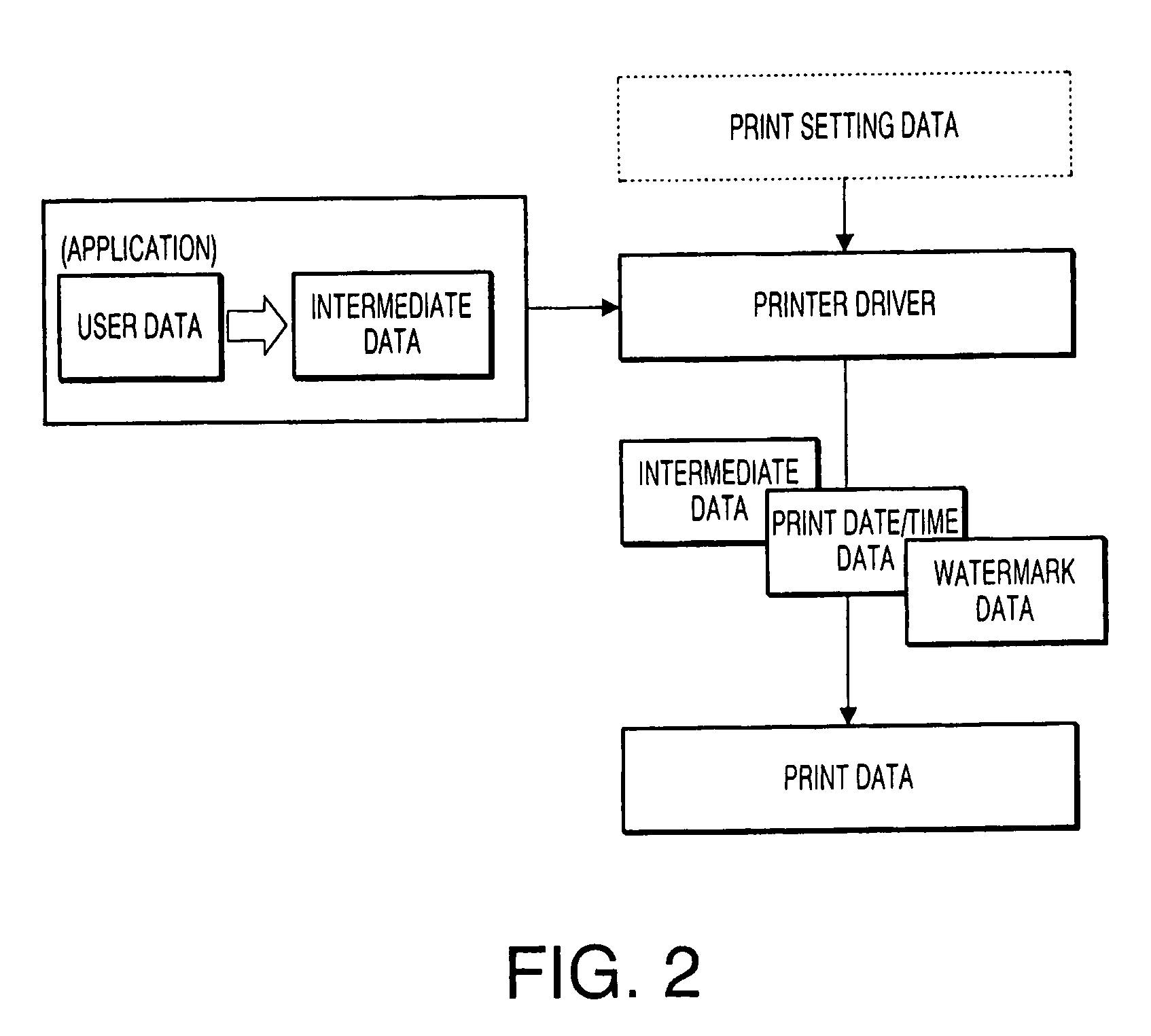

Print control device and program

InactiveUS20080080000A1Reduce executionHigh operating costsDigitally marking record carriersDigital computer detailsColor printingMonochrome

A print control device, controlling a printing device (capable of color printing and monochrome printing) to make the printing device print an image corresponding to print target data, comprises: a main data color / monochrome judgment unit which judges whether main data (included in the print target data which is made up of the main data and attached data) is color data or not when the print target data is specified, and a color restriction print control unit which makes the printing device execute the printing of the image corresponding to the print target data by the color printing when the main data is judged to be color data by the main data color / monochrome judgment unit, while making the printing device execute the printing by the monochrome printing irrespective of whether the attached data is color data or not when the main data is judged not to be color data.

Owner:BROTHER KOGYO KK

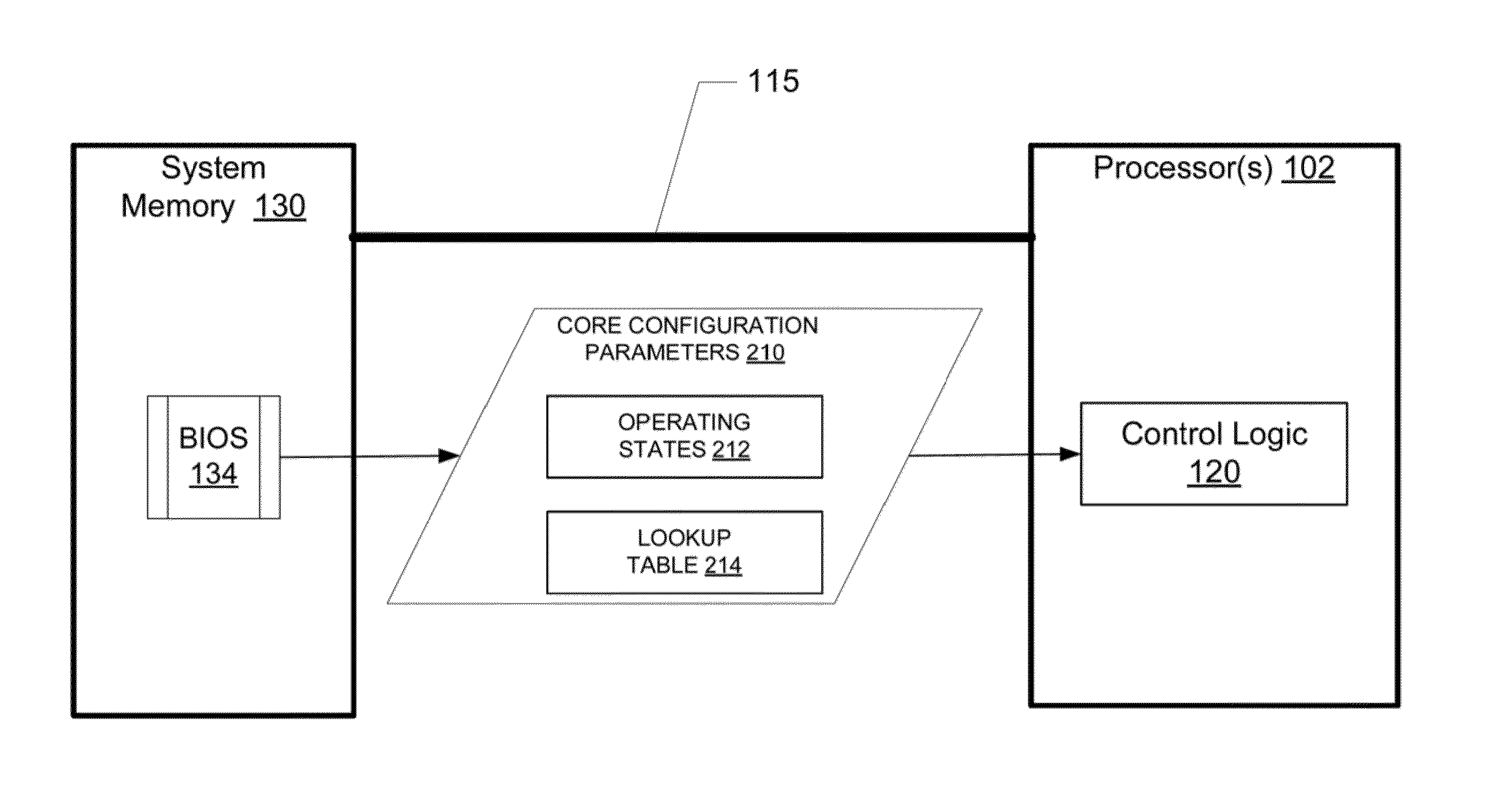

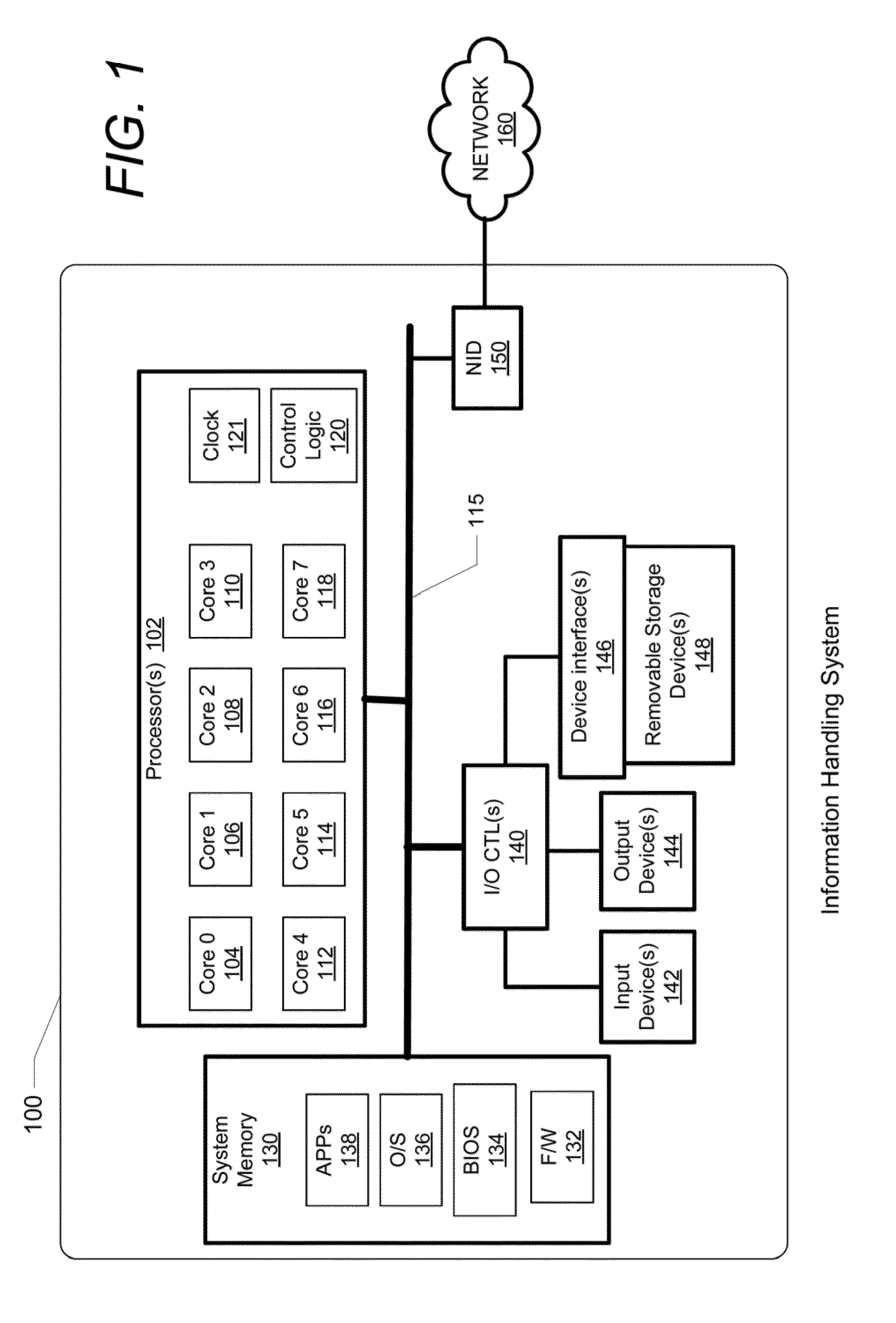

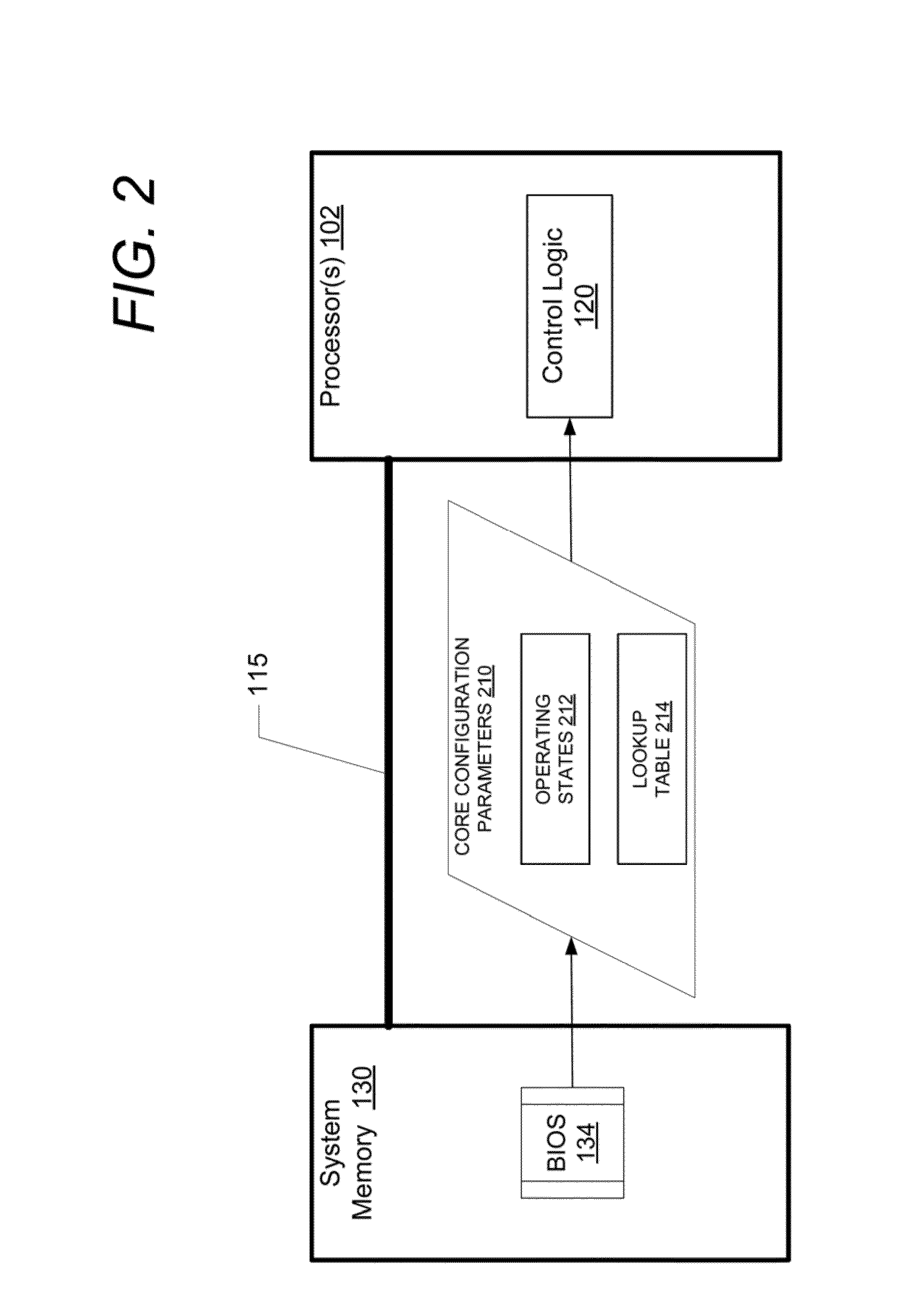

Method for Reducing Execution Jitter in Multi-Core Processors Within an Information Handling System

ActiveUS20140108778A1Reduce execution jitterReduce executionProgram control using stored programsData resettingHandling systemMulti-core processor

A method of reducing execution jitter includes a processor having several cores and control logic that receives core configuration parameters. Control logic determines if a first set of cores are selected to be disabled. If none of the cores is selected to be disabled, the control logic determines if a second set of cores is selected to be jitter controlled. If the second set of cores is selected to be jitter controlled, the second set of cores is set to a first operating state. If the first set of cores is selected to be disabled, the control logic determines a second operating state for a third set of enabled cores. The control logic determines if the third set of enabled cores is jitter controlled, and if the third set of enabled cores is jitter controlled, the control logic sets the third set of enabled cores to the second operating state.

Owner:DELL PROD LP

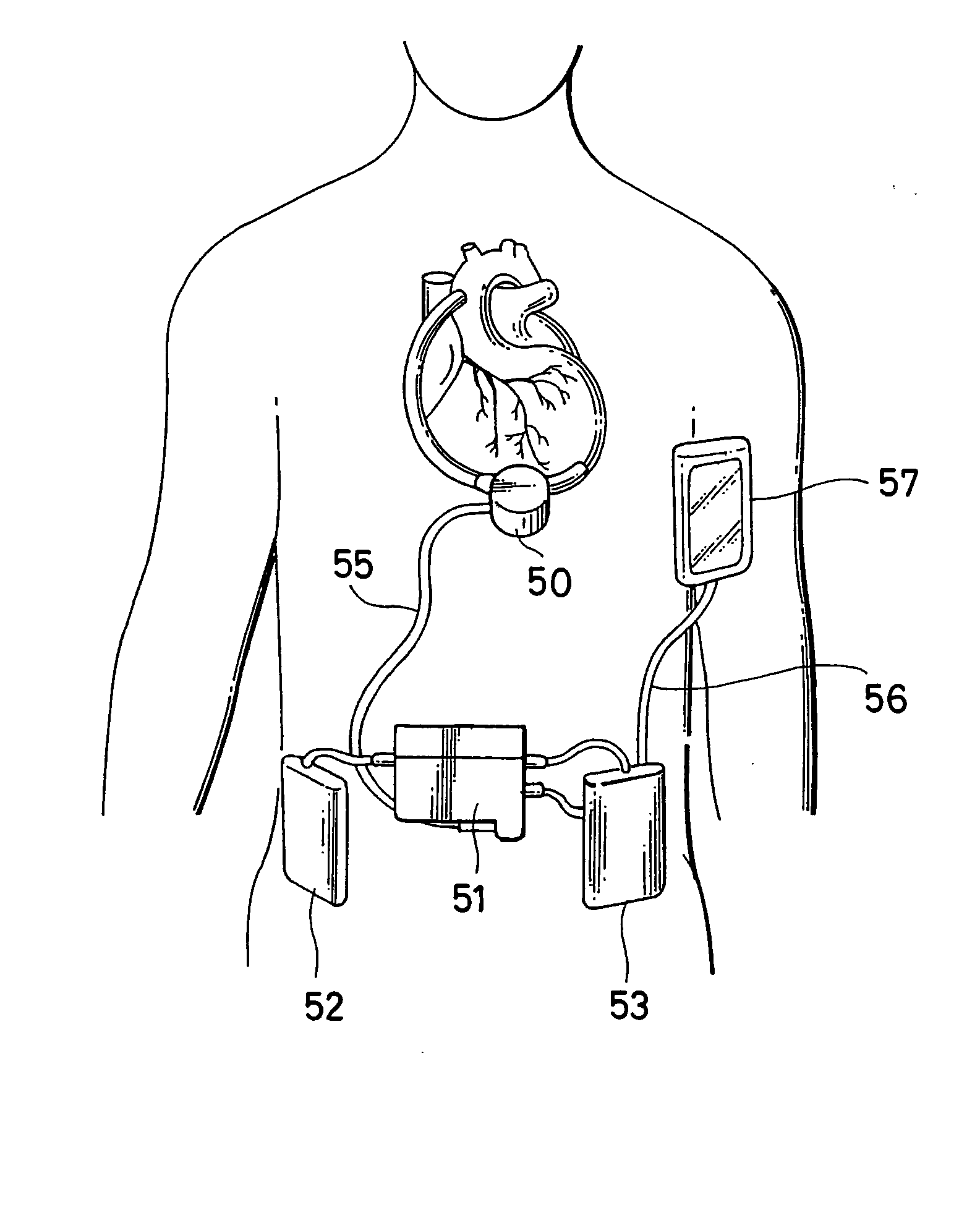

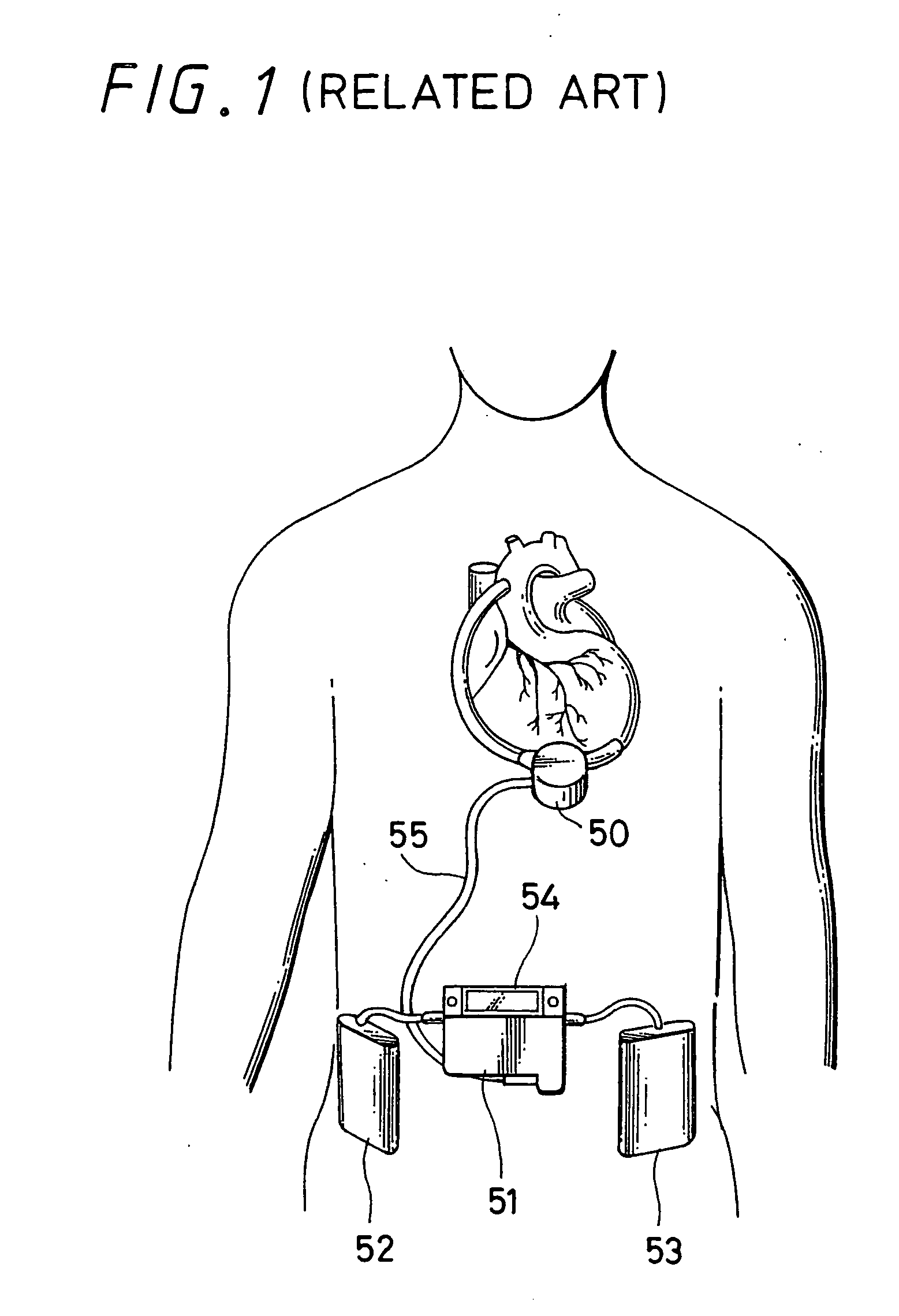

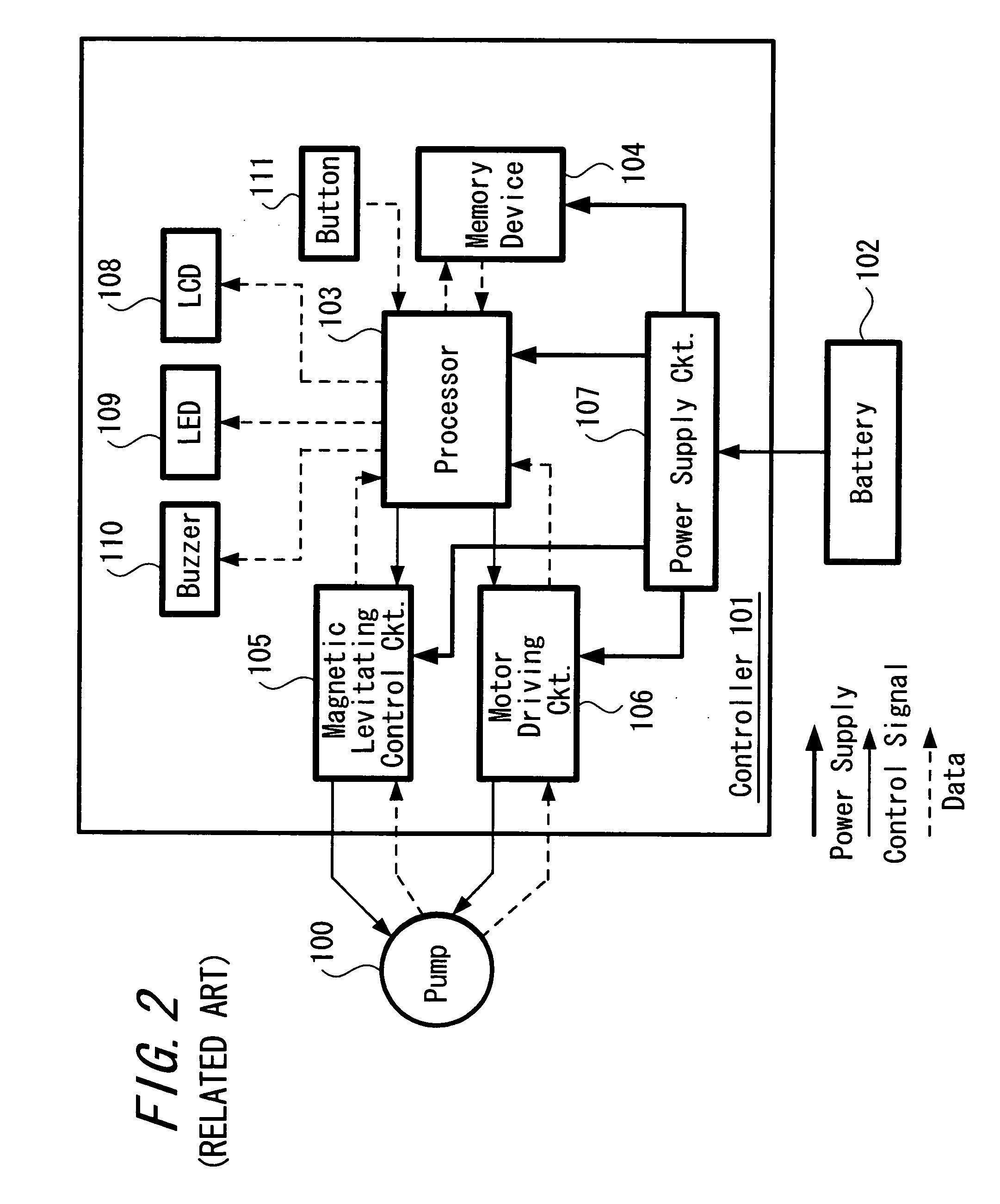

Artificial heart pump system and its control apparatus

ActiveUS20050014991A1Improve hardware performanceReduce executionControl devicesBlood pumpsBlood pumpEngineering

An artificial heart pump system is concerned where the load of a processor in a controller used for a blood pump system is reduced and the performance of the hardware is maintained or guarantied, and the artificial heart pump system is provided independently with a controlling processor for controlling an operation status of a blood pump so as to perform in a preset condition and with an observing processor for controlling a display unit displaying an operation status and an operation condition of the blood pump. In addition, a memory device is mounted in an outside-the-body type battery pack included in the artificial heart pump system; data such as pump data, event log or the like is stored therein; and data are collected and managed automatically by a battery charger when charging the battery charger for a daily performance. Further, a user interface unit of an artificial heart pump is proposed where a user can confirm displayed contents of the controller without exposing the controller externally.

Owner:TC1 LLC

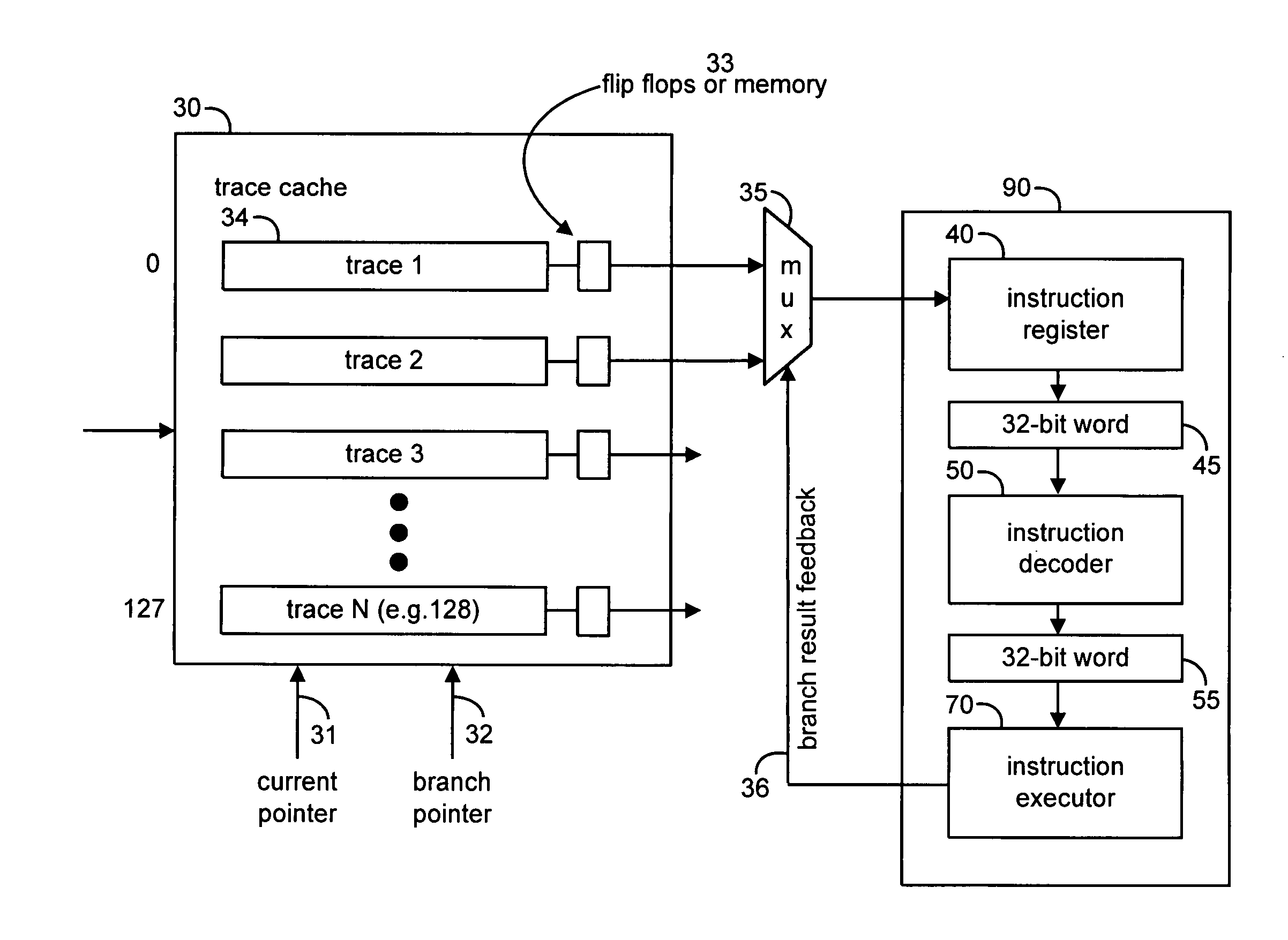

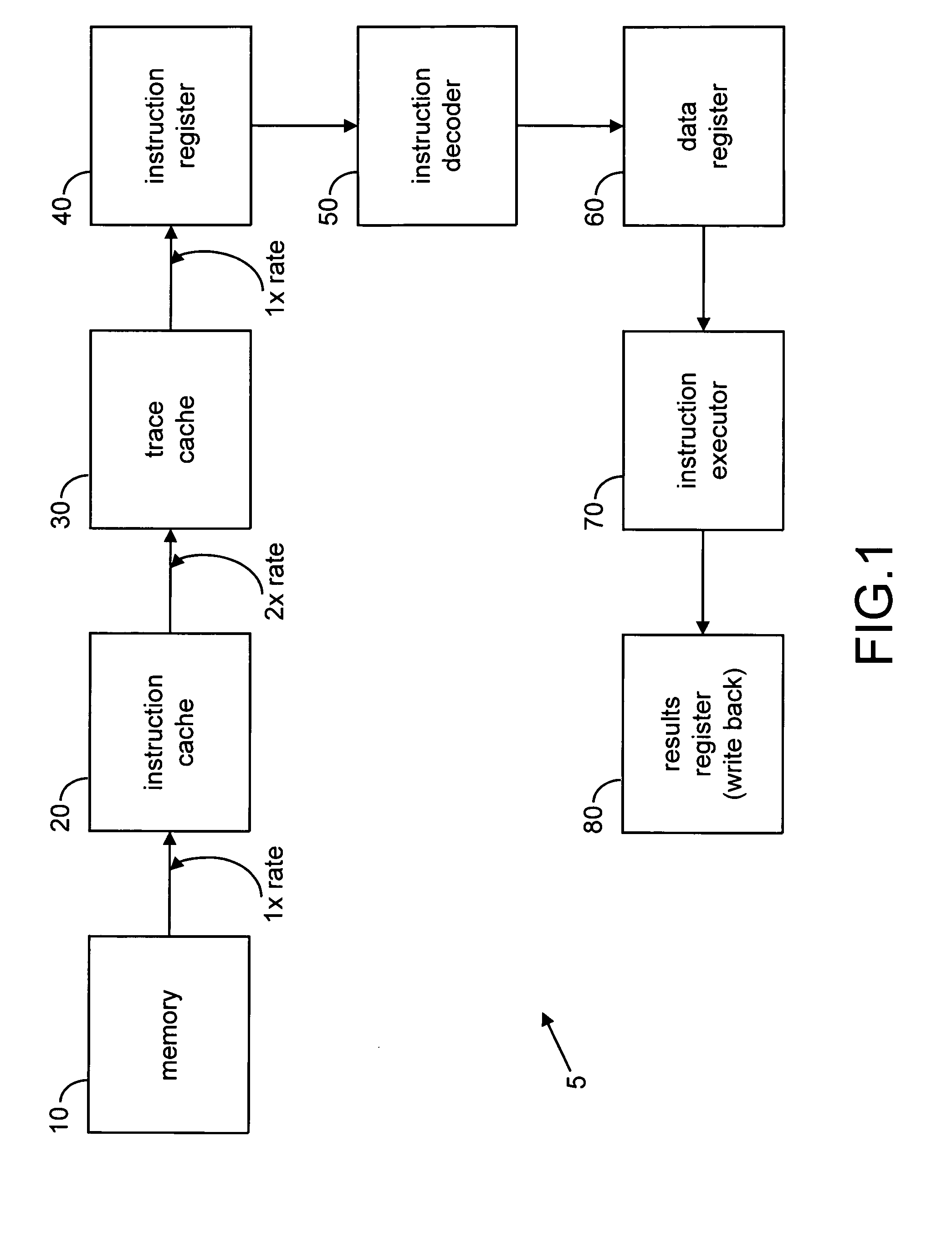

Implementation of an efficient instruction fetch pipeline utilizing a trace cache

ActiveUS7139902B2Improve computing powerReducing instruction execution pipeline stallDigital computer detailsNext instruction address formationParallel computingTrace Cache

A method and apparatus are disclosed for enhancing the pipeline instruction transfer and execution performance of a computer architecture by reducing instruction stalls due to branch and jump instructions. Trace cache within a computer architecture is used to receive computer instructions at a first rate and to store the computer instructions as traces of instructions. An instruction execution pipeline is also provided to receive, decode, and execute the computer instructions at a second rate that is less than the first rate. A mux is also provided between the trace cache and the instruction execution pipeline to select a next instruction to be loaded into the instruction execution pipeline from the trace cache based, in part, on a branch result fed back to the mux from the instruction execution pipeline.

Owner:QUALCOMM INC

Method for controlling heterogeneous multiprocessor and multigrain parallelizing compiler

ActiveUS8250548B2Reduce executionReduce processEnergy efficient ICTMultiprogramming arrangementsGeneral purposeCost comparison

A heterogeneous multiprocessor system including a plurality of processor elements having mutually different instruction sets and structures avoids a specific processor element from being short of resources to improve throughput. An executable task is extracted based on a preset depending relationship between a plurality of tasks, and the plurality of first processors are allocated to a general-purpose processor group based on a depending relationship among the extracted tasks. A second processor is allocated to an accelerator group, a task to be allocated is determined from the extracted tasks based on a priority value for each of tasks, and an execution cost of executing the determined task by the first processor is compared with an execution cost of executing the task by the second processor. The task is allocated to one of the general-purpose processor group and the accelerator group that is judged to be lower as a result of the cost comparison.

Owner:WASEDA UNIV

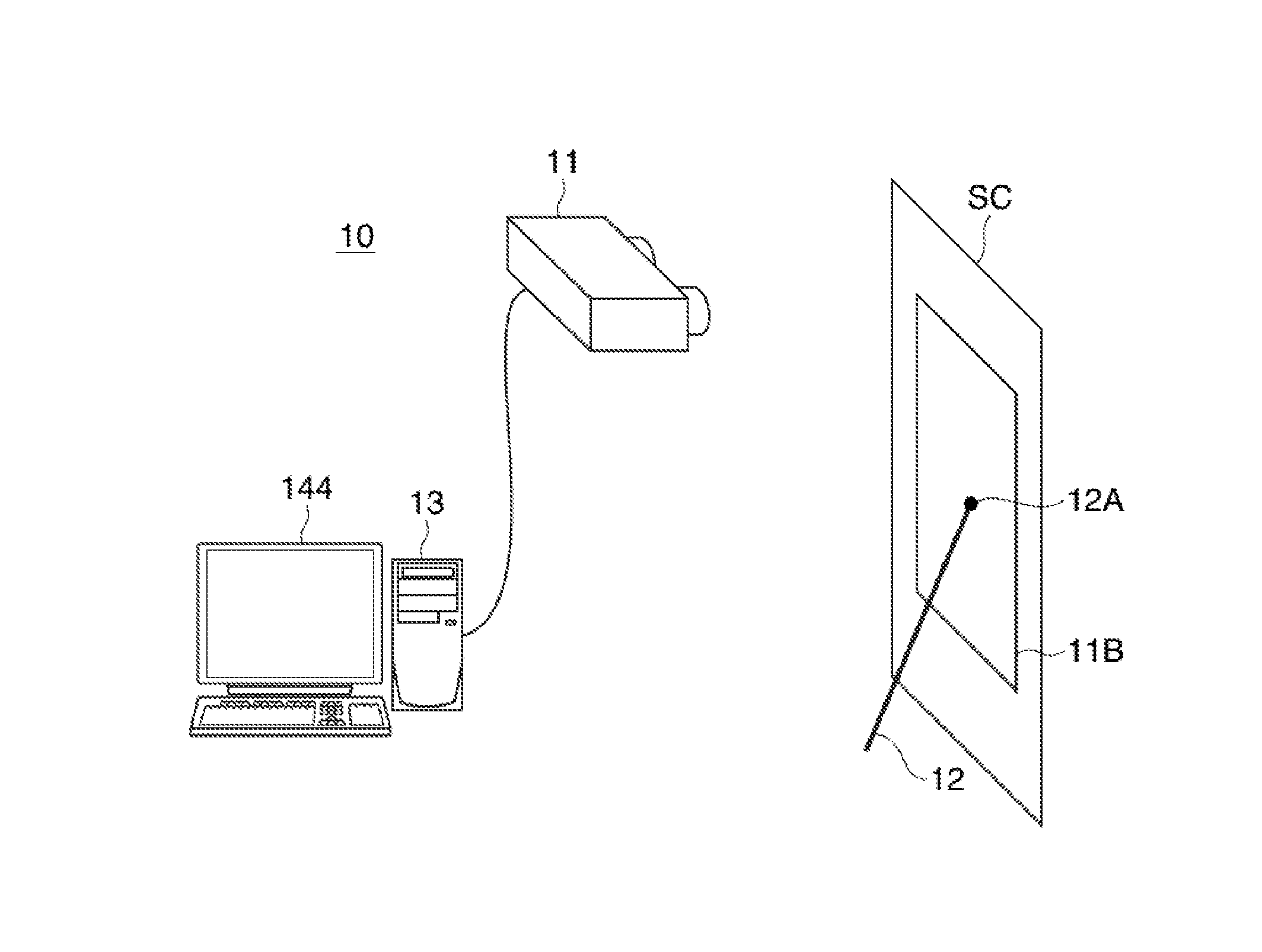

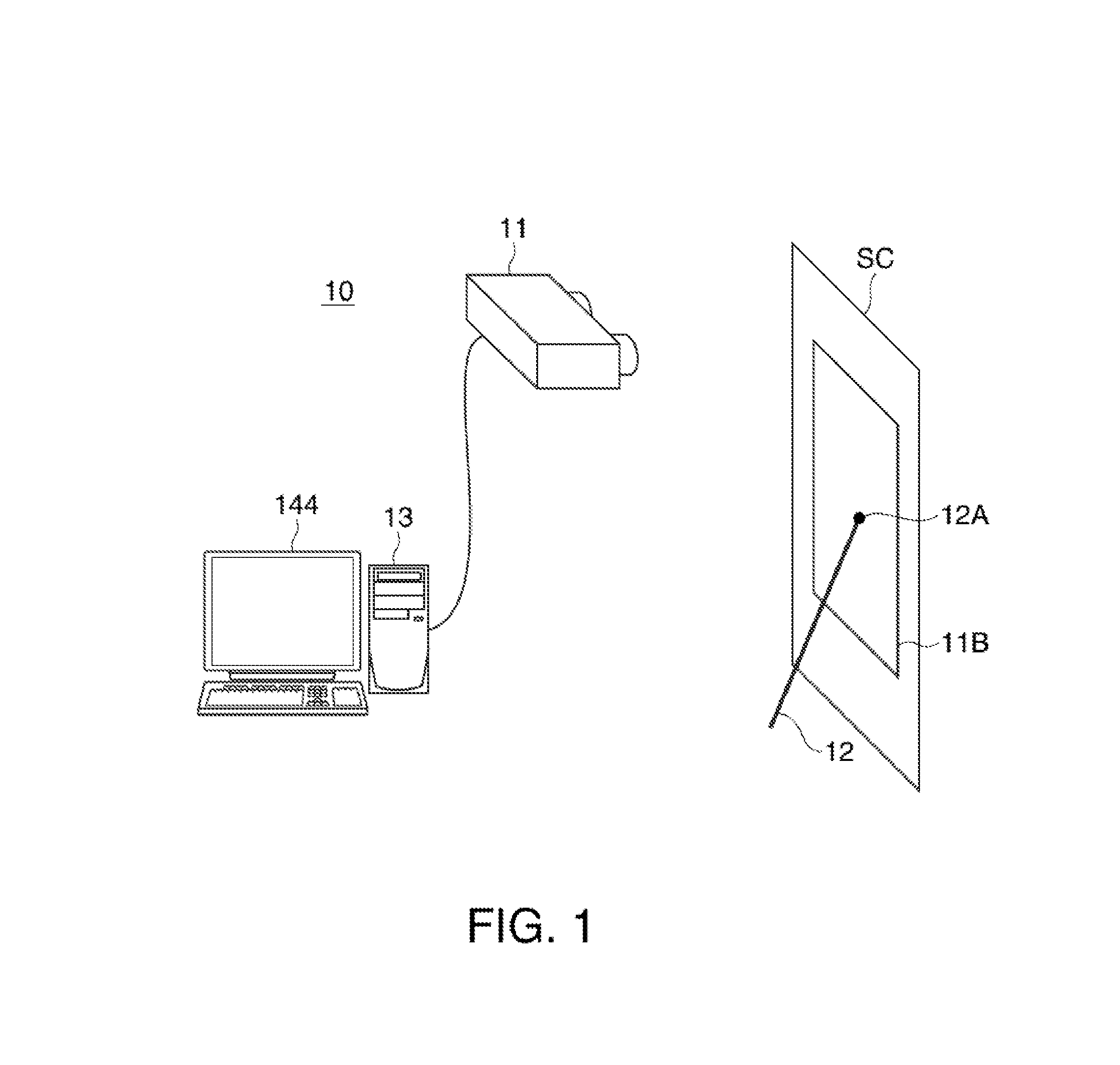

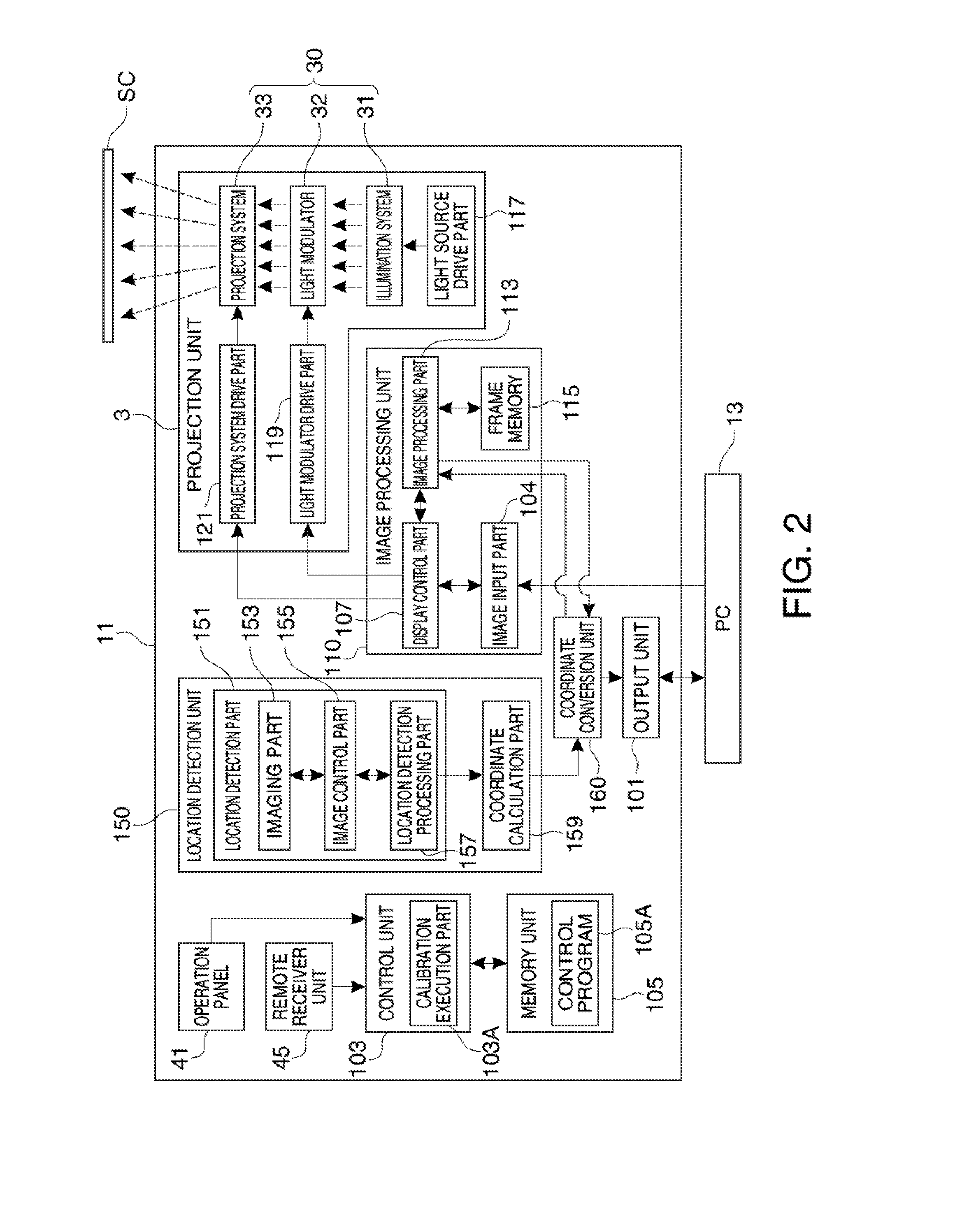

Display device, projector, and display method

ActiveUS20130069870A1Reduce the frequency of executionReduce frequencyCathode-ray tube indicatorsInput/output processes for data processingLocation detectionComputer graphics (images)

A display device include a display unit that displays a display image on a display surface based on image data, a location detection unit that detects a pointed location with respect to the display image on the display surface, a coordinate calculation unit that calculates first coordinates as coordinates of the pointed location in a displayable area within the display surface, a coordinate conversion unit that converts the first coordinates calculated by the coordinate calculation unit into second coordinates as coordinates in the image data, and an output unit that outputs the second coordinates obtained by the coordinate conversion unit.

Owner:SEIKO EPSON CORP

Method for using query templates in directory caches

InactiveUS20050203897A1Improve efficiencyReduce overheadDigital data information retrievalData processing applicationsParallel computingClient-side

The present invention discloses the use of generalized queries, referred to as query templates, obtained by generalizing individual user queries, as the semantic basis for low overhead, high benefit directory caches for handling declarative queries. Caching effectiveness can be improved by maintaining a set of generalizations of queries and admitting such generalizations into the cache when their estimated benefits are sufficiently high. In a preferred embodiment of the invention, the admission of query templates into the cache can be done in what is referred to by the inventors as a “revolutionary” fashion—followed by stable periods where cache admission and replacement can be done incrementally in an evolutionary fashion. The present invention can lead to considerably higher hit rates and lower server-side execution and communication costs than conventional caching of directory queries—while keeping the clientside computational overheads comparable to query caching.

Owner:AT & T INTPROP II LP

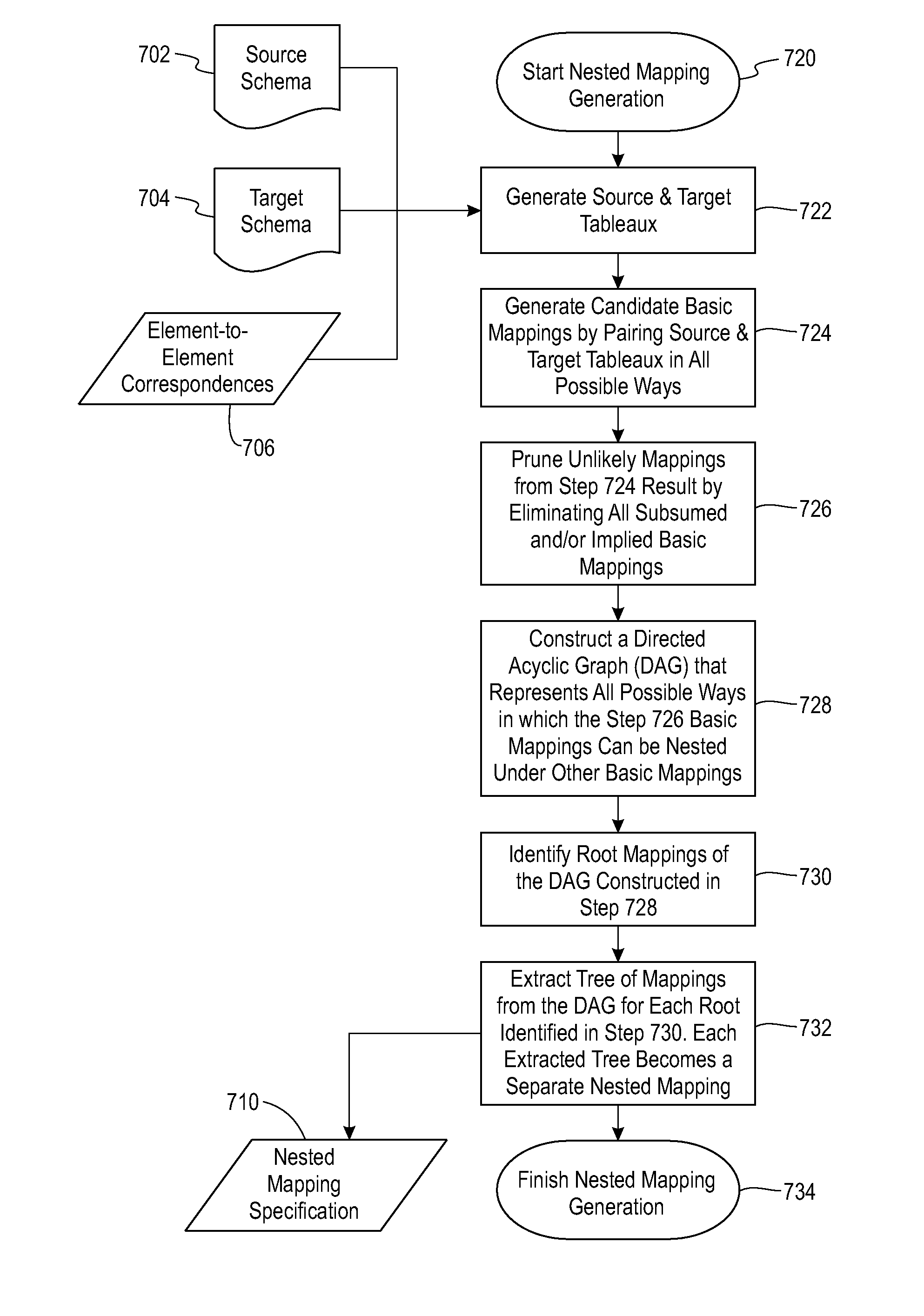

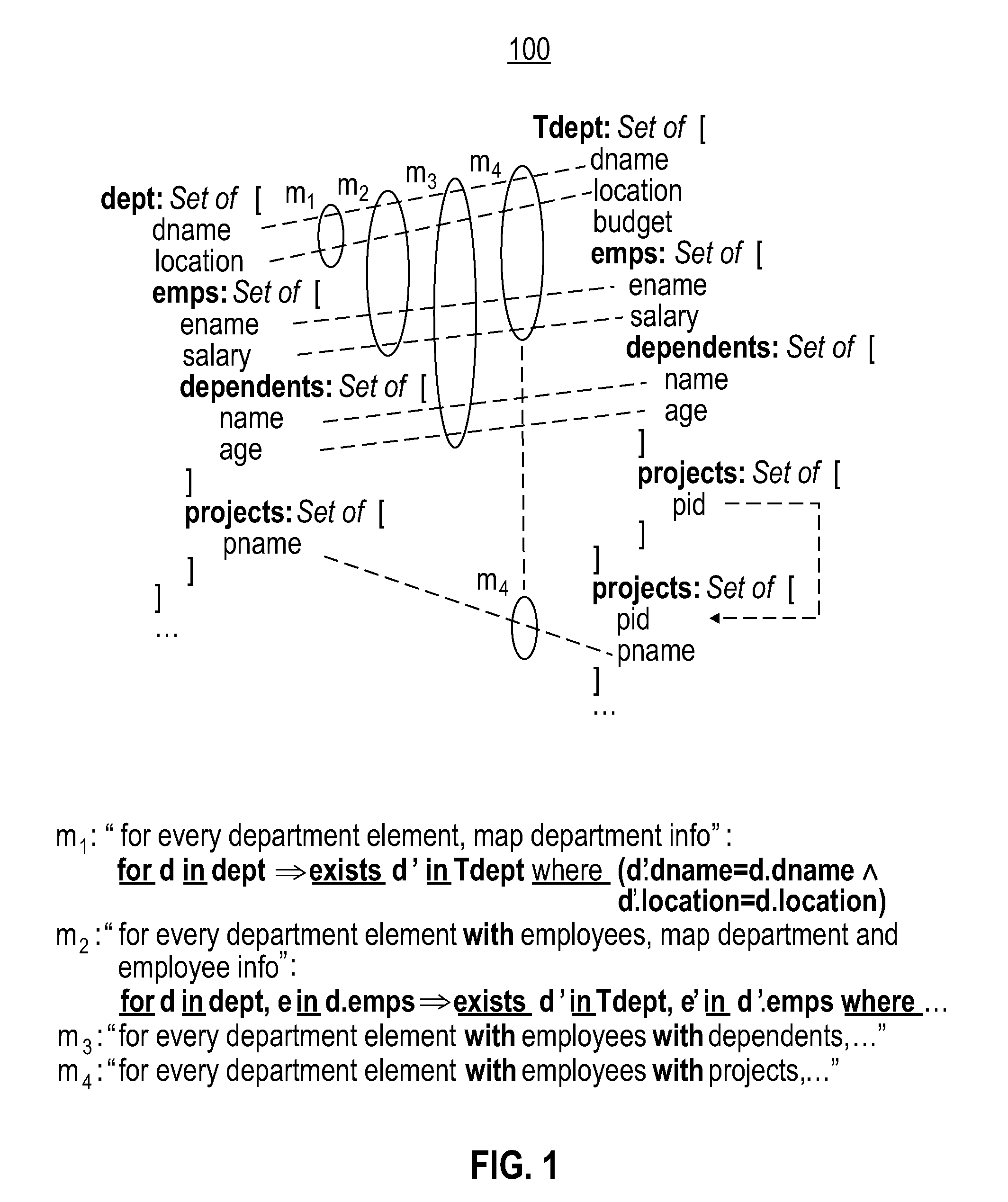

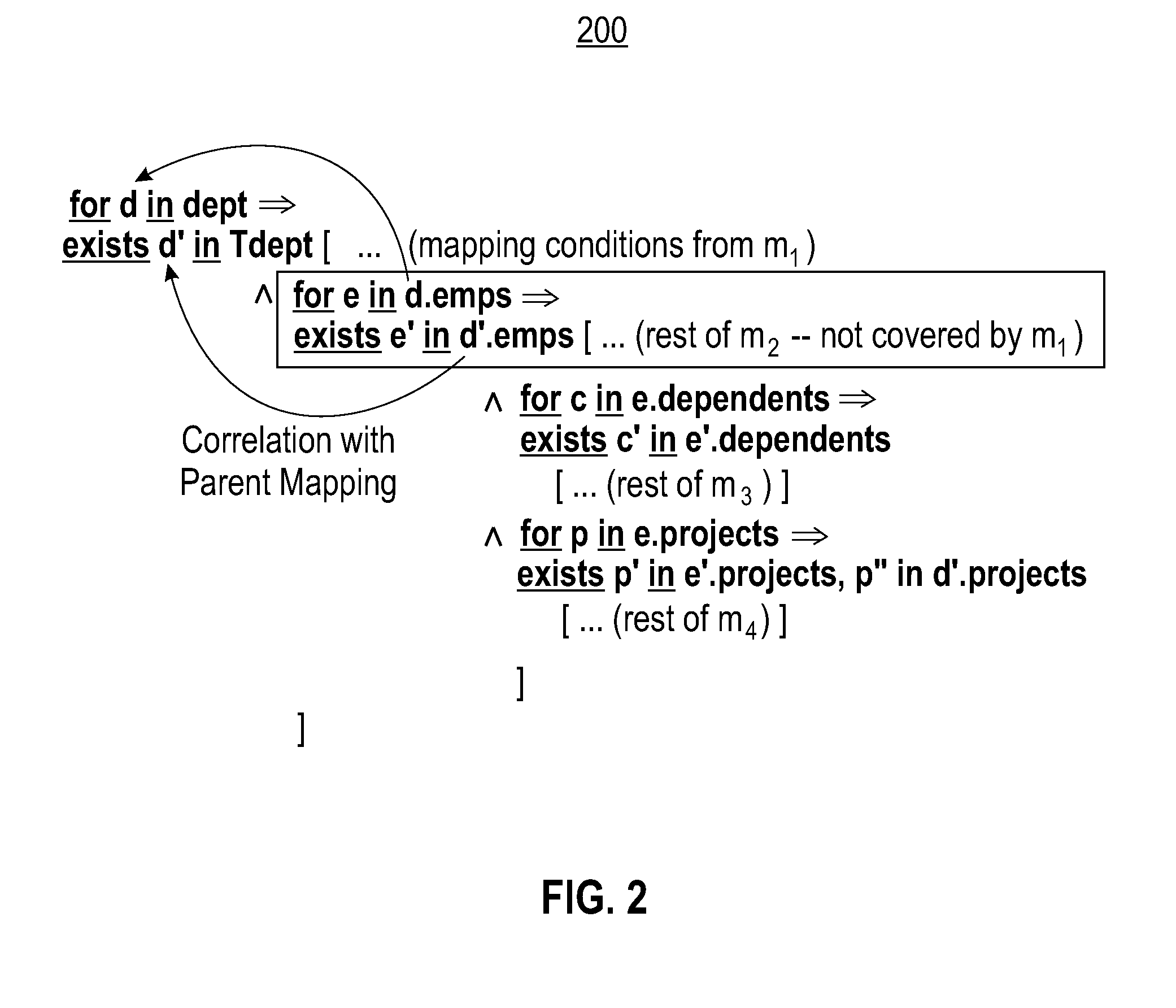

Method and sytsem for generating nested mapping specifications in a schema mapping formalism and for generating transformation queries based thereon

InactiveUS20080243772A1Accurate specificationsQuality improvementSemantic analysisSpecial data processing applicationsSchema mappingTheoretical computer science

A method and system for generating nested mapping specifications and transformation queries based thereon. Basic mappings are generated based on source and target schemas and correspondences between elements of the schemas. A directed acyclic graph (DAG) is constructed whose edges represent ways in which each basic mapping is nestable under any of the other basic mappings. Any transitively implied edges are removed from the DAG. Root mappings of the DAG are identified. Trees of mappings are automatically extracted from the DAG, where each tree of mappings is rooted at a root mapping and expresses a nested mapping specification. A transformation query is generated from the nested mapping specification by generating a first query for transforming source data into flat views of the target and a second query for nesting flat view data according to the target format. Generating the first query includes applying default Skolemization to the specification.

Owner:FUXMAN ARIEL +5

Efficient method for the scheduling of work loads in a multi-core computing environment

InactiveUS20130061233A1Maximize useReduce executionResource allocationMemory systemsComputer resourcesMulti core computing

A computer in which a single queue is used to implement all of the scheduling functionalities of shared computer resources in a multi-core computing environment. The length of the queue is determined uniquely by the relationship between the number of available work units and the number of available processing cores. Each work unit in the queue is assigned an execution token. The value of the execution token represents an amount of computing resources allocated for the work unit. Work units having non-zero execution tokens are processed using the computing resources allocate to each one of them. When a running work unit is finished, suspended or blocked, the value of the execution token of at least one other work unit in the queue is adjusted based on the amount of computing resources released by the running work unit.

Owner:EXLUDUS

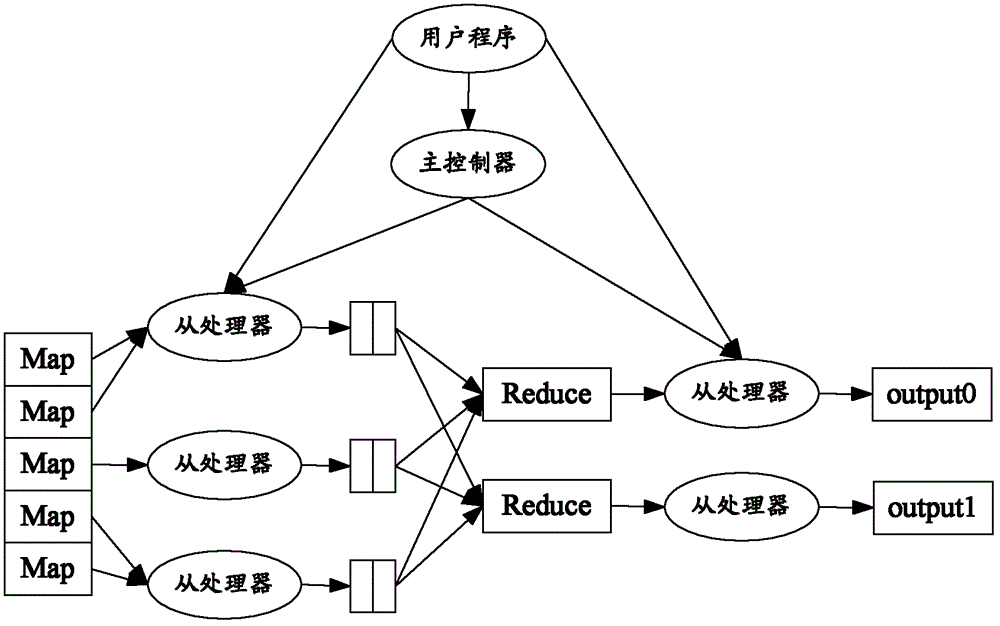

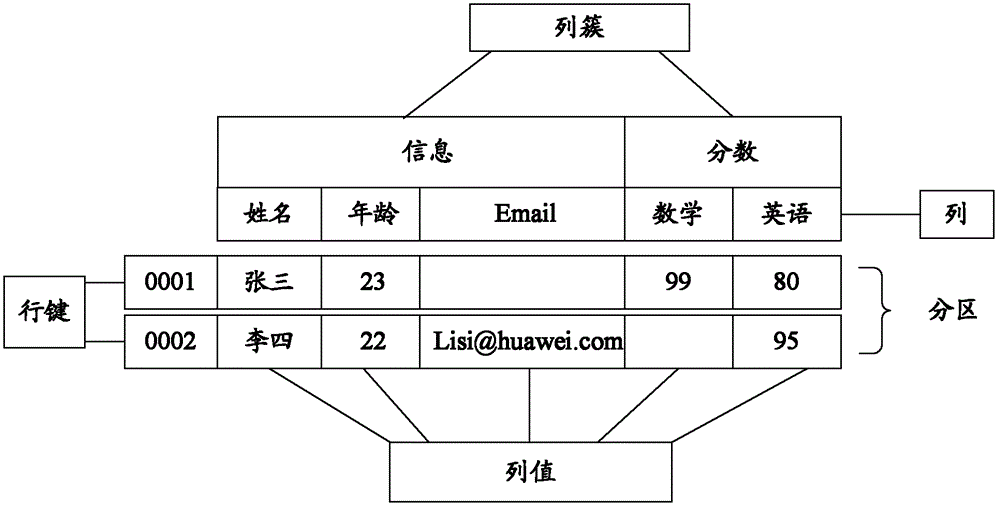

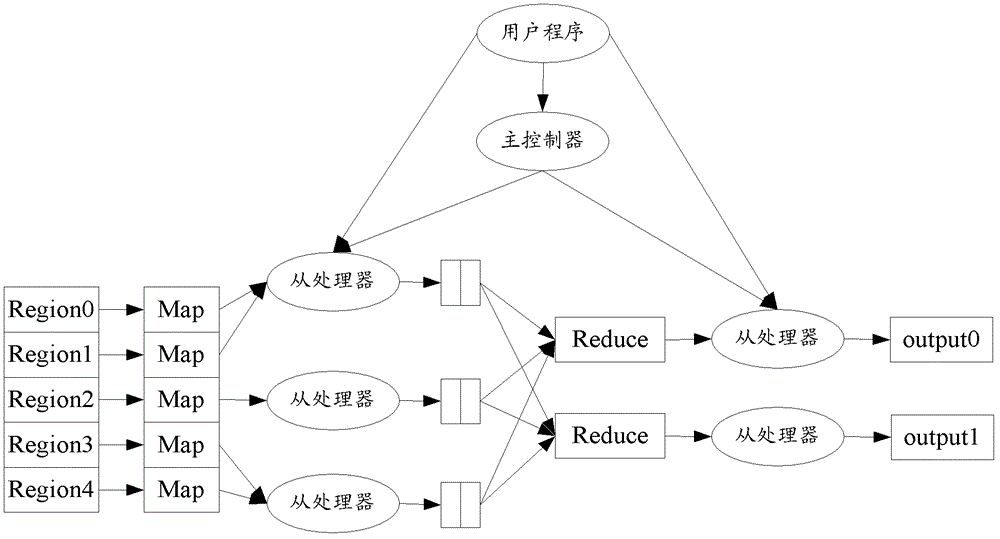

Method and apparatus for optimizing data access, method and apparatus for optimizing data storage

ActiveCN102725753AImprove processing efficiencyReduce readMemory adressing/allocation/relocationSpecial data processing applicationsData accessData entry

Provided are a method and an apparatus for optimizing data access, and a method and an apparatus for optimizing data storage. The method for optimizing data access comprises that: a host controller receives a request that a user accesses a data table in HBASE (Hadoop Database), wherein the request carries information of data input ranges, and the data input ranges comprise a plurality of data input ranges; input partitioning information is determined according to partitioning information of the data table and the data input range information; the number of Map tasks is determined on the basis of the input partitioning information; data in the data table, which is read from a processor, is distributed according to the number of the Map tasks; and the data read from the processor is returned to the user.

Owner:HUAWEI CLOUD COMPUTING TECH CO LTD

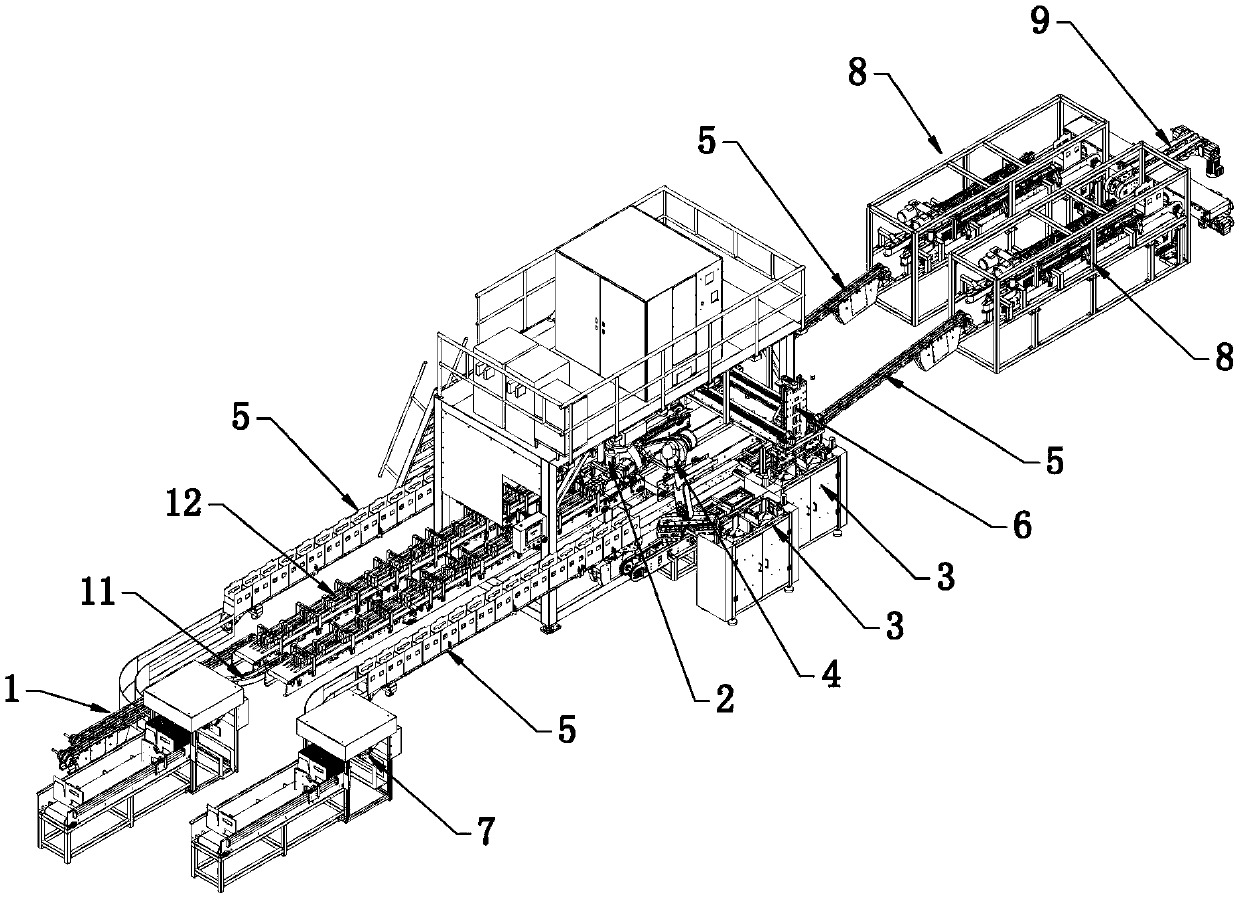

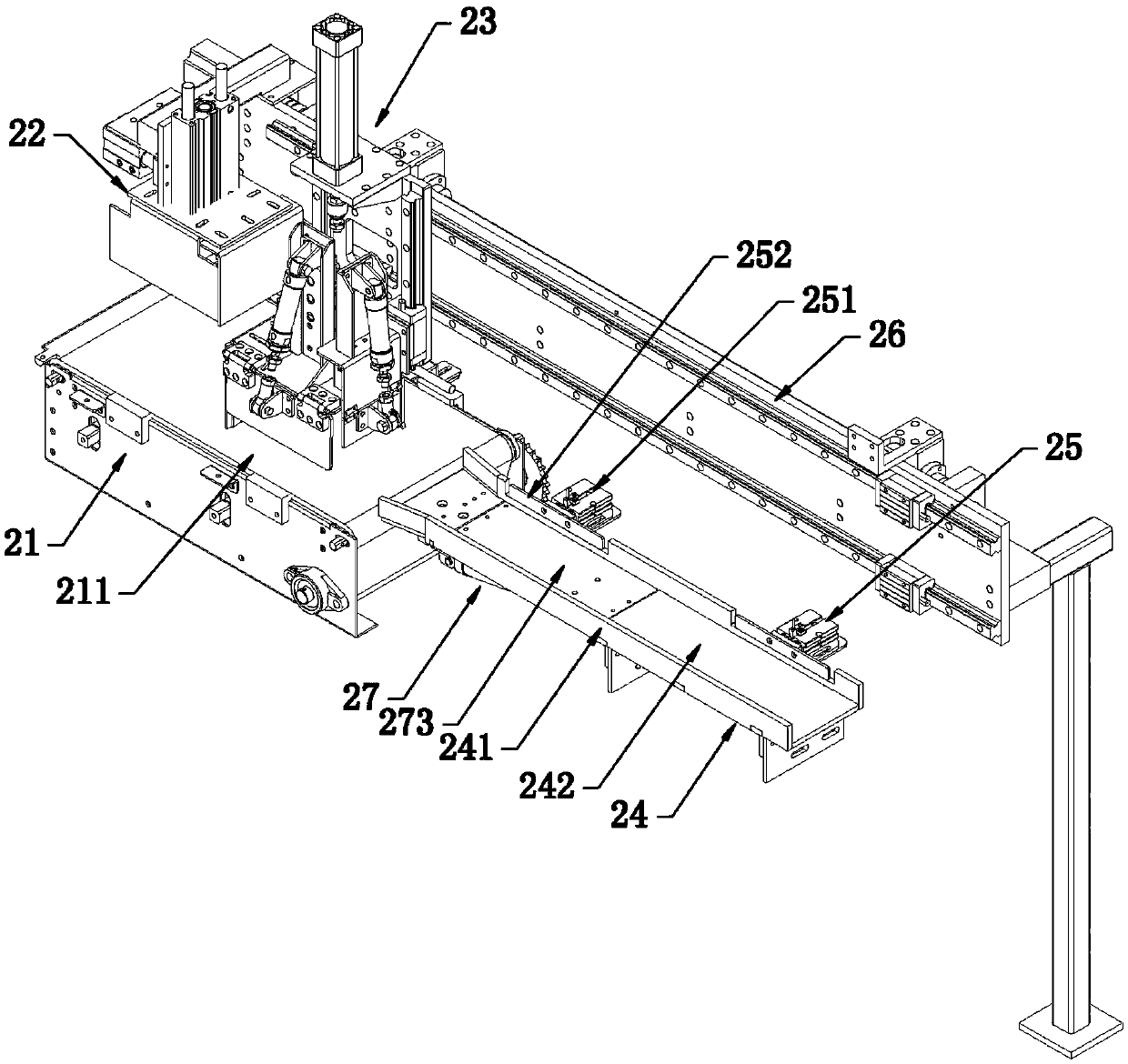

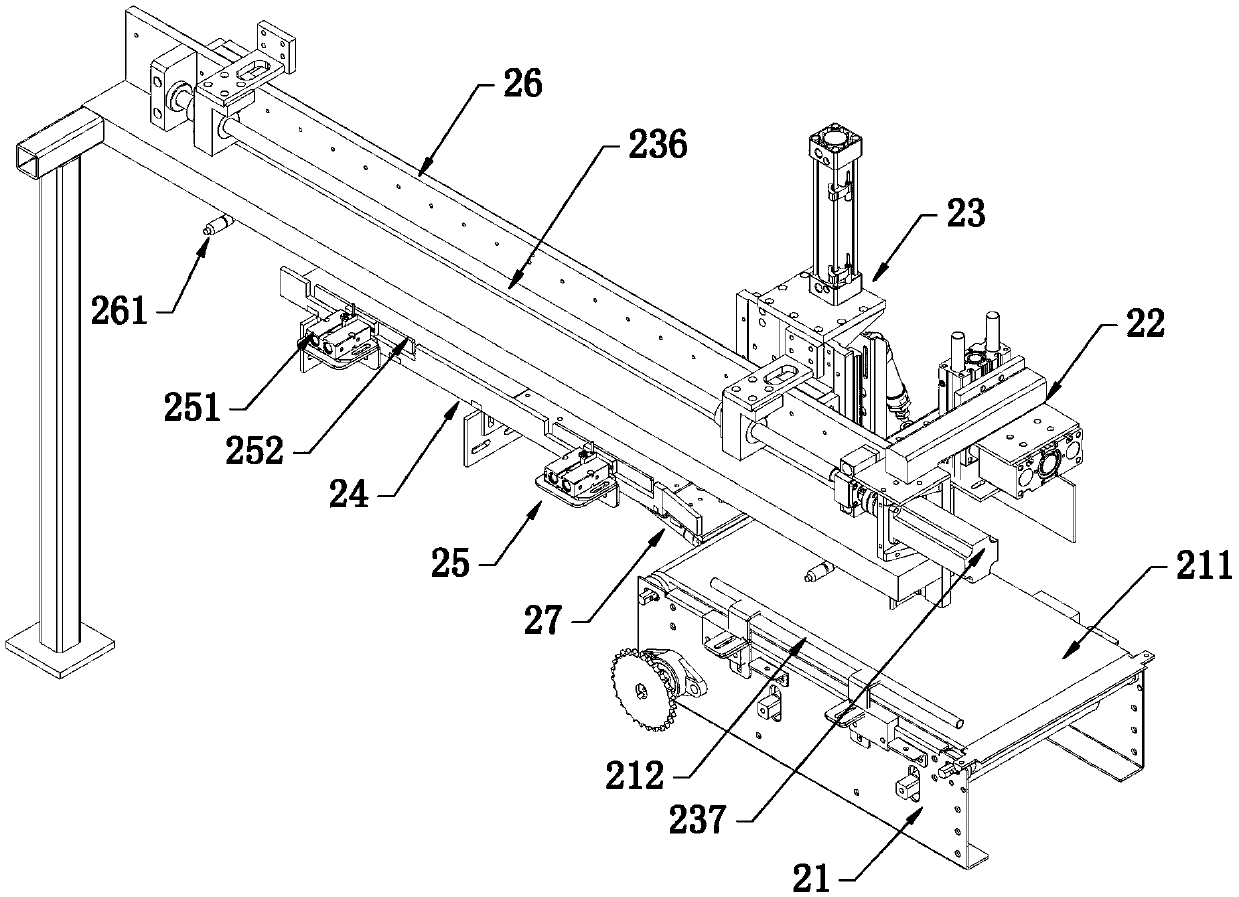

Automatic boxing system for second generation of tetra brik milk bag gift box

ActiveCN105501526AImprove the operating environmentImprove stabilityConveyorsPackagingEngineeringMechanical engineering

The invention discloses an automatic boxing system for a second generation of tetra brik milk bag gift box. The automatic boxing system comprises a separation mechanism (1), wherein the separation mechanism (1) comprises a primary separation mechanism (11) and two secondary separation mechanisms (12); an integration device (2) is correspondingly arranged at the tail end of each secondary separation mechanism (12), and two groups of partition board feeding mechanisms (3) and a milk box conveying line (5) which are corresponding are arranged beside each integration device (2) successively, the two groups of partition board feeding mechanisms (3) are respectively sleeved with lower partition boards, and a milk bag unit inbox mechanism (4) corresponds to an upper partition board straight-line module (6). A milk box sleeved with the partition board is conveyed to a corresponding lid buckling and box sealing machine (8) for lid buckling and box sealing through the milk box conveying line (5), and a two-in-one conveying mechanism (9) on the rear side of the lid buckling and box sealing machine (8) continues to convey the milk box. The automatic boxing system carries out automatic boxing in a two-in-one way, therefore reducing the speed, reducing links and increasing the output.

Owner:苏州澳昆智能机器人技术有限公司

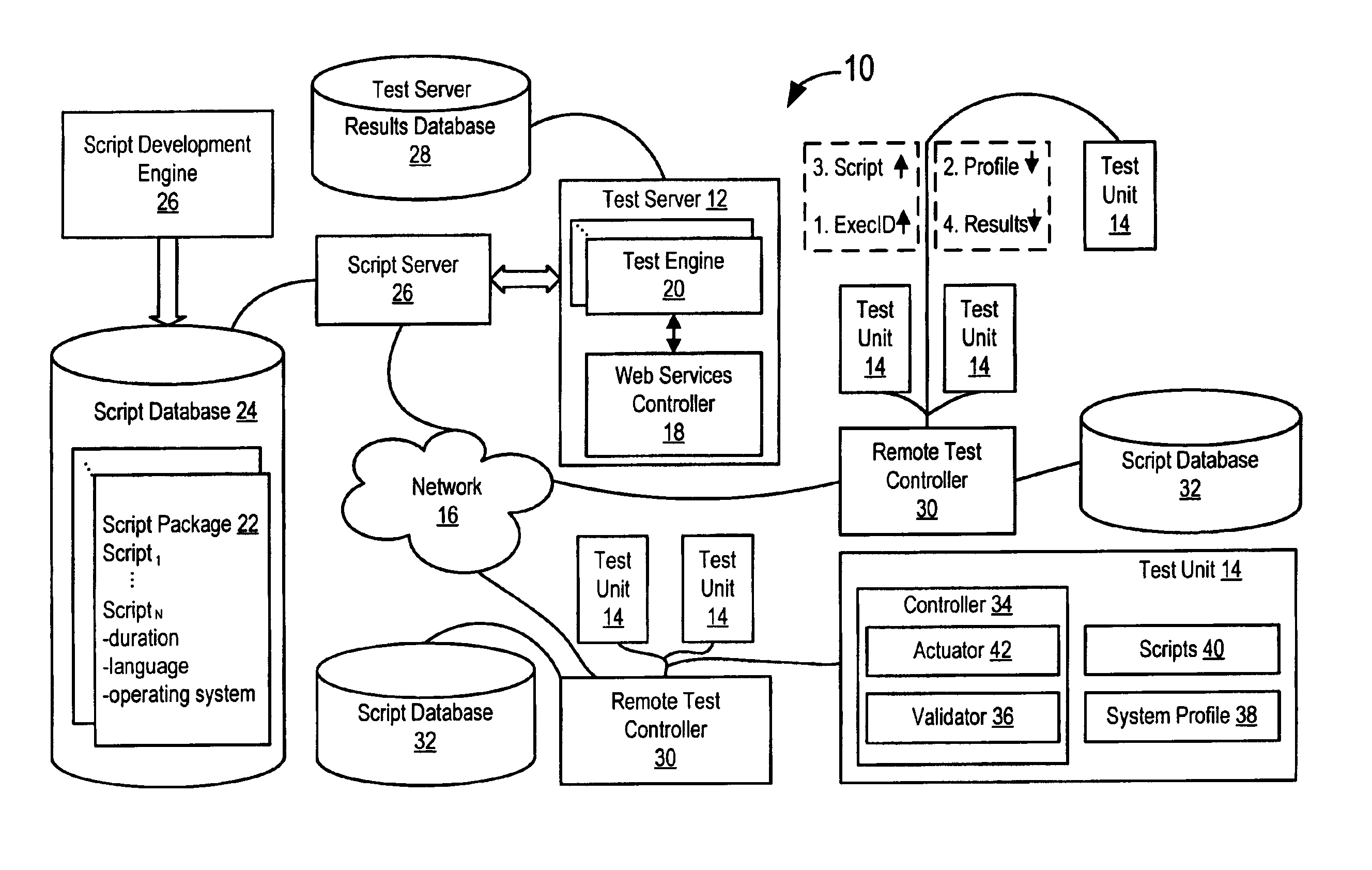

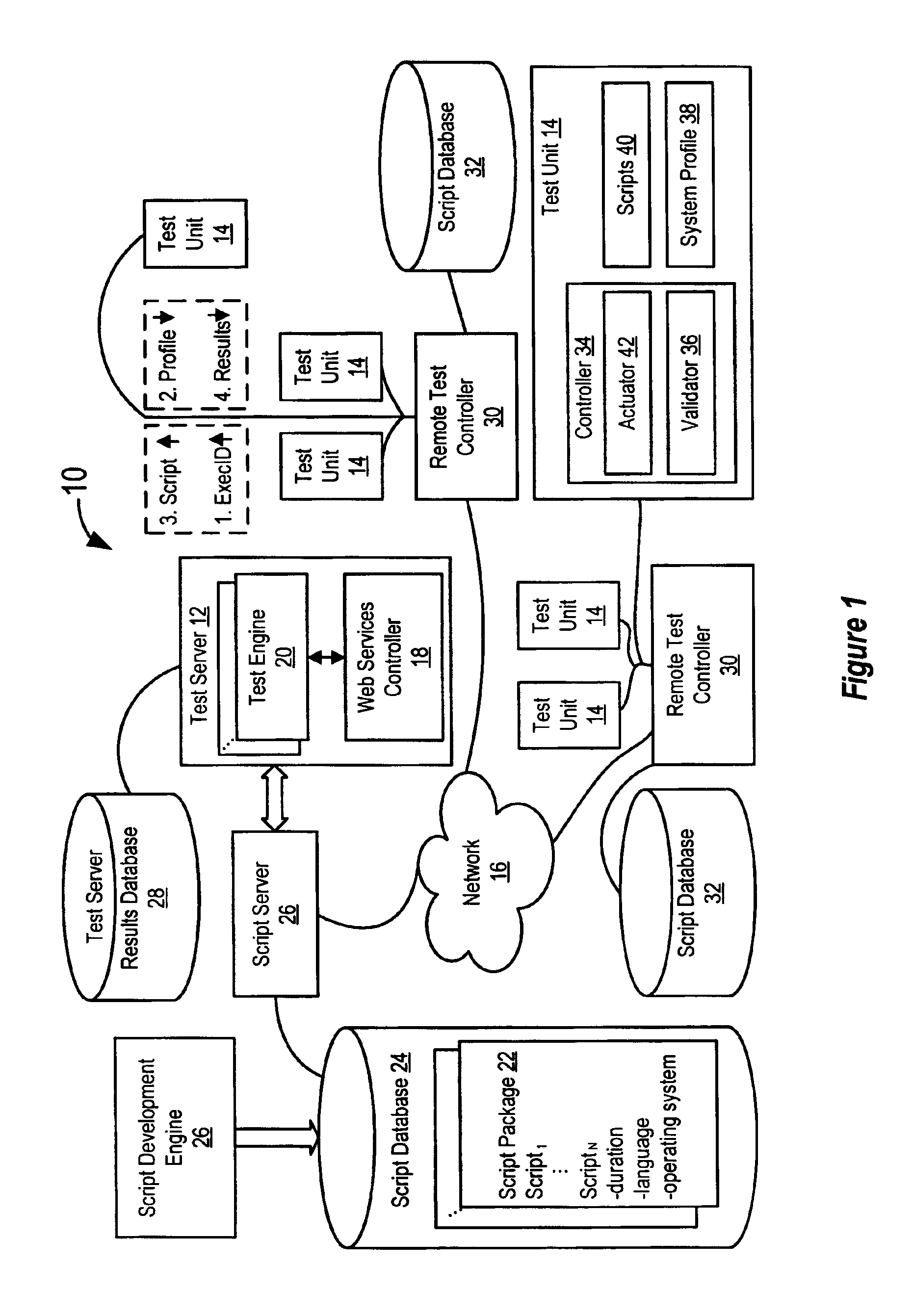

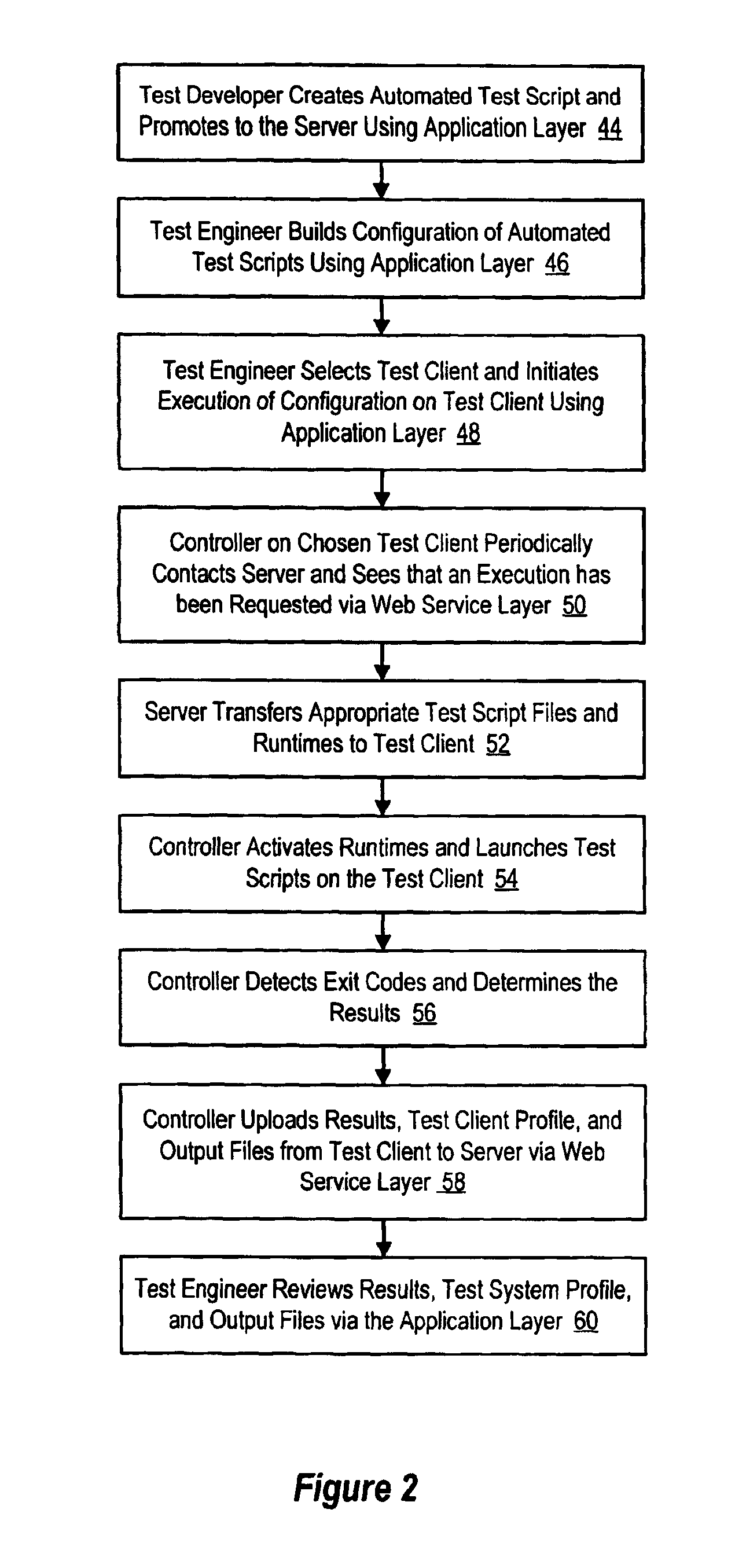

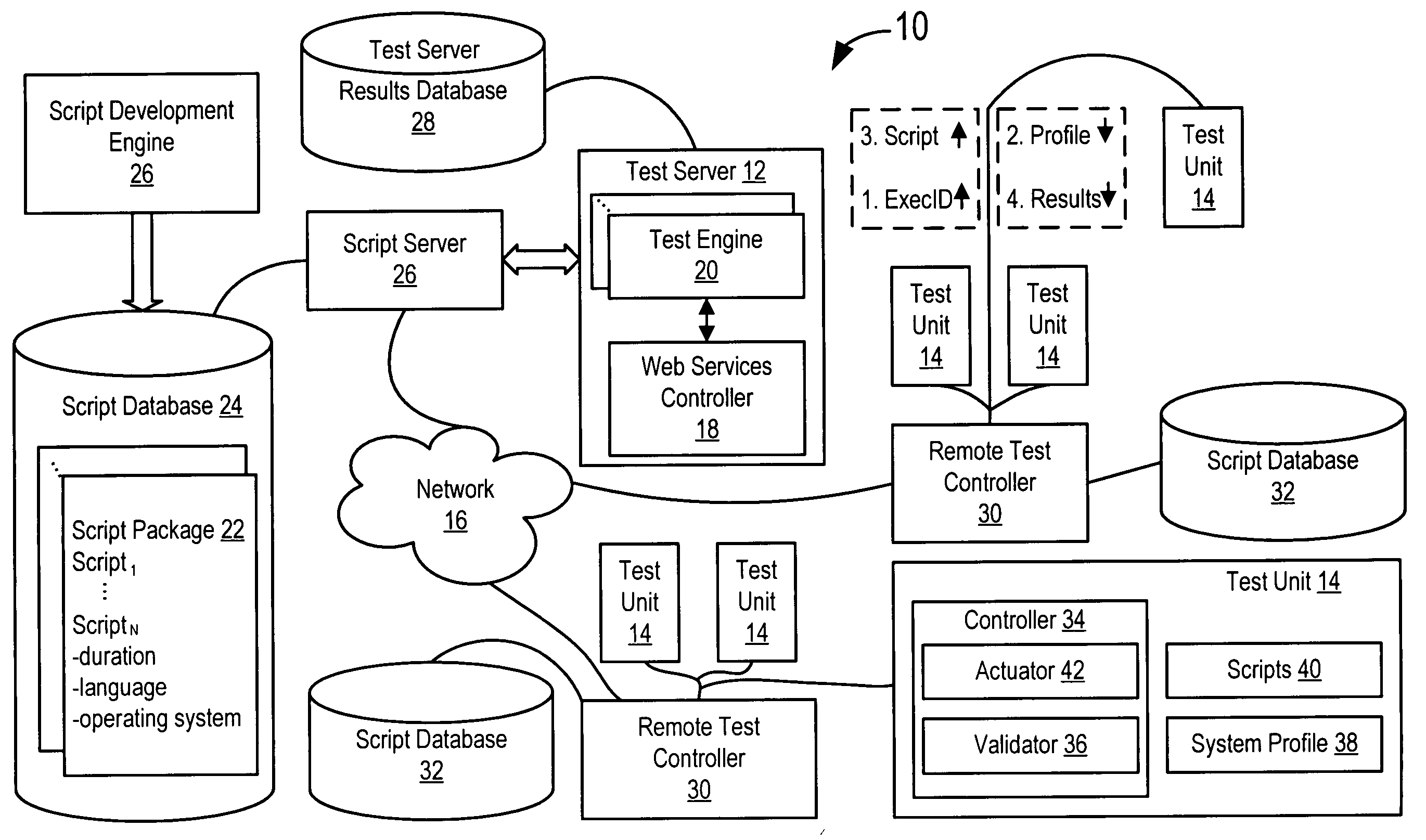

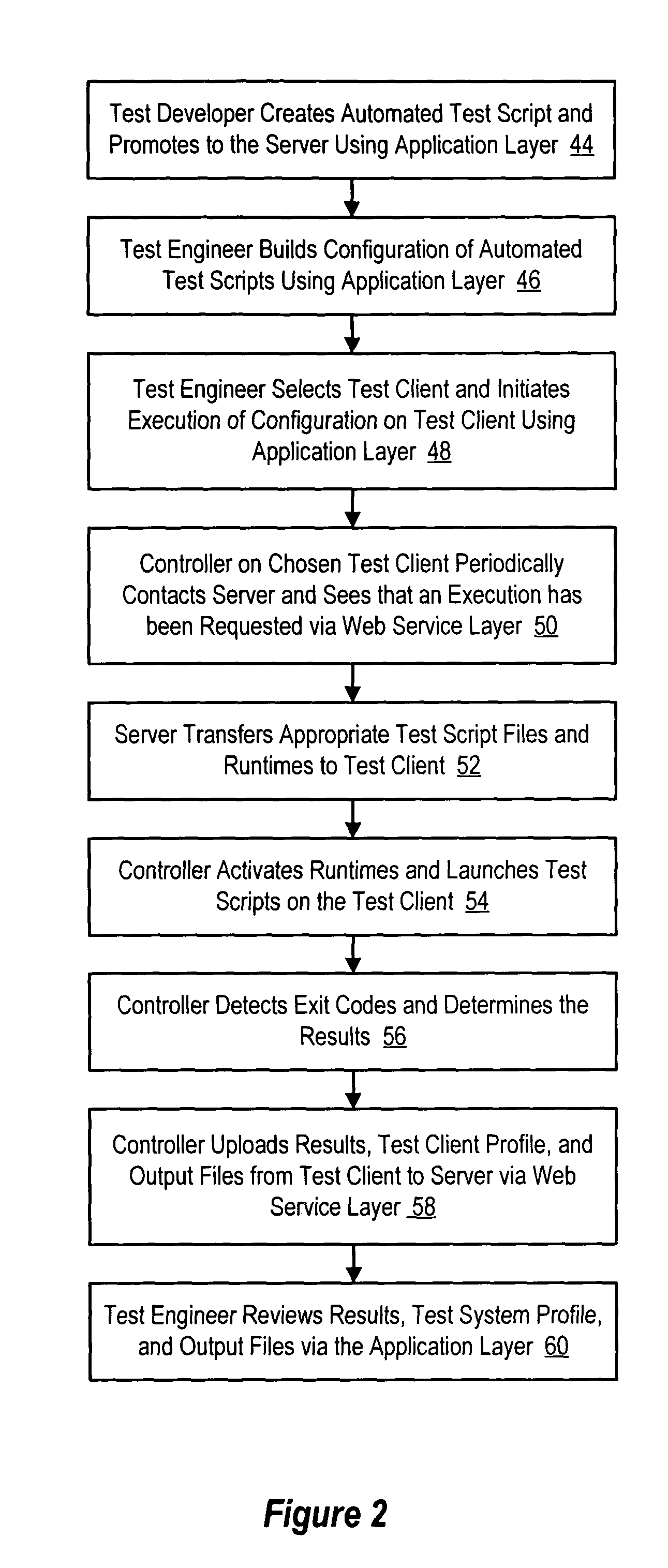

Method and system for information handling system automated and distributed test

InactiveUS6882951B2Easy to testQuick testError detection/correctionStructural/machines measurementAbstraction layerRemote control

A system and method for testing information handling systems distributes test units across a network to communicate with a test server. The test server communicates test executables and runtimes to the test units according to scripts of a script package, test engines associated with the test server and a test unit profile. To load and execute an executable and runtime on a test unit, a test controller associated with the test server sends an execution identifier to a test unit process abstraction layer controller. A validator of the test unit controller responds to the execution identifier with test unit configuration profile information that the test server controller applies to one or more test engines and a script to generate an executable and runtime that is sent to the test unit controller. An activator of the test unit process abstraction layer controller activates the executable scripts through the runtime and tracks test results. The test unit process abstraction layer controller provides improved stability during test unit failures and remote control of runtime functions, such as dynamic runtime duration, pause and abort functionality.

Owner:DELL PROD LP

Method and system for information handling system automated and distributed test

InactiveUS20050021274A1Improves test unit stabilityQuick testError detection/correctionStructural/machines measurementAbstraction layerInformation processing

A system and method for testing information handling systems distributes test units across a network to communicate with a test server. The test server communicates test executables and runtimes to the test units according to scripts of a script package, test engines associated with the test server and a test unit profile. To load and execute an executable and runtime on a test unit, a test controller associated with the test server sends an execution identifier to a test unit process abstraction layer controller. A validator of the test unit controller responds to the execution identifier with test unit configuration profile information that the test server controller applies to one or more test engines and a script to generate an executable and runtime that is sent to the test unit controller. An activator of the test unit process abstraction layer controller activates the executable scripts through the runtime and tracks test results. The test unit process abstraction layer controller provides improved stability during test unit failures and remote control of runtime functions, such as dynamic runtime duration, pause and abort functionality.

Owner:DELL PROD LP

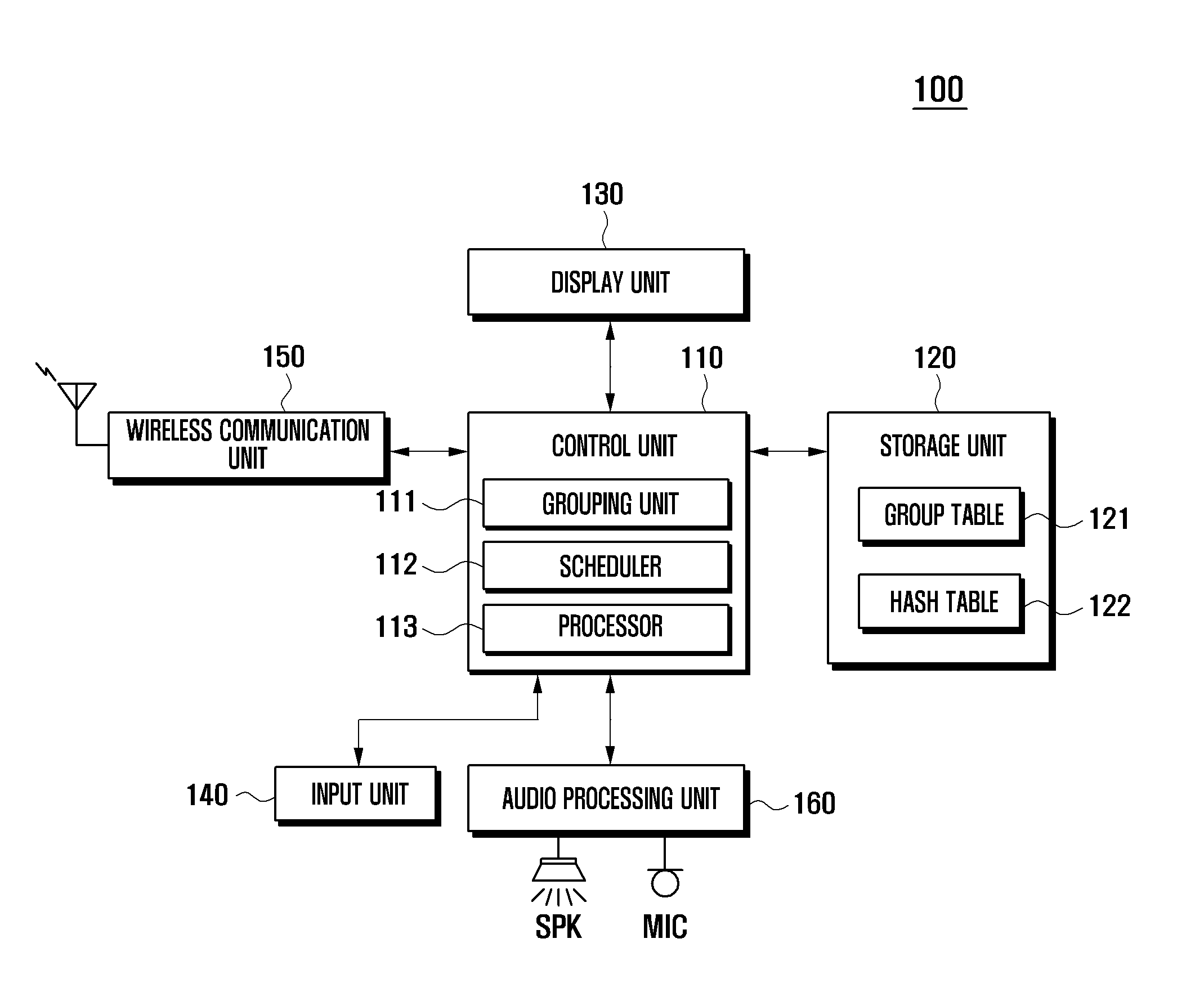

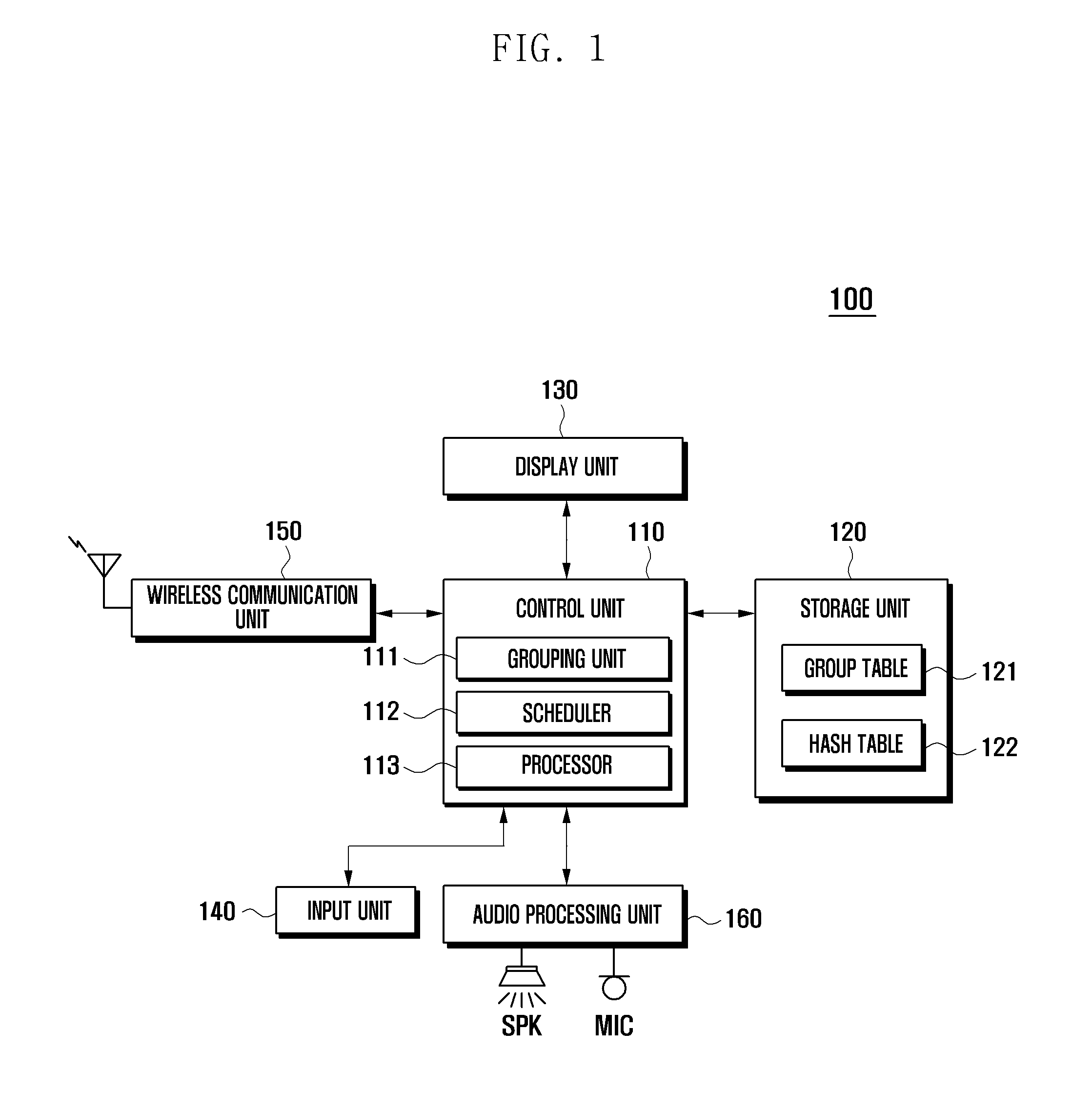

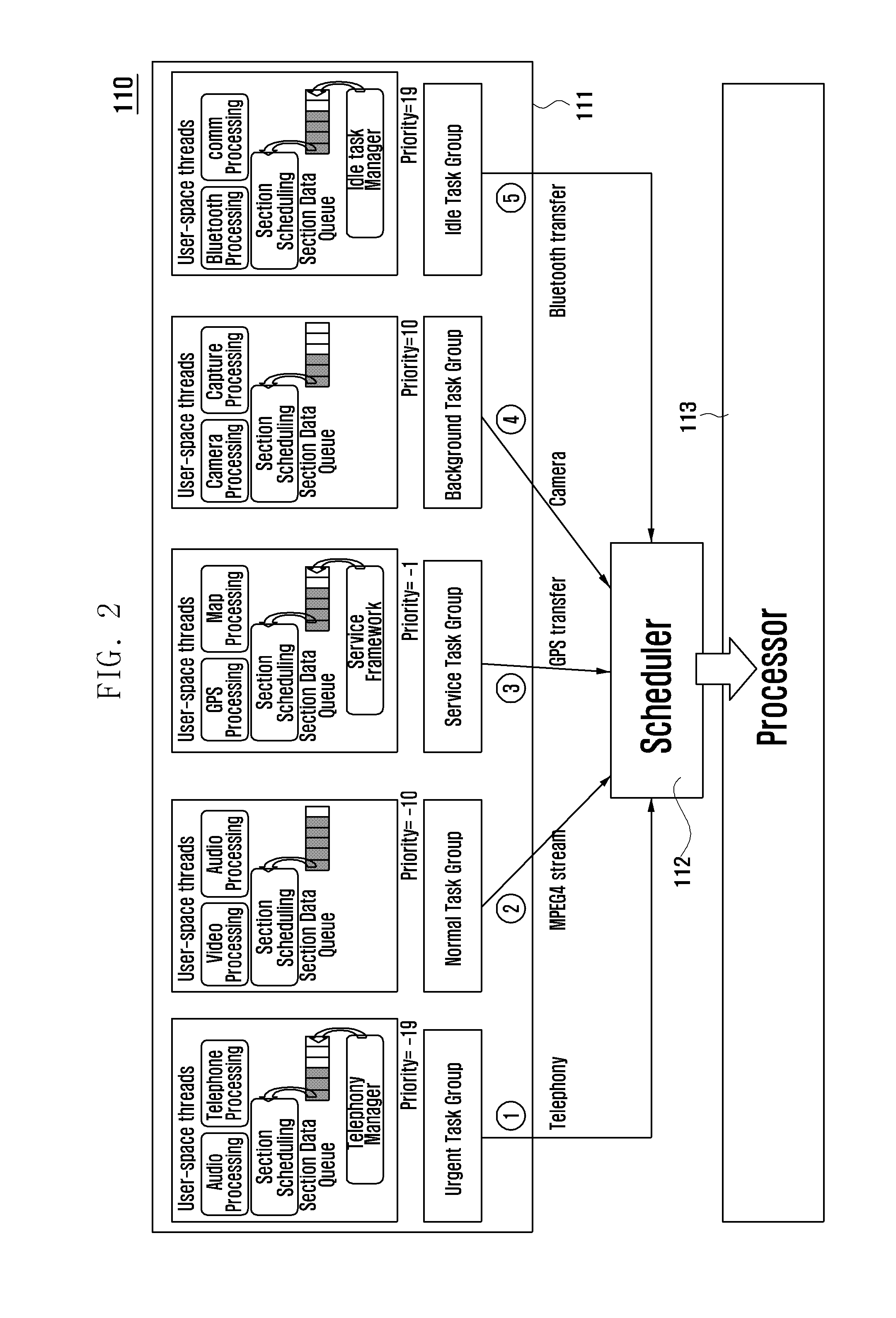

Method and apparatus for improving application processing speed in digital device

ActiveUS20130042250A1Improve processing speedReduce executionProgram initiation/switchingSpecific program execution arrangementsApplication softwareOperating system

A method and apparatus for improving application processing speed in a digital device which improve application processing speed for a digital device running in an embedded environment where processor performance may not be sufficiently powerful by detecting an execution request for an application, identifying a group to which the requested application belongs, among preset groups with different priorities and scheduling the requested application according to the priority assigned to the identified group, and executing the requested application based on the scheduling result.

Owner:SAMSUNG ELECTRONICS CO LTD

System and method for optimizing large database management systems using bloom filter

ActiveUS20180349364A1Shorten the timeReduce data volumeDatabase updatingOther databases indexingQuery optimizationRelational database management system

A large highly parallel database management system includes thousands of nodes storing huge volume of data. The database management system includes a query optimizer for optimizing data queries. The optimizer estimates the column cardinality of a set of rows based on estimated column cardinalities of disjoint subsets of the set of rows. For a particular column, the actual column cardinality of the set of rows is the sum of the actual column cardinalities of the two subsets of rows. The optimizer creates two respective Bloom filters from the two subsets, and then combines them to create a combined Bloom filter using logical OR operations. The actual column cardinality of the set of rows is estimated using a computation from the combined Bloom filter.

Owner:OCIENT INC

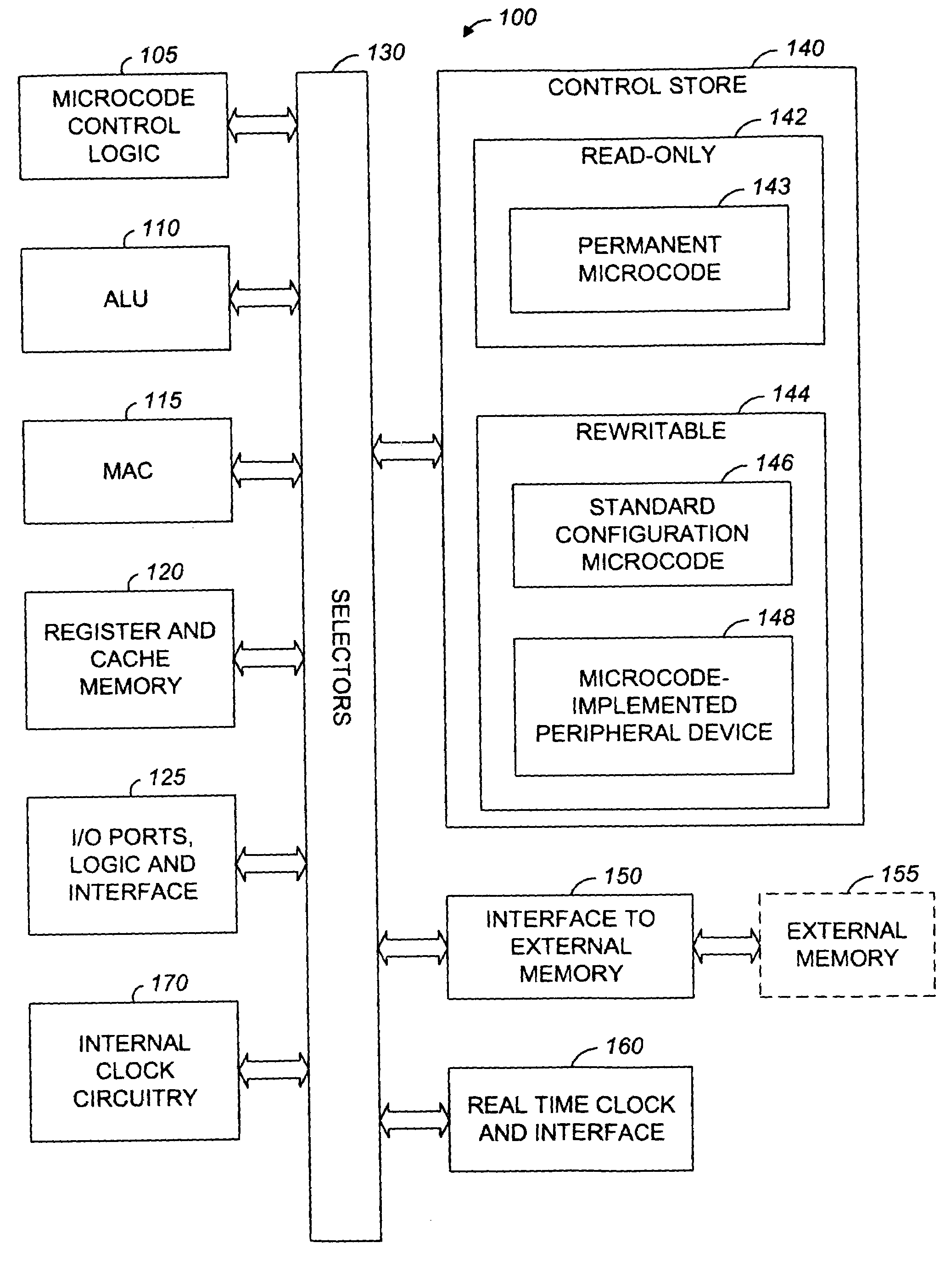

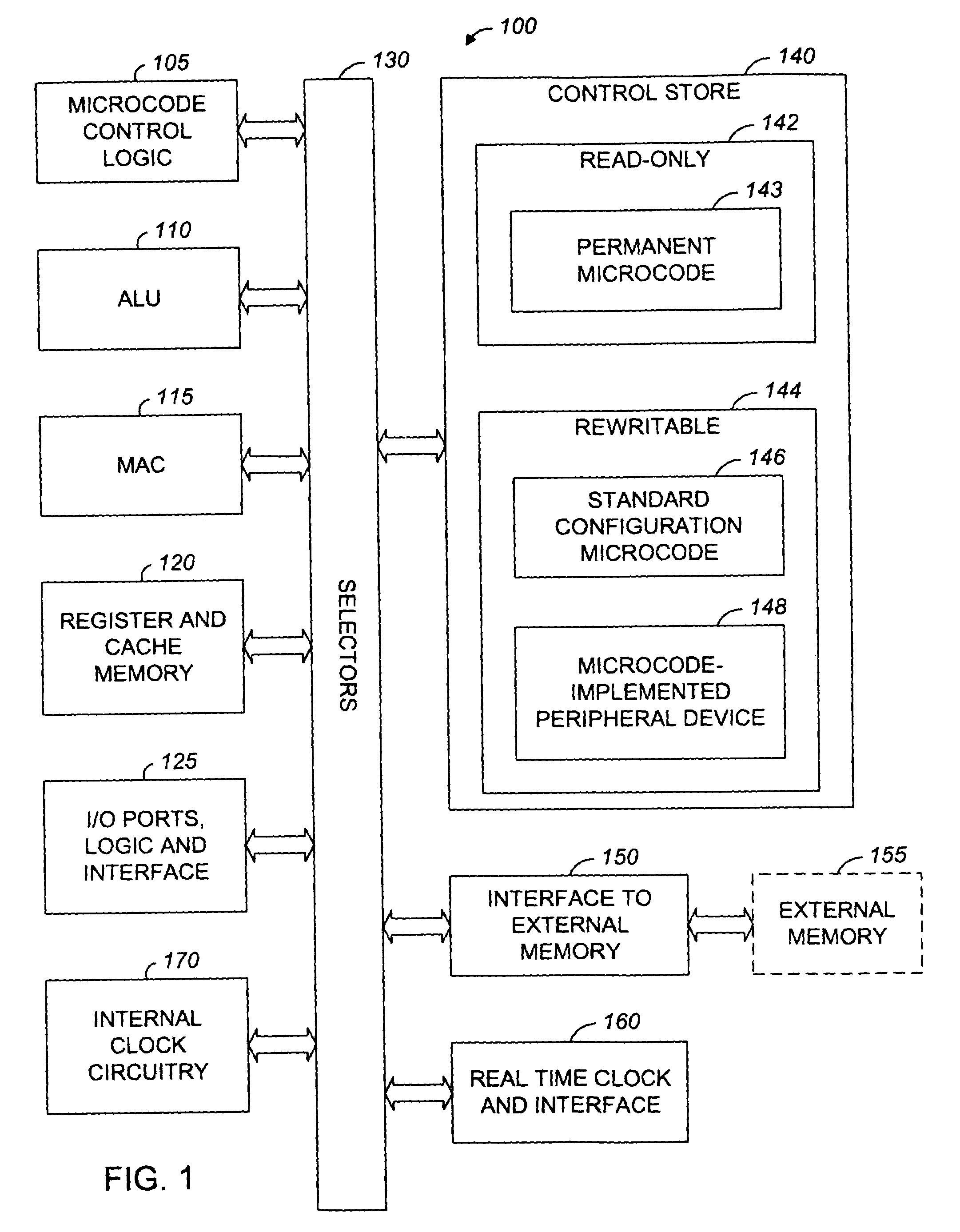

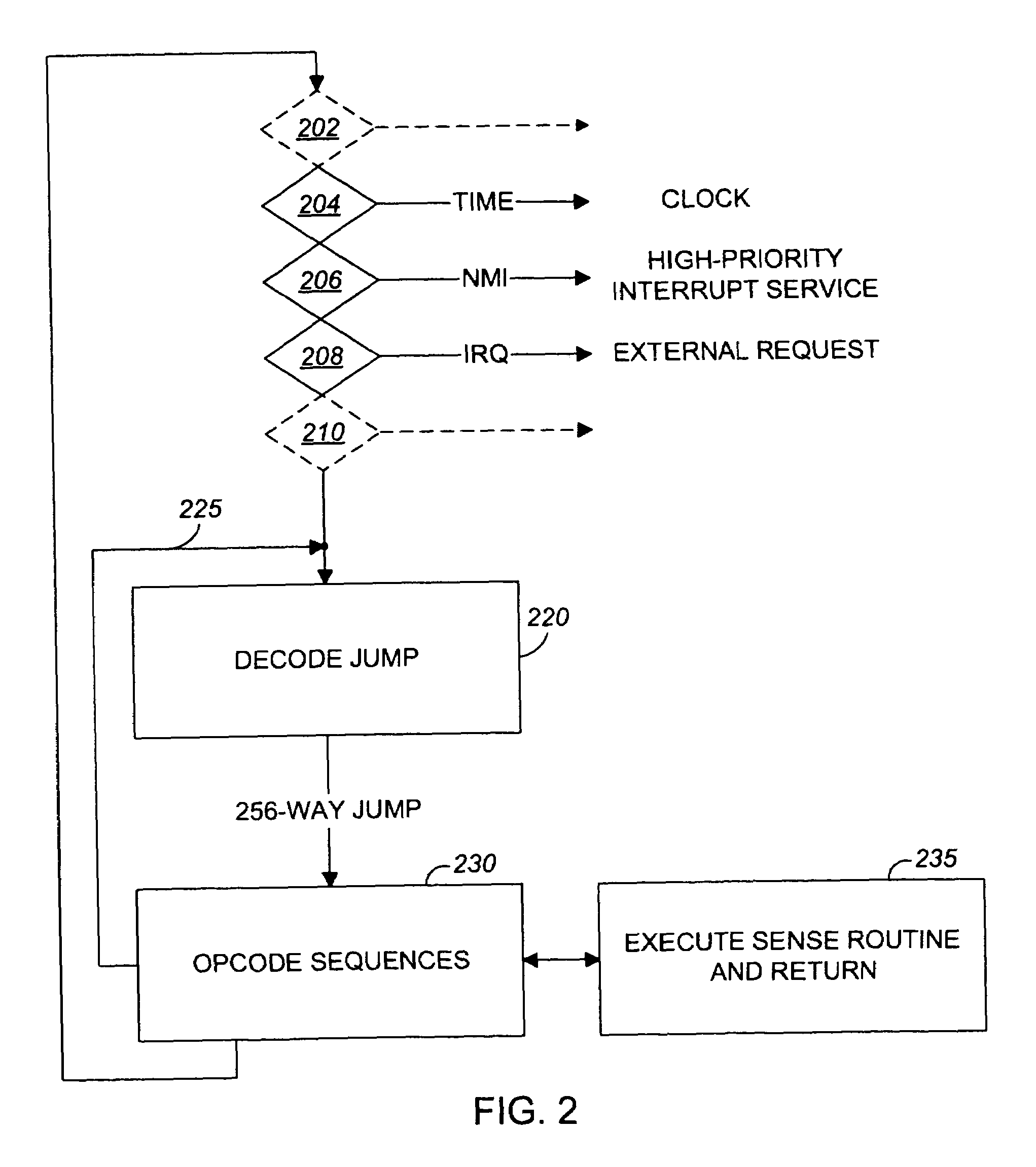

Microcontroller architecture supporting microcode-implemented peripheral devices

InactiveUS7103759B1Reduce demandLow costDigital computer detailsConcurrent instruction executionMicrocontrollerController architecture

Methods and apparatus for creating microcode-implemented peripheral devices for a microcontroller core formed in a monolithic integrated circuit. The microcontroller core has a control store for storing microcode instructions; execution circuitry operable to execute microcode instructions from the control store; and means for loading a suite of one or more microcode-device modules defining an optional peripheral device, the optional peripheral device being implemented by microcode instructions executed by the execution circuitry in accordance with the definition provided by the microcode-device modules.

Owner:CONEMTECH +1

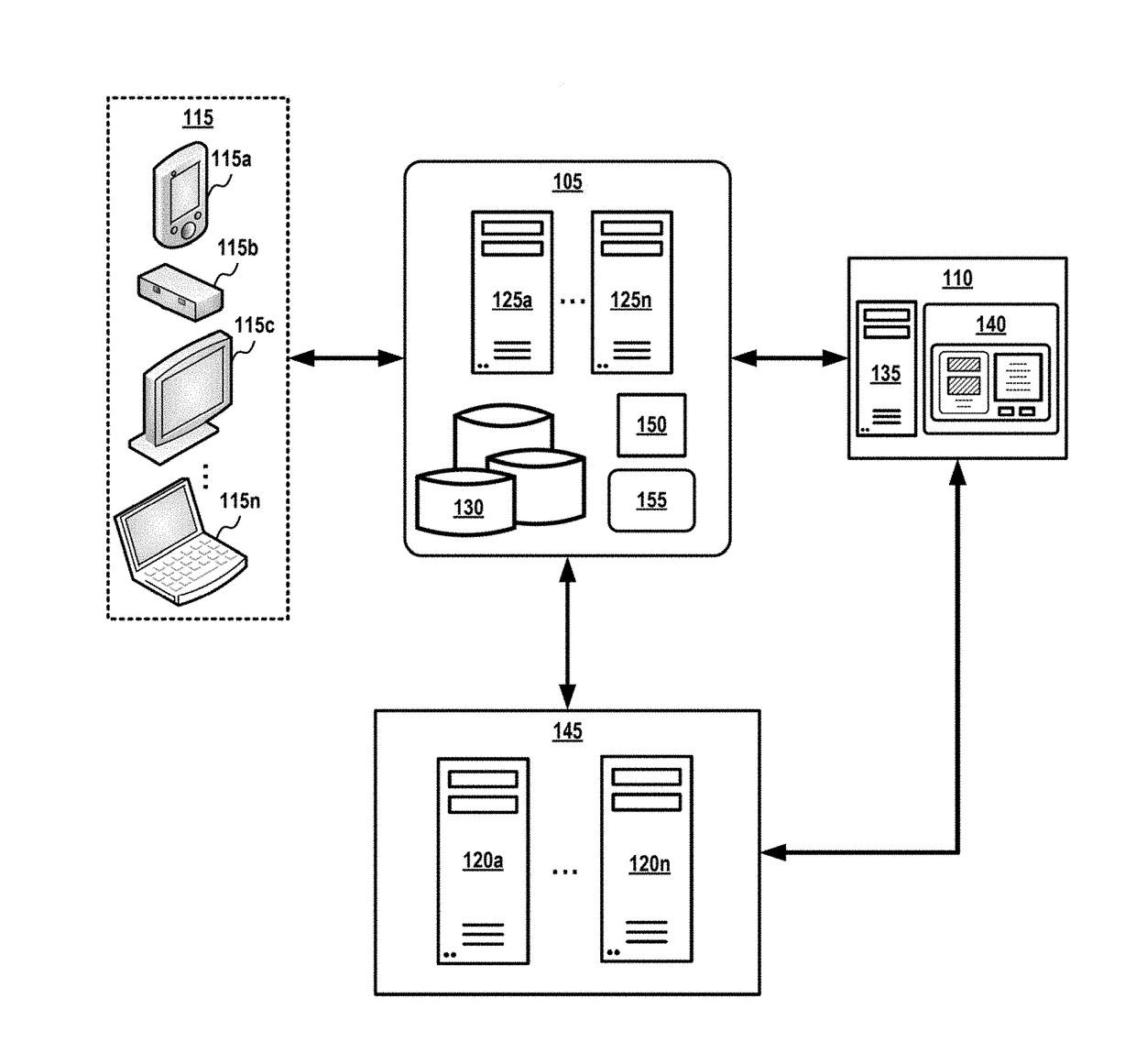

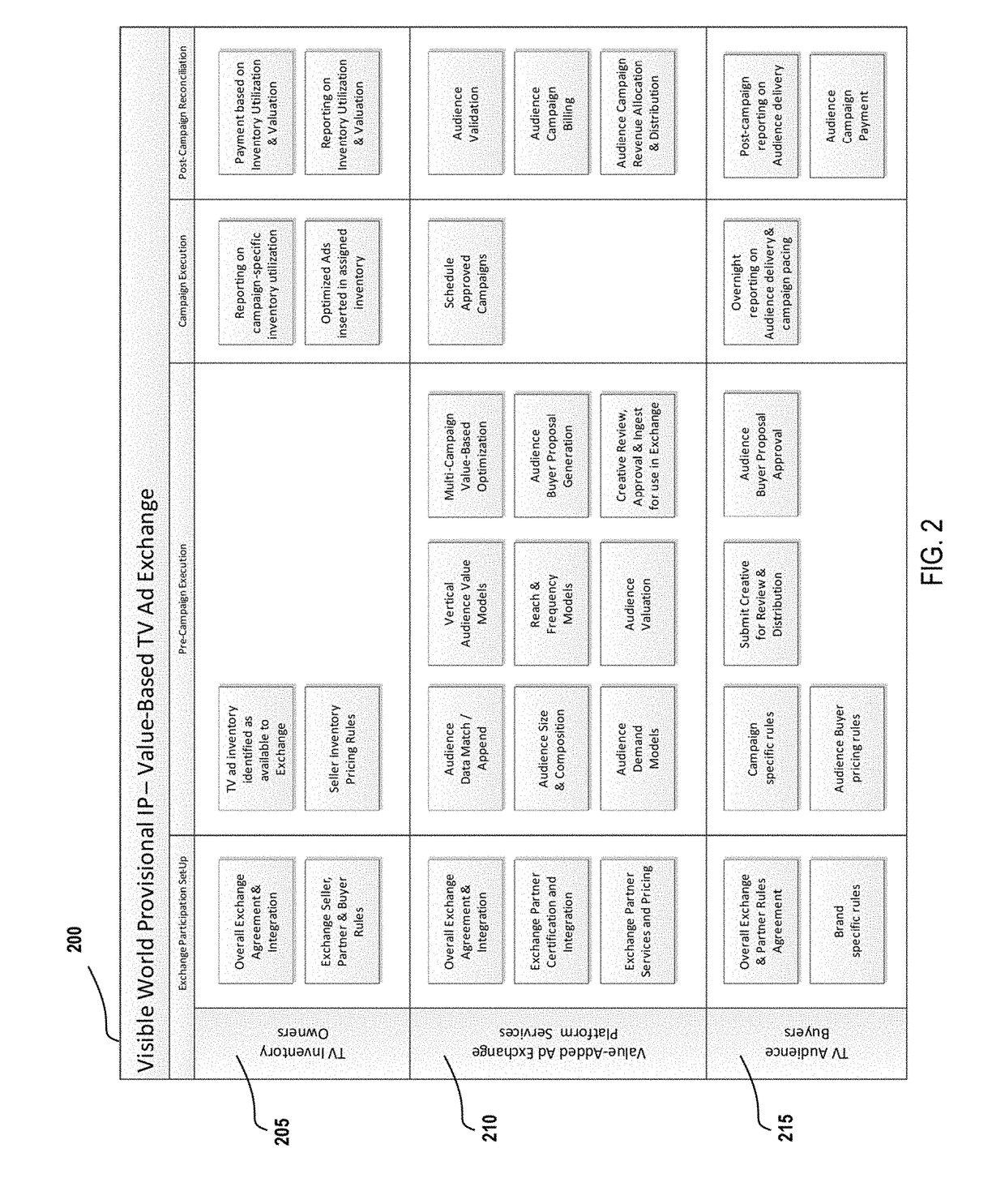

Value-based TV advertising audience exchange

ActiveUS20170195747A1Lower current transactionLower execution barrierSelective content distributionApplication softwareTrusted system

Systems, methods and computer-readable media for a decentralized application system that enables participating parties to automate the buying and selling of TV media units and / or aggregated TV and premium video audiences is described. The value-based TV / premium video media exchange application system allows the participants to interact with the system directly and / or automate transactions and execution between systems, while ensuring proper governance over each participants own rules and economics associated with the transactions, as well as individual campaign constraints and requirements. The decentralized application system significantly lowers current transaction and execution barriers, timing and costs, while providing a highly accountable and trusted system across all of the exchange participants.

Owner:FREEWHEEL MEDIA

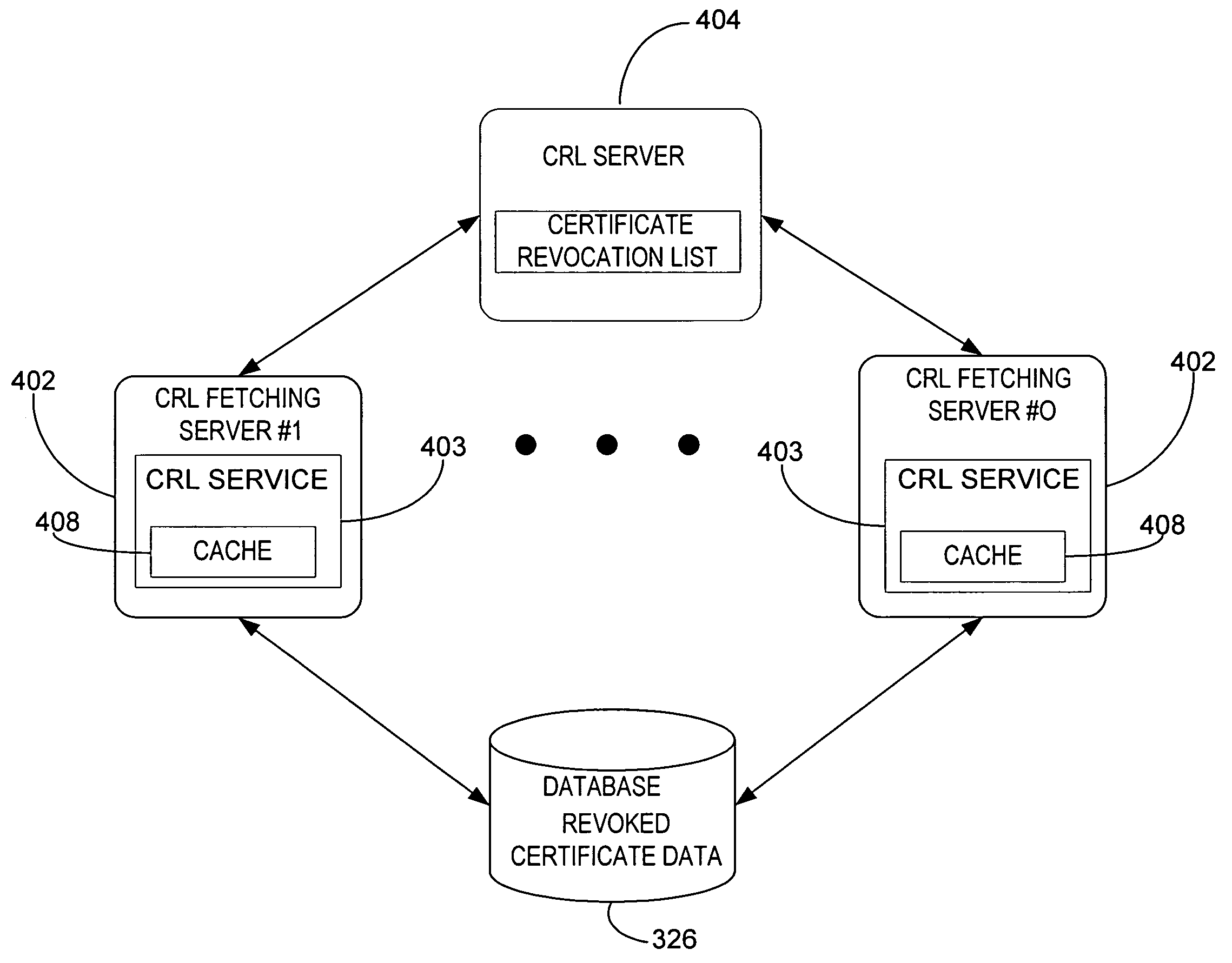

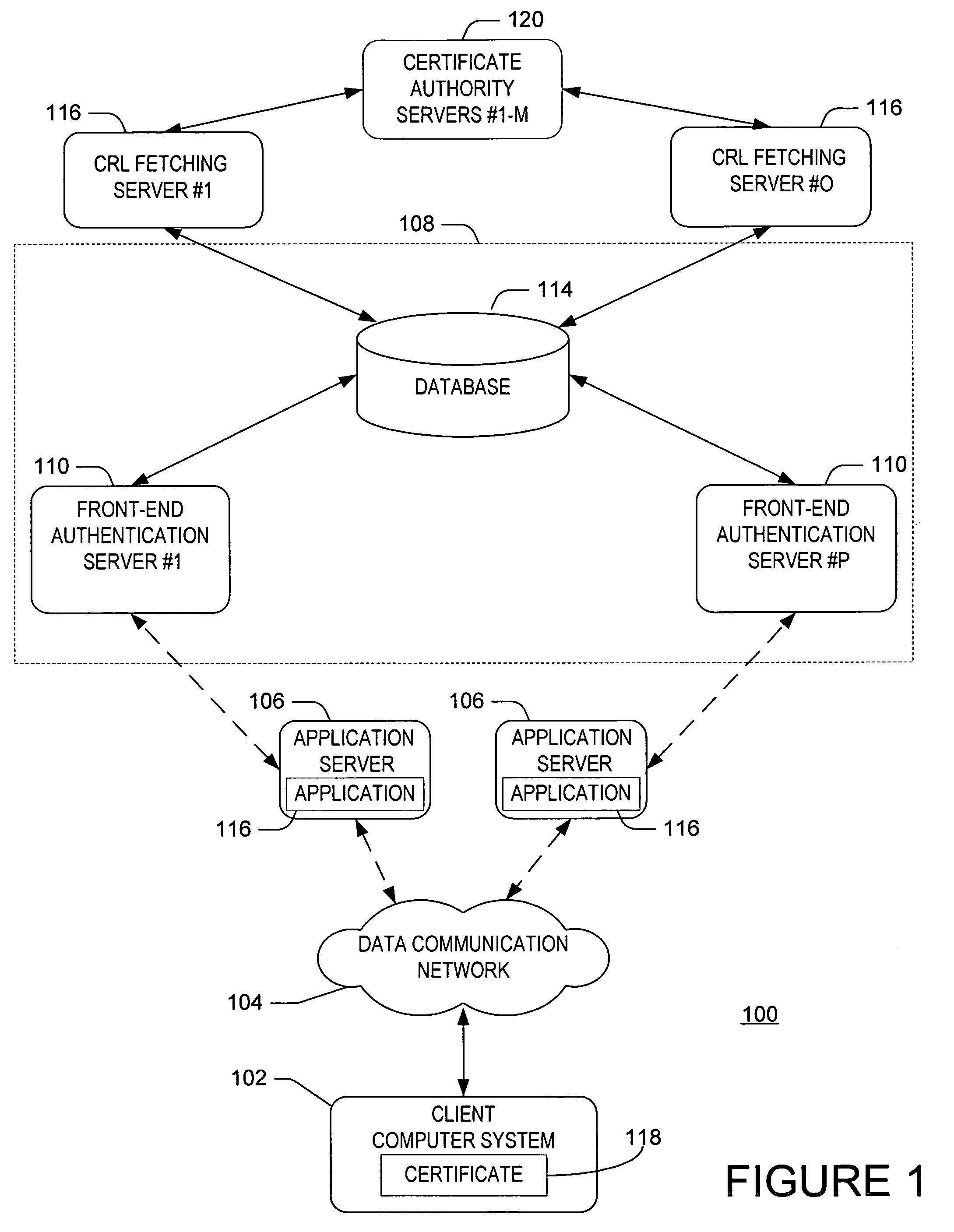

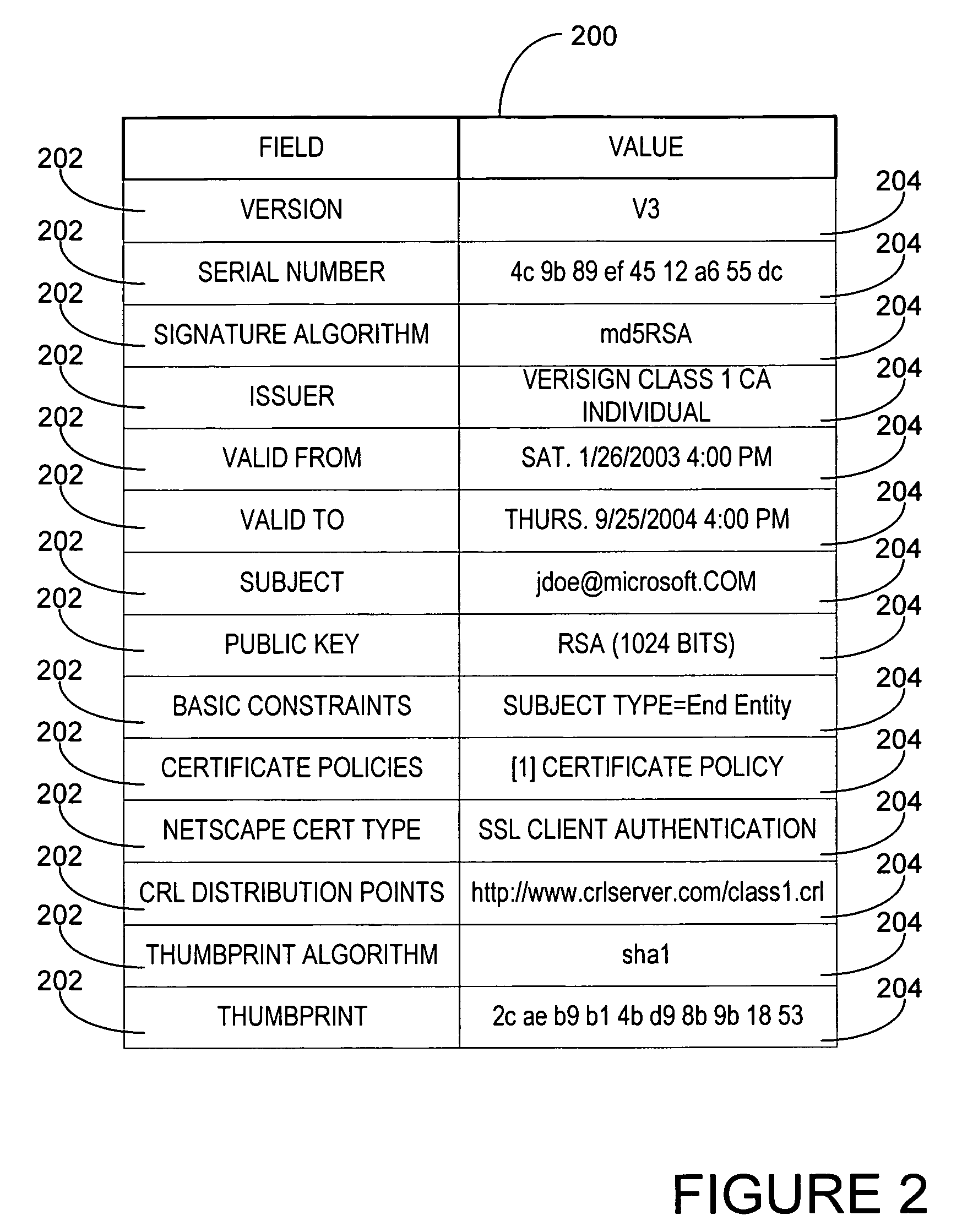

Public key infrastructure scalability certificate revocation status validation

InactiveUS7437551B2Traffic minimizationReduce executionUser identity/authority verificationCentral databaseAuthentication server

A system and method for retrieving certificate of trust information for a certificate validation process. Fetching servers periodically retrieve certificate revocation lists (CRLs) from servers maintained by various certificate issuers. The revoked certificate data included in the retrieved CRLs are stored in a central database. An authentication server receives a request from a client for access to a secure service and initiates a validation process. The authentication server retrieves revoked certificate data from the central database and compares the retrieved revoked certificate data to certificate of trust information received from the client along with the request. The authentication server denies access to the secure information if the certificate of trust information matches revoked certificate data from the central database, allows access if the certificate of trust information does not match revoked certificate data from the central database.

Owner:MICROSOFT TECH LICENSING LLC

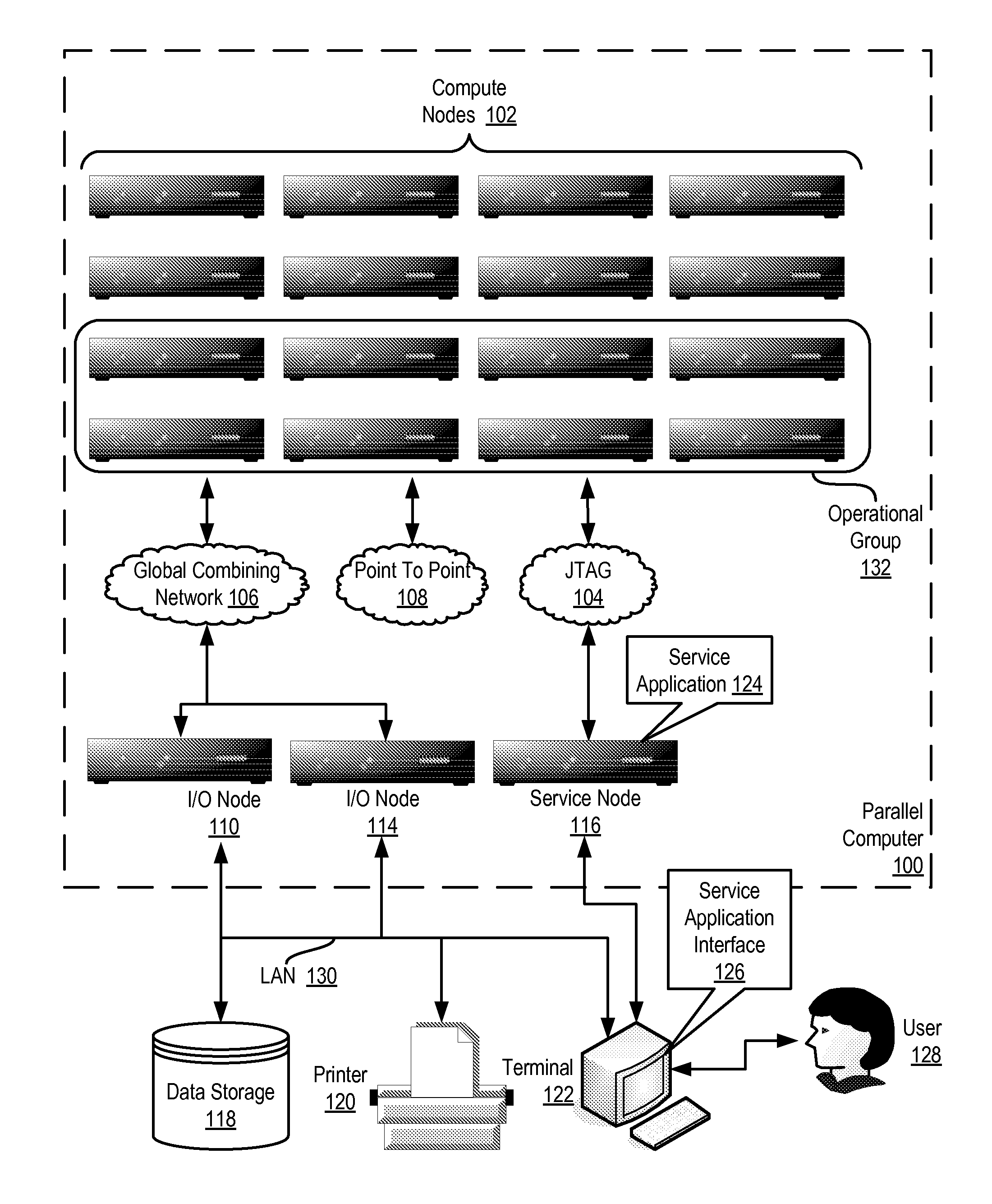

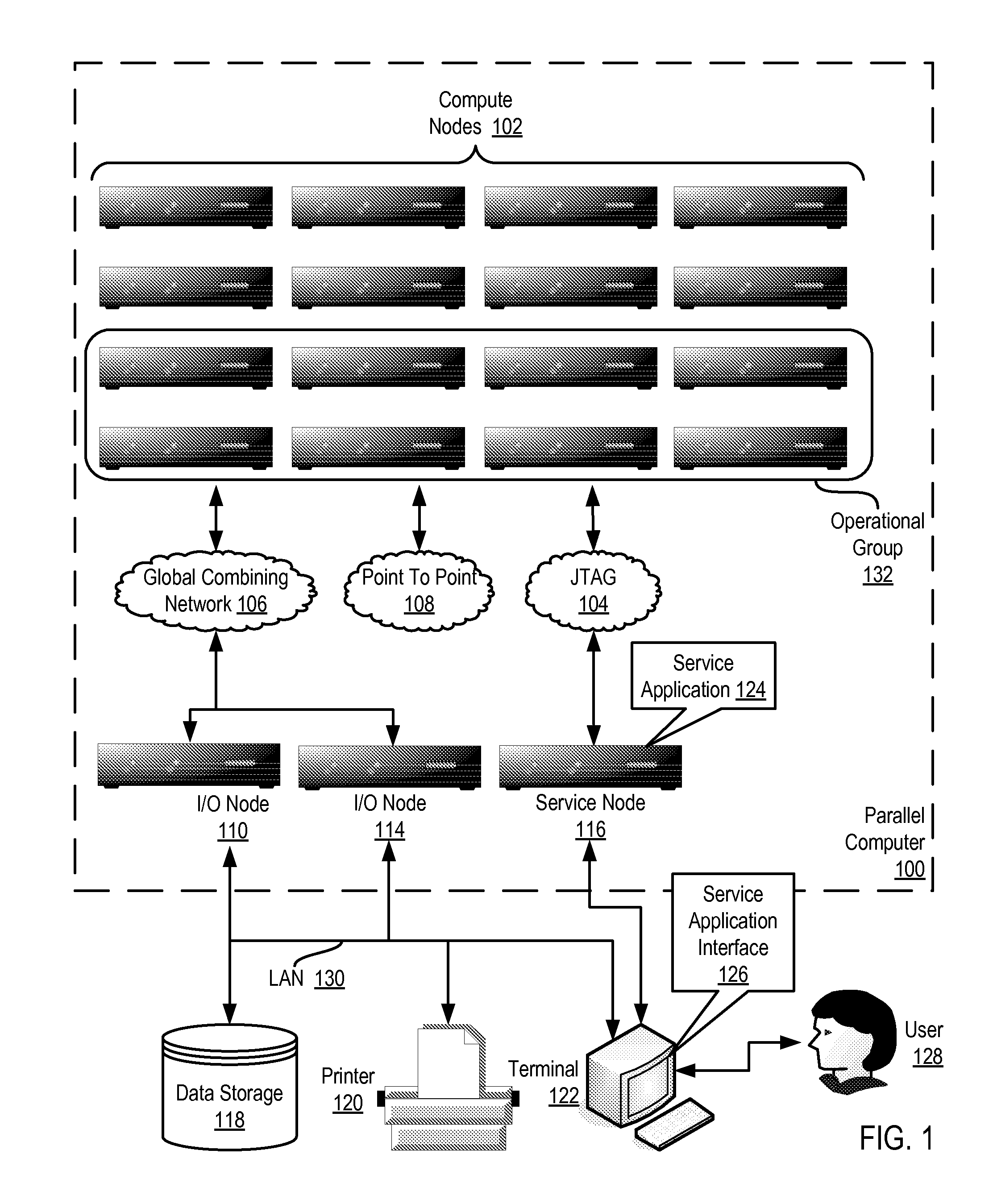

Reducing power consumption during execution of an application on a plurality of compute nodes

InactiveUS20090300386A1Reduce executionEnergy efficient ICTVolume/mass flow measurementElectricityOperational system

Methods, apparatus, and products are disclosed for reducing power consumption during execution of an application on a plurality of compute nodes that include: powering up, during compute node initialization, only a portion of computer memory of the compute node, including configuring an operating system for the compute node in the powered up portion of computer memory; receiving, by the operating system, an instruction to load an application for execution; allocating, by the operating system, additional portions of computer memory to the application for use during execution; powering up the additional portions of computer memory allocated for use by the application during execution; and loading, by the operating system, the application into the powered up additional portions of computer memory.

Owner:IBM CORP

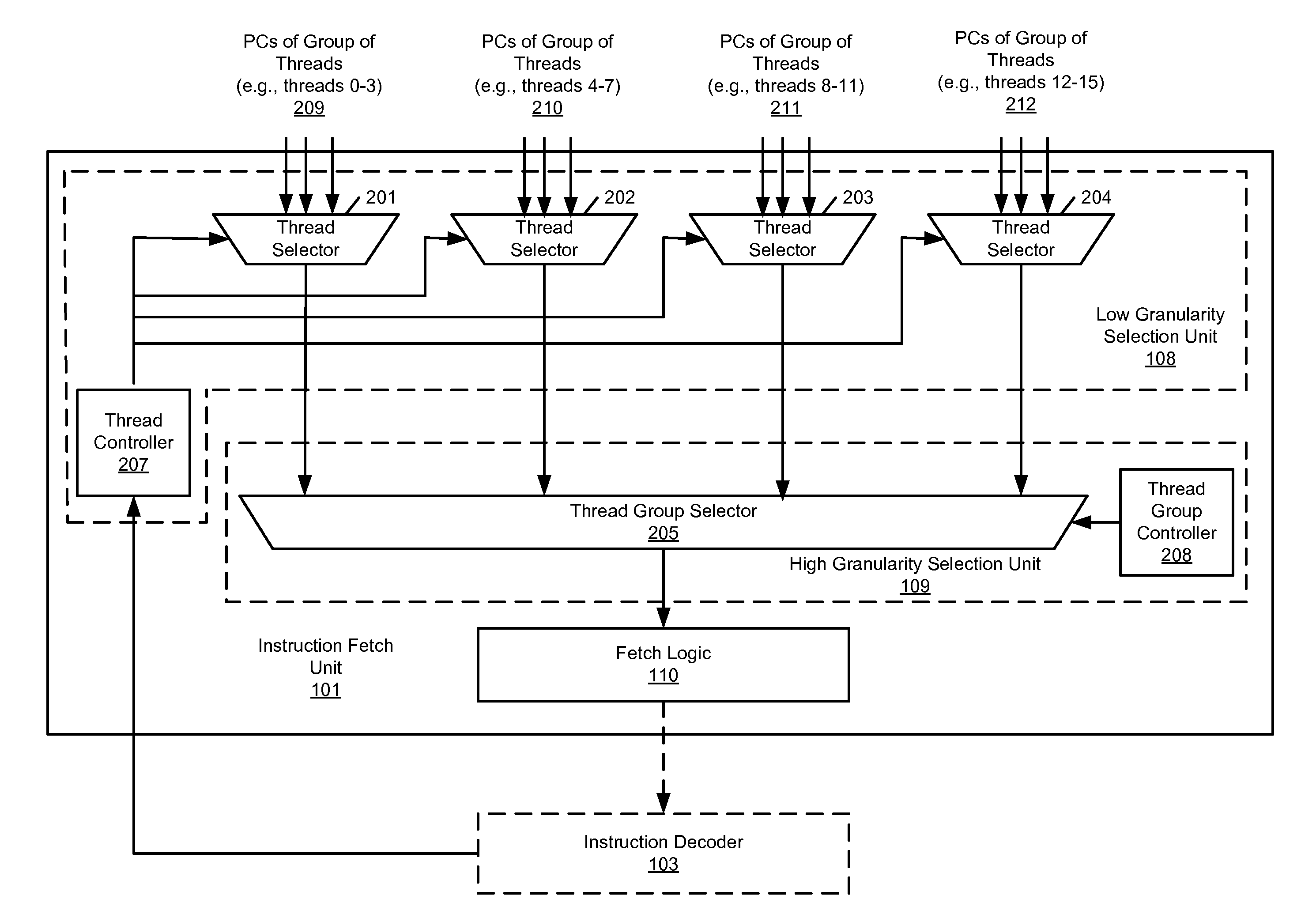

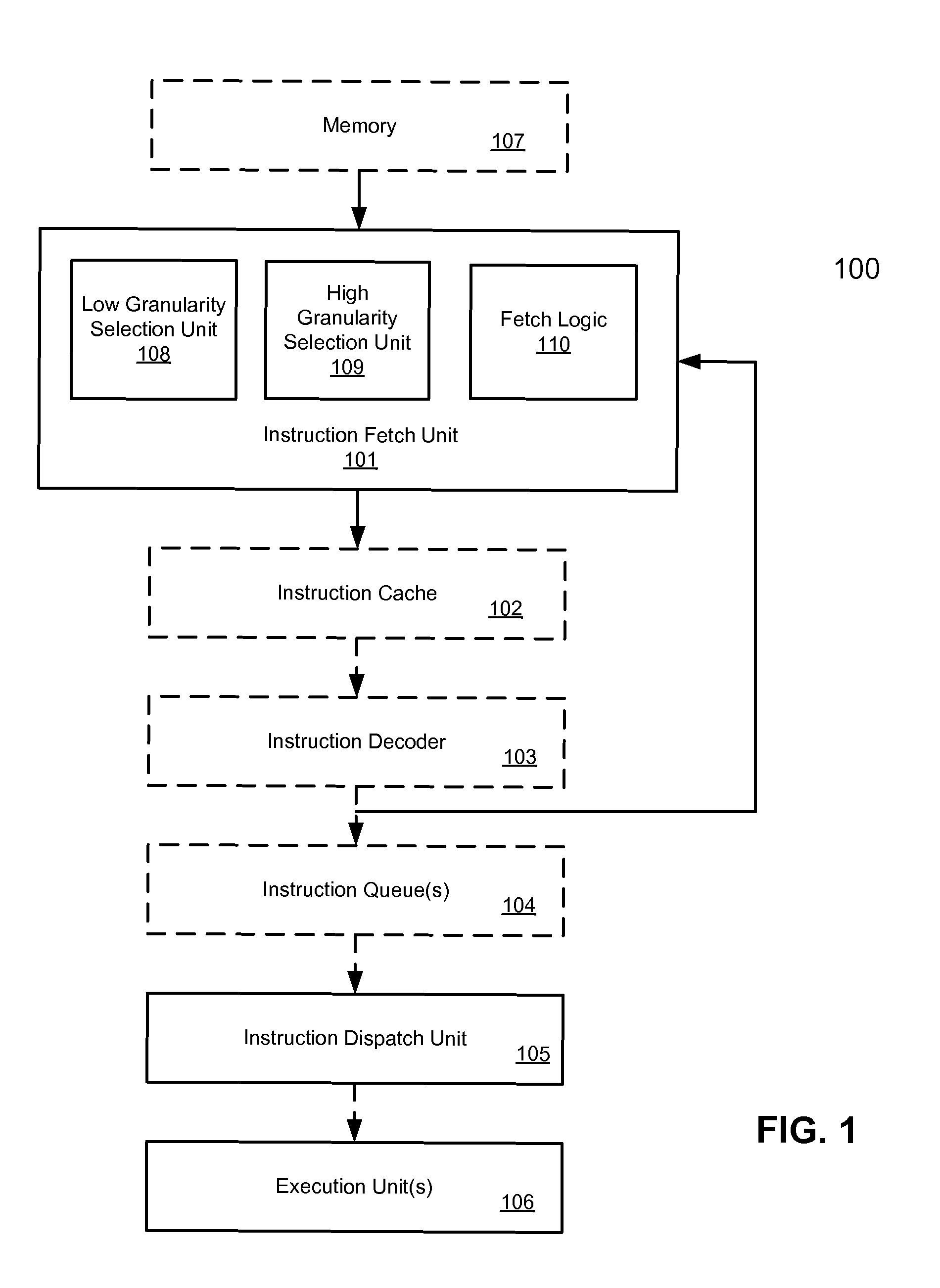

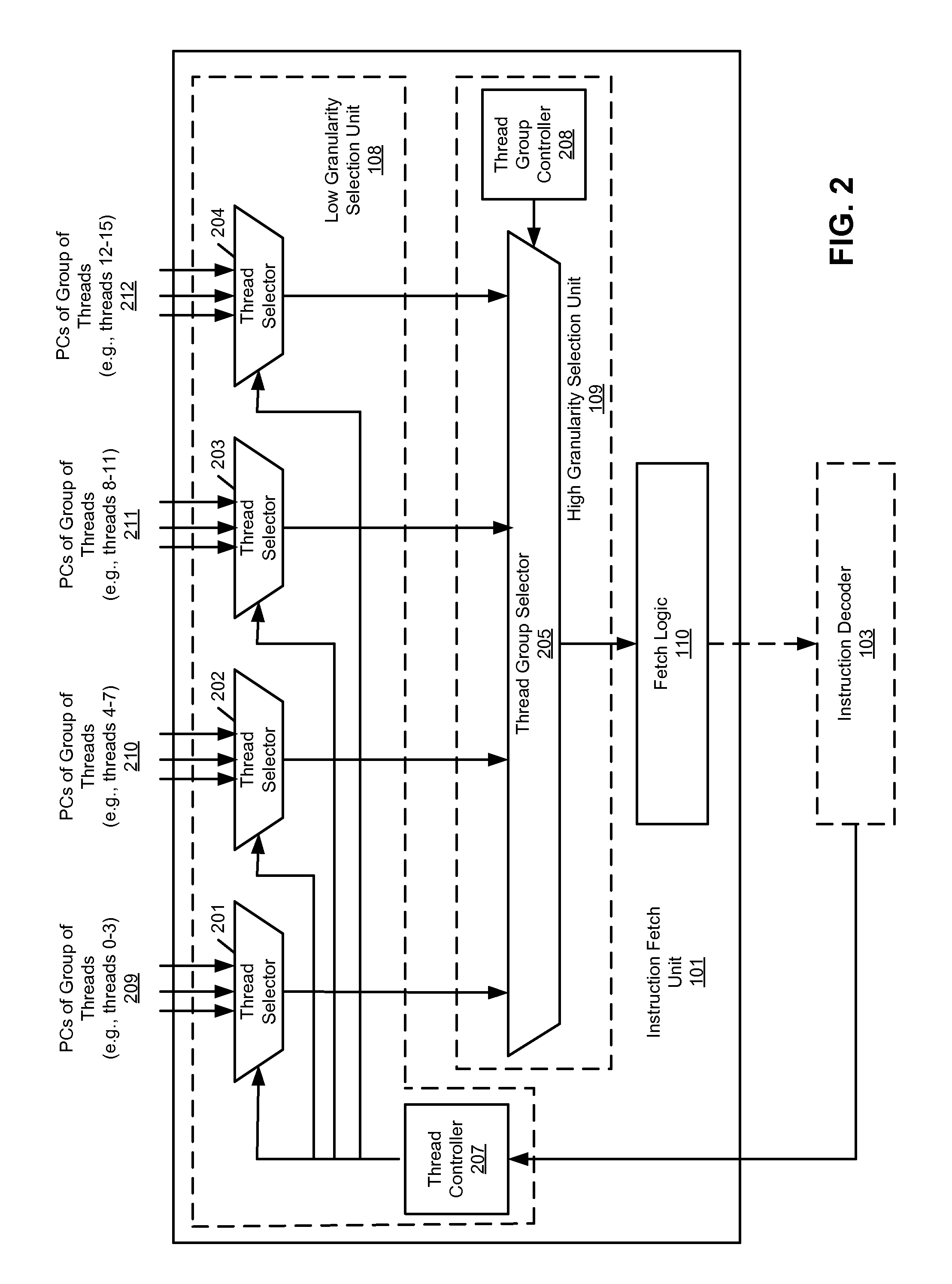

Hierarchical multithreaded processing

InactiveUS20110276784A1High selectivityReduce executionDigital computer detailsMemory systemsGranularityParallel computing

In one embodiment, a current candidate thread is selected from each of multiple first groups of threads using a low granularity selection scheme, where each of the first groups includes multiple threads and first groups are mutually exclusive. A second group of threads is formed comprising the current candidate thread selected from each of the first groups of threads. A current winning thread is selected from the second group of threads using a high granularity selection scheme. An instruction is fetched from a memory based on a fetch address for a next instruction of the current winning thread. The instruction is then dispatched to one of the execution units for execution, whereby execution stalls of the execution units are reduced by fetching instructions based on the low granularity and high granularity selection schemes.

Owner:TELEFON AB LM ERICSSON (PUBL)

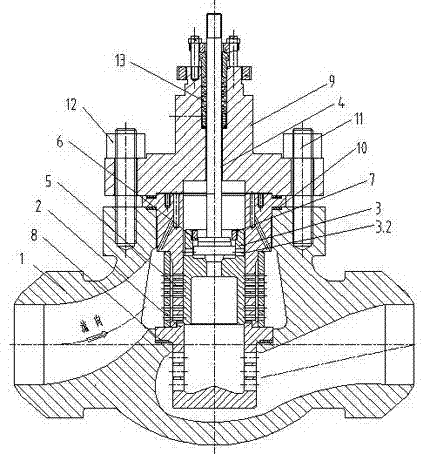

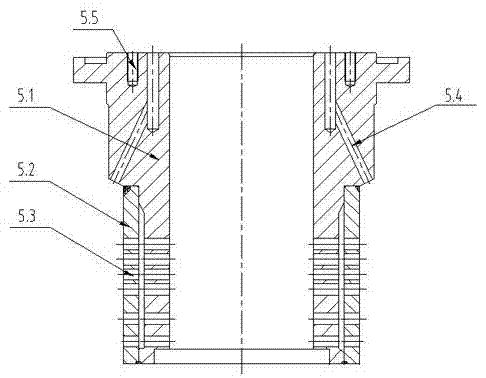

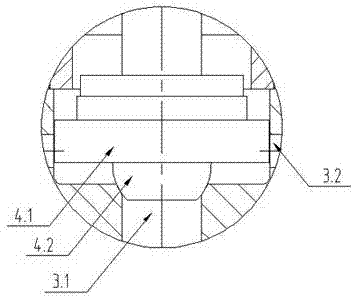

Anti-air-erosion dewatering regulating valve

ActiveCN103244748AAvoid direct impactReduce noisePressure relieving devices on sealing facesValve members for absorbing fluid energyWashoutEngineering

The invention relates to an anti-air-erosion dewatering regulating valve comprising a valve body, a valve seat, valve clacks and a valve rod. The valve seat is fixed in a valve cavity encircled by the valve body, the sealing surfaces of the valve clacks and the sealing surface of the valve seat form a main sealing pair, the valve rod drives the valve clacks straightly up and down, a orifice sleeve is fixedly arranged in a valve cavity at a medium inlet, the lower end of the orifice sleeve is abutted against the non-sealing surface of the upper end of the valve seat, the valve cavity at the medium inlet is divided into an inner portion and an outer portion by the orifice sleeve and the valve seat, multilayer sleeves are sleeved layer by layer to form the orifice sleeve, a gap is reserved between each layer of the lower portion of the orifice sleeve, a plurality of orifices are radially reserved on the sleeve wall of each sleeve layer, and external diameter surfaces of the valve clacks are slidingly matched with the inner diameter surfaces of the orifice sleeve. The anti-air-erosion dewatering regulating valve has the advantages of no erosion, small required executive force, reliable sealability, wear resistance, washout proof, simple structure, convenient mounting, long service life and the like. Erosion can be avoided under the working condition of big differential and the anti-air-erosion dewatering regulating valve can be driven by a small actuator.

Owner:HARBIN SONGLIN POWER STATION EQUIP CO LTD

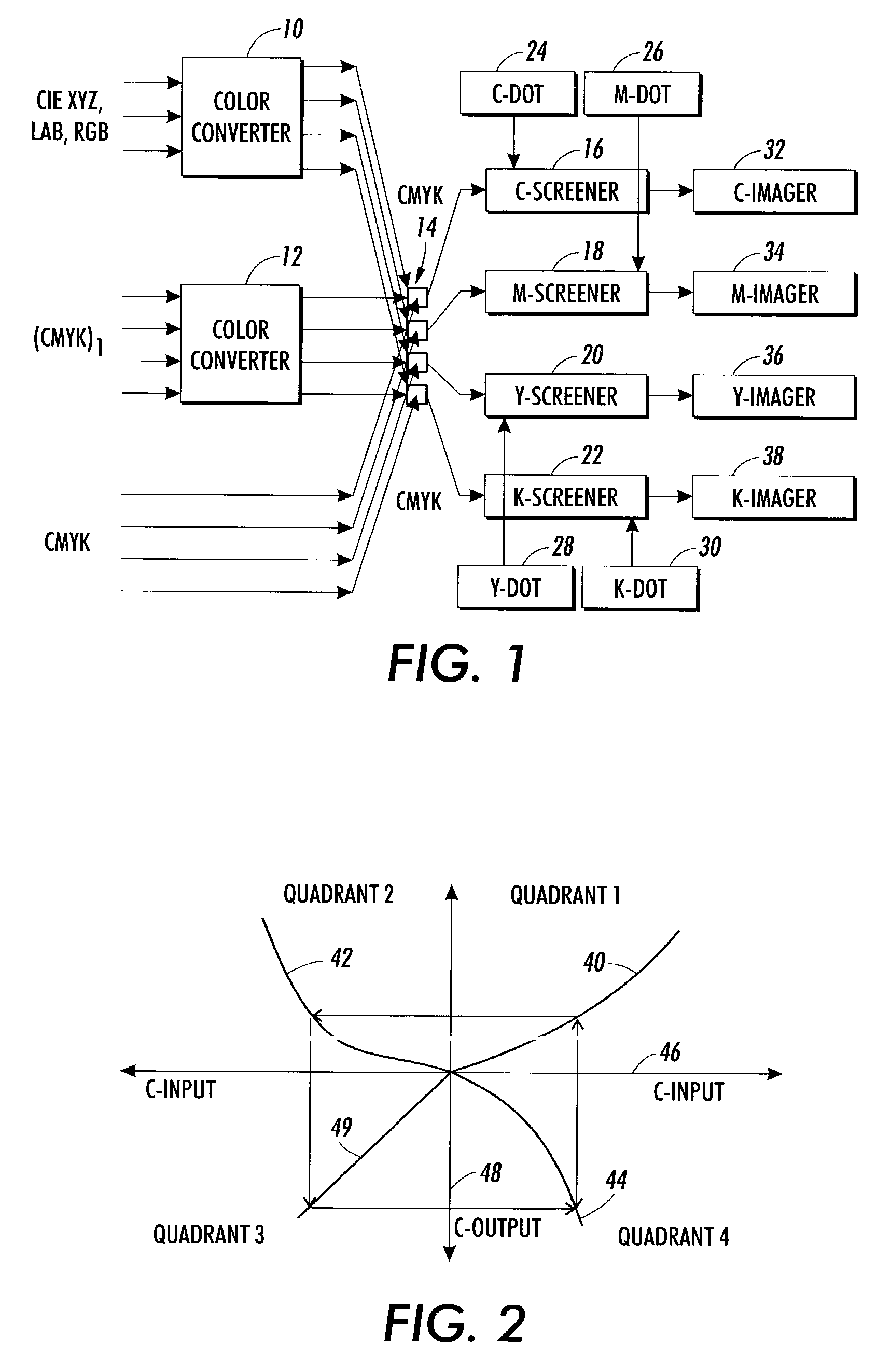

Iterative printer control and color balancing system and method using a high quantization resolution halftone array to achieve improved image quality with reduced processing overhead

ActiveUS7277196B2Reducing printing spaceReduced execution timeDigitally marking record carriersDigital computer detailsImage resolutionImaging quality

A system and method for printer control and color balance calibration. The system and method address the image quality problems of print engine instability, low quality of color balance and contouring from the calibration. The method includes defining combinations of colorants, such as inks or toners that will be used to print images, defining a desired response for the combinations that are to be used and, in real time, iteratively printing CMY halftone color patches, measuring the printed patches via an in situ sensor and iteratively performing color-balance calibration based on the measurements, accumulating corrections until the measurements are within a predetermined proximity of the desired response. The calibration is performed on the halftones while they are in a high quantization resolution form.

Owner:XEROX CORP

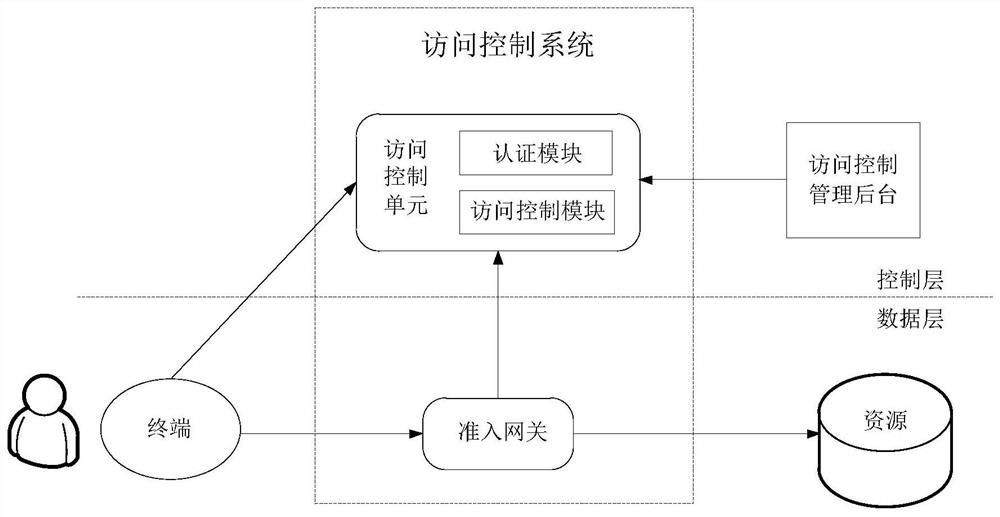

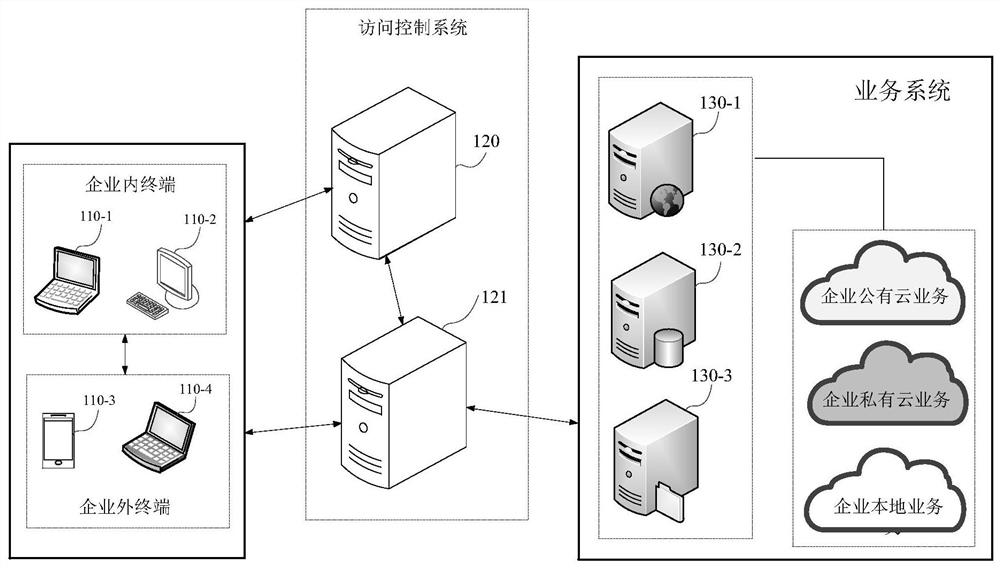

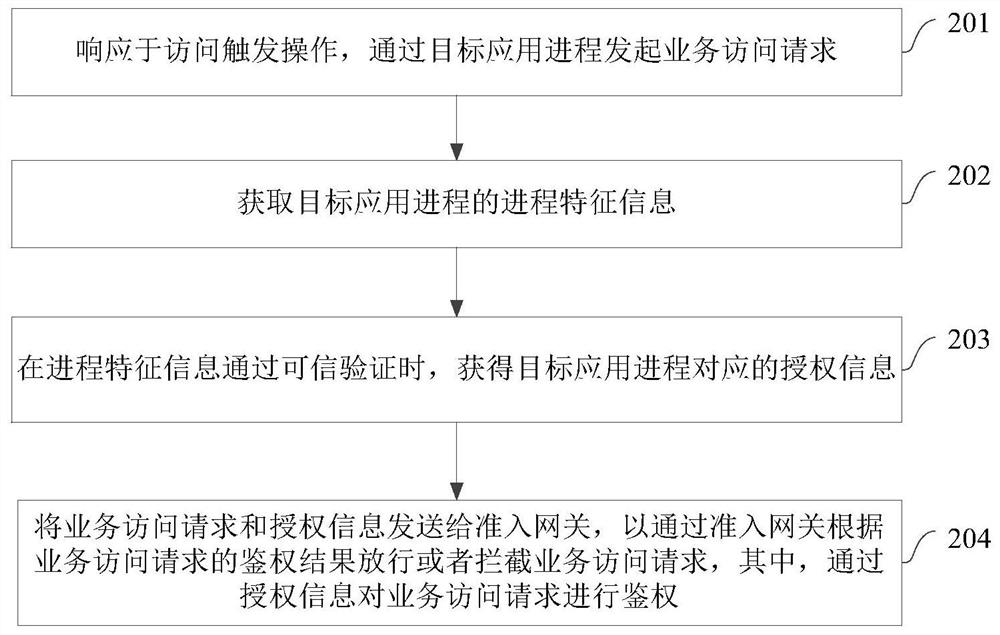

Access control method, system and device and computing equipment

The invention provides an access control method, system and device and computing equipment, relates to the technical field of cloud, in particular to the field of cloud security in the technical fieldof cloud, and is used for improving the effectiveness of access control and reducing network security risks. The method comprises the following steps: in response to an access triggering operation, initiating a service access request through a target application process; obtaining process feature information of the target application process, wherein the process feature information is used for identifying the target application process; obtaining process authorization information corresponding to the target application process when the process feature information passes credible verification;and sending the service access request and the process authorization information to an admission gateway so as to release or intercept the service access request through the admission gateway according to an authentication result of the service access request, and authenticating the service access request through the authorization information.

Owner:TENCENT CLOUD COMPUTING BEIJING CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com