Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

47 results about "Trace Cache" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Trace Cache (also known as execution trace cache) is a very specialized cache which stores the dynamic stream of instructions known as trace. It helps in increasing the instruction fetch bandwidth and decreasing power consumption (in the case of Intel Pentium 4) by storing traces of instructions that have already been fetched and decoded. Trace Processor is an architecture designed around the Trace Cache and processes the instructions at trace level granularity.

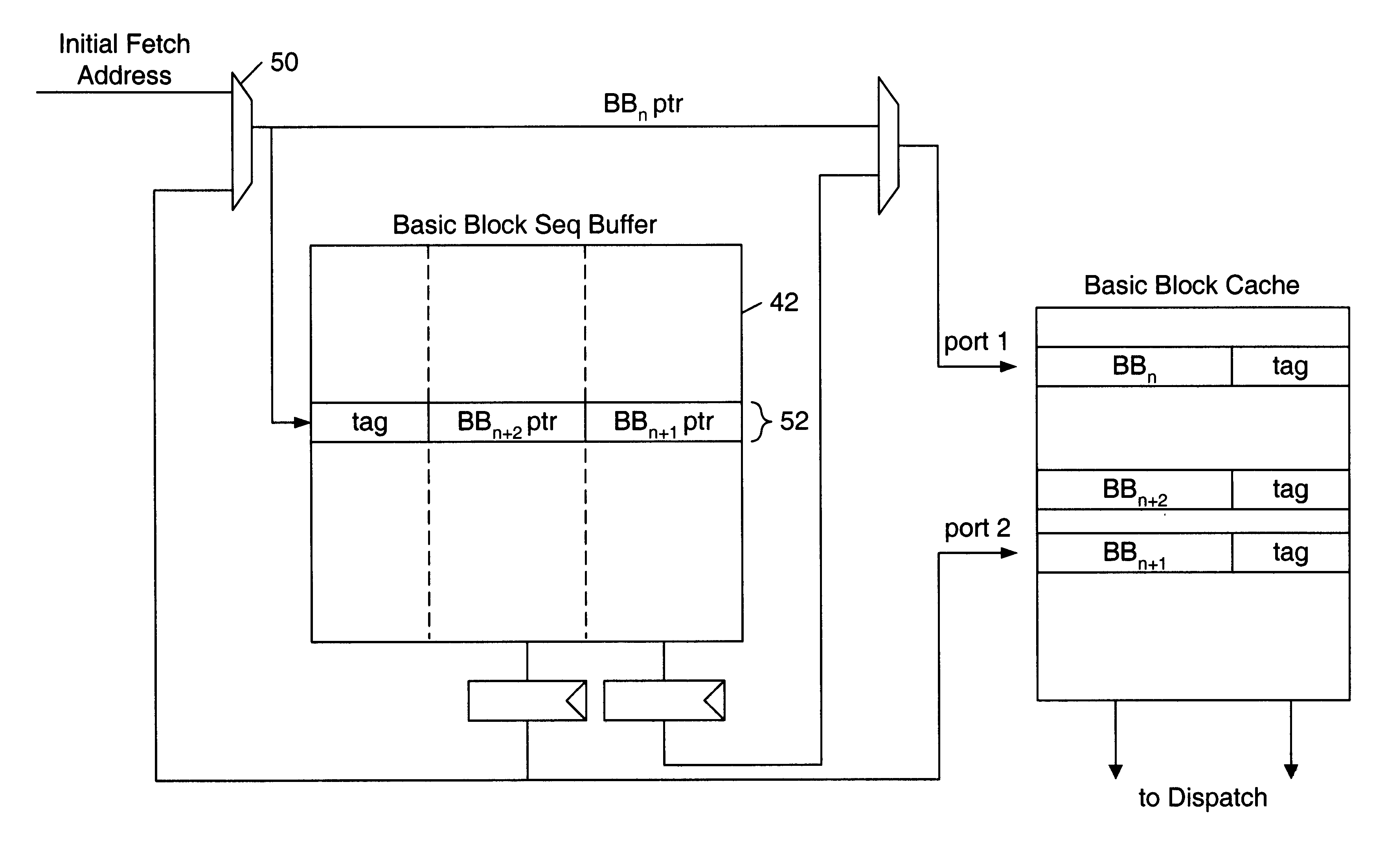

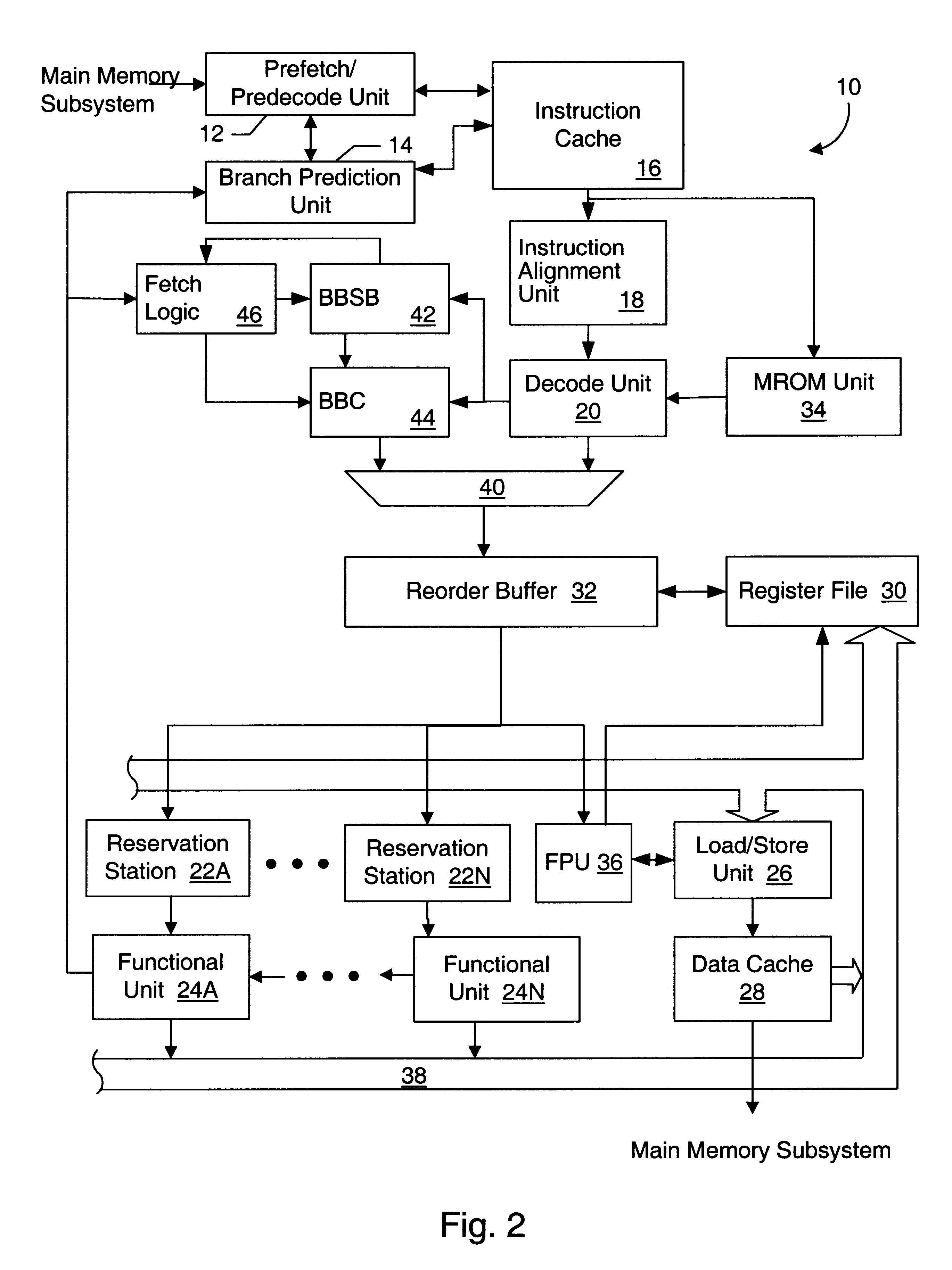

Basic block oriented trace cache utilizing a basic block sequence buffer to indicate program order of cached basic blocks

A cache memory configured to access stored instructions according to basic blocks is disclosed. Basic blocks are natural divisions in instruction streams resulting from branch instructions. The start of a basic block is a target of a branch, and the end is another branch instruction. A microprocessor configured to use a basic block oriented cache may comprise a basic block cache and a basic block sequence buffer. The basic block cache may have a plurality of storage locations configured to store basic blocks. The basic block sequence buffer also has a plurality of storage locations, each configured to store a block sequence entry. The block sequence entry may comprise an address tag and one or more basic block pointers. The address tag corresponds to the fetch address of a particular basic block, and the pointers point to basic blocks that follow the particular basic block in a predicted order. A system using the microprocessor and a method for caching instructions in a block oriented manner rather than conventional power-of-two memory blocks are also disclosed.

Owner:GLOBALFOUNDRIES INC

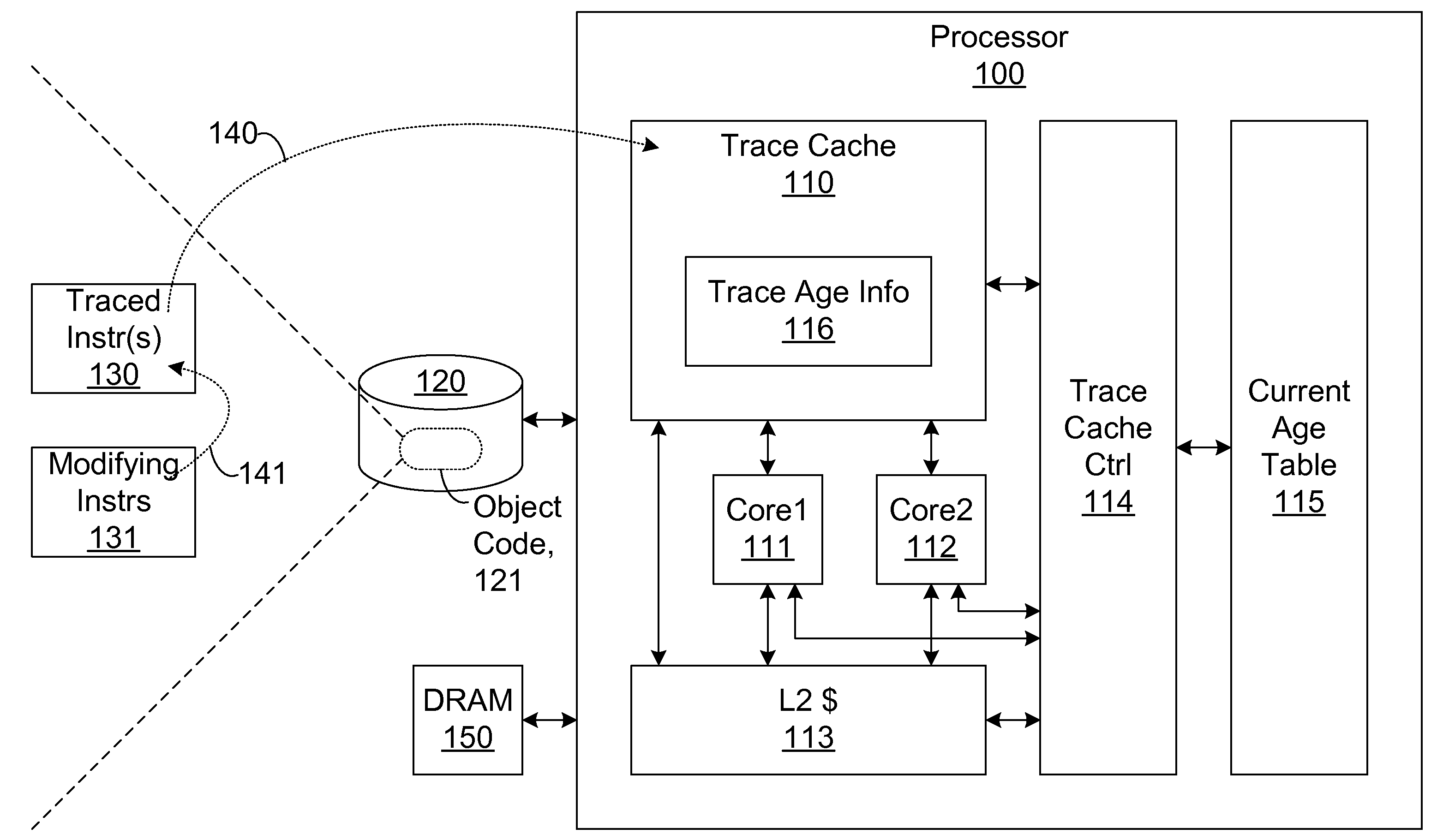

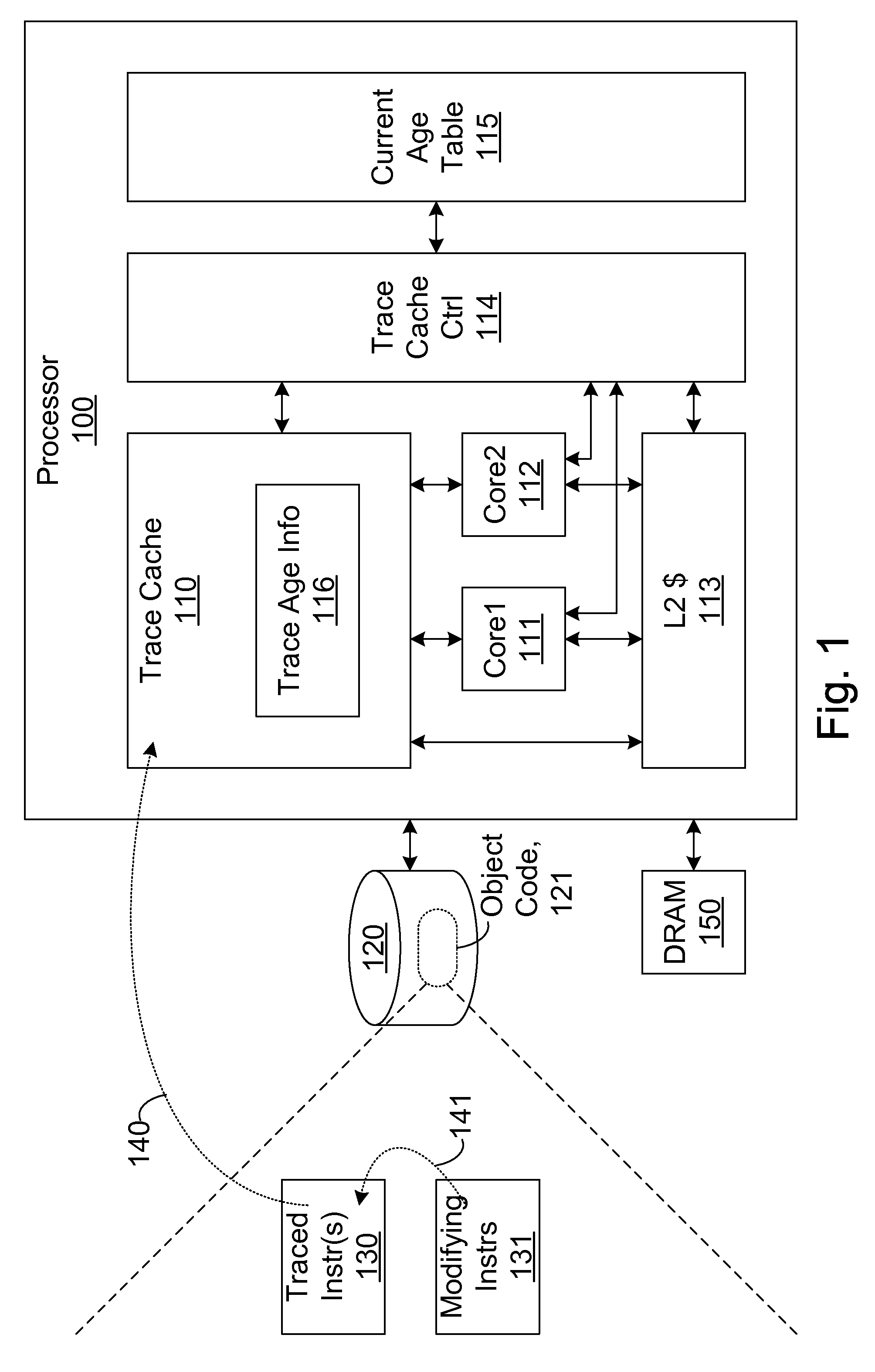

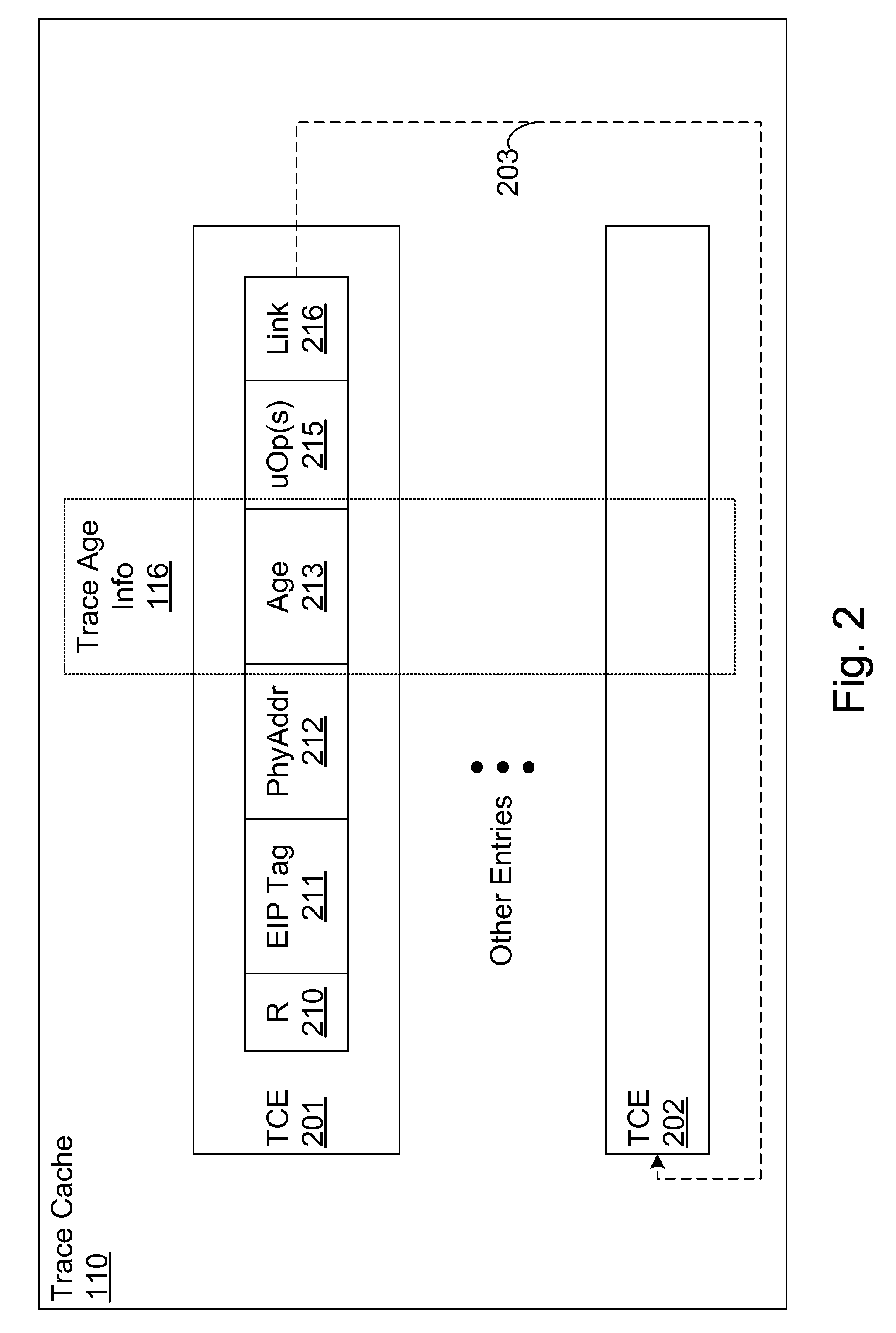

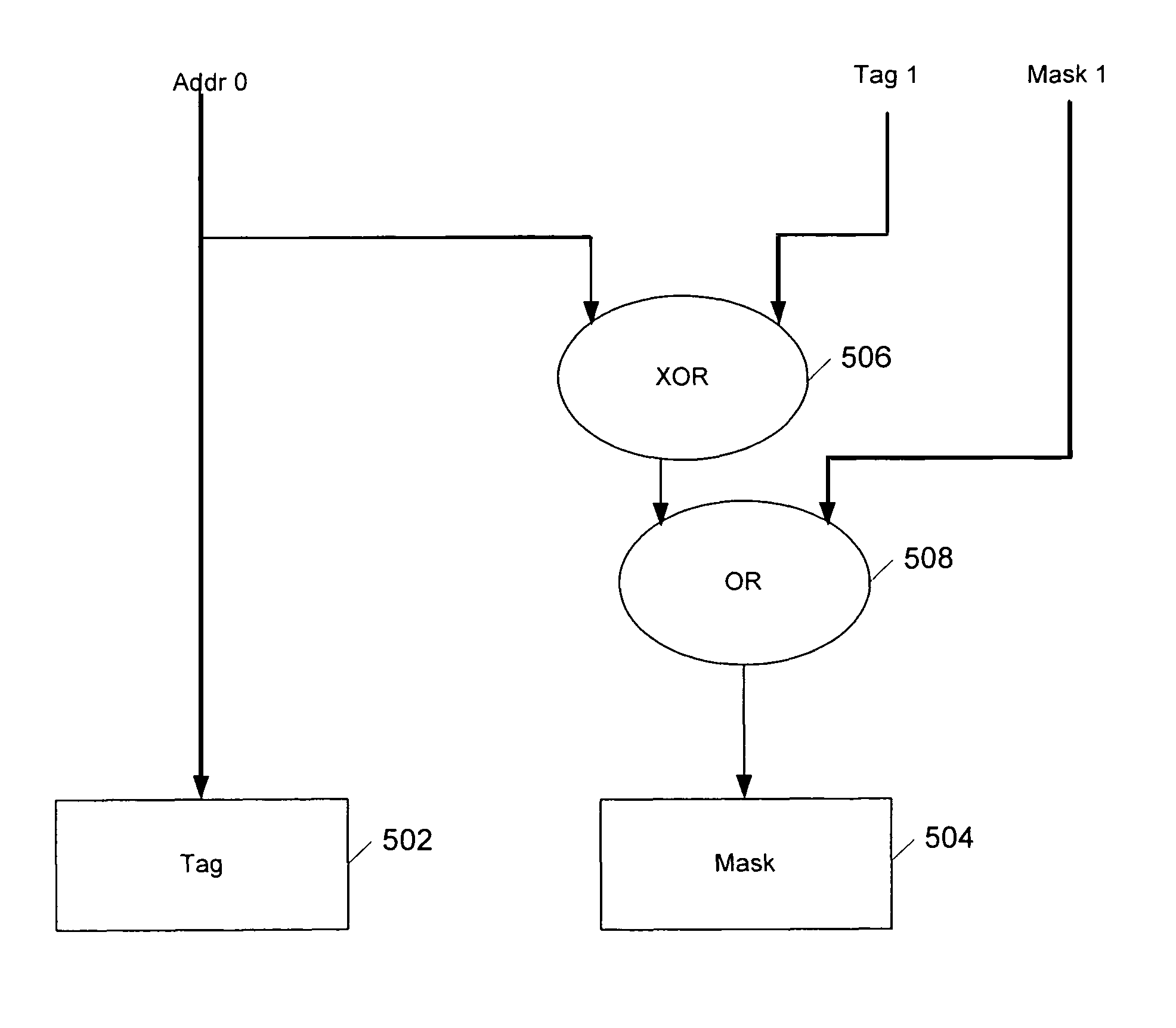

Efficient trace cache management during self-modifying code processing

ActiveUS7546420B1Process less-efficientMore hardwareEnergy efficient ICTProgram controlSelf-modifying codeMicro-operation

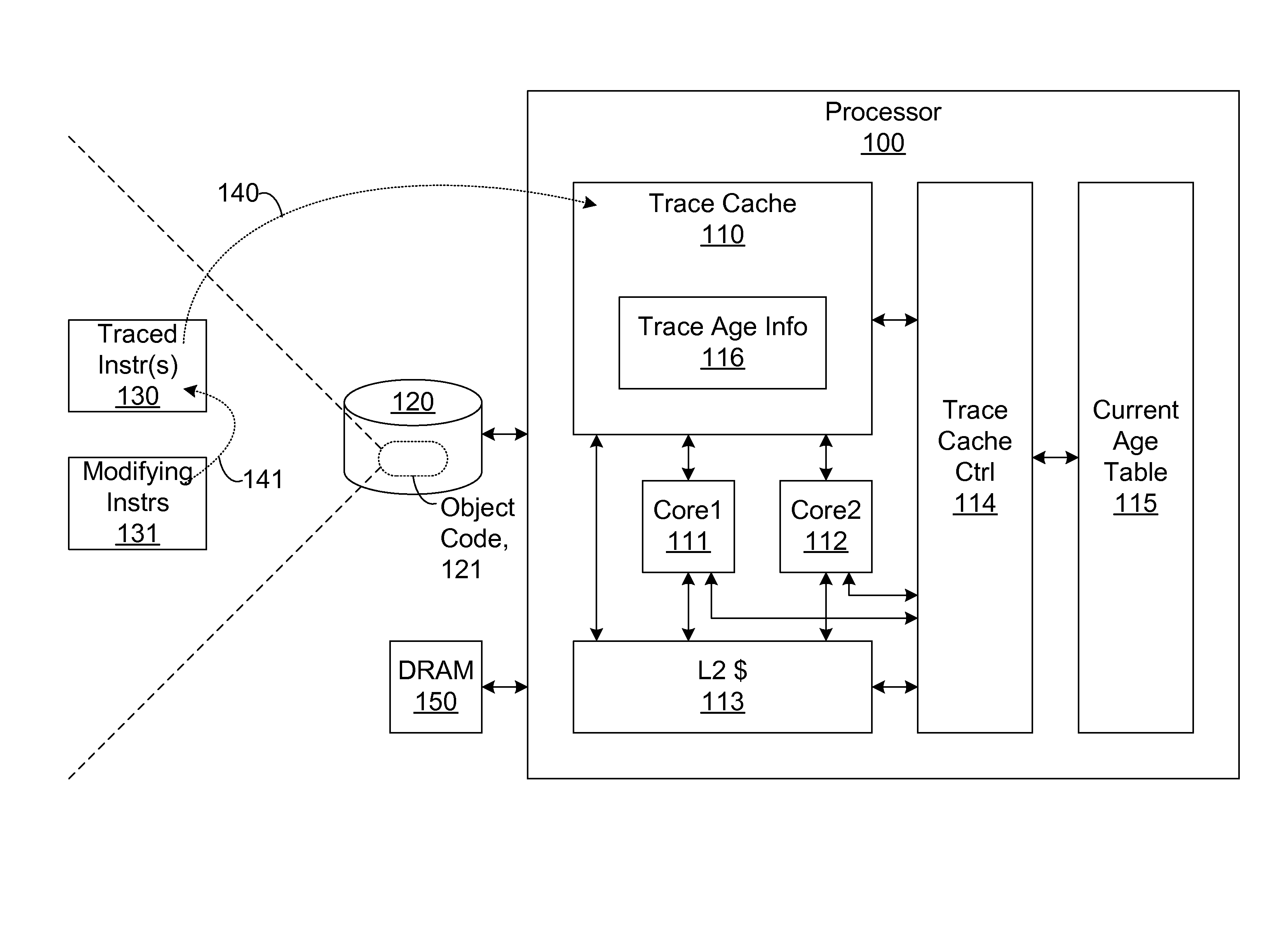

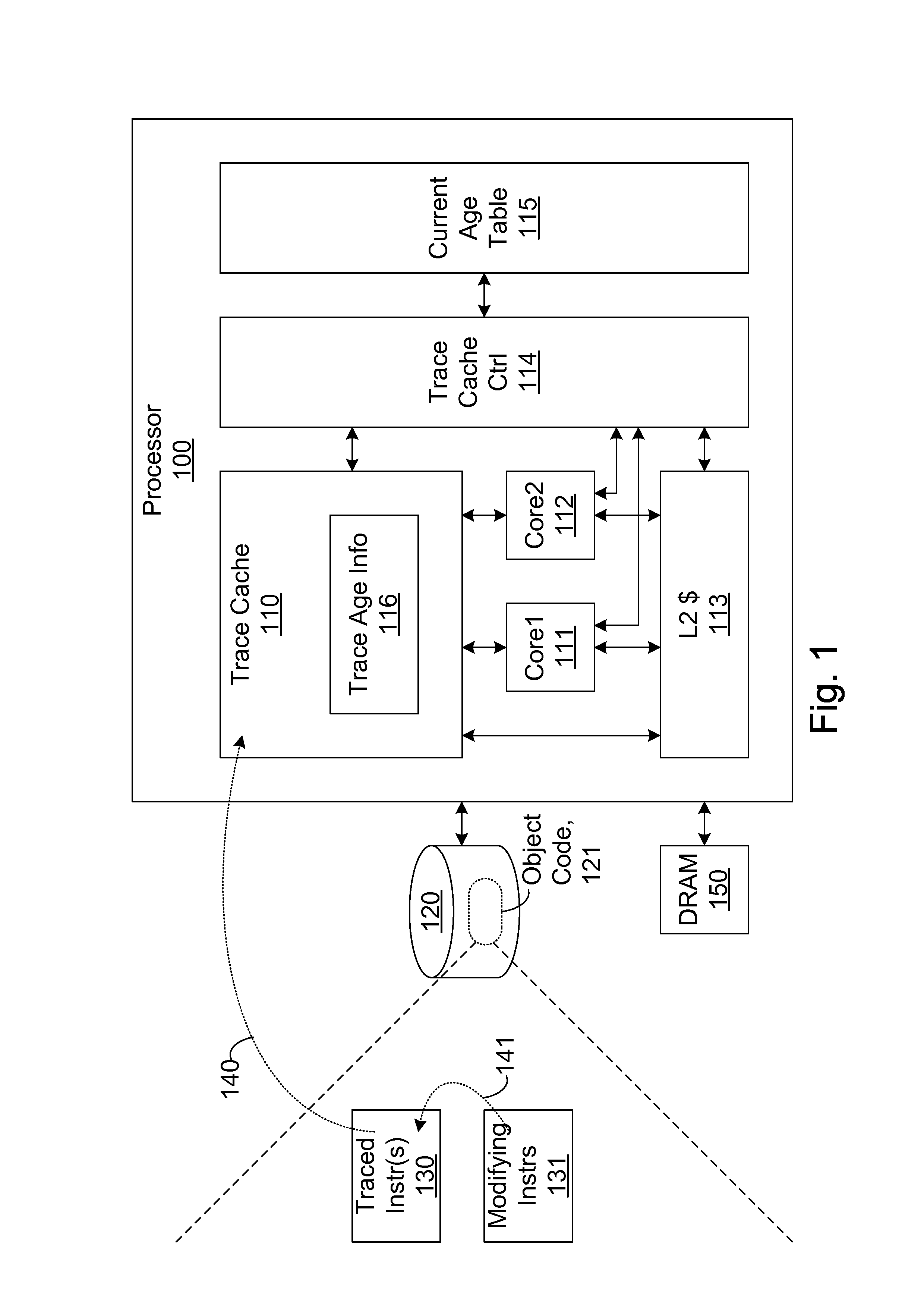

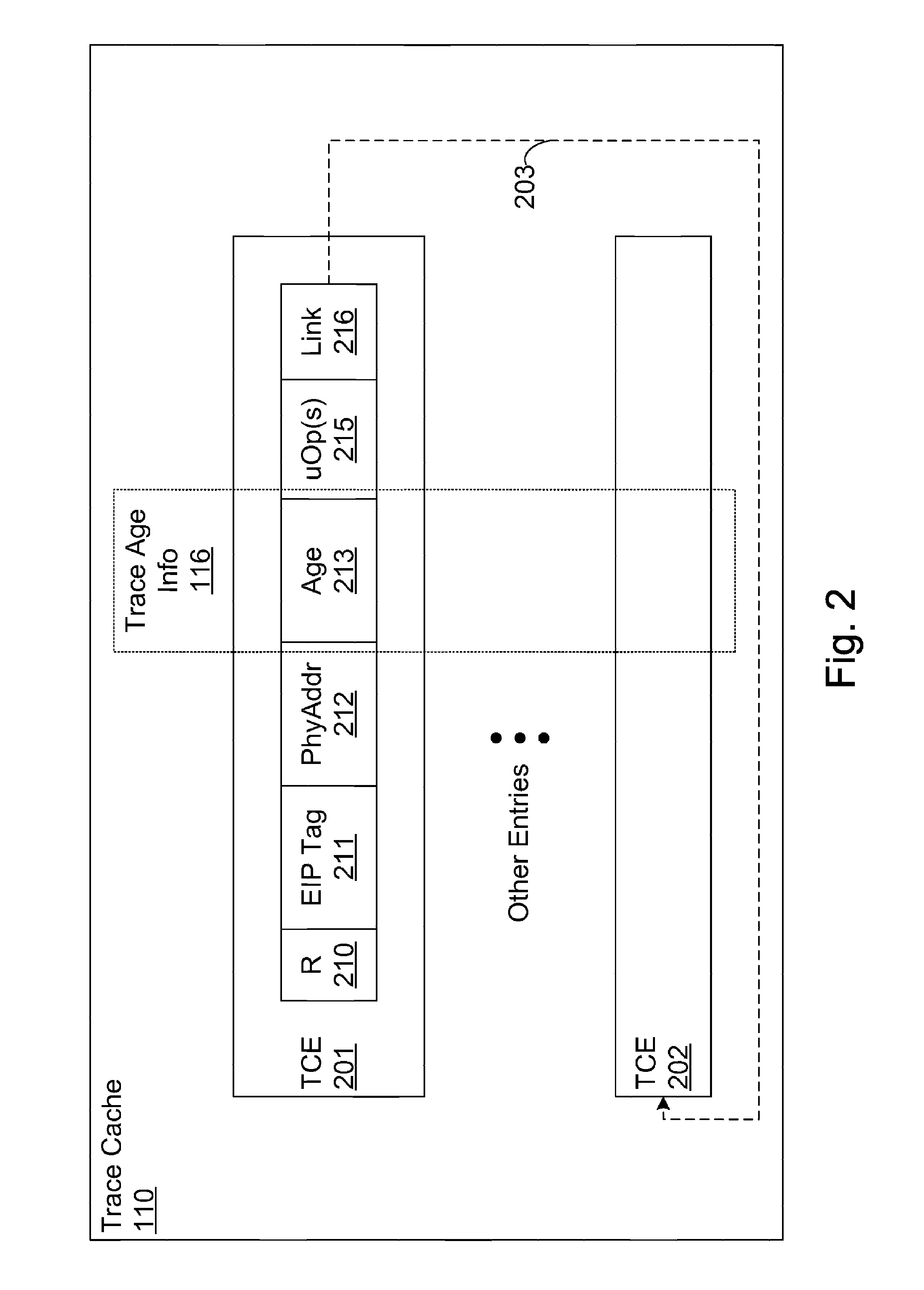

Efficient trace cache management during self-modifying code processing enables selective invalidation of entries of the trace cache, advantageously retaining some of the entries in the trace cache even during self-modifying code events. Instructions underlying trace cache entries are monitored for modification in groups, enabling advantageously reduced hardware. One or more translation ages are associated with each trace cache entry, and are determined when the entry is built by sampling current ages of memory blocks underlying the entry. When the entry is accessed and micro-operations therein are processed, the translation ages of the accessed entry are compared with the current ages of the memory blocks underlying the accessed entry. If any of the age comparisons fail, then the micro-operations are aborted and the entry is invalidated. When any portion of a memory block is modified, the current age of the modified memory block is incremented.

Owner:ORACLE INT CORP

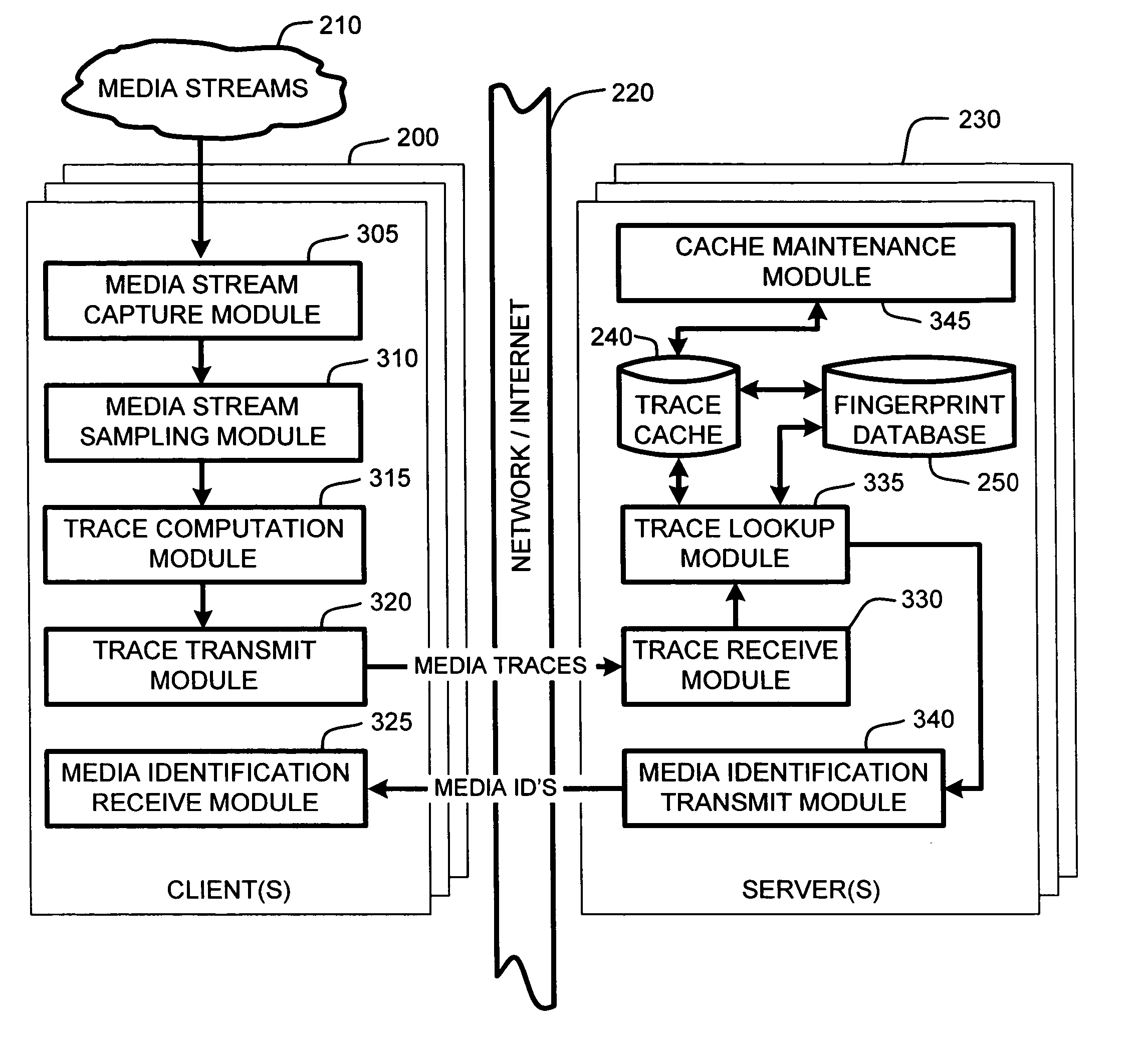

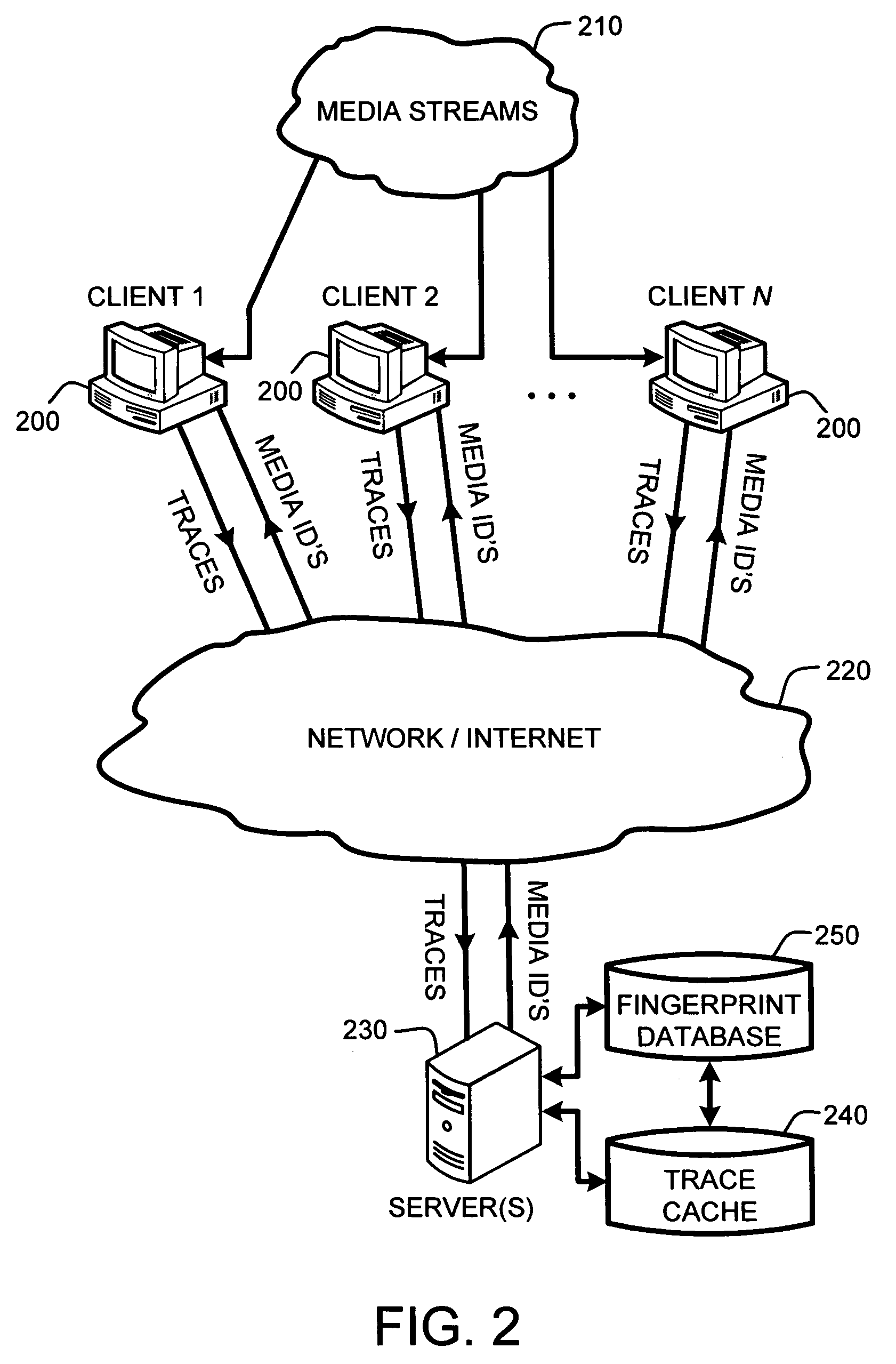

System and method for speeding up database lookups for multiple synchronized data streams

InactiveUS20060106867A1Increase loadImprove accuracyData processing applicationsDigital data information retrievalData streamReal time services

A “Media Identifier” operates on concurrent media streams to provide large numbers of clients with real-time server-side identification of media objects embedded in streaming media, such as radio, television, or Internet broadcasts. Such media objects may include songs, commercials, jingles, station identifiers, etc. Identification of the media objects is provided to clients by comparing client-generated traces computed from media stream samples to a large database of stored, pre-computed traces (i.e., “fingerprints”) of known identification. Further, given a finite number of media streams and a much larger number of clients, many of the traces sent to the server are likely to be almost identical. Therefore, a searchable dynamic trace cache is used to limit the database queries necessary to identify particular traces. This trace cache caches only one copy of recent traces along with the database search results, either positive or negative. Cache entries are then removed as they age.

Owner:MICROSOFT TECH LICENSING LLC

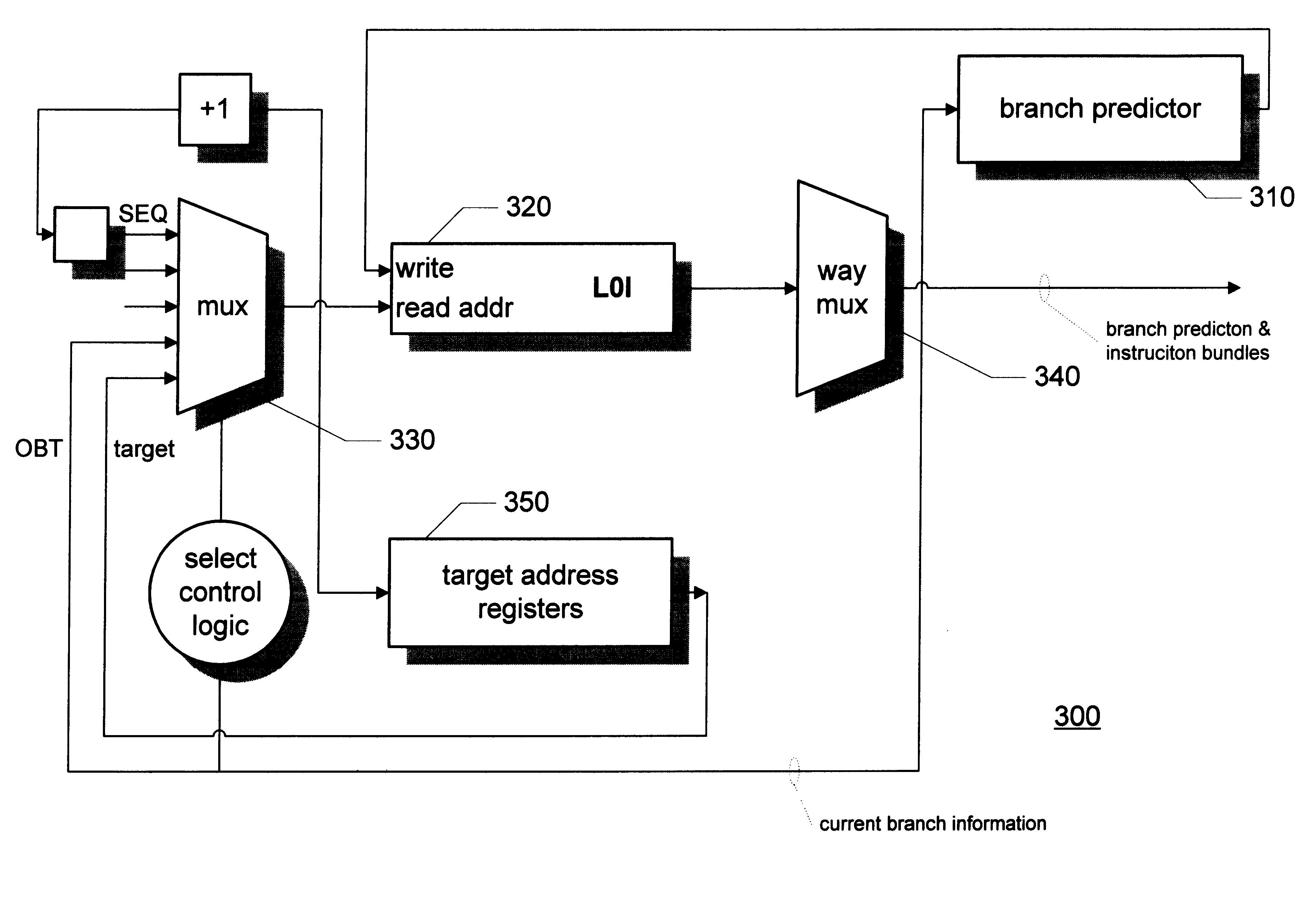

Branch prediction architecture

InactiveUS6332189B1Digital computer detailsNext instruction address formationProcessor registerTrace Cache

A branch prediction architecture is disclosed, having a branch predictor, a target address register, first and second multiplexors, a cache memory, and a trace cache. The branch predictor may advantageously be a series-parallel branch predictor, and alternatively may be a serial-BLG branch predictor or a choosing branch predictor. The first multiplexor receives an input from the target address register, and provides an output to the cache memory. The cache memory receives output from both the branch predictor and the first multiplexor, and provides an output to the second multiplexor. The trace cache receives the output from the branch predictor, and provides an output received by the second multiplexor. The second multiplexor, receiving input from both the trace cache and the cache memory, outputs branch predictions and instruction bundles.

Owner:INTEL CORP

Method and apparatus for dynamic branch prediction utilizing multiple stew algorithms for indexing a global history

InactiveUS7143273B2Digital computer detailsNext instruction address formationParallel computingInstruction sequence

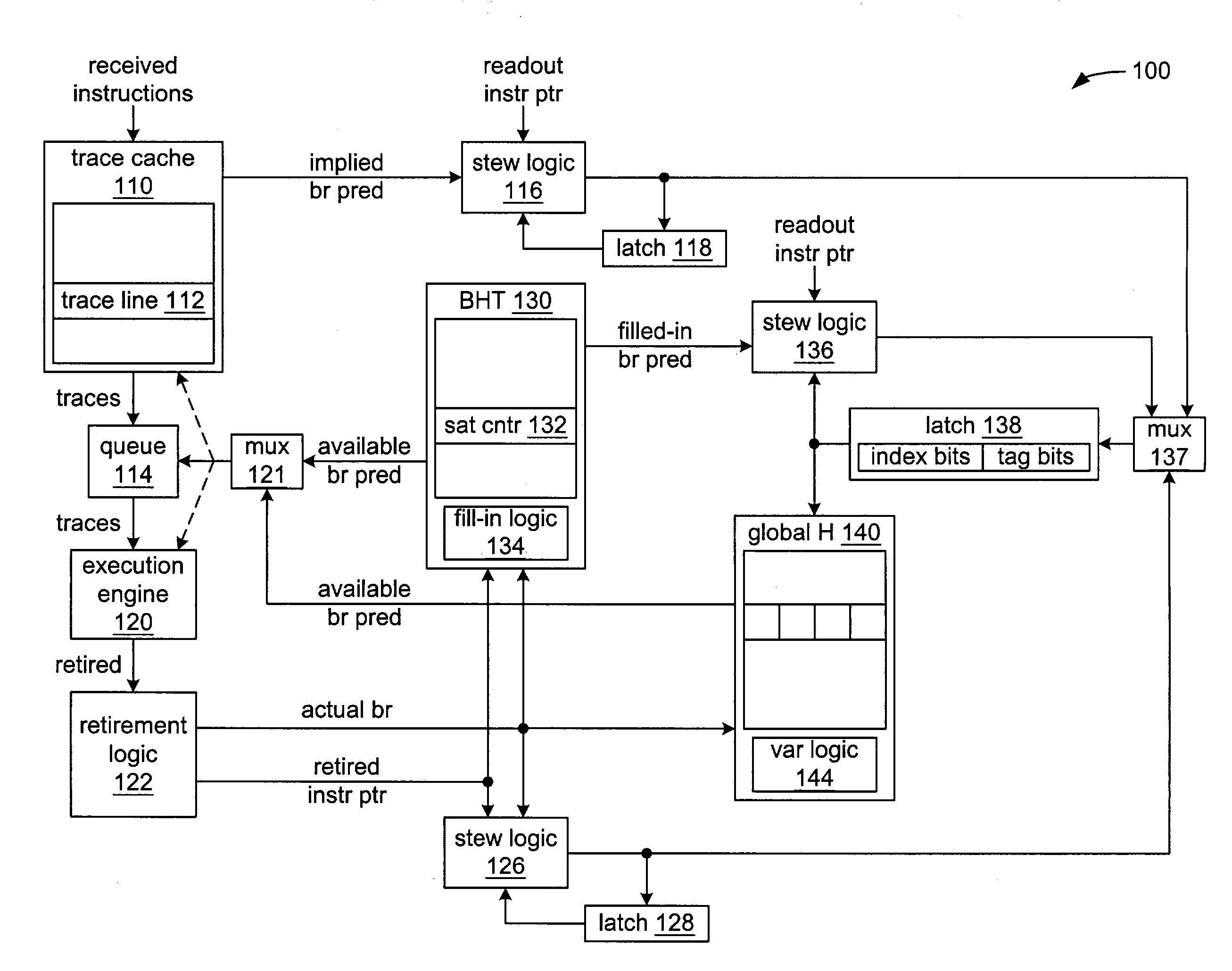

Toggling between accessing an entry in a global history with a stew created from branch predictions implied by the ordering of instructions within a trace of a trace cache when a trace is read out of a trace cache, and accessing an entry in a global history with repeatable variations of a stew when there is more than branch instruction within a trace within the trace cache and at least a second branch instruction is read out.

Owner:INTEL CORP

Transitioning from instruction cache to trace cache on label boundaries

ActiveUS20050125632A1Digital computer detailsConcurrent instruction executionParallel computingTrace Cache

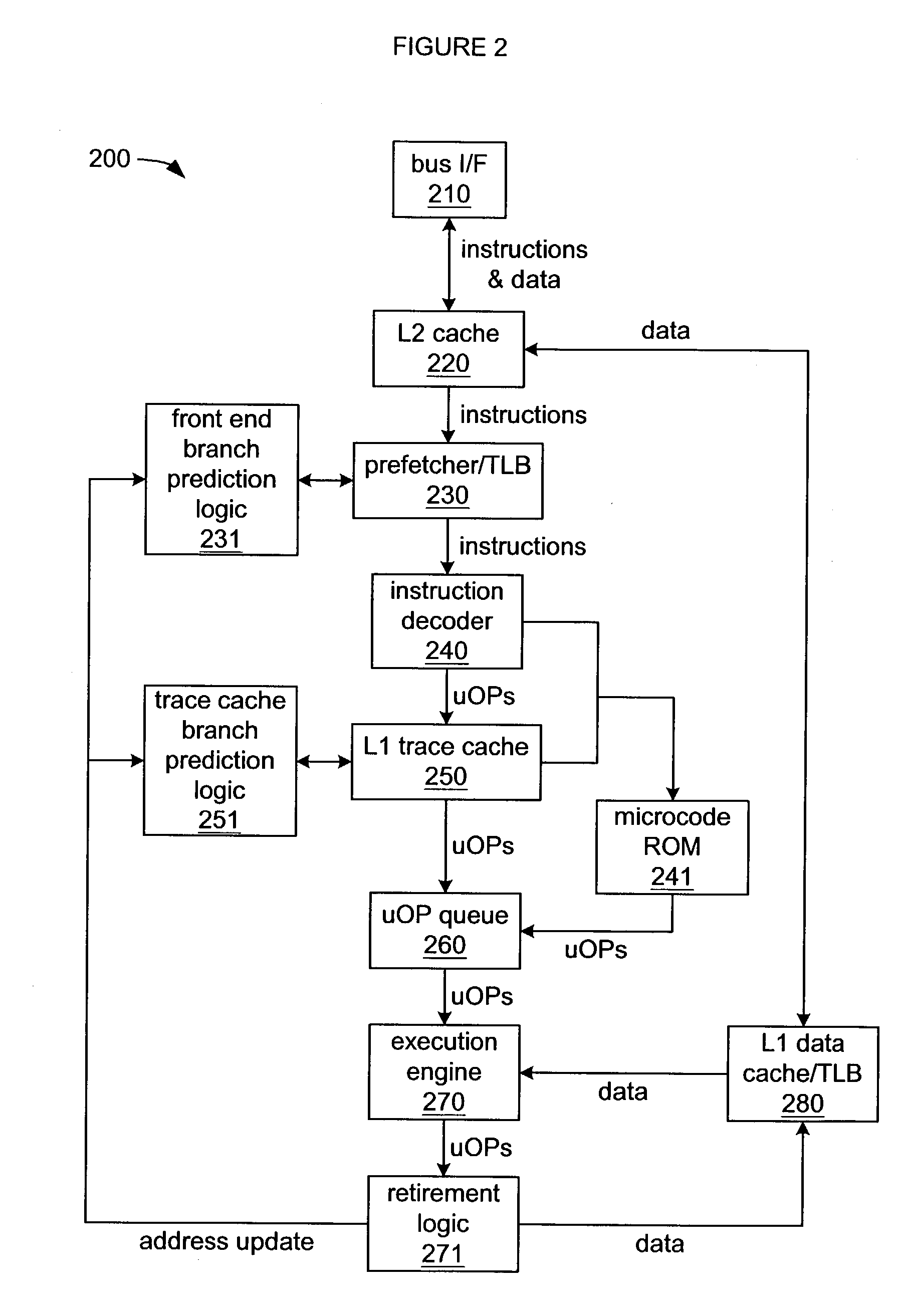

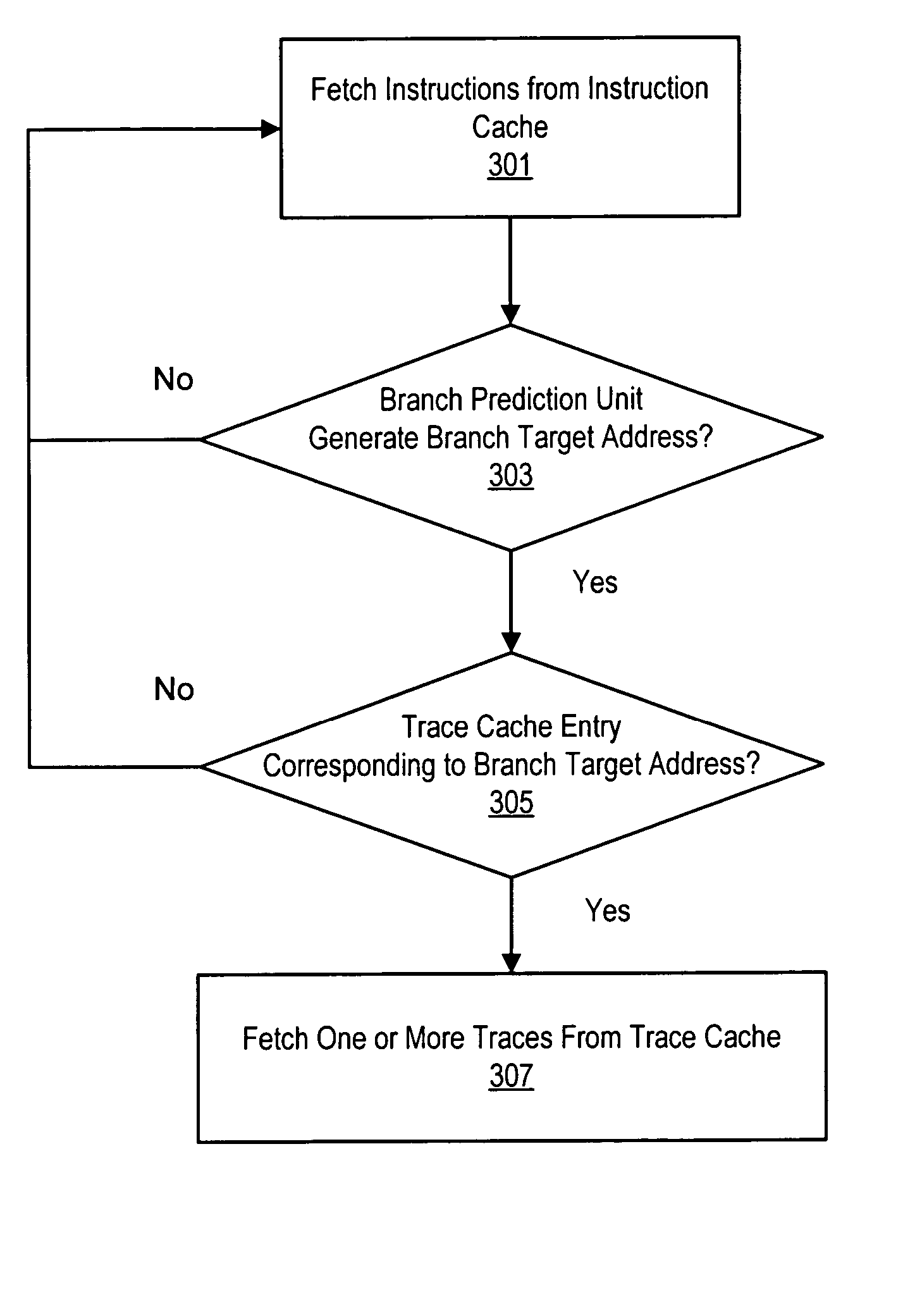

Various embodiments of methods and systems for implementing a microprocessor that includes a trace cache and attempts to transition fetching from instruction cache to trace cache only on label boundaries are disclosed. In one embodiment, a microprocessor may include an instruction cache, a branch prediction unit, and a trace cache. The prefetch unit may fetch instructions from the instruction cache until the branch prediction unit outputs a predicted target address for a branch instruction. When the branch prediction unit outputs a predicted target address, the prefetch unit may check for an entry matching the predicted target address in the trace cache. If a match is found, the prefetch unit may fetch one or more traces from the trace cache in lieu of fetching instructions from the instruction cache.

Owner:MEDIATEK INC

Method of an address trace cache storing loop control information to conserve trace cache area

InactiveUS6988190B1Shorten address decoding timeReduce chip sizeDigital computer detailsConcurrent instruction executionLoop controlOperating system

A branch prediction method using an address trace is described. An address trace corresponding to an executed instruction is stored itself with a decoded form. After appointing a start address and an end address of a repeated routine, current routine iteration count and total number of iterations are compared with each other, confirming the end of the routine and storing address information of the next routine. Therefore, access information of the repeated routine can be stored using a small amount of trace cache.

Owner:SAMSUNG ELECTRONICS CO LTD

Performance of a cache by detecting cache lines that have been reused

ActiveUS20060224830A1Improve performanceCache hit may be improvedMemory systemsMicro-instruction address formationParallel computingTrace Cache

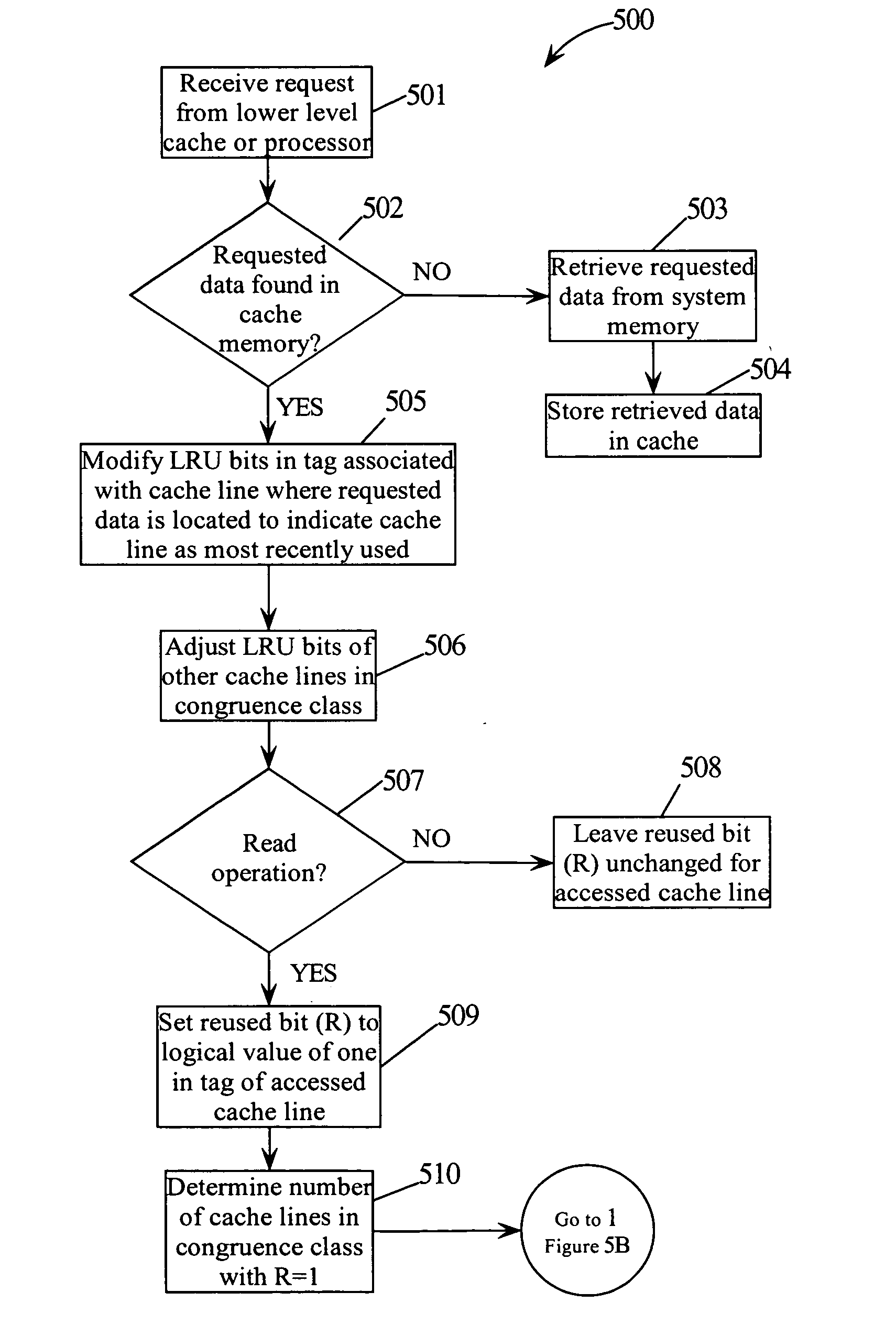

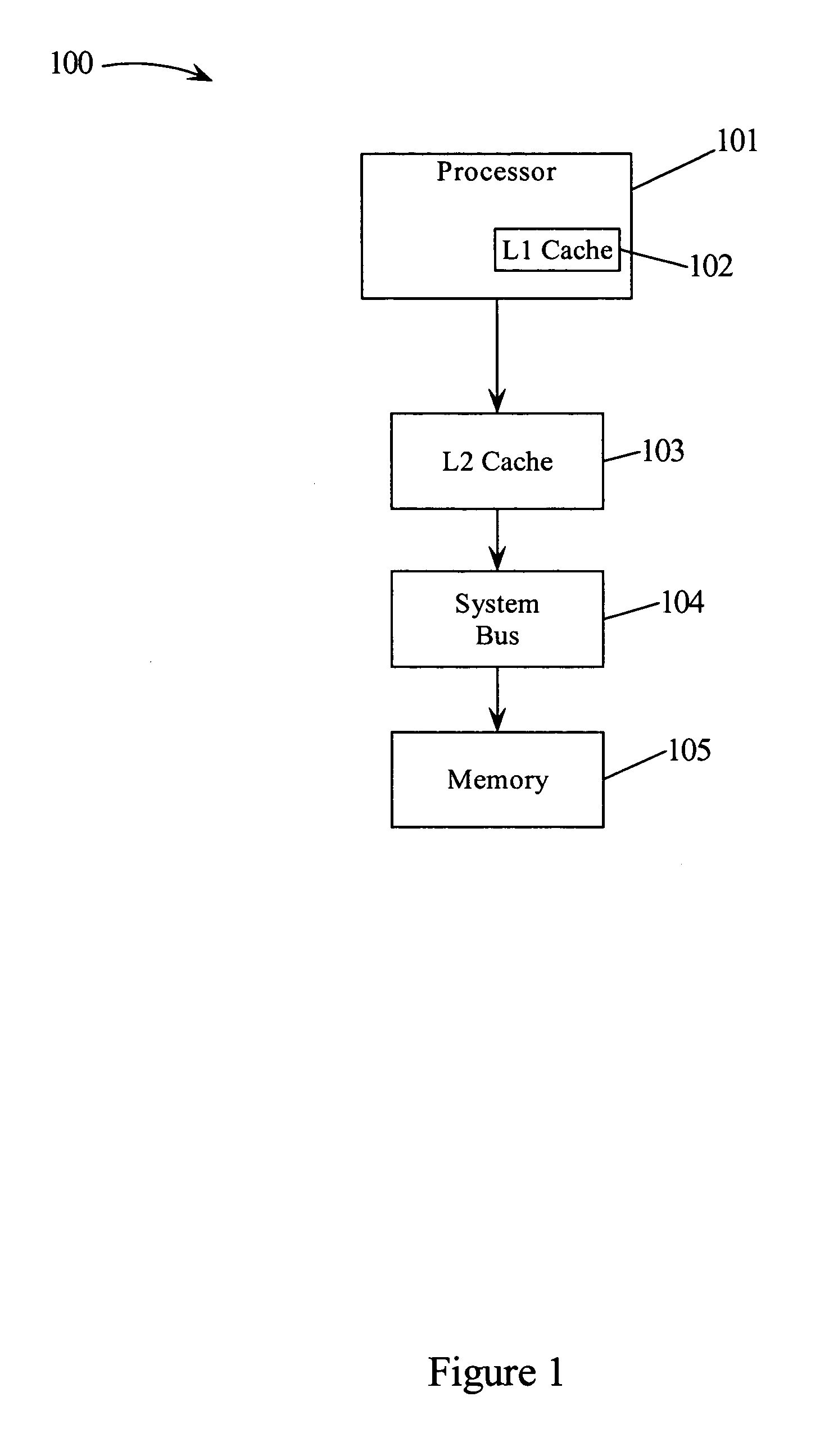

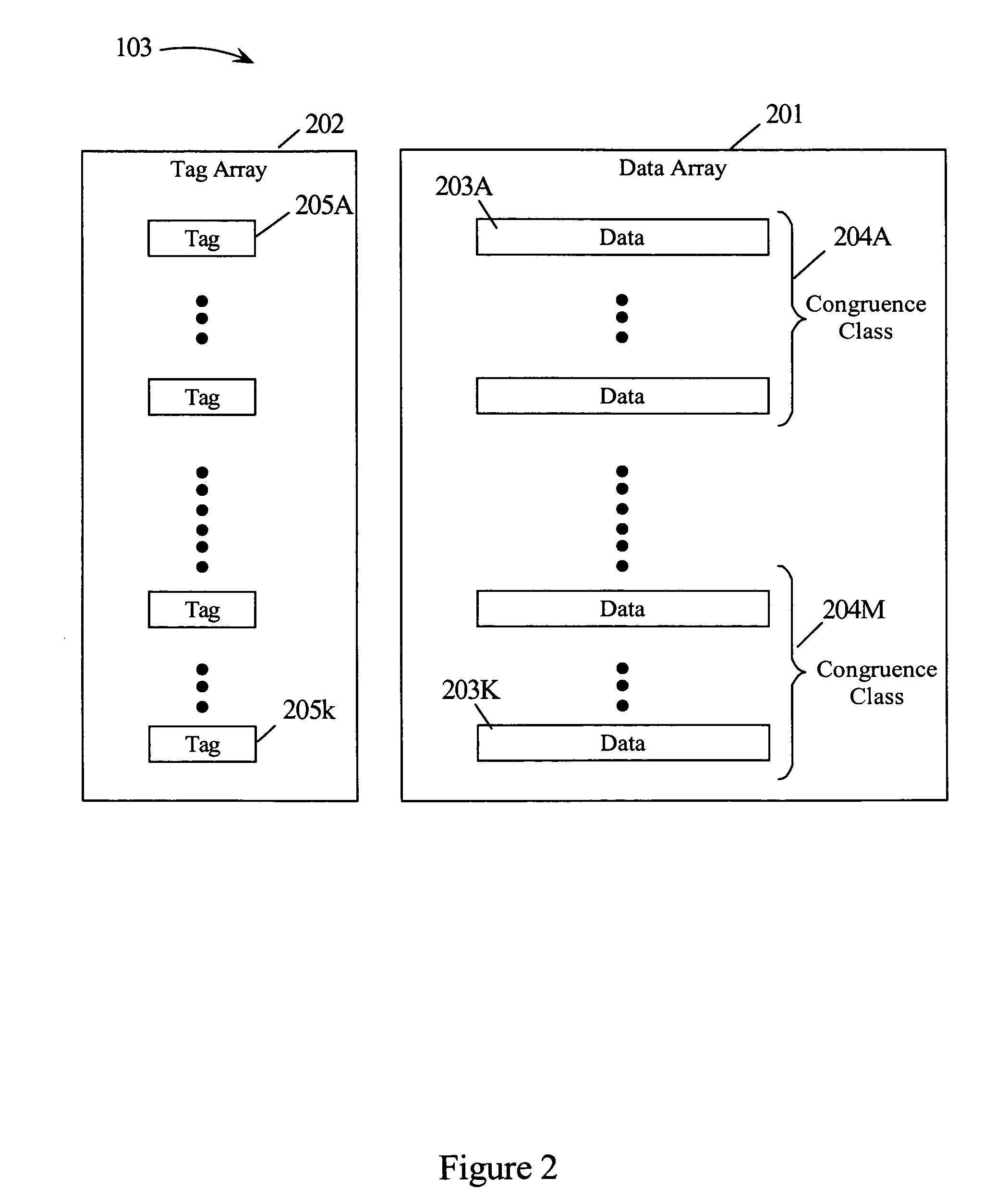

A method and system for improving the performance of a cache. The cache may include an array of tag entries where each tag entry includes an additional bit (“reused bit”) used to indicate whether its associated cache line has been reused, i.e., has been requested or referenced by the processor. By tracking whether a cache line has been reused, data (cache line) that may not be reused may be replaced with the new incoming cache line prior to replacing data (cache line) that may be reused. By replacing data in the cache memory that might not be reused prior to replacing data that might be reused, the cache hit may be improved thereby improving performance.

Owner:META PLATFORMS INC

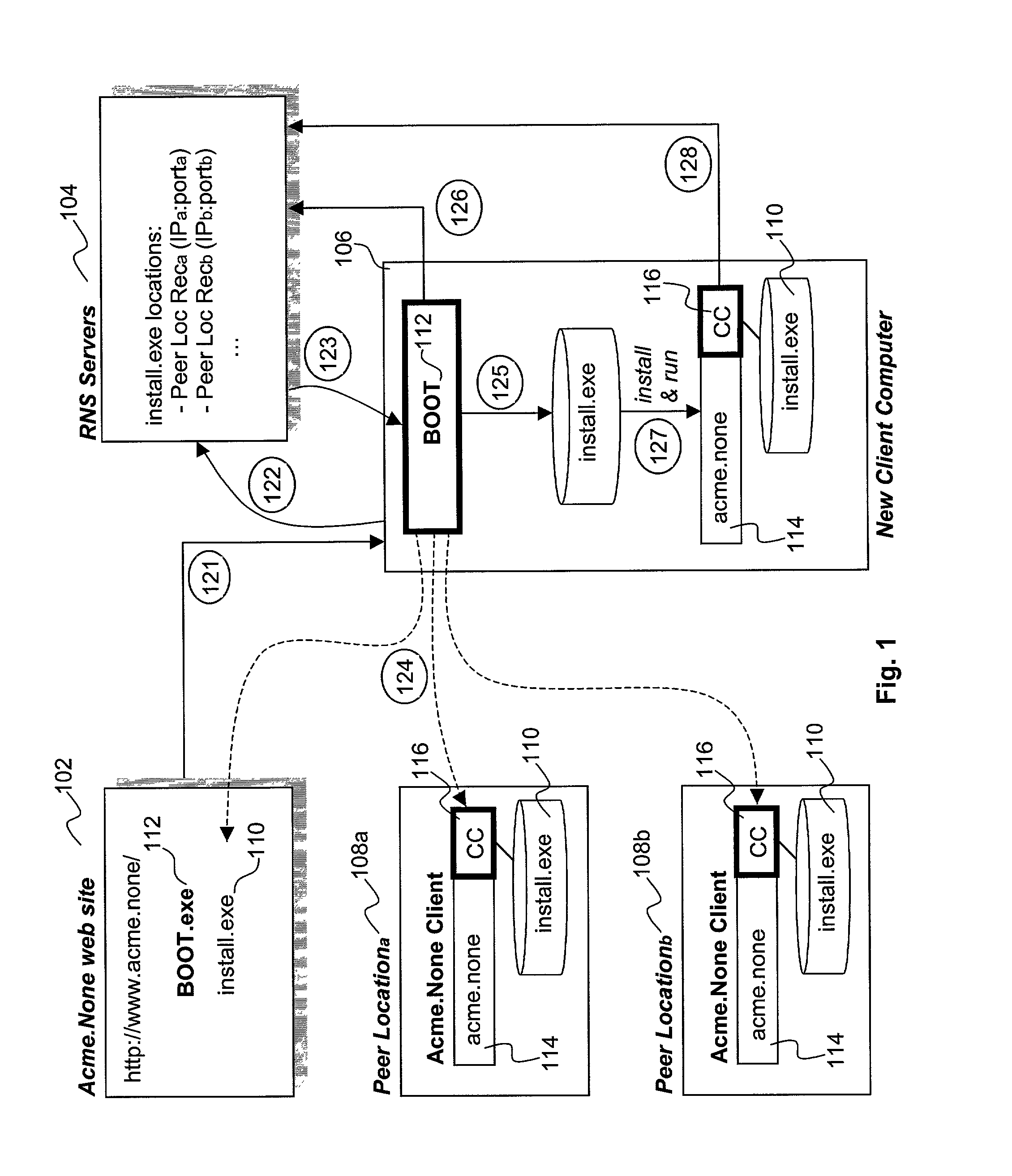

Distribution of binary executables and content from peer locations/machines

InactiveUS7584261B1Spoofing becomes difficultPrevent overloadMultiple digital computer combinationsProgram controlParallel computingClient-side

Binary executables are distributed in a distributed manner by equipping a server with a bootstrap program. The server provides the bootstrap program to a client computer in response to the client's request for the binary executables. The bootstrap program is designed to enable the client computer to obtain the binary executables in one or more portions from one or more peer locations that have already downloaded the said binary executables. In one embodiment, the bootstrap program also monitors the performance associated with obtaining the portions of the binary executables, and reports the performance data to a resource naming service that tracks peer locations that cache the binary executables. In one embodiment, the binary executables also includes a component that registers the client computer as a peer location that caches the binary executables, and provides the binary executables to other client computers responsive to their requests. In various embodiments, content is distributed in like manner.

Owner:MICROSOFT TECH LICENSING LLC

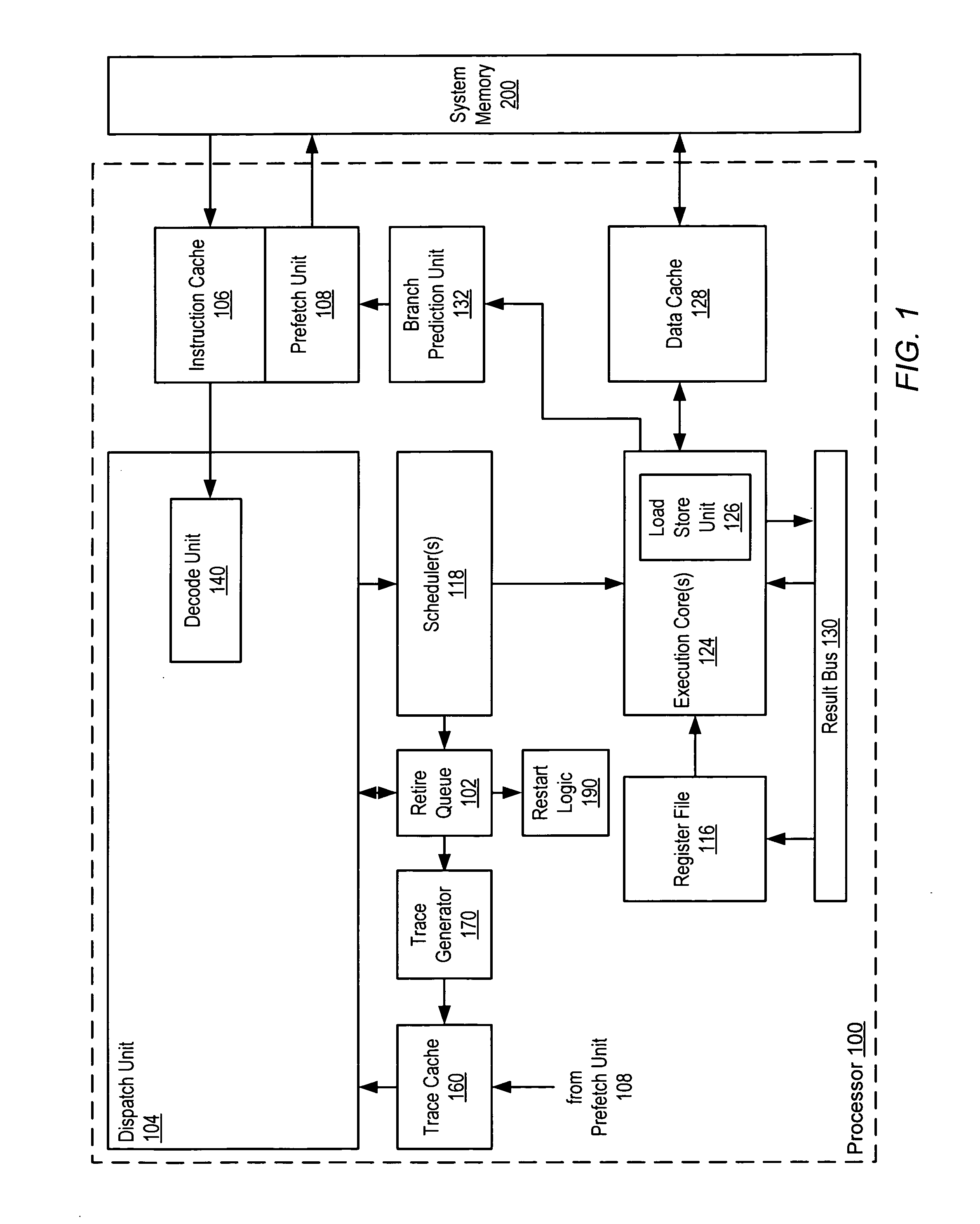

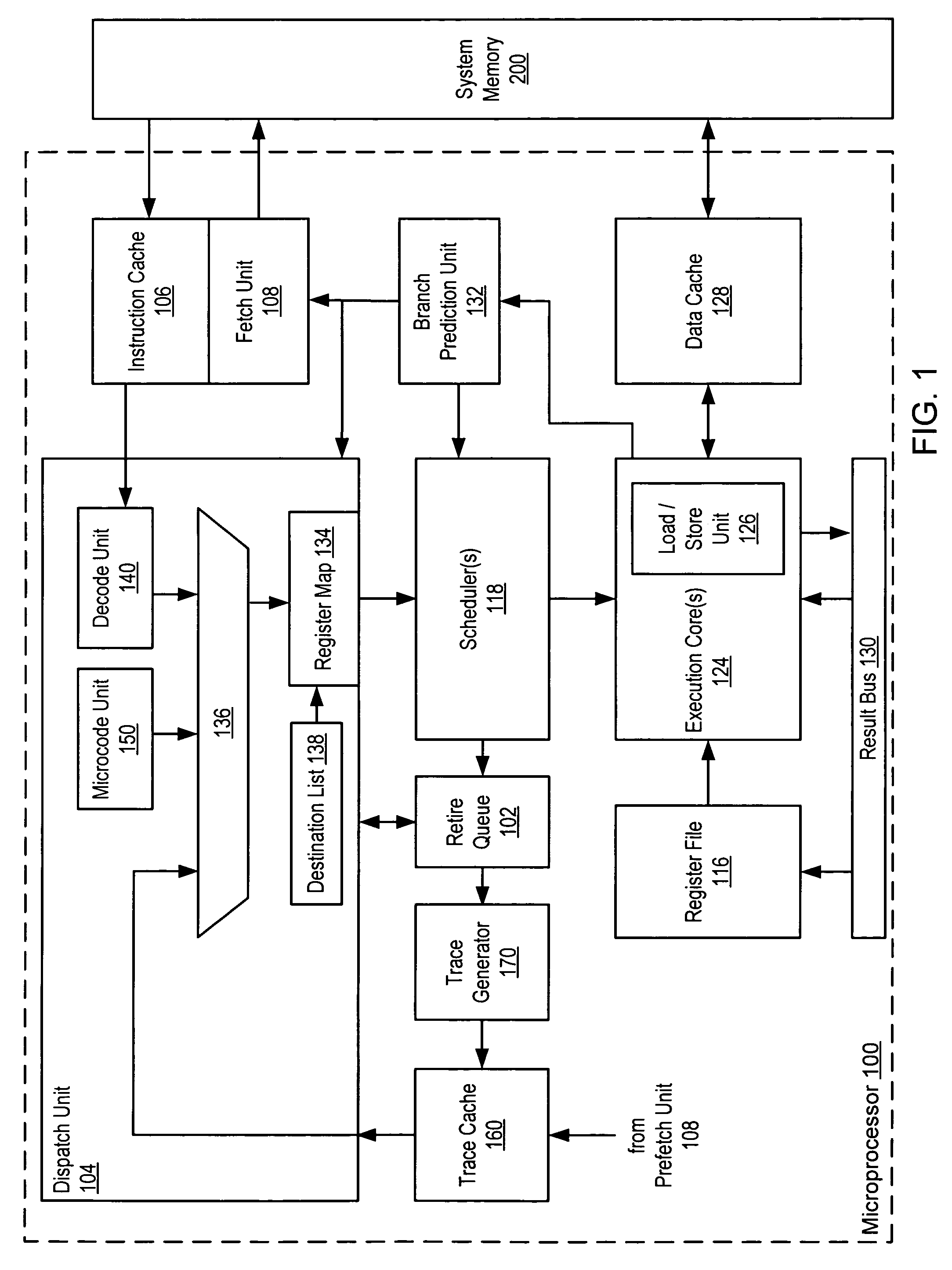

Method and processor including logic for storing traces within a trace cache

InactiveUS7213126B1Memory adressing/allocation/relocationDigital computer detailsParallel computingTrace Cache

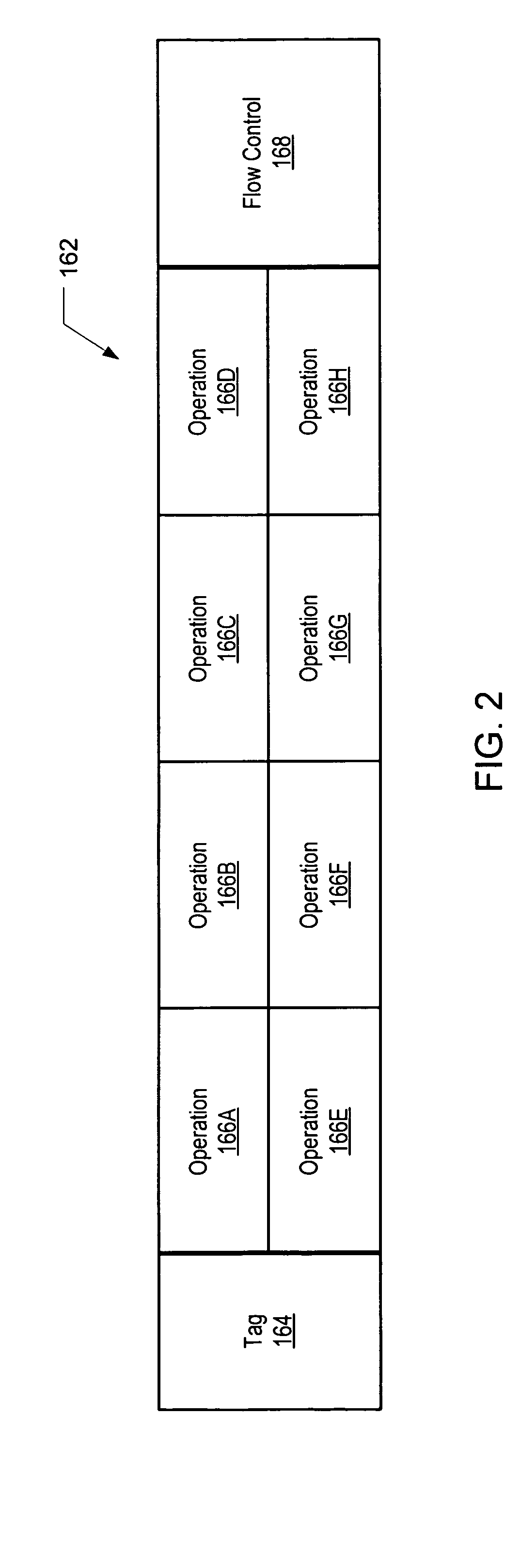

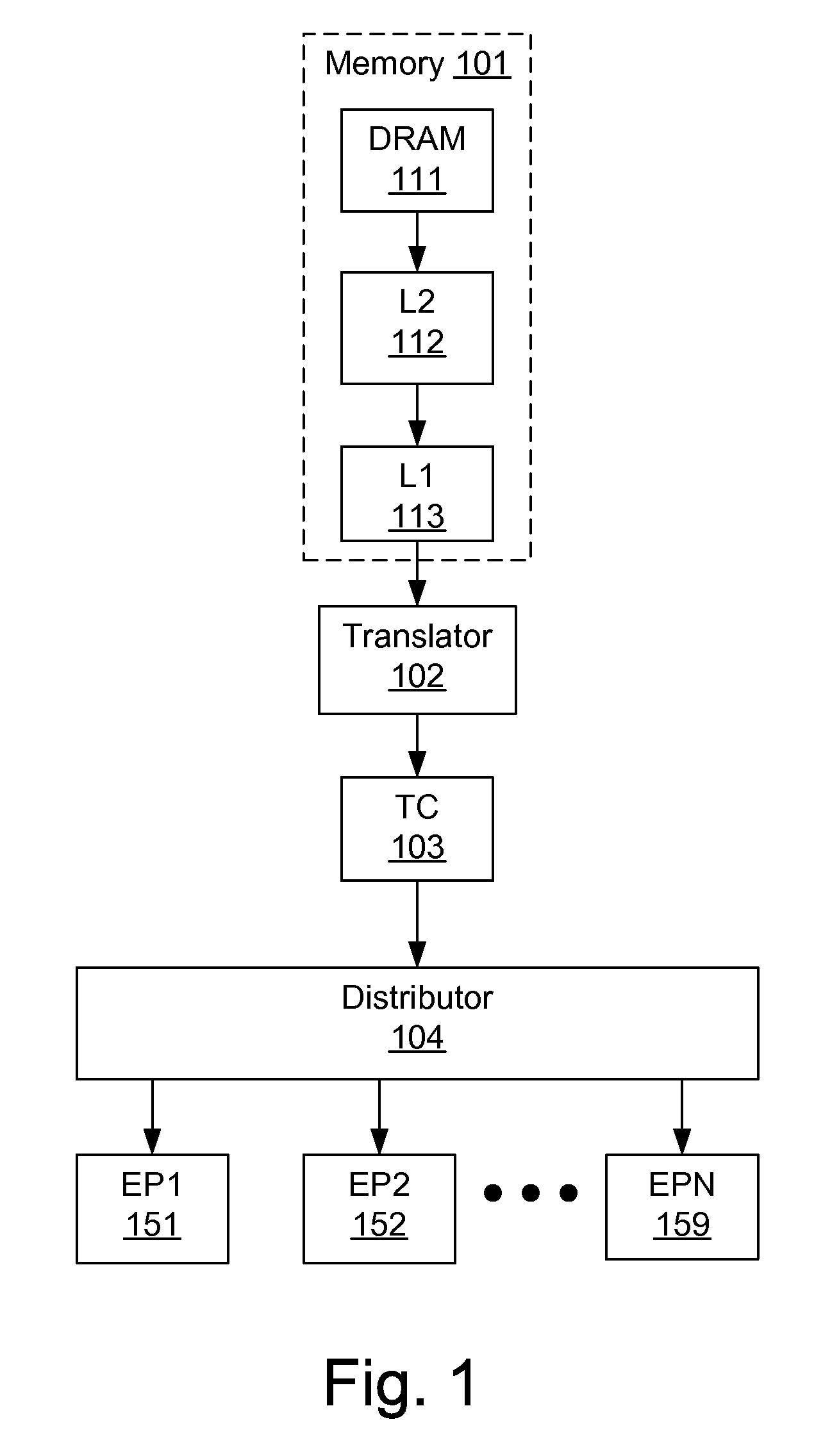

A processor includes a trace cache memory coupled to a trace generator. The trace generator may be configured to generate a plurality of traces each including one or more operations that may be decoded from one or more instructions. Each of the operations may be associated with a respective address. The trace cache memory is coupled to the trace generator and includes a plurality of entries each configured to store one of the traces. The trace generator may be further configured to restrict each of the traces to include only operations having respective addresses that fall within one or more predetermined ranges of contiguous addresses.

Owner:GLOBALFOUNDRIES INC

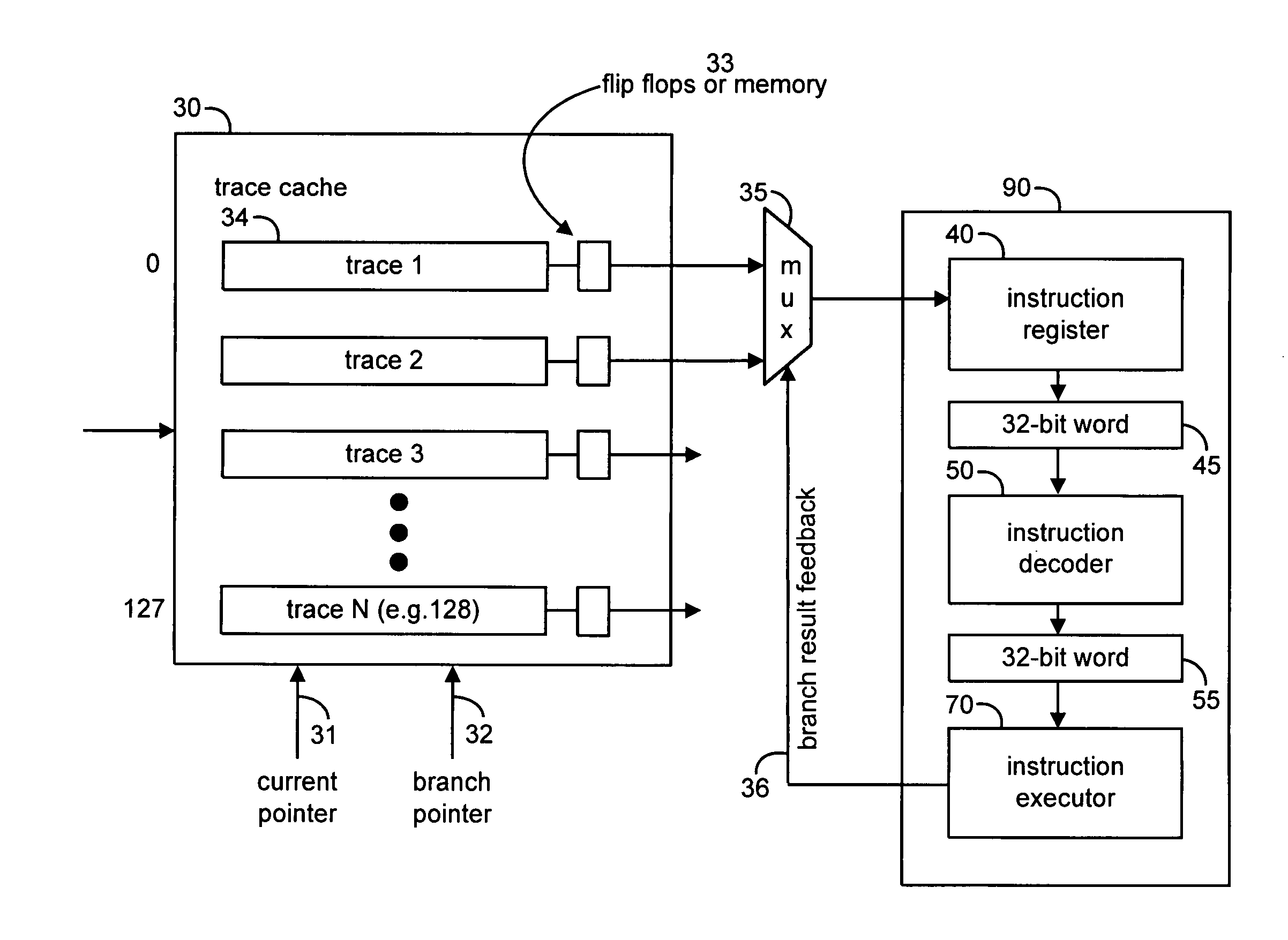

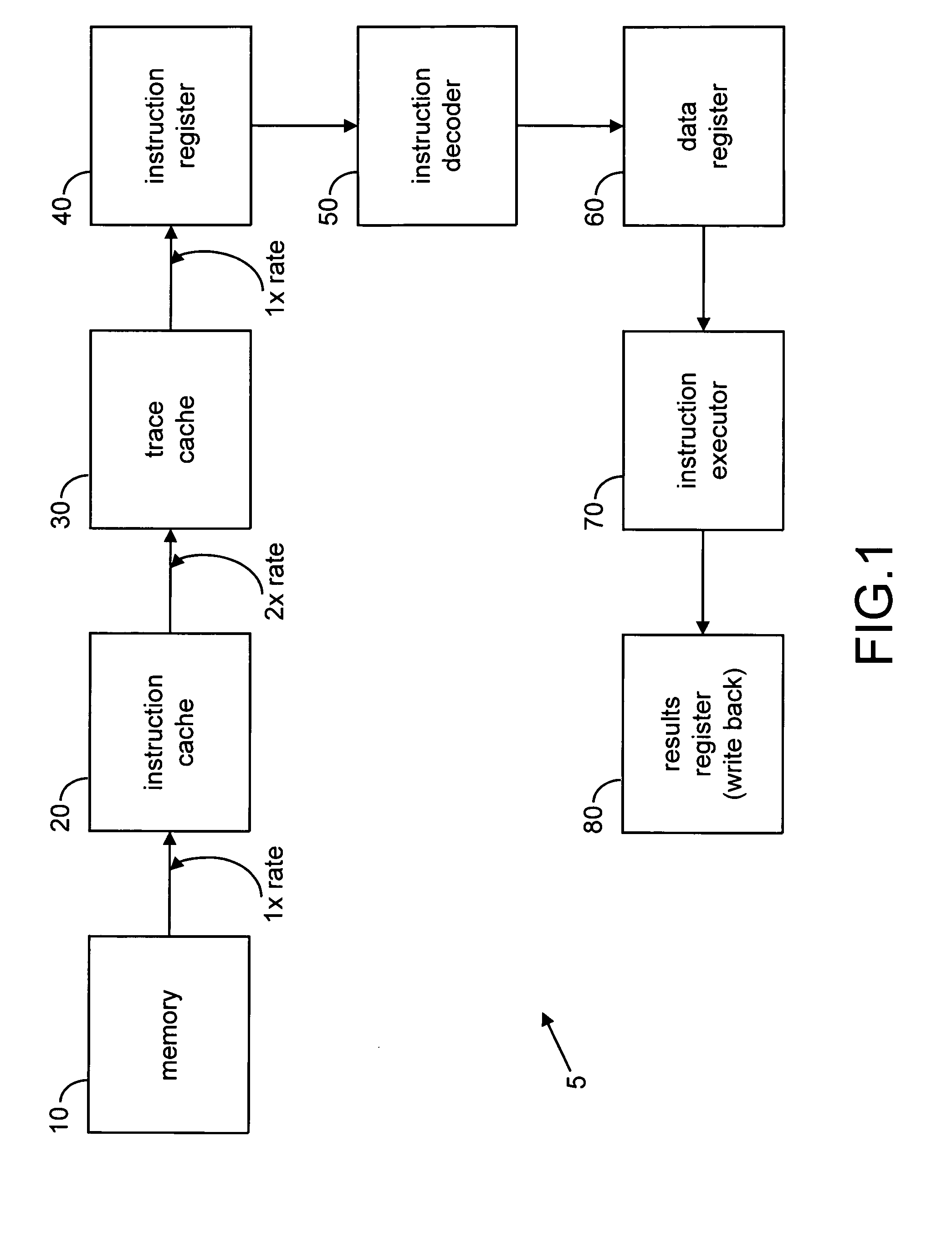

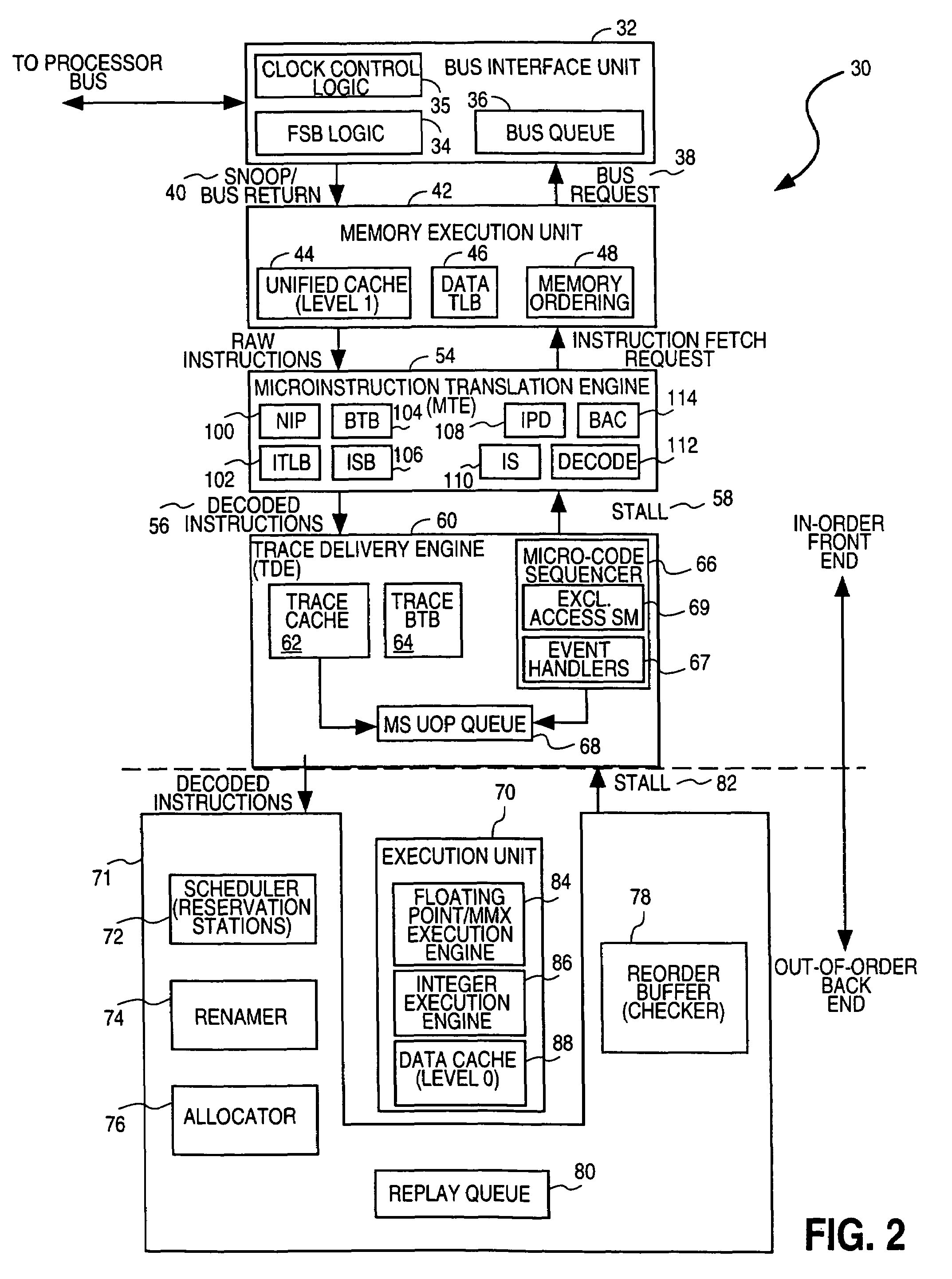

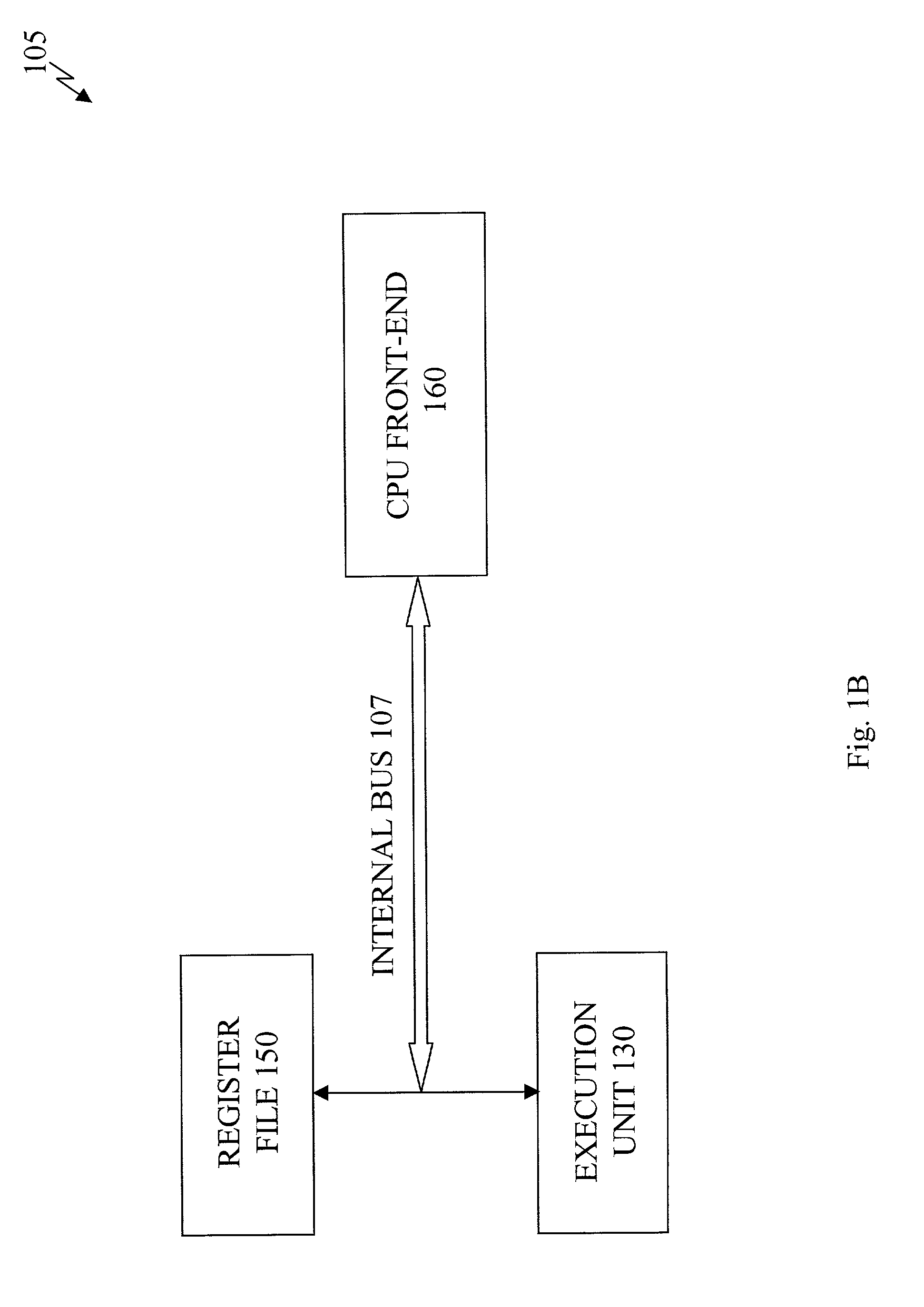

Implementation of an efficient instruction fetch pipeline utilizing a trace cache

ActiveUS7139902B2Improve computing powerReducing instruction execution pipeline stallDigital computer detailsNext instruction address formationParallel computingTrace Cache

A method and apparatus are disclosed for enhancing the pipeline instruction transfer and execution performance of a computer architecture by reducing instruction stalls due to branch and jump instructions. Trace cache within a computer architecture is used to receive computer instructions at a first rate and to store the computer instructions as traces of instructions. An instruction execution pipeline is also provided to receive, decode, and execute the computer instructions at a second rate that is less than the first rate. A mux is also provided between the trace cache and the instruction execution pipeline to select a next instruction to be loaded into the instruction execution pipeline from the trace cache based, in part, on a branch result fed back to the mux from the instruction execution pipeline.

Owner:QUALCOMM INC

Method and system for changing the executable status of an operation following a branch misprediction without refetching the operation

ActiveUS7197630B1Digital computer detailsSpecific program execution arrangementsBranch predicationOperating system

A method and system for changing the executable status of an operation following a branch misprediction. In one embodiment, a method may include predicting an execution path of a first conditional branch operation stored in an entry of a trace cache, and in response to predicting the execution path, if a first operation stored in the entry of the trace cache is not in the execution path according to the prediction, assigning to the first operation a non-executable status indicative that the first operation is not in the execution path. The method may further include detecting that the prediction is incorrect subsequent to assigning the non-executable status to the first operation and assigning an executable status to the first operation in response to detecting the incorrect prediction, where the executable status is indicative that the first operation is in the execution path.

Owner:ADVANCED MICRO DEVICES INC

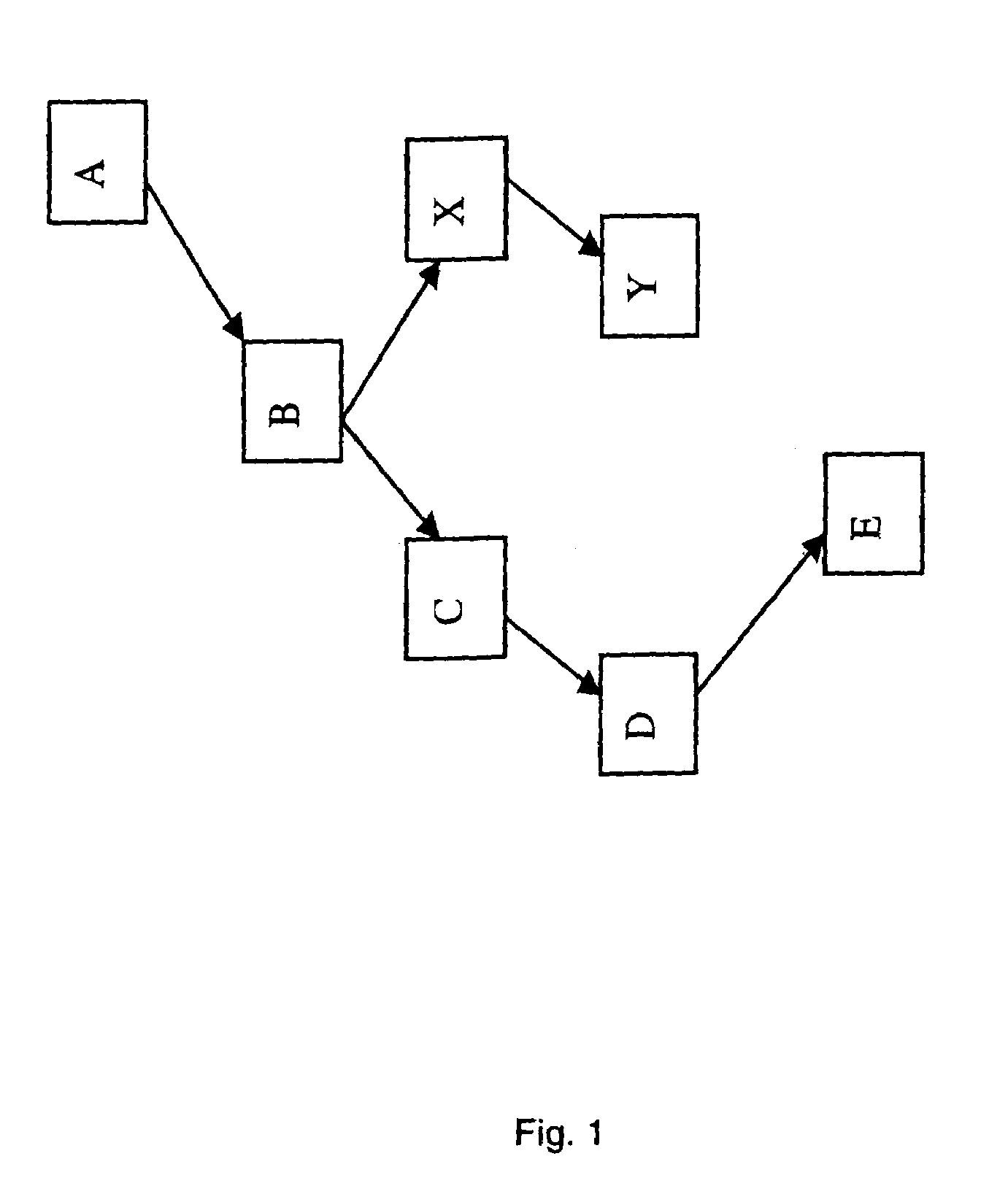

Method and apparatus for an efficient multi-path trace cache design

InactiveUS7366875B2Efficiently store and retrieveMemory architecture accessing/allocationDigital computer detailsParallel computingMulti path

A novel trace cache design and organization to efficiently store and retrieve multi-path traces. A goal is to design a trace cache, which is capable of storing multi-path traces without significant duplication in the traces. Furthermore, the effective access latency of these traces is reduced.

Owner:INT BUSINESS MASCH CORP

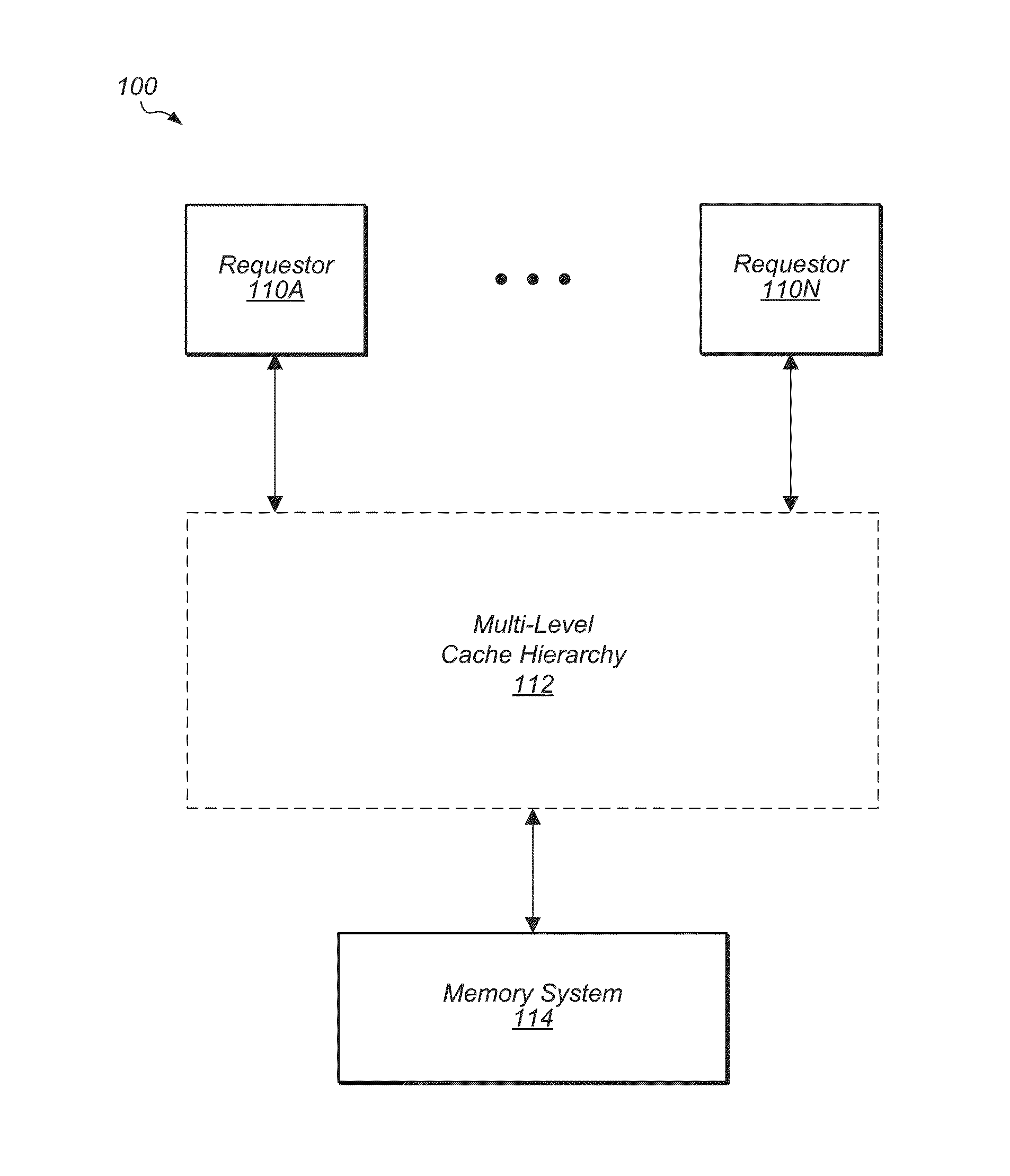

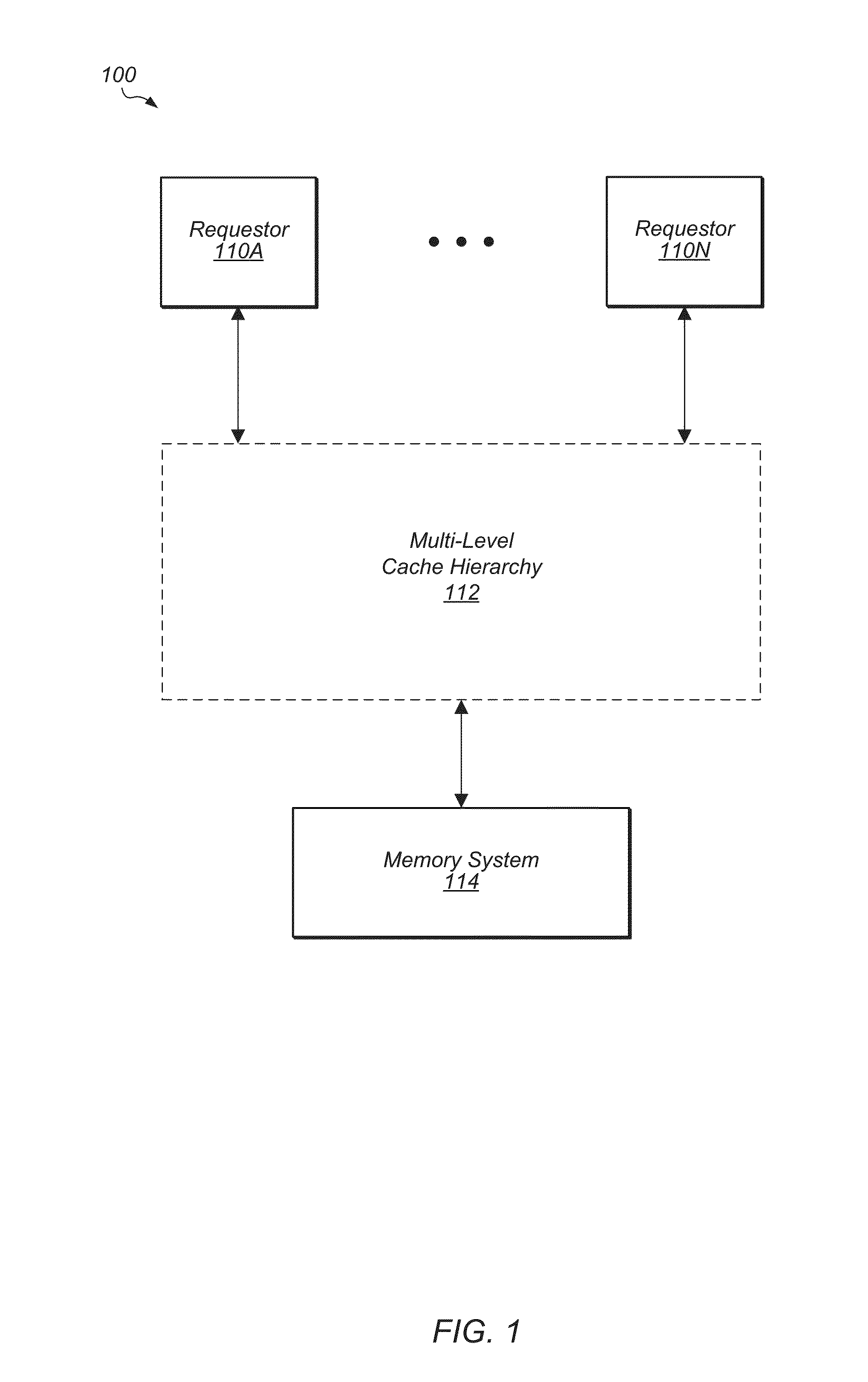

Selective victimization in a multi-level cache hierarchy

ActiveUS20150149721A1Reduce in quantityMemory adressing/allocation/relocationEnergy efficient computingCache hierarchyParallel computing

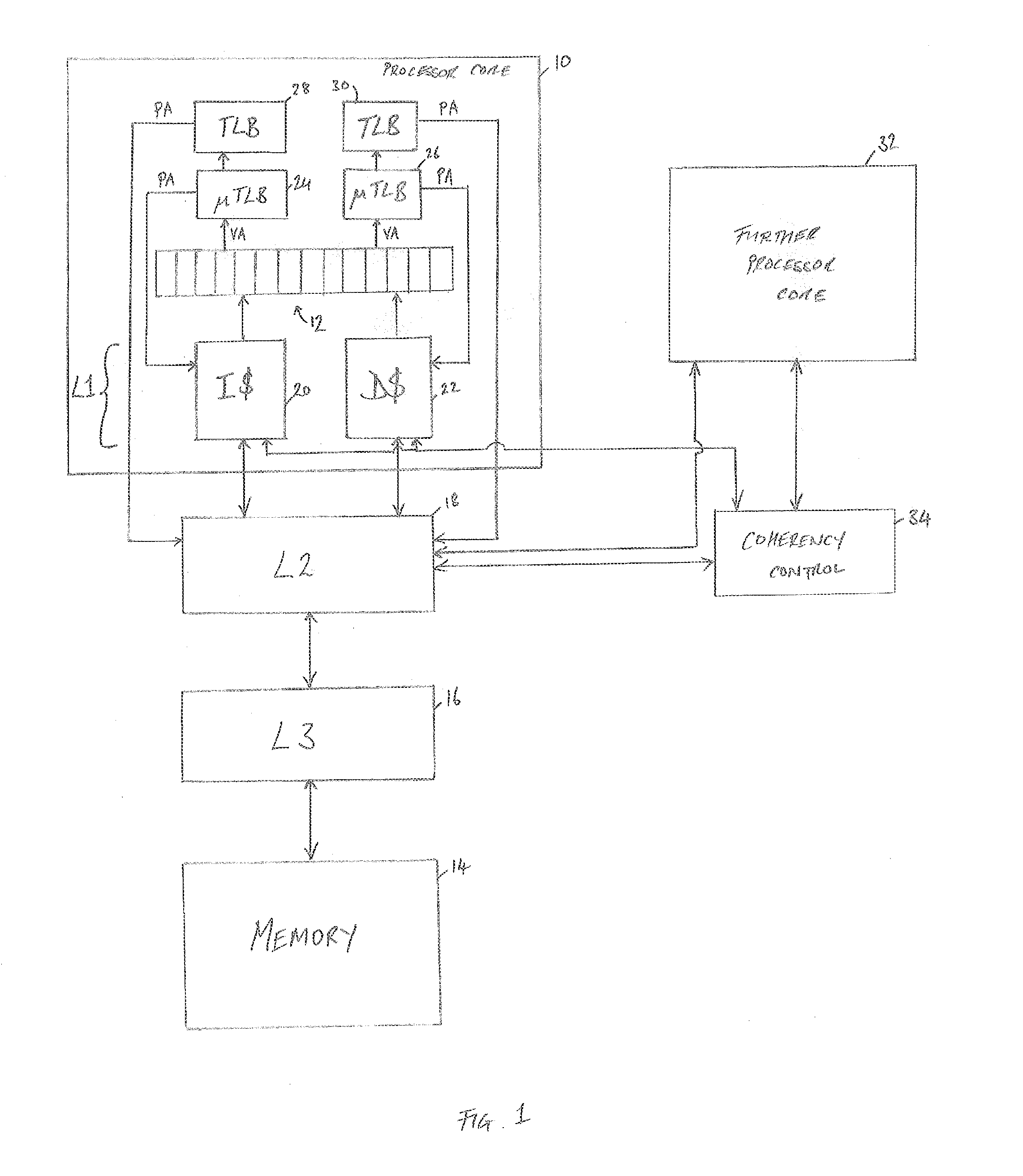

Systems, methods, and apparatuses for implementing selective victimization to reduce power and utilized bandwidth in a multi-level cache hierarchy. Each set of an upper-level cache includes a counter that keeps track of the number of times the set was accessed. These counters are periodically decremented by another counter that tracks the total number of accesses to the cache. If a given set counter is below a certain threshold value, clean victims are dropped from this given set instead of being sent to a lower-level cache. Also, a separate counter is used to track the total number of outstanding requests for the cache as a proxy for bus-bandwidth in order to gauge the total amount of traffic in the system. The cache will implement selective victimization whenever there is a large amount of traffic in the system.

Owner:APPLE INC

System and method for speeding up database lookups for multiple synchronized data streams

InactiveUS7574451B2Increase loadImprove accuracyData processing applicationsDigital data information retrievalData streamReal time services

Owner:MICROSOFT TECH LICENSING LLC

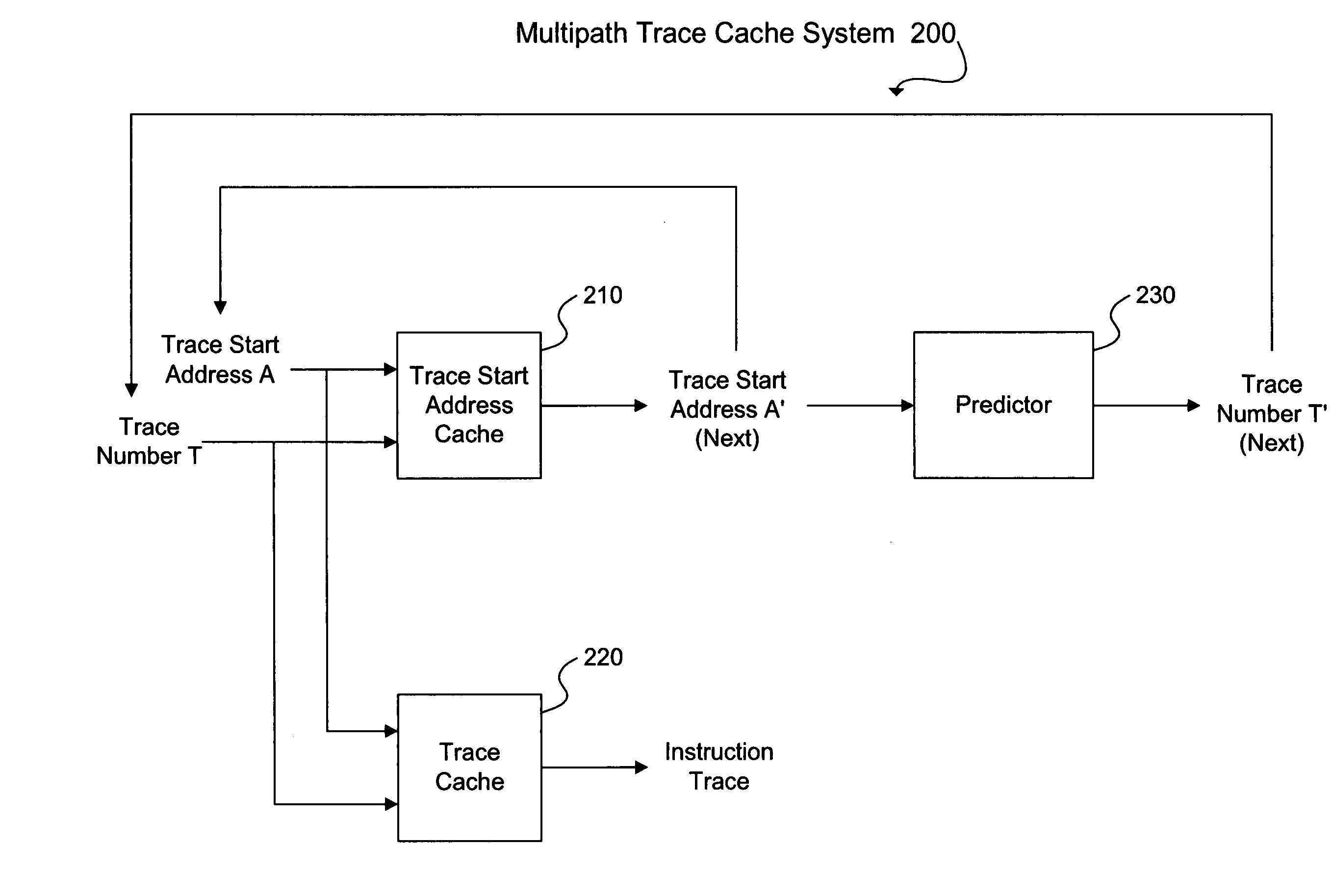

Mechanism and method for two level adaptive trace prediction

InactiveUS20070162895A1Specific program execution arrangementsMemory systemsShift registerParallel computing

A trace cache system is provided comprising a trace start address cache for storing trace start addresses with successor trace start addresses, a trace cache for storing traces of instructions executed, a trace history table (THT) for storing trace numbers in rows, a branch history shift register (BHSR) or a trace history shift register (THSR) that stores histories of branches or traces executed, respectively, a THT row selector for selecting a trace number row from the THT, the selection derived from a combination of a trace start address and history information from the BHSR or the THSR, and a trace number selector for selecting a trace number from the selected trace number row and for outputting the selected trace number as a predicted trace number.

Owner:IBM CORP

Systems and Methods to Manage Cache Data Storage in Working Memory of Computing System

ActiveUS20160041927A1Memory architecture accessing/allocationMemory adressing/allocation/relocationMetadata managementParallel computing

Systems and methods for managing records stored in a storage cache are provided. A cache index is created and maintained to track where records are stored in buckets in the storage cache. The cache index maps the memory locations of the cached records to the buckets in the cache storage and can be quickly traversed by a metadata manager to determine whether a requested record can be retrieved from the cache storage. Bucket addresses stored in the cache index include a generation number of the bucket that is used to determine whether the cached record is stale. The generation number allows a bucket manager to evict buckets in the cache without having to update the bucket addresses stored in the cache index. In an alternative embodiment, non-contiguous portions of computing system working memory are used to cache data instead of a dedicated storage cache.

Owner:PERNIXDATA

Trace cache for efficient self-modifying code processing

A trace cache for efficient self-modifying code processing enables selective invalidation of entries of the trace cache, advantageously retaining some of the entries in the trace cache even during self-modifying code events. Instructions underlying trace cache entries are monitored for modification in groups, enabling advantageously reduced hardware. Associated with trace cache entries are one or more translation ages, determined when the entry is built by sampling current ages of memory blocks underlying the entry. When the entry is accessed and micro-operations therein are processed, each of the translation ages of the accessed entry are compared with the current ages of the memory blocks underlying the accessed entry. If any of the age comparisons fail, then the micro-operations are aborted and the entry is invalidated. When any portion of a memory block is modified, the current age of the modified memory block is incremented.

Owner:SUN MICROSYSTEMS INC

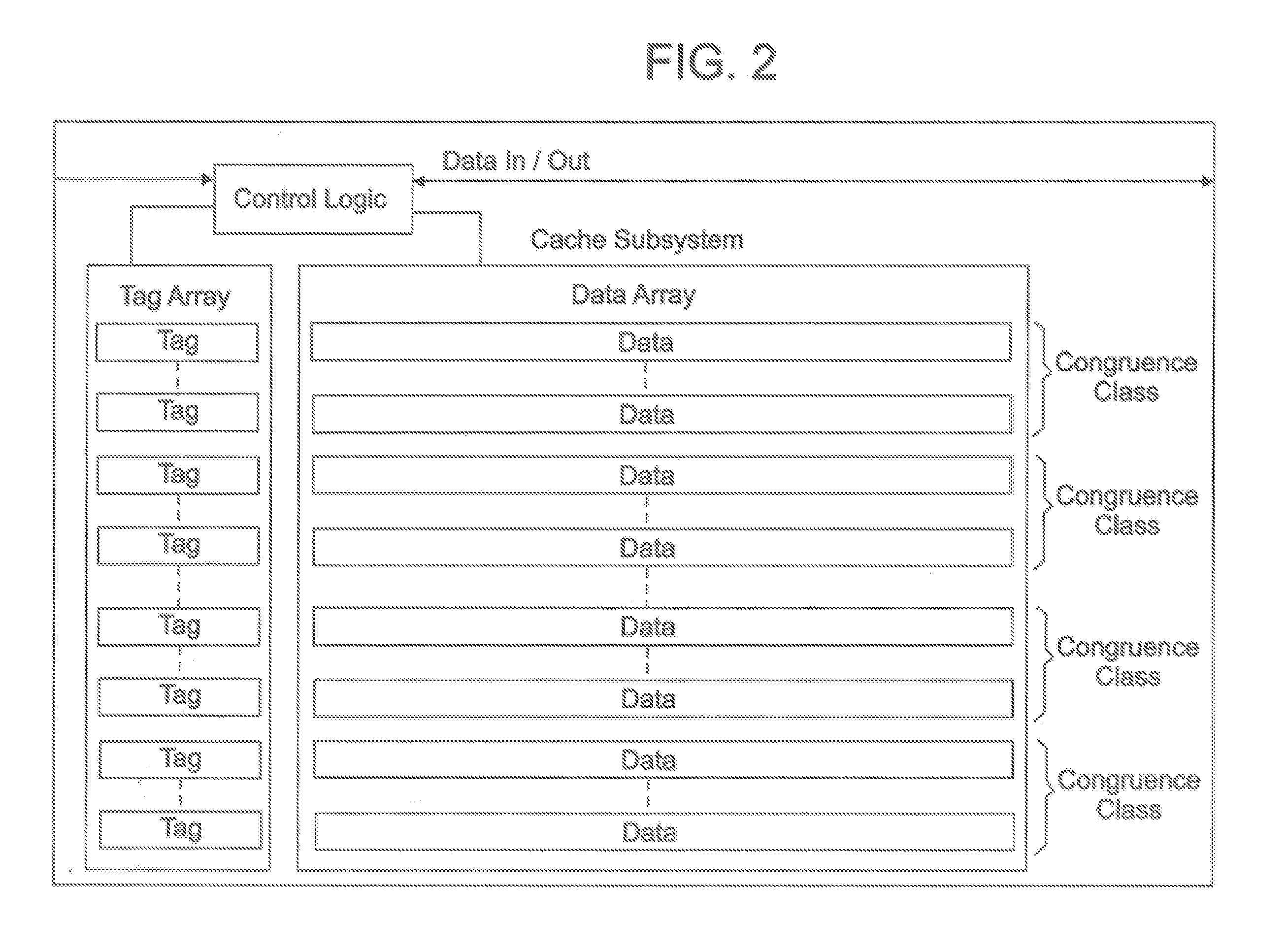

Apparatus and Method for Cache Maintenance

InactiveUS20080114964A1Avoid inefficiencyLose weightGeneral purpose stored program computerProgram controlControl flowParallel computing

A single unified level one instruction cache in which some lines may contain traces and other lines in the same congruence class may contain blocks of instructions consistent with conventional cache lines. Control is exercised over which lines are contained within the cache. This invention avoids inefficiencies in the cache by removing trace lines experiencing early exits from the cache, or trace lines that are short, by maintaining a few bits of information about the accuracy of the control flow in a trace cache line and using that information in addition to the LRU (Least Recently Used) bits that maintain the recency information of a cache line, in order to make a replacement decision.

Owner:IBM CORP

Maintaining memory coherency with a trace cache

ActiveUS7747822B1Energy efficient ICTMemory adressing/allocation/relocationPower efficientCache invalidation

A method and system for maintaining memory coherence in a trace cache is disclosed. The method and system comprises monitoring a plurality of entries in a trace cache. The method and system includes selectively invalidating at least one trace cache entry based upon detection of a modification of the at least one trace cache entry.If modifications are detected, then corresponding trace cache entries are selectively invalidated (rather than invalidating the entire trace cache). Thus trace cache coherency is maintained with respect to memory in a performance and power-efficient manner. The monitoring further accounts for situations where more than one trace cache entry is dependent on a single cache line, such that modifications to the single cache line result in invalidations of a plurality of trace cache entries.

Owner:SUN MICROSYSTEMS INC

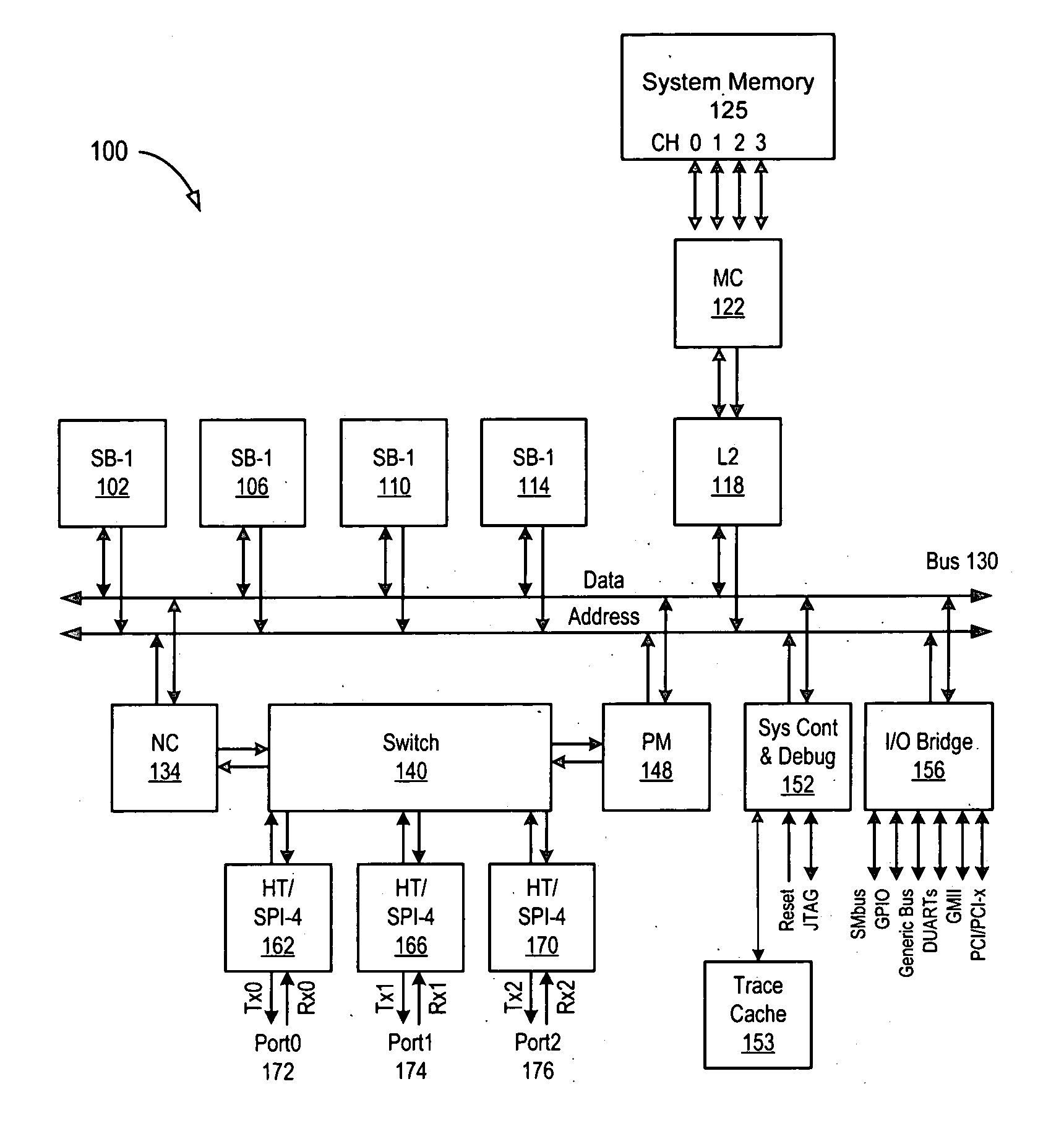

Hypertransport exception detection and processing

In accordance with the present invention a system for detecting transaction errors in a system comprising a plurality of data processing devices using a common system interconnect bus, comprises a node controller operably connected to said system interconnect bus and a plurality of interface agents communicatively coupled to said node controller. Error corresponding to transactions between said interface agents and other processing modules in said system are directed to said node controller; and wherein transaction errors that would not normally be communicated to said system interconnect bus are communicated by said node controller to said system interconnect bus to be available for detection. In an embodiment of the present invention, the interface agents operate in accordance with the hypertransport protocol. A system control and debug unit and a trace cache operably connected to the system bus can be used to diagnose and store errors conditions.

Owner:AVAGO TECH INT SALES PTE LTD

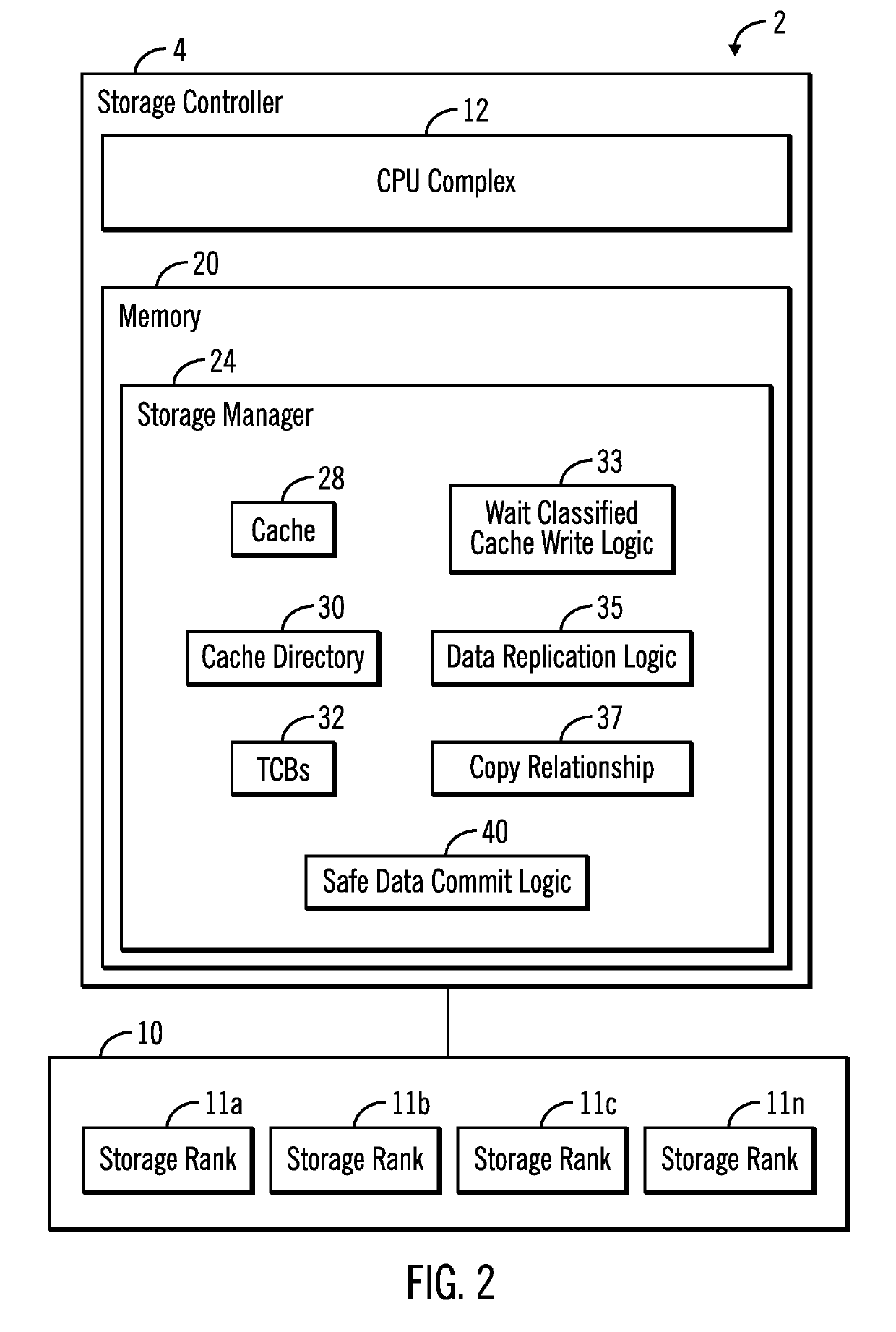

Wait classified cache writes in a data storage system

ActiveUS20190179761A1Memory architecture accessing/allocationInput/output to record carriersParallel computingWaiting period

In one embodiment, a task control block (TCB) for allocating cache storage such as cache segments in a multi-track cache write operation may be enqueued in a wait queue for a relatively long wait period, the first time the task control block is used, and may be re-enqueued on the wait queue for a relatively short wait period, each time the task control block is used for allocating cache segments for subsequent cache writes of the remaining tracks of the multi-track cache write operation. As a result, time-out suspensions caused by throttling of host input-output operations to facilitate cache draining, may be reduced or eliminated. It is appreciated that wait classification of task control blocks in accordance with the present description may be applied to applications other than draining a cache. Other features and aspects may be realized, depending upon the particular application.

Owner:IBM CORP

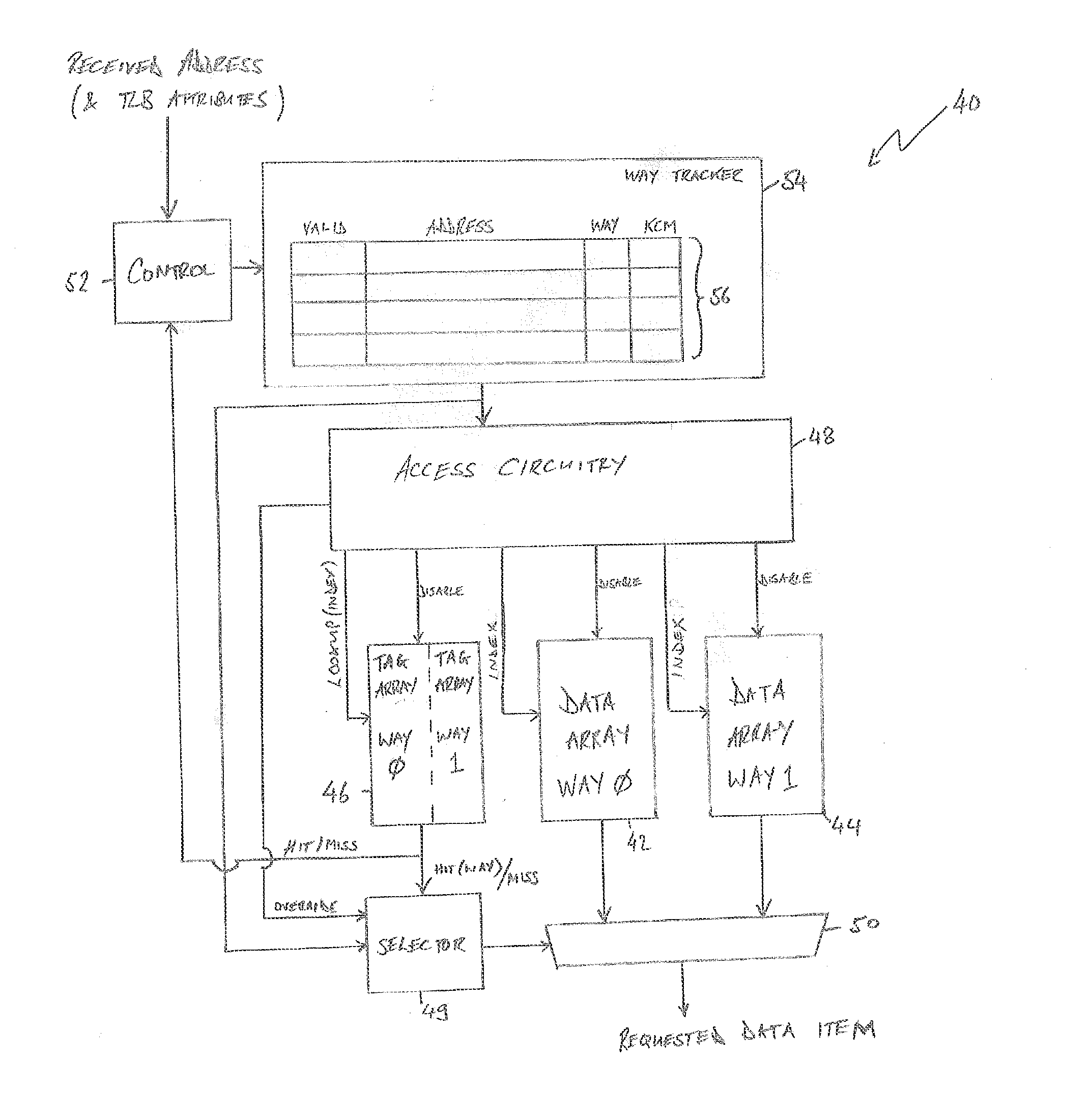

Tracking the content of a cache

ActiveUS20160328320A1Memory architecture accessing/allocationPower network operation systems integrationMissed IndicatorTrace Cache

A cache is provided comprising a plurality of ways, each way of the plurality of ways comprising a data array, wherein a data item stored by the cache is stored in the data array of one of the plurality of ways. A way tracker of the cache has a plurality of entries, each entry of the plurality of entries for storing a data item identifier and for storing, in association with the data item identifier, an indication of a selected way of the plurality of ways to indicate that a data item identified by the data item identifier is stored in the selected way. Each entry of the way tracker is further for storing a miss indicator in association with the data item identifier, wherein the miss indicator is set by the cache when a lookup for a data item identified by that data item identifier has resulted in a cache miss. A corresponding method of caching data is also provided.

Owner:ARM LTD

Linked instruction buffering of basic blocks for asynchronous predicted taken branches

InactiveUS20050257035A1Preventing unnecessary fetchingOvercomes shortcomingDigital computer detailsConcurrent instruction executionBasic blockTrace Cache

A method and apparatus for providing the capability to create a dynamic based buffer structure that takes an instruction addresses organized instruction cache and through the interaction of an asynchronous branch target buffer (BTB) and branch history table (BHT) forms a series of instructions that resembles a trace cache in the buffer structure. By allowing the dynamic creation of a predicted code sequence trace in the buffer structure, based on the past behavior of the instruction code, the usage of fetching is utilized and the instruction cache makes optimal use of area while reducing latency penalties associated with taken branches and branches which are predicted in the improper direction.

Owner:IBM CORP

Disk page swap-in method of virtual platform based on dual tracking

InactiveCN101859282AImprove continuityChange the number of swapsInput/output to record carriersMemory adressing/allocation/relocationVirtualizationInternal memory

The invention discloses a disk page swap-in method of a virtual platform based on dual tracking, comprising the following steps of: (1) establishing a tracking cache used for tracking the page swap-in operation of each process, wherein the tracking cache comprises a plurality of items and each item records the respective page swap-in operation information of one process in two hot-spot areas in which the swap-in operation happens; (2) tracking the page swap-in operation of each client process, and when the process is interrupted when page fault happens, finding the matched item in the tracking cache according to the identifier of the interrupted process; and (3) calculating the number of prefetched pages required for current page swap-in. The page swap-in method increases the page continuity of each client internal memory in a swap subarea and can dynamically change the page swapped-in number in each-time paging, thereby sufficiently reducing IO (Input Output) access times of the disks on the premise of ensuring the disk swapping cache hit and improving system efficiency.

Owner:ZHEJIANG UNIV

Prediction based indexed trace cache

InactiveUS20050149709A1Digital computer detailsConcurrent instruction executionTrace CacheBranch predictor

A system and method for compensating for branching instructions in trace caches is disclosed. A branch predictor uses the branching behavior of previous branching instructions to select between several traces beginning at the same linear instruction pointer (LIP) or instruction. The fetching mechanism of the processor selects the trace that most closely matches the previous branching behavior. In one embodiment, a new trace is generated only if a divergence occurs within a predetermined location. A divergence is a branch that is recorded as following one path (i.e. taken) and during execution follows a different path (i.e. not taken).

Owner:INTEL CORP

Virtualized platform based Method for swapping in disc page

InactiveCN101853219AImprove continuityChange the number of swapsInput/output to record carriersMemory adressing/allocation/relocationVirtualizationInternal memory

The invention discloses a virtualized platform method for swapping in a disc page, which comprises the following steps of: (1) establishing trace cache used for tracing page swap-in operations of various progresses, wherein the trace cache comprises a plurality of items and each item is recorded with the information of a progress on the latest page swap-in operation; (2) tracing the page swap-in operation of each client progress, and when interrupting due to page missing, finding a matched item in the trace cache according to an identifier of the progress subjected to interruption; and (3) calculating the number of prefetched pages to be swapped-in by the current pages according to the obtained item. A dynamic page swapping-in calculation method realized by the invention improves the page continuity of the internal memory of each client through a page swapping mechanism based on the internal memory state of the client, and can dynamically change the page swapping-in number each time when paging, therefore disc IO (Input Output) access frequency is sufficiently lowered and the system efficiency is improved on the premise of ensuring the disc switching cache hit.

Owner:ZHEJIANG UNIV

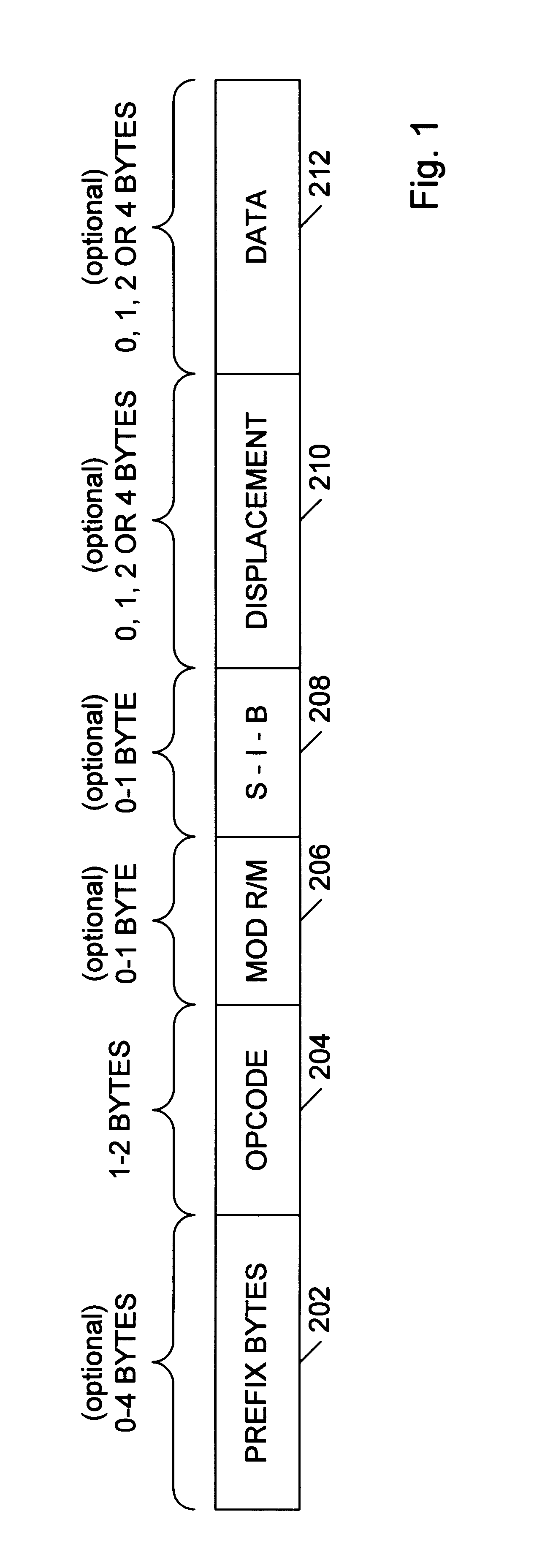

Microarchitecture for compact storage of embedded constants

An instruction stream having variable length instructions with embedded constants (e.g. immediate values and displacements) is translated into a stream of operations and a corresponding stream of bit fields, enabling advantageous compact storage of the embedded constants. The operations and the compact constants are optionally stored in entries in a trace cache and / or processed by execution pipelines. The compact constants are optionally formulated as a small constant field, a pointer, or both. The pointer of a particular one of the operations optionally references one of the bit fields within a window of the operations associated with the particular operation. A full-sized constant is constructed from one or more contiguous ones of the bit fields, starting with the referenced bit field, by unpacking and uncompressing information from the contiguous bit fields. An operation optionally includes a plurality of small constant fields and pointers to specify a respective plurality of constants.

Owner:ORACLE INT CORP

Method and apparatus selectively to advance a write pointer for a queue based on the indicated validity or invalidity of an instruction stored within the queue

InactiveUS7149883B1Runtime instruction translationDigital computer detailsInvalid DataOperating system

A buffer mechanism for buffering microinstructions between a trace cache and an allocator performs a compacting operation by overwriting entries within a queue, known not to store valid instructions or data, with valid instructions or data. Following a write operation to a queue included within the buffer mechanism, pointer logic determines whether the entries to which instructions or data have been written include the valid data or instructions. If an entry is shown to be invalid, the write pointer is not advanced past the relevant entry. In this way, an immediately following write operation will overwrite the invalid data or instruction with data or instruction. The overwriting instruction or data will again be subject to scrutiny (e.g., a qualitative determination) to determine whether it is valid or invalid, and will only be retained within the queue if valid.

Owner:MICRON TECH INC

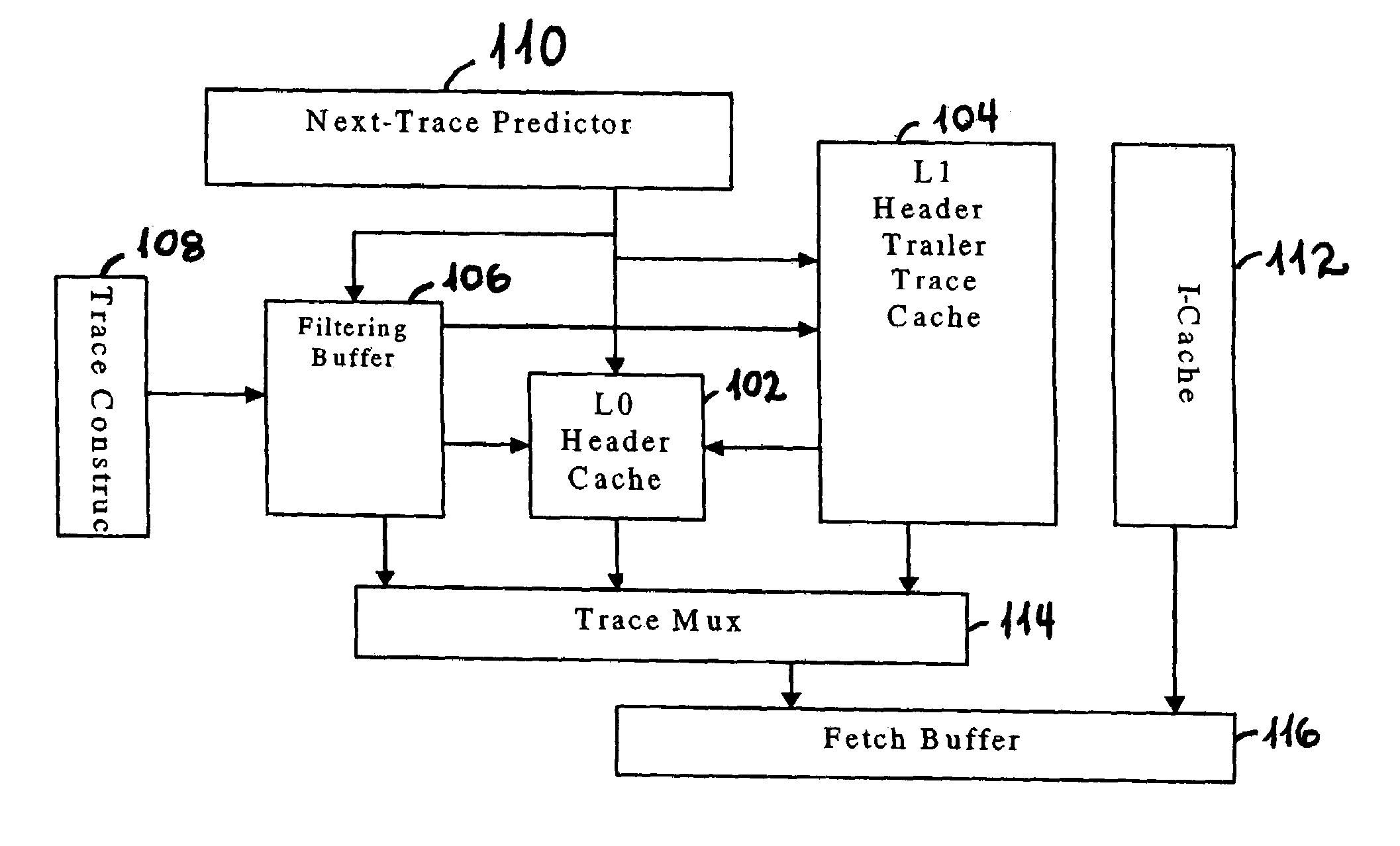

Trace cache filtering

InactiveUS7260684B2Energy efficient ICTMemory adressing/allocation/relocationLogistics managementParallel computing

A cache management logistics controls a transfer of a trace. A first cache couples to the cache management logistics to evict the trace based on a replacement mechanism. A second cache couples to the cache management logistics to receive the trace based on a number of accesses to the trace.

Owner:INTEL CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com