Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

91results about How to "Reduce synchronization overhead" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Distributed type dynamic cache expanding method and system supporting load balancing

InactiveCN102244685AReduce overheadImprove performanceData switching networksTraffic capacityCache server

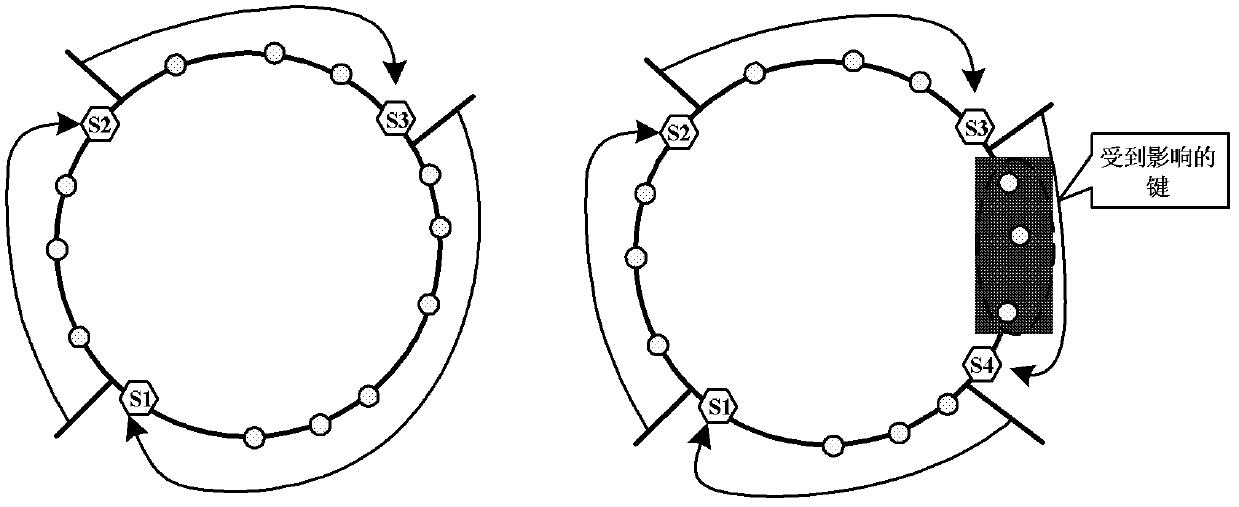

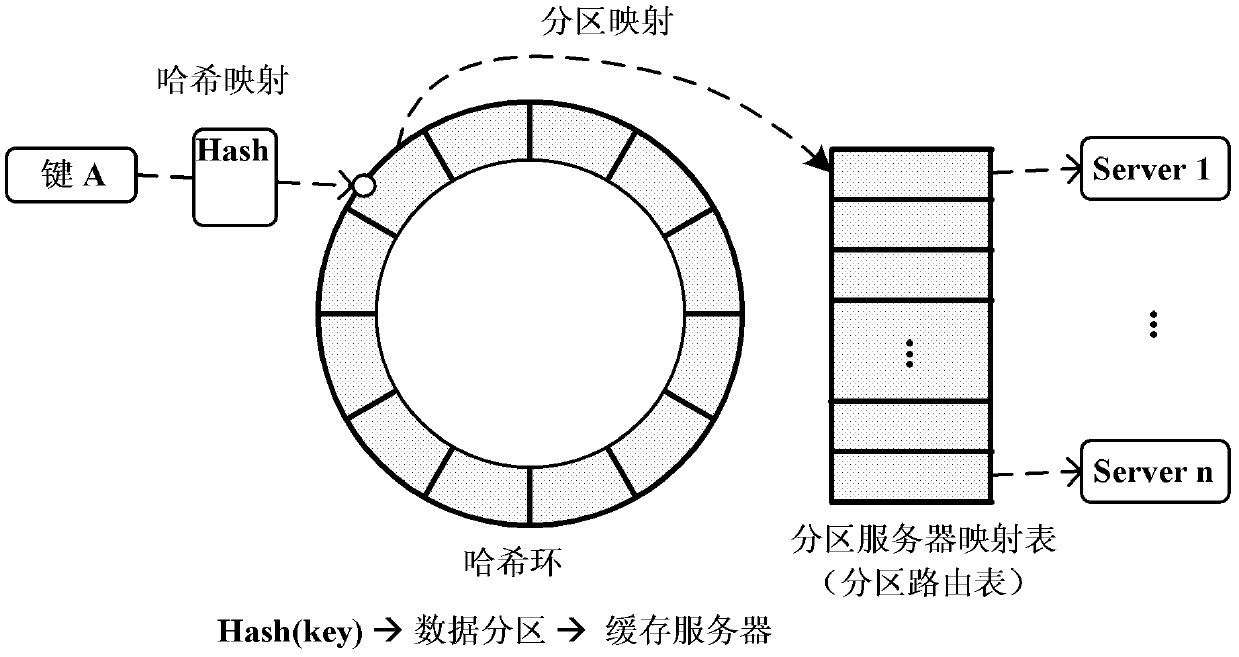

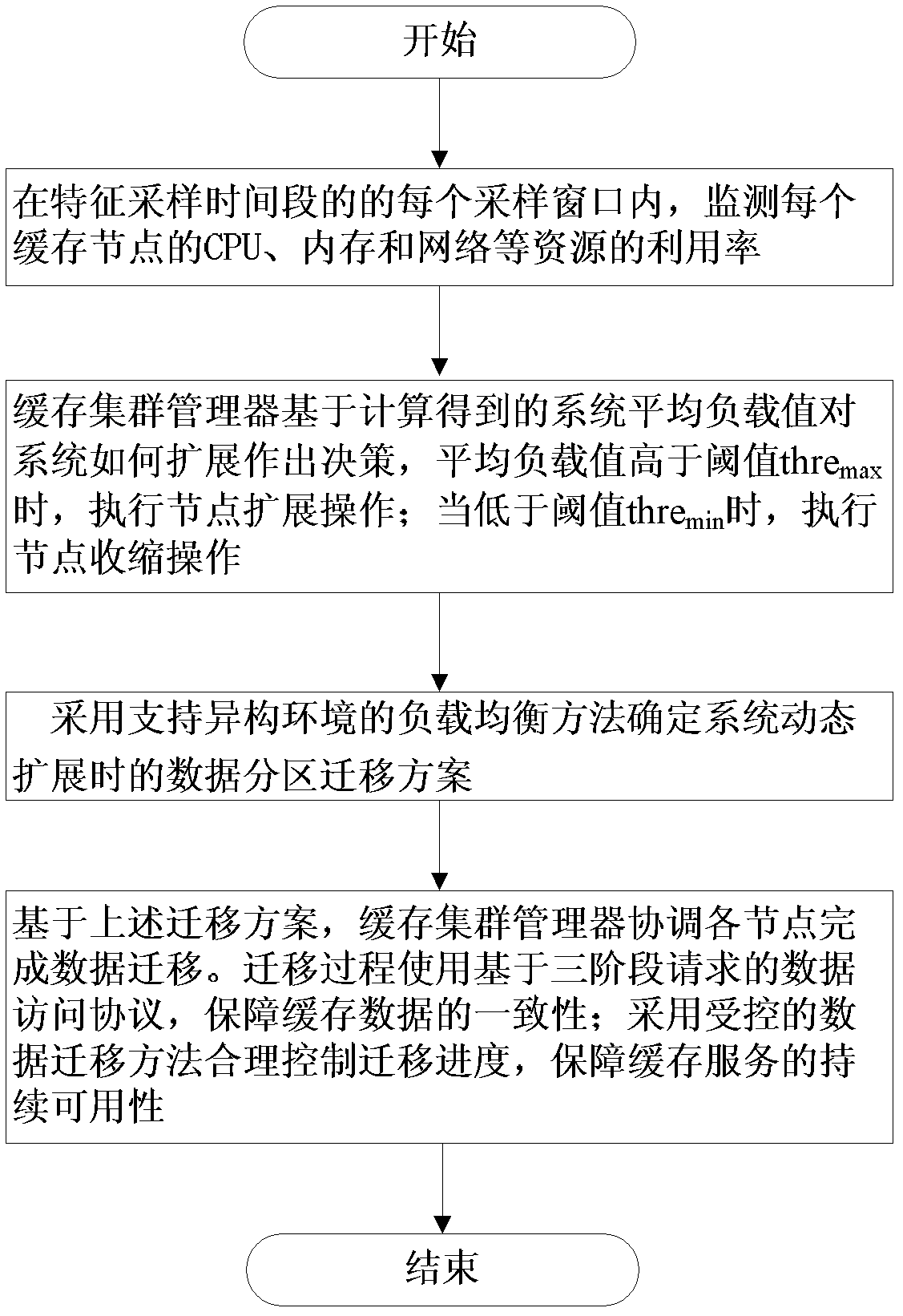

The invention discloses a distributed type dynamic cache expanding method and system supporting load balancing, which belong to the technical field of software. The method comprises steps of: 1) monitoring respective resource utilization rate at regular intervals by each cache server; 2) calculating respective weighing load value Li according to the current monitored resource utilization rate, and sending the weighting load value Li to a cache clustering manager by each cache server; 3) calculating current average load value of a distributed cache system by the cache clustering manager according to the weighting load value Li, and executing expansion operation when the current average load value is higher than a threshold thremax; and executing shrink operation when the current average load value is lower than a set threshold thremin. The system comprises the cache servers, a cache client side and the cache clustering manager, wherein the cache servers are connected with the cache client side and the cache clustering manager through the network. The invention ensures the uniform distribution of the network flow among the cache nodes, optimizes the utilization rate of system resources, and solves the problems of ensuring data consistency and continuous availability of services.

Owner:济南君安泰投资集团有限公司

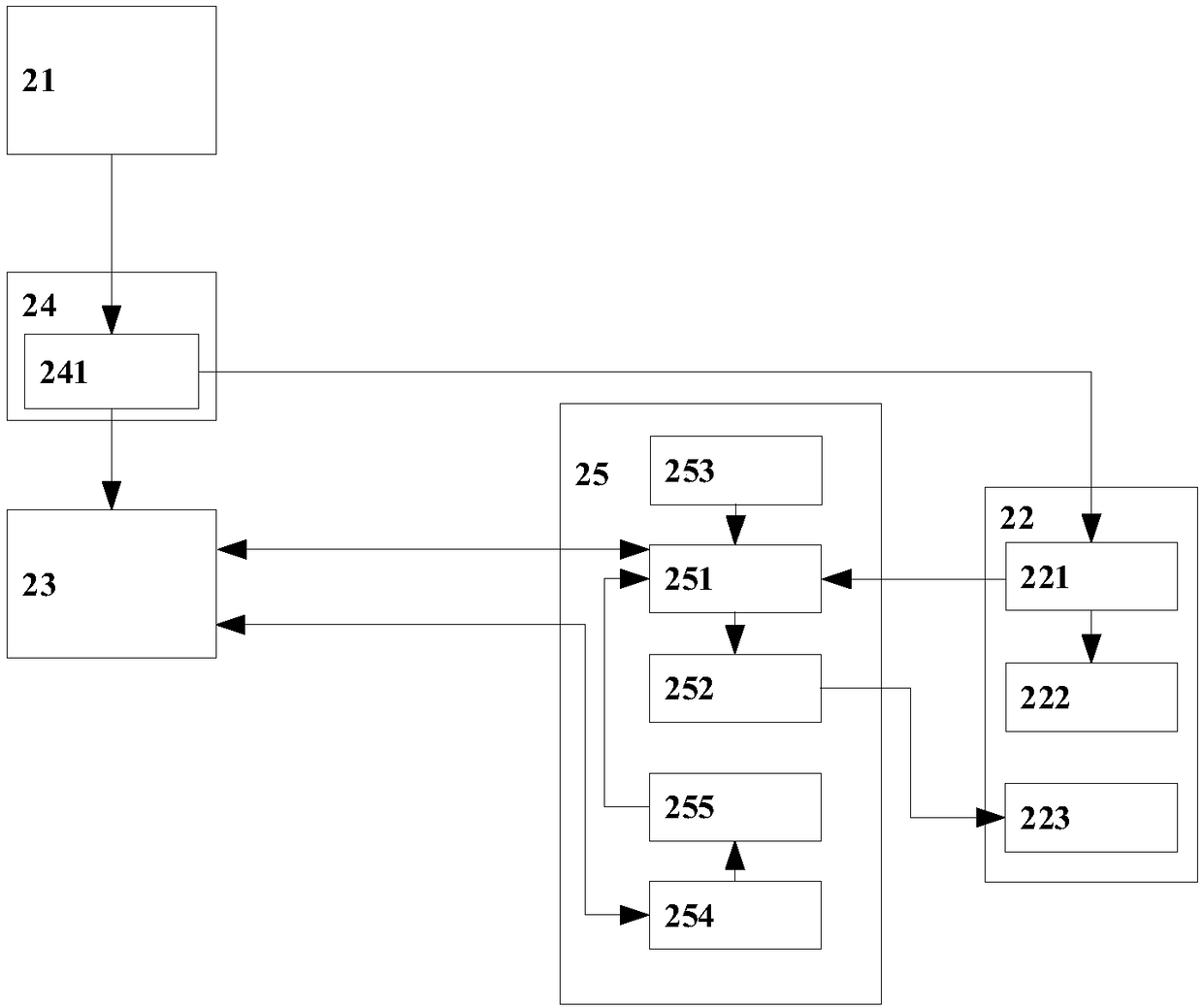

Cluster packet synchronization optimization method and system for distributed deep neutral network

ActiveCN107018184AImprove resource utilizationReduce synchronization overheadData switching networksNeural learning methodsNetwork performanceResource utilization

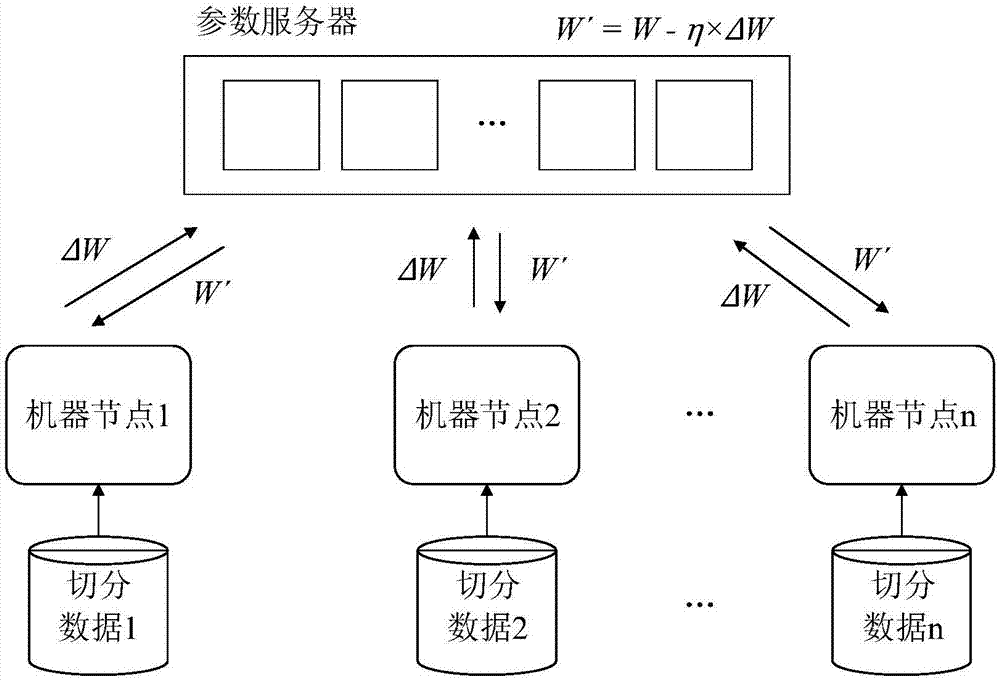

The invention discloses a cluster packet synchronization optimization method and system for a distributed deep neutral network. The method comprises the following steps of grouping nodes in a cluster according to the performance, allocating training data according to the node performance, utilizing a synchronous parallel mechanism in the same group, using an asynchronous parallel mechanism between the different groups and using different learning rates between the different groups. The nodes with the similar performance are divided into one group, so that the synchronization overhead can be reduced; more training data can be allocated to the nodes with the good performance, so that the resource utilization rate can be improved; the synchronous parallel mechanism is used in the groups with the small synchronization overhead, so that an advantage of good convergence effect of the synchronous parallel mechanism can be exerted; the asynchronous parallel mechanism is used between the groups with the large synchronization overhead, so that the synchronization overhead can be avoided; the different groups uses the different learning rates to facilitate model convergence. According to the method and the system, a packet synchronization method is used for a parameter synchronization process of the distributed deep neutral network in a heterogeneous cluster, so that the model convergence rate is greatly increased.

Owner:HUAZHONG UNIV OF SCI & TECH

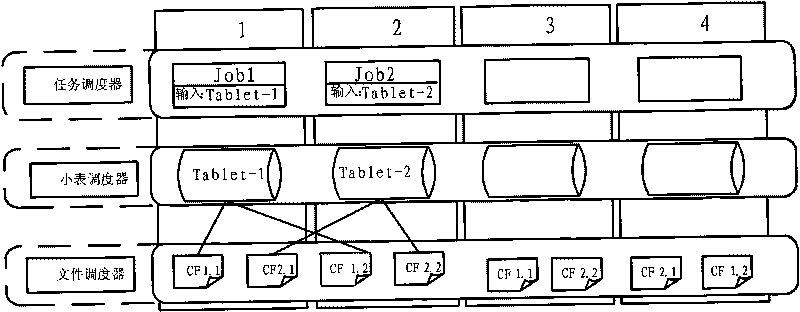

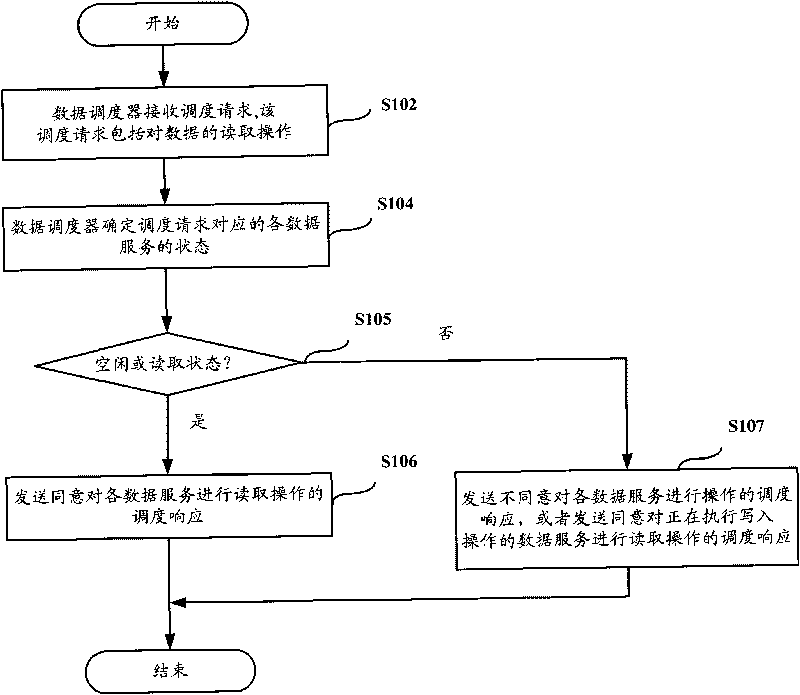

Dispatching method and system of distributed system

ActiveCN101753608AAvoid wastingImprove processing efficiencyTransmissionResource utilizationData scheduling

The invention discloses a dispatching method and a system of a distributed system. The method comprises the following steps: a dispatching request comprising data reading operation is received by a data dispatcher, and data is stored in at least one entity providing data service in the distributed system; status of each data service corresponding to the dispatching request is determined by the data dispatcher, dispatching response consenting to conduct reading operation on each data service is sent by the data dispatcher when each data service is in an idle / reading status; and each data service is at least one same data service. The invention can effectively solve the defects of resource waste, low task processing efficiency and the like in the distributed dispatching method in the prior art, realizes reasonable resource utilization, and improves task processing efficiency.

Owner:CHINA MOBILE SUZHOU SOFTWARE TECH CO LTD +2

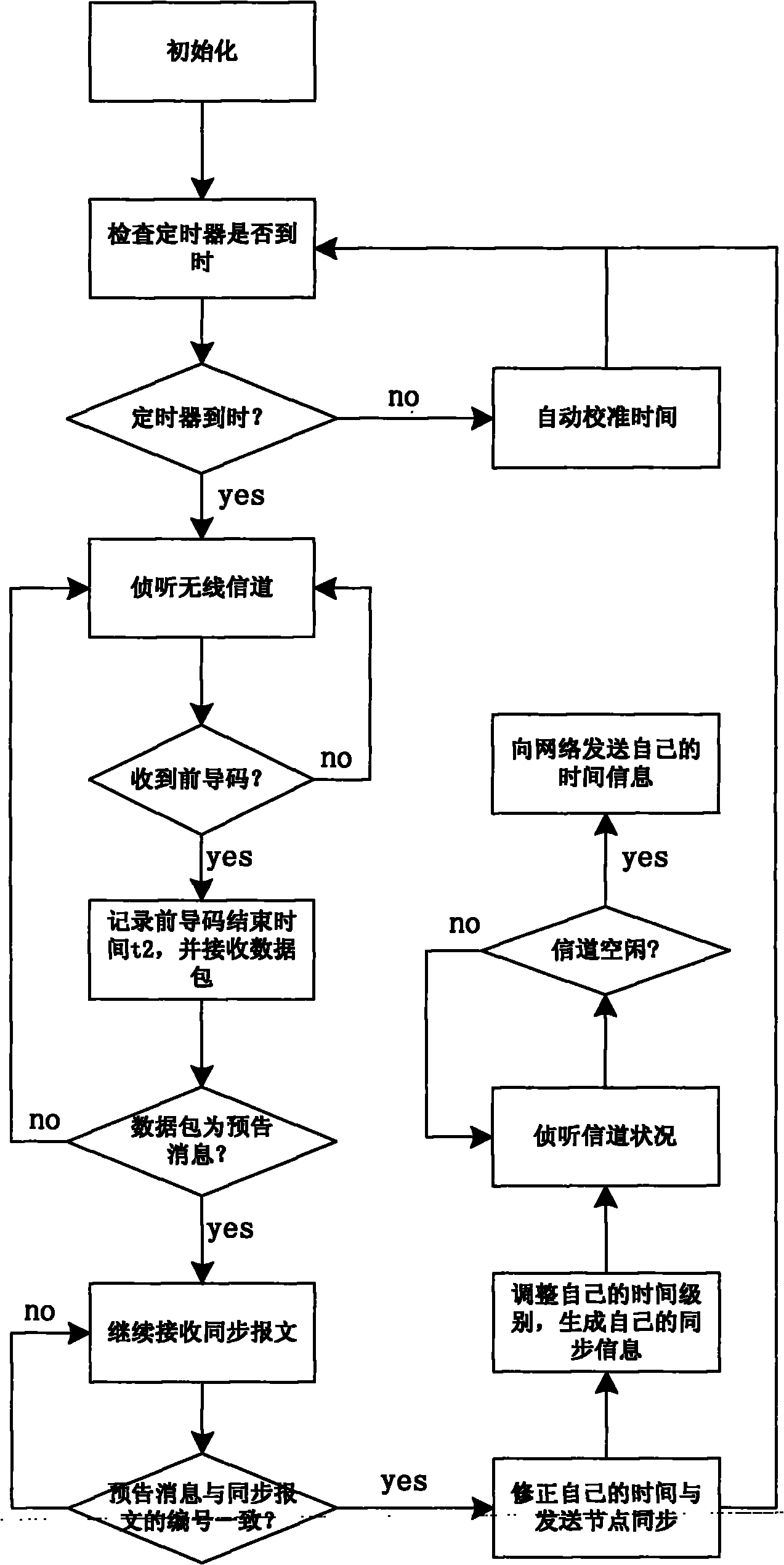

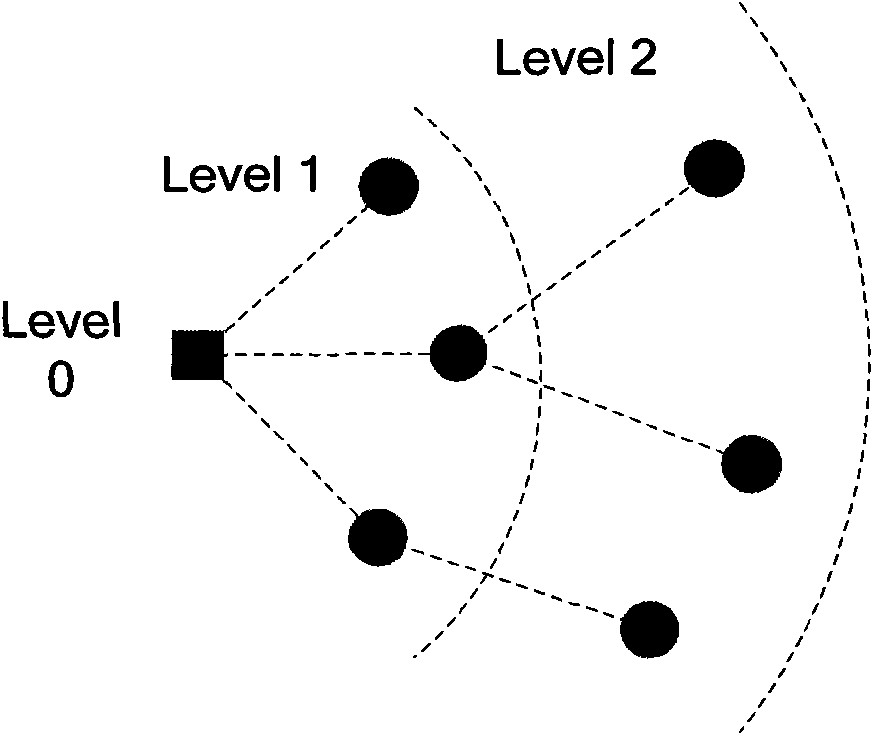

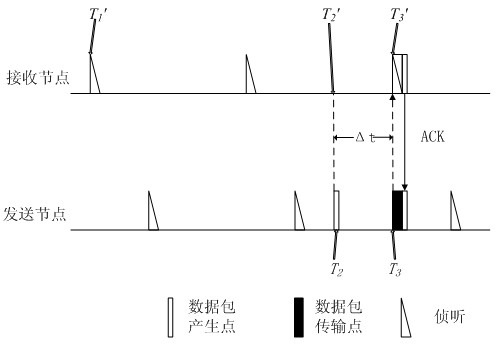

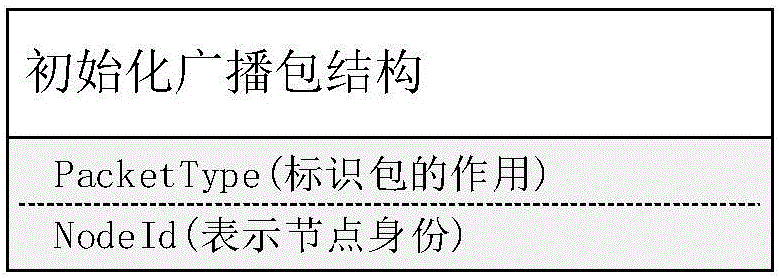

Method for synchronizing time of wireless sensor network

InactiveCN101883420AEliminate delay errorsHigh synchronization accuracySynchronisation arrangementNetwork topologiesWireless sensor networkingSensor node

The invention discloses a method for synchronizing the time of a wireless sensor network and belongs to the technical field of the wireless sensor network. The method comprises the following steps that: 1) a central node broadcasts synchronization information to a network at a specific time interval and starts a synchronization process; 2) after receiving the synchronization information, a sensornode synchronizes time with a source node of the synchronization information and broadcasts the synchronization information thereof to the network when a channel is free; and 3) after each synchronization process is completed, the sensor node predicts the working frequency of a crystal oscillator configured per se in the current period by a winters method and corrects the logic time thereof according to the prediction result till the start of the next synchronization process. Compared with the prior art, the method can greatly prolong the time interval of period synchronization and reduce synchronization expense and the occupying of a wireless channel and improve the synchronization precision among nodes.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

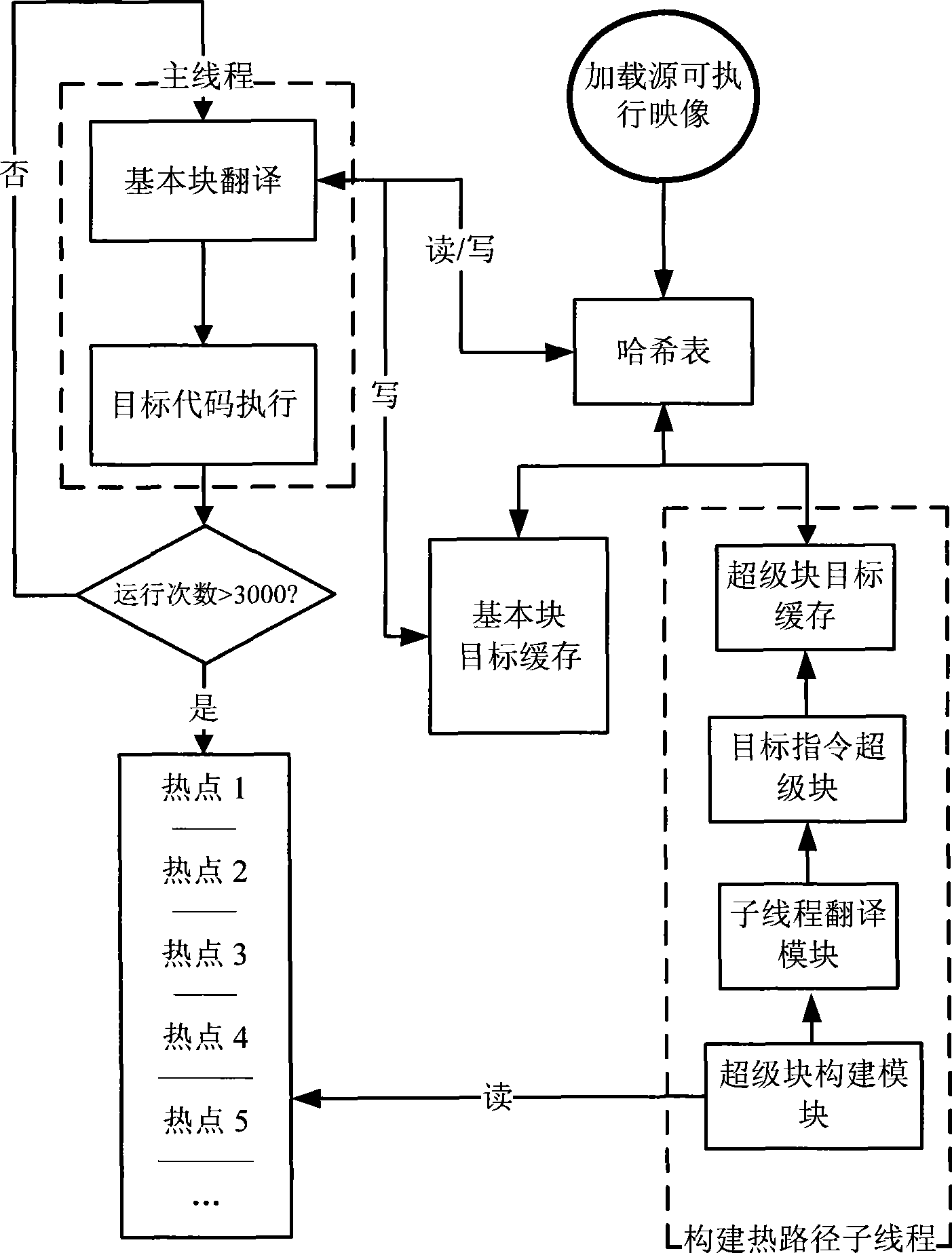

Multi-core multi-threading construction method for hot path in dynamic binary translator

InactiveCN101477472AImprove stabilityImprove efficiencyMultiprogramming arrangementsMemory systemsObject codeDual code

The invention discloses a multi-core and multi-thread construction method for a heat path in a dynamic binary translator. The method comprises the following steps: a basic block translation and object code execution part works as a main thread, and a heat path construction and super block translation part works as a sub-thread; an independent code cache structure in a dynamic binary translator for adaption adopts a design mode of dual code cache, the cache of two codes is under unified management of a hash table function, so that the main thread and the sub-thread can be conducted in parallel in the process of data inquiry and data renewal; and the main thread and the sub-thread are appointed to work on different cores of a multi-core processor combined with hard affinity, and a continuous segment of memory space and two counters are utilized to stimulate a segment of queue, so as to carry out the communication between the threads in machine language level and high-level language level. The invention has the favorable characteristics of high parallelism and low synchronous consumption, and provides new conception and new frame for the optimization work of dynamic binary translators in the future.

Owner:SHANGHAI JIAO TONG UNIV

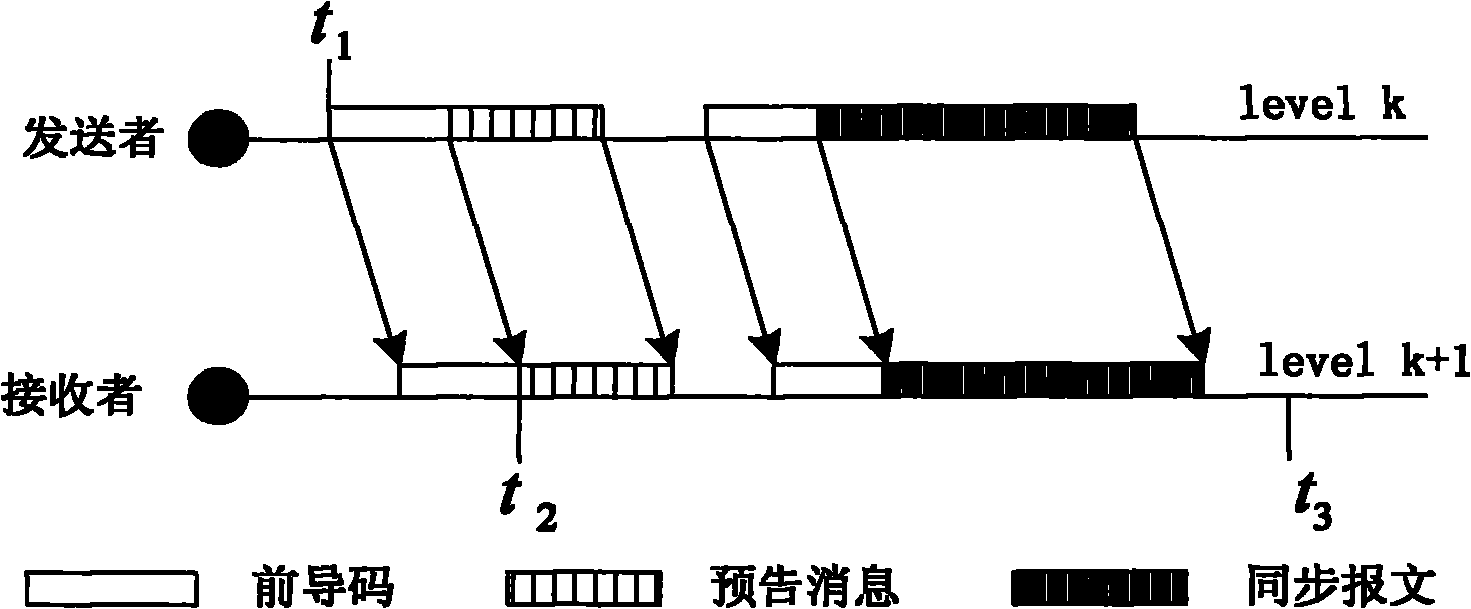

Low-energy consumption sleeping monitoring method synchronous relative to time of wireless sensor network

InactiveCN102083183AReduce energy consumptionReduce synchronization overheadPower managementEnergy efficient ICTClock driftWireless mesh network

The invention relates to a low-energy consumption sleeping monitoring method synchronous relative to time of a wireless sensor network. The traditional method is high in energy consumption. The method in the invention comprises the following steps: in the stage of creating a relative synchronization table at a new node, broadcasting addition request network packets by the new node firstly to obtain synchronizing information of a neighbourhood node; then estimating clock skew, and linearly fitting multigroup of synchronization information of the neighbourhood node to estimate clock drift; and finally saving the sleeping period of the neighbourhood node, the estimated clock skew and the clock drift into the relative synchronization table; and in the stage of prediction and transmission of data packets of the node, realizing relative synchronization with a target node by the node according to the created relative synchronization table, then predicting the waking time at the next time according to the sleeping period of the node, setting a transmission timer, and finally transmitting data packets by a short permeable when the transmission timer is triggered. According to the invention, the energy overhead for sending the data packets by the node can be saved, and the idle time of a transmitting node is reduced, and the sleeping time of the transmitting node is increased.

Owner:HANGZHOU DIANZI UNIV

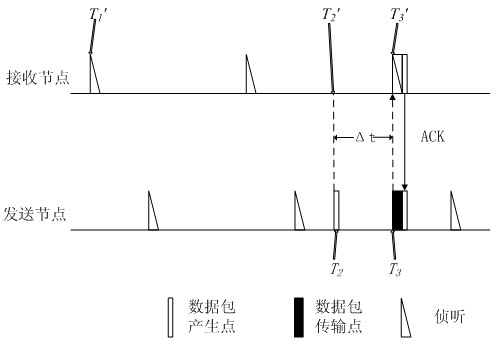

Video decoding macro-block-grade parallel scheduling method for perceiving calculation complexity

InactiveCN105491377AReduce synchronization overheadImprove parallel efficiencyDigital video signal modificationCore functionRound complexity

The invention discloses a video decoding macro-block-grade parallel scheduling method for perceiving calculation complexity. The method comprises two critical technologies: the first one involves establishing a macro-block decoding complexity prediction linear model according to entropy decoding and macro-block information after reordering such as the number of non-zero coefficients, macro-block interframe predictive coding types, motion vectors and the like, performing complexity analysis on each module, and fully utilizing known macro-block information so as to improve the parallel efficiency; and the second one involves combining macro-block decoding complexity with calculation parallel under the condition that macro-block decoding dependence is satisfied, performing packet parallel execution on macro-blocks according to an ordering result, dynamically determining the packet size according to the calculation capability of a GPU, and dynamically determining the packet number according to the number of macro-blocks which are currently parallel so that the emission frequency of core functions is also controlled while full utilization of the GPU is guaranteed and high-efficiency parallel is realized. Besides, parallel cooperative operation of a CPU and the GPU is realized by use of a buffer area mode, resources are fully utilized, and idle waiting is reduced.

Owner:HUAZHONG UNIV OF SCI & TECH

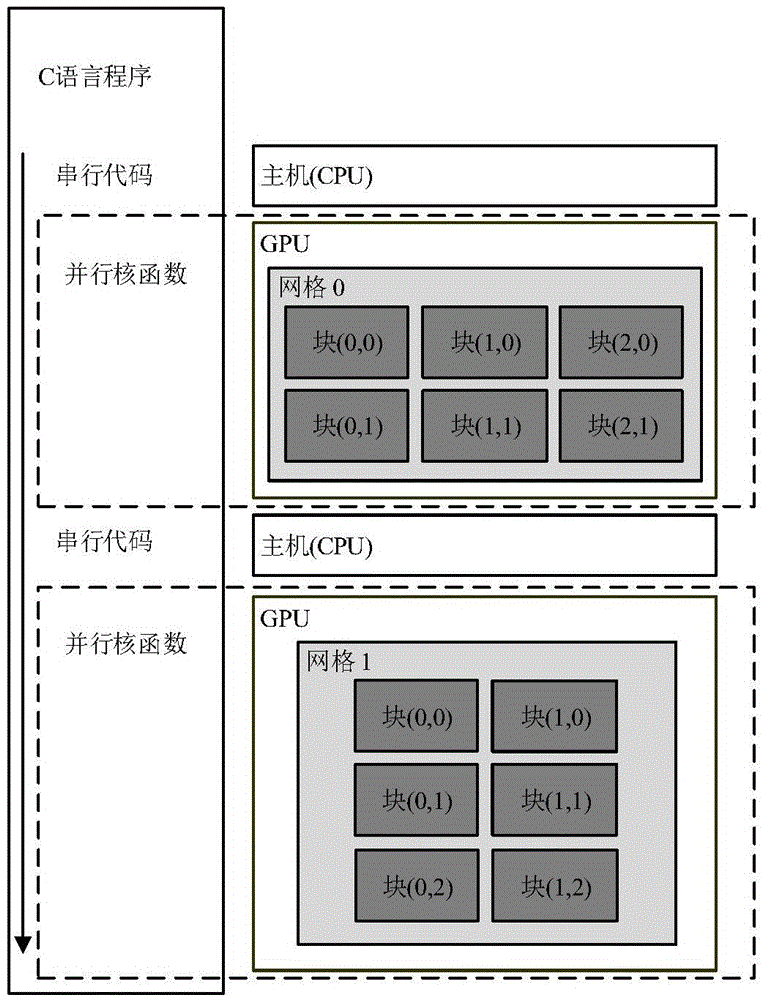

Redundant automation system for controlling a technical device, and method for operating the same

InactiveCN1879068AReduce synchronization overheadLow costComputer controlSimulator controlProcess equipmentStorage cell

The invention relates to a redundant automation system (1), and a method for operating one such automation system (1). The inventive automation system comprises two automation appliances (3a, 3b) with which a common memory unit is associated, on which status data of the automation appliances (3a, 3b) can be stored. In this way, the automation appliances (3a, 3b) have direct access to a common database and a memory compensation is dispensed with in the event of an error during the switchover to the standby automation appliance (3b).

Owner:SIEMENS AG

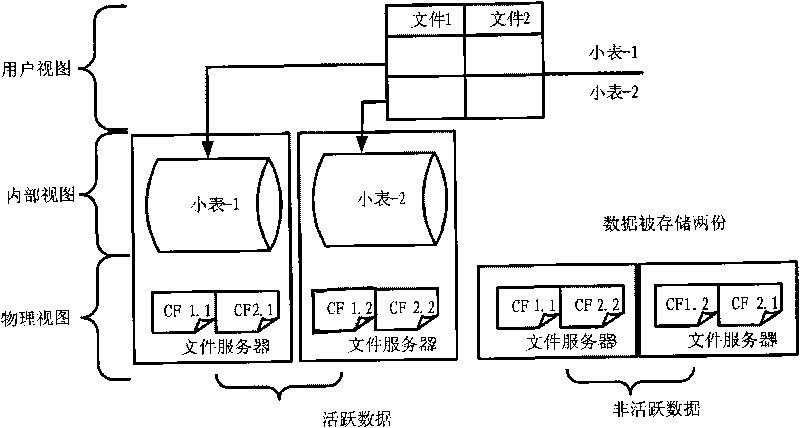

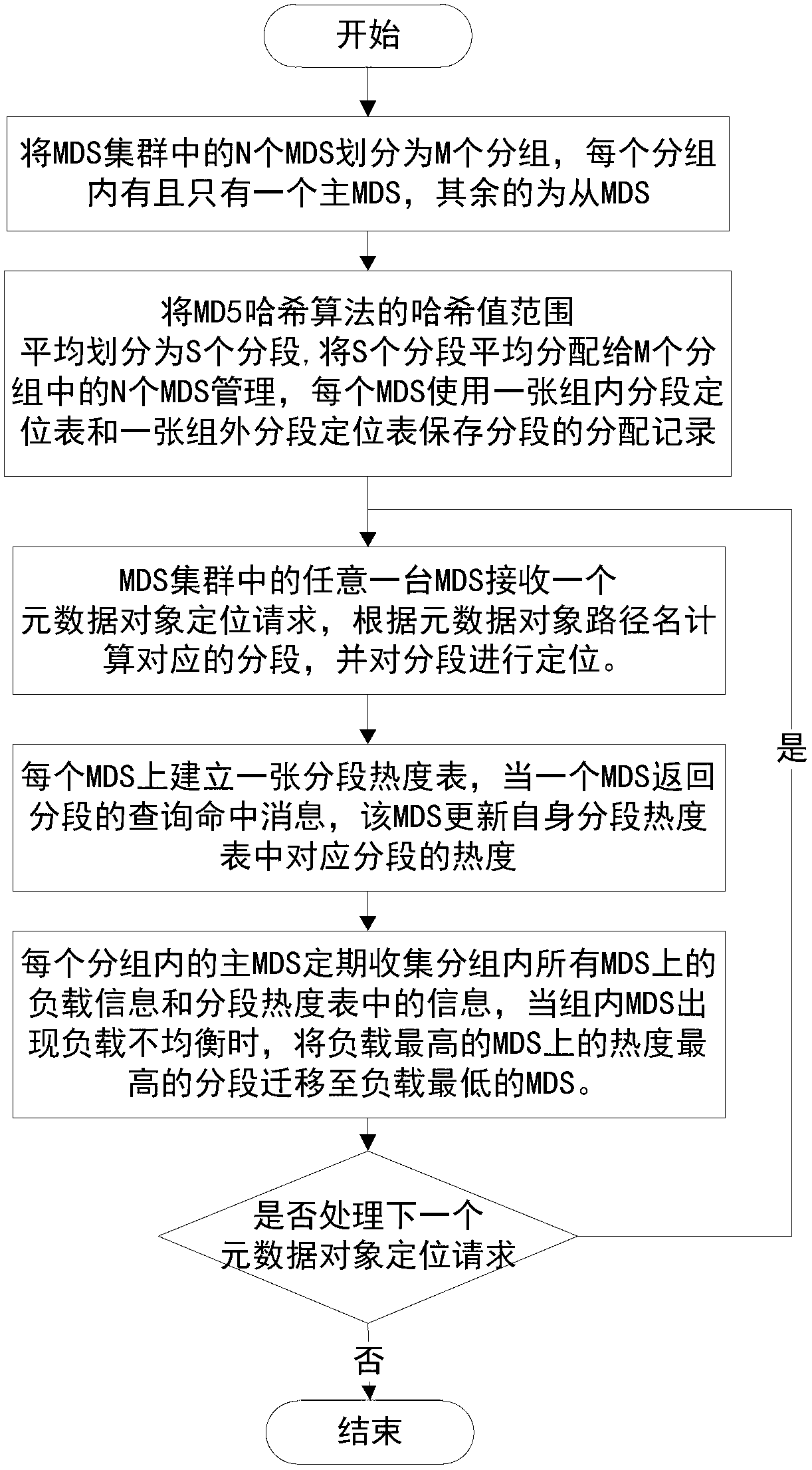

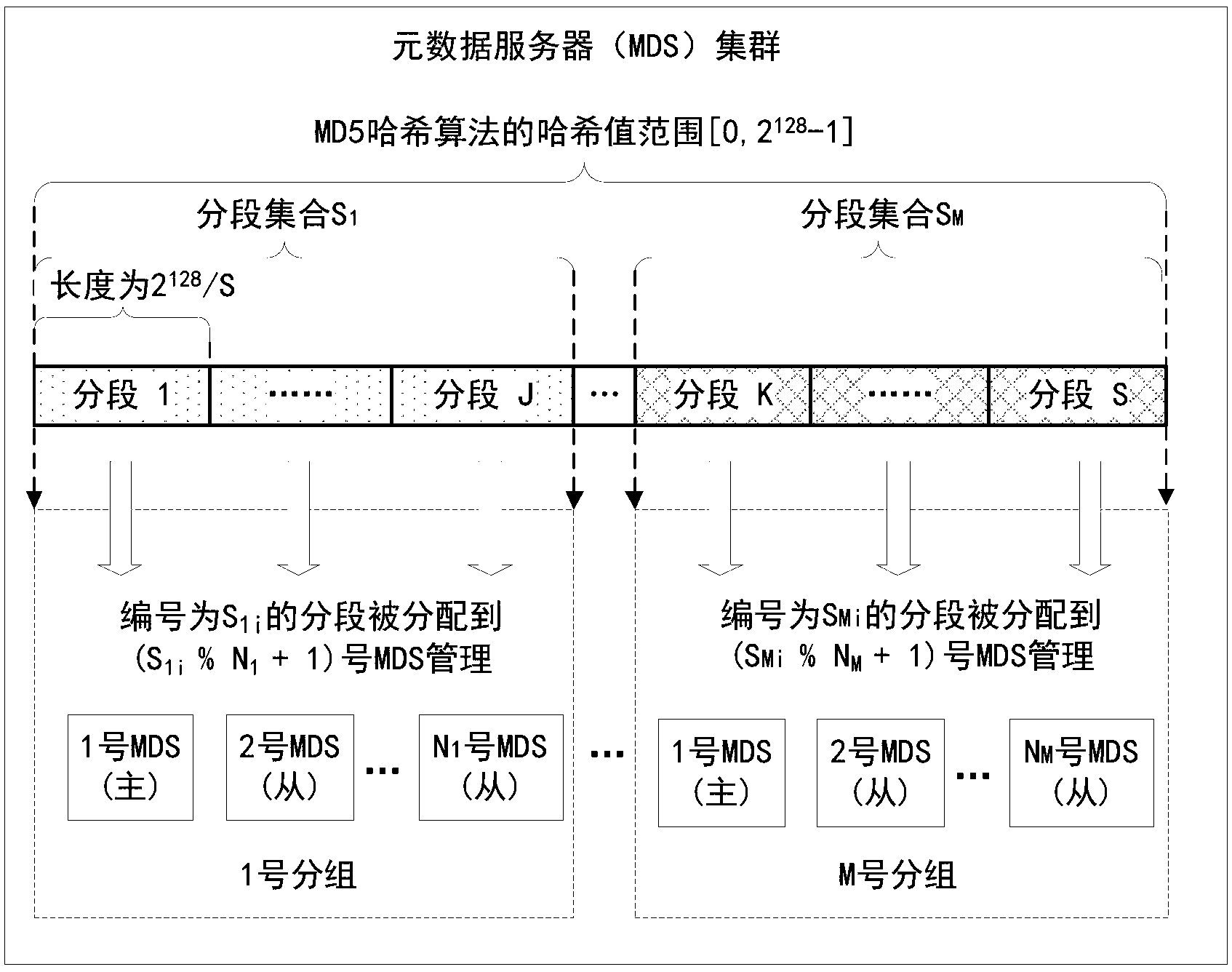

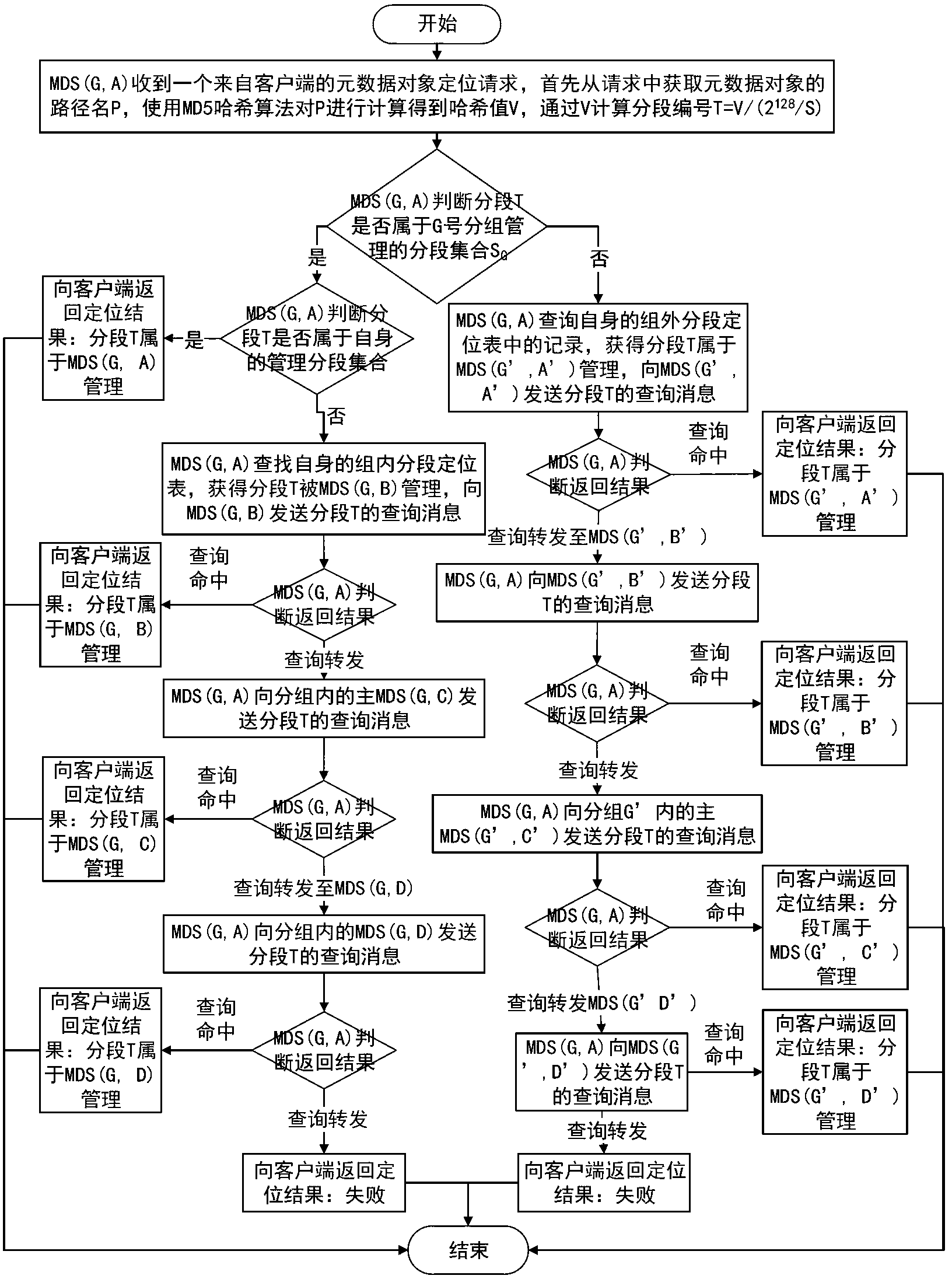

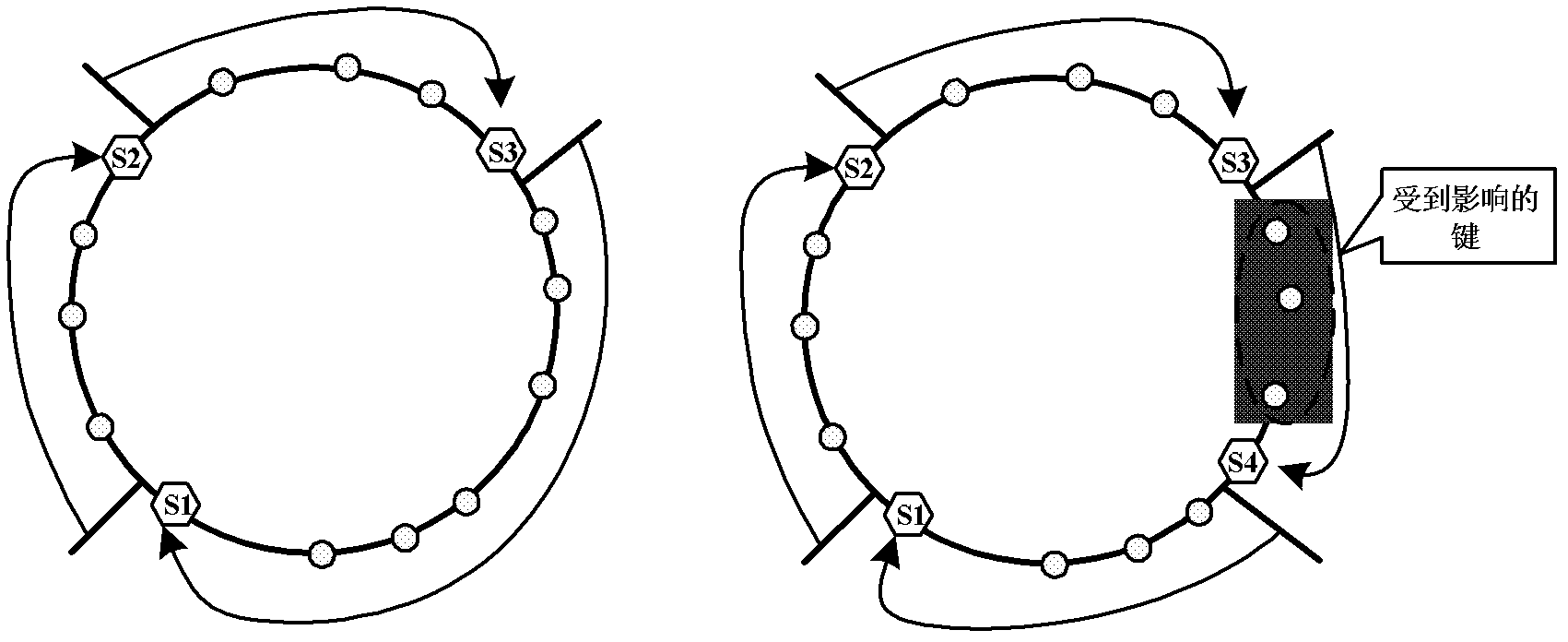

Packet-based metadata server cluster management method

ActiveCN103294785AFlexible mappingFlexible modificationSpecial data processing applicationsData miningMetadata server

The invention discloses a packet-based metadata server cluster management method. The packet-based metadata server cluster management method includes that N MDSs (metadata servers) in an MDS cluster are divided into M packets, each packet contains only one primary MDS, the others are secondary MDSs; a Hash value range of an MD5 Hash algorithm is evenly divided into S subsections which are evenly distributed to N MDSs management in M packets; each MDS adopts one subsection positioning table inside a packet and one subsection positioning table outside the packet to store distribution records of the subsections; one optional MDS receives a metadata object location request, the corresponding subsection is calculated and located according to a metadata object path name; a subsection heat degree table is stored in each MDS, when one MDS returns query hit information of one subsection, the MDS calculates and updates heat degree corresponding to the subsection heat degree table thereof. By the method, the problems that metadata mapping relations are fixed, metadata targeting efficiency is low and MDS cluster load is unbalanced in an existing method can be solved.

Owner:HUAZHONG UNIV OF SCI & TECH

Collaborative virtual network mapping method

InactiveCN105939244AReduce loadScalability problem mitigationNetworks interconnectionVirtual networkNetwork mapping

The invention provides a collaborative virtual network mapping method, comprising the following steps: (1) when a virtual network request arrives, entering a central controller to wait for mapping; (2) selecting an virtual network request with the top priority in a queue by the central controller to carry out mapping; (3) for the virtual network request, if the number of virtual nodes thereof is smaller than a set threshold, a topological structure is simple, and no subregional mapping is needed, accomplishing mapping according to a simple network mapping method; and otherwise, carrying out topology preprocessing on the virtual network request by the central controller to decompose the same into a plurality of virtual sub-networks, and mapping each virtual sub-network according to the simple network mapping method by the central controller. According to the method provided by the invention, relative advantages of a centralized algorithm and a distributed algorithm are fully integrated to achieve better mapping performance.

Owner:BEIJING UNIV OF POSTS & TELECOMM

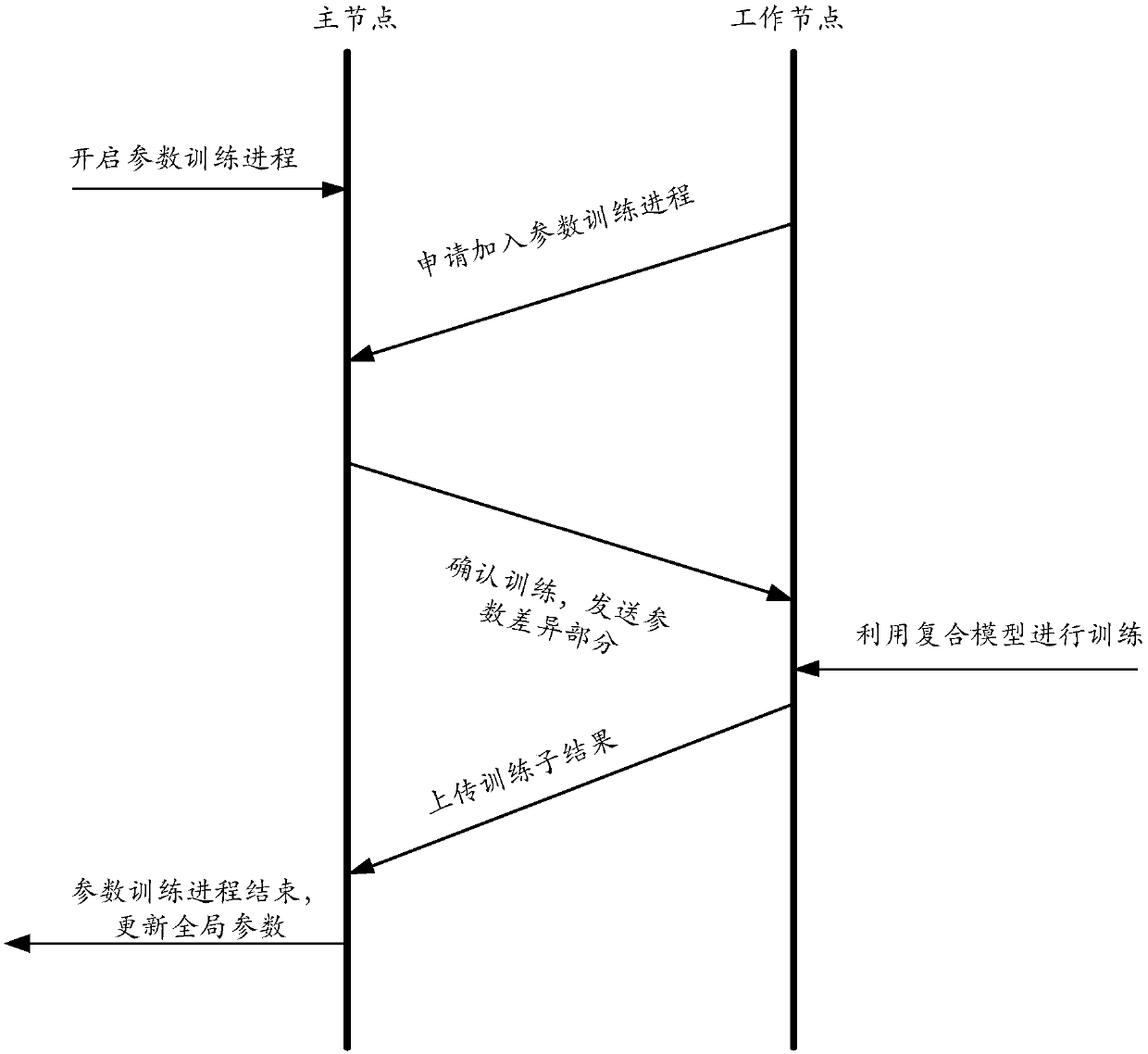

Machine learning method, master node, work nodes and system

ActiveCN107944566AControl timeReduce synchronization overheadMachine learningTime rangeTime information

The embodiment of the present invention provides a machine learning method, a master node, work nodes and a distributed machine learning system which are used to reduce the synchronization overhead ofmachine learning. The master node of the distributed machine learning system starts a parameter training process, the work nodes that join the parameter training process are determined, and time information corresponding to the parameter training process is sent to the work nodes, wherein the time information includes an end time of the parameter training process. After the work nodes receive a notification that the master node determines that the work nodes join the parameter training process, the time information corresponding to the parameter training process sent by the master node is obtained. The parameter training is carried out in a time range indicated by the time information, and the master node updates global parameters based on obtained training sub results after the master node receives training sub results sent by work nodes which join the parameter training process.

Owner:HANGZHOU CLOUDBRAIN TECH CO LTD

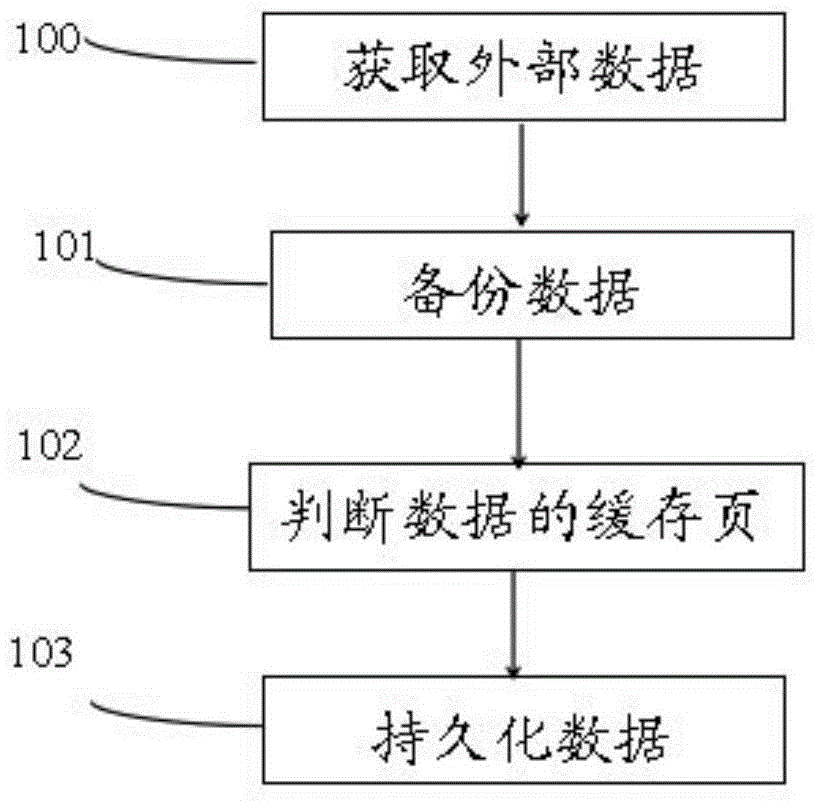

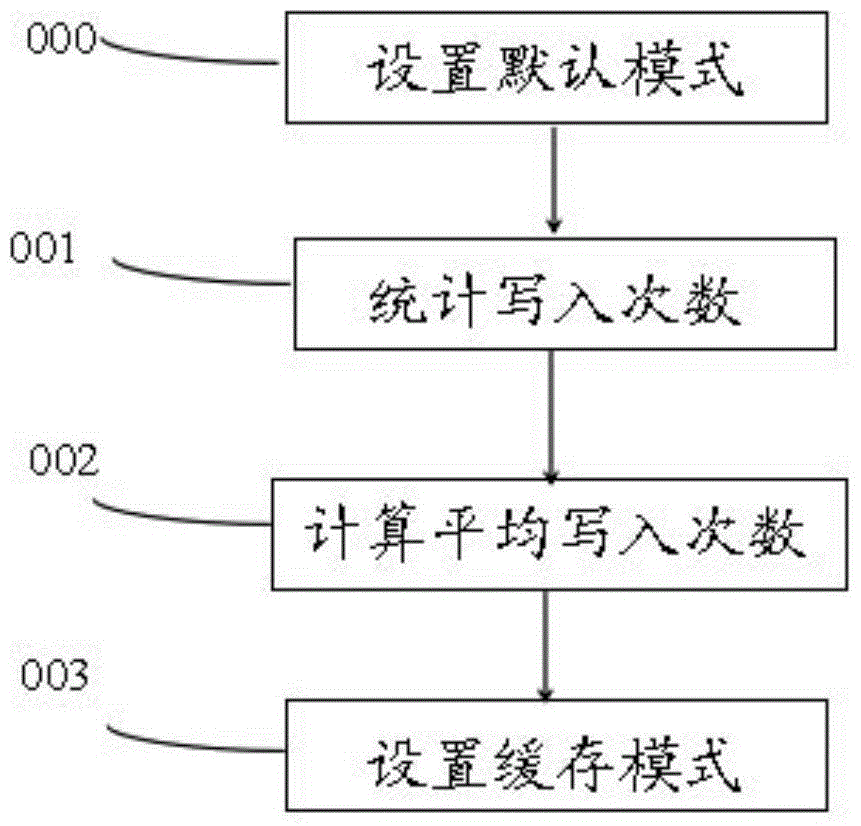

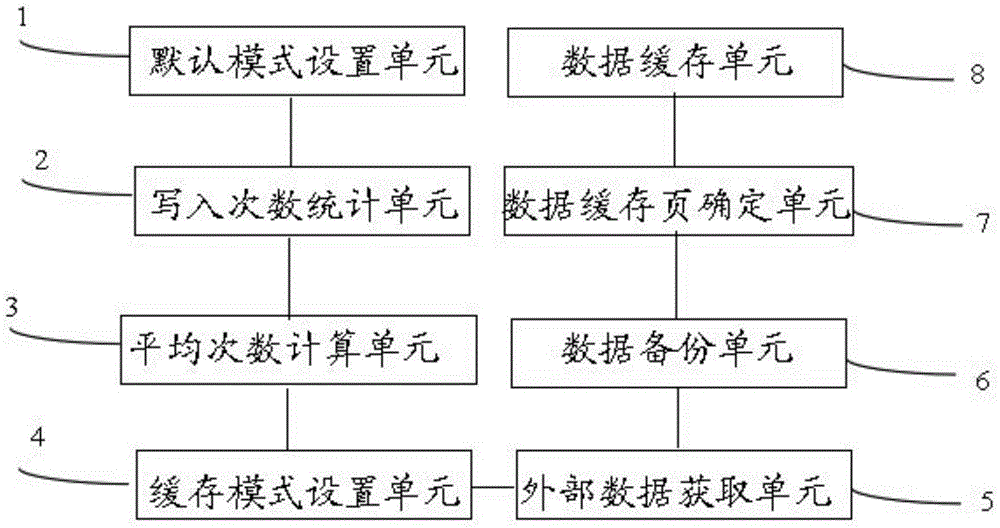

Data writing method and apparatus for reducing synchronization overheads

InactiveCN105677511AEliminate synchronization overheadReduce the number of syncsInput/output to record carriersRedundant operation error correctionScratch padBackup

The invention discloses a data writing method and an apparatus for reducing synchronization overheads. The method comprises: acquiring data written from outside; copying the data to a log region for backup; determining cached pages corresponding to the data; in according with the cache mode of the cached page where the data are, making the data persistent. The method and apparatus of the invention, in accordance with frequency of data writing, adopt different cache modes, and merely store frequently written data to a Cache region, and reduces synchronization overheads written to an off-chip memory. And a scratch pad memory (SPM) which stores backup log to the chip without having to writing to the off-chip memory, which further reduces synchronization overheads.

Owner:CAPITAL NORMAL UNIVERSITY

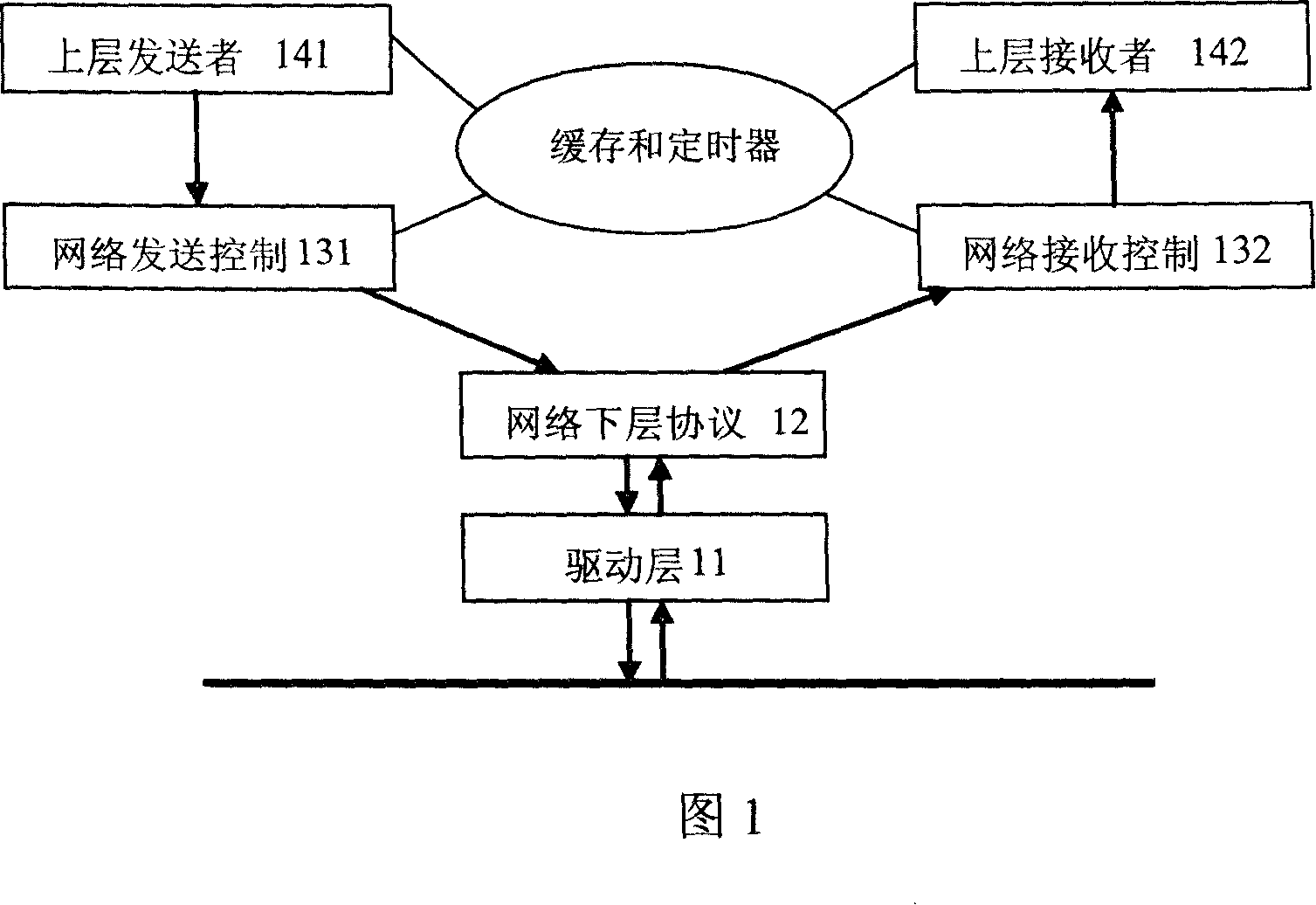

Indirect real-time flux control system and its method

InactiveCN101155132AReduce flow control overheadReduce synchronization overheadData switching networksData transmissionTraffic volume

The invention relates to an indirect control system and method of real time flow. The said system is composed of sending / receiving buffer memory and manipulative indexing queue. Each sending unit of big data block generated by each data is established relation with a sending index queue. Because the data generating and network sending does not happen at the same time and is not finished at one time, when generating the other big data block, the network layer sending is performed using the sending index queue, and the sending index queue is in duty-cycle operation. The management is performed based on the use proportion of the sending capacity of the said node and the receiving capacity of the opposite part to obtain the aim of flow control. The receiving end is operated in the same way. The network layer receives the small data block and the final consumer of data is big data block composed of small data blocks. The said system and method can greatly reduce the cost of flow control depending on protocol and the in-phase cost in node, and the real-time performance of data transmission on under layer in packet network is increased, and the transmission quality of stream media is effectively improved.

Owner:ZTE CORP

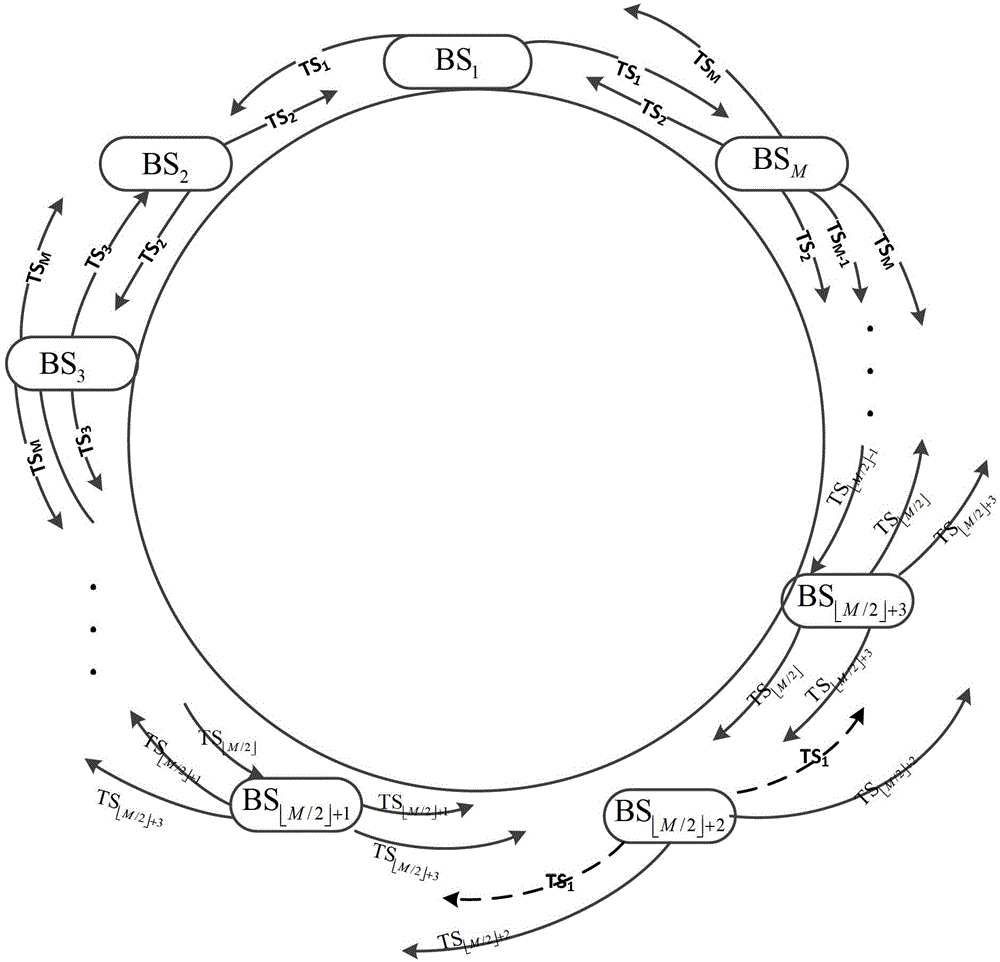

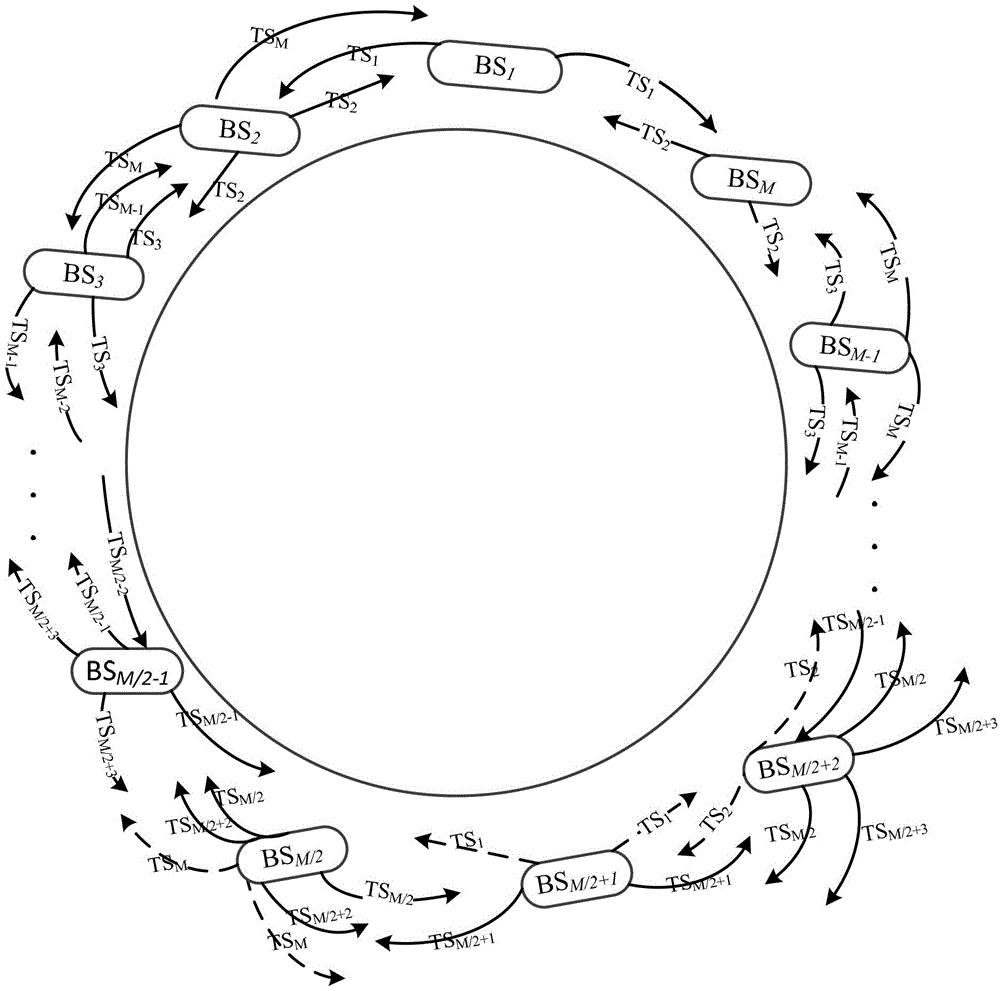

Distributed beam forming system and carrier synchronization method of transmitting antennas at source end of distributed beam forming system

InactiveCN103152816AReduce synchronization overheadIncrease effective communication timeSynchronisation arrangementSpatial transmit diversityPhase shiftedTime delays

The invention is applicable to the technical field of wireless communication and provides a distributed beam forming system and a carrier synchronization method of transmitting antennas at the source end of the distributed beam forming system. The transmitting antennas at the source end of the distributed beam forming system can realize accurate time slot control, can conduct frequency and phase estimation to received signals and can estimate phase shift information caused by channel time delay. The carrier synchronization method fully utilizes the broadcasting characteristics of wireless links and realizes the accurate synchronization of carrier frequency and phase of the distributed beam forming system through accurate time slot control and channel estimation. Compared with the traditional carrier synchronization method, the carrier synchronization method provided by the invention has the advantages that the synchronization overhead is greatly reduced, the time slot needed for synchronization is decreased to M from 2M-1 (wherein M is the number of source-end base stations) and the effective communication time of the system is increased.

Owner:SHENZHEN UNIV

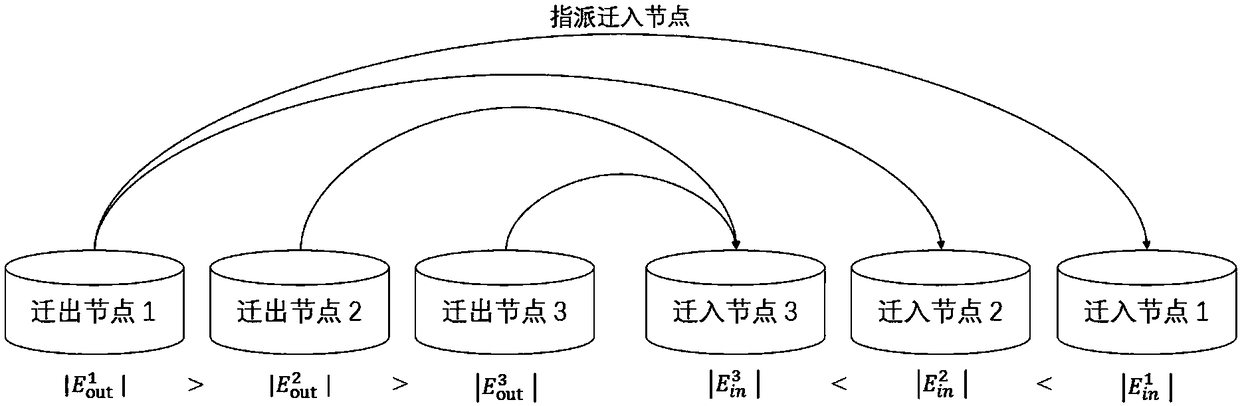

Heterogeneous server structure-oriented graph calculation load balancing method

ActiveCN108089918AReduce synchronization overheadReduce running timeProgram initiation/switchingResource allocationResource utilizationParallel computing

The invention discloses a heterogeneous server structure-oriented graph calculation load balancing method. The method includes: calculating a variation coefficient of all processing times in a currentsuper step by a main control node according to the times used by processing graph calculation loads by all calculation nodes in the super step; if variation coefficients in two consecutive super steps are greater than a preset threshold value, determining that the graph calculation loads of all the calculation nodes in running are not balanced, and the graph calculation loads on the calculation nodes need to be re-assigned after the current super step ends; and otherwise, continuing to execute a next super step by all the calculation nodes. According to the method of the invention, vertex migration is utilized to balance processing times of the calculation nodes in each super step, thus synchronization overheads of all the calculation nodes in each super step are effectively reduced, a running time of a graph calculation task is shortened, and a resource utilization rate of each calculation node in running is increased.

Owner:HUAZHONG UNIV OF SCI & TECH

Thread division method for avoiding unrelated dependence on many-core processor structure

InactiveCN103699365AReduce synchronization overheadImprove effective utilizationConcurrent instruction executionBasic blockControl flow diagram

The invention discloses a thread division method for avoiding unrelated dependence on a many-core processor structure. The method comprises the following steps of profiling to determine a loop structure with parallel potential; determining a control flow diagram of the loop iteration body; determining a data flow diagram of the loop iteration body; determining the number of derivative speculation threads; performing thread division through dependence separation; performing thread encapsulation. Based on the current situation that the existing many-core thread division mechanisms all introduce unrelated dependence by taking the instruction basic block as a minimum unit, the invention provides a fine-grit thread division method based on dependence separation, which performs further fine-gritting of iteration inside the loop, and divides a serial program into simple and independent threads for speculative execution through unrelated data dependence between minimal threads, thereby reducing the influence on synchronous overhead caused by unnecessary dependence between speculative threads from the source.

Owner:SOUTHWEAT UNIV OF SCI & TECH

Beidou/global position system (GPS) time signal-based time service system for communication network

ActiveCN102882671AReduced synchronization setup timeReduce synchronization overheadSynchronous motors for clocksSynchronising arrangementService systemVIT signals

The invention discloses a Beidou / global position system (GPS) time signal-based time service system for a communication network. The system comprises a power module 1, a Beidou module 2, a GPS module 3, a clock module 4, a master control module 5 and a panel module 6, which are combined into a whole. Beidou / GPS time is used for synchronizing the clock of a constant temperature crystal, the accuracy and stability of the clock frequency of the crystal are improved, high-accuracy and high-stability radio station time service signals and standard time service signals are output through the clock, and an external time service signal input interface is provided. A precision phase difference compensation technology and a time service error control technology are adopted, so that the synchronization time of a frequency hopping radio station is shortened, synchronization hold-in and synchronization maintenance during a radio silence period are ensured, and the synchronization overhead of the radio station is reduced. High-accuracy RS232-level and RS422-level standard time service signals are provided, so that the requirements of modern communication for the high accuracy of time signals can be met. The system has the characteristics of rational design, high reliability, high environmental adaptability, small size, convenience for use and the like.

Owner:武汉中元通信股份有限公司

Data synchronization method and system

ActiveCN108108431AImprove synchronization efficiencyReduce synchronization overheadDatabase distribution/replicationSpecial data processing applicationsData synchronizationApplication software

The invention provides a data synchronization method and system. The method comprises the steps that a first database system acquires an SQL statement sent by an application program to a second database system; the first database system executes database operation corresponding to the SQL statement; and according to state records of database operation executed by the first database system and thesecond database system, the first database system performs database operation on a first database thereof, so that the first database is consistent with a second database of the second database system. Through the data synchronization method and system, the problem that in the prior art, a database synchronization technology has low efficiency in the remote synchronization field or does not support synchronization of heterogeneous databases is solved, database synchronization efficiency is improved, database synchronization expenditure is lowered, and support of the heterogeneous databases isrealized.

Owner:TRAVELSKY

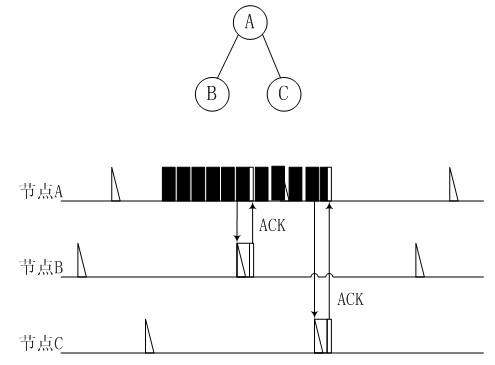

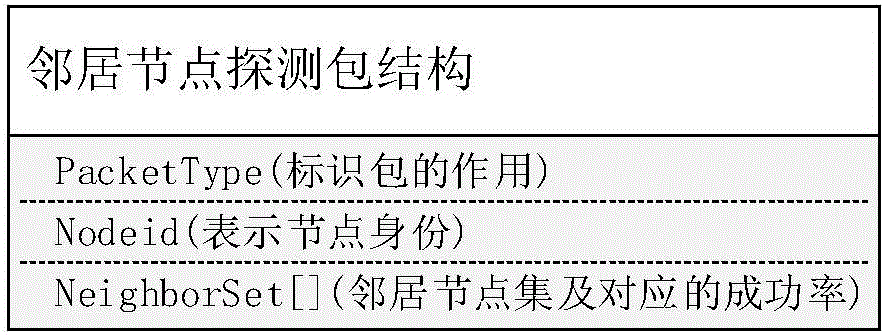

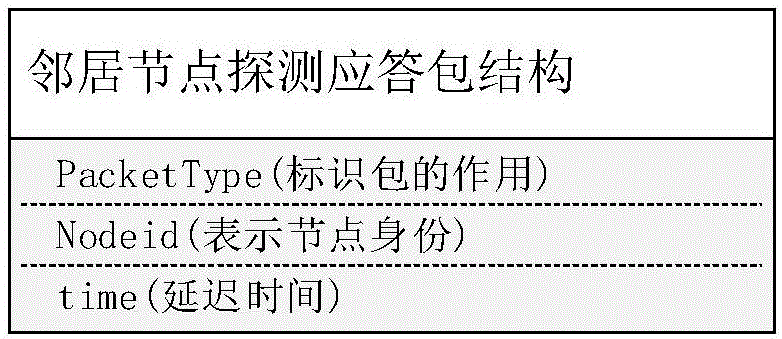

Wireless sensor network routing method with high robustness and low delay

ActiveCN106851766AShort average end-to-end latencyGood data transfer efficiencyNetwork topologiesData needsMobile wireless sensor network

The invention provides a wireless sensor network routing method with high robustness and low delay. The wireless sensor network routing method comprises several stages of network initialization, period detection, path discovery and data forwarding, and comprises the following steps: sending an initialization packet by a base station, receiving the initialization packet by a node, establishing a neighbor node set, and periodically broadcasting a detection packet; replying a detection ACK packet by the node after waiting for a random time; updating the neighbor node set; and when data need to be sent, starting the path discovery, finding a fast path leading to a destination node, making response by the destination node after receiving the detection, determining assistance nodes on the way of the path, finally returning acknowledgement to a source node, sending the data by the source node according to a detection result, making decision by each node on the way according to the detected information and delay information, and finally sending the data to the destination node. According to the method, the average end-to-end delay is shortened by the reliable path discovery, the robustness is improved on the basis of ensuring the delay by using all kinds of fault-tolerant mechanisms, and no synchronization is required, therefore the synchronization cost is reduced.

Owner:NORTHWEST UNIV

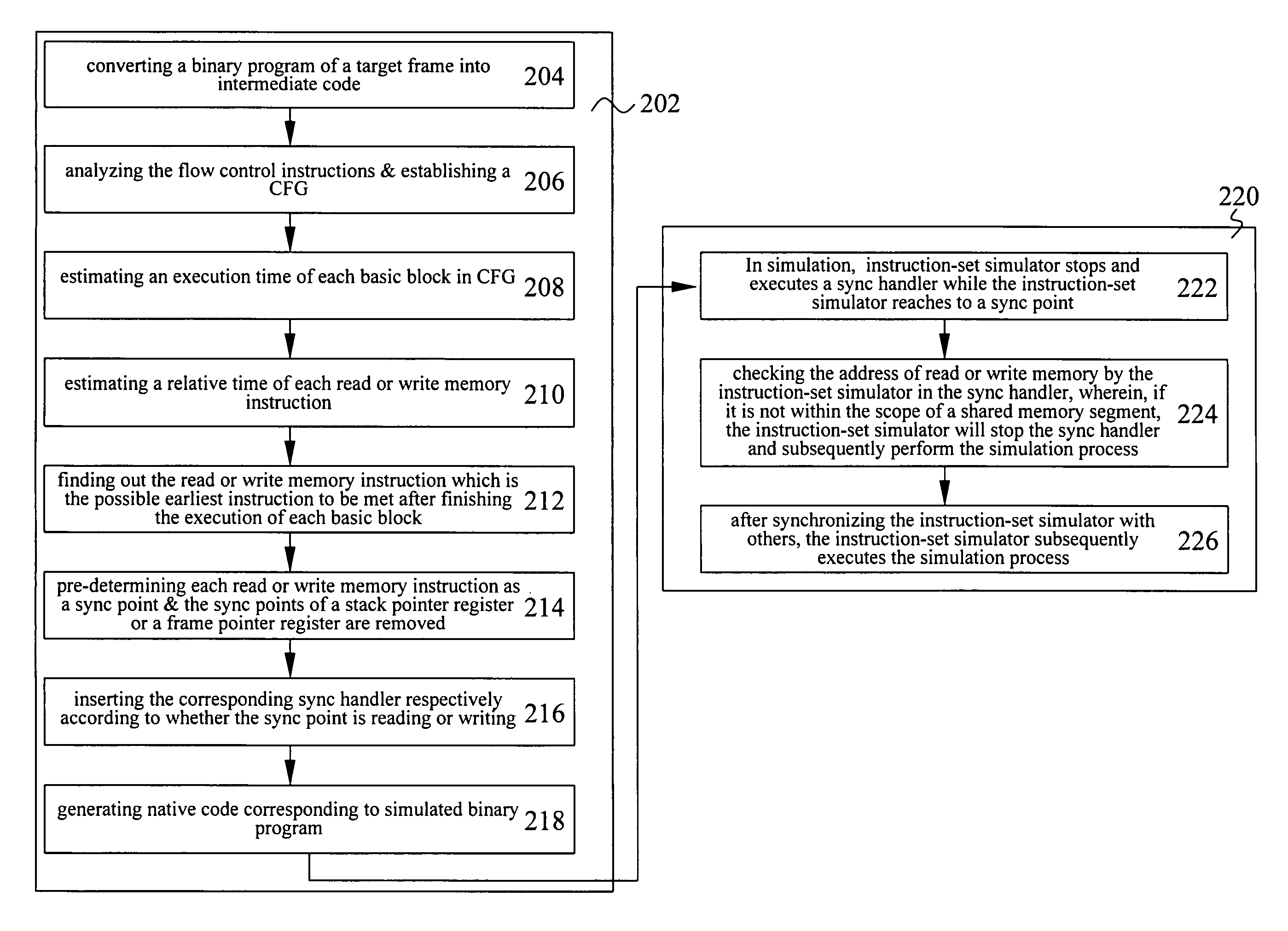

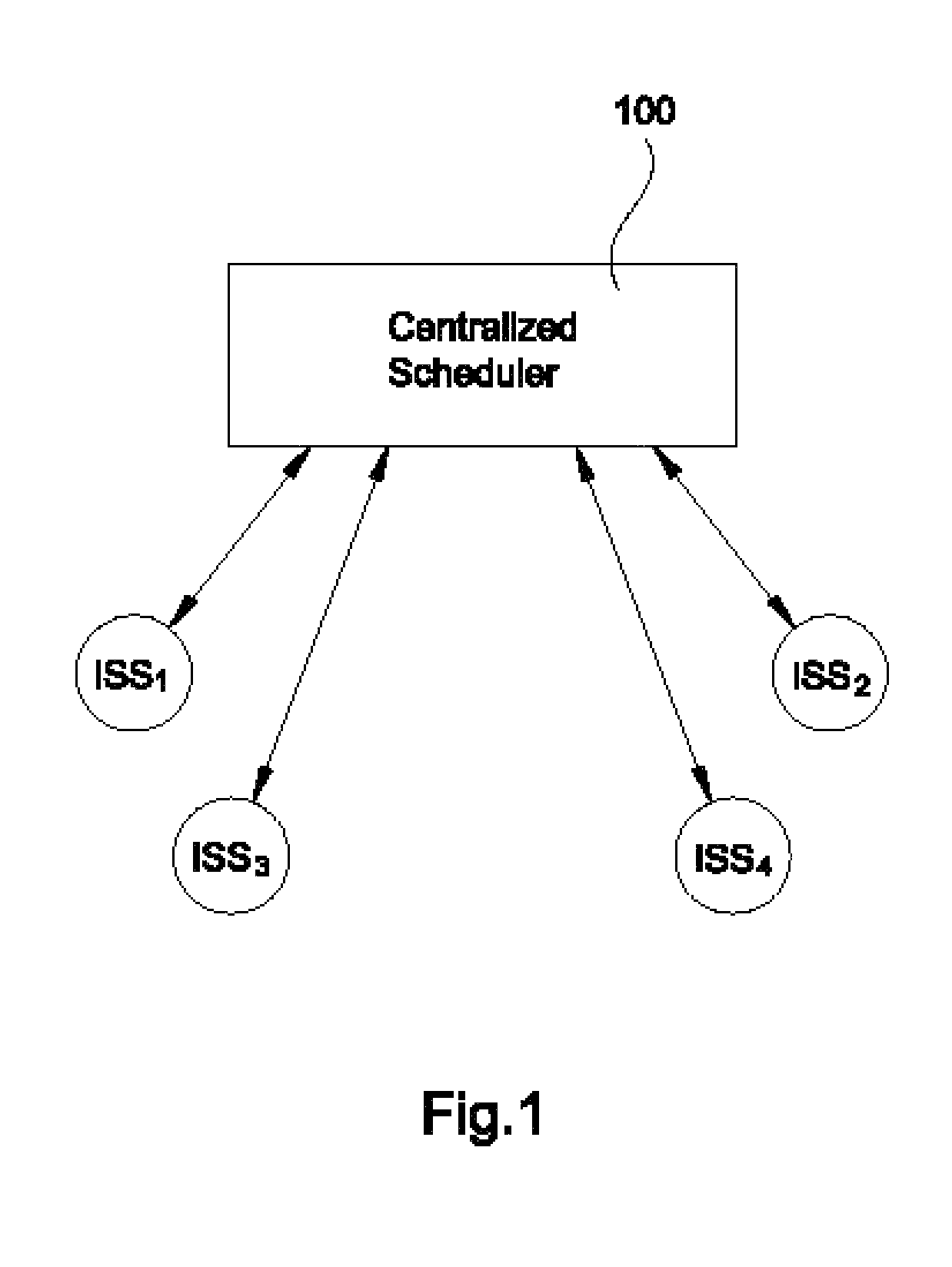

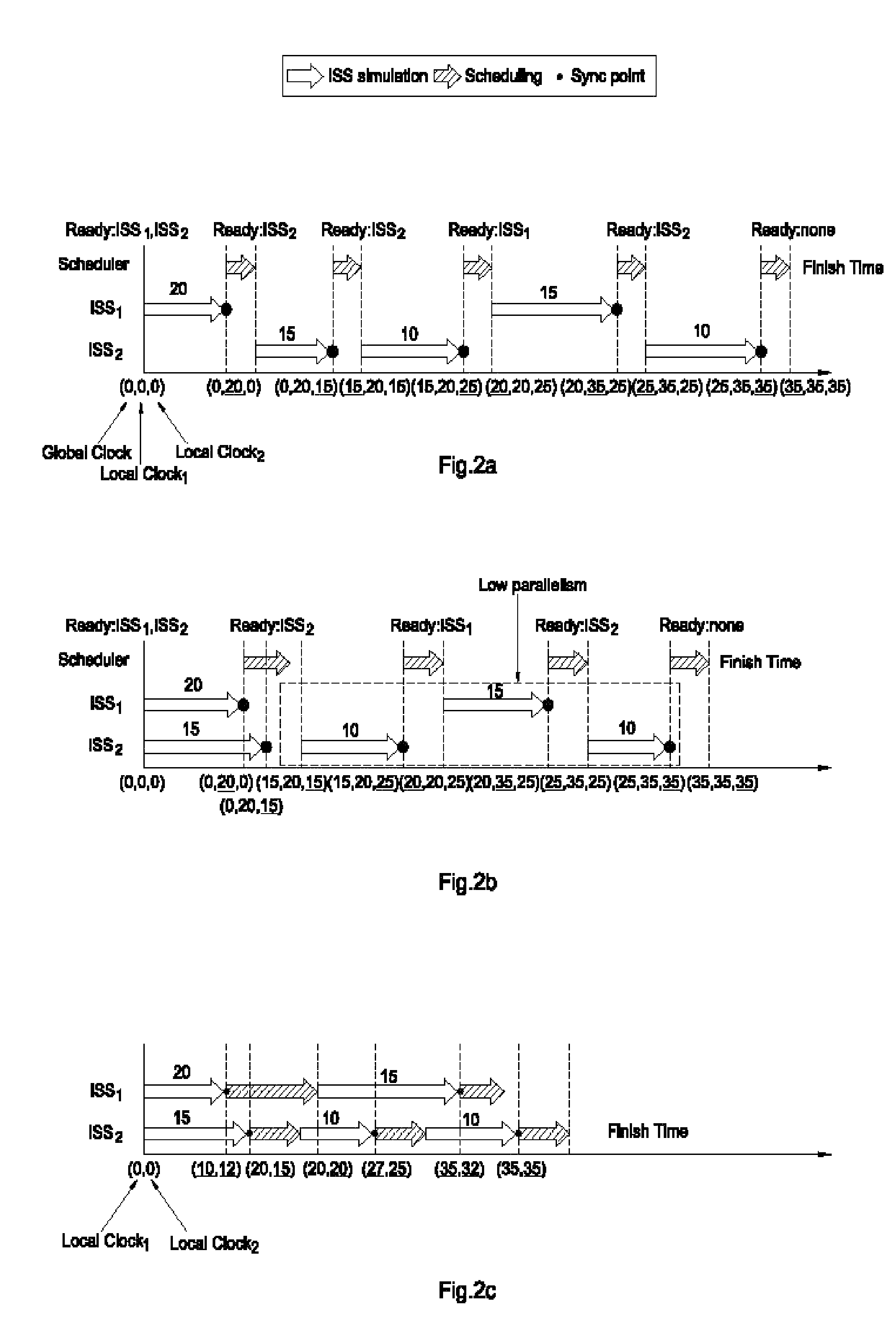

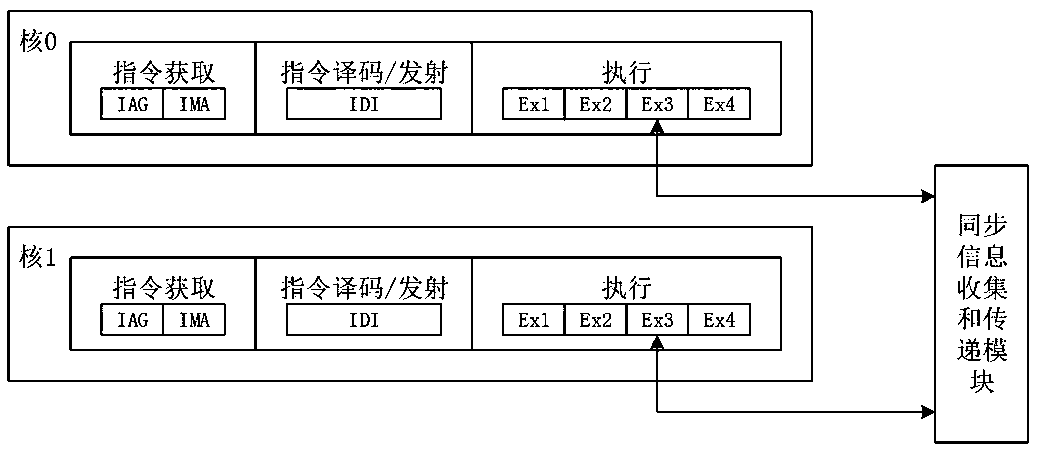

Method and device for multi-core instruction-set simulation

InactiveUS8352924B2Maintain accuracyIncrease speedDecompilation/disassemblyCAD circuit designData segmentParallel computing

The present invention discloses a method for multi-core instruction-set simulation. The proposed method identifies the shared data segment and the dependency relationship between the different cores and thus effectively reduces the number of sync points and lowers the synchronization overhead, allowing multi-core instruction-set simulation to be performed more rapidly while ensuring that the simulation results are accurate. In addition, the present invention also discloses a device for multi-core instruction-set simulation.

Owner:NATIONAL TSING HUA UNIVERSITY

Distributed type dynamic cache expanding method and system for supporting load balancing

InactiveCN102244685BEvenly distributedAdd supportData switching networksCache serverInternet traffic

The invention discloses a distributed cache dynamic scaling method and system supporting load balancing, belonging to the technical field of software. The method is as follows: 1) each cache server regularly monitors its own resource utilization rate; 2) each cache server calculates its own weighted load value Li according to the currently monitored resource utilization rate, and sends it to the cache cluster manager; 3) cache The cluster manager calculates the current average load value of the distributed cache system according to the weighted load value Li. When it is higher than the threshold value thrmax, it performs an expansion operation; when it is lower than the set threshold value thremin, it performs a contraction operation. The system includes a cache server, a cache client and a cache cluster manager; the cache server is respectively connected to the cache client and the cache cluster manager through a network. The invention guarantees the balanced distribution of network traffic among each cache node, optimizes the utilization rate of system resources, and solves the problems of guaranteeing data consistency and continuous availability of services.

Owner:济南君安泰投资集团有限公司

Carrier frequency synchronization method for UFMC system based on FPGA

ActiveCN108494712ASave Spectrum ResourcesReduce synchronization overheadCarrier regulationMulti-frequency code systemsCommunication qualitySystem requirements

The invention relates to a carrier frequency synchronization method for a UFMC system based on an FPGA, and belongs to the field of wireless communication. According to the method, a UFMC symbol is used as a training sequence, and the training sequence contains the same symbols of m parts, wherein m is not less than 2; the whole frequency offset estimation process is completed through a decimal frequency offset and an integer frequency offset; and the decimal frequency offset is obtained through self-correlation of the two same data parts before and after the training sequence, and training sequence estimation after decimal frequency offset compensation is multiplied by a local training sequence and FFT is made to obtain the integer frequency offset. According to the method, the estimationprecision can be guaranteed through the decimal frequency offset estimation, and the system requirements can be met; through the integer frequency offset estimation, the overall estimation range is greatly increased. A system frequency offset value is effectively estimated, a bit error rate of the system is reduced, and the communication quality is improved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

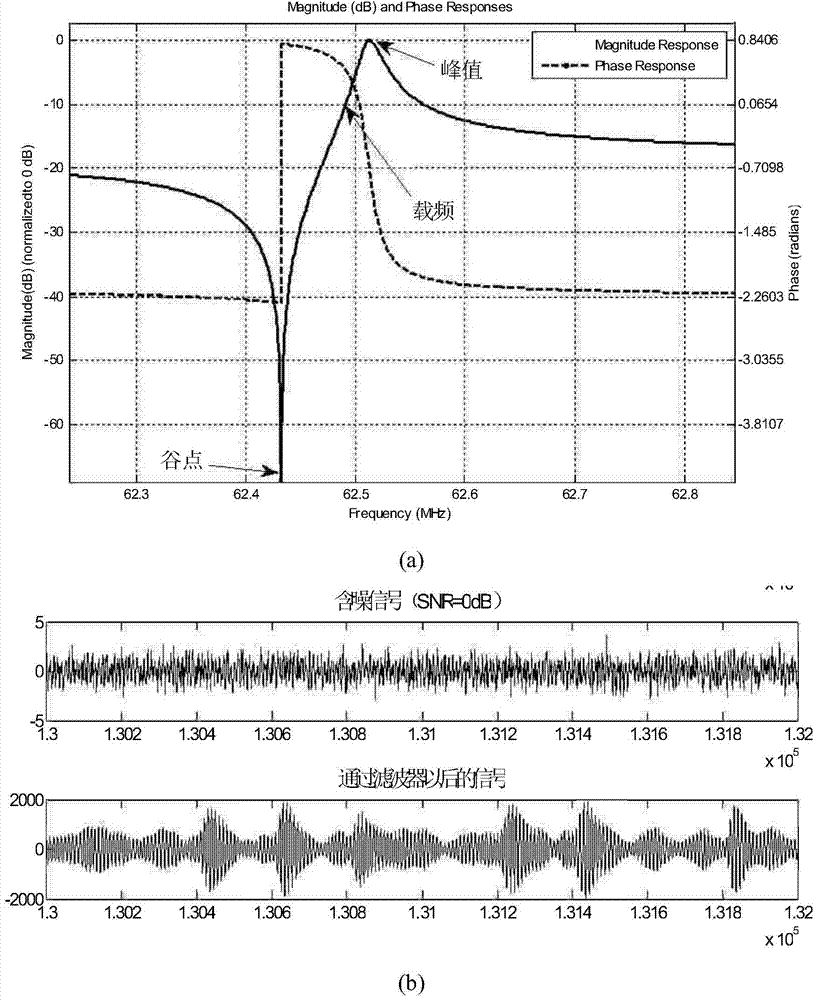

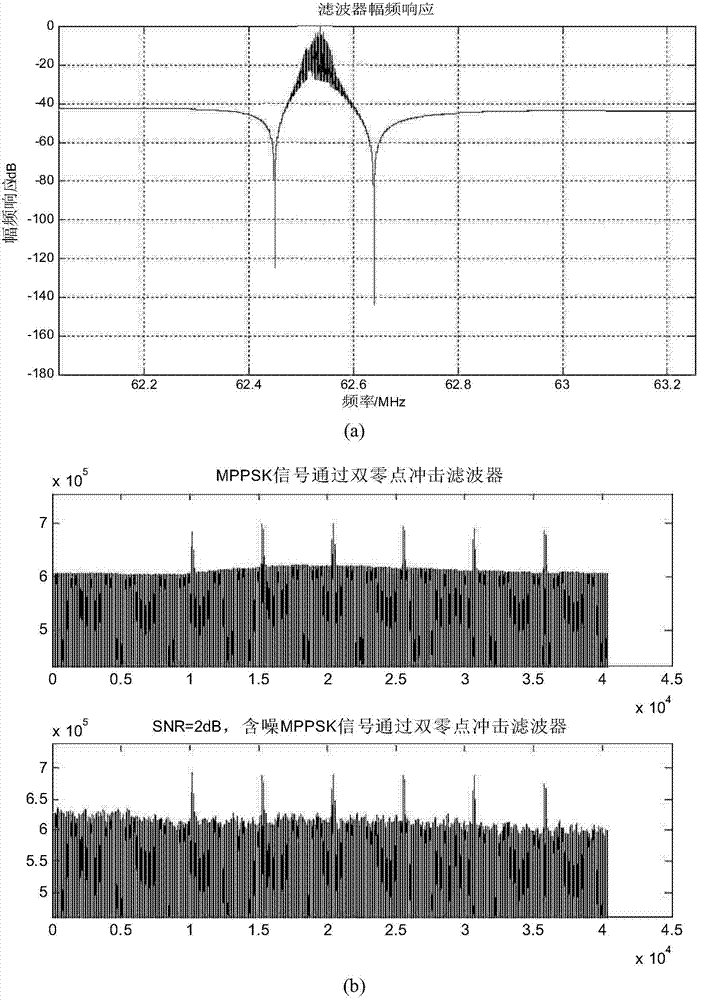

Simultaneous frame and bit combined estimation method for EBPSK (extended binary phase shift keying) communication system

ActiveCN104506472AImprove synchronization efficiencyShorten the timePhase-modulated carrier systemsHigh level techniquesData streamCommunications system

The invention discloses a simultaneous frame and bit combined estimation method for an EBPSK (extended binary phase shift keying) communication system. The method comprises the steps: framing sending data at a transmitting end, and then performing EBPSK modulation; sending a receiving signal into an impact filter at a receiving end, and converting the receiving signal into parasitic amplitude modulation impact at a code element '1'; performing down sampling on output data of the impact filter according to a relative relation with a baseband code element rate; calculating peak values of 13 continuous data points of each group in a data stream subjected to down sampling, marking the moment that the peak values are greater than a certain threshold value, calculating a relevance value to a frame header, and marking the moment, in which four times of the peak value is smaller than the relevance value, in the marked moments; searching the first peak value of the relevance value in the marked moment in the step, wherein the moment corresponding to the first peak value is a synchronous moment. According to the simultaneous frame and bit combined estimation method, frame and bit are simultaneously finished by one step, so that the synchronization efficiency is improved, the synchronization precision is improved, the demodulation performance is high, the synchronization expense is reduced, the transmission efficiency is high, the hardware expenses are reduced, and the receiving machine implementation cost is reduced.

Owner:苏州东奇信息科技股份有限公司

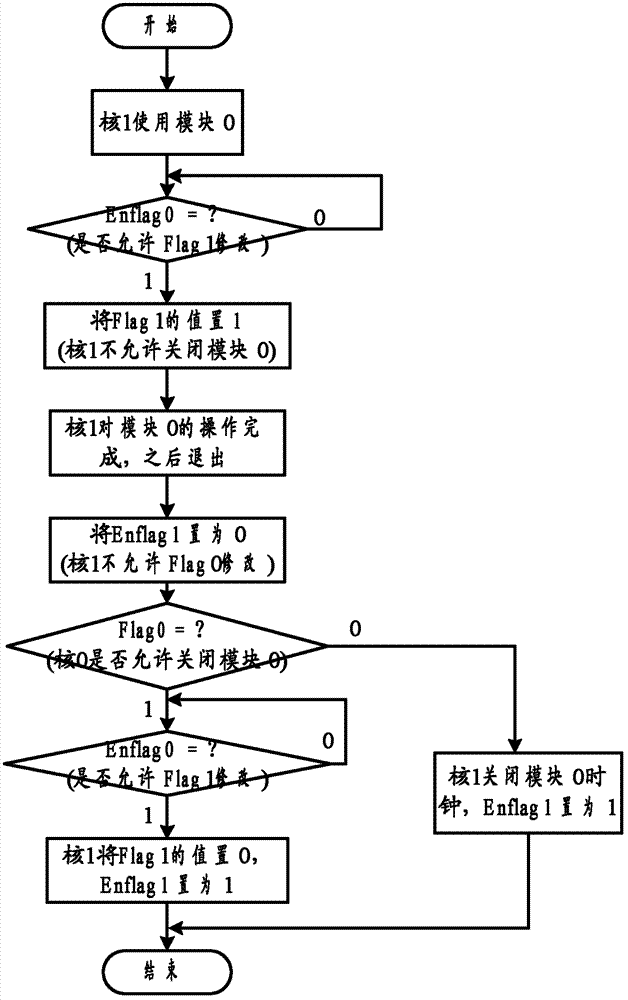

Clock control method for slaves and baseband chip

ActiveCN103092803AAchieve openImplementor closesEnergy efficient ICTPower supply for data processingTerminal equipmentMemory controller

The invention relates to terminal equipment and discloses a clock control method for slaves and a baseband chip. On and off of a clock of a slave are not controlled by means of software any more but controlled according to statuses of HSEL signals and HTRANS signals. Control is performed from bottom hardware, so that memory modules for storing system codes and external memory controller modules which cannot be switched off by the traditional software method can be switched off, and the process is simple, efficient and riskless and is free of delay required by software in judging. When accessing the slave is not required, on and off of the clock of the slave can be controlled timely, so that system power consumption is lowered greatly.

Owner:SHANGHAI LINKCHIP SEMICON TECH CO LTD

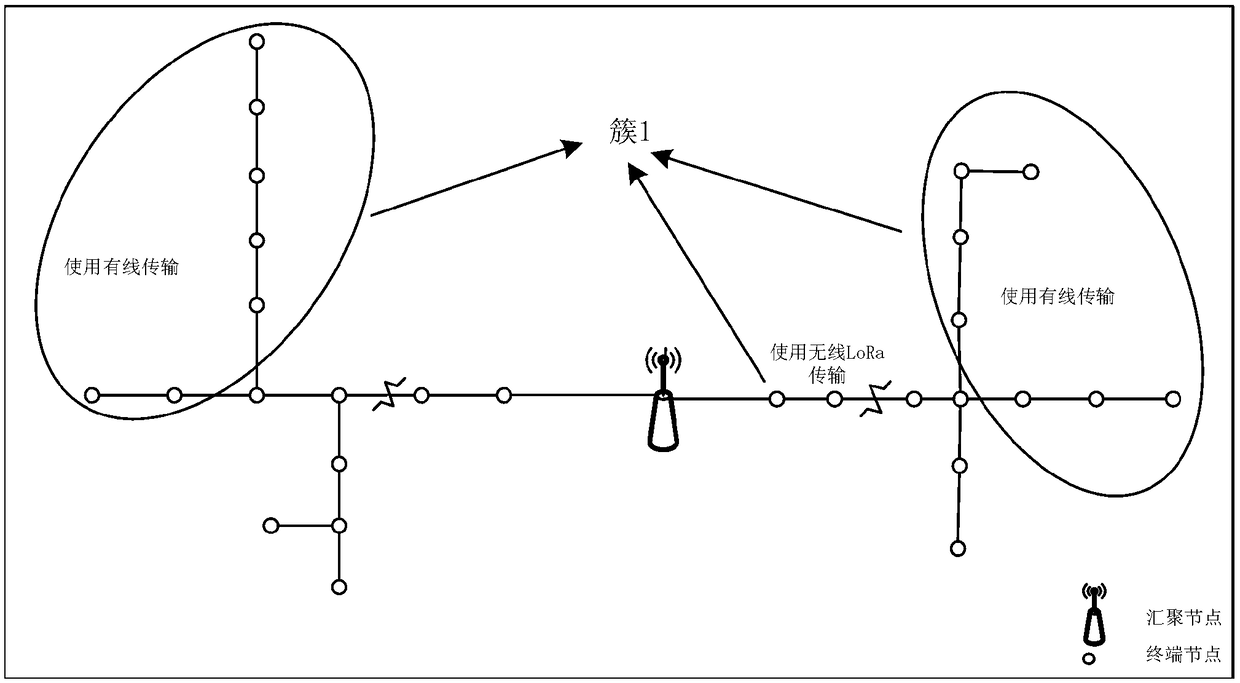

Wired and wireless hybrid Internet of Things system and clustering method

ActiveCN109361598AIncrease upload rateImprove reliabilityData switching networksComputer networkSpecific time

The invention belongs to the technical field of wireless communication network, and discloses a wired and wireless hybrid Internet of Things system and a clustering method. The method comprises the steps of initializing a network, clustering terminal nodes, determining the size of a time block occupied by each cluster, determining the size of a tie slot, and updating data by the terminal nodes. The quantity of the conflicted terminal nodes is reduced by the way of distributing a period of specific time slot for each cluster, through clustering in combination with a network optimization methodaccording to the quantity of the terminal nodes in the network, the data sizes needing to be uploaded by the terminal nodes, and the condition of channels from the terminal nodes to a convergence node; the deficiency that the uploading rate of the terminal node in the network is affected due to the fact that a standard LoRaWAN protocol provided by a current LoRa alliance does not consider that thefrequent conflicts are caused by simultaneous uploading of the lots of terminal nodes is overcome, and the uploading rates from the terminal nodes to the convergence node in the wired and wireless hybrid Internet of Things are effectively improved.

Owner:XIDIAN UNIV

High-parallelism synchronization approach for multi-core instruction-set simulation

ActiveUS8423343B2Maintain accuracyIncrease speedProgram synchronisationComputation using non-denominational number representationPredictive methodsDegree of parallelism

The present invention discloses a high-parallelism synchronization method for multi-core instruction-set simulation. The proposed method utilizes a new distributed scheduling mechanism for a parallel compiled MCISS. The proposed method can enhance the parallelism of the MCISS so that the computing power of a multi-core host machine can be effectively utilized. The distributed scheduling with the present invention's prediction method significantly shortens the waiting time which an ISS spends on synchronization.

Owner:NATIONAL TSING HUA UNIVERSITY

Method for executing dynamic allocation command on embedded heterogeneous multi-core

InactiveCN101923492BGain transparencyImprove execution efficiencyResource allocationMultiple digital computer combinationsProcessing coreStatic dispatch

The invention discloses a method for executing a dynamic allocation command on embedded heterogeneous multi-core in the technical field of computers. The method comprises the following steps of: partitioning a binary code program to obtain a plurality of basic blocks; respectively selecting each basic block so as to obtain a target processing core for executing each basic block; translating a basic block which corresponds to the obtained target processing core so as to obtain a translated binary code on the target processing core; and performing statistics on execution frequency of each basicblock, marking a basic block of which the execution frequency is greater than a threshold value T as a hot-spot basic block, and caching the translated binary code of the hot-spot basic block into the cache. The method dynamically allocates commands onto each heterogeneous multi-core to be executed according to the processing capacity and load condition of the system multi-core and the like, so that the method overcomes the defect that static scheduling cannot dynamically allocate resources and also reduces the complexity of dynamic thread division. Therefore, the execution efficiency of the program on the heterogeneous multi-core is further improved.

Owner:SHANGHAI JIAO TONG UNIV

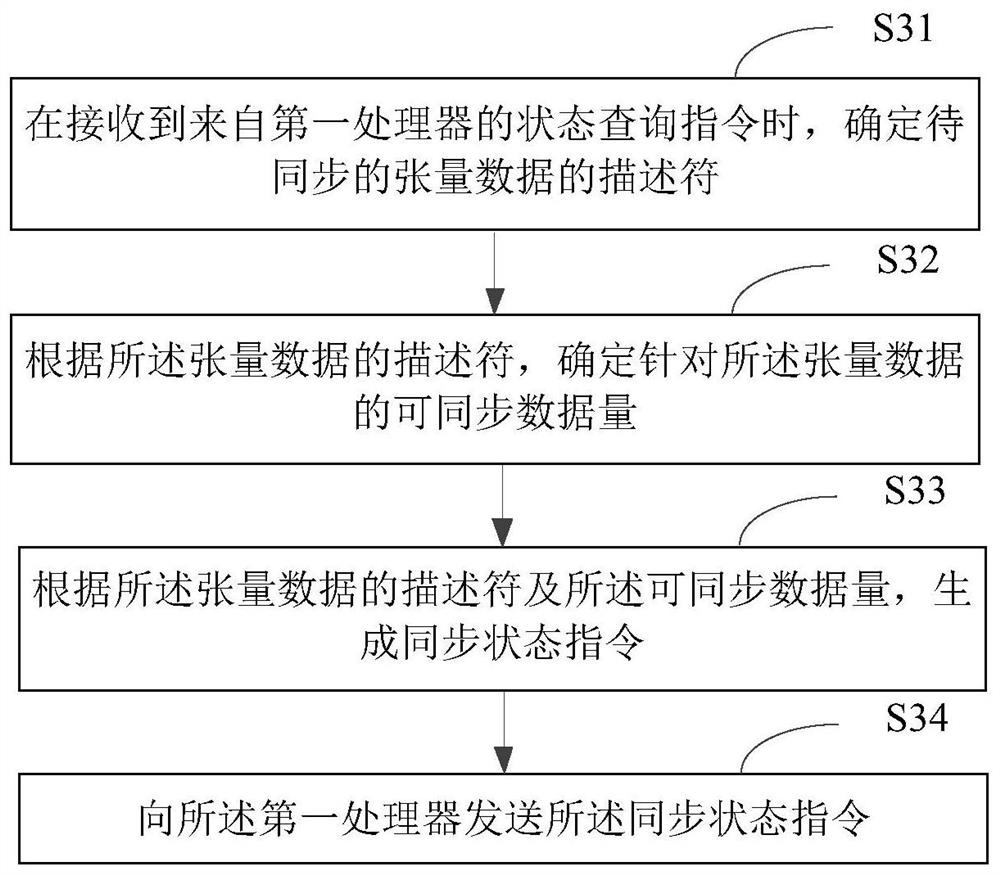

Data synchronization method and device and related product

ActiveCN112347186AImprove synchronization efficiencyAchieve synchronizationDatabase distribution/replicationSpecial data processing applicationsData synchronizationInstruction processing unit

The invention relates to a data synchronization method and device and a related product, the product comprises a control module, and the control module comprises an instruction caching unit, an instruction processing unit and a storage queue unit; the instruction caching unit is used for storing a calculation instruction associated with the artificial neural network operation; the instruction processing unit is used for analyzing the calculation instruction to obtain a plurality of operation instructions; the storage queue unit is used for storing an instruction queue, and the instruction queue comprises a plurality of operation instructions or calculation instructions to be executed according to the front-back sequence of the queue. By means of the method, the operation efficiency of therelated product during operation of a neural network model can be improved.

Owner:ANHUI CAMBRICON INFORMATION TECH CO LTD

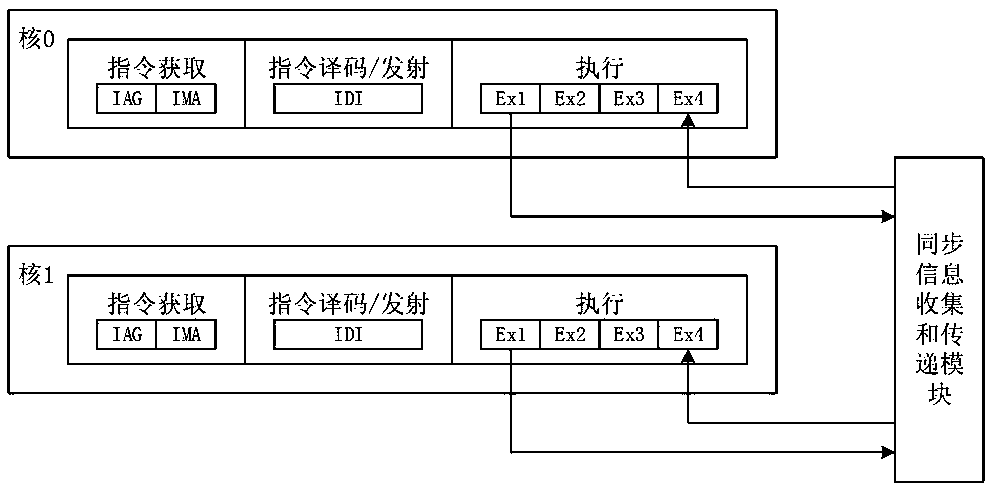

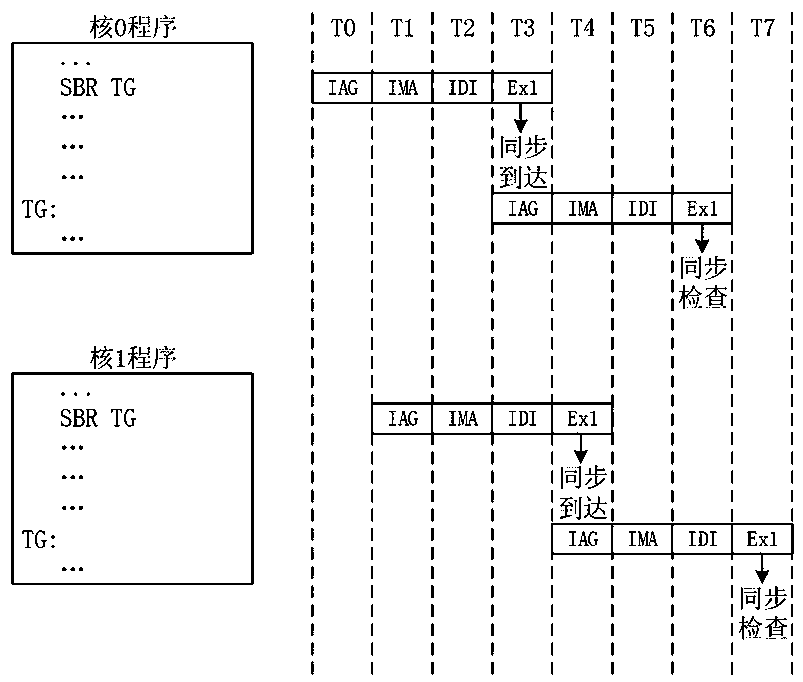

Multi-core processor synchronization method based on delay fence synchronization operation instruction

ActiveCN110147253AReduce synchronization operationsImprove synchronization efficiencyConcurrent instruction executionWait stateMulti-core processor

The invention discloses a multi-core processor synchronization method based on a delay fence synchronization operation instruction, and the method comprises the steps: enabling all participating coreprocessors to send out a synchronization arrival signal of the core processor at a synchronization arrival station, and confirming whether other core processors participating in the fence synchronization all arrive at a synchronization inspection station; and if yes, enabling the core processor to continue to execute, otherwise, enabling the core processor to enter a waiting state until the last arrived signal sent by the core processor is checked by the core processor. The synchronous arrival station is a pipeline station where the synchronous arrival station is located in a self-synchronousstate updating mode; the synchronous check station is a pipeline station where the synchronous state of other cores is inquired, and when the synchronous check station and the synchronous arrival station are not the same pipeline station, the non-adjacent synchronization mode is delay synchronization. The method has the advantages that the method is realized based on any instruction type, extra fence synchronization operation can be reduced, and the transaction synchronization efficiency is improved.

Owner:NAT UNIV OF DEFENSE TECH

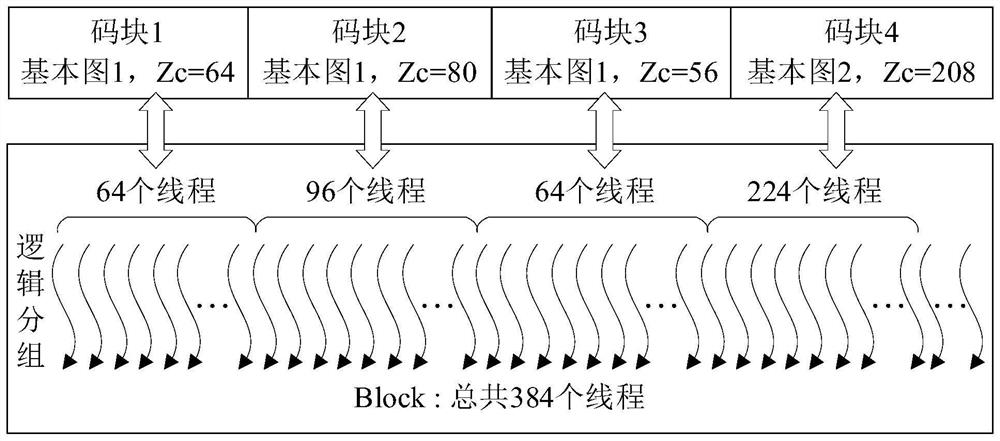

GPU-based 5G multi-user LDPC code high-speed decoder and decoding method thereof

PendingCN114884618AIncrease flexibilityImprove practicalityError preventionError correction/detection using multiple parity bitsCoding blockComputer architecture

The invention provides a 5G multi-user LDPC code high-speed decoder based on a GPU and a decoding method thereof. The 5G multi-user LDPC code high-speed decoder comprises a high-speed decoder architecture and a high-speed decoding method. The decoding method comprises the following steps: 1, initializing a storage space of a host end; 2, initializing the GPU equipment; 3, carrying out LDPC basis matrix information structure weight description; 4, the host side dispatches GPU decoding; 5, copying LLR information; 6, distributing a corresponding thread number by the GPU end according to the user code block information, selecting a corresponding basis matrix information structural body, and performing iterative decoding based on a hierarchical minimum sum algorithm; 7, symbol judgment; and 8, returning the result to the host end. According to the method, the characteristics of a hierarchical decoding algorithm and the architecture characteristics of the GPU are fully combined, GPU on-chip resources are fully utilized, the memory access efficiency and the utilization rate of a data calculation unit are improved, the decoding time of a single code block is shortened while the resource consumption of the single code block is reduced, and the overall information throughput is improved; the decoding mode is more suitable for processing multi-cell and multi-user LDPC code block decoding in an actual scene.

Owner:BEIHANG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com