Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

165 results about "Parallel scheduling" patented technology

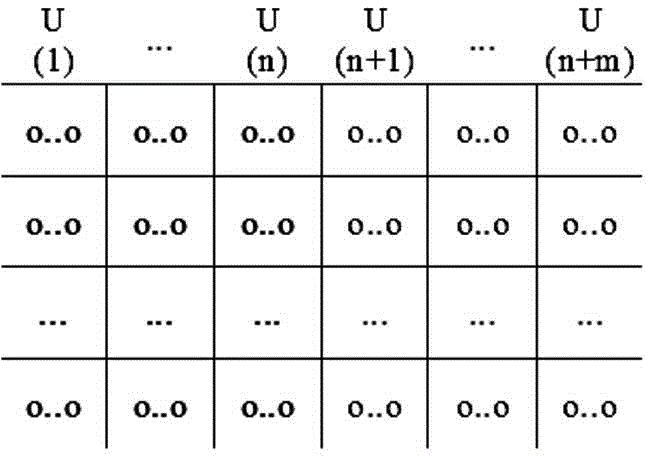

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Resource state information-based grid task scheduling processor and grid task scheduling processing method

InactiveCN101957780AAvoid failureImprove scheduling efficiencyResource allocationTransmissionGrid resourcesResource based

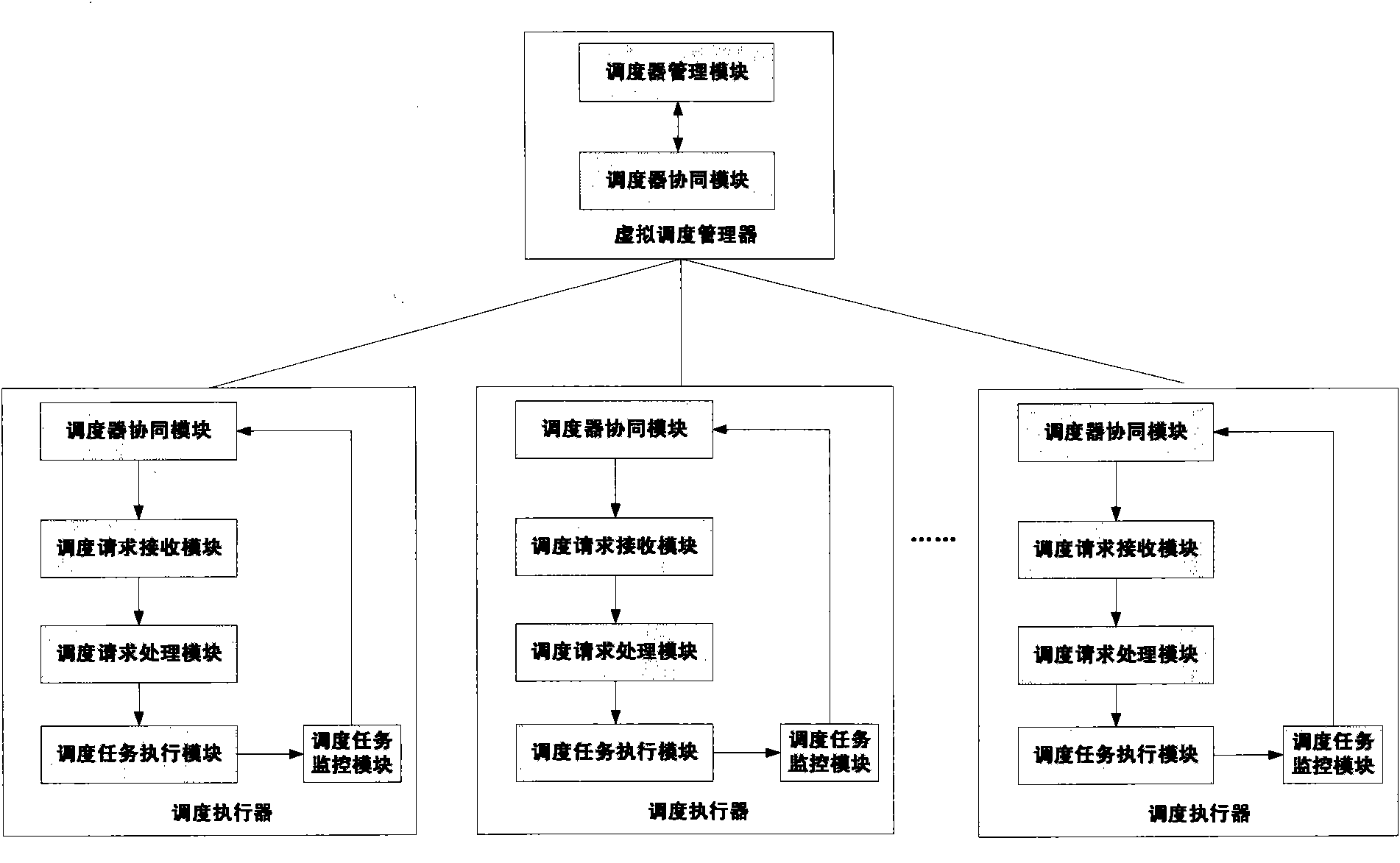

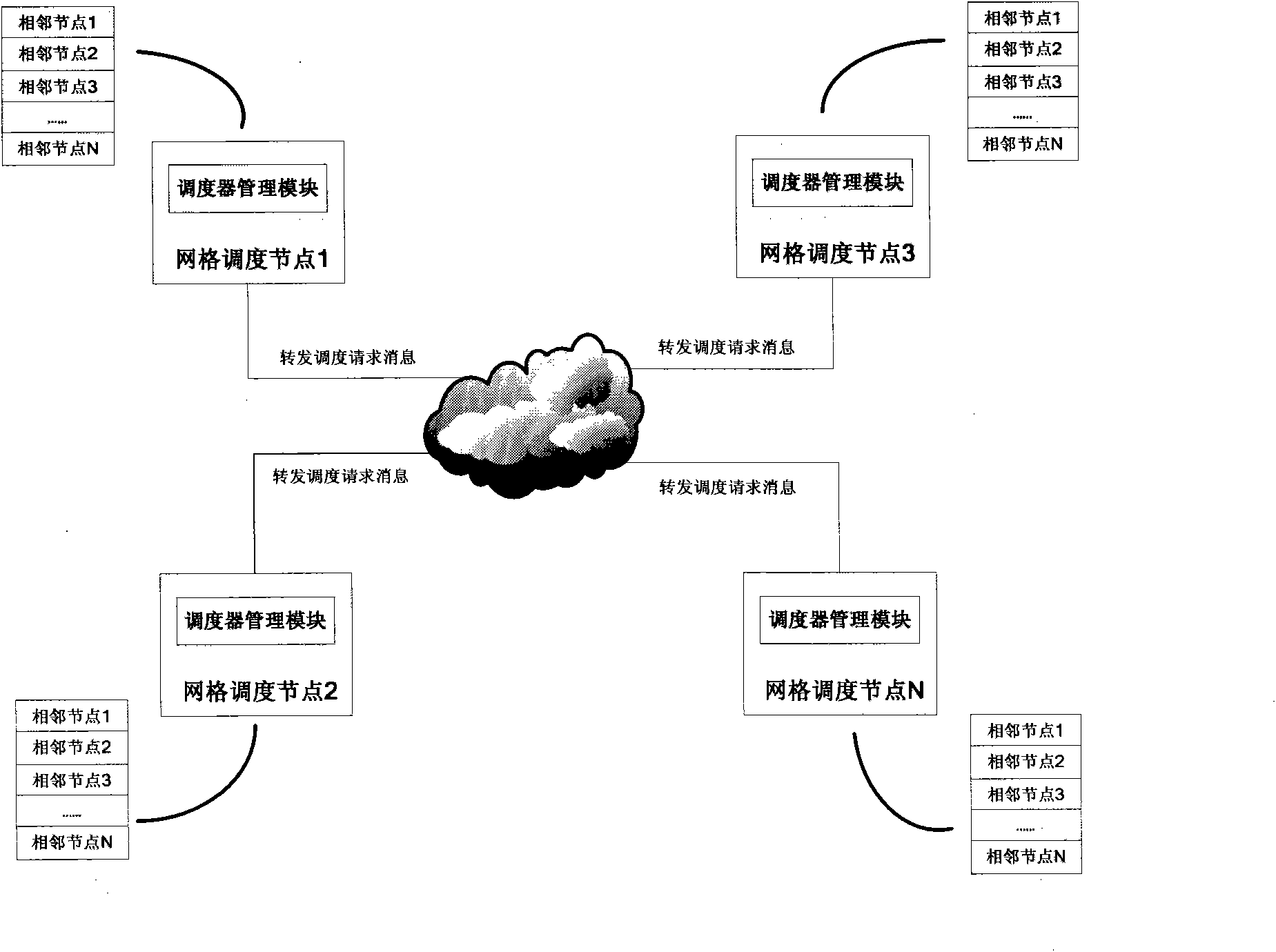

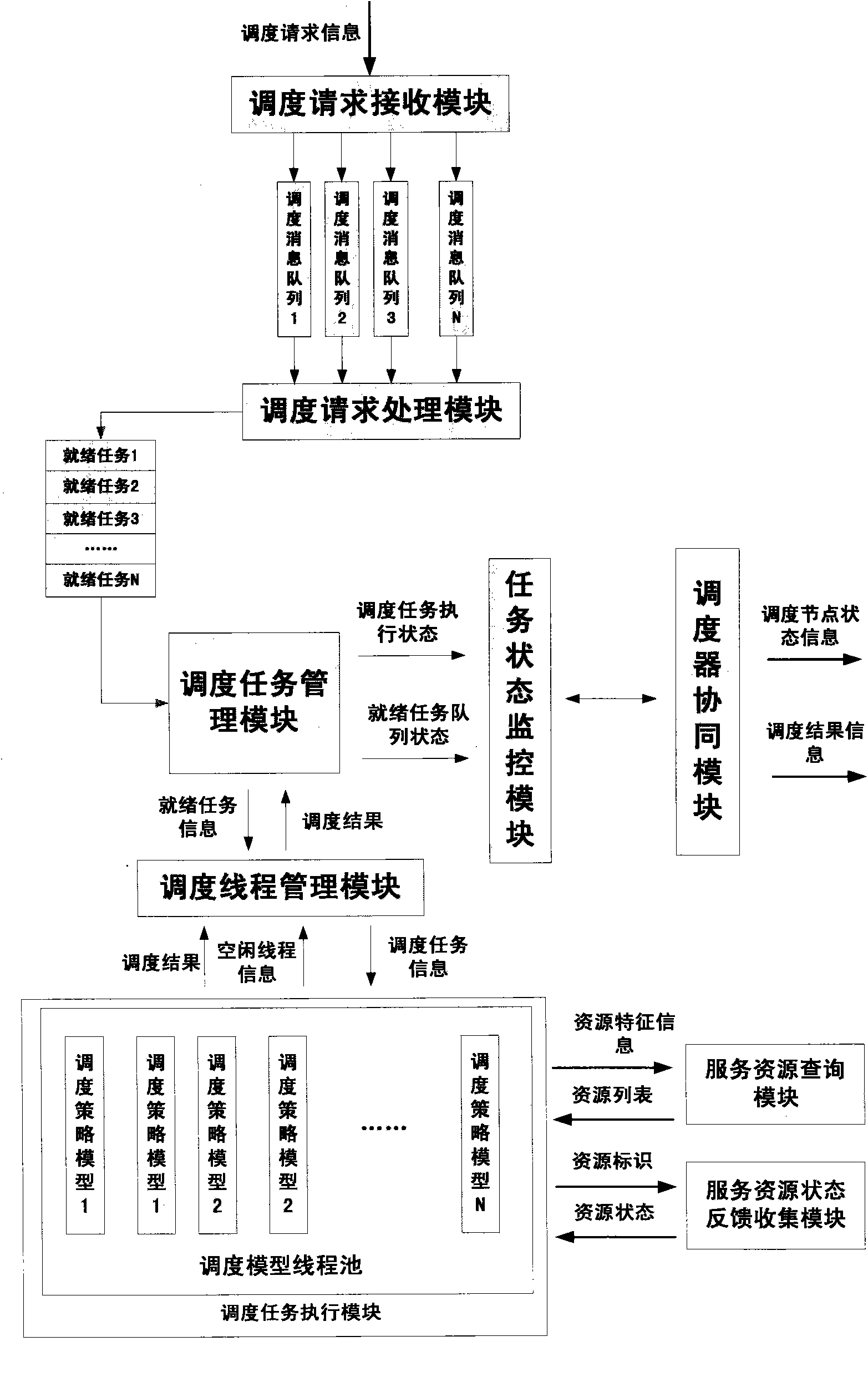

The invention discloses a resource state information-based grid task scheduling processor. The resource state information-based grid task scheduling processor comprises a plurality of distributed grid scheduling nodes, wherein each grid scheduling node is connected with the other grid scheduling nodes through an allocation mode; each grid scheduling node has a two-layer structure; and the top layer is provided with a virtual scheduling manager and the bottom layer is provided with a plurality of parallel scheduling executors. The invention also provides a grid task scheduling processing method. In the resource state information-based grid task scheduling processor and the grid task scheduling processing method, a distributed grid resource scheduling system is established; management and coordination on local scheduling executors are unified by a second-level scheduling node management method, so that the failure of a certain scheduling executor is avoided and at the same time, the over-long time waiting of the scheduling task on a certain scheduling executor is avoided; and local resource state feedback is acquired by a resource node property-based analysis and evaluation method, so that the delay caused by acquiring the resource state through a network can be reduced and the grid calculation task scheduling efficiency is further improved.

Owner:THE 28TH RES INST OF CHINA ELECTRONICS TECH GROUP CORP

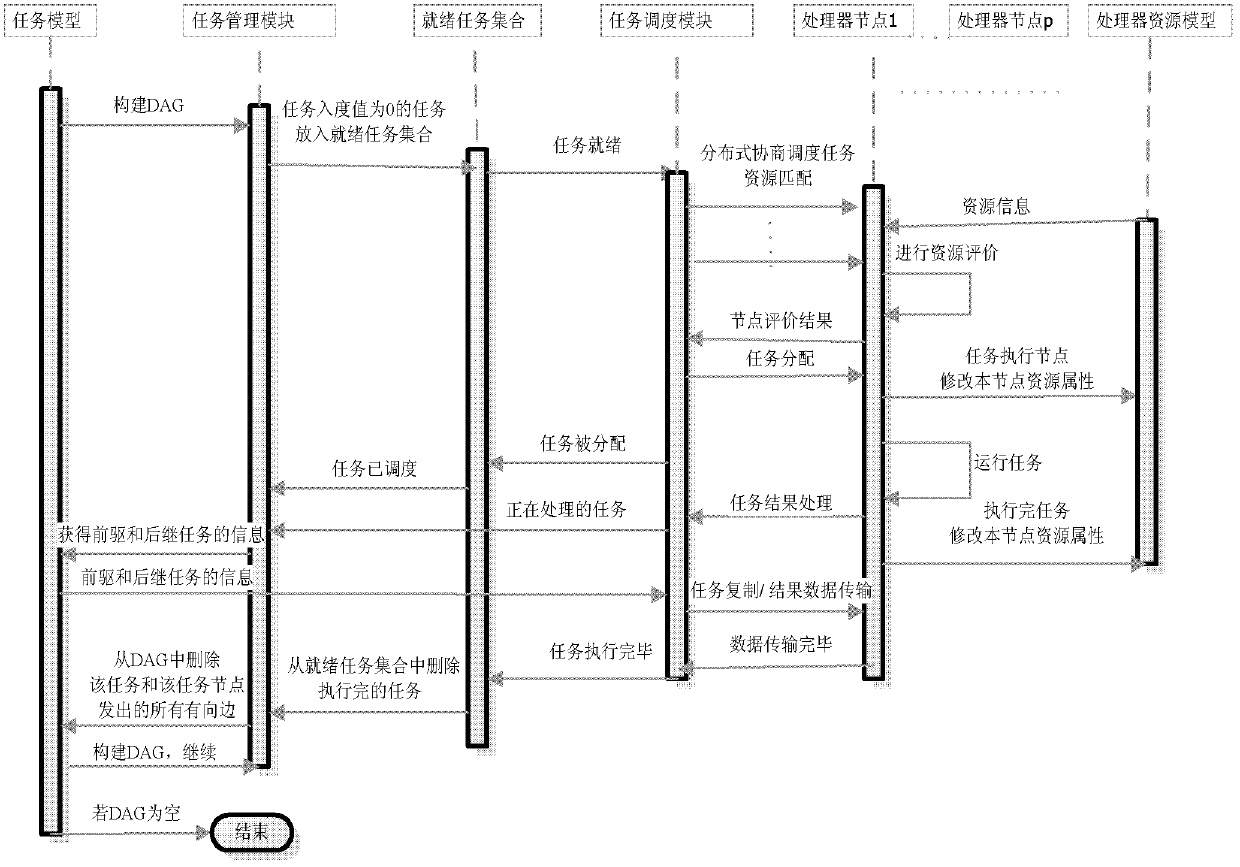

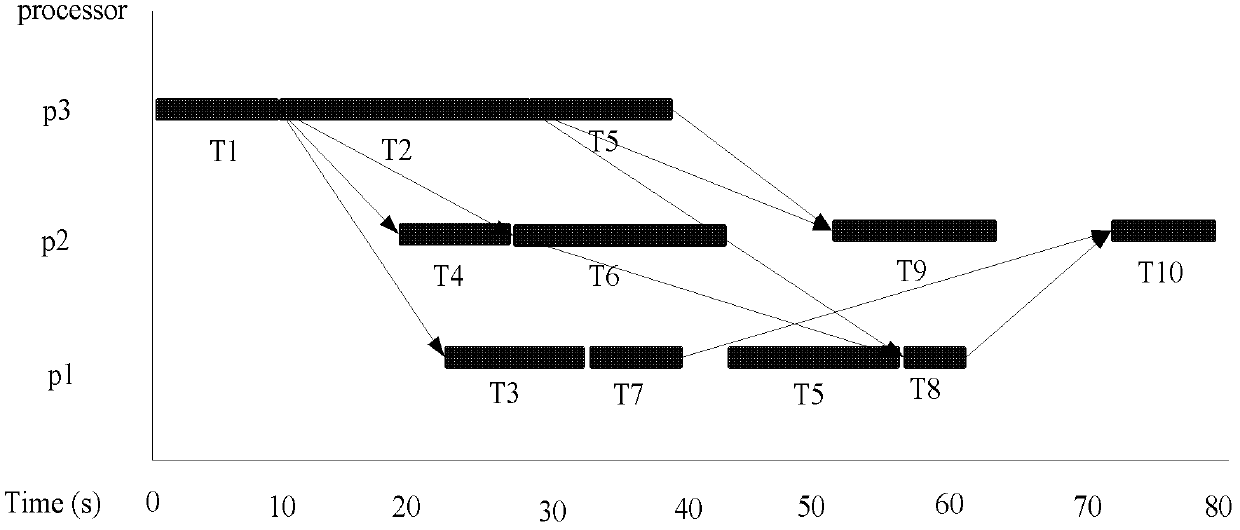

Decoupling parallel scheduling method for rely tasks in cloud computing

InactiveCN102591712AStrong parallelismLoad balancingMultiprogramming arrangementsTransmissionParallel computingPerformance index

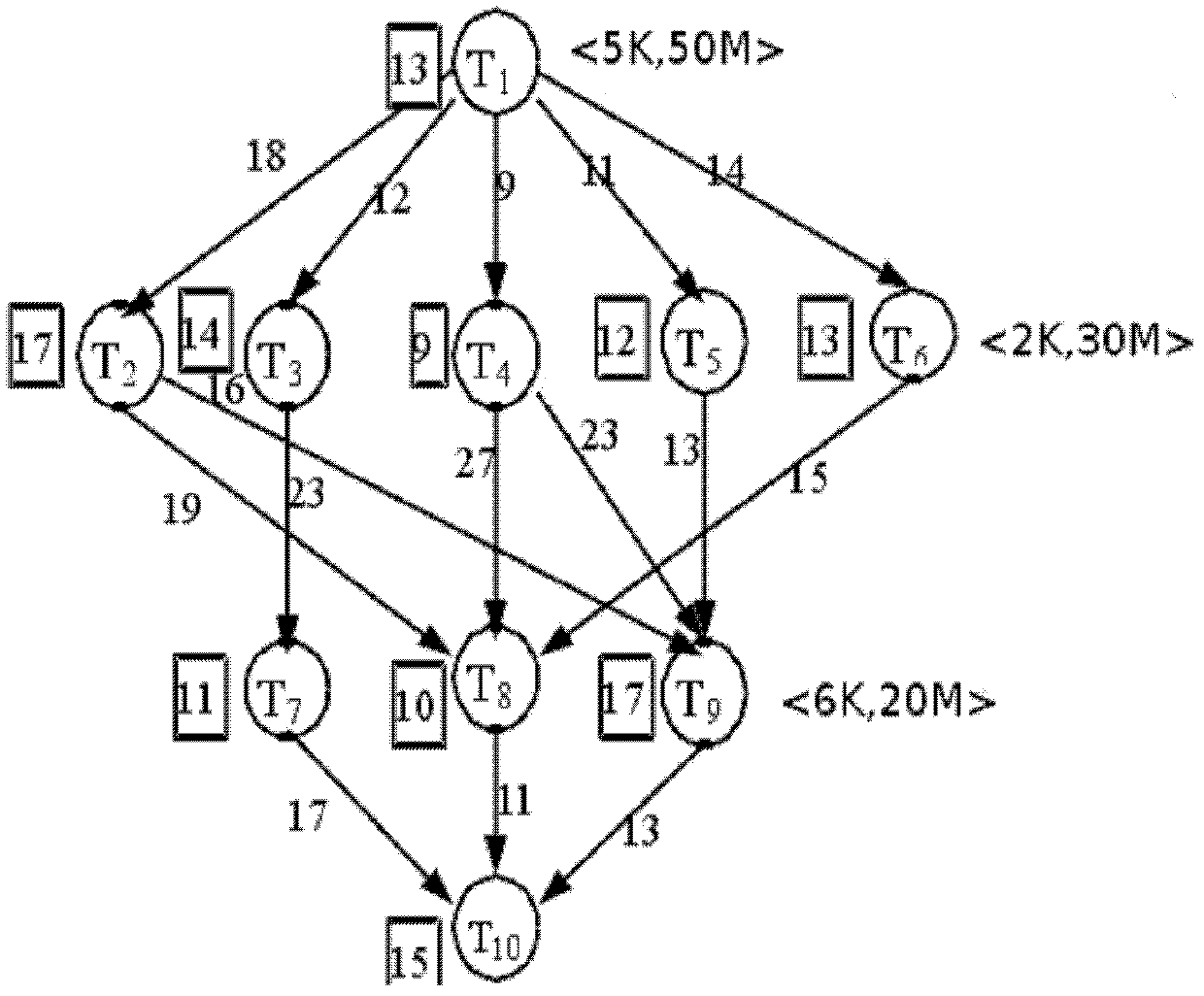

The invention belongs to the field of cloud computing application, and relates to method for task rely relation description, decoupling, parallel scheduling and the like in cloud service. Rely task relations are provided, and a decoupling parallel scheduling method of rely tasks are constructed. The method comprises first decoupling the task rely relations with incoming degree being zero to construct a set of ready tasks and dynamically describing tasks capable of being scheduled parallelly at a moment; then scheduling the set of the ready tasks in distribution type and multi-target mode according to real time resource access so as to effectively improve schedule parallelism; and during the distribution of the tasks, further considering task execution and expenditure of communication (E / C) between the tasks to determine whether task copy is used to replace rely data transmission so as to reduce the expenditure of communication. The whole scheduling method can schedule a plurality of tasks in the set of the ready tasks in dynamic parallel mode, well considers performance indexes including real time performance, parallelism, expenditure of communication, loading balance performance and the like, and effectively improves integral performance of the system through the dynamic scheduling strategy.

Owner:DALIAN UNIV OF TECH

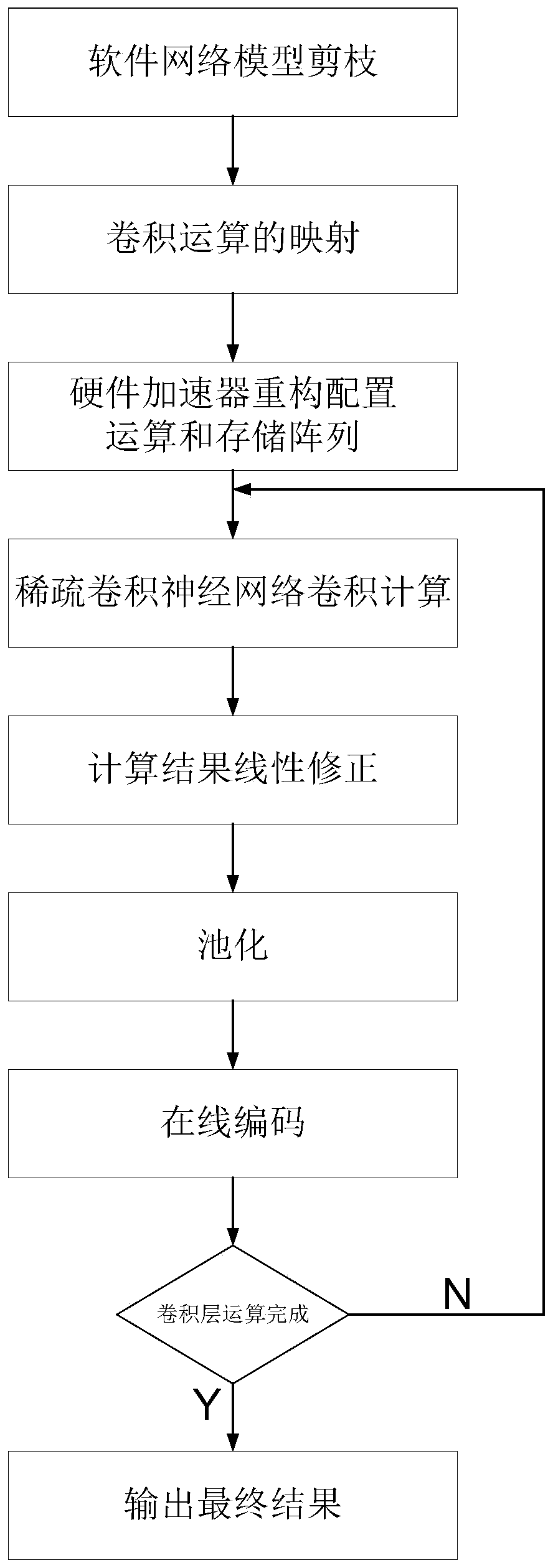

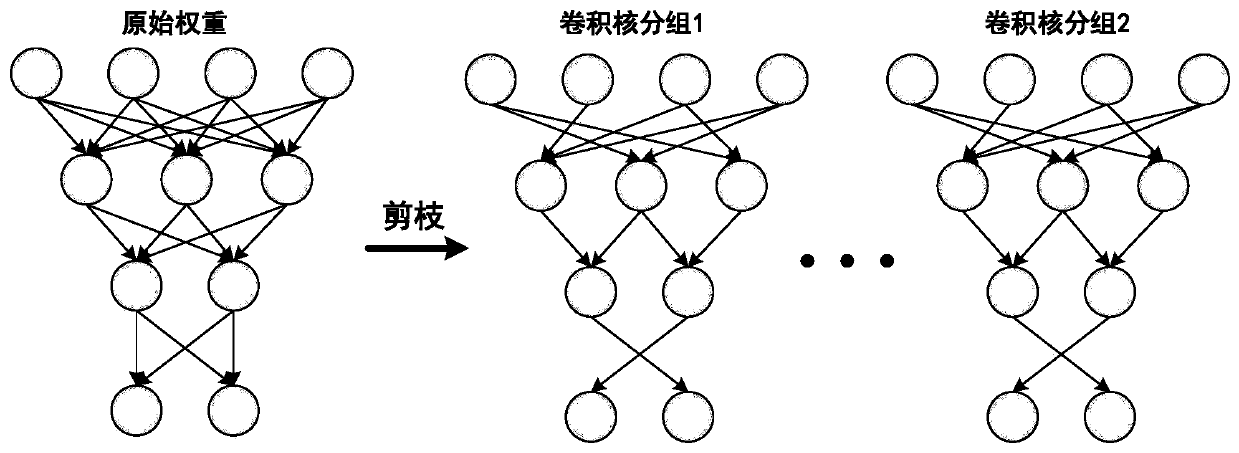

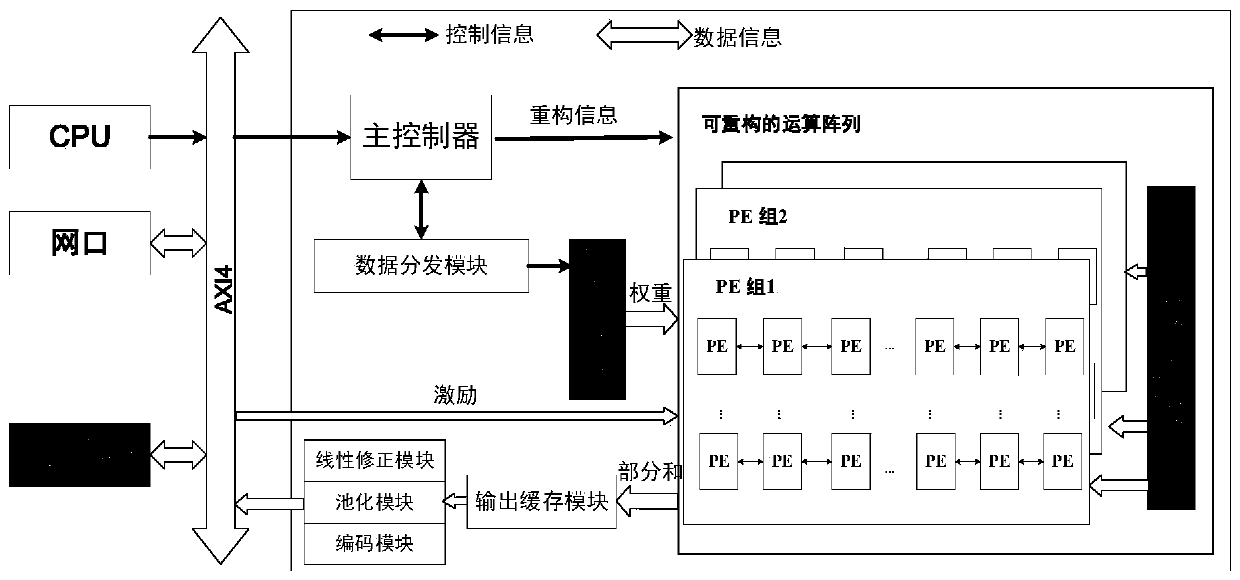

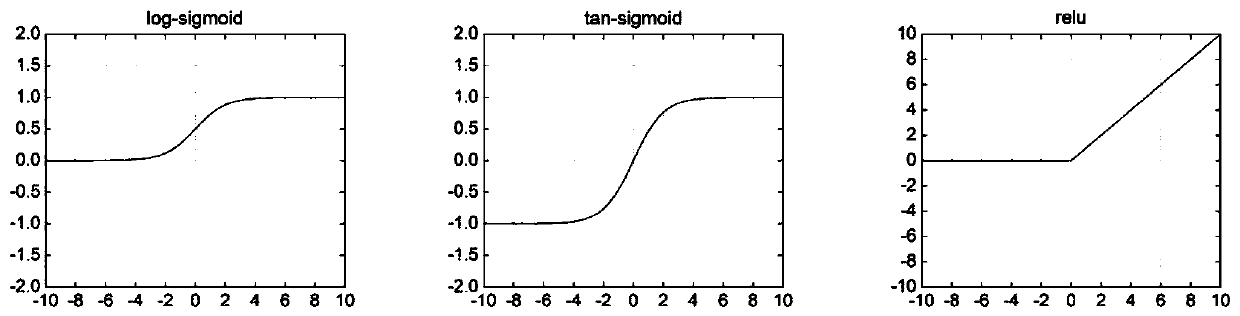

Load-balanced sparse convolutional neural network accelerator and acceleration method thereof

PendingCN109993297ABalanced operationMeet the needs of low power consumption and high energy efficiency ratioNeural architecturesPhysical realisationParallel schedulingActivation function

The invention discloses a load-balanced sparse convolutional neural network accelerator and an acceleration method thereof. The accelerator comprises a main controller, a data distribution module, a convolution operation calculation array, an output result caching module, a linear activation function unit, a pooling unit, an online coding unit and an off-chip dynamic memory. According to the scheme provided by the invention, the high-efficiency operation of the convolution operation calculation array can be realized under the condition of few storage resources, the high multiplexing rate of input excitation and weight data is ensured, and the load balance and high utilization rate of the calculation array are ensured; meanwhile, the calculation array supports convolution operations of different sizes and different scales and parallel scheduling of two layers between rows and columns and between different feature maps through a static configuration mode, and the method has very good applicability and expansibility.

Owner:南京吉相传感成像技术研究院有限公司

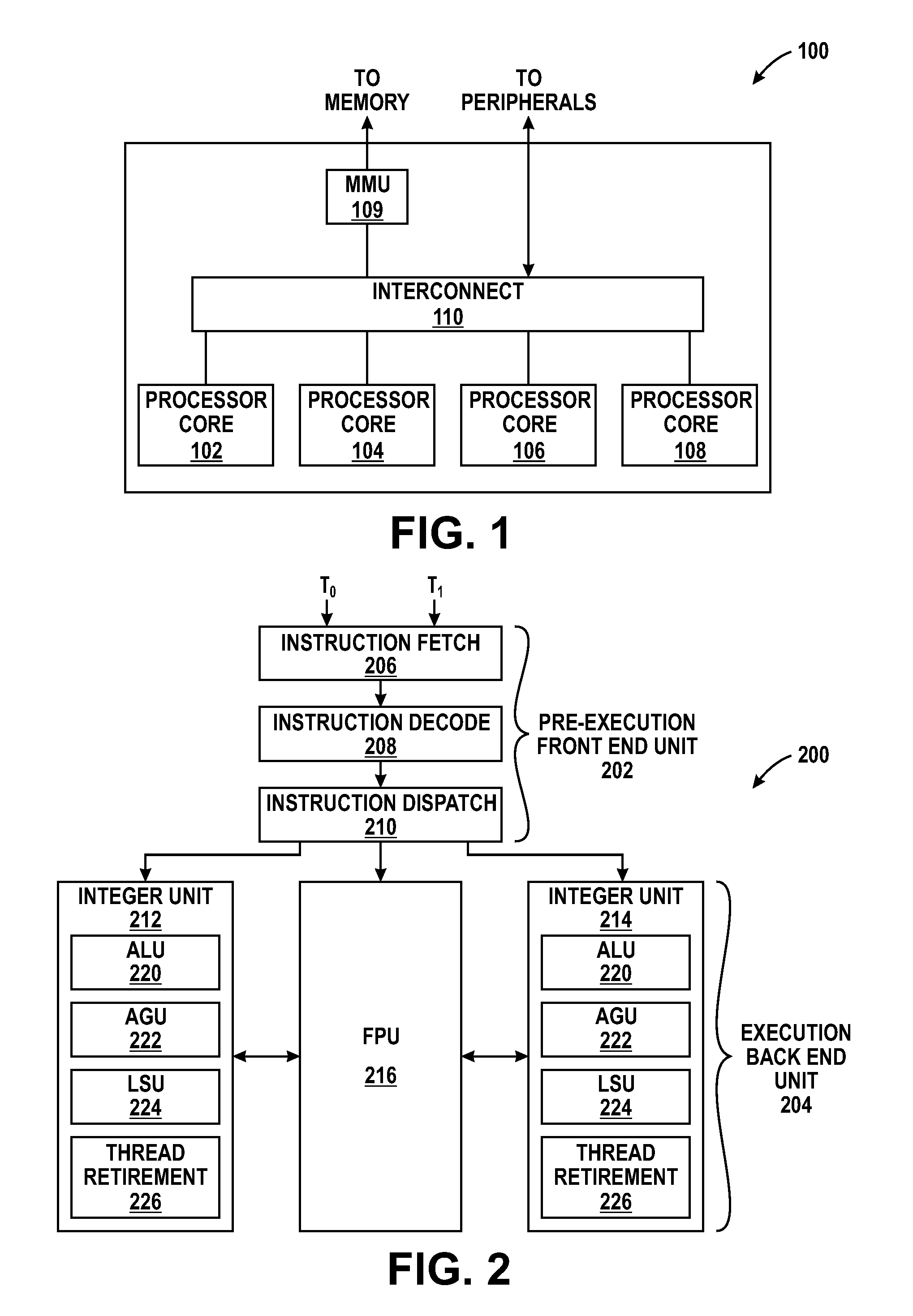

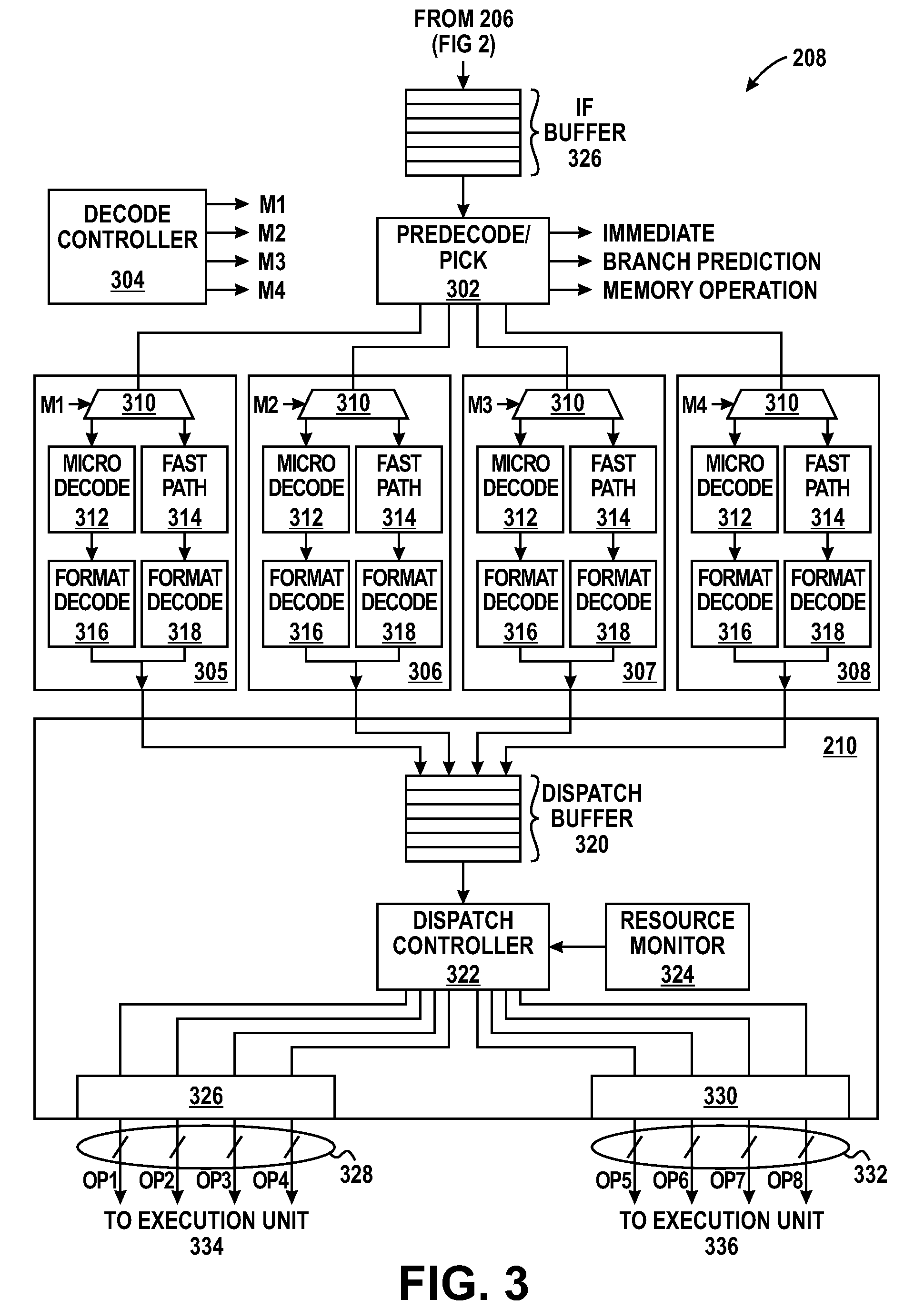

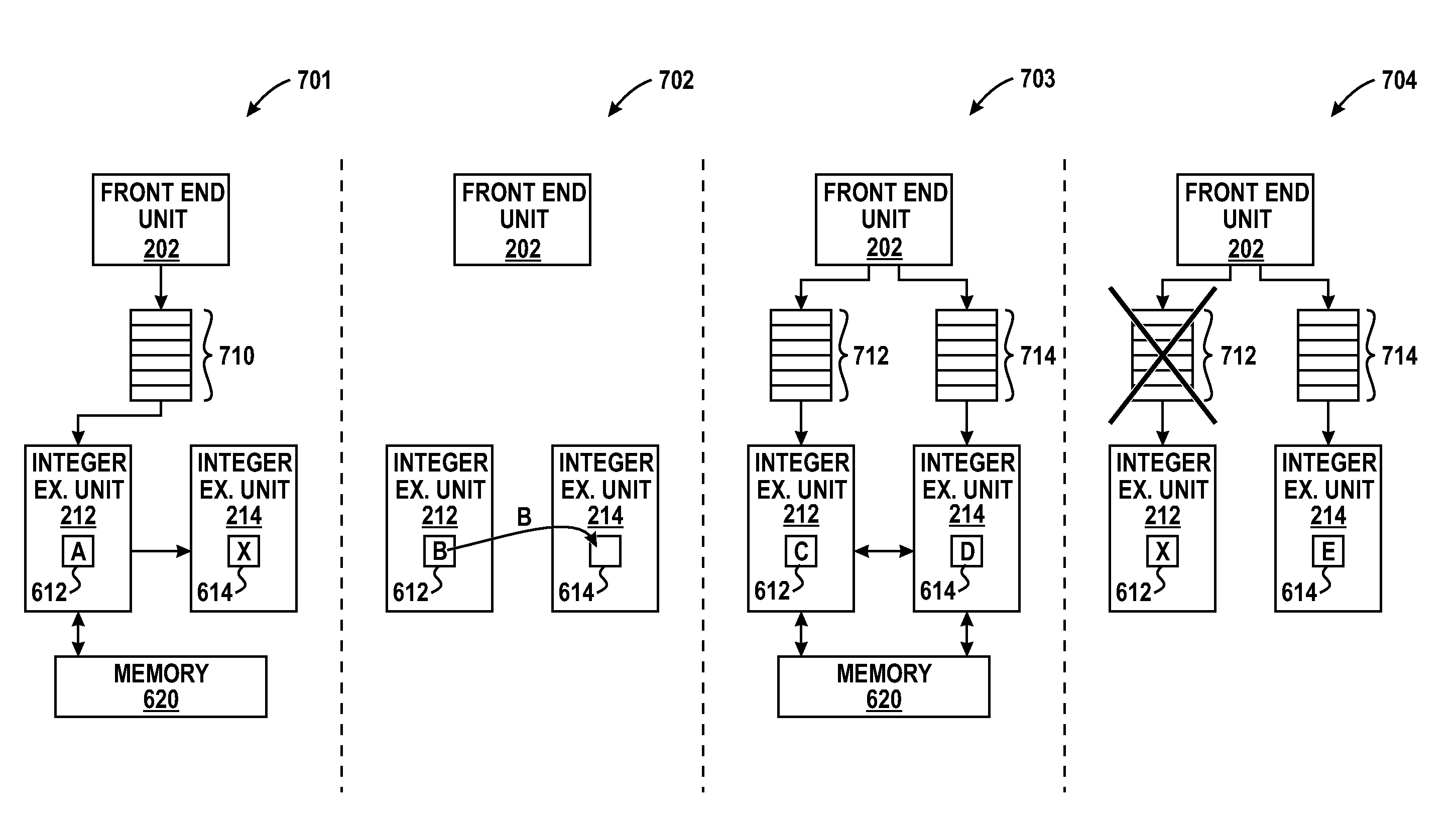

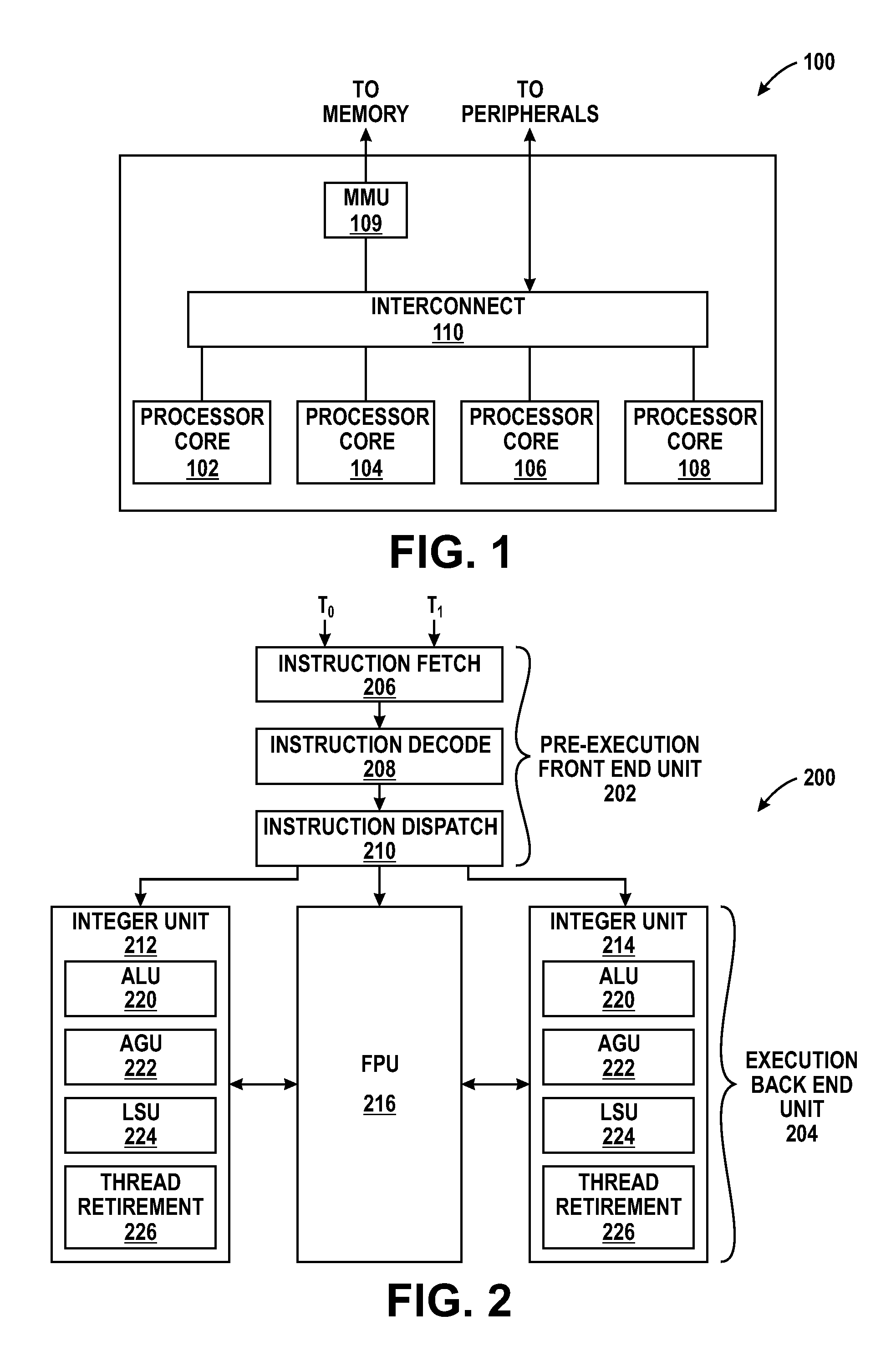

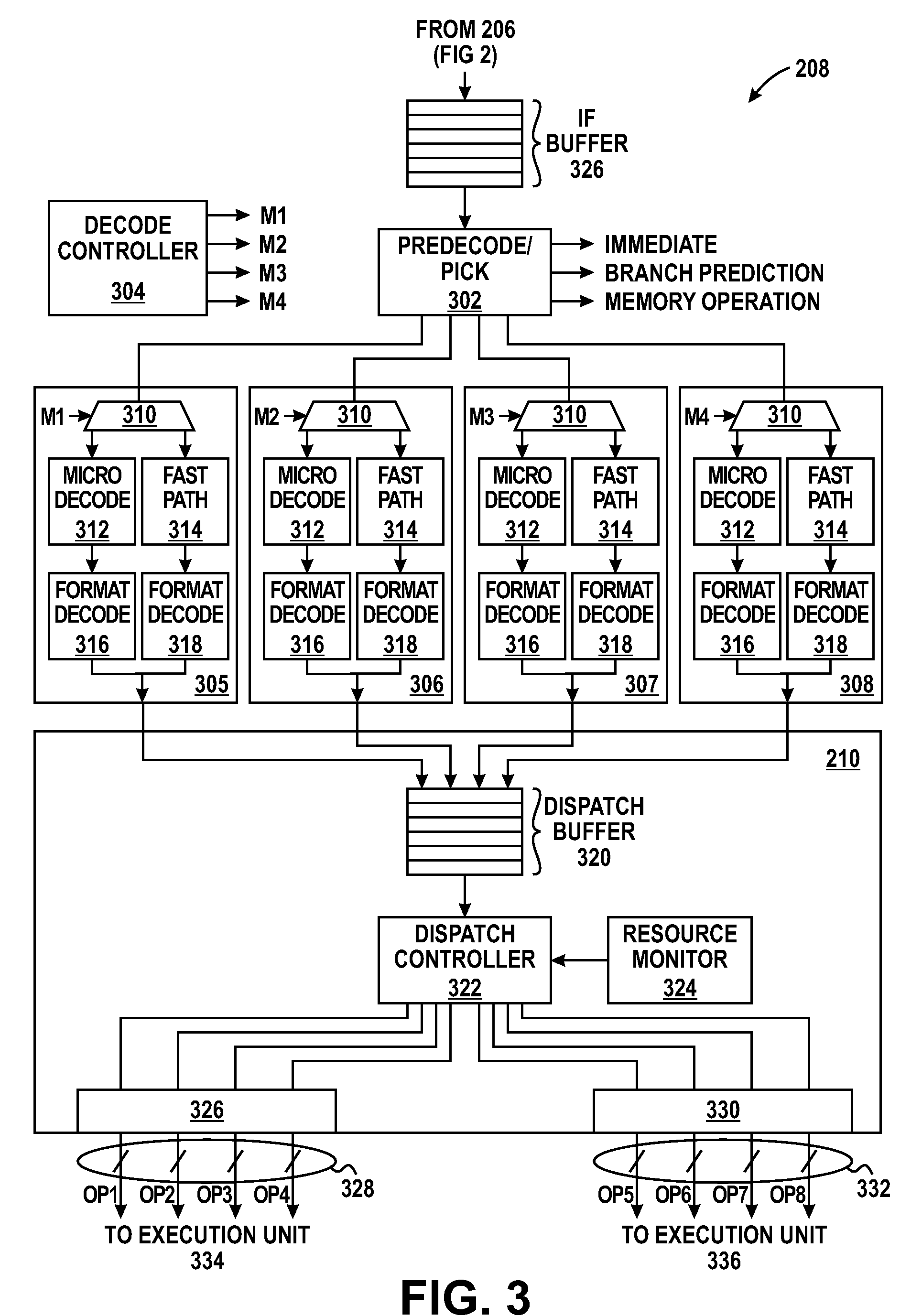

Processing pipeline having parallel dispatch and method thereof

ActiveUS20090172359A1General purpose stored program computerMemory systemsFloating-point unitParallel computing

One or more processor cores of a multiple-core processing device each can utilize a processing pipeline having a plurality of execution units (e.g., integer execution units or floating point units) that together share a pre-execution front-end having instruction fetch, decode and dispatch resources. Further, one or more of the processor cores each can implement dispatch resources configured to dispatch multiple instructions in parallel to multiple corresponding execution units via separate dispatch buses. The dispatch resources further can opportunistically decode and dispatch instruction operations from multiple threads in parallel so as to increase the dispatch bandwidth. Moreover, some or all of the stages of the processing pipelines of one or more of the processor cores can be configured to implement independent thread selection for the corresponding stage.

Owner:MEDIATEK INC

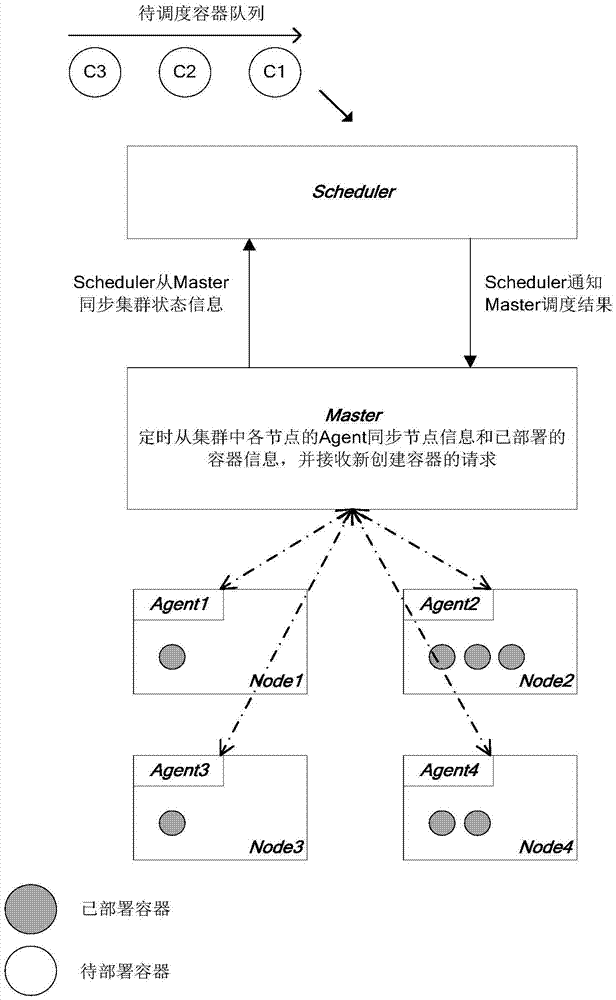

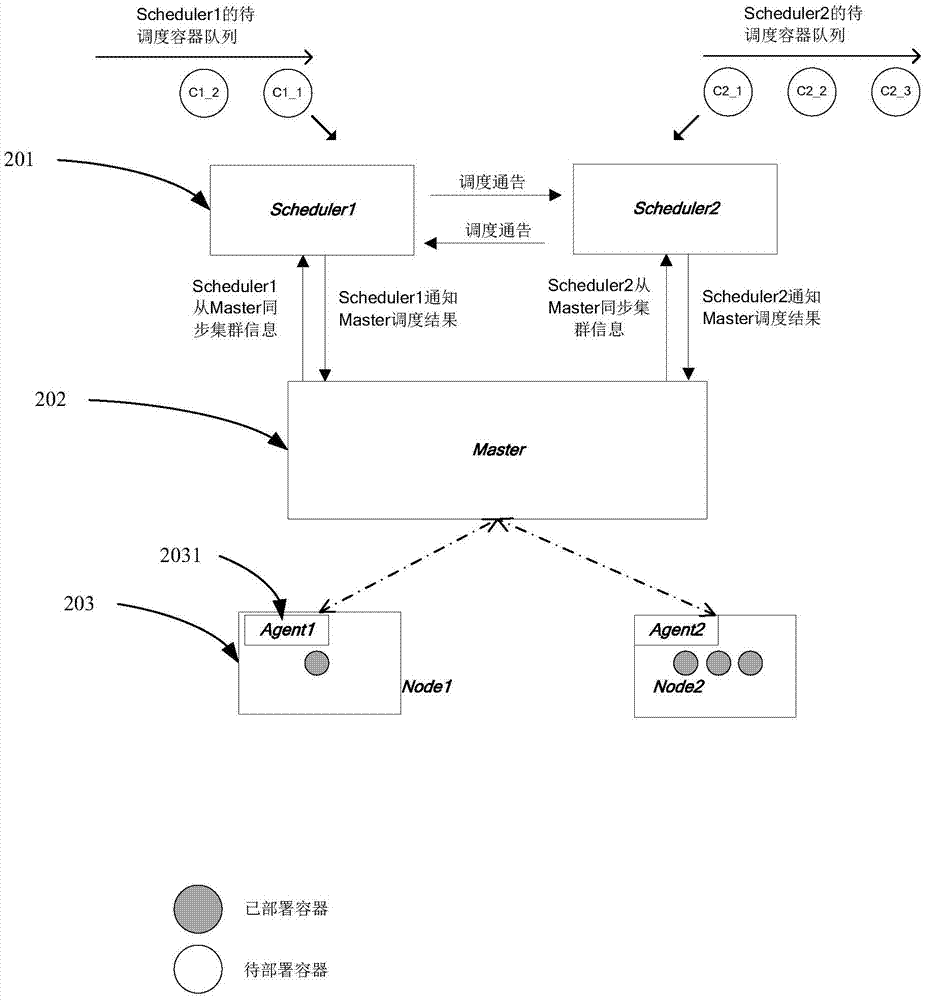

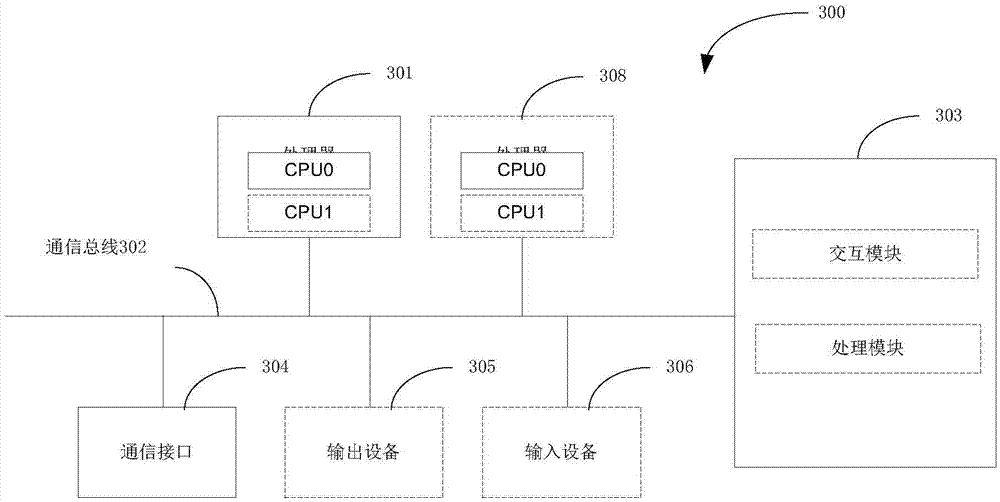

Method and apparatus for scheduling containers in parallel in cluster

ActiveCN106919445AAvoid resource conflictsReduce storage burdenProgram initiation/switchingResource informationCluster state

The invention relates to the technical field of cluster resource scheduling, and provides a method for scheduling containers in parallel in a cluster. The method comprises the steps of periodically obtaining cluster state information by a scheduler, wherein the cluster state information comprises resource information of all nodes in the cluster and description information of all containers in the cluster, the description information of the containers comprises container IDs of the containers, resource demands of the containers and deployment node IDs of the containers, and the resource information of the nodes comprises IDs of the nodes and resource quantities of the nodes; and according to the cluster state information, the container information of the containers scheduled by the scheduler and the container information of the containers scheduled by other schedulers, scheduling to-be-deployed containers to the nodes in the cluster, wherein the scheduled container information comprises container IDs and deployment node IDs of the scheduled containers. Through the scheme, the problem of resource conflicts in parallel scheduling is effectively reduced.

Owner:HUAWEI TECH CO LTD

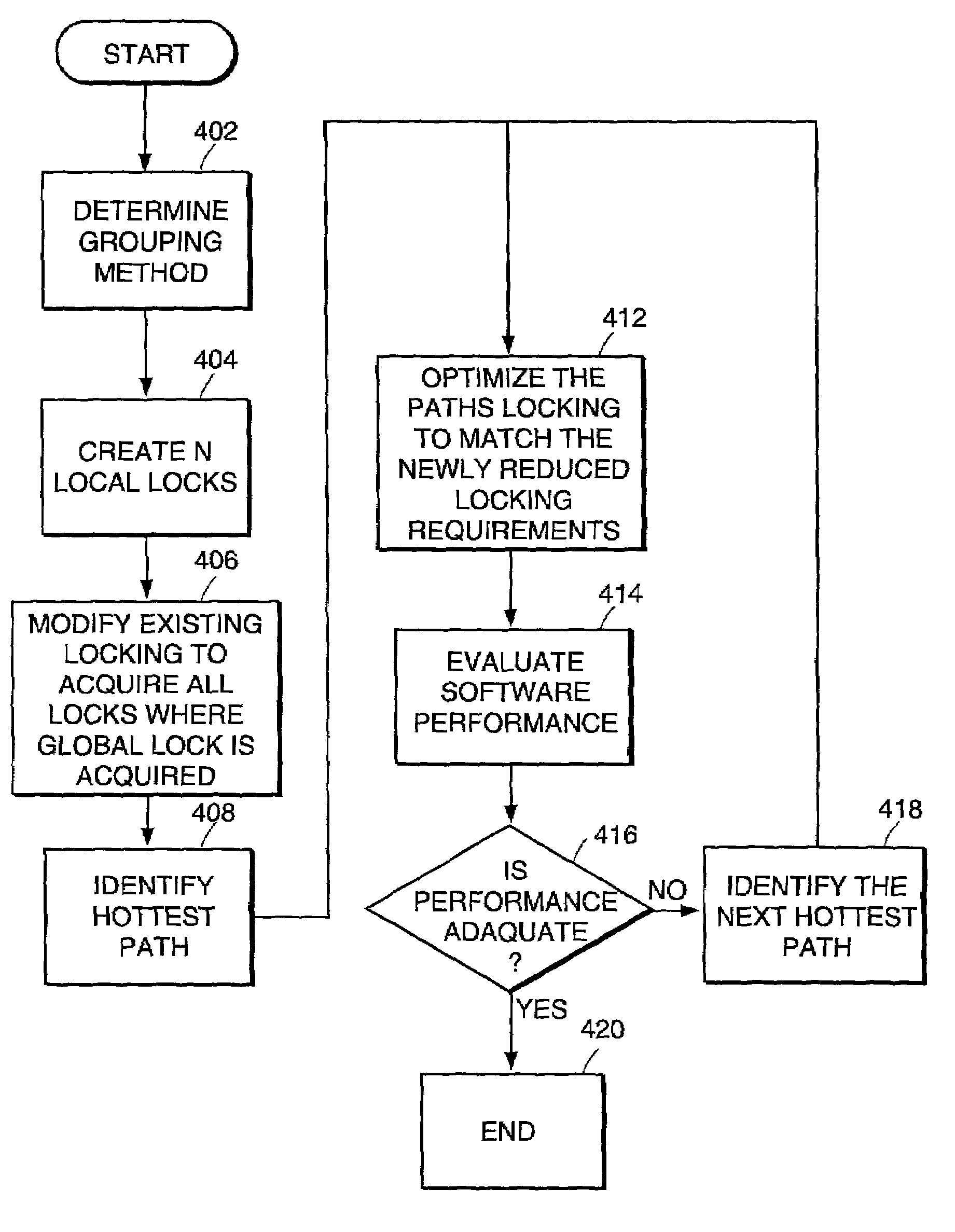

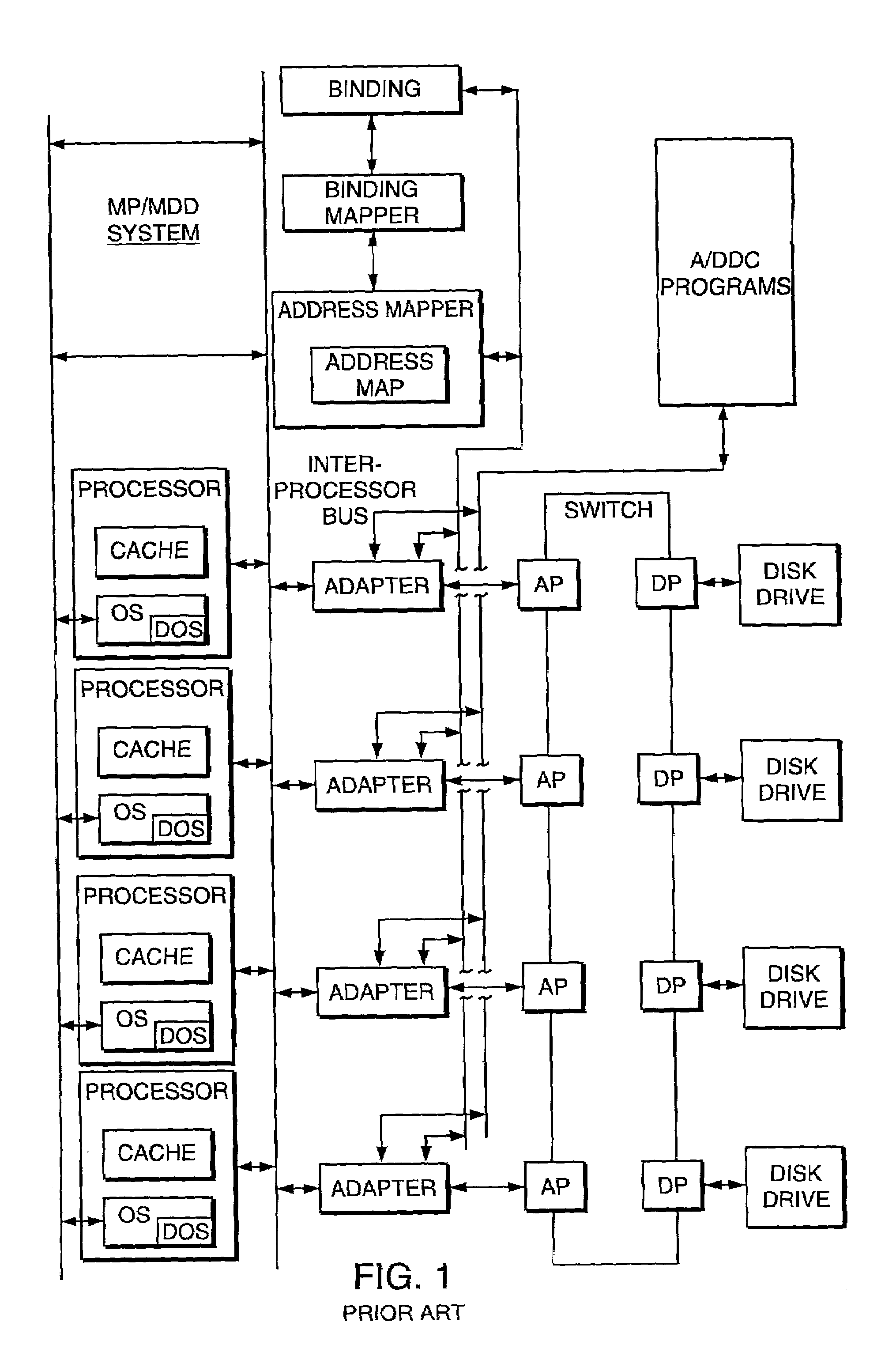

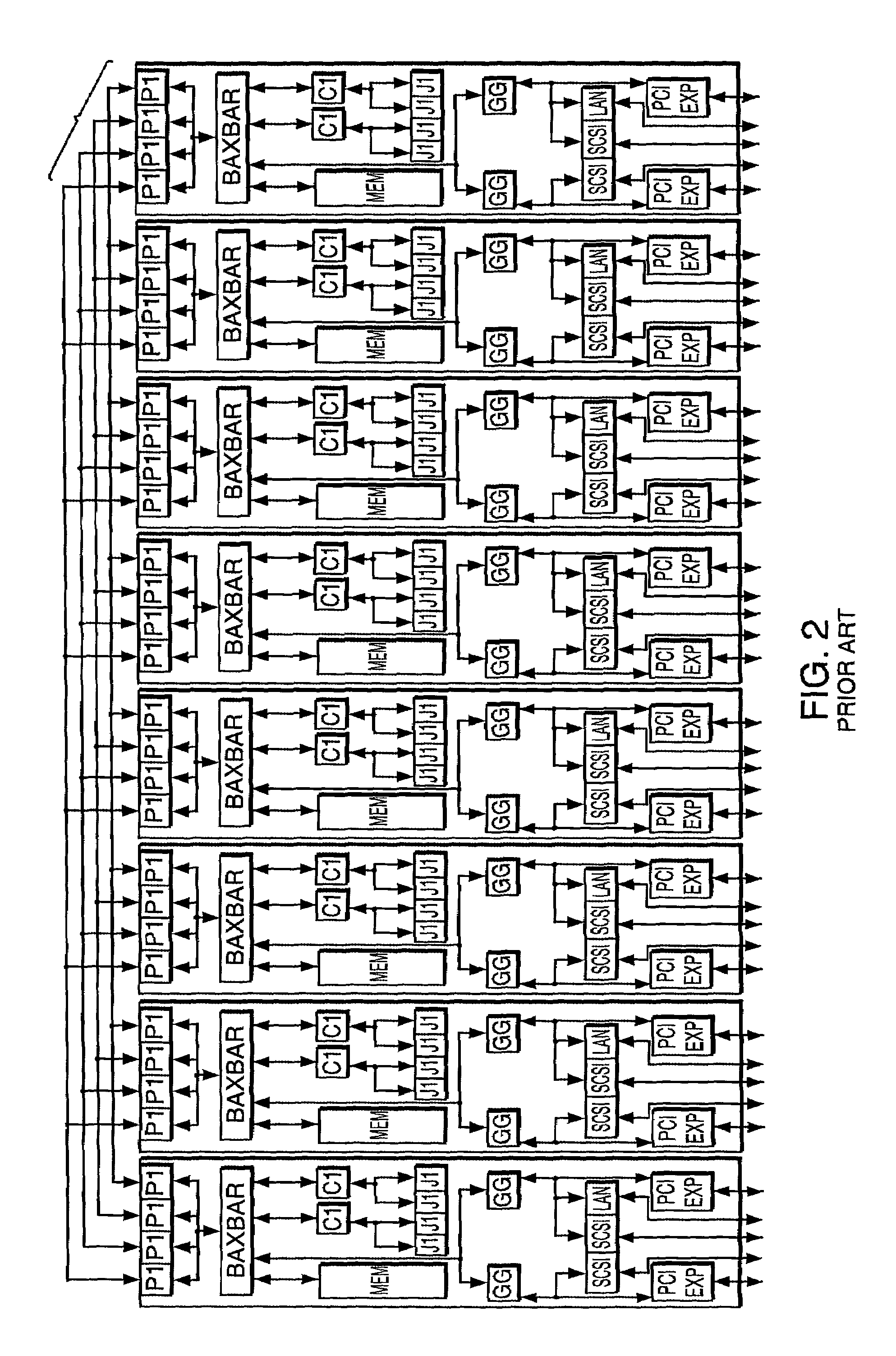

Parallel dispatch wait signaling method, method for reducing contention of highly contended dispatcher lock, and related operating systems, multiprocessor computer systems and products

InactiveUS7080375B2Reduce contentionReduce needData processing applicationsProgram initiation/switchingOperational systemMulti processor

Featured is a method for reducing the contention of the highly contended global lock(s) of an operating system, hereinafter dispatcher lock(s) that protects all dispatching structures. Such a method reduces the need for acquiring the global lock for many event notification tasks by introducing local locks for event notifications that occur frequently among well defined, or consistent dispatcher objects. For these frequently occurring event notifications a subset of the dispatching structure is locked thereby providing mutual exclusivity for the subset and allowing concurrent dispatching for one or more of other data structure subsets. The method also includes acquiring one or more local locks where the level of protection of the data structure requires locking of a plurality or more of data structures to provide mutual exclusivity. The method further includes acquiring all local locks and / or acquiring a global lock of the system wide dispatcher data structures wherever a system wide lock is required to provide mutual exclusivity.

Owner:EMC IP HLDG CO LLC

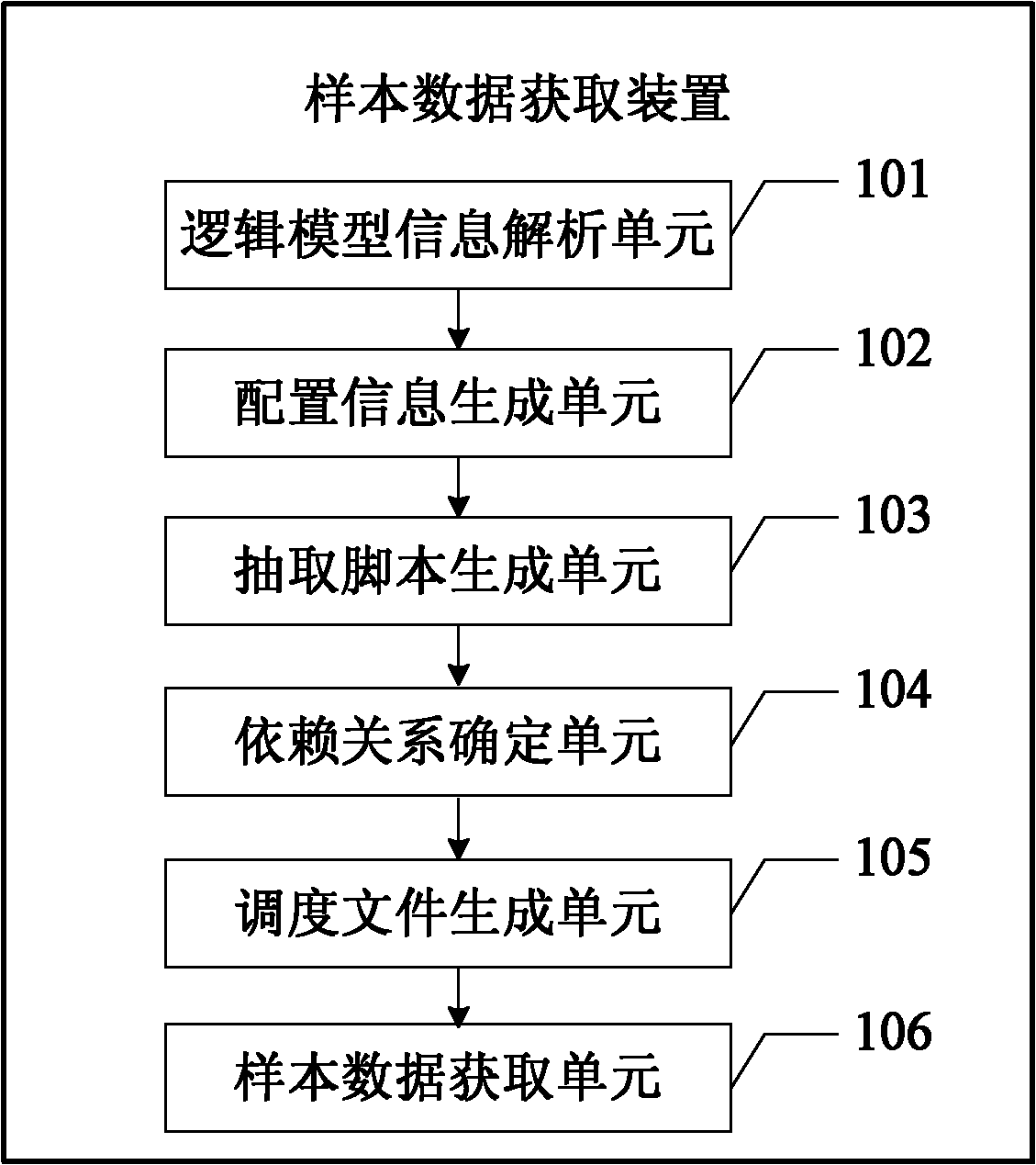

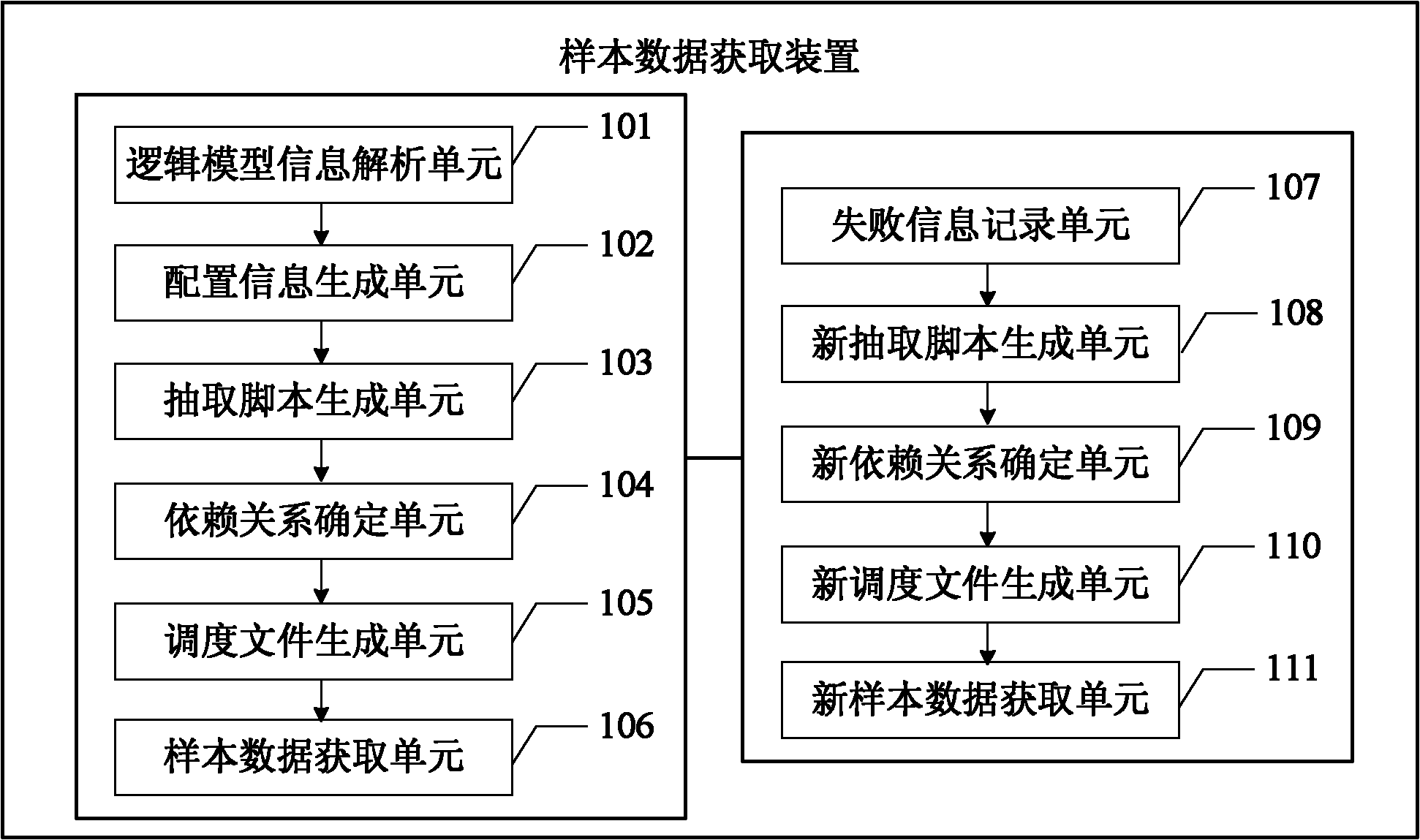

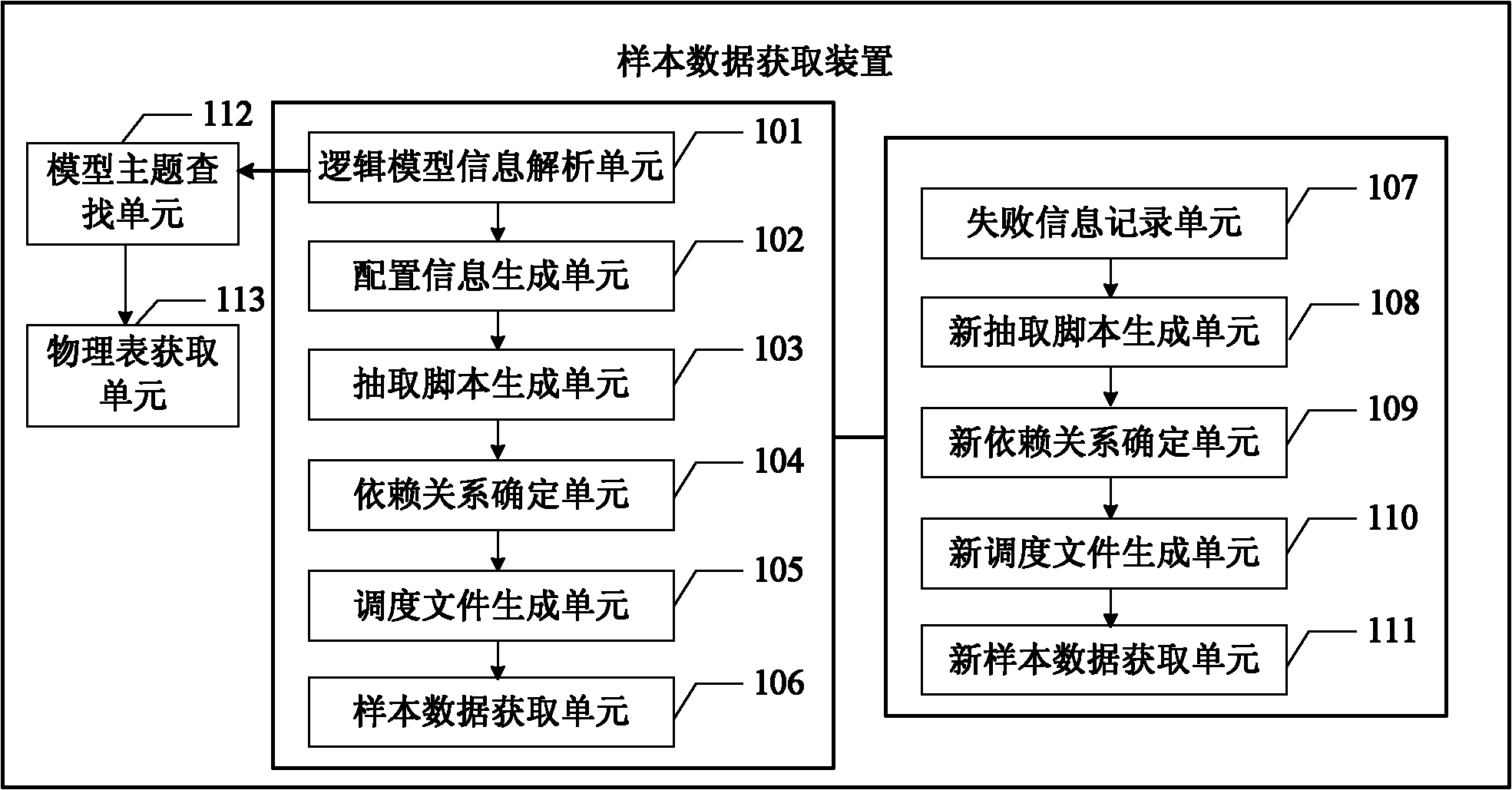

Sample data acquisition method and device for enterprise data warehouse system

The invention provides a sample data acquisition method and a device for an enterprise data warehouse system, wherein the method comprises the following steps: analyzing information of a logic model including the information of association relationship of the model of the enterprise data warehouse system so as to acquire the information of the association relationship of the model; generating sample data extraction configuration information according to the information of the association relationship of the model; generating a full amount extraction script according to the sample data extraction configuration information and preset extraction parameters; performing iterative operation on the full amount extraction script so as to determine the dependency relationship of scheduling of the full amount extraction script; generating a scheduling file based on the structure of data of an oriented graph; and scheduling the full amount extraction script in parallel according to the scheduling file so as to acquire sample data. With the adoption of the method and the device, the sample data of the enterprise data warehouse system can be conveniently and quickly acquired.

Owner:INDUSTRIAL AND COMMERCIAL BANK OF CHINA

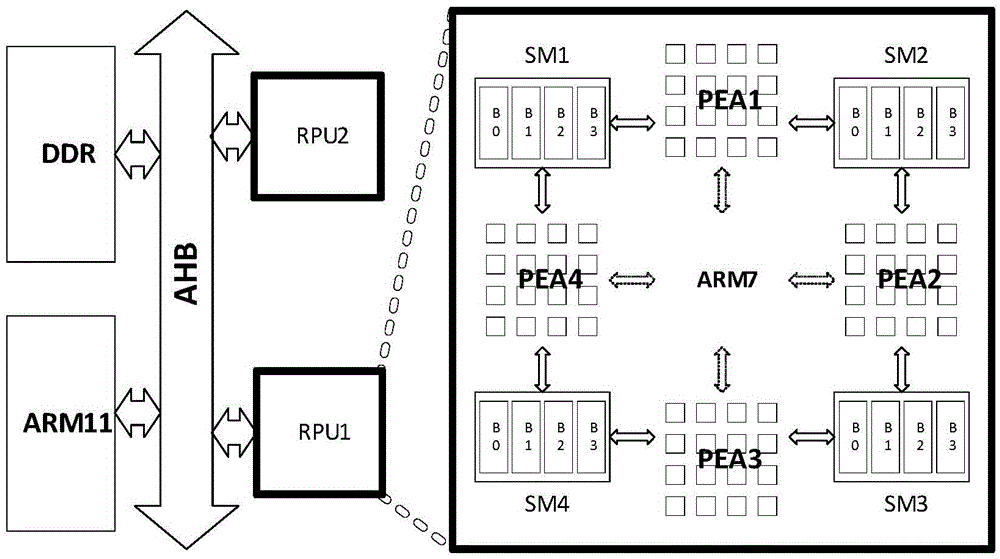

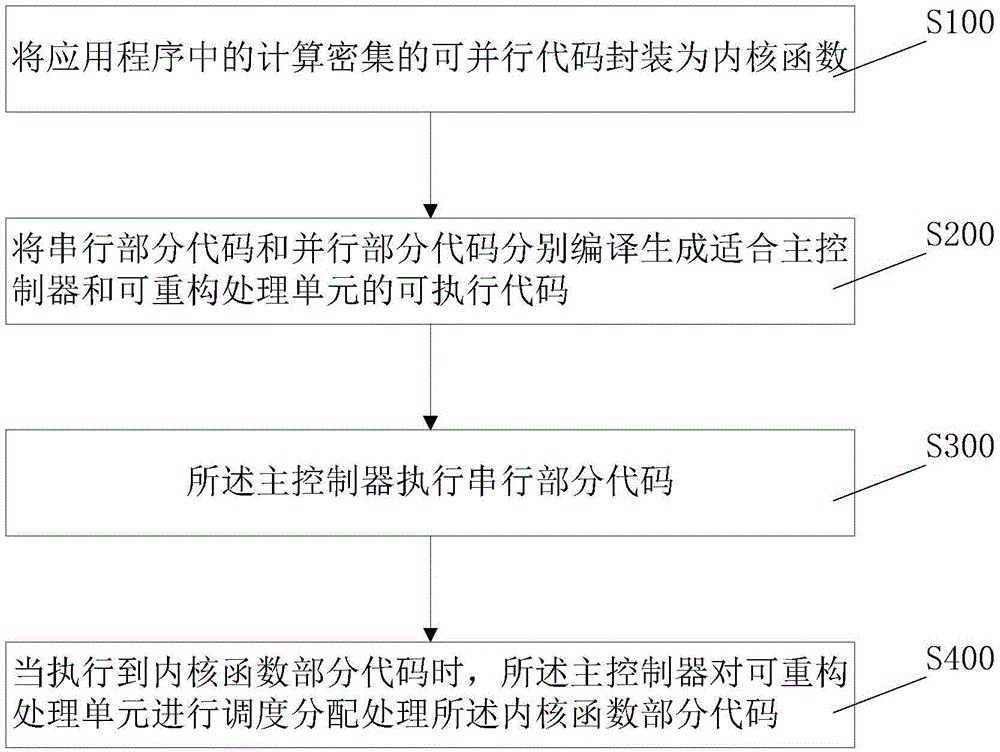

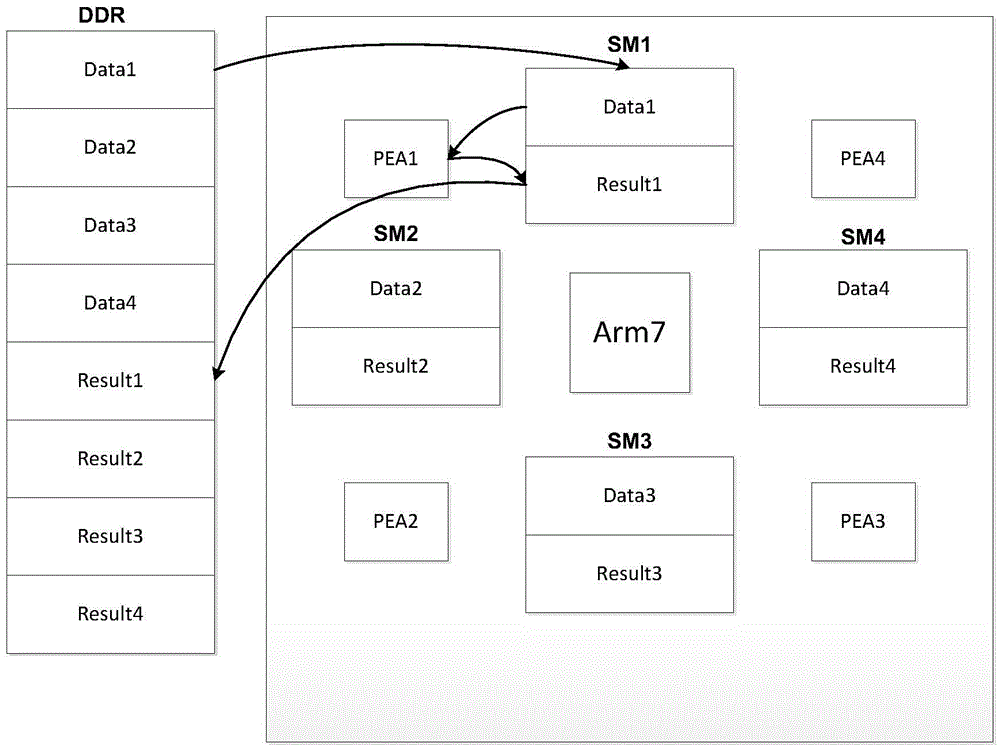

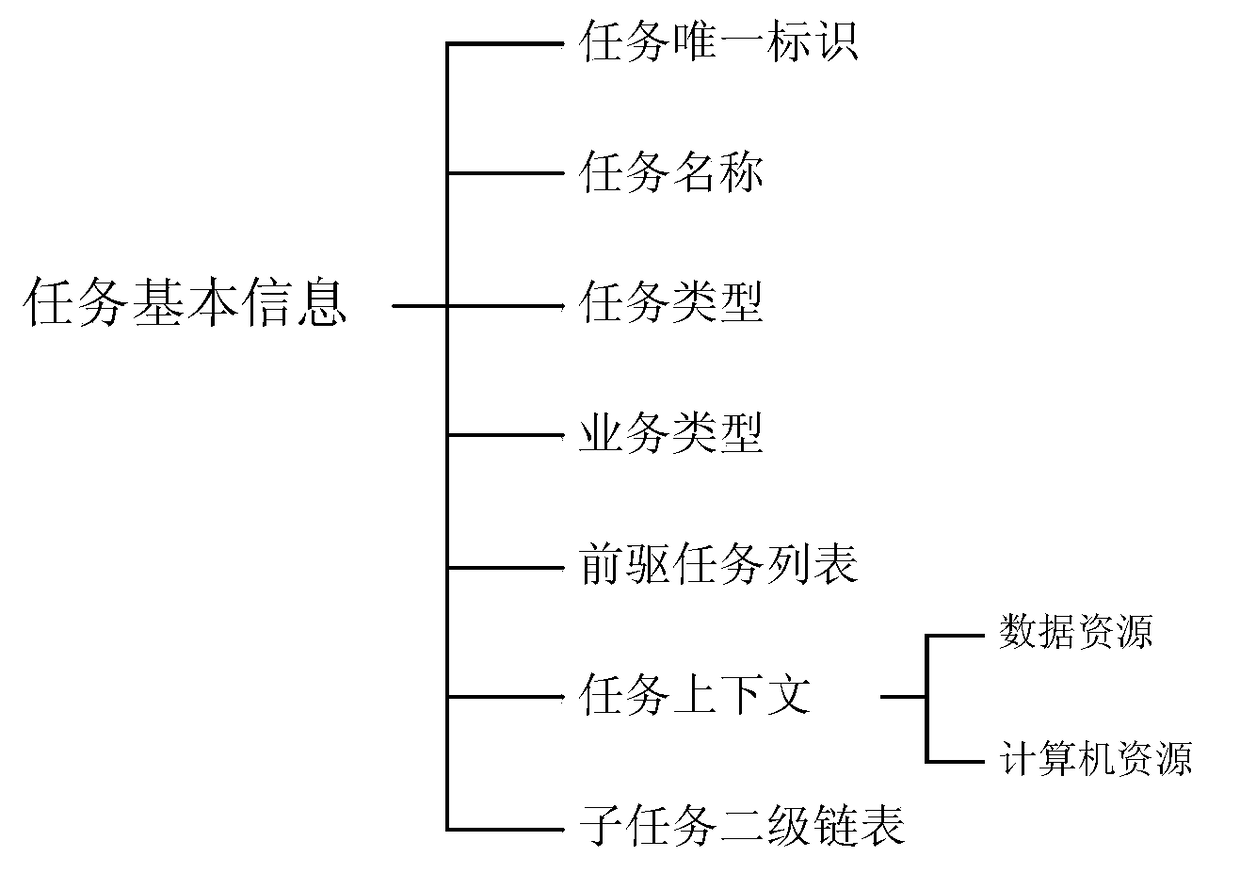

Task-level parallel scheduling method and system for dynamically reconfigurable processor

ActiveCN105487838AMake full use of parallel computing capabilitiesDispatch method extensionConcurrent instruction executionDirect memory accessProcessing element

The invention proposes a task-level parallel scheduling method and system for a dynamically reconfigurable processor. The system comprises a main controller, a plurality of reconfigurable processing units, a main memory, a direct memory access device and a system bus, wherein each reconfigurable processing unit consists of a co-controller, a plurality of reconfigurable processing element arrays in charge of reconfigurable calculation and a plurality of shared memories used for data storage; the reconfigurable processing element arrays and the shared memories are adjacently arranged; and the shared memories can be read and written by the two connected reconfigurable processing element arrays around. According to the task-level parallel scheduling method and system for the dynamically reconfigurable processor, different scheduling modes can be executed for different tasks by adjusting the scheduling method, and basically all parallel tasks can be well accelerated in parallel in the reconfigurable processor.

Owner:SHANGHAI JIAO TONG UNIV

Processing pipeline having stage-specific thread selection and method thereof

ActiveUS20090172362A1Digital computer detailsSpecific program execution arrangementsStage specificFloating-point unit

One or more processor cores of a multiple-core processing device each can utilize a processing pipeline having a plurality of execution units (e.g., integer execution units or floating point units) that together share a pre-execution front-end having instruction fetch, decode and dispatch resources. Further, one or more of the processor cores each can implement dispatch resources configured to dispatch multiple instructions in parallel to multiple corresponding execution units via separate dispatch buses. The dispatch resources further can opportunistically decode and dispatch instruction operations from multiple threads in parallel so as to increase the dispatch bandwidth. Moreover, some or all of the stages of the processing pipelines of one or more of the processor cores can be configured to implement independent thread selection for the corresponding stage.

Owner:ADVANCED MICRO DEVICES INC

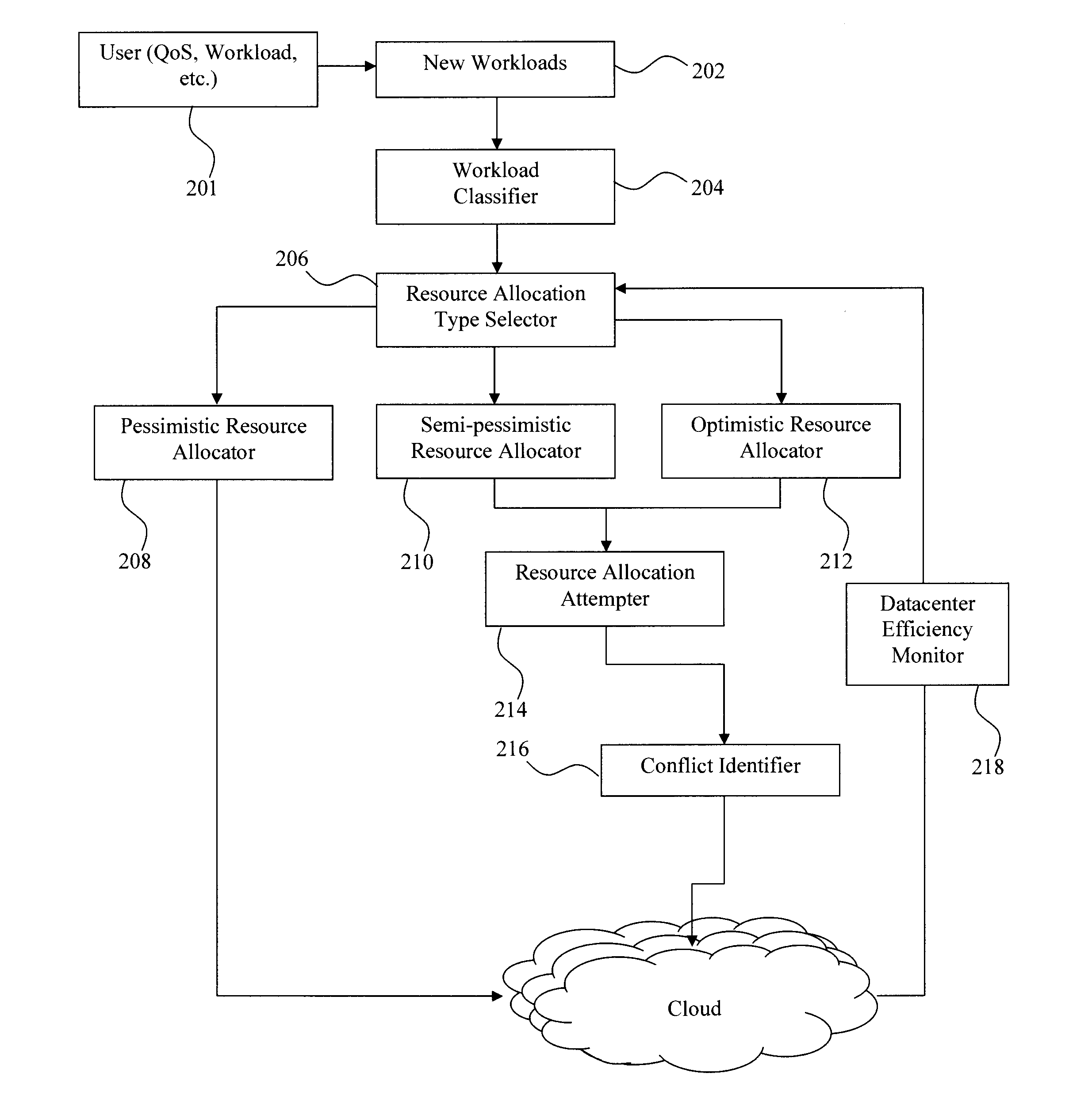

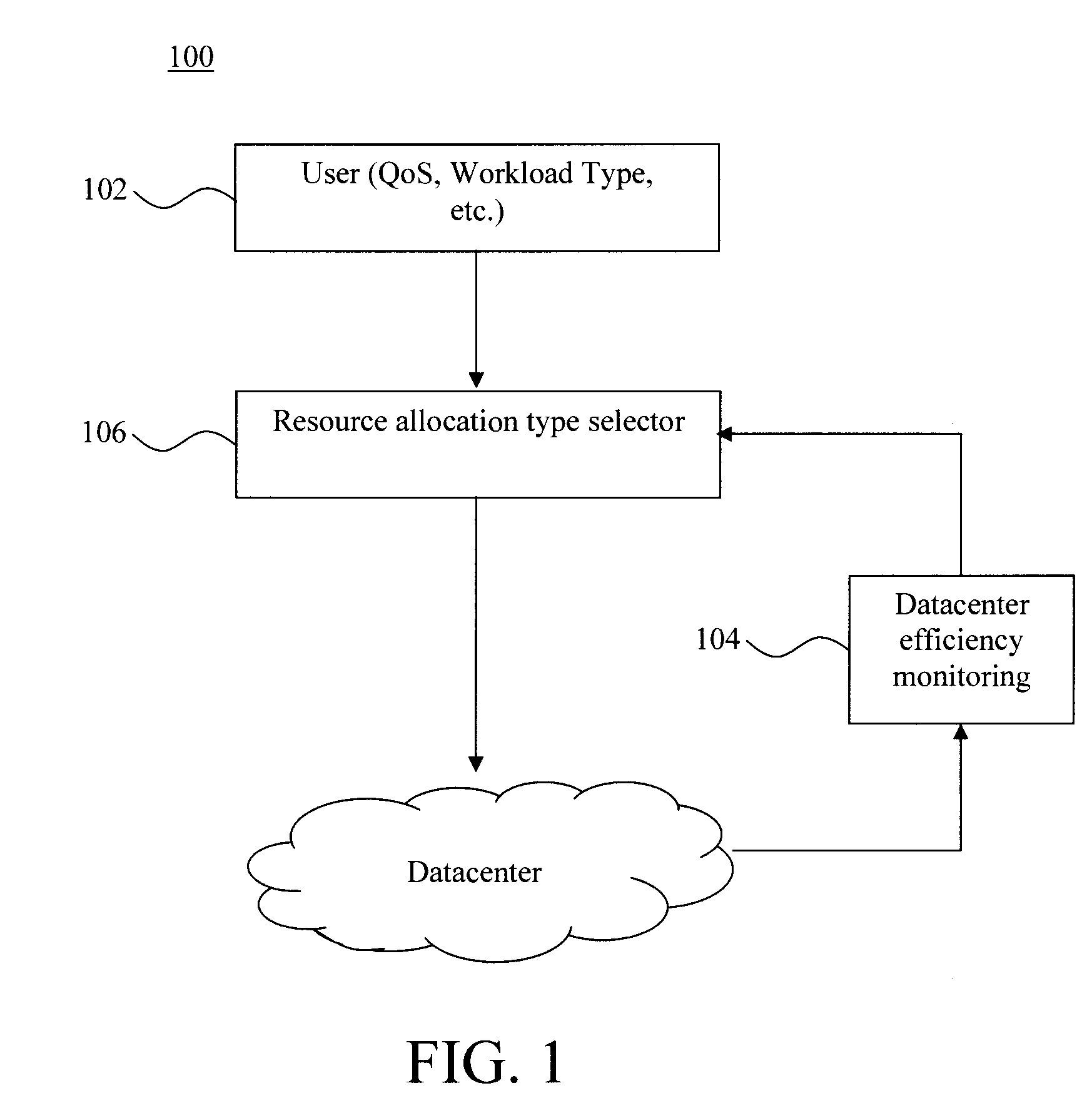

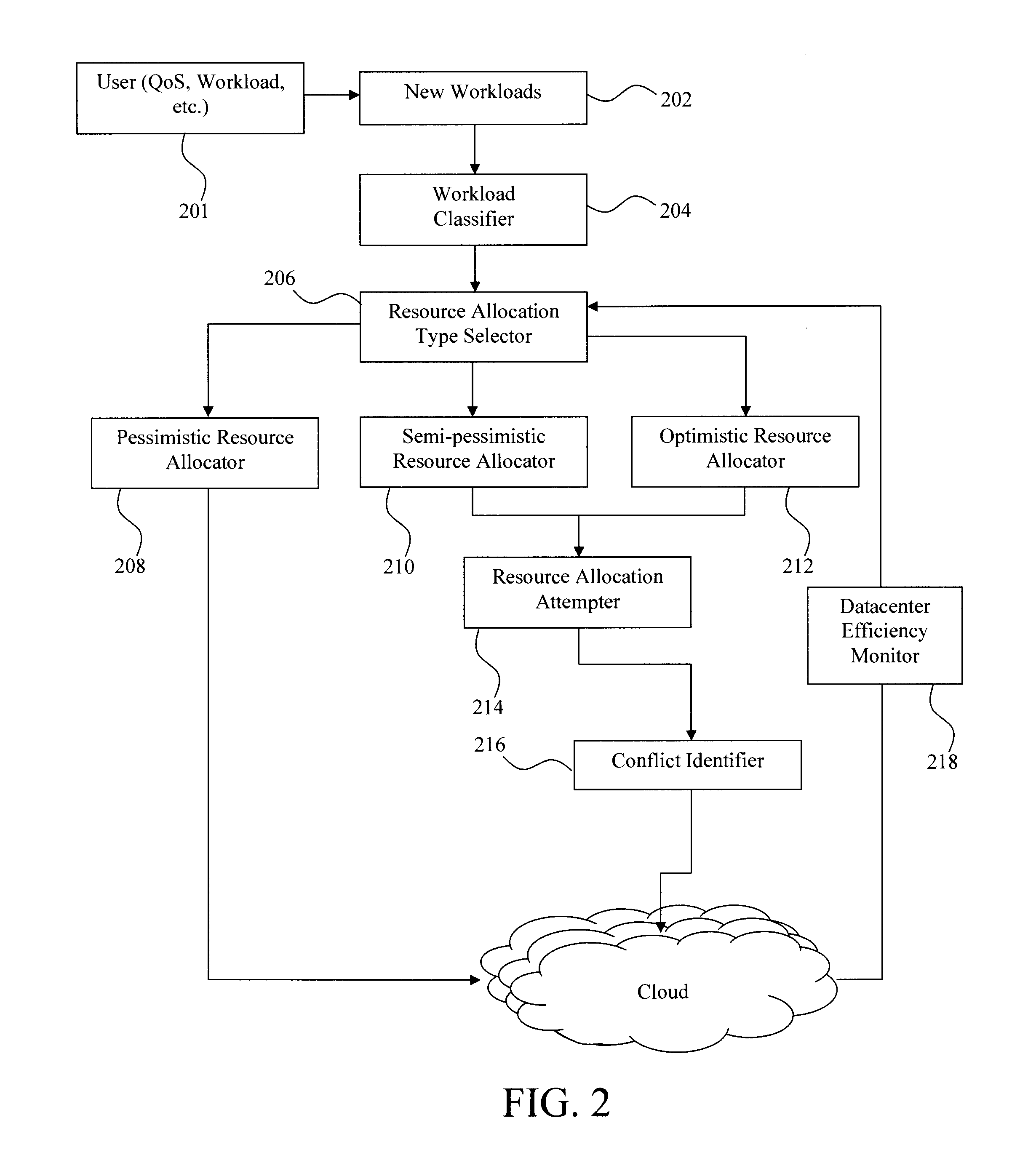

Selecting Resource Allocation Policies and Resolving Resource Conflicts

Techniques for workload management in cloud computing infrastructures are provided. In one aspect, a method for allocating computing resources in a datacenter cluster is provided. The method includes the steps of: creating multiple, parallel schedulers; and automatically selecting a resource allocation method for each of the schedulers based on one or more of a workload profile, user requirements, and a state of the datacenter cluster, wherein an optimistic resource allocation method is selected for at least a first one or more of the schedulers and a pessimistic resource allocation method is selected for at least a second one or more of the schedulers. Due to optimistic resource allocation conflicts may arise. Methods to resolve such conflicts are also provided.

Owner:IBM CORP

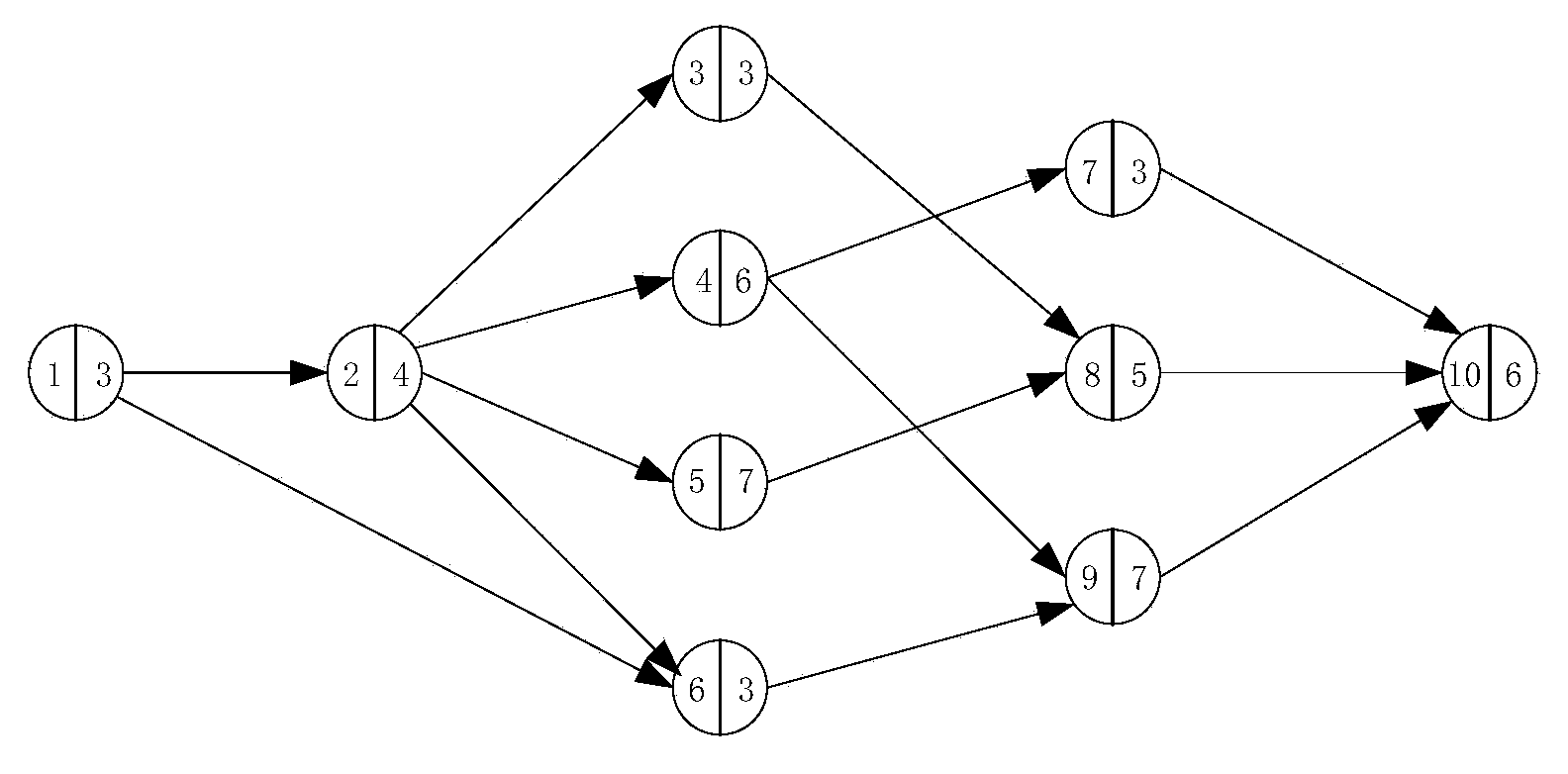

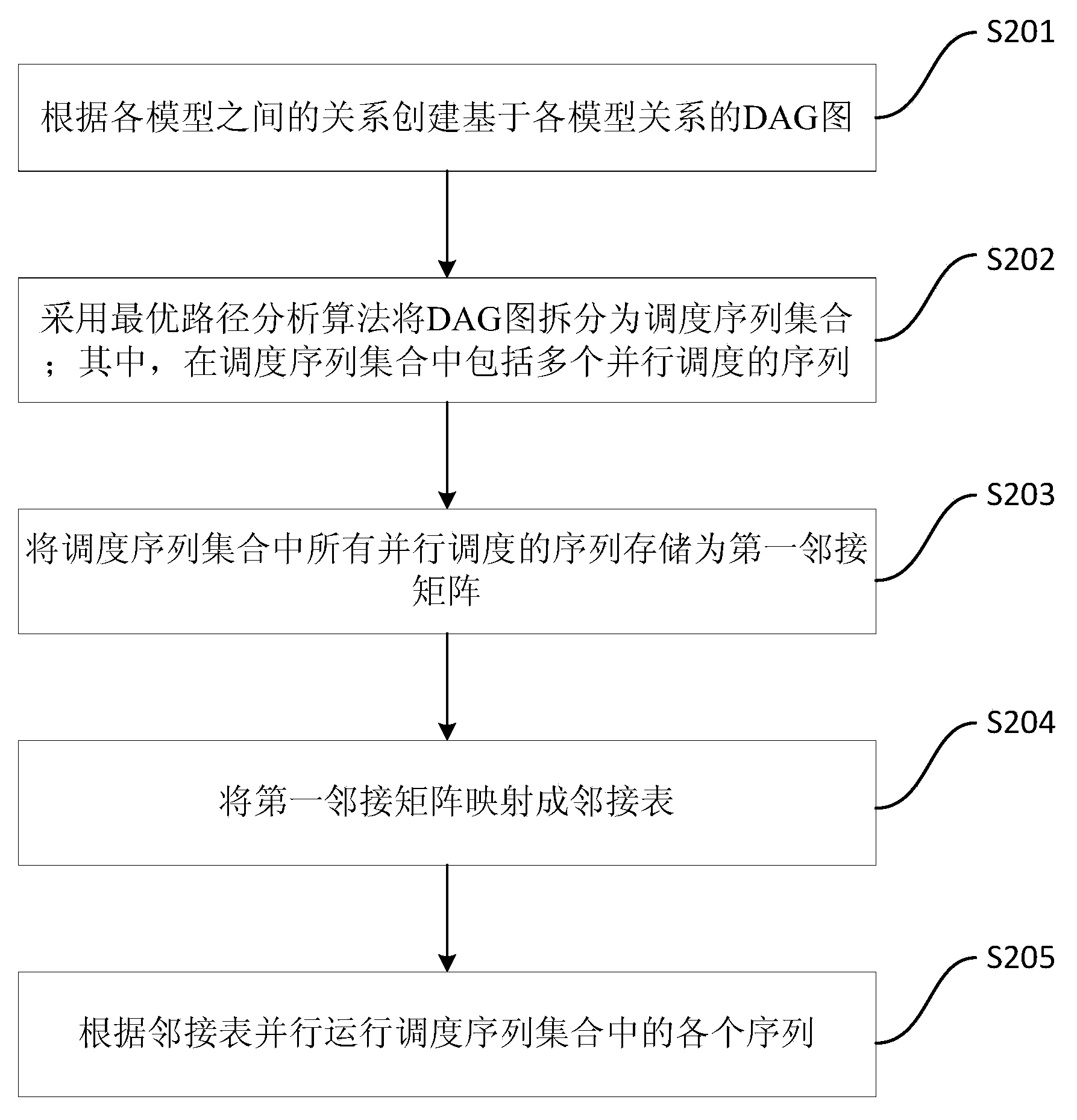

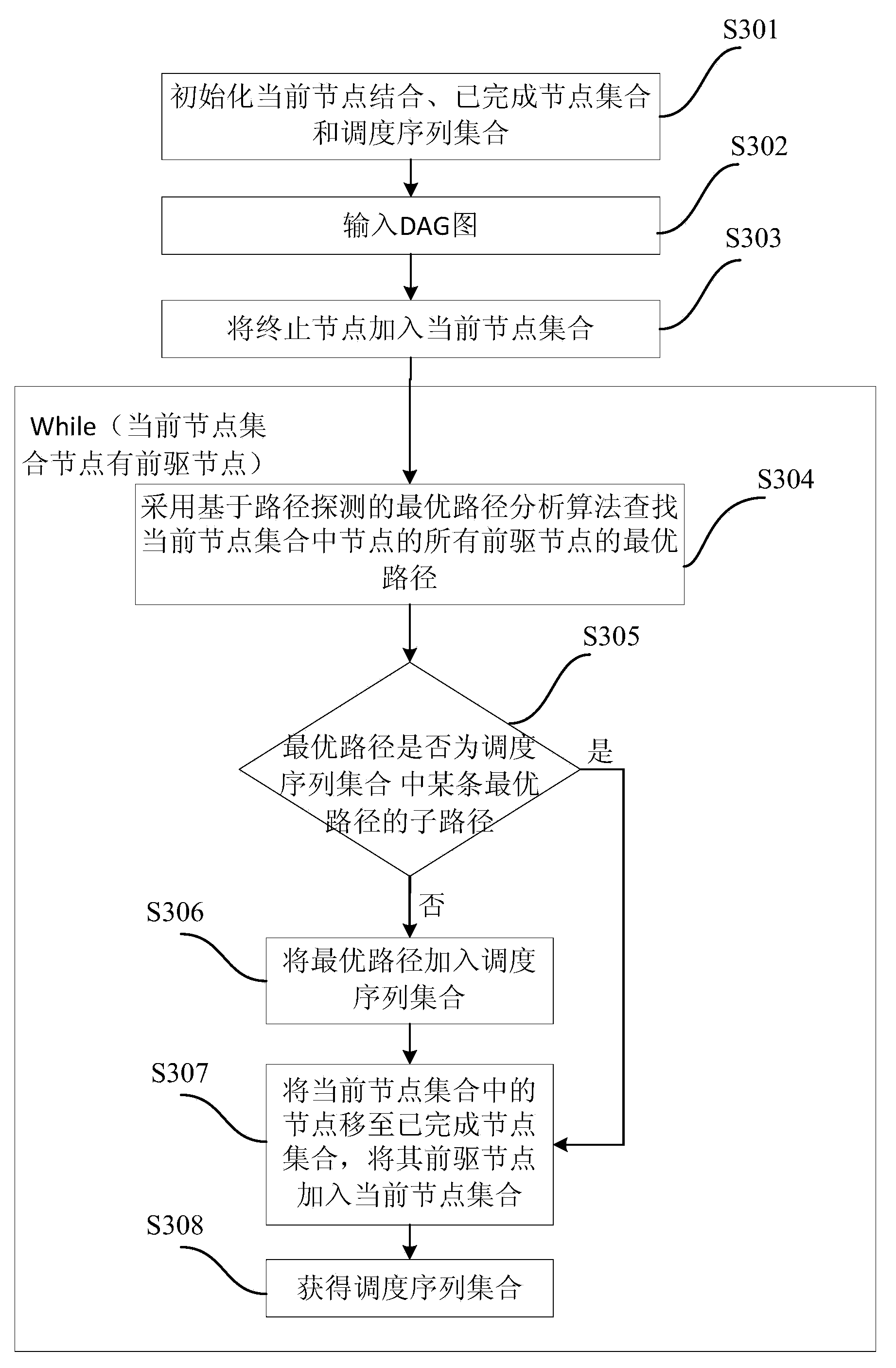

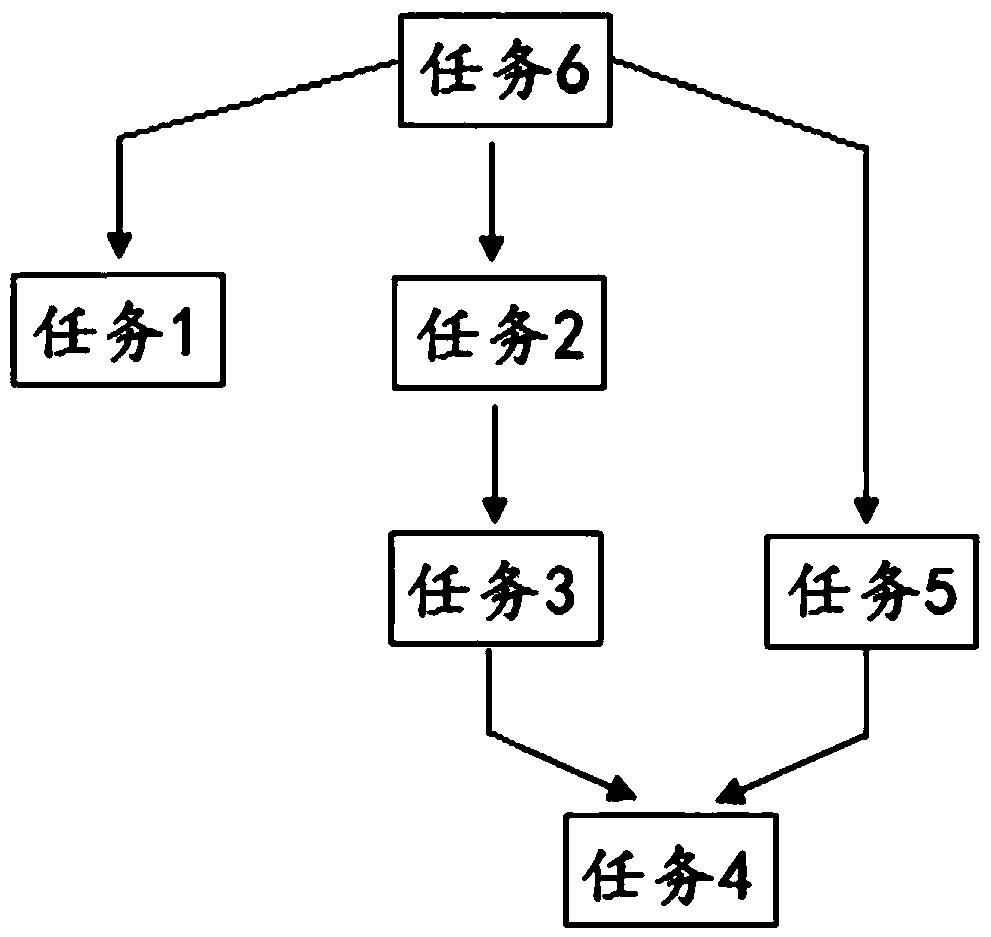

DAG (Directed Acyclic Graph) node optimal path-based multi-model parallel scheduling method and device

ActiveCN104239137ASolve complexityResolution timeProgram initiation/switchingResource allocationNODALRunning time

The invention provides a DAG (Directed Acyclic Graph) node optimal path-based multi-model parallel scheduling method and device. The method comprises the following steps of creating a DAG based on a relationship among models according to the relationship among the models; decomposing the DAG into a scheduling sequence set according to a path detection-based optimal path analysis algorithm, wherein the scheduling sequence set comprises a plurality of parallel scheduling sequences; saving all parallel scheduling sequences in the scheduling sequence set as a first adjacency matrix; mapping the first adjacency matrix into an adjacency list; running each sequence in the scheduling sequence set in parallel according to the adjacency list. According to the DAG node optimal path-based multi-model parallel scheduling method and device provided by the invention, the problems that the complexity is high and the running time is long in the existing decomposing method can be solved; the system resources can be fully utilized, and the multi-model scheduling running time is effectively shortened.

Owner:NEUSOFT CORP

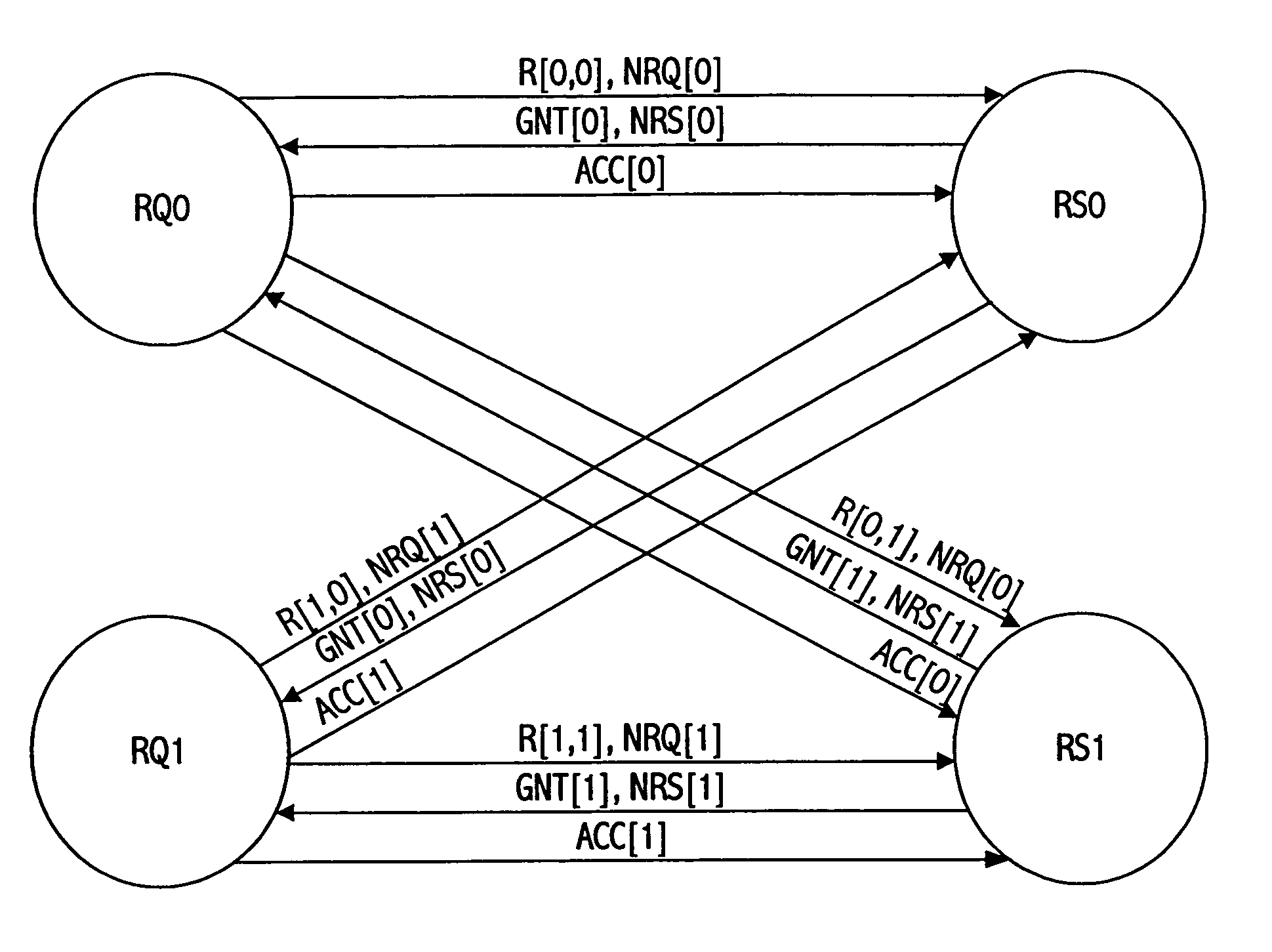

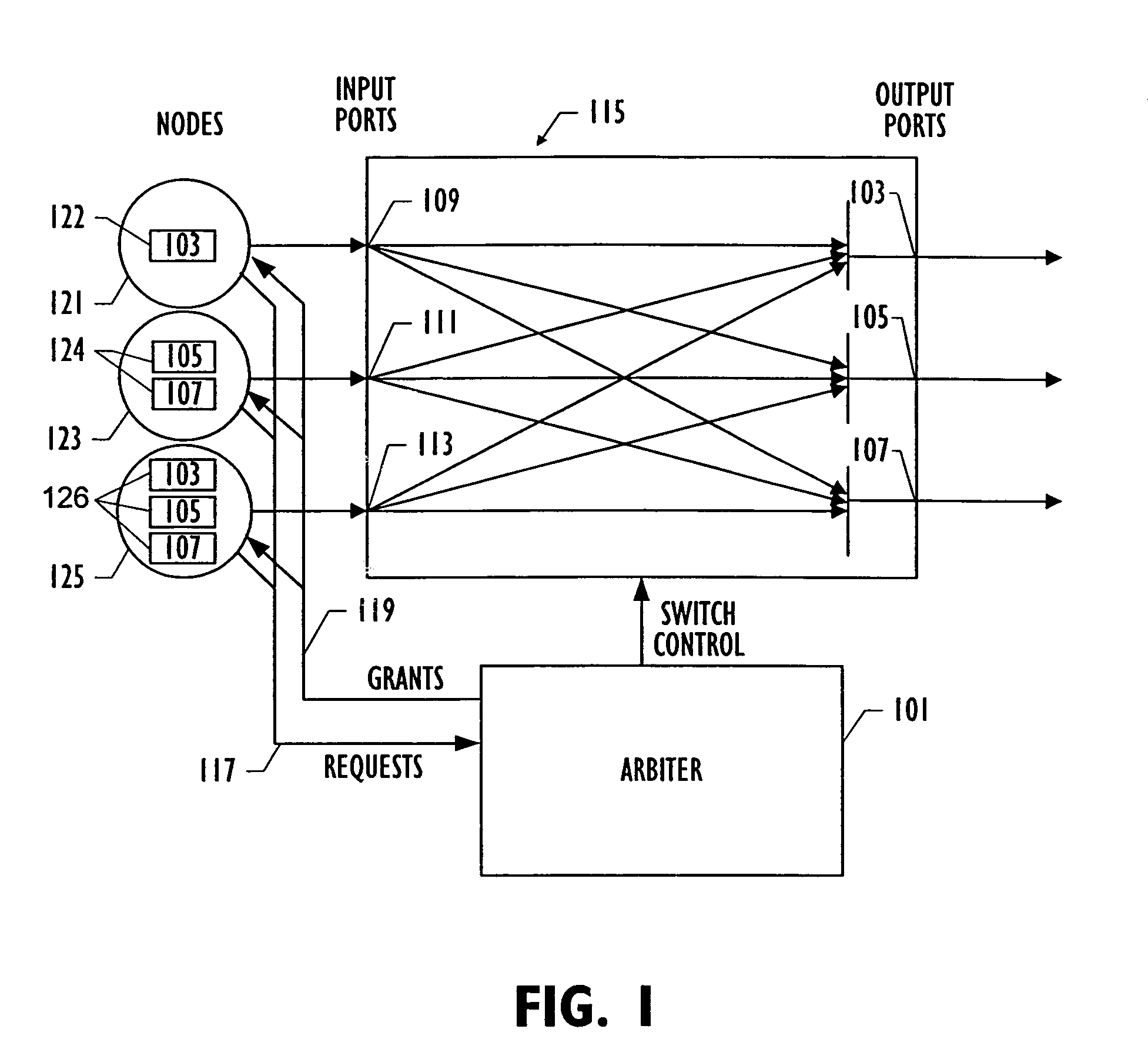

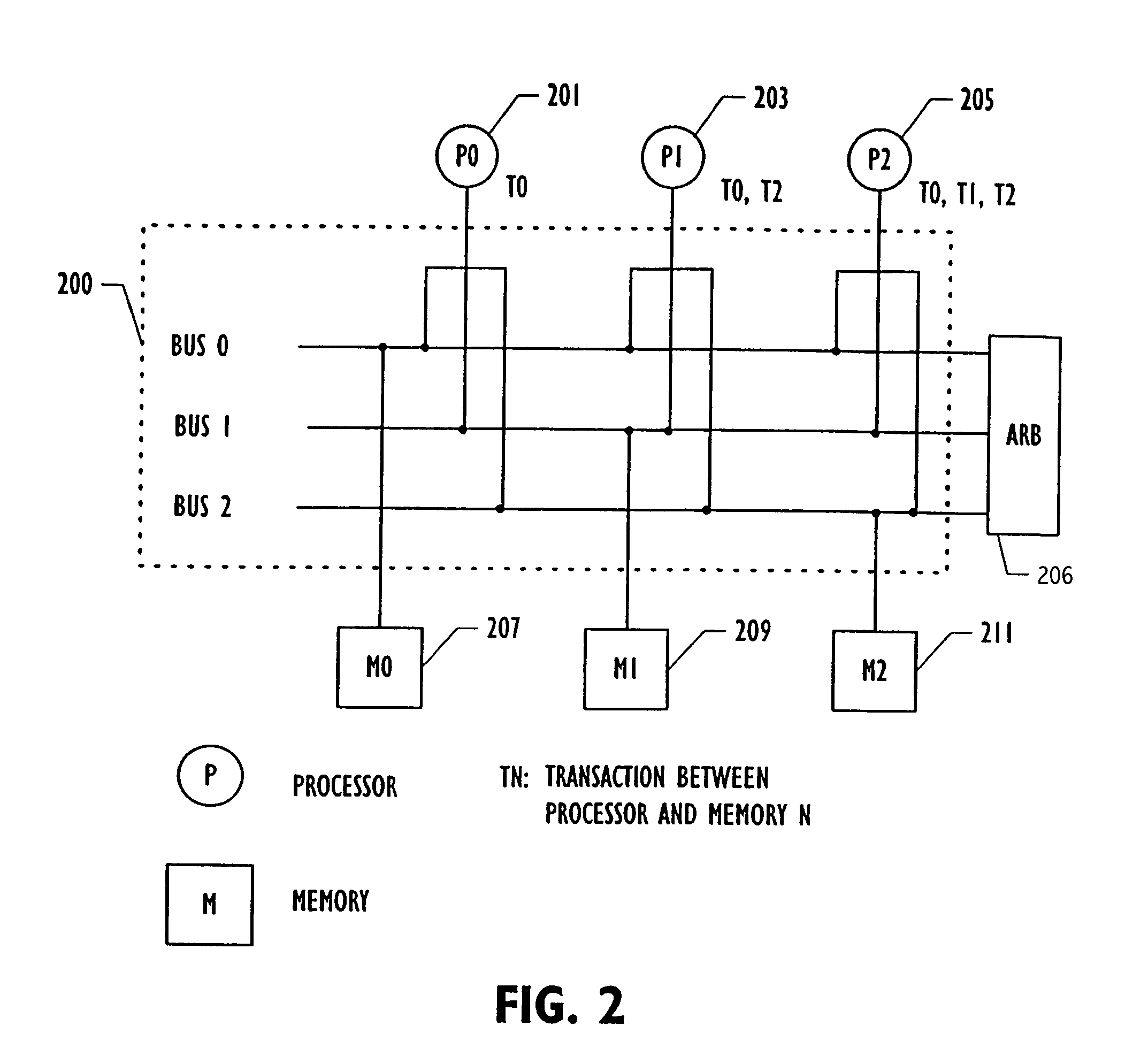

Distributed least choice first arbiter

InactiveUS7006501B1Increase the number ofImprove resource usageTime-division multiplexData switching by path configurationResource basedResource allocation

A distributed arbiter prioritizes requests for resources based on the number of requests made by each requester. Each resource gives the highest priority to servicing requests made by the requester that has made the fewest number of requests. That is, the requester with the fewest requests (least number of choices) is chosen first. Resources may be scheduled sequentially or in parallel. If a requester receives multiple grants from resources, the requester may select a grant based on resource priority, which is inversely related to the number of requests received by a granting resource. In order to prevent starvation, a round robin scheme may be used to allocate a resource to a requester, prior to issuing grants based on requester priority.

Owner:ORACLE INT CORP

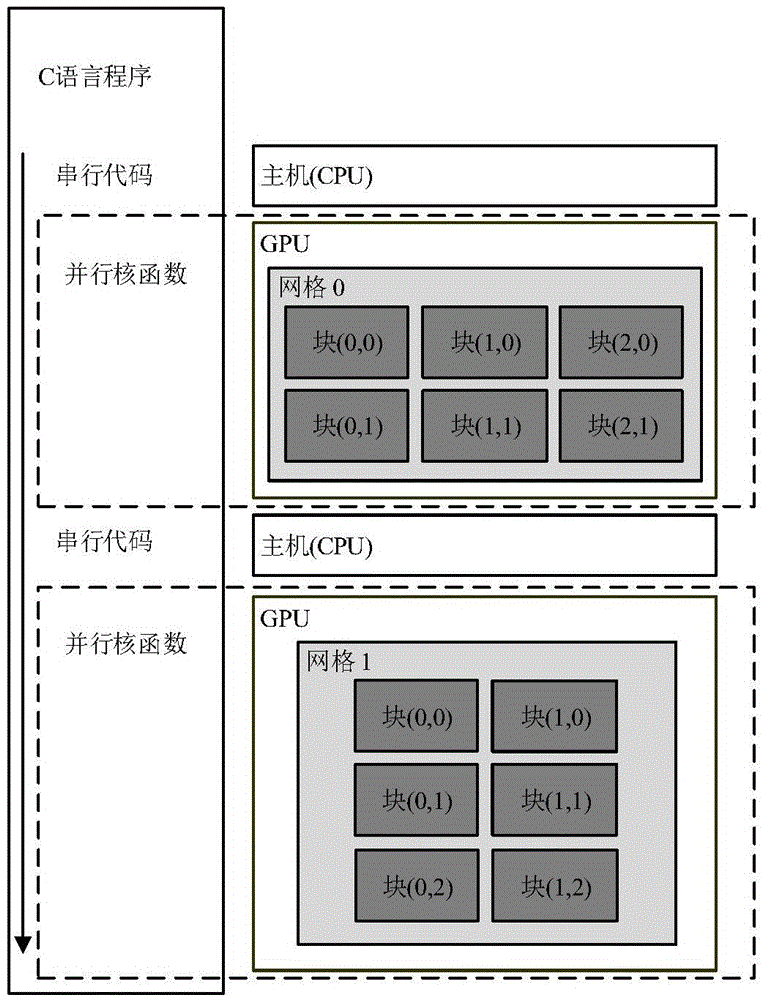

Video decoding macro-block-grade parallel scheduling method for perceiving calculation complexity

InactiveCN105491377AReduce synchronization overheadImprove parallel efficiencyDigital video signal modificationCore functionRound complexity

The invention discloses a video decoding macro-block-grade parallel scheduling method for perceiving calculation complexity. The method comprises two critical technologies: the first one involves establishing a macro-block decoding complexity prediction linear model according to entropy decoding and macro-block information after reordering such as the number of non-zero coefficients, macro-block interframe predictive coding types, motion vectors and the like, performing complexity analysis on each module, and fully utilizing known macro-block information so as to improve the parallel efficiency; and the second one involves combining macro-block decoding complexity with calculation parallel under the condition that macro-block decoding dependence is satisfied, performing packet parallel execution on macro-blocks according to an ordering result, dynamically determining the packet size according to the calculation capability of a GPU, and dynamically determining the packet number according to the number of macro-blocks which are currently parallel so that the emission frequency of core functions is also controlled while full utilization of the GPU is guaranteed and high-efficiency parallel is realized. Besides, parallel cooperative operation of a CPU and the GPU is realized by use of a buffer area mode, resources are fully utilized, and idle waiting is reduced.

Owner:HUAZHONG UNIV OF SCI & TECH

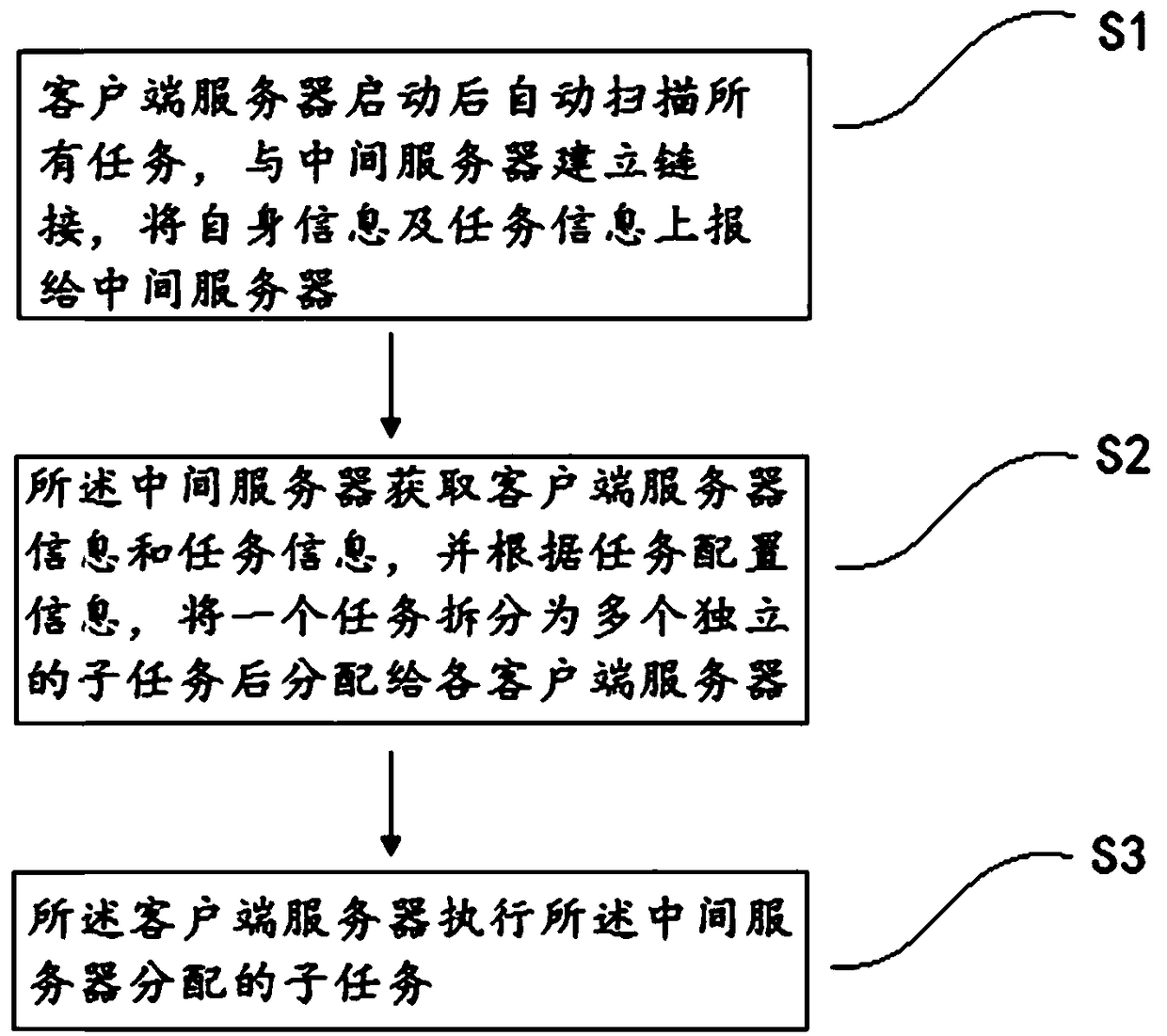

A distributed task scheduling method and system

ActiveCN108958920AEasy to manageImplement parallel schedulingProgram initiation/switchingClient-sideParallel scheduling

The invention discloses a distributed task scheduling method and system, belonging to the computer technical field. The method comprises the following steps: S1, after the client server starts up, alltasks are automatically scanned, a link is established with the intermediate server, and self-information and task information are reported to the intermediate server; S2, that intermediate server obtains the client server information and the task information, and splits a task into a plurality of independent sub-tasks according to the task configuration information, and distribute the task to each client server; S3, the client server executes the sub-tasks assigned by the intermediate server. Through integration of the task scheduling engine in the intermediate server, the dependency relationship of the tasks are conveniently managed. Through the intermediate server, a task is divided into several independent sub-tasks, and the client server is called to execute the assigned task items in parallel to achieve parallel scheduling.

Owner:ZHONGAN ONLINE P&C INSURANCE CO LTD

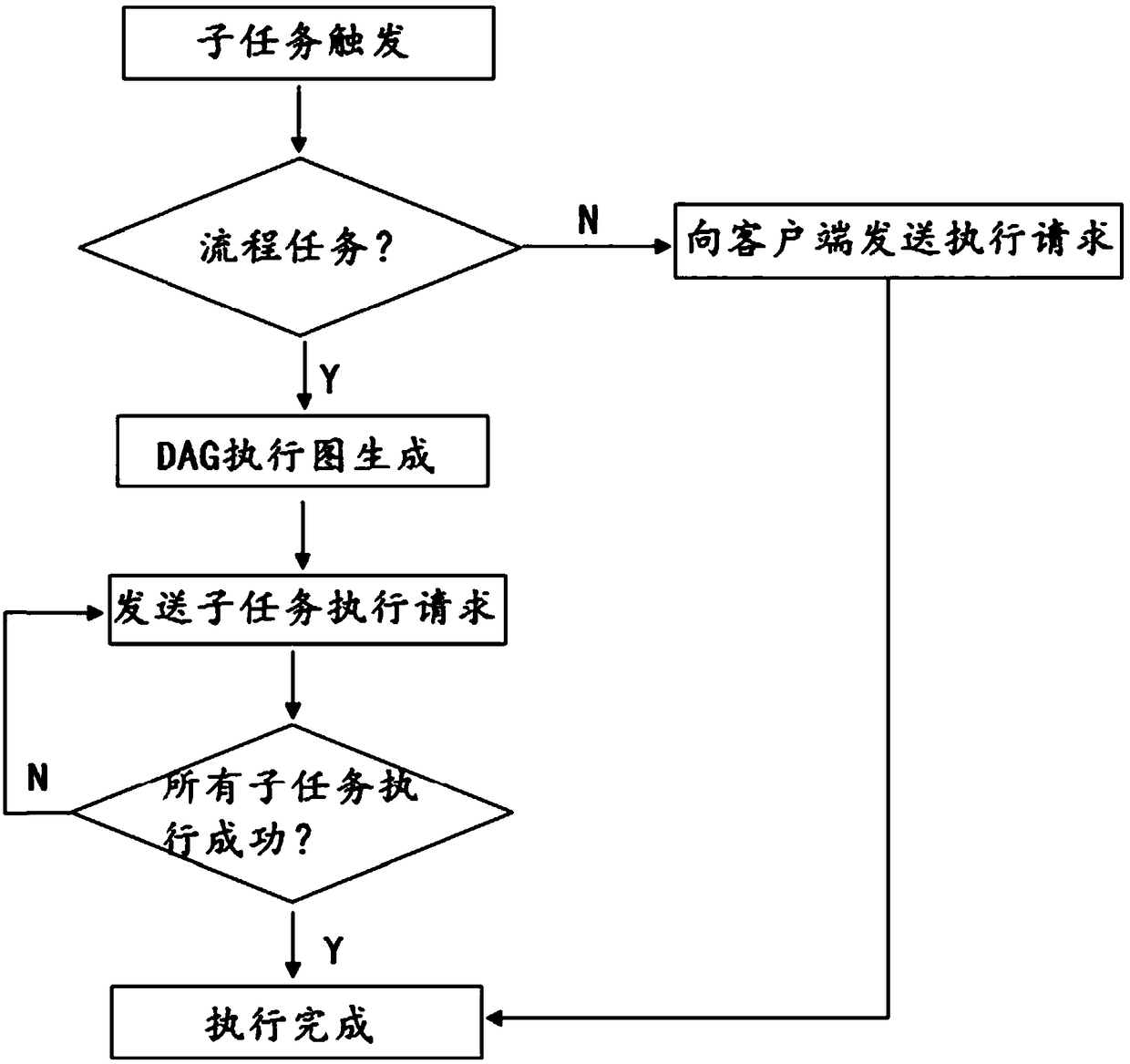

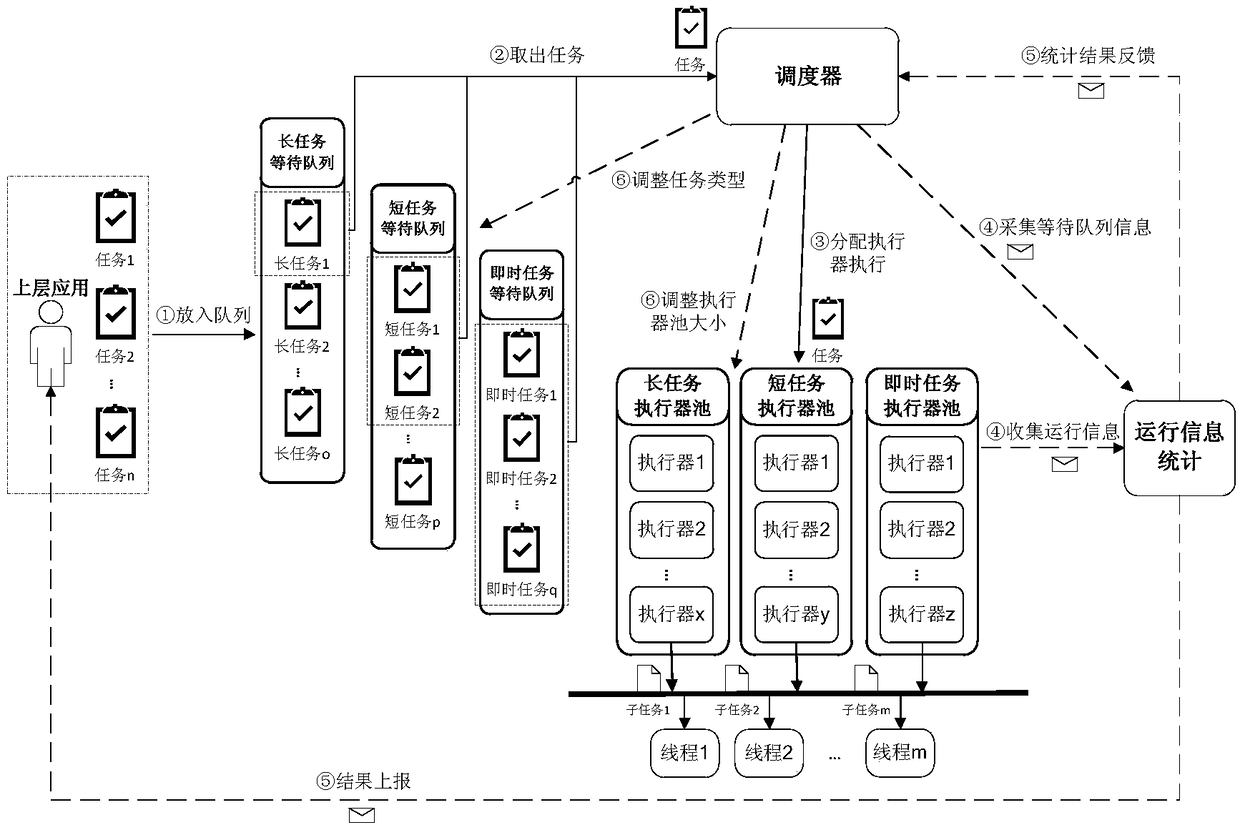

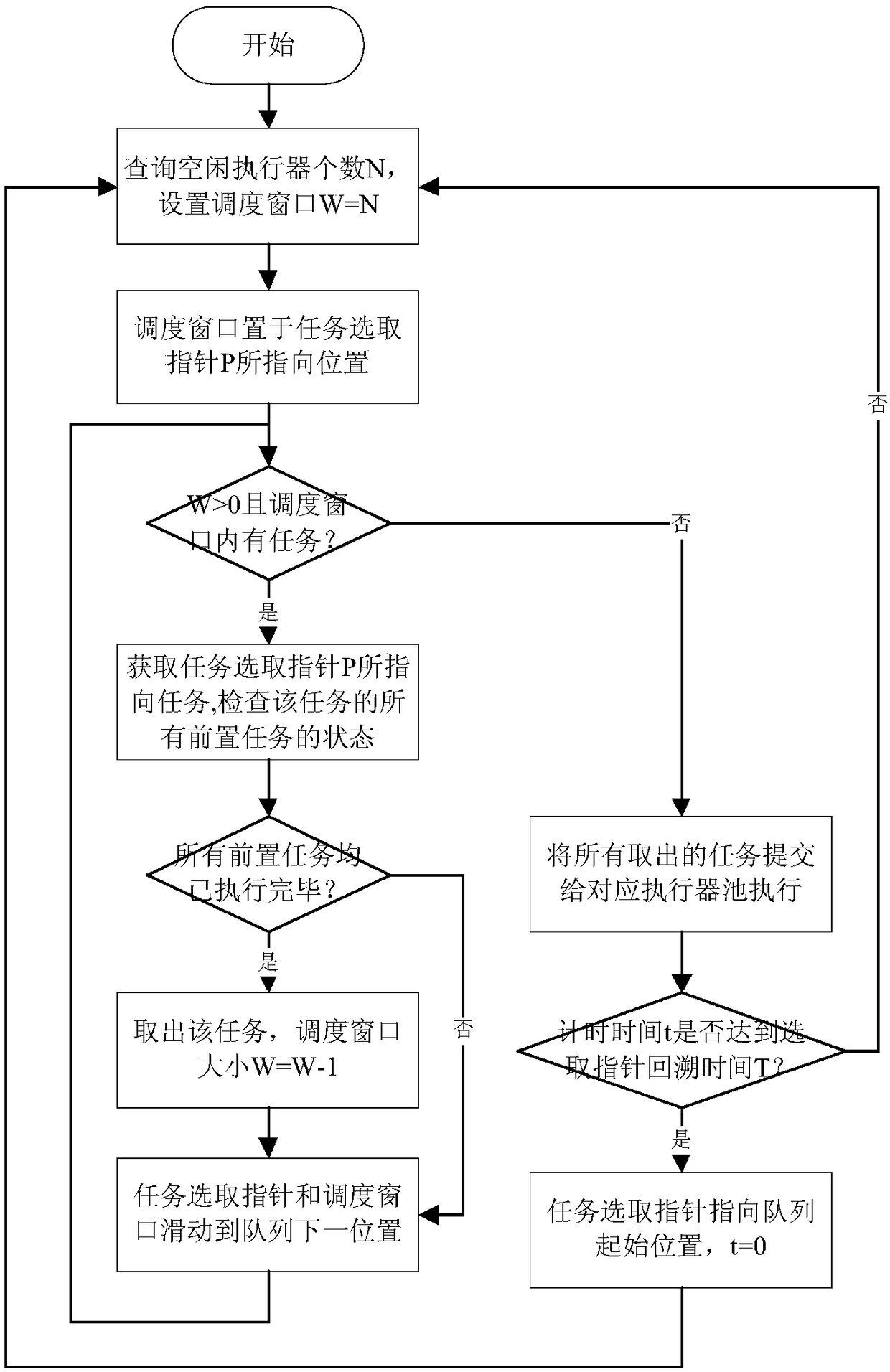

Two-stage self-adaptive scheduling method suitable for large-scale parallel data processing tasks

ActiveCN108920261ATake advantage ofSolve the difficult problem of parallel schedulingProgram initiation/switchingActuatorLarge scale data

The invention discloses a two-stage self-adaptive scheduling method suitable for a large-scale parallel data processing tasks. Two-stage scheduling is performed on tasks from a task stage and a subtask stage. By the method, the problem of parallel scheduling difficulty caused by complex dependence relationship among the tasks is solved effectively, parallelism degree is increased, orderly and efficient parallel processing of large-scale data processing tasks is realized, task waiting or executing time is reduced, and overall executing time is shortened. In addition, by the method, executor operation statistical information can be fed back to a scheduler, self-adaptive adjusting of executor pool size and task type is realized, and scheduling is constantly optimized, so that system resourceusing efficiency is improved.

Owner:中国航天系统科学与工程研究院

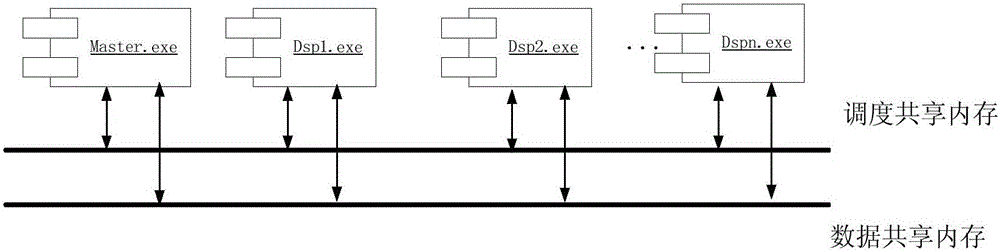

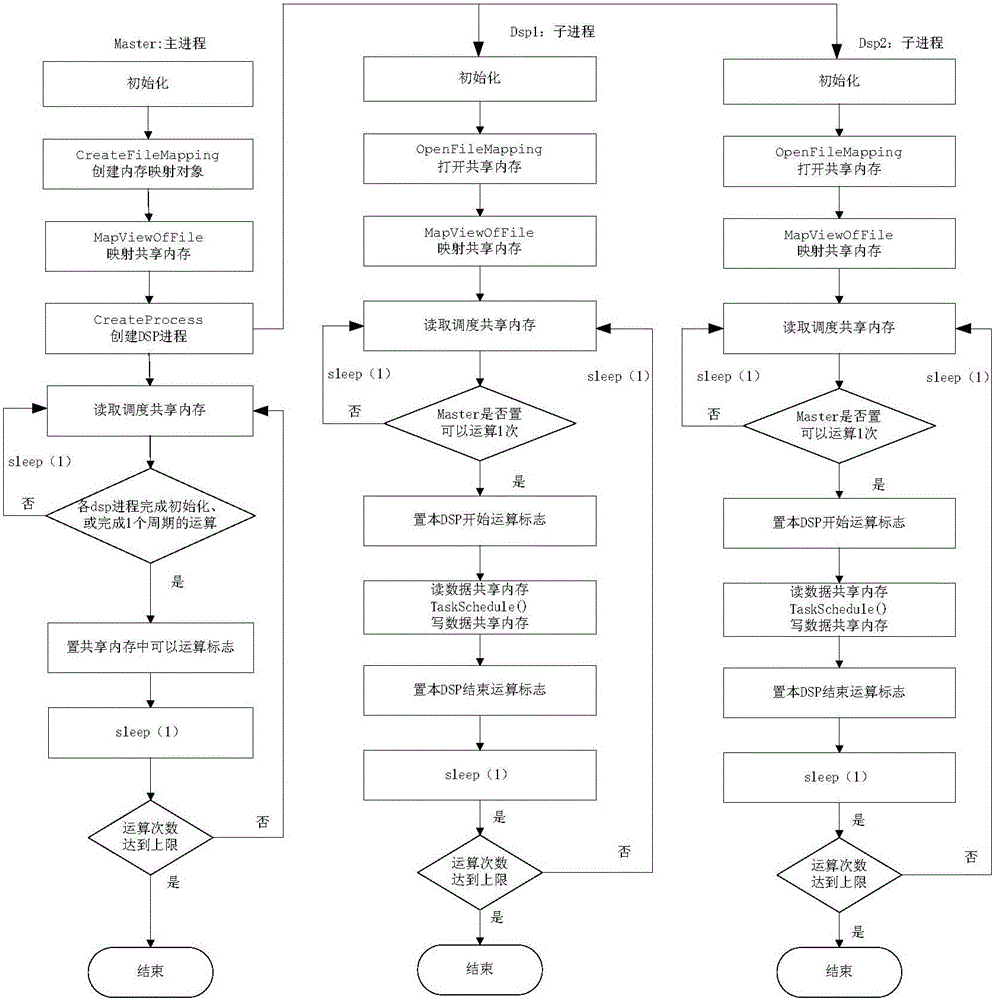

Simulation task parallel scheduling method based on progress

ActiveCN105718305ASolve the problem of naming conflicts when compiling with mixedResolve naming conflictsProgram initiation/switchingSoftware simulation/interpretation/emulationWait stateData sharing

The invention discloses a simulation task parallel scheduling method based on a progress. The simulation task parallel scheduling method comprises the following steps: for an architecture of multiple processor plug-ins of an embedded device, starting a simulation management progress in a PC to be used as a main progress, and creating a plurality of sub-progresses by virtue of the main progress to virtualize the real-time processor plug-ins; creating a scheduling and data share memory by virtue of the main progress, after the sub-progresses are initialized, starting a single period simulation starting mark by virtue of the main progress, reading the simulation starting mark of the scheduling share memory by virtue of each sub-progress, starting a task operation, setting a sub-progress task operation ending mark after the task is ended, and then entering a waiting state; and after the ending marks of all sub-progresses are collected by virtue of the main progress, issuing a next period starting mark, circularly processing until the set upper limit of the simulation operation times is reached, and exiting the progress.

Owner:NR ELECTRIC CO LTD +1

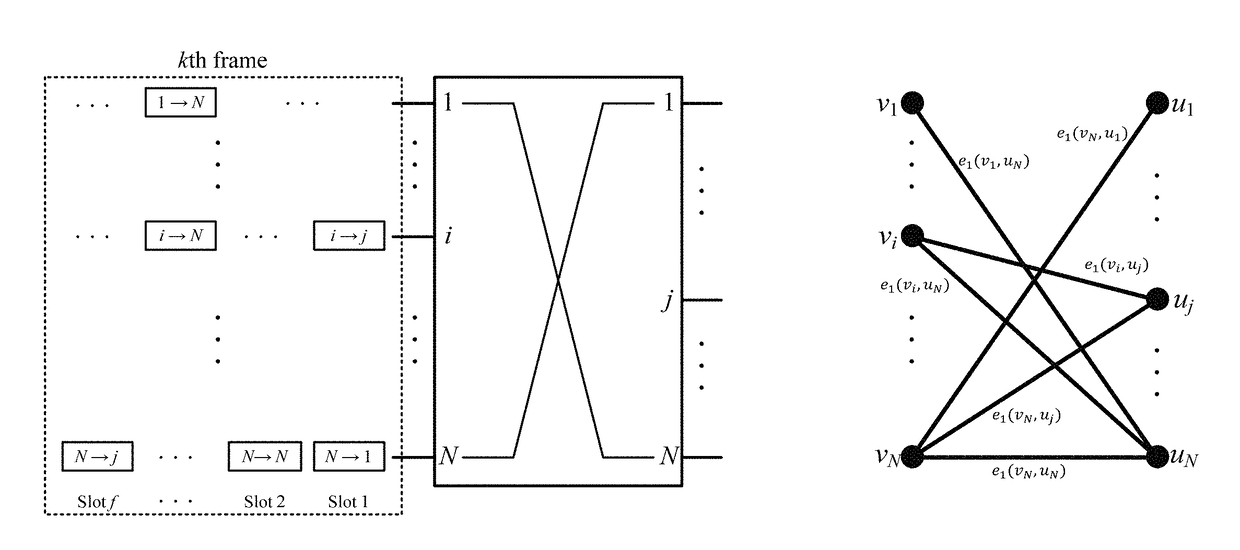

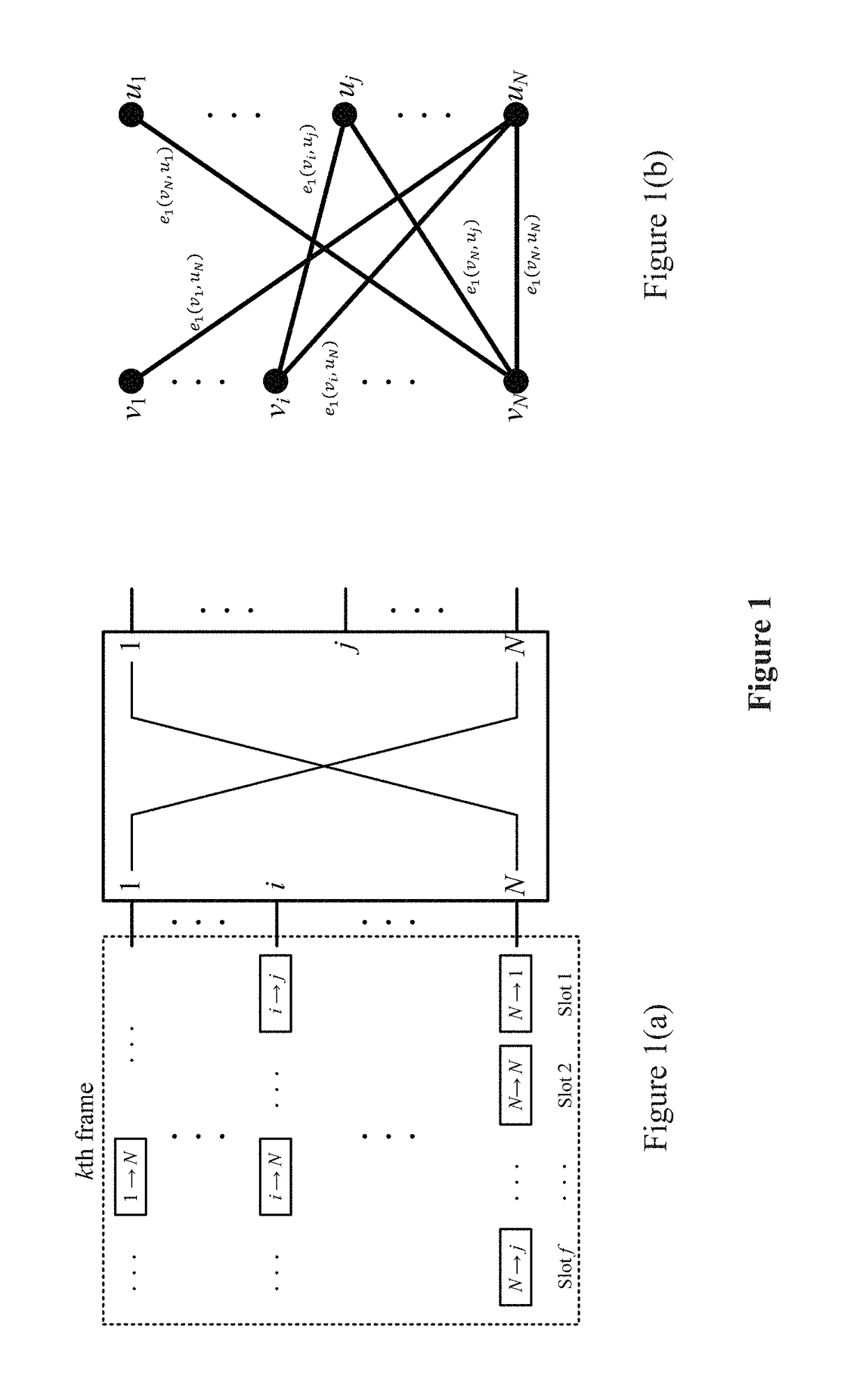

Method for complex coloring based parallel scheduling for switching network

InactiveUS20170195258A1Reduce complexityQuick fixData switching networksTraffic capacityOptimal scheduling

Method for complex coloring based parallel scheduling for the switching network that is directed at traffic scheduling in the large scale high speed switching network. The parallel scheduling algorithm is on a frame basis. By introducing the concept of complex coloring which is optimal and can be implemented in a distributed and parallel manner, the algorithm can obtain an optimal scheduling scheme without knowledge of the global information of the switching system, so as to maximize bandwidth utilization of the switching system to achieve a nearly 100% throughput. The algorithm complexity is O(log2 N).

Owner:SHANGHAI JIAO TONG UNIV

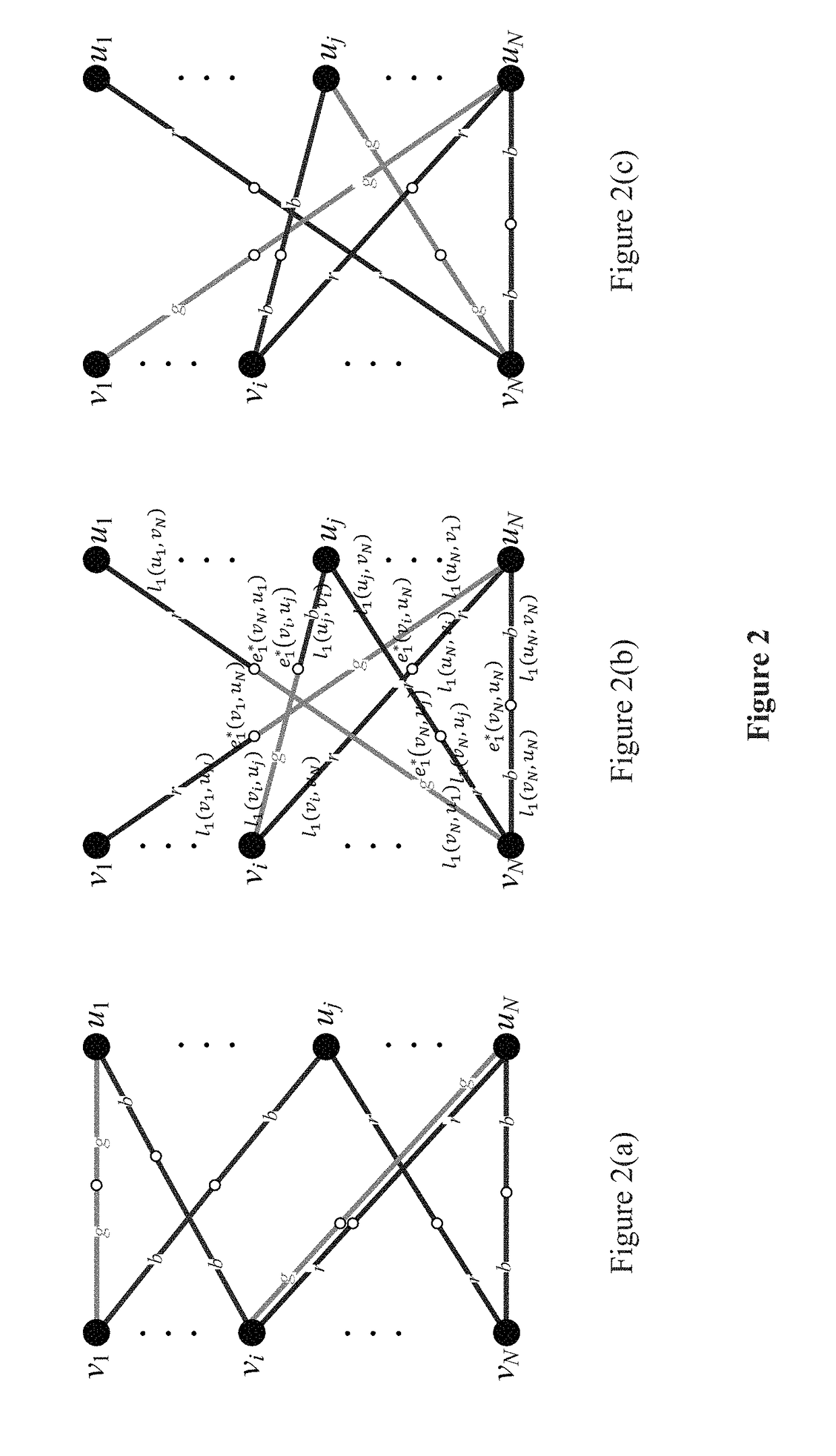

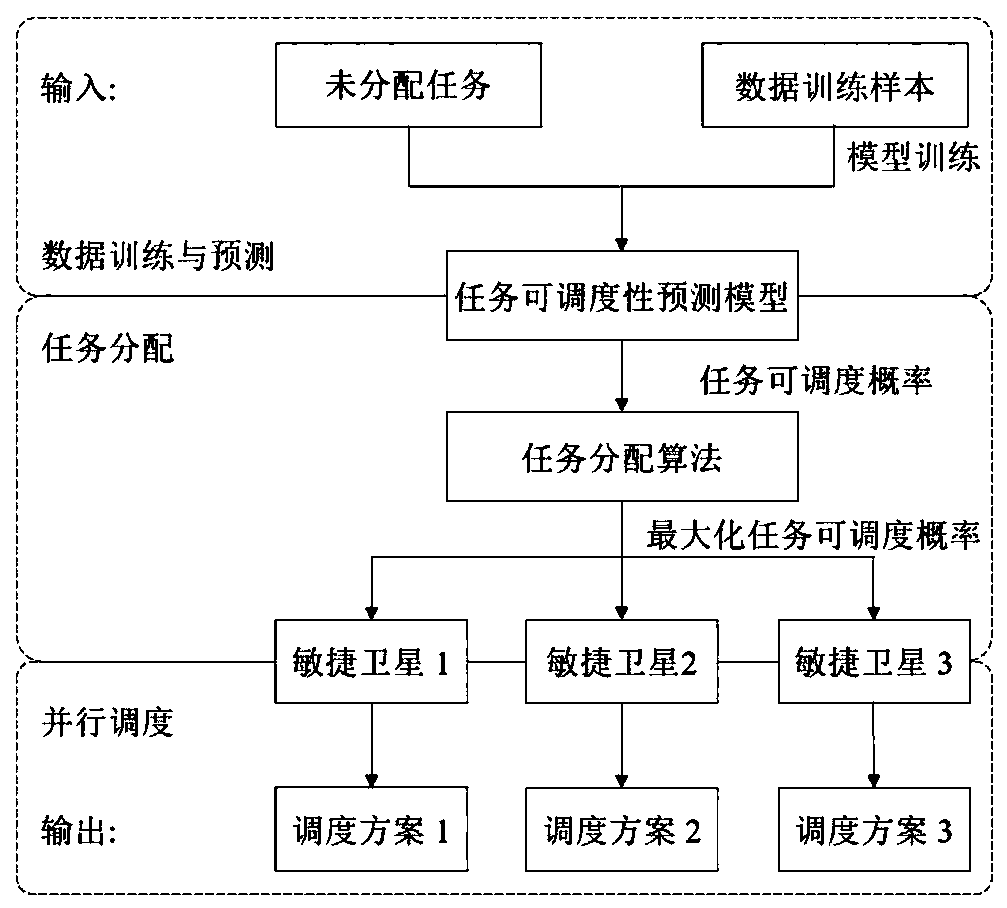

Agile satellite task parallel scheduling method based on data driving

ActiveCN109960544AImprove scheduling efficiencyReduce dispatch timeProgram initiation/switchingArtificial lifeData-drivenScaling agile

The invention discloses an agile satellite task parallel scheduling method based on data driving. The method comprises the steps of 1, obtaining agile imaging satellite historical task scheduling dataand a to-be-allocated task set; 2, training a task prediction model based on a deep learning algorithm, and predicting the scheduling probability of each to-be-allocated task; 3, according to a probability prediction result, allocating each task to the satellite with the maximum scheduling probability, and converting a large-scale satellite task scheduling problem into a plurality of parallel single-satellite scheduling problems; 4, solving; and 5, outputting the agile imaging satellite task scheduling sequence of each task to be distributed. The task scheduling probability prediction model is obtained through the machine learning algorithm, each task is allocated to the satellite with the maximum scheduling probability for execution, the complex problem is simplified, the task schedulingtime is shortened from the hour level to the minute level, and the scheduling efficiency of large-scale agile imaging satellite tasks is effectively improved.

Owner:NAT UNIV OF DEFENSE TECH

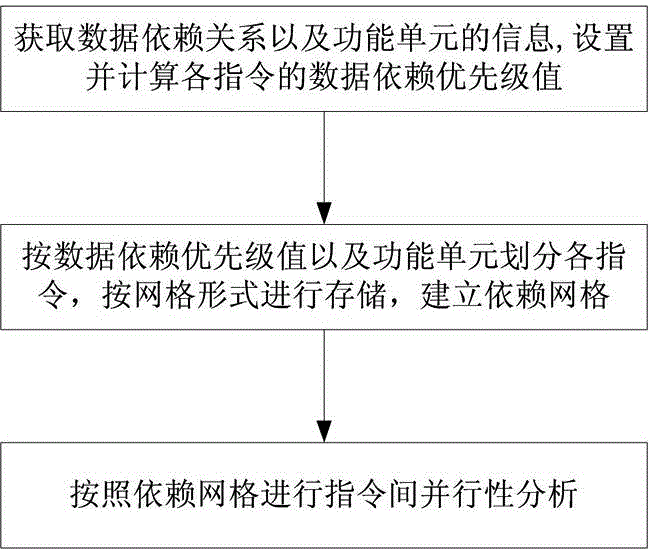

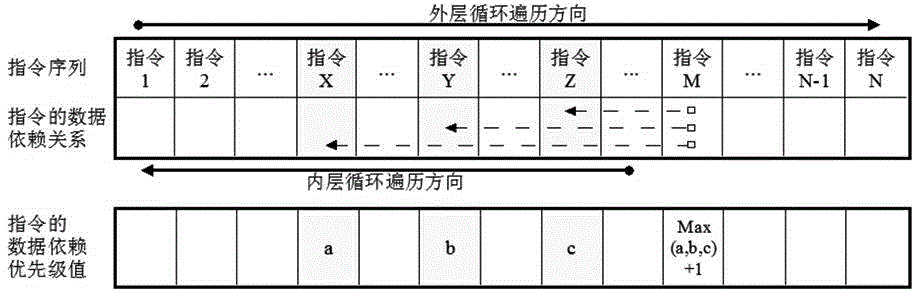

Dependency mesh based instruction-level parallel scheduling method

ActiveCN104699464AImprove parallelismImplement Logical Parallelism RecognitionConcurrent instruction executionHardware structureTheoretical computer science

The invention discloses a dependency mesh based instruction-level parallel scheduling method. The method includes the steps of 1), acquiring data dependency relations among instructions in a target basic block and information of function units corresponding to the instructions, and setting and computing data dependency priority values of the instructions according to the data dependency relations; 2), partitioning the instructions according to the data dependency priority values and the function units, storing partitioned results according to a mesh form, and establishing dependency meshes obtaining dependency relations between the instructions and data dependency priorities as well as between the instructions and the function units; 3), performing parallelism analysis among the instructions according to the relations, in the dependency meshes obtained in the step 2, between the instructions and the data dependency priorities as well as between the instructions and the function units. The dependency mesh based instruction-level parallel scheduling method has the advantages that the method is capable of describing parallel relations among the instructions and relativity between the instructions and hardware structures by combining the data dependency relations and function unit allocation relations, and is simple in implementation, wide in application range and high in instruction-level parallelism.

Owner:NAT UNIV OF DEFENSE TECH

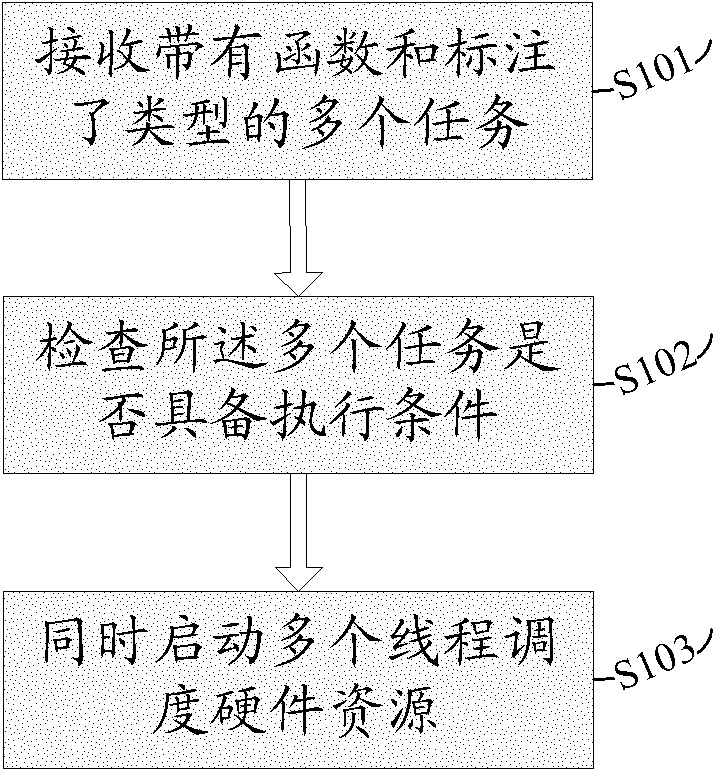

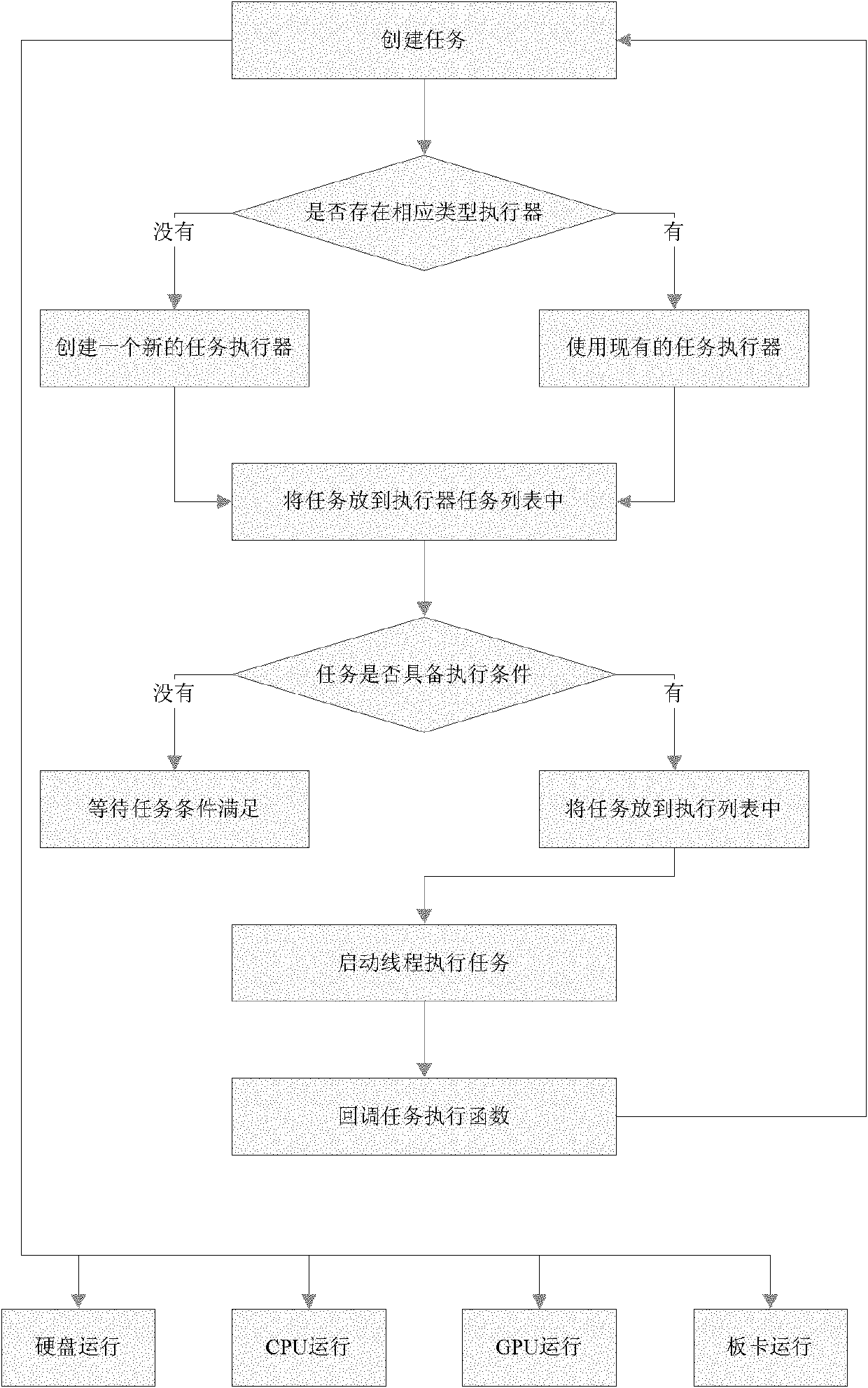

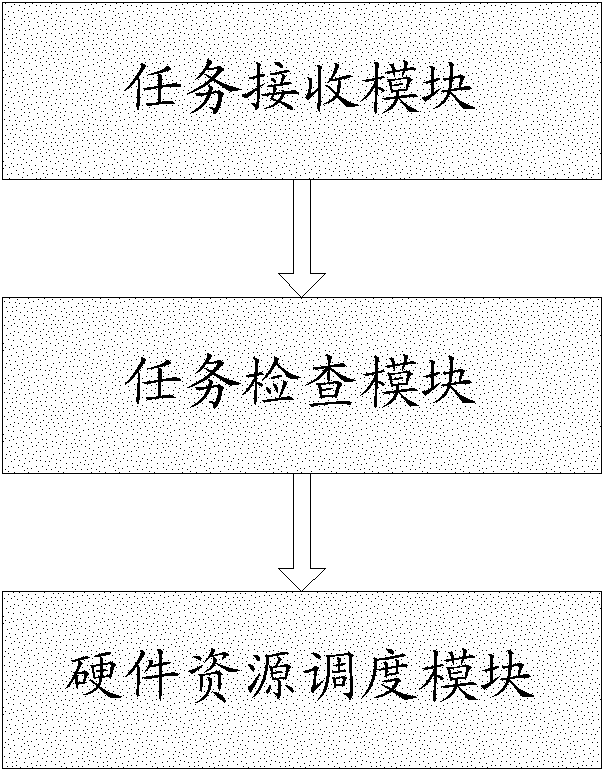

Multi-task parallel scheduling mechanism

InactiveCN102567084AIncrease profitImprove production efficiencyMultiprogramming arrangementsMain processing unitThread scheduling

The embodiment of the invention discloses a multi-task parallel scheduling method, which comprises the steps of: receiving a plurality of tasks which have functions and are marked with types; checking whether the tasks have execution conditions or not; and simultaneously starting a plurality of tread scheduling hardware resources. The invention further discloses a multi-task parallel scheduling device. Through applying the method and the device, a central processing unit (CPU), a graphic processing unit (GPU), a high-speed hard disk, a video and audio card and other hardware resources can be scheduled in parallel and work at the same time, accordingly, the utilization rate of the resources is greatly increased, so the programming efficiency can be improved.

Owner:CHINA DIGITAL VIDEO BEIJING

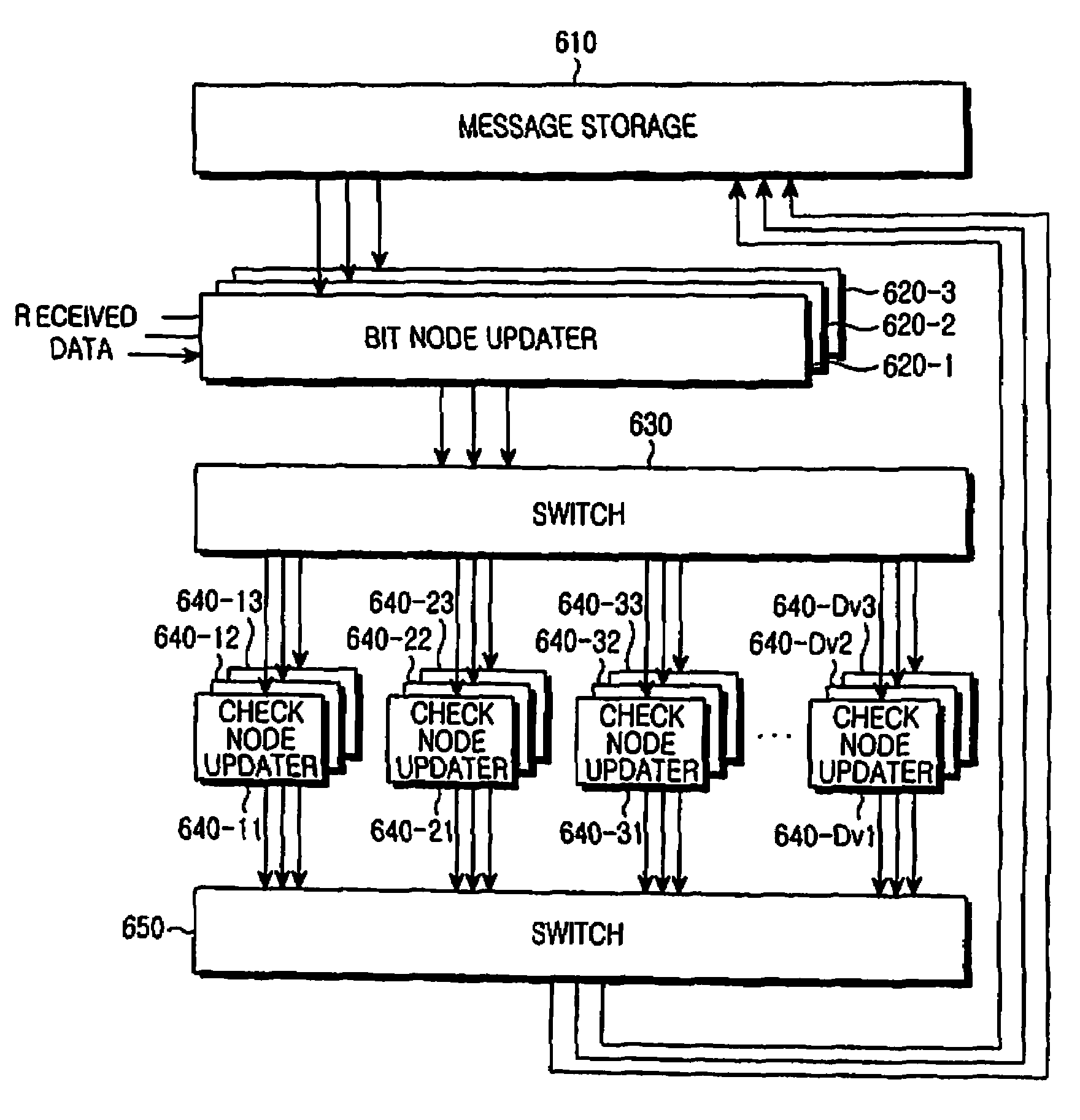

Apparatus and method for receiving signal in a communication system

ActiveUS20080189589A1Garment special featuresData representation error detection/correctionCommunications systemParity-check matrix

Provided are an apparatus and method for receiving a signal in a communication system, in which a signal is received and decoded by setting an offset indicating a start position of a node operation for each block column of a parity check matrix of a Low Density Parity Check (LDPC) code and scheduling an order of performing the node operation on a Partial Parallel Scheduling (PPS) group basis. Here, the parity check matrix includes p×L rows and q×L columns, the p×L rows are grouped into p block rows, the q×L columns are grouped into q block columns, and each PPS group includes one column from each of the q block columns.

Owner:SAMSUNG ELECTRONICS CO LTD

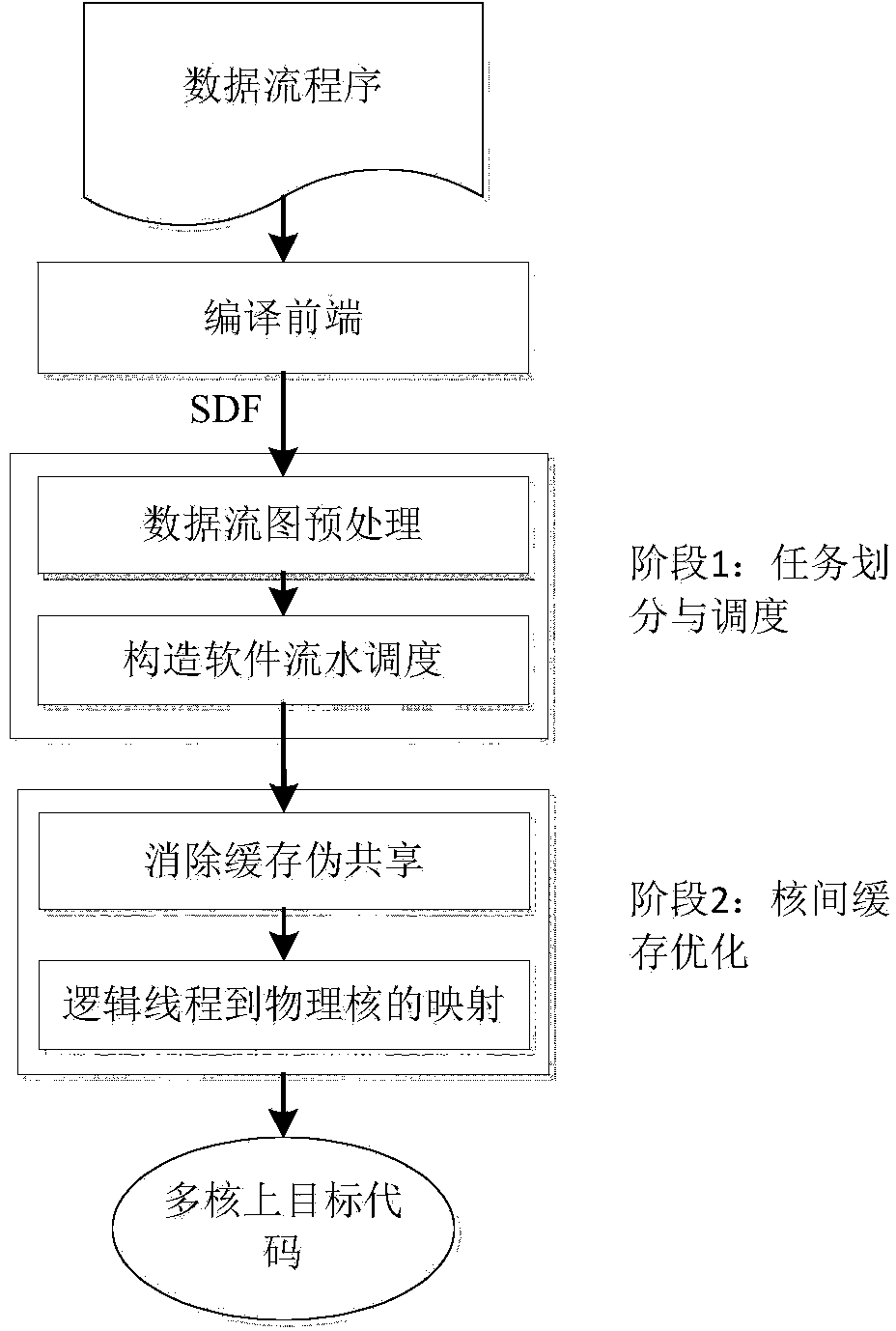

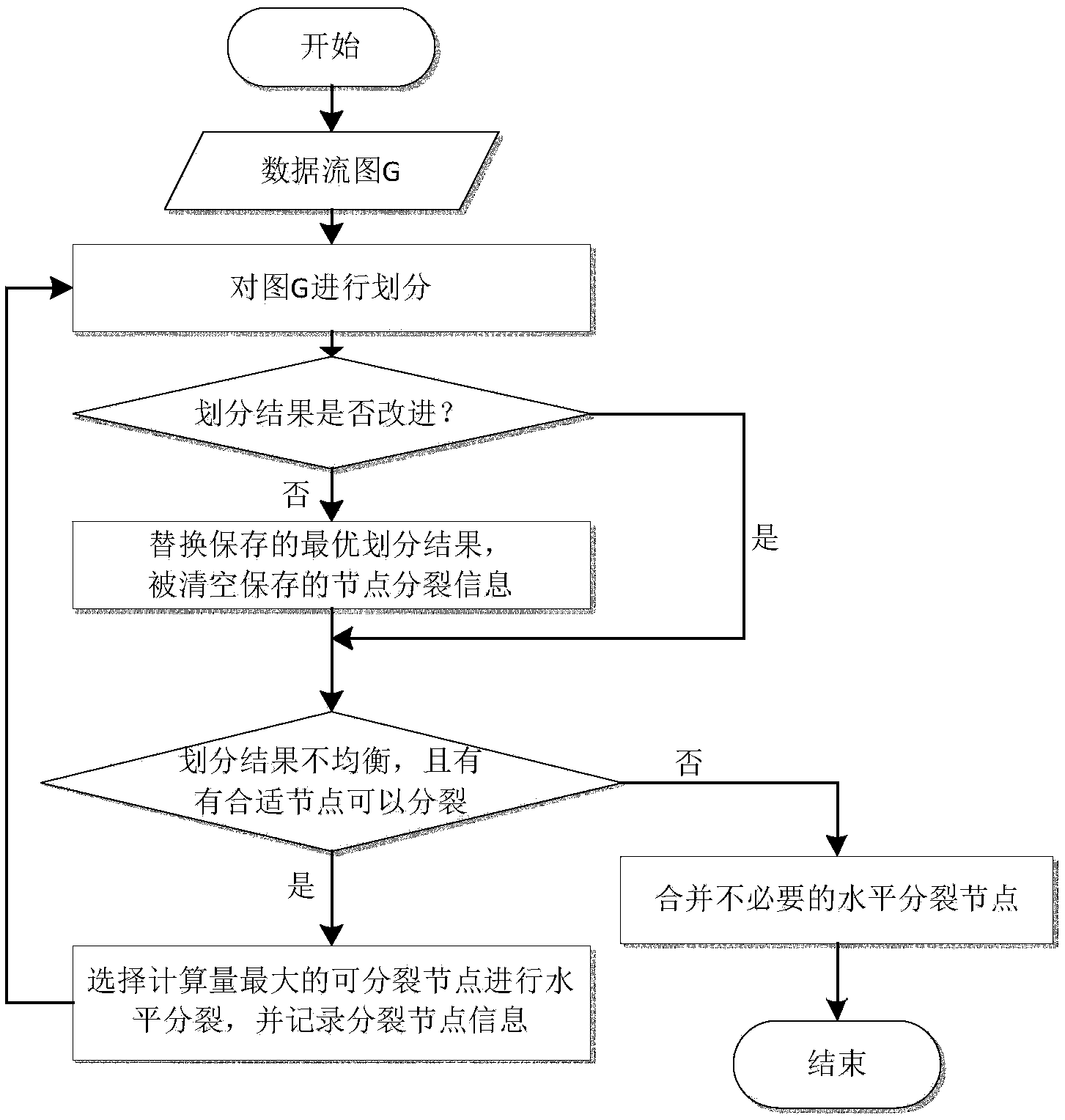

Data flow program scheduling method oriented to multi-core processor X86

ActiveCN103970602AReduce distractionsRealize the mappingProgram initiation/switchingResource allocationX86Parallel scheduling

The invention discloses a data flow program scheduling method oriented to a multi-core system. The method comprises the following steps that task partitioning of mapping from calculation tasks to processor cores is determined, and software pipeline scheduling is constructed; according to structural characteristics of a multi-core processor and execution situations of a data flow program on the multi-core processor, cache optimization among the cores is conducted. According to the method, data flow parallel scheduling and optimization related to the cache structure of a multi-core framework are combined, and high parallelism of the multi-core processor is brought into full play; according to the hierarchical cache structure and the cache principle of the multi-core system, access of the calculation tasks to a communication cache region is optimized, and the throughput rate of a target program is further increased.

Owner:HUAZHONG UNIV OF SCI & TECH

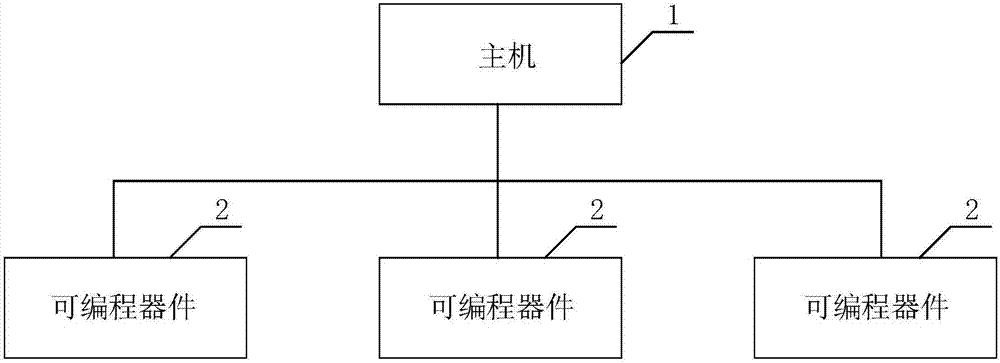

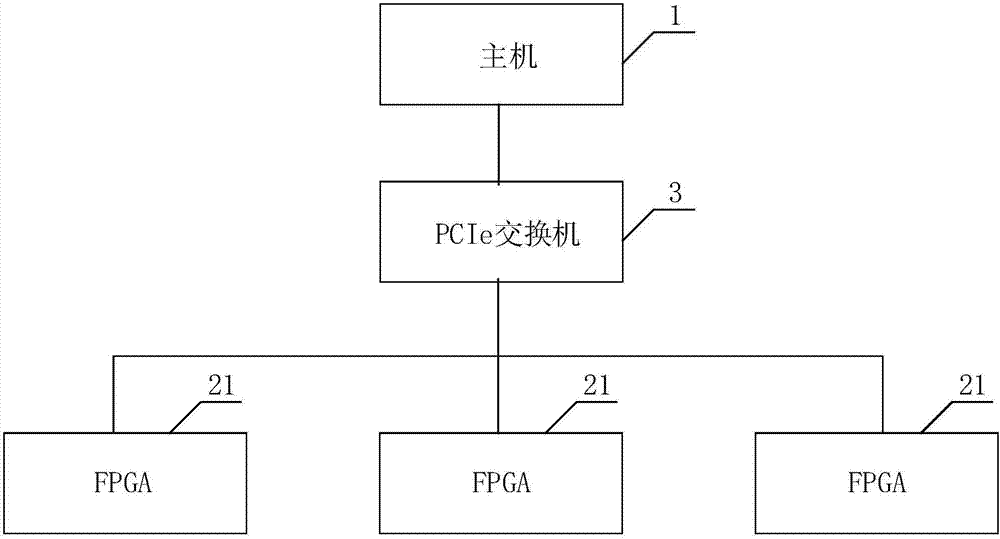

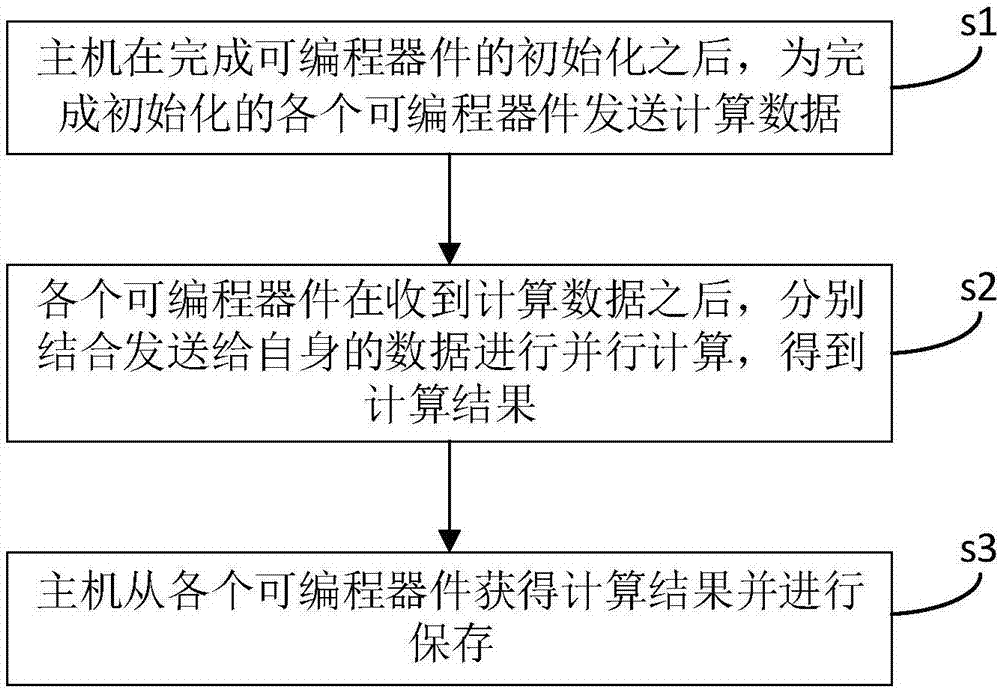

Heterogeneous computing platform and acceleration method on basis of heterogeneous computing platform

InactiveCN107402902AIncrease computing speedImprove parallelismMultiple digital computer combinationsArchitecture with single central processing unitParallel processingParallel scheduling

The invention discloses a heterogeneous computing platform. The platform comprises a host and a plurality of programmable devices, wherein the host is connected with each of the programmable devices; the host is used for initializing the programmable devices, carrying out parallel scheduling on each programmable device, sending computing data to each programmable device and obtaining a computing result; and each programmable device is used for processing the distributed computing data in parallel. The plurality of programmable devices of the heterogeneous computing platform can carry out computation at the same time, and the operation speed of the whole heterogeneous computing platform is equal to the sum of operation speeds of the programmable devices; compared with the heterogeneous computing platform with only one programmable device in the prior art, the whole operation speed and degree of parallelism of the heterogeneous computing platform are improved, so that the computing efficiency is improved, and the requirements, for the operation speed of the heterogeneous computing platform, of more and more complicated algorithms and data with larger and larger scales can be better satisfied. The invention furthermore provides an acceleration method on the basis of the heterogeneous computing platform.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

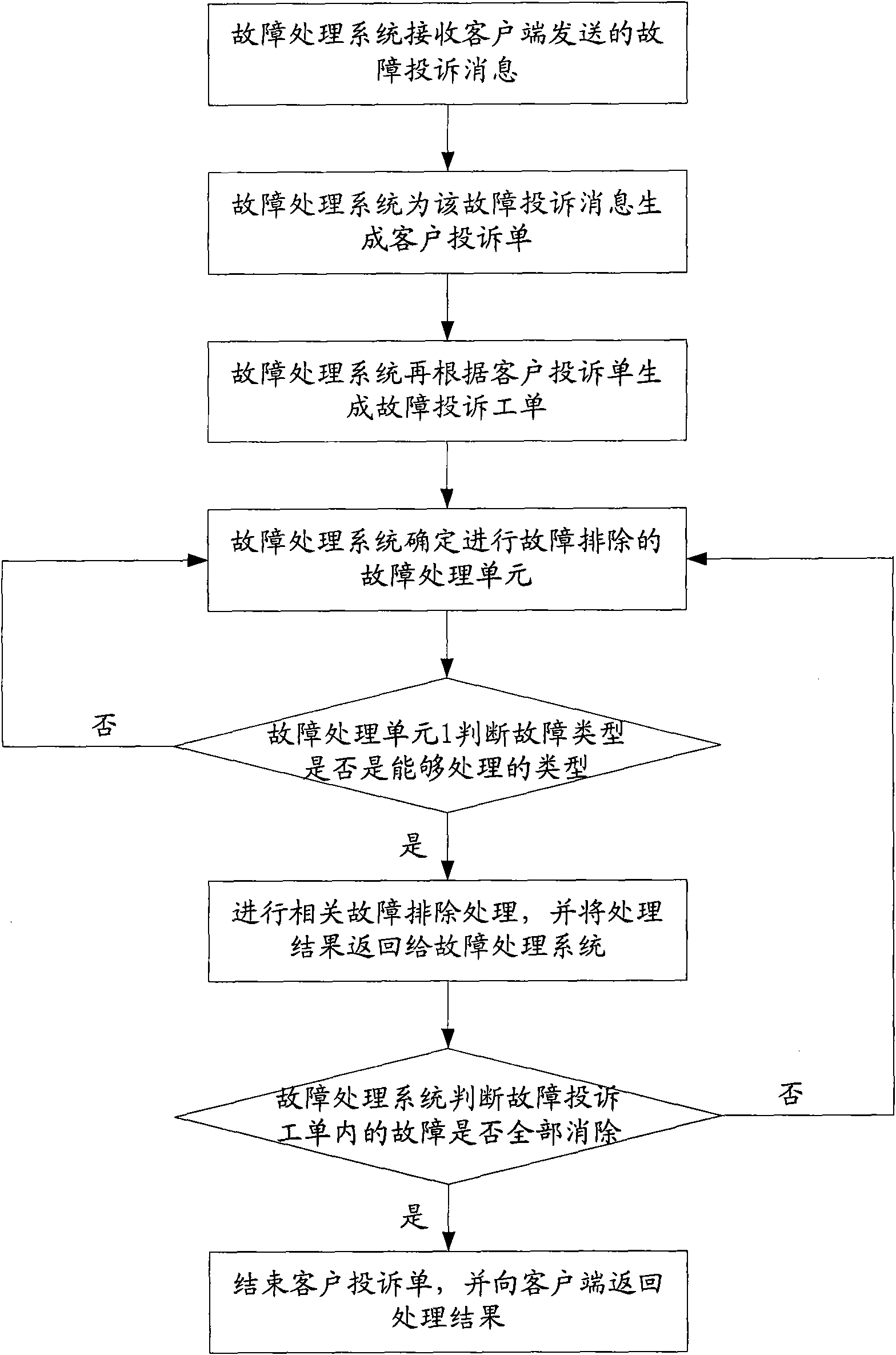

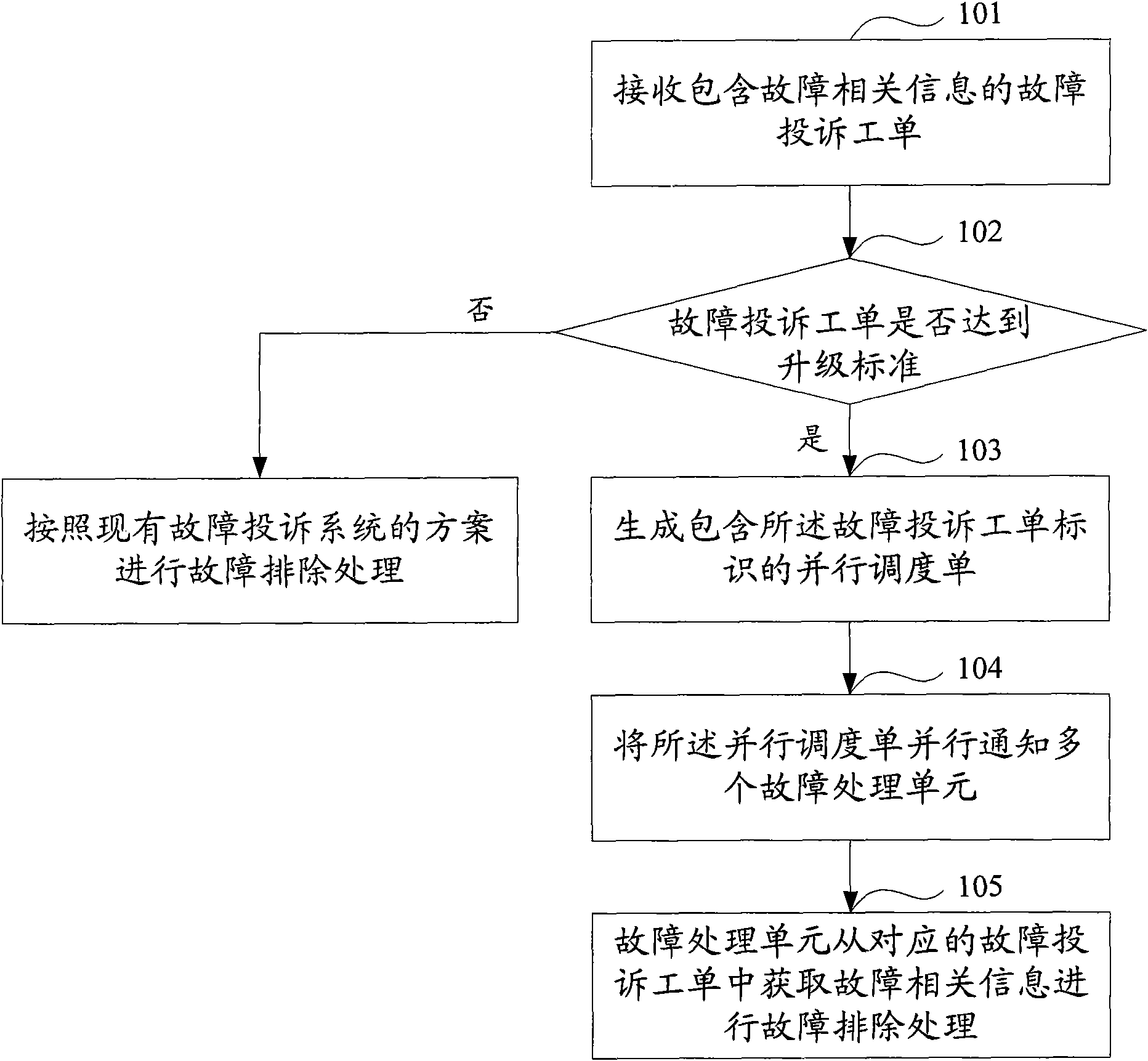

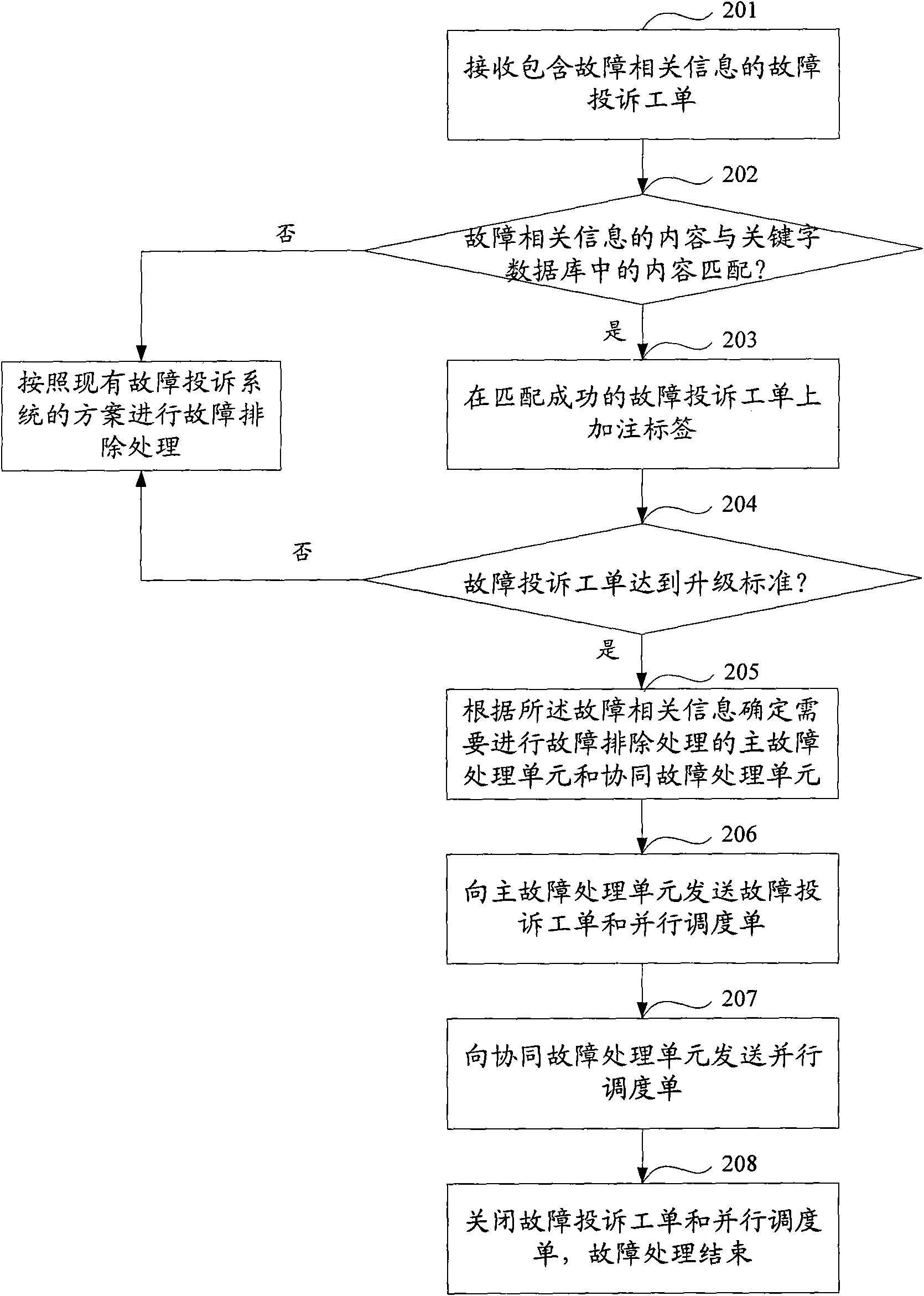

Fault processing method and system as well as fault scheduling equipment

InactiveCN102111508AReduce processing timeImprove processing timeSupervisory/monitoring/testing arrangementsRelevant informationFault handling

The invention discloses a fault processing method and system as well as fault scheduling equipment. The processing method comprises the following steps: judging information related to faults in a fault complaint list; generating a parallel scheduling list containing the identification of the fault complaint list when the fault complaint list reaches upgrade standards; notifying a plurality of fault processing units in parallel; and simultaneously eliminating faults by the plurality of fault processing units, thus shortening the whole processing time for fault complaint lists with special requirements, and improving the processing ageing for important fault complaints with special requirements.

Owner:CHINA MOBILE GROUP DESIGN INST

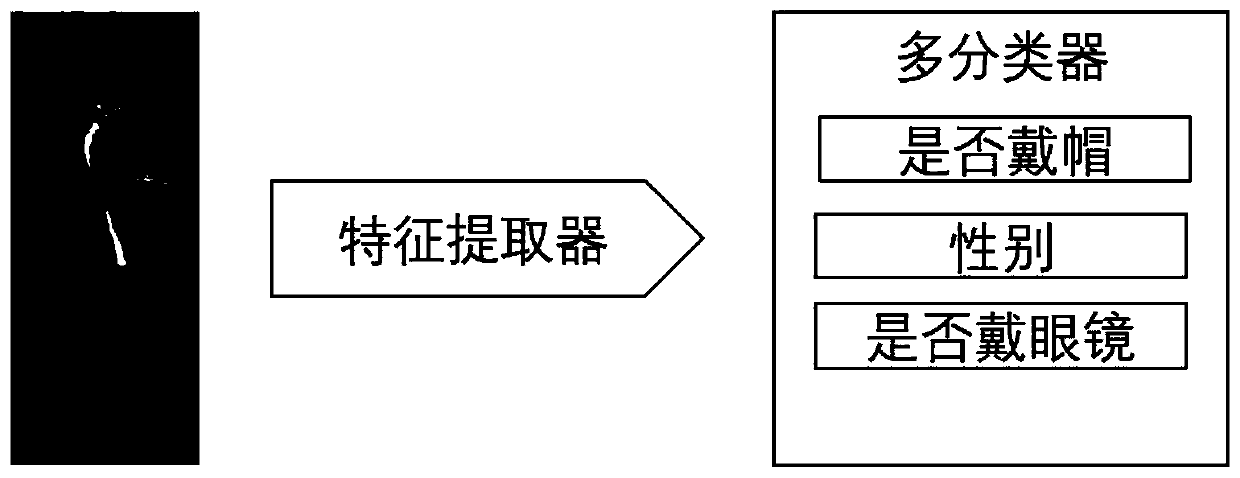

Monitoring video pedestrian real-time detection, attribute identification and tracking method and system based on deep learning

InactiveCN110188596ASolve the problem of single monitoring function and low monitoring efficiencyEnsure personal and property safetyBiometric pattern recognitionProtection systemSystem monitoring

The invention relates to a monitoring video pedestrian real-time detection, attribute identification and tracking method and system based on deep learning. The invention mainly provides an efficient pedestrian detection, attribute identification and tracking method, designs an efficient scheduling method, and performs series-parallel scheduling on modules, so that the modules can perform multi-channel video pedestrian real-time detection, attribute identification and tracking as much as possible on limited computing resources. The problems in the prior art of single monitoring function of a road scene security and protection system and that monitoring efficiency is not high are solved. The efficient multifunctional security and protection system for road scene security and protection monitoring is provided. During application, through monitoring, attribute recognition and tracking on pedestrians, the monitoring strength and intensity are enhanced, especially for important places such as airports, stations and subways, crimes can be effectively restrained, and the personal and property safety of national people is guaranteed.

Owner:PEKING UNIV +1

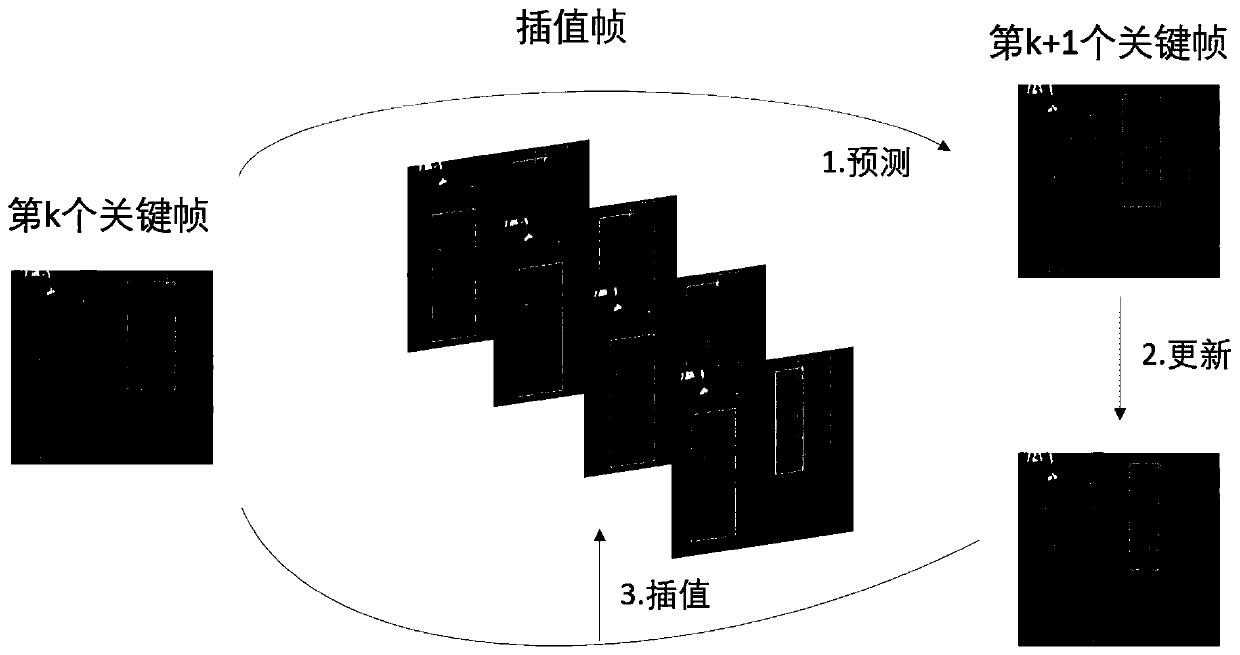

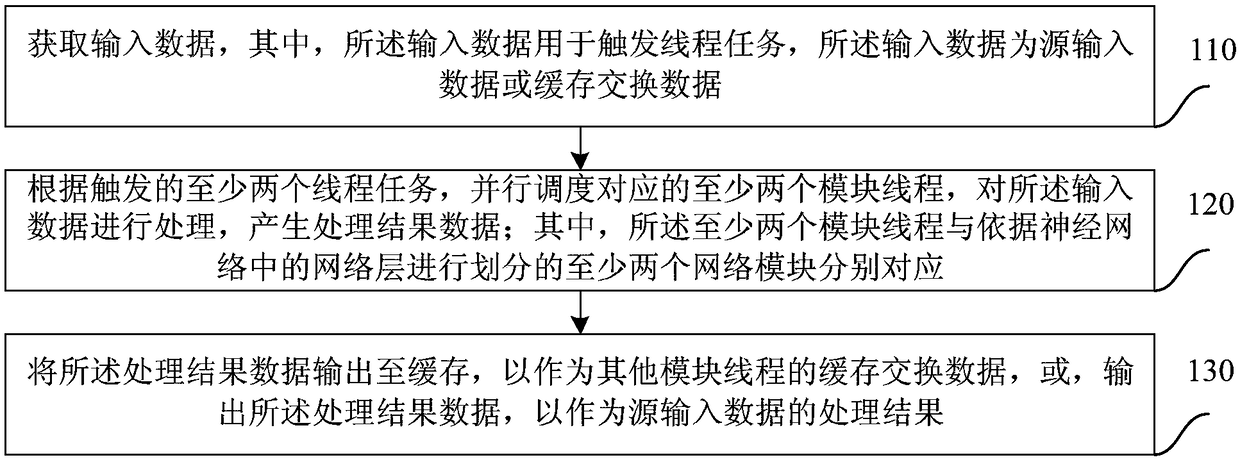

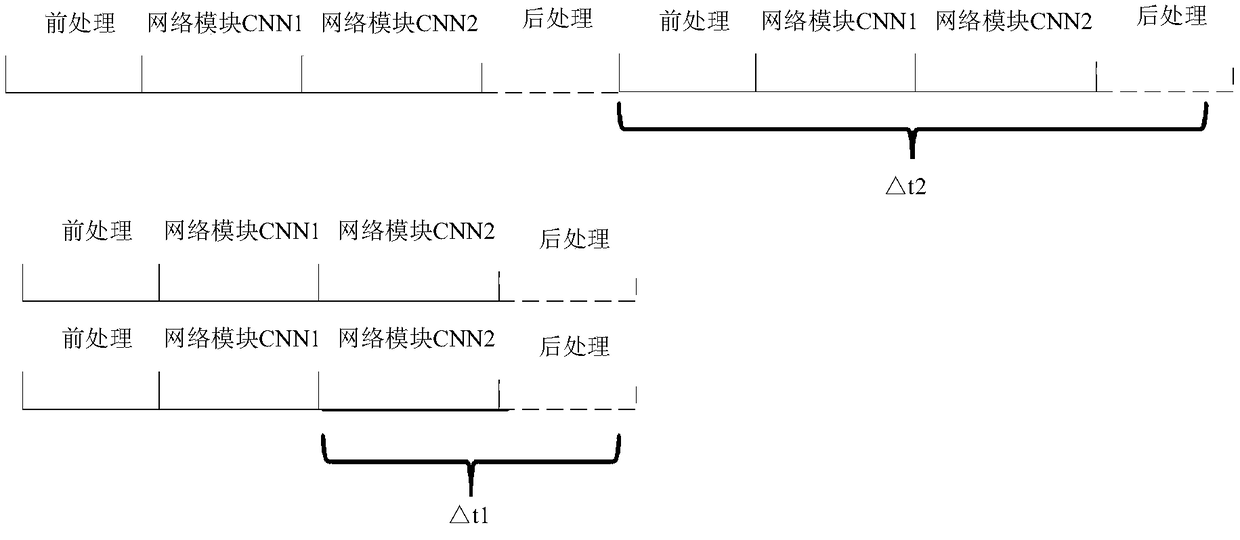

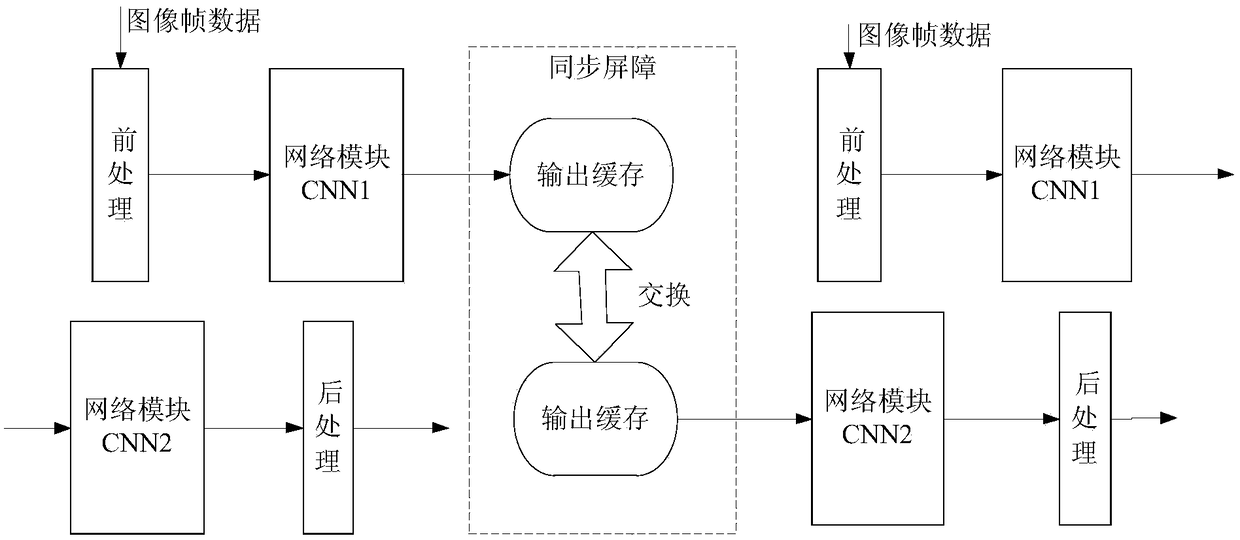

A task processing method based on a neural network and related equipment

ActiveCN109409513ATime-consuming masking operationsImprove operational efficiencyProgram controlPhysical realisationNerve networkMulti-core processor

The embodiment of the invention discloses a task processing method based on a neural network and related equipment, which relates to the technical field of a computer network. The method comprises thefollowing steps: obtaining input data, wherein, the input data is used for triggering a thread task, and the input data is source input data or cache exchange data; according to the triggered at least two thread tasks, the corresponding at least two module threads are dispatched in parallel to process the input data and generate the processing result data. At least two module thread correspond toat least two network modules divide according to that network layer in the neural network; Output the processing result data to the cache as cache swap data for other module threads or output the processing result data as a processing result for the source input data. The embodiment of the invention assigns tasks of different network modules in the neural network to different module threads for parallel execution, and improves the operation efficiency of the neural network related application on the multi-core processor.

Owner:BIGO TECH PTE LTD

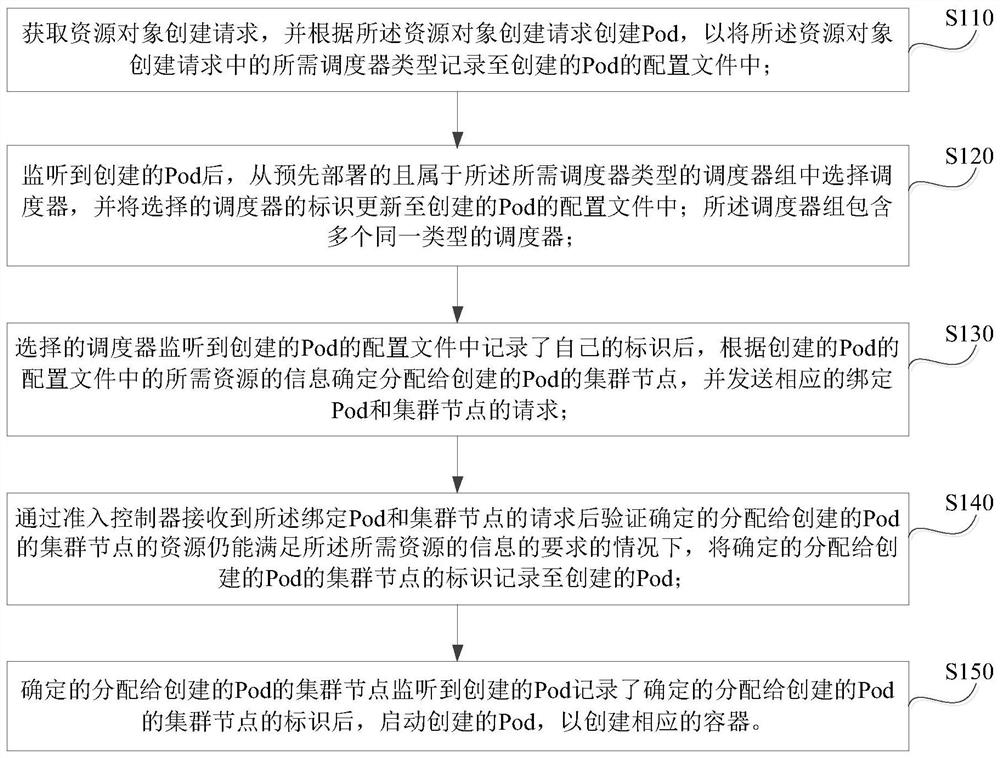

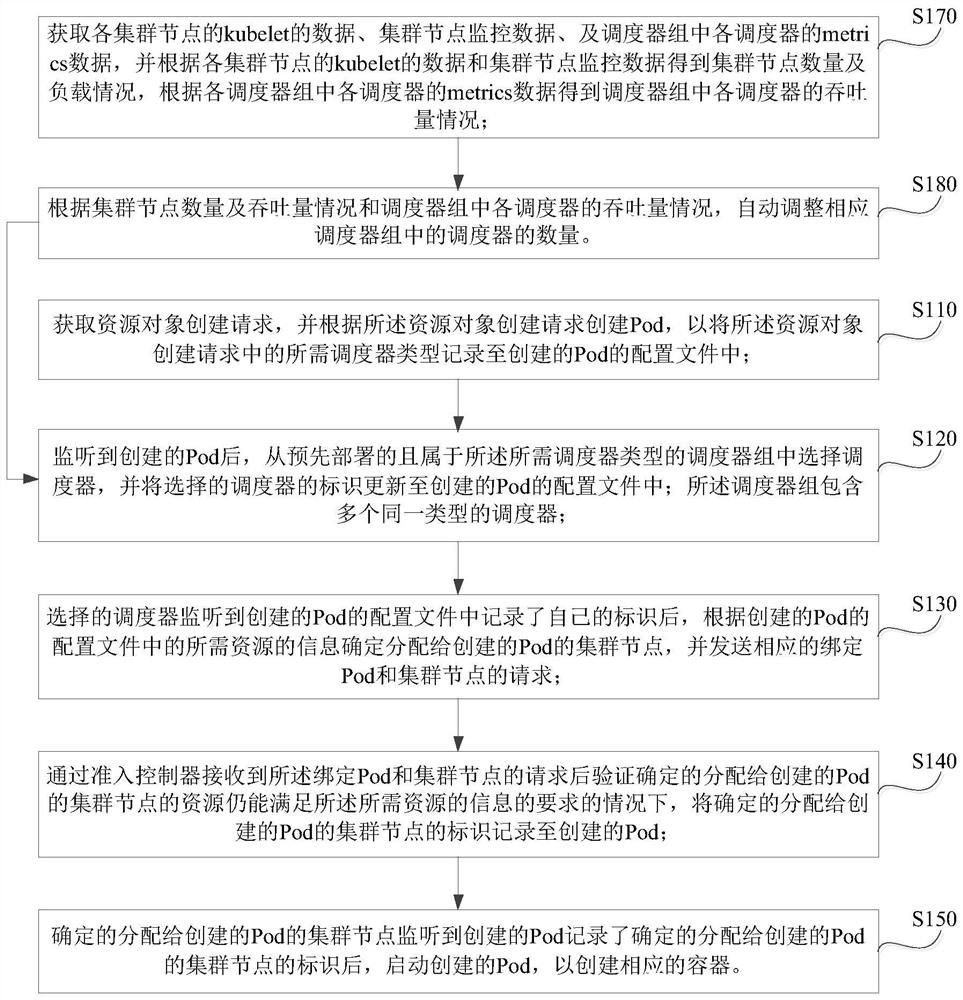

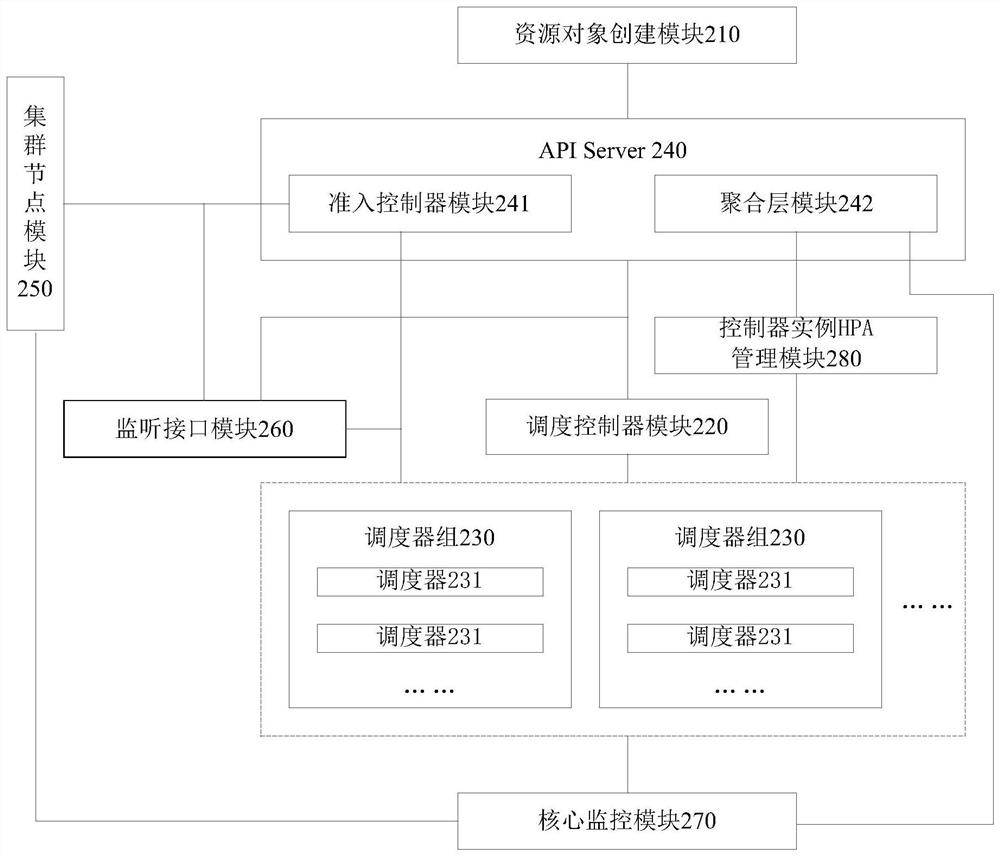

Cloud resource scheduling method and system based on Kubernetes

PendingCN113918270AMeeting throughput needsProgram initiation/switchingResource allocationParallel computingEngineering

The invention provides a cloud resource scheduling method and system based on Kubernetes. The method comprises the following steps: creating a Pod according to a resource object creation request, so as to record a type of required schedulers in the resource object creation request into a configuration file of created Pod; after the created Pod is monitored, selecting schedulers from a pre-deployed scheduler group of a required scheduler type, and updating identifiers of the selected schedulers to a configuration file of the Pods, wherein the scheduler group comprises a plurality of schedulers of the same type; after the selected schedulers listen to their own own identifiers, determining a cluster node allocated to the Pod and sending a binding request; after the binding request is received through an access controller, verifying that resources still can meet requirements of information of required resources, and recording the identifiers of the cluster nodes to the Pod; after the corresponding cluster nodes monitor that the Pod records the identifiers of the cluster nodes, starting the Pod, and creating a corresponding container. According to the scheme, more schedulers can schedule cloud resources in parallel, and the resource scheduling efficiency is improved.

Owner:电科云(北京)科技有限公司

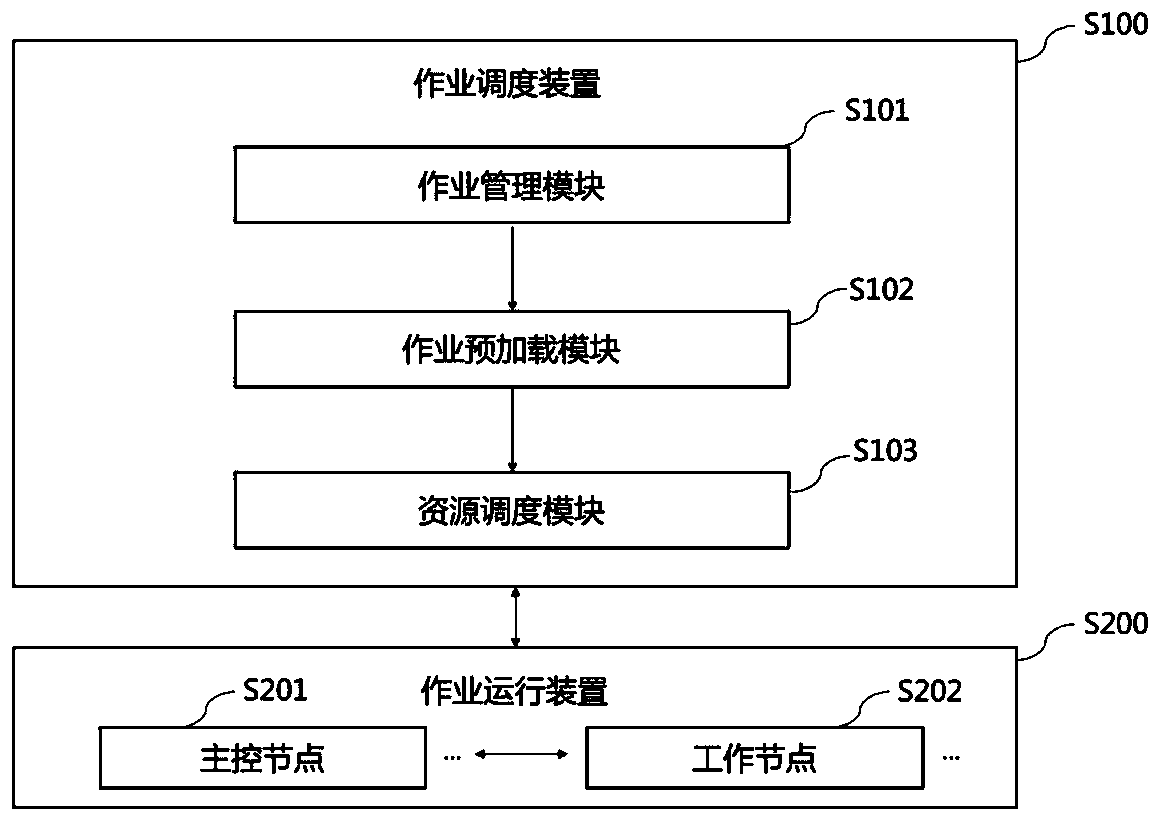

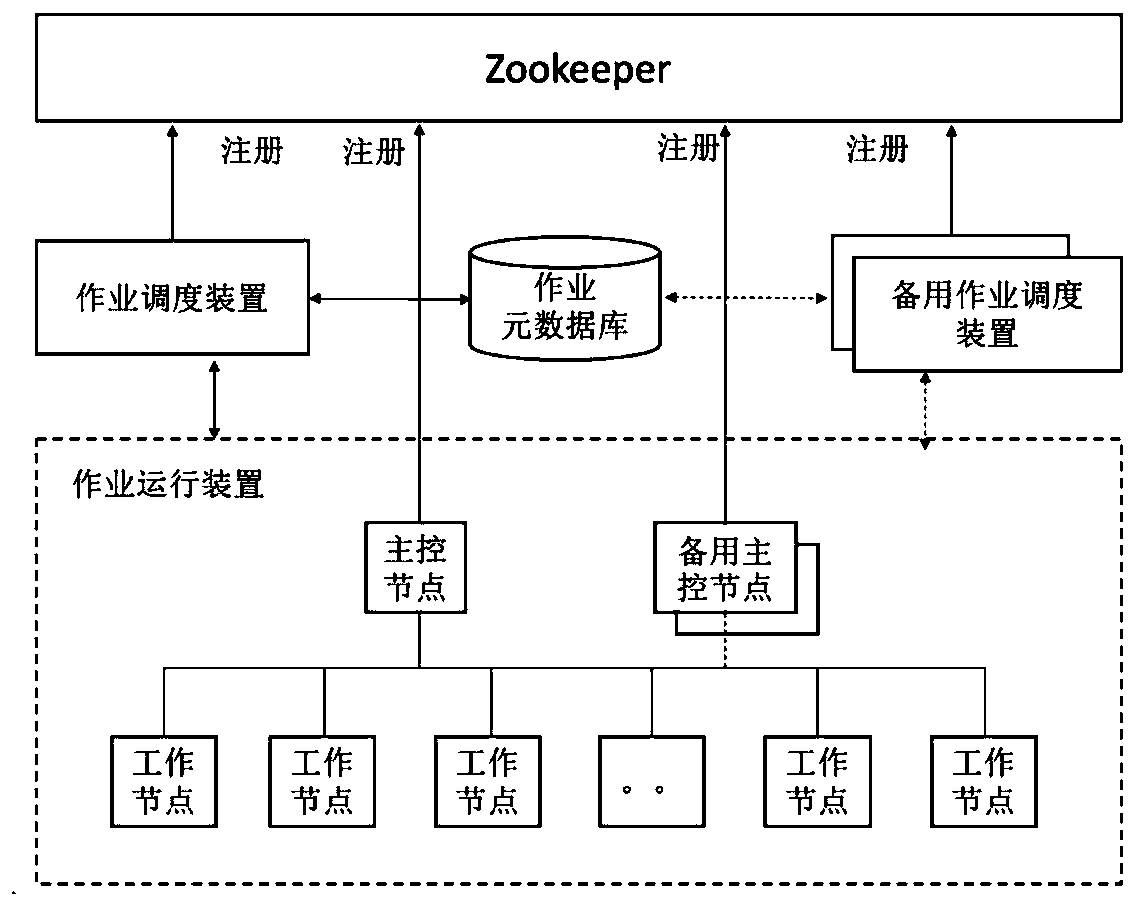

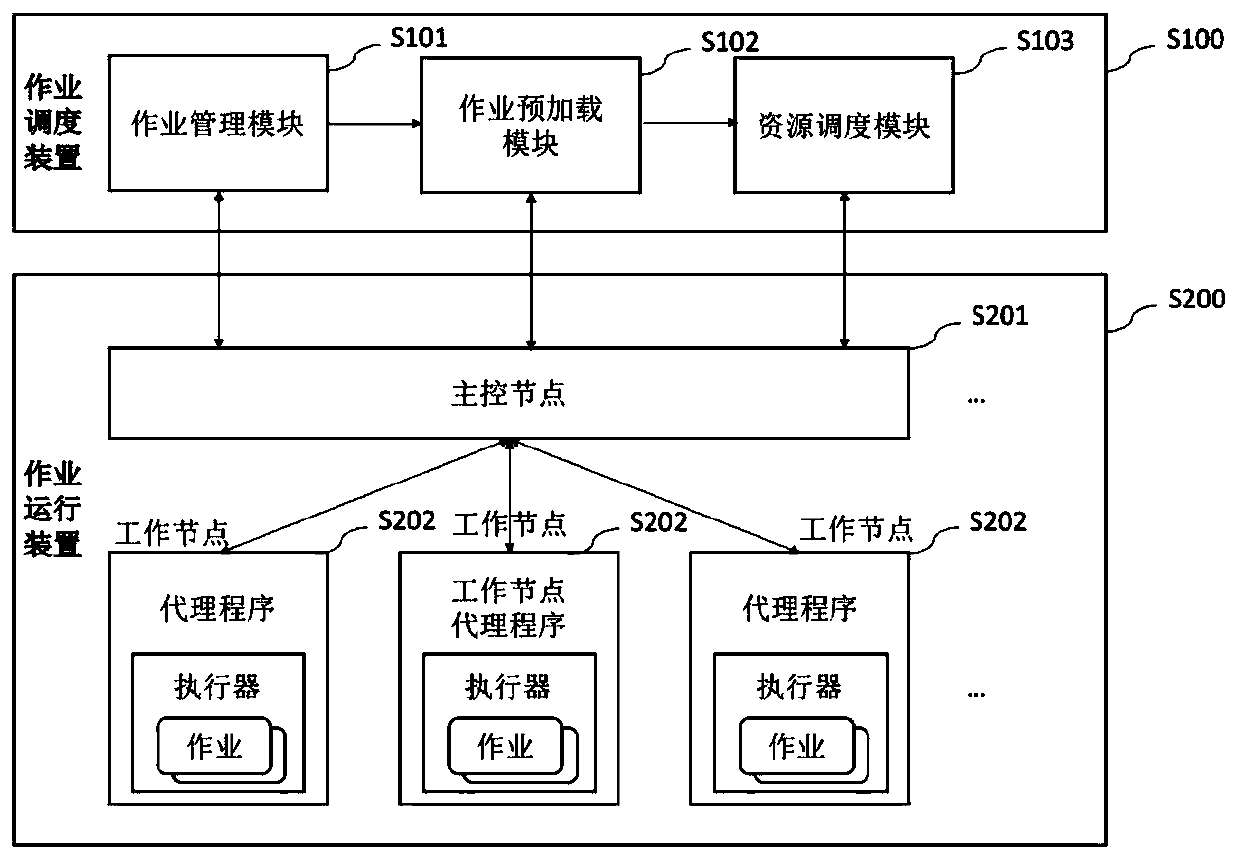

Distributed data integration job scheduling method and device

ActiveCN110362390AEnsure consistencyLower latencyProgram initiation/switchingDatabase distribution/replicationFault toleranceConcurrency control

The invention relates to a distributed data integration job scheduling method and device. The method aims at a special scene possibly faced by data integration. A job scheduling device is responsiblefor issuing the data integration job to the job operation device, and the job operation device receives the scheduling task, starts job execution, feeds back job operation state information to the jobmanagement module, feeds back working node computing resources to the resource scheduling module, and feeds back lost or fault information to the job preloading module. The method has the following comprehensive characteristics: (1) high availability, fault tolerance and weak consistency; (2) a low delay characteristic for quasi-real-time job scheduling; (3) multi-tenant concurrency control oriented to the cloud service application; (4) computing resource isolation and multi-job parallel scheduling; and (5) a priority scheduling mechanism.

Owner:ENJOYOR COMPANY LIMITED

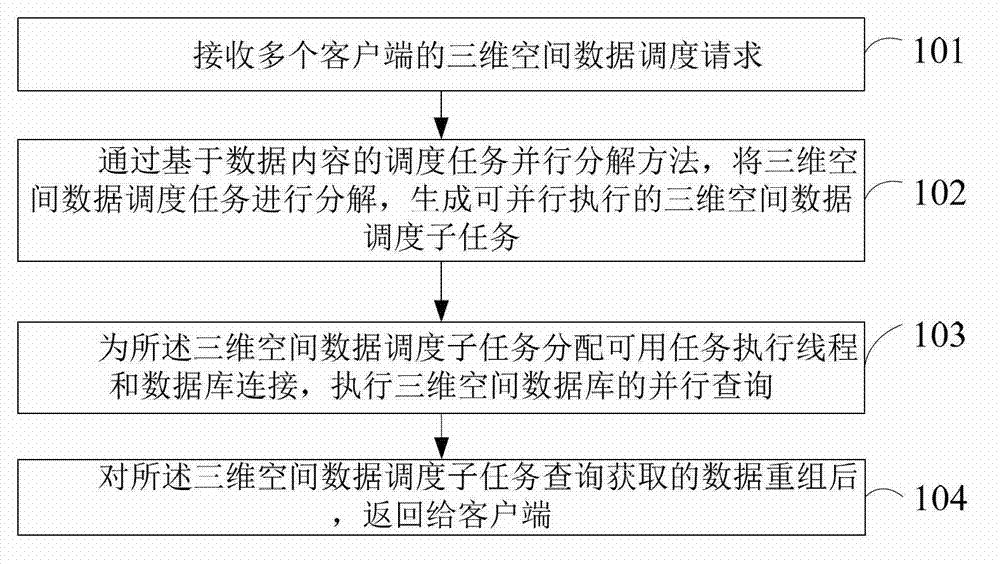

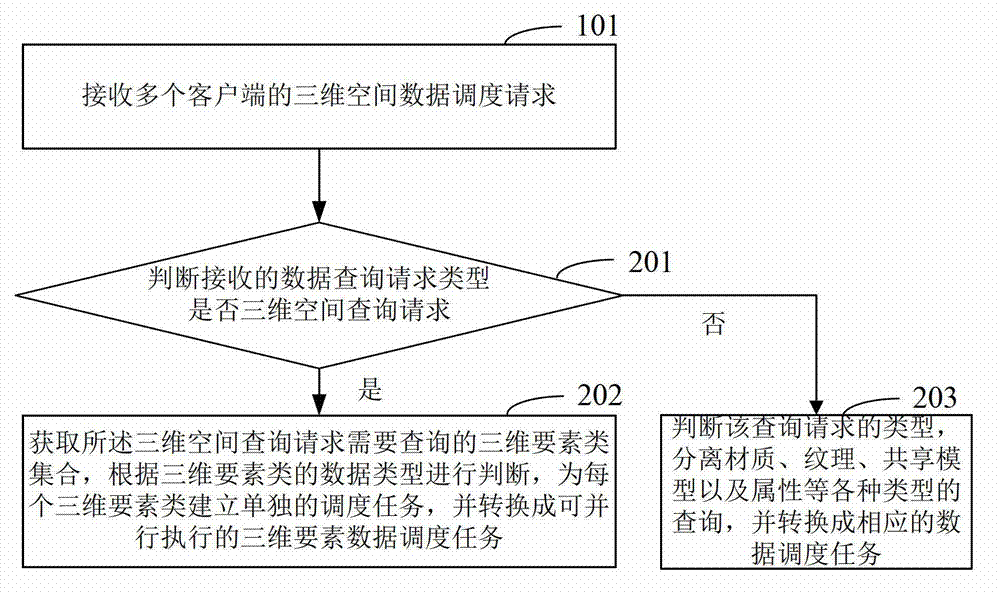

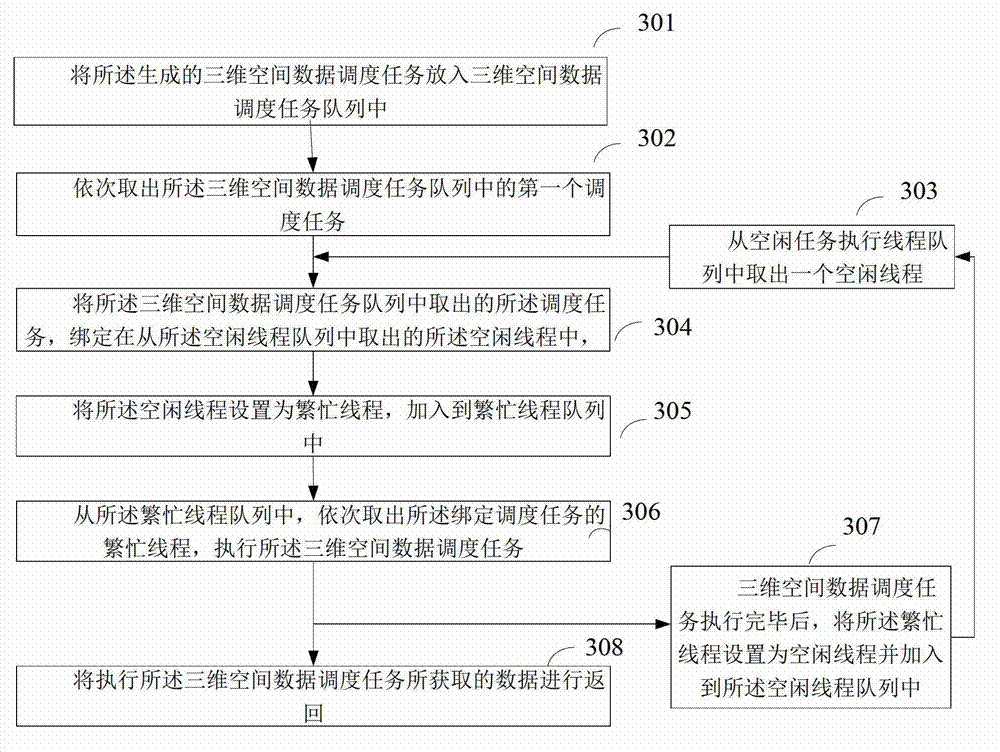

Three-dimensional space data parallel scheduling method and system

ActiveCN103077074AImprove scheduling efficiencyImproved ability to visualize real-timeMultiprogramming arrangementsSpecial data processing applicationsThree-dimensional spaceSpatial information systems

The invention relates to the technical field of a geospatial information system, and provides a three-dimensional space data parallel scheduling method based on data content. The method comprises the steps of receiving three-dimensional space data scheduling requests of a plurality of clients; resolving a three-dimensional space data scheduling task through a scheduling task parallel resolving method based on the data content, generating a three-dimensional space data scheduling sub-task which can be executed in parallel; distributing a usable task executing thread and a database connection for the three-dimensional space data scheduling sub-task, carrying out parallel inquiry of the three-dimensional space database; recombining the obtained data inquired by the three-dimensional space data scheduling sub-task and then returning to the clients. The efficiency of scheduling different types of three-dimensional space data from the three-dimensional space database is improved, and the real-time visualization ability of a three-dimensional geographic information system (GIS) is also improved by resolving and parallel executing of the three-dimensional space data scheduling task.

Owner:SHENZHEN INST OF ADVANCED TECH

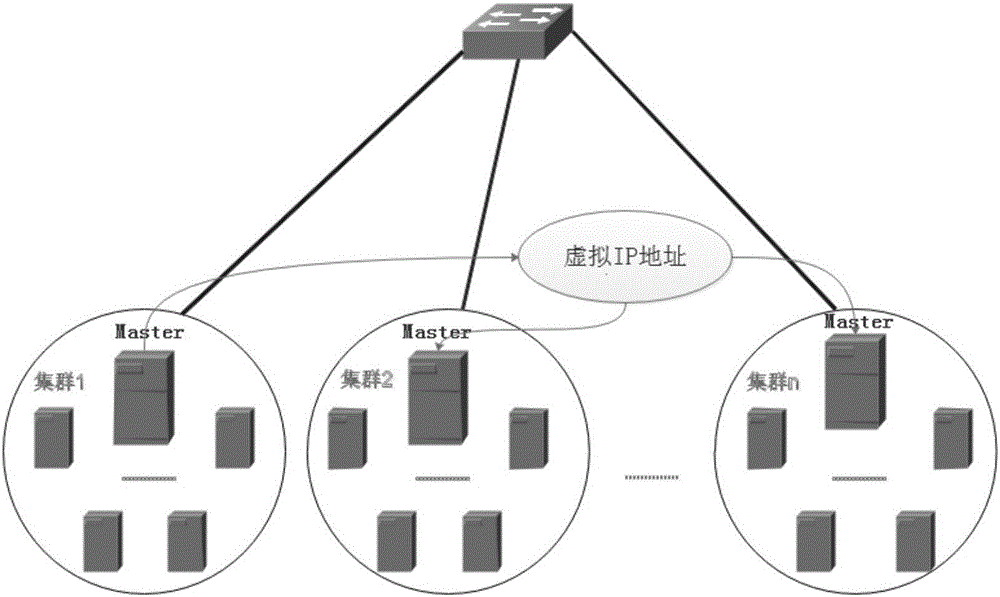

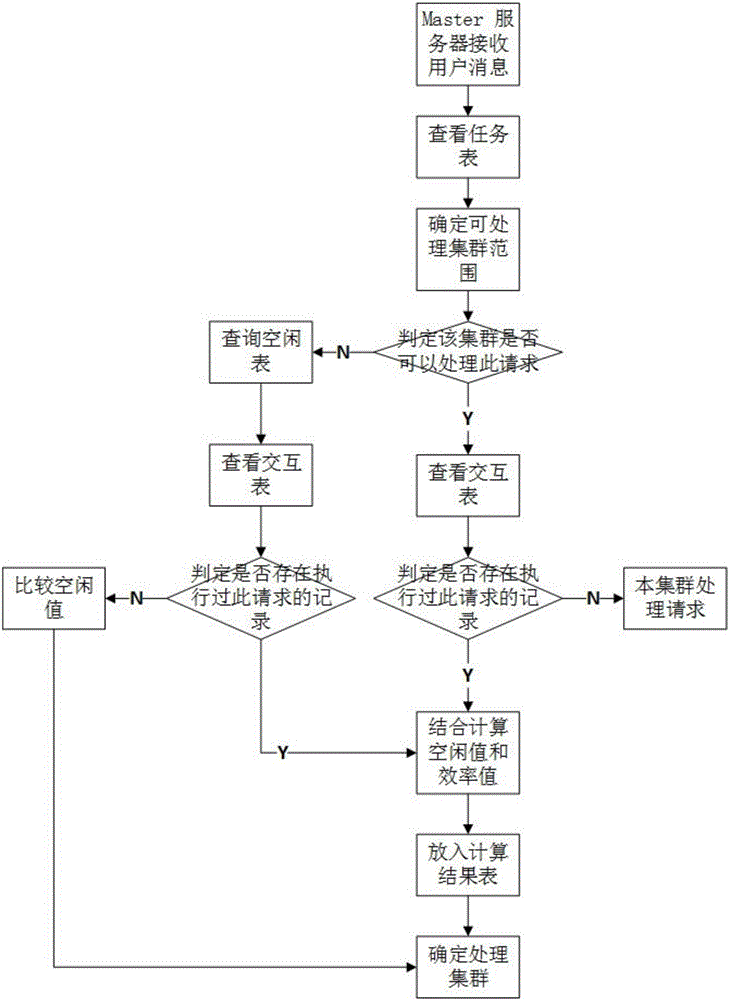

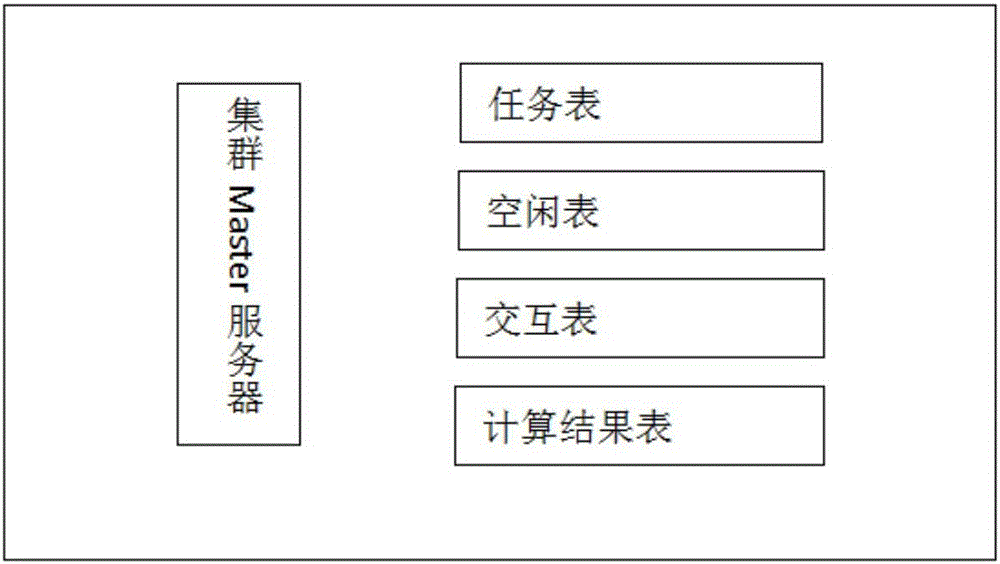

Parallel scheduling system and method for distributed cluster load balancing

The present invention discloses a parallel scheduling system and method for distributed cluster load balancing, relating to the technical field of load balancing scheduling, and characterized in that: by virtualizing an IP address to receive a user request message, master servers of all clusters exchange information with one another by sending a Hello packet, to select a cluster with the maximum idle value to bind the virtualized IP address, and the cluster sends a Statement packet to notify the whole network after binding; and a master server of the cluster looks up a task table and calculates data in an idle table and an interactive table according to a certain calculation method, to obtain an optimal response cluster, and pushes the request message to the optimal response cluster by sending a Push packet, so as to implement the load balancing in response to request messages among peer-to-peer clusters. The master server of the cluster is provided with four tables including a task table, an idle table, an interactive table and a calculation result table which are used for scheduling request messages, and four packets including a Hello packet, a Push packet, an Exchange packet and a Statement packet are used for maintaining relationships among the clusters.

Owner:XUZHOU MEDICAL UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com