Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

50results about How to "Lower memory access latency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

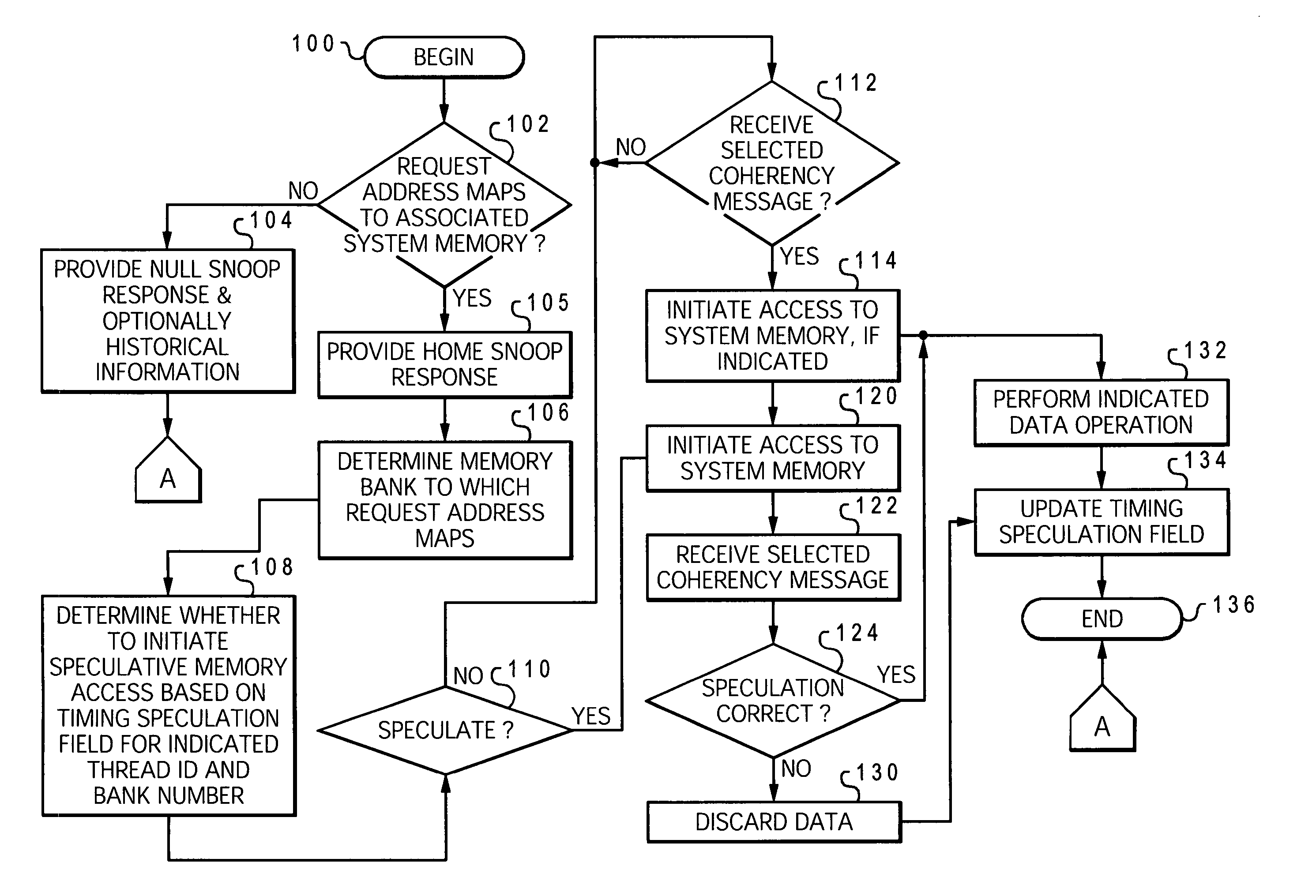

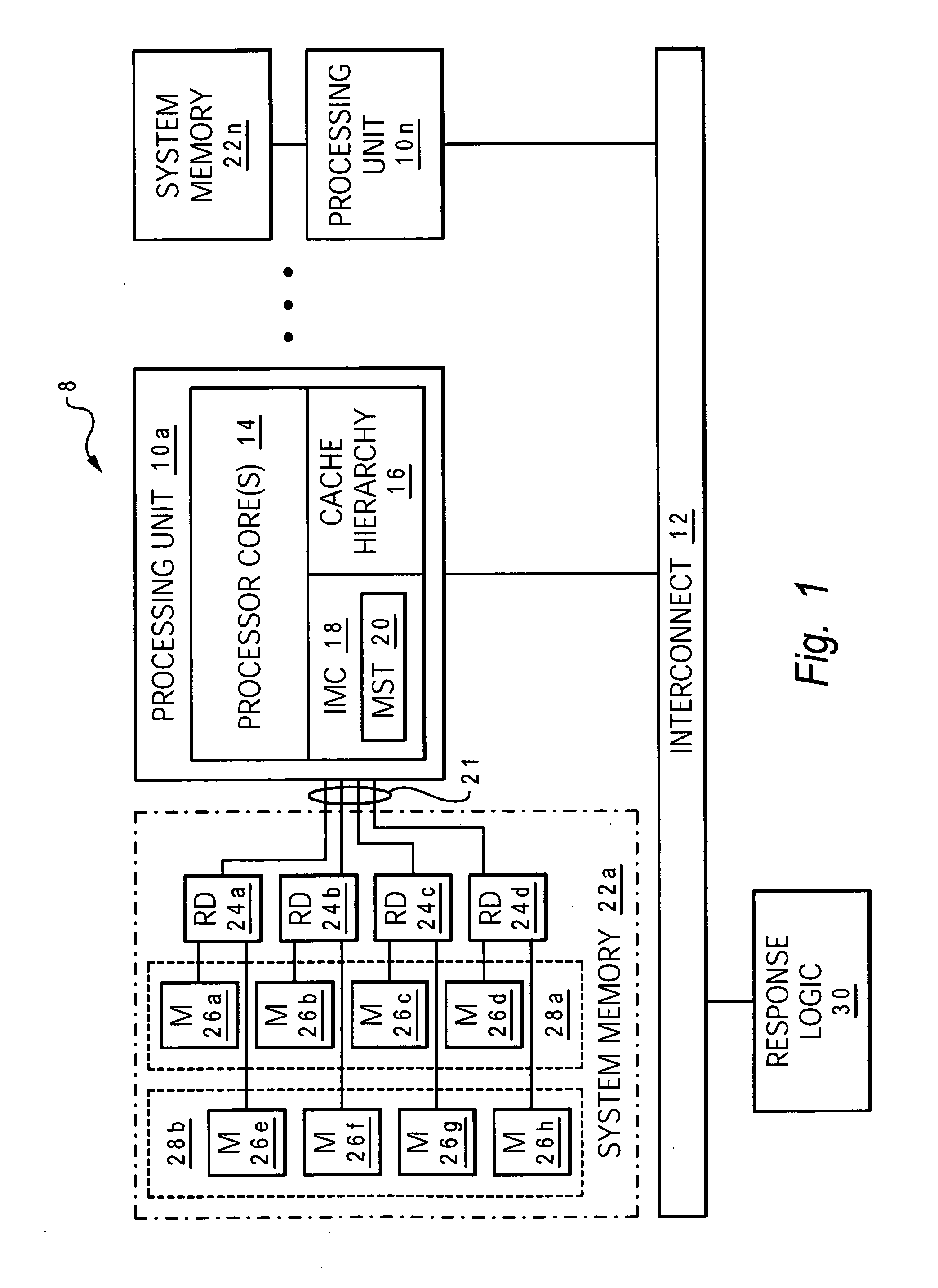

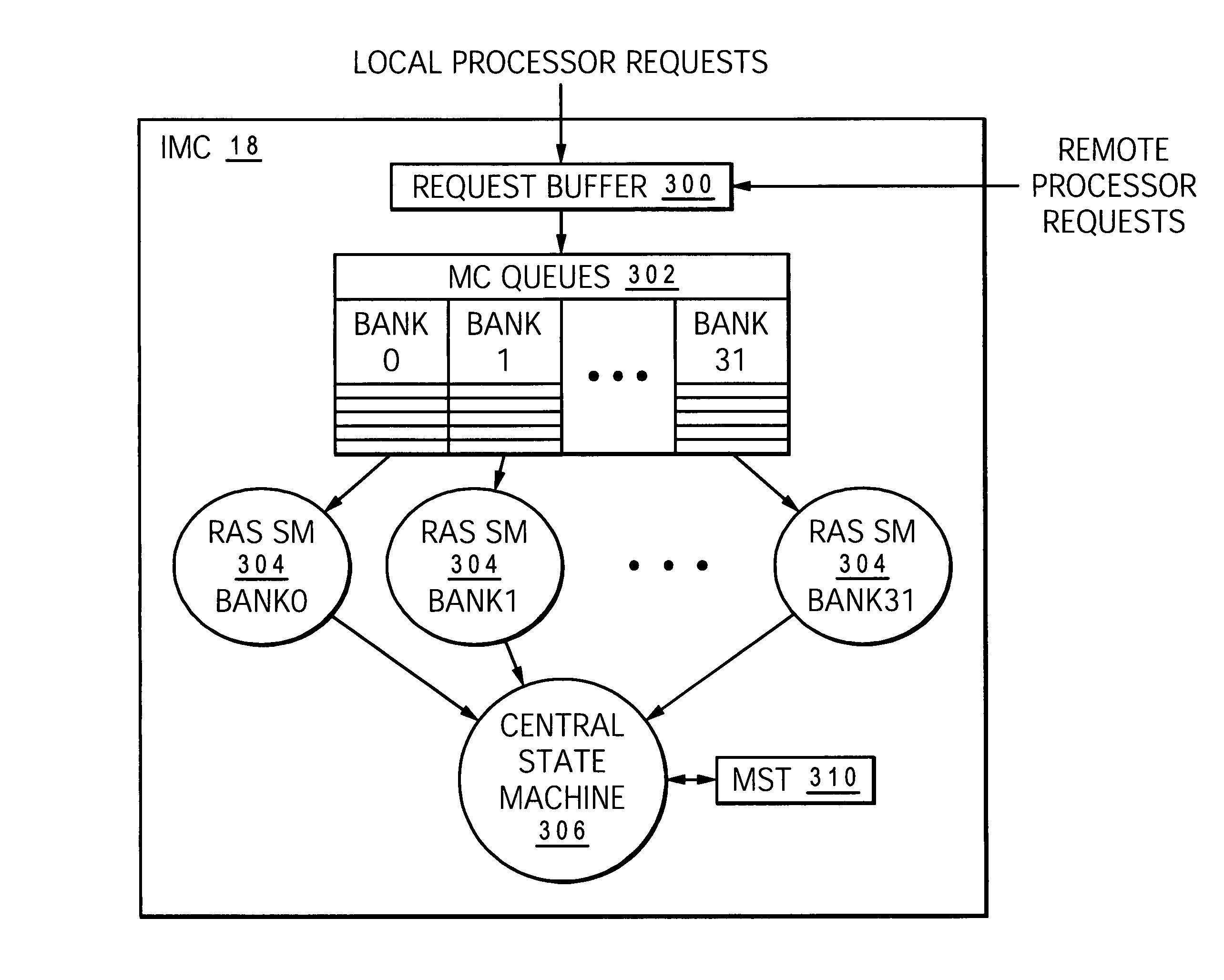

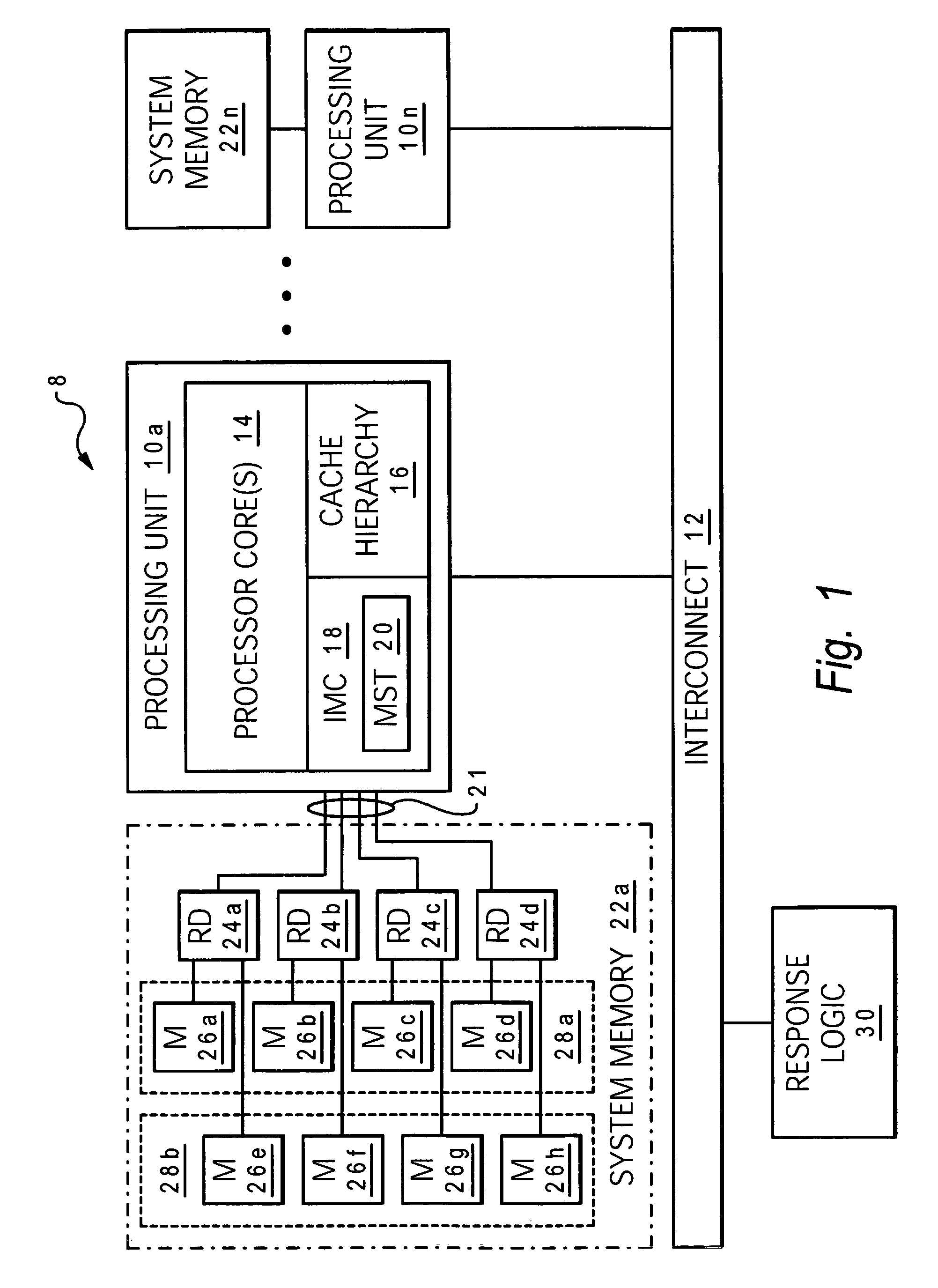

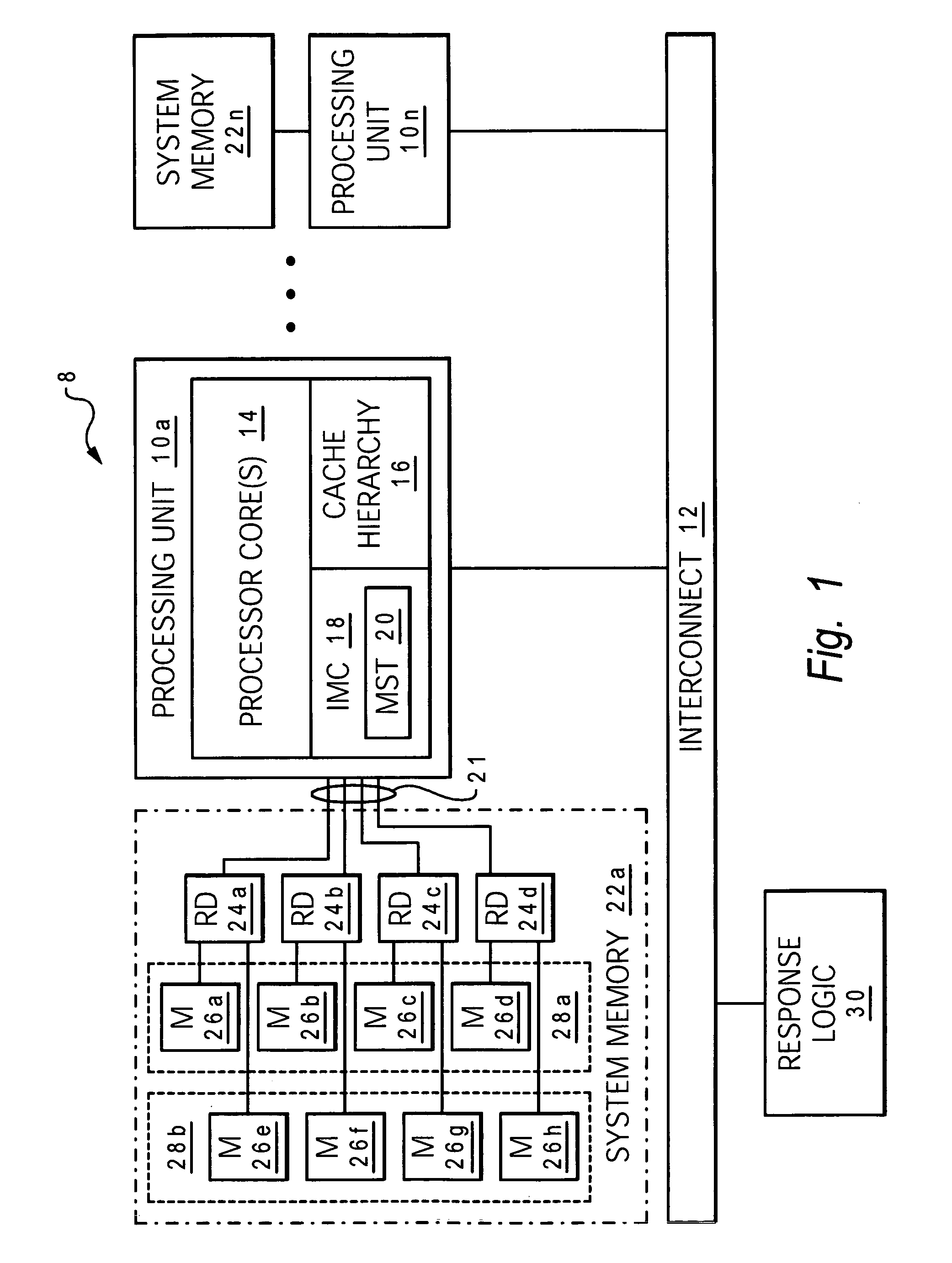

Method and system for thread-based memory speculation in a memory subsystem of a data processing system

InactiveUS20050132148A1Improvement in average memory access latencySignificant comprehensive benefitsMemory architecture accessing/allocationMemory adressing/allocation/relocationData processing systemProcessing core

A data processing system includes a system memory, one or more processing cores, and a memory controller that controls access to a system memory. The memory controller includes a memory speculation mechanism that stores historical information regarding prior memory accesses. In response to a memory access request, the memory controller speculatively initiates access to the system memory based upon the historical information in the memory speculation mechanism in advance of receipt of a coherency message indicating that the memory access request is to be serviced by reference to the system memory.

Owner:IBM CORP

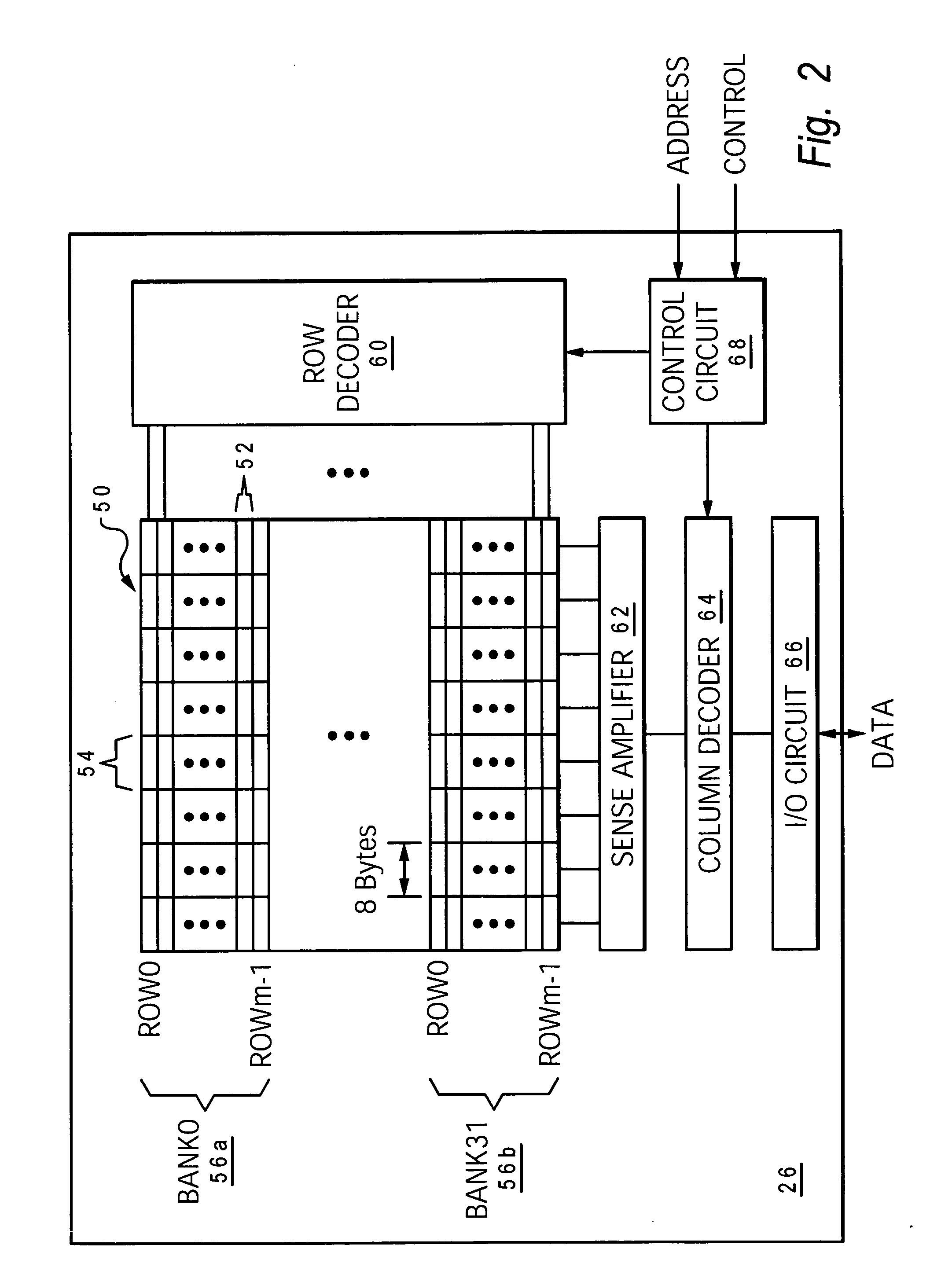

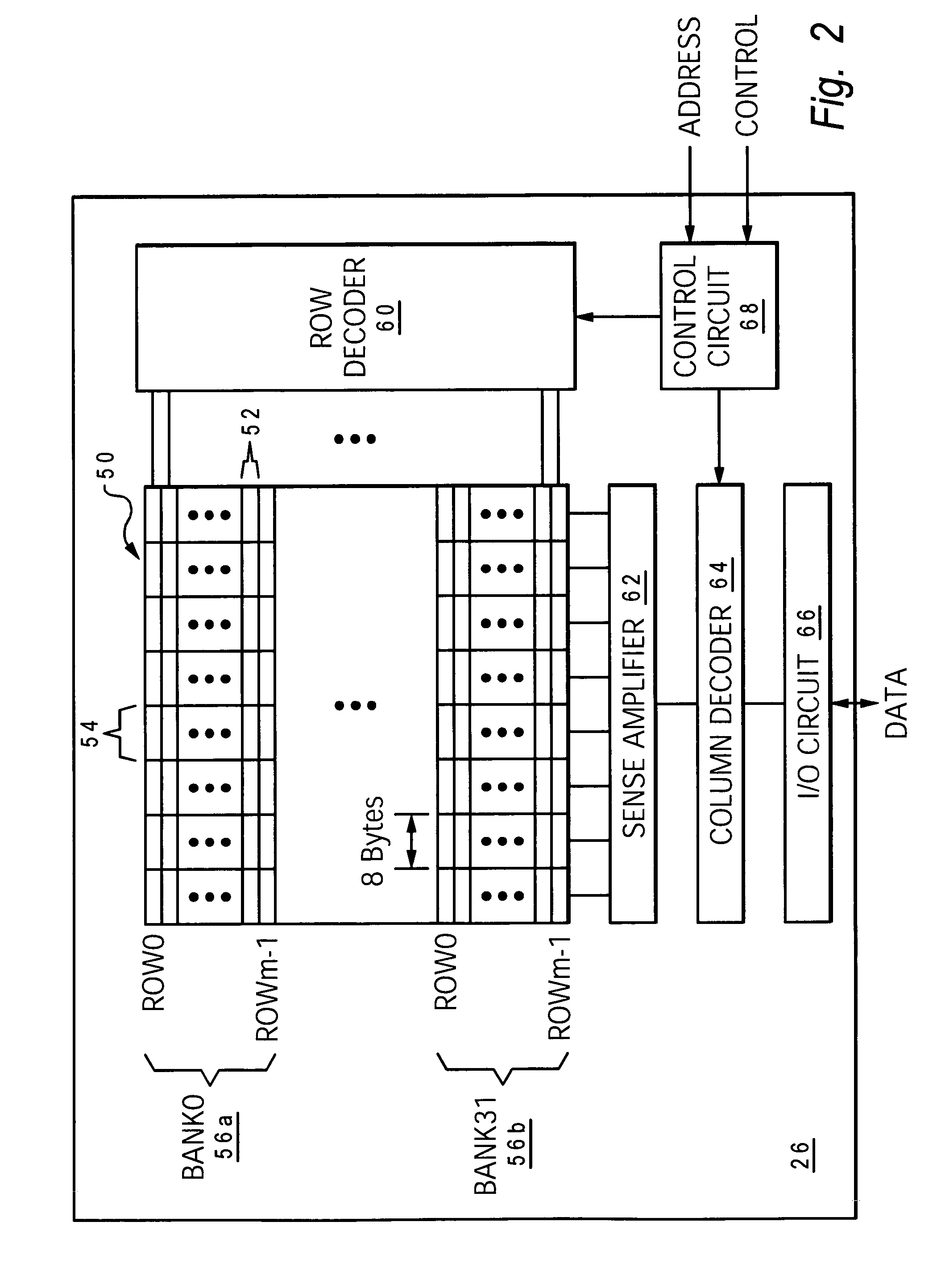

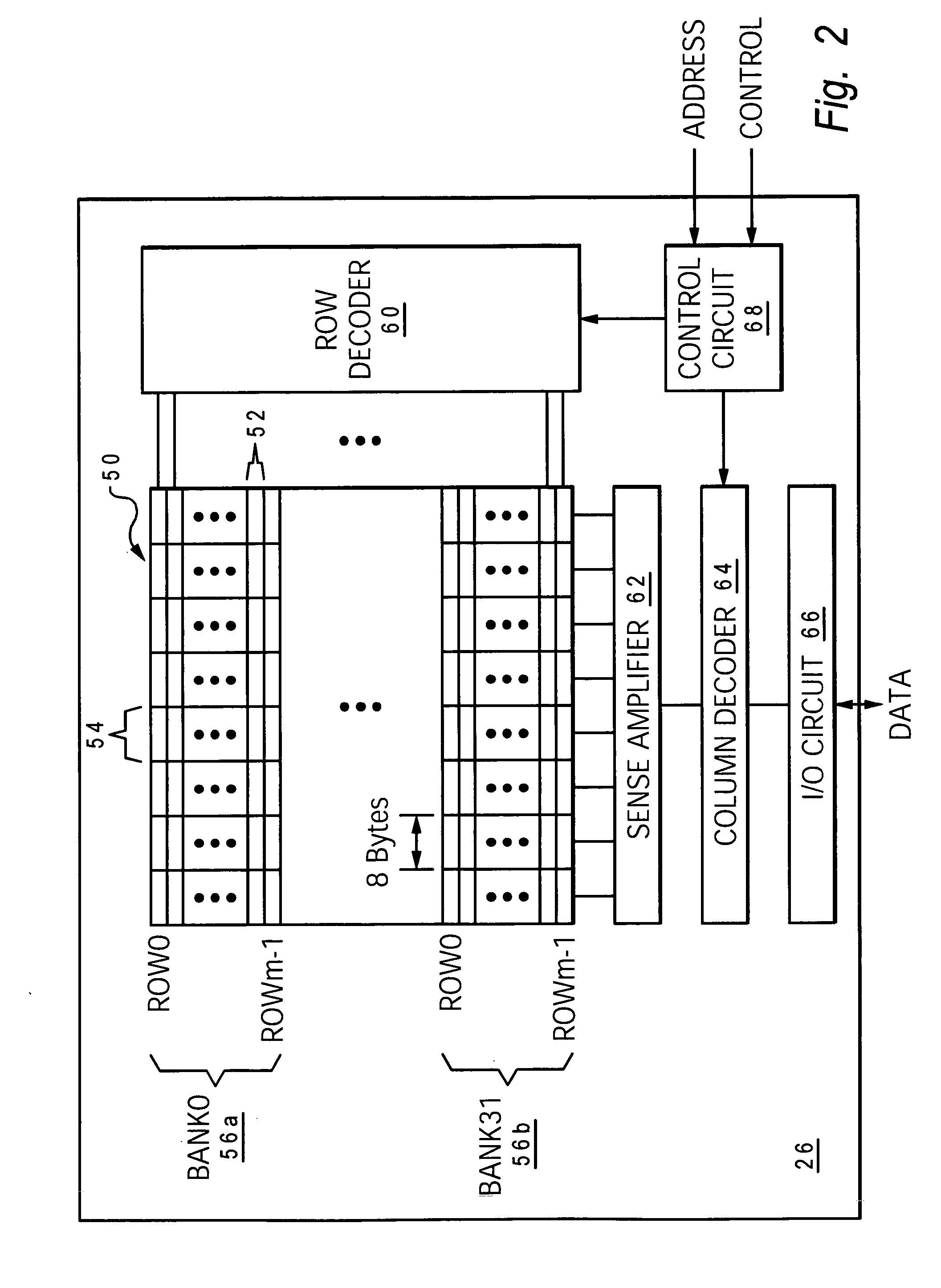

Method and system for supplier-based memory speculation in a memory subsystem of a data processing system

InactiveUS7130967B2Improvement in average memory access latencySignificant comprehensive benefitsMemory adressing/allocation/relocationConcurrent instruction executionData processing systemProcessing core

A data processing system includes one or more processing cores, a system memory having multiple rows of data storage, and a memory controller that controls access to the system memory and performs supplier-based memory speculation. The memory controller includes a memory speculation table that stores historical information regarding prior memory accesses. In response to a memory access request, the memory controller directs an access to a selected row in the system memory to service the memory access request. The memory controller speculatively directs that the selected row will continue to be energized following the access based upon the historical information in the memory speculation table, so that access latency of an immediately subsequent memory access is reduced.

Owner:INT BUSINESS MASCH CORP

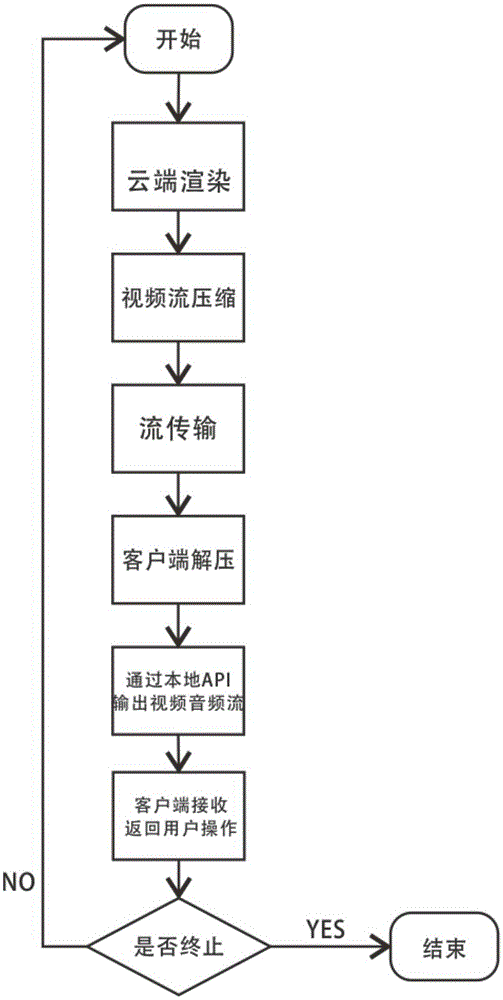

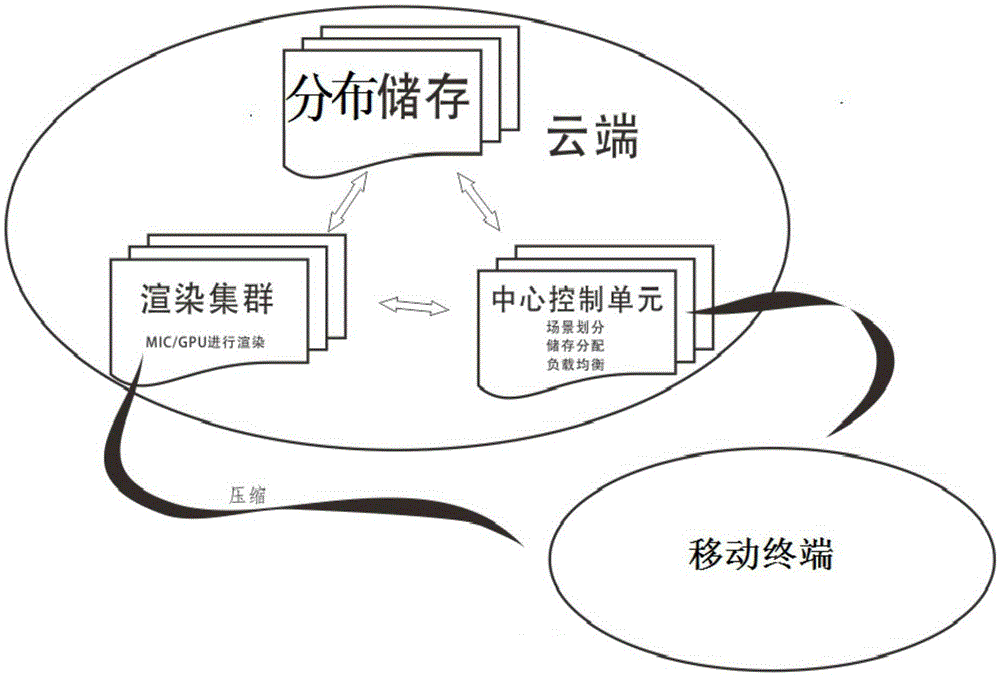

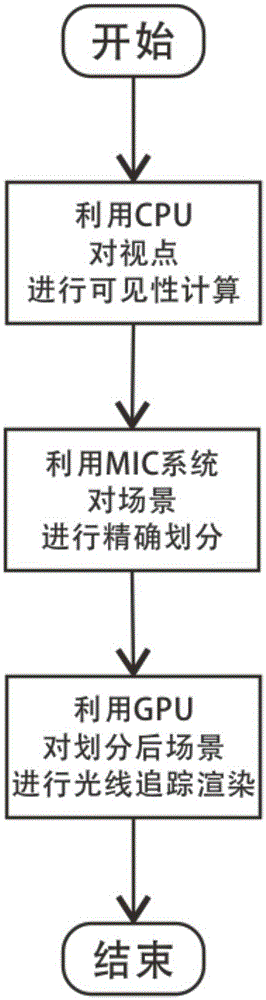

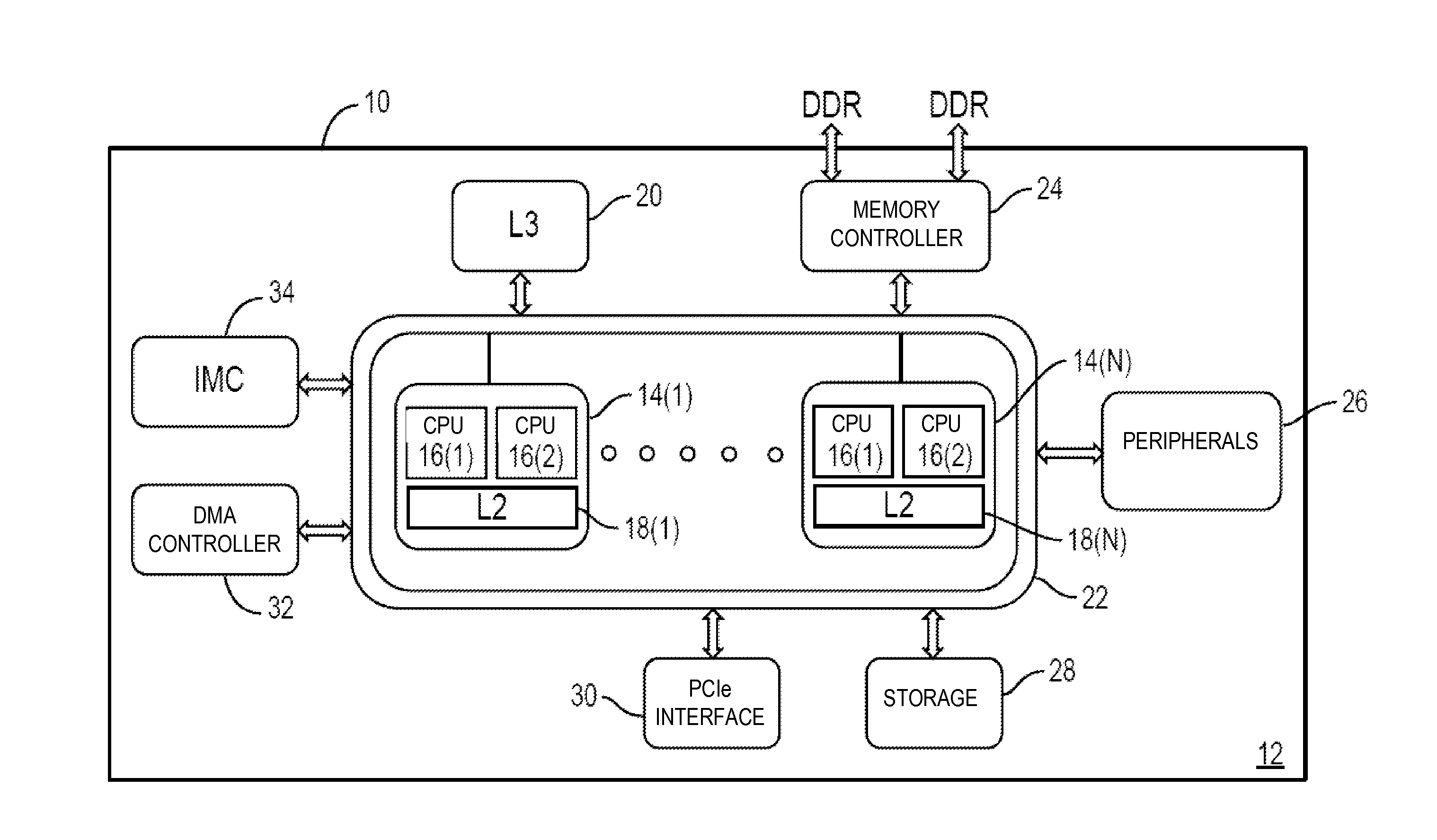

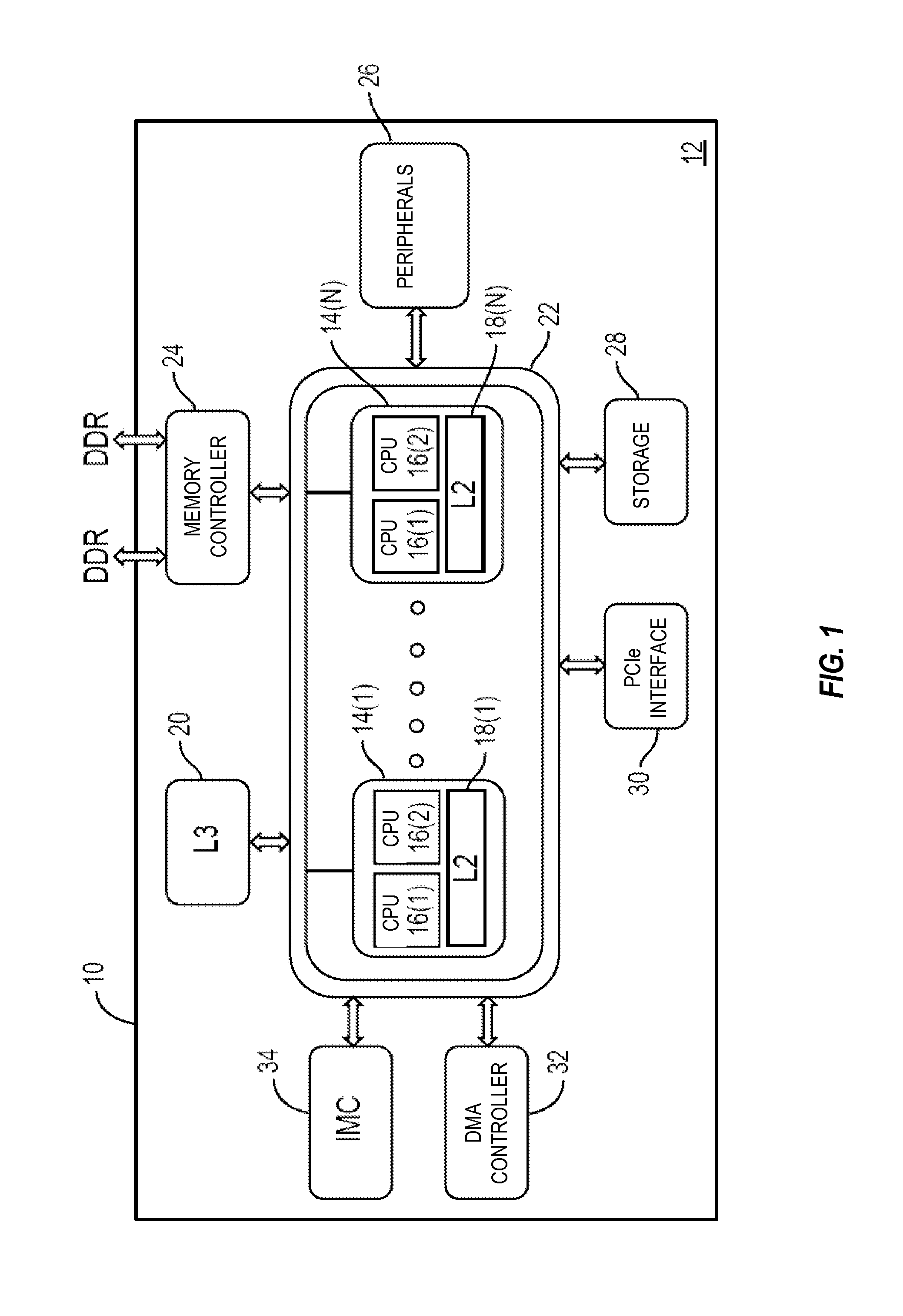

Mobile terminal real-time rendering system and method based on cloud platform

ActiveCN105263050ALower memory access latencyImprove efficiencySelective content distributionViewpointsComputer graphics (images)

The invention discloses a mobile terminal real-time rendering system and method based on a cloud platform, and the method comprises the steps: receiving viewpoint information and interaction information transmitted by a mobile terminal, inquiring and reading a model and scene file, and obtaining three-dimensional scene data; dividing the three-dimensional scene data to obtain three-dimensional scene model group data according to the type of model groups of a three-dimensional scene; storing the three-dimensional scene model group data and carrying out the automatic adjustment of storage positions according to different data demands in different three-dimensional scenes; extracting the three-dimensional scene model group data, building and managing an MIC / GPU rendering task of a three-dimensional scene image, finally obtaining rendering result data of the three-dimensional scene image, and enabling the obtained rendering result data of the three-dimensional scene image to be compressed and then transmitted to the mobile terminal; employing a dynamic load strategy to carry out the deployment and management of the MIC / GPU rendering task, and guaranteeing the load balance of a cloud server. The method consumes the calculation capability and storage space of a mobile client to the minimum degree.

Owner:SHANDONG UNIV

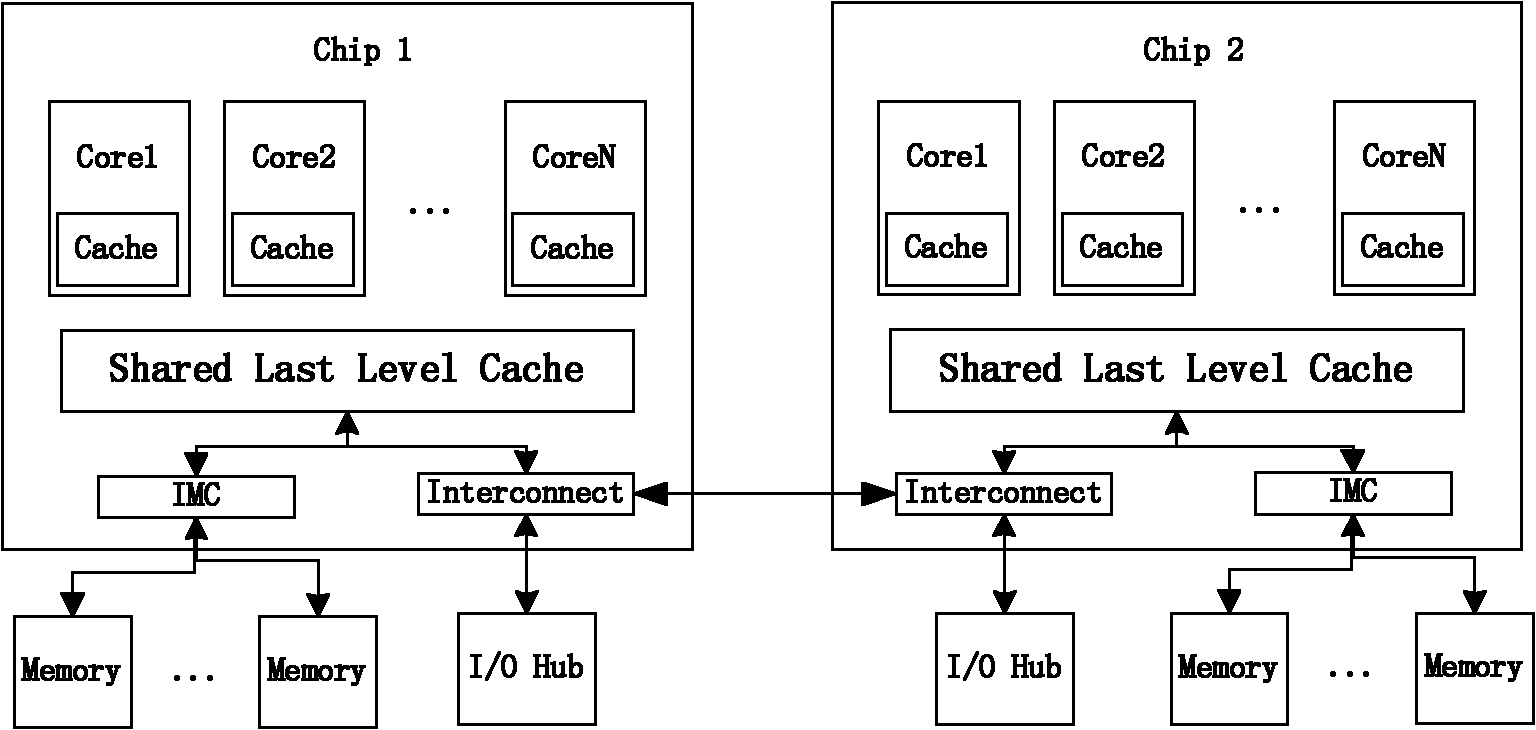

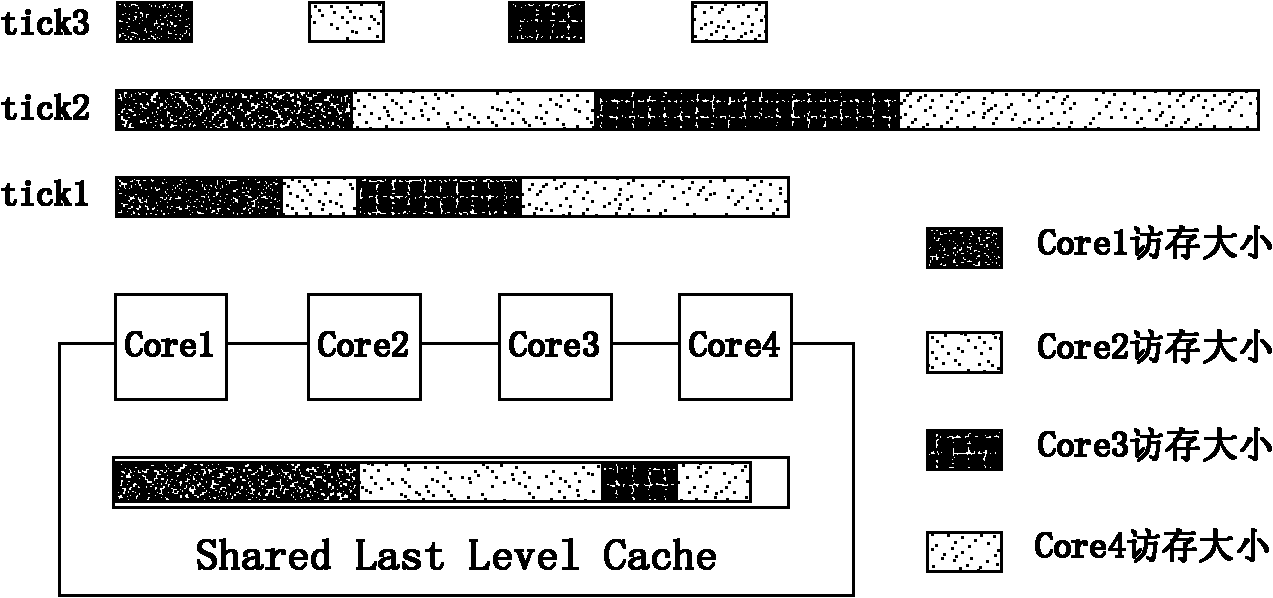

Micro-architecture sensitive thread scheduling (MSTS) method

InactiveCN102081551AImprove hit rateRun fastMultiprogramming arrangementsOperational systemRelevant information

The invention relates to a micro-architecture sensitive thread scheduling (MSTS) method. The method comprises two-level scheduling strategies: in the inner portions of nodes, according to the structural characteristics of CMP, through acquiring relevant information of cache invalidation in real time, the threads which excessively compete for the shared cache resources operate in a staggered mode in time and spatial dimension, and the threads which can carry out effective mutual data sharing operate simultaneously or successively; and in a layer between nodes, through sampling memory data areas frequently accessed by the threads, the threads are bound to the nodes in which data are arranged as far as possible so as to reduce the access to a remote memory and reduce the amount of communication between chips. The method provided by the invention has the advantages of reducing the access delay caused by simultaneously operating a plurality of threads with large shared cache contention, and improving the operation speeds and system throughput rates of the threads.

Owner:NAT UNIV OF DEFENSE TECH

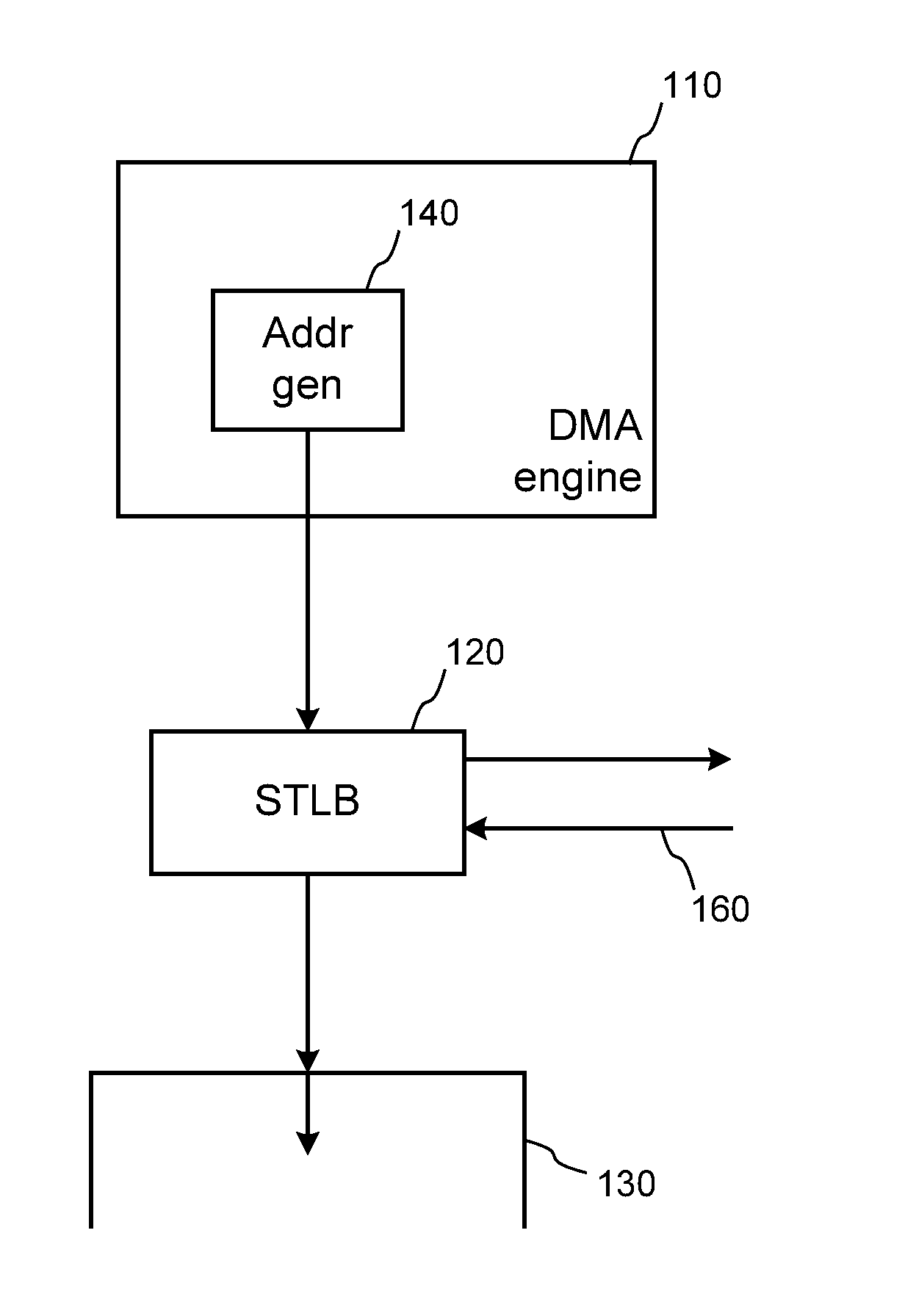

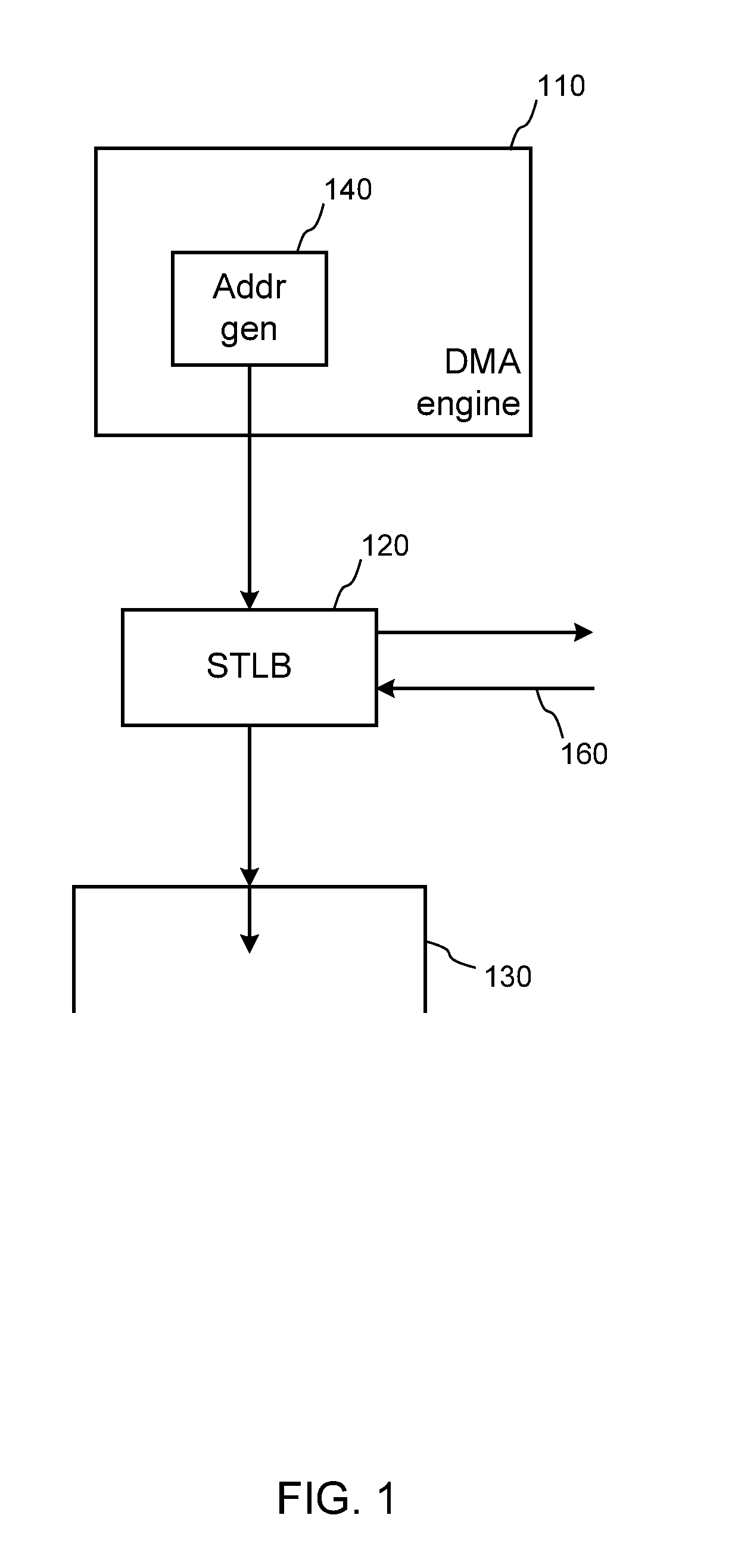

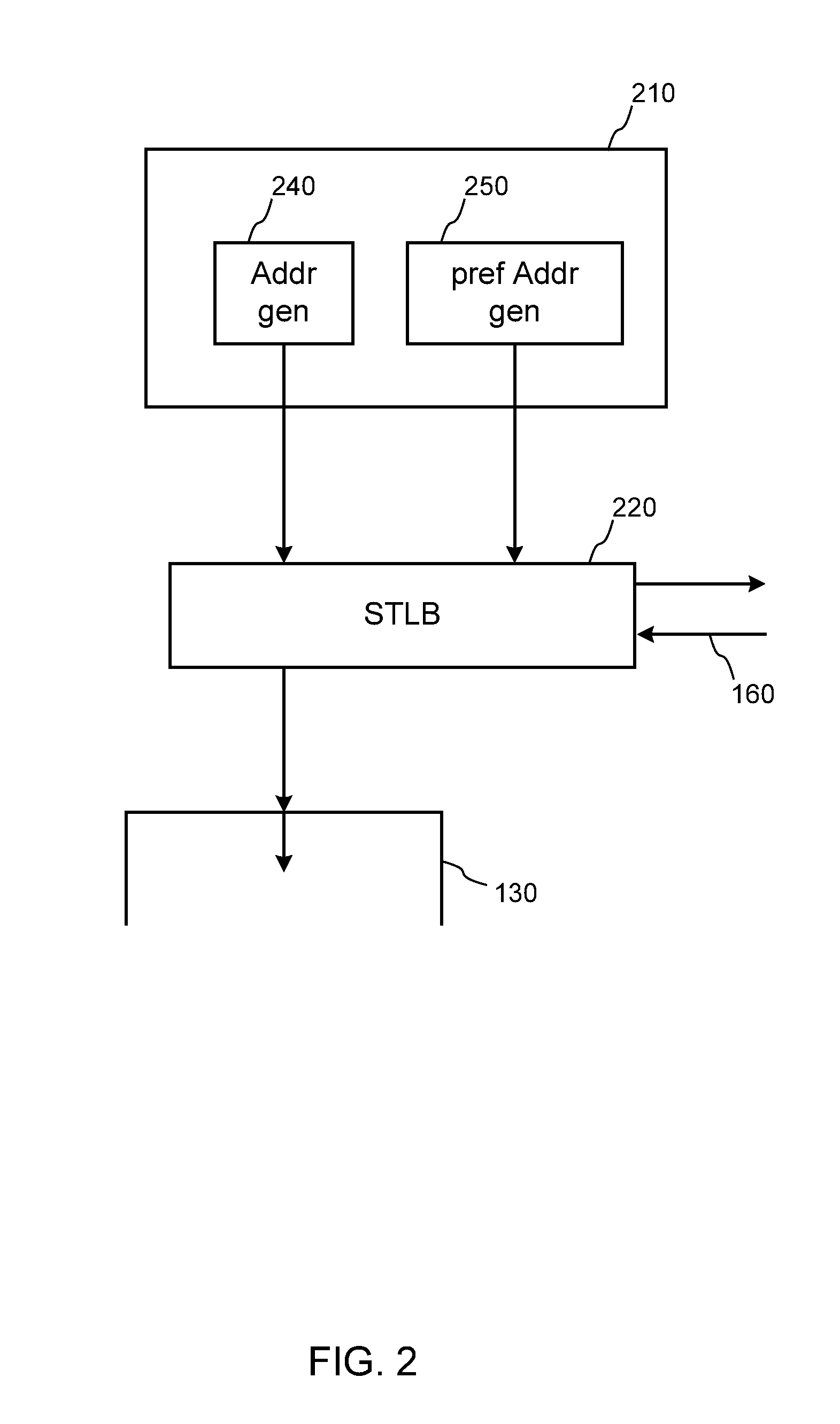

DMA engine with stlb prefetch capabilities and tethered prefetching

ActiveUS20140052955A1Lower memory access latencyMemory architecture accessing/allocationMemory adressing/allocation/relocationAddress generatorOperating system

A system with a prefetch address generator coupled to a system translation look-aside buffer that comprises a translation cache. Prefetch requests are sent for page address translations for predicted future normal requests. Prefetch requests are filtered to only be issued for address translations that are unlikely to be in the translation cache. Pending prefetch requests are limited to a configurable or programmable number. Such a system is simulated from a hardware description language representation.

Owner:QUALCOMM TECHNOLOGIES INC

Method and system for supplier-based memory speculation in a memory subsystem of a data processing system

InactiveUS20050132147A1Improvement in average memory access latencyReduce apparent memory access latencyMemory adressing/allocation/relocationConcurrent instruction executionMemory controllerHandling system

A data processing system includes one or more processing cores, a system memory having multiple rows of data storage, and a memory controller that controls access to the system memory and performs supplier-based memory speculation. The memory controller includes a memory speculation table that stores historical information regarding prior memory accesses. In response to a memory access request, the memory controller directs an access to a selected row in the system memory to service the memory access request. The memory controller speculatively directs that the selected row will continue to be energized following the access based upon the historical information in the memory speculation table, so that access latency of an immediately subsequent memory access is reduced.

Owner:IBM CORP

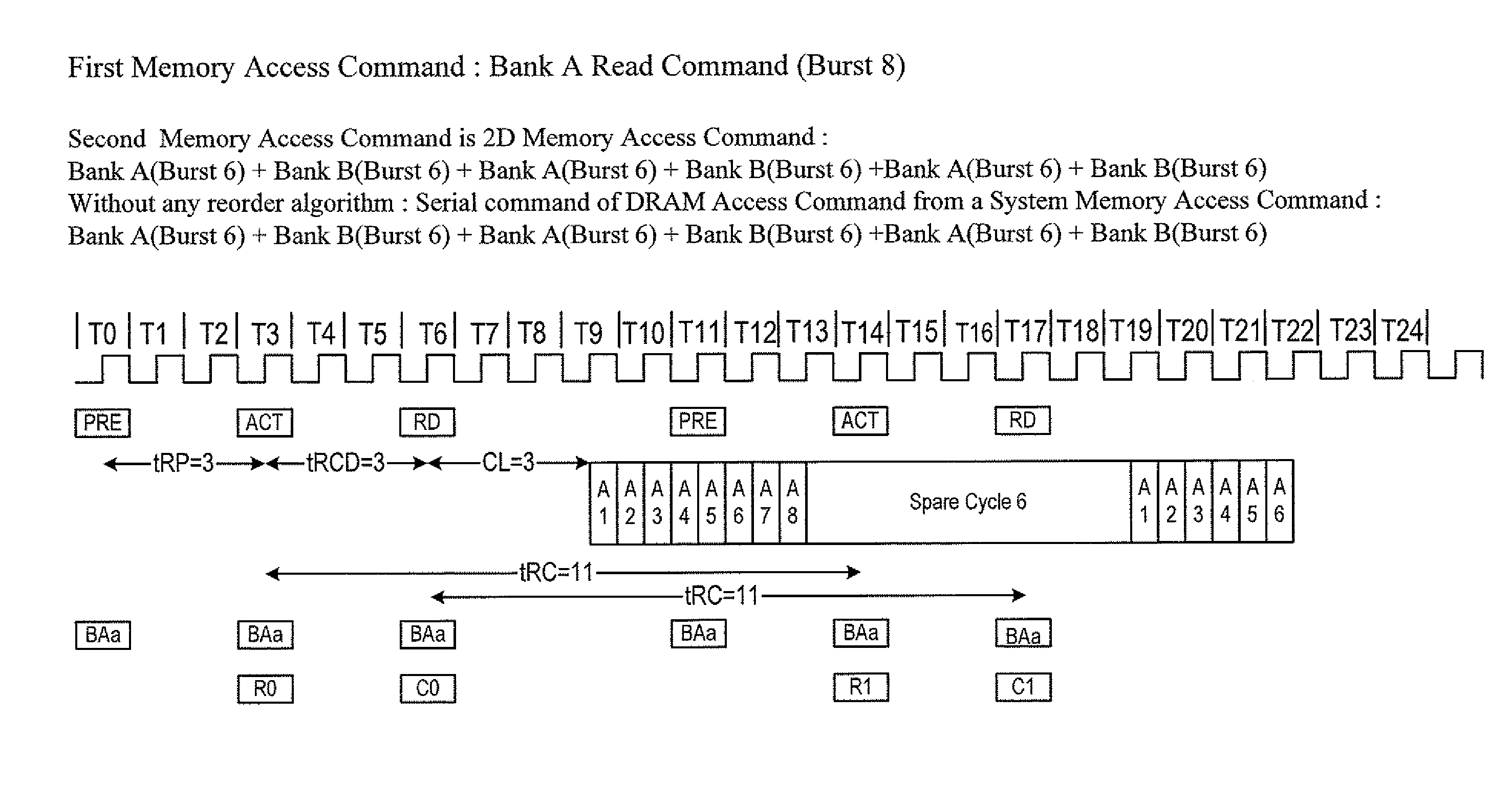

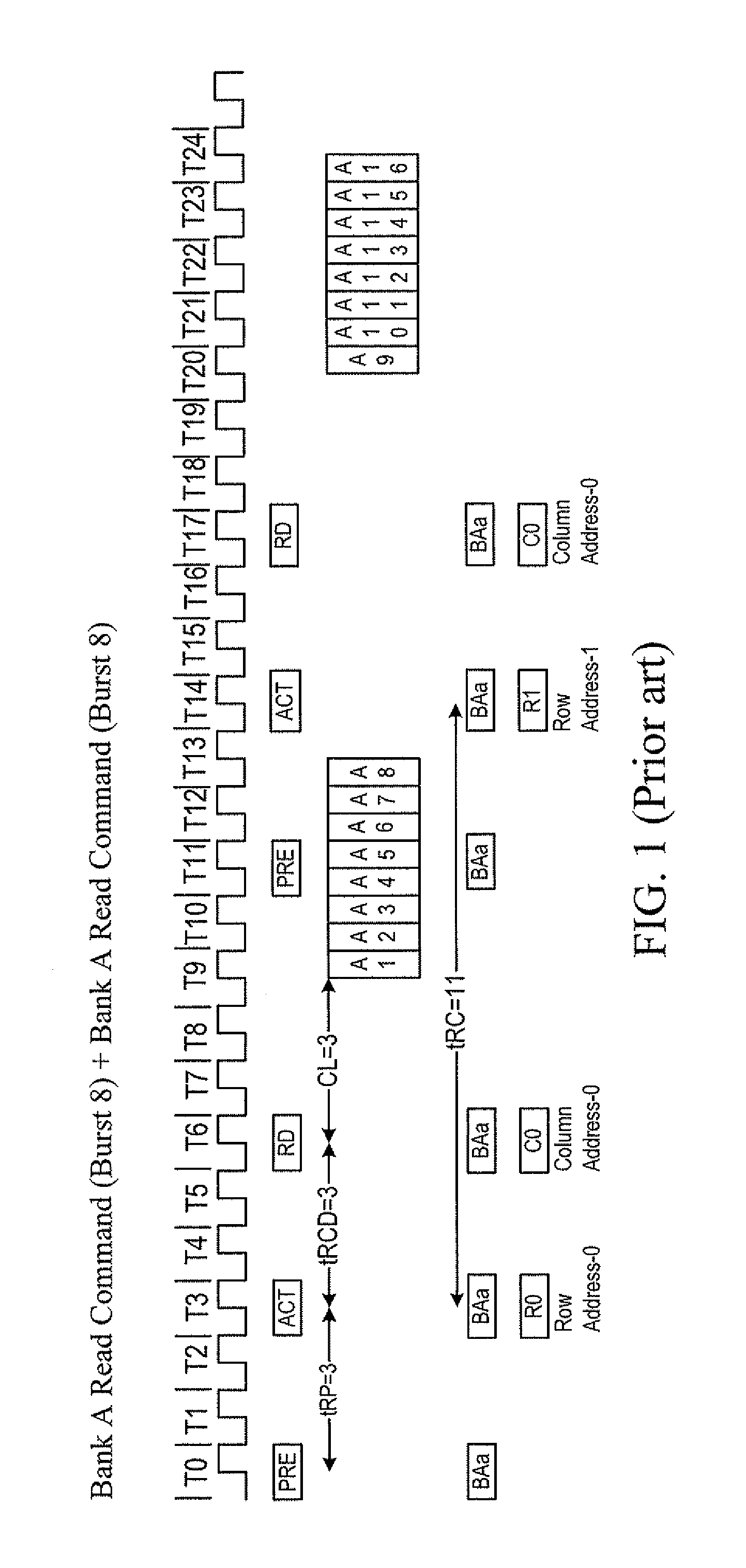

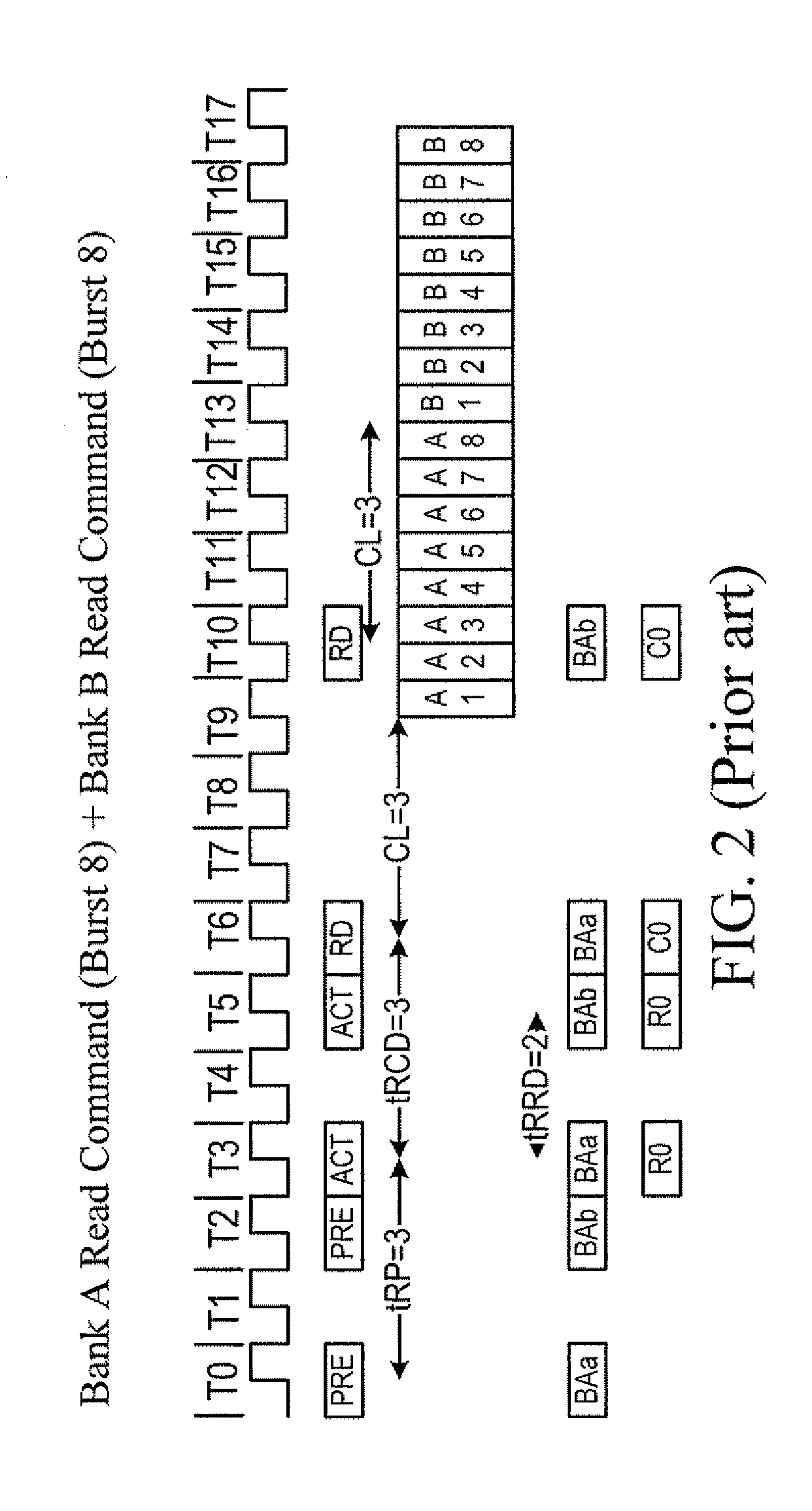

Control system and method for memory access

InactiveUS20100153636A1Reduce memory access latencyRaise bandwidthMemory systemsData bufferPriority setting

A control system for memory access includes a system memory access command buffer, a memory access command parallel processor, a DRAM command controller and a read data buffer. The system memory access command buffer stores plural system memory access commands. The memory access command parallel processor is connected to the system memory access command buffer for fetching and decoding the system memory access commands to plural DRAM access commands, storing the DRAM access commands in DRAM bank command FIFOs, and performing priority setting according to a DRAM bank priority table. The DRAM command controller is connected to the memory access command parallel processor and a DRAM for receiving the DRAM access commands, and sending control commands to the DRAM. The read data buffer is connected to the DRAM command controller and the system bus for storing the read data and rearranging a sequence of the read data.

Owner:SUNPLUS TECH CO LTD

Directory cache management method for big data application

ActiveCN104461932AReduce conflictReduce the number of replacementsMemory adressing/allocation/relocationCache managementData application

The invention discloses a directory cache management method for big data application, and belongs to directory cache management methods. The method comprises the steps that shared flag bits and data block pointers are added in a last stage shared cache, the shared flag bits are used for distinguishing whether data are private data or shared data, the data block pointers are used for tracing the positions of the private data in a private cache, and a directory cache is used for maintaining the consistency of the shared data; the data are divided into the private data or the shared data based on the last stage shared cache and the directory cache; the private data do not occupy the space of the directory cache, and the private cache is used for maintaining the consistency of direction data; the shared data occupy the space of the directory cache, and the directory cache is used for maintaining the consistency of the data. According to the directory cache management method for the big data application, the conflict and replacing frequency of the directory cache can be lowered, the memory access delay of the private data is shortened, and the performance of a multi-core processor system is improved.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

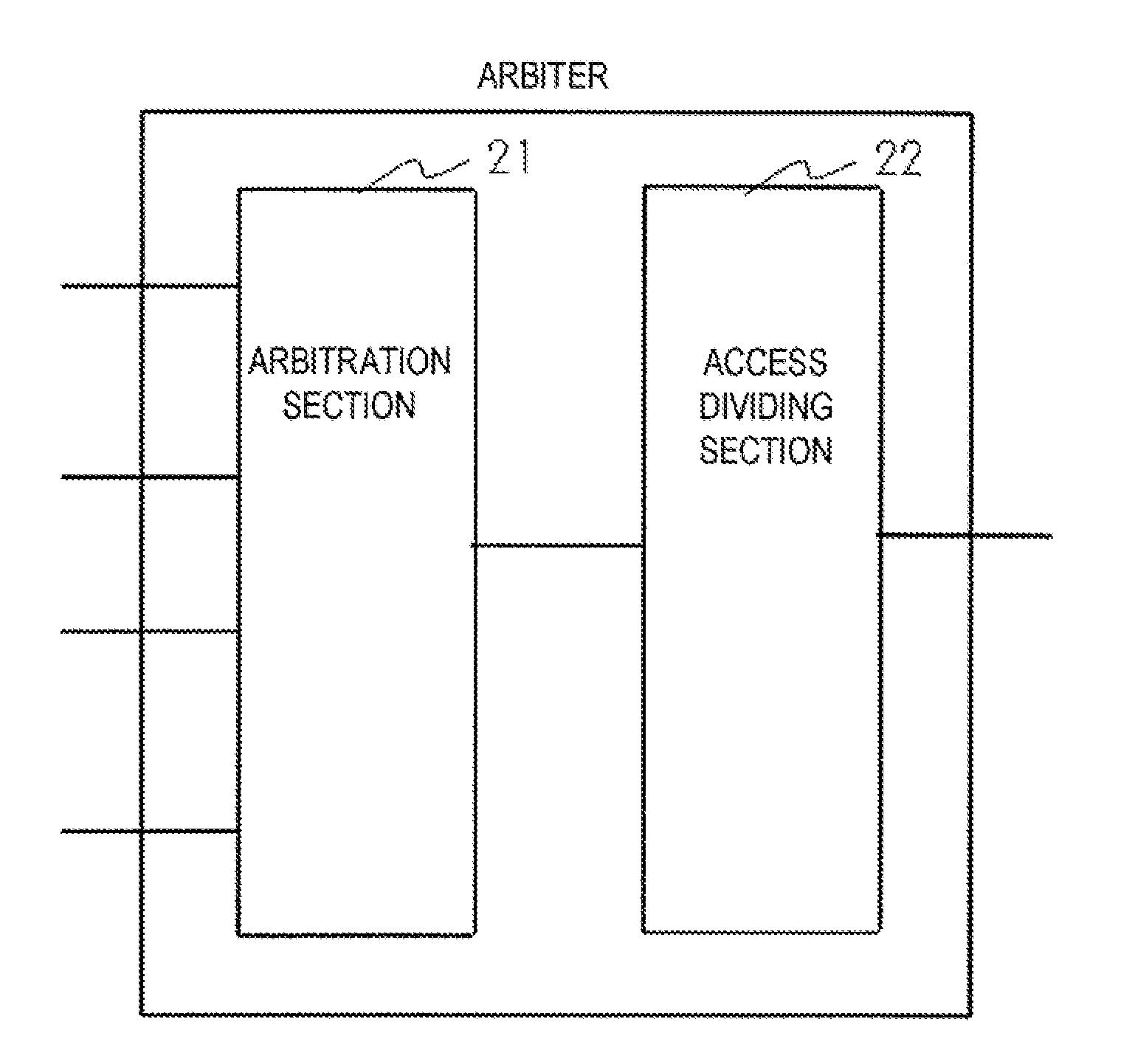

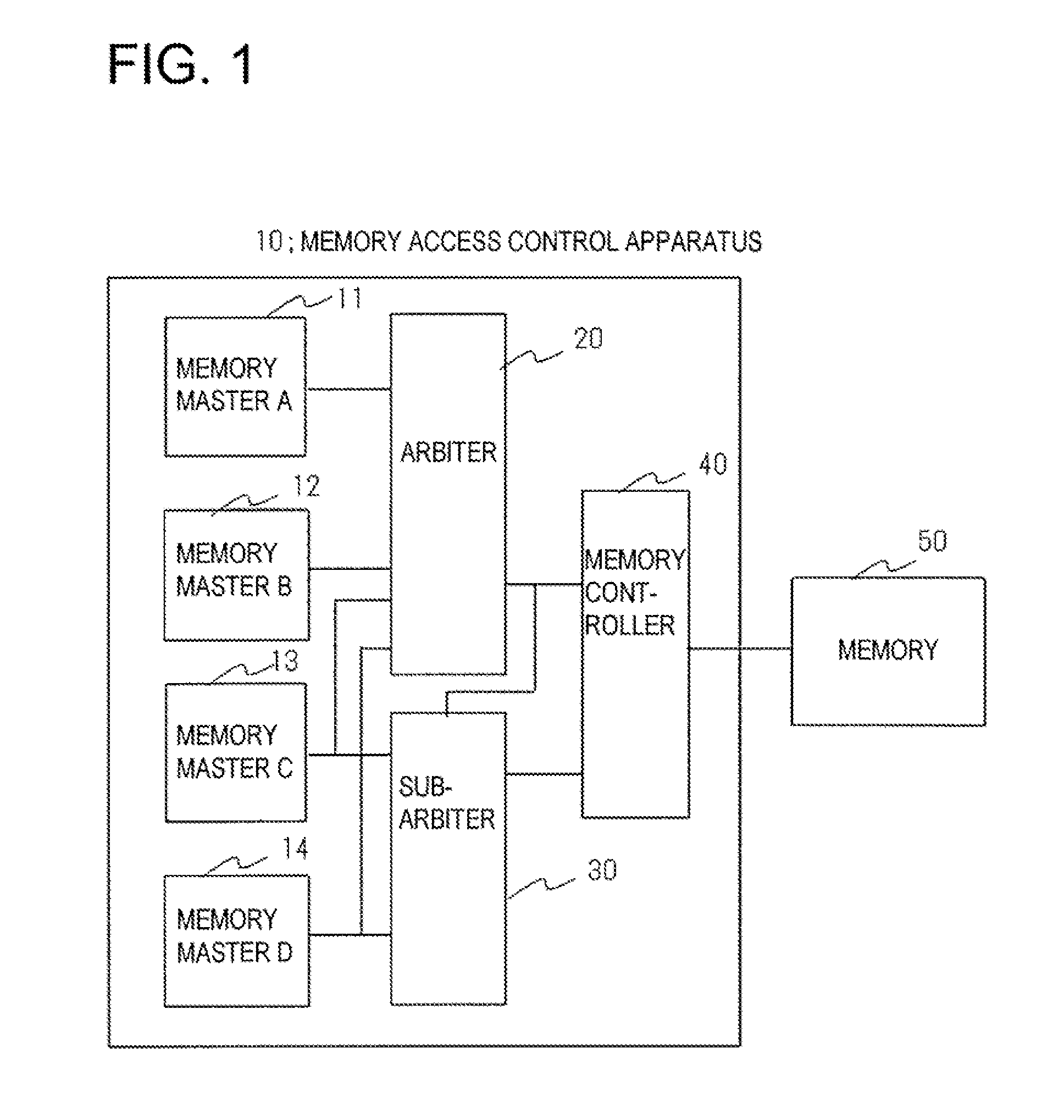

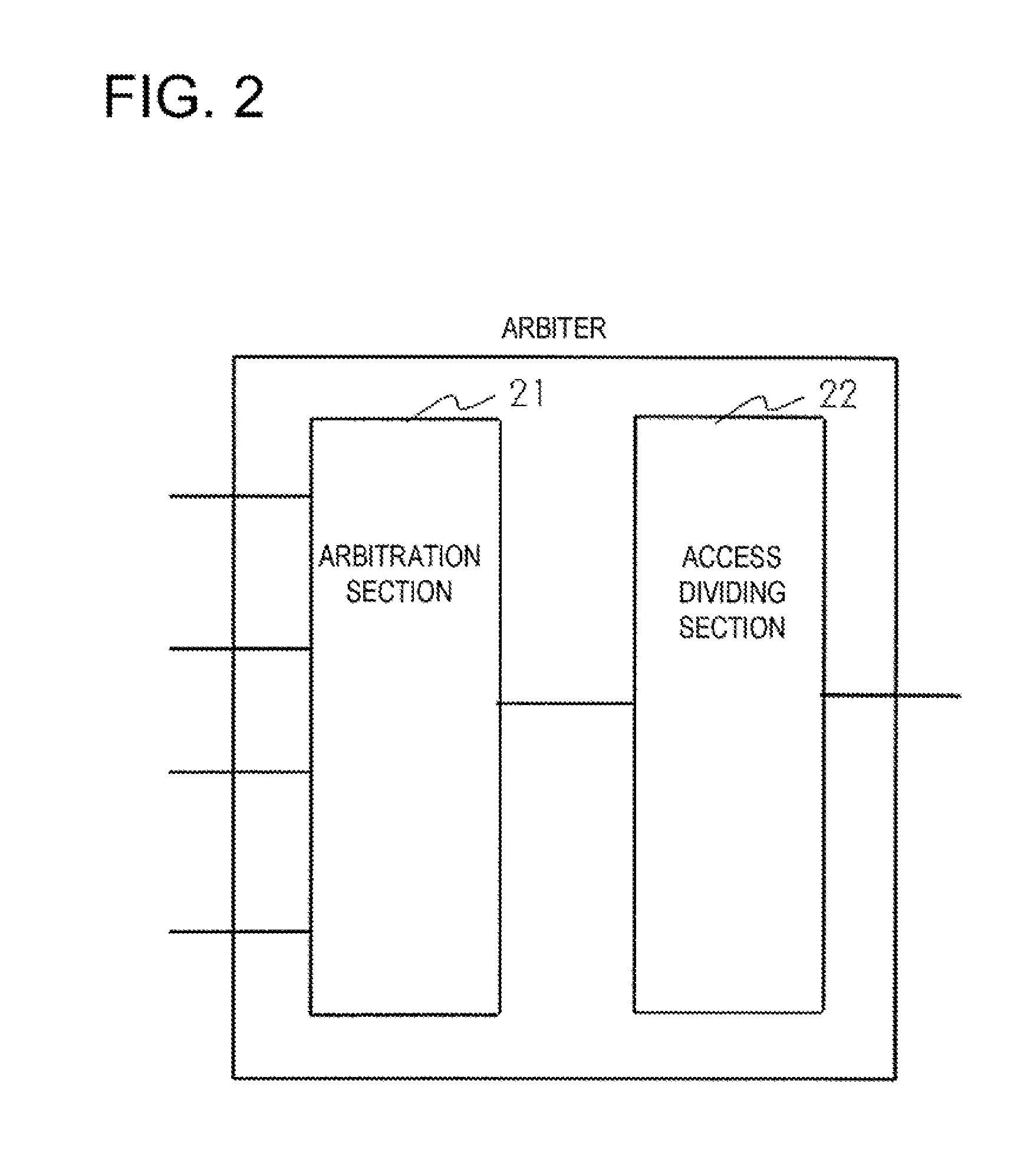

Memory access control apparatus

InactiveUS20100082877A1Improve memory access efficiencyIncrease latency of memory accessMemory adressing/allocation/relocationMemory controllerAccess control

A memory access control apparatus includes an arbiter and a sub-arbiter receiving and arbitrating access requests from a plurality of memory masters; a memory controller; and a memory having a plurality of banks. When a bank of the memory used by an access request allowed by the arbiter and currently being executed and a bank of the memory to be accessed by an access request by the sub-arbiter are different and the type of access request allowed by the arbiter and currently being executed and the type of memory access to be performed by the sub-arbiter are identical, then it is decided that access efficiency will not decline, memory access by the arbiter is suspended and memory access by the sub-arbiter is allowed to squeeze in (FIG. 1).

Owner:NEC CORP

Command reordering based on command priority

InactiveUS8250322B2Lower memory access latencyHigh bandwidthMemory systemsControl systemPriority setting

A control system for memory access includes a system memory access command buffer, a memory access command parallel processor, a DRAM command controller and a read data buffer. The system memory access command buffer stores plural system memory access commands. The memory access command parallel processor is connected to the system memory access command buffer for fetching and decoding the system memory access commands to plural DRAM access commands, storing the DRAM access commands in DRAM bank command FIFOs, and performing priority setting according to a DRAM bank priority table. The DRAM command controller is connected to the memory access command parallel processor and a DRAM for receiving the DRAM access commands, and sending control commands to the DRAM. The read data buffer is connected to the DRAM command controller and the system bus for storing the read data and rearranging a sequence of the read data.

Owner:SUNPLUS TECH CO LTD

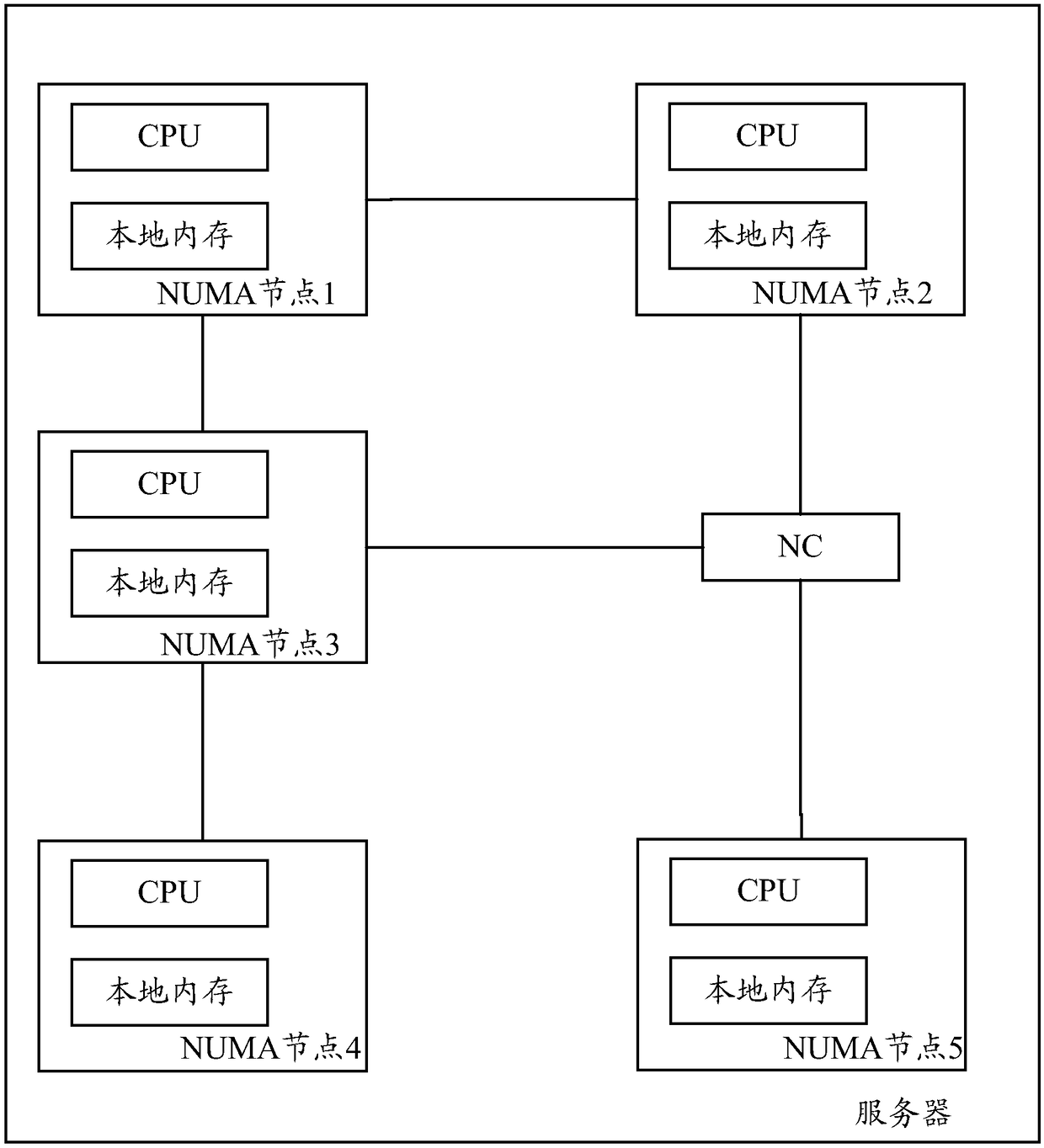

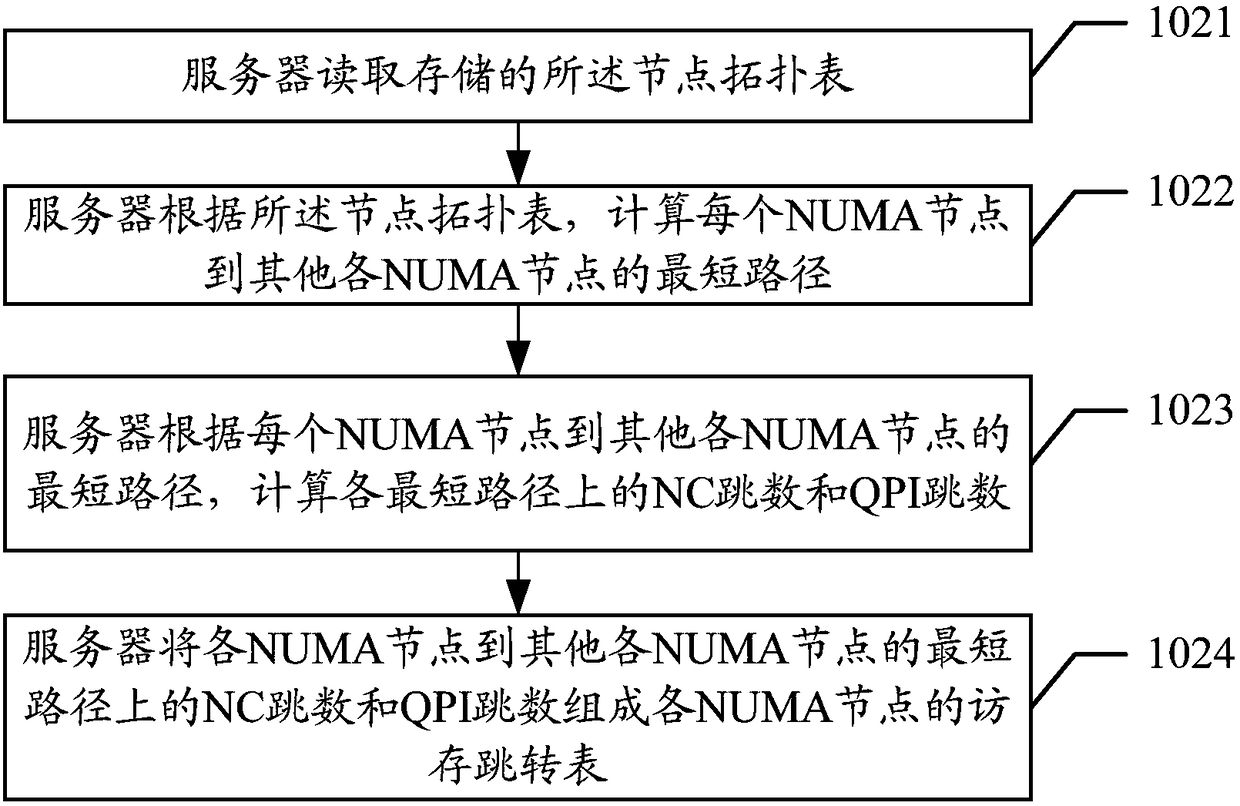

A memory allocation method and serv

ActiveCN109388490AImprove performanceReduce chance of allocating memory across NCsMemory architecture accessing/allocationResource allocationTopology tableAssignment methods

The embodiment of the present application discloses a memory allocation method and a server, which are used for reducing performance loss caused by NC delay and improving server performance when memory allocation is performed. A method for embodiment of that present application includes: Server Identification Node Topology Table, The node topology table contains not only the connection relationships between NUMA nodes, but also between the NUMA node and the NC, the connection between NCs, Based on the node topology table, generating a memory access jump table of each NUMA node, The hop table contains not only the number of QPI hops in the shortest path connected to other NUMA nodes, and has an NC hop count, according to the access jump table of each NUMA node, calculating the memory accesspriority of each NUMA node, The number of NC hops is taken as an important parameter in the calculation of memory access priority. The less the number of NC hops, the higher the memory access priority. When a NUMA node applies for memory, the memory is allocated according to the memory access priority table. The higher the priority, the more priority the memory is allocated from the NUMA node corresponding to the priority.

Owner:XFUSION DIGITAL TECH CO LTD

Method and system for thread-based memory speculation in a memory subsystem of a data processing system

InactiveUS8892821B2Improvement in average memory access latencySignificant comprehensive benefitsMemory architecture accessing/allocationMemory adressing/allocation/relocationData processing systemProcessing core

Owner:INT BUSINESS MASCH CORP

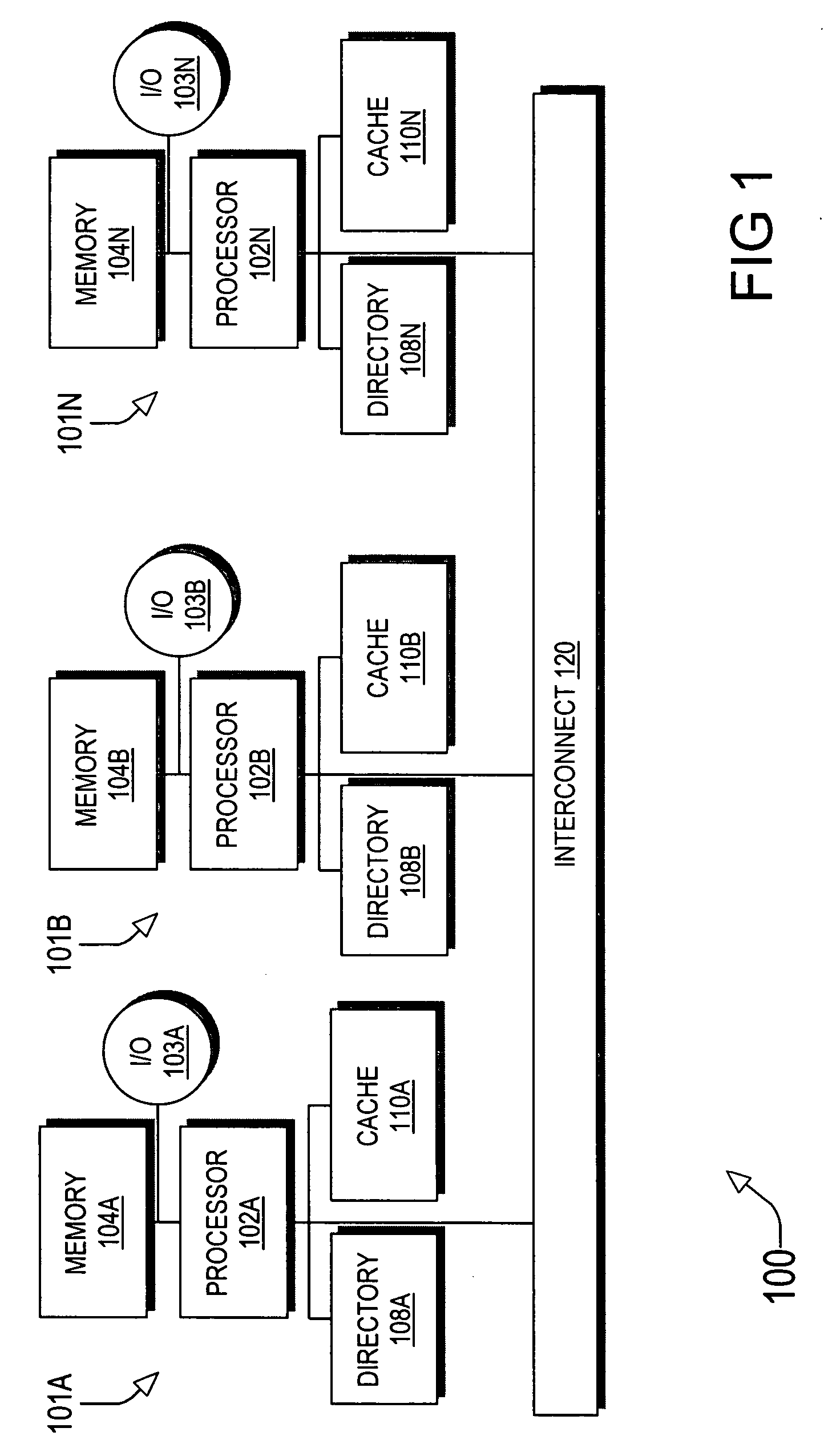

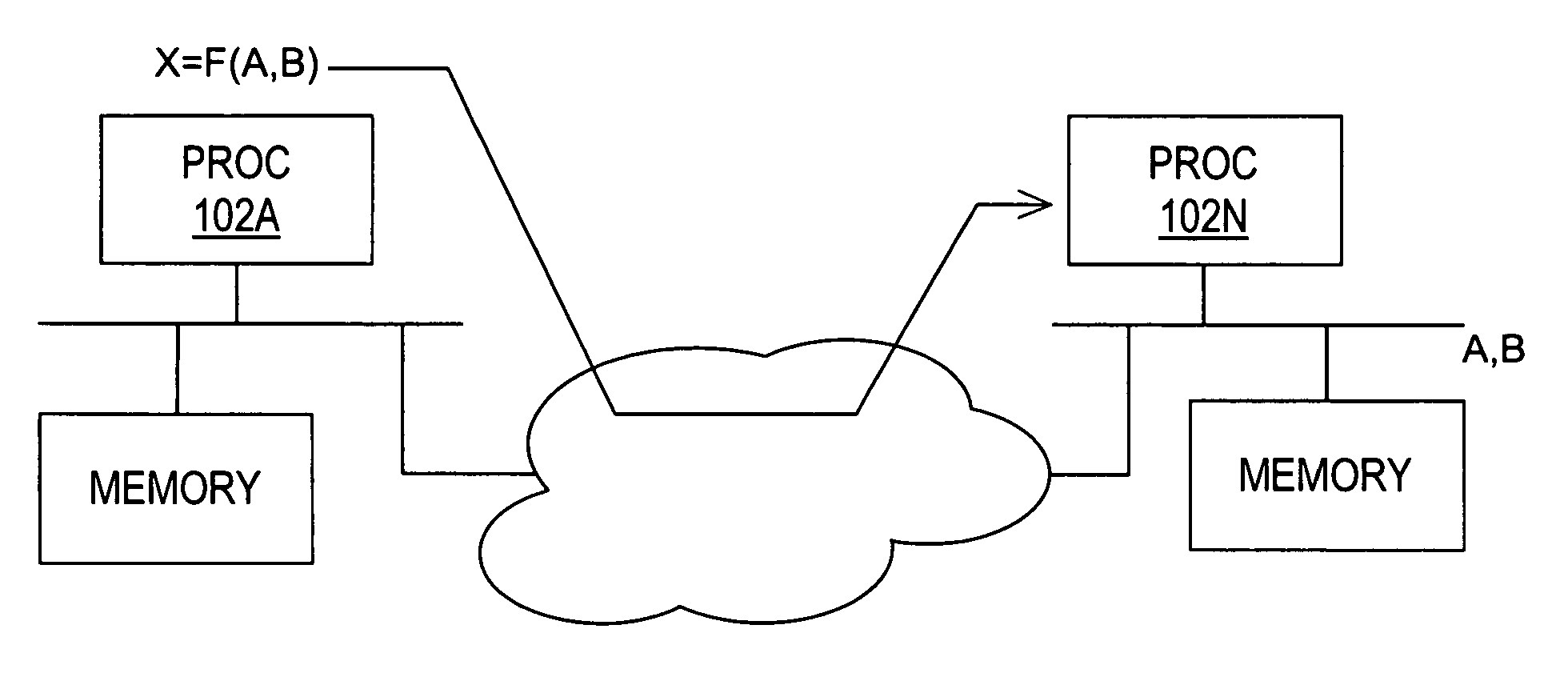

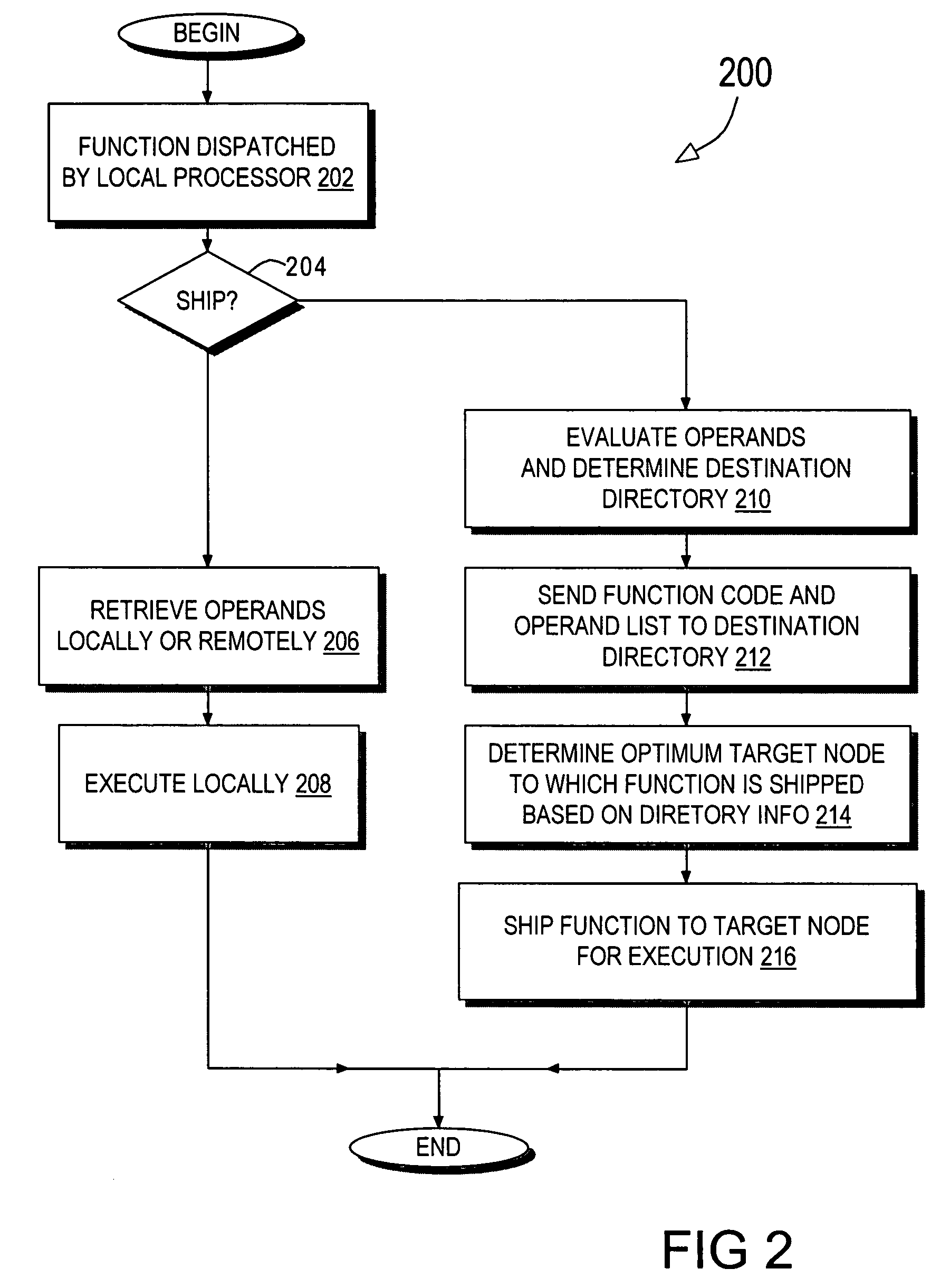

Directory based support for function shipping in a multiprocessor system

InactiveUS20050086438A1Lower memory access latencyMemory adressing/allocation/relocationMemory addressMulti processor

A multiprocessor system includes a plurality of data processing nodes. Each node has a processor coupled to a system memory, a cache memory, and a cache directory. The cache directory contains cache coherency information for a predetermined range of system memory addresses. An interconnection enables the nodes to exchange messages. A node initiating a function shipping request identifies an intermediate destination directory based on a list of the function's operands and sends a message indicating the function and its corresponding operands to the identified destination directory. The destination cache directory determines a target node based, at least in part, on its cache coherency status information to reduce memory access latency by selecting a target node where all or some of the operands are valid in the local cache memory. The destination directory then ships the function to the target node over the interconnection.

Owner:IBM CORP

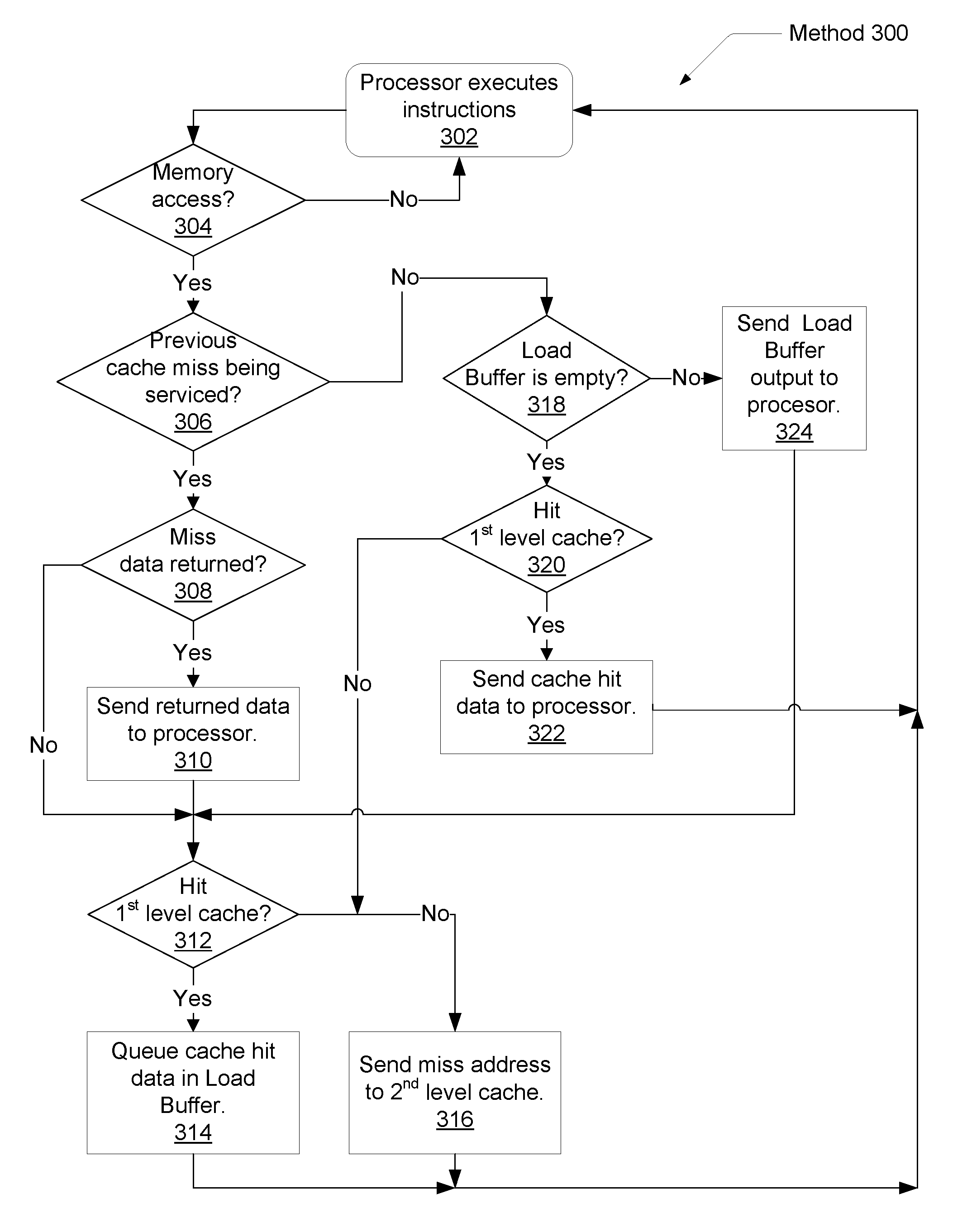

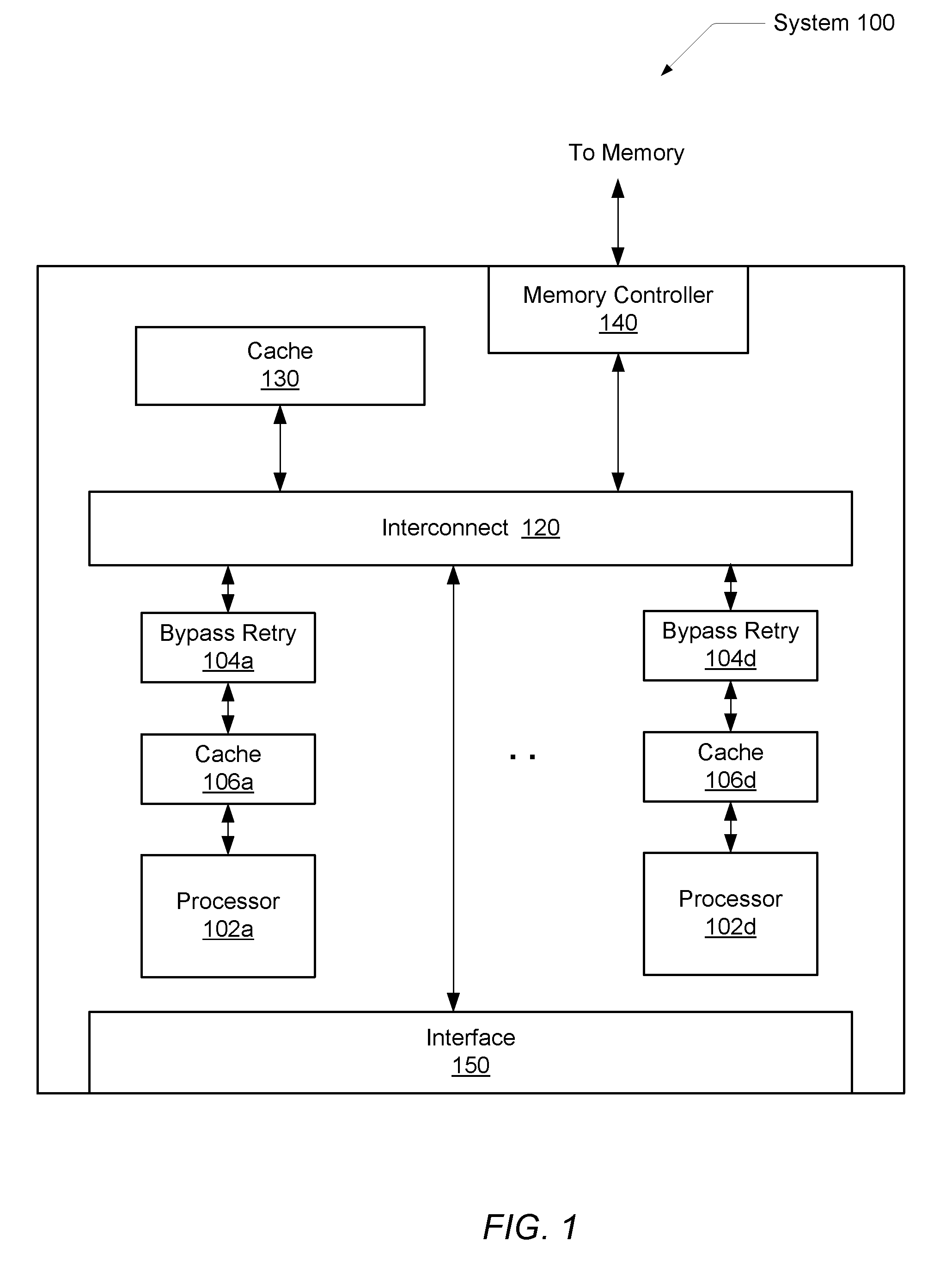

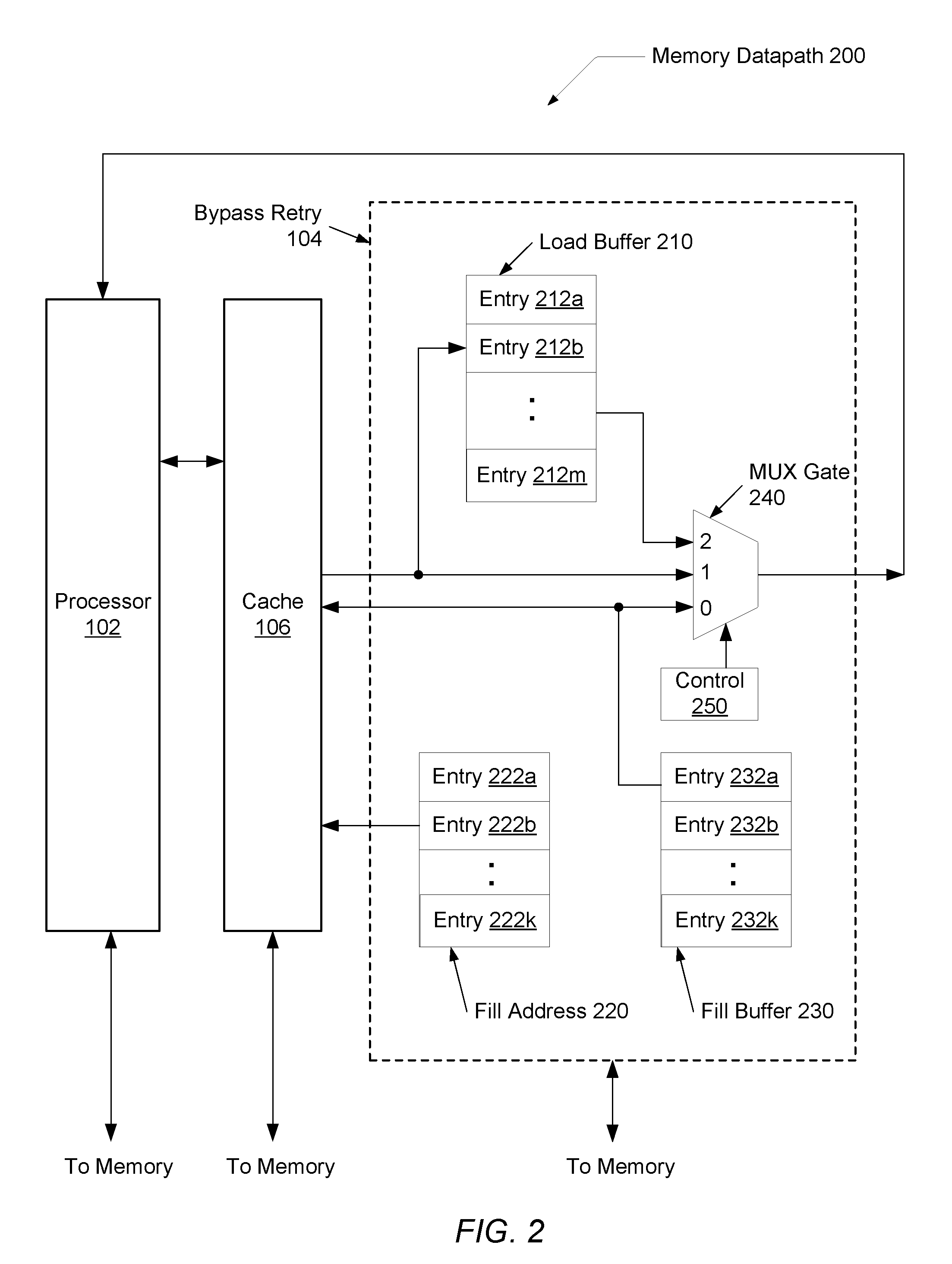

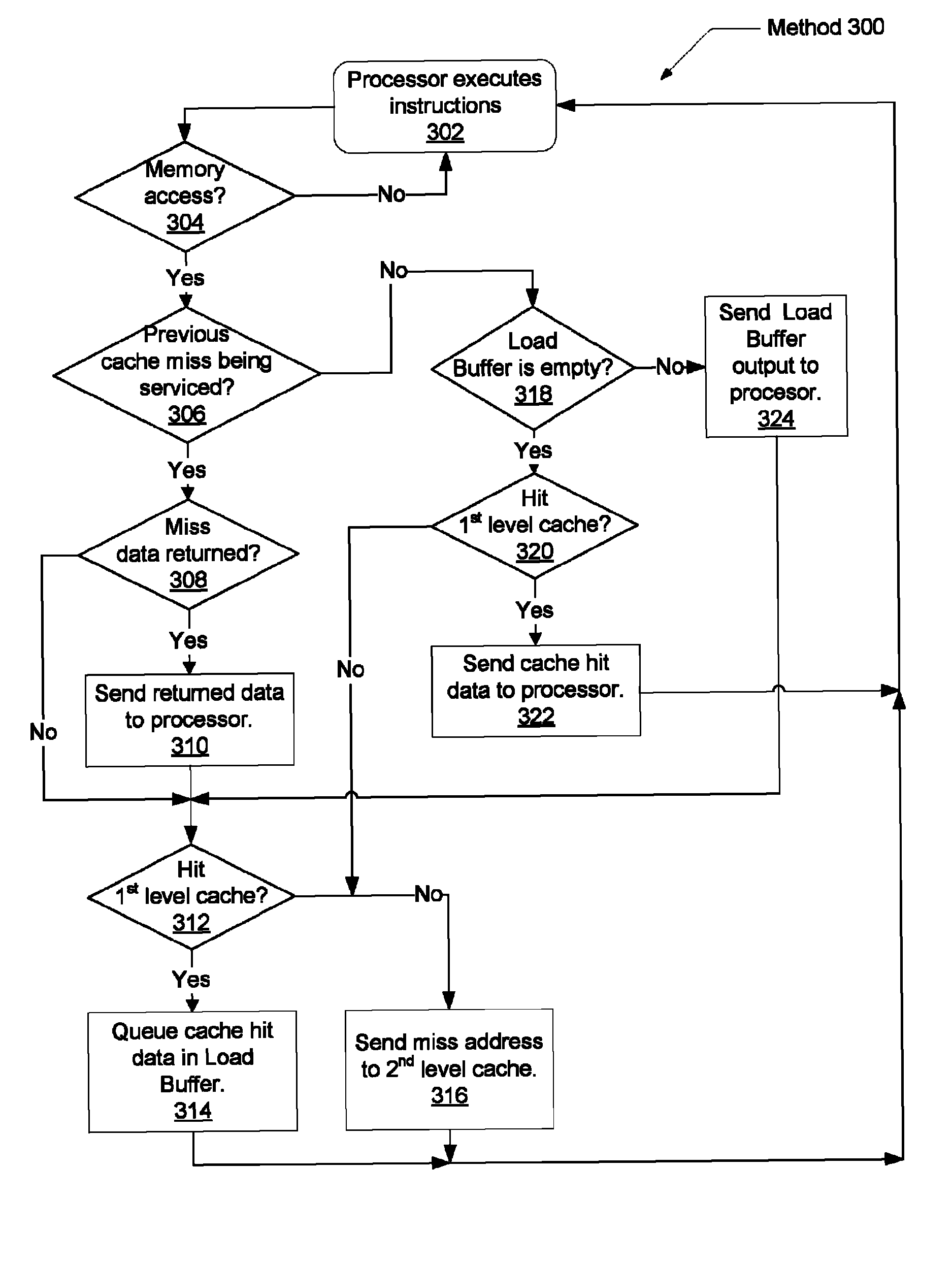

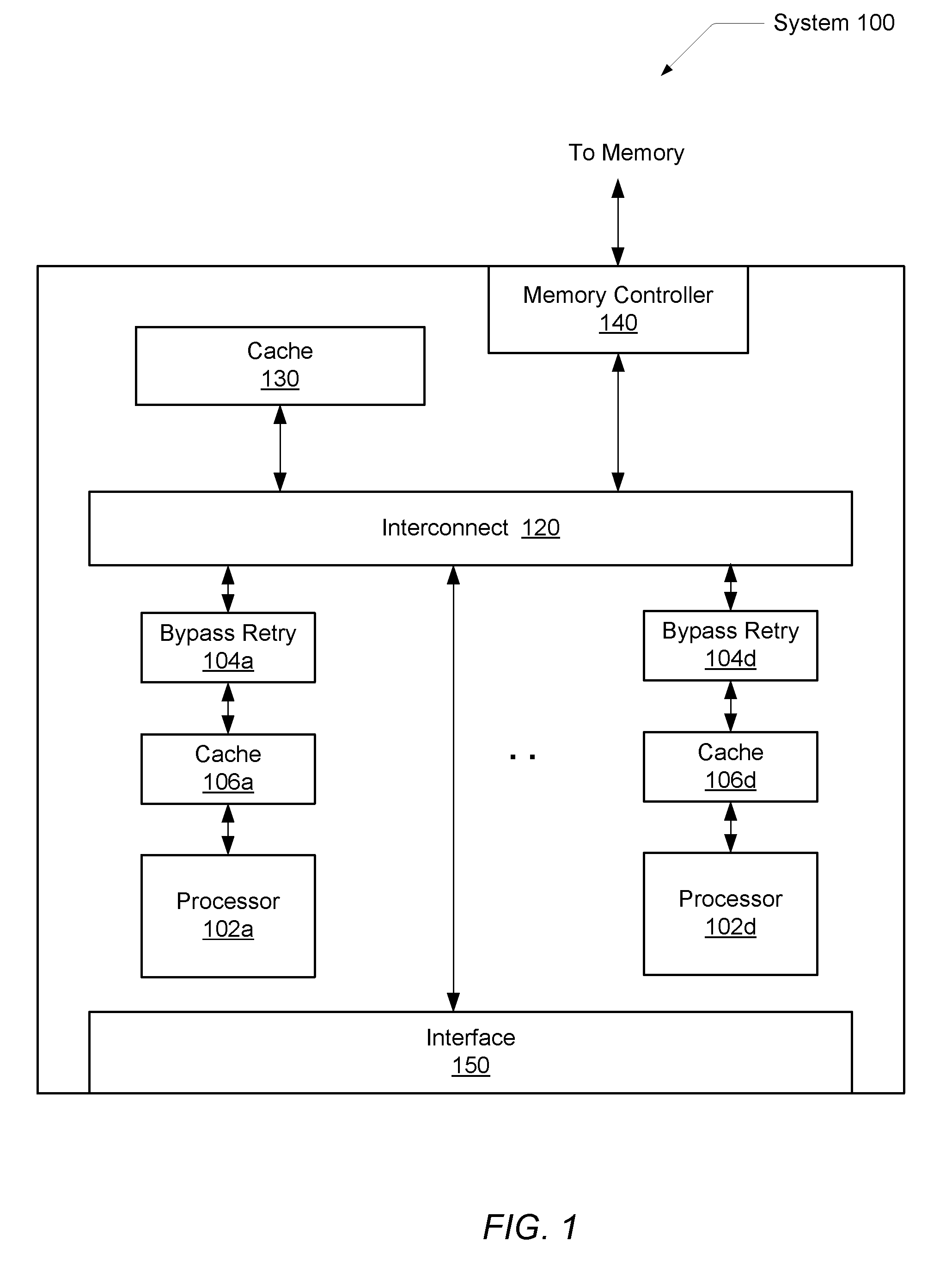

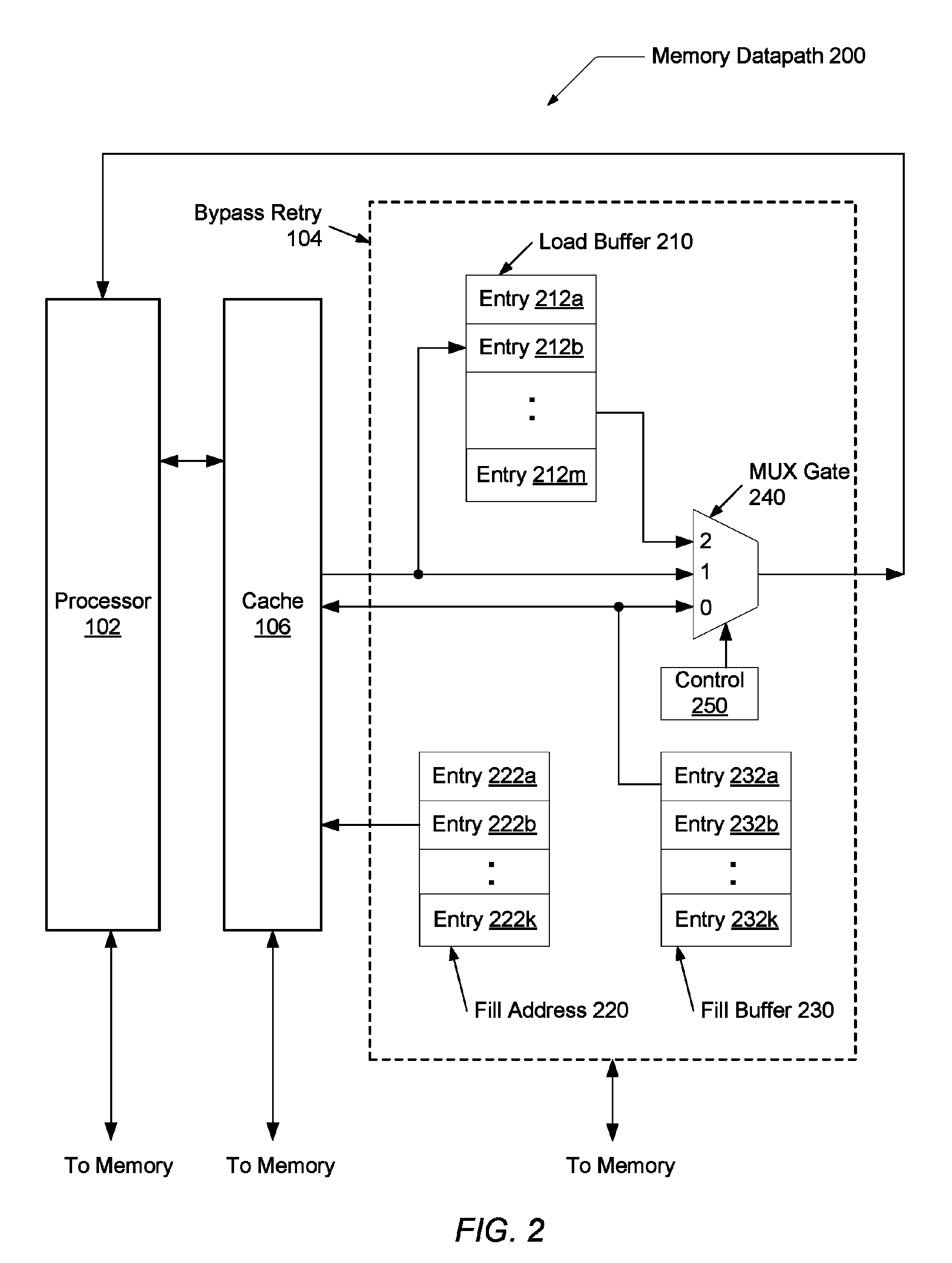

Floating point bypass retry

InactiveUS20090138662A1Lower memory access latencyMemory adressing/allocation/relocationFloating pointData store

A system and method for increasing the throughput of a processor during cache misses. During the retrieval of the cache miss data, subsequent memory requests are generated and allowed to proceed to the cache. The data for the subsequent cache hits are stored in a bypass retry device. Also, the cache miss address and memory line data may be stored by the device when they are retrieved and they may be sent them to the cache for a cache line replacement. The bypass retry device determines the priority of sending data to the processor. The priority allows the data for memory requests to be provided to the processor in the same order as they were generated from the processor without delaying subsequent memory requests after a cache miss.

Owner:GLOBALFOUNDRIES INC

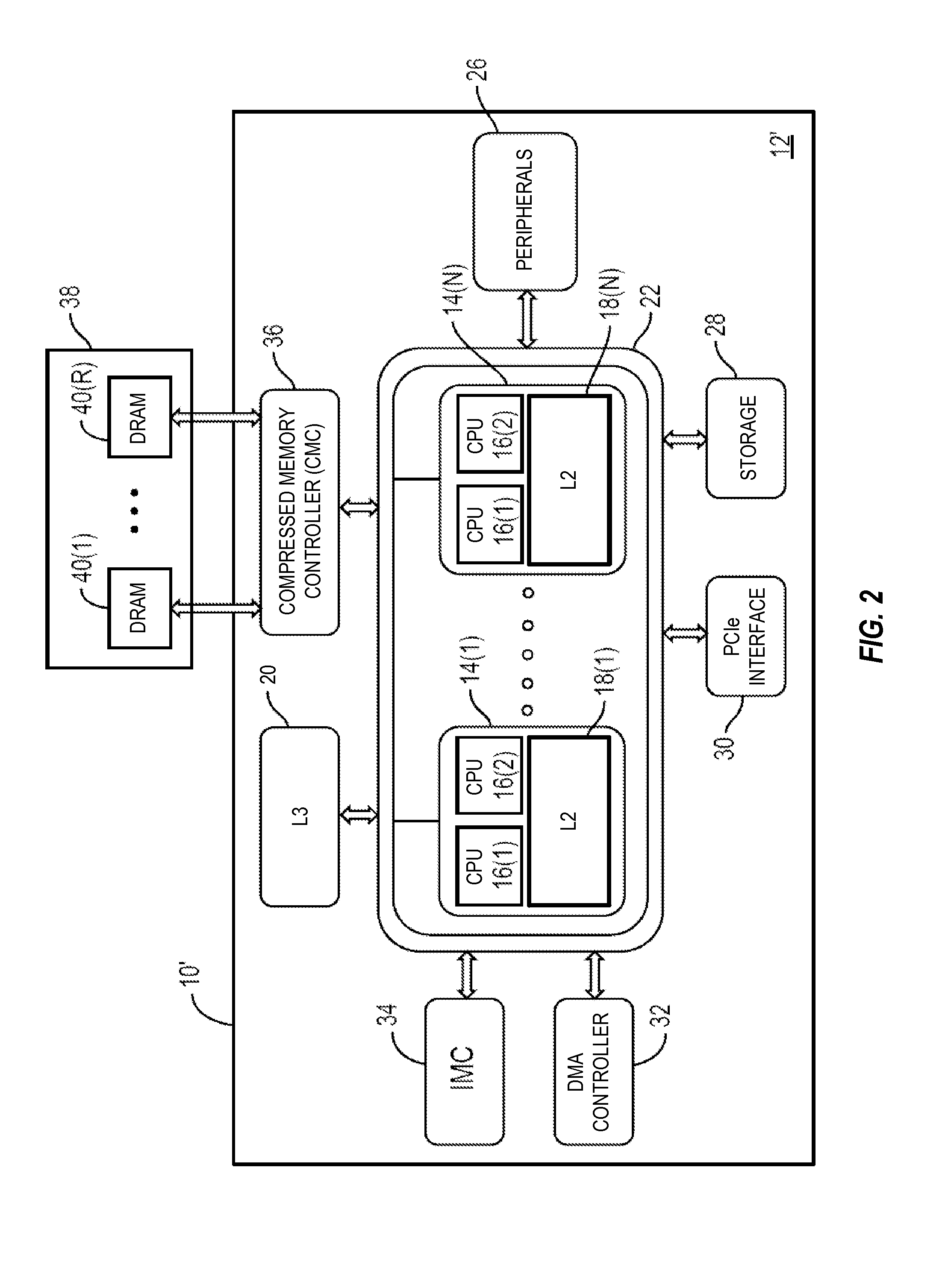

PROVIDING MEMORY BANDWIDTH COMPRESSION USING BACK-TO-BACK READ OPERATIONS BY COMPRESSED MEMORY CONTROLLERS (CMCs) IN A CENTRAL PROCESSING UNIT (CPU)-BASED SYSTEM

InactiveUS20160224241A1Improve latencyMore dataMemory architecture accessing/allocationInput/output to record carriersPhysical addressMemory controller

Providing memory bandwidth compression using back-to-back read operations by compressed memory controllers (CMCs) in a central processing unit (CPU)-based system is disclosed. In this regard, in some aspects, a CMC is configured to receive a memory read request to a physical address in a system memory, and read a compression indicator (CI) for the physical address from error correcting code (ECC) bits of a first memory block in a memory line associated with the physical address. Based on the CI, the CMC determines whether the first memory block comprises compressed data. If not, the CMC performs a back-to-back read of one or more additional memory blocks of the memory line in parallel with returning the first memory block. Some aspects may further improve memory access latency by writing compressed data to each of a plurality of memory blocks of the memory line, rather than only to the first memory block.

Owner:QUALCOMM INC

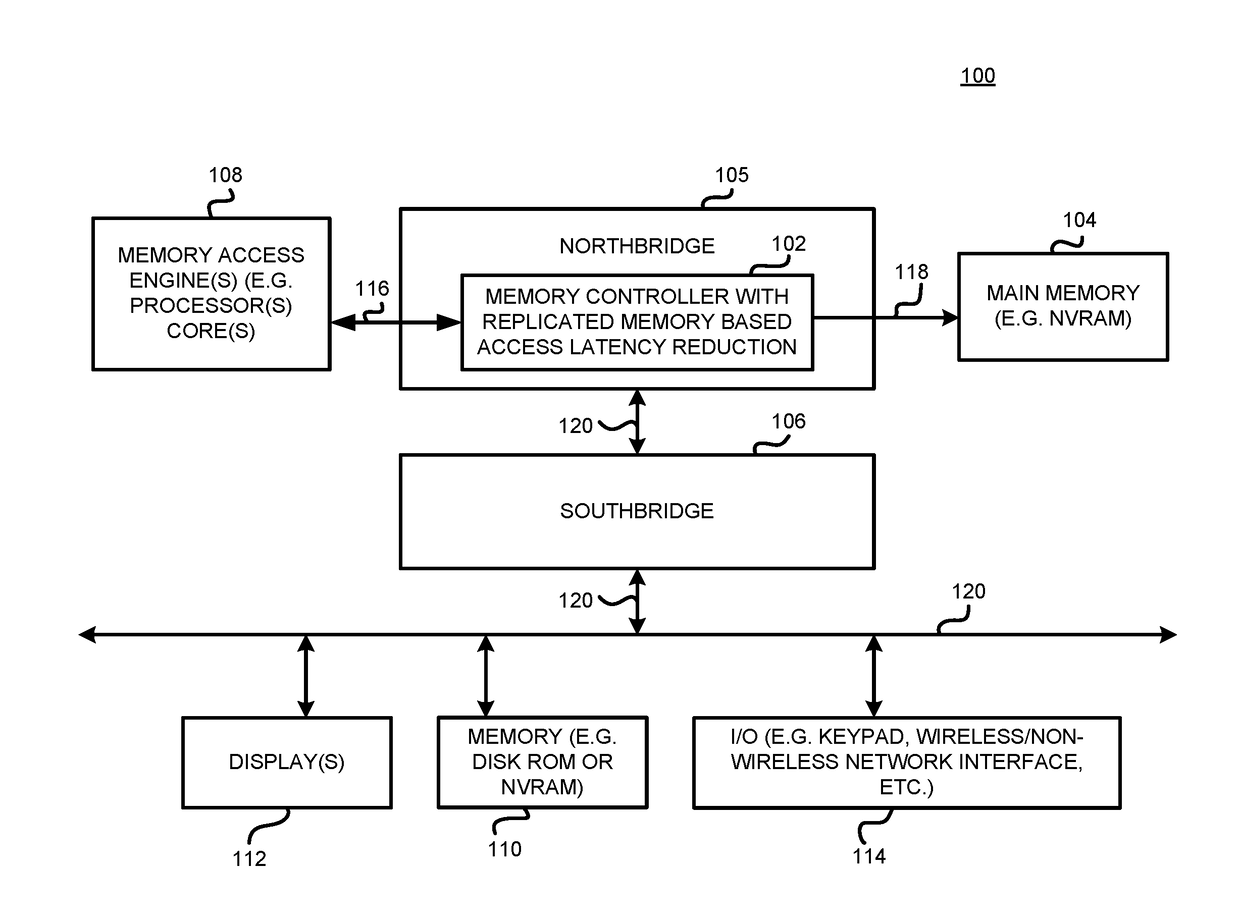

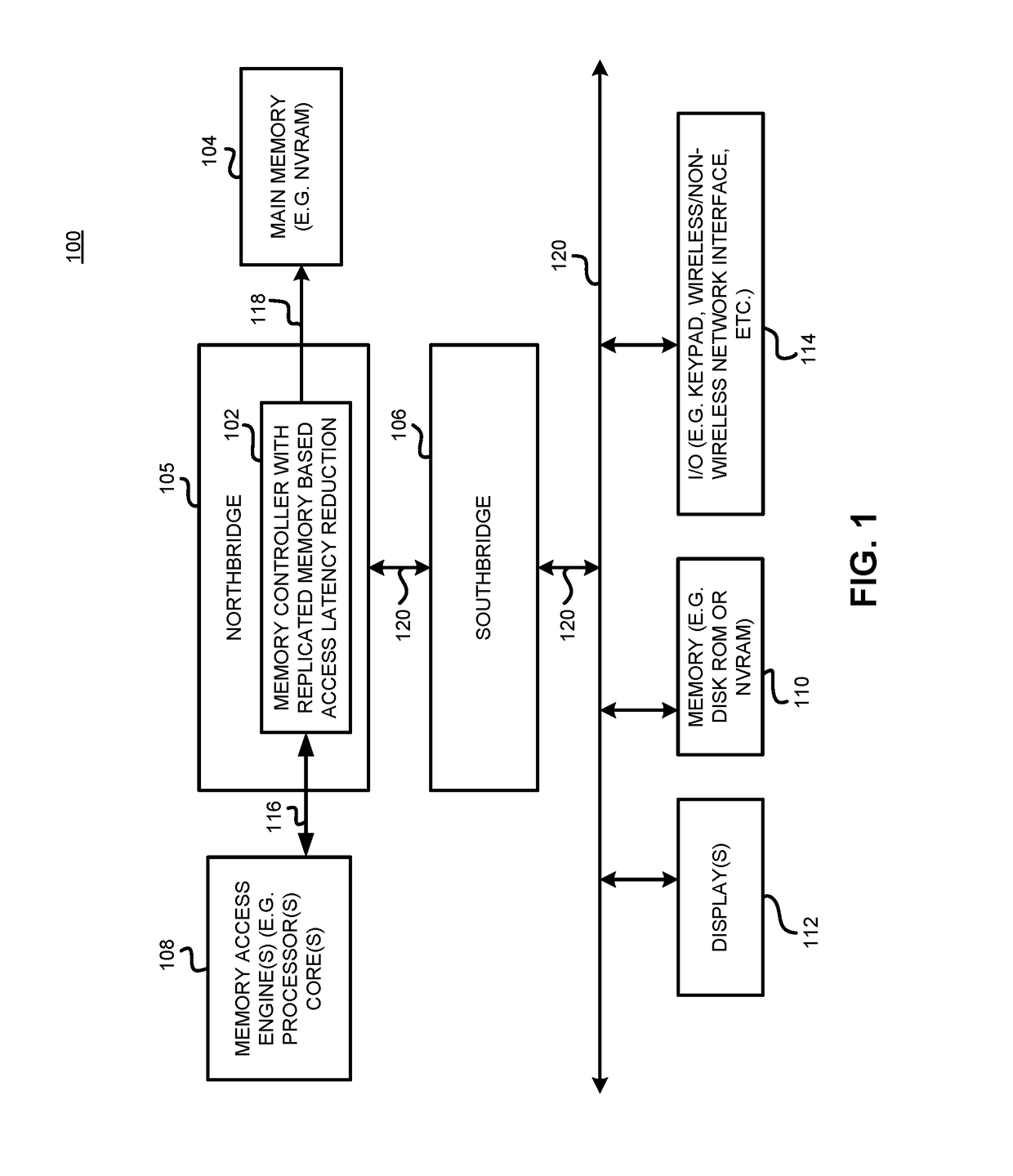

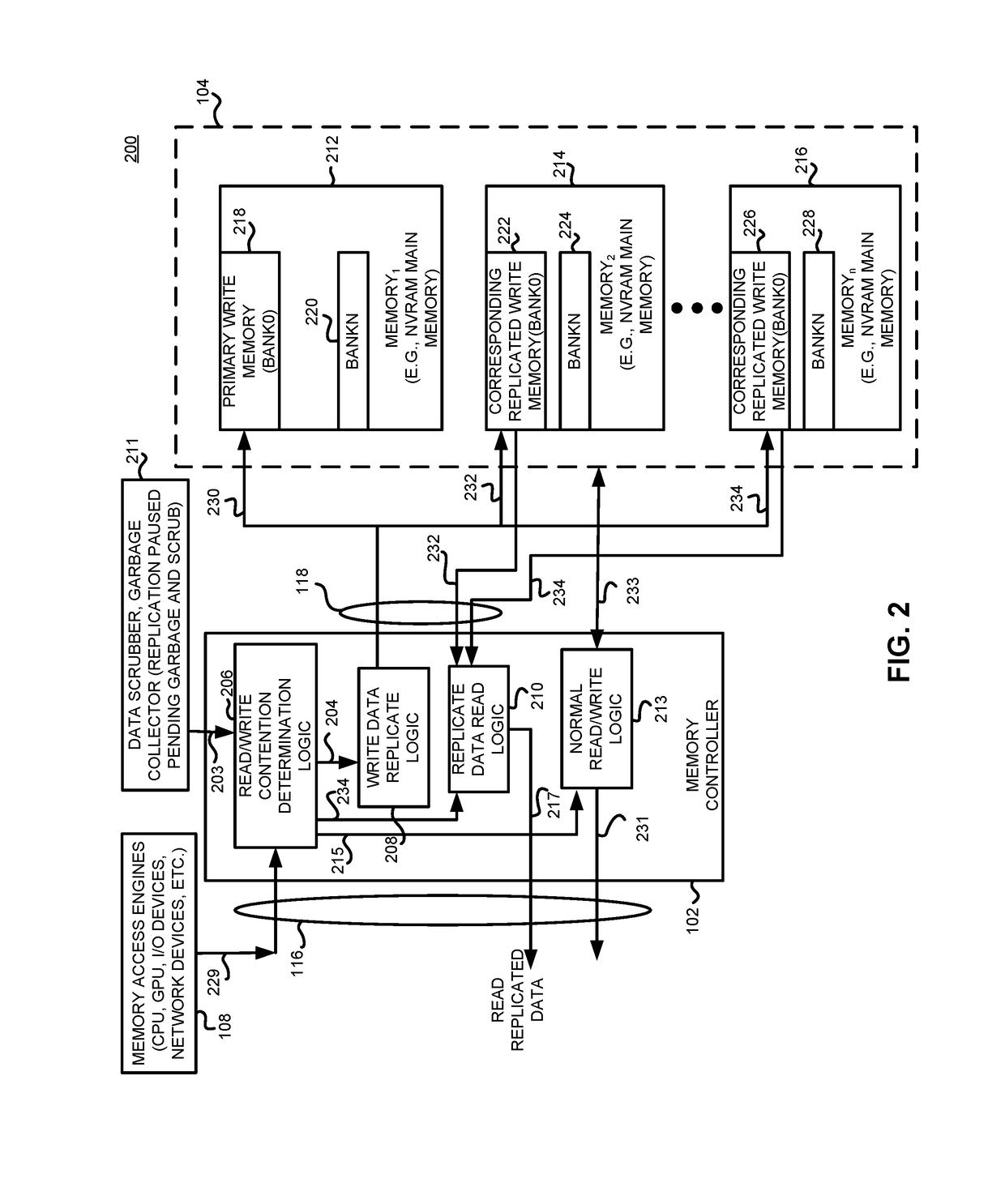

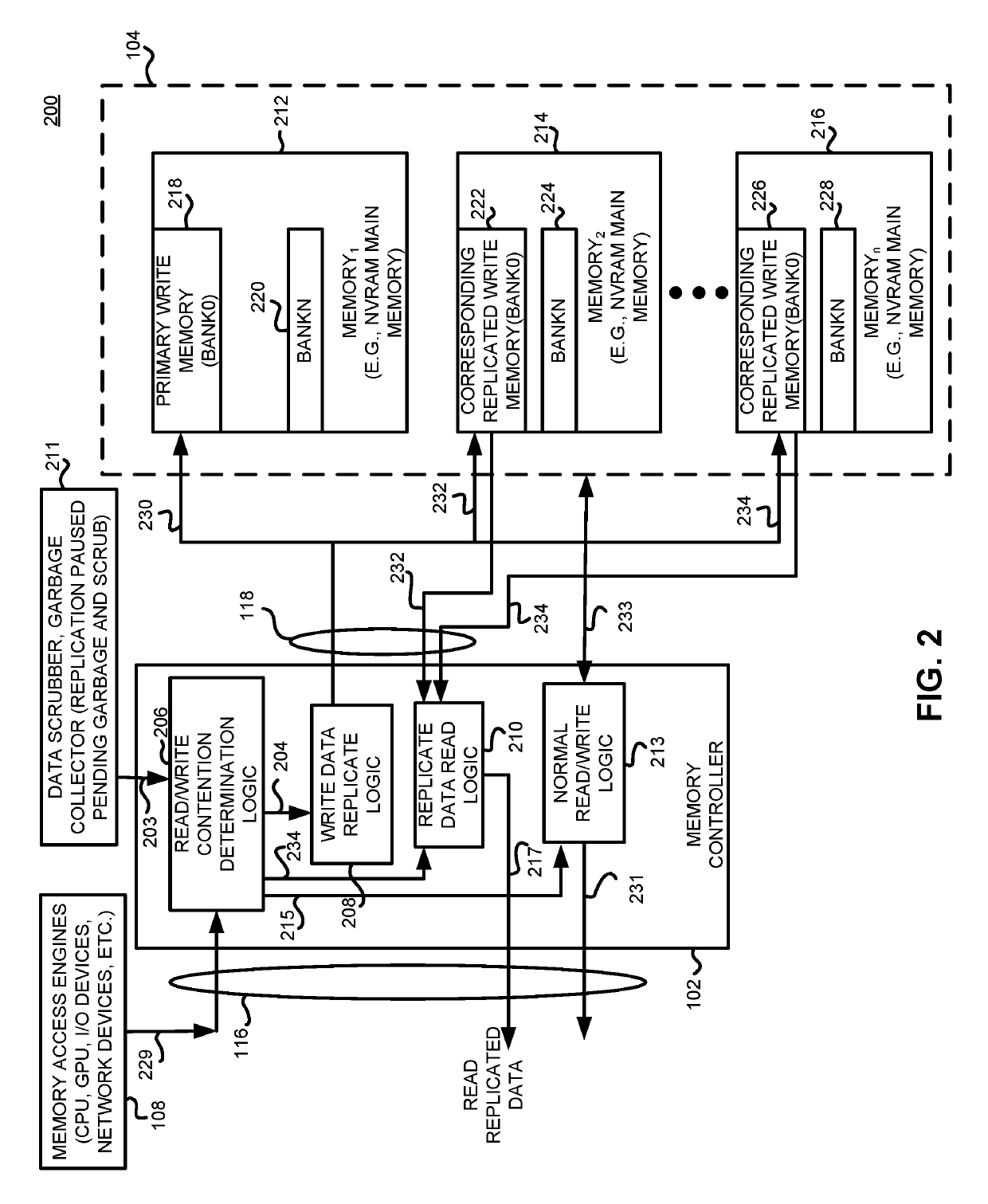

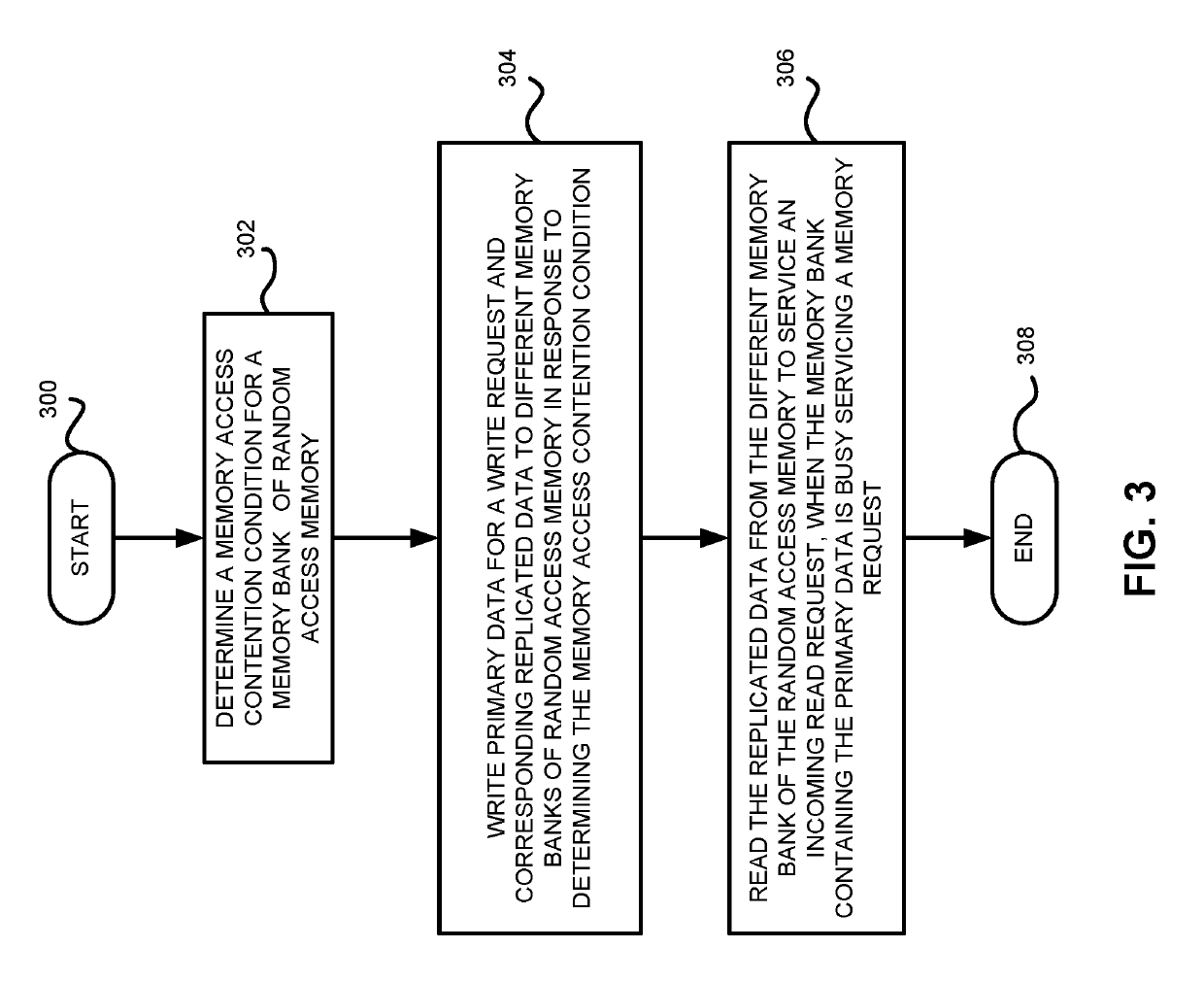

Method and apparatus for reducing memory access latency

ActiveUS20180081563A1Lower memory access latencyInput/output to record carriersDigital storageMemory bankRandom access memory

Logic such as a memory controller writes primary data from an incoming write request as well as corresponding replicated primary data (which is a copy of the primary data) to one or more different memory banks of random access memory in response to determining a memory access contention condition for the address (including a range of addresses) corresponding to the incoming write request. When the memory bank containing the primary data is busy servicing a write request, such as to another row of memory in the bank, a read request for the primary data is serviced by reading the replicated primary data from the different memory bank of the random access memory to service the incoming read request.

Owner:ADVANCED MICRO DEVICES INC

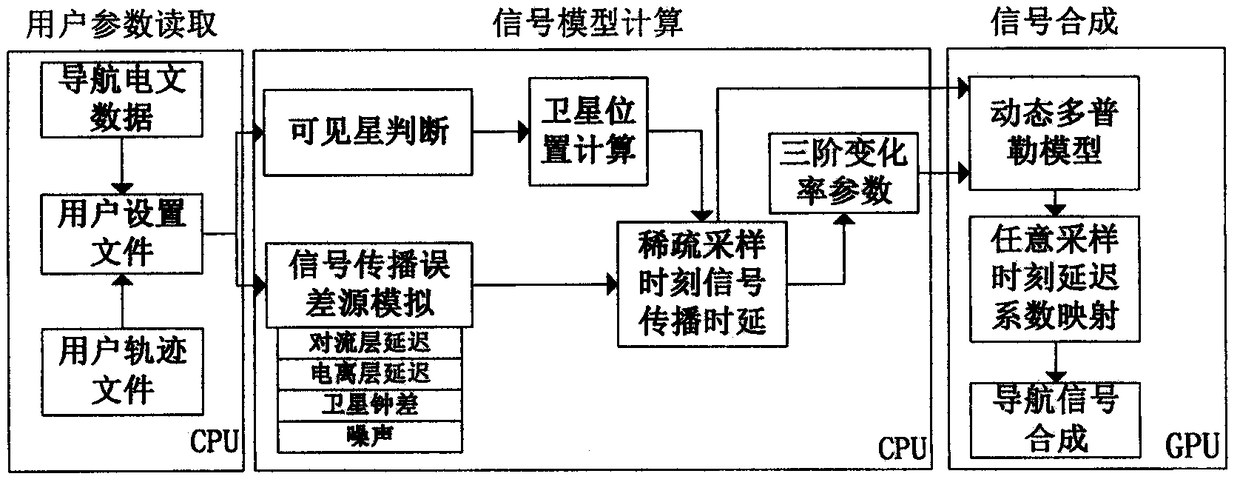

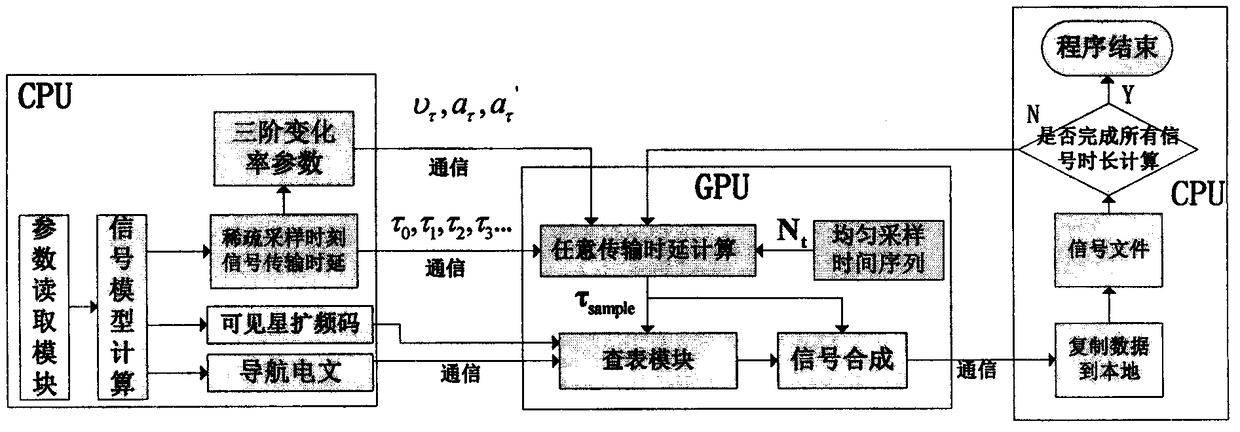

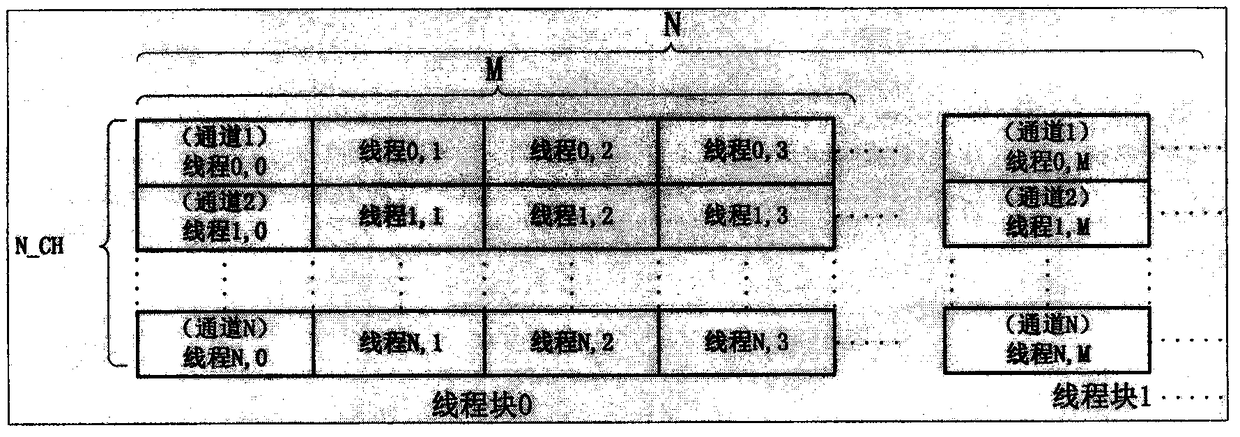

GPU-based parallel satellite navigation signal simulation method

ActiveCN108073455ATake full advantage of computing performanceLower memory access latencyResource allocationSatellite radio beaconingGeneration processPropagation delay

The invention discloses a GPU-based parallel satellite navigation signal simulation method, and aims at solving the problem that the calculation speed is low in test signal generation process in the technical field of satellite navigation. According to the method, a CPU+GPU hybrid heterogeneous computing mode is adopted; and a GPU is imported to serve as an acceleration component for signal synthesis and matched with a CPU to complete program operation. The CPU is responsible for functions such as user data reading, visible star judgement, model calculation and the like; and the GPU is responsible for functions such as propagation delay calculation, table lookup calculation, signal multiplication and accumulation and the like under high sampling rates. In the aspect of memory optimization,spread spectrum codes and navigation messages use texture memories, and three-order change rate parameters and sparse sampling propagation delay use constant memories, so that memory access speeds ofprograms are improved. Finally, the satellite navigation signal simulation speed is improved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

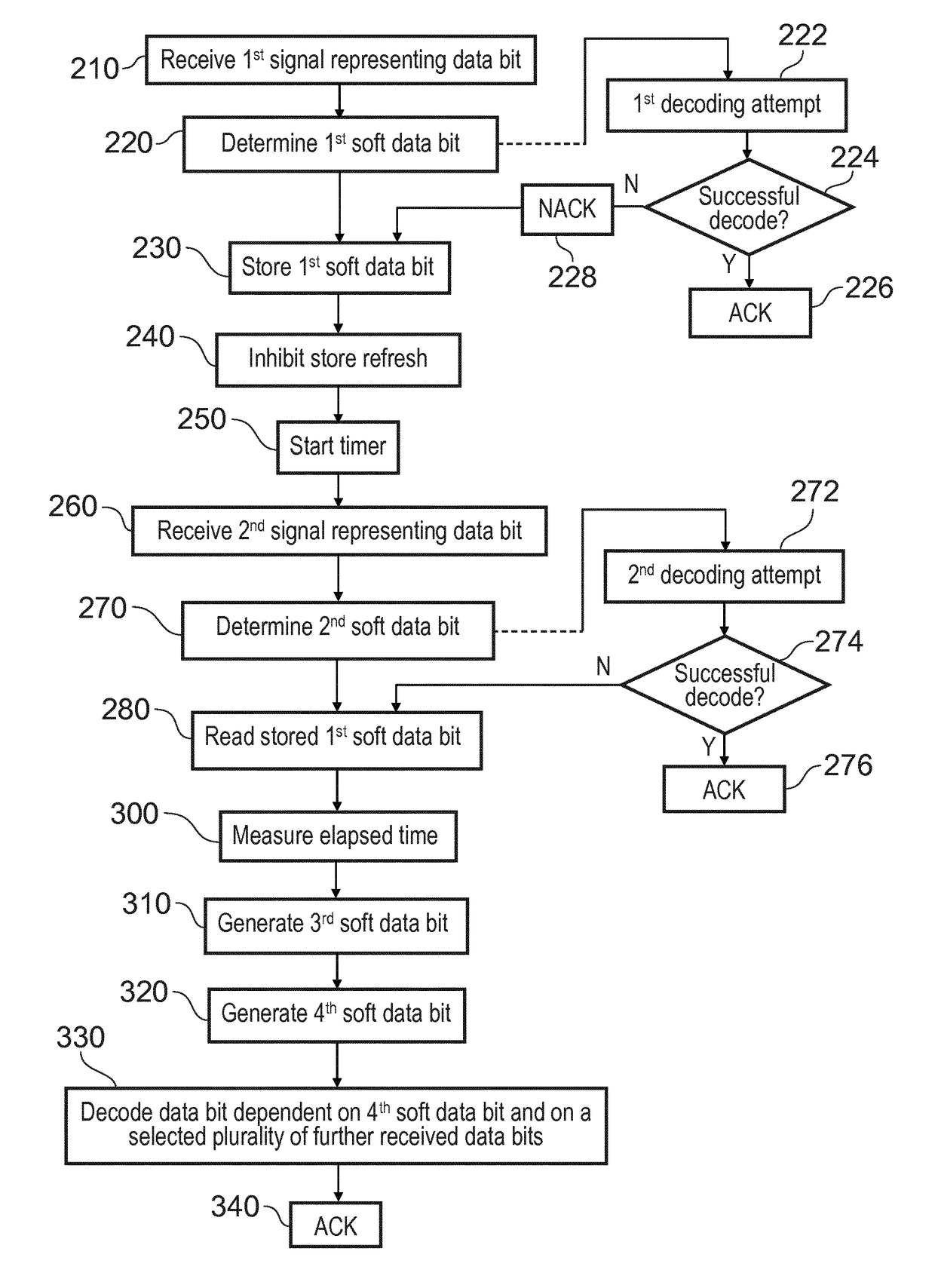

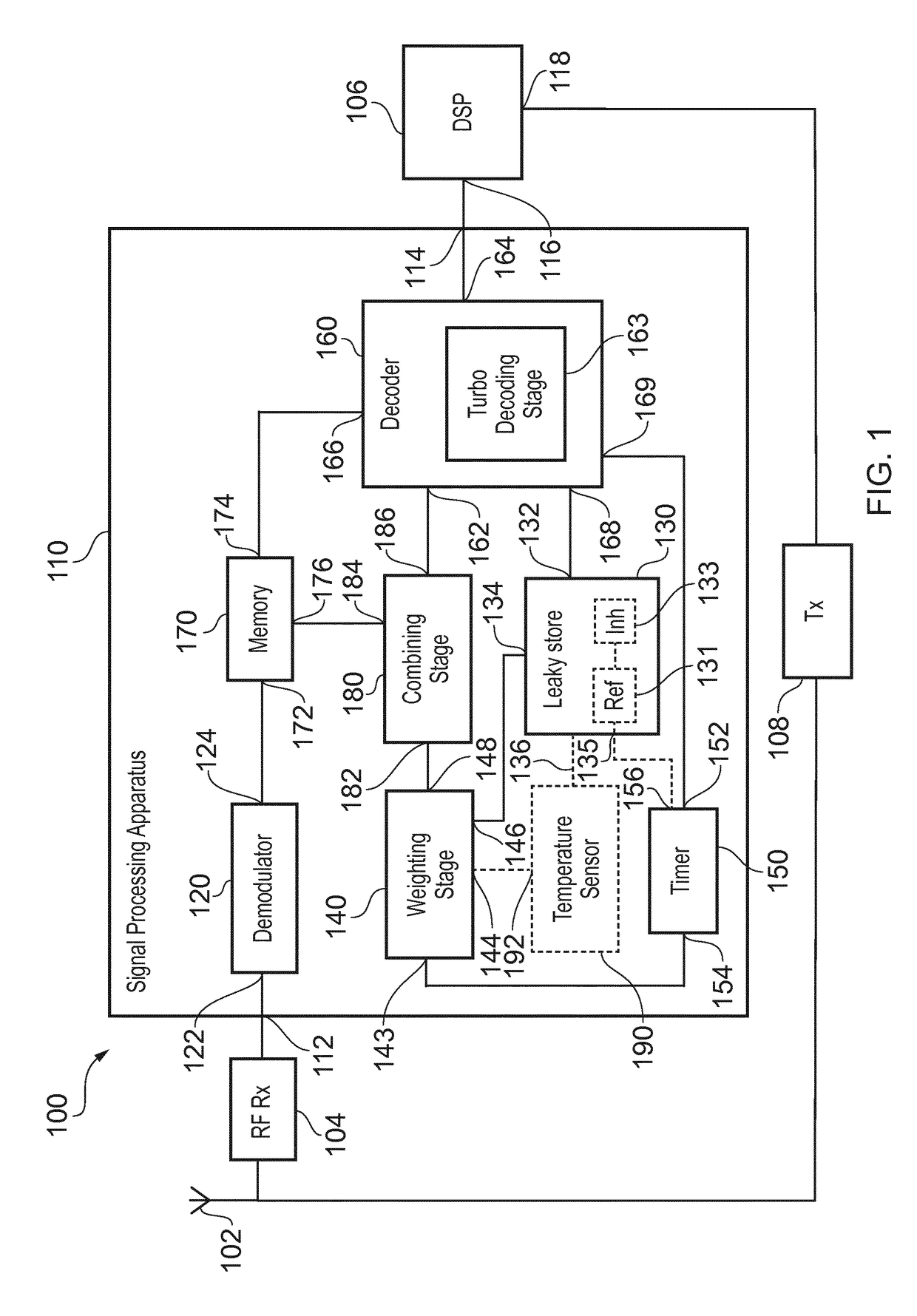

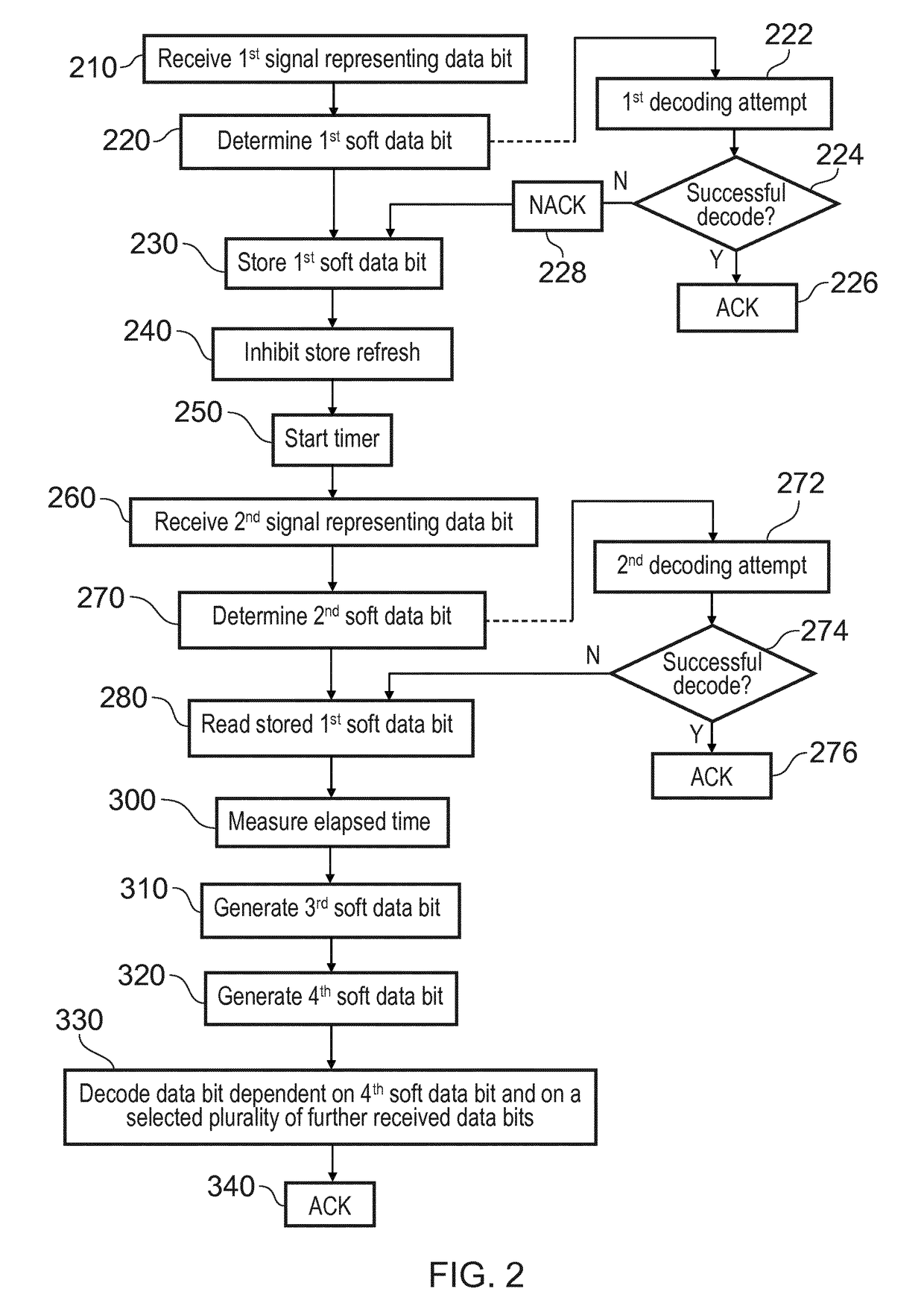

Signal Processing Apparatus and Method

ActiveUS20170331588A1Improve reliabilityLow reliabilityError prevention/detection by using return channelNetwork traffic/resource managementComputer hardwareSoft data

A method of operating a signal processing apparatus (110) comprises receiving a first signal representing a received data bit, determining from the first signal a first soft data bit, storing the first soft data bit in a leaky storage device (130), receiving a second signal representing the received data bit, and determining from the second signal a second soft data bit. The stored first soft data bit is read from the leaky storage device (130), an elapsed leakage time of the stored first soft data bit is measured, and a third soft data bit is generated dependent on the stored first soft data bit read from the leaky storage device (130) and on the elapsed leakage time. A fourth soft data bit is generated by combining the second soft data bit and the third soft data bit, and the received data bit is decoded dependent on the fourth soft data bit and on a selected plurality of further received data bits.

Owner:TELEFON AB LM ERICSSON (PUBL)

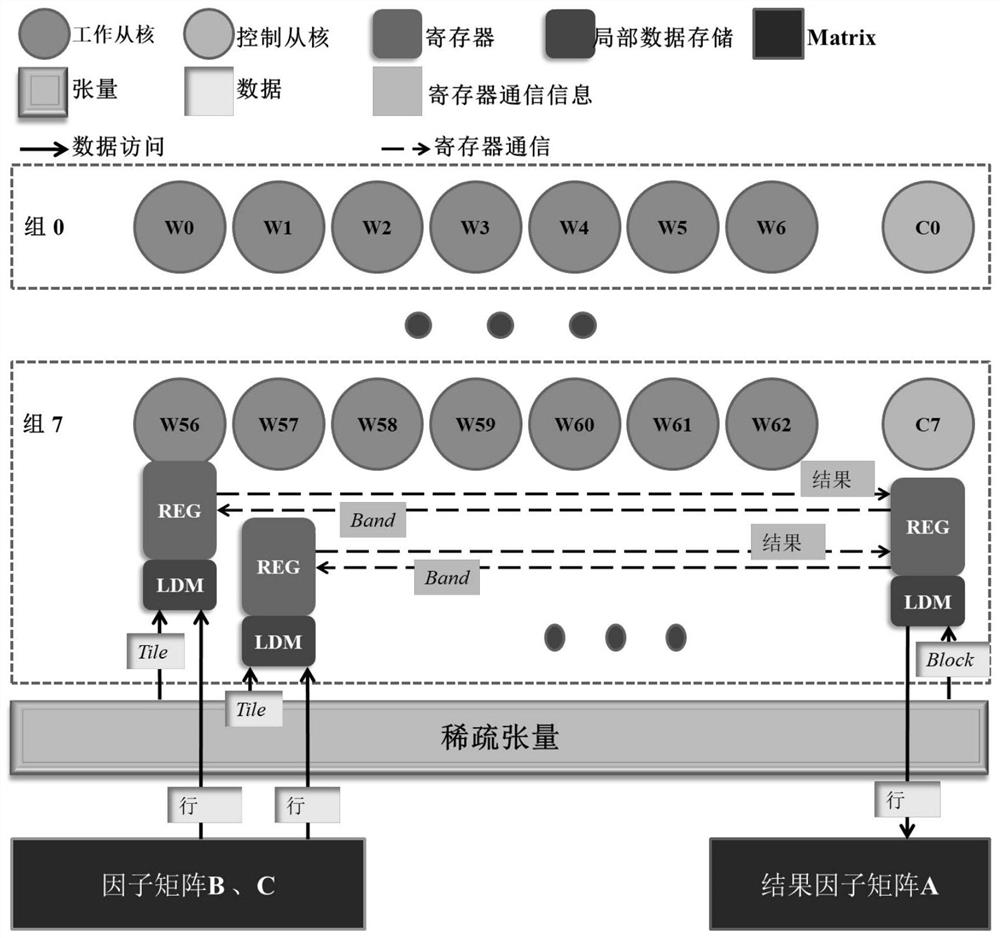

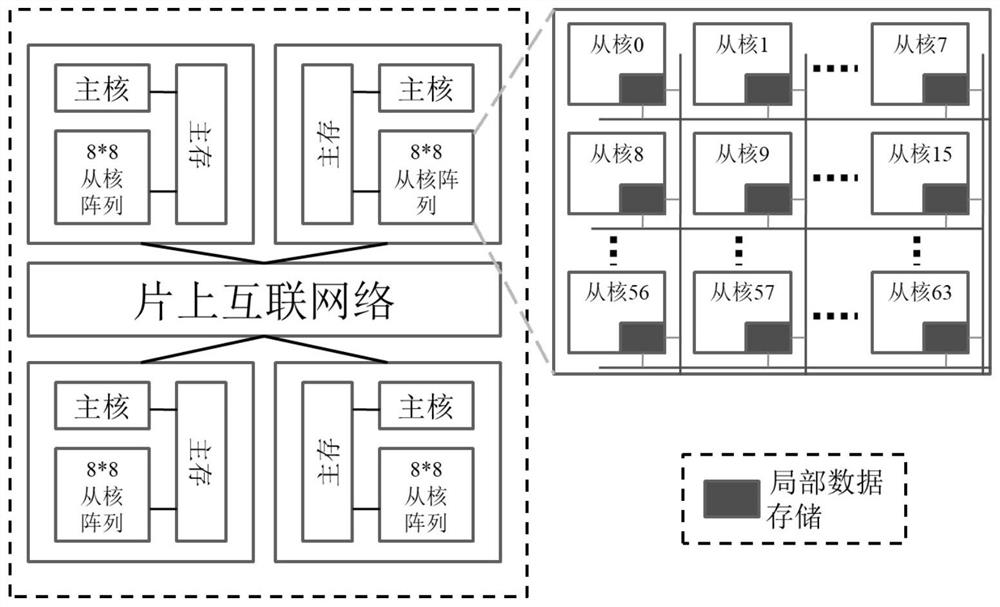

Sparse tensor canonical decomposition method based on data division and calculation distribution

ActiveCN112765094AImprove parallelismImprove performanceElectric digital data processingArchitecture with multiple processing unitsProcessing coreAlgorithm

The invention relates to a sparse tensor canonical decomposition method based on data division and task allocation. The sparse tensor canonical decomposition method comprises the following steps: initially, performing multi-stage division and task allocation on a plurality of processing cores on a core group according to the many-core characteristics of an SW processor; initially, performing multi-stage segmentation processing on sparse tensor data; designing a communication strategy aiming at sparse tensor canonical decomposition by utilizing the register communication characteristics of the SW processor SW26010; aiming at the common performance bottleneck of different sparse tensor canonical decomposition methods, namely different requirements (whether tensor elements need to be randomly extracted for calculation) of matrix tensor multiplied by Khatri-Rao product (MTTKRP for short) during specific operation, different calculation schemes of the MTTKRP process are designed by utilizing the characteristics of a SW processor. According to the method, the characteristics of the SW system structure are fully excavated, the calculation requirements of sparse tensor decomposition are fully considered, multiple sparse tensor canonical decomposition calculation methods can be completed on the SW system structure in parallel and efficiently, and dynamic load balance is guaranteed to the maximum extent.

Owner:BEIHANG UNIV

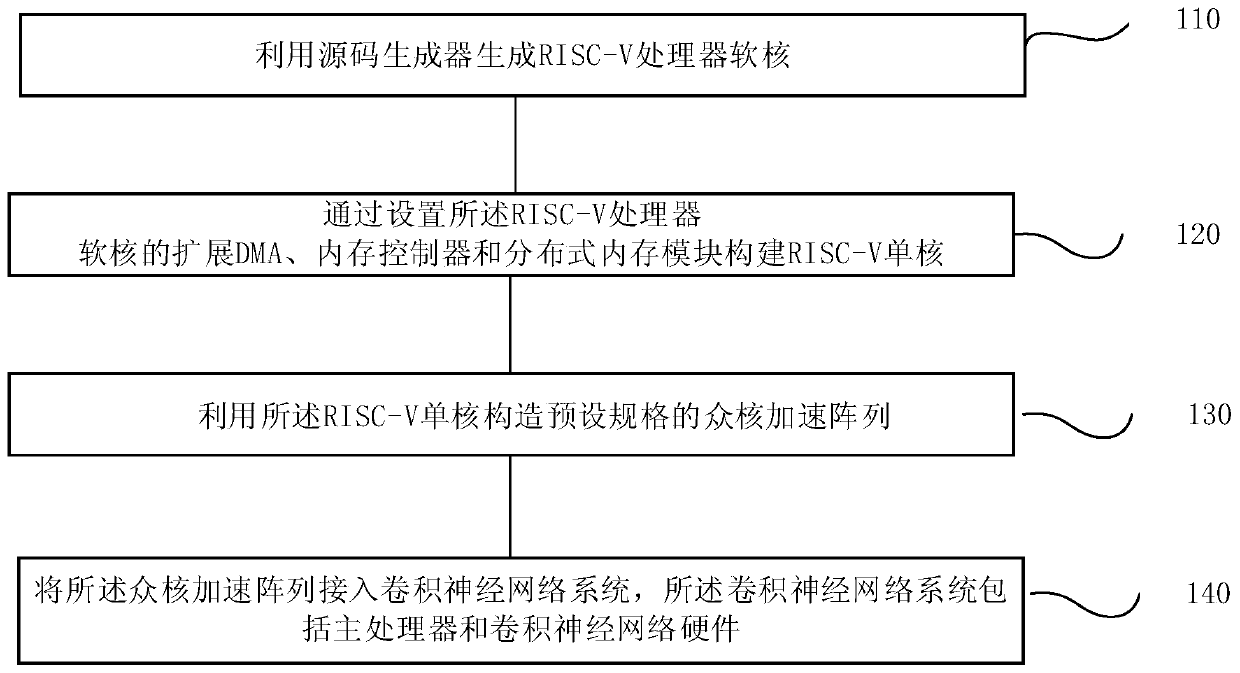

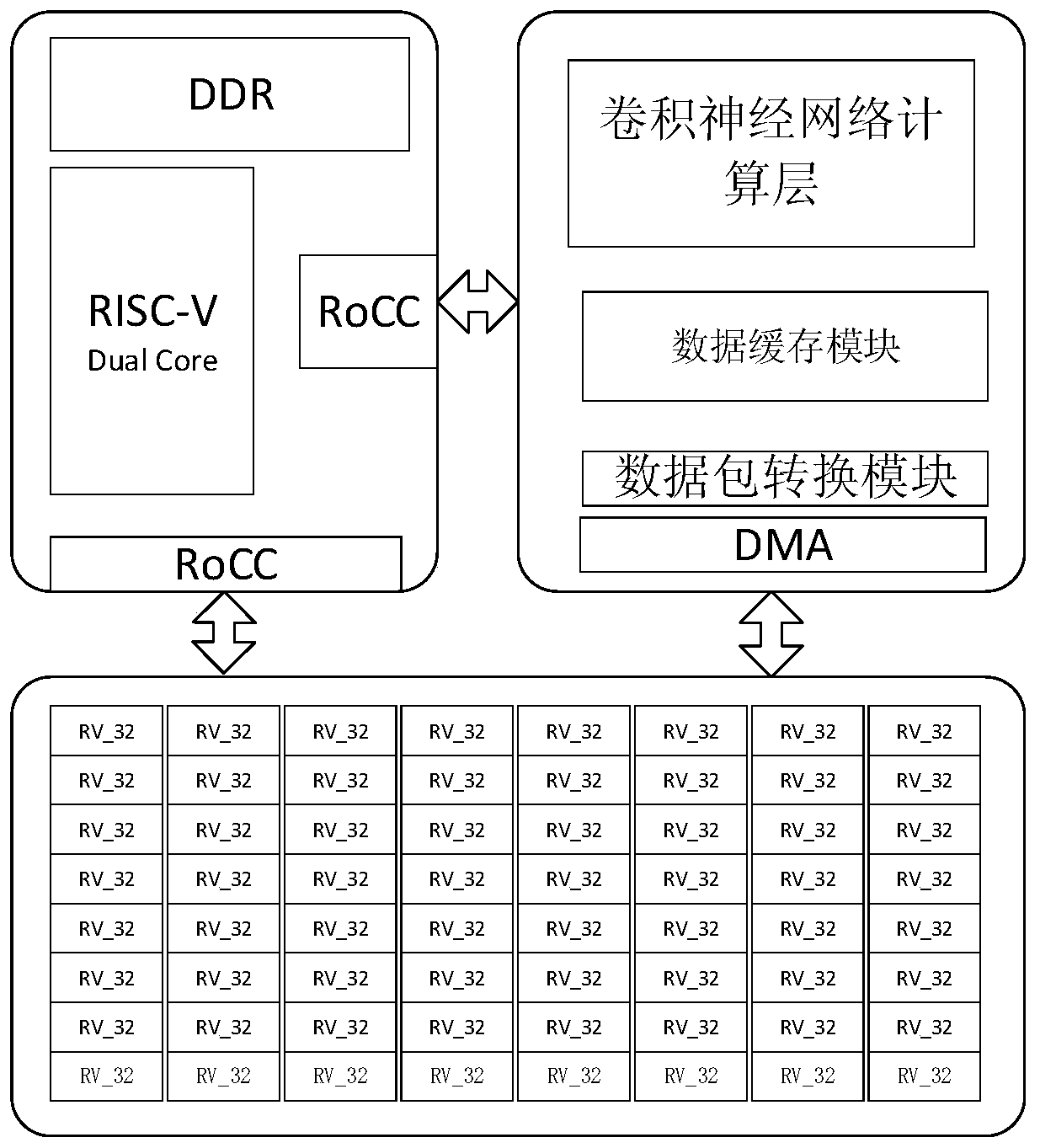

Convolutional neural network acceleration method and system, terminal and storage medium

PendingCN111340185AImprove computing powerImplement concurrent memory accessNeural architecturesPhysical realisationNetworked systemComputation process

The invention provides a convolutional neural network acceleration method and system, a terminal and a storage medium. The method comprises the following steps: generating an RISC-V processor soft core by using a source code generator; constructing an RISC-V single core by setting an extended DMA of the RISC-V processor soft core, a memory controller and a distributed memory module; constructing amany-core acceleration array with a preset specification by utilizing the RISC-V single core; and accessing the many-core acceleration array to a convolutional neural network system, wherein the convolutional neural network system comprises a main processor and convolutional neural network hardware. According to the invention, the memory access bandwidth in the calculation process can be greatlyimproved, the memory access delay is reduced, the calculation performance of the convolutional neural network is improved, and the calculation acceleration of the convolutional neural network is realized.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

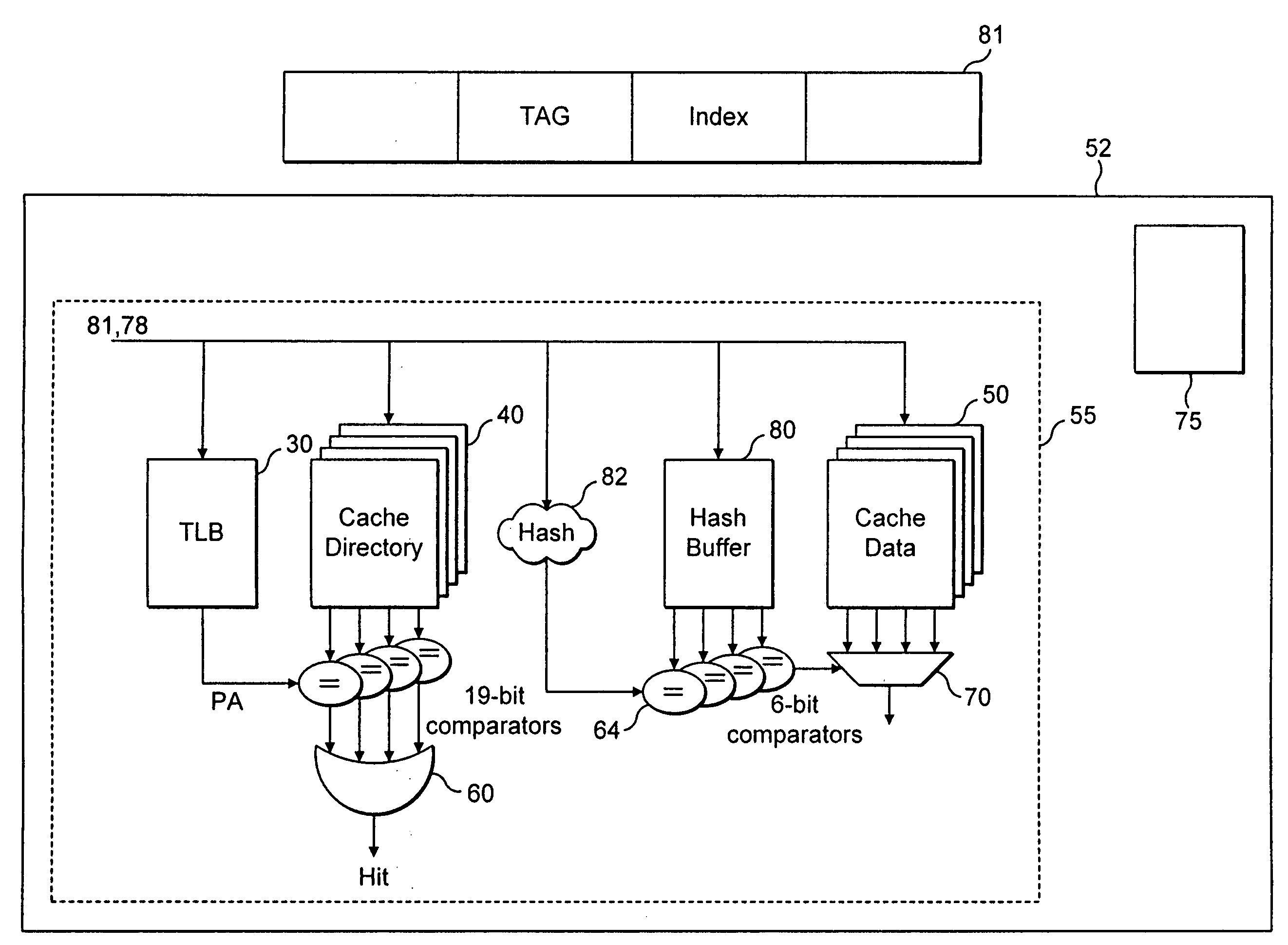

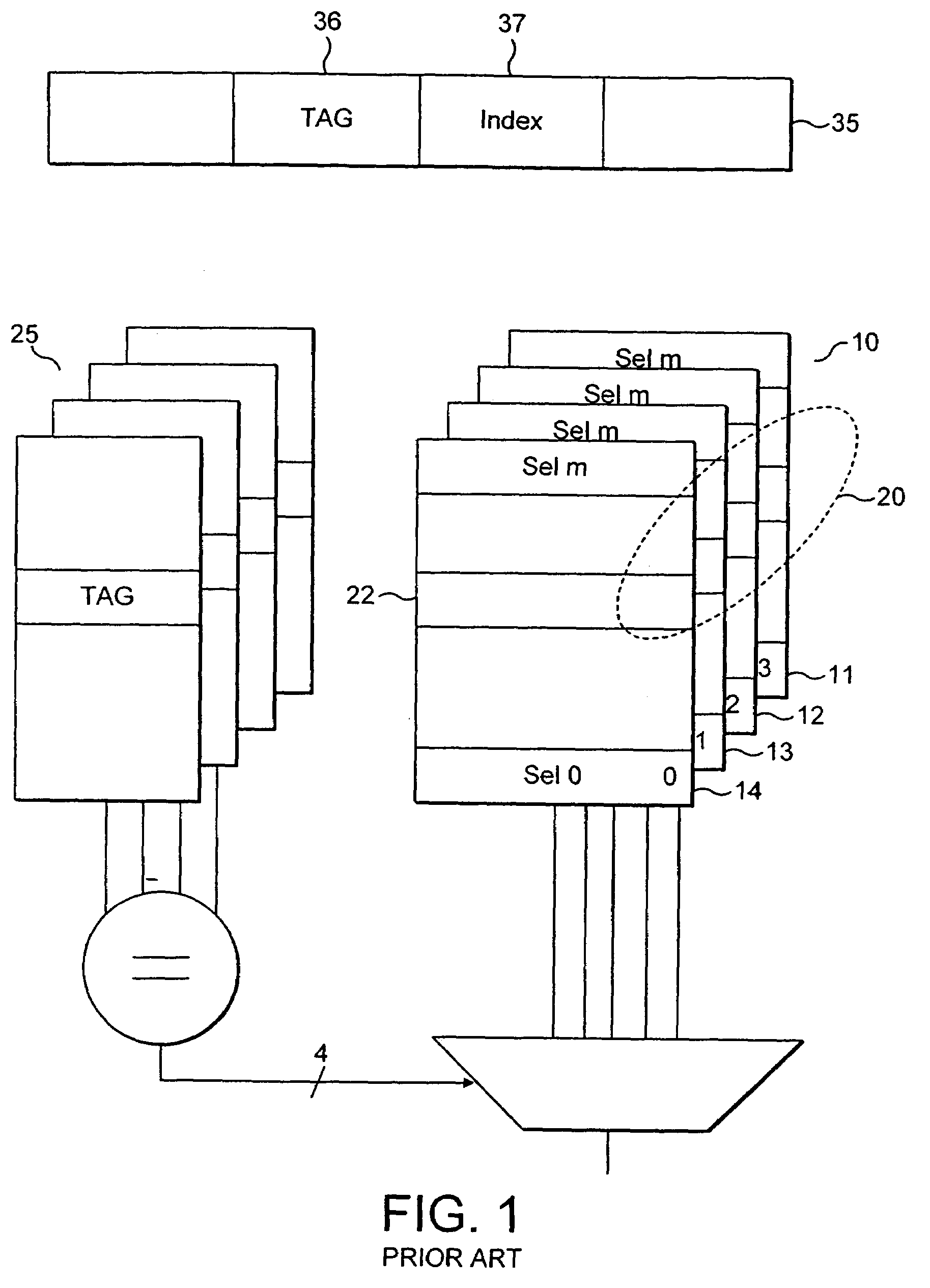

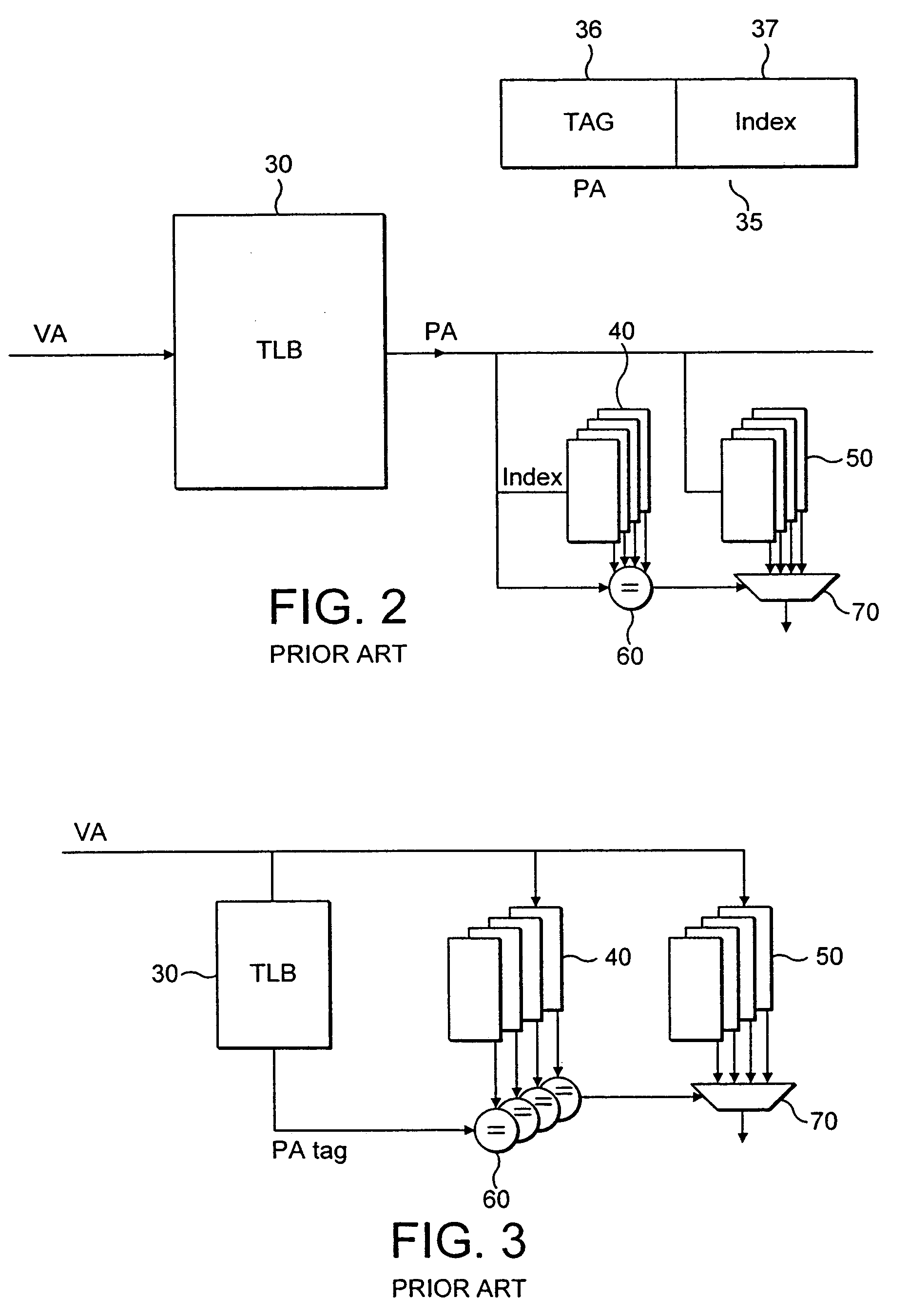

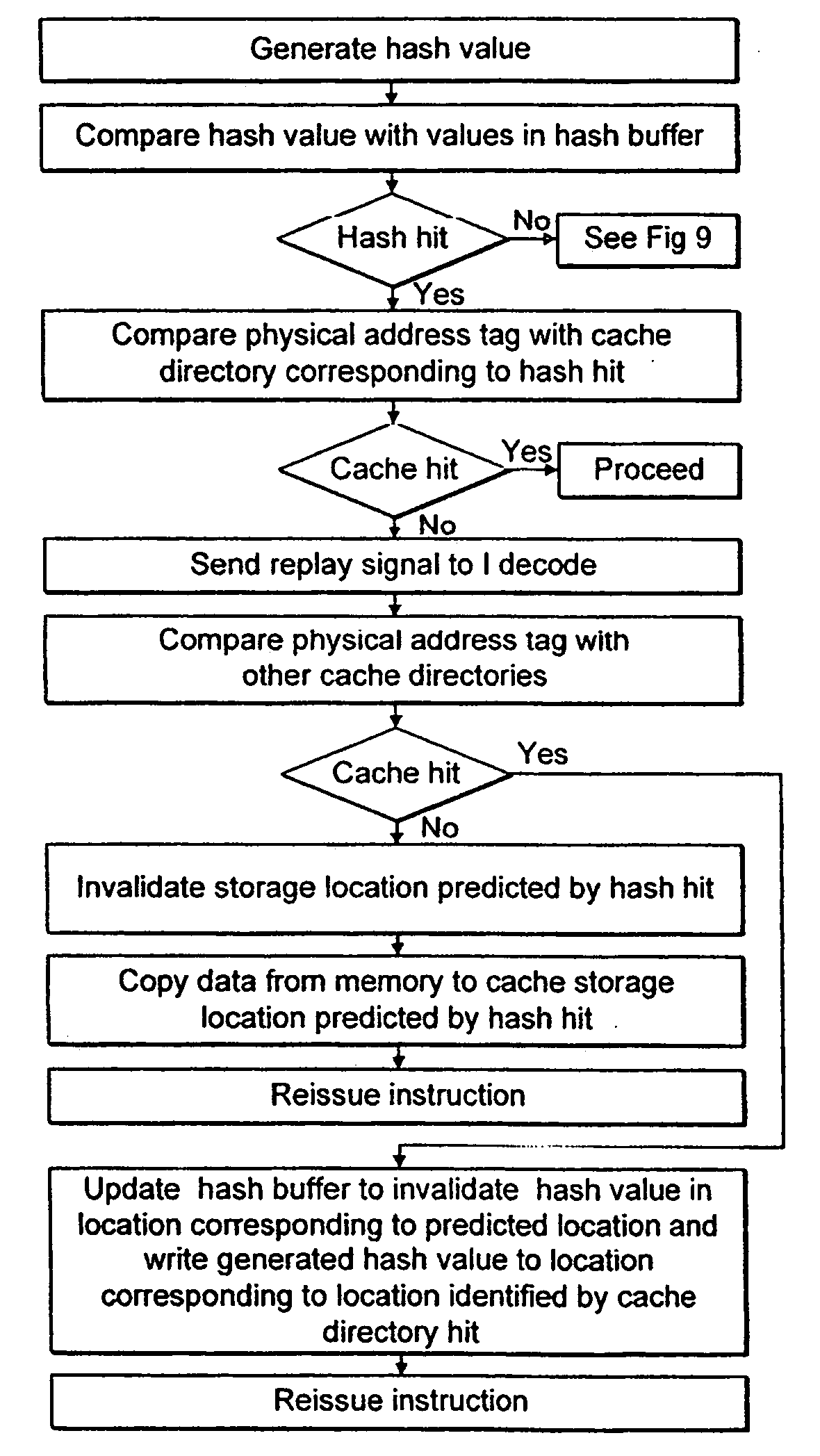

Correction of incorrect cache accesses

ActiveUS20080222387A1Reduce probabilityReduce power consumptionEnergy efficient ICTMemory adressing/allocation/relocationCache accessPhysical address

Owner:ARM LTD +1

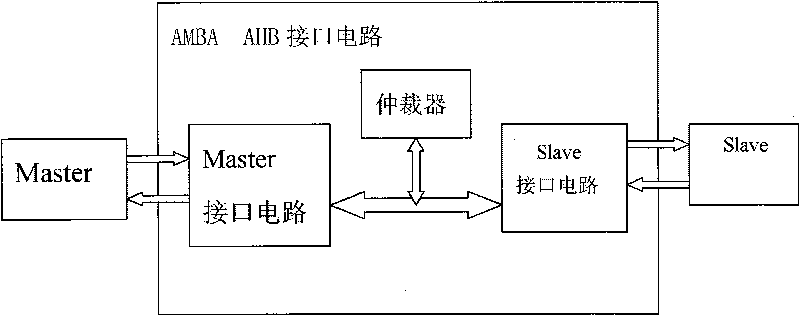

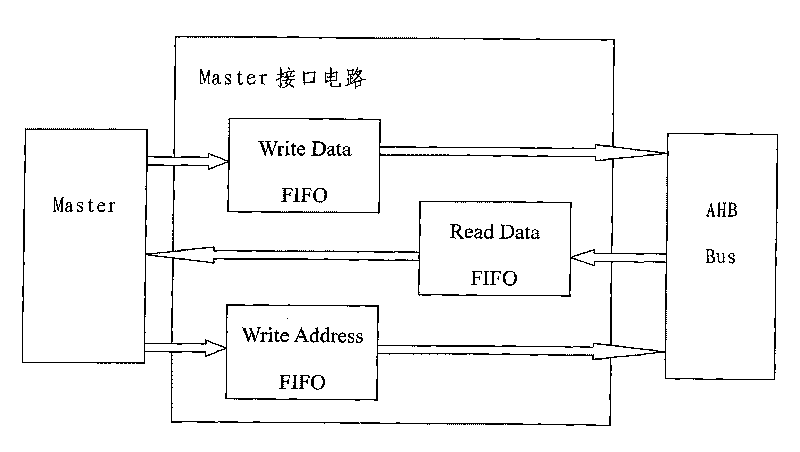

AMBA interface circuit

InactiveCN101710310AReduce latency and memory access latencySave resourcesElectric digital data processingEmbedded systemNetwork on

The invention relates to an AMBA interface circuit which is characterized in that 3 FIFOs are arranged in a Master interface circuit, wherein the Writer Data FIFO and the Writer Address FIFO are used for receiving the data and the address from the transmission of master equipment; if the master equipment does not obtain the right to use the bus temporarily, the data or the address can be first written into the Writer Data FIFO or the Writer Address FIFO, and the data or the address can be transmitted after the master equipment obtains the right to use the bus; the Read Data FIFO is used for sending data to the master equipment; when the master equipment is busy, the data from the transmission of a Slave equipment can be stored temporarily in the Read Data FIFO, then the bus can be release, and the data can be transmitted when the master equipment can receive the data. Compared with the prior art, the invention has the advantages that firstly, because the FIFOs are arranged in the Master interface circuit, the running of the master equipment and the slave equipment and the transmission of the data or the address can be made concurrent, and the bus waiting time and the access-memory delay can be can be shortened, secondly, because the FIFOs are arranged in the Master interface circuit, the resource can be saved in the process of the transmitting the data or the address by the master equipment and the slave equipment, and thirdly, the loss of the data can be avoided when the Master interface circuit is used for transmitting the network on the chip.

Owner:EAST CHINA INST OF OPTOELECTRONICS INTEGRATEDDEVICE

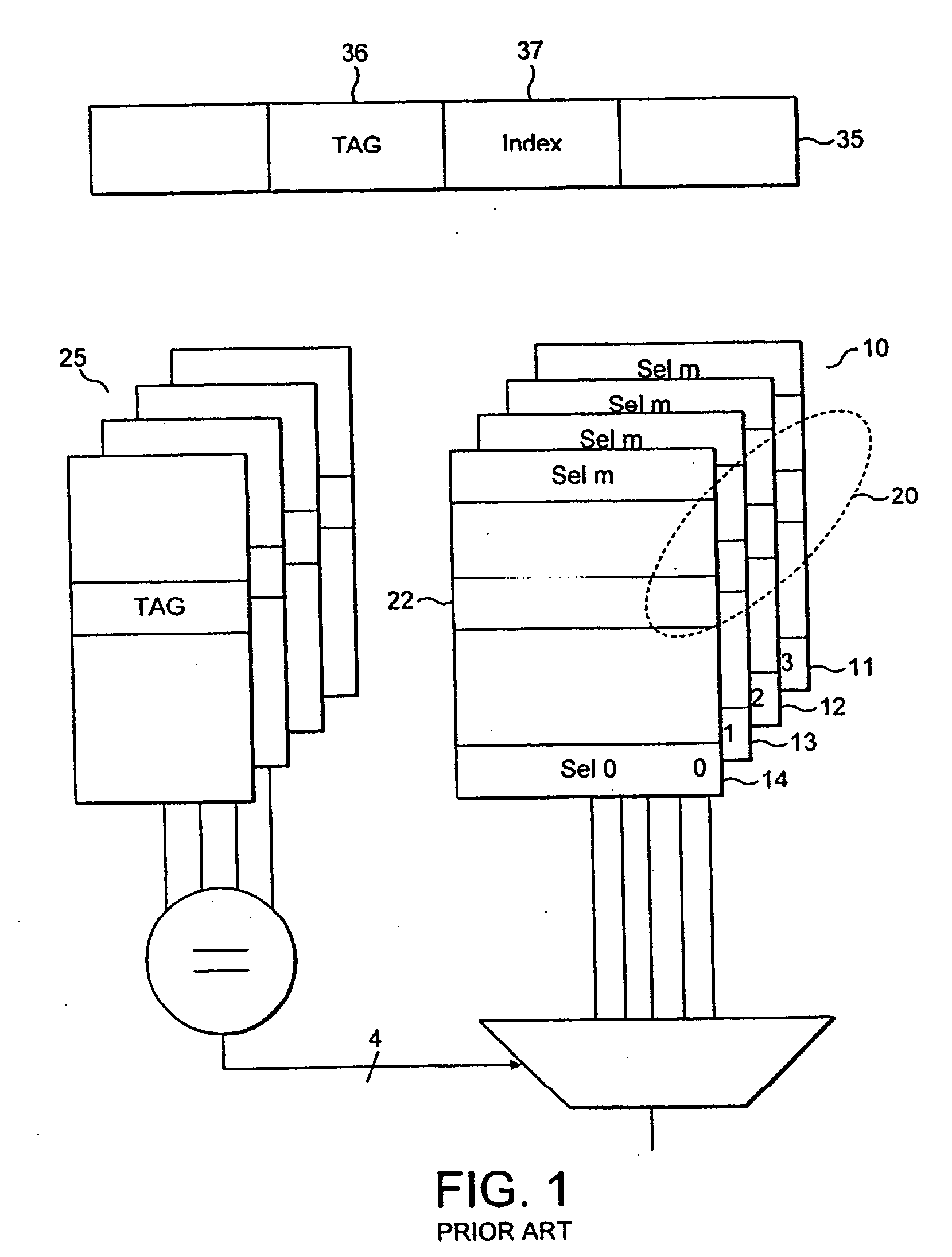

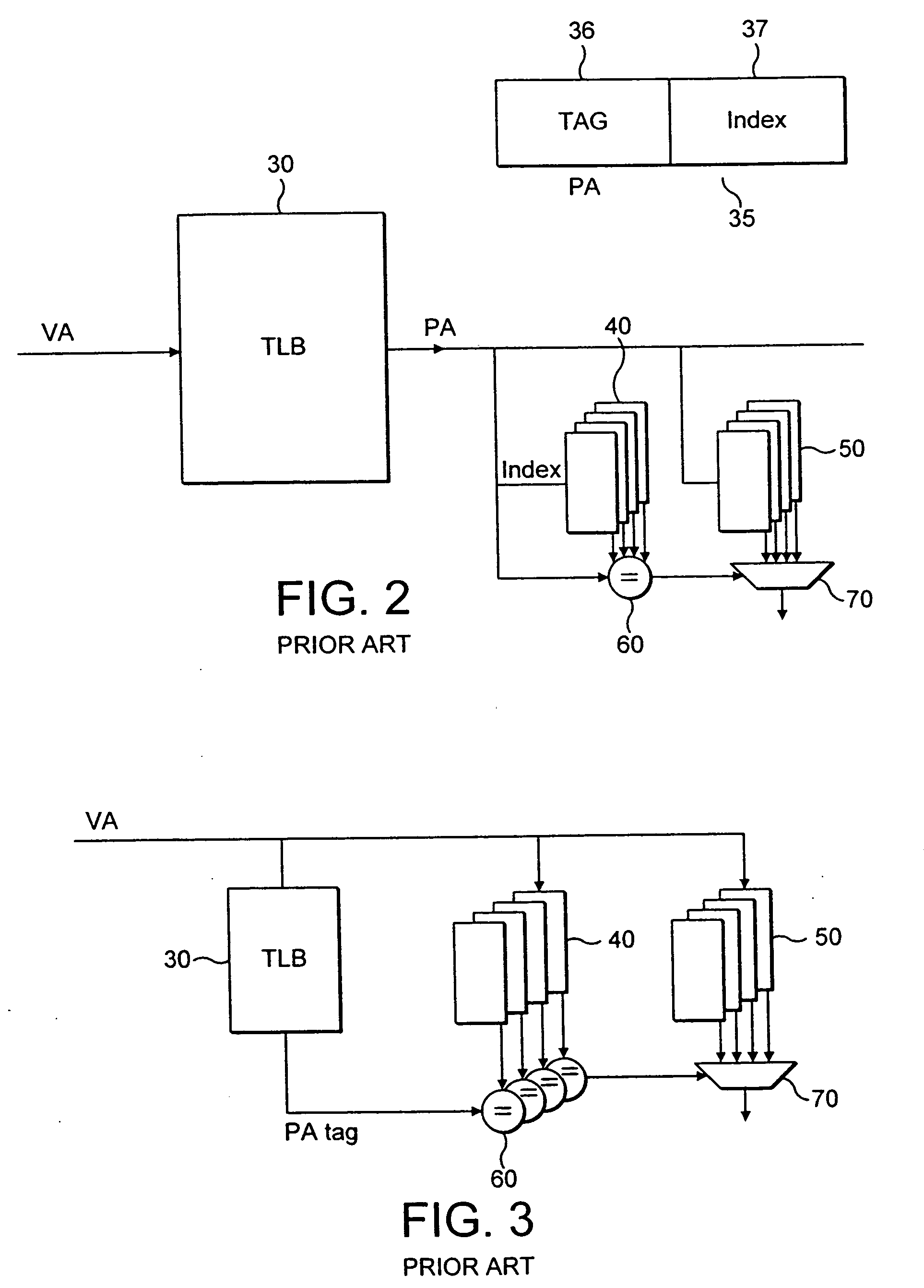

Correction of incorrect cache accesses

InactiveUS20070028047A1Lower memory access latencyReduce probabilityEnergy efficient ICTMemory systemsCache accessPhysical address

The application describes a data processor operable to process data, and comprising: a cache in which a storage location of a data item within said cache is identified by an address, said cache comprising a plurality of storage locations and said data processor comprising a cache directory operable to store a physical address indicator for each storage location comprising stored data; a hash value generator operable to generate a generated hash value from at least some of said bits of said address said generated hash value having fewer bits than said address; a buffer operable to store a plurality of hash values relating to said plurality of storage locations within said cache; wherein in response to a request to access said data item said data processor is operable to compare said generated hash value with at least some of said plurality of hash values stored within said buffer and in response to a match to indicate a indicated storage location of said data item; and said data processor is operable to access one of said physical address indicators stored within said cache directory corresponding to said indicated storage location and in response to said accessed physical address indicator not indicating said address said data processor is operable to invalidate said indicated storage location within said cache.

Owner:ARM LTD +1

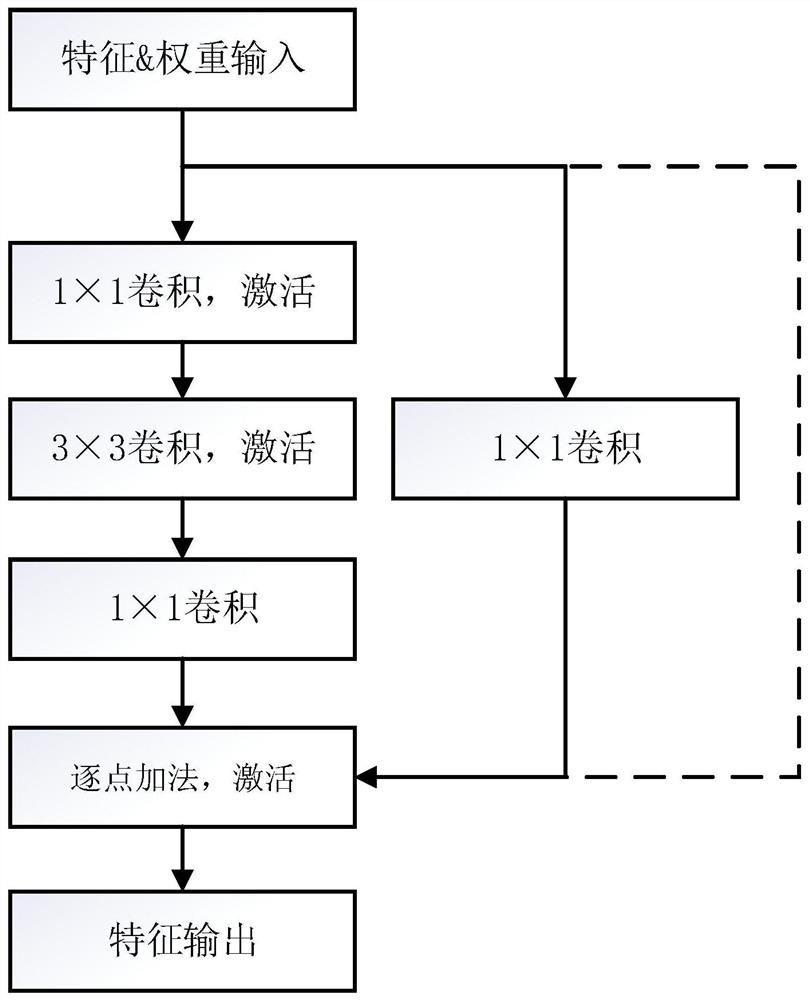

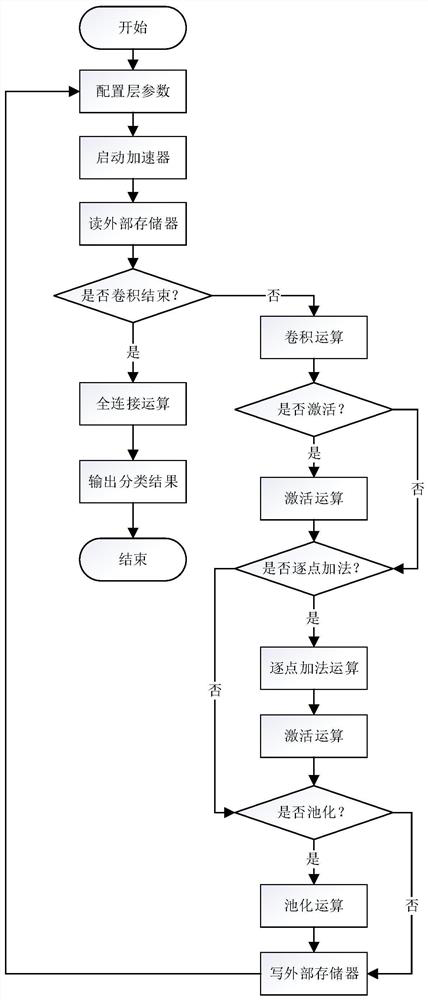

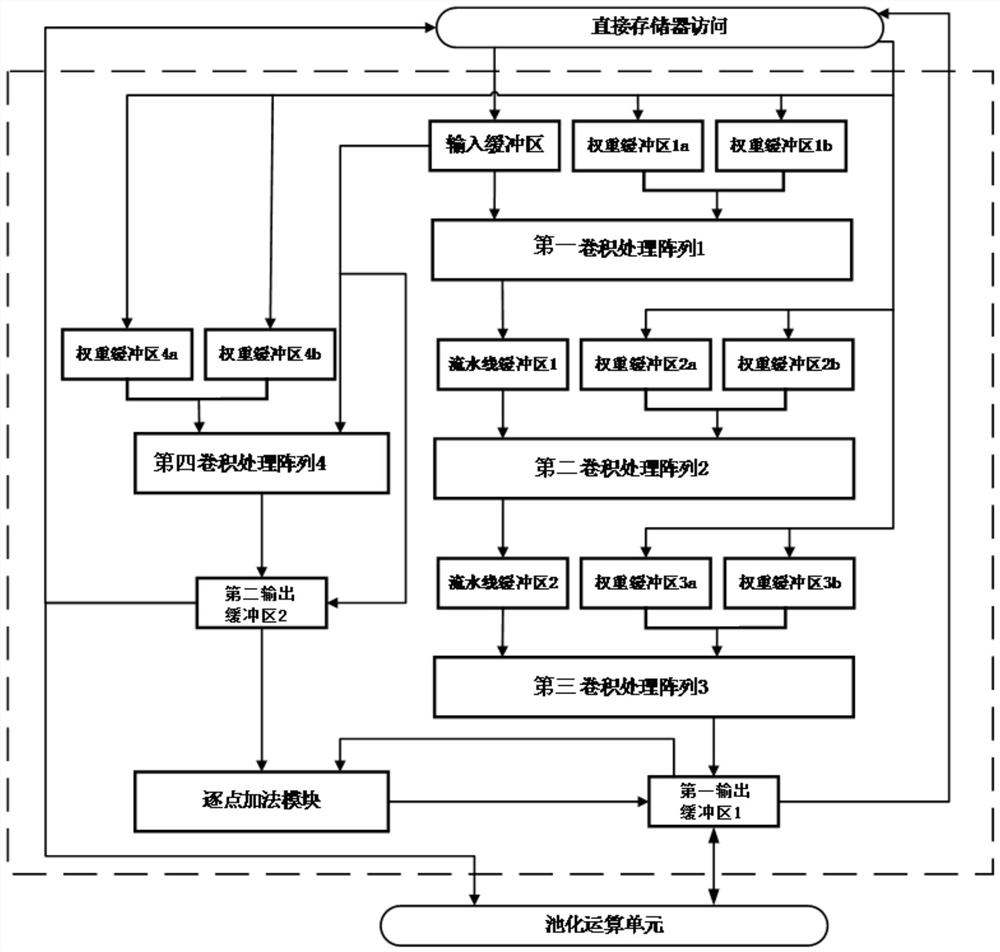

Streamlined convolution computing architecture design method and residual network acceleration system

ActiveCN112862079AImprove parallelismReduce computing latencyNeural architecturesEnergy efficient computingComputer architectureEngineering

The invention provides a streamlined convolution computing architecture design method and a residual network acceleration system. According to the method, a hardware acceleration architecture is divided into an on-chip buffer area, a convolution processing array and a point-by-point addition module; a main path of the hardware acceleration architecture is composed of three convolution processing arrays which are arranged in series, and two assembly line buffer areas are inserted among the three convolution processing arrays and used for achieving interlayer assembly lines of three layers of convolution of the main path. A fourth convolution processing array is set to be used for processing convolution layers, with the kernel size being 1 * 1, of the branches of the residual building blocks in parallel, a register in the fourth convolution processing array is configured, the working mode of the fourth convolution processing array is changed, the fourth convolution processing array can be used for calculating a residual network head convolution layer or a full connection layer, and when the branches of the residual building blocks are not convolved, the fourth convolution processing array is skipped out and convolution is not exected; and a point-by-point addition module is set to add corresponding output feature pixels element by element for the output feature of the main path of the residual building block and the output feature of the branch quick connection.

Owner:SUN YAT SEN UNIV

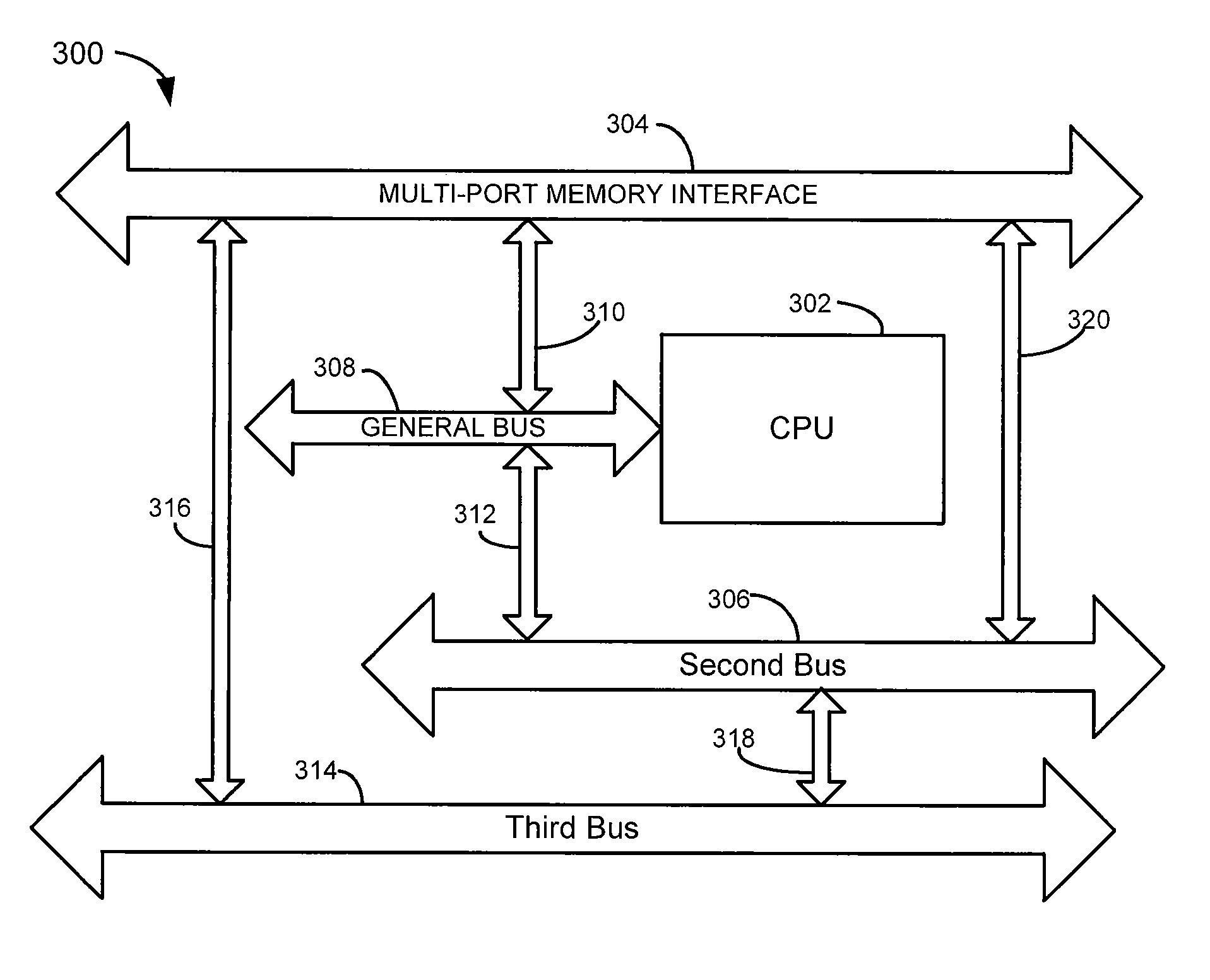

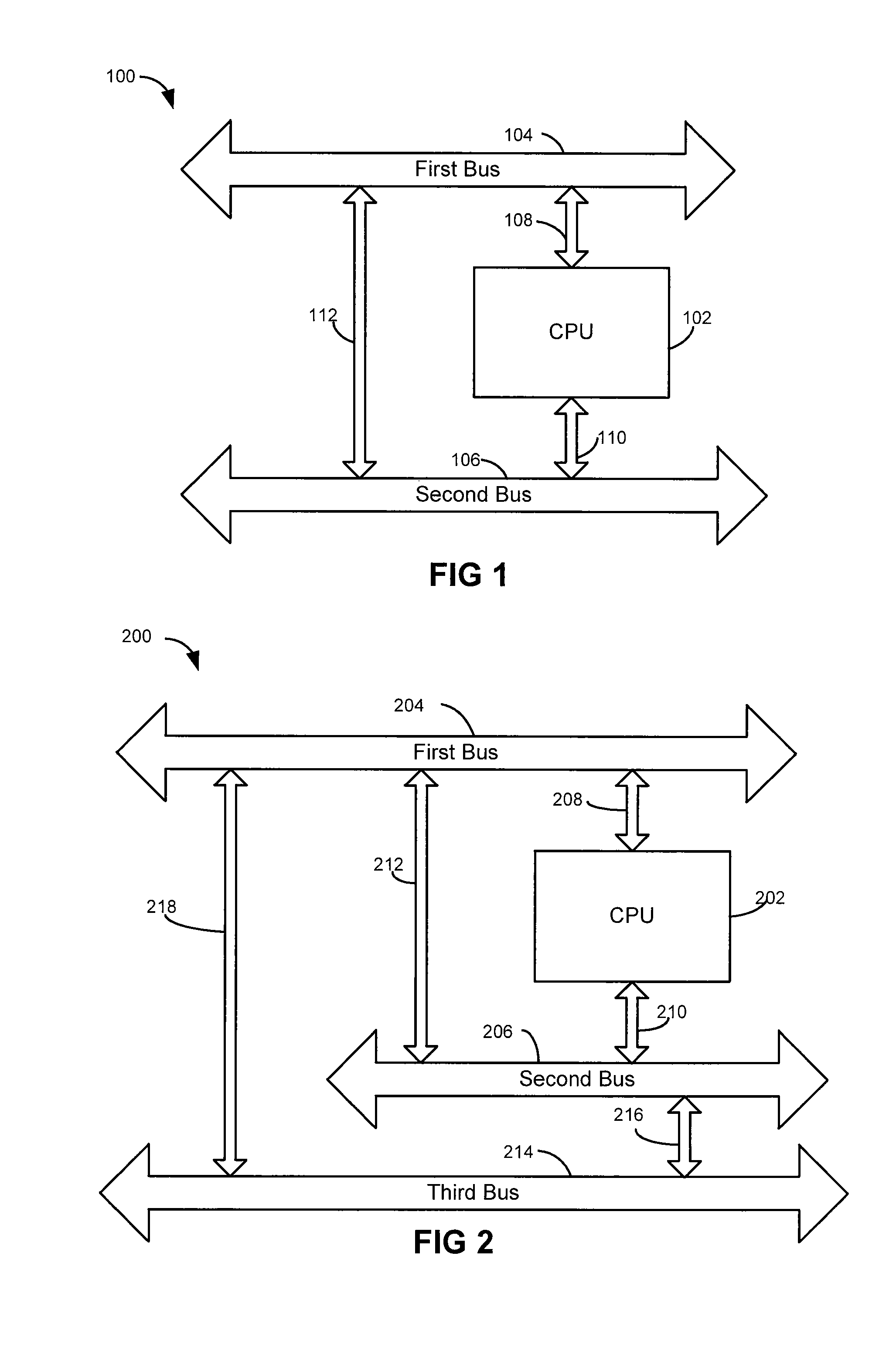

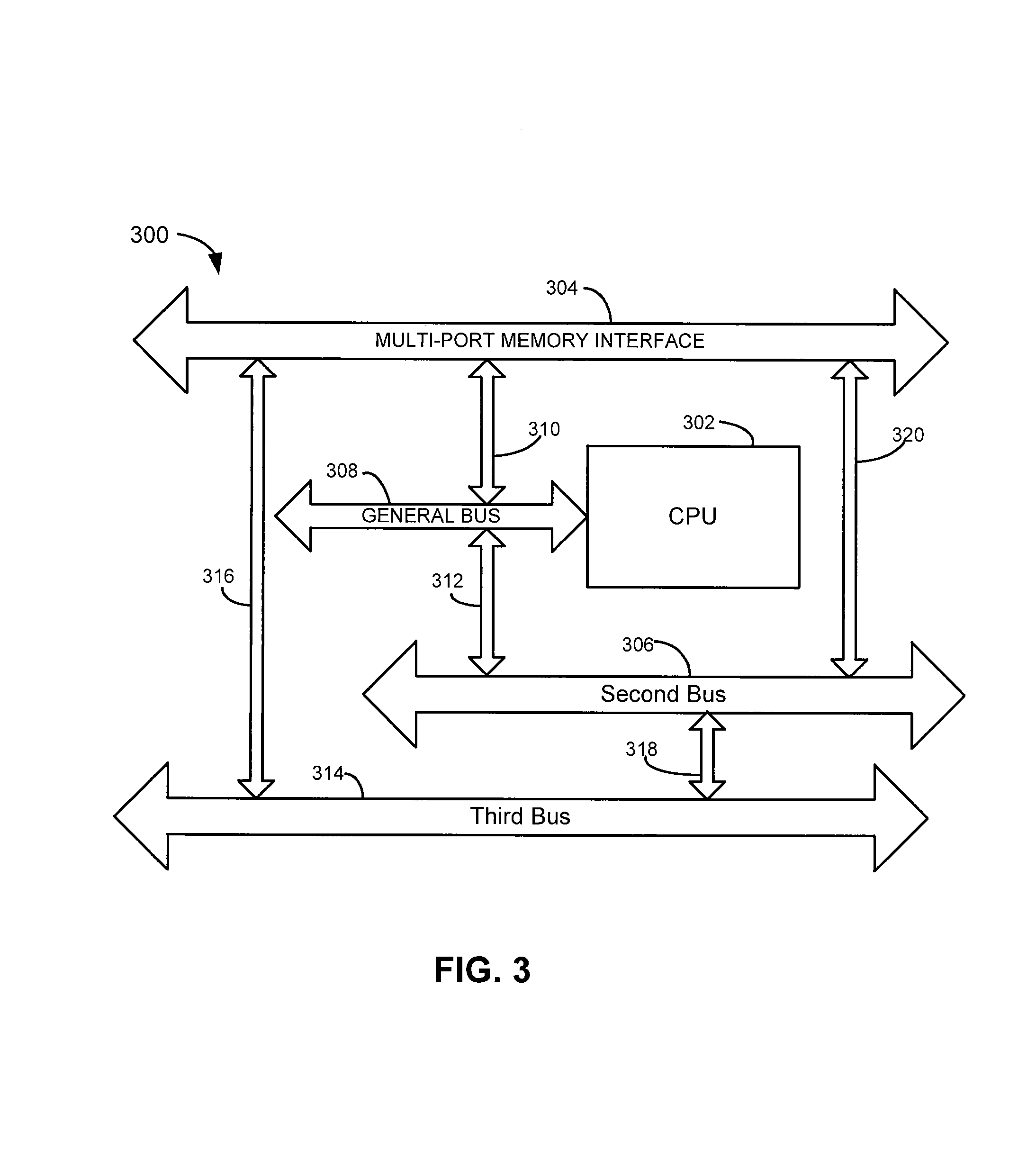

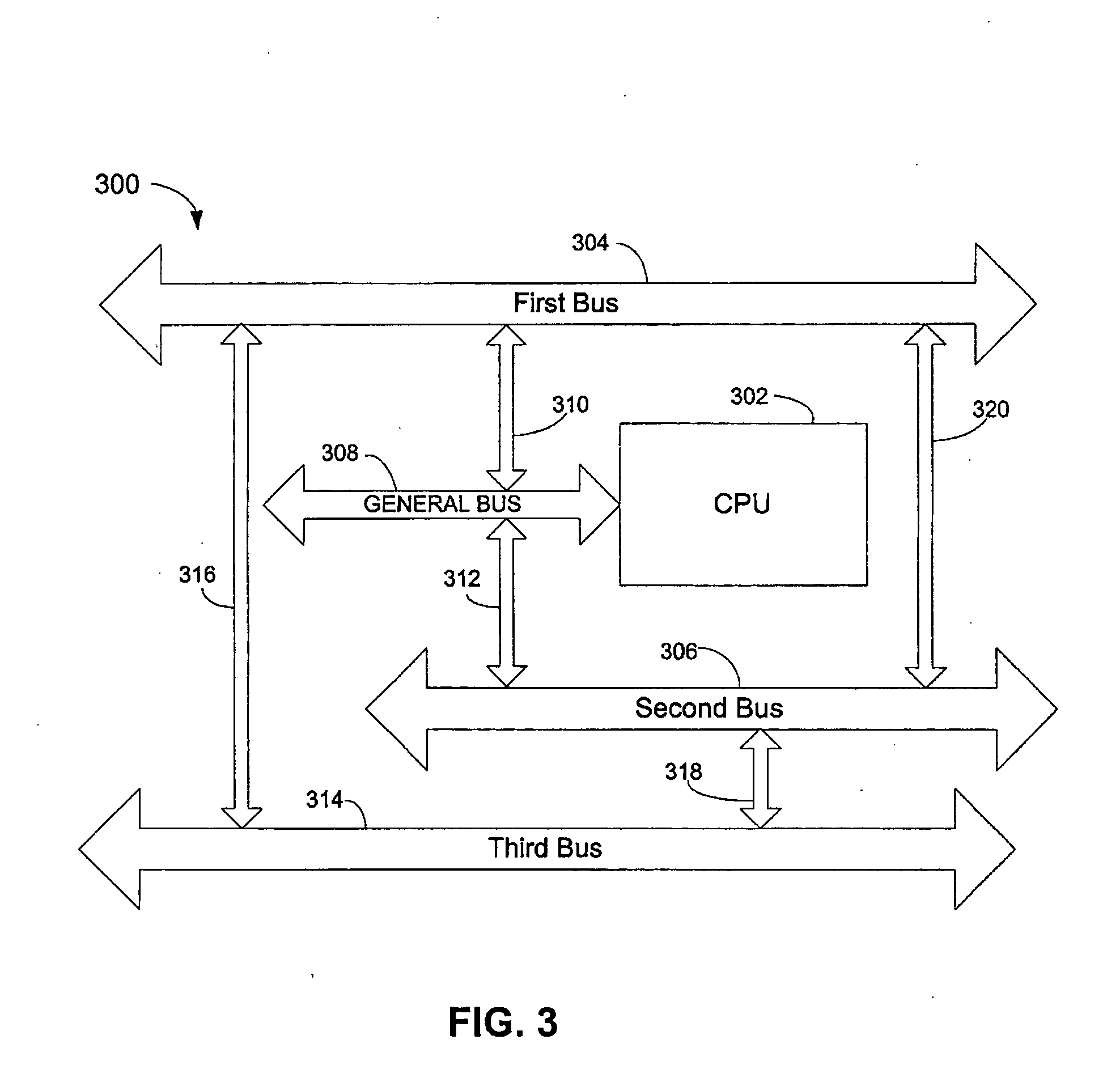

Multi-port processor architecture with bidirectional interfaces between busses

InactiveUS7373447B2Reducing memory bandwidth efficiencyLower memory access latencyElectric digital data processingMulti portEmbedded system

A multi-port processor architecture having a first bus, a second bus and a central processing unit. The central processing unit having a first and second ports coupled to first and second busses respectively. A first bus to second bus bi-directional interface couples the first bus to the second bus. Optionally, the first bus or the second bus can be connected to a memory. The architecture can include a third bus with a third bus to first bus bi-directional interface connecting the third bus to the first bus and a third bus to second bus bi-directional interface connecting the third bus to the second bus. If there are additional bus systems, the Nth port (where N is an integer greater than 2) is connected to the Nth port. The buses use bi-directional interfaces to communicate with each other without using CPU or memory resources, reducing memory access latency.

Owner:TOSHIBA AMERICA ELECTRONICS COMPONENTS

Floating point bypass retry

A system and method for increasing the throughput of a processor during cache misses. During the retrieval of the cache miss data, subsequent memory requests are generated and allowed to proceed to the cache. The data for the subsequent cache hits are stored in a bypass retry device. Also, the cache miss address and memory line data may be stored by the device when they are retrieved and they may be sent them to the cache for a cache line replacement. The bypass retry device determines the priority of sending data to the processor. The priority allows the data for memory requests to be provided to the processor in the same order as they were generated from the processor without delaying subsequent memory requests after a cache miss.

Owner:GLOBALFOUNDRIES INC

Directory based support for function shipping in a multiprocessor system

InactiveUS7080214B2Lower memory access latencyMemory adressing/allocation/relocationMemory addressMulti processor

Owner:INT BUSINESS MASCH CORP

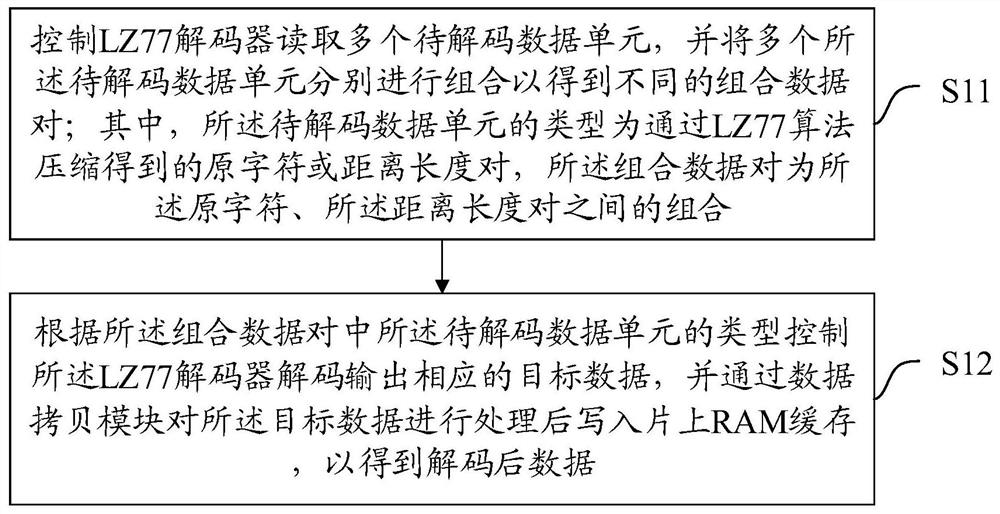

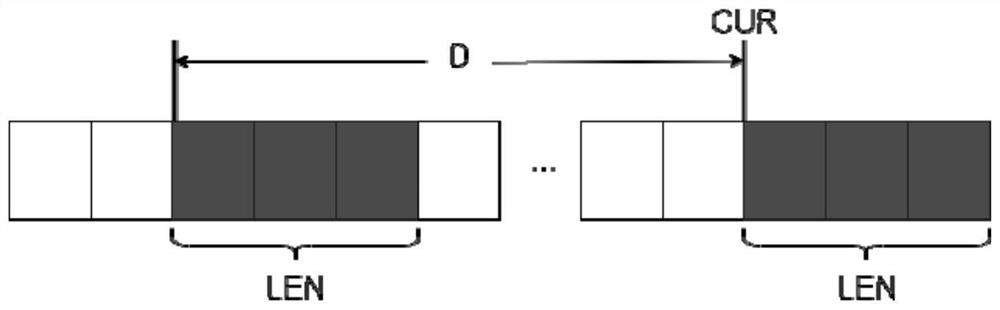

Parallel acceleration LZ77 decoding method and device

PendingCN113890540AImprove decompression performanceReduce visit frequencyCode conversionAccess frequencyComputer engineering

The invention discloses a parallel acceleration LZ77 decoding method and device; wherein the method comprises the steps: controlling an LZ77 decoder to read a plurality of to-be-decoded data units, and carrying out the combination of the plurality of to-be-decoded data units, so as to obtain different combined data pairs, wherein the type of the to-be-decoded data unit is an original character or a distance length pair obtained by compression through an LZ77 algorithm, and the combined data pair is a combination of the original character and the distance length pair; and controlling the LZ77 decoder to decode and output corresponding target data according to the type of the to-be-decoded data unit in the combined data pair, processing the target data through a data copying module, and writing the processed target data into an on-chip RAM cache to obtain decoded data. According to the method, a plurality of to-be-decoded data units are read at the same time, the problem that serial copy delay is too large is solved, meanwhile, an on-chip RAM cache is additionally arranged, the access frequency of an off-chip memory is greatly reduced, access delay and access bandwidth pressure are effectively reduced, and decompression performance is improved.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

Method and apparatus for reducing memory access latency

Owner:ADVANCED MICRO DEVICES INC

Multi port processor architecture

InactiveUS20060101187A1Avoids bus latencyReduce memory latencyElectric digital data processingMulti portEmbedded system

A multi-port processor architecture having a first bus, a second bus and a central processing unit. The central processing unit having a first and second ports coupled to first and second busses respectively. A first bus to second bus bi-directional interface couples the first bus to the second bus. Optionally, the first bus or the second bus can be connected to a memory. The architecture can include a third bus with a third bus to first bus bi-directional interface connecting the third bus to the first bus and a third bus to second bus bi-directional interface connecting the third bus to the second bus. If there are additional bus systems, the Nth port (where N is an integer greater than 2) is connected to the Nth port. The buses use bi-directional interfaces to communicate with each other without using CPU or memory resources, reducing memory access latency.

Owner:TOSHIBA AMERICA ELECTRONICS COMPONENTS

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com