Micro-architecture sensitive thread scheduling (MSTS) method

A technology of architecture and operating system, applied in the direction of multi-programming device, etc., can solve the problem of providing users with a unified and difficult cache failure rate analysis and processing of memory access addresses, so as to alleviate cache jitter and mutual coverage, and reduce memory access delays. , Improve the effect of Cache hit rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] The present invention will be described in further detail below in conjunction with the accompanying drawings and specific implementation methods.

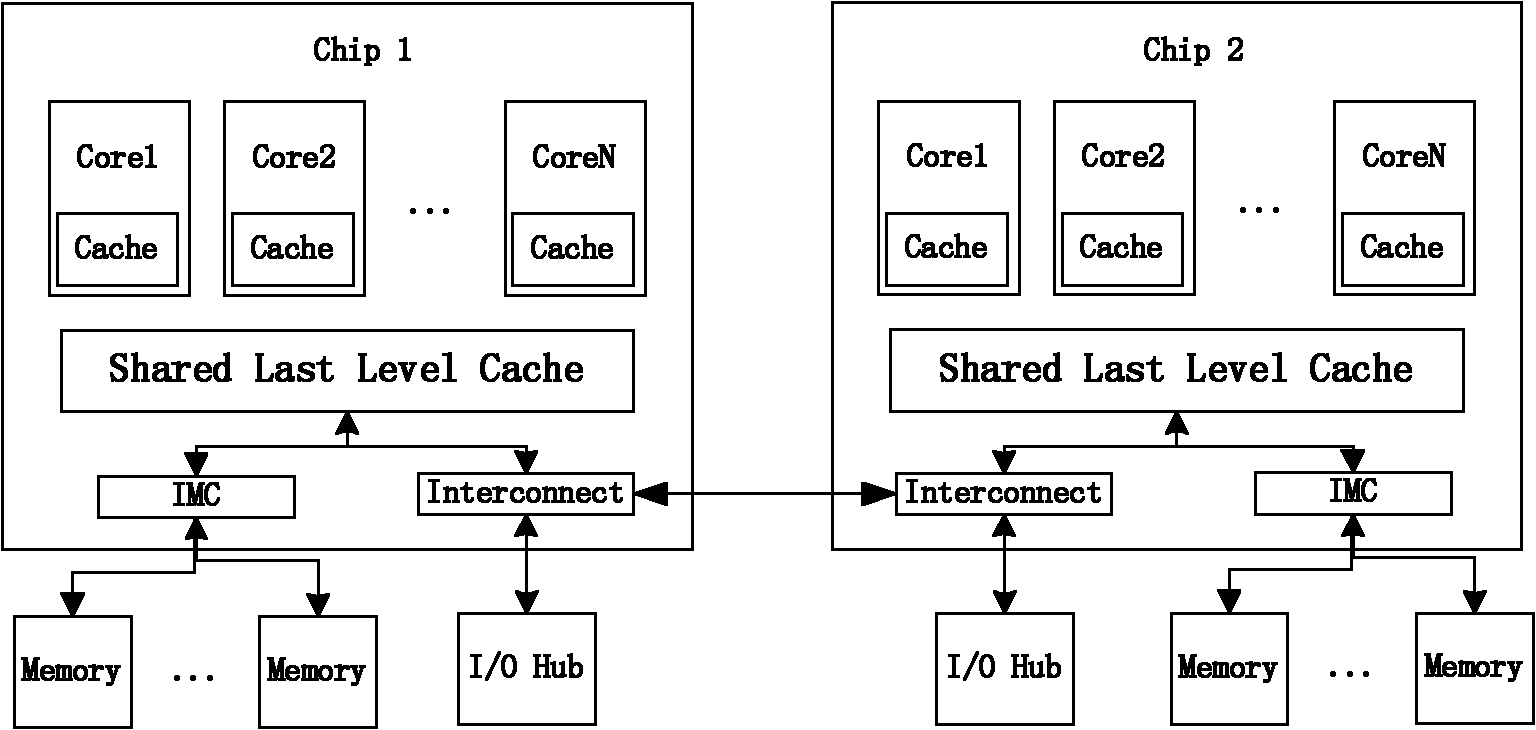

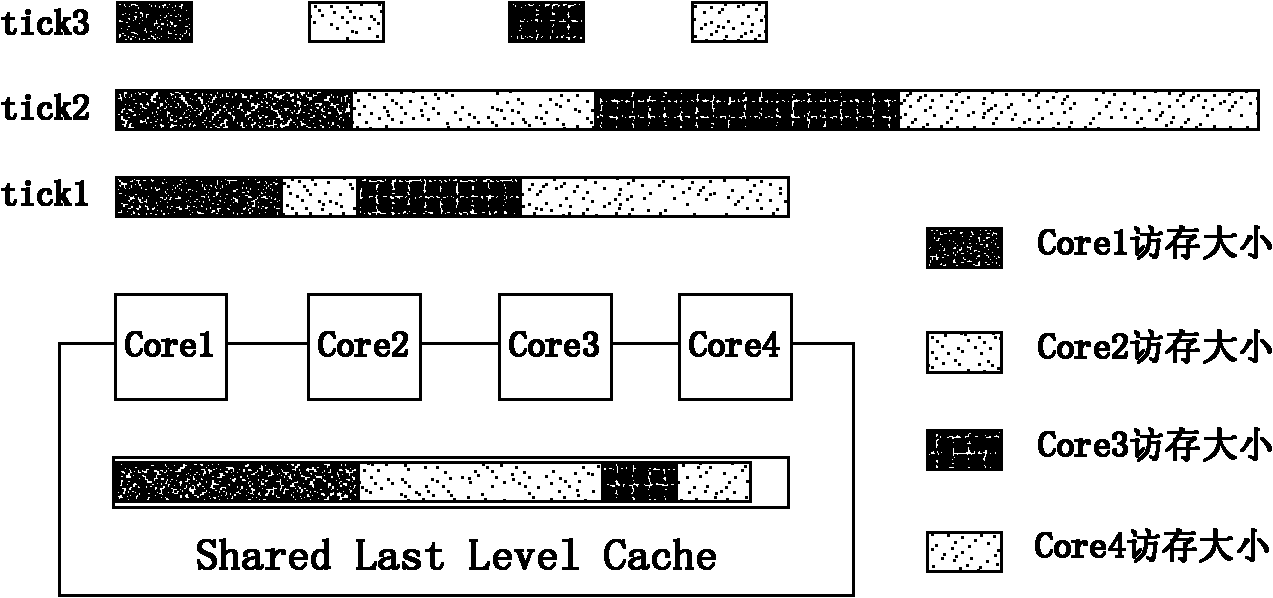

[0034] Traditional uniprocessors cannot meet the needs of modern commercial and scientific computing fields because they cannot overcome obstacles such as storage walls and power consumption walls. In order to reduce power consumption and improve processor memory access speed and bandwidth, most of the current Using a ccNUMA parallel processor structure composed of CMP nodes, figure 1 Shown is a parallel processor architecture consisting of two CMP nodes. Each core has its own local Cache. Each core on the CMP node shares the global Cache with a larger capacity at the last level, and has a built-in memory controller to improve memory access speed. It is connected to other CMPs through a high-speed interconnection mechanism. Realize high-speed data transmission. In order to improve the scalability of the system, the inter...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com