Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

30 results about "CPU-bound" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer science, a computer is CPU-bound (or compute-bound) when the time for it to complete a task is determined principally by the speed of the central processor: processor utilization is high, perhaps at 100% usage for many seconds or minutes. Interrupts generated by peripherals may be processed slowly, or indefinitely delayed.

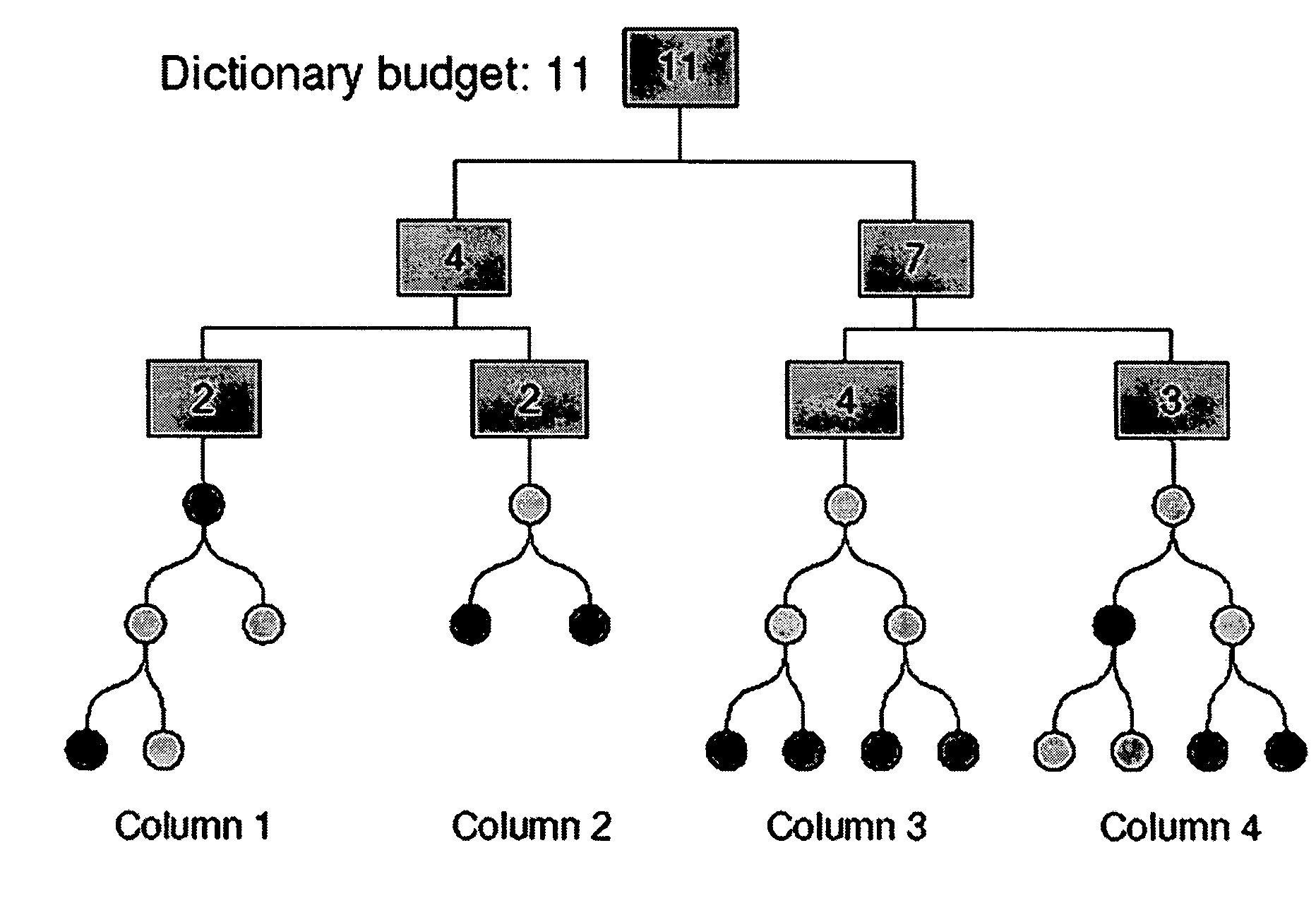

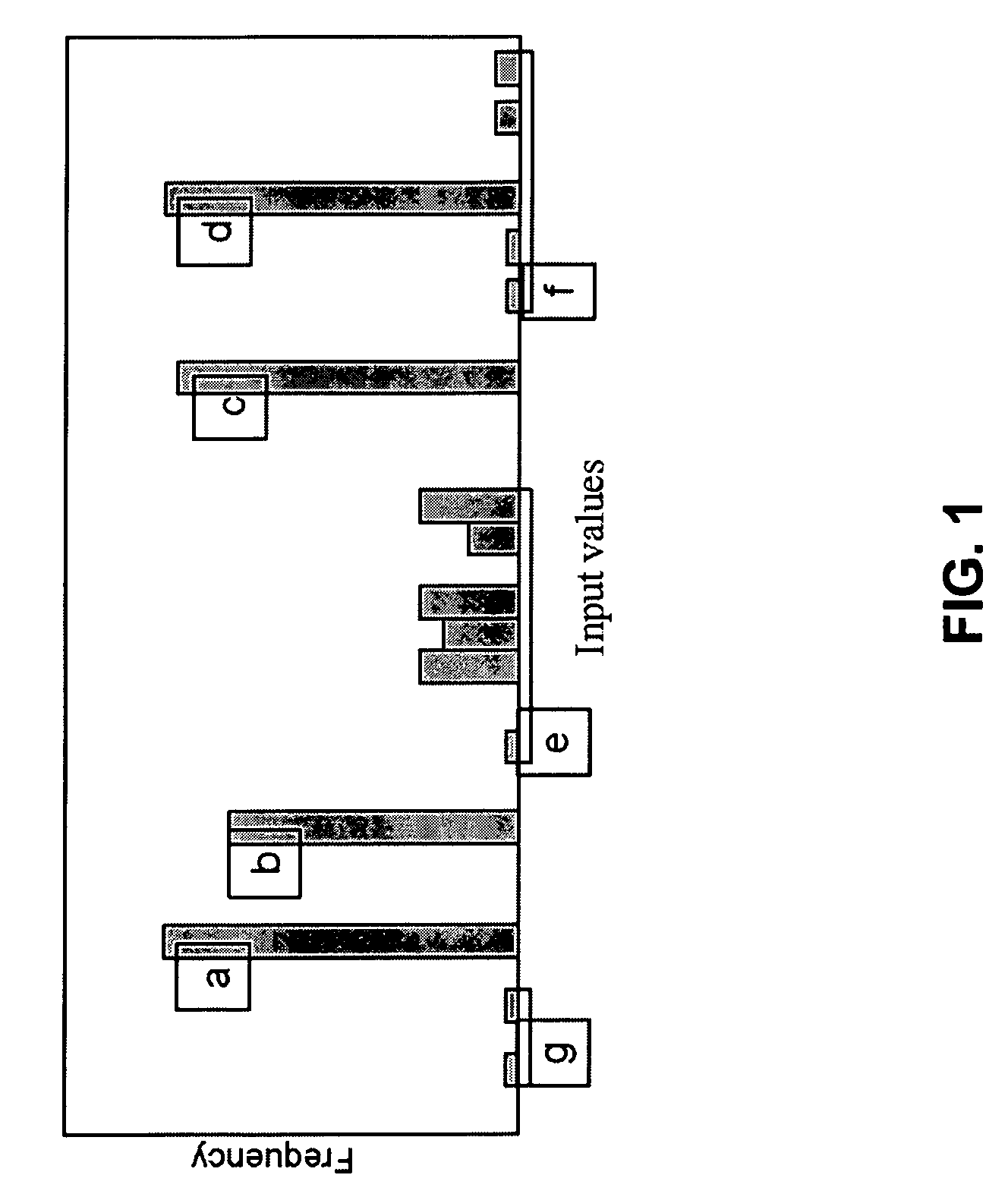

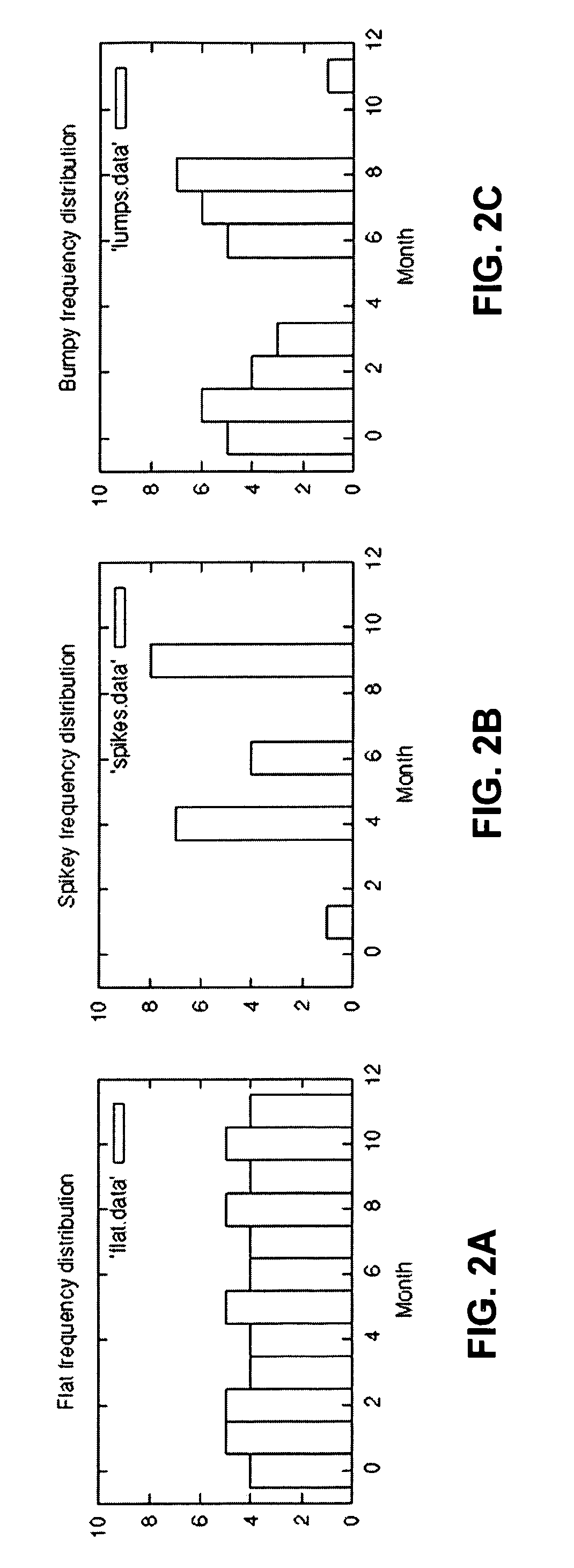

Method for compressed data with reduced dictionary sizes by coding value prefixes

The speed of dictionary based decompression is limited by the cost of accessing random values in the dictionary. If the size of the dictionary can be limited so it fits into cache, decompression is made to be CPU bound rather than memory bound. To achieve this, a value prefix coding scheme is presented, wherein value prefixes are stored in the dictionary to get good compression from small dictionaries. Also presented is an algorithm that determines the optimal entries for a value prefix dictionary. Once the dictionary fits in cache, decompression speed is often limited by the cost of mispredicted branches during Huffman code processing. A novel way is presented to quantize Huffman code lengths to allow code processing to be performed with few instructions, no branches, and very little extra memory. Also presented is an algorithm for code length quantization that produces the optimal assignment of Huffman codes and show that the adverse effect of quantization on the compression ratio is quite small.

Owner:IBM CORP +1

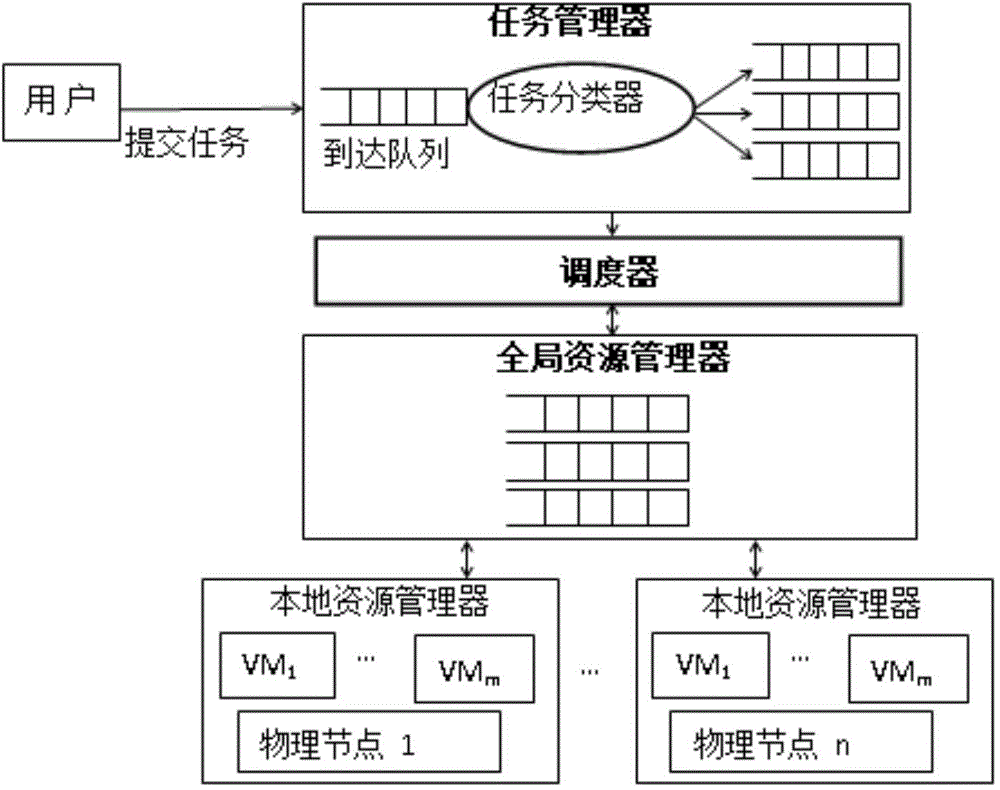

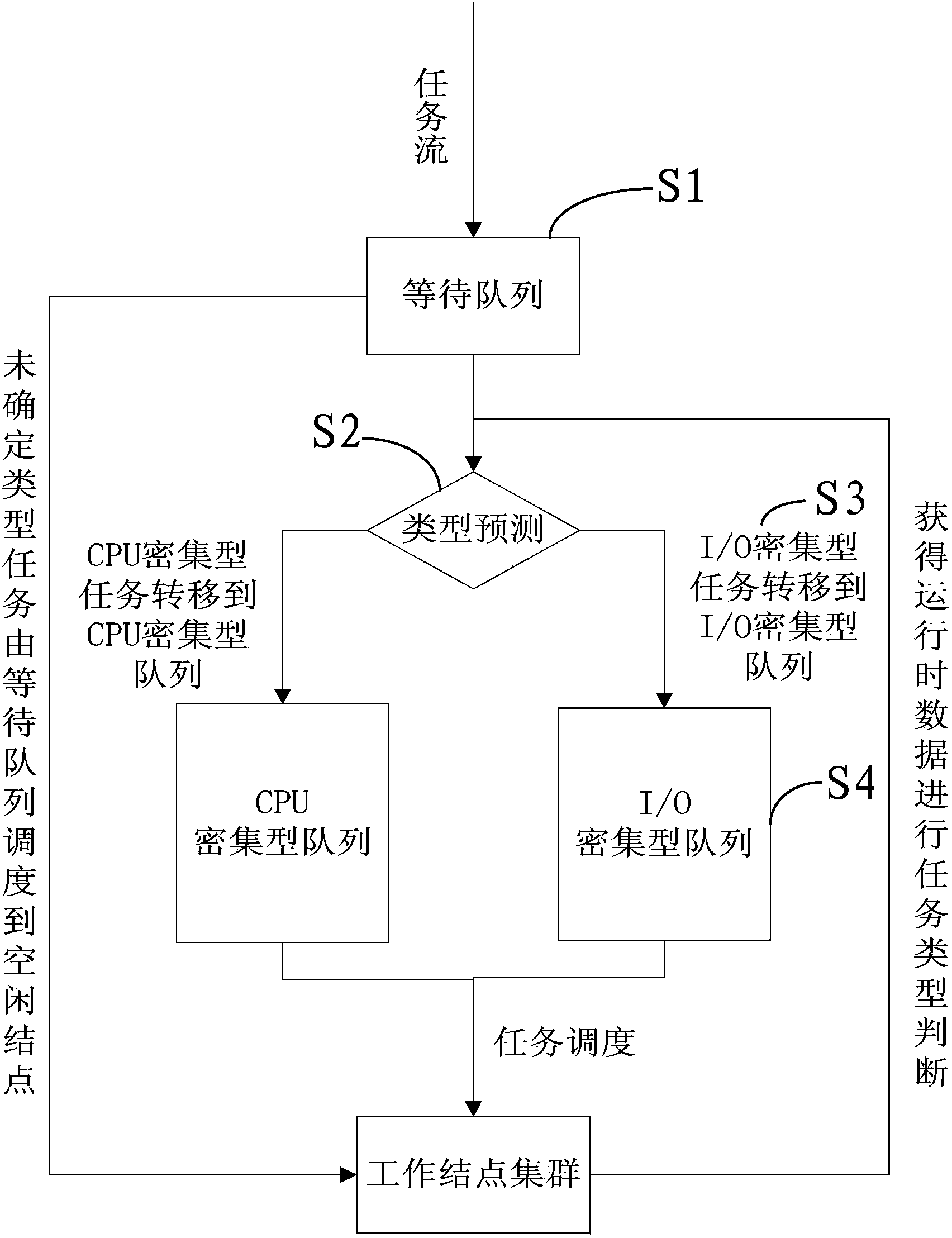

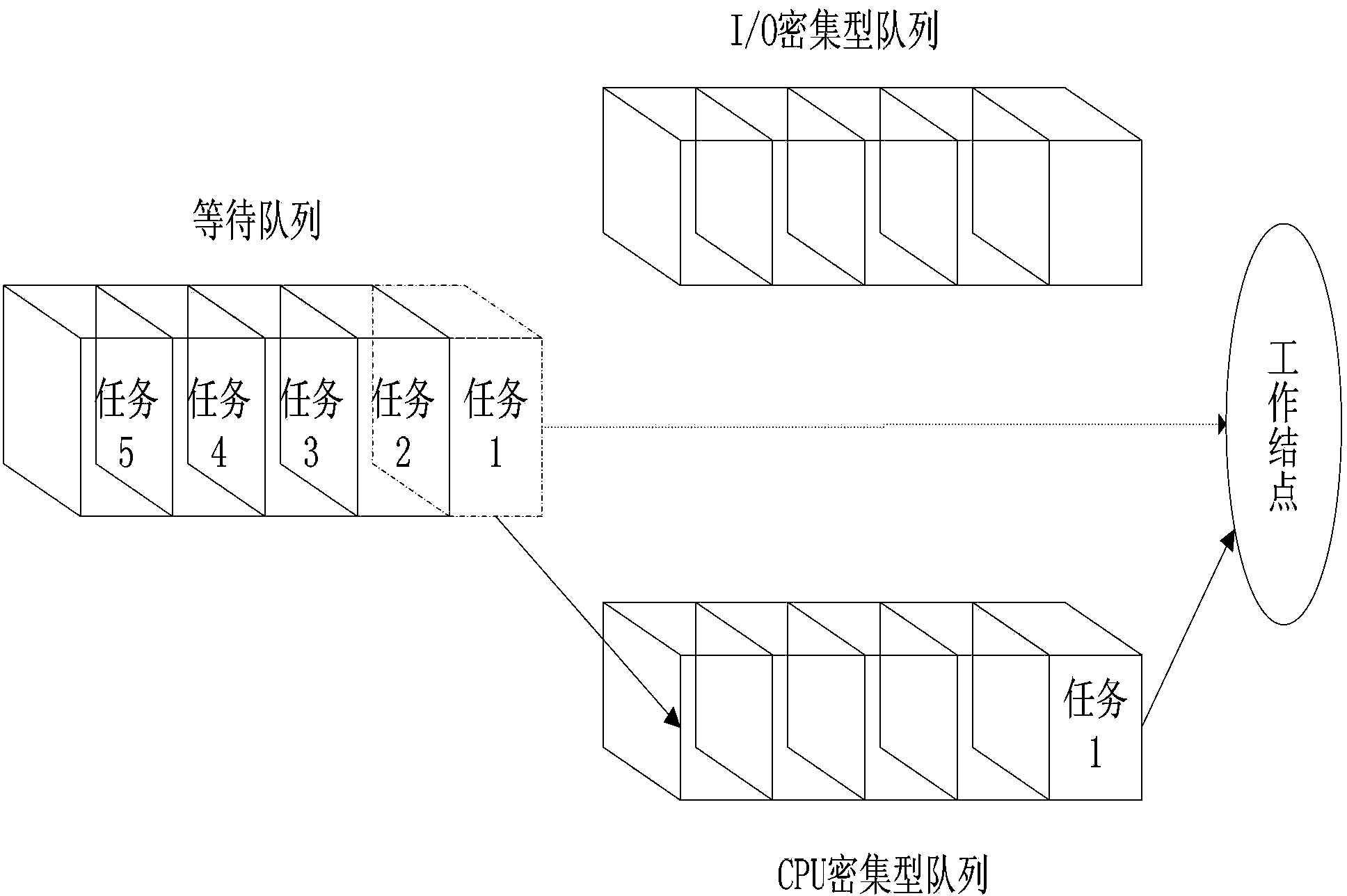

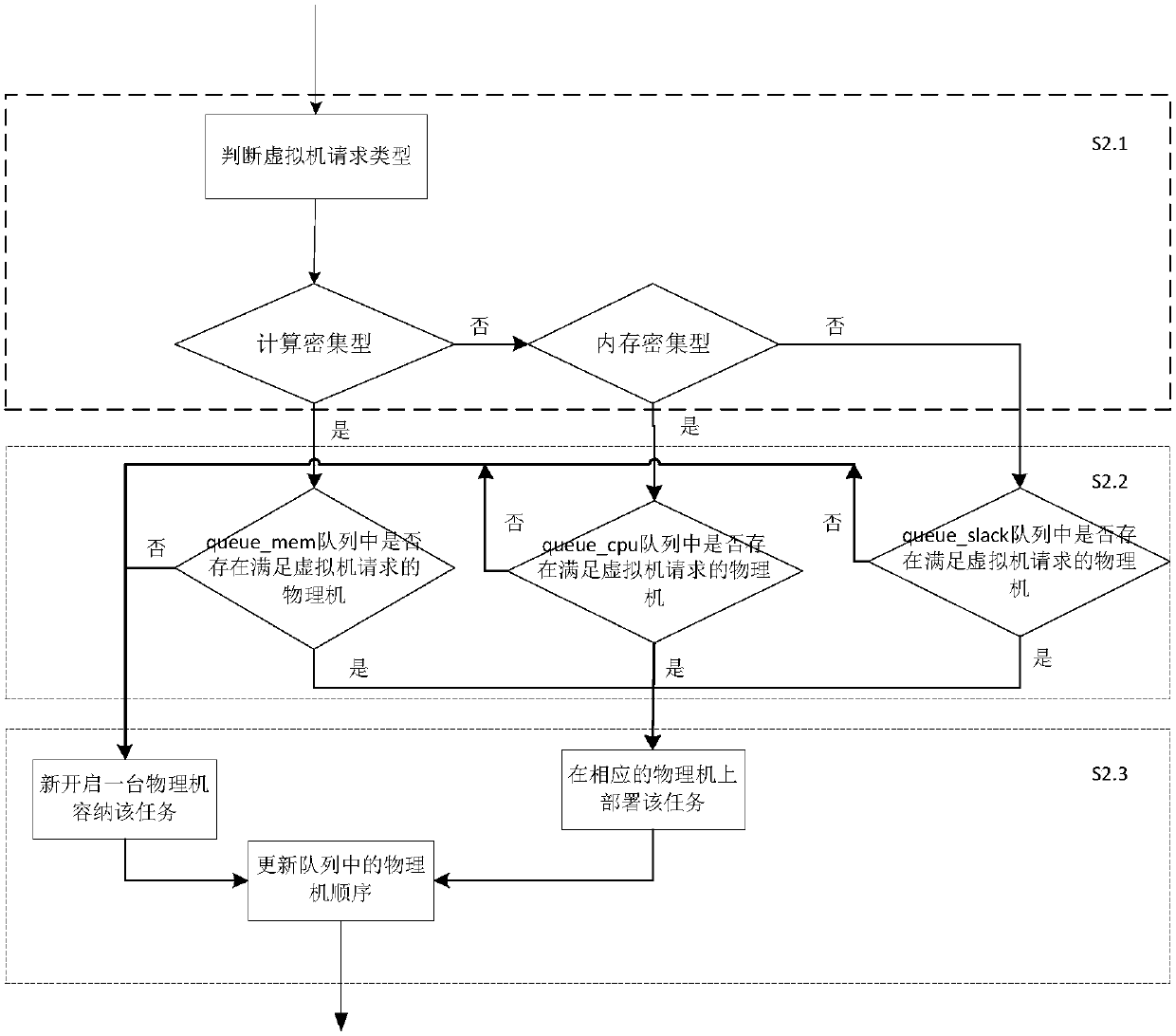

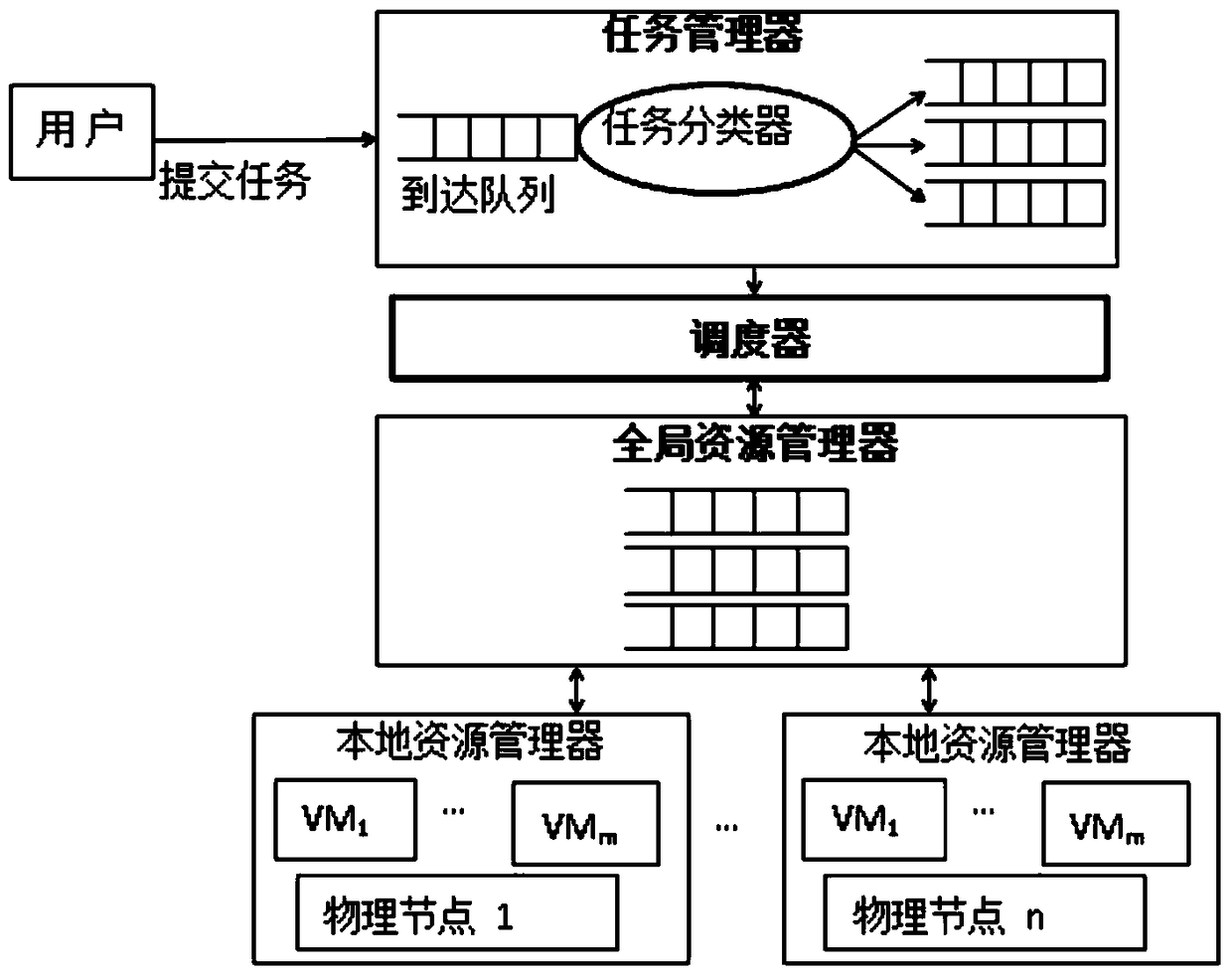

Multi-queue peak-alternation scheduling model and multi-queue peak-alteration scheduling method based on task classification in cloud computing

ActiveCN104657221ASimple methodNo differentiation involvedResource allocationResource utilizationResource management

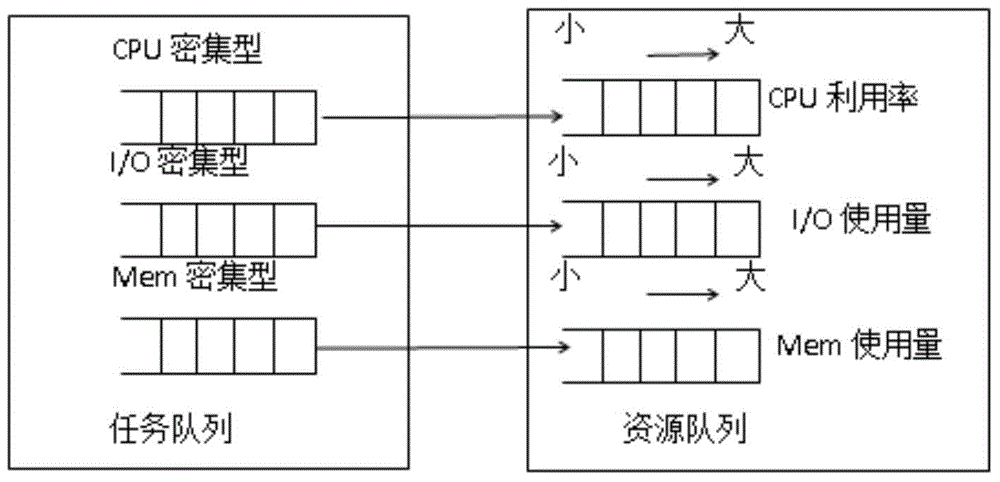

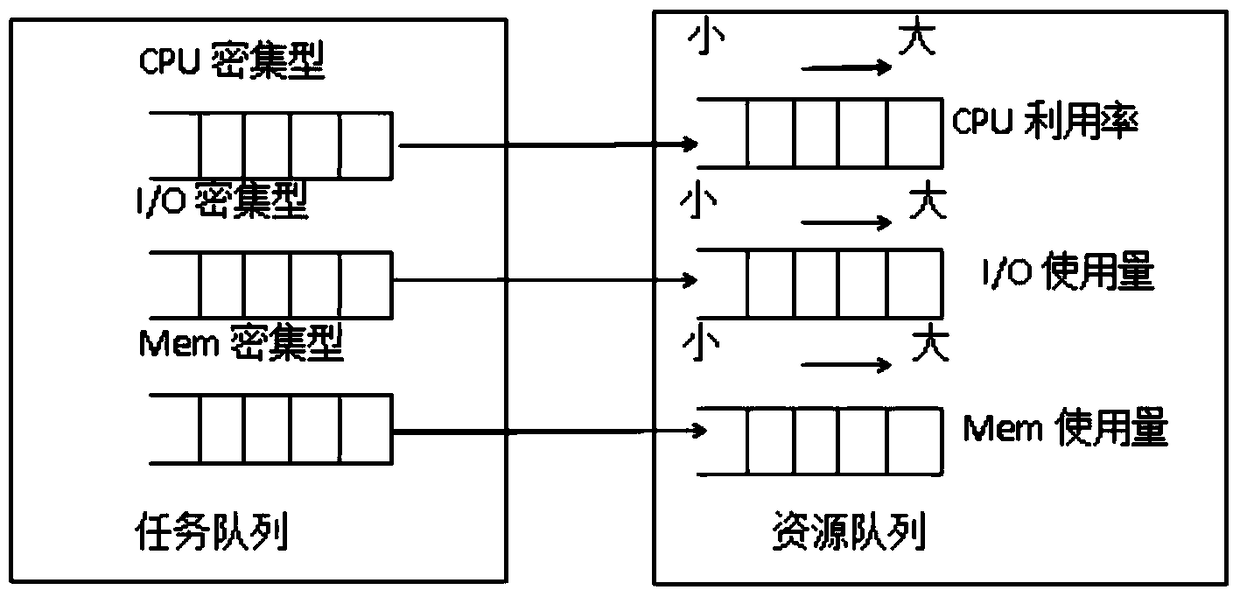

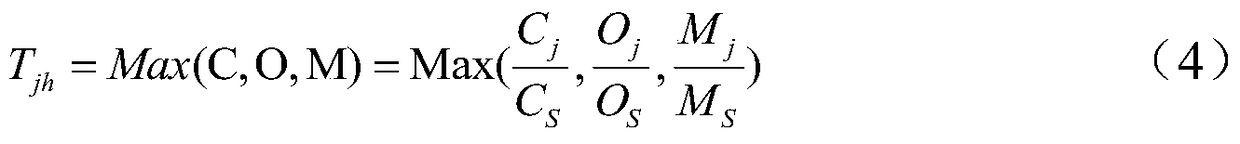

The invention discloses a multi-queue peak-alternation scheduling model and a multi-queue peak-alteration scheduling method based on task classification in cloud computing. The multi-queue peak-alternation scheduling model is characterized by comprising a task manager, a local resource manager, a global resource manager and a scheduler. The multi-queue peak-alternation scheduling method comprises the following steps: firstly, according to demand conditions of a task to resources, dividing tasks into a CPU (central processing unit) intensive type, an I / O (input / output) intensive type and a memory intensive type; sequencing the resources according to the CPU, the I / O and the memory load condition, and staggering a resource using peak during task scheduling; scheduling a certain parameter intensive type mask to a resource with relatively light index load, scheduling the CPU intensive type mask to the resource with relatively low CPU utilization rate. According to the multi-queue peak-alternation scheduling model and the multi-queue peak-alternation scheduling method disclosed by the invention, load balancing can be effectively realized, scheduling efficiency is improved and the resource utilization rate is increased.

Owner:GUANGDONG UNIV OF PETROCHEMICAL TECH

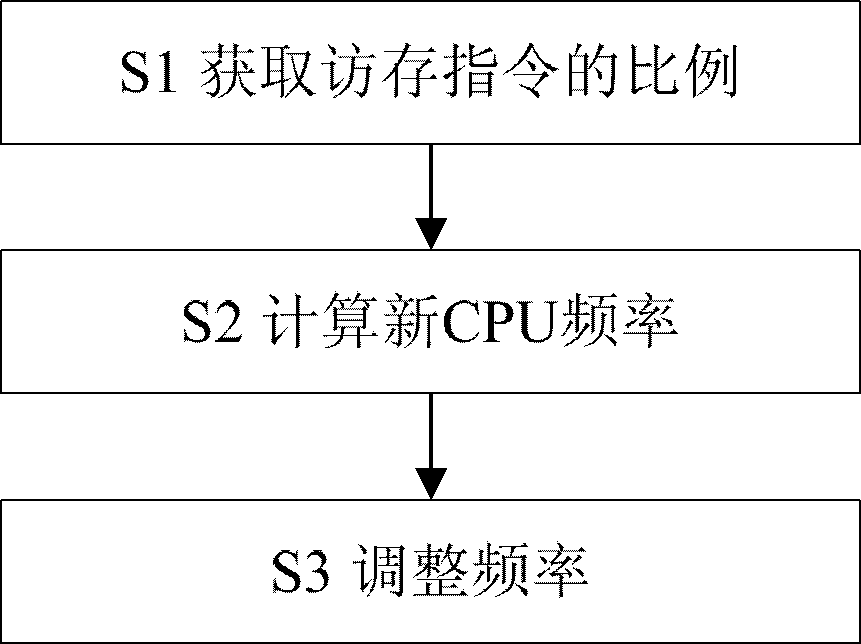

Device and method for dynamically adjusting frequency of central processing unit

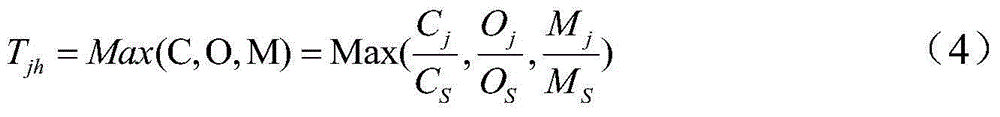

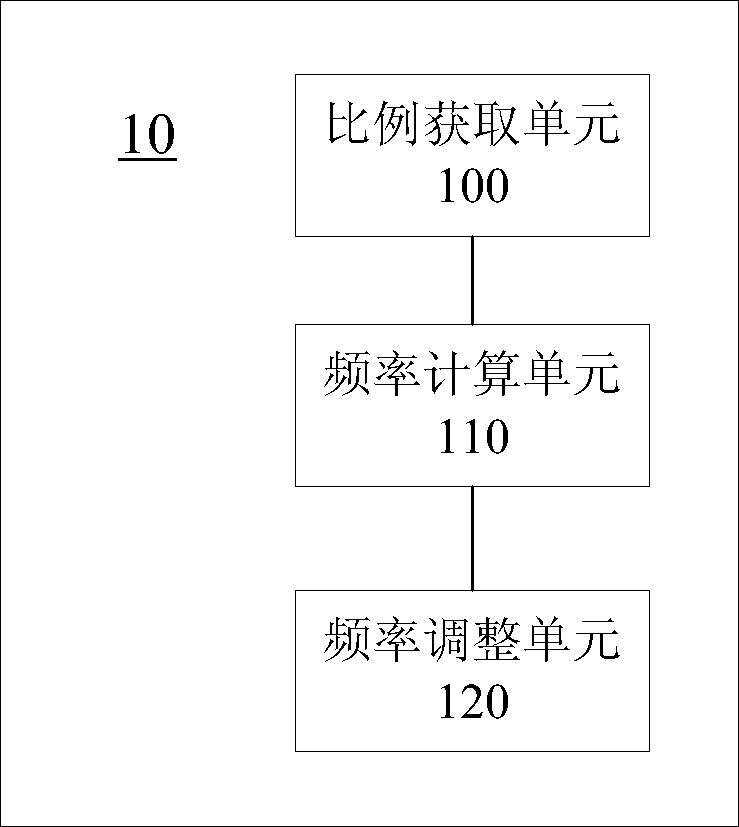

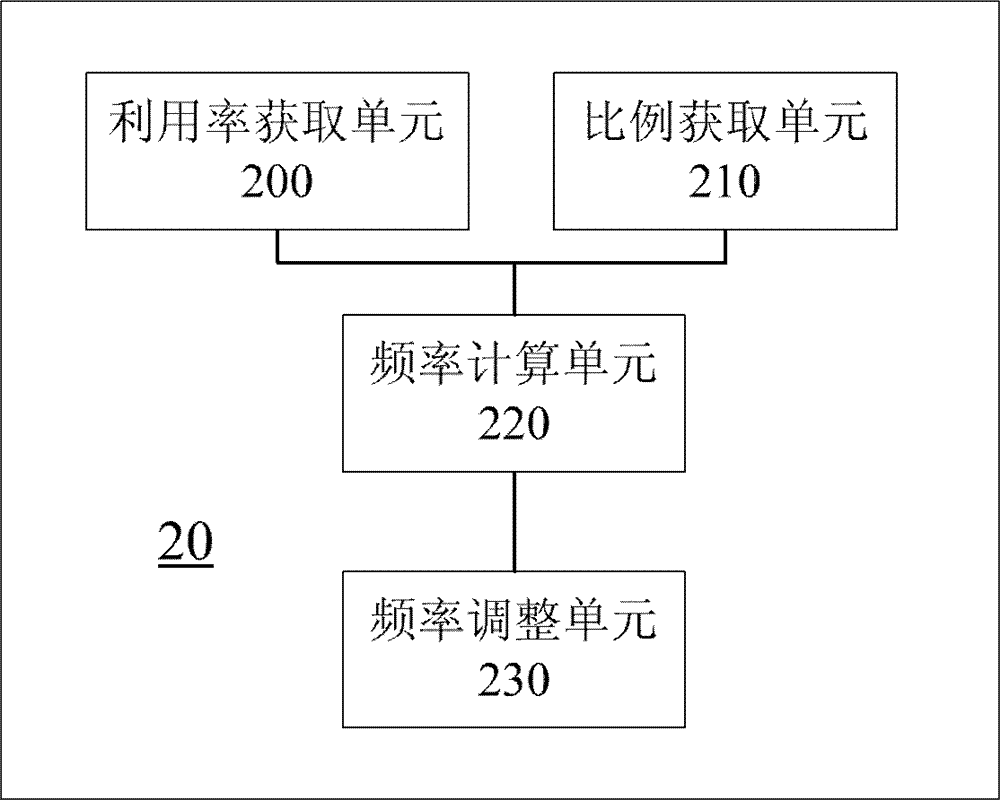

InactiveCN103246340AFrequency downReduce power consumptionPower supply for data processingEnergy efficient computingComputerized systemParallel computing

The invention provides a device and a method for dynamically adjusting the frequency of a central processing unit. The device is used on a computer system which executes a computer processing unit (CPU) intensive application program and a storage intensive application program. The device comprises a proportion obtaining unit, a frequency calculating unit and a frequency adjusting unit, wherein the proportion obtaining unit is used for obtaining the proportion of an instruction of an access storage in executing an application program task set; the frequency calculating unit is connected with the proportion obtaining unit and used for calculating adjusted new CPU frequency in an inverse proportional mode on the basis of the proportion of the instructions of the access storage obtained by the proportion access unit; and the frequency adjusting unit is connected with the frequency calculating unit and used for adjusting the CPU frequency into the new CPU frequency. According to the device and the method for dynamically adjusting the frequency of the central processing unit, the frequency of the CUP can be turned down by considering the proportion of access and storage instructions, and accordingly power consumption of the computer system can be reduced.

Owner:SONY CORP

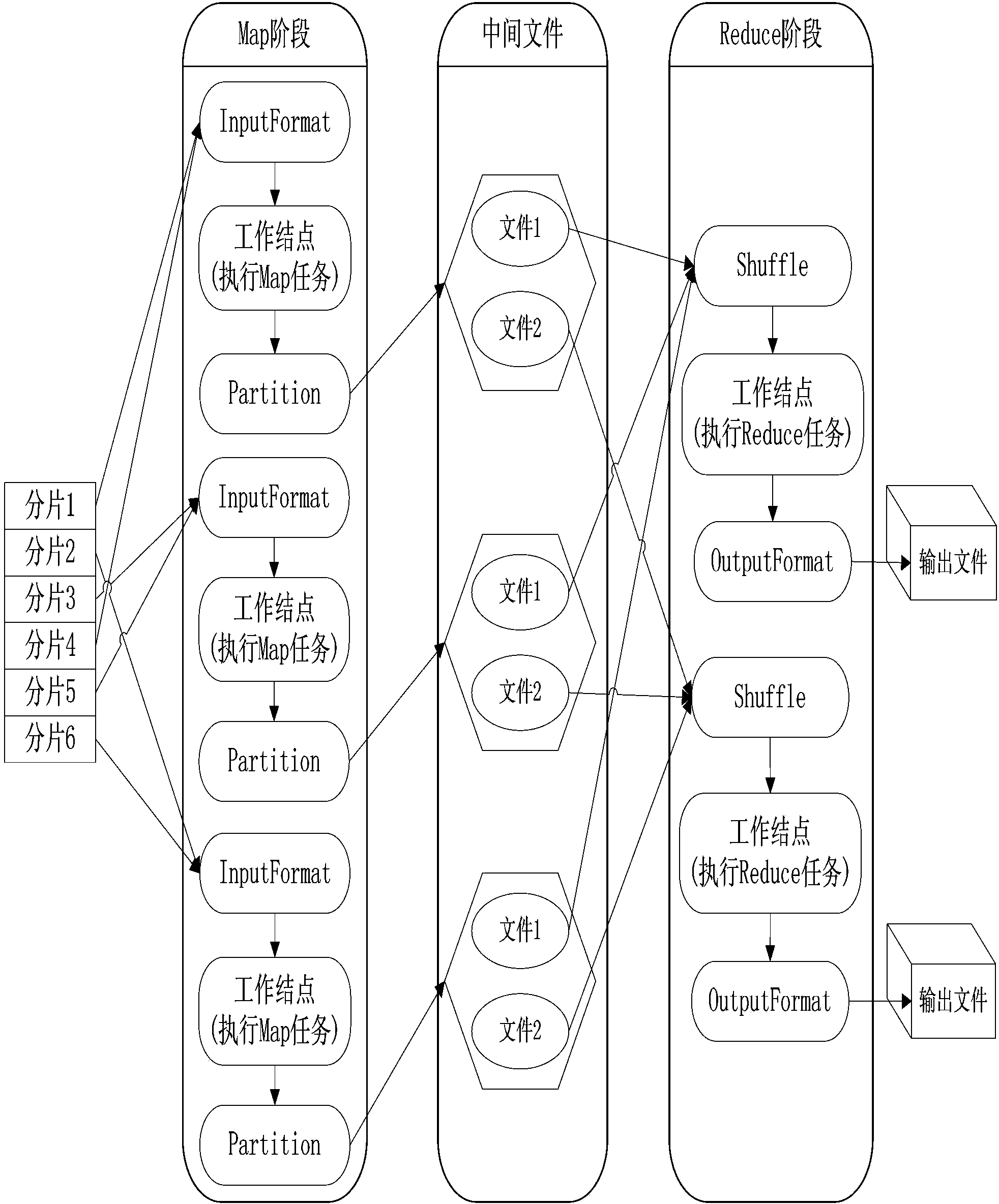

Dynamic MapReduce dispatching method and system based on task type

The invention provides a dynamic MapReduce dispatching method and system based on a task type. The dynamic MapReduce dispatching method based on task type comprises the steps as follows: A. entering the waiting queue, B. classifying the work task, C. moving the work task, and D. dispatching the work task, independently dispatching a CPU (Central Processing Unit) intensive queue and an I / O intensive queue respectively, dispatching the work task to a work node cluster, and executing the task. The method and the system have the benefits that the dynamic MapReduce dispatching method based on the task type arranges queue to the task with various types respectively and independently dispatching the queues through the prediction to the work task type. The dynamic MapReduce dispatching method based on the task type improves the throughput of the cluster under task environment with various types.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

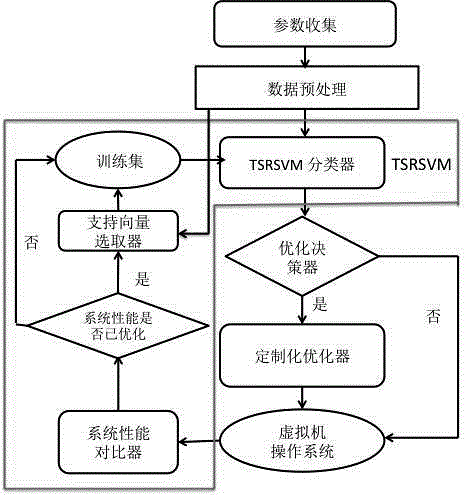

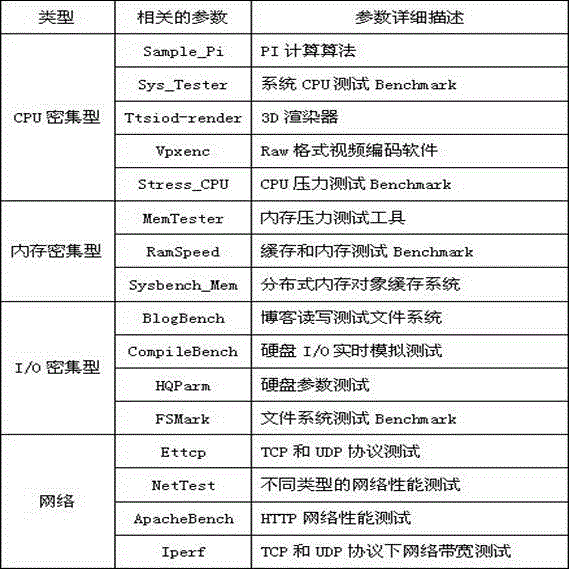

Method for classifying operated load in virtual machine under cloud computing environment

ActiveCN103279392AHigh load classification accuracyExclude the effects of misclassificationResource allocationTransmissionOperational systemParallel computing

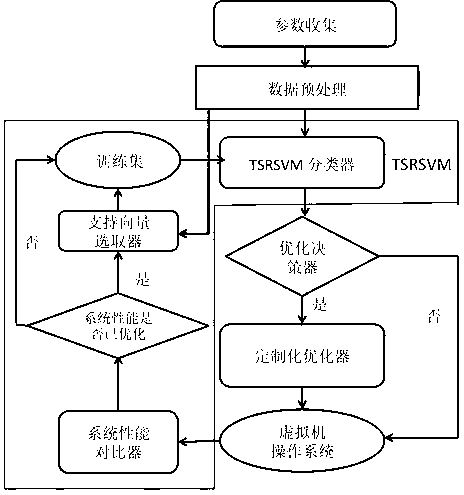

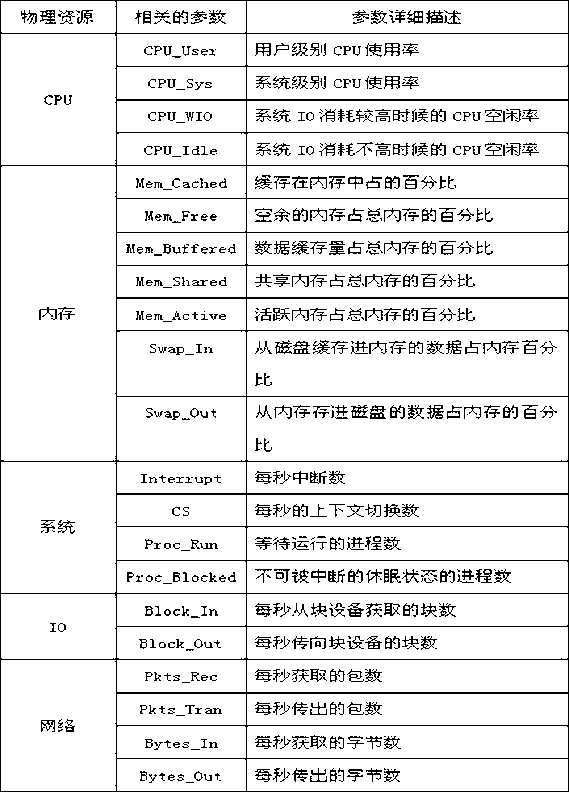

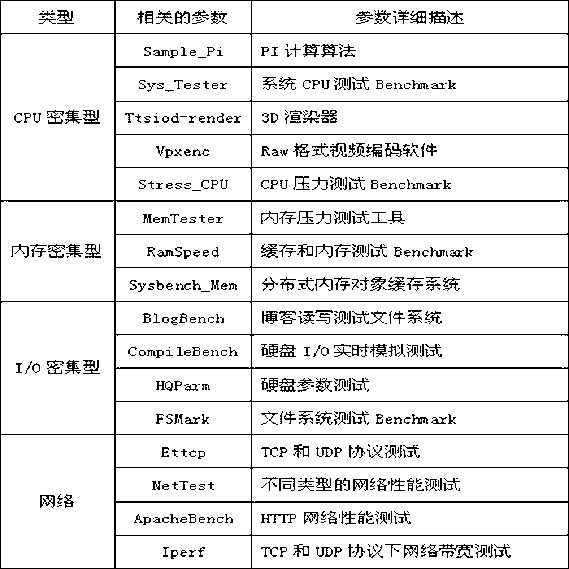

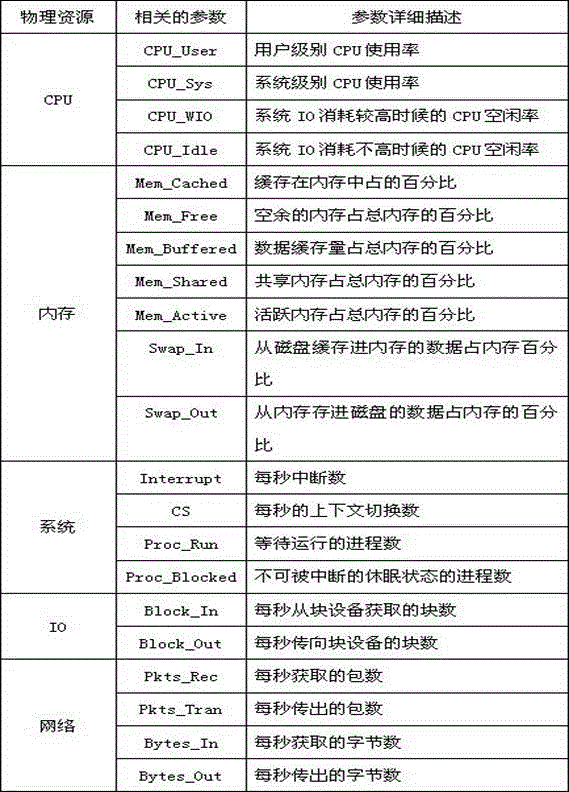

The invention discloses a method for classifying operated load in a virtual machine under cloud computing environment. The method includes firstly acquiring monitoring parameters in 5 minutes of load operation and subjecting the monitored parameters to normalization processing; classifying load monitored into four categories such as CPU (central processing unit)-intensive load, memory intensive load, I / O (input / output) intensive load and network intensive load by means of TSRSVM (training sets refresh SVM); providing corresponding customized optimizing strategies for operation systems which running the four categories of intensive loads, and monitoring operating state of the systems through a performance comparison device; indicating that classification strategies are correct if performance of the systems is improved, otherwise, indicating that the classification strategies are incorrect. By the method, accuracy of load classification is high and system performance loss is low.

Owner:ZHEJIANG UNIV

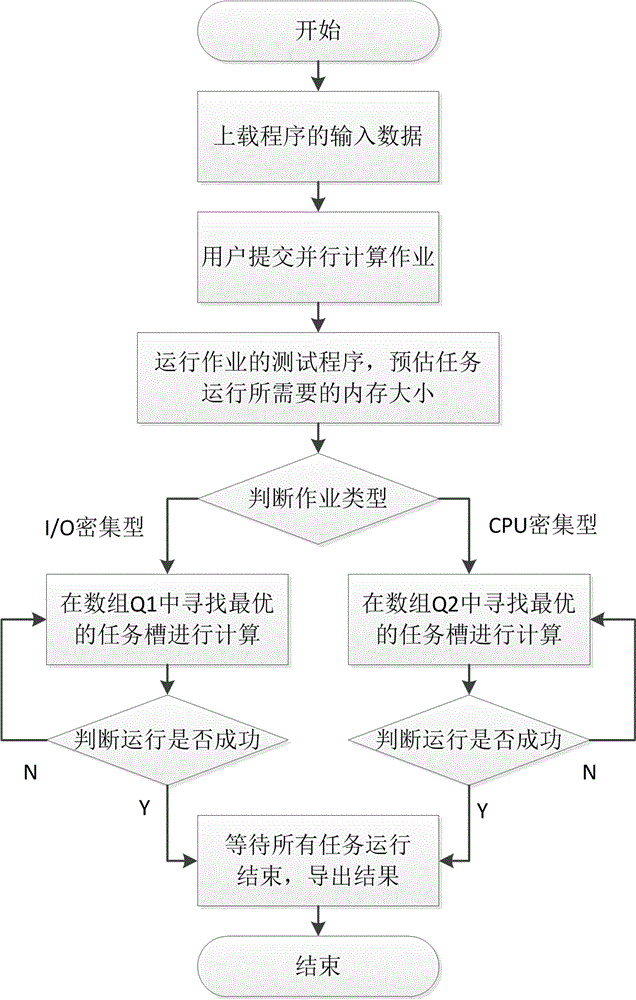

Parallel computation dispatch method in heterogeneous environment

ActiveCN103500123AGood technical effectImprove resource utilizationResource allocationArray data structureResource utilization

The invention relates to the field of parallel computation, and discloses a parallel computation dispatch method in the heterogeneous environment. According to the method, a plurality of JVM task slots having different internal storages, and idle task slot sets are built, tasks in the parallel computation are divided into I / O intensities and CPU intensities, the tasks are appointed to suitable task slots for calculation, and parallel calculation efficiency in the heterogeneous environment is optimized. The parallel computation dispatch method has the advantages that the sizes and the types of the internal storages required by the tasks are determined dynamically, resource using efficiency of heterogeneous clusters is improved, total operation time of the parallel calculation work is shortened, and the situation that the internal storages overflow in a task operation process is avoided.

Owner:ZHEJIANG UNIV

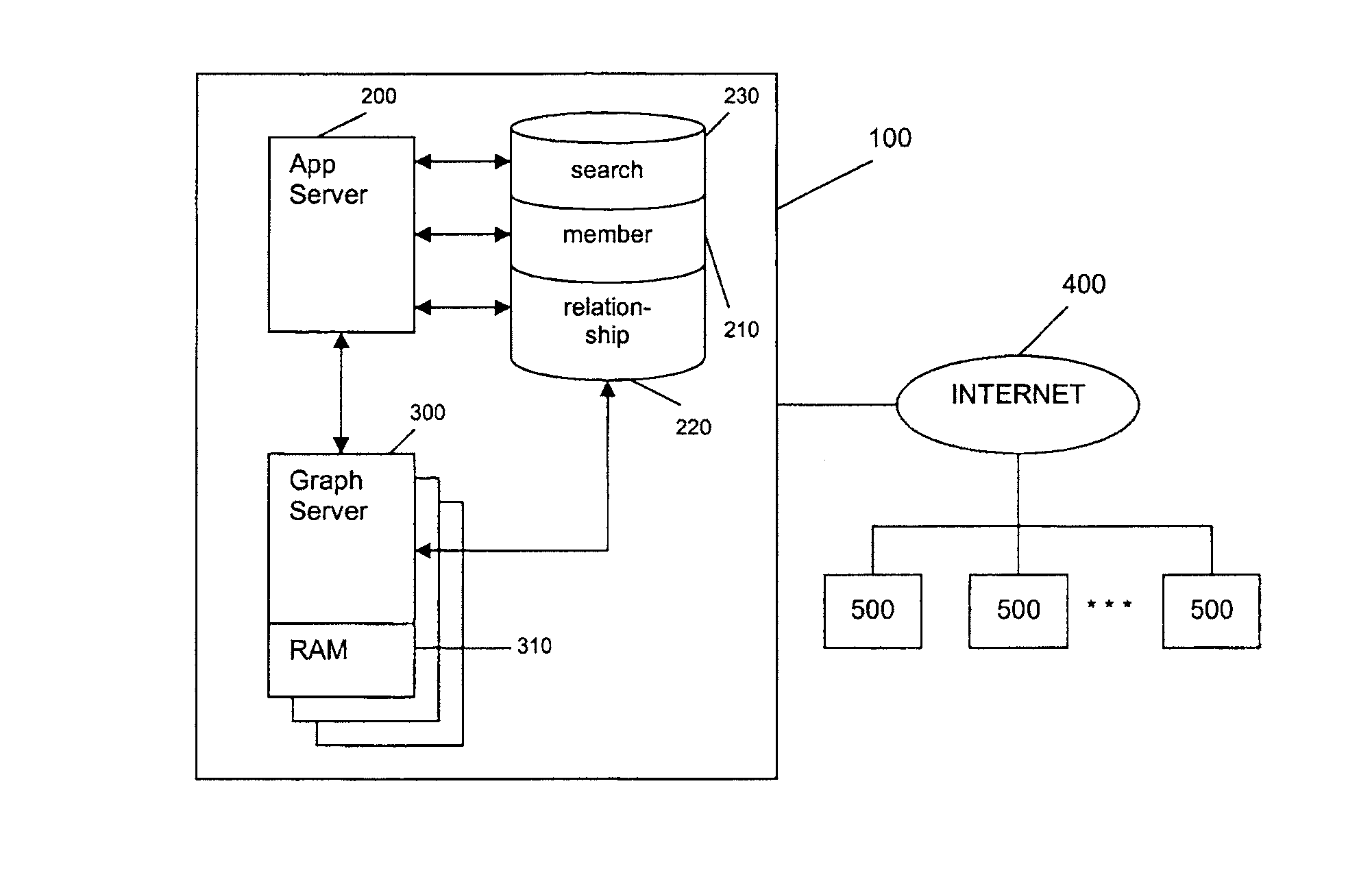

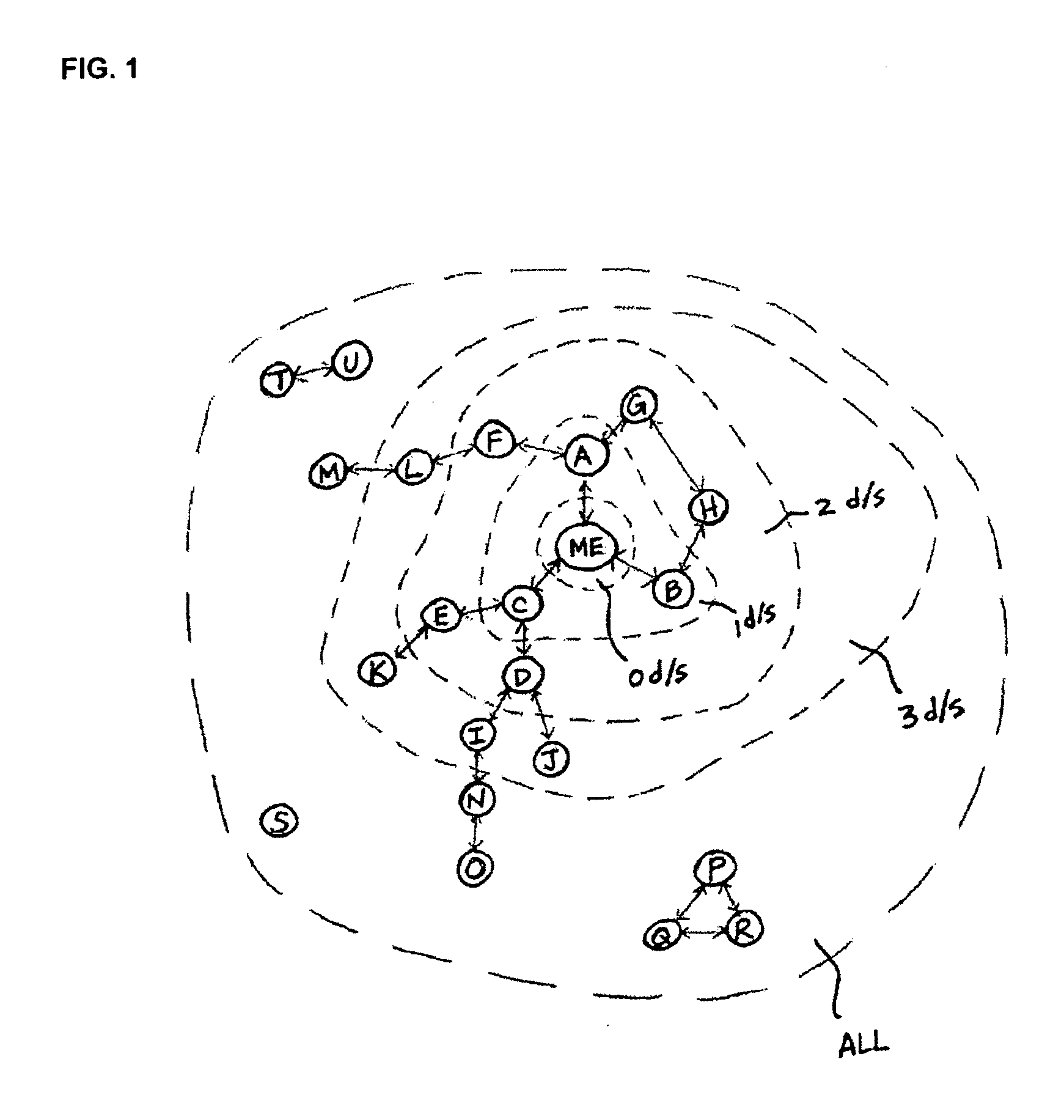

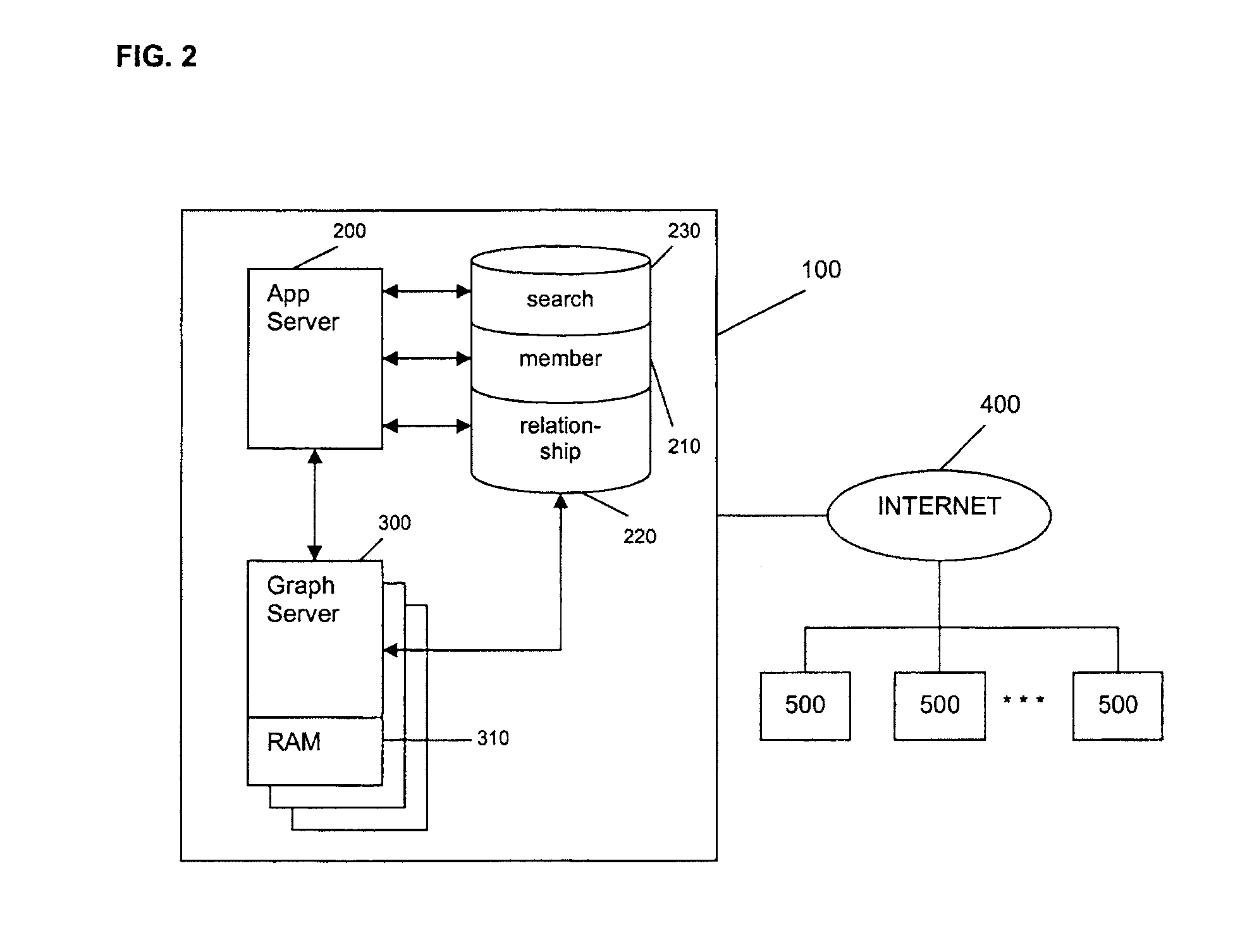

Graph Server Querying for Managing Social Network Information Flow

ActiveUS20110099167A1Improve processing efficiencyReduce in quantityDigital data processing detailsComputer security arrangementsGraphicsNetwork information flow

An online social network is managed using one server for database management tasks and another server, preferably in a distributed configuration, for CPU-intensive computational tasks, such as finding a shortest path between two members or a degree of separation between two members. The additional server has a memory device containing relationship information between members of the online social network and carries out the CPU-intensive computational tasks using this memory device. With this configuration, the number of database lookups is decreased and processing speed is thereby increased.

Owner:META PLATFORMS INC

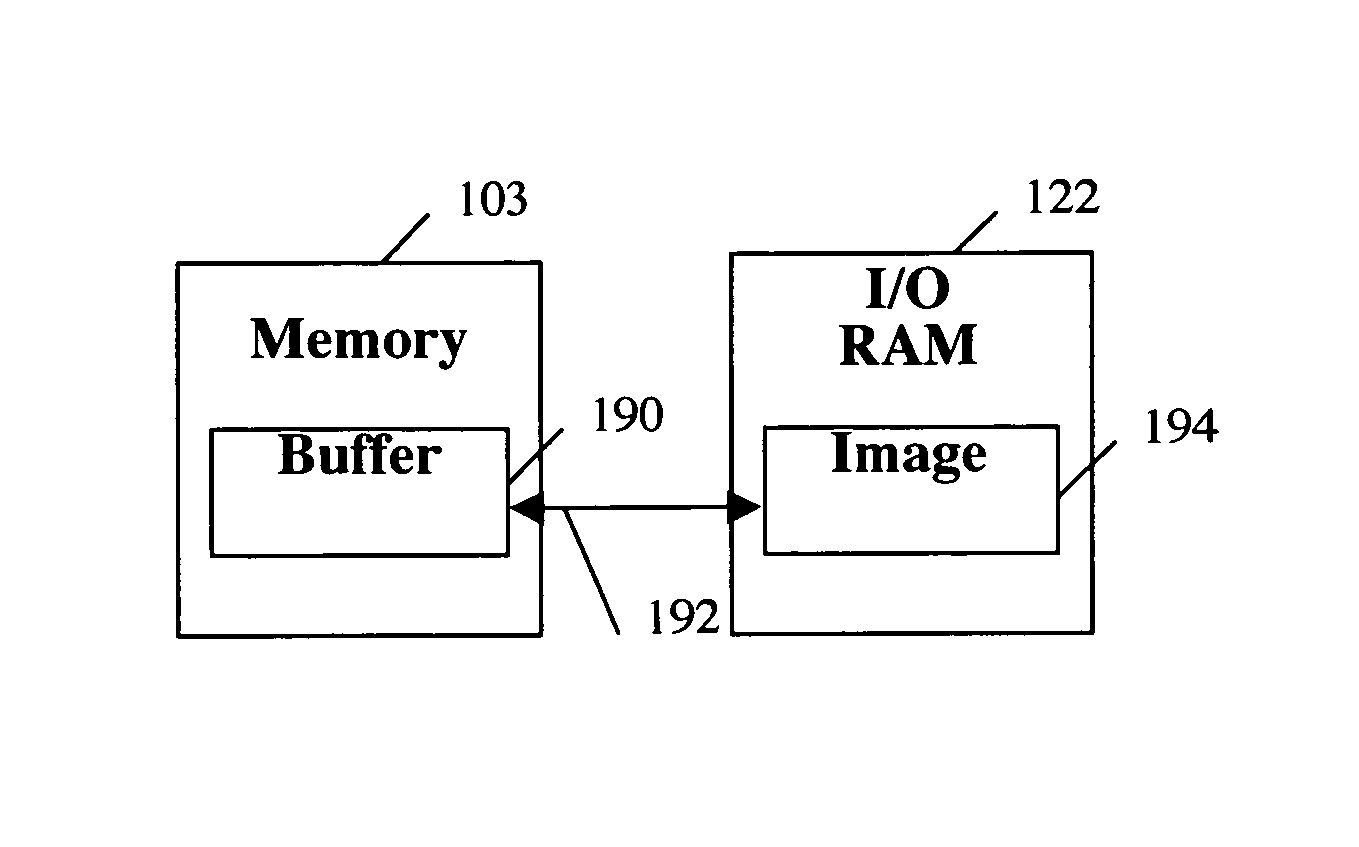

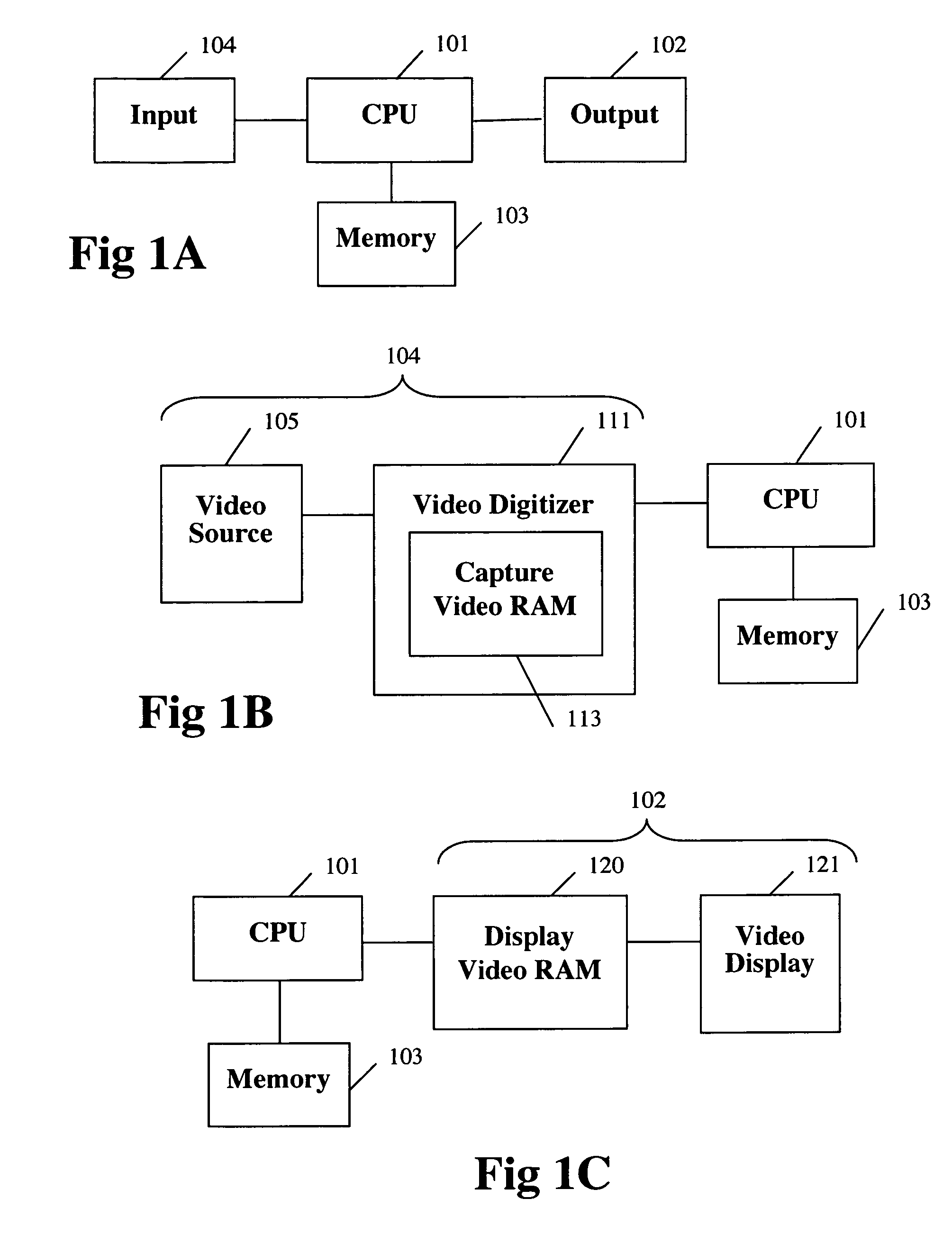

Faster image processing

InactiveUS8170095B2Color television with pulse code modulationColor television with bandwidth reductionImaging processingComputer graphics (images)

Methods, medium, and machines associated with image processing performance are disclosed. Image data may be copied from input memory to main memory before performing CPU intensive operations, and efficiently copied from memory thereafter. CPU intensive operations may include decryption, decompression, image enhancement, or reformatting. Real time compression may be achieved by sub-sampling each frame. A separate plane compression aspect may provide for distinguishing between regions of an image, separating and / or masking the original image into multiple image planes, and compressing each separated image plane with a compression method that is optimal for its characteristics.

Owner:MUSICQUBED INNOVATIONS LLC

Faster image processing

InactiveUS7671864B2Improve image processing capabilitiesMemory adressing/allocation/relocationImage memory managementComputer hardwareImaging processing

Methods and machines which increase image processing performance by efficiently copying image data from input memory to main memory before performing CPU intensive operations, such as image enhancement, compression, or encryption, and by efficiently copying image data from main memory to output memory after performing CPU intensive operations, such as decryption, decompression, image enhancement, or reformatting.

Owner:MUSICQUBED INNOVATIONS LLC

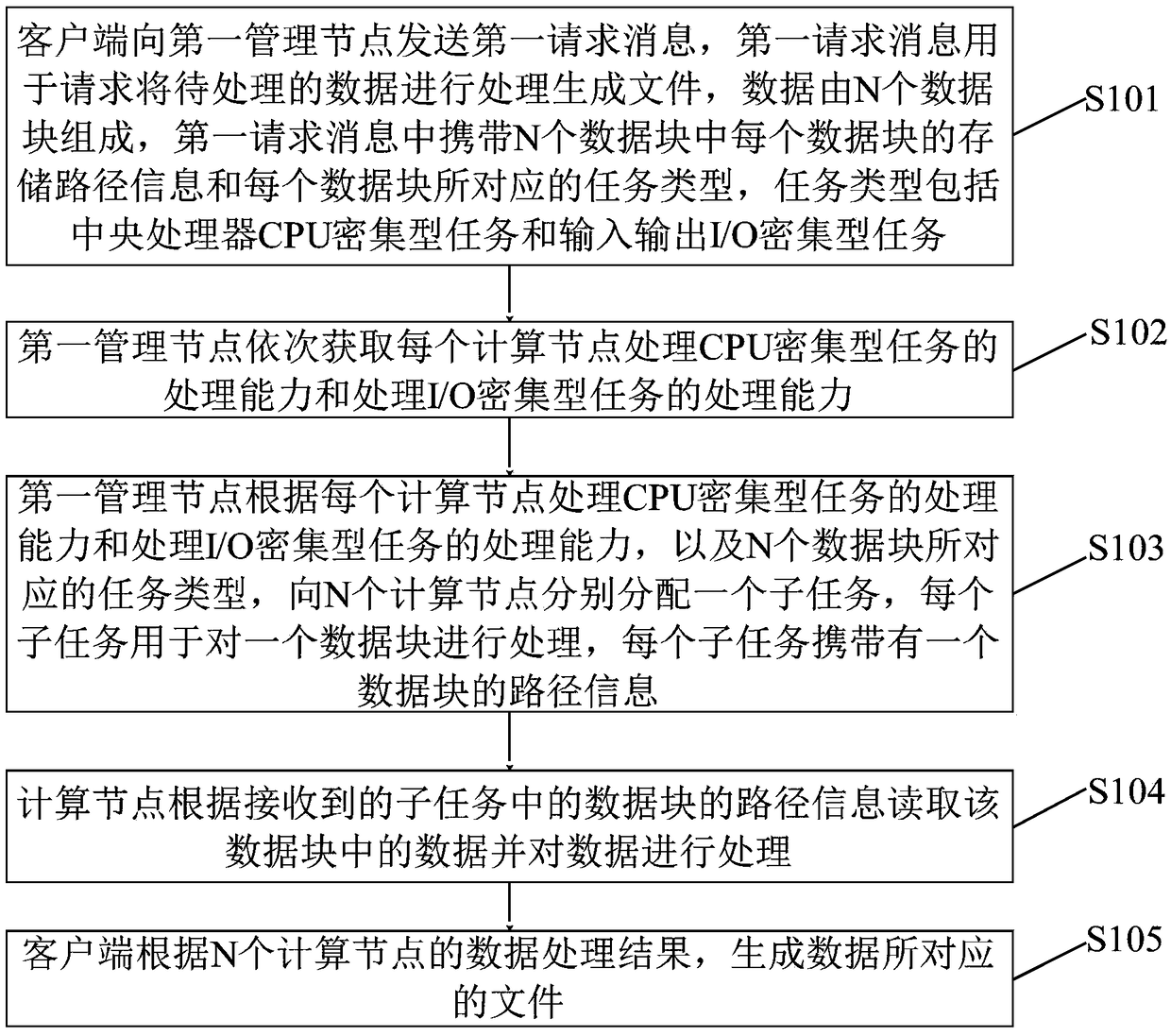

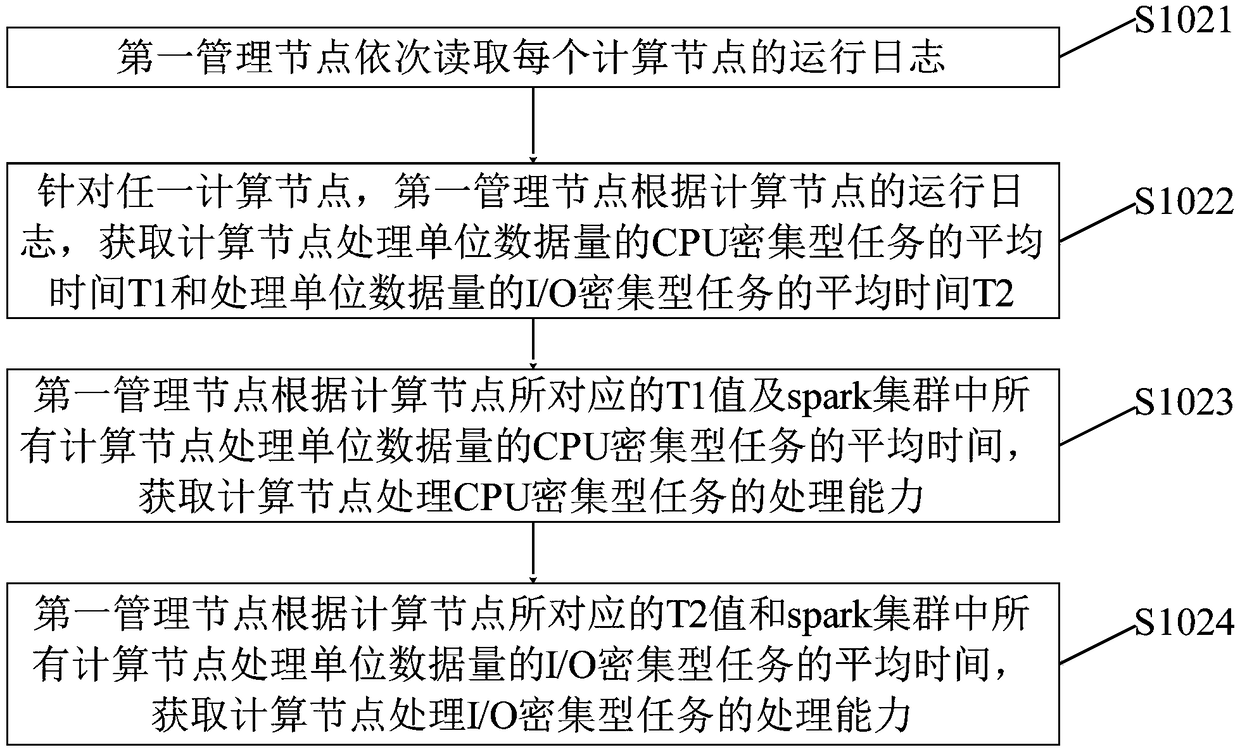

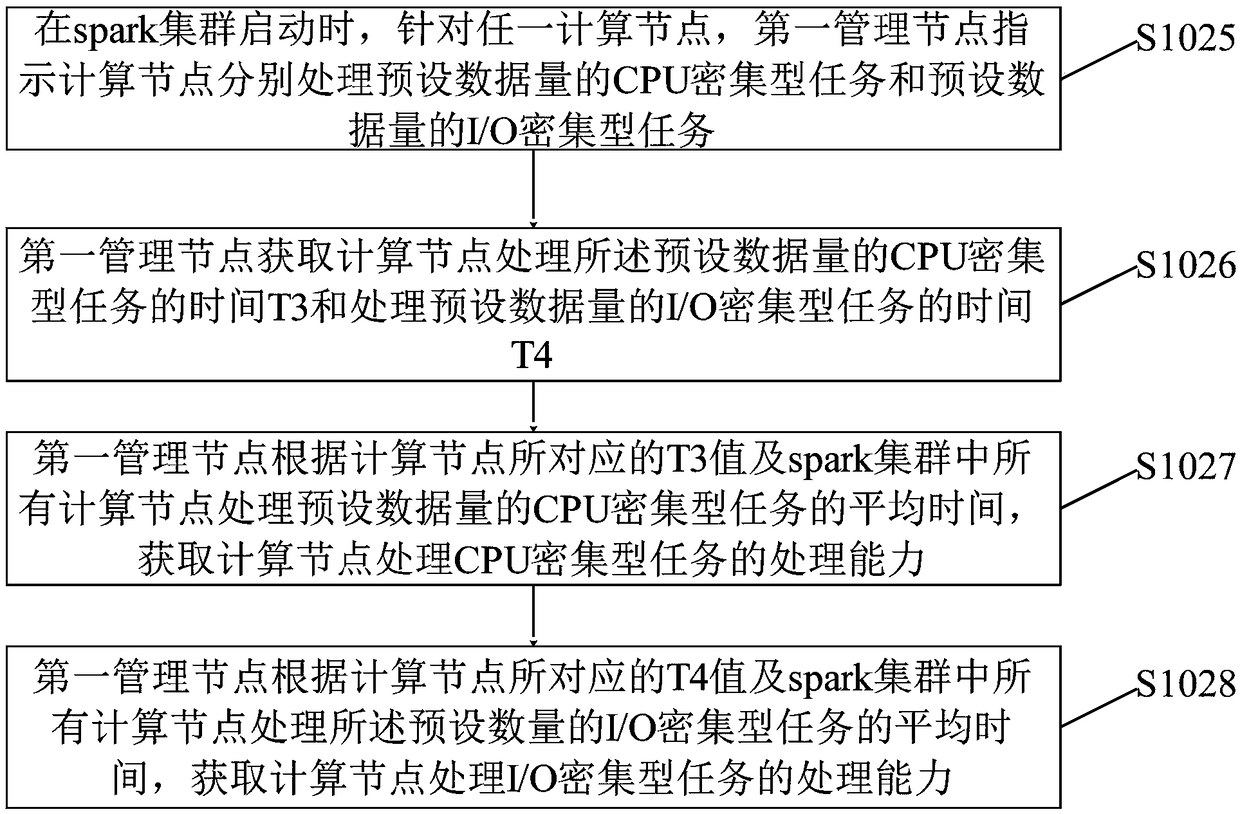

File generation method and system based on massive data

The invention provides a file generation method and system based on massive data. The method comprises the following steps: a client sends a first request message to a first management node, wherein the first request message carries a storage path of N data blocks and a task type corresponding to each data block, and comprises a CPU intensive task and an I / O intensive task; the first management node sequentially obtains the processing capability of each computing node to process two types of tasks, and respectively allocates a sub-task to each of N computing nodes according to the task types of the N data blocks, and the computing nodes read the data in the data blocks and process the data; and the client generates a file corresponding to the data according to data processing results of the N computing nodes. A plurality of computing nodes in a spark cluster process the massive data in parallel to generate the file, and the management node in the spark cluster allocates the data blocksto the computing nodes having high capability of processing the type of tasks according to the task types corresponding to the database, so that data processing speed is improved on the basis of achieving load balancing.

Owner:PING AN TECH (SHENZHEN) CO LTD

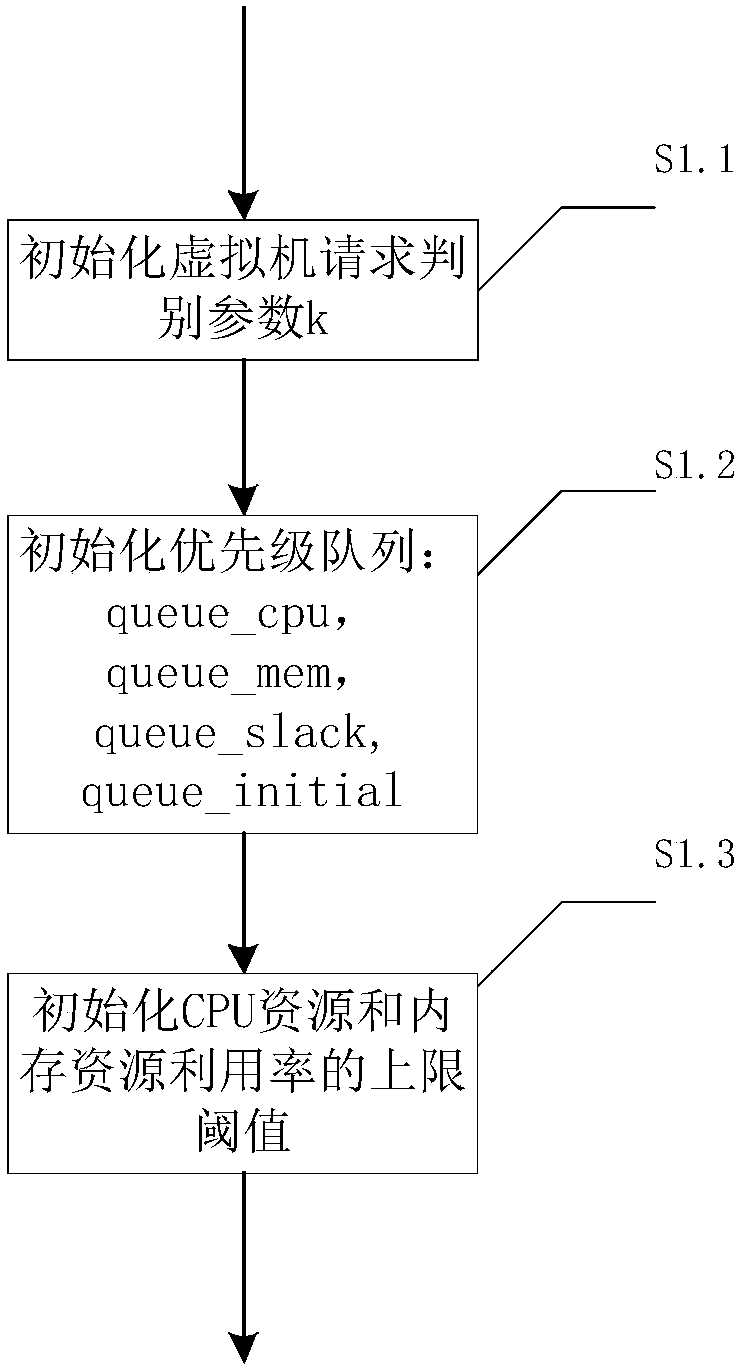

Cloud computing virtual machine deployment algorithm based on energy consumption

The invention discloses a cloud computing virtual machine deployment algorithm based on energy consumption, comprising a virtual machine initial deployment algorithm and a virtual machine re-deployment algorithm. The virtual machine initial deployment algorithm is a DRWMG (different resource with minimum gap) algorithm provided herein and includes: classifying newly arrived virtual machine requests into CPU (central processing unit) intensive requests, memory intensive requests and normal requests; applying different deployment strategies provided herein to the different types of virtual machine requests. The virtual machine initial deployment algorithm is an MCA (migration cost algorithm) provided herein, including: periodically re-deploying existing virtual machines according to virtualmachine migration overhead (migration time, migration energy consumption and downtime). Compared with existing virtual machine deployment algorithms, the DRWMG algorithm provided herein can reduce energy consumption of a cloud computing system and bring improved balance for the use of different resources of physical machines; the MCA provided herein can reduce the energy consumption of the cloud computing system and the migration overhead of virtual machines in the cloud computing system.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Docker dynamic scheduling algorithm for typical containers

ActiveCN108897627ASimplify priority designMeet the requirements of the SLA agreementResource allocationStatic dispatchDistributed computing

The invention discloses a Docker dynamic scheduling algorithm for a typical container, includes the following steps: S1: Typical application container scenarios include CPU intensive / batch, Memory-intensive / batch, I / O-intensive / batch and CPU-intensive / real-time, which respectively select the corresponding application containers and analyze the resource usage and performance of each application container when it runs separately and concurrently in the Docker environment; S2: The scheduling algorithm comprises a container static scheduling mode and a container dynamic scheduling mode based on runtime monitoring; Container static scheduling mode and container dynamic scheduling mode are adopted according to user's demand. The invention has the beneficial effect that the dynamic scheduling algorithm can improve the utilization rate of the system resources without affecting the running performance of the application container.

Owner:NANJING SUPERSTACK INFORMATION TECH CO LTD

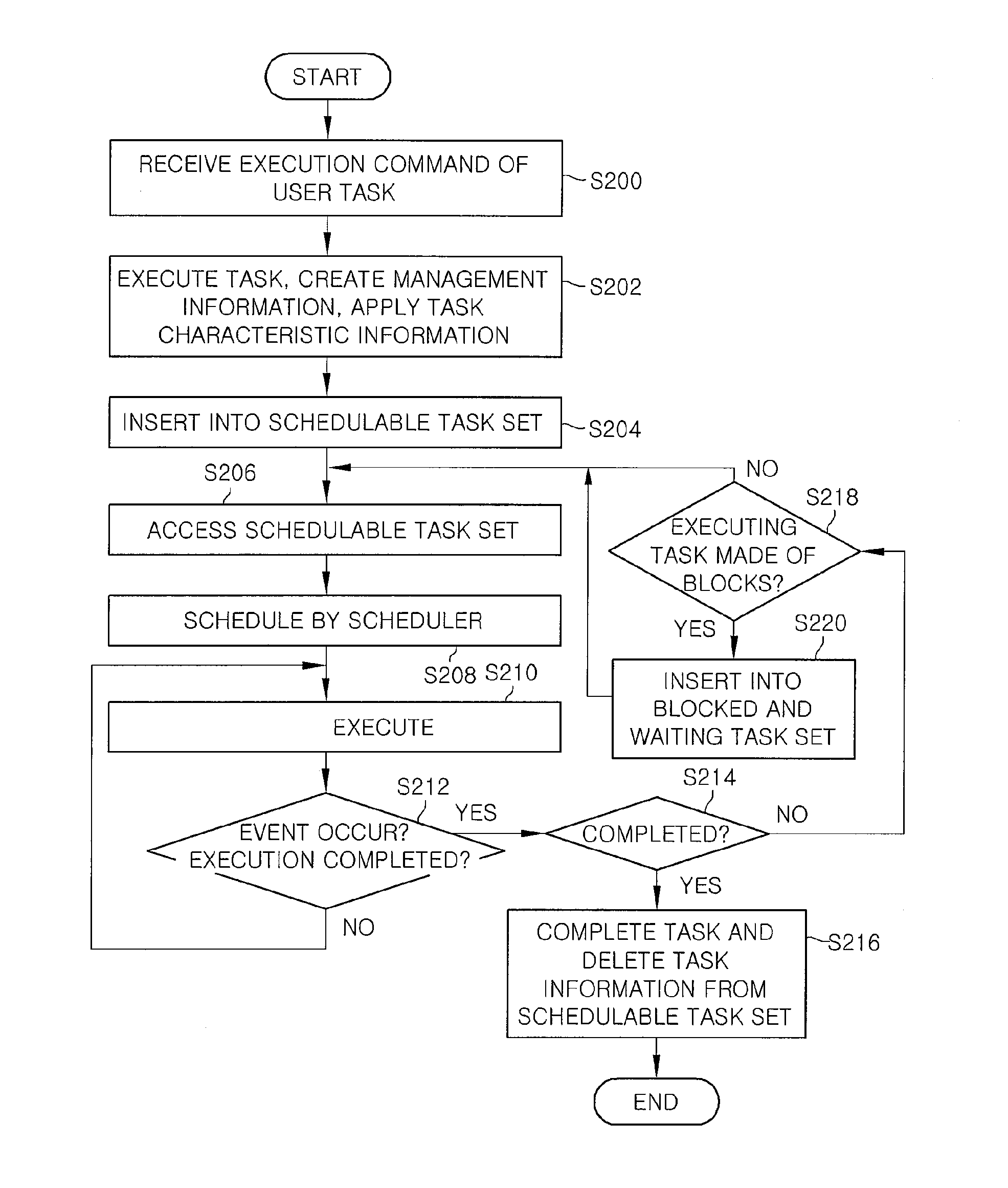

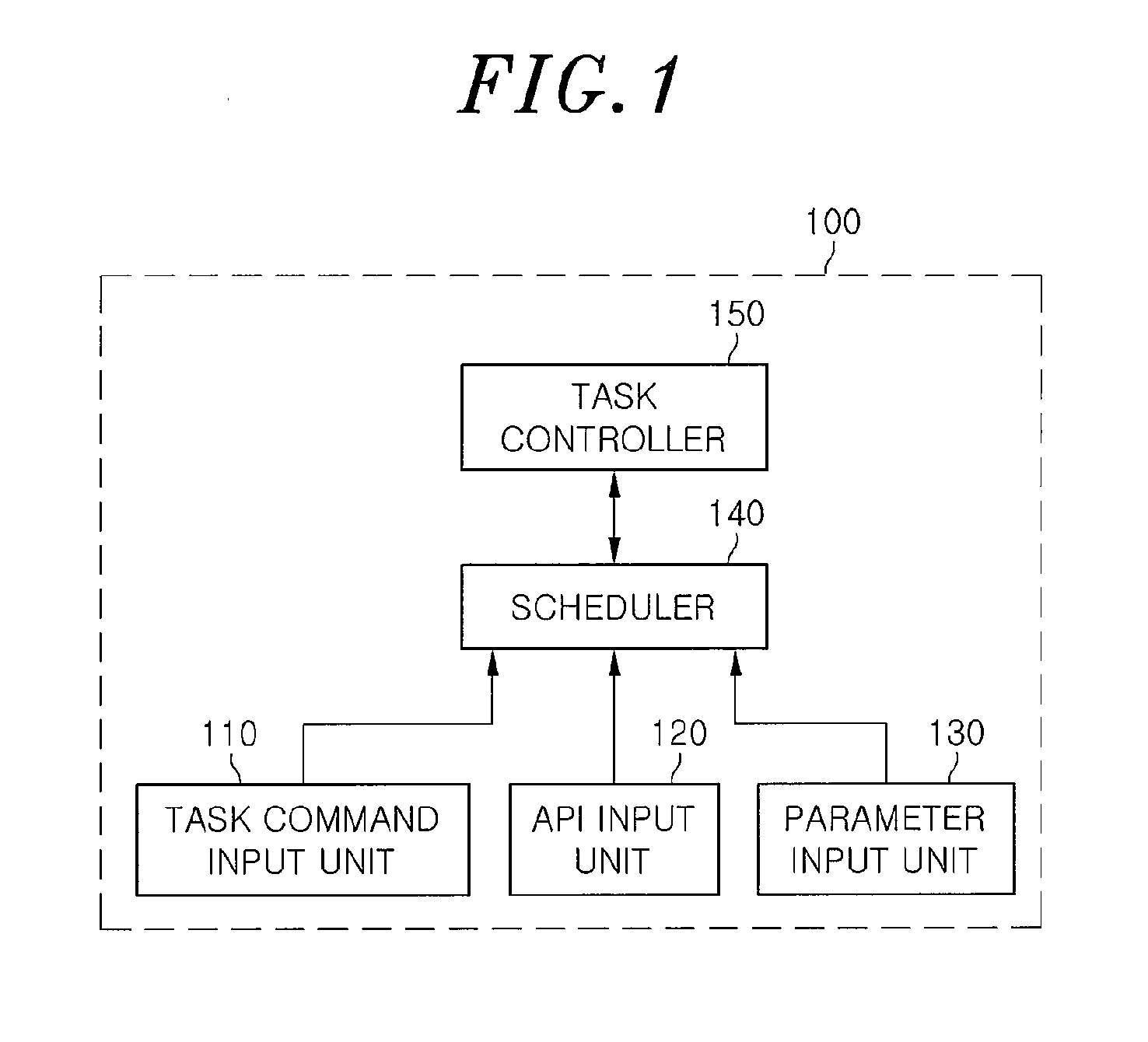

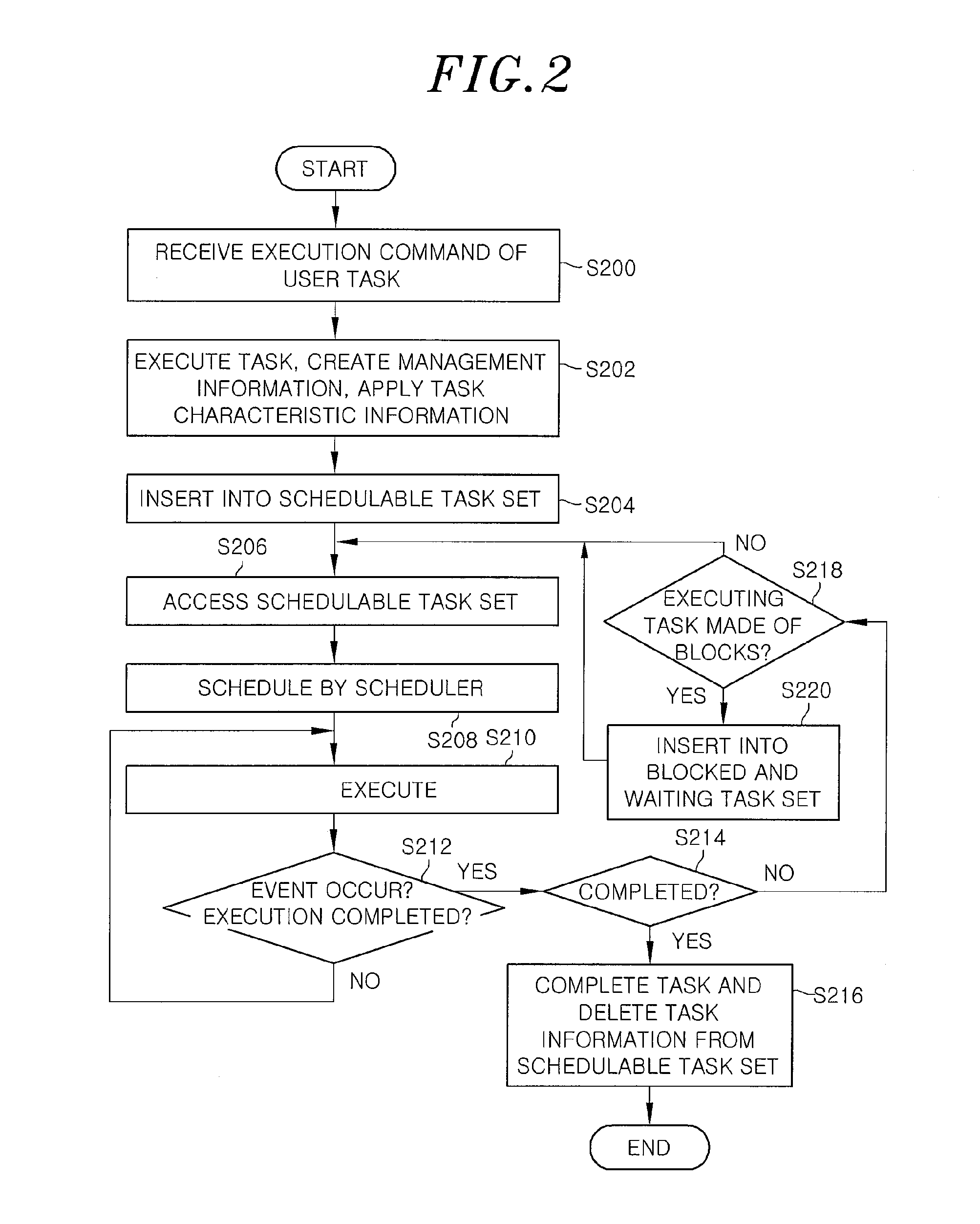

Method and apparatus for scheduling application programs

InactiveUS20130117757A1Improve performancePerformance advantageResource allocationMemory systemsOperating systemI/O bound

Owner:ELECTRONICS & TELECOMM RES INST

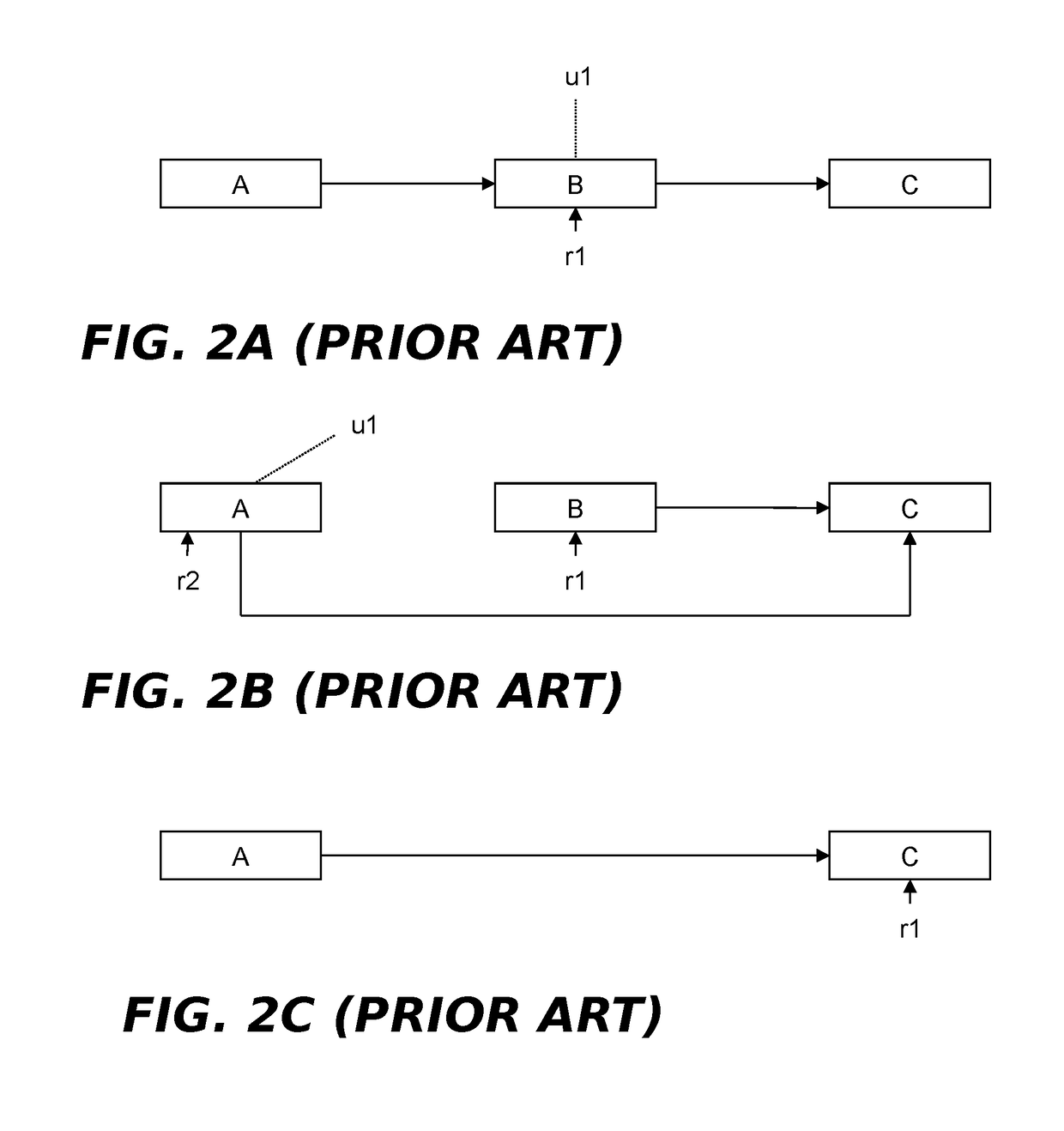

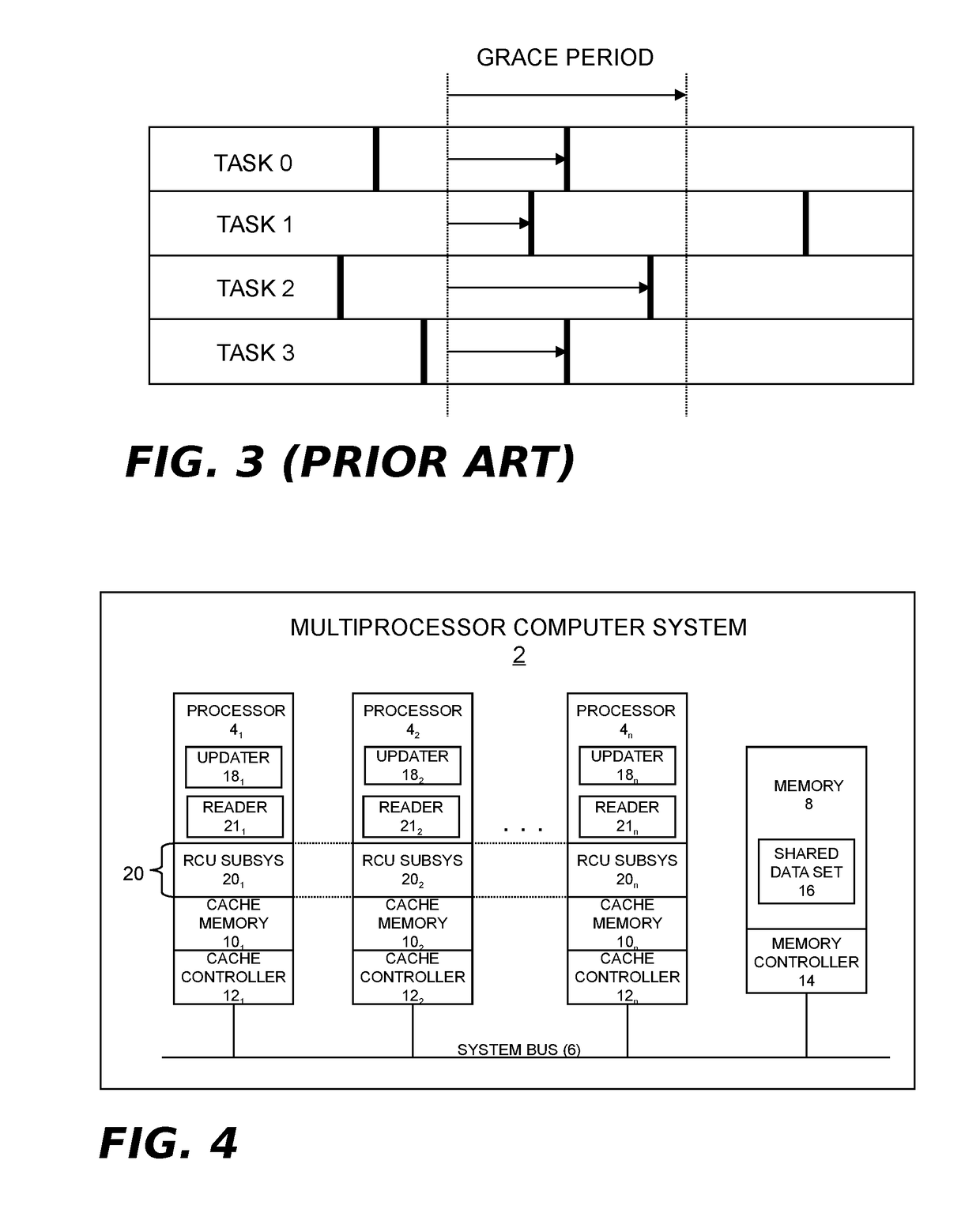

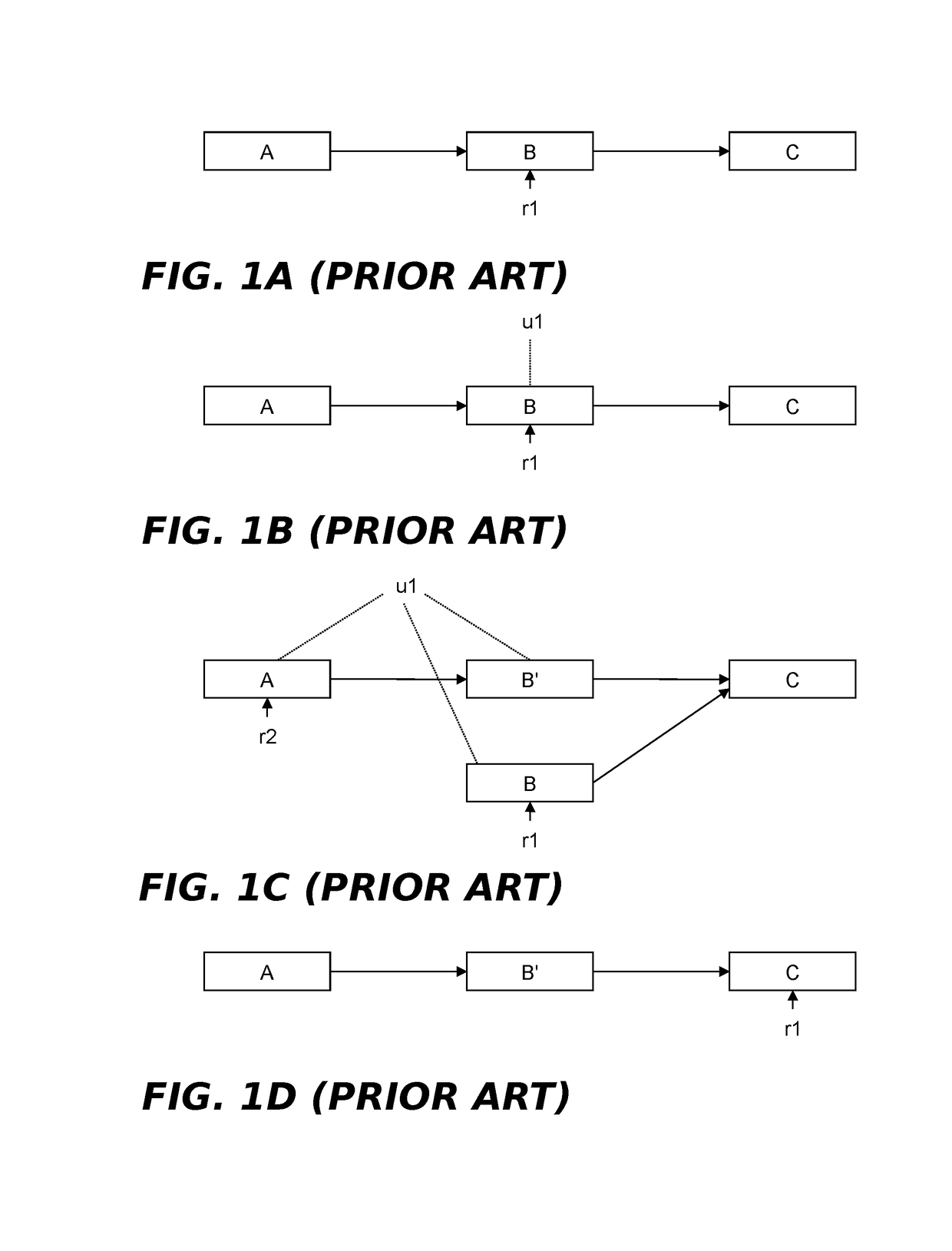

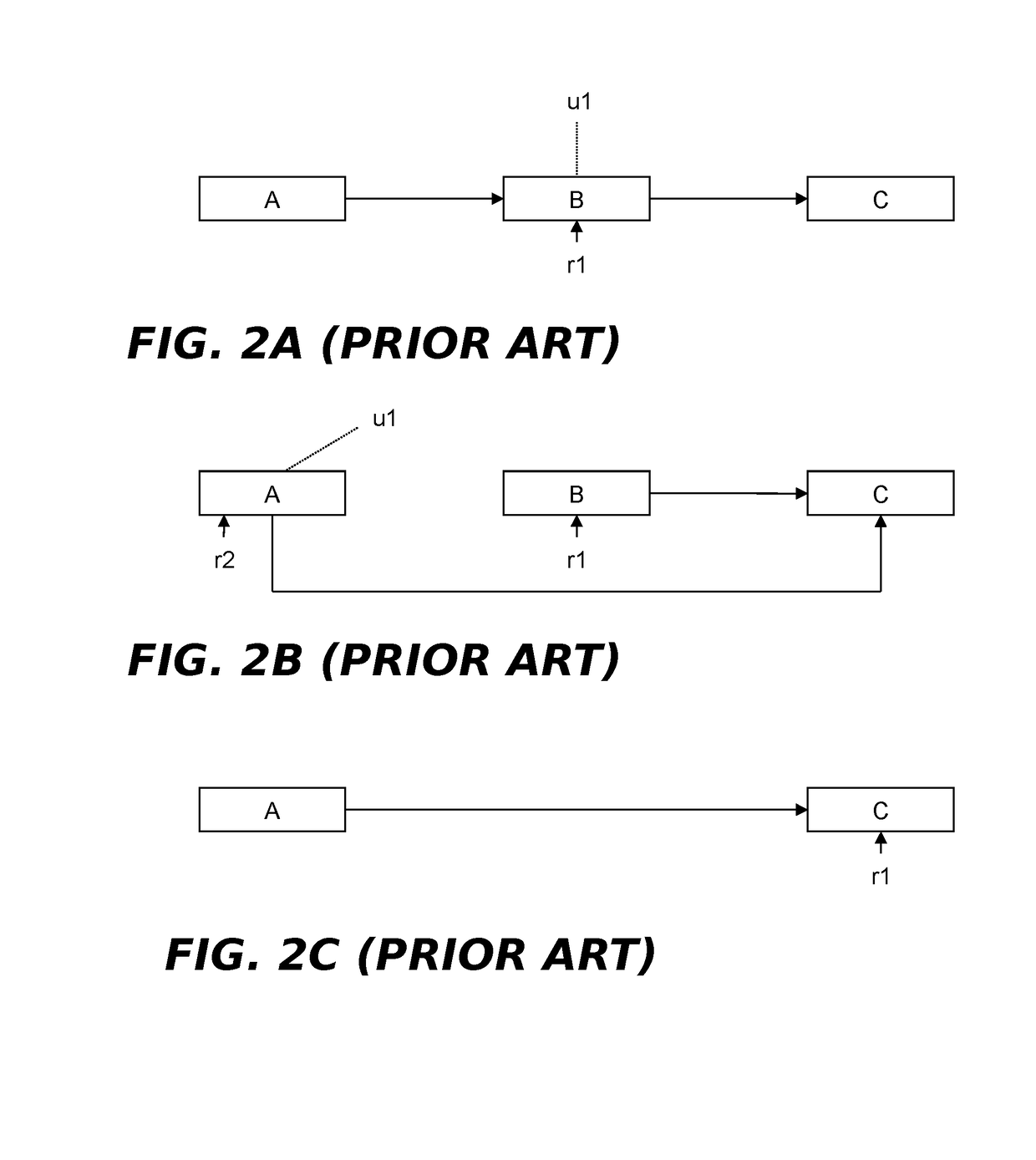

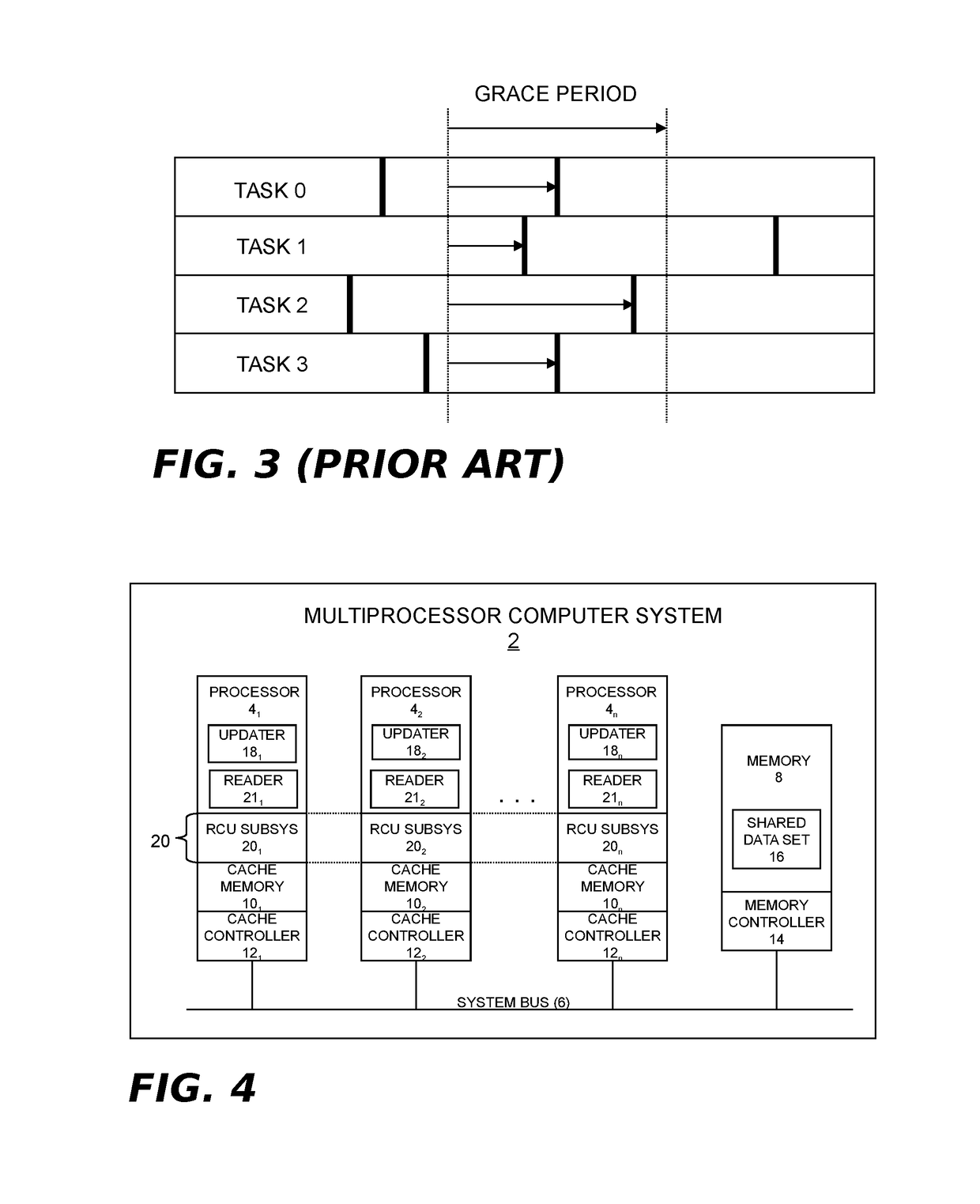

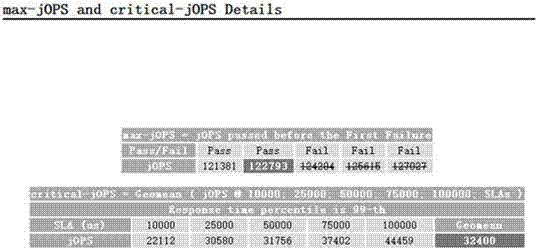

Enabling Real-Time CPU-Bound In-Kernel Workloads To Run Infinite Loops While Keeping RCU Grace Periods Finite

A technique for enabling real-time CPU-bound in-kernel workloads to run infinite loops while keeping read-copy update (RCU) grace periods finite. In an embodiment, a per-CPU indicator may be set to indicate that a CPU running the CPU-bound in-kernel workload has not reported an RCU quiescent state within a defined time. A function may be invoked from within the workload that causes an RCU quiescent state to be reported on behalf of the CPU if the per-CPU indicator is set. If the RCU quiescent state is not reported within a longer defined time, the CPU may be rescheduled.

Owner:IBM CORP

Enabling real-time CPU-bound in-kernel workloads to run infinite loops while keeping RCU grace periods finite

A technique for enabling real-time CPU-bound in-kernel workloads to run infinite loops while keeping read-copy update (RCU) grace periods finite. In an embodiment, a per-CPU indicator may be set to indicate that a CPU running the CPU-bound in-kernel workload has not reported an RCU quiescent state within a defined time. A function may be invoked from within the workload that causes an RCU quiescent state to be reported on behalf of the CPU if the per-CPU indicator is set. If the RCU quiescent state is not reported within a longer defined time, the CPU may be rescheduled.

Owner:IBM CORP

Enabling real-time CPU-bound in-kernel workloads to run infinite loops while keeping RCU grace periods finite

A technique for enabling real-time CPU-bound in-kernel workloads to run infinite loops while keeping read-copy update (RCU) grace periods finite. In an embodiment, a per-CPU indicator may be set to indicate that a CPU running the CPU-bound in-kernel workload has not reported an RCU quiescent state within a defined time. A function may be invoked from within the workload that causes an RCU quiescent state to be reported on behalf of the CPU if the per-CPU indicator is set. If the RCU quiescent state is not reported within a longer defined time, the CPU may be rescheduled.

Owner:INT BUSINESS MASCH CORP

Multi-JVM deployment method based on non-uniform memory accessing technology

InactiveCN106897122AEasy accessImprove performanceResource allocationSoftware simulation/interpretation/emulationAccess timeParallel computing

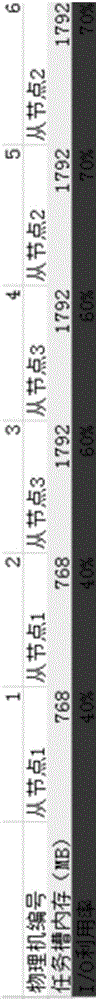

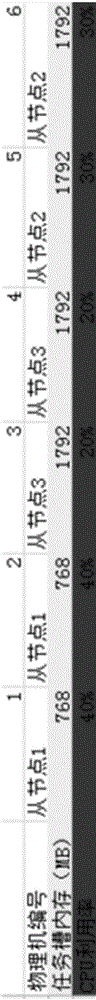

The invention relates to a multi-JVM deployment method based on a non-uniform memory accessing technology. The method comprises the following steps of 1, confirming the starting of a NUMA service in a BIOS option; 2, confirming the installation of a numactl rpm package in a Linux system; 3, using numactl-hardware to check the number of nodes and a CPU core and memory information which are contained in each node; 4, using a numactl order to carry out node binding. A JVM is bound on a specific node or a specific CPU by the adoption of a CPU binding strategy; the problems are effectively avoided that when the node binding is not conducted on the JVM, each brought JVM memory is randomly distributed among the nodes, and therefore accessing time is increased when the CPU accesses the memory by crossing nodes, so that the Java application performance is prone to severe influence.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

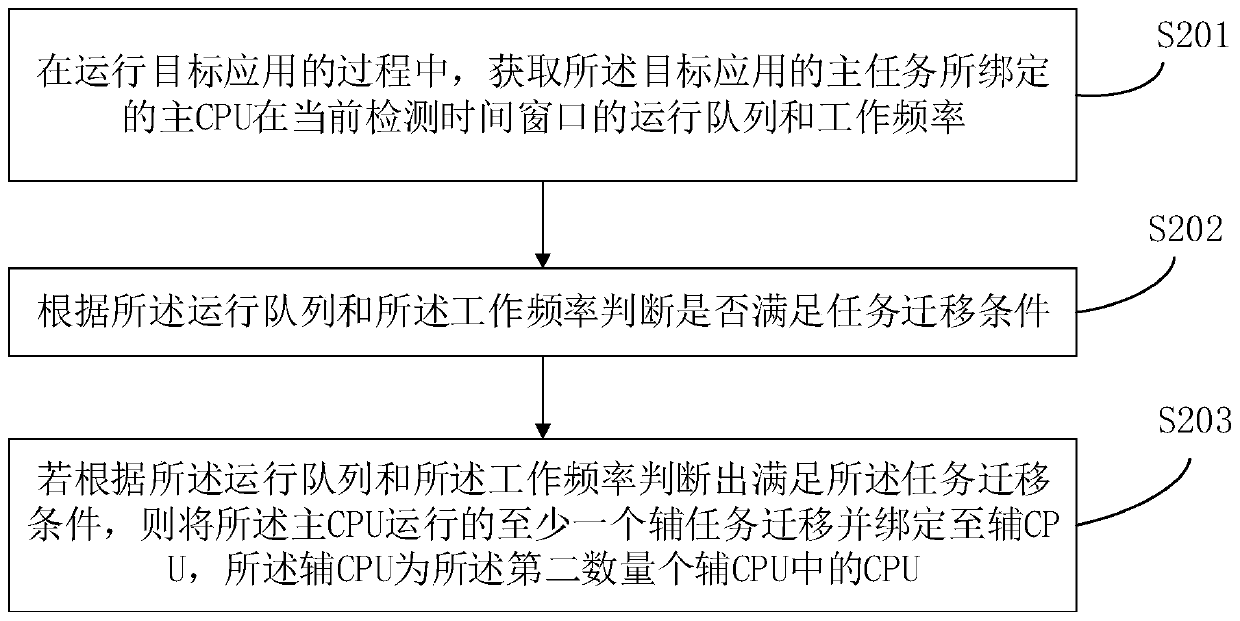

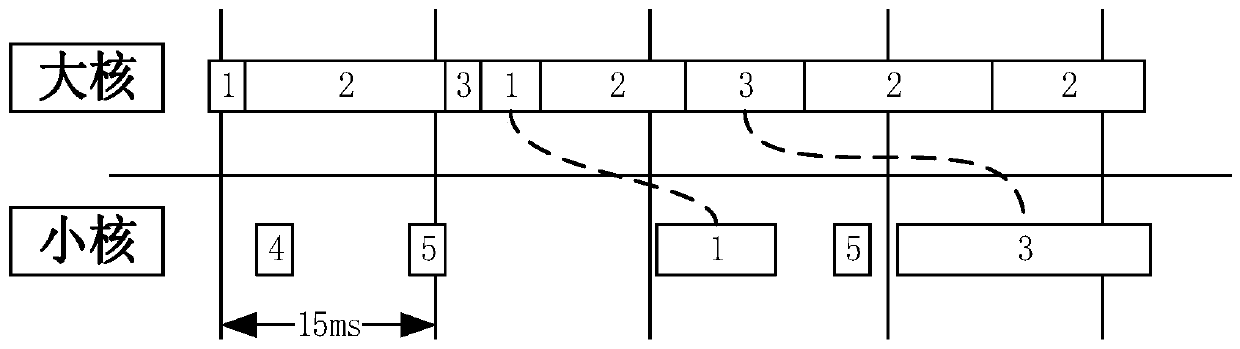

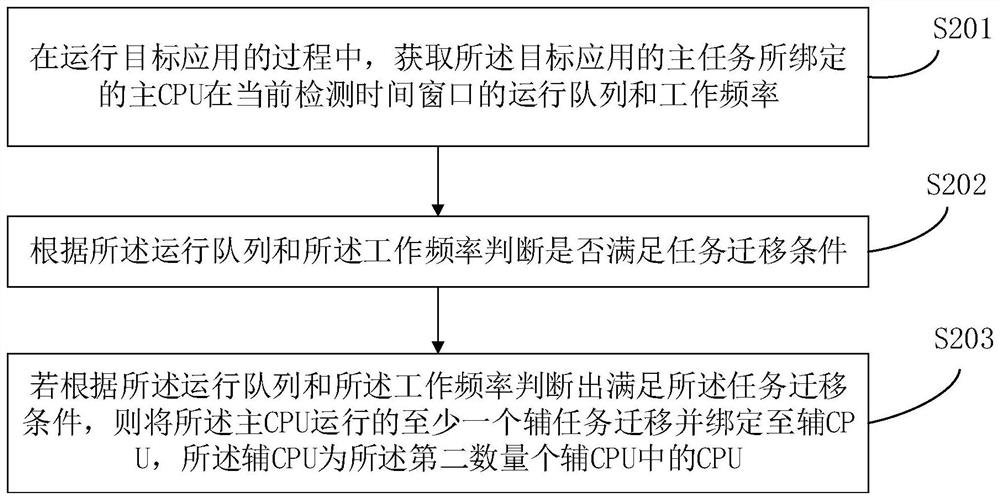

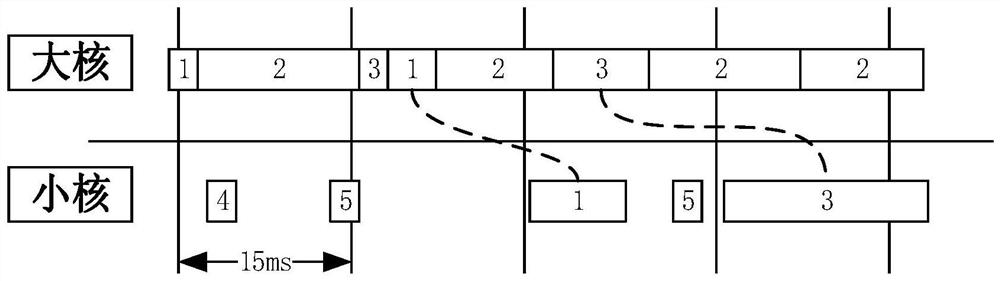

Application operation optimization control method and related product

ActiveCN110825524AImprove stabilityImprove intelligenceProgram initiation/switchingResource allocationTime windowsEmbedded system

The invention discloses an application operation optimization control method and a related product. The mrthod is applied to eElectronic equipment, a system-on-chip of the electronic equipment comprises a first number of main central processing units (CPUs) and a second number of auxiliary CPUs, and the method comprises the following steps: in the process of running a target application, obtaininga running queue and a working frequency of a main CPU bound with a main task of the target application in a current detection time window; judging whether a task migration condition is met or not according to the running queue and the working frequency; if it is judged that the task migration condition is met according to the operation queue and the working frequency, migrating and binding at least one auxiliary task operated by the main CPU to an auxiliary CPU, wherein the auxiliary CPU is one of CPUs in the second number of auxiliary CPUs. The method provided by the embodiment of the invention can enable the electronic equipment to detect the computing resource configuration conditions of various tasks in real time in the process of running the target application, adjust the resource configuration in time, and reduce and optimize lagging.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

A Load Classification Method Running on Virtual Machines in Cloud Computing Environment

ActiveCN103279392BHigh load classification accuracyExclude the effects of misclassificationResource allocationTransmissionCloud computingPerformance comparison

The invention discloses a method for classifying operated load in a virtual machine under cloud computing environment. The method includes firstly acquiring monitoring parameters in 5 minutes of load operation and subjecting the monitored parameters to normalization processing; classifying load monitored into four categories such as CPU (central processing unit)-intensive load, memory intensive load, I / O (input / output) intensive load and network intensive load by means of TSRSVM (training sets refresh SVM); providing corresponding customized optimizing strategies for operation systems which running the four categories of intensive loads, and monitoring operating state of the systems through a performance comparison device; indicating that classification strategies are correct if performance of the systems is improved, otherwise, indicating that the classification strategies are incorrect. By the method, accuracy of load classification is high and system performance loss is low.

Owner:ZHEJIANG UNIV

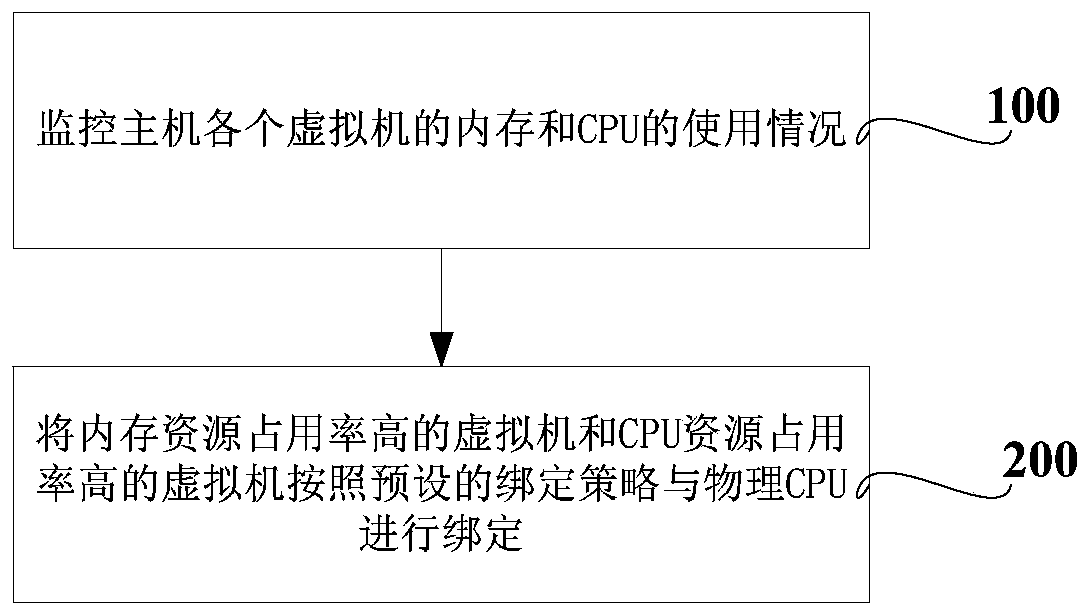

A virtual machine central processing unit cpu binding method and device

ActiveCN107273188BAvoid ability to declineImprove performanceSoftware simulation/interpretation/emulationParallel computingProcessing element

The invention discloses a virtual machine central processing unit CPU binding method and device, and relates to the virtual machine technology. The virtual machine CPU binding method comprises the following step: binding virtual machines whose memory resource occupancy rate reaches or exceeds a first set threshold to a physical CPU that shares an L2 cache in accordance with a first binding strategy, wherein the first binding strategy comprises the following conditions: binding the virtual machines whose memory resource occupancy rate reaches or exceeds the first set threshold to the physical CPU that shares the L2 cache, and only one virtual machine whose memory resource occupancy rate reaches or exceeds the first set threshold is running on the bound physical CPU.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

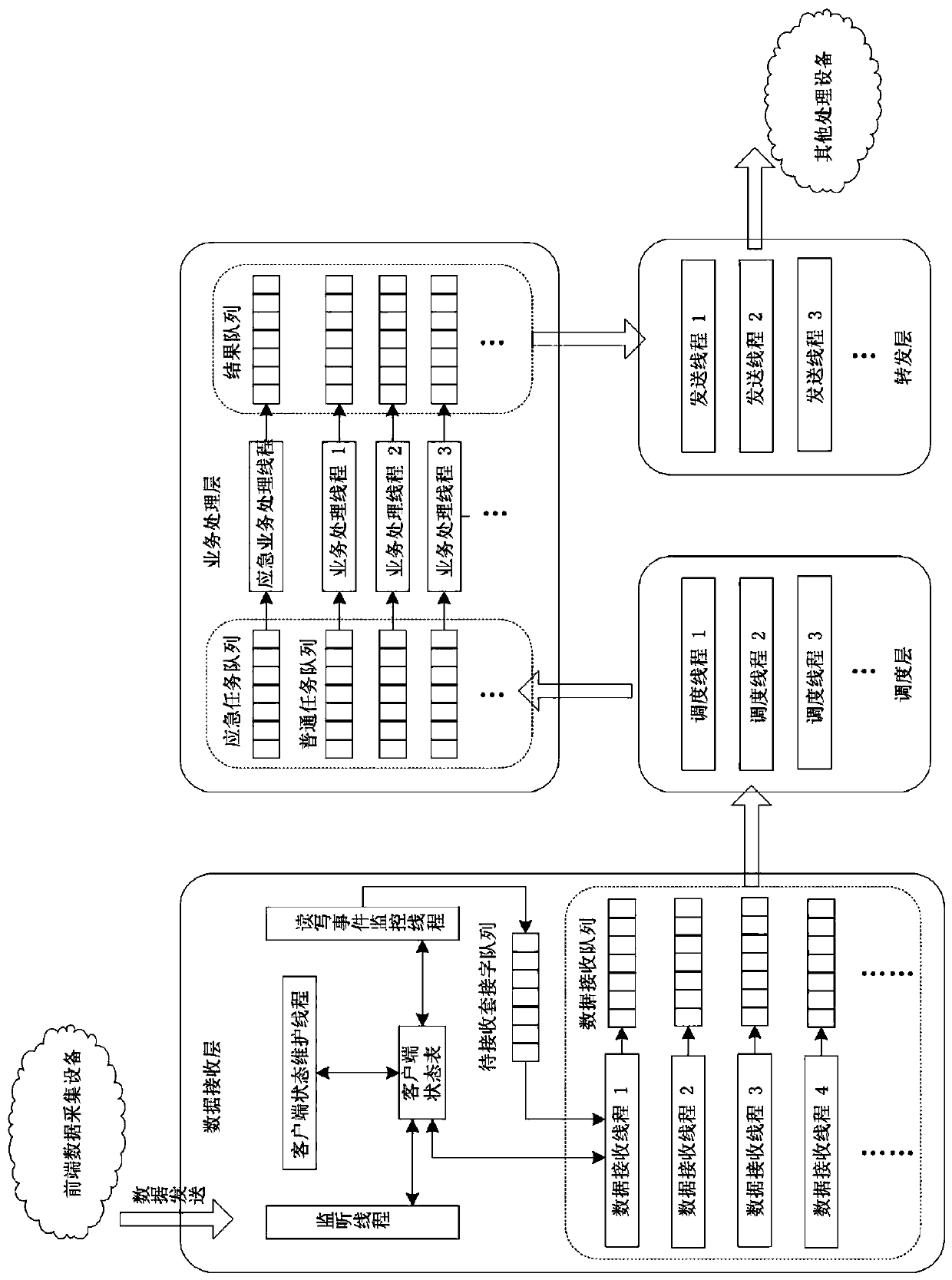

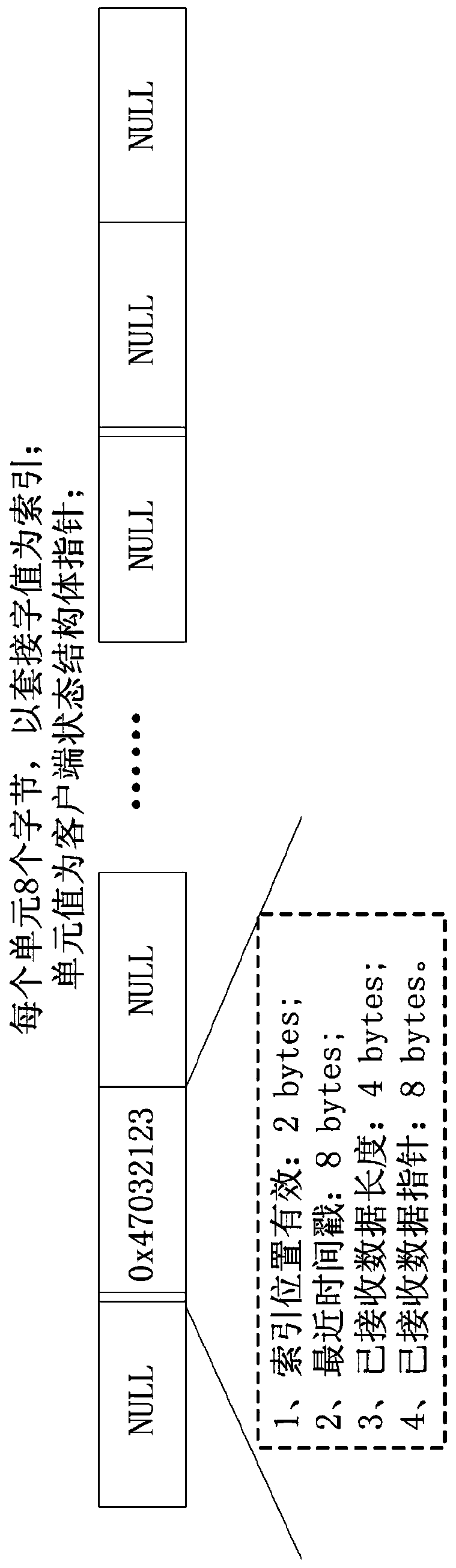

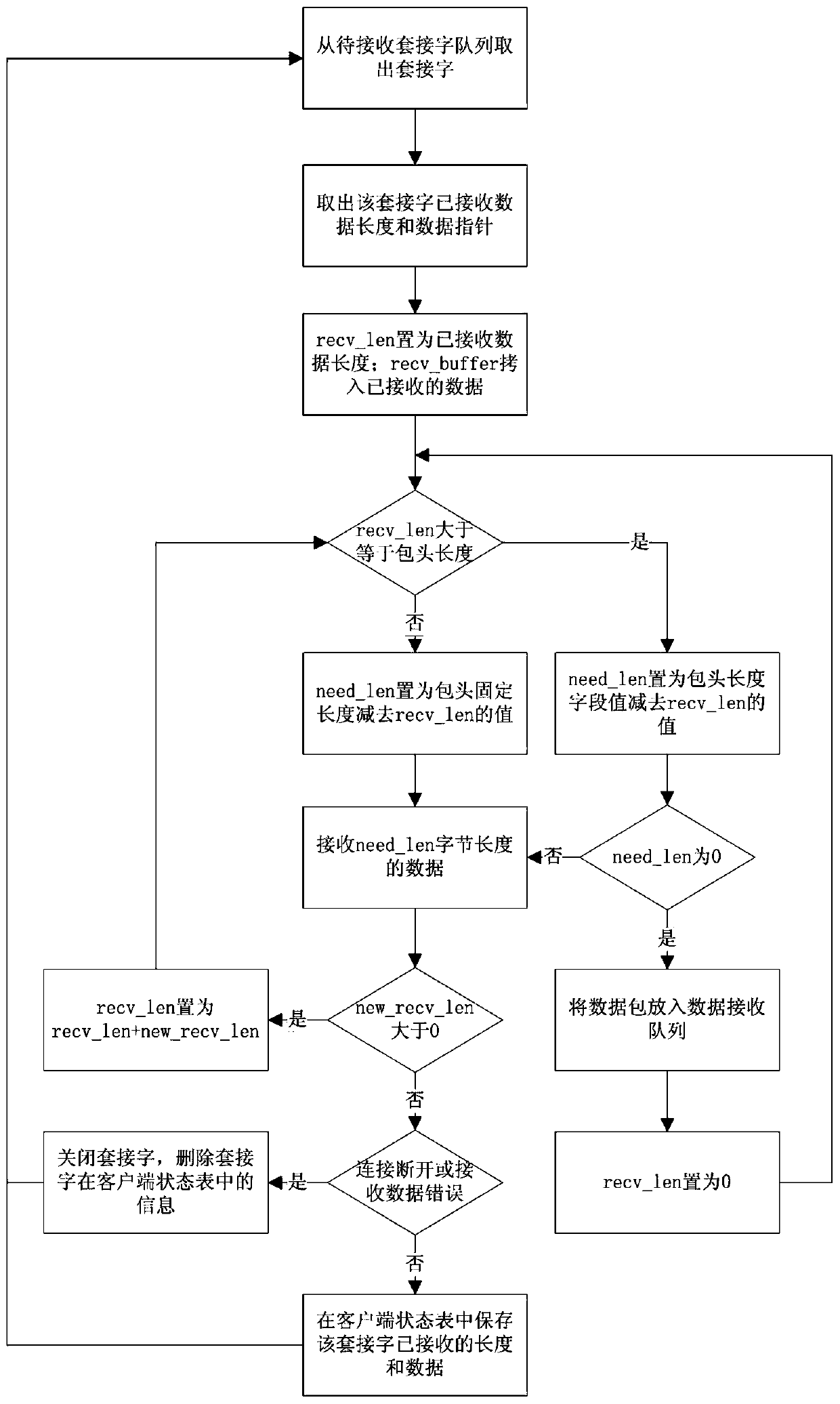

A High Throughput Data Stream Processing Method

ActiveCN106850740BTake advantage ofAvoid interferenceData switching networksData stream processingCPU time

The invention discloses a high-throughput data stream processing method, which receives and processes data by asynchronous data reception, uses a small number of receiving threads to send requests from as many clients as possible, makes full use of the CPU time of the receiving threads, and improves the system The overall throughput rate; the received data is sent to the business processing thread for processing as quickly as possible through the lock-free task scheduling method, which reduces the lock overhead and ensures the real-time performance of data processing; in addition, because data reception is an IO-intensive Data processing is a CPU-intensive operation. The two layers are separated and run on different ranges of CPU cores to ensure that the data receiving and processing processes do not interfere with each other, and there will be no data processing threads occupying the CPU for a long time. , the data receiving thread cannot be scheduled to run by the system, and the ratio of the number of receiving threads to the number of processing threads can be configured and balanced according to business requirements, making full use of the system's cpu and memory resources.

Owner:INST OF INFORMATION ENG CHINESE ACAD OF SCI

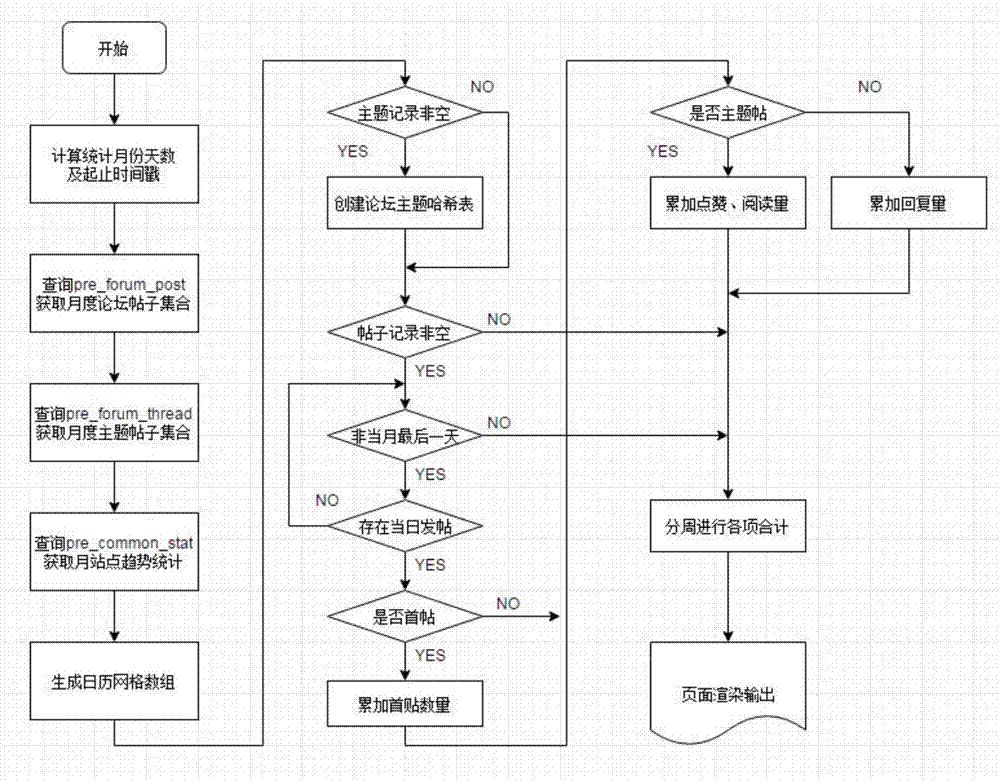

Quick statistical method based on Discuz community

ActiveCN107958011AEasy to handleReduce table locks such as exclusive locksData processing applicationsWeb data indexingPersonalizationData memory

The invention relates to the technical field of real-time data processing, in particular to a quick statistical method based on a Discuz community. The method comprises the following steps that: S1: constructing a data memory calculation mechanism; S2: constructing a forum topic Hash table; and S3: carrying out statistical processing. By use of the method, the processing performance of a communityplatform with huge historical data can be remarkably improved, a database intensive operation is transferred into a memory CPU (Central Processing Unit) intensive operation, database resources, including MySQL and the like, are released in time, lock table situations, including exclusive locks and the like, are reduced, a response success rate is improved, and the method can be used for the business fields with a large handling capacity, wherein the business fields include medium-and-small-scale instant query, statistics and the like with high frequency; an advantageous guarantee is providedfor arrival instantaneity and accuracy required by a user to use business; and the secondary extension development of personalized statistical item requirements is supported. The method opens a new approach for a data statistical processing platform, and a new research idea is provided for the business application research and development of a community forum.

Owner:北京聚通达科技股份有限公司

Application operation optimization control method and related products

ActiveCN110825524BImprove stabilityImprove intelligenceProgram initiation/switchingResource allocationComputer scienceTime windows

The present application discloses an application operation optimization control method and related products, which are applied to electronic equipment. The system-on-chip of the electronic equipment includes a first number of main central processing units (CPUs) and a second number of auxiliary CPUs. The method includes: : in the process of running the target application, obtain the running queue and working frequency of the main CPU bound to the main task of the target application in the current detection time window; judge whether the task migration is satisfied according to the running queue and the working frequency condition; if it is determined that the task migration condition is satisfied according to the running queue and the working frequency, at least one auxiliary task run by the main CPU is migrated and bound to the auxiliary CPU, and the auxiliary CPU is the second A CPU among two secondary CPUs. The method of the embodiment of the present application can enable the electronic device to detect the computing resource configuration of each task in real time during the process of running the target application, and adjust the resource configuration in time to reduce and optimize the stall.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

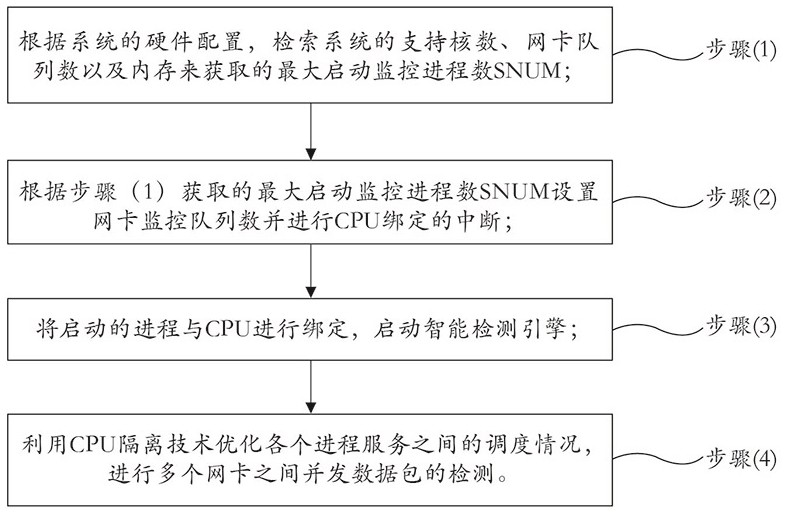

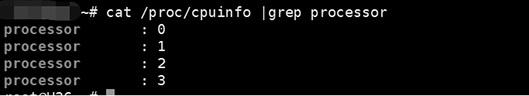

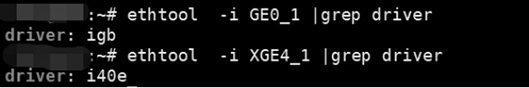

Firewall multi-core architecture intelligent detection method

PendingCN114531285ASuit one's needsOptimize schedulingResource allocationSecuring communicationData packQueue number

The invention discloses a firewall multi-core architecture intelligent detection method, and belongs to the technical field of multi-core architecture intelligent detection methods. The intelligent detection method comprises the following steps: (1) according to hardware configuration of a system, retrieving the number of support cores, the number of network card queues and a memory of the system to obtain the maximum number of start monitoring processes SNUM; (2) setting a network card monitoring queue number according to the maximum start monitoring process number SNUM obtained in the step (1), and interrupting CPU binding; (3) binding the started process with a CPU (Central Processing Unit), and starting an intelligent detection engine; and (4) optimizing the scheduling condition among the process services by utilizing a CPU isolation technology, and detecting concurrent data packets among the plurality of network cards. According to the method and the device, the scheduling condition among the process services is optimized, so that a multi-network-card concurrent data packet detection process with higher performance is finally realized.

Owner:HANGZHOU GUYI NETWORK TECH CO LTD

A multi-queue off-peak scheduling model and method based on task classification in cloud computing

ActiveCN104657221BSimple methodReduce complexityResource allocationResource utilizationResource management

The invention discloses a multi-queue peak-alternation scheduling model and a multi-queue peak-alteration scheduling method based on task classification in cloud computing. The multi-queue peak-alternation scheduling model is characterized by comprising a task manager, a local resource manager, a global resource manager and a scheduler. The multi-queue peak-alternation scheduling method comprises the following steps: firstly, according to demand conditions of a task to resources, dividing tasks into a CPU (central processing unit) intensive type, an I / O (input / output) intensive type and a memory intensive type; sequencing the resources according to the CPU, the I / O and the memory load condition, and staggering a resource using peak during task scheduling; scheduling a certain parameter intensive type mask to a resource with relatively light index load, scheduling the CPU intensive type mask to the resource with relatively low CPU utilization rate. According to the multi-queue peak-alternation scheduling model and the multi-queue peak-alternation scheduling method disclosed by the invention, load balancing can be effectively realized, scheduling efficiency is improved and the resource utilization rate is increased.

Owner:GUANGDONG UNIV OF PETROCHEMICAL TECH

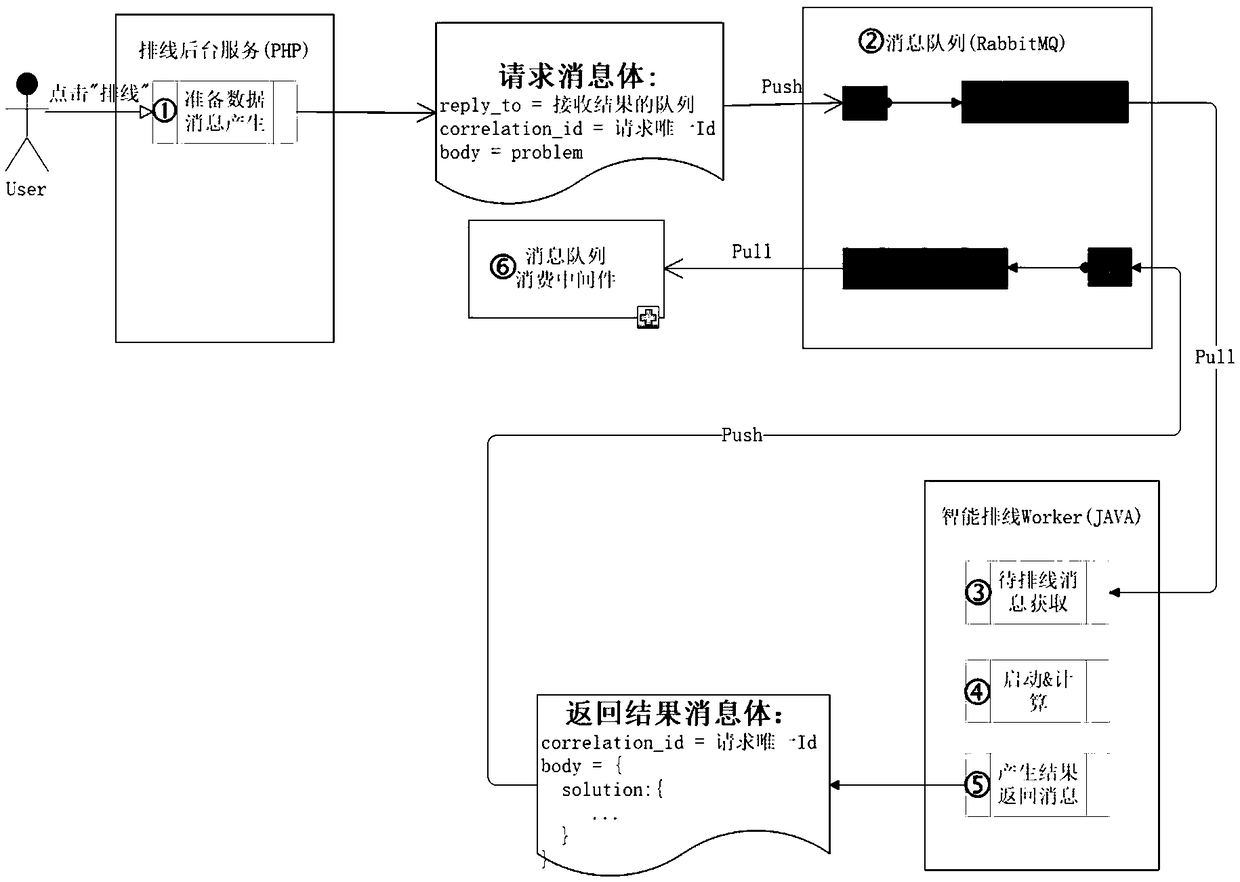

CPU-intensive request processing method and system for VRP cloud service system

InactiveCN108874540AImprove usabilityIncrease CPU utilizationResource allocationInterprogram communicationMessage queueHigh availability

The invention relates to a CPU-intensive request processing method and system for a VRP cloud service system, wherein the method comprises the steps of: a message generation step: receiving differentrequests of service systems of various requests in a unified manner, then, generating solving messages uniform in format according to pre-settings of a client, and simultaneously, pushing the solvingmessages into a message queue; a message queue processing step: performing processing by adopting a message queue RabbitMQ; a to-be-solved message obtaining step: starting as the role of a message consumer, and solving by actively pulling a message from the message queue while being idle; a VRP system starting and calculating step: calculating the reasonable iteration time as the calculation parameter based on the solving problem complexity of the client; a solving result message generation step: organizing solving results of a VRP system into a uniform result message, and pushing the result message into the message queue; and a message queue consumption middleware processing step: pulling a winding displacement result from the message queue in a timed manner, and then, pushing the windingdisplacement result to the rear winding displacement end. Thereby, the high availability of the service system is improved; and furthermore, the CPU utilization rate of the service system is increased.

Owner:北京云鸟科技有限公司

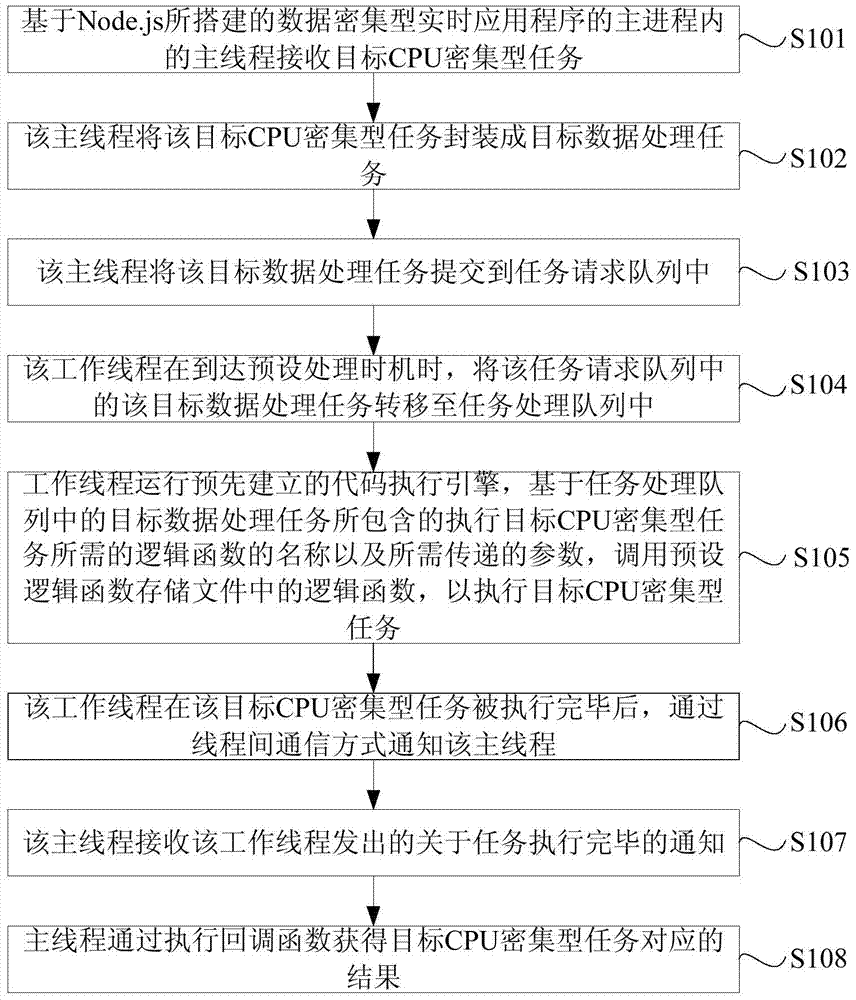

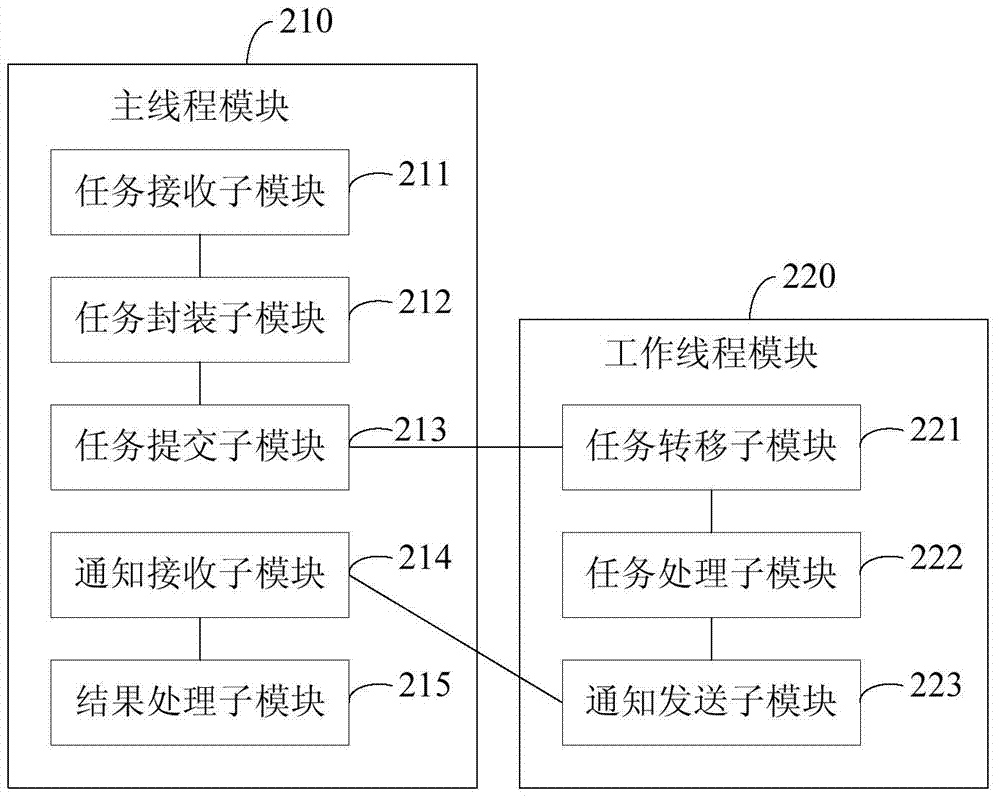

A data processing method and device

ActiveCN104216768BImprove communication efficiencySolve the blockageMultiprogramming arrangementsResource utilizationEncapsulated data

The embodiment of the invention discloses a data processing method and device. The method includes: the main thread in the main process receives the target CPU-intensive task, and submits the target data processing task encapsulated by the target CPU-intensive task to the task request queue; The target data processing tasks in the request queue are transferred to the task processing queue, and the pre-established code execution engine is run. Based on the target data processing tasks in the task processing queue, the preset logic function is called to store the logic function in the file. When the target CPU is intensive Notify the main thread after the task is executed; the main thread receives the notification from the worker thread about the completion of the task, and obtains the corresponding result of the target CPU-intensive task. While solving the problem of task blocking, this solution reduces the occupancy rate of system resources and improves the efficiency of task processing.

Owner:ZHUHAI BAOQU TECH CO LTD

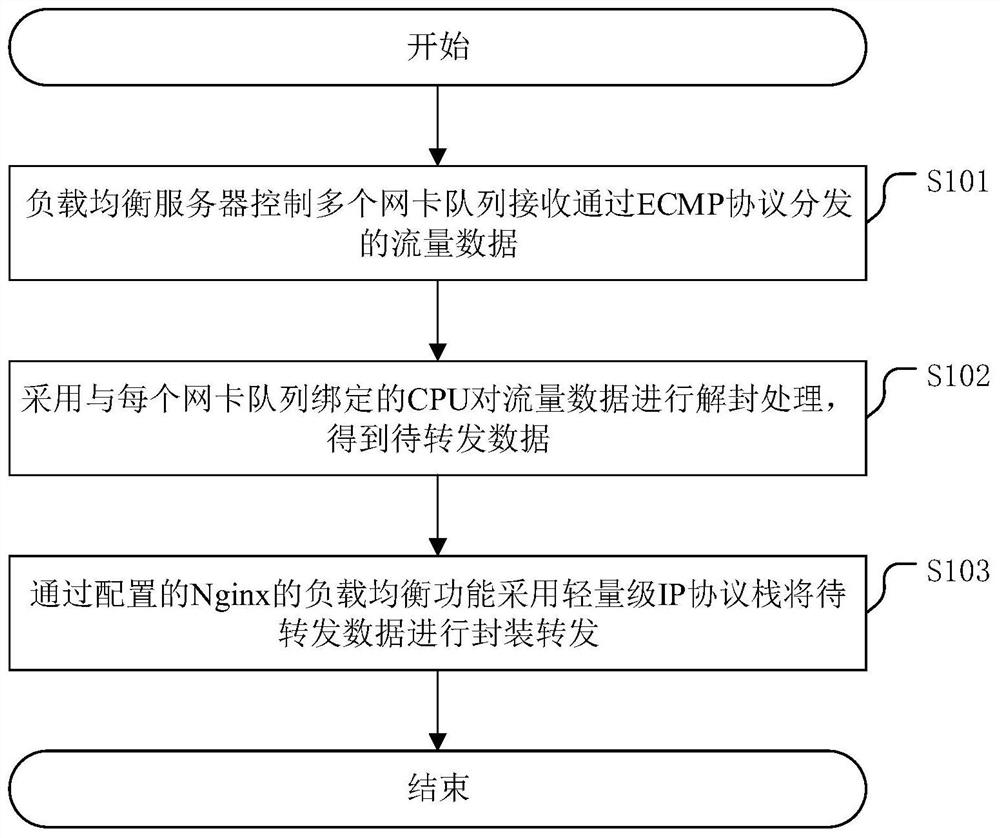

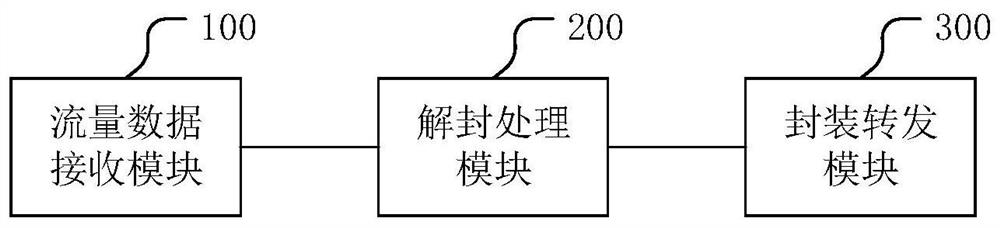

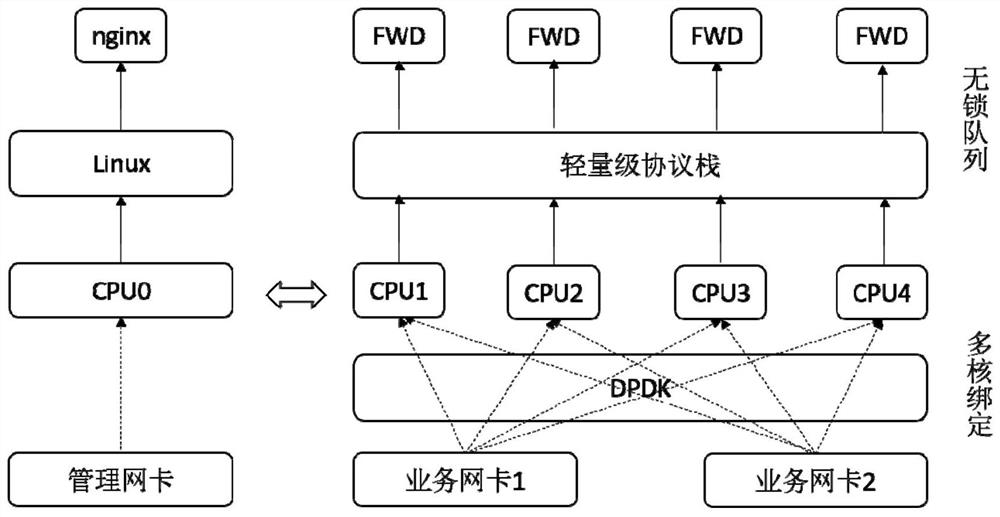

Load balancing method and related device

PendingCN112737966AReduce waiting timeEasy to handleResource allocationData switching networksNetworking protocolParallel computing

The invention discloses a load balancing method, which comprises the steps of: controlling a plurality of network card queues to receive flow data distributed through using an ECMP protocol by a load balancing server; adopting a CPU bound with each network card queue to decapsulate the flow data to obtain to-be-forwarded data; and packaging and forwarding the to-be-forwarded data by adopting a lightweight IP protocol stack through a configured Nginx load balancing function. According to the load balancing method of the invention, the received flow data is decapsulated through the bound CPU, and the data is further encapsulated and forwarded by adopting the lightweight IP protocol stack instead of adopting the network protocol stack of the Linux kernel through the load balancing function of the Nginx, so that the load balancing processing capacity is improved, and the load balancing performance is further improved. The invention further discloses a load balancing device, a server and a computer readable storage medium, which have the above beneficial effects.

Owner:北京浪潮数据技术有限公司

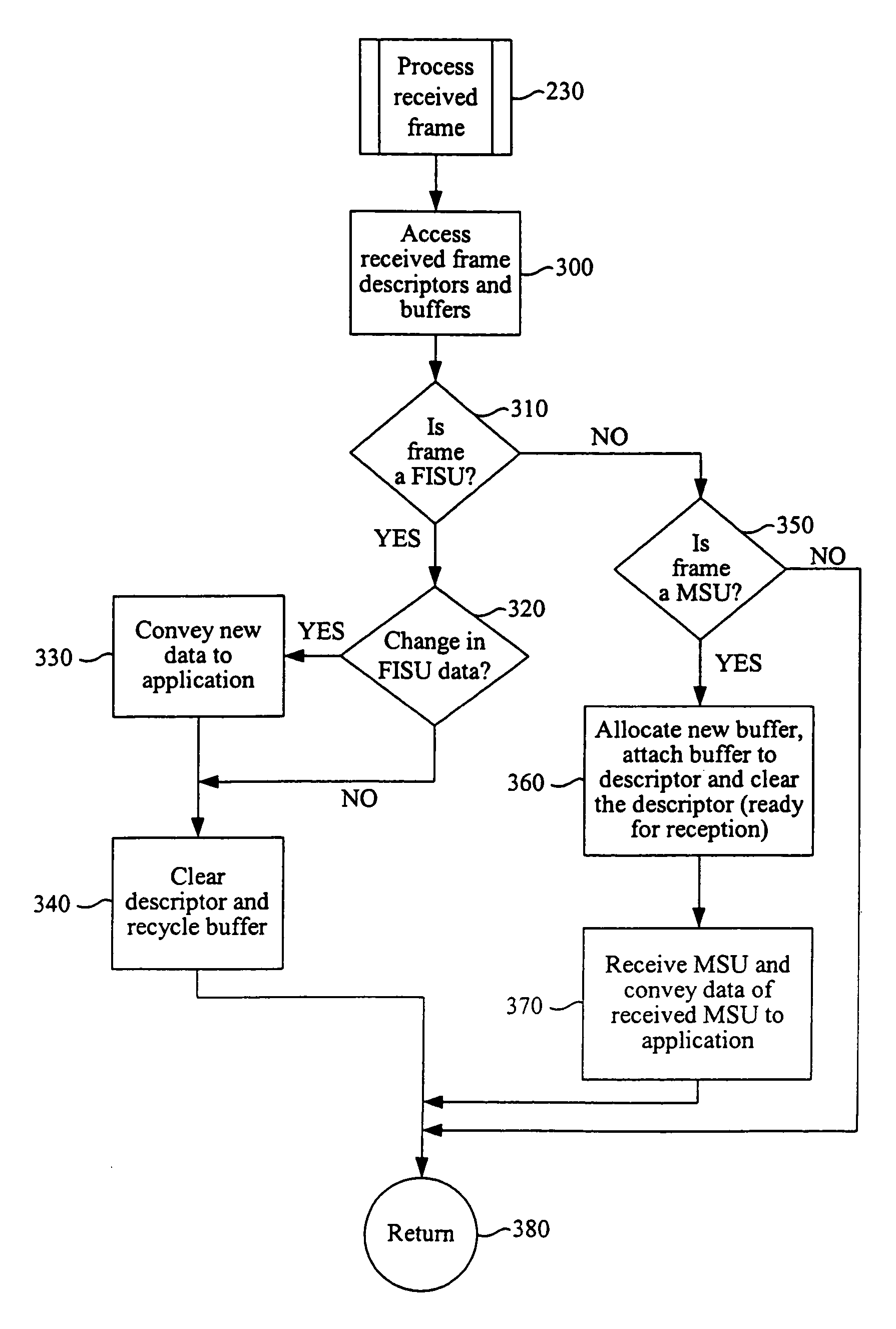

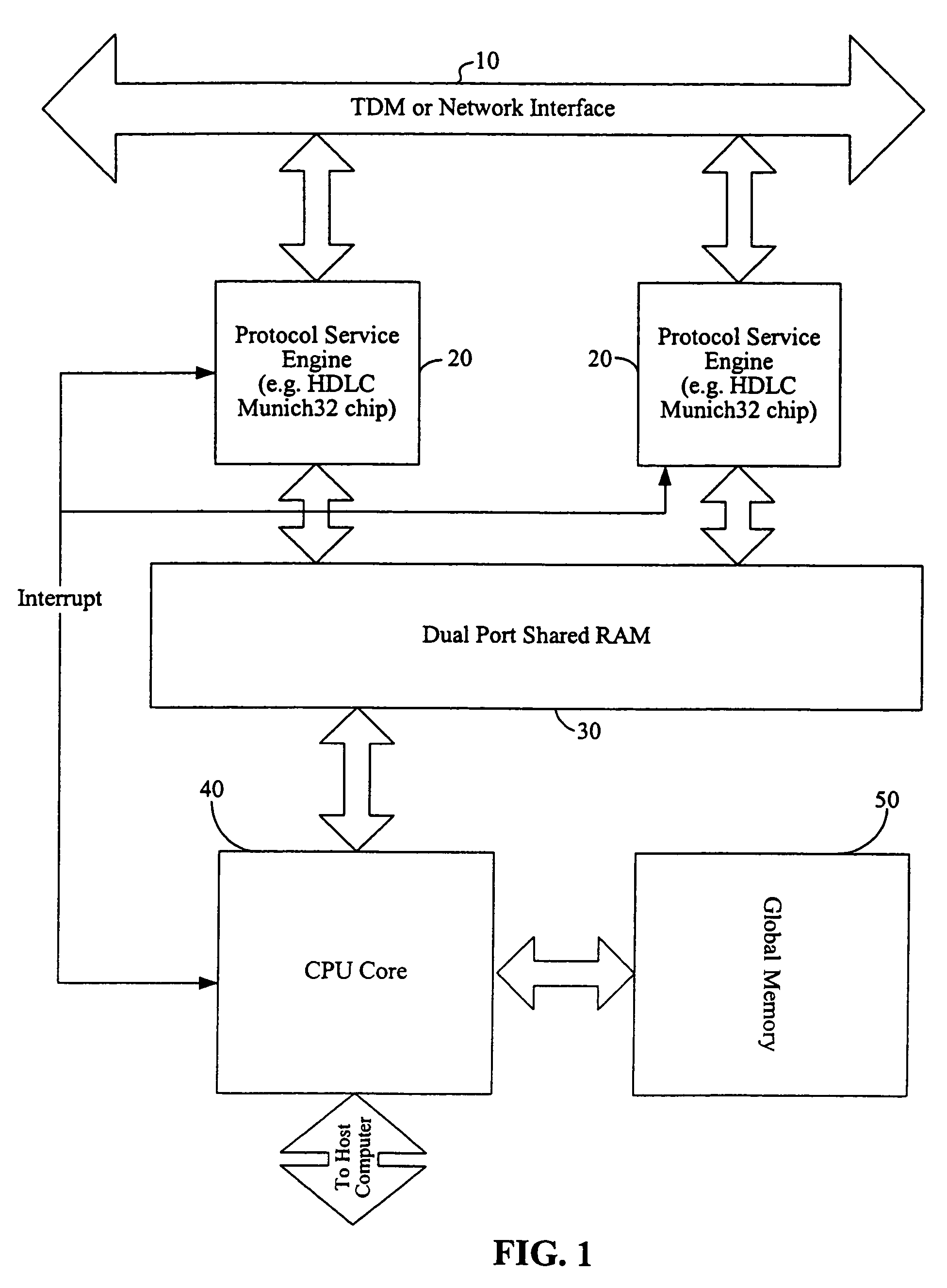

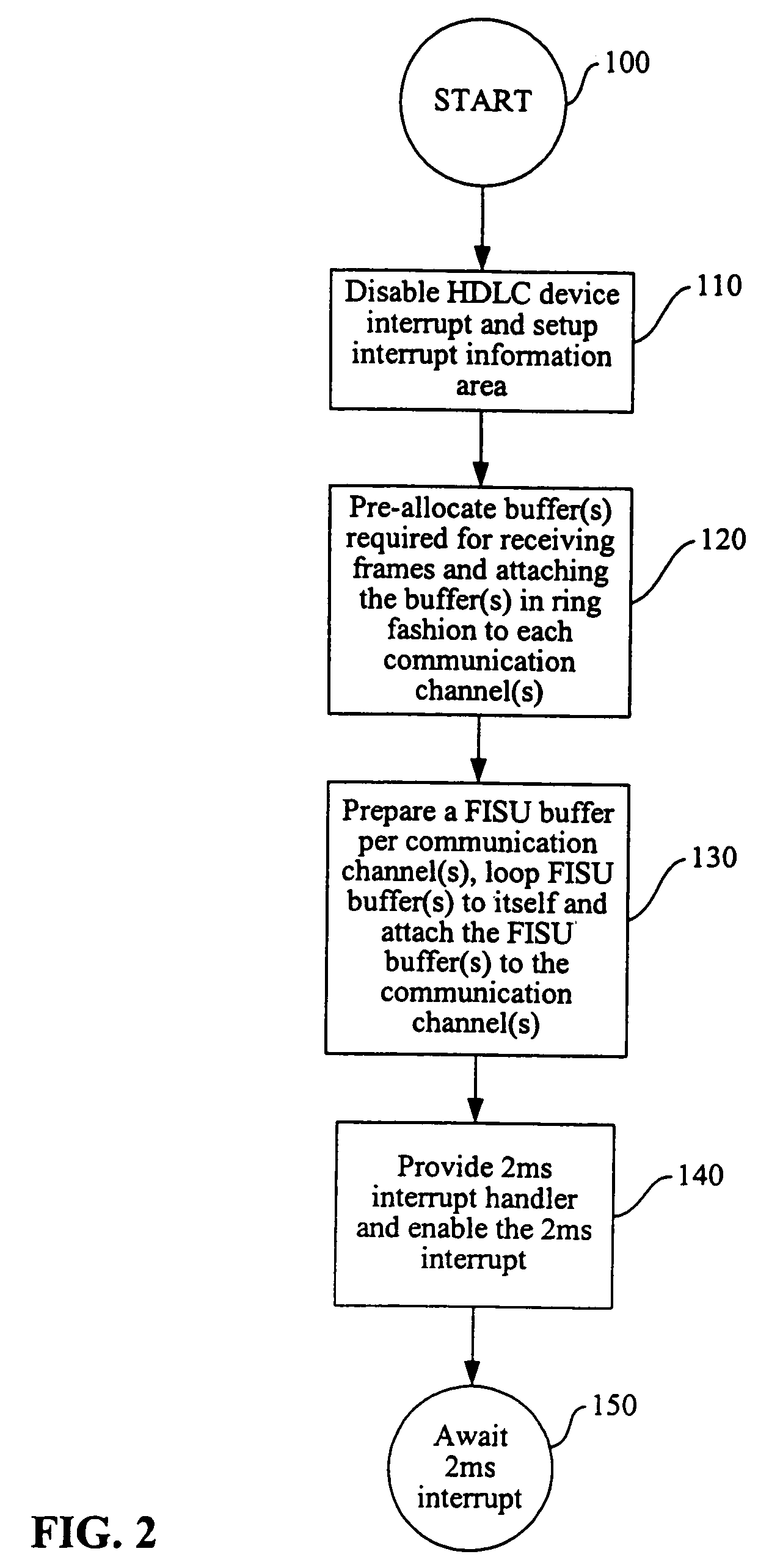

Method and system for improved processing of CPU intensive communications protocols

InactiveUS7043583B2Program initiation/switchingTime-division multiplexNetwork Communication ProtocolsInterrupt handler

A method of processing a frame of a CPU intensive communications protocol includes disabling per frame interrupts of a CPU; enabling a periodic interrupt handler to interrupt the CPU upon an interrupt period; and upon an interrupt of the periodic interrupt handler, determining and processing a frame received in the interrupt period. Further, a frame sent acknowledgment stored in the interrupt period may be processed during the interrupt. A method of processing the transmission of frames of a CPU intensive communications protocol includes, when no MSU frame is queued for transmission, sending FISU frames that each point to itself; and if a MSU frame is queued for transmission, updating the MSU frame to point to a new FISU frame, updating a current FISU frame to point to the MSU frame, and sending the current FISU frame, the MSU frame and the new FISU frame.

Owner:INTEL CORP

Parallel Computing Scheduling Method in Heterogeneous Environment

ActiveCN103500123BImprove resource utilizationIncrease job throughputResource allocationArray data structureResource utilization

The invention relates to the field of parallel computing, and discloses a parallel computing scheduling method in a heterogeneous environment. The method divides tasks in a parallel computing job into I / O by constructing a variety of JVM task slots with different memory and an array of idle task slots. Intensive and CPU-intensive, and assign the above tasks to the appropriate task slots for calculation, to achieve the purpose of optimizing parallel computing efficiency in heterogeneous environments. The invention has the advantages of dynamically determining the size and type of memory required by the task, improving the resource utilization rate of heterogeneous clusters, reducing the overall running time of parallel computing jobs, and avoiding the situation of memory overflow during task running .

Owner:ZHEJIANG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com