Parallel Computing Scheduling Method in Heterogeneous Environment

A technology of parallel computing and scheduling method, applied in the direction of resource allocation, multi-programming device, etc., can solve the problem of low resource utilization rate of data parallel model, and achieve the effect of increasing job throughput, speeding up operation speed, and improving resource utilization rate.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

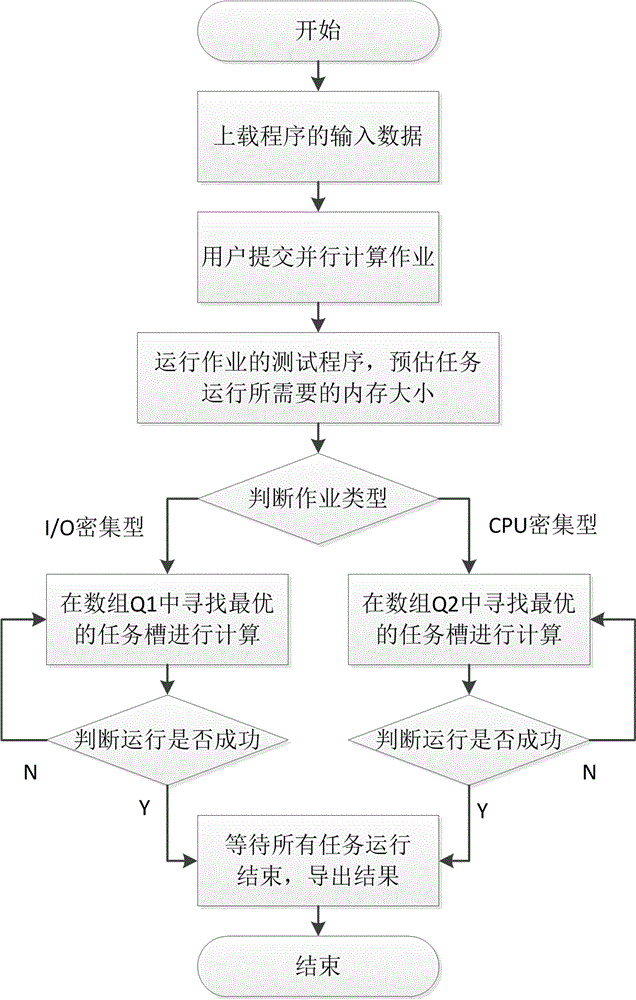

[0034] Parallel computing scheduling method in a heterogeneous environment, the specific process is as follows figure 1 shown, including the following specific steps:

[0035] 1) Build multiple JVM task slots on a heterogeneous cluster. The multiple JVM task slots are composed of memory spaces of different or the same size. The heterogeneous cluster includes a master node and a slave node, and the JVM task slots are located on the slave nodes. superior;

[0036] 2) The master node monitors the I / O utilization and CPU utilization of all slave nodes, and builds an array of idle task slots Q 1 and Q 2 , the array of free task slots Q 1 and Q 2 Each consists of one or more JVM task slots;

[0037] 3) The distributed file system built on the heterogeneous cluster receives the input data to be processed uploaded by the user, and stores the input data in the form of data blocks on the nodes of the heterogeneous cluster; the distributed file system receives the parallel data subm...

Embodiment 2

[0053] According to the steps listed in embodiment 1, a specific parallel computing scheduling test is carried out, and the specific steps are as follows:

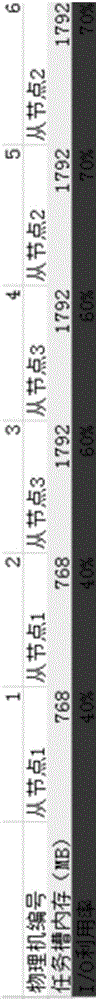

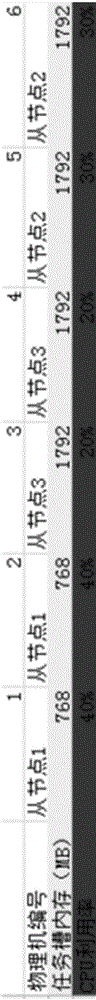

[0054] Step 1): The heterogeneous cluster contains four physical nodes, one master node and three slave nodes (slave node 1, slave node 2, and slave node 3). Each node has a single-core 64-bit Xeon processor with a main frequency of 2.00Hz, and the memory of the three slave nodes is 4GB, 8GB, and 8GB respectively. All machines are connected in the same Gigabit LAN, and their disk read and write speeds are the same. Construct 2 JVM task slots on each slave node, and the memory of each JVM task slot is based on the formula Calculated as: 768MB, 1792MB and 1792MB;

[0055] Step 2): At a certain moment, the master node monitors and obtains that the I / O utilization rates of each slave node are 40%, 70% and 60%, and the CPU utilization rates are 40%, 30% and 20% respectively. At this time, all task slots are in the idle stat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com