Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

65results about How to "Improve I/O efficiency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

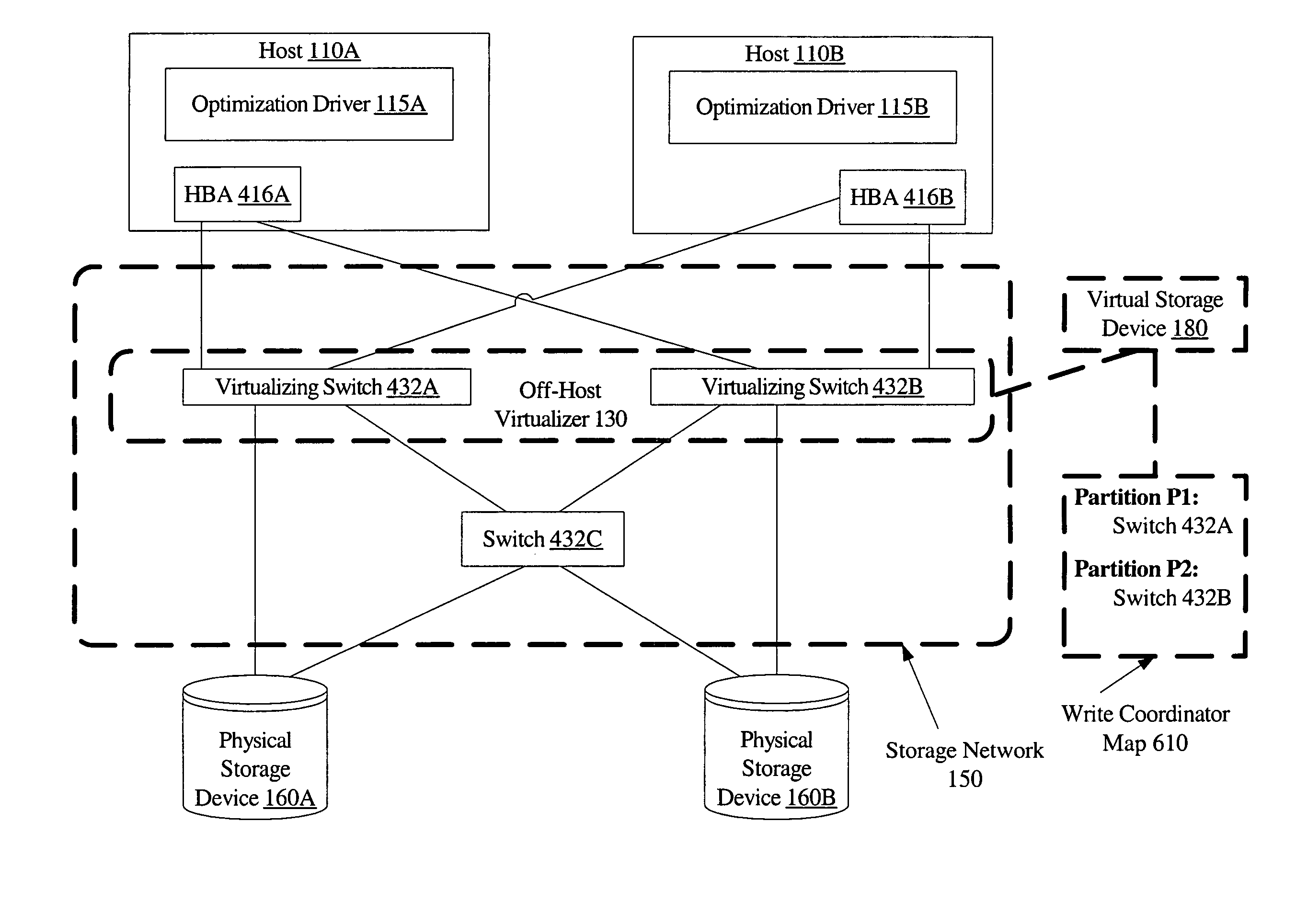

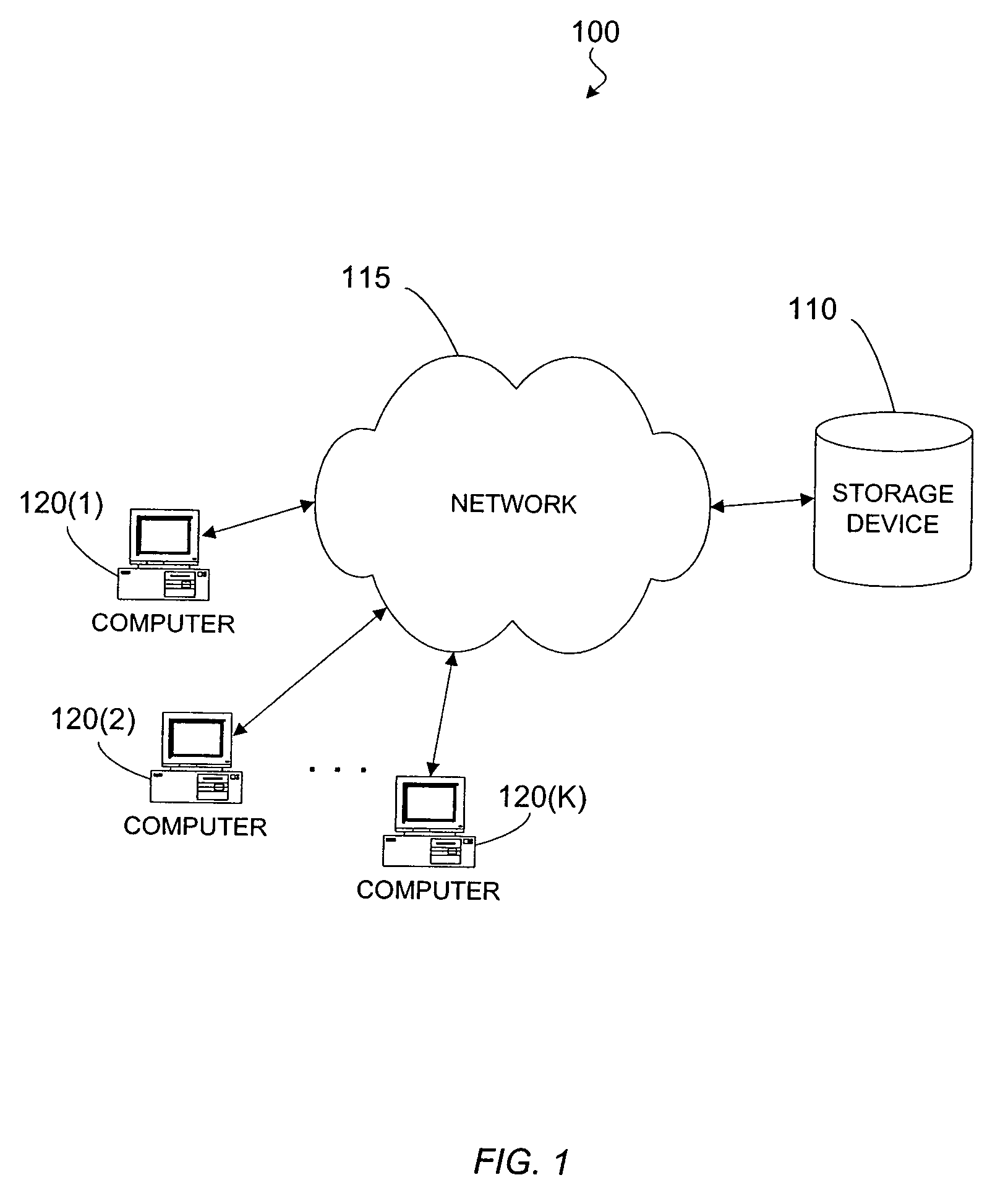

Host-based virtualization optimizations in storage environments employing off-host storage virtualization

InactiveUS20060112251A1Efficient responseFast responseError detection/correctionMemory systemsStorage virtualizationControl data

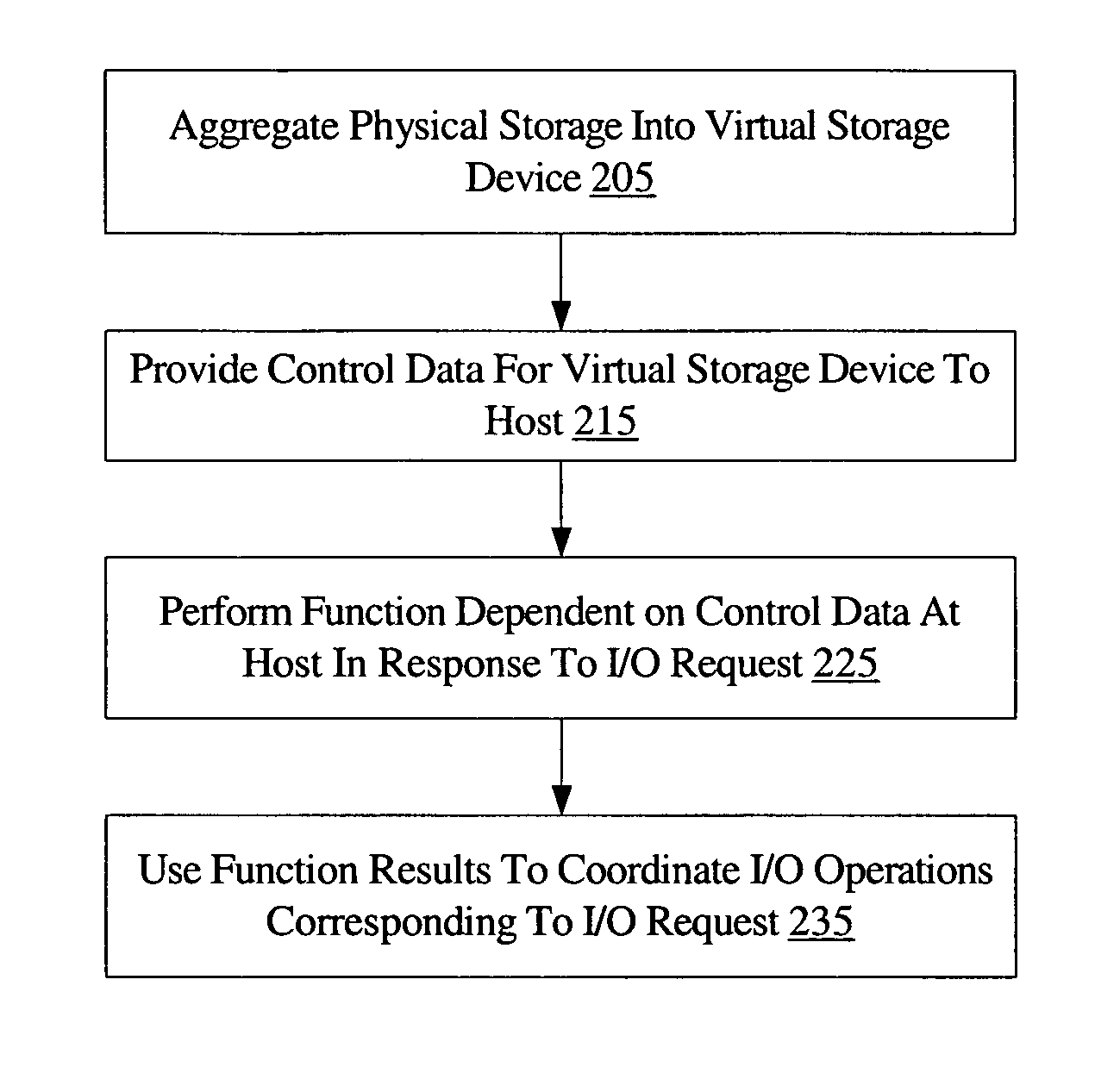

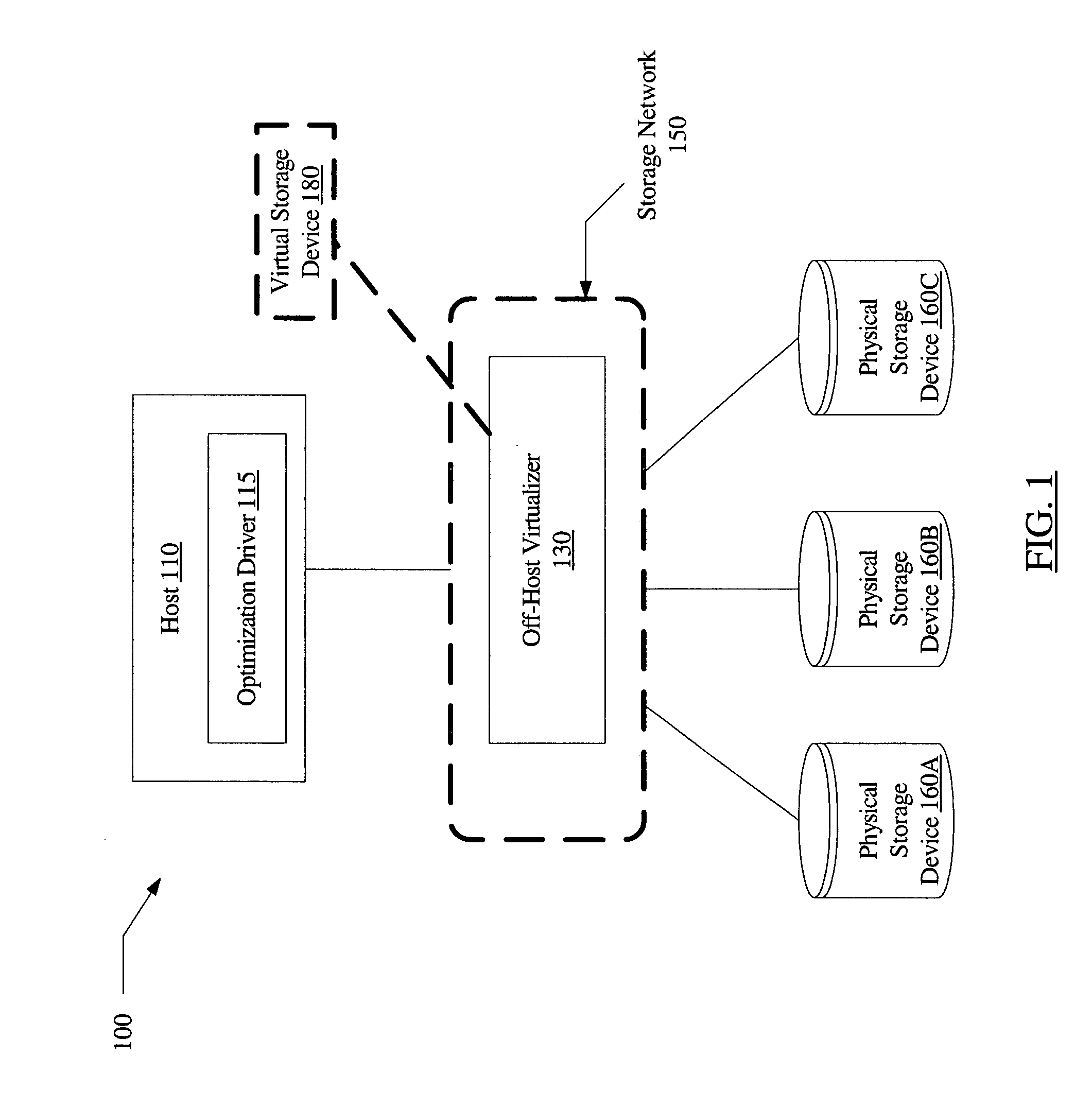

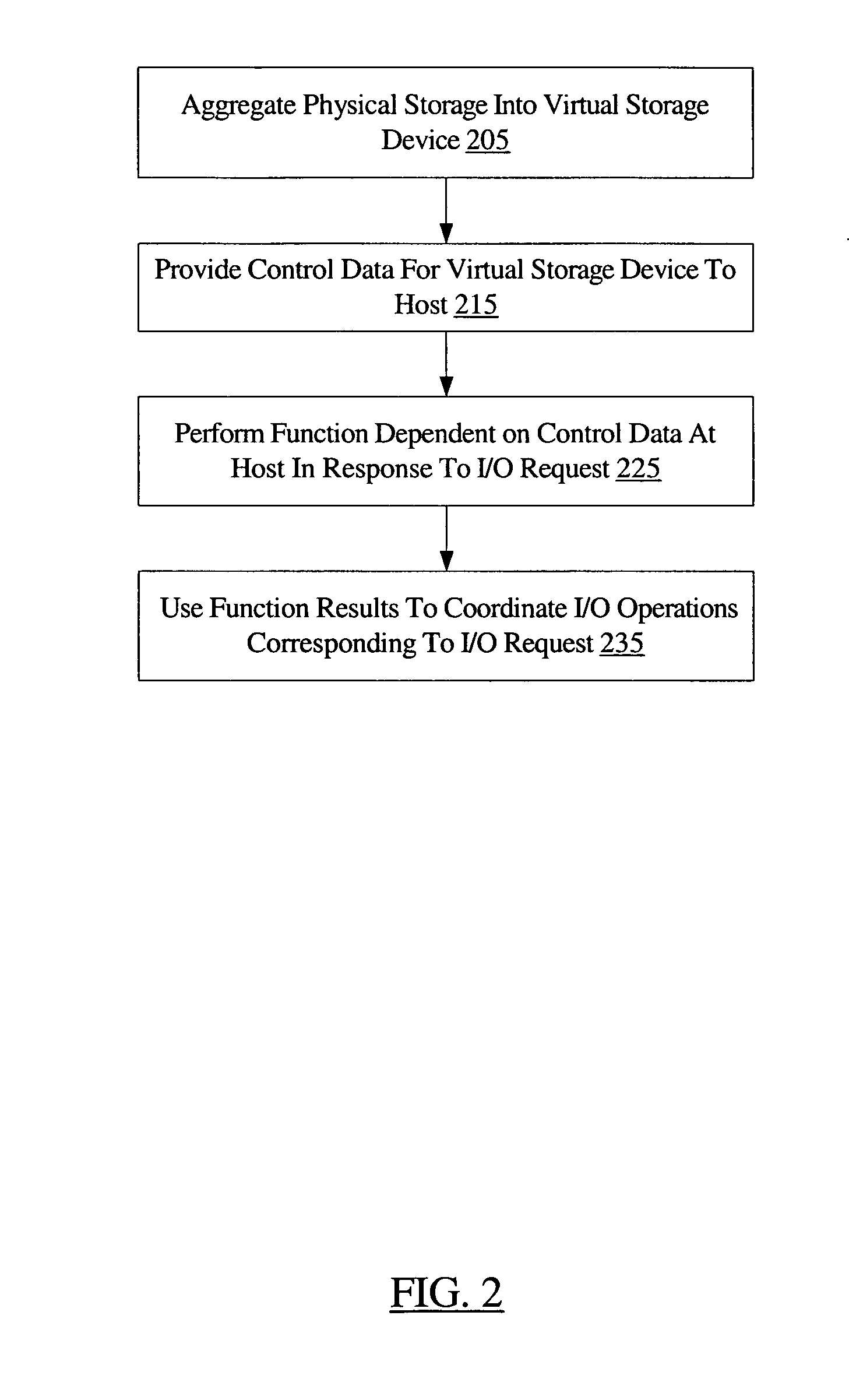

A system for host-based virtualization optimizations in storage environments employing off-host virtualization may include a host, one or more physical storage devices, and an off-host virtualizer such as a virtualizing switch. The off-host virtualizer may be configured to aggregate storage within the one or more physical storage devices into a virtual storage device such as a logical volume, and to provide control data for the virtual storage device to the host. The host may be configured to use the control data to perform a function in response to an I / O request from a storage consumer directed at the virtual storage device, and to use a result of the function to coordinate one or more I / O operations corresponding to the I / O request.

Owner:SYMANTEC OPERATING CORP

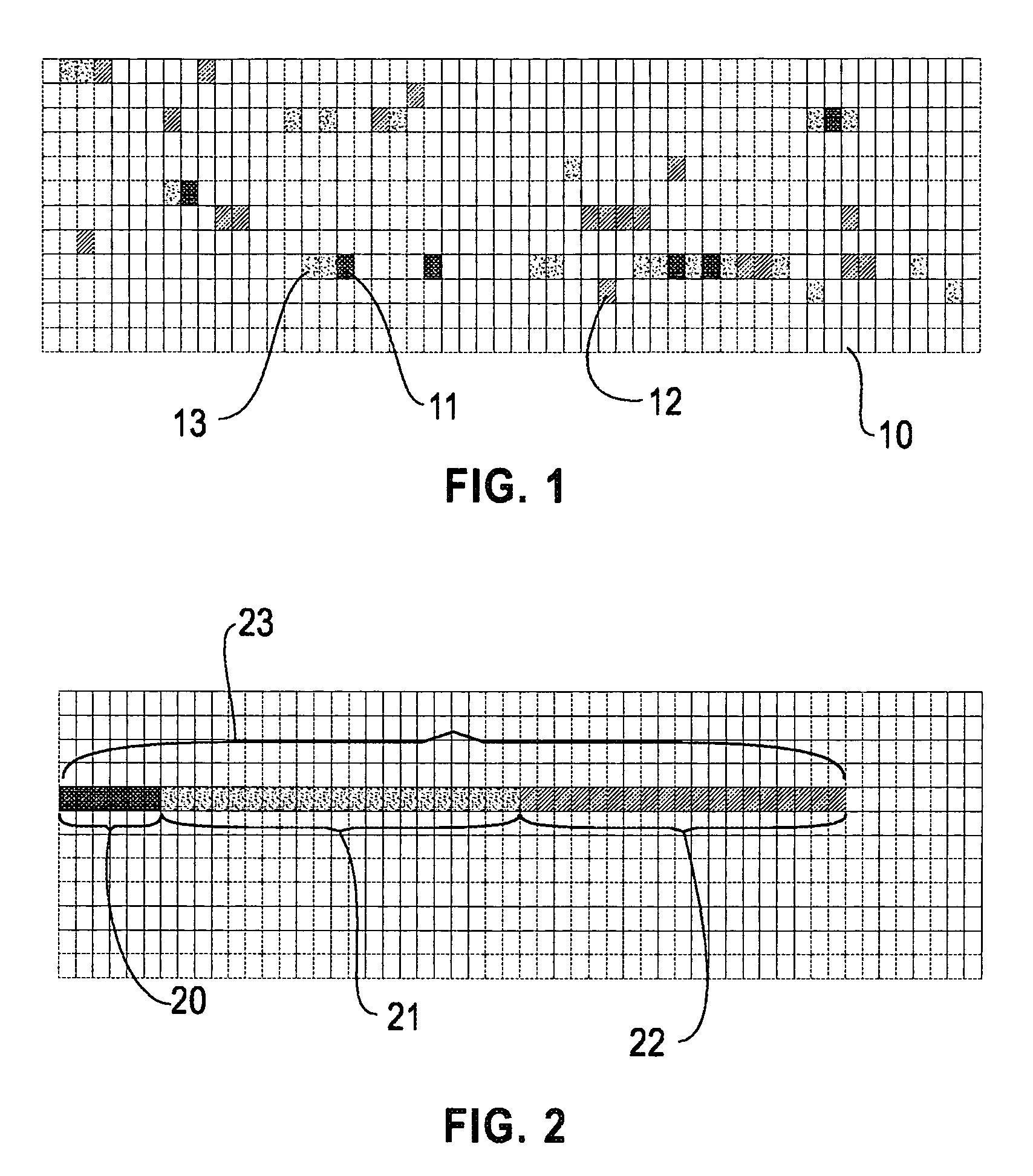

Storage system and method for reorganizing data to improve prefetch effectiveness and reduce seek distance

InactiveUS20060026344A1Improve spatial localityImprove system performanceInput/output to record carriersMemory adressing/allocation/relocationParallel computingComputer science

Owner:INT BUSINESS MASCH CORP

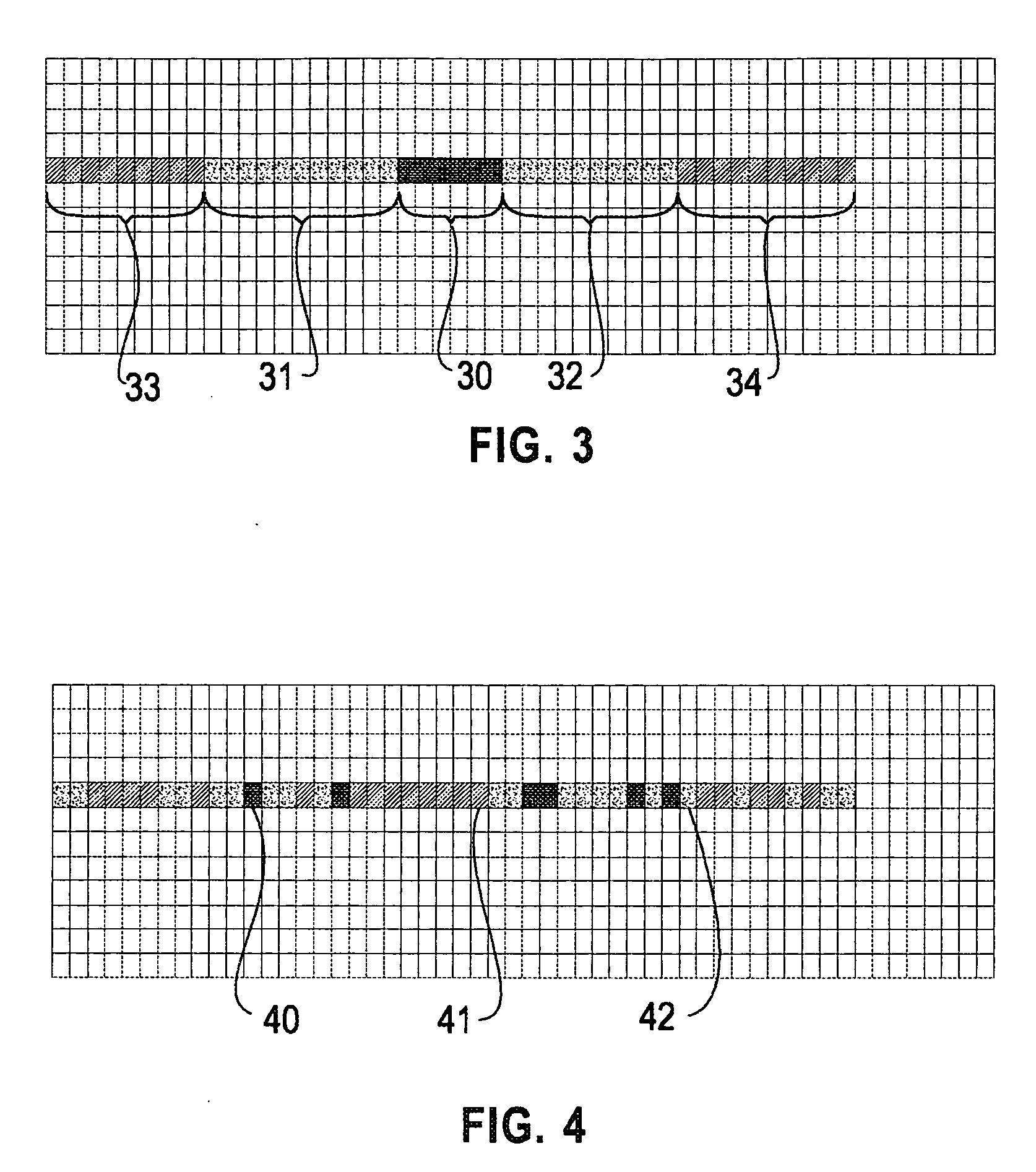

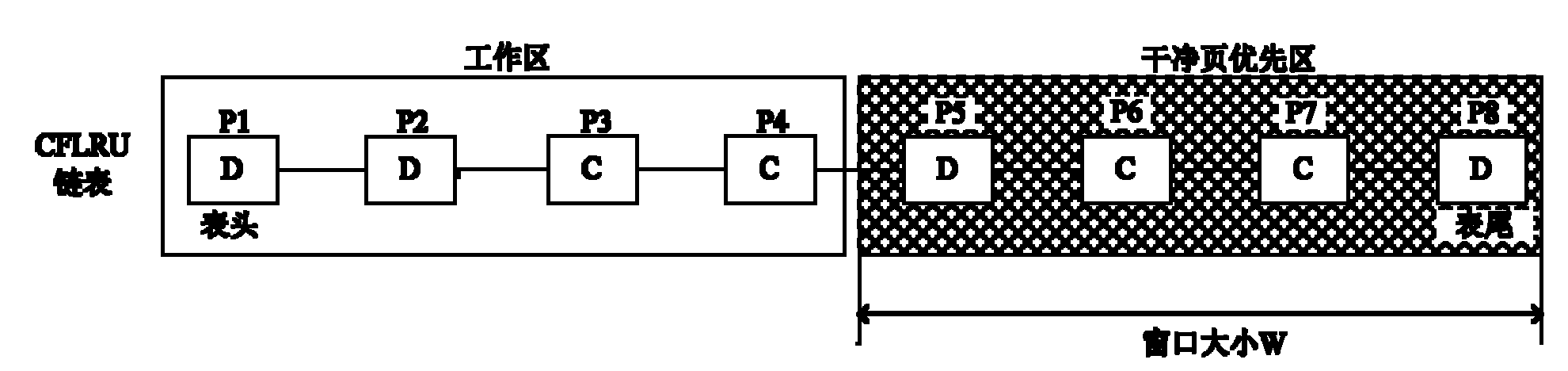

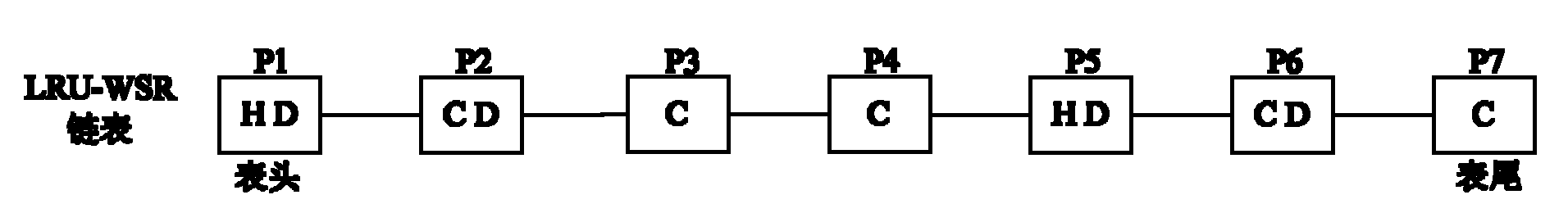

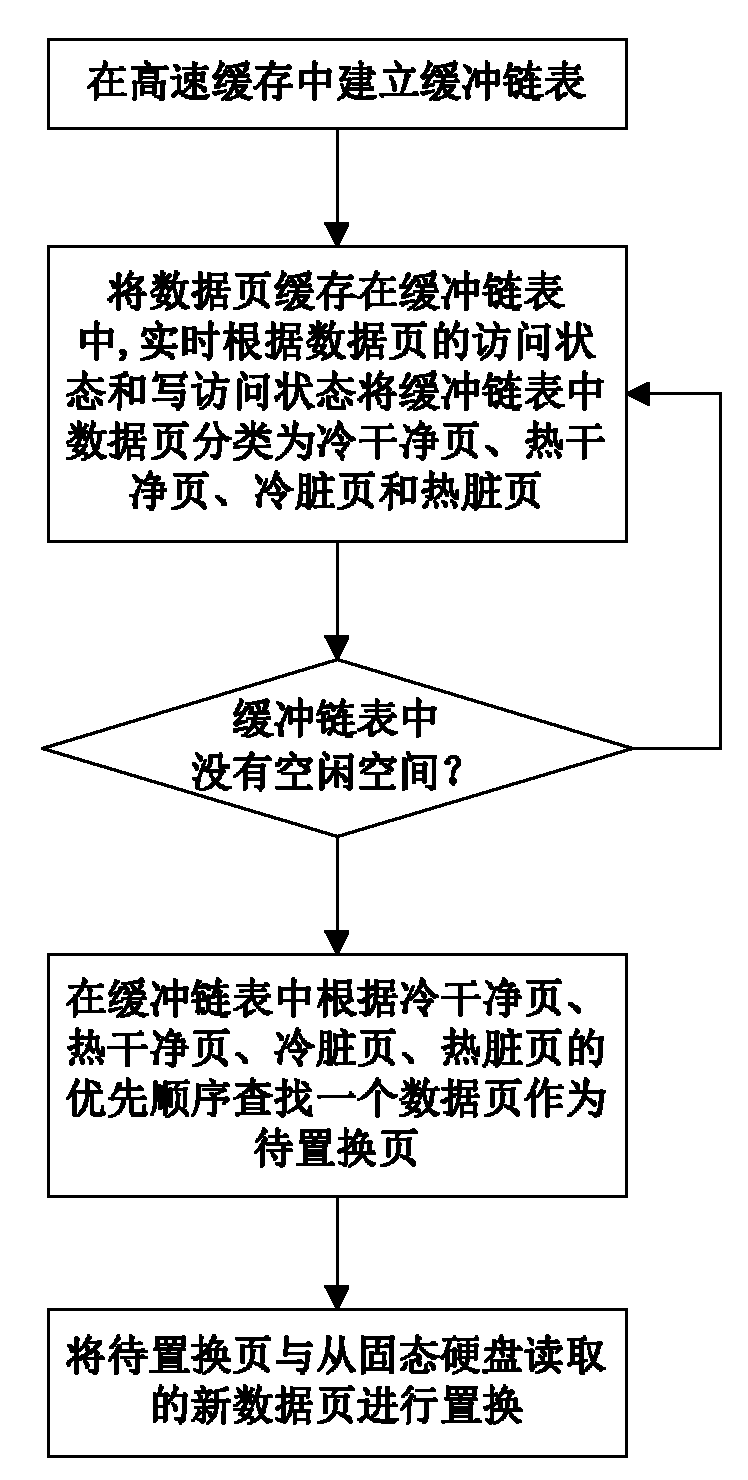

Data page caching method for file system of solid-state hard disc

ActiveCN102156753AReduce overheadImprove hit rateSpecial data processing applicationsDirty pageExternal storage

The invention discloses a data page caching method for a file system of a solid-state hard disc, which comprises the following implementation steps of: (1) establishing a buffer link list used for caching data pages in a high-speed cache; (2) caching the data pages read in the solid-state hard disc in the buffer link list for access, classifying the data pages in the buffer link list into cold clean pages, hot clean pages, cold dirty pages and hot dirty pages in real time according to the access states and write access states of the data pages; (3) firstly searching a data page as a page to be replaced in the buffer link list according to the priority of the cold clean pages, the hot clean pages, the cold dirty pages and the hot dirty pages, and replacing the page to be replaced with a new data page read from the solid-state hard disc when a free space does not exist in the buffer link list. In the invention, the characteristics of the solid-state hard disc can be sufficiently utilized, the performance bottlenecks of the external storage can be effectively relieved, and the storage processing performance of the system can be improved; moreover, the data page caching method has the advantages of good I / O (Input / Output) performance, low replacement cost for cached pages, low expense and high hit rate.

Owner:NAT UNIV OF DEFENSE TECH

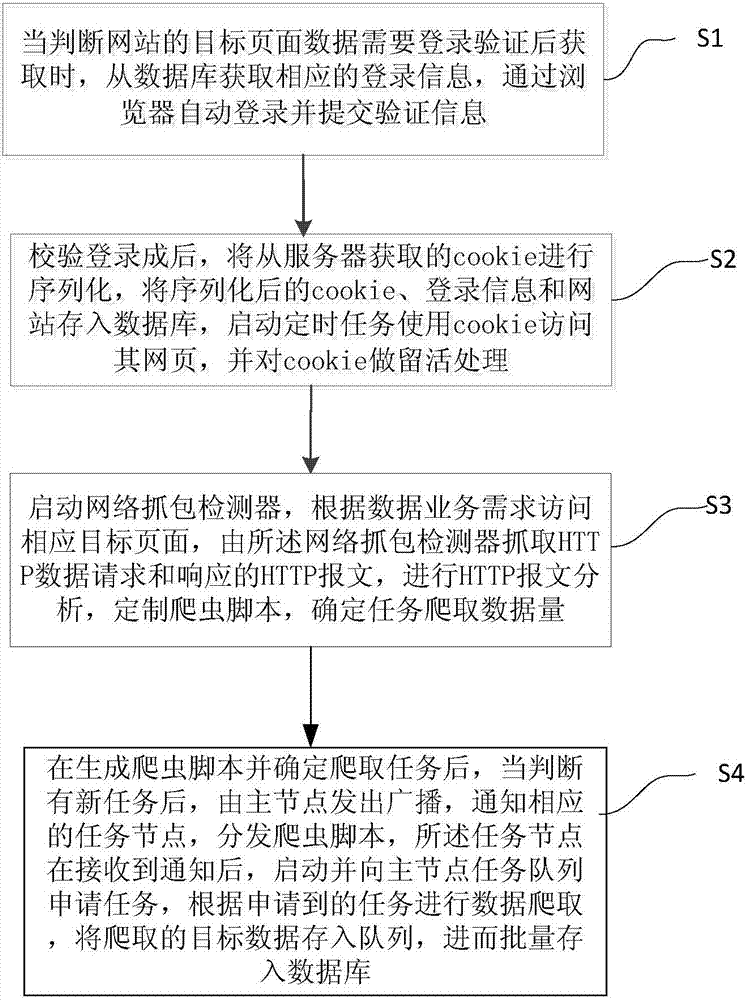

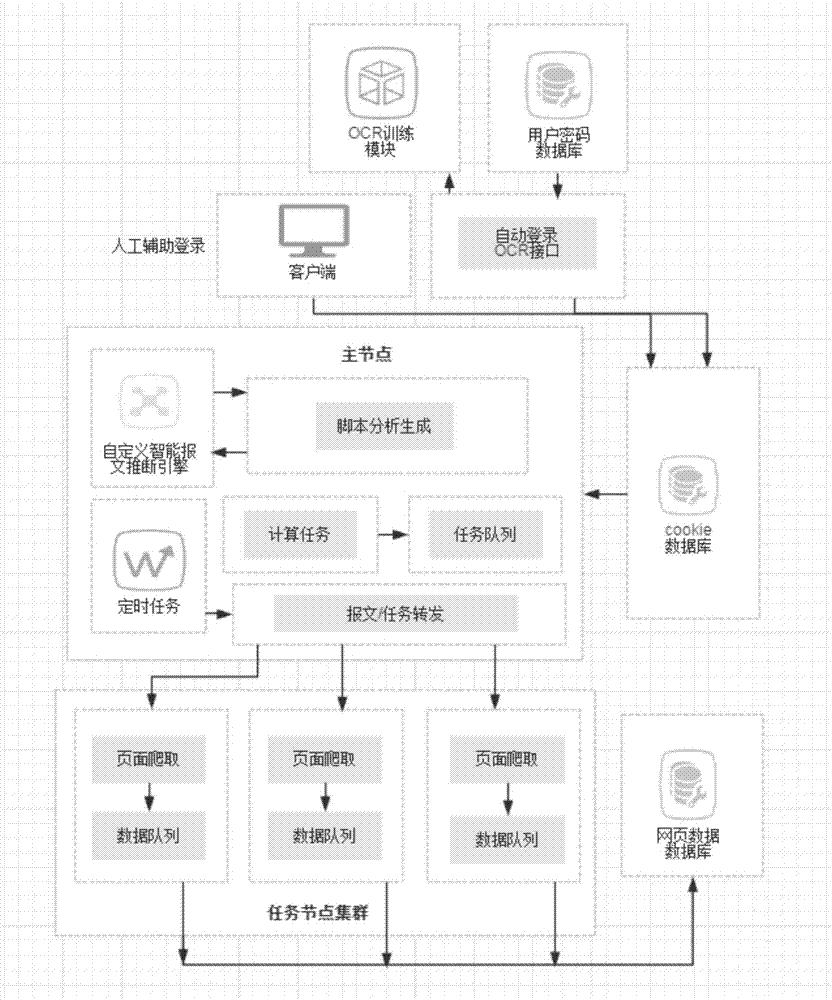

Method with verification for intelligently crawling network information in distributed way

ActiveCN106897357AImprove I/O efficiencyEfficient retentionResource allocationWeb data indexingWeb siteDistributed intelligence

The invention puts forward a method with verification for intelligently crawling network information in a distributed way. The method comprises the following steps that: when a judgement result shows that the target page data of a website can be obtained after login verification is carried out, obtaining corresponding login information from a database, carrying out automatic login through a browser, and submitting verification information; starting a timed task, using cookie to access the webpage of the timed task, and carrying out keep-alive processing; starting a network package capture detector, accessing a corresponding target page according to business requirements, carrying out HTTP (Hyper Text Transport Protocol) message analysis, carrying out customization on a crawler script, and determining a task crawling data size; and emitting a broadcast by a main node, notifying a corresponding task node, distributing the crawler script, starting the task node, applying for a task from a main node task queue, carrying out data crawling according to the applied task, and storing the crawled target data into the queue so as to store the crawled target data into the database in batches. By use of the method, a protected page can be automatically logged in and accessed, and a quick and expandable distributed webpage crawler integrated framework capable of mining the script is automatically generated.

Owner:北京京拍档科技股份有限公司

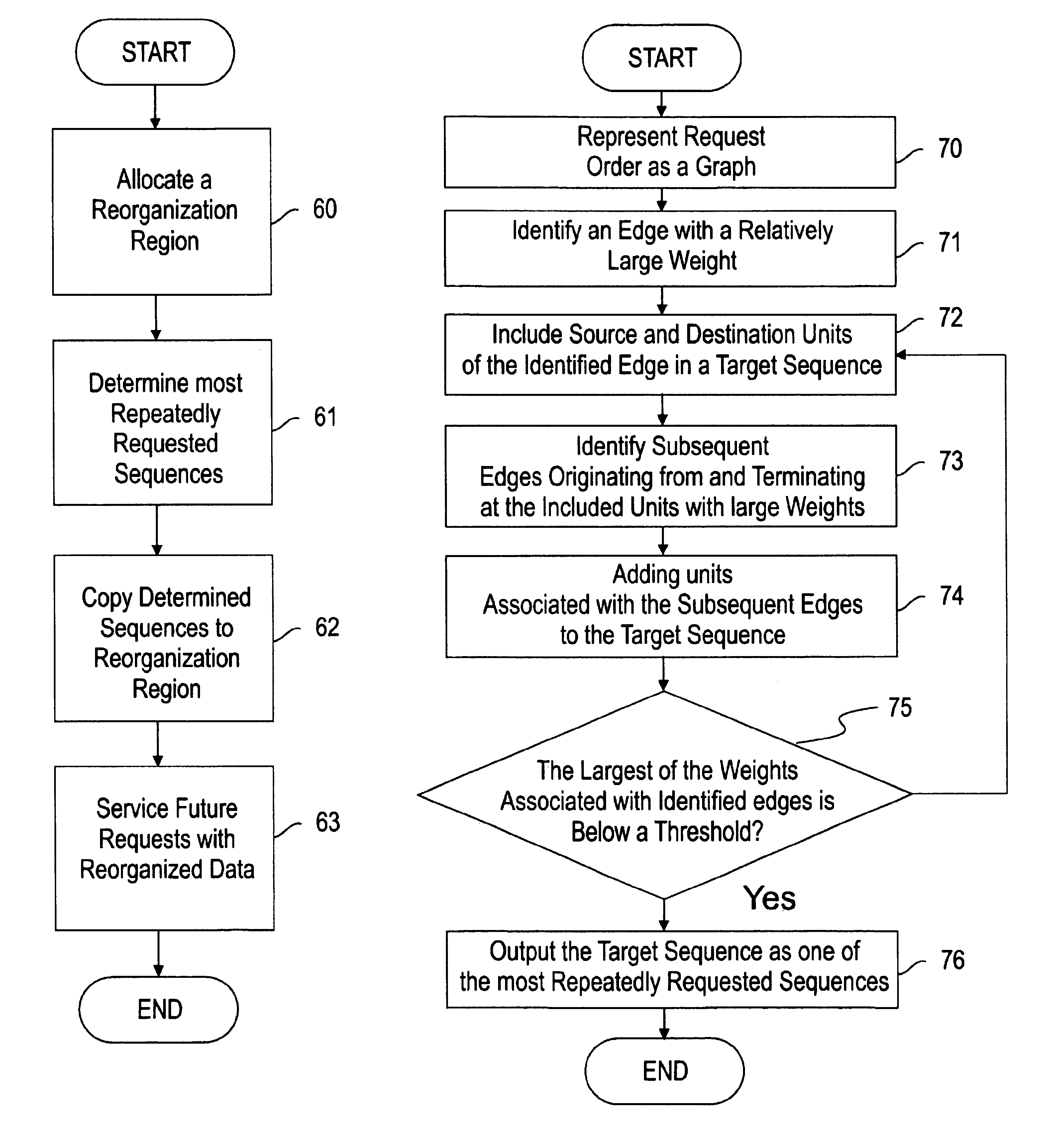

Storage system and method for reorganizing data to improve prefetch effectiveness and reduce seek distance

InactiveUS6963959B2Improve spatial localityImprove system performanceInput/output to record carriersMemory adressing/allocation/relocationData reorganizationDatabase

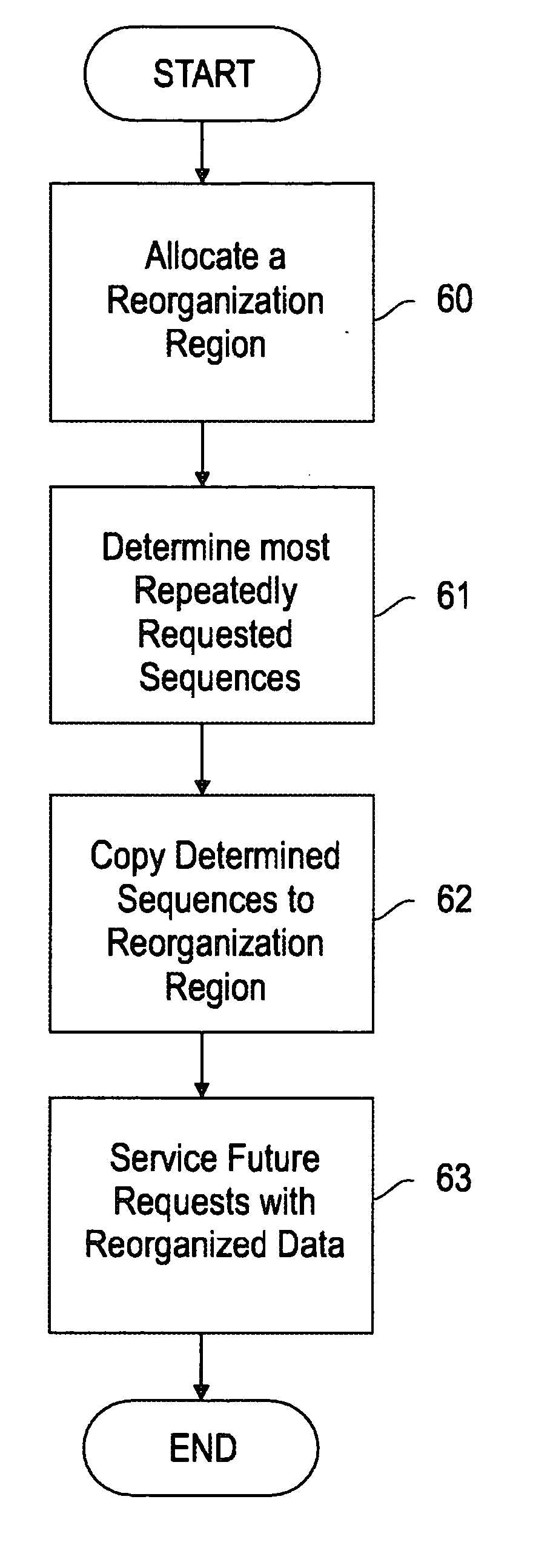

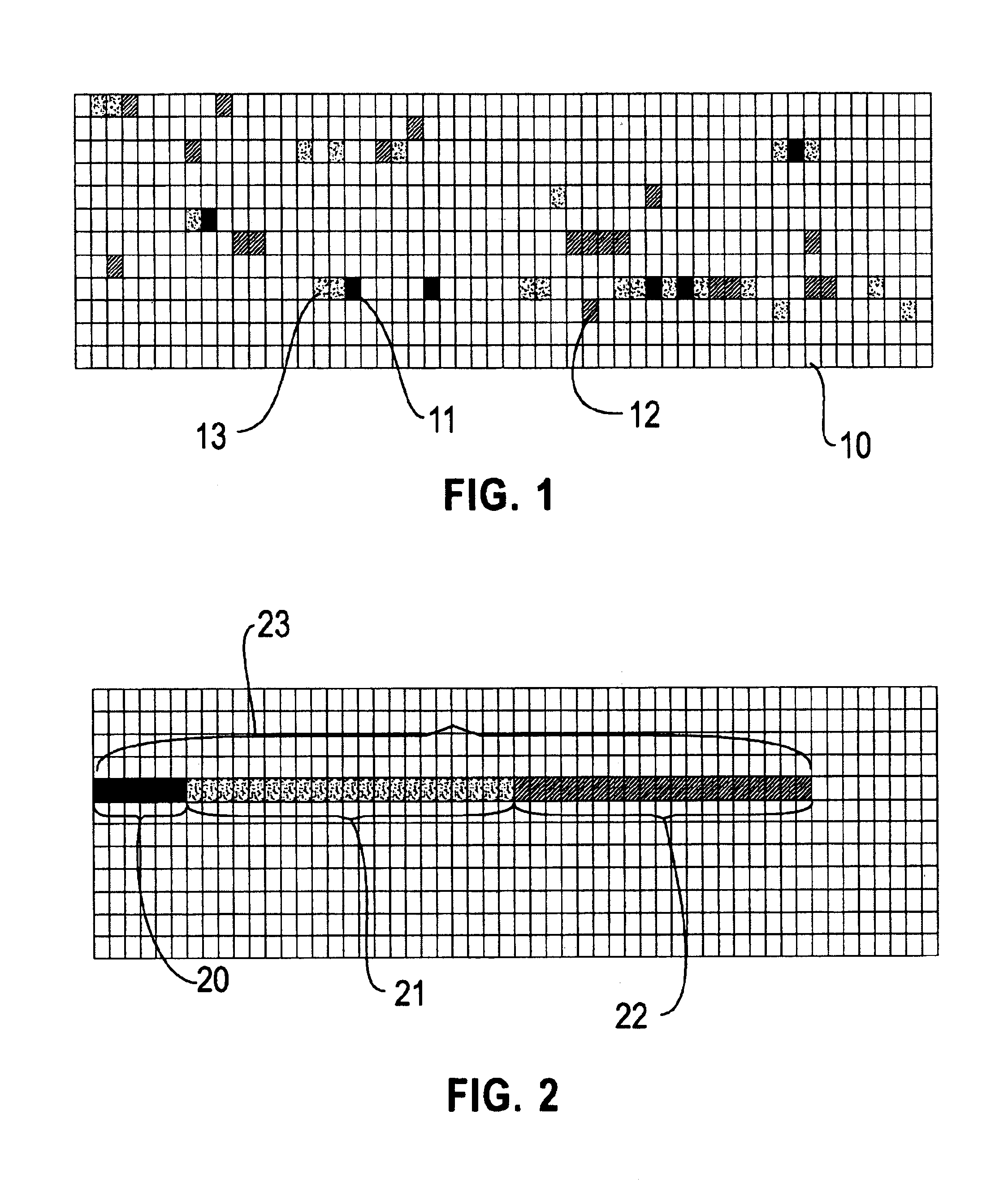

A data storage system and method for reorganizing data to improve the effectiveness of data prefetching and reduce the data seek distance. A data reorganization region is allocated in which data is reorganized to service future requests for data. Sequences of data units that have been repeatedly requested are determined from a request stream, preferably using a graph where each vertex of the graph represents a requested data unit and each edge represents that a destination unit is requested shortly after a source unit the frequency of this occurrence. The most frequently requested data units are also determined from the request stream. The determined data is copied into the reorganization region and reorganized according to the determined sequences and most frequently requested units. The reorganized data might then be used to service future requests for data.

Owner:IBM CORP

Hash table concurrent access performance optimization method under multi-core environment

ActiveCN104536724AImprove I/O efficiencyProcessing will not affectMemory adressing/allocation/relocationConcurrent instruction executionAsynchronous networkHash table

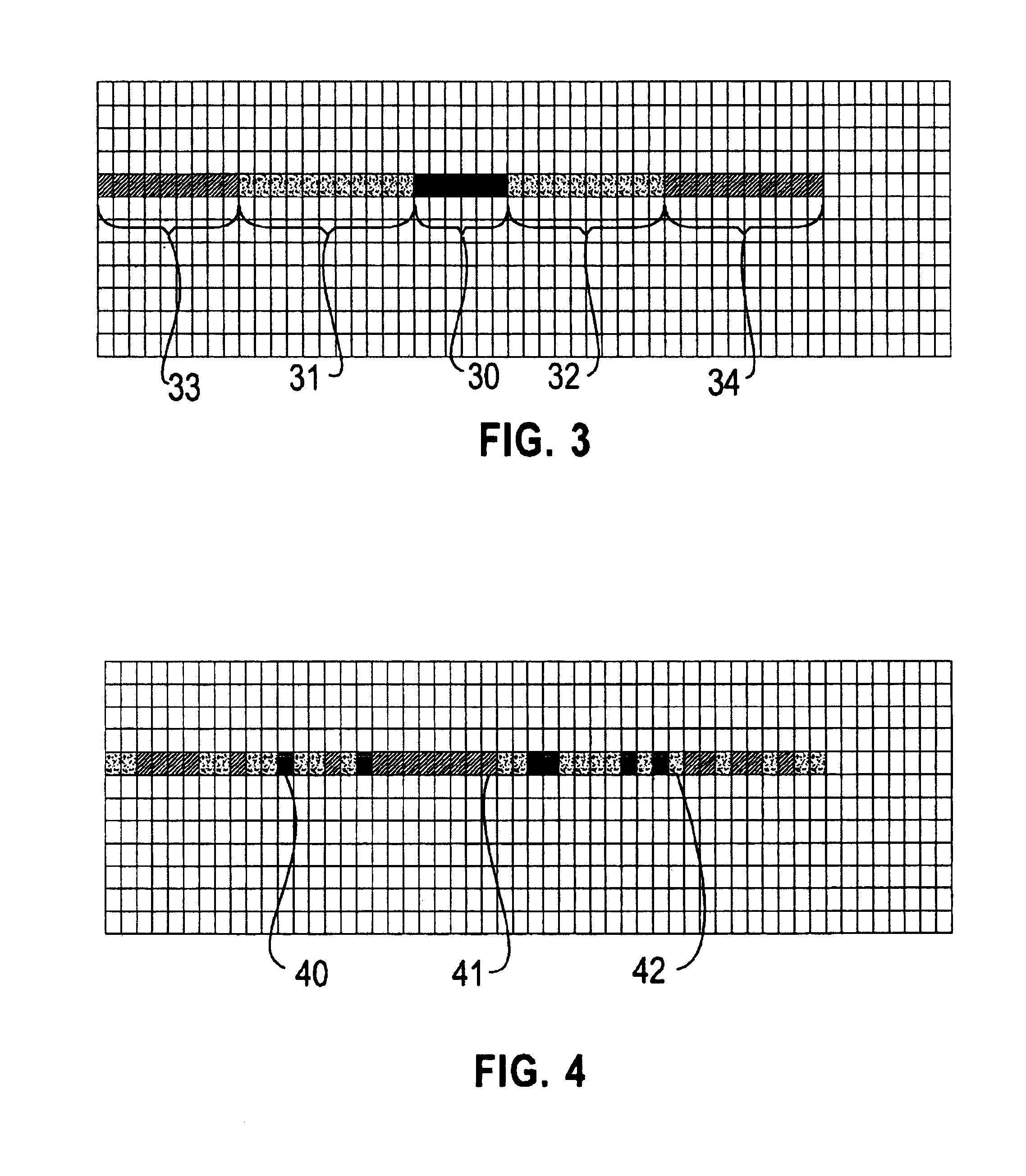

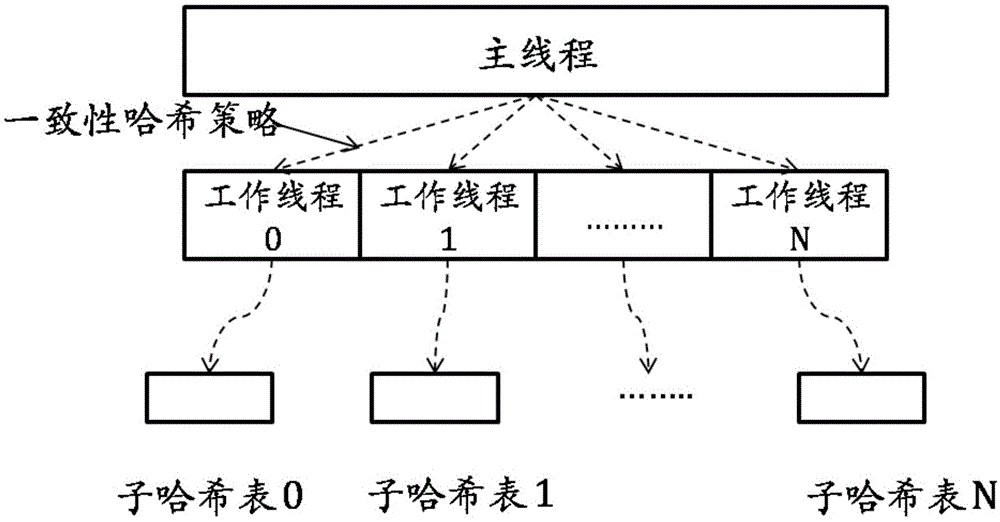

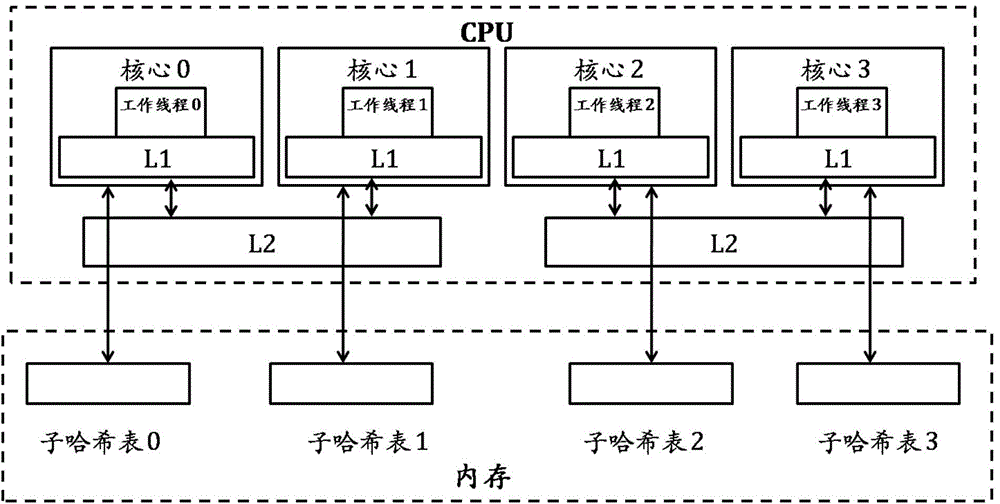

The invention discloses a hash table concurrent access performance optimization method under a multi-core environment. The method comprises the steps that aiming at the concurrent connection treatment of high concurrent access of a hash table, a half-synchronous half-asynchronous network connection treatment mechanism is adopted; aiming at the concurrent data treatment of high concurrent access of the hash table, the hash table is divided into multiple independent sub hash tables according to the number of CPU cores, working threads correspond to the independent sub hash tables in a one-to-one mode, and each working thread is only in charge of the data of the corresponding sub hash table; a main thread selects the corresponding working threads to process by adopting a consistent hash strategy according to the Key of each datum; each sub hash table maintains one LRU queue, when a memory space is insufficient, cold data are deleted, and new elements are inserted. The method improves the concurrent connection treatment capacity and the concurrent access capacity of the hash table, and the synchronization overhead problem and the cache consistency overhead problem are solved when the shared hash table is visited by multiple threads.

Owner:HUAZHONG UNIV OF SCI & TECH

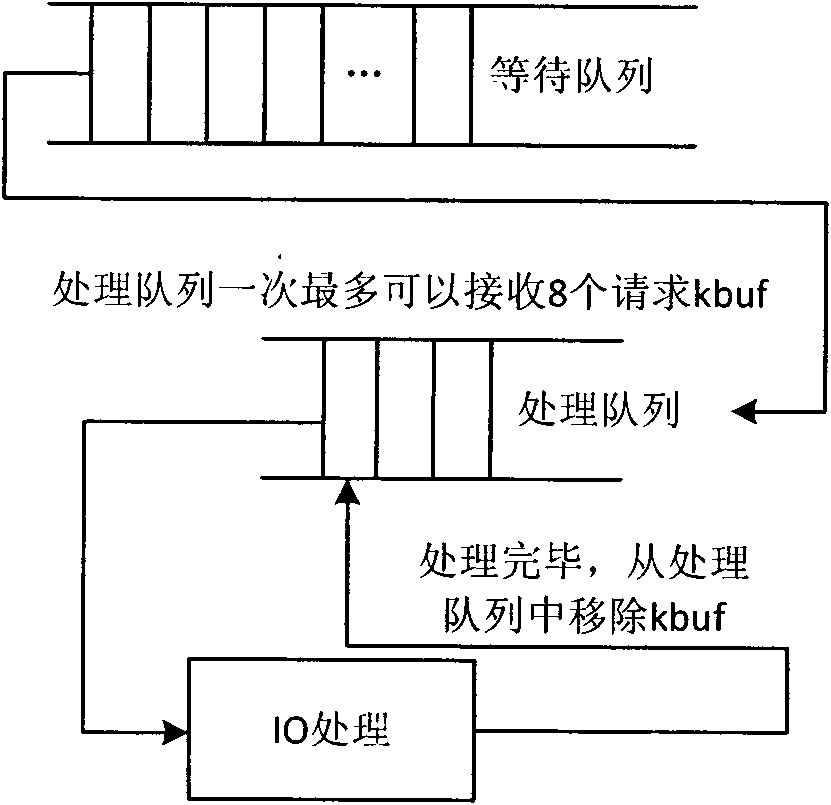

Method for storage interface bypassing Bio layer to access disk drive

ActiveCN102073605AImprove efficiencyReduce processing timeElectric digital data processingApplication softwareTread

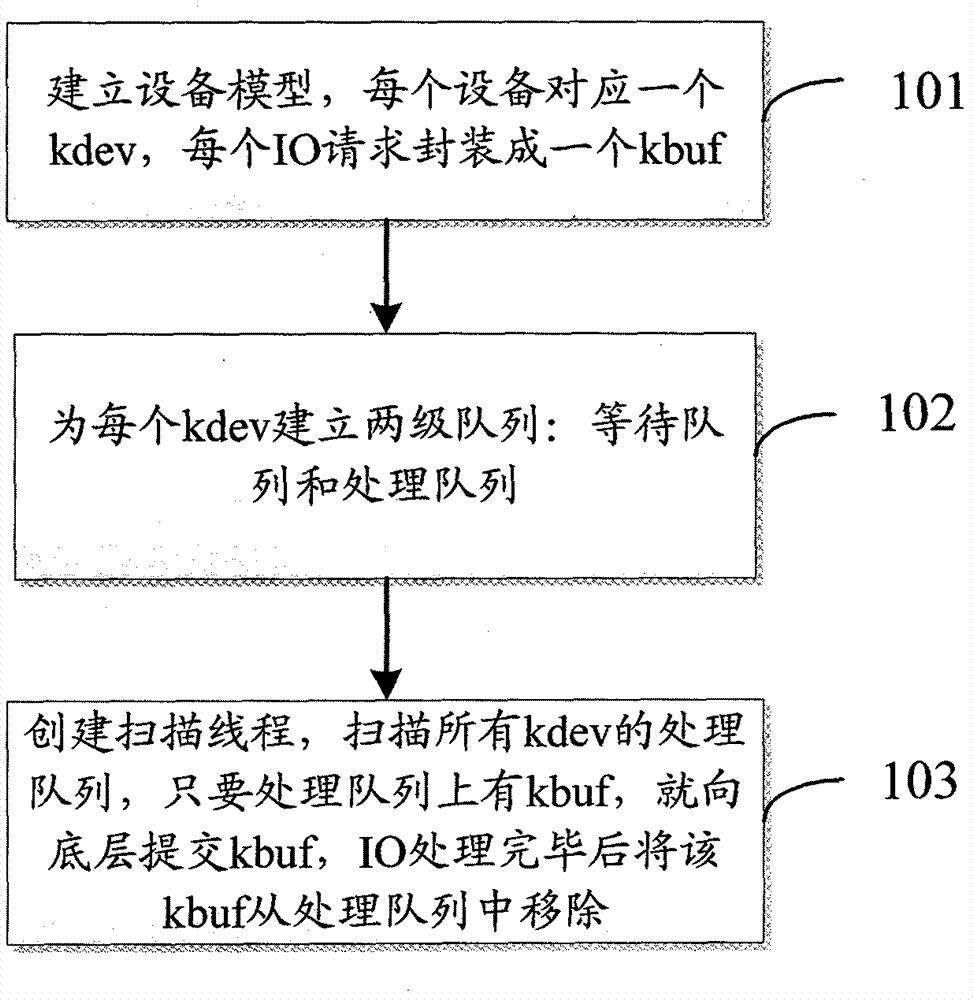

The invention provides a method for a storage interface bypassing a Bio layer to access disk drive, which comprises the following steps of: setting up an equipment module, wherein each disk equipment corresponds to a disk equipment object; packing an IO (Input Output) request initiated by an application program into an IO request object; setting up two stages of queue for each disk equipment: a waiting queue and a processing queue, wherein the waiting queues are used for sequentially receiving the IO request objects, and under the condition that the processing queues have a vacancy, the IO request objects are converted to the processing queues from the waiting queues; and creating a scanning tread for scanning the waiting queues of all the disk equipment objects, if the waiting queues have the IO request objects, the IO request objects are extracted from the processing queues and then are submitted to a bottom layer, and after the IO processing is finished, the IO request objects are removed from the processing queues.

Owner:深圳市安云信息科技有限公司

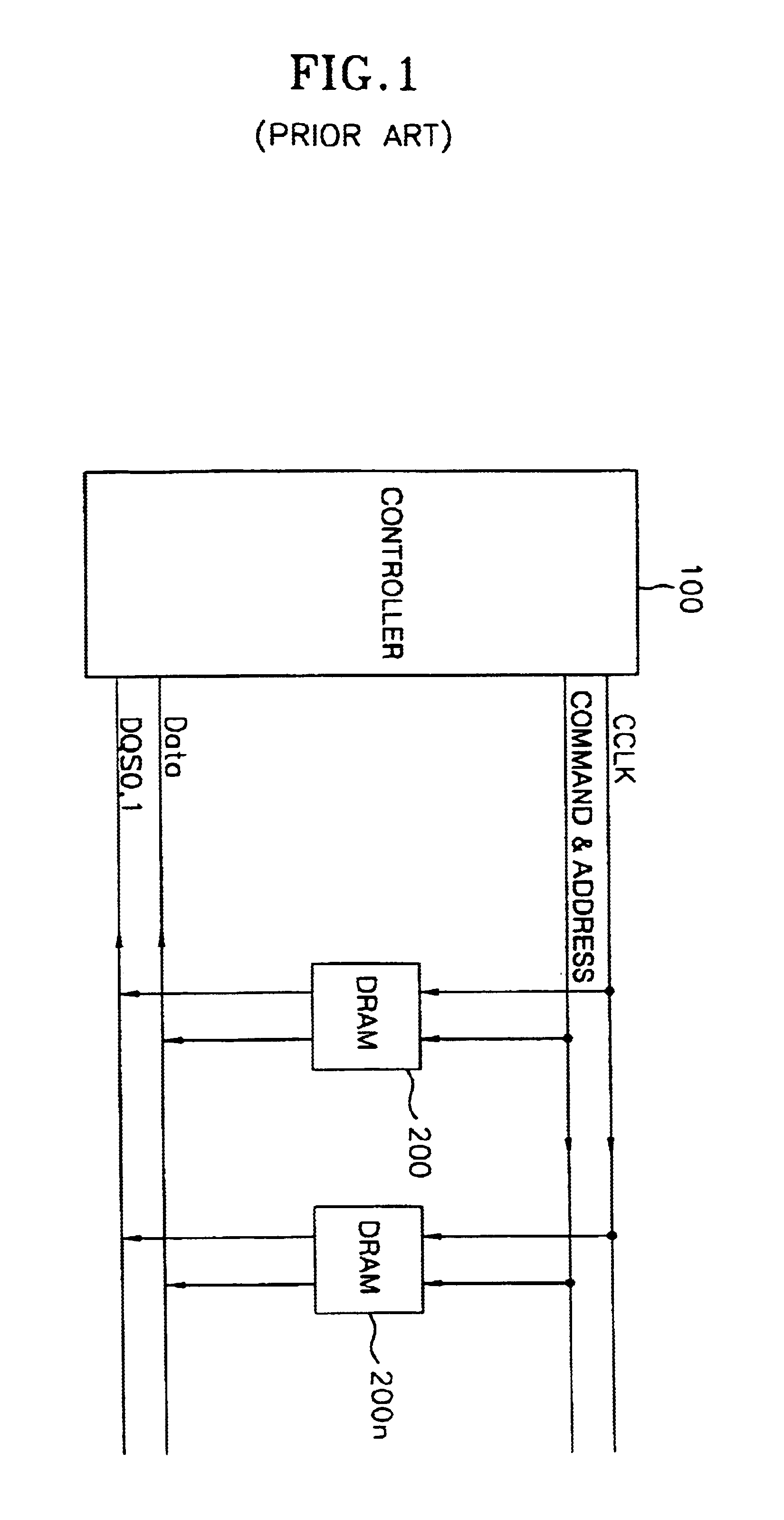

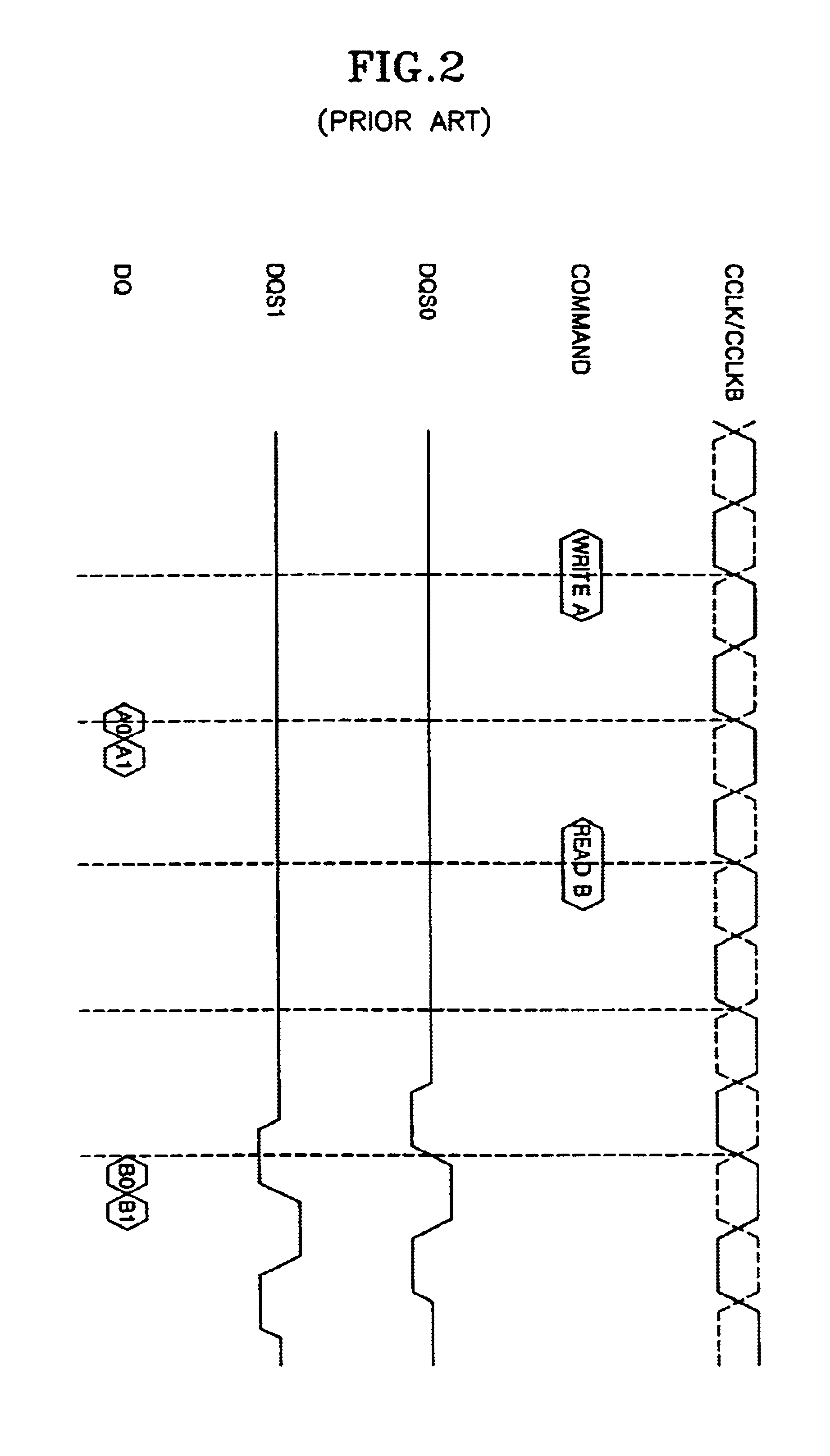

High speed interface device for reducing power consumption, circuit area and transmitting/receiving a 4 bit data in one clock period

InactiveUS6918046B2Improve data I/O efficiencyPrecise synchronization of clockDigital storageGenerating/distributing signalsPhase differenceData signal

A high speed interface type device can reduce power consumption and a circuit area, and transmit / receive a 4 bit data in one clock period. The high speed interface type device includes a DRAM unit for generating first clock and clock bar signals which do not have a phase difference from a main clock signal, and second clock and clock bar signals having 90° phase difference from the first clock and clock bar signals in a write operation, storing an inputted 4 bit data in one period of the main clock signal according to the first clock to second clock bar signals, synchronizing the stored data with data strobe signals according to the first clock to second clock bar signals in a read operation, and outputting a 4 bit data in one period of the main clock signal, and a controller for transmitting a command, address signal and data signal synchronized with the main clock signal to the DRAM unit in the write operation, and receiving data signals from the DRAM unit in the read operation.

Owner:SK HYNIX INC

Host-based virtualization optimizations in storage environments employing off-host storage virtualization

InactiveUS7669032B2Efficient responseImprove I/O efficiencyError detection/correctionMemory systemsControl dataVirtual storage

Owner:SYMANTEC OPERATING CORP

Method for supporting parallel input and output (I/O) of trace files in parallel simulation

InactiveCN101526915AImprove I/O efficiencyImprove efficiencyHardware monitoringMultiprogramming arrangementsTrace fileParallel I/O

The invention discloses a method for supporting the parallel input and output (I / O) of trace files in parallel simulation, in particular to a method for supporting the distributed parallel I / O of the Trace files in order to improve the I / O efficiency and the simulation precision of the Trace files in the parallel simulation. The technical scheme comprises the following steps: a host machine for running parallel simulators is constructed and comprises a main control node and simulation nodes; a main configuration program is executed on the main control node for global configuration; then, scheduling software A on the main control node schedules a simulator A on a simulation node to execute a simulation job; afterwards, Trace distribution software on the main control node generates and sends Trace mobile scripts to all destination simulation nodes, and each destination simulation node executes a Trace mobile script and moves a Trace file from a corresponding source node to the own node; and finally, scheduling software B on the main control node schedules a simulator B on a simulation node to execute a simulation job. The method can greatly improve the I / O efficiency of the Trace files and the simulation precision.

Owner:NAT UNIV OF DEFENSE TECH

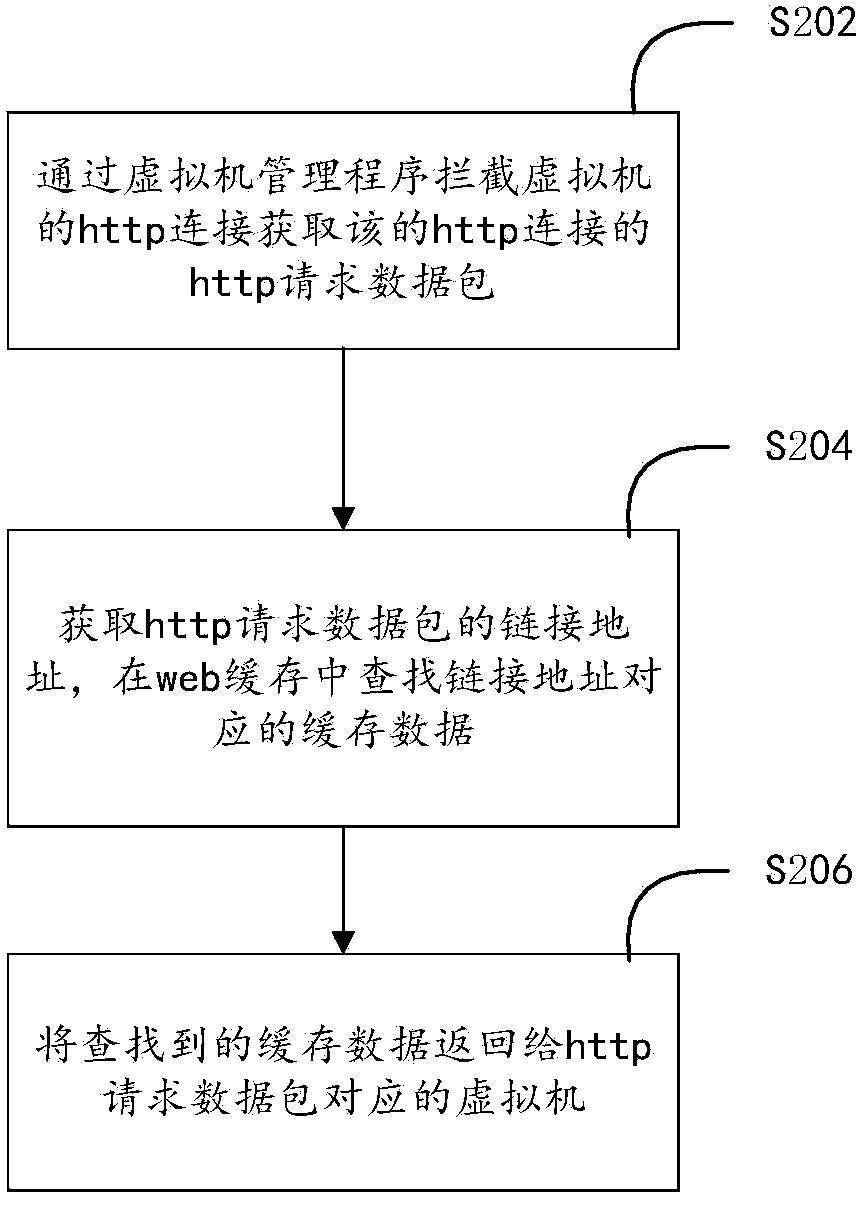

Web buffering method and device in virtual machine environment

ActiveCN104021028AAvoid cachingReduce randomnessMemory adressing/allocation/relocationSoftware simulation/interpretation/emulationNetwork packetWeb cache

A web buffering method in a virtual machine environment is characterized by comprising the steps that the http connection of a virtual machine is intercepted through a virtual machine management program to obtain an http response data package of the http connection; a buffering identifier in the http response data package is obtained; whether the buffering identifier can be buffered or not is judged, if yes, the link address corresponding to the http response data package is obtained, data content of the http response data package is copied, and the extracted data content and the link address are correspondingly stored in a web buffering memory set by the virtual machine management program; the buffering identifier in the http response data package is set to be not capable of being buffered and is transmitted to a virtual machine corresponding to the http connection. In addition, the invention provides a web buffering device in the virtual machine environment. The web buffering method and device in the virtual machine environment can improve I / O efficiency.

Owner:SANGFOR TECH INC

Huge amount of data compacting storage method and implementation apparatus therefor

InactiveCN1908932AImprove I/O efficiencyReduce storage sizeSpecial data processing applicationsMultiversion concurrency controlData store

The related compression storage method for great much data comprises: detecting CPU type to select matched storage strategy; saving one piece of record with integral information in website as the reference for other records; noting the reference record difference by increment means; removing the last-version data in history to realize the dynamic conversion for history and active data; according to frequency of data value, recoding data to calculate without decoding. This invention can improve query efficiency with less storage space.

Owner:北京人大金仓信息技术股份有限公司 +2

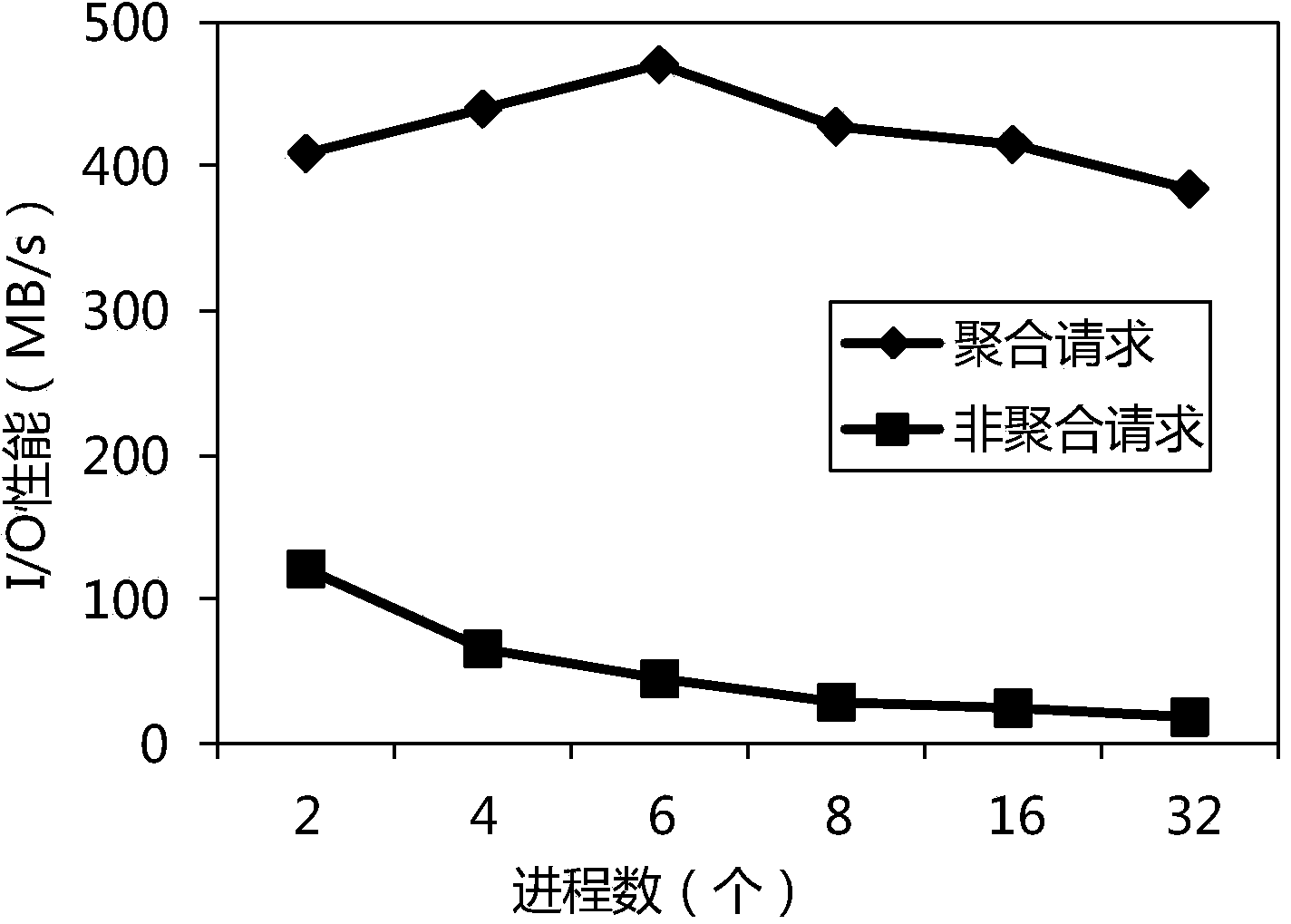

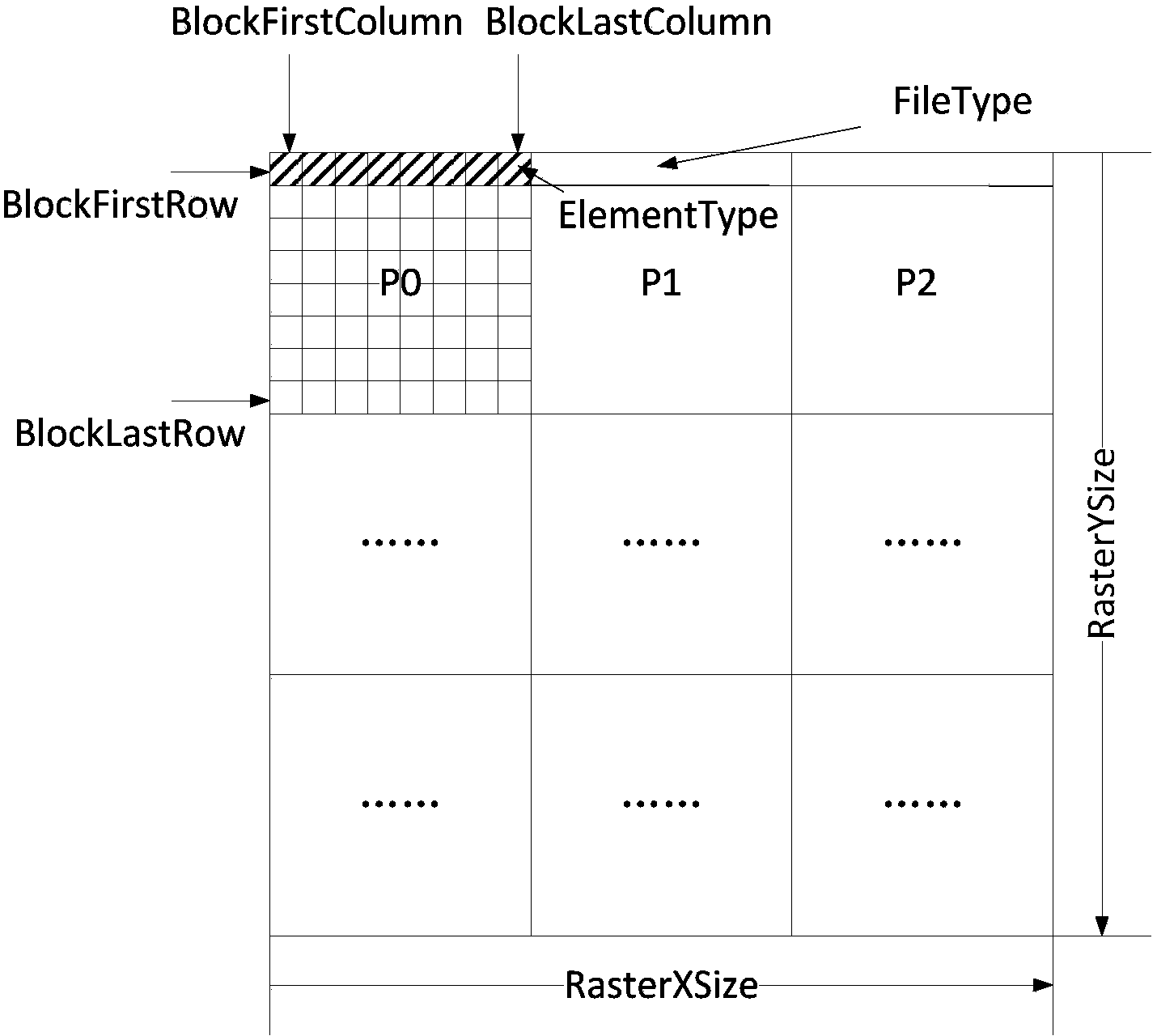

Geographical raster data parallel reading-writing method based on request aggregation

InactiveCN103761291AUnrestricted method of divisionWaiting time is negligibleConcurrent instruction executionGeographical information databasesTask completionData file

The invention provides a geographical raster data parallel reading-writing method based on request aggregation. According to the technical scheme, for all processes, a GDAL (geographical data abstract library) is called to read geographical raster data files to be processed; geographical raster metadata information is acquired from the files; all processes calculate partition size and offset of respective reading-required geographical raster data in the geographical raster data files by means of uniform data partitioning; any process is response of creating a GTIFF output file; after creating, the process broadcasts the status of creating completion to other processes; the other processes read the geographical raster data to be processed; each process completes its respective calculation task, and results of calculation task completion are written to output files by means of uniform data partitioning. The method has the advantages that various formats of data can be processed, a parallel processing mechanism is good, and overall input / output efficiency is improved.

Owner:NAT UNIV OF DEFENSE TECH

Method for partial data reallocation in a storage system

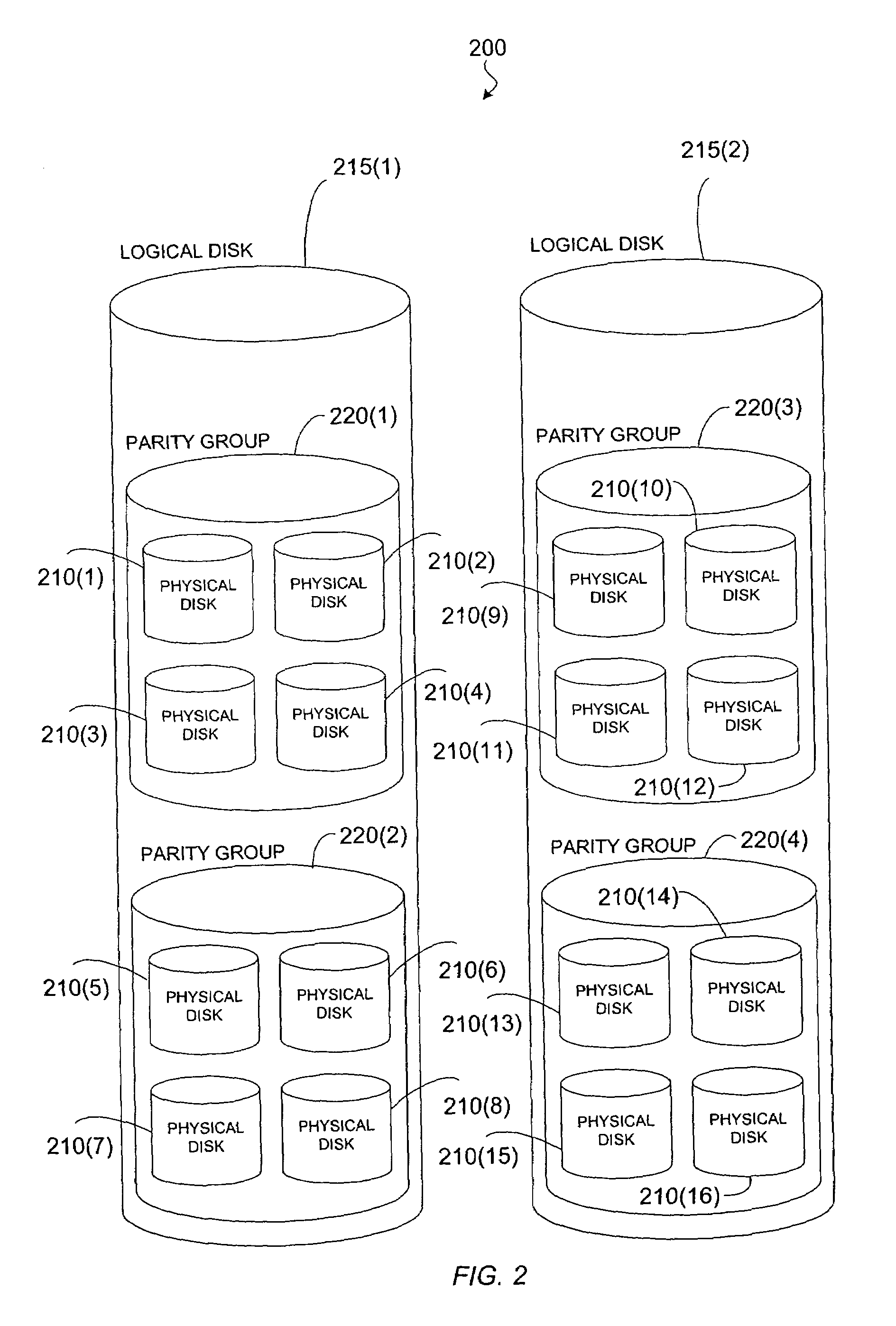

InactiveUS7032070B2Sacrificing disk capacityWithout decreasing I/O performanceInput/output to record carriersError detection/correctionLogical diskOperating system

A method of reallocating data among physical disks corresponding to a logical disk is provided. A logical disk is partitioned into a plurality of groups, each group comprising at least one segment on at least one of a first plurality of physical disks corresponding to the logical disk. One group of the plurality of groups is partitioned into a plurality of sub-groups, and, for each sub-group of the plurality of sub-groups but one, the sub-group is copied to at least one segment on at least one of a second plurality of physical disks corresponding to the logical disk.

Owner:HITACHI LTD

Data processing equipment and data processing method thereof

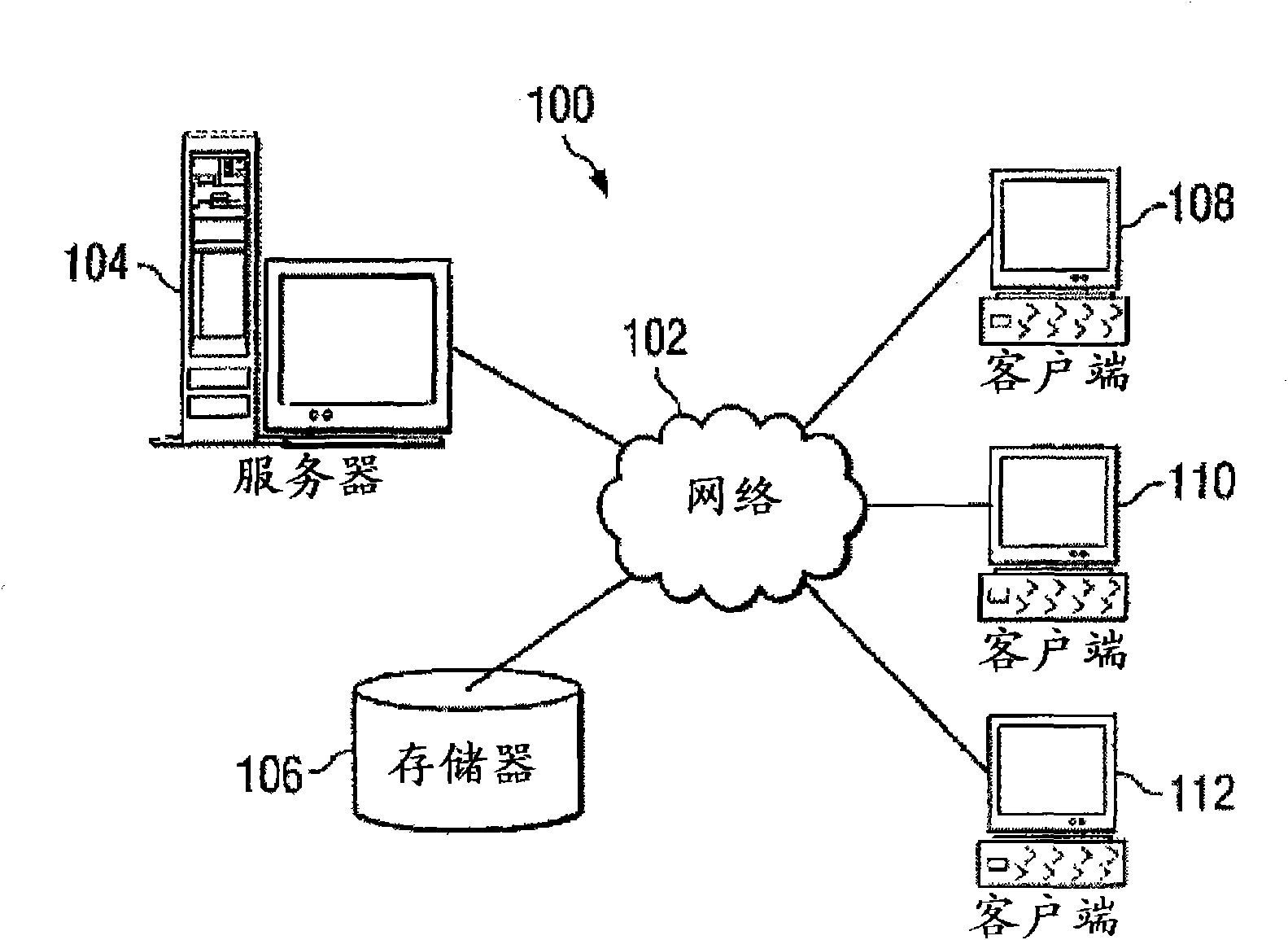

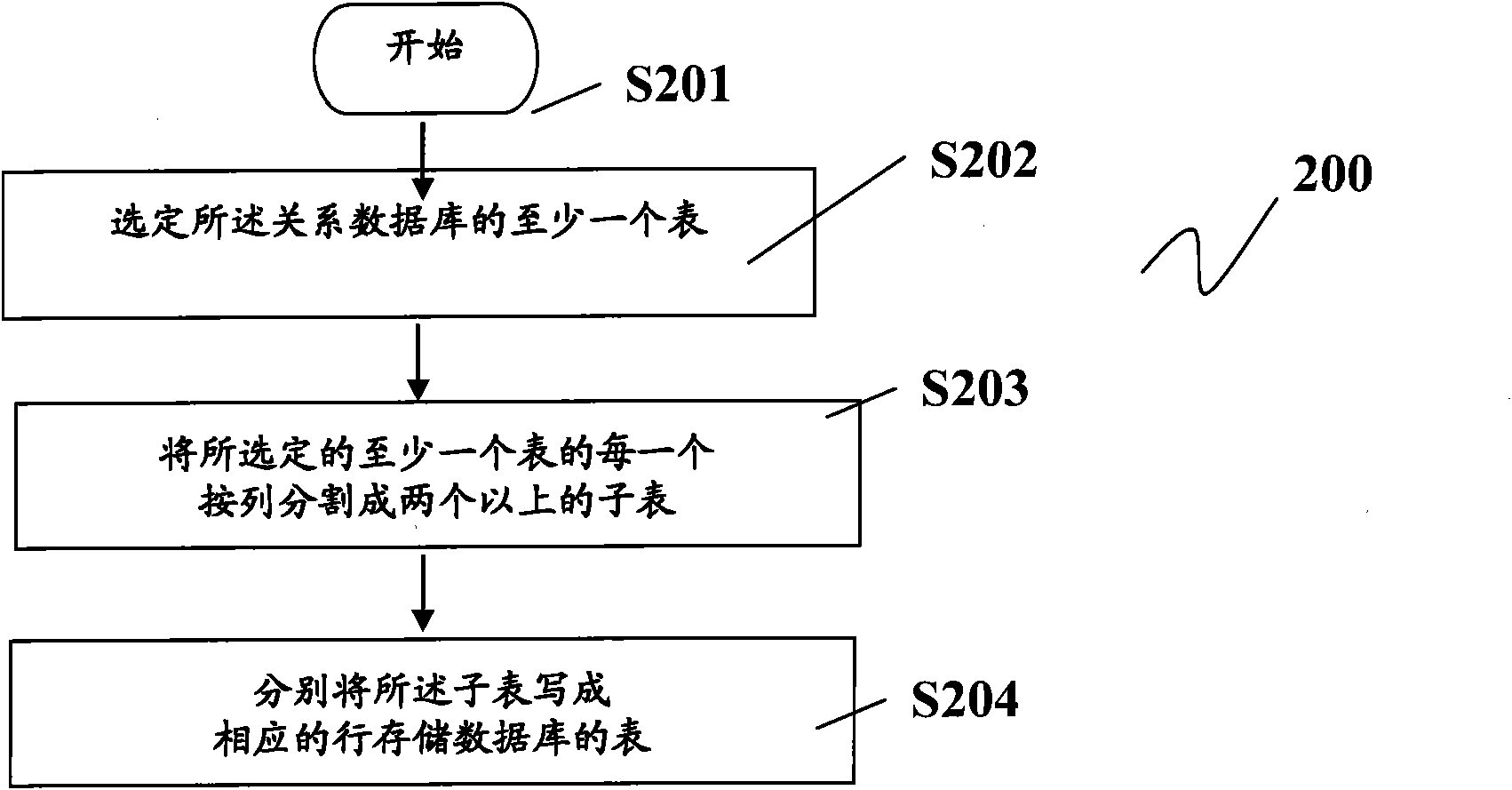

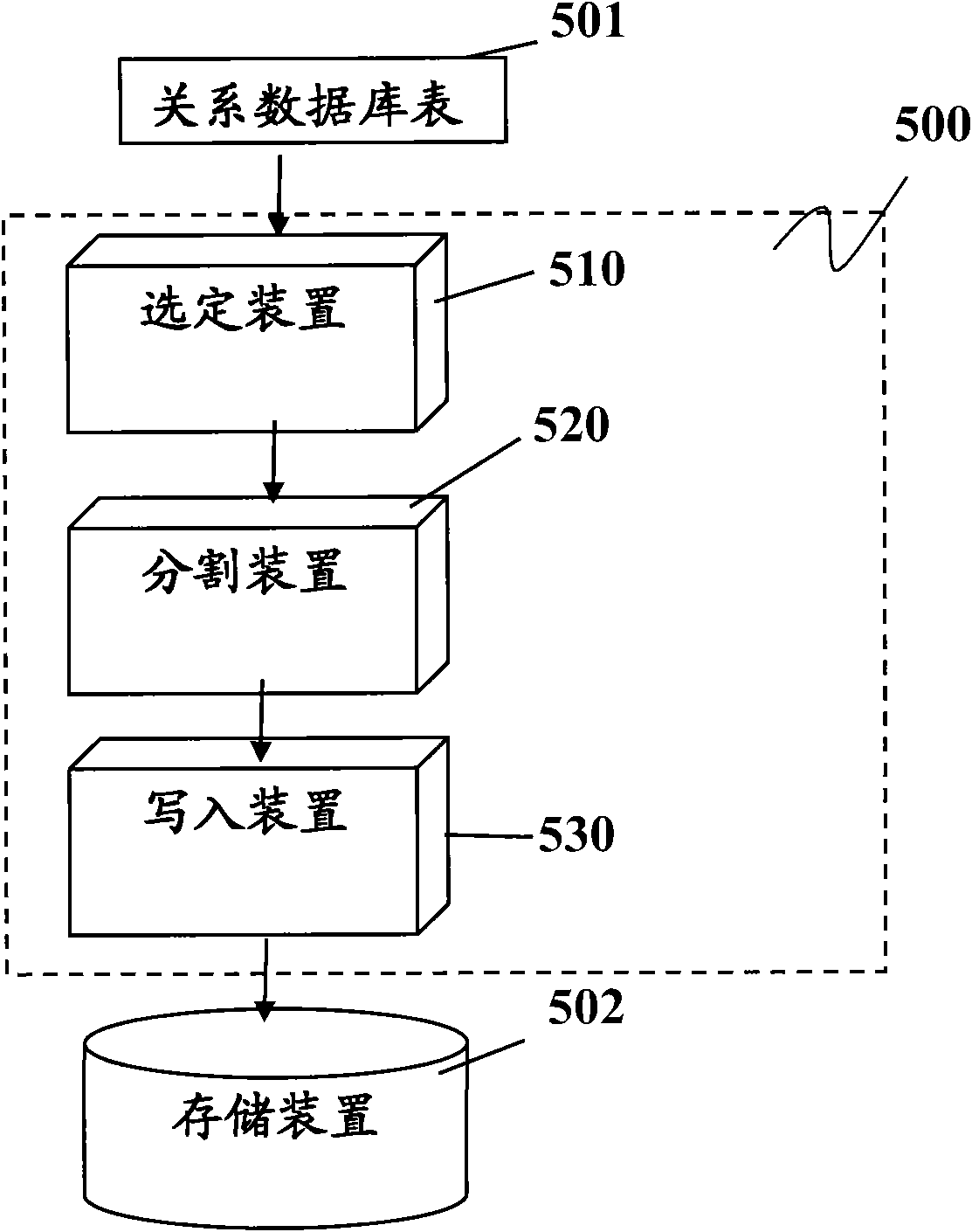

ActiveCN102737033AGood workloadReduce workloadMulti-dimensional databasesSpecial data processing applicationsData processingRelational database

The invention discloses data processing equipment and a data processing method thereof, and the data processing equipment and the data processing method are used in a relational database. The data processing method used in the relational database comprises the steps that: at least one table is selected from the relational database; each of the selected at least one table is divided into more than two sub-tables according to columns, wherein at least one of the sub-tables comprises at least two columns; and the sub-tables are written into tables of a corresponding row store database.

Owner:IBM CORP

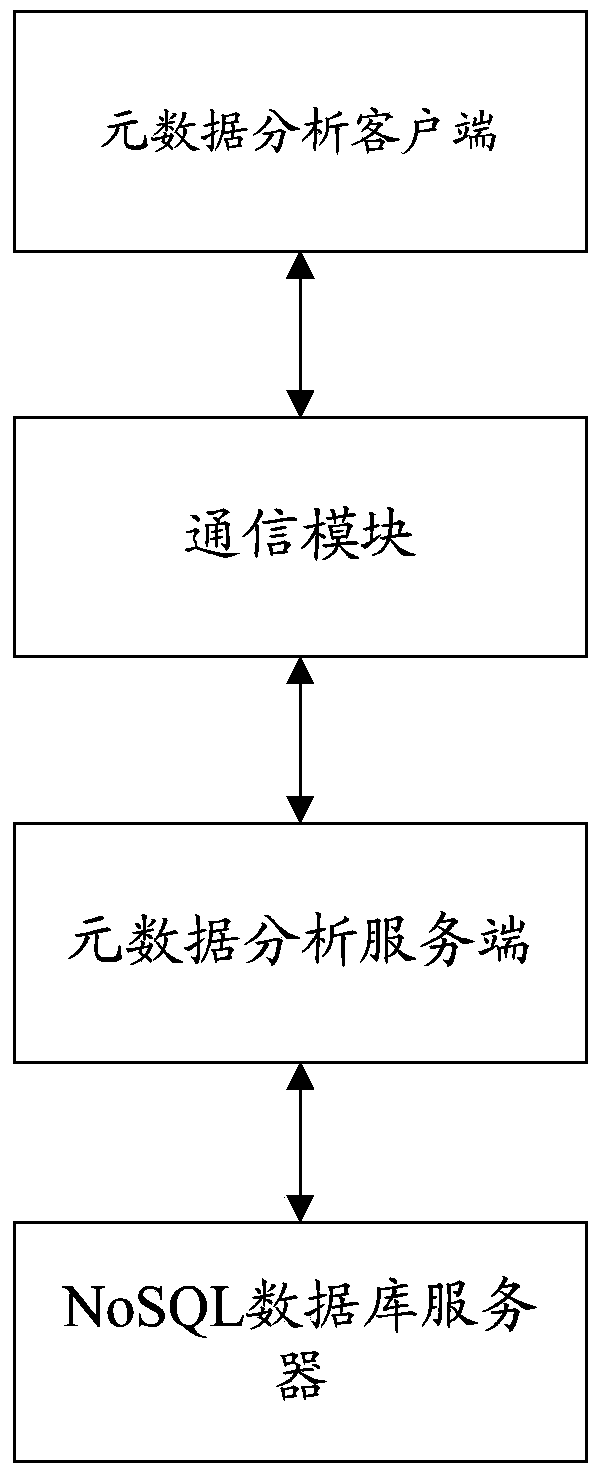

System and method for realizing metadata cache and analysis based on NoSQL and method

InactiveCN104199978AReduce head seek timeImprove efficiencySpecial data processing applicationsData bufferServer-side

Owner:PRIMETON INFORMATION TECH

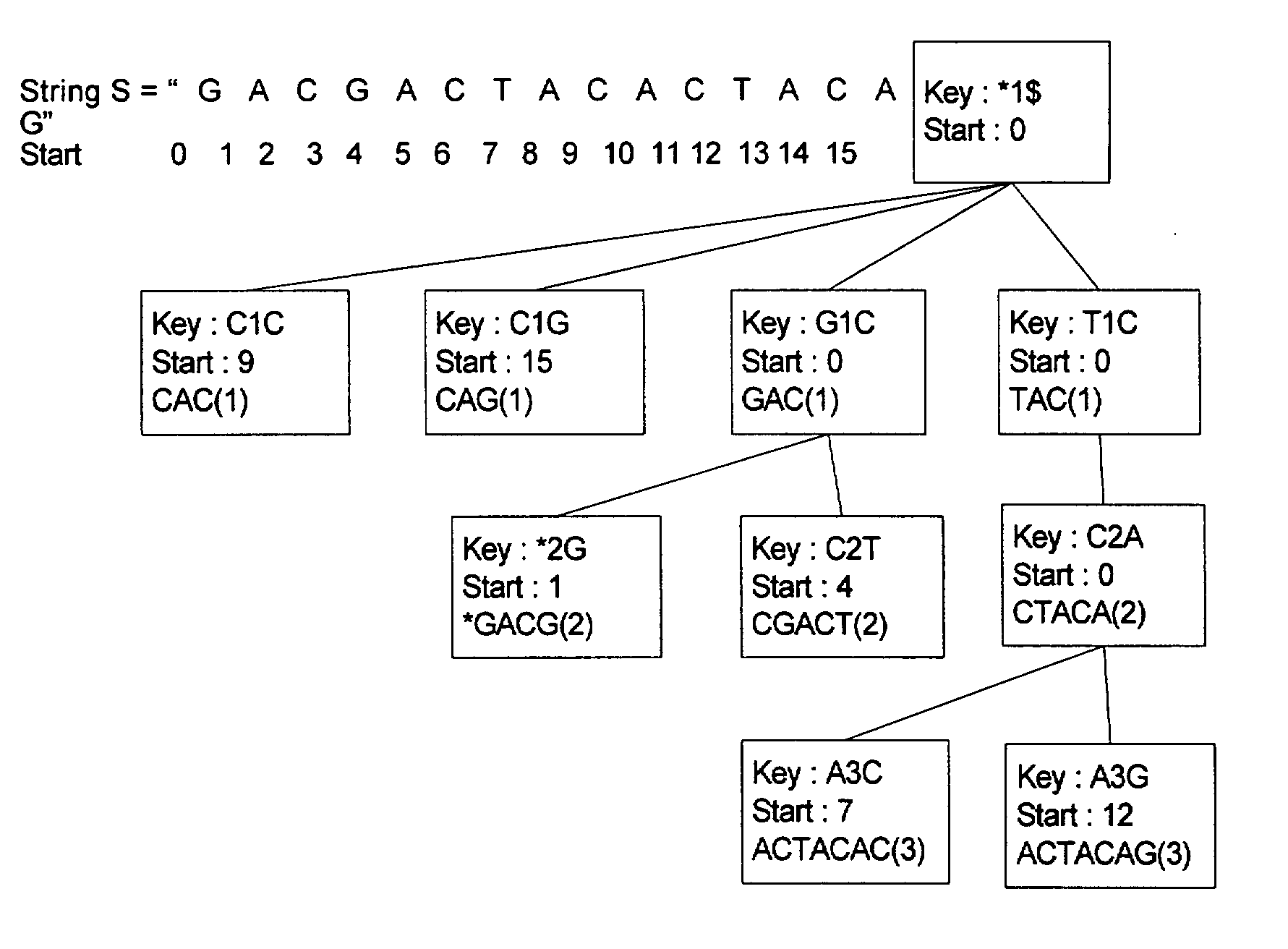

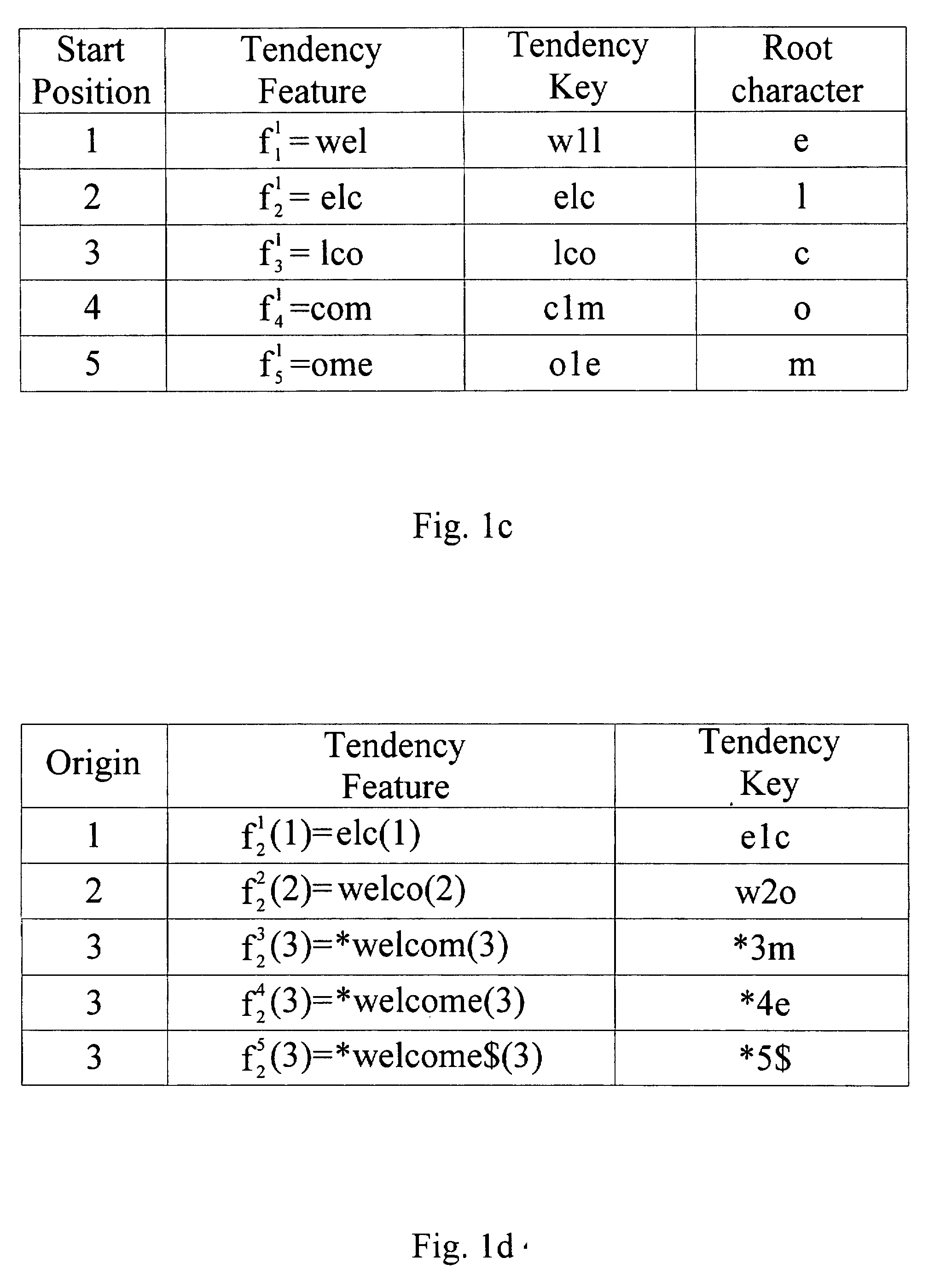

Method and structure for string partial search

InactiveUS20070282816A1Improve I/O efficiencyReduce storageDigital data information retrievalSpecial data processing applicationsLayered structureTheoretical computer science

An index structure, tendency B trees, to alleviate the high cost of string partial search in large data sets is presented. A tendency B tree is a two layered data structure, including a logical layer and a physical layer. In the logical layer, a tendency tree provides a hierarchical structure to group similar tendency features together to facilitate fast partial search for a given query. The physical layer is a B-tree like structure. In addition, the balanced topology of B trees provides consistent I / O complexity. The tendency B tree is dynamically compressed during the construction process to reduce storage and enhance search efficiency. Experiments on both dictionary search and DNA sequence search using tendency B trees show that consistent, fast search times can be achieved in large data sets, requiring lower space usage and linear construction time.

Owner:TSAI SHING JUNG

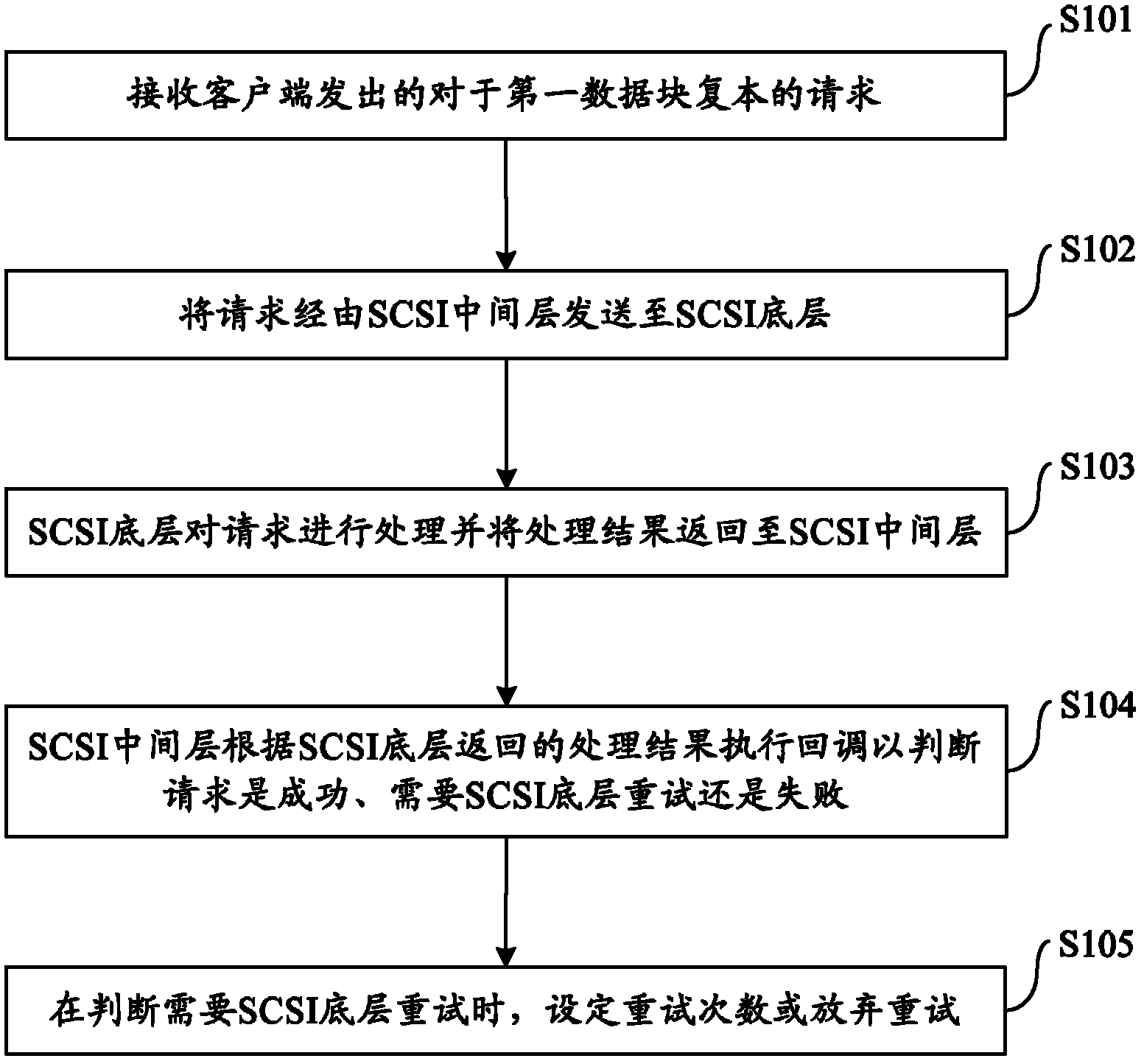

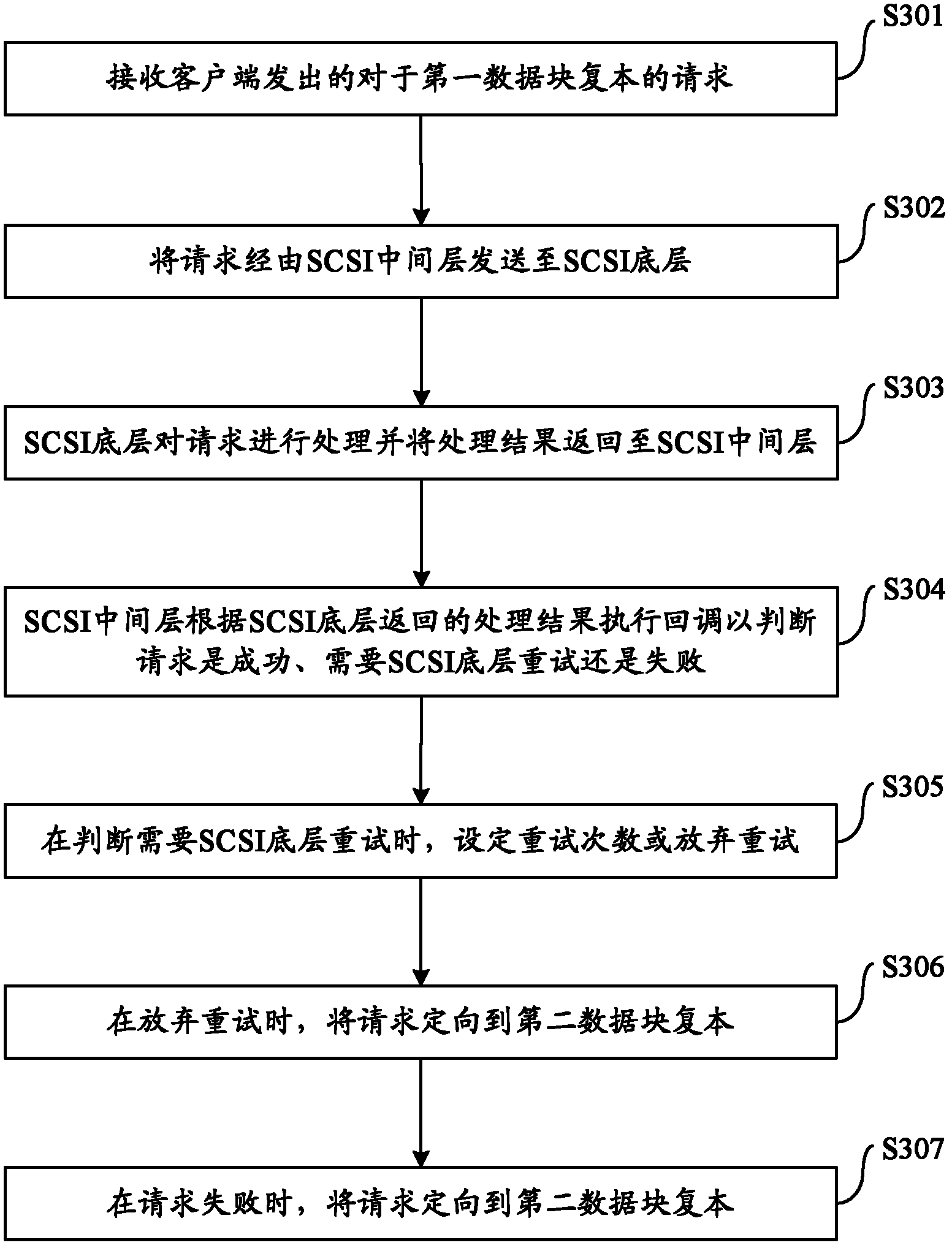

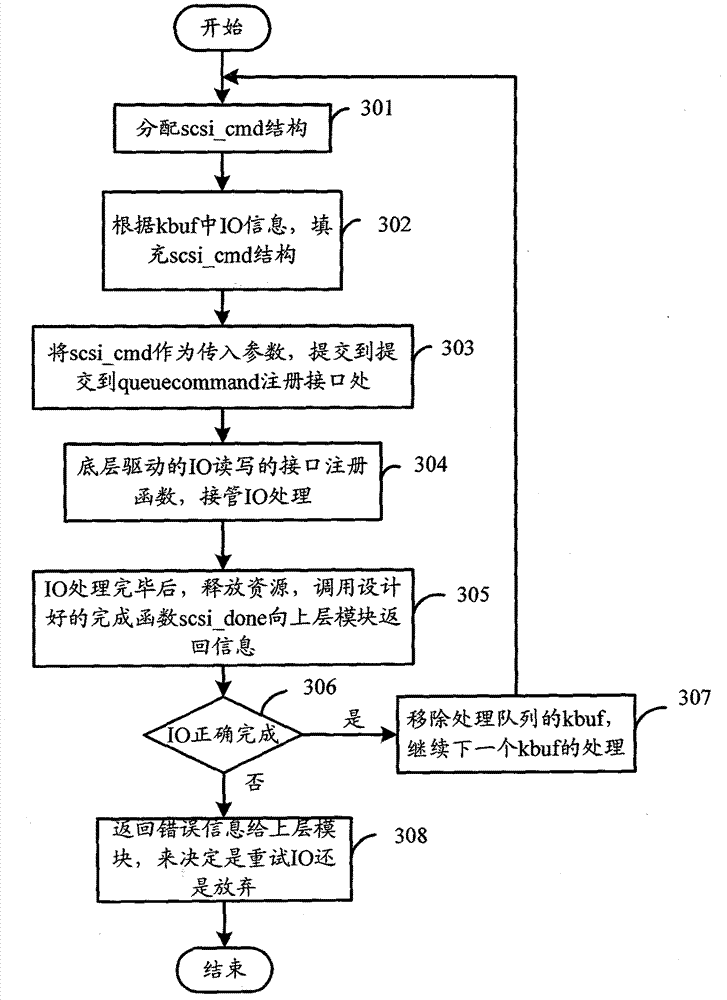

Small computer system interface (SCSI) fault-tolerant optimization method and device based on hadoop distributed file system (HDFS)

The invention provides a small computer system interface (SCSI) fault-tolerant optimization method and device based on a hadoop distributed file system (HDFS). The method comprises the following steps: a request which is sent by a client end and is about a first data block duplicate is received; the request is sent to an SCSI bottom layer through an SCSI middle layer; the SCSI bottom layer processes the request and returns a processed result to the SCSI middle layer; the SCSI middle layer executes calling-back according to the processed result returned by the SCSI bottom layer so as to judge whether the request is successful or not, and the SCSI bottom layer needs to be retried or is failed; and retrying times are set or retrying is given up when the fact that the SCSI bottom layer needs to be retried is judged. According to the SCSI fault-tolerant optimization method and device based on the HDFS, when the request of the client end is judged to be the fact that the SCSI bottom layer needs to be retried according to the result returned by the SCSI bottom layer, the retrying times are set or giving-up retrying is set, and the error processing method which does not influence the actual request of the client end is optimized. Thus, IO efficiency is improved, and hardware failure rate is lowered.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

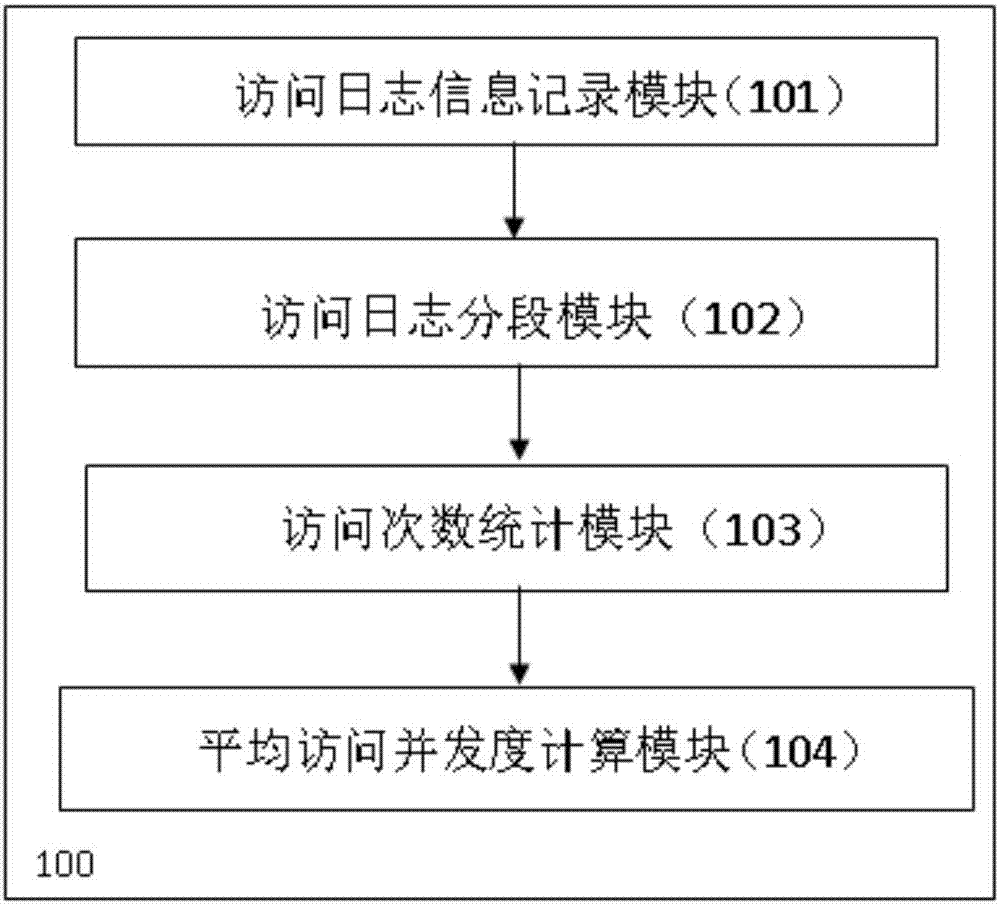

Spatial data storage organization method and system in consideration of load balancing and disc efficiency

InactiveCN106933511AEfficient bulk readImprove I/O efficiencyInput/output to record carriersResource allocationNatural scienceData file

The invention discloses a spatial data storage organization method and system in consideration of load balancing and disc efficiency. The method comprises the steps of counting an average access concurrency degree according to times of spatial data files accessed and requested by a user; distributively storing the spatial data files to a distributed geographical information system server according to the load balancing requirement of a distributed geographical information system and the average access concurrency degree; calculating the average access continuity of the spatial data files according to the average access concurrency degree and an average access spacing distance of the spatial data files; and carrying out consecutive storage organization on the spatial data files in the same server according to the average access continuity of the spatial data files. According to the spatial data storage organization method and the spatial data storage organization system in consideration of load balancing and disc efficiency, effective batch reading of continuous accessed space data is realized and the storage efficiency of the geographical information system is guaranteed while the load balancing requirement is satisfied. The project is completed by funding of Natural Science Foundation of China (Fund No: 41671382, 41271398).

Owner:WUHAN UNIV

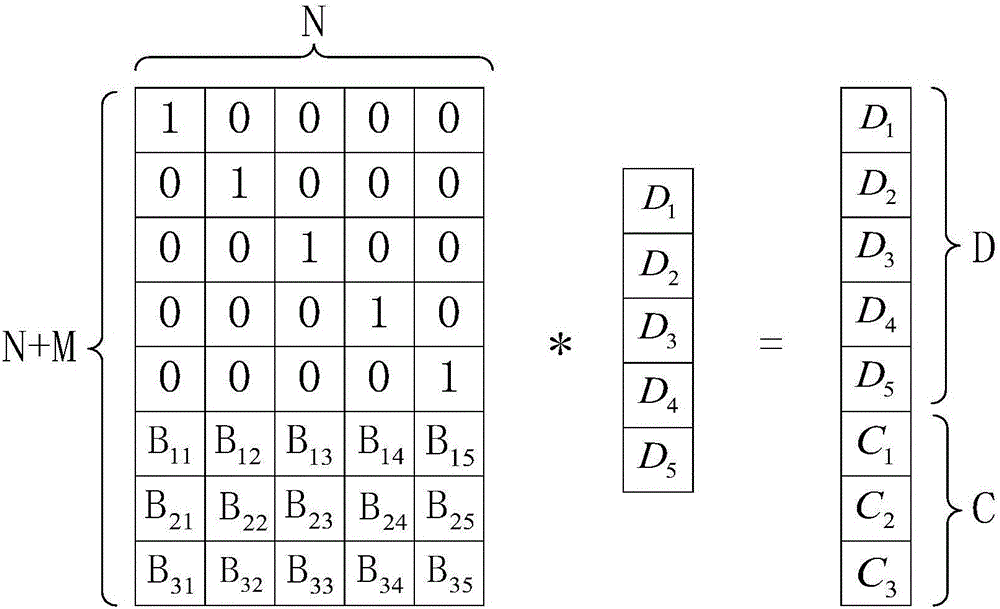

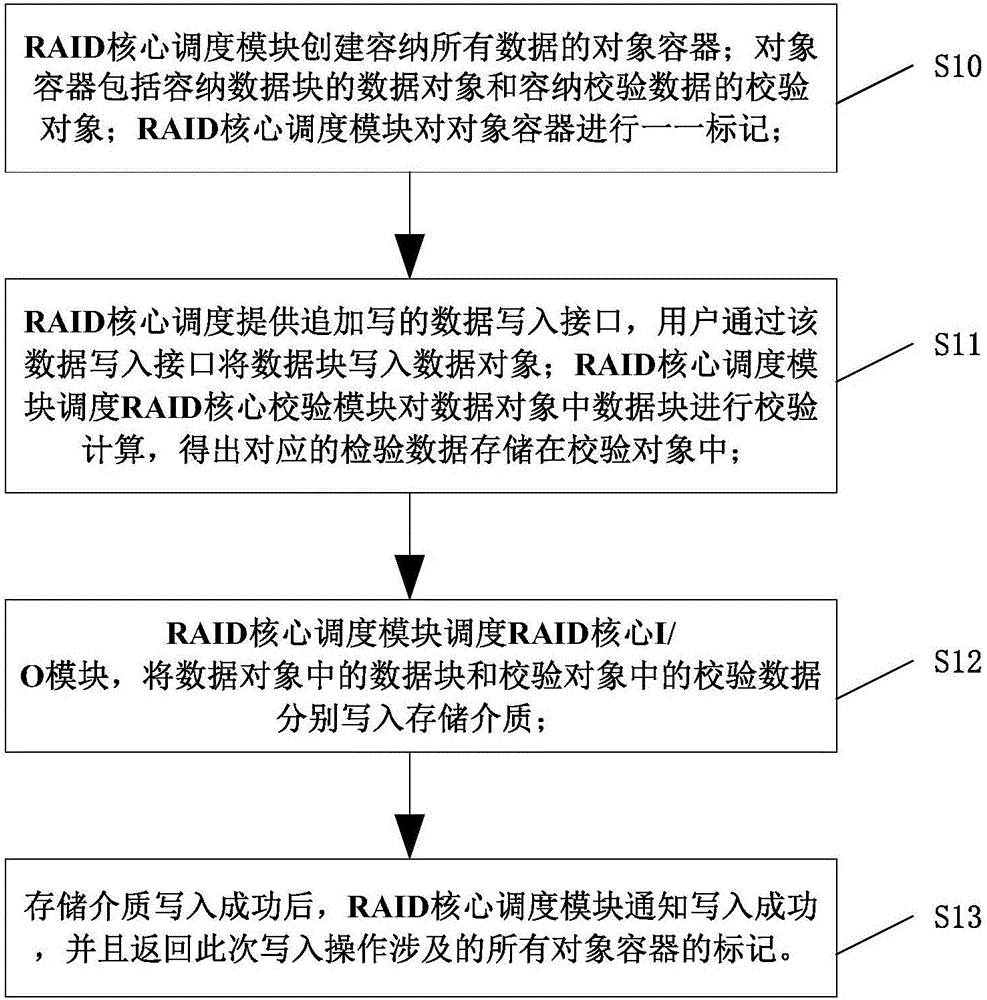

RAID model, data reading and writing and reconstruction method thereof

ActiveCN106227463AIncrease redundancyCollaborate efficientlyInput/output to record carriersRAIDComputer architecture

The invention discloses an RAID model. The RAID model comprises an RAID core verification module and an RAID core scheduling module; the RAID core verification module adopts an Erasure code verification algorithm of RS code on the basis of a Vandermonde matrix, the Erasure code verification algorithm supports N data blocks to generate M verification data, and the RAID core scheduling module is used for conducting unified scheduling on all data reading and writing operations of the RAID model; the RAID core scheduling module selects N correct data blocks through a scheduling algorithm, and corresponding M verification data is calculated through the RAID core verification module; an RAID core I / O module is used for executing data reading and writing operations of the RAID; the RAID core scheduling module creates an object container containing all data. According to the RAID model, the RAID core scheduling module conducts unified scheduling on all the data reading and writing operations of the RAID, a user does not need to conduct caching for length adaptation, the verification number can be customized, no state exists for attitude to data, overall reconstruction is not needed, and the reading and writing efficiency is high.

Owner:SUZHOU KEDA TECH

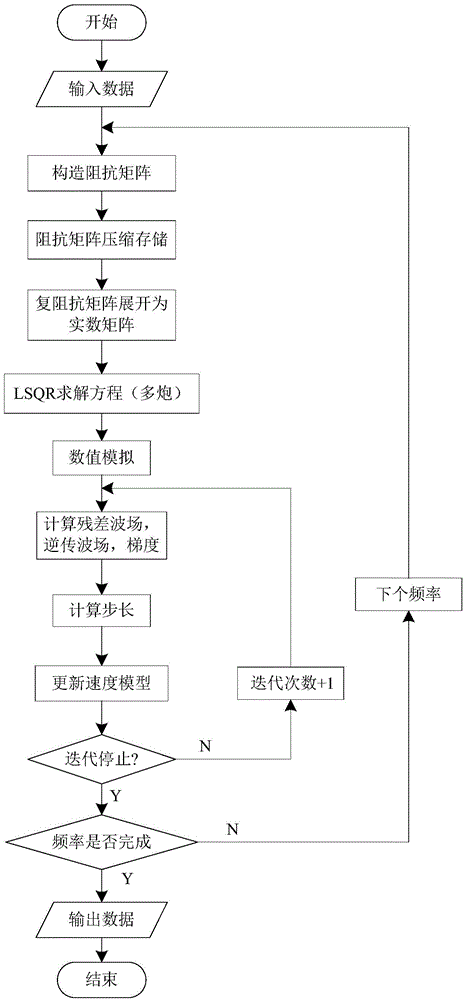

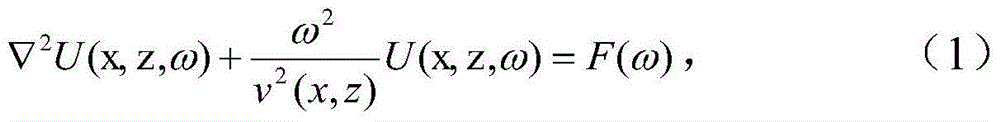

Storage method of LSQR algorithm-based frequency domain waveform inversion

InactiveCN105589833AIncrease calculation rateImprove I/O efficiencyComplex mathematical operationsLU decompositionBase frequency

The invention discloses a storage method of LSQR frequency-based domain waveform inversion. The method comprises the following steps: 1) establishing a frequency domain acoustic wave equation; 2) carrying out real number processing on an impedance matrix; 3) compressing and storing the impedance matrix; 4) solving the acoustic wave equation on the basis of an LSQR algorithm of GPU acceleration; 5) calculating related kernel functions in the GPU; 6) calculating a virtual focus, a gradient and a Hessian matrix after compression and storage; 7) calculating an approximate step length; and 8) realizing the frequency domain waveform inversion. Compared with the traditional method of solving the system of linear equations through an LU decomposition method, the method disclosed in the invention has the advantages of being small in memory usage, saving the memory overhead and improving the calculation efficiency by thousands of times under the same hardware condition.

Owner:SHAANXI ZHONGHAOYUAN HYDROPOWER ENG CO LTD

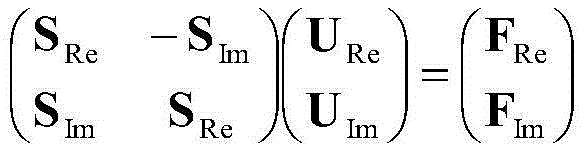

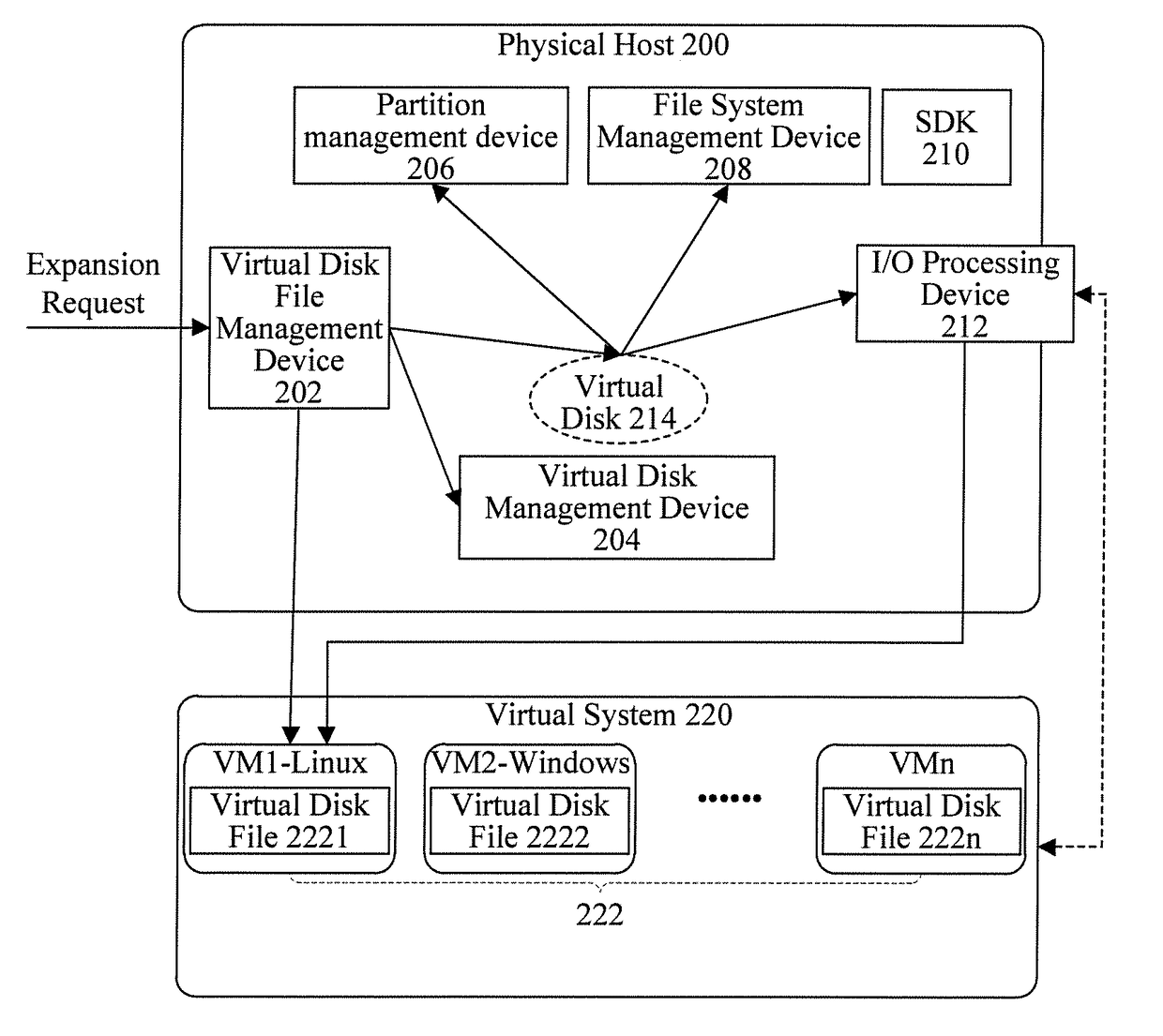

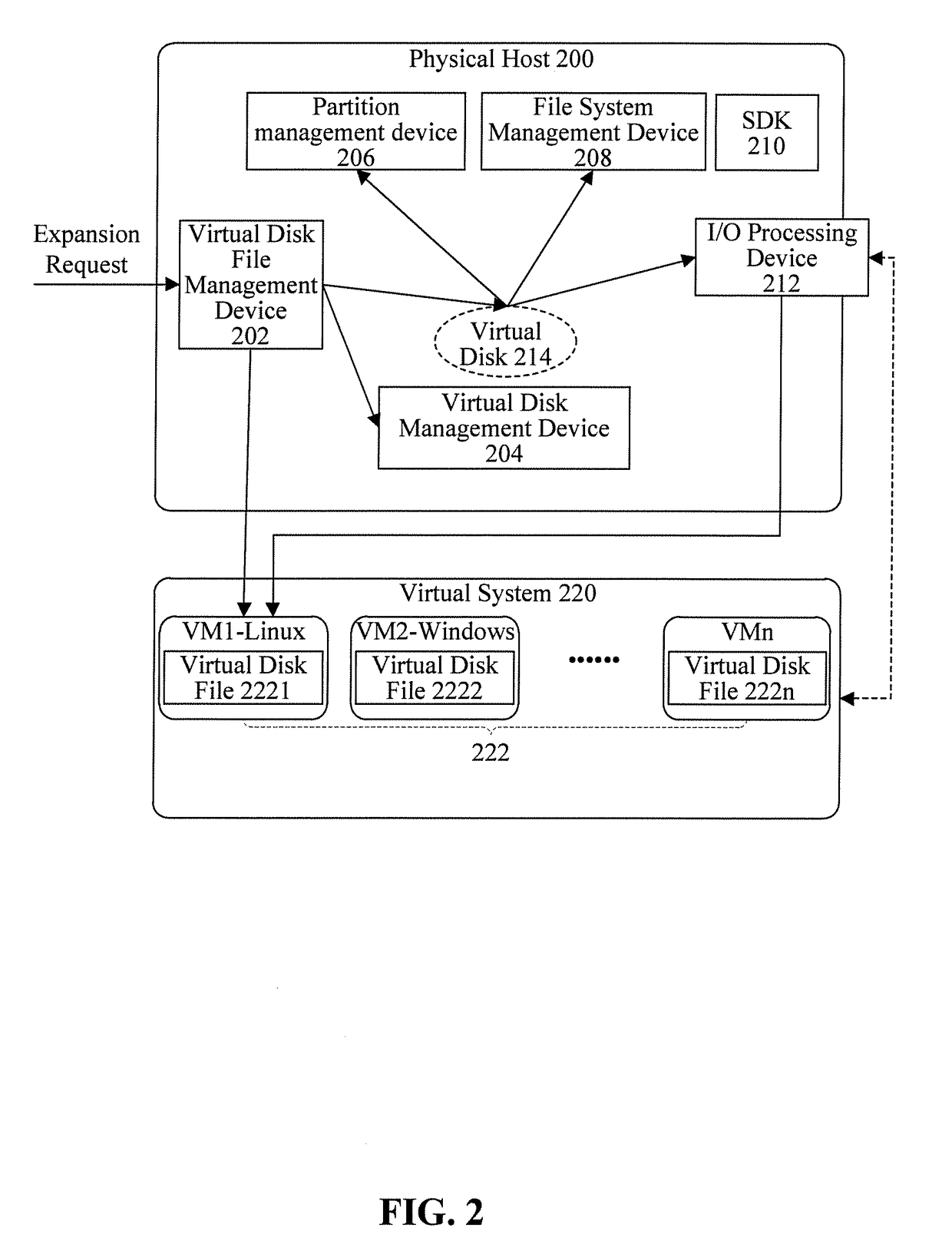

Method and device for expanding capacity of virtual disk

ActiveCN107800730AReduce consumptionImprove I/O efficiencyBootstrappingTransmissionCapacity valueCoupling

The invention discloses a method for expanding the capacity of a virtual disk, which includes the following steps: creating a virtual disk file according to a preset system image and a target disk capacity value contained in a received capacity expansion request; mounting the virtual disk file to a physical host, and generating a corresponding virtual disk; reading the partition information of thevirtual disk; deleting a to-be-expanded partition of the virtual disk, and creating a new partition according to the partition capacity value and the expansion value of the to-be-expanded partition;and reading the file system of the virtual disk, and expanding the capacity of the file system to make the file system adapt to the new partition. The method for expanding the capacity of a virtual disk is implemented based on a physical host, shortens the I / O path, and improves the I / O efficiency. Meanwhile, there is no need to start a virtual machine on a physical host in the capacity expansionprocess of a virtual disk, the capacity is expanded before the startup of the virtual machine, and the technological complexity and the coupling degree are reduced.

Owner:ALIBABA GRP HLDG LTD

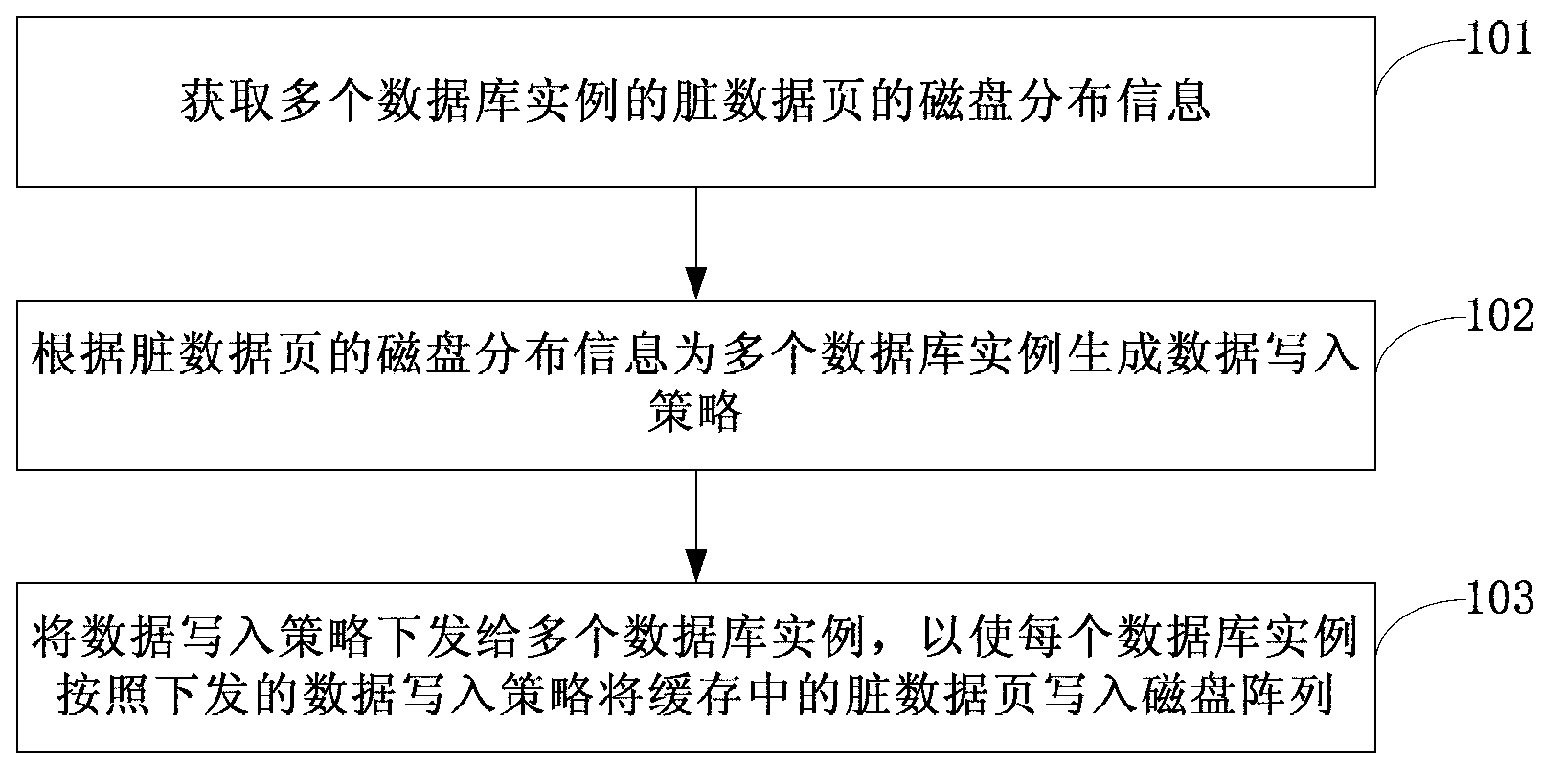

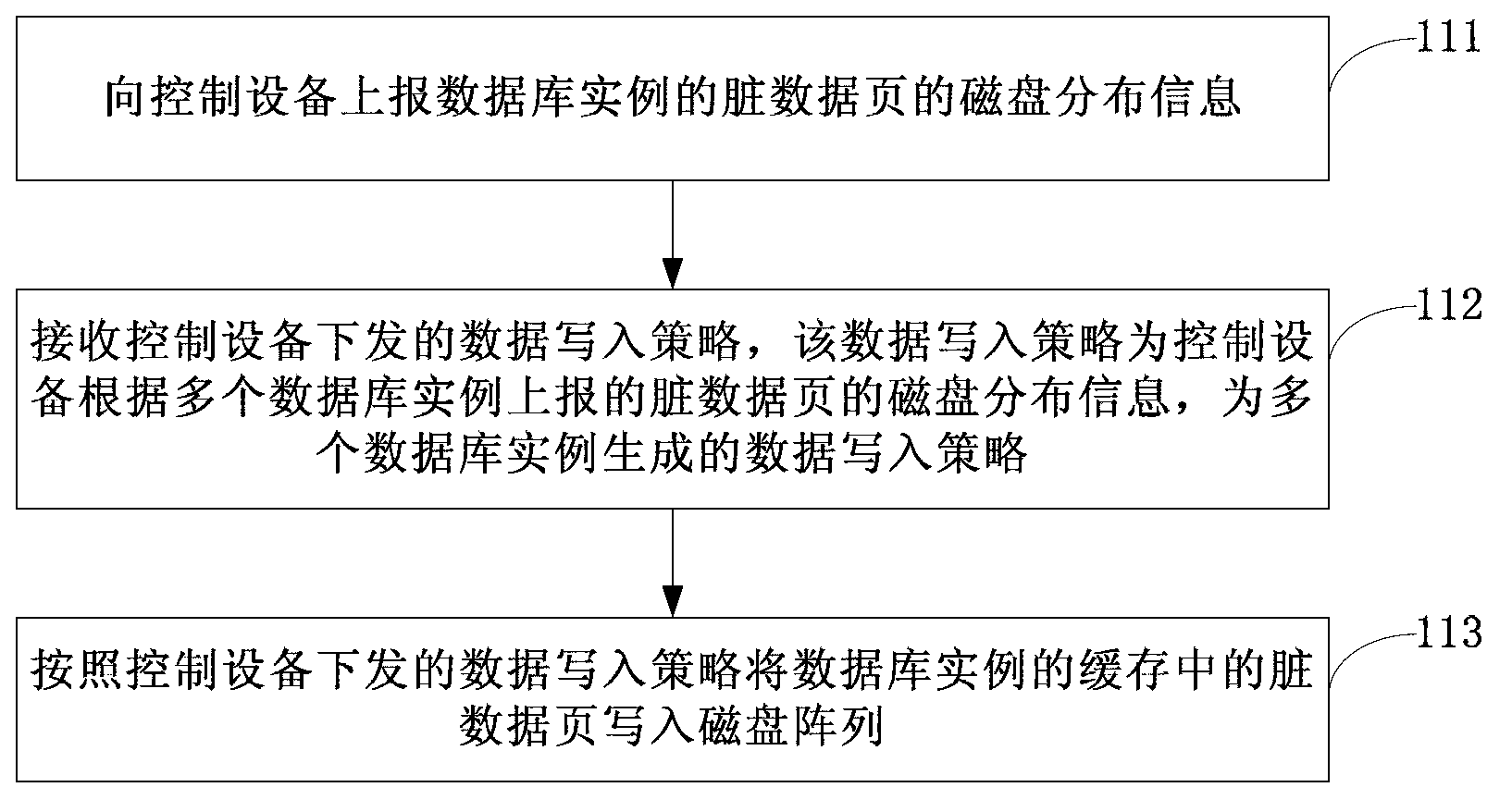

Method, system and equipment for controlling data writing

Owner:HUAWEI TECH CO LTD

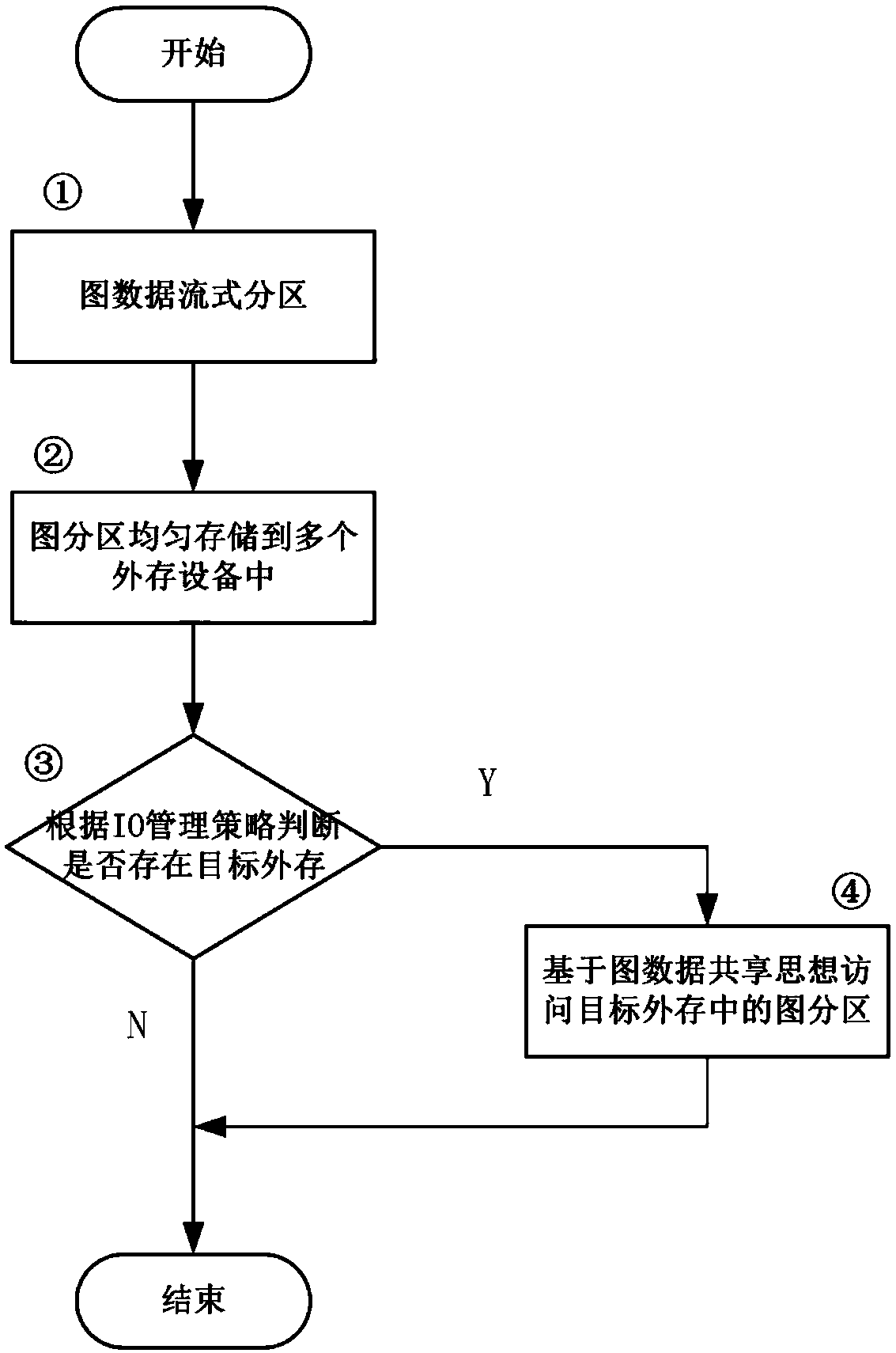

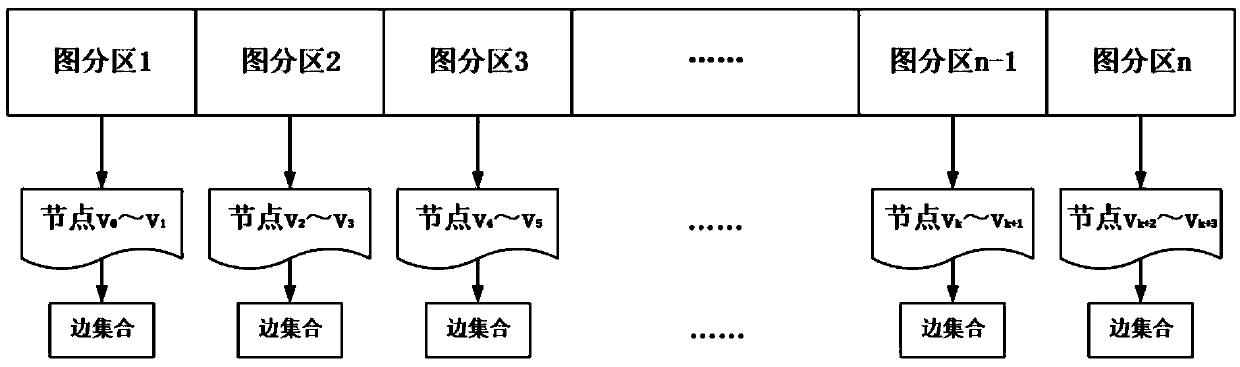

A multi-task external memory schema graph processing method based on I/O scheduling

ActiveCN109522102AImprove performanceIncrease profitProgram initiation/switchingExternal storageComputer architecture

The invention discloses a multi-task external memory mode diagram processing method based on I / O scheduling, includes streaming partitioning graph data to obtain graph partition, evenly placing graphpartition in multiple external storage devices, selecting target external storage devices from multiple external storage devices based on I / O scheduling, and taking graph partition in the target external storage device that has not been accessed by graph processing task as designated partition; Judging whether the synchronization field of the designated partition is not mapped into the memory according to the synchronization field of the designated partition, if so, mapping the designated partition from the external storage device into the memory, and updating the synchronization field of thedesignated partition; Otherwise, the graph partition data is accessed directly through the address information mapped to memory by the specified partition. Through I / O scheduling, the invention selects the external storage device with the least number of tasks to access, thereby controlling the sequence of accessing the data of the external storage diagram partition and balancing the I / O pressure.By setting the synchronization field to realize the data sharing of graph partition, the repeated loading of the same graph partition is reduced, so as to reduce the total I / O bandwidth and improve I / O efficiency.

Owner:HUAZHONG UNIV OF SCI & TECH

Crawler system IO optimization method and device

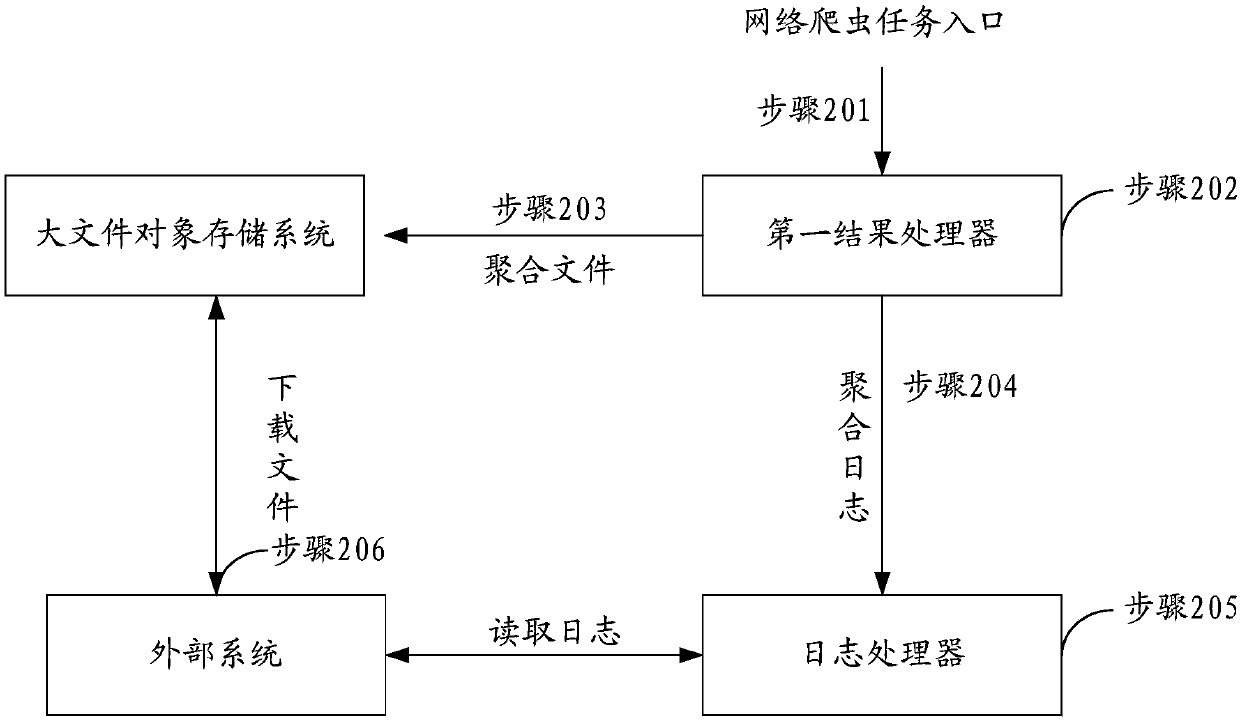

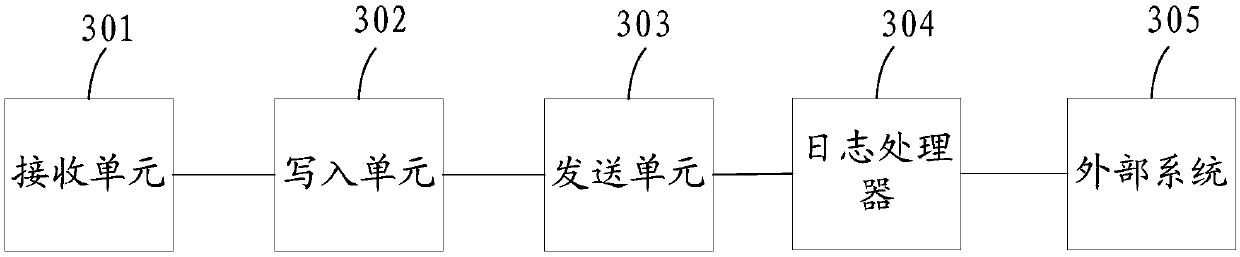

InactiveCN107943858AHigh speedReduce IO frequencyWeb data indexingSpecial data processing applicationsData miningObject storage

The invention discloses a crawler system IO optimization method and device, relates to the field of software engineering, and aims at solving the problem that existing result storage work carried outby taking crawler tasks as units is low in IO efficiency and influences the retrieval efficiency. The method comprises the following steps of: caching received first crawlers by a first result processor, and when the fact that the quantity of cached crawling results exceeds an aggregation threshold value is determined, writing the plurality of crawling results into an aggregation file according toan end-to-end splicing method and recording a position offset of each crawling result; generating an aggregation path stored in a big file object storage system according to a content of the aggregation file and sending the aggregation file to the aggregation path; and generating an aggregation log which comprises each crawling result, the position offset of each crawling result, the aggregationpath and a number of each crawler according to the aggregation file and sending the aggregation log to a log processor.

Owner:广州探迹科技有限公司

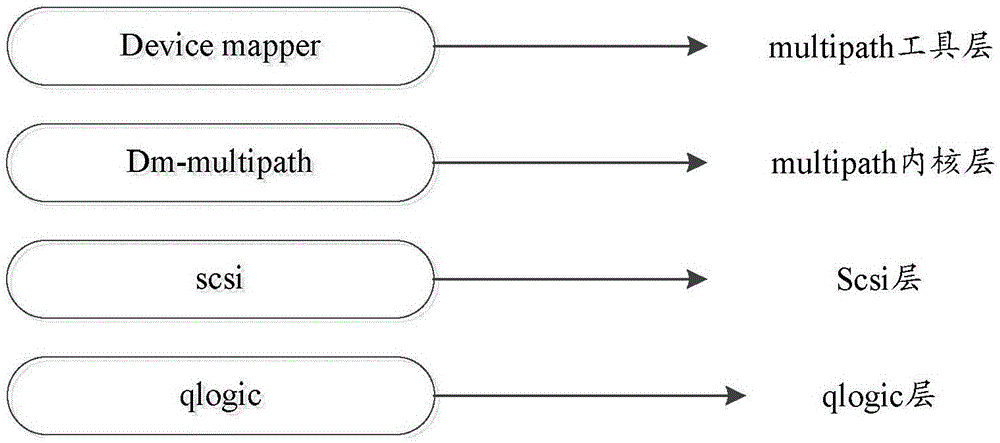

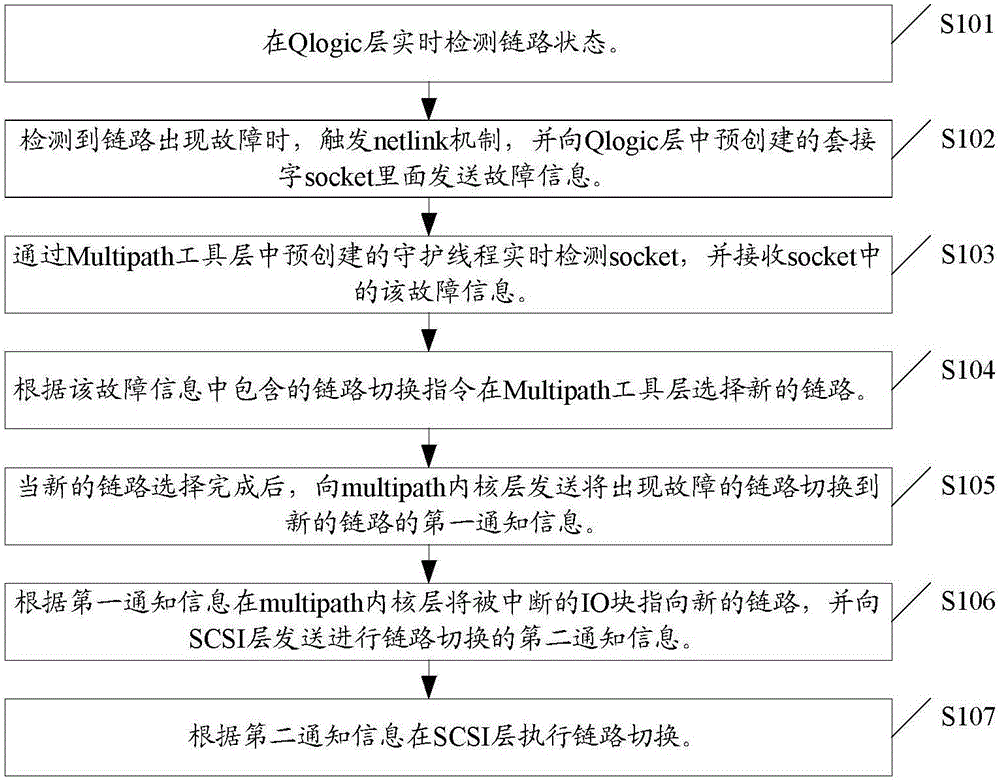

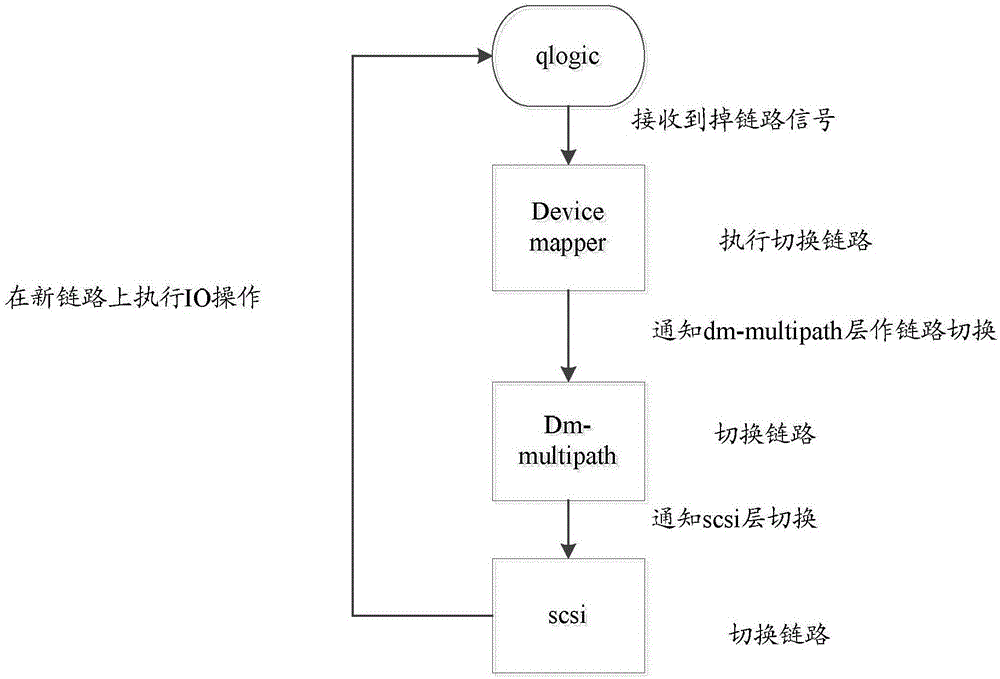

Link switching method and system

The invention relates to a link switching method and system. The switching method comprises: a link state is detected in real time at a Qlogic layer; when occurrence of a fault is detected at the link, a netlink mechanism is triggered and fault information is sent to a socket that is established in advance in the Qlogic layer; the socket is detected in real time by a daemon thread that is established in advance and the fault information is received; according to a link switching instruction contained by the fault information, a new link is selected at a multipath tool layer; after the new link selection is completed, a first notification message for switching the link with the fault into the new link is sent; on the basis of the first notification message, an interrupted IO block is pointed to the new link at a multiplepath inner nuclear layer and a second notification message for link switching is sent to an SCSI layer; and according to the second notification message, the link switching is executed at the SCSI layer. On the basis of the scheme, the IO efficiency can be effectively improved.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

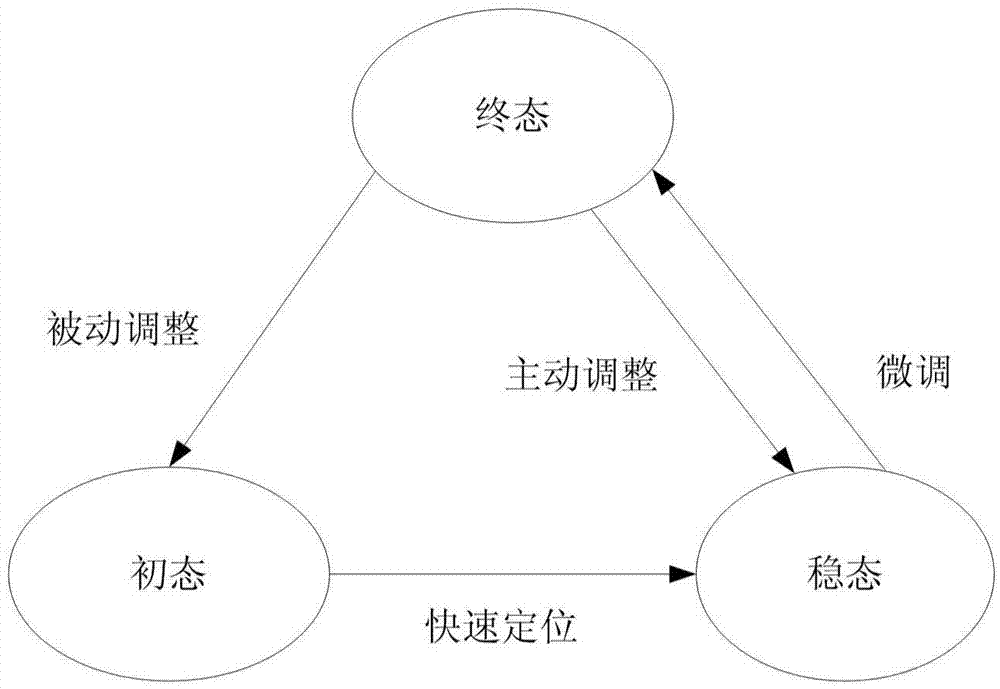

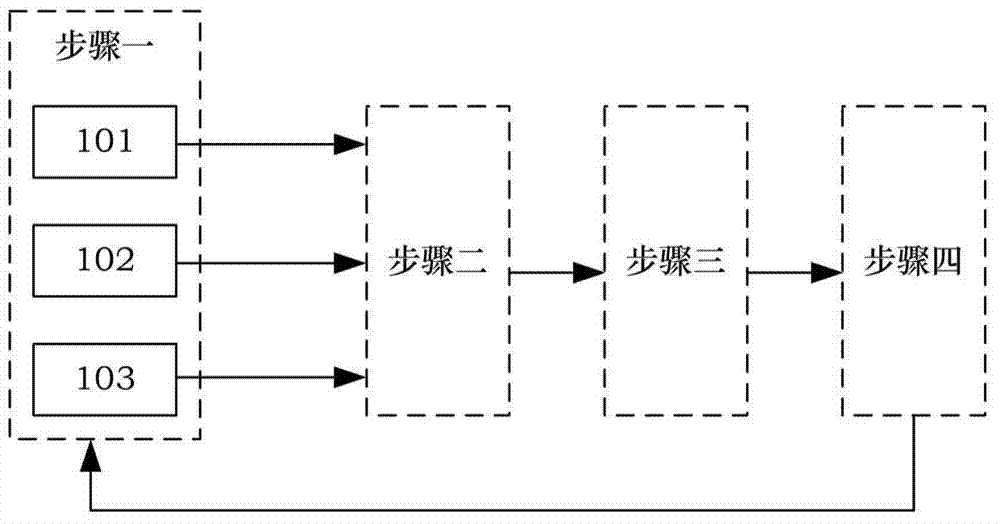

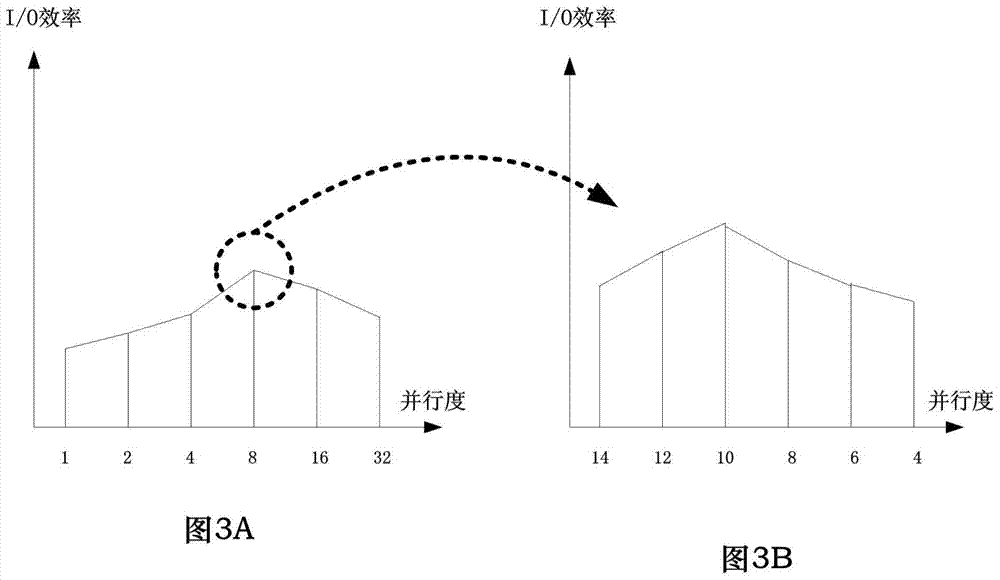

Method for controlling parallelism degree of program capable of sensing band width of storage device

InactiveCN103677757AImprove acceleration performanceImprove throughputMultiprogramming arrangementsConcurrent instruction executionDegree of parallelismSelf adaptive

The invention discloses a method for controlling the parallelism degree of a program capable of sensing the band width of a storage device. By means of the method, the parallelism degree of the application program is dynamically adjusted according to the comprehensive performance and real-time loading conditions of the storage device of an operating platform of the application program in a self-adaptive mode, namely the number of courses or threads, so that the application program keeps and obtains the parallelism degree with the optimal I / O efficiency. According to the method, the information of the I / O efficiency of the application program is monitored and recorded in real time and is used as feedback information to control and adjust the parallelism degree of the application program. The parallelism degree of the program is probed step by step and increased gradually until the parallelism degree reaches the inflection point of the actual I / O efficiency, and then fine adjustment is carried out to obtain the optimal parallelism degree for different application program platforms; further according to the real-time loading conditions of the different application program platforms, periodic, dynamic and self-adaptive operation combining active adjustment and passive adjustment is carried out to obtain the optimal parallelism degree of the application program.

Owner:凯习(北京)信息科技有限公司

Virtual disk expansion method and apparatus

InactiveUS20180059978A1Reduced feasibilityHigh complexityInput/output to record carriersBootstrappingFile systemCoupling

A virtual disk expansion method is disclosed in the present application, comprising: creating a virtual disk file according to system images and a target disk volume value contained in a received expansion request; mounting the virtual disk file to a physical host, and generating a virtual disk; reading partition information of the virtual disk; deleting a partition to be expanded of the virtual disk and creating a new partition according to a partition volume value of the partition to be expanded and an expansion value; and reading a file system of the virtual disk and expanding the file system to be adapted to the new partition. The virtual disk expansion method is realized based on a physical host, shortens the I / O path and improves the I / O efficiency; also, during the virtual disk expansion process, there is no need to start a virtual machine on the physical host and the expansion is performed before the start of the virtual machine, thereby reducing technical complexity and coupling.

Owner:ALIBABA GRP HLDG LTD

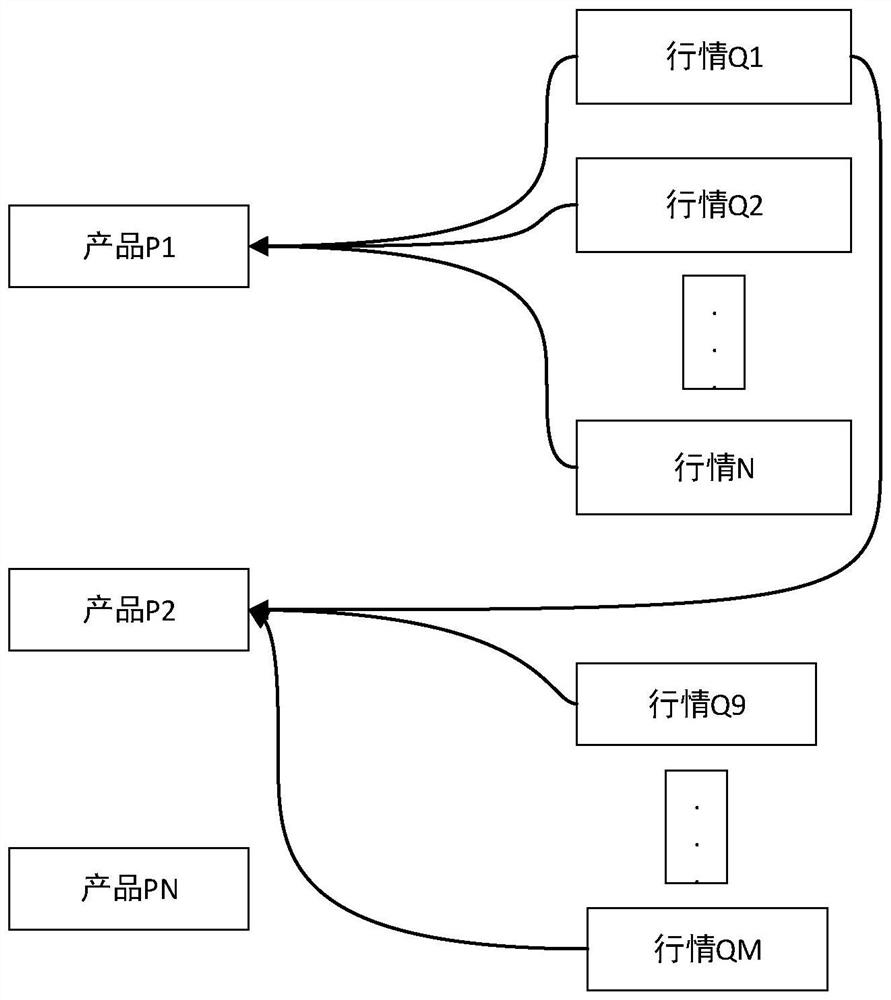

Data updating system and method based on resource mutual exclusion scheduling model

PendingCN112783633AEasy to useReduce tensionDatabase updatingProgram initiation/switchingData classDistributed computing

The invention provides a data updating system and method based on a resource mutual exclusion scheduling model, which can be applied to the field of data processing and finance, and the system comprises a resource cache module which obtains market information push data, and stores the market information data into a preset cache queue according to the receiving time of the market information push data and the data category of each piece of market information data in the market information push data, a coordination thread module which obtains the resource type of each product, and constructs characteristic data corresponding to each product according to the resource type, acquires market data in the cache queue, and constructs a product set according to resource types and characteristic data of the market data and performs mutual exclusion scheduling according to resource types of the product set, distributes market information data to different execution queues, and calculates resource updating data of each product through working threads corresponding to the execution queues, and a synthesis calculation module which obtains resource update data calculated by each working thread, and updates corresponding resources in each product through the resource update data.

Owner:INDUSTRIAL AND COMMERCIAL BANK OF CHINA

Method for storage interface bypassing Bio layer to access disk drive

ActiveCN102073605BImprove efficiencyReduce processing timeElectric digital data processingTreadInput/output

The invention provides a method for a storage interface bypassing a Bio layer to access disk drive, which comprises the following steps of: setting up an equipment module, wherein each disk equipment corresponds to a disk equipment object; packing an IO (Input Output) request initiated by an application program into an IO request object; setting up two stages of queue for each disk equipment: a waiting queue and a processing queue, wherein the waiting queues are used for sequentially receiving the IO request objects, and under the condition that the processing queues have a vacancy, the IO request objects are converted to the processing queues from the waiting queues; and creating a scanning tread for scanning the waiting queues of all the disk equipment objects, if the waiting queues have the IO request objects, the IO request objects are extracted from the processing queues and then are submitted to a bottom layer, and after the IO processing is finished, the IO request objects are removed from the processing queues.

Owner:深圳市安云信息科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com