Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

180 results about "In-Memory Processing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer science, in-memory processing is an emerging technology for processing of data stored in an in-memory database. Older systems have been based on disk storage and relational databases using SQL query language, but these are increasingly regarded as inadequate to meet business intelligence (BI) needs. Because stored data is accessed much more quickly when it is placed in random-access memory (RAM) or flash memory, in-memory processing allows data to be analysed in real time, enabling faster reporting and decision-making in business.

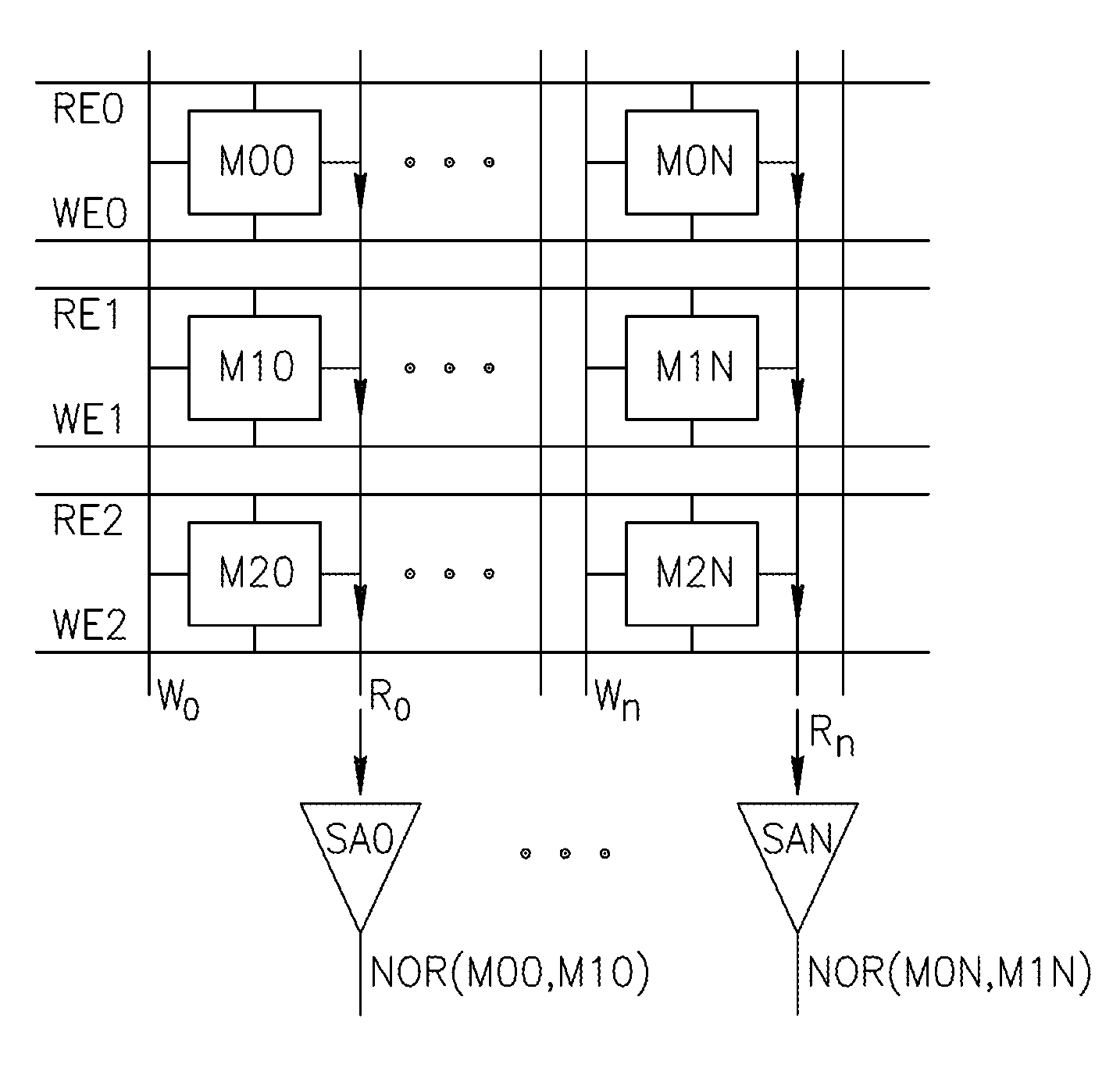

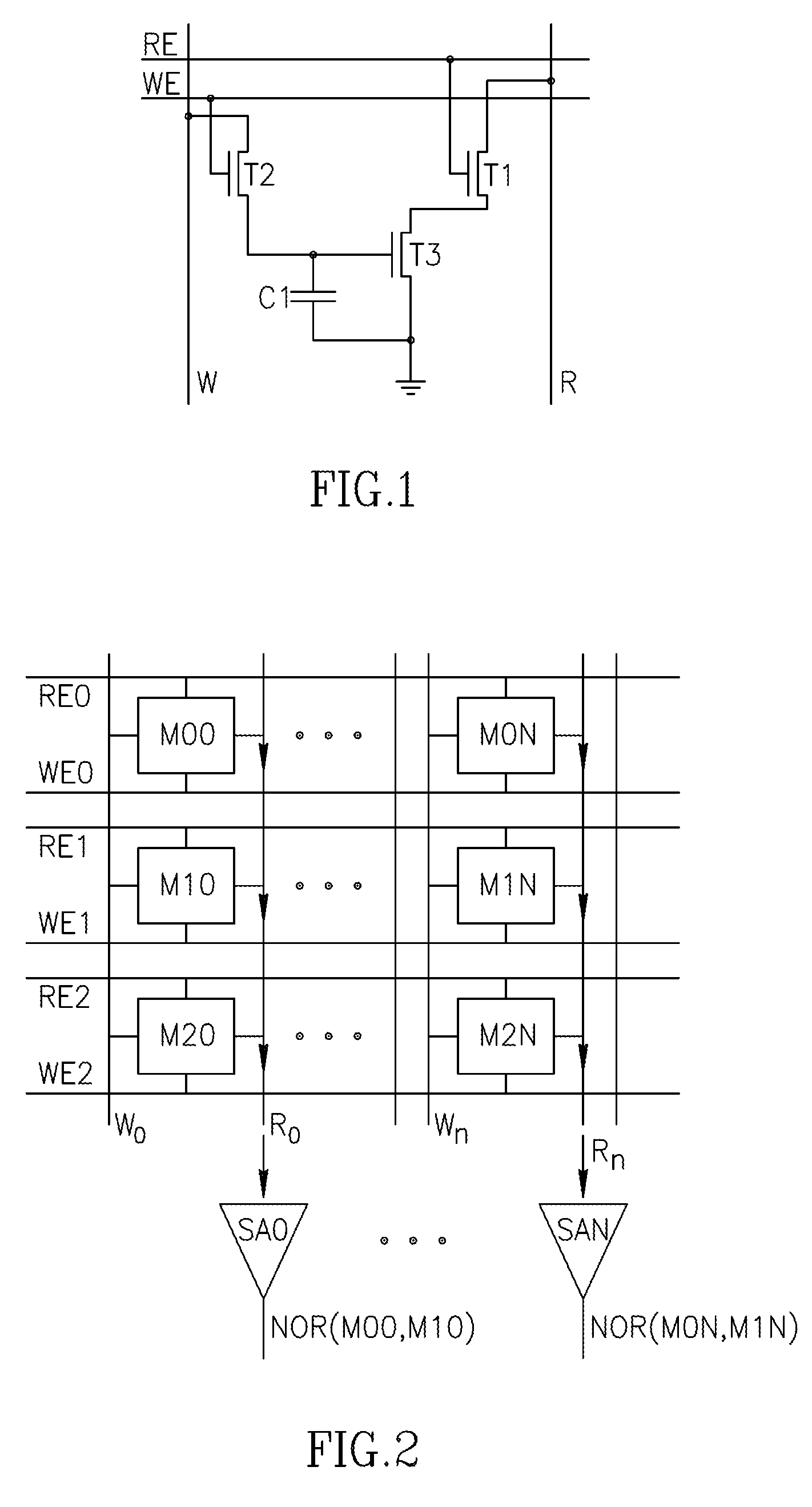

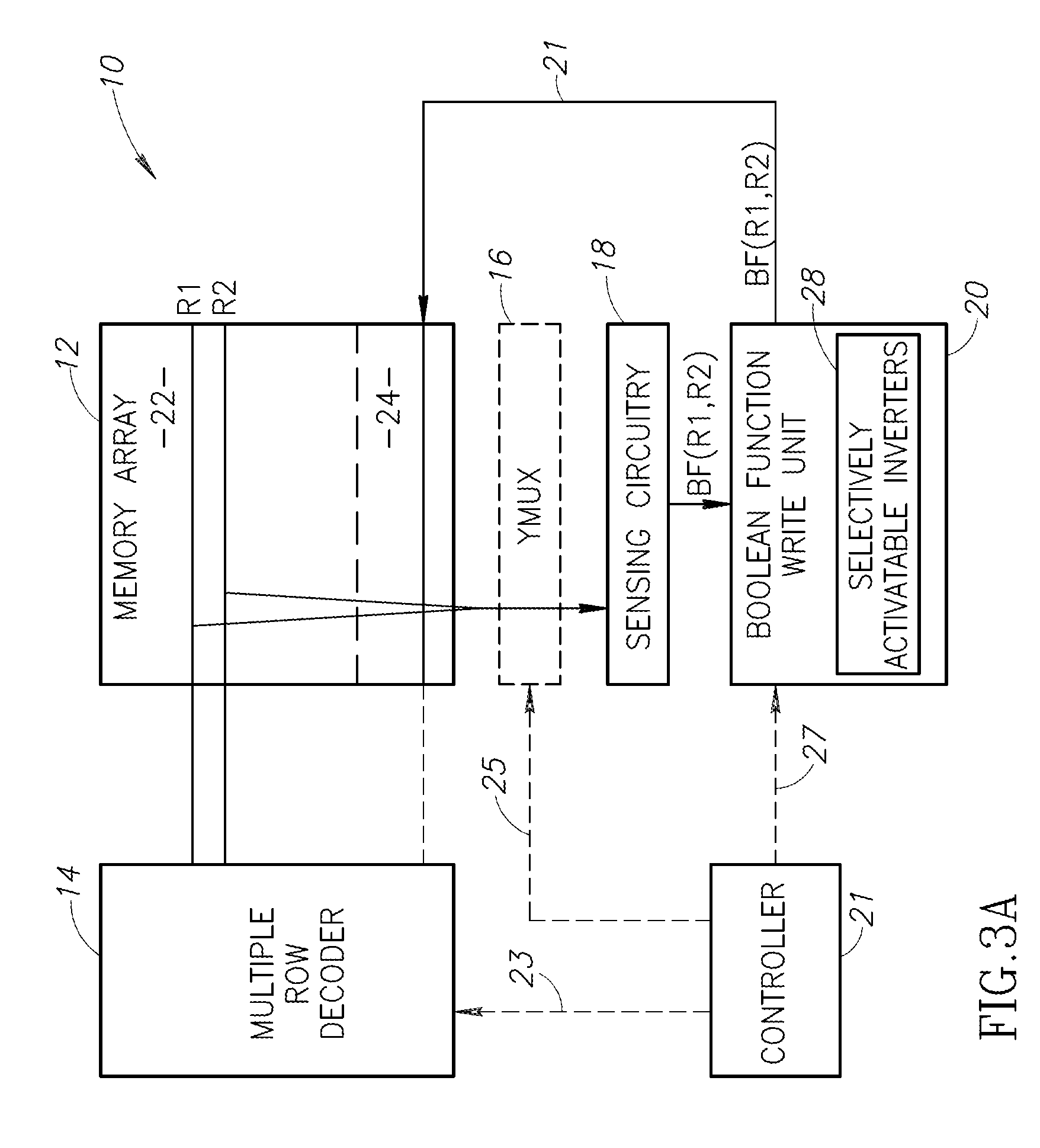

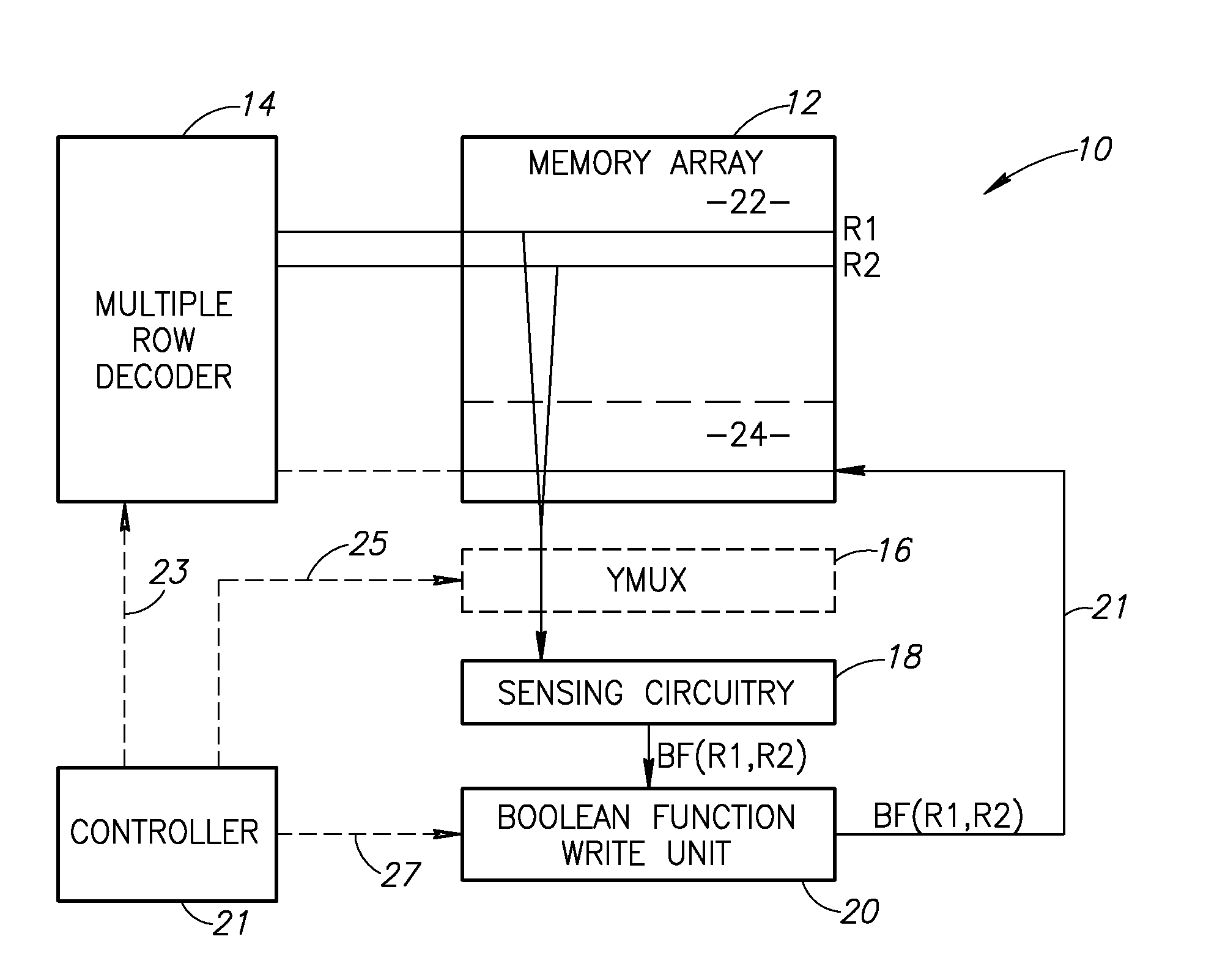

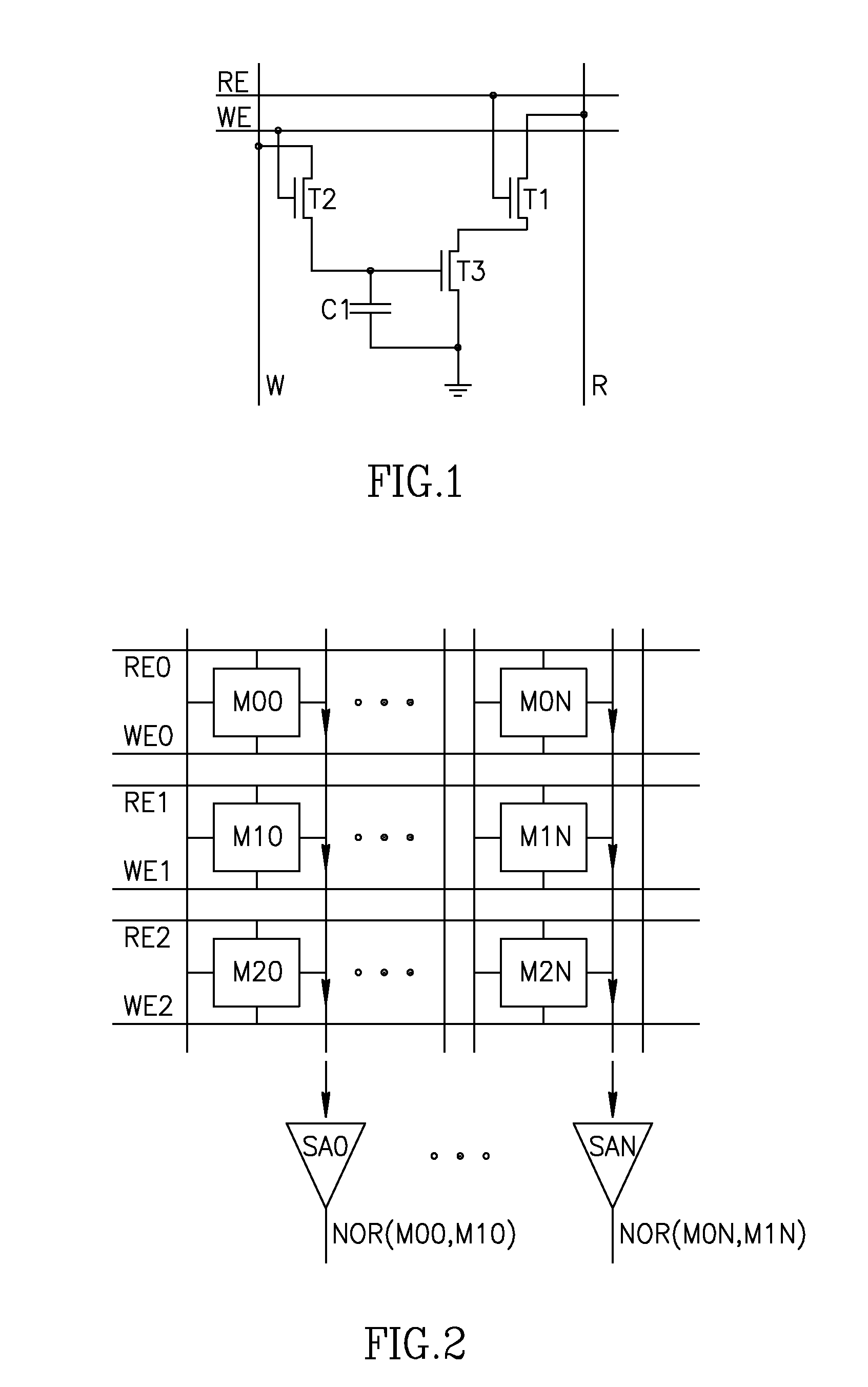

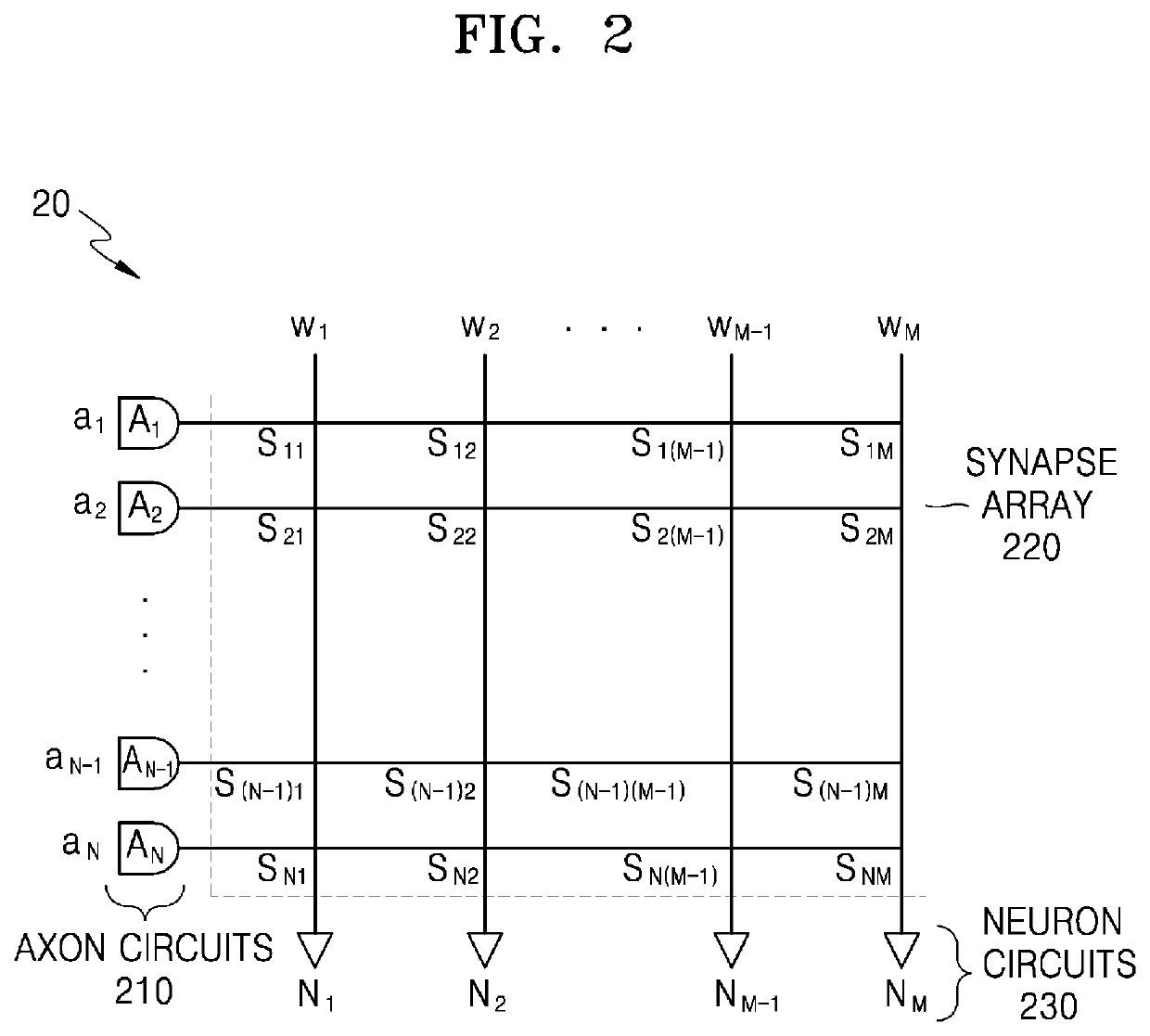

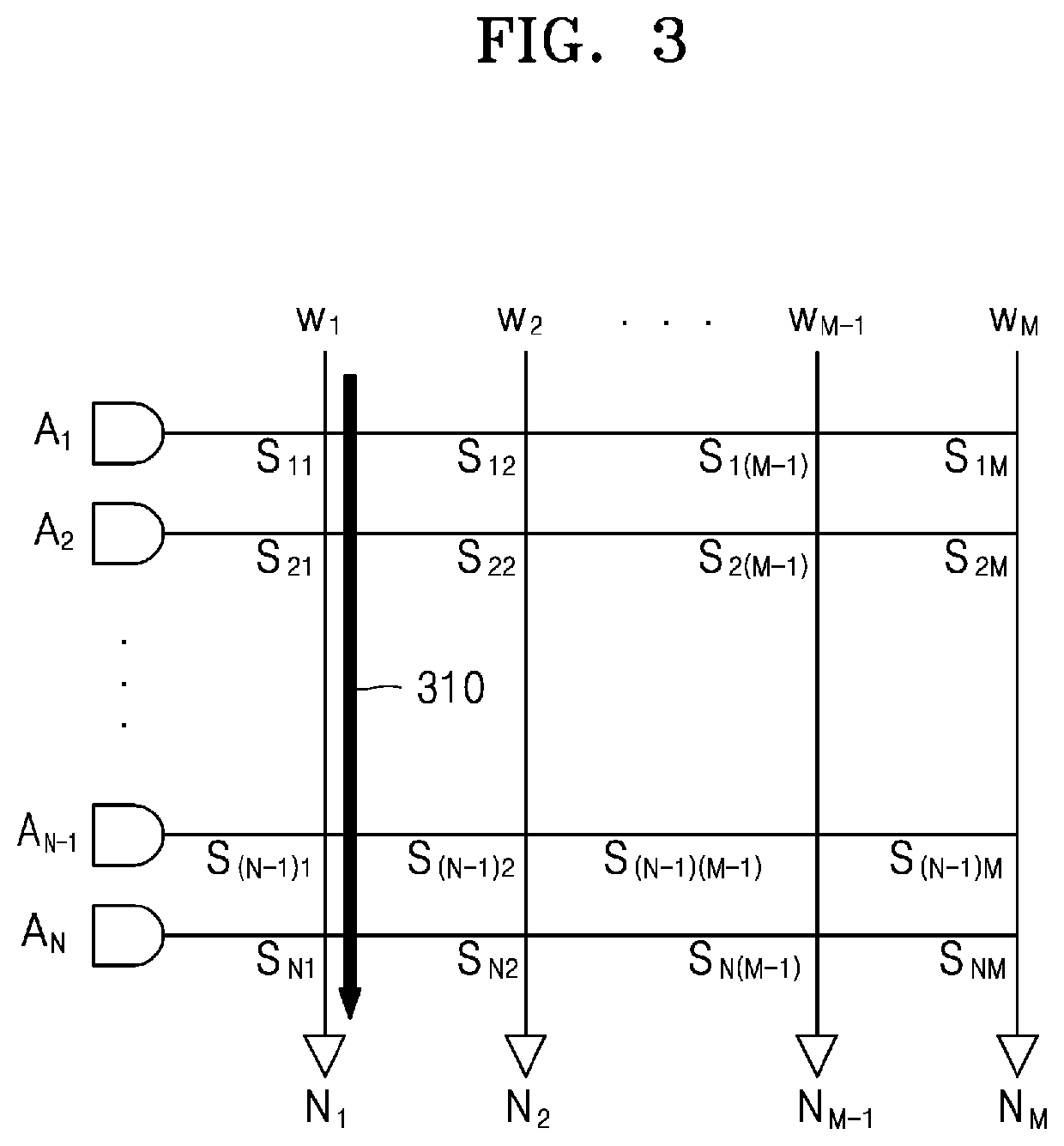

Using storage cells to perform computation

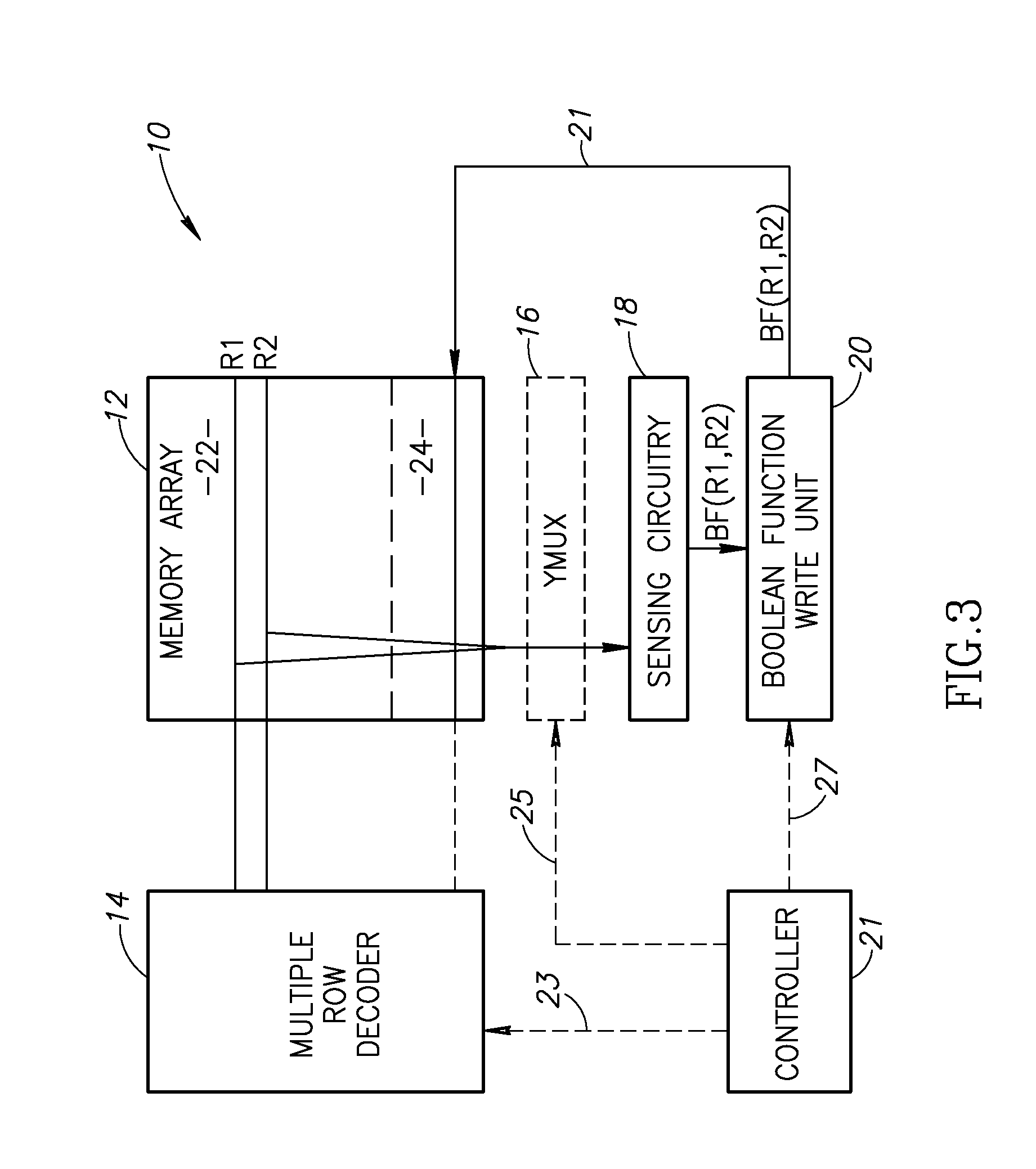

An in-memory processor includes a memory array which stores data and an activation unit to activate at least two cells in a column of the memory array at generally the same time thereby to generate a Boolean function output of the data of the at least two cells. Another embodiment shows a content addressable memory (CAM) unit without any in-cell comparator circuitry.

Owner:GSI TECH

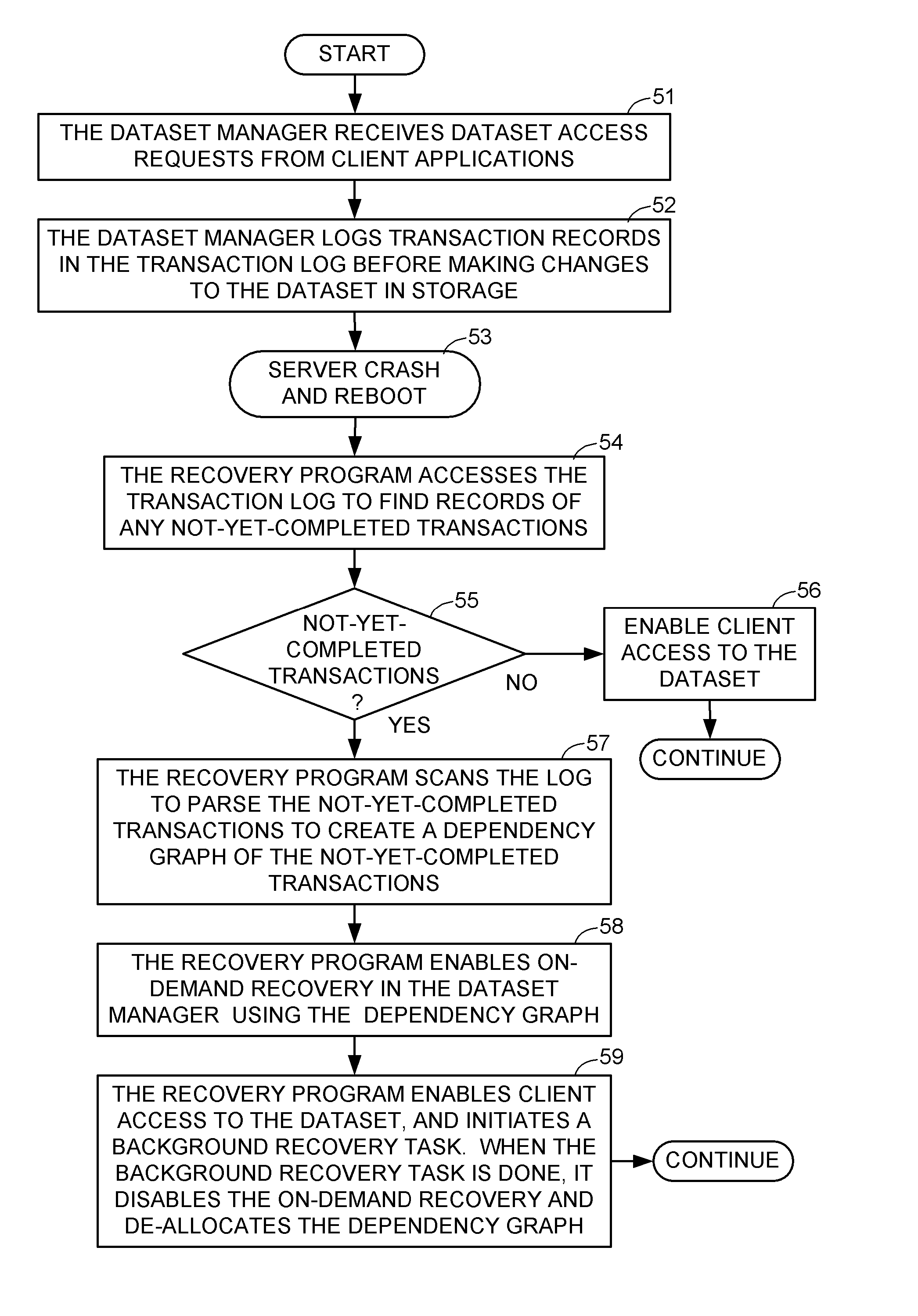

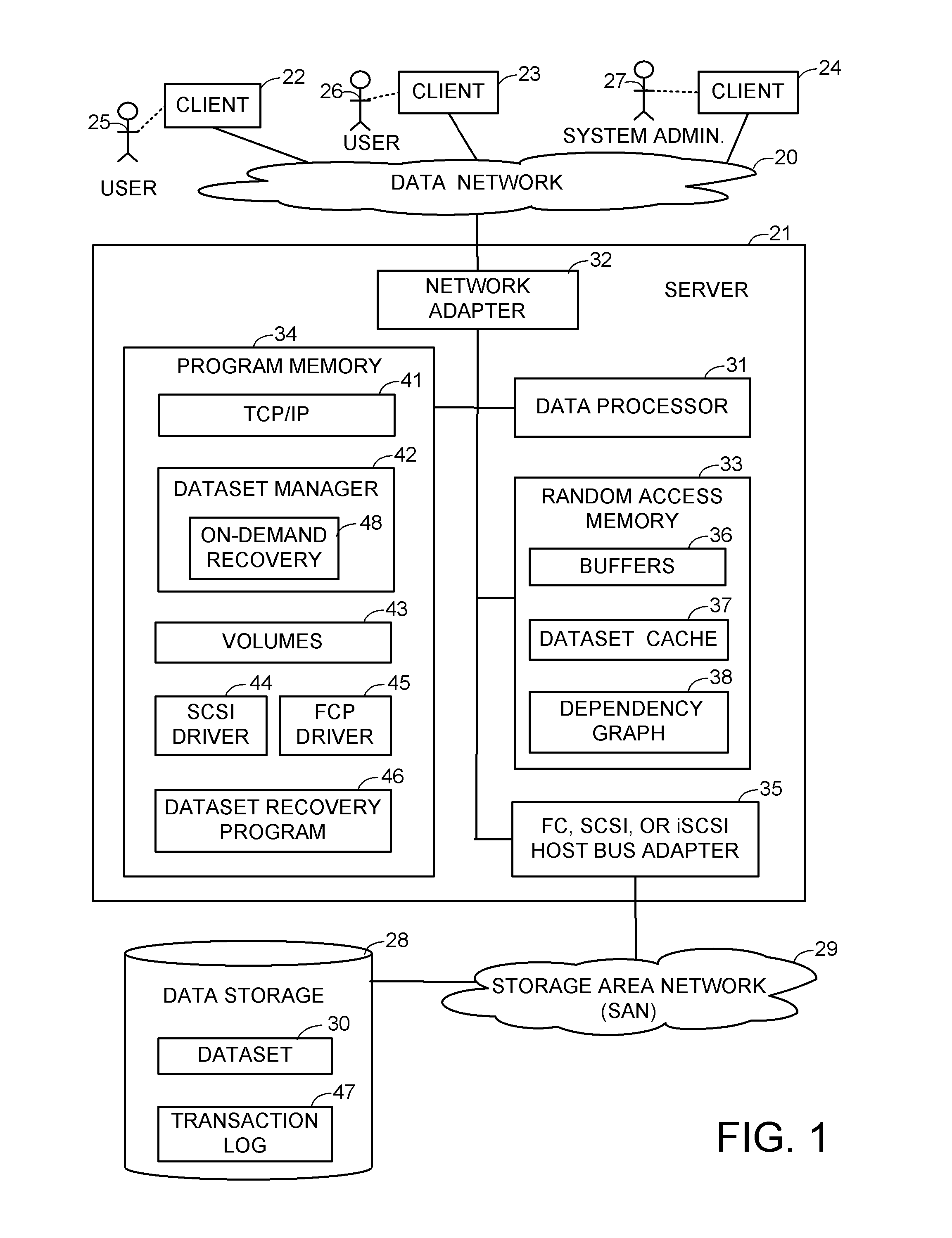

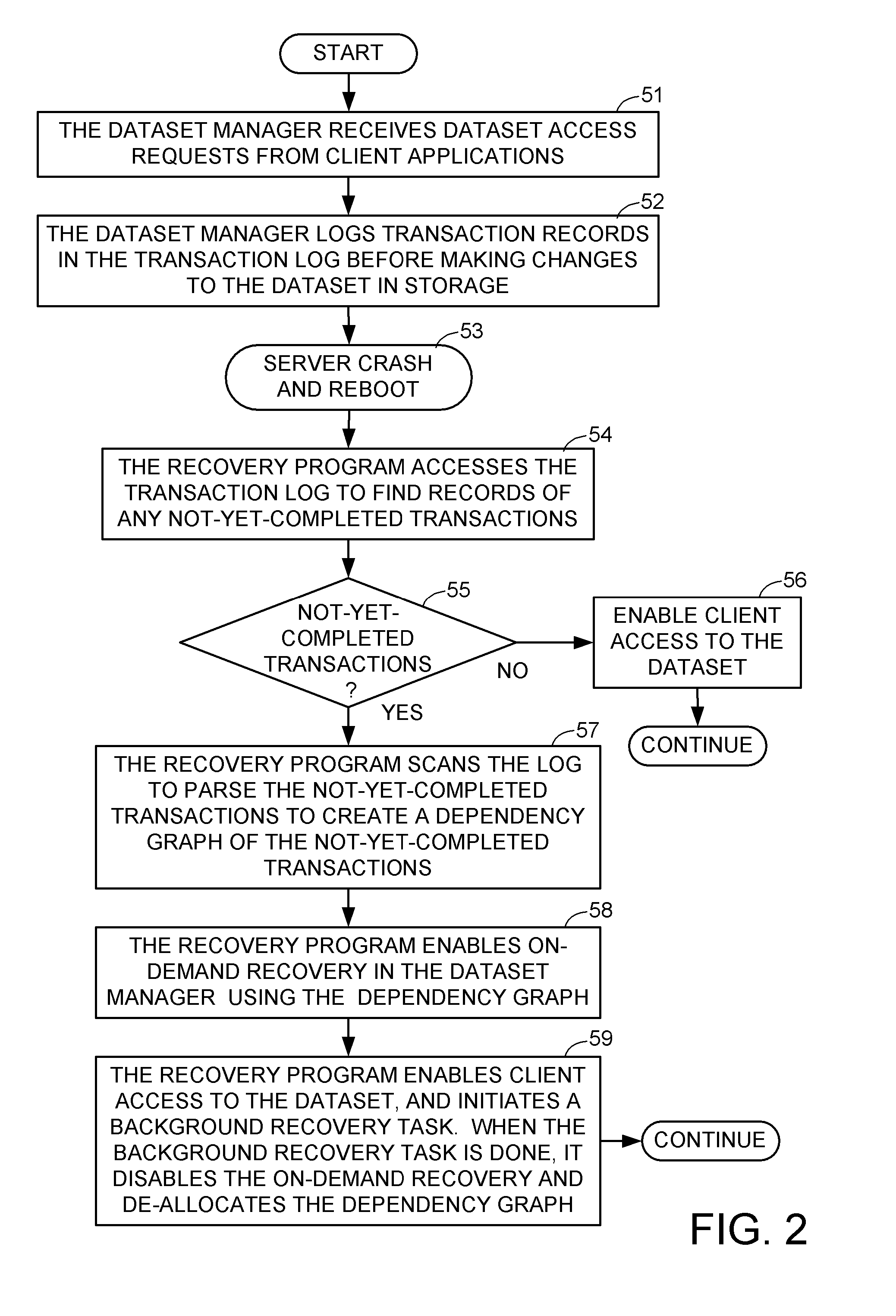

Multi-threaded in-memory processing of a transaction log for concurrent access to data during log replay

ActiveUS9021303B1Shorten the timeRedundant operation error correctionClient-sideIn-Memory Processing

A dataset is recovered after a server reboot while clients access the dataset. In response to the reboot, not-yet-completed transactions in a log are parsed to create, for each of the dataset blocks modified by these active transactions, a respective block replay list of the active transactions that modify the block. Once the block replay lists have been created, clients may access specified blocks of the dataset after on-demand recovery of the specified blocks. The on-demand recovery is concurrent with a background recovery task that replays the replay lists. To accelerate log space recovery, the parsing of the log inserts each replay list into a first-in first-out queue serviced by multiple replay threads. The queue can also be used as the cache writeback queue, so that the cache index is used for lookup of the replay list and the recovery state of a given block.

Owner:EMC IP HLDG CO LLC

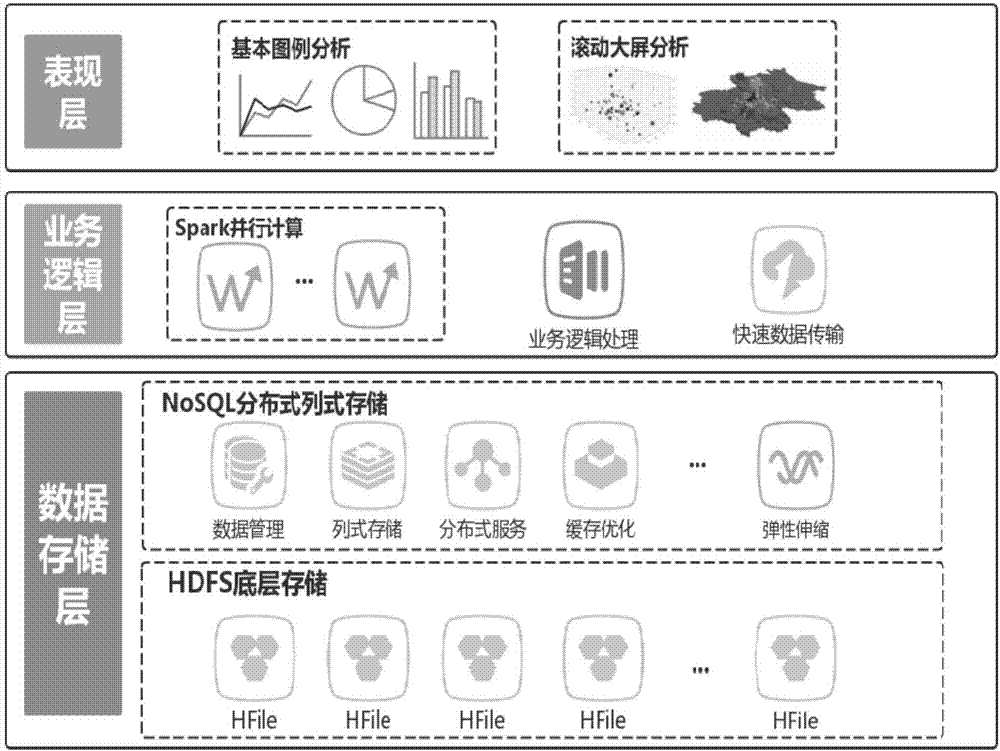

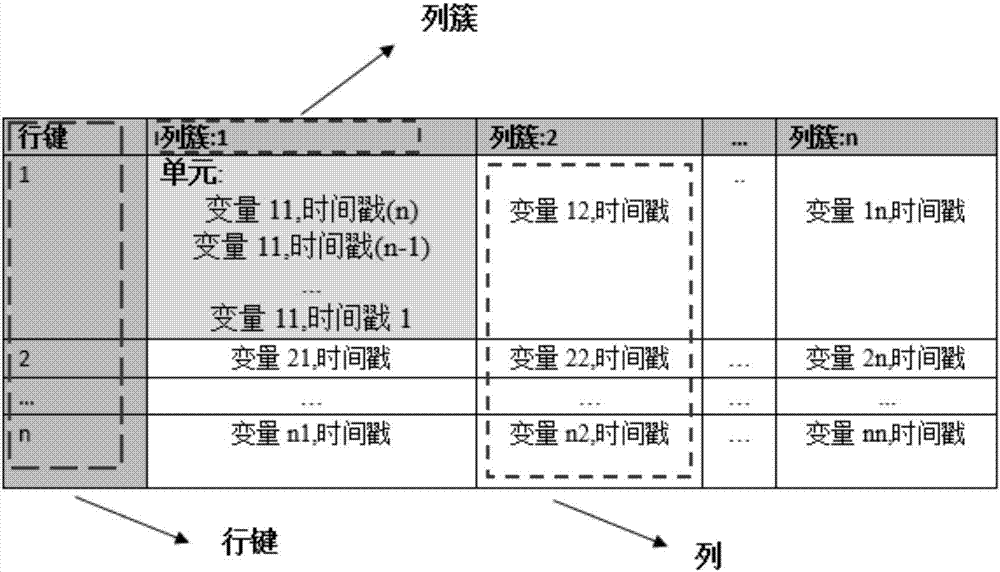

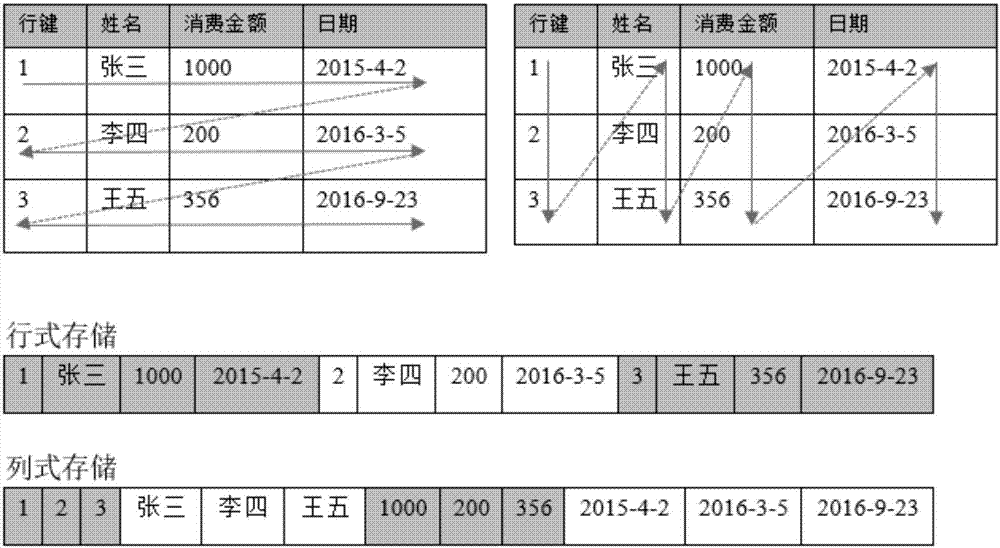

Big-data parallel computing method and system based on distributed columnar storage

InactiveCN107329982AReduce the frequency of read and write operationsShort timeResource allocationSpecial data processing applicationsParallel computingLarge screen

The invention discloses a big-data parallel computing method and system based on distributed columnar storage. Data which is most often accessed currently is stored by using the NoSQL columnar storage based on a memory, the cache optimizing function is achieved, and quick data query is achieved; a distributed cluster architecture, big data storing demands are met, and the dynamic scalability of the data storage capacity is achieved; combined with a parallel computing framework based on Spark, the data analysis and the parallel operation of a business layer are achieved, and the computing speed is increased; the real-time data visual experience of the large-screen rolling analysis is achieved by using a graph and diagram engine. In the big-data parallel computing method and system, the memory processing performance and the parallel computing advantages of a distributed cloud server are given full play, the bottlenecks of a single server and serial computing performance are overcome, the redundant data transmission between data nodes is avoided, the real-time response speed of the system is increased, and quick big-data analysis is achieved.

Owner:SOUTH CHINA UNIV OF TECH

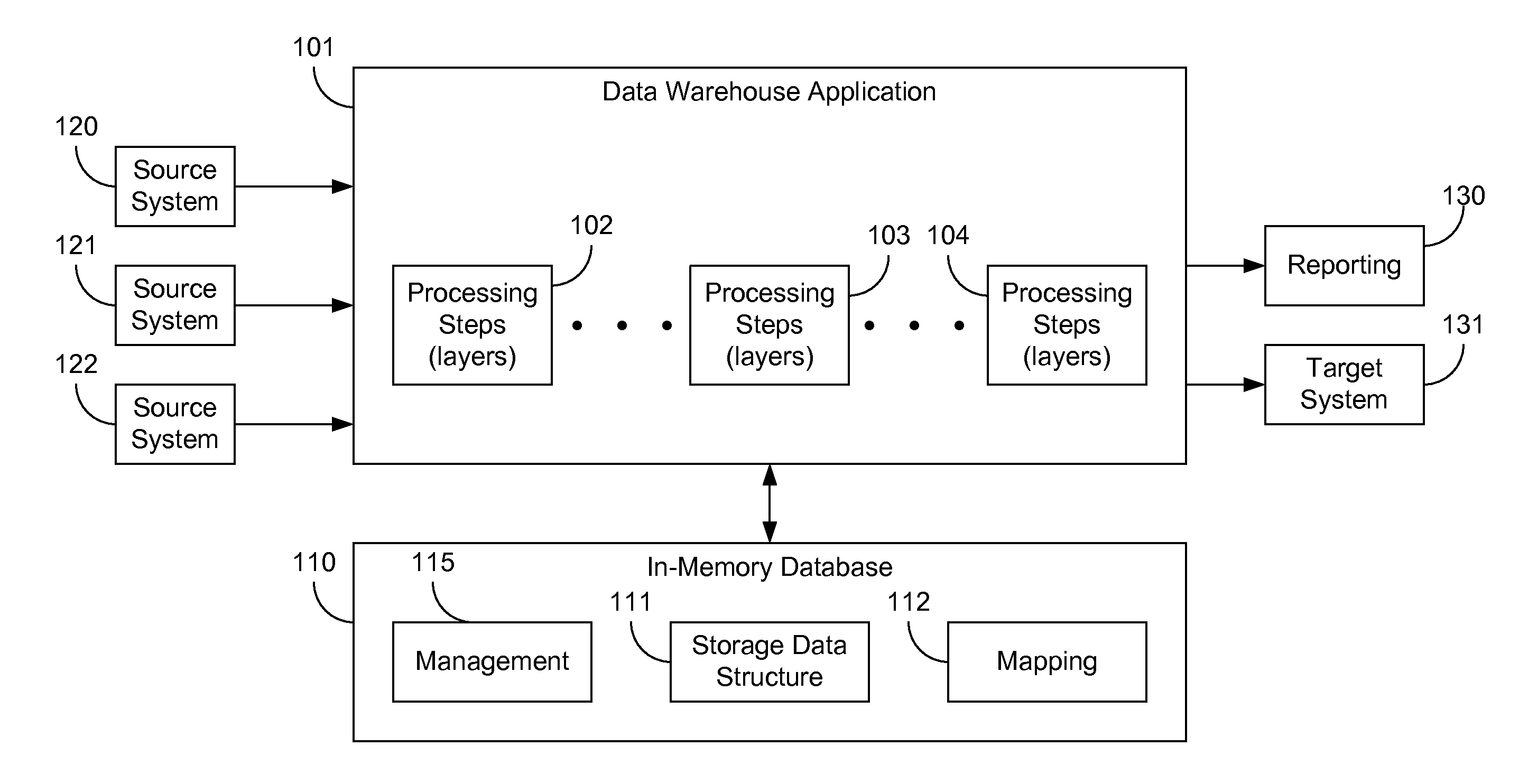

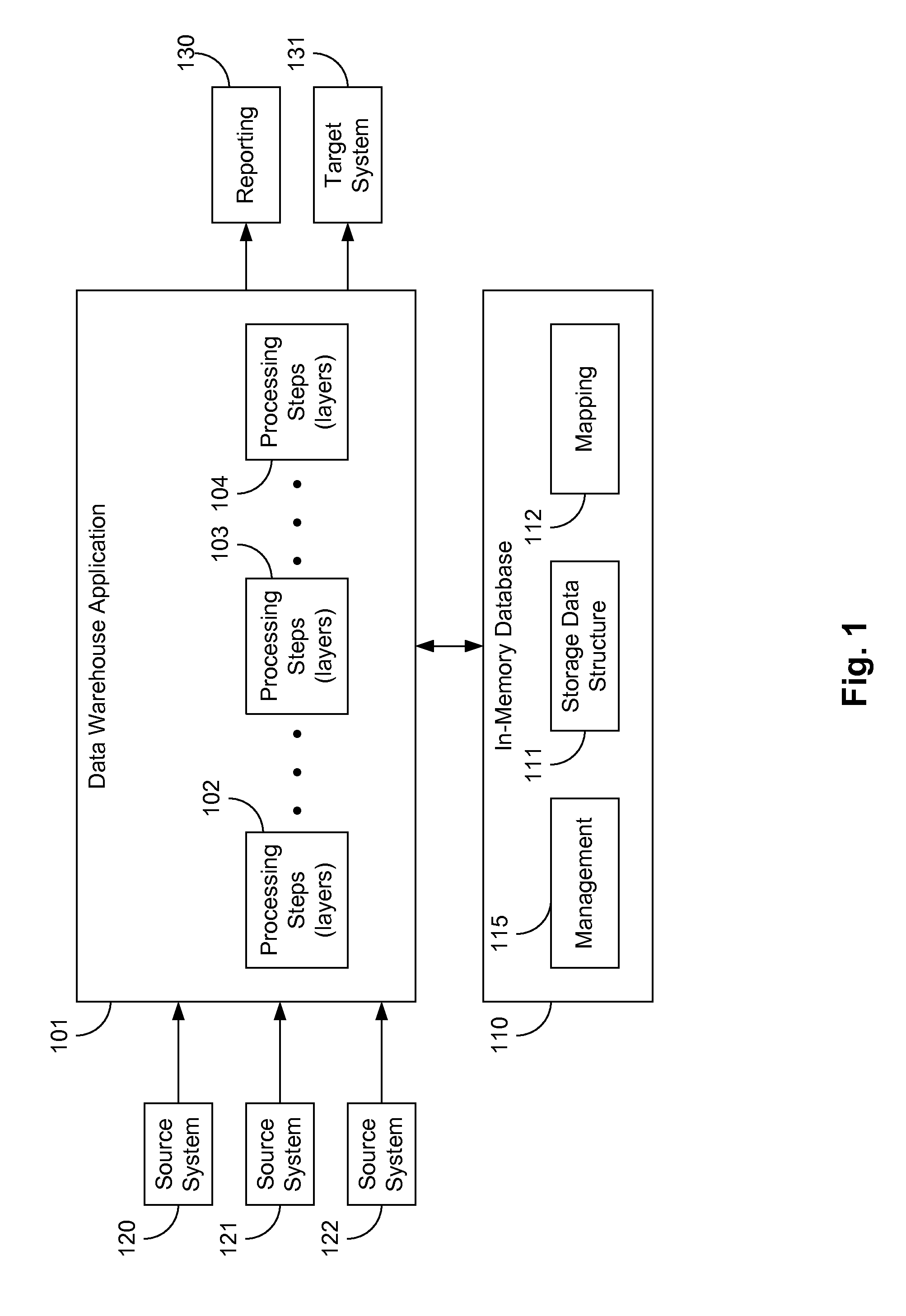

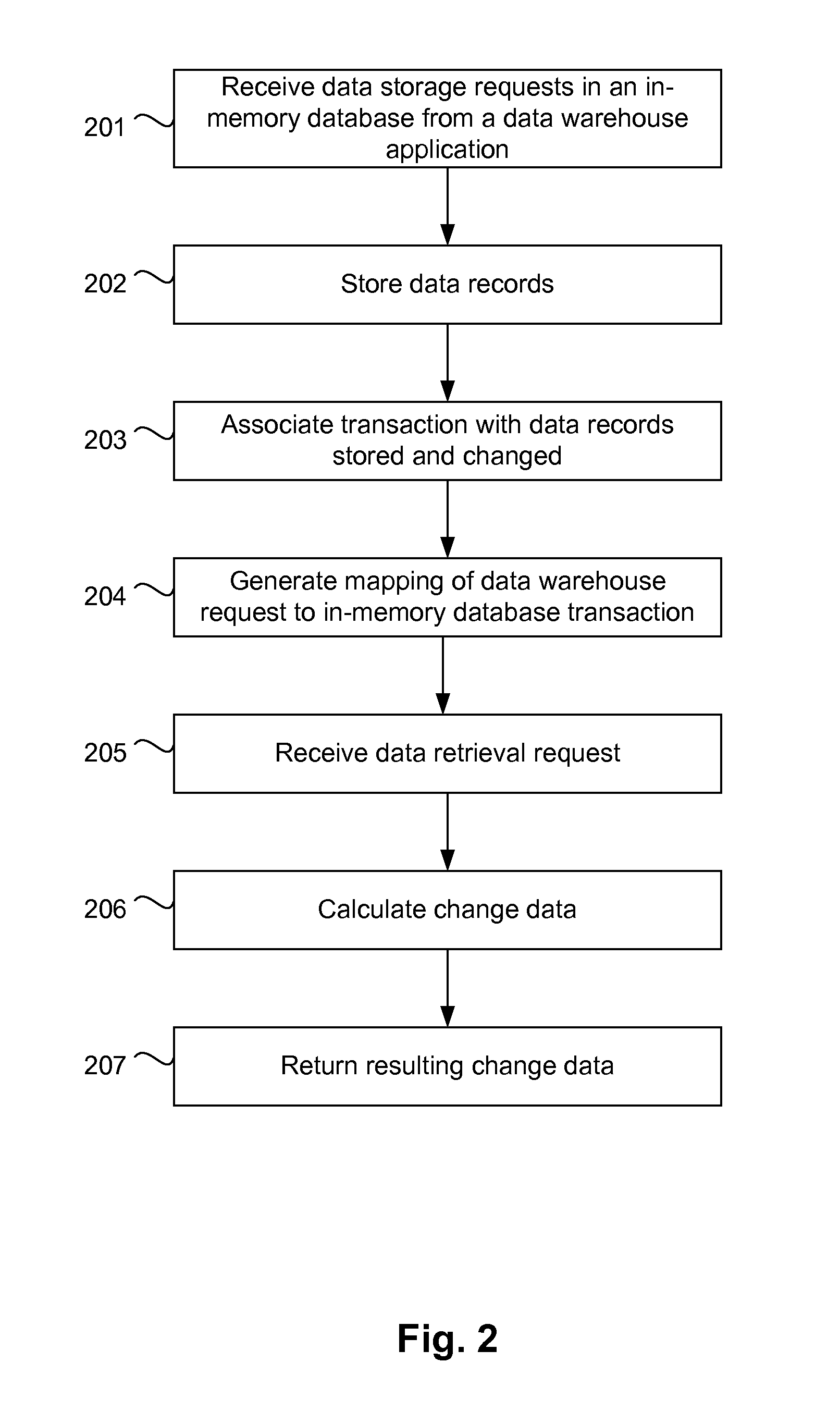

In-Memory Processing for a Data Warehouse

ActiveUS20120259809A1Digital data information retrievalDigital data processing detailsIn-memory databaseData warehouse

Embodiments of the present invention include in-memory processing for data warehouse applications. In one embodiment, data records from a data warehouse application are stored in a data storage structure of an in-memory database. Data received from the data warehouse may be stored in a queue and loaded into the data storage structure according to predefined rules. Stored data records are associated with in-memory database transactions that caused the stored data record to be stored, and may further be associated with transactions that caused the stored data records to be changed. A mapping is generated to associate requests from the data warehouse application with in-memory database transactions. The data warehouse application may retrieve data in a change data format calculated on-the-fly.

Owner:SAP AG +1

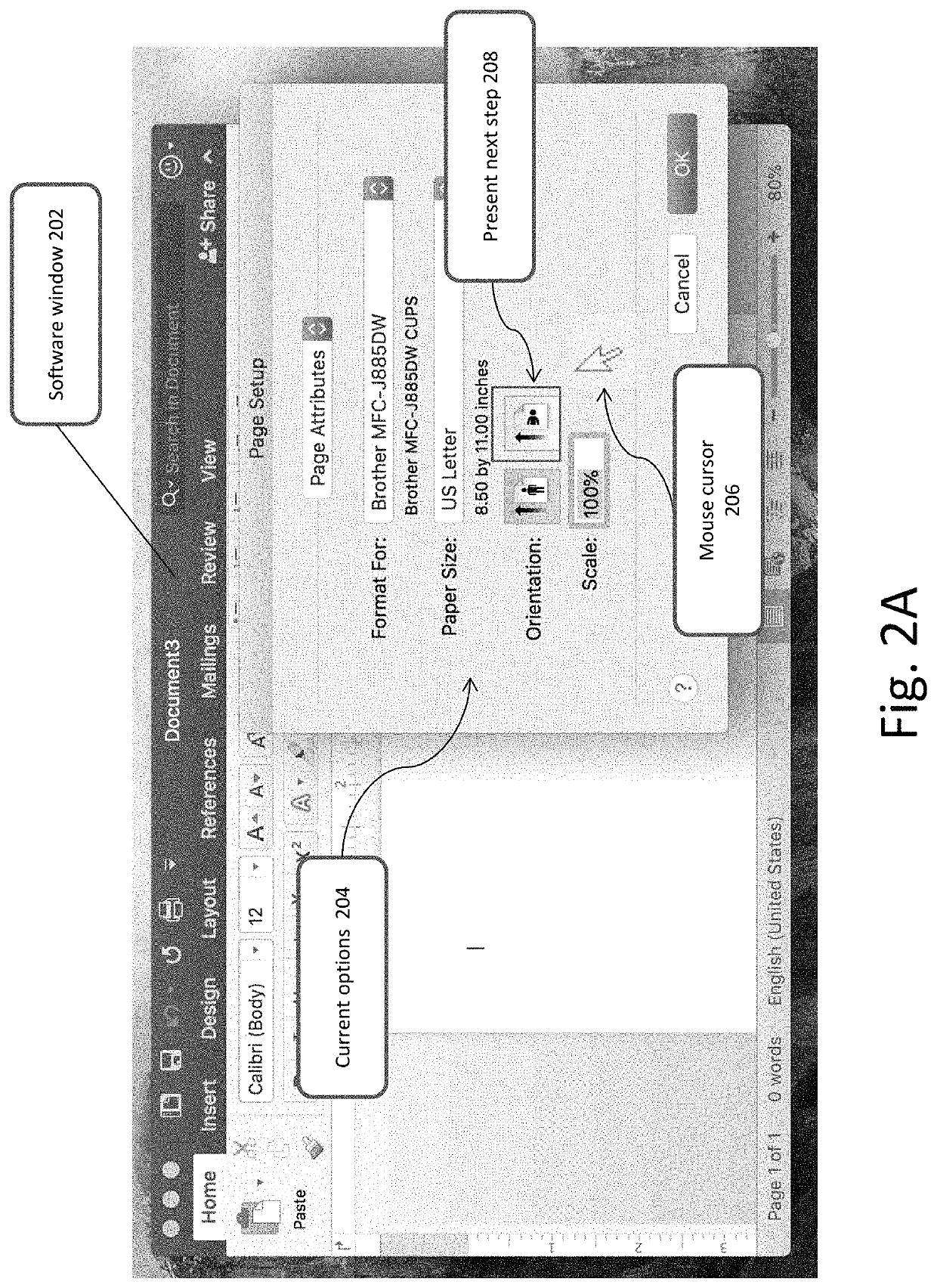

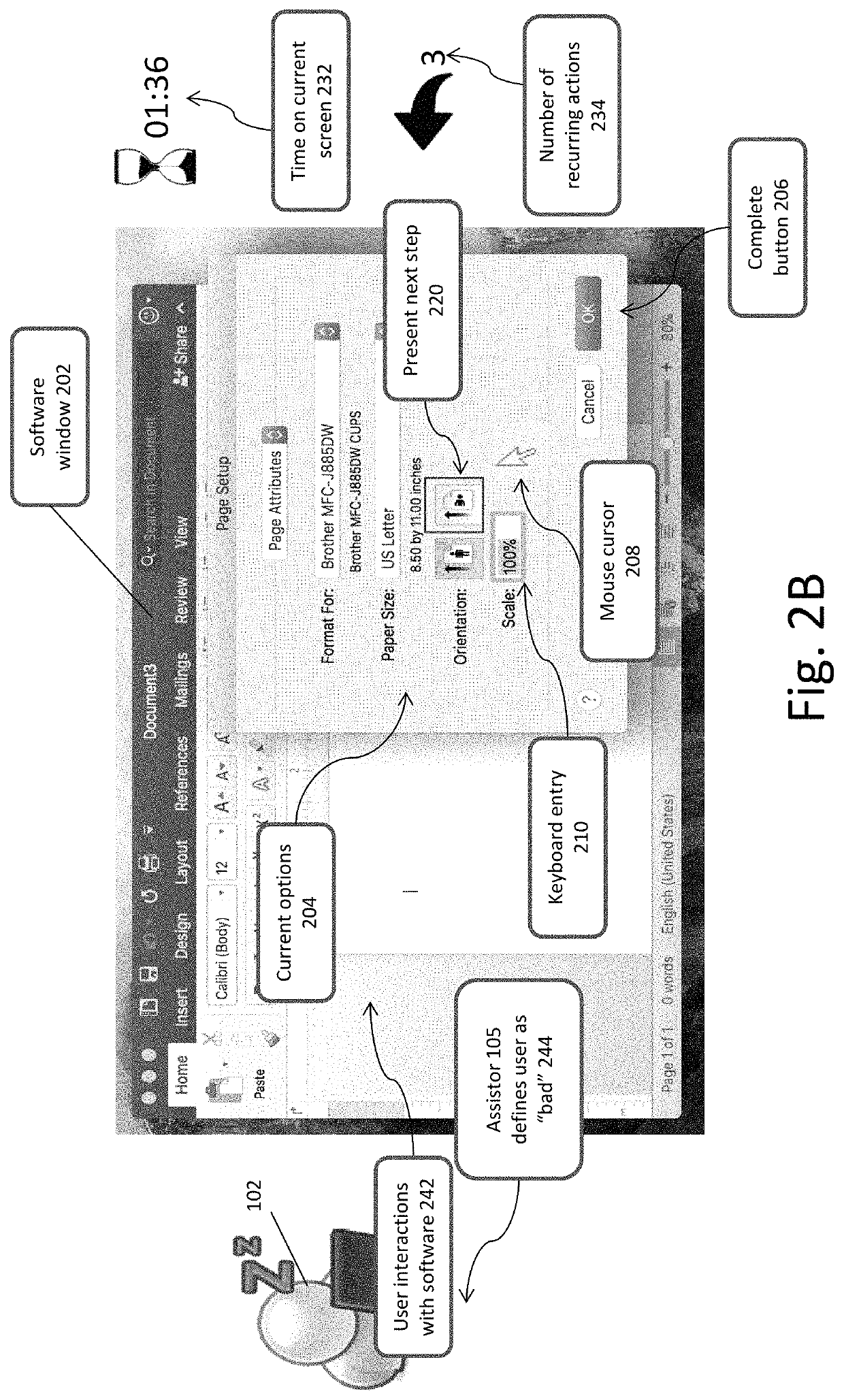

User interface advisor

ActiveUS10846105B2Input/output for user-computer interactionError detection/correctionTelecommunications linkIn-Memory Processing

A server system, the server system including: a memory processor; and a communication link, where the server system includes a program designed to construct a user interface experience graph from a plurality of prior user experience interfacing with a specific software application, and where the prior user experience interfacing had been received into a memory of the memory processor by the communication link.

Owner:GRANOT ILAN YEHUDA +1

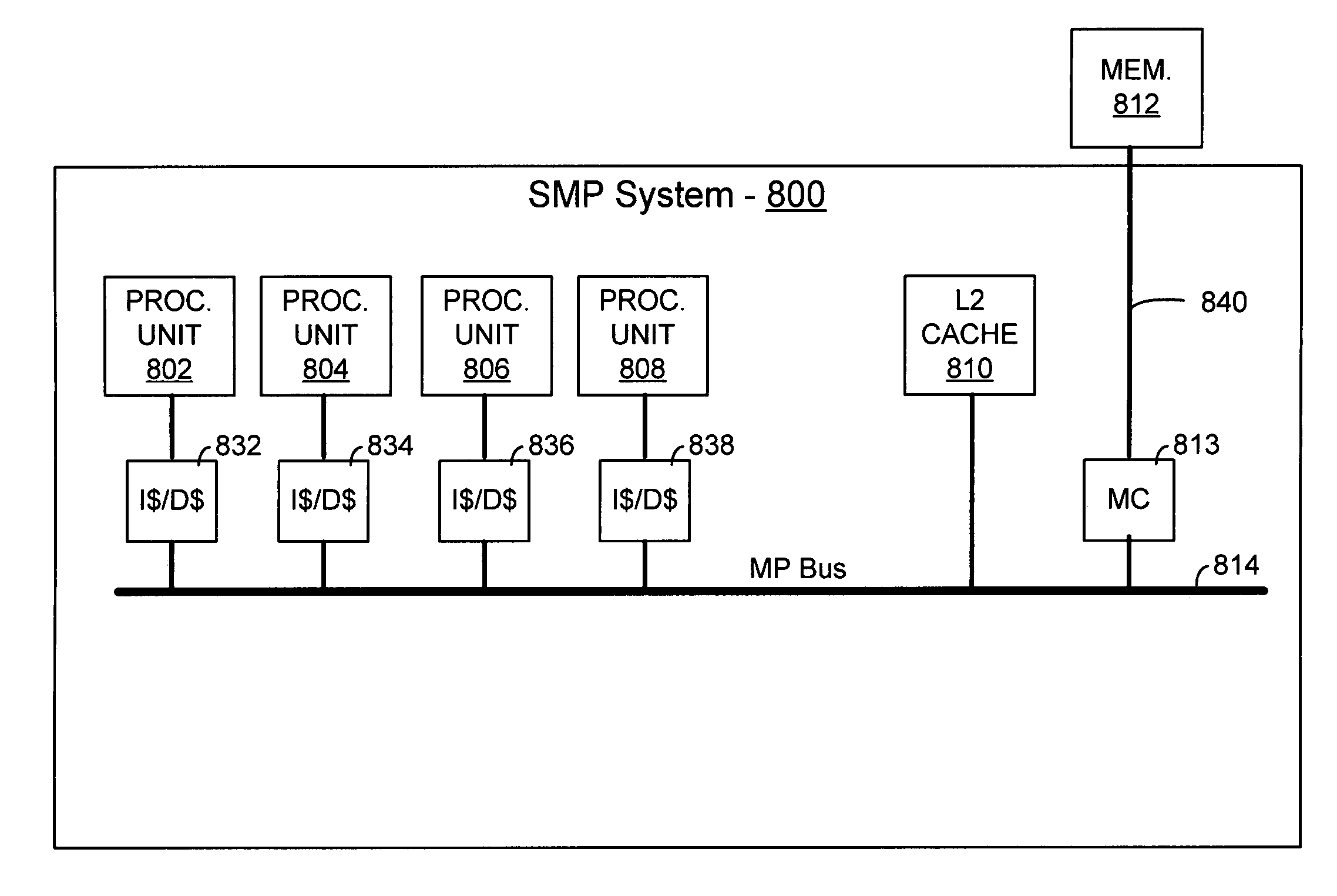

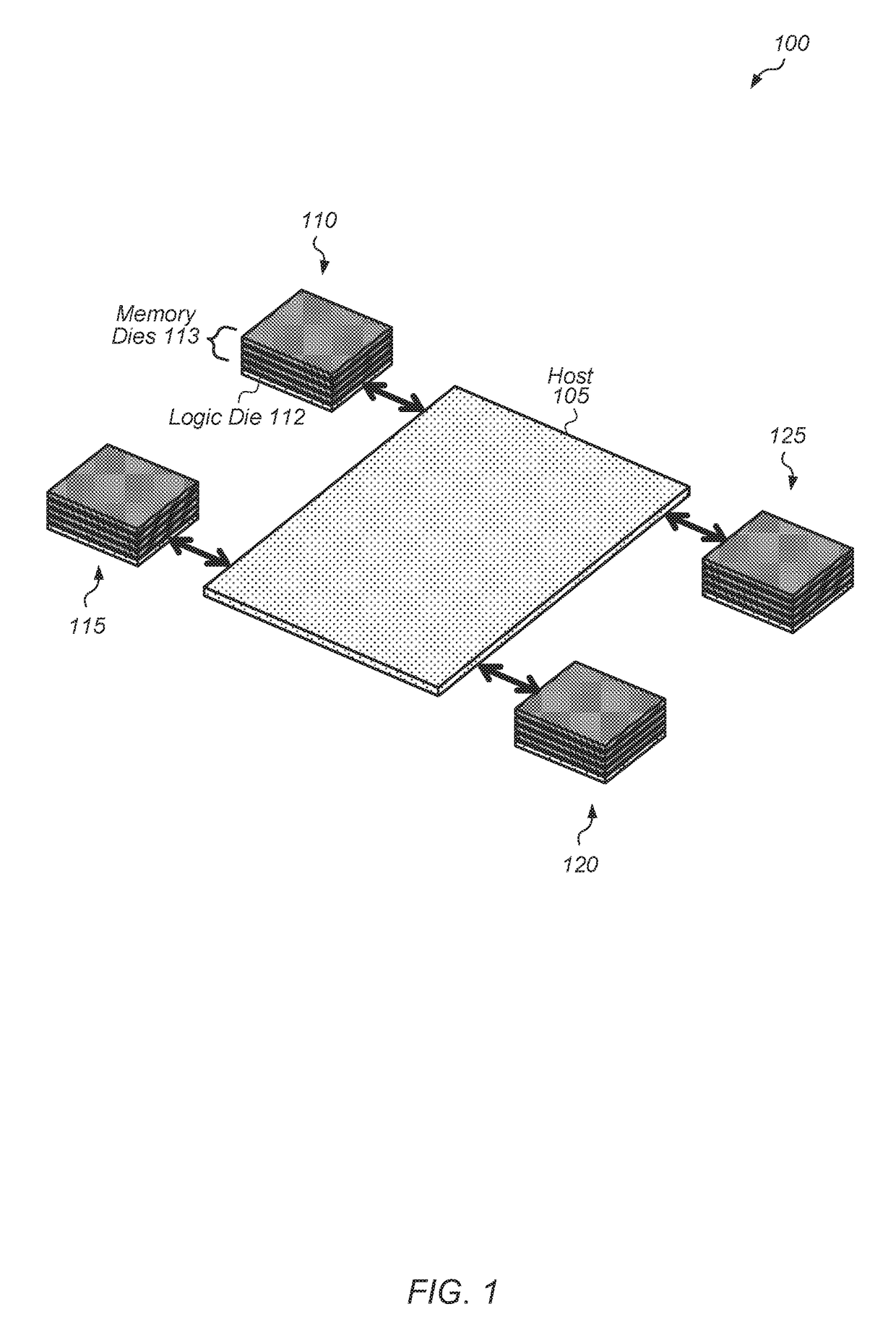

Coherent shared memory processing system

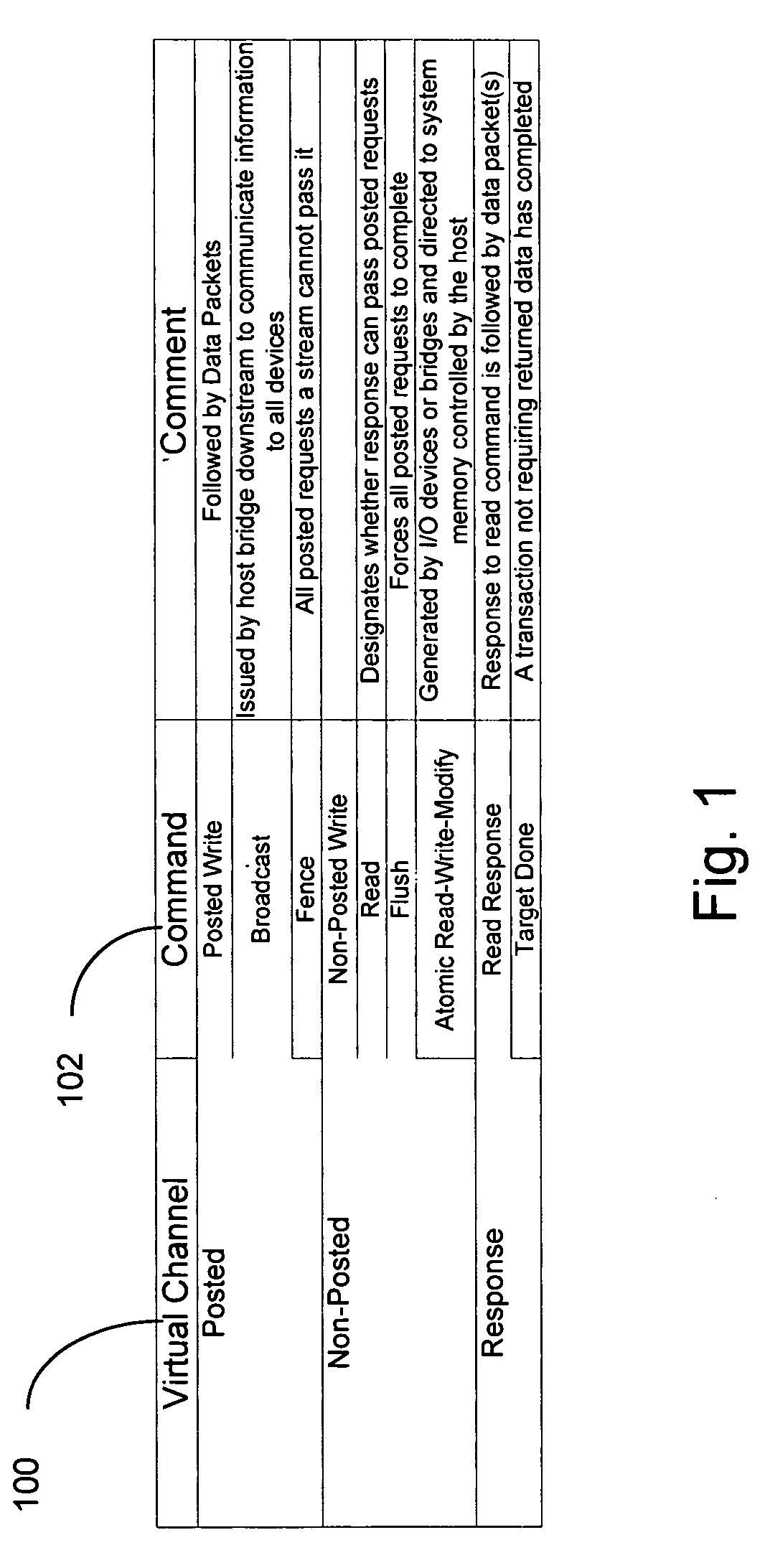

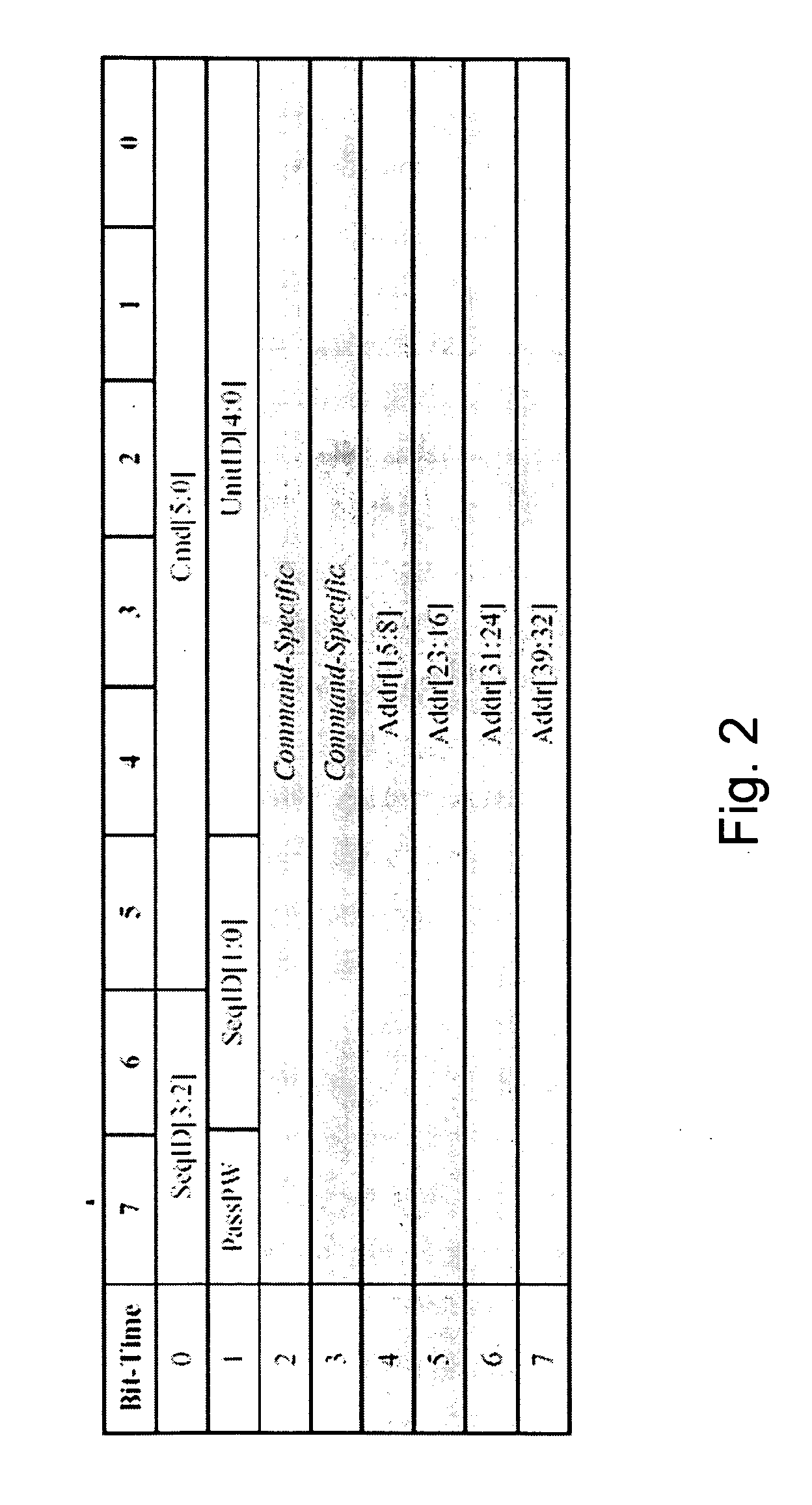

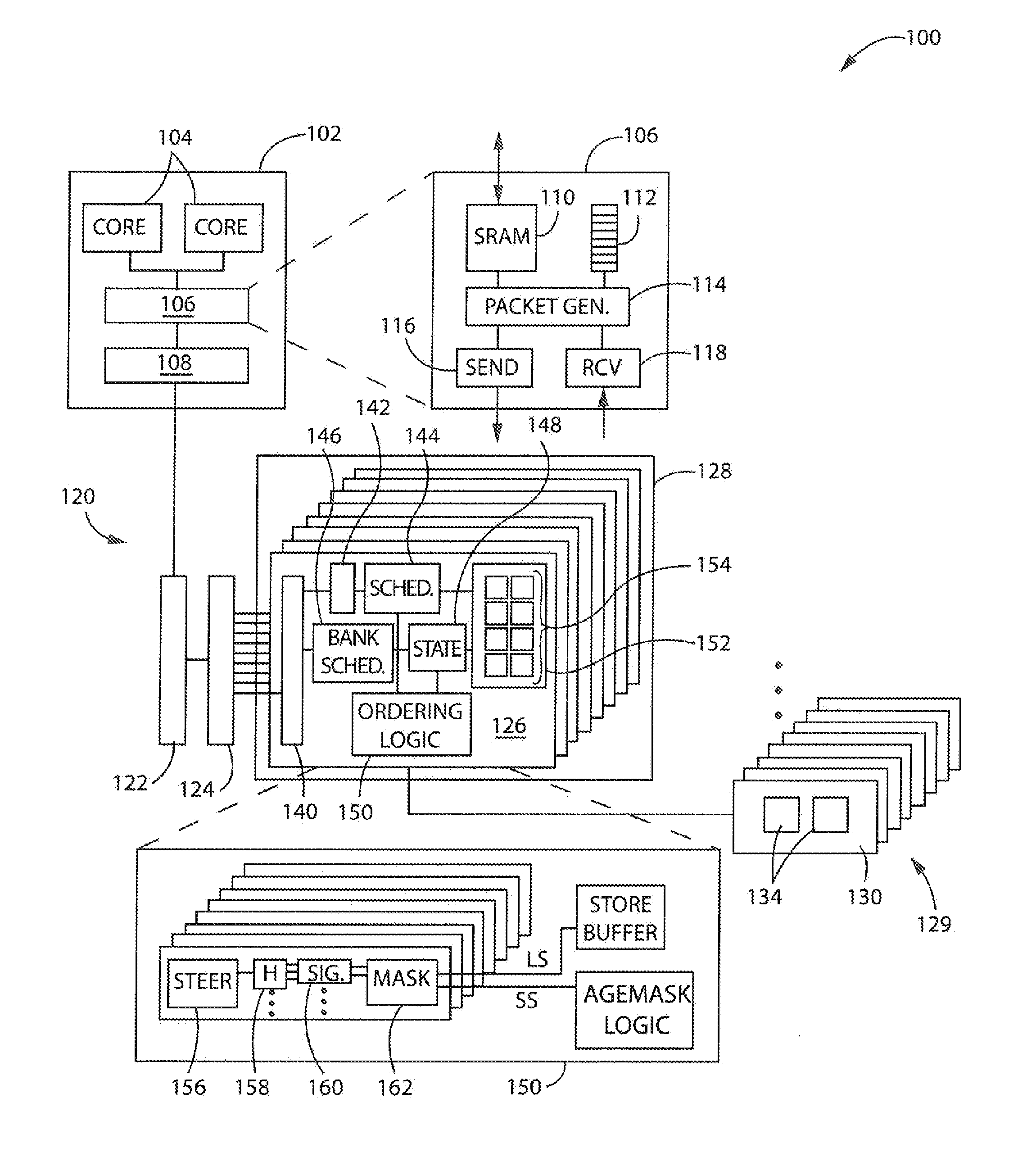

A shared memory system includes a plurality of processing nodes and a packetized input / output link. Each of the plurality of processing nodes includes a processing resource and memory. The packetized I / O link operably couples the plurality of processing nodes together. One of the plurality of processing nodes is operably coupled to: initiate coherent memory transactions such that another one of plurality of processing nodes has access to a home memory section of the memory of the one of the plurality of processing nodes; and facilitate transmission of a coherency transaction packet between the memory of the one of the plurality of processing nodes and the another one of the plurality of processing nodes over the packetized I / O link.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Using storage cells to perform computation

An in-memory processor includes a memory array which stores data and an activation unit to activate at least two cells in a column of the memory array at generally the same time thereby to generate a Boolean function output of the data of the at least two cells. Another embodiment shows a content addressable memory (CAM) unit without any in-cell comparator circuitry.

Owner:GSI TECH

Method for mass model data dynamic scheduling and real-time asynchronous loading under virtual reality

InactiveCN103914868ASolve bottlenecksThe ability to expand the scale of the scene3D modellingComputational scienceViewing frustum

The invention discloses a method for mass model data dynamic scheduling and real-time asynchronous loading under virtual reality. The method includes the following steps of firstly, preprocessing 3D model scene data, secondly, cutting and partitioning a whole model scene, thirdly, conducting multithreading parallel distribution loading and fourthly, clipping a view cone. Based on a view cone clipping algorithm for acquiring the point of intersection of the view cone and a topographic region, real-time clipping based on the topographic region in dynamic scheduling is achieved, by the adoption of a multithreading processing mechanism of a model data partitioning scheduling and rending pipeline, a dynamic scheduling and drawing pipeline and a data processing scheduling pipeline between different mediums can be asynchronously loaded, and therefore dynamic balance of loading and performance efficiency of the mass scene model data is achieved in the hardware environment with a limited memory, a limited processor and the like.

Owner:柳州腾龙煤电科技股份有限公司

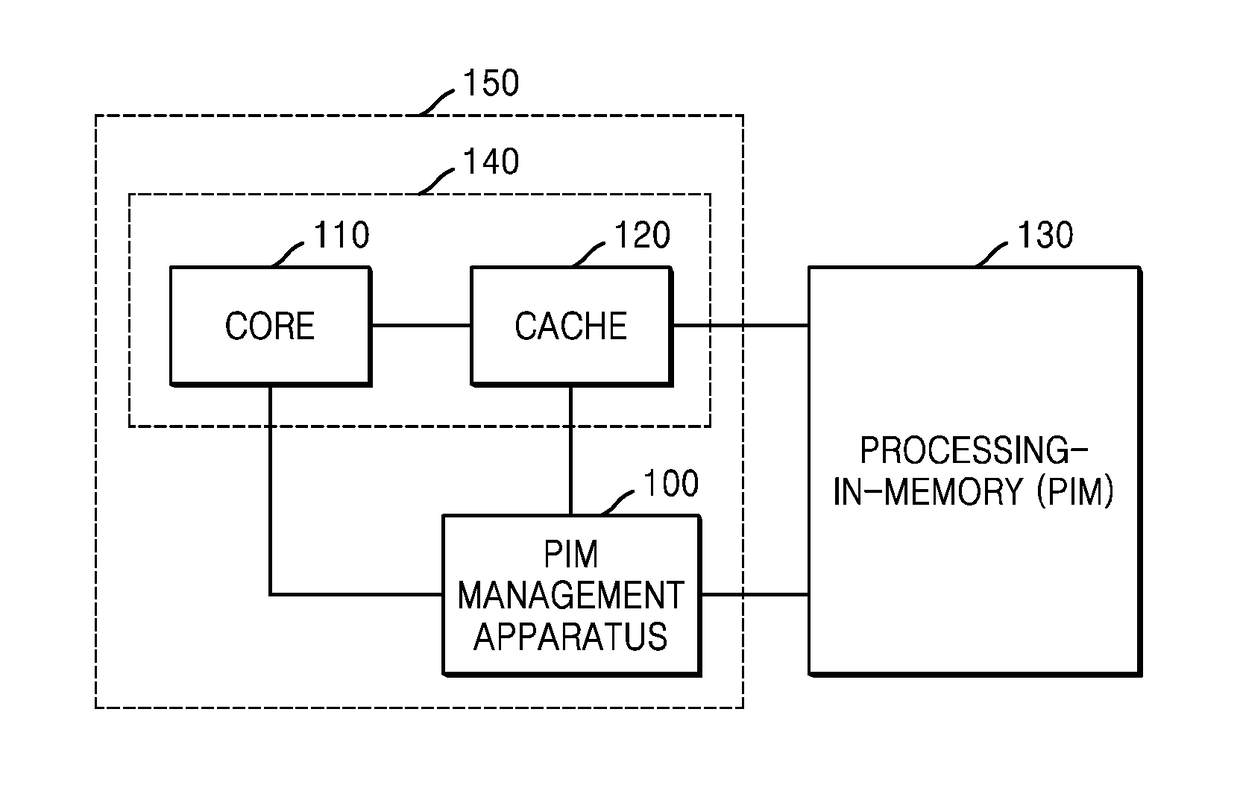

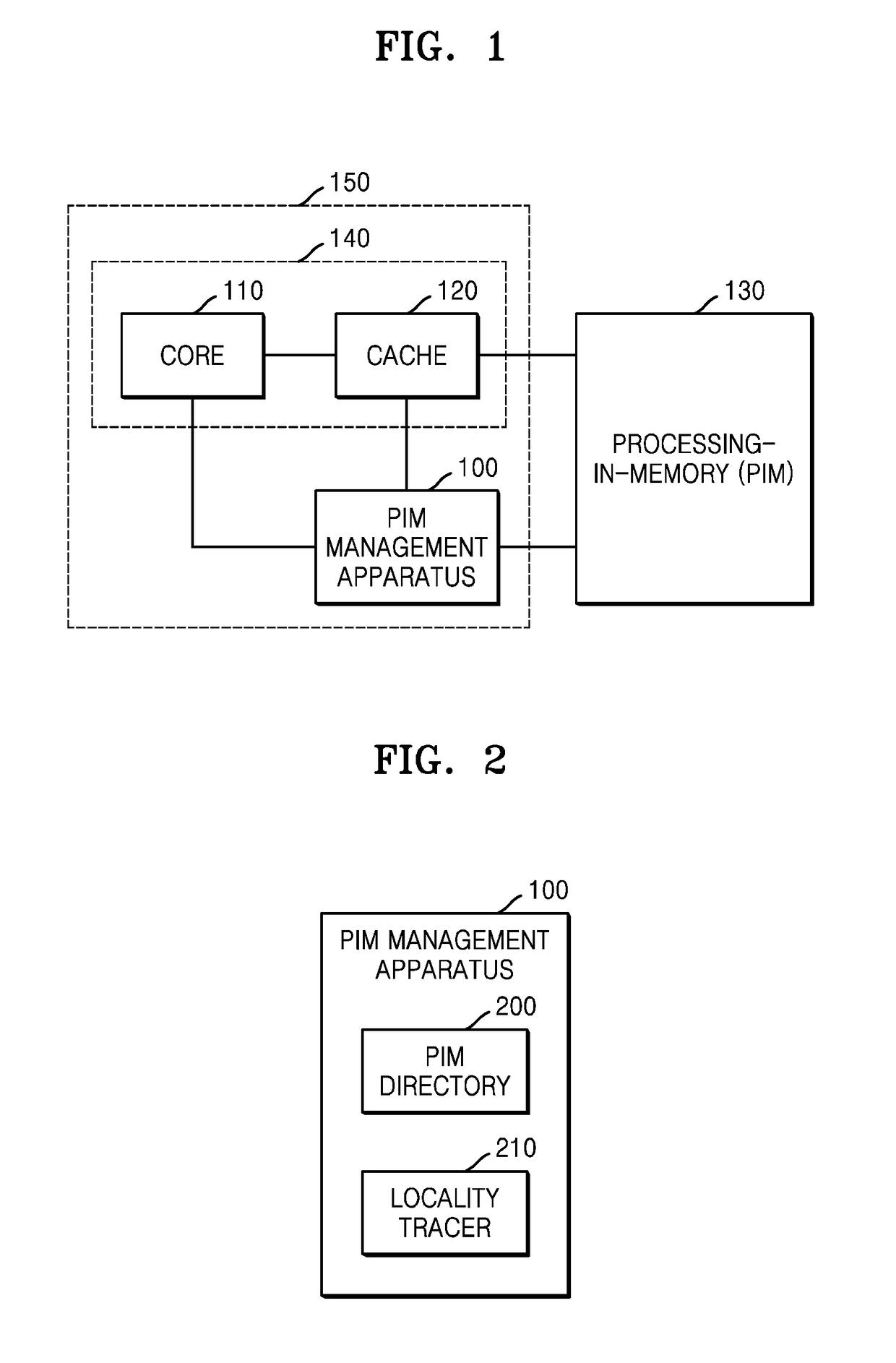

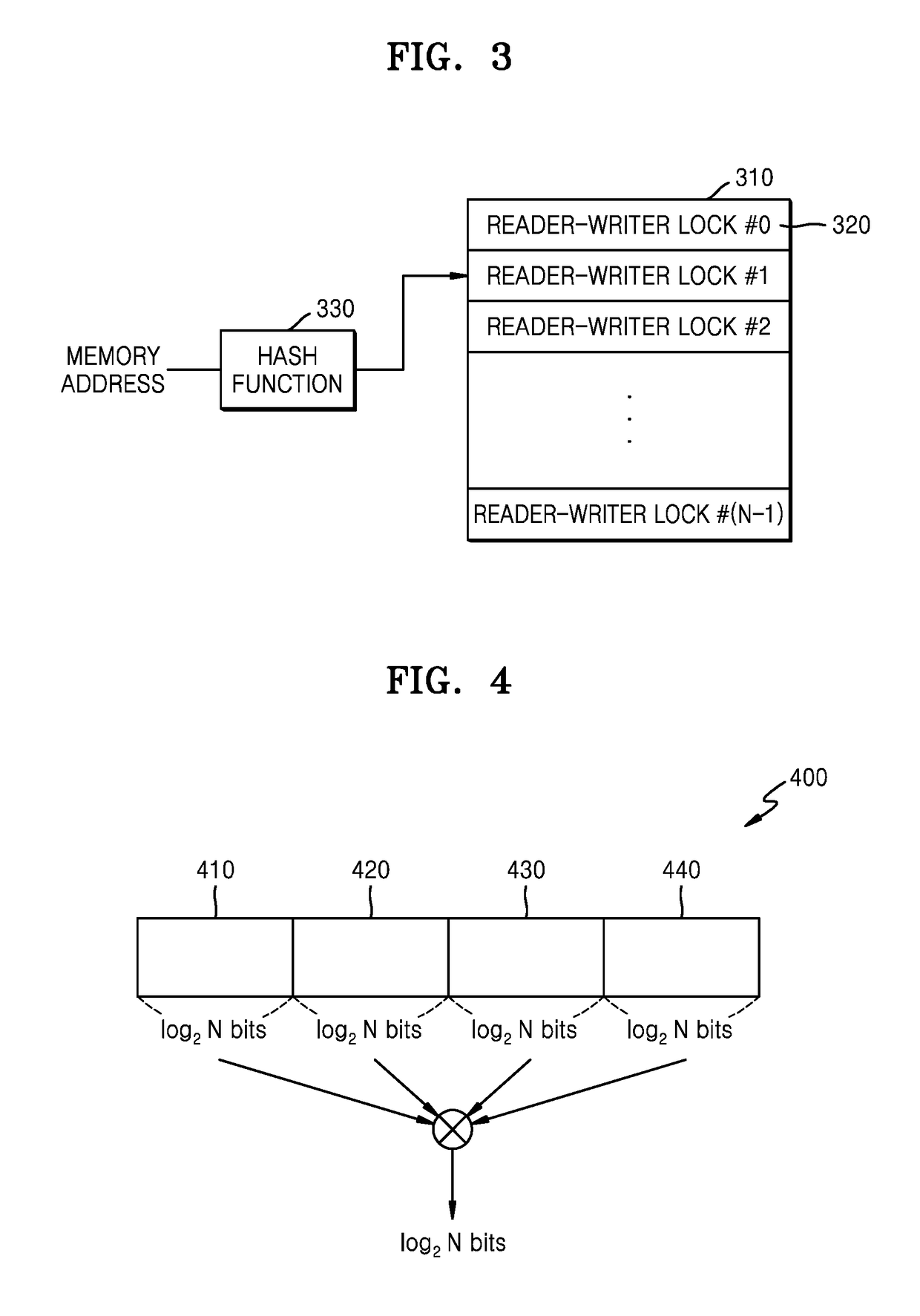

Method and apparatus for processing instructions using processing-in-memory

ActiveUS20180336035A1Control performanceIncreased memory bandwidthMemory architecture accessing/allocationProgram synchronisationMemory addressProcessing Instruction

Provided is a method and apparatus for processing instructions using a processing-in-memory (PIM). A PIM management apparatus includes: a PIM directory comprising a reader-writer lock regarding a memory address that an instruction accesses; and a locality tracer configured to figure out locality regarding the memory address that the instruction accesses and determine whether or not an object that executes the instruction is a PIM.

Owner:SAMSUNG ELECTRONICS CO LTD

Coherent shared memory processing system

A shared memory system includes a plurality of processing nodes and a packetized input / output link. Each of the plurality of processing nodes includes a processing resource and memory. The packetized I / O link operably couples the plurality of processing nodes together. One of the plurality of processing nodes is operably coupled to: initiate coherent memory transactions such that another one of plurality of processing nodes has access to a home memory section of the memory of the one of the plurality of processing nodes; and facilitate transmission of a coherency transaction packet between the memory of the one of the plurality of processing nodes and the another one of the plurality of processing nodes over the packetized I / O link.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

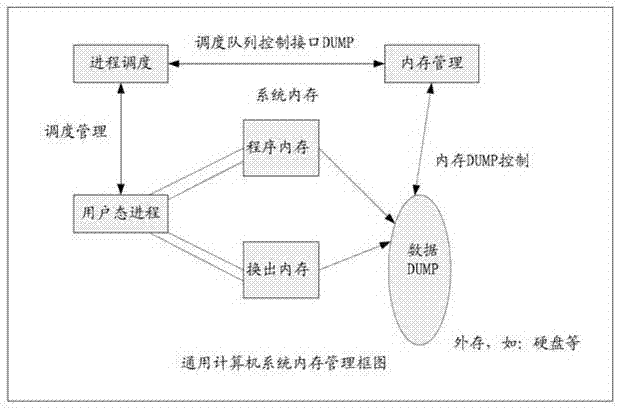

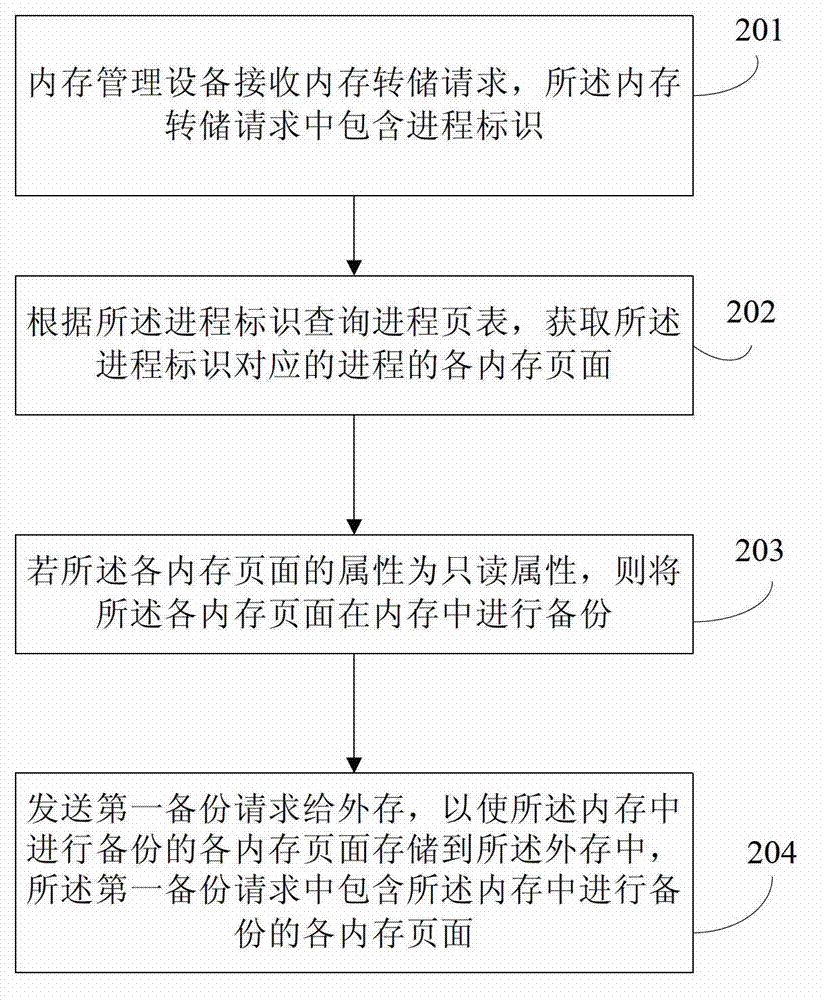

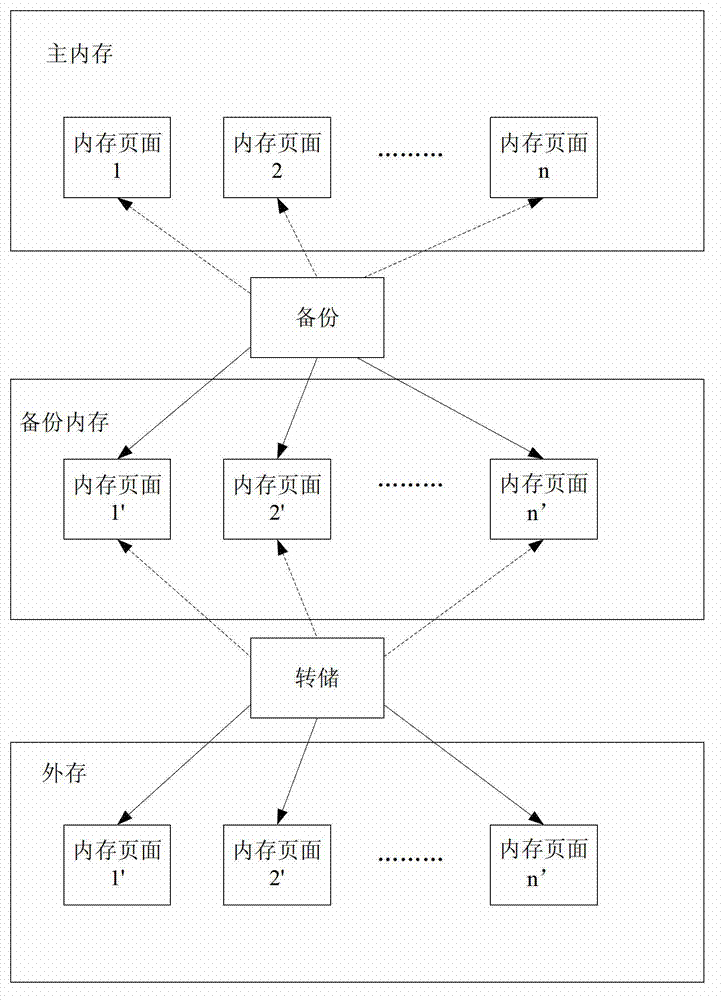

Memory processing method and memory management equipment

InactiveCN102831069AEnsure consistencyMemory adressing/allocation/relocationRedundant operation error correctionMemory processingIn-Memory Processing

The embodiment of the invention discloses a memory processing method and memory management equipment. The method comprises the steps: receiving a memory dump request by the memory management equipment, wherein the memory dump request contains a process identification (PID), and memory pages of the process corresponding to the PID are needed dump memory pages; according to the PID, querying a process page table, and acquiring the memory pages of the process corresponding to the PID; if the attributes of the memory pages are read only attributes, carrying out backups of the memory pages in a memory; and sending a first backup request to external memory so that the external memory copies the backup memory pages into the external memory, wherein the first backup request contains the memory pages of the backups, and can ensure the data consistency while realizing online memory dump.

Owner:HUAWEI TECH CO LTD

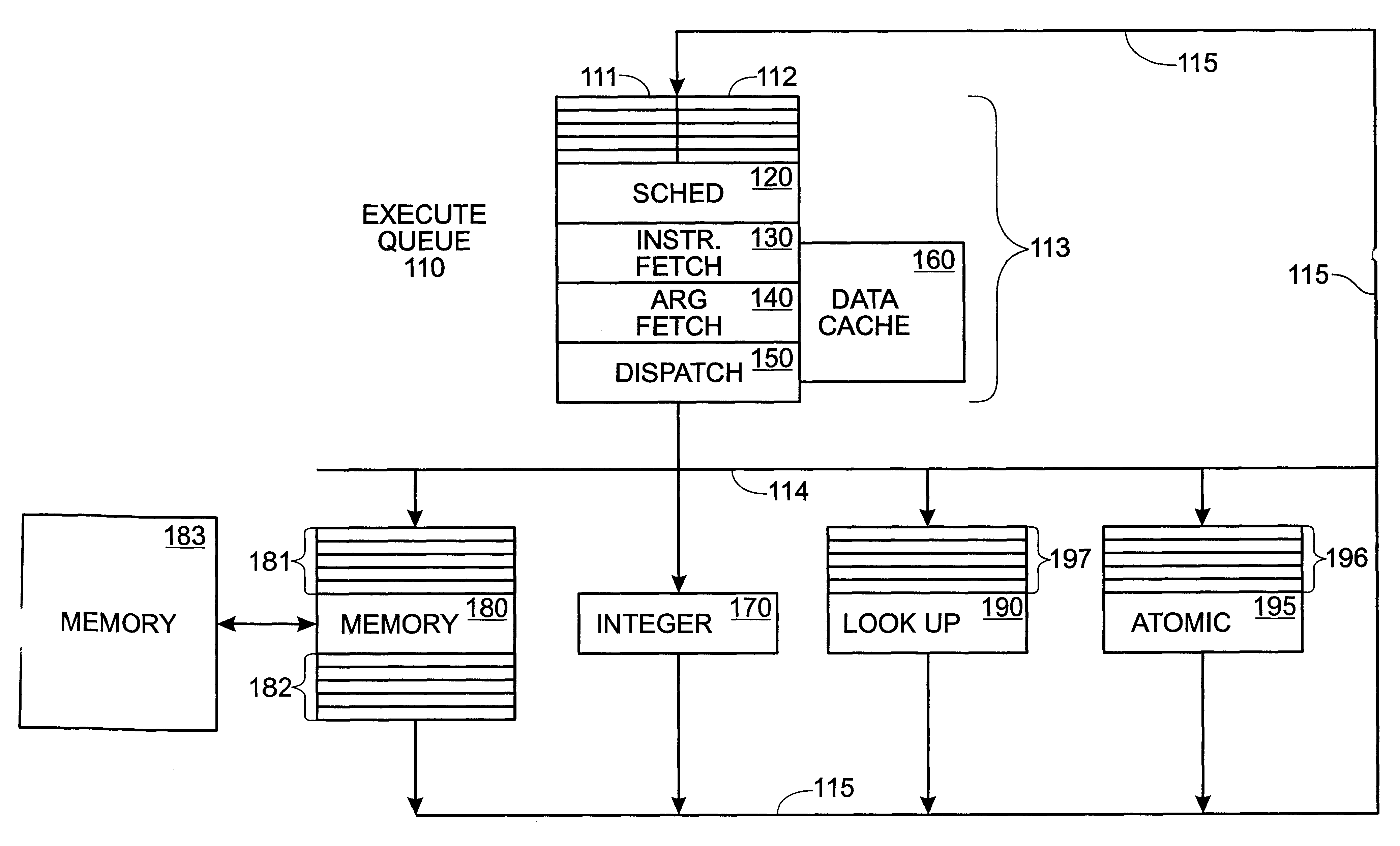

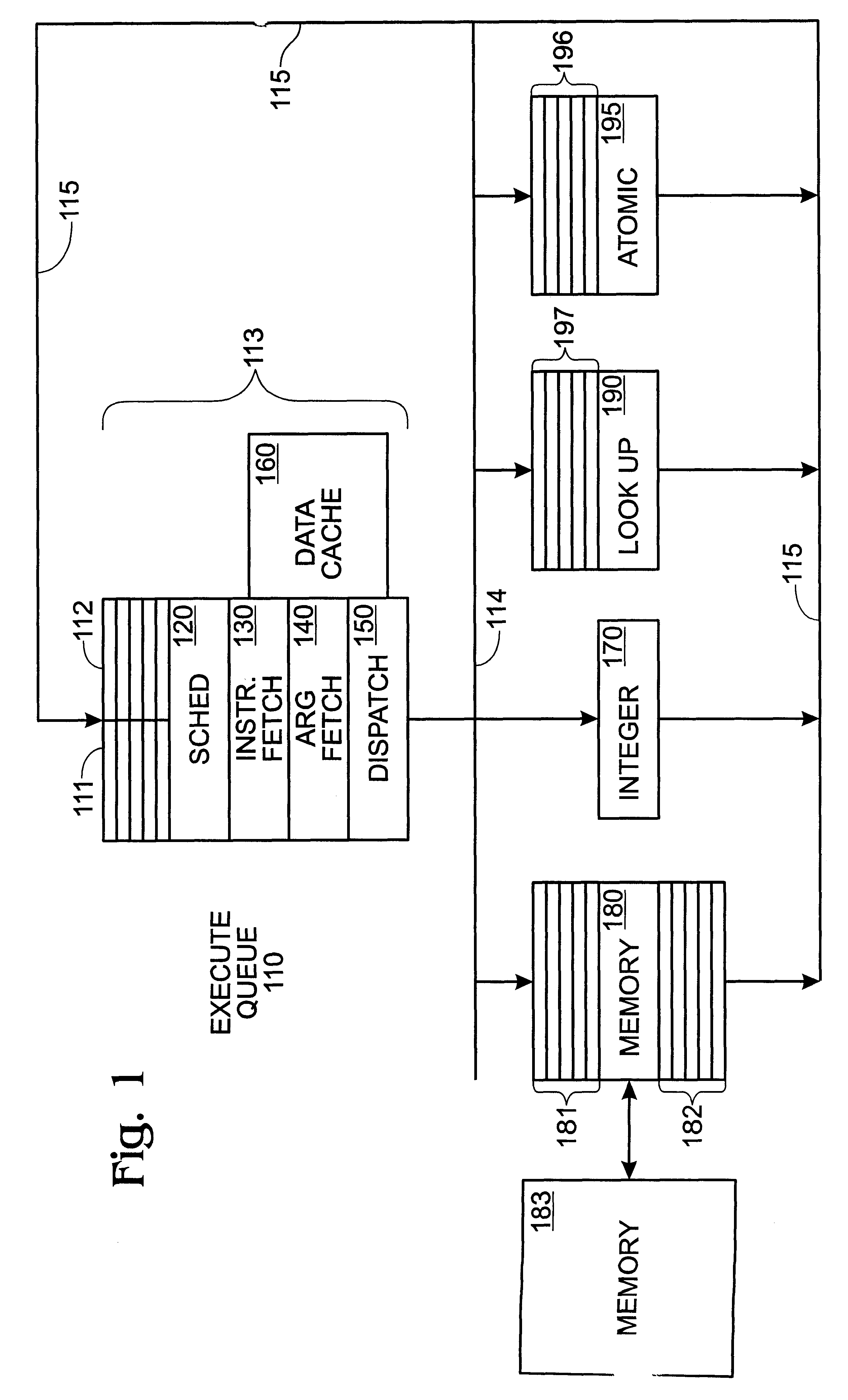

Method and apparatus for handling cache misses in a computer system

InactiveUS6272516B1Multiprogramming arrangementsConcurrent instruction executionComputer architectureCoprocessor

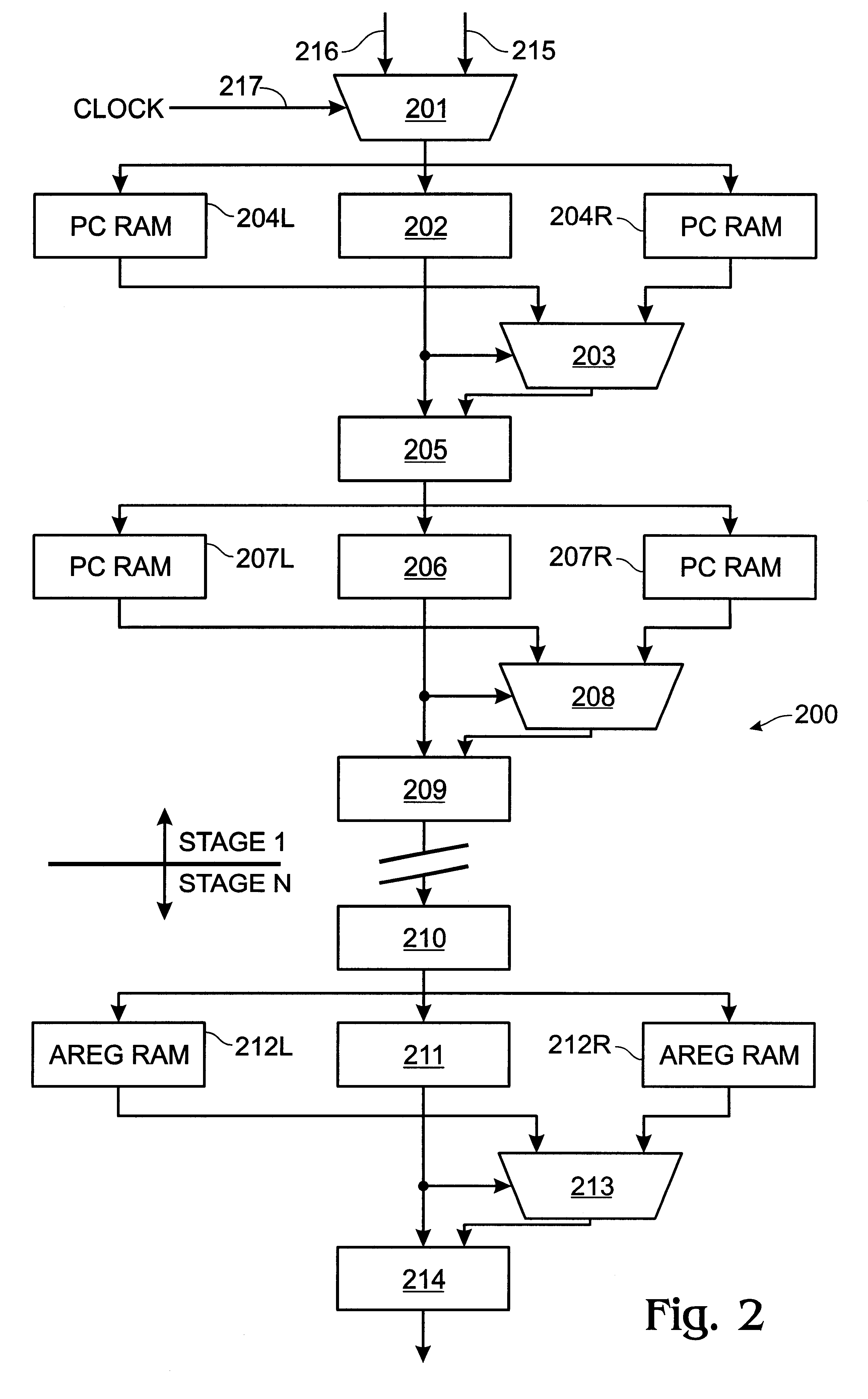

A method for handling cache misses in a computer system. A prefetch unit fetches an instruction for execution by one of a plurality of coprocessors. When the preferred embodiment of the present invention experiences a cache miss in a prefetch unit, the process for which an instruction is being fetched is passed off to a memory processor which executes a read of the missing cache line in memory. While the process is executing in memory processor, or queued by the scheduler for execution of the same instruction, the prefetch unit continues to dispatch other processes from the its queue to the other processors. Thus, the computer system, including the processors, do not stall. Processors continue to execute processes. The prefetch unit continues to dispatch processes. When the memory read is completed, the process in which the cache miss occurred is rescheduled by the scheduler. The prefetch again attempts to fetch and decode the instruction and arguments. If another cache miss occurs, the process is again dispatched to the memory processor. Upon reading the cache line, the memory processor again sends the process to the scheduler's queue.

Owner:RPX CLEARINGHOUSE

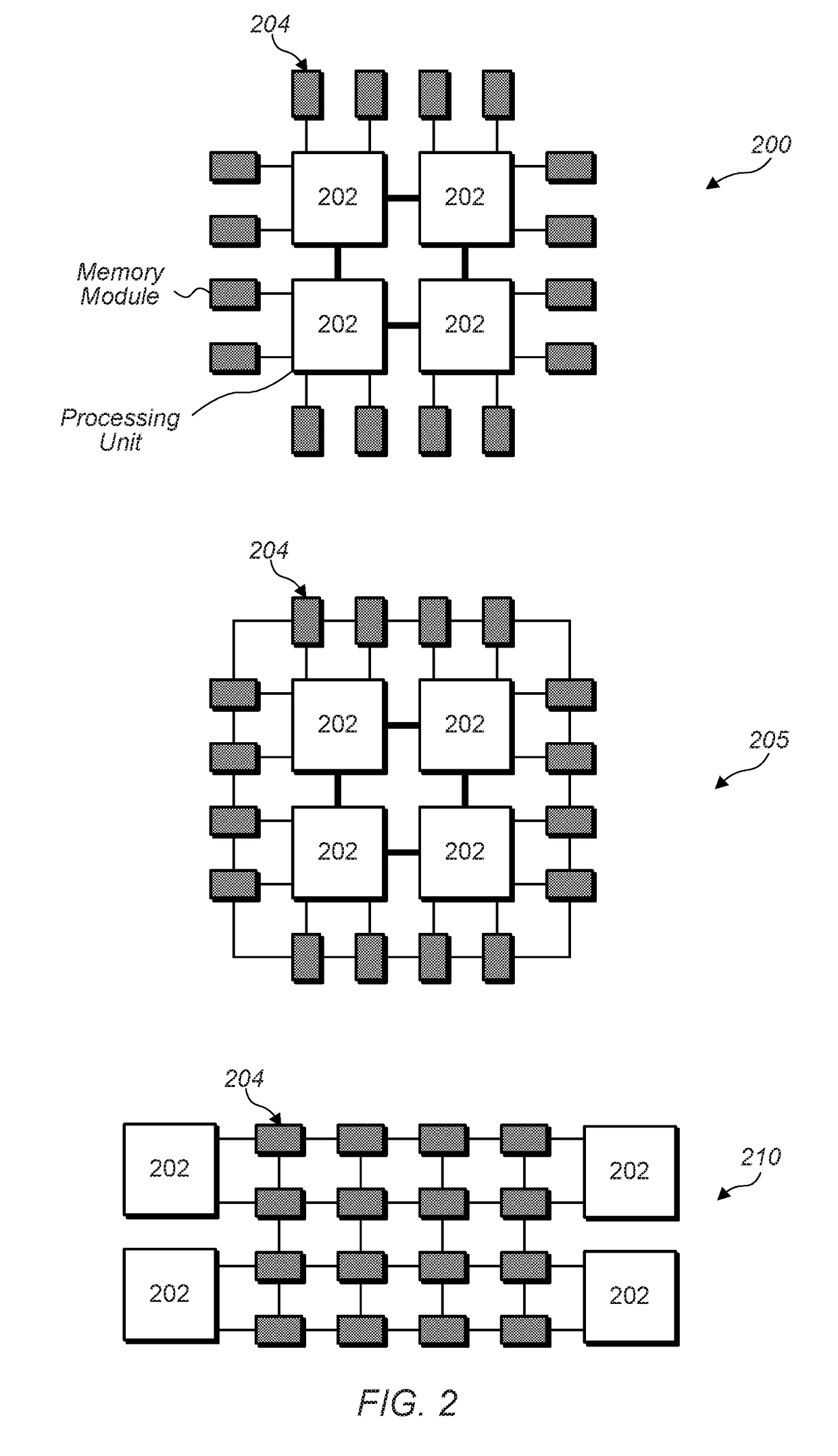

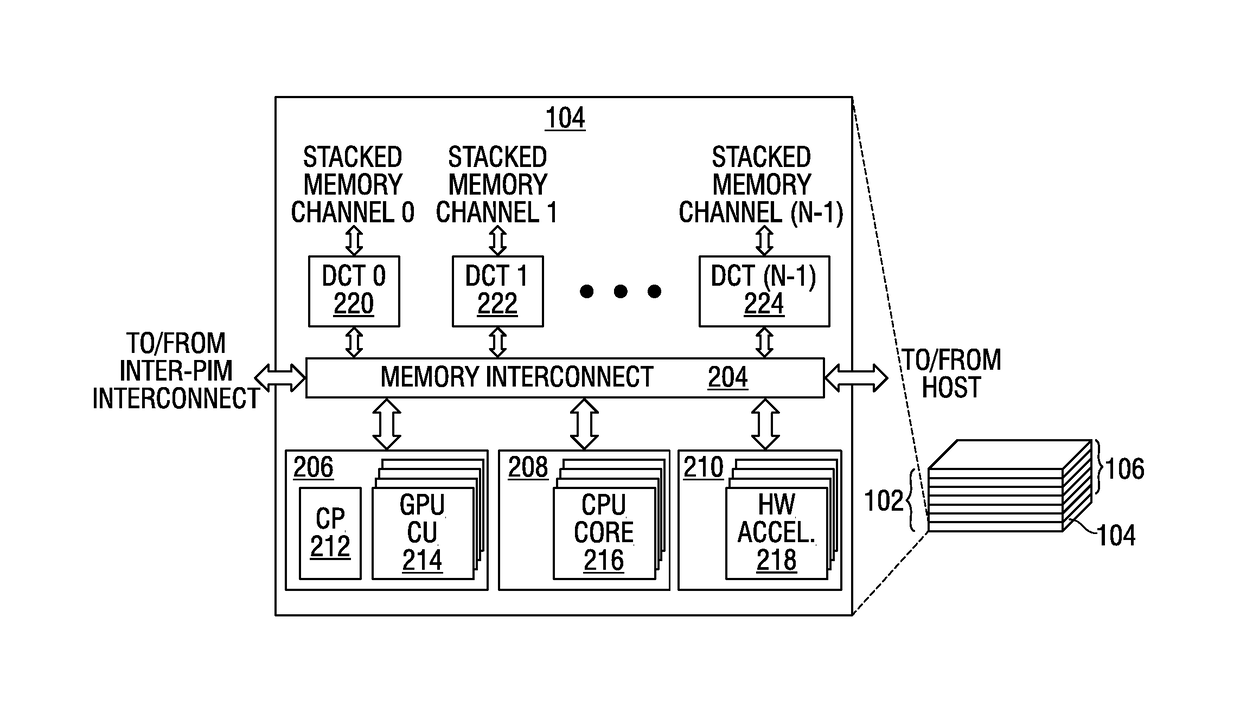

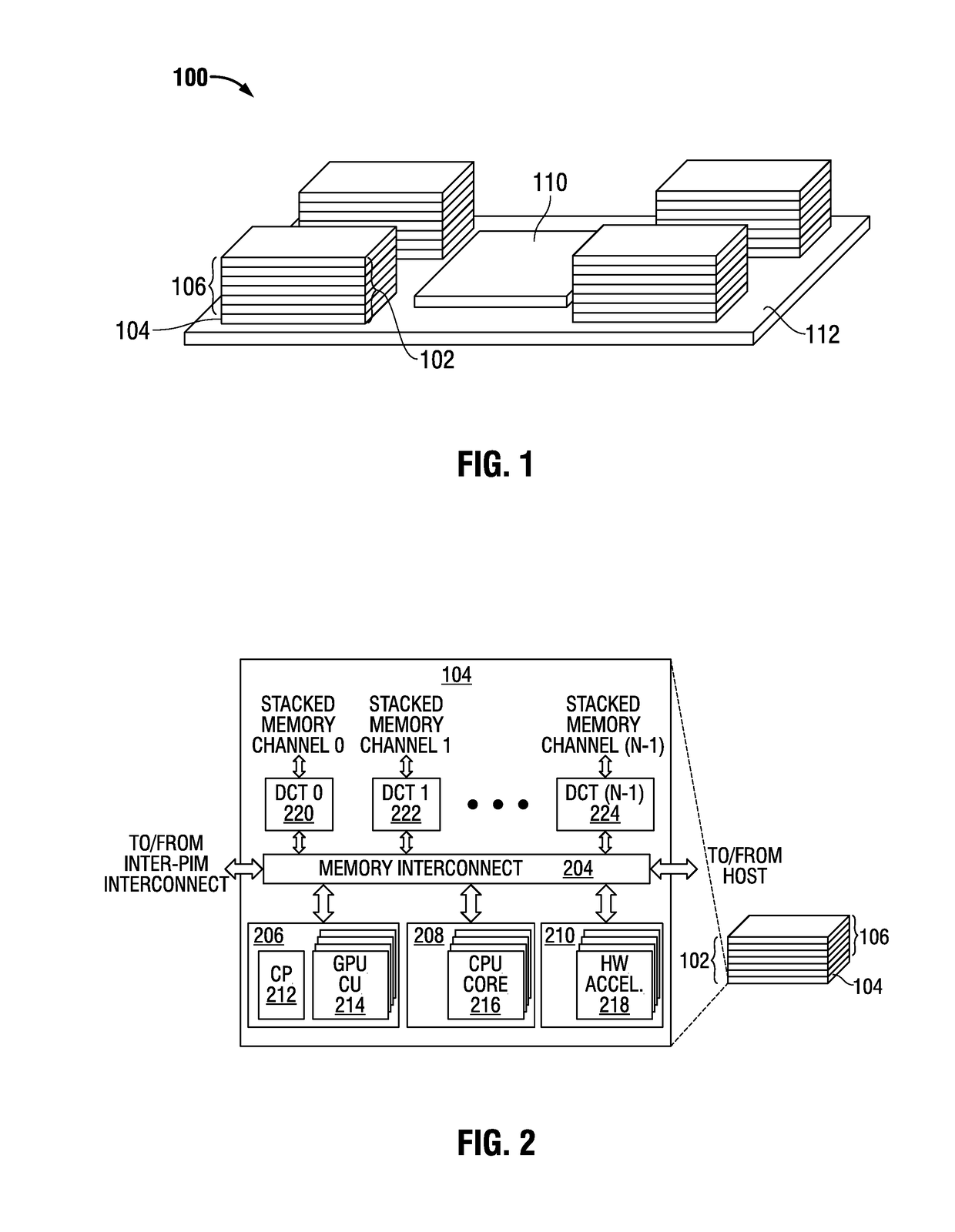

Memory Processing Core Architecture

ActiveUS20160041856A1Improve efficiencyEfficiently offload entire piecesInterprogram communicationDigital computer detailsMemory processingProcessing core

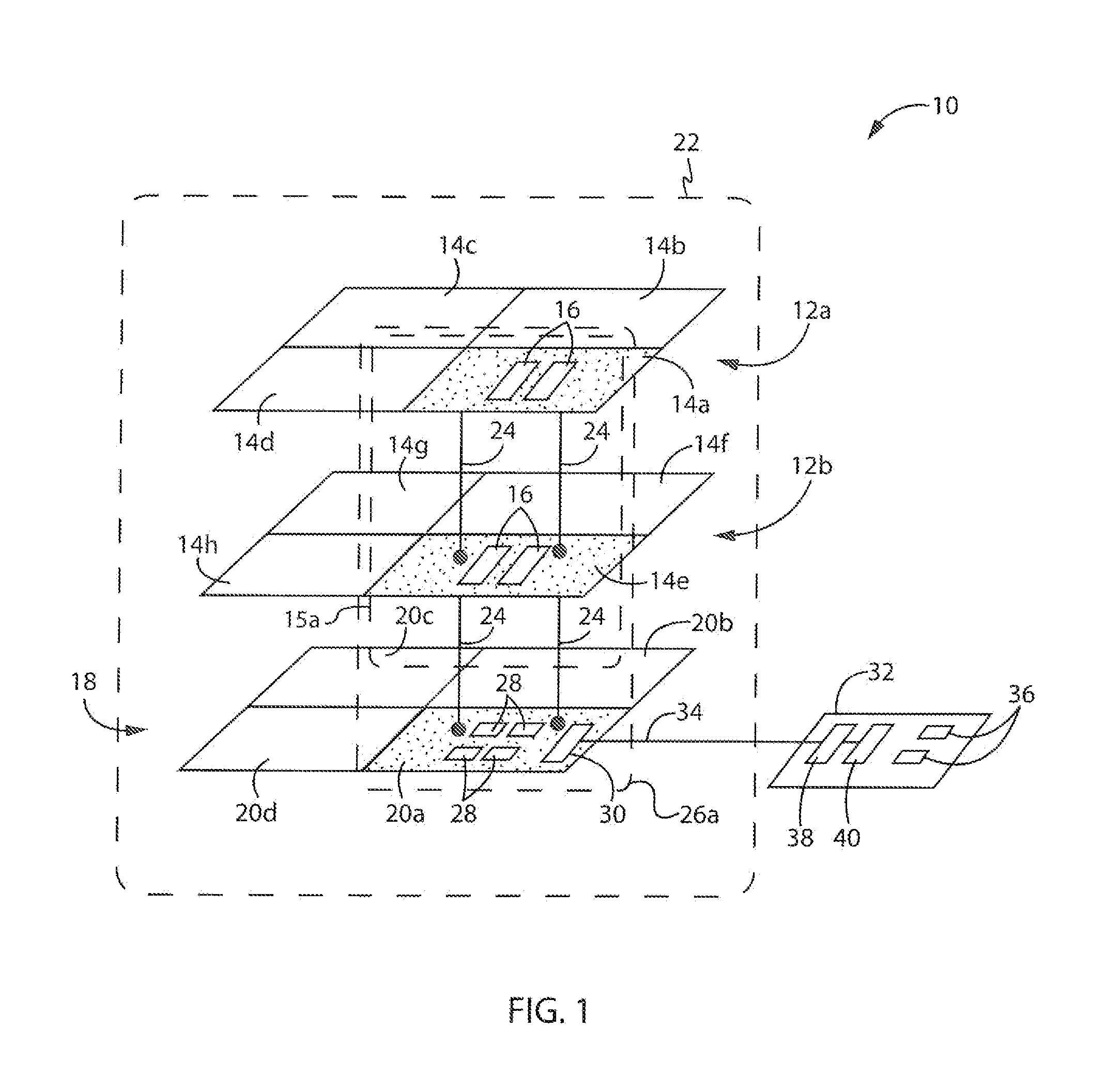

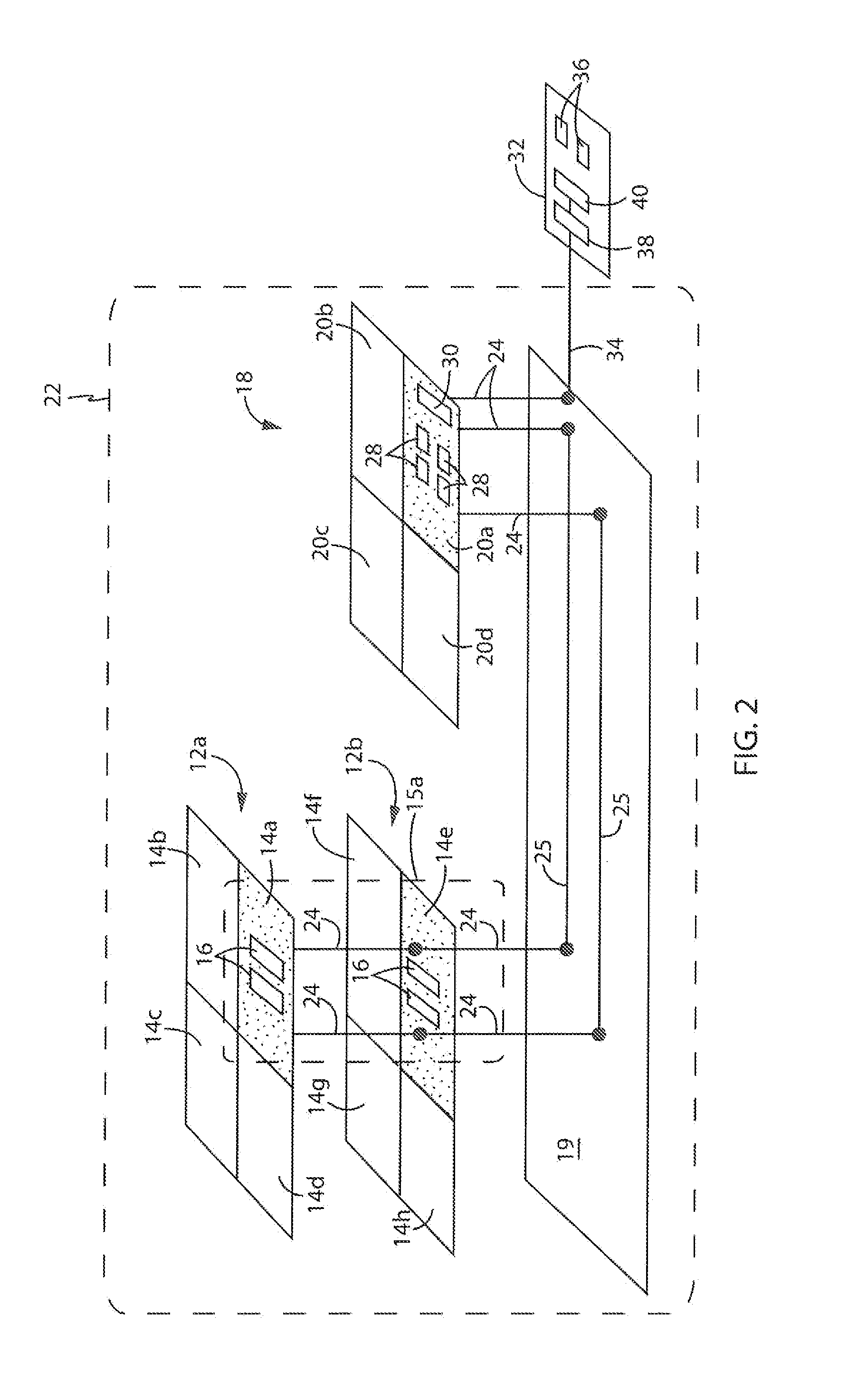

Aspects of the present invention provide a memory system comprising a plurality of stacked memory layers, each memory layer divided into memory sections, wherein each memory section connects to a neighboring memory section in an adjacent memory layer, and a logic layer stacked among the plurality of memory layers, the logic layer divided into logic sections, each logic section including a memory processing core, wherein each logic section connects to a neighboring memory section in an adjacent memory layer to form a memory vault of connected logic and memory sections, and wherein each logic section is configured to communicate directly or indirectly with a host processor. Accordingly, each memory processing core may be configured to respond to a procedure call from the host processor by processing data stored in its respective memory vault and providing a result to the host processor. As a result, increased performance may be provided.

Owner:WISCONSIN ALUMNI RES FOUND

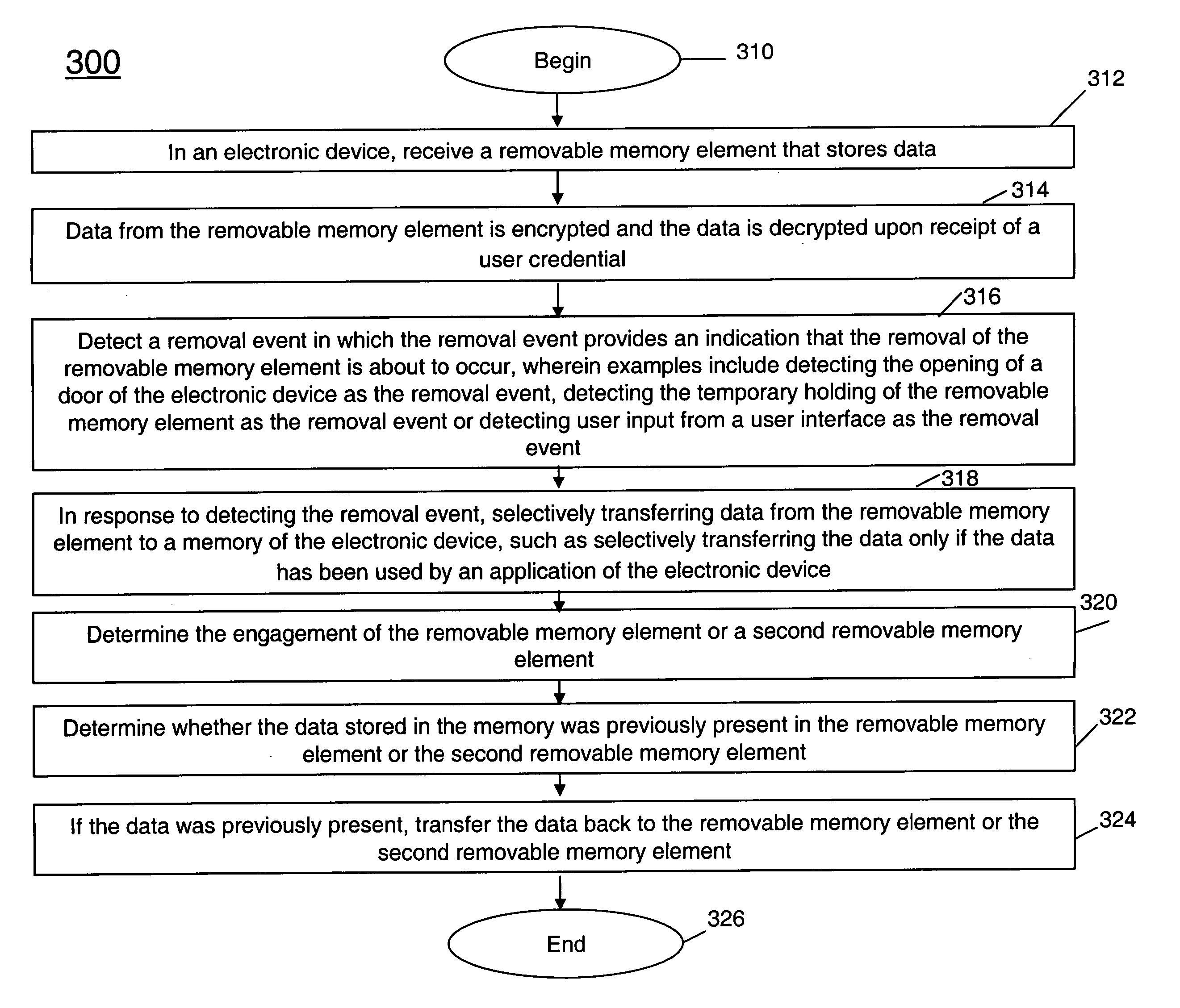

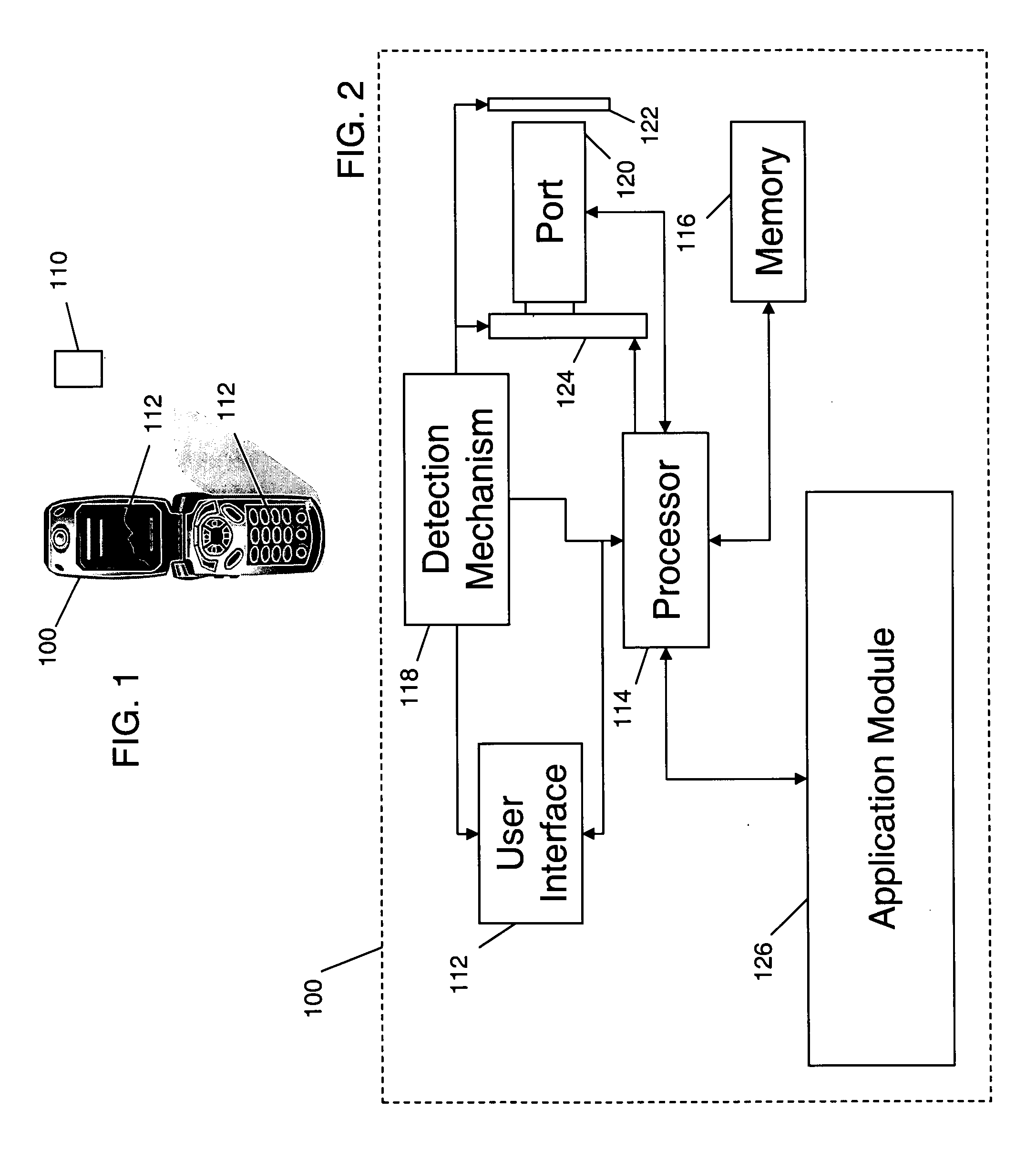

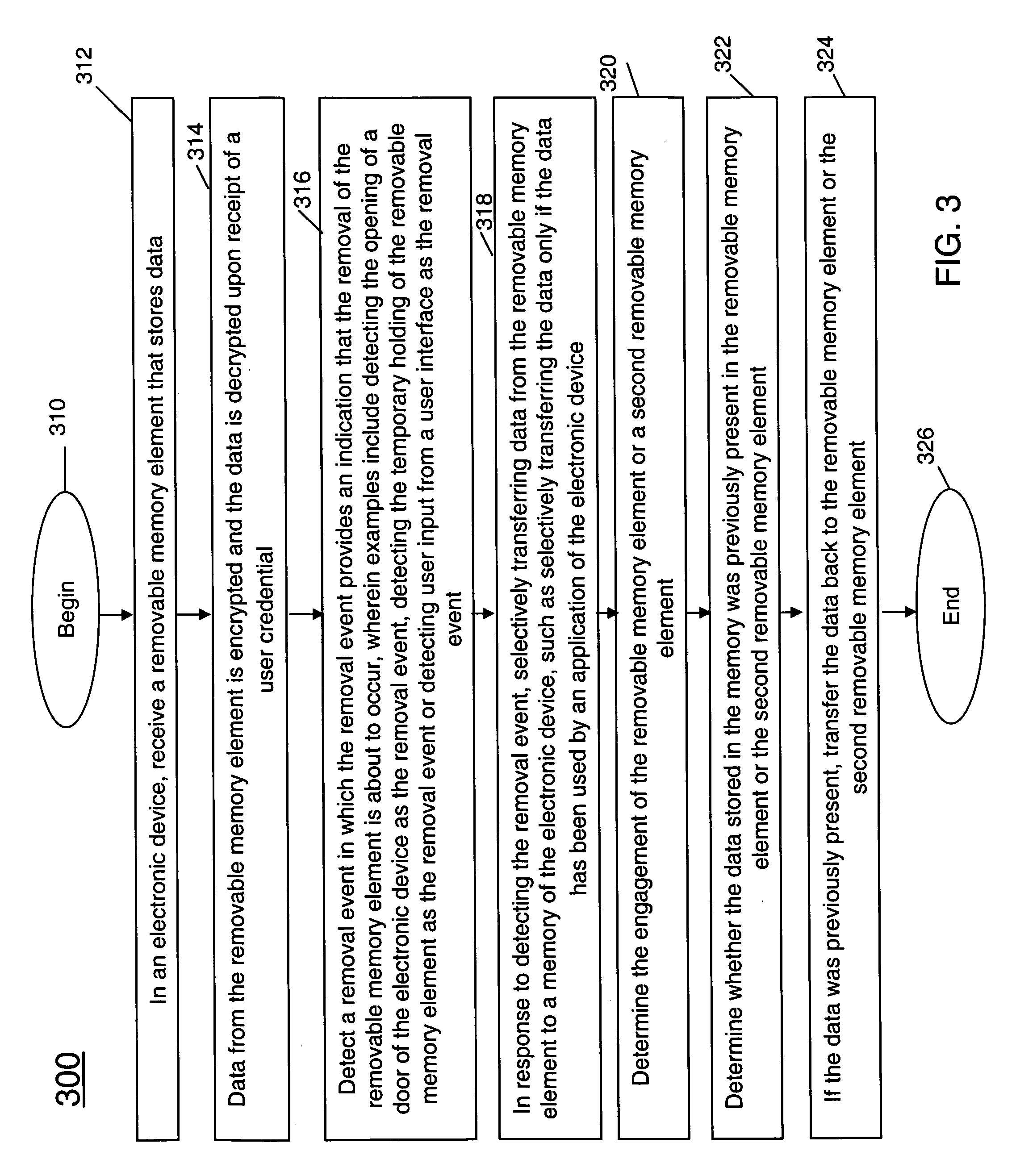

Method and electronic device for selective transfer of data from removable memory element

InactiveUS20070088914A1Memory adressing/allocation/relocationTransmissionComputer hardwareIn-Memory Processing

The invention concerns a method (300) and electronic device (100) for the selective transfer of data from a removable memory element (110). The electronic device can include a port (120) that can receive the removable memory element, a memory (116) that can selectively store data from the removable memory element and a processor (114) that can be coupled to the port and the memory. The processor can be programmed to detect (316) a removal event in which the removal event can provide an indication that the removal of the removable memory element is about to occur and in response to the detection, selectively transfer (318) data from the removable memory element to the memory. The processor can be further programmed to transfer data from the removable memory element to the memory only if the data has been used by an application of the electronic device.

Owner:MOTOROLA INC

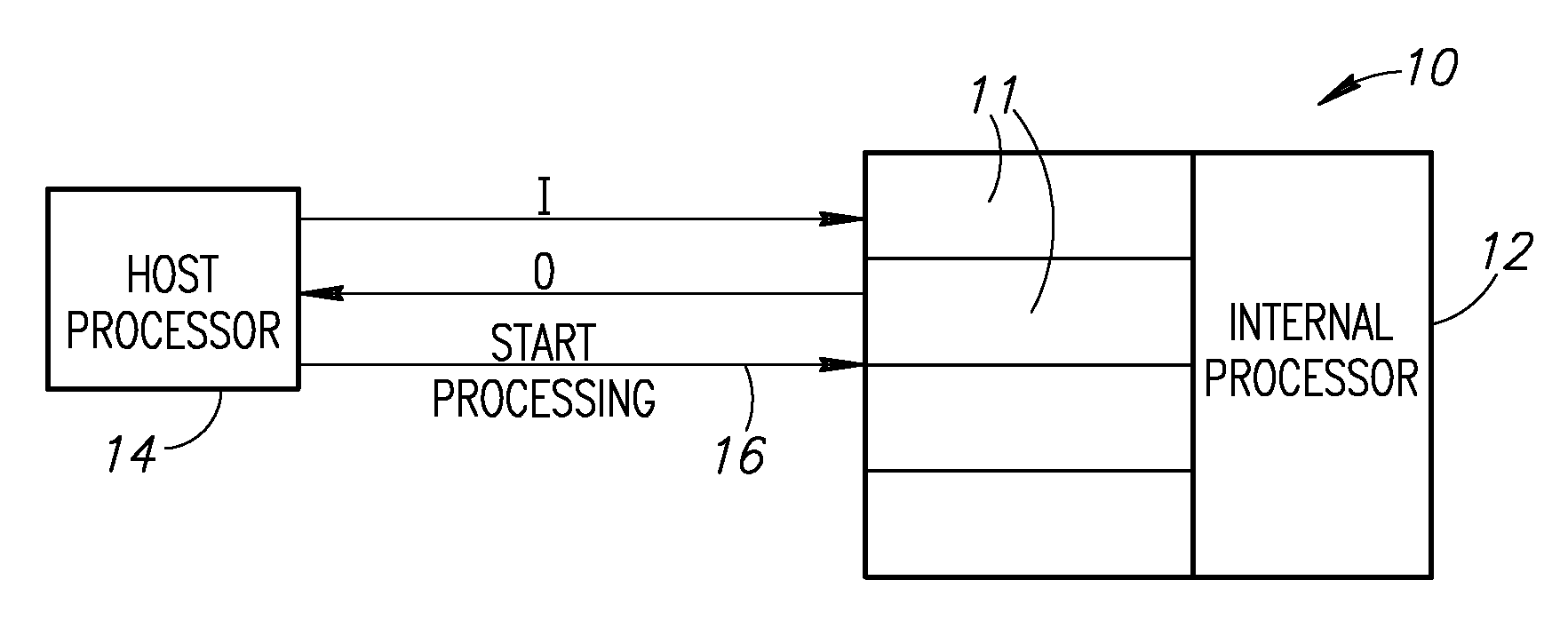

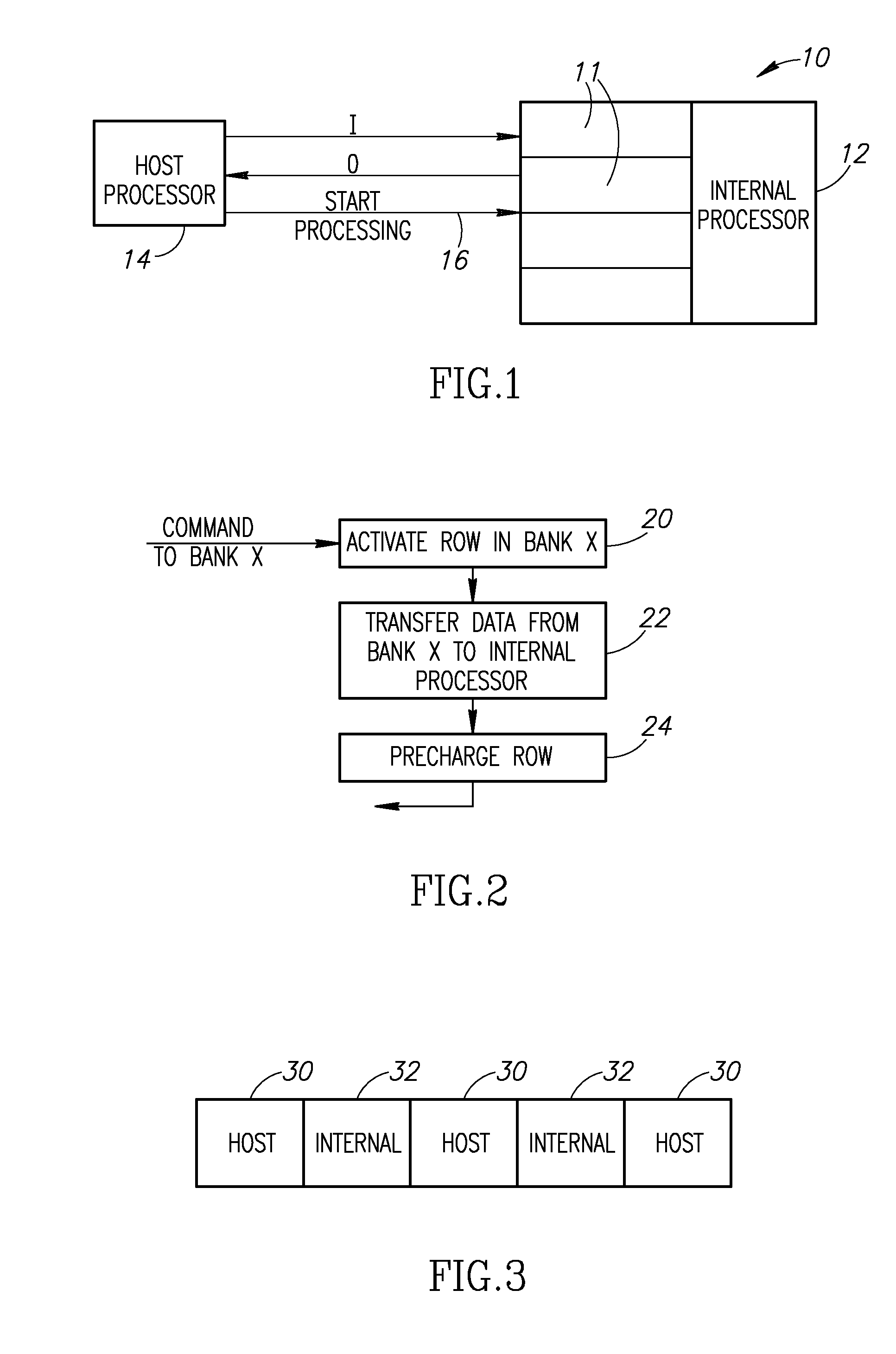

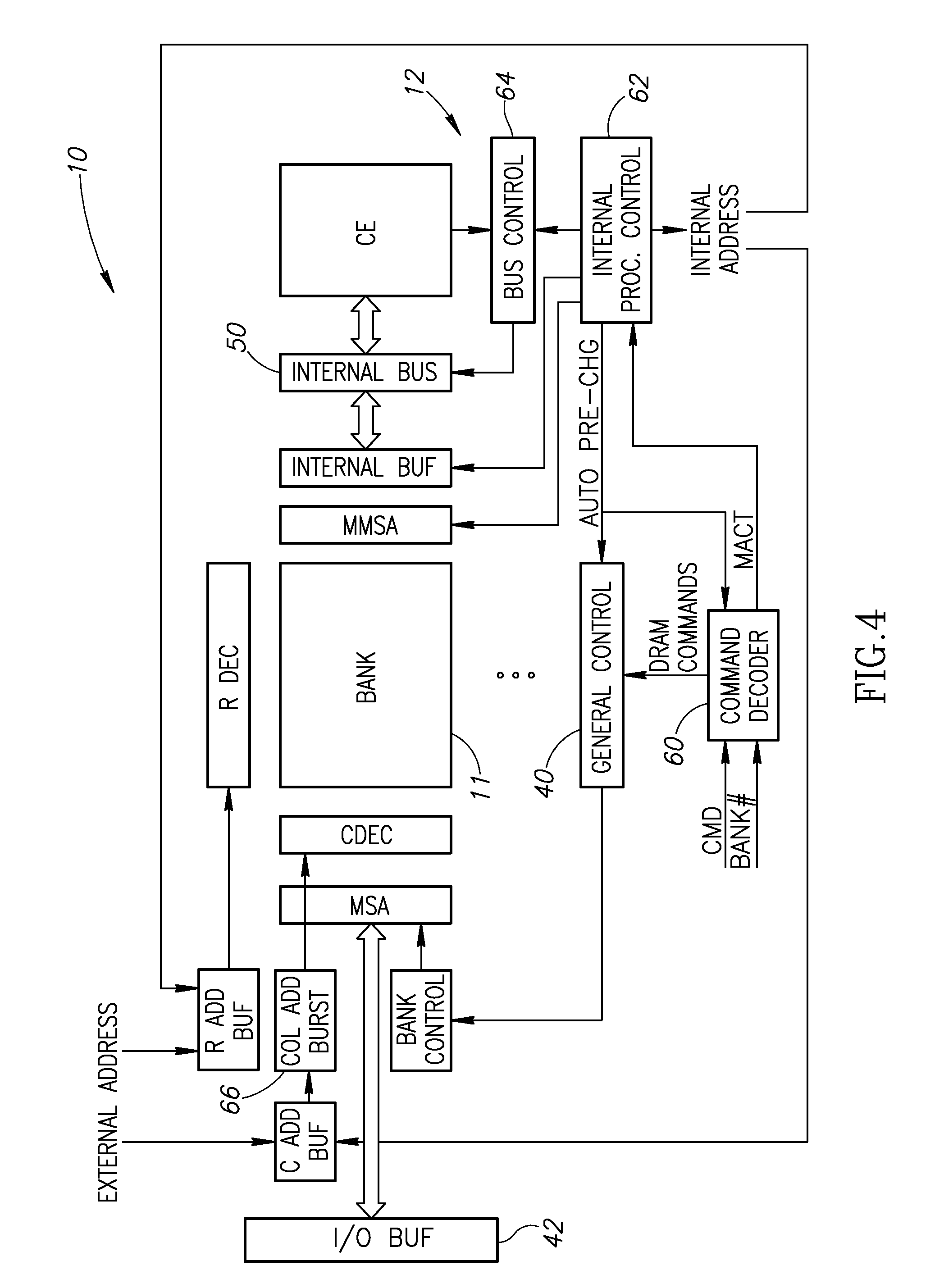

In-memory processor

A memory device includes at least two memory banks storing data and an internal processor. The at least two memory banks are accessible by a host processor. The internal processor receives a timeslot from the host processor and processes a portion of the data from an indicated one of the at least two banks of the memory array during the timeslot while the remaining banks are available to the host processor during the timeslot. A method of operating a memory device having banks storing data includes a host processor issuing per bank timeslots to an internal processor of a memory device, the internal processor operating on an indicated bank of the memory device during the timeslot and the host processor not accessing the indicated bank during the timeslot.

Owner:MIKAMONU GROUP

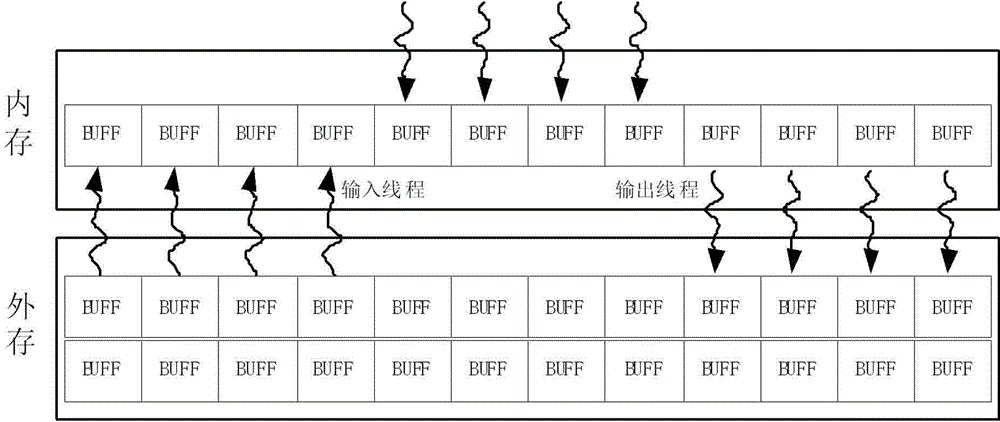

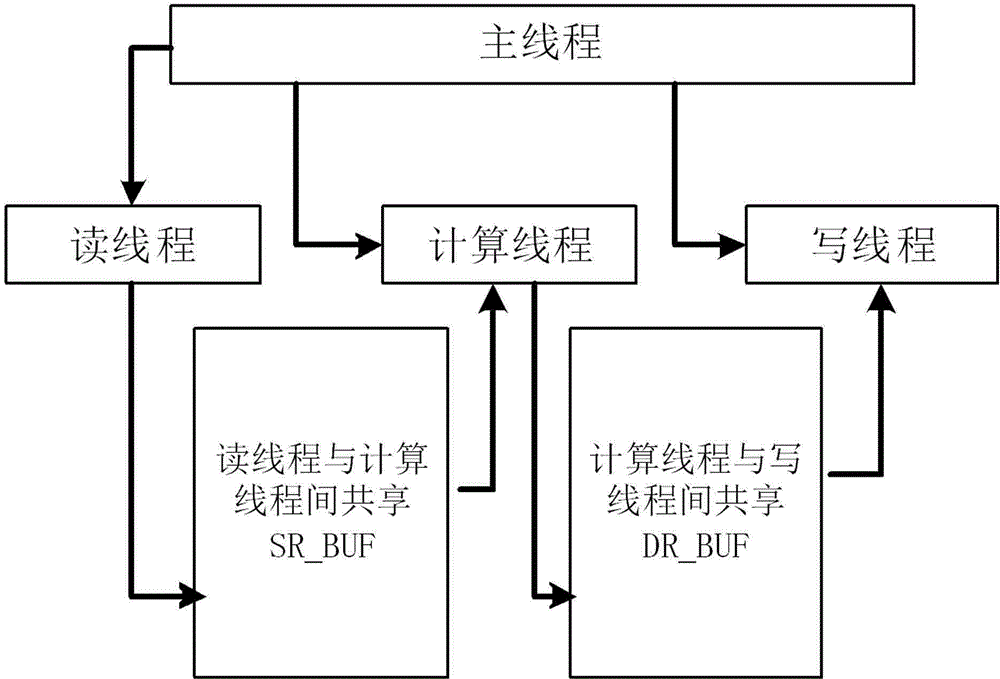

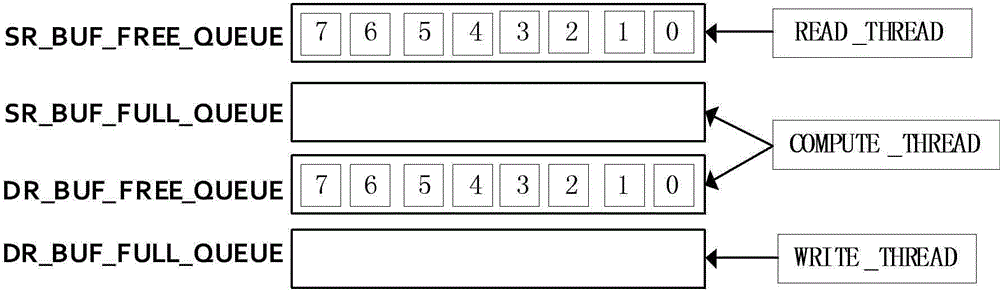

Concurrent program developing method for processing of large-scale data based on small memory

InactiveCN104572106AEasy to handleImplement block processingConcurrent instruction executionSpecific program execution arrangementsComputer architectureData space

The invention discloses a concurrent program developing method for processing of large-scale data based on a small memory; the method comprises the following steps: developing data spaces in a memory and a disk, dividing the data spaces into a plurality of data blocks and reading task data into the divided disk data blocks; setting a reading thread, a calculating thread and a writing thread; and utilizing the disk as the cache of the memory to store data which is not calculated temporarily. The entire calculating data is divided into proper small data blocks and stored in the disk. Only when the data block is required to be calculated, the data is dispatched to the memory; therefore, the dynamic dispatching of the data is realized and the requirement of processing large-scale data based on the small memory is satisfied. Compared with the prior art, the concurrent program developing method for processing of large-scale data based on the small memory has the advantages: the large volume of the disk is utilized sufficiently, the calculating data is dynamically dispatched to a calculation core and the balance of the calculated load is achieved; meanwhile, the communication and the calculation are executed asynchronously, the entire performance of the system is improved and the requirement of processing the large-scale data based on the small memory is satisfied.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

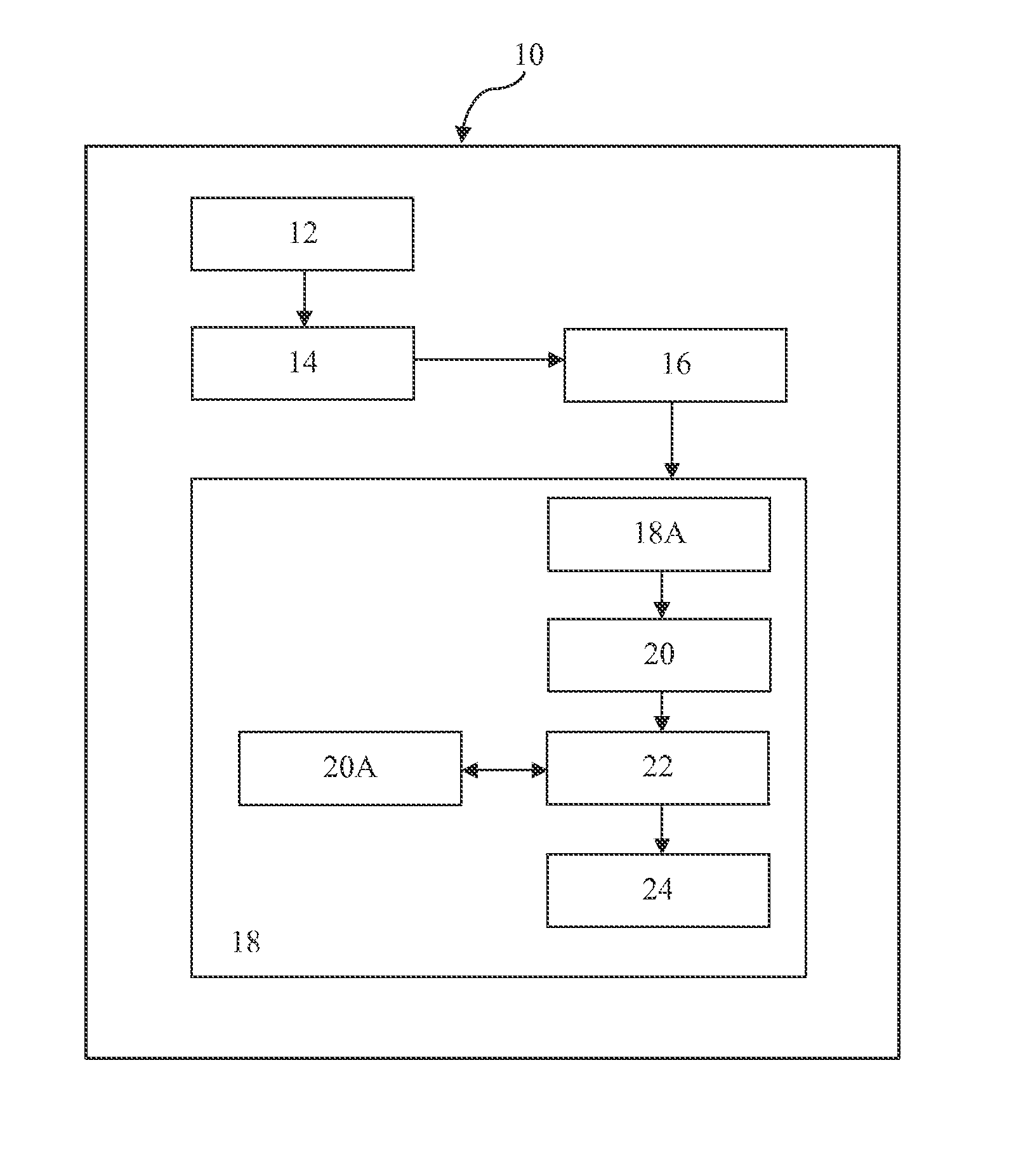

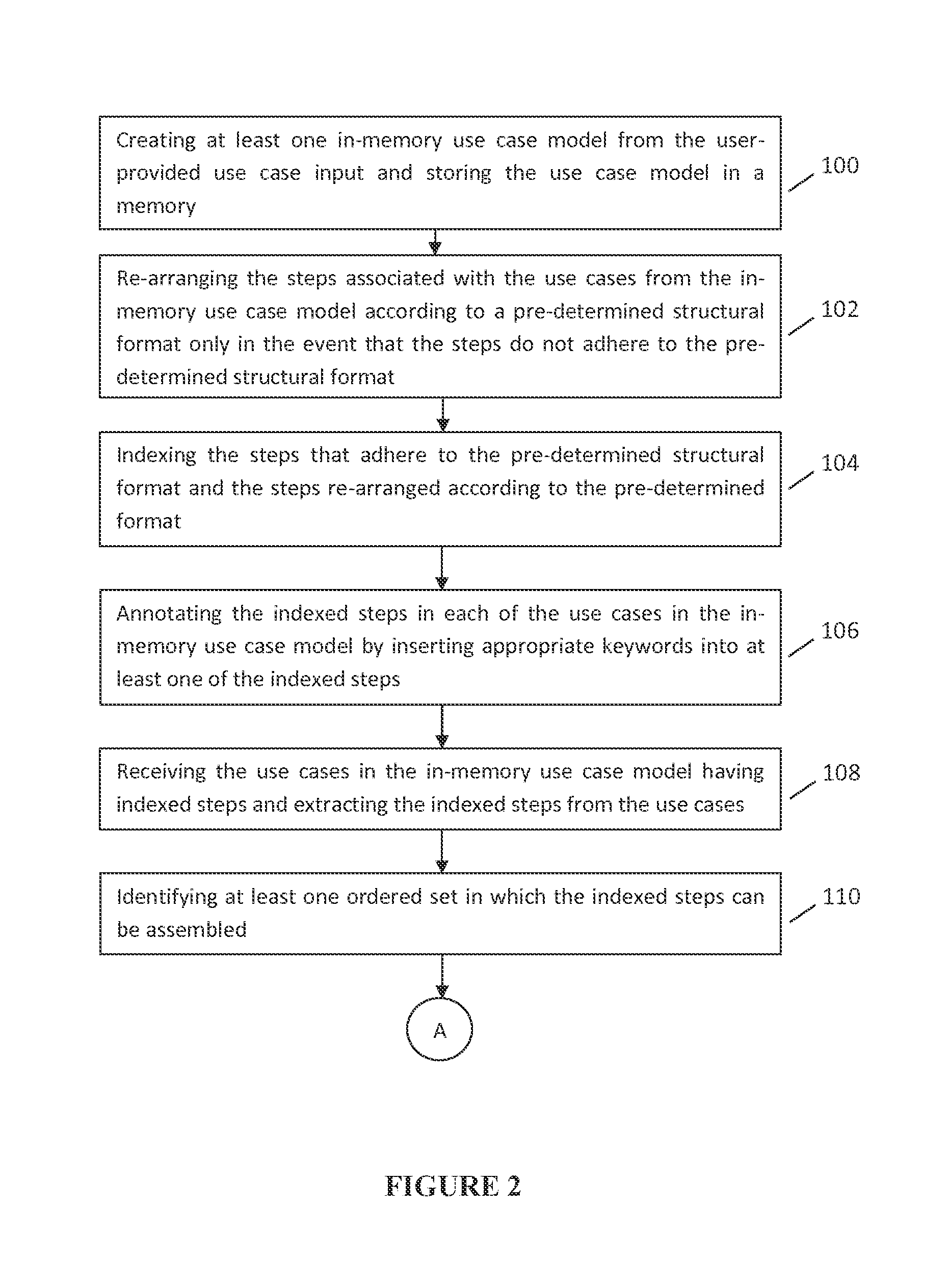

Computer implemented system and method for indexing and annotating use cases and generating test scenarios therefrom

ActiveUS20140331212A1Software testing/debuggingSpecific program execution arrangementsIn-Memory ProcessingOrder set

A system and method for indexing and annotating use cases and generating test scenarios using in-memory processing from the corresponding use case model includes a use case model creator to create / build an in-memory use case model for the use cases created by a user; a predetermined structural format, according to which the steps of the use cases are organized by an editor; an indexer to appropriately index the steps of the use case(s) in the use case model; a generator which facilitates extraction of the indexed steps from the use case and identification of at least one ordered set in which the indexed steps can be assembled; and a test scenario generator to generate a test scenario having the indexed steps arranged according to the ordered set identified by an identifier and being validated by a validator according to pre-determined validation criteria.

Owner:ZENSAR TECHNOLOGIES

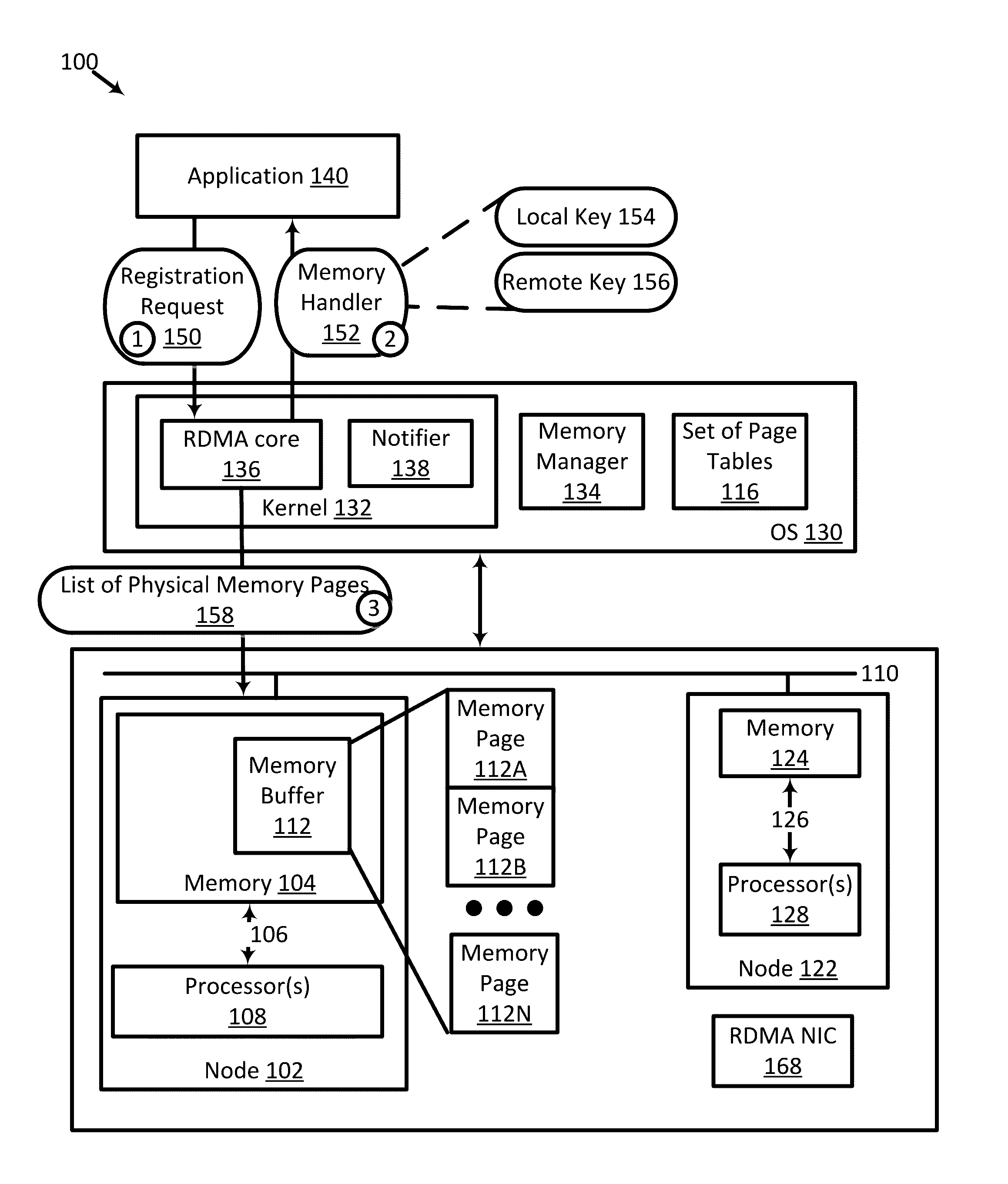

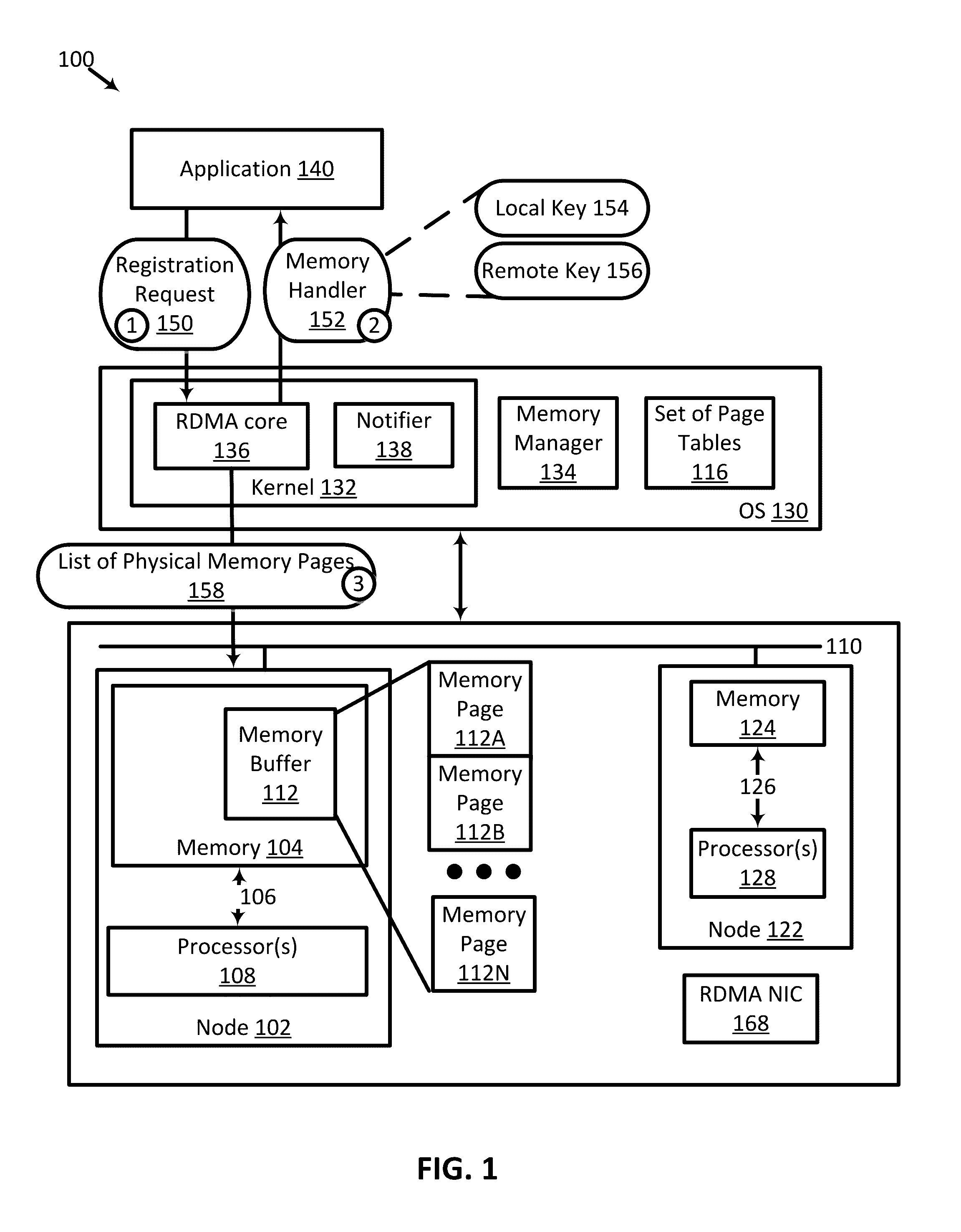

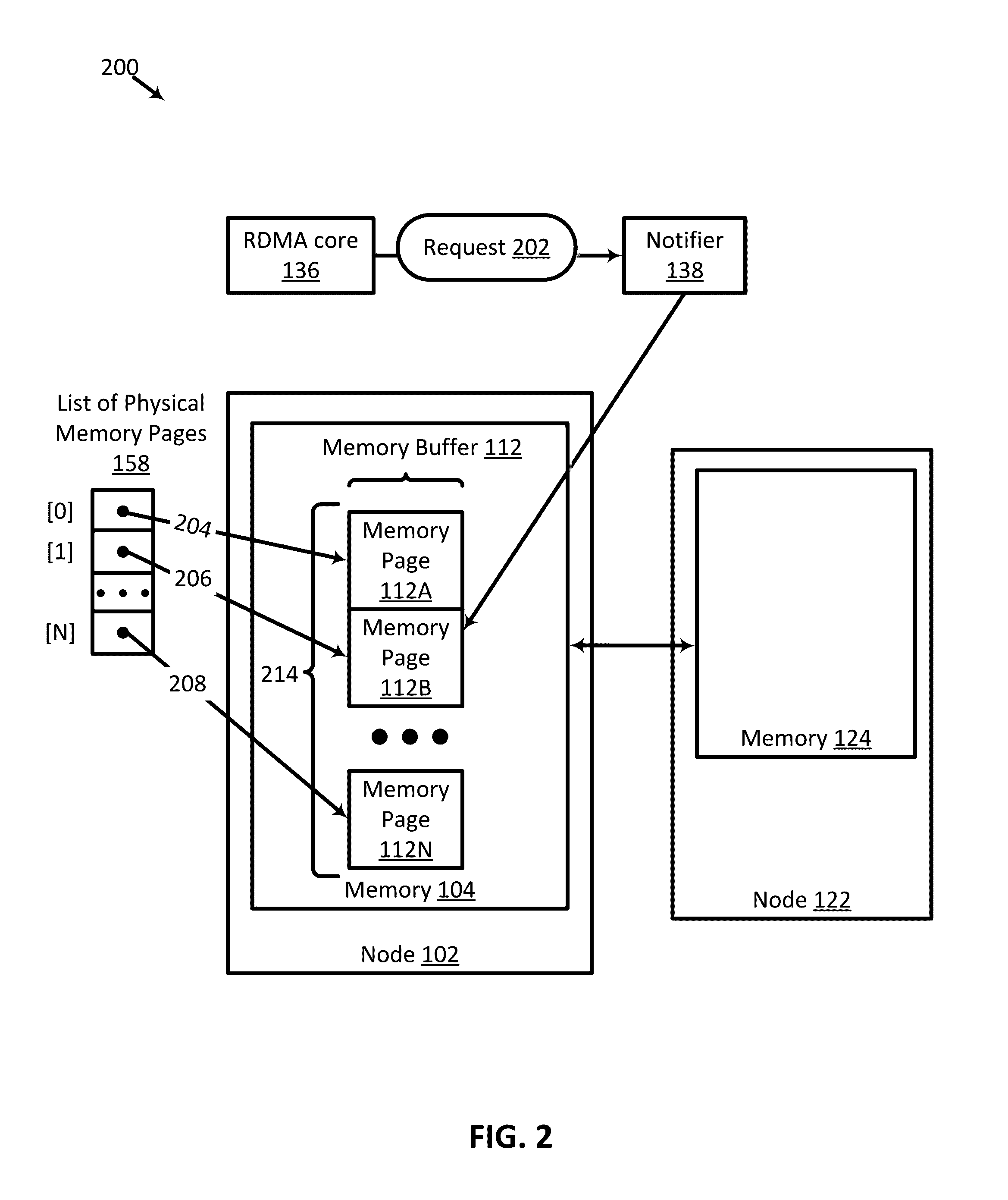

Dynamic Non-Uniform Memory Architecture (NUMA) Locality for Remote Direct Memory Access (RDMA) Applications

ActiveUS20160350260A1Digital computer detailsProgram controlIn-Memory ProcessingRemote direct memory access

An example method of moving RDMA memory from a first node to a second node includes protecting a memory region from write operations. The memory region resides on a first node and includes a set of RDMA memory pages. A list specifies the set of RDMA memory pages and is associated with a memory handler. The set of RDMA memory pages includes a first memory page. The method also includes allocating a second memory page that resides on a second node and copying data stored in the first memory page to the second memory page. The method also includes updating the list by replacing the first memory page specified in the list with the second memory page. The method further includes registering the updated list as RDMA memory. The updated list is associated with the memory handler after the registering.

Owner:RED HAT ISRAEL

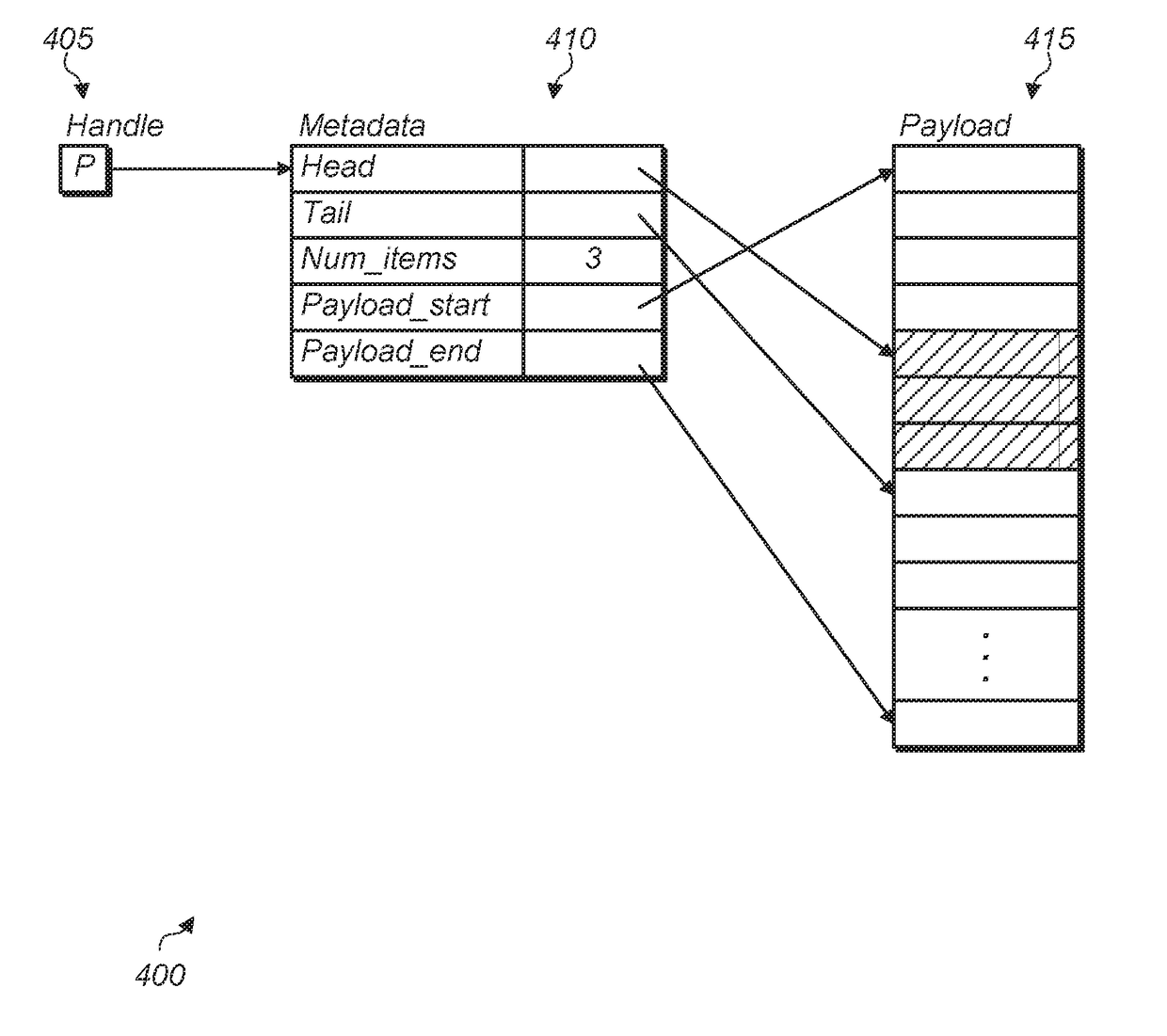

Efficient implementation of queues and other data structures using processing near memory

ActiveUS20170255397A1Avoid cachingMemory architecture accessing/allocationInput/output to record carriersArray data structureManagement unit

Systems, apparatuses, and methods for implementing efficient queues and other data structures. A queue may be shared among multiple processors and / or threads without using explicit software atomic instructions to coordinate access to the queue. System software may allocate an atomic queue and corresponding queue metadata in system memory and return, to the requesting thread, a handle referencing the queue metadata. Any number of threads may utilize the handle for accessing the atomic queue. The logic for ensuring the atomicity of accesses to the atomic queue may reside in a management unit in the memory controller coupled to the memory where the atomic queue is allocated.

Owner:ADVANCED MICRO DEVICES INC

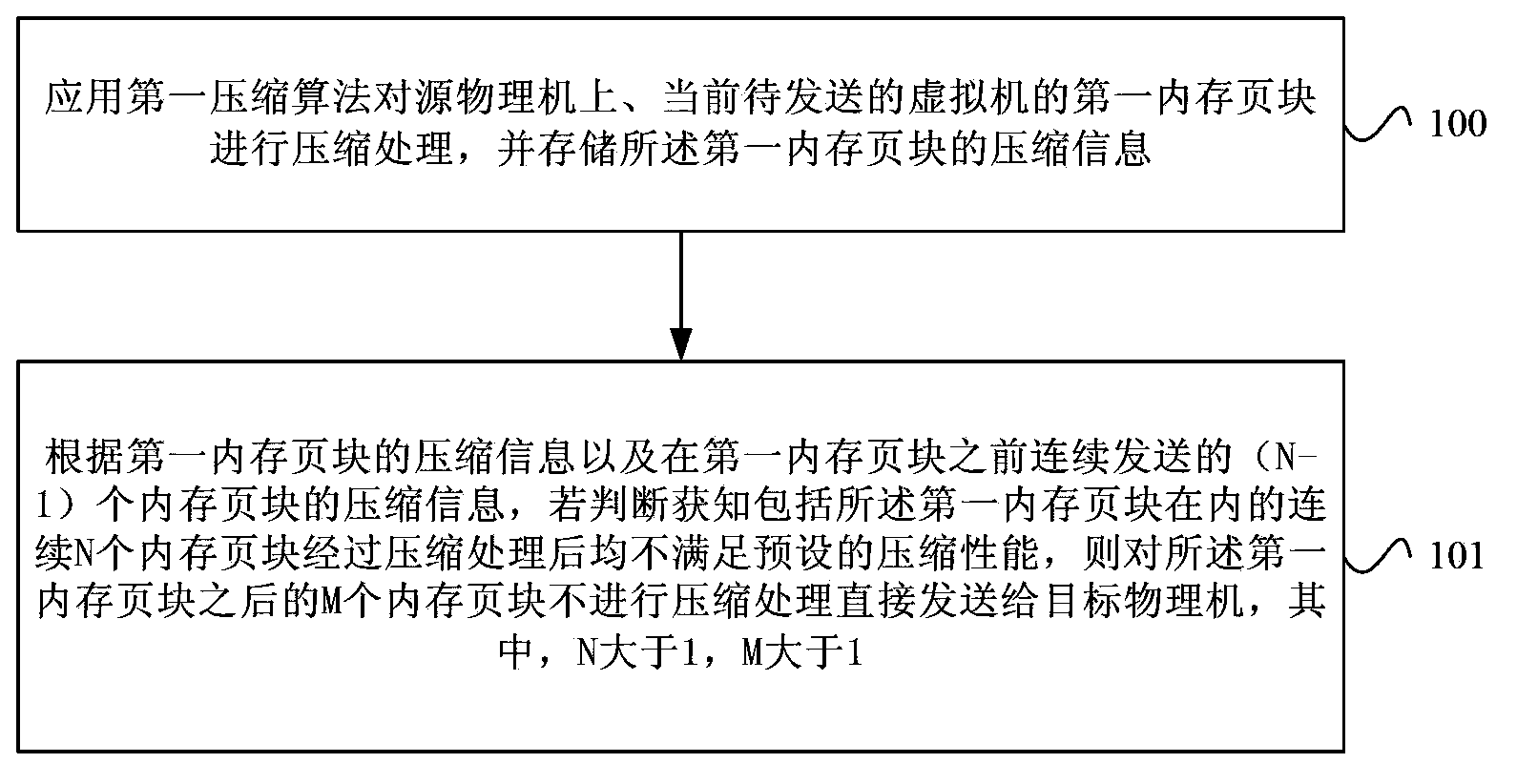

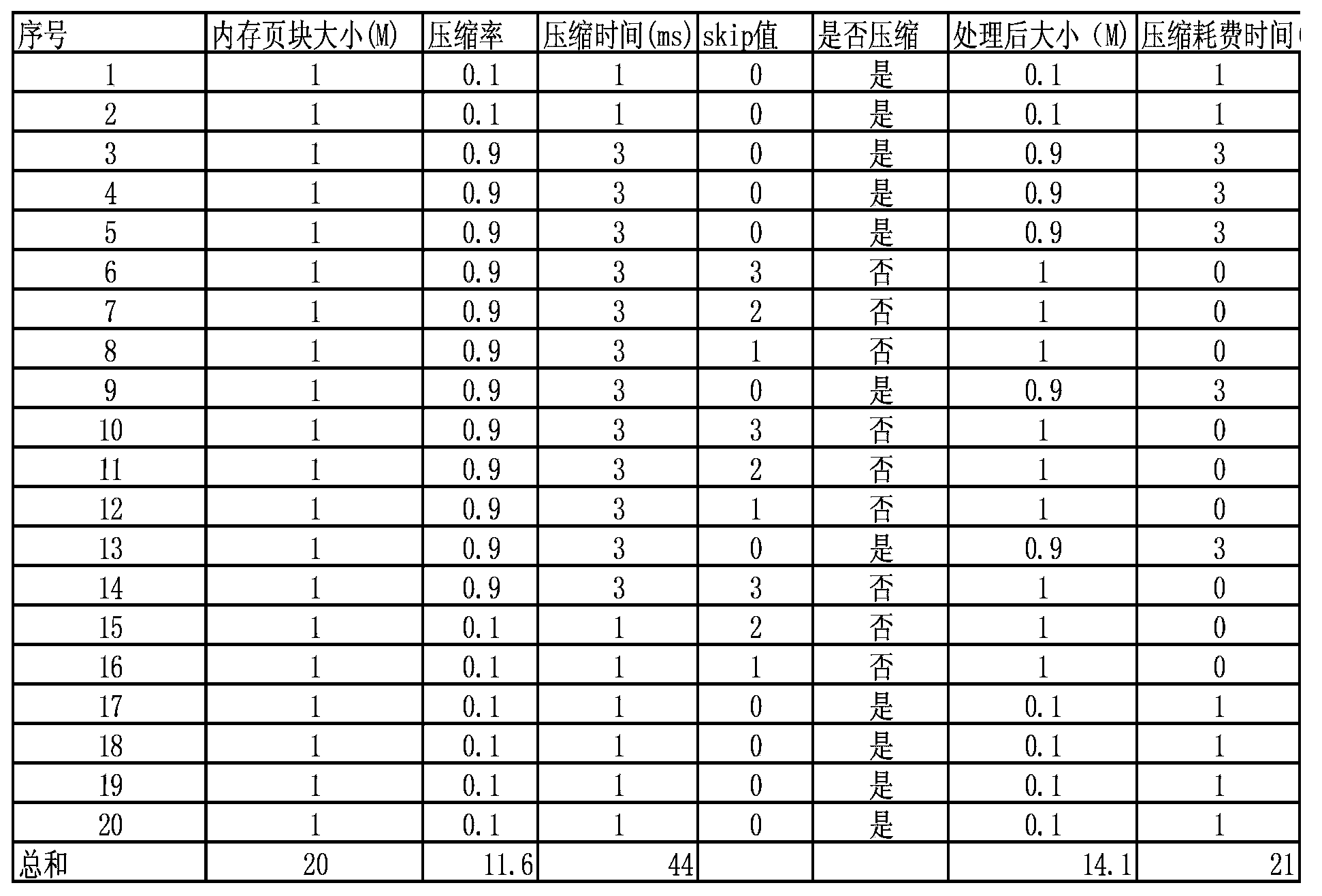

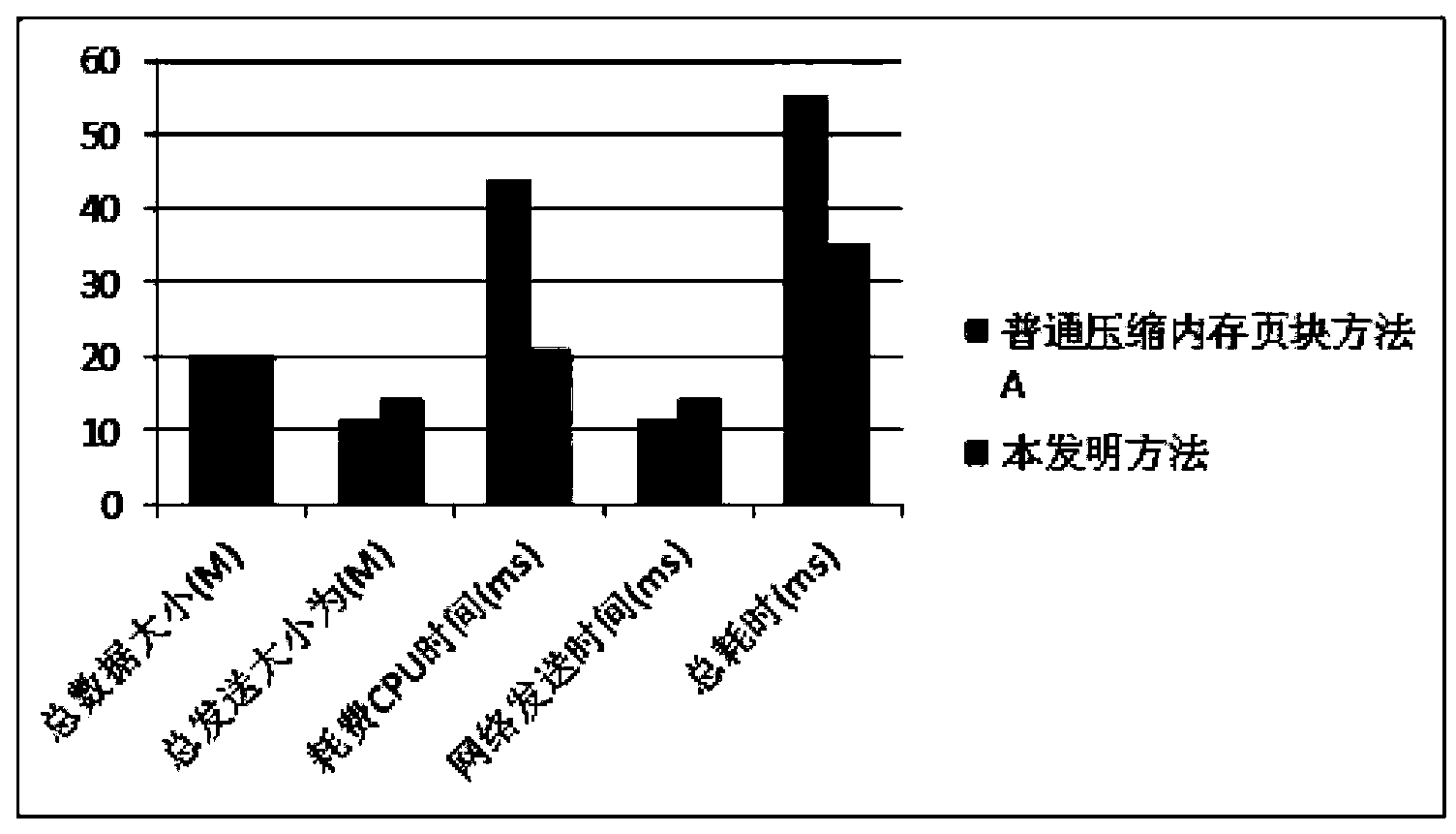

Virtual machine thermal migration memory processing method, device and system

InactiveCN103353850AImprove performanceReduce CPU overheadProgram initiation/switchingSoftware simulation/interpretation/emulationMemory processingIn-Memory Processing

The invention provides a virtual machine thermal migration memory processing method, device and system. The method comprises the following steps: compression processing of a current to-be-transmitted first memory page block of a virtual machine on a source physical machine is performed by using the first compression algorithm, and storing compression information of the first memory page block; if N memory page blocks containing the first memory page block do not meet preset compression performance after being subjected to compression processing through judging according to the compression information of the first memory page block and compression information of (N-1) memory page blocks transmitted before the first memory page block, and then M memory page blocks after the first memory page block are not subjected to compression processing and are directly transmitted to a target physical machine, wherein the N is greater than 1 and the M is greater than 1. According to the method, the device and the system provided by the invention, the thermal migration performance of the virtual machine is improved, the CPU (Central Processing Unit) overhead of the source physical machine is reduced and processing resources are saved.

Owner:HUAWEI TECH CO LTD

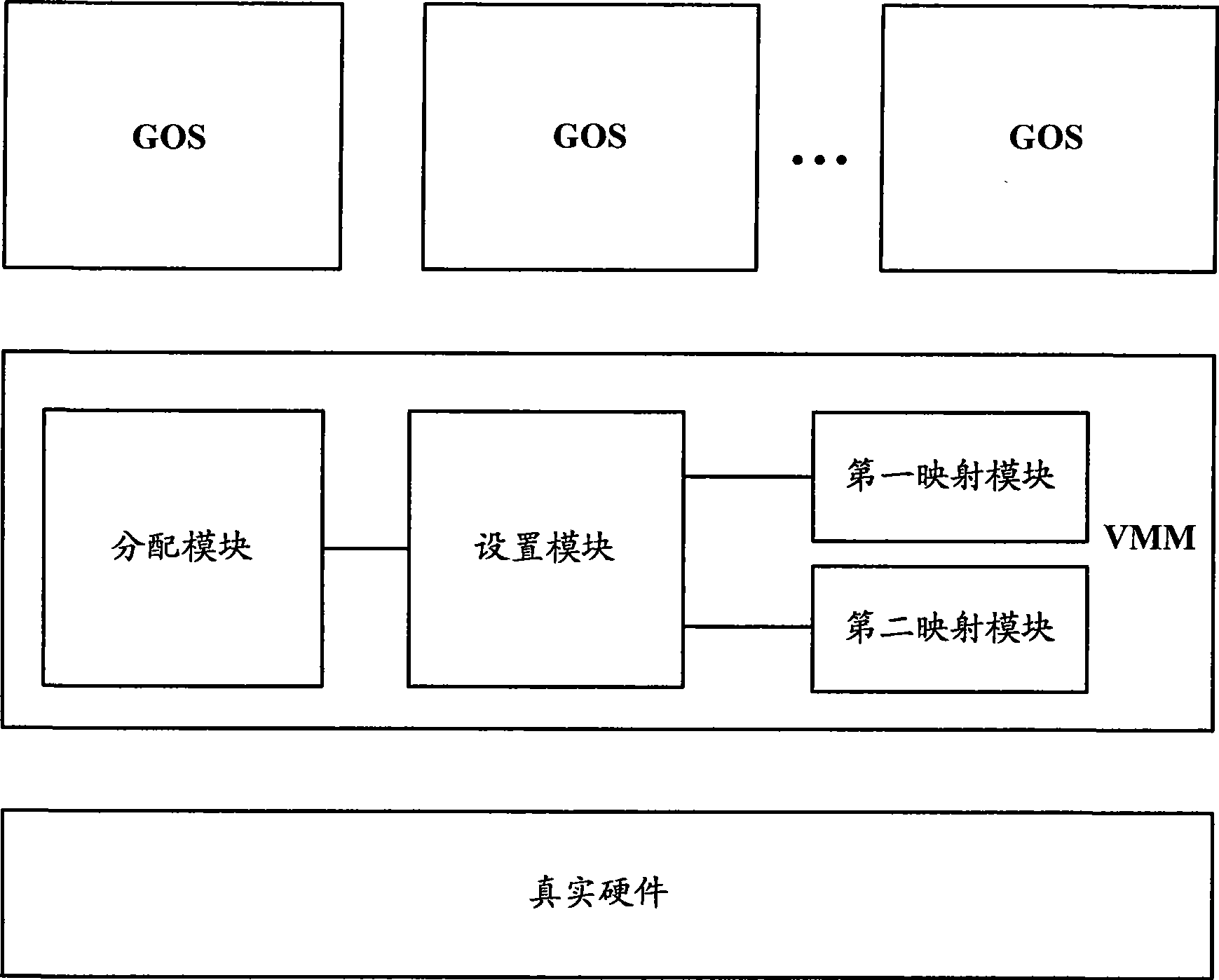

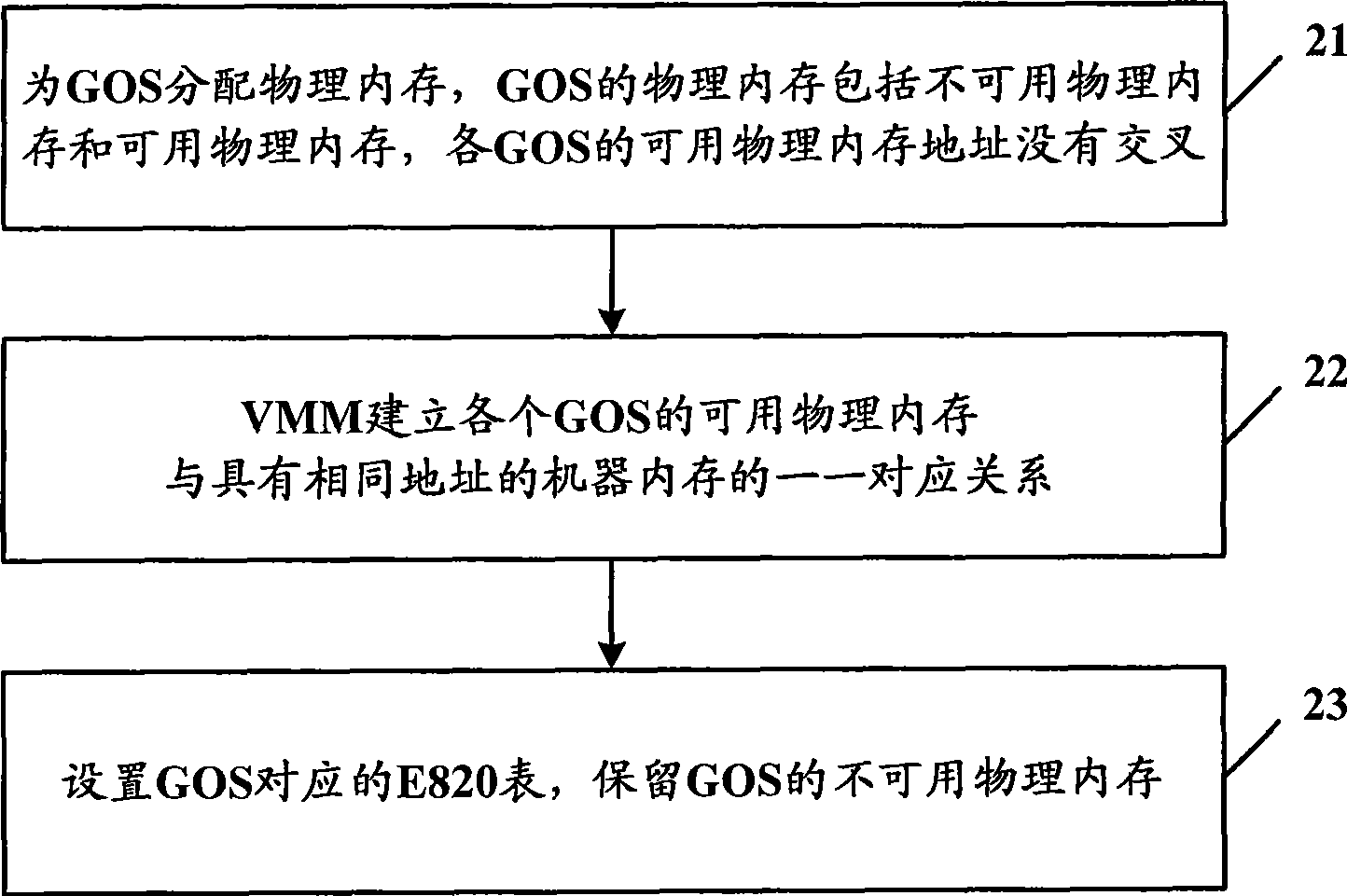

Virtual machine monitor, virtual machine system and its internal memory processing method

ActiveCN101470633AAchieve accessResource allocationMemory adressing/allocation/relocationInternal memoryOperational system

The invention provides a virtual machine monitor, a virtual machine system and a memory treatment method, wherein the virtual machine monitor comprises a distribution module, a first setting module and a first mapping module, the distribution module is used to respectively distribute physical memory for each client operating system in a plurality of client operating systems, the first setting module is used to arrange available physical memory in the physical memory which corresponds to each client operating system, the addresses of the available physical memory which corresponds to each client operating system do not cross. The first mapping module is used to build a corresponding first mapping relation between the available physical memory which corresponds to each client operating system and a first machine memory, and the address of the first machine memory is same to the address of the available physical memory which corresponds to each client operating system. The invention achieves the DMA visiting of a plurality of GOS.

Owner:LENOVO (BEIJING) CO LTD

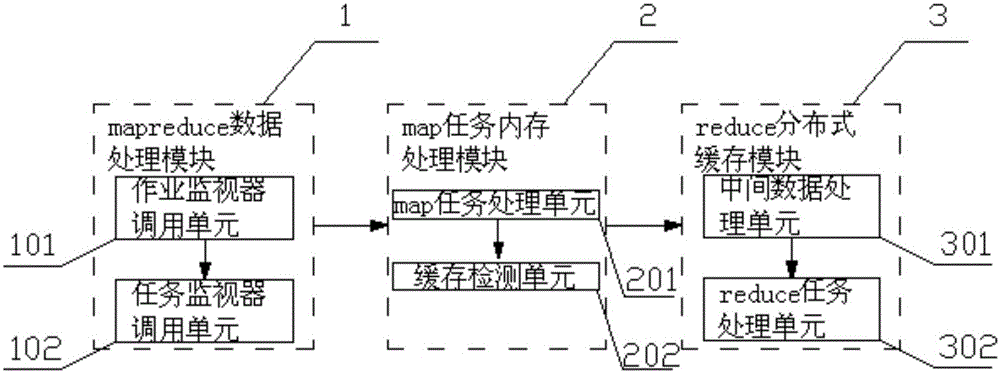

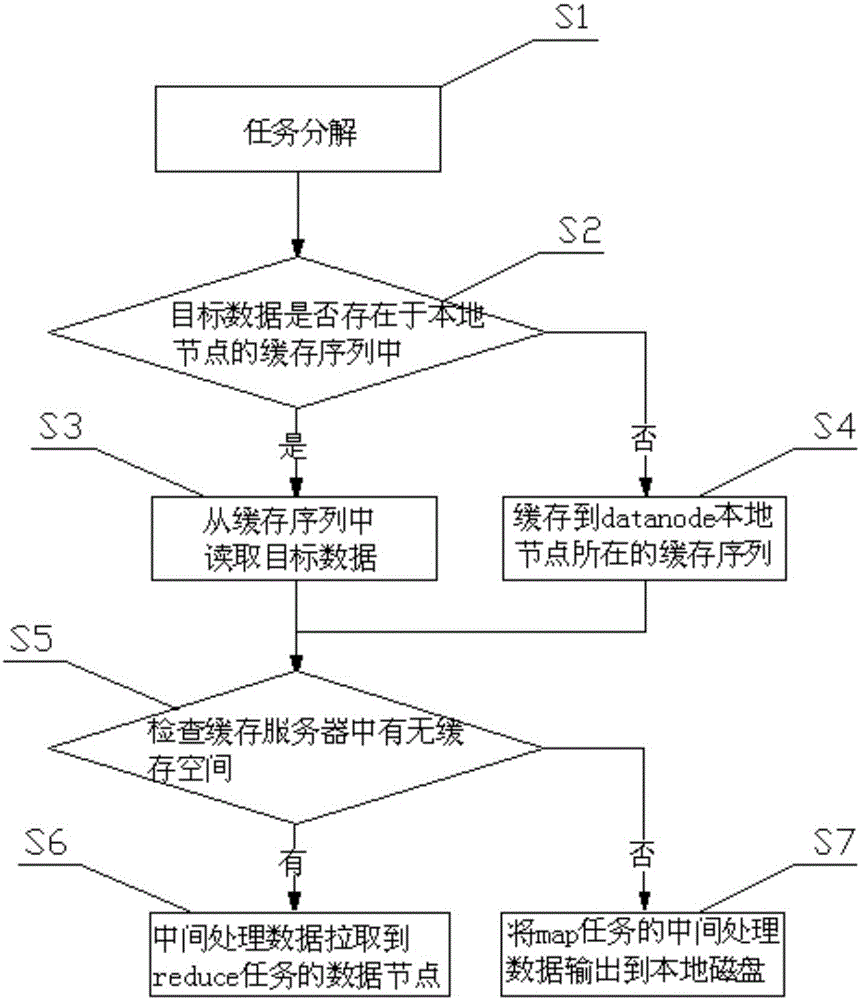

Data processing system and method based on distributed caching

ActiveCN105138679AImplement localizationImprove hit rateSpecial data processing applicationsMemory processingData processing system

The invention relates to a data processing system based on distributed caching. The data processing system comprises a mapreduce data processing module, a map task memory processing module and a reduce distributed caching module, wherein the mapreduce data processing module is used for decomposing submitted user jobs into multiple map tasks and multiple reduce tasks, the map task memory processing module is used for processing the map tasks, and the reduce distributed caching module is used for processing the map tasks through the reduce tasks. The invention further relates to a data processing method based on distributed caching. The data processing system and method have the advantages of mainly serving for the map tasks, optimizing map task processing data, ensuring that the map can find target data within the shortest time and transmitting an intermediate processing result at the highest speed; data transmission quantity can be reduced, the data can be processed in a localized mode, the data hit rate is increased, and therefore the execution efficiency of data processing is promoted.

Owner:GUILIN UNIV OF ELECTRONIC TECH

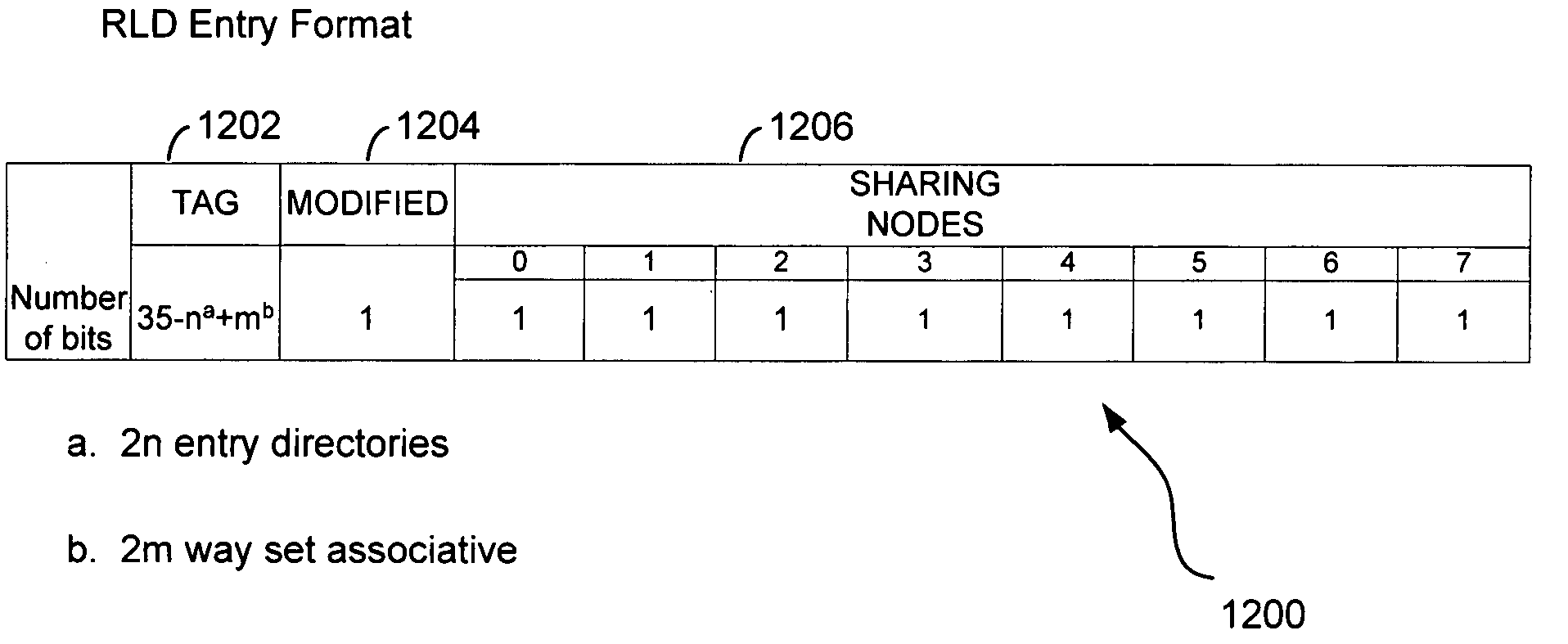

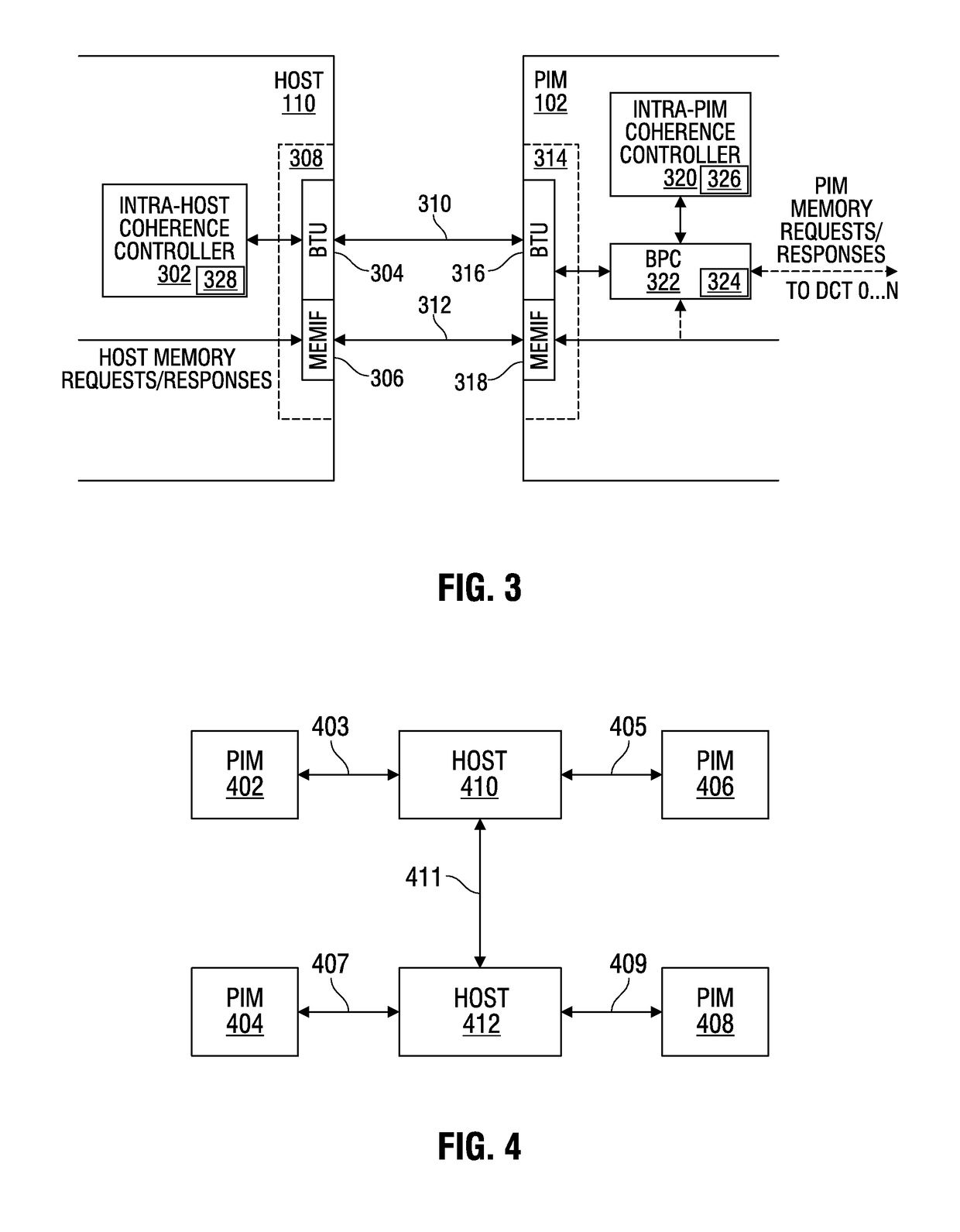

Cache coherence for processing in memory

ActiveUS20170344479A1Memory architecture accessing/allocationMemory systemsInteroperability ProblemCache coherence

A cache coherence bridge protocol provides an interface between a cache coherence protocol of a host processor and a cache coherence protocol of a processor-in-memory, thereby decoupling coherence mechanisms of the host processor and the processor-in-memory. The cache coherence bridge protocol requires limited change to existing host processor cache coherence protocols. The cache coherence bridge protocol may be used to facilitate interoperability between host processors and processor-in-memory devices designed by different vendors and both the host processors and processor-in-memory devices may implement coherence techniques among computing units within each processor. The cache coherence bridge protocol may support different granularity of cache coherence permissions than those used by cache coherence protocols of a host processor and / or a processor-in-memory. The cache coherence bridge protocol uses a shadow directory that maintains status information indicating an aggregate view of copies of data cached in a system external to a processor-in-memory containing that data.

Owner:ADVANCED MICRO DEVICES INC

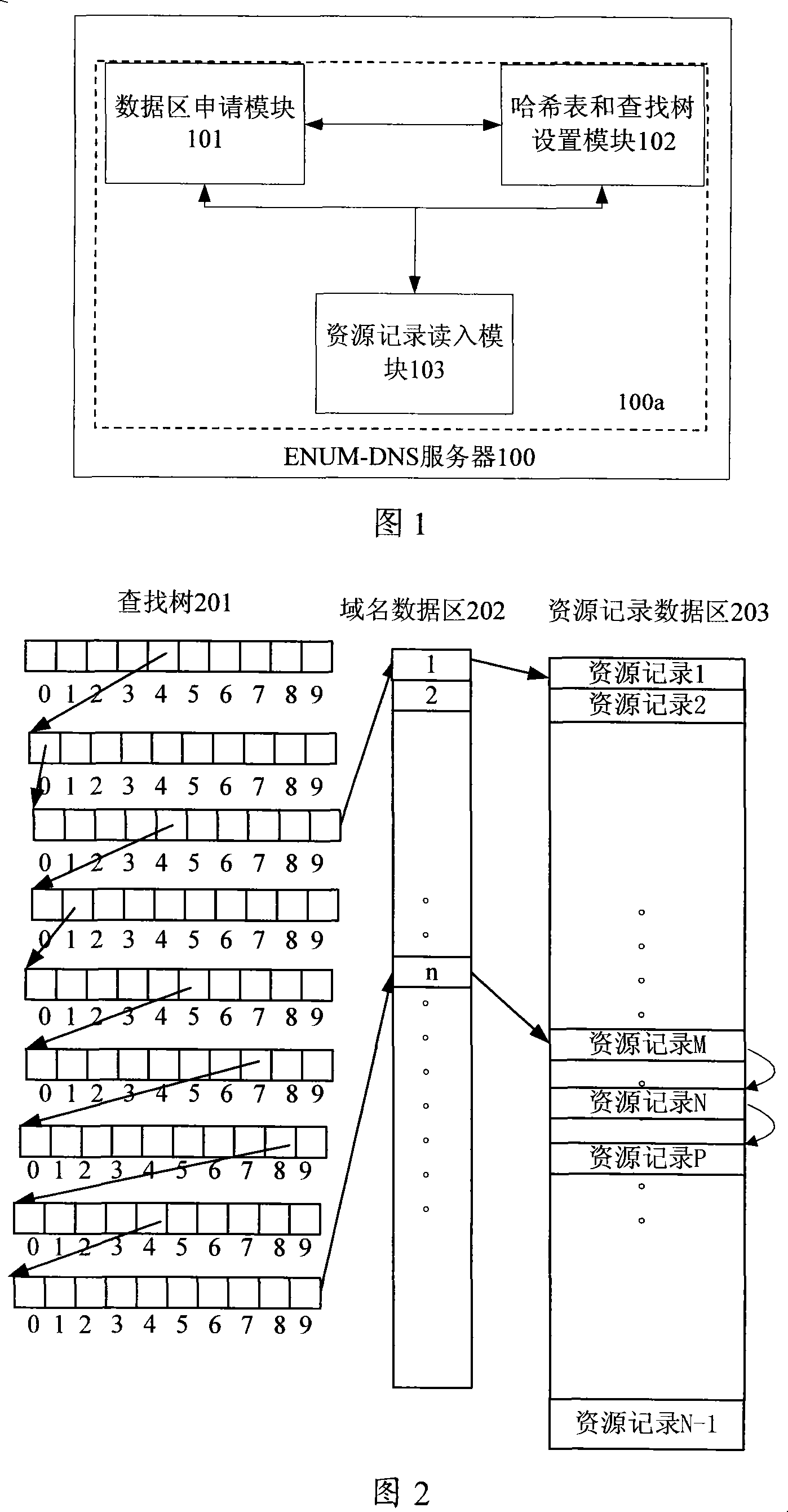

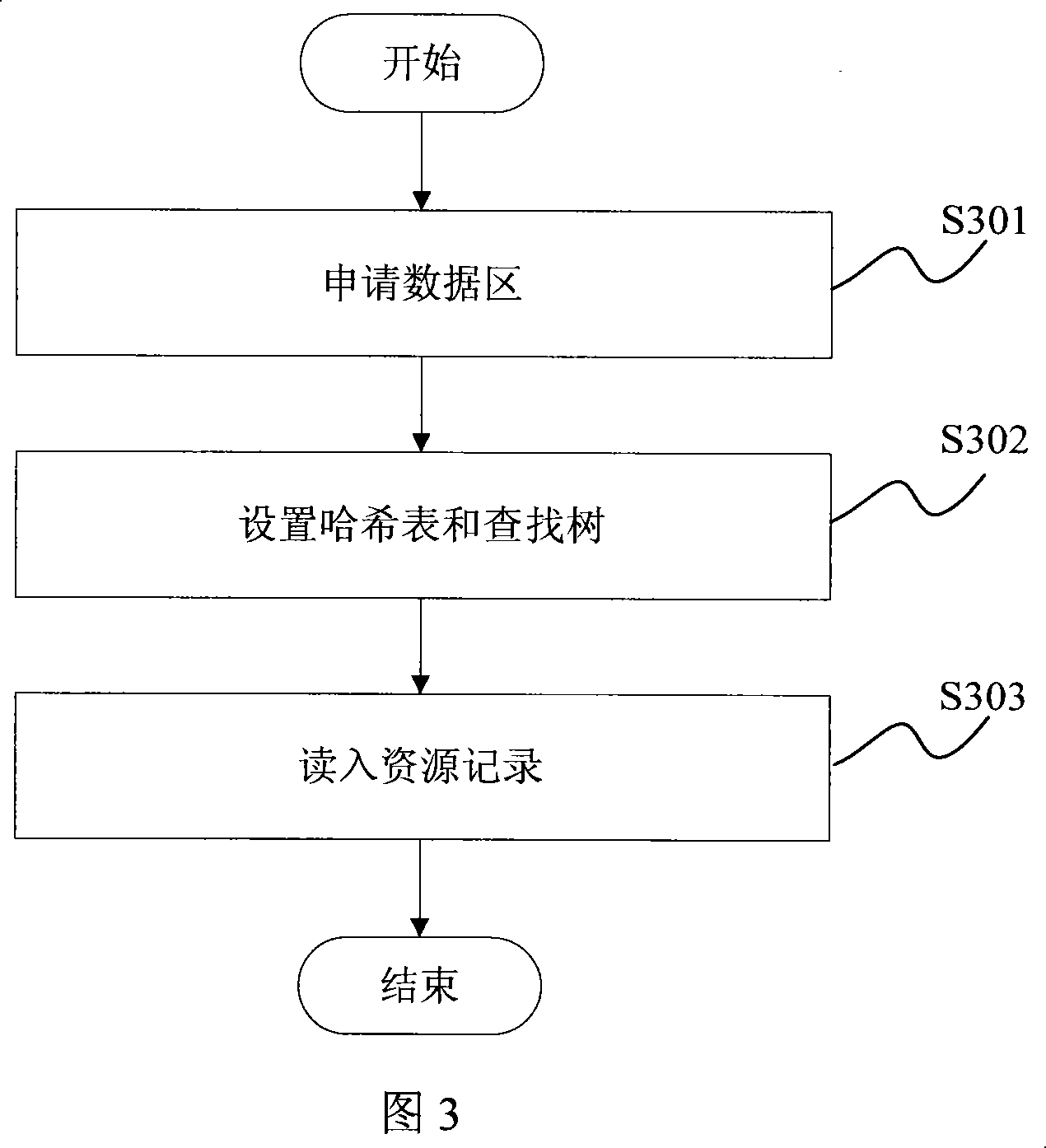

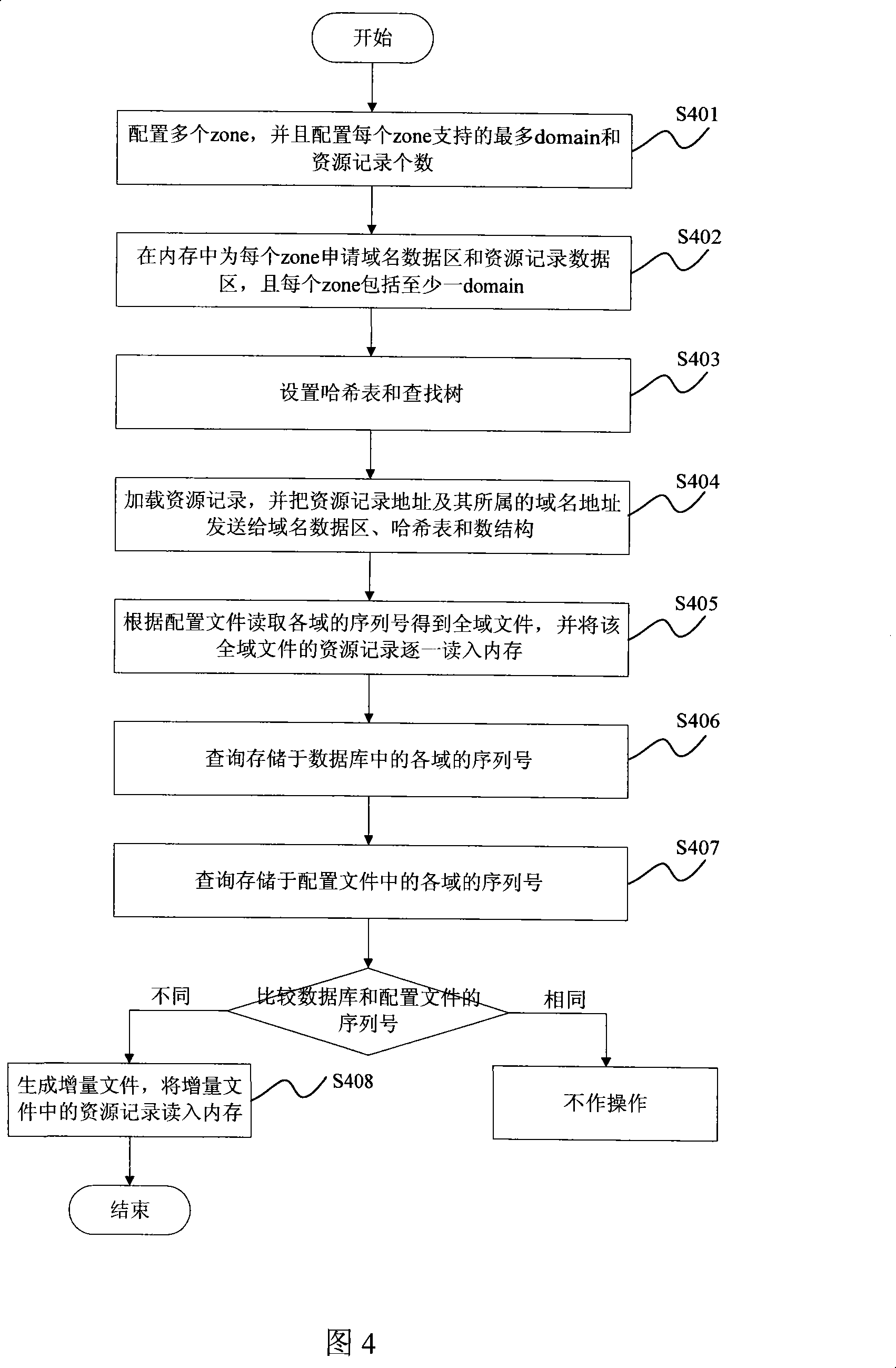

A memory processing method and device of telephone number and domain name mapping server

InactiveCN101102336AMeet the requirements for resource recordsRapid positioningTransmissionSpecial data processing applicationsDomain nameMemory processing

The method comprises: in memory, applying a domain name data area and a resource record data area for each domain; each domain comprises at least one domain name; in the memory, setting up at least one harsh table and a search tree; the node of harsh table saves non-digital portion of the domain name and its corresponding search tree location; the node of the search tree saves the digital portion of the domain name and its domain name data area location; dynamically applying the resource records, saving the initial resource records corresponding to each domain name into the domain name data area, and saving the resource records corresponding to each domain name into the resource records data area. The invention also provides a phone number mapping domain name server thereof.

Owner:ZTE CORP

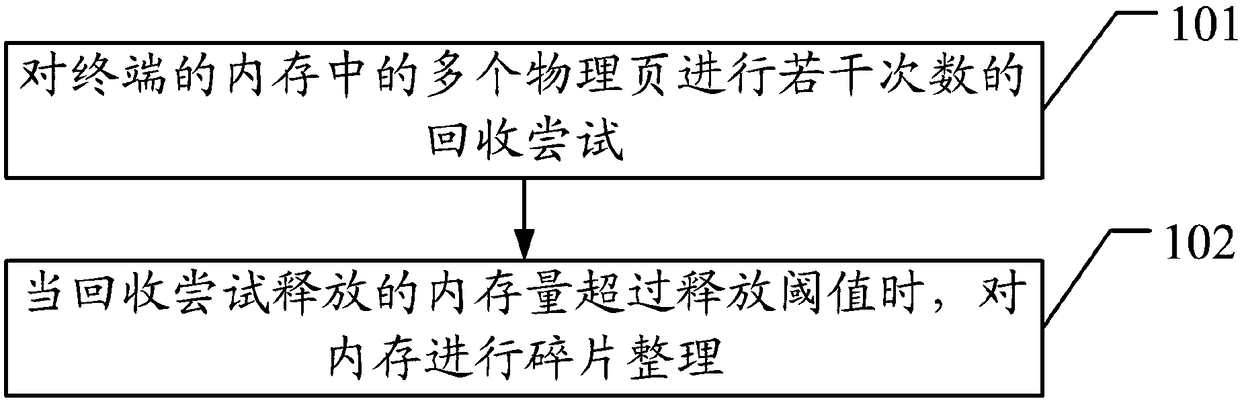

Memory processing method and apparatus, computer apparatus and computer readable storage medium

ActiveCN108205473AImprove finishing efficiencyIncrease available memory spaceResource allocationMemory adressing/allocation/relocationMemory processingComputational science

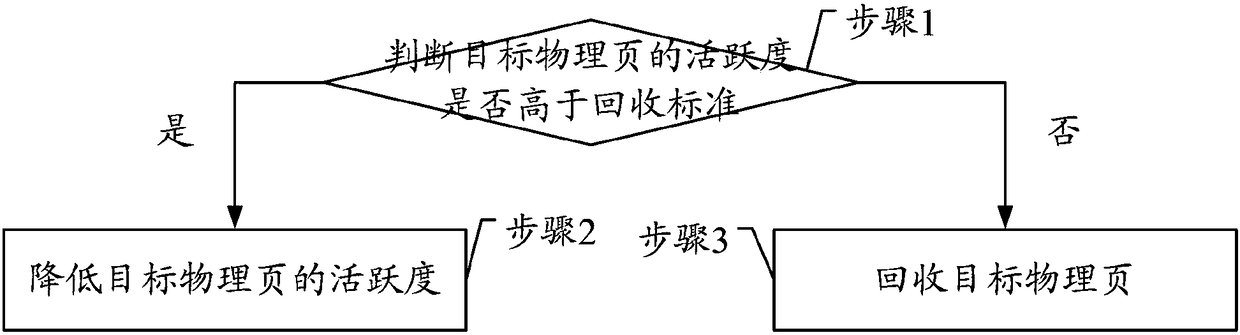

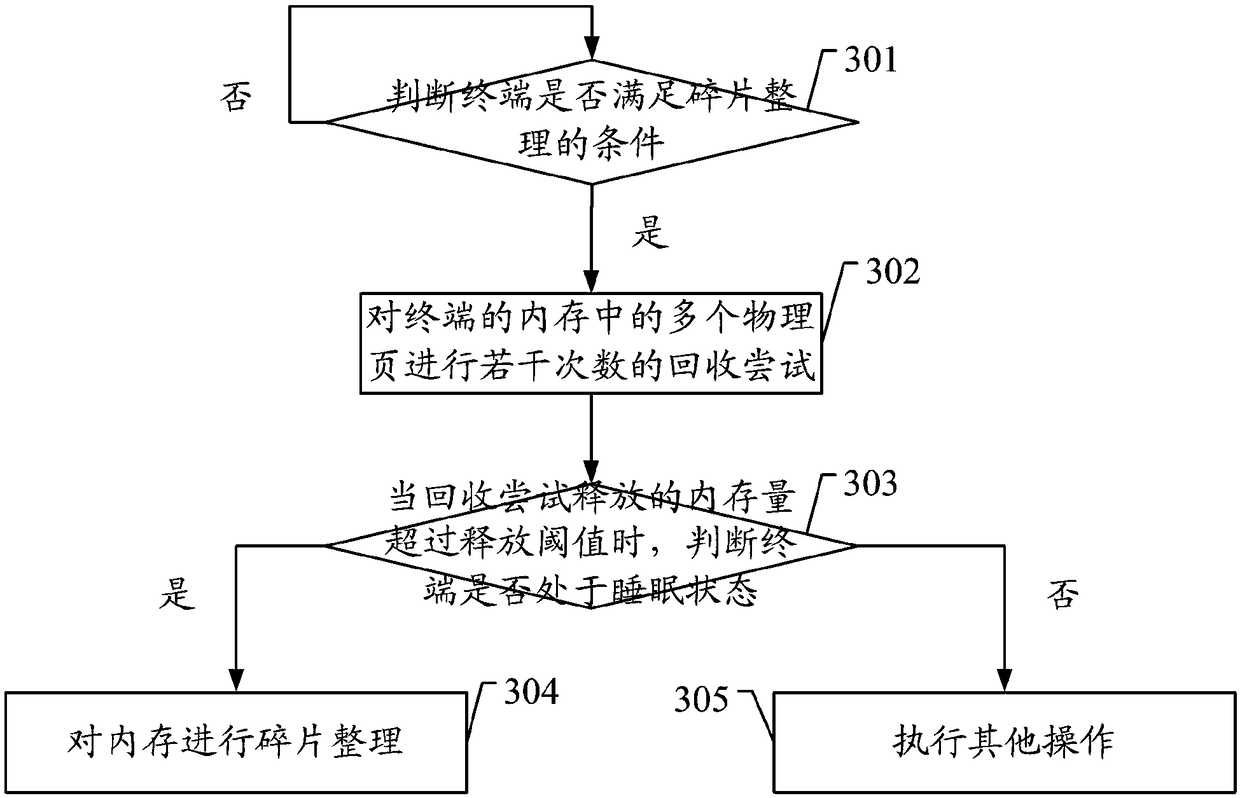

Embodiments of the invention disclose a memory processing method and apparatus, a computer apparatus and a computer readable storage medium, which relate to the technical field of computers and are used for solving the problem of difficulty in full utilization of defragmentation for expanding available memory space in the prior art. The method comprises the steps of performing recycling attempt onmultiple physical pages in a memory of a terminal for multiple times; and when the memory capacity released by the recycling attempt exceeds a release threshold, performing defragmentation on the memory, wherein the process of performing the recycling attempt on the physical pages for one time comprises the steps of judging whether the activity degrees of the physical pages are higher than a recycling standard or not, wherein the activity degrees of the physical pages are used for marking the activity levels of the physical pages, and the values of the activity degrees of the physical pages have positive correlation with the activity levels of the physical pages; and if yes, reducing the activity degrees of the physical pages, otherwise, recycling the physical pages.

Owner:MEIZU TECH CO LTD

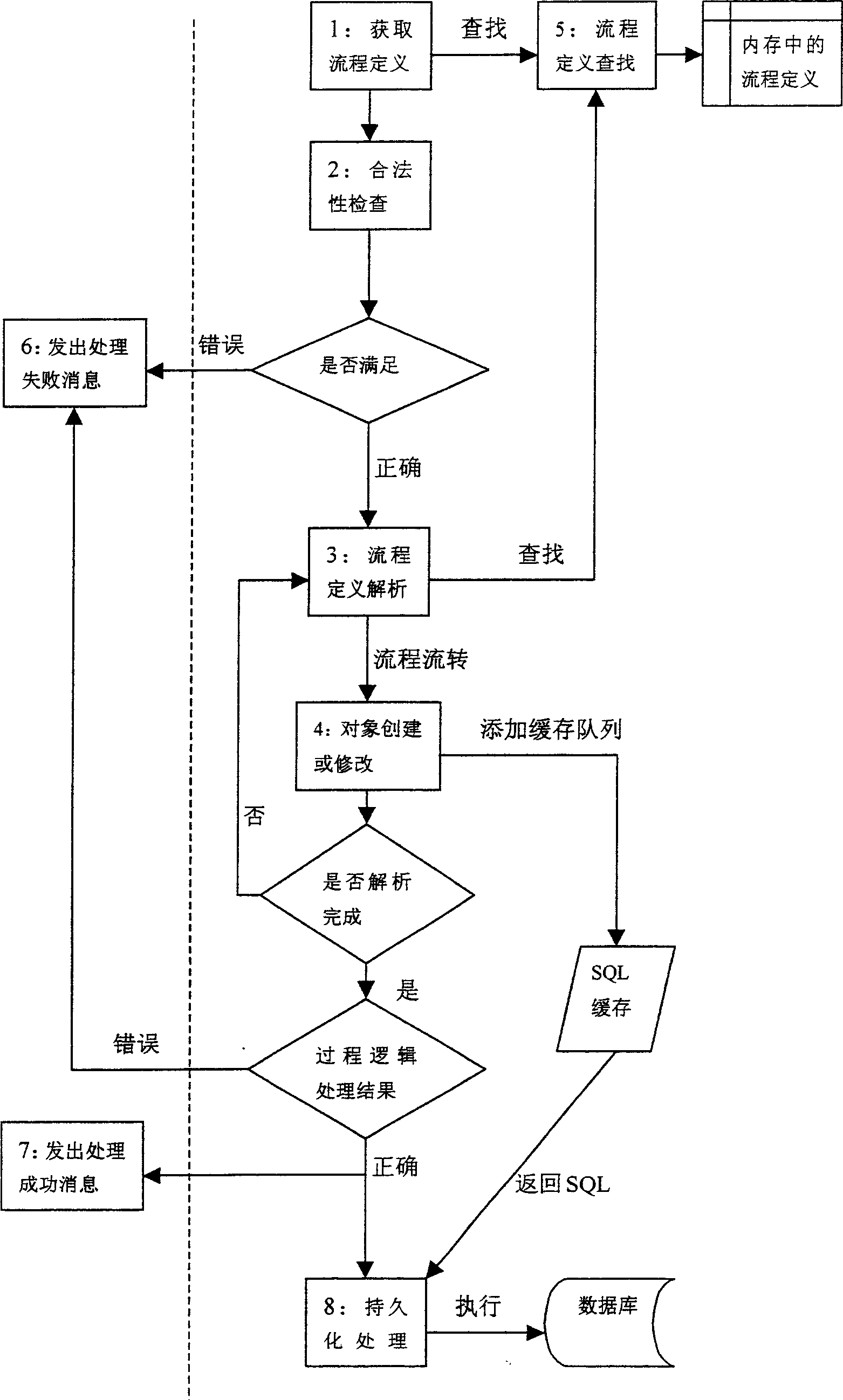

High-efficient processing method of working-fluid engine

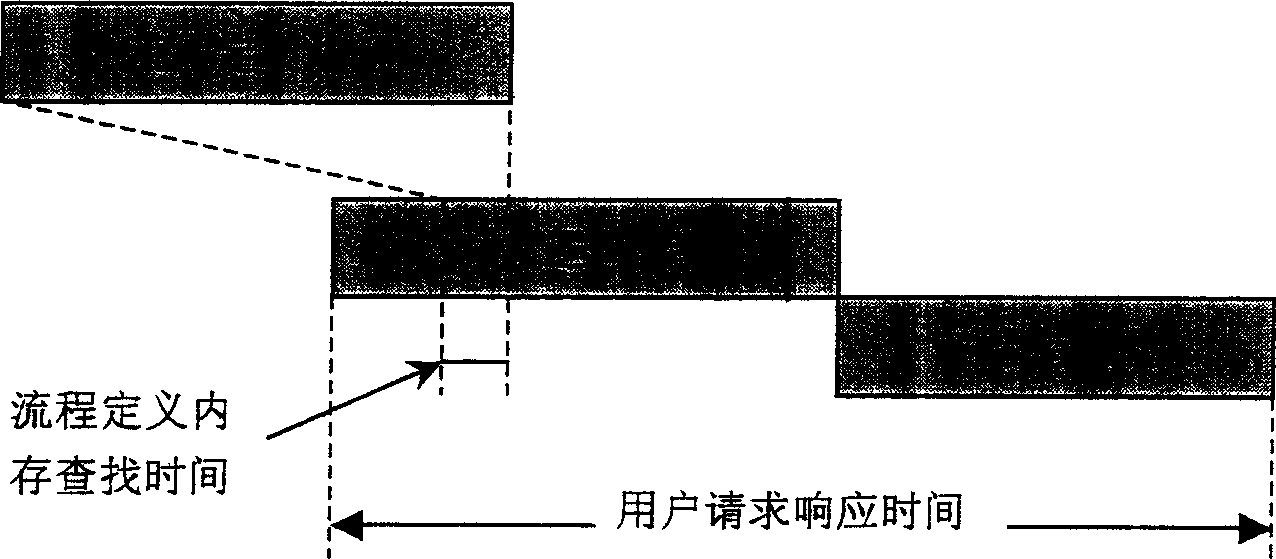

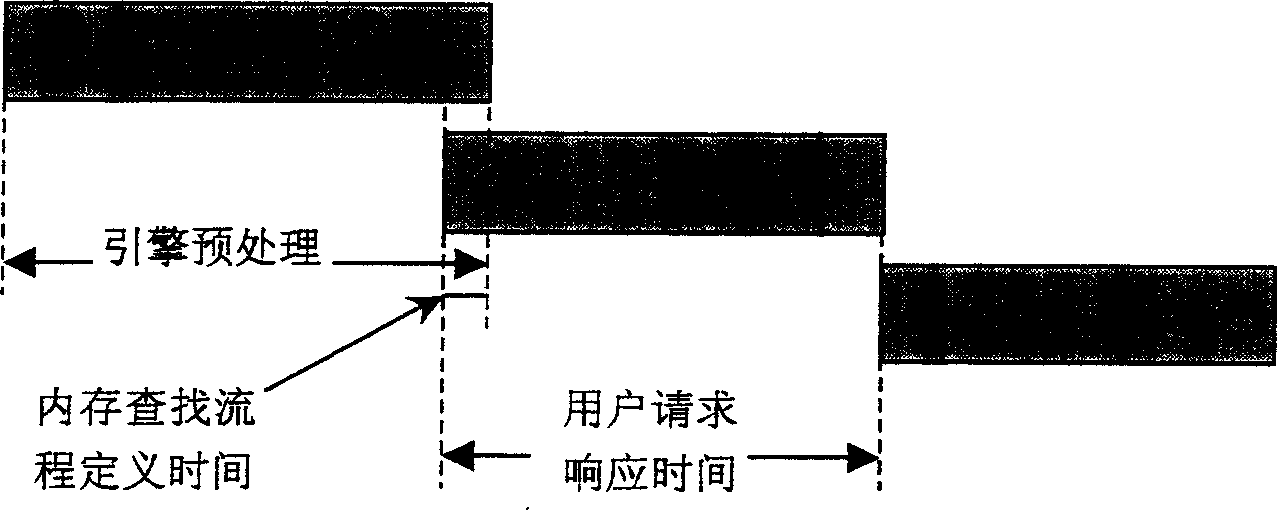

InactiveCN1680923AExtension of timeShort response timeMultiprogramming arrangementsSpecial data processing applicationsInternal memoryLinear growth

A method for processing work flow engine in high - efficiency way includes using mode of application program to operate work flow engine and dividing course of processing request by engine to be external resource access part using internal memory to seek, object logic conversion part used to check all errors occurred possibly in execution course and object persisting part executed at time of returning back to client request.

Owner:NEUSOFT CORP

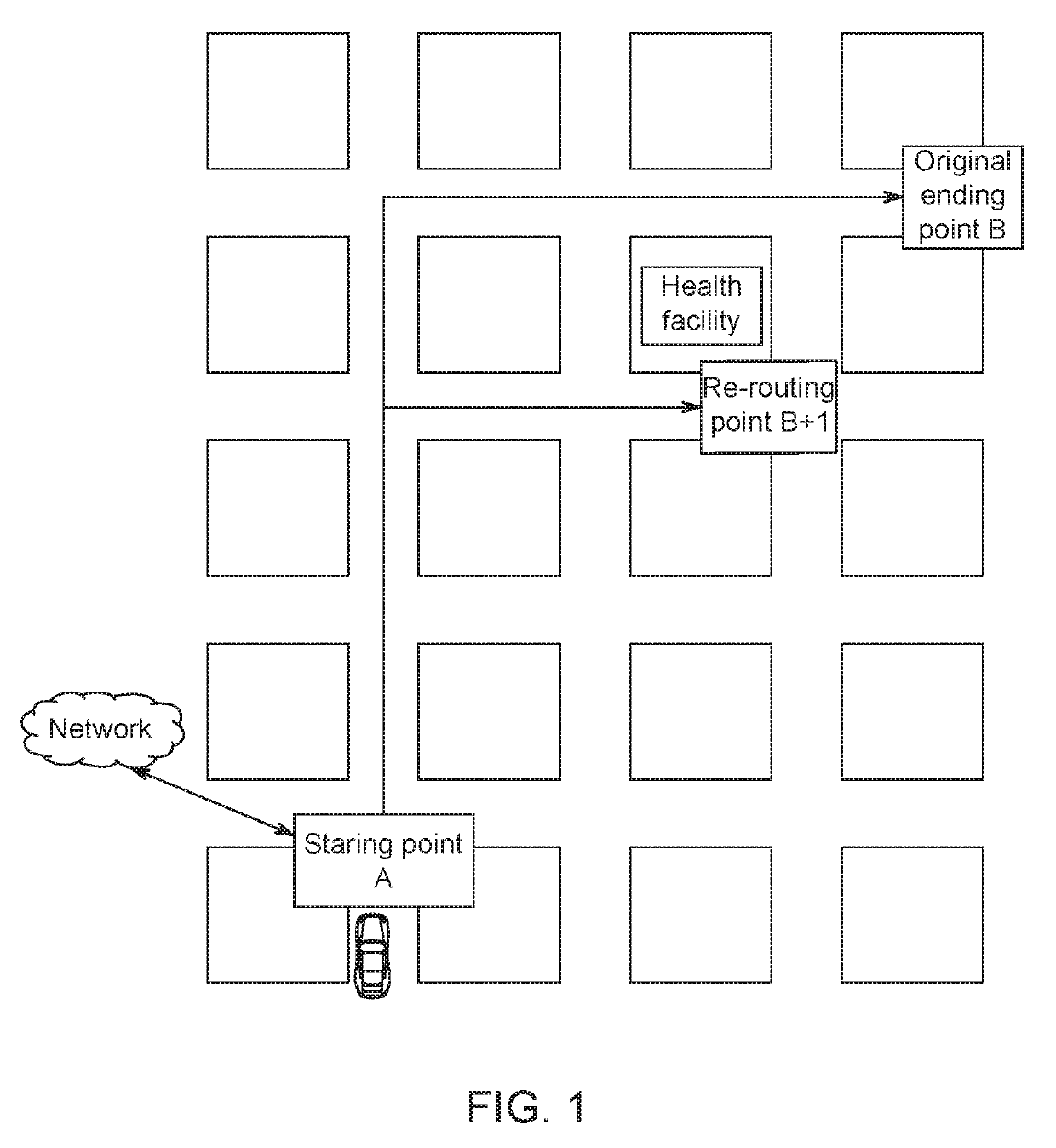

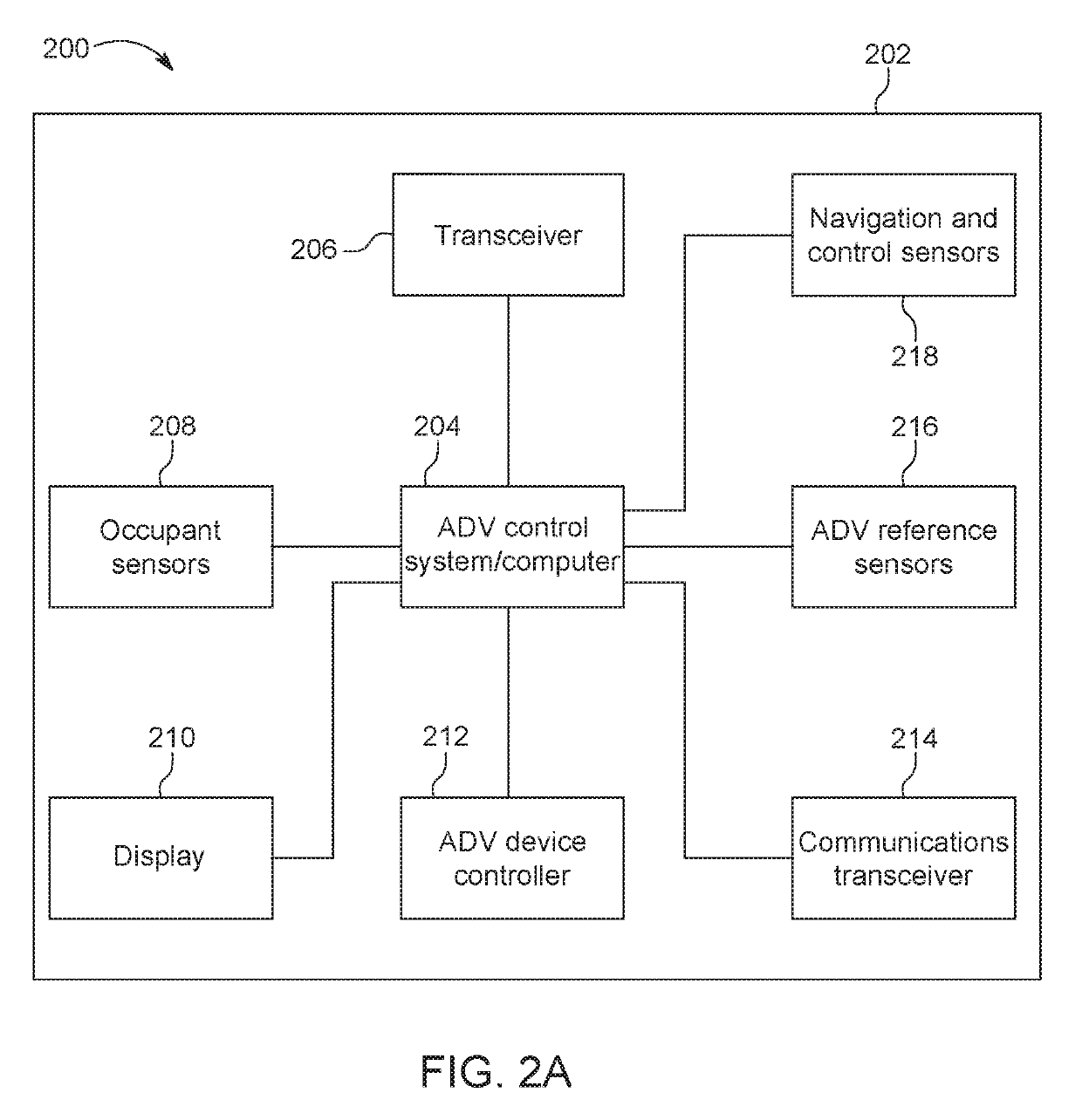

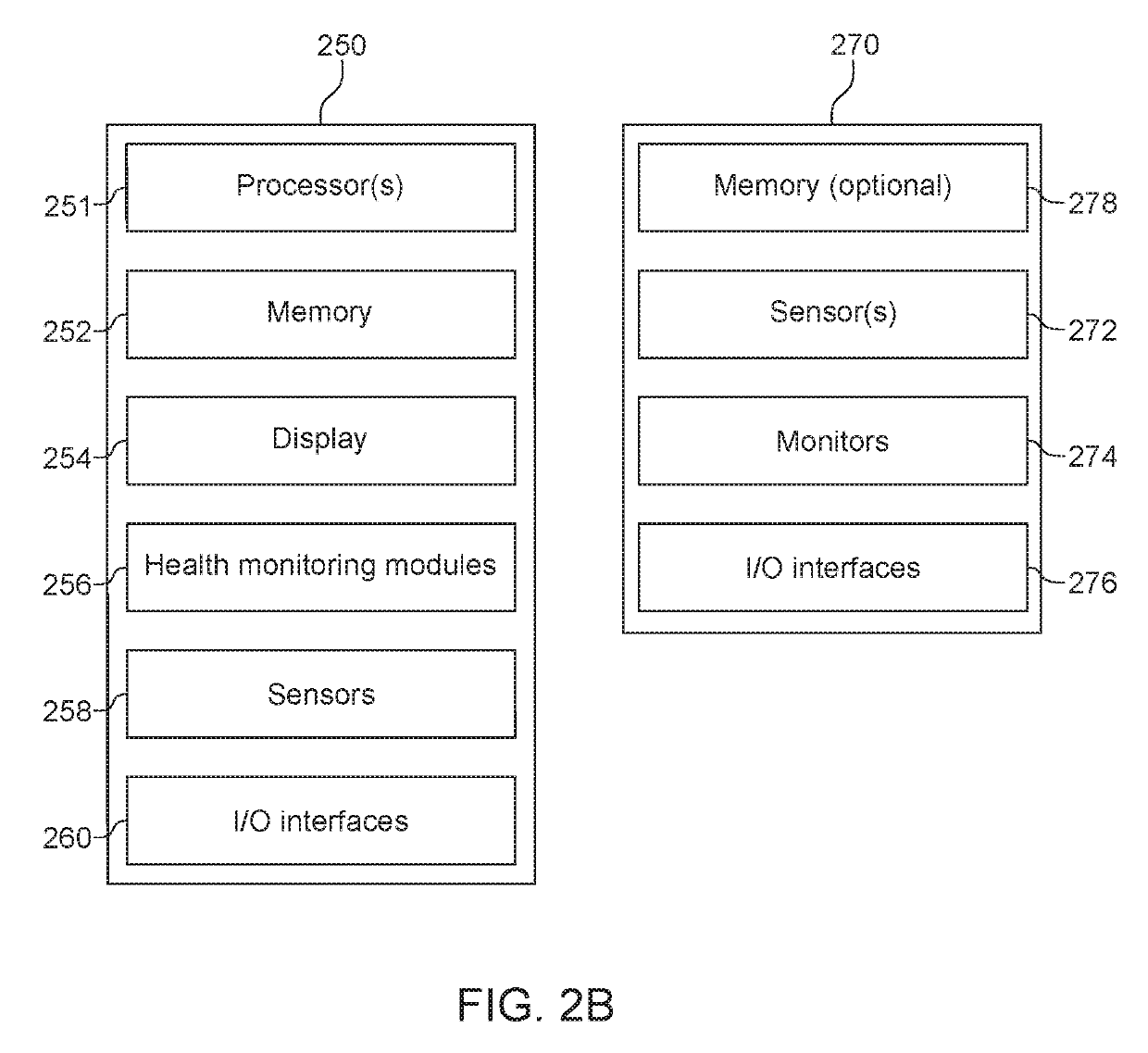

Re-Routing Autonomous Vehicles Using Dynamic Routing and Memory Management

ActiveUS20190277643A1Insufficient improvementInstruments for road network navigationAutonomous decision making processControl systemOn board

The invention relates to a system and method for navigating an autonomous driving vehicle (ADV) that utilizes ADV an-onboard computer and / or one or more ADV control system nodes in an ADV network platform. The on-board computer receives sensor data concerning one or more occupants occupying an ADV and / or the ADV itself and, upon a detection of an event, automatically initiates a dynamic routing algorithm that utilizes artificial intelligence to re-route the ADV to another destination, for example a healthcare facility. One or more embodiment of the system and method include an ADV on-board computer and / or one or more ADV network platform nodes that utilize in-memory processing to aid in the generation of routing, health and navigational information to advantageously navigate an ADV.

Owner:MICRON TECH INC

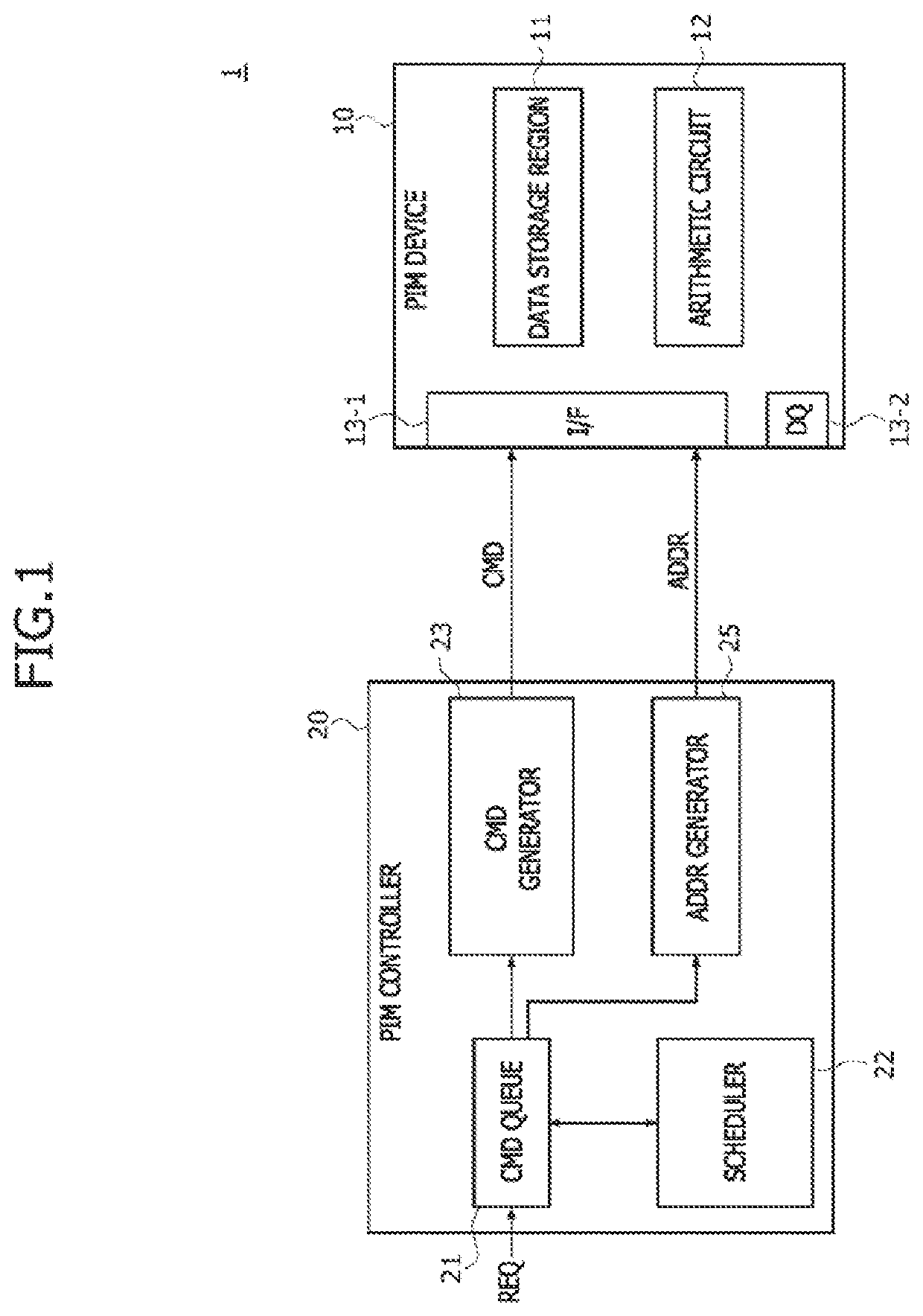

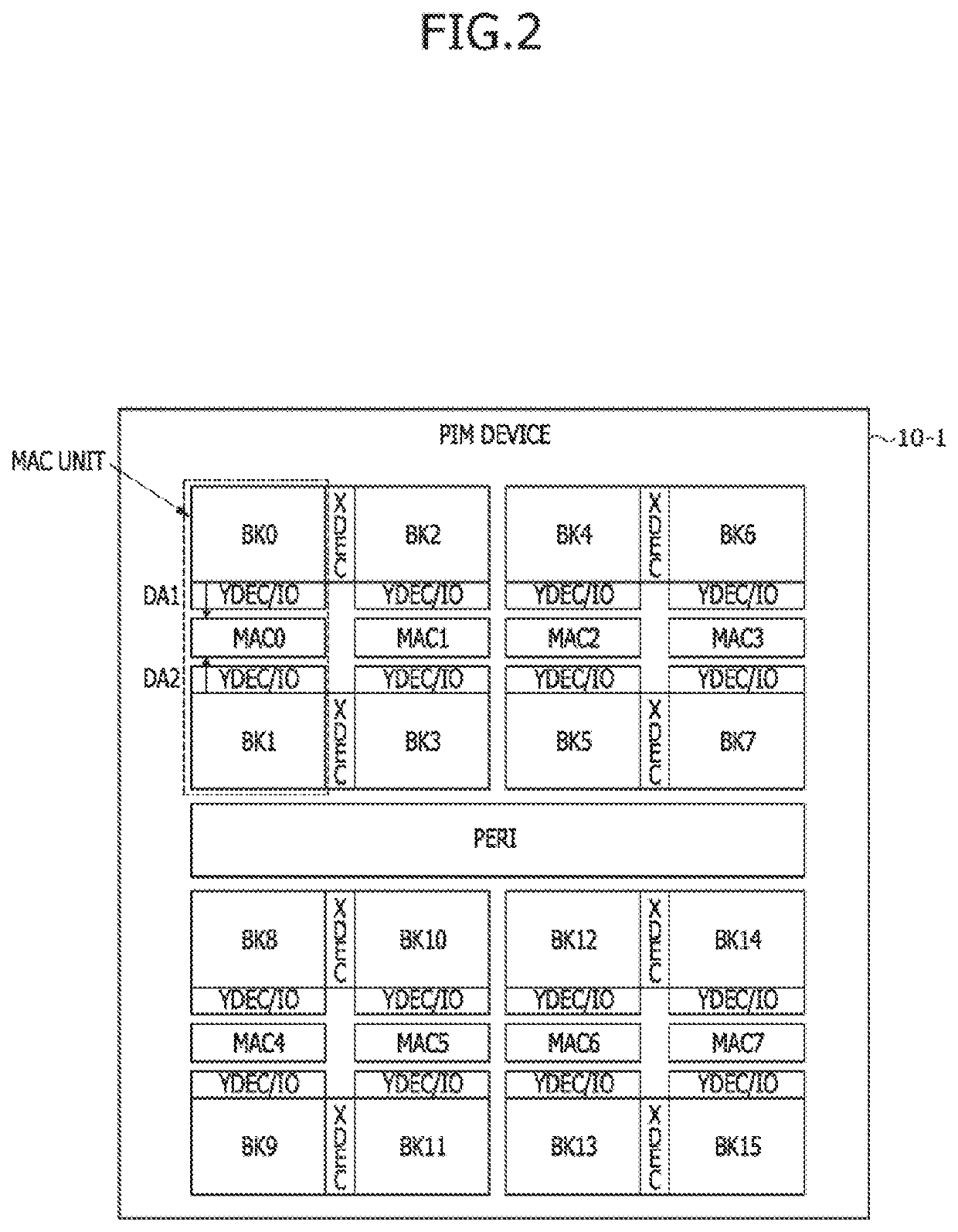

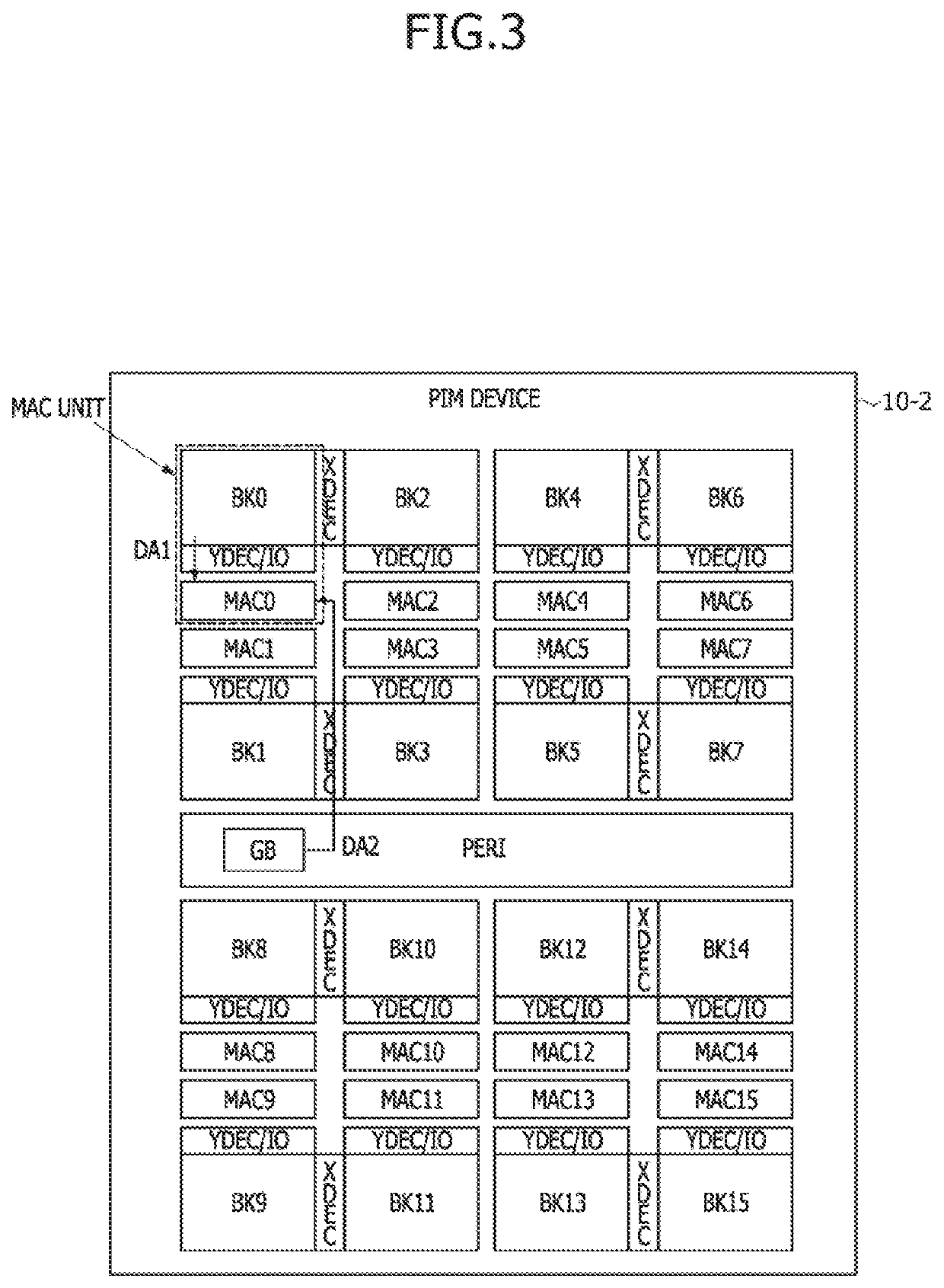

Processing-in-memory (PIM) devices

ActiveUS20210089390A1Computation using non-contact making devicesCode conversionComputer hardwareParallel computing

A Processing-In-Memory (PIM) device includes a first storage region and a multiplication / accumulation (MAC) calculator. The first storage region configured to store a first data. The MAC operator configured to execute a MAC calculation on the first data and second data in an MAC mode. When an error exists in the first data, the MAC operator compensates multiplication result data generated by a multiplying calculation of the first data and the second data and executes an adding calculation of the compensated multiplication result data.

Owner:SK HYNIX INC

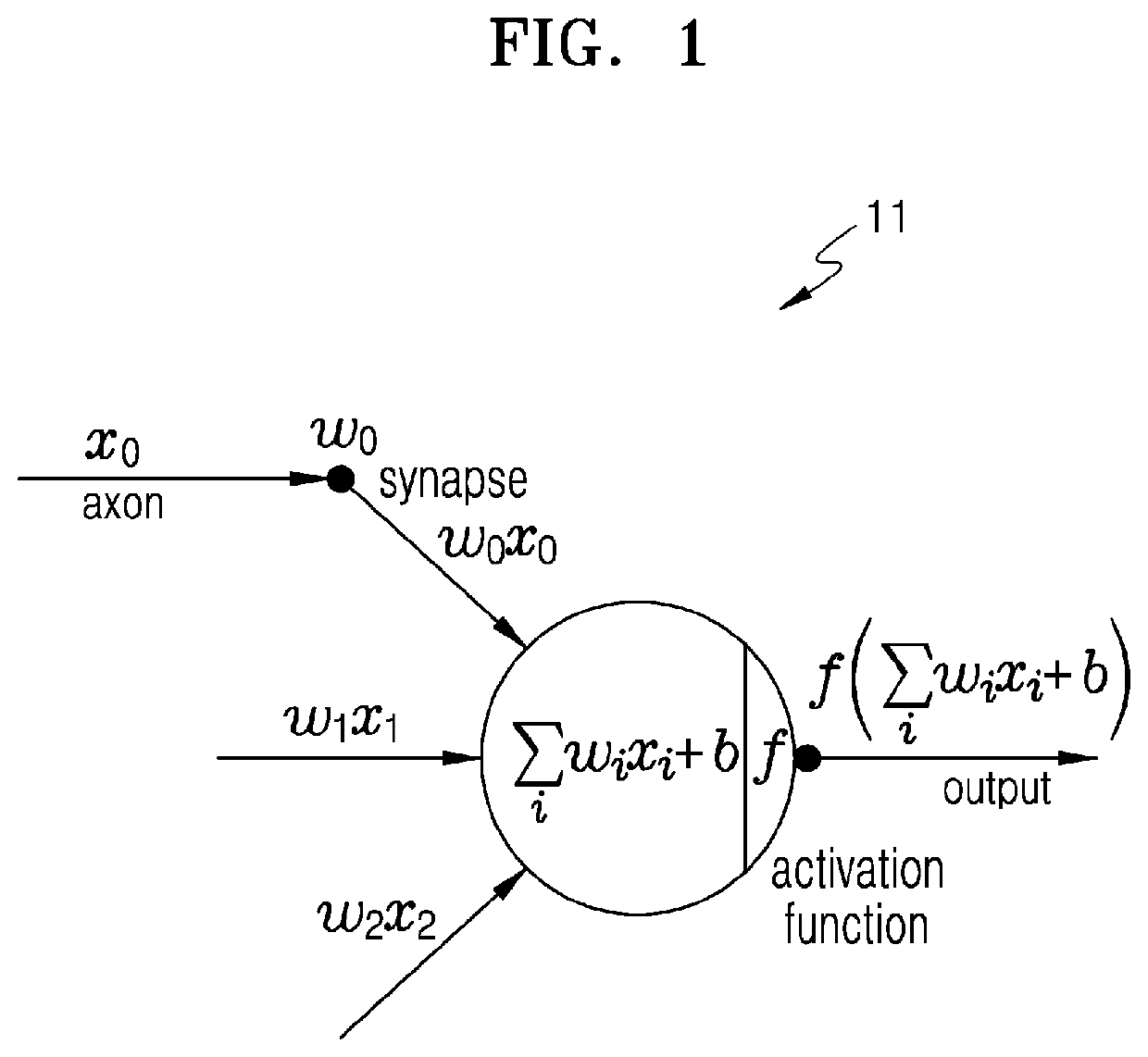

Apparatus and method with in-memory processing

An apparatus for performing in-memory processing includes a memory cell array of memory cells configured to output a current sum of a column current flowing in respective column lines of the memory cell array based on an input signal applied to row lines of the memory cells, a sampling circuit, comprising a capacitor connected to each of the column lines, configured to be charged by a sampling voltage of a corresponding current sum of the column lines, and a processing circuit configured to compare a reference voltage and a currently charged voltage in the capacitor in response to a trigger pulse generated at a timing corresponding to a quantization level, among quantization levels, time-sectioned based on a charge time of the capacitor, and determine the quantization level corresponding to the sampling voltage by performing time-digital conversion when the currently charged voltage reaches the reference voltage.

Owner:SAMSUNG ELECTRONICS CO LTD

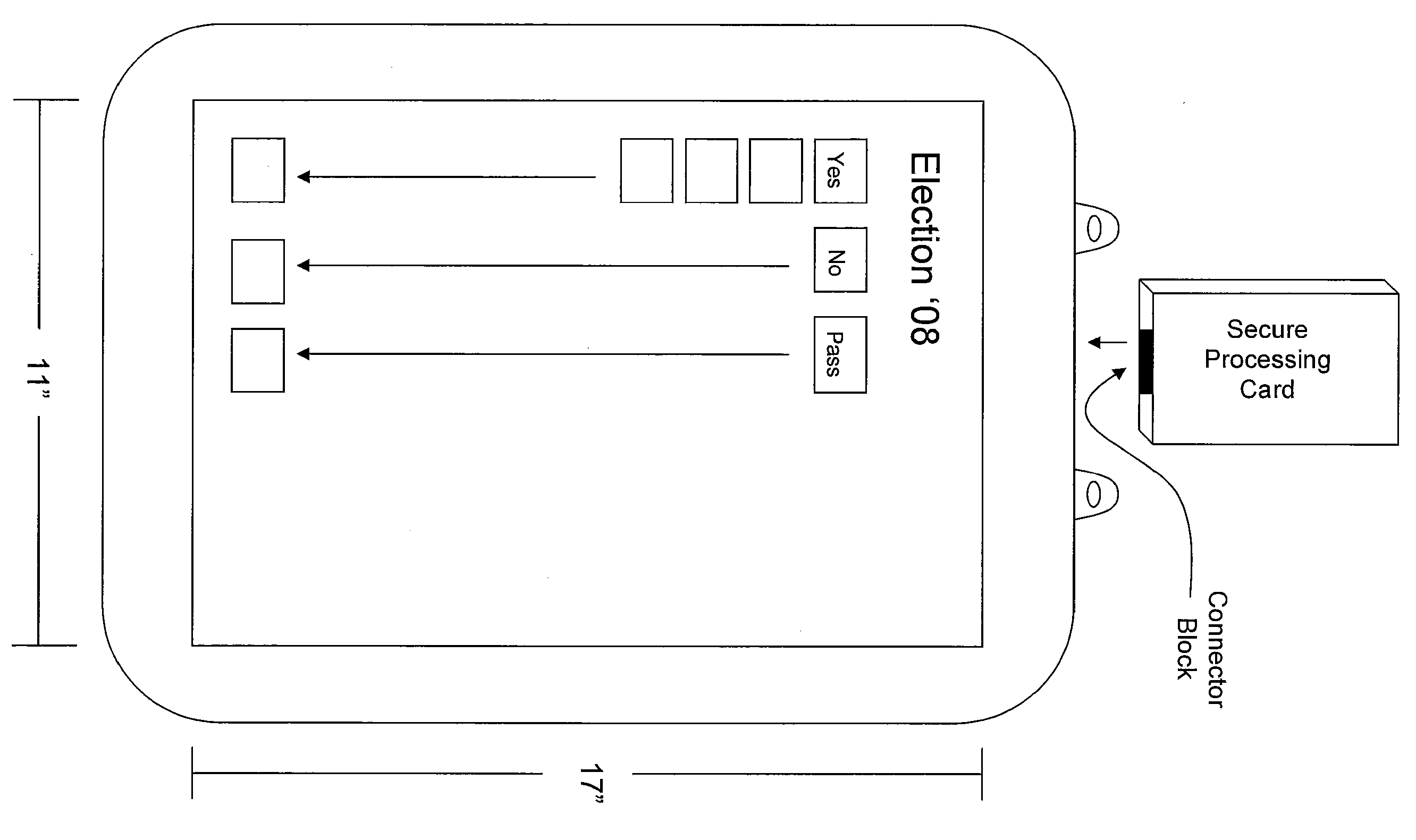

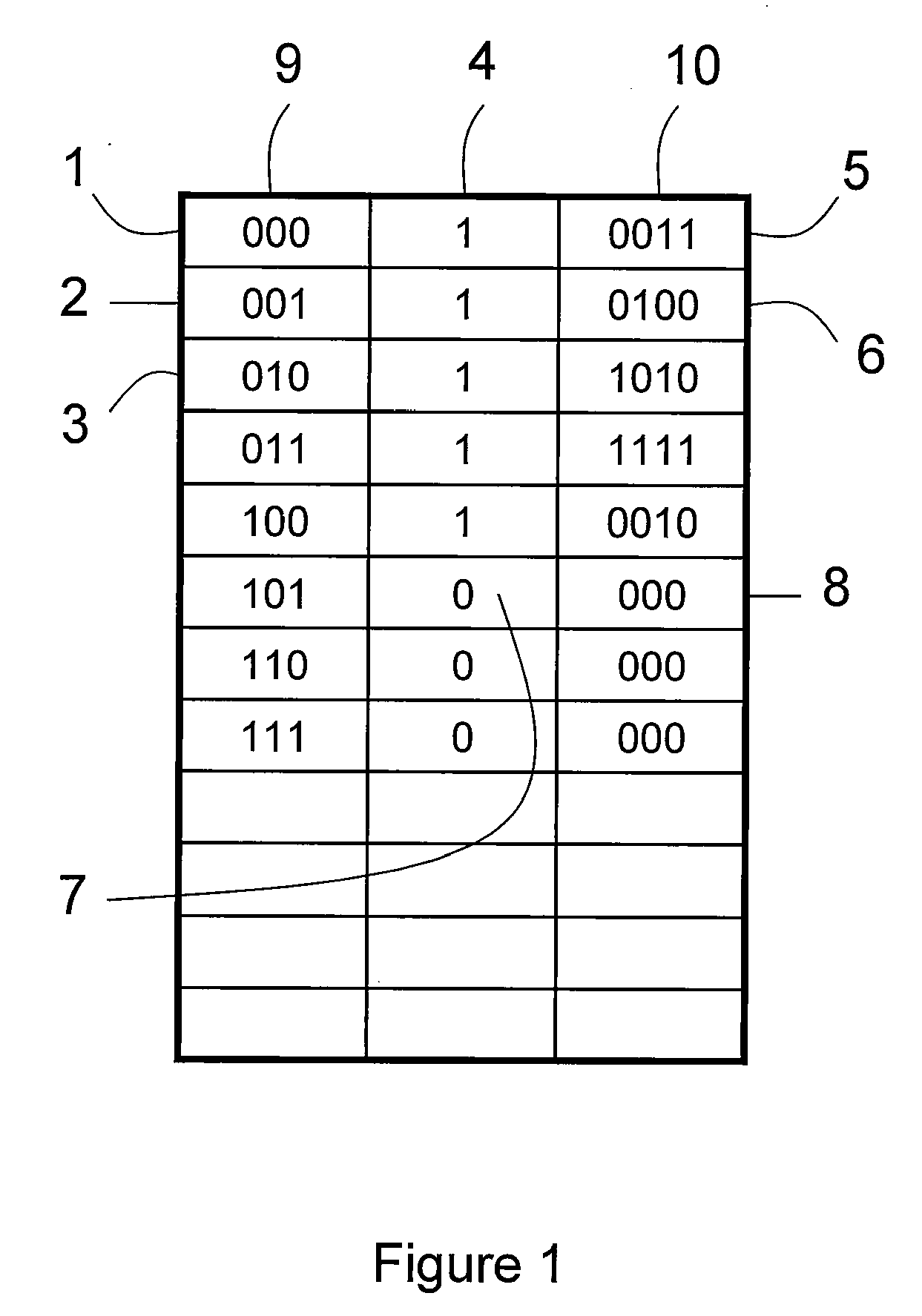

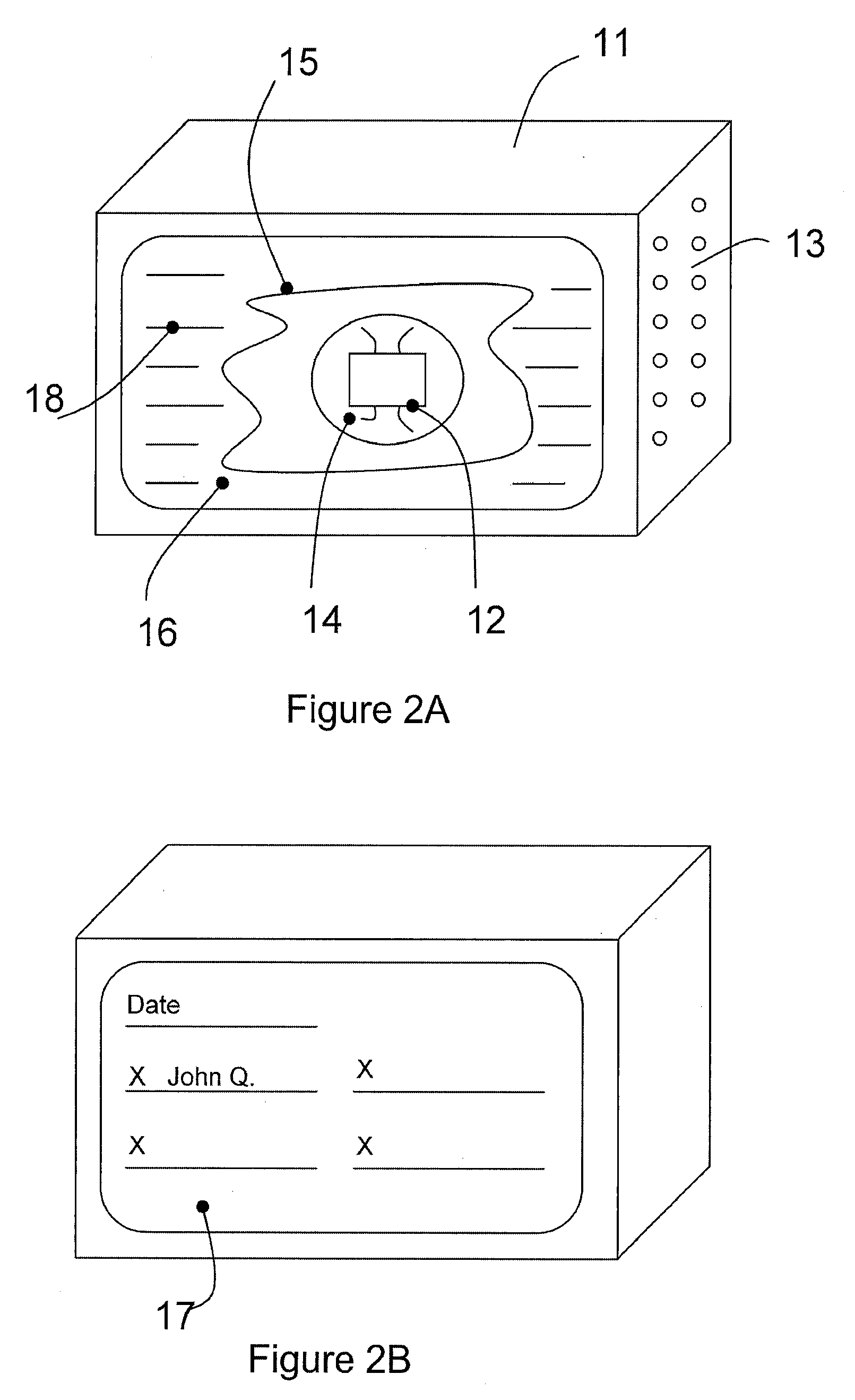

Voting Machine with Secure Memory Processing

InactiveUS20070170252A1Voting apparatusCo-operative working arrangementsComputer hardwareMemory processing

An integrated voting system includes a memory element configured with multiple lock bits. Lock bits are arranged according to vote records, thereby allowing each individual vote cast to be recorded securely, providing a tamper-resistant record of the vote count. The system forms a complete, integrated, secure voting system that can be re-used on different elections without requiring any updates, changes, or other maintenance.

Owner:ORTON KEVIN R

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com