Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

36results about How to "Relieve memory pressure" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

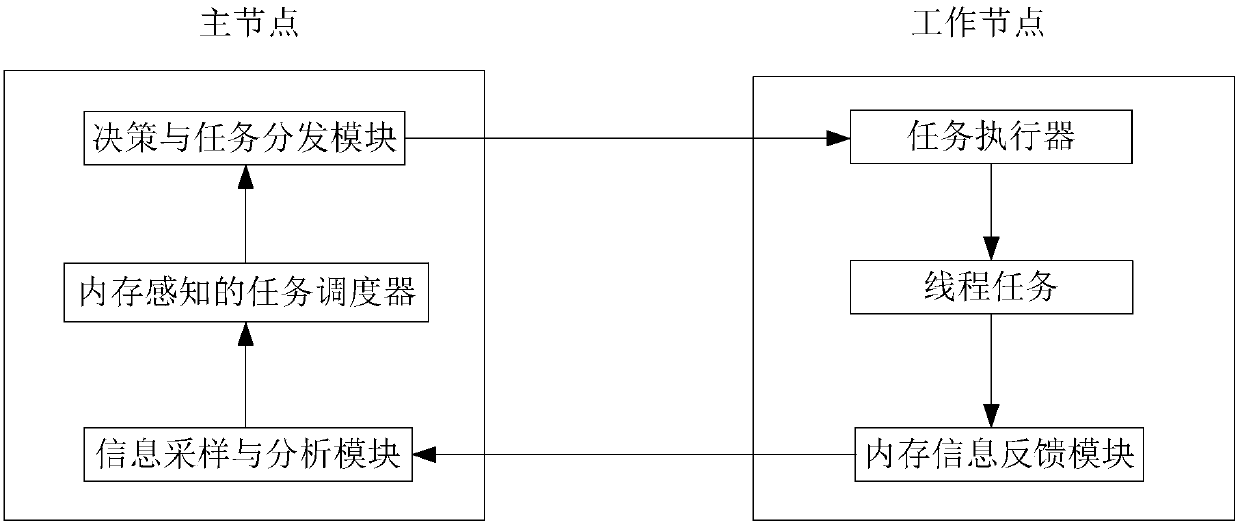

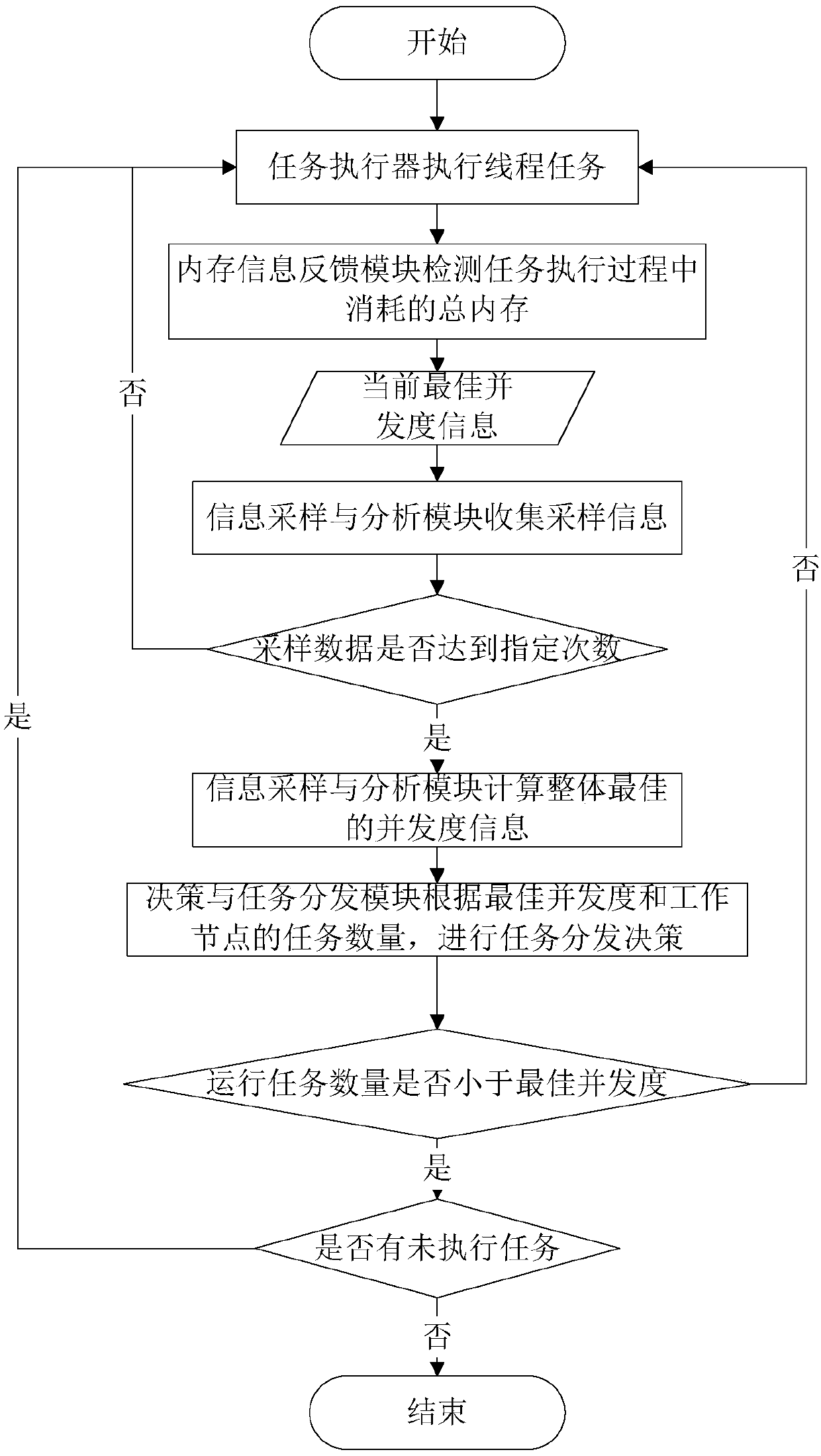

System for settling fierce competition of memory resources in big data processing system

ActiveCN105868025ARelieve memory pressureGuaranteed uptimeResource allocationData platformComputer module

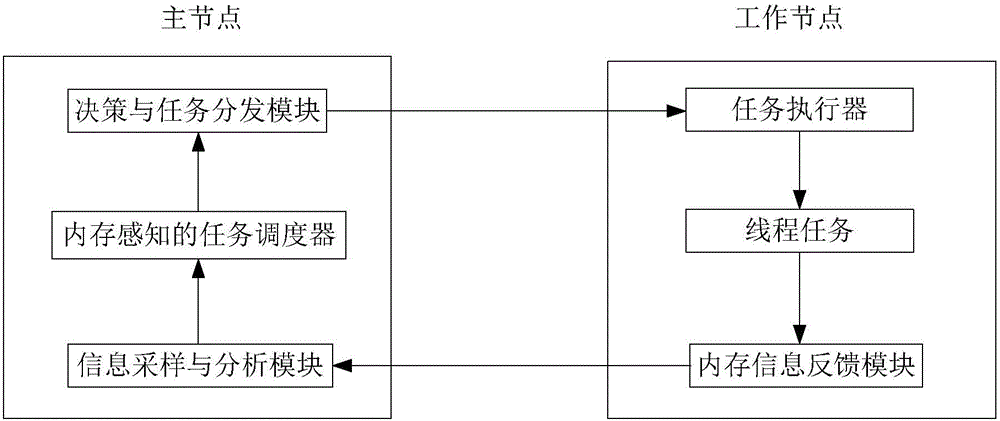

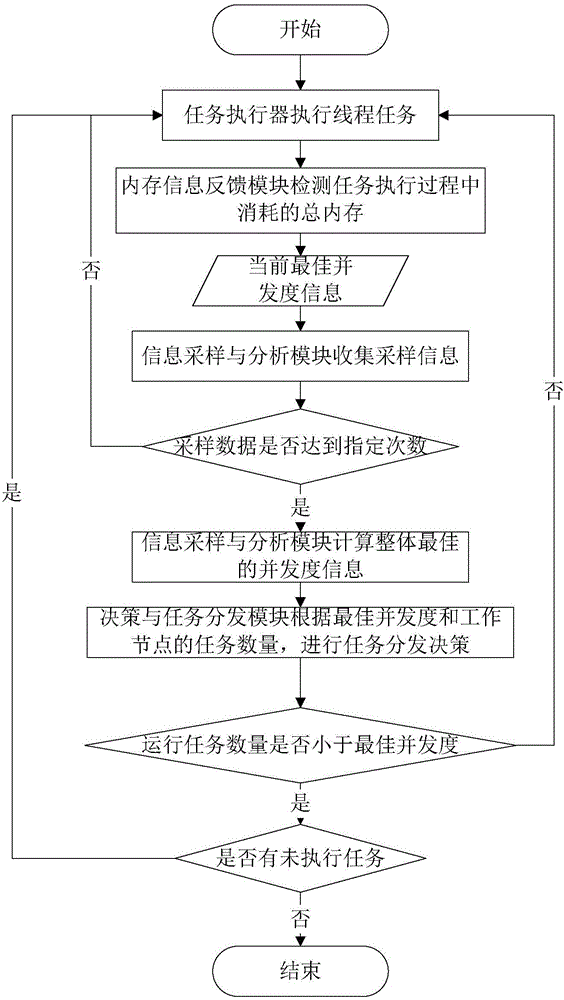

The invention discloses a system for settling fierce competition of memory resources in a big data processing system. A memory information feedback module is used for monitoring the memory using condition for a running thread task, converting collected memory information and then feeding back the converted memory information to an information sampling and analyzing module; the information sampling and analyzing module is used for dynamically controlling the number of sampling times of information of all working nodes, analyzing data after the assigned number of sampling times is achieved, and calculating the optimal CPU to memory proportion of the current working node; a decision making and task distributing module is used for making a decision and controlling whether new tasks are distributed to the working nodes for calculation operation according to the information obtained through analysis and the task running information of the current working node to achieve effective limit on the CPU and memory use relation. By means of the system, a memory-perceptive task distribution mechanism can be achieved on a universal big data platform, the I / O expenditure generated when data overflows to a disc due to fierce competition of memory resources can be reduced, and the integral performance of the system can be effectively improved.

Owner:HUAZHONG UNIV OF SCI & TECH

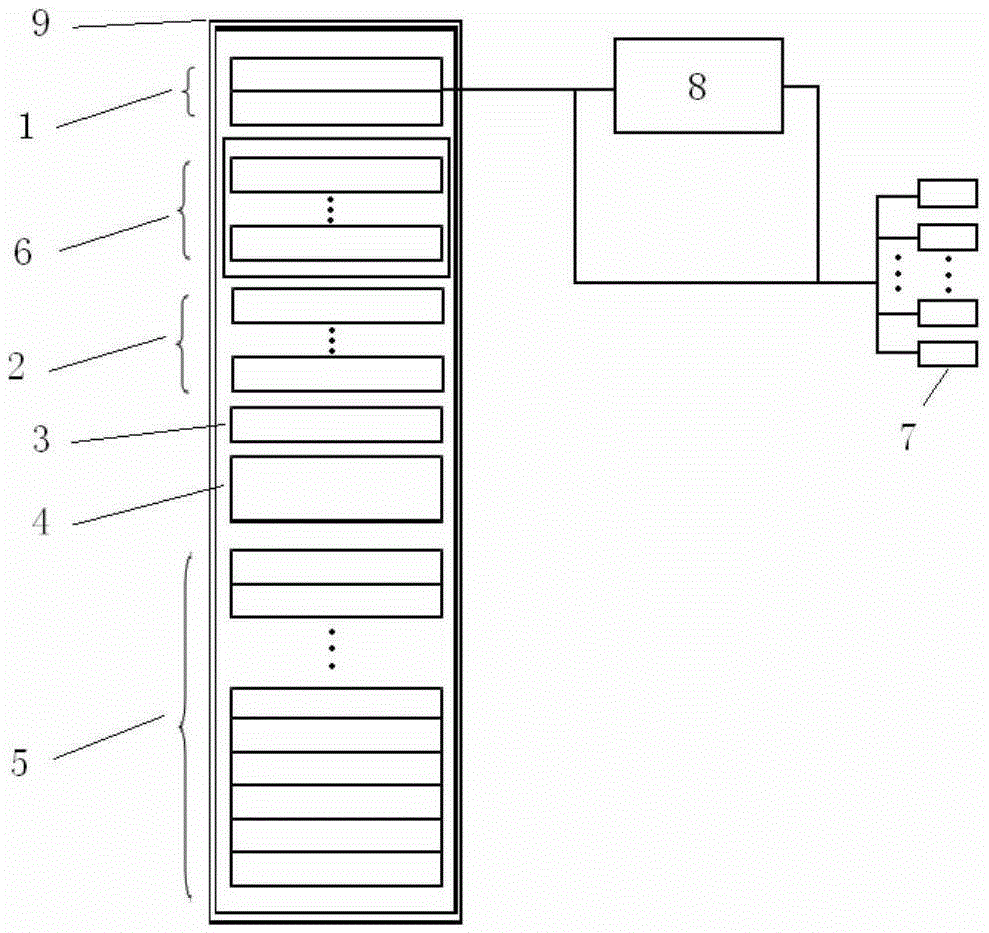

Analysis system and method for power equipment monitoring data

InactiveCN104991958APerformance tuningImprove usabilityData processing applicationsSpecial data processing applicationsPower equipmentElectric power

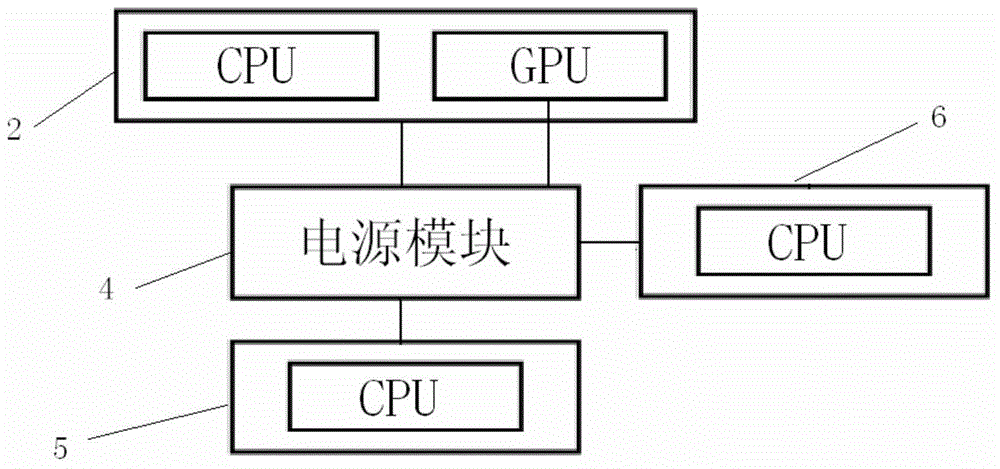

The invention provides an analysis system and method for power equipment monitoring data. The analysis system comprises a cabinet, an outer server, an outer management analysis platform, and one or more power transformation equipment measuring point sensors, and further comprises a lower calculation storage server, a power supply module and a kilomega management network module, a 10-GB optical fiber network module, an upper calculation storage server and a high-performance calculation storage server, wherein the outer management analysis platform comprises a big data analysis platform and a power application analysis module; the high-performance calculation storage server comprises one graph processing unit GPU; the graph processing unit GPU is connected through a single power supply so that independent power supply is realized; and the equipment has high integrity, high availability and good expandability, and the system has stable performances and is simple and convenient to operate.

Owner:SHANDONG LUNENG SOFTWARE TECH

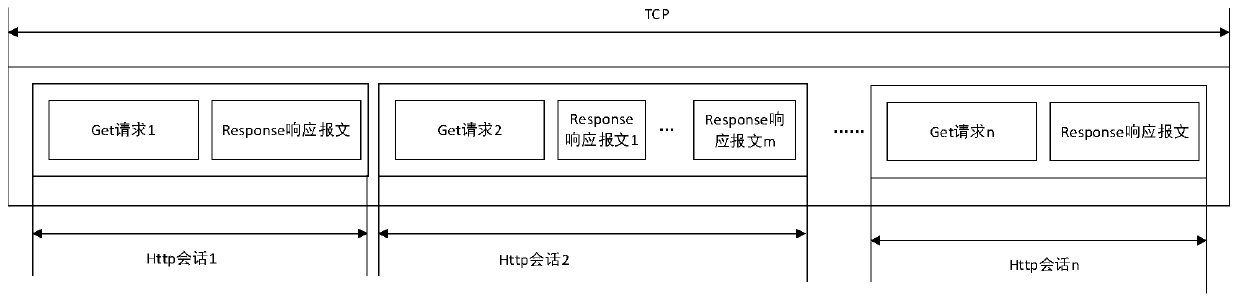

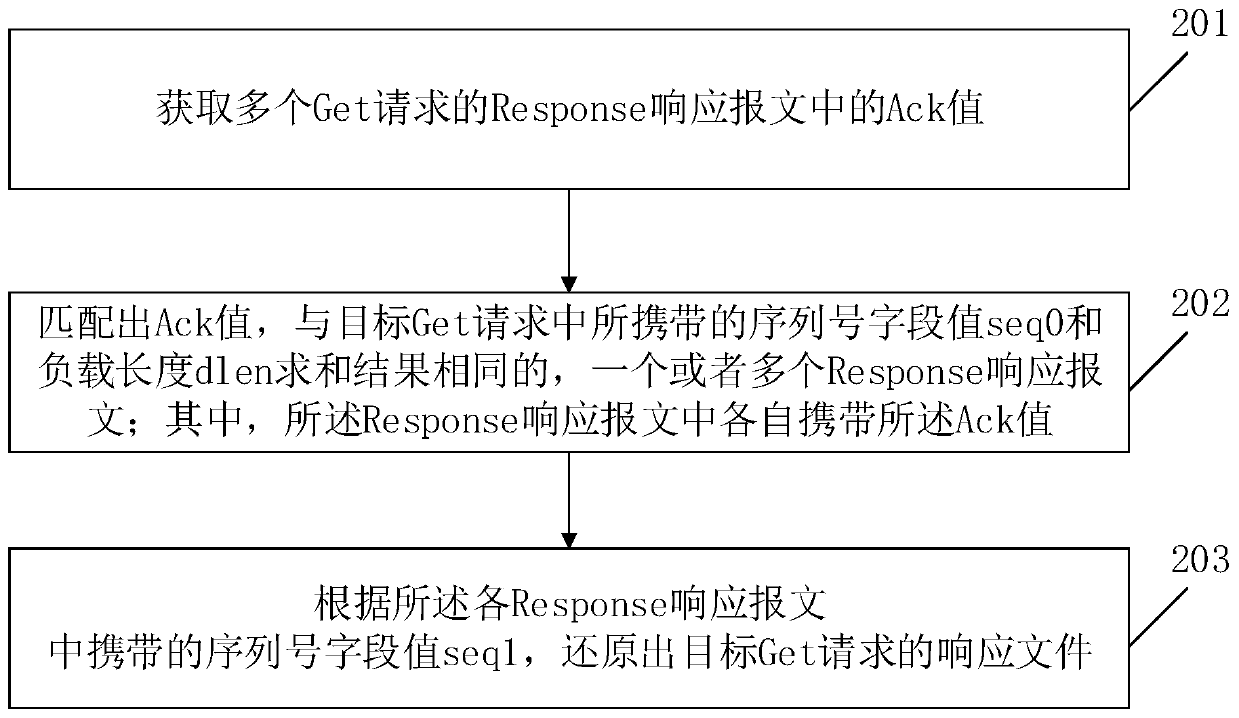

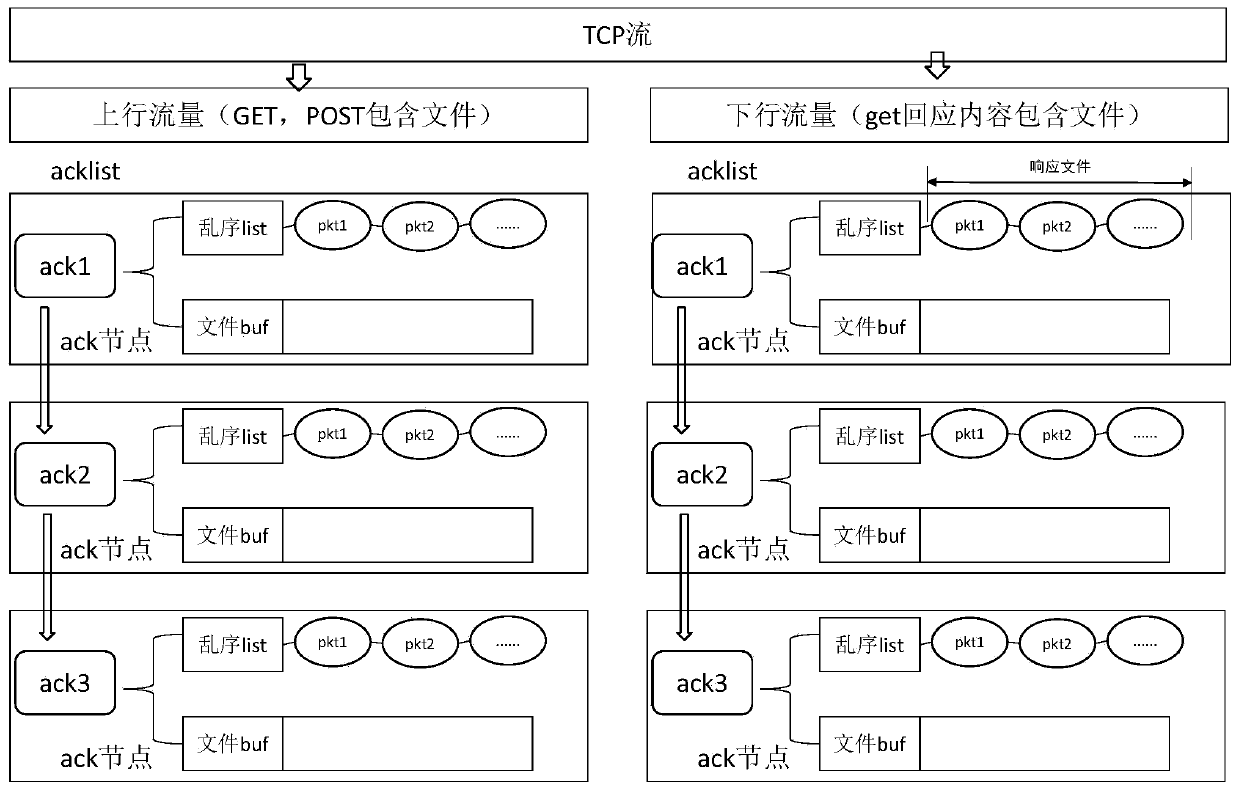

File restoration method and device for http multi-session in DPI scene

ActiveCN110839060AEliminate distractionsImprove reduction efficiencyTransmissionData packSerial code

The invention relates to the technical field of data packet recombination, and provides a file restoration method and device for http multi-session in a DPI scene. The method comprises the following steps: acquiring Ack values in Response response messages of a plurality of Get requests; matching out one or more Response response messages of which the Ack values are the same as the summation result of the serial number field value seq0 and the load length dlen carried in the target Get request; wherein the Ack values are carried in the Response response messages respectively; and restoring a response file of the target Get request according to the serial number field value seq1 carried in each Response response message. According to the invention, a plurality of http sessions in the TCP session can be processed in real time, disorder or retransmission interference among different http sessions is eliminated, and the restoration efficiency is improved.

Owner:WUHAN GREENET INFORMATION SERVICE

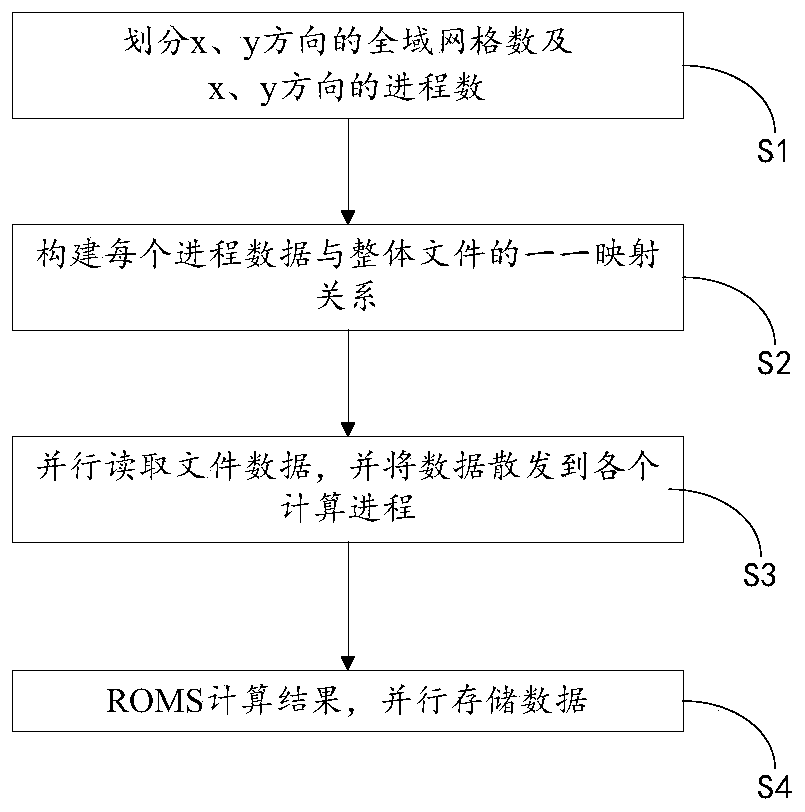

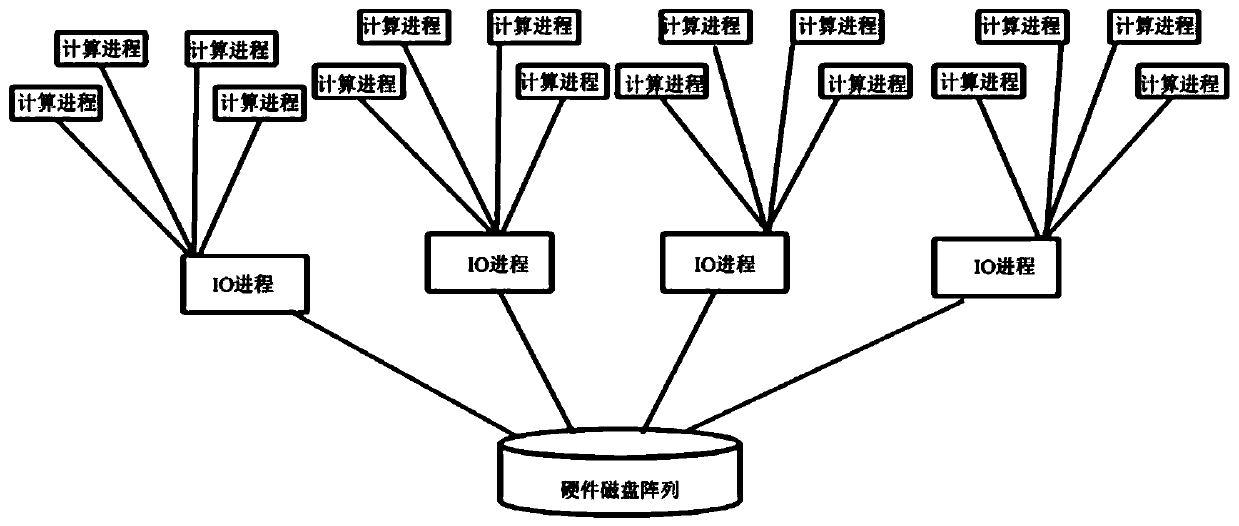

I/O parallel acceleration method and device for ROMS mode in regional coupling forecasting system, and medium

ActiveCN110209353AImprove performanceSolving inefficienciesInput/output to record carriersICT adaptationCouplingGlobal grid

The invention provides an I / O parallel acceleration method and device for an ROMS mode in a regional coupling forecasting system and a computer readable storage medium, and relates to the technical field of the regional coupling forecasting system. The I / O parallel acceleration method comprises the steps: dividing the number of global grids in the x direction and the y direction and the number ofprocesses in the x direction and the y direction; constructing a one-to-one mapping relation between input and output data of each computing process and the integral input and output file, and constructing a mapping relation between the computing processes and I / O process data transmission; reading the file data in parallel by the I / O process and distributing the data to each computing process; and calculating a result by the ROMS, collecting data from each calculation process by the I / O process and storing the data in parallel. In the I / O parallel acceleration method, parallel I / O input and output are designed in the ROMS marine computing mode, and an ROMS bottom layer I / O framework is optimized, and the I / O performance of the ROMS in the marine mode is improved.

Owner:青岛海洋科技中心

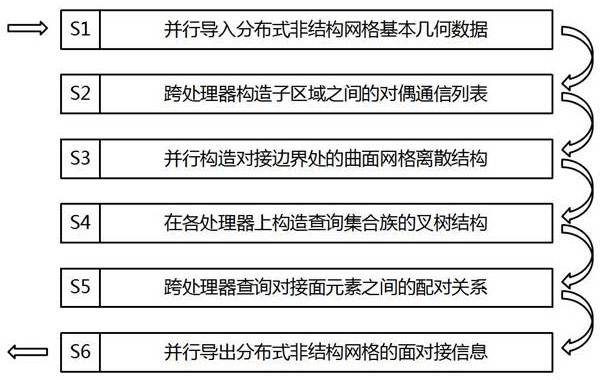

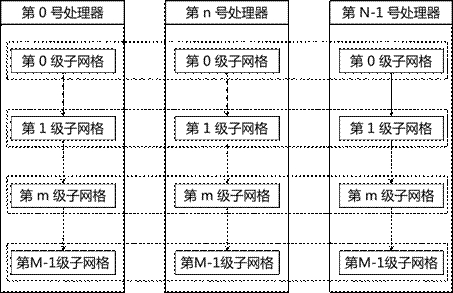

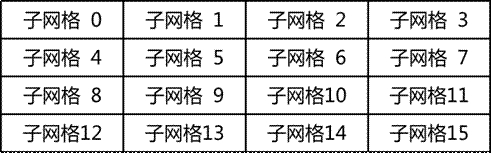

Distributed unstructured grid cross-processor-plane butt joint method and distributed unstructured grid cross-processor-plane butt joint system

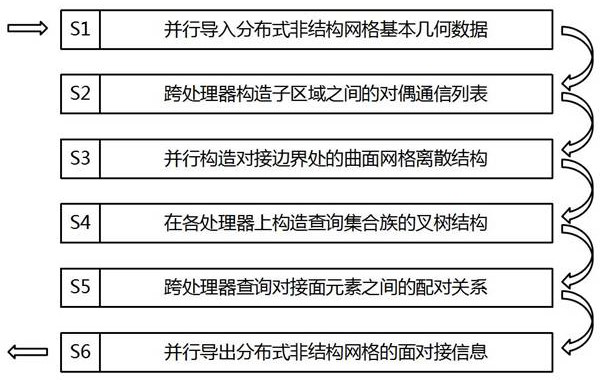

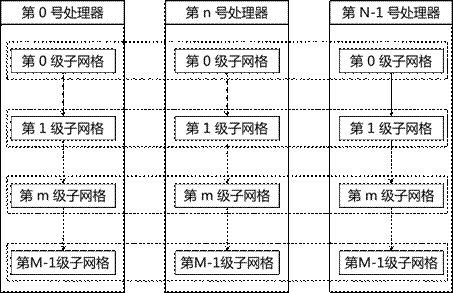

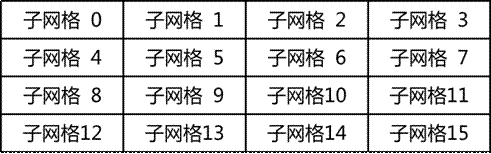

ActiveCN114494650AQuickly establish face-to-face mapping relationshipRelieve memory pressureOther databases indexingOther databases queryingComputational scienceParallel computing

The invention relates to the technical field of grid processing, and discloses a distributed unstructured grid cross-processor-plane butt joint method and system.The butt joint method comprises the steps that a two-stage index structure is adopted for recognizing the butt joint relation of the two sides of a grid partition boundary in a parallel mode; and carrying out equivalence judgment on the centroid coordinates of any two butt joint surface elements and the normalized grid point sequence in sequence. Comprising the following steps: S1, importing basic geometric data of a distributed unstructured grid in parallel; s2, constructing a dual communication list among the sub-regions in a cross-processor manner; s3, constructing a curved surface grid discrete structure at the butt joint boundary in parallel; s4, constructing a fork tree structure of the query set family on each processor; s5, querying a pairing relationship between the docking surface elements in a cross-processor manner; and S6, exporting the butt joint information of the distributed unstructured grid in parallel. According to the method, the problems of low processing efficiency, poor data processing capability and the like during large-scale unstructured grid processing in the prior art are solved.

Owner:CALCULATION AERODYNAMICS INST CHINA AERODYNAMICS RES & DEV CENT

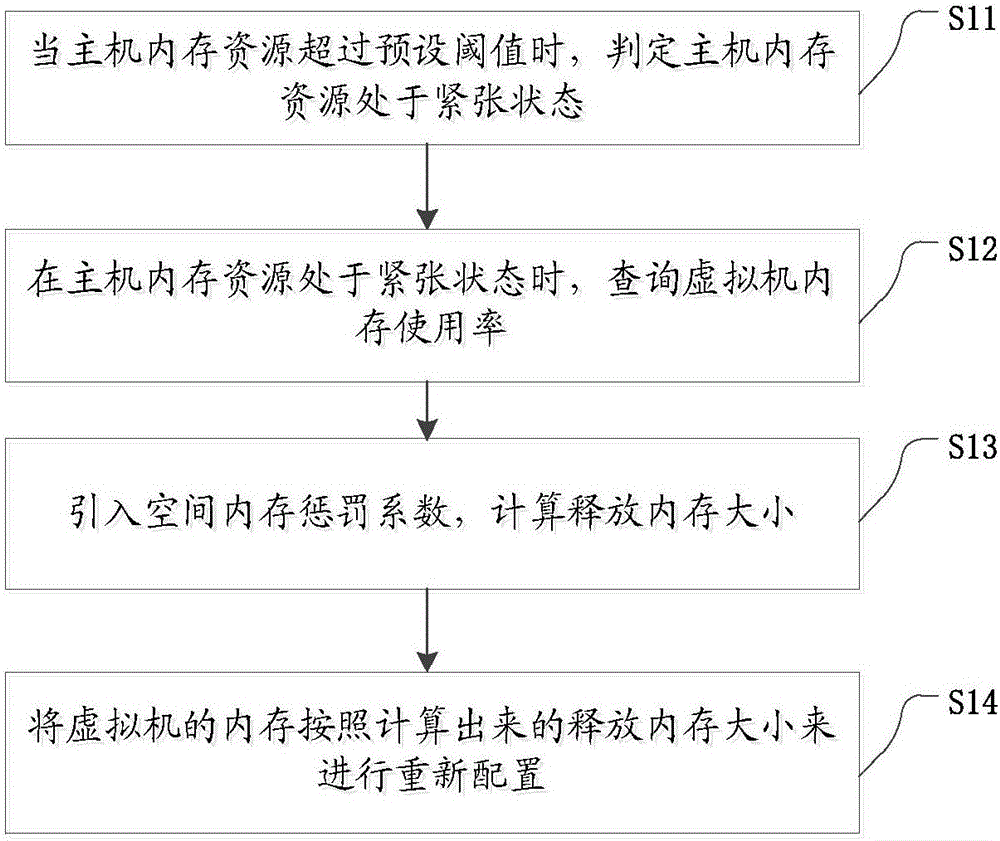

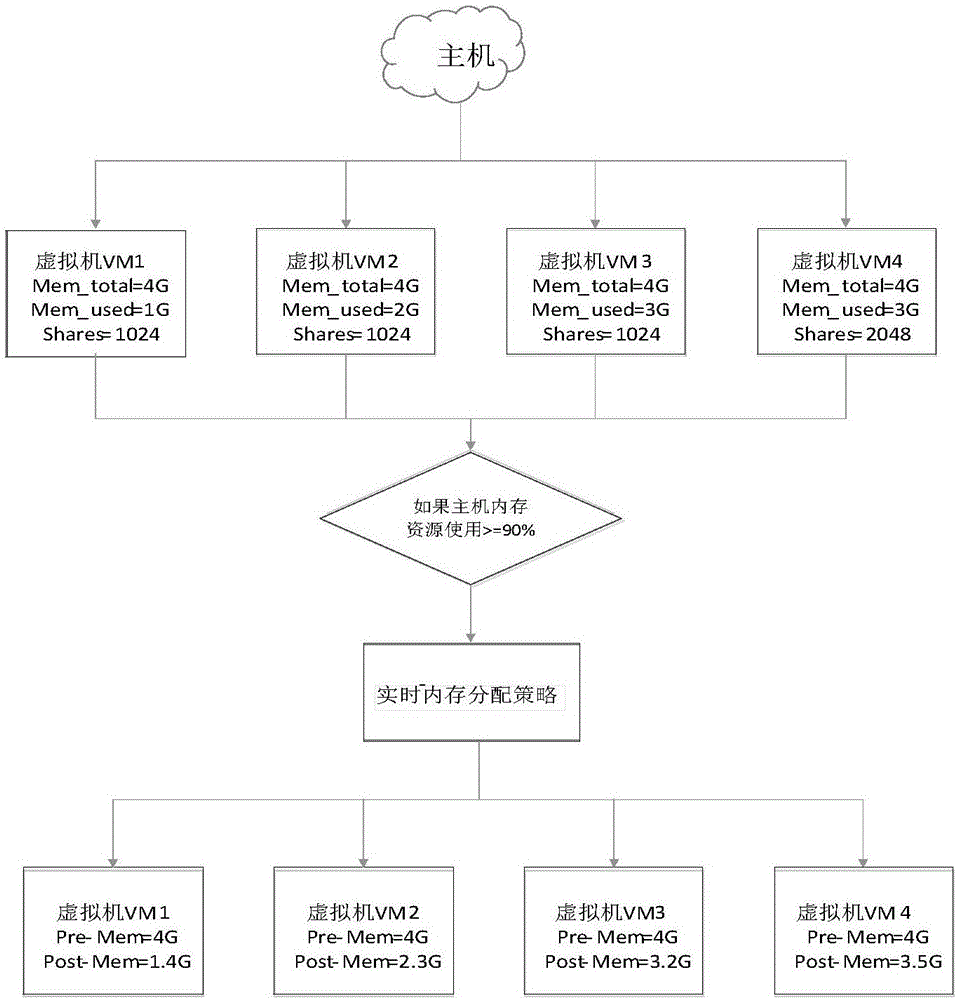

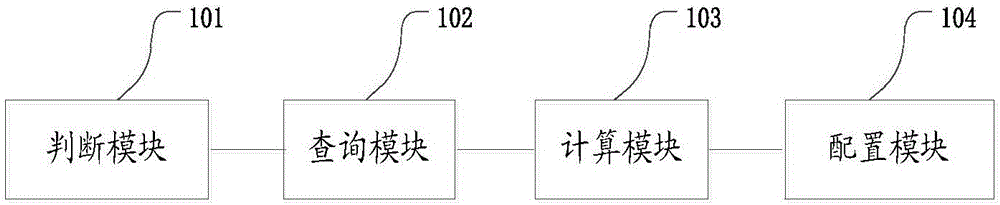

Real-time virtual machine memory scheduling method and device

InactiveCN106776048AAvoid wastingRelieve memory pressureResource allocationSoftware simulation/interpretation/emulationStressed stateHost memory

The invention discloses a real-time virtual machine memory scheduling method and device. The memory scheduling method comprises the steps that when the memory resource of a mainframe exceeds the preset threshold value, the memory resource of the mainframe is determined to be in a stressed state; when the memory resource of the mainframe is in a stressed state, the memory usage rate of a virtual machine is queried; the spatial memory punishment coefficient is introduced, the size of the released memory is calculated; the memory of the virtual machine is reallocated according to the calculated size of the released memory. According to the memory scheduling method, the optimization of memory allocation is achieved, and wastes of memory resources are avoided.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

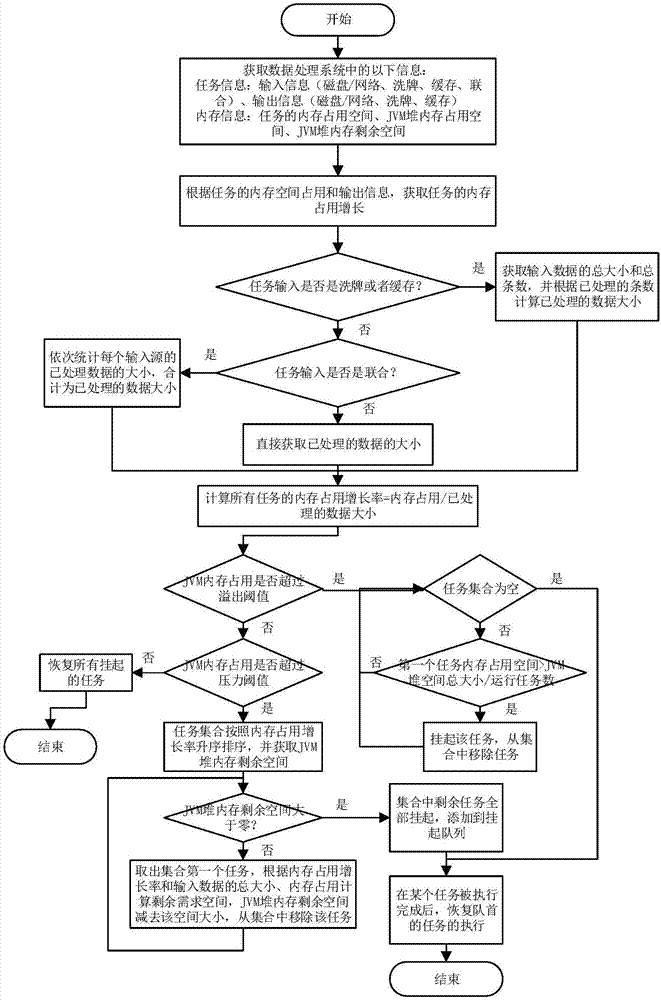

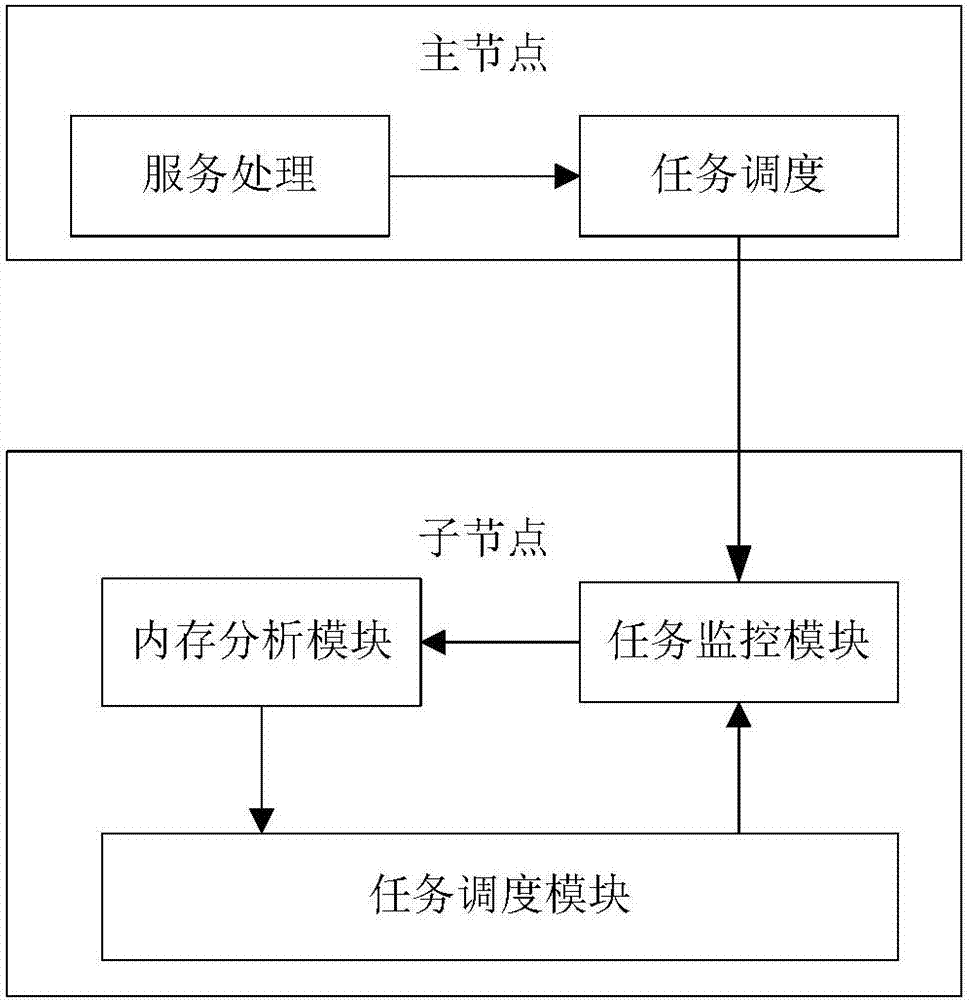

Scheduling method and system for relieving memory pressure in distributed data processing systems

InactiveCN107066316AReduce memory pressureAvoid waitingResource allocationDistributed object oriented systemsService systemDistributive data processing

The invention discloses a scheduling method for relieving memory pressure in distributed data processing systems. The scheduling method comprises the following steps of: analyzing a memory using law according to characteristics of an operation carried out on a key value pair by a user programming interface, and establishing a memory using model of the user programming interface in a data processing system; speculating memory using models of tasks according to a sequence of calling the programming interface by the tasks; distinguishing different models by utilizing a memory occupation growth rate; and estimating the influence, on memory pressure, of each task according to the memory using model and processing data size of the currently operated task, and hanging up the tasks with high influences until the tasks with low influences are completely executed or the memory pressure is relieved. According to the method, the influences, on the memory pressure, of all the tasks during the operation are monitored and analyzed in rea time in the data processing systems, so that the expandability of service systems is improved.

Owner:HUAZHONG UNIV OF SCI & TECH

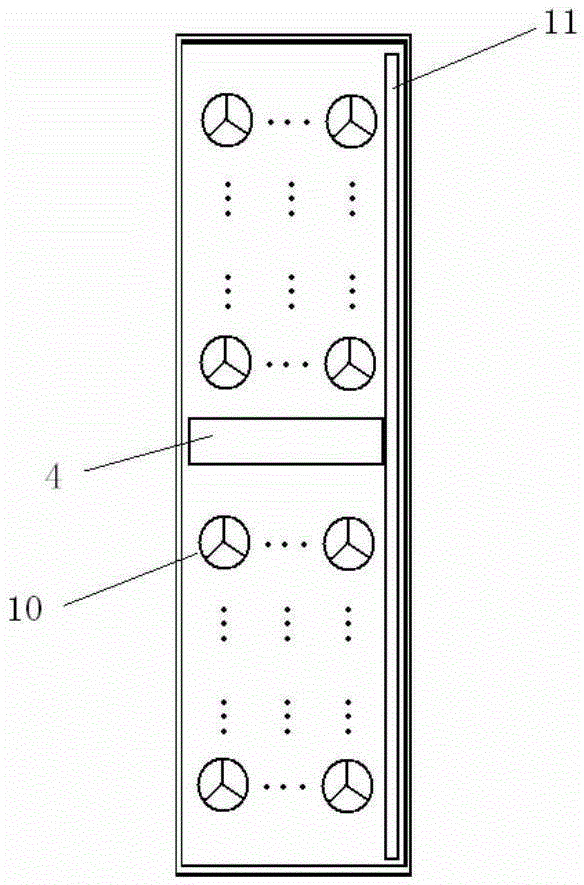

Electrical equipment monitoring method and device

InactiveCN105045922AImprove usabilityRelieve memory pressureData processing applicationsSpecial data processing applicationsReliability engineeringReal-time database

The invention provides an electrical equipment monitoring method and a monitoring device thereof. The electrical equipment monitoring method comprises the following steps of: initializing; setting parameters; establishing an electrical business analysis model; controlling output power of a fan by grades; acquiring measuring point information of power transformation equipment in real time; and transmitting the acquired measuring point information of the power transformation equipment to a real-time database to be packaged and stored; and transmitting inquiry, accounting, analysis and / or processing operation requests to the electrical equipment monitoring device according to the request of a user; and displaying to the user in real time after visualized processing. The electrical equipment monitoring method is real-time and effective; the equipment is high in integration level and availability, good in expansibility, and stable in system performance, as well as simple and convenient to operate.

Owner:SHANDONG LUNENG SOFTWARE TECH

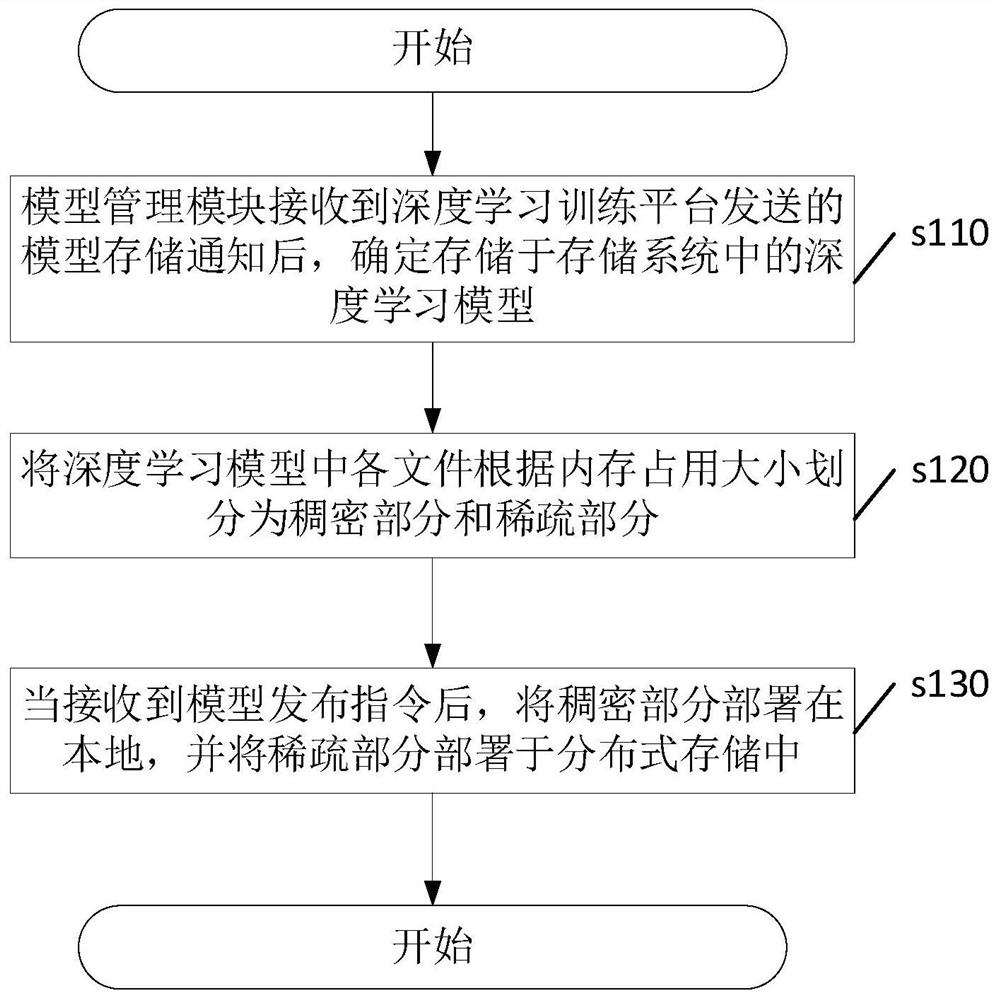

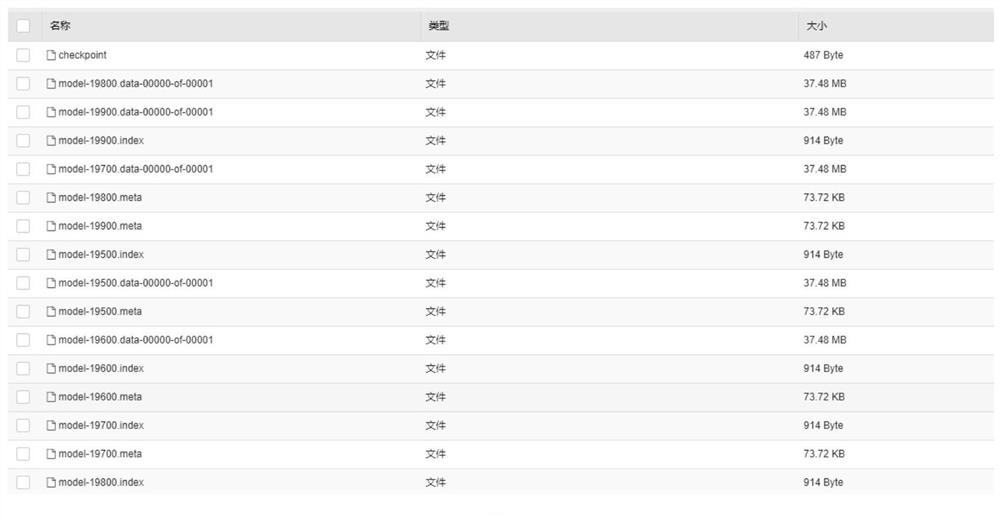

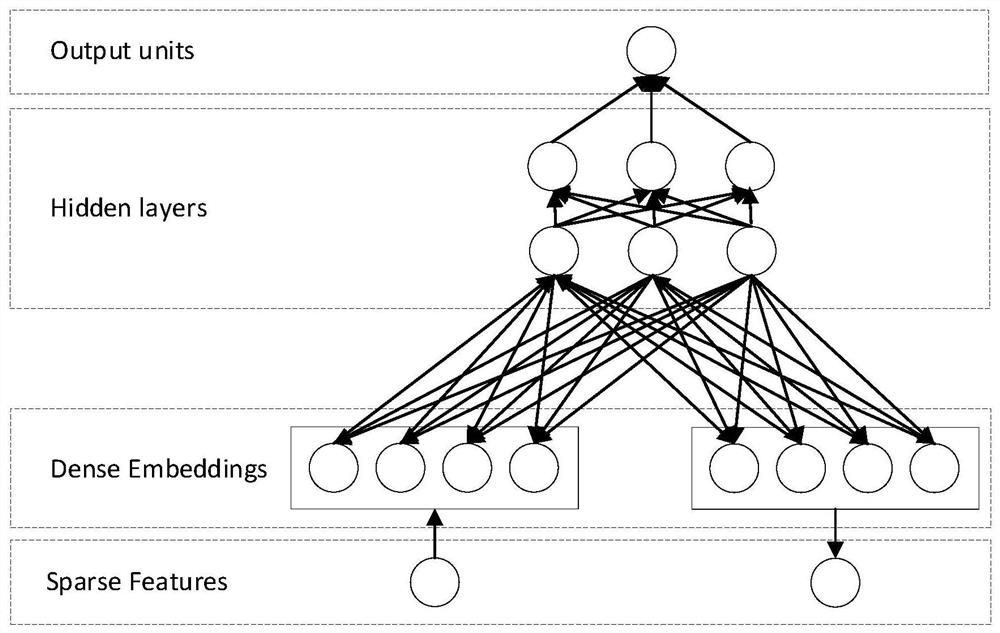

Model publishing method, device and equipment, and storage medium

ActiveCN111857949ARelieve memory pressureReduce occupancySoftware simulation/interpretation/emulationComputational scienceTerm memory

The invention discloses a model publishing method. According to the method, a local + distributed storage model release scheme is provided; a complete deep learning model with a large space is segmented into a dense part and a sparse part; the sparse part occupying large space is deployed in the distributed cluster, the dense part occupying small space is deployed in the local computing cluster, and the model dispersion part is dispersedly pulled, so that the occupation of a memory by the model is reduced; the memory pressure of the local computing cluster is relieved when the model is loaded,and the memory consumption of computing nodes is greatly reduced. The invention further provides a model publishing device and equipment and a readable storage medium, which have the above beneficialeffects.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

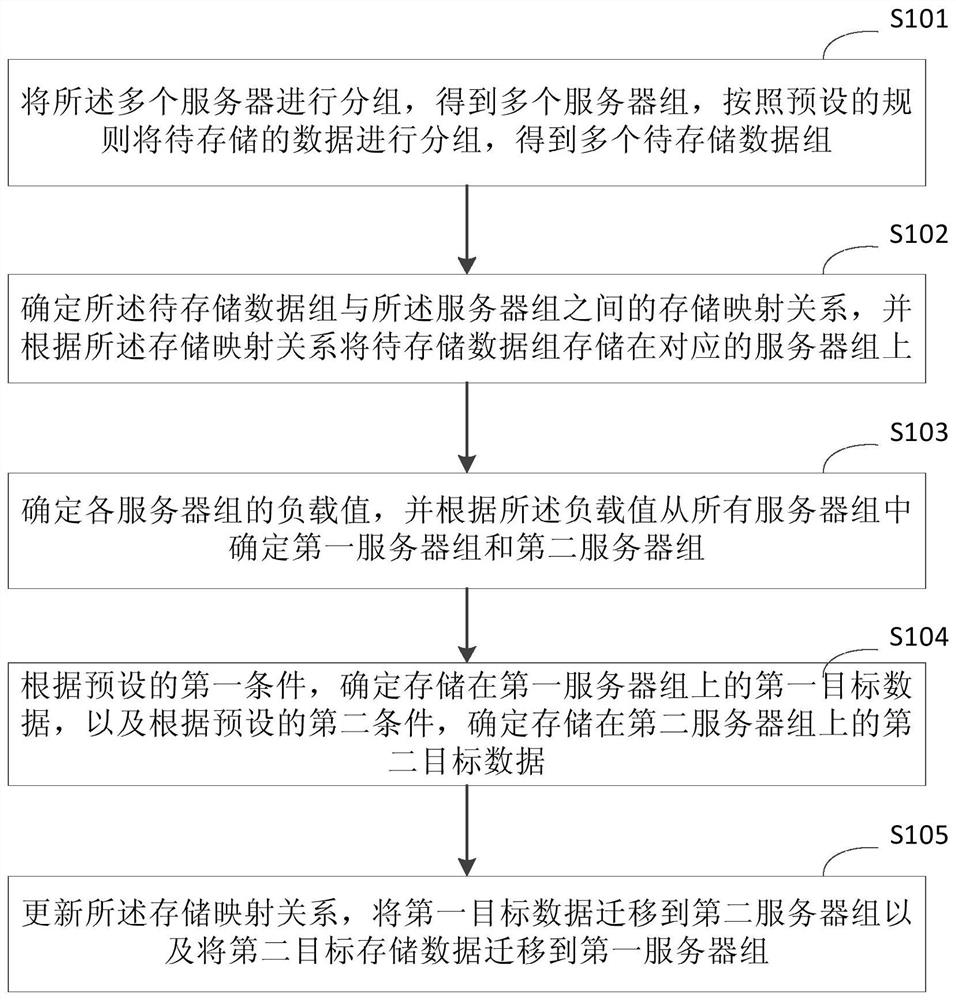

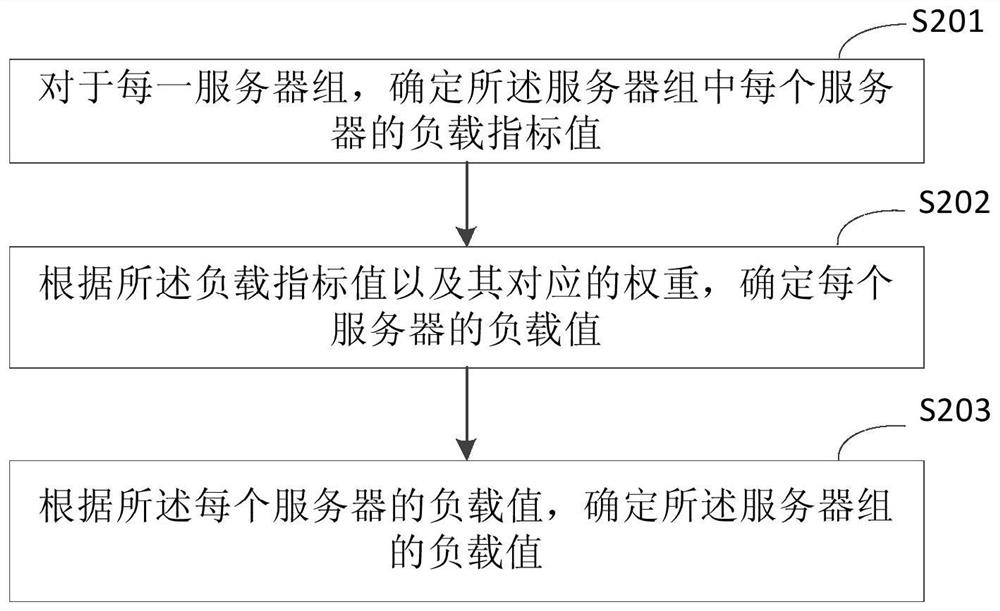

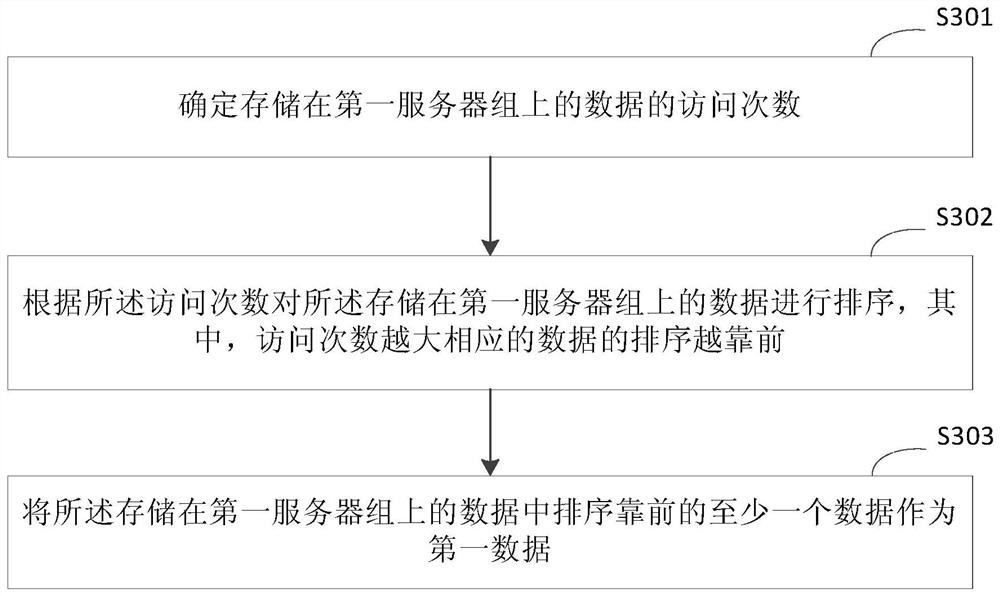

Data storage method and system

PendingCN113407108ARelieve memory pressureSave resourcesInput/output to record carriersResource allocationEngineeringData storing

The invention discloses a data storage method and system, and relates to the technical field of computers. The embodiment of the method comprises the following steps: grouping a plurality of servers to obtain a plurality of server groups, and grouping to-be-stored data to obtain a plurality of to-be-stored data groups; determining a storage mapping relationship between the to-be-stored data groups and the server groups, and performing storing according to the storage mapping relationship; determining a first server group and a second server group according to the load value of each server group; determining a first target data stored on the first server group, and determining a second target data stored on the second server group; updating the storage mapping relationship, migrating the first target data to the second server group, and migrating the second target storage data to the first server group. According to the embodiment, the storage pressure can be effectively relieved, and server resources can be saved; meanwhile, the data storage position can be dynamically adjusted, hotspot data and access pressure can be automatically dispersed, and load balancing is achieved.

Owner:BEIJING WODONG TIANJUN INFORMATION TECH CO LTD +1

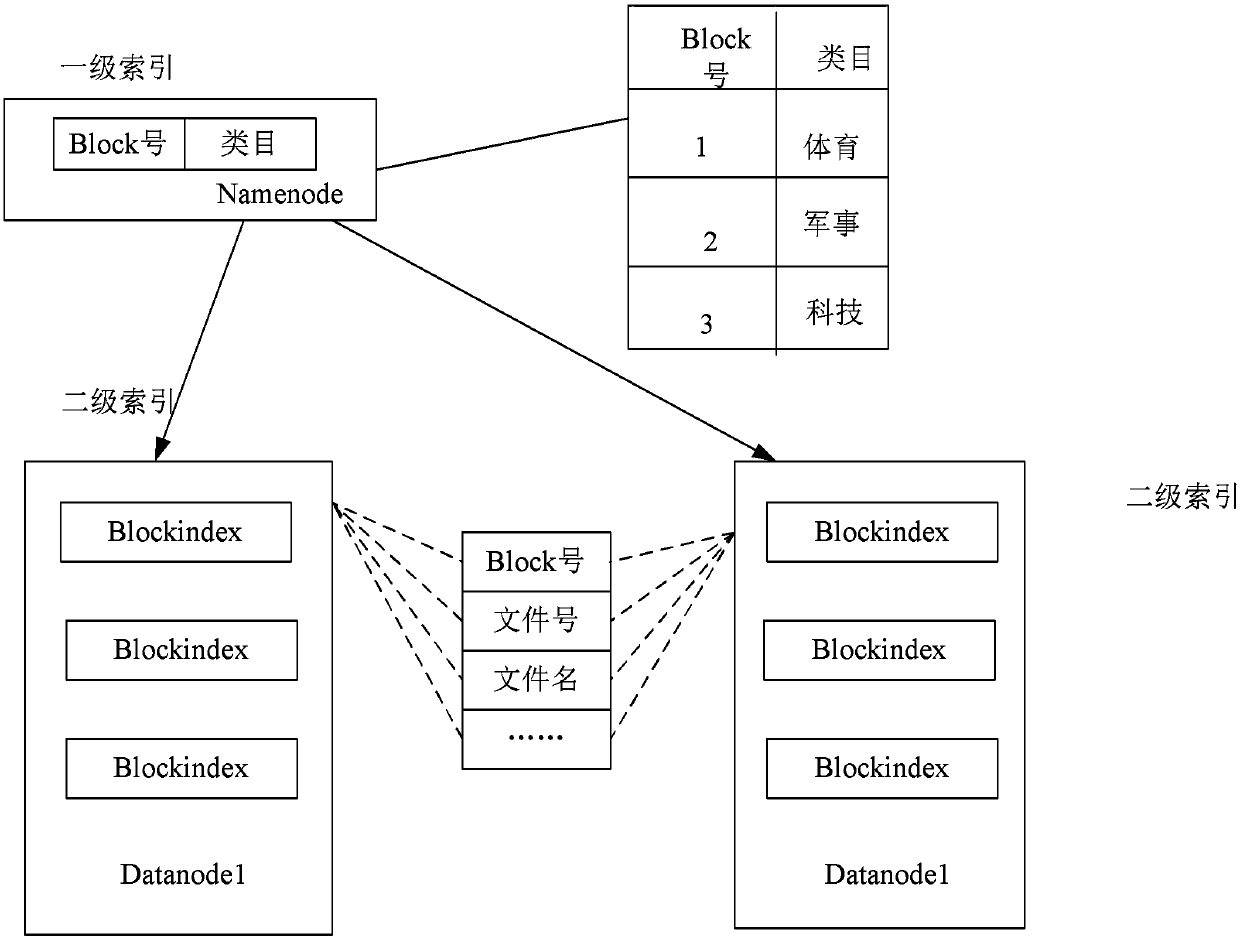

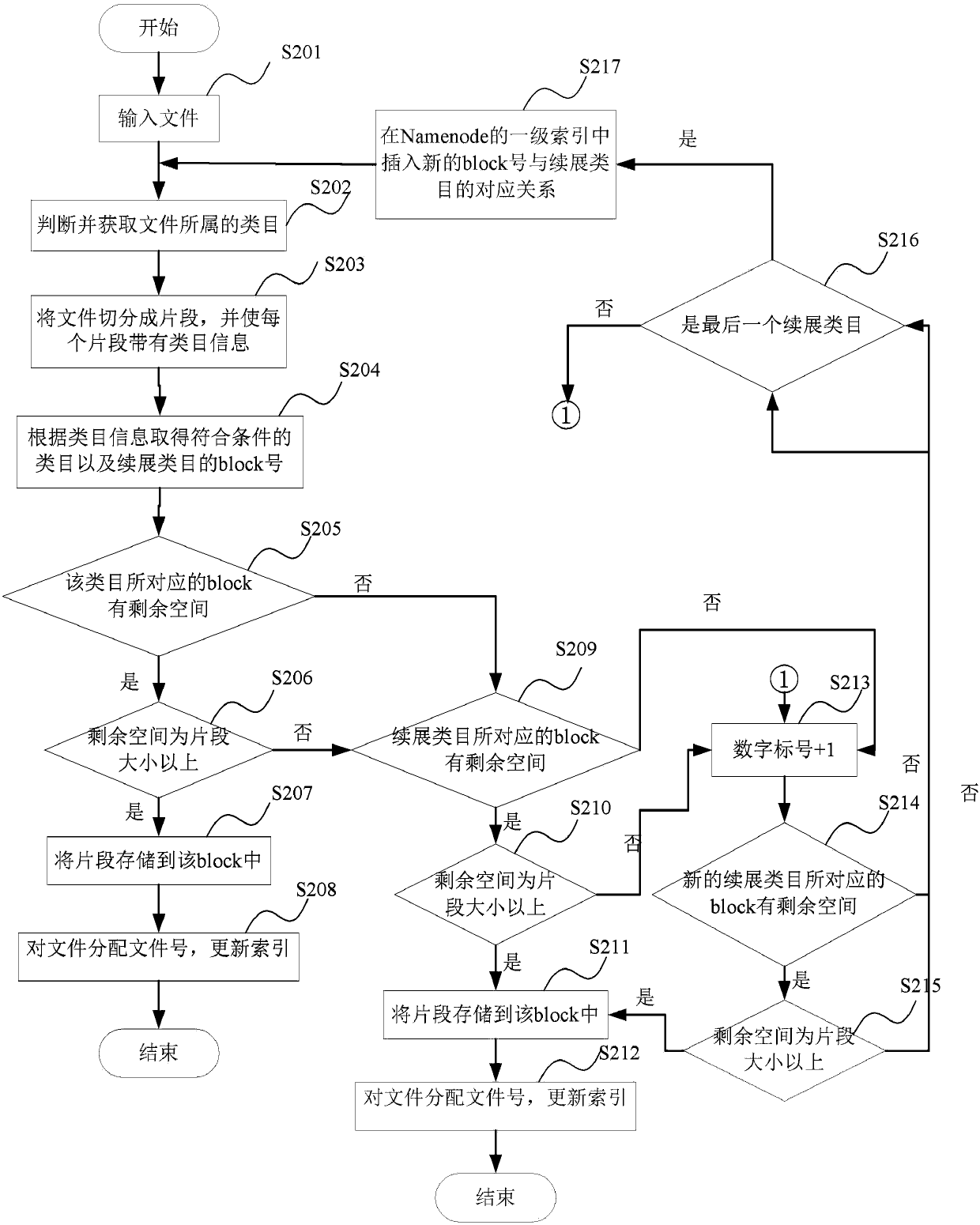

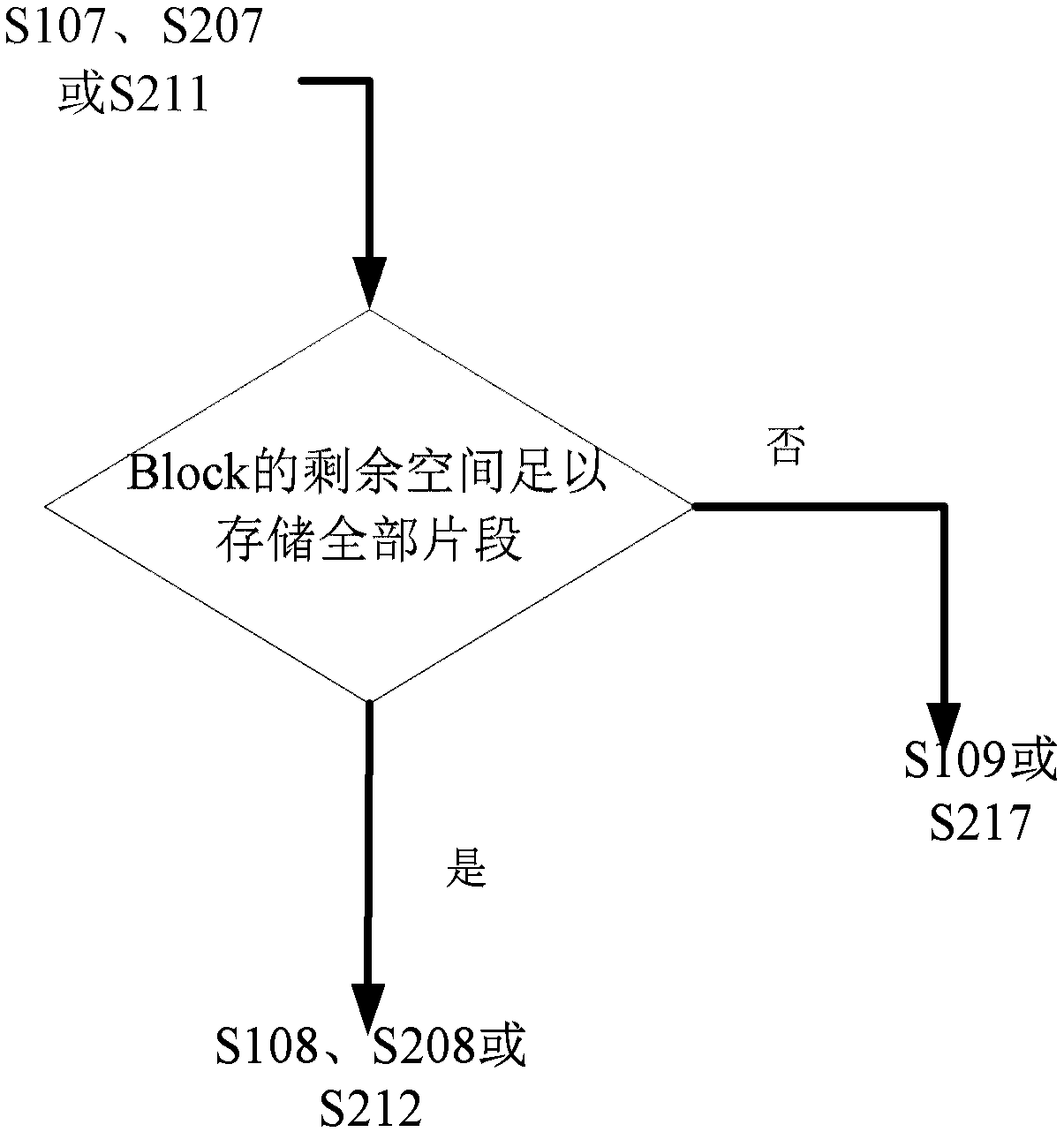

File system, file storage method, storage device and computer readable medium

InactiveCN109947703ARelieve memory pressureAccelerateInput/output to record carriersFile access structuresFile systemResidual space

The invention provides a file system, a file storage method of the file system, a file storage device of the file system and a computer readable medium. In the file storage method of the file system,at least two levels of indexes are used in the file system, a relation between a category of a file to be stored and block information of the file system is stored in a first level of index in the atleast two levels of indexes, and the file storage method of the file system comprises the following operations: obtaining the category according to the category information; Segmenting the file into aplurality of segments; Querying and obtaining block information associated with the category in the first-level index according to the category information; Under the condition that the correspondingblock has no residual space or the size of the residual space is smaller than that of the fragment, inserting a relation between a continuous extension category representing the same category as thecategory of the file and new block information into the first-level index, and storing the fragment in the block when the size of the fragment is greater than or equal to that of the fragment; And storing the relationship between the block information and the information of the file in other indexes except the first-level index in the at least two levels of indexes.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

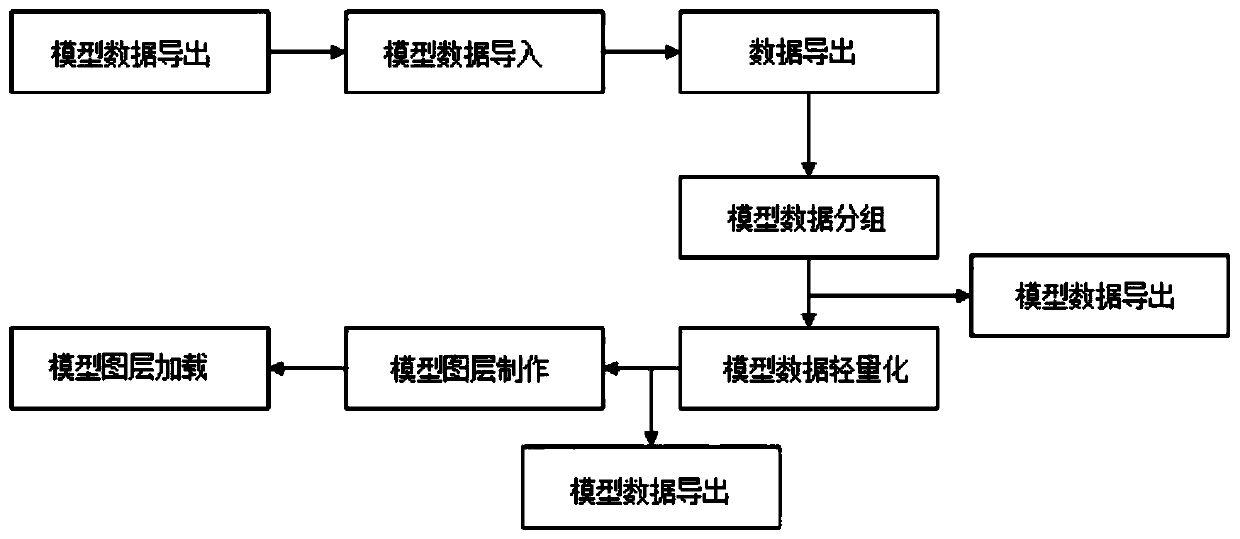

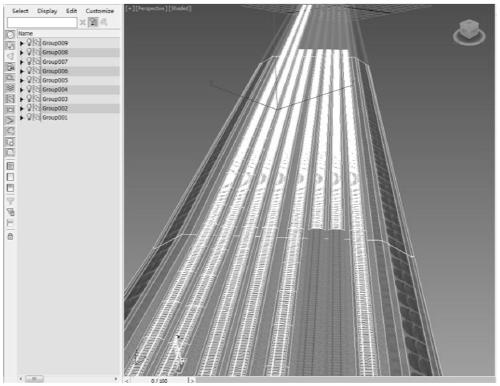

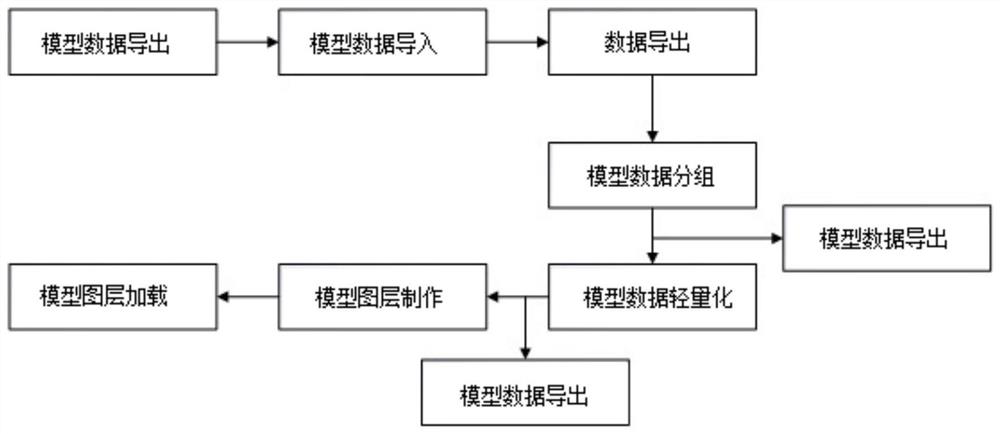

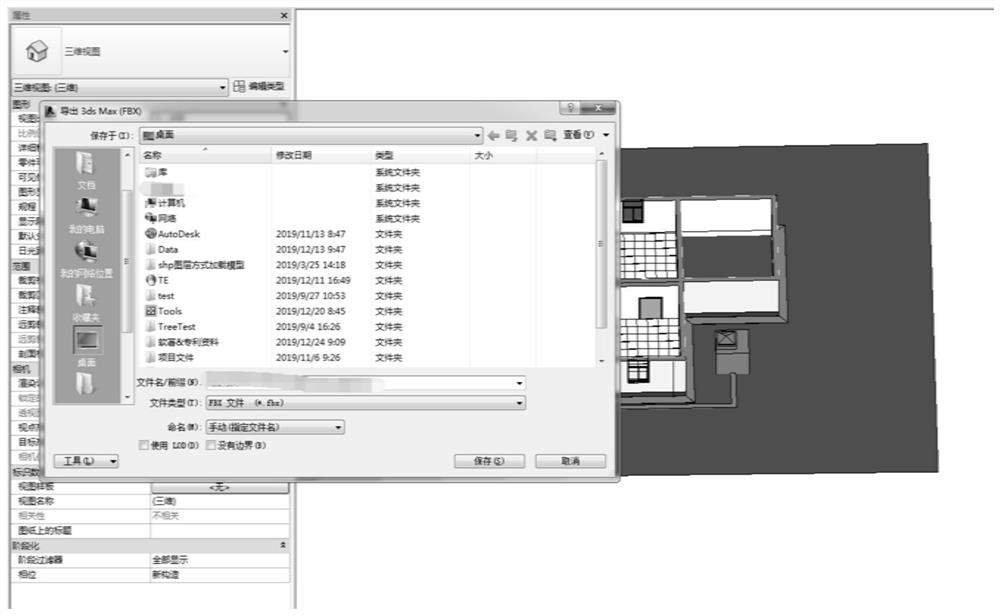

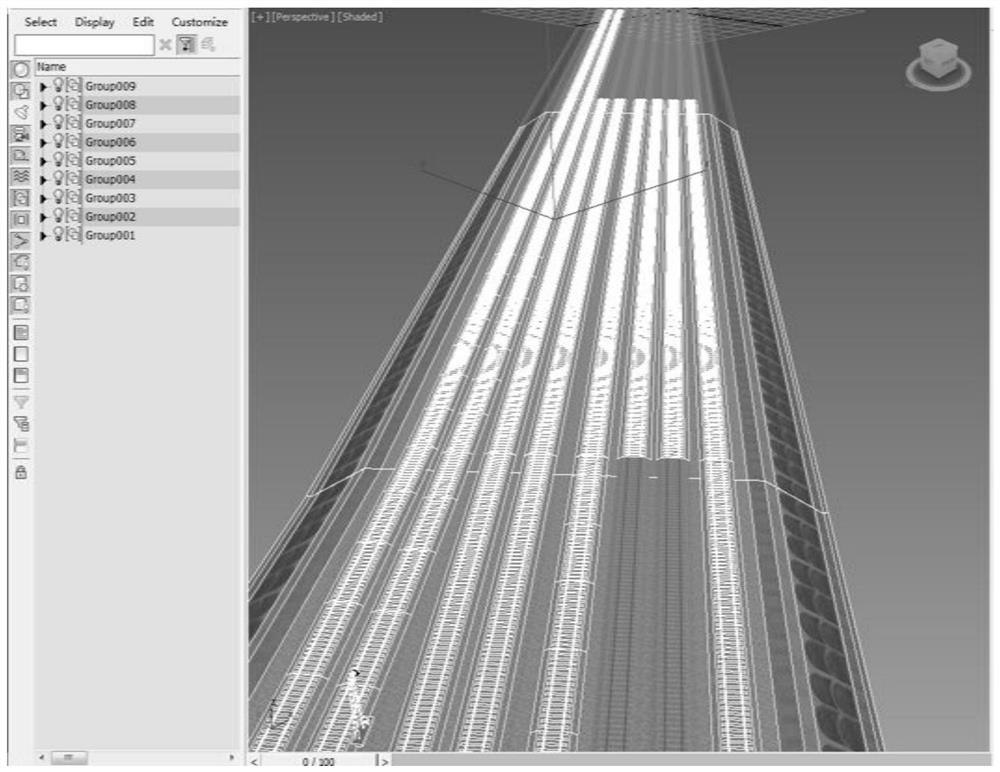

Large-volume BIM model data processing and loading method and device

ActiveCN111259474AImprove browsing speedRelieve memory pressureGeometric CADData processing applicationsProcess engineeringAngle of view

The invention provides a large-volume BIM model data processing and loading method and device, and belongs to the technical field of BIM data processing. The device comprises a data export module; a model data grouping module; a model data export module; a model data lightweight module; model layer manufacturing module. The BIM loading adopts the principle of nearby loading, the scene model closest to the current visual angle can be quickly displayed, and when the number of the models reaches a certain online state, unloading can be automatically performed according to the loading condition.

Owner:陕西心像信息科技有限公司

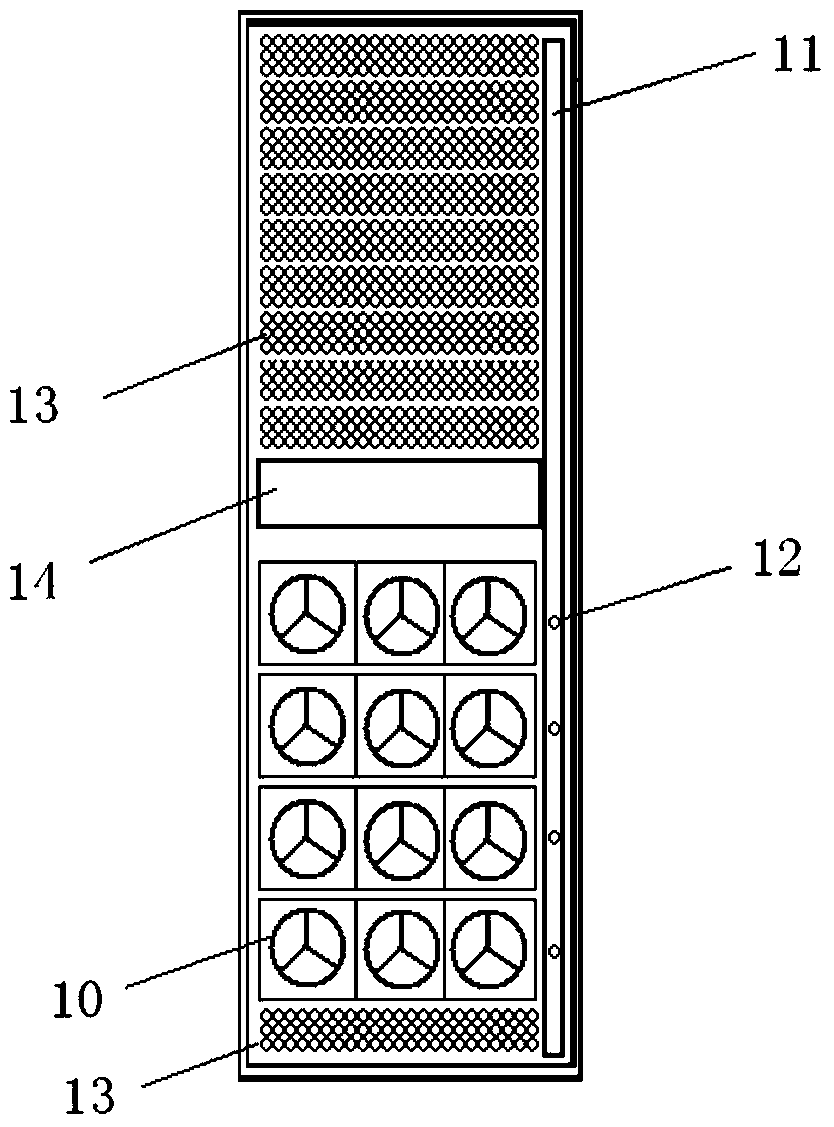

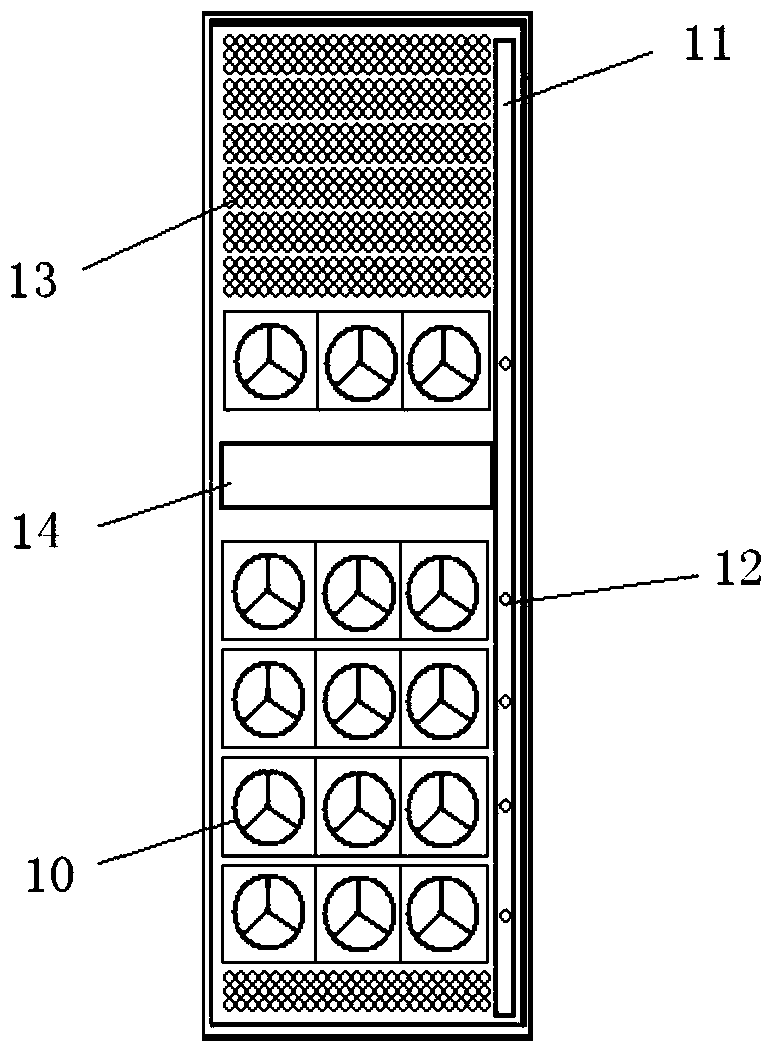

Power equipment monitoring device having intelligent heat-dissipation function and monitoring method thereof

The invention provides a power equipment monitoring device having the intelligent heat-dissipation function and a monitoring method thereof. The method comprises the steps of initializing, parameter setting, establishing a power service analysis model, hierarchically controlling the output power of a fan at each layer, acquiring the measuring point information of a transformer in real time, sending the information to a real-time database for storage, sending out searching, counting analyzing and / or processing requests to the monitoring device of power equipment according to the requests of users, displaying a result to users in real time after the visualization treatment, and the like. The device is good in real-time performance, effective, high in integration level, good in availability, excellent in scalability, ideal in heat dissipation effect, stable in performance, and simple and convenient in operation.

Owner:SHANDONG LUNENG SOFTWARE TECH

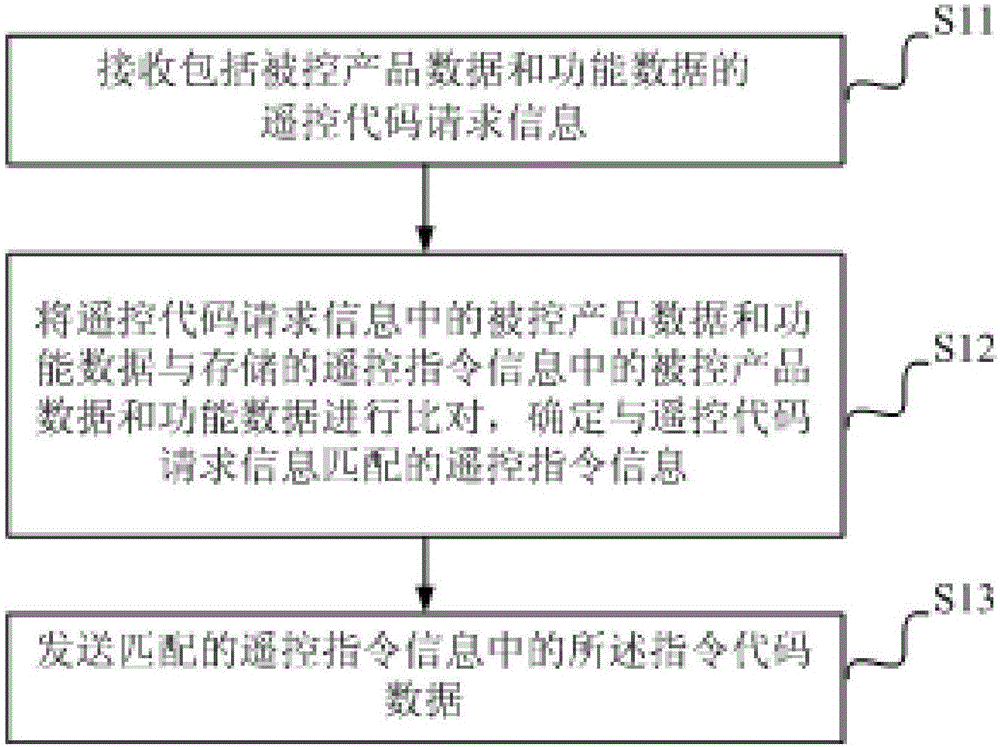

Method and system for achieving remote control function, server and remote control terminal

InactiveCN106210113AMeet needsImprove processing efficiencyNon-electrical signal transmission systemsTransmissionRemote controlComputer terminal

The invention provides a method and system for achieving a remote control function, a server and a remote control terminal. The method for achieving the remote control function comprises the steps that when remote control code requesting information including controlled product data and function data is received, the remote control code requesting information is compared with controlled product data and function data in stored remote control instruction information, and remote control instruction information matched with the remote control code requesting information is determined; instruction code data in the matched remote control instruction information is sent. By means of the scheme, the instruction code data corresponding to the corresponding function of a controlled product can be sent according to the demand of a user instead of directly releasing all instruction codes related to the controlled product to the user remote control terminal. On the premise that the demand of the user is met, the storage space of the remote control terminal is occupied as little as possible; besides, the remote control terminal only needs to process instruction code data related to the demand of the user when processing the instruction code, and the processing efficiency can be effectively improved.

Owner:LETV HLDG BEIJING CO LTD +1

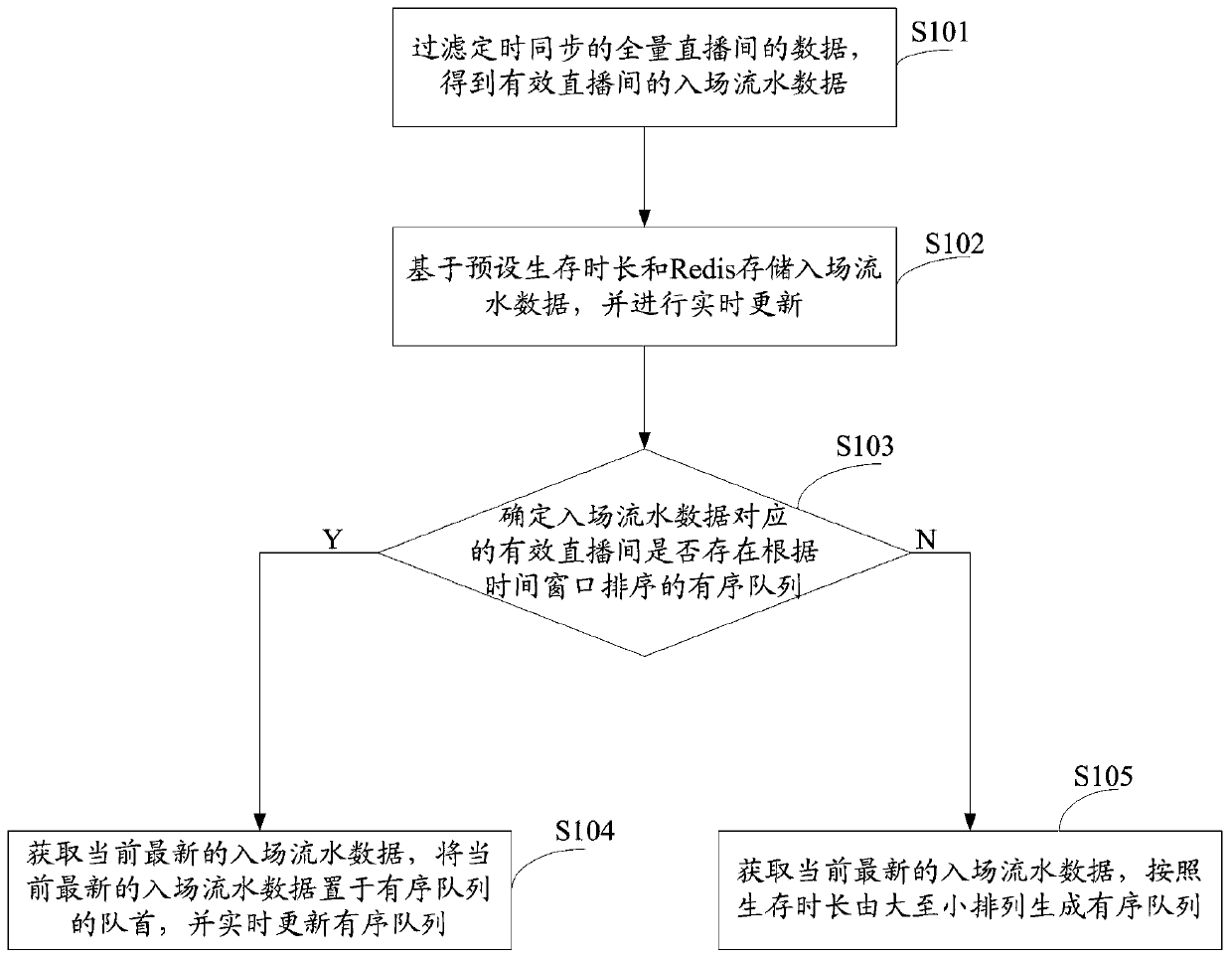

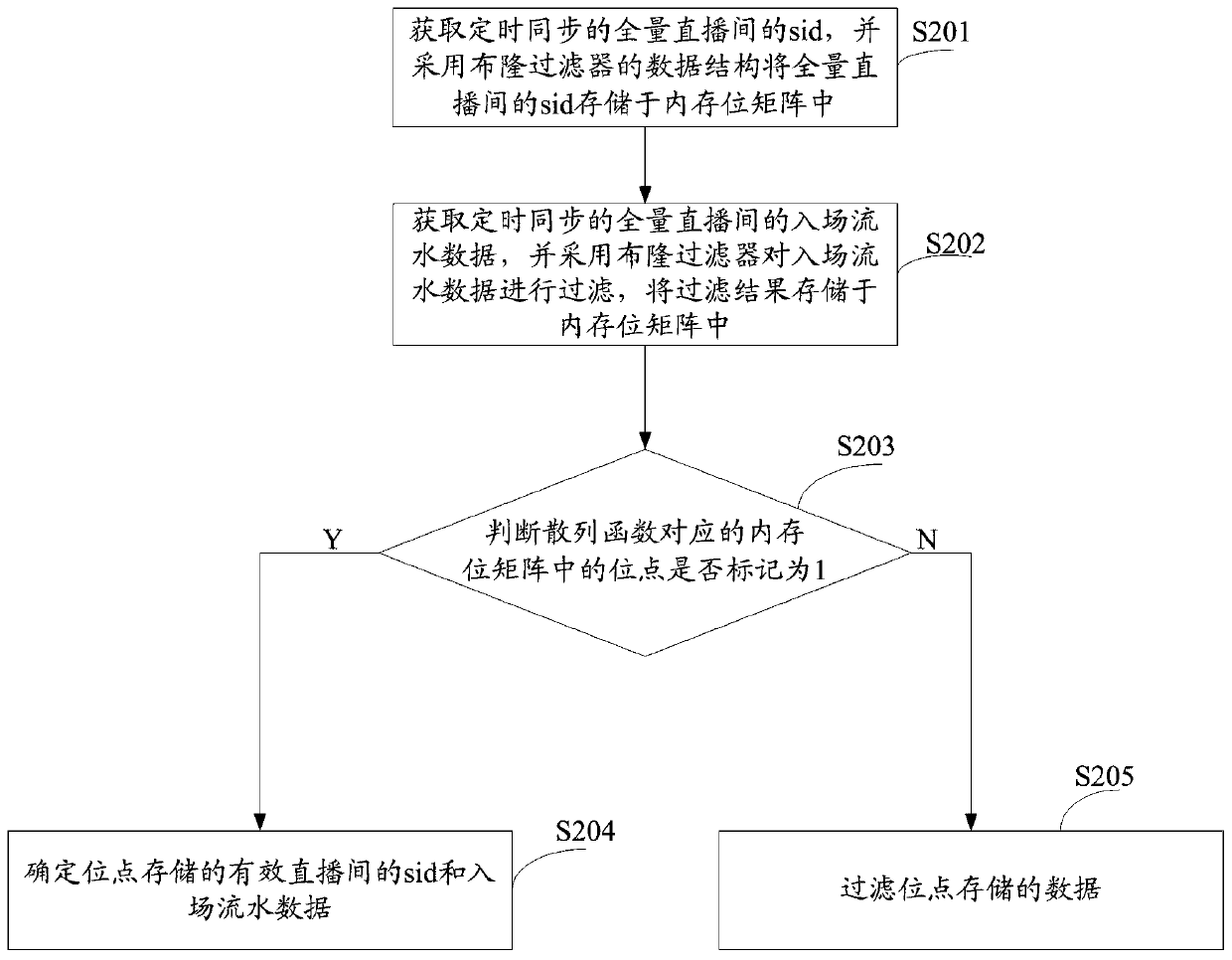

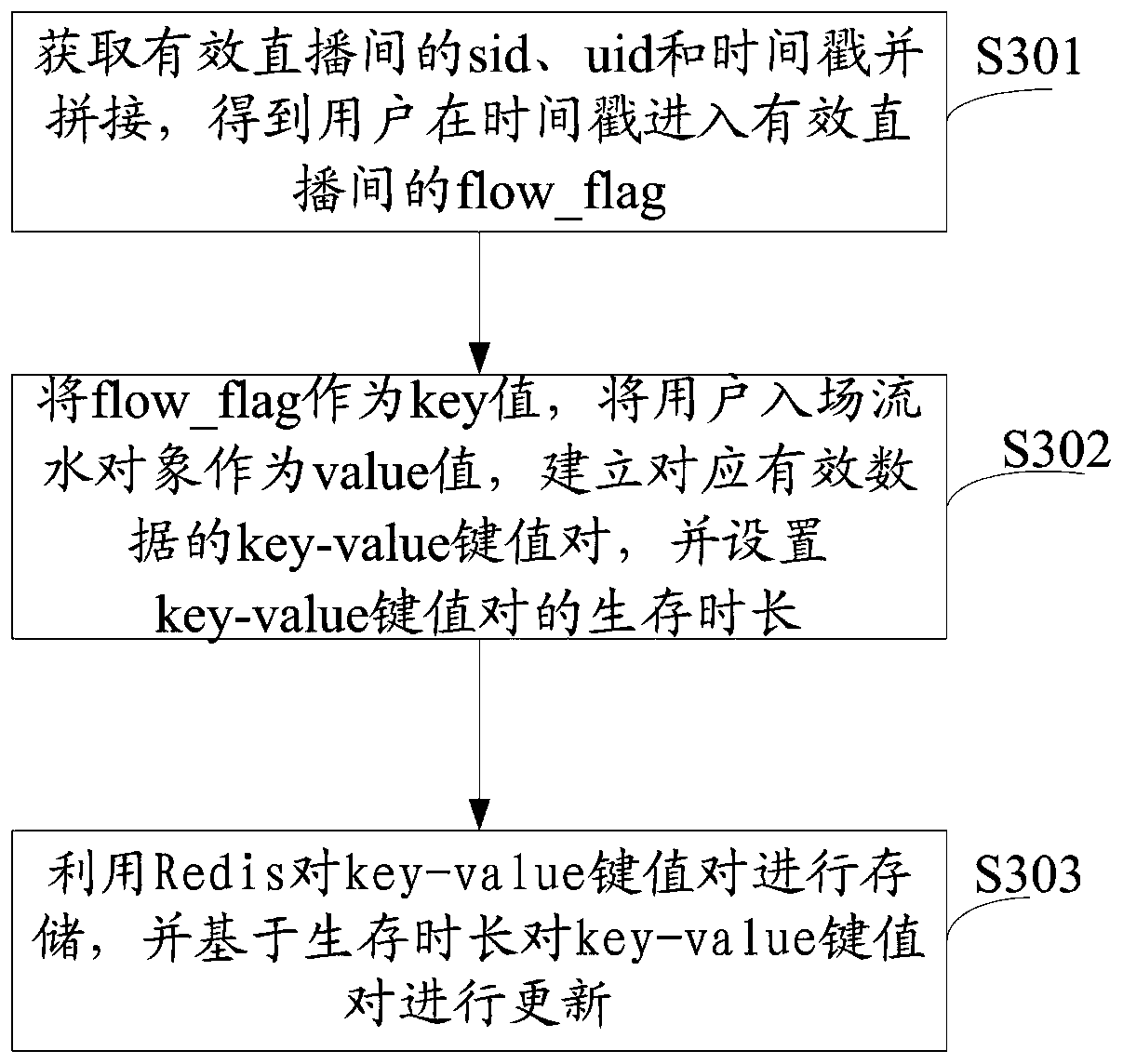

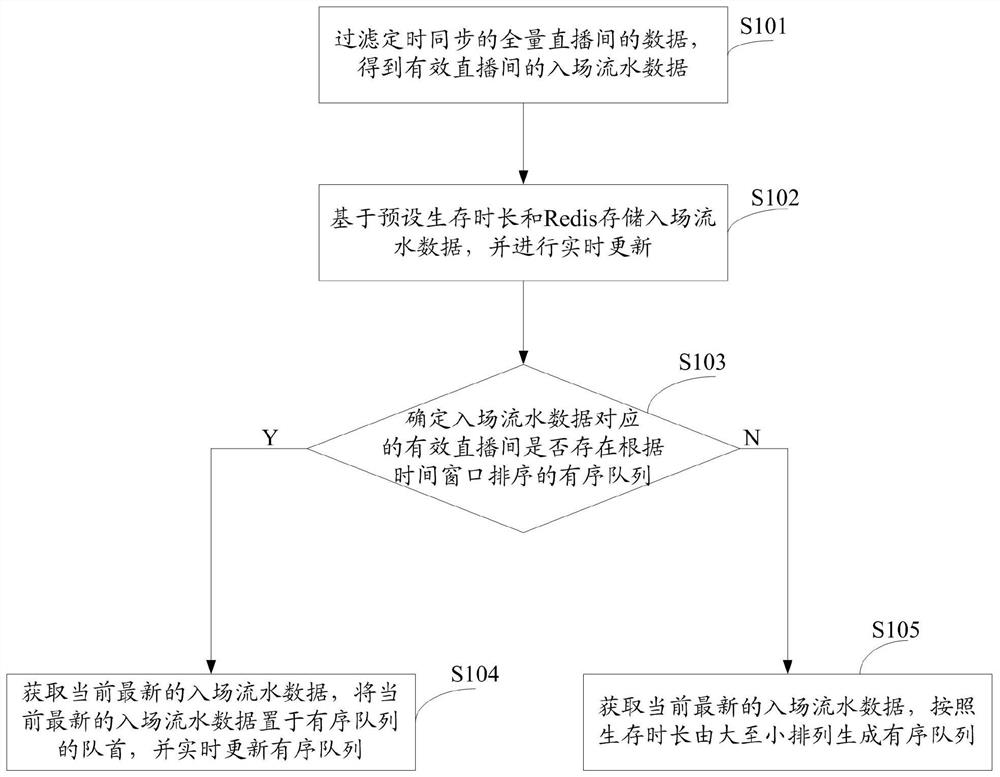

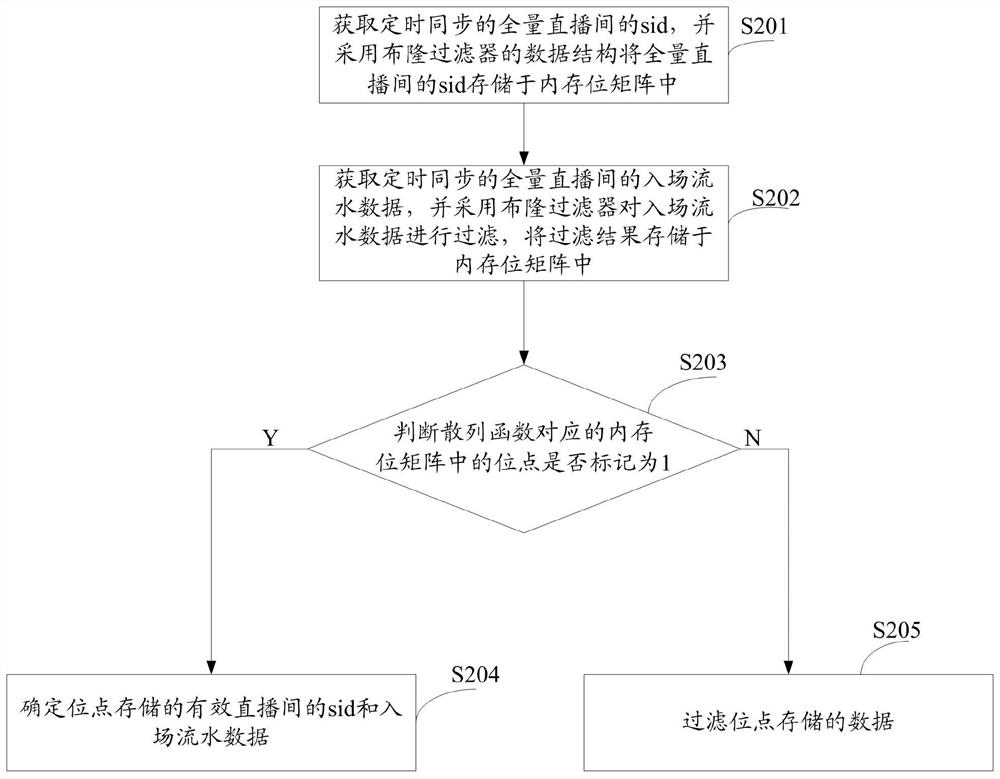

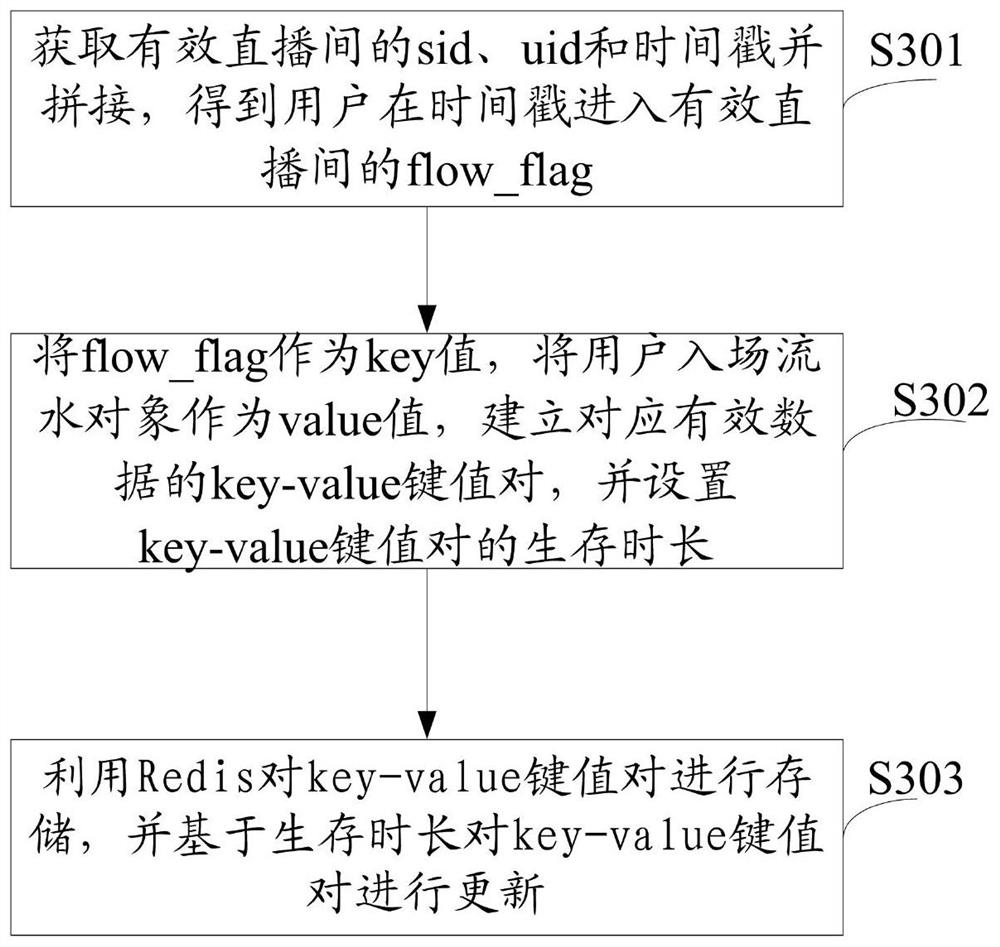

Live broadcast room admission flow data processing method and device, equipment and storage medium

ActiveCN110784729ARelieve memory pressureGuaranteed treatment effectSelective content distributionTerm memoryDistributed computing

The invention provides a live broadcast room admission flow data processing method and device, equipment and a storage medium, and the method comprises the steps: filtering the data of a full live broadcast room in timing synchronization, and obtaining the admission flow data of an effective live broadcast room; storing the admission flow data based on a preset survival time length and Redis, andperforming real-time updating; determining whether an ordered queue sorted according to a time window exists in an effective live broadcast room corresponding to the admission flow data or not; if so,obtaining the current latest admission flow data, placing the current latest admission flow data at the head of the ordered queue, and updating the ordered queue in real time; and if not, obtaining the current latest admission flow data, and generating an ordered queue according to the descending order of the survival duration. The data of the full live broadcast room is filtered to obtain the admission flow data of the effective live broadcast room, the ordered queue corresponding to the effective live broadcast room is stored and updated by utilizing Redis, the memory pressure caused by massive admission flow data is relieved, and the real-time processing of the admission flow data is ensured.

Owner:GUANGZHOU HUADUO NETWORK TECH

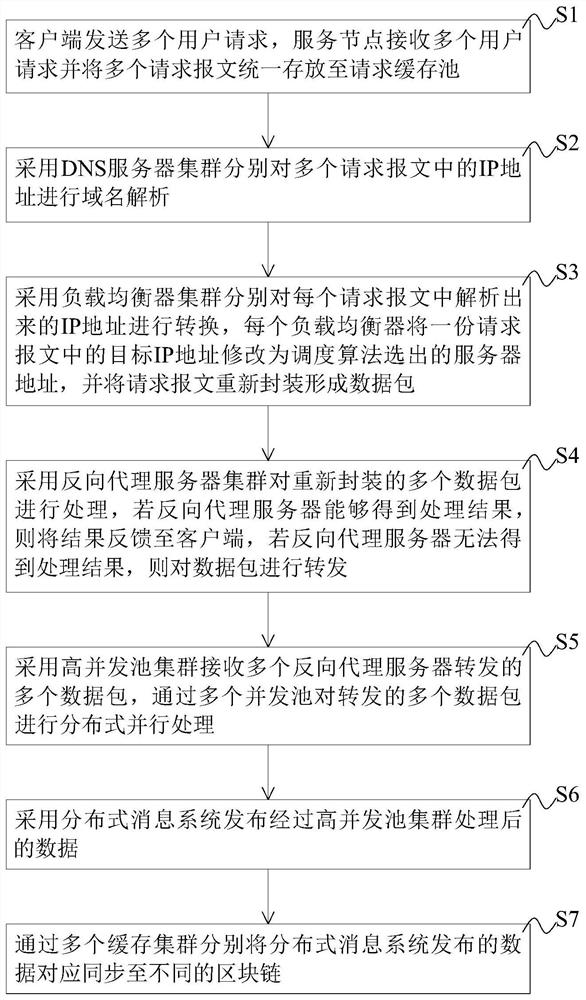

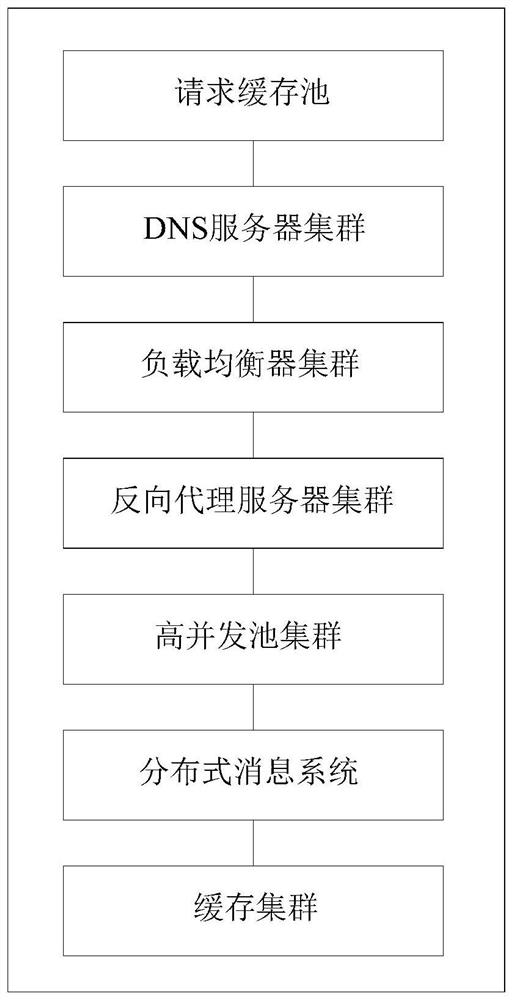

Distributed high-concurrency transaction processing method and system for block chain, equipment and storage medium

ActiveCN113691611APrevent crash situationsEase of batch processingTransmissionBatch processingResource utilization

The invention discloses a distributed high-concurrency transaction processing method and system for a block chain, equipment and a storage medium, wherein the method comprises the steps: distributing a user request to a plurality of processing servers through employing a multi-stage load balancing and multi-stage caching mechanism, can accelerate the response speed of the user request and improves the throughput, and the situation that the processing server crashes due to sudden increase of the user request is prevented. Besides, the distributed and parallel processing is carried out on the mass data through the high-concurrency pool cluster, so that the scheduling pressure can be reduced, the large-scale scheduling problem is effectively solved, the memory pressure is relieved, and the resource utilization efficiency is improved. Finally, the data processed by the high-concurrency pool cluster is published through the distributed message system, so that persistence of the message can be realized, batch processing of the data is facilitated, and the data is written into the corresponding block chain in parallel at a high speed through the plurality of cache clusters.

Owner:HUNAN UNIV

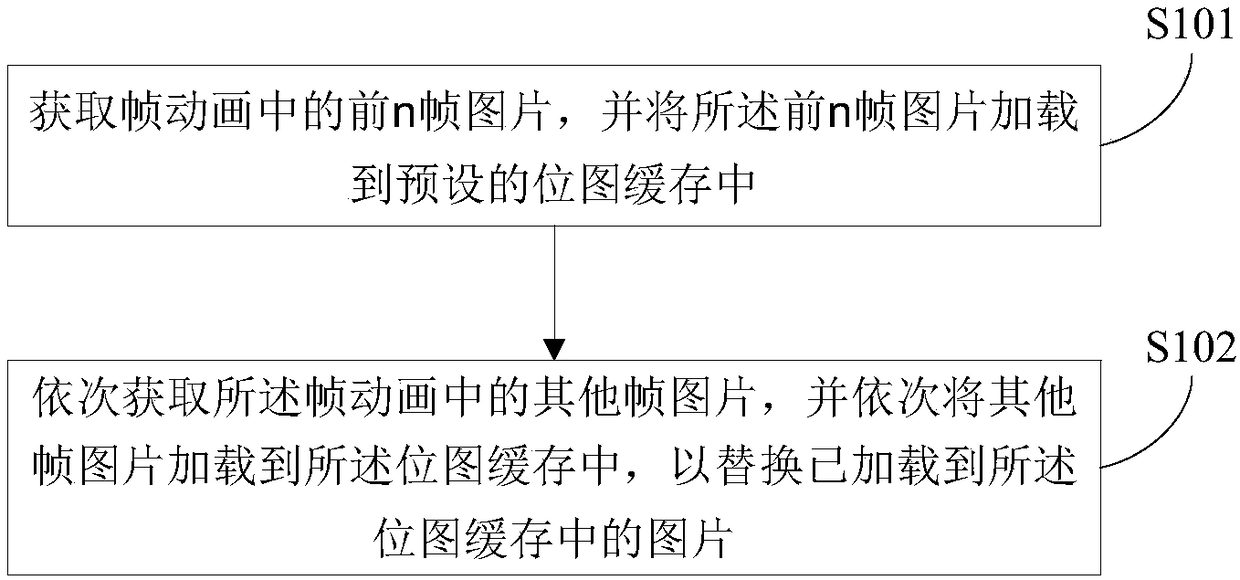

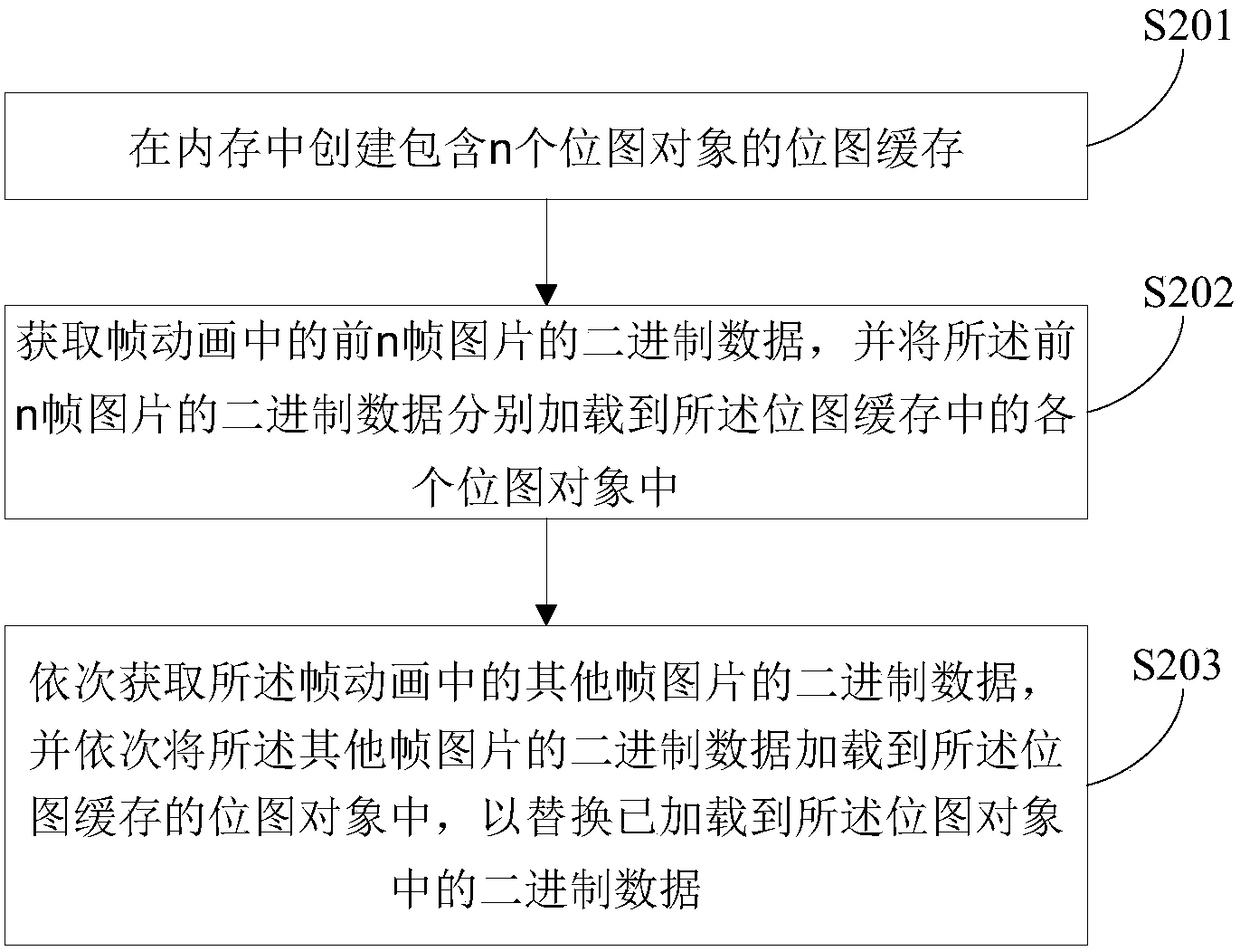

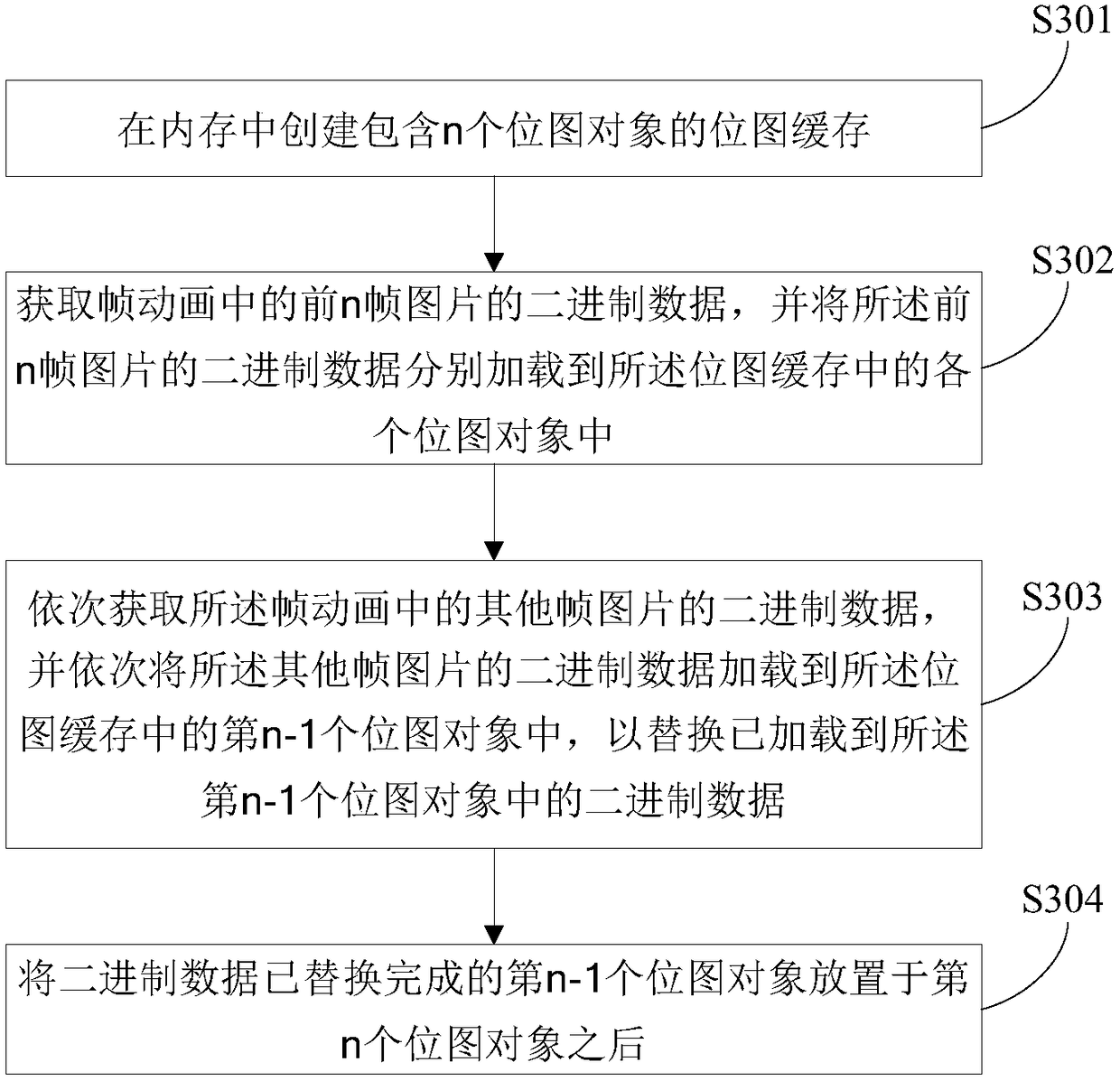

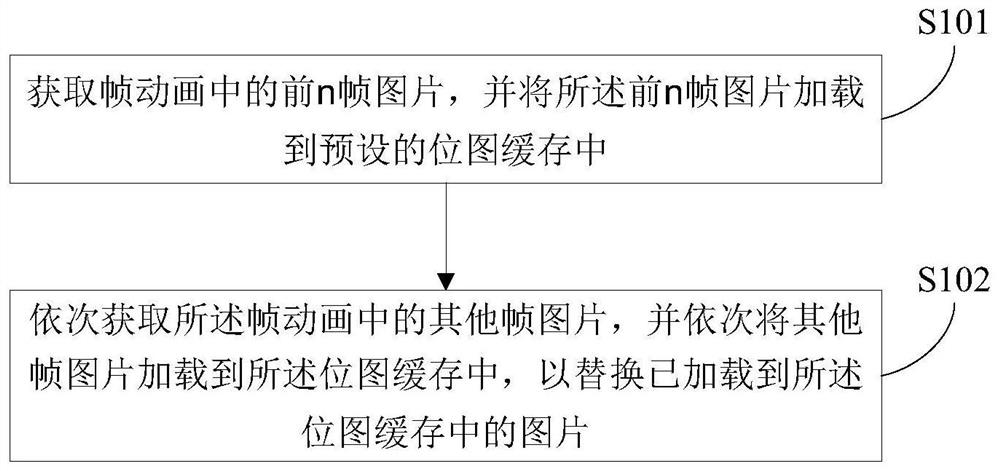

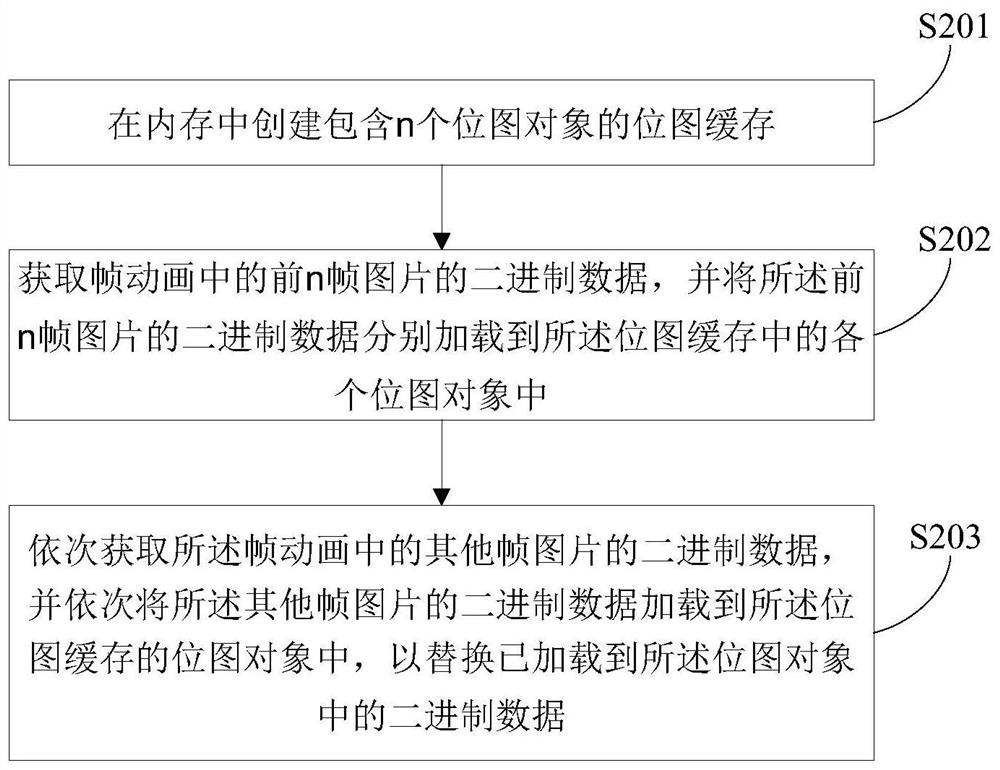

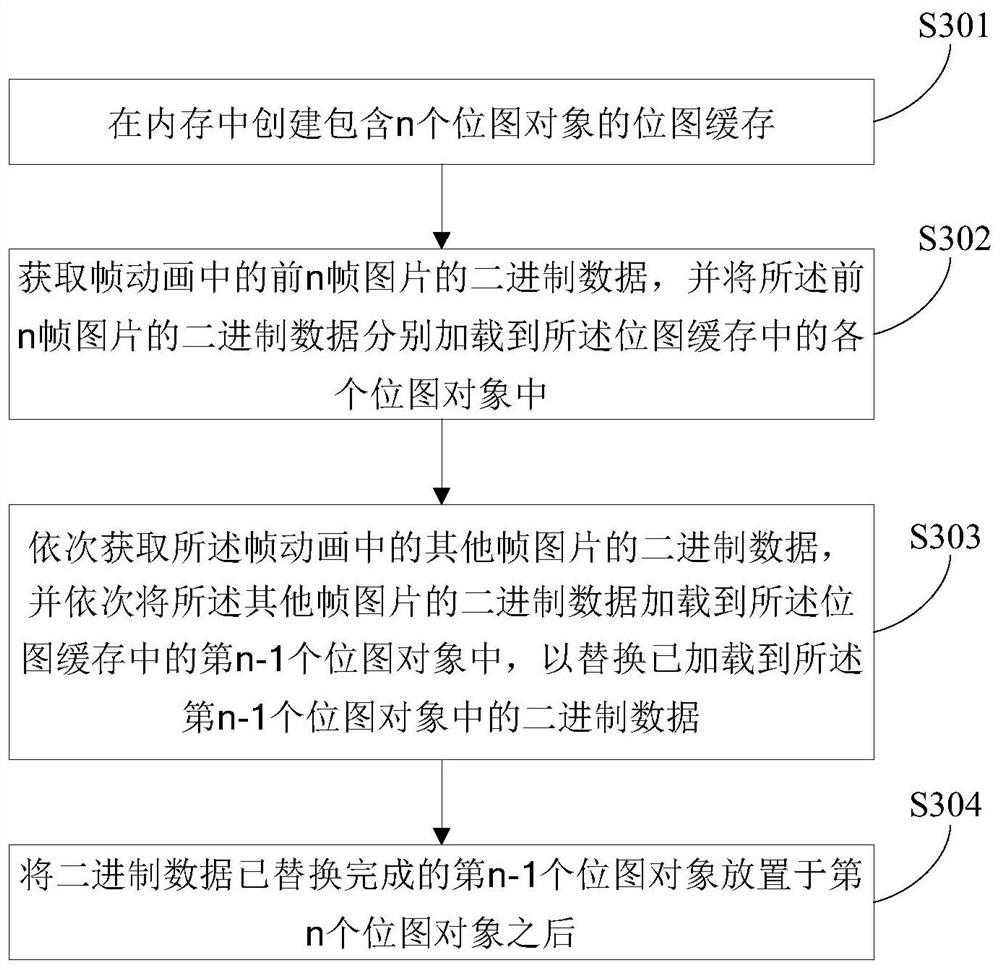

Frame animation caching method, apparatus and equipment, and computer readable storage medium

ActiveCN108271070ARelieve memory pressureReduce overheadSelective content distributionAnimationRaster graphics

The invention provides a frame animation caching method, apparatus and equipment, and a computer readable storage medium. The frame animation caching method includes the steps: acquiring the first n frames of images in a frame animation, and loading the first n frames of images into a preset bitmap cache; and successively acquiring other frames of images in the frame animation, and successively loading the other frames of images into the bitmap cache so as to replace the images which have been loaded into the bitmap cache. The frame animation caching method, apparatus and equipment can alleviate the memory pressure of the frame animation, and can avoid the problem of overflow of memory when the frame animation is carried out.

Owner:BEIJING 58 INFORMATION TTECH CO LTD

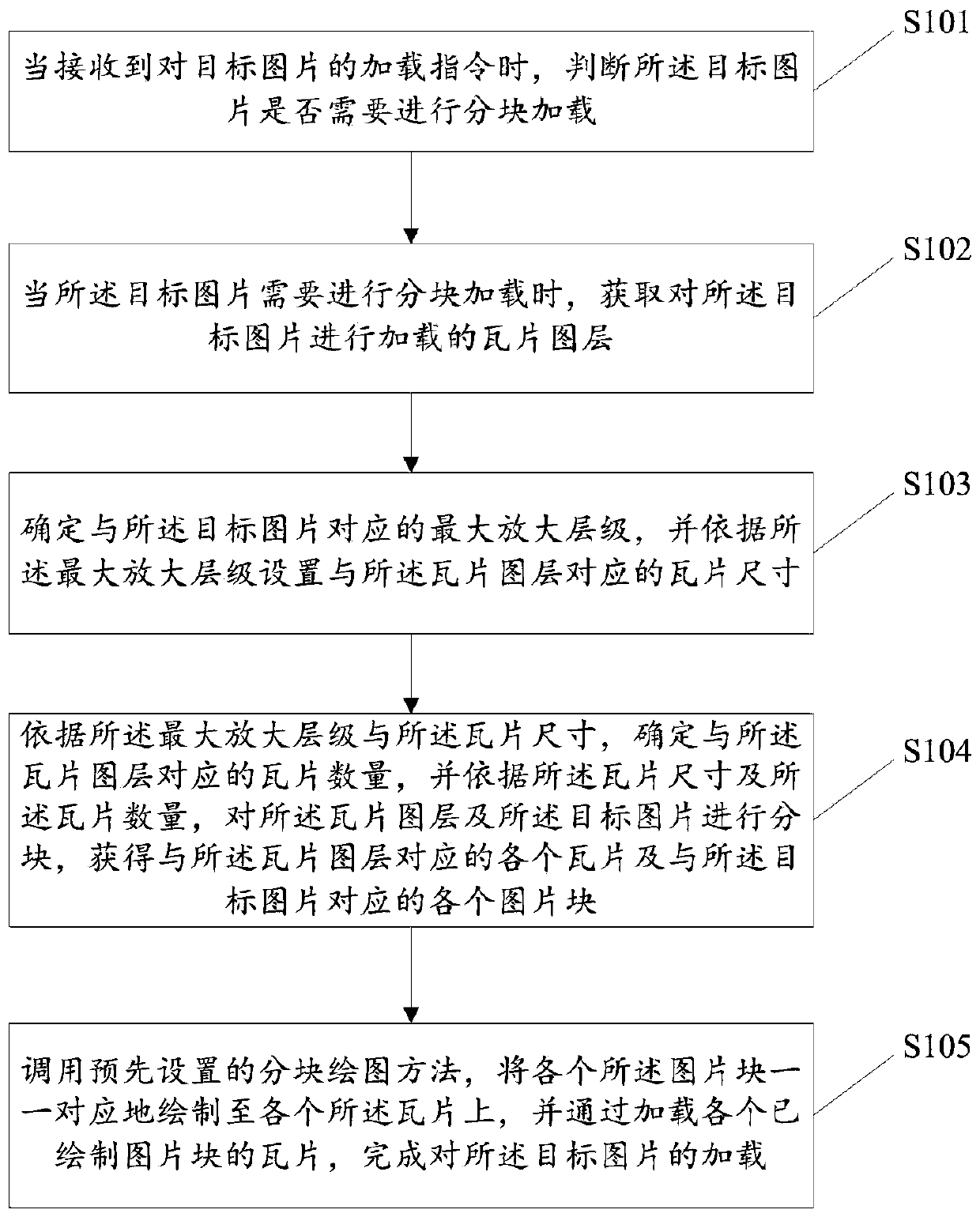

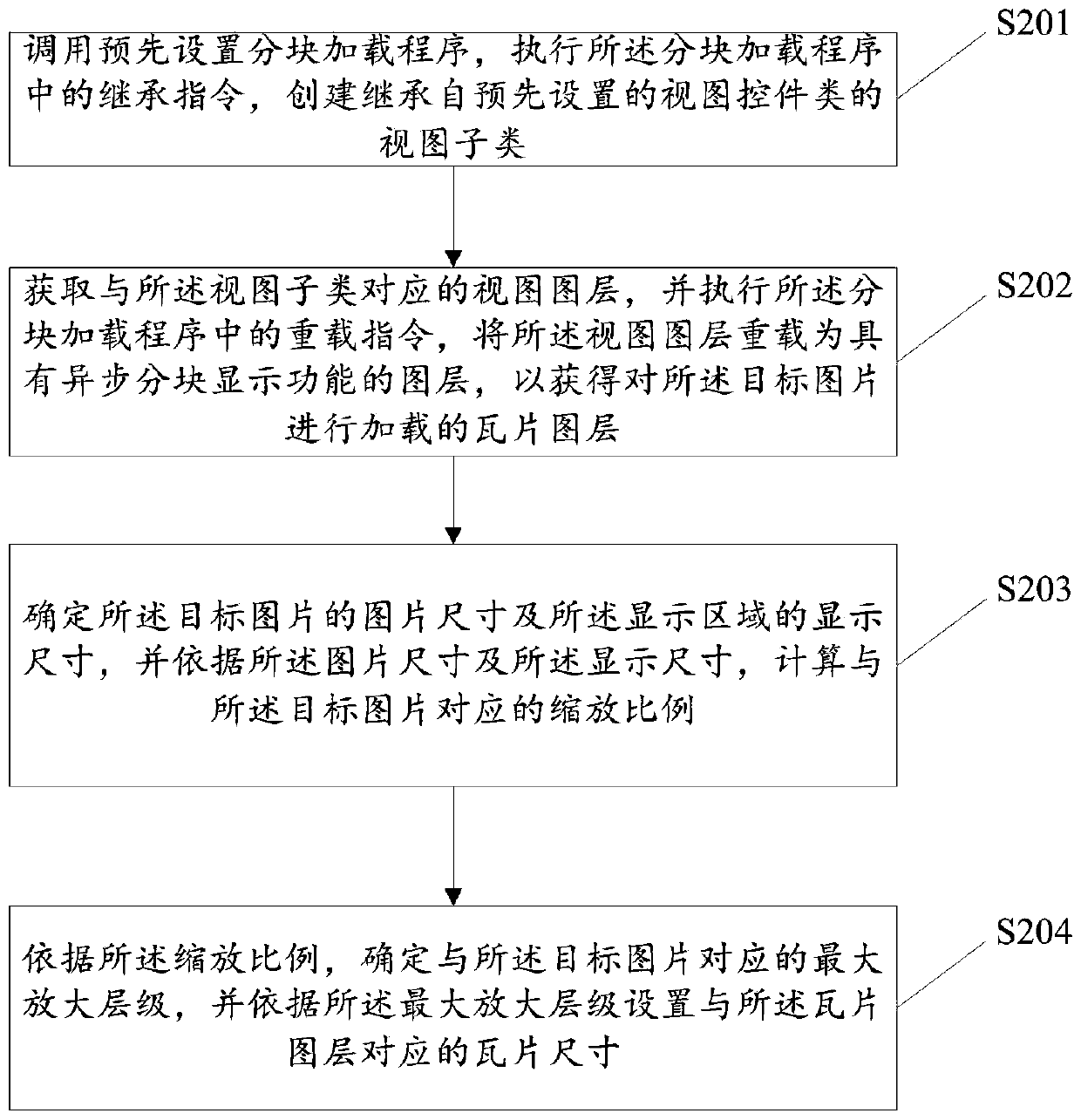

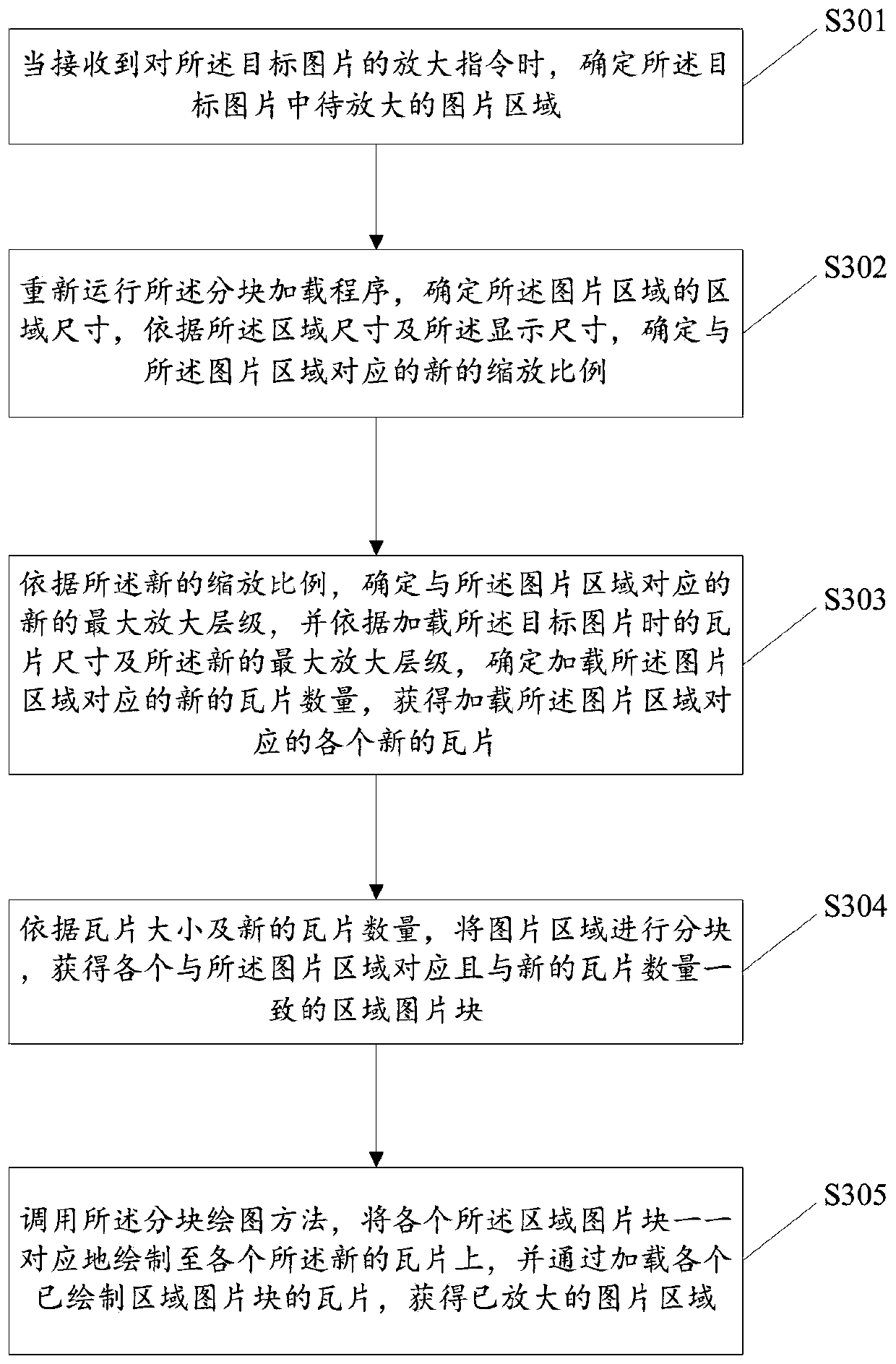

Picture loading method and device

InactiveCN110187924ARelieve memory pressureWon't crashResource allocationBootstrappingLoad instructionSize determination

The invention relates to the technical field of picture processing, in particular to a picture loading method and device. The picture loading method comprises the steps: judging whether partitioning loading is needed or not when a loading instruction for a target picture is received; when partitioning loading is needed, obtaining a tile layer; determining a maximum amplification level corresponding to the target picture and setting a tile size corresponding to the tile layer; determining the number of tiles according to the maximum amplification level and the tile size; partitioning the tile layer and the target picture into blocks to obtain each tile and each picture block; and calling a partitioning drawing method, drawing the picture blocks on the tiles in a one-to-one correspondence manner, and loading the tiles of the drawn picture blocks to complete loading of the target picture. By applying the picture loading method provided by the invention, the target picture is partitioned and then drawn on the tile; each tile is loaded in a partitioned manner; the loading of the target picture is completed; the memory pressure is relieved; the memory is prevented from rising violently;and the main thread of the loaded picture is prevented from collapsing.

Owner:吉林亿联银行股份有限公司

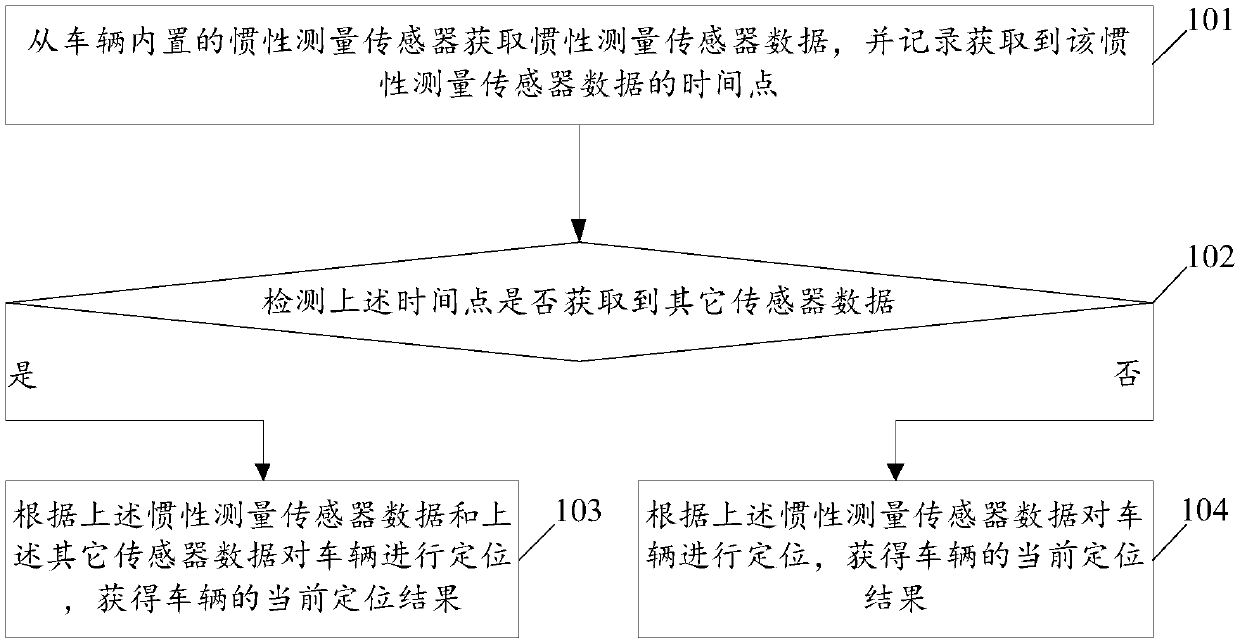

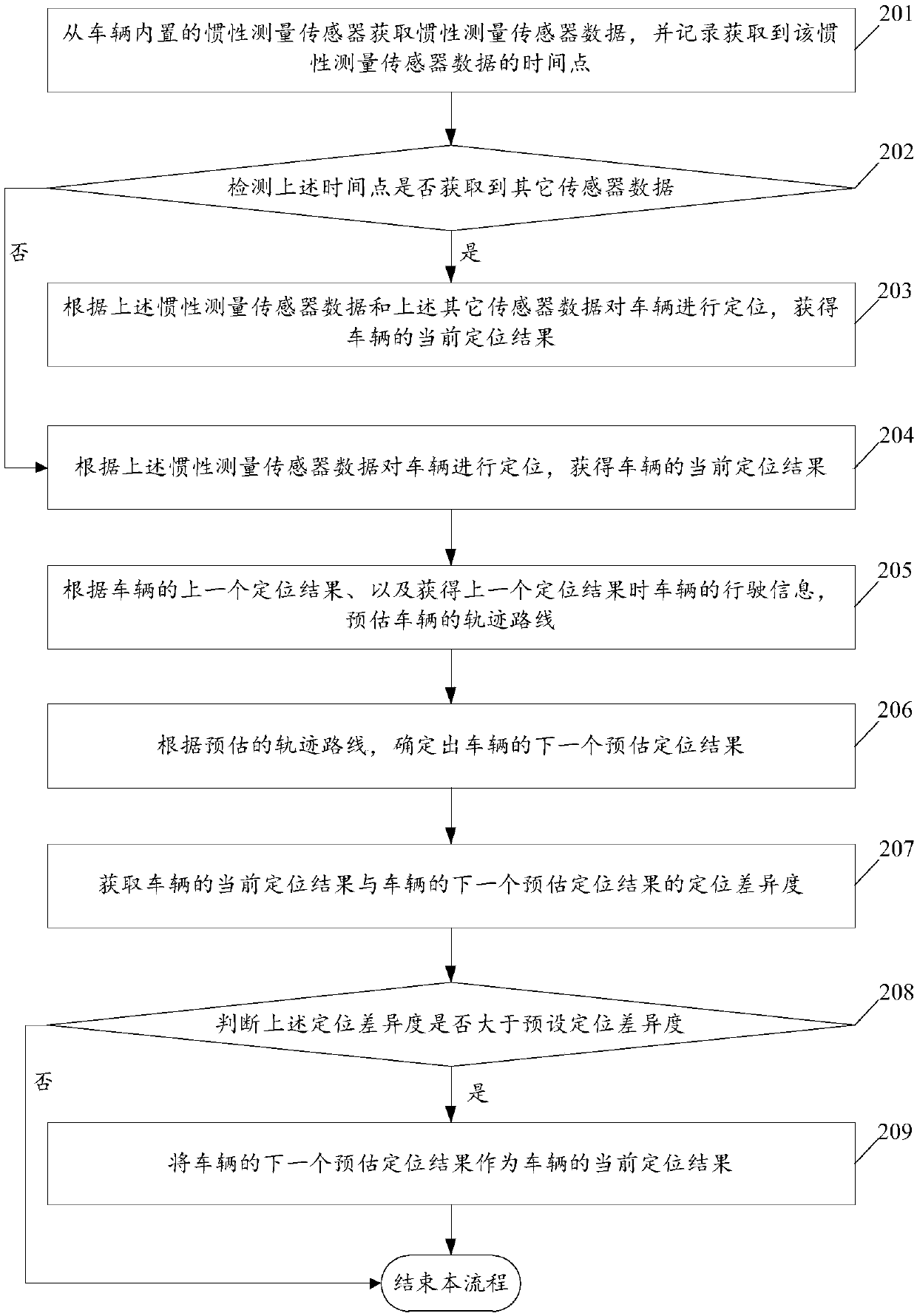

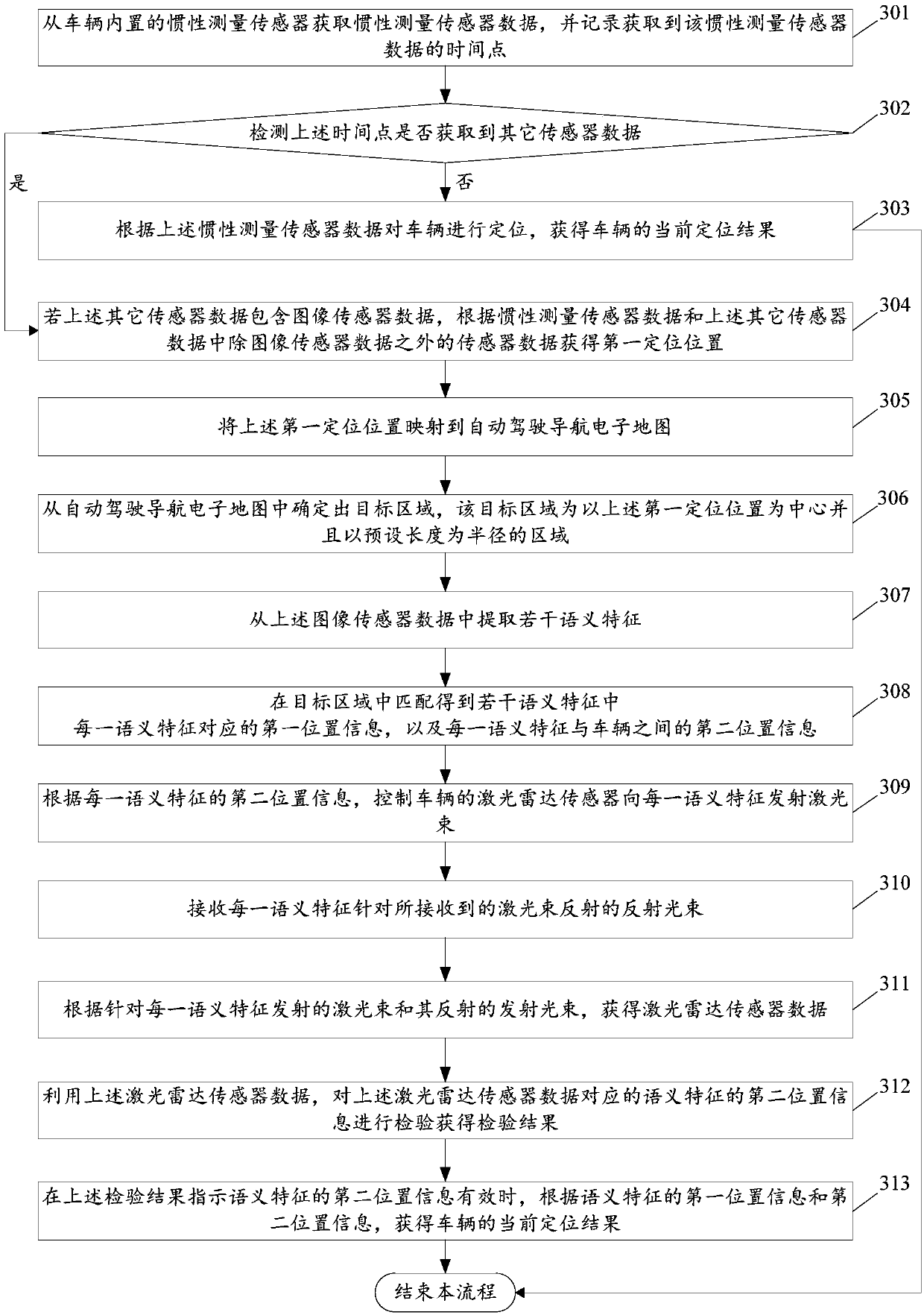

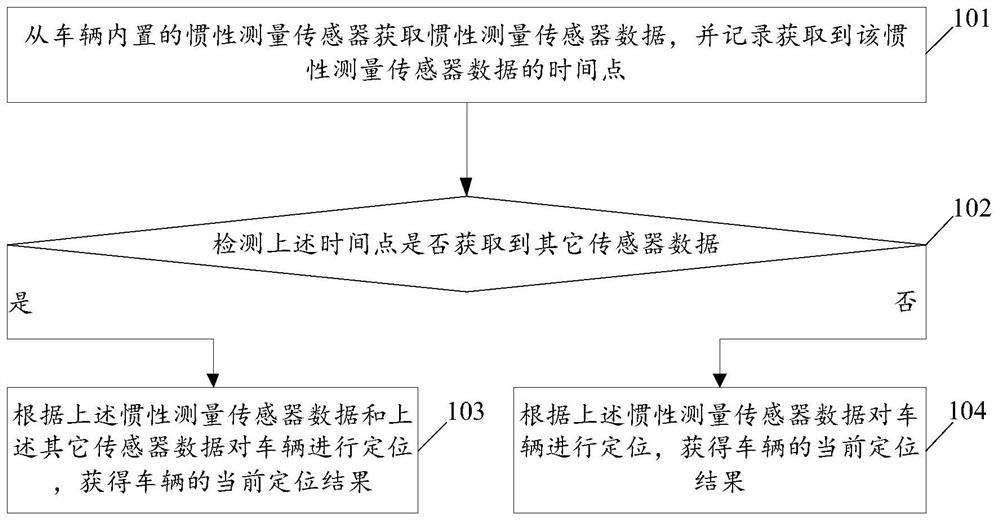

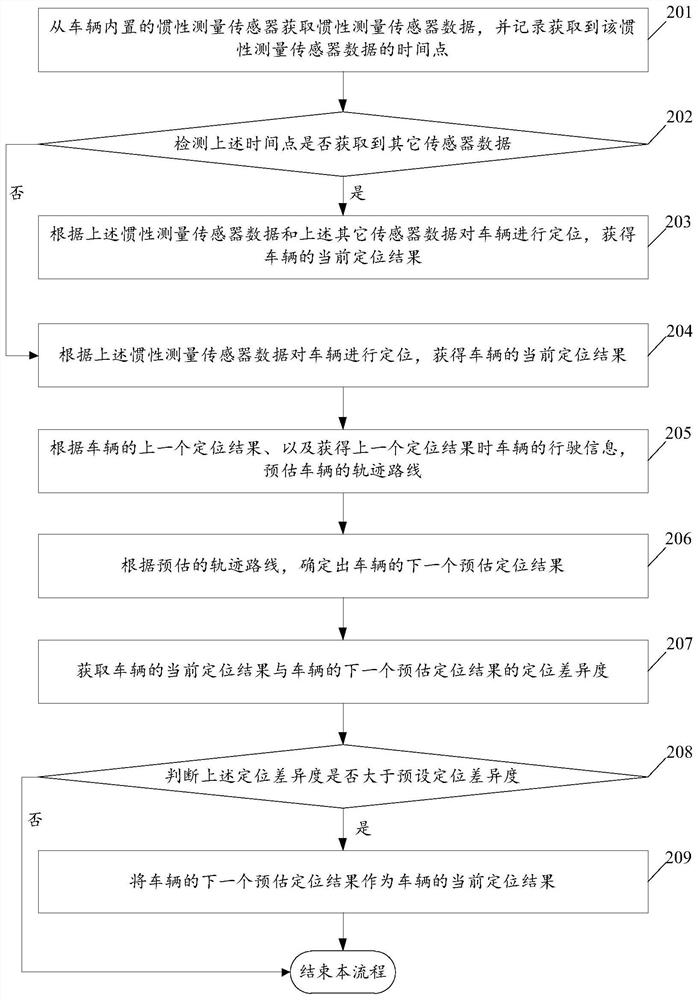

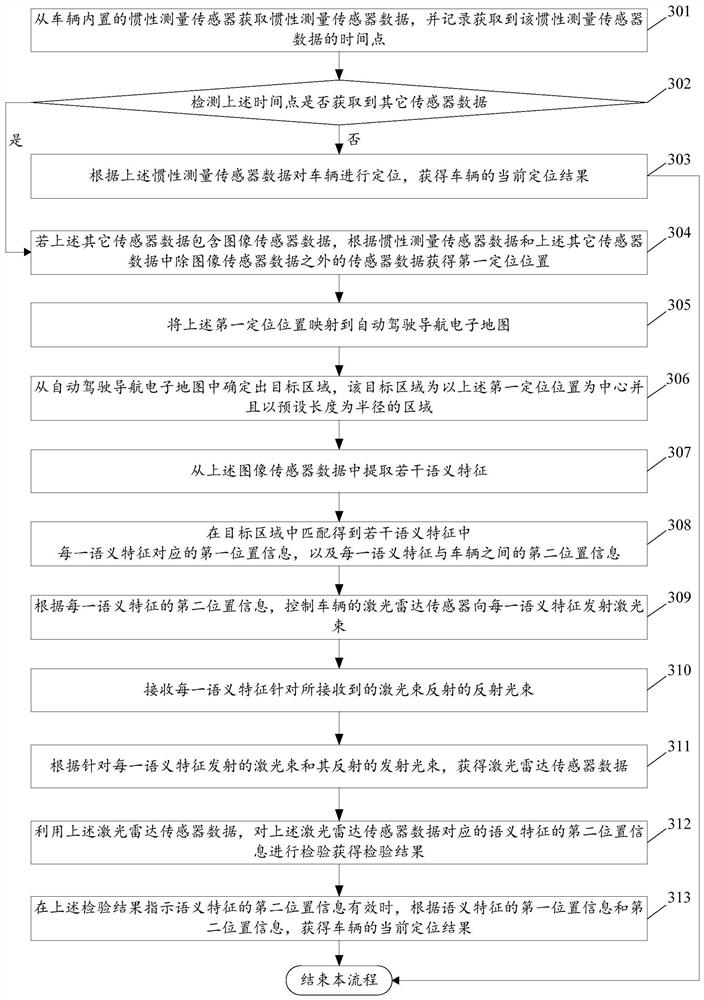

Real-time positioning method and device applied to automatic driving

ActiveCN110146074AHigh positioning accuracySolve the problem of reduced positioning accuracyNavigation by speed/acceleration measurementsElectromagnetic wave reradiationComputer scienceReal-time computing

Embodiments of the invention disclose a real-time positioning method and device applied to automatic driving. The method comprises the steps of obtaining inertia measurement sensor data from an inertia measurement sensor built in a vehicle and recording a time point of obtaining the inertia measurement sensor data; when sensor data except the inertia measurement sensor data is obtained at the timepoint, positioning the vehicle according to the inertia measurement sensor data and the other sensor data; and when the other sensor data is not obtained at the time point, positioning the vehicle according to the inertia measurement sensor data. By implementing the method and device, the time point of receiving the inertia measurement sensor data can be used as a time reference, and the sensor data obtained at the time point is combined to carry out positioning, thereby improving the vehicle positioning precision.

Owner:BEIJING MOMENTA TECH CO LTD

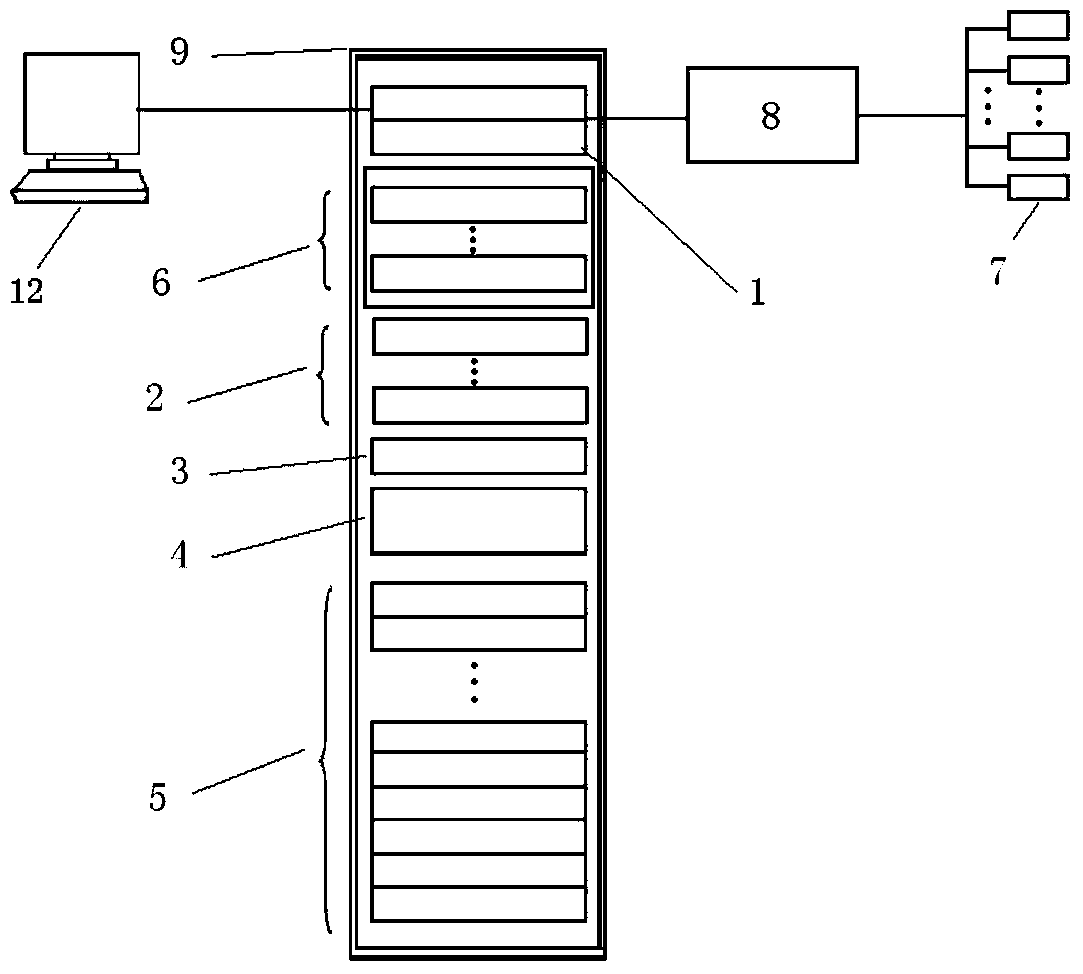

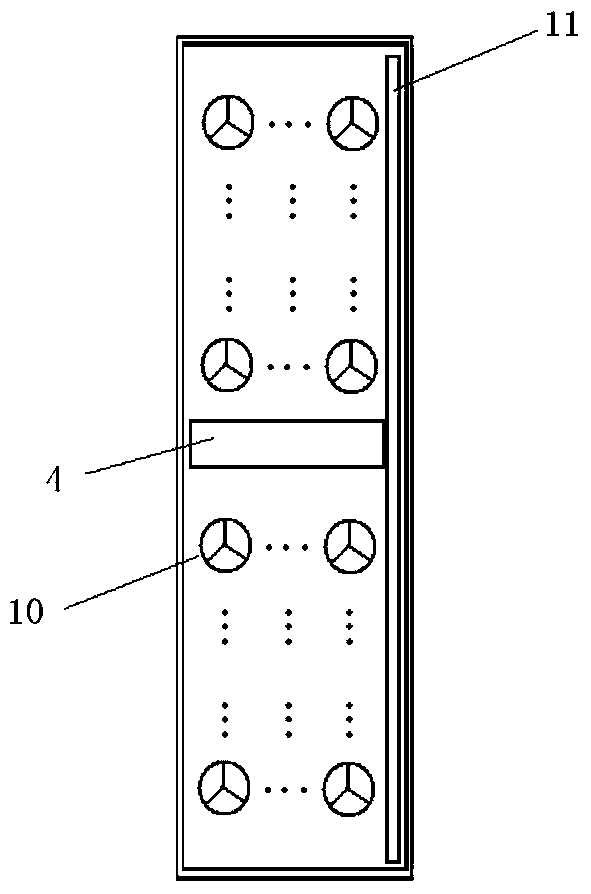

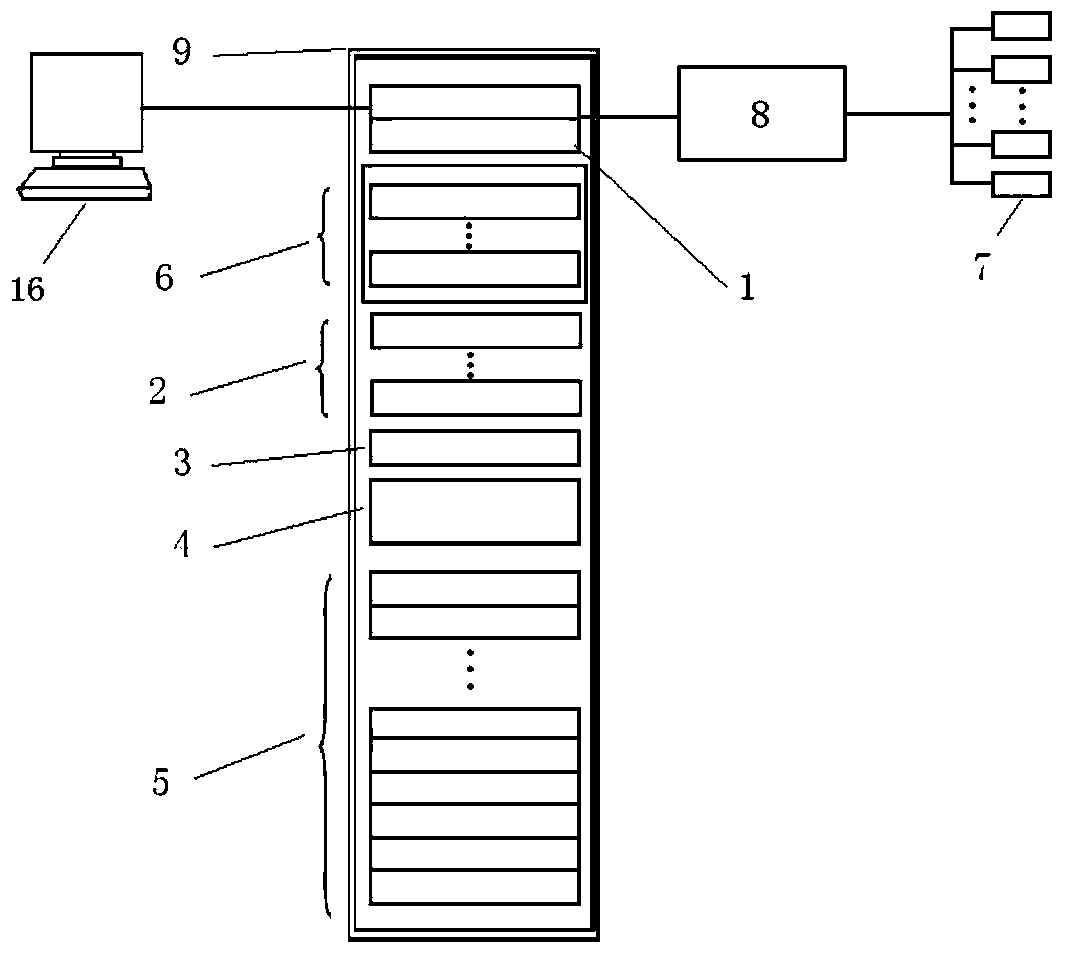

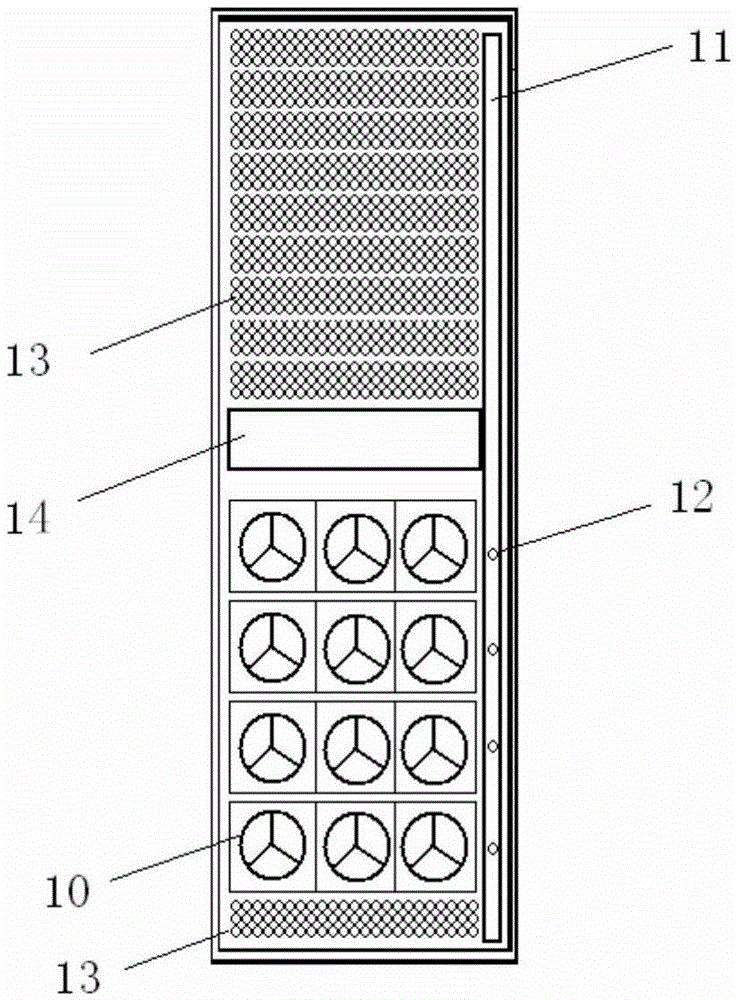

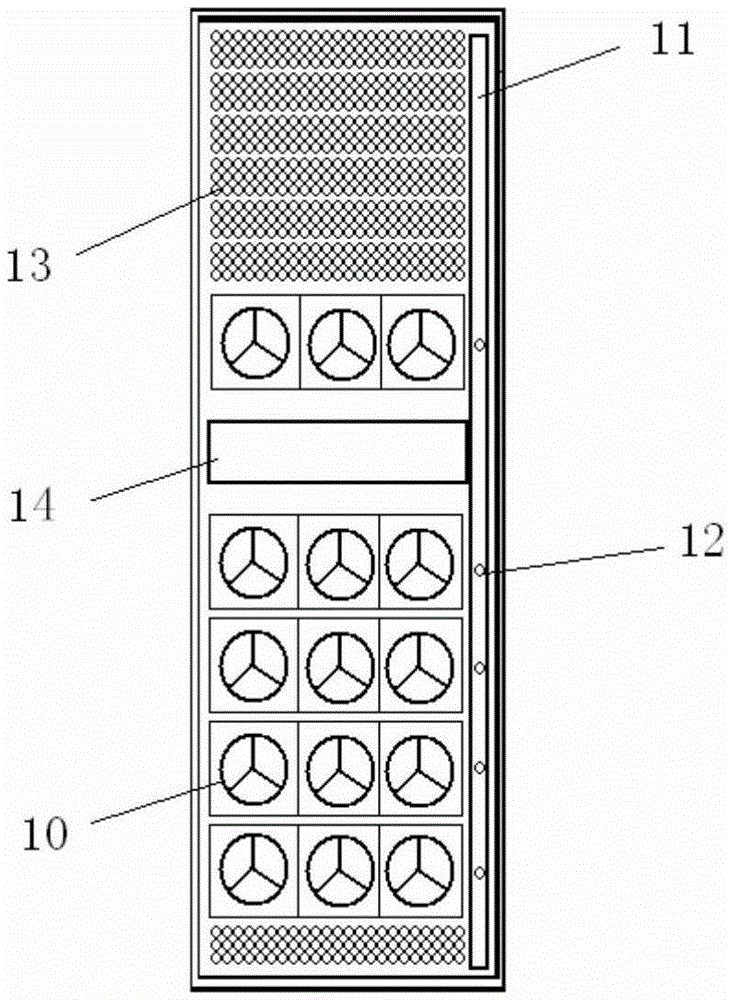

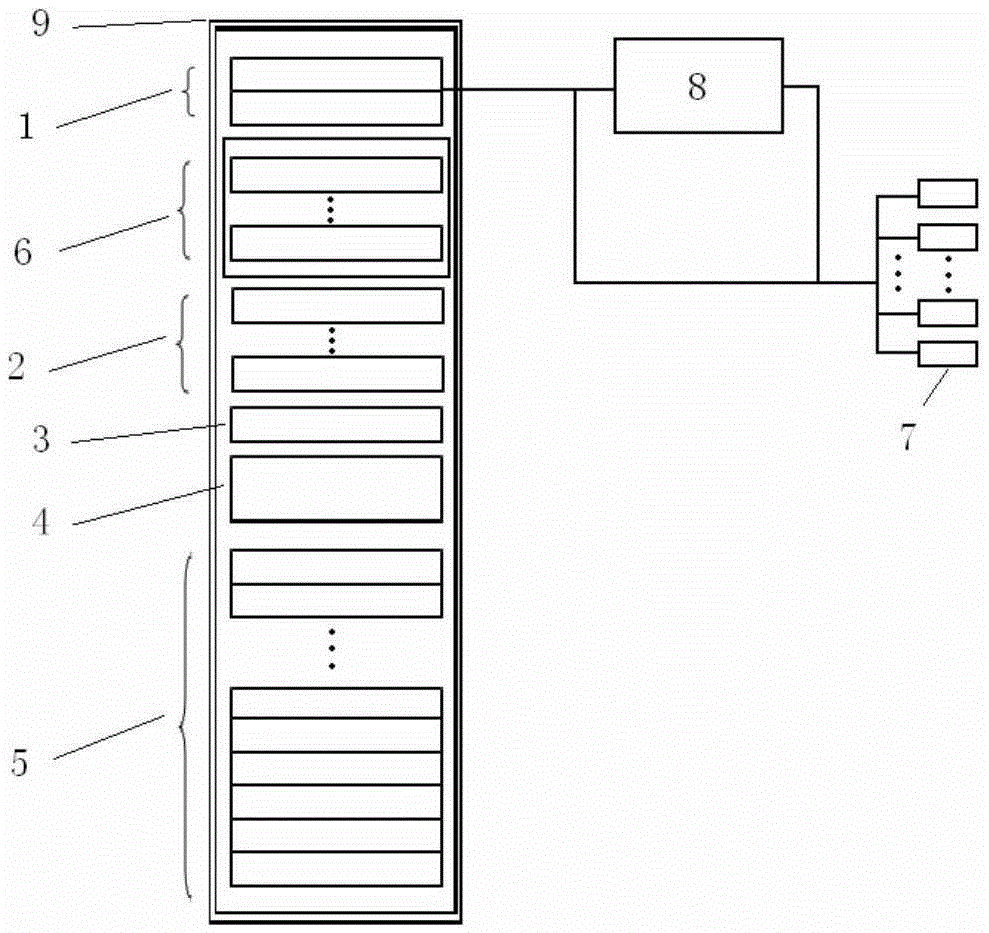

A cooling cabinet for big data all-in-one machine based on power application

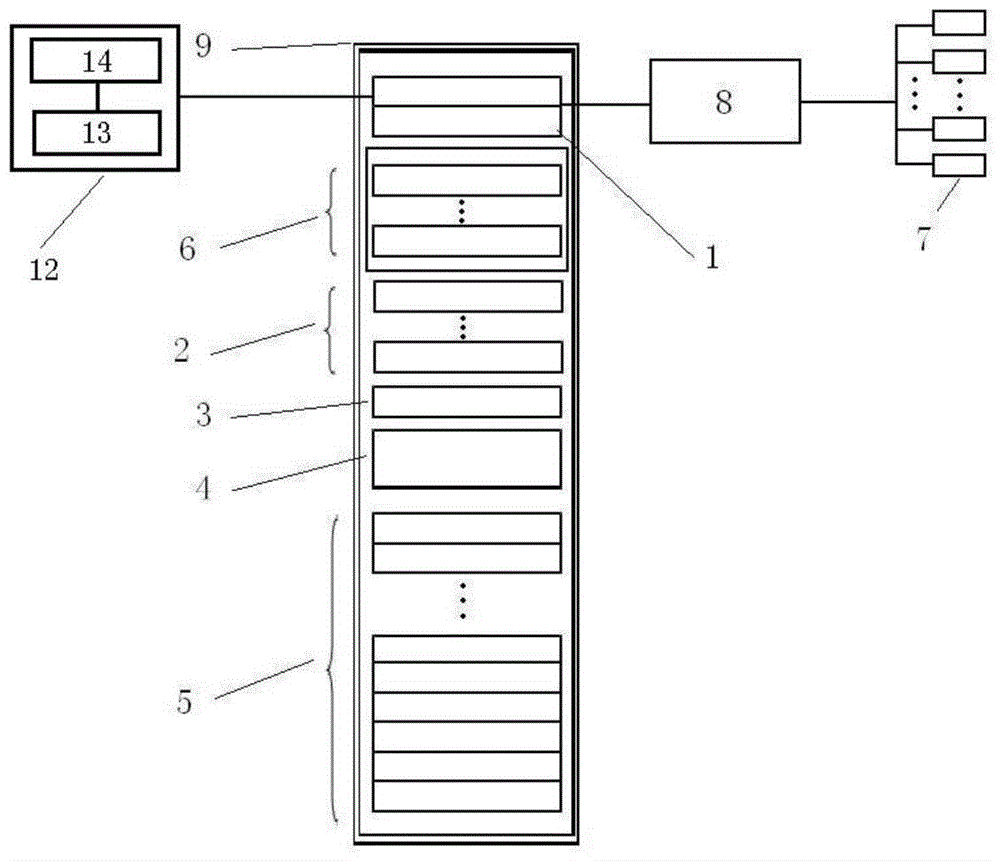

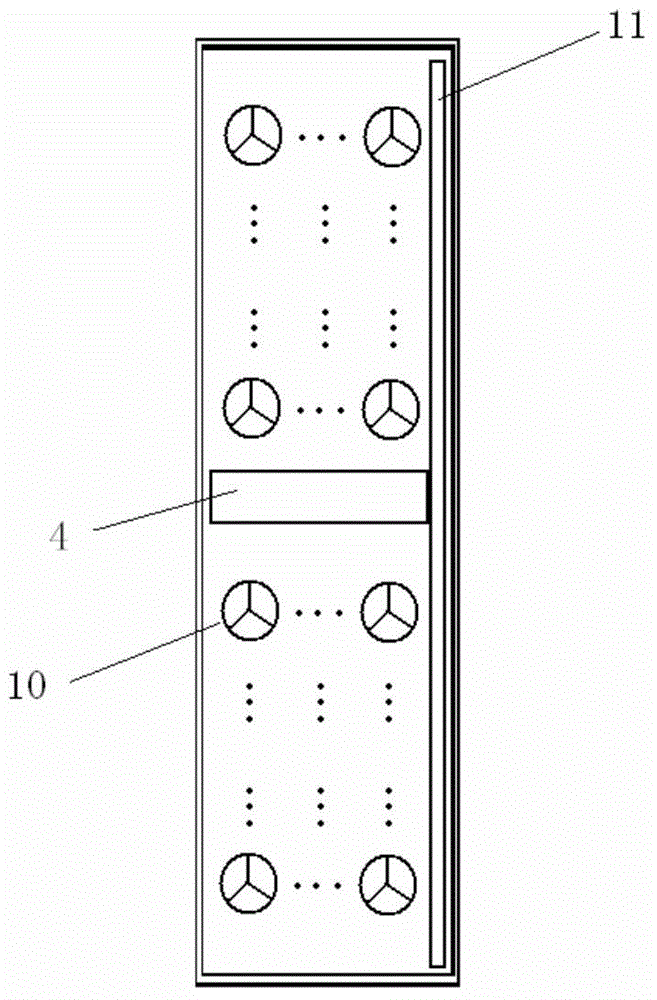

ActiveCN104981136BPerformance tuningAvoid functioDigital data processing detailsCooling/ventilation/heating modificationsFiberElectric power

The present invention provides a large data integrated machine heat radiation cabinet based on a power application. The cabinet comprises a cabinet body, an external server, one or more substation equipment measuring point sensors, one or more lower calculation storage servers arranged at the lower part of the cabinet body, and a power supply module and a Gigabit management network module arranged in the middle part of the cabinet body. The cabinet also comprises a 10-Gigabit fiber data network module, one or more upper part calculation storage servers and one or more high performance calculation storage servers which are orderly arranged at the upper part of the cabinet from top to bottom. The cabinet also comprises a ventilation window, a fan window and a fan controller which are arranged at the middle part of the back side of the cabinet and also comprises a back plate arranged at the back side of the cabinet body and a side plate arranged at the side of the cabinet body. The large data integrated machine heat radiation cabinet has the advantages of high integration, high equipment availability, good scalability, good scattering effect, and stable system performance.

Owner:SHANDONG LUNENG SOFTWARE TECH

Method, apparatus, apparatus, and computer-readable storage medium for caching frame animation

ActiveCN108271070BRelieve memory pressureReduce overheadSelective content distributionComputational scienceAnimation

Owner:BEIJING 58 INFORMATION TTECH CO LTD

A big data all-in-one machine based on electric power application

ActiveCN104951024BPerformance tuningImprove usabilityDigital processing power distributionPower applicationComputer module

The invention provides a big data integrated machine based on electric power application. The big data integrated machine comprises a cabinet, an external server and one or more transformation equipment measuring point sensors and further comprises one or more lower calculation storage servers arranged at the lower part of the cabinet, a power module, a gigabit management network module, a 10-gigabit optical fiber data network module, one or more upper calculation storage servers, one or more high-performance calculation storage servers, fans and a fan controller, wherein the power module and the gigabit management network module are arranged in the middle of the cabinet; the 10-gigabit optical fiber data network module, the upper calculation storage servers and the high-performance calculation storage servers are arranged at the upper part of the cabinet from top to bottom in sequence; the fans and the fan controller are arranged on the back of the cabinet. The big data integrated machine is high in integration level, high in equipment usability, good in expansibility and stable in system performance.

Owner:SHANDONG LUNENG SOFTWARE TECH

A Distributed Unstructured Grid Cross-Processor Interface Method and System

ActiveCN114494650BQuickly establish face-to-face mapping relationshipRelieve memory pressureOther databases indexingOther databases queryingComputational scienceParallel computing

The invention relates to the technical field of grid processing, and discloses a distributed unstructured grid cross-processor face docking method and system. The docking method uses a two-level index structure to parallelize and identify faces on both sides of the grid partition boundary. Connection relationship; for any two docking surface elements, perform equivalence judgment on their centroid coordinates and normalized grid point sequence in turn. It includes the following steps: S1, parallel import of distributed unstructured grid basic geometric data; S2, cross-processor construction of dual communication lists between sub-regions; S3, parallel construction of surface grid discrete structures at docking boundaries; S4, in Each processor constructs the fork tree structure of query set family; S5, cross-processor query pairing relationship between docking surface elements; S6, parallel export of face docking information of distributed unstructured grid. The invention solves the problems of low processing efficiency, poor data processing capability and the like in the prior art when processing large-scale unstructured grids.

Owner:CALCULATION AERODYNAMICS INST CHINA AERODYNAMICS RES & DEV CENT

Method, device, equipment, and storage medium for data processing of entry flow in live broadcast room

ActiveCN110784729BRelieve memory pressureGuaranteed treatment effectSelective content distributionStreaming dataComputer network

Owner:GUANGZHOU HUADUO NETWORK TECH

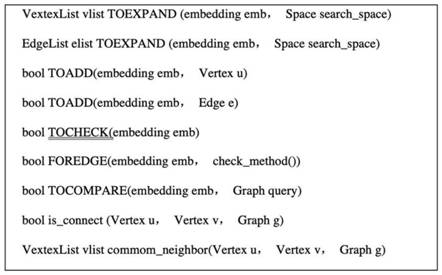

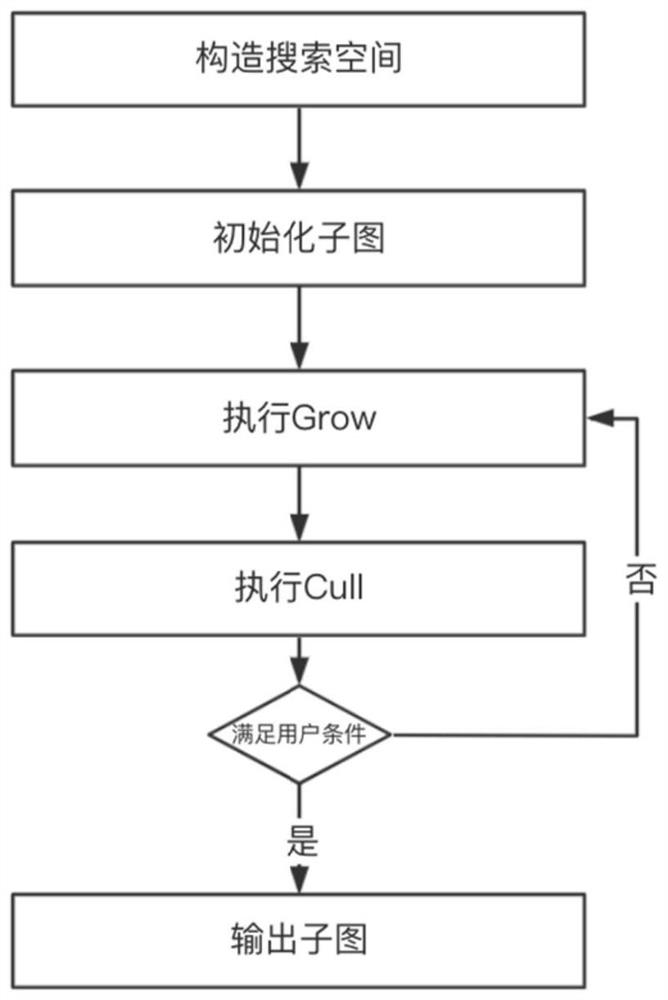

GPU-based high-performance graph mining method and system

InactiveCN111831864AStrong descriptive abilityAccelerating the graph mining processData miningOther databases indexingTheoretical computer scienceTerm memory

The invention discloses a GPU-based high-performance graph mining method and system. The method comprises the steps: employing a GPU & CPU cooperative computing architecture, carrying out the graph mining operation through multiple threads of a GPU, improving the search efficiency, and storing a large number of intermediate sub-graphs generated in a graph mining process through a CPU memory; describing a system architecture by combining a Grow-Cull execution model: in a system operation process, copying a part of sub-graphs to a GPU each time to execute a Grow operation, judging a relationshipbetween the sub-graphs and vertexes / edges, and copying the generated candidate sub-graphs to a CPU memory; in order to check the legality of the candidate sub-graphs, the CPU multithreading technology is used for executing the Cull operation to judge the candidate sub-graphs, the qualified sub-graphs are stored in the CPU main memory. The system repeats the iteration process. By referring to thethought of a pipeline, CPU calculation and GPU calculation can be executed at the same time during iteration, bidirectional copying of data can also be executed at the same time, and calculation and transmission delay is masked.

Owner:中科院计算所西部高等技术研究院

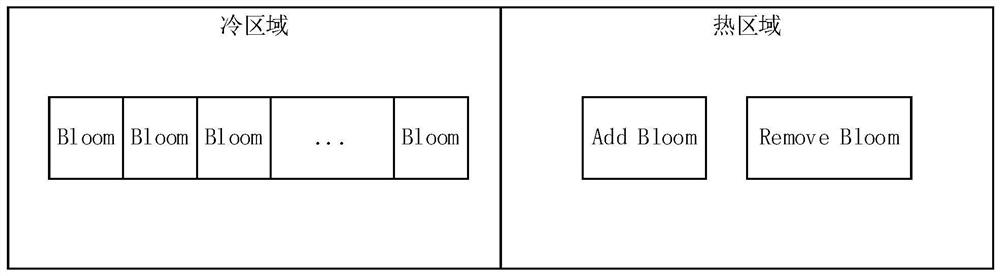

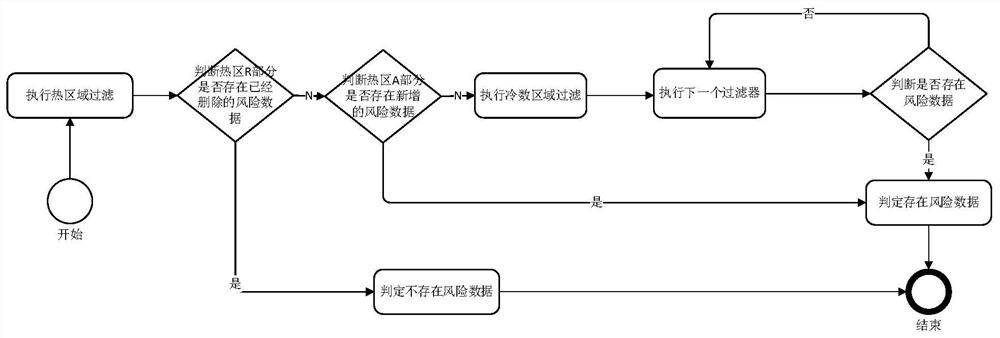

A method and device for intercepting risky SMS based on Bloom filter

ActiveCN111629378BLower loading costsRelieve memory pressureDigital data information retrievalSpecial data processing applicationsBloom filterTerm memory

The invention relates to the technical field of risk message interception, in particular to a method and device for intercepting risk message messages based on a Bloom filter. According to the characteristics of risk data, the memory is divided into two areas, and the data loading cost will become very low. The risk data that may be added or deleted at any time of the day is stored in the hot zone H, and the historical risk data is stored in the cold zone C. At the same time, because the data of the Bloom filter can only be increased but not decreased, and users may delete historical risk data, such deletion cannot change the cold area data. Therefore, two Bloom filters are set in the hot data area, one adds H-A for the hot area, and the other deletes H-R for the hot area. By adopting the above method, the present invention can alleviate memory pressure, improve efficiency and reduce cost.

Owner:上海创蓝云智信息科技股份有限公司

A system to solve fierce competition for memory resources in big data processing systems

ActiveCN105868025BRelieve memory pressureGuaranteed uptimeResource allocationData platformComputer module

The invention discloses a system for settling fierce competition of memory resources in a big data processing system. A memory information feedback module is used for monitoring the memory using condition for a running thread task, converting collected memory information and then feeding back the converted memory information to an information sampling and analyzing module; the information sampling and analyzing module is used for dynamically controlling the number of sampling times of information of all working nodes, analyzing data after the assigned number of sampling times is achieved, and calculating the optimal CPU to memory proportion of the current working node; a decision making and task distributing module is used for making a decision and controlling whether new tasks are distributed to the working nodes for calculation operation according to the information obtained through analysis and the task running information of the current working node to achieve effective limit on the CPU and memory use relation. By means of the system, a memory-perceptive task distribution mechanism can be achieved on a universal big data platform, the I / O expenditure generated when data overflows to a disc due to fierce competition of memory resources can be reduced, and the integral performance of the system can be effectively improved.

Owner:HUAZHONG UNIV OF SCI & TECH

Large volume bim model data processing and loading method and equipment

ActiveCN111259474BImprove browsing speedRelieve memory pressureGeometric CADData processing applicationsData miningIndustrial engineering

The present invention proposes a large volume BIM model data processing and loading method and equipment, belonging to the technical field of BIM data processing, which includes a model data import module; a data export module; a model data grouping module; a model data export module; a model data lightweight module; Model layer making module. The BIM model loading of the present invention adopts the principle of nearby loading, and can quickly display the scene model closest to the current viewing angle. When the number of models reaches a certain online level, it can automatically unload according to the loading situation.

Owner:陕西心像信息科技有限公司

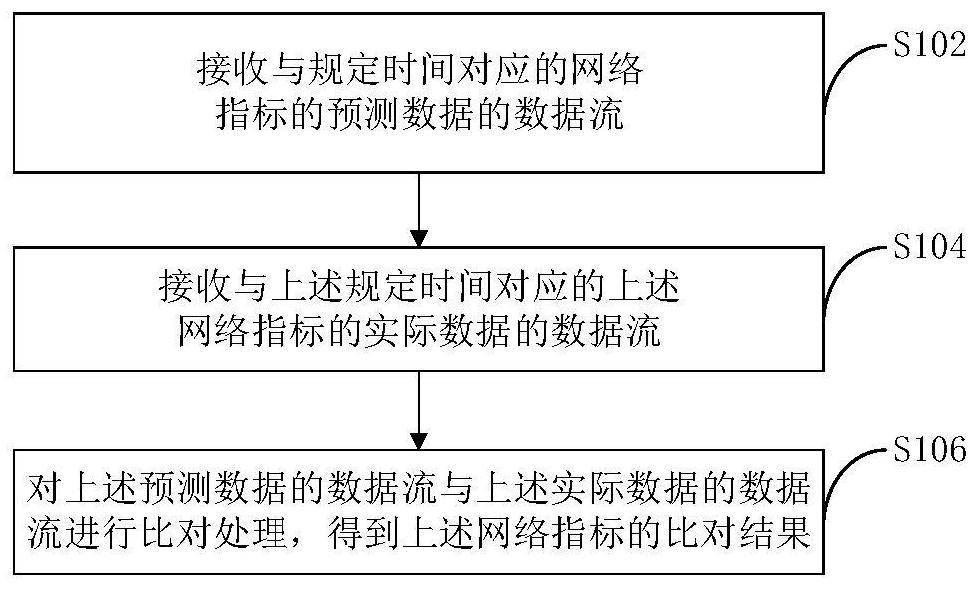

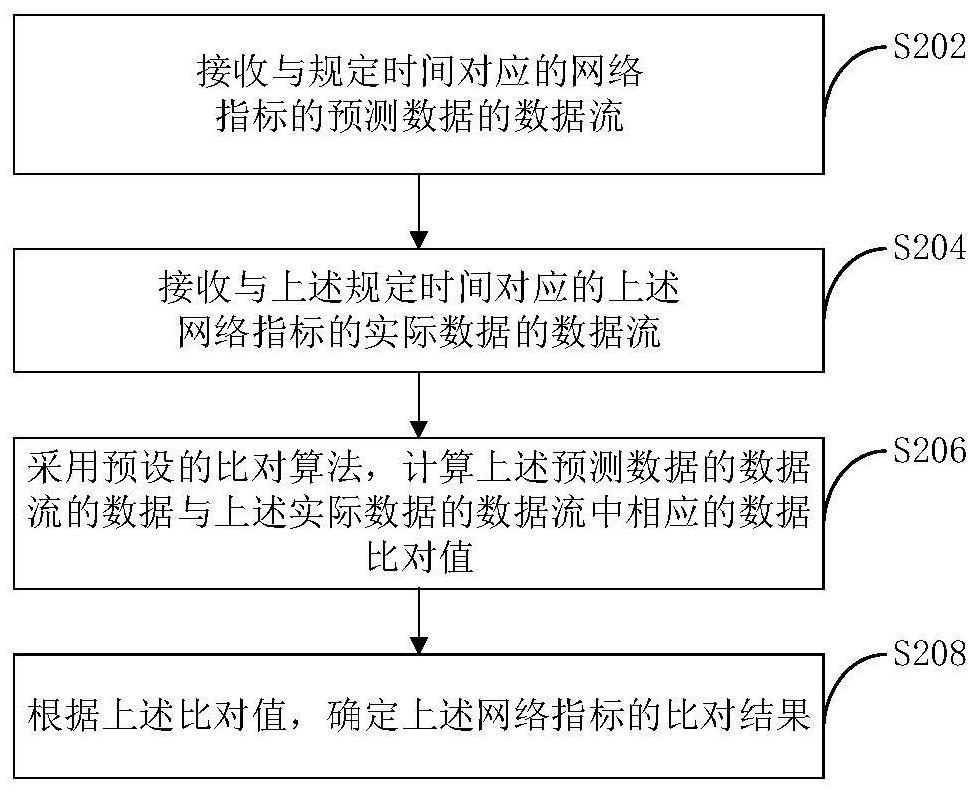

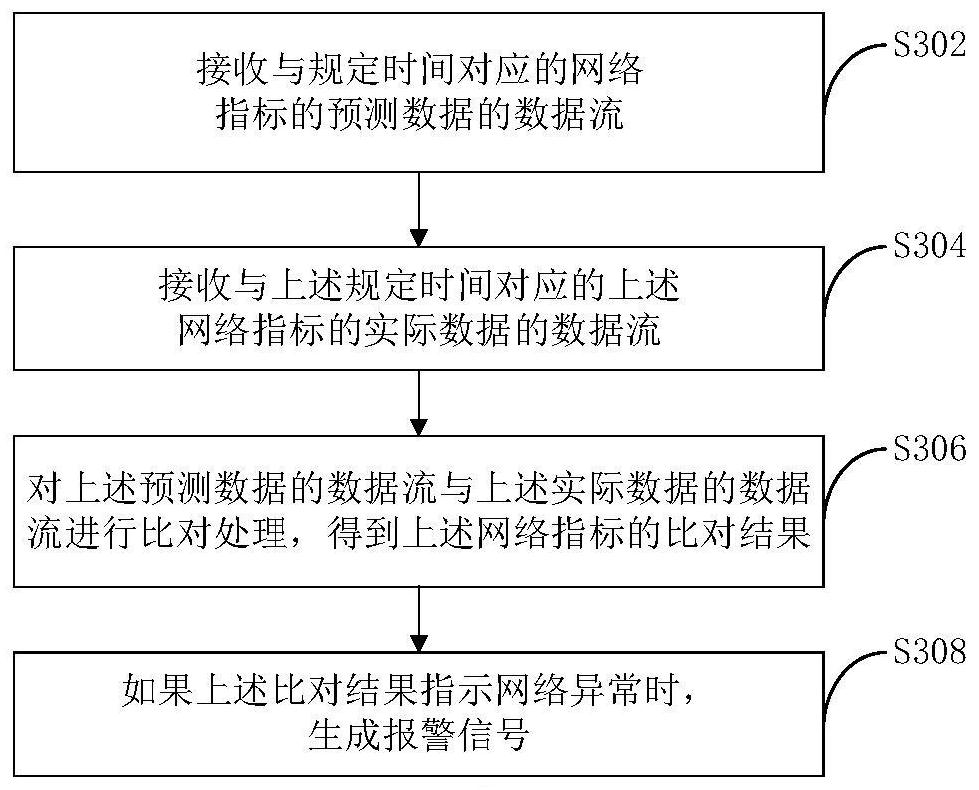

Network anomaly detection method and device and server

The invention provides a network anomaly detection method and device and a server. The method comprises the following steps: receiving a data stream of predicted data of a network index corresponding to a specified time and a data stream of actual data of the network index corresponding to the specified time; and comparing the data stream of the received prediction data with the data stream of the actual data to obtain a comparison result of the network indexes. According to the method, specified time is set in advance, when the specified time is reached, a data stream of prediction data and a data stream of actual data of a network index corresponding to the specified time are received and are compared, the prediction data and the actual data are gradually extracted in the time dimension, and the comparison is carried out in the form of the data streams, and a large amount of prediction data does not need to be cached, so that memory pressure is relieved.

Owner:BEIJING KINGSOFT CLOUD NETWORK TECH CO LTD

A real-time positioning method and device applied to automatic driving

ActiveCN110146074BHigh positioning accuracySolve the problem of reduced positioning accuracyNavigation by speed/acceleration measurementsElectromagnetic wave reradiationEngineeringComputer vision

Owner:BEIJING MOMENTA TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com