Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

50 results about "Memory scheduling" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The Memory Scheduling Championship (MSC) invites contestants to submit their memory scheduling code to participate in this competition. Contestants must develop algorithms to optimize multiple metrics on a common evaluation framework provided by the organizing committee.

System and method for cooperative virtual machine memory scheduling

ActiveUS7716446B1Memory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryOperational system

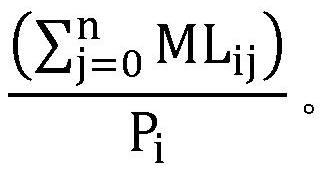

Memory assigned to a virtual machine is reclaimed. A resource reservation application running as a guest application on the virtual machine reserves a location in guest virtual memory. The corresponding physical memory can be reclaimed and allocated to another virtual machine. The resource reservation application allows detection of guest virtual memory page-out by the guest operating system. Measuring guest virtual memory page-out is useful for determining memory conditions inside the guest operating system. Given determined memory conditions, memory allocation and reclaiming can be used control memory conditions. Memory conditions in the virtual machine can be controlled with the objective of achieving some target memory conditions.

Owner:VMWARE INC

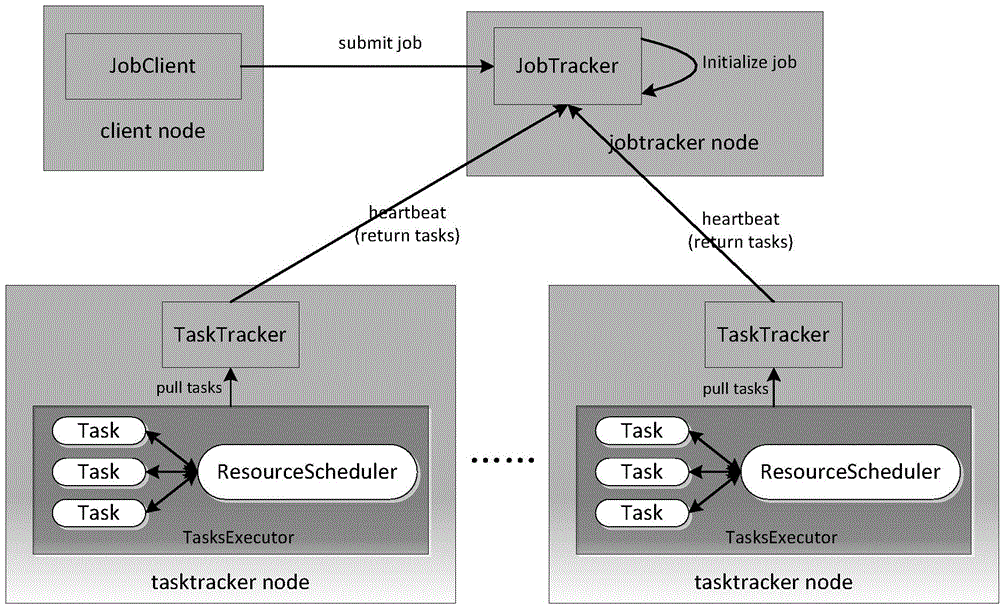

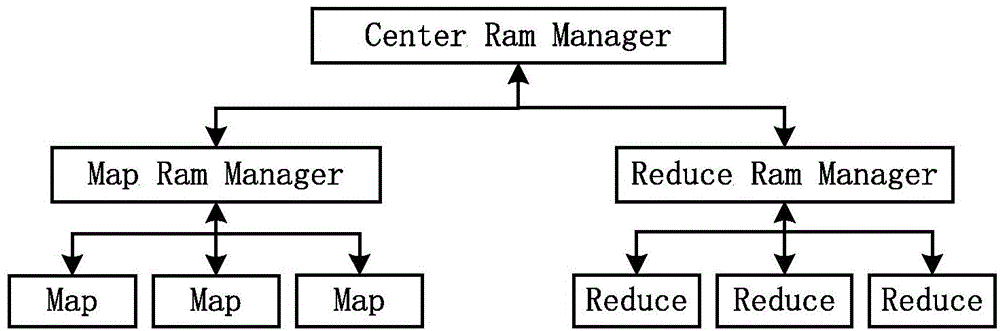

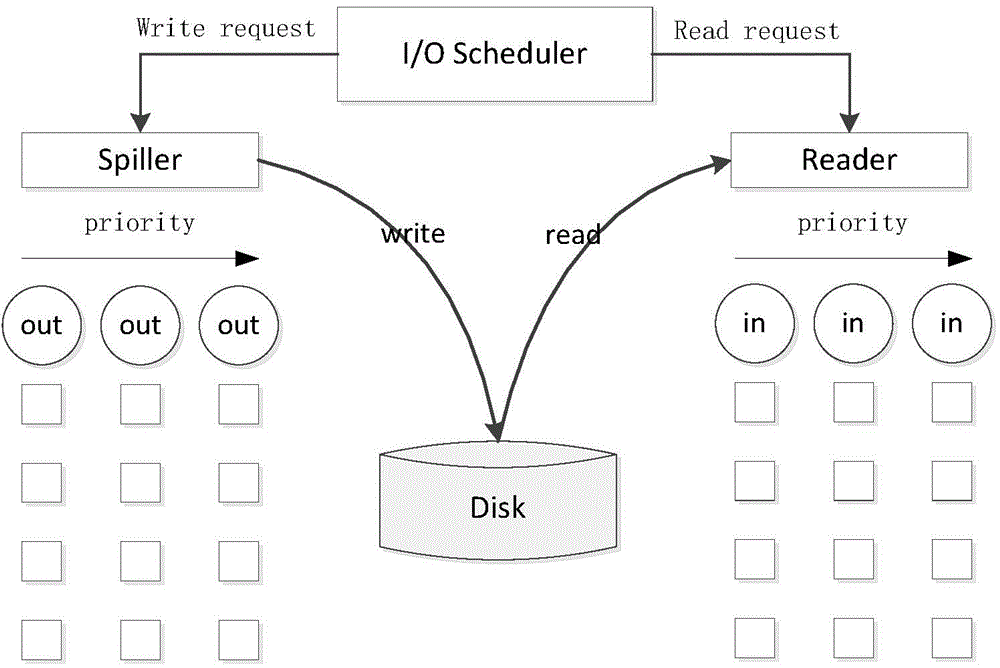

Multithreading-based MapReduce execution system

ActiveCN103605576AReduce management pressureReduce competitionResource allocationGranularityParallel computing

The invention discloses a multithreading-based MapReduce execution system comprising a MapReduce execution engine implementing multithreading. A multi-process execution mode of Map / Reduce tasks in original Hadoop is changed into a multithread mode; details about memory usage are extracted from Map tasks and Reduce tasks, a MapReduce process is divided into multiple phases under fine granularity according to the details, and a shuffle process in the original Hadoop is changed from Reduce pull into Map active push; a uniform memory management module and an I / O management module are implemented in the MapReduce multithreading execution engine and used to centrally manage memory usage of each task thread; a global memory scheduling and I / O scheduling algorithm is designed and used to dynamically schedule system resources during the execution process. The system multithreading-based MapReduce execution system has the advantages that memory usage can be maximized by users without modifying the original MapReduce program, disk bandwidth is fully utilized, and the long-last I / O bottleneck problem in the original Hadoop is solved.

Owner:HUAZHONG UNIV OF SCI & TECH

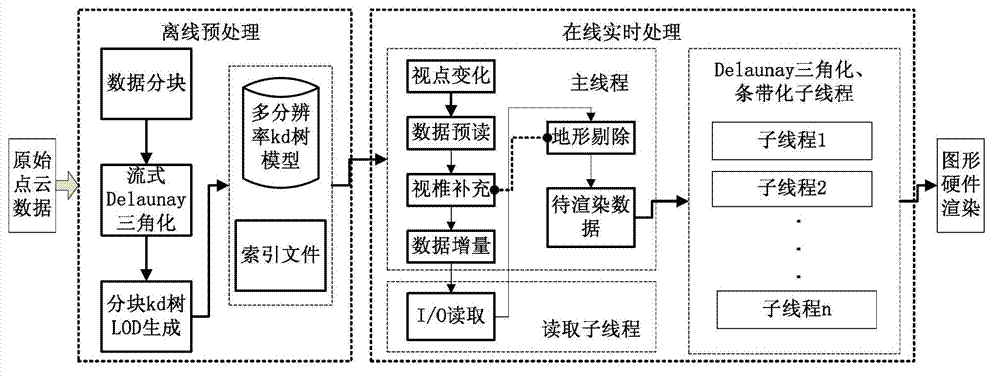

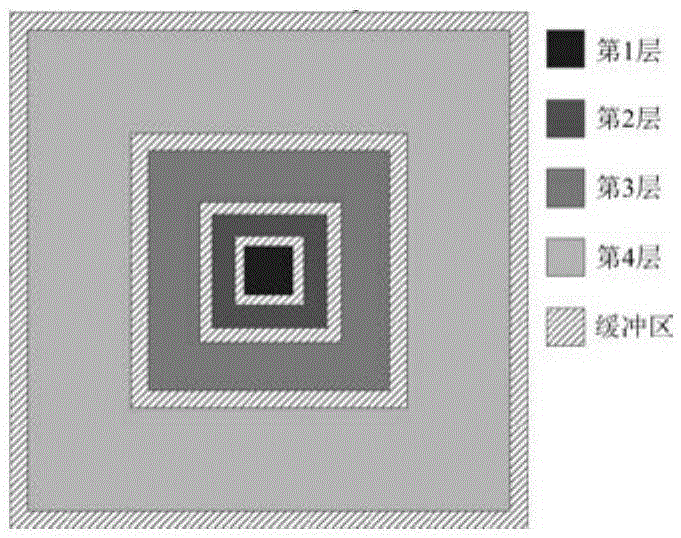

Real-time large-scale terrain visualization implementation method based on kd tree

The invention discloses a real-time large-scale terrain visualization implementation method based on a kd tree. The real-time large-scale terrain visualization implementation method comprises the following steps: partitioning terrain according to a point cloud space; off line establishing an LOD (level of detail) model by utilizing kd tree layering, wherein the established LOD model is responsible for obtaining multi-resolution model data by the terrain point cloud data, and switching each resolution ratio of the model; loading the off line constructed LOD model data into a memory by utilizing an external storage algorithm when on line, wherein the external storage algorithm is responsible for scheduling the externally stored LOD model data to the memory; utilizing a simplified terrain eliminating technology to the LOD model data in the memory, wherein the simplified terrain establishing technology is responsible for eliminating invisible LOD model data, so as to reduce the amount of the data transmitted to graphical hardware; and further reducing the data mount and calculation amount of the eliminated data transmitted to the graphical hardware by utilizing a three-dimensional engine optimization technique. The real-time large-scale terrain visualization implementation method based on the kd tree is simple to realize and high in display efficiency and has excellent visualization effect based on a triangular irregular network visualization algorithm, so that the visualization speed is improved.

Owner:SOUTH CHINA UNIV OF TECH

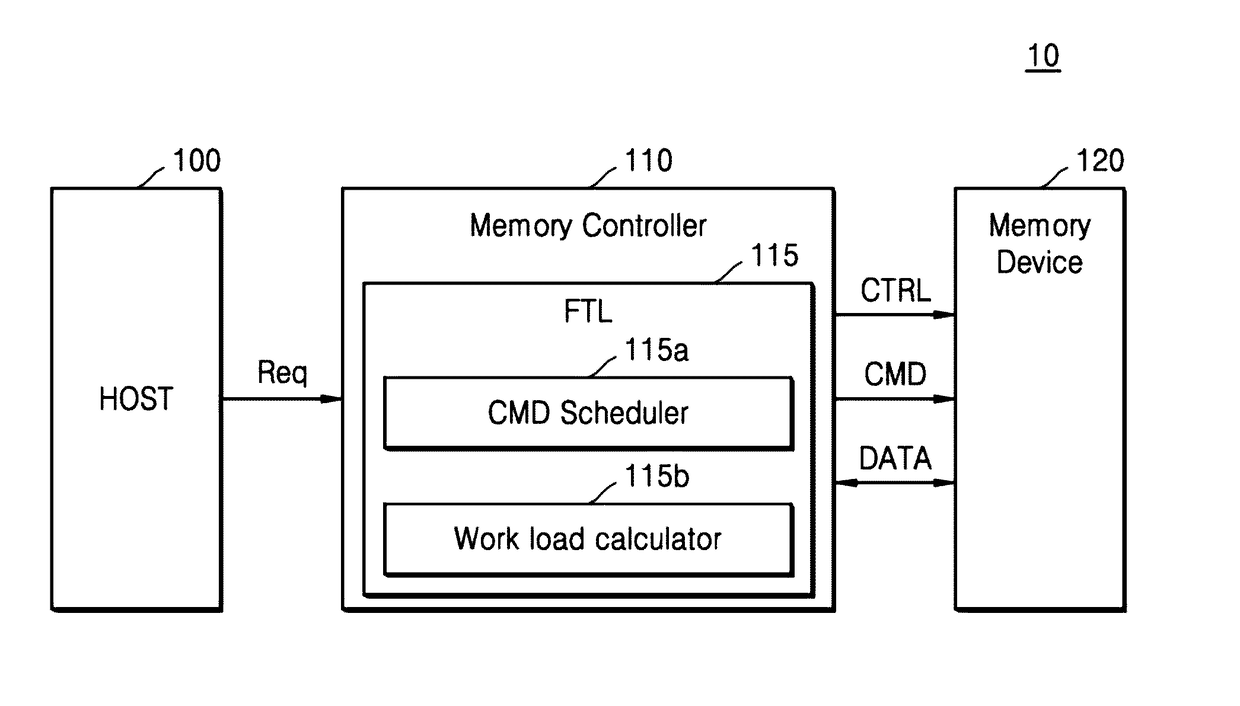

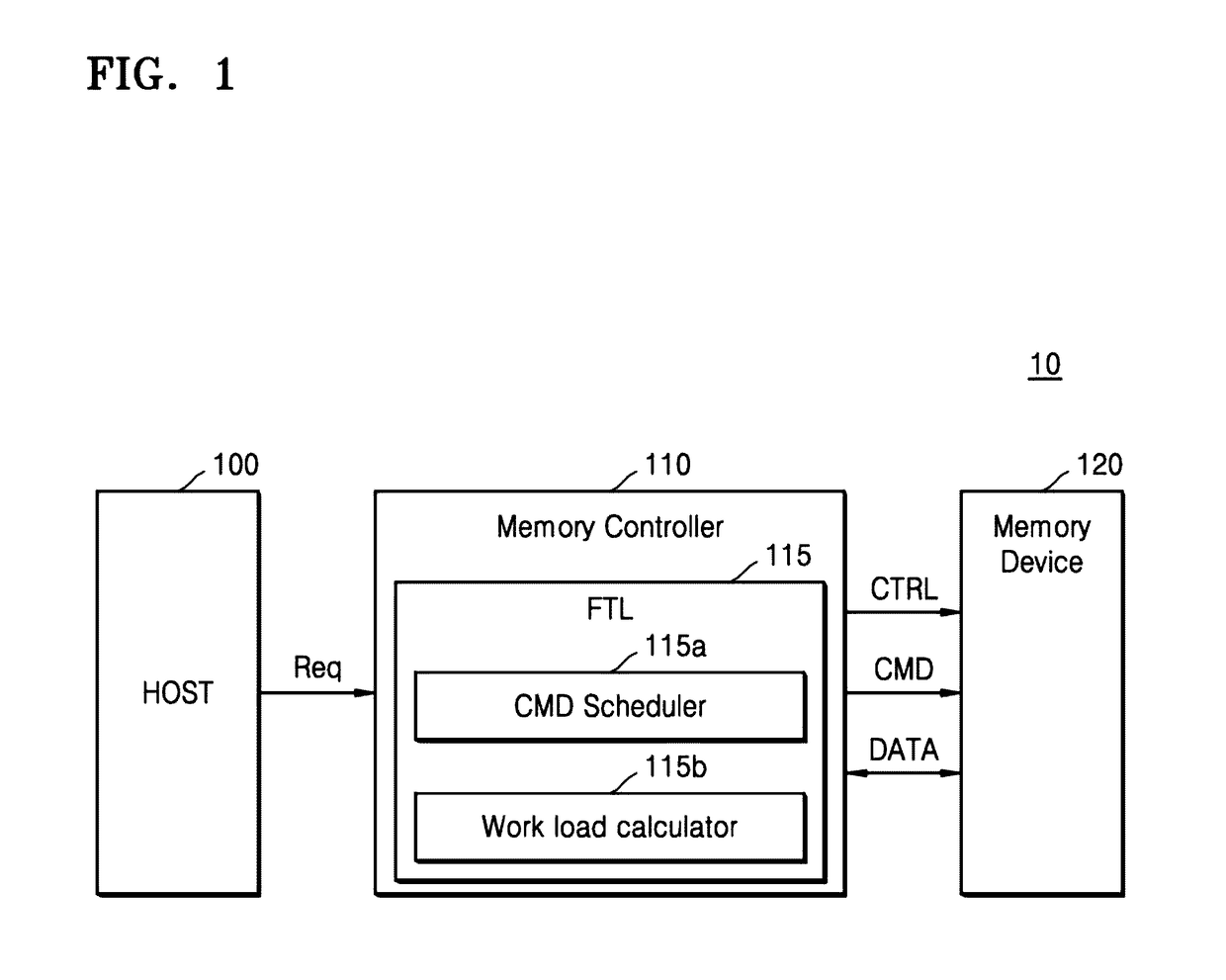

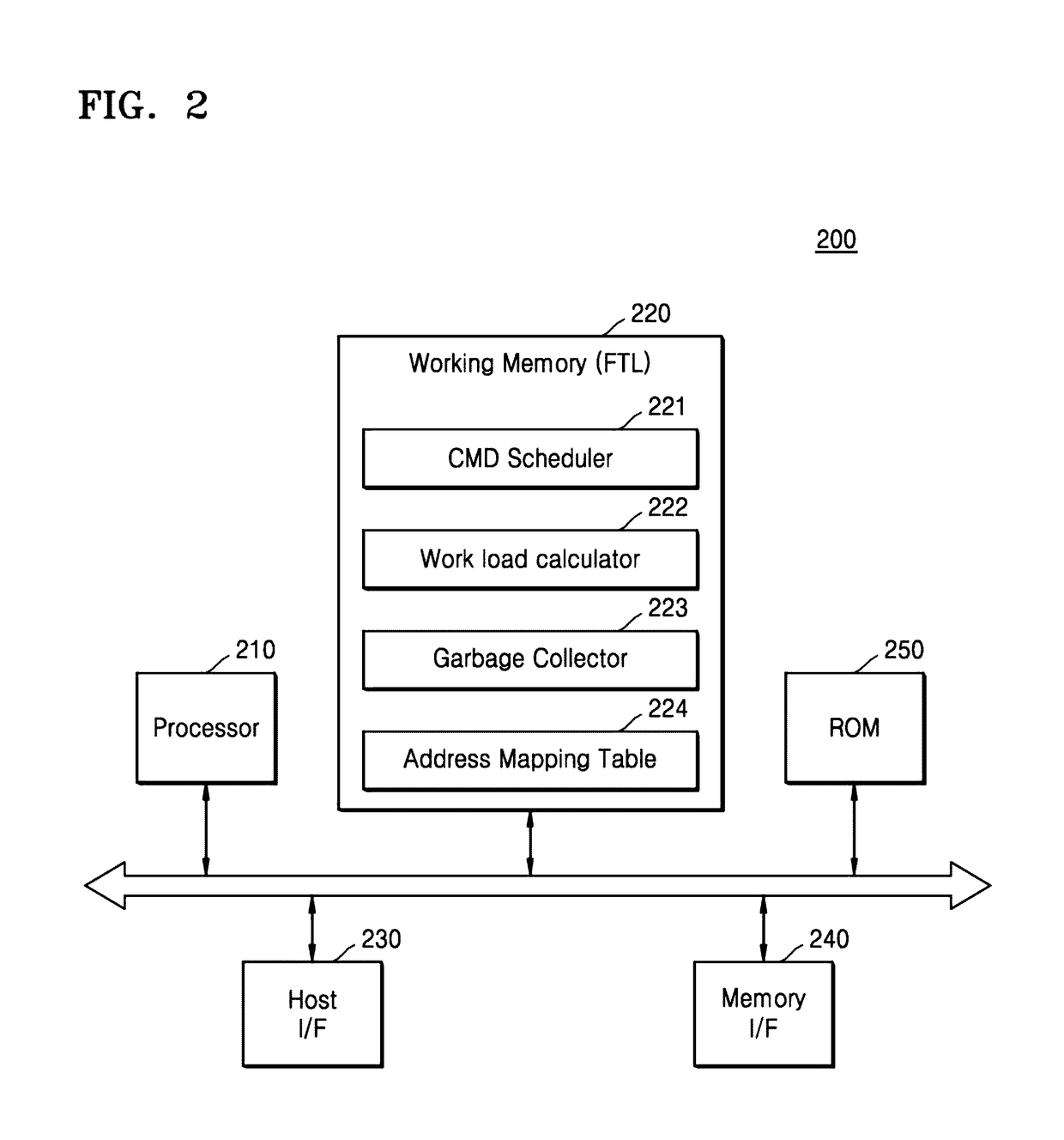

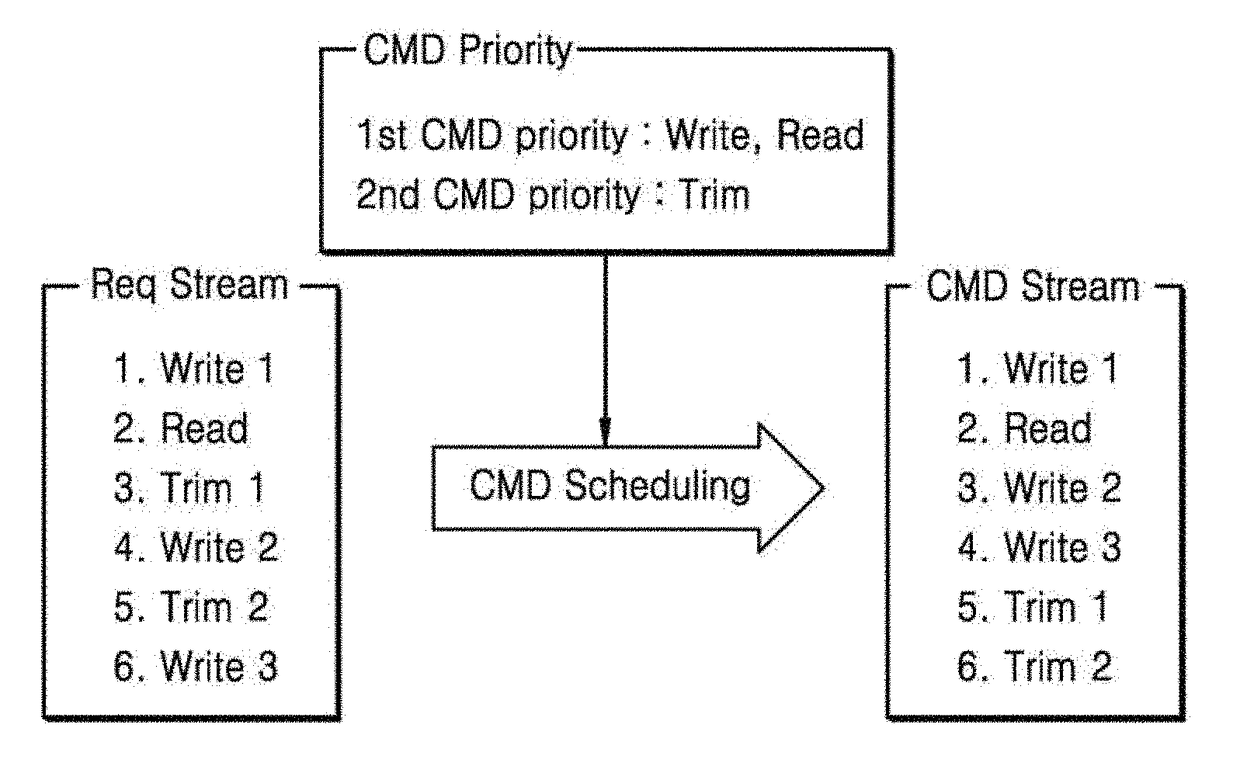

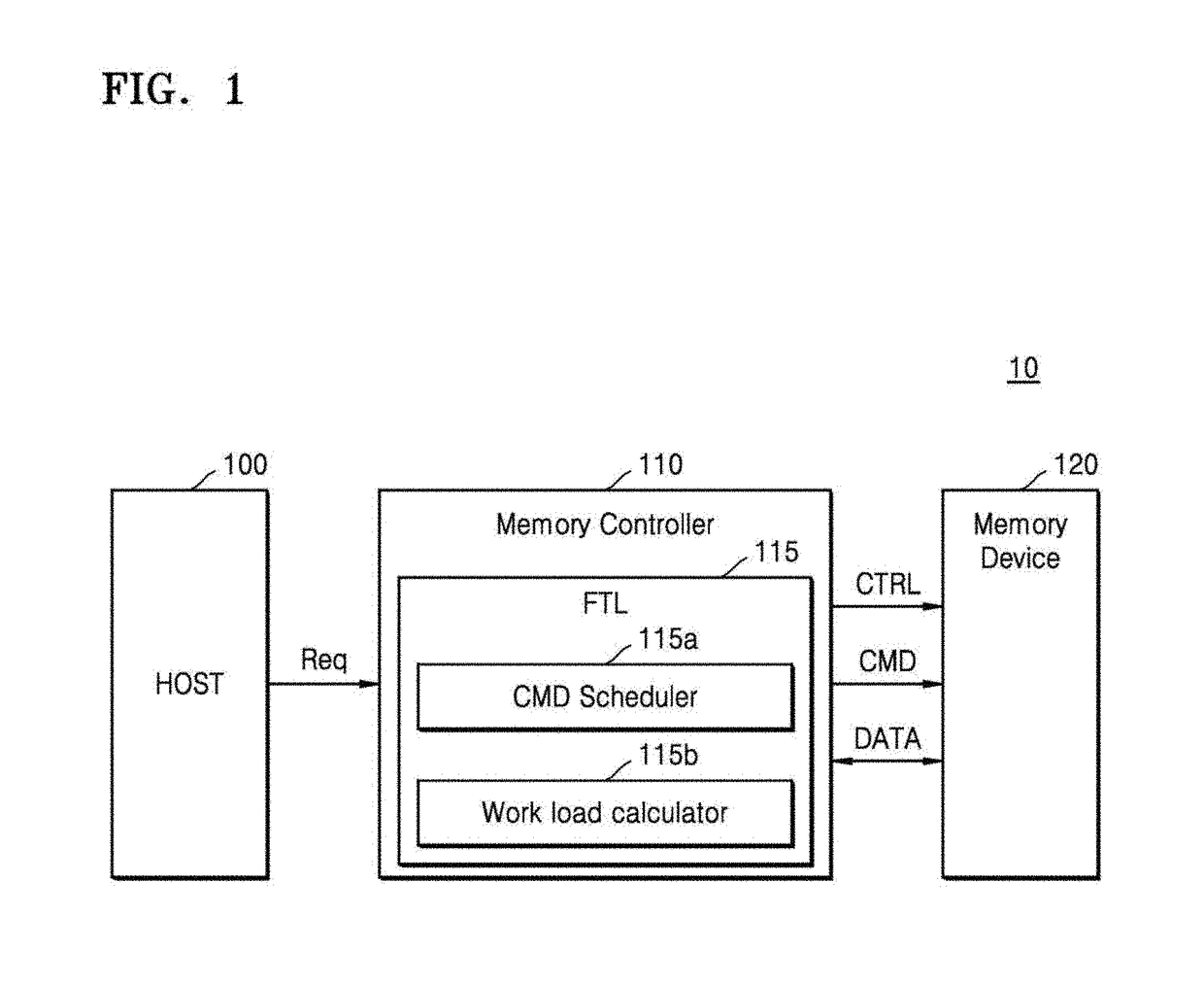

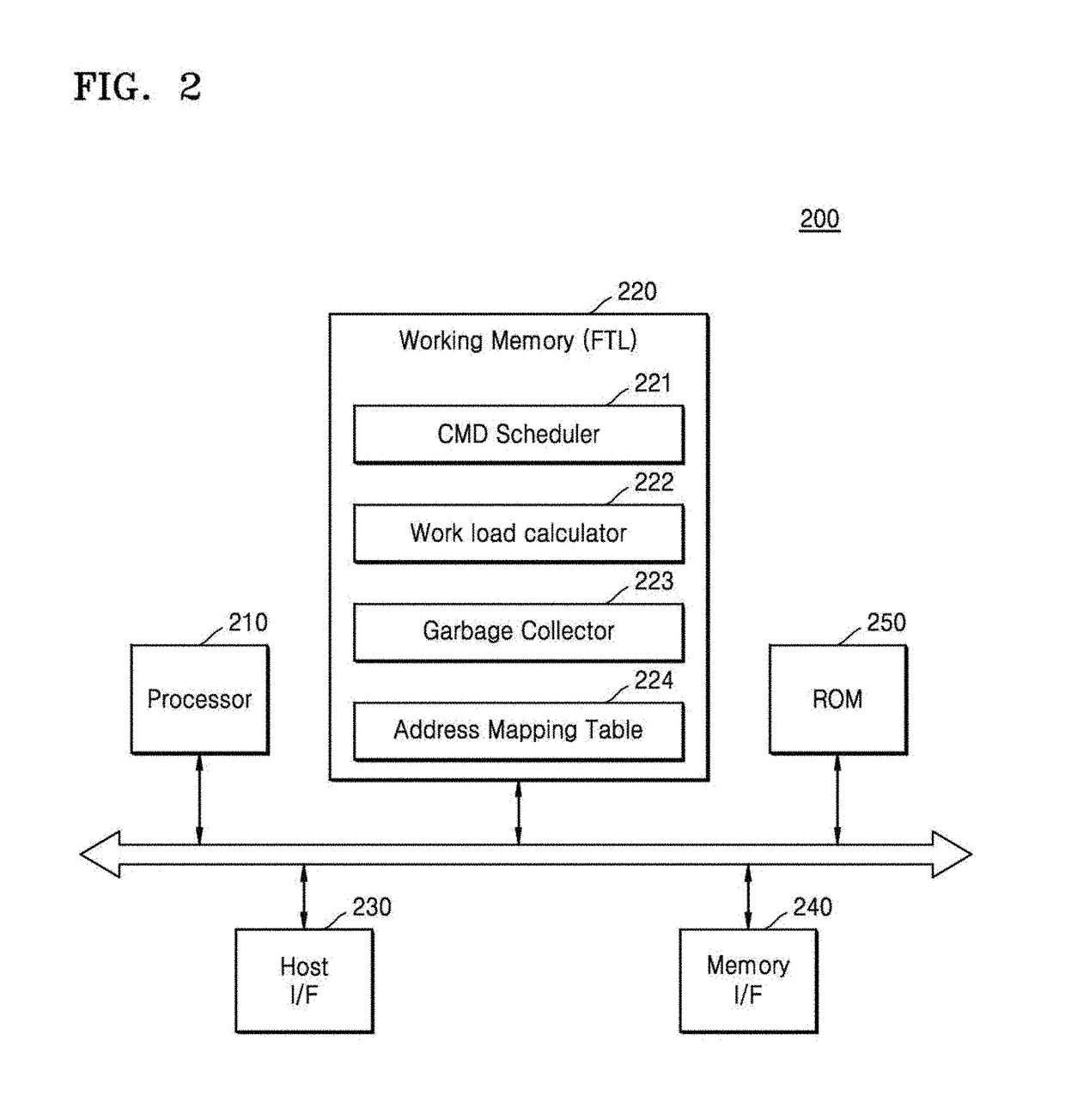

Memory scheduling method and method of operating memory system

Disclosed is a method of operating a memory system which executes a plurality of commands including a write command and a trim command. The memory system includes a memory device, which includes a plurality of blocks. The method further includes performing garbage collection for generating a free block, calculating a workload level in performing the garbage collection, and changing a command schedule based on the workload level.

Owner:SAMSUNG ELECTRONICS CO LTD

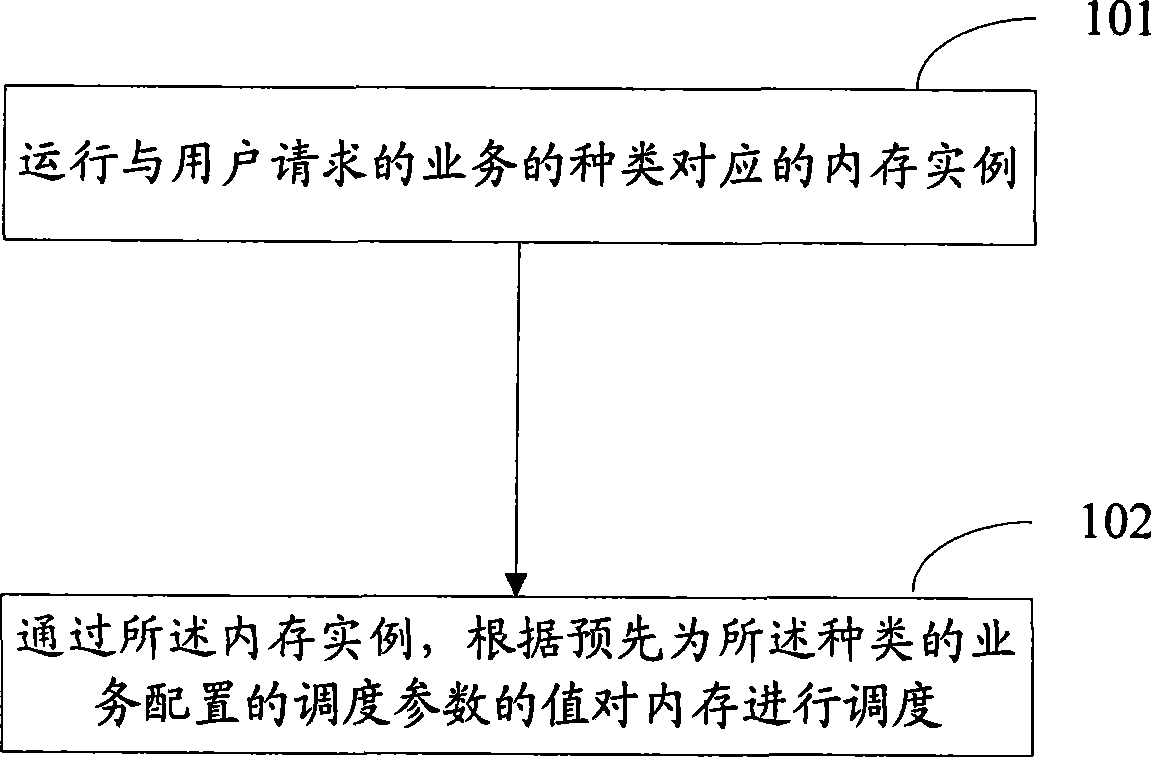

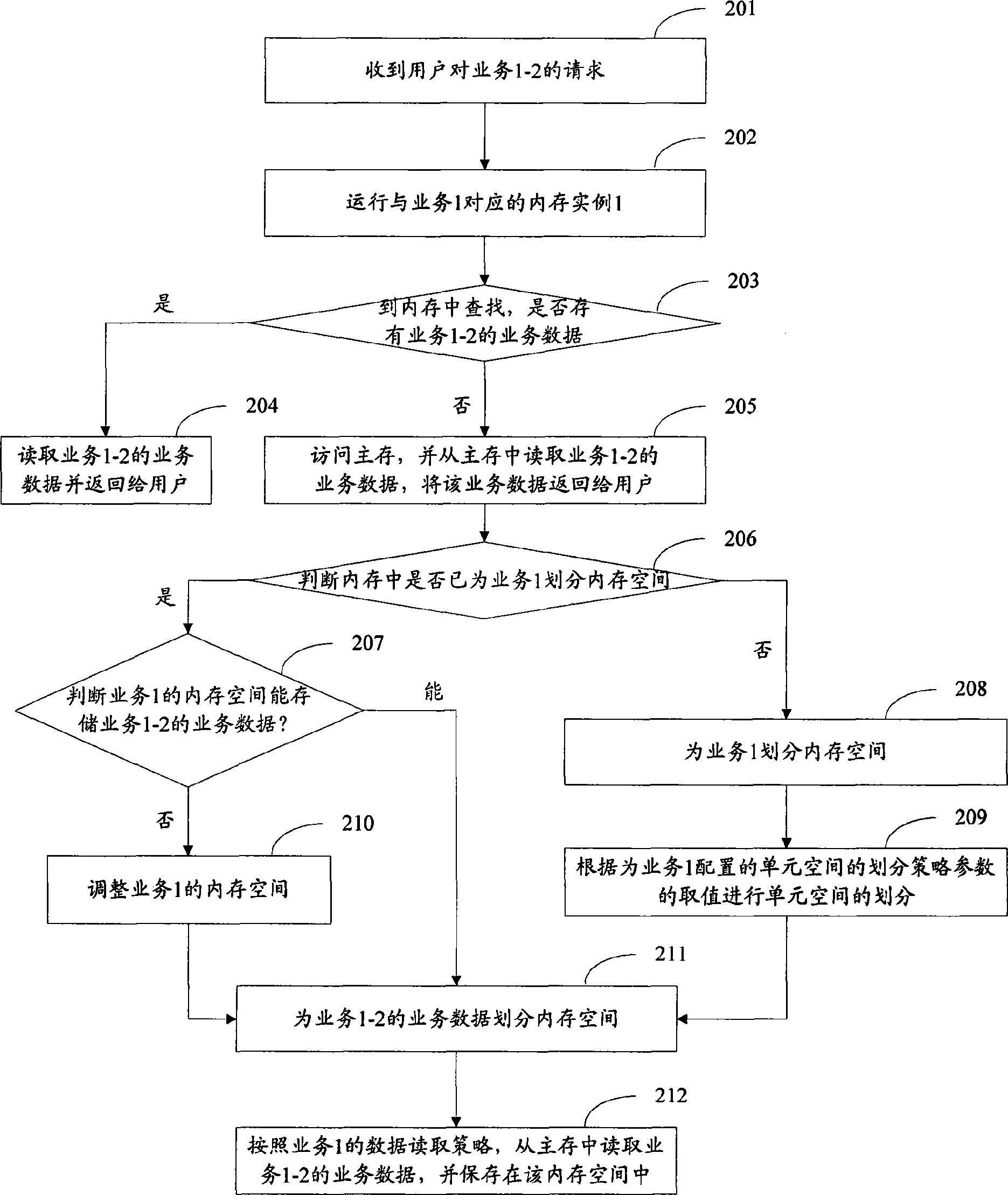

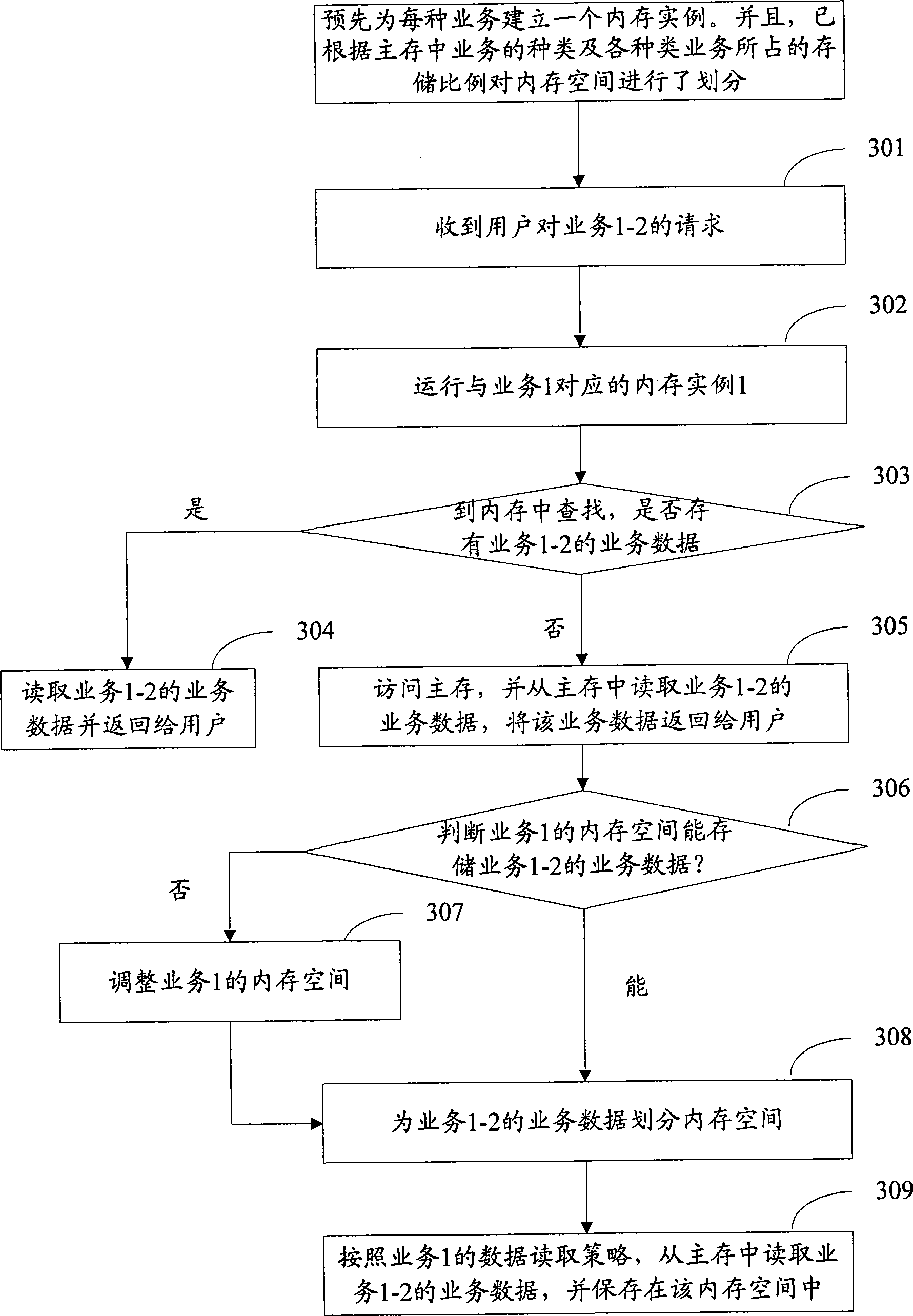

Method and apparatus for scheduling memory

ActiveCN101373445AIncrease usageHigh speedResource allocationMemory adressing/allocation/relocationUtilization rateMemory scheduling

The invention discloses a memory dispatching method which is used for realizing the optimization of a memory (Cache) dispatching strategy and improving the utilization rate of Cache resources and the speed of data processing. The method comprises the steps of operating a Cache example corresponding to the type of the business required by users and dispatching Cache according to a value of a dispatching parameter configured for the business of the type in advance through the Cache example. The invention further discloses a device and a system used to realize the method.

Owner:UNITED INFORMATION TECH H K COMPANY

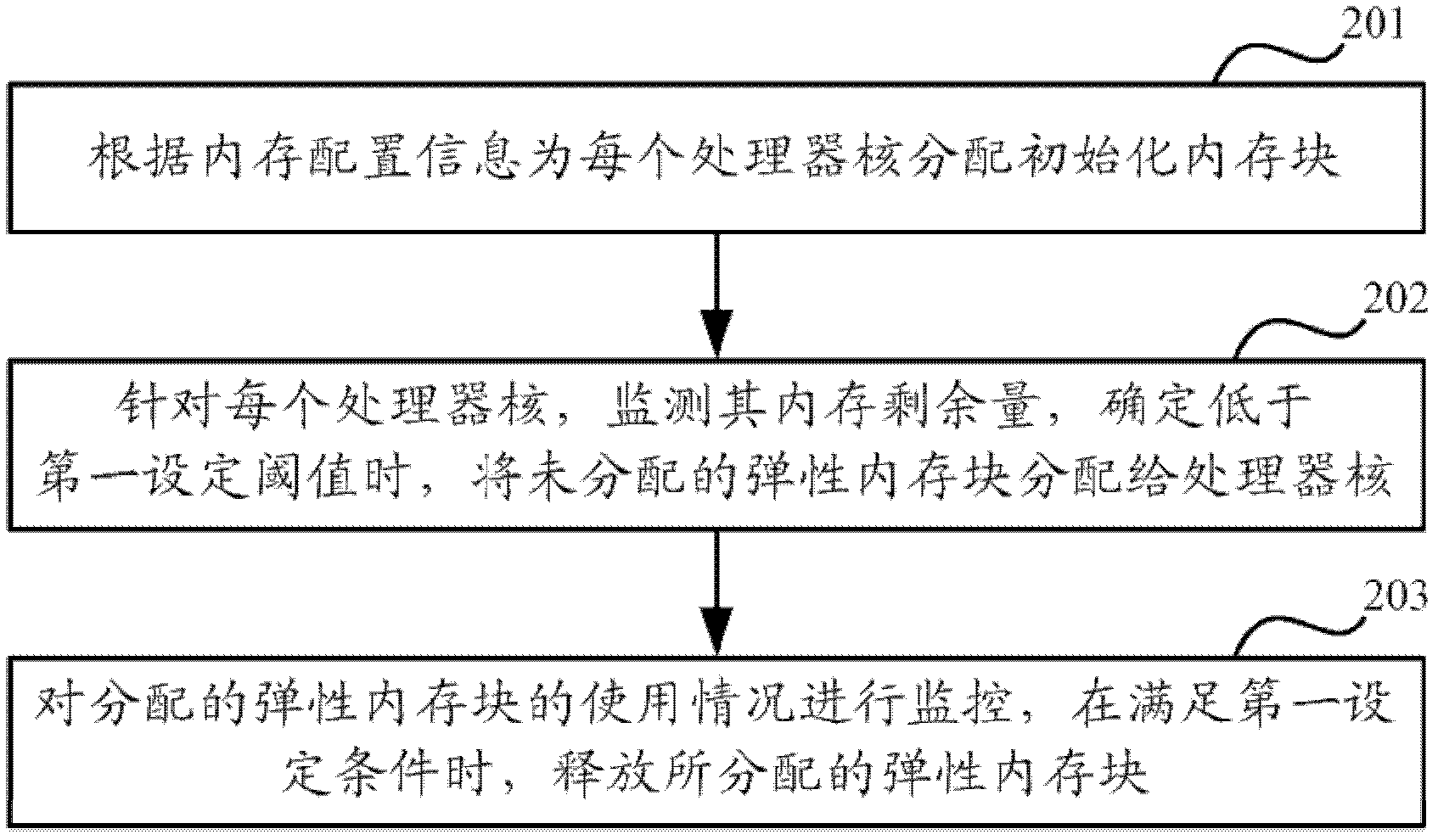

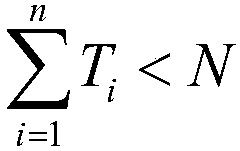

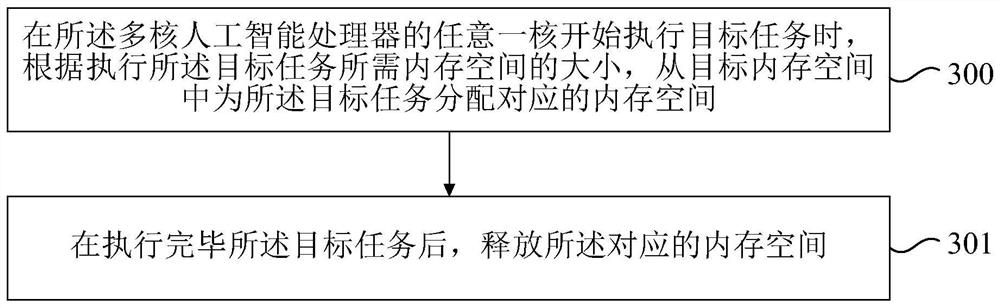

Memory scheduling method and memory scheduling device for multi-core processor

ActiveCN102508717AIncrease profitImprove dynamic performanceResource allocationMemory adressing/allocation/relocationService conditionMulti-core processor

The invention discloses a memory scheduling method and a memory scheduling device for a multi-core processor. The memory scheduling method comprises the following steps of: distributing initialized memory blocks for each processor core according to memory configuration information, wherein the memory configuration information comprises information of a plurality of initialized memory blocks and aplurality of elastic memory blocks which is obtained by dividing a physical memory; for each processor core, monitoring residual quantity of the memory of the processor core; when the residual quantity of the memory is determined to be lower than a first set threshold, distributing the undistributed elastic memory blocks to the processor cores; and monitoring service conditions of the distributedelastic memory blocks and releasing the distributed elastic memory blocks when a first setting condition is met. According to the memory scheduling method and the memory scheduling device disclosed by the invention, the distribution dynamic of the memory is better; the requirement for newly-added memory caused by application change can be met; and the integral utilization rate of the memory is effectively improved.

Owner:DATANG MOBILE COMM EQUIP CO LTD

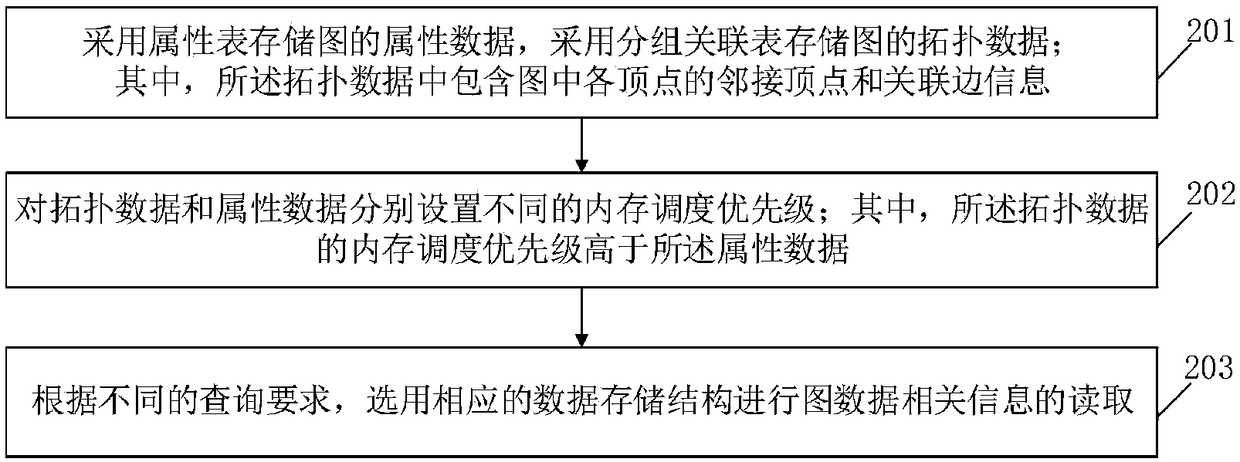

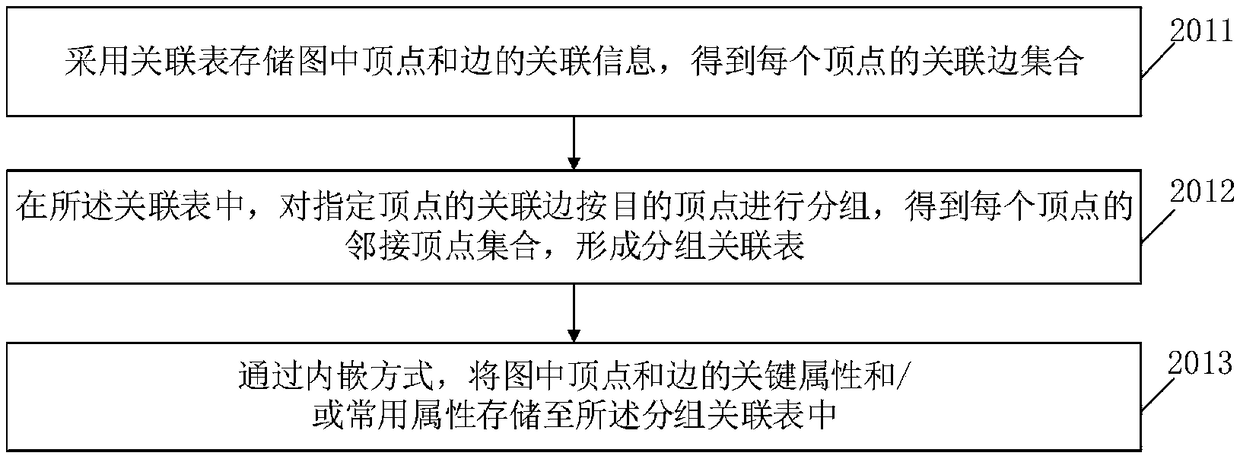

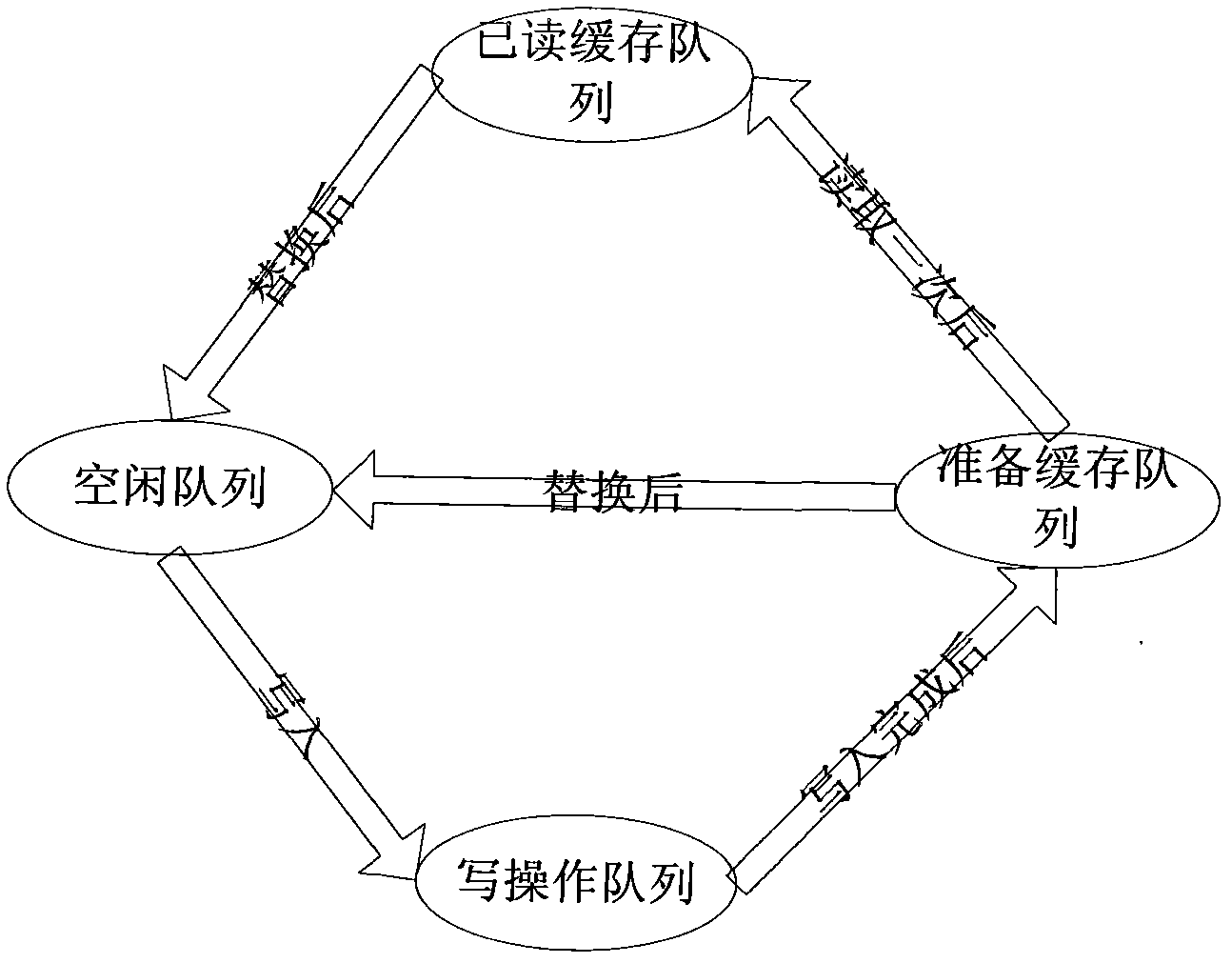

A method and device for accessing graph data based on a grouping association table

ActiveCN109255055AImprove traversal query efficiencyImprove traversal query performanceOther databases indexingOther databases queryingData informationStructure of Management Information

The invention relates to the field of data processing, in particular to a method and a device for accessing graph data based on a grouping association table, wherein, the method comprises the following steps: adopting an attribute table to store attribute data of a graph; adopting a grouping association table to store topological data of the graph; the topological data including information of adjacent vertices and associated edges of each vertex; setting different memory scheduling priorities for topology data and attribute data respectively, and the memory scheduling priority of topology data being higher than that of attribute data, according to different query requirements, and selecting the corresponding data storage structure to read the graph data information. The invention can completely store the adjacency point and the associated edge information of the point only through the data storage structure of the grouping association table. When the attribute information is not used,the invention only accesses the grouping association table to complete the traversal inquiry of the graph, thereby improving the traversal inquiry efficiency; At the same time, attribute data and topology data are stored separately, and different memory scheduling priorities are set according to weights, which further improves the performance of traversal query.

Owner:四川蜀天梦图数据科技有限公司 +1

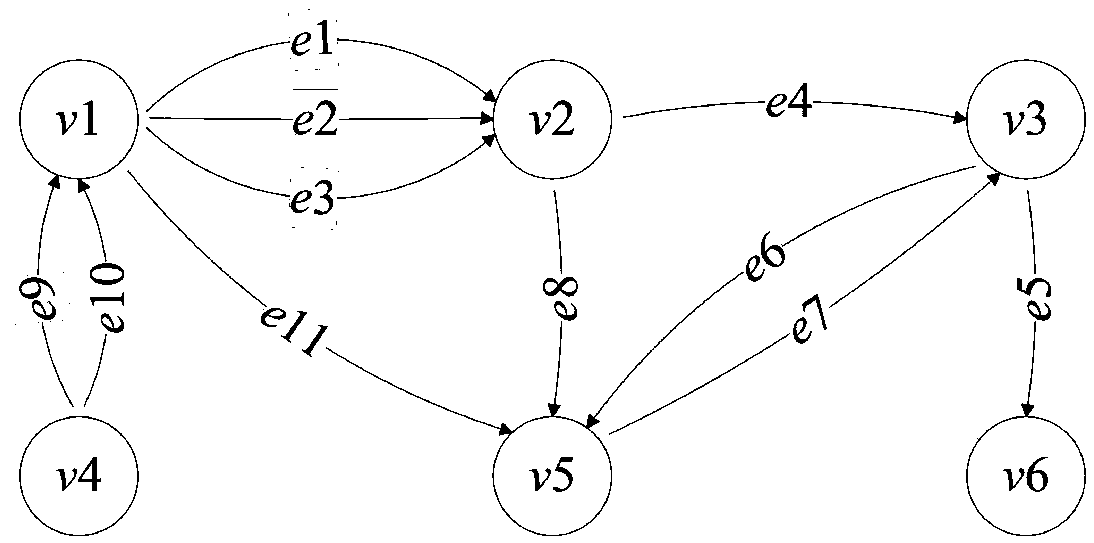

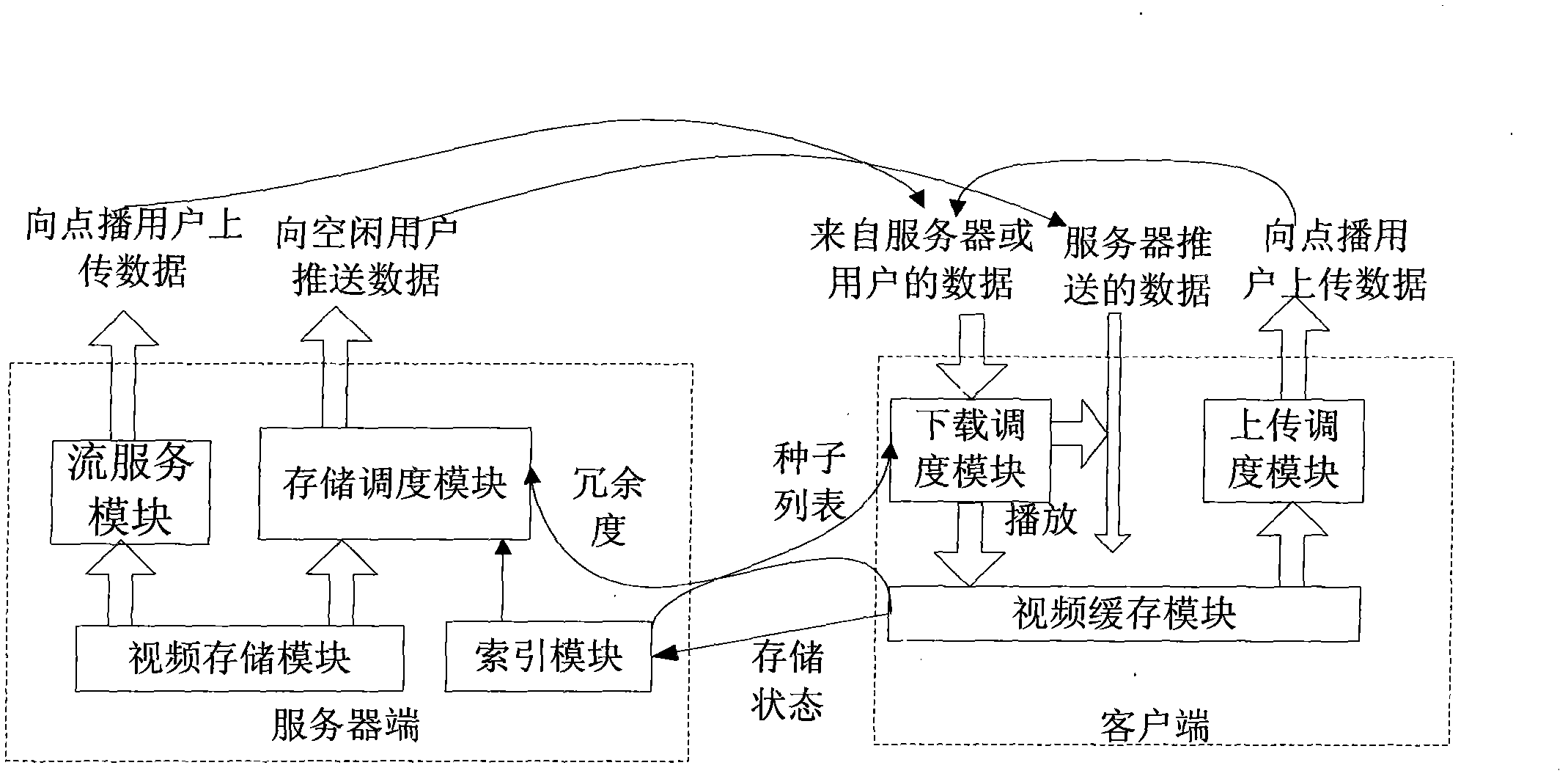

Video-on-demand system based on P2P (Peer-To-Peer)

ActiveCN102256163AImprove transmission efficiencyReduce loadTransmissionSelective content distributionVideo memoryExtensibility

The embodiment of the invention discloses a video-on-demand system based on P2P (Peer-To-Peer). The system comprises a server side and a client side, wherein the server side comprises a stream service module, a memory indexing module, a memory scheduling module and a video memory module; and the client side comprises a downloading scheduling module, an uploading scheduling module and a video caching module, wherein the downloading scheduling module is used for downloading data from the server side and other user nodes when users demand a video, the uploading scheduling module is used for providing data for the users with requests after receiving data requests of other users, and the video caching module is used for caching the data pushed by the server side. By implementing the embodimentof the invention, a P2P structure is used as a network structure of a streaming media service, data transmission efficiency is improved, and load on broadband is reduced; a memory structure is divided into a server and a client, which has the characteristics of mutual coordination and clear assignment of responsibilities; and the video-on-demand system based on P2P is designed in a modularizationmanner and has excellent expandability.

Owner:RES INST OF SUN YAT SEN UNIV & SHENZHEN

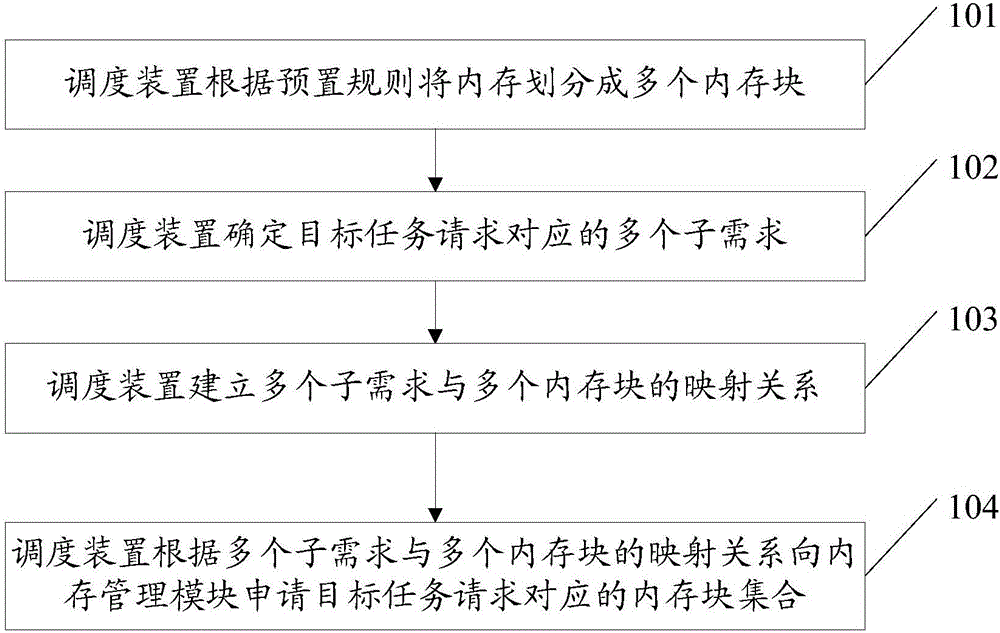

Memory scheduling method and device

ActiveCN106502918AImprove efficiencyFacilitated releaseMemory adressing/allocation/relocationMemory schedulingMemory block

The embodiment of the invention discloses a memory scheduling method. The memory scheduling method is used for simplifying memory division and improving the scheduling efficiency. The method provided by the embodiment of the invention comprises the following steps: dividing a memory into a plurality of memory blocks according to a pre-set rule, wherein the size of each memory block is a fixed value; when receiving a target task request, determining a plurality of sub-requirements corresponding to the target task request, wherein the size of each sub-requirement is smaller than the size of the memory block with the maximum capacity in the plurality of memory blocks; establishing a mapping relation of the plurality of sub-requirements and the plurality of memory blocks; and according to the mapping relation of the plurality of sub-requirements and the plurality of memory blocks, applying for a corresponding memory block set corresponding to the target task request from a memory management module. The embodiment of the invention also provides a scheduling device which is used for simplifying the memory division and improving the scheduling efficiency.

Owner:SHANGHAI HUAWEI TECH CO LTD

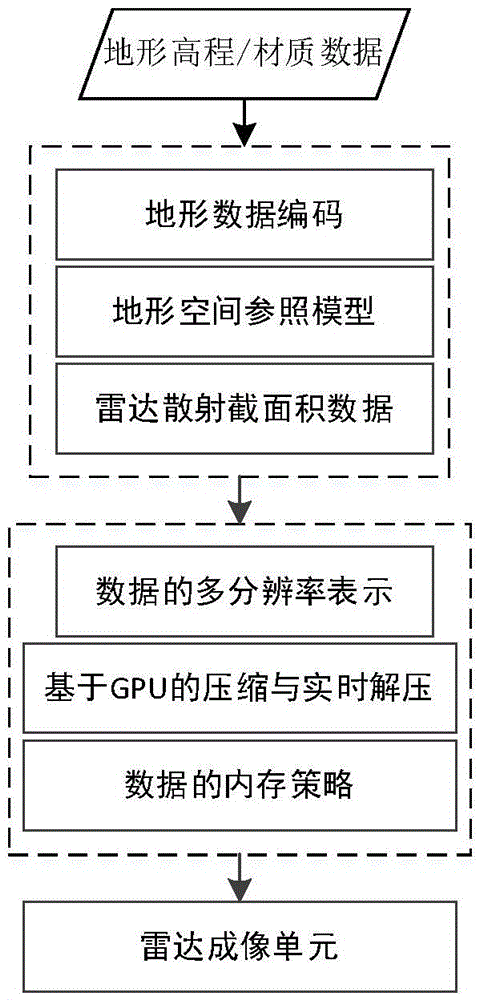

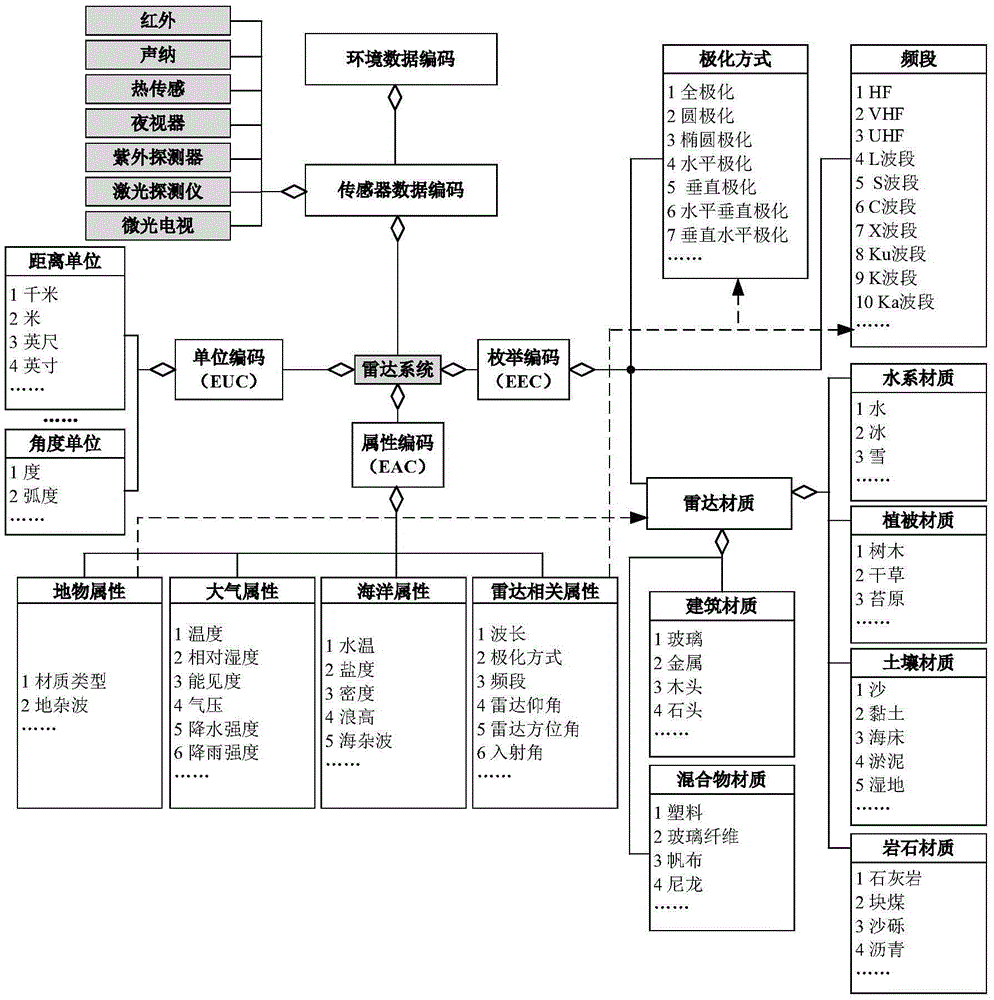

Radar image simulation-oriented terrain environment data representing method

The invention discloses a radar image simulation-oriented terrain environment data representing method and belongs to the technical field of simulations. The method is used for constructing radar terrain databases which have consistent representation and are used for simulating real environments. The method disclosed by the invention comprises the following steps: 1, constructing the static storage representation of terrain data, comprising a terrain data coding method, a terrain space reference model and a radar terrain scattering sectional area data representation; and 2, constructing simulation dynamic representation of the terrain data, comprising detail level structure constructing and a real-time memory scheduling method of data. The method disclosed by the invention is mainly used for the ground imaging simulation in an airborne radar simulation system, is an essential constitution for developing the real-time radar simulation system and has a broad application prospect in the aspects of flight simulation training and radar design.

Owner:NAVAL AERONAUTICAL & ASTRONAUTICAL UNIV PLA

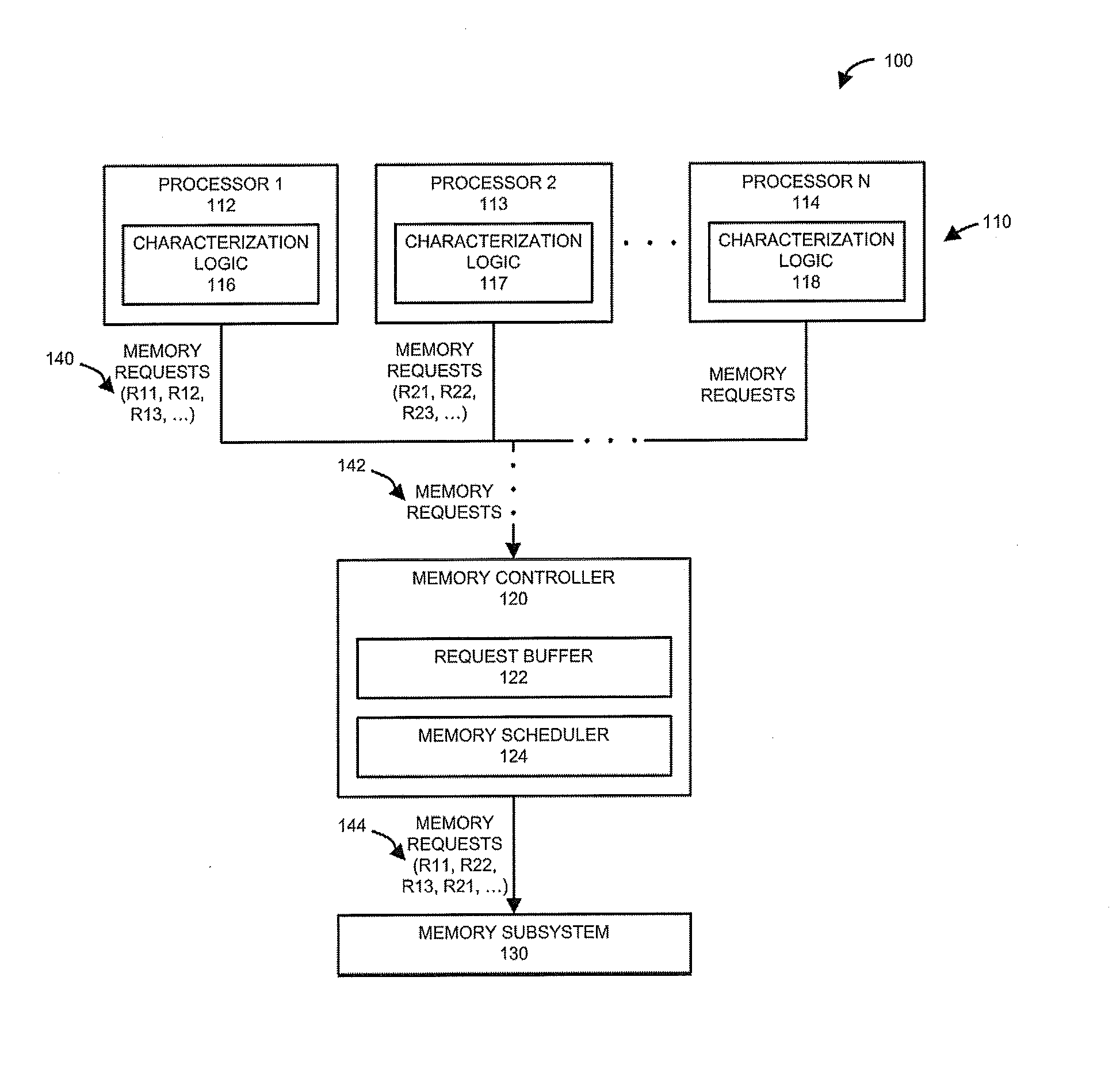

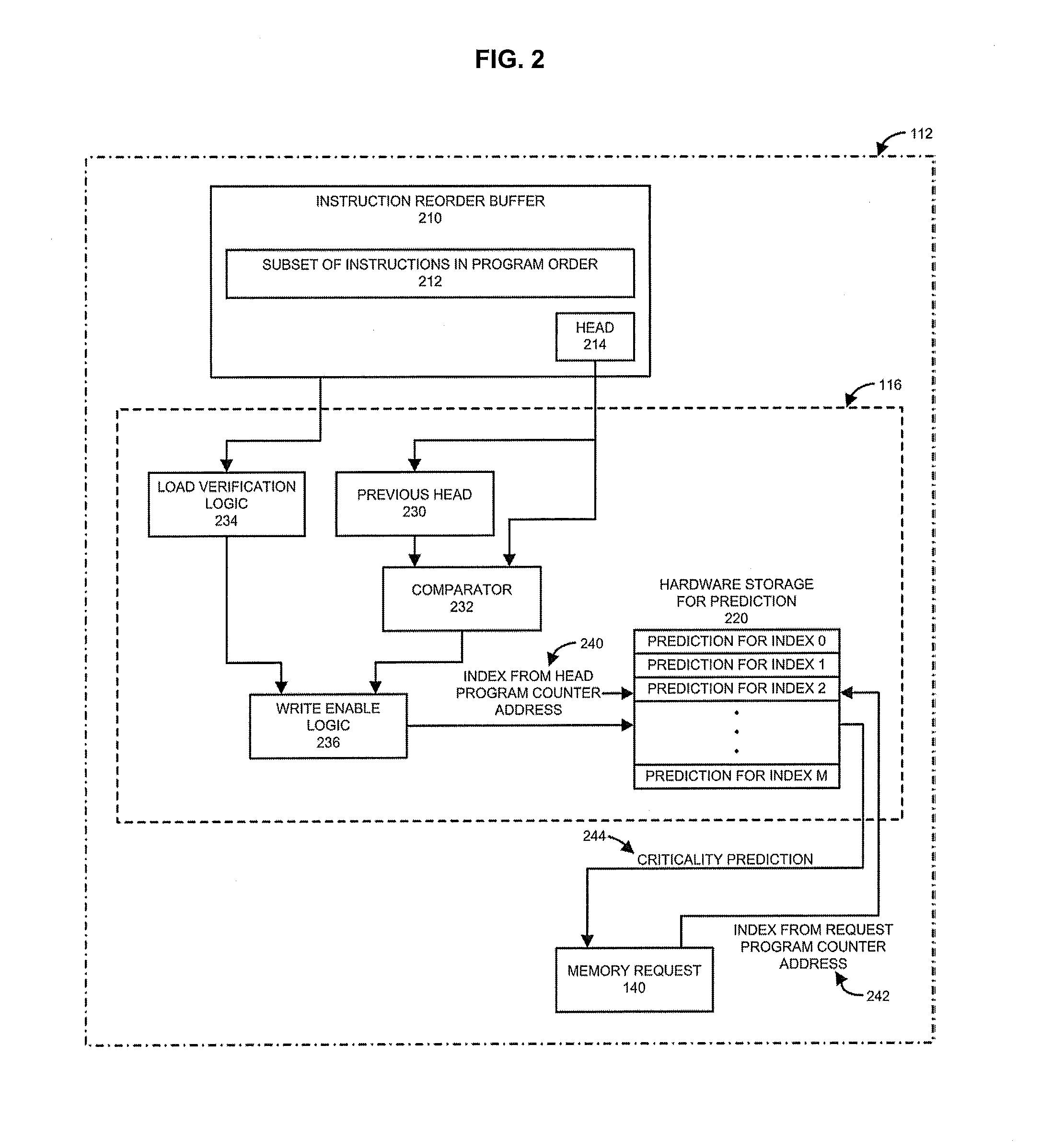

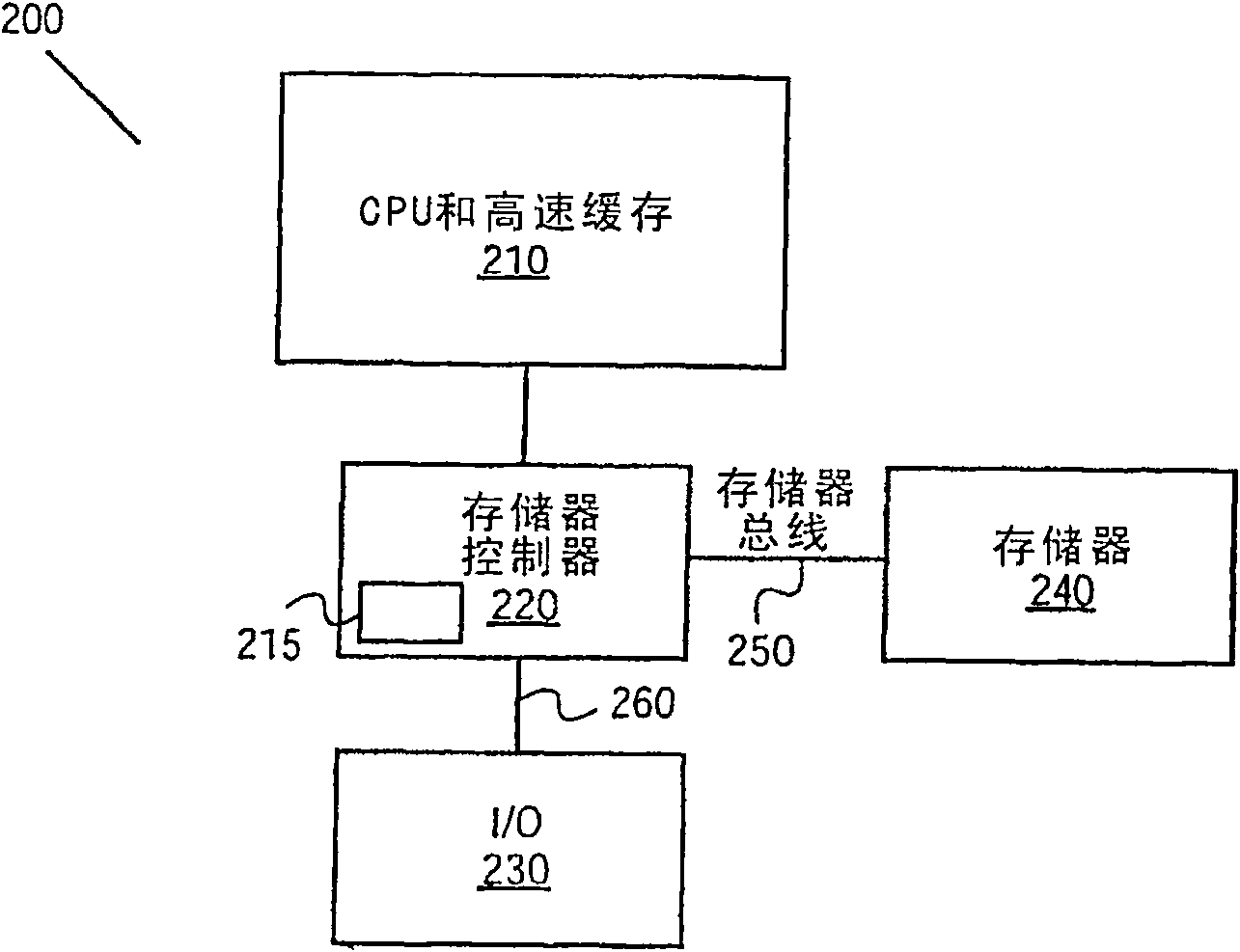

System and methods for processor-based memory scheduling

InactiveUS20160117118A1Input/output to record carriersMicrobiological testing/measurementOperating systemMemory scheduling

The invention relates to a system and methods for memory scheduling performed by a processor using a characterization logic and a memory scheduler. The processor influences the order by which memory requests are serviced and provides associated hints to the memory scheduler, where scheduling actually takes place.

Owner:CORNELL UNIV CENT FOR TECH LICENSING CTL AT CORNELL UNIV

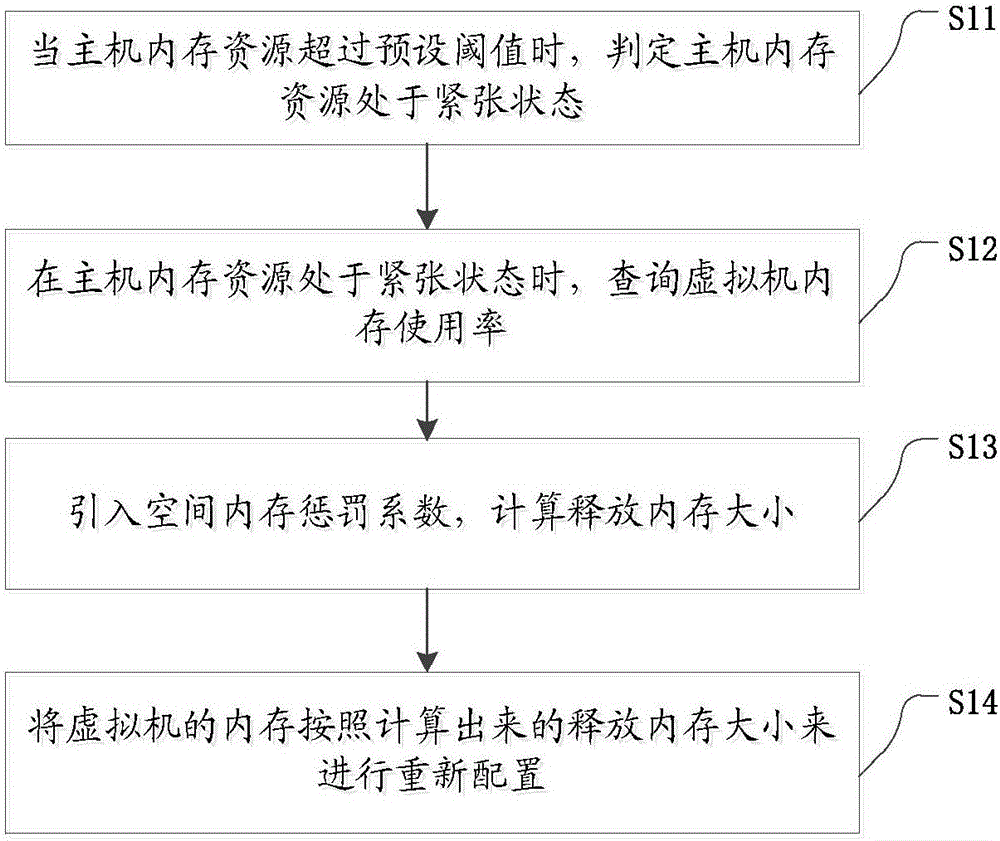

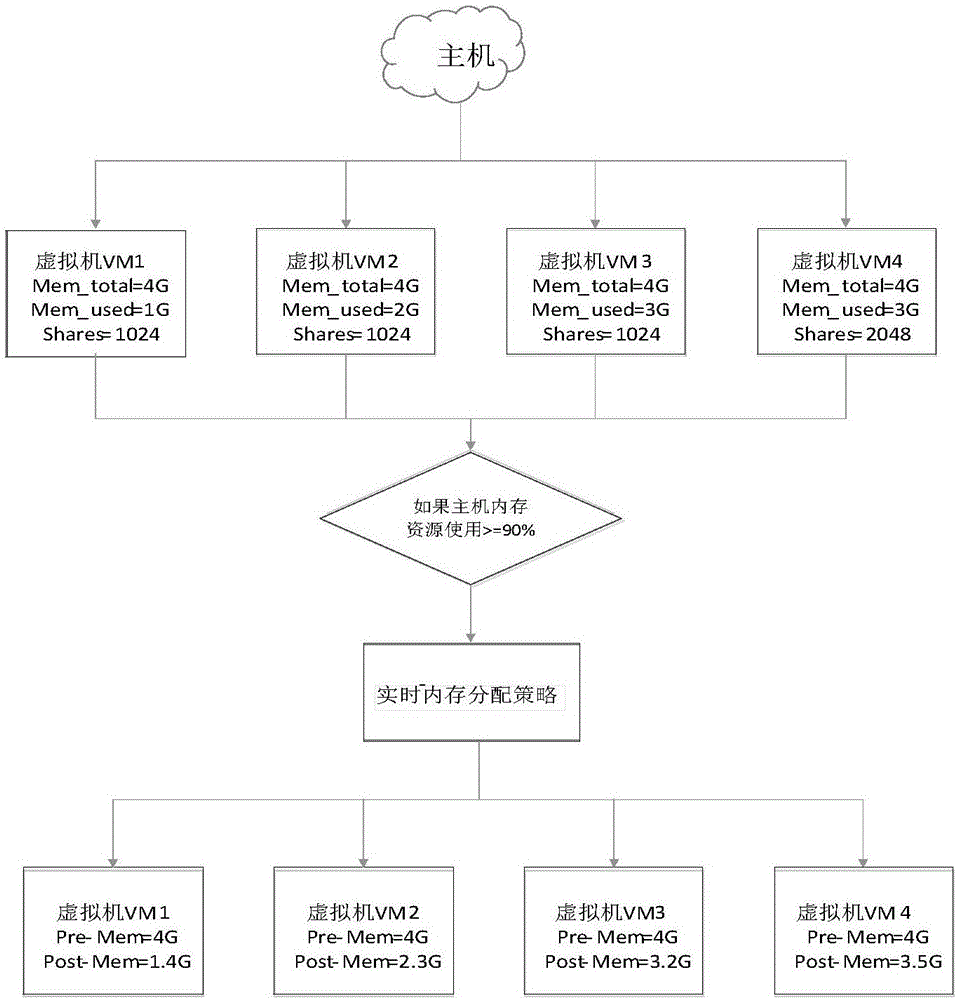

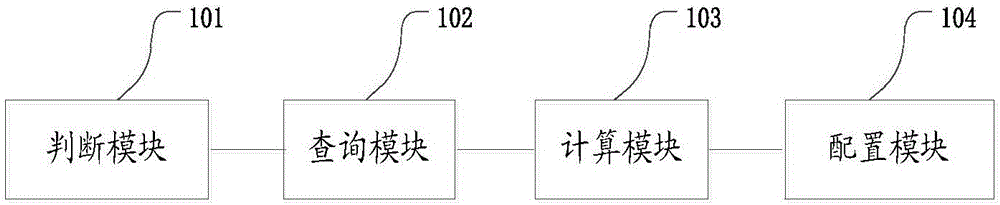

Real-time virtual machine memory scheduling method and device

InactiveCN106776048AAvoid wastingRelieve memory pressureResource allocationSoftware simulation/interpretation/emulationStressed stateHost memory

The invention discloses a real-time virtual machine memory scheduling method and device. The memory scheduling method comprises the steps that when the memory resource of a mainframe exceeds the preset threshold value, the memory resource of the mainframe is determined to be in a stressed state; when the memory resource of the mainframe is in a stressed state, the memory usage rate of a virtual machine is queried; the spatial memory punishment coefficient is introduced, the size of the released memory is calculated; the memory of the virtual machine is reallocated according to the calculated size of the released memory. According to the memory scheduling method, the optimization of memory allocation is achieved, and wastes of memory resources are avoided.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

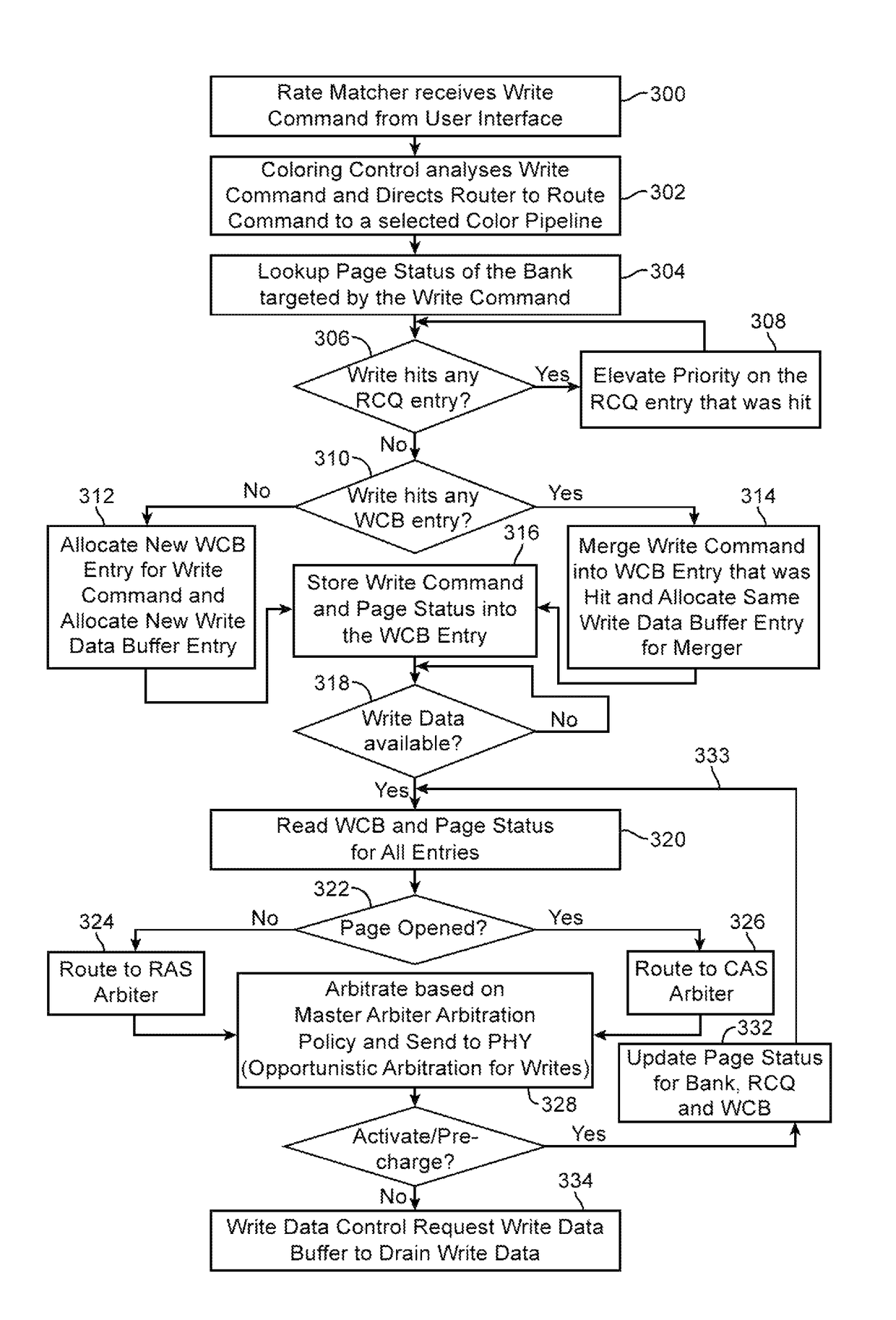

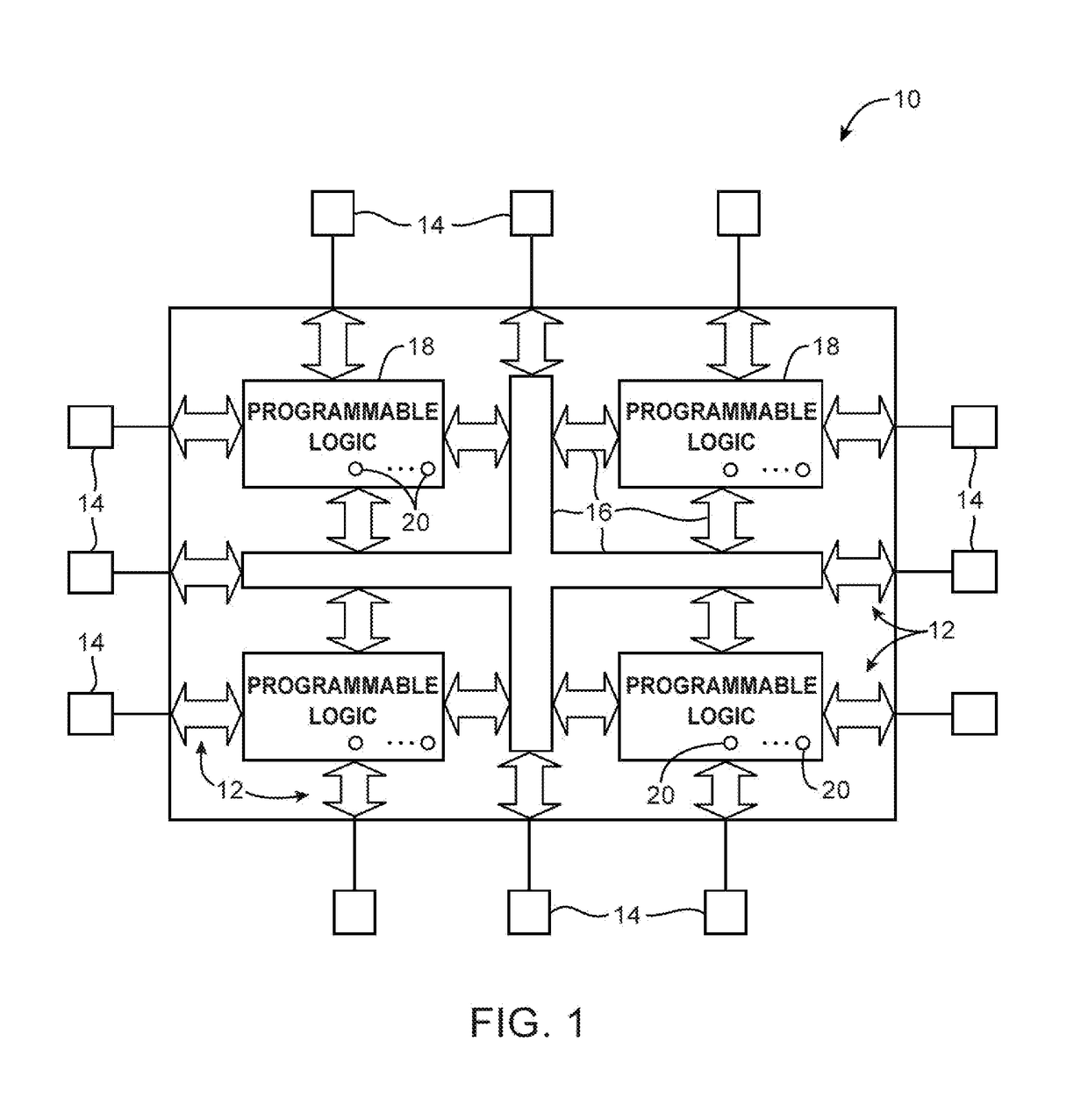

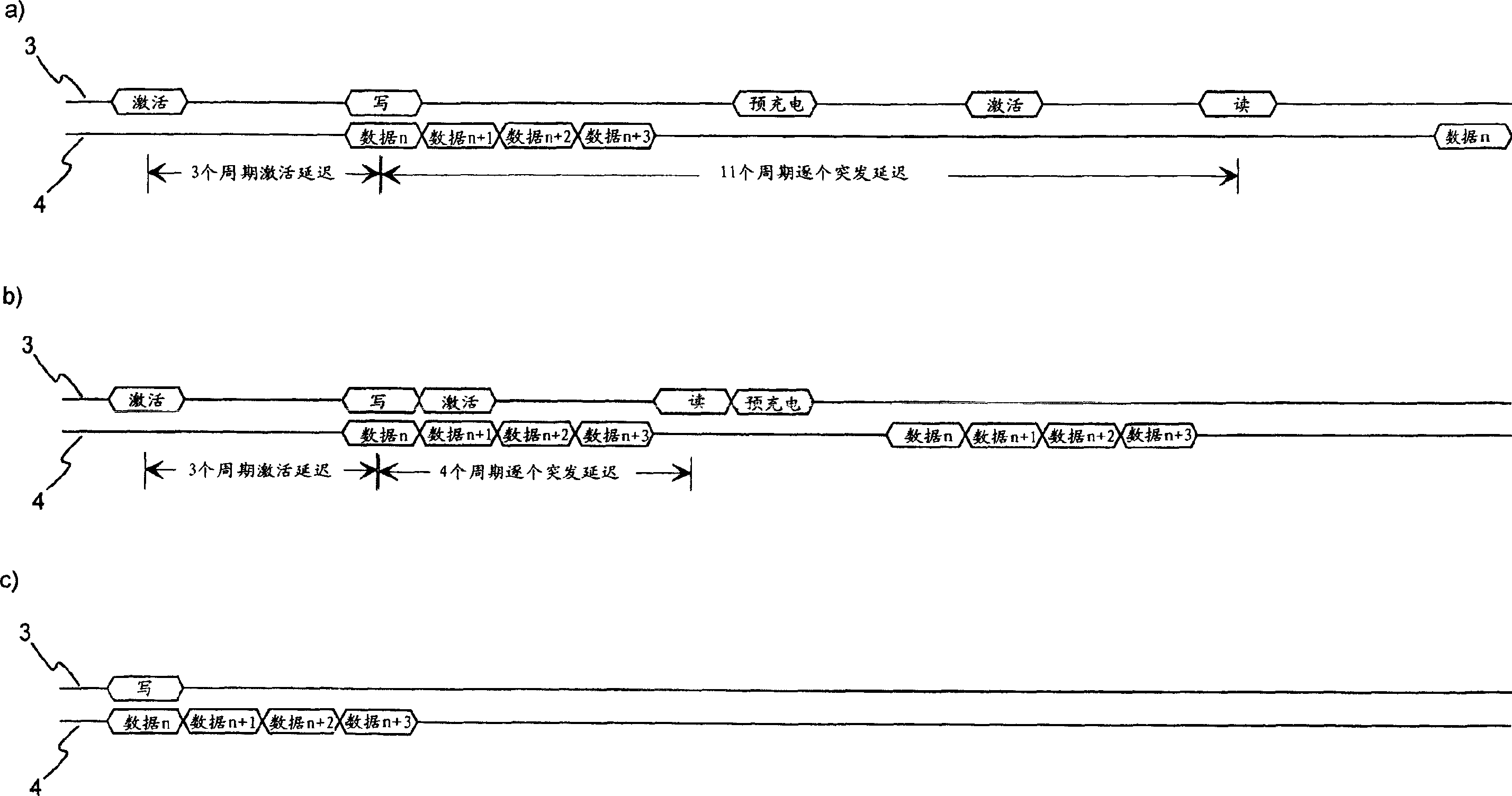

Memory controller architecture with improved memory scheduling efficiency

Integrated circuits that include memory interface and controller circuitry for communicating with external memory are provided. The memory interface and controller circuitry may include a user logic interface, a memory controller, and a physical layer input-output interface. The user logic interface may be operated in a first clock domain. The memory controller may be operated in a second clock domain. The physical layer interface may be operated in a third clock domain that is an integer multiple of the second clock domain. The user logic interface may include only user-dependent blocks. The physical layer interface may include memory protocol agnostic blocks and / or memory protocol specific blocks. The memory controller may include both memory protocol agnostic blocks and memory protocol dependent blocks. The memory controller may include one or more color pipelines for scheduling memory requests in a parallel arbitration scheme.

Owner:ALTERA CORP

Dynamic management method and system for virtual machine memory on the basis of memory and Swap space

ActiveCN107463430ADoes not degrade performanceReduce performance lossSoftware simulation/interpretation/emulationComputer moduleDynamic management

The invention relates to the field of virtual machine memory management, in particular to a dynamic management method and system for virtual machine memory on the basis of memory and Swap space. In order to eliminate the defects that system performance loss is caused when an existing virtual machine memory scheduling algorithm uses the Swap space and in-band monitoring is used in virtual machine memory monitoring to cause low virtual machine operation performance and high potential safety hazard, the invention puts forward the dynamic management method and system for the virtual machine memory on the basis of the memory and the Swap space. The system comprises a monitoring module, a calculation module and an execution module, wherein the monitoring module, the calculation module and the execution module are arranged in a privileged domain; the monitoring module is used for sending the address of to-be-read data to the virtual machine and receiving the to-be-read data returned from the virtual machine and is also used for sending the to-be-read data to the calculation module; the calculation module is used for calculating a target memory size which needs to be distributed to the virtual machine; and the execution module is used for regulating the memory of the virtual machine according to the target memory size. The system is suitable for the dynamic management tool of the memory.

Owner:HARBIN INST OF TECH

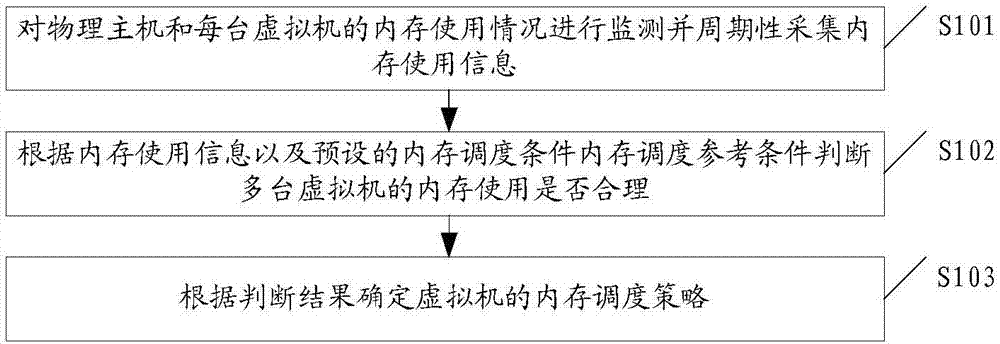

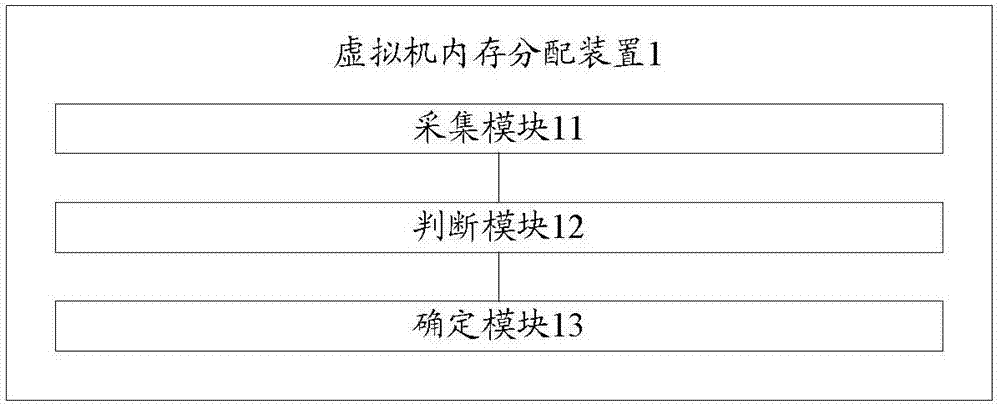

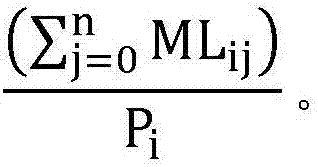

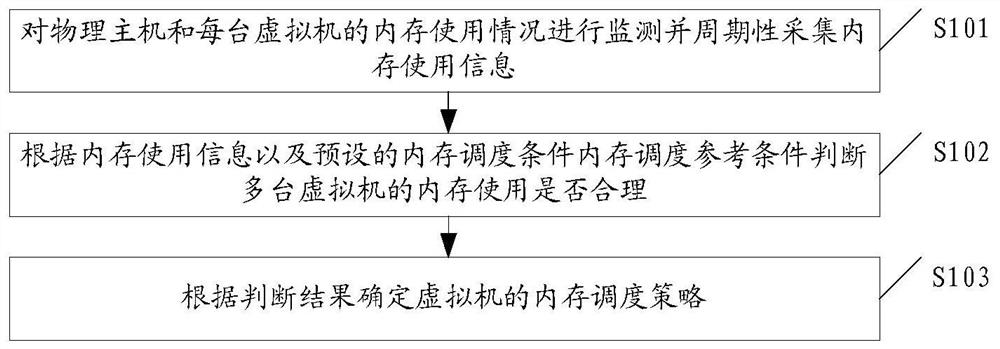

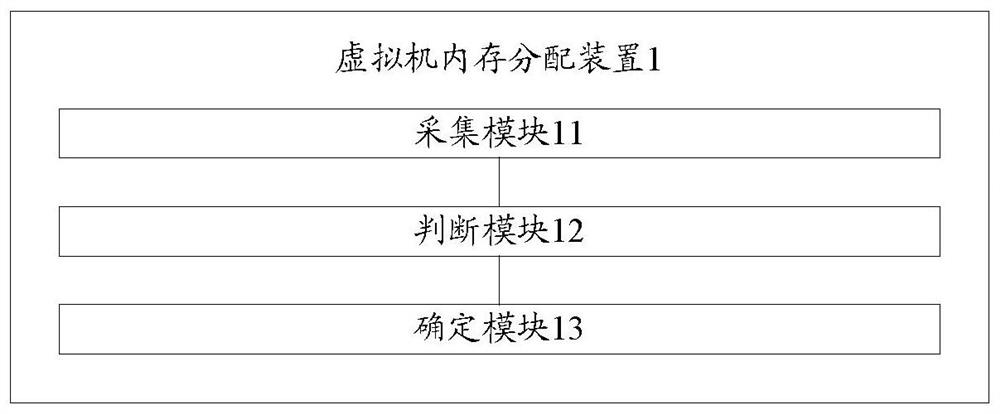

Virtual machine memory allocation method and device

ActiveCN107341060ABalanced memory utilizationImprove resource utilization efficiencyResource allocationSoftware simulation/interpretation/emulationDistribution methodResource utilization

The embodiment of the invention discloses a virtual machine memory allocation method. The method comprises the steps that memory use conditions of a physical host and each virtual machine are monitored, and memory use information is collected periodically; whether memory use of the virtual machines is reasonable is judged according to the memory use information and preset memory scheduling reference conditions; and a memory scheduling policy of the virtual machines is determined according to the judgment result. The embodiment of the invention furthermore discloses a virtual machine memory allocation device. Through the scheme in the embodiment, memory utilization rates of the virtual machines are kept balanced, and the resource utilization efficiency of the physical machine is improved.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

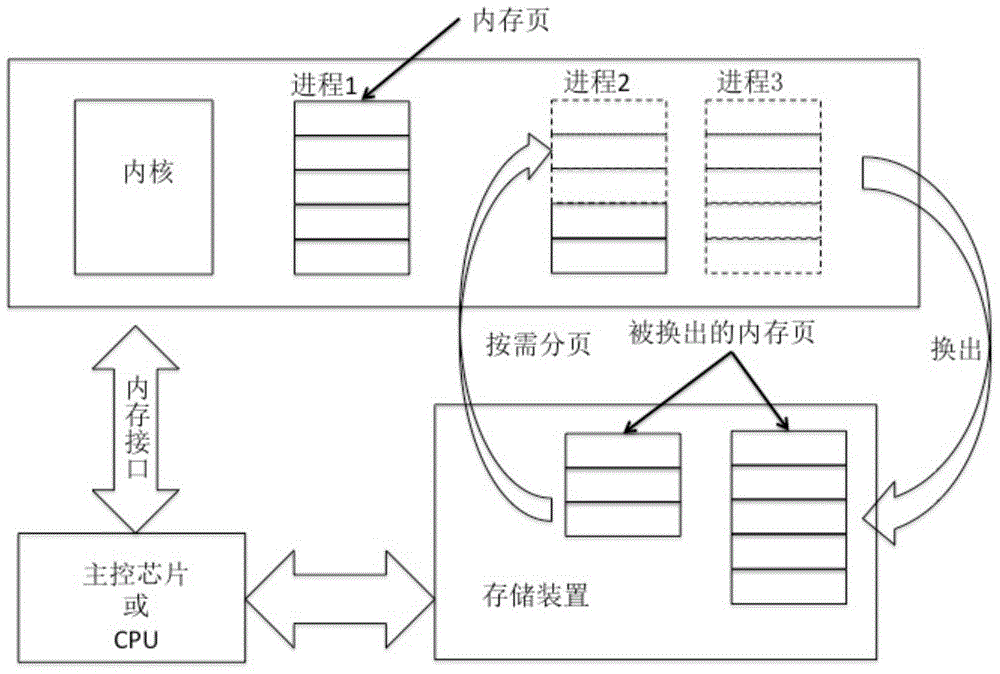

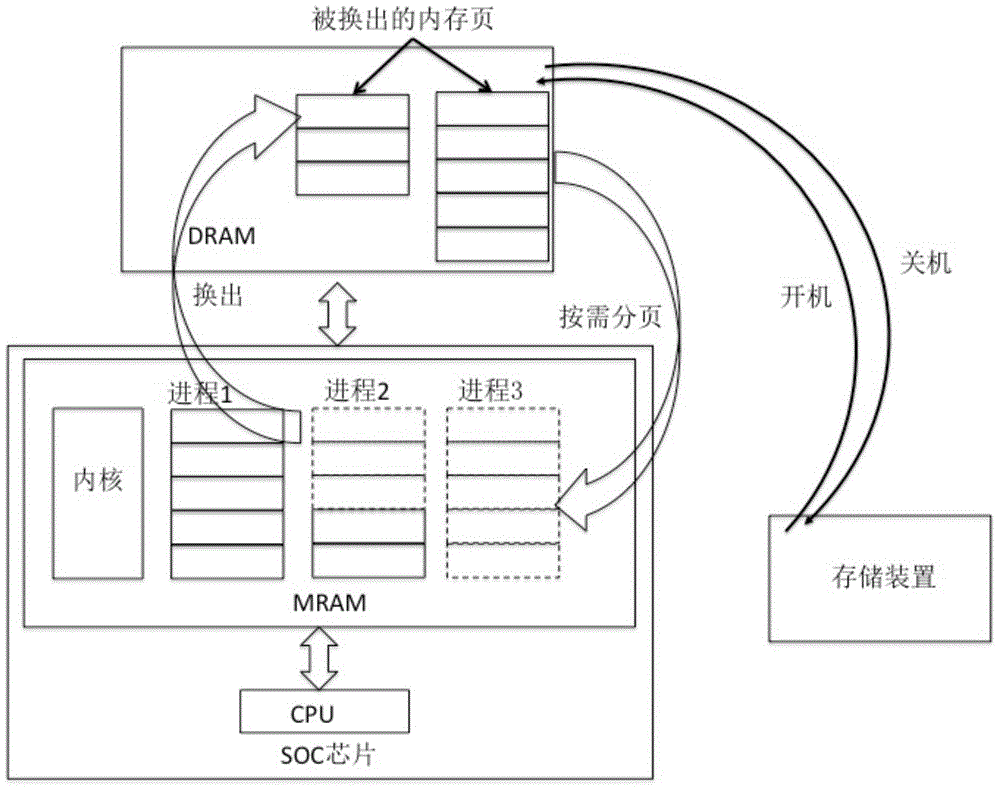

Computer system, memory scheduling method of operation system and system starting method

InactiveCN105630407AInput/output to record carriersProgram loading/initiatingStatic random-access memoryOperational system

The invention discloses a computer system. The system comprises a CPU, a random access memory and a storage device. The CPU transmits instructions and data with the random access memory through a bus and a random access memory interface; the CPU transmits instructions and data with the storage device through a bus and an input / output interface; the computer system also comprises a nonvolatile random access memory; the nonvolatile random access memory can exchange instructions and data with the CPU and the random access memory interface; and the nonvolatile random access memory and the CPU are arranged integrally. The invention also discloses a memory scheduling method of an operation system. A process in the operation system continuously applies new memory pages; therefore, the space of the nonvolatile random access memory is insufficient; a swap out / demand paging mechanism generally adopted in an open operation system is used for swapping out temporarily needless memory pages to the peripheral random access memory instead of storing in the storage device.

Owner:SHANGHAI CIYU INFORMATION TECH CO LTD

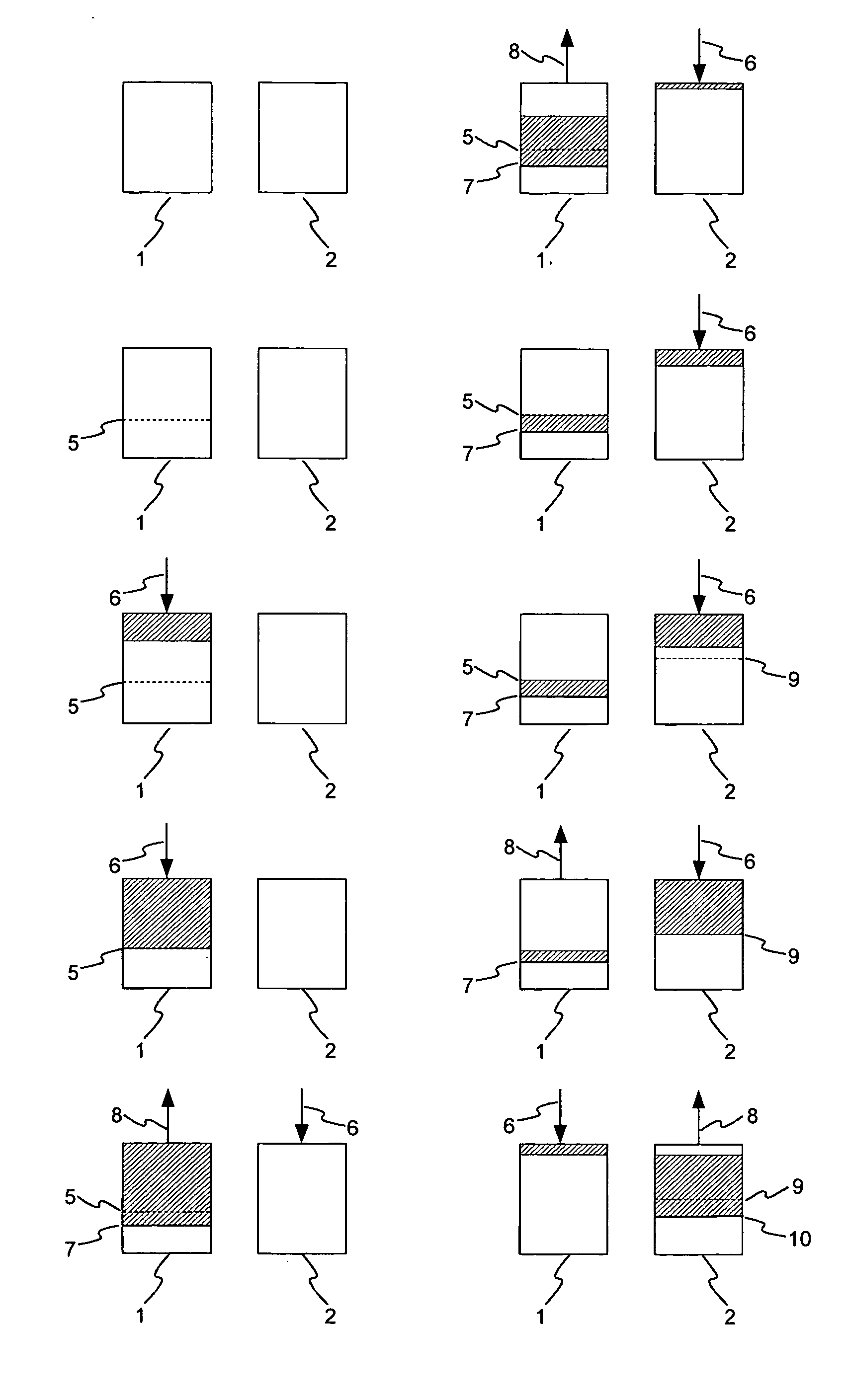

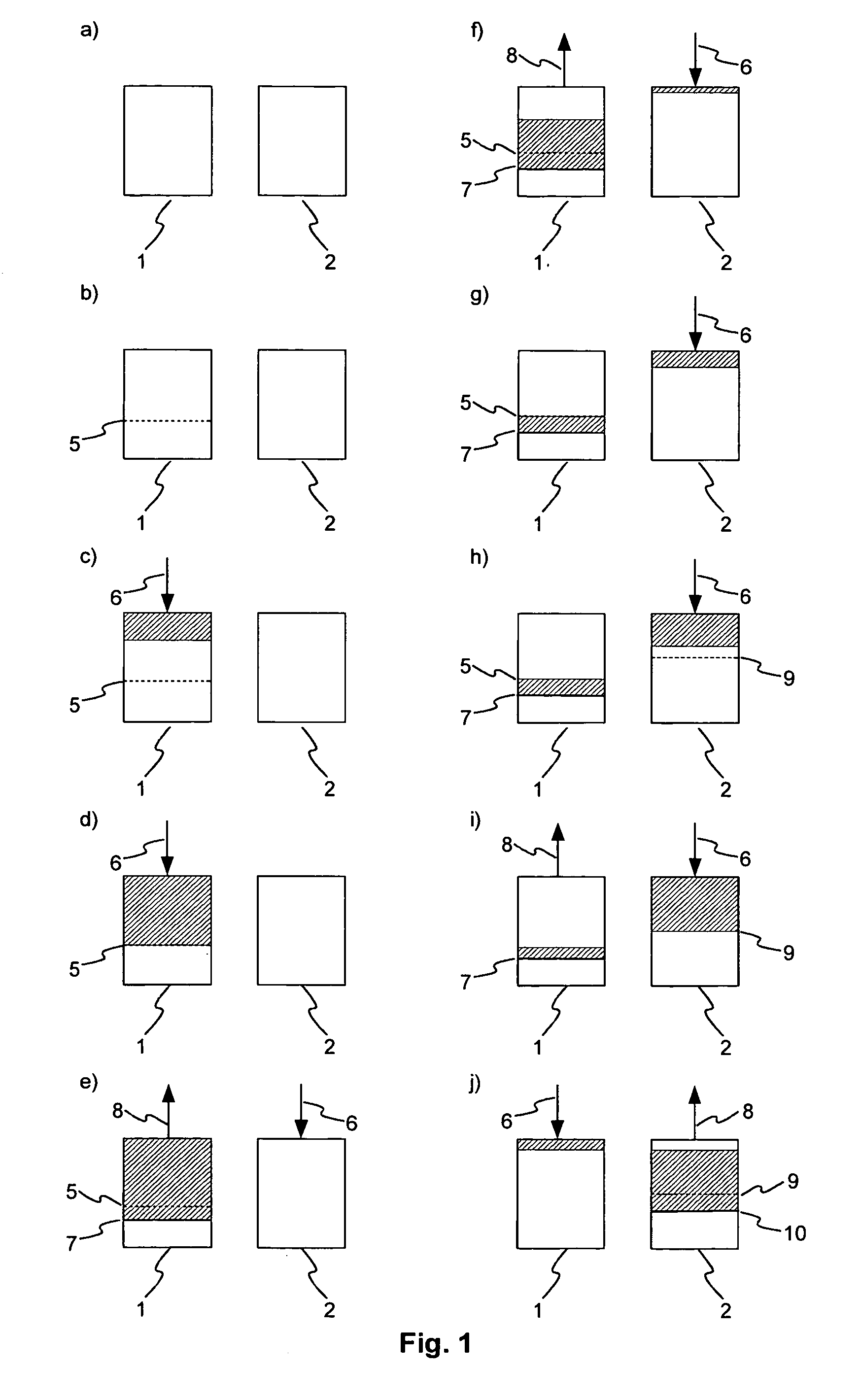

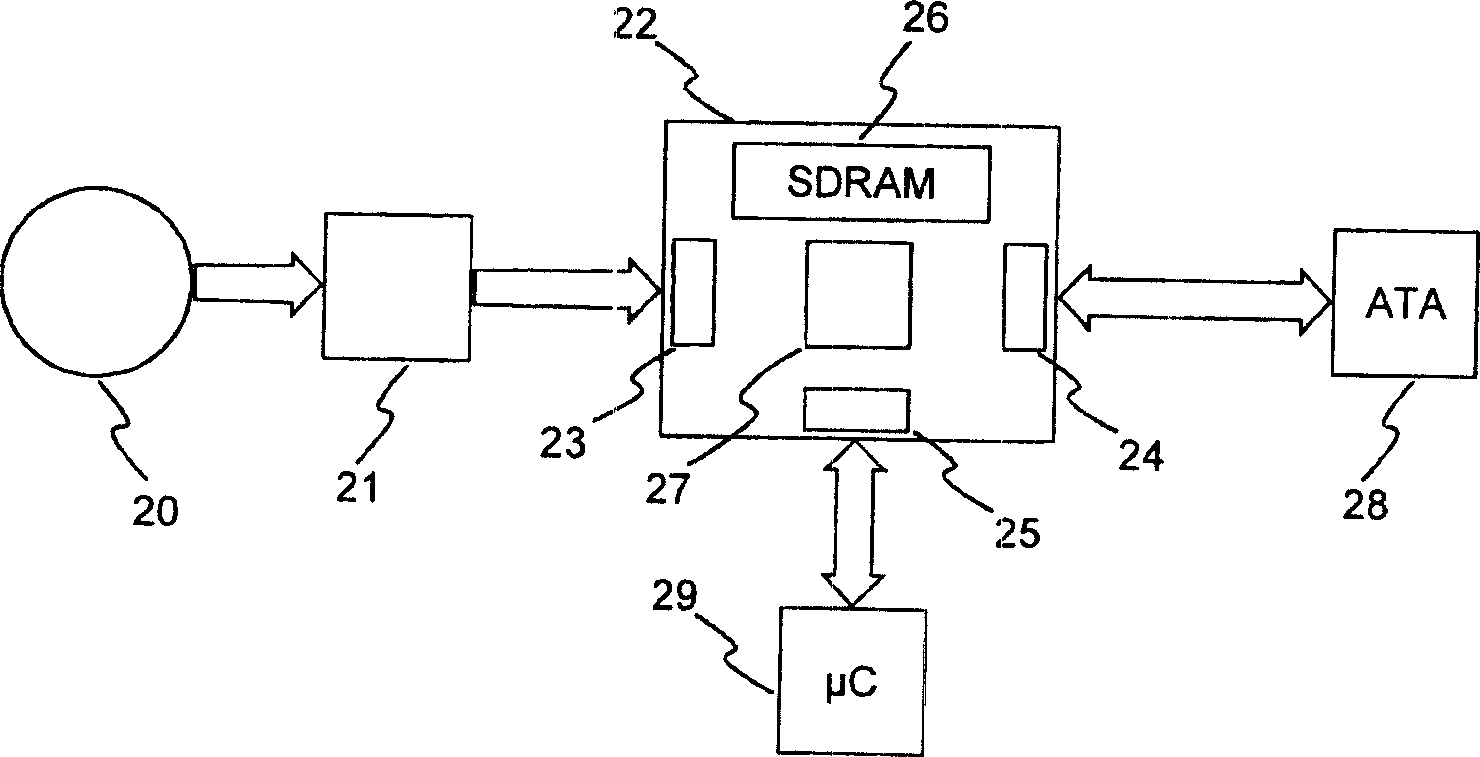

Method for multibank memory scheduling

InactiveUS20050050259A1Cheap and less complex solutionSimple switch controlMemory adressing/allocation/relocationDigital storageOperating systemRecording media

The present invention relates to a method for scheduling and controlling access to a multibank memory having at least two banks, and to an apparatus for reading from and / or writing to recording media using such method. According to the invention, the method comprises the steps of: writing an input stream to the first bank; switching the writing of the input stream to the second bank when a read command for the first bank is received; and switching the writing of the input stream back to the first bank when a read command for the second bank is received.

Owner:THOMSON LICENSING SA

Method for multibank memory scheduling

InactiveCN1591670AMemory adressing/allocation/relocationDigital storageOperating systemRecording media

The present invention relates to a method for scheduling and controlling access to a multibank memory having at least two banks (1, 2), and to an apparatus for reading from and / or writing to recording media using such method. According to the invention, the method comprises the steps of: writing an input stream (6) to the first bank (1); switching the writing of the input stream (6) to the second bank (2) when a read command for the first bank (1) is received; and switching the writing of the input stream (6) back to the first bank (1) when a read command for the second bank (2) is received.

Owner:THOMSON LICENSING SA

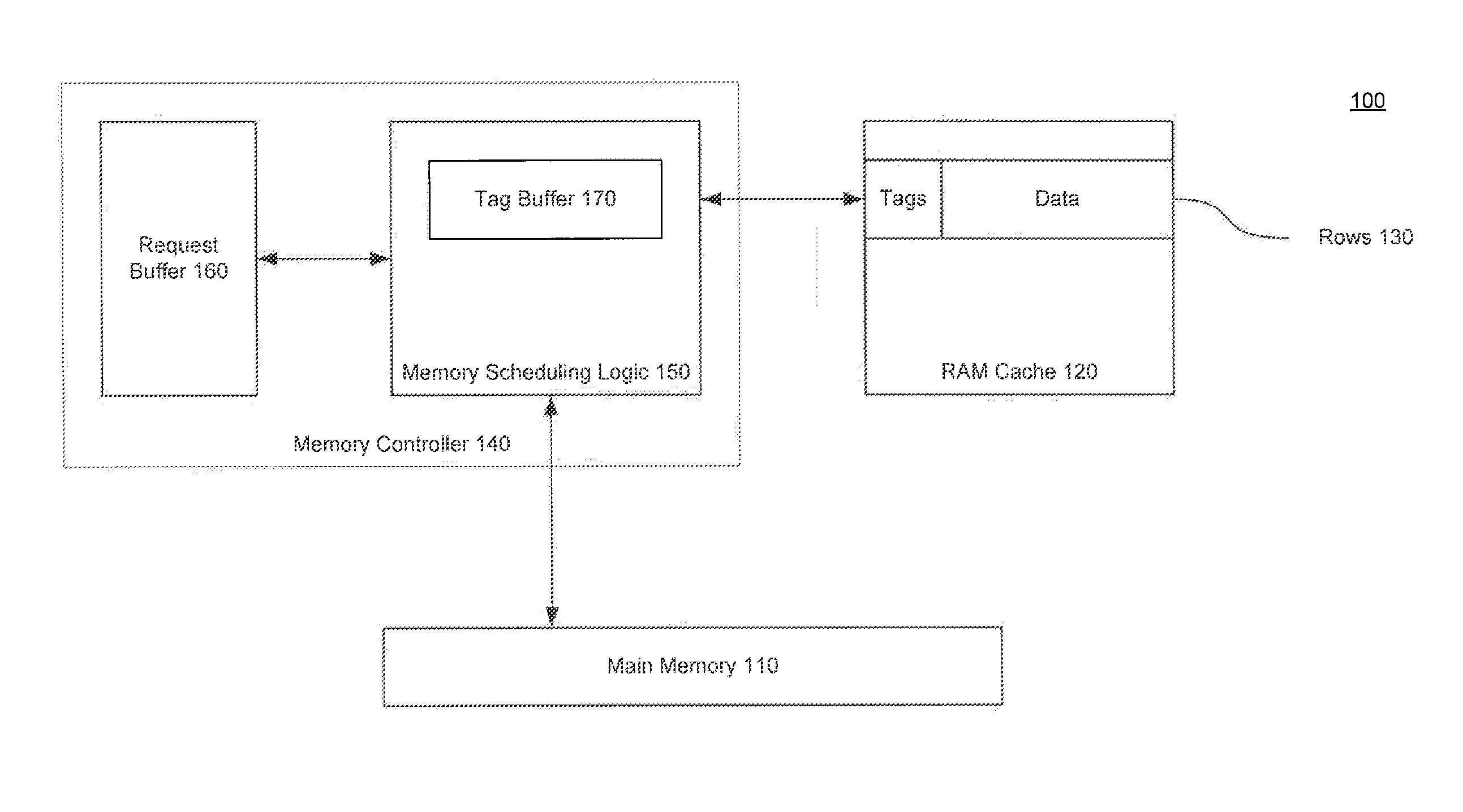

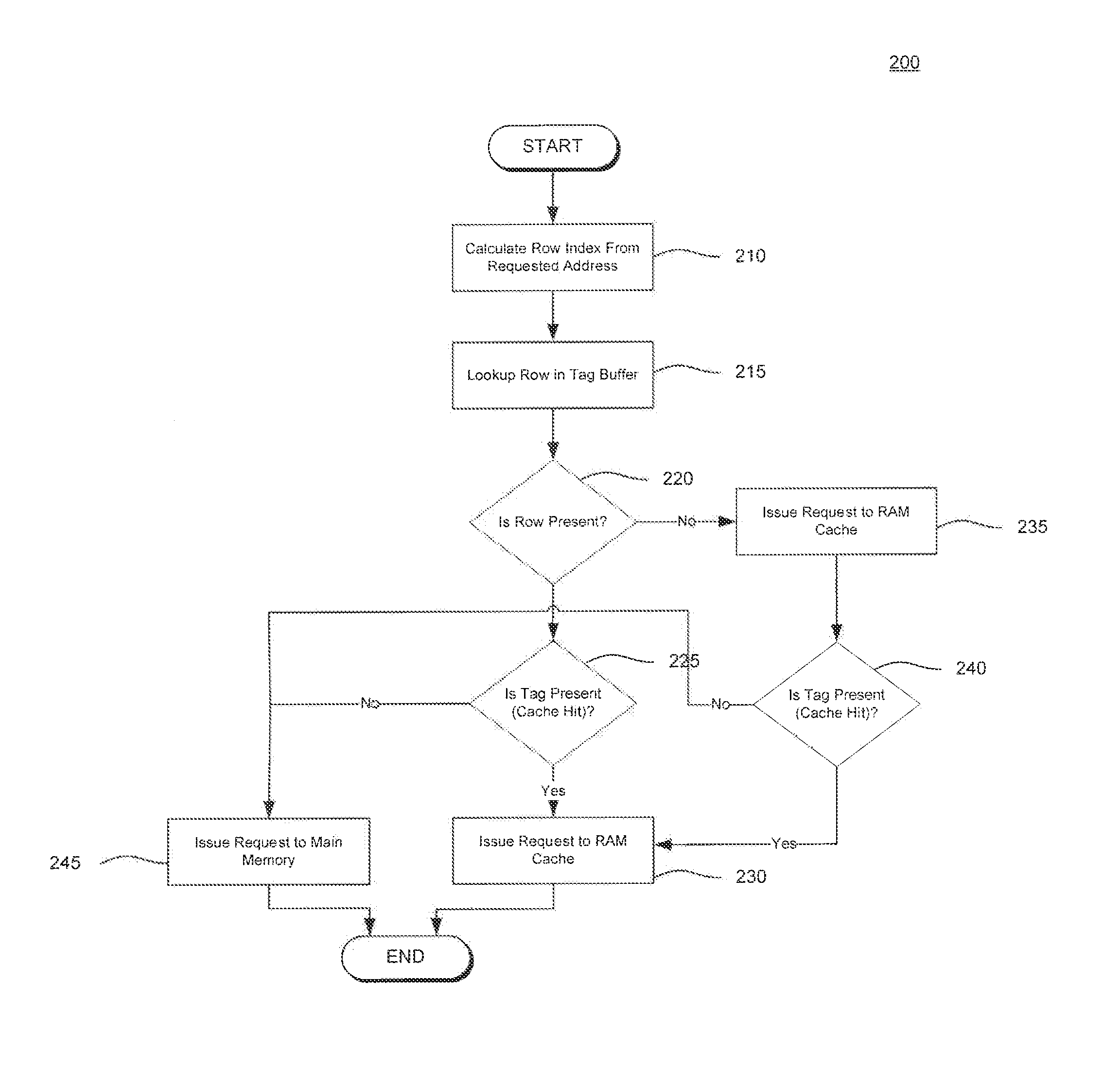

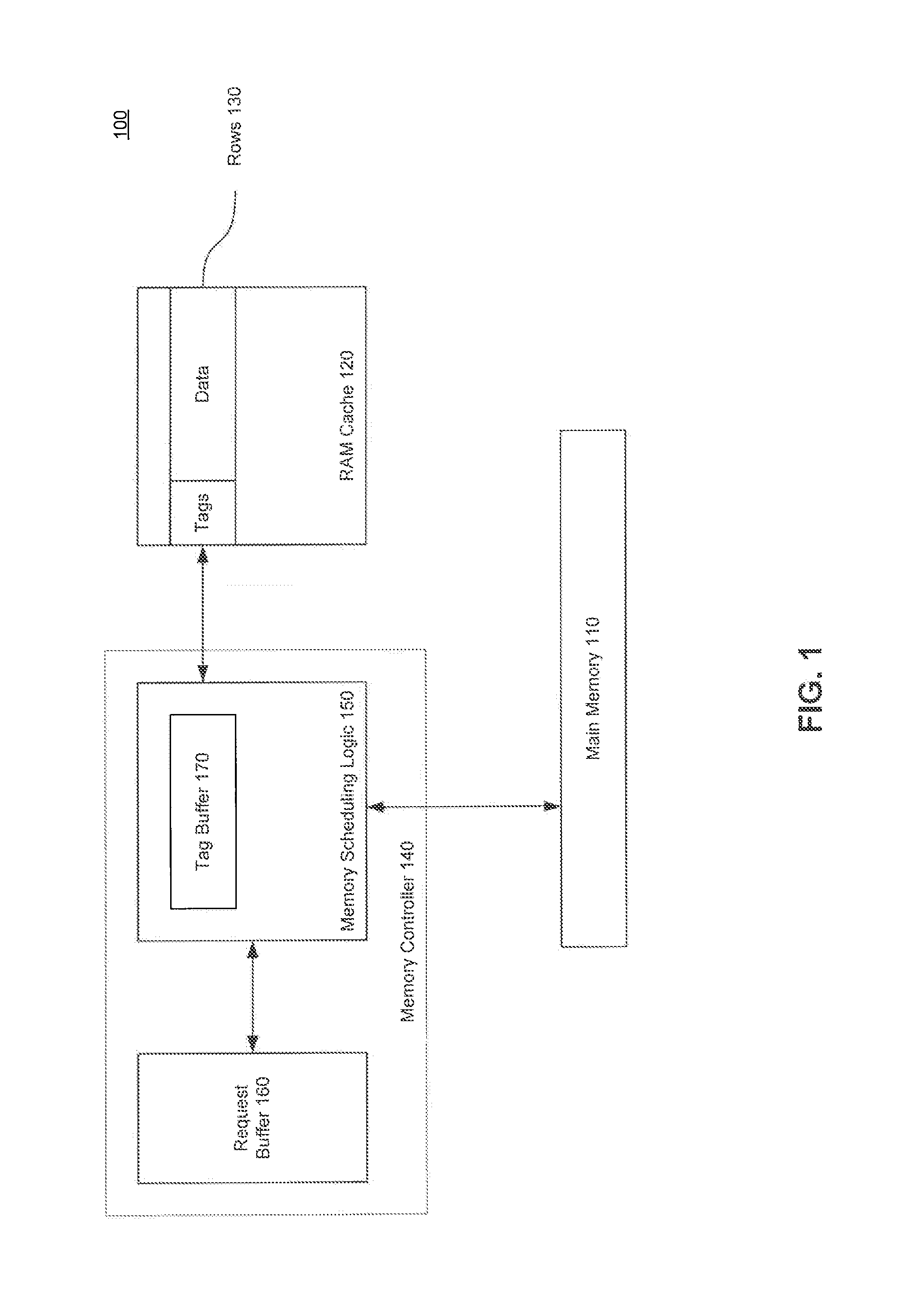

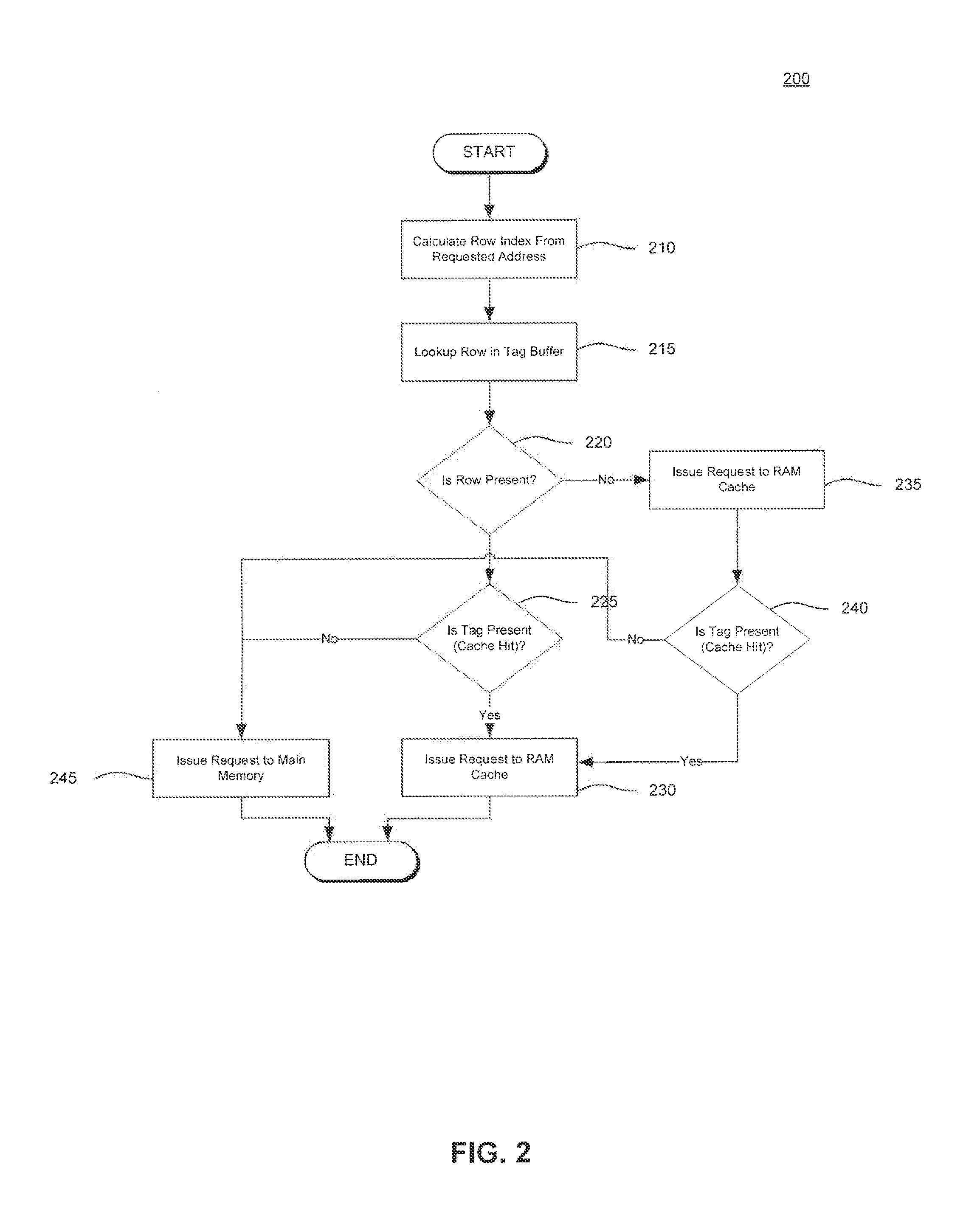

Memory Scheduling for RAM Caches Based on Tag Caching

ActiveUS20140181384A1Effective serviceEfficient serviceDigital storageEnergy efficient computingMemory controllerComputer science

A system, method and computer program product to store tag blocks in a tag buffer in order to provide early row-buffer miss detection, early page closing, and reductions in tag block transfers. A system comprises a tag buffer, a request buffer, and a memory controller. The request buffer stores a memory request having an associated tag. The memory controller compares the associated tag to a plurality of tags stored in the tag buffer and issues the memory request stored in the request buffer to either a memory cache or a main memory based on the comparison.

Owner:ADVANCED MICRO DEVICES INC

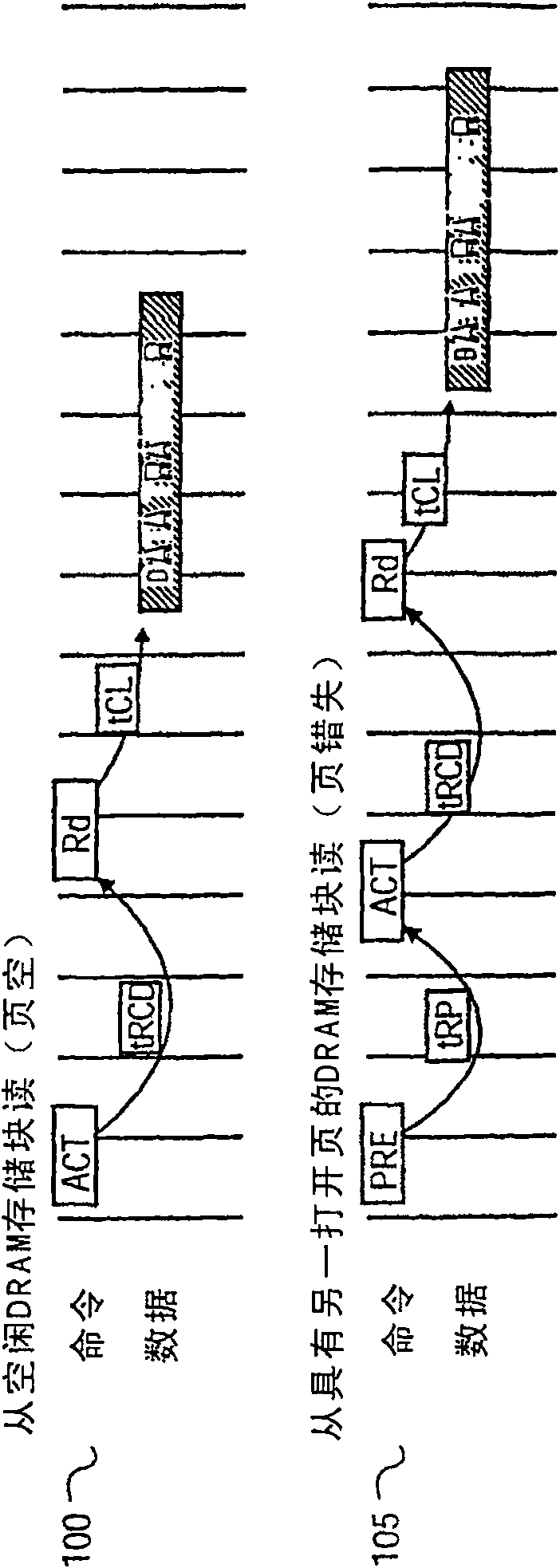

Method and apparatus for memory access scheduling to reduce memory access latency

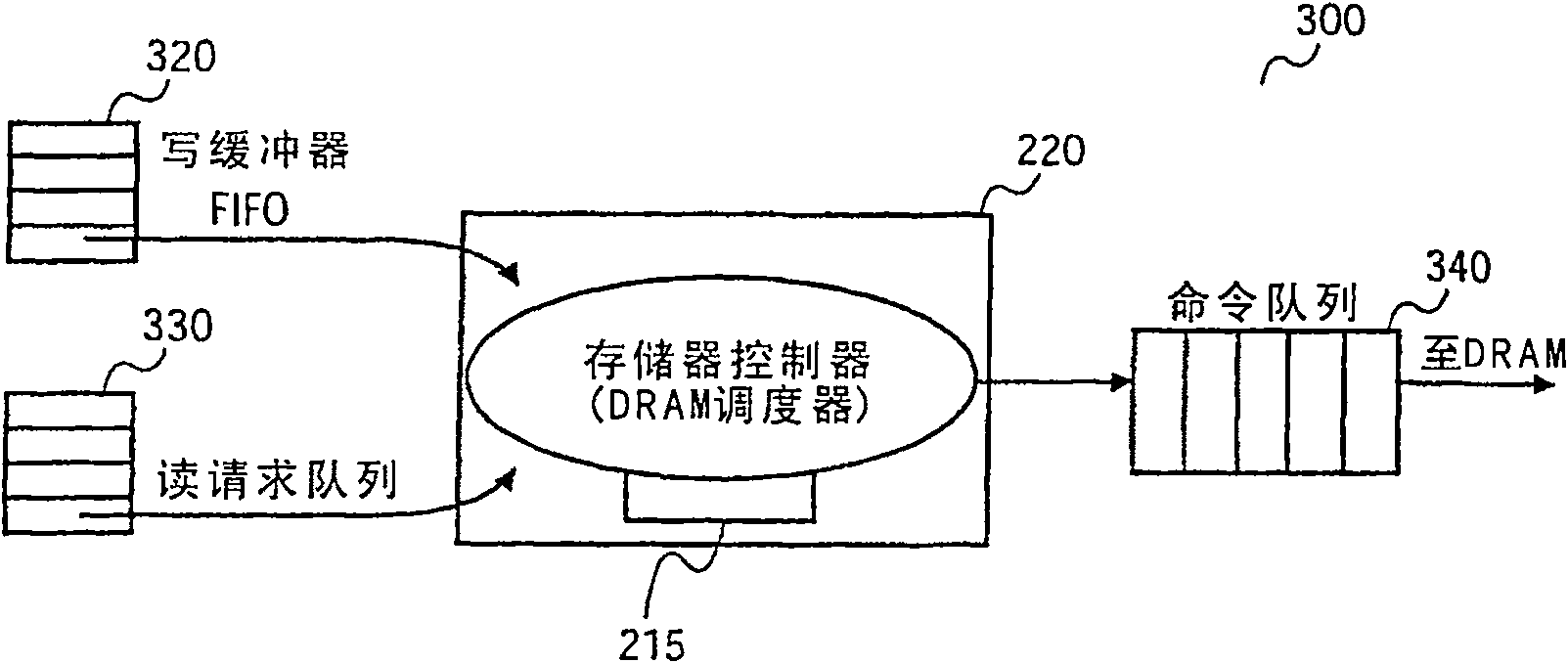

A device is presented including a memory controller. The memory controller is connected to a read request queue. A command queue is coupled to the memory controller. A memory page table is connected to the memory controller. The memory page table has many page table entries. A memory page history table is connected to the memory controller. The memory history table has many page history table entries. A pre-calculated lookup table is connected to the memory controller. The memory controller includes a memory scheduling process to reduce memory access latency.

Owner:INTEL CORP

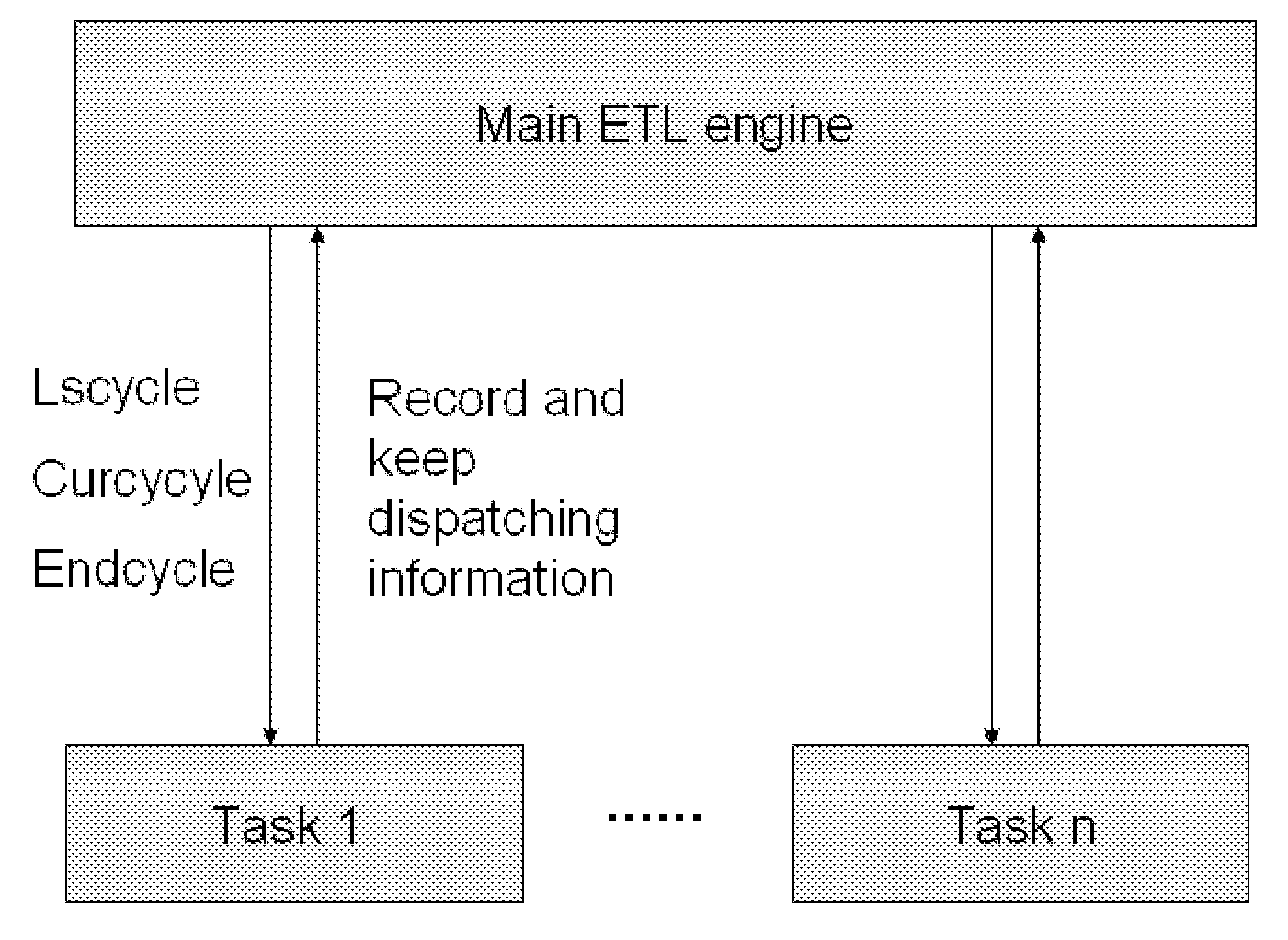

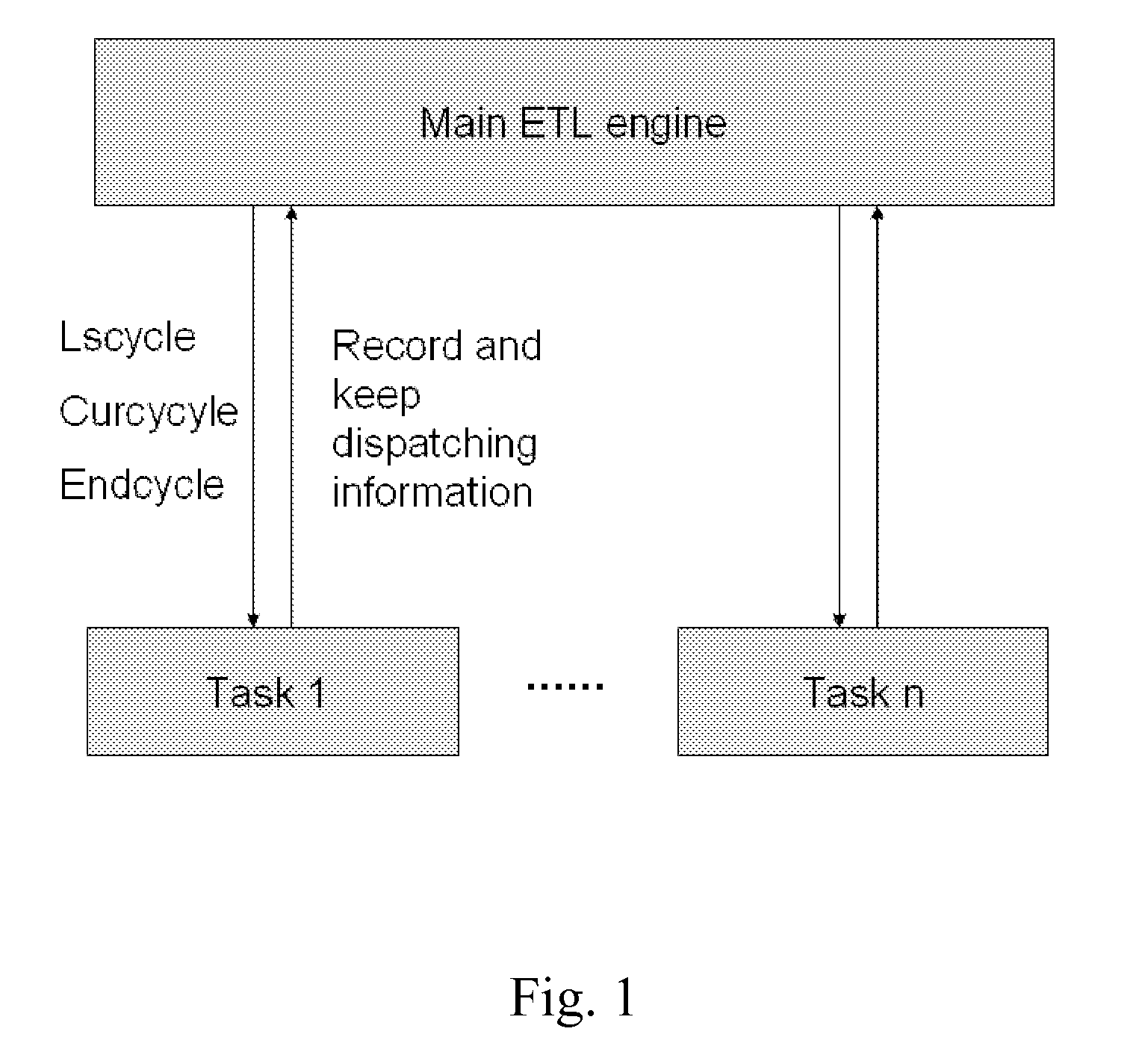

Memory Dispatching Method Applied to Real-time Data ETL System

ActiveUS20100180088A1Solve the real problemEfficiency problemDigital data information retrievalDigital data processing detailsReal-time dataBusiness logic

As for the memory dispatching method applied to real-time data ETL system, the main ETL dispatching program executes one task according to preset sequence. In the execution, some key information are memorized by dispatching engine, such as lscycle (latest successful data cycle), curcycle (current processing data cycle), and endcycle (processing end data cycle), etc., are transferred to the called program. After the execution of the called program, the dispatching engine records and keeps the updated dispatching information; in the data re-extraction, memory dispatching method is adopted for the automatic re-extraction of some tasks and some cycles therein. Memory dispatching method (state-based dispatching method) solves the defect in stateless of traditional ETL dispatching program, simplifies the tasks of the called program, makes the called program focus on its own business logic through the memory state, wins plentiful development time for the real-time data ETL field, and greatly enhances the efficiency of project implementation.

Owner:LINKAGE TECH GROUP

A multi-thread based mapreduce execution system

ActiveCN103605576BEfficient resource sharing mechanismReduce management pressureResource allocationGranularityParallel computing

The invention discloses a multithreading-based MapReduce execution system comprising a MapReduce execution engine implementing multithreading. A multi-process execution mode of Map / Reduce tasks in original Hadoop is changed into a multithread mode; details about memory usage are extracted from Map tasks and Reduce tasks, a MapReduce process is divided into multiple phases under fine granularity according to the details, and a shuffle process in the original Hadoop is changed from Reduce pull into Map active push; a uniform memory management module and an I / O management module are implemented in the MapReduce multithreading execution engine and used to centrally manage memory usage of each task thread; a global memory scheduling and I / O scheduling algorithm is designed and used to dynamically schedule system resources during the execution process. The system multithreading-based MapReduce execution system has the advantages that memory usage can be maximized by users without modifying the original MapReduce program, disk bandwidth is fully utilized, and the long-last I / O bottleneck problem in the original Hadoop is solved.

Owner:HUAZHONG UNIV OF SCI & TECH

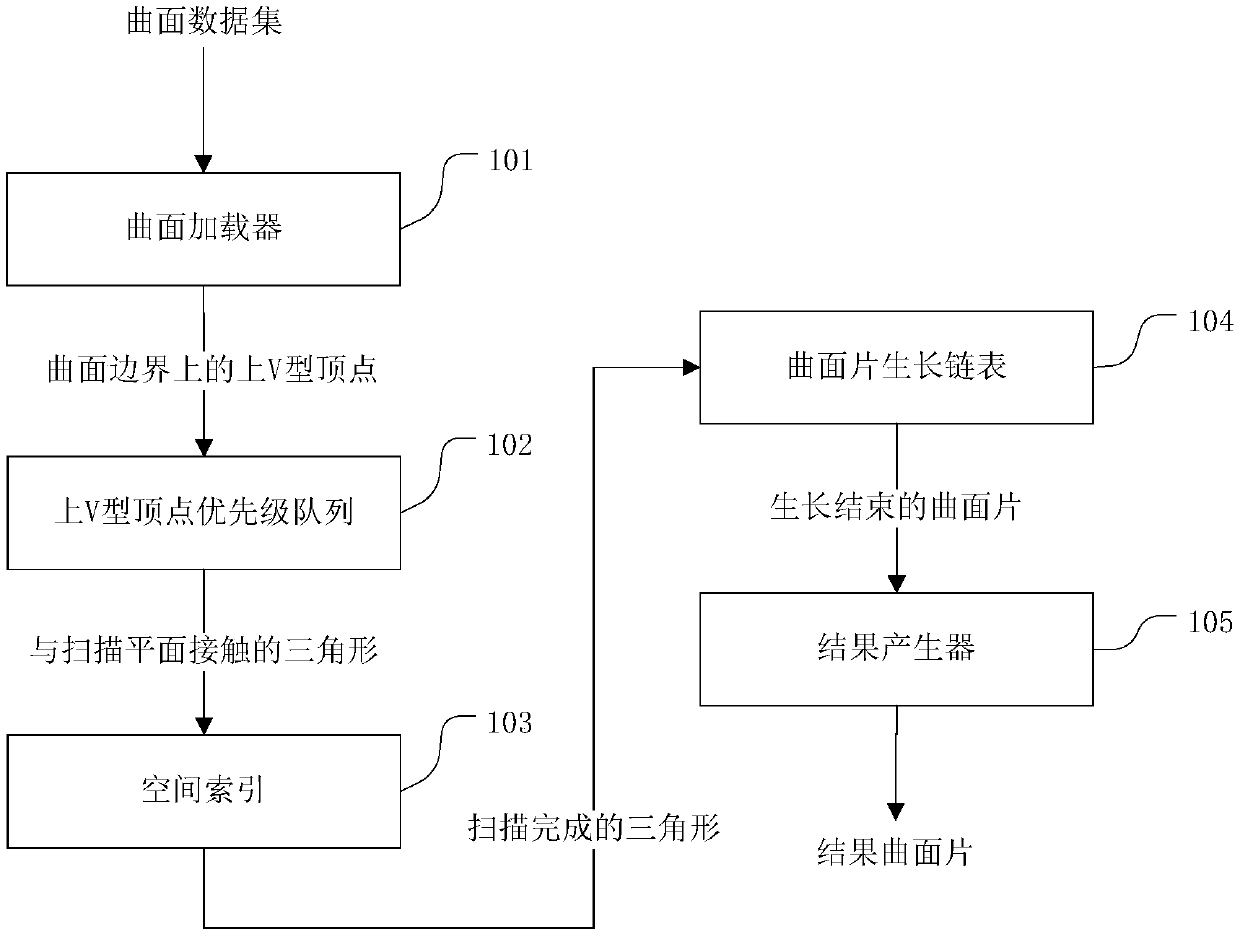

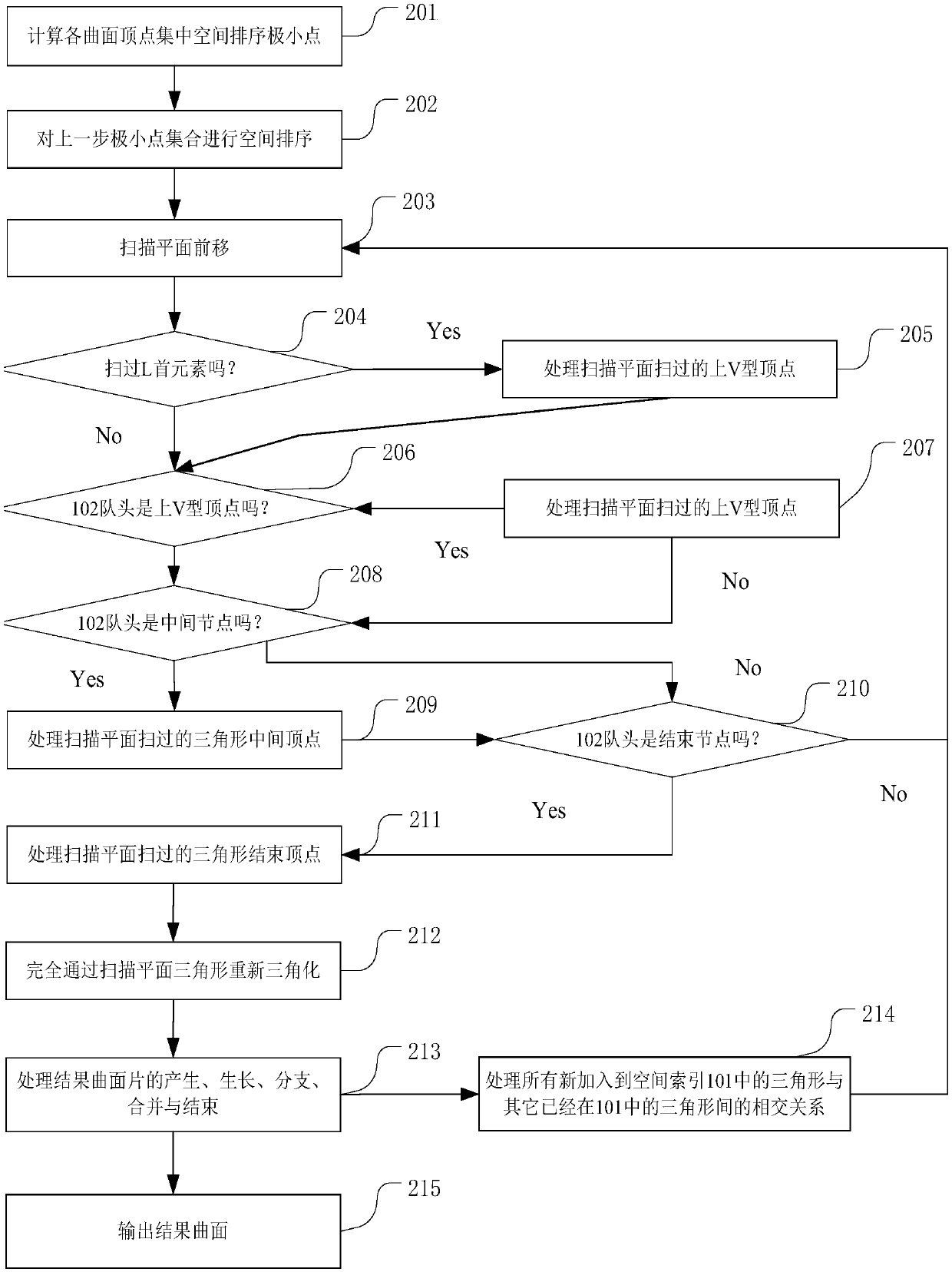

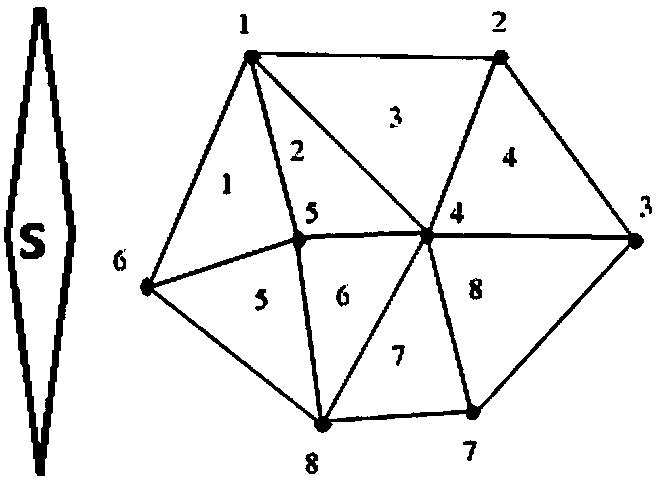

Surface set mutual cutting method and system based on space scanning

InactiveCN107633555AReduce data volumeReduce computing scaleImage analysis3D modellingTriangulationMulti method

The invention discloses a surface set mutual cutting method and system based on space scanning. The method and system provide users a technique for efficiently realizing mutual cutting of a lot of surfaces, can finish multiple steps of triangular intersection detection, memory scheduling, re-triangulation and generation of result surface patches and the like in a single scanning process, and can overcome the inconsistency problems of multi-surface common point, collineation, coplane and self-intersection and the like in pairwise cutting calculation; through a plurality of modes of flow loading, maintaining triangles interacting with a scanning plane, generating and storing finished surface patches as soon as possible and freeing memory occupied by the surface patches and the like, data volume involved in calculation is reduced, and calculation scale is reduced; and in the cutting process, calculation in each step no longer depends on the result that all data in the previous step is processed, and the steps can be processed in parallel, and thus the method and system are especially suitable for high-performance calculating environment.

Owner:CHINA UNIV OF GEOSCIENCES (WUHAN)

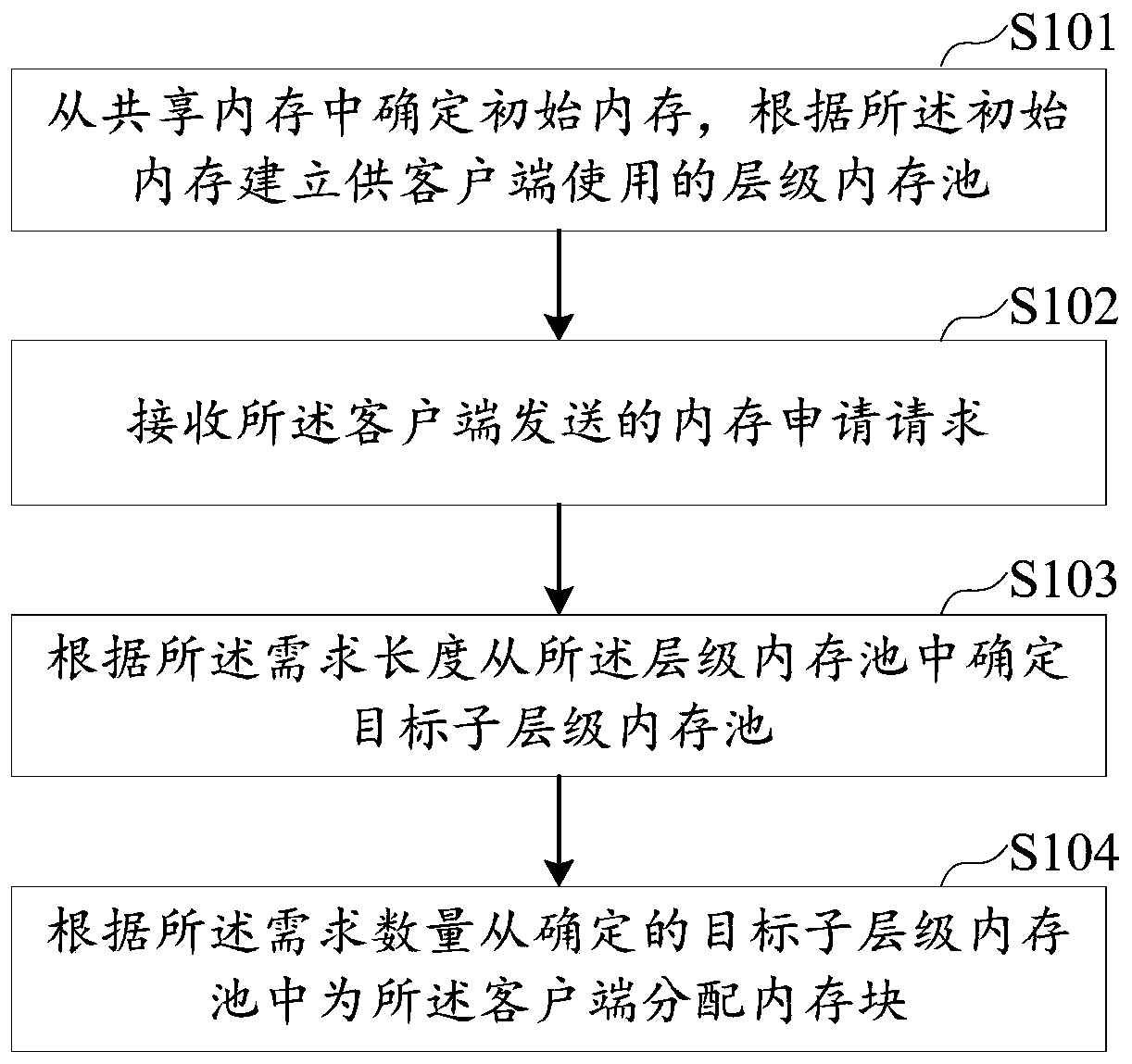

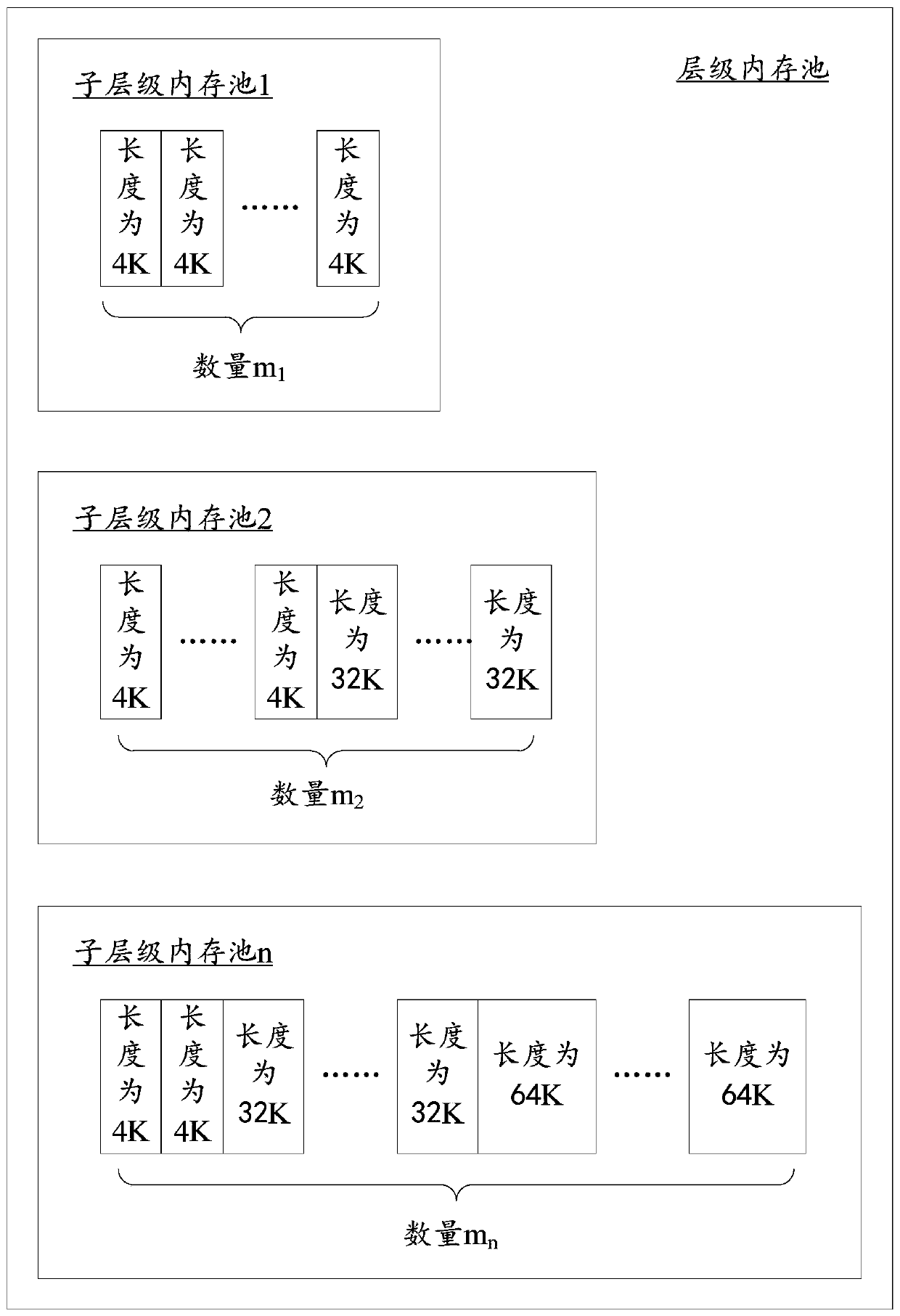

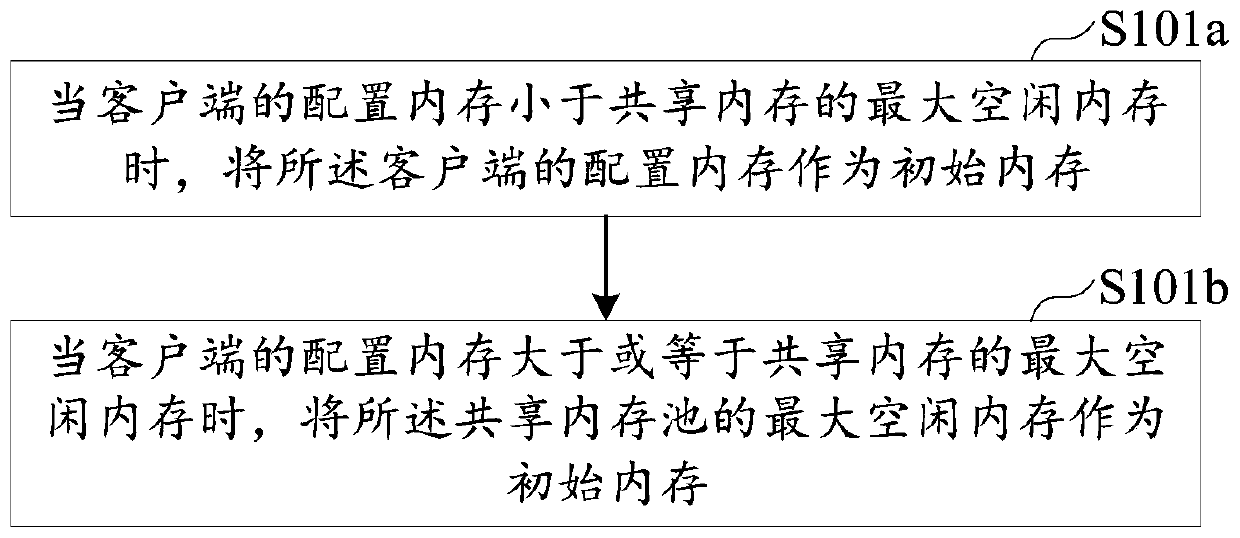

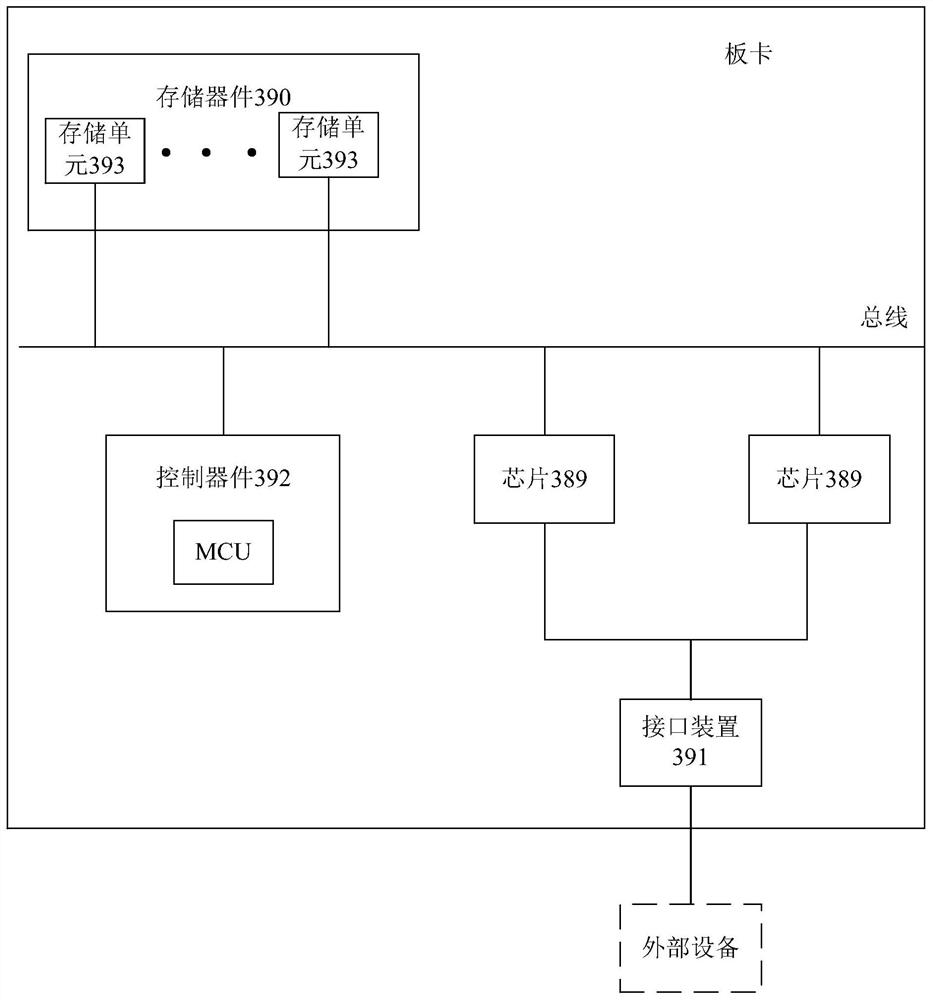

Memory scheduling method, apparatus and device, and storage medium

PendingCN110427273AIncrease profitSave memoryProgram initiation/switchingResource allocationComputer scienceUtilization rate

The invention relates to the field of data storage. Disclosed are a memory scheduling method, apparatus and device, and a storage medium, the method comprising: determining an initial memory from a shared memory, establishing a hierarchical memory pool for a client to use according to the initial memory, the hierarchical memory pool comprising a plurality of sub-hierarchical memory pools, the sub-hierarchical memory pool comprising a plurality of memory blocks; receiving a memory application request sent by the client, wherein the memory application request comprises the required length and the required quantity of required memory blocks; determining a target sub-level memory pool from the level memory pool according to the required length; and allocating memory blocks to the client from the determined target sub-level memory pool according to the demand quantity. The memory utilization rate is improved, and the memory is saved.

Owner:PING AN TECH (SHENZHEN) CO LTD

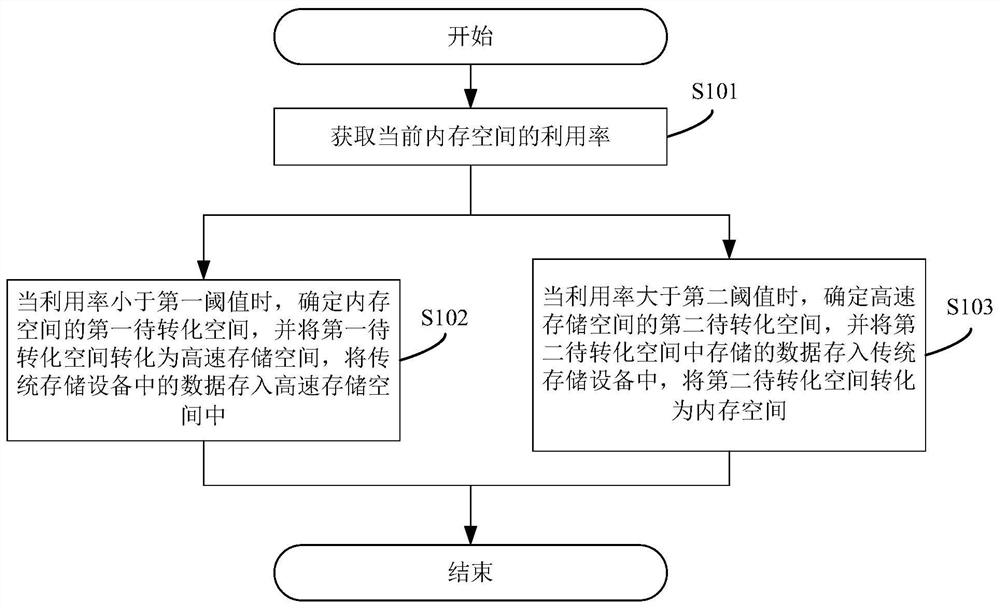

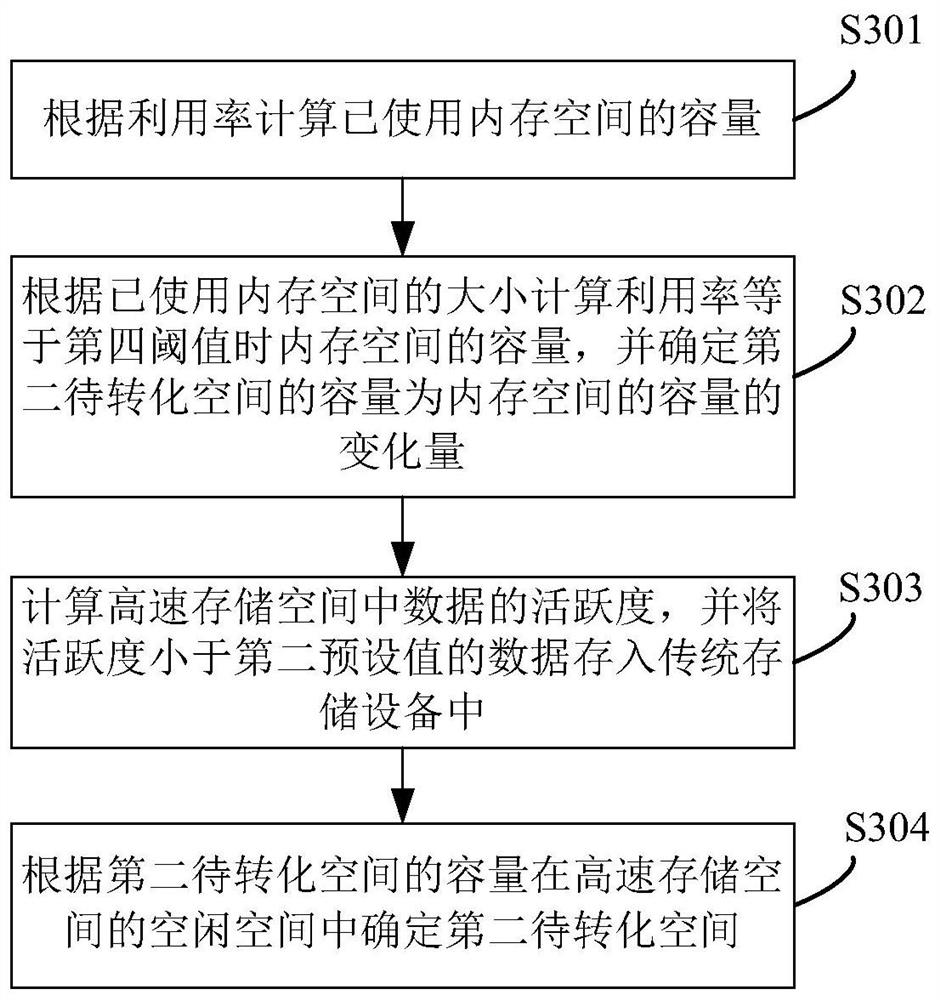

Non-volatile memory scheduling method, system and device and readable storage medium

ActiveCN111722804AImprove the speed of storage read and writeAvoid CatonInput/output to record carriersEnergy efficient computingComputer hardwareMemory footprint

The invention discloses a nonvolatile memory scheduling method. The method comprises the steps of obtaining a utilization rate of a current memory space; when the utilization rate is smaller than a first threshold value, determining a first to-be-converted space of the memory space, converting the first to-be-converted space into a high-speed storage space, and storing data in traditional storageequipment into the high-speed storage space; and when the utilization rate is greater than a second threshold, determining a second to-be-converted space of the high-speed storage space, storing datastored in the second to-be-converted space into the traditional storage device, and converting the second to-be-converted space into the memory space. According to the method, the nonvolatile memory is scheduled in real time to serve as the proportion of the memory to the data storage, the read-write speed of the data storage is increased while the equipment utilization rate is increased, server jamming caused by too high memory occupation is avoided, and the running speed of the server is guaranteed. The invention furthermore provides a nonvolatile memory scheduling system and device, and a readable storage medium, which have the above beneficial effects.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

Memory scheduling for RAM caches based on tag caching

ActiveUS9026731B2Efficient serviceReduction in tag block transferMemory adressing/allocation/relocationEnergy efficient computingMemory controllerComputer program

A system, method and computer program product to store tag blocks in a tag buffer in order to provide early row-buffer miss detection, early page closing, and reductions in tag block transfers. A system comprises a tag buffer, a request buffer, and a memory controller. The request buffer stores a memory request having an associated tag. The memory controller compares the associated tag to a plurality of tags stored in the tag buffer and issues the memory request stored in the request buffer to either a memory cache or a main memory based on the comparison.

Owner:ADVANCED MICRO DEVICES INC

Memory scheduling method for changing command order and method of operating memory system

Disclosed is a method of operating a memory system which executes a plurality of commands including a write command and a trim command. The memory system includes a memory device, which includes a plurality of blocks. The method further includes performing garbage collection for generating a free block, calculating a workload level in performing the garbage collection, and changing a command schedule based on the workload level.

Owner:SAMSUNG ELECTRONICS CO LTD

Memory scheduling method and device

InactiveCN112214304AImprove the efficiency of accessing memoryIncrease profitResource allocationComputer architectureEngineering

The invention relates to a memory scheduling method and device. The device comprises a control module, wherein the control module comprises an instruction cache sub-module, an instruction processing sub-module and a storage queue sub-module; the instruction caching sub-module is used for storing a calculation instruction associated with the artificial neural network operation; the instruction processing sub-module is used for analyzing the calculation instruction to obtain a plurality of operation instructions; the storage queue sub-module is used for storing an instruction queue, and the instruction queue comprises a plurality of operation instructions or calculation instructions to be executed according to the front-back sequence of the queue. By means of the method, the operation efficiency of related products during operation of the neural network model can be improved.

Owner:CAMBRICON TECH CO LTD

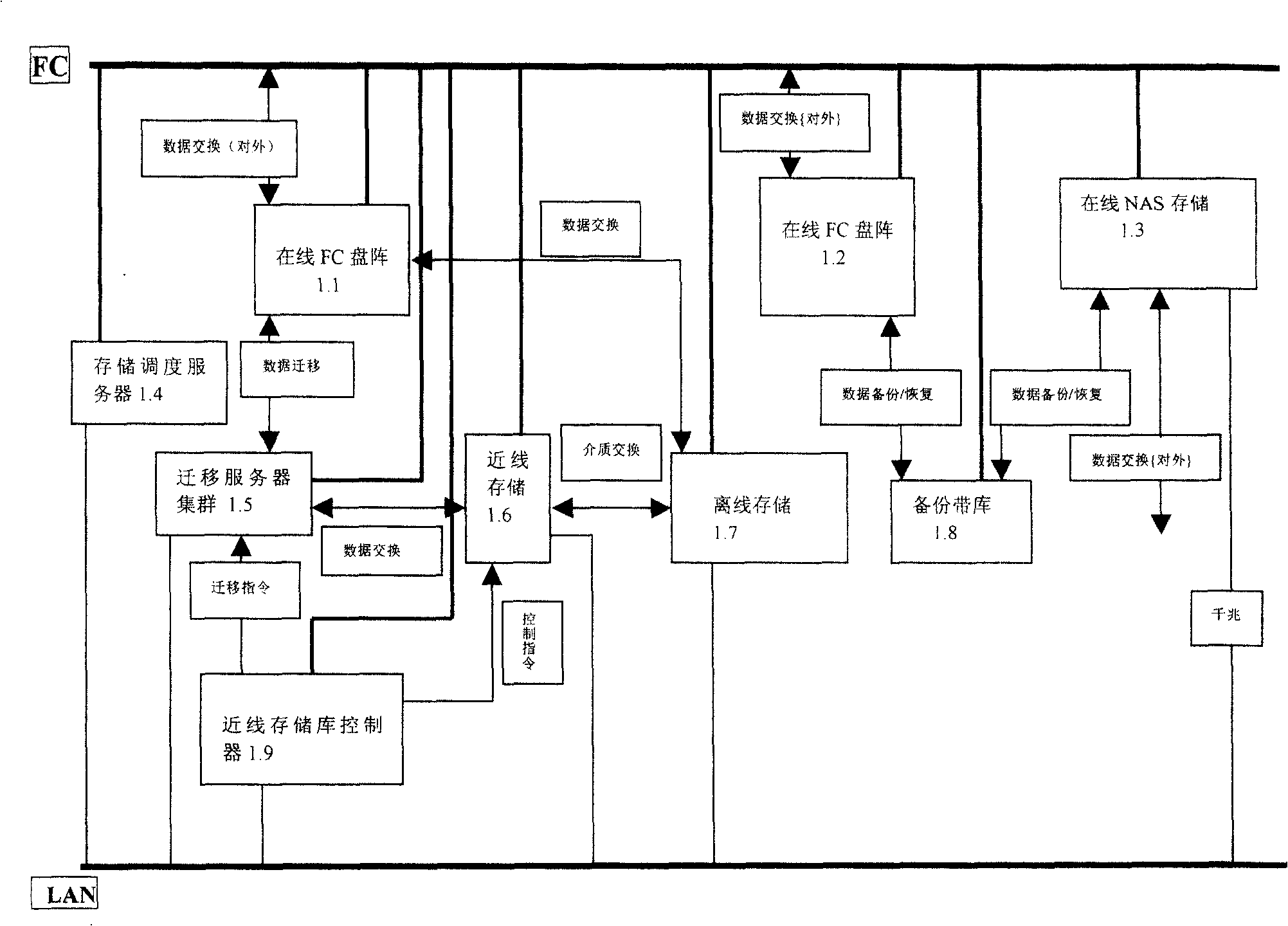

Graded memory management system

InactiveCN100452861CGuarantee opennessSimple interfaceTelevision system detailsColor television detailsDocumentation procedureSystems management

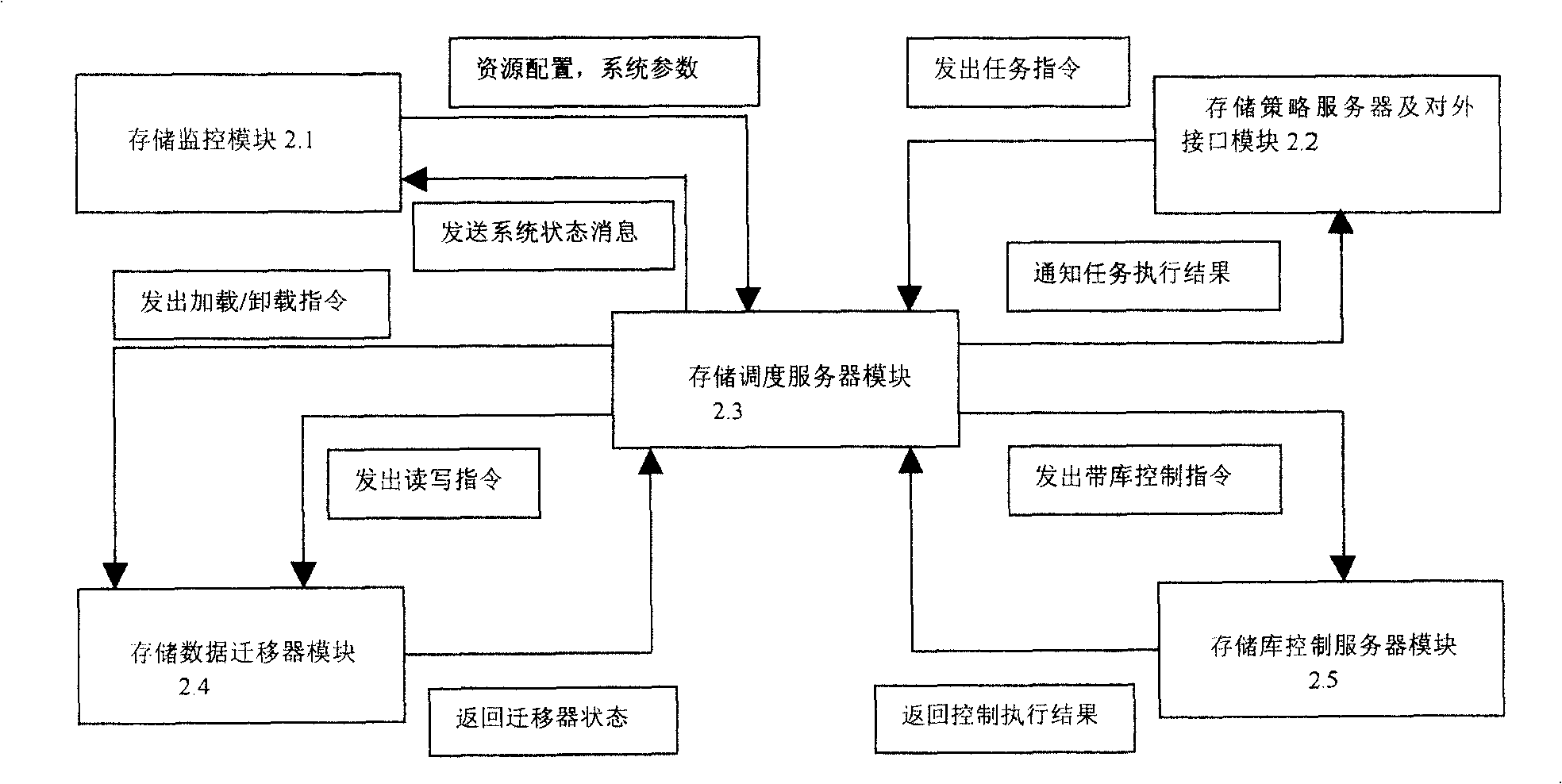

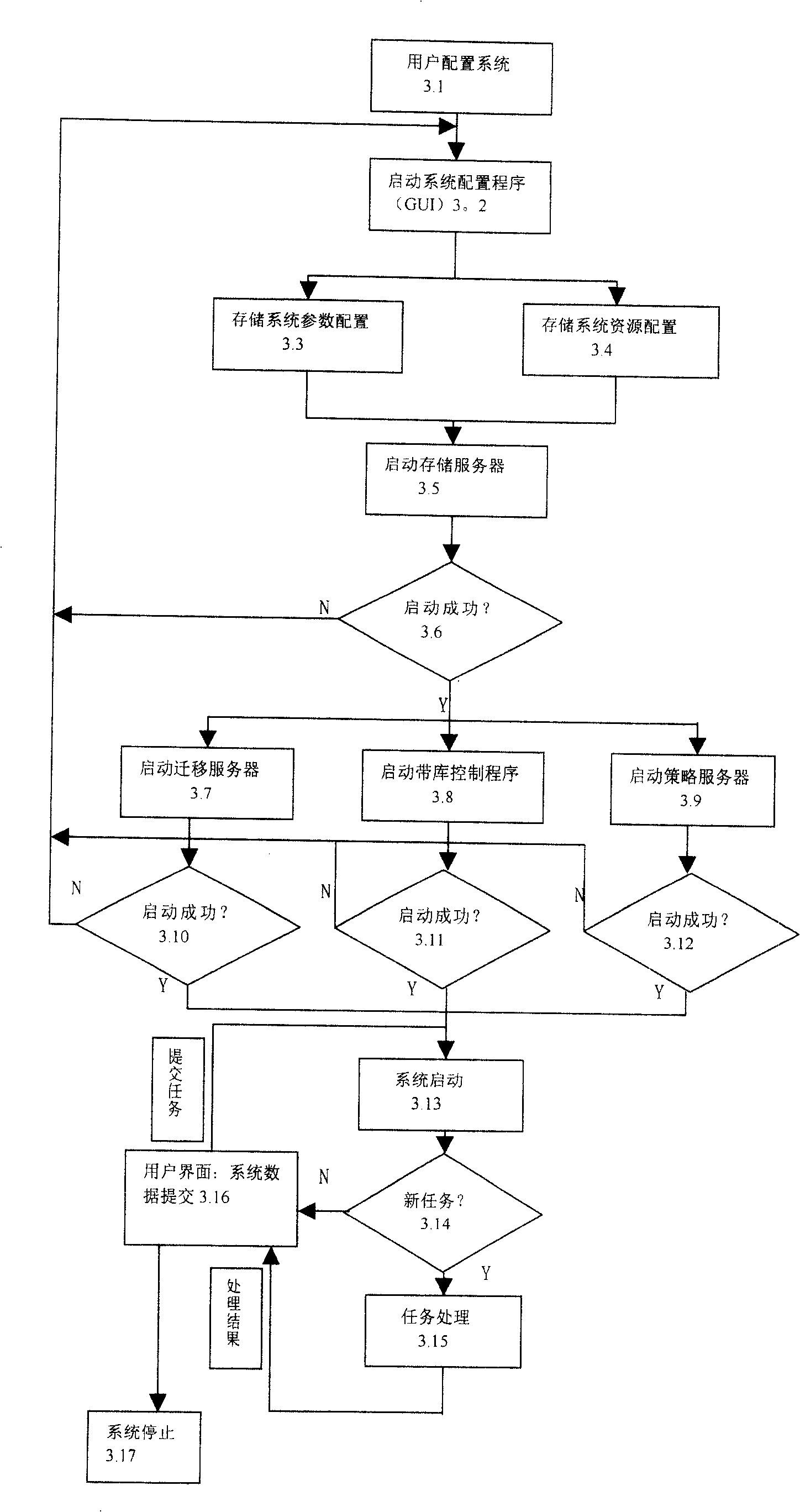

Present invention relates to staging memory management systems management systems for TV station digitizing medium documentation,it is composed of memory scheduling memory scheduling server, data migration server assembly, and management control unit, near wire memory store controller FC disk array, NAS storage facility storage facility, near wire storing, off line storing spare tape library, adopting server + multi-transport apparatus distributed architecture, transport apparatus construction in SAN circumstance, can proceed any extension adopting FC + LAN double net structure adopting object oriented staging memory management technology, to realize on-line, near, off line stored data mode and stored data exchange through staging memory management software. Said invention has excellent disaster held performance.

Owner:CHINA CENTRAL TELEVISION +1

A virtual machine memory allocation method and device

ActiveCN107341060BBalanced memory utilizationImprove resource utilization efficiencyResource allocationSoftware simulation/interpretation/emulationComputer hardwareTerm memory

The embodiment of the invention discloses a virtual machine memory allocation method. The method comprises the steps that memory use conditions of a physical host and each virtual machine are monitored, and memory use information is collected periodically; whether memory use of the virtual machines is reasonable is judged according to the memory use information and preset memory scheduling reference conditions; and a memory scheduling policy of the virtual machines is determined according to the judgment result. The embodiment of the invention furthermore discloses a virtual machine memory allocation device. Through the scheme in the embodiment, memory utilization rates of the virtual machines are kept balanced, and the resource utilization efficiency of the physical machine is improved.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com