Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

47 results about "I/O scheduling" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Input/output (I/O) scheduling is the method that computer operating systems use to decide in which order the block I/O operations will be submitted to storage volumes. I/O scheduling is sometimes called disk scheduling.

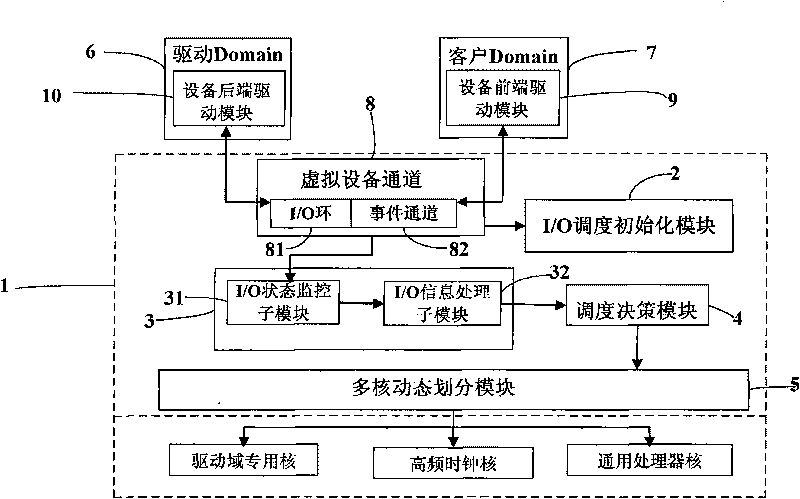

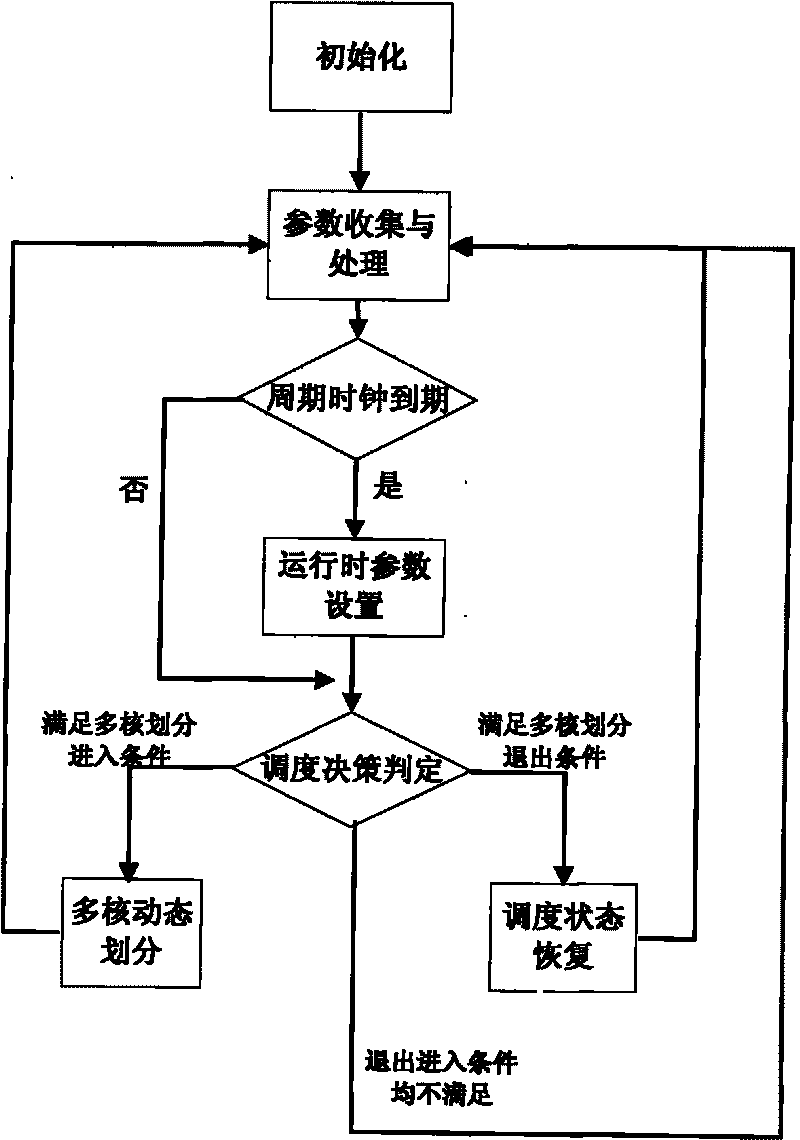

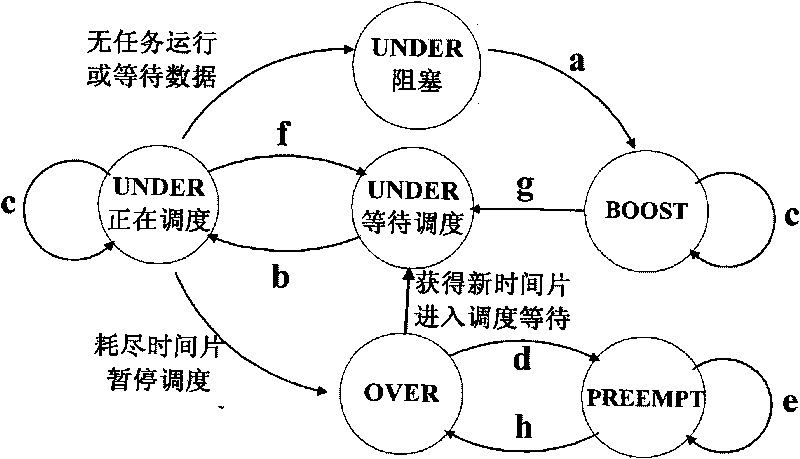

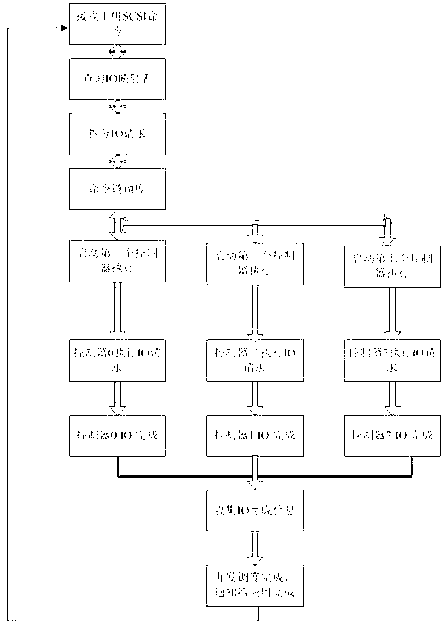

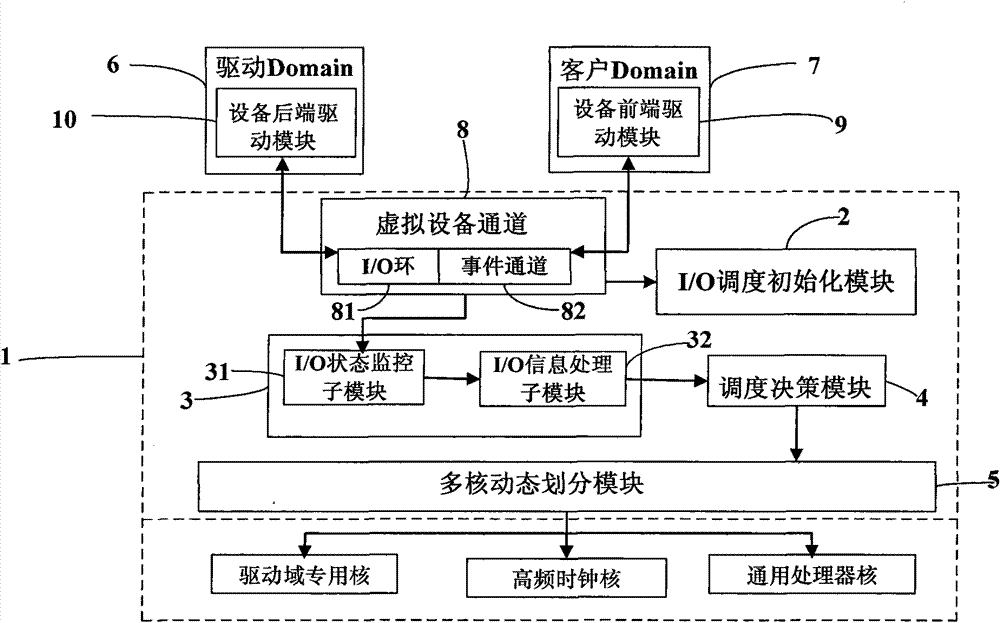

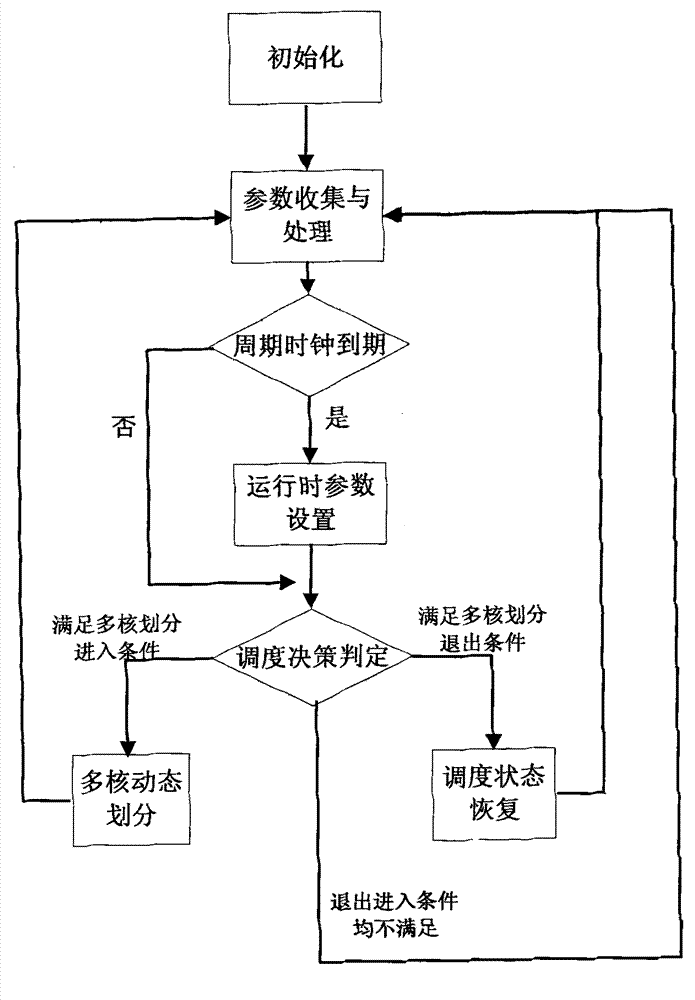

Method for dispatching I/O of asymmetry virtual machine based on multi-core dynamic partitioning

InactiveCN101706742AReduce response delayReduce link time consumptionResource allocationSoftware simulation/interpretation/emulationInformation processingComputer architecture

The invention provides a method for dispatching I / O of an asymmetry virtual machine based on multi-core dynamic partitioning, which comprises the following steps: when a system is started, firstly an I / O dispatching initialization module completes the configuration of dispatching parameters and the allocation of a buffer zone; subsequently, when the system runs, an I / O status monitoring and information processing module dynamically collects and processes the runtime parameters of driving Domain and customer Domain, carries out statistics on parameters such as types, time and frequency of happened I / O event, and then sends the statistics result to a dispatching decision module for judgment; and the dispatching decision module makes dispatching decision according to preset conditions, and gives out commands to a multi-core dynamic partitioning module to execute the operation of multi-core dynamic partitioning or recovering, thus changing the core partitioning mode and dispatching status of a processor of the system and leading the using mode of processor resources and the dispatching switching strategy of Domain to better adapt to I / O load requirements of the current system, and achieving the purpose of optimizing I / O performance.

Owner:BEIHANG UNIV

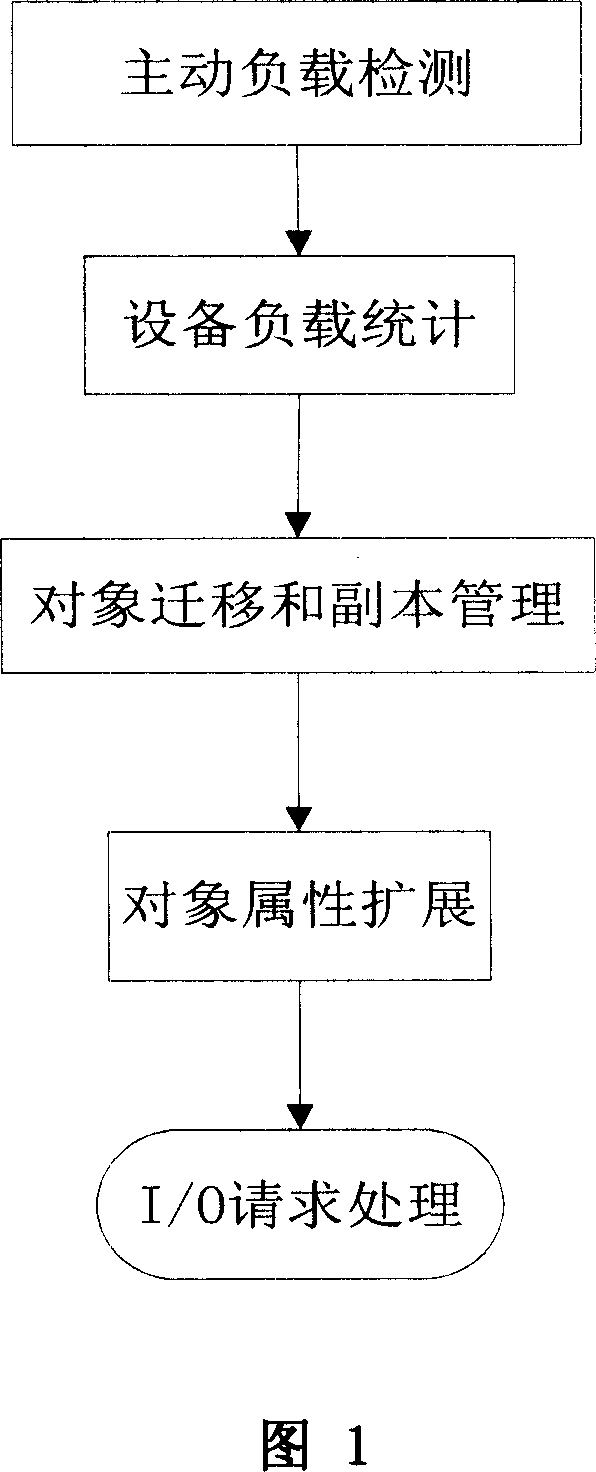

Load balancing method based on object storage device

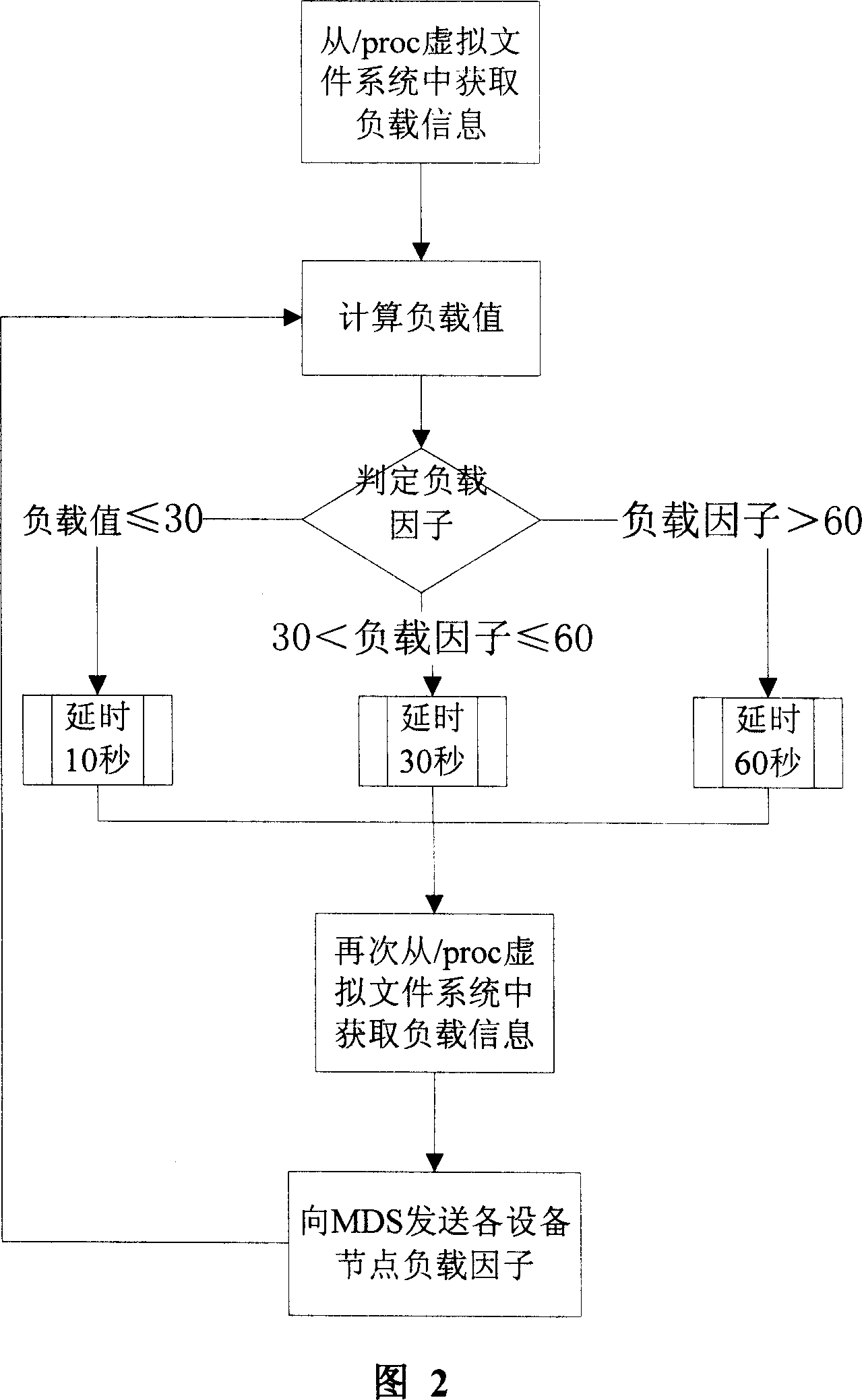

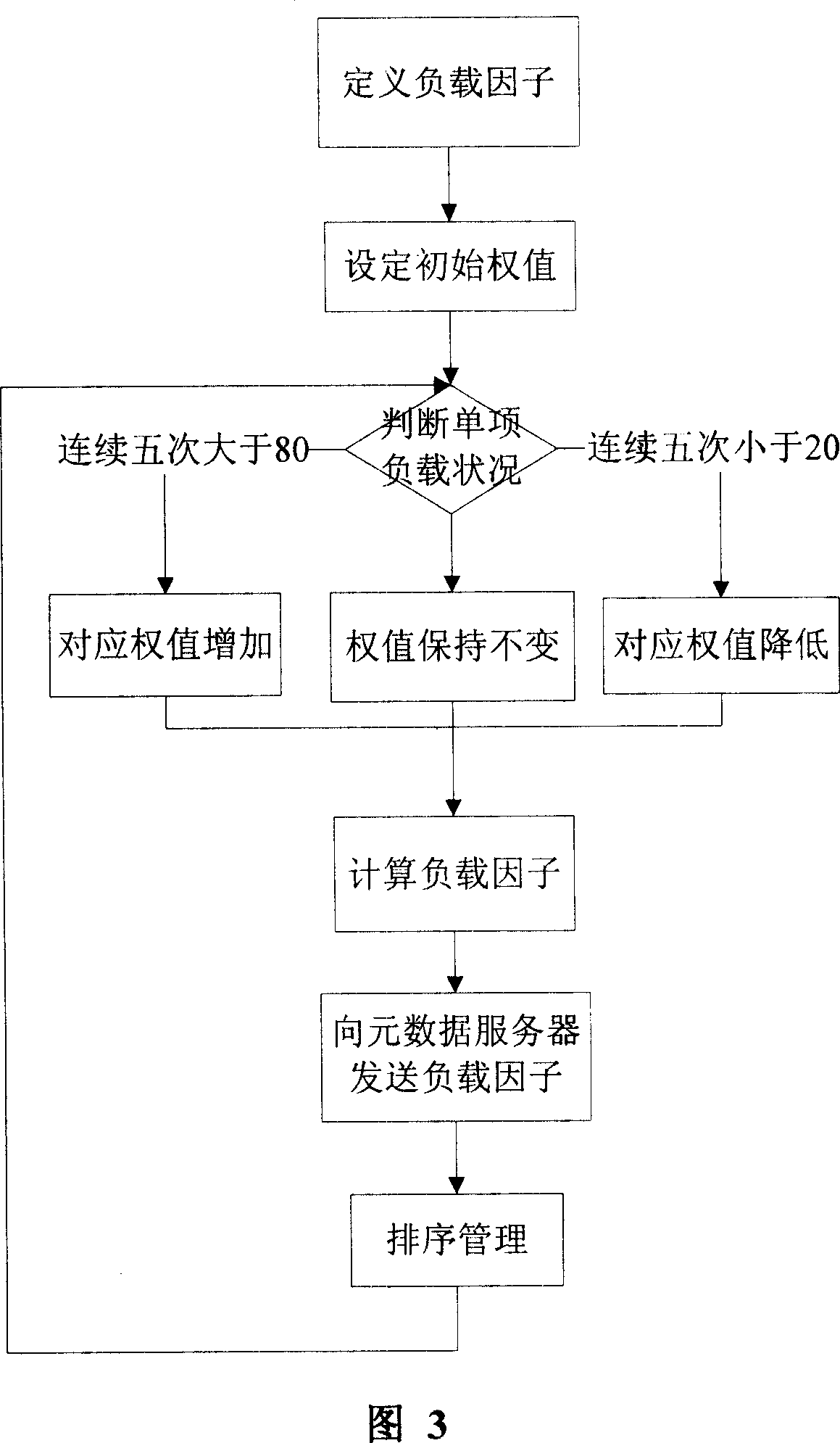

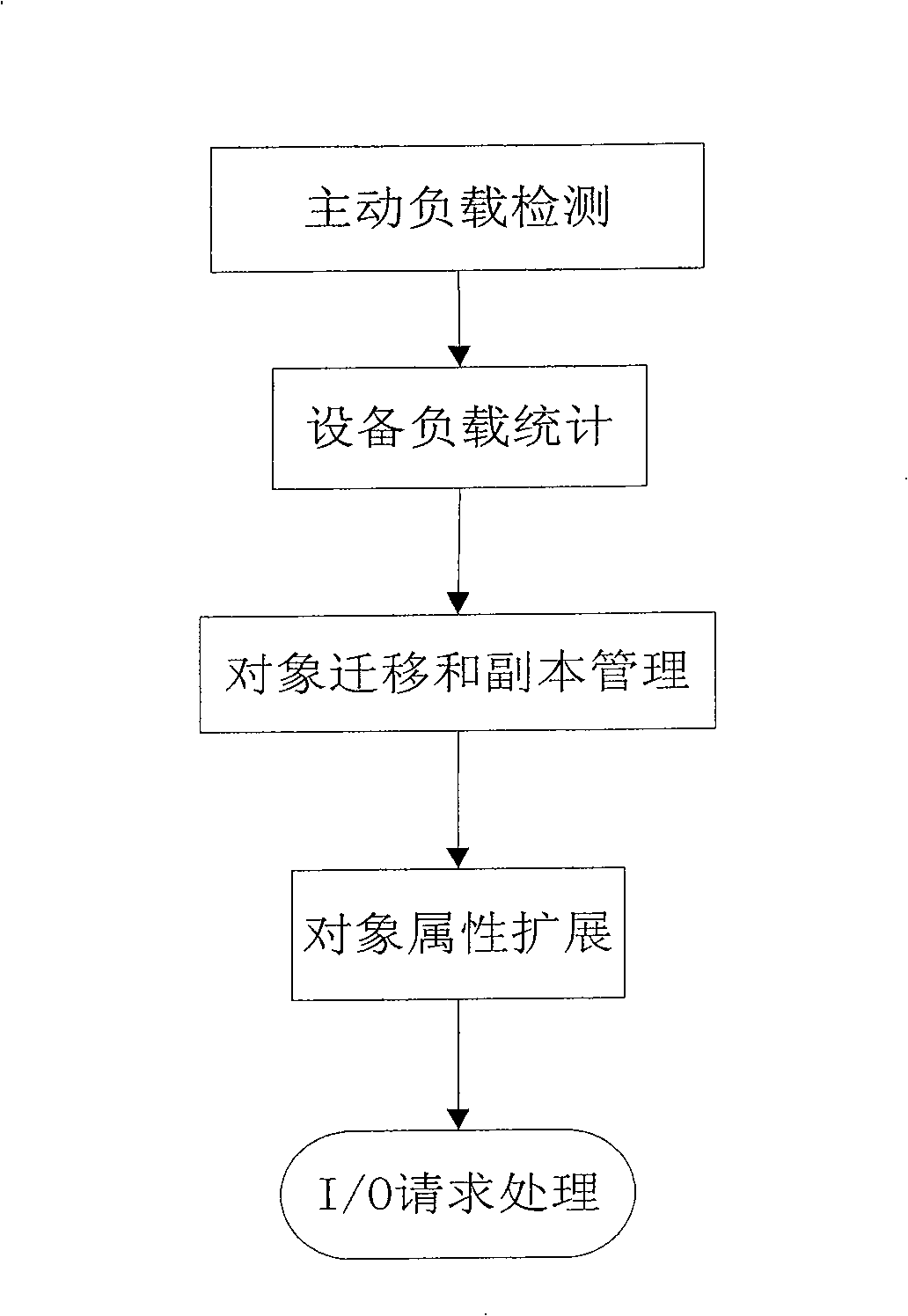

InactiveCN101013387AImplement load migrationLoad balancingResource allocationSpecial data processing applicationsObject basedSCSI

It's an object-based storage device load balancing method, belonging to the computer storage technology, aiming at balancing the distribution of the system load in the storage nodes by reasonable scheduling of I / O load and hot data migration, to take full advantage of high-performance of storage device nodes. The order of the invention includes: (a) active load detection steps; (2) equipment load statistics steps; (3) object migration and copies management steps; (4) object properties expansion steps; (5) I / O request processing steps. The invention expands the SCSI protocol standards of object storage devices (OSD). With the advantages of object storage model, it provides basis for I / O scheduling decision, makes full use of the computing capacity of all the storage nodes to balance the load, reduce storage system response time and increase the storage system throughput.

Owner:HUAZHONG UNIV OF SCI & TECH

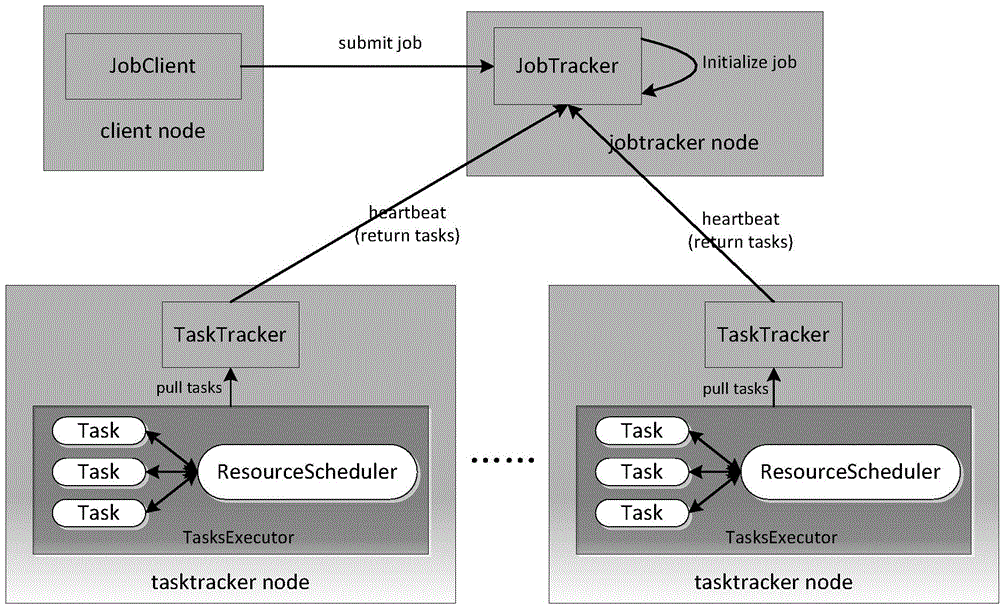

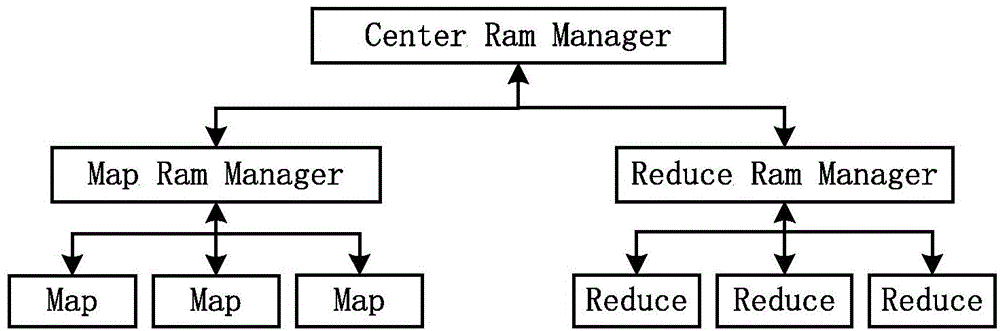

Multithreading-based MapReduce execution system

ActiveCN103605576AReduce management pressureReduce competitionResource allocationGranularityParallel computing

The invention discloses a multithreading-based MapReduce execution system comprising a MapReduce execution engine implementing multithreading. A multi-process execution mode of Map / Reduce tasks in original Hadoop is changed into a multithread mode; details about memory usage are extracted from Map tasks and Reduce tasks, a MapReduce process is divided into multiple phases under fine granularity according to the details, and a shuffle process in the original Hadoop is changed from Reduce pull into Map active push; a uniform memory management module and an I / O management module are implemented in the MapReduce multithreading execution engine and used to centrally manage memory usage of each task thread; a global memory scheduling and I / O scheduling algorithm is designed and used to dynamically schedule system resources during the execution process. The system multithreading-based MapReduce execution system has the advantages that memory usage can be maximized by users without modifying the original MapReduce program, disk bandwidth is fully utilized, and the long-last I / O bottleneck problem in the original Hadoop is solved.

Owner:HUAZHONG UNIV OF SCI & TECH

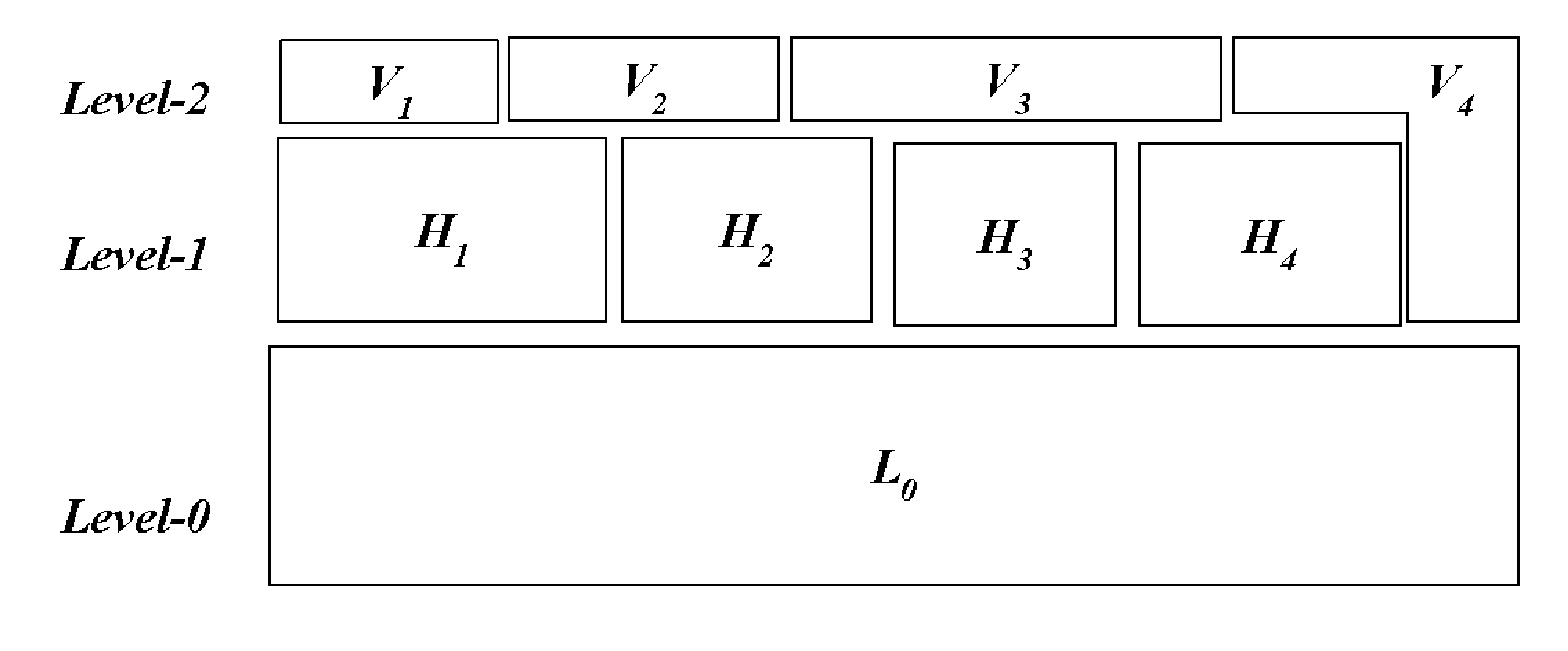

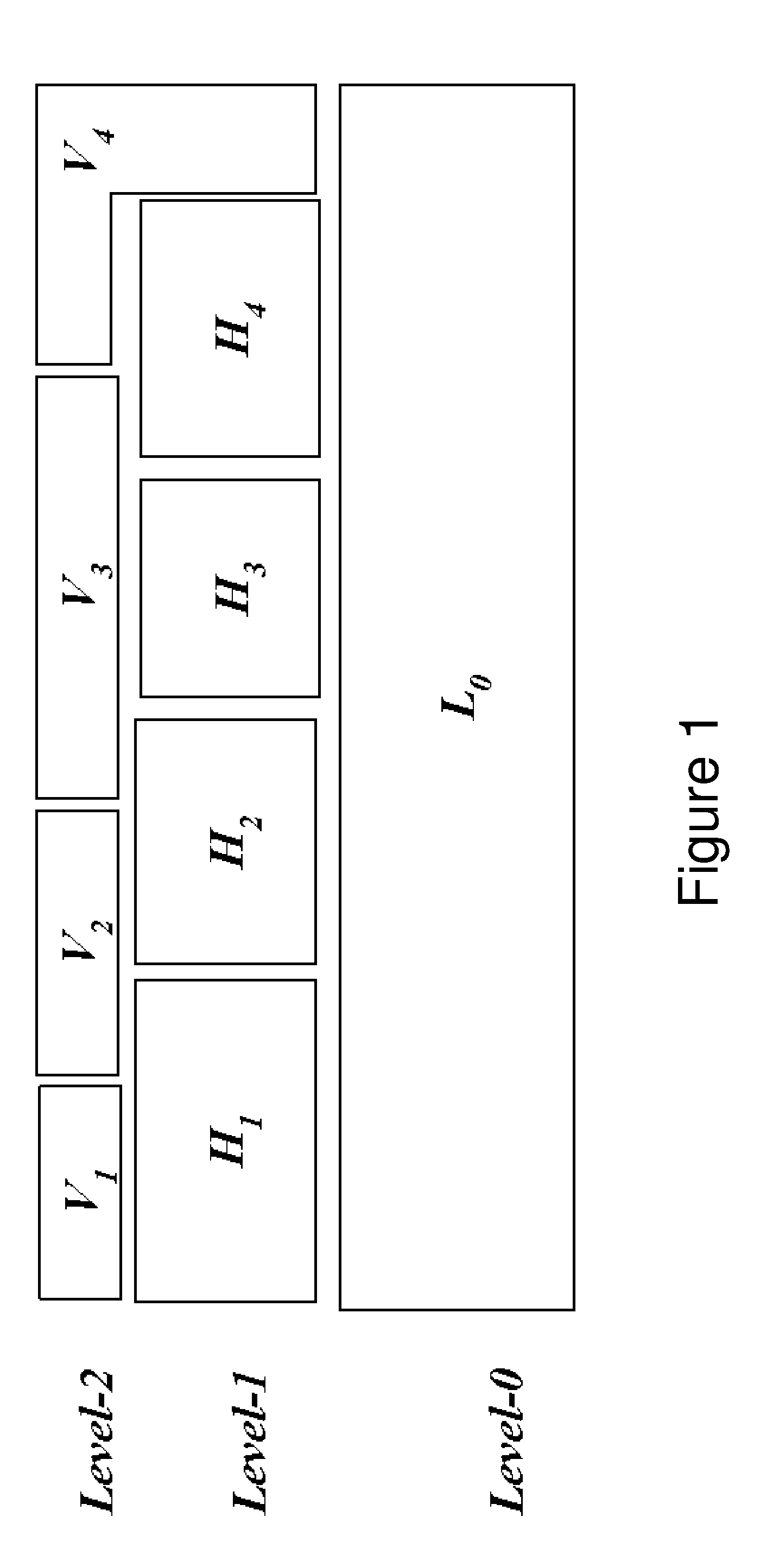

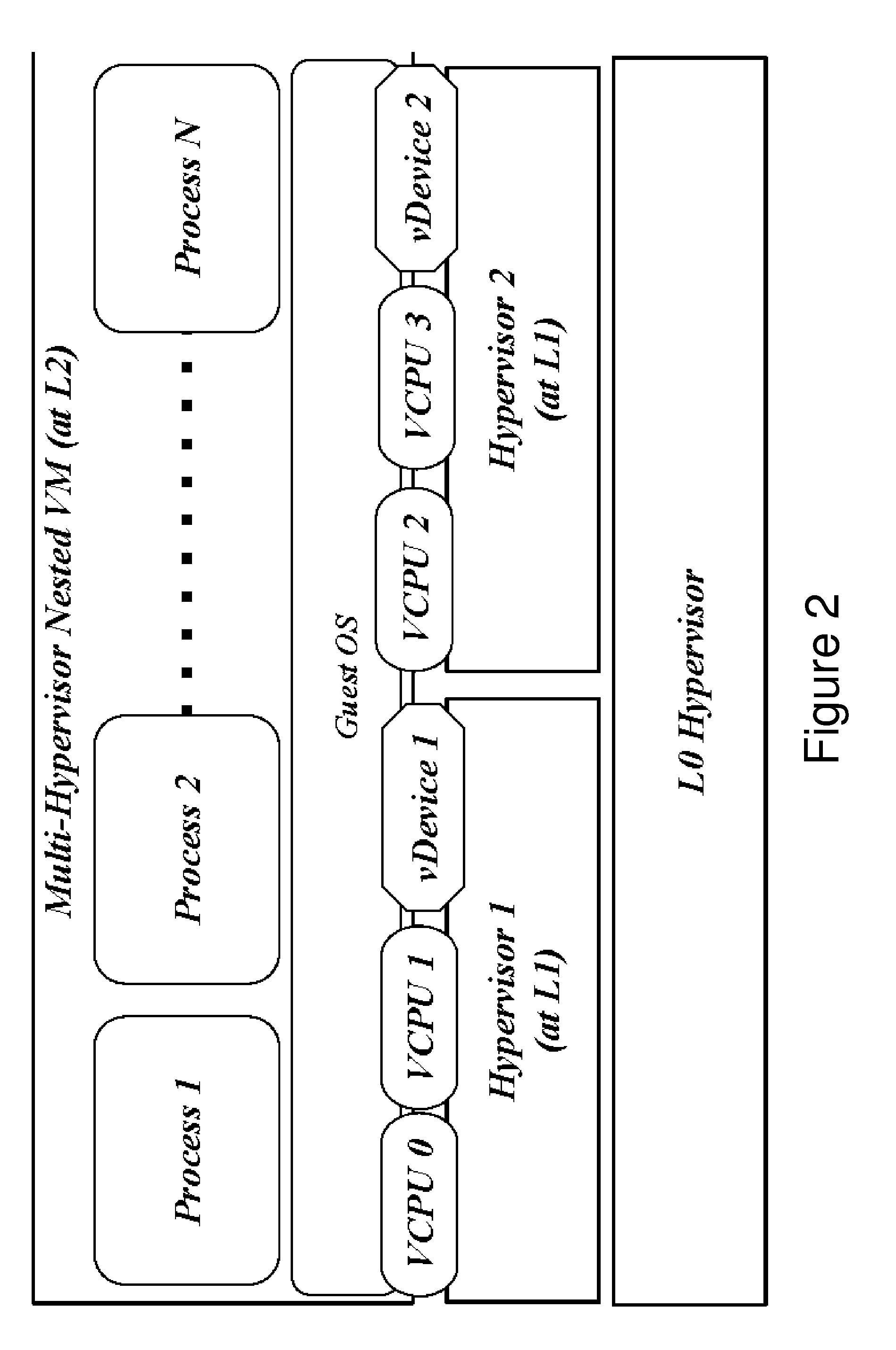

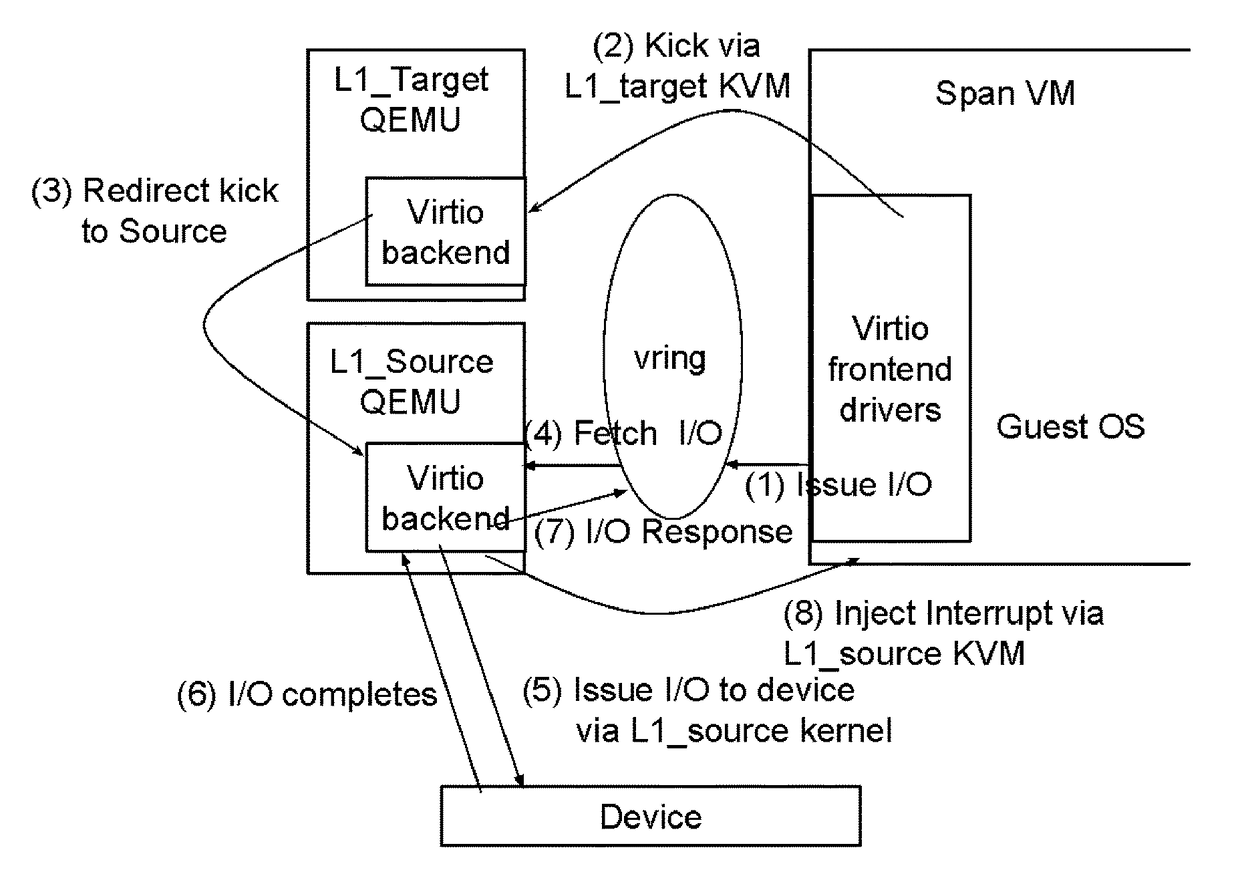

Multi-hypervisor virtual machines

ActiveUS20160147556A1Improve performanceNegatively impacts the I/O performance of the benchmarked systemSoftware simulation/interpretation/emulationMemory systemsMemory footprintNested virtualization

Standard nested virtualization allows a hypervisor to run other hypervisors as guests, i.e. a level-0 (L0) hypervisor can run multiple level-1 (L1) hypervisors, each of which can run multiple level-2 (L2) virtual machines (VMs), with each L2 VM is restricted to run on only one L1 hypervisor. Span provides a Multi-hypervisor VM in which a single VM can simultaneously run on multiple hypervisors, which permits a VM to benefit from different services provided by multiple hypervisors that co-exist on a single physical machine. Span allows (a) the memory footprint of the VM to be shared across two hypervisors, and (b) the responsibility for CPU and I / O scheduling to be distributed among the two hypervisors. Span VMs can achieve performance comparable to traditional (single-hypervisor) nested VMs for common benchmarks.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

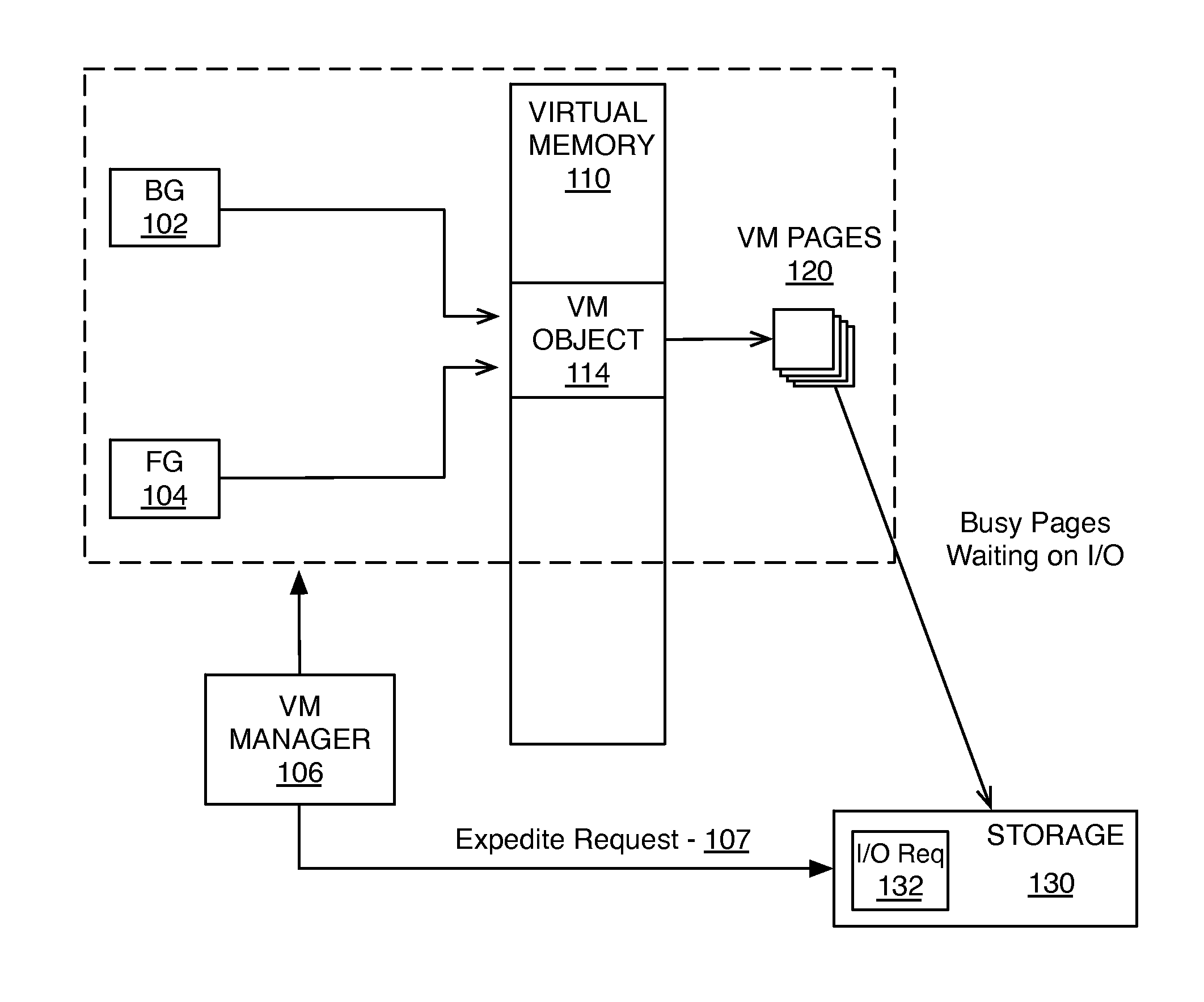

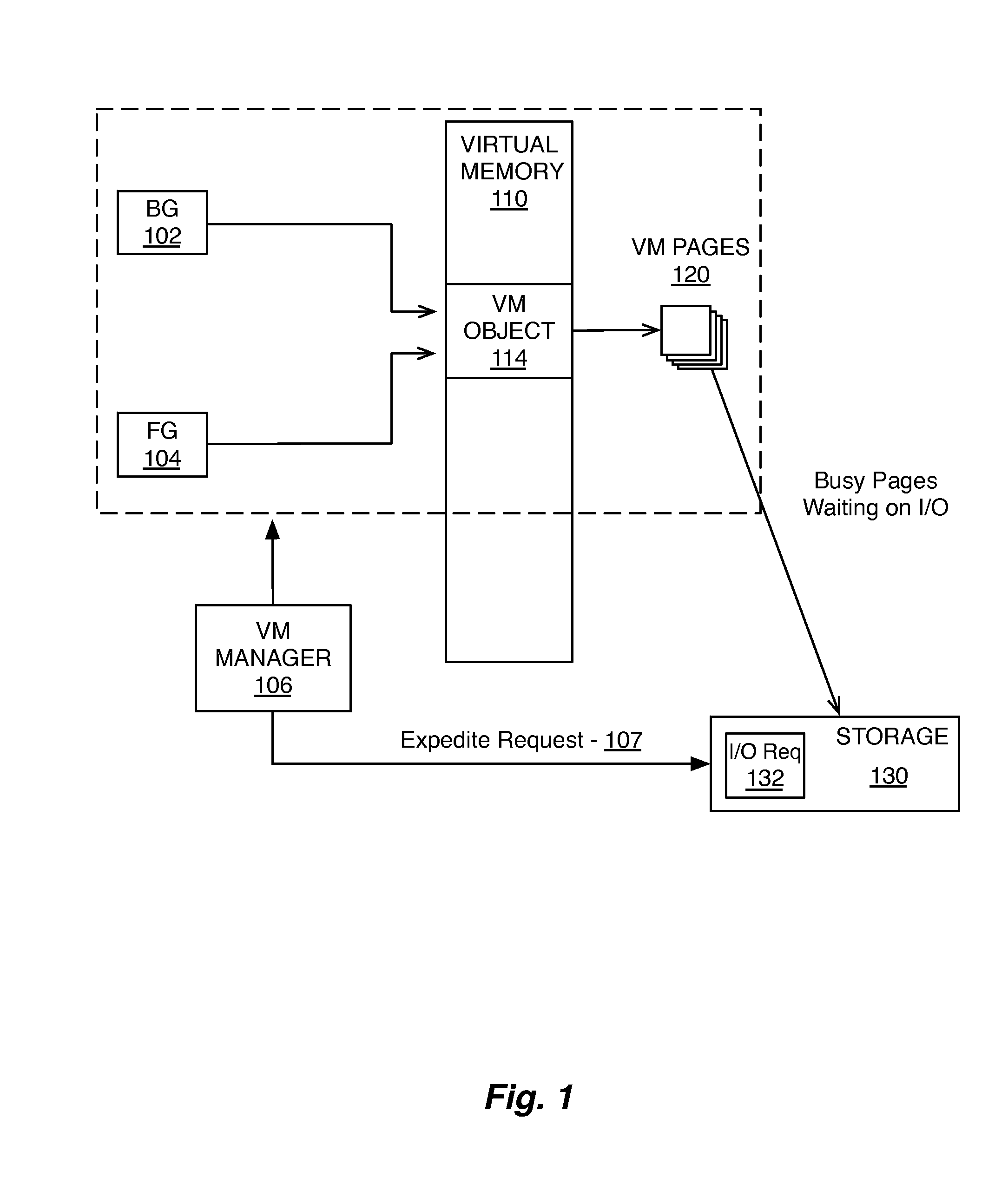

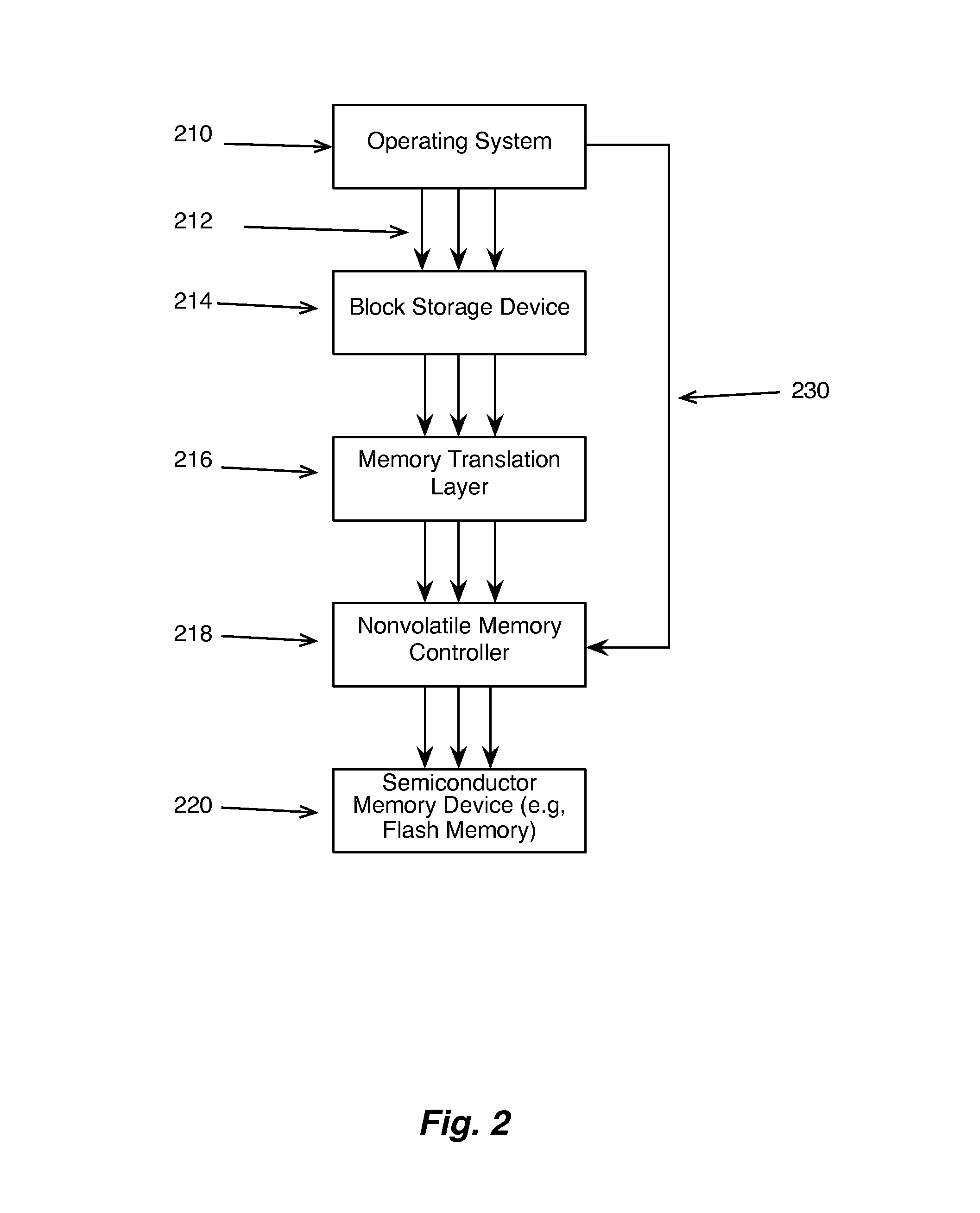

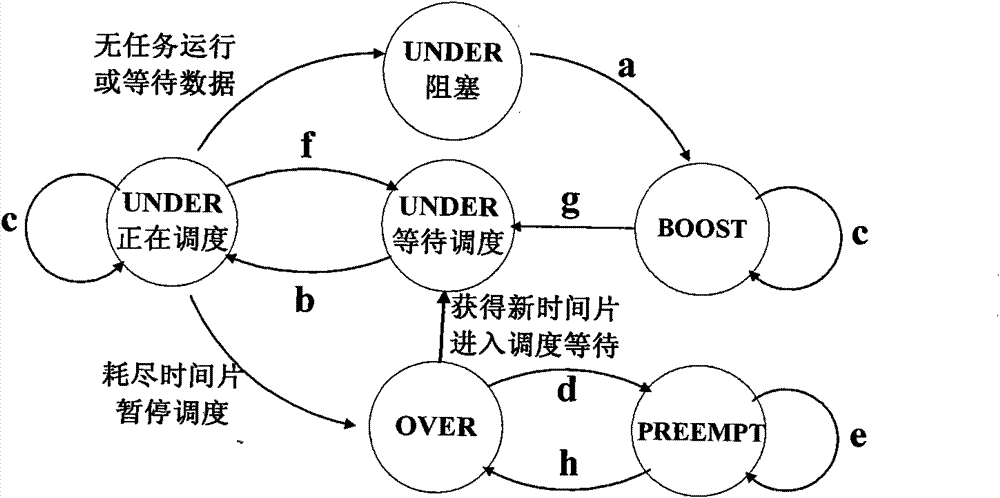

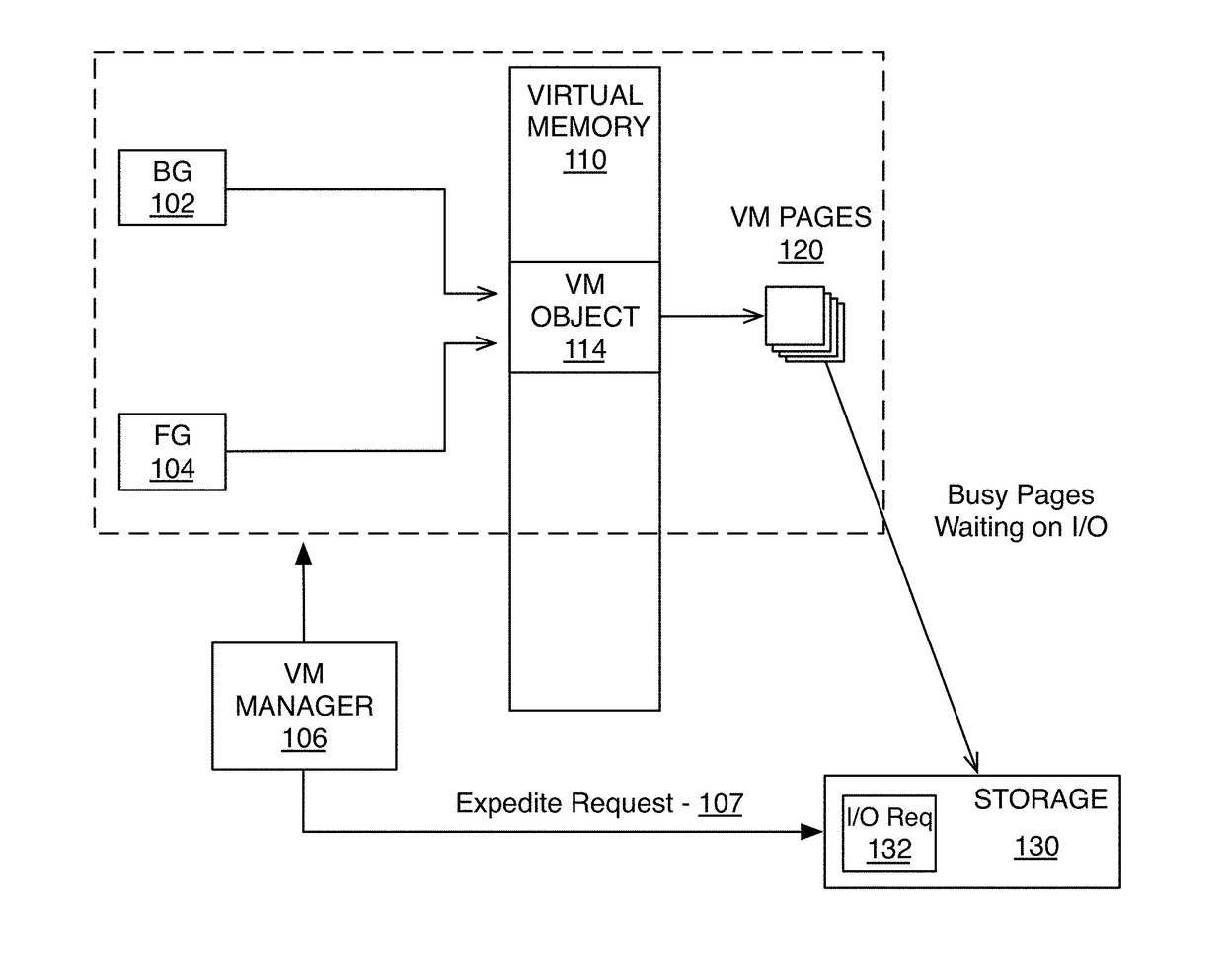

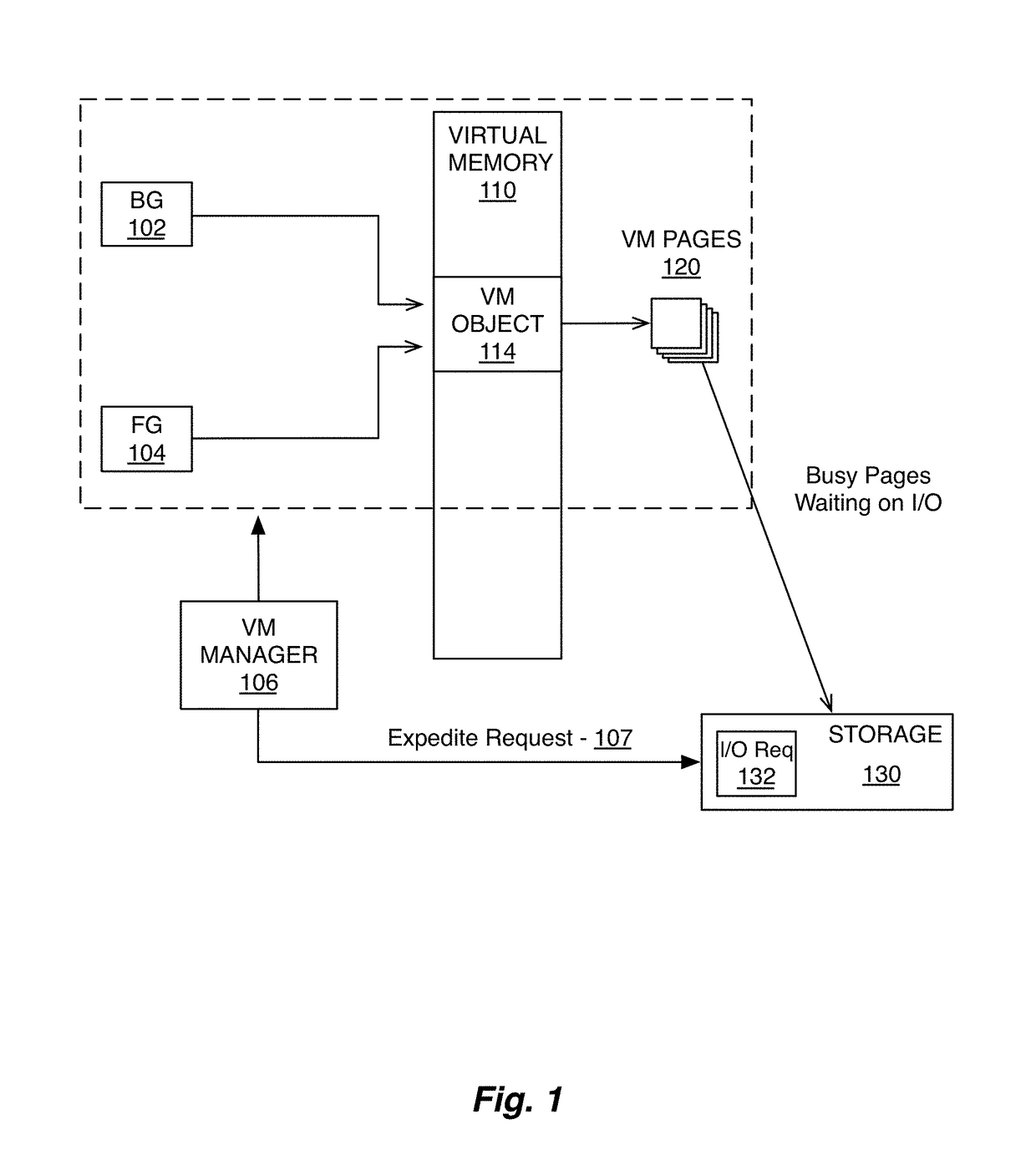

I/O scheduling

ActiveUS20150347327A1Input/output to record carriersProgram controlComputer hardwarePriority inversion

In one embodiment, input-output (I / O) scheduling system detects and resolves priority inversions by expediting previously dispatched requests to an I / O subsystem. In response to detecting the priority inversion, the system can transmit a command to expedite completion of the blocking I / O request. The pending request can be located within the I / O subsystem and expedited to reduce the pendency period of the request.

Owner:APPLE INC

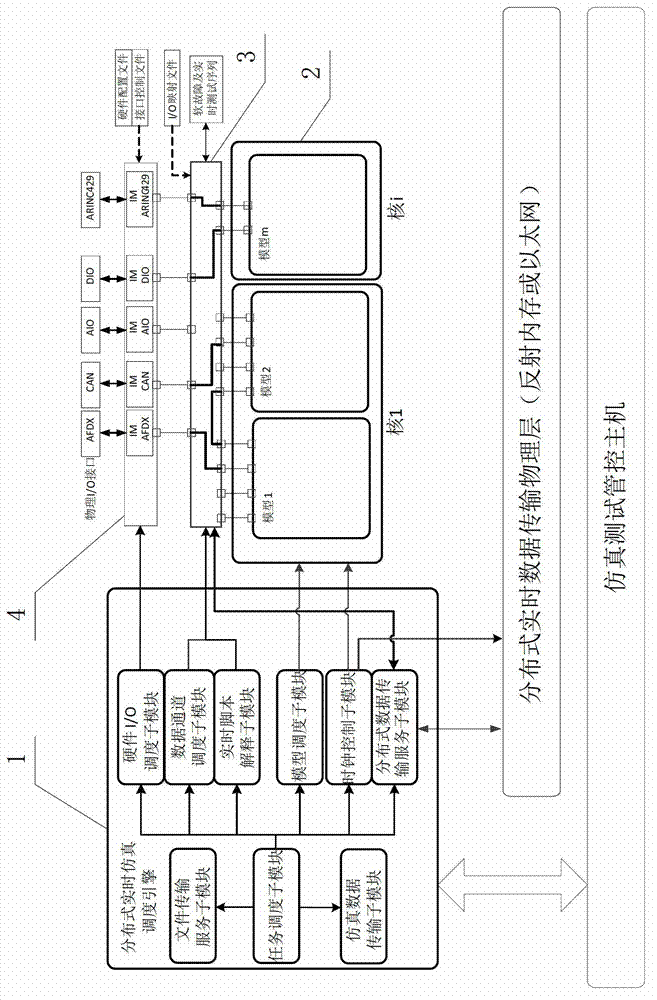

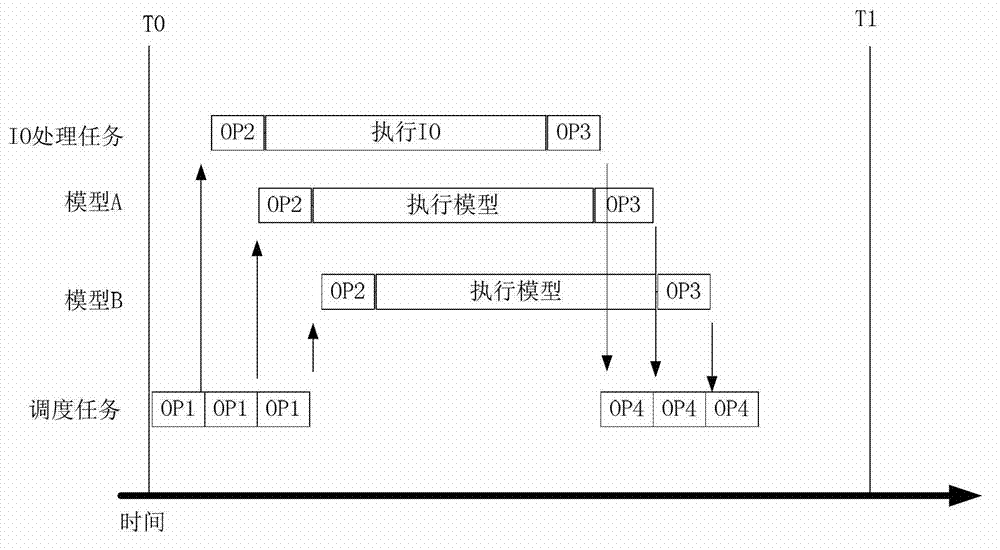

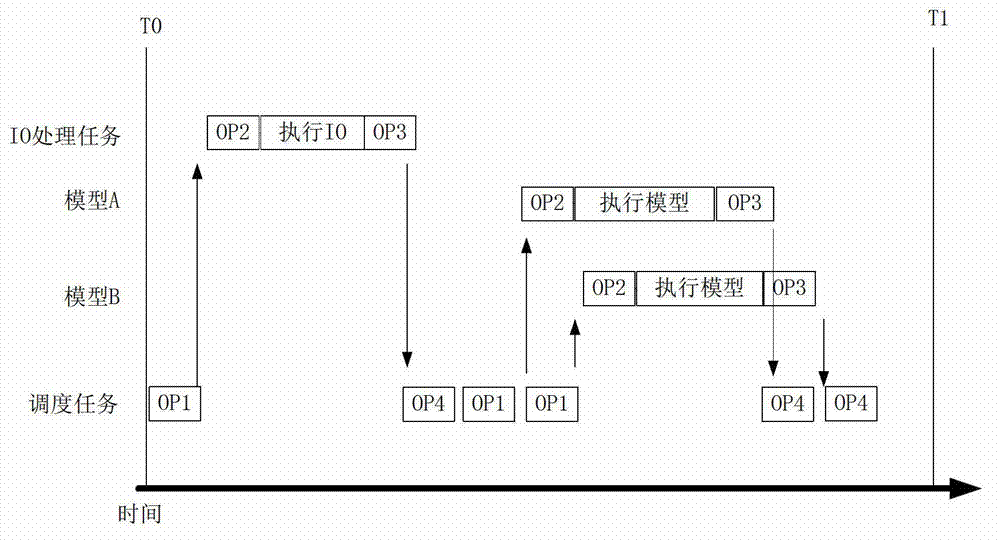

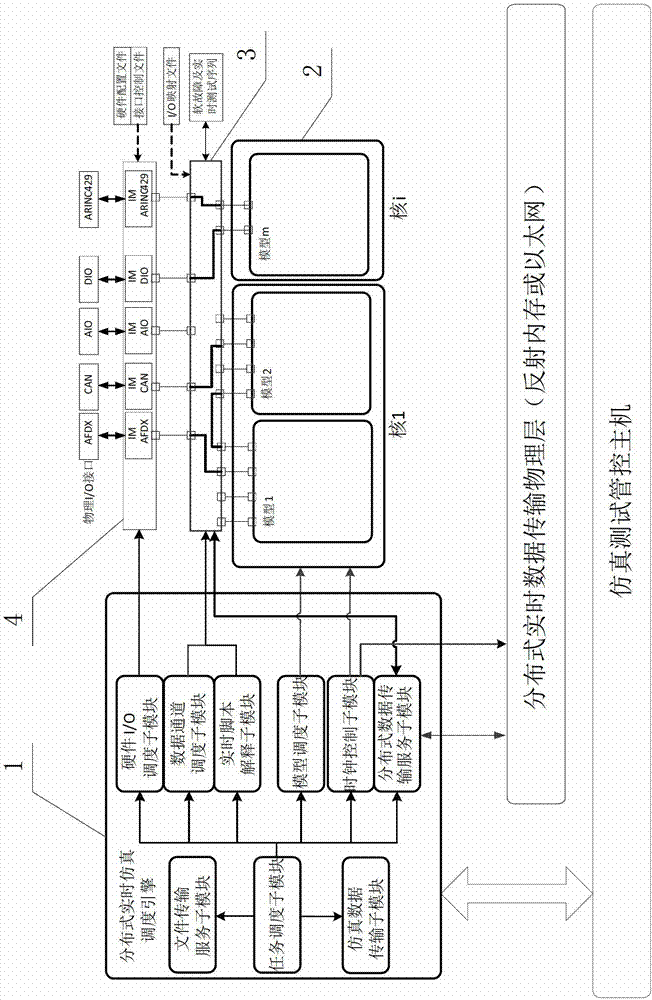

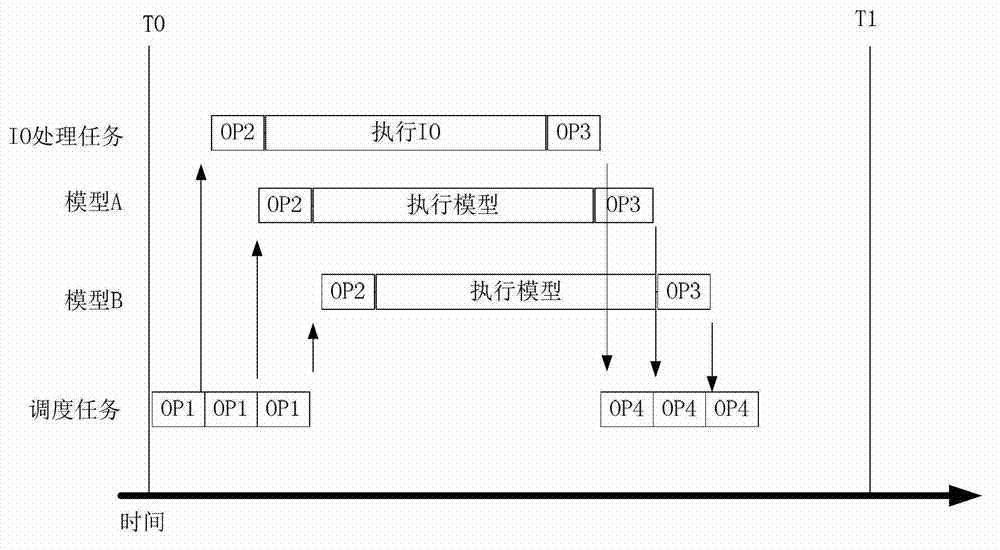

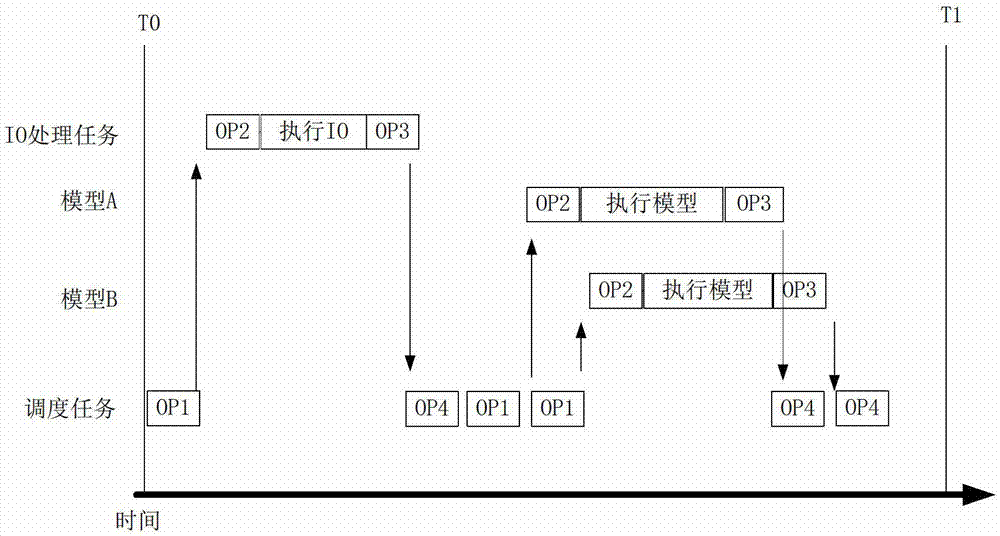

Multi-core multi-model parallel distributed type real-time simulation system

ActiveCN102929158AImprove applicabilityFlexible configurationSimulator controlComputer hardwareReal-time simulation

The invention provides a multi-core multi-model parallel distributed type real-time simulation system which comprises a main control module, a model module, a data channel control module and a hardware interface module, wherein the main control module is used for accomplishing corresponding functions by scheduling different simulation scheduling algorithms, transmitting instructions for accomplishing data mapping in accordance with simulation setup to the data channel control module according to hardware I / O (Input / Output) scheduling polices in each simulation period, transmitting instructions for accomplishing the IO data read-out and write-in within one period to the hardware interface module, and transmitting instructions for stimulating the operation to the model module; the model module is used for acquiring input data once, solving once and output data once in each simulation period according to the instructions; the data channel control module is used for accomplishing data mapping and relevant program processing of the I / O of a model or hardware in each period according to the instructions; and the hardware interface module is used for carrying out configuration read-out and output on input / output streams of hardware I / O equipment according to a data configuration format of a file, and accomplishing the operation of read-out or write-in to various hardware I / O equipment.

Owner:北京华力创通科技股份有限公司

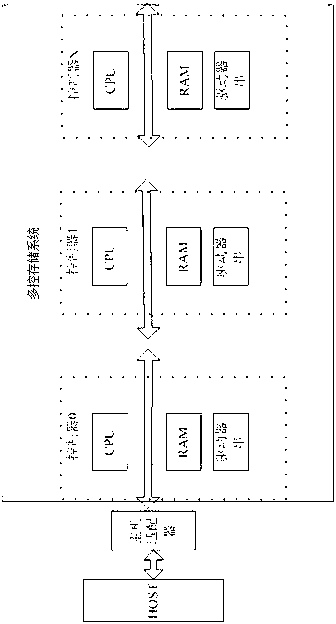

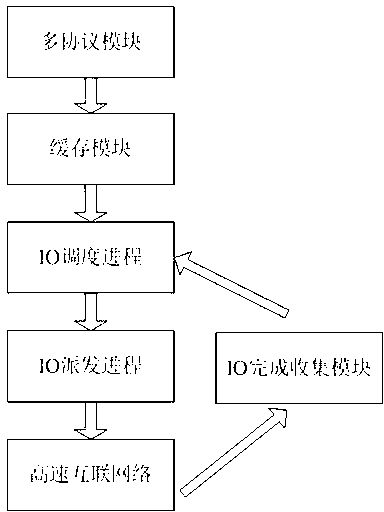

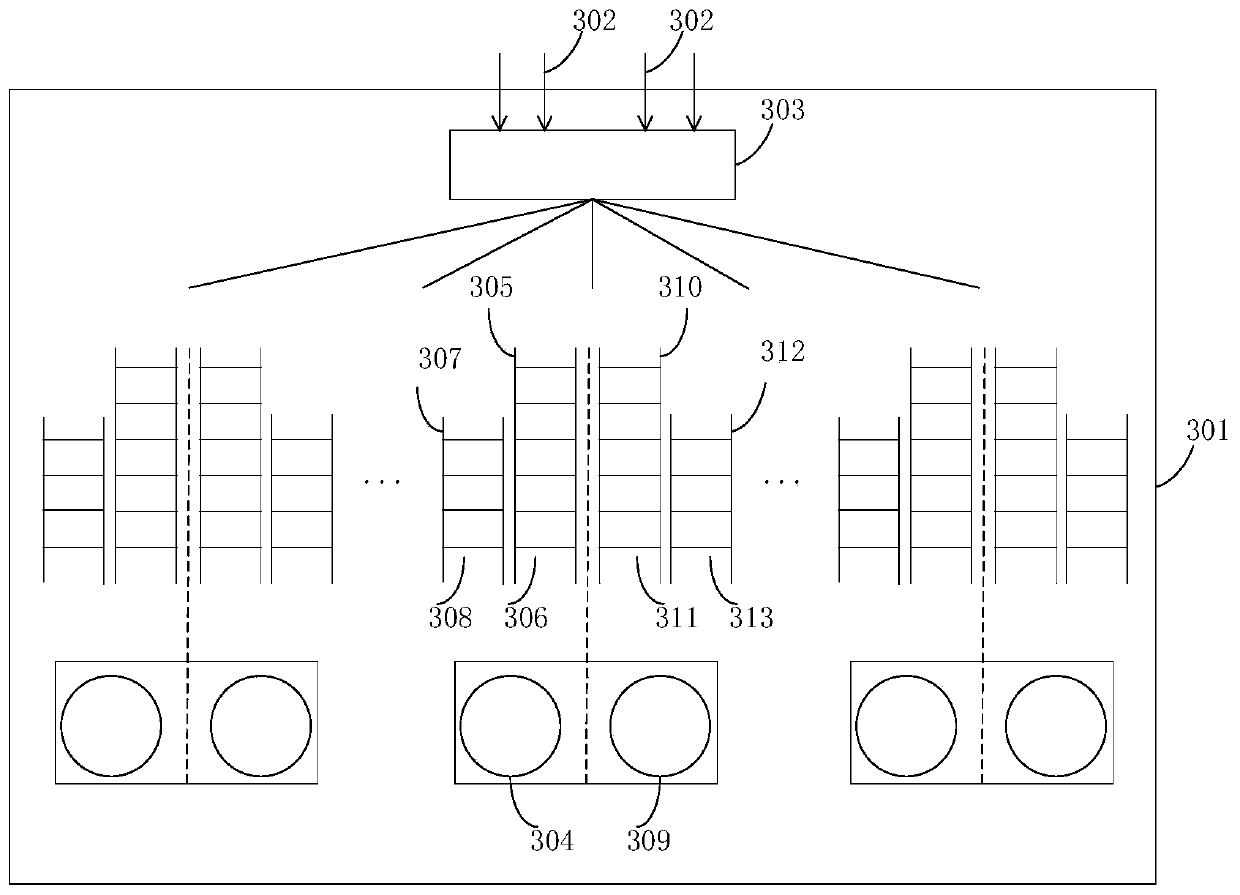

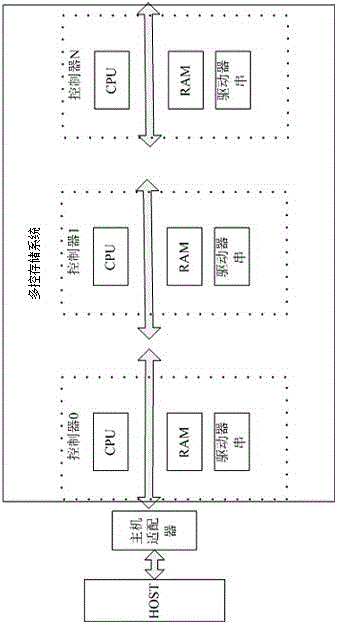

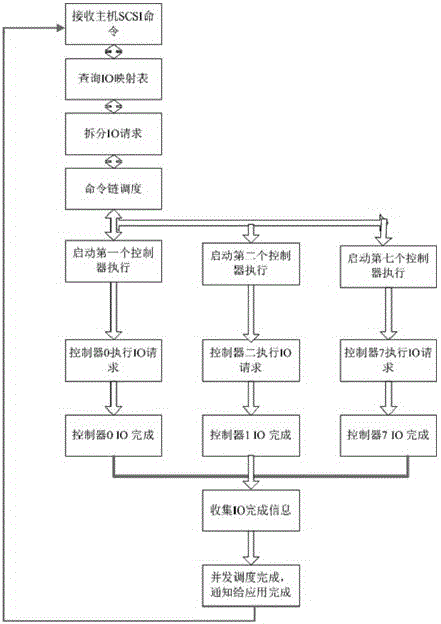

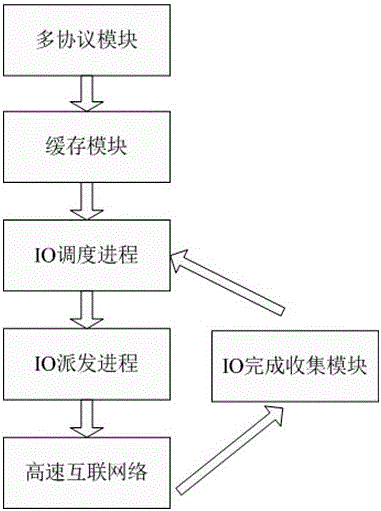

Self-adaptive IO (Input Output) scheduling method of multi-control storage system

ActiveCN103135943AImprove load statusLoad balancingInput/output to record carriersController architectureNetwork connection

The invention provides a self-adaptive I / O (Input / Output) scheduling method of a multi-control storage system. The self-adaptive I / O scheduling method of the multi-control storage system has the advantages of being capable of achieving load balance of a multiple controller architecture and among controllers; avoiding risk and performance choke points caused by single controller failure; supporting a plurality of host connecting interfaces; supporting an ISCSI (Internet Small Computer System Interface), an FC (Fibre Channel), an InfiniBand and 10 gigabit network connection; being capable of providing high bandwidth IB (InfiniBand) and the 10 gigabit network connection for users at the same time; and meeting the differentiation requirements of customers for high bandwidth and high performance. The invention relates to I / O scheduling of the multi-control storage system and provides the I / O scheduling method among multiple controllers. When the multi-control storage system accepts an I / O request from an application layer, the self-adaptive I / O scheduling method of the multi-control storage system can be utilized to schedule the I / O request to the multiple controllers to perform simultaneous concurrent execution, not only allocates unallocated I / O requests to low load controllers, but also reschedules I / O requests to lower load controllers from overload controllers, so that the load state of every controller in a system is improved, I / O load scheduling and balance on multi-control nodes are achieved, potentials of equipment are fully scheduled, and the performance of the system is improved.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

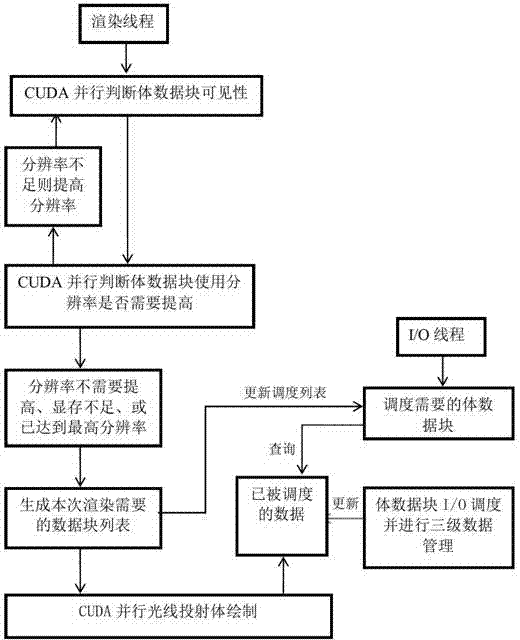

Real-time ray cast volume rendering method of three-dimensional earthquake volume data

InactiveCN103198514ADraw in real timeEasy to shareSeismic signal processing3D-image renderingVideo memoryImage resolution

Owner:NANJING UNIV

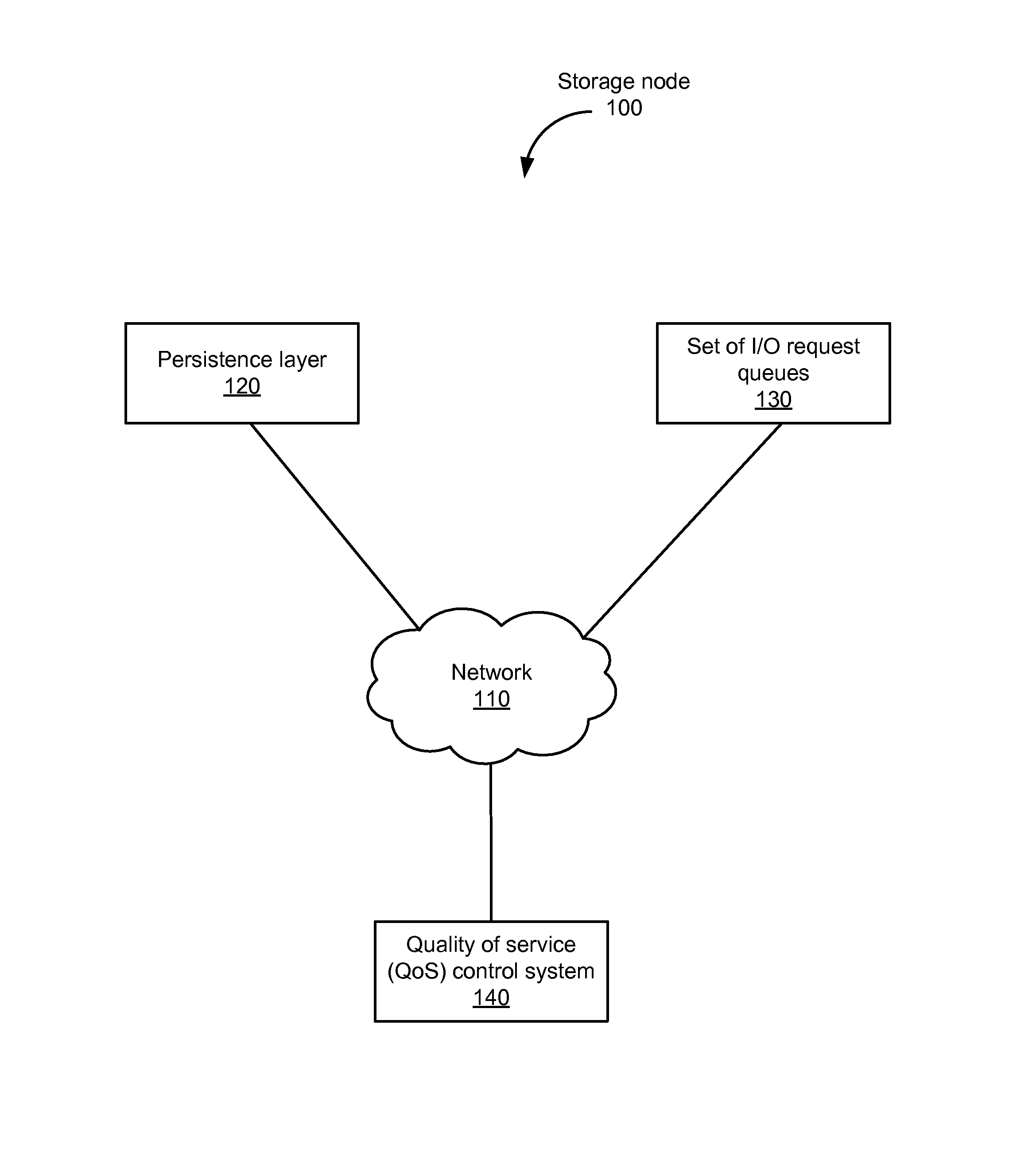

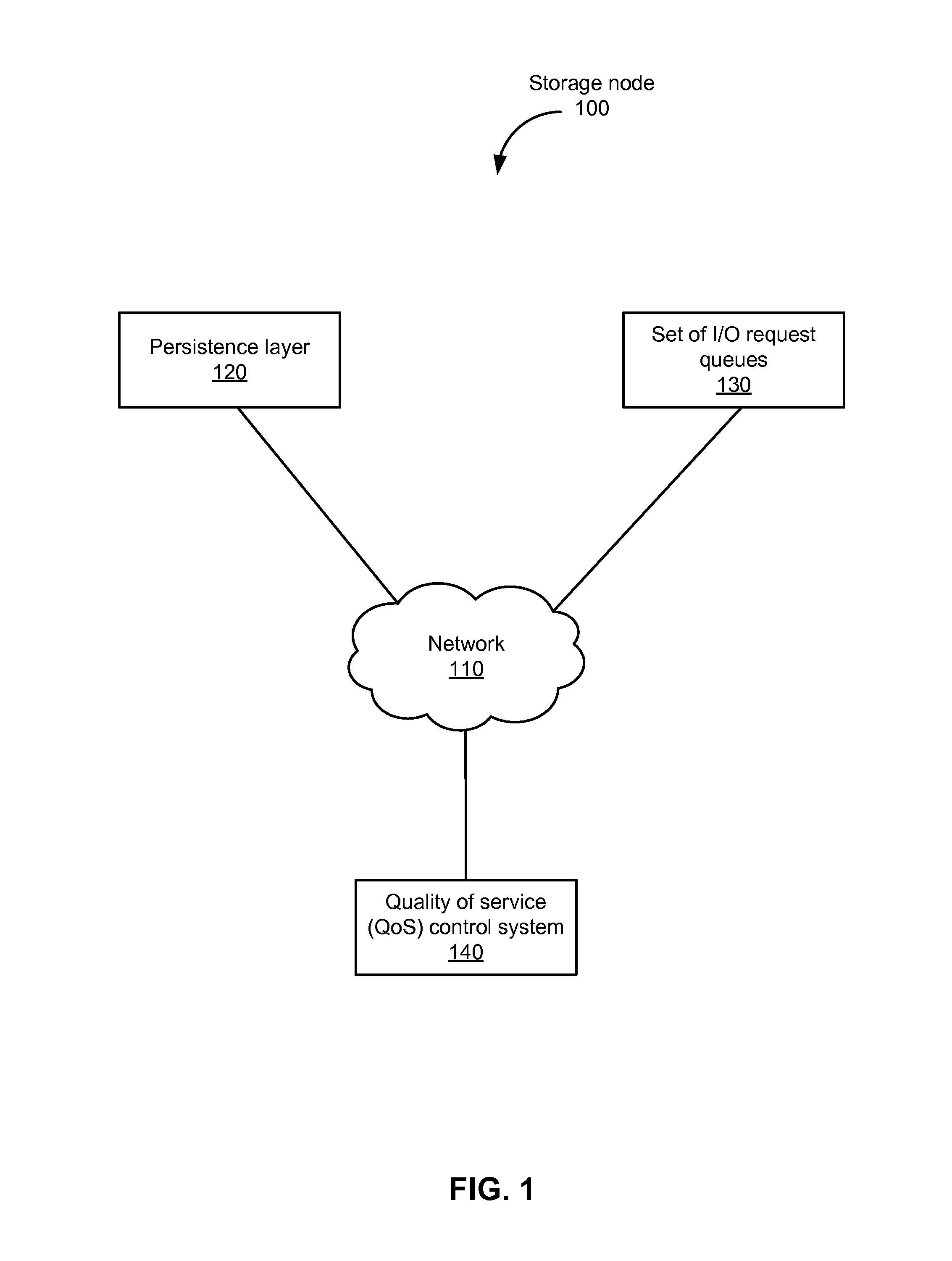

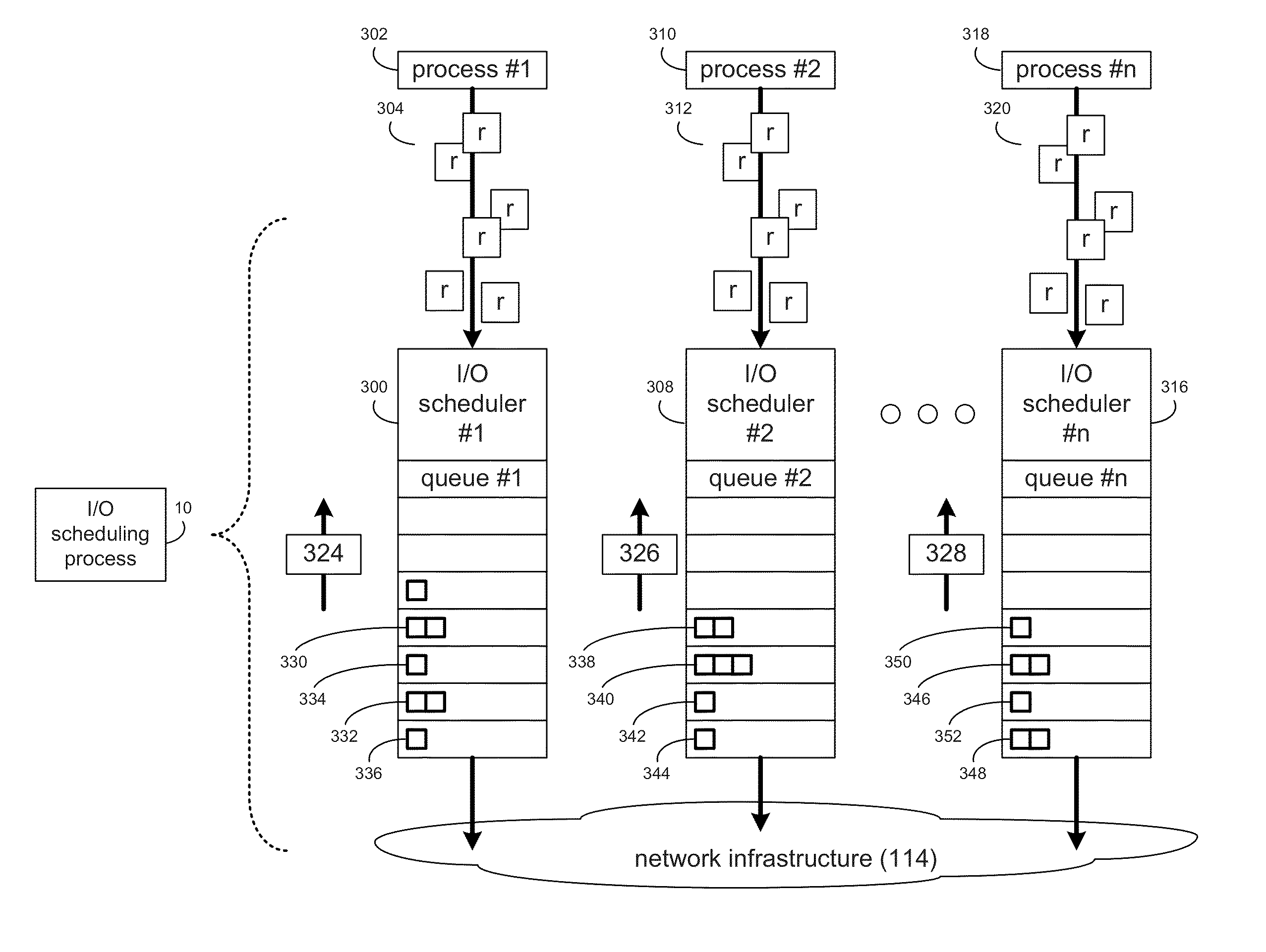

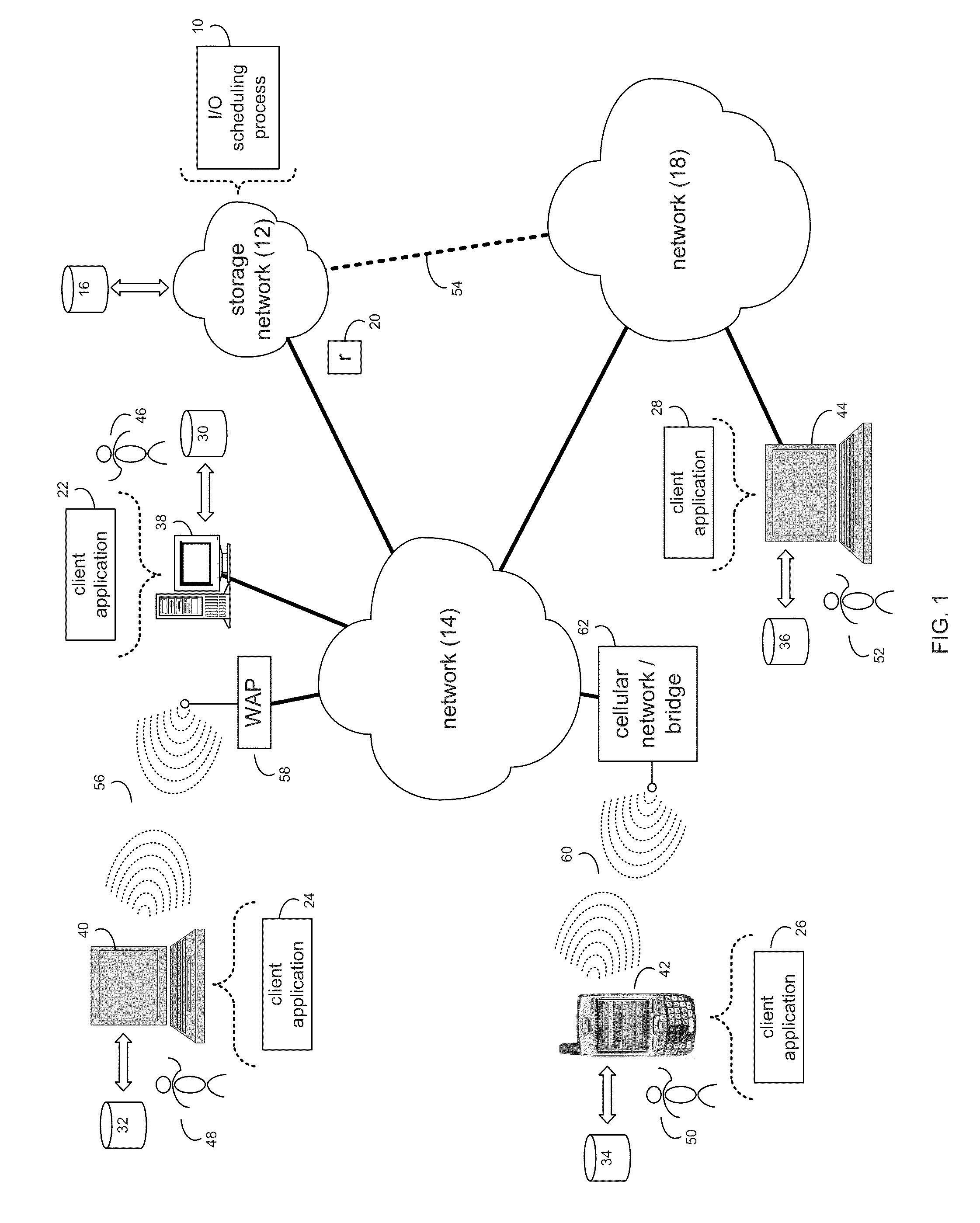

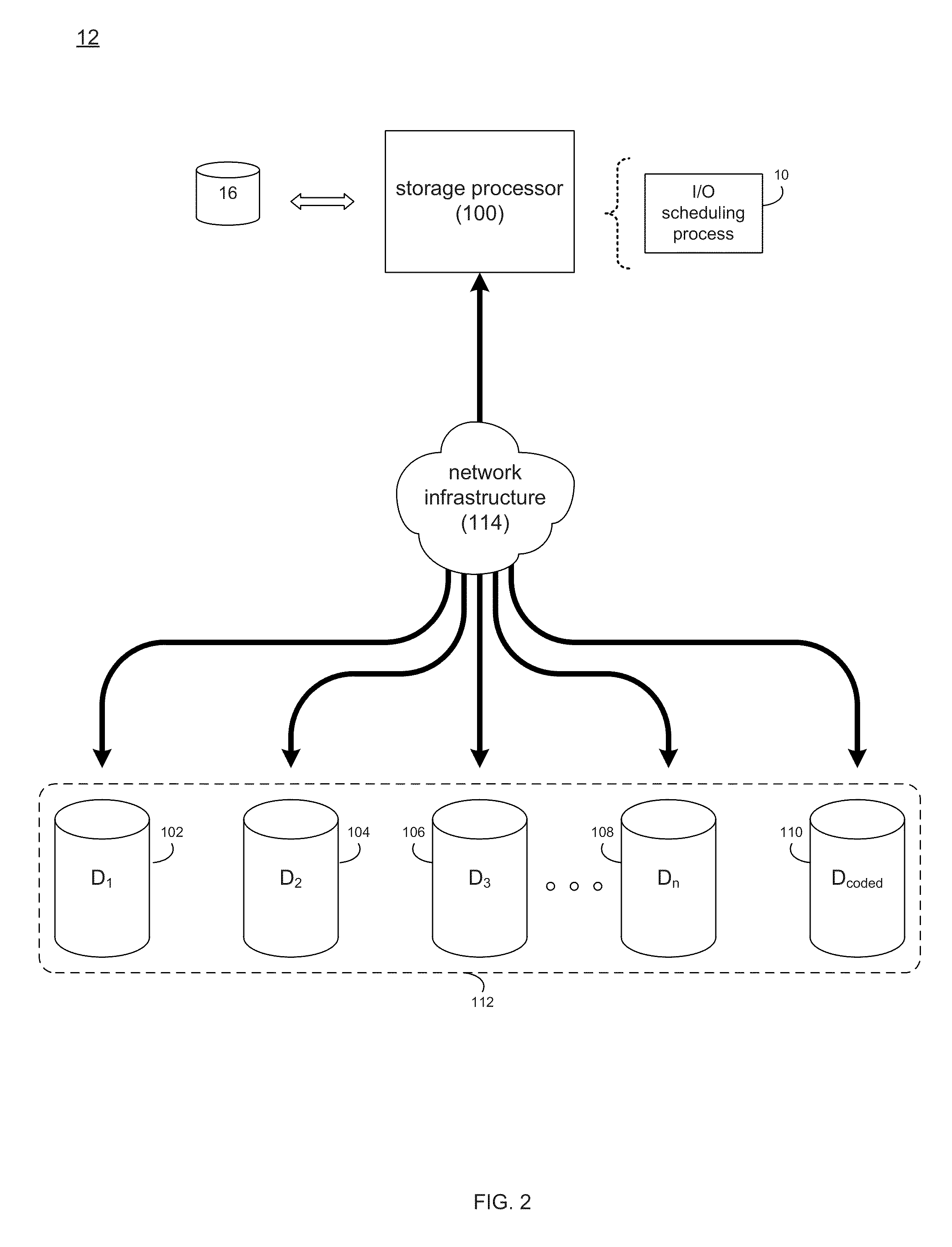

Efficient scalable I/O scheduling

An input / output (I / O) request is dispatched. A determination is made regarding a storage volume to service. A determination is made regarding whether an actual disk throughput exceeds a first threshold rate. The first threshold rate exceeds a reserved disk throughput. Responsive to determining that the actual disk throughput exceeds the first threshold rate, a first storage volume is selected based on credits or based on priority. Responsive to determining that the actual disk throughput does not exceed the first threshold rate, a second storage volume is selected based on guaranteed minimum I / O rate. An I / O request queue associated with the determined storage volume is determined. An I / O request is retrieved from the determined I / O request queue. The retrieved I / O request is sent to a persistence layer that includes the selected storage volume.

Owner:EBAY INC

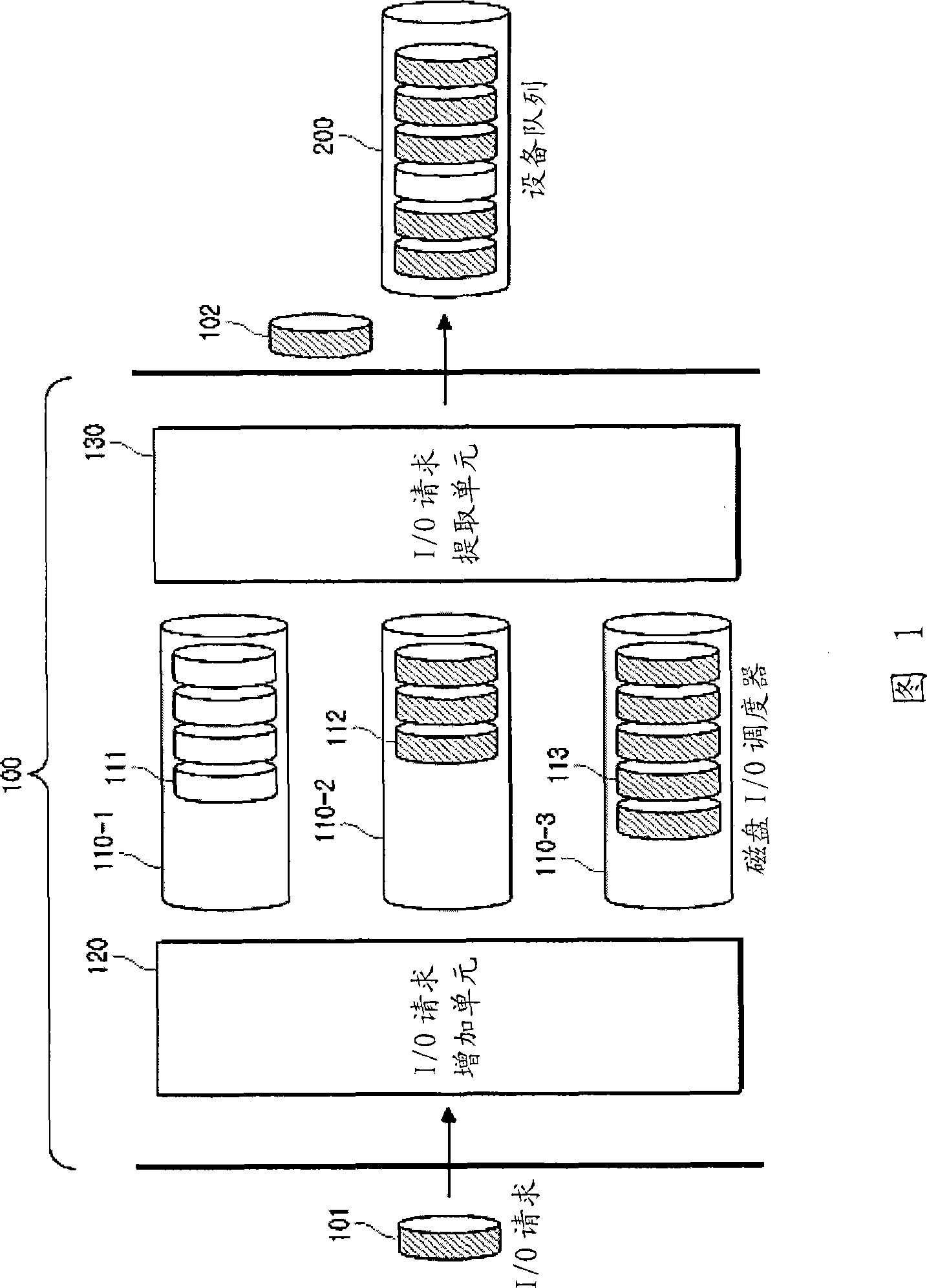

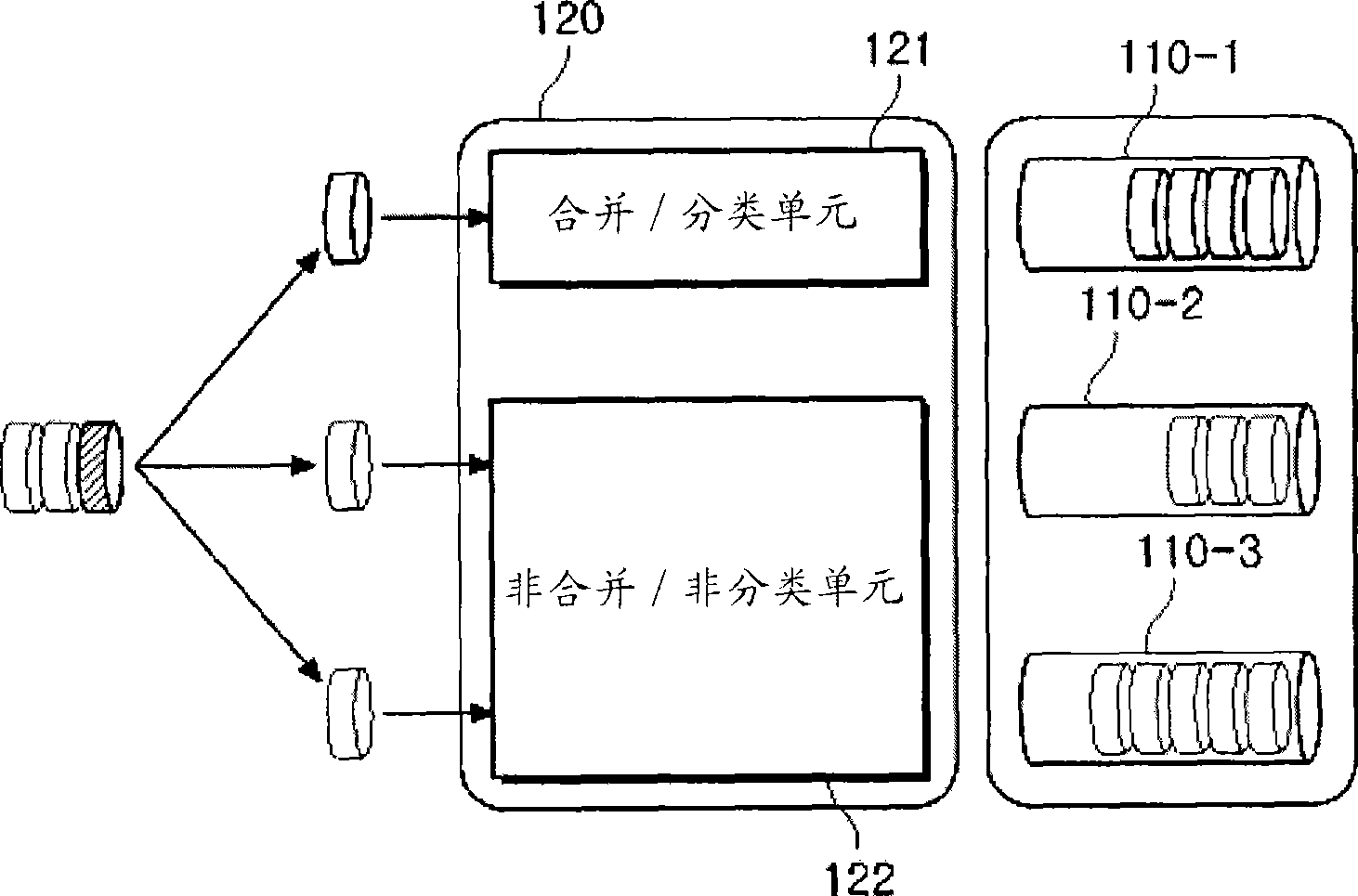

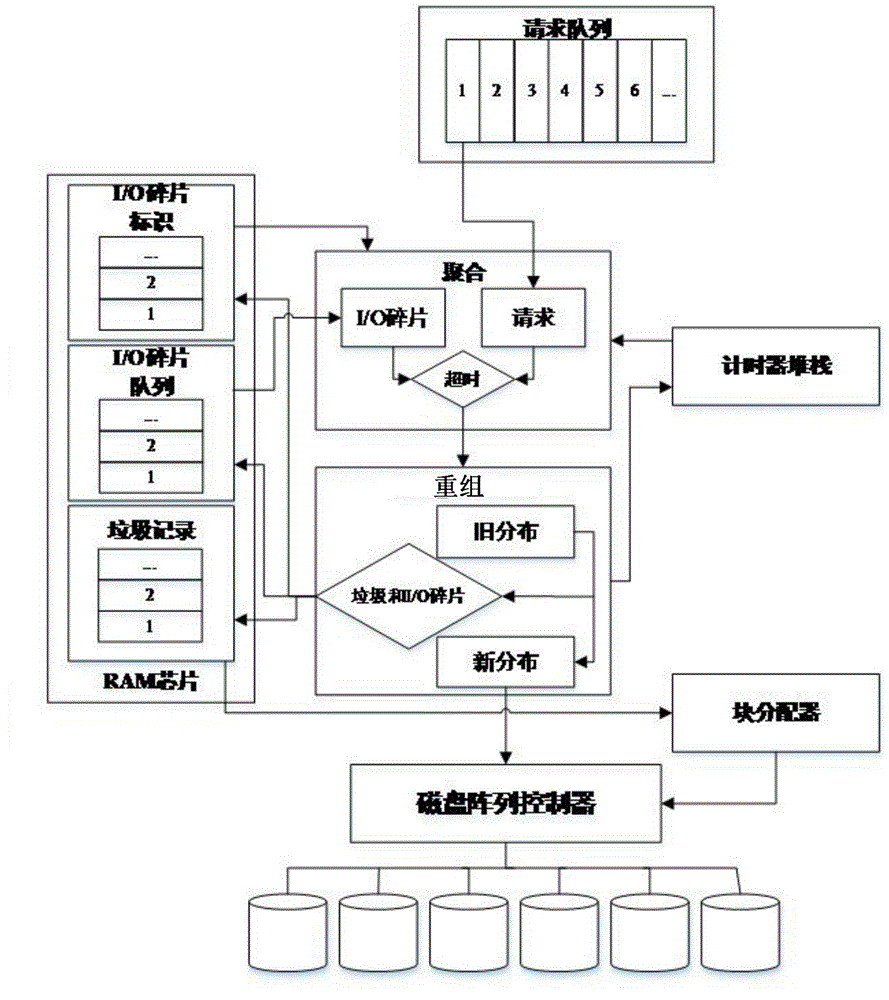

Disk i/o dispatcher under server virtual environment and dispatching method thereof

InactiveCN101458635AGuaranteed performanceEfficient use ofMultiprogramming arrangementsInput/output processes for data processingLimited resourcesOperating system

The invention provides a magnetic disk I / O dispatcher for server virtual environment and dispatching method thereof. The block I / O resource is proportionally allocated to each virtual system in the server virtual environment and the allocated I / O resource in the virtual system is rigorously isolated, therefore the performance of the virtual system is ensured and the effective utilization of the limited resource is provided. The magnetic disk I / O dispatcher comprises: a plurality of queues for I / O request, an I / O request increasing unit and an I / O request extracting unit. The I / O request increasing unit increases the I / O request of each system to the corresponding queue. The I / O request extracting unit extracts the I / O request from the queen.

Owner:ELECTRONICS & TELECOMM RES INST

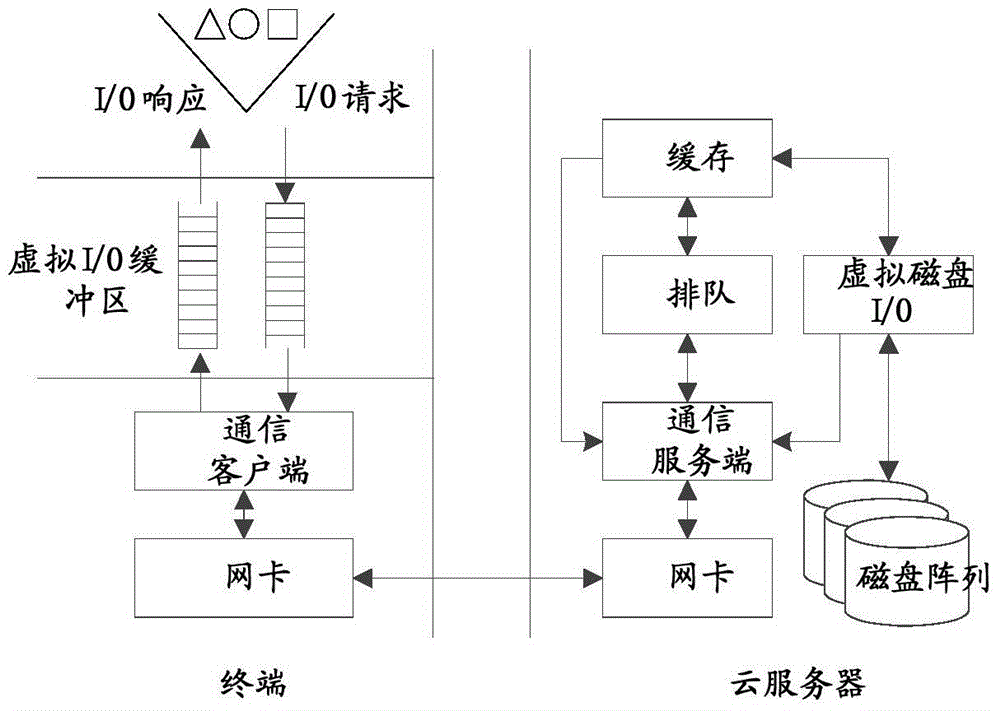

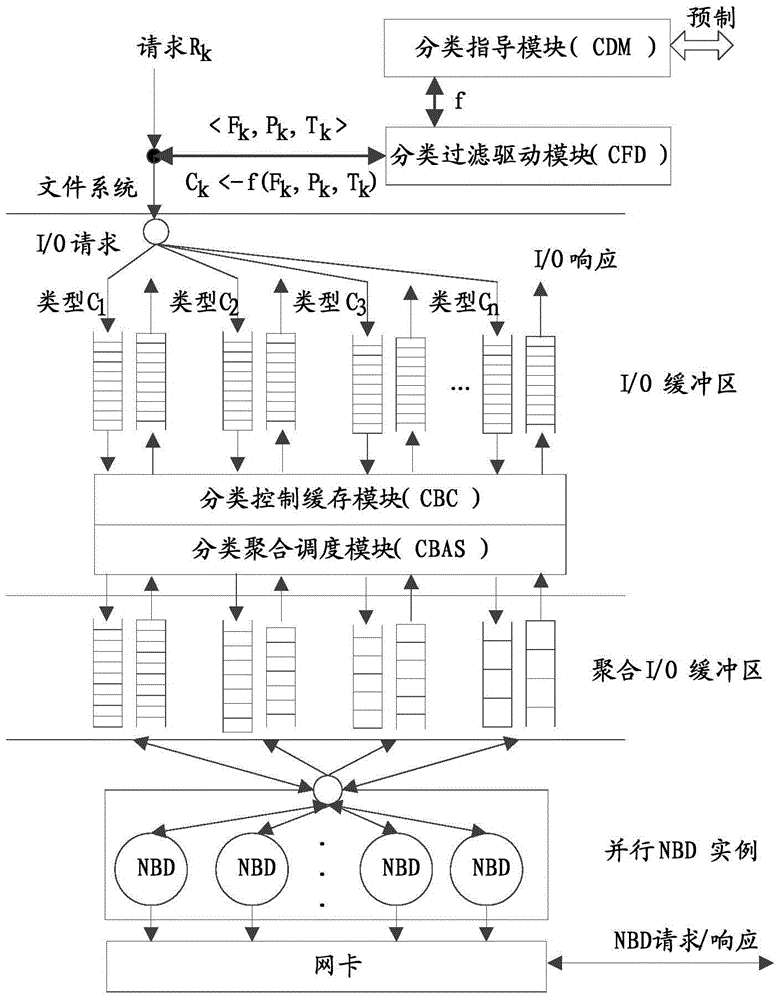

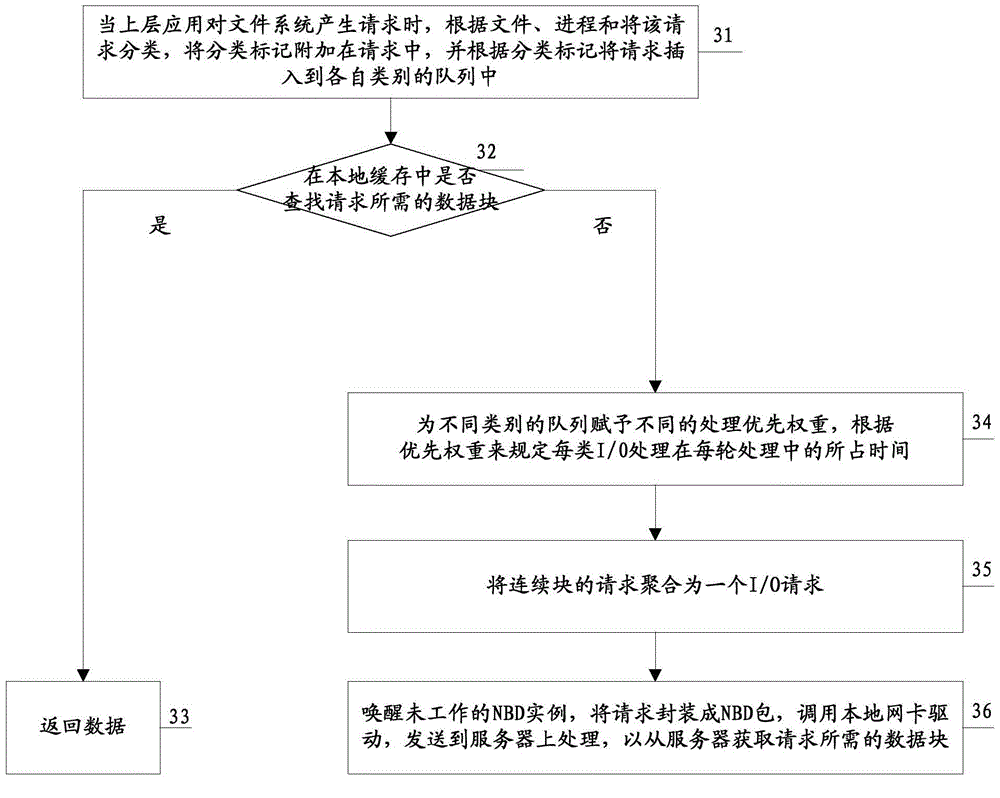

Virtual I/O scheduling method and system

ActiveCN104636201AReduce modificationReduce overheadResource allocationSoftware simulation/interpretation/emulationOperational systemTimestamp

The invention discloses a virtual I / O scheduling method and system. The method includes the steps that when an upper-level application generates an I / O request Rk for a file system, a CFD captures the request Rk through a filter driver of the file system, classifies the request according to a file Fk, a process Pk and a timestamp Tk, adds a class label Ck into the request Rk and inserts the I / O request into an I / O queue of the corresponding class according to the added class label Ck; data blocks needed by the I / O request are searched for in a local cache, and if the data blocks are found, the process returns. By means of the virtual I / O scheduling method and system, low overhead and little modification on an operating system are achieved, and the problem that in a cloud calculation environment, numerous confusing types of I / Os generated by terminal equipment lead to poor system performance is solved.

Owner:CHINA TELECOM CLOUD TECH CO LTD

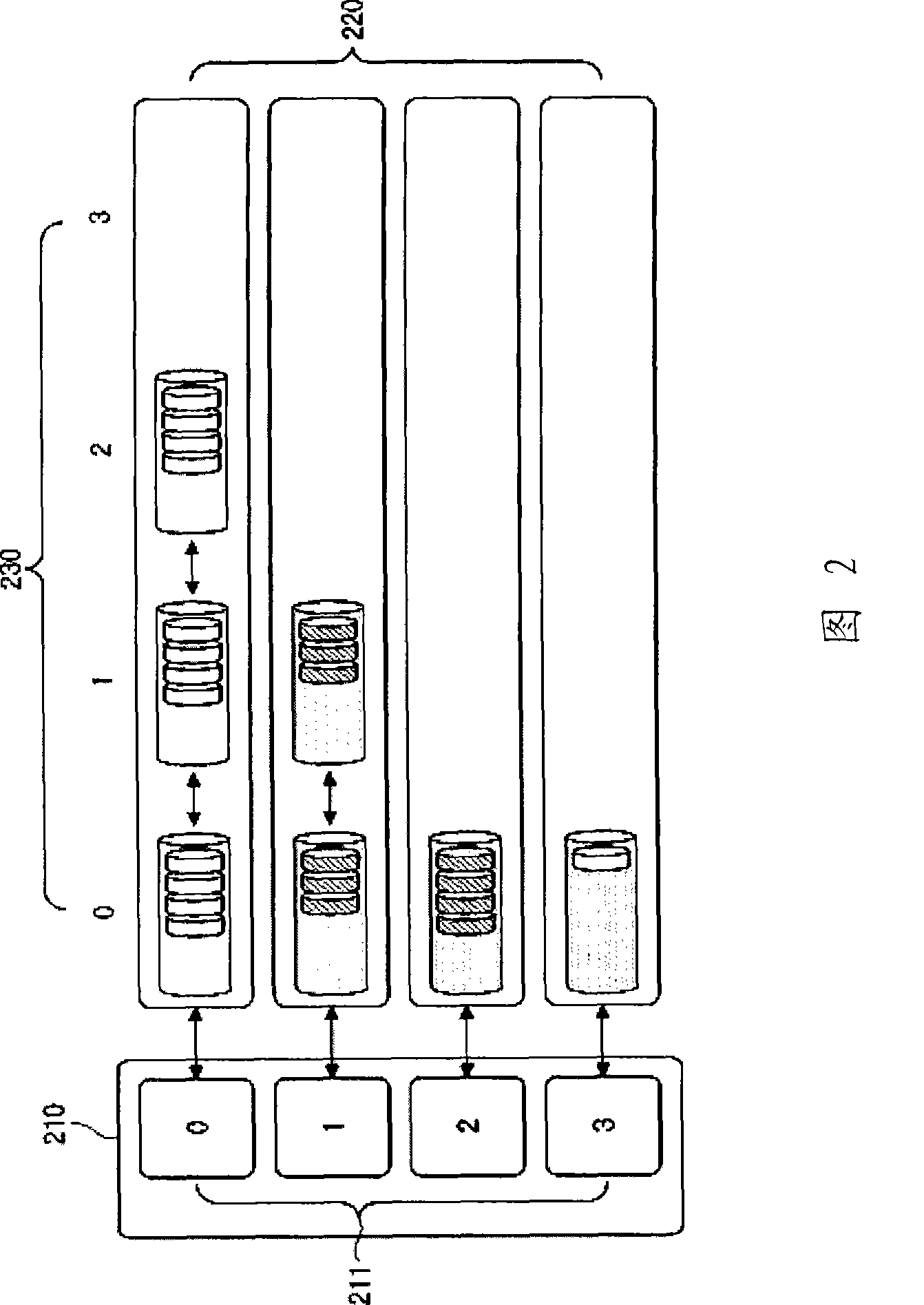

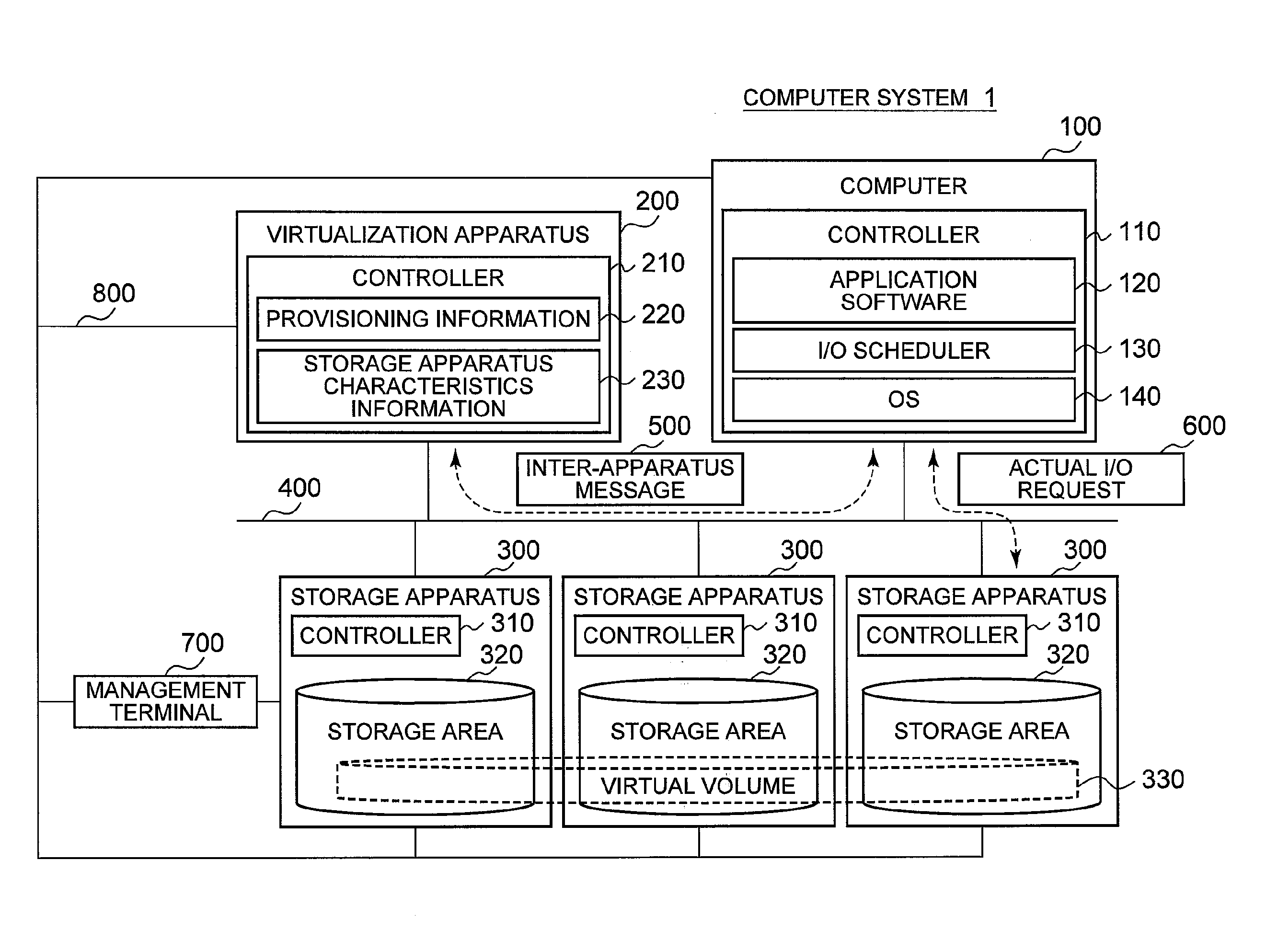

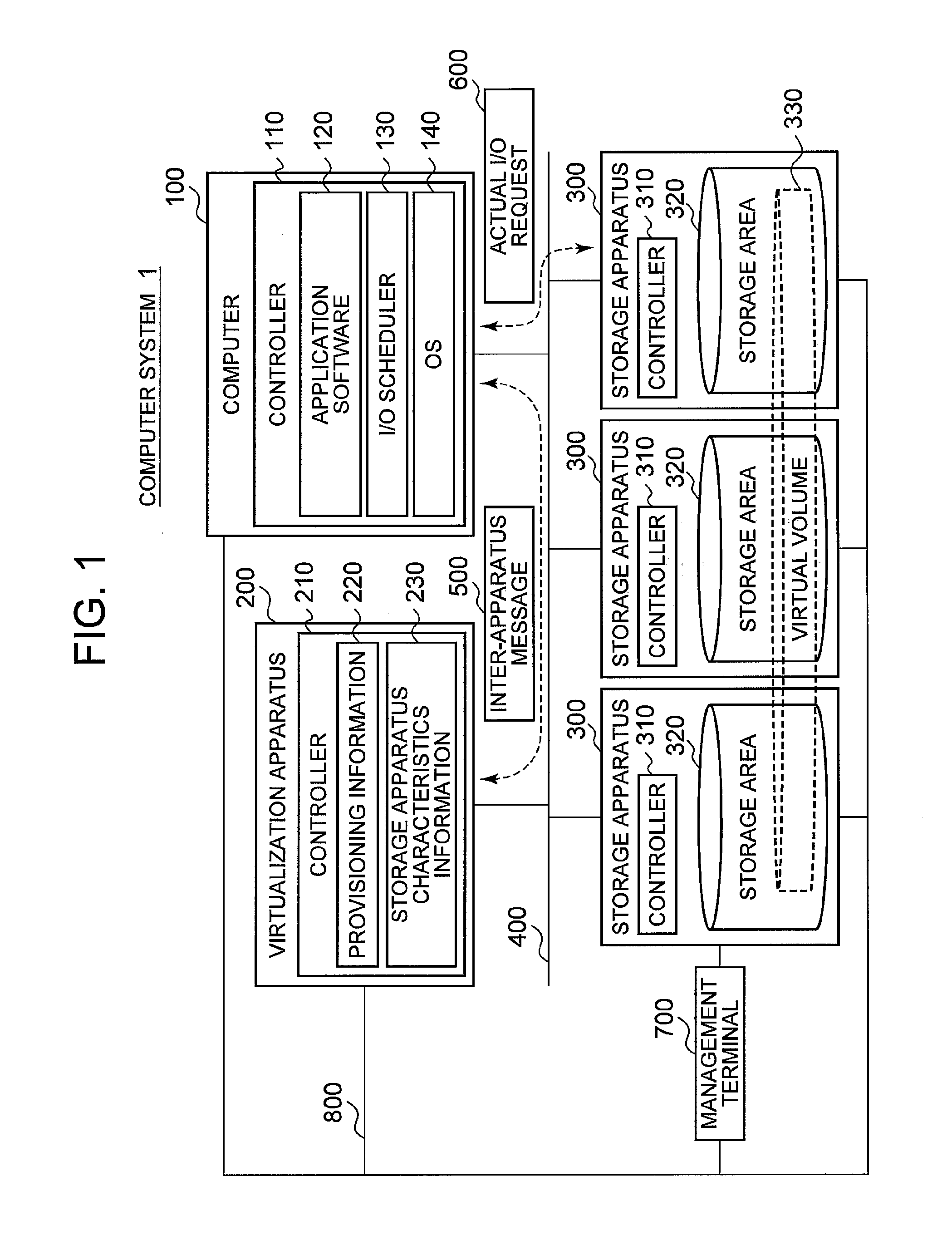

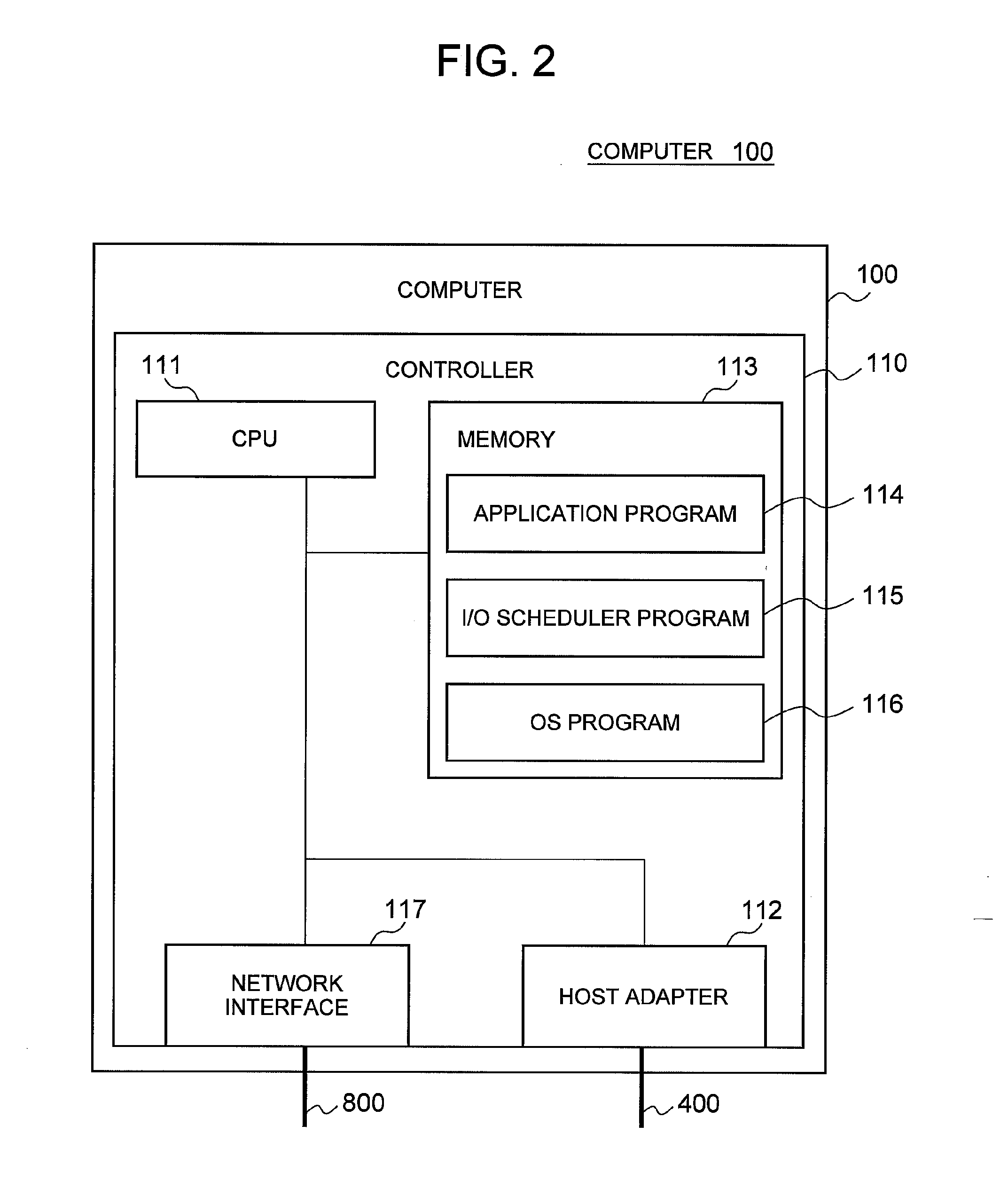

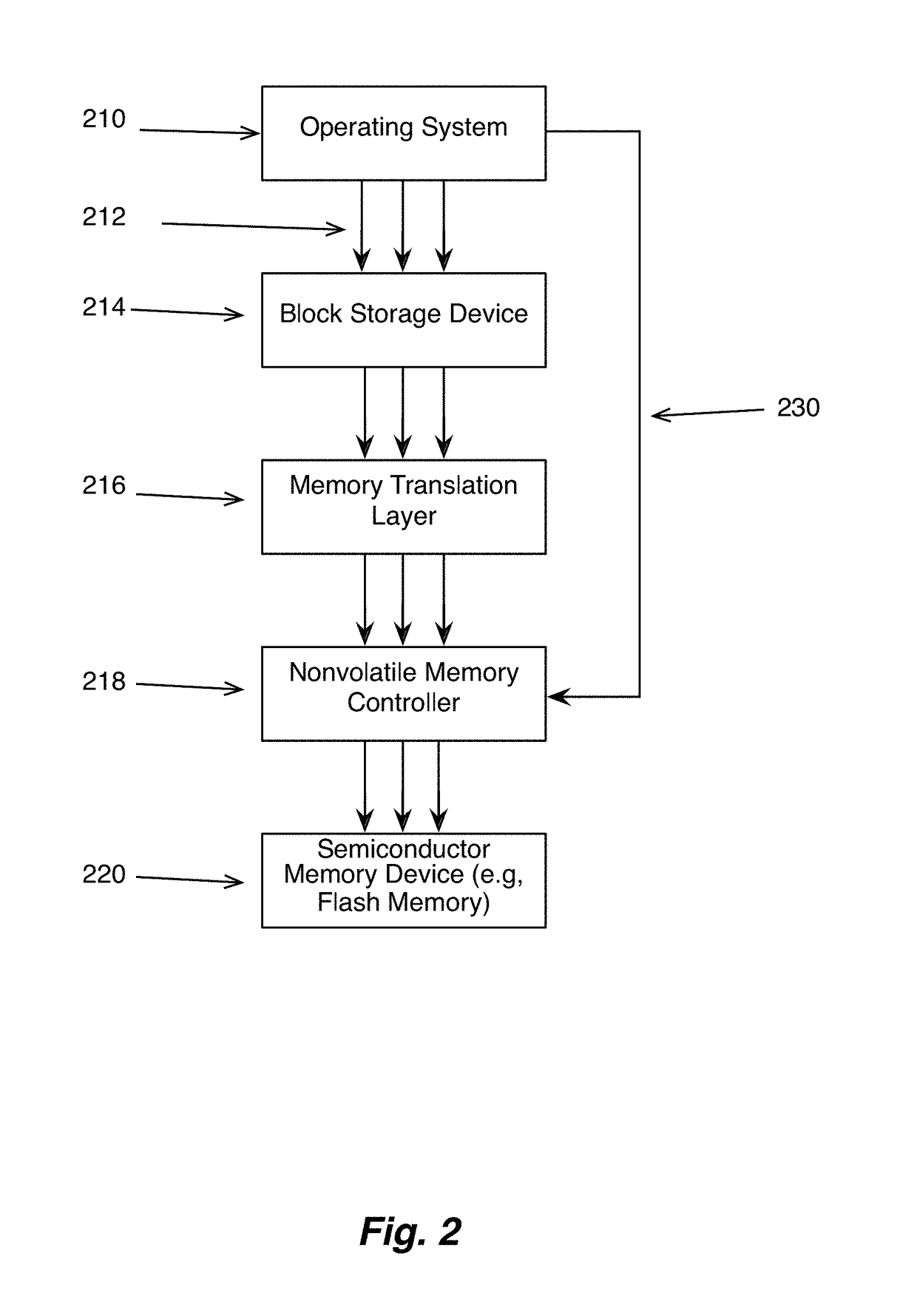

Computer system and computer system input/output method

InactiveUS20110082950A1Improve accuracyImprove I/O performanceInput/output processes for data processingData conversionVirtualizationComputerized system

Under the environment where a storage system is virtualized by Thin Provisioning technology or the like, it is difficult to statically estimate the I / O characteristics of the entire virtual volume, causing a problem that the effect of input / output control by a computer cannot be fully achieved by I / O scheduling that is based on the characteristics of a virtual volume unit. To solve the above problem, the computer system of the present invention acquires characteristics information of a storage apparatus in which there exists an actual storage area corresponding to a virtual volume storage area that is the access target when accessing the virtual volume, divides an I / O request with respect to the virtual volume by storage apparatus in a case where the I / O request spans multiple storage apparatuses, carries out I / O scheduling based on the acquired actual area characteristics information, and issues the I / O request directly to the storage apparatus in accordance with the I / O scheduling result.

Owner:HITACHI LTD

RAID-6 I/O scheduling method for balancing strip writing

Owner:SHANGHAI JIAO TONG UNIV

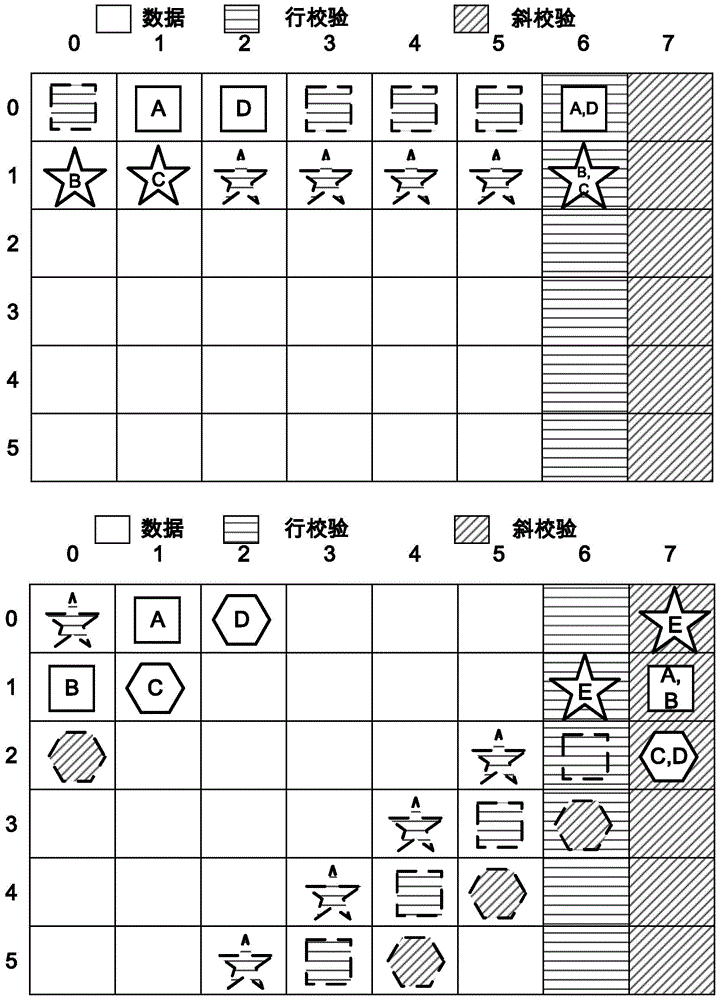

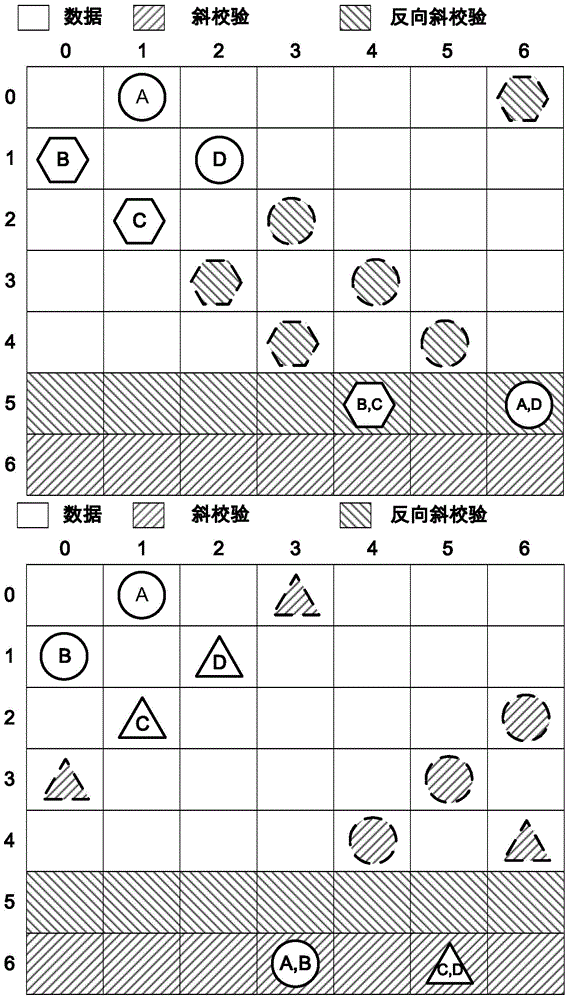

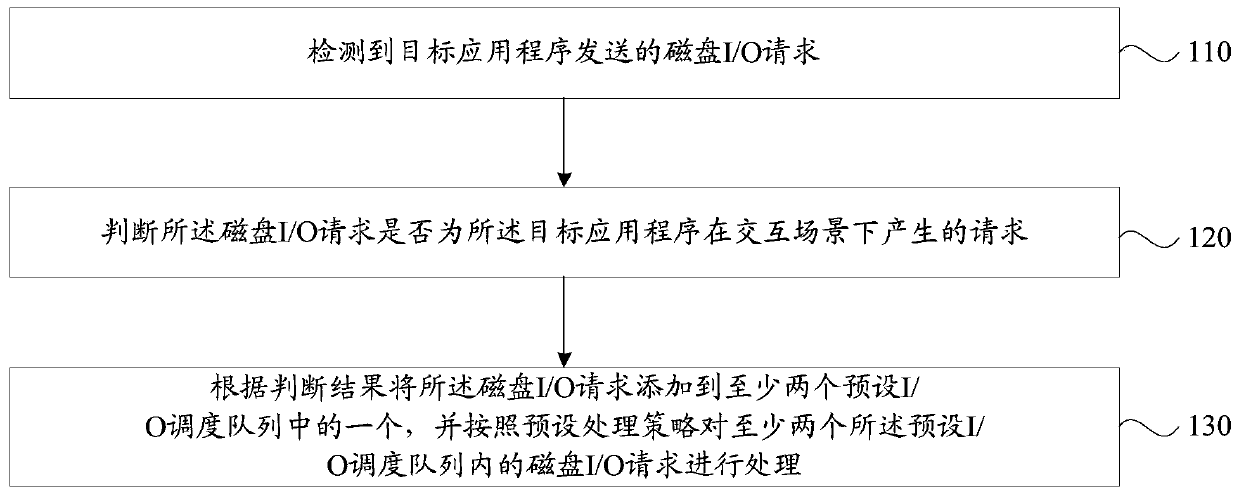

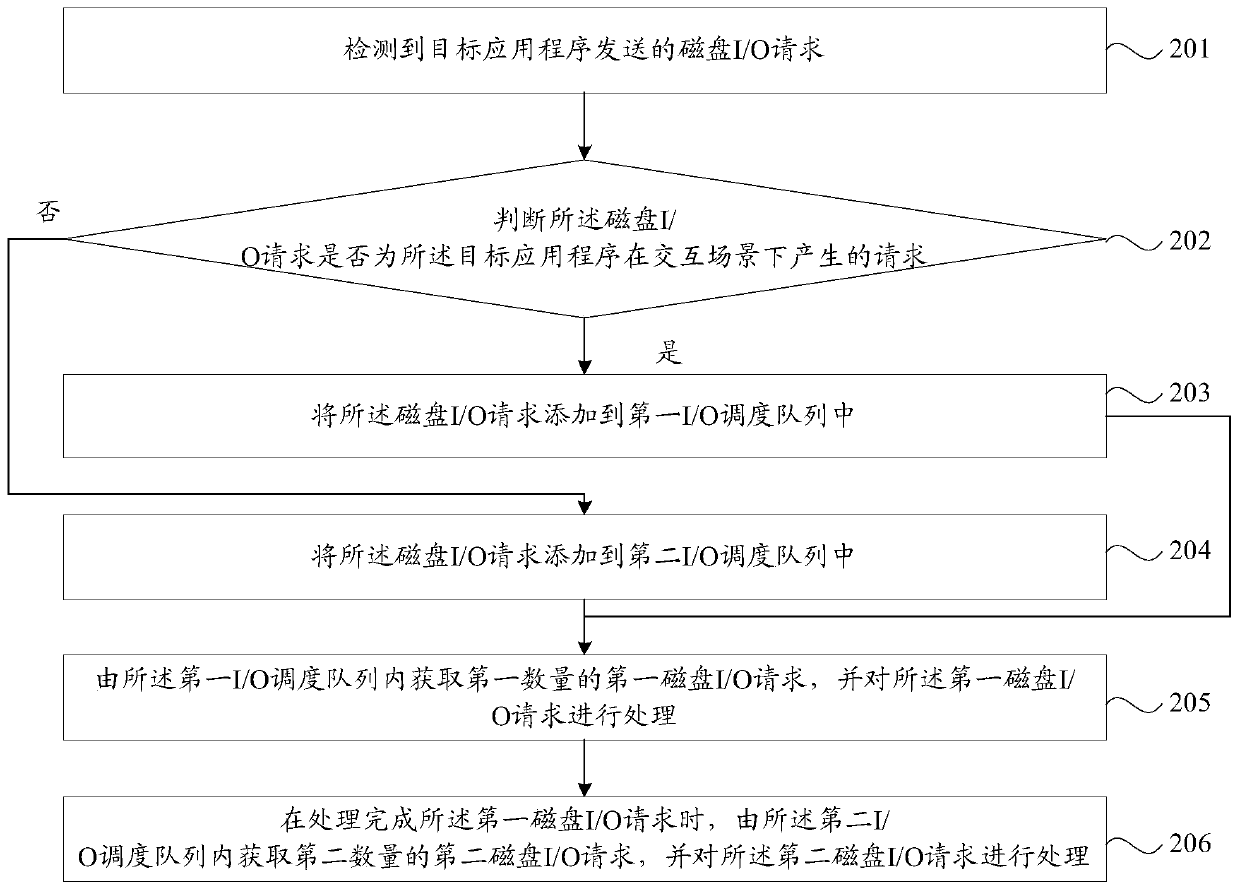

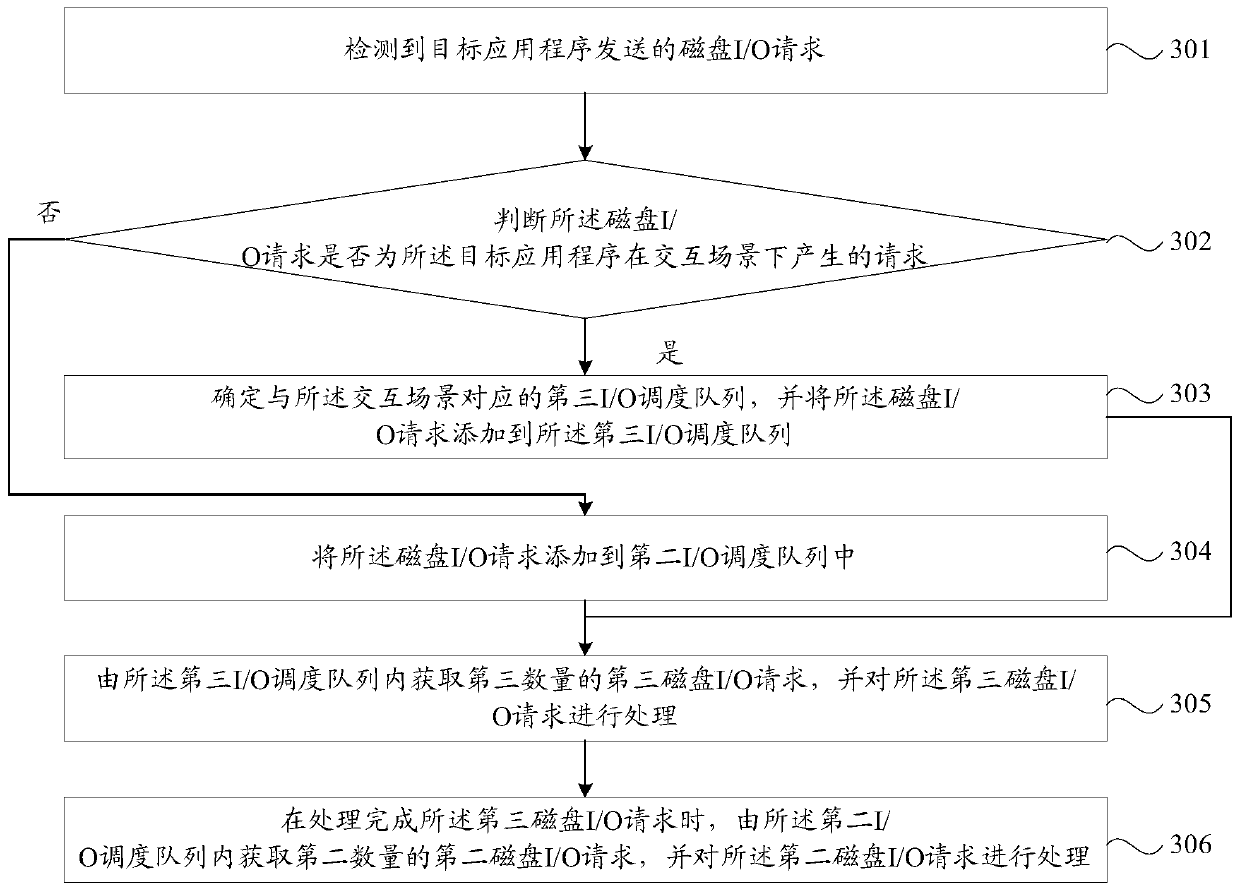

Optimization method and device for I/O scheduling, storage medium and intelligent terminal

Embodiments of the invention disclose an optimization method and device for I / O scheduling, a storage medium and an intelligent terminal. The method comprises: detecting a disk I / O request sent by a target application program; judging whether the disk I / O request is a request generated by the target application program under an interaction scene or not; and adding the disk I / O request to one of atleast two preset I / O scheduling queues according to a judgment result, and processing the disk I / O request in the at least two preset I / O scheduling queues according to a preset processing strategy.By adopting the technical scheme, the problem that the disk I / O request cannot be responded in time in an interactive scene can be avoided, and the problem of terminal jamming in the interactive sceneis improved.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

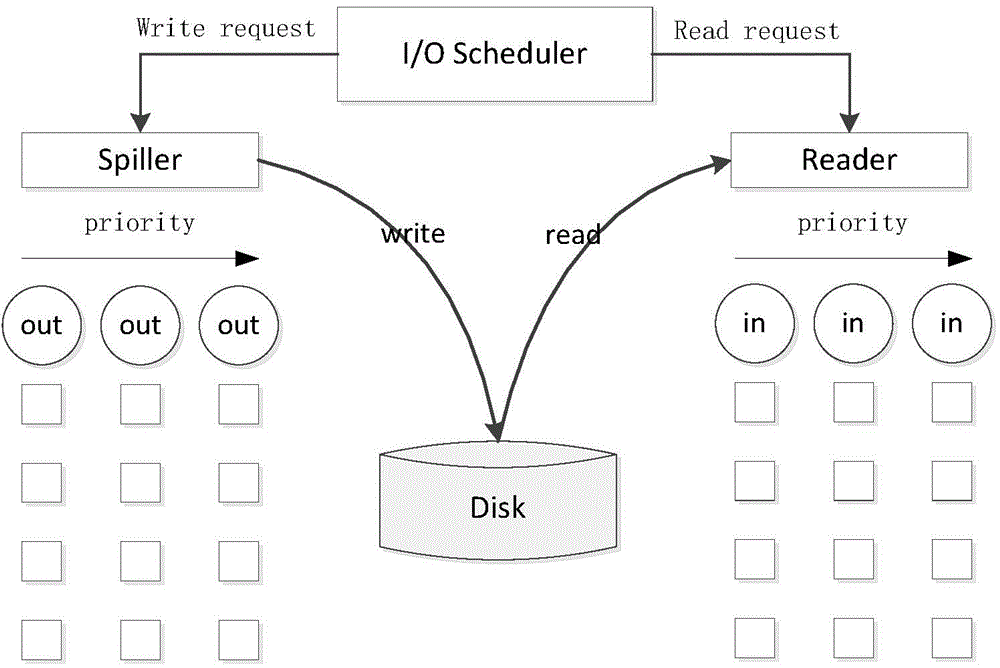

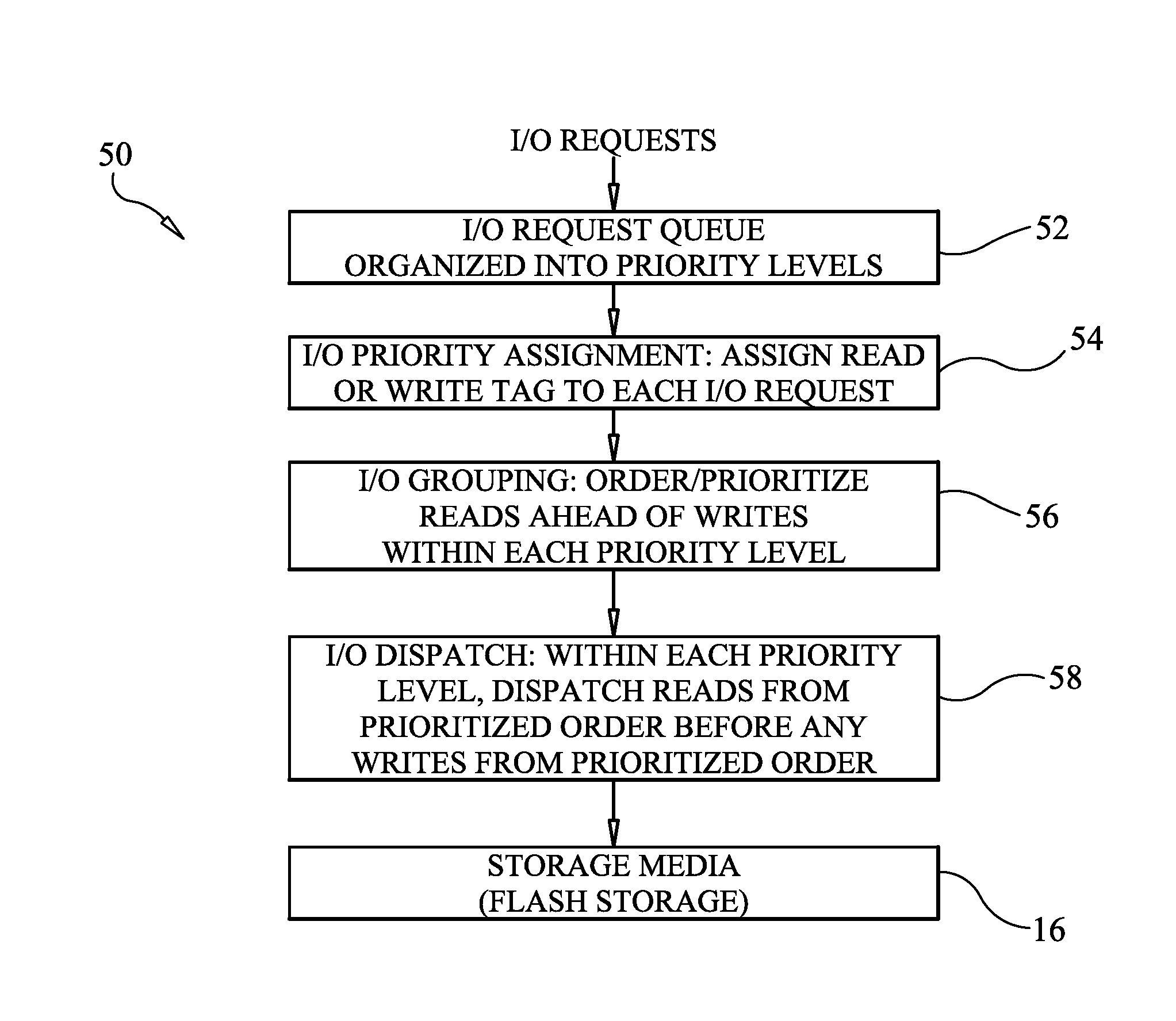

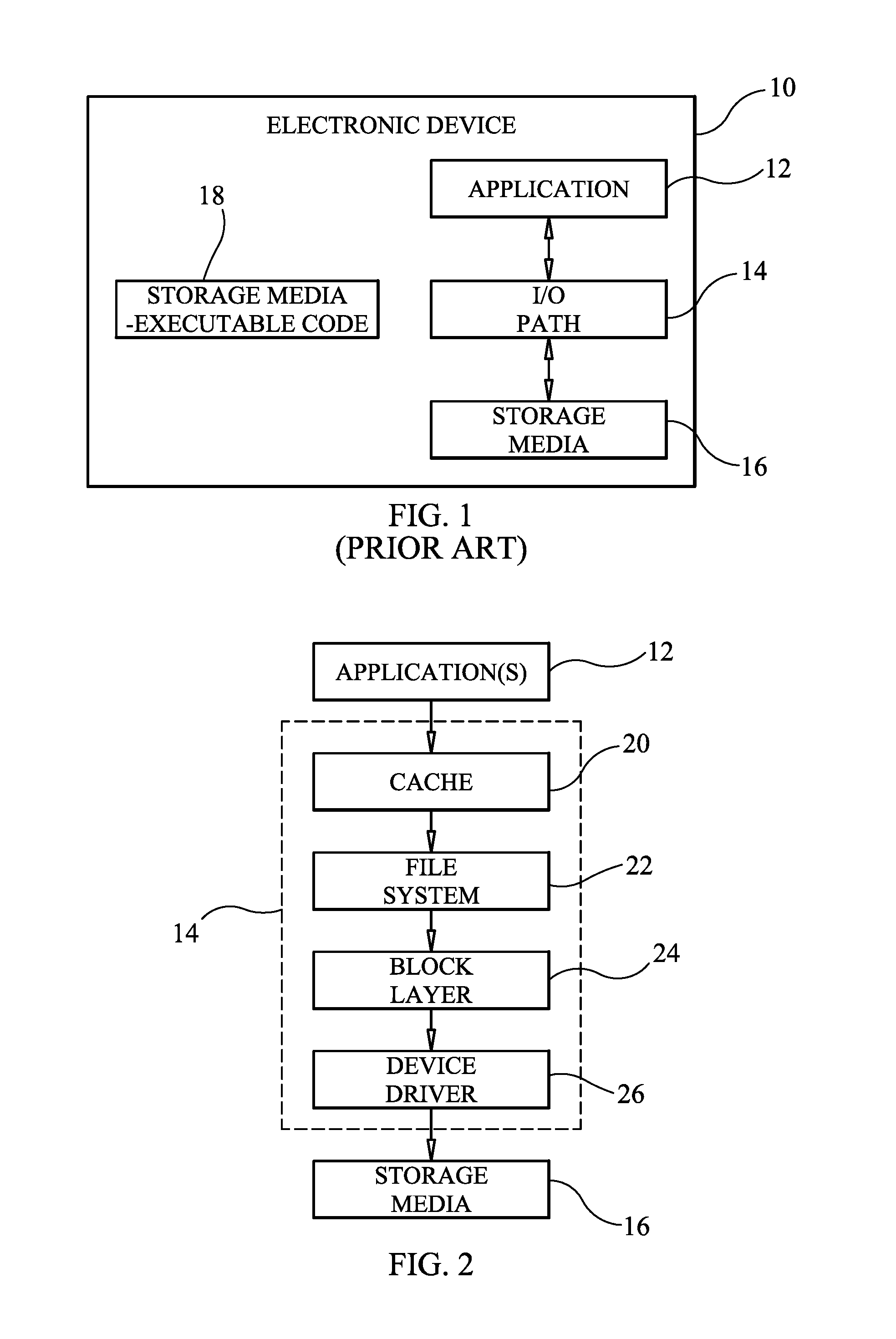

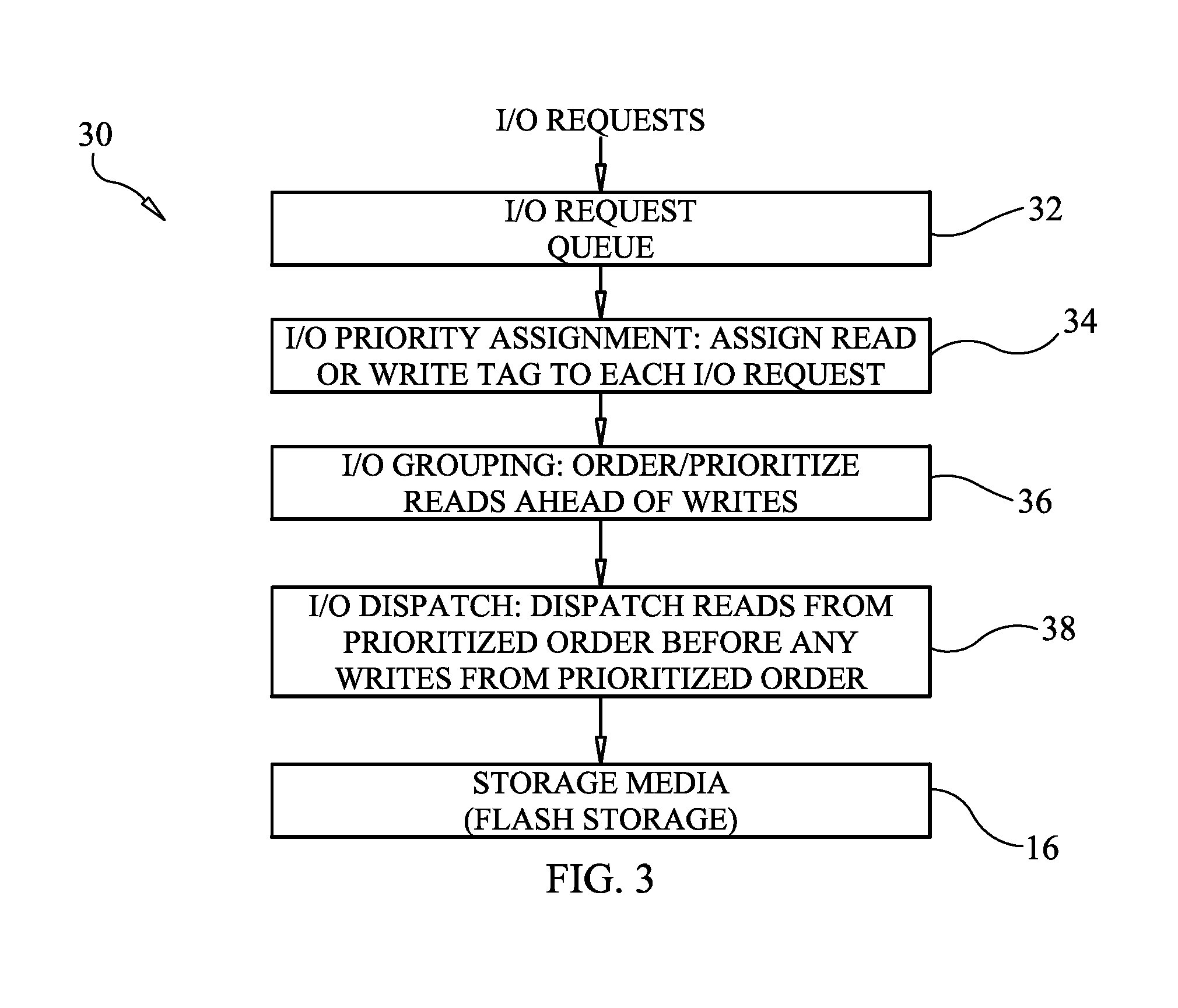

I/O scheduling method using read prioritization to reduce application delay

InactiveUS20160202909A1Reduce delaysInput/output to record carriersMemory systemsApplication softwareOperating system

An I / O scheduler having reduced application delay is provided for an electronic device having storage media and running at least one application. Each application interfaces with the storage media through an I / O path. Each application issues I / O requests requiring access to the storage media. The I / O requests include reads from the storage media and writes to the storage media. The I / O requests are ordered in the I / O path such that the reads are assigned a higher priority than the writes. The I / O requests are dispatched from the I / O path to the storage media in accordance with ordering step such that the reads are dispatched before the writes. The scheduler's dispatch can also apply concurrency parameters for the electronic device.

Owner:COLLEGE OF WILLIAM & MARY

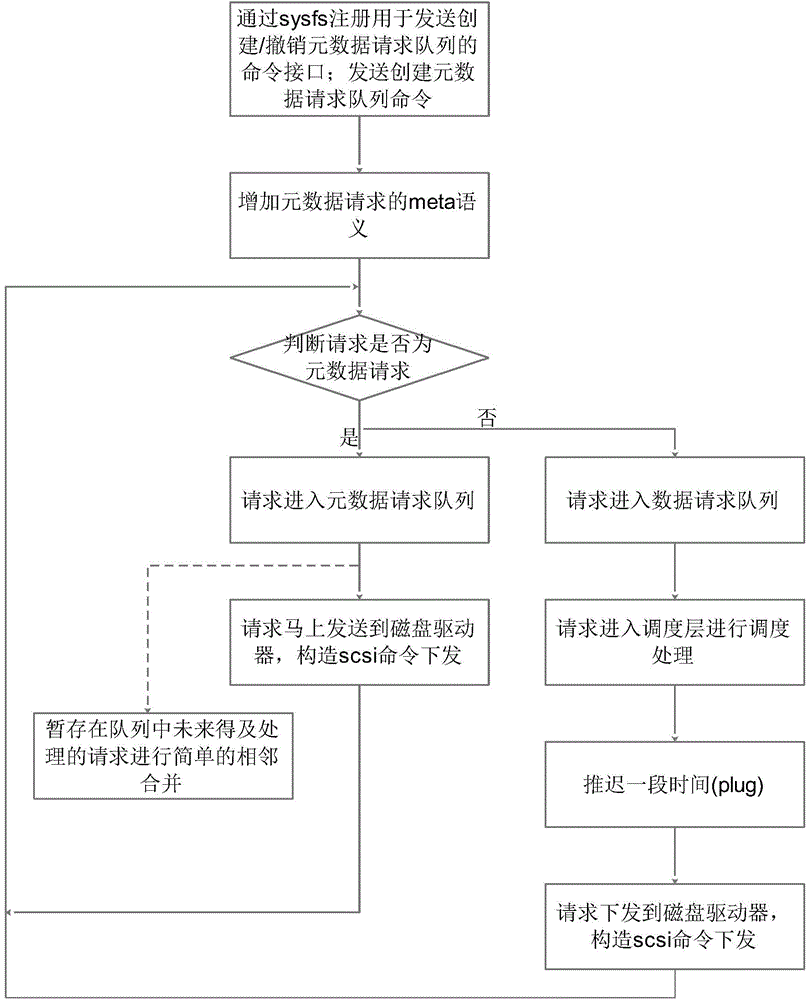

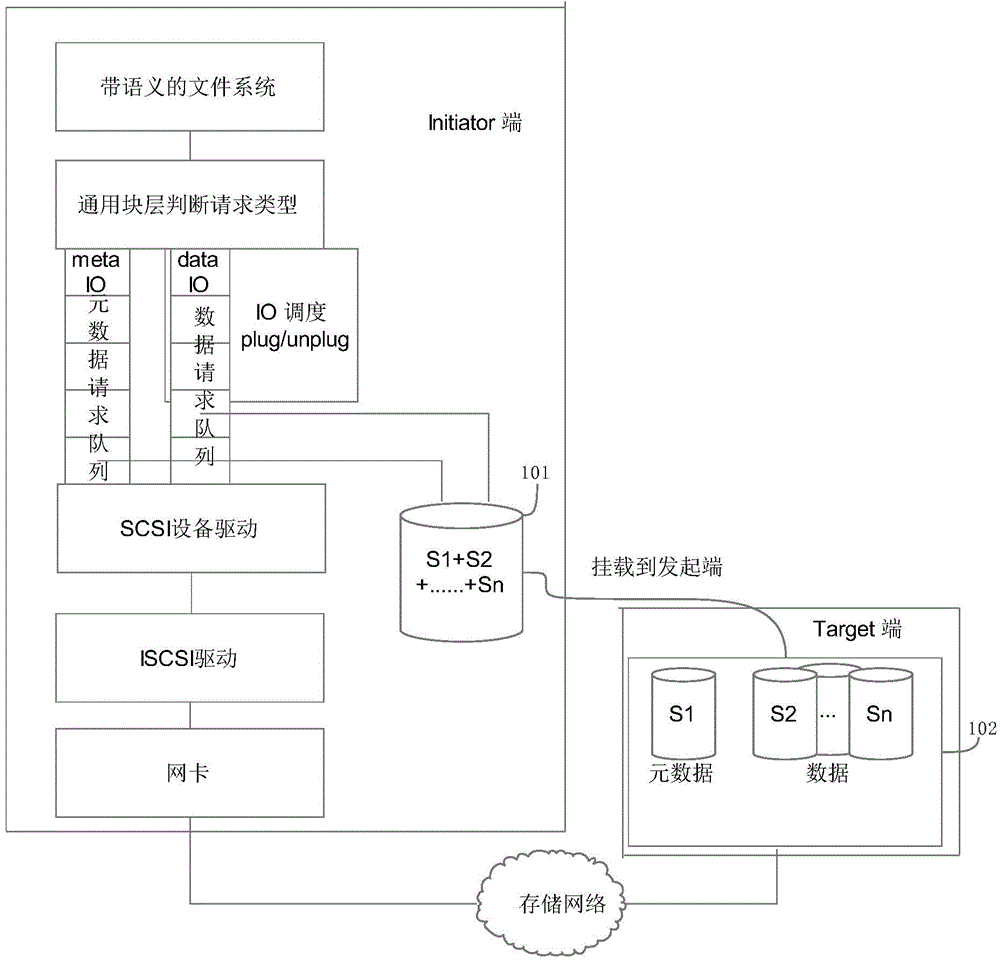

Method for separately processing data reading and writing requests and metadata reading and writing requests

ActiveCN104571952ASemantic increaseDifferentiate between request typesInput/output to record carriersSCSISemantics

Owner:HUAZHONG UNIV OF SCI & TECH

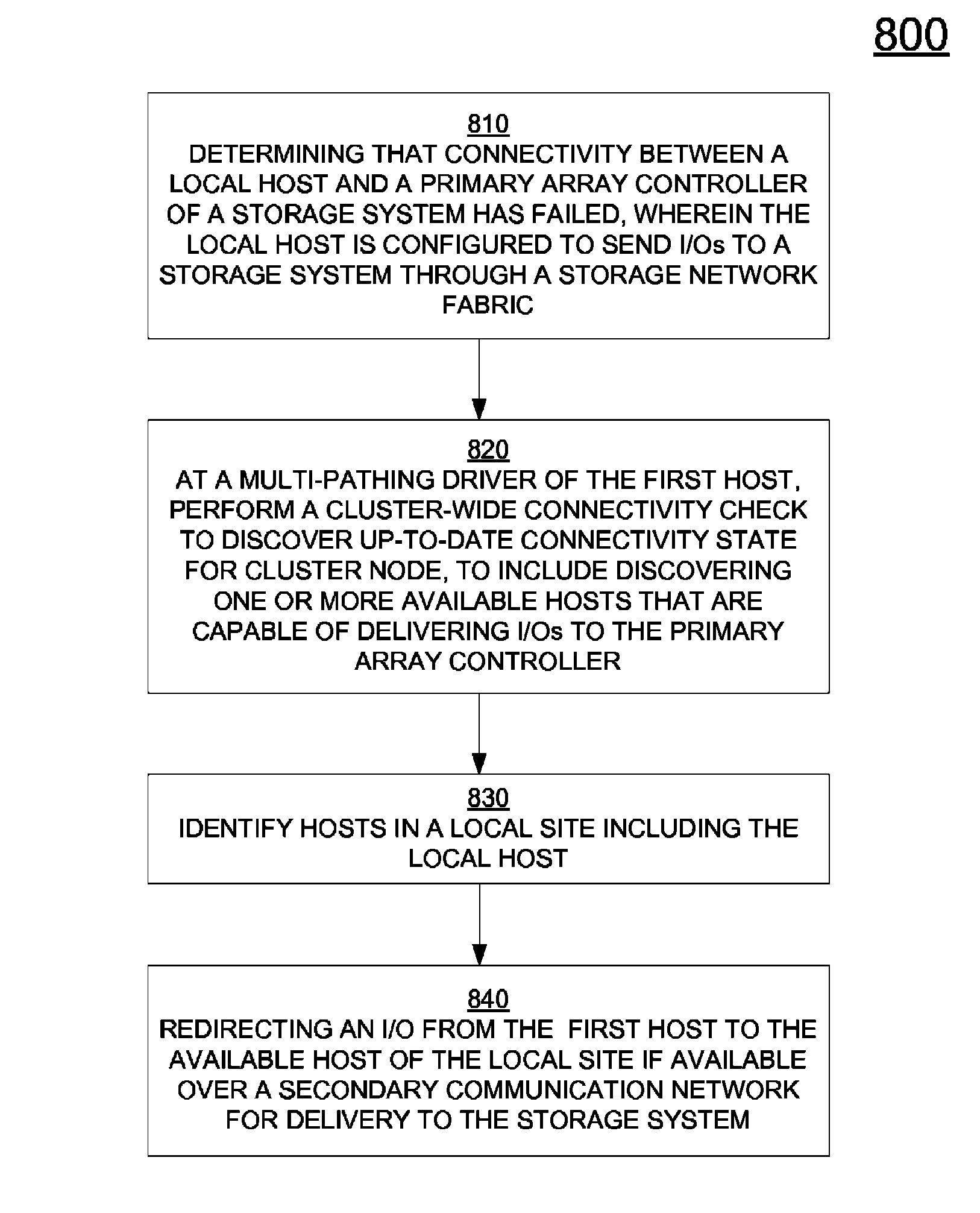

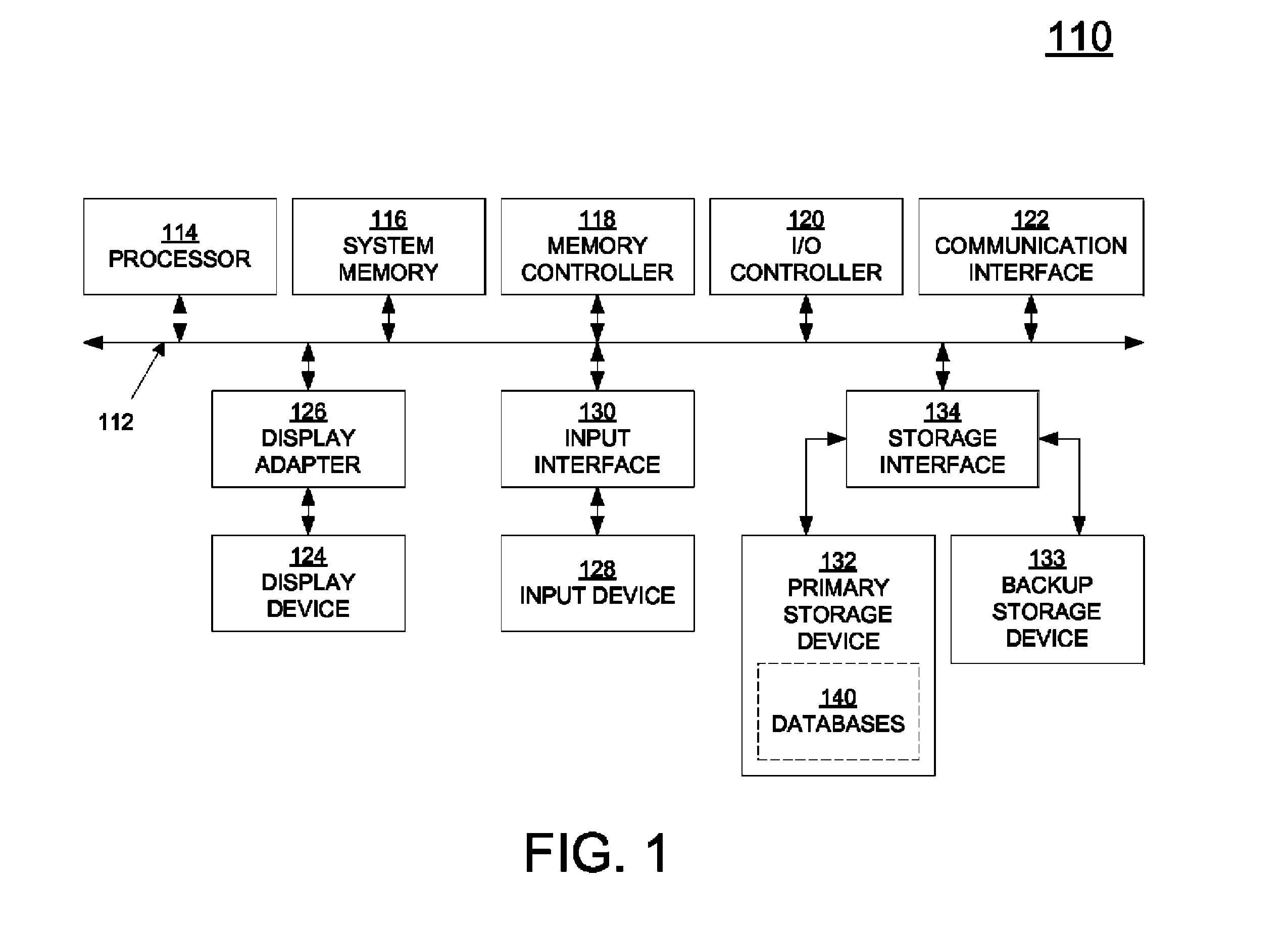

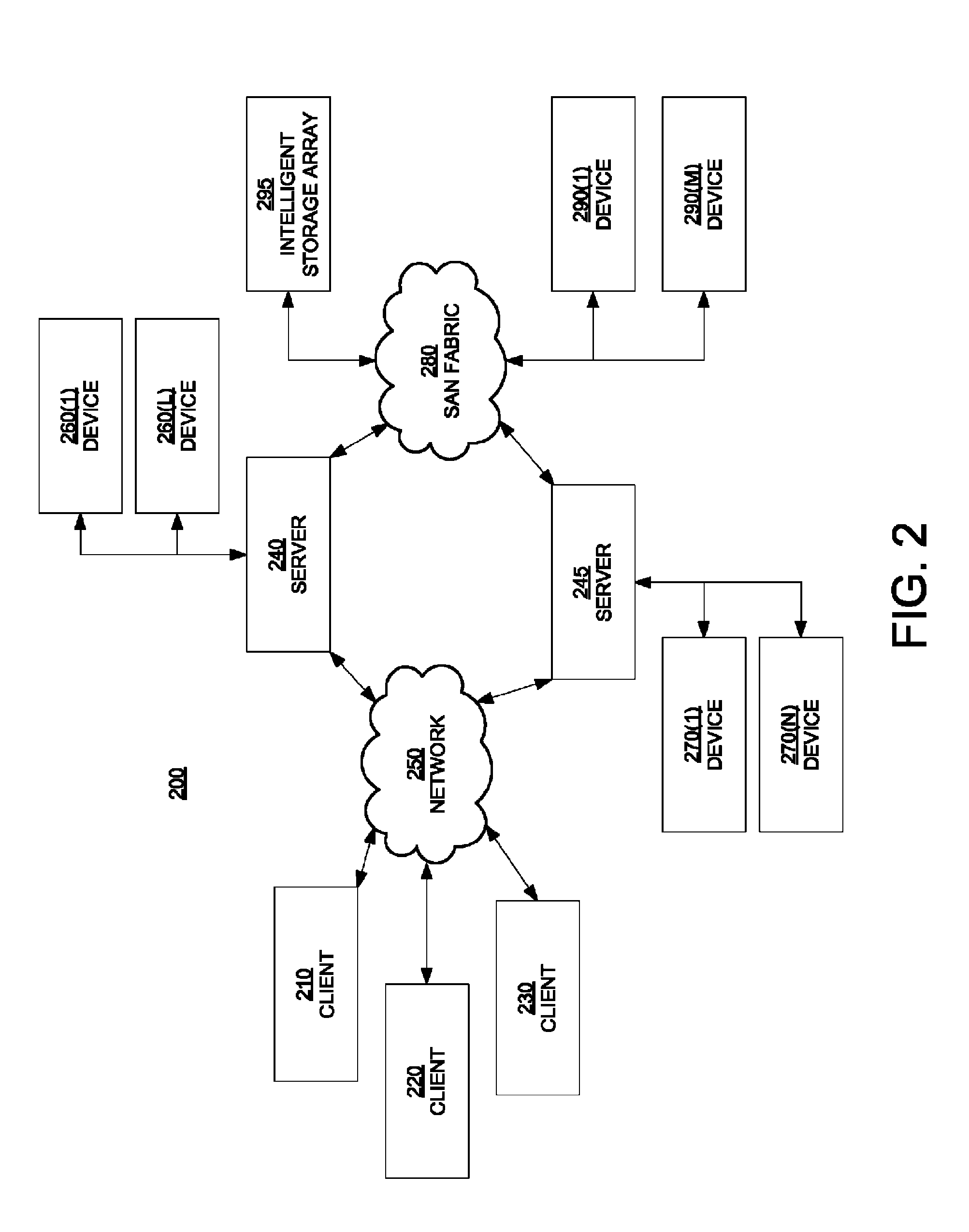

Method and system for cluster wide adaptive I/O scheduling by a multipathing driver

ActiveUS9015519B2Guaranteed economical operationExpensive to operateMultiple digital computer combinationsData switching networksNetwork structureDistributed computing

A method and system for load balancing. The method includes determining that connectivity between a first host and a primary array controller of a storage system has failed. The first host is configured to send input / output messages (I / Os) to a storage system through a storage network fabric. An available host is discovered at a multi-pathing driver of the first host. The available host is capable of delivering I / Os to the primary array controller. An I / O is redirected from said first host to the available host over a secondary communication network for delivery to the storage system.

Owner:VERITAS TECH

Multi-hypervisor virtual machines

Standard nested virtualization allows a hypervisor to run other hypervisors as guests, i.e. a level-0 (L0) hypervisor can run multiple level-1 (L1) hypervisors, each of which can run multiple level-2 (L2) virtual machines (VMs), with each L2 VM is restricted to run on only one L1 hypervisor. Span provides a Multi-hypervisor VM in which a single VM can simultaneously run on multiple hypervisors, which permits a VM to benefit from different services provided by multiple hypervisors that co-exist on a single physical machine. Span allows (a) the memory footprint of the VM to be shared across two hypervisors, and (b) the responsibility for CPU and I / O scheduling to be distributed among the two hypervisors. Span VMs can achieve performance comparable to traditional (single-hypervisor) nested VMs for common benchmarks.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

Optimization method of I/O (input/output) performance of virtual machine

InactiveCN104346212AReduce clock interrupt frequencyReduce overheadSoftware simulation/interpretation/emulationVirtualizationOperational system

The invention discloses an optimization method of the I / O (input / output) performance of a virtual machine. According to the method, on one hand, the clock interruption frequency of the virtual machine is reduced by combining continuous I / O instructions in a client operation system, so that the environment switching expense is reduced; on the other hand, redundant operations in the client operation system are eliminated and include invalid I / O dispatching of redundant function in a virtualized environment and support of virtual network card drive on an NAPI (new application programming interface), so that necessary operations are performed by the virtual machine, and the system performance is improved.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

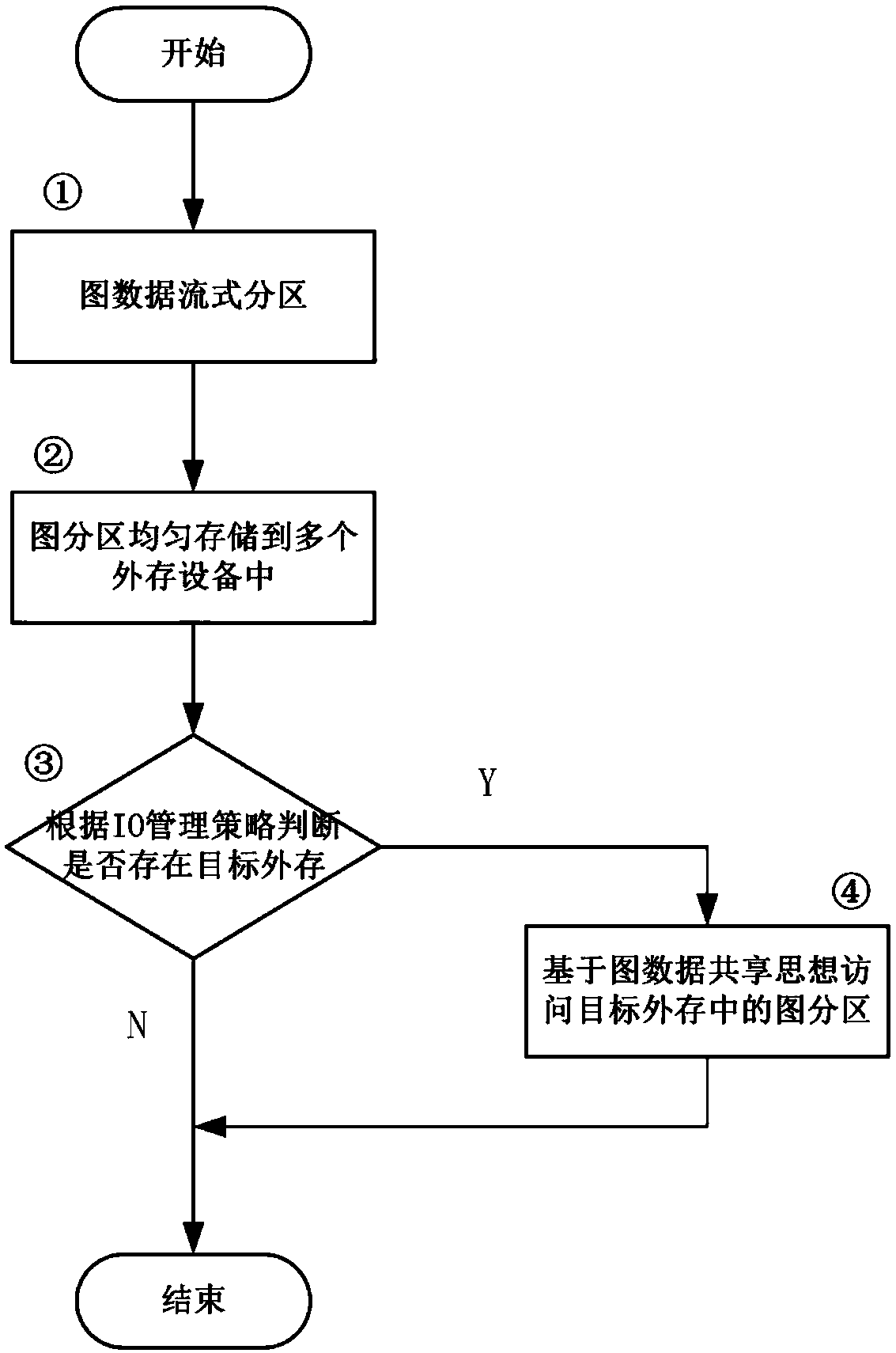

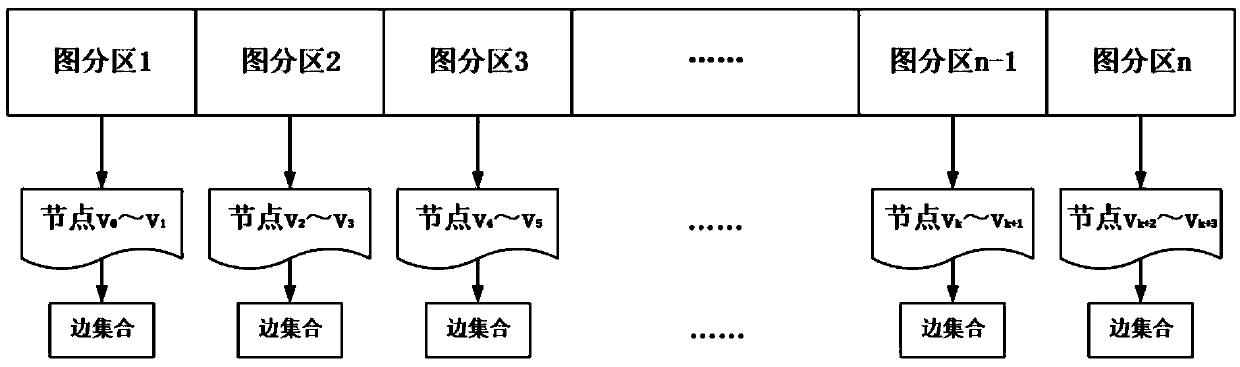

A multi-task external memory schema graph processing method based on I/O scheduling

ActiveCN109522102AImprove performanceIncrease profitProgram initiation/switchingExternal storageComputer architecture

The invention discloses a multi-task external memory mode diagram processing method based on I / O scheduling, includes streaming partitioning graph data to obtain graph partition, evenly placing graphpartition in multiple external storage devices, selecting target external storage devices from multiple external storage devices based on I / O scheduling, and taking graph partition in the target external storage device that has not been accessed by graph processing task as designated partition; Judging whether the synchronization field of the designated partition is not mapped into the memory according to the synchronization field of the designated partition, if so, mapping the designated partition from the external storage device into the memory, and updating the synchronization field of thedesignated partition; Otherwise, the graph partition data is accessed directly through the address information mapped to memory by the specified partition. Through I / O scheduling, the invention selects the external storage device with the least number of tasks to access, thereby controlling the sequence of accessing the data of the external storage diagram partition and balancing the I / O pressure.By setting the synchronization field to realize the data sharing of graph partition, the repeated loading of the same graph partition is reduced, so as to reduce the total I / O bandwidth and improve I / O efficiency.

Owner:HUAZHONG UNIV OF SCI & TECH

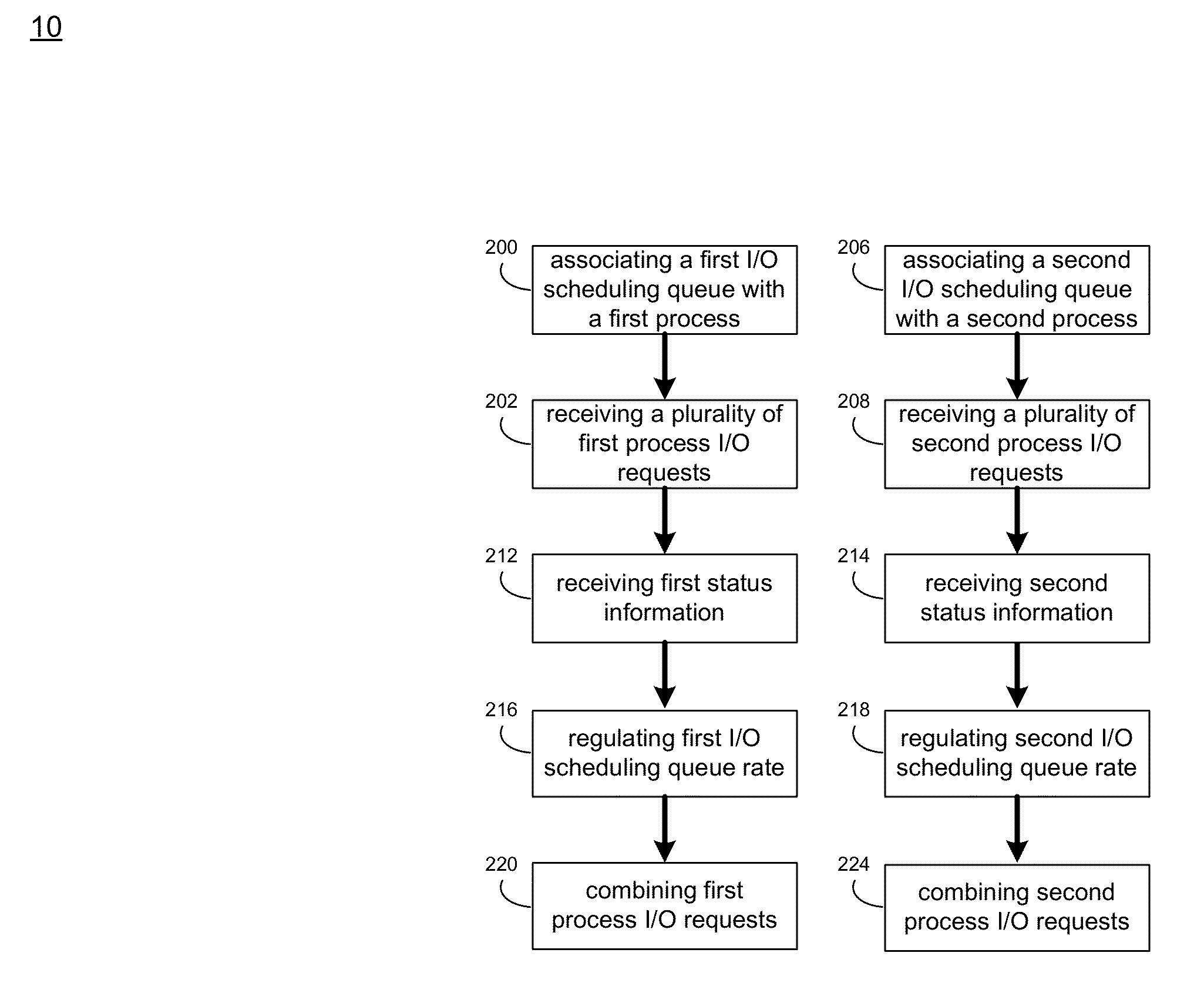

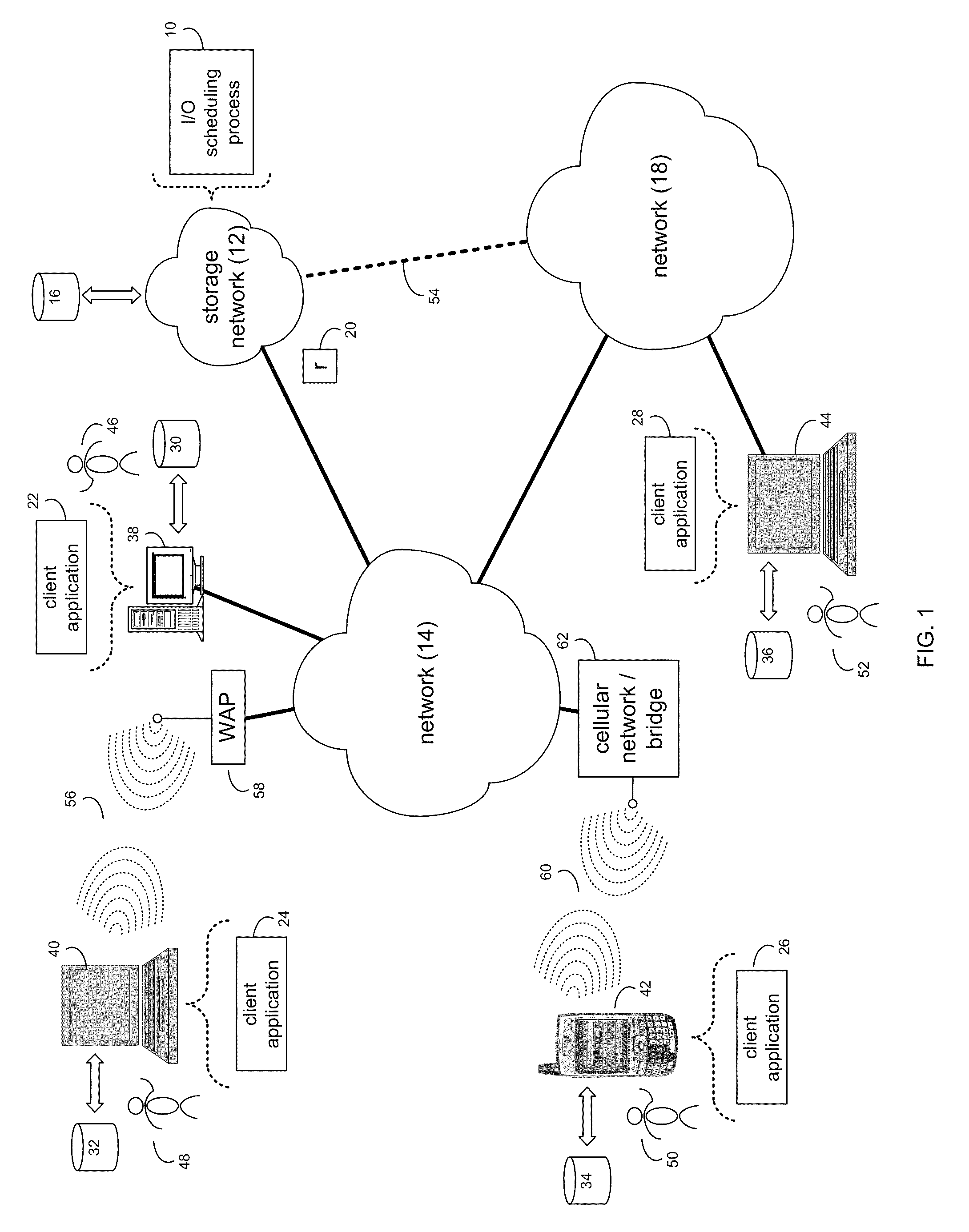

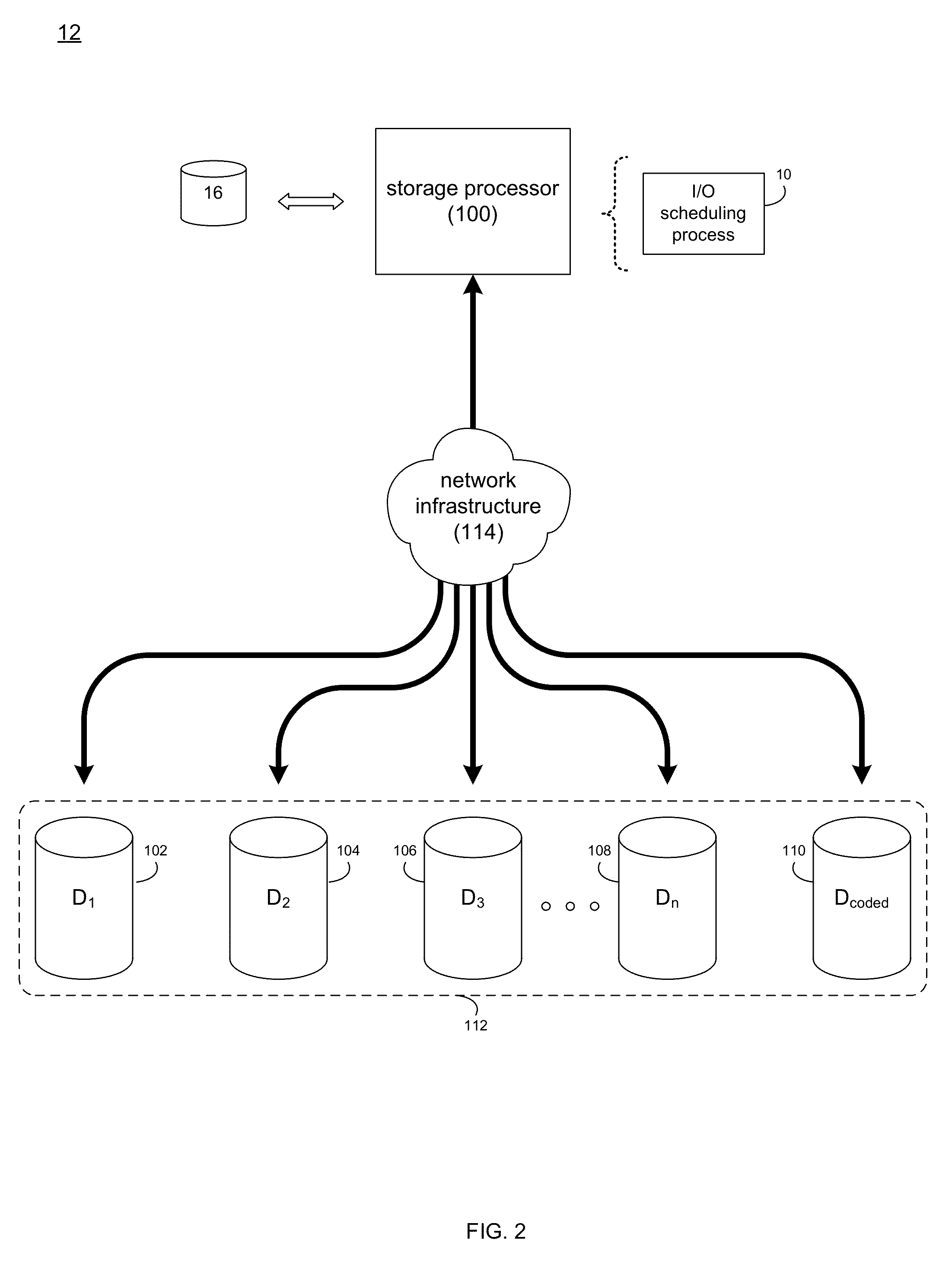

I/O scheduling system and method

ActiveUS8732342B1Error preventionFrequency-division multiplex detailsComputing systemsDistributed computing

A method, computer program product, and computing system for associating a first I / O scheduling queue with a first process accessing a storage network. The first I / O scheduling queue is configured to receive a plurality of first process I / O requests. A second I / O scheduling queue is associated with a second process accessing the storage network. The second I / O scheduling queue is configured to receive a plurality of second process I / O requests.

Owner:EMC IP HLDG CO LLC

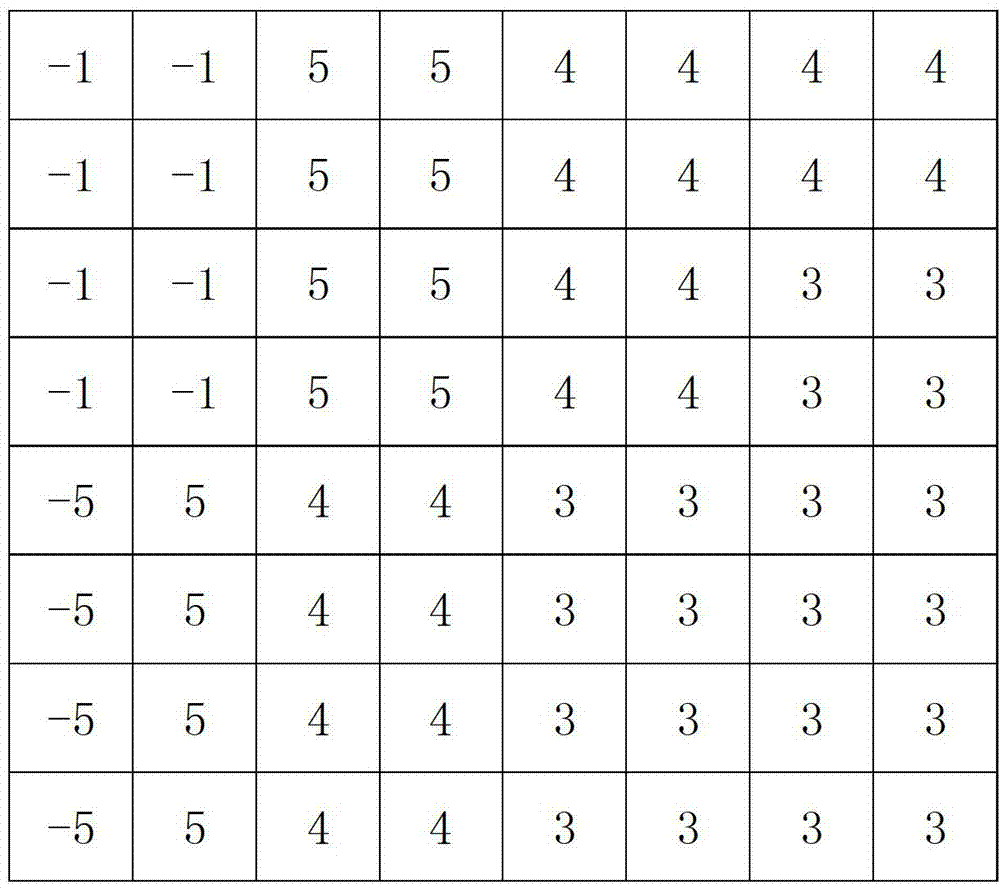

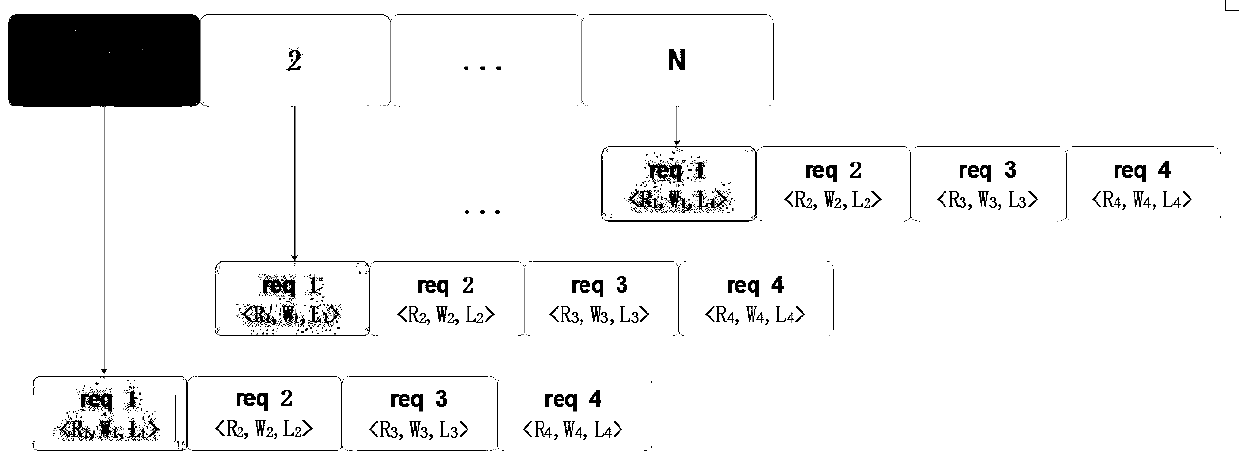

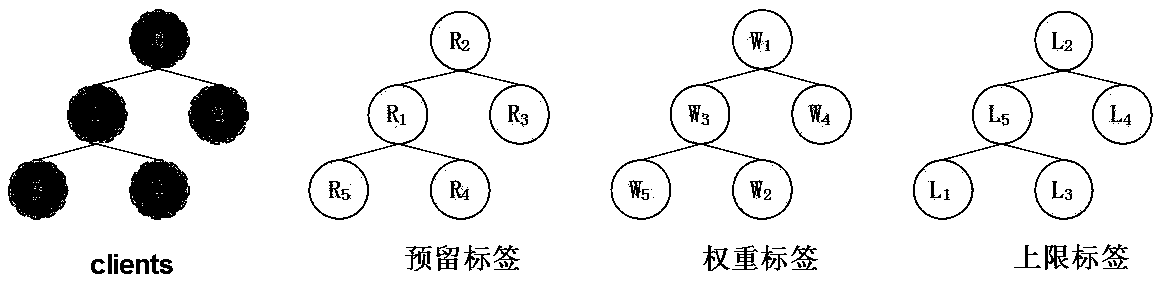

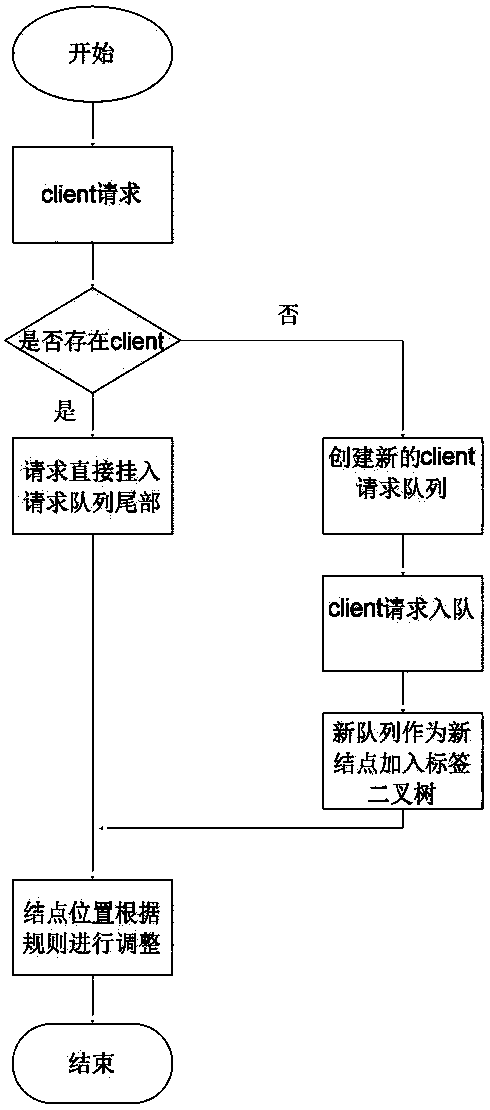

A time label based I/O scheduling QoS method

InactiveCN109104463AReasonable distribution according to needsGuaranteed reliabilityData switching networksSystems designBinary tree

A time label based I / O scheduling QoS method System Design Based on Time Label is provided. In the form of a two-level mapping queue, where the first level is the client queue for the client, the second level is a real request queue, each request contains three time stamps (Ri, Wi, Li), where i denotes the client number to which it belongs, and a large amount of dynamic data is organized and managed by constructing reserved time stamps, weighted time stamps and full binary trees of upper bound time stamps respectively. The I / O scheduling QoS method based on the time label designed by the invention can more reasonably allocate the limited I / O resources according to requirements, so as to cope with the flexible and changeable application scenarios and ensure the reliability of the system performance.

Owner:NANJING YUNCHUANG LARGE DATA TECH CO LTD

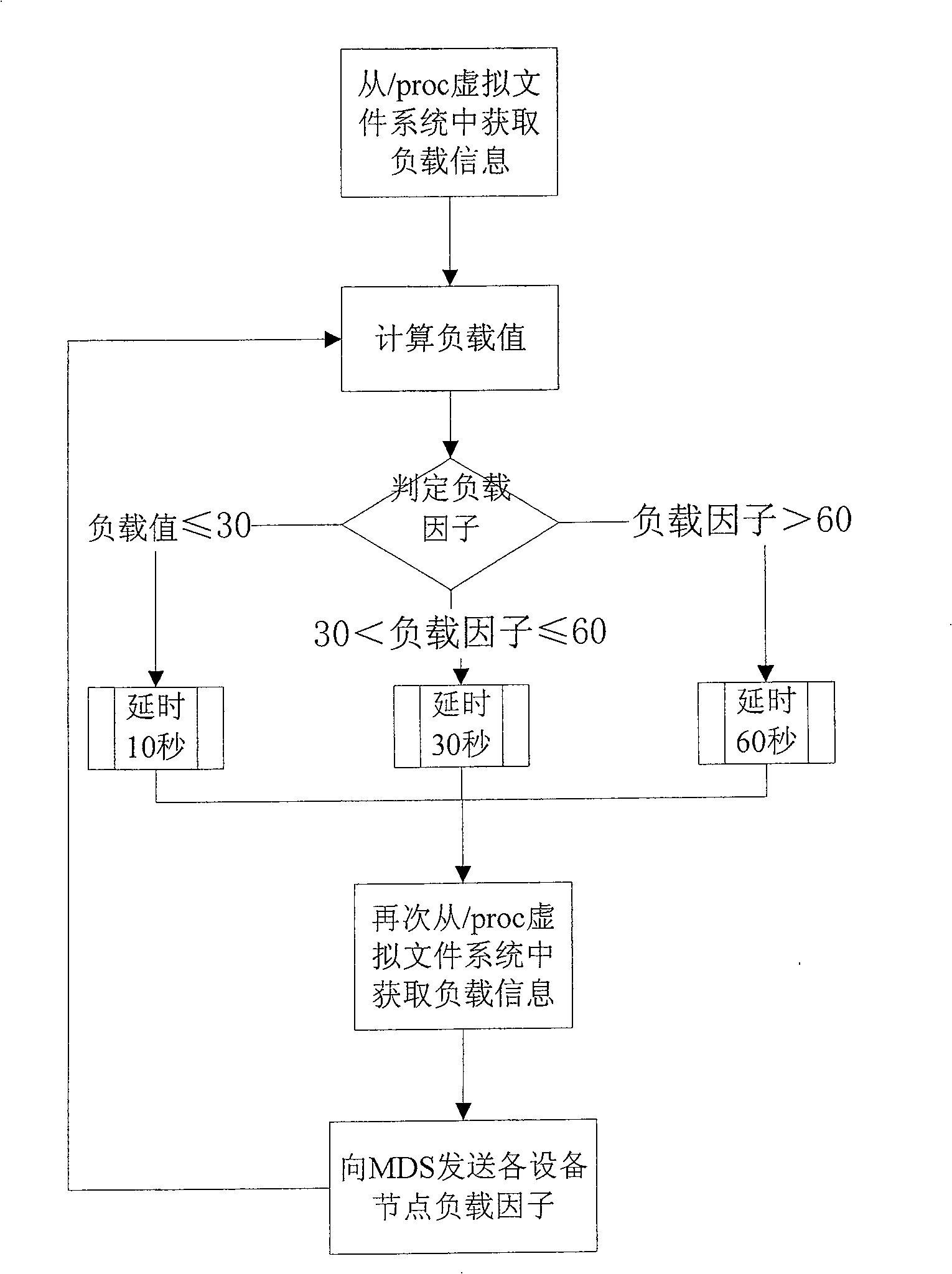

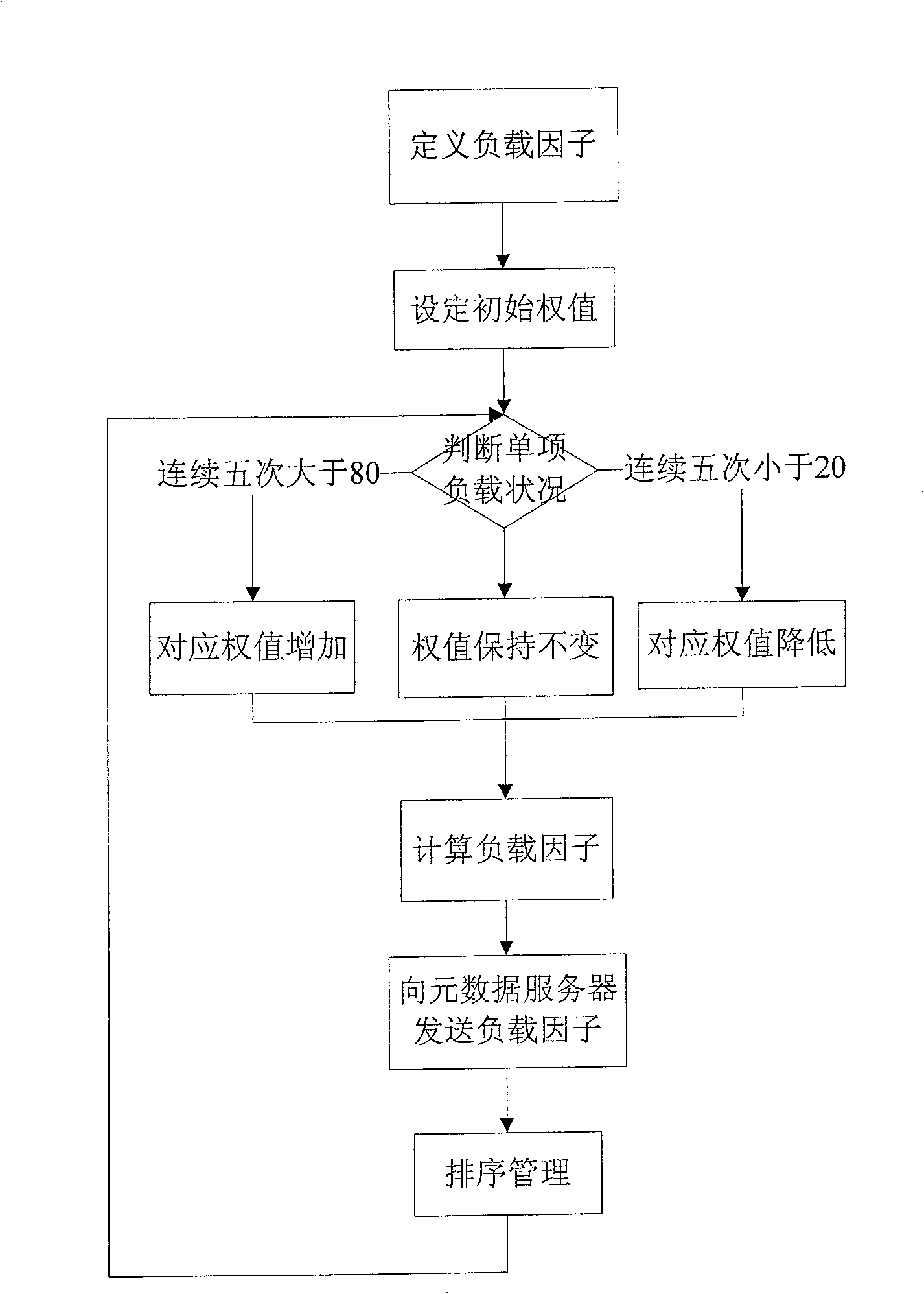

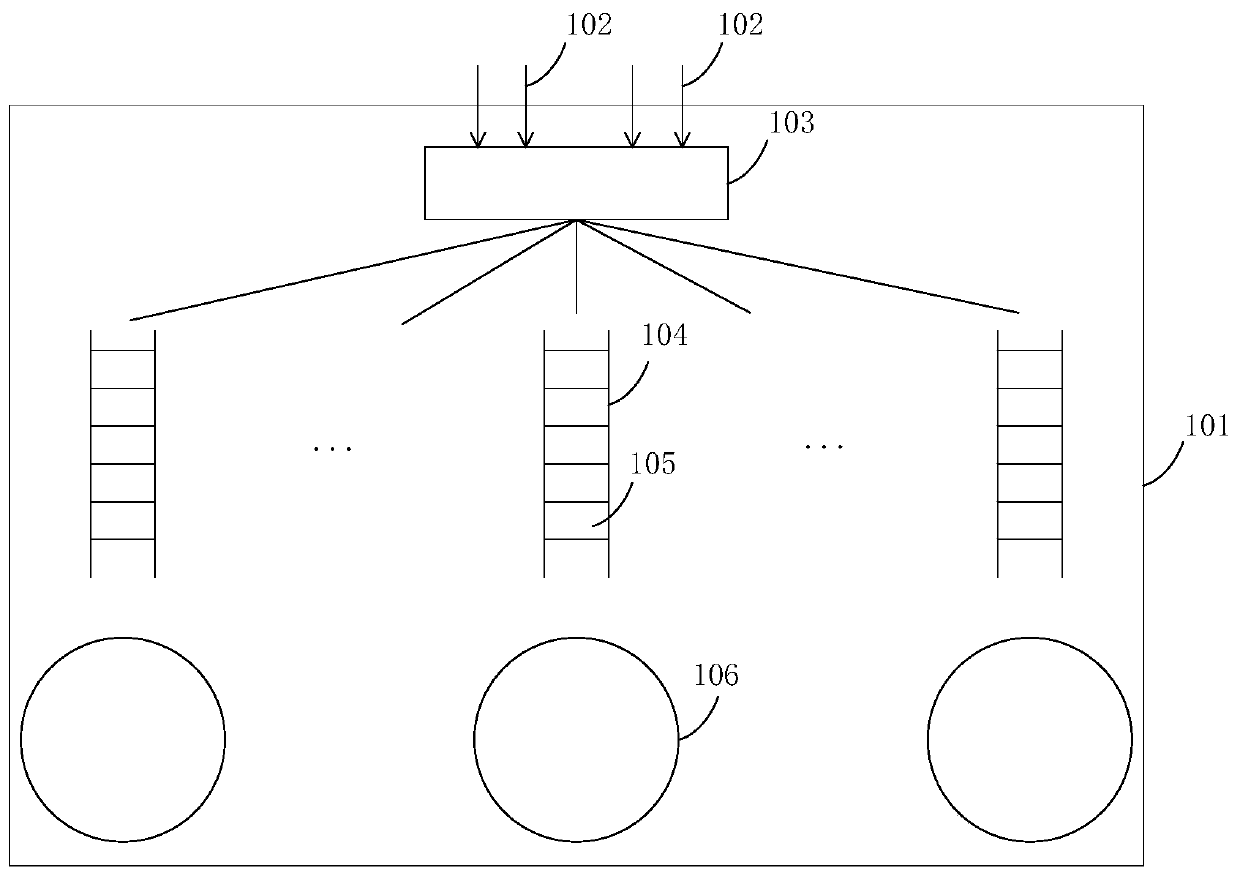

Load balancing method based on object storage device

InactiveCN100476742CImplement load migrationLoad balancingResource allocationSpecial data processing applicationsLoad SheddingSCSI

It's an object-based storage device load balancing method, belonging to the computer storage technology, aiming at balancing the distribution of the system load in the storage nodes by reasonable scheduling of I / O load and hot data migration, to take full advantage of high-performance of storage device nodes. The order of the invention includes: (a) active load detection steps; (2) equipment load statistics steps; (3) object migration and copies management steps; (4) object properties expansion steps; (5) I / O request processing steps. The invention expands the SCSI protocol standards of object storage devices (OSD). With the advantages of object storage model, it provides basis for I / O scheduling decision, makes full use of the computing capacity of all the storage nodes to balance the load, reduce storage system response time and increase the storage system throughput.

Owner:HUAZHONG UNIV OF SCI & TECH

A multi-thread based mapreduce execution system

ActiveCN103605576BEfficient resource sharing mechanismReduce management pressureResource allocationGranularityParallel computing

The invention discloses a multithreading-based MapReduce execution system comprising a MapReduce execution engine implementing multithreading. A multi-process execution mode of Map / Reduce tasks in original Hadoop is changed into a multithread mode; details about memory usage are extracted from Map tasks and Reduce tasks, a MapReduce process is divided into multiple phases under fine granularity according to the details, and a shuffle process in the original Hadoop is changed from Reduce pull into Map active push; a uniform memory management module and an I / O management module are implemented in the MapReduce multithreading execution engine and used to centrally manage memory usage of each task thread; a global memory scheduling and I / O scheduling algorithm is designed and used to dynamically schedule system resources during the execution process. The system multithreading-based MapReduce execution system has the advantages that memory usage can be maximized by users without modifying the original MapReduce program, disk bandwidth is fully utilized, and the long-last I / O bottleneck problem in the original Hadoop is solved.

Owner:HUAZHONG UNIV OF SCI & TECH

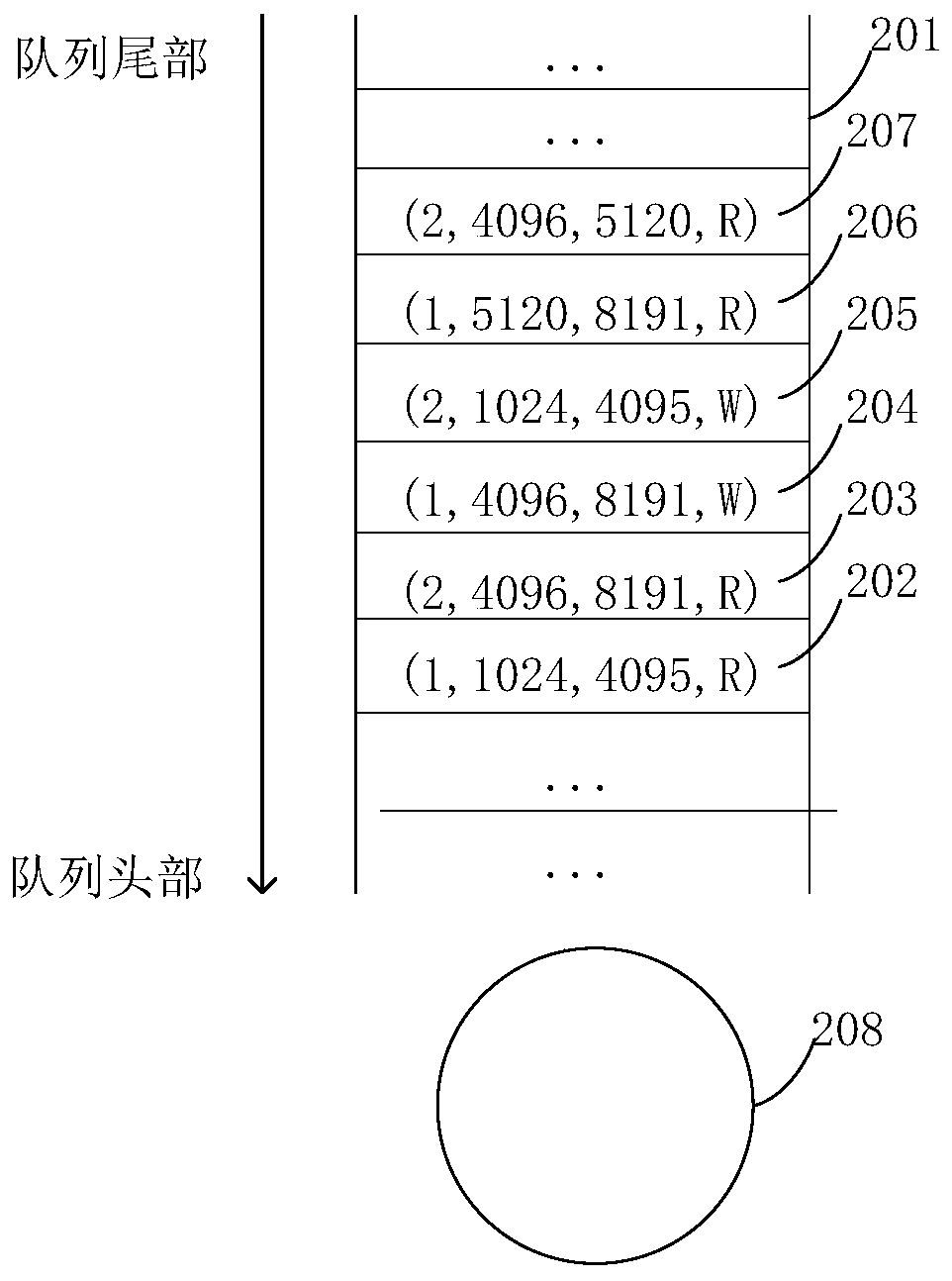

Internal concurrent I/O scheduling method and system for partitions of data server side

ActiveCN110837411AProgram initiation/switchingEnergy efficient computingParallel computingConcurrency

The invention provides an internal concurrent I / O scheduling method and system for partitions of a data server side. The method includes the steps of: receiving I / O requests, and detecting whether a read request or a write request in the current I / O request conflicts with a previous request in the request conflict queue or the request execution queue or not, adding the conflict read request or write request into the corresponding request conflict queue, and adding the conflict-free read request or write request into the corresponding request execution queue. According to the method, meaningless waiting between requests without read-write conflicts or write conflicts is avoided, the concurrency and response time delay of IO in the partition of the data server side are effectively improved,the IO performance of the data server is improved, and then the overall IO performance of the storage system is improved.

Owner:敏博科技(武汉)有限公司

A self-adaptive io scheduling method for a multi-controller storage system

ActiveCN103135943BImprove load statusLoad balancingInput/output to record carriersController architectureNetwork connection

The present invention provides an adaptive IO scheduling method for a multi-controller storage system, a multi-controller architecture, load balancing between controllers, avoiding risks and performance bottlenecks caused by single controller failures, and supporting rich host connection interfaces , supports iSCSI, FC, InfiniBand and 10 Gigabit network connections, and can provide users with high-bandwidth IB and 10-Gigabit network connections to meet customers' differentiated needs for high bandwidth and high performance. The present invention relates to IO scheduling of a multi-control storage system, and proposes an IO scheduling method among multiple controllers. When a multi-control storage system receives an IO request from an application layer, it can dispatch the IO request to multiple controllers for concurrent execution. This method not only allocates unallocated IO requests to low-load controllers, but also reschedules IO requests from overloaded controllers to lighter-loaded controllers, thereby improving the load status of each controller in the system. Completed the IO load scheduling and balancing on multi-control nodes, fully dispatched the potential of the equipment, and improved the system performance.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

Multi-core multi-model parallel distributed type real-time simulation system

ActiveCN102929158BImprove applicabilityFlexible configurationSimulator controlReal-time simulationComputer module

The invention provides a multi-core multi-model parallel distributed type real-time simulation system which comprises a main control module, a model module, a data channel control module and a hardware interface module, wherein the main control module is used for accomplishing corresponding functions by scheduling different simulation scheduling algorithms, transmitting instructions for accomplishing data mapping in accordance with simulation setup to the data channel control module according to hardware I / O (Input / Output) scheduling polices in each simulation period, transmitting instructions for accomplishing the IO data read-out and write-in within one period to the hardware interface module, and transmitting instructions for stimulating the operation to the model module; the model module is used for acquiring input data once, solving once and output data once in each simulation period according to the instructions; the data channel control module is used for accomplishing data mapping and relevant program processing of the I / O of a model or hardware in each period according to the instructions; and the hardware interface module is used for carrying out configuration read-out and output on input / output streams of hardware I / O equipment according to a data configuration format of a file, and accomplishing the operation of read-out or write-in to various hardware I / O equipment.

Owner:北京华力创通科技股份有限公司

Method for dispatching I/O of asymmetry virtual machine based on multi-core dynamic partitioning

InactiveCN101706742BGood I/O performanceReduce response delayResource allocationSoftware simulation/interpretation/emulationInformation processingComputer architecture

The invention provides a method for dispatching I / O of an asymmetry virtual machine based on multi-core dynamic partitioning, which comprises the following steps: when a system is started, firstly an I / O dispatching initialization module completes the configuration of dispatching parameters and the allocation of a buffer zone; subsequently, when the system runs, an I / O status monitoring and information processing module dynamically collects and processes the runtime parameters of driving Domain and customer Domain, carries out statistics on parameters such as types, time and frequency of happened I / O event, and then sends the statistics result to a dispatching decision module for judgment; and the dispatching decision module makes dispatching decision according to preset conditions, and gives out commands to a multi-core dynamic partitioning module to execute the operation of multi-core dynamic partitioning or recovering, thus changing the core partitioning mode and dispatching statusof a processor of the system and leading the using mode of processor resources and the dispatching switching strategy of Domain to better adapt to I / O load requirements of the current system, and achieving the purpose of optimizing I / O performance.

Owner:BEIHANG UNIV

I/O scheduling system and method

Owner:EMC IP HLDG CO LLC

I/O scheduling

In one embodiment, input-output (I / O) scheduling system detects and resolves priority inversions by expediting previously dispatched requests to an I / O subsystem. In response to detecting the priority inversion, the system can transmit a command to expedite completion of the blocking I / O request. The pending request can be located within the I / O subsystem and expedited to reduce the pendency period of the request.

Owner:APPLE INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com