Method and apparatus for memory access scheduling to reduce memory access latency

A memory access and latency technology, applied in memory systems, instruments, memory address/allocation/relocation, etc., to solve problems such as memory system efficiency and performance degradation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

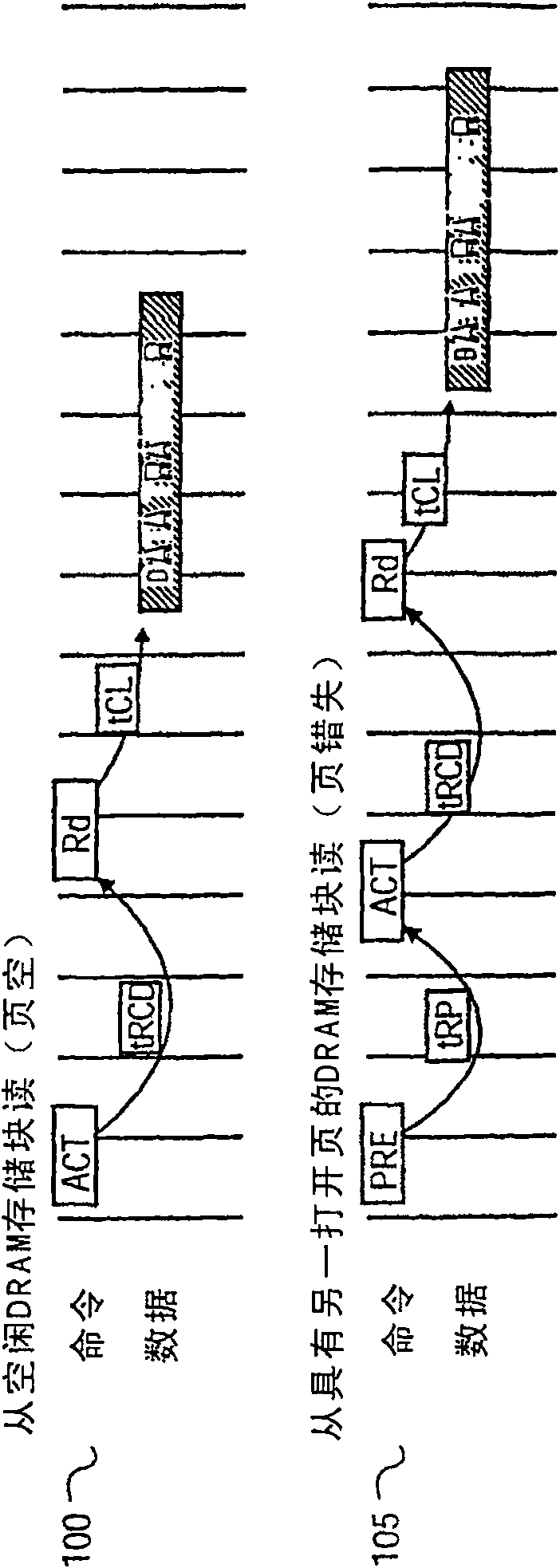

[0034] The present invention generally relates to methods and apparatus for reducing latency due to memory controller scheduling and memory accesses. Referring to the drawings, exemplary embodiments of the present invention will now be described. The exemplary embodiments are provided to illustrate the invention and should not be construed as limiting the scope of the invention.

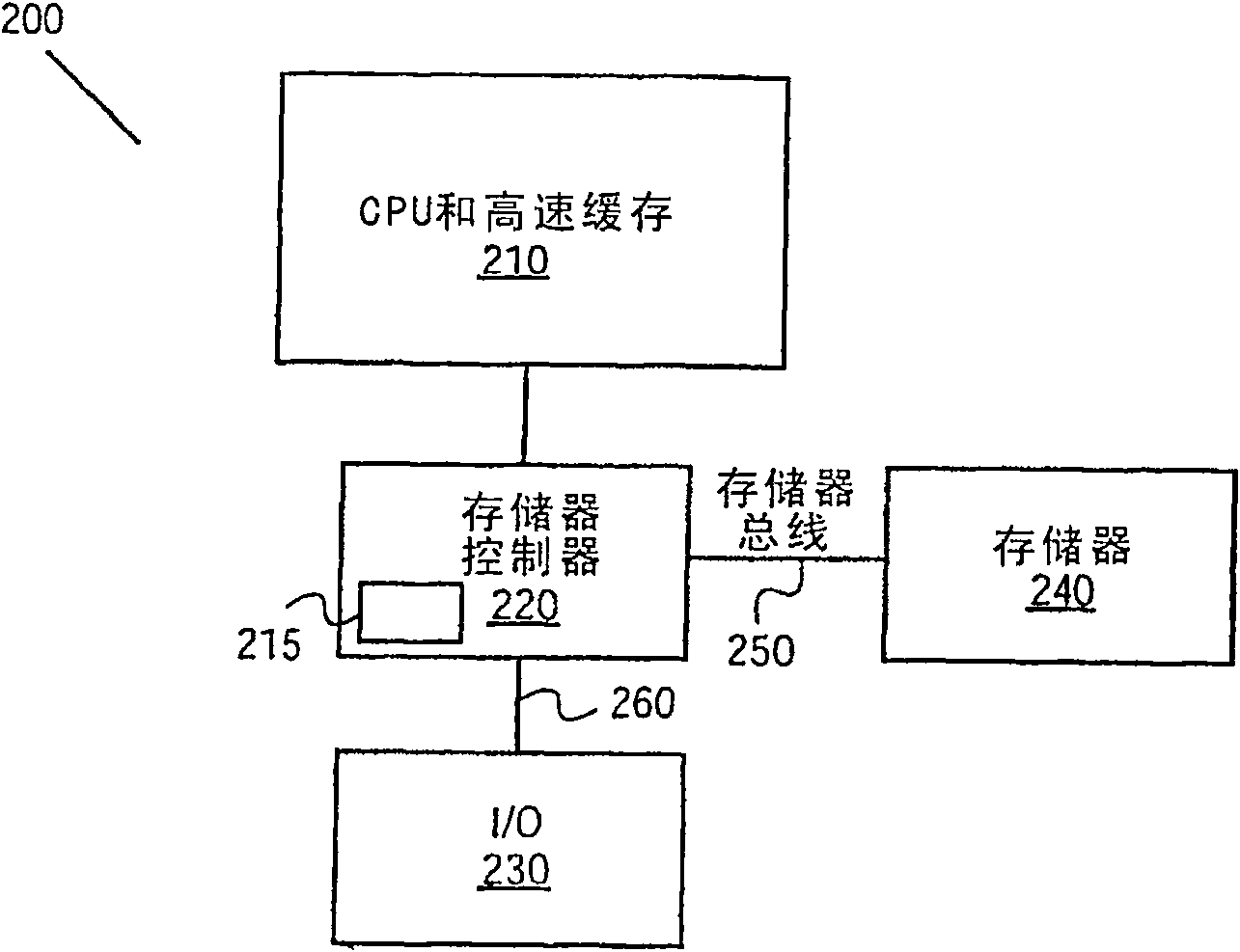

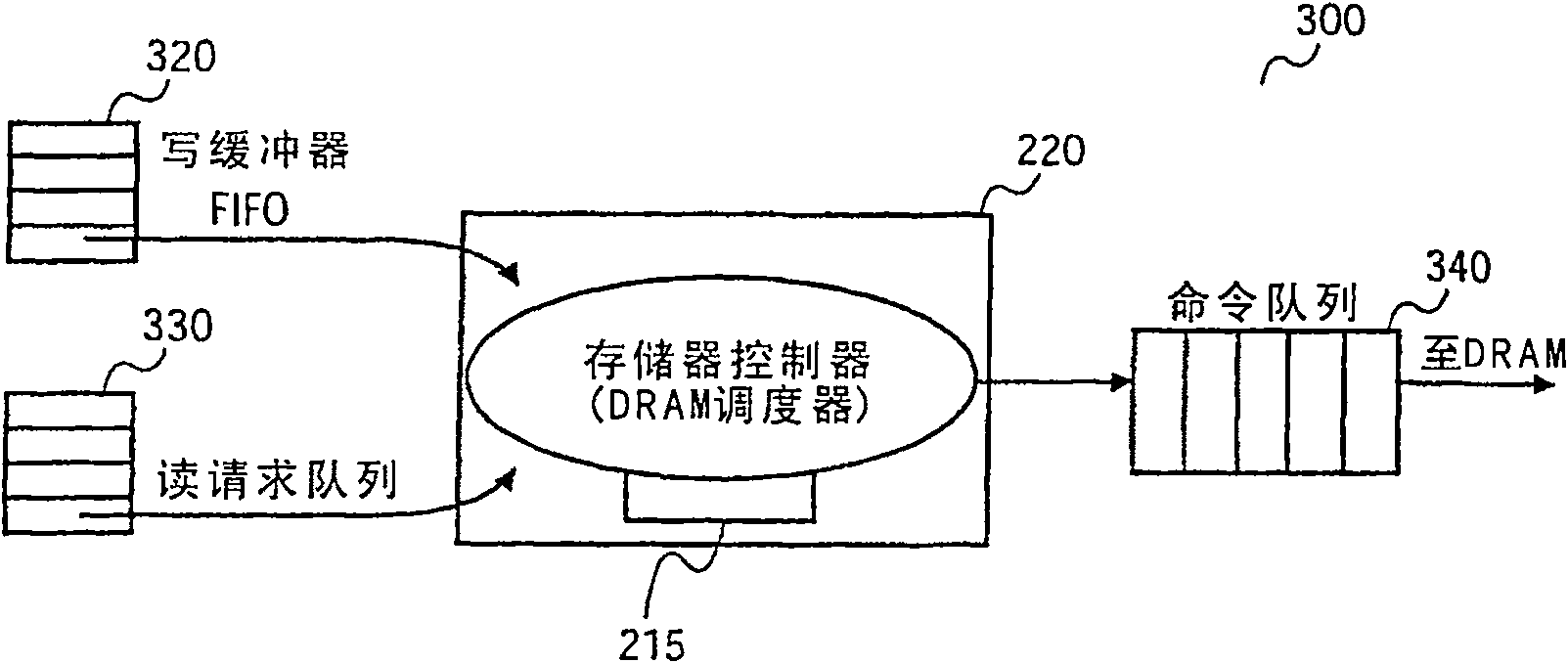

[0035] figure 2 An embodiment of the invention comprising system 200 is shown. System 200 includes central processing unit (CPU) and cache memory 210 , memory controller 220 , input / output (I / O) 230 , main memory 240 and memory bus 250 . Note that the CPU and cache memory 210 and memory controller 220 may be on the same chip. The main memory 240 may be Dynamic Random Access Memory (DRAM), Synchronous DRAM (SDRAM), SDRAM / Double Data Rate (SDRAM / DDR), Rambus DRAM (RDRAM), or the like. In one embodiment of the invention, memory controller 220 includes an intelligent automatic precharge process 215 ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com