System for settling fierce competition of memory resources in big data processing system

A technology of big data processing and memory resources, which is applied in the field of I/O performance optimization under the computer system structure, can solve the problems of fierce competition for memory resources, excessive competition for memory resources, and the inability to dynamically adjust the ratio of CPU and memory resources, etc., to achieve The effect of reducing overflow disk I/O operations, strong versatility and portability, and efficient use

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments. It should be understood that the specific embodiments described here are only used to explain the present invention, not to limit the present invention.

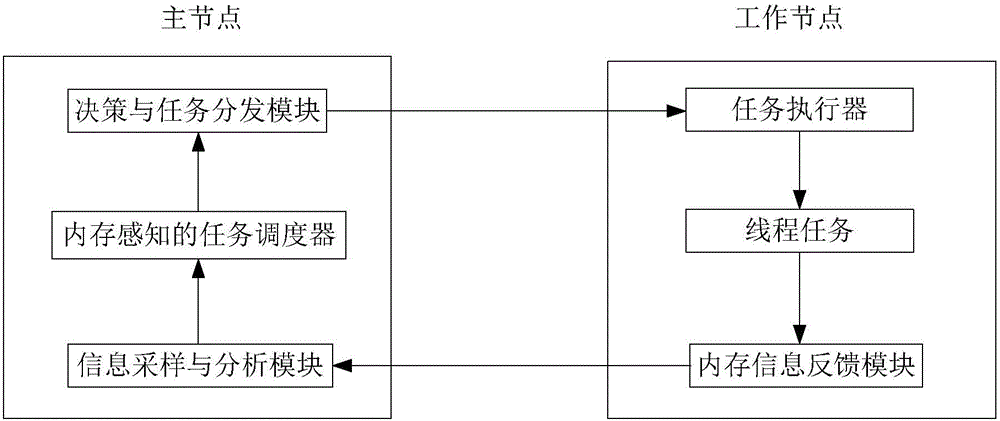

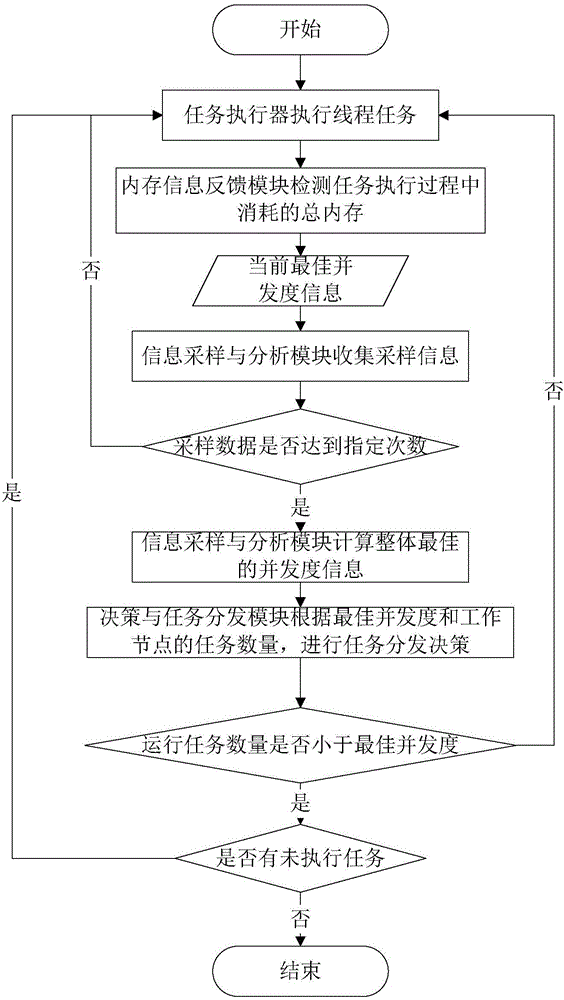

[0028] Such as figure 1 As shown, the present invention provides a system for solving fierce competition for memory resources in a big data processing system, including a memory information feedback module, an information sampling and analysis module, and a decision-making and task distribution module.

[0029]The memory information feedback module is used to monitor the memory usage of running thread tasks, count the amount of memory consumed during the execution of thread tasks, and also count the amount of data overflowed from the memory to the disk when the memory is insufficient. Calculate th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com