Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

64 results about "Interleaved memory" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computing, interleaved memory is a design made to compensate for the relatively slow speed of dynamic random-access memory (DRAM) or core memory, by spreading memory addresses evenly across memory banks. That way, contiguous memory reads and writes are using each memory bank in turn, resulting in higher memory throughputs due to reduced waiting for memory banks to become ready for desired operations.

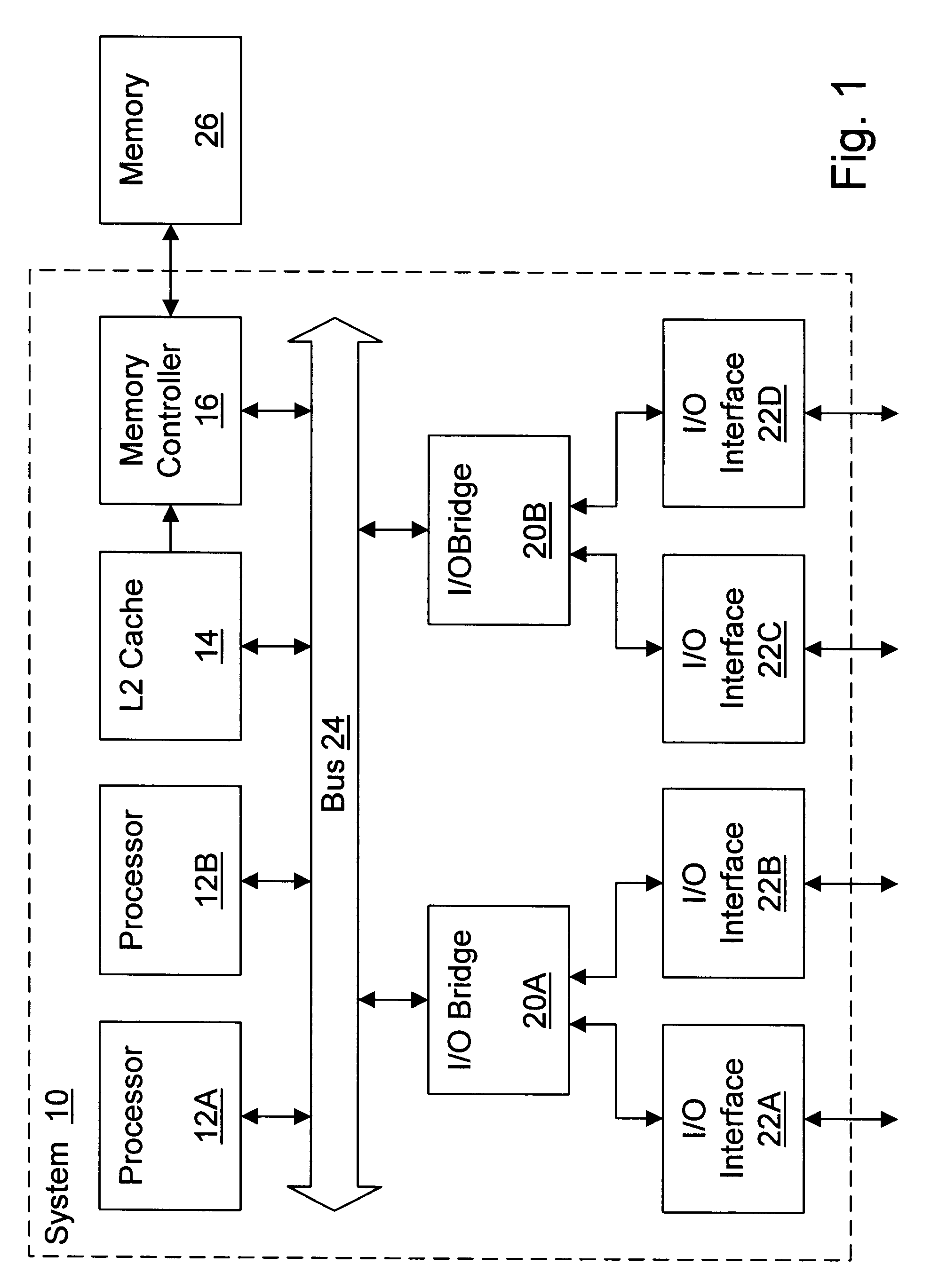

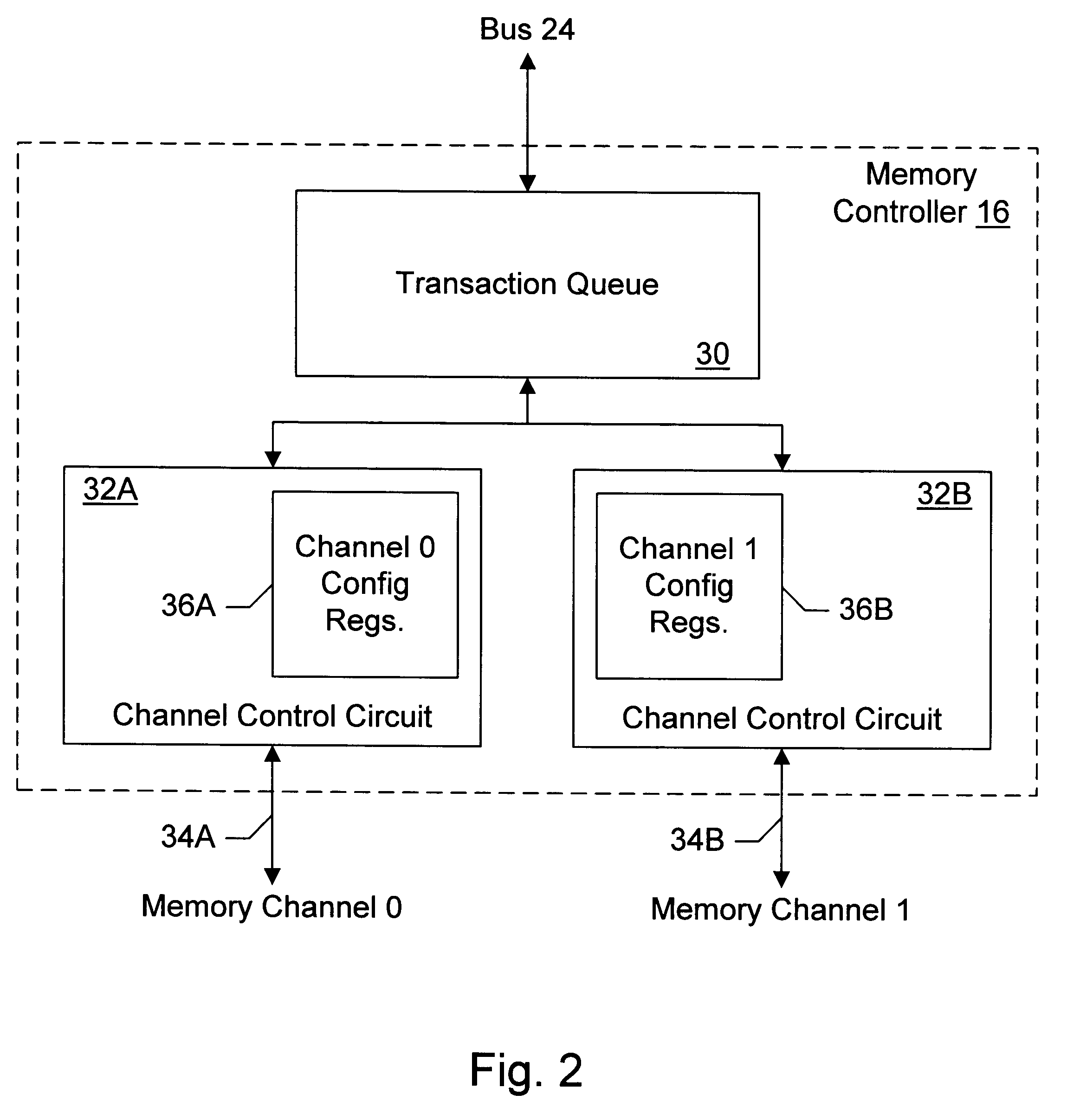

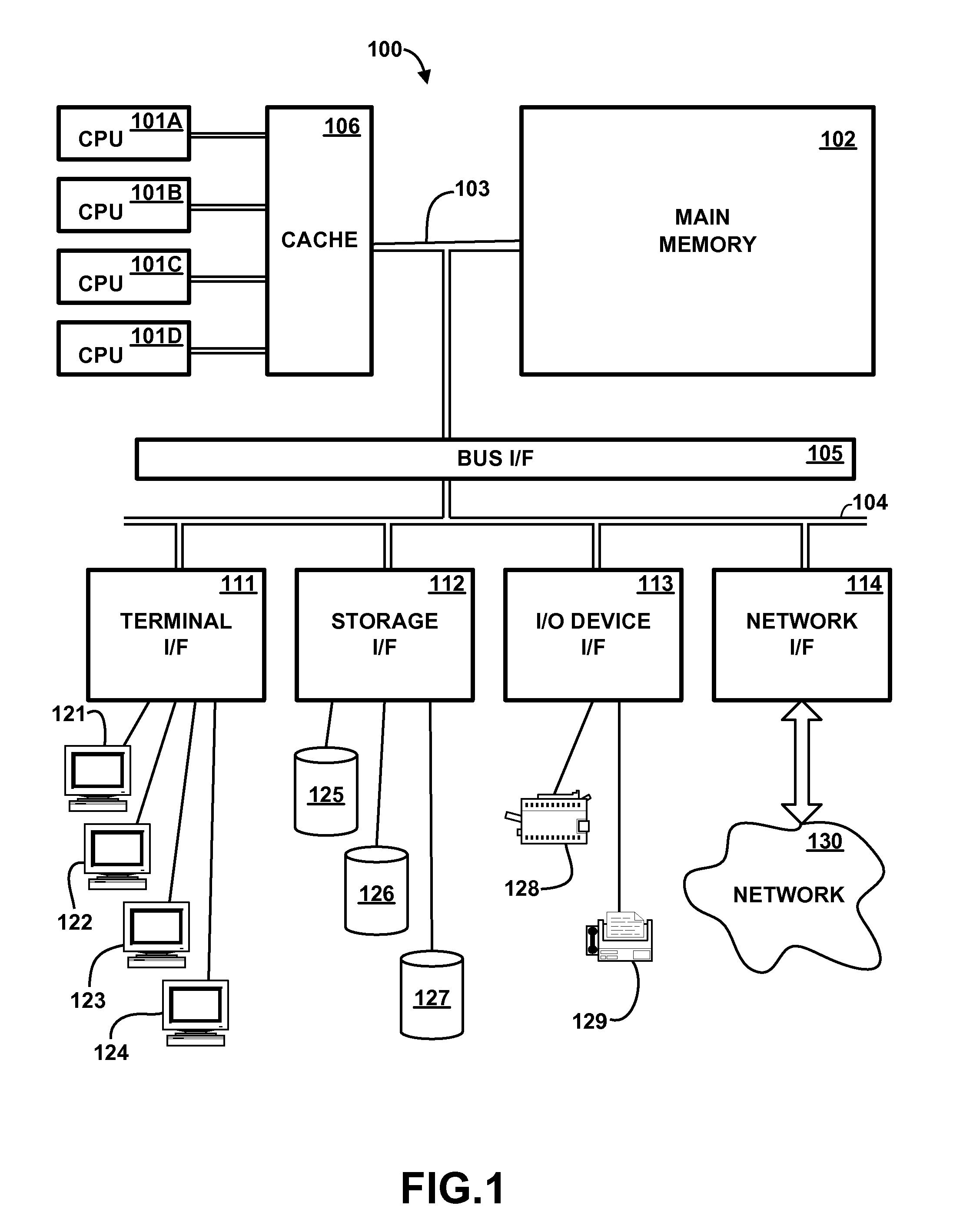

Memory controller with programmable configuration

InactiveUS6877076B1Flexible configurationOpen in timeMemory adressing/allocation/relocationMicro-instruction address formationProcessor registerParallel computing

Owner:AVAGO TECH INT SALES PTE LTD

Memory controller with programmable configuration

InactiveUS6625685B1Memory adressing/allocation/relocationMicro-instruction address formationProcessor registerParallel computing

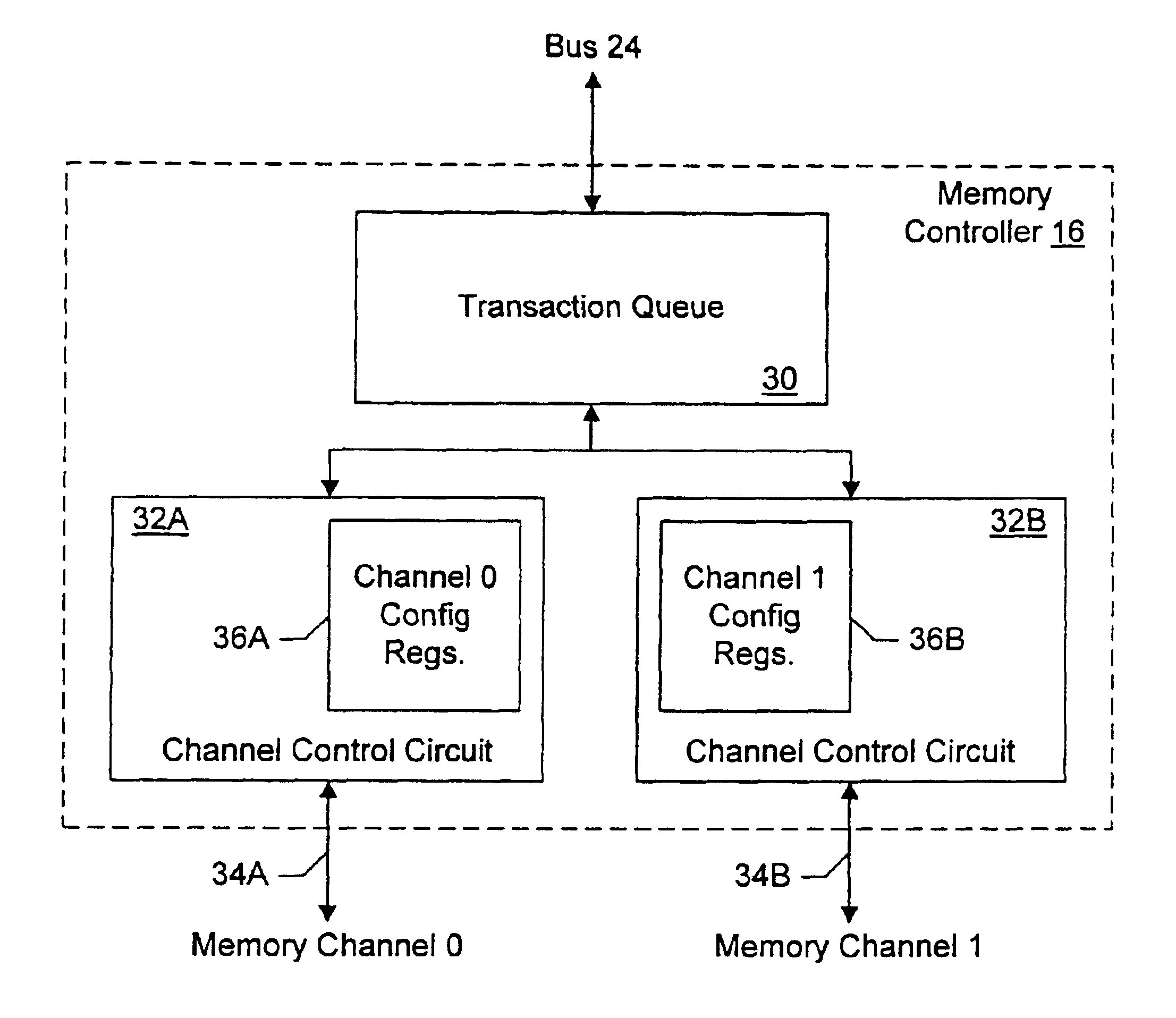

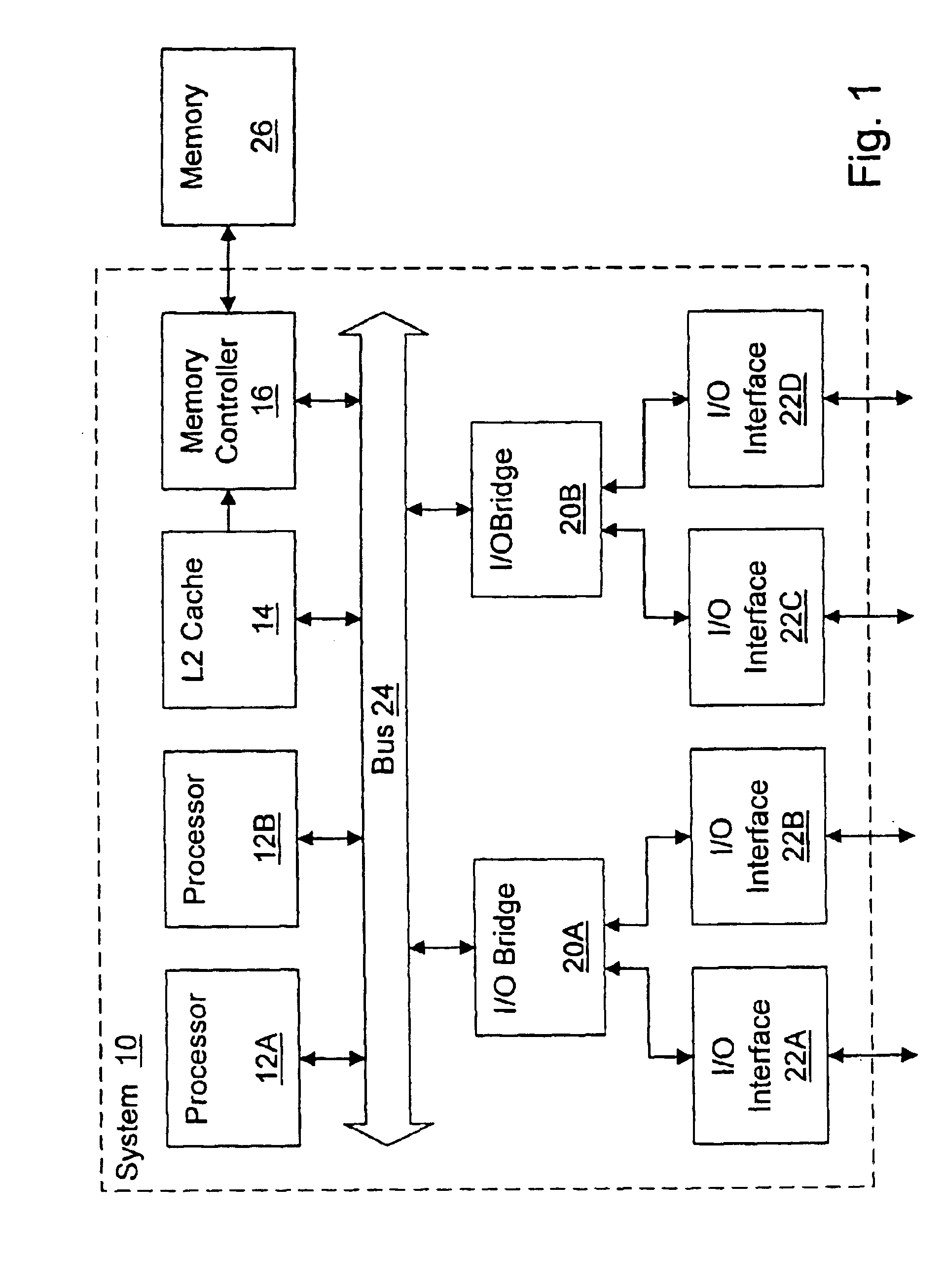

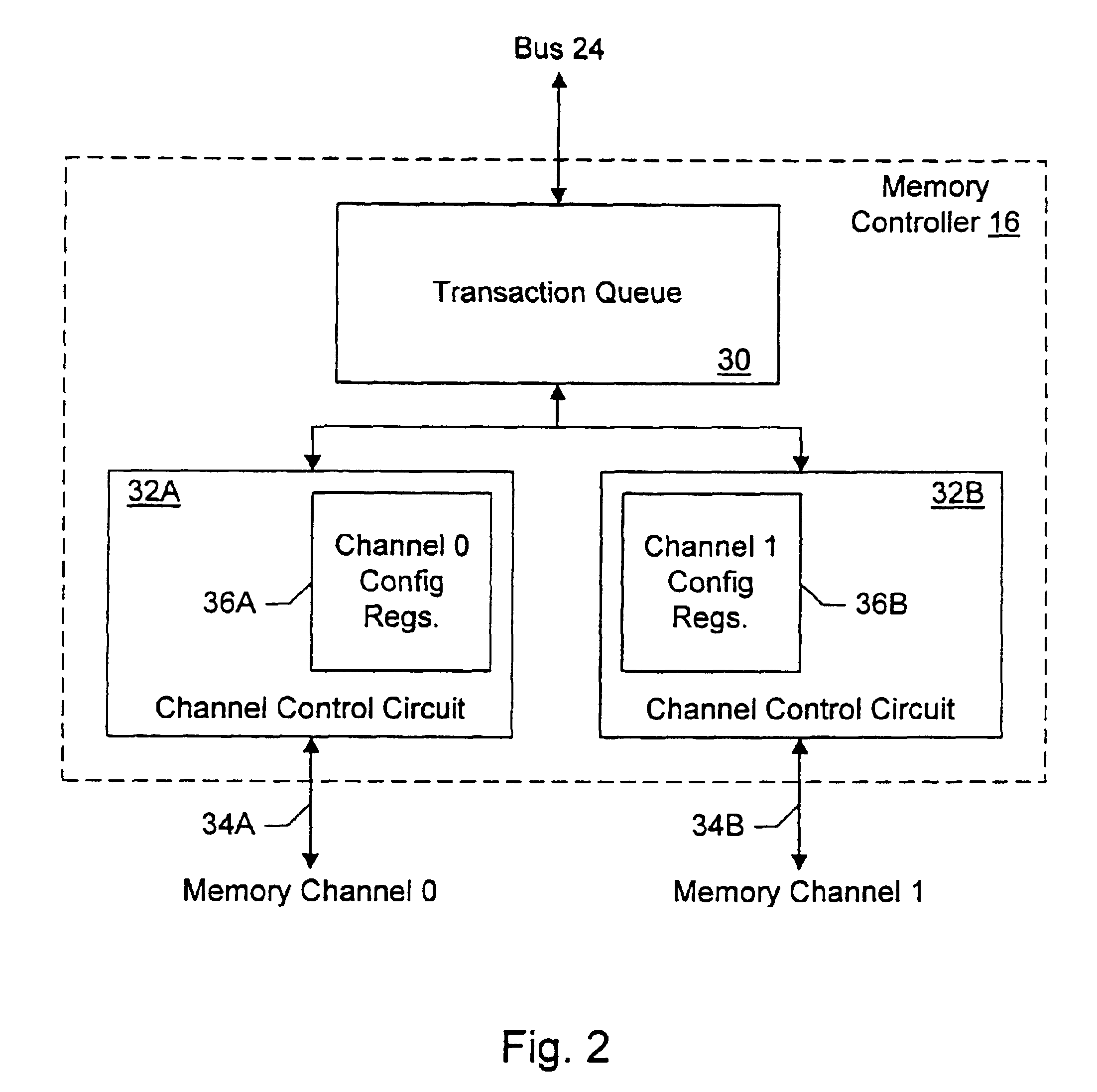

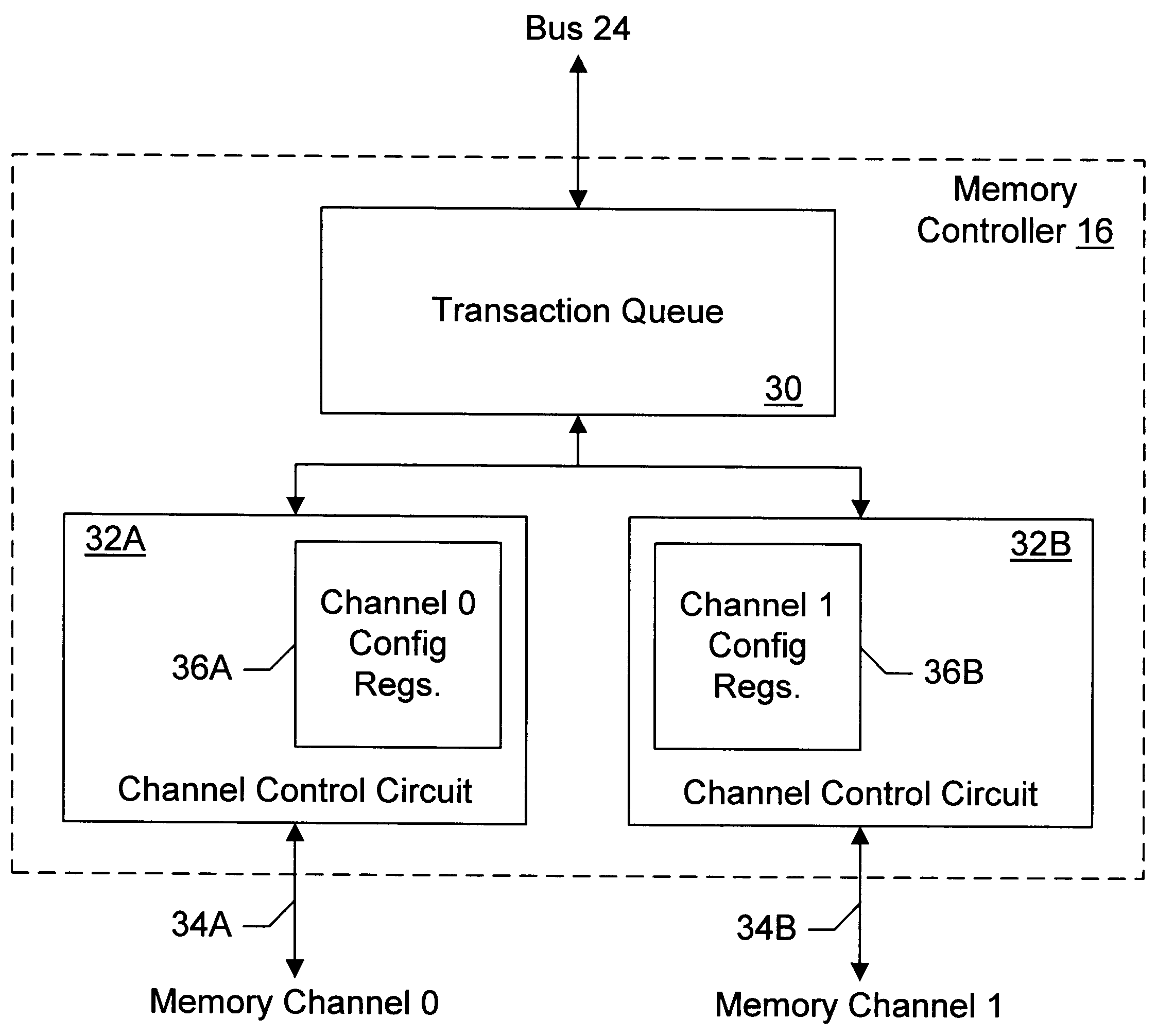

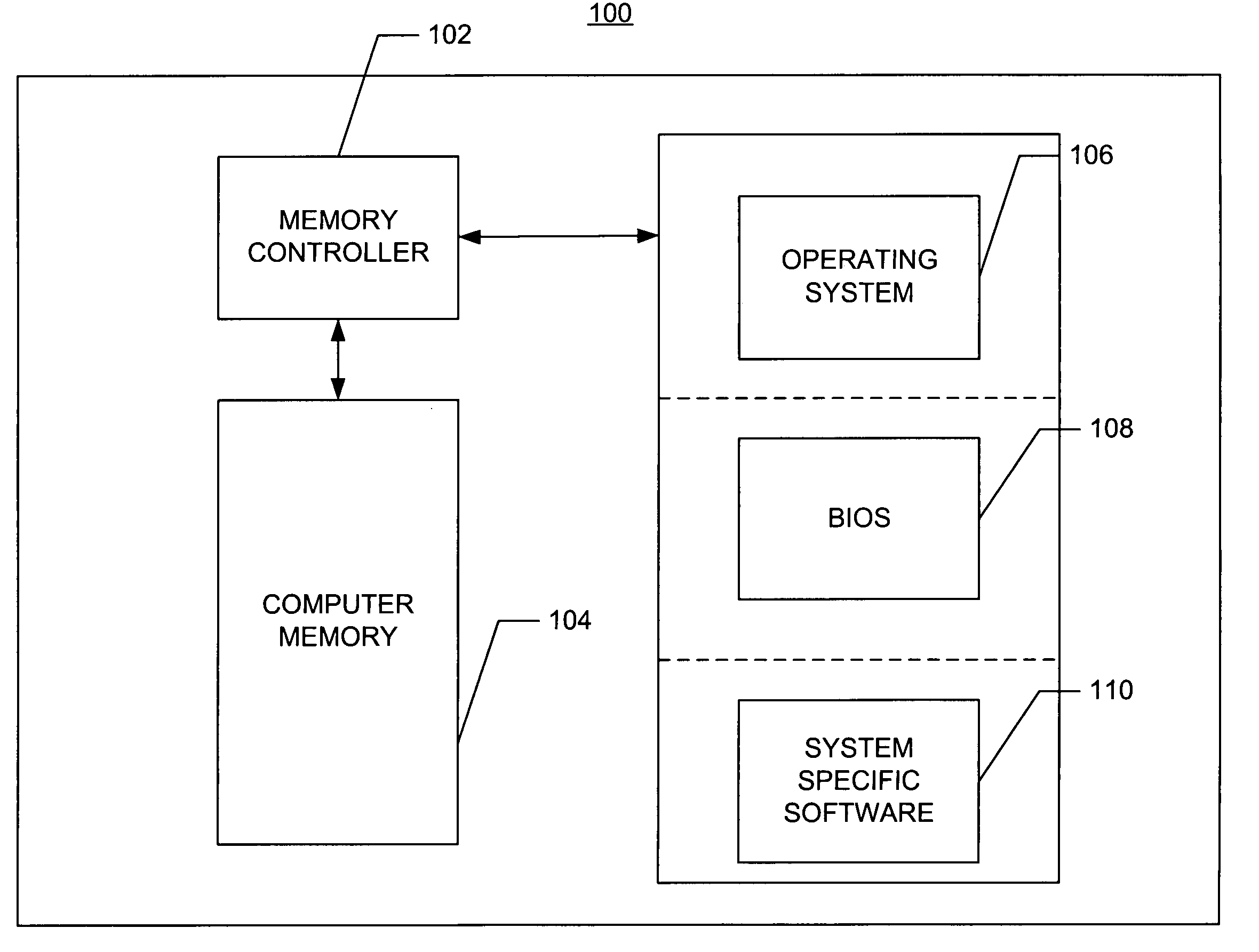

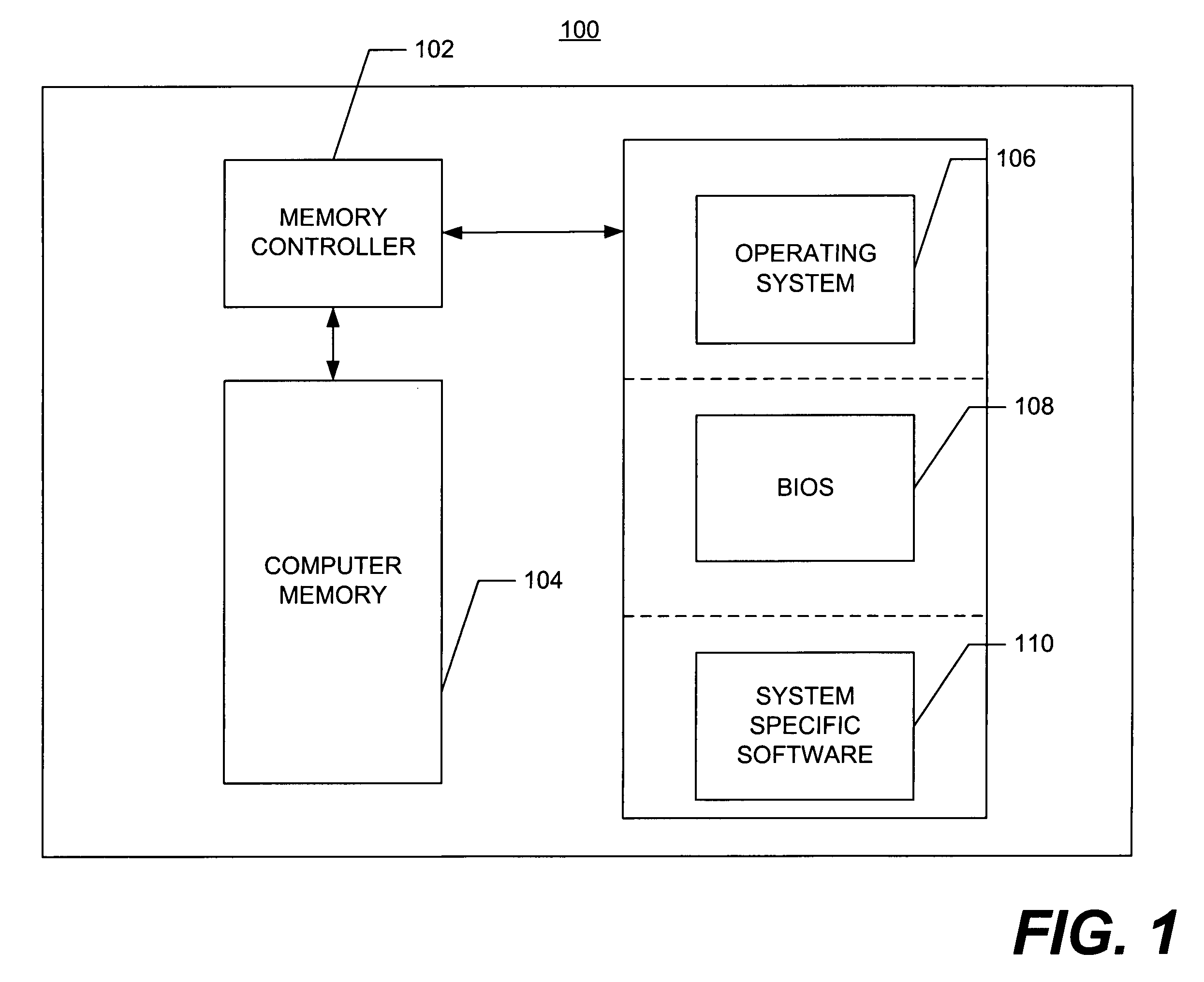

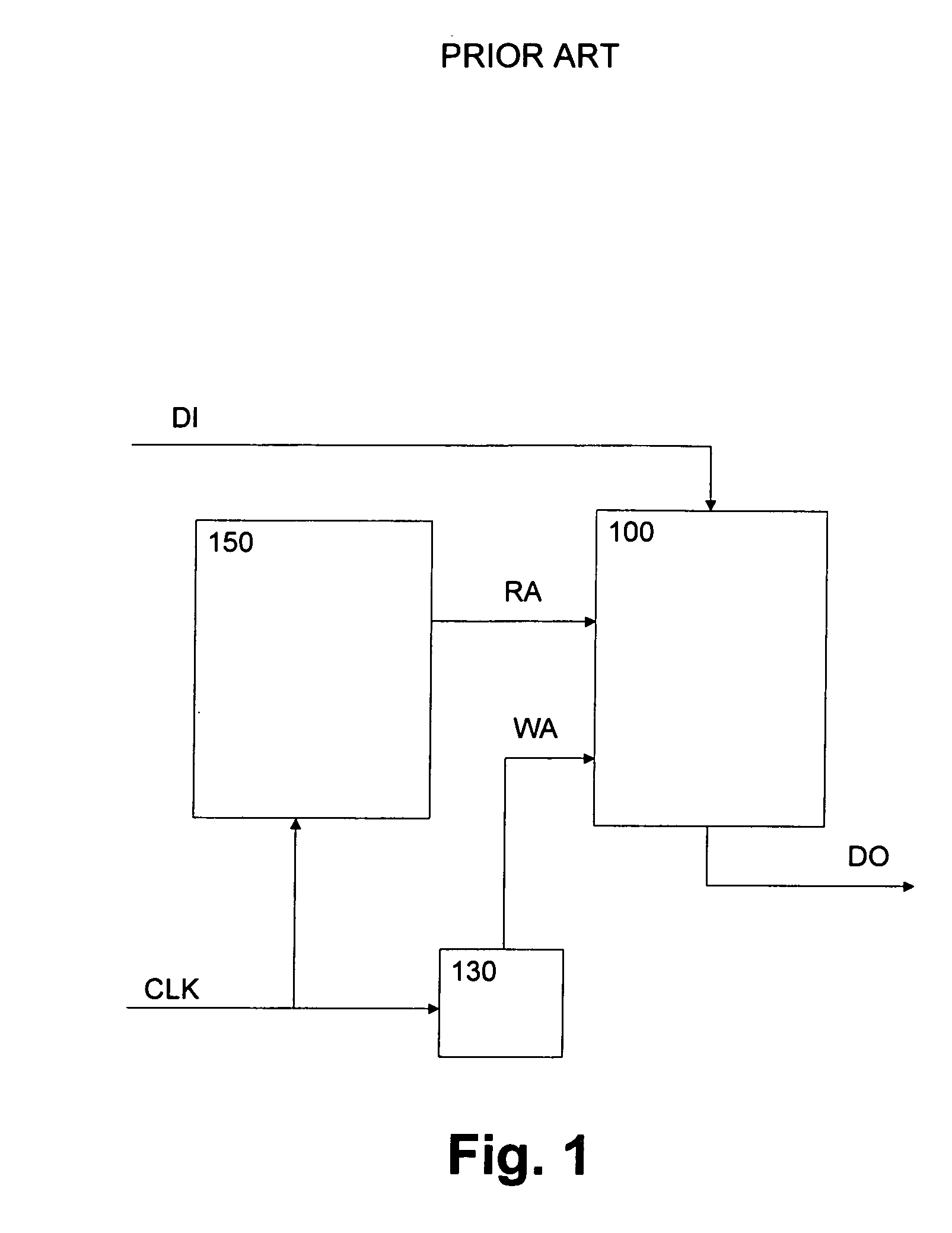

A memory controller provides programmable flexibility, via one or more configuration registers, for the configuration of the memory. The memory may be optimized for a given application by programming the configuration registers. For example, in one embodiment, the portion of the address of a memory transaction used to select a storage location for access in response to the memory transaction may be programmable. In an implementation designed for DRAM, a first portion may be programmably selected to form the row address and a second portion may be programmable selected to form the column address. Additional embodiments may further include programmable selection of the portion of the address used to select a bank. Still further, interleave modes among memory sections assigned to different chip selects and among two or more channels to memory may be programmable, in some implementations. Furthermore, the portion of the address used to select between interleaved memory sections or interleaved channels may be programmable. One particular implementation may include all of the above programmable features, which may provide a high degree of flexibility in optimizing the memory system.

Owner:AVAGO TECH INT SALES PTE LTD

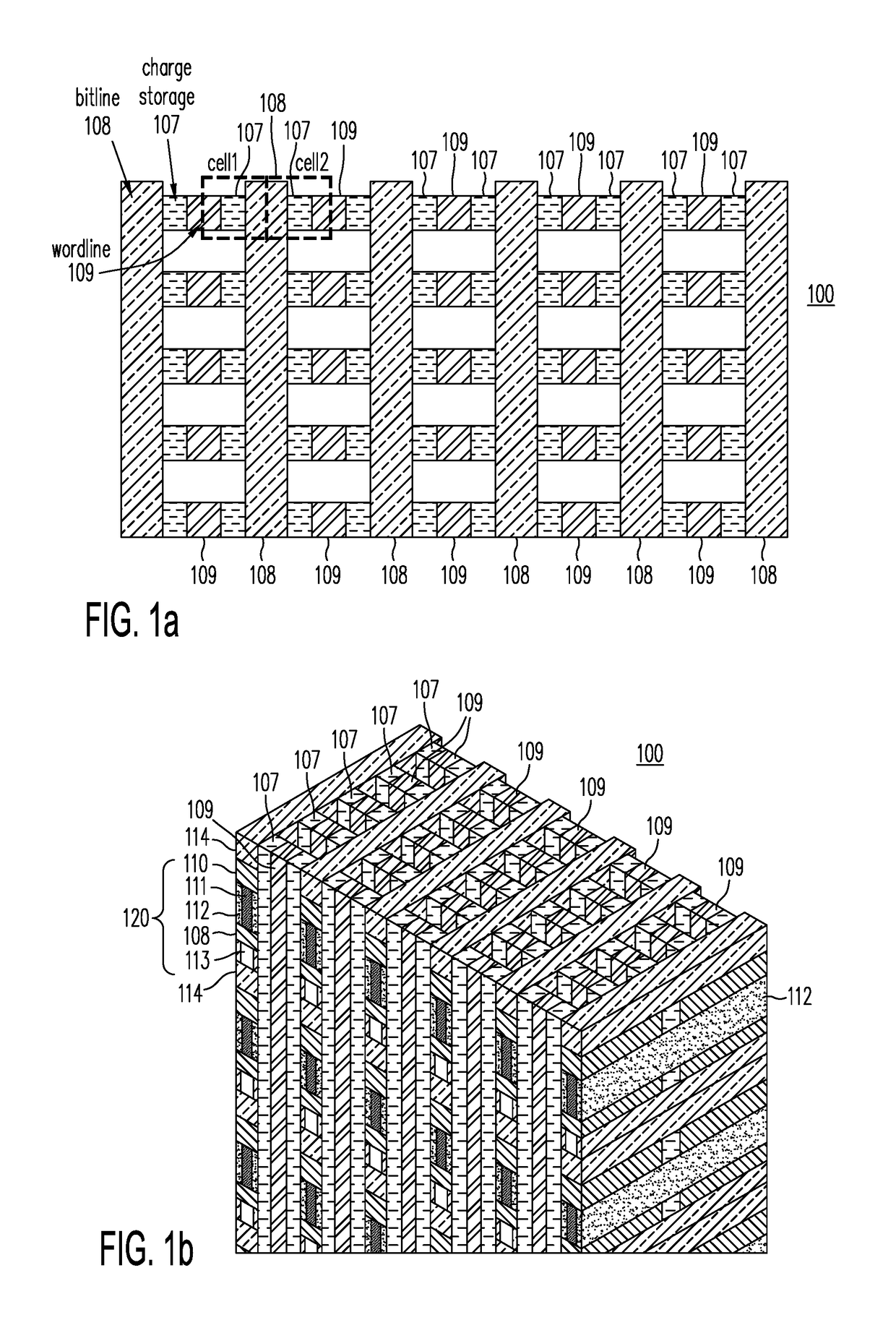

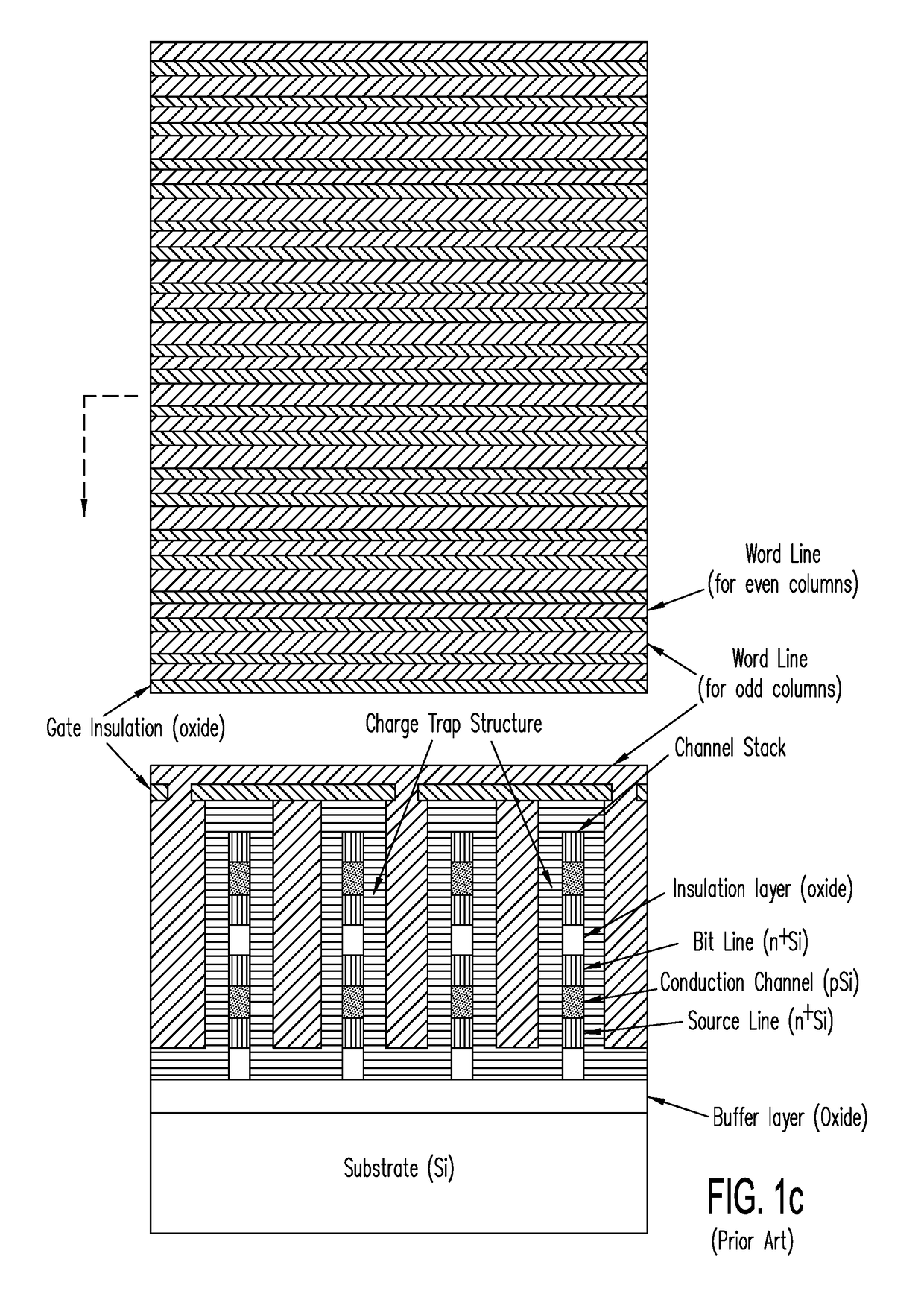

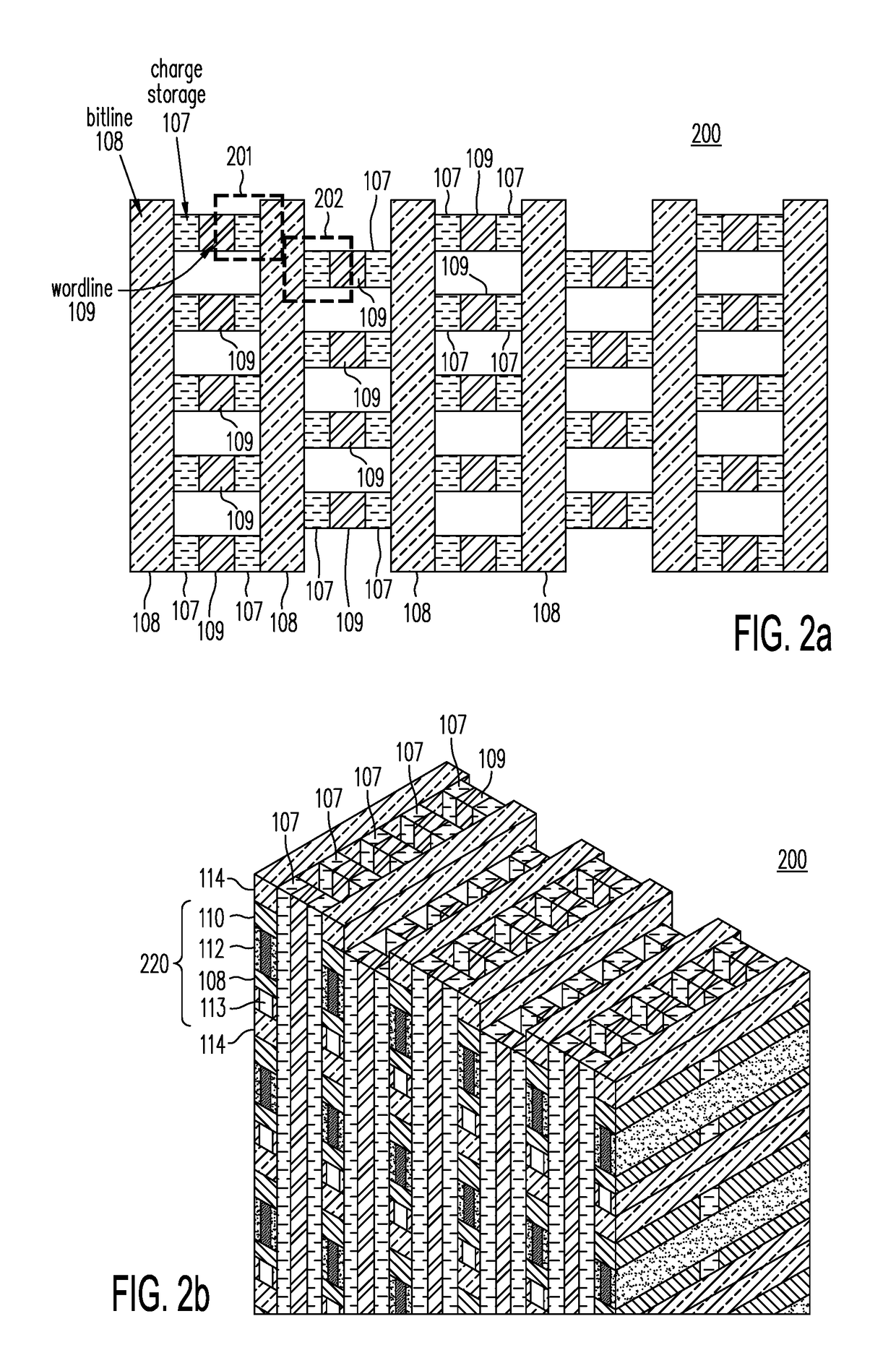

Staggered Word Line Architecture for Reduced Disturb in 3-Dimensional NOR Memory Arrays

ActiveUS20190067327A1Sacrificing memory cell densityIncrease distanceSolid-state devicesRead-only memoriesBit lineComputer architecture

A staggered memory cell architecture staggers memory cells on opposite sides of a shared bit line preserves memory cell density, while increasing the distance between such memory cells, thereby reducing the possibility of a disturb. In one implementation, the memory cells along a first side of a shared bit line are connected to a set of global word lines provided underneath the memory structure, while the memory cells on the other side of the shared bit line —which are staggered relative to the memory cells on the first side—are connected to global word lines above the memory structure.

Owner:SUNRISE MEMORY CORP

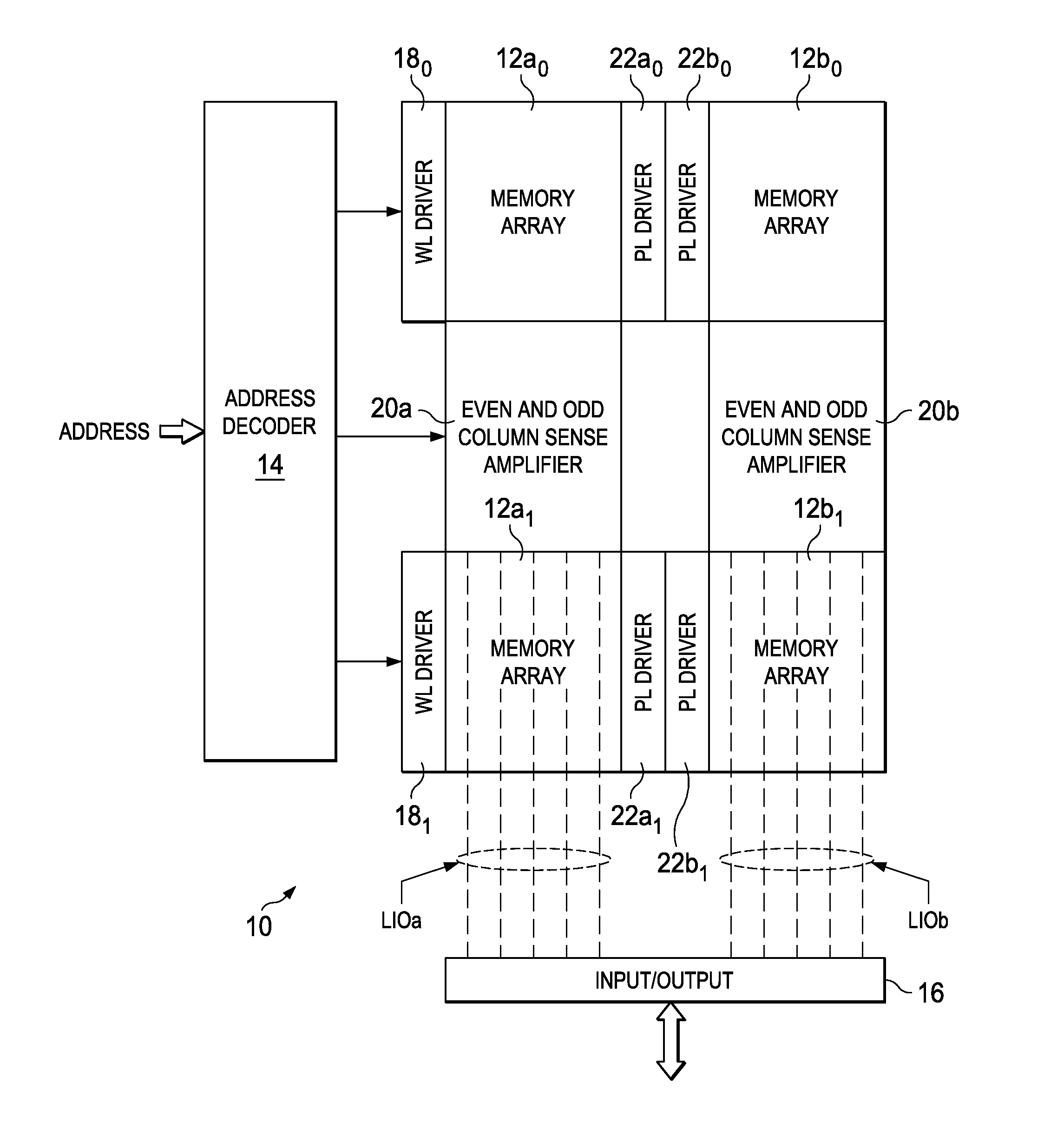

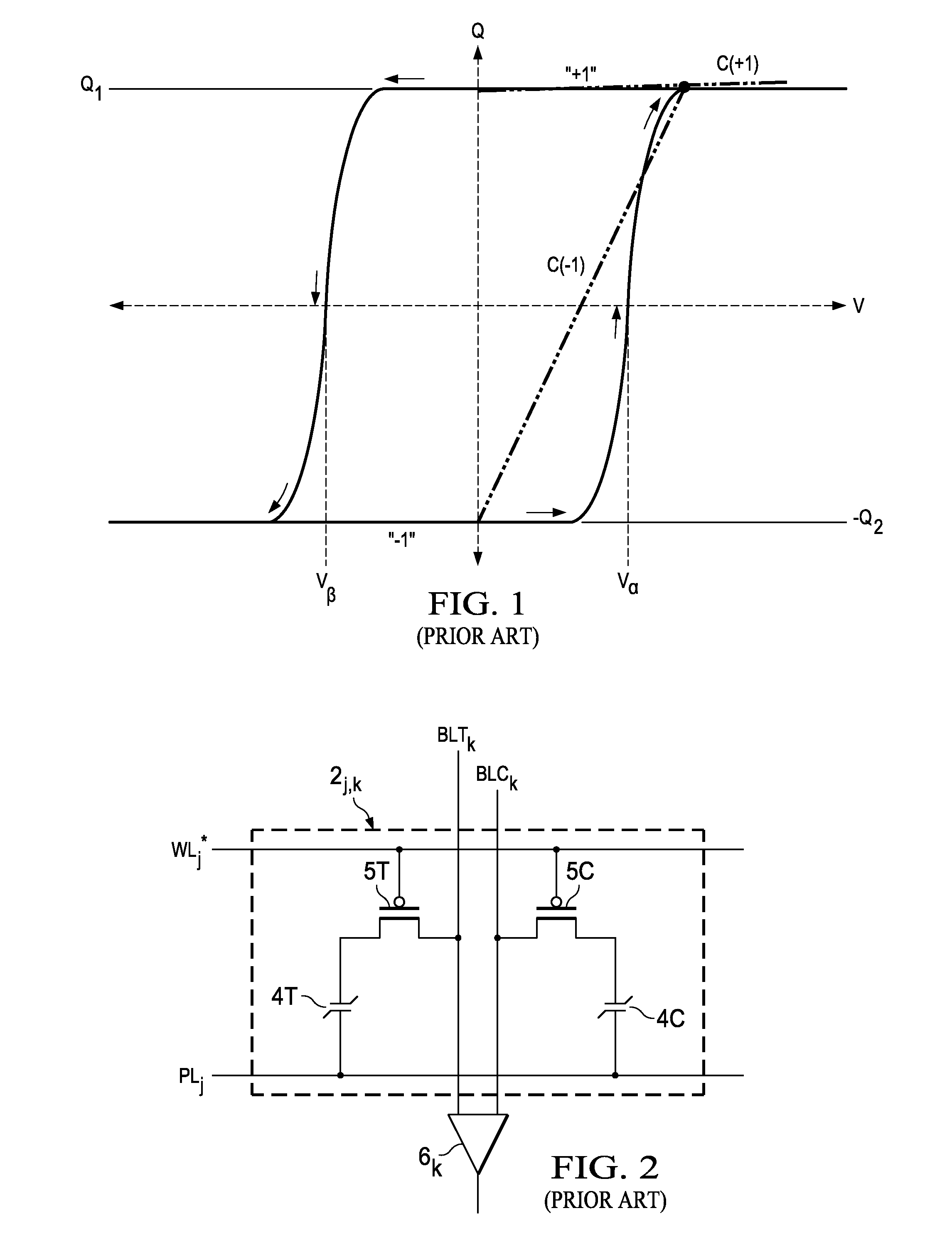

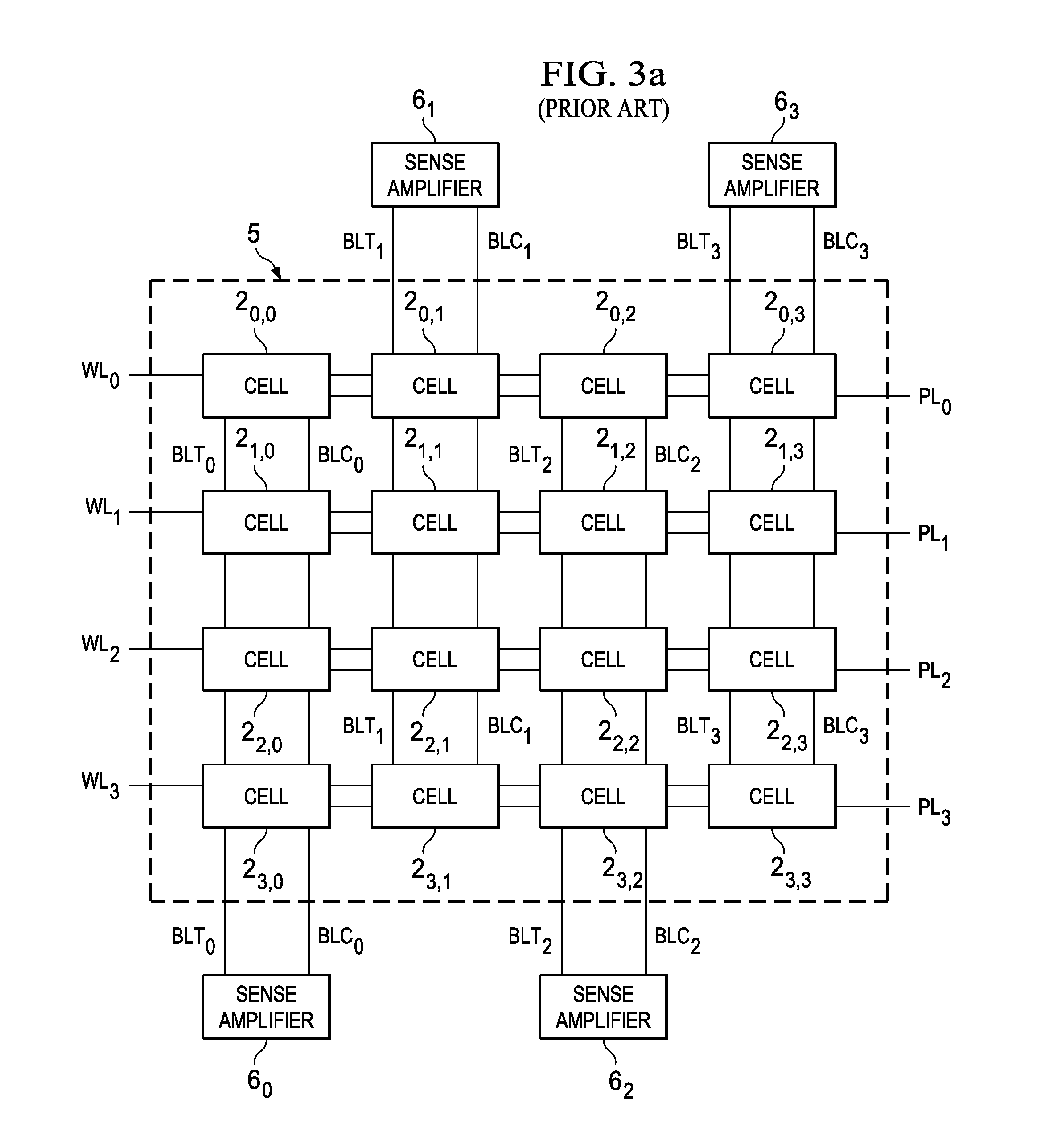

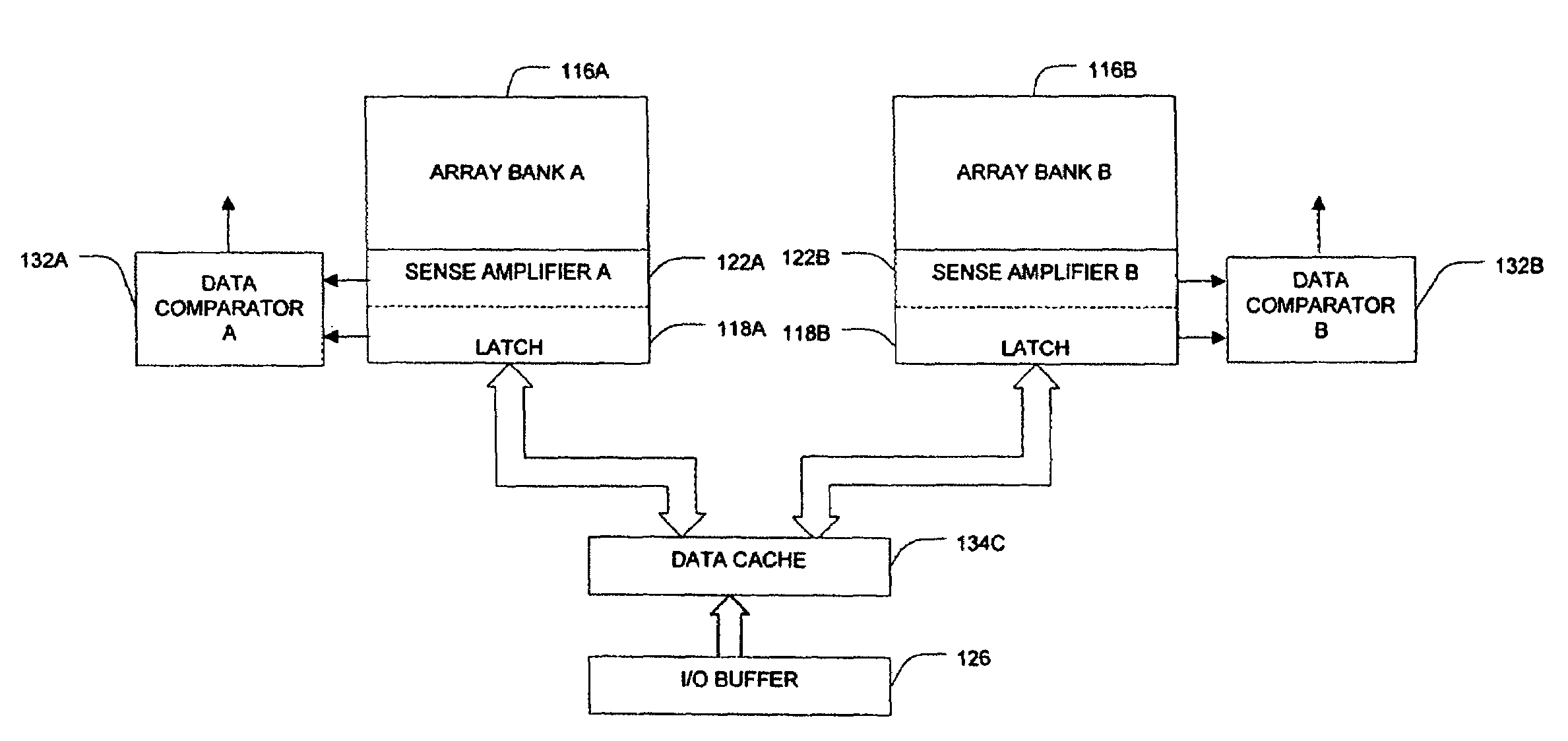

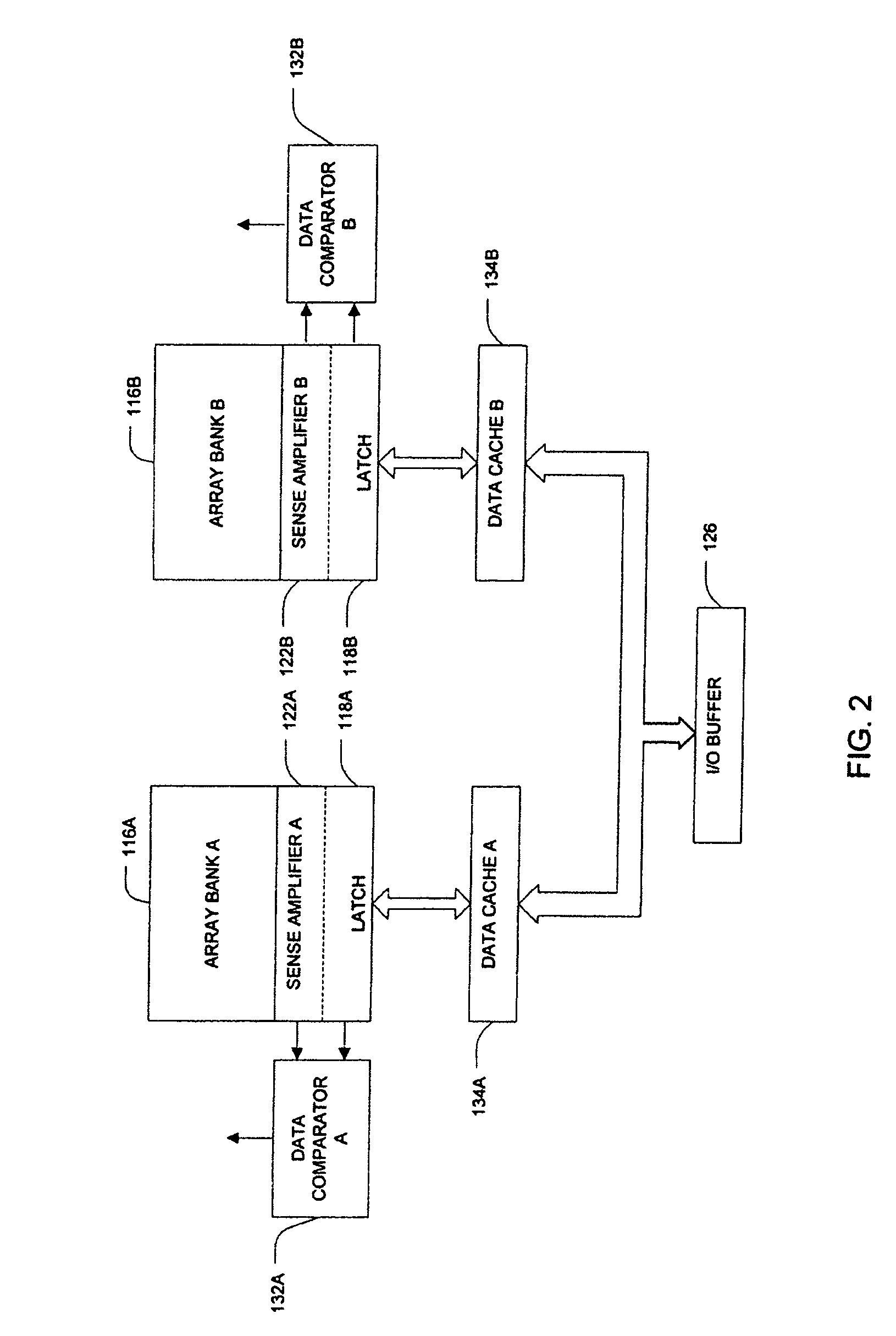

Interleaved Bit Line Architecture for 2T2C Ferroelectric Memories

InactiveUS20120307545A1Reduce couplingReduce in quantityDigital storageAudio power amplifierMultiplexer

A ferroelectric memory with interleaved pairs of ferroelectric memory cells of the two-transistor, two-capacitor (2T2C) type. Each memory cell in a given pair is constructed as first and second portions, each portion including a transistor and a ferroelectric capacitor. Within each pair, a first portion of a second memory cell is physically located between the first and second portions of the first memory cell. As a result, complementary bit lines for adjacent columns are interleaved with one another. Each sense amplifier is associated with a multiplexer, so that the adjacent columns of the interleaved memory cells are supported by a single sense amplifier. Noise coupling among the bit lines is reduced, and the sense amplifiers can be placed along one side of the array, reducing the number of dummy cells required to eliminate edge cell effects.

Owner:TEXAS INSTR INC

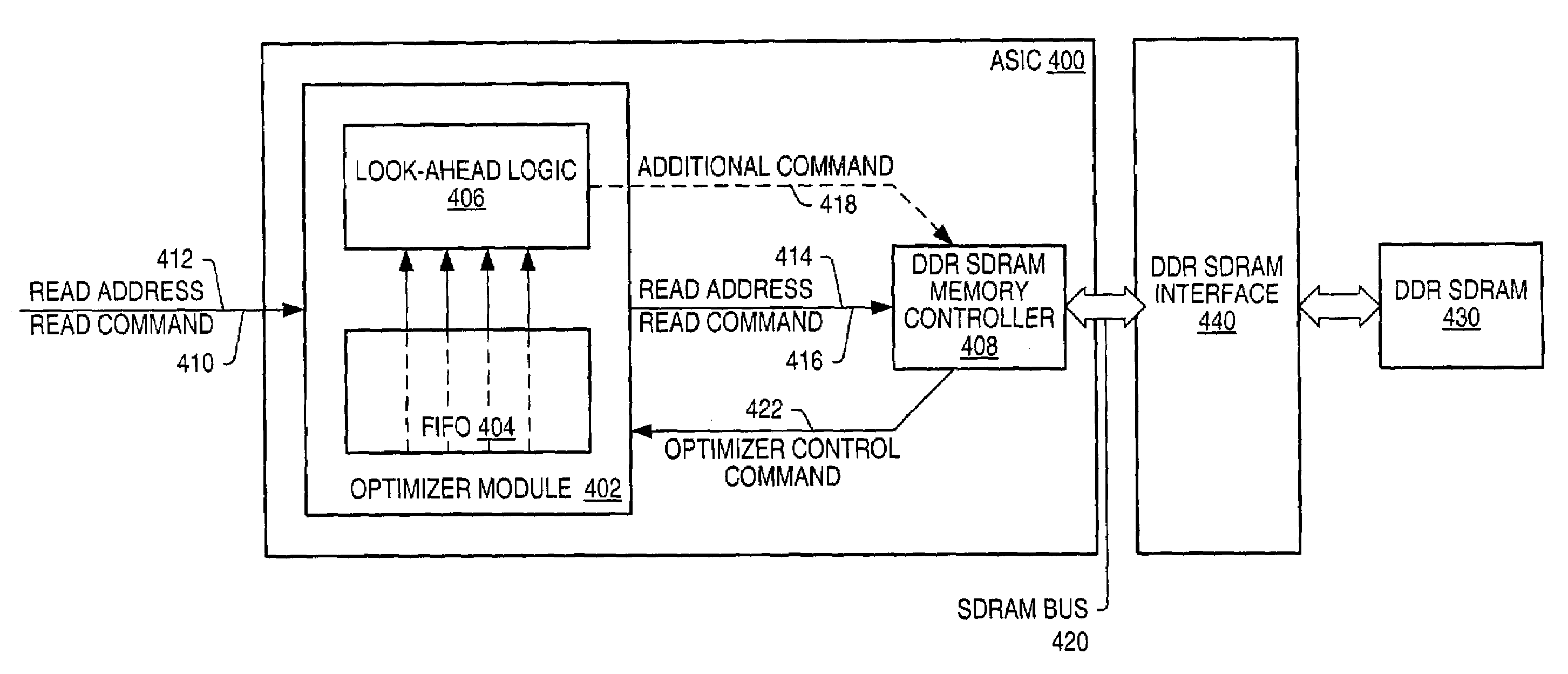

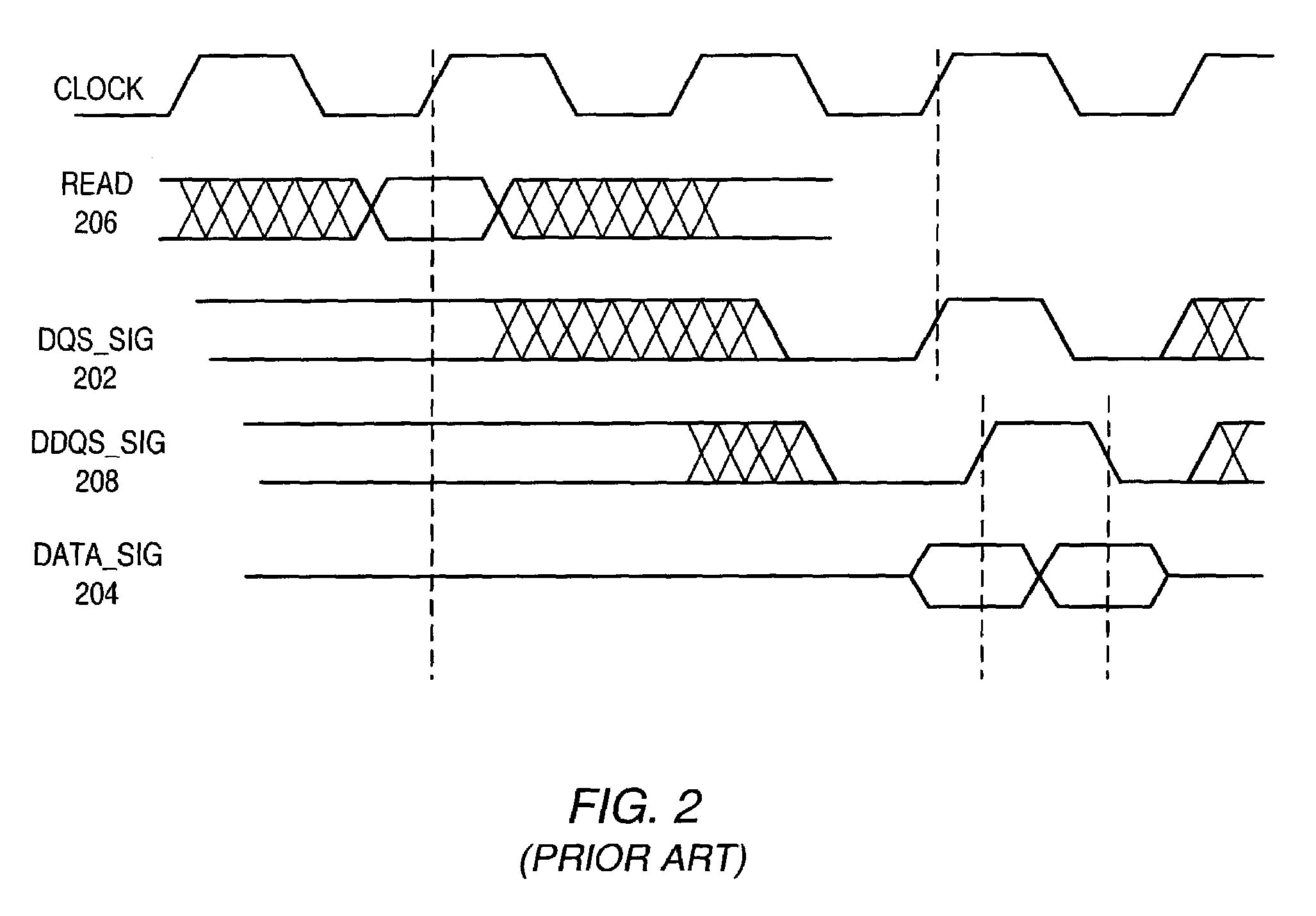

Method for optimizing utilization of a double-data-rate-SDRAM memory system

ActiveUS7089369B2Reduce in quantityMemory adressing/allocation/relocationDigital storageDouble data rateInterleaved memory

A predictive memory performance optimizing unit for use with an interleaved memory, for example a DDR SDRAM memory, and suitable for use in a computer graphics system, among others, is described. The unit maintains a queue of pending requests for data from the memory, and prioritizes precharging and activating interleaves with pending requests. Interleaves which are in a ready state may be accessed independently of the precharging and activation of non-ready interleaves. The unit utilizes idle cycles occurring between consecutive requests to activate interleaves with pending requests.

Owner:ORACLE INT CORP

Interleaved memory program and verify method, device and system

Owner:ROUND ROCK RES LLC

Various methods and apparatus for configurable mapping of address regions onto one or more aggregate targets

InactiveUS20080320255A1Memory adressing/allocation/relocationDigital computer detailsIntellectual propertyInterleaved memory

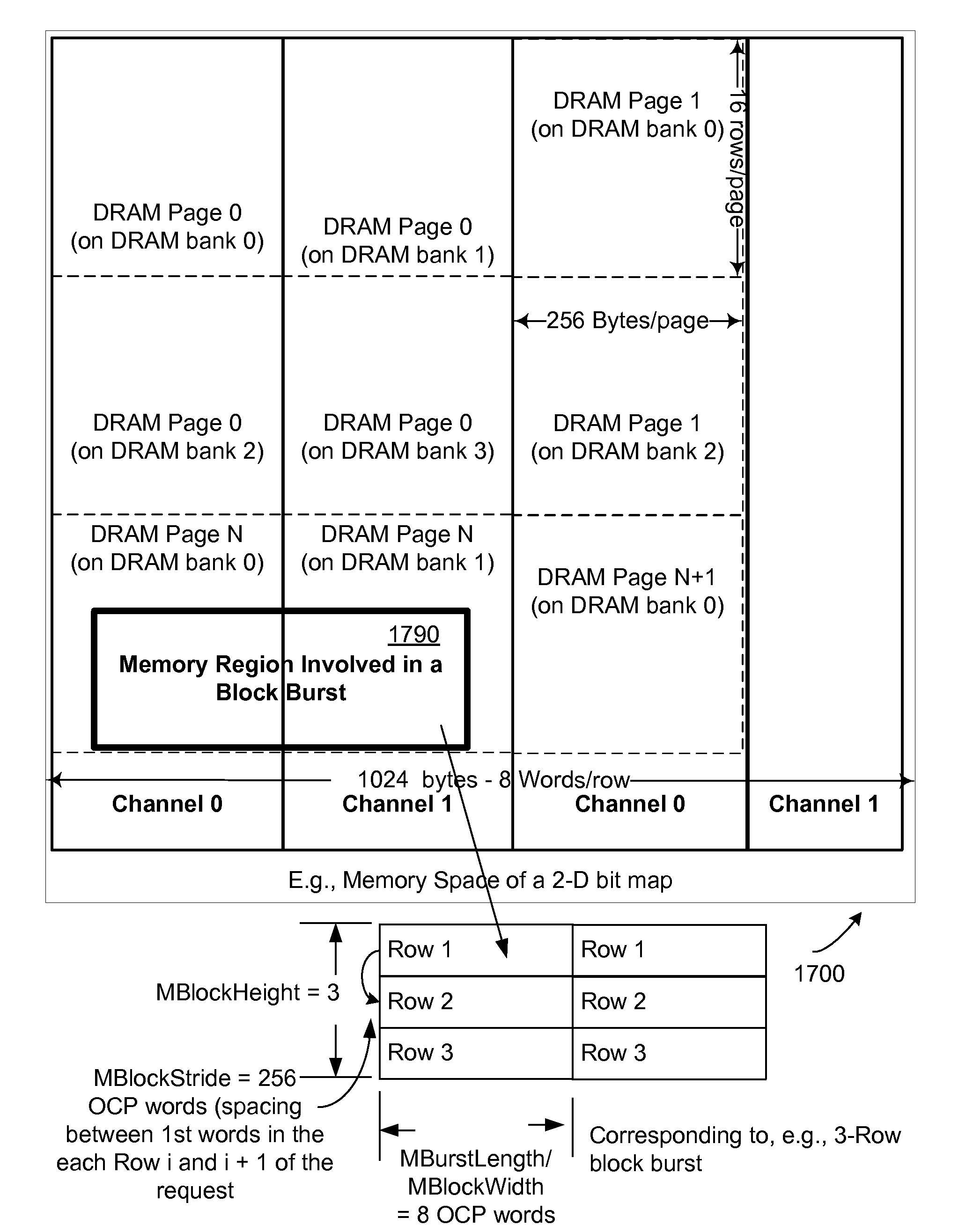

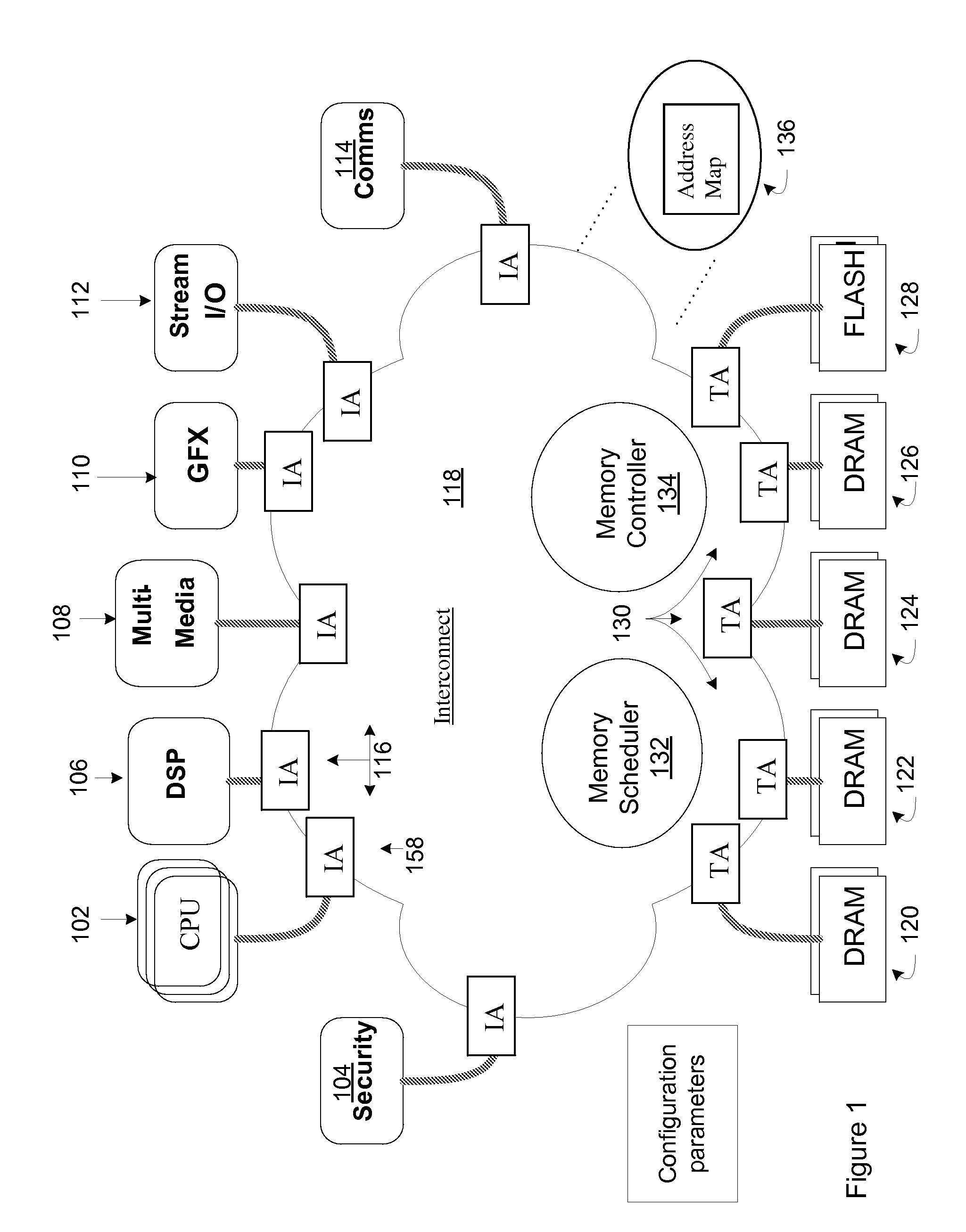

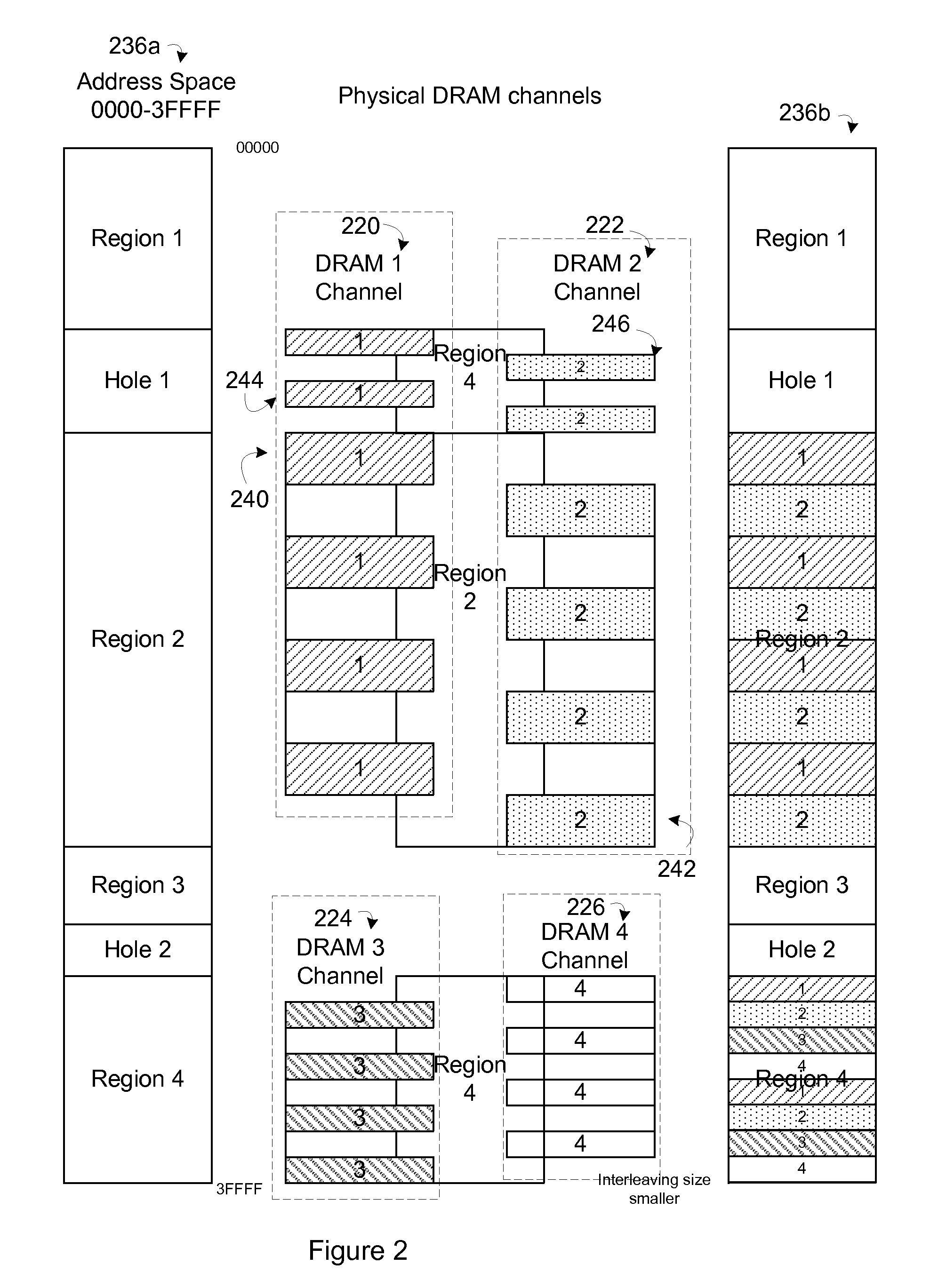

An interconnect for an integrated circuit communicating transactions between initiator Intellectual Property (IP) cores and multiple target IP cores coupled to the interconnect is generally described. The interconnect routes the transactions between the target IP cores and initiator IP cores in the integrated circuit. A first aggregate target of the target IP cores includes two or more memory channels that are interleaved in an address space for the first aggregate target in the address map. Each memory channel is divided up in defined memory interleave segments and then interleaved with memory interleave segments from other memory channels. An address map is divided up into two or more regions. Each interleaved memory interleave segment is assigned to at least one of those regions and populates the address space for that region, and parameters associated with the regions and memory interleave segments are configurable.

Owner:META PLATFORMS TECH LLC

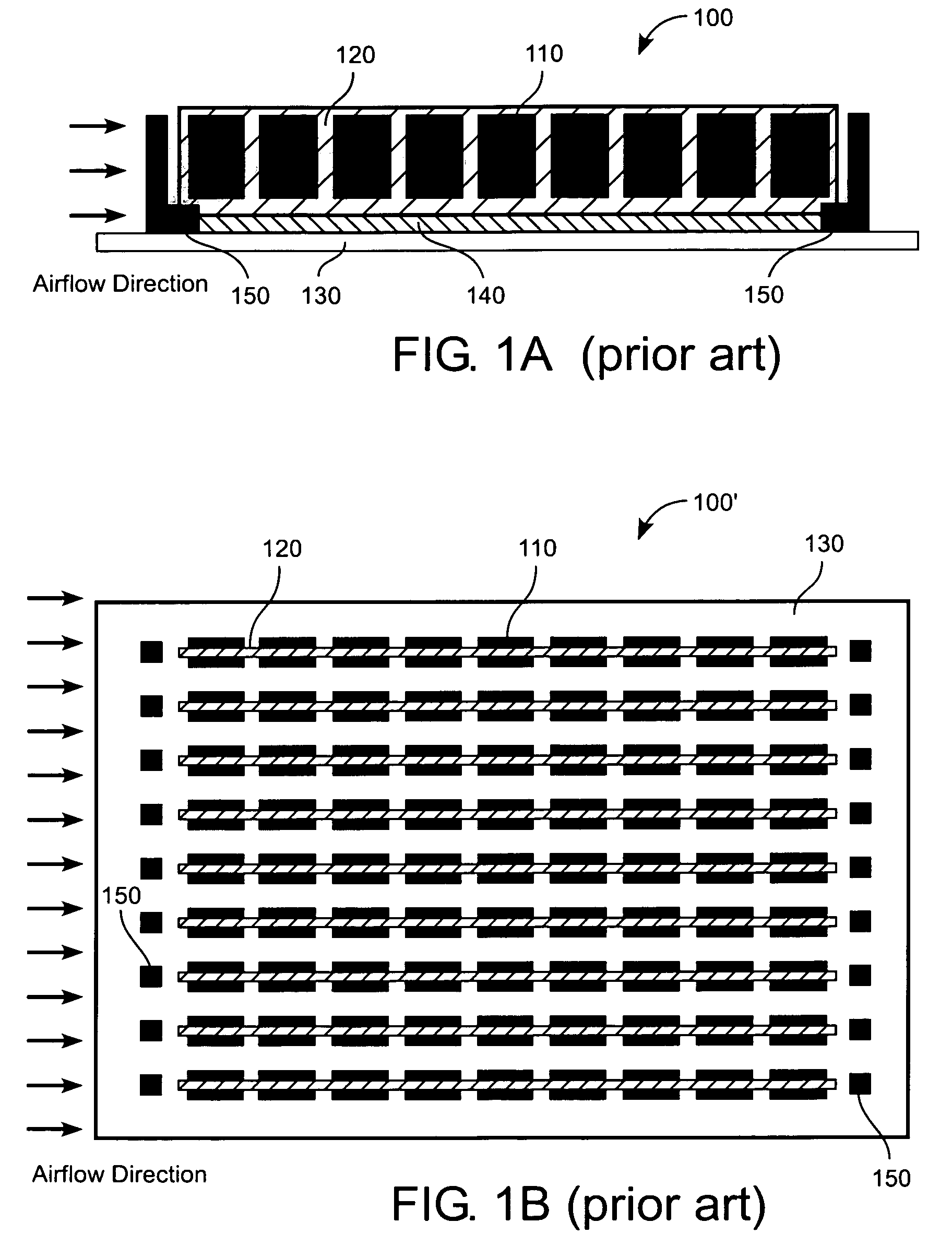

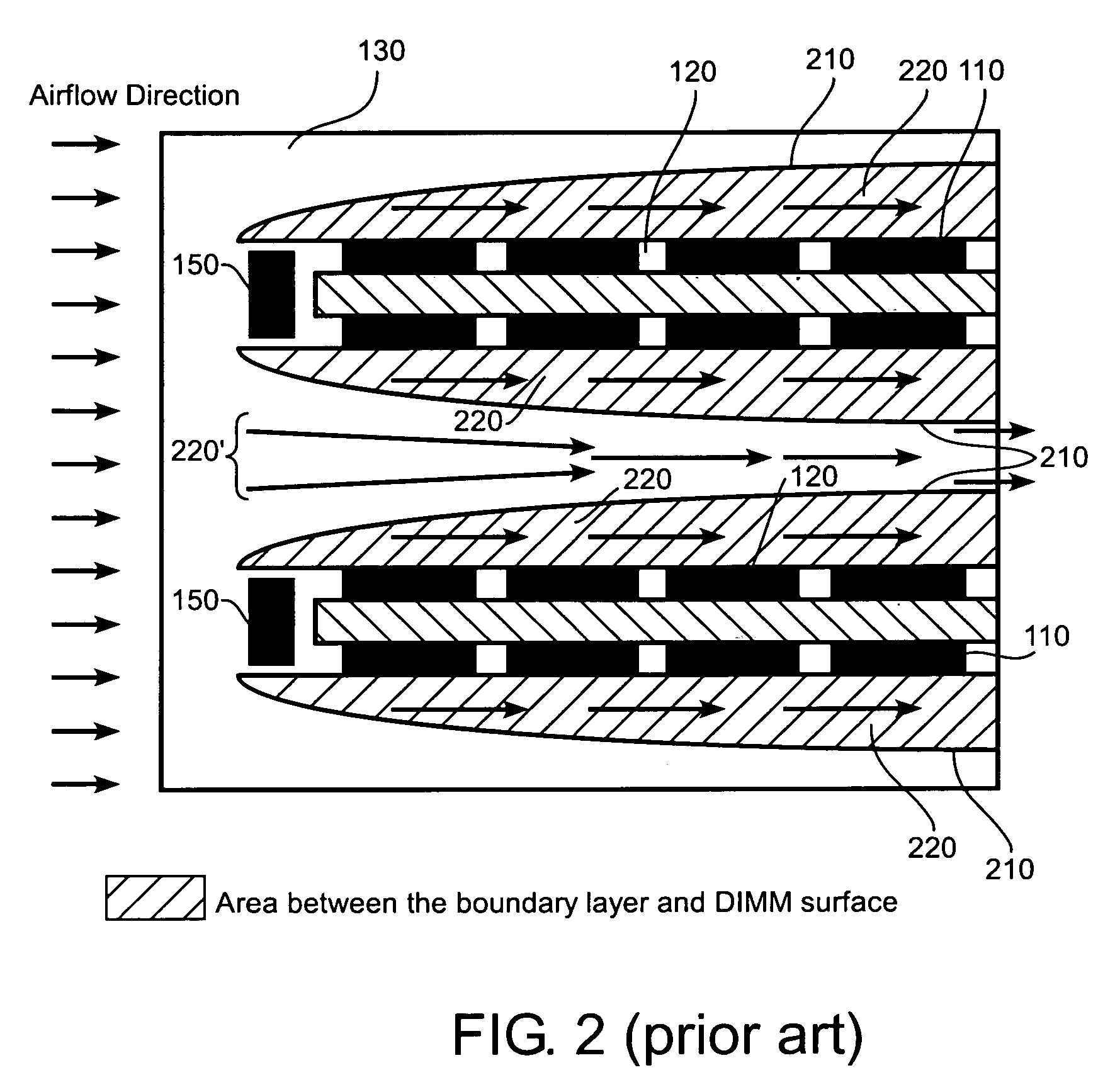

Interleaved memory heat sink

ActiveUS7233501B1Digital data processing detailsSemiconductor/solid-state device detailsControl systemInterleaved memory

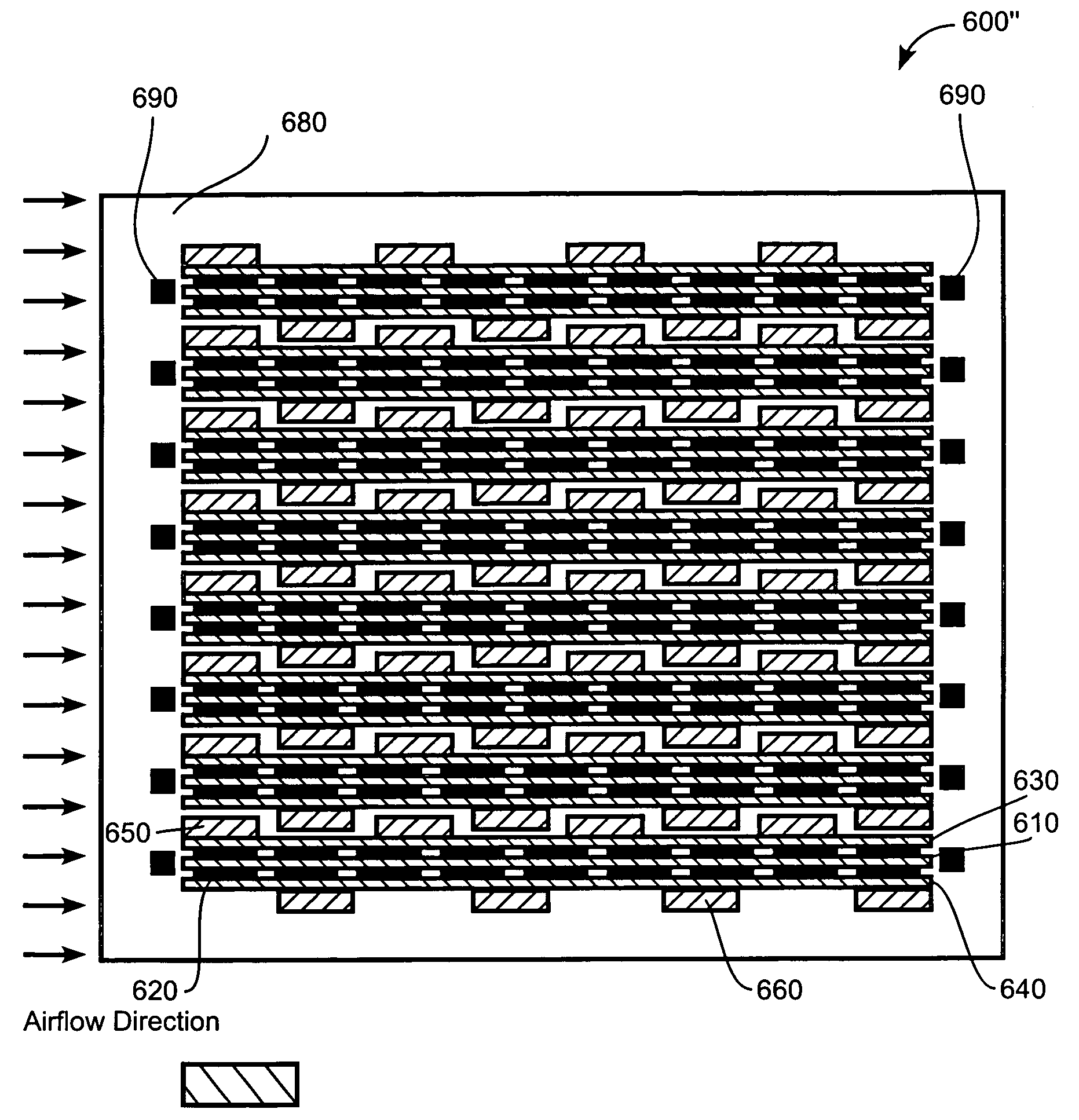

A heat sink comprises a heat sink base and a row of heat sink extensions that are attached to one side of the heat sink base. An interleaved heat sink structure includes a first row and a second row of heat sink extensions. The first row and the second row of heat sink extensions are coupled respectively to a first and a second heat sink bases. The first and the second heat sink bases are thermally coupled to a first plurality of memory packages and a second plurality of memory packages, respectively. The first row of heat sink extensions is parallel to and at least partially interleaved with the second row of heat sink extensions. A memory heat dissipation control system and a method for assembly a memory part that includes a DIME and two heat sinks are also described.

Owner:ORACLE INT CORP

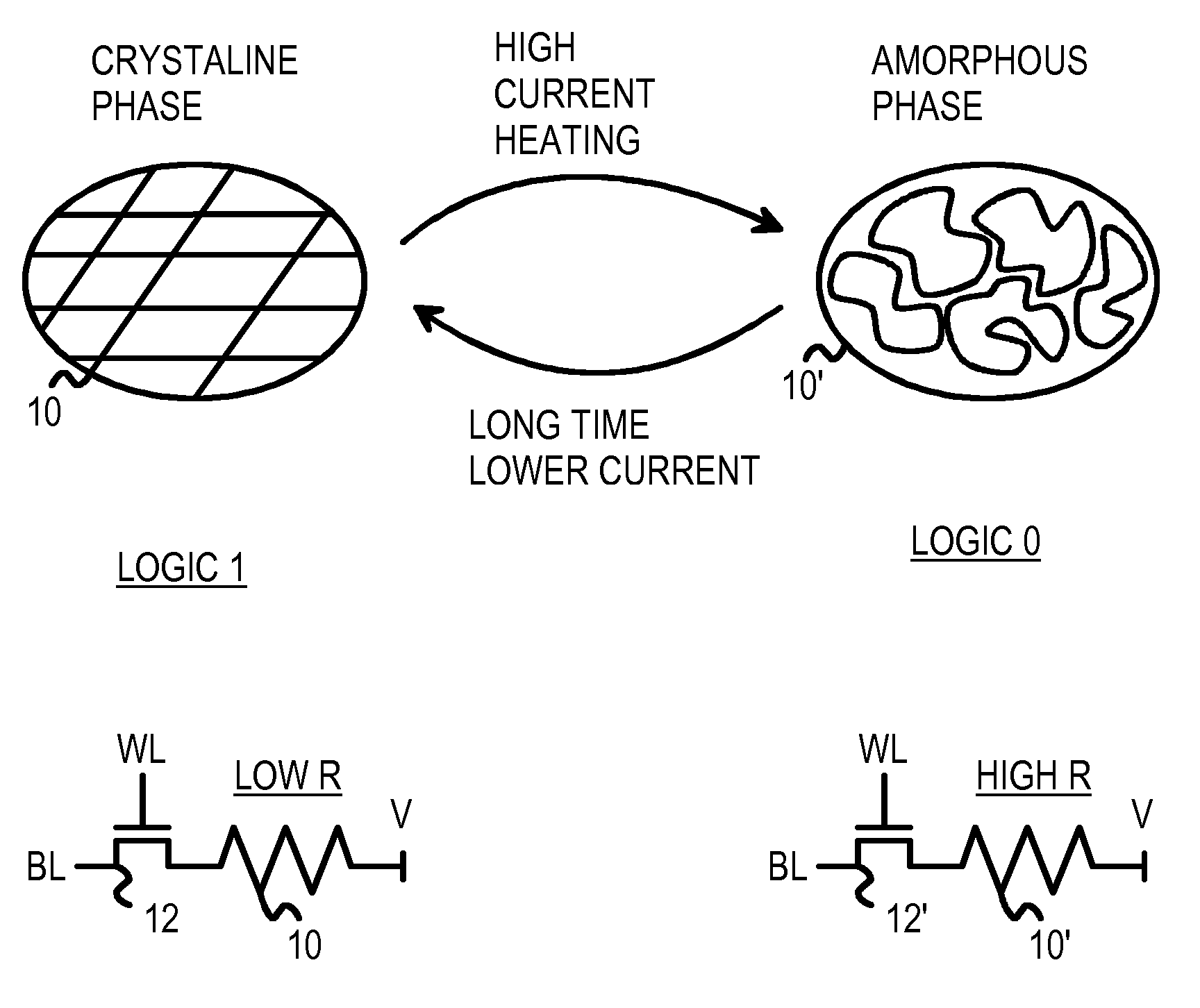

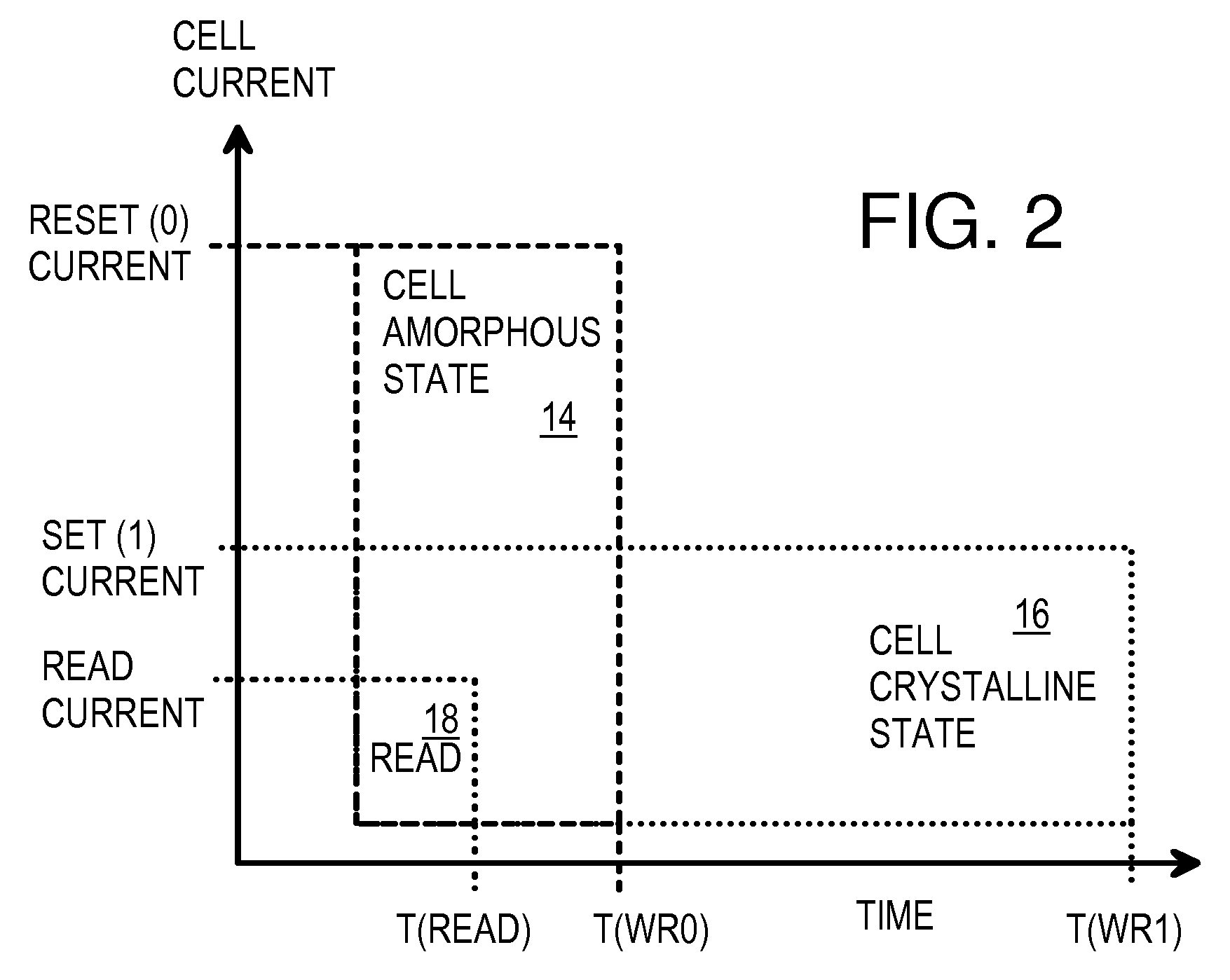

High-speed controller for phase-change memory peripheral device

InactiveUS7889544B2Memory architecture accessing/allocationDigital storageHigh resistancePhase-change memory

Owner:SUPER TALENT TECH CORP

Interleaved memory program and verify method, device and system

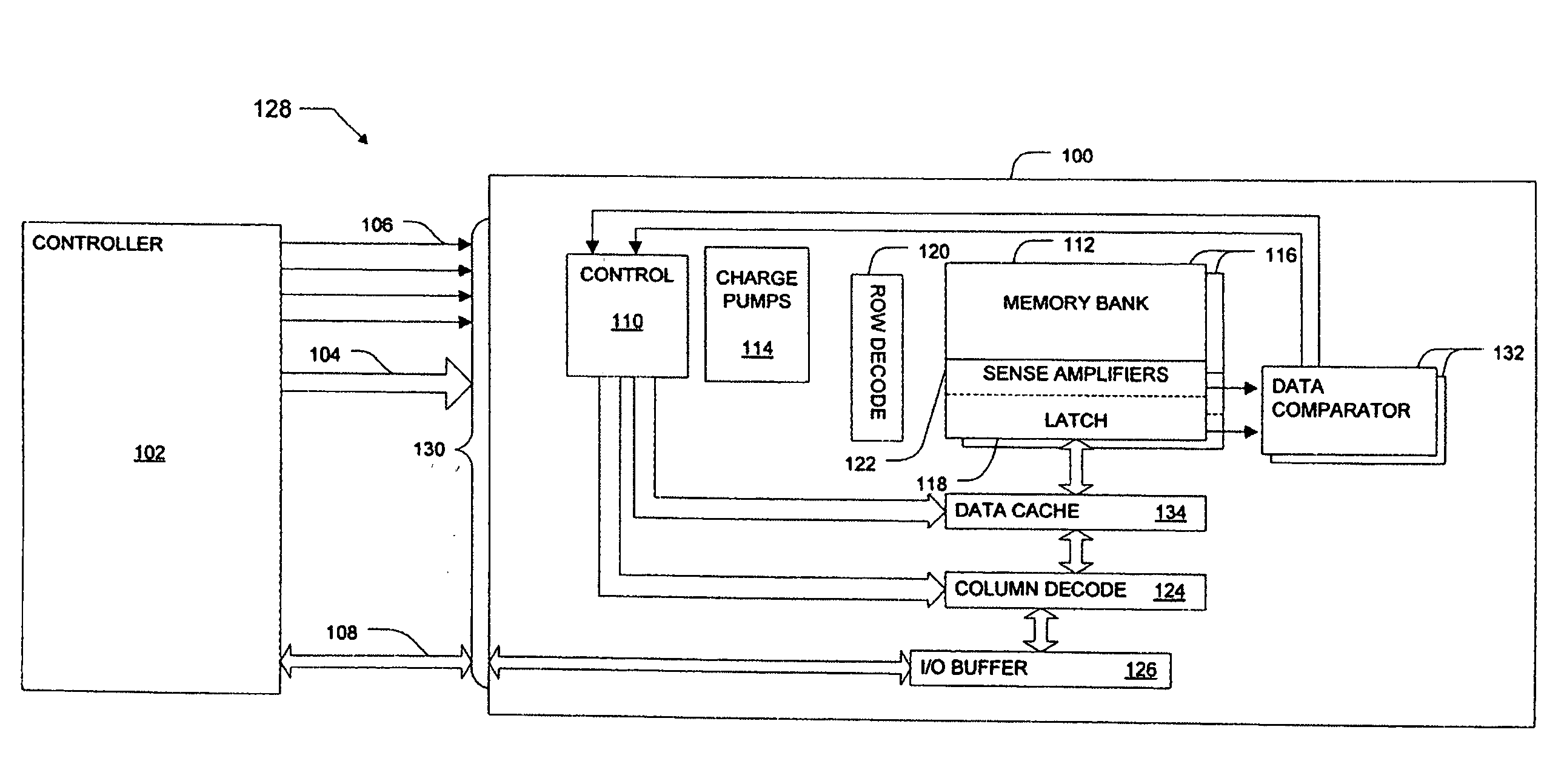

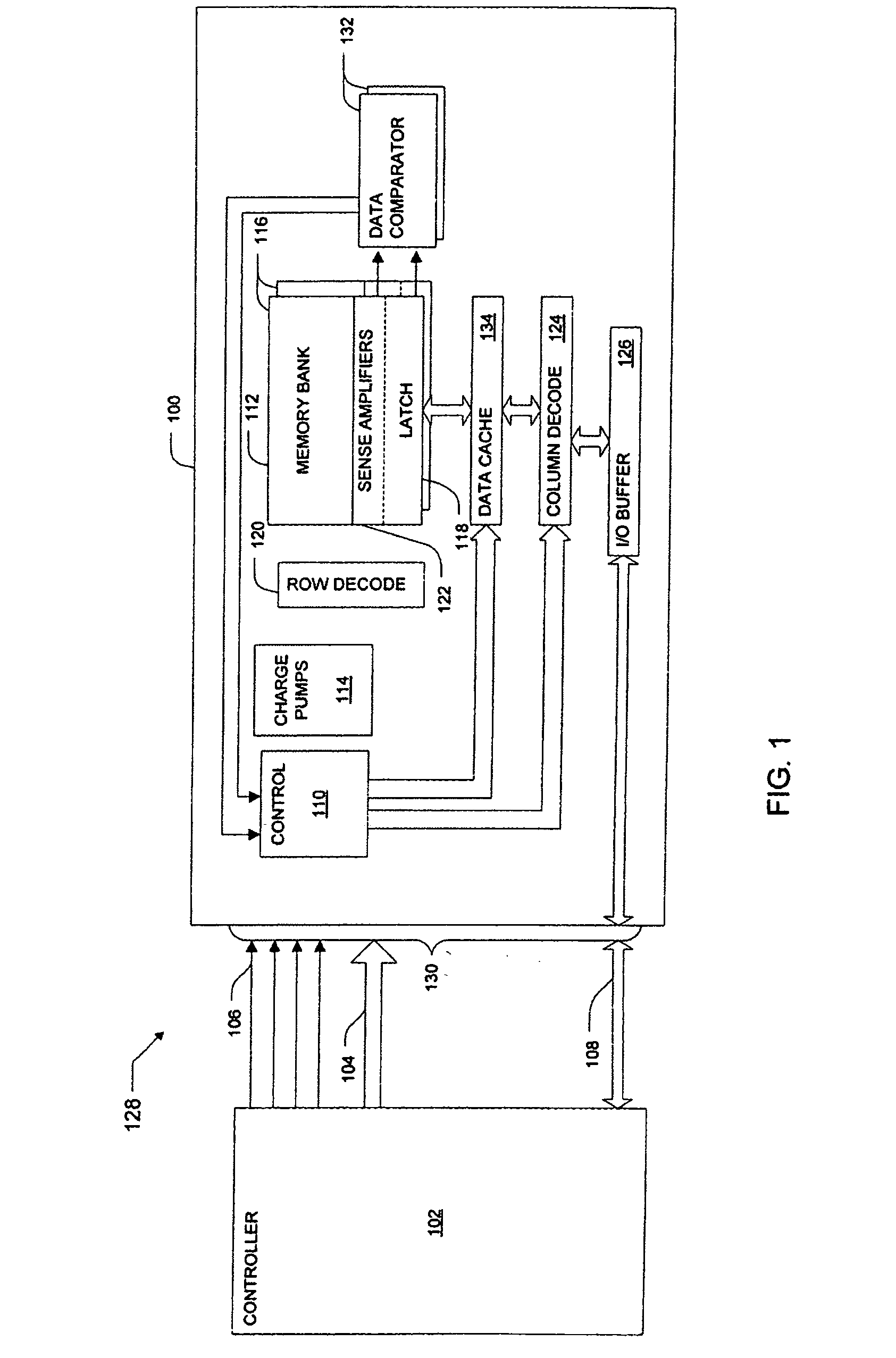

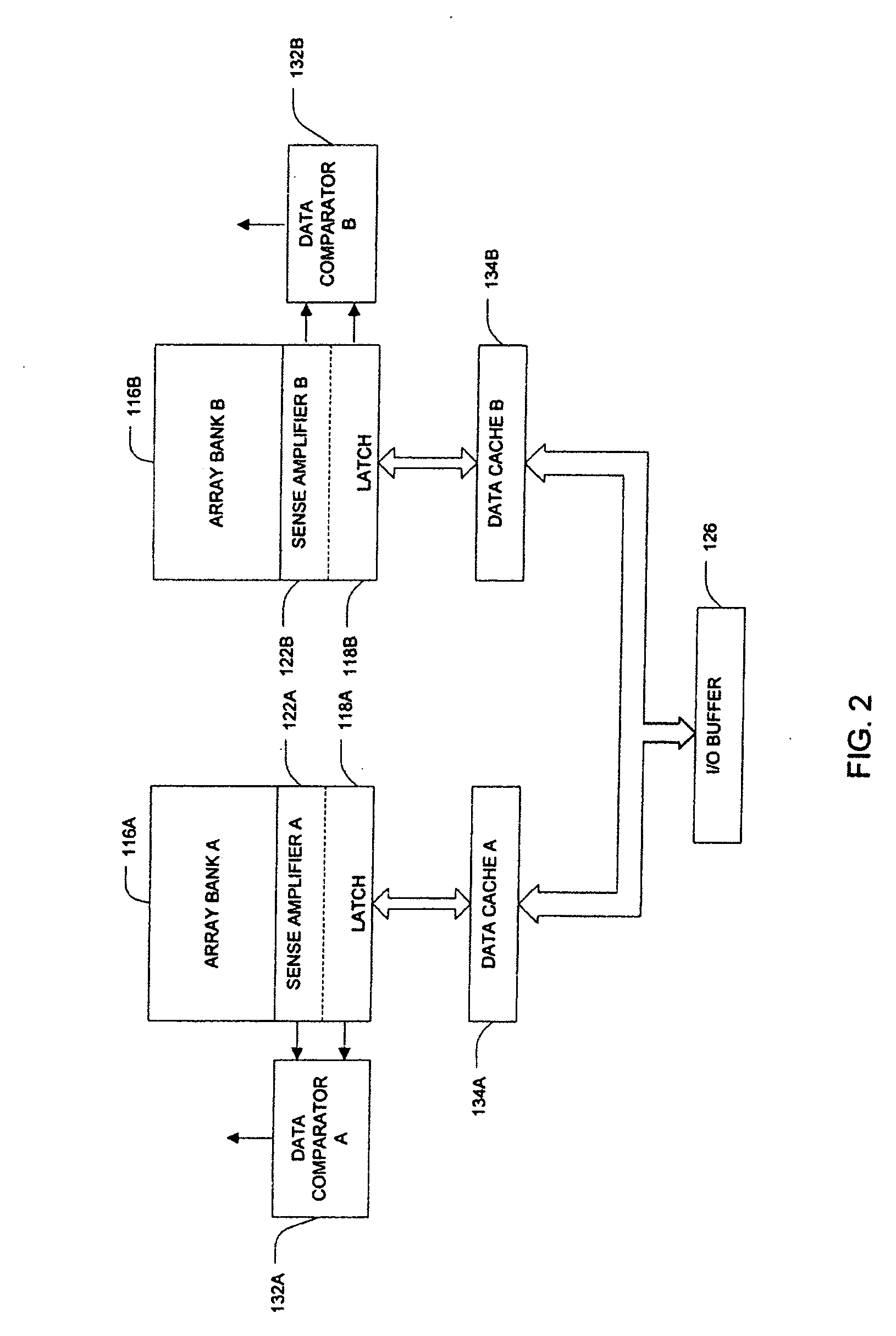

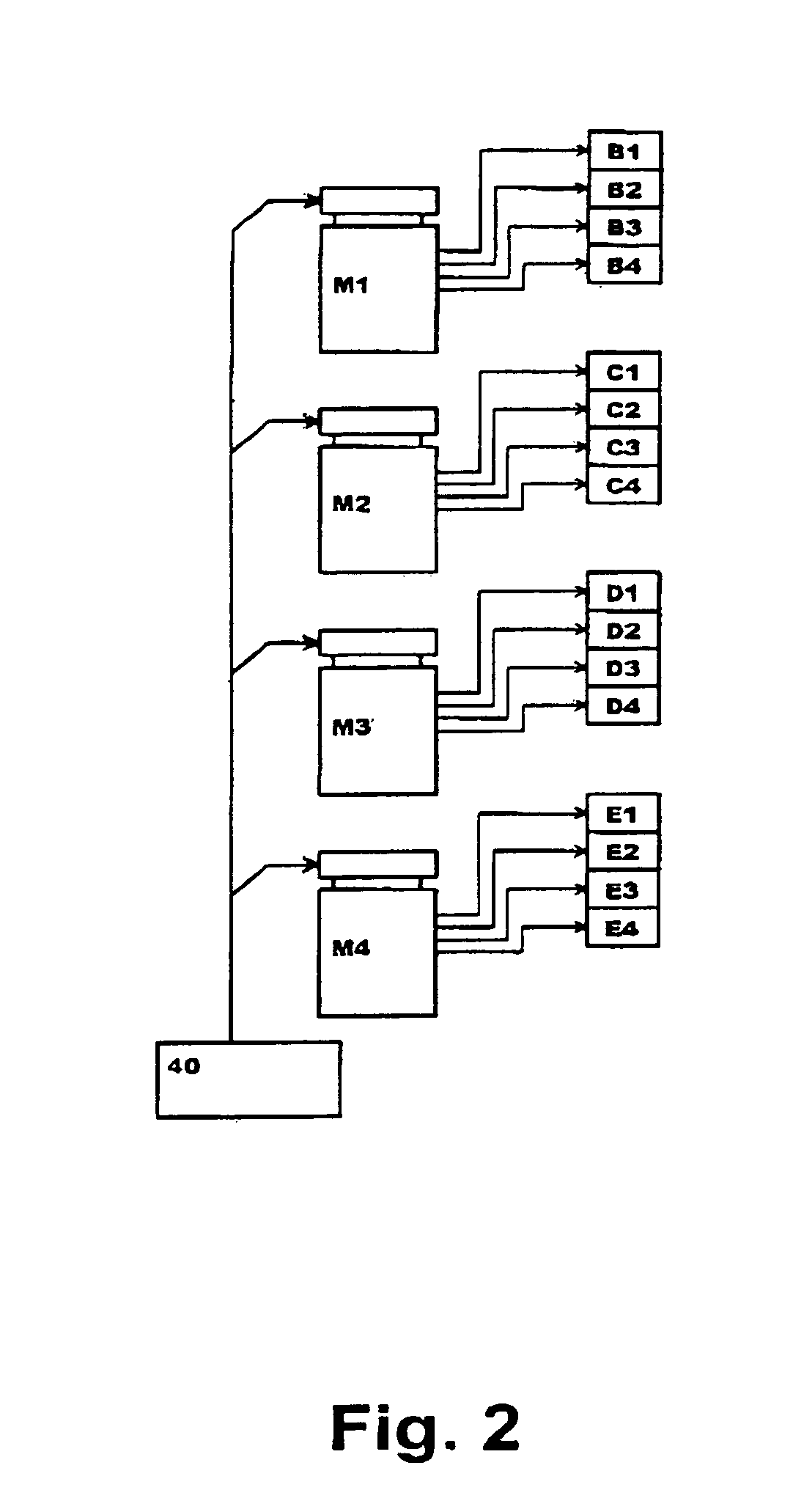

An interleaved memory programming and verification method, device and system includes a memory array includes first and second memory banks of memory cells. The memory device further includes a controller configured to concurrently program a first data into the first memory bank and a second data into the second memory bank using iterative programming and verification operations in each of the first and second memory banks with the programming and verification operations in the second memory bank being offset from the programming and verification operations in the first memory bank.

Owner:ROUND ROCK RES LLC

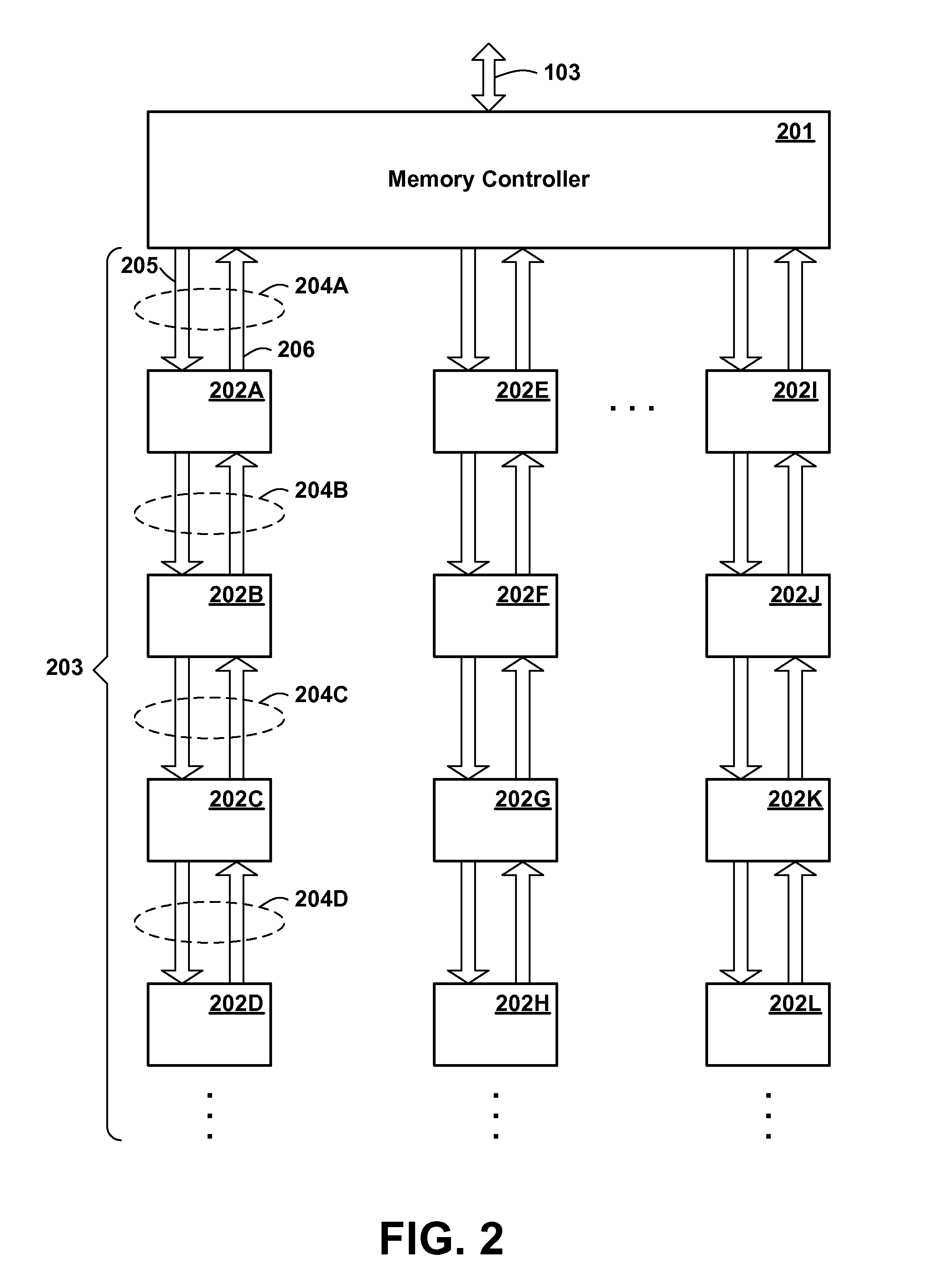

Dual-Mode Memory Chip for High Capacity Memory Subsystem

ActiveUS20090006775A1Significant power savingReducing frequency of busEnergy efficient ICTEnergy efficient computingMemory chipData access

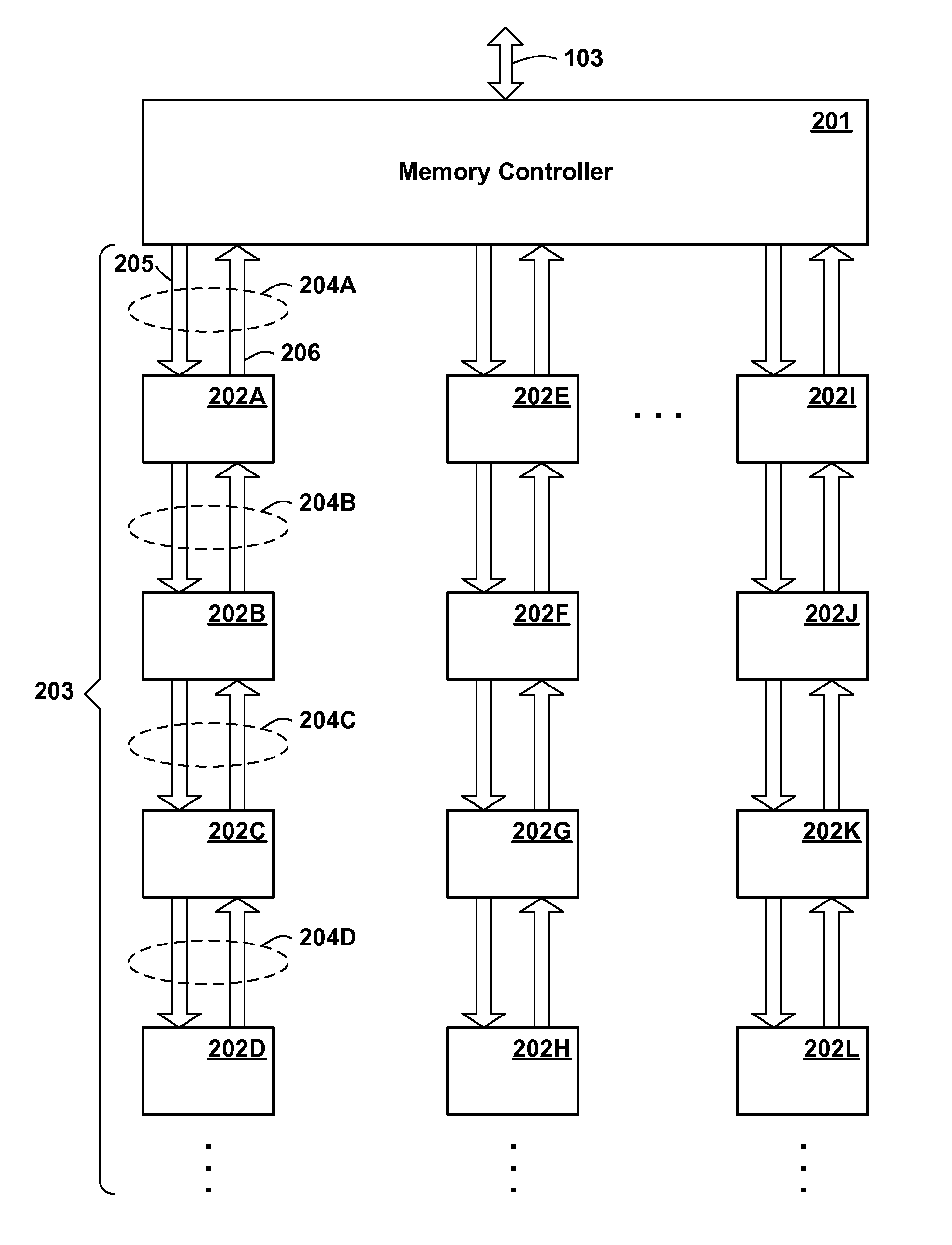

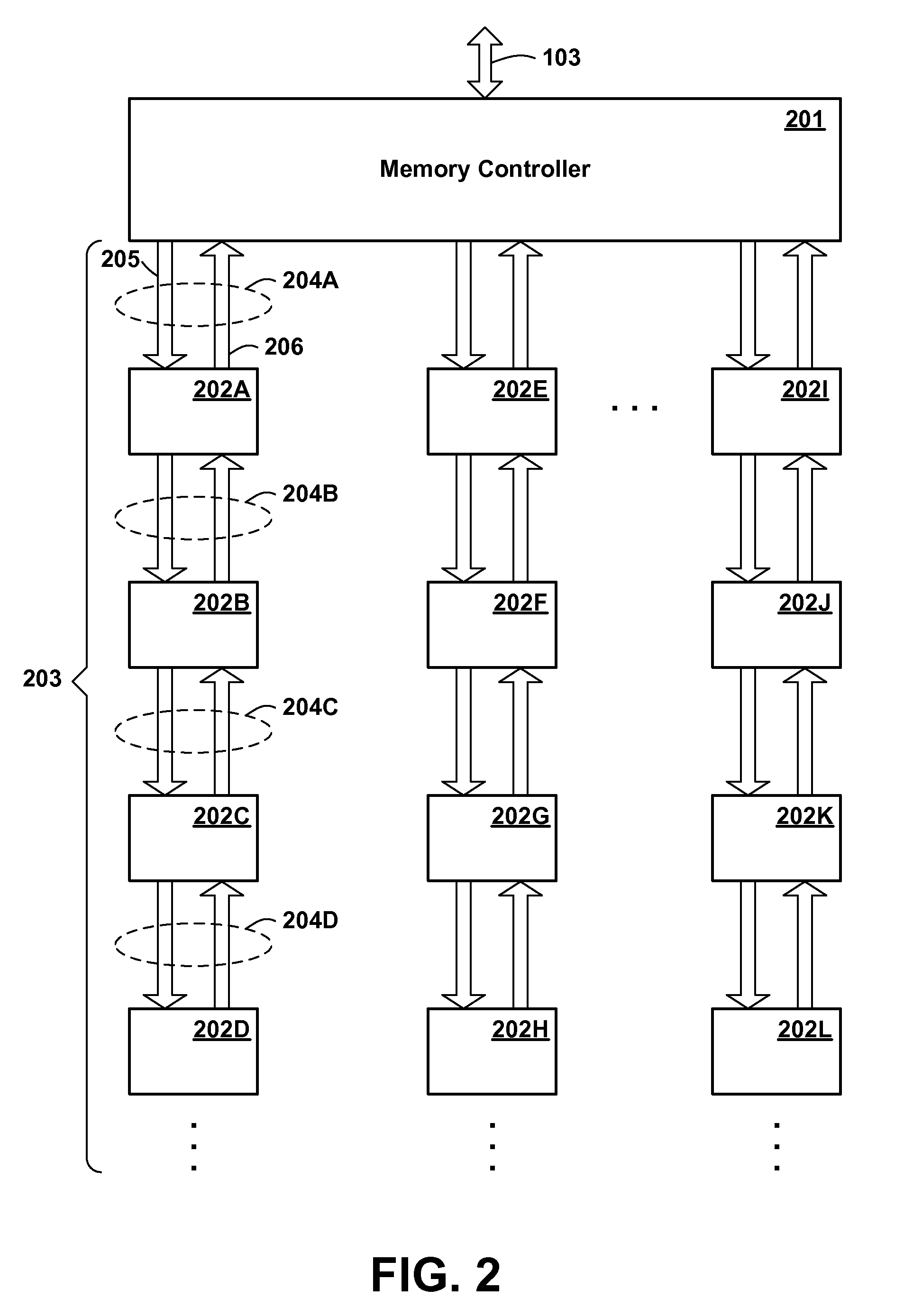

A dual-mode memory chip supports a first operation mode in which received data access commands contain chip select data to identify the chip addressed by the command, and control logic in the memory chip determines whether the command is addressed to the chip, and a second operation mode in which the received data access command addresses a set of multiple chips. Preferably, the first mode supports a daisy-chained configuration of memory chips. Preferably the second mode supports a hierarchical interleaved memory subsystem, in which each addressable set of chips is configured as a tree, command and write data being propagated down the tree, the number of chips increasing at each succeeding level of the tree.

Owner:LENOVO GLOBAL TECH INT LTD

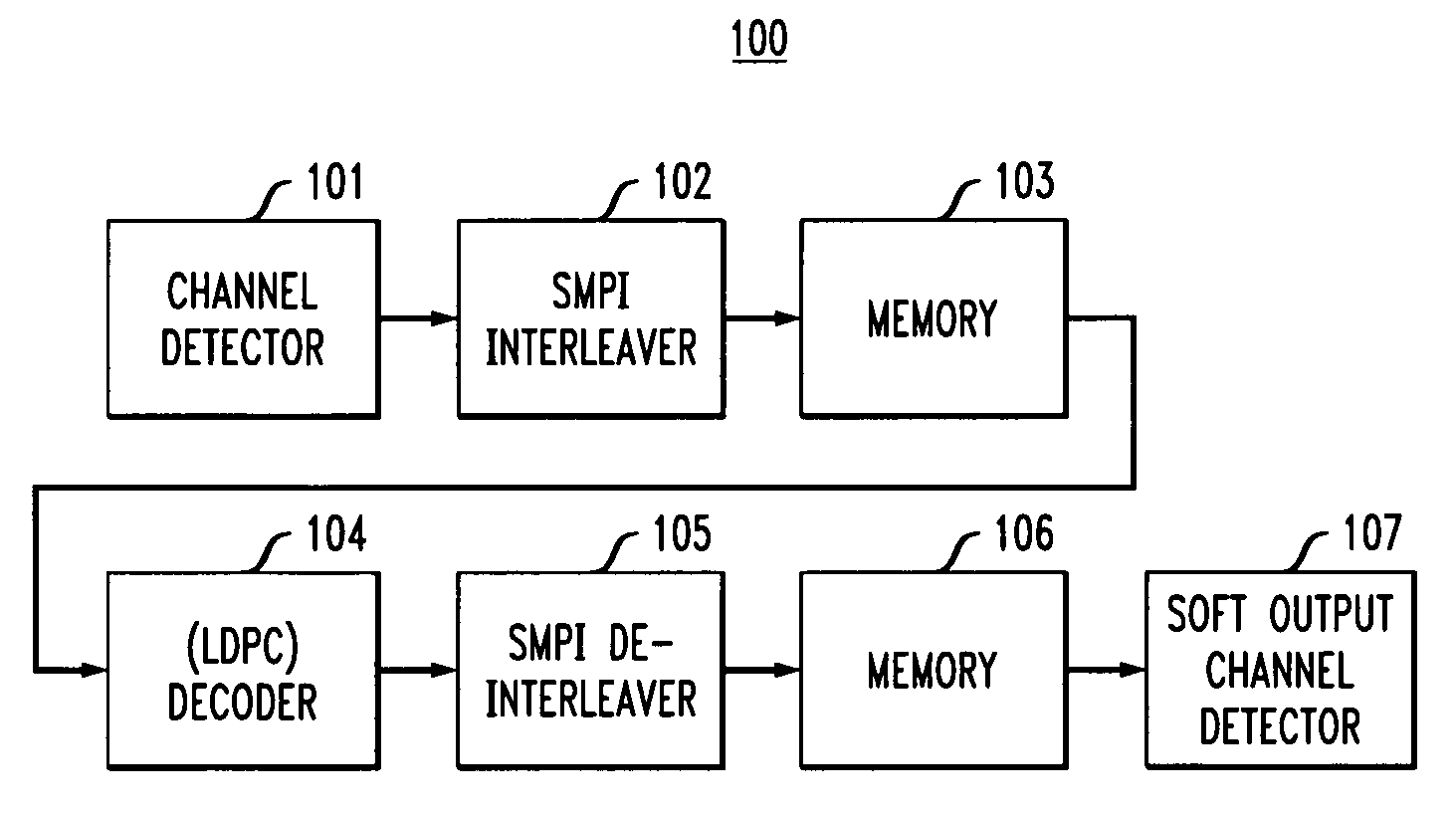

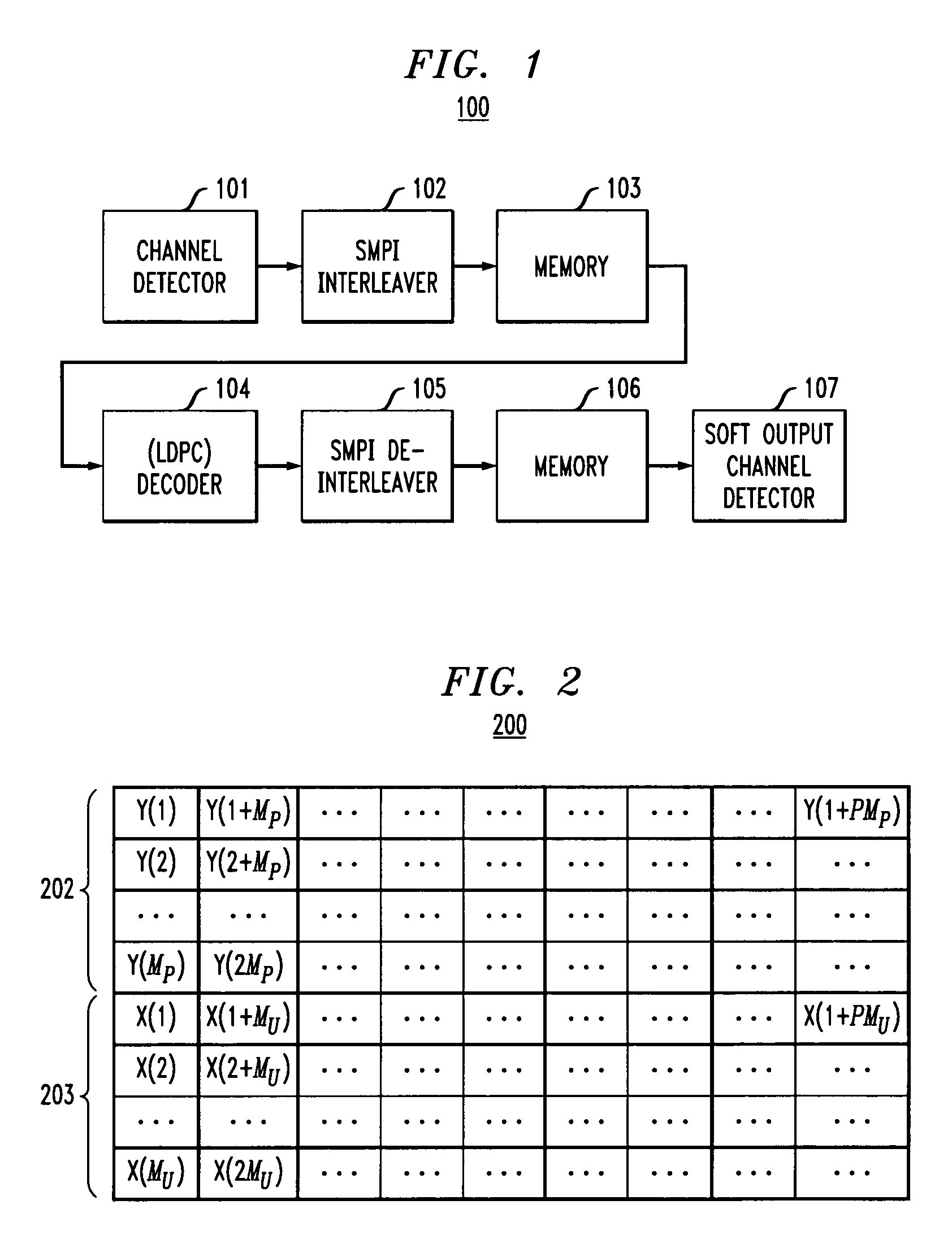

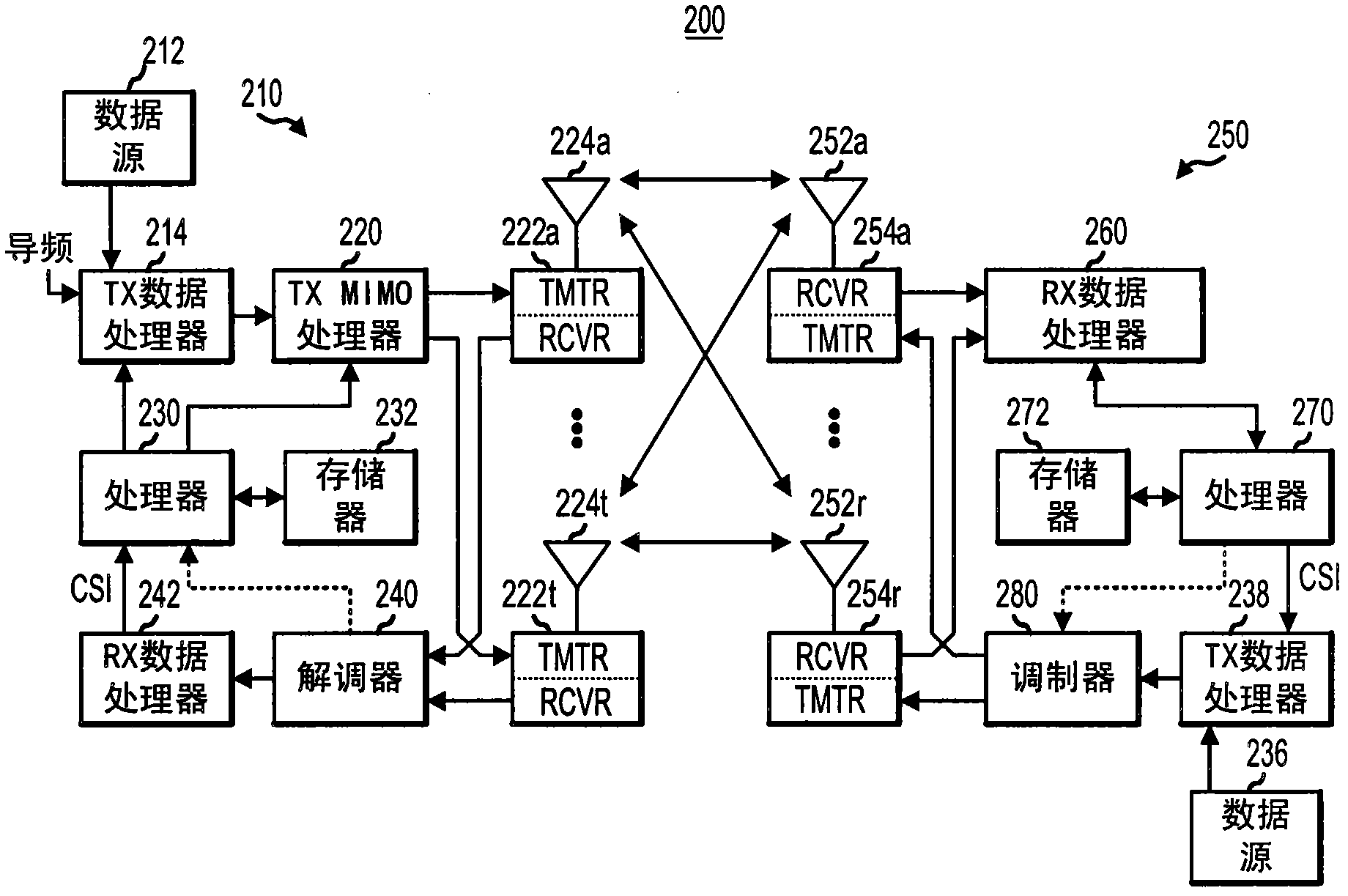

Interleaver and de-interleaver for iterative code systems

InactiveUS20100146229A1Fault responseMemory adressing/allocation/relocationAlgorithmInterleaved memory

In exemplary embodiments, a skewed interleaving function for iterative code systems is described. The skewed interleaving function provides a skewed row and column memory partition and a layered structure for re-arranging data samples read from, for example, a first channel detector. An iterative decoder, such as an iterative decoder based on a low-density parity-check code (LDPC), might employ an element to de-skew the data from the interleaved memory partition before performing iterative decoding of the data, and then re-skew the information before passing decoded samples to the de-interleaver. The de-interleaver re-arranges the iterative decoded data samples in accordance with an inverse of the interleaver function before passing the decoded data samples to, for example, a second channel detector.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Memory request reordering in a data processing system

InactiveUS6976135B1Increase speedMemory adressing/allocation/relocationDigital computer detailsData processing systemParallel computing

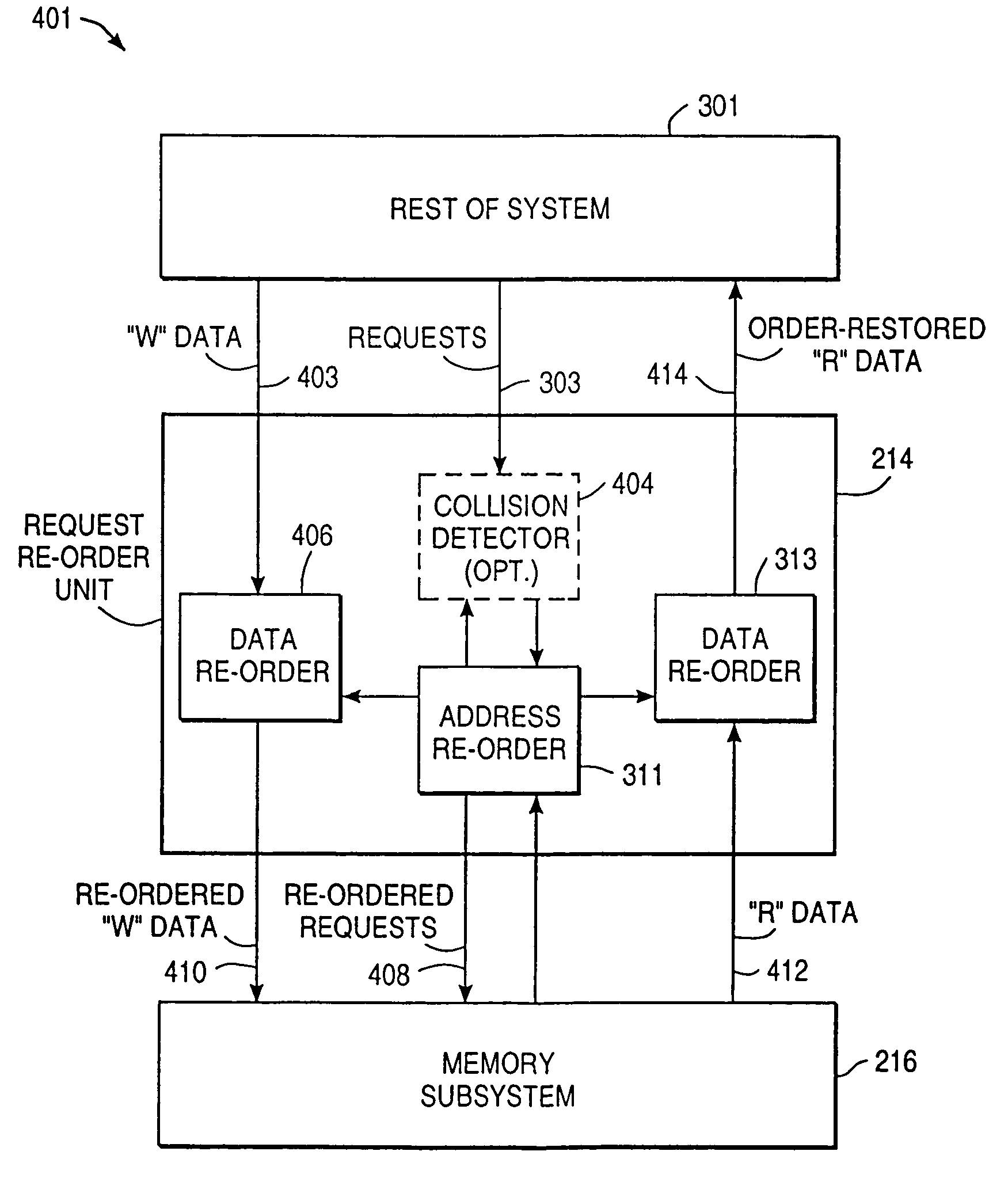

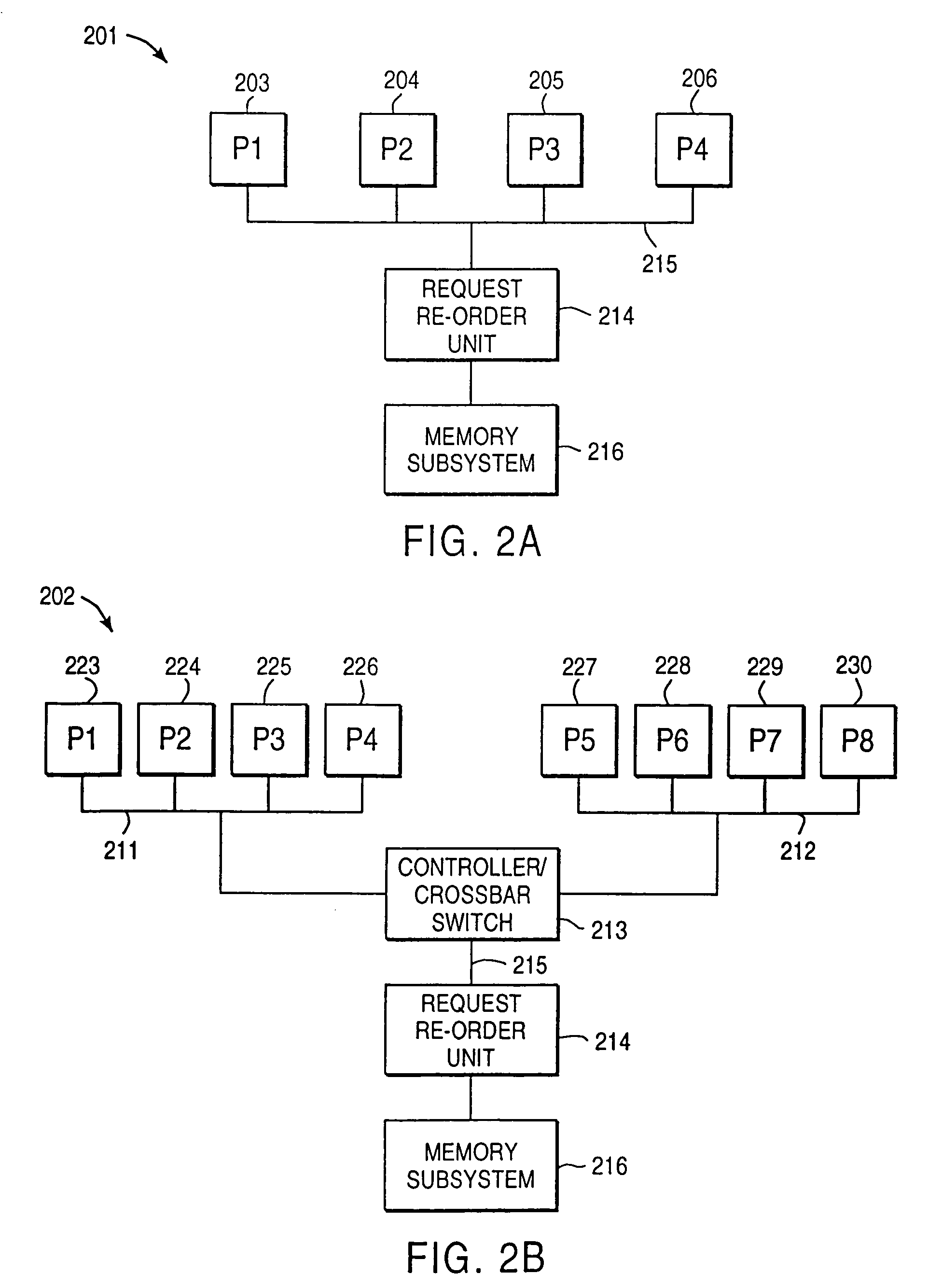

Memory transactions are carried out in an order that maximizes concurrency in a memory system such as a multi-bank interleaved memory system. Read data is collected in a buffer memory to be presented back to the bus in the same order as read transactions were requested. An adaptive algorithm groups writes to minimize overhead associated with transitioning from reading to writing into memory.

Owner:TAIWAN SEMICON MFG CO LTD

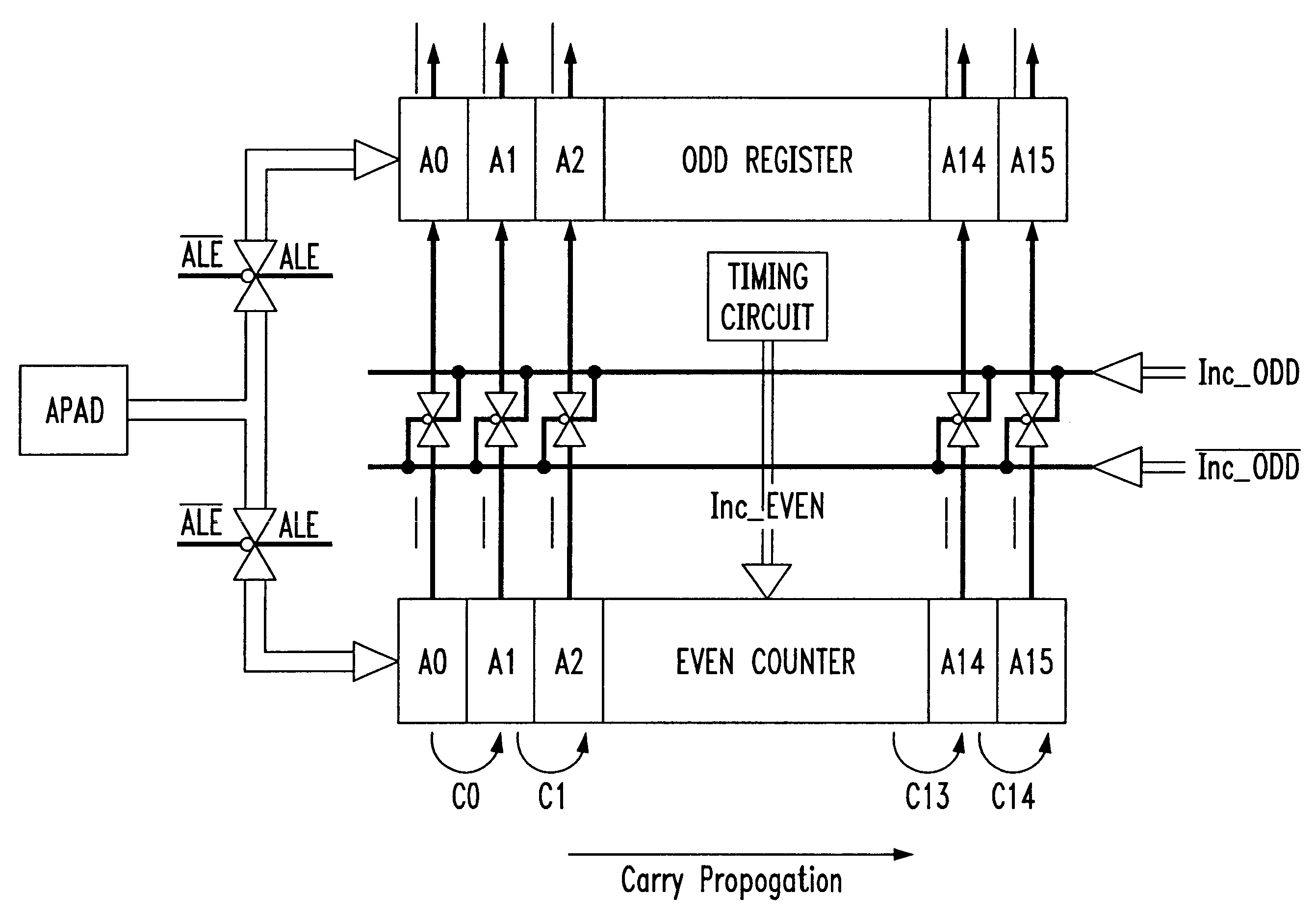

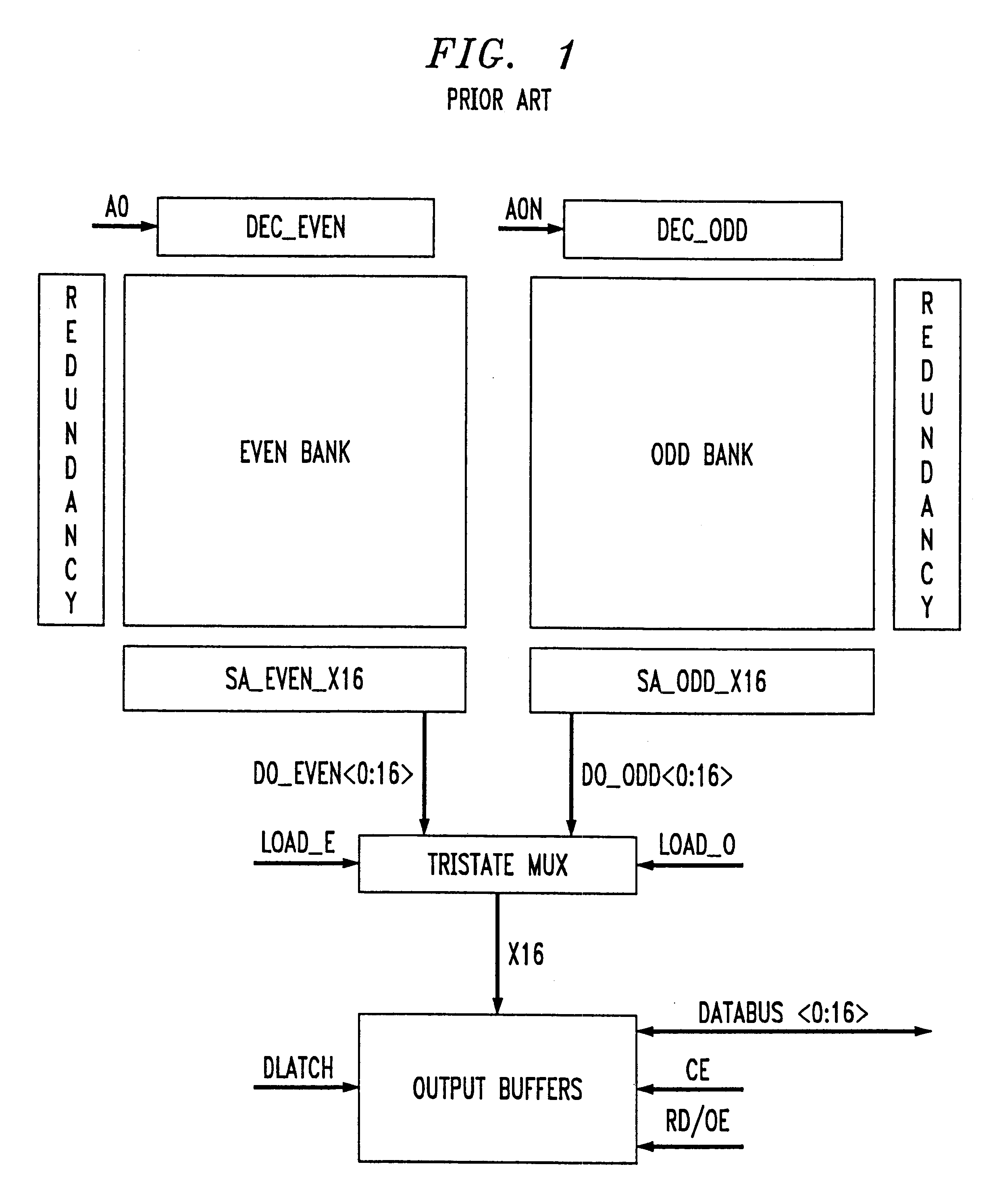

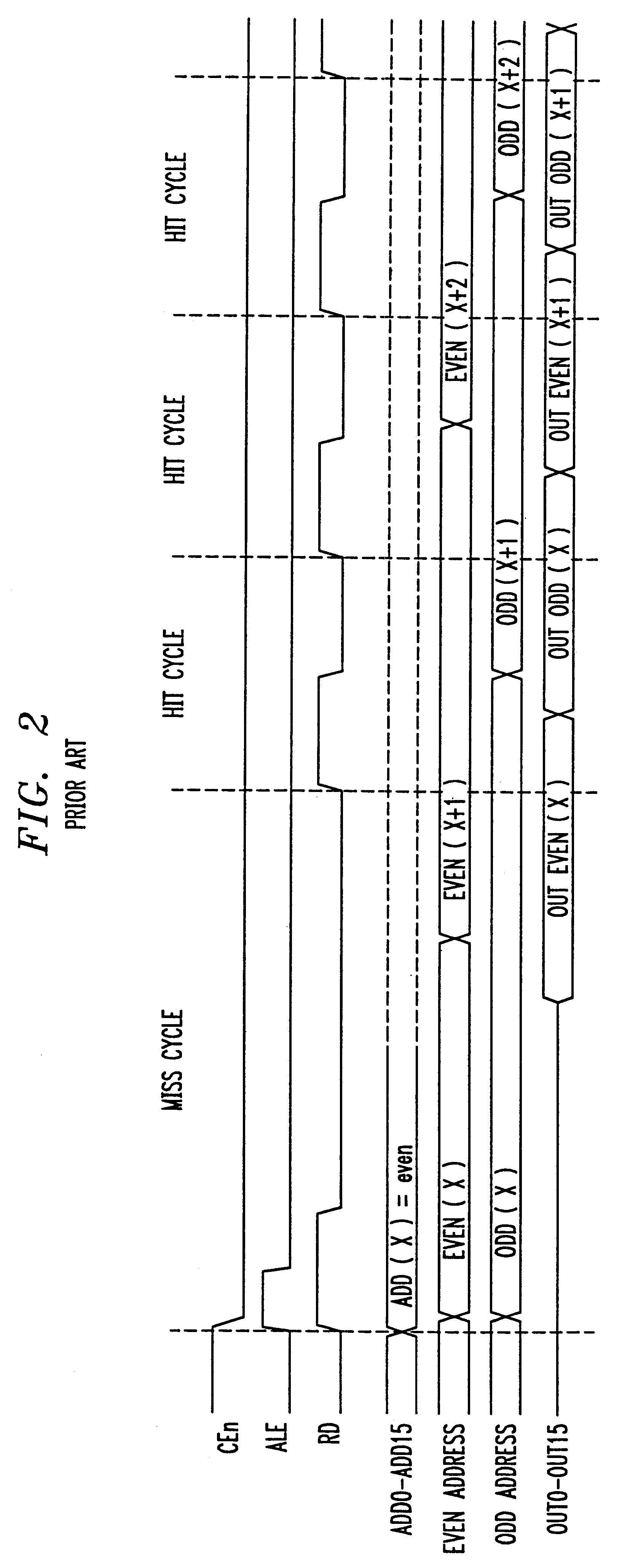

Interleaved memory device for sequential access synchronous reading with simplified address counters

An interleaved memory includes an array of memory cells divided into a first bank of memory cells and a second bank of memory cells. The interleaved memory operates in a burst access mode. A first address counter is coupled to the first bank of memory cells, and an address register is coupled to the first address counter and to the second bank of memory cells. A timing circuit generates increment pulses to the first address counter so that a first random access asynchronous read cycle starts with the first bank of memory cells. A function of an address counter for the second bank of memory cells is being performed by coping contents of the first address counter to the address register.

Owner:MICRON TECH INC

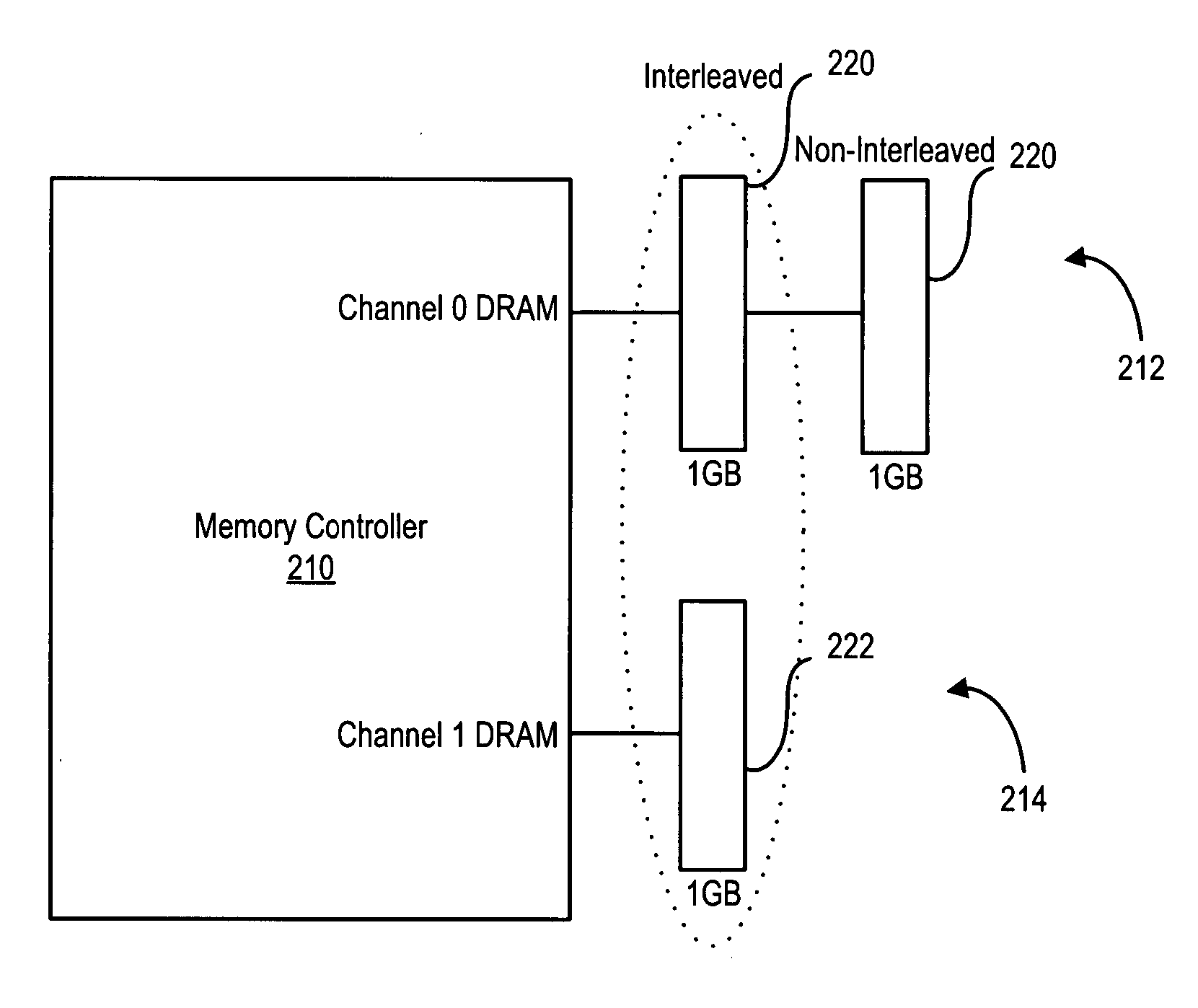

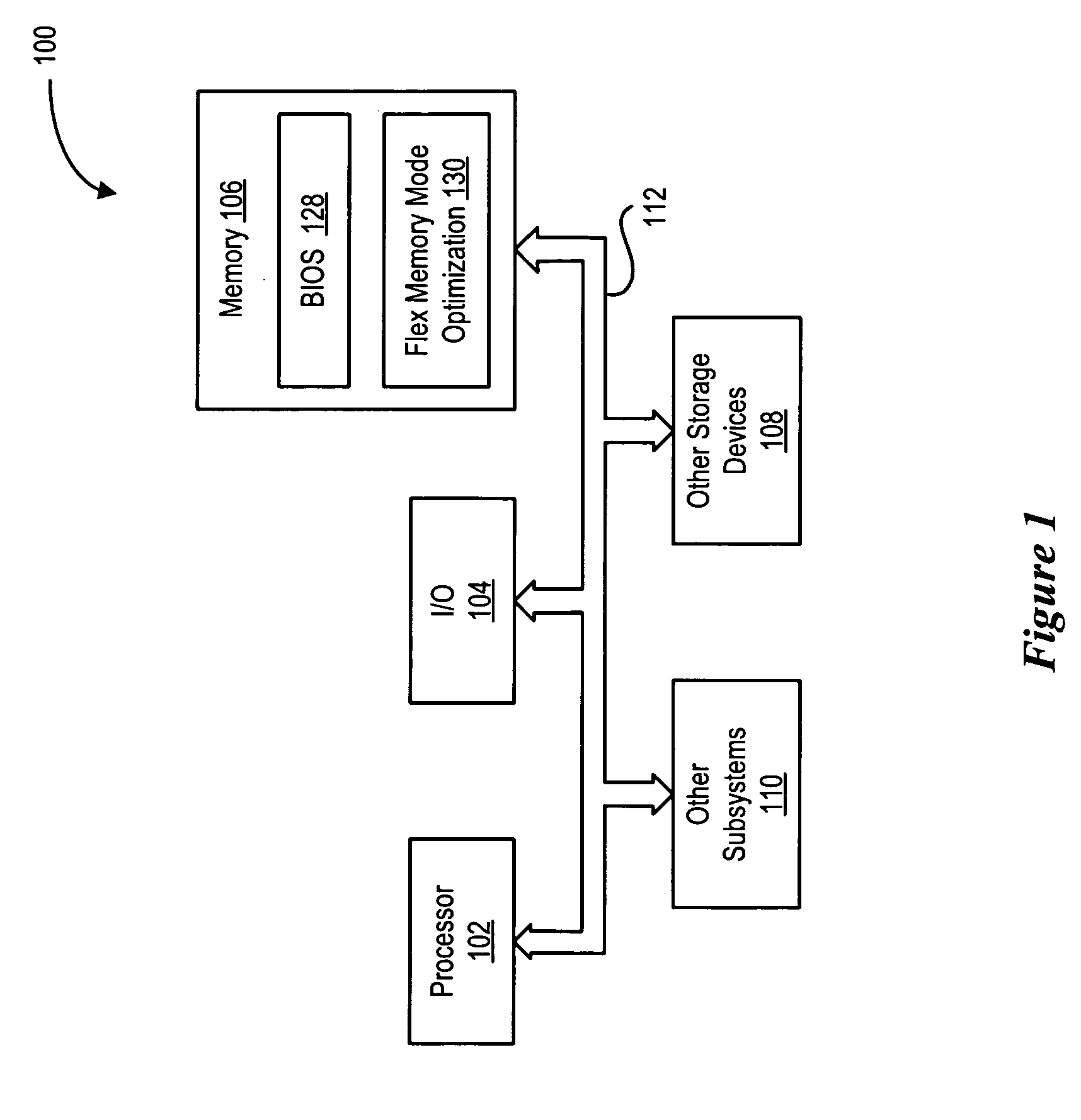

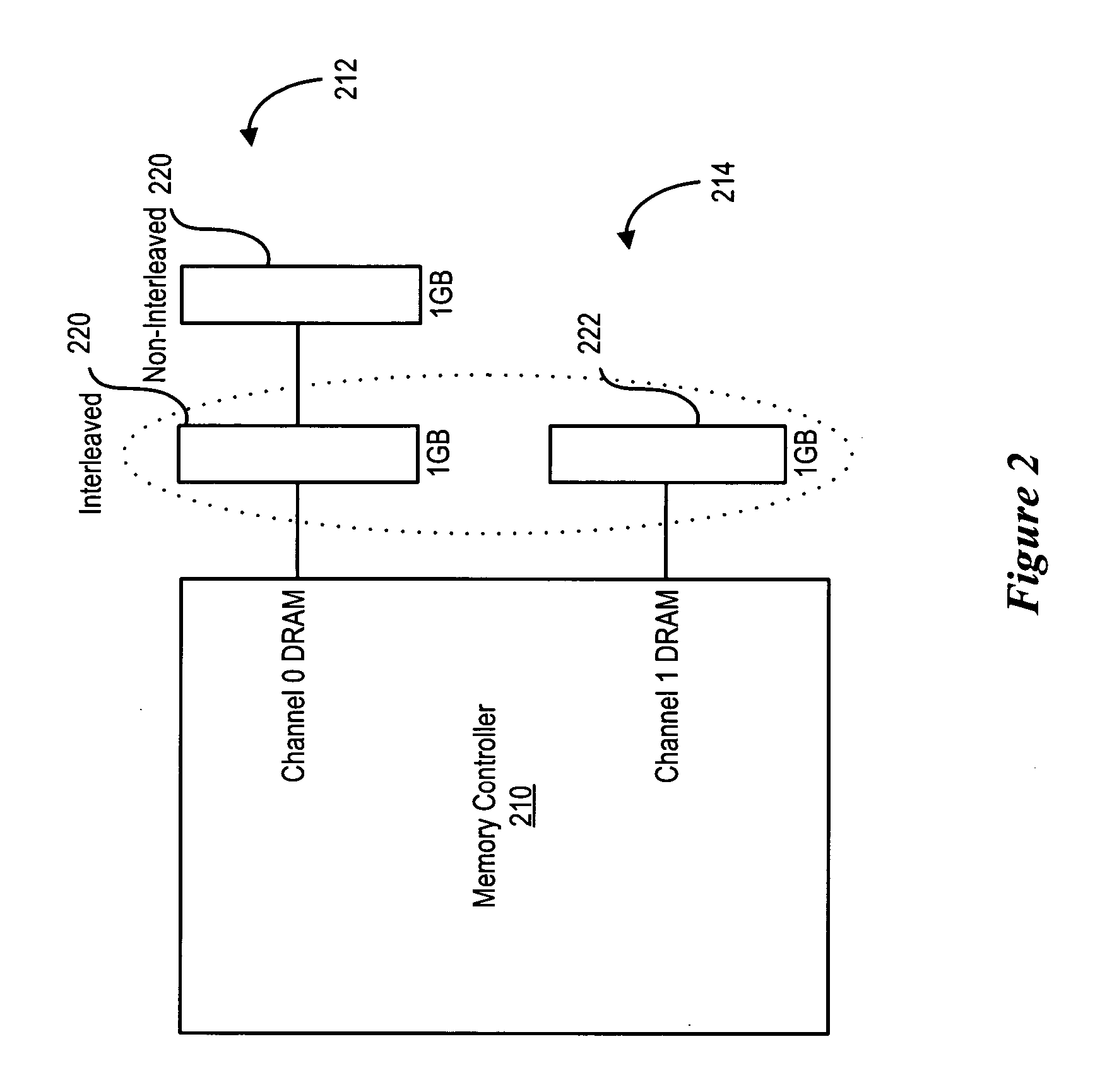

Optimizing system performance in flexible interleaving memory mode

A method for optimizing performance of memory in an information handling system which includes determining whether memory within the information handing system is being accessed in a flexible interleaving memory mode of operation, when the memory is being accessed in the flexible interleaving memory mode of operation, identifying which of the memory is configured as interleaved memory and which of the memory is configured as non-interleaved memory, and configuring the memory such that the interleaved memory is accessed prior to the non-interleaved memory being accessed is disclosed.

Owner:DELL PROD LP

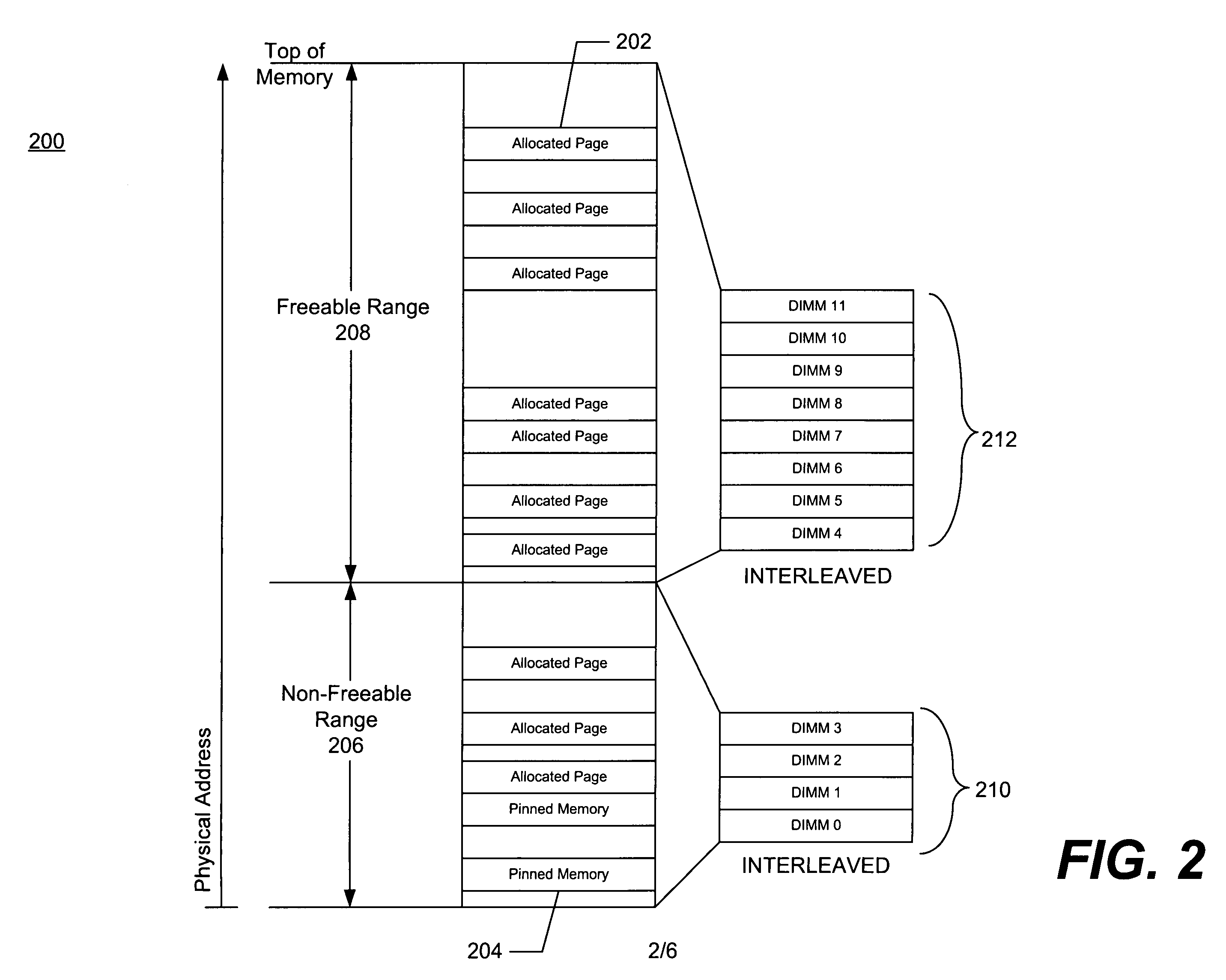

Method of sparing memory devices containing pinned memory

Embodiments of the present invention may provide a method of sparing and removing pinned or interleaved memory. When a memory device failure is predicted in a device containing pinned memory, a request may be made for the de-allocation of a freeable memory range 304. When the request for de-allocating the freeable range of memory is accepted 306, the memory data from the failing memory device may be copied to one or more de-allocated memory devices 308. Requests directed to the failing memory device may be re-routed to the replacement memory device(s) 310 and the memory without the deactivated memory device 312 may be re-interleaved.

Owner:INTEL CORP

Interleaved memory cell with single-event-upset tolerance

ActiveUS7515452B1Digital storageReliability increase by circuit redundancyComputer architectureInterleaved memory

A memory array has a first memory cell with a plurality of transistors connected so as to restore a data value to a node of the memory cell to an initial value following an event upsetting the initial value. A first portion of the plurality of transistors is in a first cell portion and a second portion of the plurality of transistors is in a second cell portion. A second memory cell has a third cell portion and a fourth cell portion. The third cell portion is between the first cell portion and the second cell portion and adjacent to each of the first cell portion and the second cell portion. In a particular embodiment, the memory cell is a single-event-upset (“SEU”) tolerant memory cell and the first and second cell portions are each a half cell of a sixteen transistor memory cell.

Owner:XILINX INC

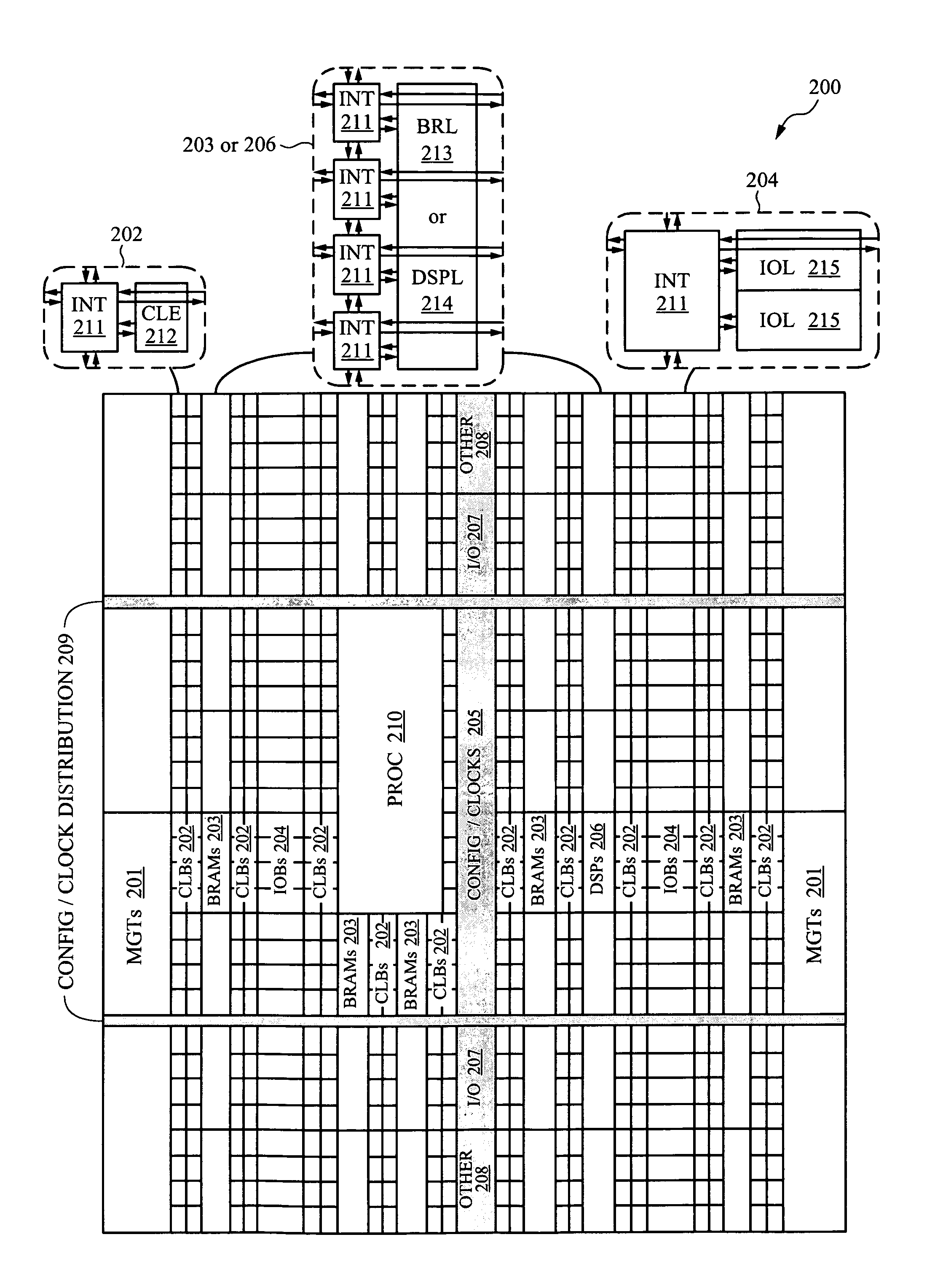

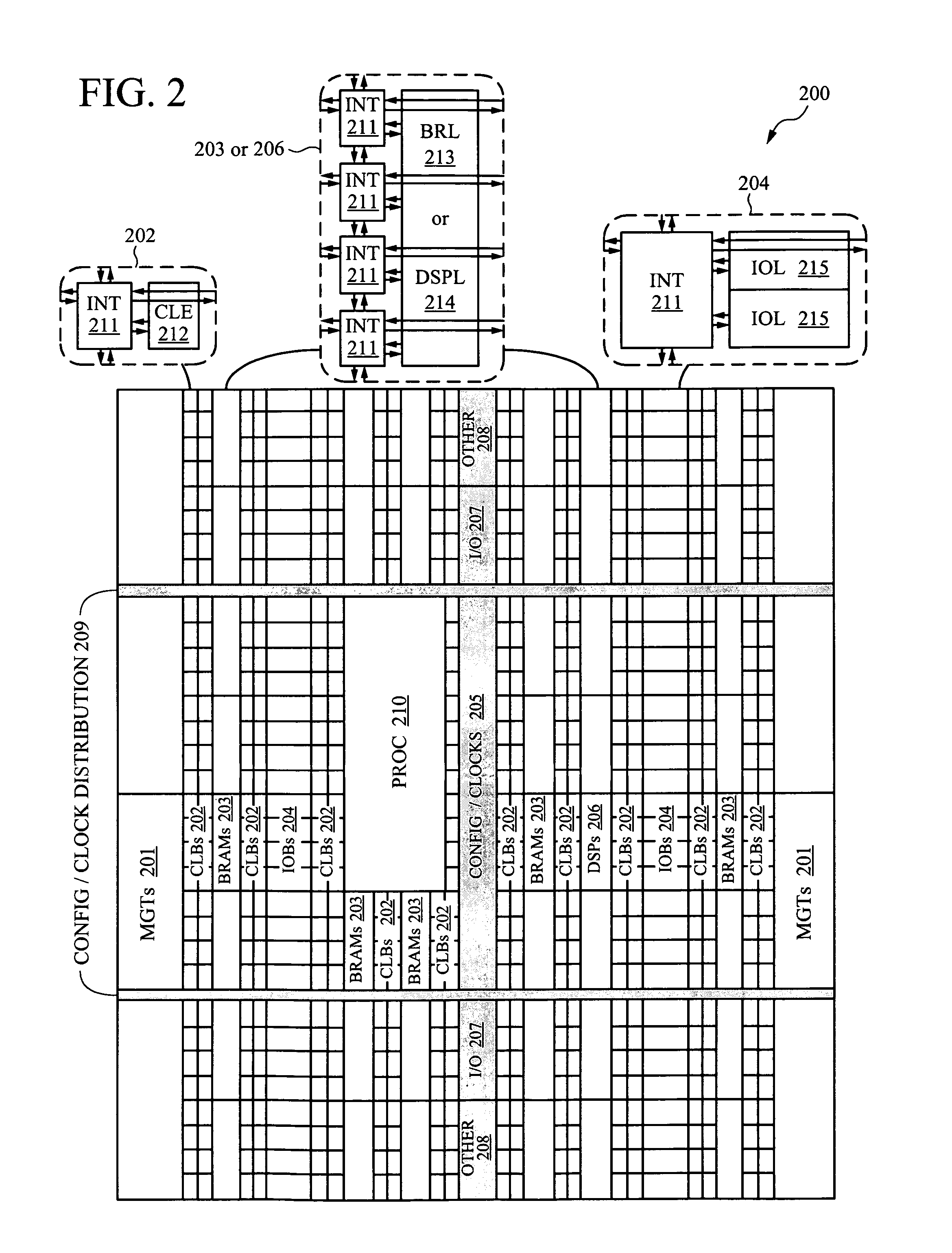

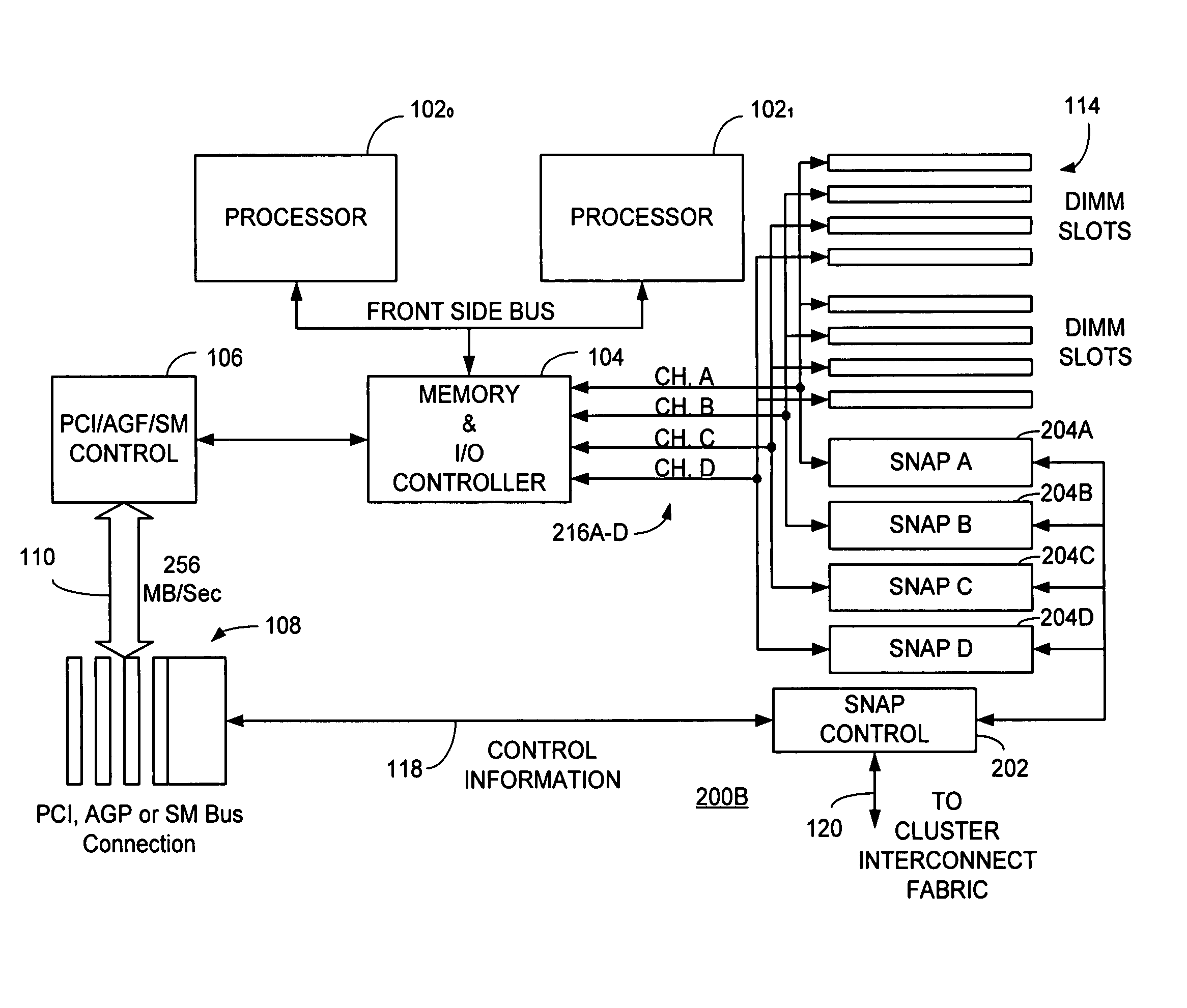

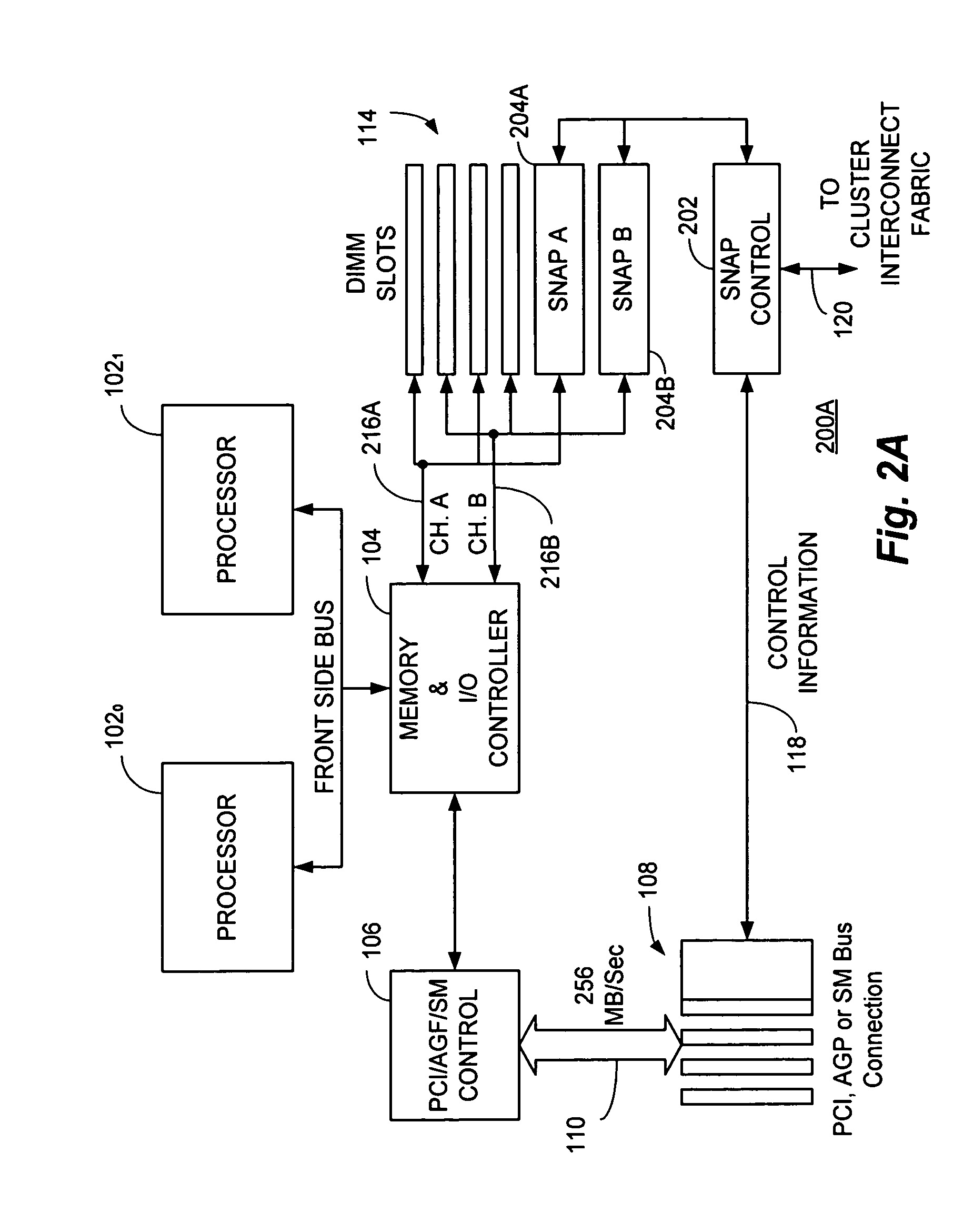

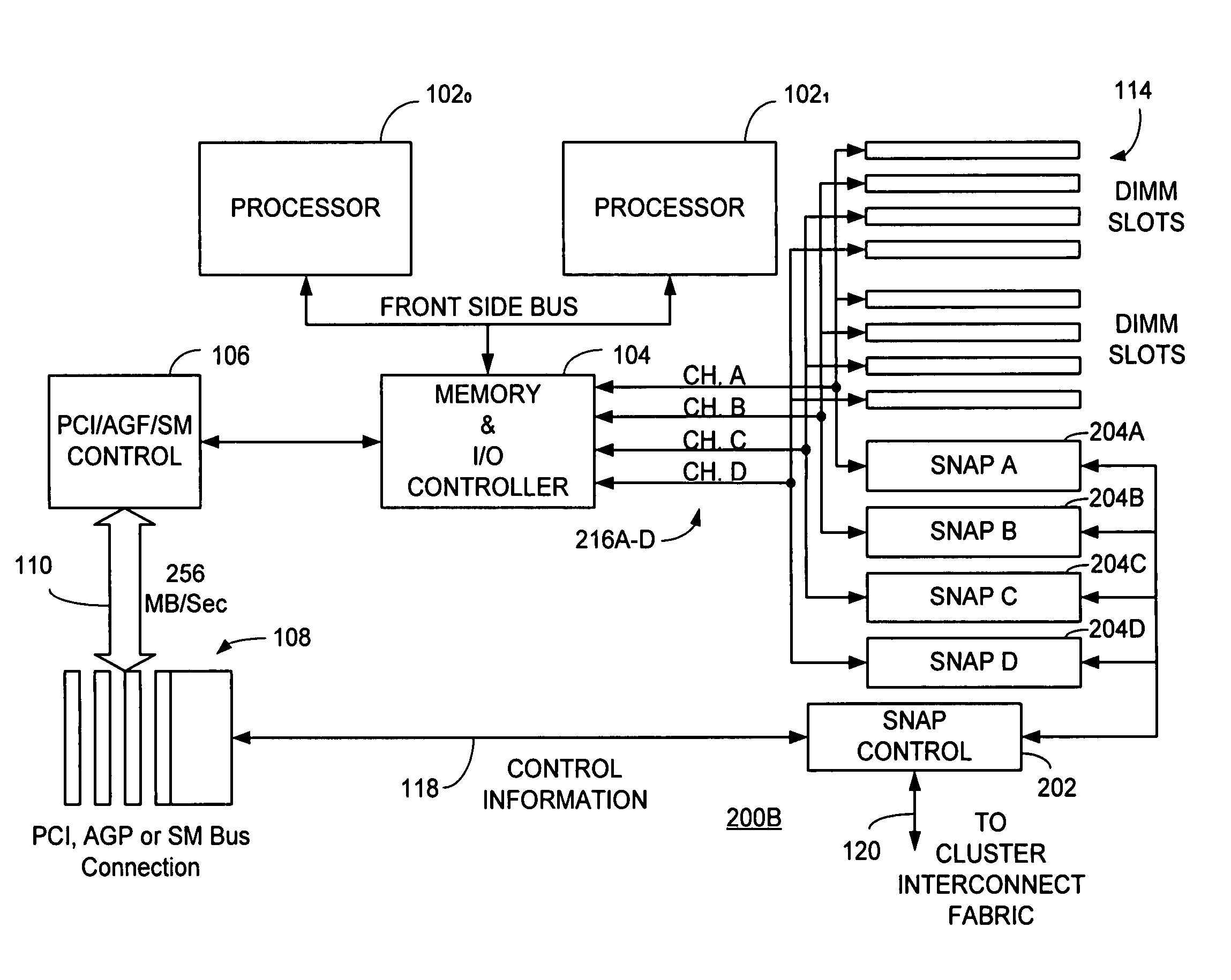

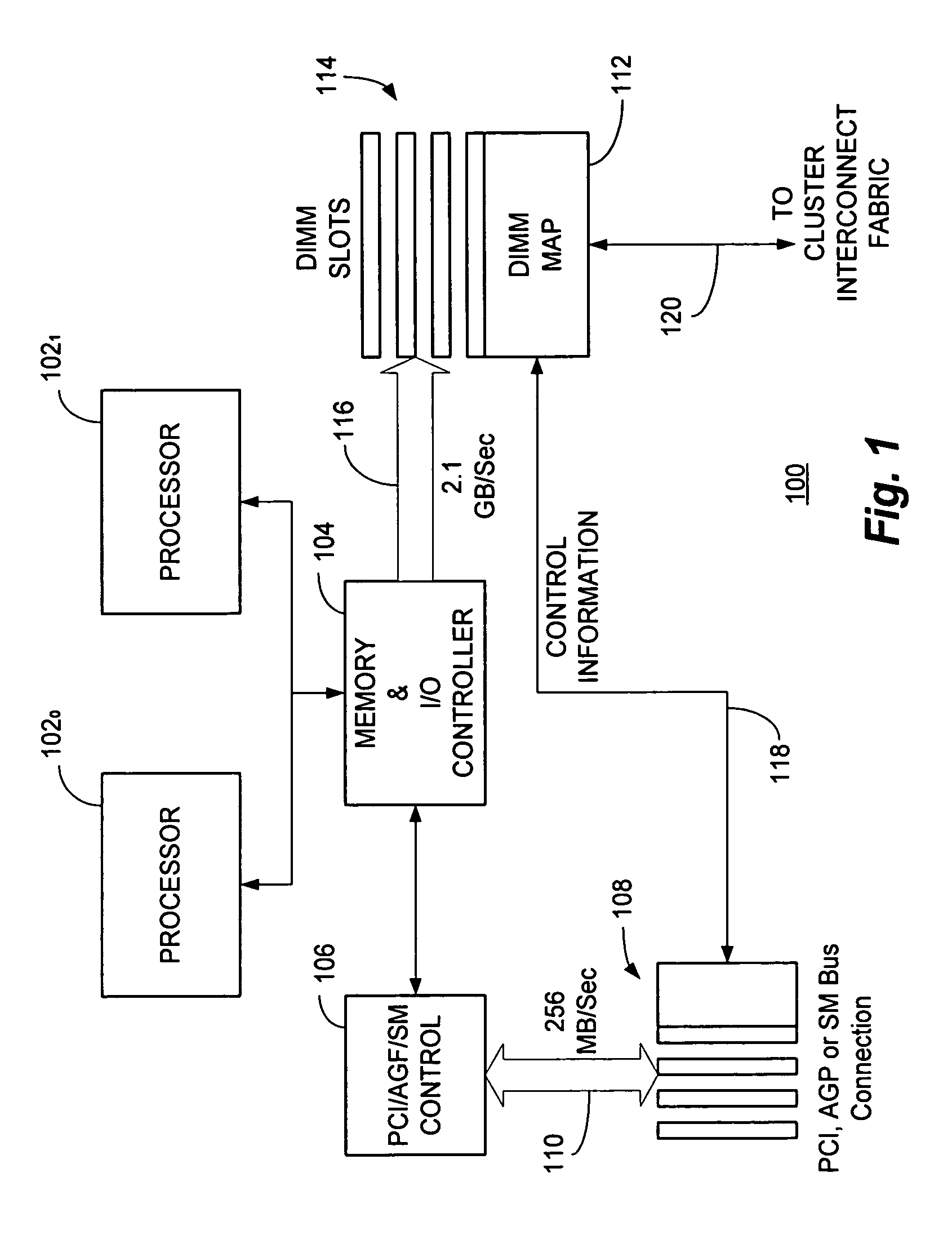

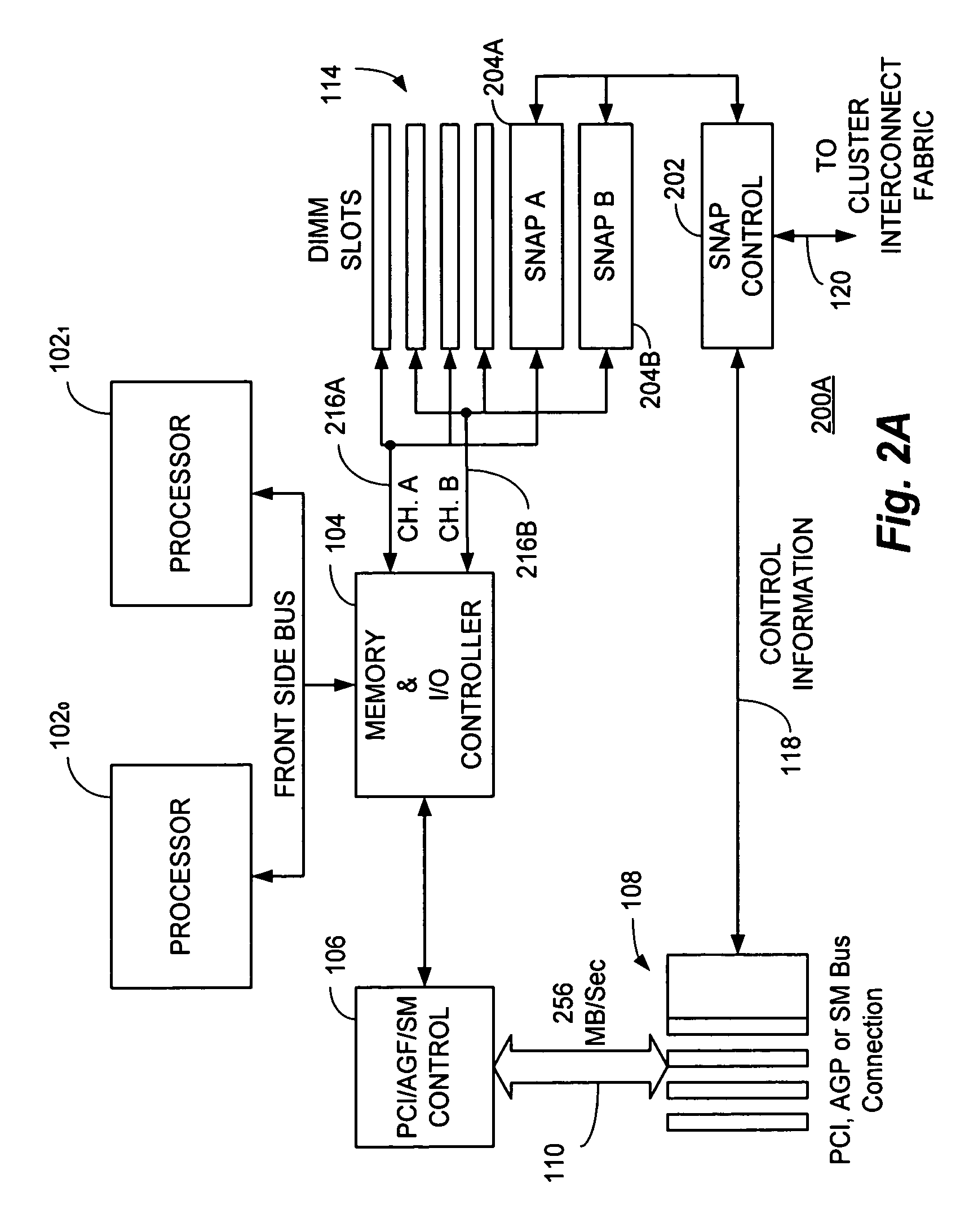

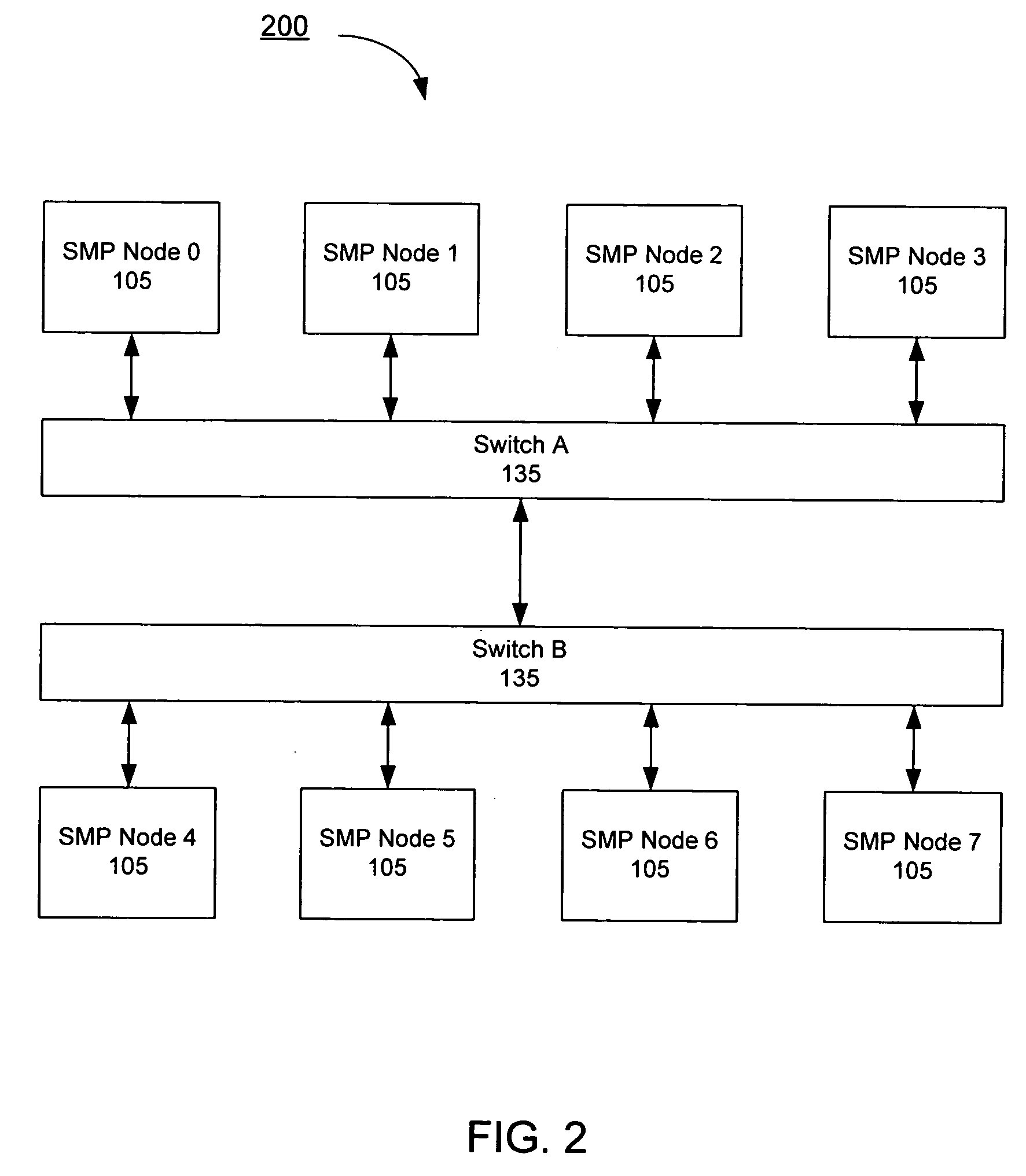

Switch/network adapter port coupling a reconfigurable processing element to one or more microprocessors for use with interleaved memory controllers

InactiveUS20050283546A1Conveniently providedMultiple digital computer combinationsInput/output processes for data processingTrademarkControl store

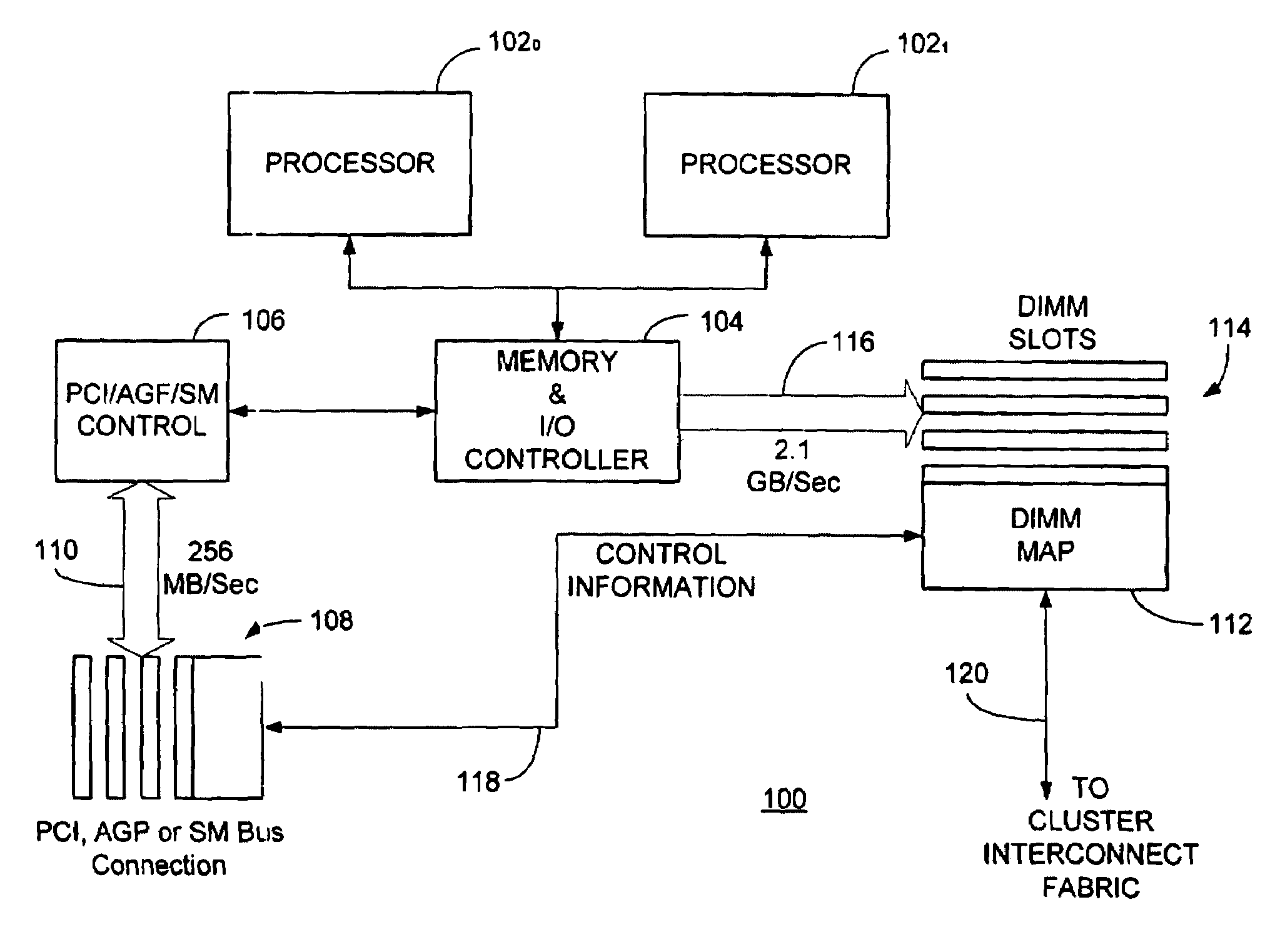

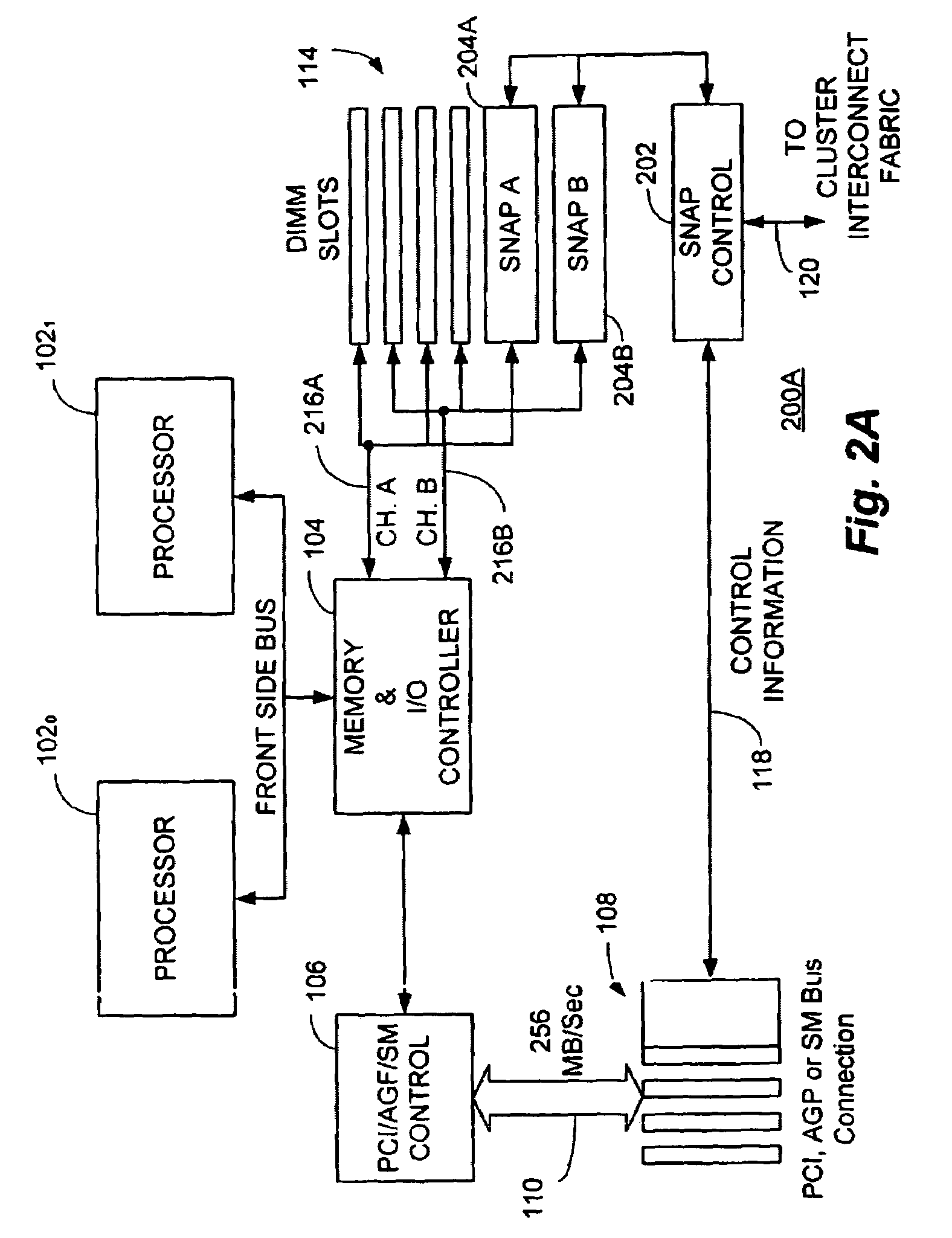

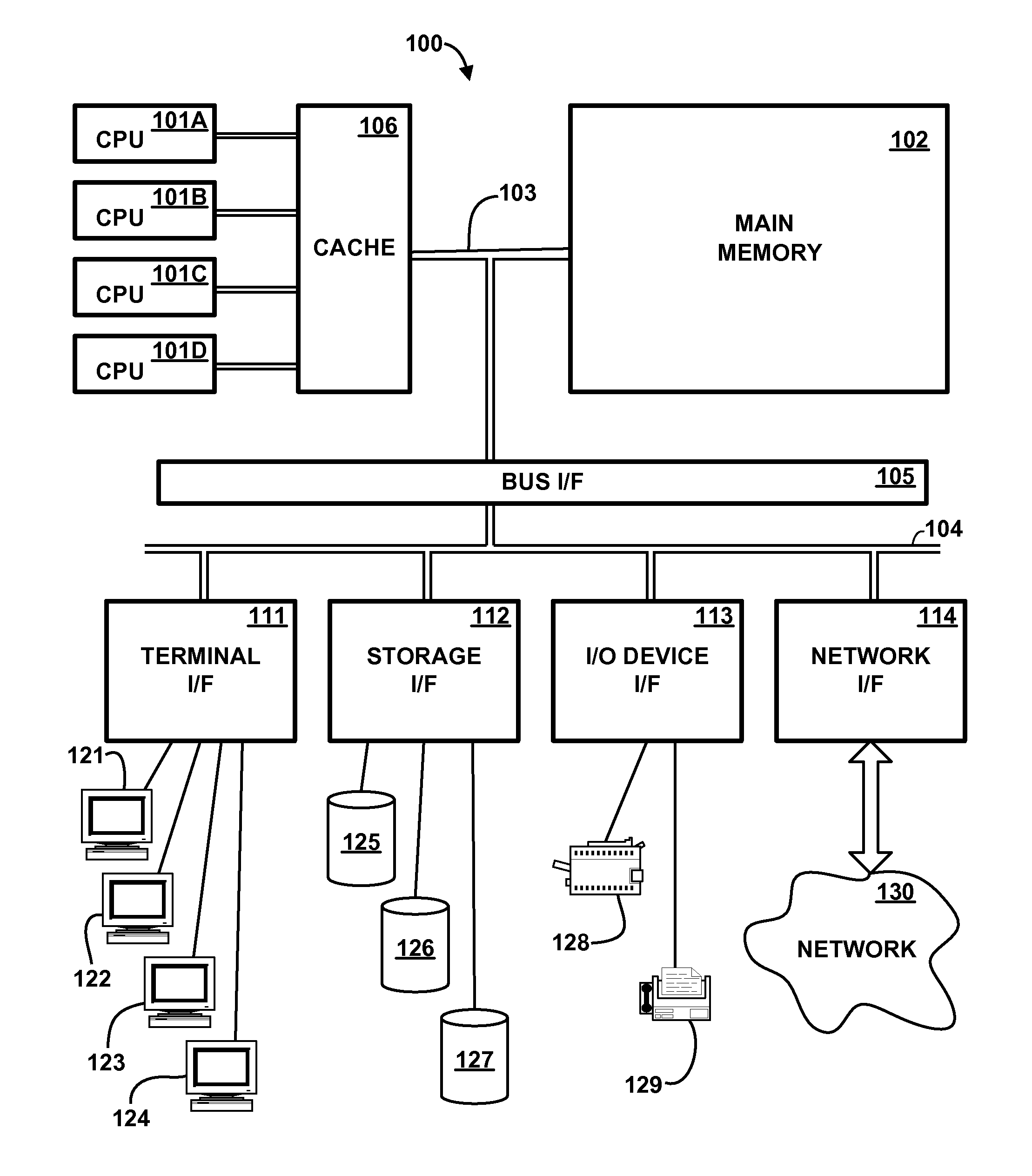

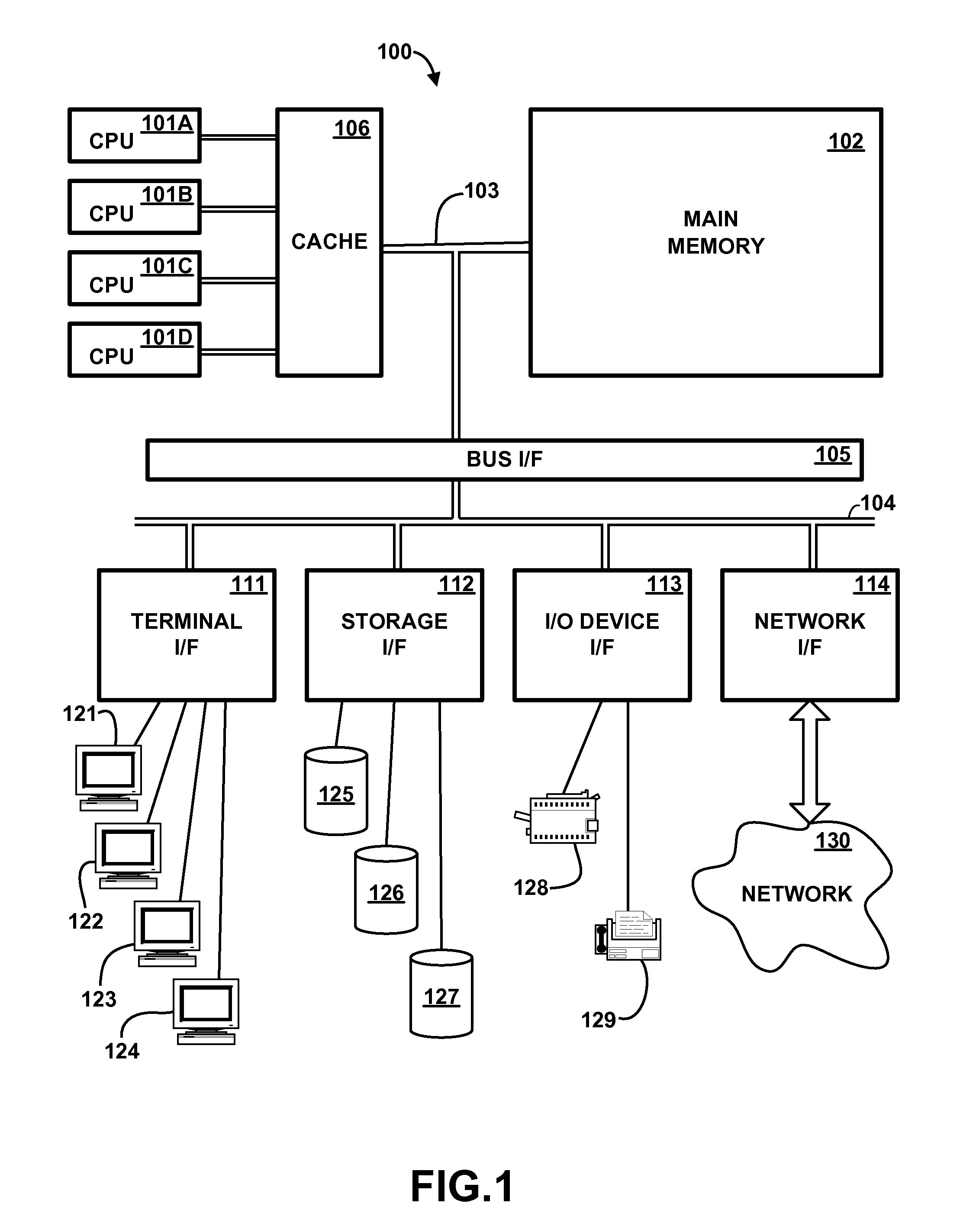

A switch / network adapter port (“SNAP™”) in a dual in-line memory module (“DIMM”) or Rambus™ in-line memory module (“RIMM”) format for clustered computers employing multi-adaptive processor (“MAP®”, both trademarks of SRC Computers, Inc.) elements for use with interleaved memory controllers. Particularly disclosed is a microprocessor based computer system utilizing either a DIMM or RIMM physical format adapter port coupled to a reconfigurable processor element for the purpose of implementing a connection to an external switch, network, or other device. In a particular embodiment, connections may be provided to either the PCI, accelerated graphics port (“AGP”) or system maintenance (“SM”) bus for purposes of passing control information to the host microprocessor or other control chips. The field programmable gate array (“FPGA”) based processing elements have the capability to alter data passing through it to and from an external interconnect fabric or device.

Owner:SRC COMP

Switch/network adapter port coupling a reconfigurable processing element to one or more microprocessors for use with interleaved memory controllers

InactiveUS7565461B2Multiple digital computer combinationsInput/output processes for data processingTrademarkSystem maintenance

Owner:SRC COMP

Structure for Dual-Mode Memory Chip for High Capacity Memory Subsystem

ActiveUS20090006760A1Significant power savingReducing frequency of busMemory systemsMemory chipDual mode

A design structure is provided for a dual-mode memory chip supporting a first operation mode in which received data access commands contain chip select data to identify the chip addressed by the command, and control logic in the memory chip determines whether the command is addressed to the chip, and a second operation mode in which the received data access command addresses a set of multiple chips. Preferably, the first mode supports a daisy-chained configuration of memory chips. Preferably the second mode supports a hierarchical interleaved memory subsystem, in which each addressable set of chips is configured as a tree, command and write data being propagated down the tree, the number of chips increasing at each succeeding level of the tree.

Owner:LENOVO GLOBAL TECH INT LTD

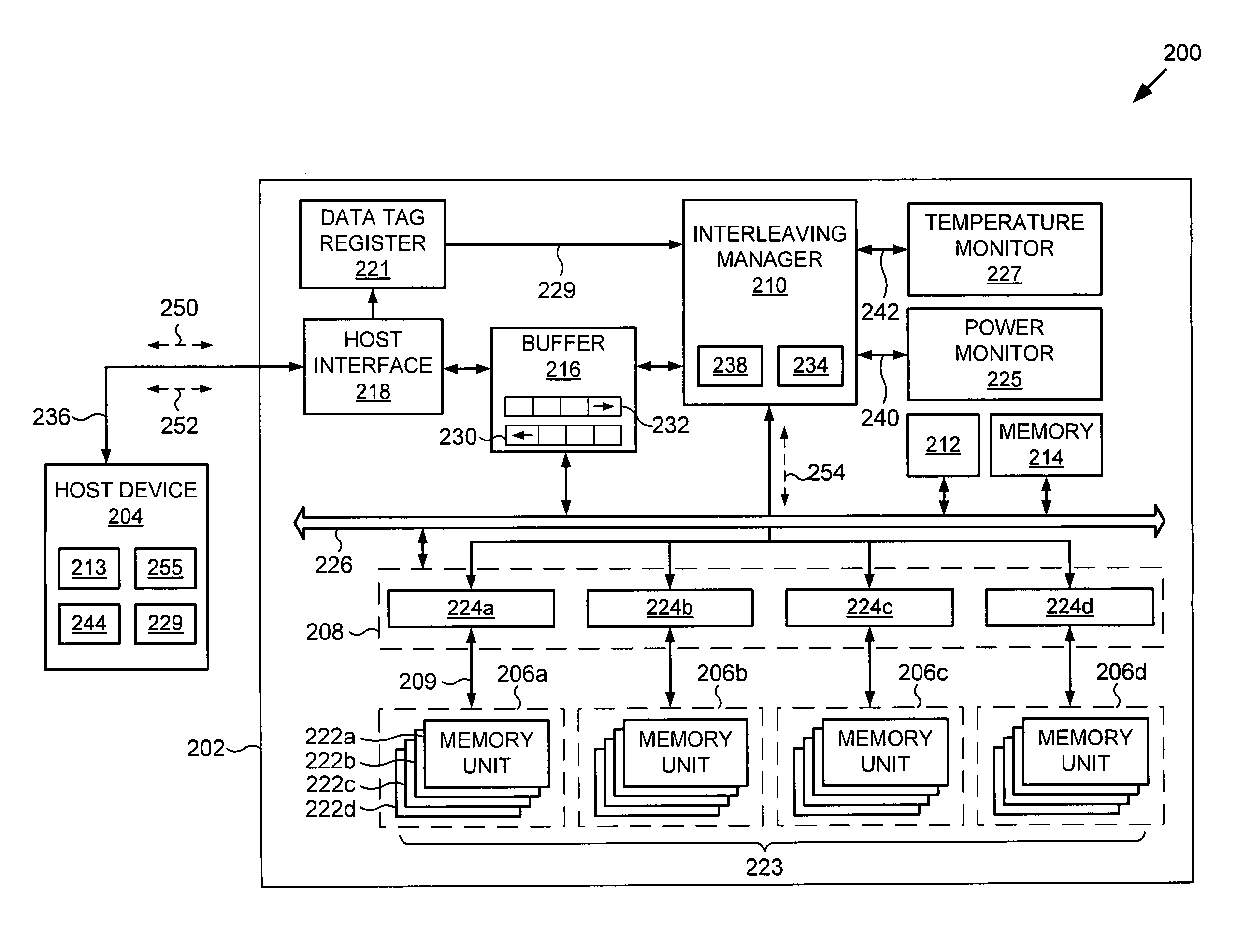

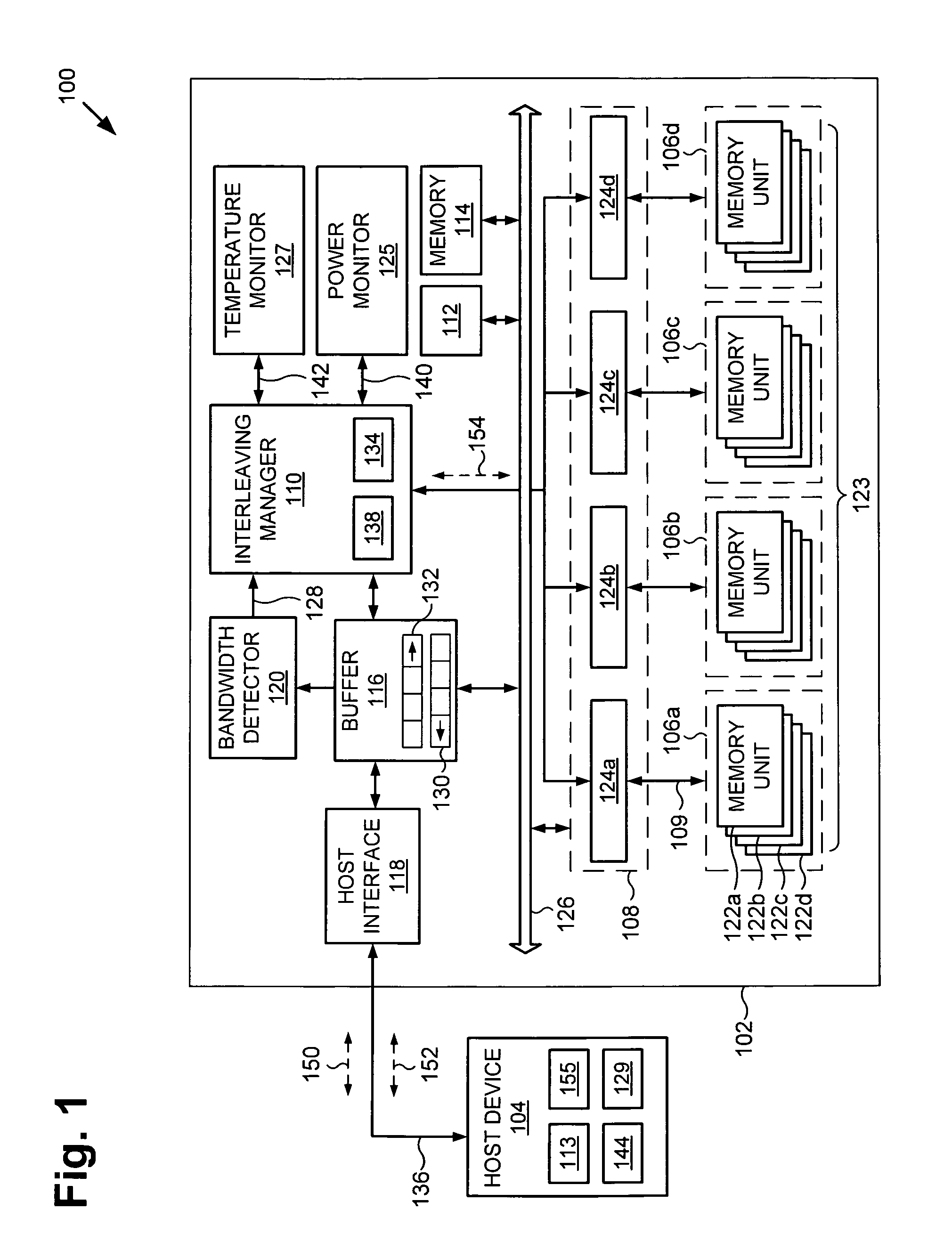

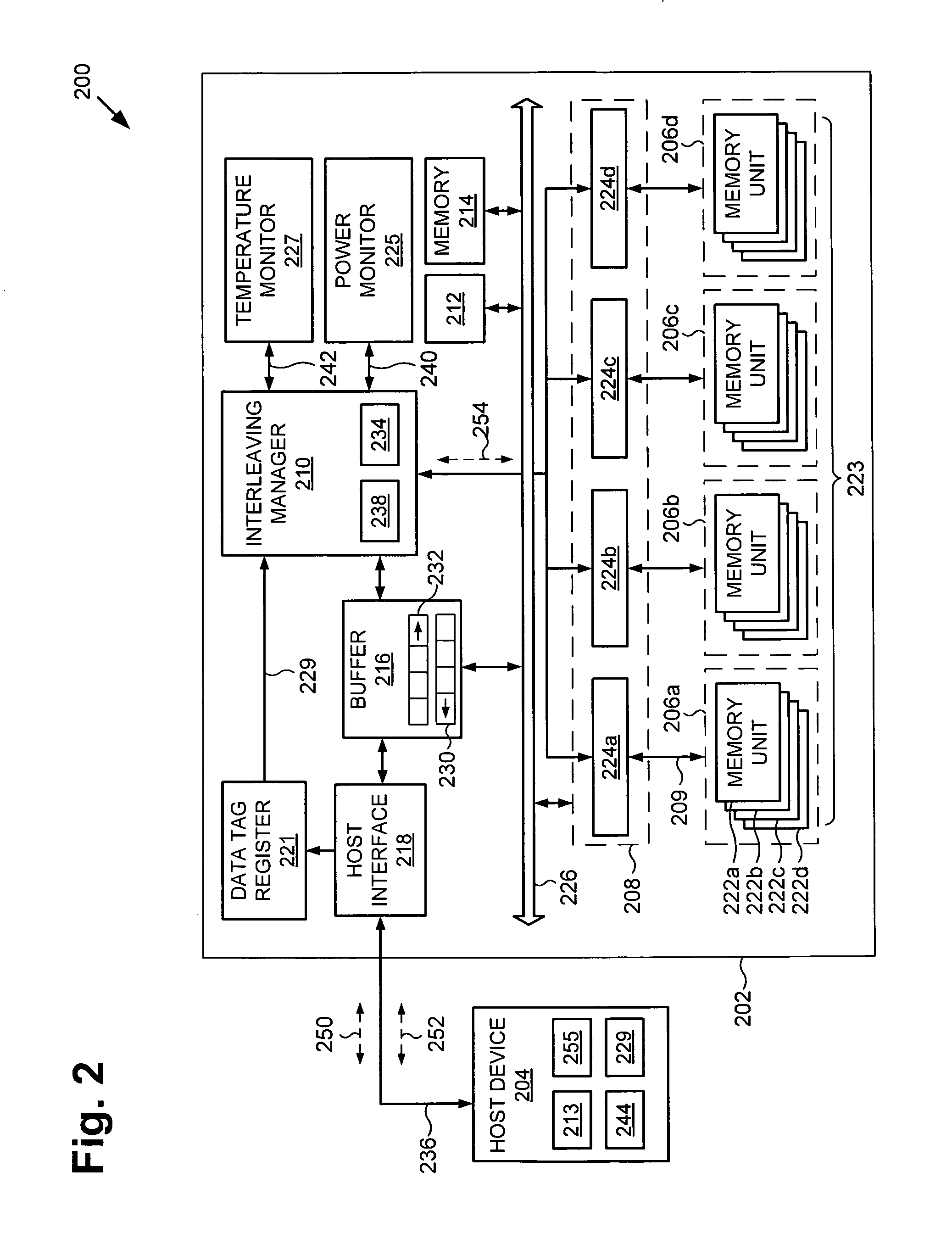

System and method for dynamically adjusting memory performance

According to an exemplary embodiment, a method for dynamically adjusting memory performance includes detecting a bandwidth of a data transfer. Detecting the bandwidth can comprise measuring a data stream of the data transfer and determining the bandwidth based on the measuring. The method further includes selecting a subset of a plurality of interleaved memory units based on the bandwidth. A device performing the data transfer can comprise a power supply and the selecting can be based on a power level of the power supply. The selecting can also be based on a temperature of the device performing the data transfer. The method also includes performing a data access for the data transfer using the subset of the plurality of interleaved memory units.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

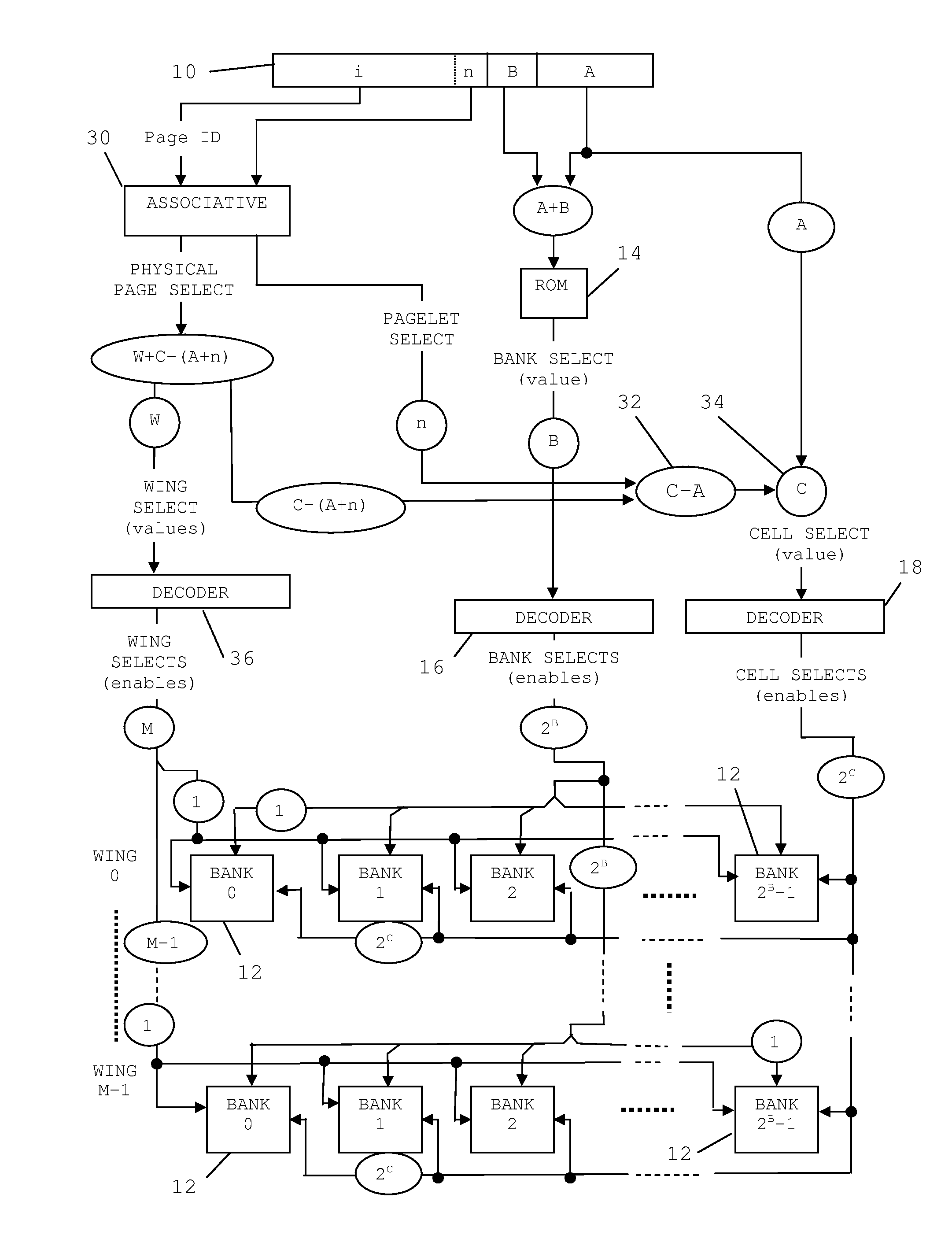

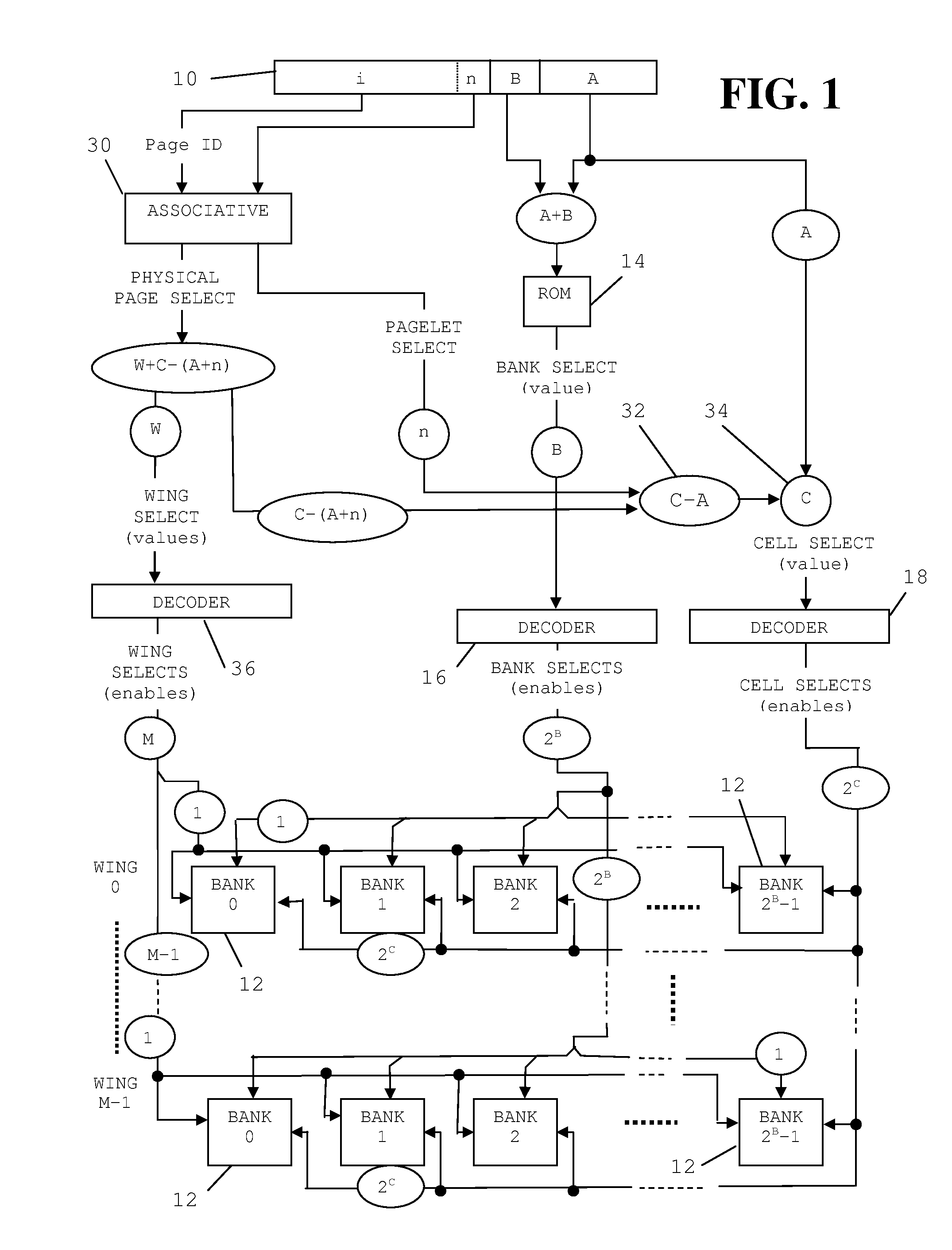

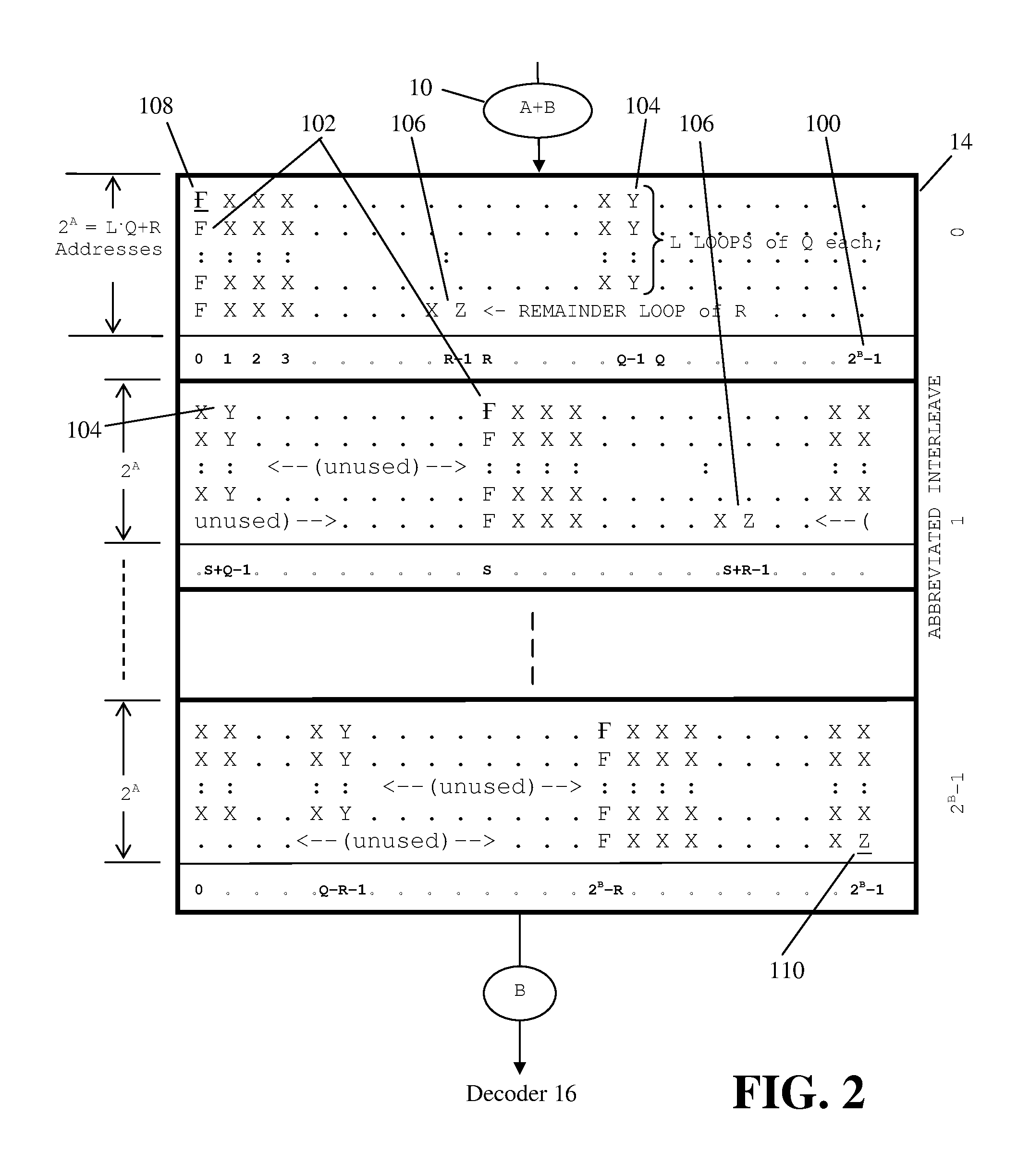

Intelligent memory banks for storing vectors

InactiveUS20100312945A1Computation using non-contact making devicesMemory adressing/allocation/relocationMemory bankInterleaved memory

An intelligent memory bank for use with interleaved memories storing plural vectors comprises setup apparatus (96) receives an initial address (B+C+V+NMSK) and spacing data (D) for each vector. Addressing logic (90) associates a memory cell select (C) to each initial and subsequent address of each of the plurality of vectors. Cell select apparatus (98) accesses a memory cell (in 92) using a memory cell select (C) associated to a respective one of the initial and successive addresses of each vector.

Owner:EFFICIENT MEMORY TECH

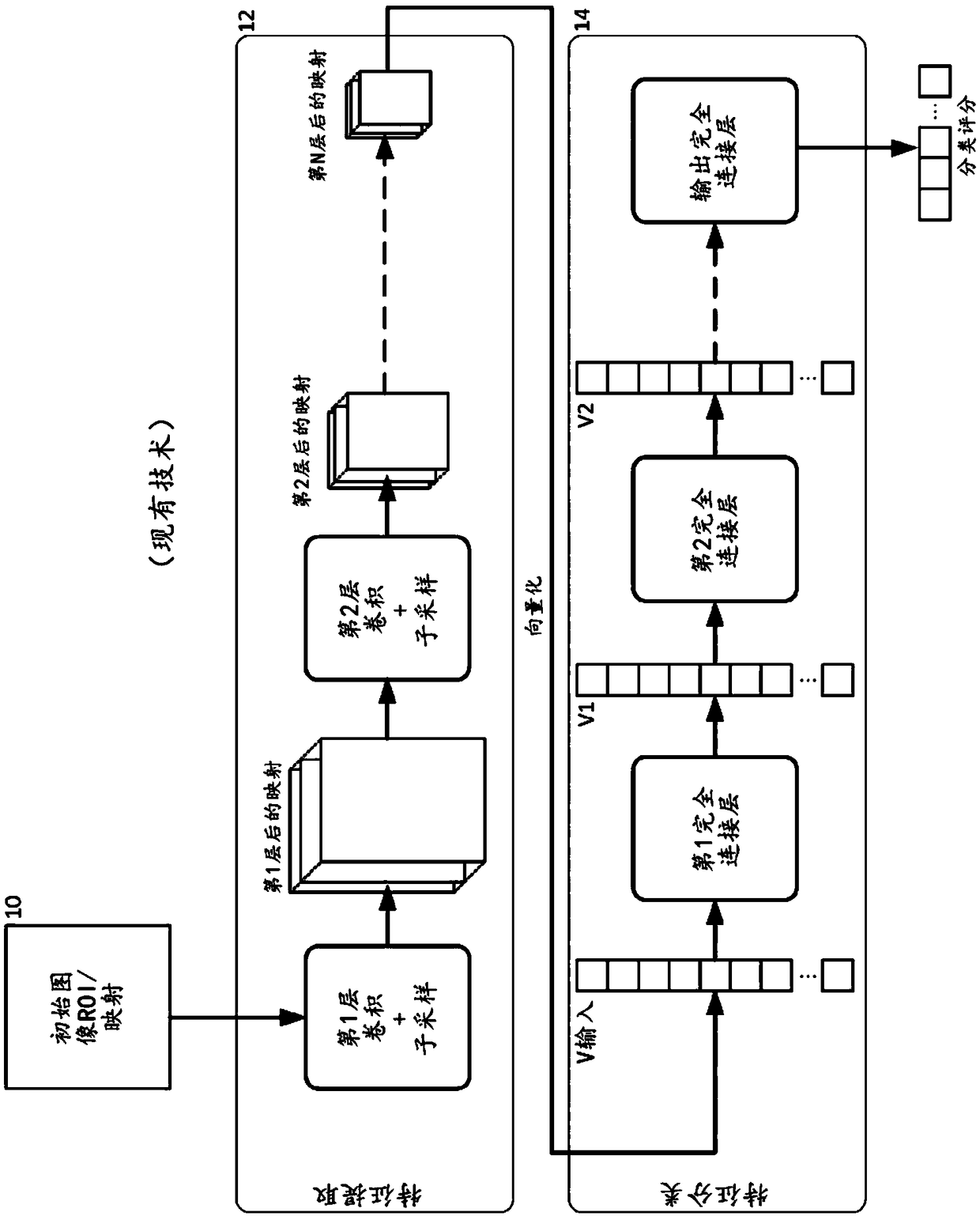

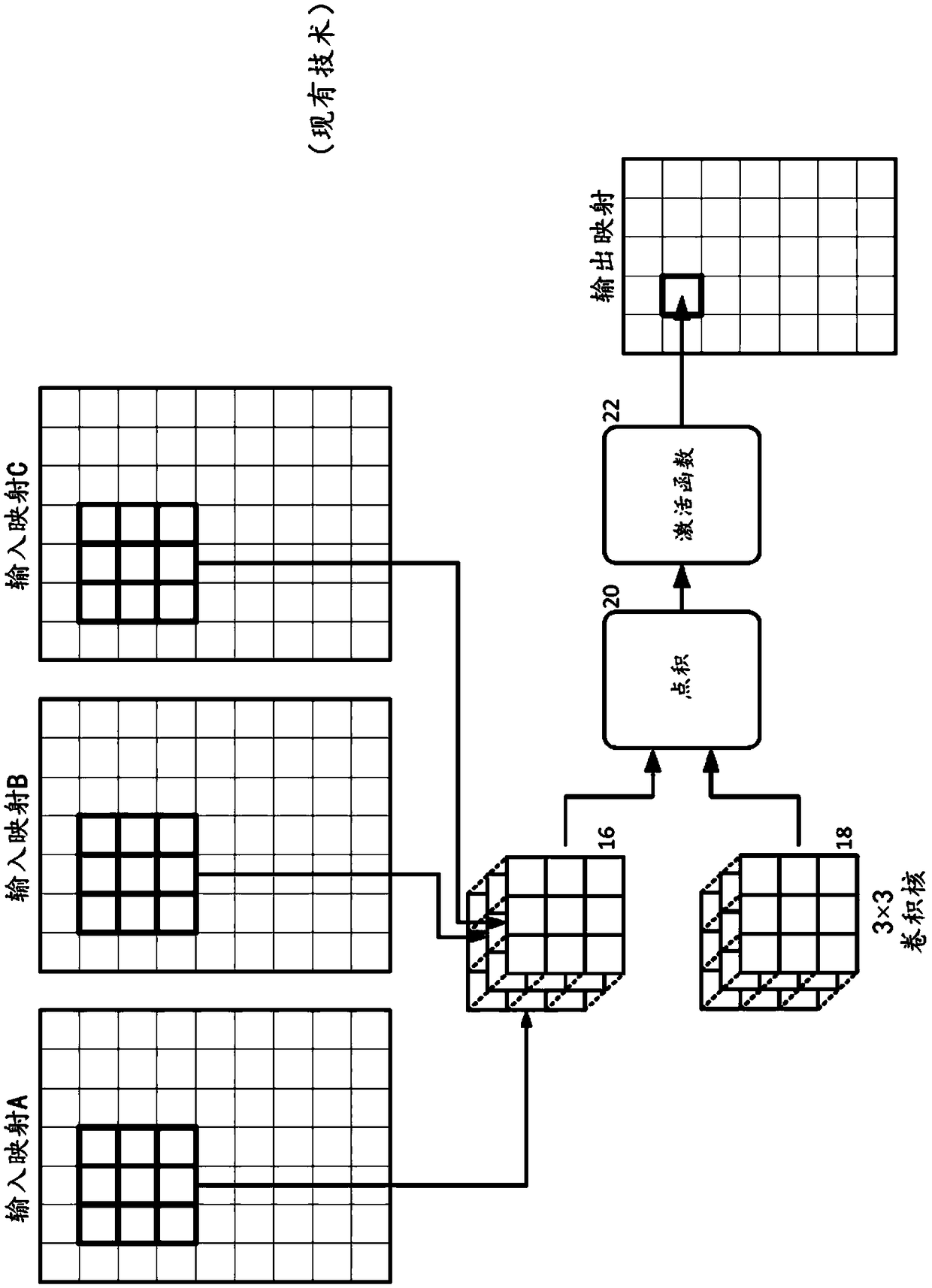

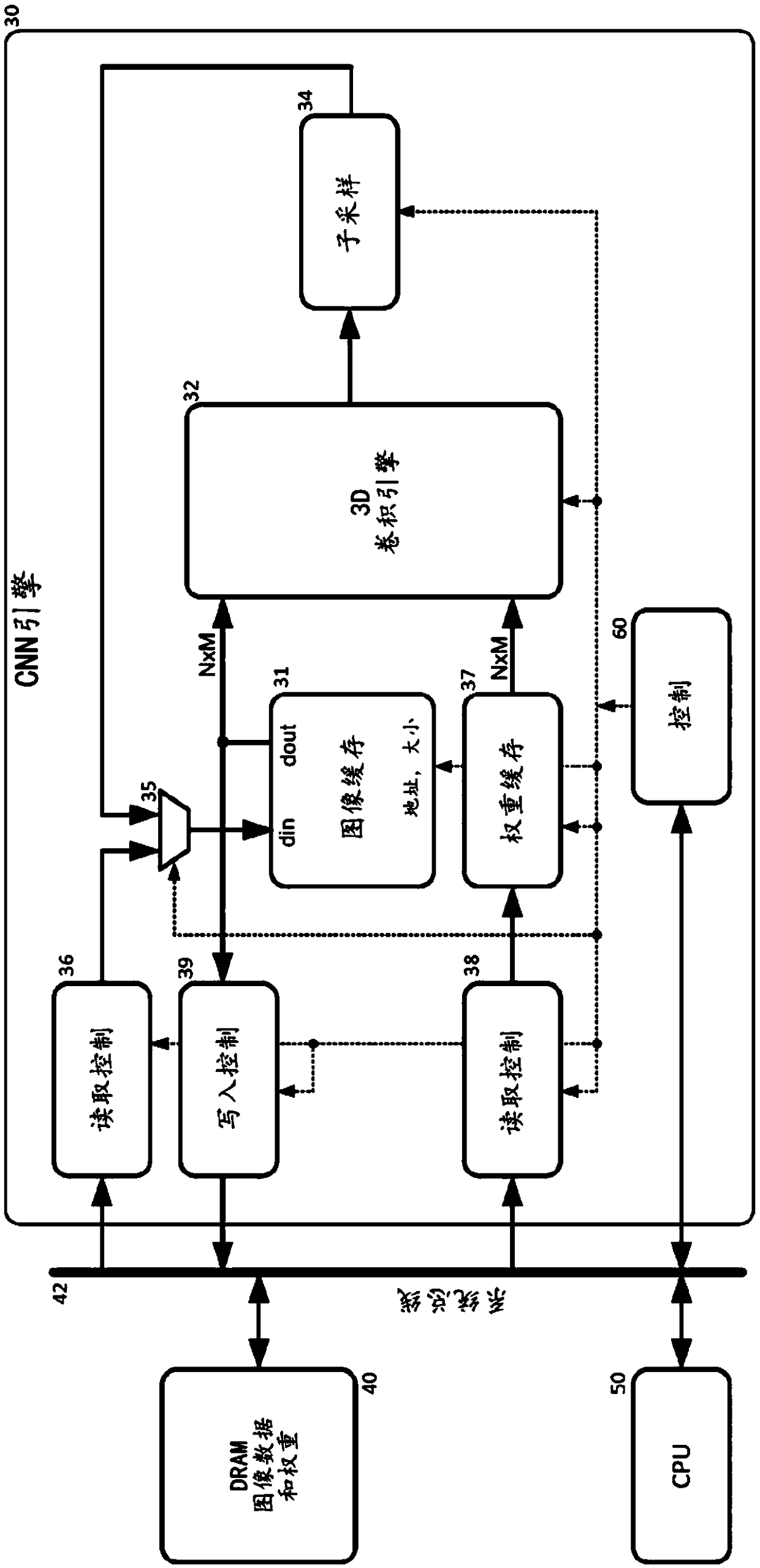

A convolutional neural network

ActiveCN108701236ASmall footprintMemory architecture accessing/allocationCharacter and pattern recognitionImaging processingActivation function

A convolutional neural network (CNN) for an image processing system comprises an image cache responsive to a request to read a block of N*M pixels extending from a specified location within an input map to provide a block of N*M pixels at an output port. A convolution engine reads blocks of pixels from the output port, combines blocks of pixels with a corresponding set of weights to provide a product, and subjects the product to an activation function to provide an output pixel value. The image cache comprises a plurality of interleaved memories capable of simultaneously providing the N*M pixels at the output port in a single clock cycle. A controller provides a set of weights to the convolution engine before processing an input map, causes the convolution engine to scan across the input map by incrementing a specified location for successive blocks of pixels and generates an output map within the image cache by writing output pixel values to successive locations within the image cache.

Owner:FOTONATION LTD

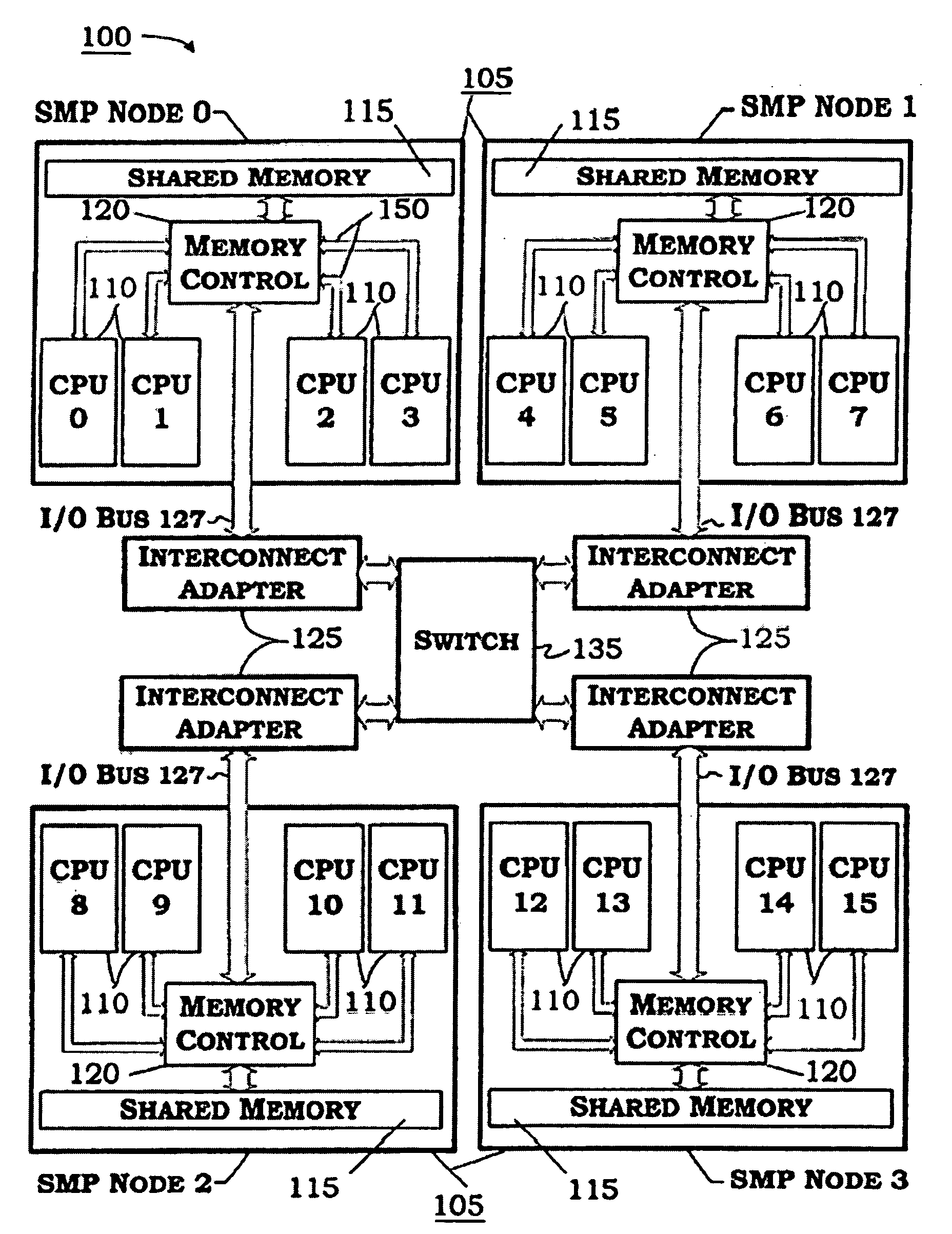

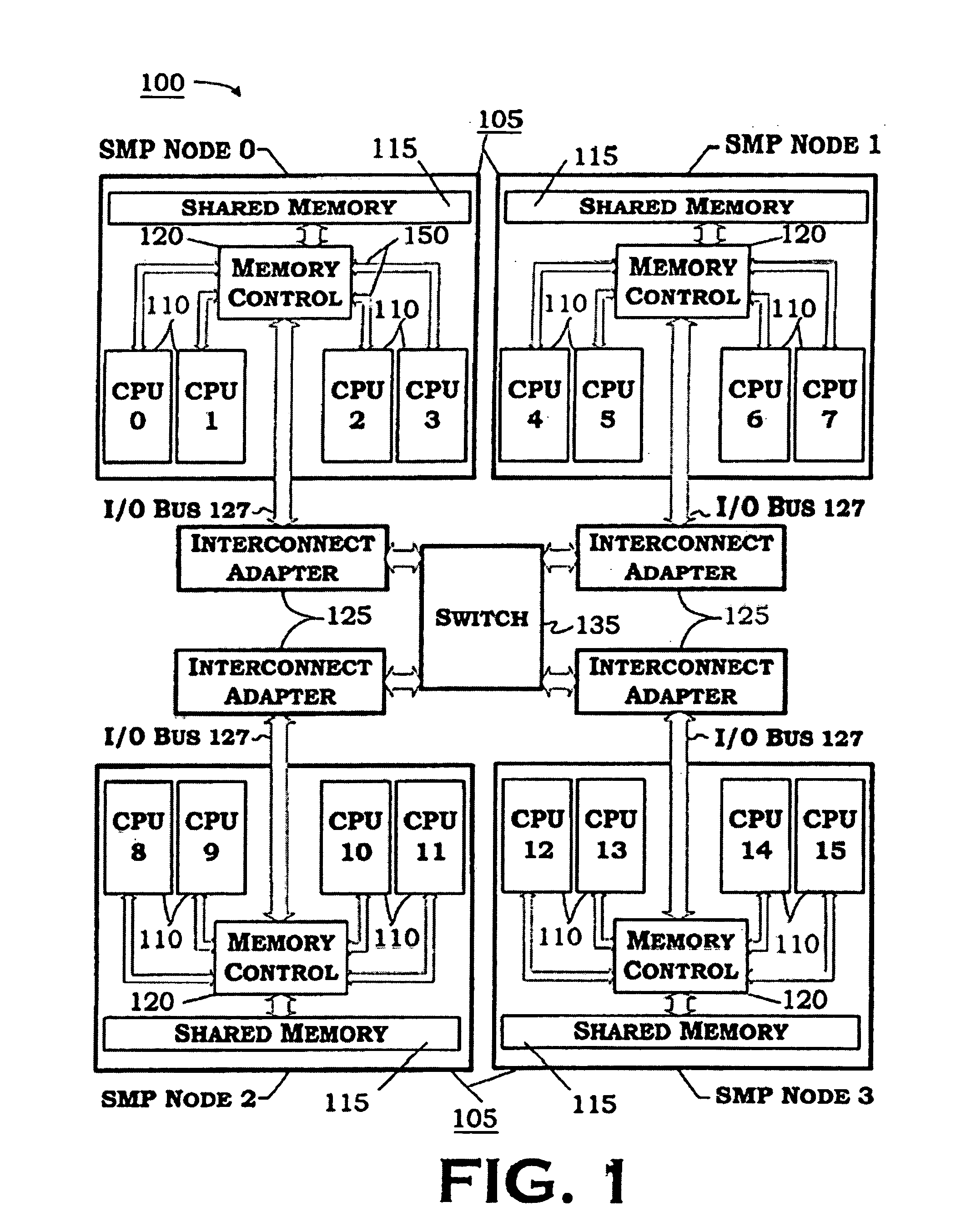

Default locality selection for memory objects based on determining the type of a particular memory object

InactiveUS7114040B2Memory adressing/allocation/relocationProgram controlMulti processorInterleaved memory

One embodiment disclosed relates to a method of selecting a default locality for a memory object requested by a process running on a CPU in a multiprocessor system. A determination is made as to whether the memory object comprises a shared-memory object. If the memory object comprises said shared-memory object, then the default locality is selected to be within interleaved memory in the system. If not, a further determination may be made as to whether the memory object comprises a stack-type object. If the memory object comprises said stack-type object, then the default locality may be selected to be within local memory at a same cell as the requesting CPU. If not, a further determination may be made as to whether the requesting process has threads running on multiple cells.

Owner:HEWLETT PACKARD DEV CO LP

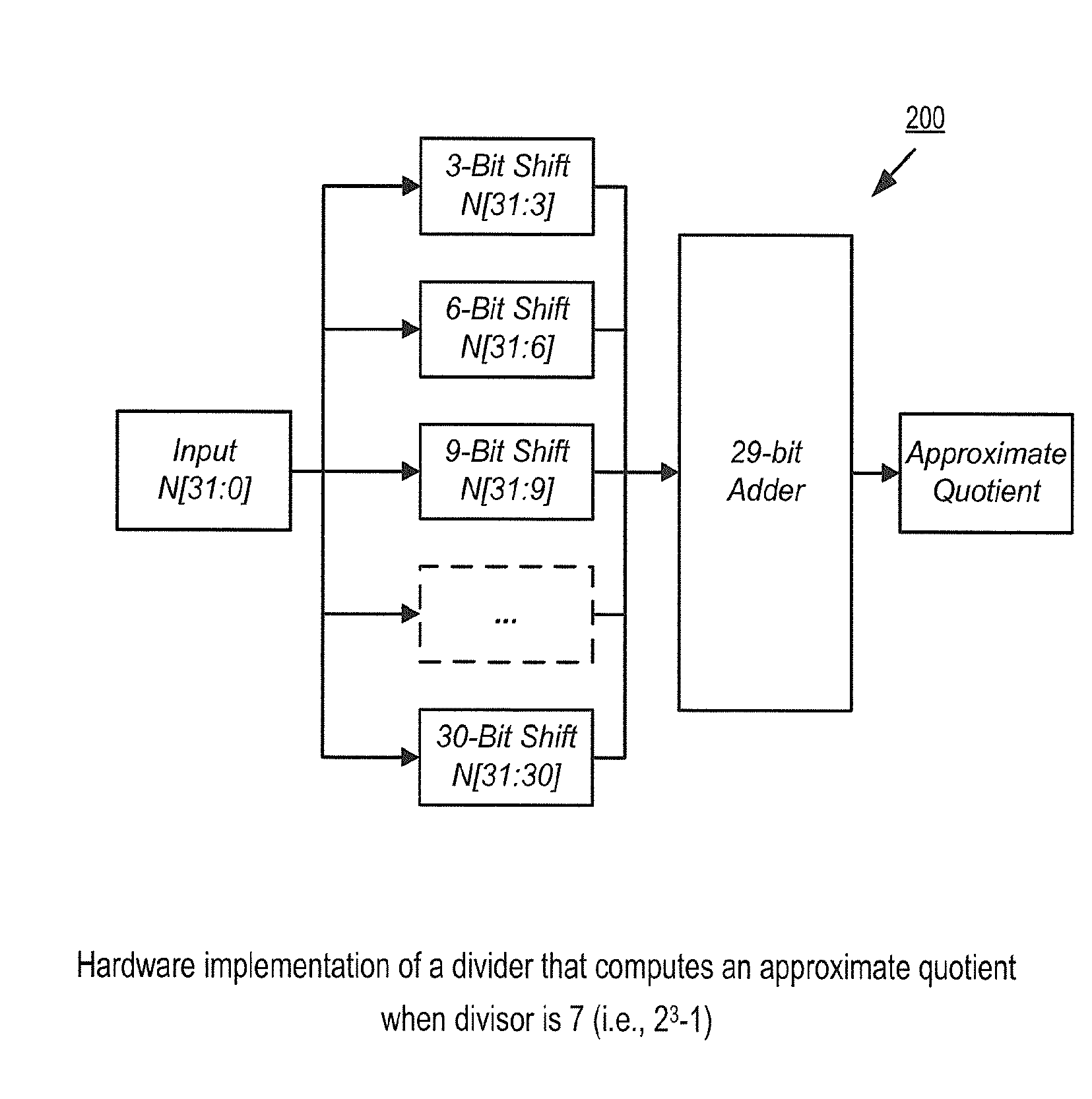

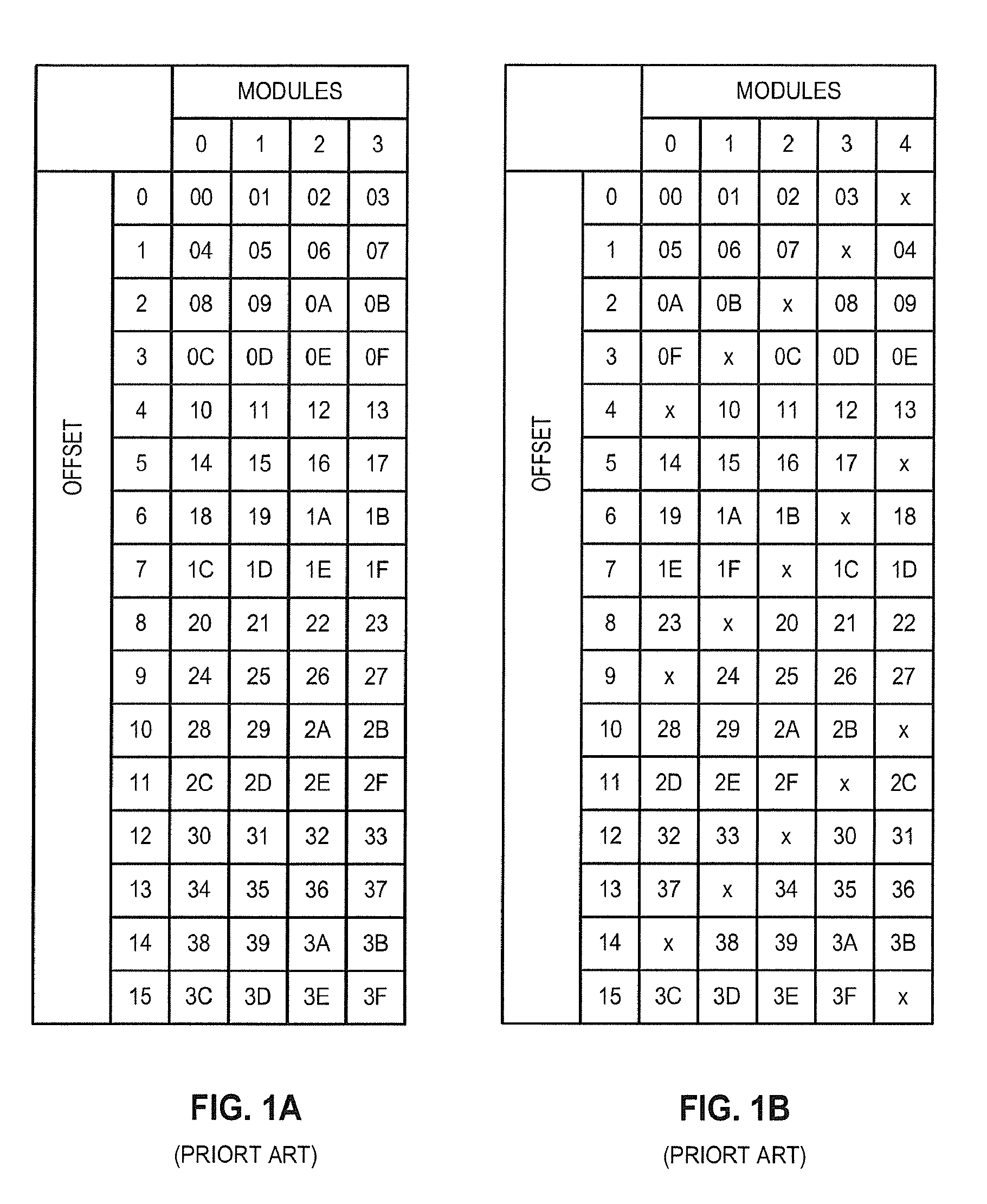

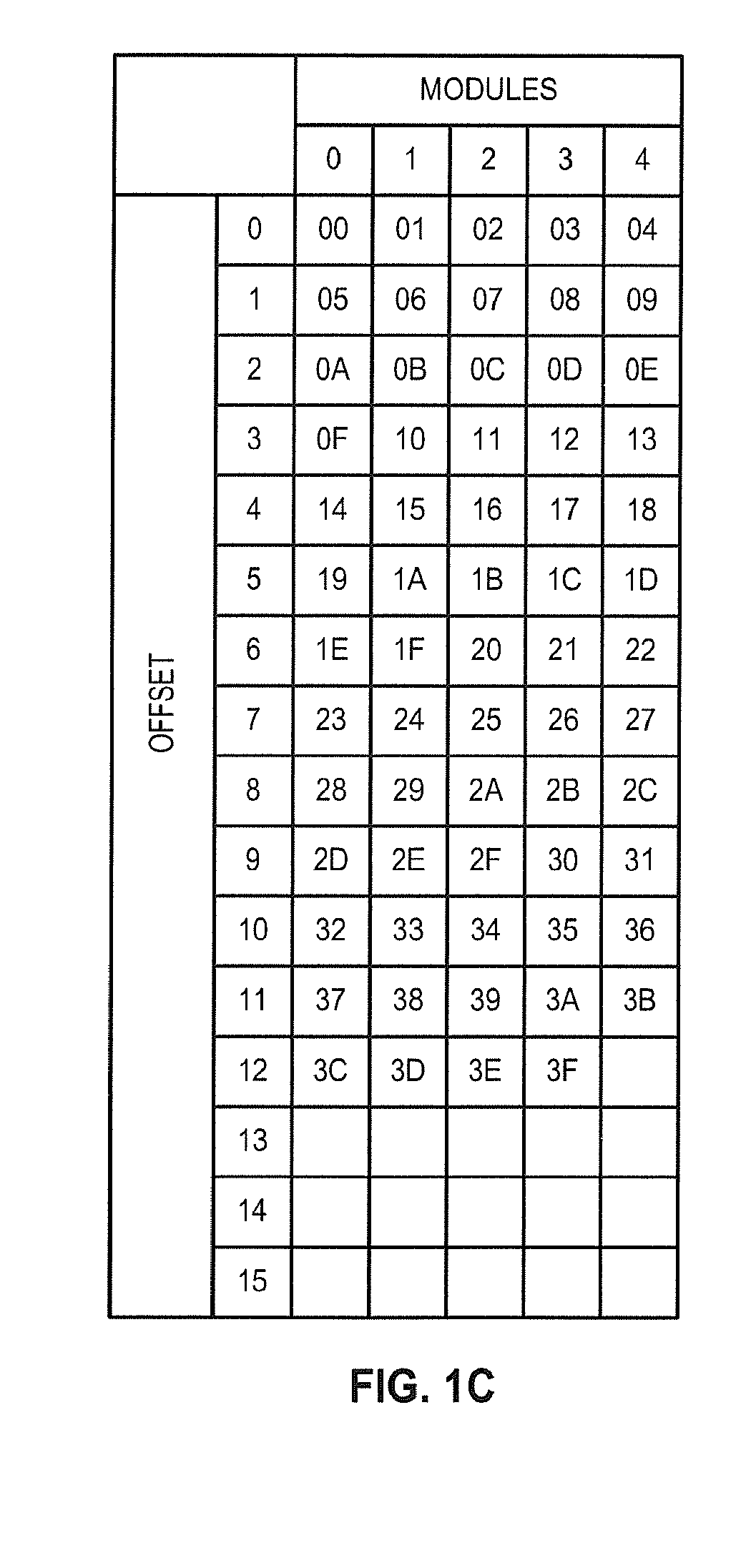

FAST MECHANISM FOR ACCESSING 2n±1 INTERLEAVED MEMORY SYSTEM

InactiveUS20140025908A1Memory architecture accessing/allocationMemory adressing/allocation/relocationShift-and-addInterleaved memory

A mechanism implemented by a controller enables efficient access to an interleaved memory system that includes M modules, M being (2n+1) or (2n−1), n being a positive integer number. Upon receiving an address N, the controller performs shift and add / subtract operations to obtain a quotient of N divided by M based on a binomial series expansion of N over M. The controller computes a remainder of N divided by M based on the quotient. The controller then accesses one of the modules in the memory based on the remainder.

Owner:INTEL CORP

Interleaving method and apparatus with parallel access in linear and interleaved order

ActiveUS7139862B2Reduce chip areaLow costMemory adressing/allocation/relocationCode conversionParallel computingInterleaved memory

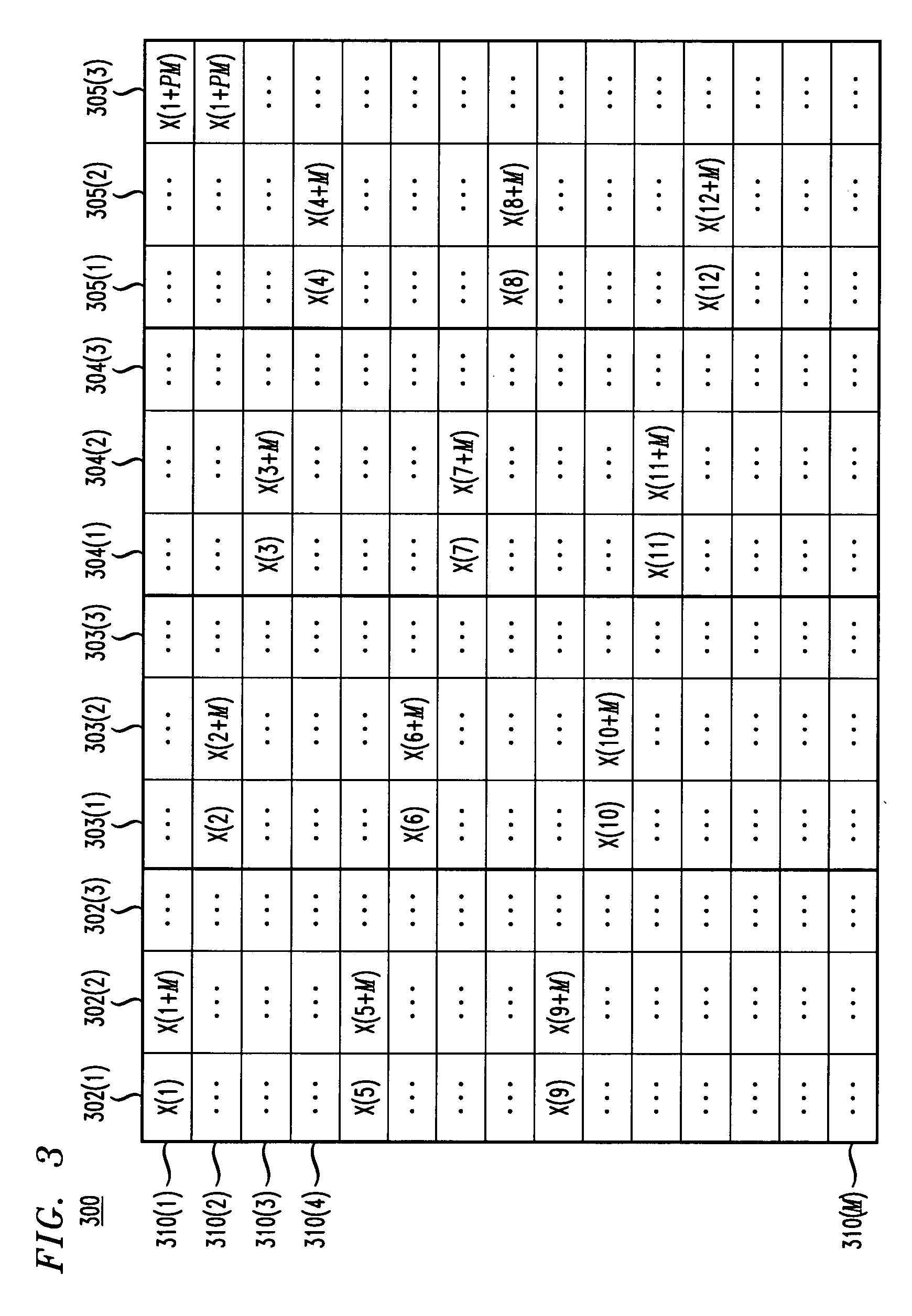

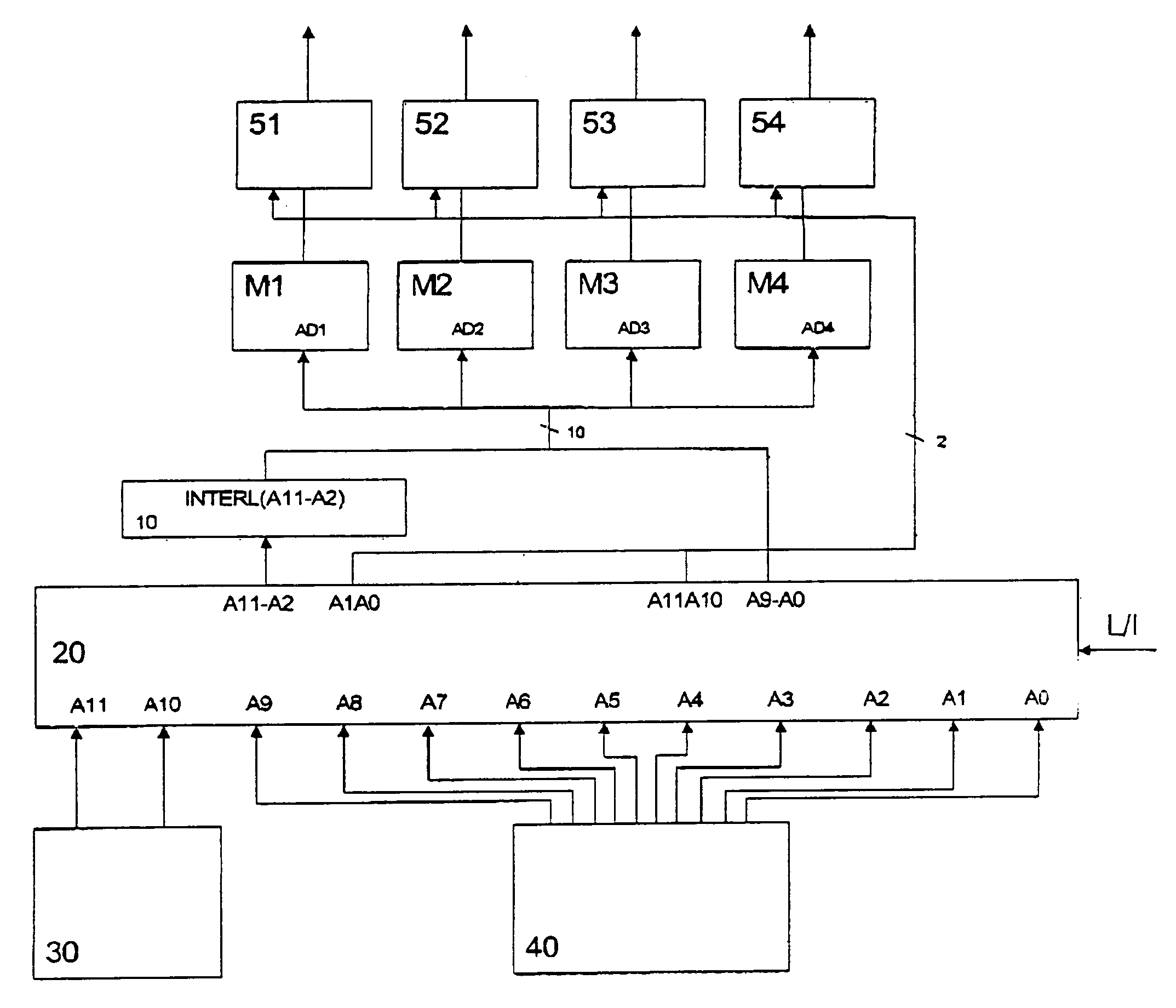

An interleaving method and apparatus provides parallel access in a linear and interleaved order to a predetermined number of stored data samples. A memory array with a plurality of memory devices is addressed by applying a first portion of an address to memory devices and by using a second portion of the address to select at least one memory device to be accessed, wherein the position of the first and second portions within the address is changed in response to a change between the linear order and the interleaved order. Due to the fact that the memory array is split into several individually addressable memory devices, each of these memory devices can be accessed in a linear and interleaved order by changing an allocation of a chip selection portion and a chip addressing portion of the address.

Owner:NOKIA TECHNOLOGLES OY

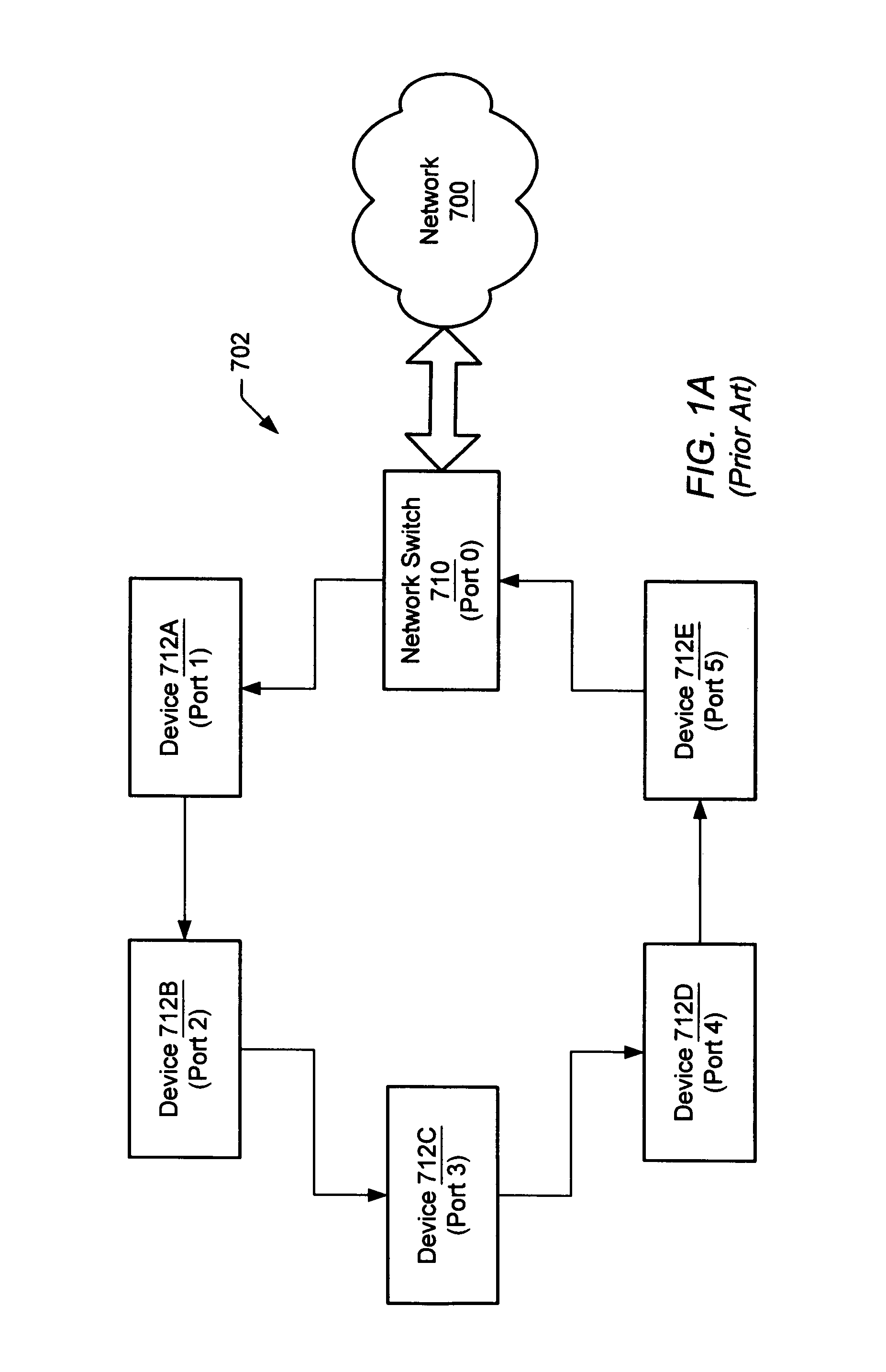

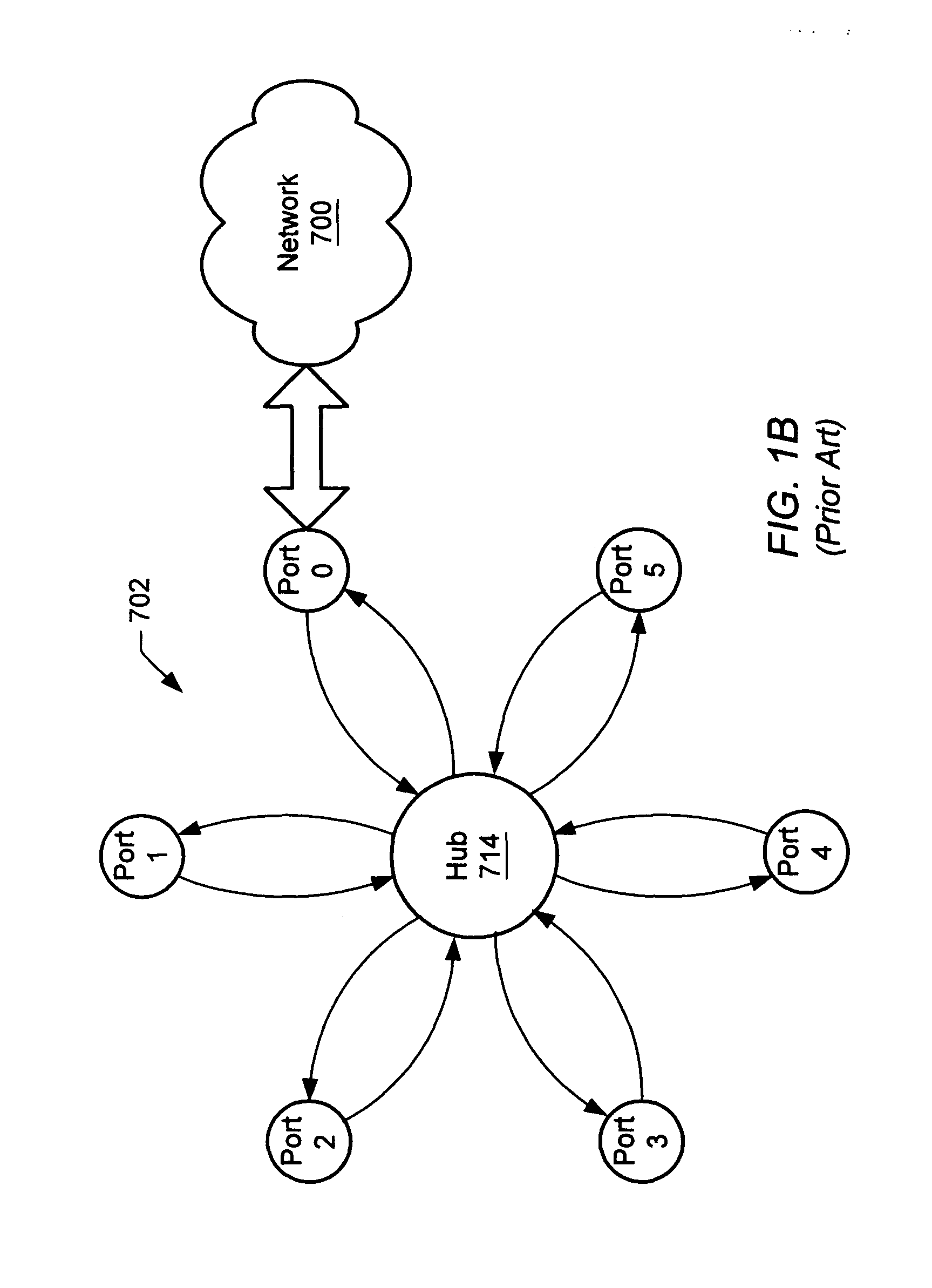

High jitter scheduling of interleaved frames in an arbitrated loop

InactiveUS7809852B2Multiple digital computer combinationsData switching networksTraffic capacityLow jitter

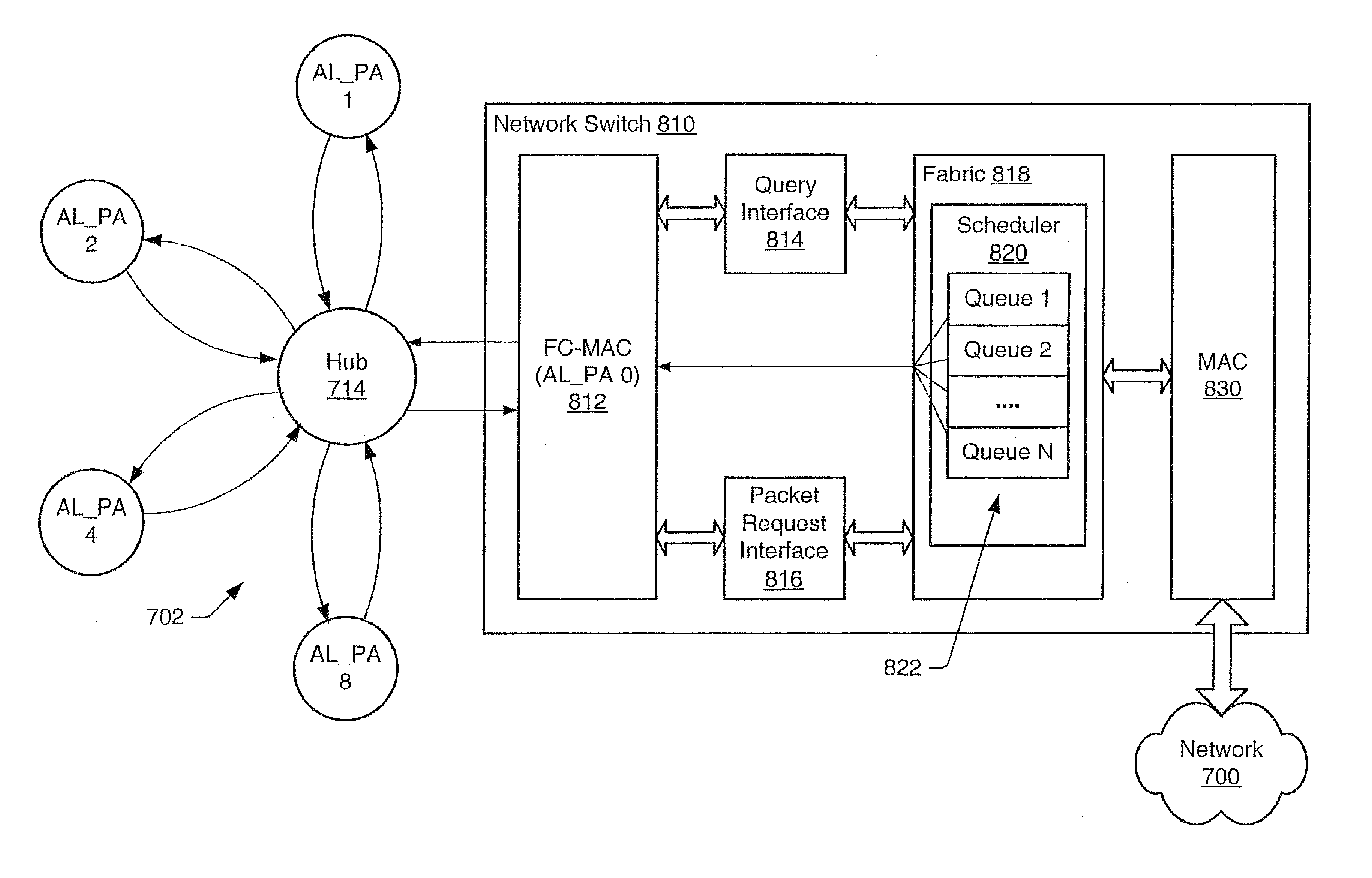

A system and method for converting low-jitter, interleaved frame traffic, such as that generated in an IP network, to high jitter traffic to improve the utilization of bandwidth on arbitrated loops such as Fibre Channel Arbitrated Loops. Embodiments of a high jitter scheduling algorithm may be used in devices such as network switches that interface an arbitrated loop with an IP network that carries low-jitter traffic. The high jitter algorithm may use a separate queue for each device on the arbitrated loop, or alternatively may use one queue for two or more devices. Incoming frames are distributed among the queues based upon each frame's destination device. The scheduling algorithm may then service the queues and forward queued frames to the devices from the queues. In one embodiment, the queues are serviced in a round-robin fashion. In one embodiment, each queue may be serviced for a programmed limit.

Owner:BROCADE COMMUNICATIONS SYSTEMS

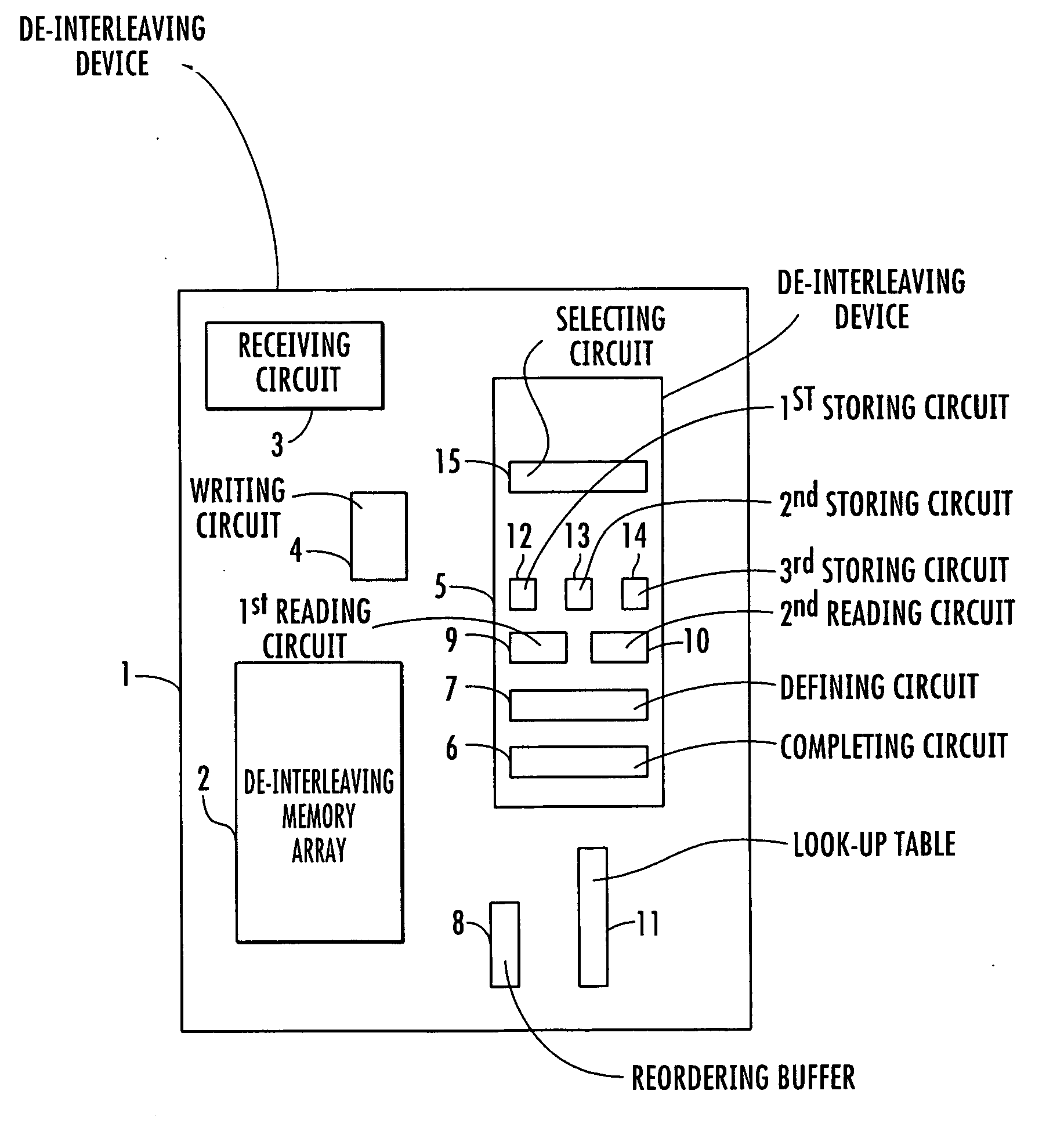

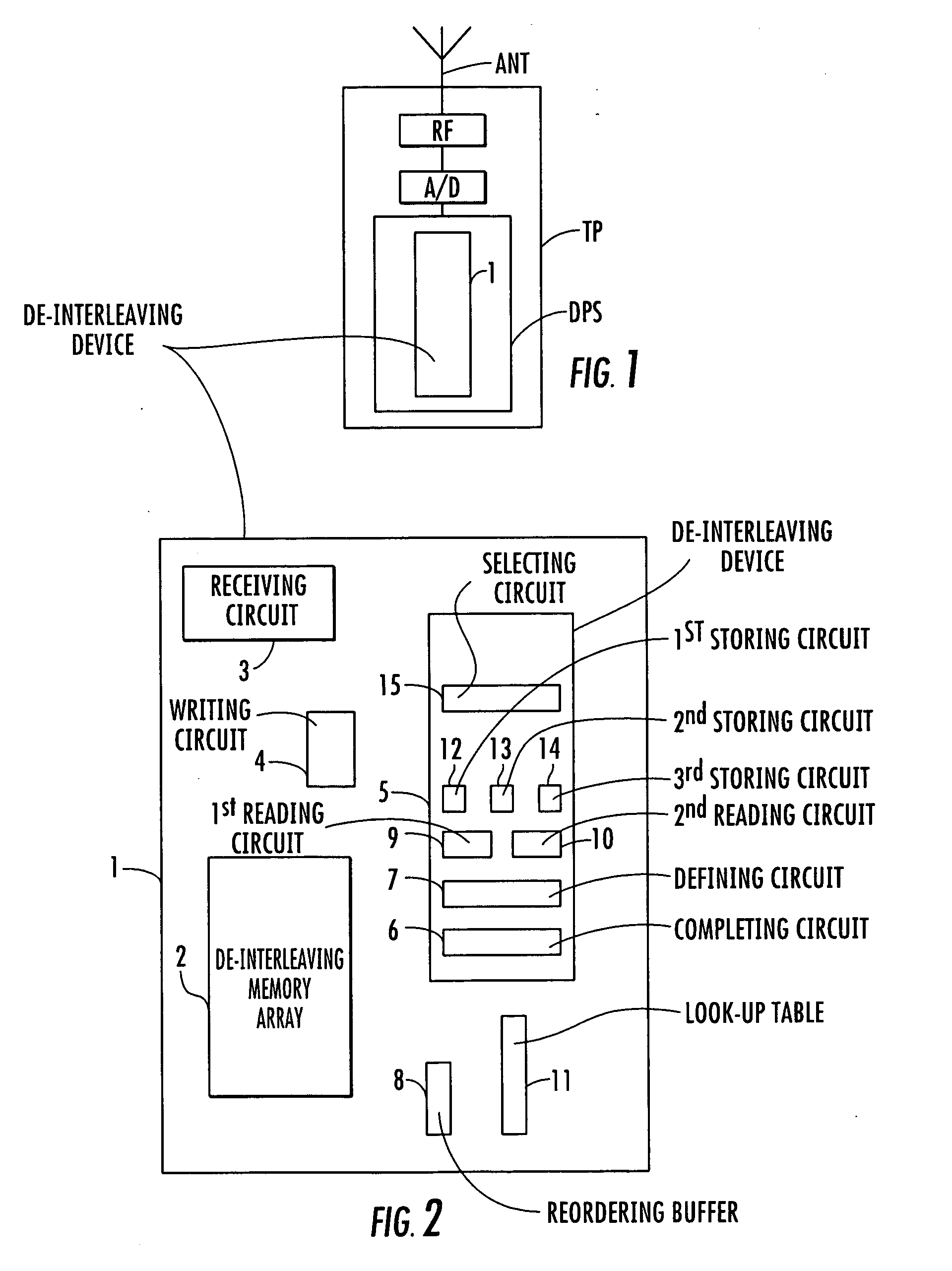

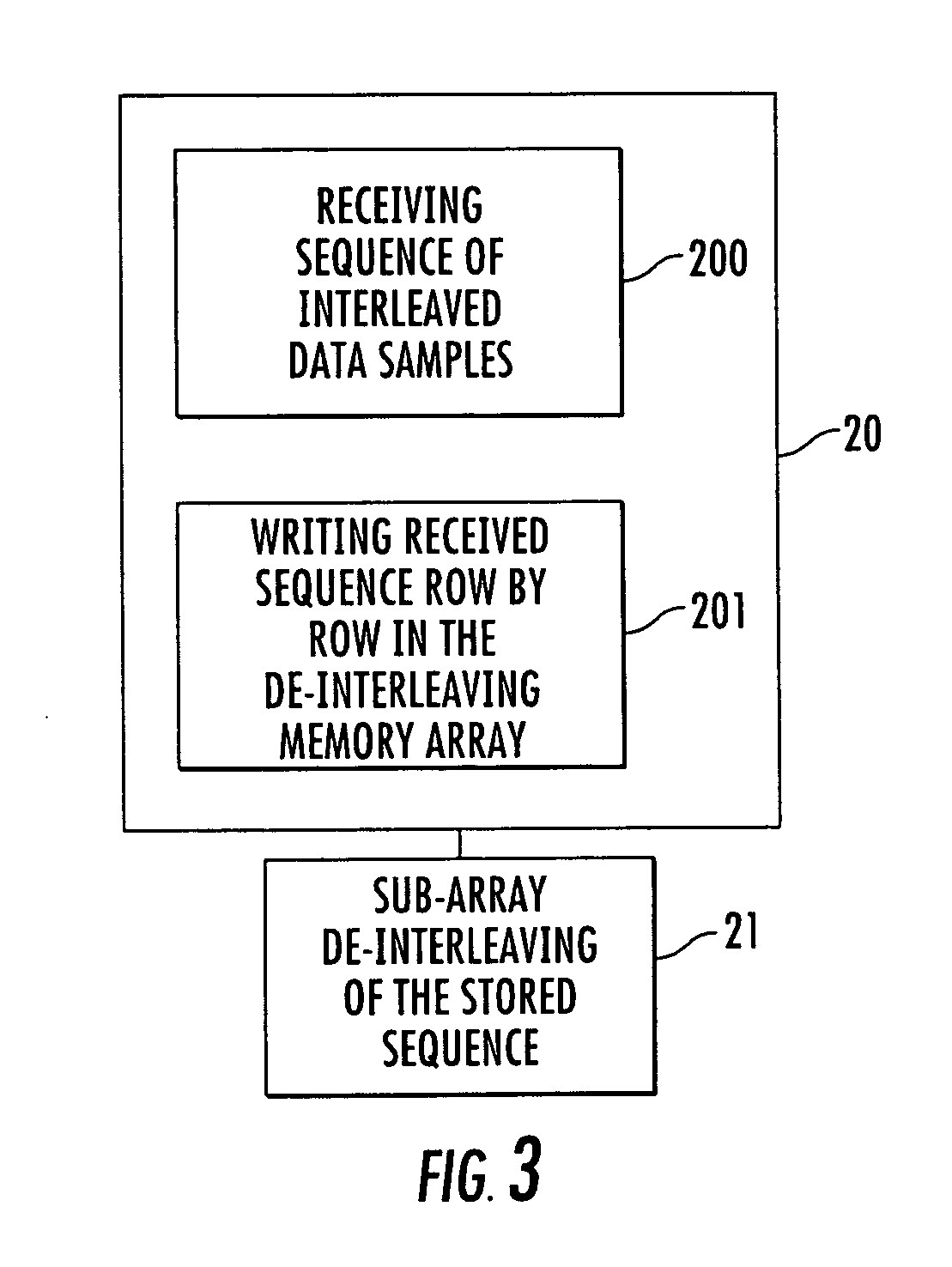

Method and device of de-interleaving successive sequences of interleaved data samples

ActiveUS20050160342A1Avoidance of memory re-use bottleneckDecrease memory access rateData representation error detection/correctionError detection/correctionVirtual memoryParallel computing

Successive sequences of interleaved data samples extracted from a virtual memory having L0 columns and C0 rows are de-interleaved. The de-interleaving includes receiving each sequence of the interleaved data samples, and writing row by row the received sequences of interleaved data samples in a de-interleaving memory array having L rows and C columns, with L being greater or equal to L0 and C being greater or equal to C0. The data samples stored in the de-interleaving memory array are de-interleaved sub-array by sub-array. Each sub-array is a square cluster array having a number SQ of rows and columns. A cluster array is a row of the square cluster array comprising SQ data samples, with the number L of rows and the number C of columns of the de-interleaving memory array being multiples of the number SQ of rows and columns.

Owner:STMICROELECTRONICS INT NV

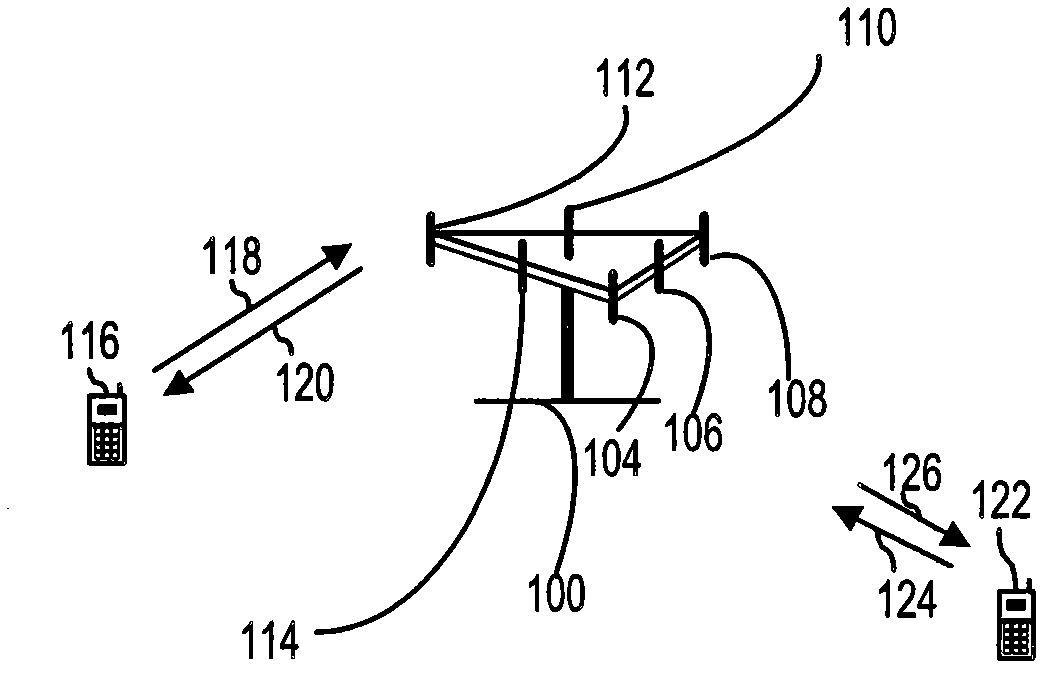

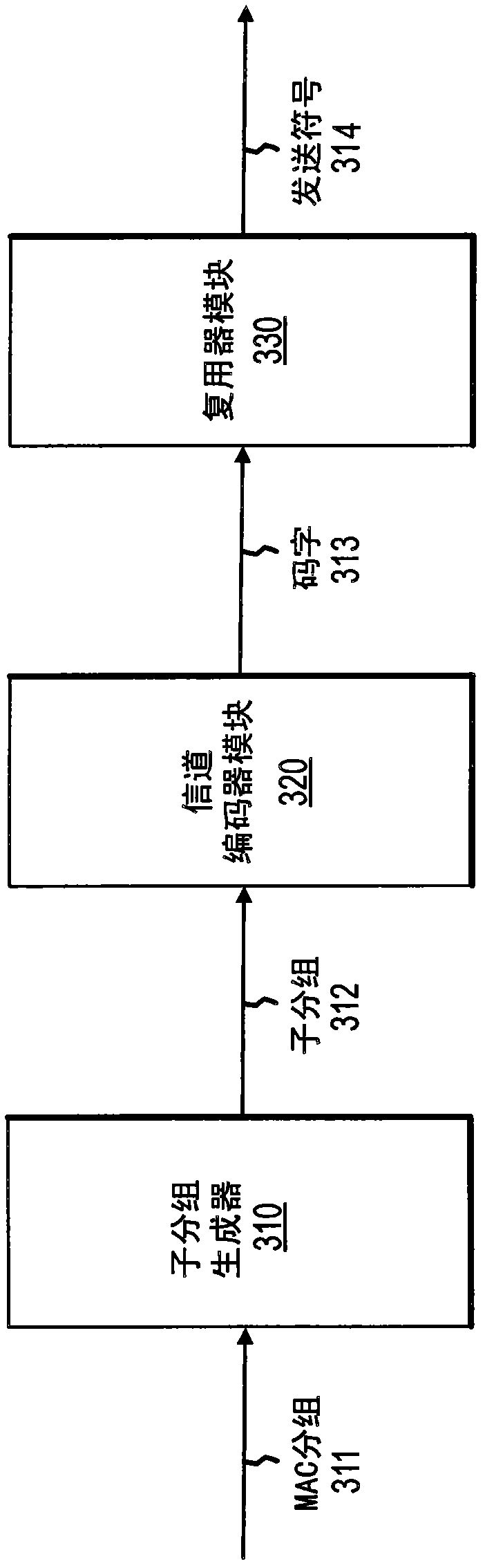

Method and apparatus for storing log likelihood ratios in interleaved form to reduce hardware memory

An apparatus and method for storing log likelihood ratios in an interleaved form comprising receiving a plurality of interleaved codewords (710); obtaining at least one log likelihood ratio (LLR) for the plurality of interleaved codewords (720); storing the at least one LLR in a memory (730); deinterleaving the plurality of interleaved codewords after the at least one LLR has been stored in the memory (740); and performing a bit decision of the deinterleaved codewords using the stored at least one LLR (750).

Owner:QUALCOMM INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com