Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

31 results about "Busy waiting" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer science and software engineering, busy-waiting, busy-looping or spinning is a technique in which a process repeatedly checks to see if a condition is true, such as whether keyboard input or a lock is available. Spinning can also be used to generate an arbitrary time delay, a technique that was necessary on systems that lacked a method of waiting a specific length of time. Processor speeds vary greatly from computer to computer, especially as some processors are designed to dynamically adjust speed based on external factors, such as the load on the operating system. Consequently spinning as a time-delay technique can produce unpredictable or even inconsistent results on different systems unless code is included to determine the time a processor takes to execute a "do nothing" loop, or the looping code explicitly checks a real-time clock.

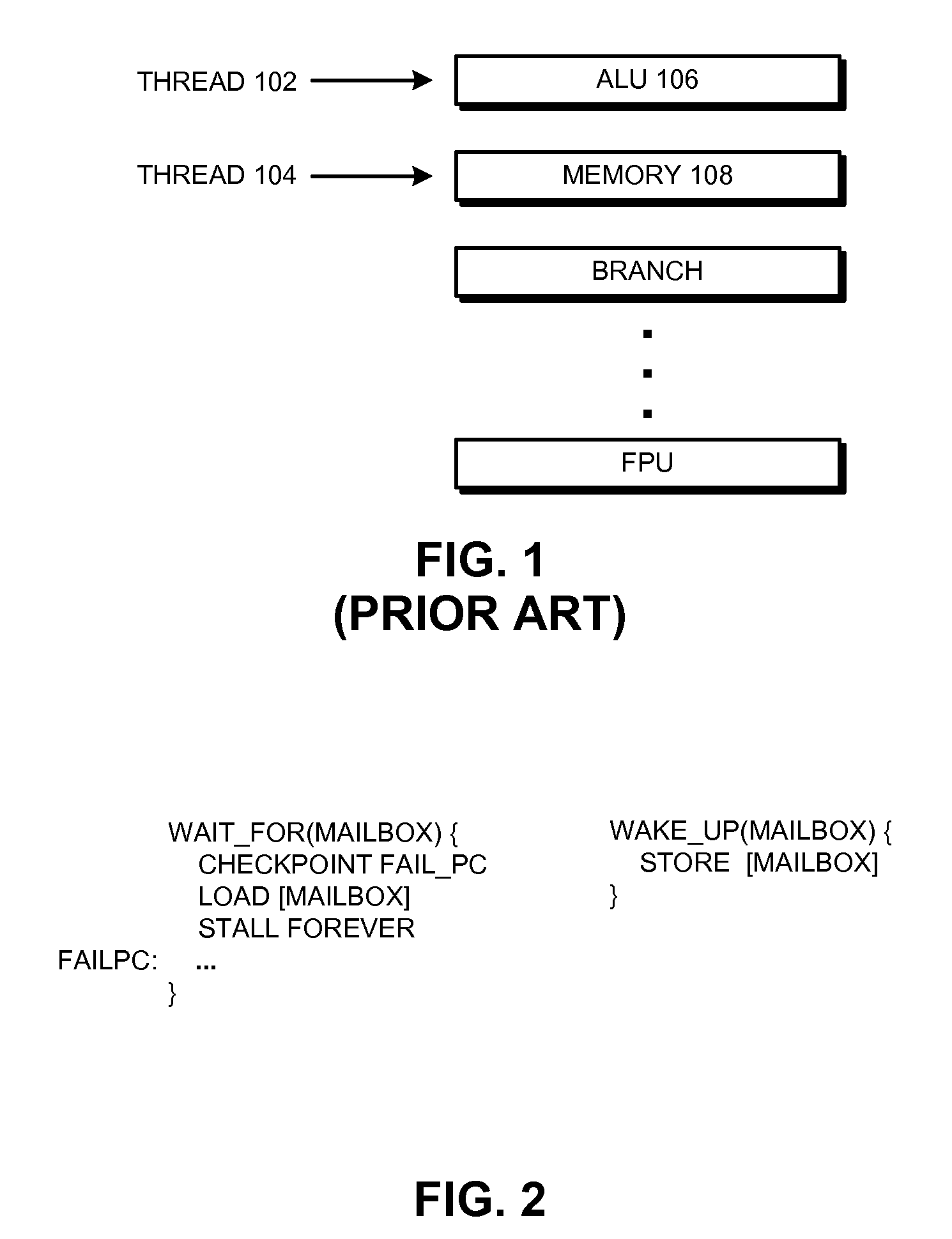

System and Method for Mitigating the Impact of Branch Misprediction When Exiting Spin Loops

ActiveUS20130198499A1None meet conditionsQuick responseDigital computer detailsConcurrent instruction executionProgram instructionComputerized system

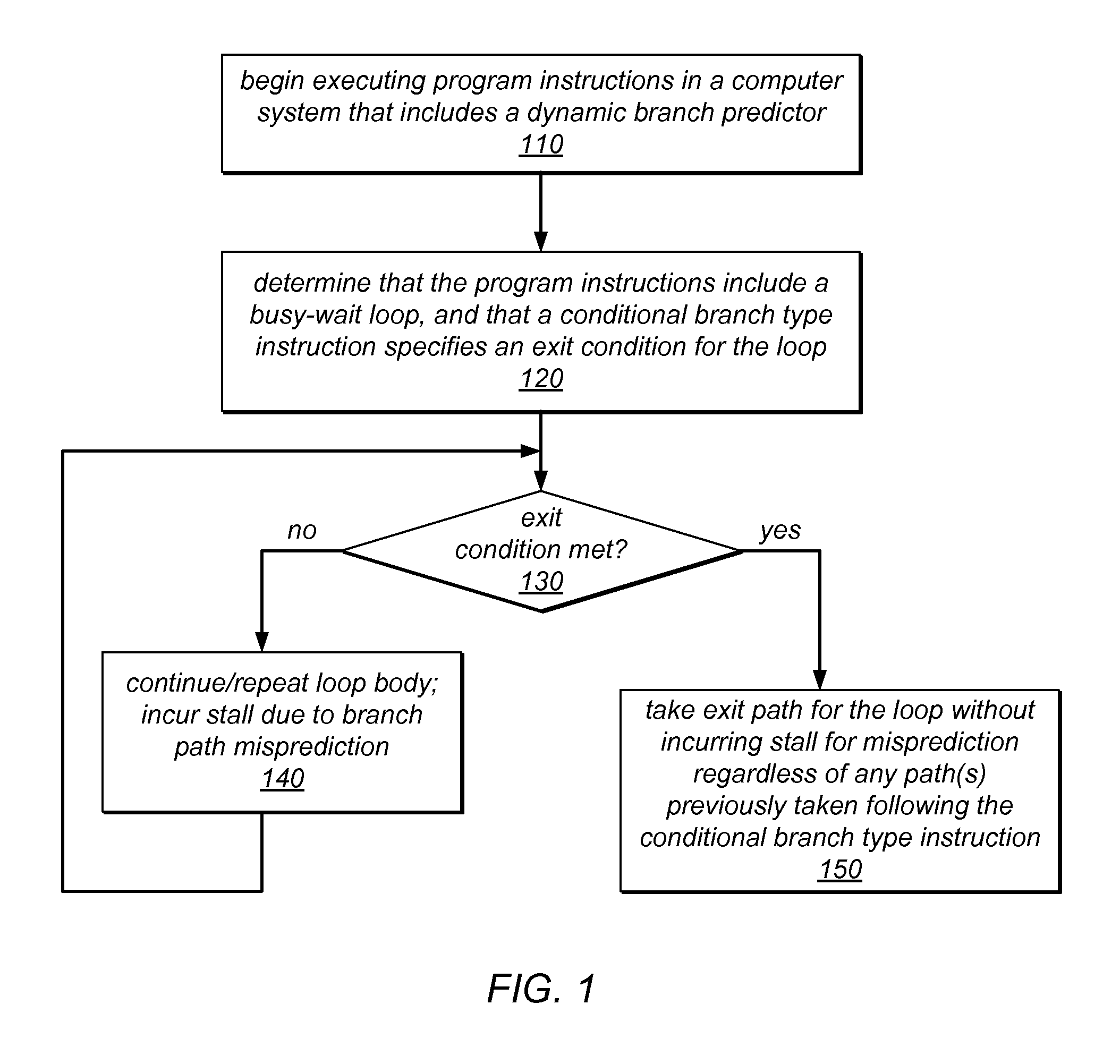

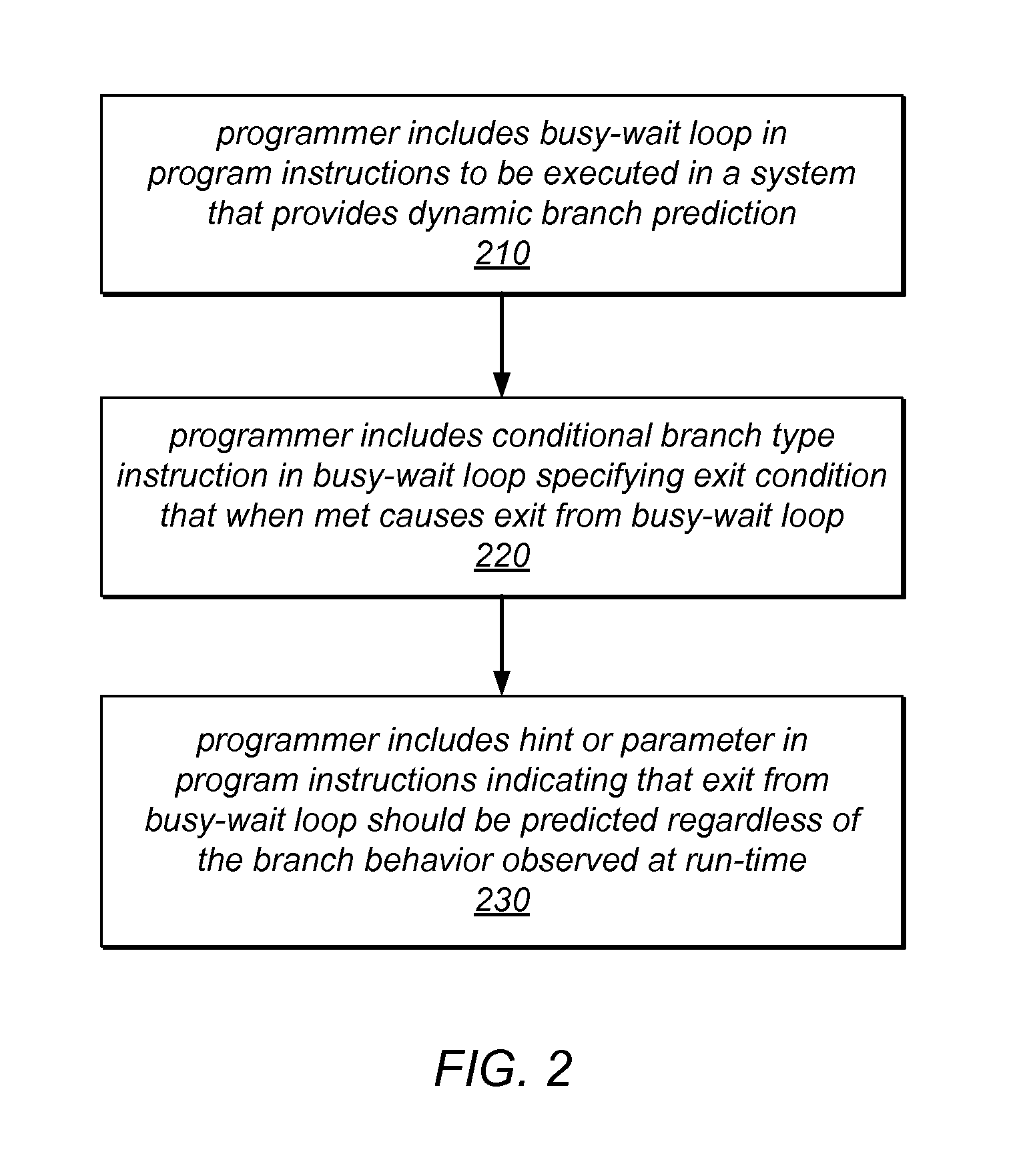

A computer system may recognize a busy-wait loop in program instructions at compile time and / or may recognize busy-wait looping behavior during execution of program instructions. The system may recognize that an exit condition for a busy-wait loop is specified by a conditional branch type instruction in the program instructions. In response to identifying the loop and the conditional branch type instruction that specifies its exit condition, the system may influence or override a prediction made by a dynamic branch predictor, resulting in a prediction that the exit condition will be met and that the loop will be exited regardless of any observed branch behavior for the conditional branch type instruction. The looping instructions may implement waiting for an inter-thread communication event to occur or for a lock to become available. When the exit condition is met, the loop may be exited without incurring a misprediction delay.

Owner:ORACLE INT CORP

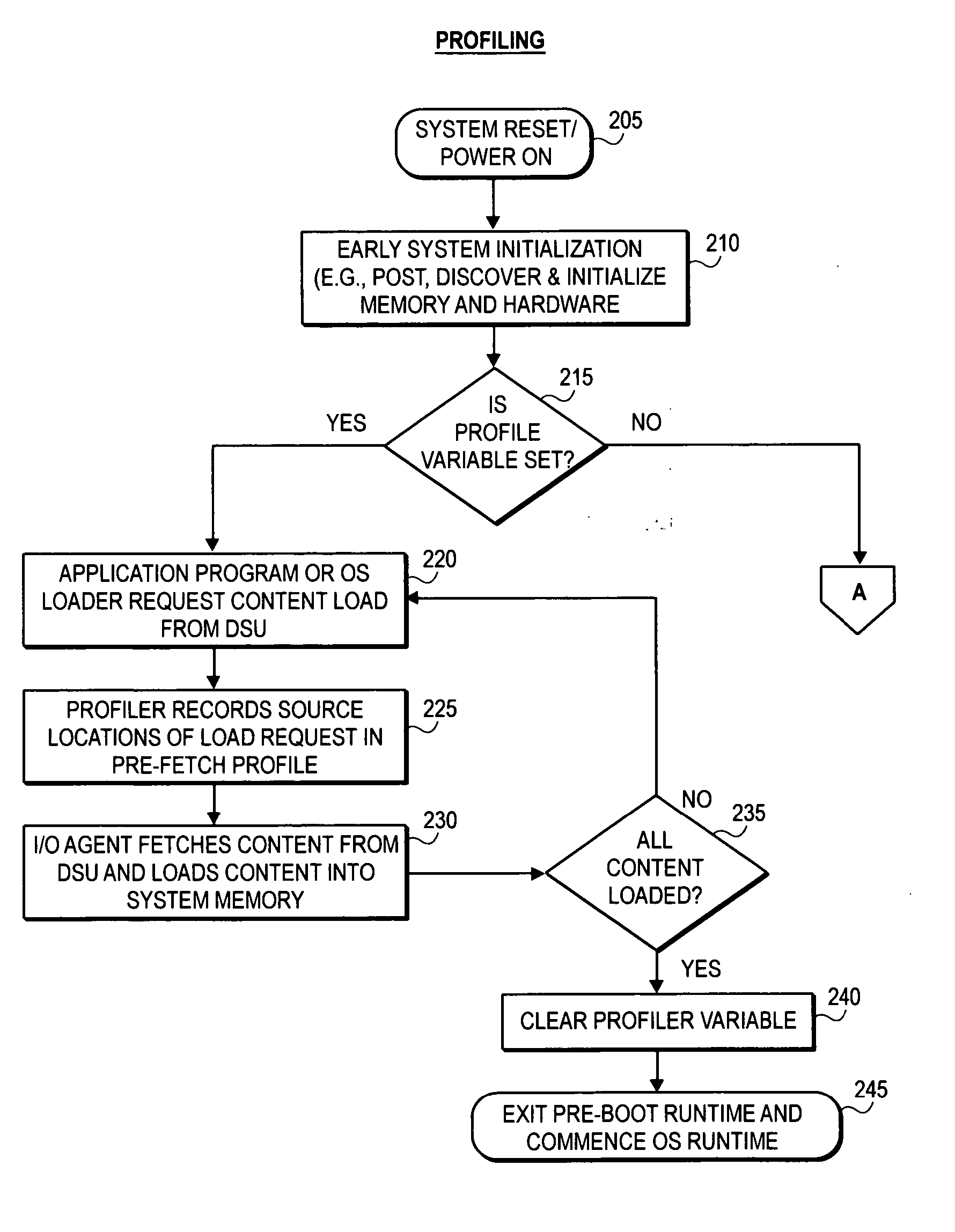

Aggressive content pre-fetching during pre-boot runtime to support speedy OS booting

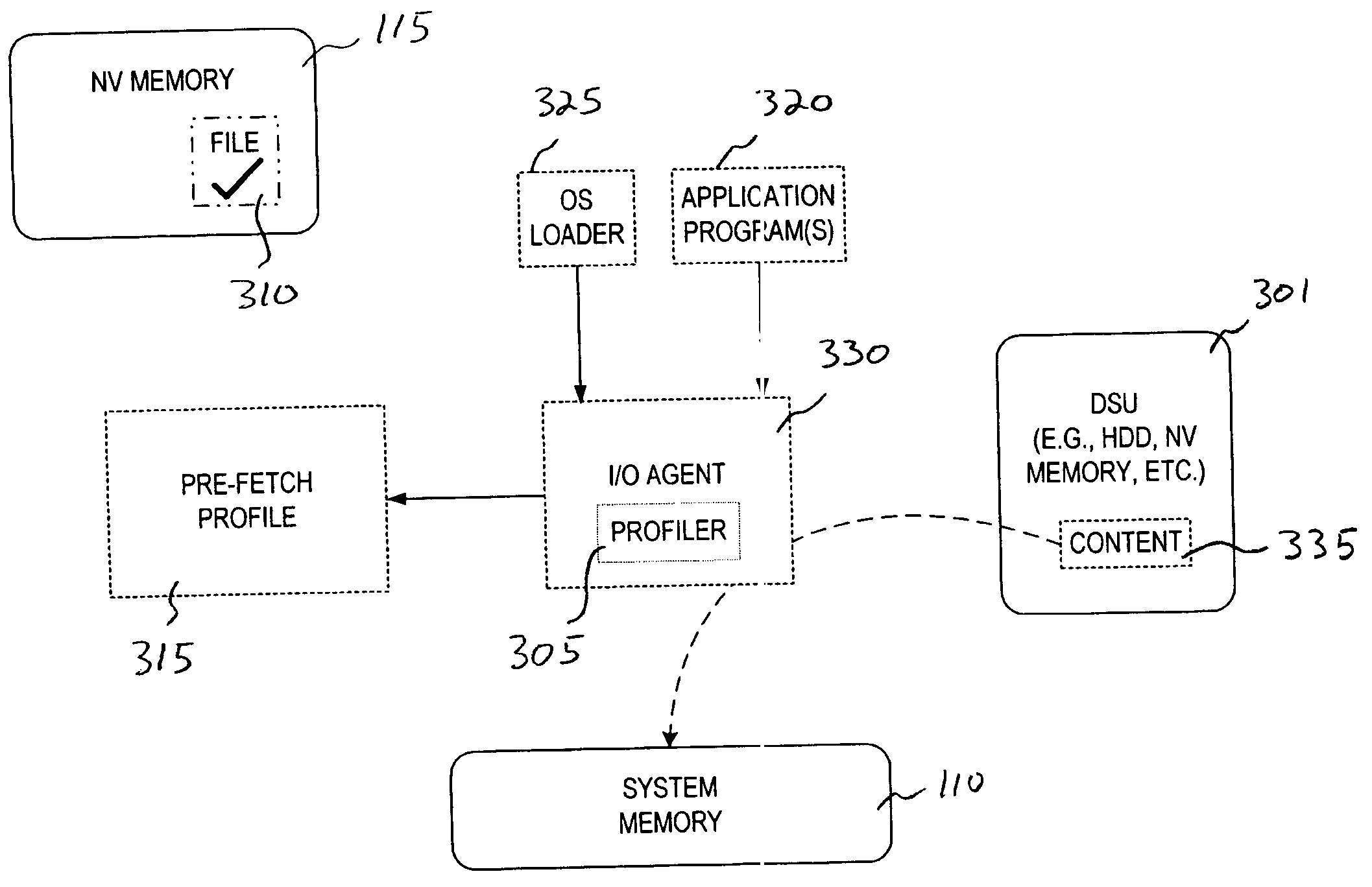

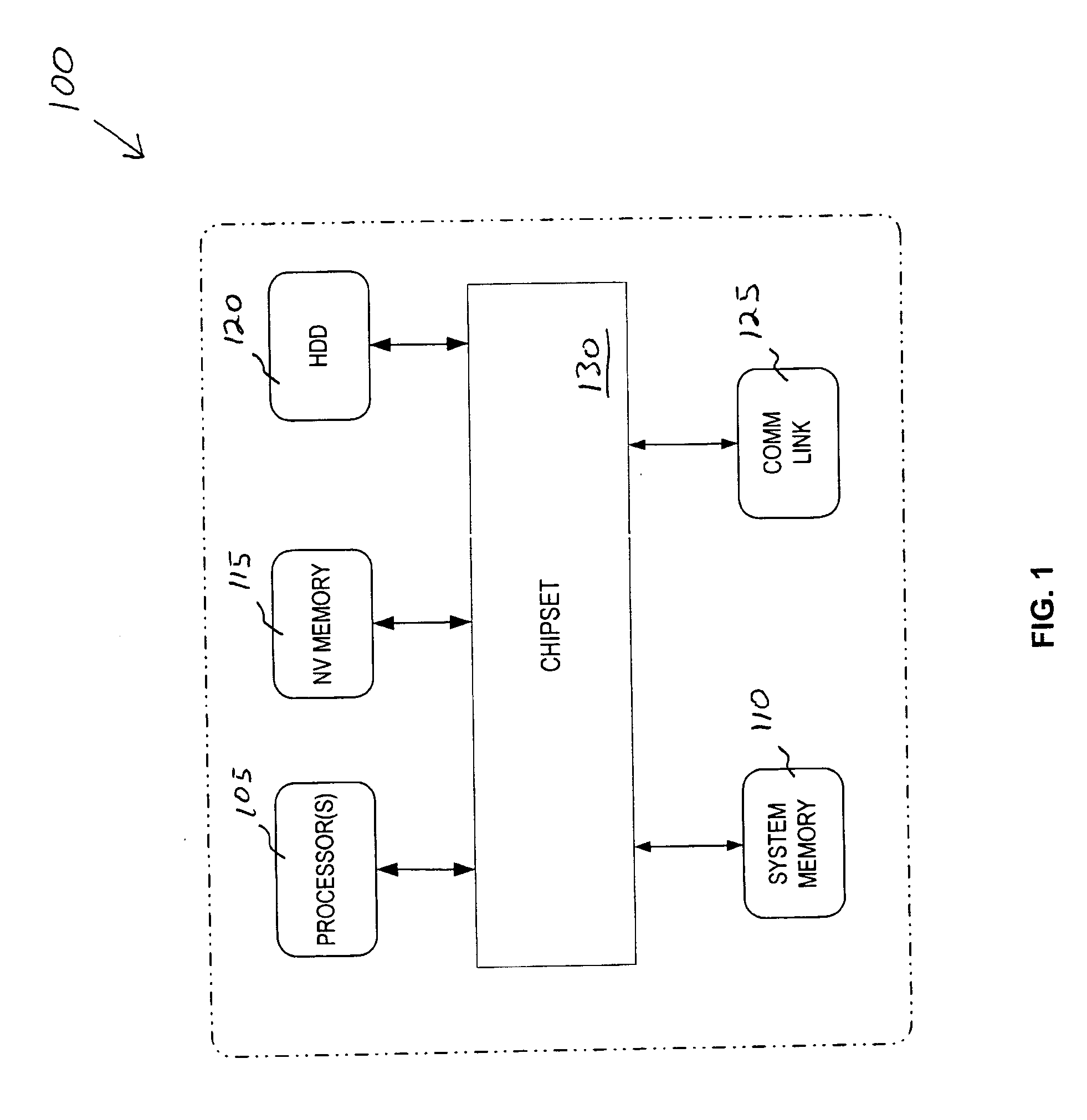

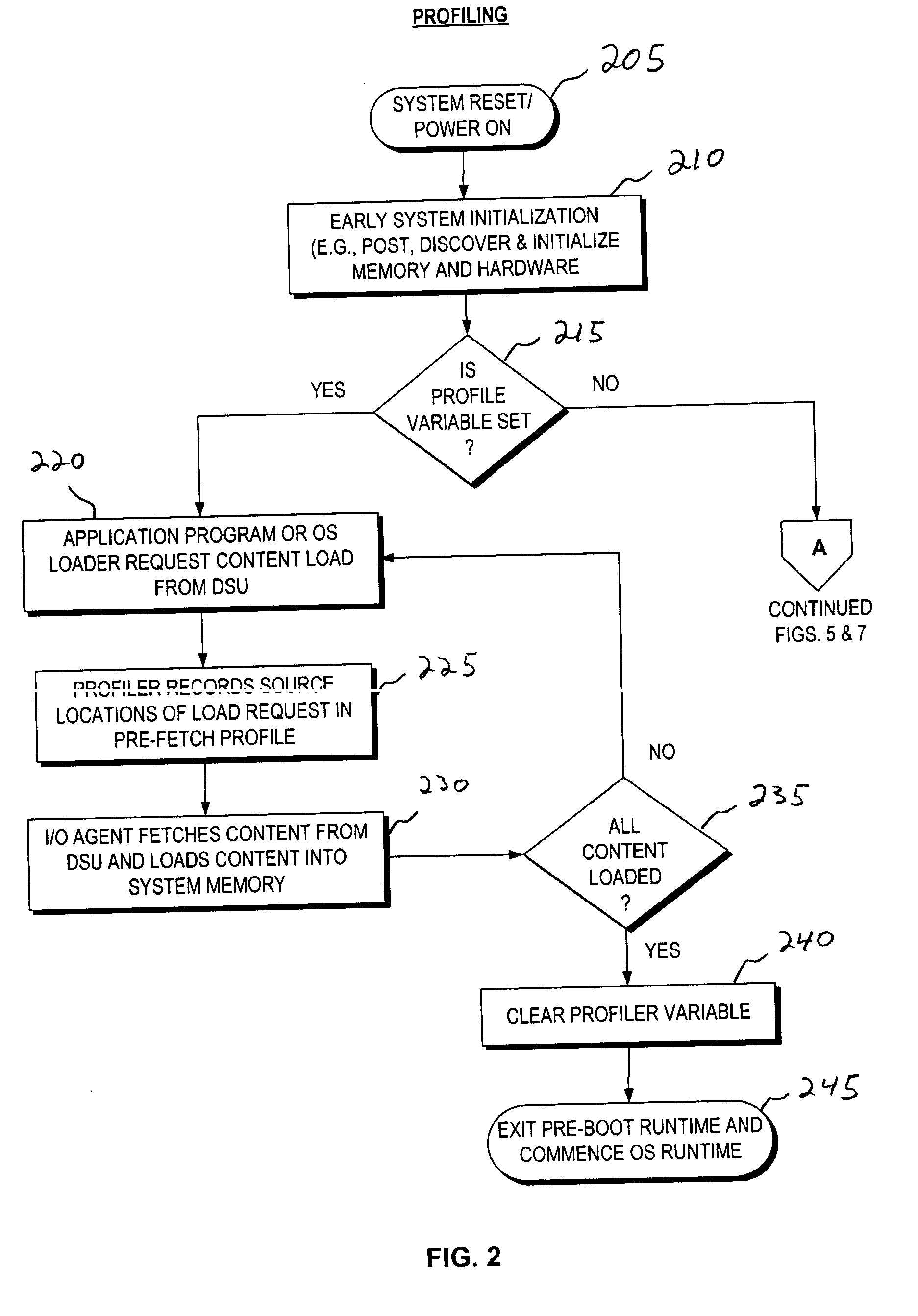

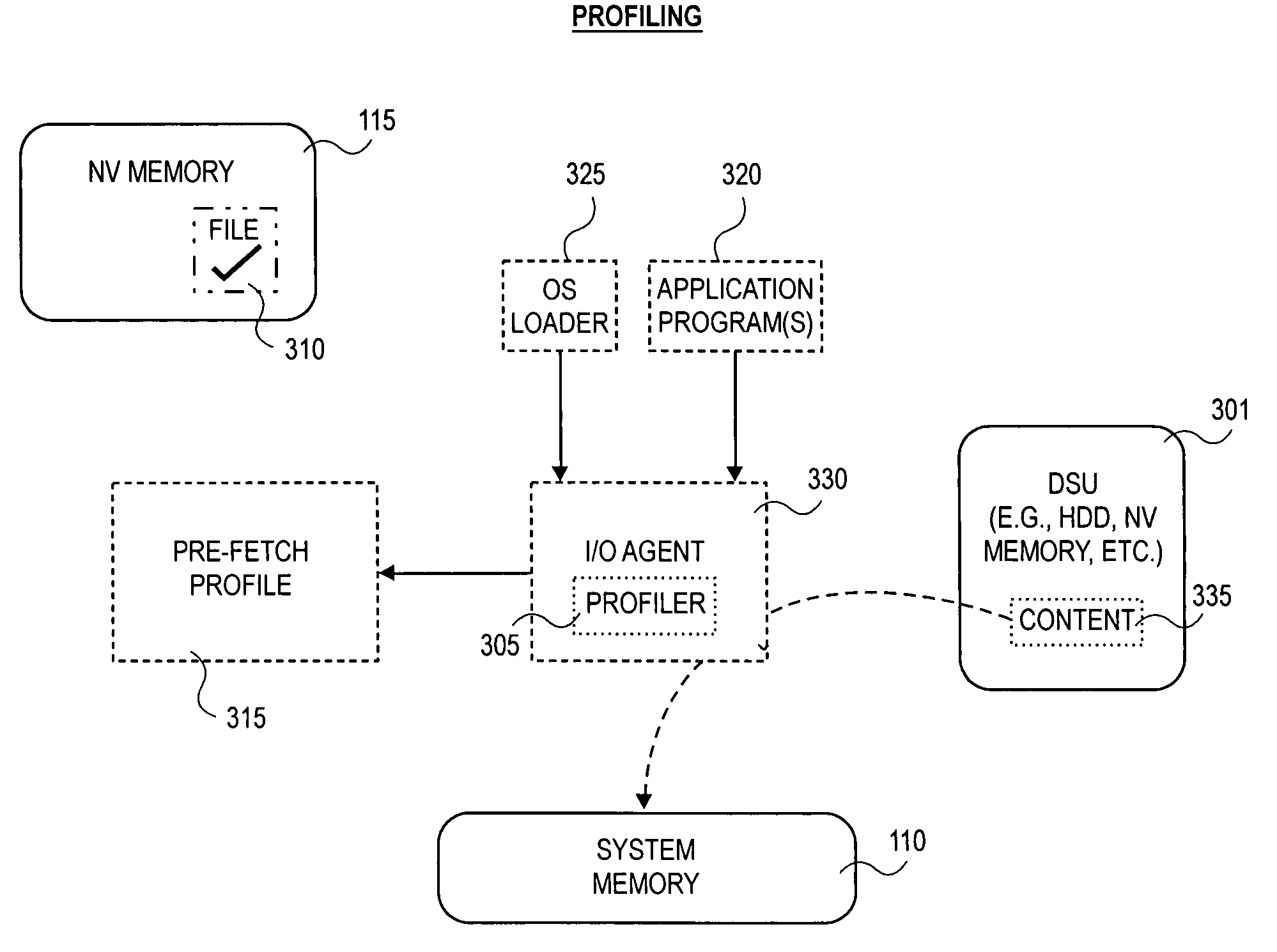

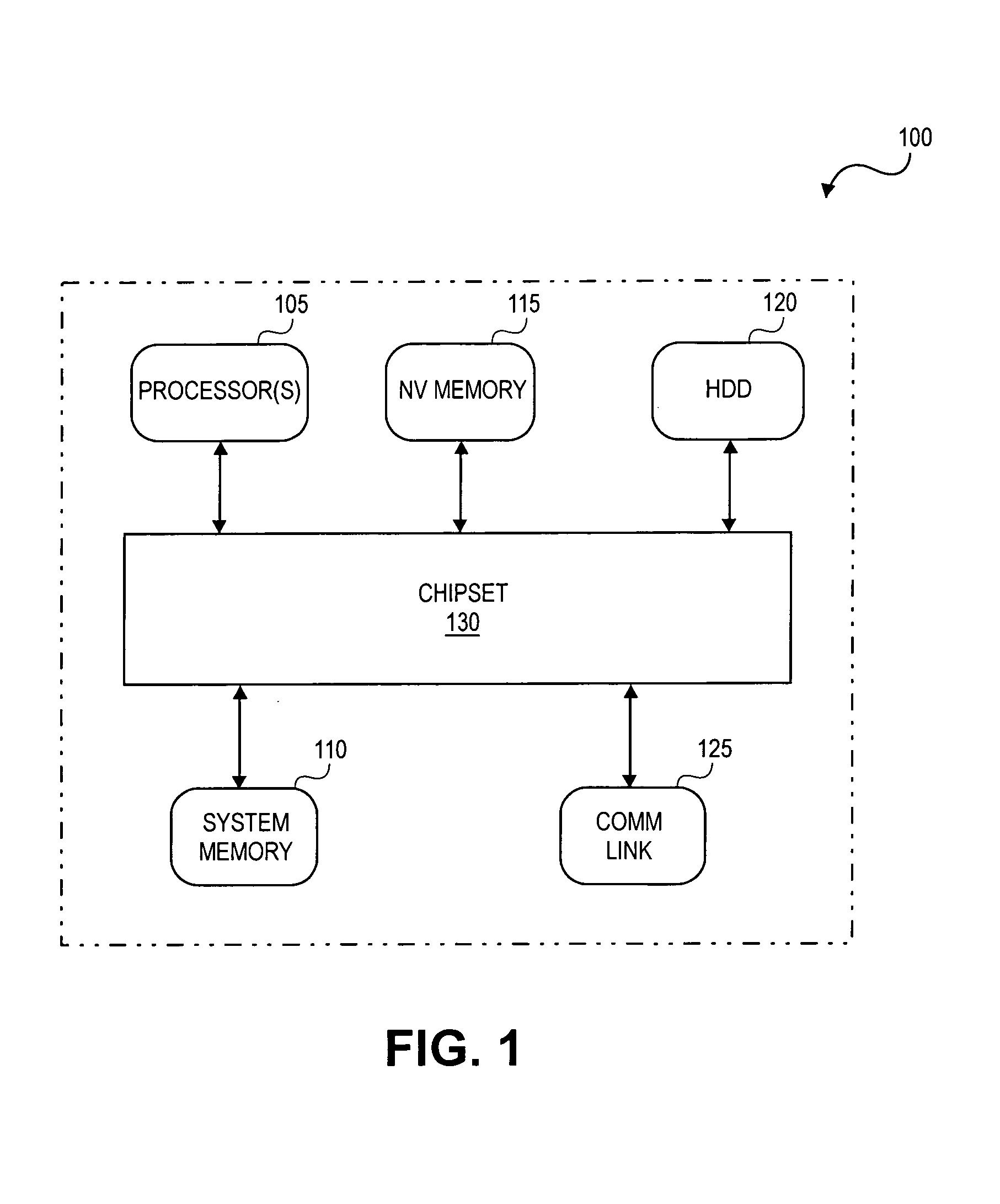

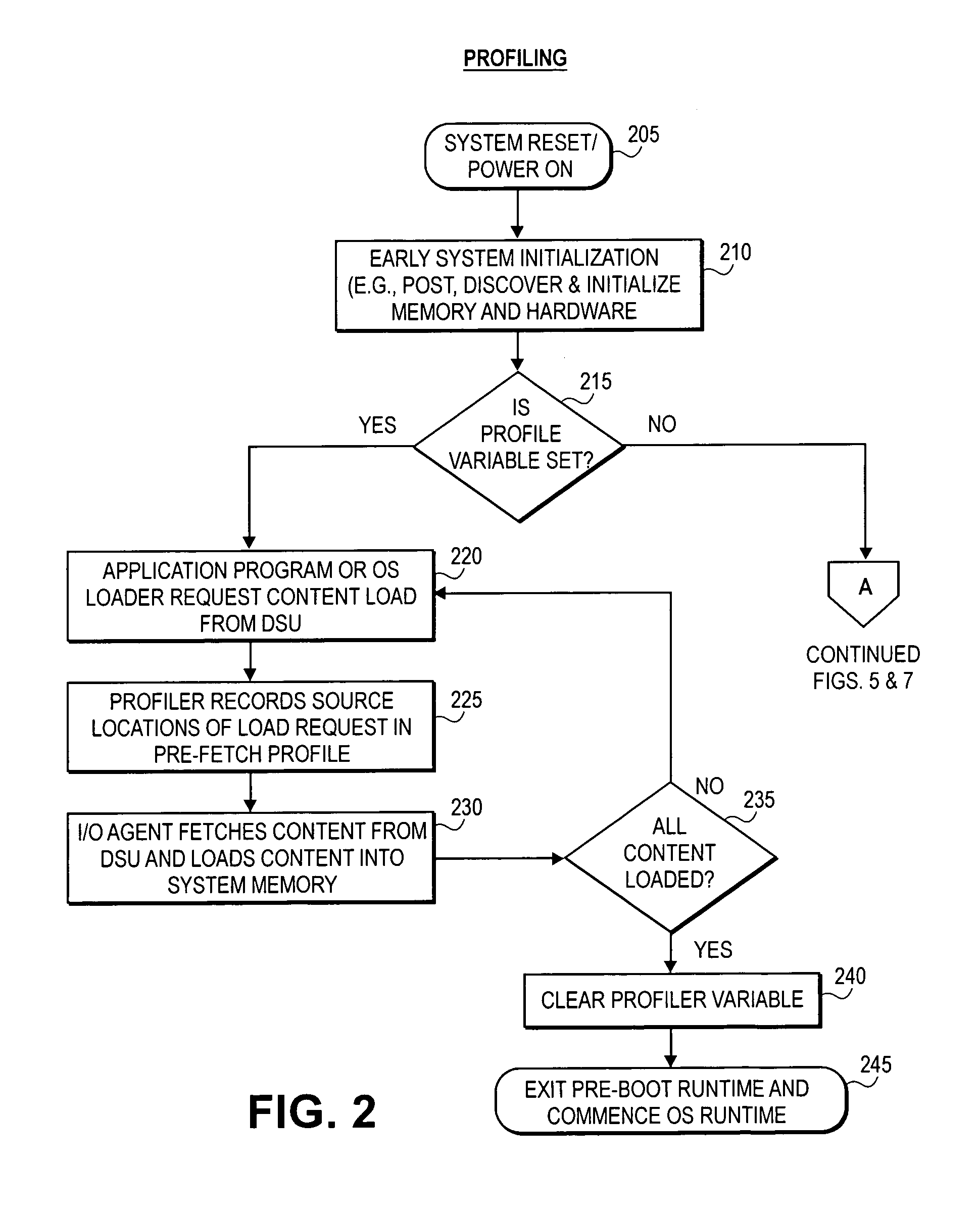

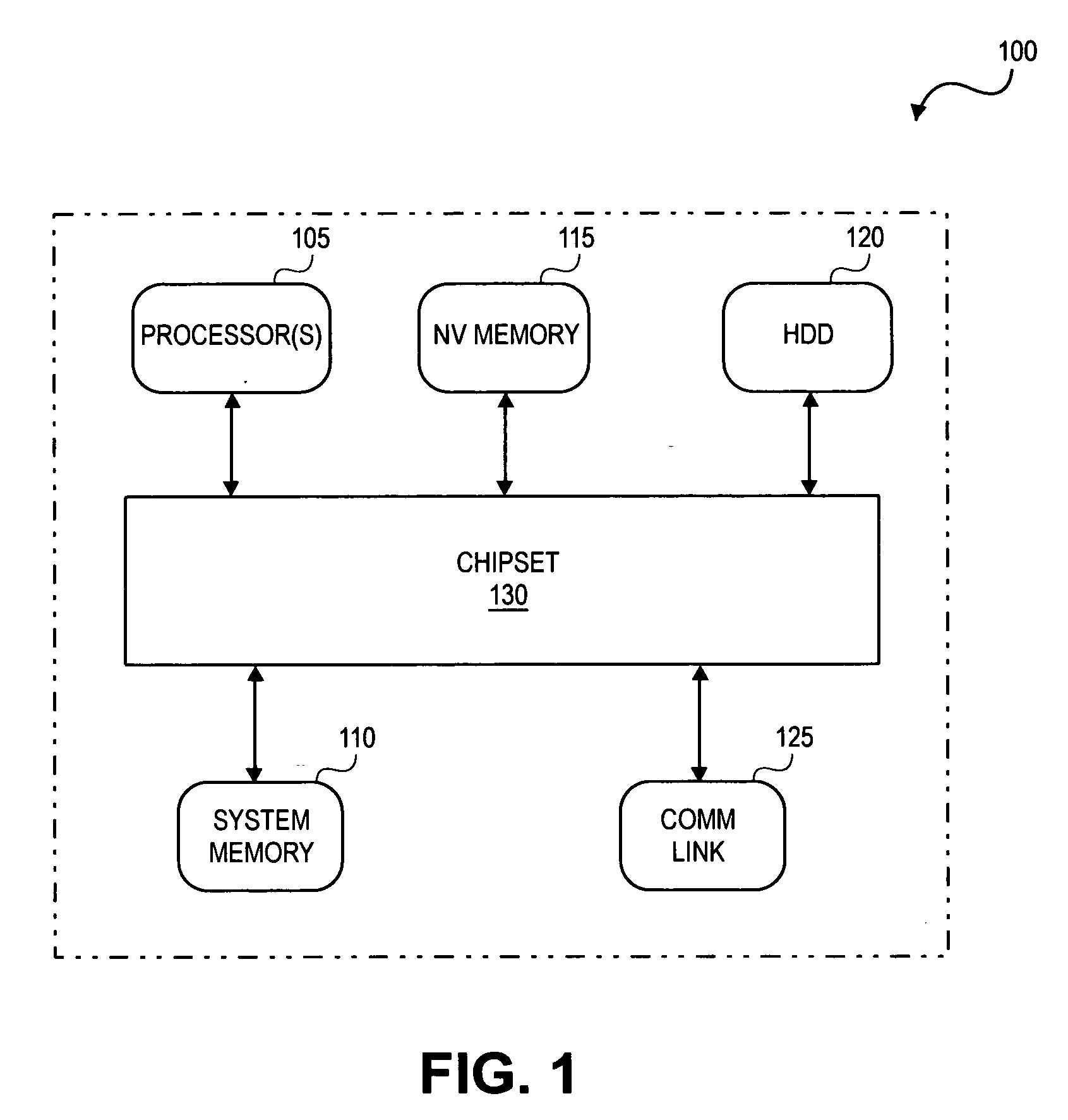

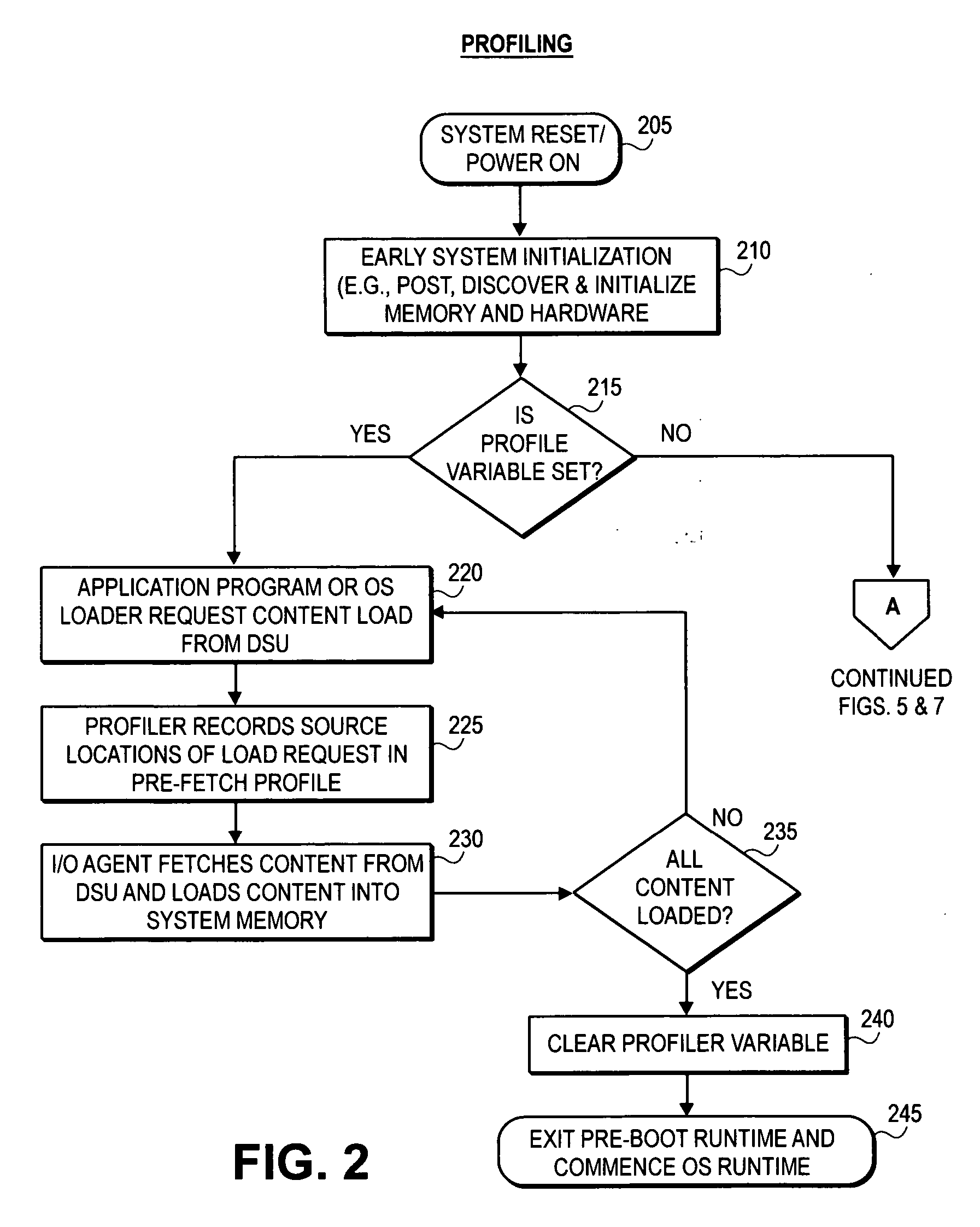

A method and system for content pre-fetching during a processing system pre-boot runtime. First, it is determined when a processor of a processing system is in one of a busy wait state and an idle state during a pre-boot runtime of the processing system. Then, content is pre-fetched from a data storage unit of the processing system. The content is pre-fetched based upon a pre-fetch profile. The content is loaded into system memory of the processing system.

Owner:INTEL CORP

Aggressive content pre-fetching during pre-boot runtime to support speedy OS booting

A method and system for content pre-fetching during a processing system pre-boot runtime. First, it is determined when a processor of a processing system is in one of a busy wait state and an idle state during a pre-boot runtime of the processing system. Then, content is pre-fetched from a data storage unit of the processing system. The content is pre-fetched based upon a pre-fetch profile. The content is loaded into system memory of the processing system.

Owner:INTEL CORP

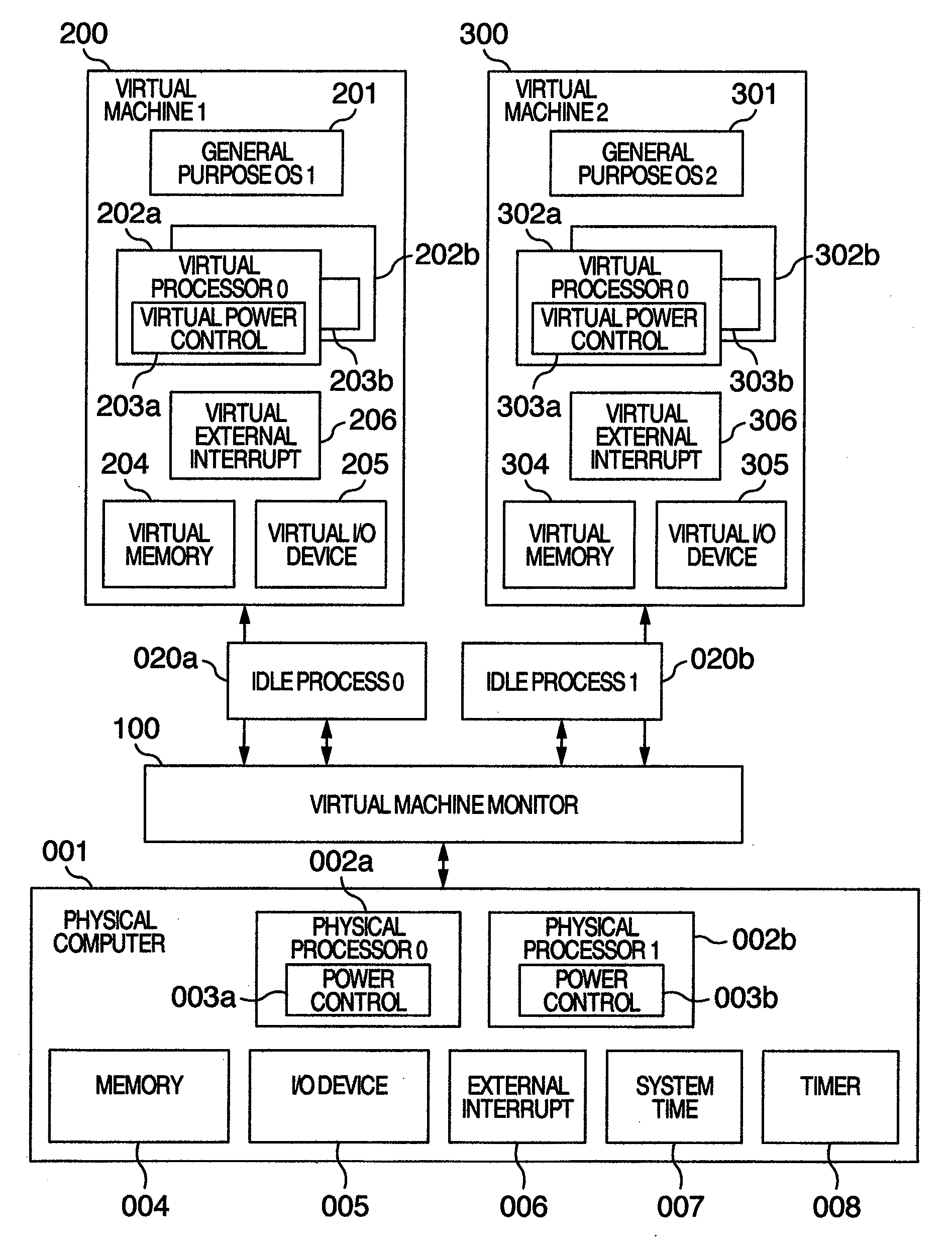

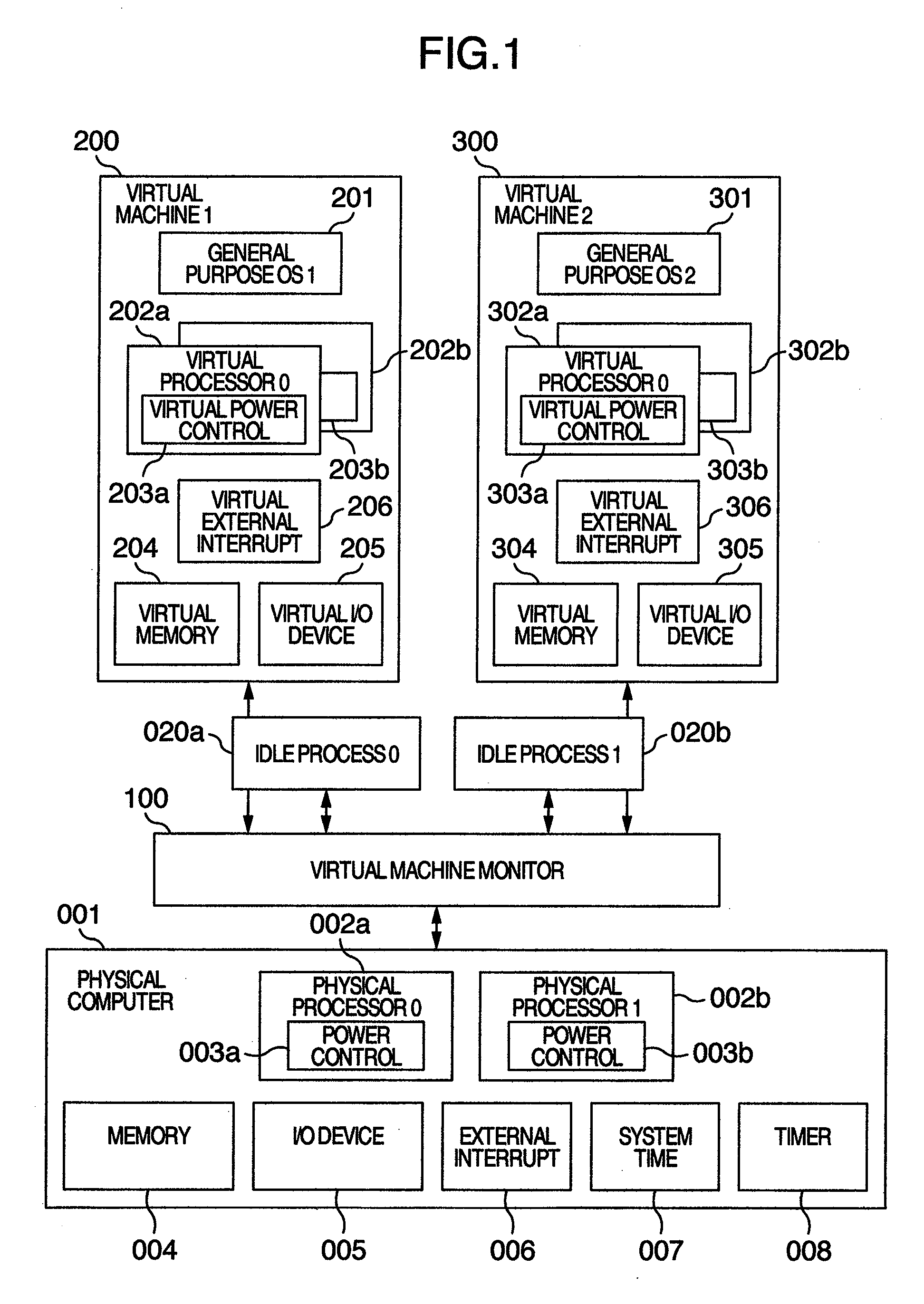

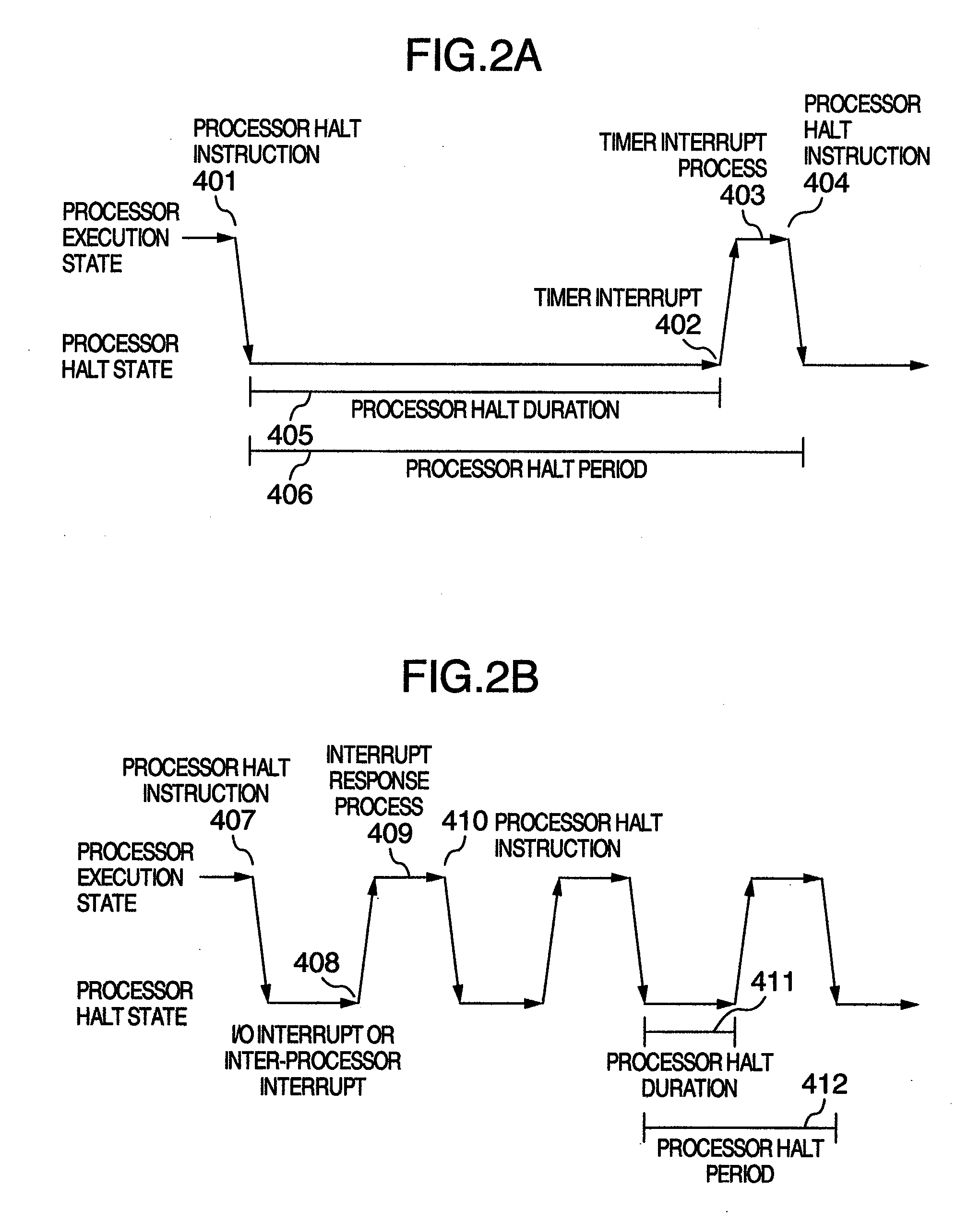

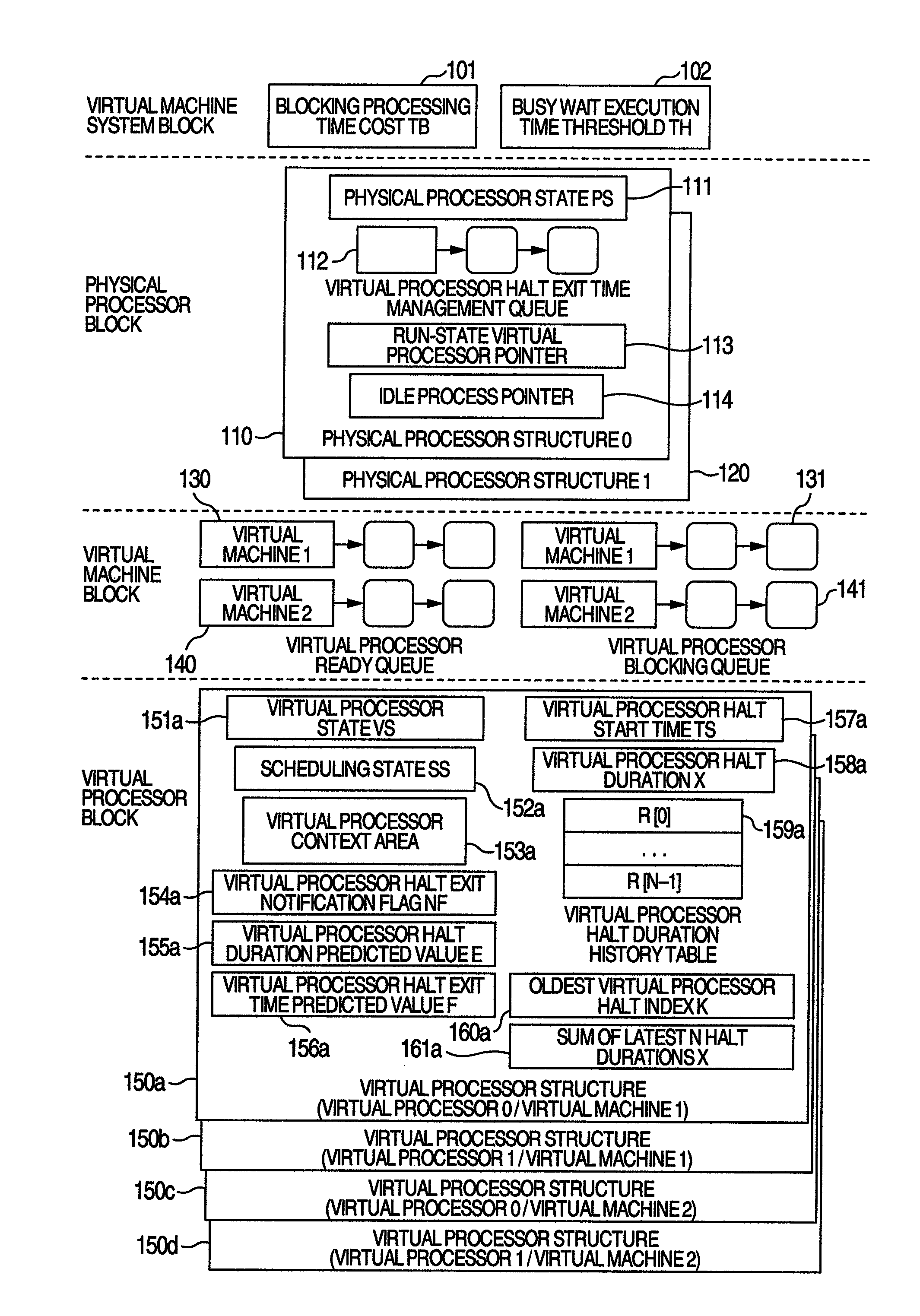

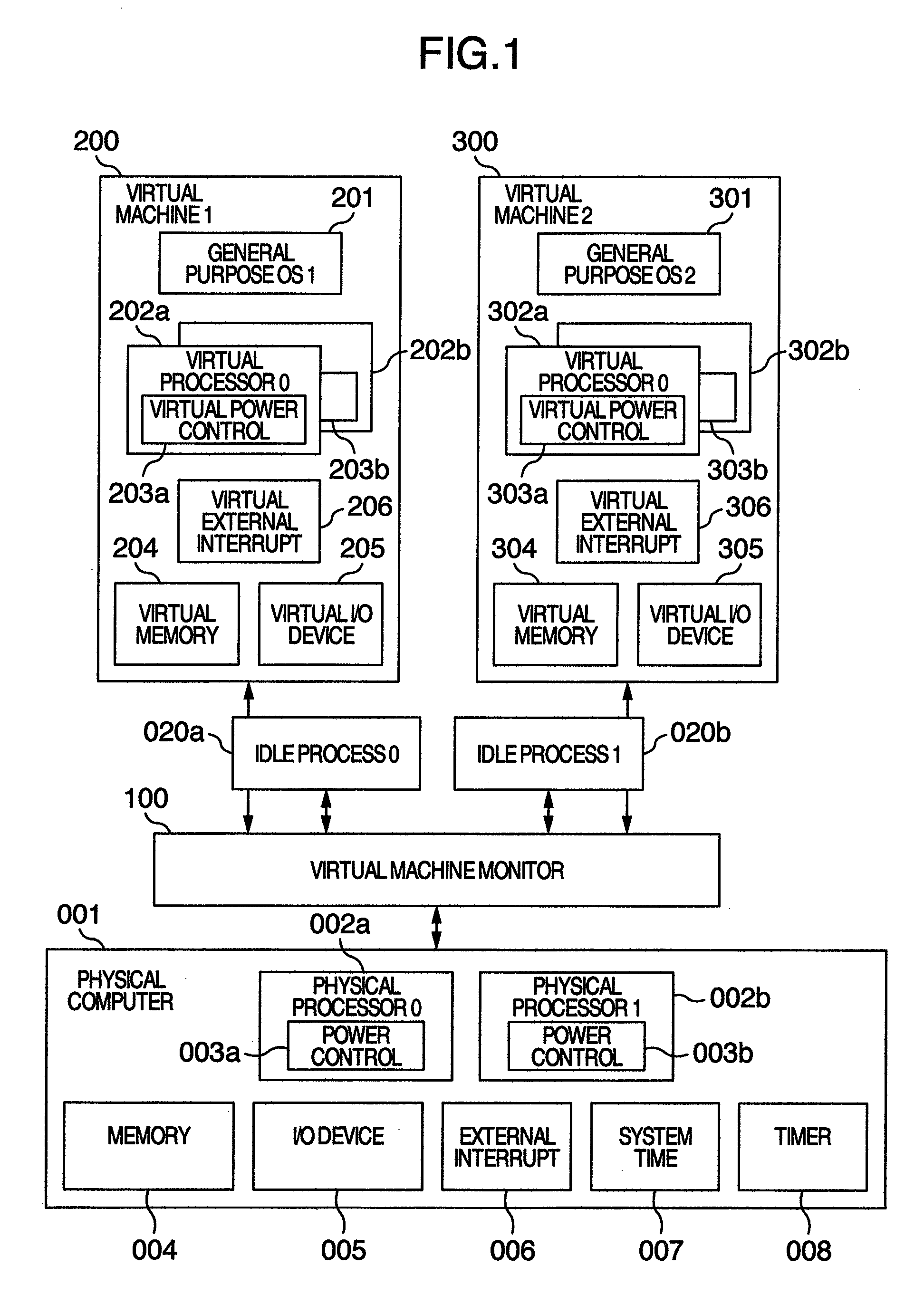

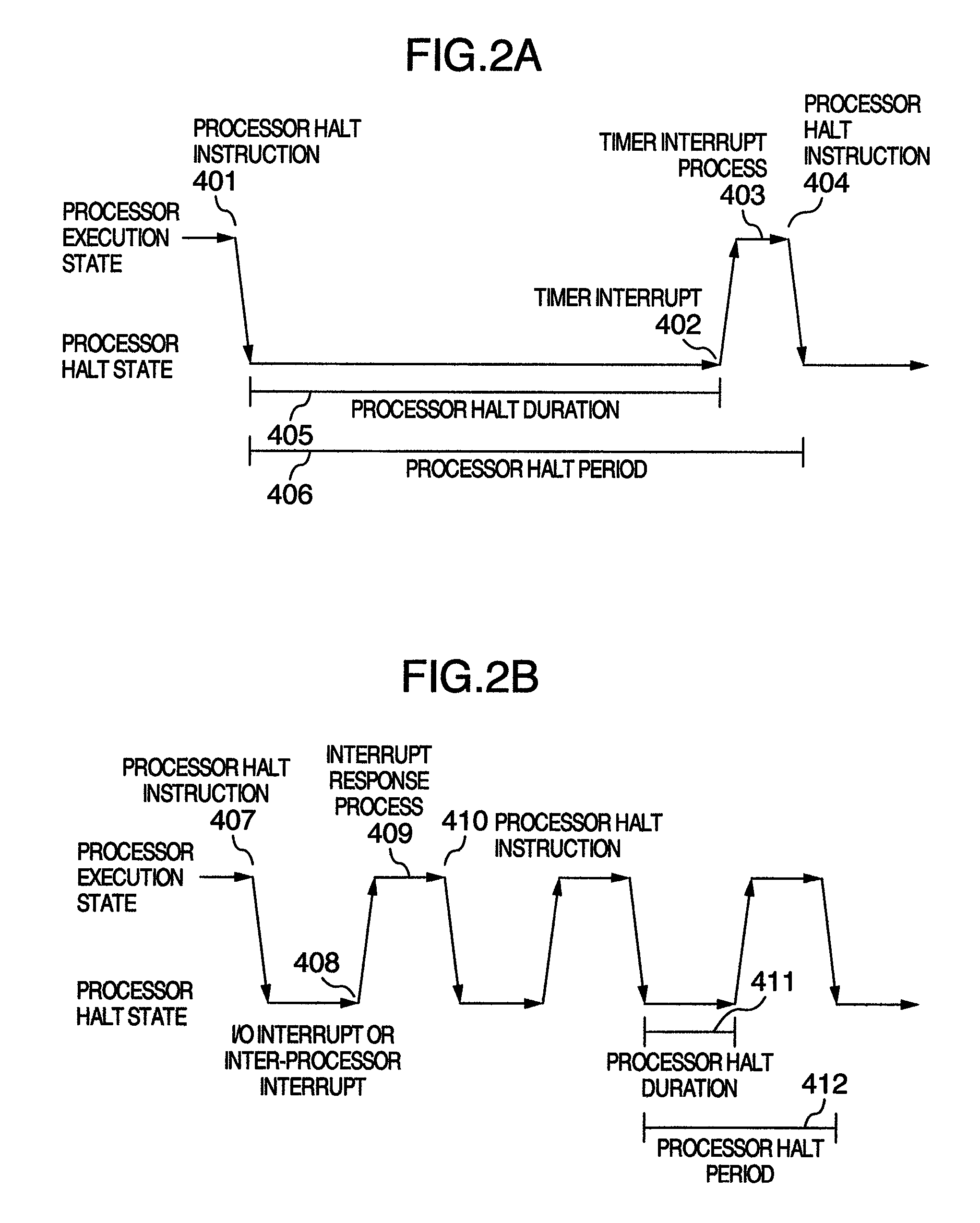

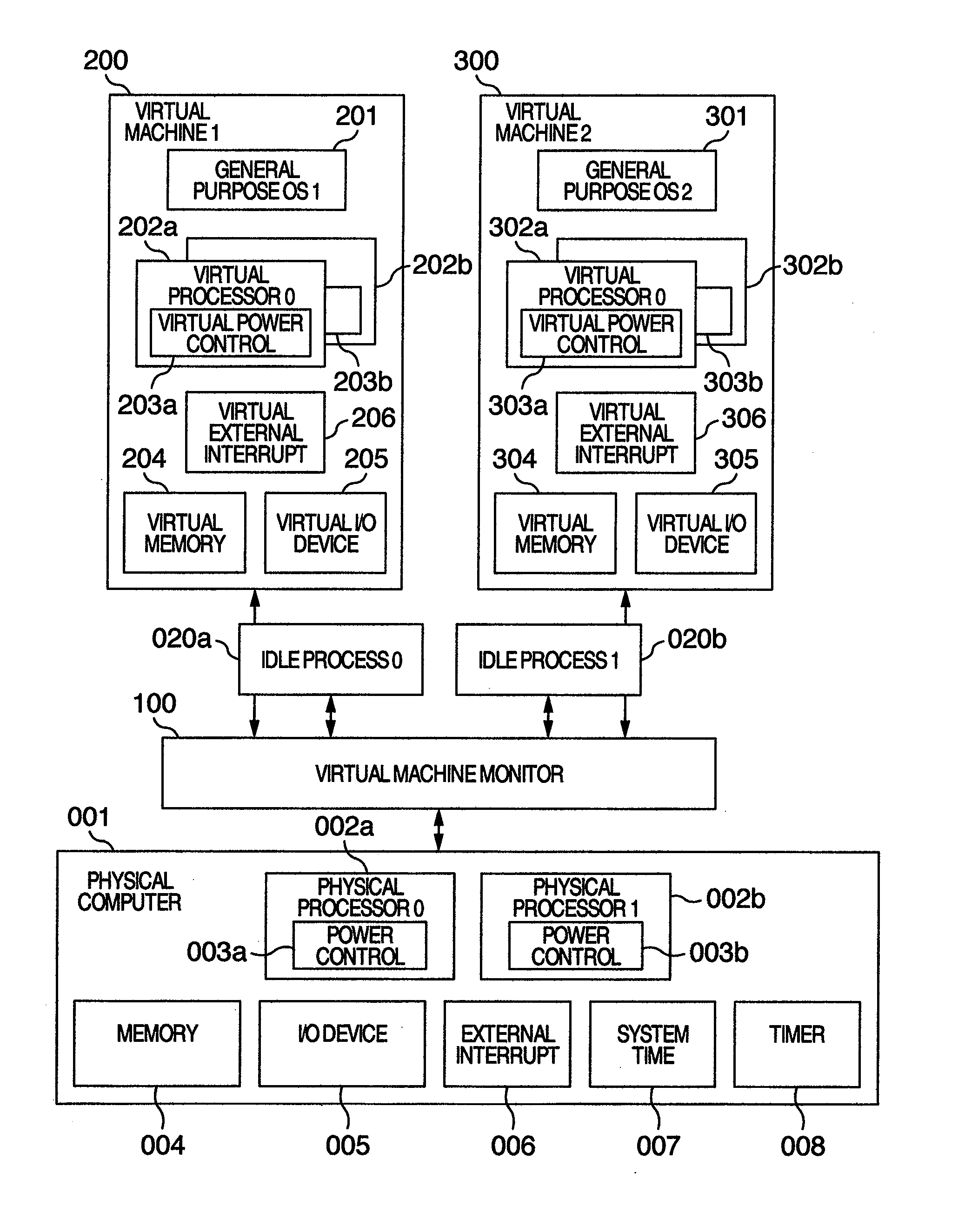

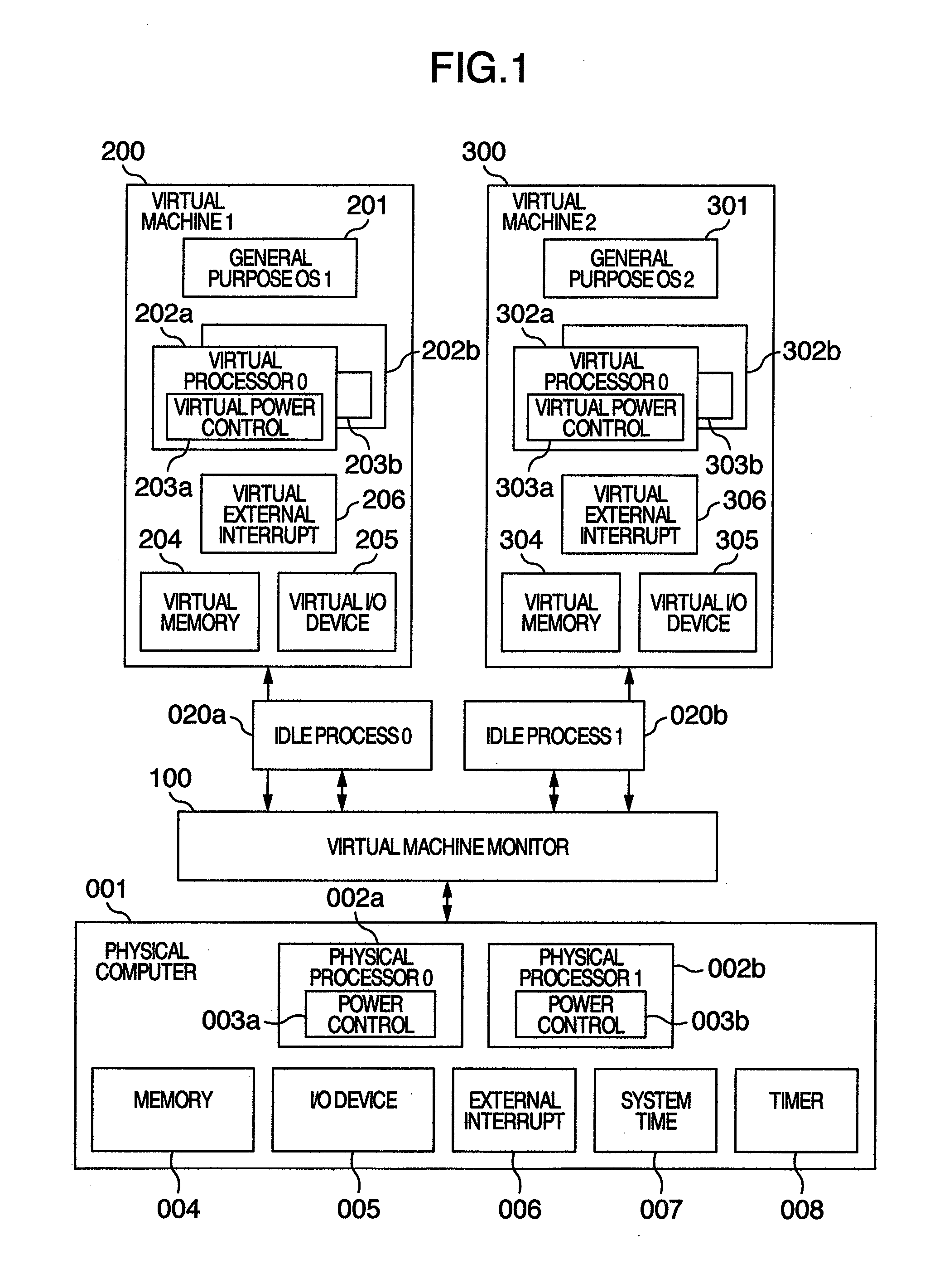

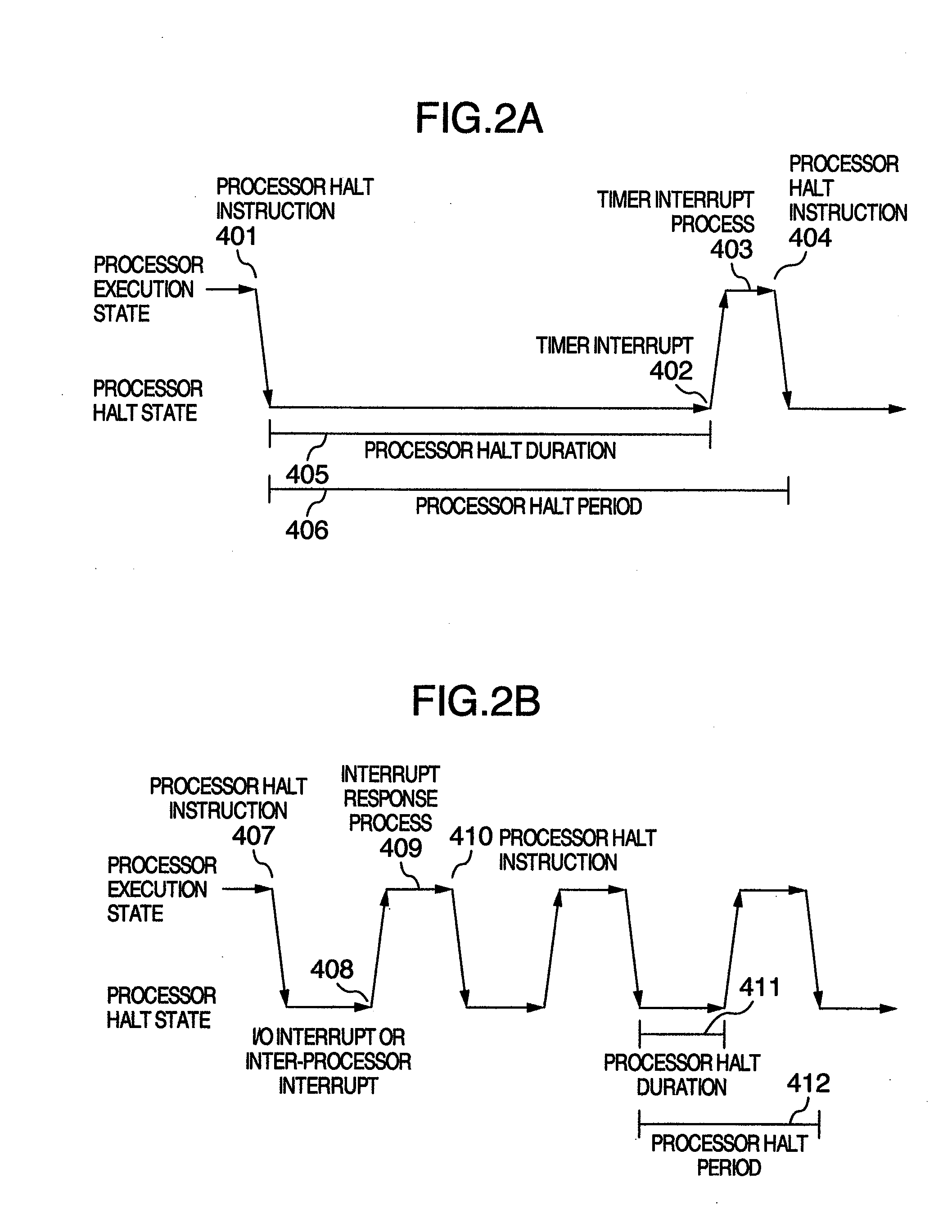

Computer system, virtual machine monitor and scheduling method for virtual machine monitor

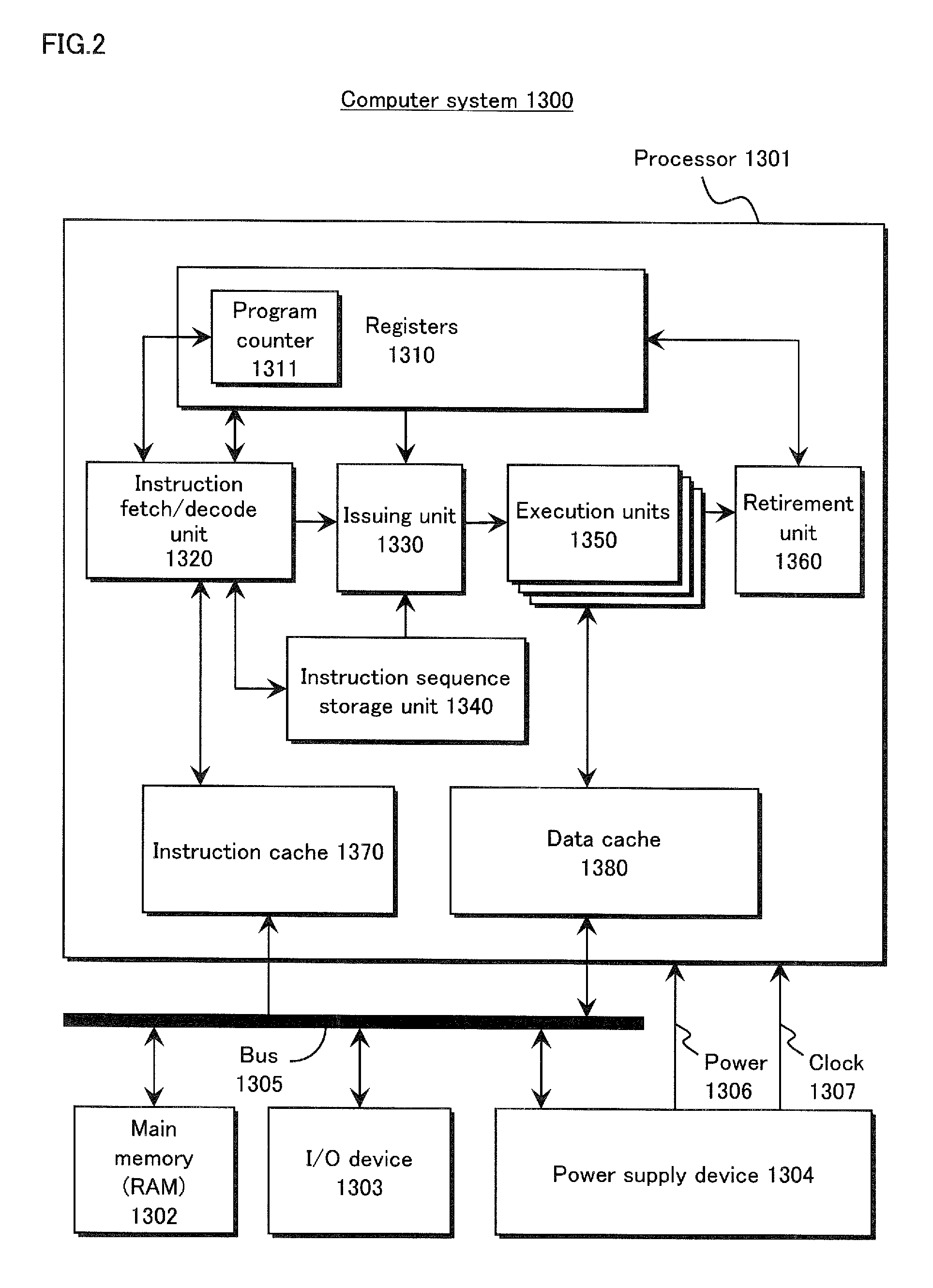

ActiveUS20110010713A1Reduce power consumptionReduce processing timeEnergy efficient ICTMultiprogramming arrangementsComputerized systemPhysics processing unit

In a computer system according to the background art, when a request to halt a virtual processor was detected, the virtual processor was blocked. In the blocking method, latency of virtual halt exit of the virtual processor was so long that a problem of performance was caused. A virtual machine monitor selects either of a busy wait method for making repeatedly examination until the virtual halt state exits while the virtual processor stays on the physical processor and a blocking method for stopping execution of the virtual processor and scheduling other virtual processors on the physical processor while yielding the operating physical processor and checking off scheduling of the virtual processor to the physical processor, based on a virtual processor halt duration predicted value of the virtual processor which is an average value of latest N virtual processor halt durations of the virtual processor.

Owner:HITACHI LTD

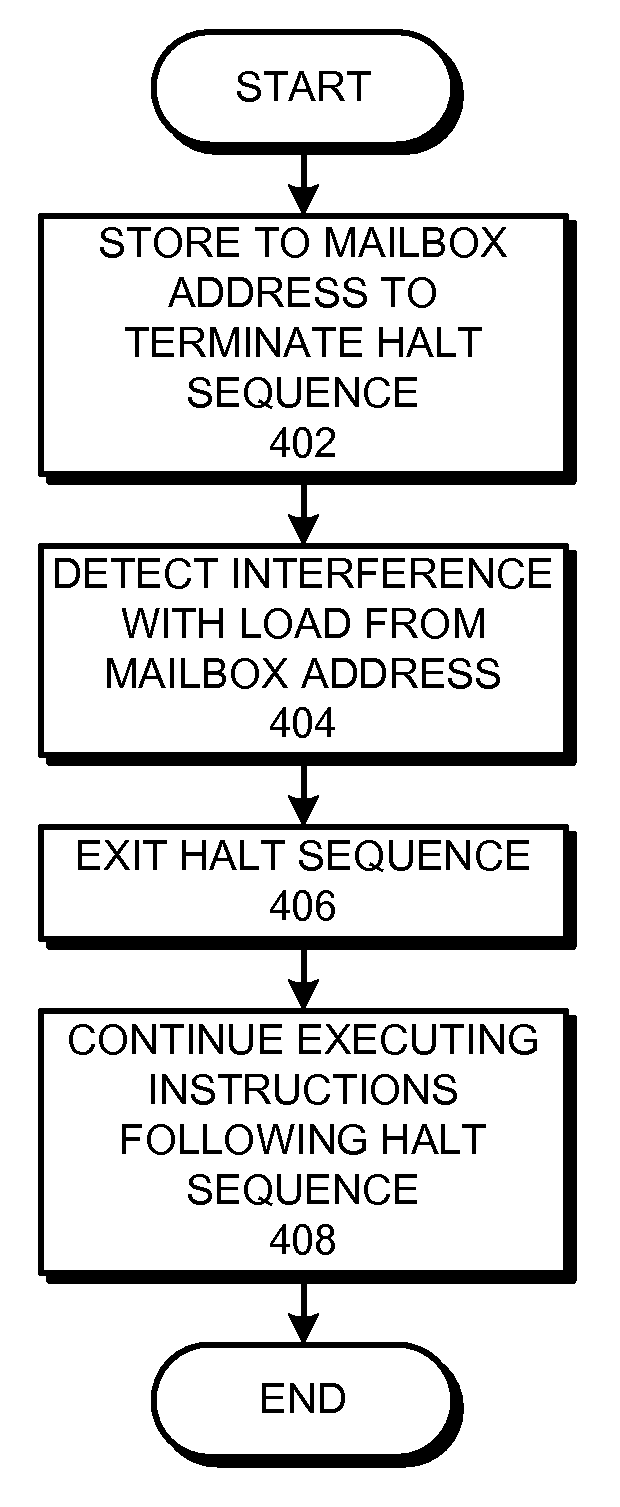

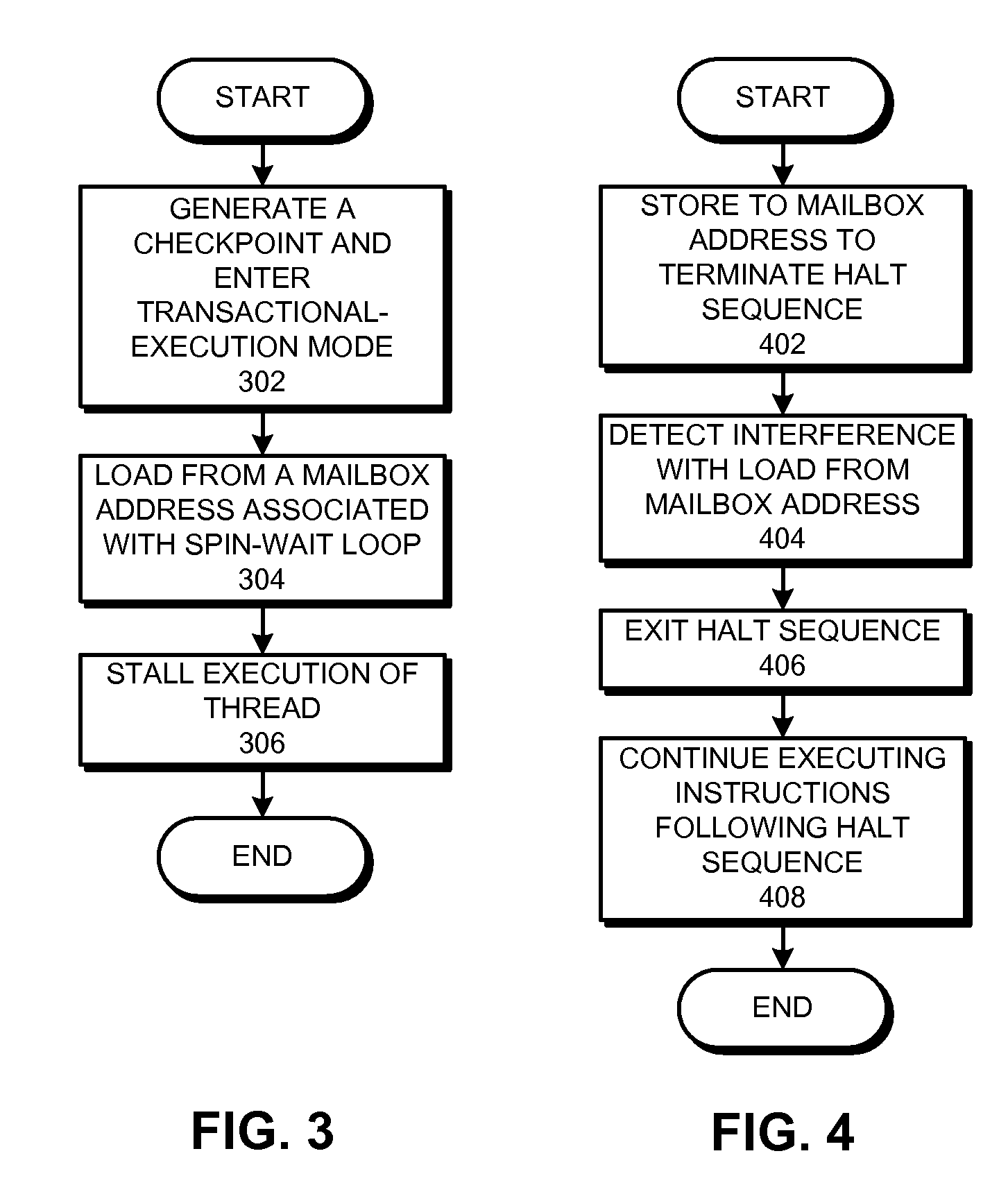

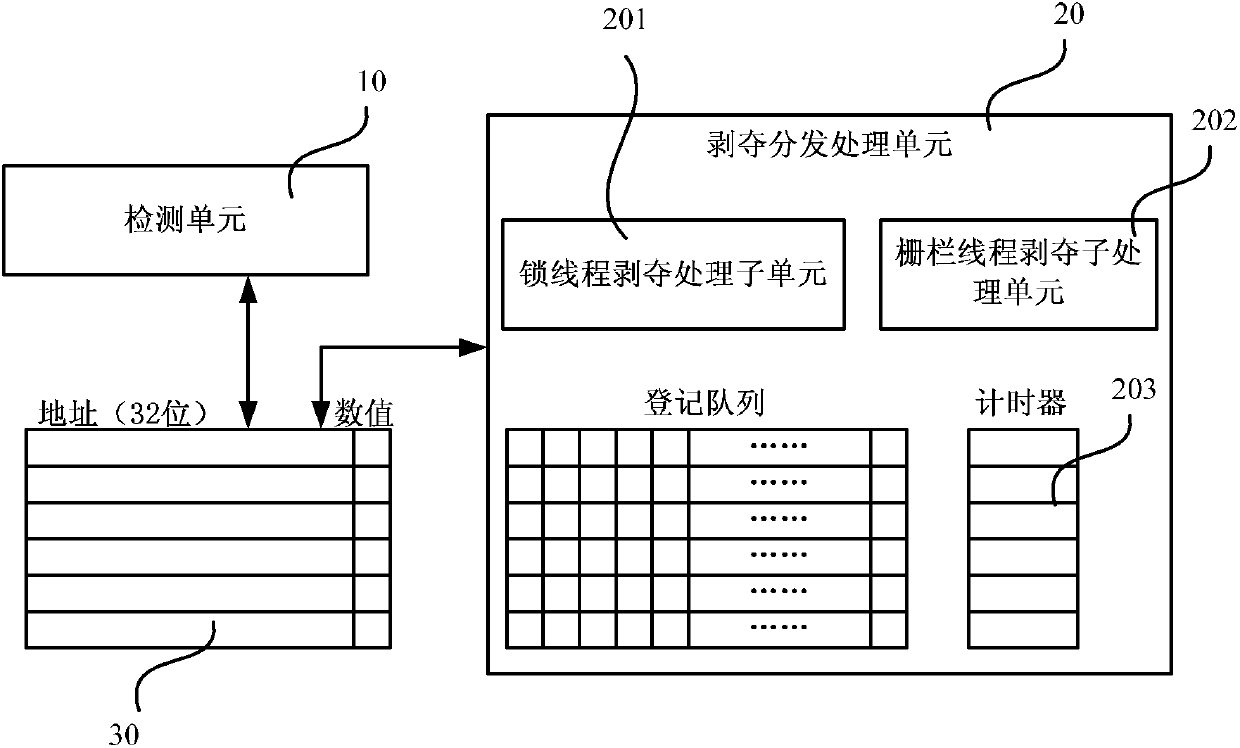

Using a transactional execution mechanism to free up processor resources used by a busy-waiting thread

ActiveUS7395418B1Improve system performanceProcessor resourceDigital computer detailsSpecific program execution arrangementsParallel computingTransactional memory

A technique for improving the performance of a system that supports simultaneous multi-threading (SMT). When a first thread encounters a halt sequence, the system starts a transactional memory operation by generating a checkpoint and entering a transactional-execution mode. Next, the system loads from a mailbox address associated with the halt sequence. The system then stalls execution of the first thread, so that the first thread does not execute instructions within the halt sequence, thereby freeing up processor resources for other threads. To terminate the halt sequence, a second thread stores to the mailbox address, which causes a transactional-memory mechanism within the processor to detect an interference with the previous load from the mailbox address by the first thread and which causes the first thread to exit from the halt sequence. The system then continues executing instructions following the halt sequence.

Owner:ORACLE INT CORP

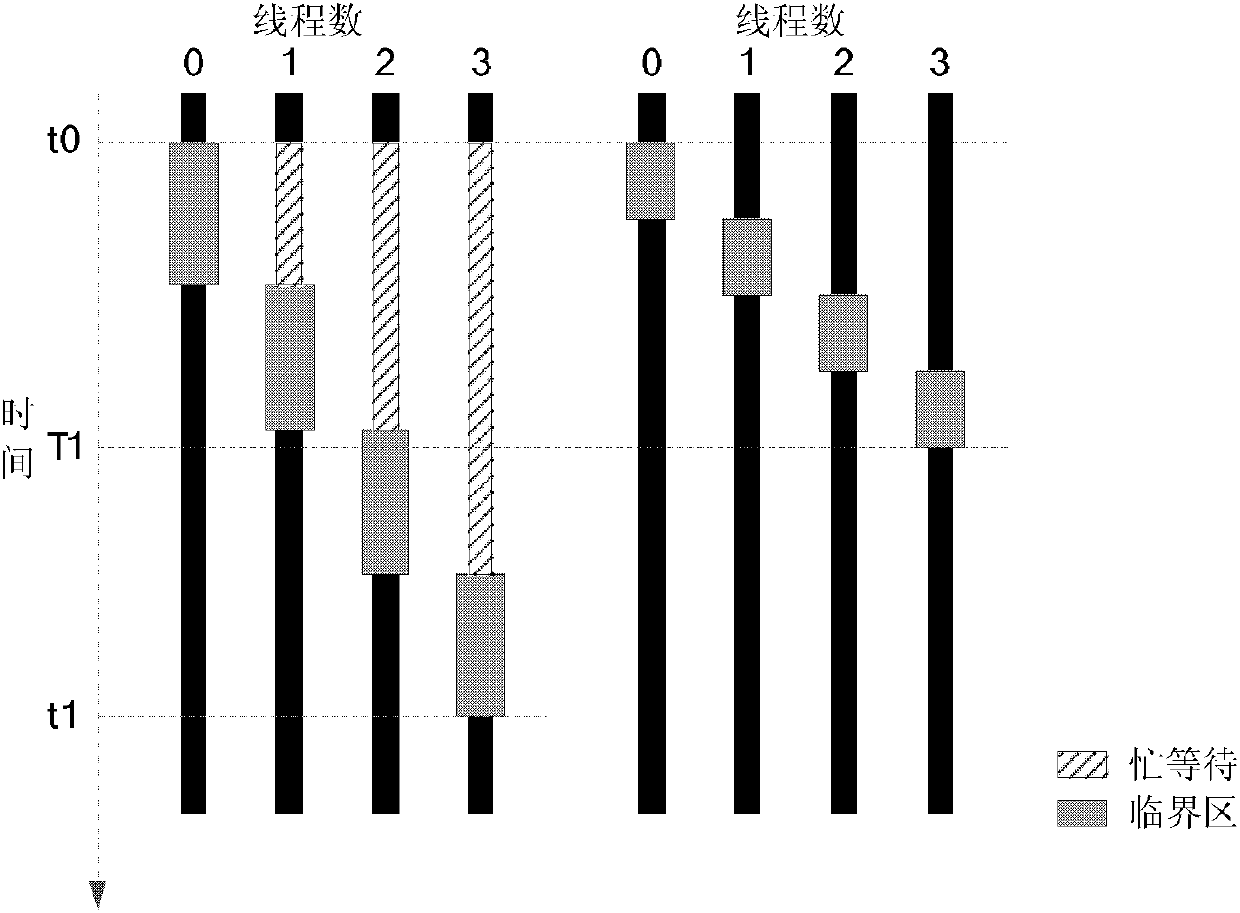

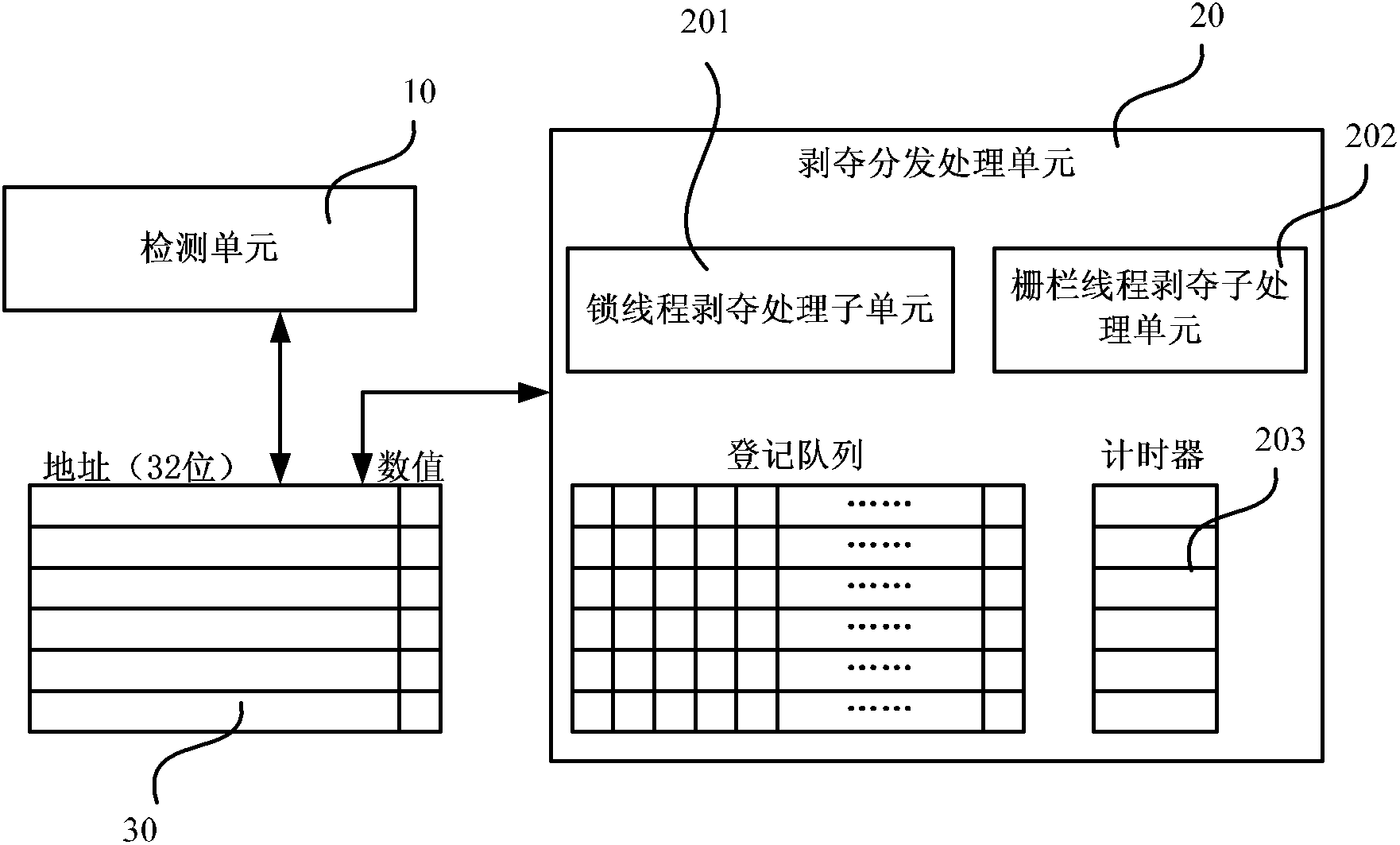

NoC (Network-on-Chip) multi-core processor multi-thread resource allocation processing method and system

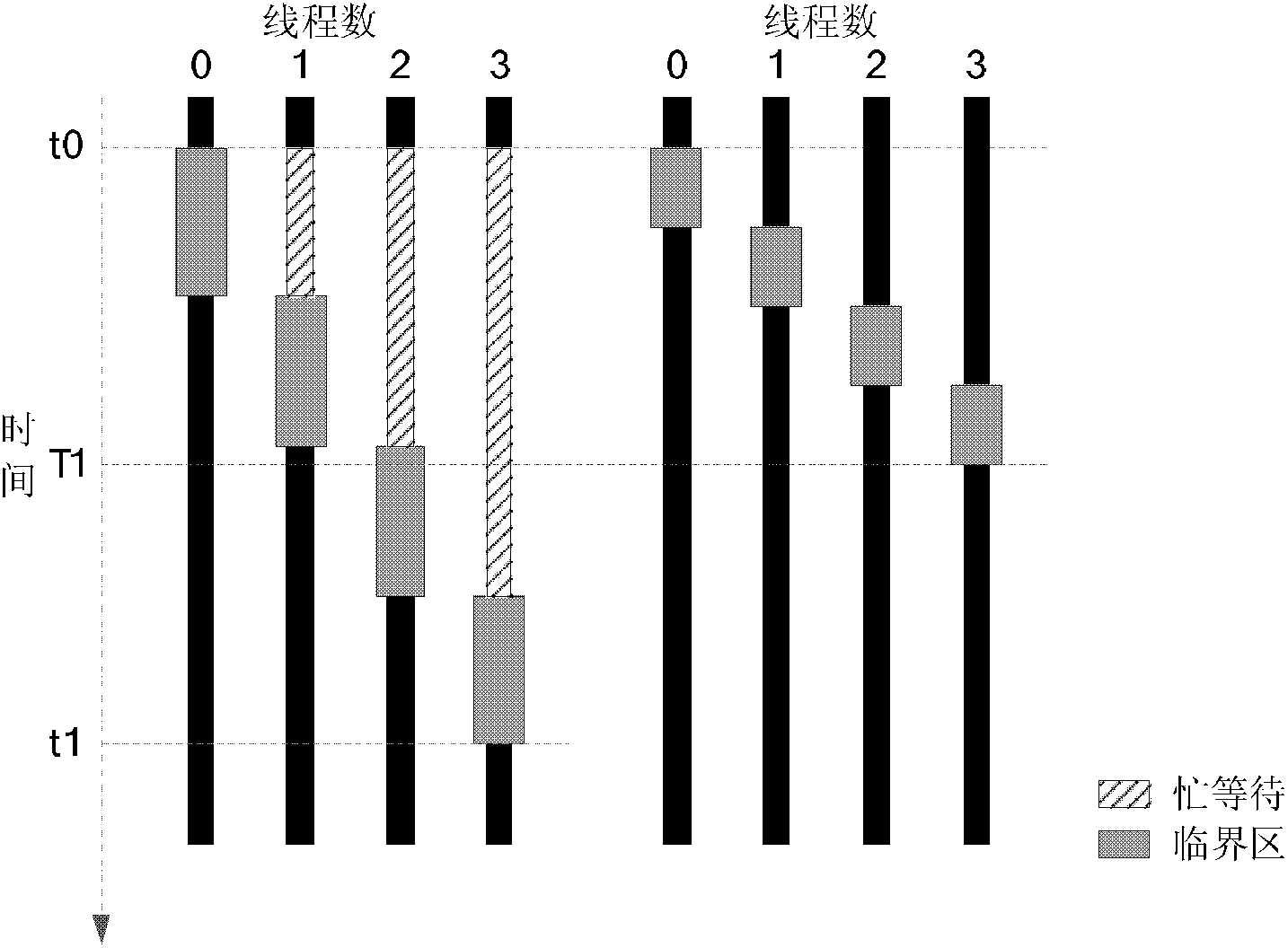

ActiveCN102591722AImprove performanceImprove acceleration performanceResource allocationNetworks on chipResource allocation

The invention provides a NoC (Network-on-Chip) multi-core processor multi-thread resource allocation processing method and a NoC multi-core processor multi-thread resource allocation processing system. The system comprises a detection unit, an exclusive control index table and a depriving and distributing processing unit, wherein the detection unit is used for detecting an address and a value of exclusive control in the process of executing a NoC multi-core processor multi-thread program and writing the address and the value into the exclusive control index table; the exclusive control index table is used for storing the address and the value of the exclusive control, which are detected by the detection unit; and the depriving and distributing processing unit is used for arranging threads of the exclusive control with the same address into a registration queue, depriving busy waiting core resources of part of the threads in the registration queue and distributing the busy waiting core resources to other threads for use. The integral performance and the expandability of the multi-core processor multi-thread program are effectively improved.

Owner:LOONGSON TECH CORP

Computer system, virtual machine monitor and scheduling method for virtual machine monitor

ActiveUS8423999B2Reduce power consumptionReduce processing timeEnergy efficient ICTMultiprogramming arrangementsComputerized systemPhysics processing unit

In a computer system according to the background art, when a request to halt a virtual processor was detected, the virtual processor was blocked. In the blocking method, latency of virtual halt exit of the virtual processor was so long that a problem of performance was caused. A virtual machine monitor selects either of a busy wait method for making repeatedly examination until the virtual halt state exits while the virtual processor stays on the physical processor and a blocking method for stopping execution of the virtual processor and scheduling other virtual processors on the physical processor while yielding the operating physical processor and checking off scheduling of the virtual processor to the physical processor, based on a virtual processor halt duration predicted value of the virtual processor which is an average value of latest N virtual processor halt durations of the virtual processor.

Owner:HITACHI LTD

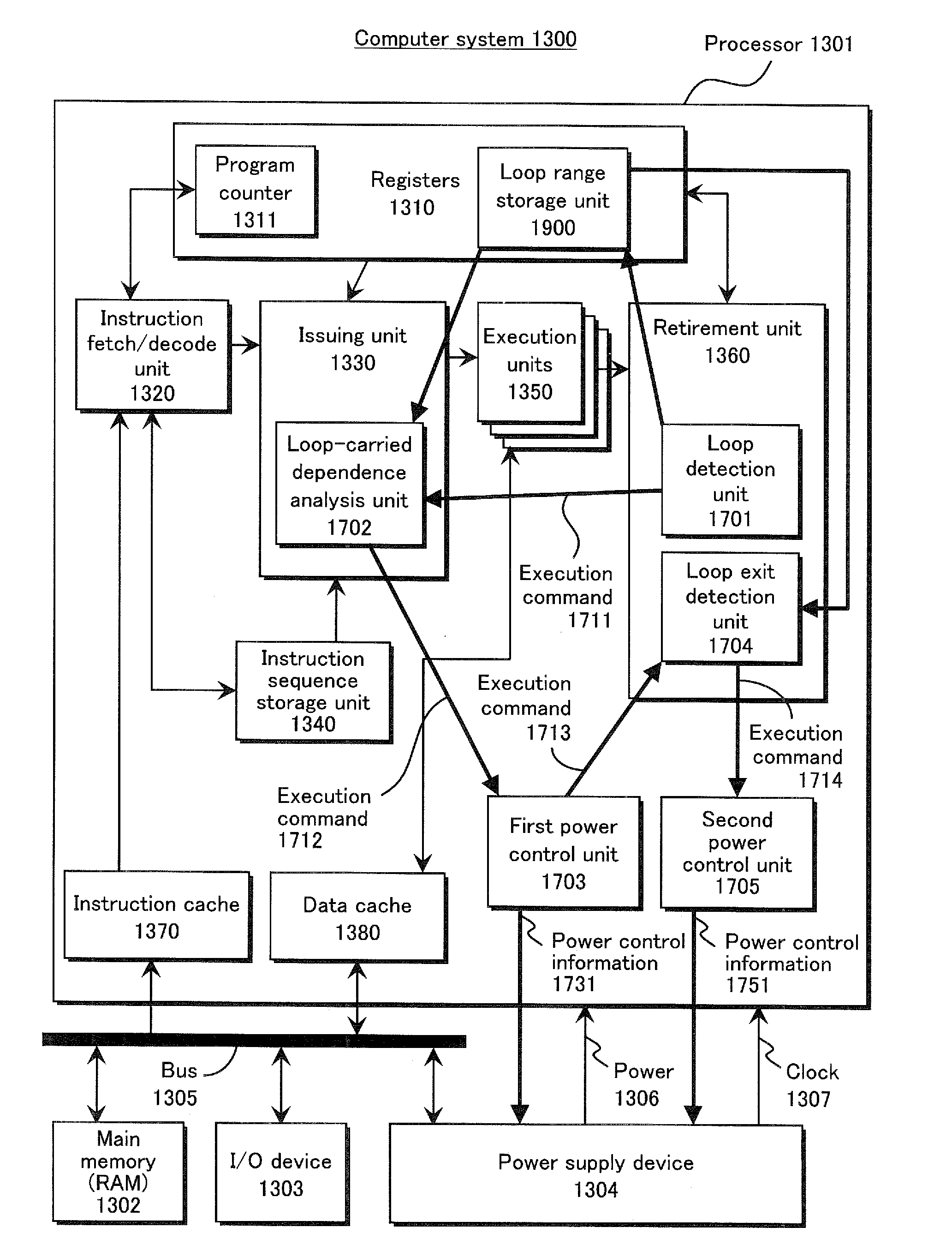

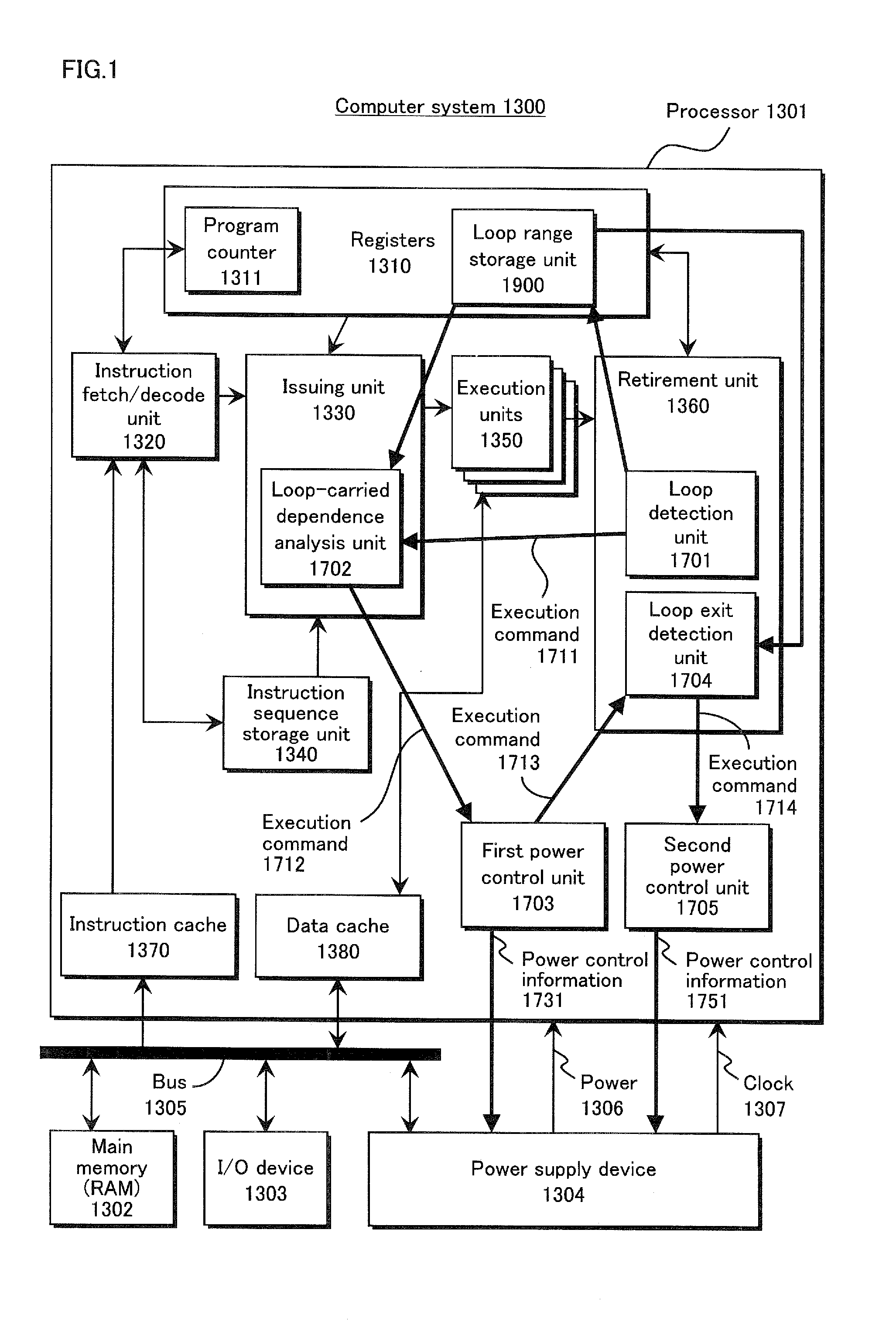

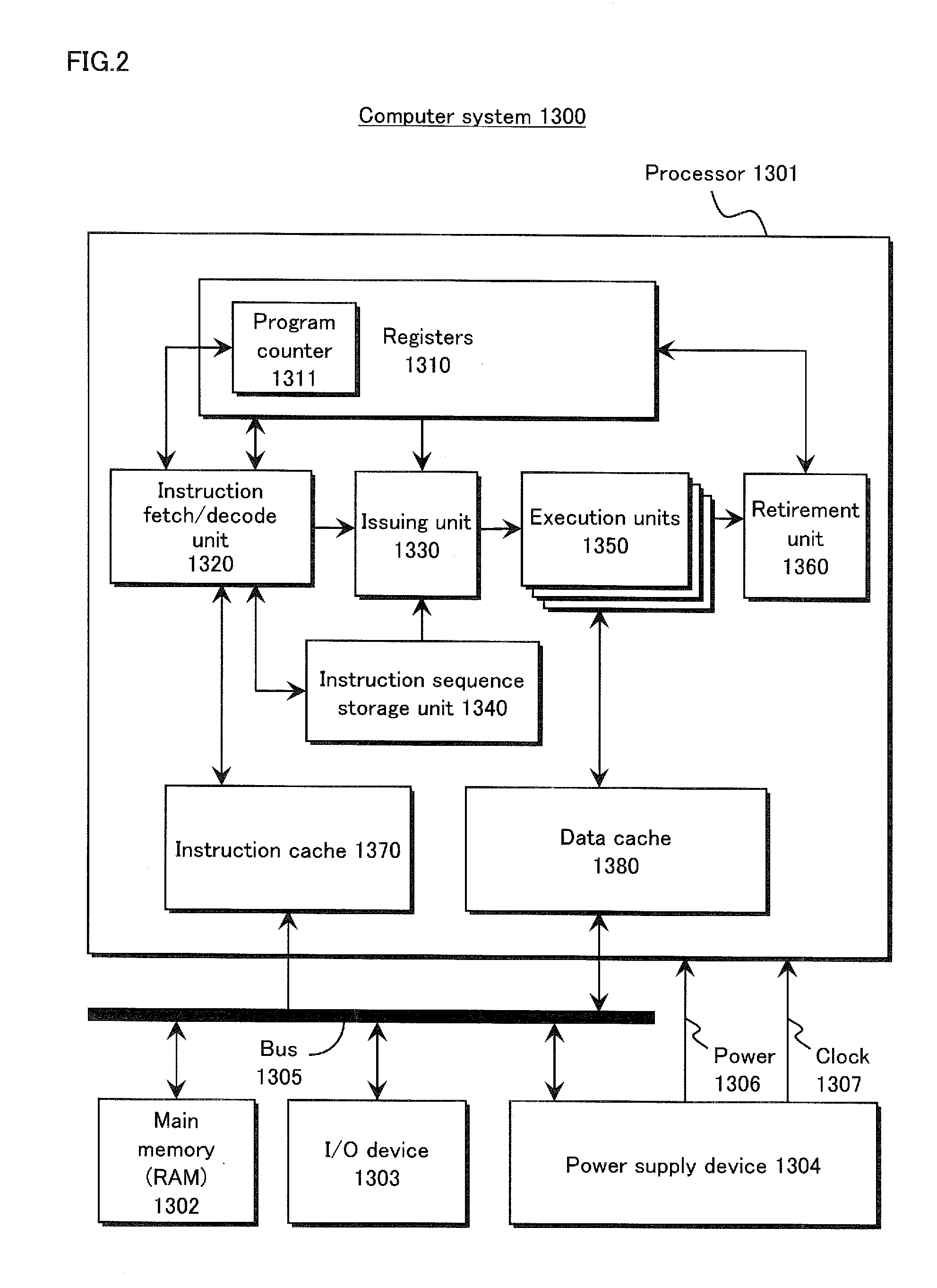

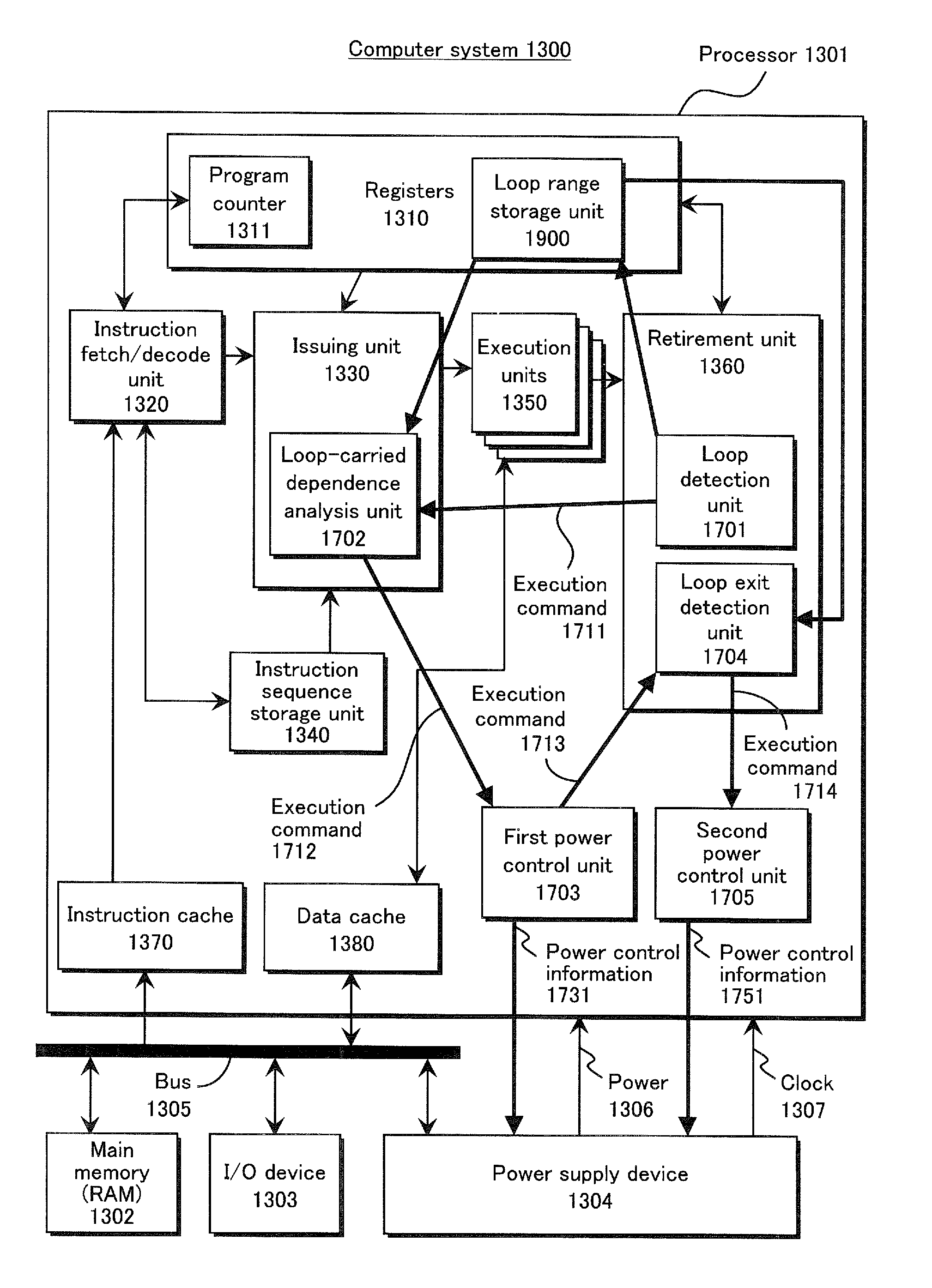

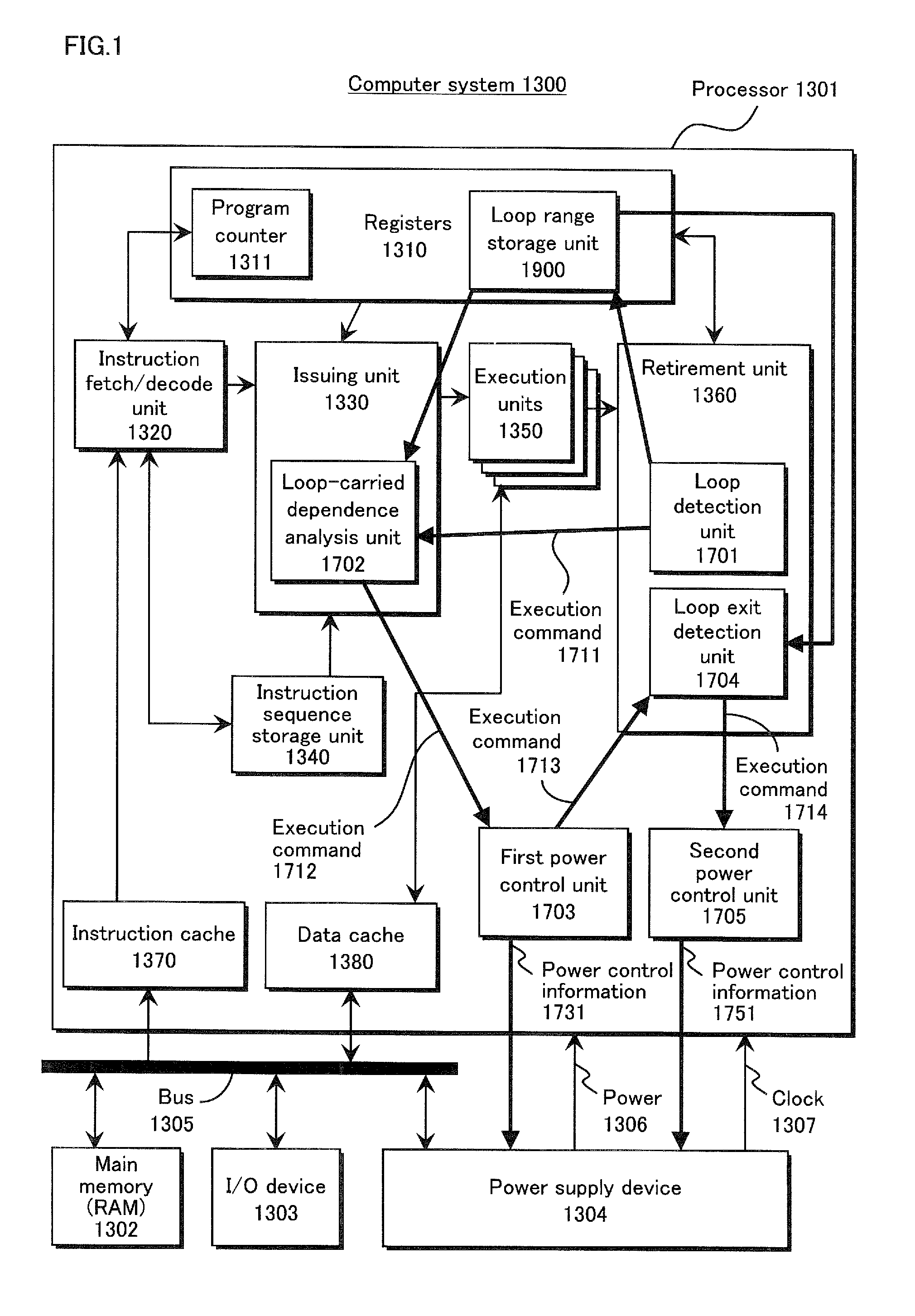

Integrated circuit, computer system, and control method

InactiveUS20120179924A1Easily detect loopReduce the amount of powerVolume/mass flow measurementConcurrent instruction executionComputerized systemEngineering

An integrated circuit provided with a processor includes a loop detection unit that detects execution of a loop in the processor, a loop-carried dependence analysis unit that analyzes the loop in order to detect loop-carried dependence, and a power control unit that performs power saving control when no loop-carried dependence is detected. By detecting whether a loop has loop-carried dependence, loops for calculation or the like can be excluded from power saving control. As a result, a larger variety of busy-waits can be detected, and the amount of power wasted by a busy-wait can be reduced.

Owner:PANASONIC INTELLECTUAL PROPERTY MANAGEMENT CO LTD

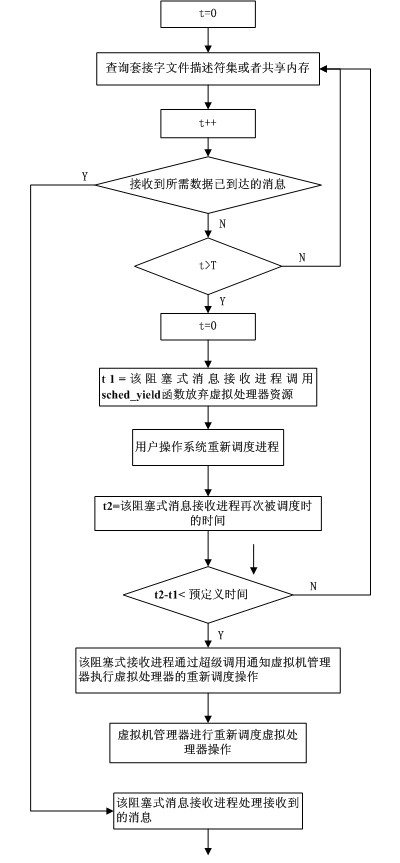

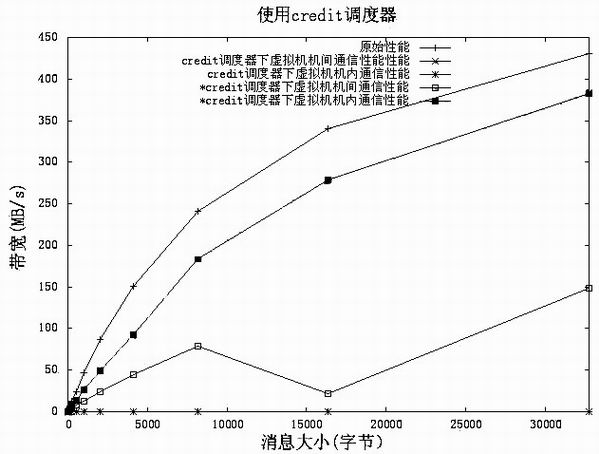

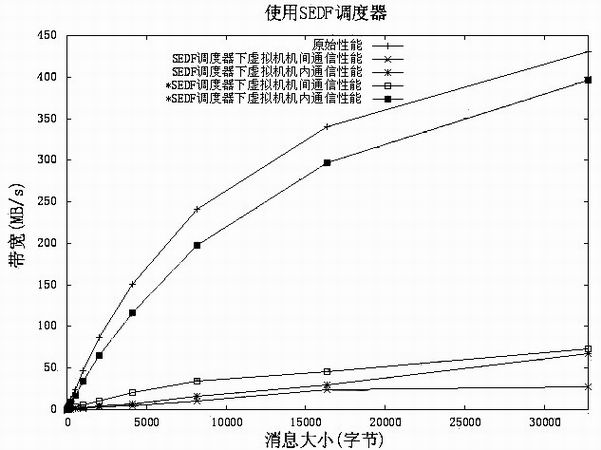

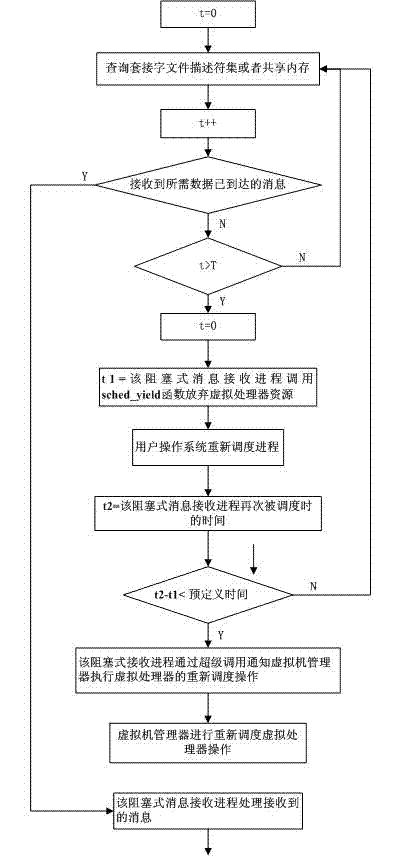

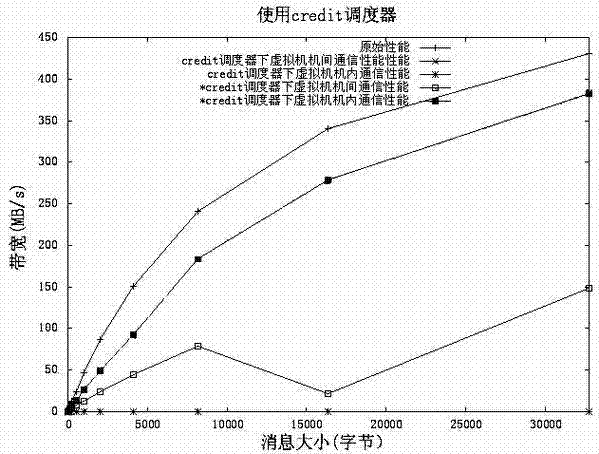

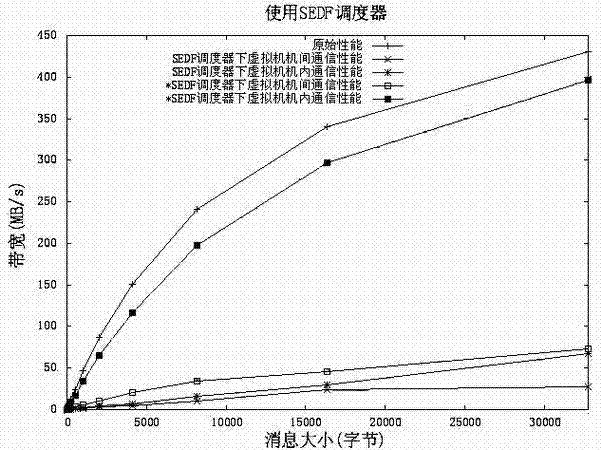

Method for receiving message passing interface (MPI) information under circumstance of over-allocation of virtual machine

InactiveCN101968749AImprove communication performanceImprove performanceMultiprogramming arrangementsSoftware simulation/interpretation/emulationOperational systemMessage Passing Interface

The invention discloses a method for receiving message passing interface (MPI) information under the circumstance of over-allocation of a virtual machine, which comprises the following steps: polling a socket file descriptor set or shared memory, invoking a sched_yield function, and releasing the currently-occupied virtual processor resource of the process in a blocking information receiving process; inquiring the run queue of a virtual processor and selecting a process which can be scheduled to carry out scheduling operation by a user operating system in a virtual machine comprising the virtual processor; when the blocking information receiving process is re-scheduled to operate, judging whether needing to notify a virtual machine manager of executing the rescheduling operation of the virtual processor; and executing the rescheduling operation of the virtual processor by the virtual machine manager through super invoking in the blocking information receiving process, and dealing withthe received information in the blocking information receiving process. The invention can reduce the performance loss caused by 'busy waiting' phenomenon produced by an MPI library information receiving mechanism.

Owner:HUAZHONG UNIV OF SCI & TECH

System and method for mitigating the impact of branch misprediction when exiting spin loops

ActiveUS9304776B2Digital computer detailsNext instruction address formationProgram instructionComputerized system

A computer system may recognize a busy-wait loop in program instructions at compile time and / or may recognize busy-wait looping behavior during execution of program instructions. The system may recognize that an exit condition for a busy-wait loop is specified by a conditional branch type instruction in the program instructions. In response to identifying the loop and the conditional branch type instruction that specifies its exit condition, the system may influence or override a prediction made by a dynamic branch predictor, resulting in a prediction that the exit condition will be met and that the loop will be exited regardless of any observed branch behavior for the conditional branch type instruction. The looping instructions may implement waiting for an inter-thread communication event to occur or for a lock to become available. When the exit condition is met, the loop may be exited without incurring a misprediction delay.

Owner:ORACLE INT CORP

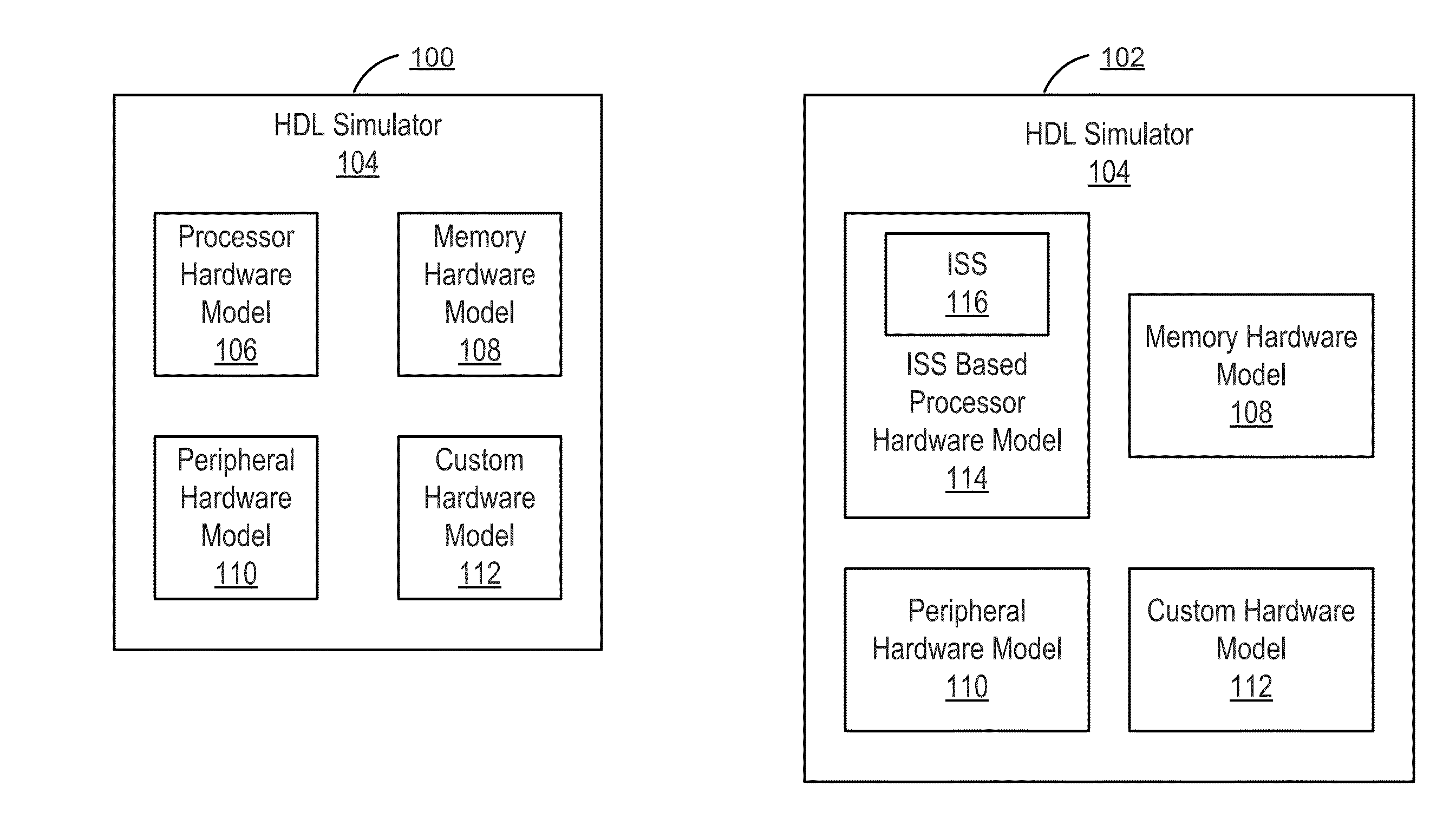

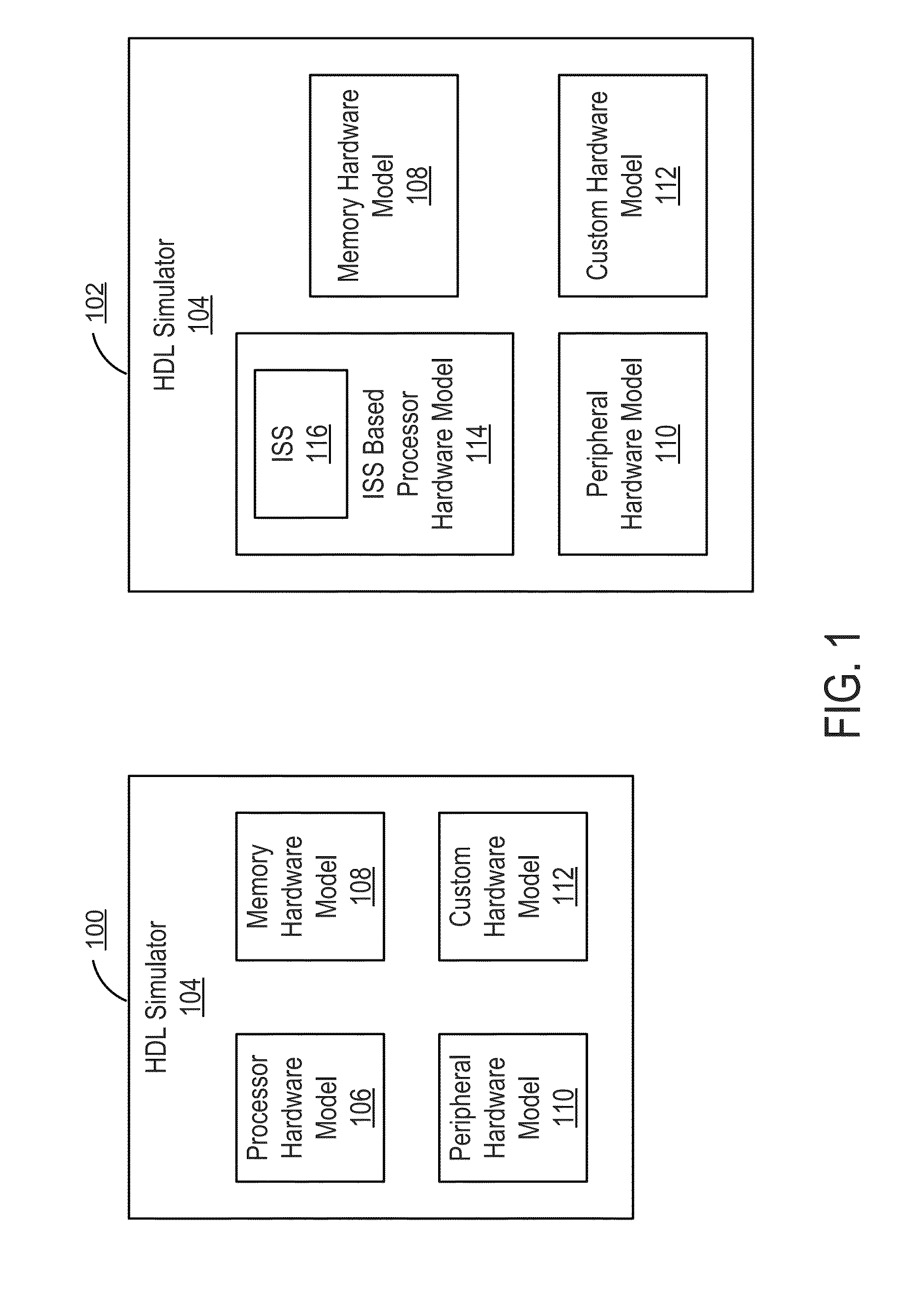

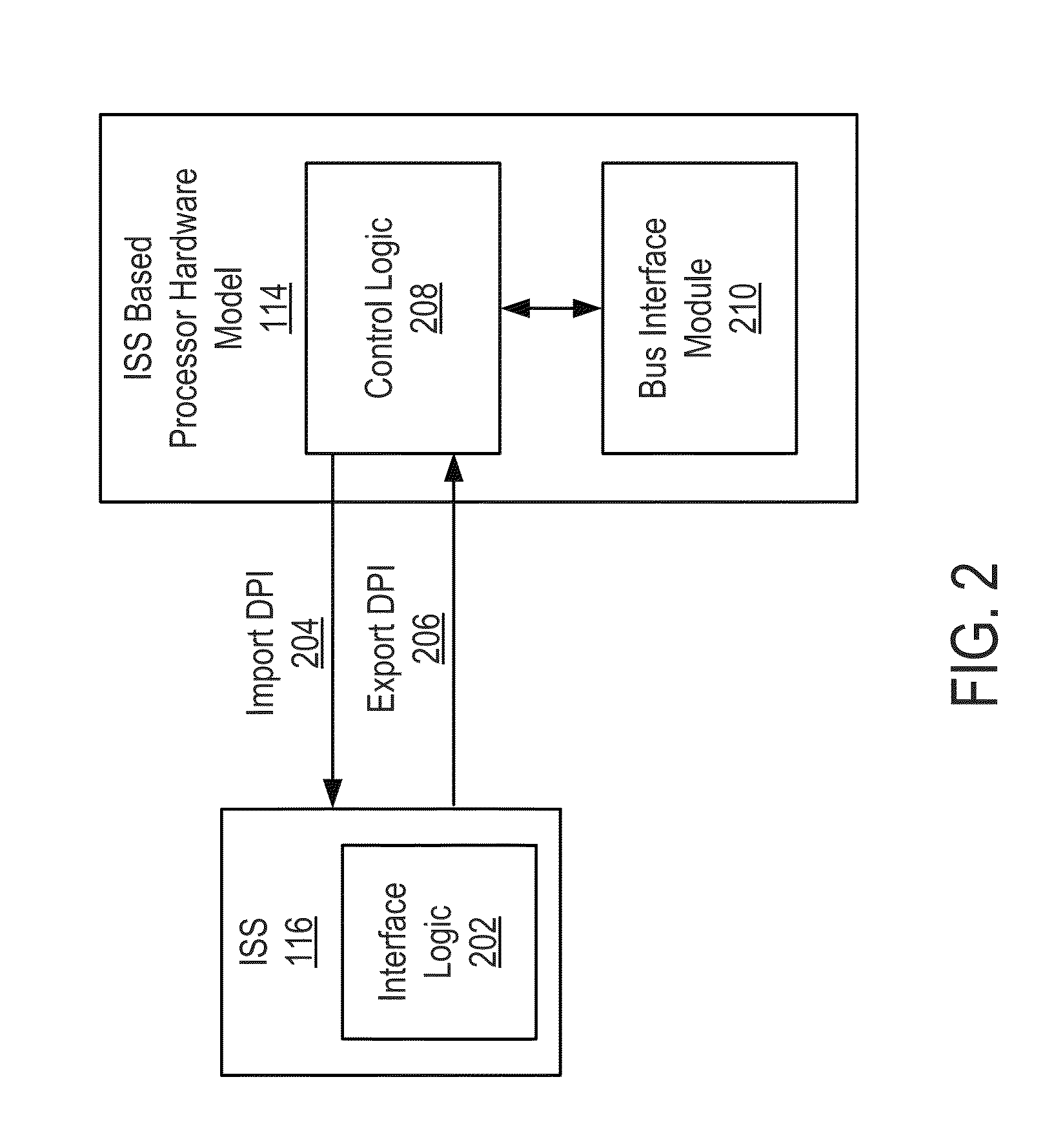

Efficient mechanism in hardware and software co-simulation system

ActiveUS20150356219A1Improving user controllabilityImprove ease of useCAD circuit designSpecial data processing applicationsComputer hardwareUsability

The described embodiments provide an efficient mechanism for performing hardware and software co-simulation that greatly simplifies system implementation, and improves the user controllability and ease-of-use. The mechanism includes the hardware and software co-simulators synchronizing at finite and predetermined synchronization points (e.g., five synchronization points) without using polling or busy-wait techniques. The hardware and software co-simulators run freely and independently from each other until one of the synchronization points is reached. At such point the simulators can communicate and / or control other simulation states, thereby reducing the amount of communication and control between the simulators.

Owner:SYNOPSYS INC

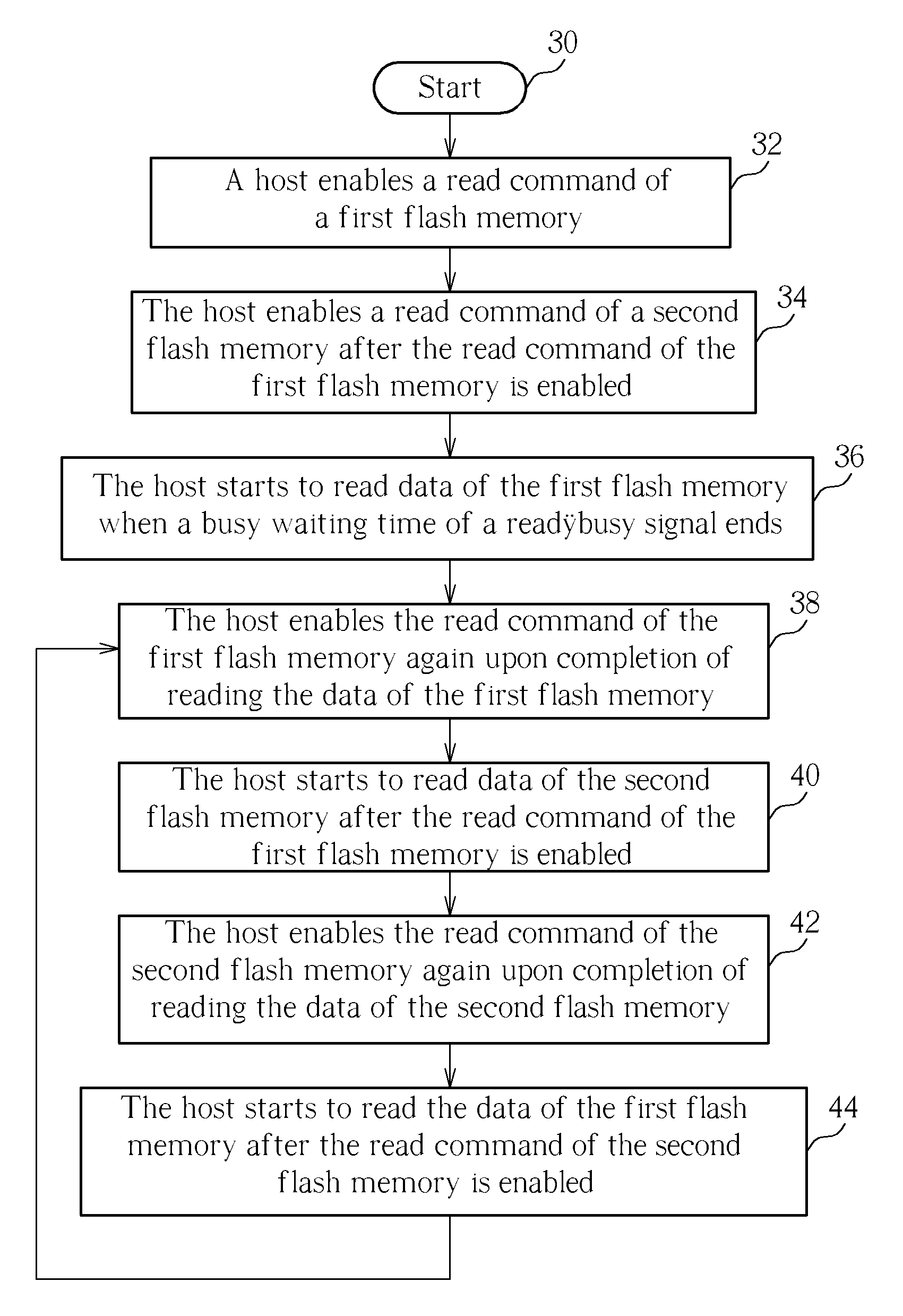

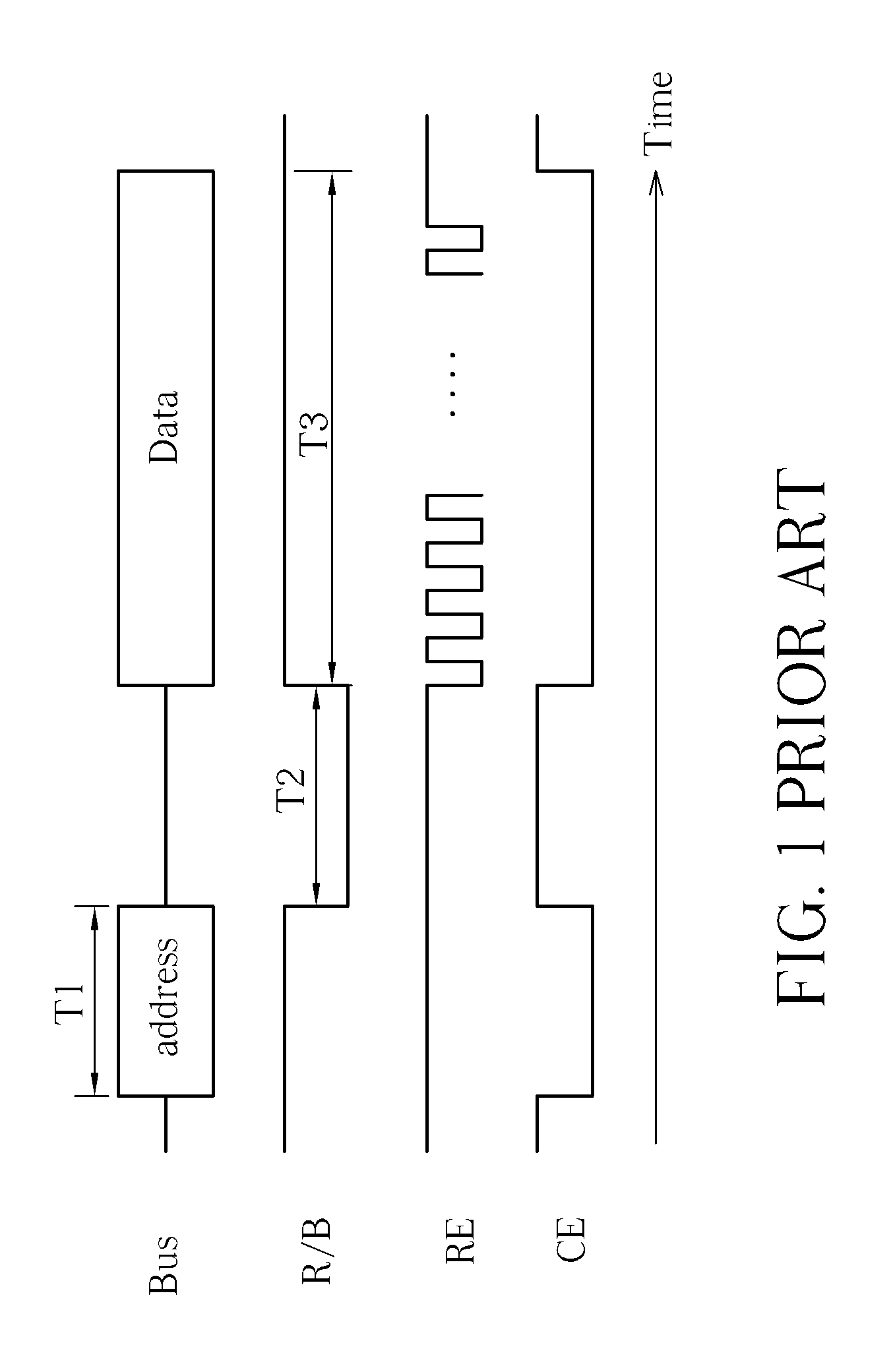

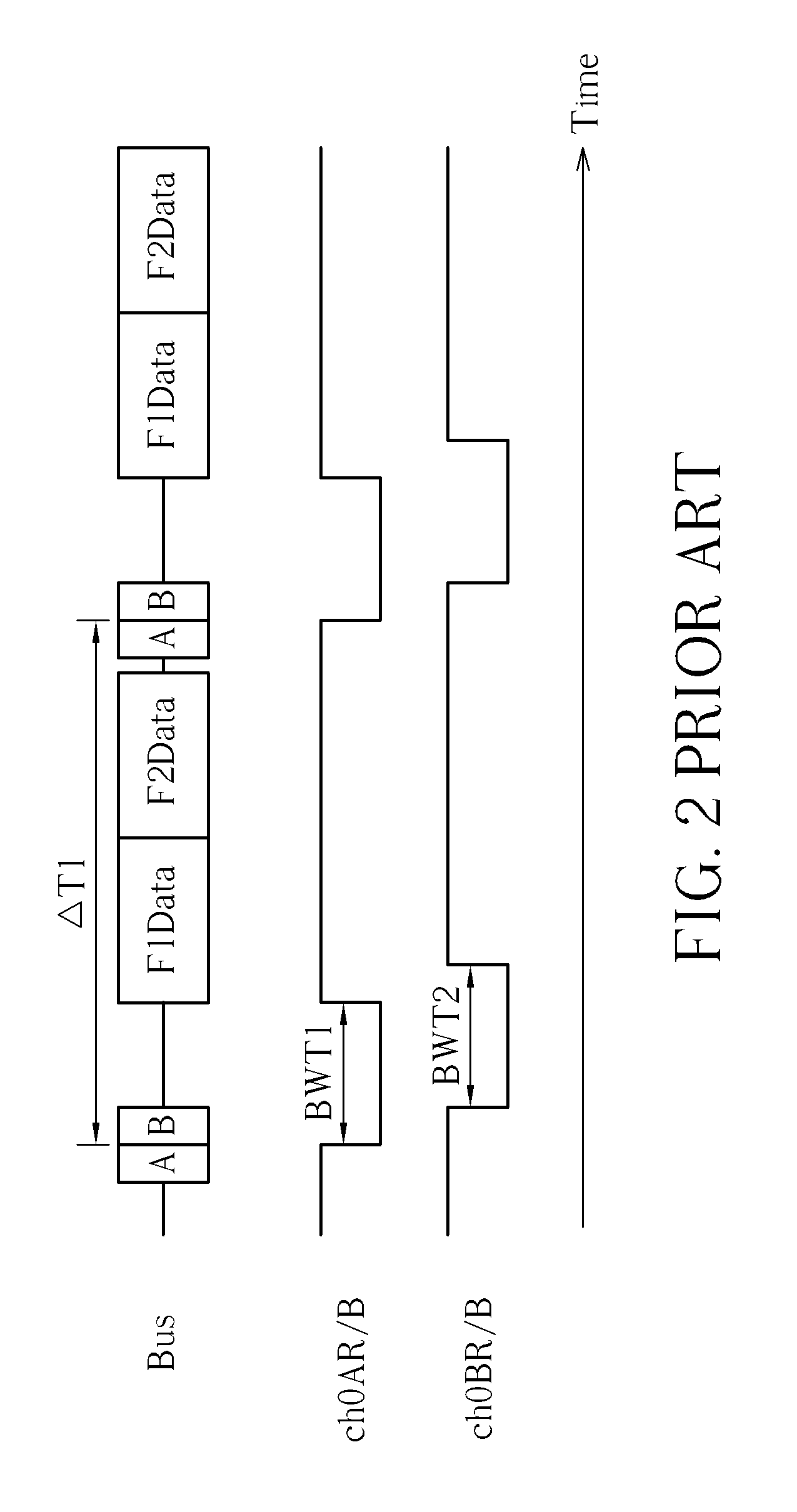

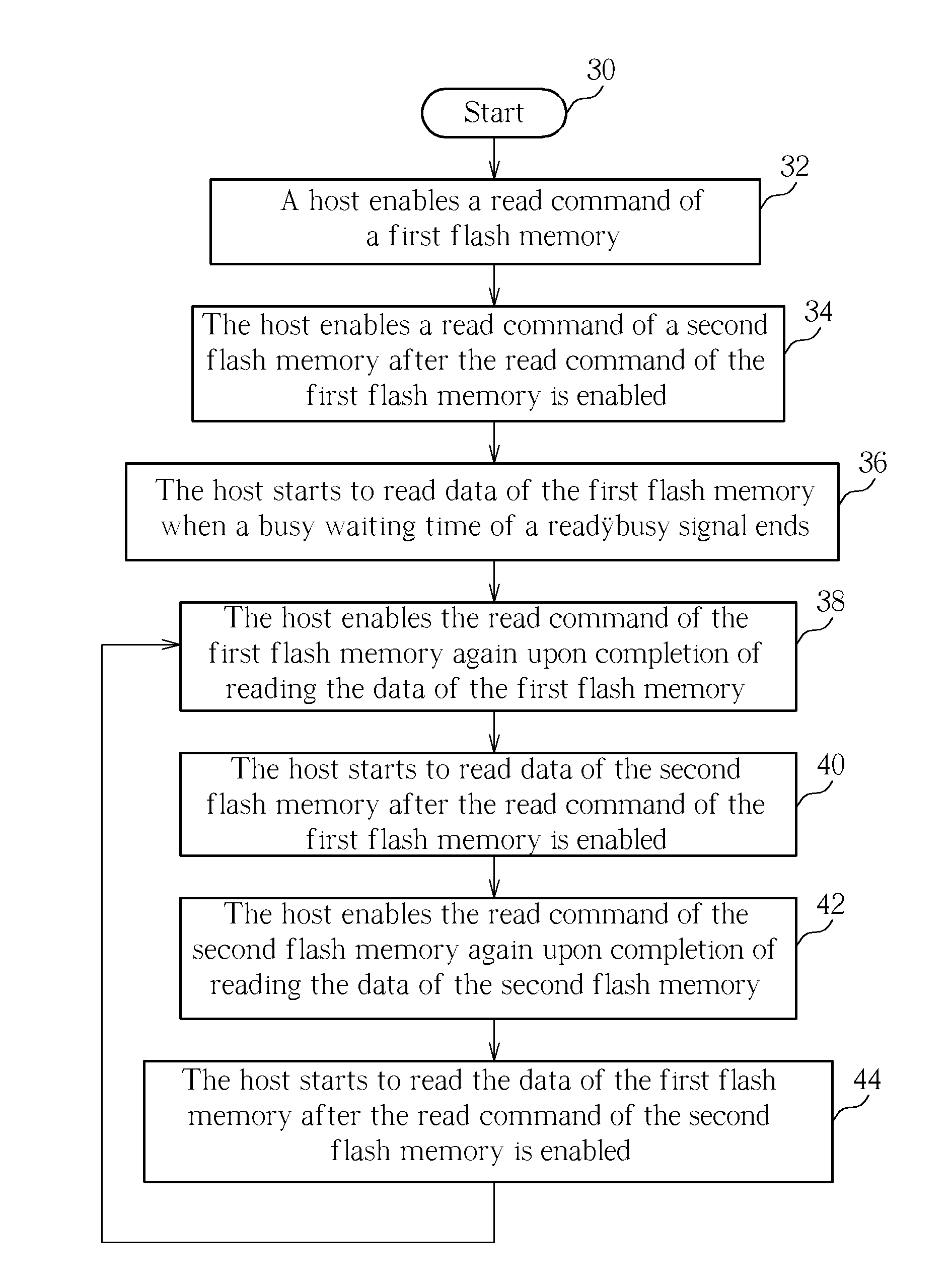

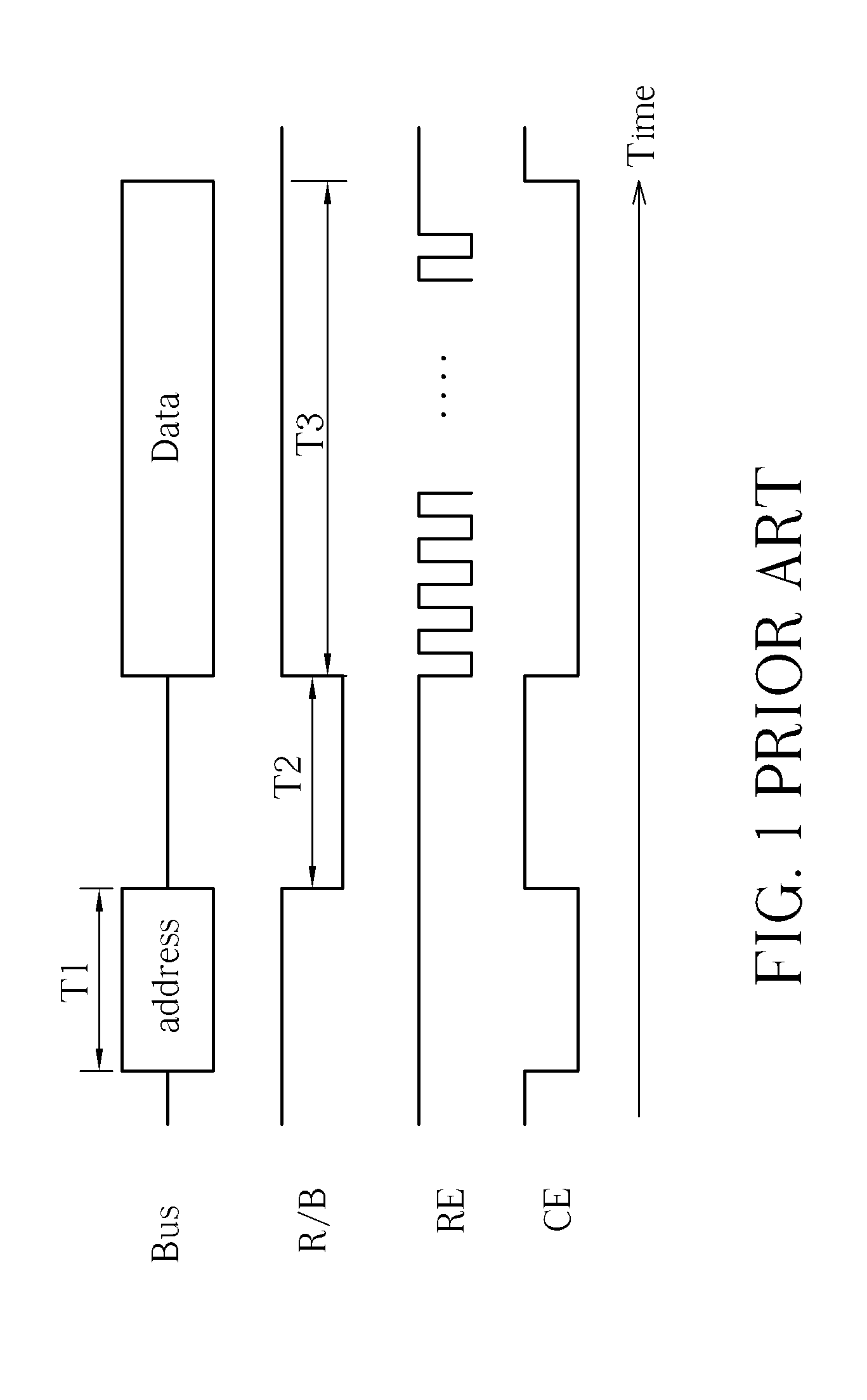

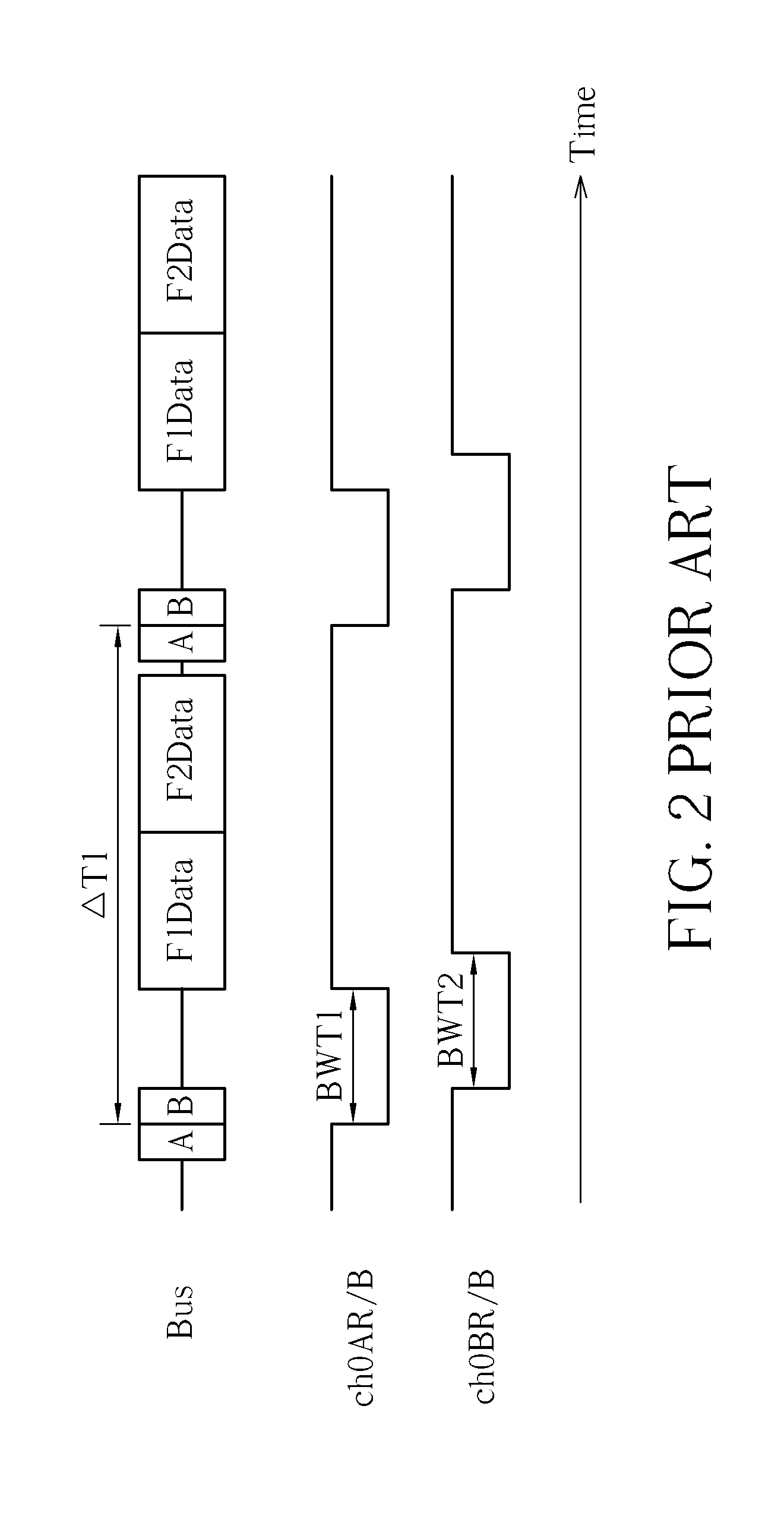

Method for operating flash memories on a bus

Enable a read command of a first flash memory. After the read command of the first flash memory is enabled, a ready / busy signal of the first flash memory enters a busy waiting time, and a read command of a second flash memory starts to be enabled. Start to read data of the first flash memory when the busy waiting time is over. Enable the read command of the first flash memory again upon completion of reading the data of the first flash memory. Start to read data of the second flash memory after the read command of the first flash memory is enabled again. And enable the read command of the second flash memory again upon completion of reading the data of the second flash.

Owner:ETRON TECH INC

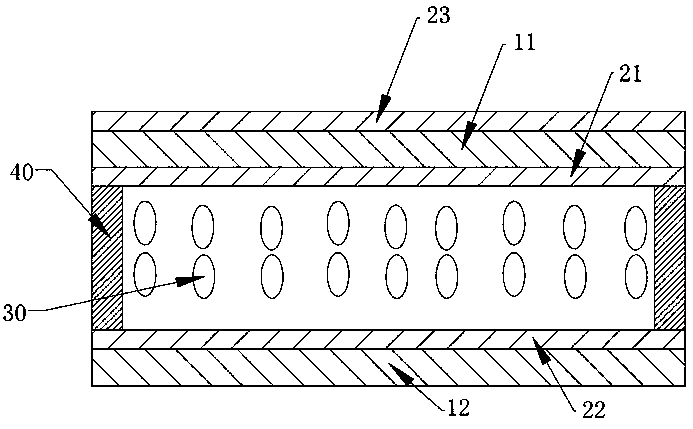

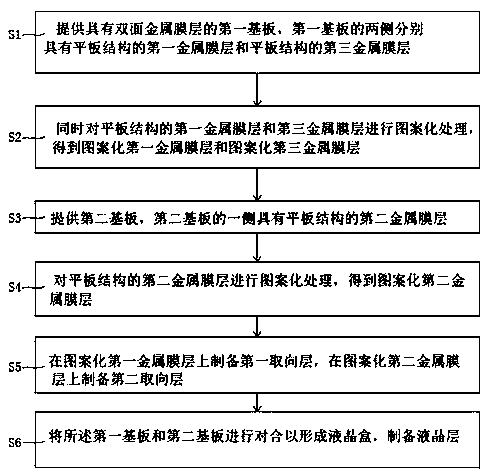

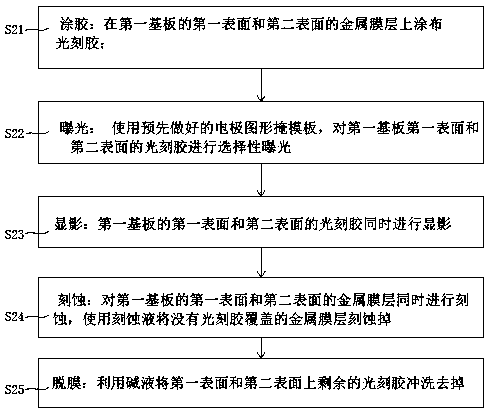

Flat-panel liquid crystal antenna and manufacturing method thereof

PendingCN109830806ALarge-scale manufacturingSave the yellow light processHigh frequency circuit adaptationsPrinted circuit aspectsMetalLiquid crystal cell

Owner:TRULY SEMICON

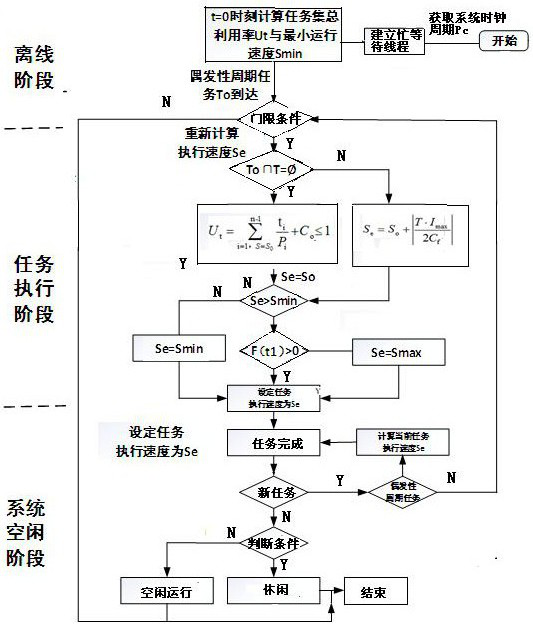

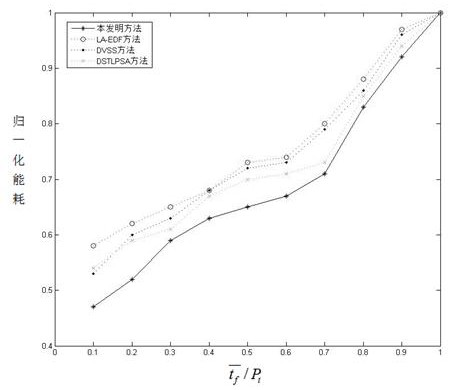

Low-power-consumption scheduling method based on high-performance open type numerical control system

PendingCN112130992ASlow downGuaranteed real-timeResource allocationDigital data processing detailsTime conditionSimulation

The invention relates to a low-power-consumption scheduling method based on a high-performance open type numerical control system. According to an existing real-time task energy-saving scheduling method, the characteristics of accidental periodic tasks and time and energy consumed during CPU acceleration are not considered, extra energy loss may occur in the process of processor speed adjustment,and the overall power consumption of a system is increased. According to the low-power-consumption scheduling method meeting the real-time condition of the high-performance open type numerical controlsystem, before a task set is scheduled, a busy waiting thread is established firstly, and the power consumption of a CPU in an idle state and the execution speed of subsets in the task set are calculated; and then, on the premise of ensuring the real-time performance of the system, whether the task can enter a task preparation queue or not is judged through a threshold condition, thus recalculating the task execution speed, and comparing the task execution speed with the allowable minimum operation speed of the system to obtain an optimal solution of the CPU operation speed. The invention isapplied to the numerical control field.

Owner:HARBIN UNIV

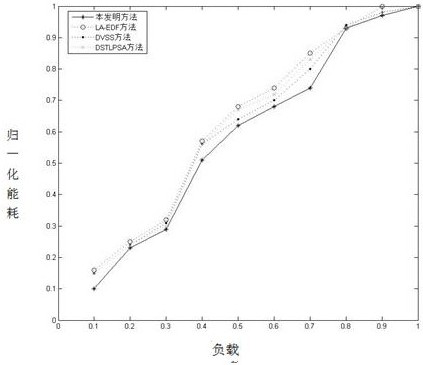

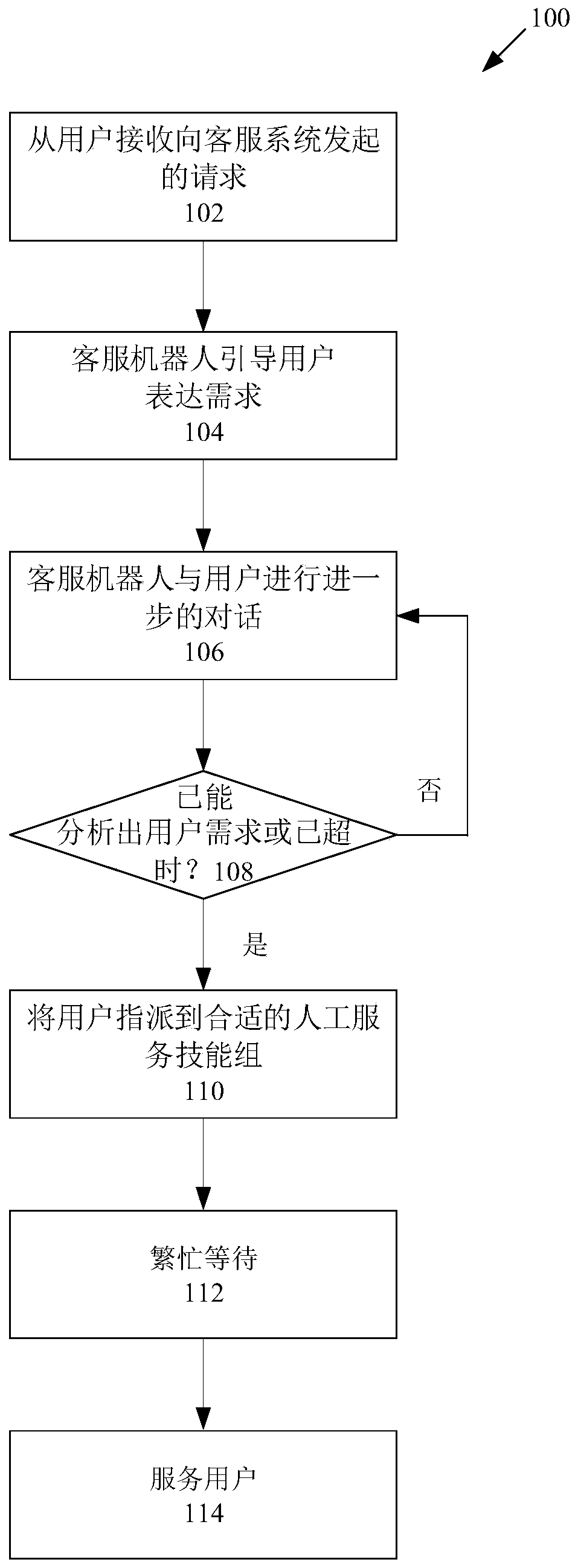

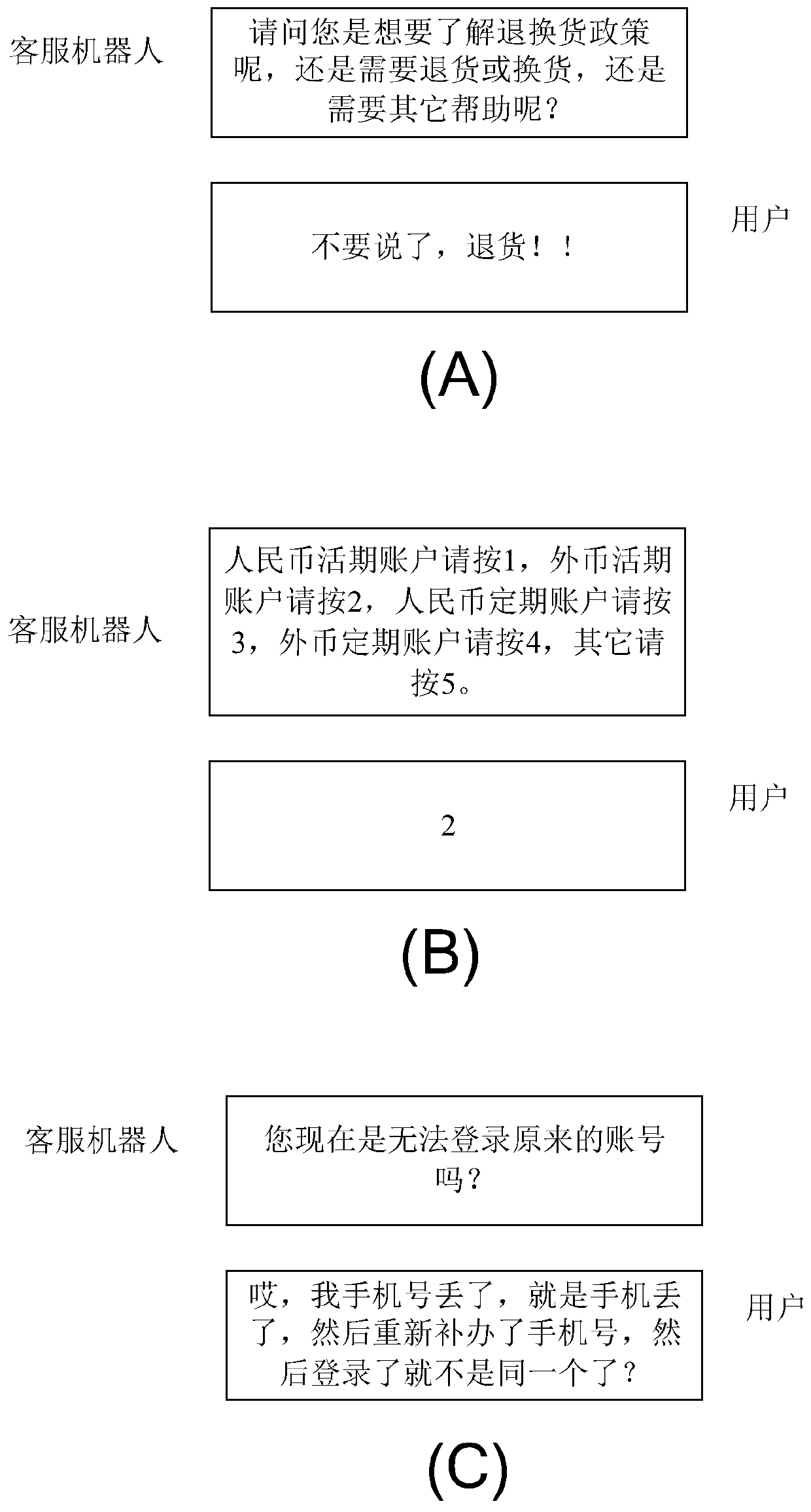

Method, device and equipment for personalized busy waiting service based on machine learning

PendingCN110060083ADigital data information retrievalCustomer communicationsUser needsPersonalization

The invention relates to a method for personalized busy waiting service based on machine learning. The method comprises the steps of analyzing the user requirements through man-machine conversation; assigning a user to a corresponding manual service and entering a busy wait in a case where it is determined based on the analysis that the user demand or the man-machine conversation exceeds a limit;and during the busy wait, using a machine learning-based model to provide a personalized busy wait service to the user, where the personalized busy wait service includes using the machine learning-based model to provide a wait-to-dispatch adapted to the user or further analyzing the user demand. The invention also relates to a corresponding device, equipment and a computer readable medium.

Owner:ADVANCED NEW TECH CO LTD

Computer system

ActiveUS20130219392A1Reduce power consumptionReduce processing timeEnergy efficient ICTEnergy efficient computingPhysics processing unitBlock method

In a computer system according to the background art, when a request to halt a virtual processor was detected, the virtual processor was blocked. In the blocking method, latency of virtual halt exit of the virtual processor was so long that a problem of performance was caused. A virtual machine monitor selects either of a busy wait method for making repeatedly examination until the virtual halt state exits while the virtual processor stays on the physical processor and a blocking method for stopping execution of the virtual processor and scheduling other virtual processors on the physical processor while yielding the operating physical processor and checking off scheduling of the virtual processor to the physical processor, based on a virtual processor halt duration predicted value of the virtual processor which is an average value of latest N virtual processor halt durations of the virtual processor.

Owner:HITACHI LTD

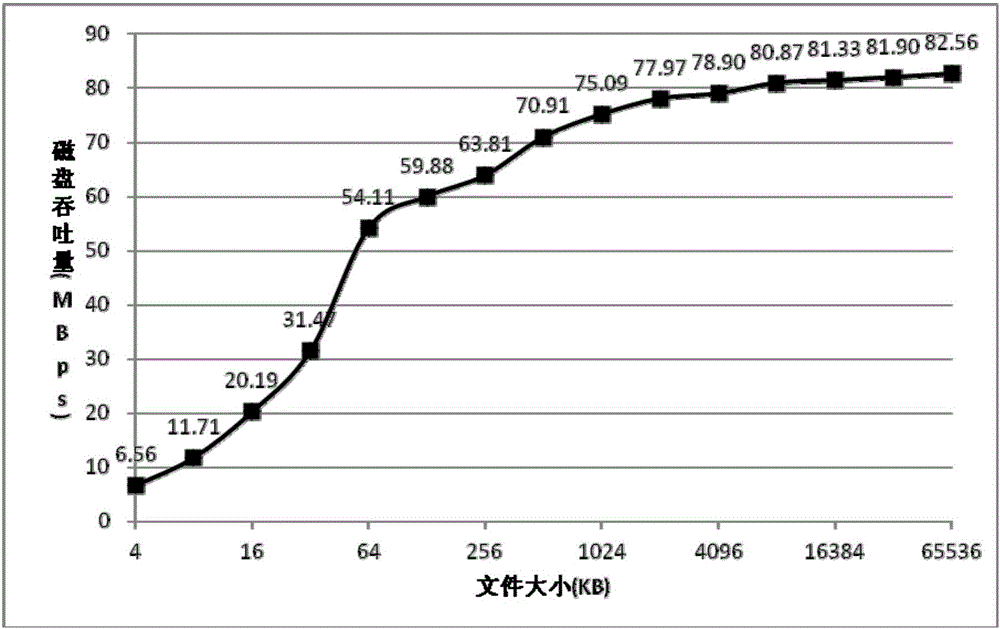

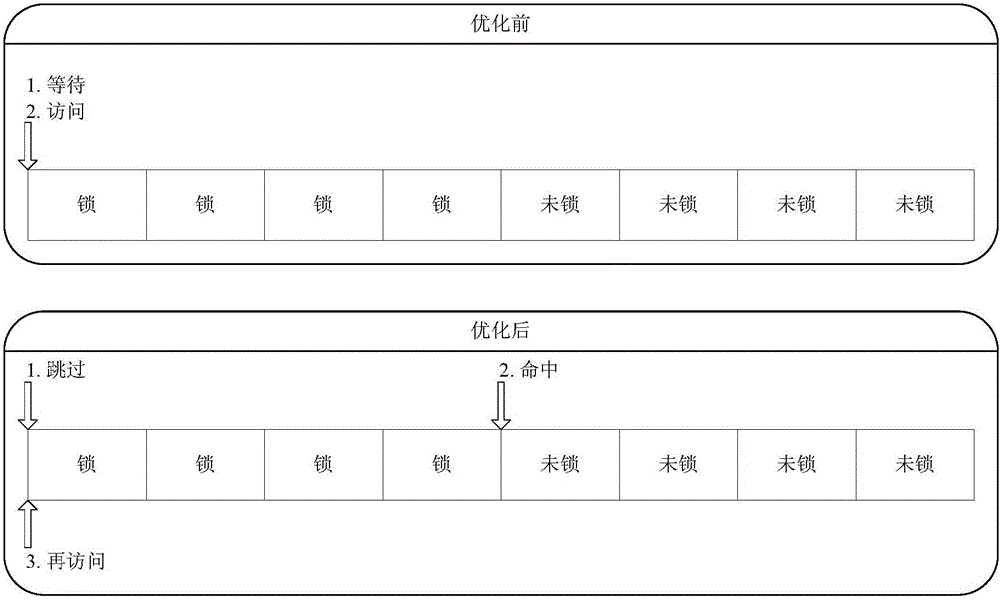

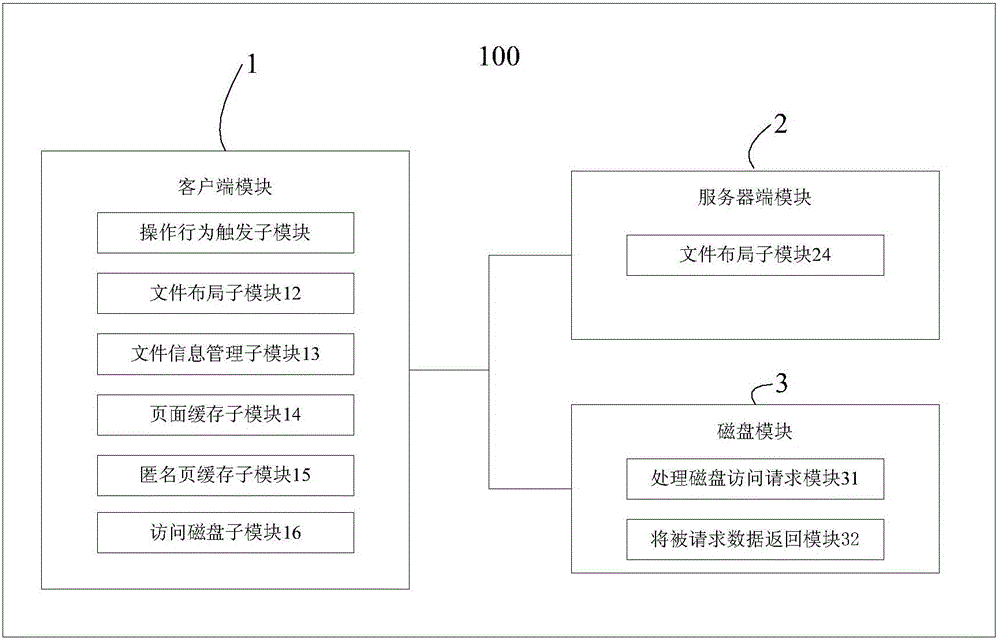

System and method of busy-wait after pre-reading small file in parallel network file system

InactiveCN105095353AImprove read access performanceSpecial data processing applicationsNetwork File SystemClient-side

The present invention discloses a system and a method of busy-wait after pre-reading a small file in a parallel network file system. The system comprises: a client, configured to determine a data page number of the current access file according to a file layout of a current access file, and access a data page of the current access file in a disk; and a server, configured to obtain the file layout of the current access file, and send the file layout to the client. When an upper application finds a locked current data page in a cache, the client marks the current data page as "not accessed", accesses a next data page by skipping the current data page, and accesses the current data page again after accessing all data pages.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI +1

Aggressive content pre-fetching during pre-boot runtime to support speedy OS booting

A method and system for content pre-fetching during a processing system pre-boot runtime. First, it is determined when a processor of a processing system is in one of a busy wait state and an idle state during a pre-boot runtime of the processing system. Then, content is pre-fetched from a data storage unit of the processing system. The content is pre-fetched based upon a pre-fetch profile. The content is loaded into system memory of the processing system.

Owner:INTEL CORP

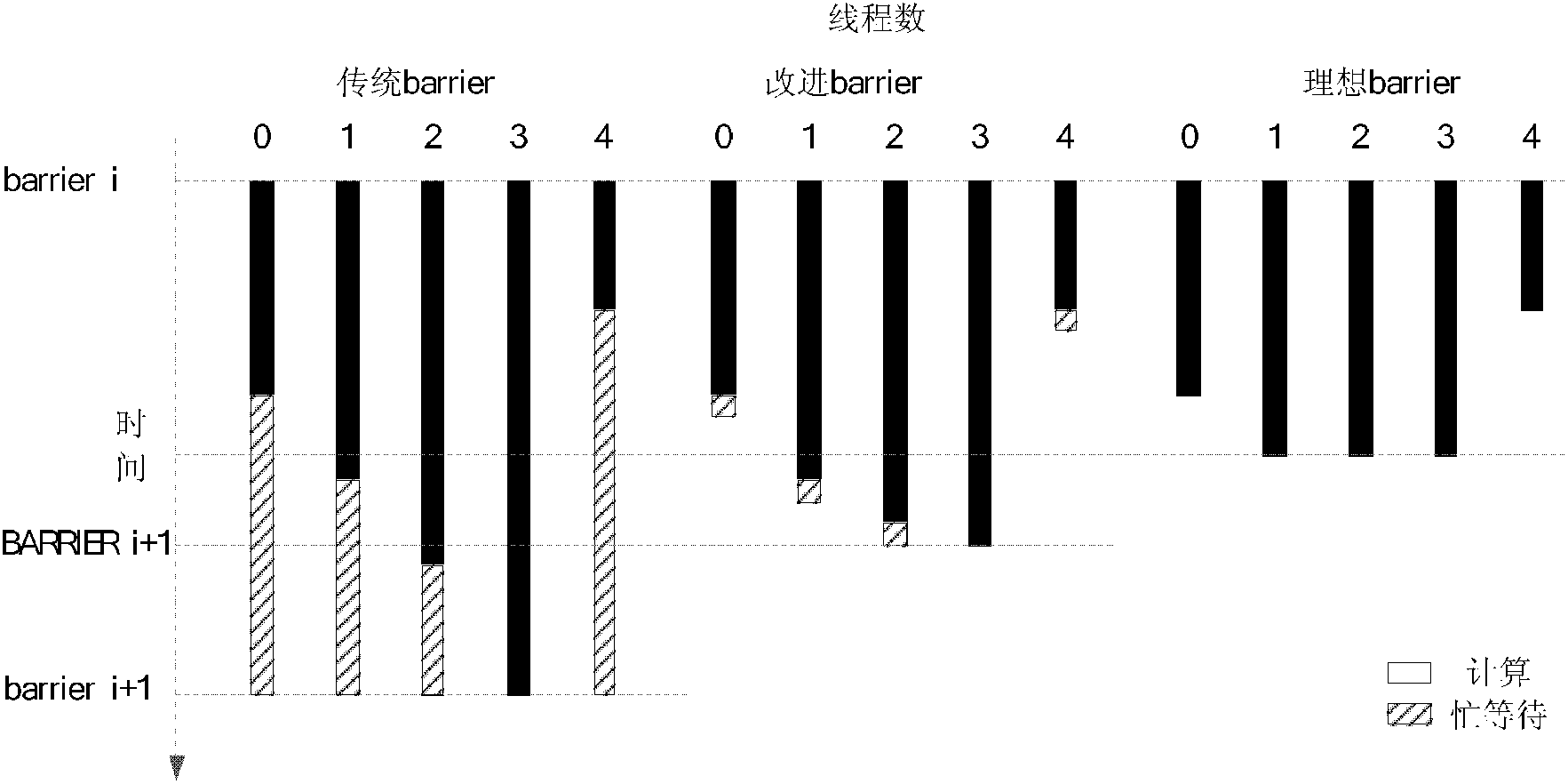

Method and system for reducing power consumption of multi-thread program

ActiveCN103336571AReduce power consumptionPower supply for data processingPrediction intervalComputer science

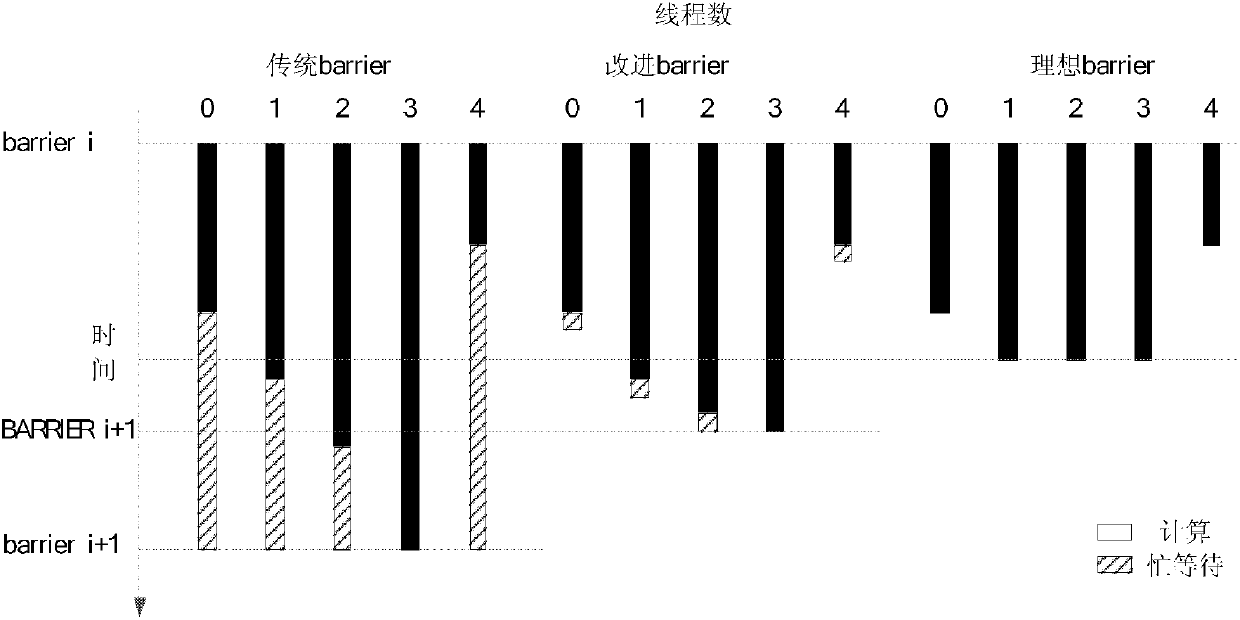

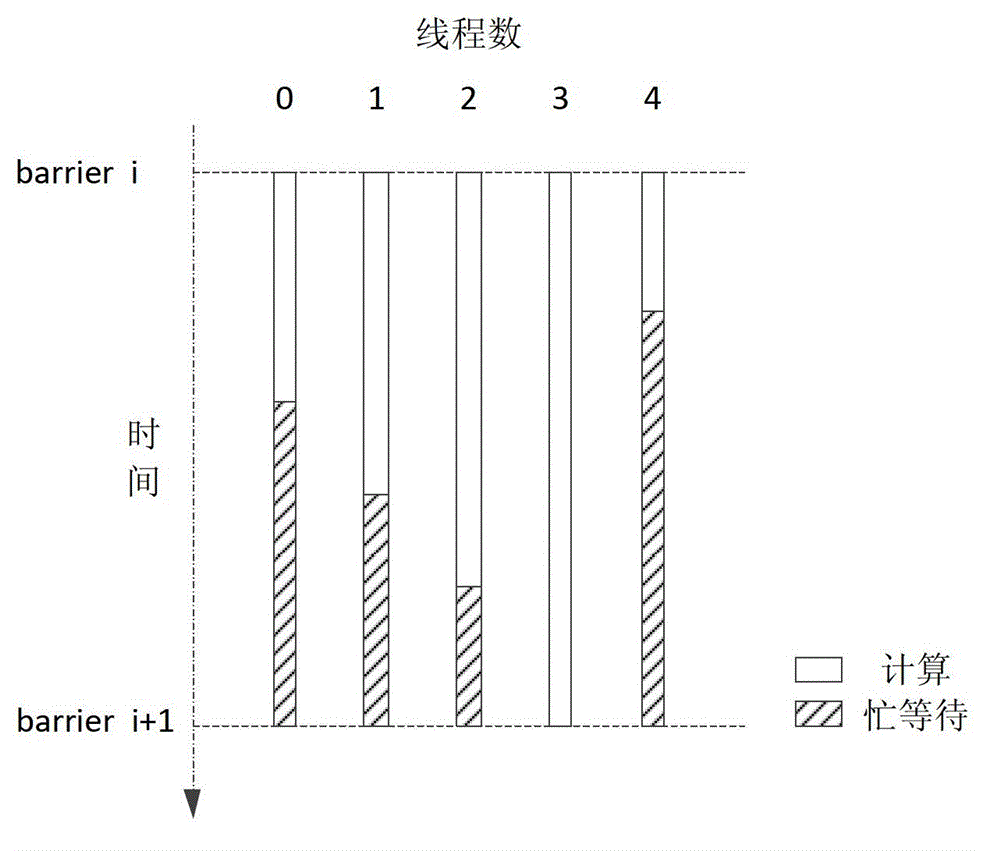

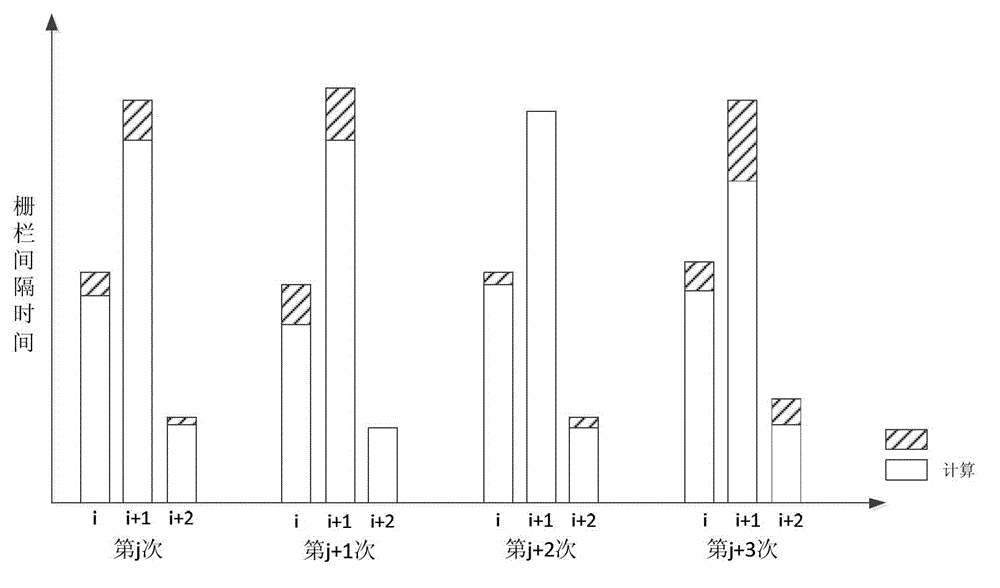

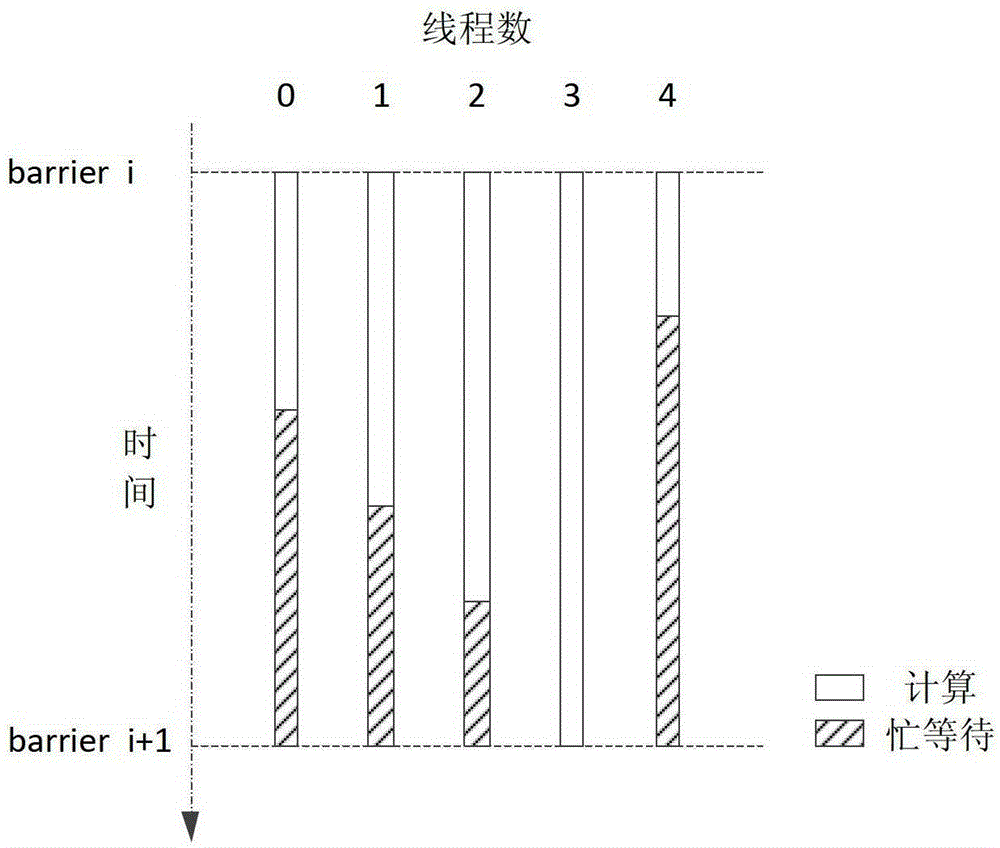

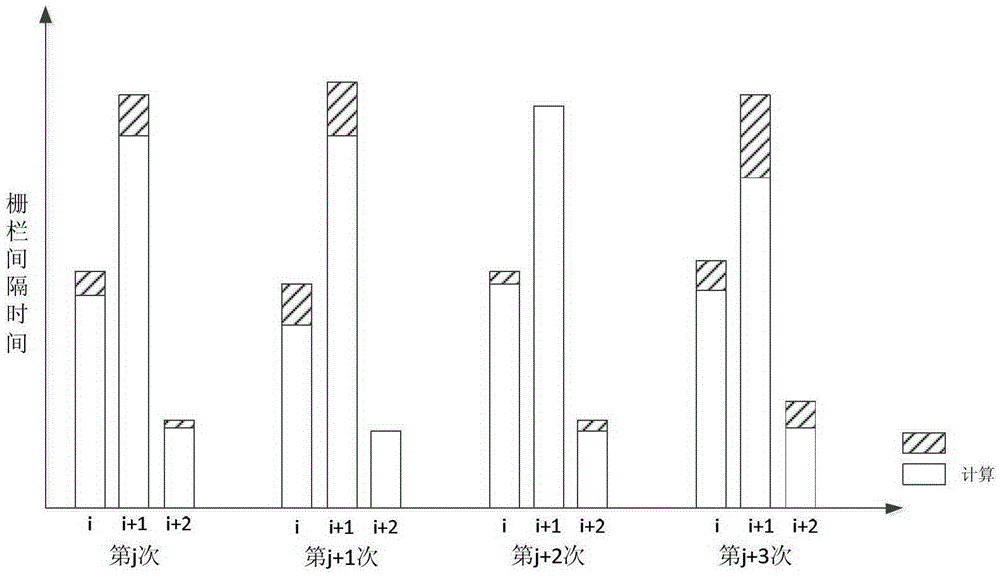

The invention discloses a method and a system for reducing power consumption of a multi-thread program. The method comprises the steps as follows: when one thread arrives the first barrier, the address of the barrier and the time that the last thread leaves the barrier are recorded in a barrier interval time predicting table; when the last thread leaves the barrier i+1, the time that the last thread leaves the barrier i is subtracted from the time at this moment, so that the interval time of the barrier i is obtained; the address and the interval time of the barrier i are written into the barrier interval time predicting table; when threads arrive the same barrier again, the computation time is subtracted from the interval time read out from the barrier interval time predicting table adopted by the thread arriving a barrier synchronization point first, the busy waiting time of the thread is predicted, and the thread chooses to enter an appropriate low power consumption mode; when the predicted interval time of the barrier is coming, the thread arriving the barrier synchronization point first is restored into a normal power consumption mode, and the barrier interval time predicting table is updated, so that the power consumption of the whole processor is reduced.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

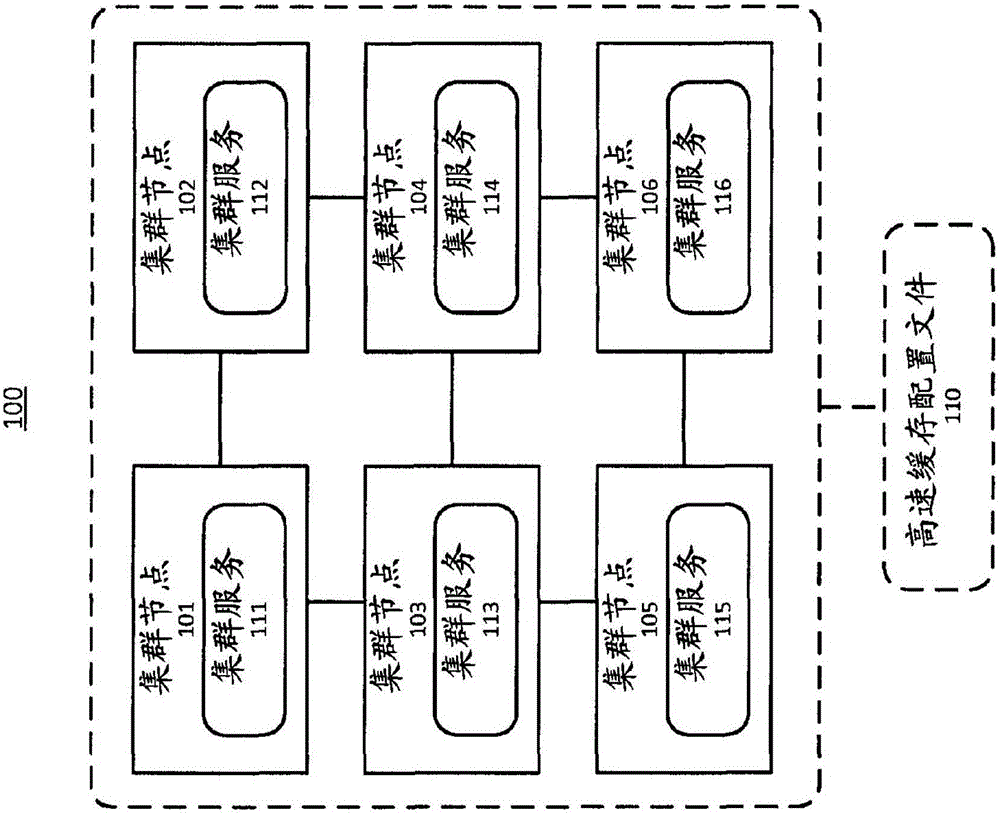

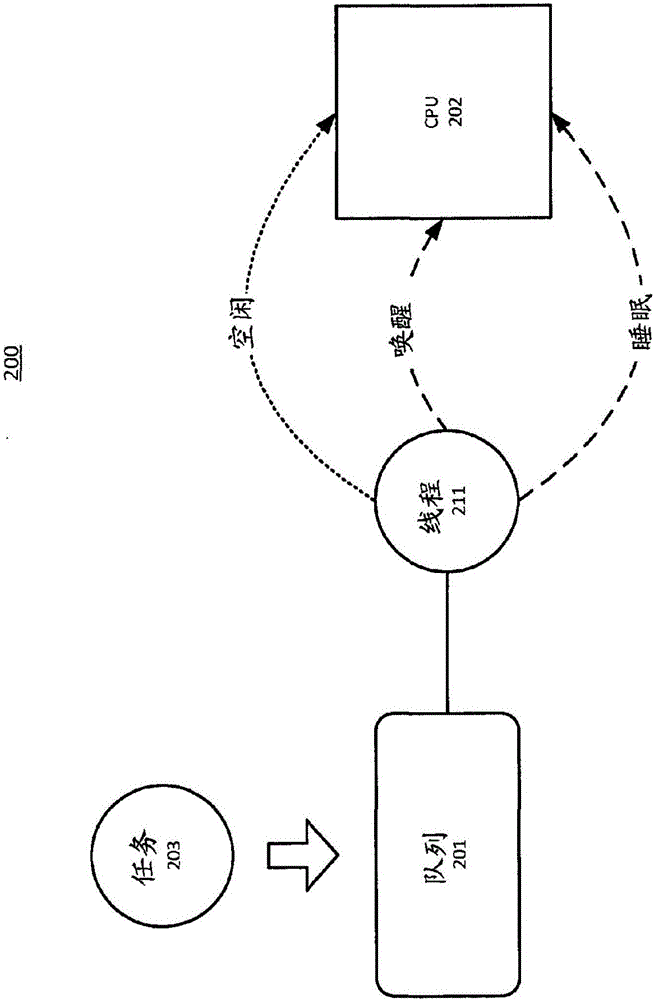

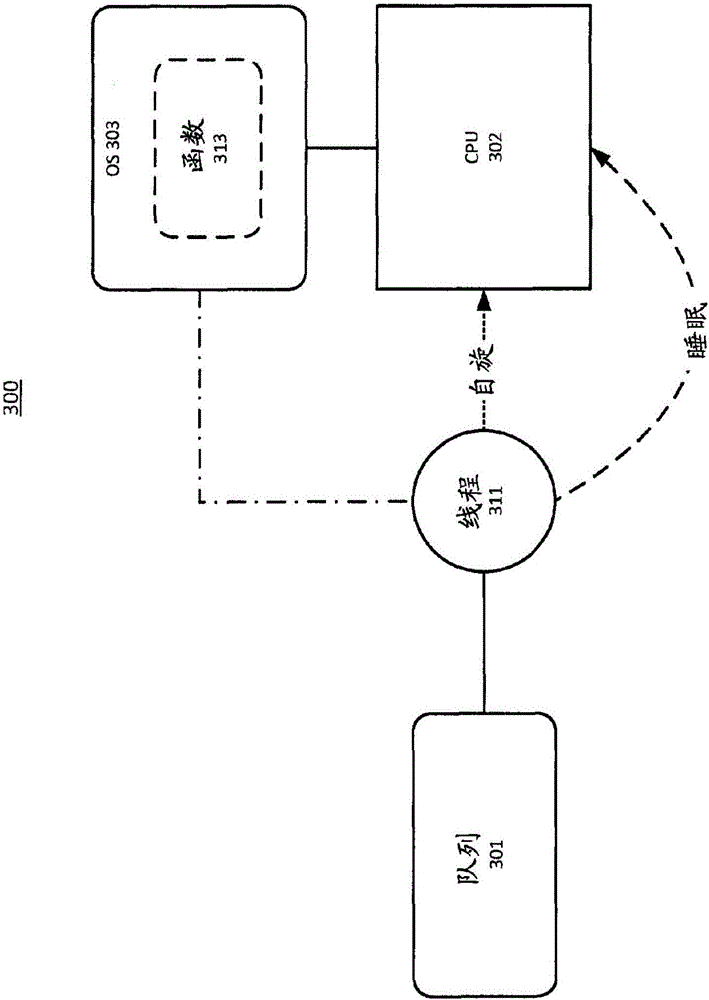

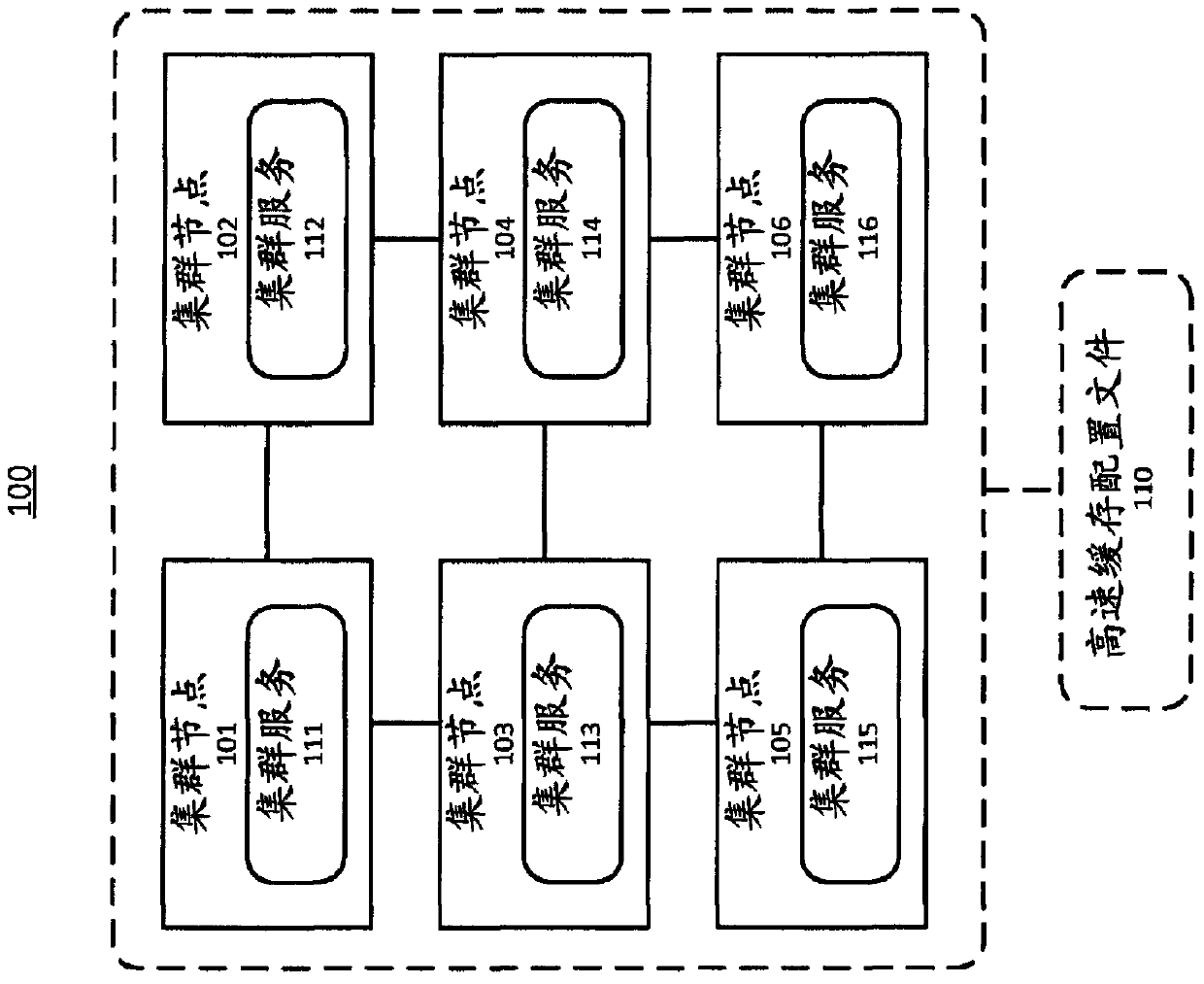

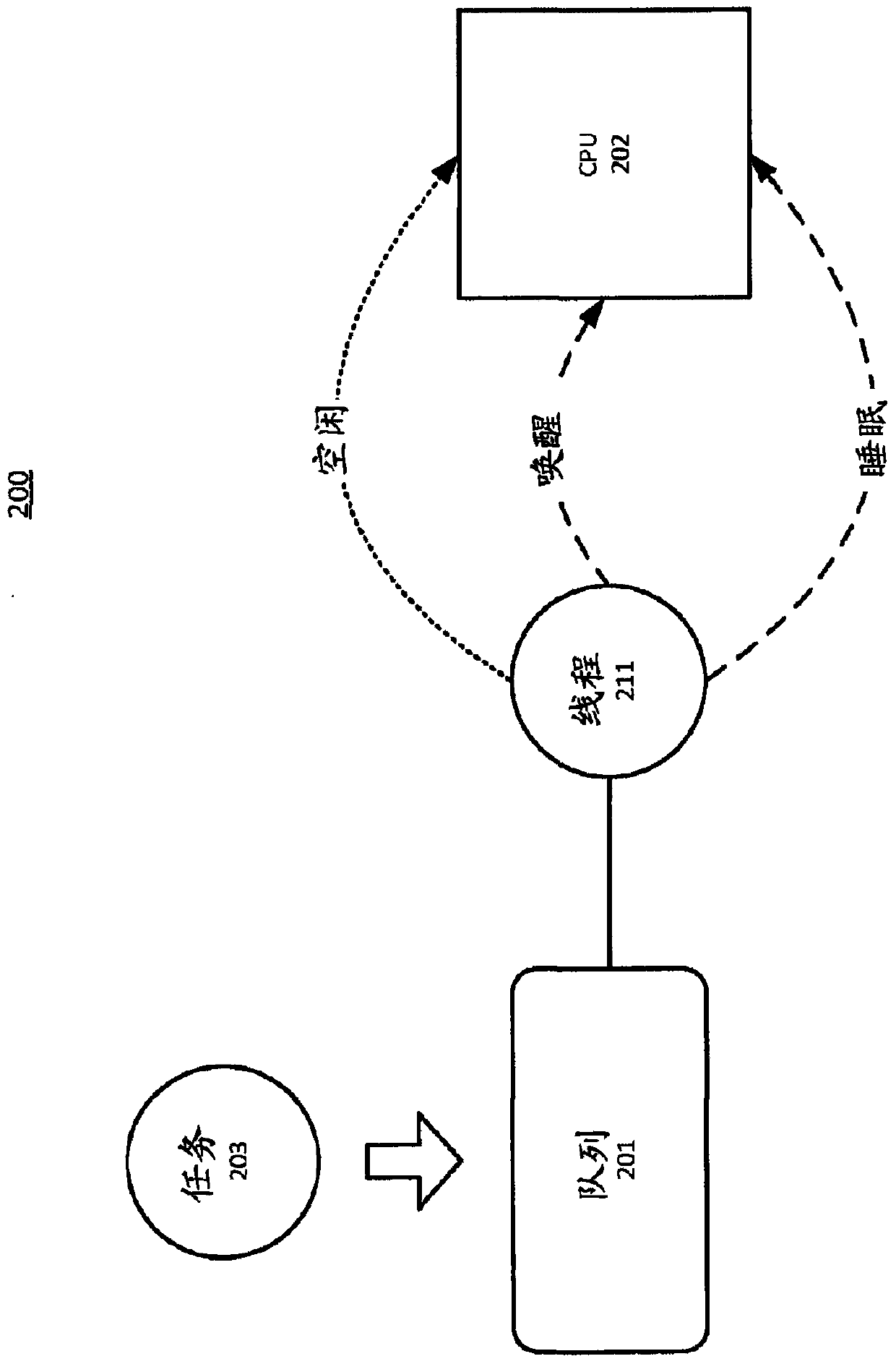

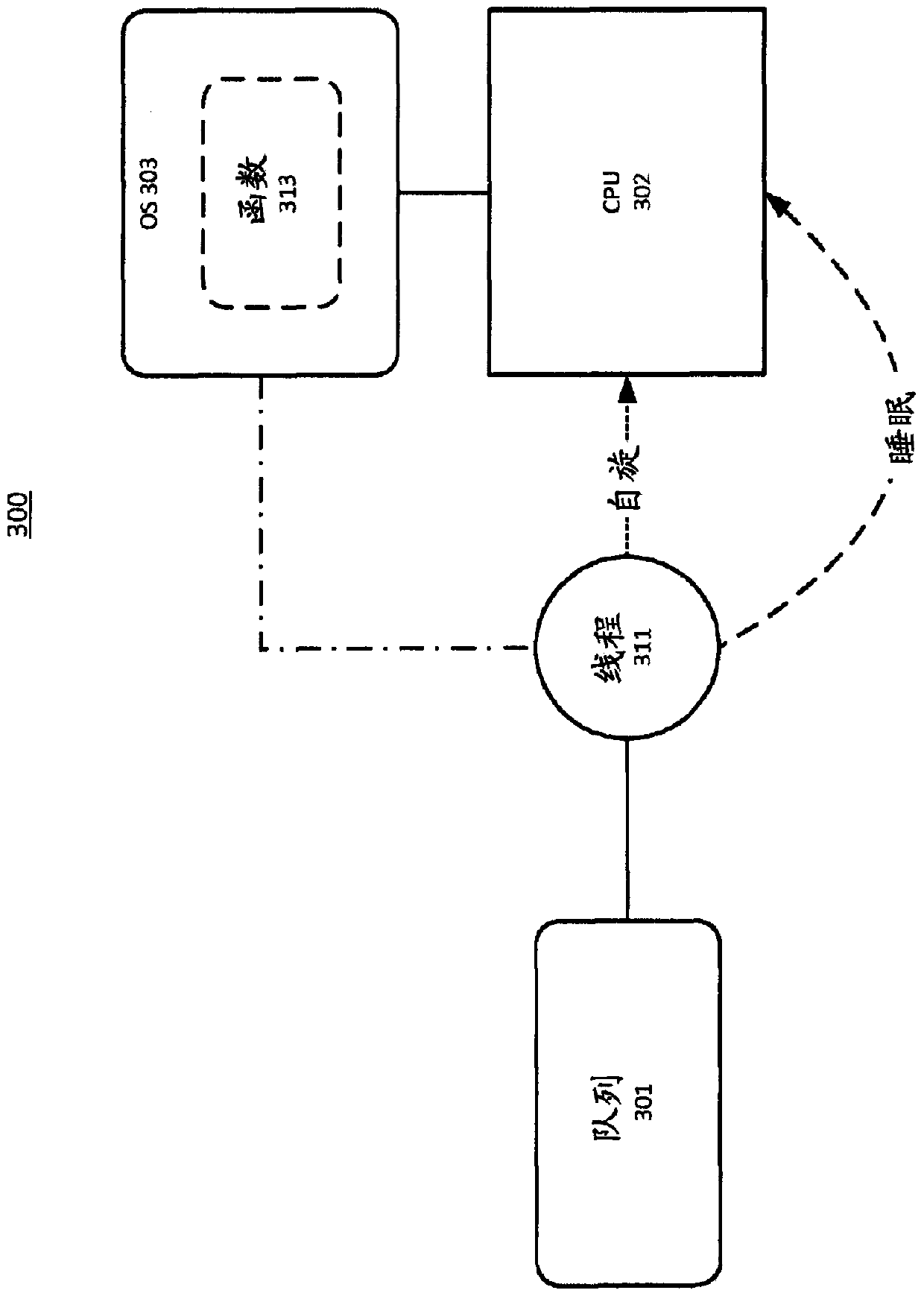

System and method for supporting adaptive busy wait in a computing environment

ActiveCN105830029AProgram initiation/switchingDigital data processing detailsSleep stateDistributed computing

A system and method can support queue processing in a computing environment such as a distributed data grid. A thread can be associated with a queue in the computing environment, wherein the thread runs on one or more microprocessors that support a central processing unit (CPU). The system can use the thread to process one or more tasks when said one or more tasks arrive at the queue. Furthermore, the system can configure the thread to be in one of a sleep state and an idle state adaptively, when there is no task in the queue.

Owner:ORACLE INT CORP

NoC (Network-on-Chip) multi-core processor multi-thread resource allocation processing method and system

ActiveCN102591722BImprove performanceImprove acceleration performanceResource allocationNetworks on chipResource allocation

The invention provides a NoC (Network-on-Chip) multi-core processor multi-thread resource allocation processing method and a NoC multi-core processor multi-thread resource allocation processing system. The system comprises a detection unit, an exclusive control index table and a depriving and distributing processing unit, wherein the detection unit is used for detecting an address and a value of exclusive control in the process of executing a NoC multi-core processor multi-thread program and writing the address and the value into the exclusive control index table; the exclusive control index table is used for storing the address and the value of the exclusive control, which are detected by the detection unit; and the depriving and distributing processing unit is used for arranging threads of the exclusive control with the same address into a registration queue, depriving busy waiting core resources of part of the threads in the registration queue and distributing the busy waiting core resources to other threads for use. The integral performance and the expandability of the multi-core processor multi-thread program are effectively improved.

Owner:LOONGSON TECH CORP

Integrated circuit, computer system, and control method, including power saving control to reduce power consumed by execution of a loop

InactiveUS8918664B2Easily detect loopReduce the amount requiredVolume/mass flow measurementPower supply for data processingEngineeringIntegrated circuit

An integrated circuit provided with a processor includes a loop detection unit that detects execution of a loop in the processor, a loop-carried dependence analysis unit that analyzes the loop in order to detect loop-carried dependence, and a power control unit that performs power saving control when no loop-carried dependence is detected. By detecting whether a loop has loop-carried dependence, loops for calculation or the like can be excluded from power saving control. As a result, a larger variety of busy-waits can be detected, and the amount of power wasted by a busy-wait can be reduced.

Owner:PANASONIC INTELLECTUAL PROPERTY MANAGEMENT CO LTD

Log pre-writing method and system based on RocksDB

PendingCN114138200AIncrease storage capacityTake advantage ofDatabase updatingInput/output to record carriersFile systemParallel computing

The invention particularly relates to a log pre-writing method and system based on RocksDB. According to the log pre-writing method and system based on the RocksDB, a fixed number of threads bound with cores are started to accept, manage, execute and return a writing request of NVMe SSD equipment; partitioning, managing and optimizing the hybrid storage device by using a self-made file system; reading data of the high-speed NVMe SSD equipment by using the SPDK to realize pre-write log recovery; a synchronization mechanism of the pre-write file system is realized by using a sliding window algorithm and / or a bitmap algorithm and a busy waiting technology. According to the log pre-writing method and system based on the RocksDB, the high storage performance of the NVMe SSD can be fully played, the throughput speed of a database is greatly increased, it can be guaranteed that all hardware devices can be fully utilized, the problem of the writing speed of the database caused by the slow disk writing speed is avoided, the recovery speed of the database is increased, and the recovery efficiency of the database is improved. And the reading and writing performance of the database is greatly improved.

Owner:上海沄熹科技有限公司

System and method for supporting adaptive busy waiting in a computing environment

ActiveCN105830029BProgram initiation/switchingDigital data processing detailsSleep stateParallel computing

A system and method that can support queue processing in a computing environment such as a distributed data grid. A thread may be associated with a queue in a computing environment where the thread runs on one or more microprocessors supporting a central processing unit (CPU). When one or more tasks arrive on the queue, the system can use threads to process the one or more tasks. Additionally, the system can adaptively configure threads to be in one of the sleep and idle states when there are no tasks in the queue.

Owner:ORACLE INT CORP

Method for receiving message passing interface (MPI) information under circumstance of over-allocation of virtual machine

InactiveCN101968749BImprove communication performanceImprove performanceMultiprogramming arrangementsSoftware simulation/interpretation/emulationOperational systemMessage Passing Interface

The invention discloses a method for receiving message passing interface (MPI) information under the circumstance of over-allocation of a virtual machine, which comprises the following steps: polling a socket file descriptor set or shared memory, invoking a sched_yield function, and releasing the currently-occupied virtual processor resource of the process in a blocking information receiving process; inquiring the run queue of a virtual processor and selecting a process which can be scheduled to carry out scheduling operation by a user operating system in a virtual machine comprising the virtual processor; when the blocking information receiving process is re-scheduled to operate, judging whether needing to notify a virtual machine manager of executing the rescheduling operation of the virtual processor; and executing the rescheduling operation of the virtual processor by the virtual machine manager through super invoking in the blocking information receiving process, and dealing withthe received information in the blocking information receiving process. The invention can reduce the performance loss caused by 'busy waiting' phenomenon produced by an MPI library information receiving mechanism.

Owner:HUAZHONG UNIV OF SCI & TECH

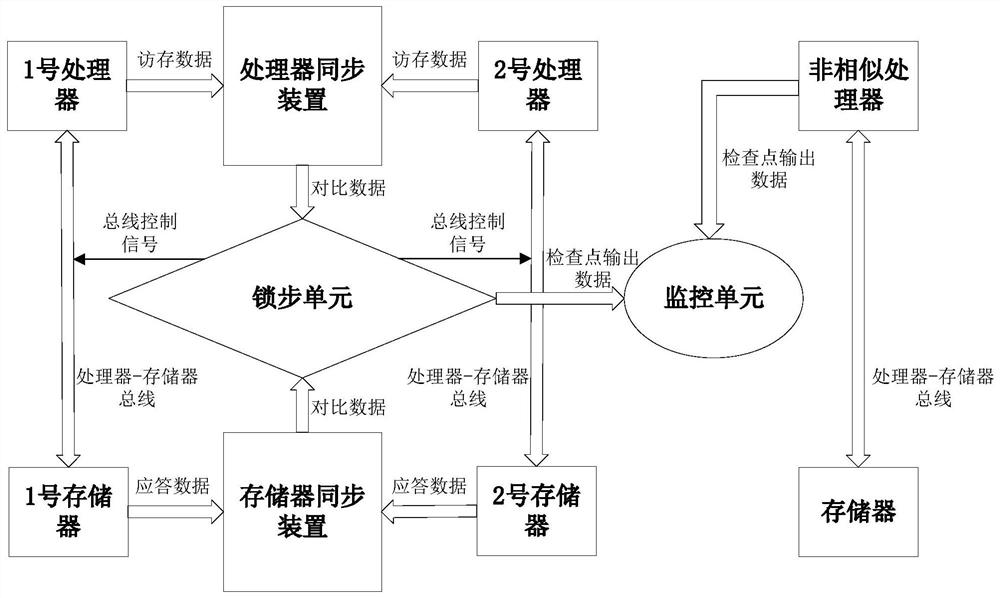

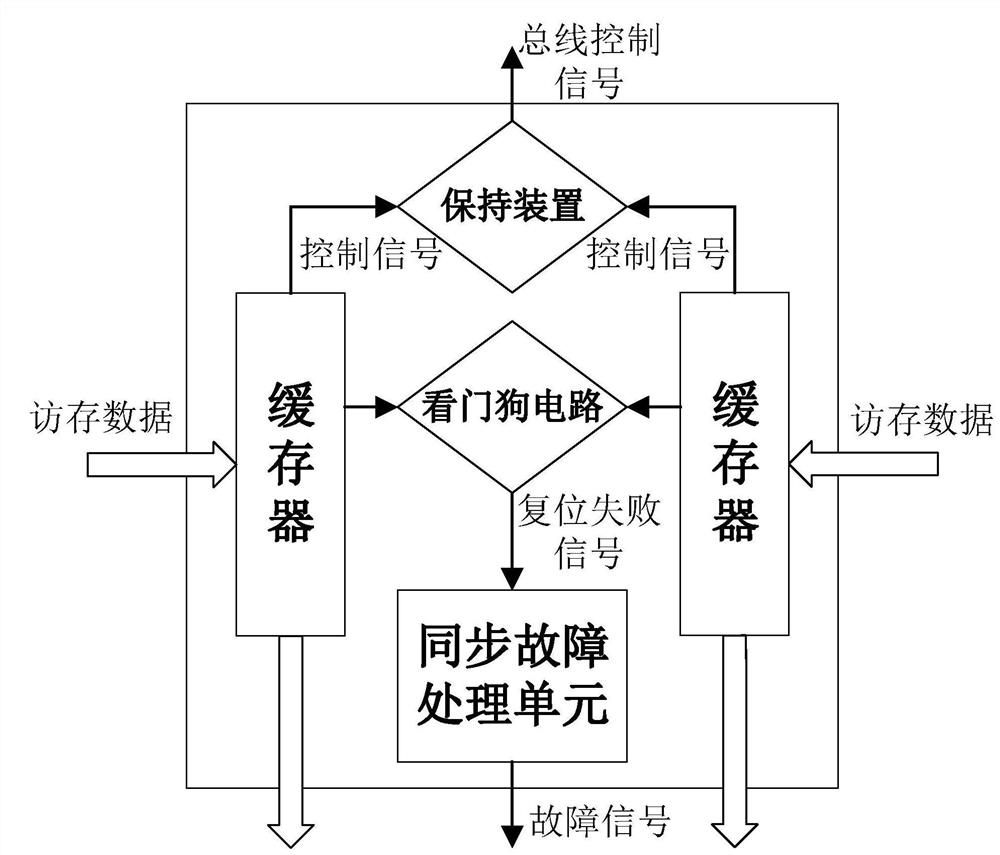

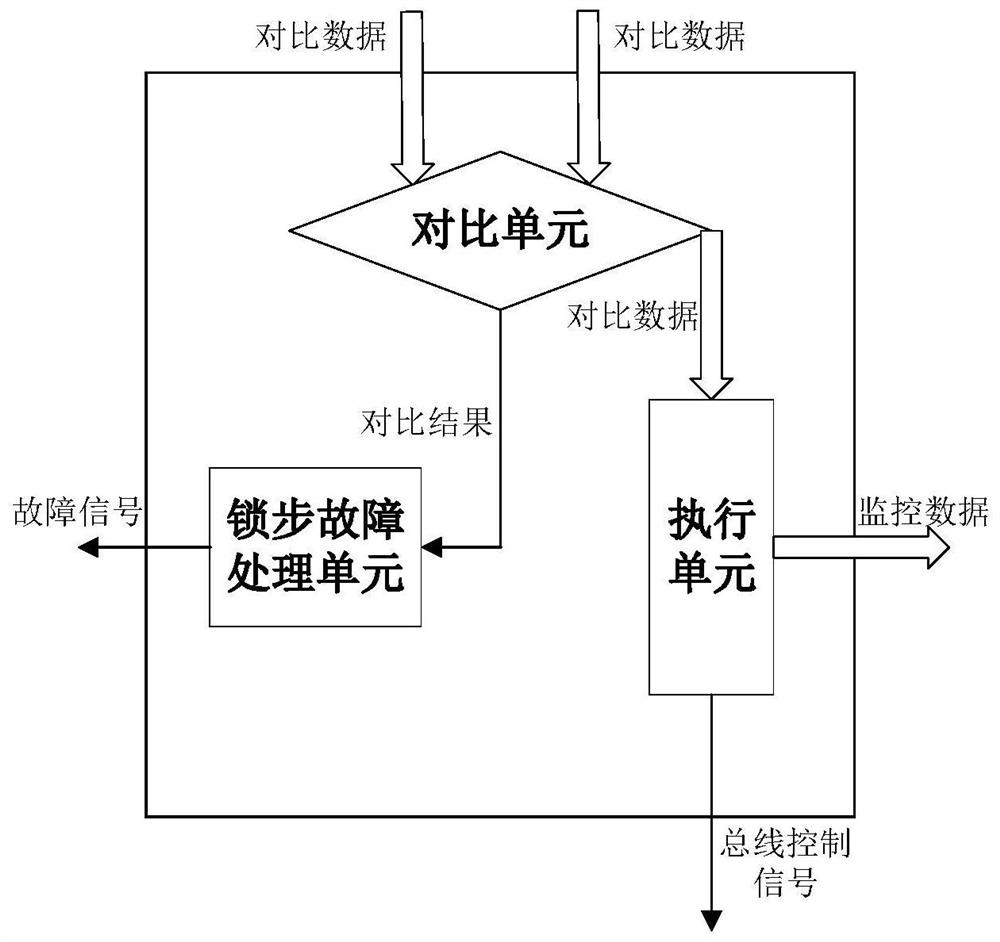

A high-security computer system based on lockstep and monitoring and its design method

ActiveCN109815040BImprove detection rateReduce busy waiting timeRedundant hardware error correctionTerm memorySystem failure

The invention provides a high-security computer system based on lock-step and monitoring and a design method thereof. When the processor performs a read operation, the dual processors respectively read data from the same address of the two memories and compare them. If the comparison is correct, the data is sent to the corresponding processor. If the comparison is wrong, the fault handling operation is performed; the comparison module for monitoring and lockstep is set up, and the checkpoint output is added to the load. When the checkpoint arrives, the cross comparison is performed. If the comparison is correct, the system is based on operation; if the comparison is incorrect, the troubleshooting operation is performed. The invention realizes fault detection and location at the instruction level, greatly reduces system faults caused by random faults in the memory, improves the detection rate of the dual-processor common-mode faults of the lockstep module, and further improves the safety of the entire system, effectively It solves the problem that the running speed of dissimilar processors does not match, and reduces the busy waiting time of the monitoring unit.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

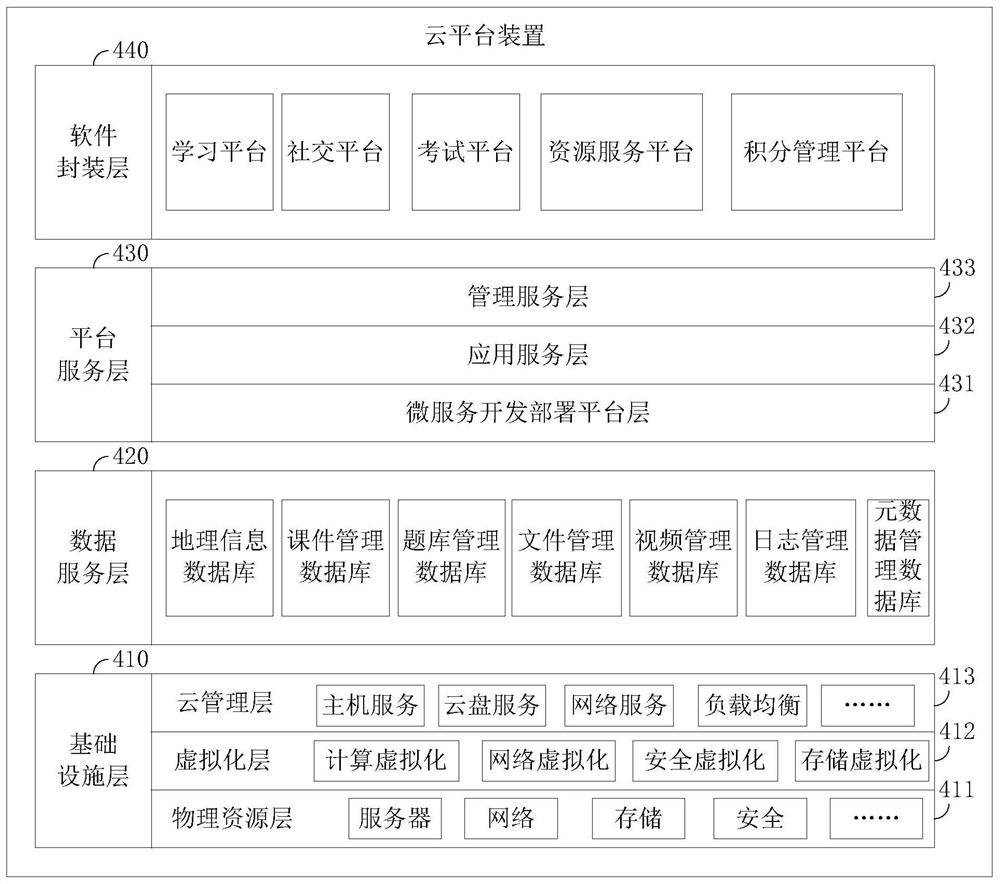

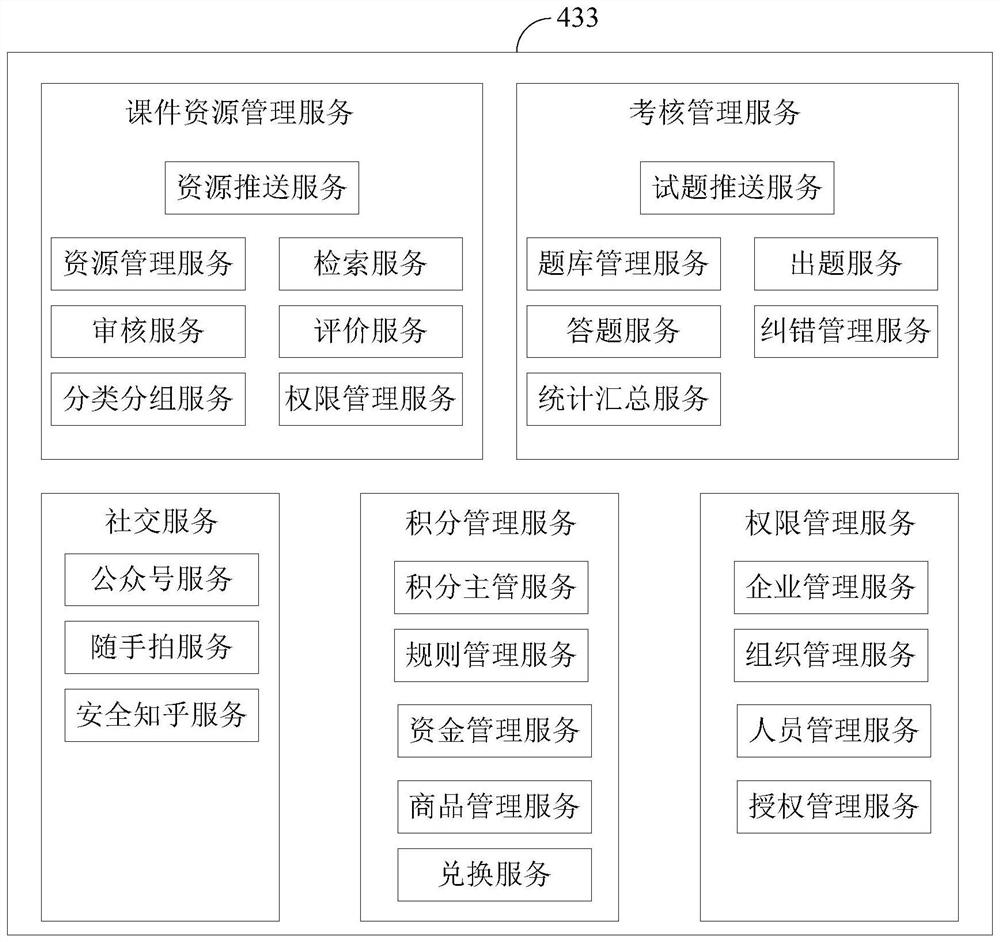

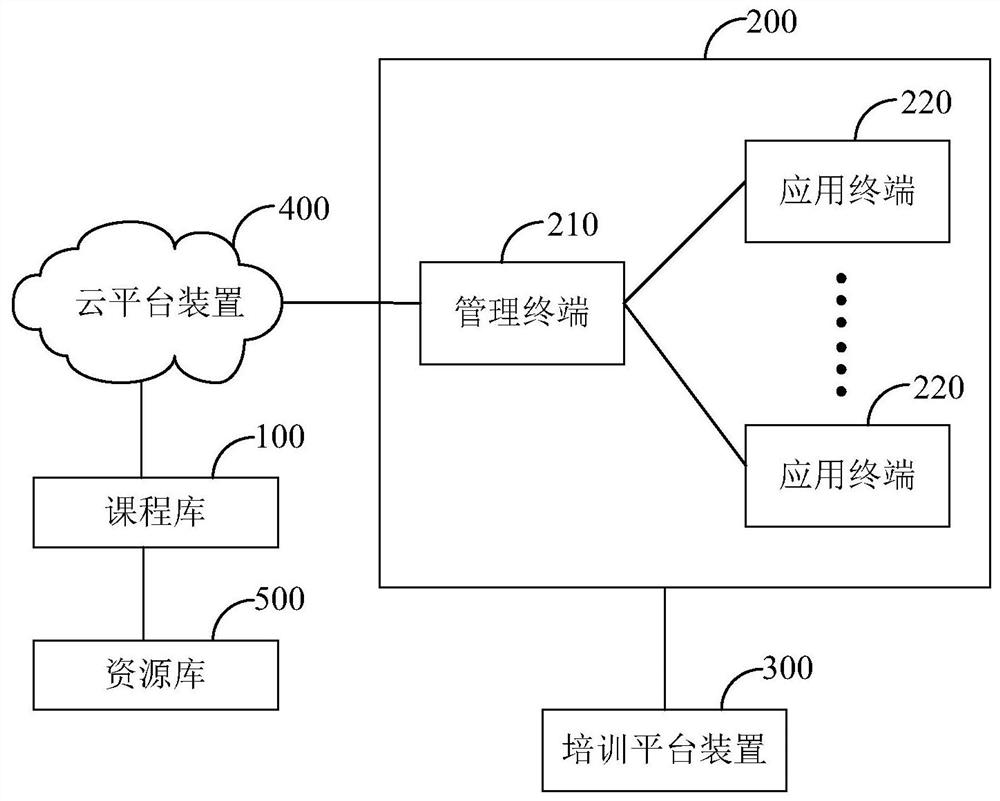

A system for crew safety training

Embodiments of the present invention provide a cloud platform device and system for team safety training, which belong to the fields of safety engineering technology and information technology. The cloud platform device adopts a layered architecture, and includes from bottom to top: an infrastructure layer, which is used to provide physical resources suitable for team safety training, and perform virtualization processing and cloud management of the physical resources; a data service layer , for providing data resources suitable for team safety training; platform service layer, for developing application services and management services suitable for team training in a microservice encapsulation method based on the physical resources and the data resources; and software encapsulation The layer is used to encapsulate and release the services developed by the platform service layer to the training platform. The solution of the present invention can be used to carry out various forms of team safety training activities, and can realize continuous updating of training content through cloud push.

Owner:CHINA PETROLEUM & CHEM CORP +1

Method for operating flash memories on a bus

ActiveUS20120151122A1The process is compact and efficientMemory adressing/allocation/relocationBusy waitingFlash memory

Owner:ETRON TECH INC

A method and system for reducing power consumption of multithreaded programs

ActiveCN103336571BReduce power consumptionPower supply for data processingPrediction intervalComputer science

The invention discloses a method and a system for reducing power consumption of a multi-thread program. The method comprises the steps as follows: when one thread arrives the first barrier, the address of the barrier and the time that the last thread leaves the barrier are recorded in a barrier interval time predicting table; when the last thread leaves the barrier i+1, the time that the last thread leaves the barrier i is subtracted from the time at this moment, so that the interval time of the barrier i is obtained; the address and the interval time of the barrier i are written into the barrier interval time predicting table; when threads arrive the same barrier again, the computation time is subtracted from the interval time read out from the barrier interval time predicting table adopted by the thread arriving a barrier synchronization point first, the busy waiting time of the thread is predicted, and the thread chooses to enter an appropriate low power consumption mode; when the predicted interval time of the barrier is coming, the thread arriving the barrier synchronization point first is restored into a normal power consumption mode, and the barrier interval time predicting table is updated, so that the power consumption of the whole processor is reduced.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

Electronic device and power consumption control method thereof

PendingCN113986663AIncrease power consumptionImprove convenienceDigital data processing detailsHardware monitoringOperational systemWait state

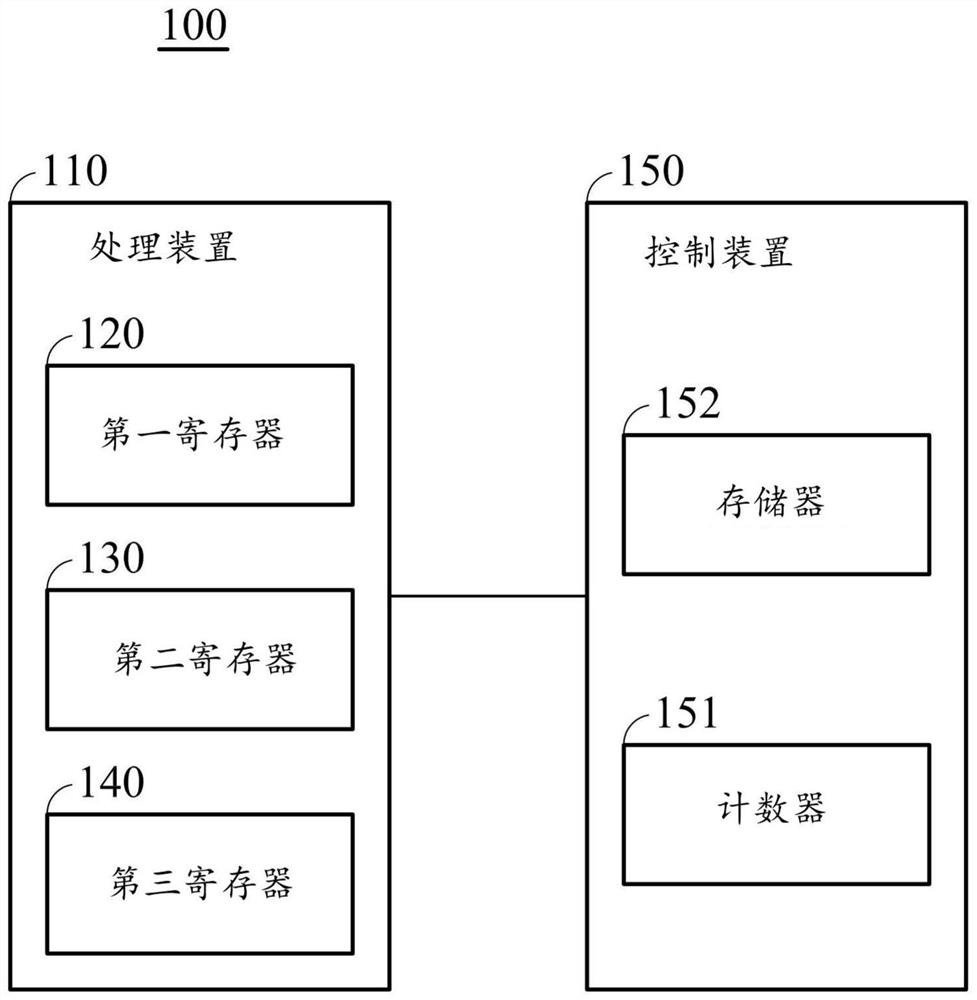

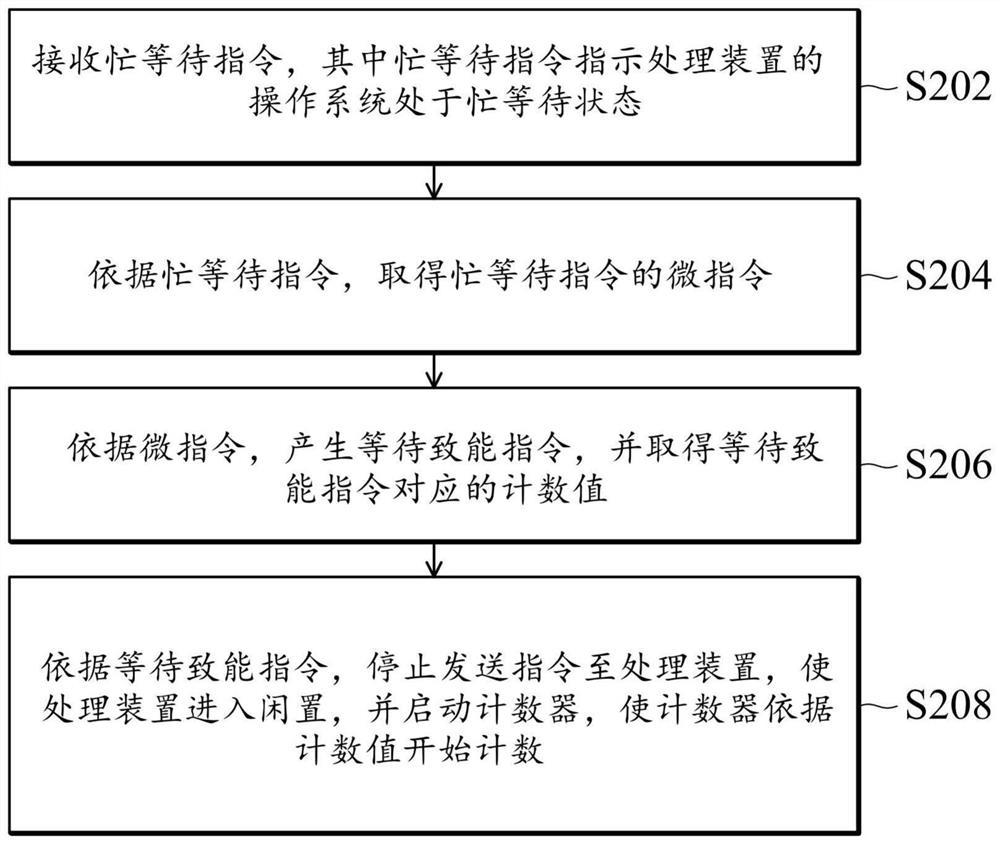

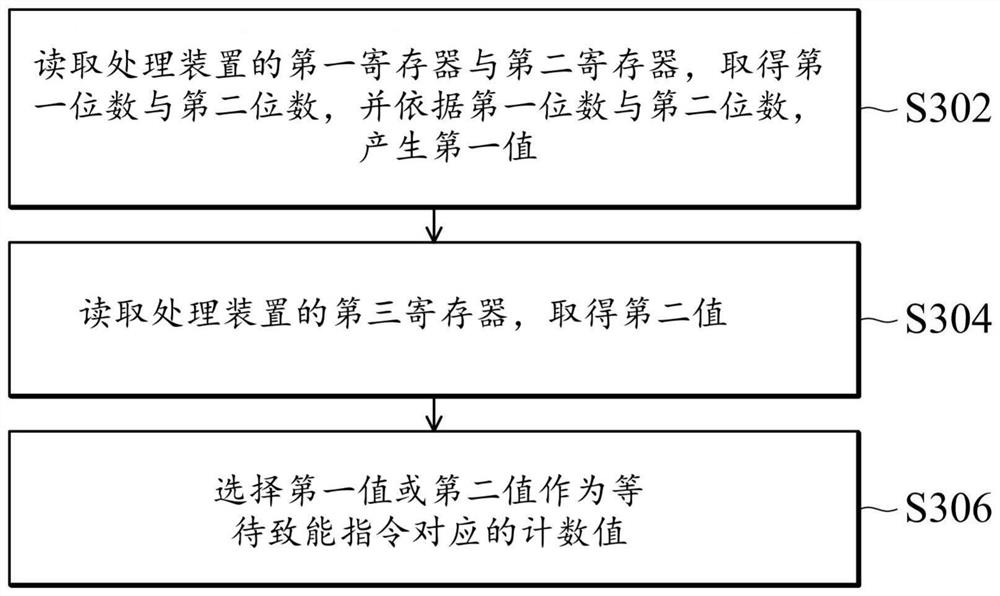

The invention relates to an electronic device and a power consumption control method thereof. The method comprises the following steps: receiving a busy waiting instruction , wherein the busy waiting instruction indicates that an operating system of the processing device is in a busy waiting state; obtaining a micro instruction of the busy waiting instruction according to the busy waiting instruction; generating a waiting enabling instruction according to the micro instruction, and obtaining a count value corresponding to the waiting enabling instruction; and according to the waiting enabling instruction, stopping sending the instruction to the processing device, enabling the processing device to enter an idle state, and starting the counter to enable the counter to start counting according to the counting value.

Owner:VIA ALLIANCE SEMICON CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com