Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

42results about How to "Mitigate disadvantage" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

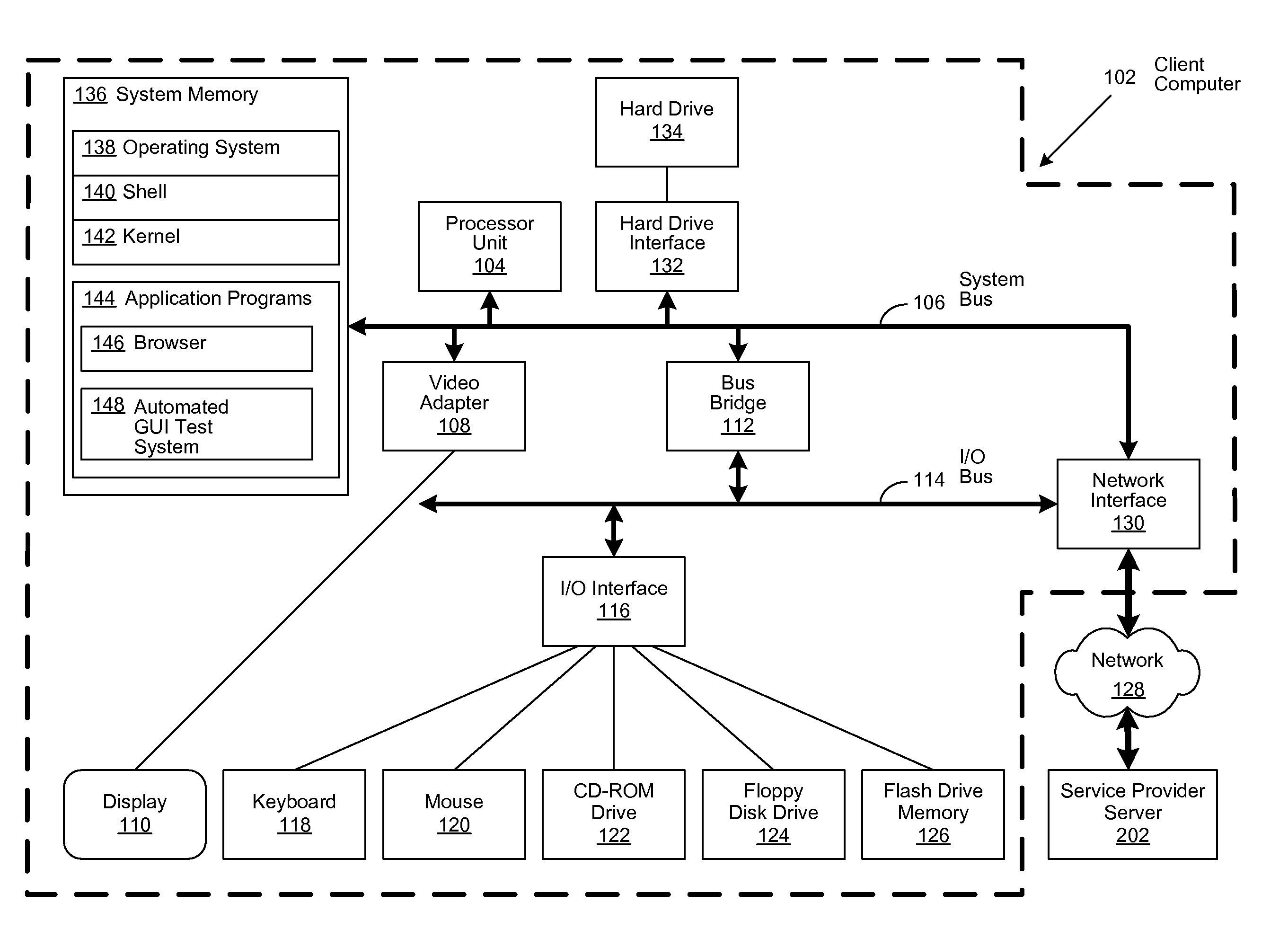

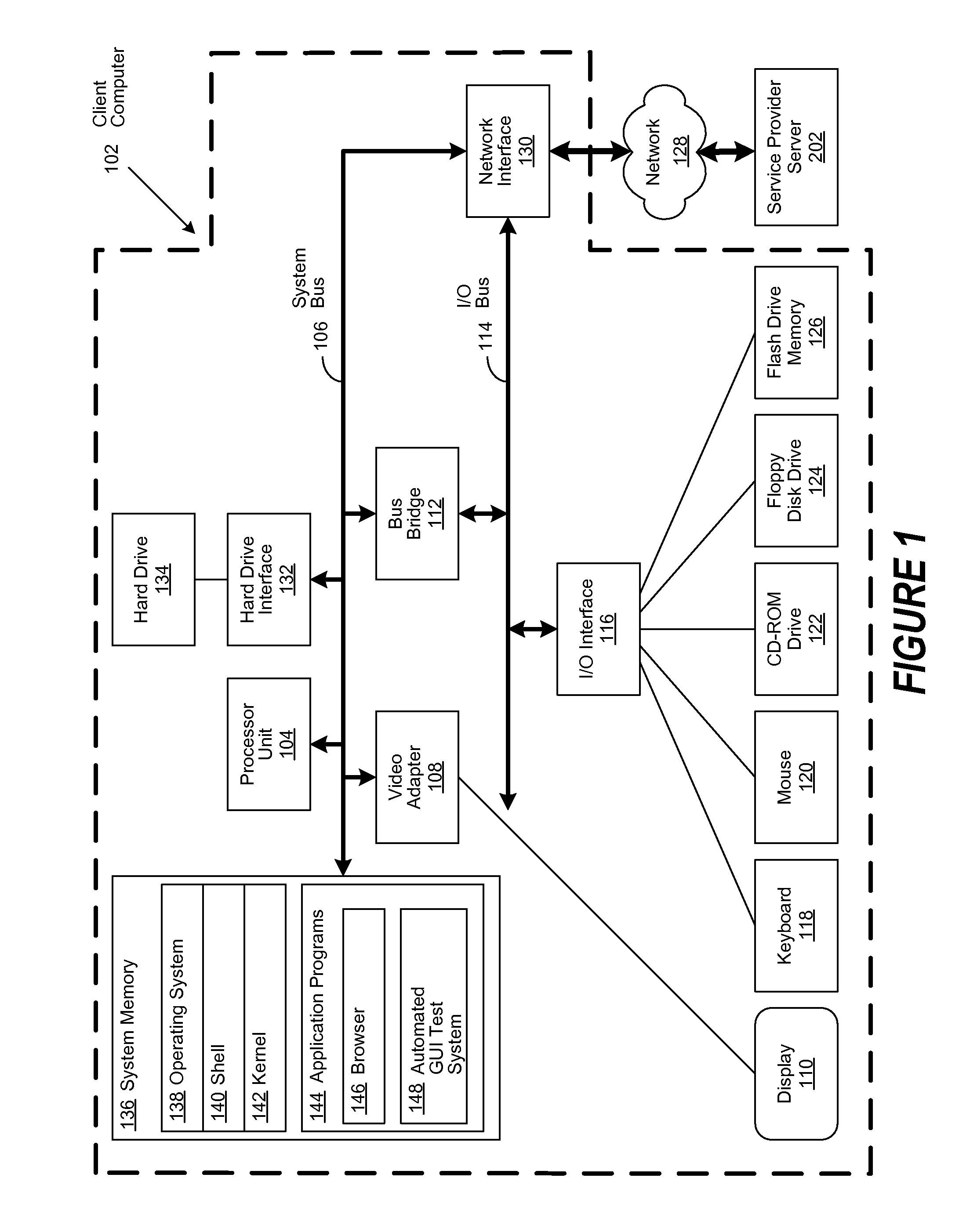

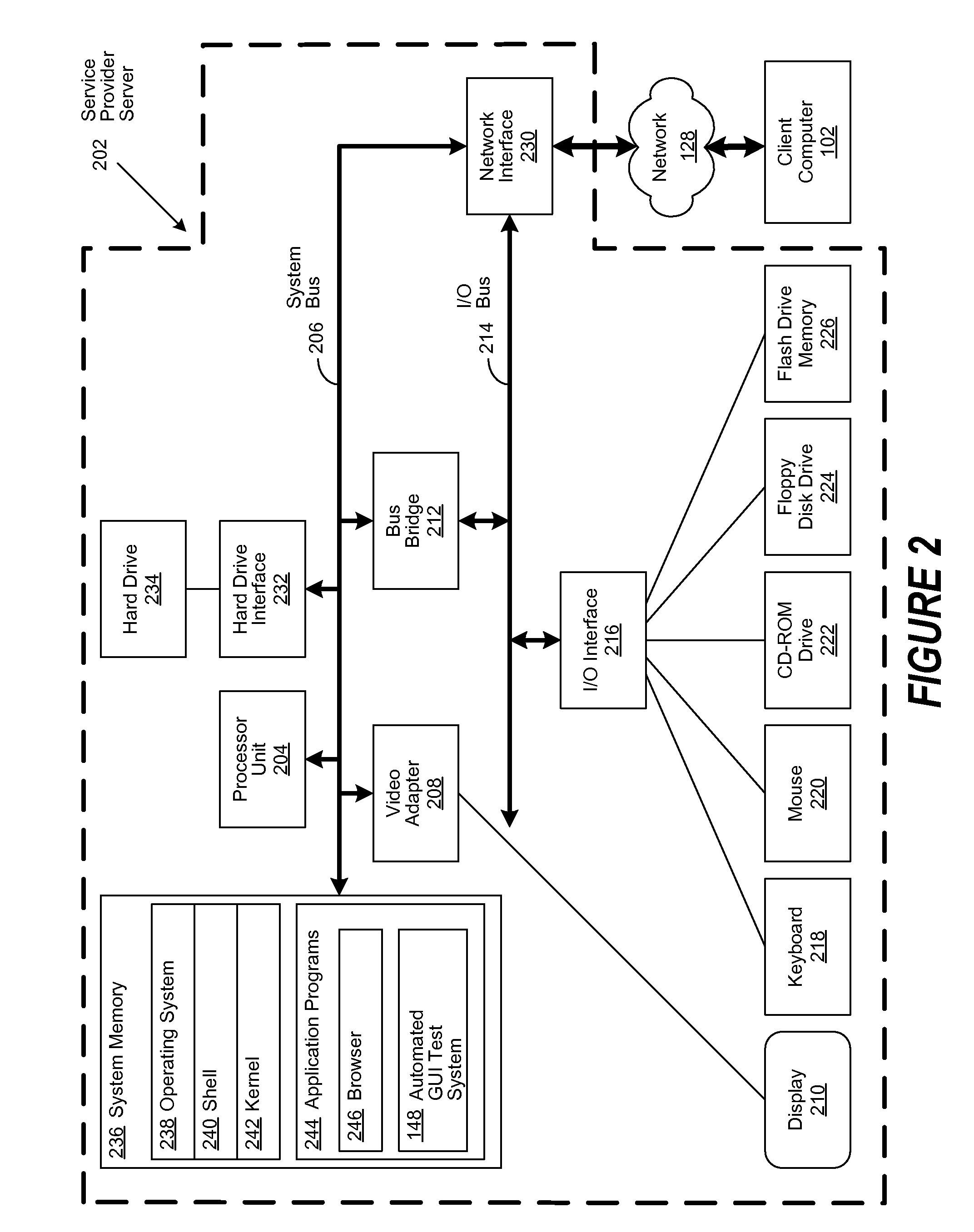

Method for Creating Error Tolerant and Adaptive Graphical User Interface Test Automation

ActiveUS20080010537A1Mitigate disadvantageIncrease reliabilityError detection/correctionTest proceduresGraphical user interface

A method, apparatus and computer-usable medium for the improved automated testing of a software application's graphical user interface (GUI) through implementation of a recording agent that allows the GUI interactions of one or more human software testers to be captured and incorporated into an error-tolerant and adaptive automated GUI test system. A recording agent is implemented to capture the GUI interactions of one or more human software testers. Testers enact a plurality of predetermined test cases or procedures, with known inputs compared against preconditions and expected outputs compared against the resulting postconditions, which are recorded and compiled into an aggregate test procedure. The resulting aggregate test procedure is amended and configured to correct and / or reconcile identified abnormalities to create a final test procedure that is implemented in an automated testing environment. The results of each test run are subsequently incorporated into the automated test procedure, making it more error-tolerant and adaptive as the number of test runs increases.

Owner:IBM CORP

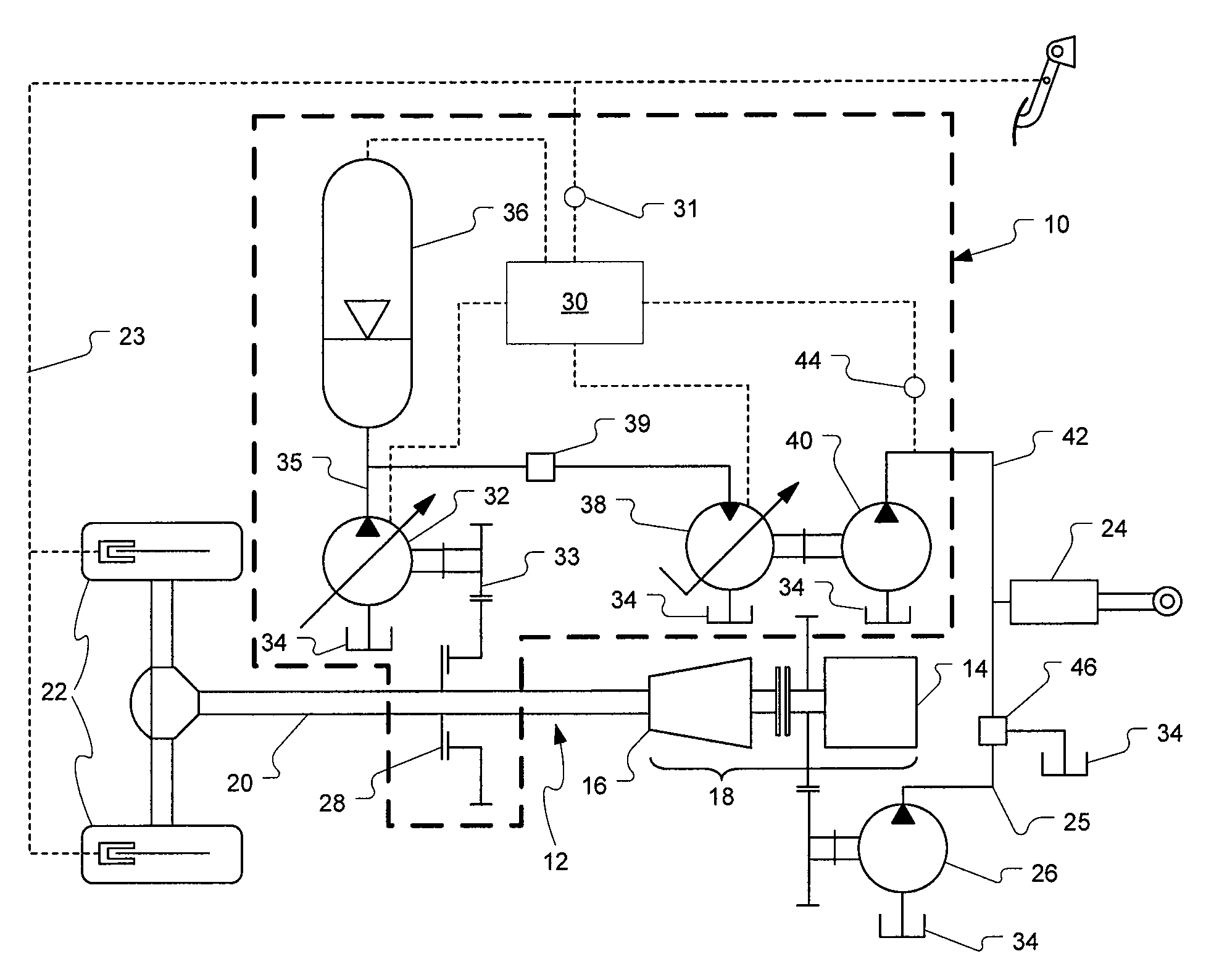

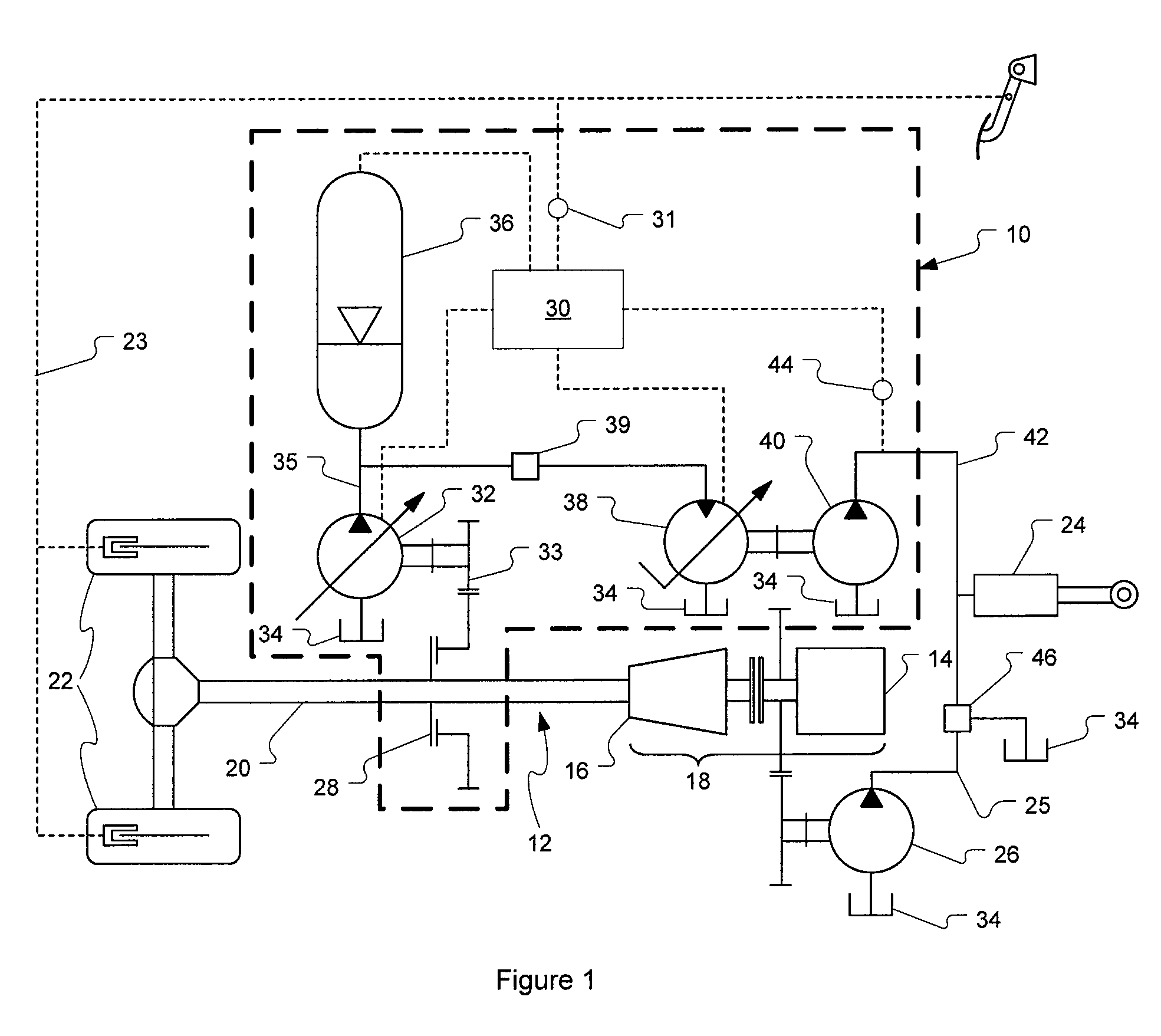

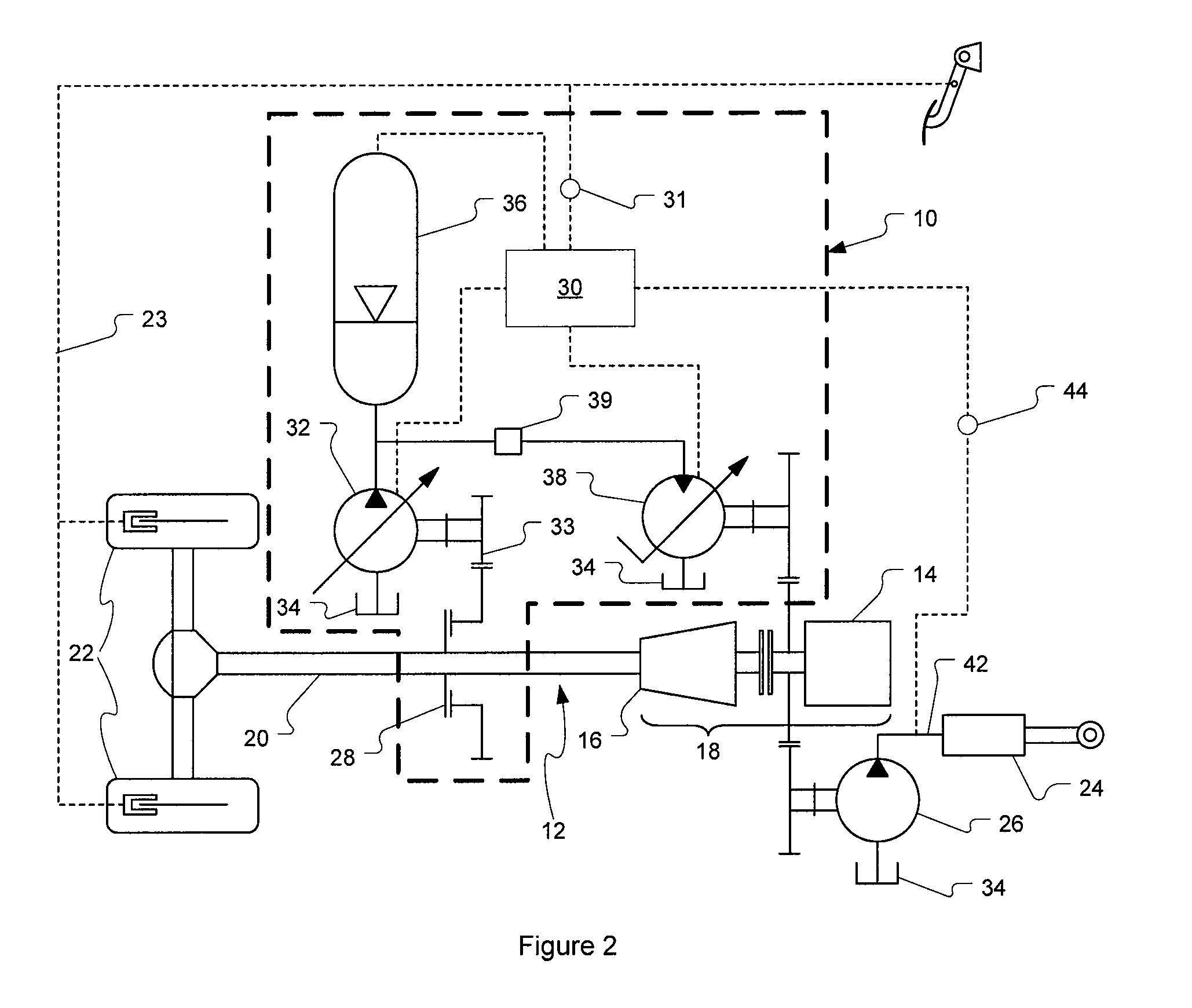

Braking energy recovery system for a vehicle and vehicle equipped with same

ActiveUS20100141024A1Mitigate disadvantageOvercomes or mitigates one or more disadvantagesAuxillary drivesHydrostatic brakesAutomotive engineeringHydraulic accumulator

A braking energy recovery system adapted for use on a vehicle and a vehicle having such a system installed. The vehicle has an engine-transmission assembly, a driveshaft, a braking system and an auxiliary system. The energy recovery system comprises a first pump, a hydraulic accumulator and a hydraulic motor. The first pump is a variable displacement hydraulic pump. The hydraulic accumulator is connected to the first pump and is operative to store hydraulic fluid under pressure. The hydraulic motor is hydraulically connected to the accumulator to receive hydraulic fluid. The motor is adapted to drive a second hydraulic pump, which is hydraulically connected to the auxiliary system, using hydraulic energy stored in the accumulator.

Owner:DEV EFFENCO INC +1

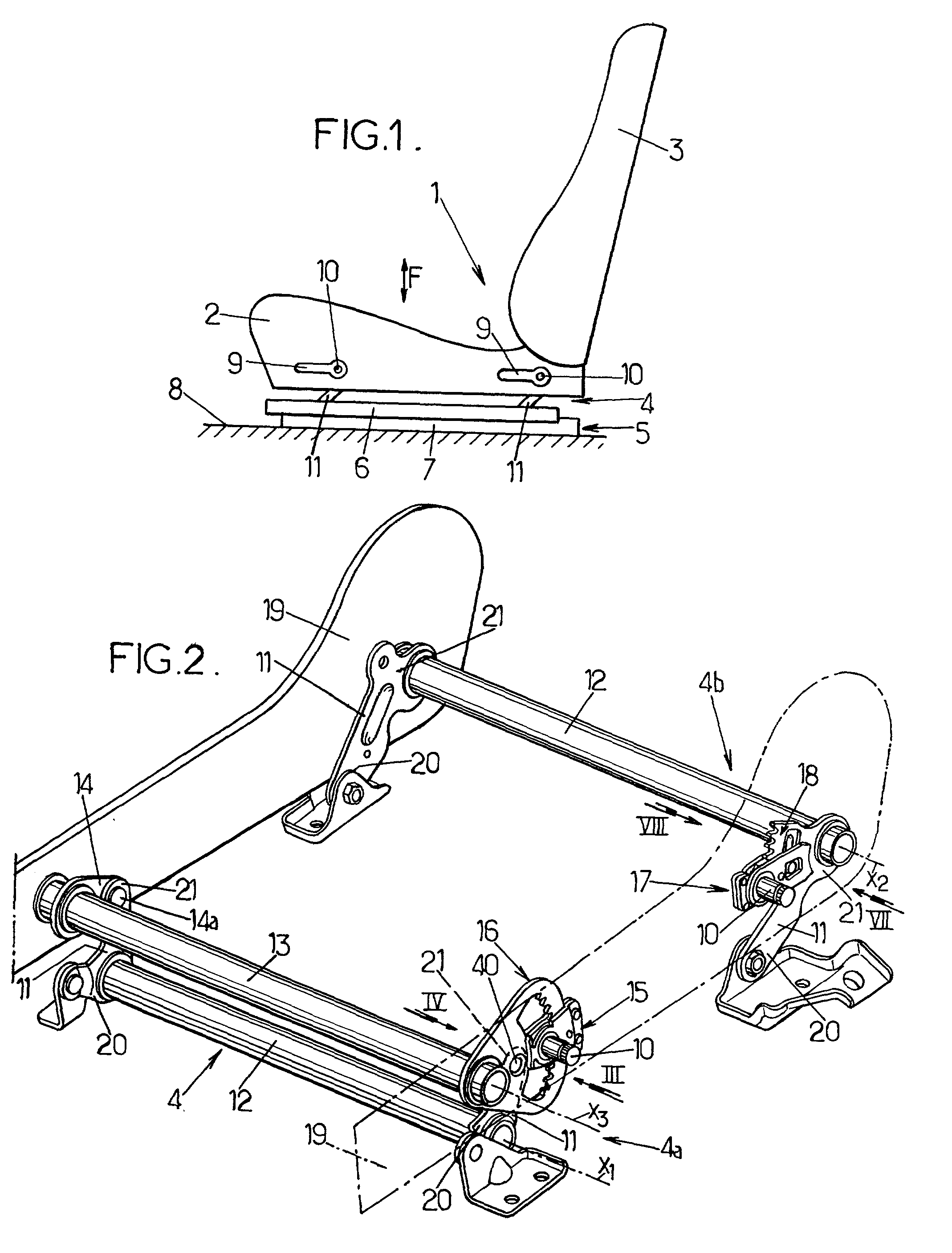

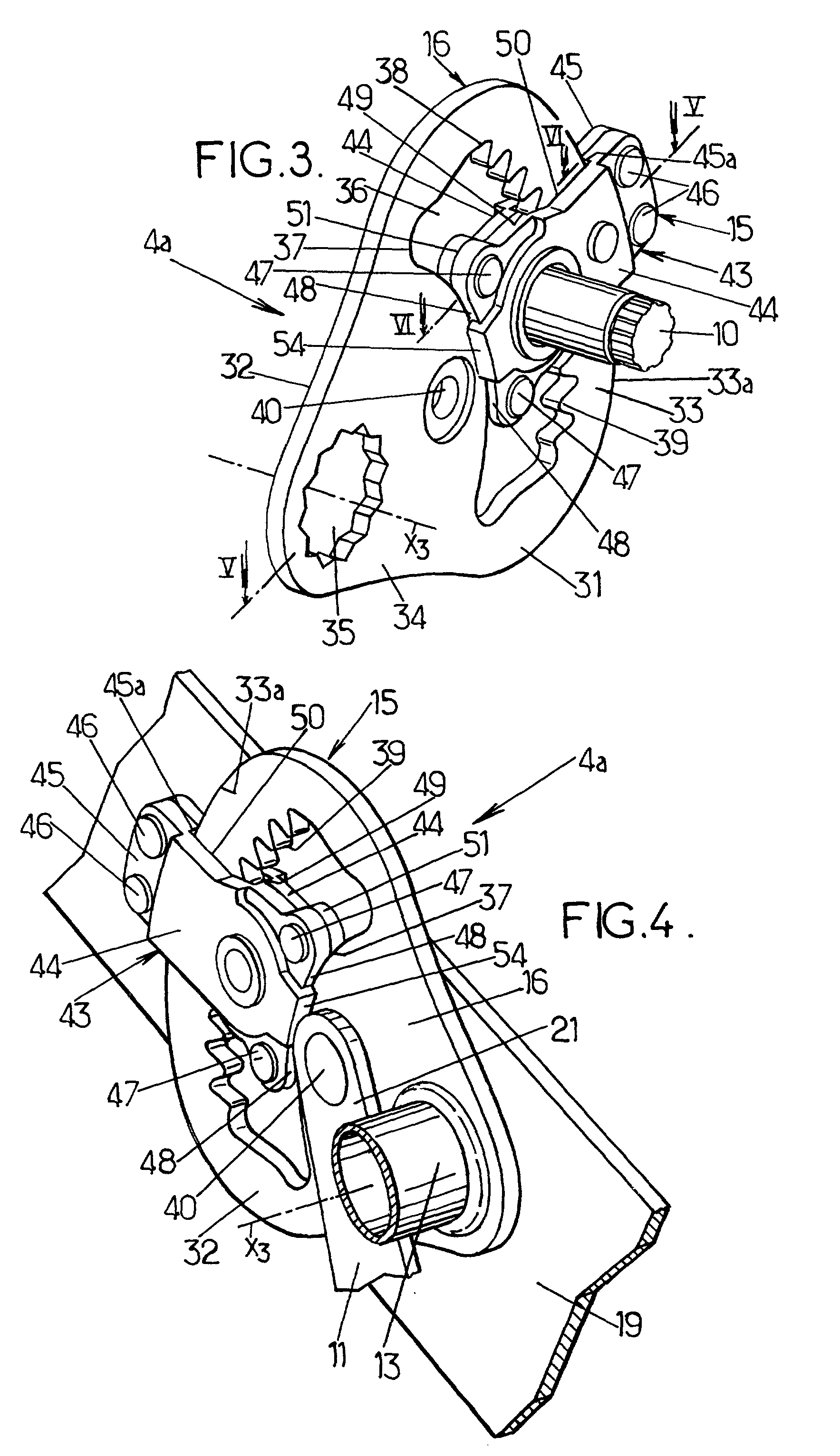

Vehicle seat comprising a height-adjusting mechanism, and a control device for such a seat

InactiveUS20010035673A1Mitigate disadvantageWeight controlOperating chairsDental chairsCushionEngineering

Vehicle seat provided with a height-adjusting mechanism comprising a link connected to a rack which is pivotally mounted to the seat cushion and has a circular toothed section meshing with a pinion mounted to the cushion by means of a rigid yoke the plates of which are guided without free play by arcuate guides formed by the rack, a part of the yoke engaging in an arcuate slot formed in the rack.

Owner:FAURECIA

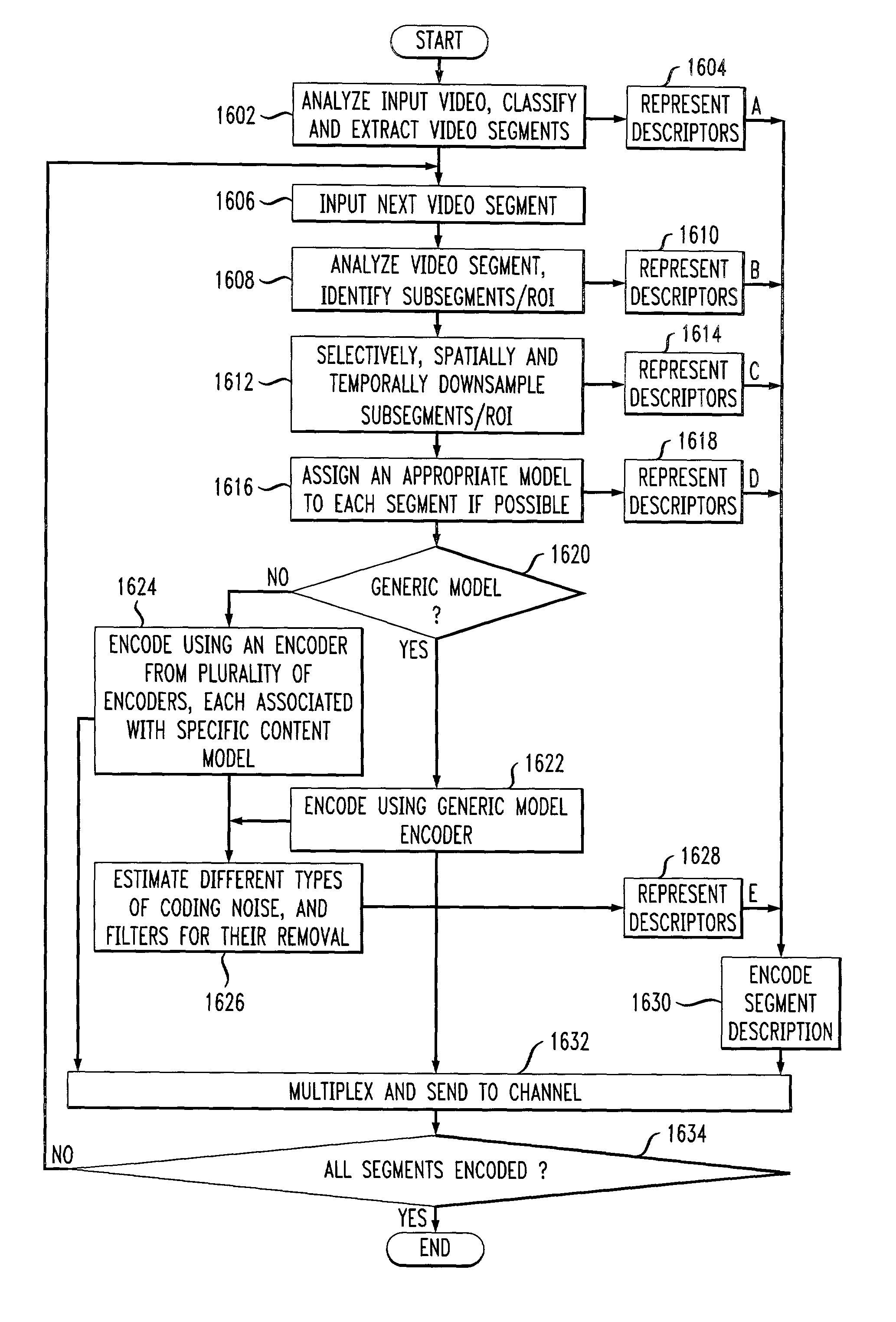

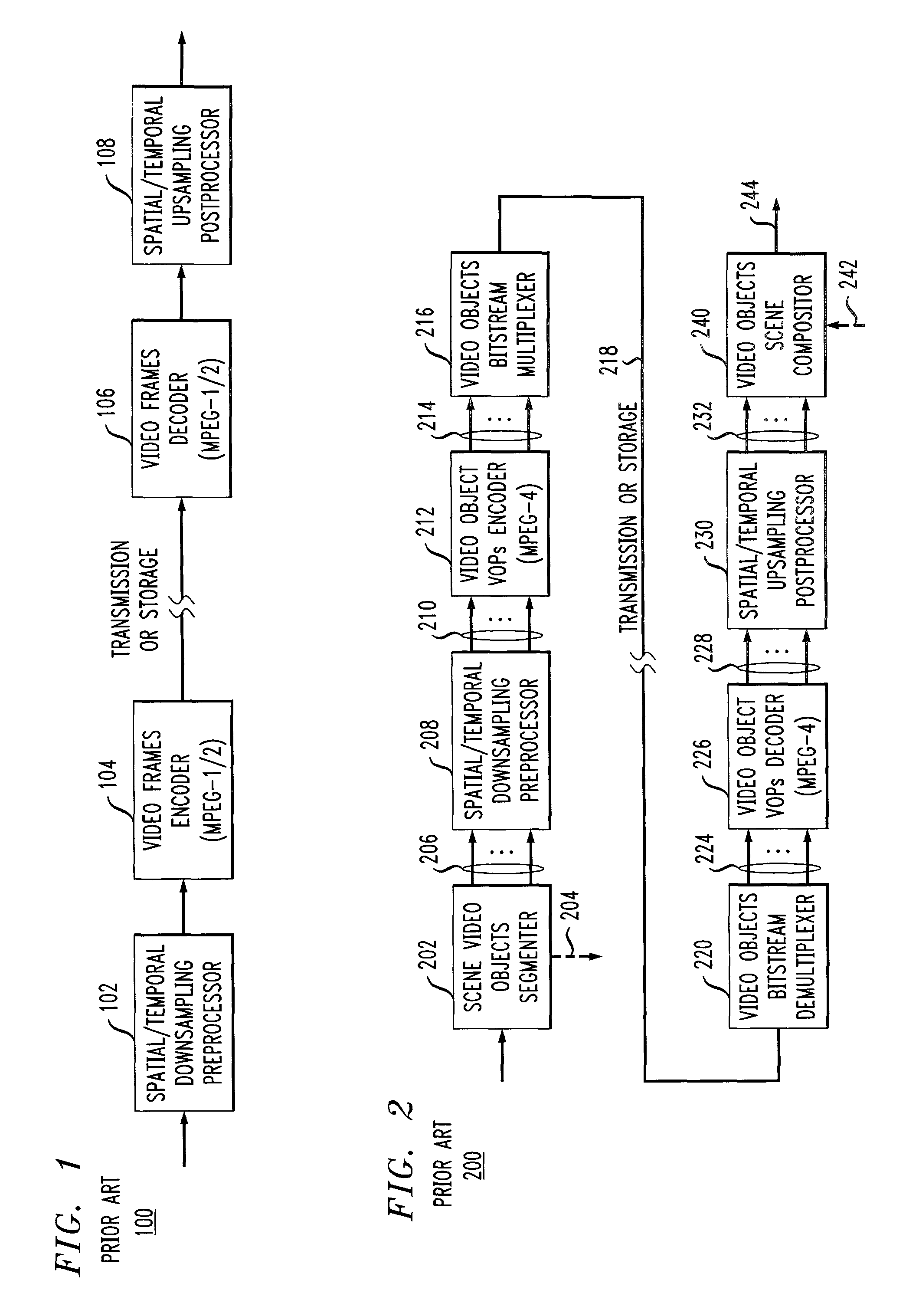

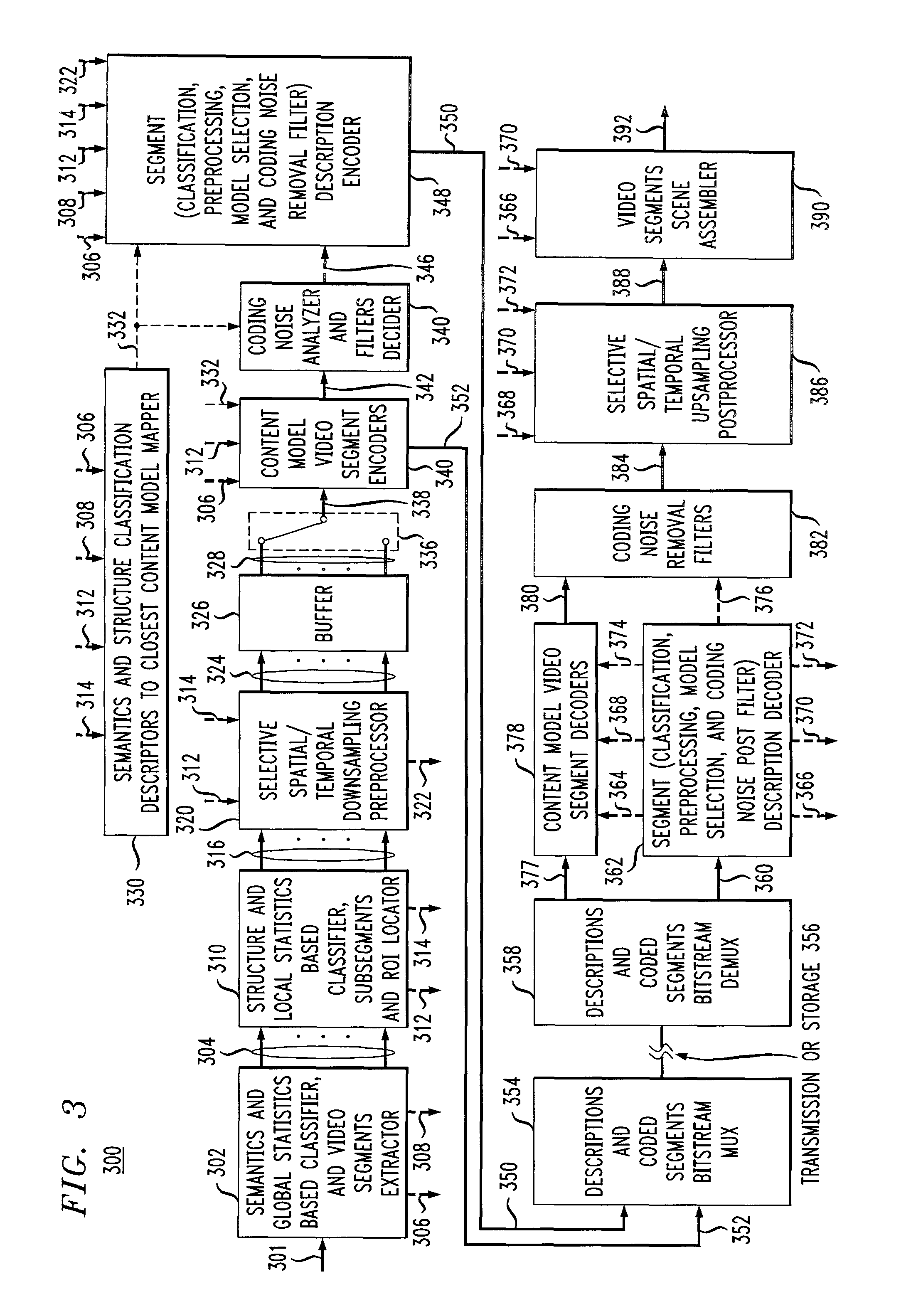

Method of content adaptive video encoding

ActiveUS7773670B1Increase in costMitigate disadvantageColor television with pulse code modulationColor television with bandwidth reductionAdaptive encodingBitstream

A method of content adaptive encoding video is disclosed. The method comprises segmenting video content into segments based on predefined classifications or models. Examples of such classifications include action scenes, slow scenes, low or high detail scenes, and brightness of the scenes. Based on the segment classifications, each segment is encoded with a different encoder chosen from a plurality of encoders. Each encoder is associated with a model. The chosen encoder is particularly suited to encoding the unique subject matter of the segment. The coded bit-stream for each segment includes information regarding which encoder was used to encode that segment. A matching decoder of a plurality of decoders is chosen using the information in the coded bitstream to decode each segment using a decoder suited for the classification or model of the segment. If scenes exist which do not fall in a predefined classification, or where classification is more difficult based on the scene content, these scenes are segmented, coded and decoded using a generic coder and decoder.

Owner:AMERICAN TELEPHONE & TELEGRAPH CO

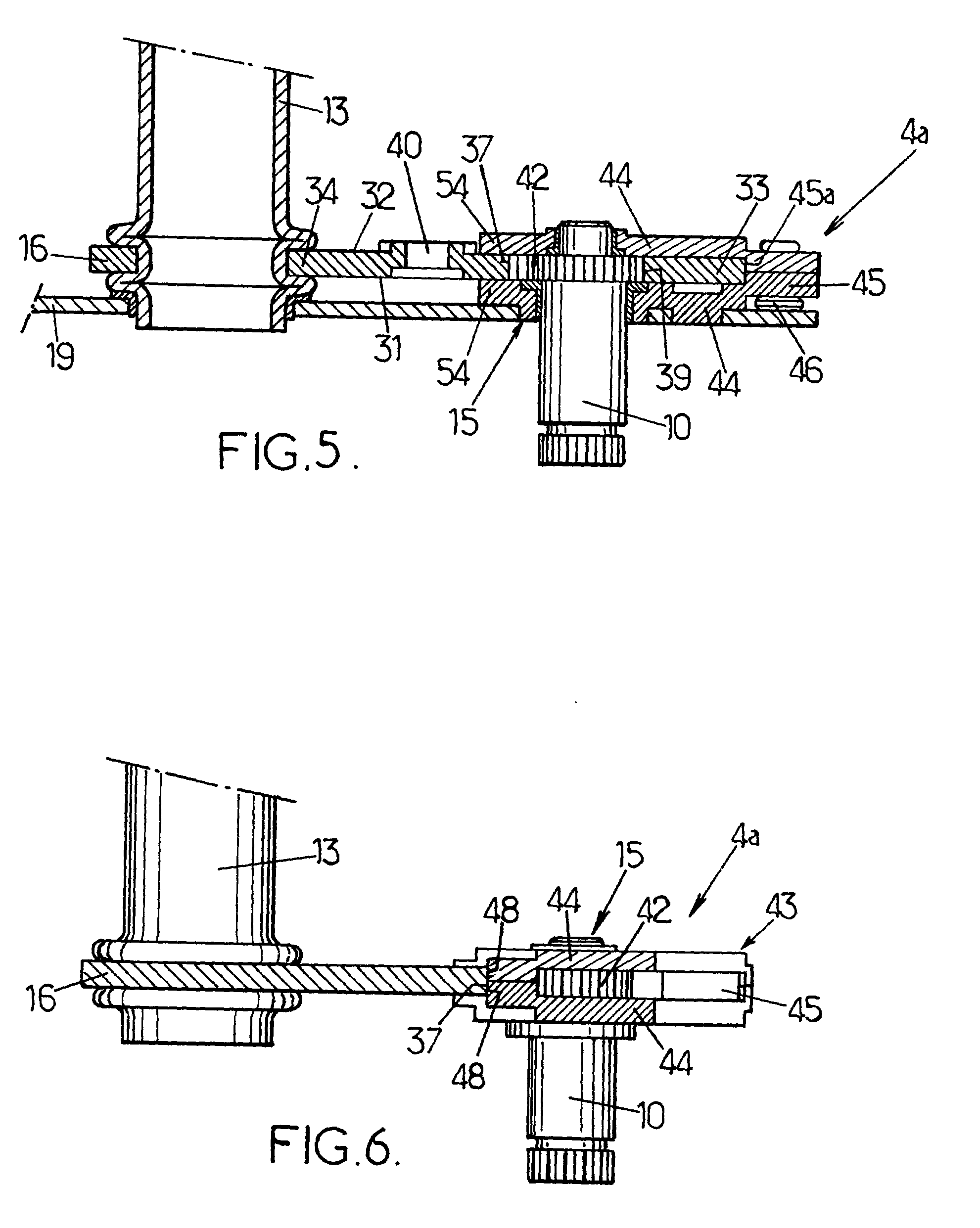

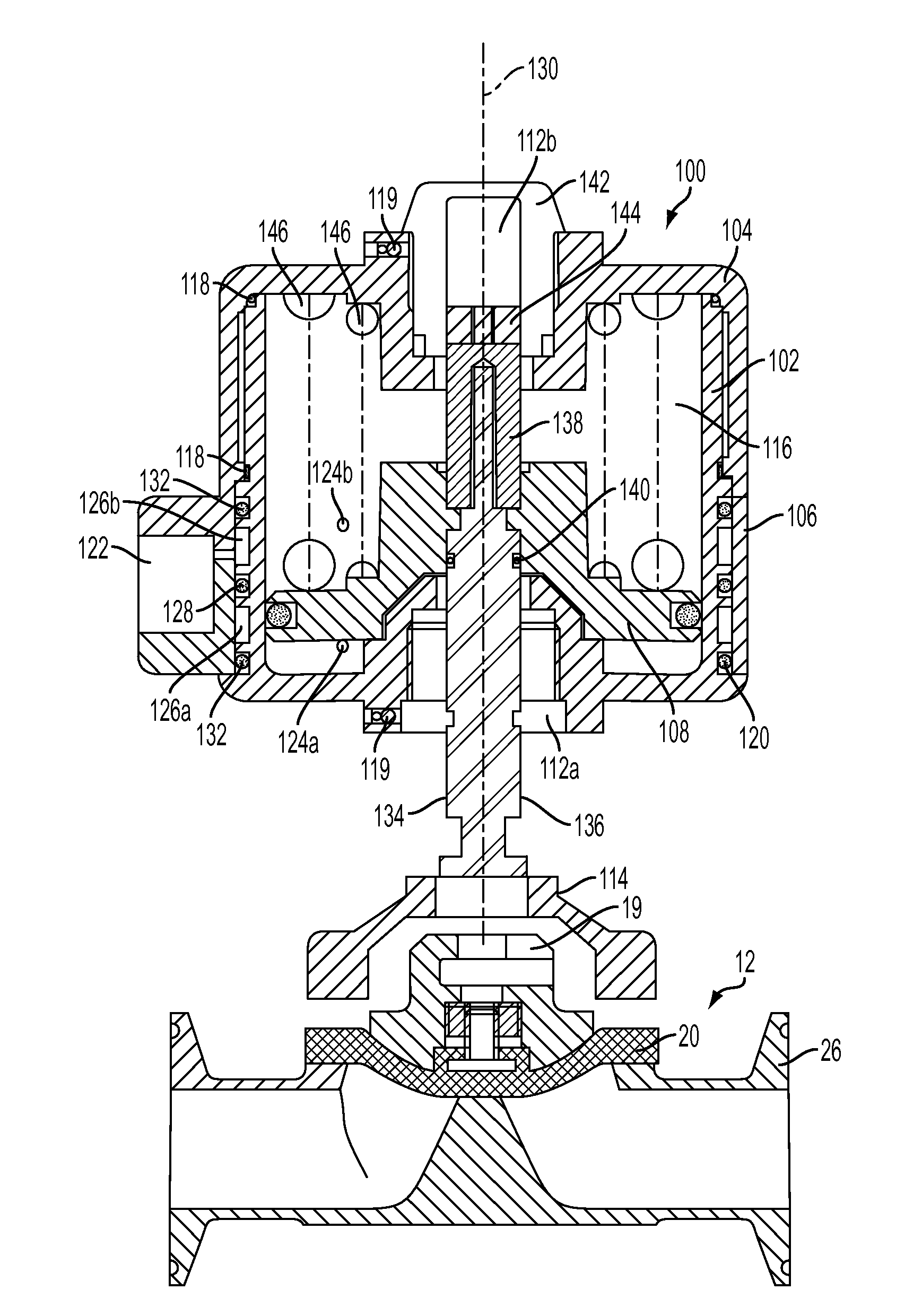

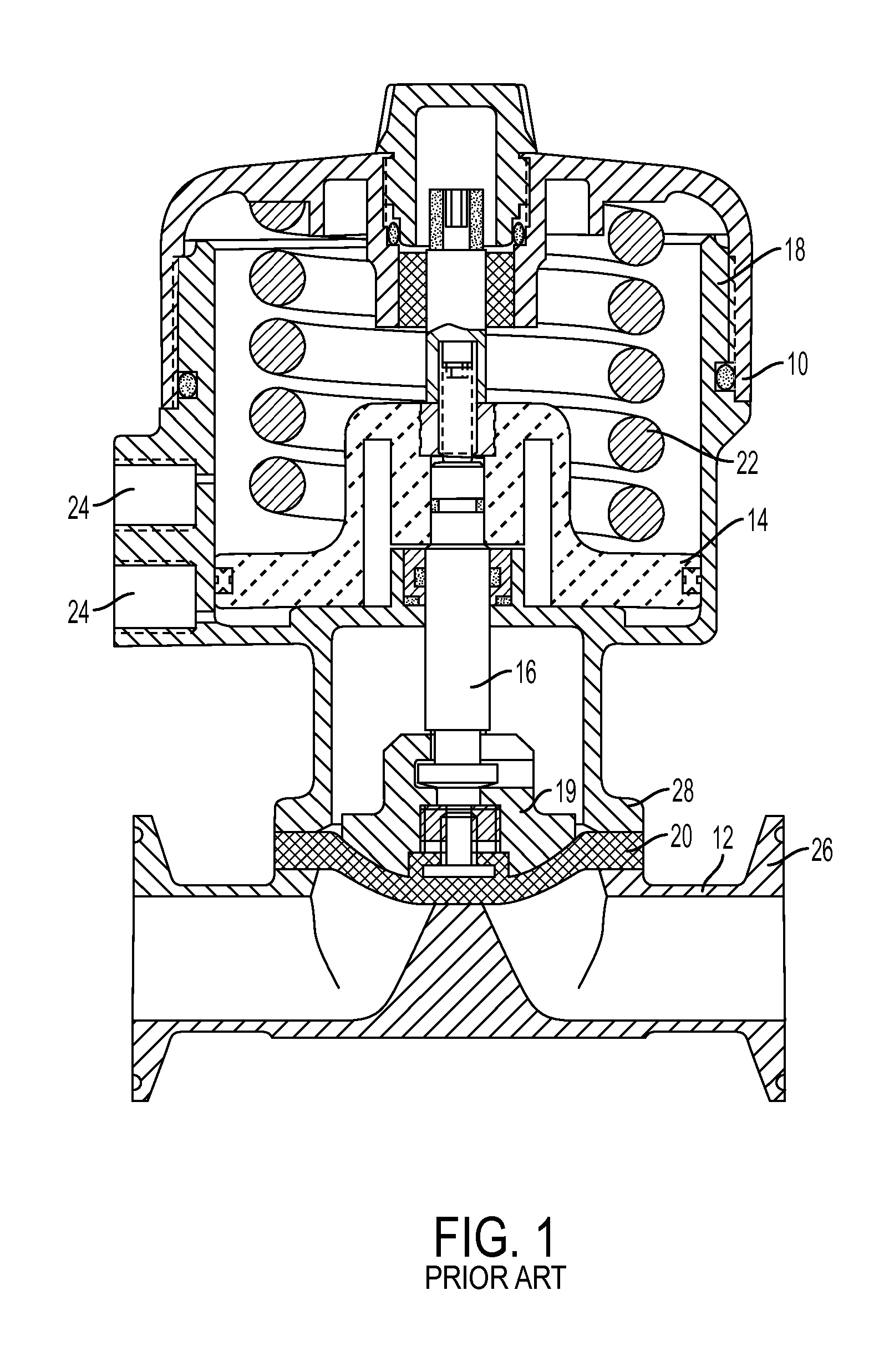

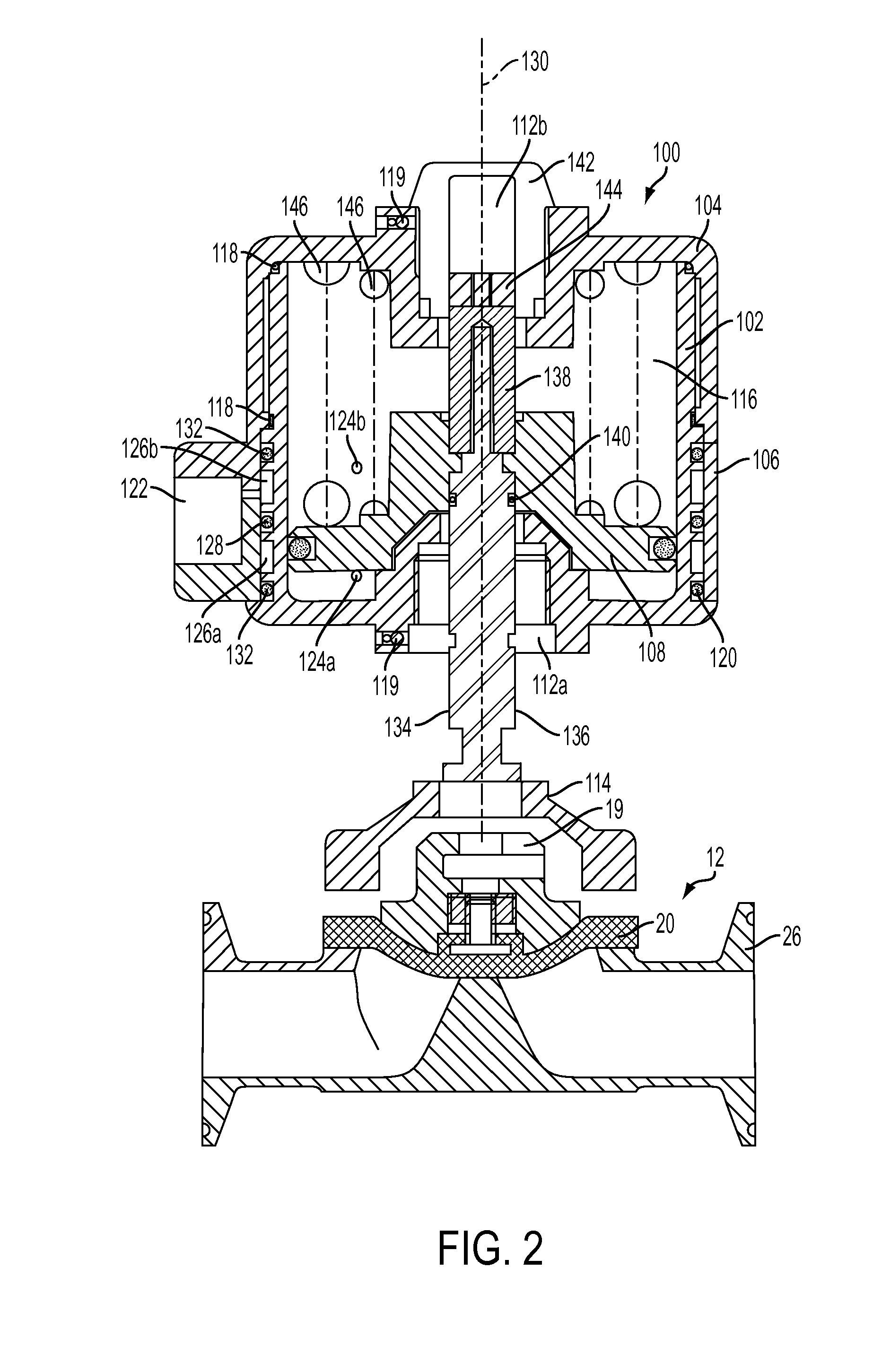

Actuator for operating valves such as diaphragm valves

ActiveUS20100072410A1Mitigate disadvantageOvercomes or mitigates oneOperating means/releasing devices for valvesServomotor componentsPistonEngineering

An actuator for operating a valve comprises a housing, a cap, a ring and a piston. The housing has a connecting interface for being mounted on the valve. The cap substantially covers a first portion of the housing. Both the cap and the housing define a substantially enclosed space inside the housing. The ring sealably covers a second portion of the housing. The ring is equipped with two ports that are in fluid communication with the enclosed space. The piston is slidably located inside the housing. Advantageously, an actuator assembly may include the actuator as previously described and one bonnet, one of the connecting interfaces of the actuator being connected to the bonnet. The bonnet is adapted to be mounted to a valve body.

Owner:RICHARDS INDS

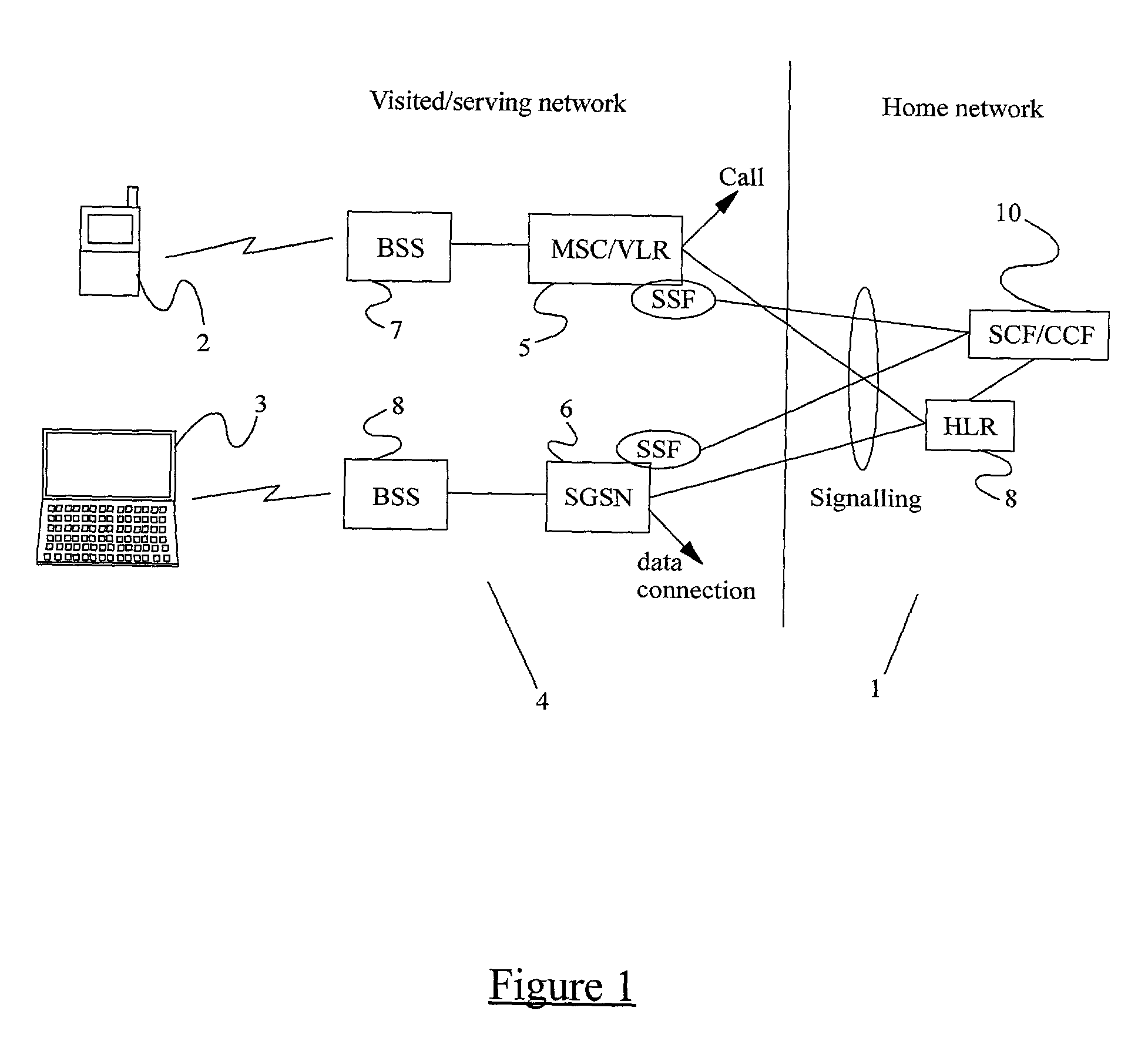

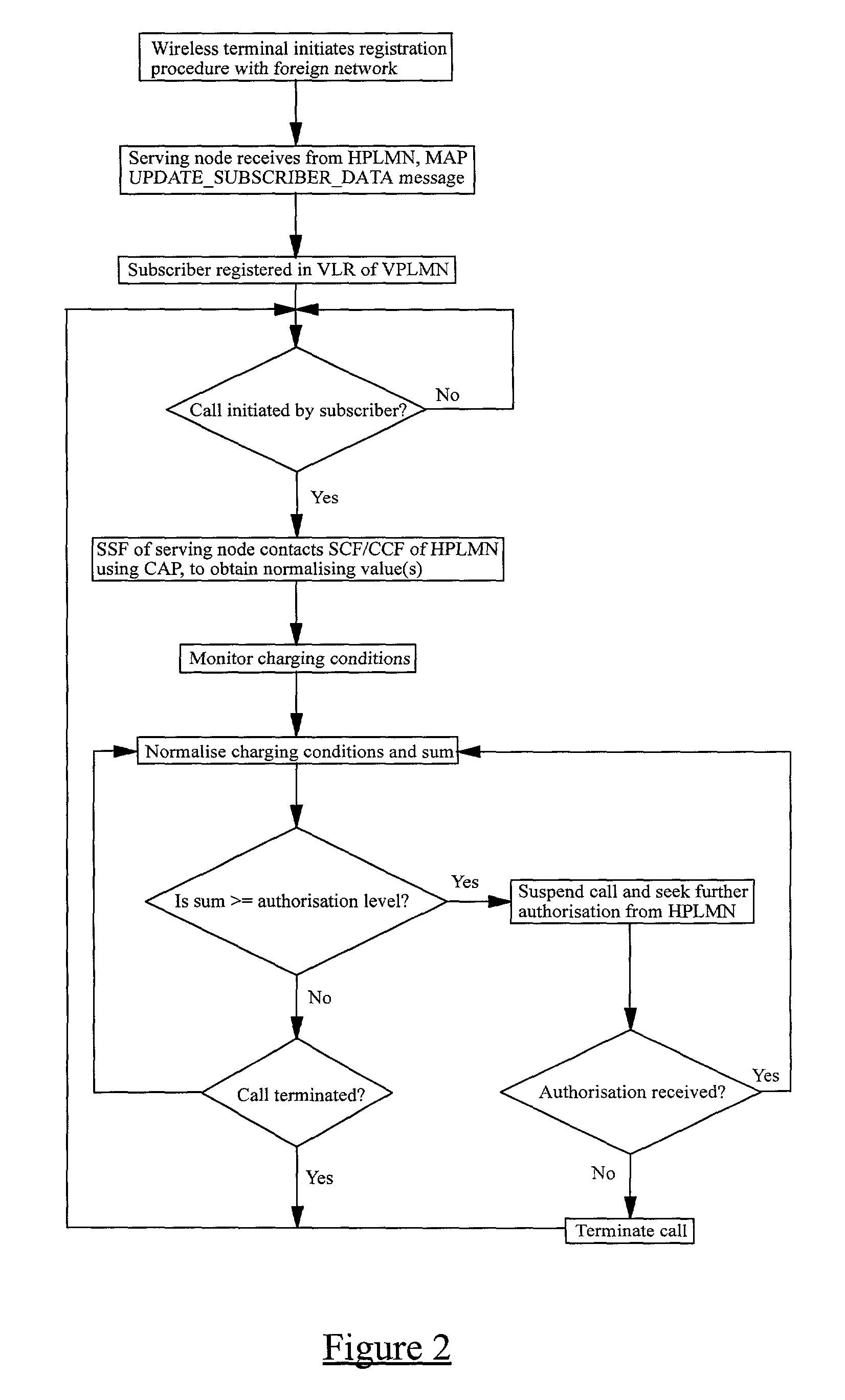

Cost control in a telecommunications system

InactiveUS20010023181A1Reduce charging related signalling trafficMitigate disadvantageUnauthorised/fraudulent call preventionEavesdropping prevention circuitsTelecommunications serviceStandardization

A method of monitoring the chargeable activities of a user in a mobile telecommunications network. The method comprises monitoring one or more conditions on which charging may be based and normalising the or each monitored condition so that the condition(s) can be compared against a standard value and / or used for calculating a charge.

Owner:TELEFON AB LM ERICSSON (PUBL)

Expandable Orthopedic Device

ActiveUS20140031940A1Mitigate disadvantageEnhanced advantageInternal osteosythesisSpinal implantsFilling materialsAnatomical structures

Methods and apparatuses for restoration of human or animal bone anatomy, which may include introduction, into a bone of an expansible implant capable of expansion in a single determined plane, positioning the expansible implant in the bone in order to correspond the single determined plane with a bone restoration plane and opening out the expansible implant in the bone restoration plane. A first support surface and a second support surface spread tissues within bone. The embodiments of the disclosure may also include injecting a filling material around the implant.

Owner:STRYKER EUROPEAN OPERATIONS LIMITED

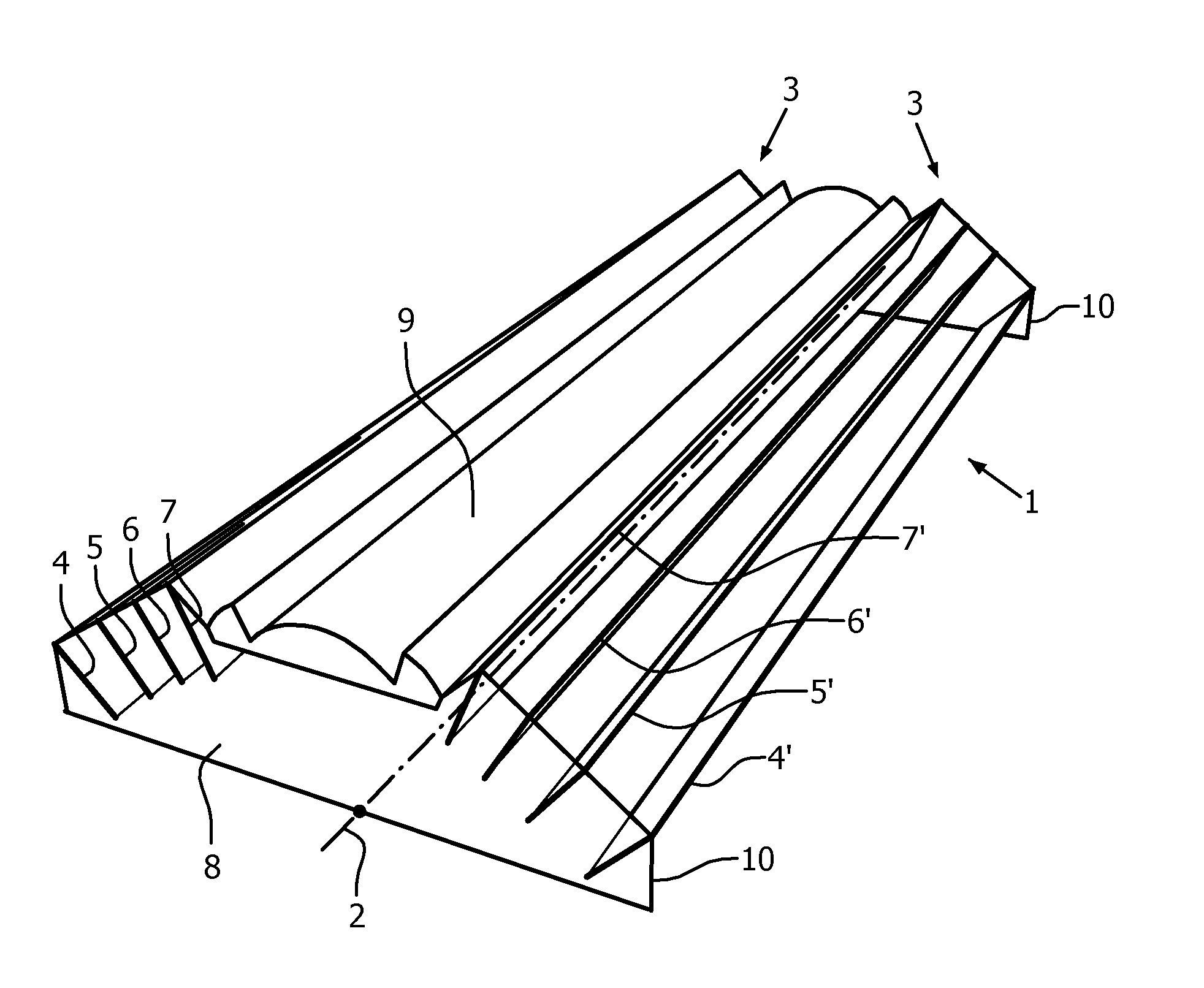

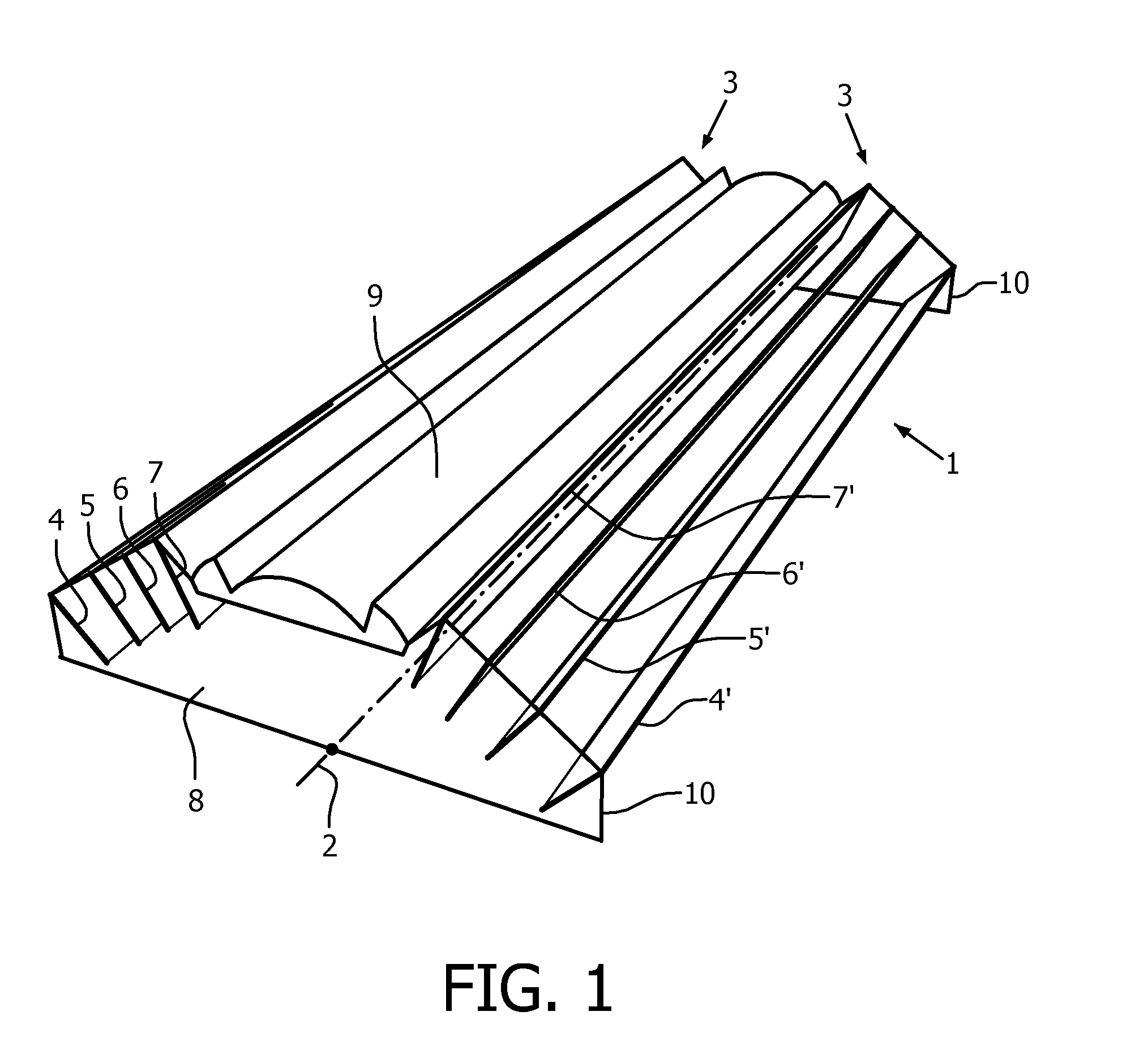

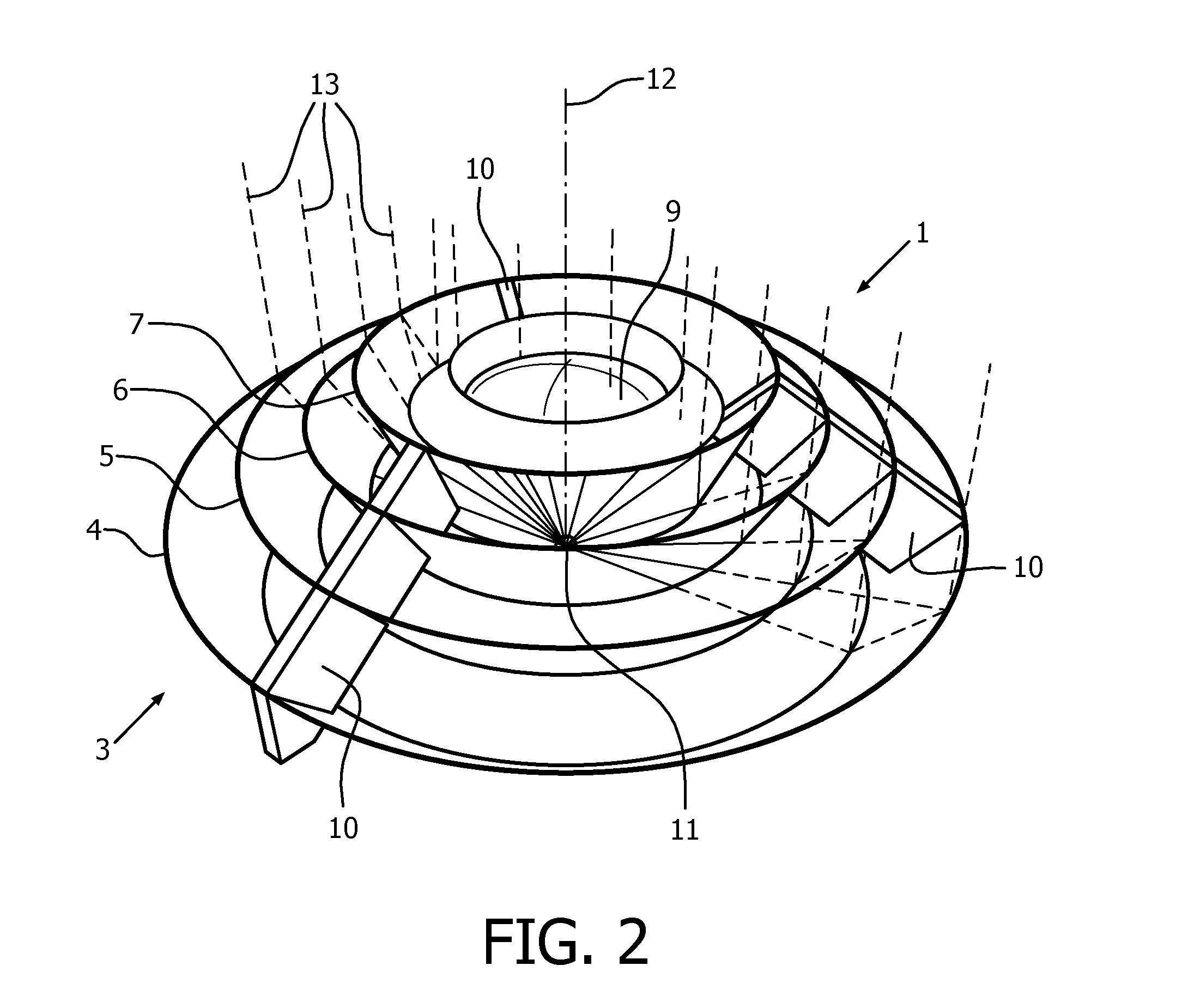

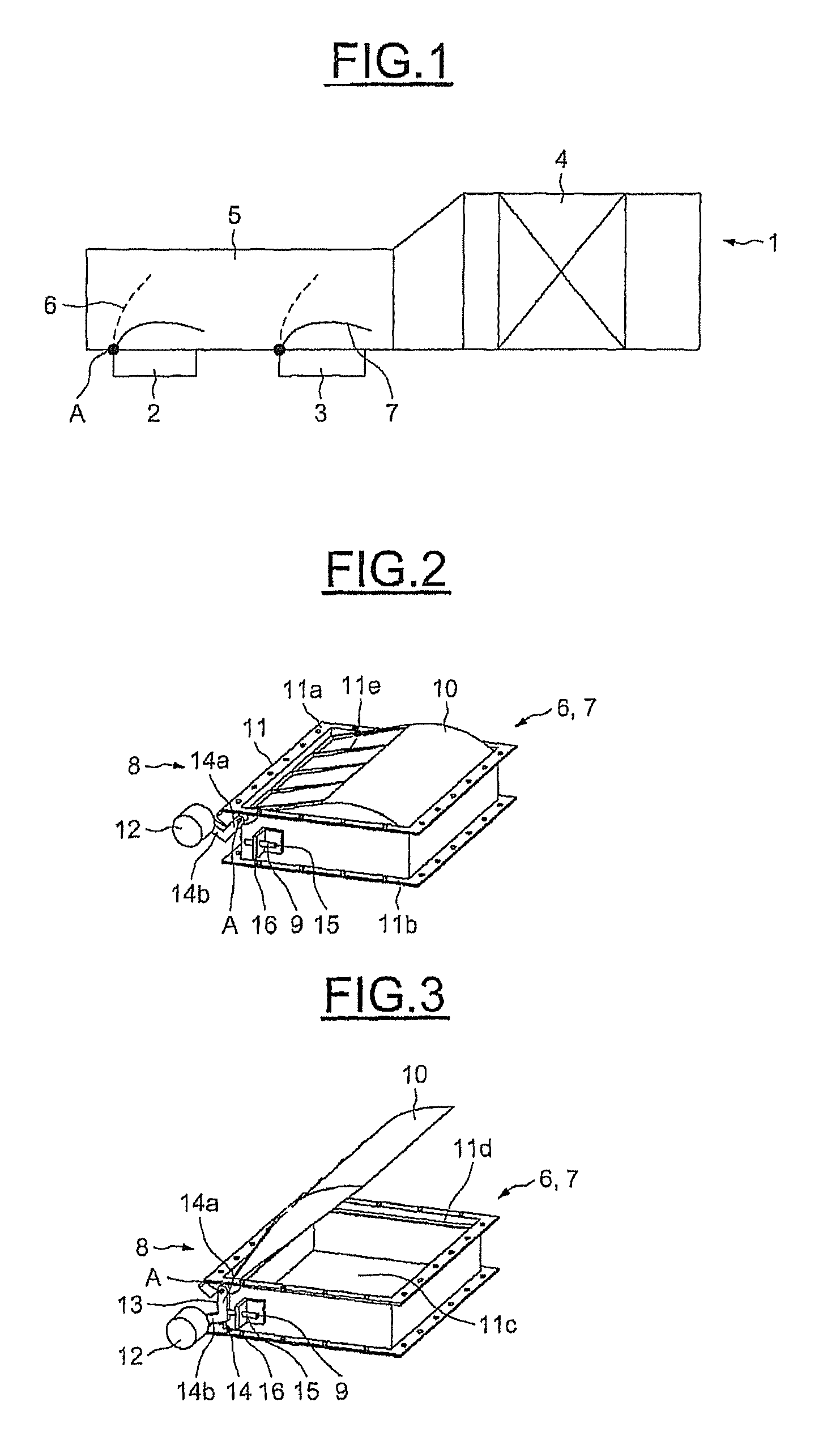

Lighting device with a LED and an improved reflective collimator

InactiveUS20150219308A1Improve heat dissipationMitigate disadvantageLine/current collector detailsPoint-like light sourceCollimatorEngineering

The invention relates to a lighting device (1) comprising a housing (8) with a light source connector for a LED (11) and a reflective collimator (3) and a refractive collimator (9) as well as to a method for their manufacture. The reflective collimator (3) comprises a plurality of reflective segments (4, 4′, 5, 5′, 6, 6′, 7, 7′), which are spaced apart from each other by means of air slits suitable for dissipation of heated air. The segments (4, 4′, 5, 5′, 6, 6′, 7, 7′) are adapted to reflect laterally emitted light generated by the light source (11) towards a direction which is substantially parallel to said main direction. Lighting devices according to this invention may have a compact design and an improved dissipation of the heat generated by the LEDs.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

Position finding system

ActiveUS20120153089A1Mitigate disadvantageReduce disadvantagesElectric/electromagnetic visible signallingAlarmsMetallic materialsTransponder

Position finding system having a sensor unit and a transmitter unit. The sensor unit comprises a first RFID transponder reader unit, a first inductive detector unit, and an analysis unit connected to the RFID transponder reader unit and the inductive detector unit; the transmitter unit comprises an RFID transponder and a metallic material. The sensor unit is movable relative to the transmitter unit. The RFID transponder reader unit is configured for absolute position finding and outputs a first position value, and the inductive detector unit is configured for absolute position finding and outputs a second position value. The analysis unit is configured to determine, from the data acquired from the transmitter unit, an absolute position of the sensor unit from the first and second position values.

Owner:PEPPERL FUCHS GMBH

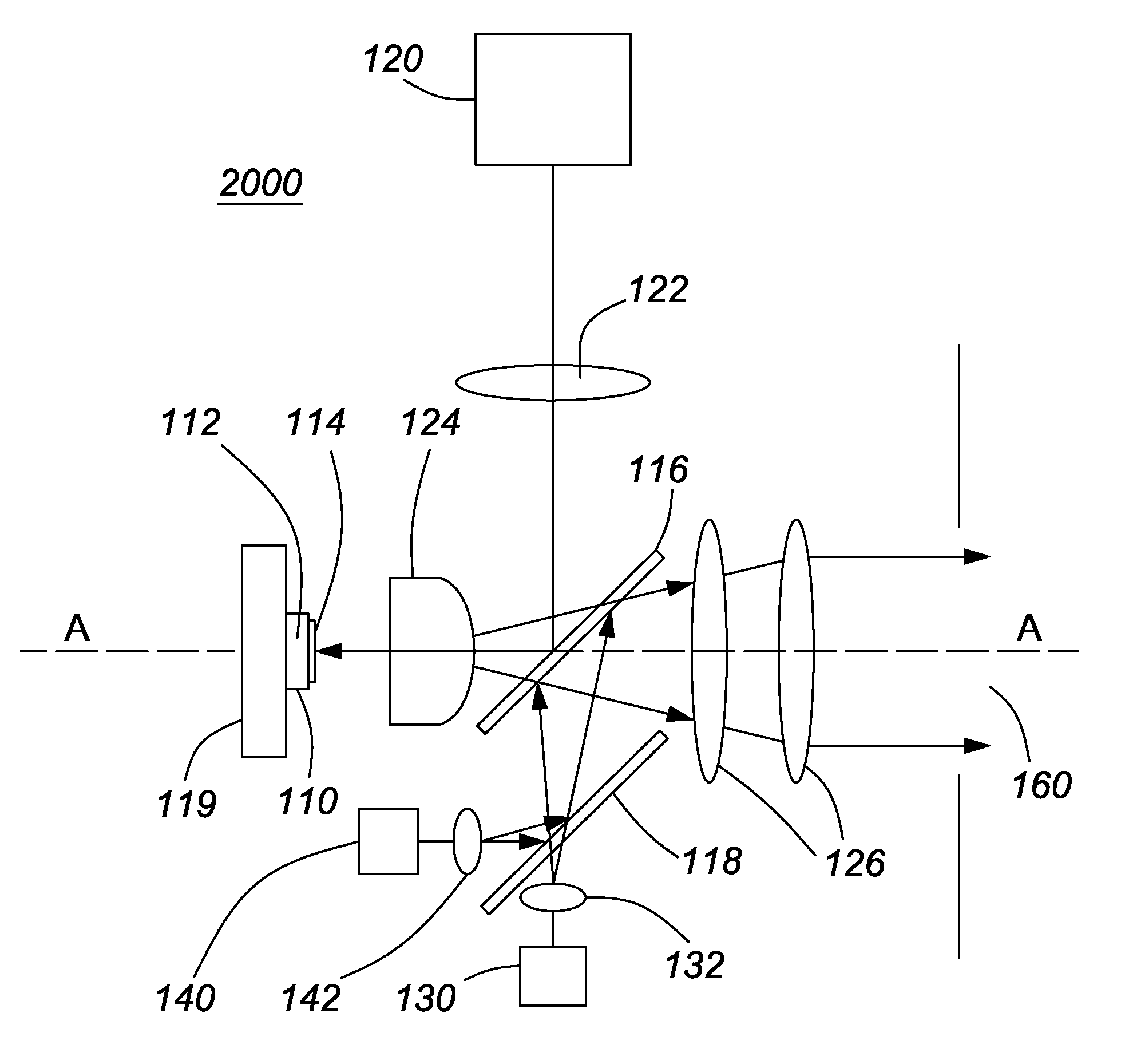

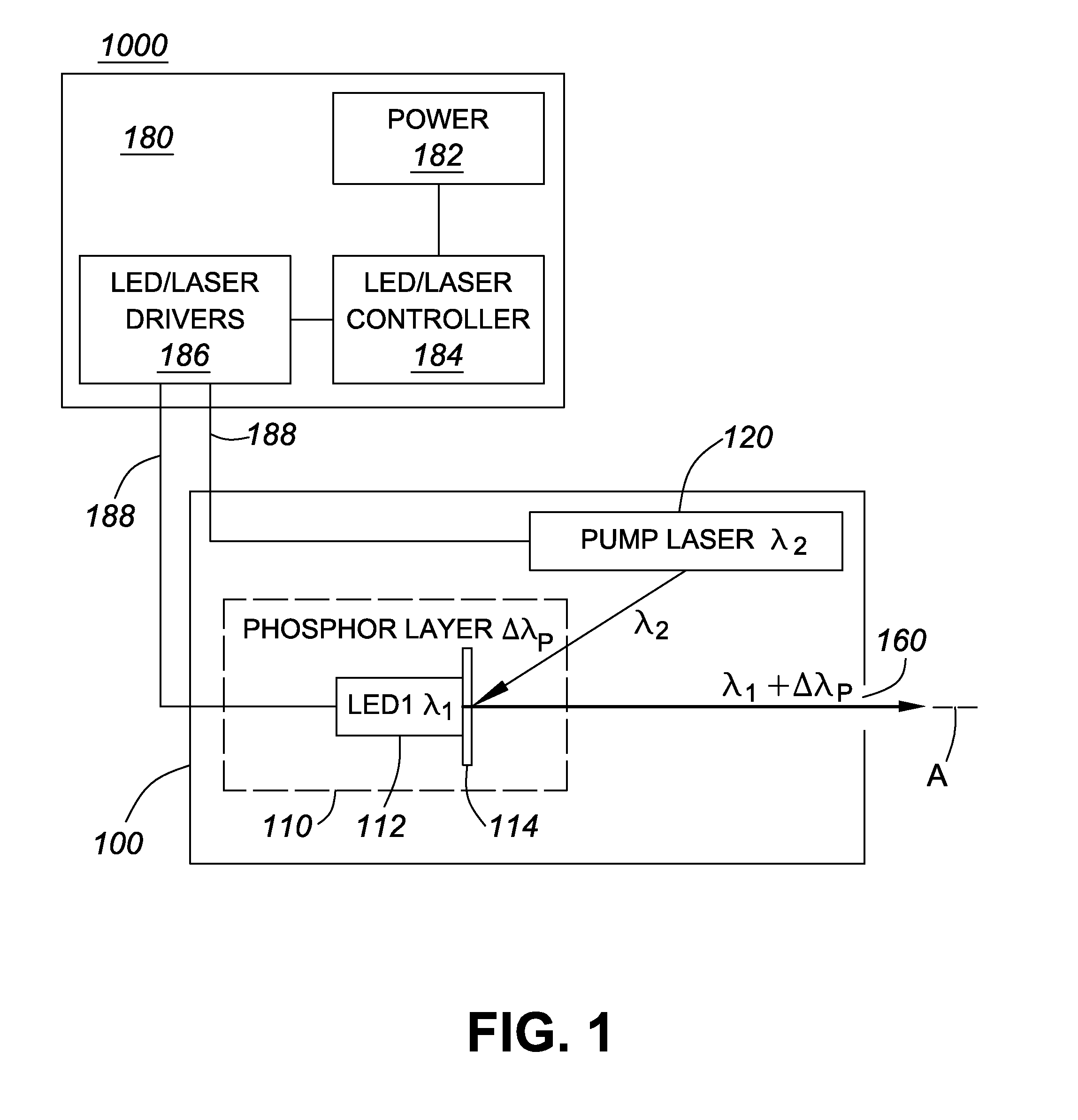

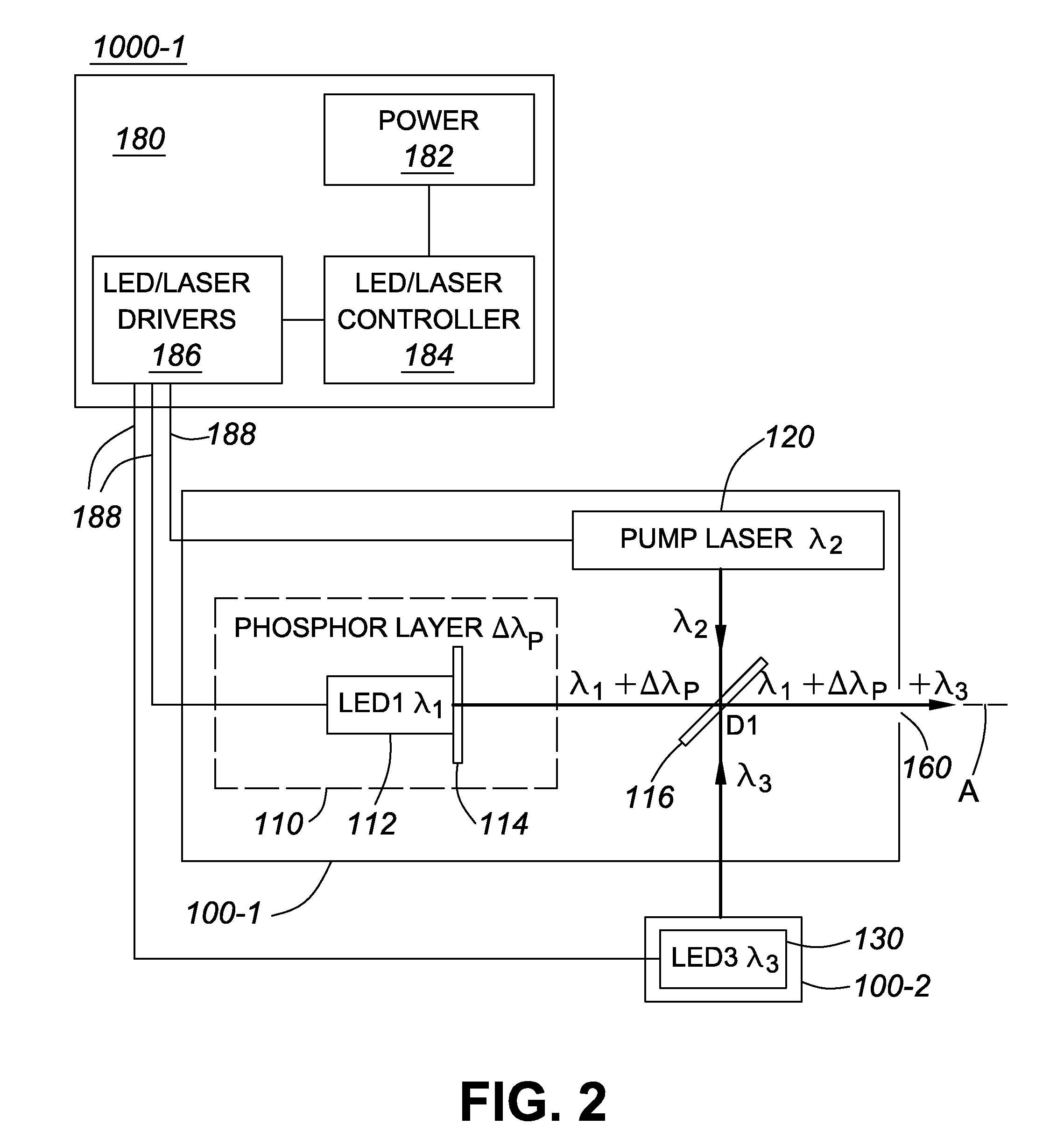

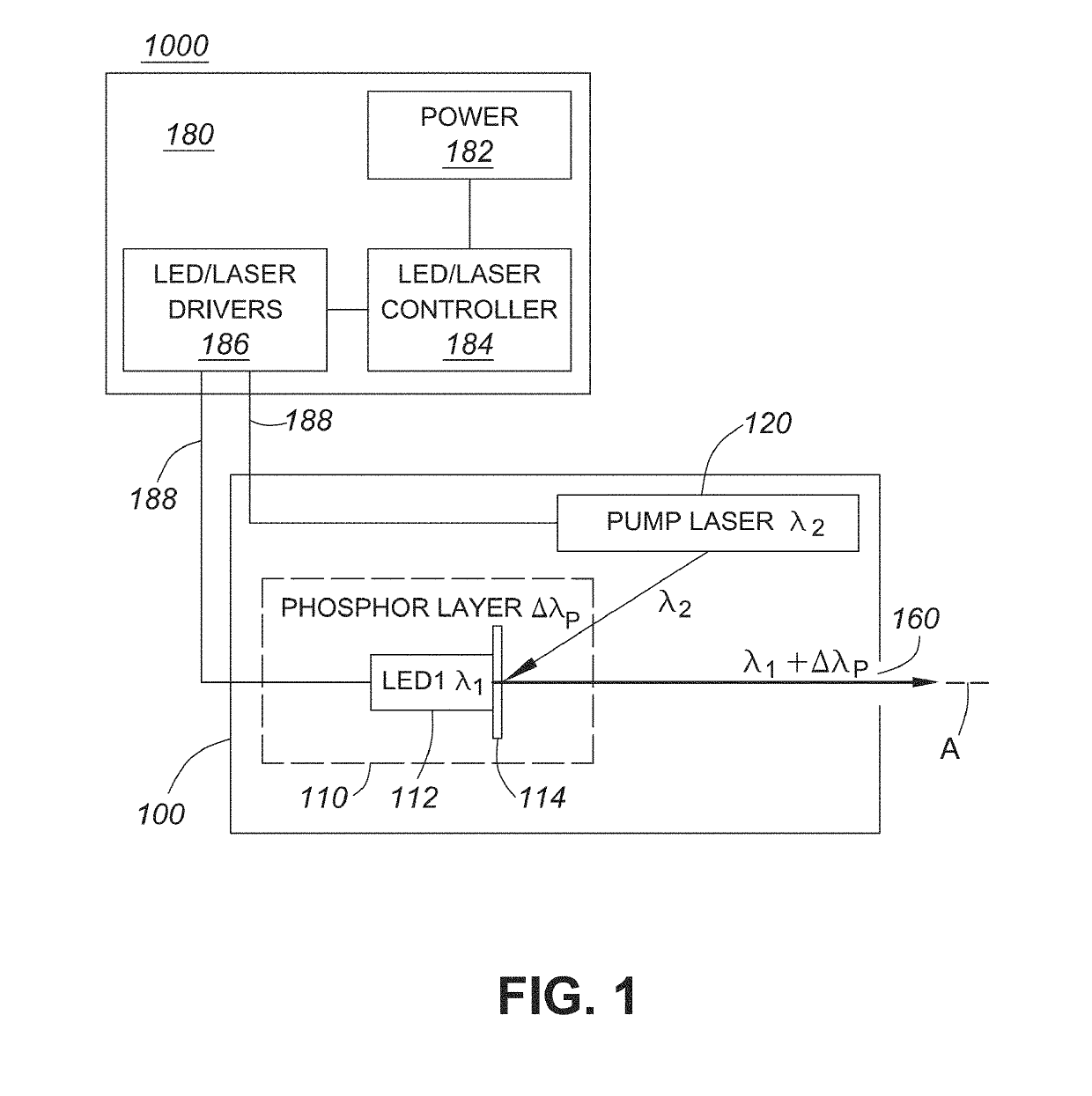

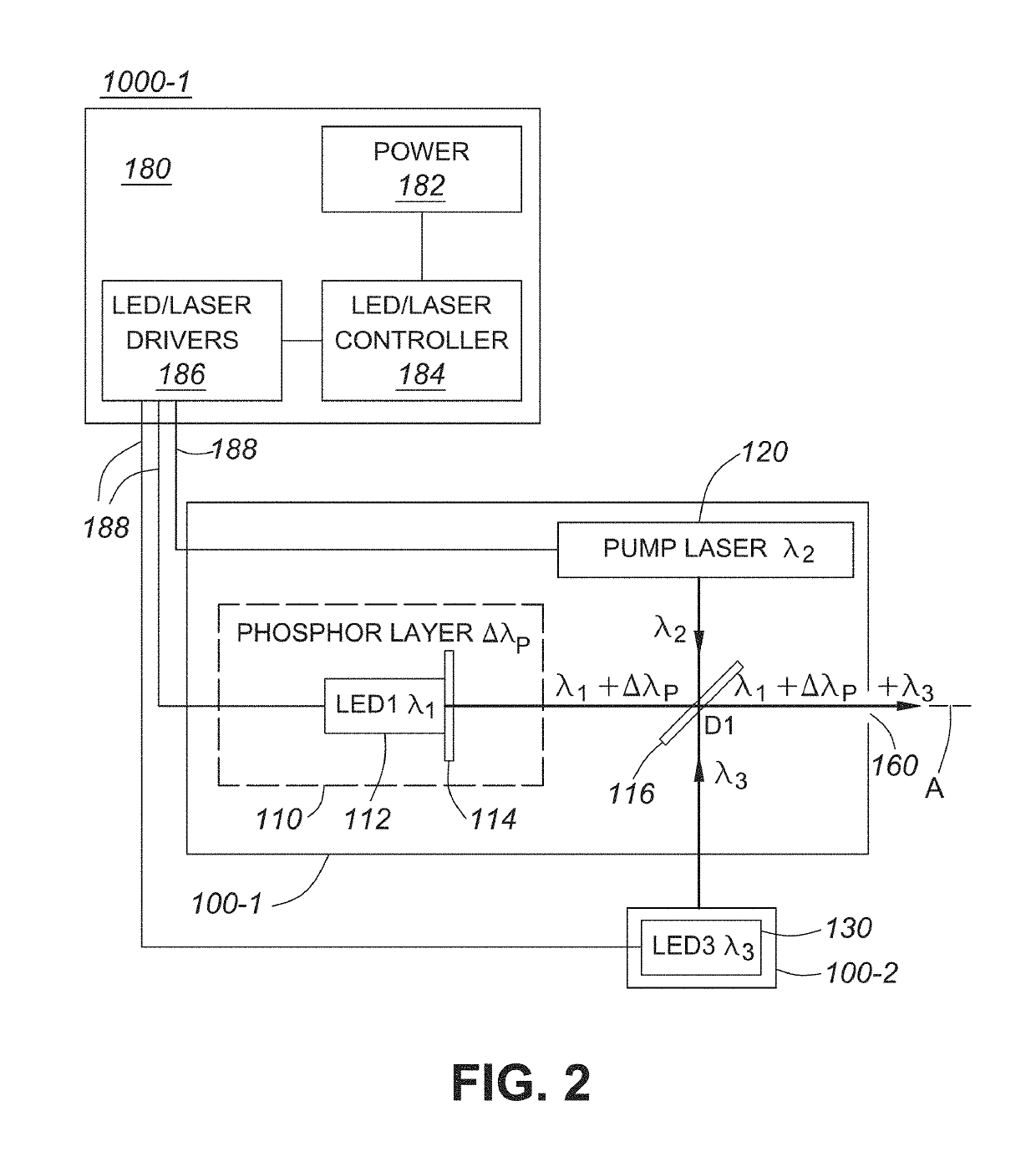

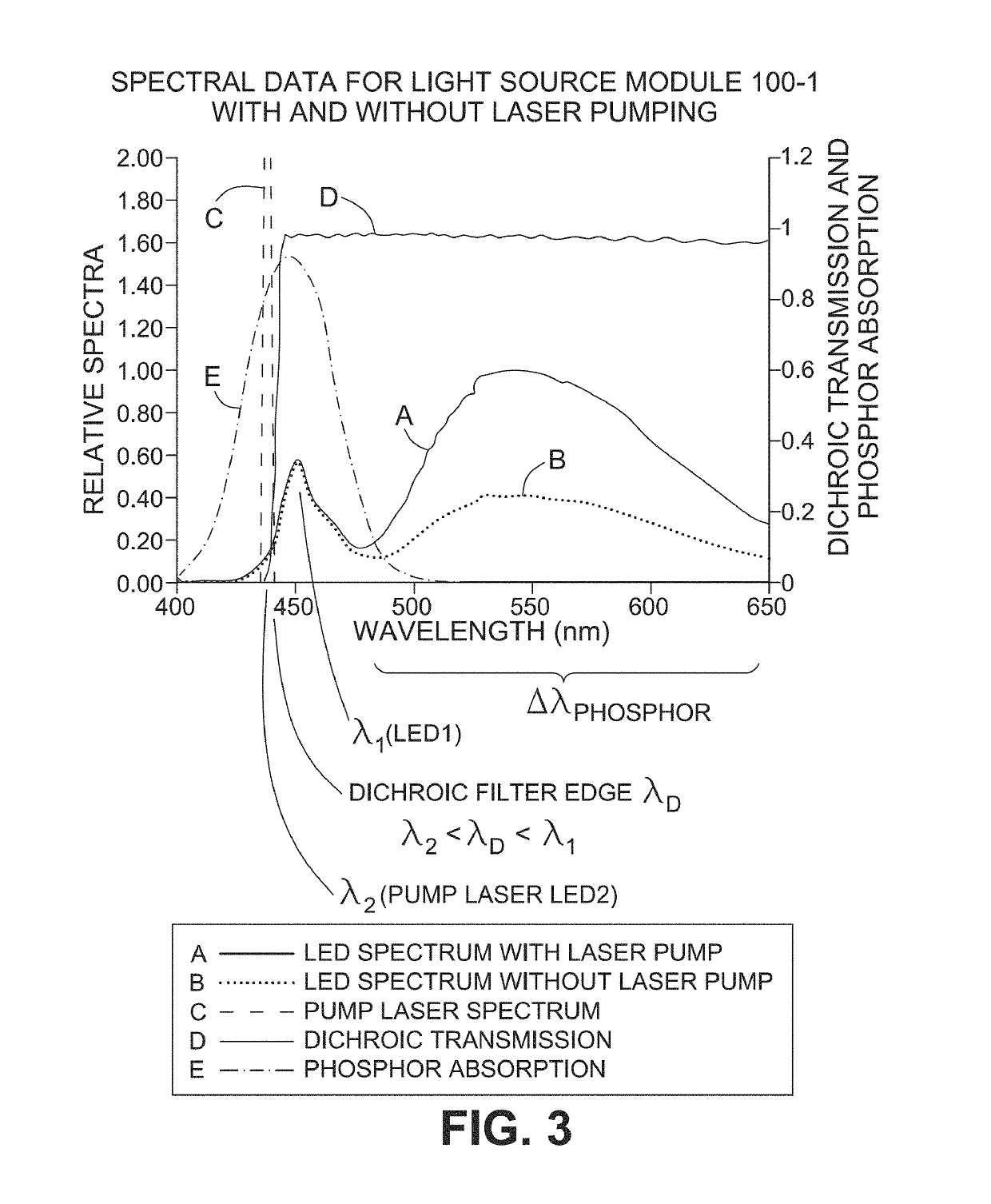

High brightness solid state illumination system for fluorescence imaging and analysis

ActiveUS20140340869A1Overcome disadvantageMitigate disadvantageElectroluminescent light sourcesMaterial analysis by optical meansPhysicsBroadband

A solid state illumination system is disclosed, wherein a light source module comprises a first light source, comprising an LED and a phosphor layer, the LED emitting a wavelength λ1 in an absorption band of the phosphor layer for generating longer wavelength broadband light emission from the phosphor, ΔλPHOSPHOR, and a second light source comprising a laser emitting a wavelength λ2 in the absorption band of the phosphor layer. While operating the LED, concurrent laser pumping of the phosphor layer increases the light emission of the phosphor, and provides high brightness emission, e.g. in green, yellow or amber spectral regions. Additional modules, which provide emission at other wavelengths, e.g. in the UV and near UV spectral bands, together with dichroic beam-splitters / combiners allow for an efficient, compact, high-brightness illumination system, suitable for fluorescence imaging and analysis.

Owner:EXCELITAS CANADA

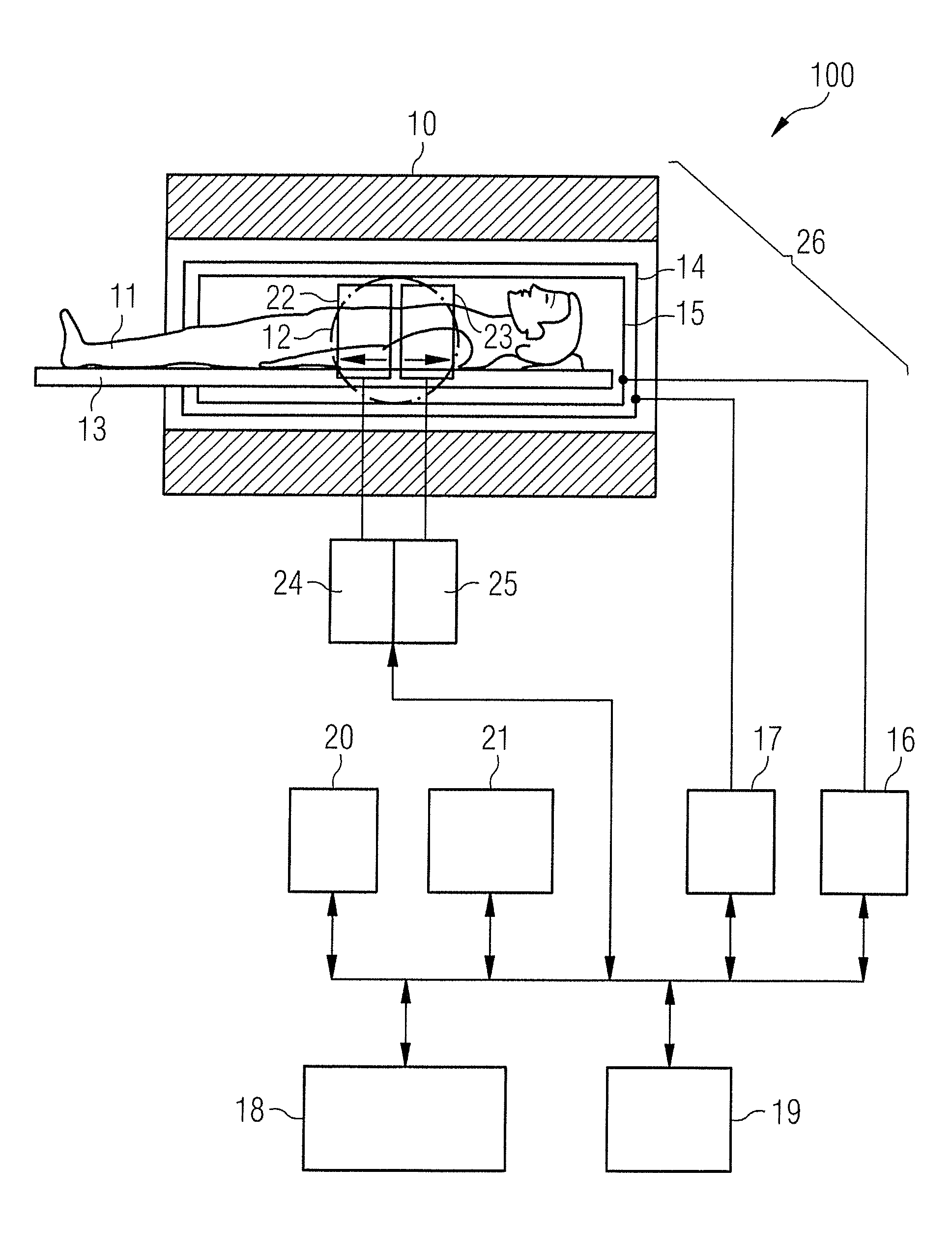

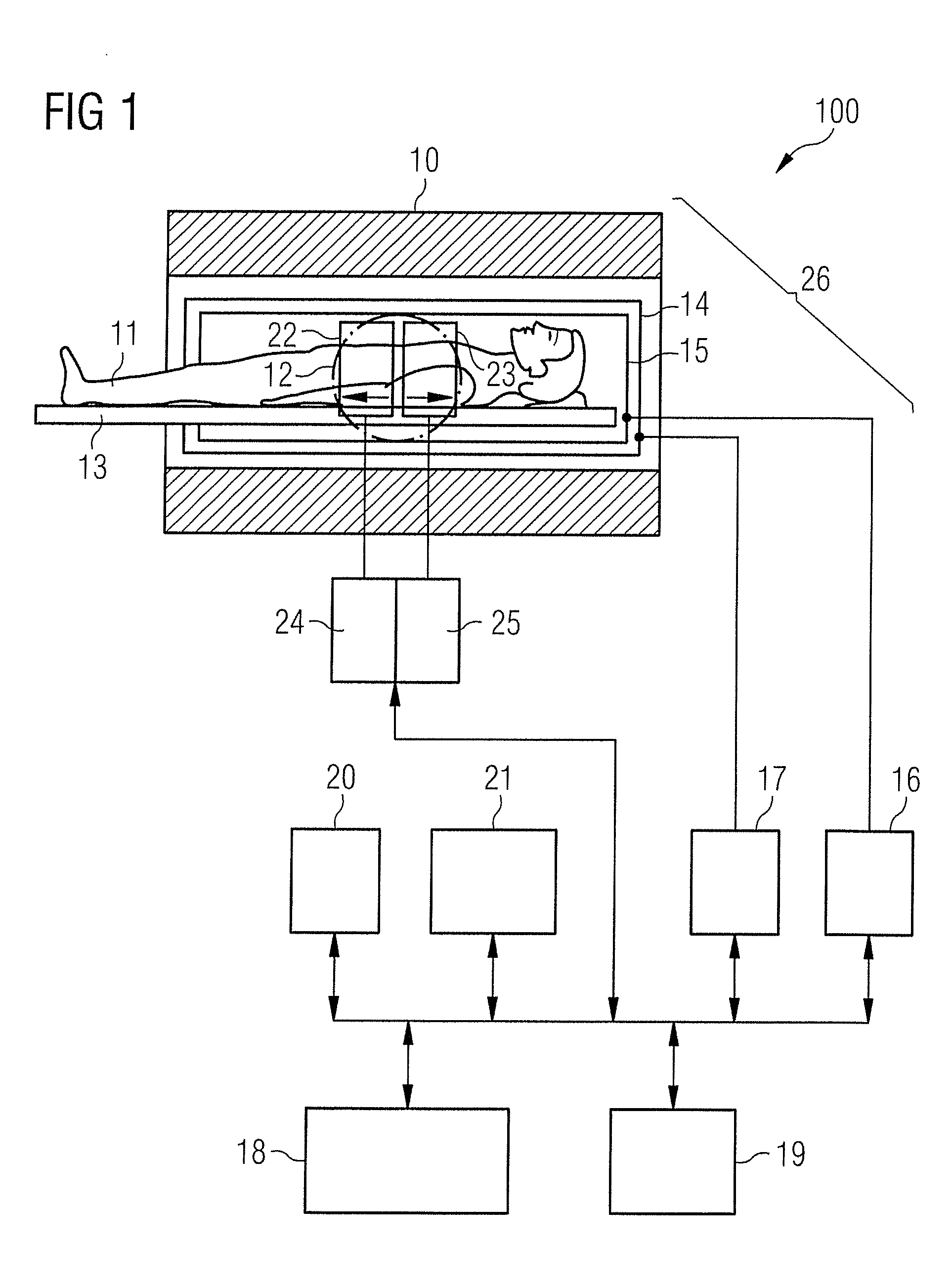

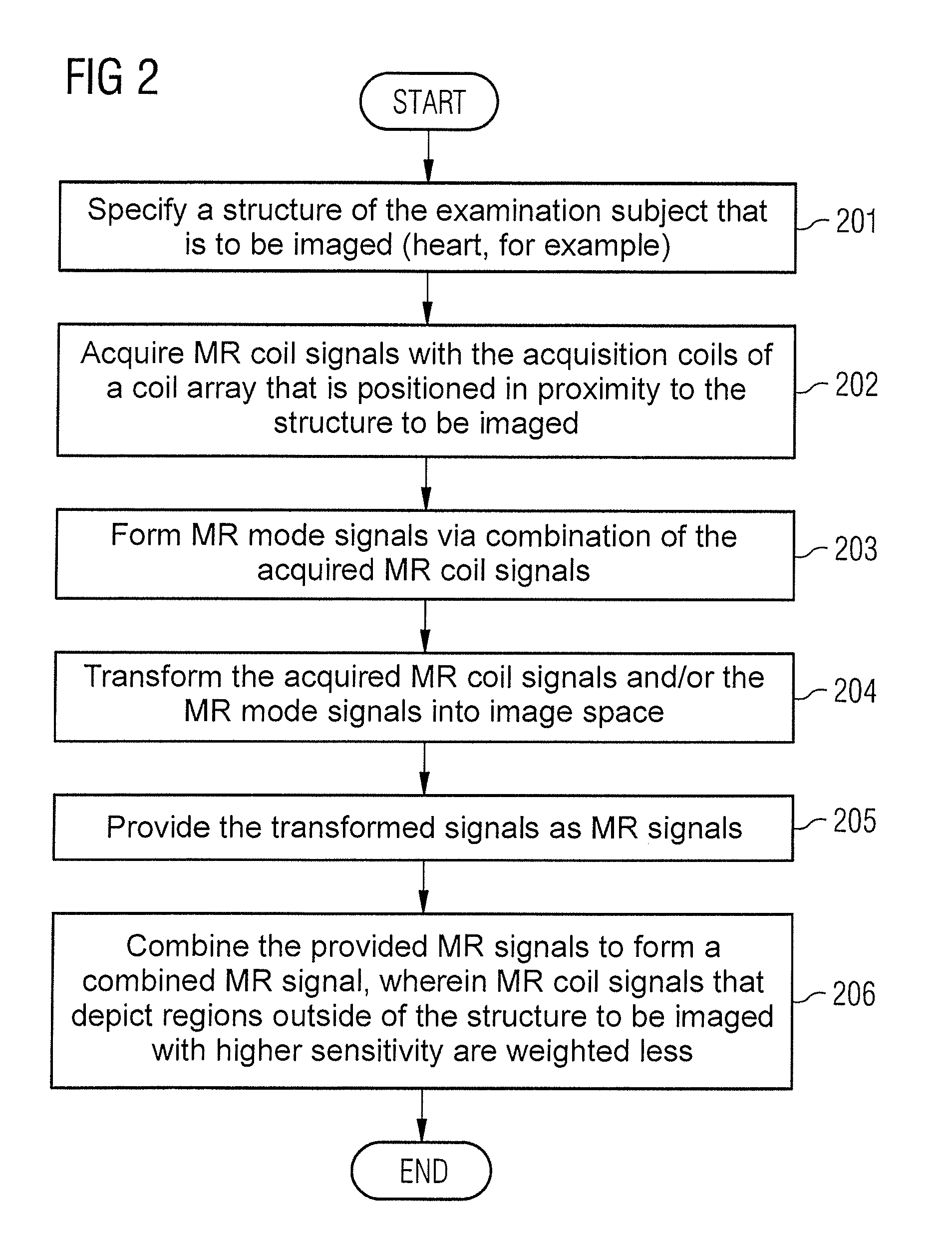

Method and magnetic resonance system for combining signals acquired from different acquisition coils

ActiveUS20120112751A1Mitigate disadvantageAvoid artifactsDiagnostic recording/measuringSensorsNuclear magnetic resonanceResonance

In a method and magnetic resonance (MR) apparatus for combining MR signals that were acquired with different acquisition coils from a region of an examination subject at least two MR signals that are based on MR signals acquired with at least two different acquisition coils are provided to a processor. Due to the spatially differing arrangement of the respective acquisition coils, the at least two MR signals image the region of the examination subject with different sensitivity profiles. The provided MR signals are combined, such that unwanted MR signal portions are suppressed, to form a combined MR signal with the suppression of unwanted MR signal portions being implemented by MR signal portions that were acquired with an acquisition coil that detects the unwanted MR signal portions with increased sensitivity in comparison to other acquisition coils being weighted less in the combined MR signal than other MR signal portions.

Owner:SIEMENS HEALTHCARE GMBH

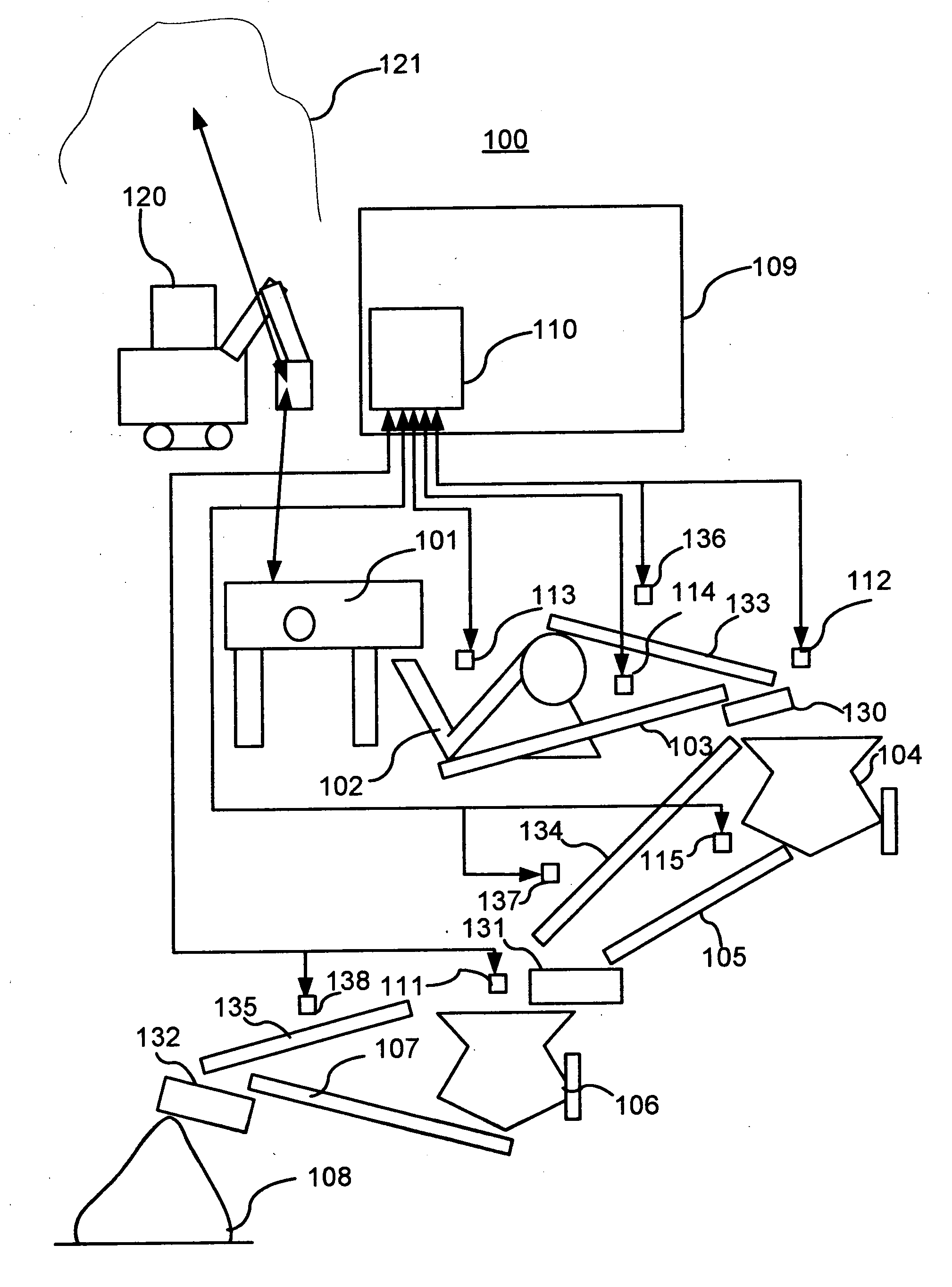

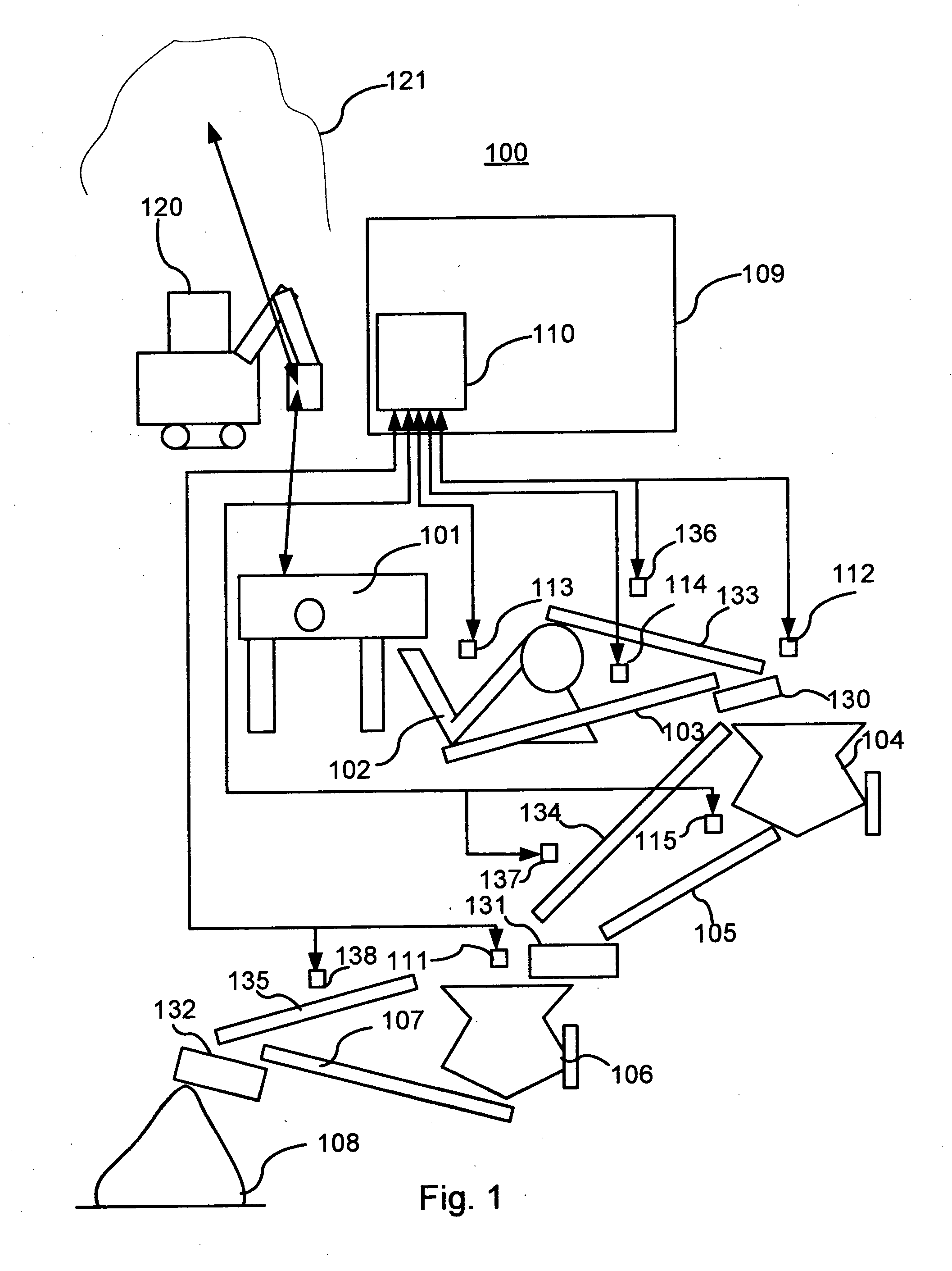

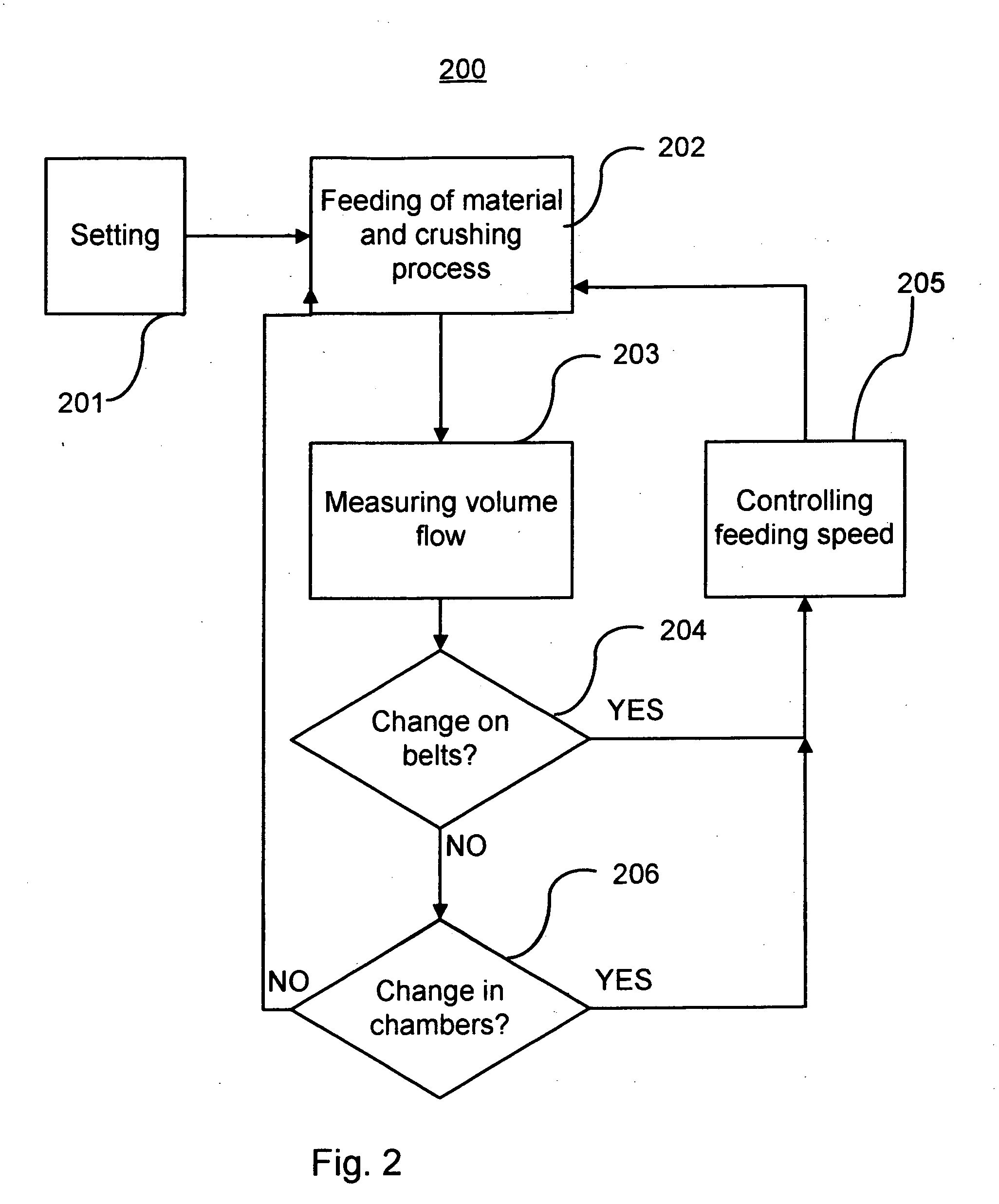

Method and equipment for controlling crushing process

ActiveUS20110089270A1Eliminate disadvantageMitigate disadvantageCocoaGrain treatmentsEngineeringCrusher

A method, a system and a crushing plant for controlling a crushing process, which crushing plant includes a feeder for feeding material to be crushed to a crusher, a first crusher for crushing the fed material, a second crusher for crushing the crushed material and a conveyor for conveying the crushed material from said first crusher to said second crusher. A crushing plant includes measurement means for measuring the volume flow of the crushed material and control means for controlling the feeding speed of the material to be crushed responsive to change in the volume flow of the crushed material.

Owner:METSO OUTOTEC FINLAND OY

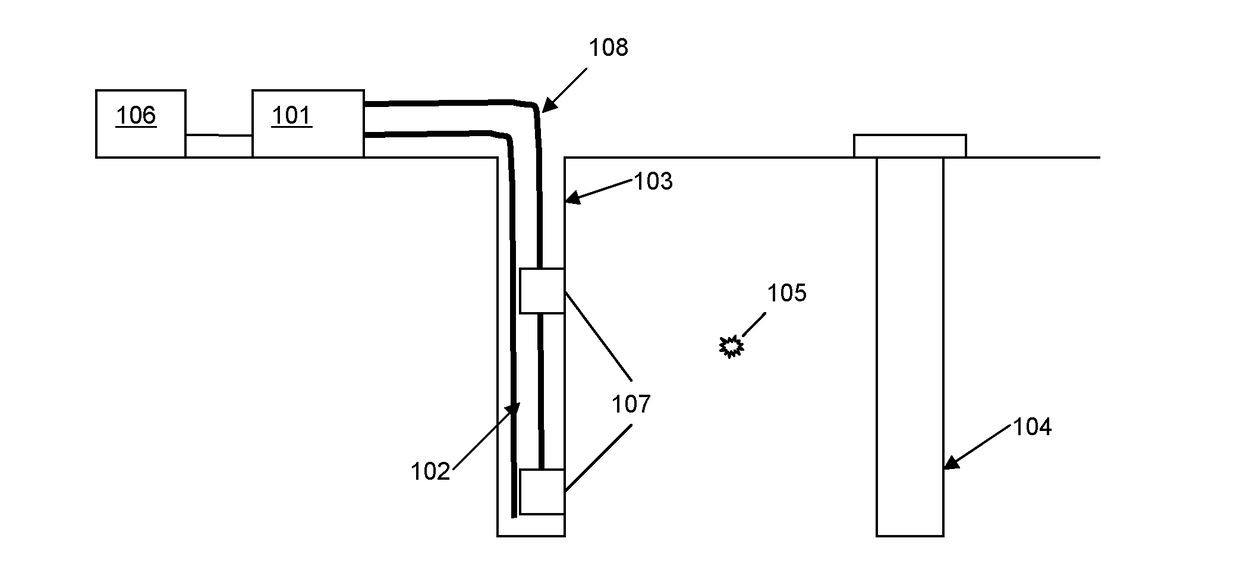

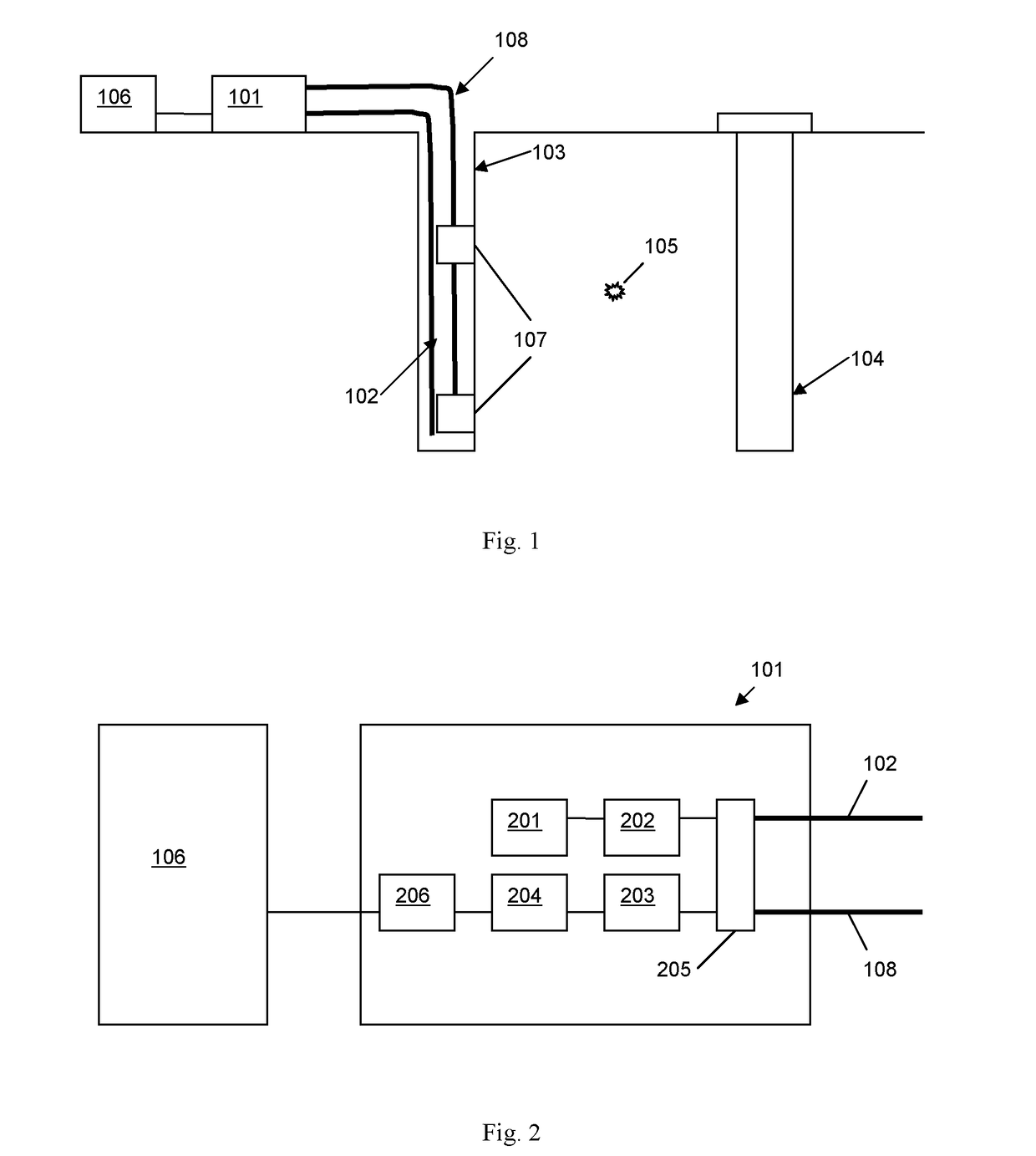

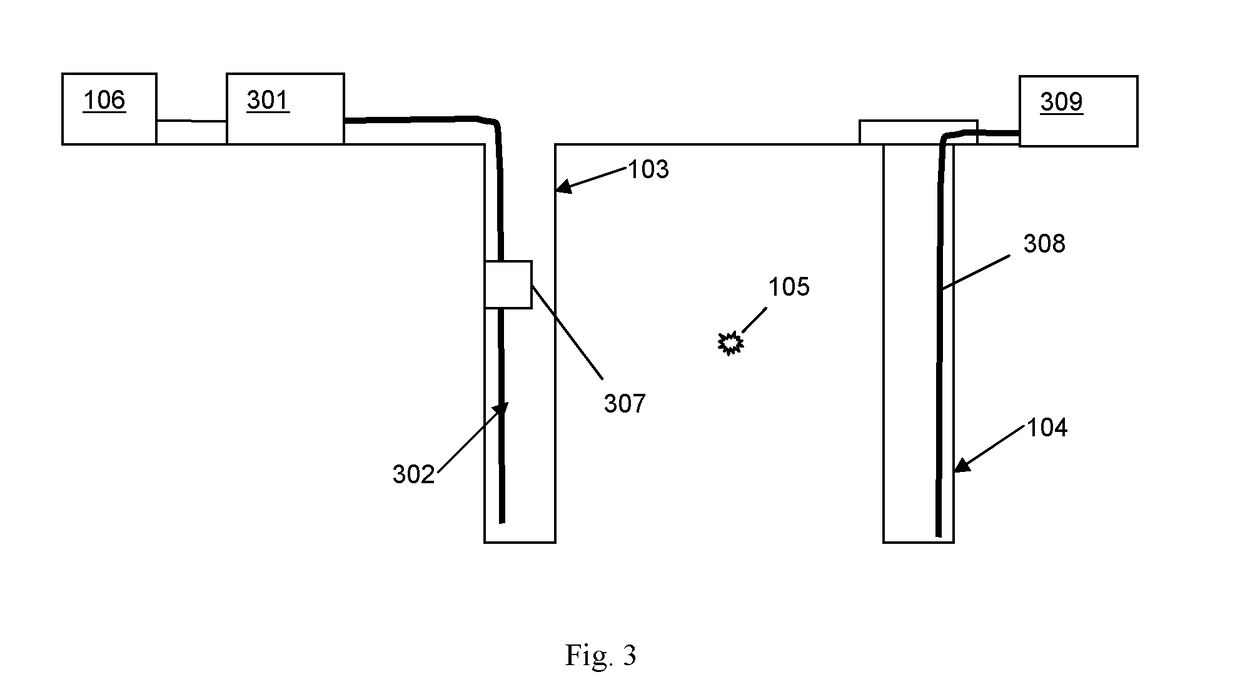

Seismic monitoring

ActiveUS9759824B2Mitigate disadvantageAvoid the needSubsonic/sonic/ultrasonic wave measurementSeismic signal receiversGeophoneRegion of interest

The application describes methods and apparatus for seismic monitoring using fiber optic distributed acoustic sensing (DAS). The method involves interrogating a first optical fiber (102) deployed in an area of interest to provide a distributed acoustic sensor comprising a plurality of longitudinal sensing portions of fiber and also monitoring at least one geophone (107) deployed in the area of interest. The signal from the at least one geophone is analyzed to detect an event of interest (105). If an event of interest is detected the data from the distributed acoustic sensor acquired during said event of interest is recorded. The geophone may be co-located with part of the sensing fiber and in some embodiments may be integrated (307) with the sensing fiber.

Owner:OPTASENSE HLDG LTD

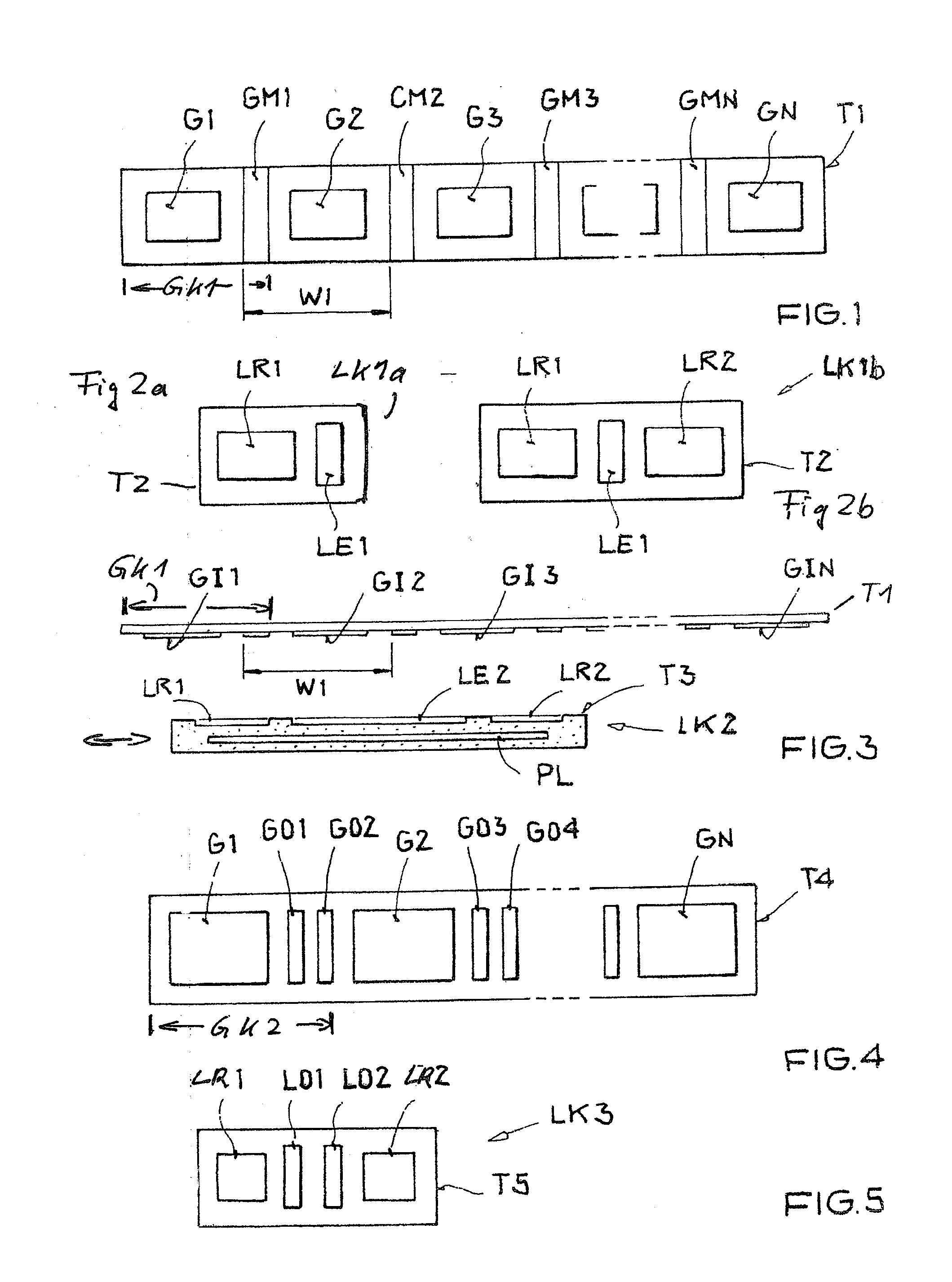

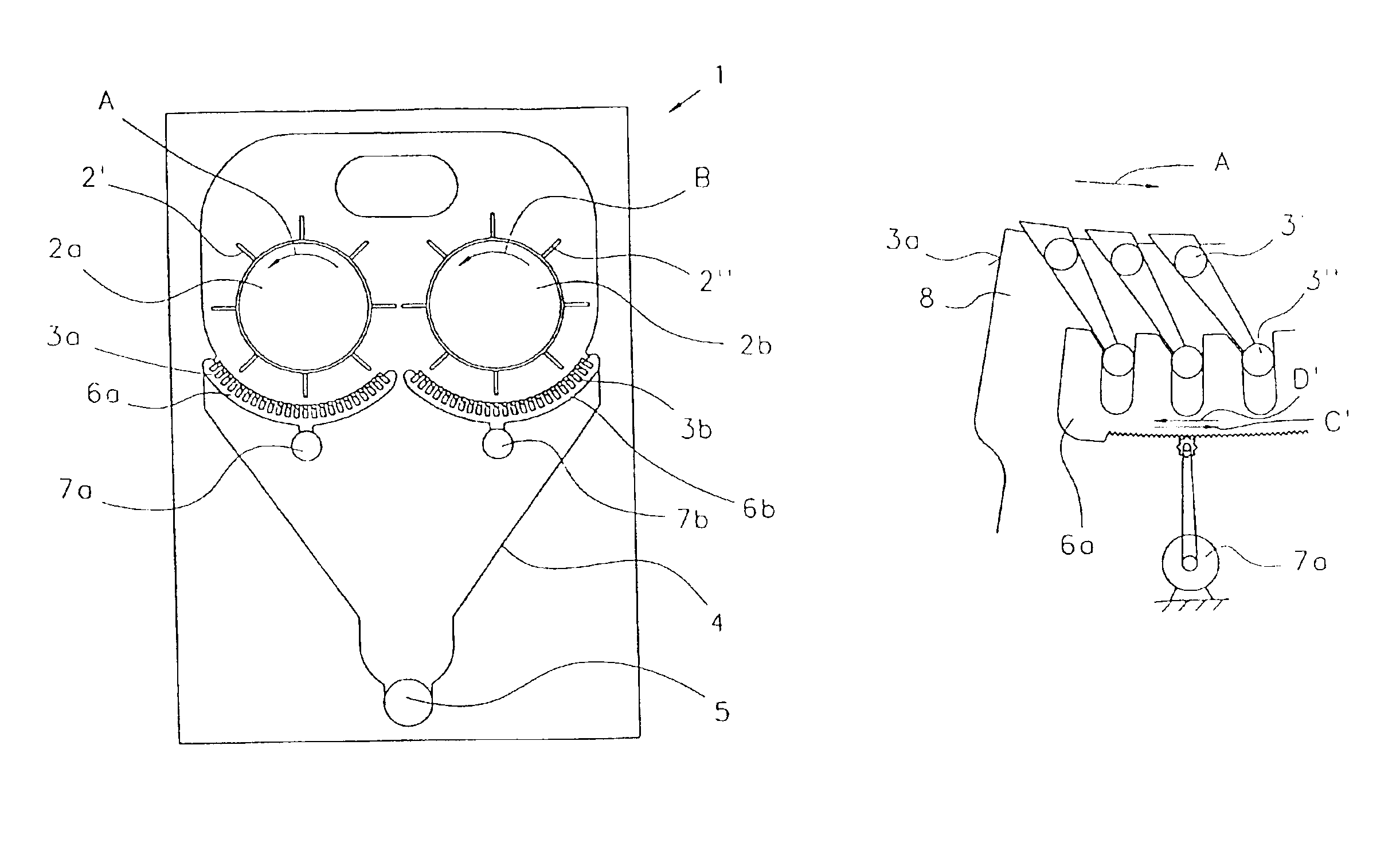

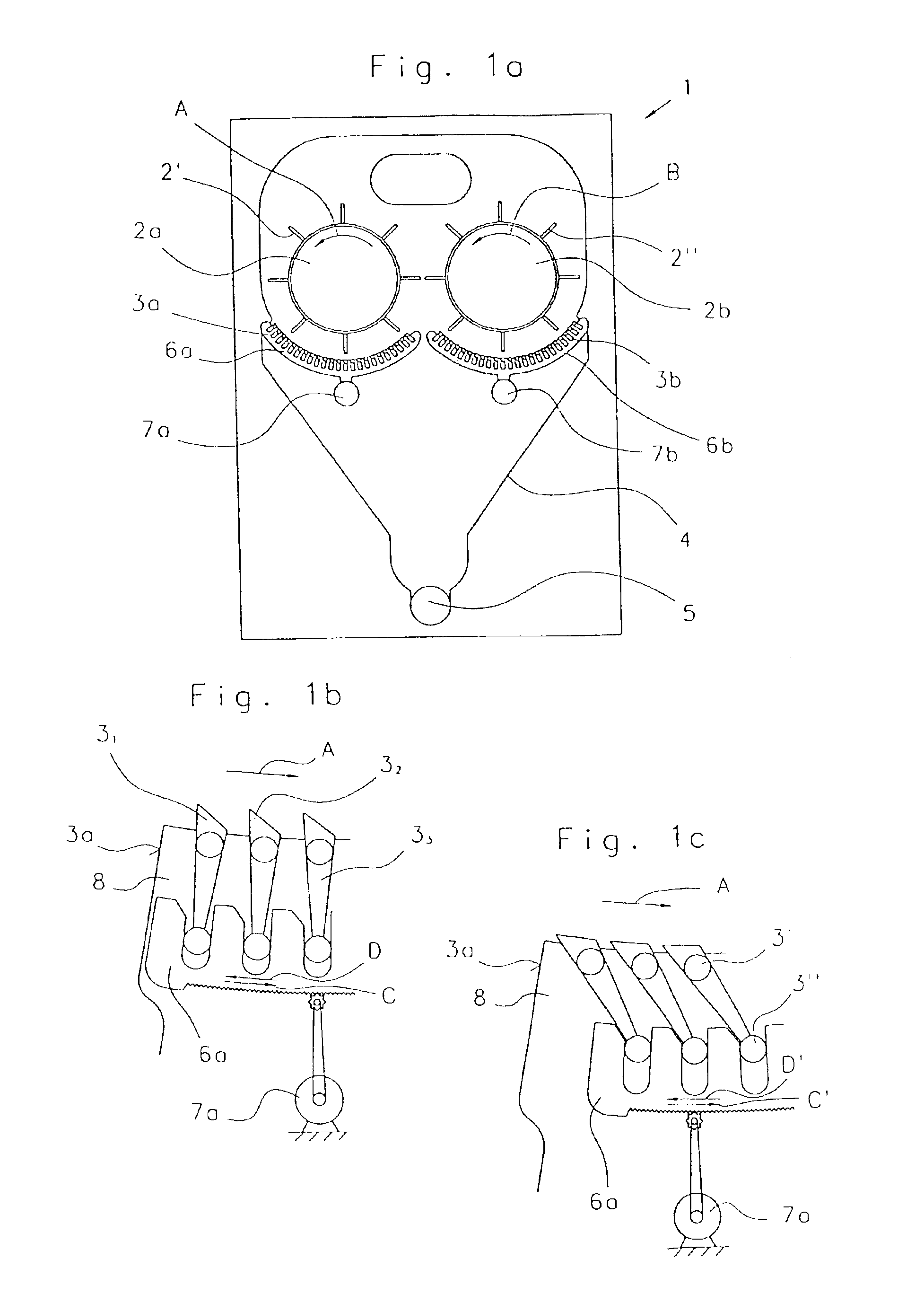

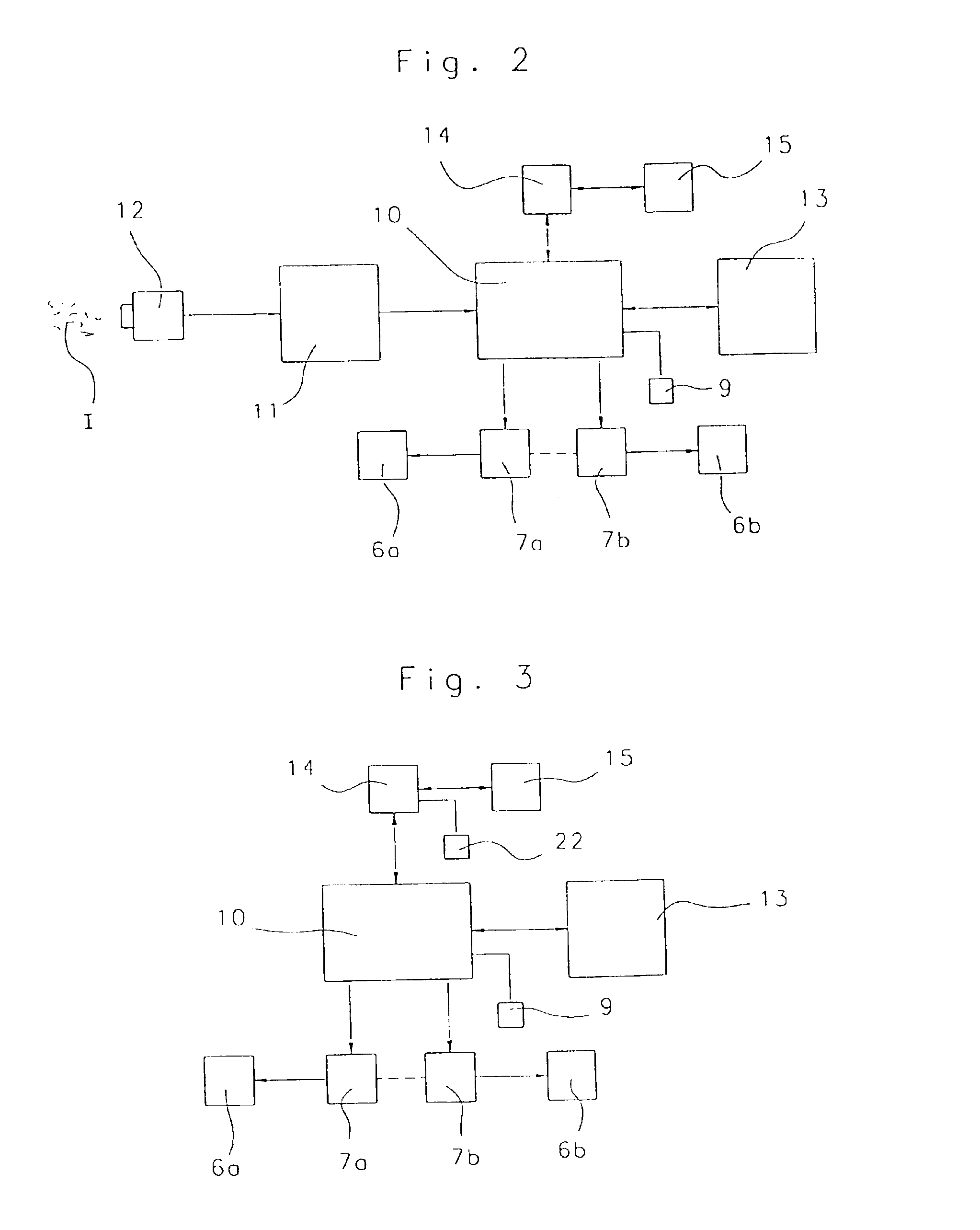

Apparatus for decorating stiff objects by screen printing

InactiveUS20050223918A1Mitigate disadvantageReduce disadvantagesLiquid surface applicatorsInking apparatusEngineeringElectrical and Electronics engineering

Owner:KBA KAMMANN

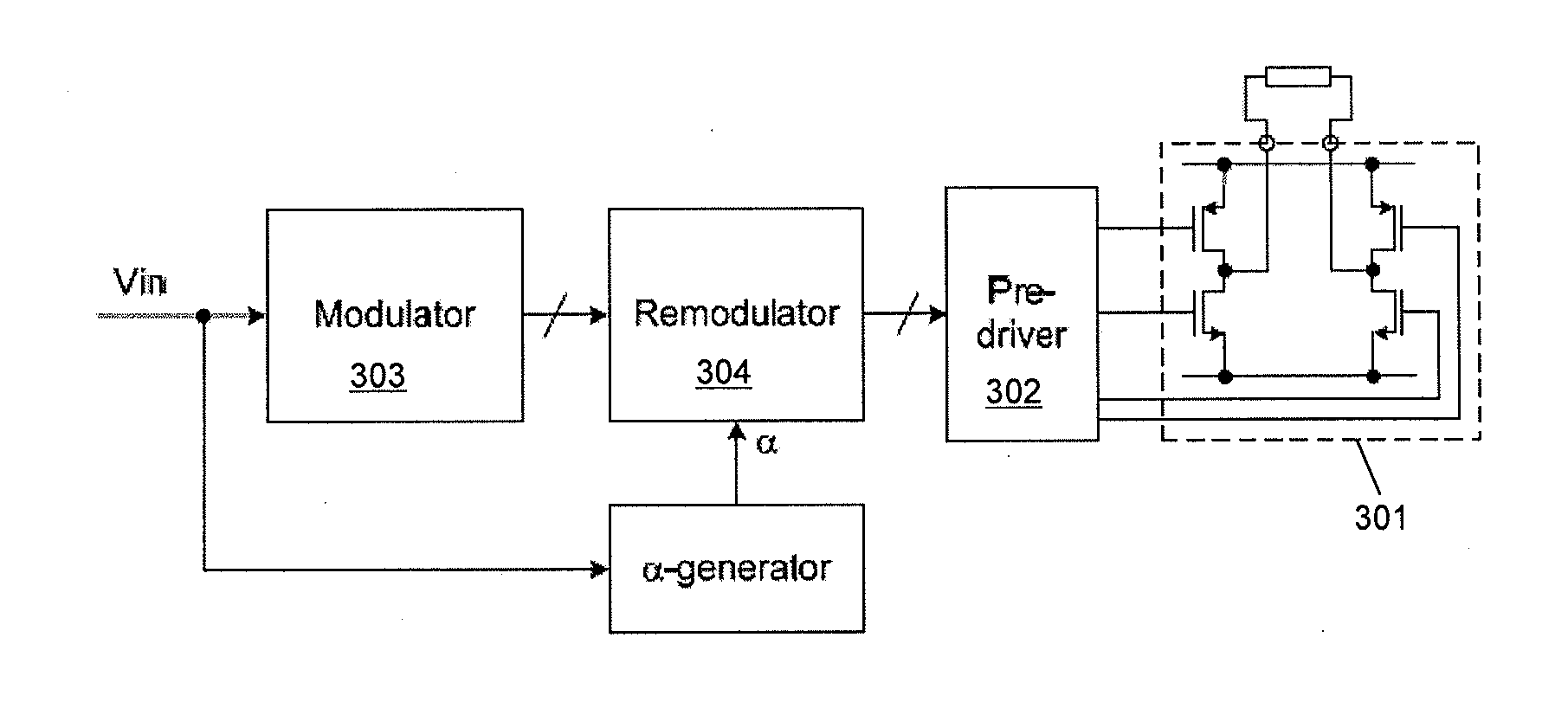

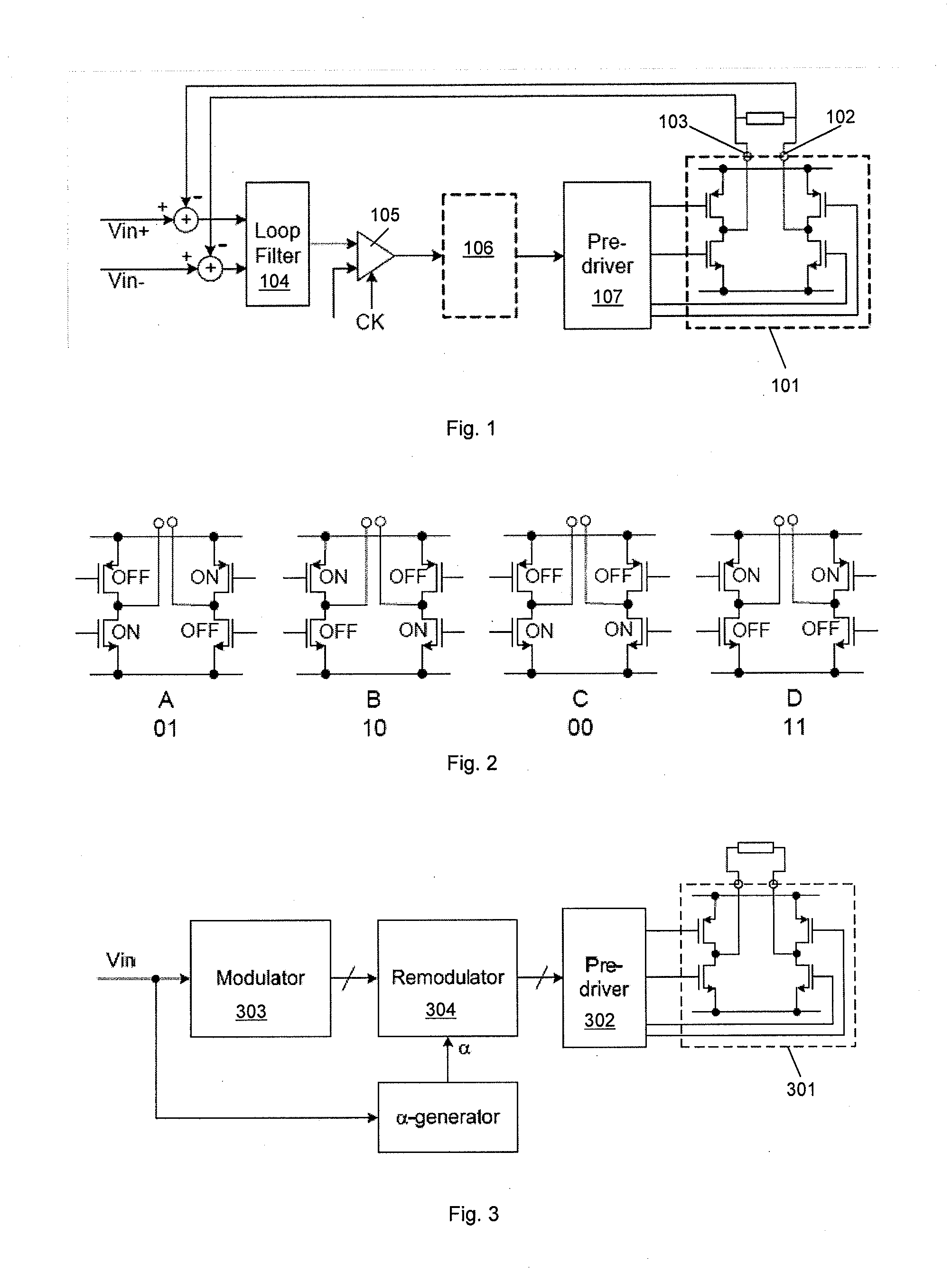

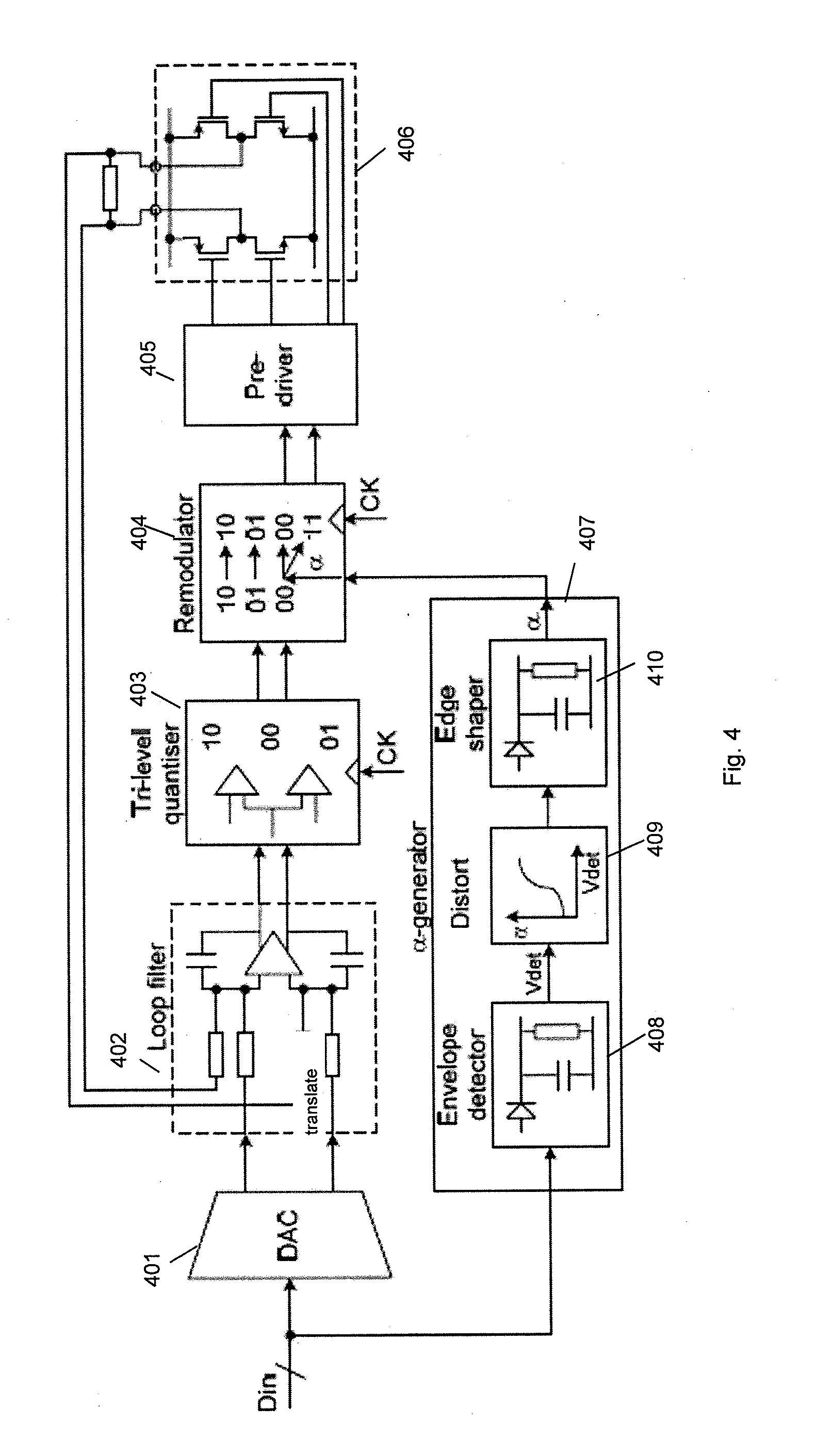

Amplifier circuit

ActiveUS20130120063A1Mitigate disadvantageReduce gapAmplifier with semiconductor-devices/discharge-tubesH bridgeEngineering

Class D amplifier circuits for amplifying an input signal. The amplifier has an H-bridge output stage and thus has switches for switchably connecting a first output to a first voltage, e.g. Vdd, or a second voltage (e.g. ground) and for switchably connecting a second output to the first or second voltages. A switch controller is configured to control the H-bridge stage so as to vary between a plurality of states including at least a first state in which the outputs are both connected to the first voltage and a second state in which the outputs are both connected to said second voltage. The switch controller is configured to vary the proportion of time spent in the first state relative to the second state based on an indication of the amplitude of the input signal. The amplifier may therefore have first circuitry for deriving a proportion value (α) based on the input signal (Din) and second circuitry for generating control signals for selecting the first state or said second state based on the proportion value (α).

Owner:CIRRUS LOGIC INC

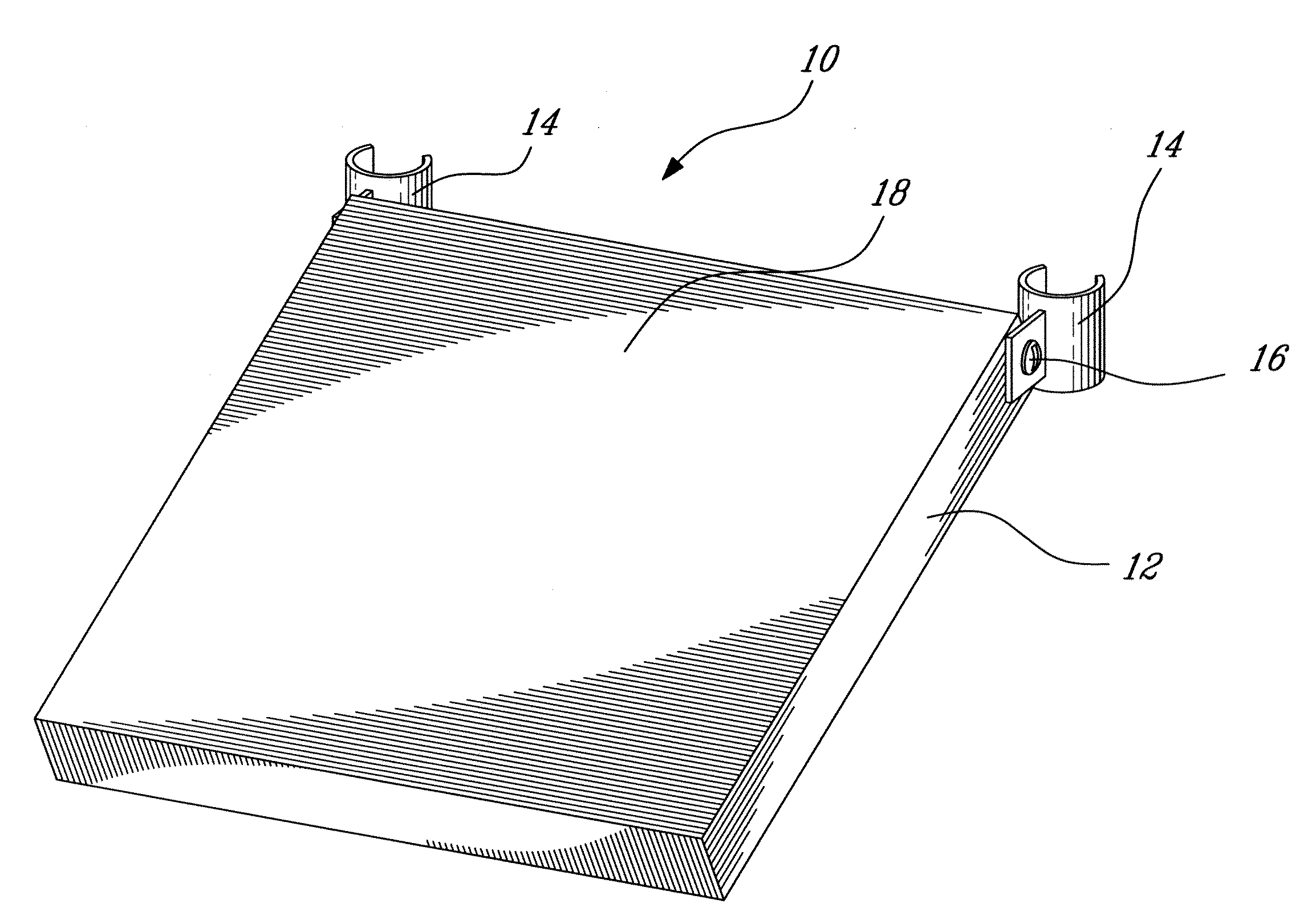

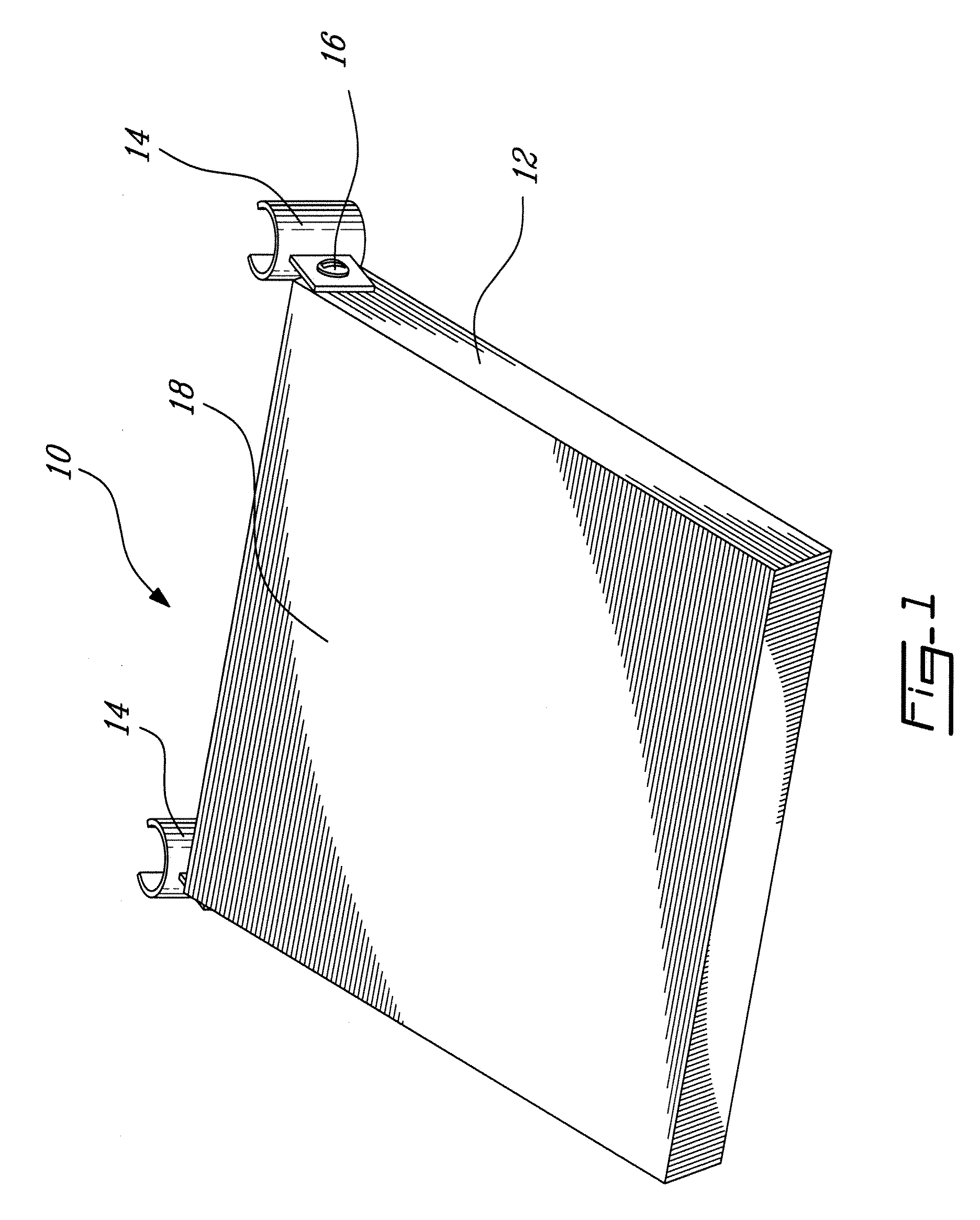

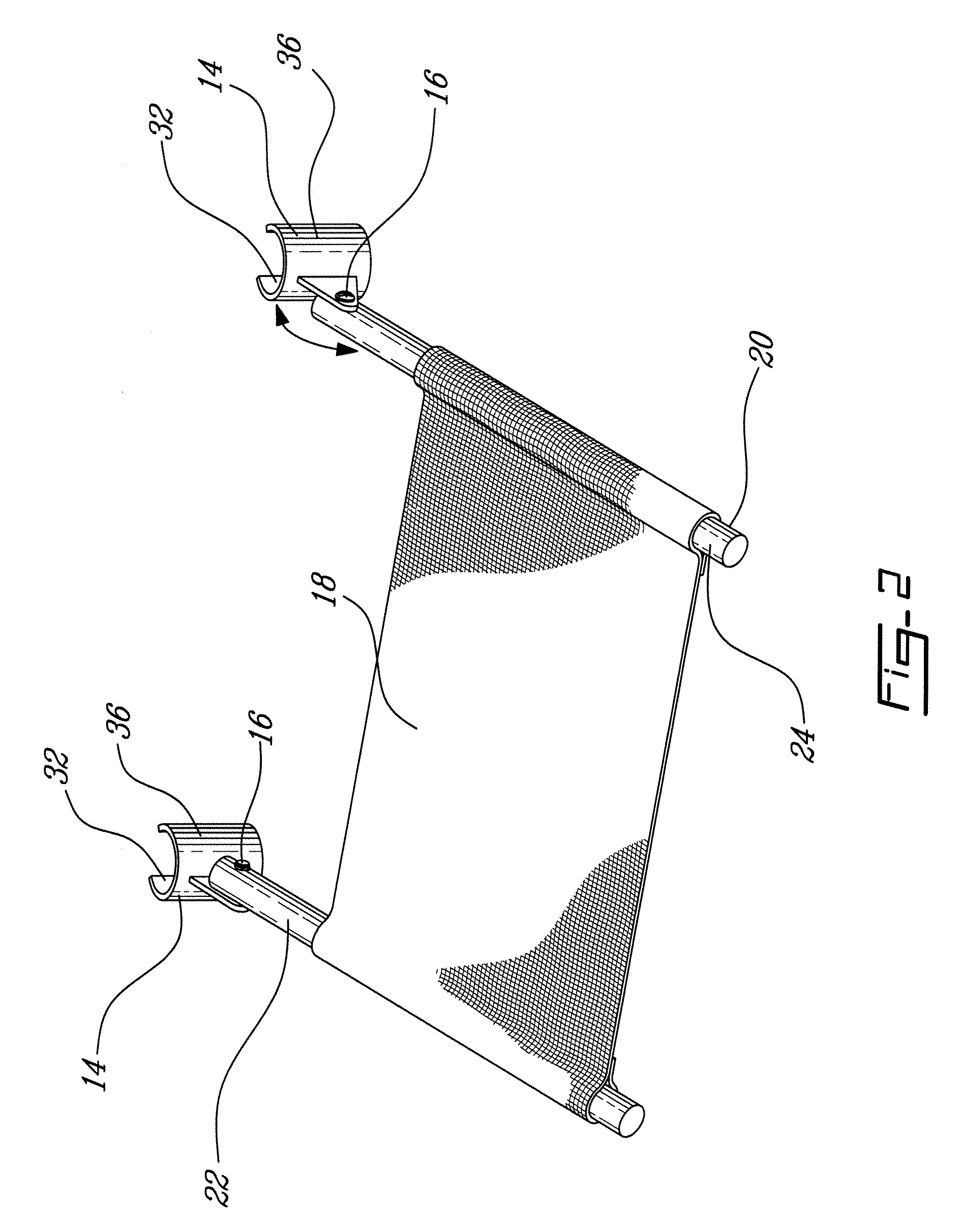

Leg rest for a stroller

InactiveUS20080217981A1Mitigate disadvantageOvercomes or mitigates one orCarriage/perambulator accessoriesCarriage/perambulator with multiple axesSupport surfaceChassis

A removable leg rest for a stroller having a chassis made of chassis members. The removable leg rest comprises a support structure, at least one fastening mechanism and at least one angle adjusting mechanism. The support structure has a leg support surface. The fastening mechanism is attached to the support structure and is operative to fix the support structure to the chassis. The angle adjusting mechanism is used to set an angle of the support structure with respect to the chassis members.

Owner:TON THAT QUOC THANH

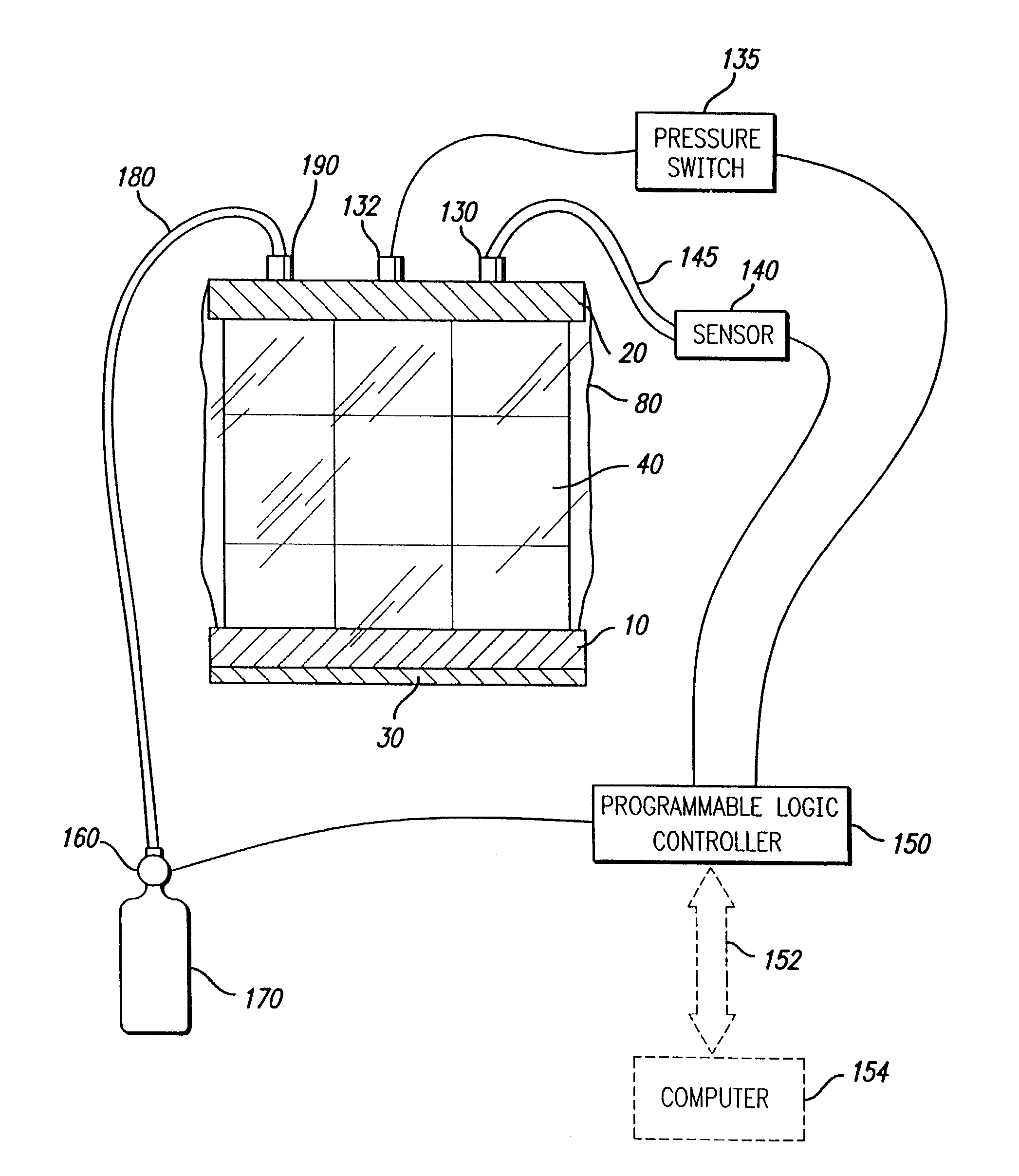

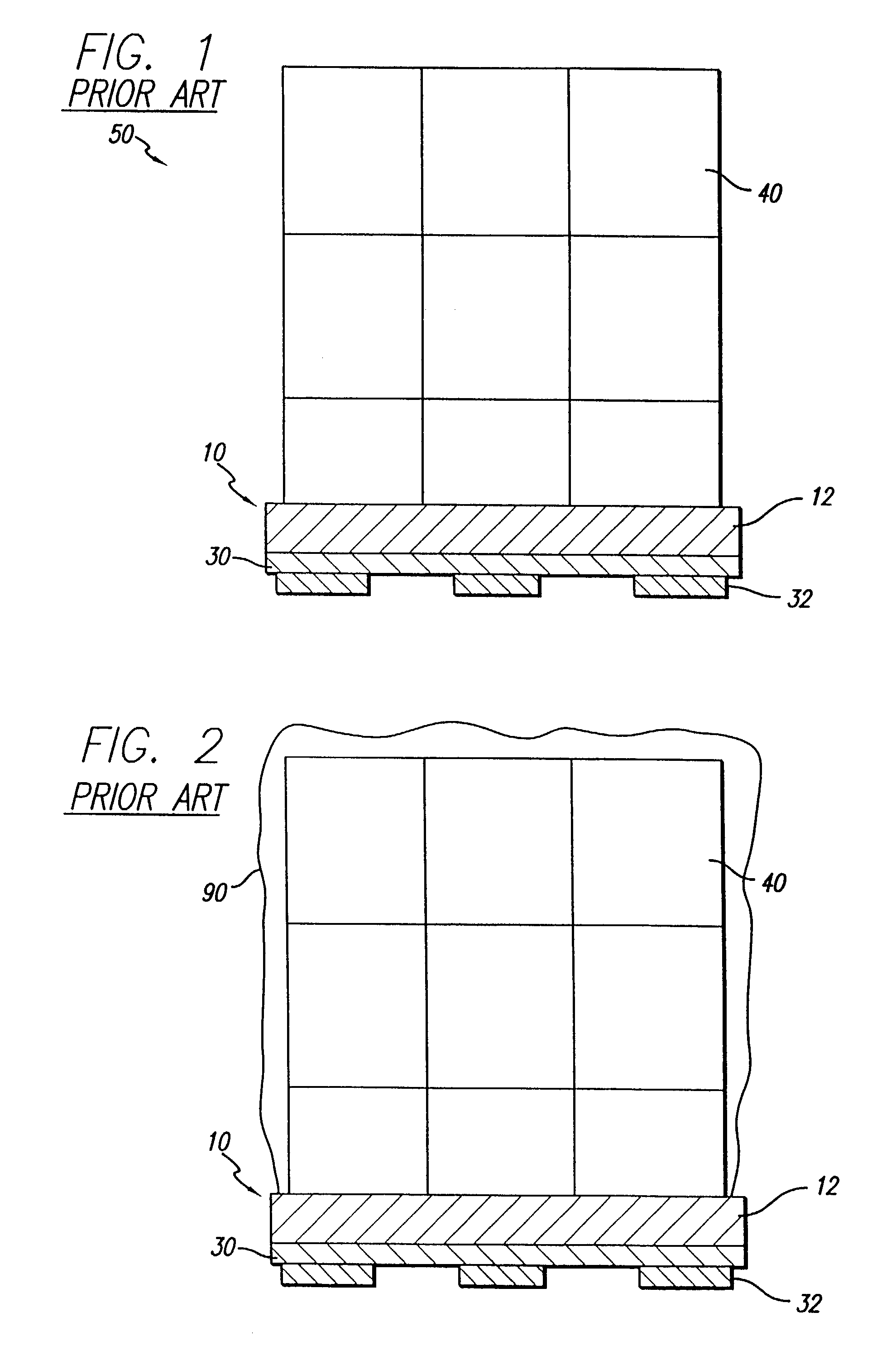

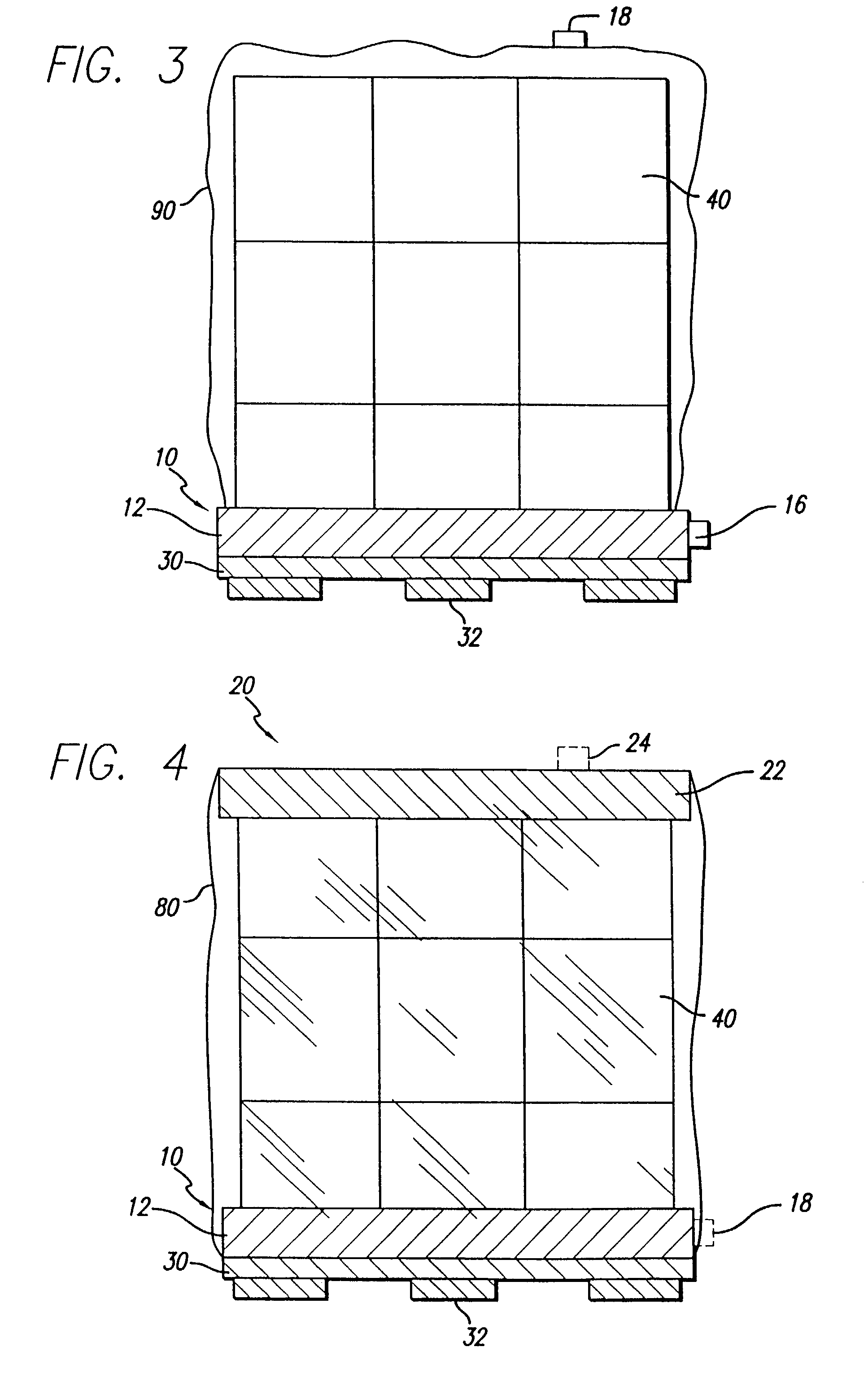

System and method for providing a regulated atmosphere for packaging perishable goods

InactiveUS7644560B2Mitigate disadvantageReduce disadvantagesWrappers shrinkageWeb rotation wrappingEngineeringPallet

A new method and system for establishing, and optionally maintaining, a desired atmosphere for perishable or atmosphere-sensitive goods during their storage and / or transportation. In one embodiment, a conveyor is used to move a pallet with goods from station to station. A mechanical arm at a sheeting station lifts goods from a pallet while one or more sheets are placed between the goods and the pallet. A bottom plate with fingers having hollow tubes holds the pallet while the pallet is being wrapped and enclosed. A portable manifold may also be connected to the hollow tubes and a controller samples and adjusts atmosphere inside the pallet via the portable manifold.

Owner:THE BOWDEN GROUP

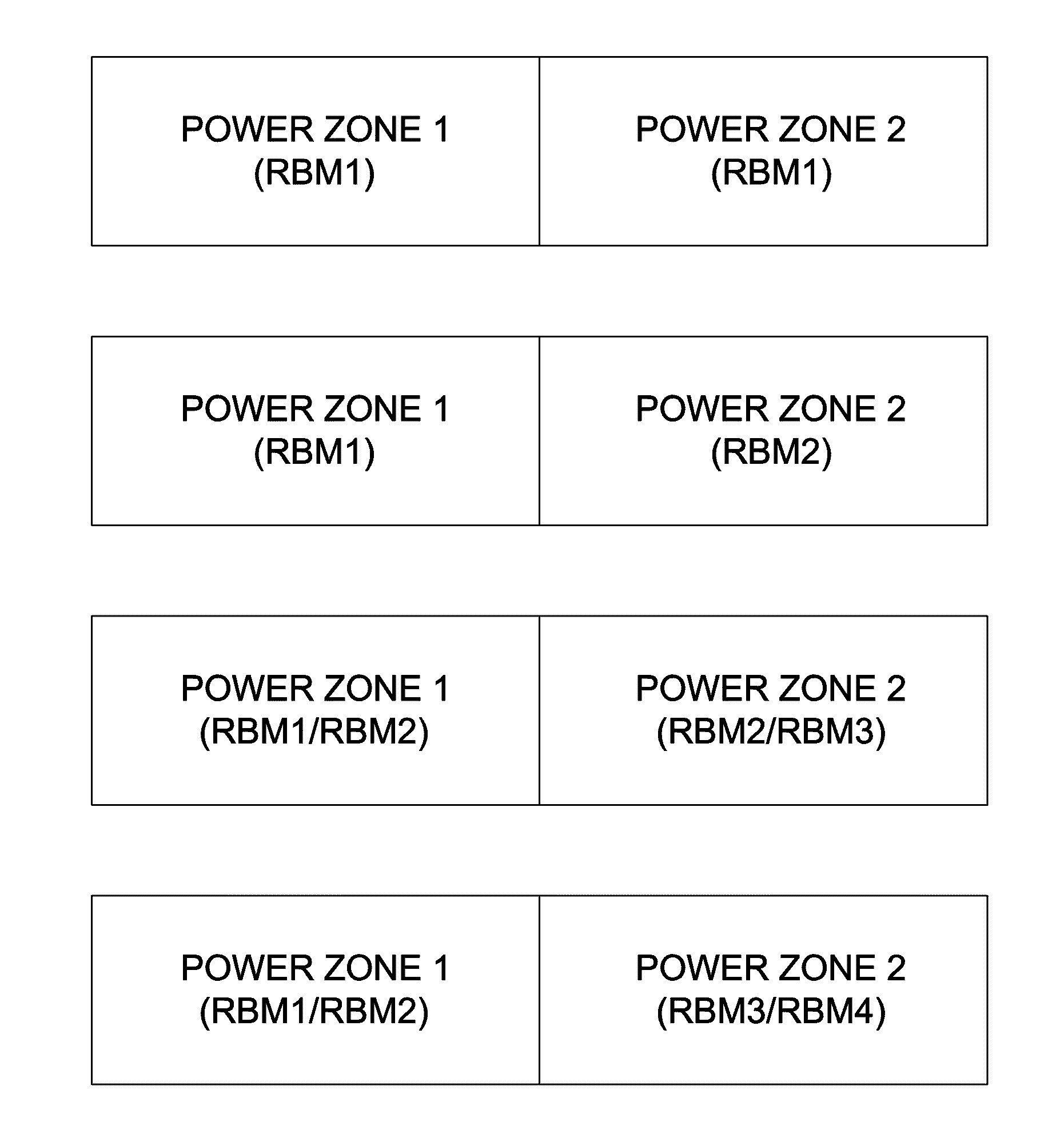

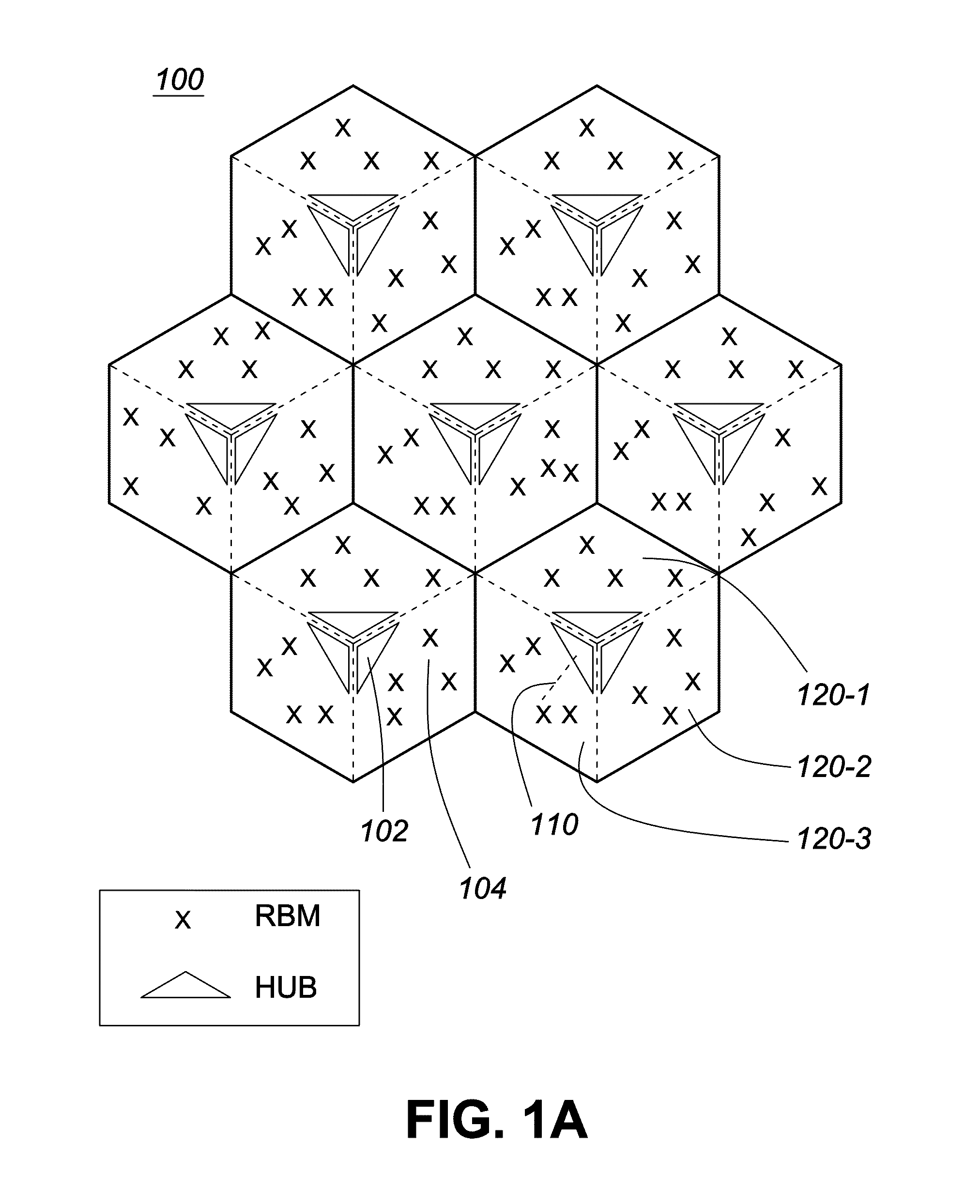

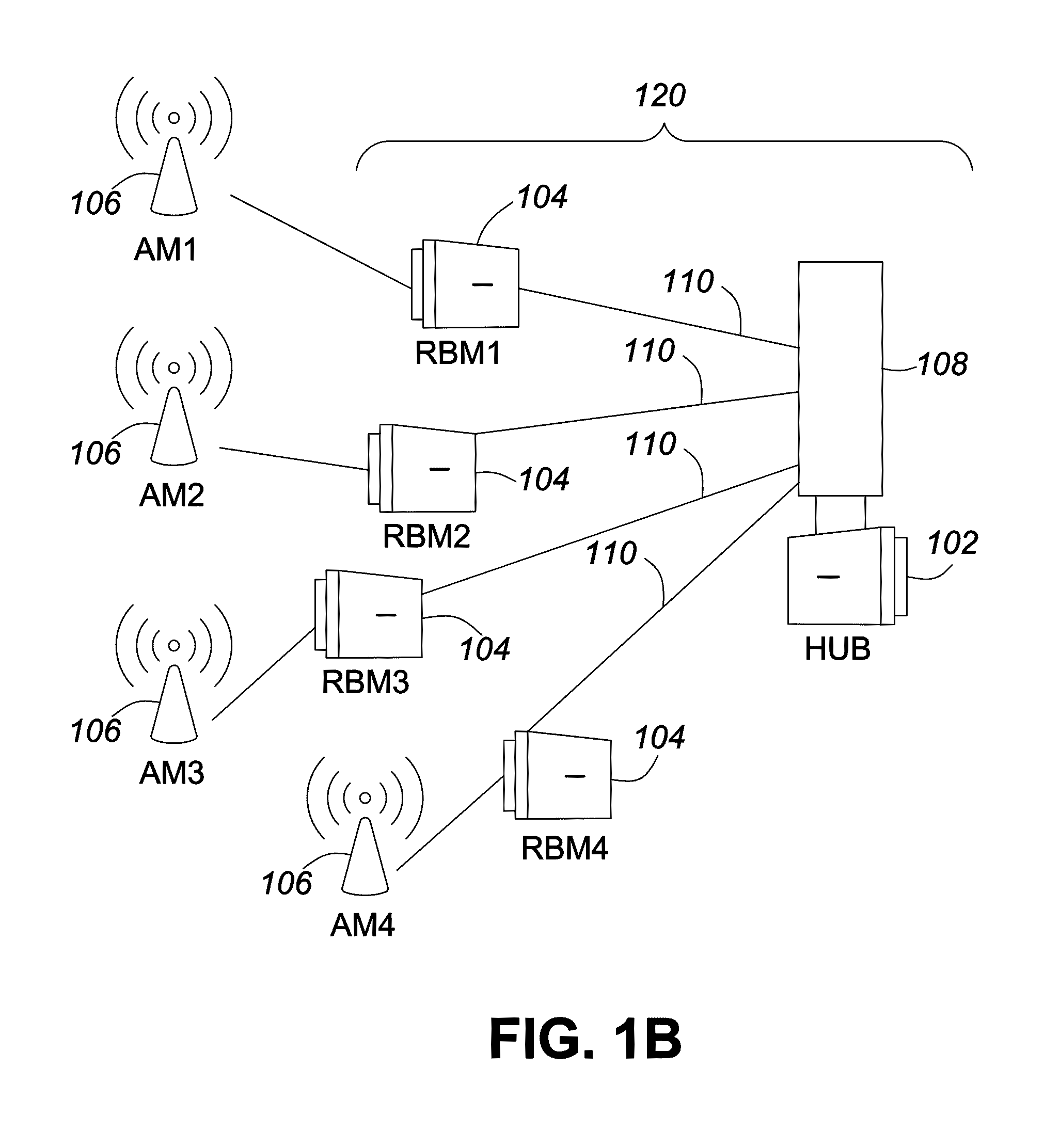

Method and apparatus for performance management in wireless backhaul networks via power control

ActiveUS20140126500A1Mitigate disadvantageEliminate disadvantagePower managementSite diversityPower controlPower management

Owner:BLINQ NETWORKS

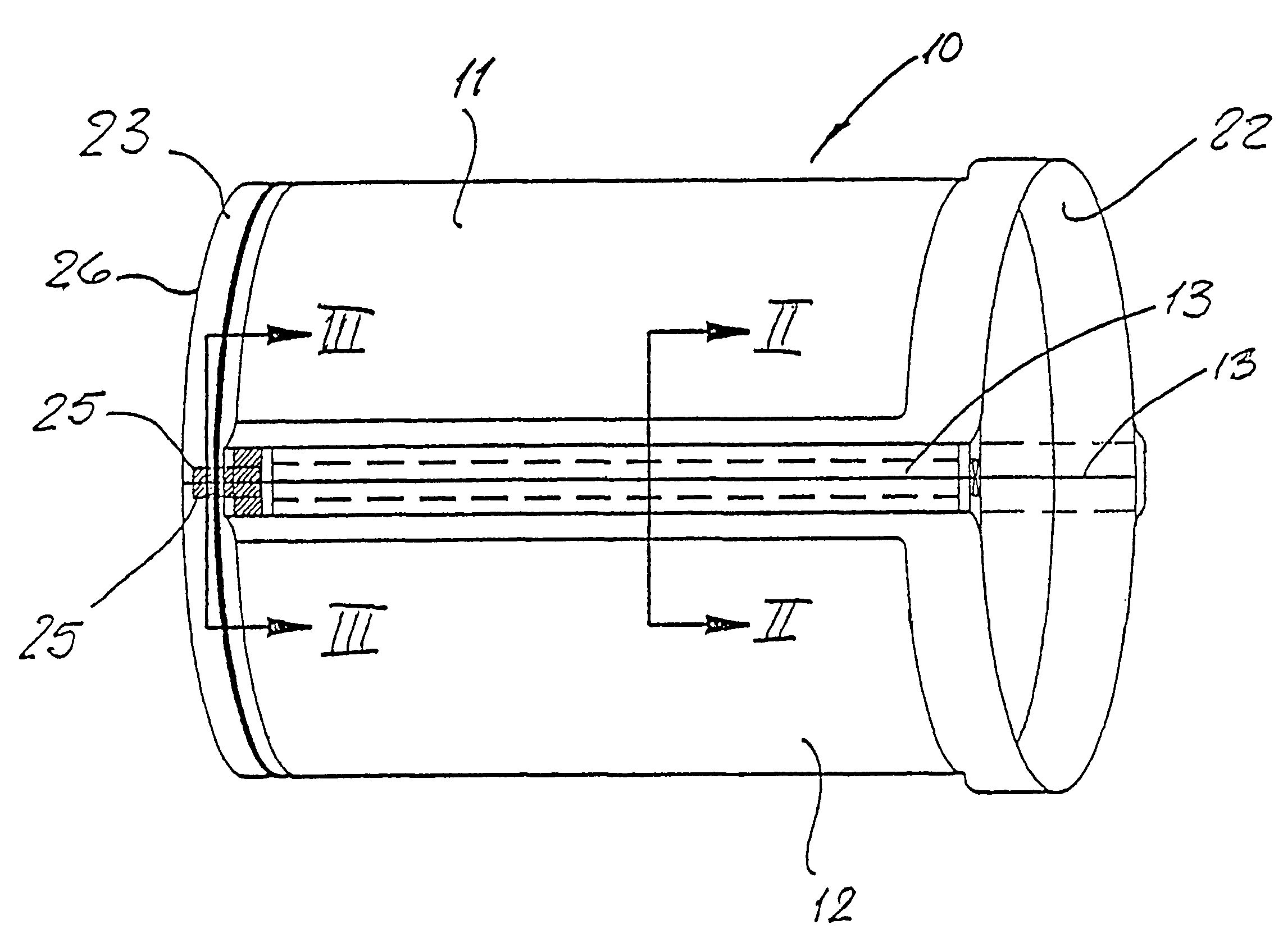

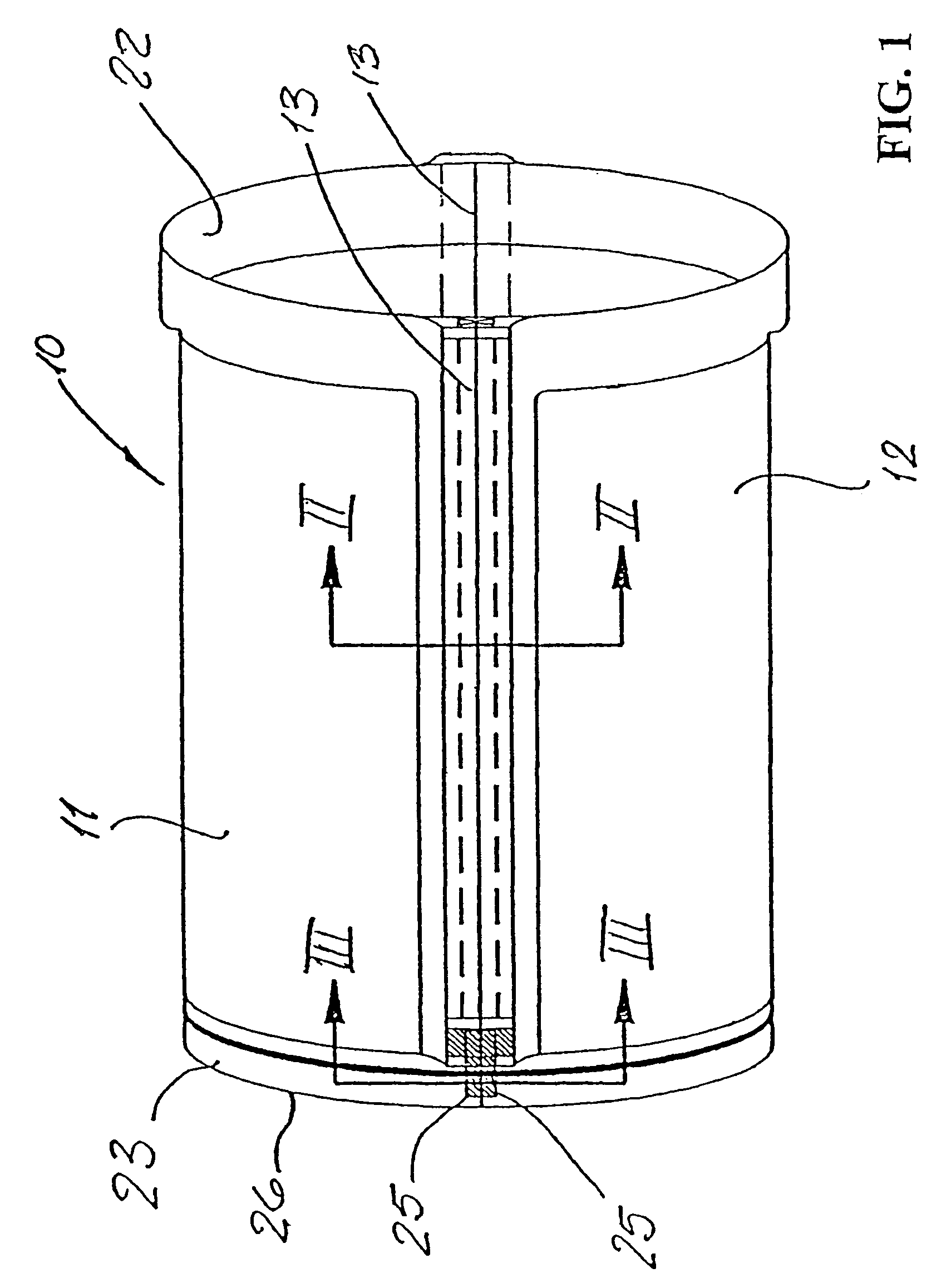

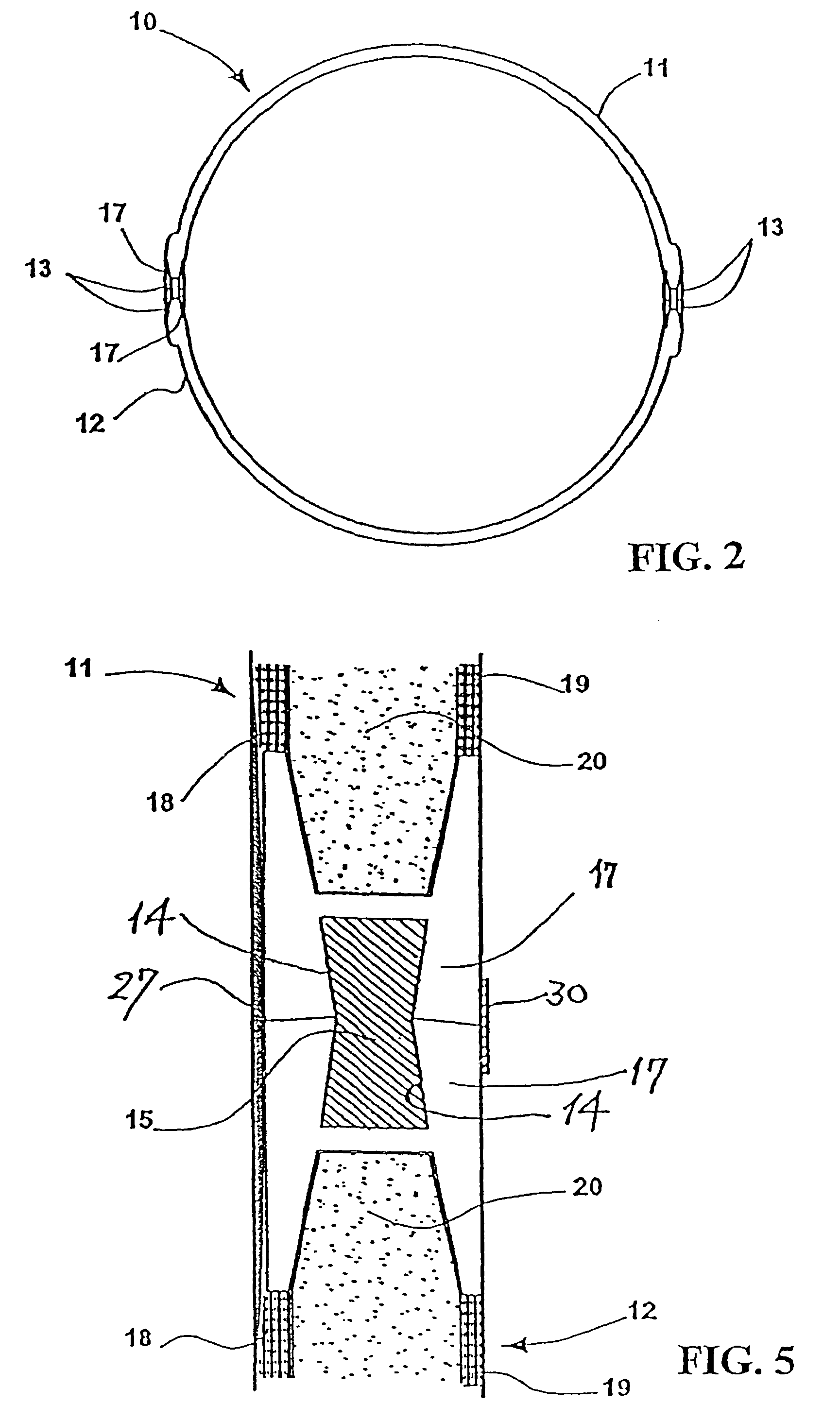

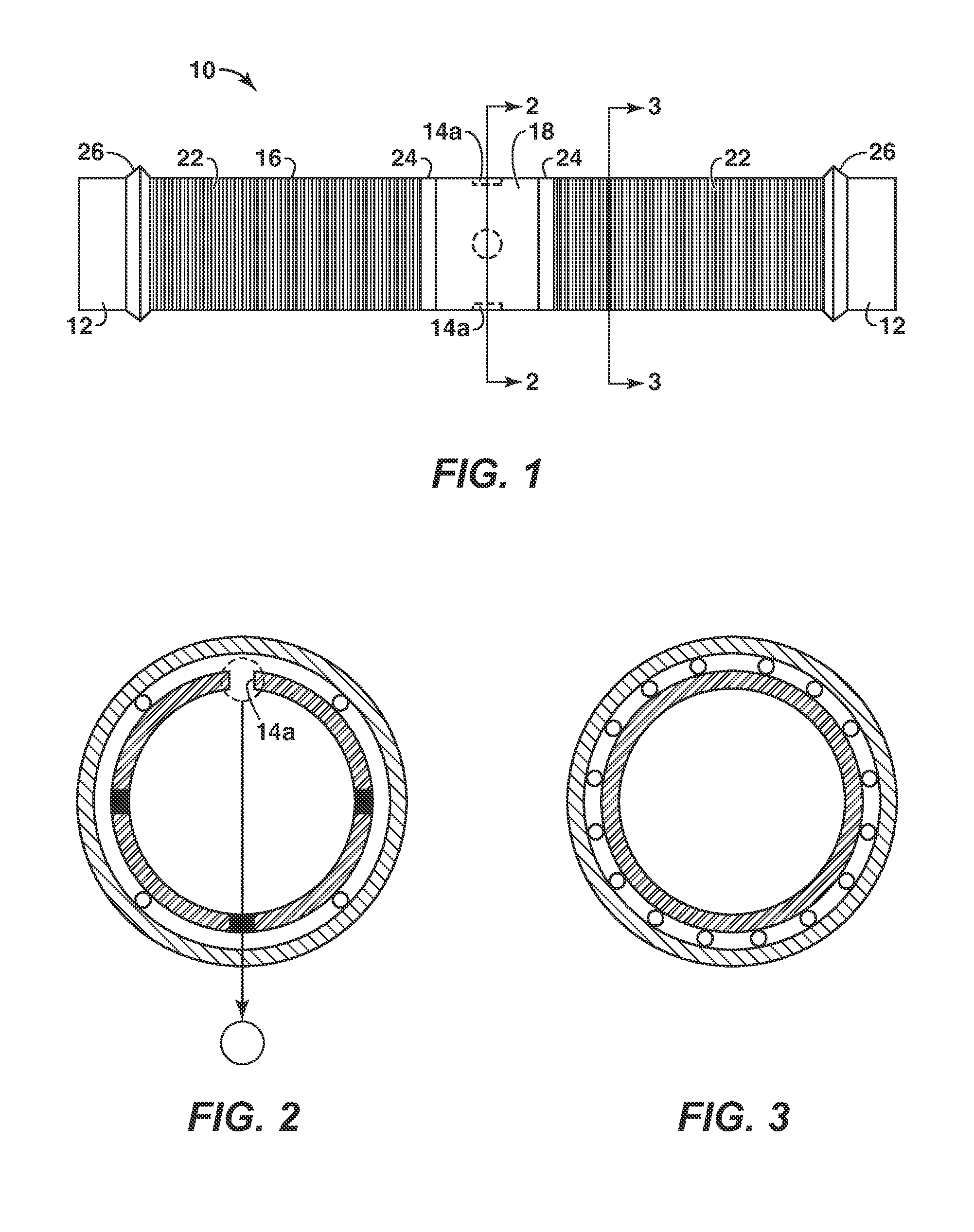

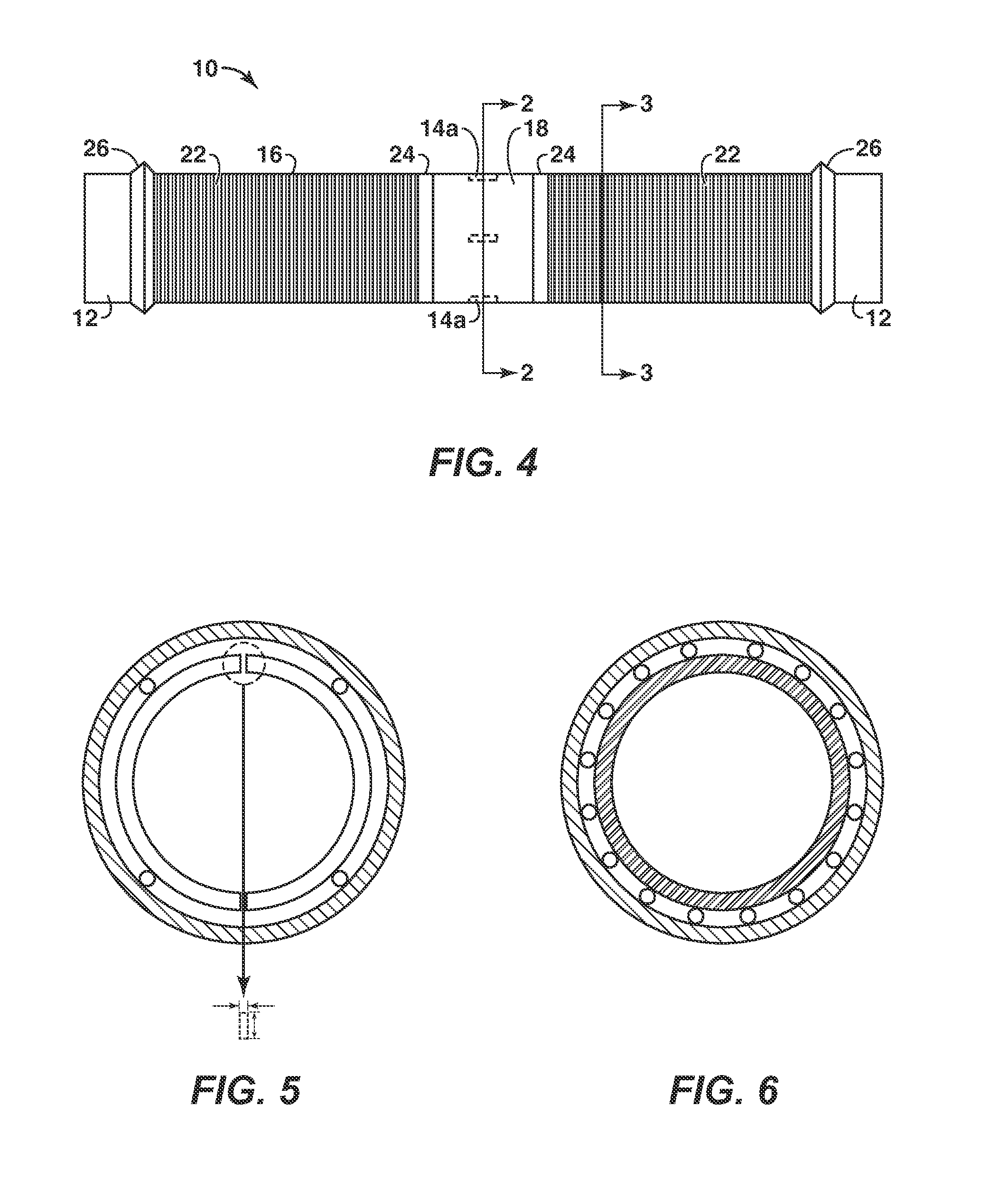

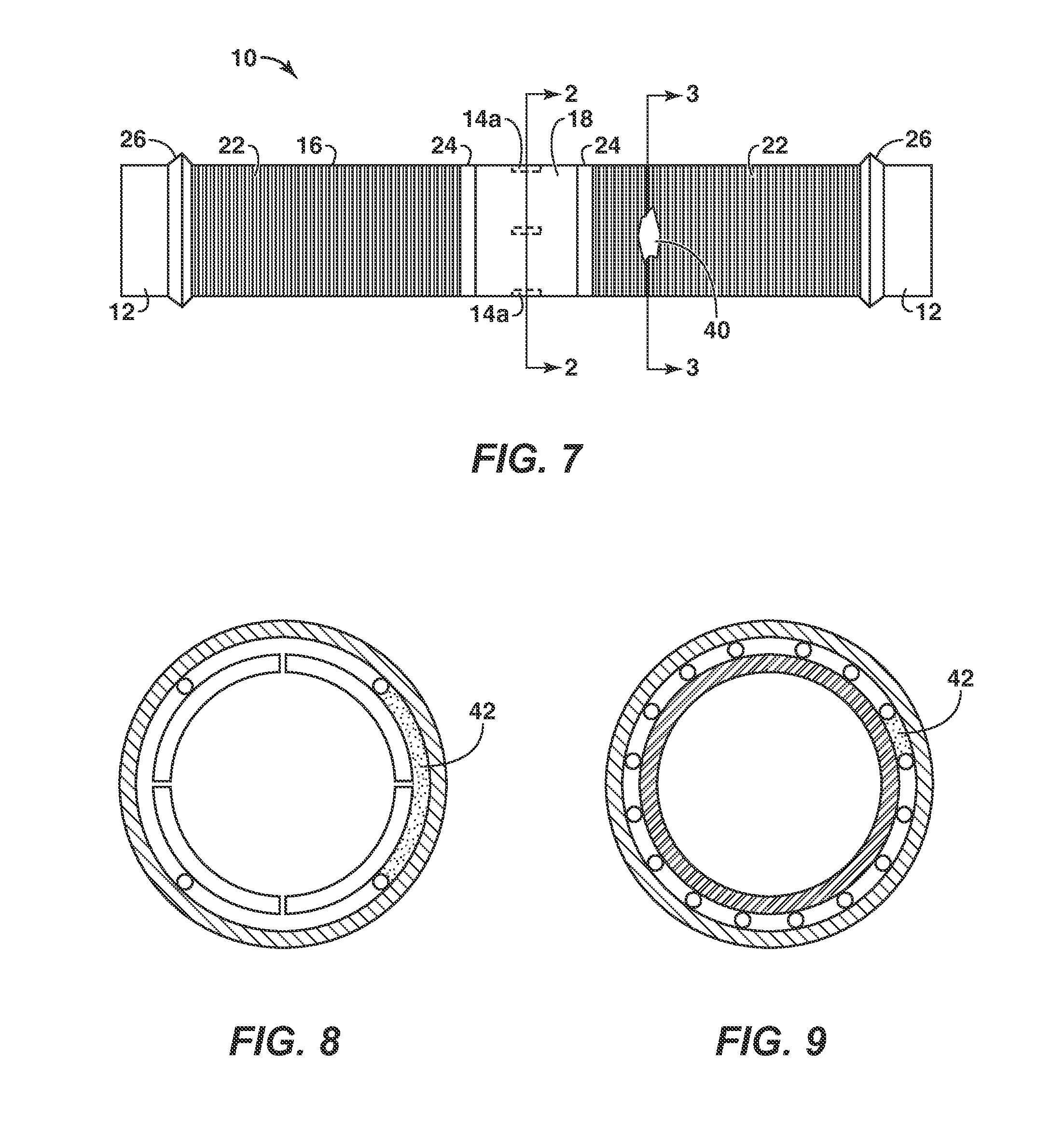

Pipe construction system

InactiveUS7238256B2Mitigate disadvantageIncrease radial thicknessLaminationLamination apparatusEngineeringSealant

A pipe construction system which utilizes a multiplicity of arcuate pipe segments 11, 12 to form a pipe section 10 and which sections are assembled together to form a length of pipe. Each segment 11, 12 has two longitudinal edges 13 of increased radial thickness and having a groove 14 formed in the circumferential direction whereby an interlocking and sealing member 15 may be located in the aligned grooves 14 of two adjacent segments 11, 12. One end of a pipe section 10 assembled from the segments defines a socket 22 for receiving a spigot 23 defined at the other end of a like section. The spigot end of each segment has a pocket 25 formed along said edge 13 but within the thickness of the major area of the segment, which pocket 25 mates with a corresponding pocket 25 of an adjacent segment whereby sealant may be disposed in the mating pockets.

Owner:SABAS LIMITED

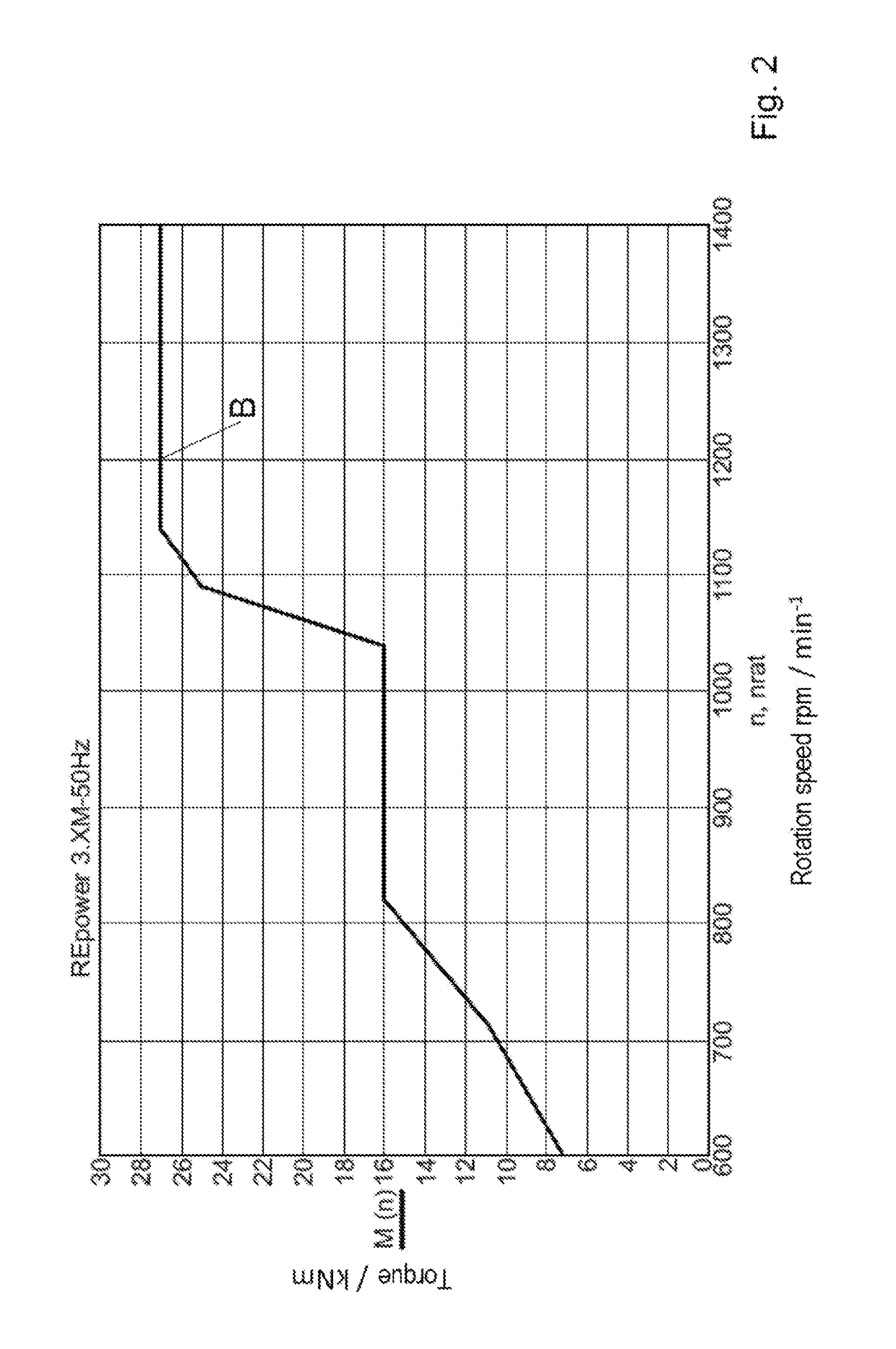

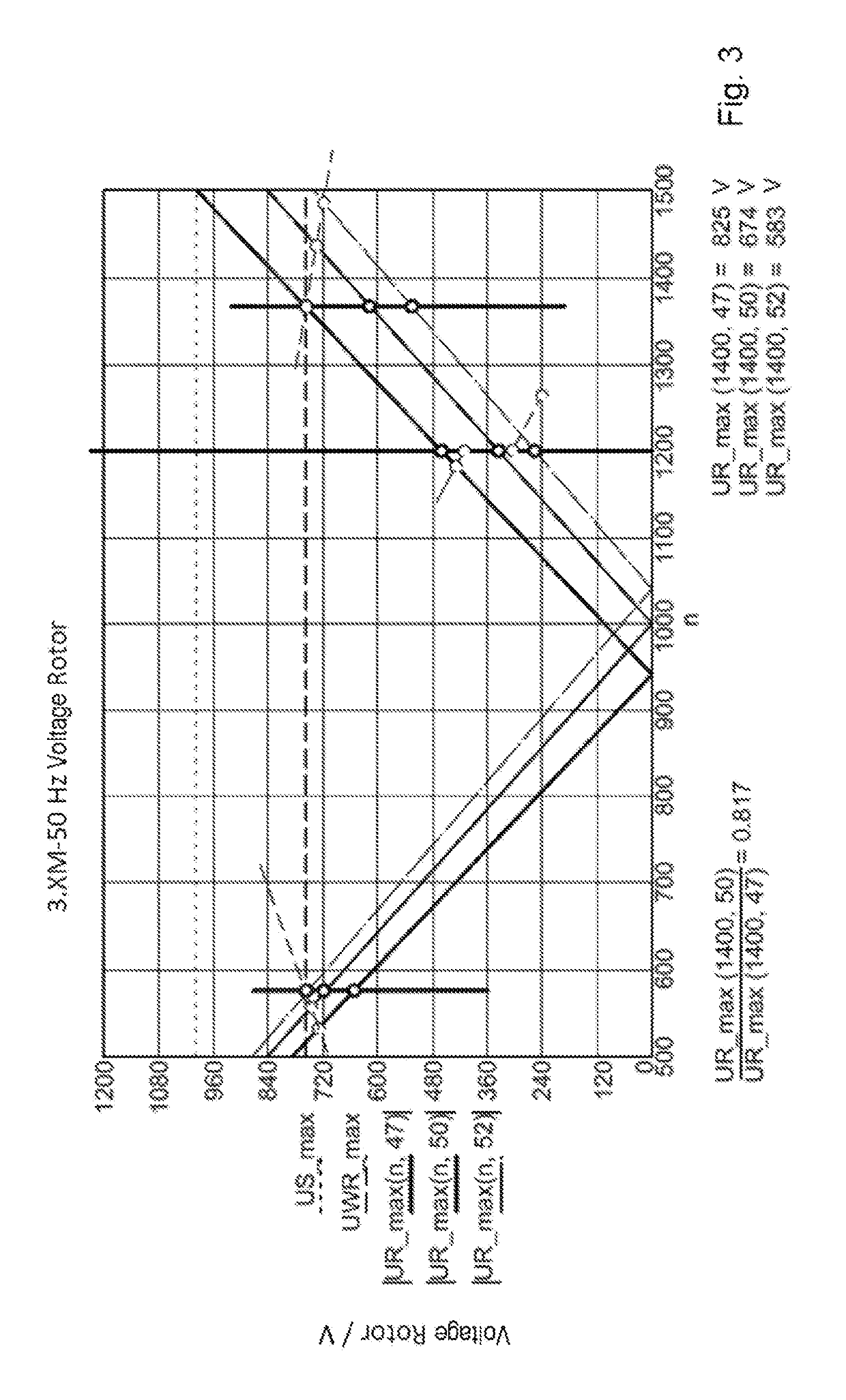

Control of a Wind Turbine by Changing Rotation Rate Parameters

ActiveUS20190214927A1Mitigate disadvantageChange effectGenerator control circuitsGeneration protection through controlRate parameterWind force

A method for controlling a wind turbine with a wind rotor (2), a doubly-fed induction generator (1) driven therewith, and a converter (4), which is electrically connected to feed electrical energy into an electrical grid (8) with at least one grid parameter, and having a controller with a memory in which rotation rate parameters are stored, characterized in that at least one variable characteristic curve is determined between at least one of the rotation rate parameters and the at least one grid parameter, the at least one characteristic is stored in the memory, the at least one grid parameter is measured, the grid parameter measurements are fed to the controller, the values of the at least one rotation rate parameter associated with the grid parameter measurements via the at least one characteristic curve are activated.

Owner:SENVION DEUT GMBH

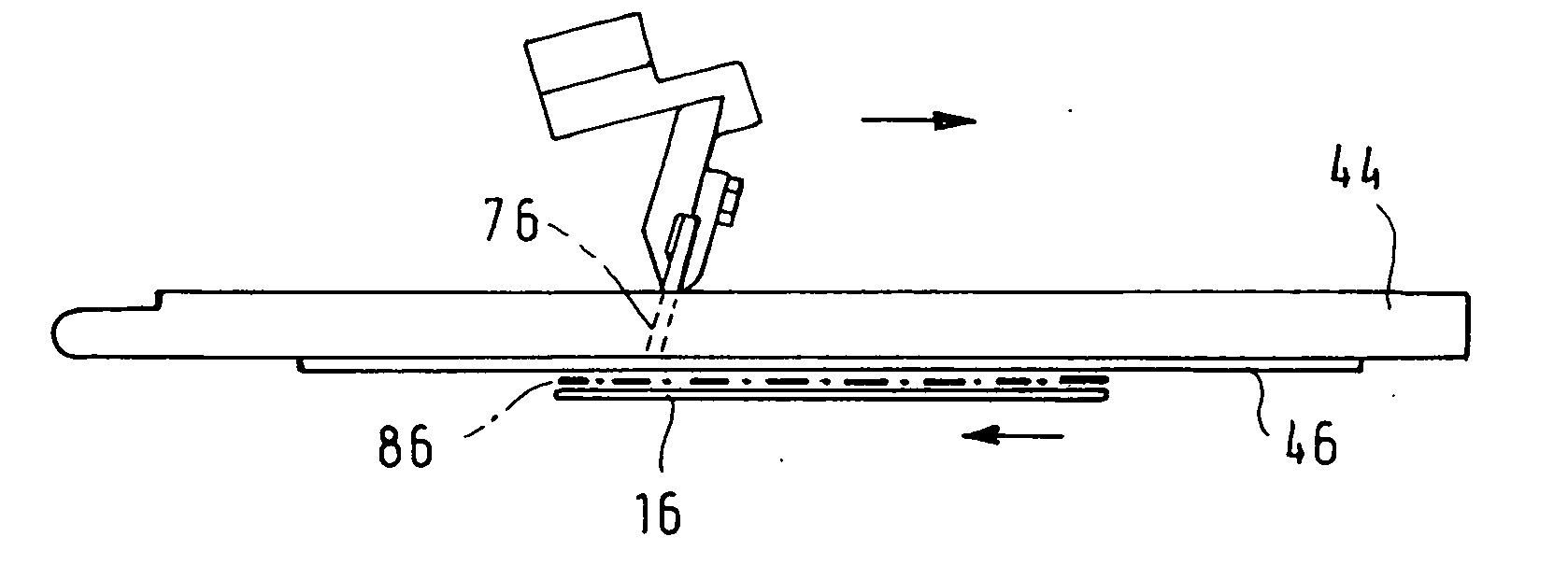

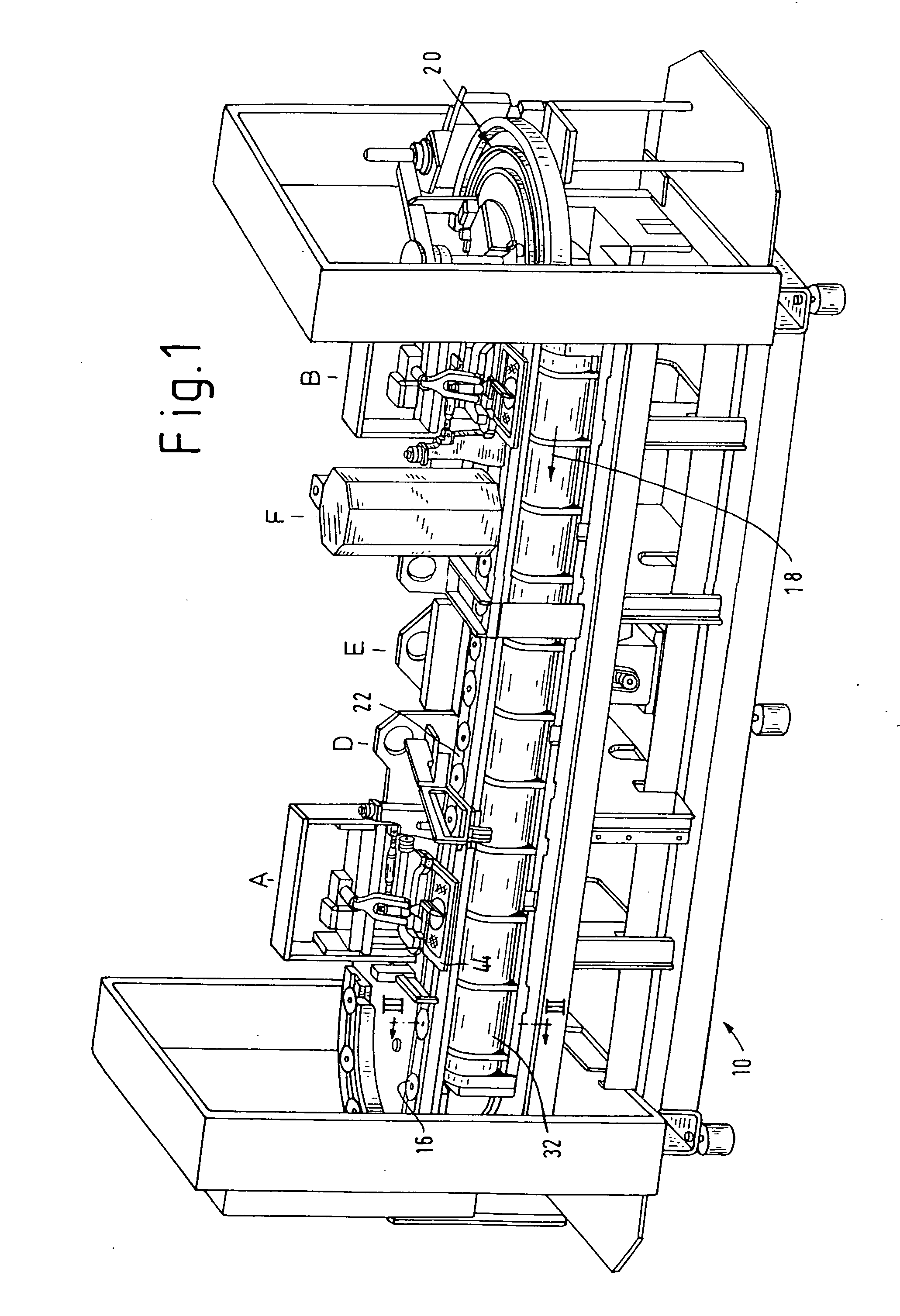

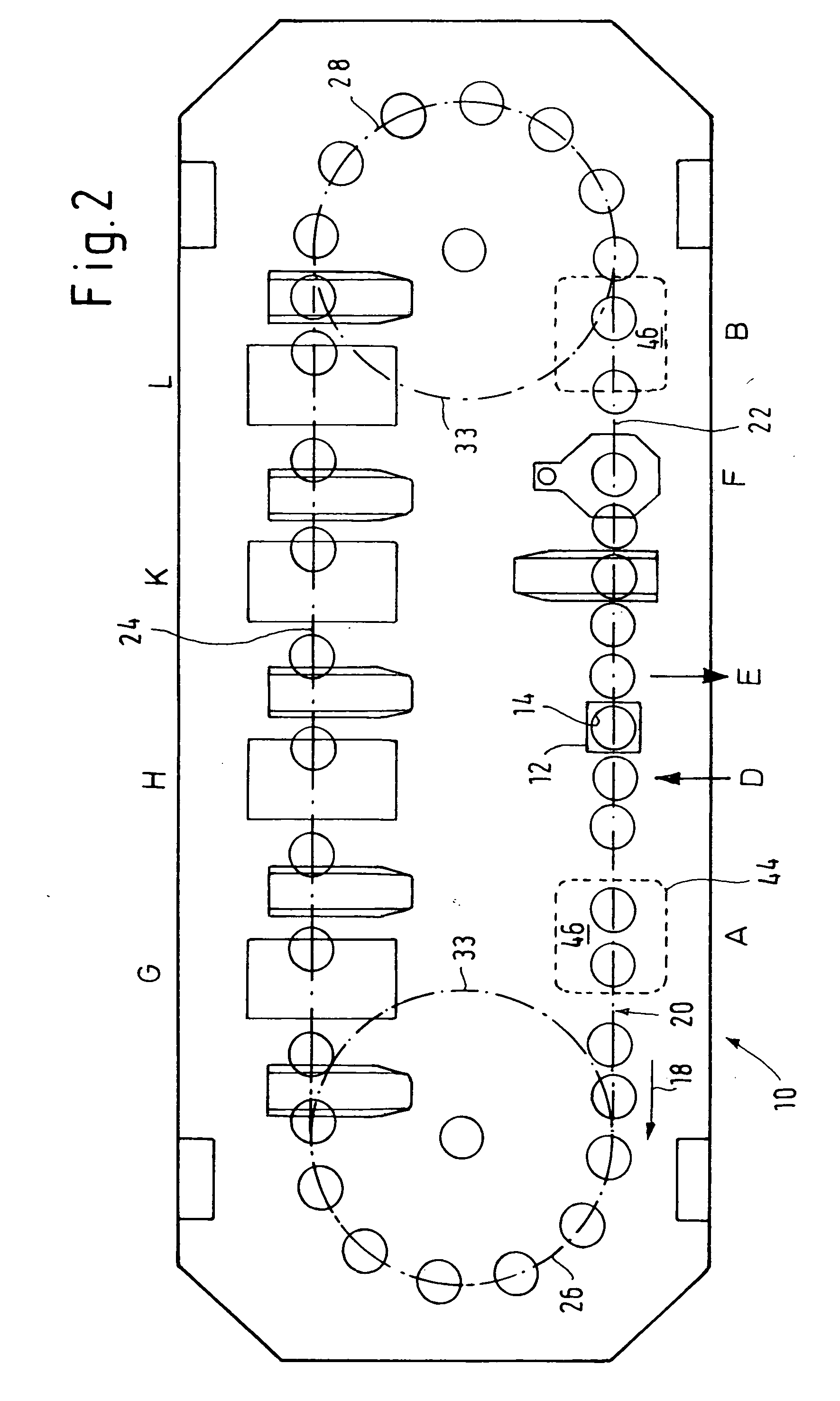

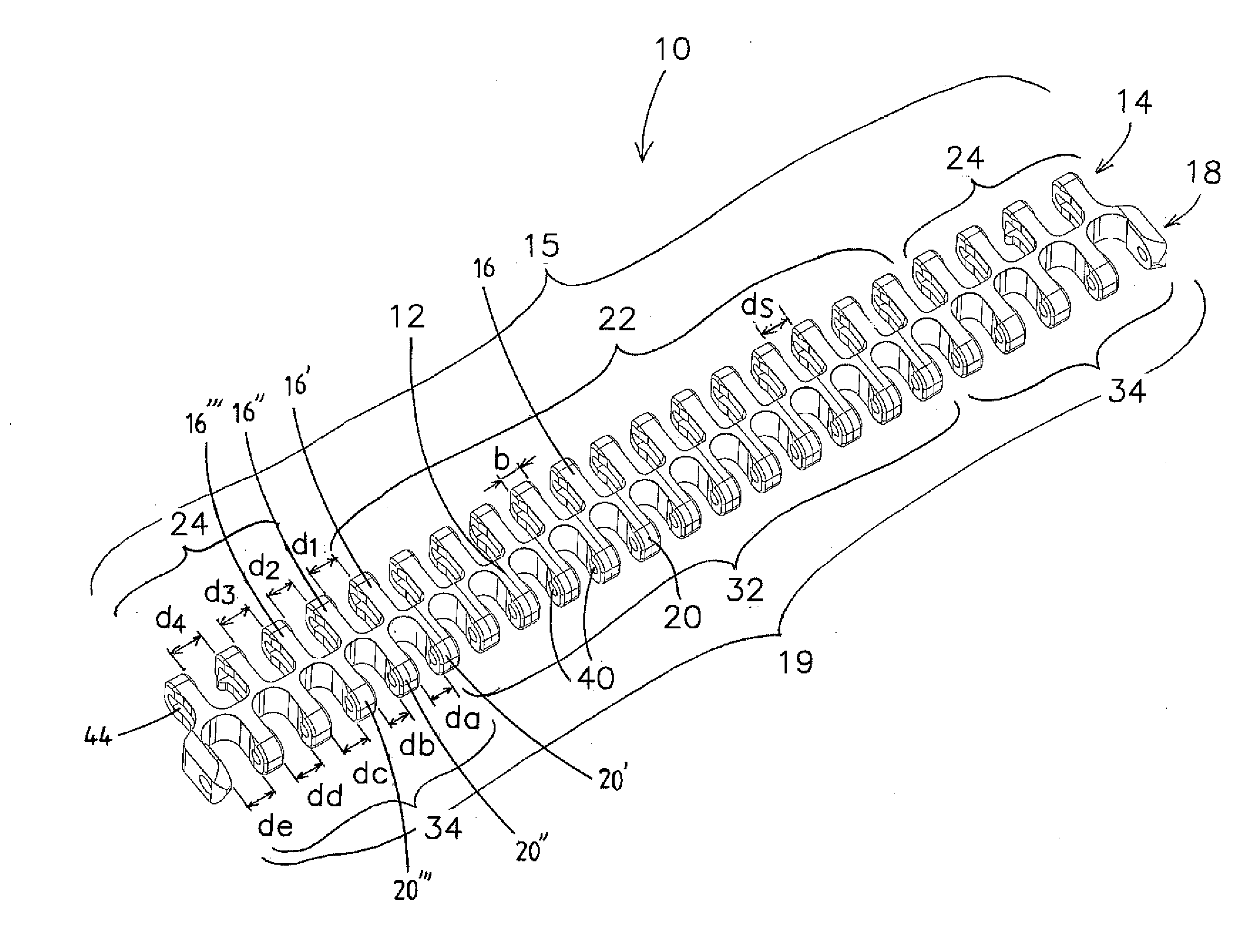

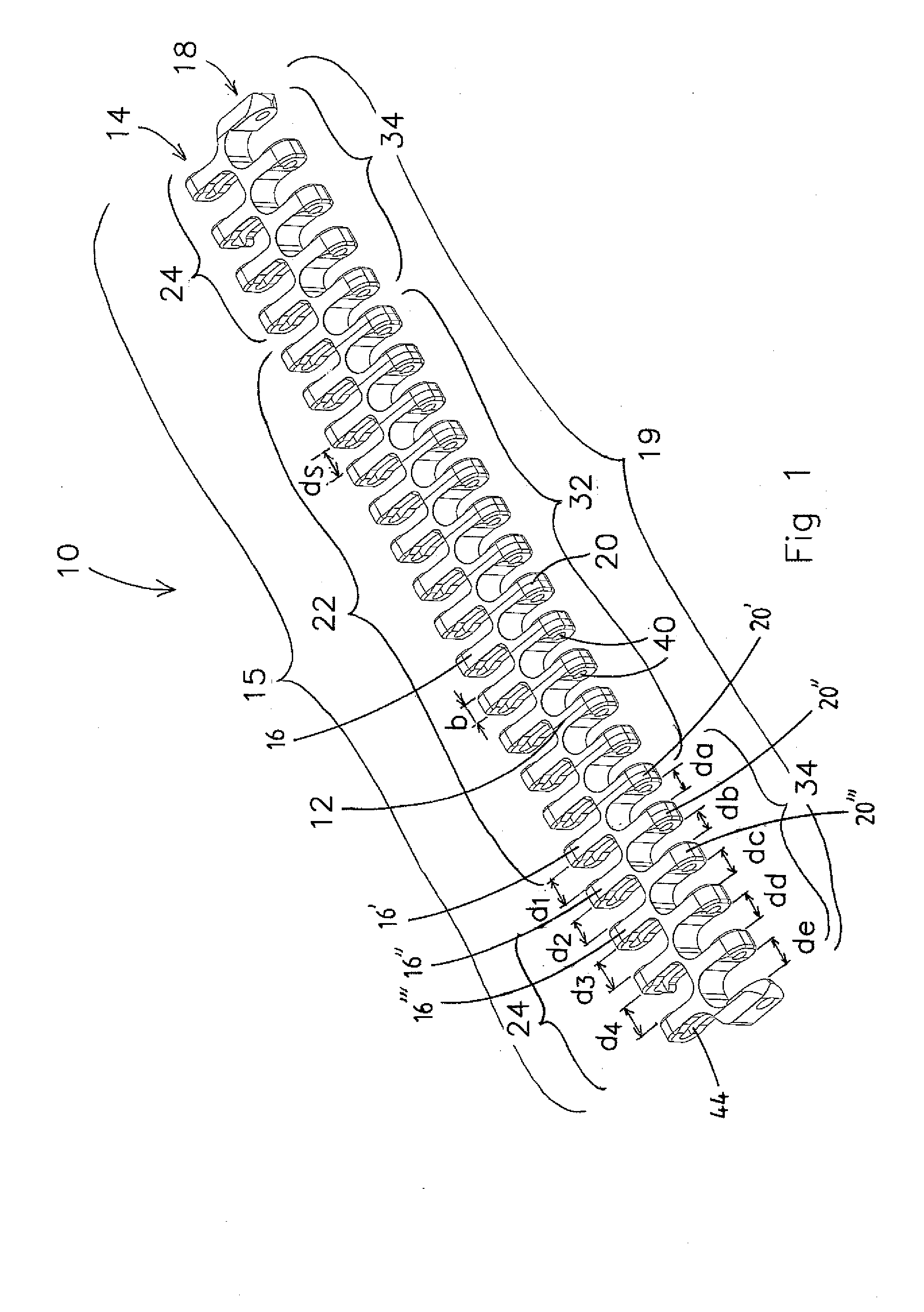

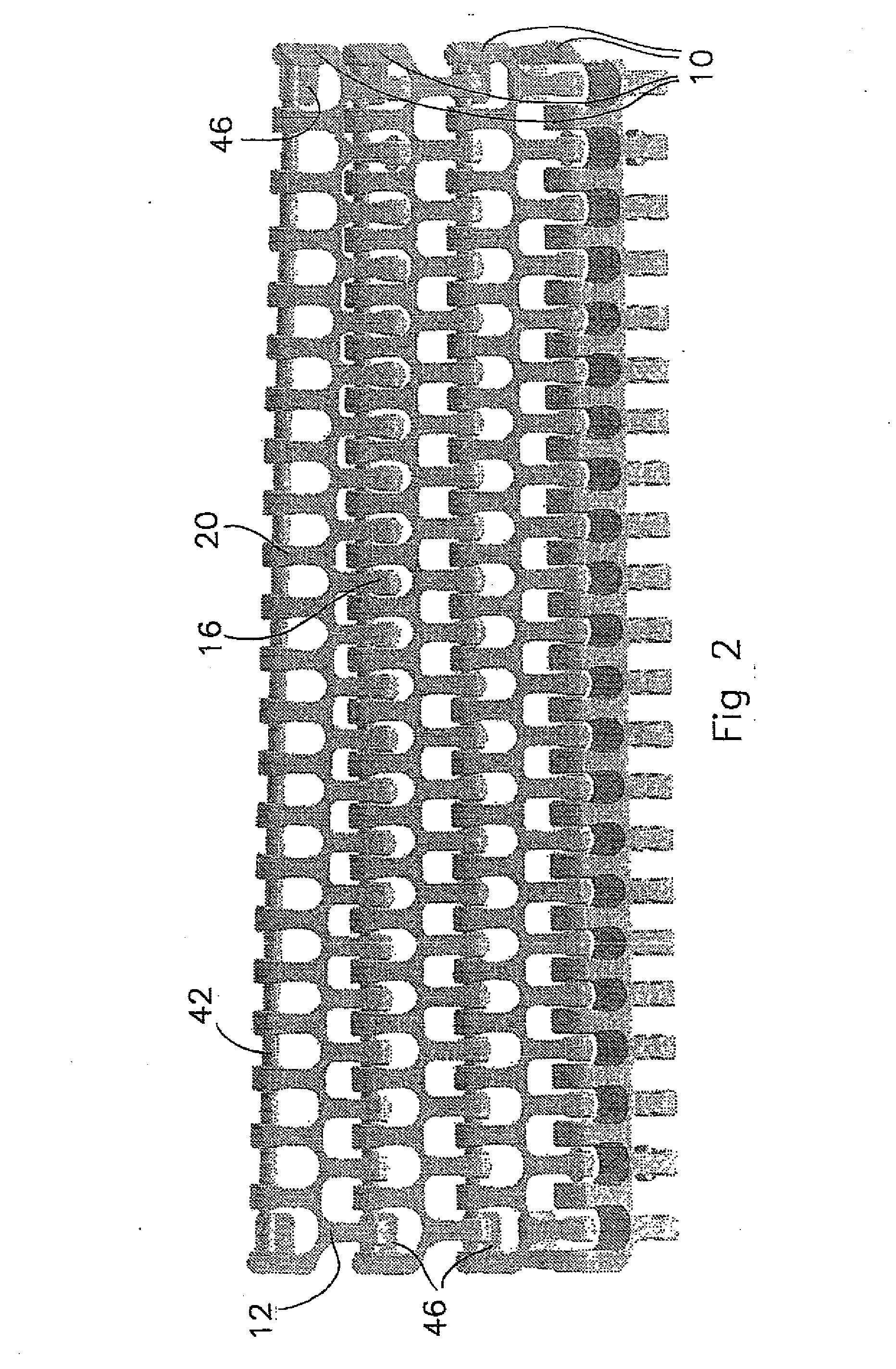

Modularly constructed conveyor belt, and module

ActiveUS20090057108A1Mitigate disadvantageCompact structureConveyorsRoller-waysModularityMechanical engineering

A conveyor belt for following a conveyance path with straight and curved conveyance path sections is constructed of module rows of a module (10) or a plurality of modules situated side by side in the transverse direction of the conveyor belt. Adjacent module rows are connected to each other by means of connecting pins (42). Each module comprises a set (15) of first aligned spaced fingers (16) with transverse aperture (40), and also a set (19) of second aligned spaced fingers (20) with transverse aperture (44), which in general are disposed in a staggered position relative to the first fingers (16). The mutual distance between at least the three outermost fingers of a set situated side by side and having the same width in a set is greater than the mutual distance between fingers situated side by side at a distance from the abovementioned at least three outermost fingers. A shorter run-in length and run-out length of a curved conveyance section of the conveyance path is achieved in this way.

Owner:AMMERAAL BELTECH BV

Well completion for viscous oil recovery

Described is a well completion for evenly distributing a viscosity reducing injectant (e.g. steam and / or solvent, e.g. in SAGD or CSS) into a hydrocarbon reservoir (e.g. of bitumen), for evenly distributing produced fluids (most specifically vapor influx) and for limiting entry of particulate matter into the well upon production. On injection, the injectant passes through a limited number of slots in a base pipe, is deflected into an annulus between the base pipe and a screen or the like, and passes through the screen into the reservoir. On production, hydrocarbons pass from the reservoir through the screen into a compartmentalized annulus. The screen limits entry of particulate matter (e.g. sand). The hydrocarbons then pass through the slots in the base pipe and into the well. Where a screen is damaged, the compartmentalization and the slots in the base pipe limit particulate matter entry into the well.

Owner:EXXONMOBIL UPSTREAM RES CO

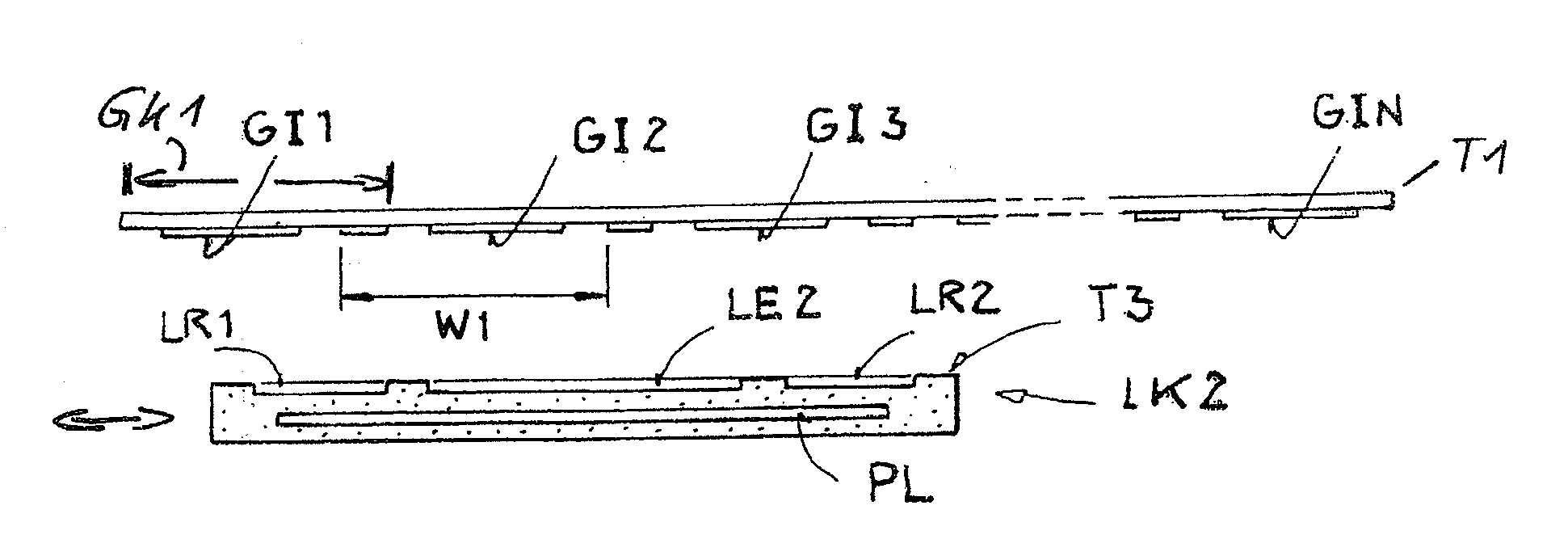

Method and apparatus at a spinning preparation machine for cleaning fiber material

InactiveUS6865781B2Mitigate disadvantageImprove and undisrupted productionMechanical impurity removalFibre cleaning/openingFiberMechanical engineering

In a method at a spinning preparation machine, for example a cleaner, opener, carding machine or the like, for cleaning fiber material, especially cotton, an examination of the nature of the trash is carried out, which examination is used for adjustment of at least one adjustable cleaning element, for example a separating blade, cleaning grid or the like.In order to make possible improved and undisrupted production by simple means, the optimum adjustment of the at least one cleaning element for a specific fiber batch is stored in a memory of an electronic control and regulation device and, when the same fiber batch is processed again, the optimum adjustment of the cleaning element is implemented automatically.

Owner:TRUETZSCHLER GMBH & CO KG

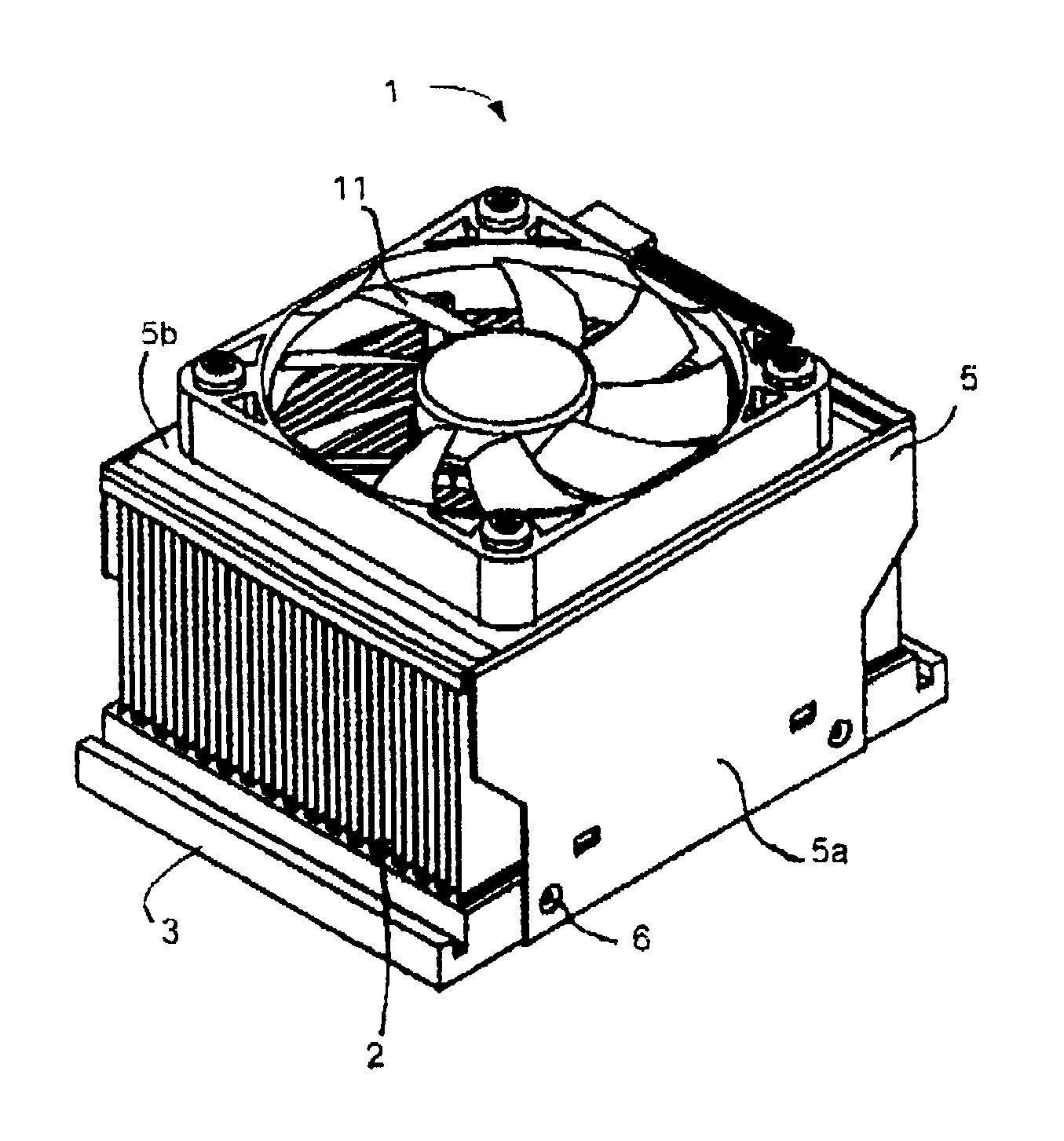

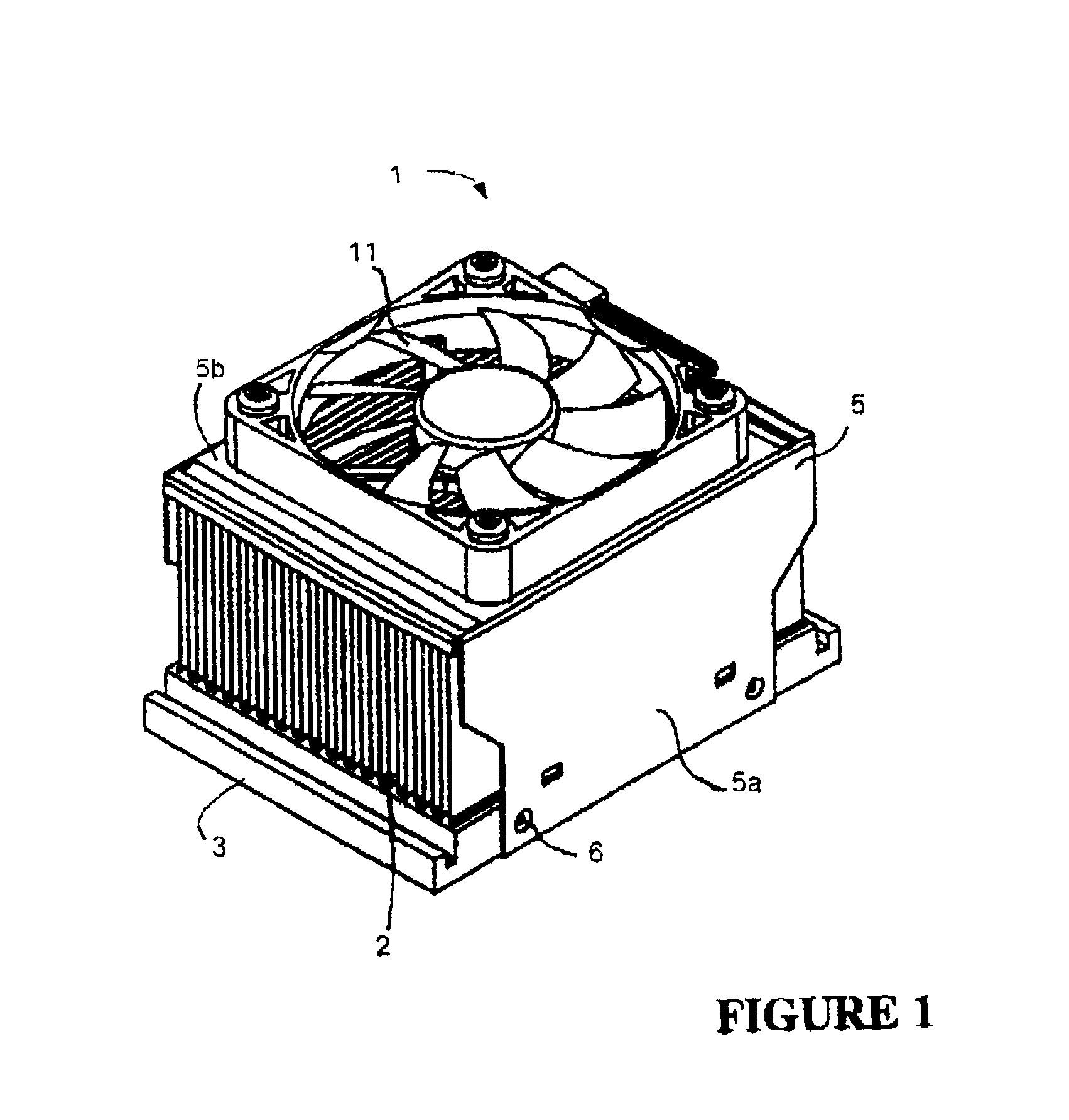

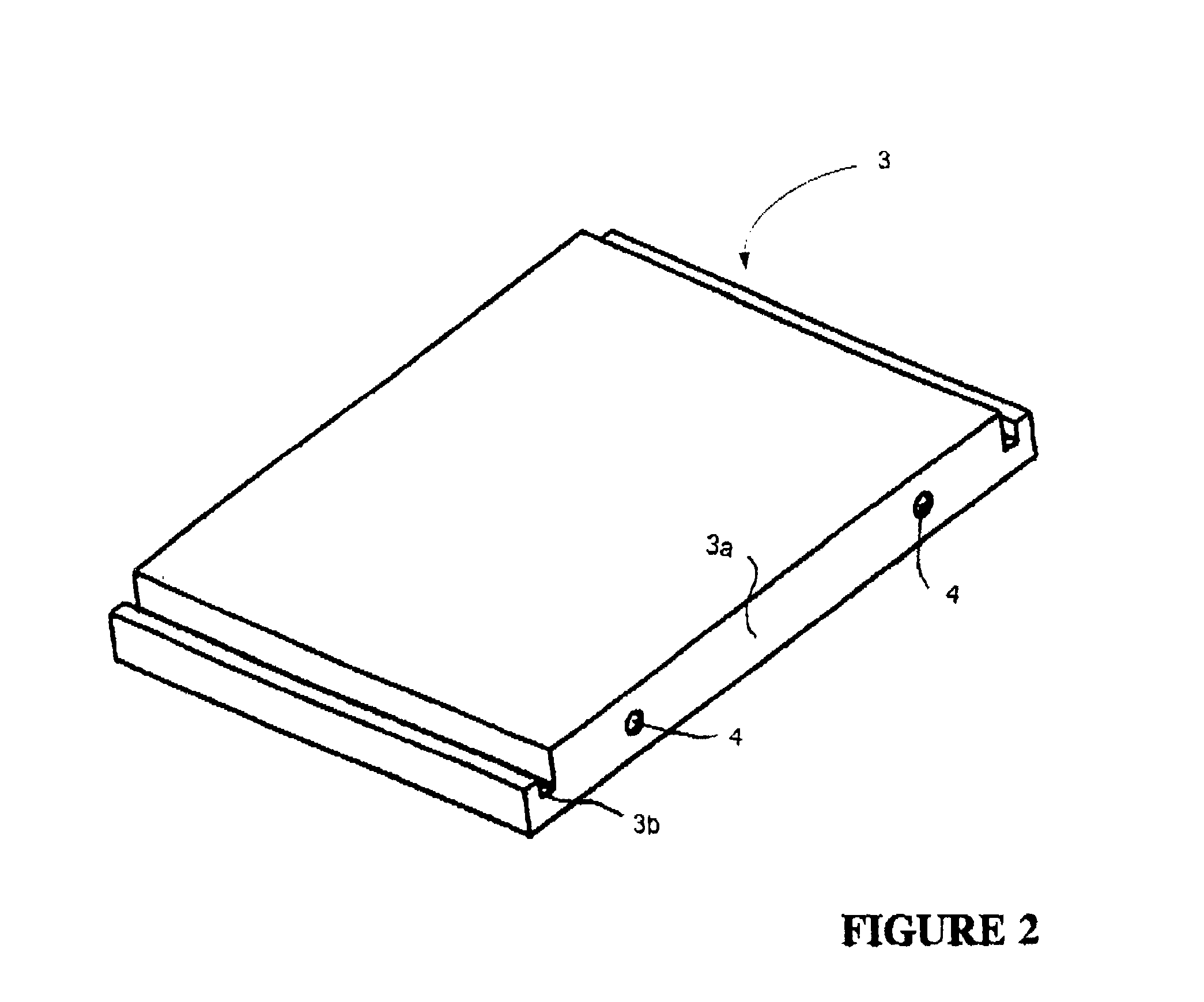

Folded-fin heat sink assembly and method of manufacturing same

InactiveUS6854181B2Mitigate disadvantageControl pressureSemiconductor/solid-state device detailsSolid-state devicesHeat spreaderEngineering

A method of assembling a folded-fin heat sink assembly, the assembly including base plate, a folded fin-assembly and a shroud, includes positioning the folded-fin assembly on a base plate, placing the shroud over the folded-fin assembly, urging the shroud to press the folded-fin assembly against the baze plate and bonding attached to the base plate and a shroud. According to an embodiment, the resulting shroud of the folded-fin assembly acts as extension of the folded-fin heat sink providing additional fin surfaces via which heat can be dissipated.

Owner:TYCO ELECTRONICS CANADA

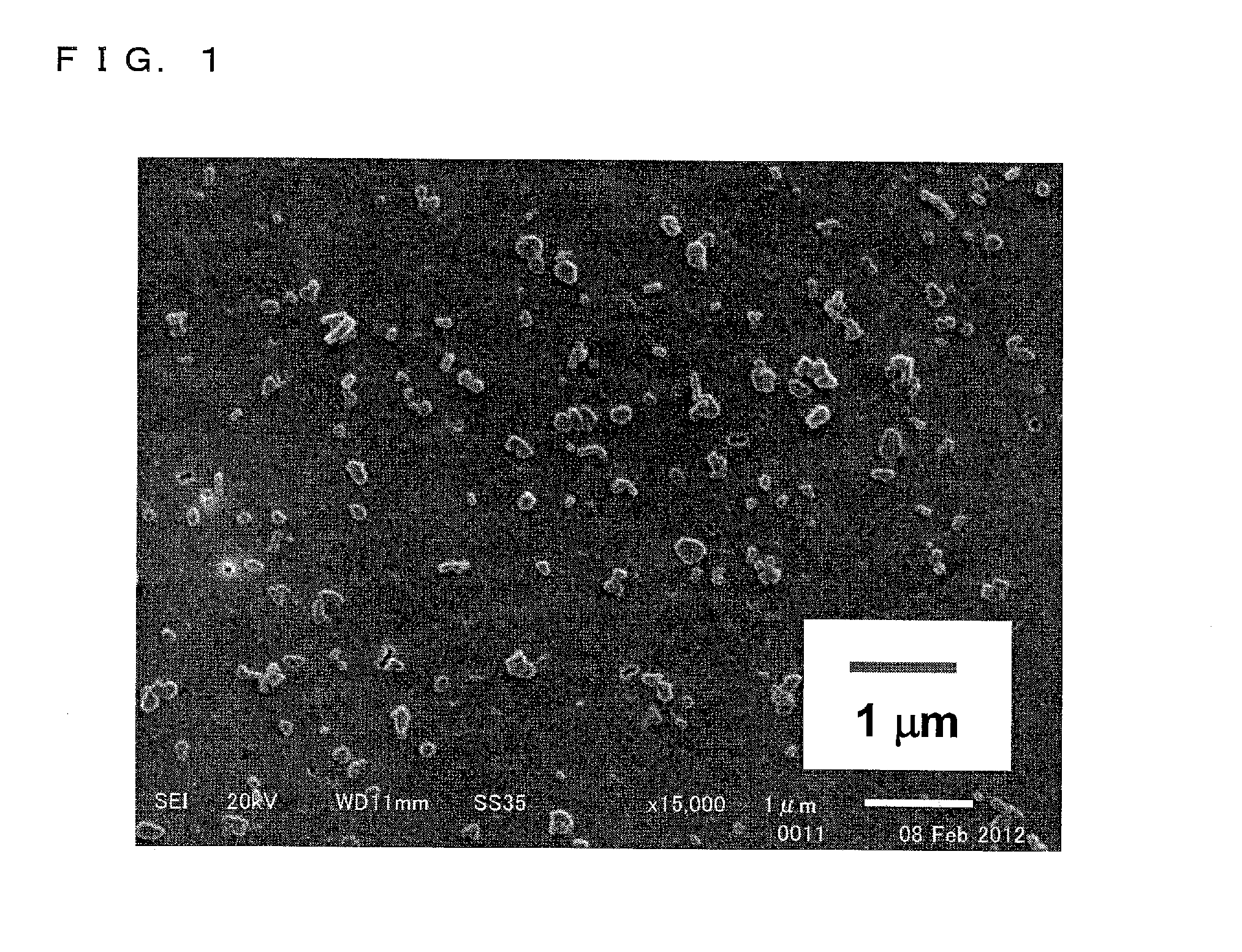

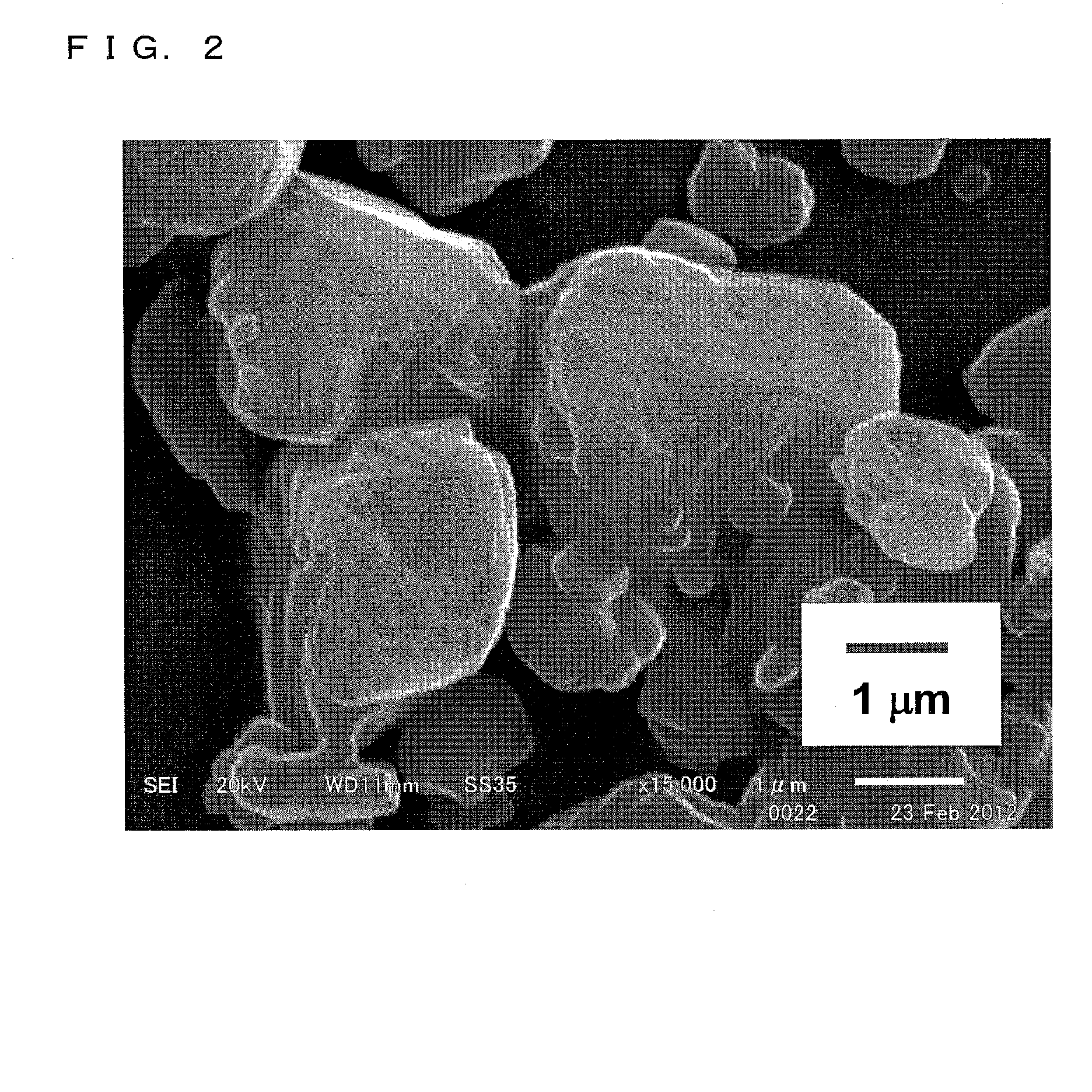

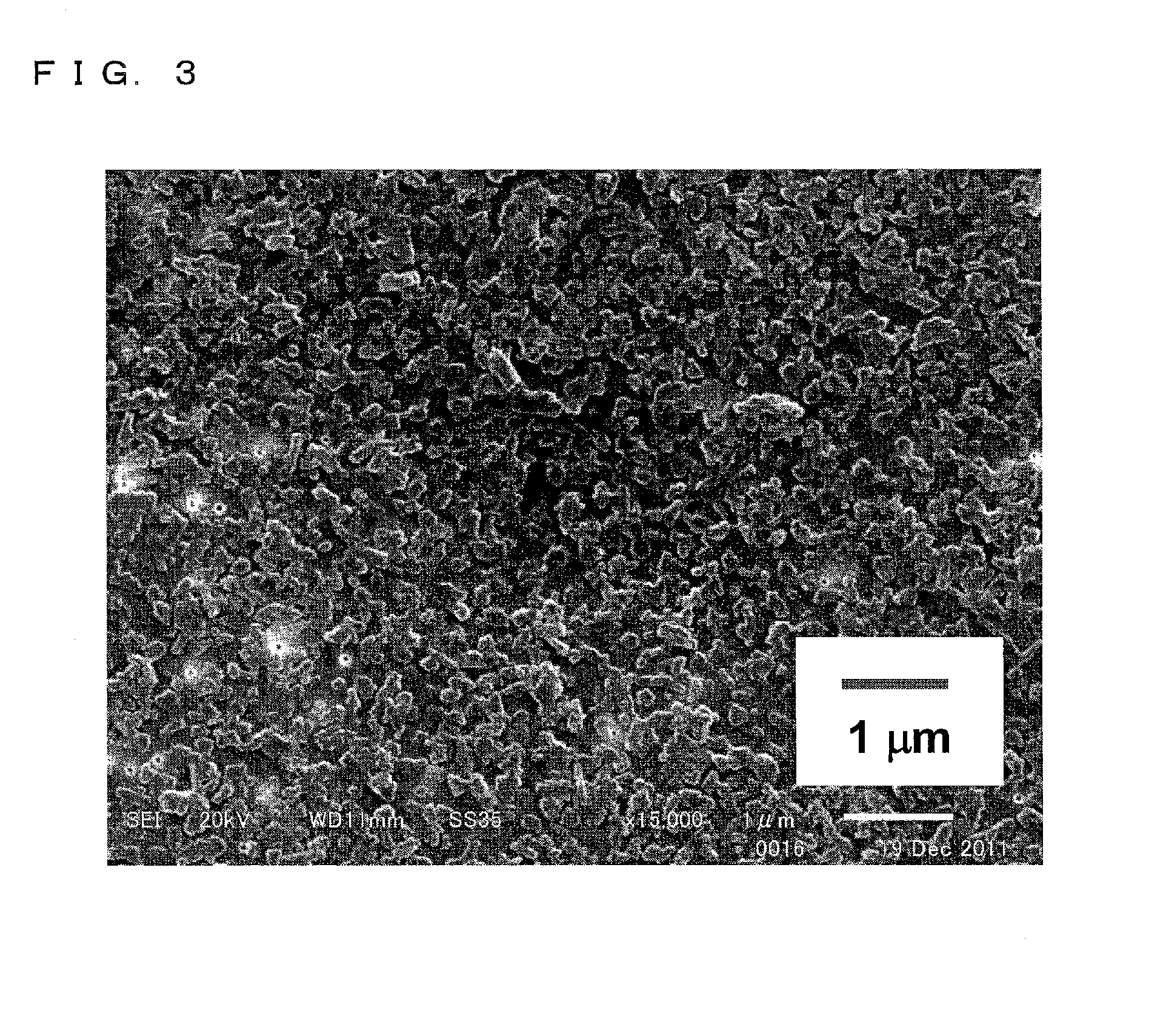

Method for producing an aqueous dispersion of drug nanoparticles and use thereof

InactiveUS20150087624A1Inhibition formationMitigate disadvantageBiocideSenses disorderSolventFreeze dry

A nanoparticle aqueous dispersion in which nanoparticles are dispersed in water is produced through a method including a step of freeze-drying a frozen sample of a liquid mixture of a first solution and a second solution and a step of dispersing the freeze-dried sample in water. In this method, the liquid mixture contains an active ingredient and an ointment base, the first solution includes contains an organic solvent as its solvent, and the second solution contains water as its solvent. The method, which is arranged as such, can provide an aqueous composition containing nanoparticles dispersed therein and usable stably as an aqueous dispersion preparation.

Owner:OSAKA UNIV

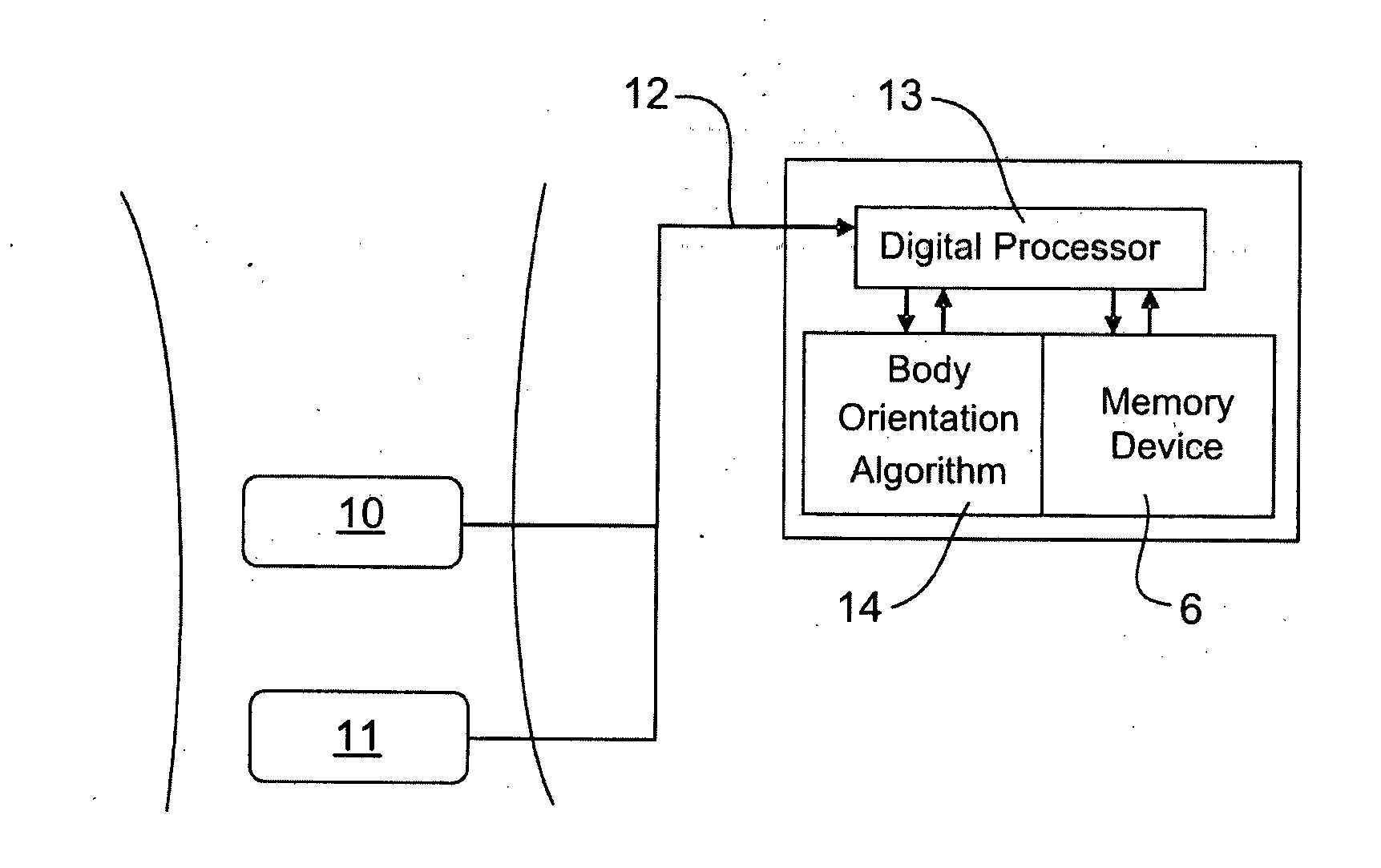

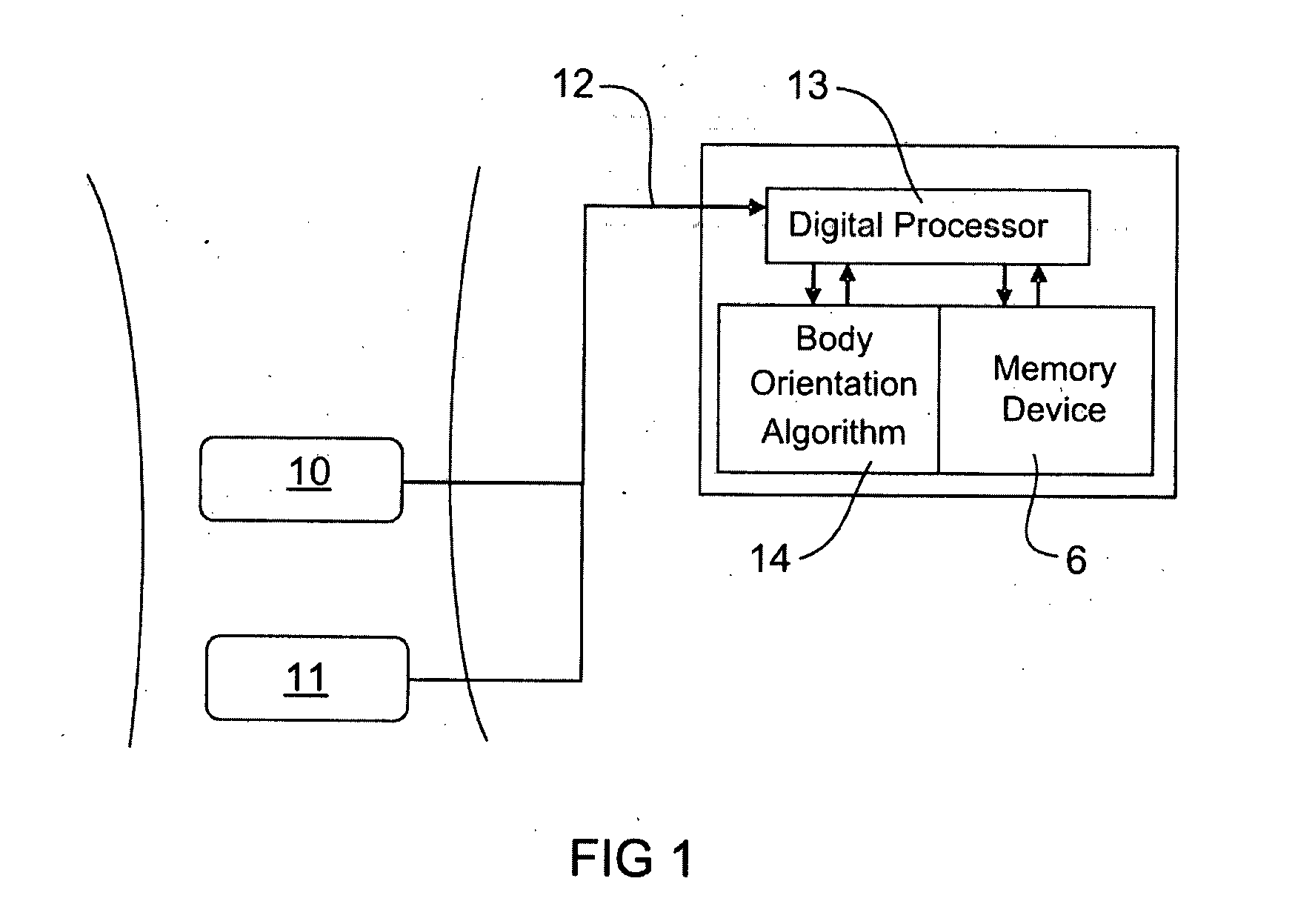

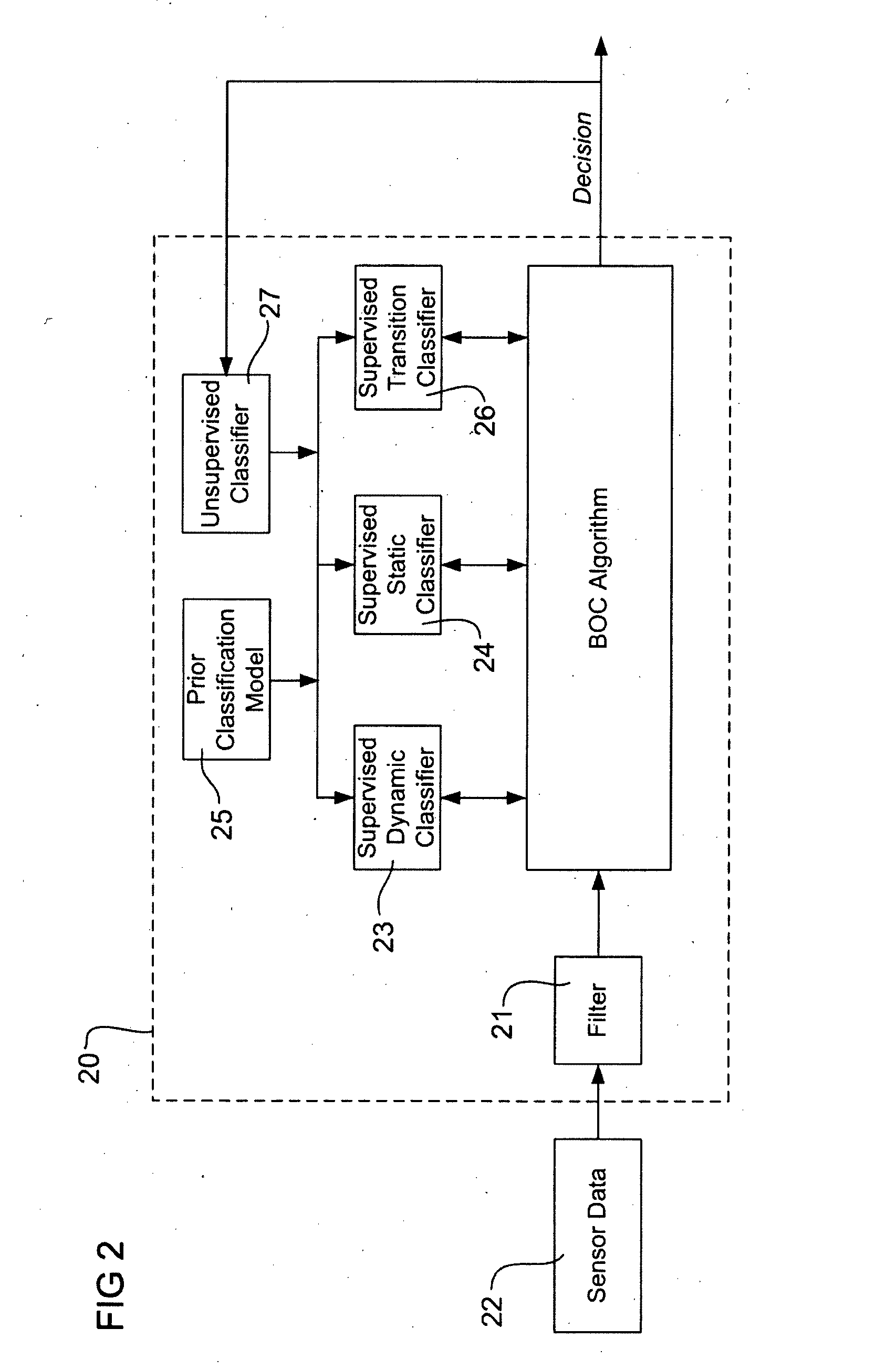

Apparatus and method for classifying orientation of a body of a mammal

ActiveUS20140032124A1Mitigate disadvantageImprove accuracyAngles/taper measurementsInertial sensorsComputer visionAnimal science

Apparatus is disclosed for providing classification of body orientation of a mammal. The apparatus includes means (10, 11) for measuring position of said body relative to a frame of reference at one or more points on the body, wherein said means for measuring includes at least one position sensor. The apparatus includes means (12) for providing first data indicative of said position; means (15) for storing said data at least temporarily; and means (13, 14) for processing said data to provide said classification of body orientation. A method for providing classification of body orientation of a mammal is also disclosed.

Owner:DORSAVI LTD

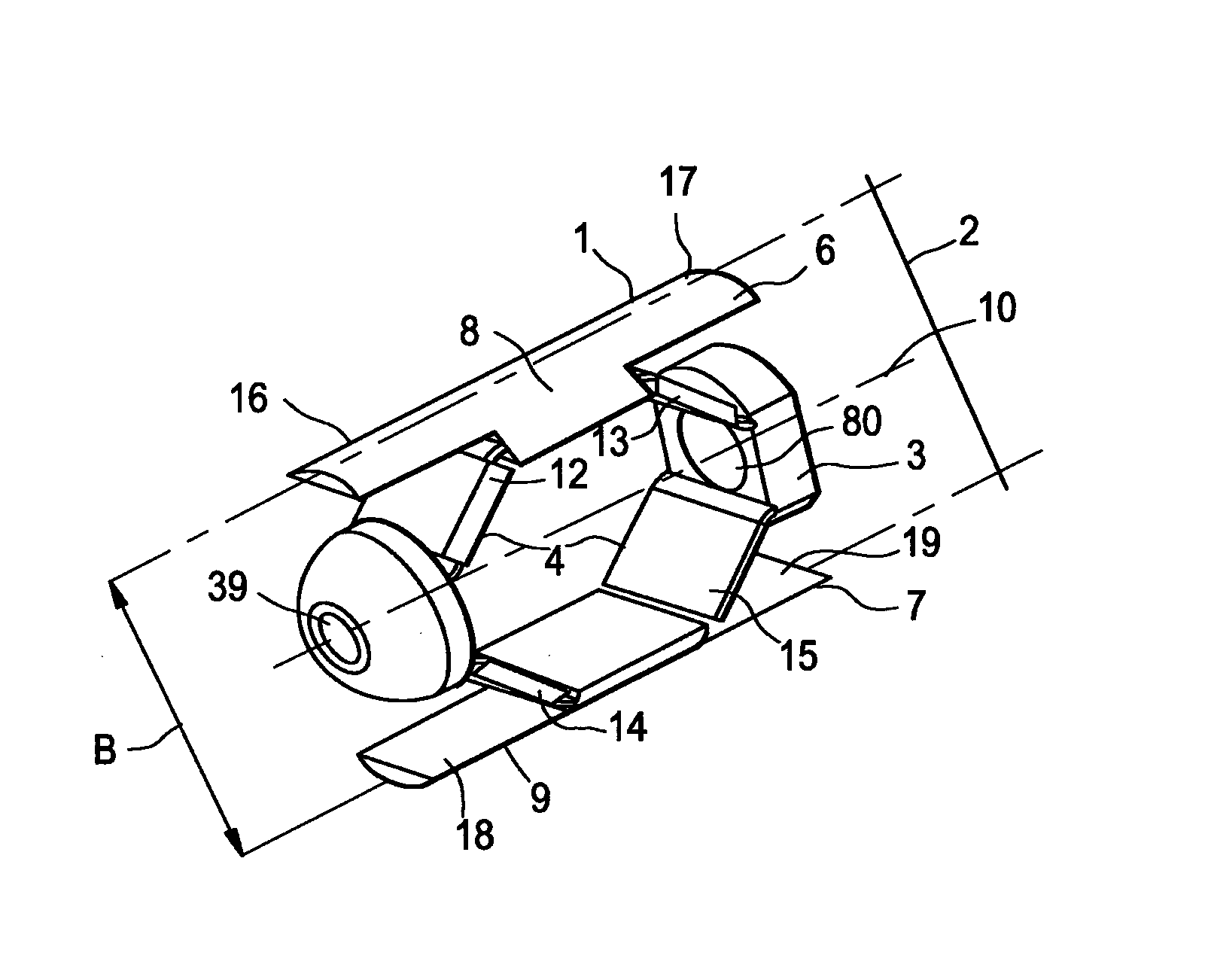

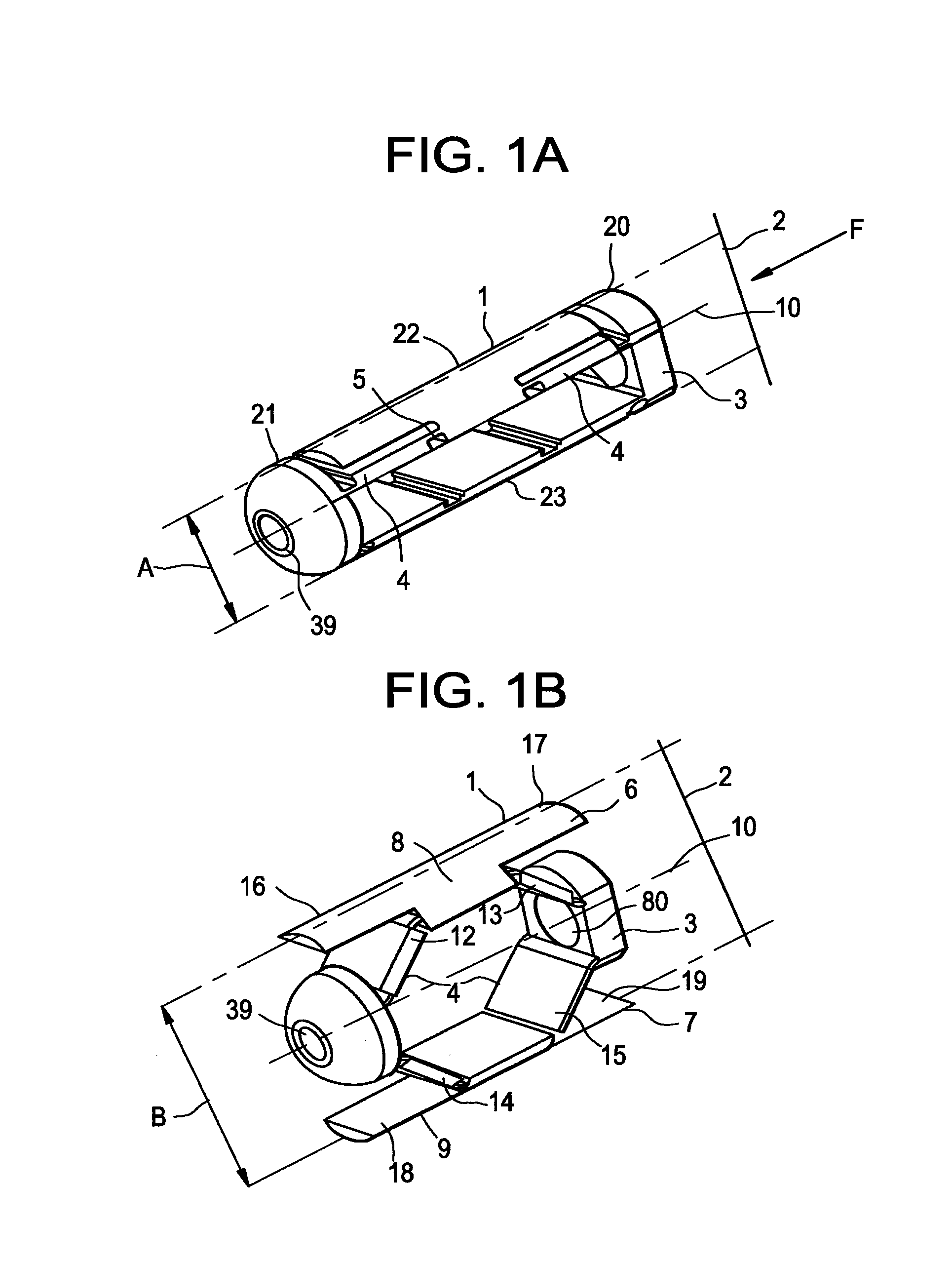

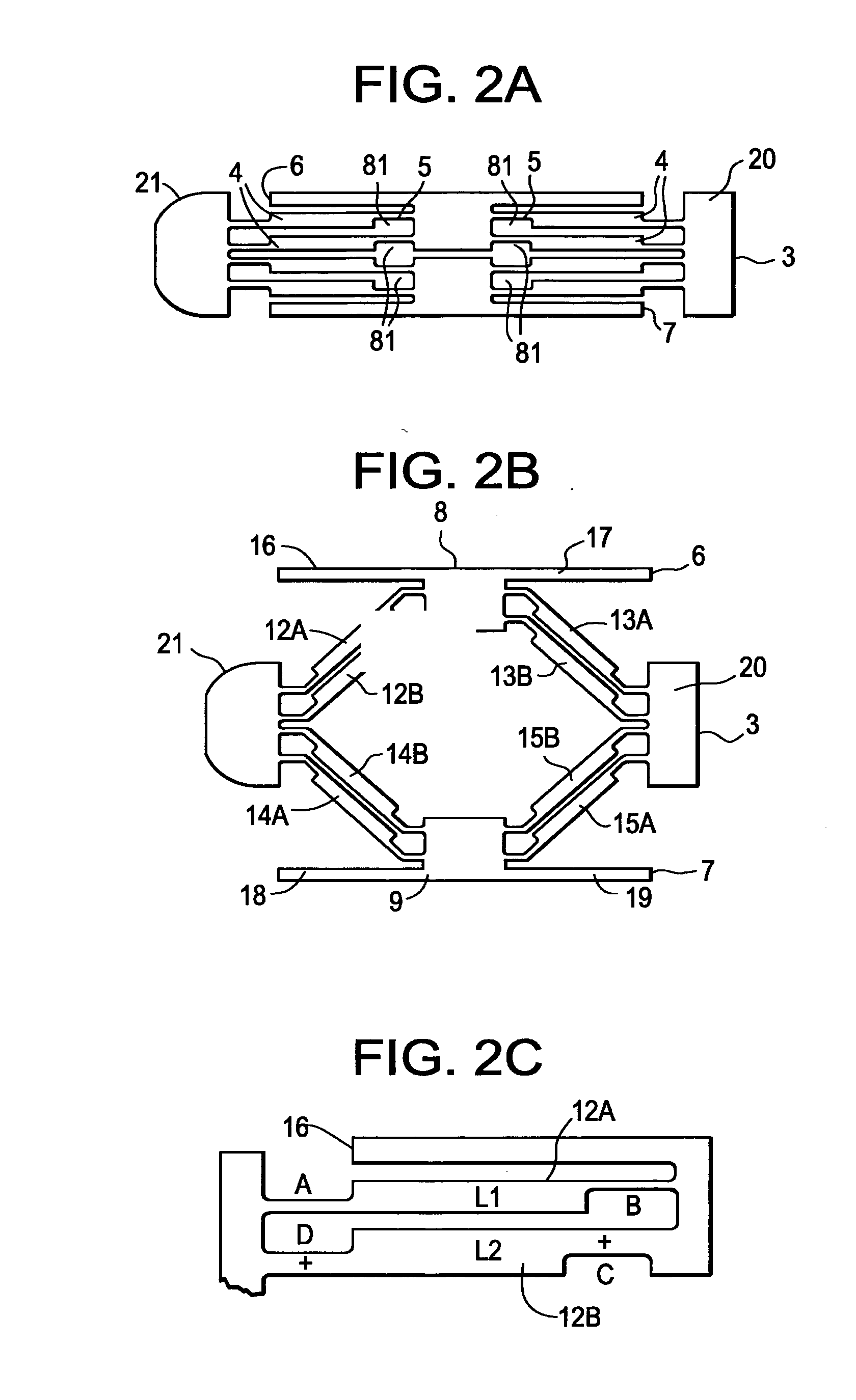

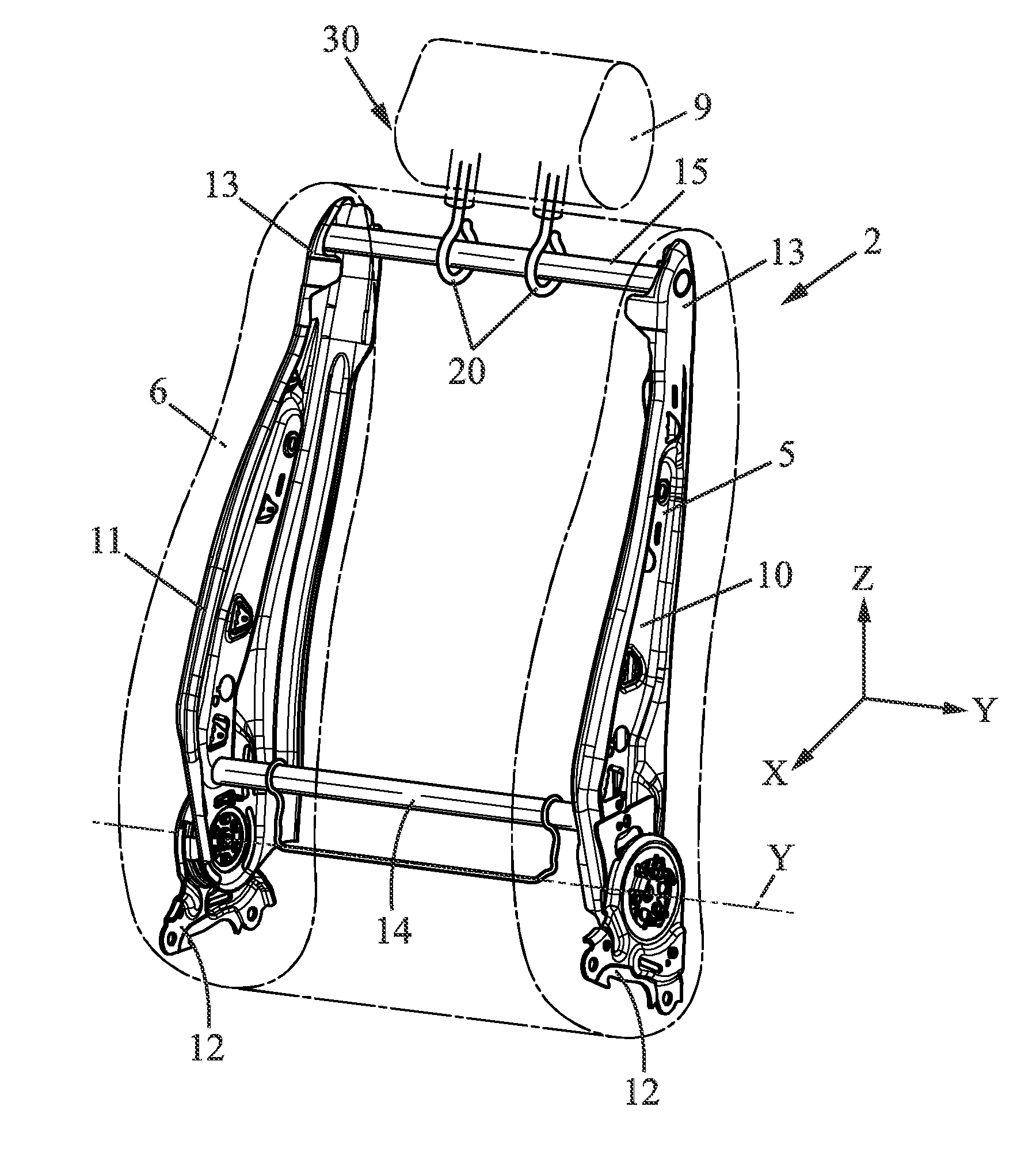

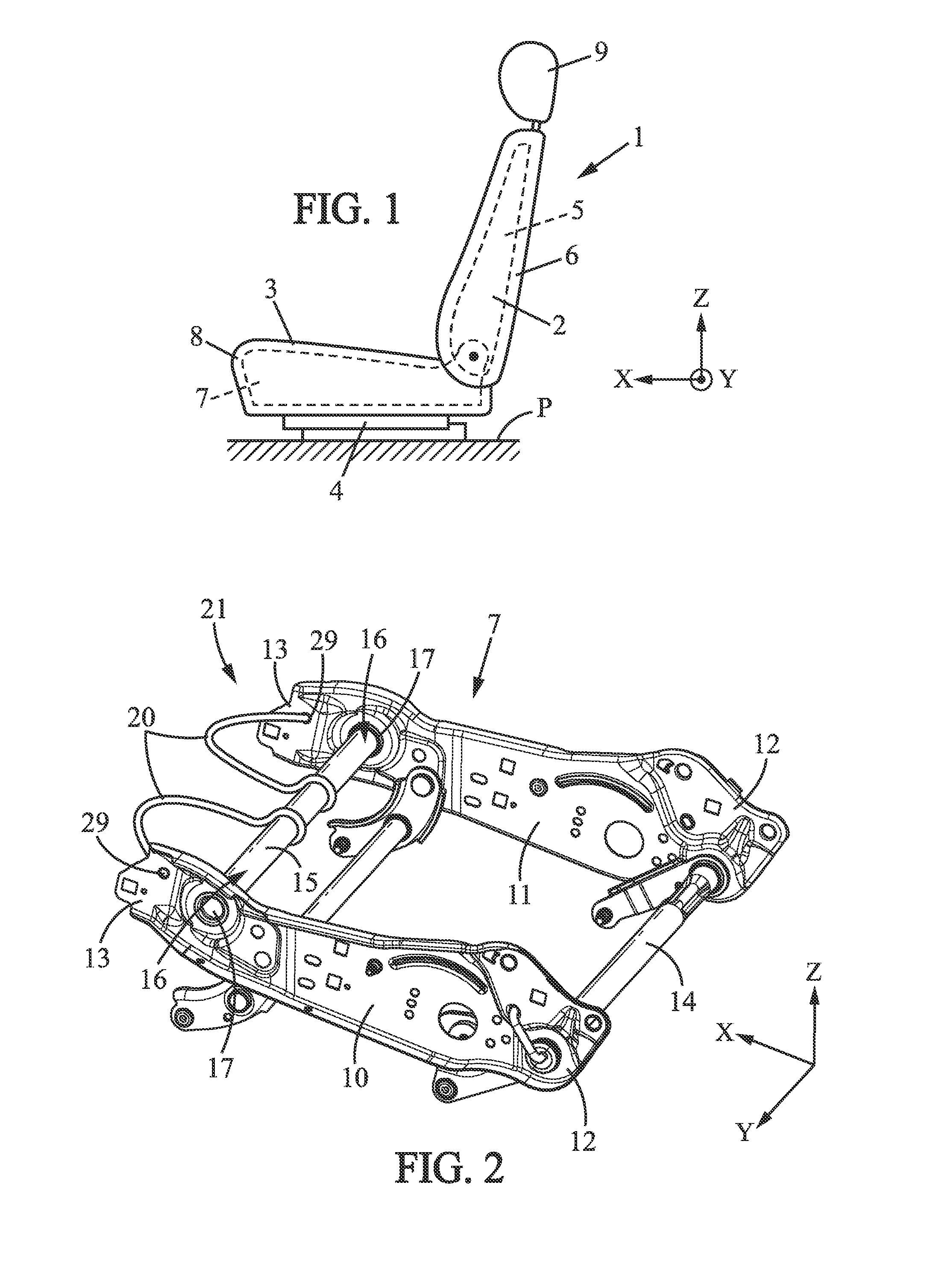

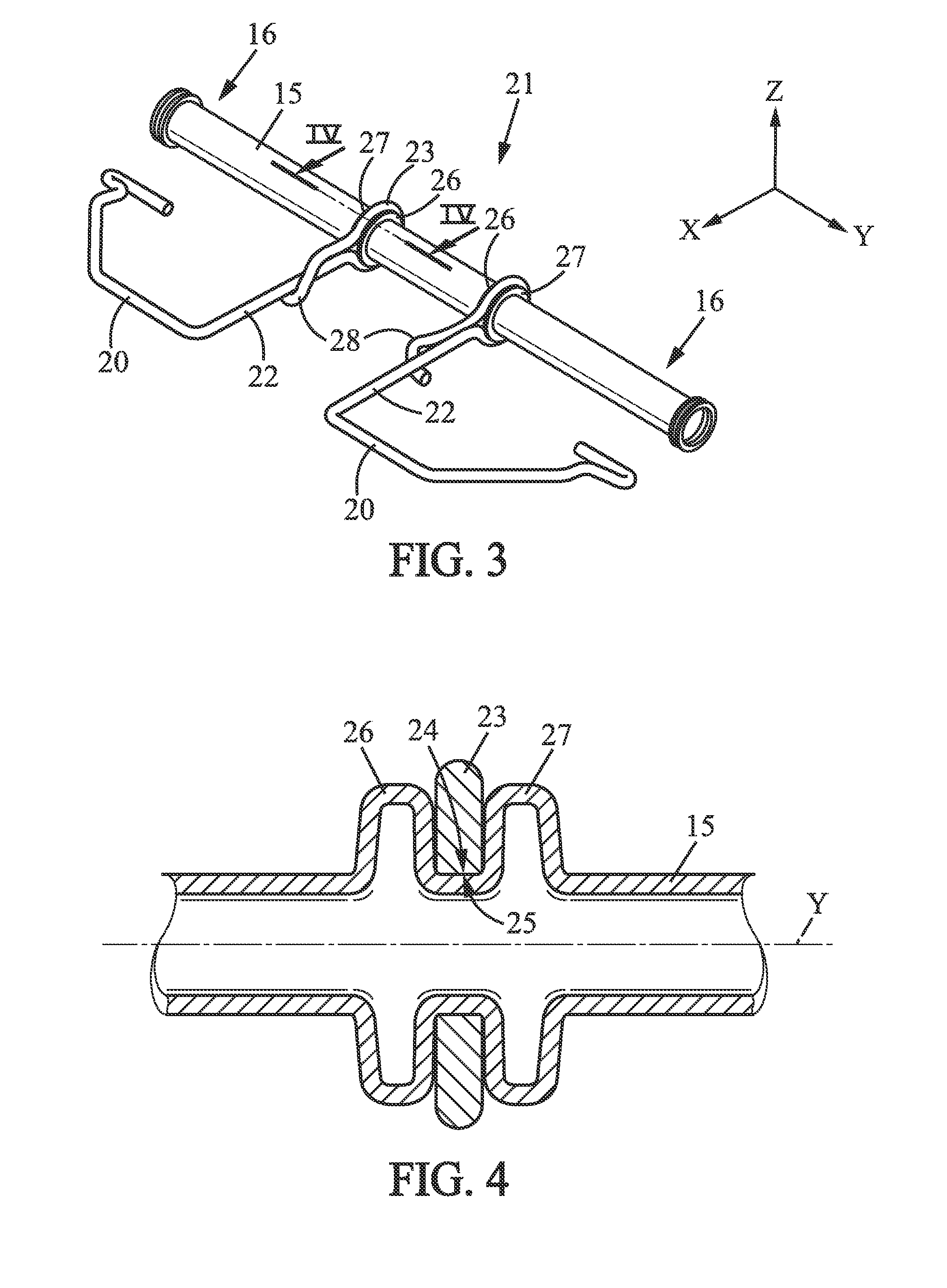

Assembly for a seat frame, seat frame and method for manufacturing such an assembly

The present invention relates to an assembly (21), particularly for a seat frame (7), comprising a structural tube (15) and at least one frame wire (20) mounted on the structural tube (15). The frame wire is mounted on the structural tube (15) by metal forming without the addition of external material. The present invention also relates to a seat frame (7), particularly for a backrest or a seat portion of a vehicle seat, comprising a left side flank (10) and / or a right side flank (11). The seat frame comprises at least one structural tube (15) mounted to the left side flank (10) and / or to the right side flank (11) by metal forming without the addition of external material.

Owner:FAURECIA

High Brightness Solid State Illumination System for Fluorescence Imaging and Analysis

ActiveUS20190121146A1Overcome disadvantageMitigate disadvantageSemiconductor laser optical deviceMicroscopesFluorescent imagingLighting system

An illumination system includes a phosphor to emit light in a wavelength band ΔλPHOSPHOR, a second light source to emit light at a second wavelength λ2 within an absorption band of the phosphor, a third light source to emit light at a third wavelength λ3 and a fourth light source to emit light at a fourth wavelength λ4. A controller drives the second, third and fourth light sources. A first dichroic optical element: 1) directs light from the phosphor to an optical output of the system, 2) directs light from the third light source to the optical output, and 3) directs light from the fourth light source to the optical output. A second dichroic optical element: 1) directs light from the third light source to the first dichroic optical element, and 2) directs the light from the fourth light source to the first dichroic optical element.

Owner:EXCELITAS CANADA

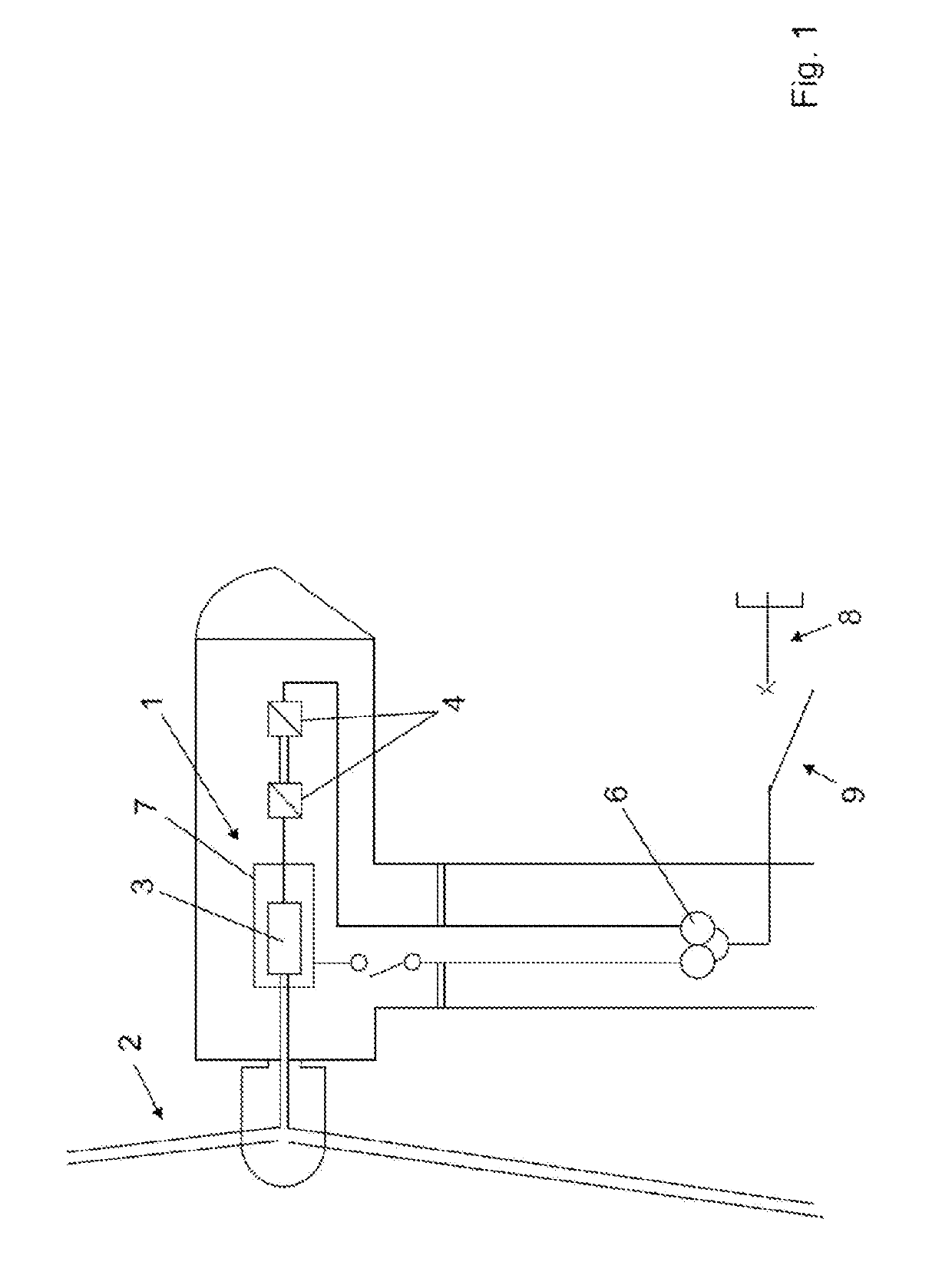

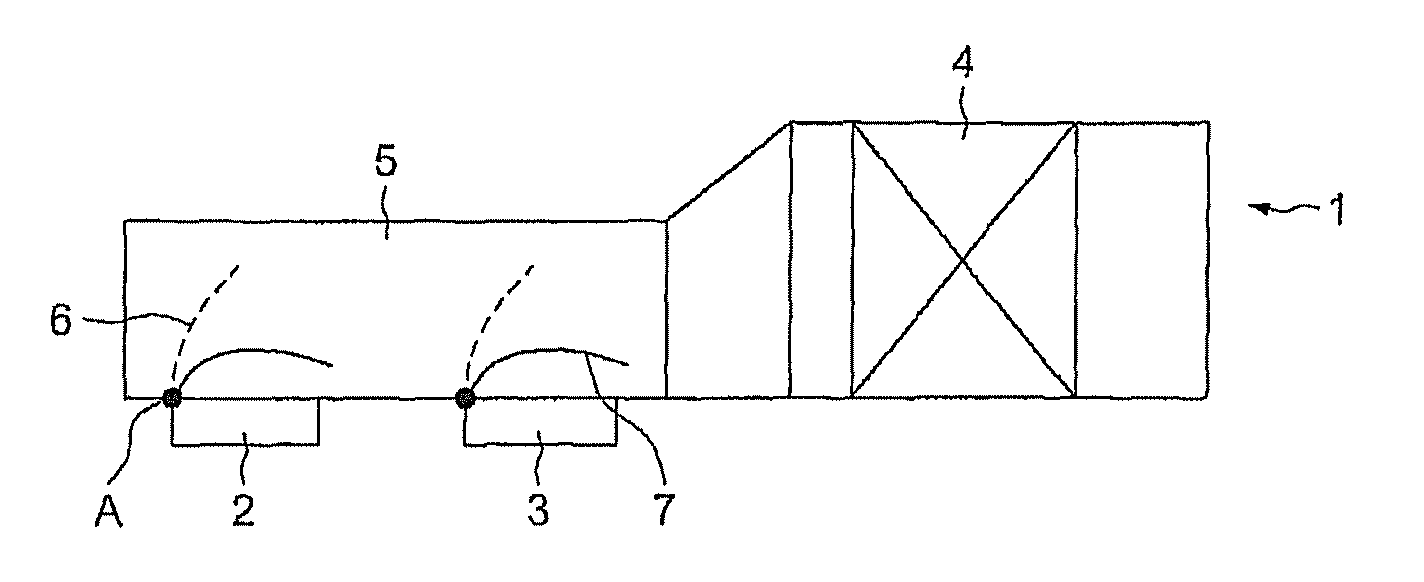

System and method for ventilating a turbine

InactiveUS9109810B2Preserving quiet operationMitigate disadvantageTurbine/propulsion engine coolingCheck valvesGas turbinesEngineering

This ventilation system (1), notably for a gas turbine, comprises at least one fan (2) opening into an air extraction duct (5).It comprises at least one device (6) for varying the pressure drop in the air extraction duct (5), comprising a means (8) of regulating the air flow rate in the air extraction duct (5).

Owner:GE ENERGY PRODS FRANCE

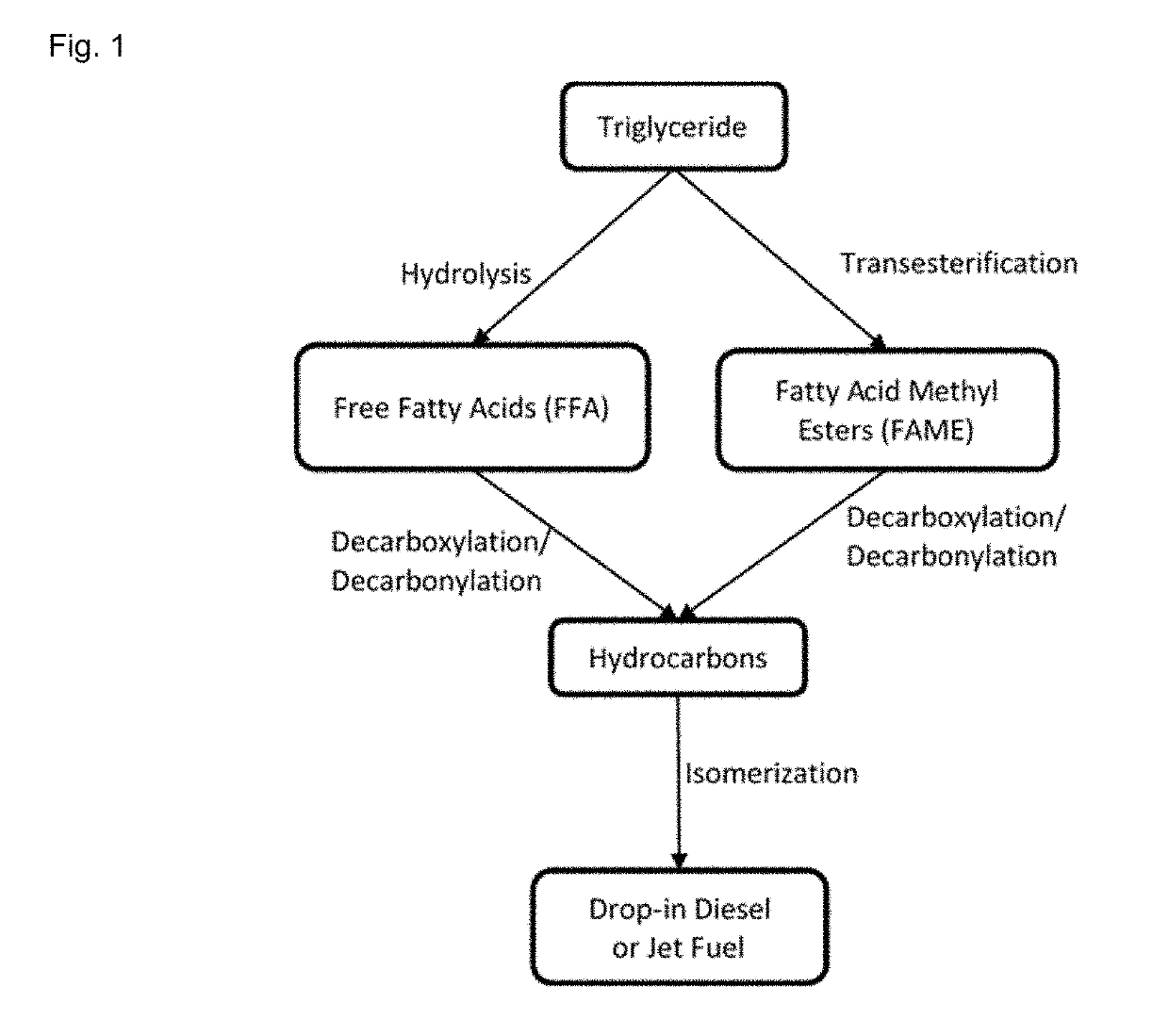

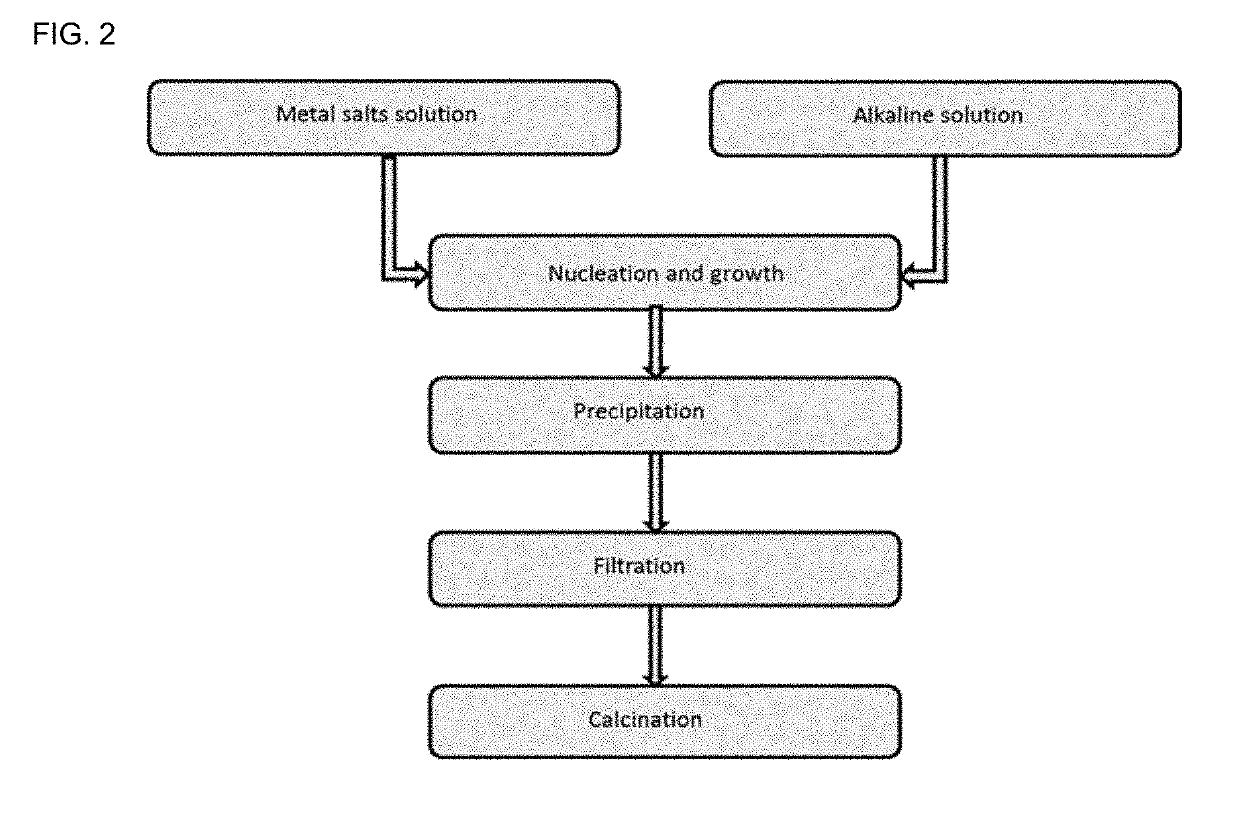

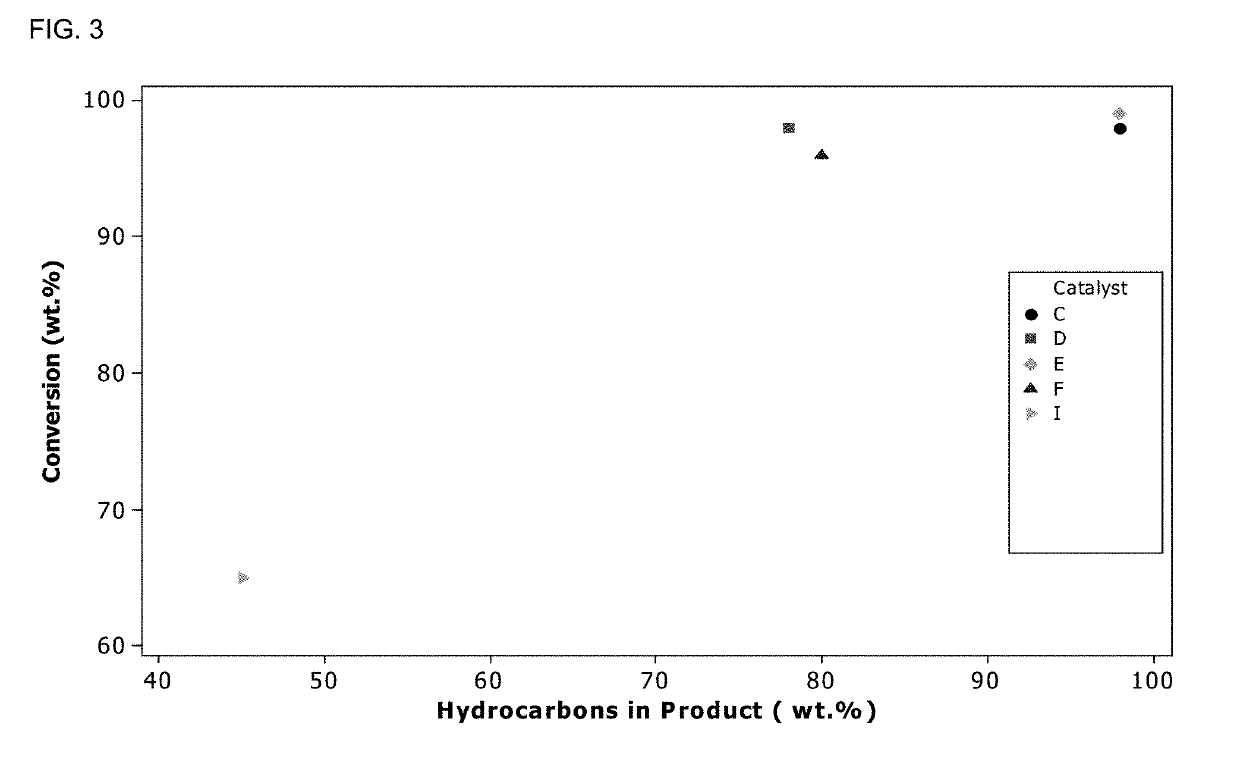

Process for the production of hydrocarbon biofuels

InactiveUS20190136140A1Mitigate disadvantageLow costHeterogenous catalyst chemical elementsBiofuelsDeoxygenationOrganic compound

A method of deoxygenating a feedstock, comprising at least one oxygenated organic compound, to form a hydrocarbon product, comprising the steps of: contacting the feedstock with a catalyst under conditions to promote deoxygenation of the at least one oxygenated compound, wherein the catalyst comprises a mixed metal oxide of the empirical formula: (M2)y(M1)O—ZnO—(Al2O3)x is disclosed. The invention is useful in the production of renewable fuels, such as renewable diesel, and jet fuel.

Owner:SASKATCHEWAN RESEARCH COUNCIL

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com