Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1557 results about "RAID processing unit" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A RAID processing unit (RPU) is an integrated circuit that performs specialized calculations in a RAID host adapter. XOR calculations, for example, are necessary for calculating parity data, and for maintaining data integrity when writing to a disk array that uses a parity drive or data striping. An RPU may perform these calculations more efficiently than the computer's central processing unit (CPU).

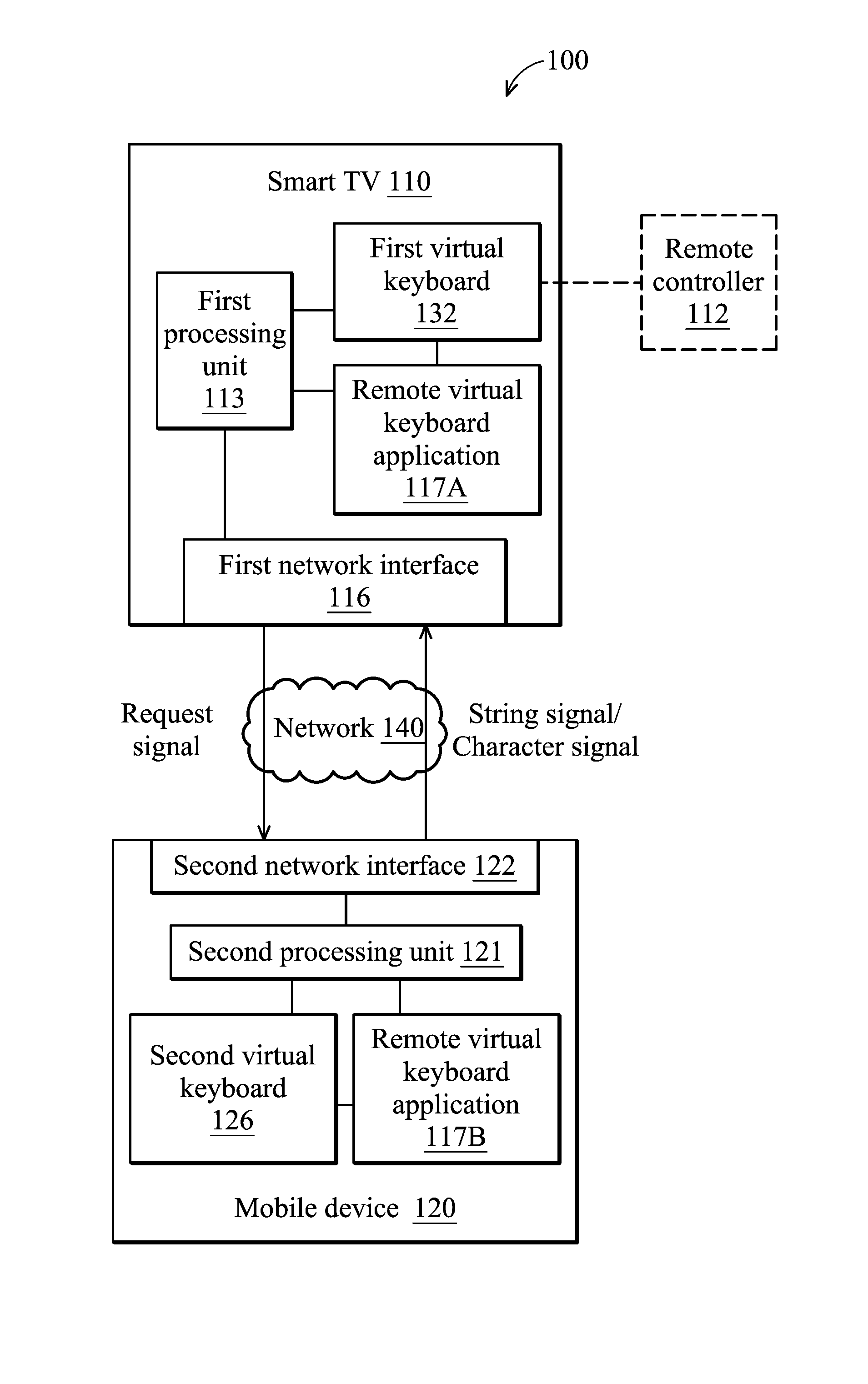

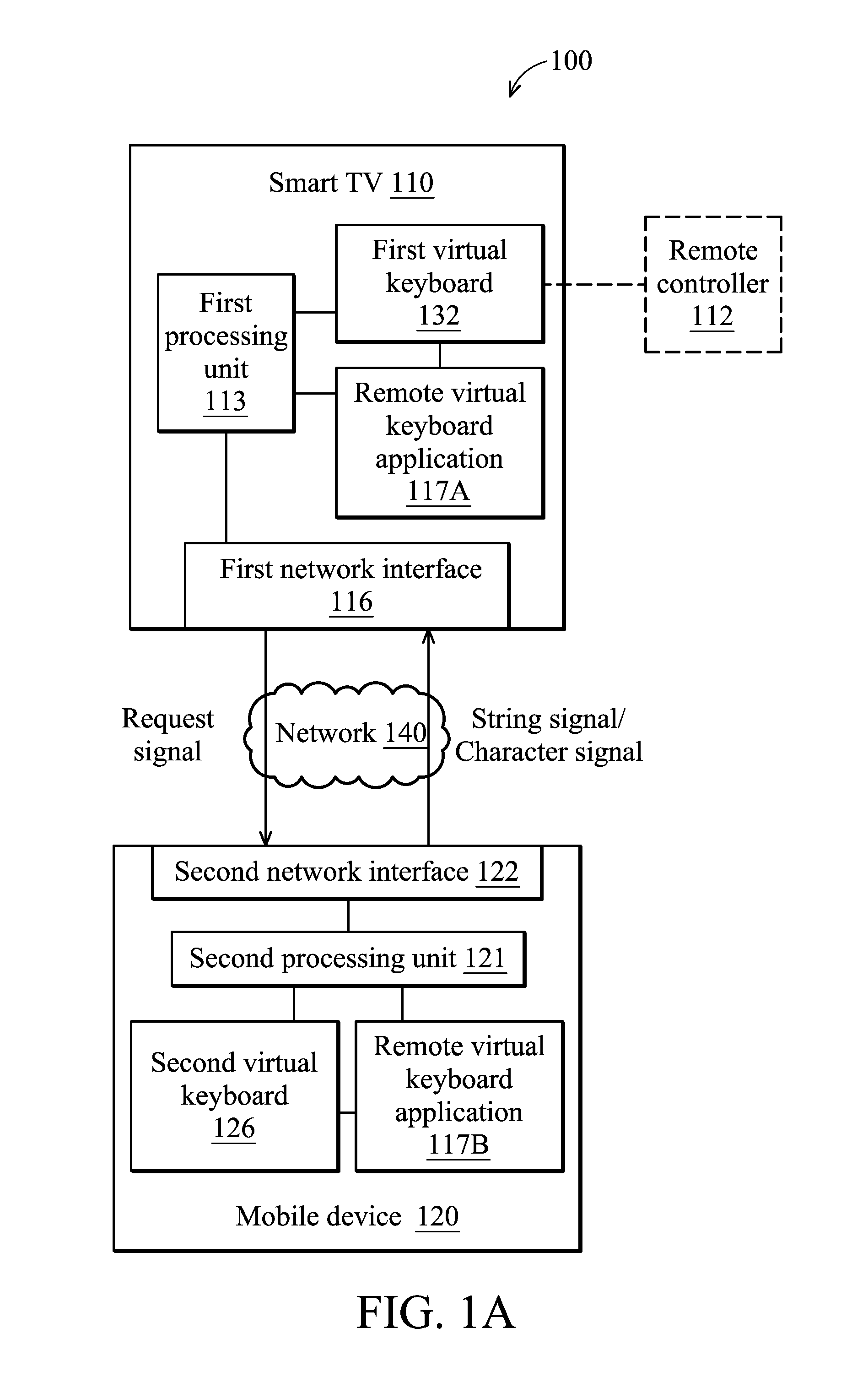

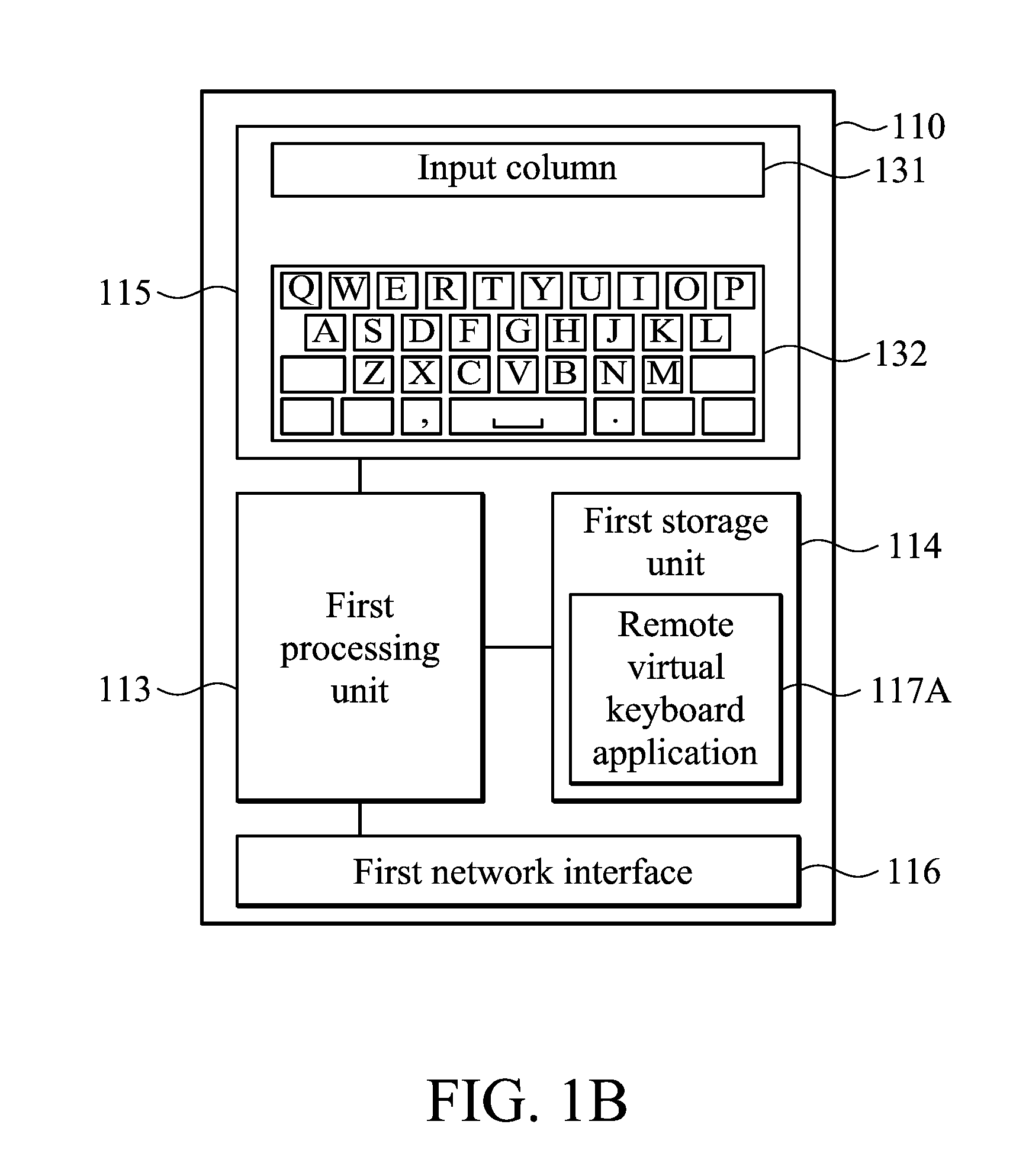

Smart TV system and input operation method

ActiveUS20130291015A1Television system detailsColor television detailsTelevision systemApplication software

A smart TV is provided. The smart TV has a network interface, configured to connect the smart TV with a mobile device via a network; and a processing unit, configured to execute a first remote virtual keyboard application for activating a remote virtual keyboard mode of the smart TV; wherein the processing unit further generates an input interface comprising a first virtual keyboard when the smart TV generates an input column in response to an input demand; wherein when the remote virtual keyboard mode of the smart TV is activated and there is the input demand, the processing unit hides the first virtual keyboard without being displayed, and uses the mobile device to replace the hidden first virtual keyboard for accepting input from a user.

Owner:WISTRON CORP

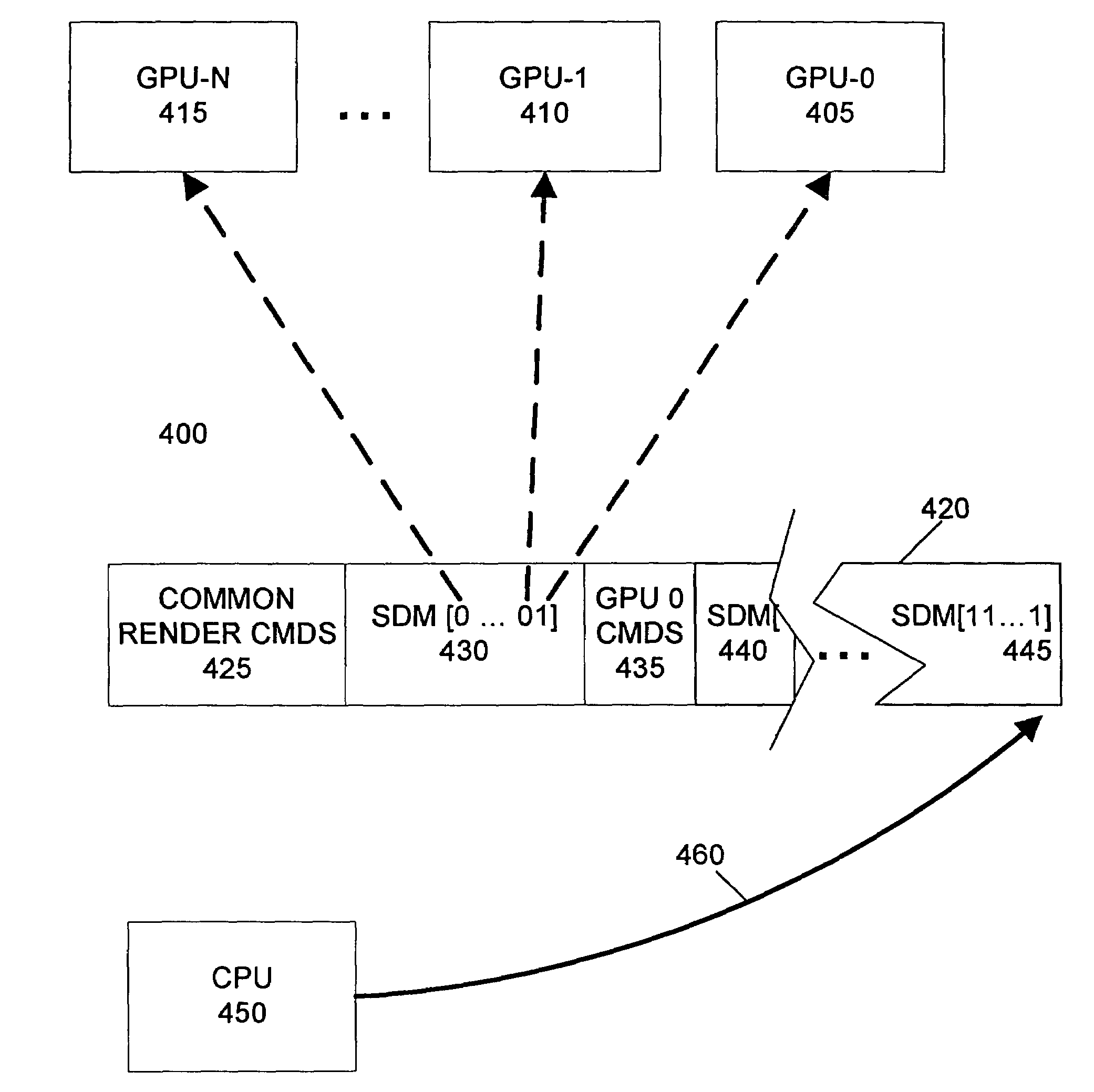

Programming multiple chips from a command buffer

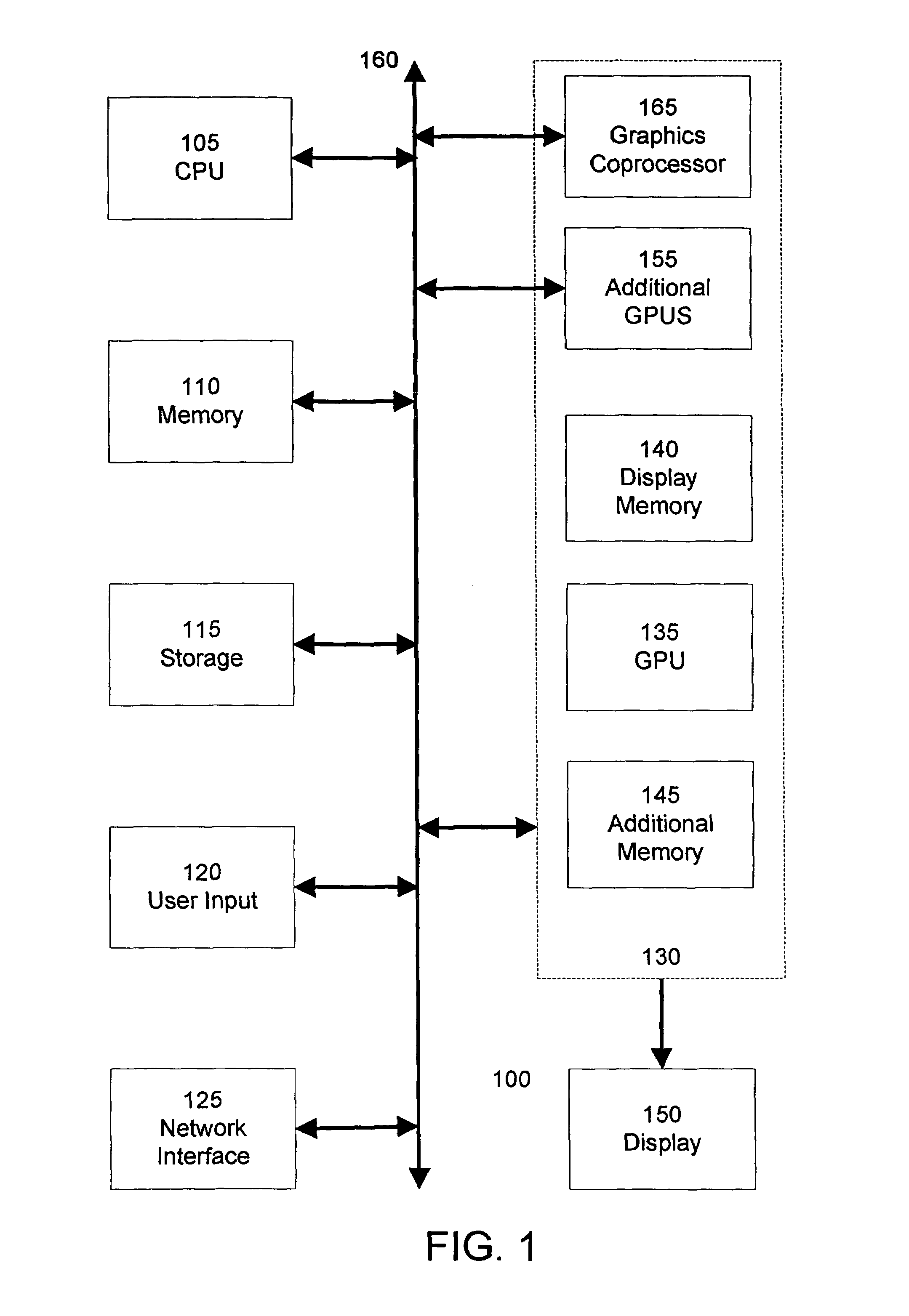

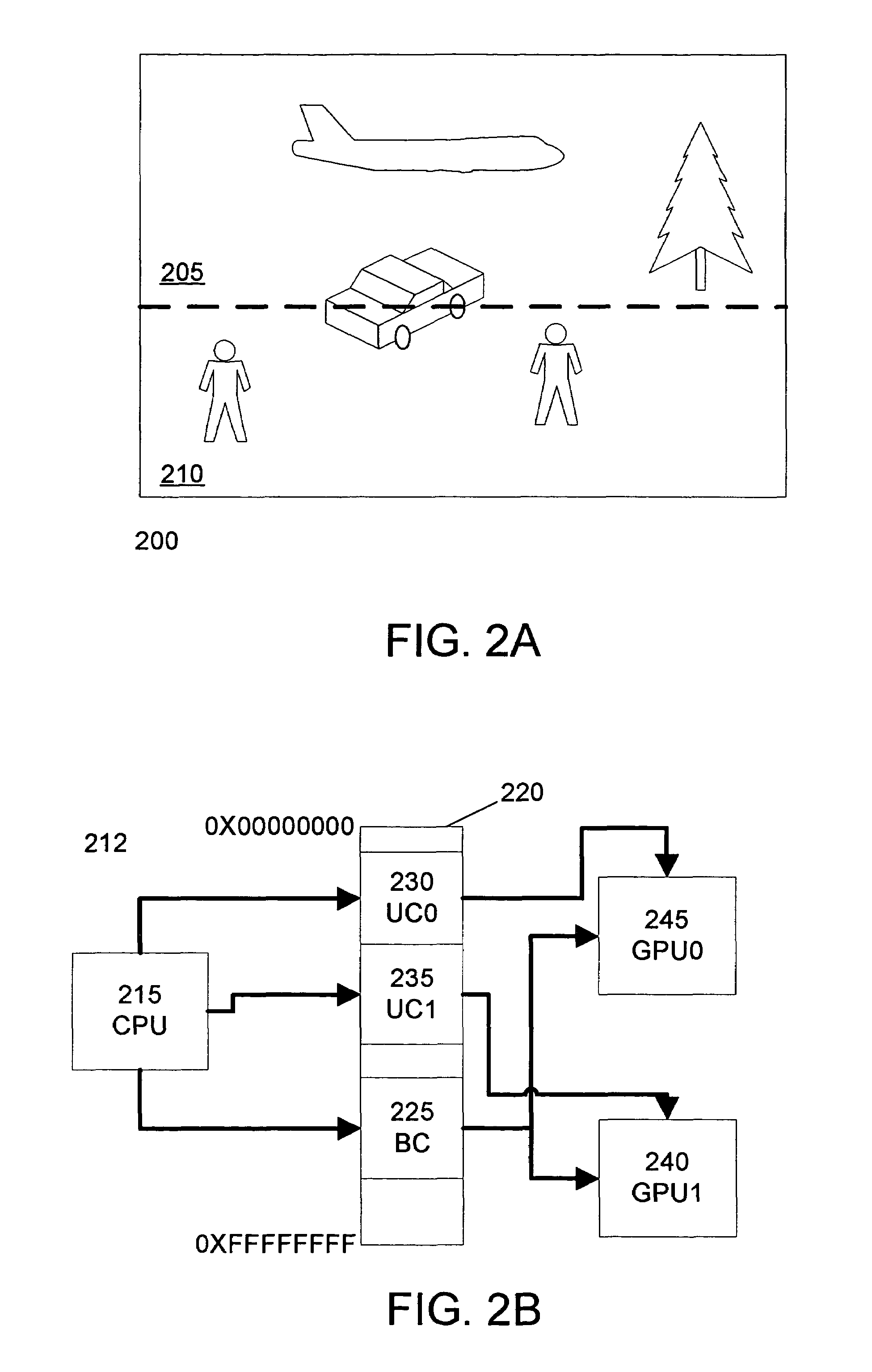

ActiveUS7015915B1Keep in syncSingle instruction multiple data multiprocessorsProcessor architectures/configurationGraphicsCoprocessor

A CPU selectively programs one or more graphics devices by writing a control command to the command buffer that designates a subset of graphics devices to execute subsequent commands. Graphics devices not designated by the control command will ignore the subsequent commands until re-enabled by the CPU. The non-designated graphics devices will continue to read from the command buffer to maintain synchronization. Subsequent control commands can designate different subsets of graphics devices to execute further subsequent commands. Graphics devices include graphics processing units and graphics coprocessors. A unique identifier is associated with each of the graphics devices. The control command designates a subset of graphics devices according to their respective unique identifiers. The control command includes a number of bits. Each bit is associated with one of the unique identifiers and designates the inclusion of one of the graphics devices in the first subset of graphics devices.

Owner:NVIDIA CORP

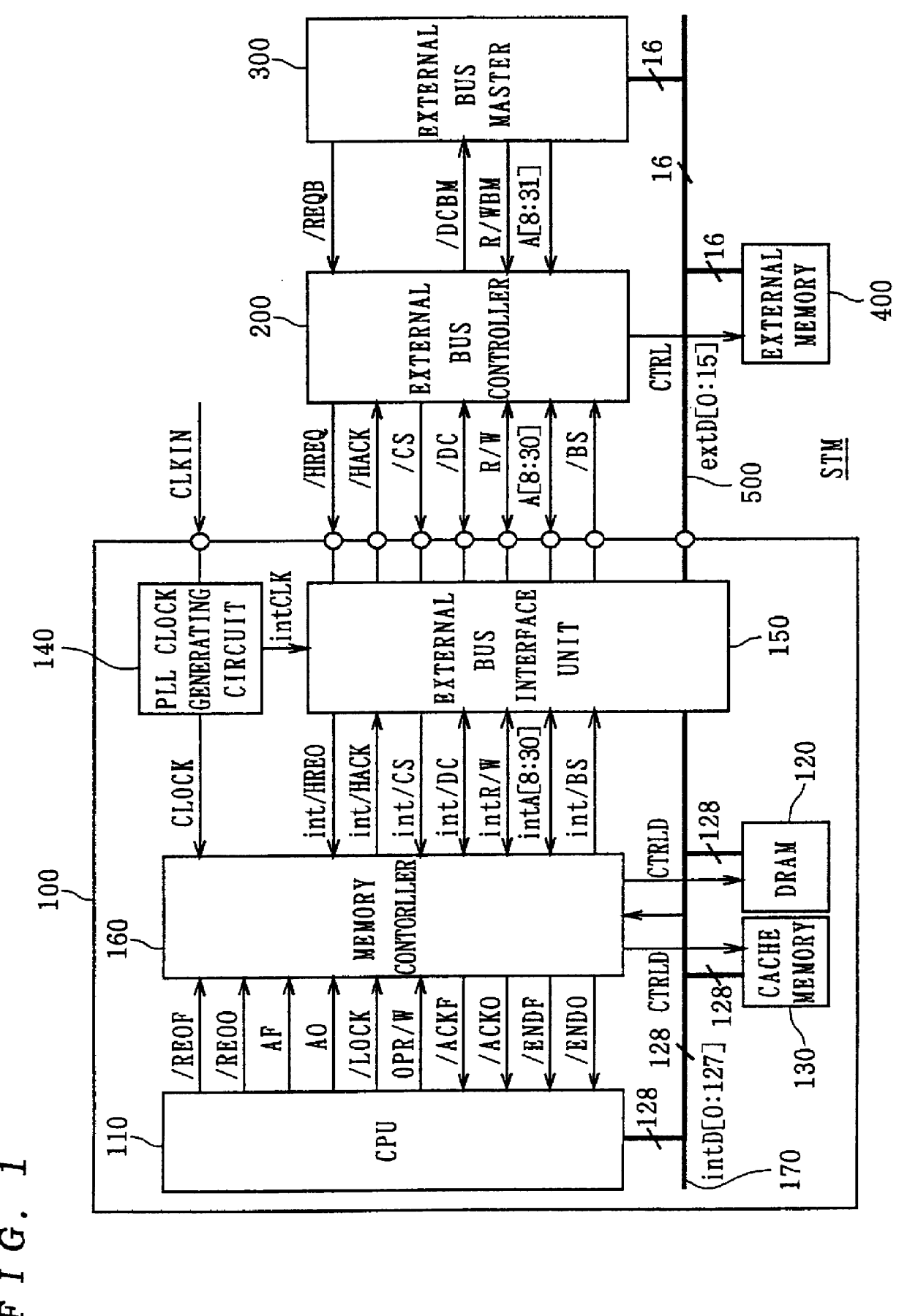

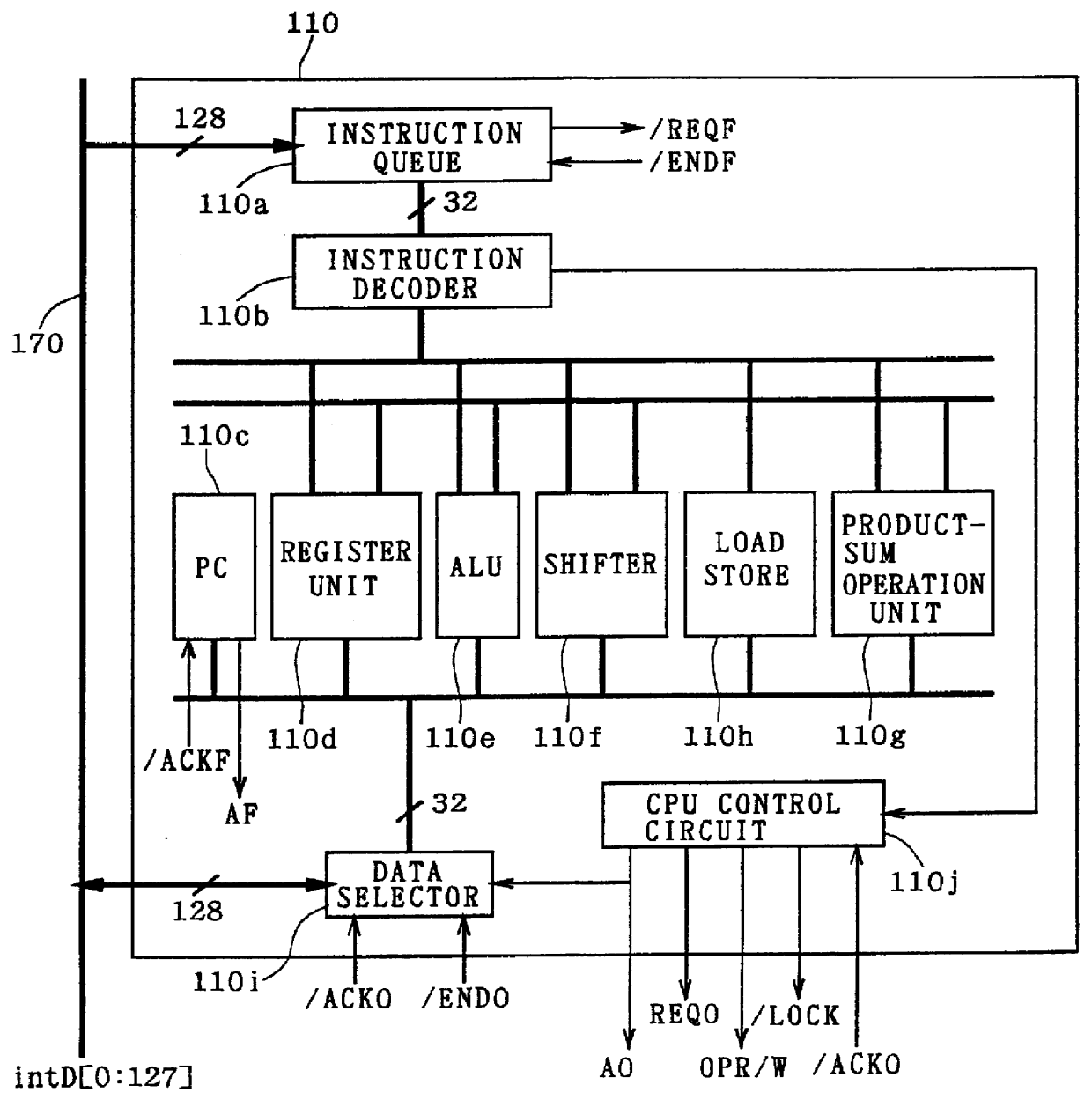

Computer system and semiconductor device on one chip including a memory and central processing unit for making interlock access to the memory

InactiveUS6101584AUnauthorized memory use protectionMultiple digital computer combinationsChip selectComputerized system

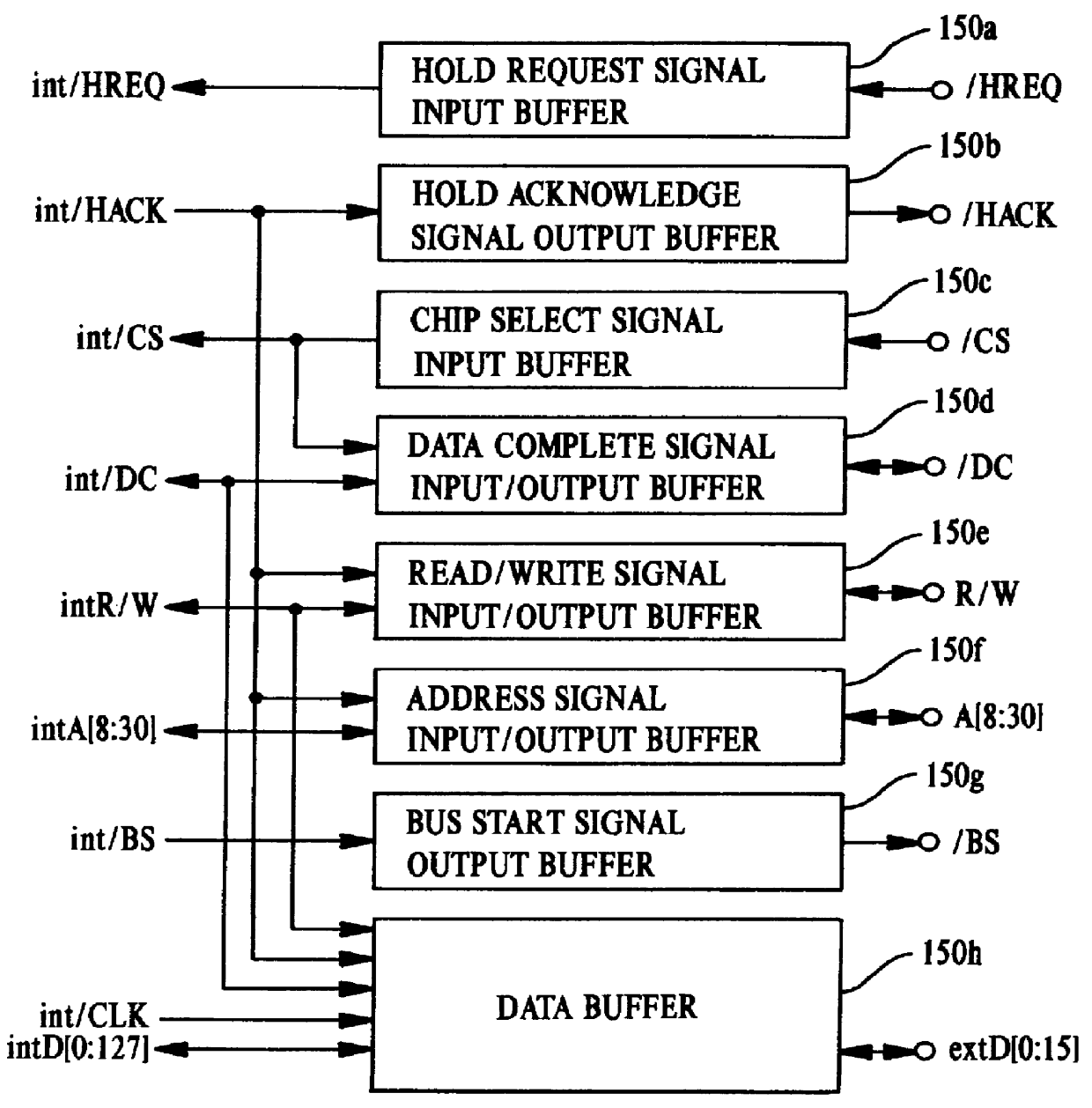

A central processing unit (CPU) having a built-in dynamic random-access memory (DRAM) with exclusive access to the DRAM when the CPU performs an interlock access to the DRAM. A memory controller prevents the DRAM from being externally accessed while the CPU is performing the interlock access. When the memory controller receives an external request for accessing the DRAM during a time when the CPU is performing an interlock access to the DRAM, the memory controller outputs a response signal indicating that external access to the DRAM is excluded or inhibited. The request signal can be a hold request signal for requesting a bus right or can be a chip select signal. The response signal can be a hold acknowledge signal or a data complete signal. The memory controller can be switched to and from first and second lock modes, where hold request and hold acknowledge signals are used during the first lock mode and chip select and data complete signals are used in the second lock mode.

Owner:MITSUBISHI ELECTRIC CORP

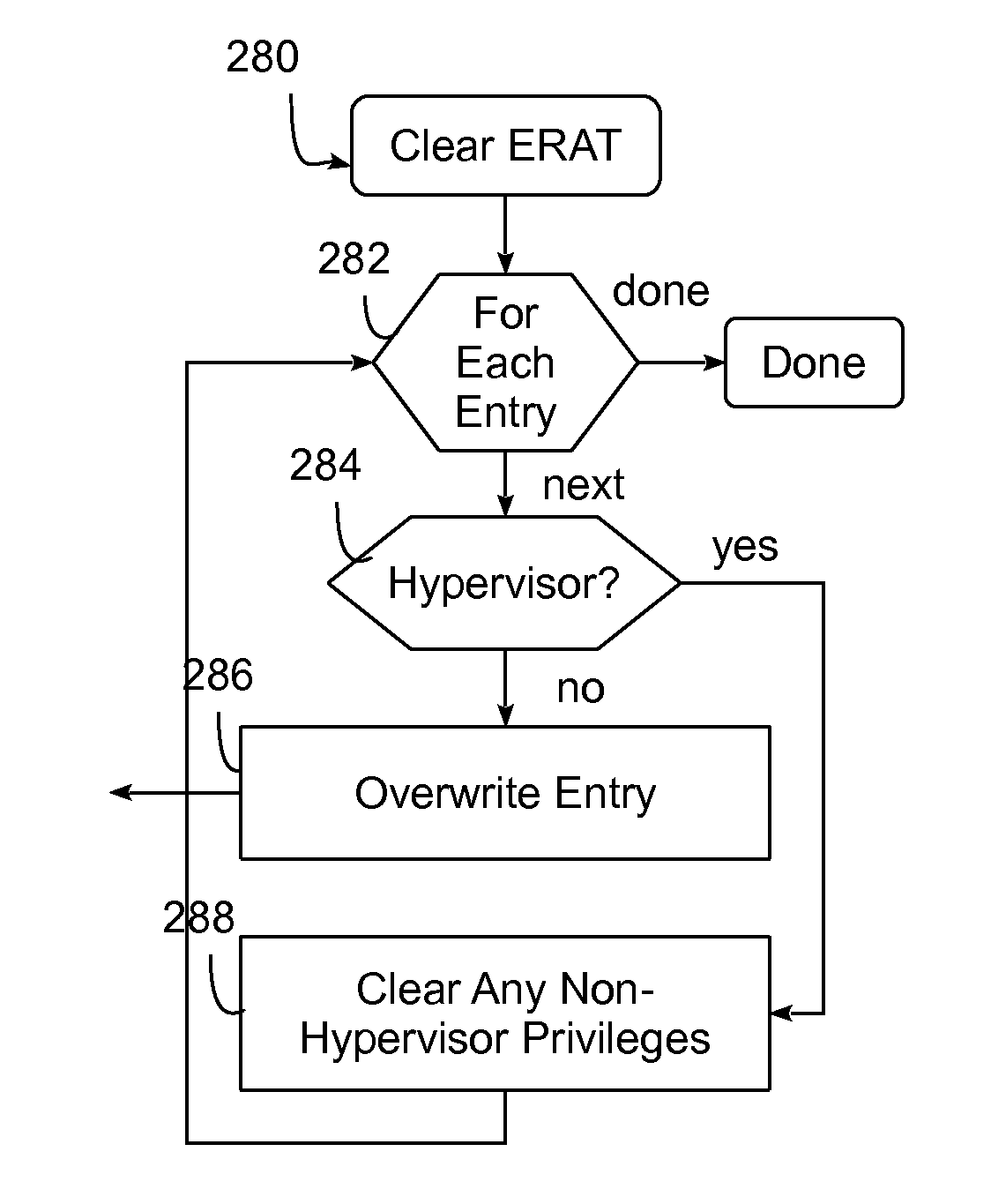

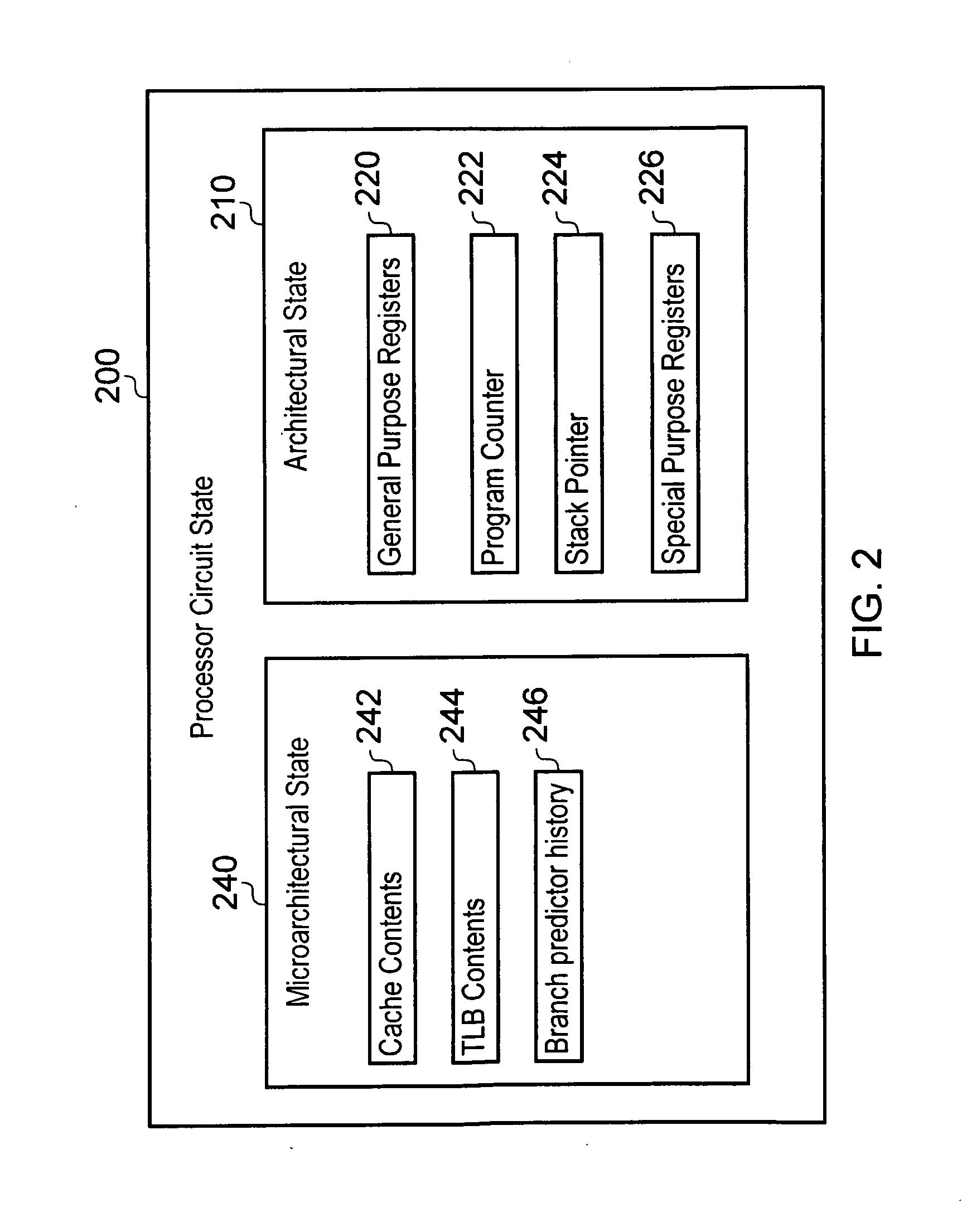

Instruction set architecture with secure clear instructions for protecting processing unit architected state information

InactiveUS20140230077A1Digital data processing detailsAnalogue secracy/subscription systemsComputer hardwareSupervisory program

A method and circuit arrangement utilize secure clear instructions defined in an instruction set architecture (ISA) for a processing unit to clear, overwrite or otherwise restrict unauthorized access to the internal architected state of the processing unit in association with context switch operations. The secure clear instructions are executable by a hypervisor, operating system, or other supervisory program code in connection with a context switch operation, and the processing unit includes security logic that is responsive to such instructions to restrict access by an operating system or process associated with an incoming context to architected state information associated with an operating system or process associated with an outgoing context.

Owner:IBM CORP

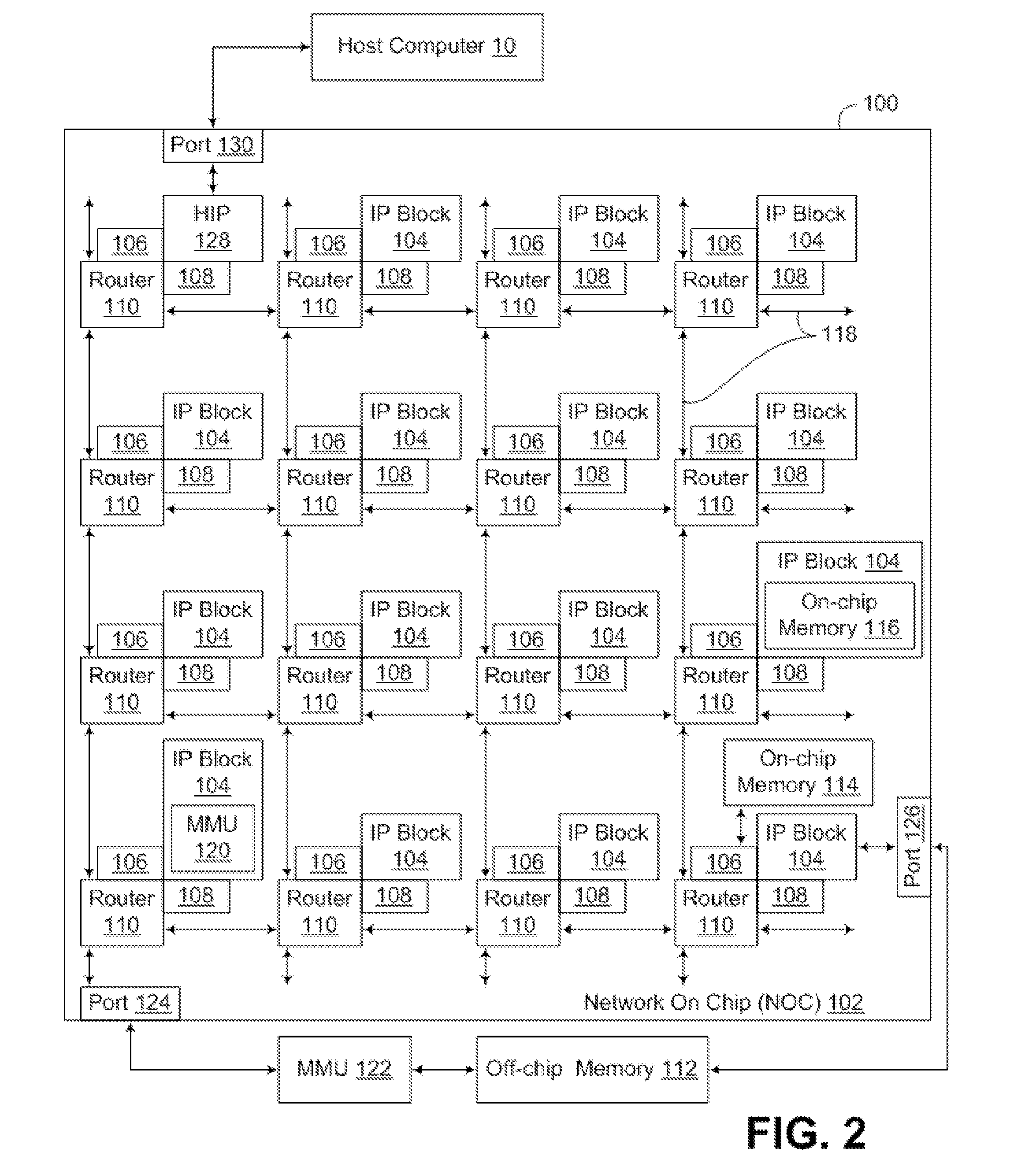

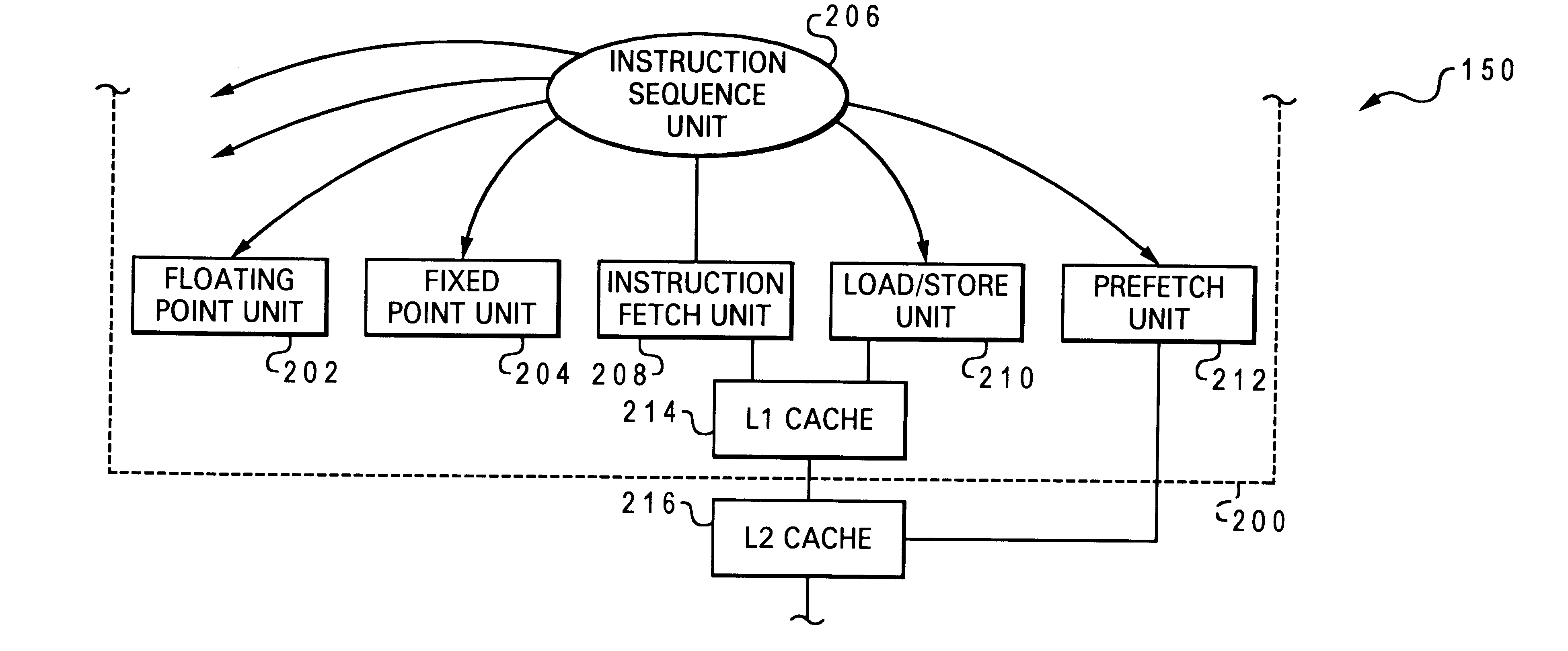

Optimized cache allocation algorithm for multiple speculative requests

A method of operating a computer system is disclosed in which an instruction having an explicit prefetch request is issued directly from an instruction sequence unit to a prefetch unit of a processing unit. In a preferred embodiment, two prefetch units are used, the first prefetch unit being hardware independent and dynamically monitoring one or more active streams associated with operations carried out by a core of the processing unit, and the second prefetch unit being aware of the lower level storage subsystem and sending with the prefetch request an indication that a prefetch value is to be loaded into a lower level cache of the processing unit. The invention may advantageously associate each prefetch request with a stream ID of an associated processor stream, or a processor ID of the requesting processing unit (the latter feature is particularly useful for caches which are shared by a processing unit cluster). If another prefetch value is requested from the memory hiearchy and it is determined that a prefetch limit of cache usage has been met by the cache, then a cache line in the cache containing one of the earlier prefetch values is allocated for receiving the other prefetch value.

Owner:IBM CORP

Unit for processing numeric and logic operations for use in central processing units (CPUS), multiprocessor systems, data-flow processors (DSPS), systolic processors and field programmable gate arrays (FPGAS)

InactiveUS20030056085A1The process is convenient and fastSimplifies (re)configurationEnergy efficient ICTMultiple digital computer combinationsBus masteringBroadcasting

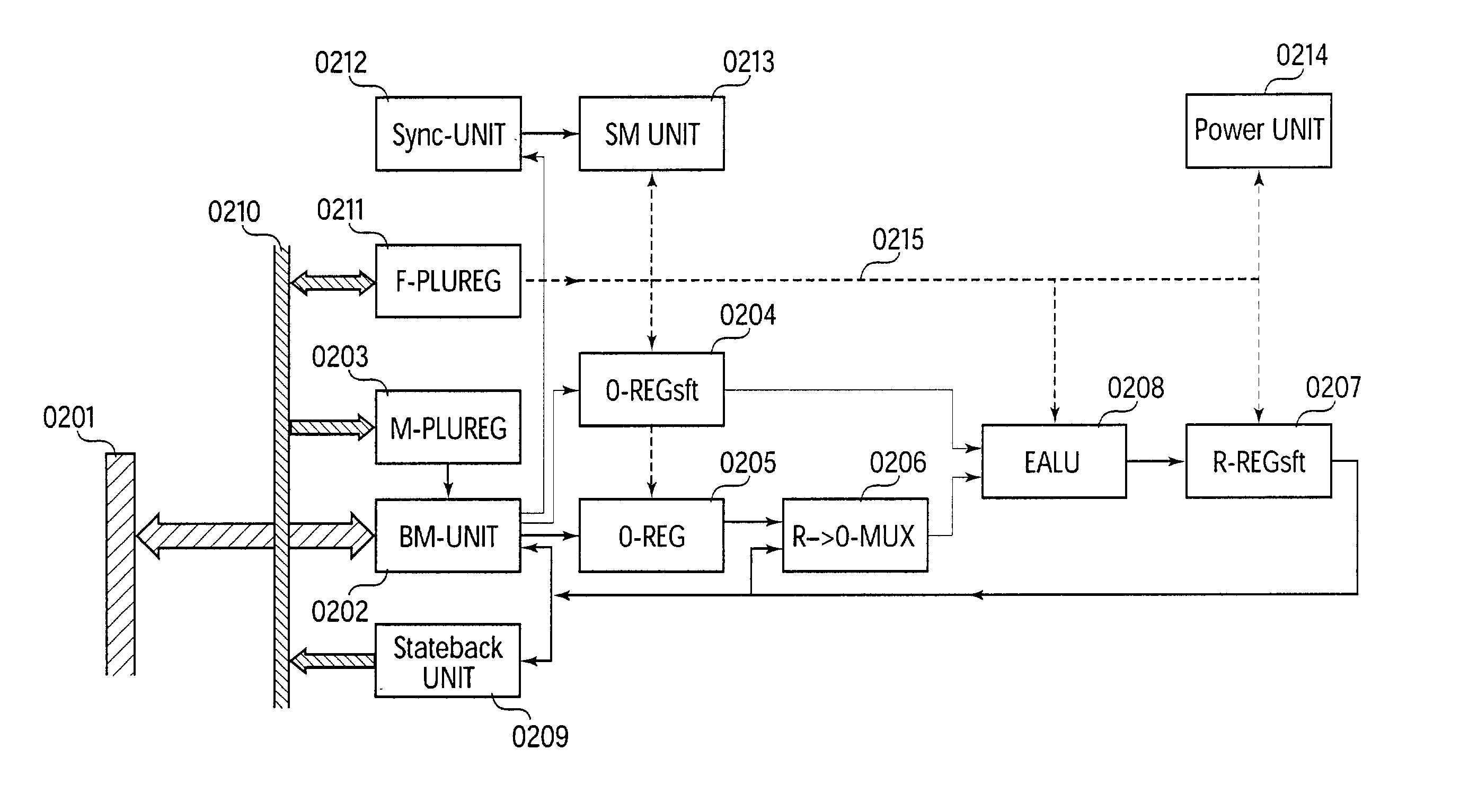

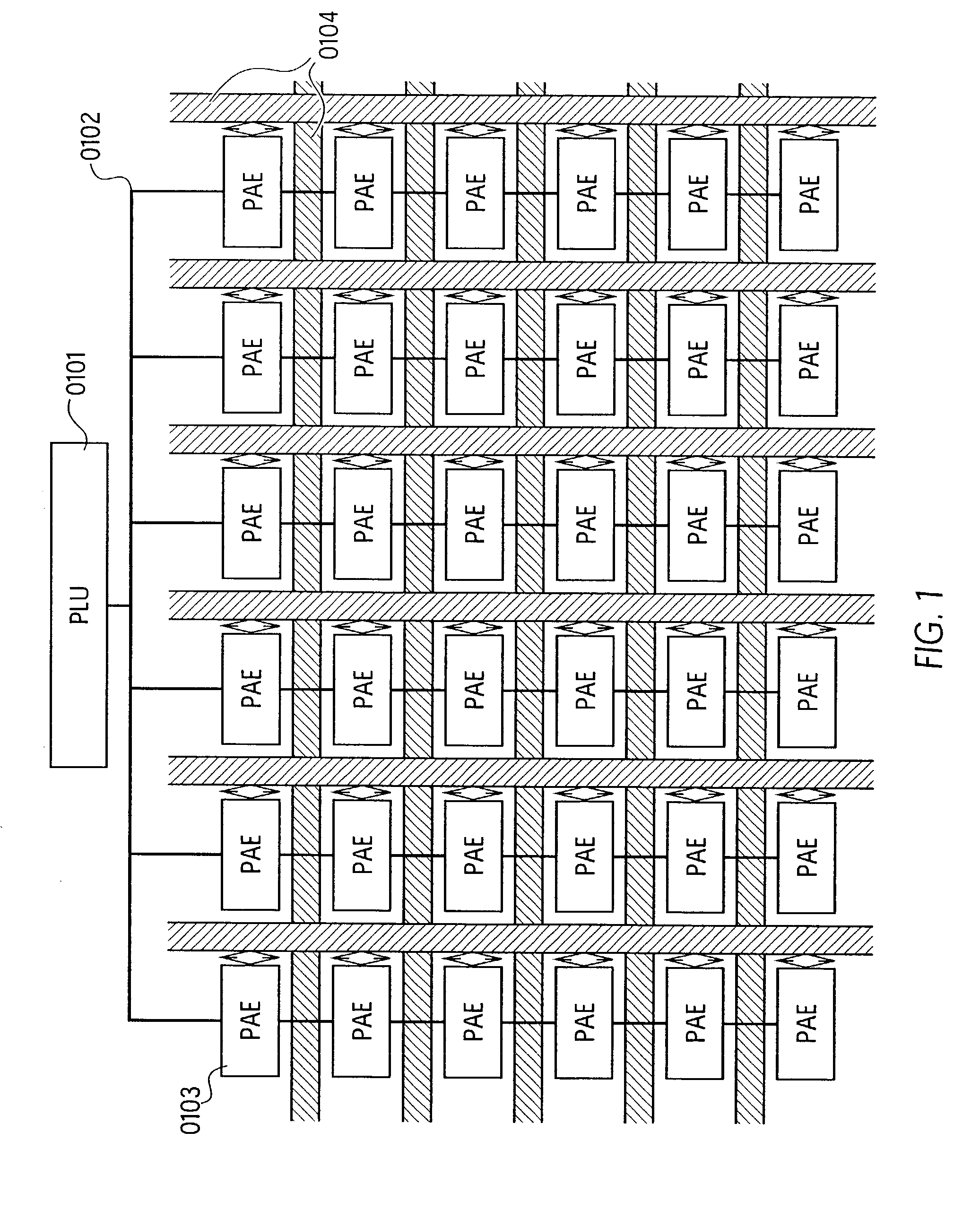

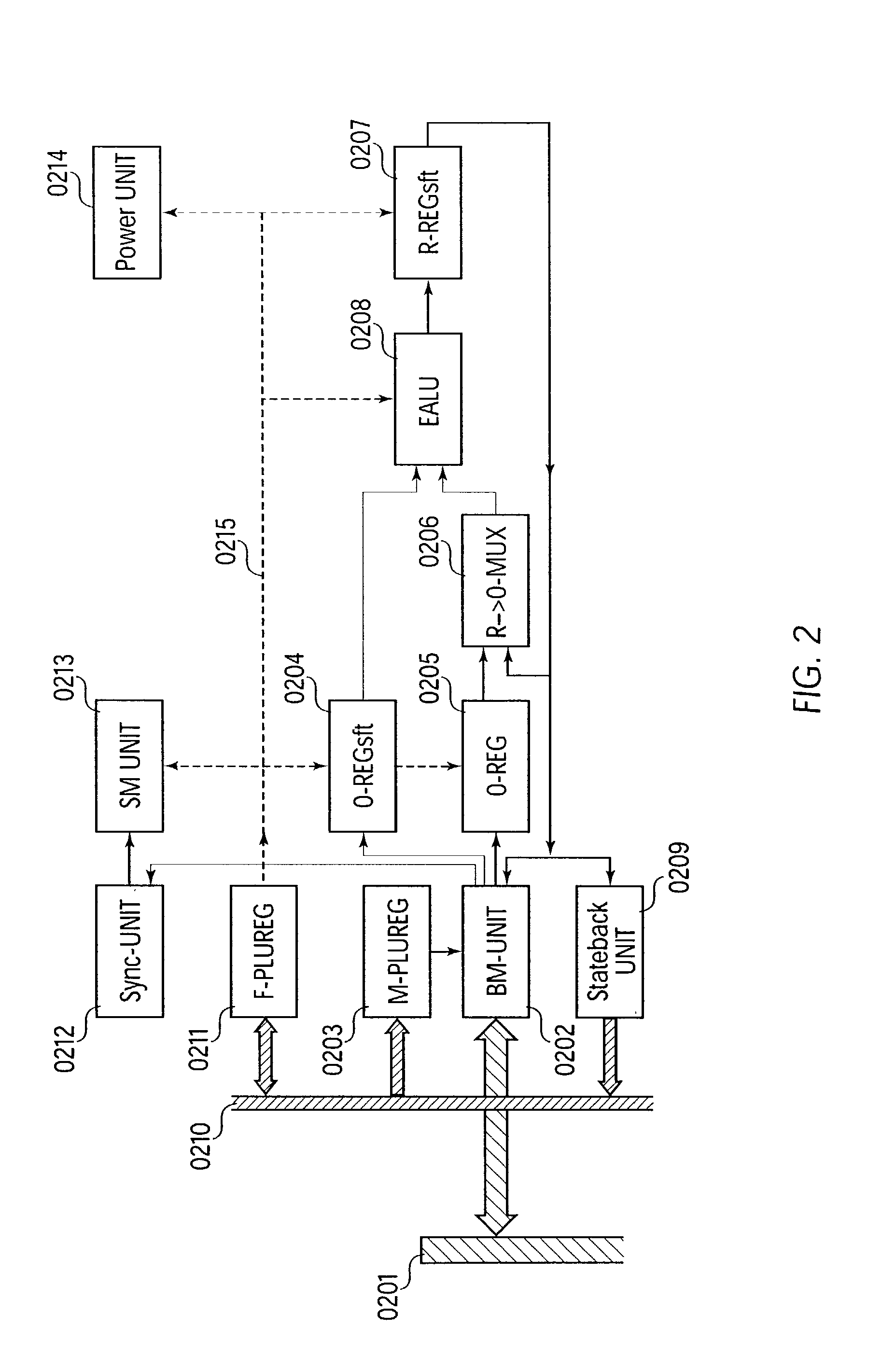

An expanded arithmetic and logic unit (EALU) with special extra functions is integrated into a configurable unit for performing data processing operations. The EALU is configured by a function register, which greatly reduces the volume of data required for configuration. The cell can be cascaded freely over a bus system, the EALU being decoupled from the bus system over input and output registers. The output registers are connected to the input of the EALU to permit serial operations. A bus control unit is responsible for the connection to the bus, which it connects according to the bus register. The unit is designed so that distribution of data to multiple receivers (broadcasting) is possible. A synchronization circuit controls the data exchange between multiple cells over the bus system. The EALU, the synchronization circuit, the bus control unit, and registers are designed so that a cell can be reconfigured on site independently of the cells surrounding it. A power-saving mode which shuts down the cell can be configured through the function register; clock rate dividers which reduce the working frequency can also be set.

Owner:PACT +1

Mobile phone distribution system

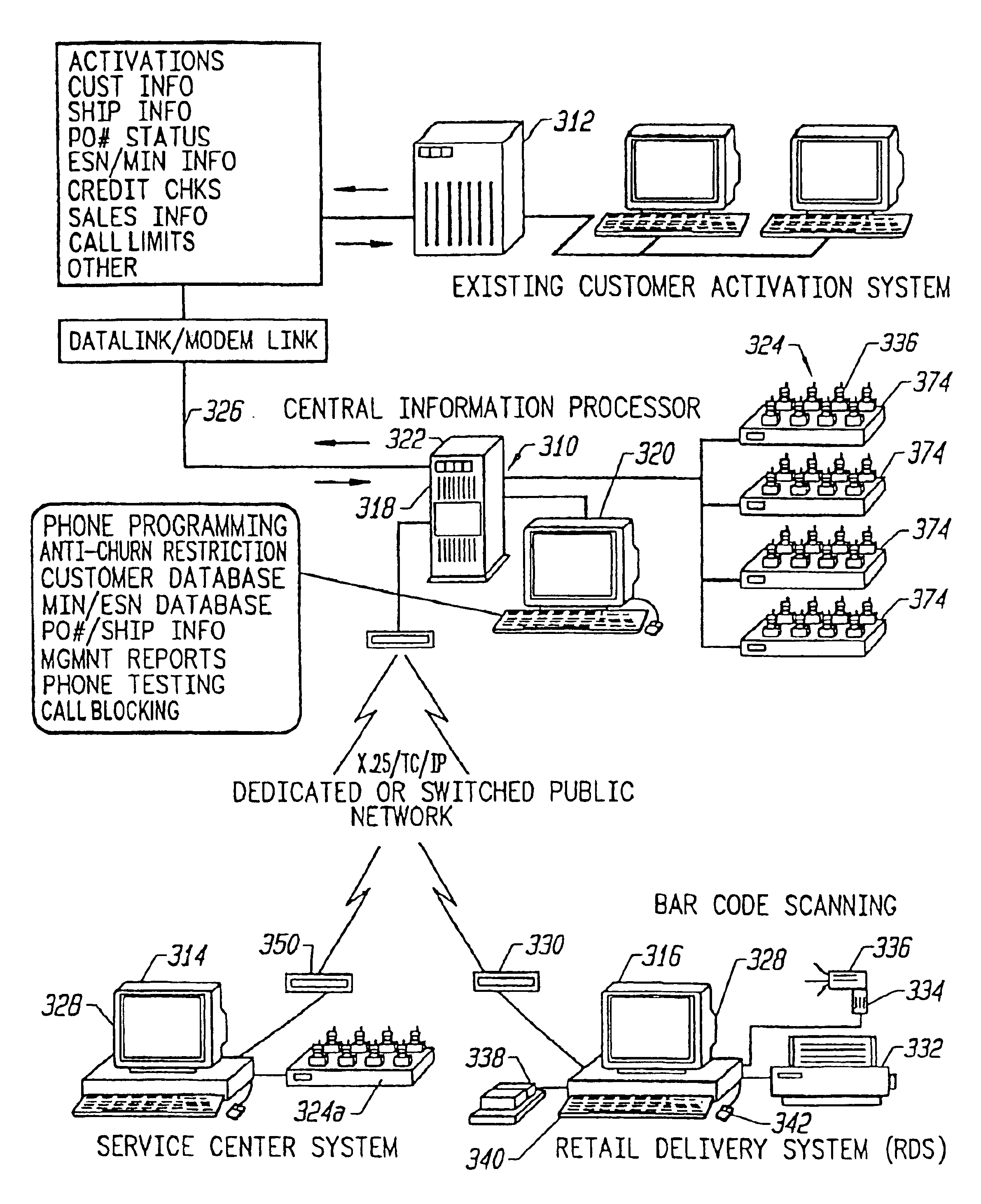

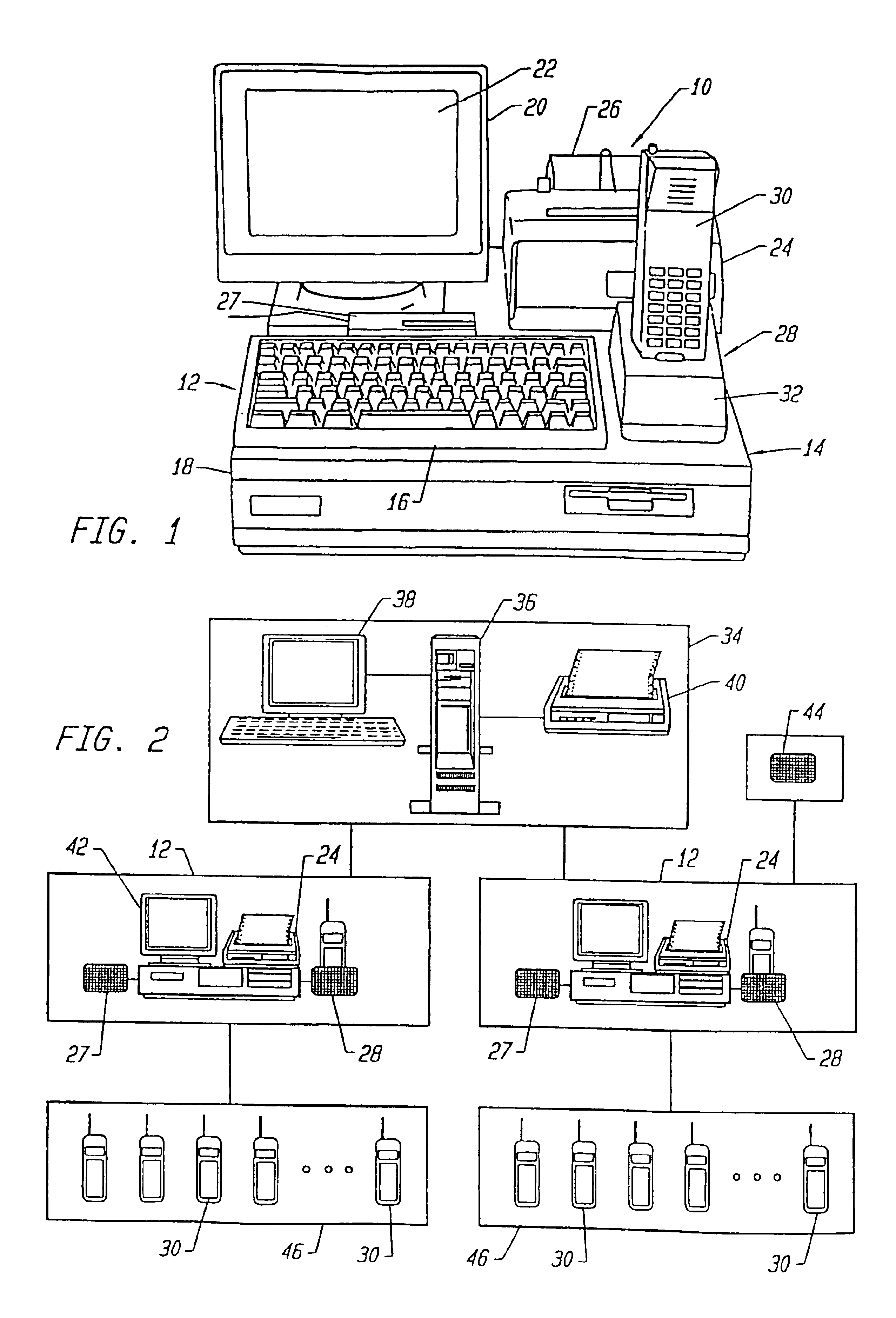

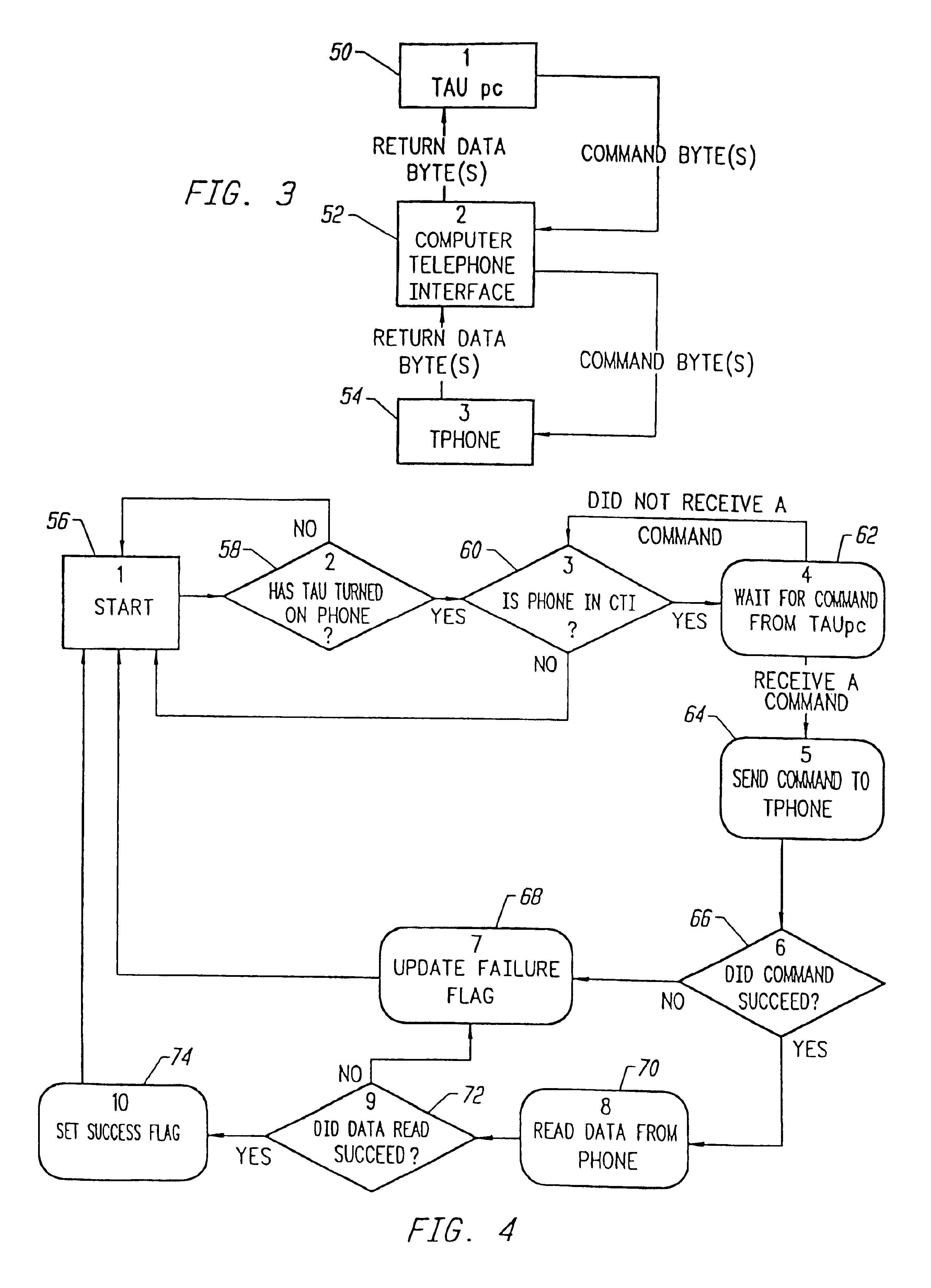

InactiveUS7058386B2Avoid switchingAvoid drudgeryAccounting/billing servicesUnauthorised/fraudulent call preventionCredit cardAutomatic programming

A mobile telephone programming and accounting system that includes an integrated hardware system interlinking a telephone unit, a telephone interlink receiver, and a central processing unit connected to the interlink receiver. The hardware system also preferably includes a receipt printer and a credit card reader. The telephone unit is preferably equipped with an internal real time clock and calendar circuit and memory store to record the time and date of calls for reporting to the central processing unit to enable tracking and detailed accounting of calls. The interlink receiver in the improved design includes a gang platform for programming multiple phone units, which may be phone units of different manufacturers, and provides for automatic programming of the multiple units and, in the retail distribution setting, programming the operating parameters and assignment of the phone unit to a service provider with encryption keys to reduce service churning.

Owner:TRACFONE WIRELESS

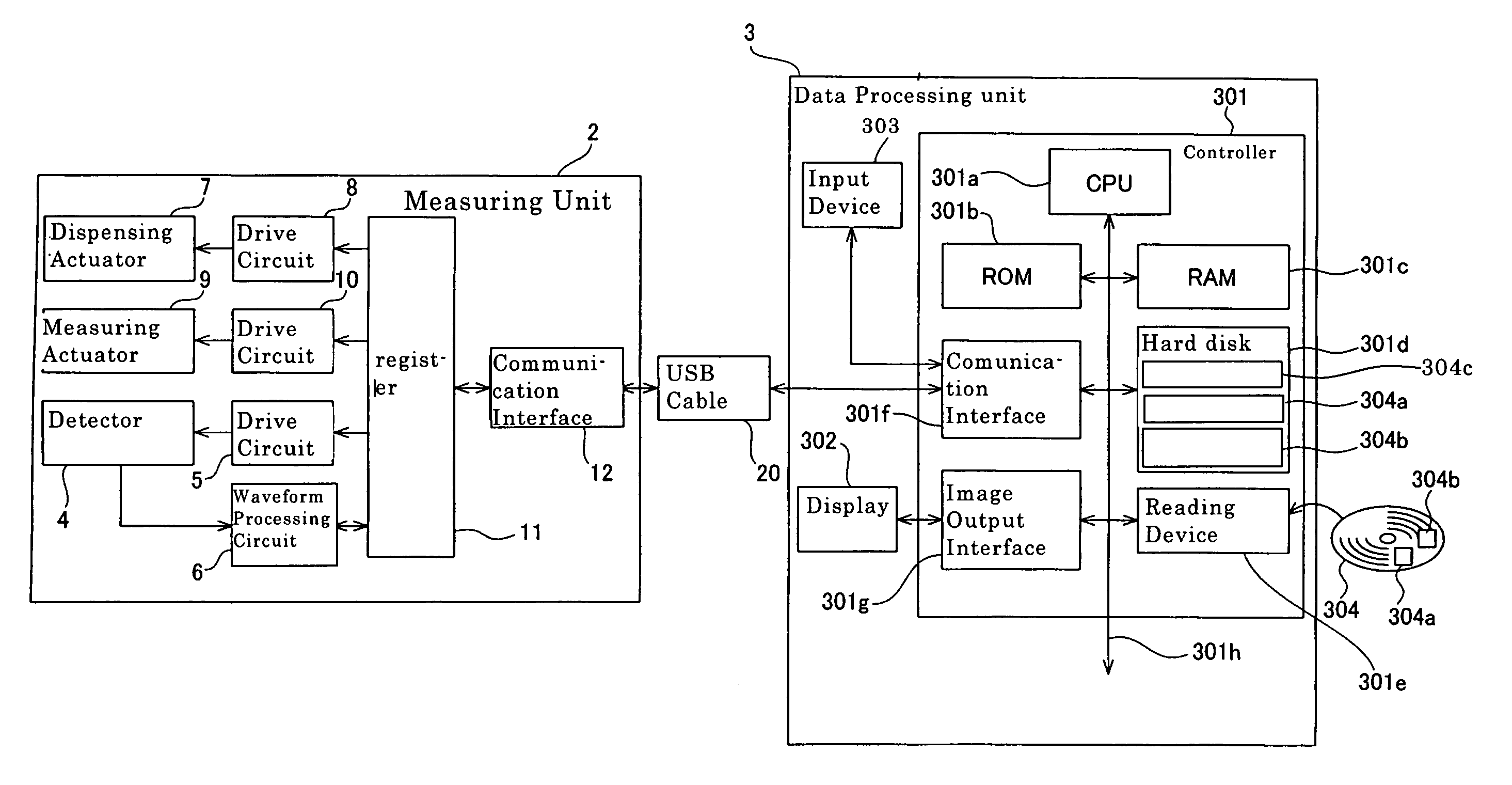

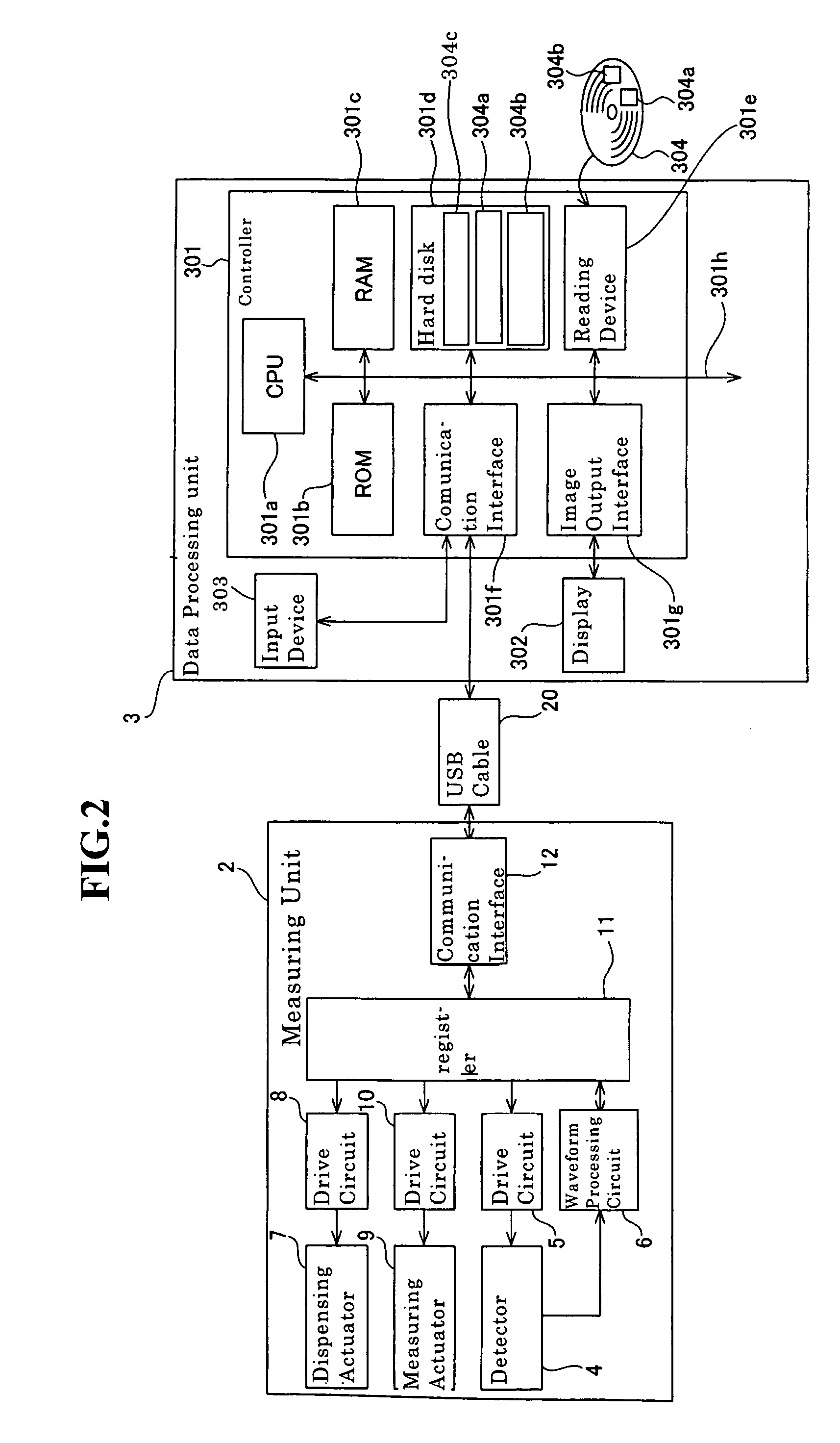

Sample processing apparatus and data processing apparatus

A sample processing apparatus, comprising: a sample processing unit for processing a sample, wherein the sample processing unit comprises an operating device for processing a sample, a drive circuit for driving the operating device, and a first communication part which is configured so as to be capable of external communication; and a data processing unit for processing data output from the sample processing unit, wherein the data processing unit comprises a processor for generating control data for controlling the operating device, and a second communication part which is configured so as to be capable of communicating with the first communication part for transmitting the control data generated by the processor to the first communication part, wherein the drive circuit is configured so as to drive the operating device based on the control data received by the first communication part, is disclosed. A data processing apparatus is also disclosed.

Owner:SYSMEX CORP

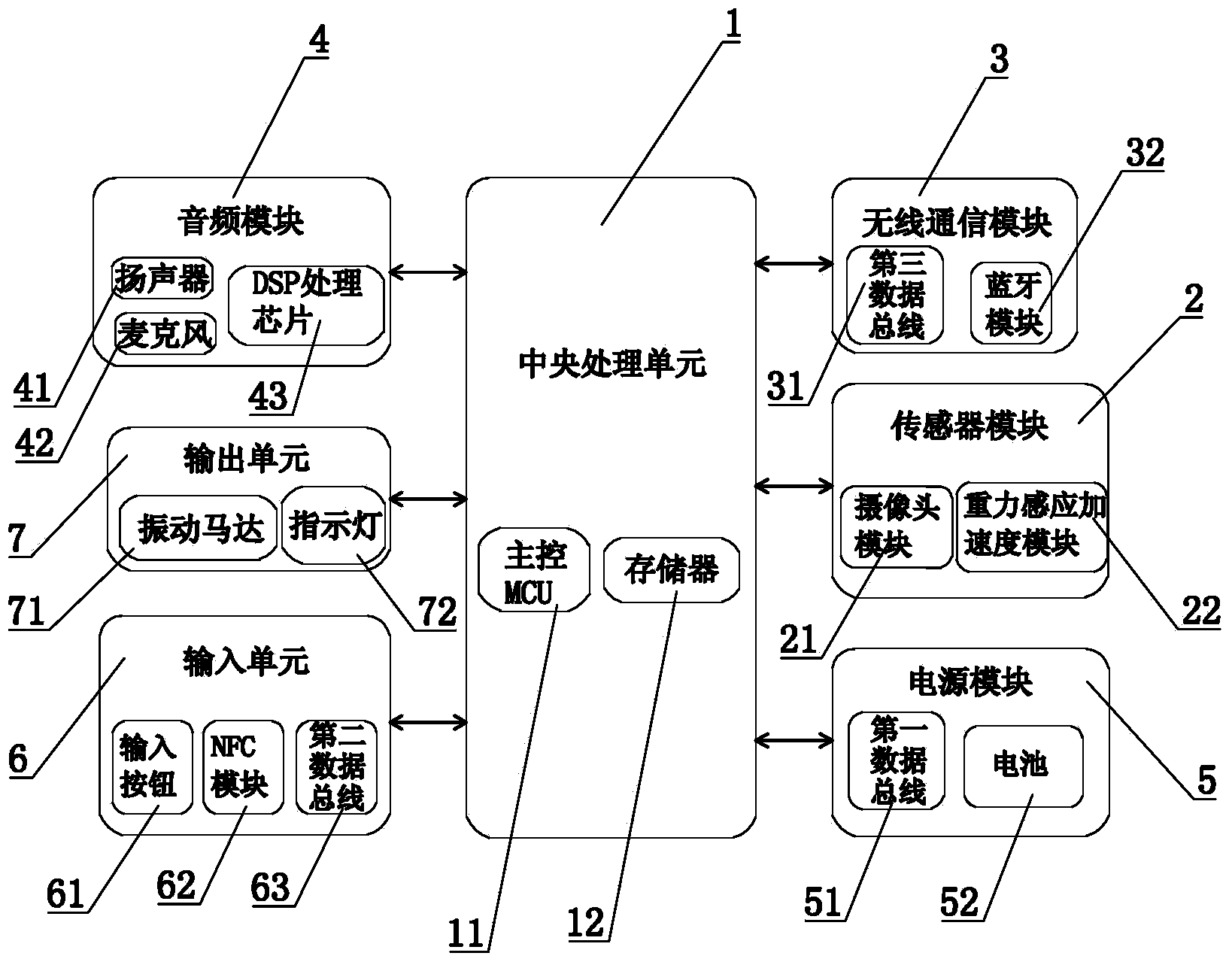

Intelligent bracelet

ActiveCN103445409AMonitor exercise statusShoot in timeBraceletsWrist-watch strapsElectricityDigital signal processing

The invention discloses an intelligent bracelet. The intelligent bracelet comprises a sensor module, an audio module, a power module, a central processing unit and a wireless communication module, wherein the sensor module is electrically connected with the central processing unit and is used for detecting information on an intelligent bracelet wearer and transmitting the information to the central processing unit; the audio module comprises a speaker, a microphone and a DSP (Digital Signal Processing) chip and is used for encoding and decoding an audio signal; the central processing unit comprises a master control MCU (Micro Control Unit) and is used for receiving and storing information acquired by the sensor module and coordinating the work of the audio module and the wireless communication module; the wireless communication module is used for realizing wireless communication connection between the intelligent bracelet and an intelligent mobile phone of a user, so that the interactive transmission of the audio signal between the intelligent bracelet and the intelligent mobile phone is realized. The intelligent bracelet disclosed by the invention can be in wireless synchronous connection with the intelligent mobile phone, and the intelligent bracelet has a call receiving function.

Owner:QINGDAO GOERTEK

Storage system

A technique that can update the firmware with services for clients continued on a circuit board that comprises a control unit of a storage system is provided. A plurality of blade that comprise control unit have previous BIOS on flash memory. Clusters are formed including the blade which is subject to firmware update. At the time of updating the firmware, for the update candidate substrate, the processing unit including processing to move the service provided by OS of blade to be subject to firmware update processing to OS of other blade in the cluster as well as firmware update processing for updating previous BIOS of blade which no longer provides services by the use of the BIOS update program and new BIOS image.

Owner:RAKUTEN GRP INC

Data processing system

ActiveUS20110271126A1Easy to adaptData processing processEnergy efficient ICTVolume/mass flow measurementData processing systemPower domains

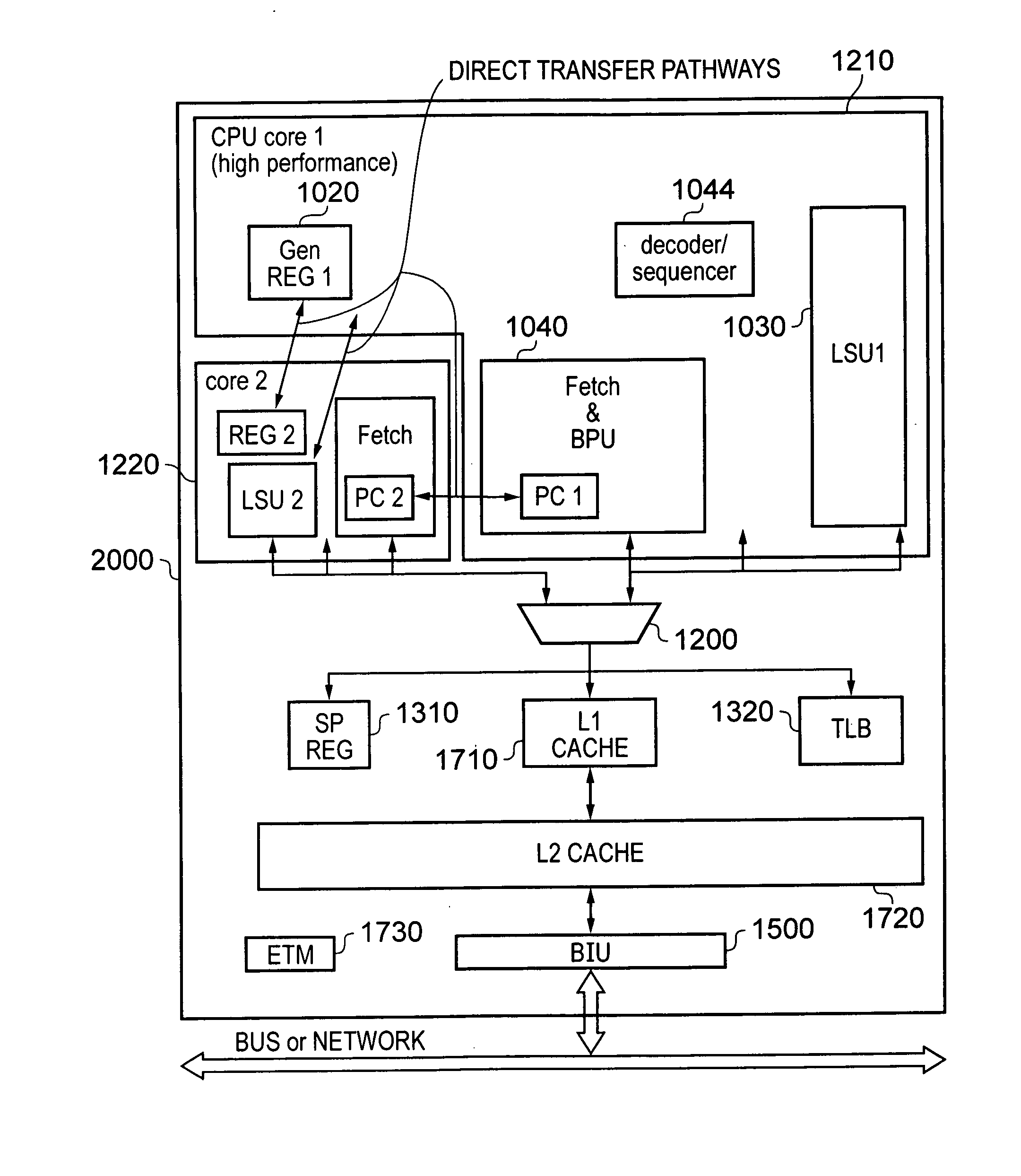

A data processing apparatus is provided comprising first processing circuitry, second processing circuitry and shared processing circuitry. The first processing circuitry and second processing circuitry are configured to operate in different first and second power domains respectively and the shared processing circuitry is configured to operate in a shared power domain. The data processing apparatus forms a uni-processing environment for executing a single instruction stream in which either the first processing circuitry and the shared processing circuitry operate together to execute the instruction stream or the second processing circuitry and the shared processing circuitry operate together to execute the single instruction stream. Execution flow transfer circuitry is provided for transferring at least one bit of processing-state restoration information between the two hybrid processing units.

Owner:ARM LTD

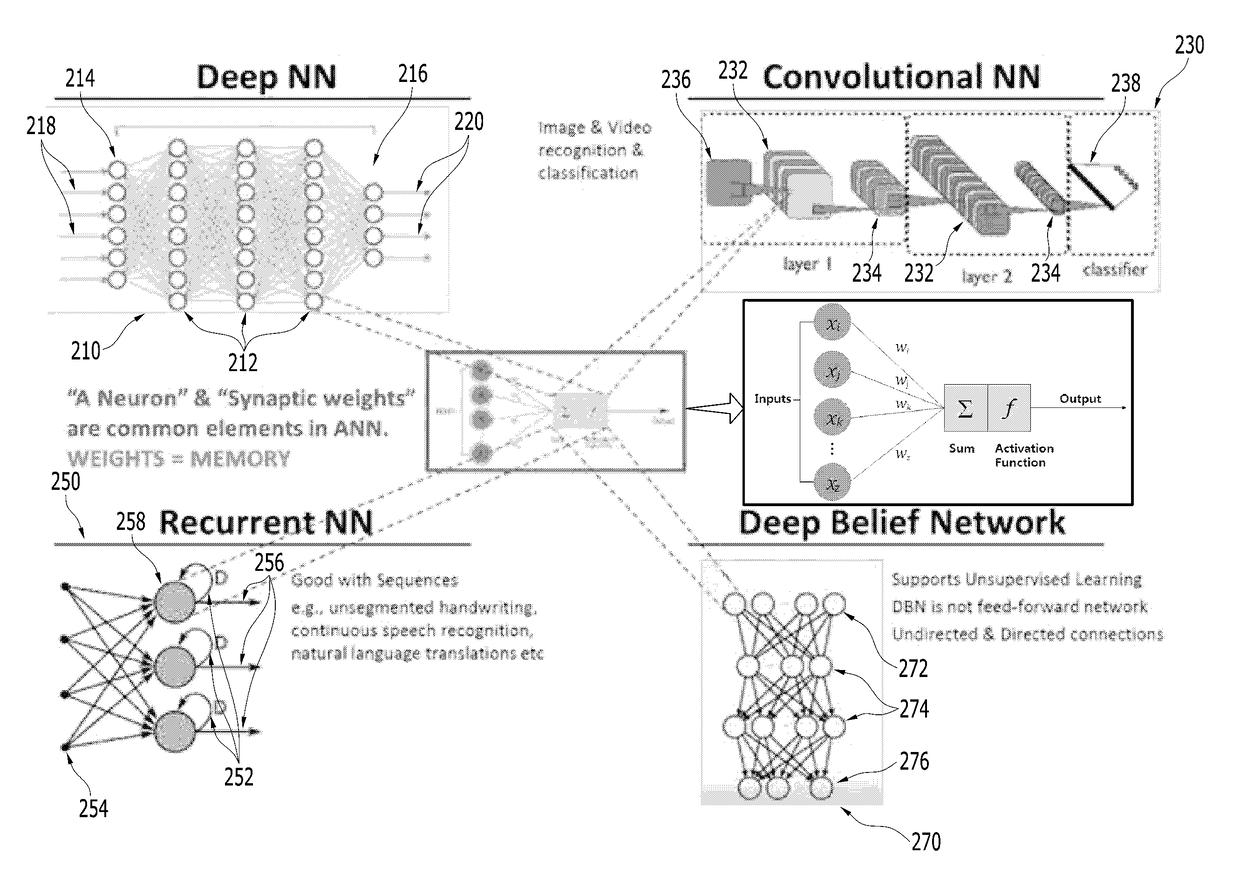

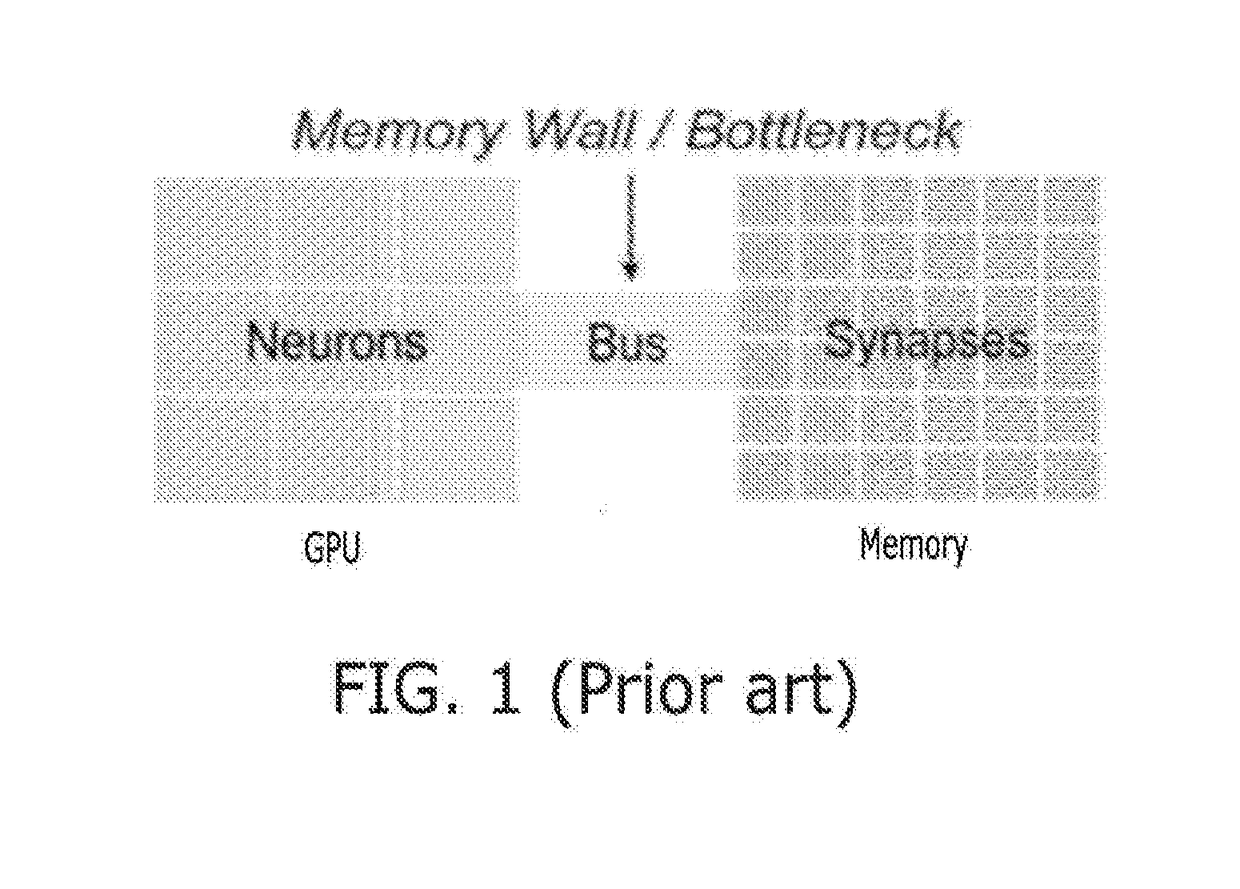

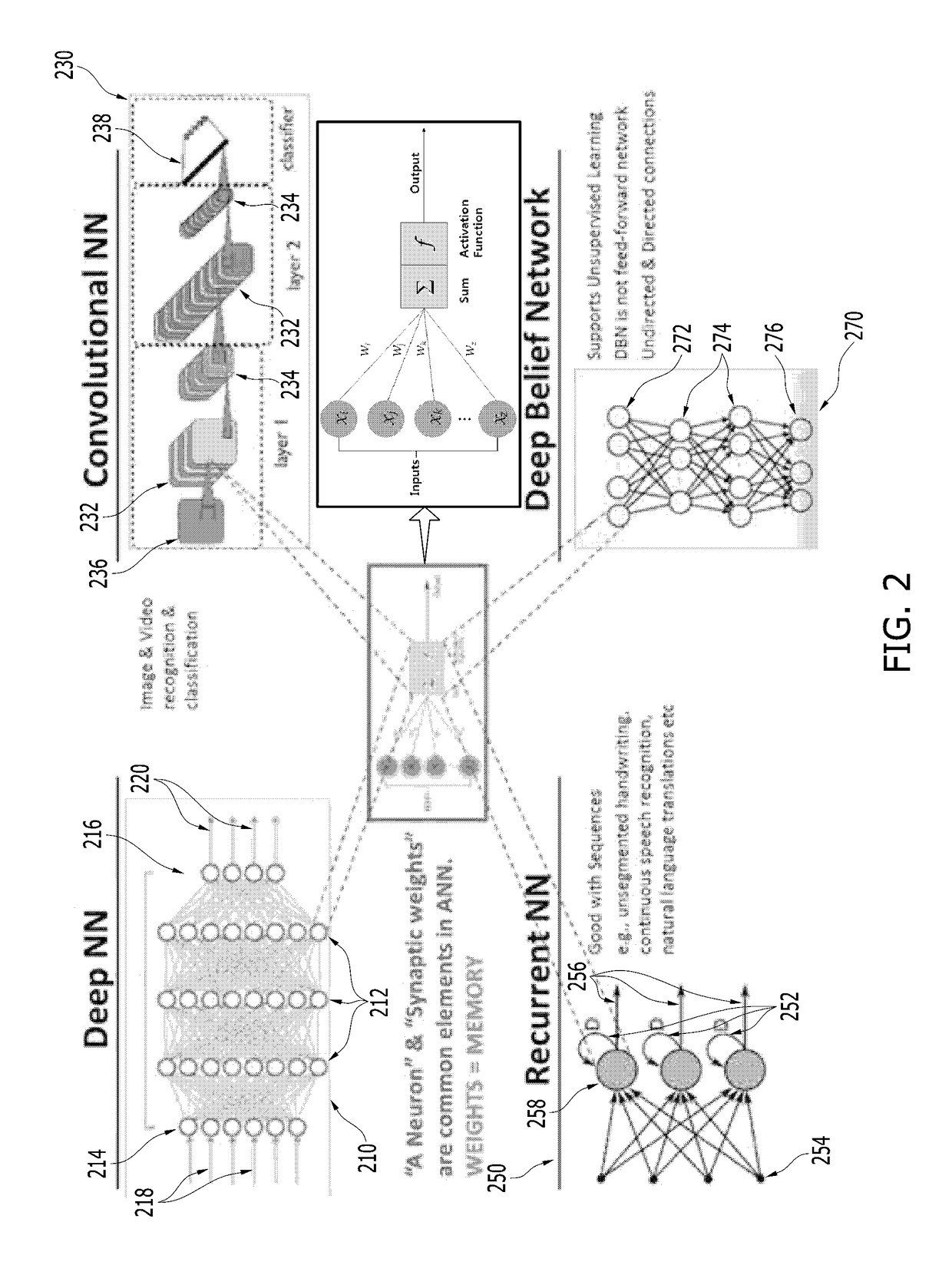

Neural network hardware accelerator architectures and operating method thereof

ActiveUS20180075344A1Improve performanceImprove efficiencyComputation using non-contact making devicesDigital storageTimestampNeural network system

A memory-centric neural network system and operating method thereof includes: a processing unit; semiconductor memory devices coupled to the processing unit, the semiconductor memory devices contain instructions executed by the processing unit; a weight matrix constructed with rows and columns of memory cells, inputs of the memory cells of a same row are connected to one of Axons, outputs of the memory cells of a same column are connected to one of Neurons; timestamp registers registering timestamps of the Axons and the Neurons; and a lookup table containing adjusting values indexed in accordance with the timestamps, the processing unit updates the weight matrix in accordance with the adjusting values.

Owner:SK HYNIX INC

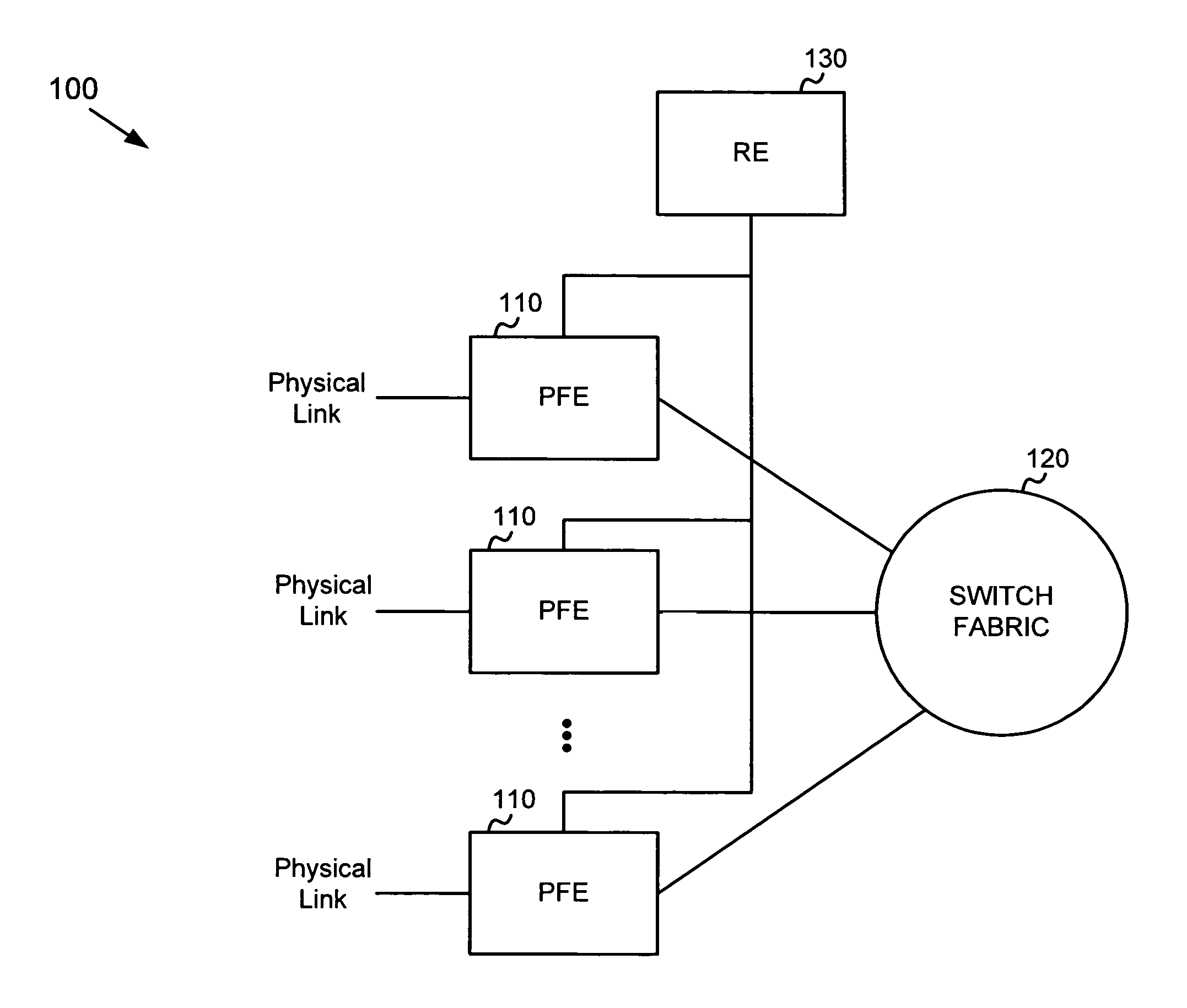

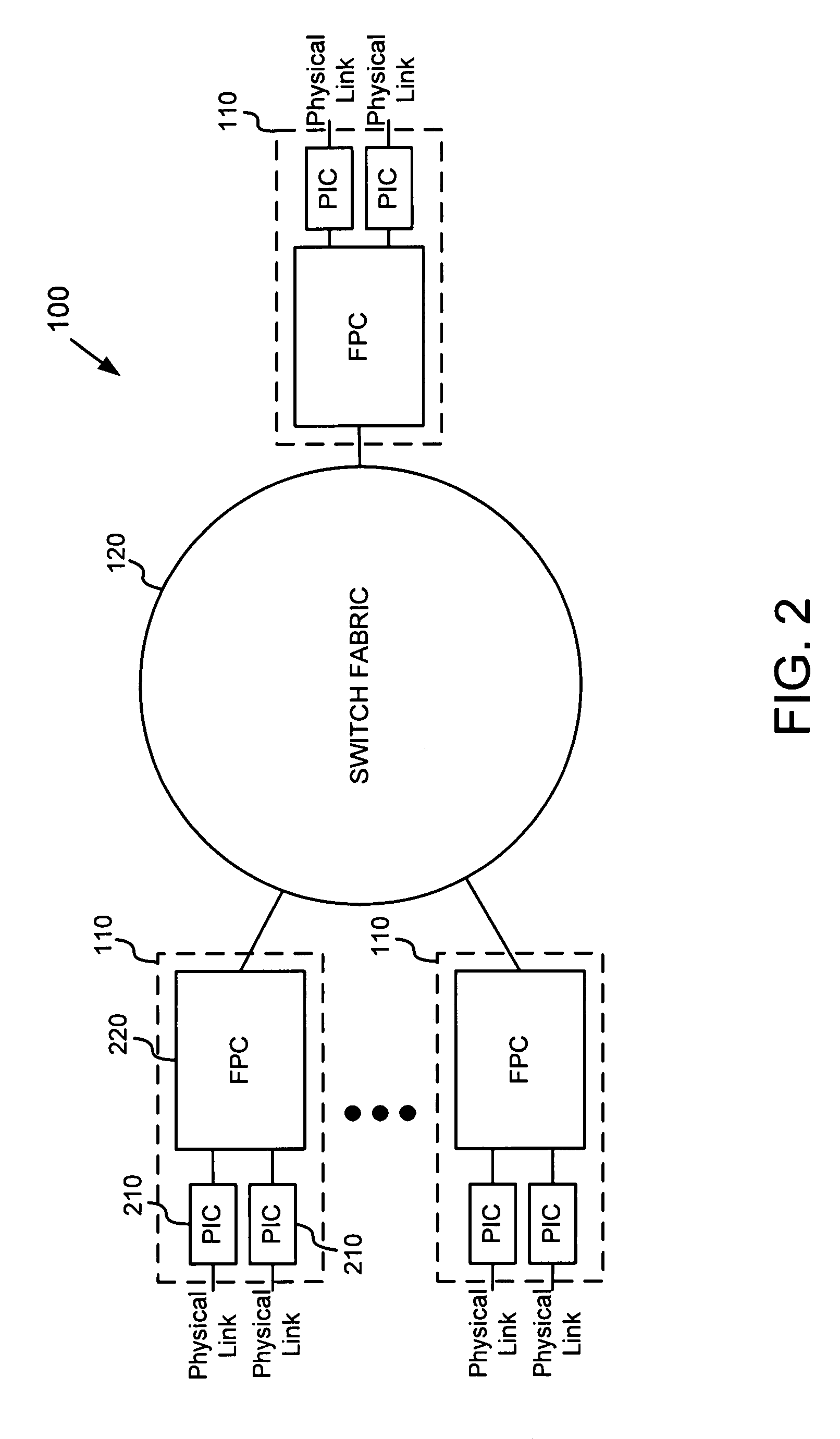

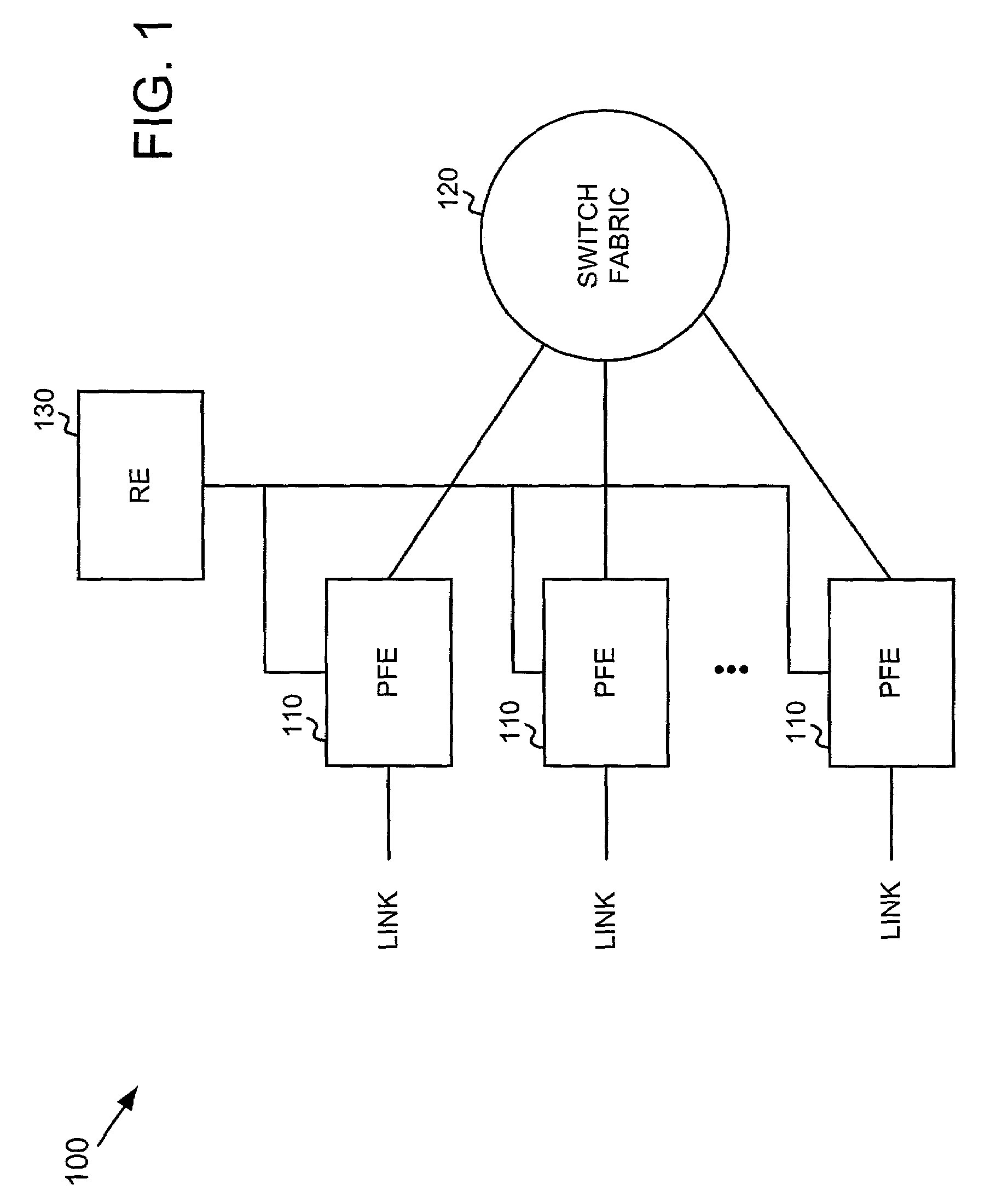

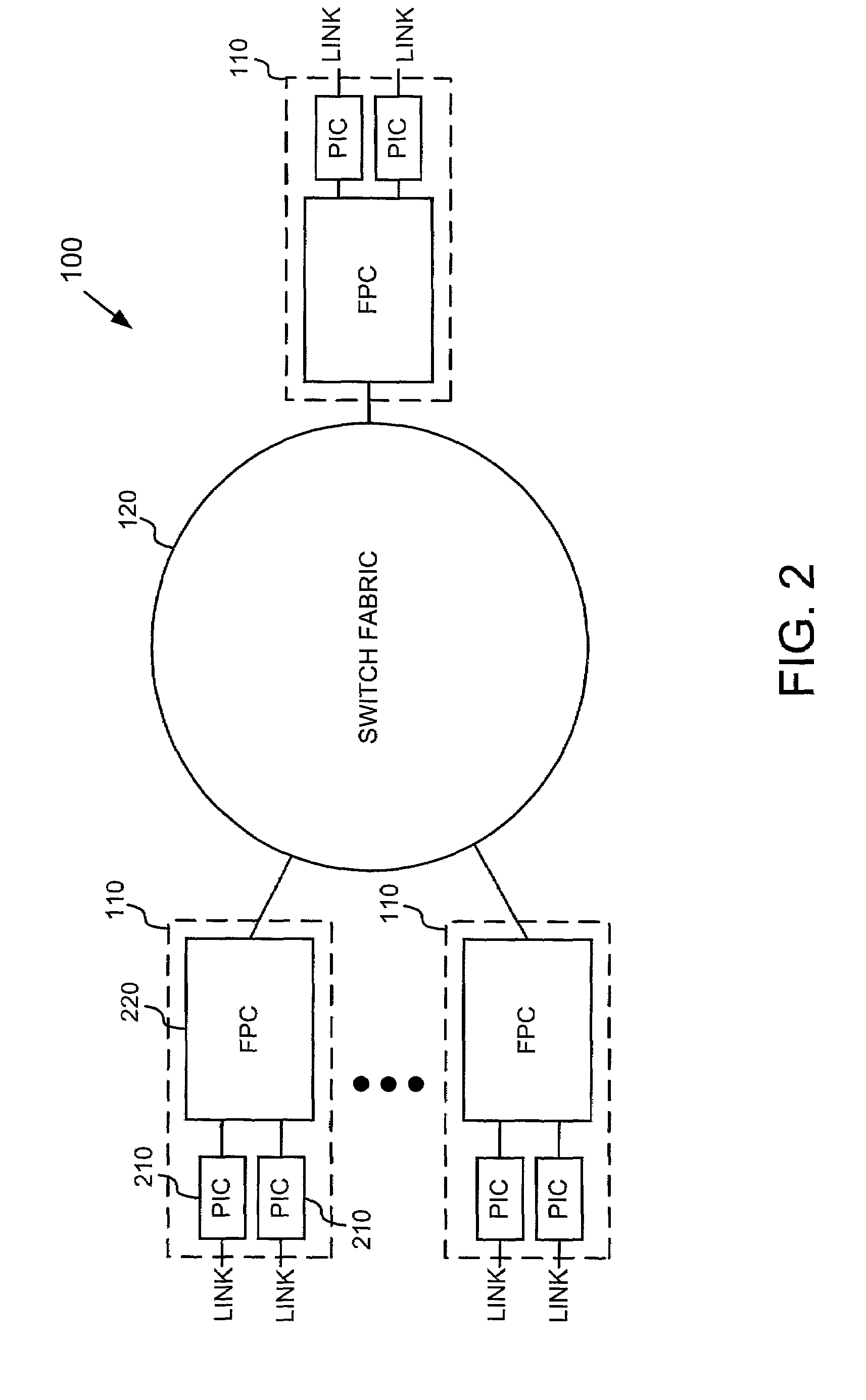

Maintaining packet order using hash-based linked-list queues

InactiveUS7764606B1Error preventionFrequency-division multiplex detailsData streamParallel processing

Ordering logic ensures that data items being processed by a number of parallel processing units are unloaded from the processing units in the original per-flow order that the data items were loaded into the parallel processing units. The ordering logic includes a pointer memory, a tail vector, and a head vector. Through these three elements, the ordering logic keeps track of a number of “virtual queues” corresponding to the data flows. A round robin arbiter unloads data items from the processing units only when a data item is at the head of its virtual queue.

Owner:JUMIPER NETWORKS INC

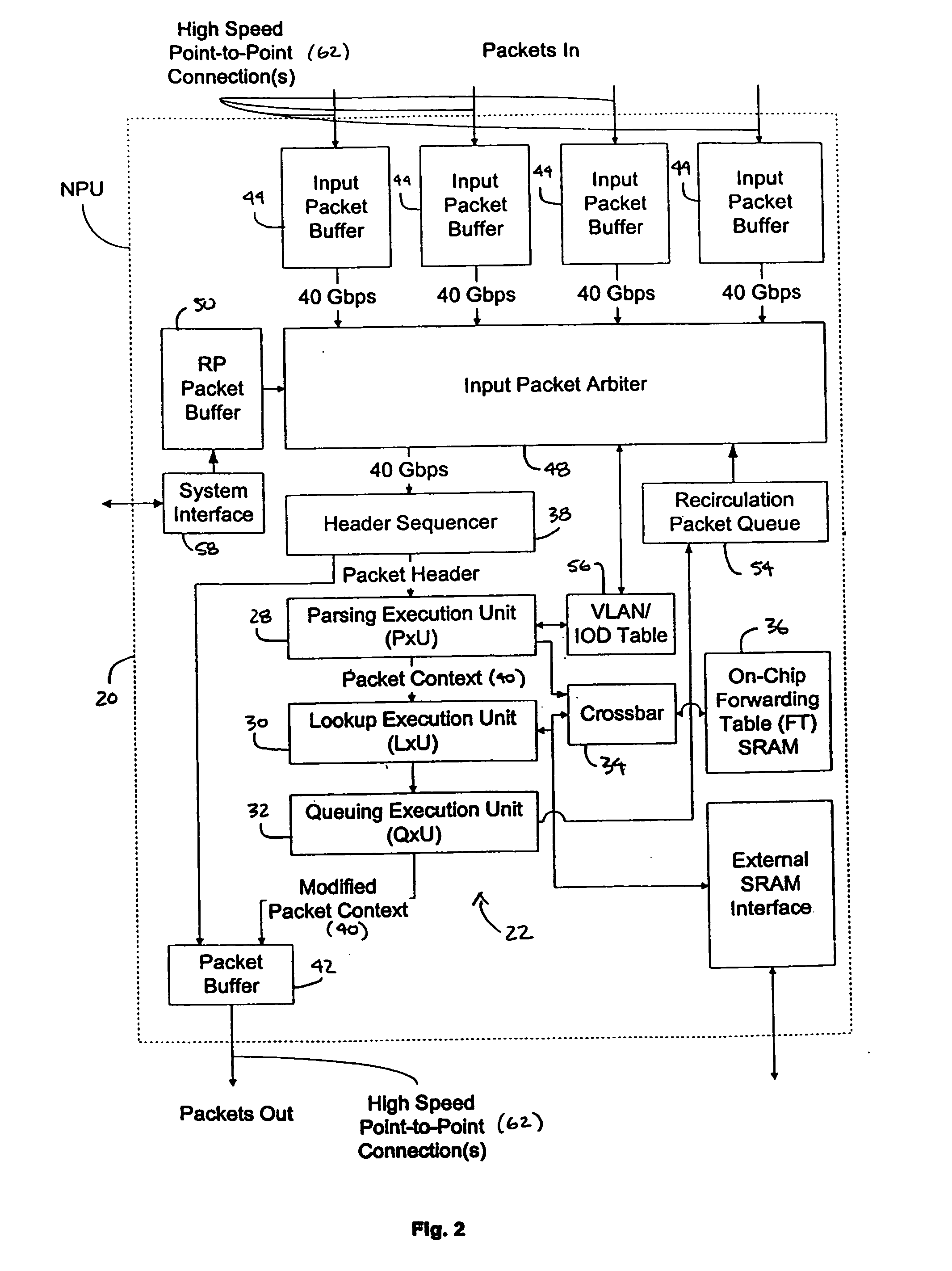

Processing unit for efficiently determining a packet's destination in a packet-switched network

InactiveUS20060117126A1Input is hugeEliminate needTransmissionElectric digital data processingMain processing unitSingle stage

A processor for use in a router, the processor having a systolic array pipeline for processing data packets to determine to which output port of the router the data packet should be routed. In one embodiment, the systolic array pipeline includes a plurality of programmable functional units and register files arranged sequentially as stages, for processing packet contexts (which contain the packet's destination address) to perform operations, under programmatic control, to determine the destination port of the router for the packet. A single stage of the systolic array may contain a register file and one or more functional units such as adders, shifters, logical units, etc., for performing, in one example, very long instruction word (vliw) operations. The processor may also include a forwarding table memory, on-chip, for storing routing information, and a cross bar selectively connecting the stages of the systolic array with the forwarding table memory.

Owner:CISCO TECH INC

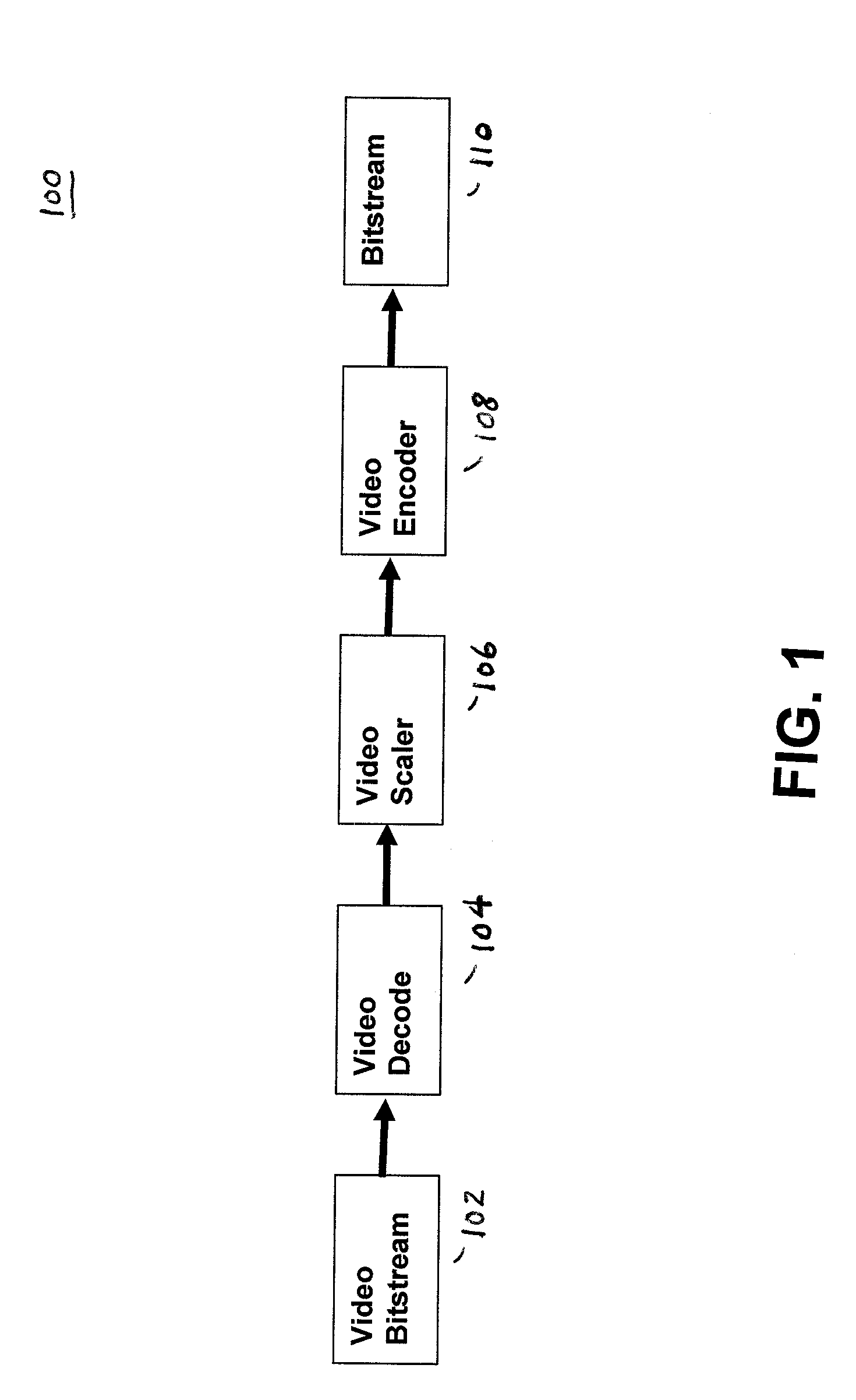

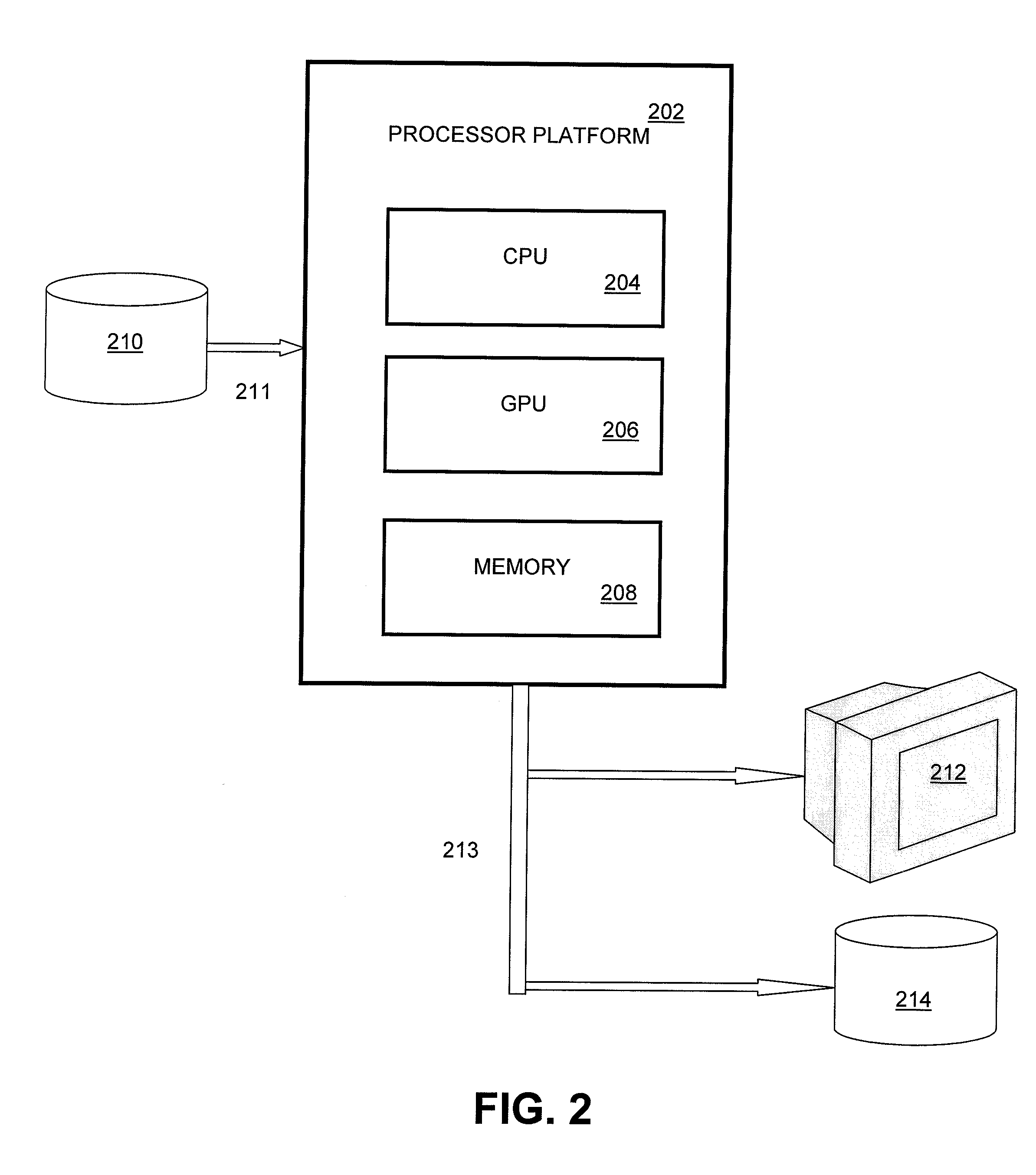

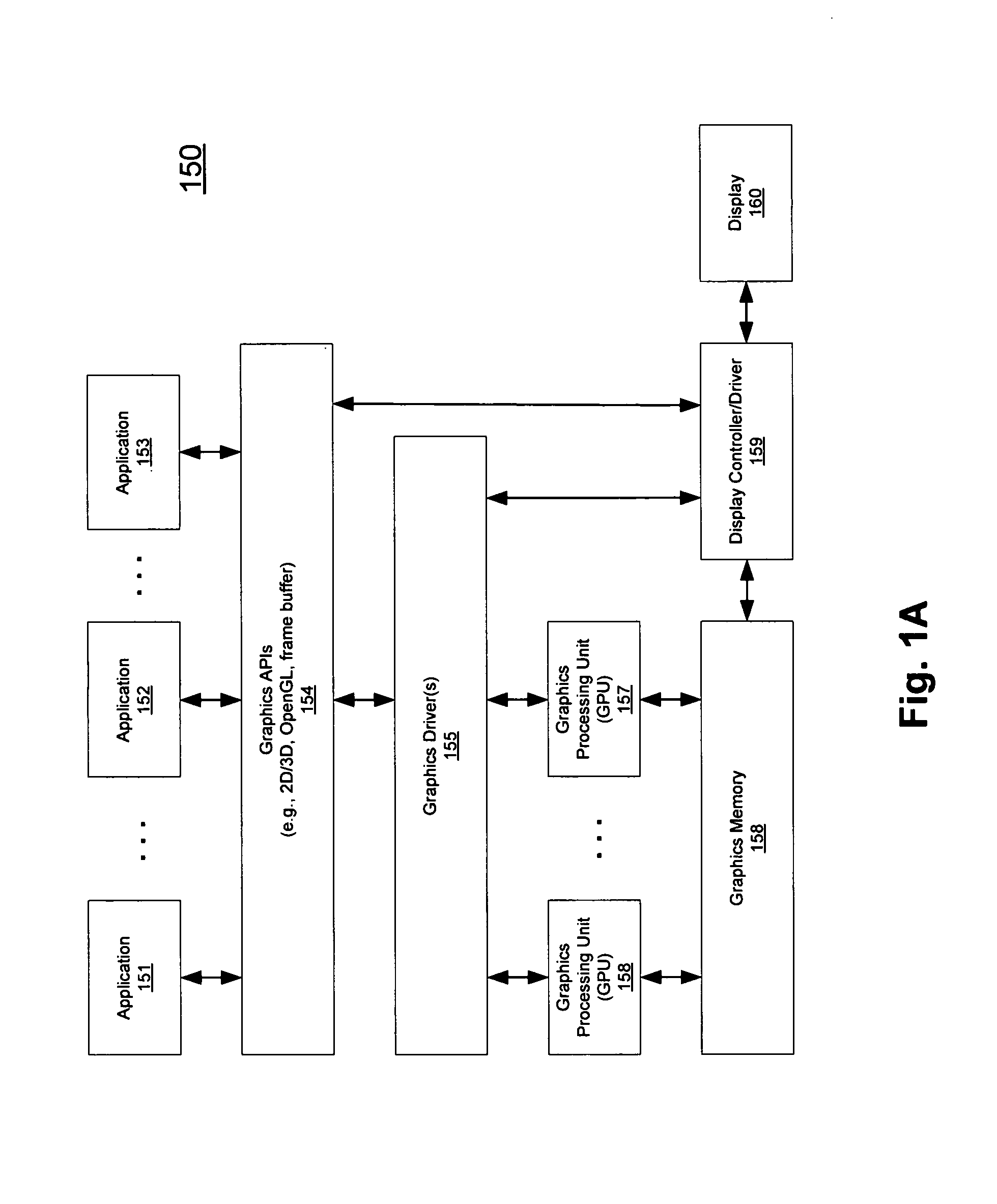

Software Video Transcoder with GPU Acceleration

ActiveUS20090060032A1Picture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningTranscodingWorkload

Embodiments of the invention as described herein provide a solution to the problems of conventional methods as stated above. In the following description, various examples are given for illustration, but none are intended to be limiting. Embodiments are directed to a transcoding system that shares the workload of video transcoding through the use of multiple central processing unit (CPU) cores and / or one or more graphical processing units (GPU), including the use of two components within the GPU: a dedicated hardcoded or programmable video decoder for the decode step and compute shaders for scaling and encoding. The system combines usage of an industry standard Microsoft DXVA method for using the GPU to accelerate video decode with a GPU encoding scheme, along with an intermediate step of scaling the video.

Owner:ADVANCED MICRO DEVICES INC

Design method of hardware accelerator based on LSTM recursive neural network algorithm on FPGA platform

InactiveCN108090560AImprove forecastImprove performanceNeural architecturesPhysical realisationNeural network hardwareLow resource

The invention discloses a method for accelerating an LSTM neural network algorithm on an FPGA platform. The FPGA is a field-programmable gate array platform and comprises a general processor, a field-programmable gate array body and a storage module. The method comprises the following steps that an LSTM neural network is constructed by using a Tensorflow pair, and parameters of the neural networkare trained; the parameters of the LSTM network are compressed by adopting a compression means, and the problem that storage resources of the FPGA are insufficient is solved; according to the prediction process of the compressed LSTM network, a calculation part suitable for running on the field-programmable gate array platform is determined; according to the determined calculation part, a softwareand hardware collaborative calculation mode is determined; according to the calculation logic resource and bandwidth condition of the FPGA, the number and type of IP core firmware are determined, andacceleration is carried out on the field-programmable gate array platform by utilizing a hardware operation unit. A hardware processing unit for acceleration of the LSTM neural network can be quicklydesigned according to hardware resources, and the processing unit has the advantages of being high in performance and low in power consumption compared with the general processor.

Owner:SUZHOU INST FOR ADVANCED STUDY USTC

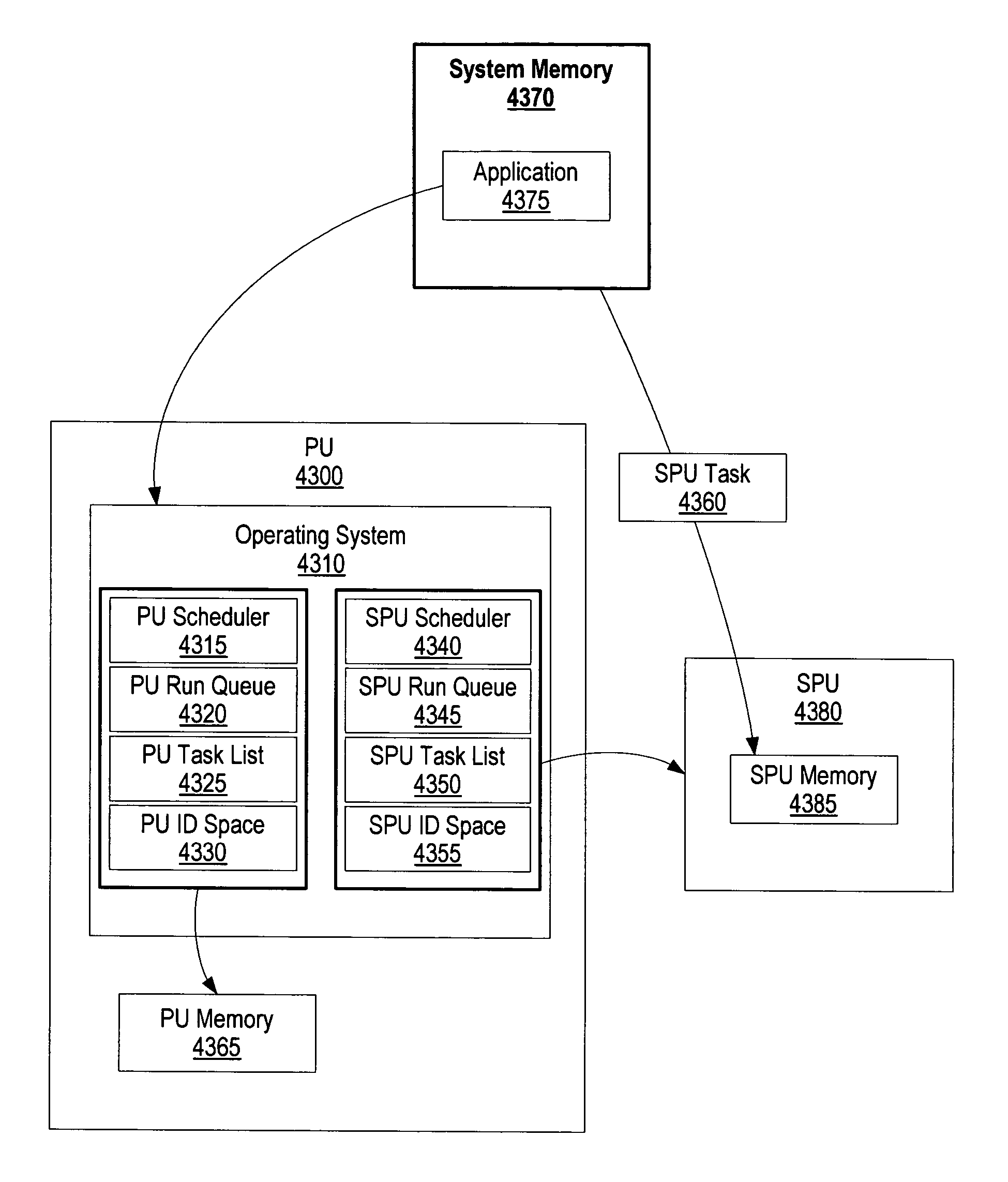

Asymmetric heterogeneous multi-threaded operating system

A method for an asymmetric heterogeneous multi-threaded operating system is presented. A processing unit (PU) provides a trusted mode environment in which an operating system executes. A heterogeneous processor environment includes a synergistic processing unit (SPU) that does not provide trusted mode capabilities. The PU operating system uses two separate and distinct schedulers which are a PU scheduler and an SPU scheduler to schedule tasks on a PU and an SPU, respectively. In one embodiment, the heterogeneous processor environment includes a plurality of SPUs. In this embodiment, the SPU scheduler may use a single SPU run queue to schedule tasks for the plurality of SPUs or, the SPU scheduler may use a plurality of run queues to schedule SPU tasks whereby each of the run queues correspond to a particular SPU.

Owner:GLOBALFOUNDRIES INC

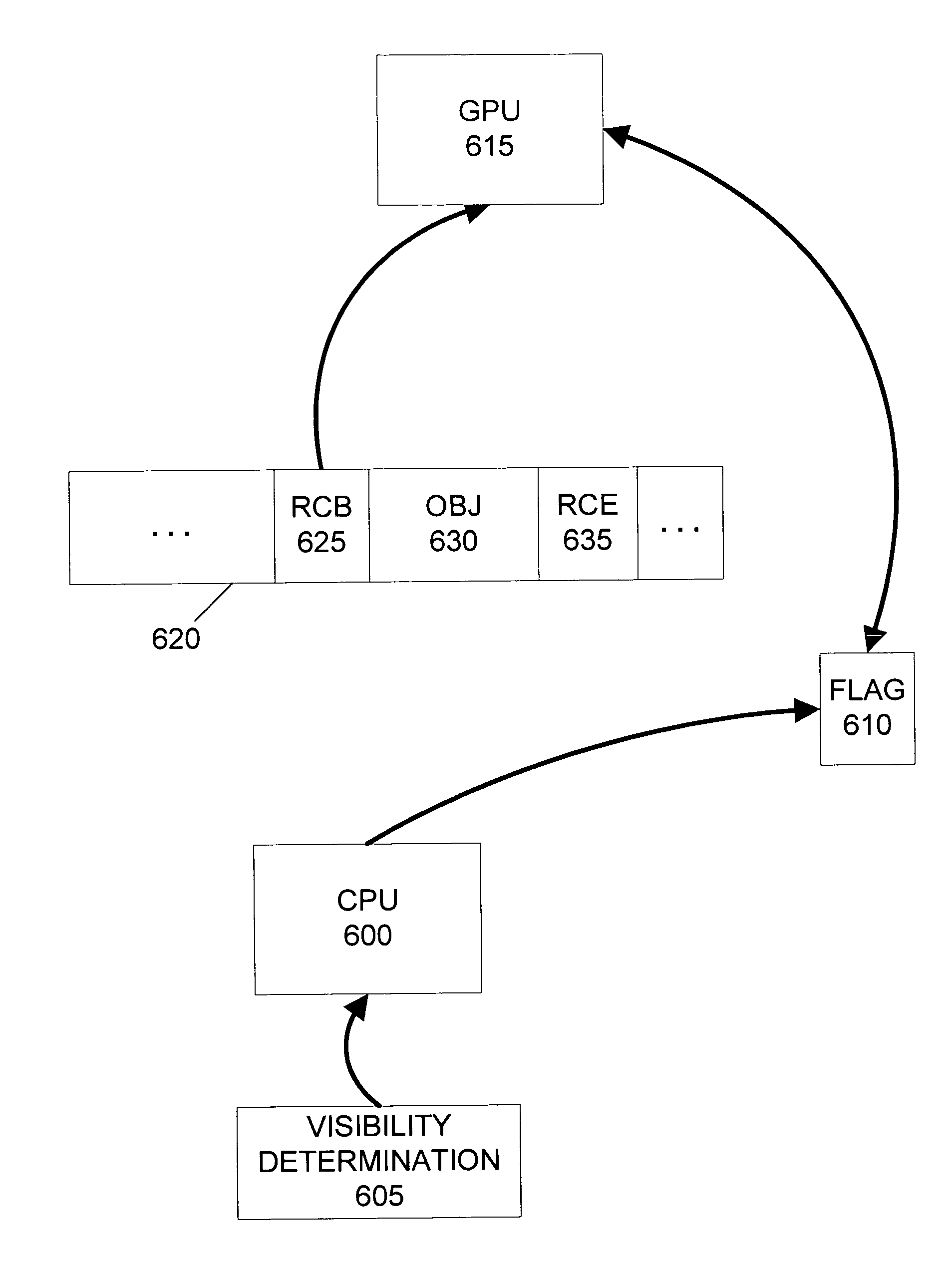

Asynchronous conditional graphics rendering

ActiveUS7388581B1Digital computer detailsProcessor architectures/configurationComputational scienceGraphics

A graphics processing unit implements conditional rendering by putting itself in a state in which it does not execute any rendering commands. Once the graphics processing unit is placed in this state, all subsequent rendering commands are ignored until another rendering command explicitly removes the graphics processing unit from this state. Conditional rendering commands enable the graphics processing unit to place itself in and out of this state based upon the value of a flag in memory. Conditional rendering commands can include conditions that must be satisfied by the flag value in order to change the state of the graphics processing unit. The value of the flag can be set by the graphics processing unit itself, a second graphics processing unit, a graphics coprocessor, or the central processing unit. This enables a wide variety of conditional rendering methods to be implemented.

Owner:NVIDIA CORP

Cpu/gpu dcvs co-optimization for reducing power consumption in graphics frame processing

ActiveUS20150317762A1Minimize power consumptionEnergy efficient ICTStatic indicating devicesGraphicsComputational science

Systems, methods, and computer programs are disclosed for minimizing power consumption in graphics frame processing. One such method comprises: initiating graphics frame processing to be cooperatively performed by a central processing unit (CPU) and a graphics processing unit (GPU); receiving CPU activity data and GPU activity data; determining a set of available dynamic clock and voltage / frequency scaling (DCVS) levels for the GPU and the CPU; and selecting from the set of available DCVS levels an optimal combination of a GPU DCVS level and a CPU DCVS level, based on the CPU and GPU activity data, which minimizes a combined power consumption of the CPU and the GPU during the graphics frame processing.

Owner:QUALCOMM INC

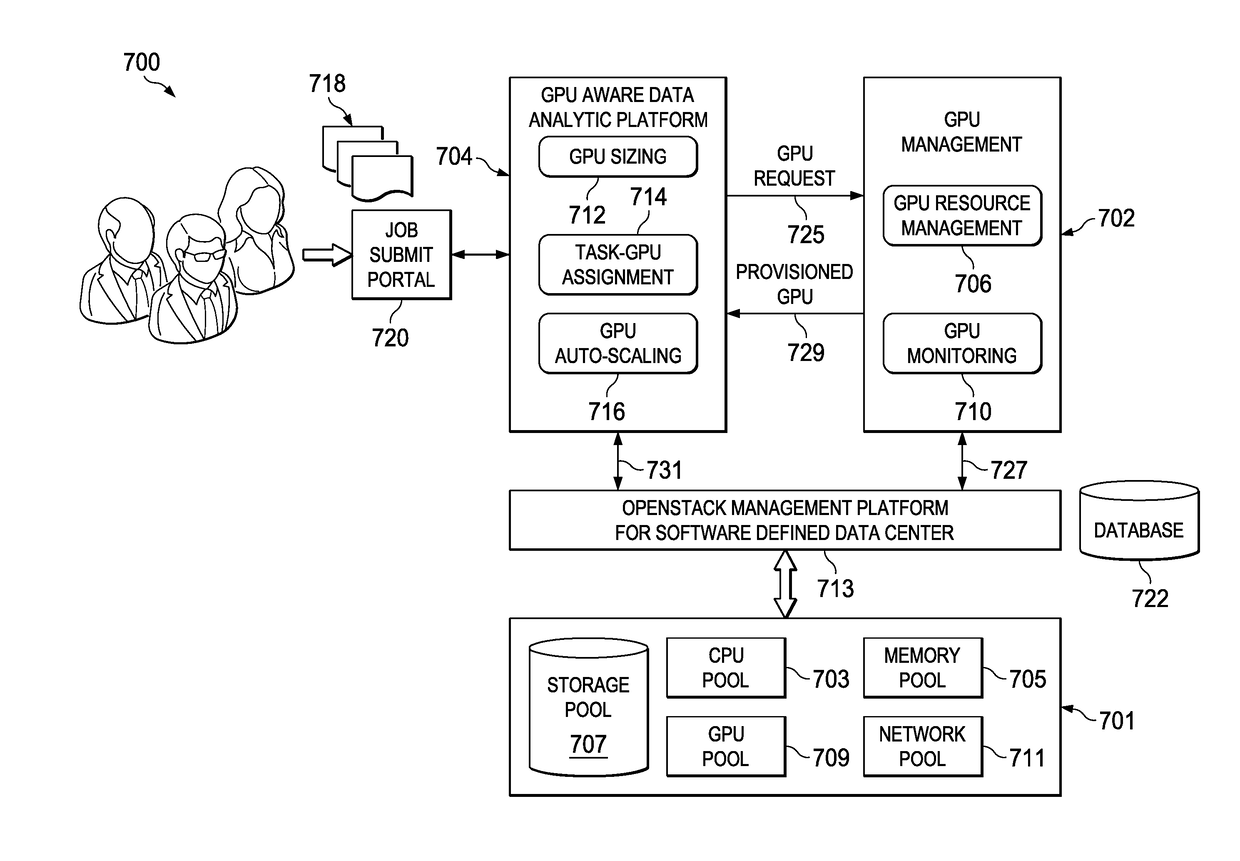

Dynamically provisioning and scaling graphic processing units for data analytic workloads in a hardware cloud

ActiveUS20170293994A1Improves GPU utilizationFine granularity and agileProcessor architectures/configurationProgram controlResource poolGraphics

Server resources in a data center are disaggregated into shared server resource pools, including a graphics processing unit (GPU) pool. Servers are constructed dynamically, on-demand and based on workload requirements, by allocating from these resource pools. According to this disclosure, GPU utilization in the data center is managed proactively by assigning GPUs to workloads in a fine granularity and agile way, and de-provisioning them when no longer needed. In this manner, the approach is especially advantageous to automatically provision GPUs for data analytic workloads. The approach thus provides for a “micro-service” enabling data analytic workloads to automatically and transparently use GPU resources without providing (e.g., to the data center customer) the underlying provisioning details. Preferably, the approach dynamically determines the number and the type of GPUs to use, and then during runtime auto-scales the GPUs based on workload.

Owner:IBM CORP

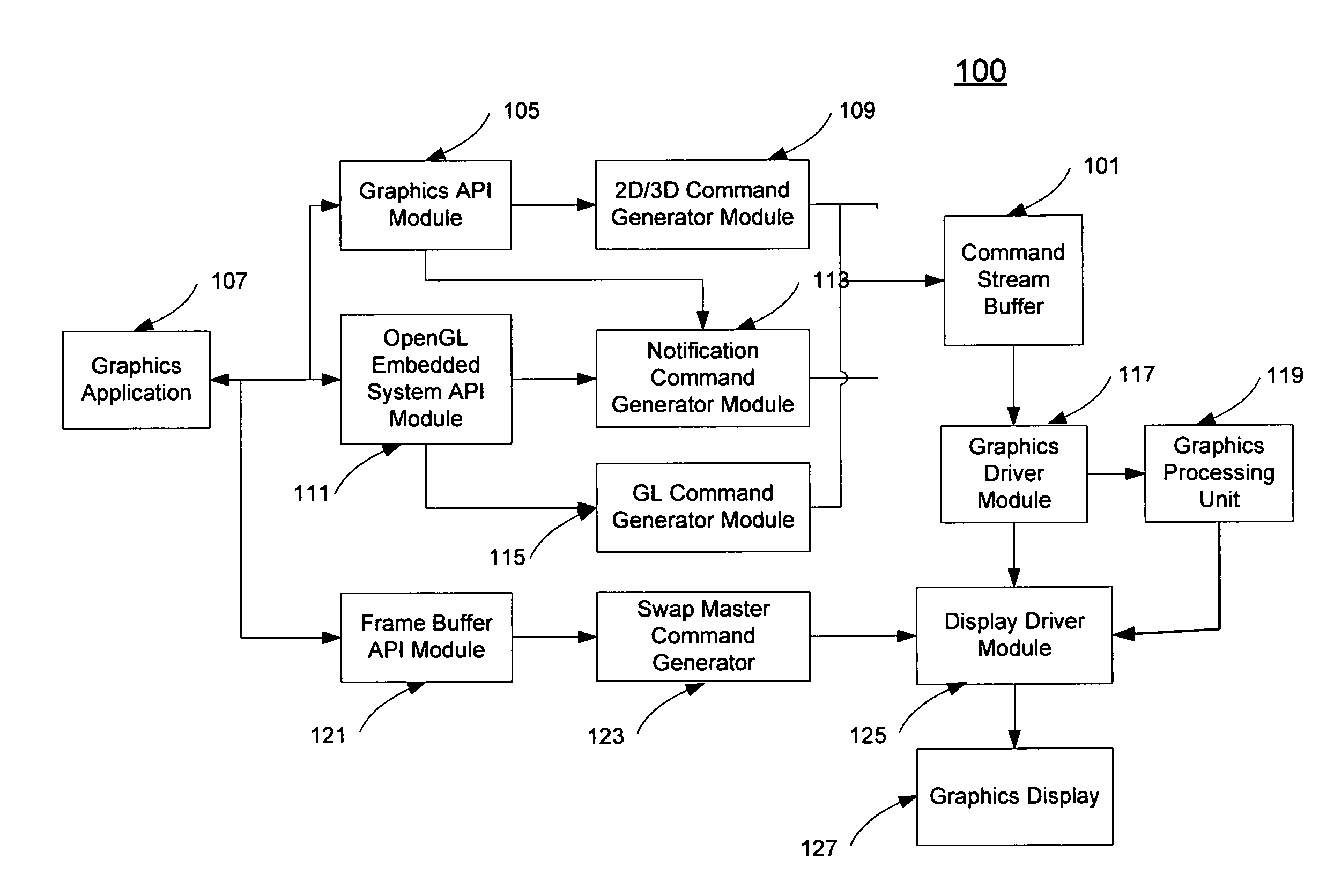

Serializing command streams for graphics processors

ActiveUS20080303835A1Image memory managementCathode-ray tube indicatorsApplication programming interfaceSerialization

A method and an apparatus for determining a dependency relationship between graphics commands based on availability of graphics hardware resources to perform graphics processing operations according to the dependency relationship are described. The graphics commands may be received from graphics APIs (application programming interfaces) for rendering a graphics object. A graphics driver may transmit a portion or all of the received graphics commands to a graphics processing unit (GPU) or a media processor based on the determined dependency relationship between the graphics commands.

Owner:APPLE INC

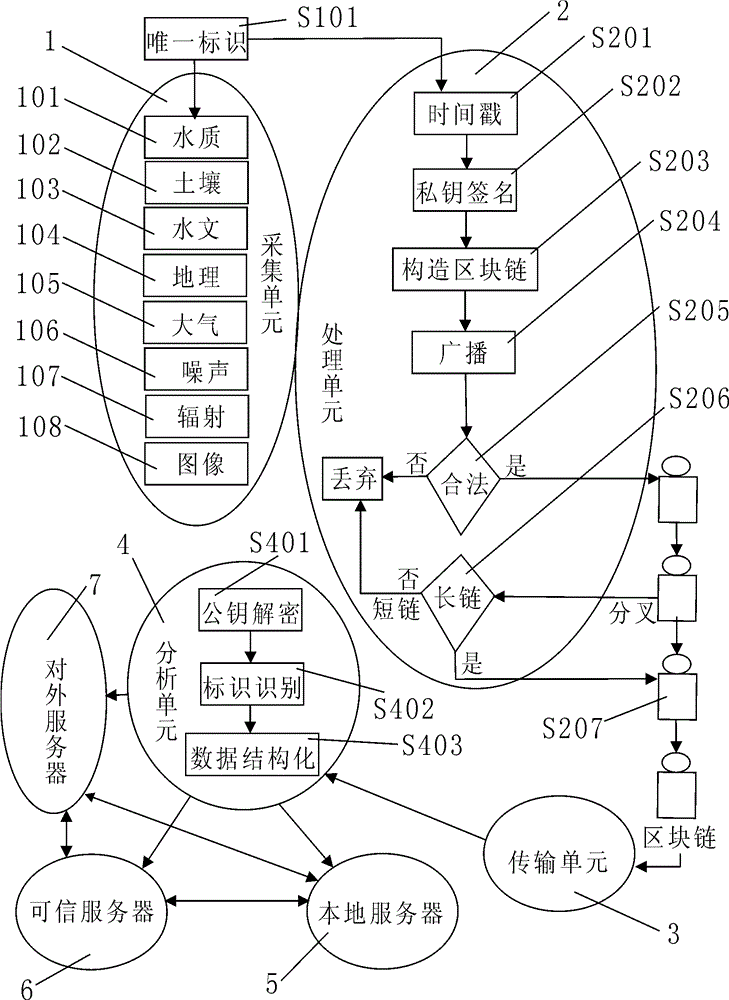

Block chain architecture-based ecological environment monitoring system and implementation method thereof

ActiveCN106407481AEnsure integrityEnsure authenticityData processing applicationsGeneral water supply conservationEcological environmentTimestamp

The invention relates to a block chain architecture-based ecological environment monitoring system, which comprises collection units, a processing unit, a transmission unit, an analysis unit, a local server, a trusted server and an external server, wherein each collection device has an inalterable device series number and is combined with geographic information to reform a unique identifier of a whole network; and meanwhile, a timestamp and a private key DSA signature are added to real-time monitoring data of each collection unit, and a new block which cannot be tampered and cannot be anonymous is constructed and added to a block chain. All participants in the network have copies of the block chain, the credibility of the data can be confirmed without check of any centralized mechanism, and meanwhile, ecological environment monitoring information and data are opened to the society, so that illegal acts of excess emission, data tampering or data falsification and the like can be effectively blocked; the integrity and the authenticity of the data are ensured; and the ecological environment monitoring uniformity and effectiveness are strengthened.

Owner:福州微启迪物联科技有限公司

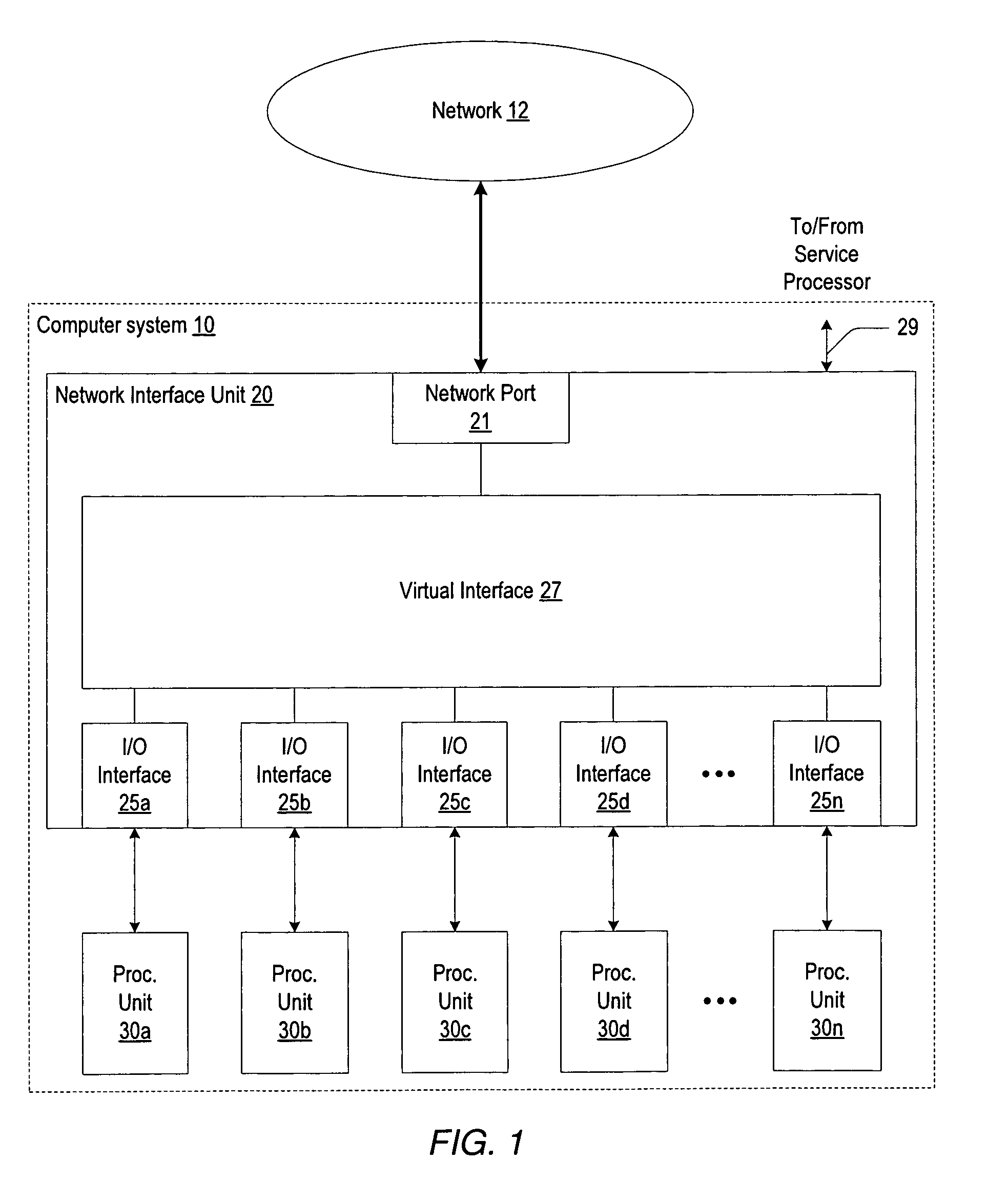

Shared virtual network interface

A system includes one or more processing units coupled to a network interface unit. The network interface unit may include a network port for connection to a network and a virtual interface that may be configured to distribute an available communication bandwidth of the network port between the one or more processing units. The network port may include a shared media access control (MAC) unit. The virtual interface may include a plurality of processing unit resources each associated with a respective one of the one or more processing units. Each of the processing unit resources may include an I / O interface unit coupled to a respective one of the one or more processing units via an I / O interconnect, and an independent programmable virtual MAC unit that is programmably configured by the respective one of the one or more processing units. The virtual interface may also include a receive datapath and a transmit datapath that are coupled between and shared by the plurality of processing unit resources and the network port.

Owner:ORACLE INT CORP

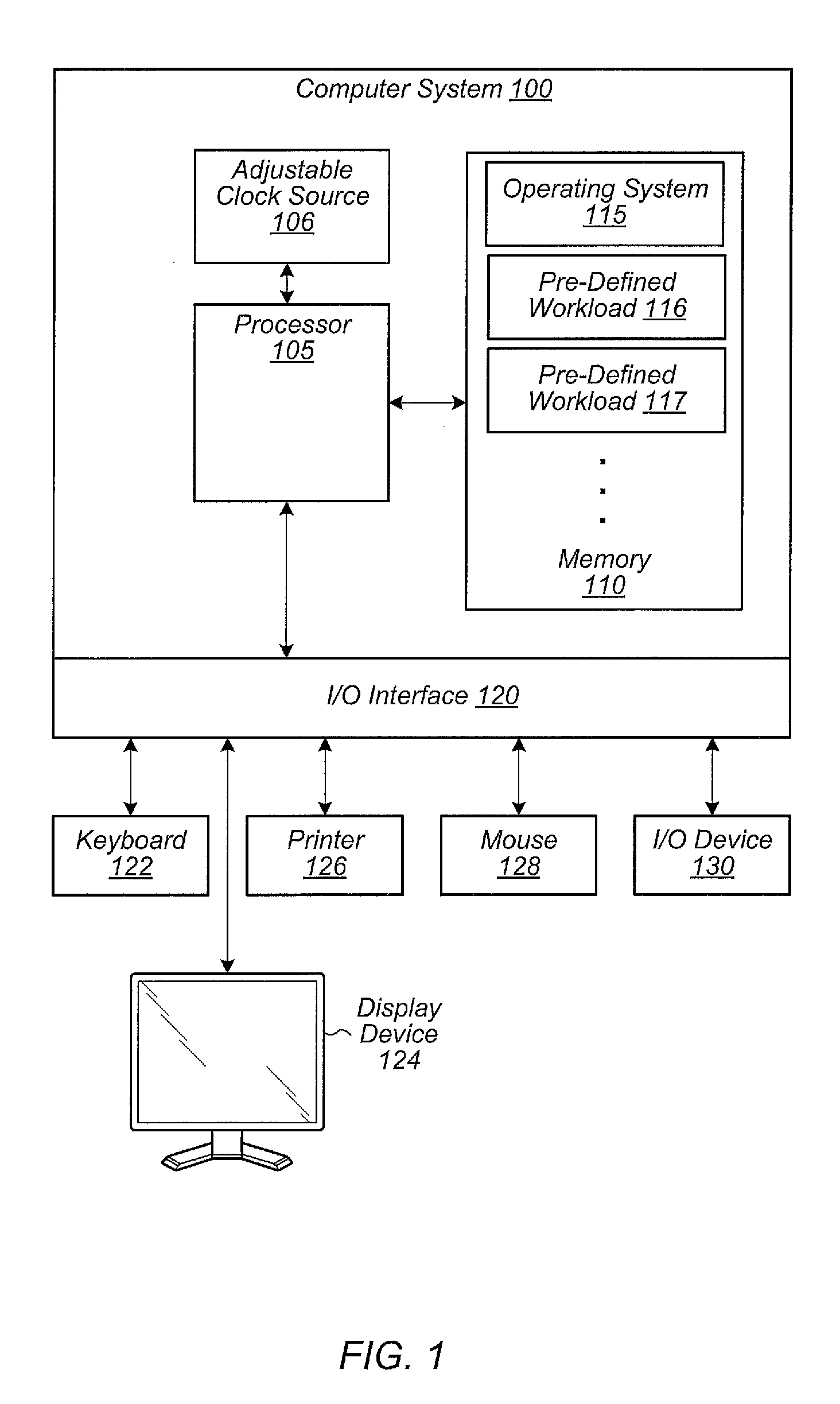

Adjusting the clock frequency of a processing unit in real-time based on a frequency sensitivity value

A system, method, and medium for adjusting an input clock frequency of a processor in real-time based on one or more hardware metrics. First, the processor is characterized for a plurality of workloads. Next, the frequency sensitivity value of the processor for each of the workloads is calculated. Hardware metrics are also monitored and the values of these metrics are stored for each of the workloads. Then, linear or polynomial regression is performed to match the metrics to the frequency sensitivity of the processor. The linear or polynomial regression will produce a formula and coefficients, and the coefficients are applied to the metrics in real-time to calculate a frequency sensitivity value of an application executing on the processor. Then, the frequency sensitivity value is utilized to determine whether to adjust the input clock frequency of the processor.

Owner:ADVANCED MICRO DEVICES INC

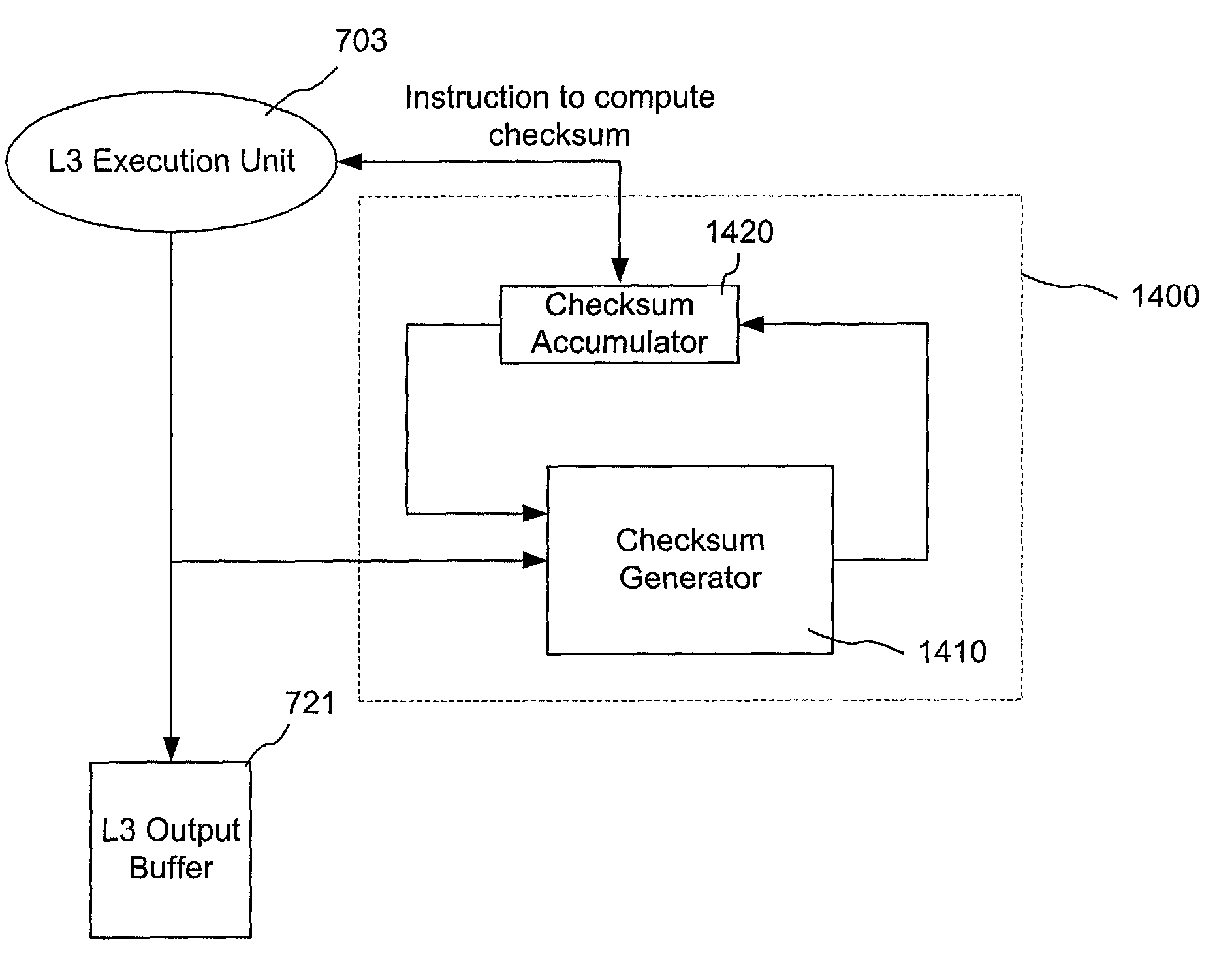

On the fly header checksum processing using dedicated logic

InactiveUS7283528B1Speed up the processError detection/correctionCode conversionChecksumComputer science

A packet header processing engine includes a packet processing unit that is configured to generate the packet header information based on the packet header data. A checksum generating unit is connected to the packet processing unit. The checksum generating unit is configured to compute and store a partial checksum for a packet header being processed by the packet processing unit. After all packet header information for a packet is stored in the buffer, the checksum generating unit contains a complete checksum for the packet header.

Owner:JUMIPER NETWORKS INC

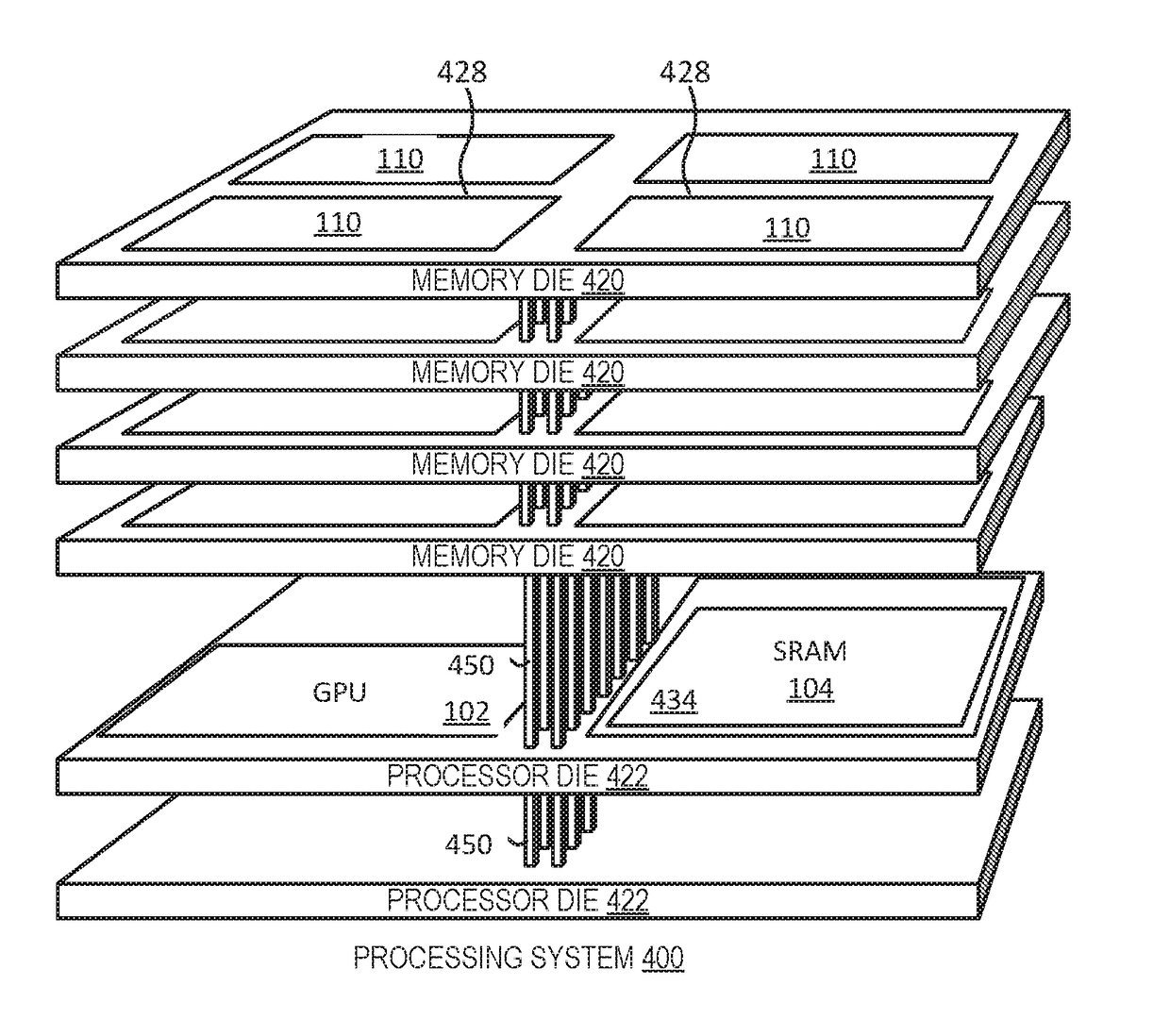

Hierarchical register file at a graphics processing unit

A processor employs a hierarchical register file for a graphics processing unit (GPU). A top level of the hierarchical register file is stored at a local memory of the GPU (e.g., a memory on the same integrated circuit die as the GPU). Lower levels of the hierarchical register file are stored at a different, larger memory, such as a remote memory located on a different die than the GPU. A register file control module monitors the status of in-flight wavefronts at the GPU, and in particular whether each in-flight wavefront is active, predicted to be become active, or inactive. The register file control module places execution data for active and predicted-active wavefronts in the top level of the hierarchical register file and places execution data for inactive wavefronts at lower levels of the hierarchical register file.

Owner:ADVANCED MICRO DEVICES INC

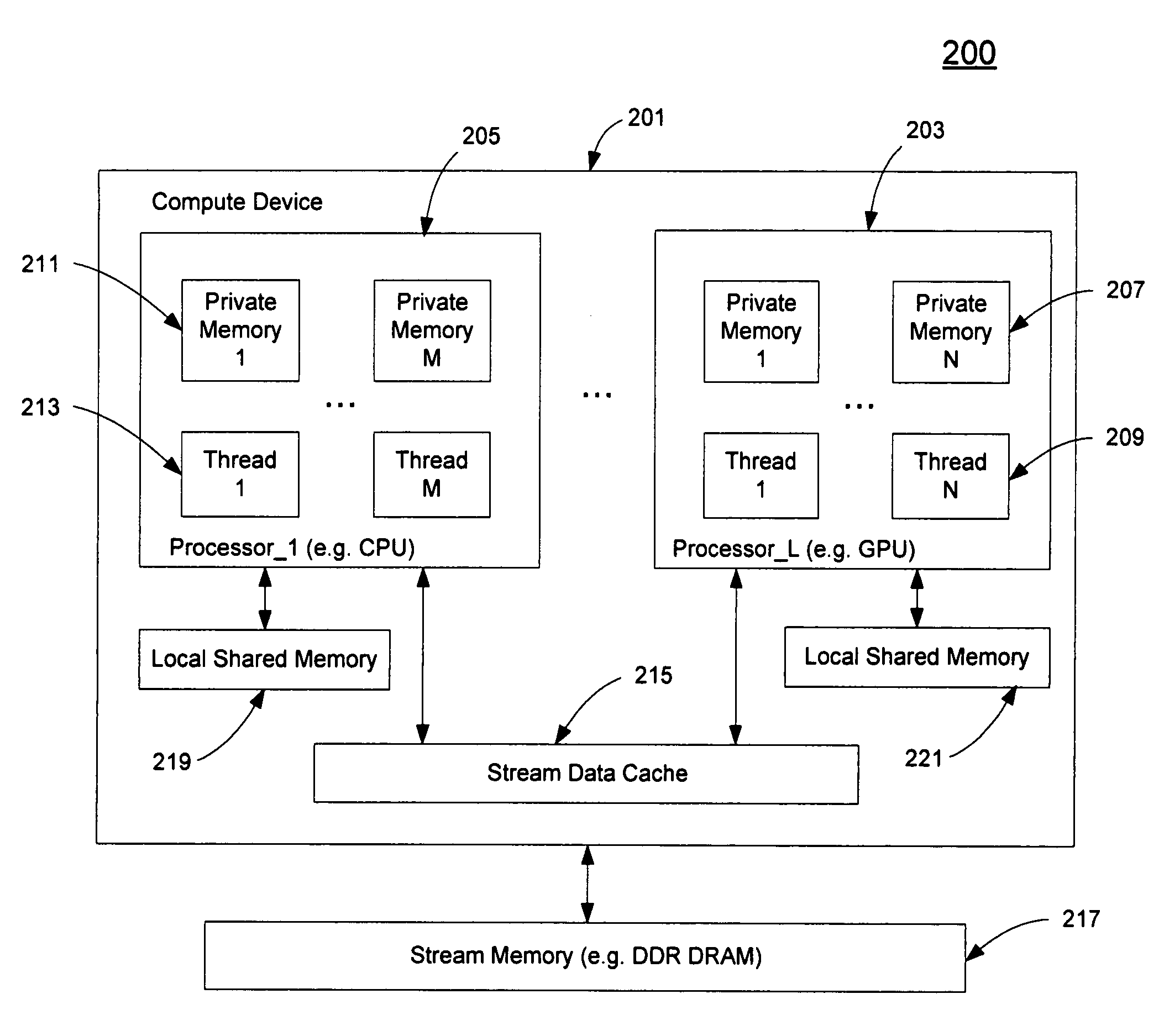

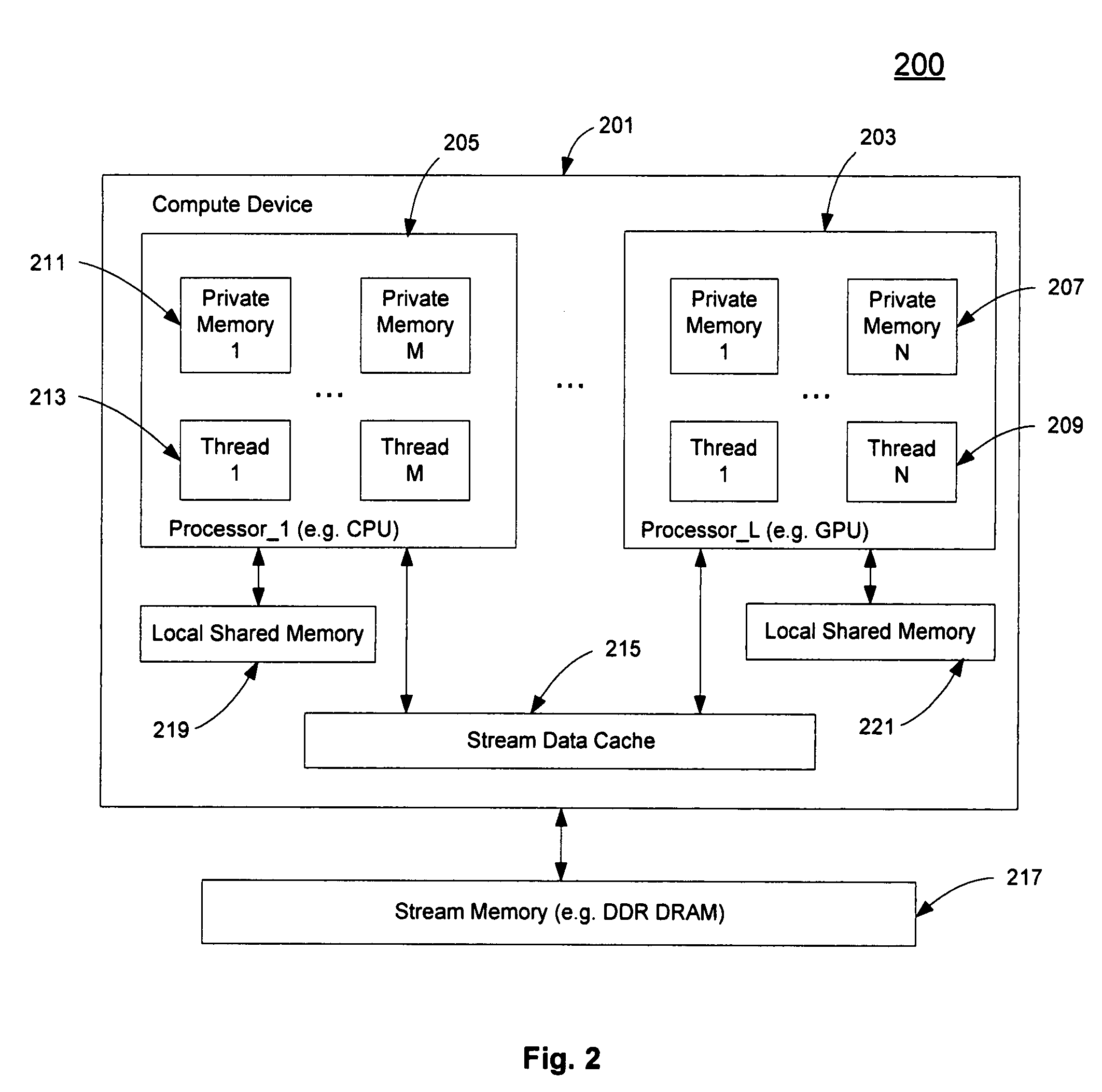

Shared stream memory on multiple processors

ActiveUS20080276064A1Memory adressing/allocation/relocationDigital computer detailsGraphicsApplication software

A method and an apparatus that allocate a stream memory and / or a local memory for a variable in an executable loaded from a host processor to the compute processor according to whether a compute processor supports a storage capability are described. The compute processor may be a graphics processing unit (GPU) or a central processing unit (CPU). Alternatively, an application running in a host processor configures storage capabilities in a compute processor, such as CPU or GPU, to determine a memory location for accessing a variable in an executable executed by a plurality of threads in the compute processor. The configuration and allocation are based on API calls in the host processor.

Owner:APPLE INC

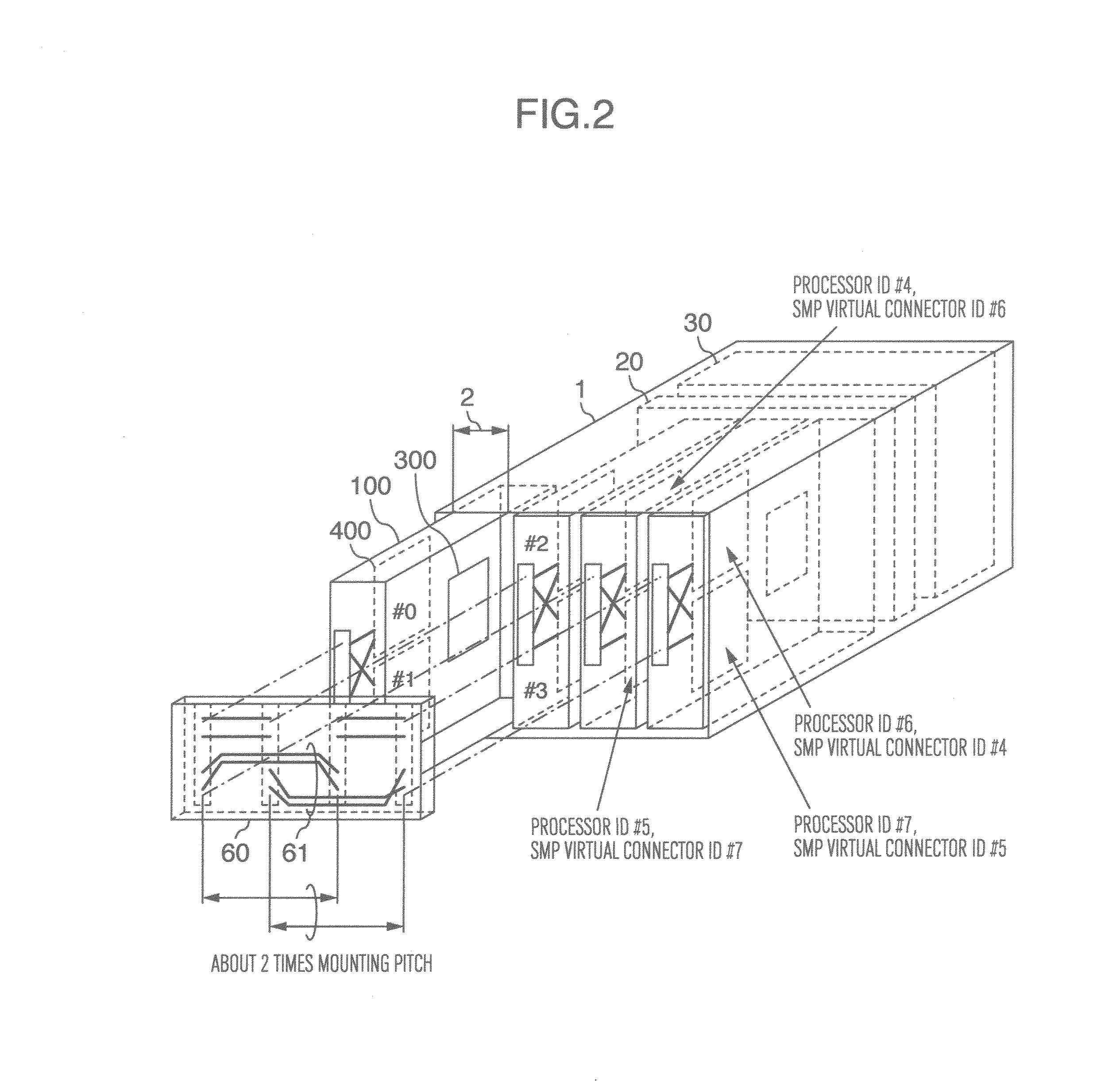

Blade server apparatus

ActiveUS20120054469A1Shorten the line lengthImprove signal transmission qualityProgram control using stored programsGeneral purpose stored program computerComputer scienceBlade server

A blade server apparatus including a plurality of server modules, a backplane for mounting the plurality of server modules thereon, and an SMP coupling device having wiring lines to SMP couple the plurality of server modules. Each of the server modules has one or more processors controlled by firmware and a module manager for managing its own server module, the module manager has an ID determiner for informing each processor of a processor ID, each processor has a processing unit and an SMP virtual connecting unit for instructing ones of wiring lines of the SMP coupling device through which a packet received from the processing unit is to be transmitted, and an ID converter for converting the processor ID and informing it to the virtual connecting unit is provided within the firmware.

Owner:HITACHI LTD

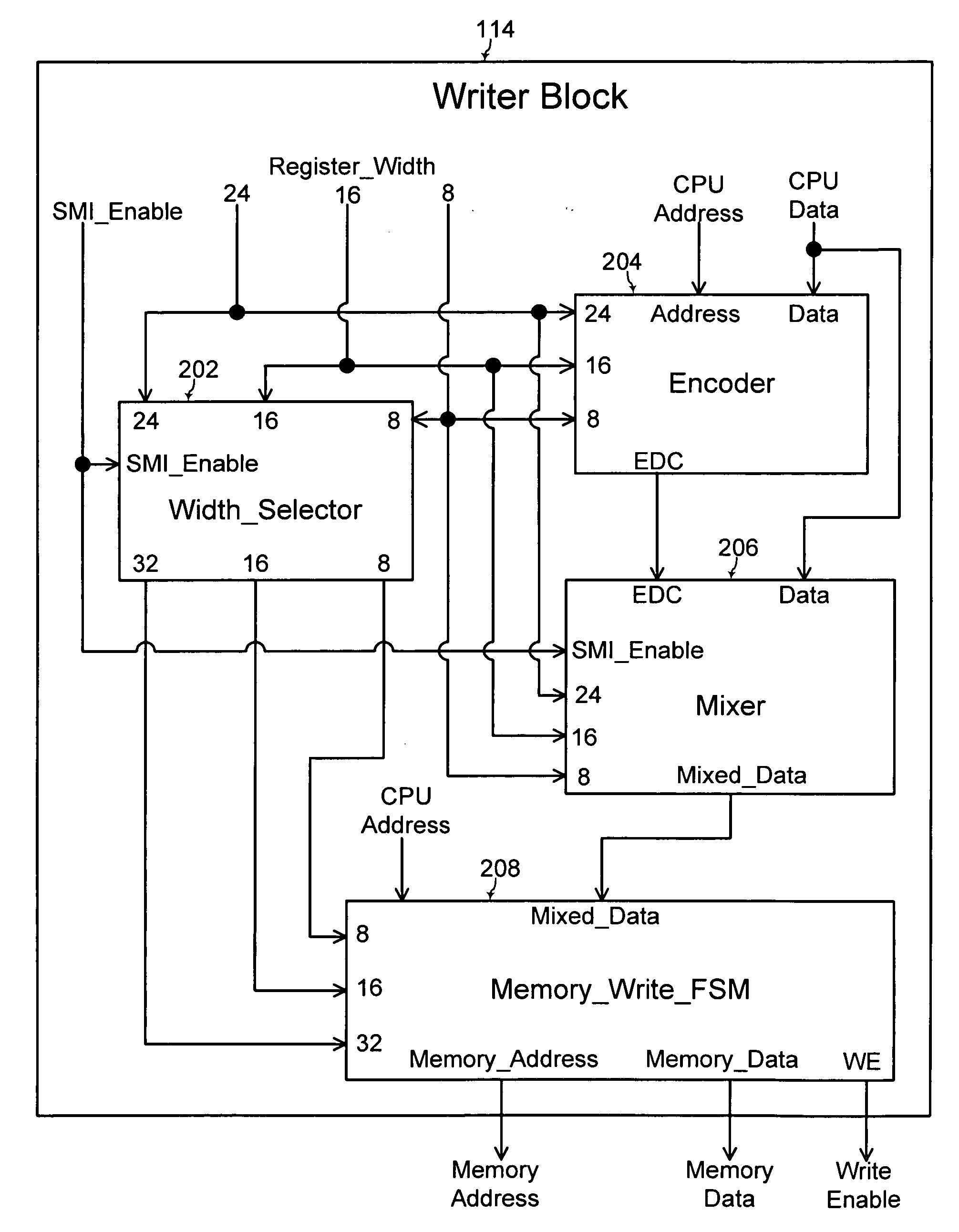

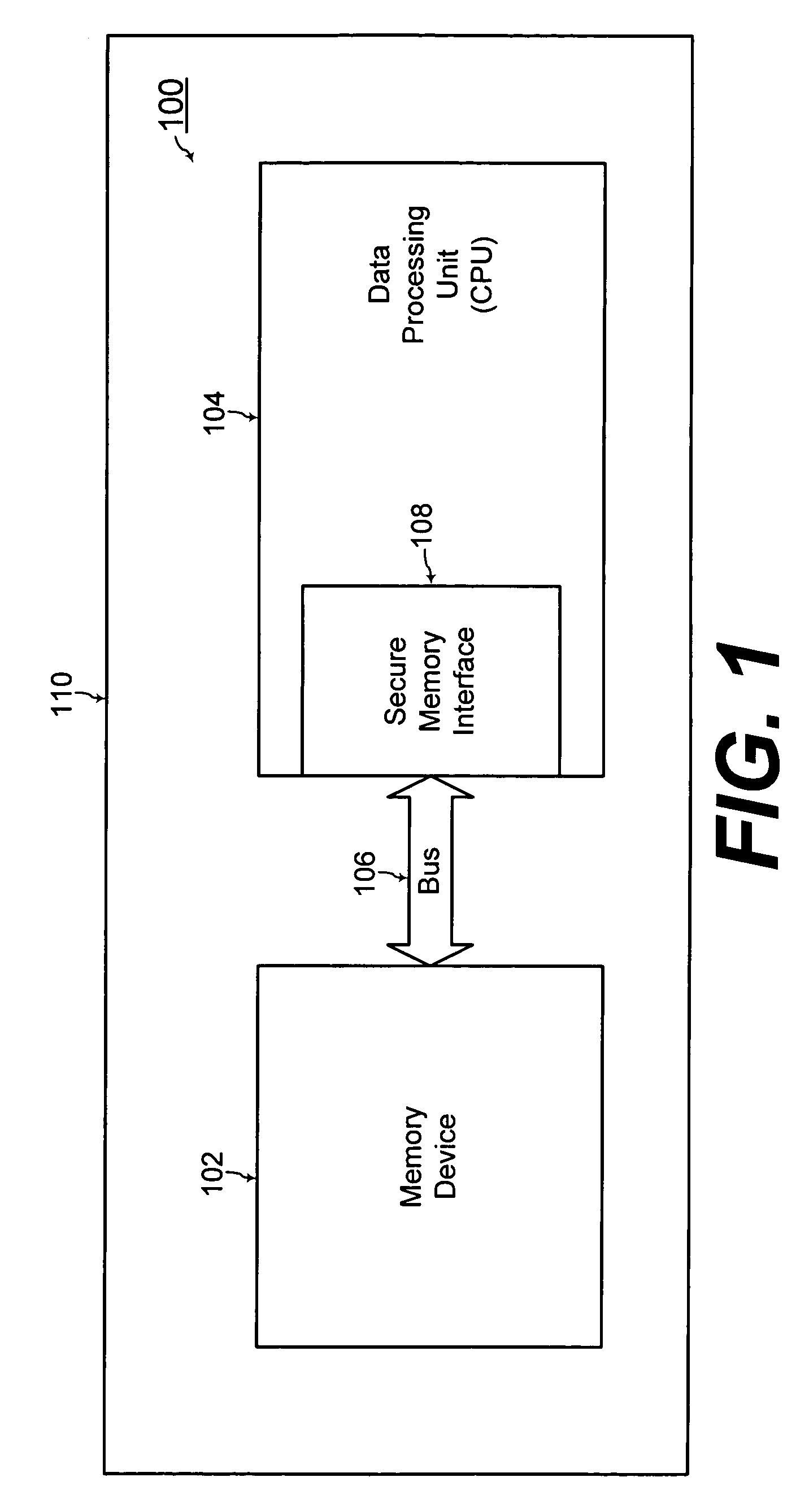

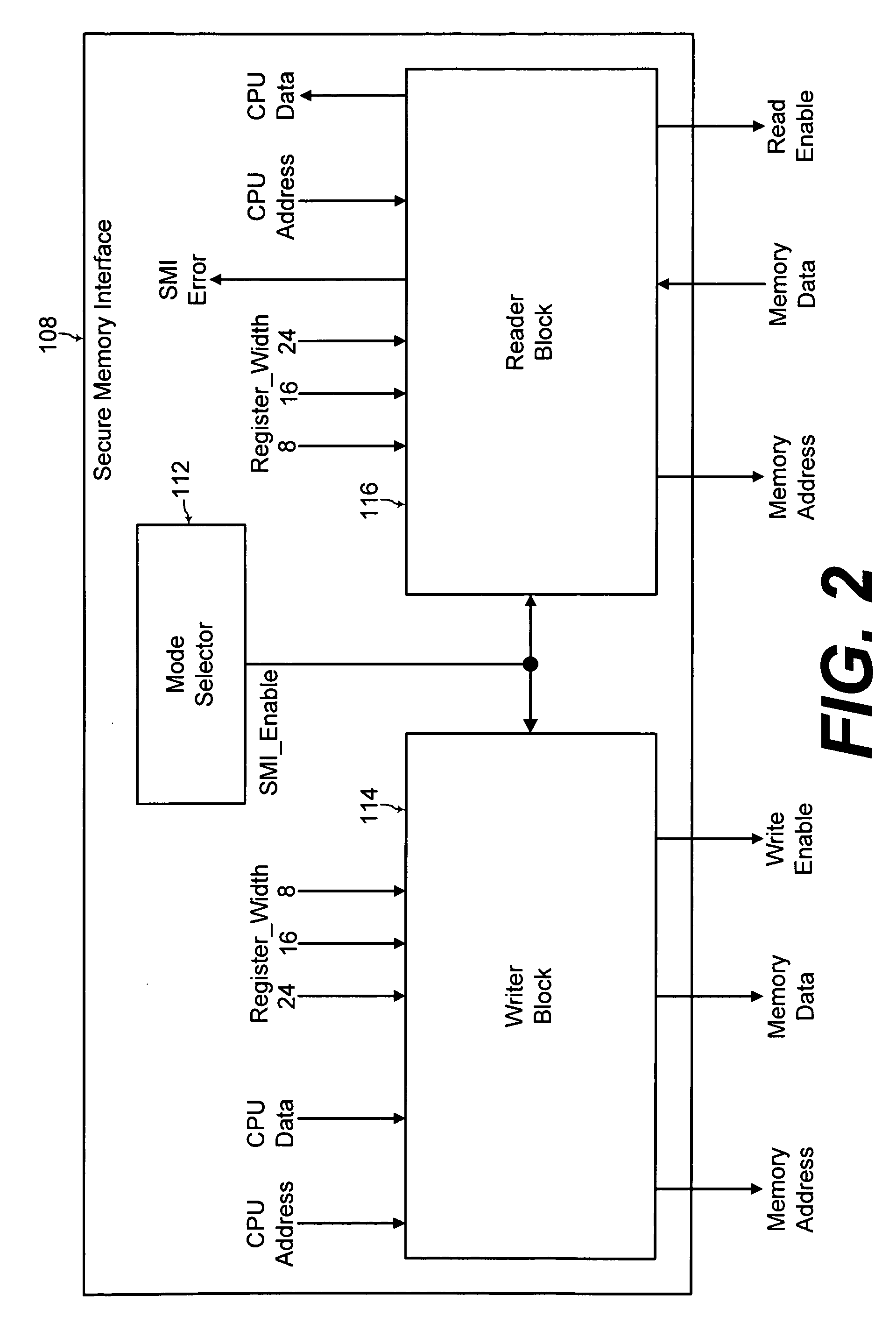

Secure memory interface

ActiveUS20100082927A1Efficient detectionEasy accessDigital computer detailsUnauthorized memory use protectionMain processing unitMemory interface

A secure memory interface includes a reader block, a writer block, and a mode selector for detecting fault injection into a memory device when a secure mode is activated. The mode selector activates or deactivates the secure mode using memory access information from a data processing unit. Thus, the data processing unit flexibly specifies the amount and location of the secure data stored into the memory device.

Owner:SAMSUNG ELECTRONICS CO LTD

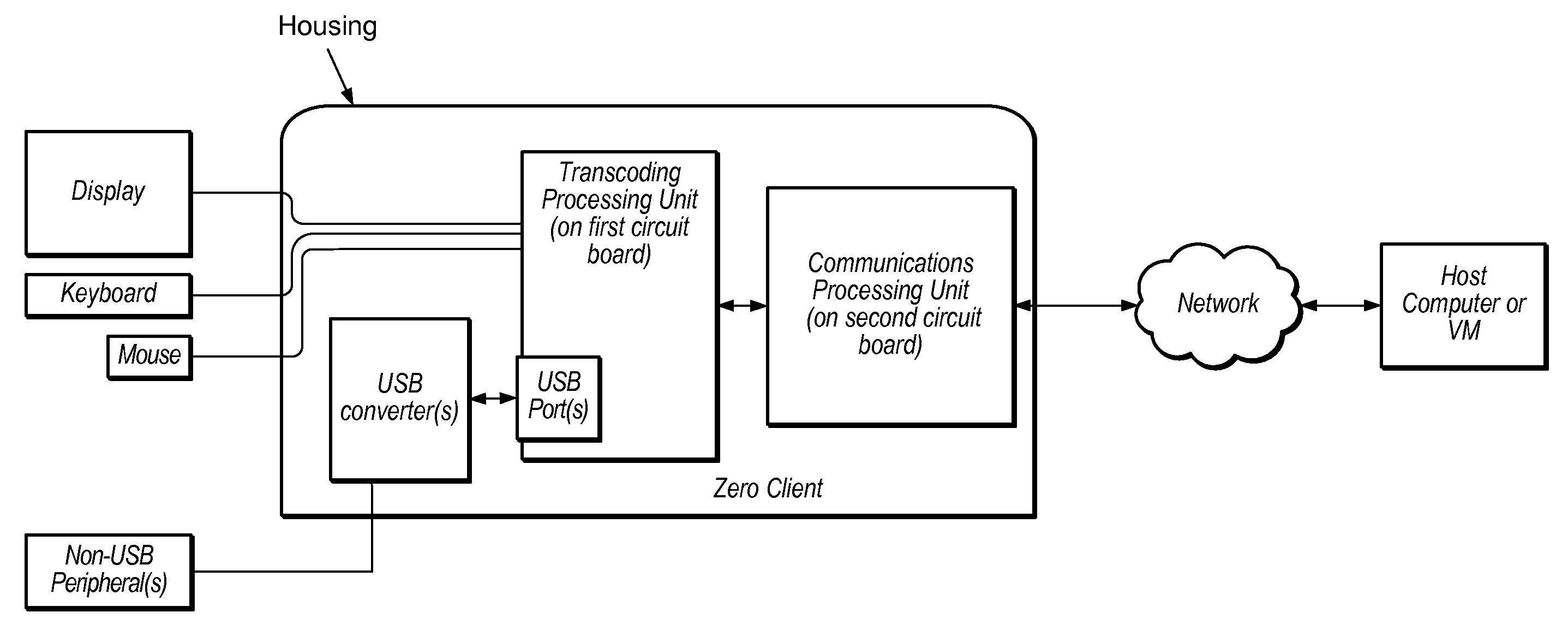

Zero client device with integrated serial or parallel ports

System and method for zero client communications. A zero client device includes a housing, and in the housing, a transcoding processing unit (transcoder) and a communications processing unit coupled to the transcoder. The transcoder is configured to receive input data from human interface device(s), encode the input data, and provide the encoded input data to the communications processing unit for transmission over a network to a server. The communications processing unit is configured to receive the encoded input data from the transcoder, transmit the encoded input data over the network to the server, receive output data from the server, and send the output data to the transcoder. The transcoder is further configured to receive the output data from the communications processing unit, decode the output data, and send the decoded output data to at least one of the human interface devices.

Owner:CLEARCUBE TECHNOLOGY INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com