Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

90 results about "Process (computing)" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computing, a process is the instance of a computer program that is being executed by one or many threads. It contains the program code and its activity. Depending on the operating system (OS), a process may be made up of multiple threads of execution that execute instructions concurrently.

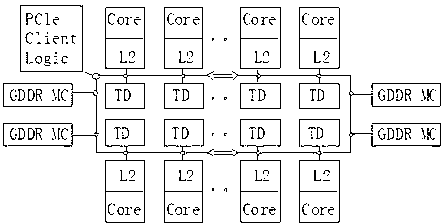

Object-oriented, parallel language, method of programming and multi-processor computer

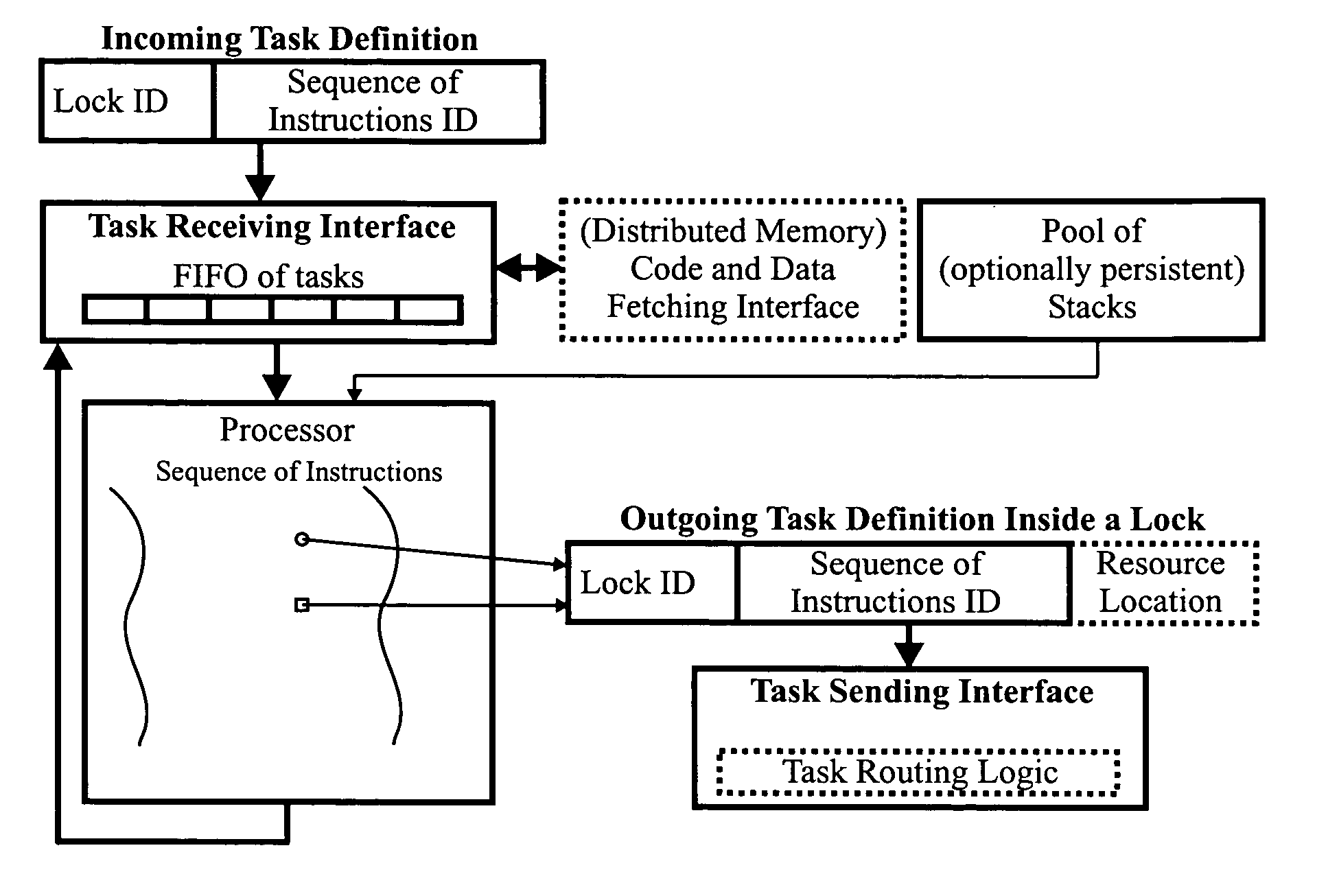

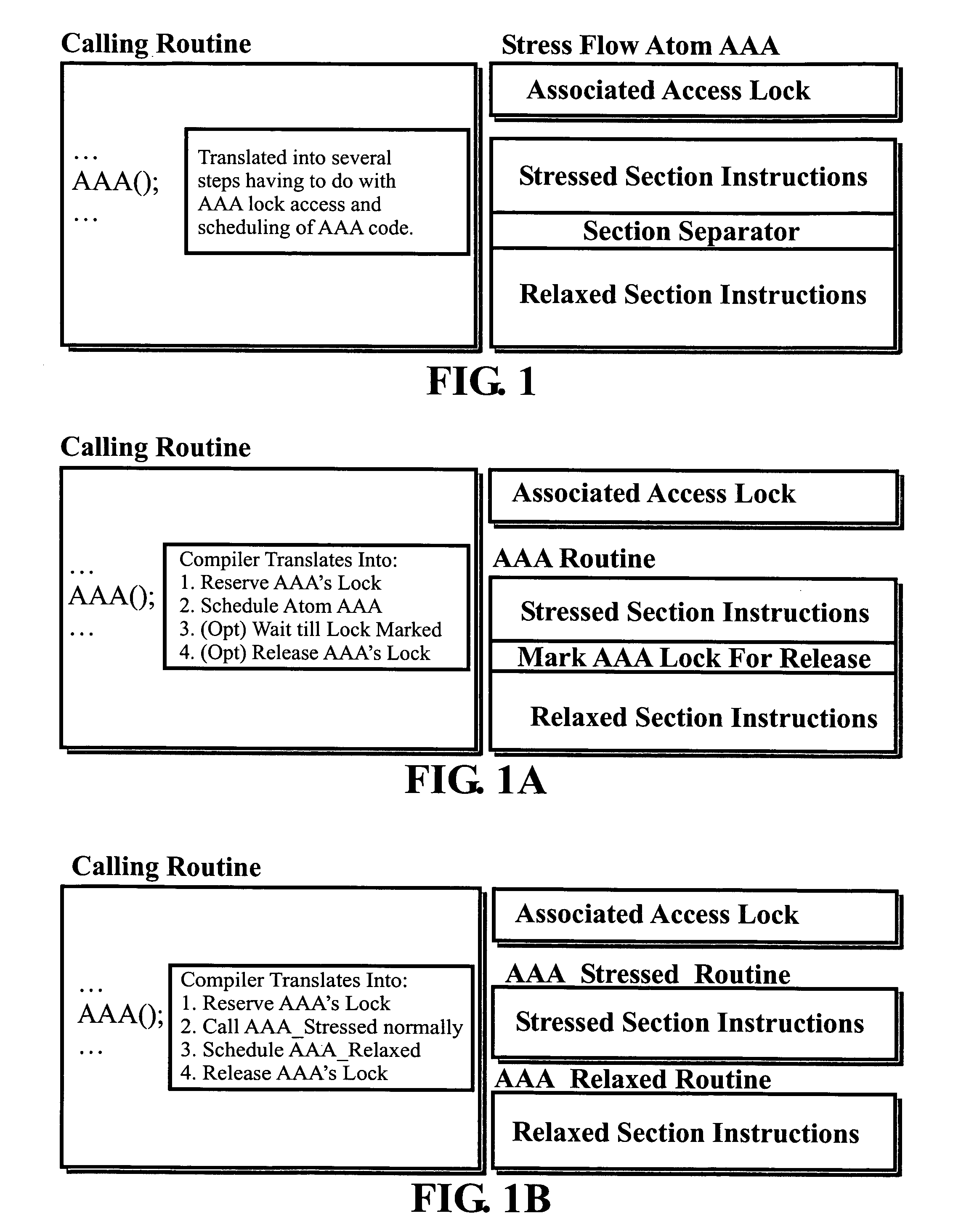

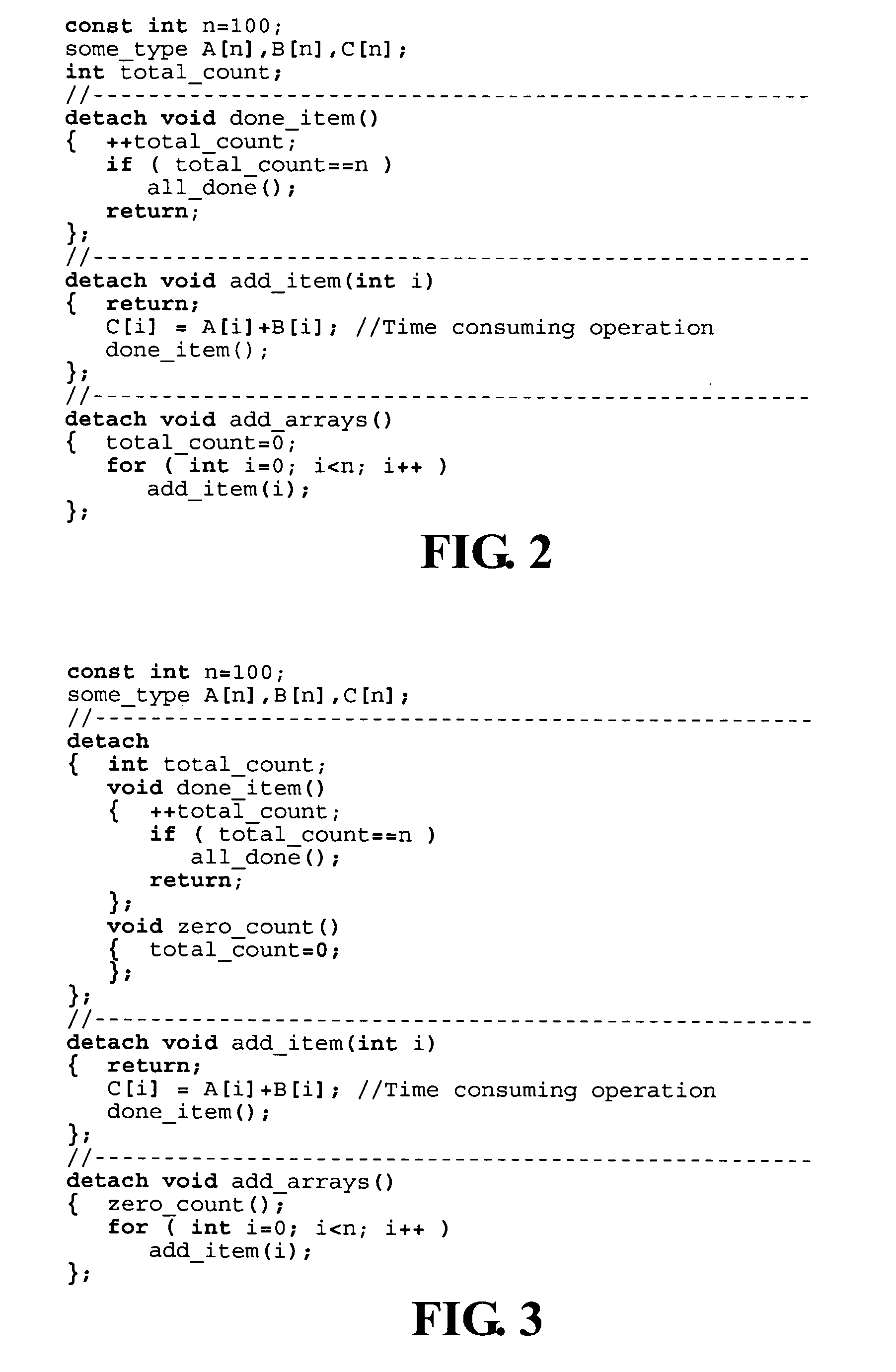

This invention relates to architecture and synchronization of multi-processor computing hardware. It establishes a new method of programming, process synchronization, and of computer construction, named stress-flow by the inventor, allowing benefits of both opposing legacy concepts of programming (namely of both data-flow and control flow) within one cohesive, powerful, object-oriented scheme. This invention also relates to construction of object-oriented, parallel computer languages, script and visual, together with compiler construction and method to write programs to be executed in fully parallel (or multi-processor) architectures, virtually parallel, and single-processor multitasking computer systems.

Owner:JANCZEWSKA NATALIA URSZULA +2

Parallel-aware, dedicated job co-scheduling method and system

InactiveUS20050131865A1Improve performanceImprove scalabilityProgram initiation/switchingSpecial data processing applicationsExtensibilityOperational system

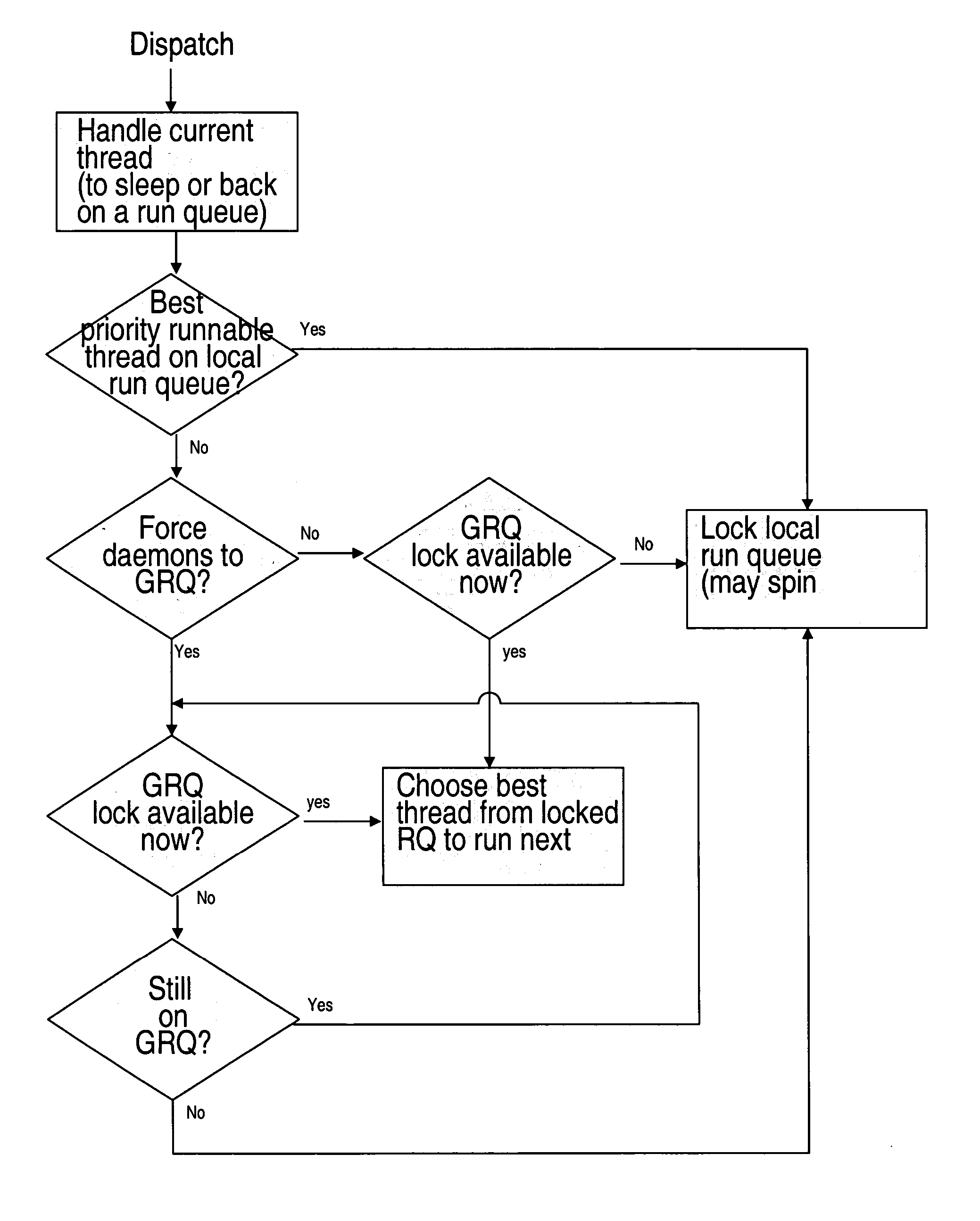

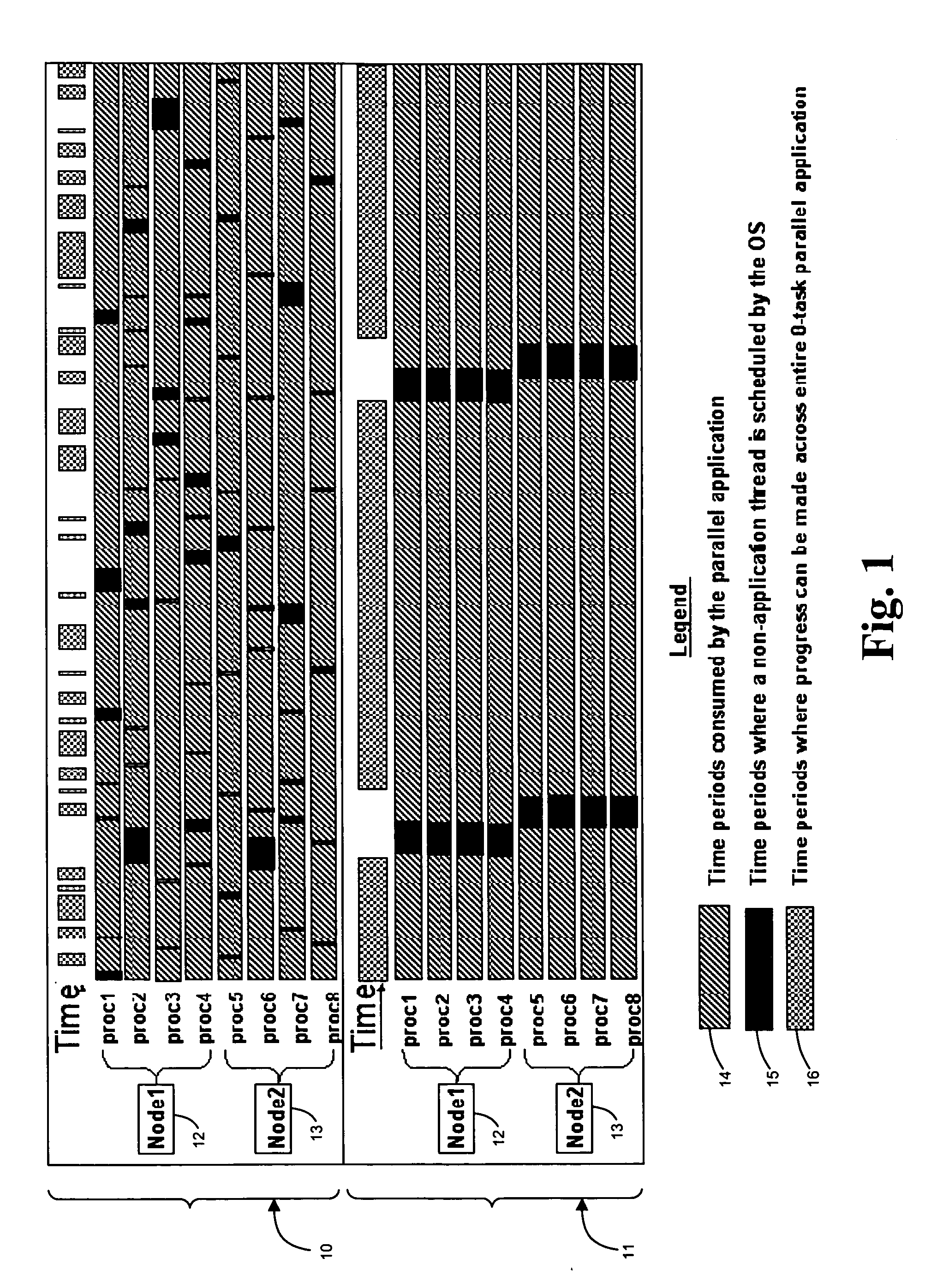

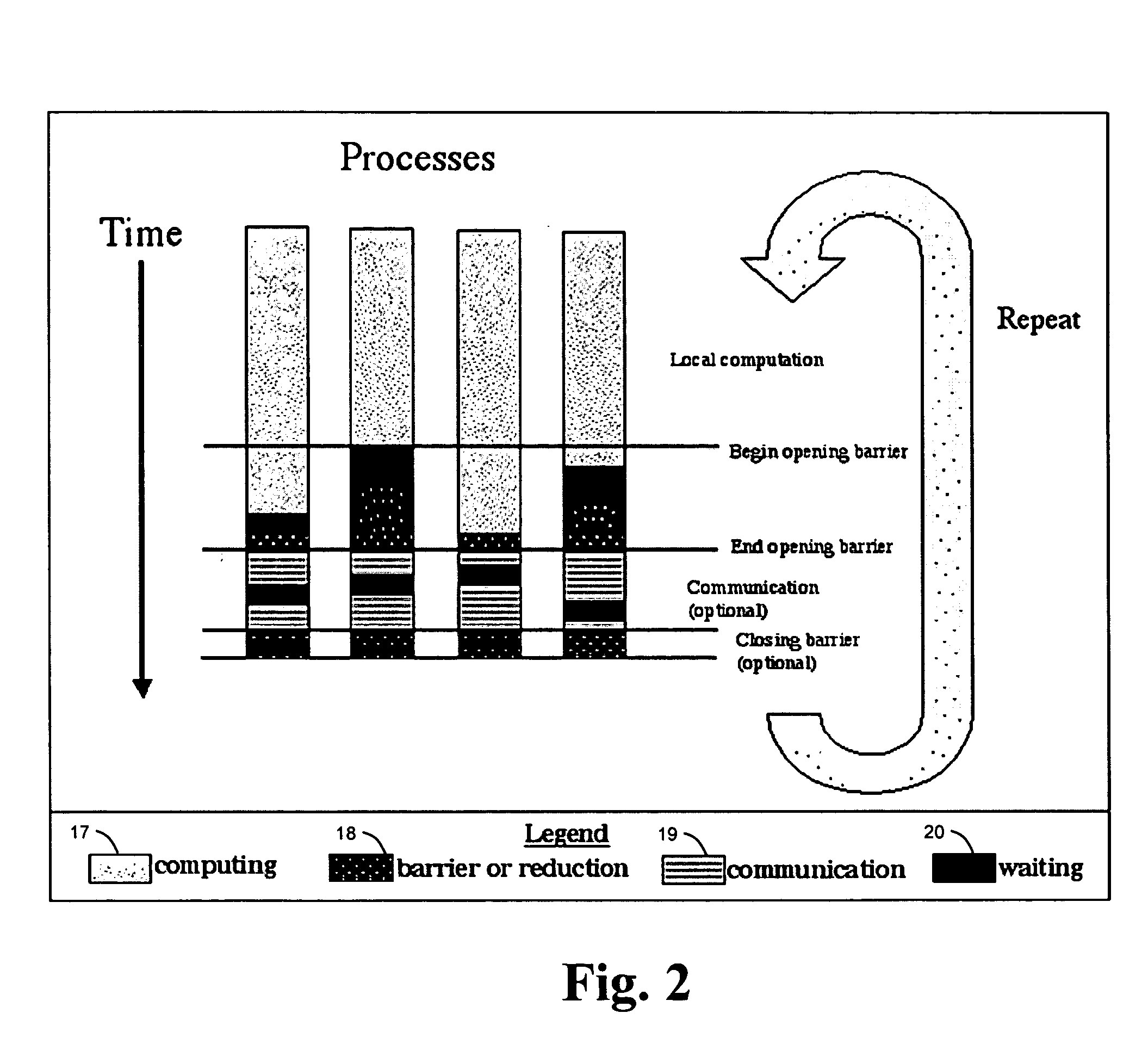

In a parallel computing environment comprising a network of SMP nodes each having at least one processor, a parallel-aware co-scheduling method and system for improving the performance and scalability of a dedicated parallel job having synchronizing collective operations. The method and system uses a global co-scheduler and an operating system kernel dispatcher adapted to coordinate interfering system and daemon activities on a node and across nodes to promote intra-node and inter-node overlap of said interfering system and daemon activities as well as intra-node and inter-node overlap of said synchronizing collective operations. In this manner, the impact of random short-lived interruptions, such as timer-decrement processing and periodic daemon activity, on synchronizing collective operations is minimized on large processor-count SPMD bulk-synchronous programming styles.

Owner:LAWRENCE LIVERMORE NAT SECURITY LLC

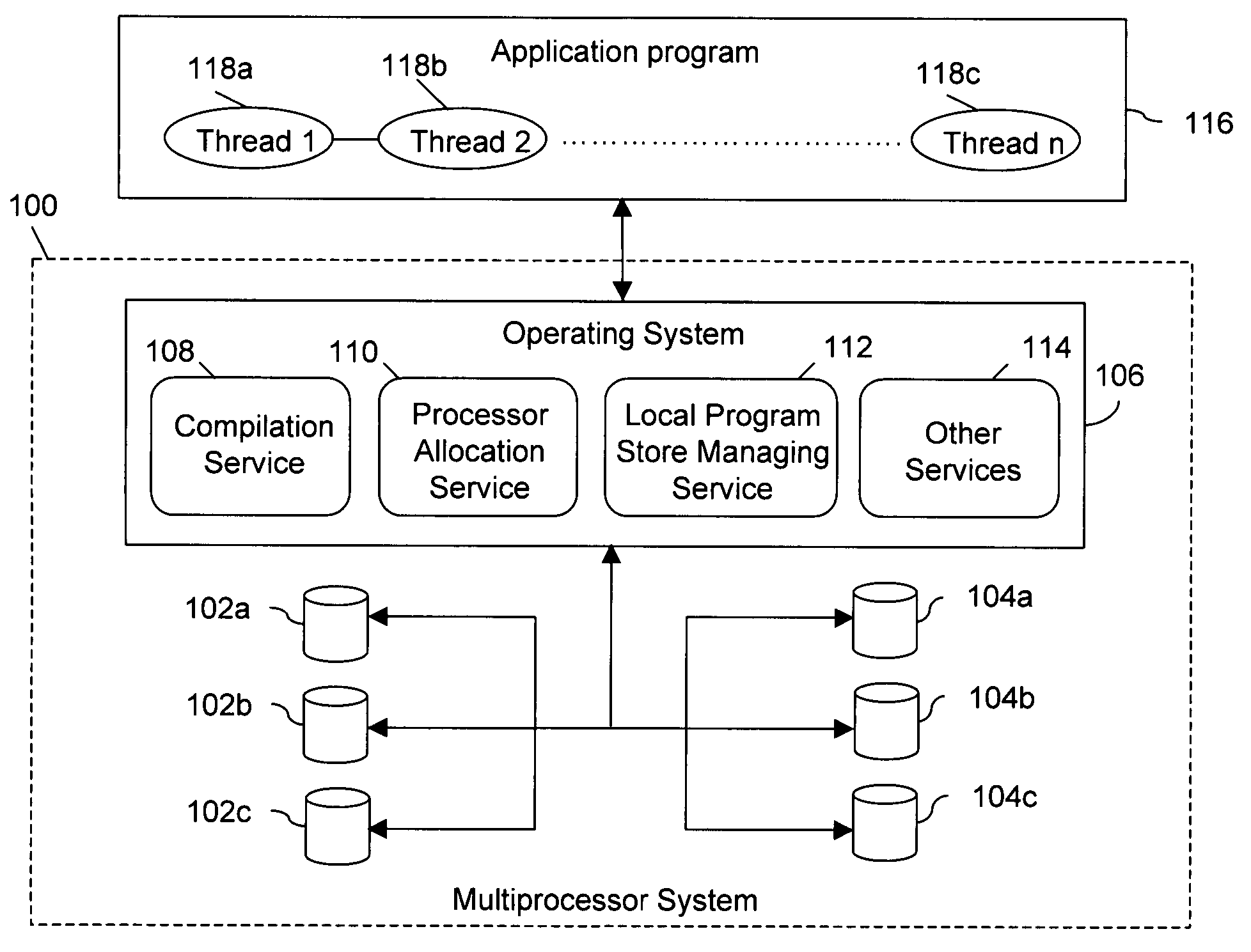

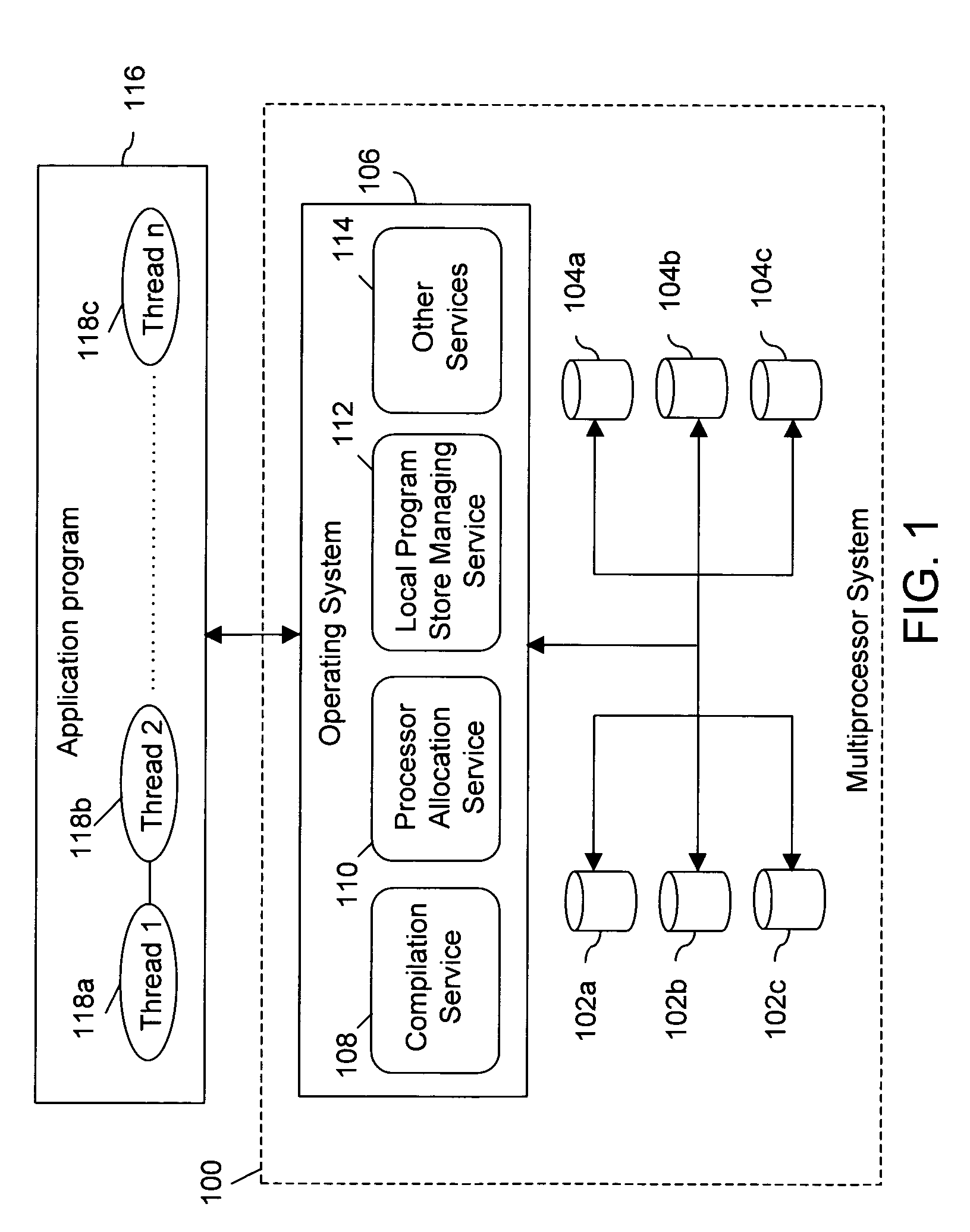

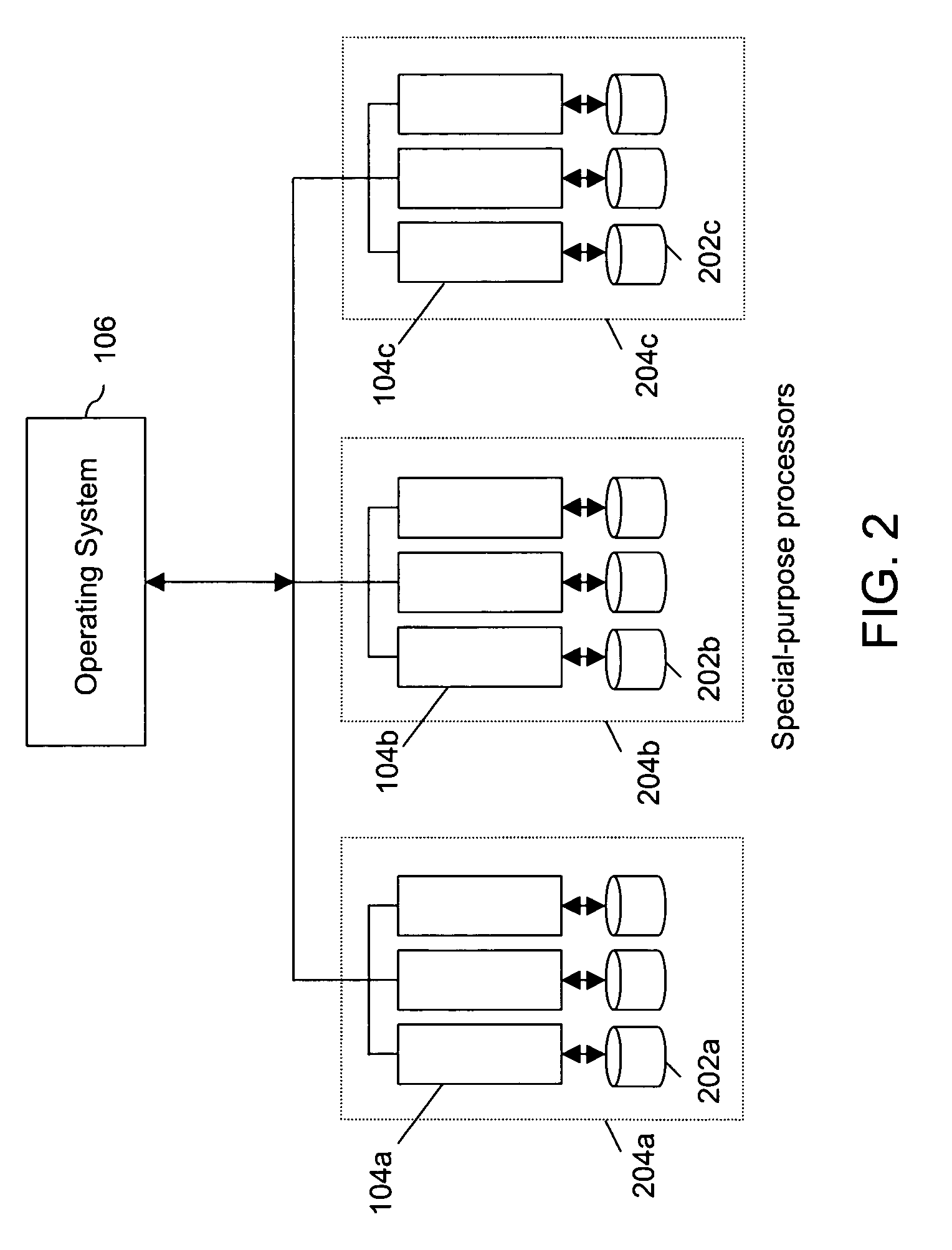

Method and system for allocation of special purpose computing resources in a multiprocessor system

InactiveUS20050022173A1Reduce numberFacilitates efficient allocationProgram initiation/switchingSpecific program execution arrangementsRequest queueProcfs

A method and system for allocating special-purpose computing resources in a multiprocessor system capable of executing a plurality of threads in a parallel manner is disclosed. A thread requesting the execution of a specific program is allocated a special-purpose processor with the requested program loaded on its local program store. The programs in the local stores of the special-purpose processors can be evicted and replaced by the requested programs, if no compatible processor is available to complete a request. The thread relinquishes the control of the allocated processor once the requested process is executed. When no free processors are available, the pending threads are blocked and added to a request-queue. As soon as a processor becomes free, it is allocated to one of the pending threads in a first-in-first-out manner, with special priority given to a thread requesting a program already loaded on the processor.

Owner:CODITO TECH

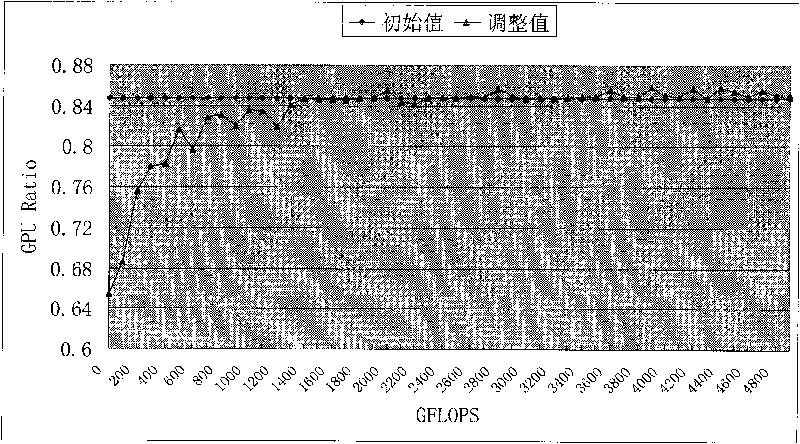

Method for partitioning dynamic tasks of CPU and GPU based on load balance

InactiveCN101706741AGuaranteed task load balancingImprove performanceResource allocationApplication softwareReal-time computing

Owner:NAT UNIV OF DEFENSE TECH

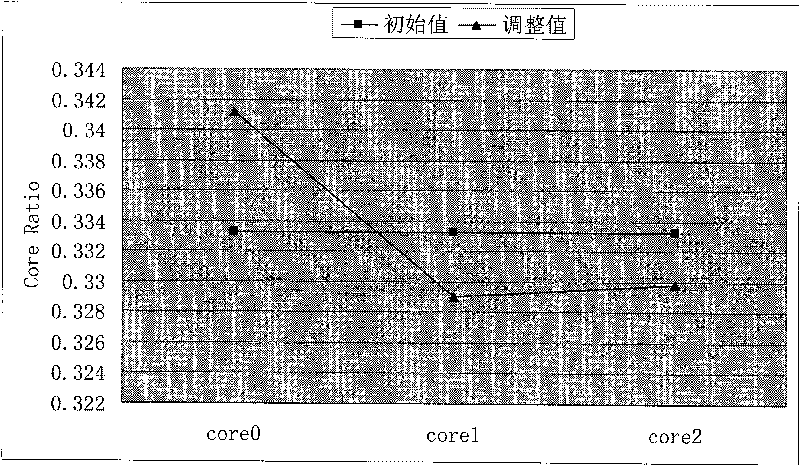

Load balancing optimization method based on CPU (central processing unit) and MIC (many integrated core) framework processor cooperative computing

InactiveCN103279391AImprove computing efficiencyImprove performanceResource allocationComputer architectureThread scheduling

The invention provides a load balancing optimization method based on CPU (central processing unit) and MIC (many integrated core) framework processor cooperative computing, and relates to an optimization method for load balancing among computing nodes, between CPU and MIC computing equipment in the computing nodes and among computing cores inside the CPU and MIC computing equipment. The load balancing optimization method specifically includes task partitioning optimization, progress / thread scheduling optimization, thread affinity optimization and the like. The load balancing optimization method is applicable to software optimization of CPU and MIC framework processor cooperative computing, software developers are guided to perform load balancing optimization and modification on software of existing CPU and MIC framework processor cooperative computing modes scientifically, effectively and systematically, maximization of computing resource unitization by the software is realized, and computing efficiency and overall performance of the software are remarkably improved.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

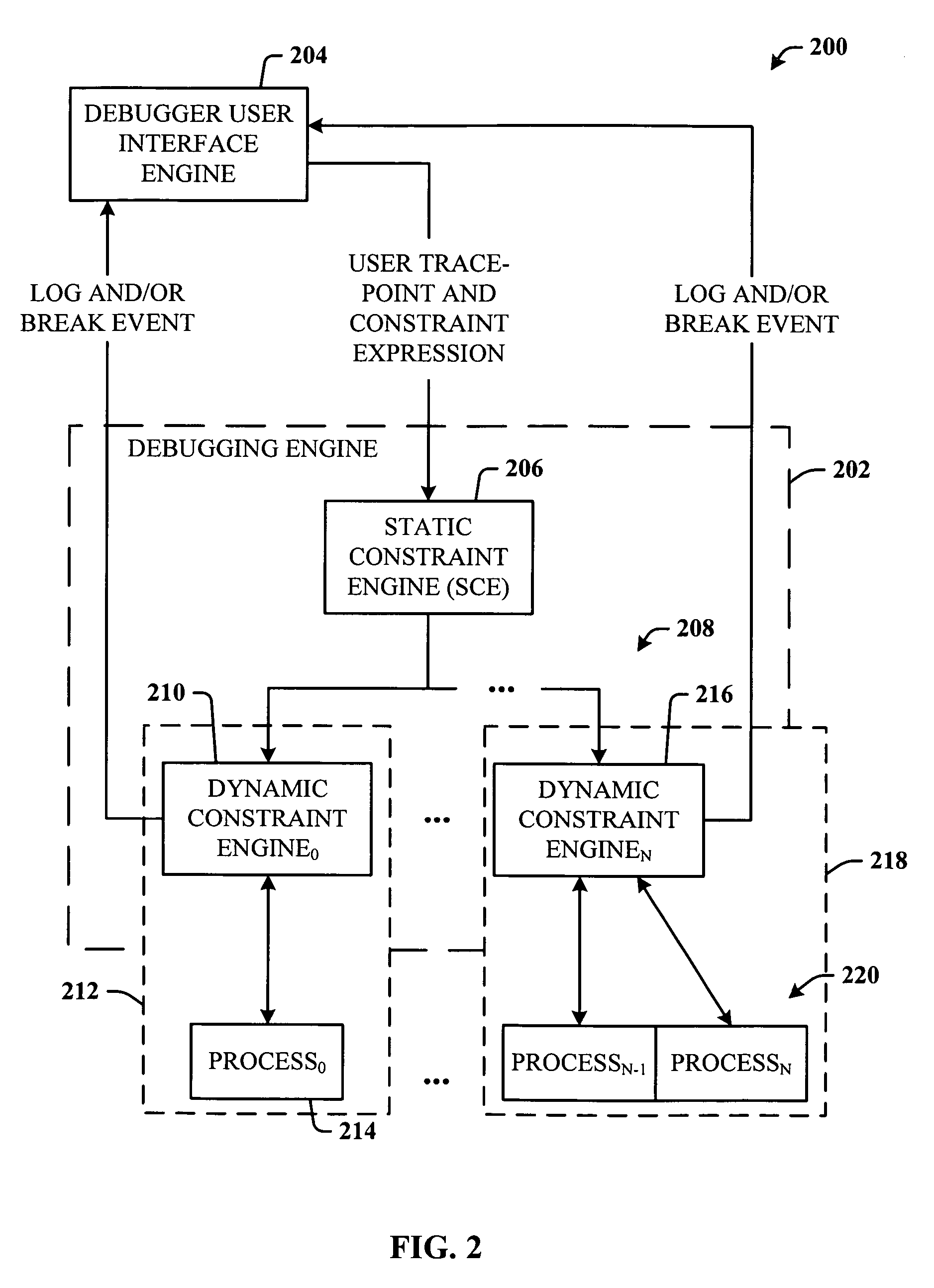

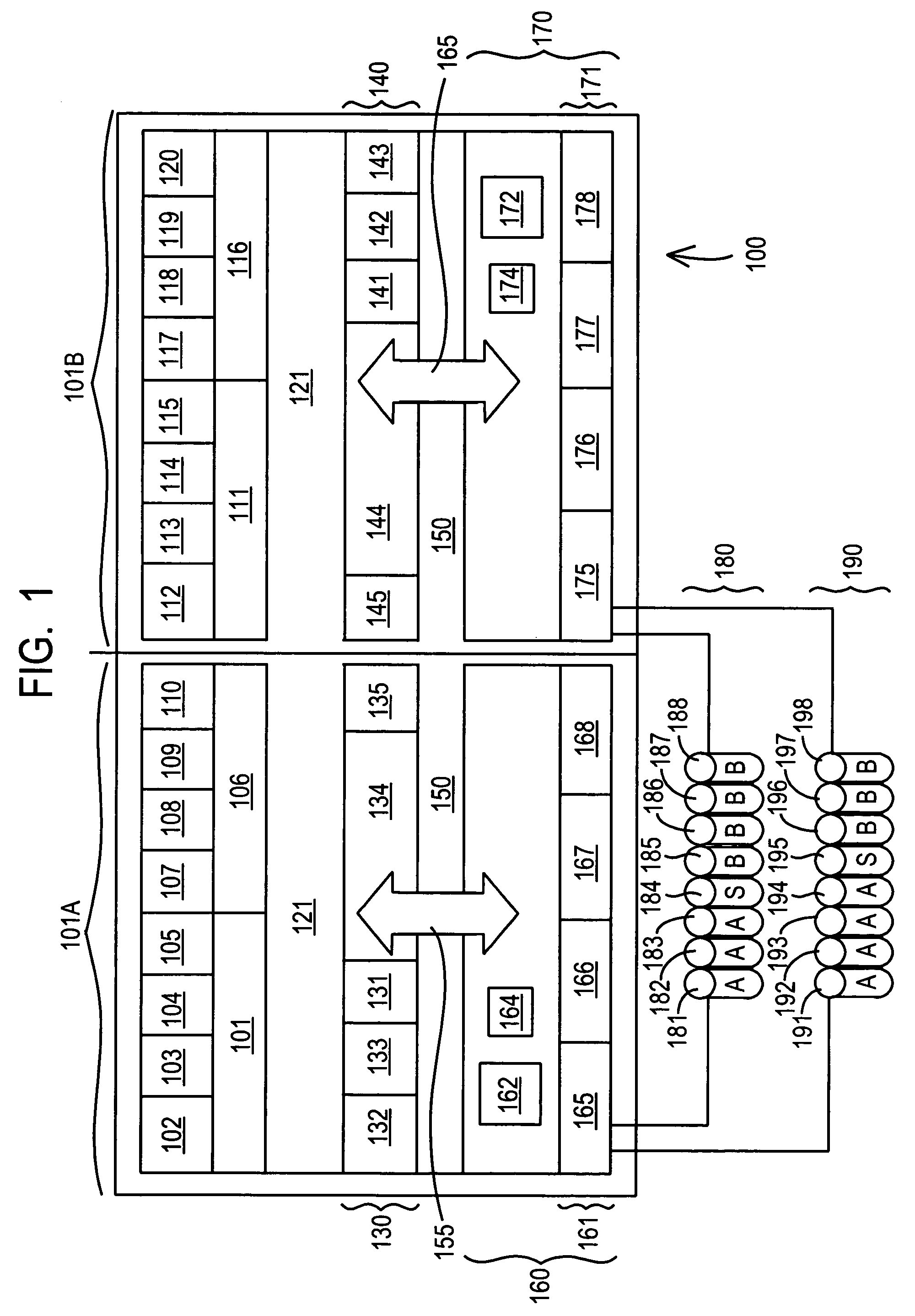

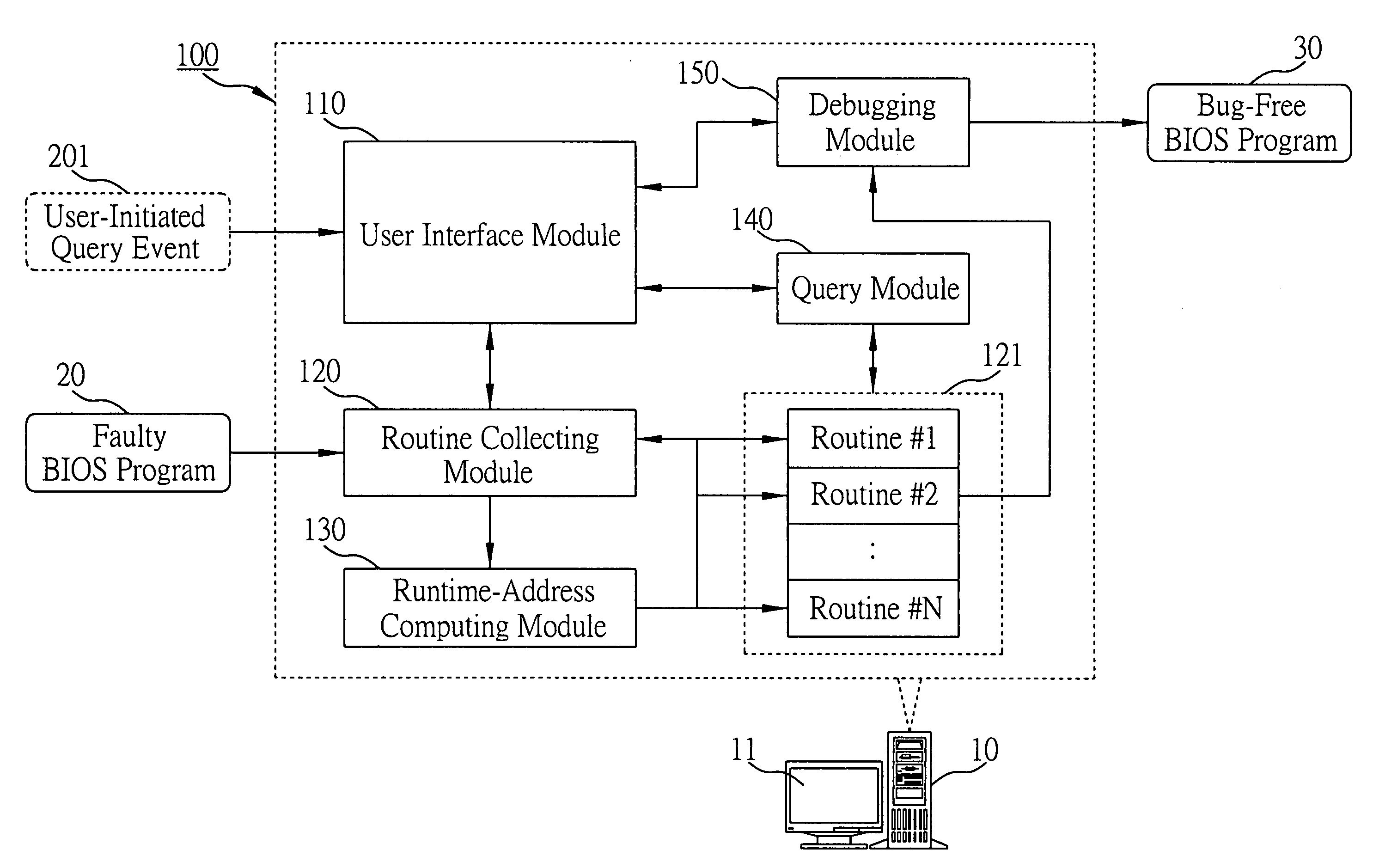

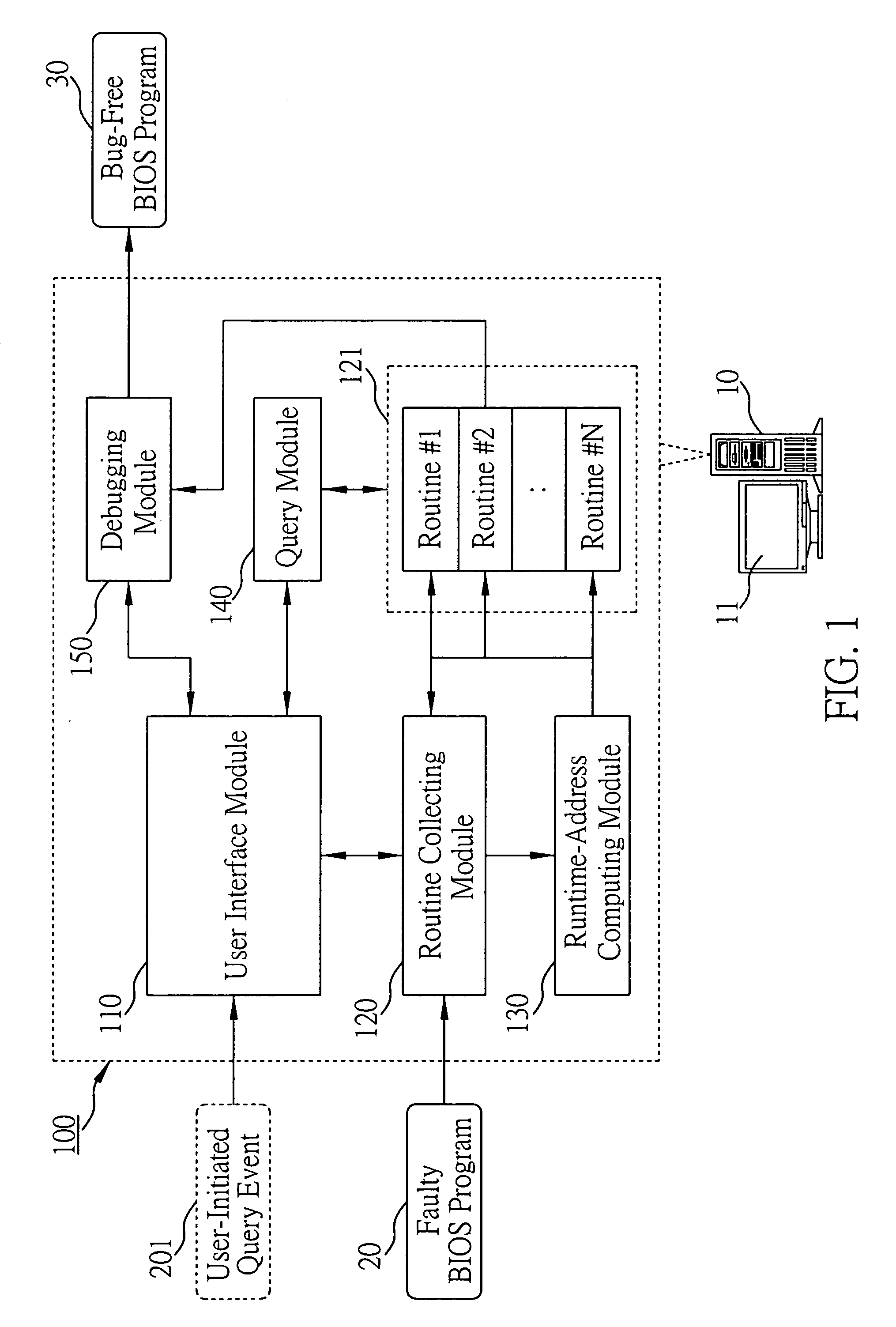

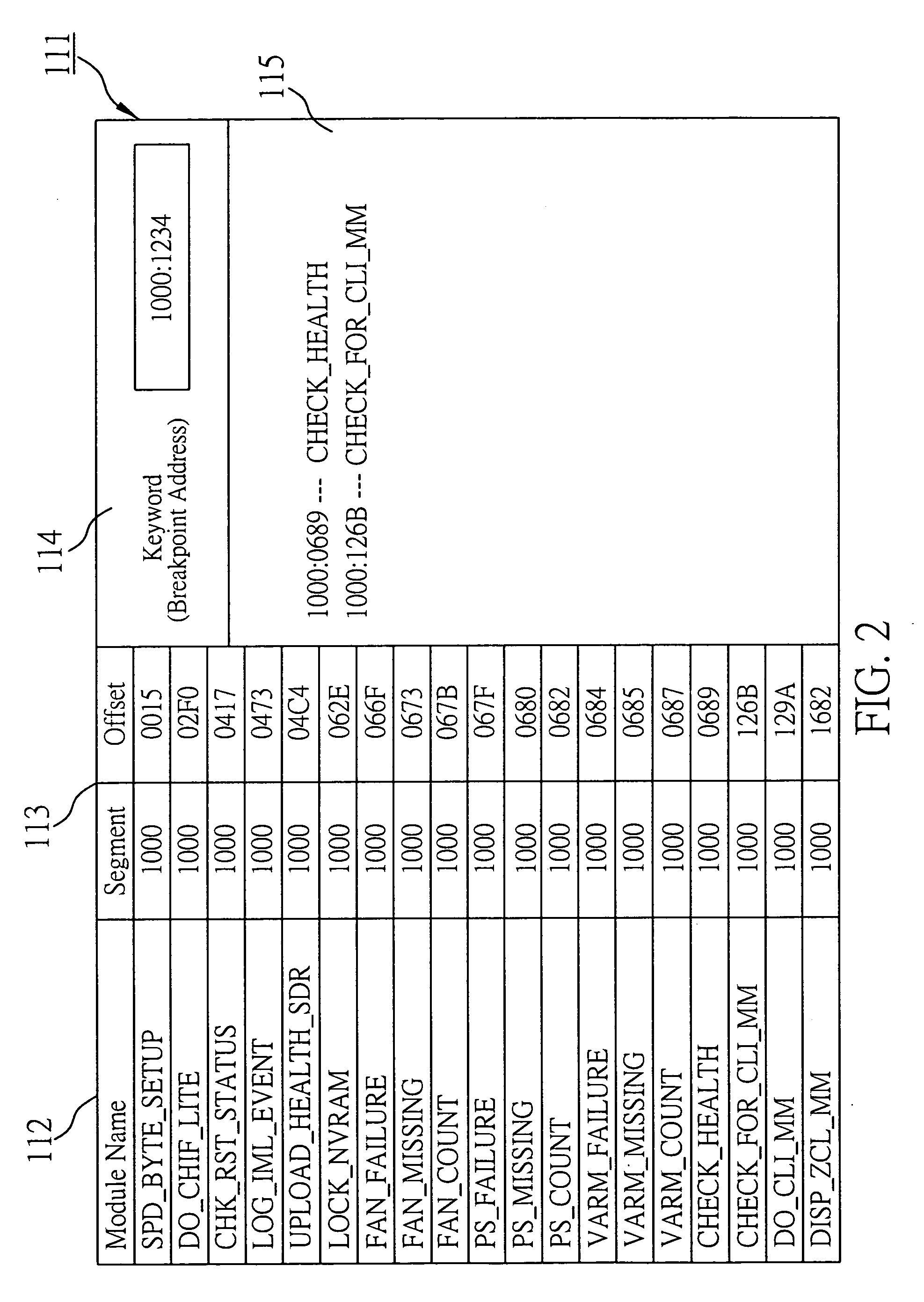

Breakpoint logging and constraint mechanisms for parallel computing systems

InactiveUS7634761B2Error detection/correctionSpecific program execution arrangementsConcurrent computationParallel computing

Owner:MICROSOFT TECH LICENSING LLC

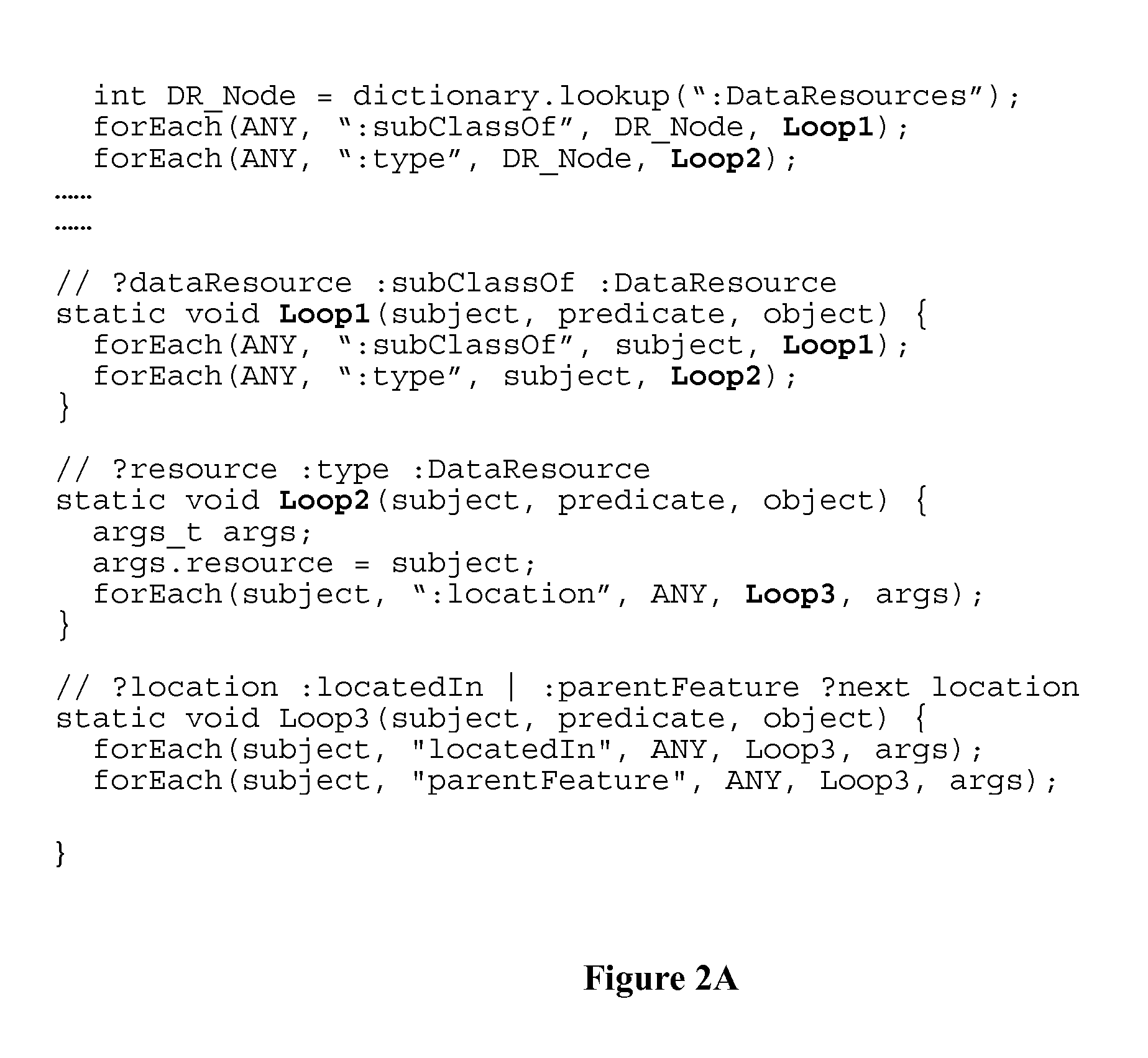

System and method of storing and analyzing information

ActiveUS20160026677A1Digital data information retrievalDigital data processing detailsTheoretical computer scienceRuntime library

A system and method of storing and analyzing information is disclosed. The system includes a compiler layer to convert user queries to data parallel executable code. The system further includes a library of multithreaded algorithms, processes, and data structures. The system also includes a multithreaded runtime library for implementing compiled code at runtime. The executable code is dynamically loaded on computing elements and contains calls to the library of multithreaded algorithms, processes, and data structures and the multithreaded runtime library.

Owner:BATTELLE MEMORIAL INST

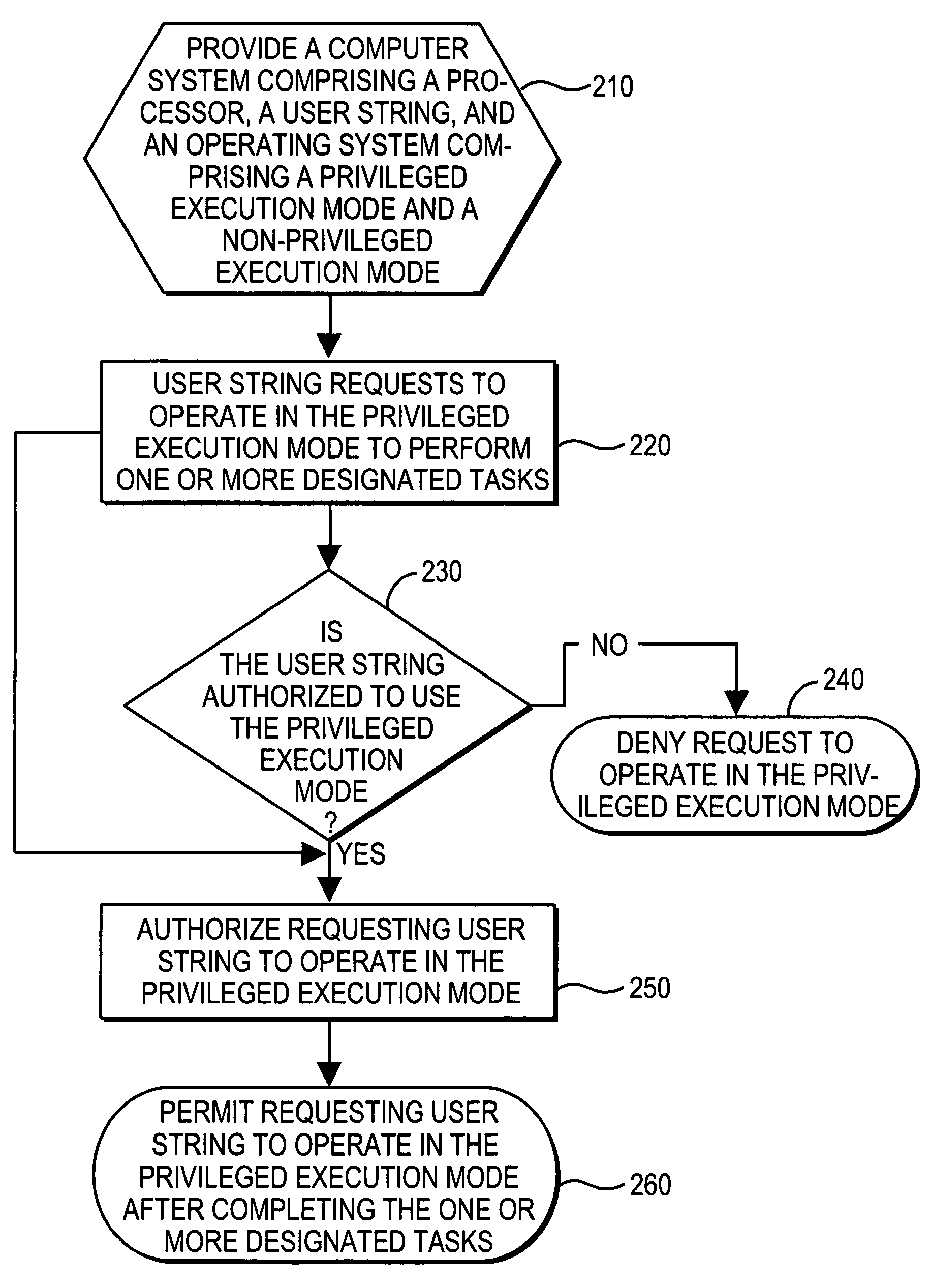

Method to enable user mode process to operate in a privileged execution mode

Owner:INT BUSINESS MASCH CORP

Computer program code debugging method and system

InactiveUS20070168978A1The process is convenient and fastConveniently and efficiently debugError detection/correctionSpecific program execution arrangementsBIOSProgram code

A computer program code debugging method and system is proposed, which is designed for use in conjunction with a computer platform for providing a user-operated computer program code debugging function on a faulty computer program such as a BIOS program; and which is characterized by the capability of automatically collecting all the constituent routines of a faulty computer program and computing for the runtime address of these routines so that the user can utilize the runtime breakpoint address of the faulty program as a keyword to find the problem-causing routine that contains the breakpoint address and thereupon can focus the debugging process solely on that routine rather on the entire program. This feature allows software engineers to more conveniently and efficiently debug a faulty computer program.

Owner:INVENTEC CORP

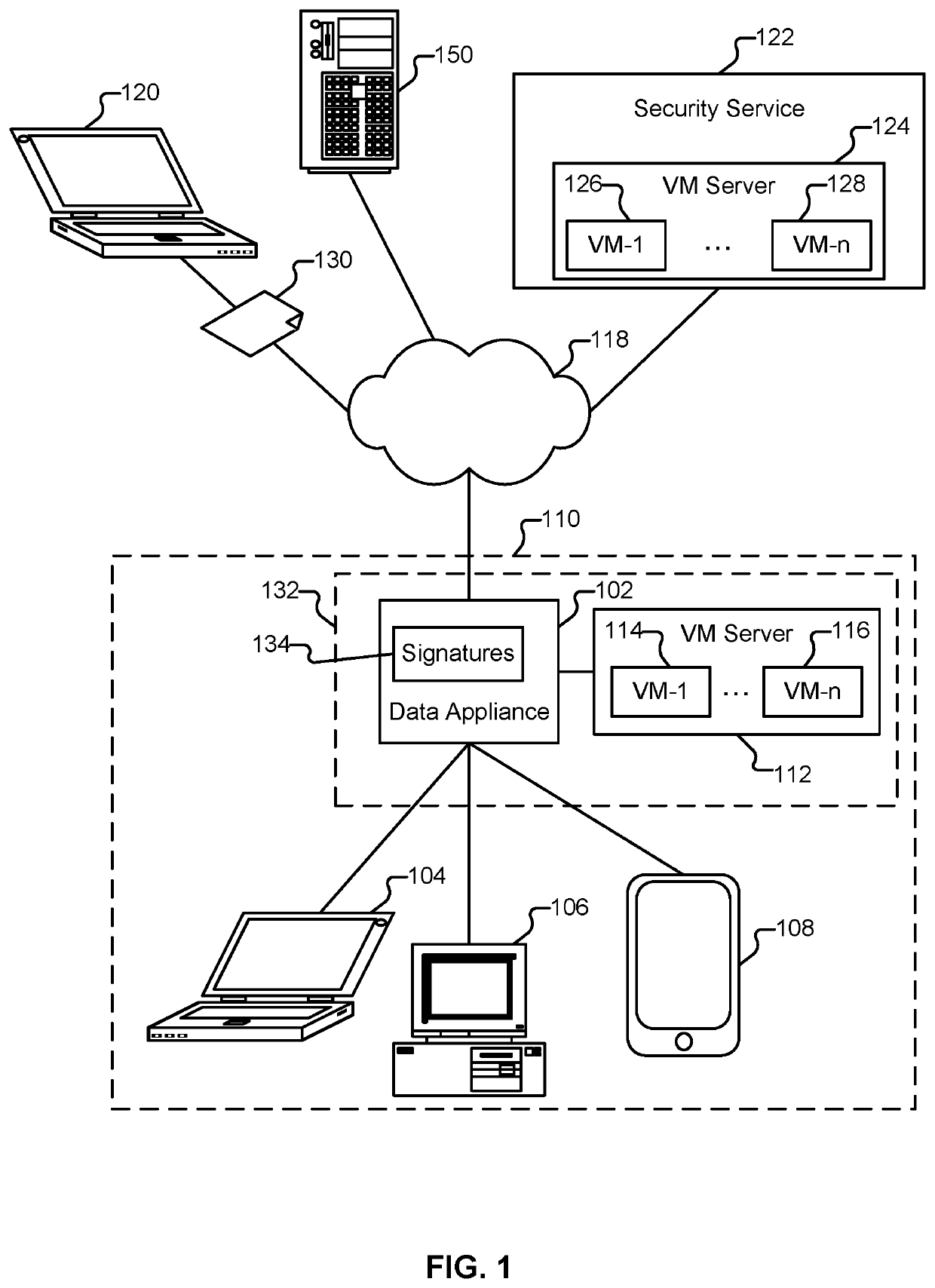

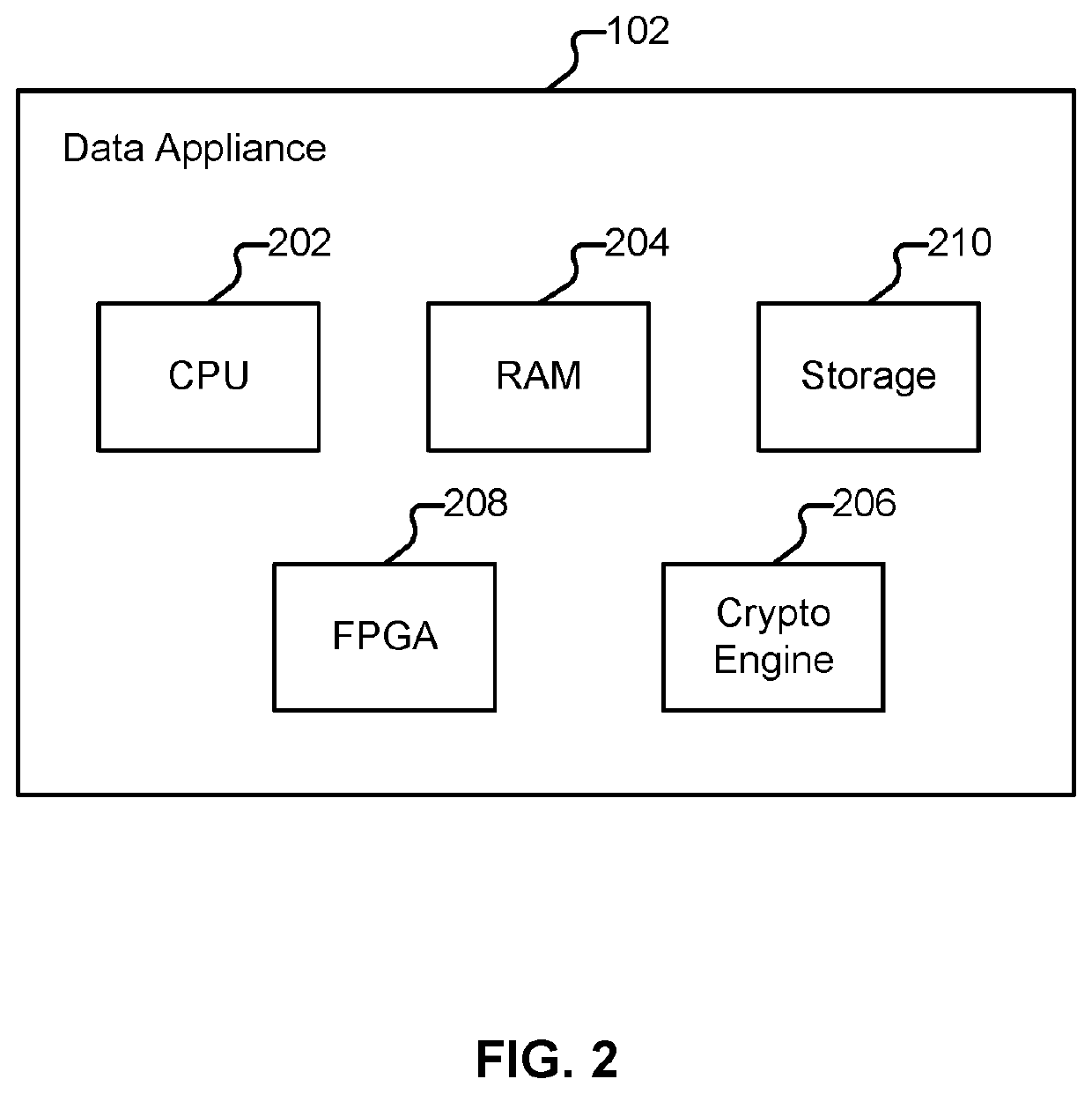

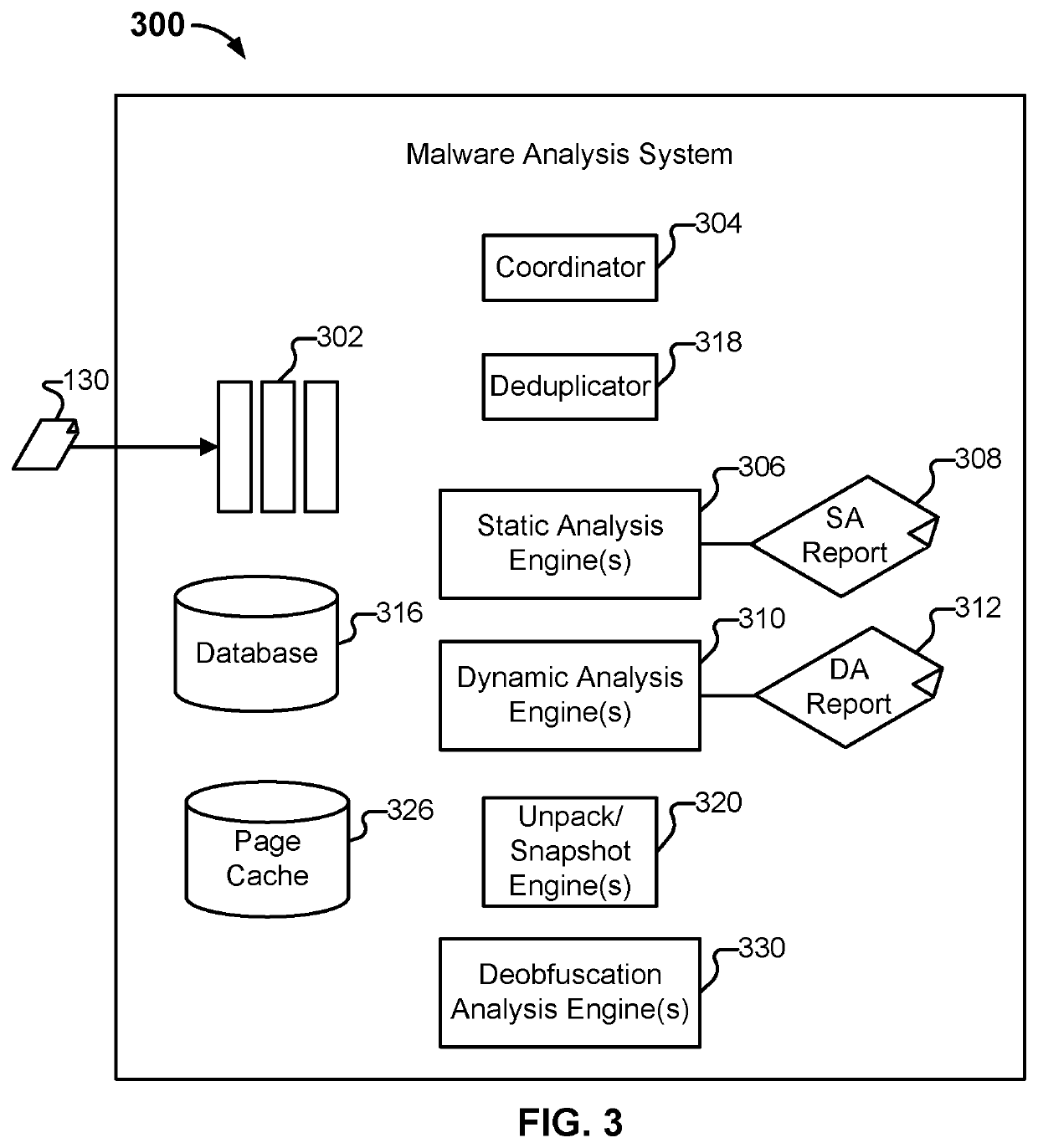

Efficient program deobfuscation through system API instrumentation

ActiveUS10565376B1Memory architecture accessing/allocationInput/output to record carriersSoftware engineeringProcess (computing)

Techniques for efficient program deobfuscation through system application program interface (API) instrumentation are disclosed. In some embodiments, a system / process / computer program product for efficient program deobfuscation through system API instrumentation includes monitoring changes in memory after a system call event during execution of a malware sample in a computing environment; and generating a signature based on an analysis of the monitored changes in memory after the system call event during execution of the malware sample in the computing environment.

Owner:PALO ALTO NETWORKS INC

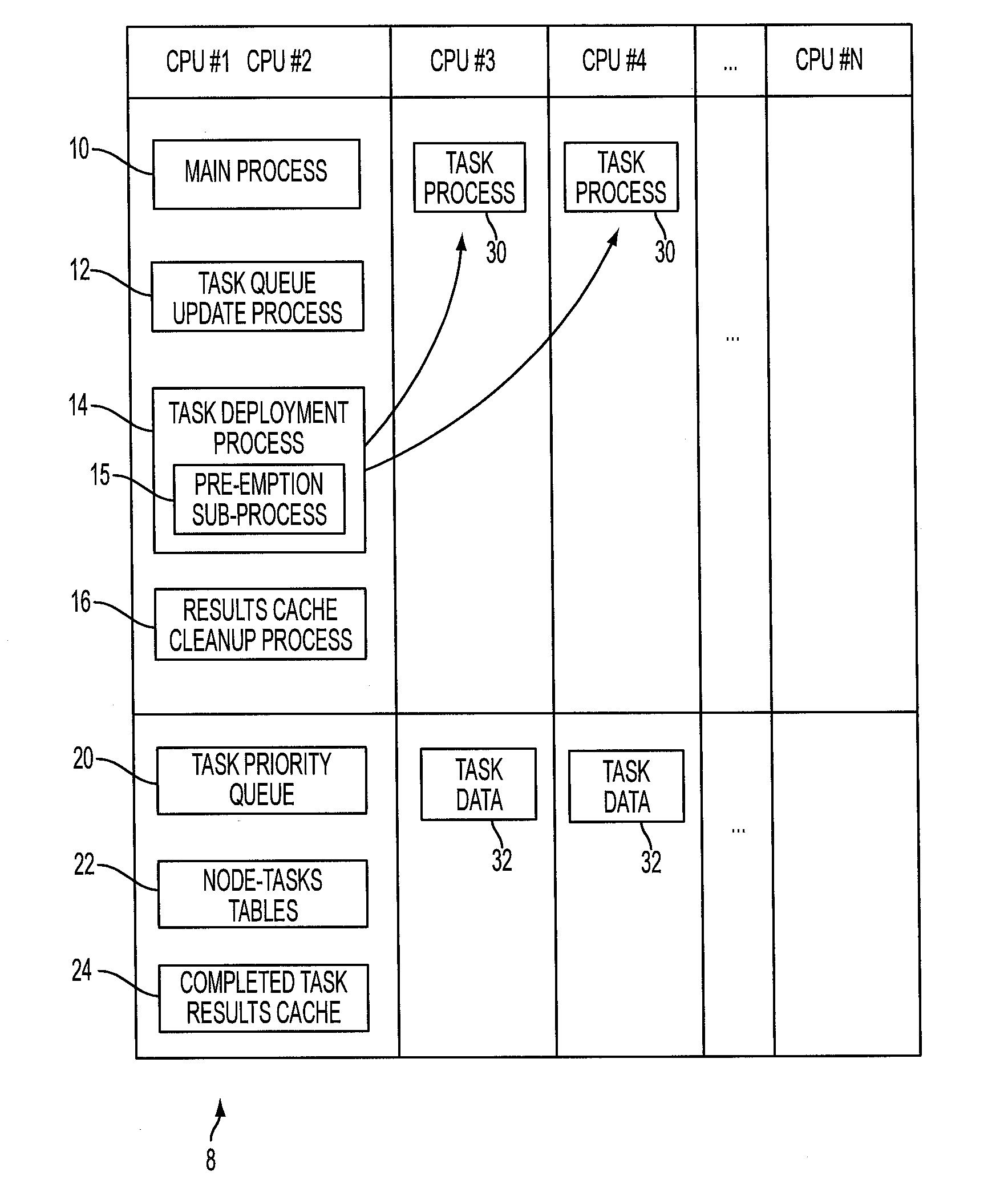

Transportation network micro-simulation pre-emptive decomposition

ActiveUS20160124770A1Simple technologyProgram initiation/switchingProbabilistic networksDecompositionTraffic network

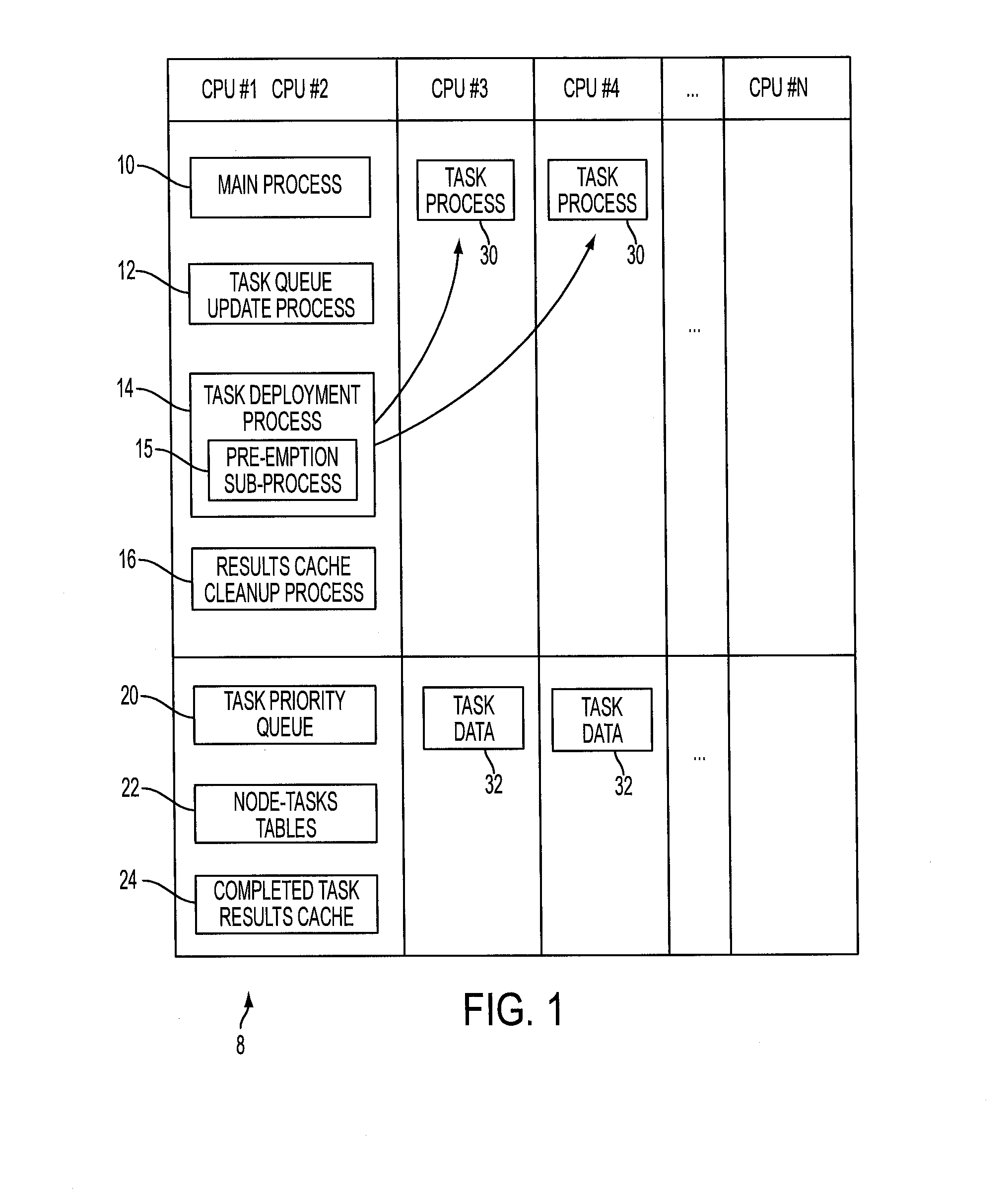

In a parallel computing method performed by a parallel computing system comprising a plurality of central processing units (CPUs), a main process executes. Tasks are executed in parallel with the main process on CPUs not used in executing the main process. Results of completed tasks are stored in a cache, from which the main process retrieves completed task results when needed. The initiation of task execution is controlled by a priority ranking of tasks based on at least probabilities that task results will be needed by the main process and time limits for executing the tasks. The priority ranking of tasks is from the vantage point of a current execution point in the main process and is updated as the main process executes. An executing task may be pre-empted by a task having higher priority if no idle CPU is available.

Owner:CONDUENT BUSINESS SERVICES LLC

Synthesis Path For Transforming Concurrent Programs Into Hardware Deployable on FPGA-Based Cloud Infrastructures

Exploiting FPGAs for acceleration may be performed by transforming concurrent programs. One example mode of operation may provide one or more of creating synchronous hardware accelerators from concurrent asynchronous programs at software level, by obtaining input as software instructions describing concurrent behavior via a model of communicating sequential processes (CSP) of message exchange between concurrent processes performed via channels, mapping, on a computing device, each of the concurrent processes to synchronous dataflow primitives, comprising at least one of join, fork, merge, steer, variable, and arbiter, producing a clocked digital logic description for upload to one or more field programmable gate array (FPGA) devices, performing primitive remapping of the output design for throughput, clock rate and resource usage via retiming, and creating an annotated graph of the input software description for debugging of concurrent code for the field FPGA devices.

Owner:RECONFIGURE IO LTD

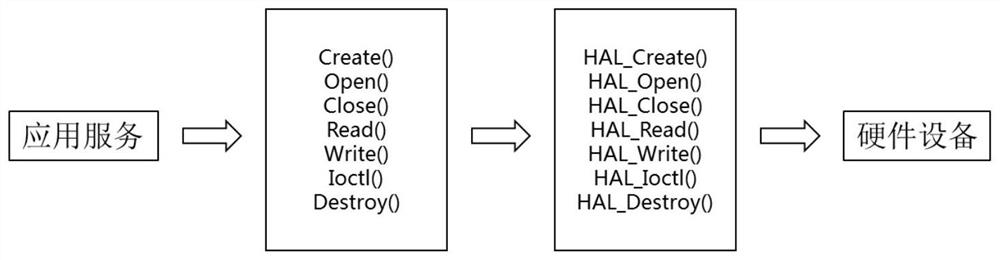

Lightweight application adaptation method for edge computing heterogeneous environment

PendingCN113687913ASolve the problem of repeatedly compiling applicationsProgram code adaptionSoftware simulation/interpretation/emulationVirtualizationOperational system

The invention particularly relates to a lightweight application adaptation method oriented to an edge computing heterogeneous environment. According to the lightweight application adaptation method oriented to the edge computing heterogeneous environment, an integrated development environment driven by an automatic assembly line is constructed by combining a cross compiling technology and a virtualization technology, compilers of different hardware environments are packaged, and different steps and tasks in the compiling process are automatically connected in series by using a containerized cross compiling environment, a unified workflow framework is provided for heterogeneous environments, codes needing to be compiled and a compiling tool are combined together by using a container persistence means, and a container automatically runs a compiling assembly line to finally generate an executable program of each heterogeneous environment. According to the lightweight application adaptation method oriented to the edge computing heterogeneous environment, an edge computing application across the heterogeneous environment can be conveniently constructed, various edge computing operating system environments are adapted, and a heterogeneous CPU environment is supported. The problem that applications need to be repeatedly compiled in a heterogeneous environment is solved.

Owner:SHANDONG LANGCHAO YUNTOU INFORMATION TECH CO LTD

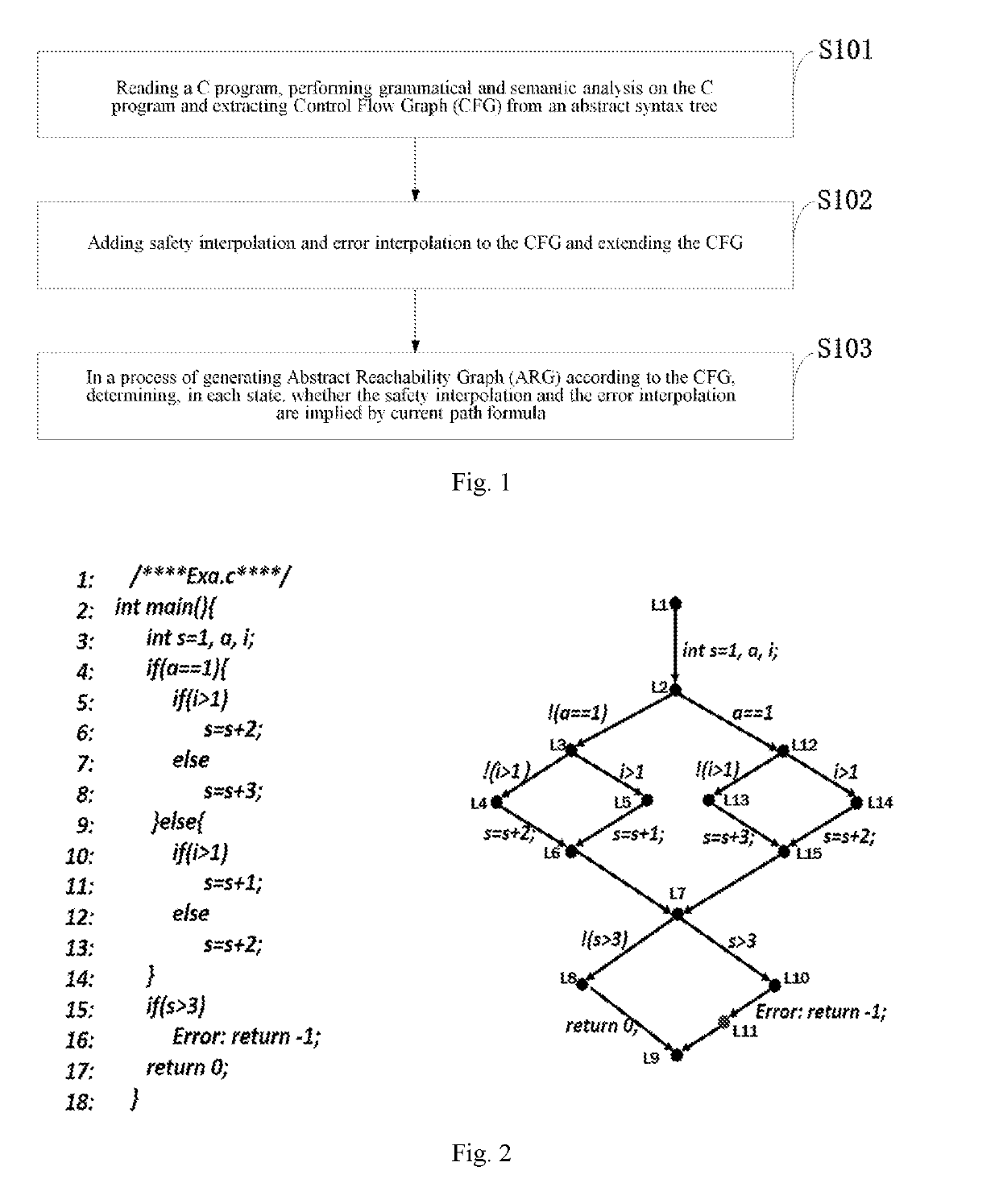

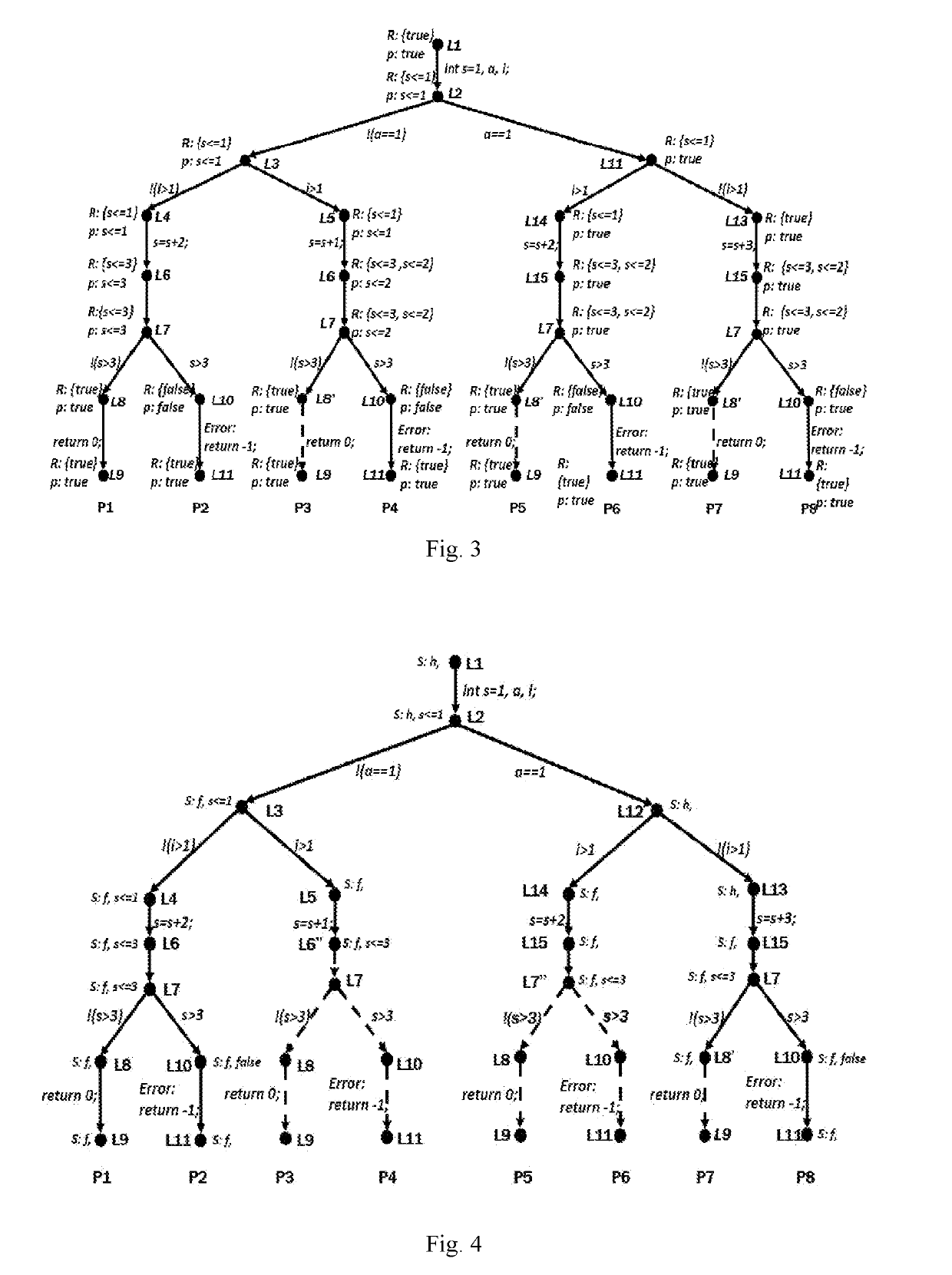

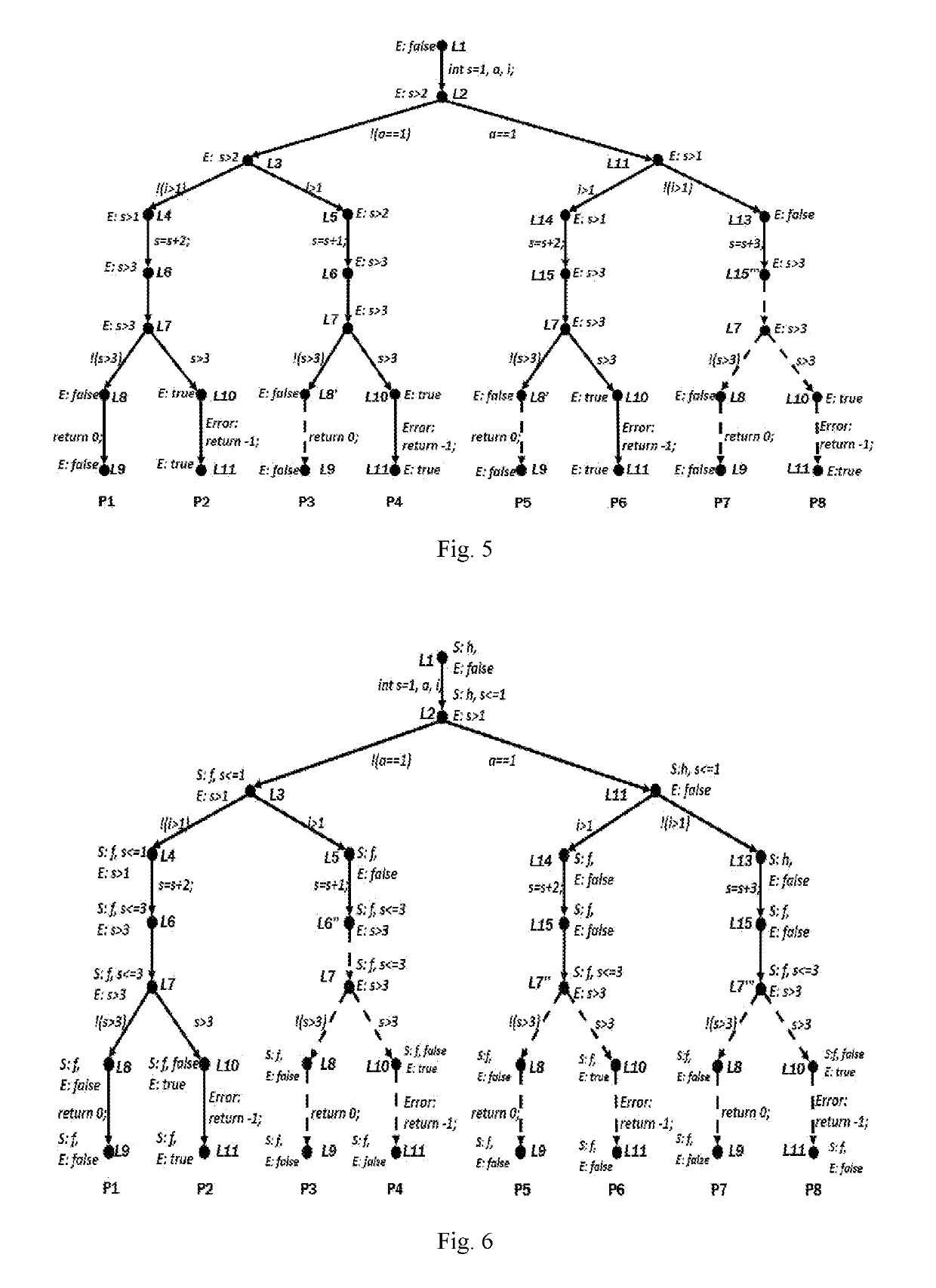

Interpolation Based Path Reduction Method in Software Model Checking

ActiveUS20190095314A1Reduces path search spaceImprove verification efficiencySoftware testing/debuggingErrors and residualsAbstract syntax tree

A method for model checking path reduction based on interpolation comprises: reading a C program, performing grammatical and semantic analysis on the C program, and extracting CFG from an abstract syntax tree; adding safety (S) interpolation and error (E) interpolation to the CFG and extending the CFG; in a process of generating ARG according to the CFG, determining, in each state, whether the safety interpolation and the error interpolation are implied by current path formula. The method improves the verification efficiency by computing the S interpolation and the E interpolation, which makes the algorithm of the model checking a better use in large-scale programs. The S interpolation can be used to avoid the unnecessary traversal, greatly reducing the number of ARG state. The E interpolation can be used to quickly determine whether there is a true counterexample in the program, accelerating the program's verification and improving the efficiency.

Owner:XIDIAN UNIV

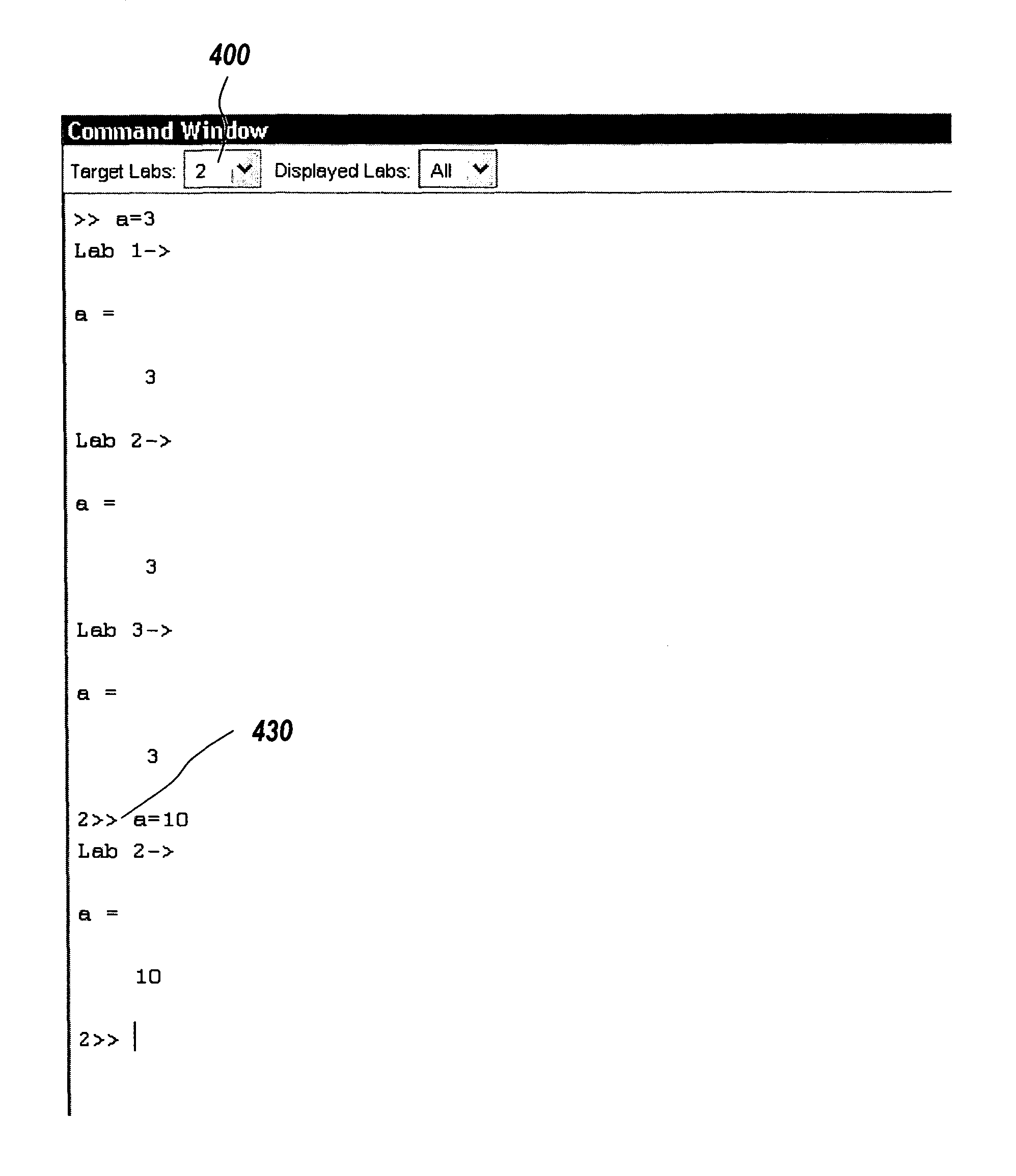

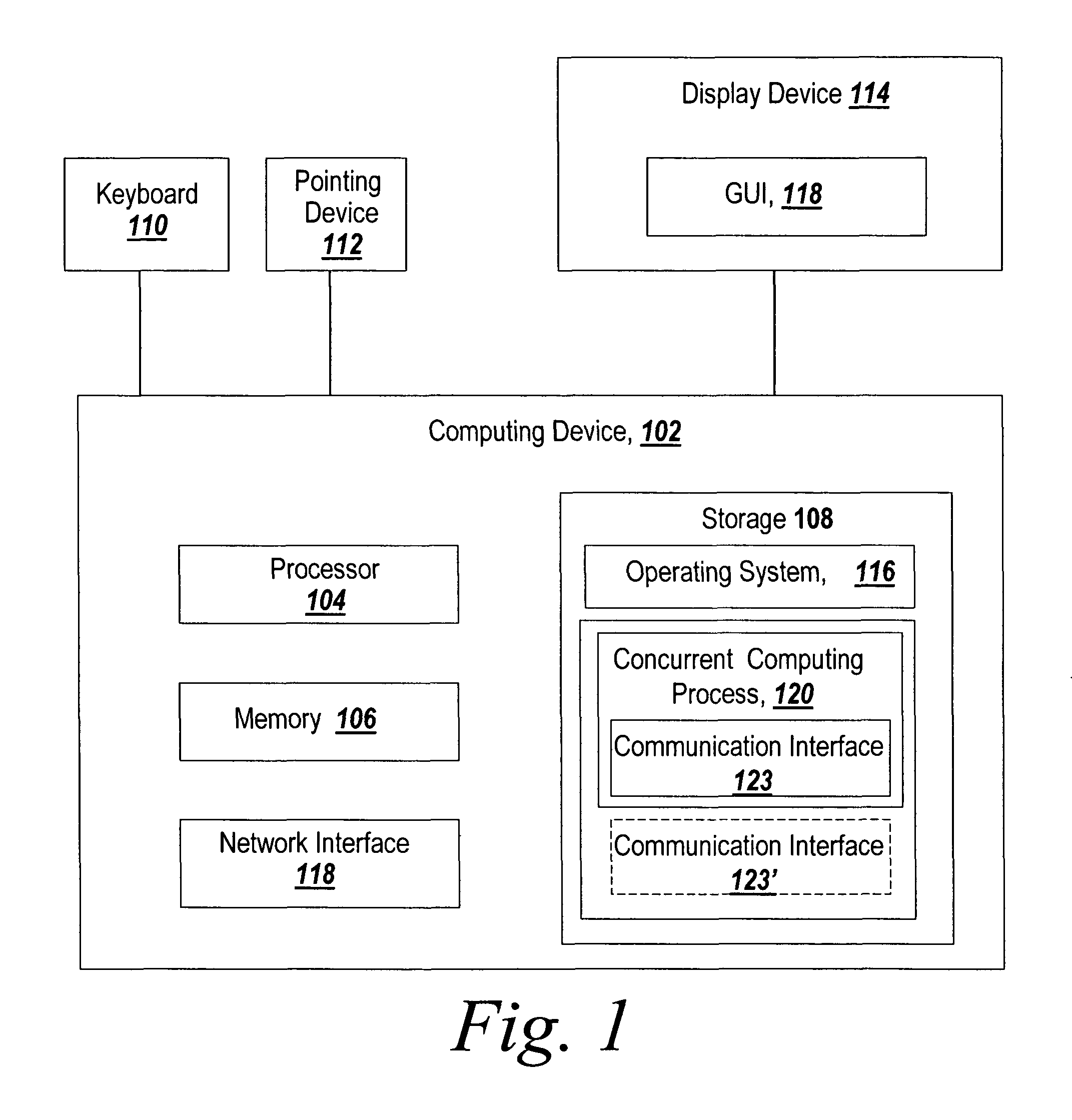

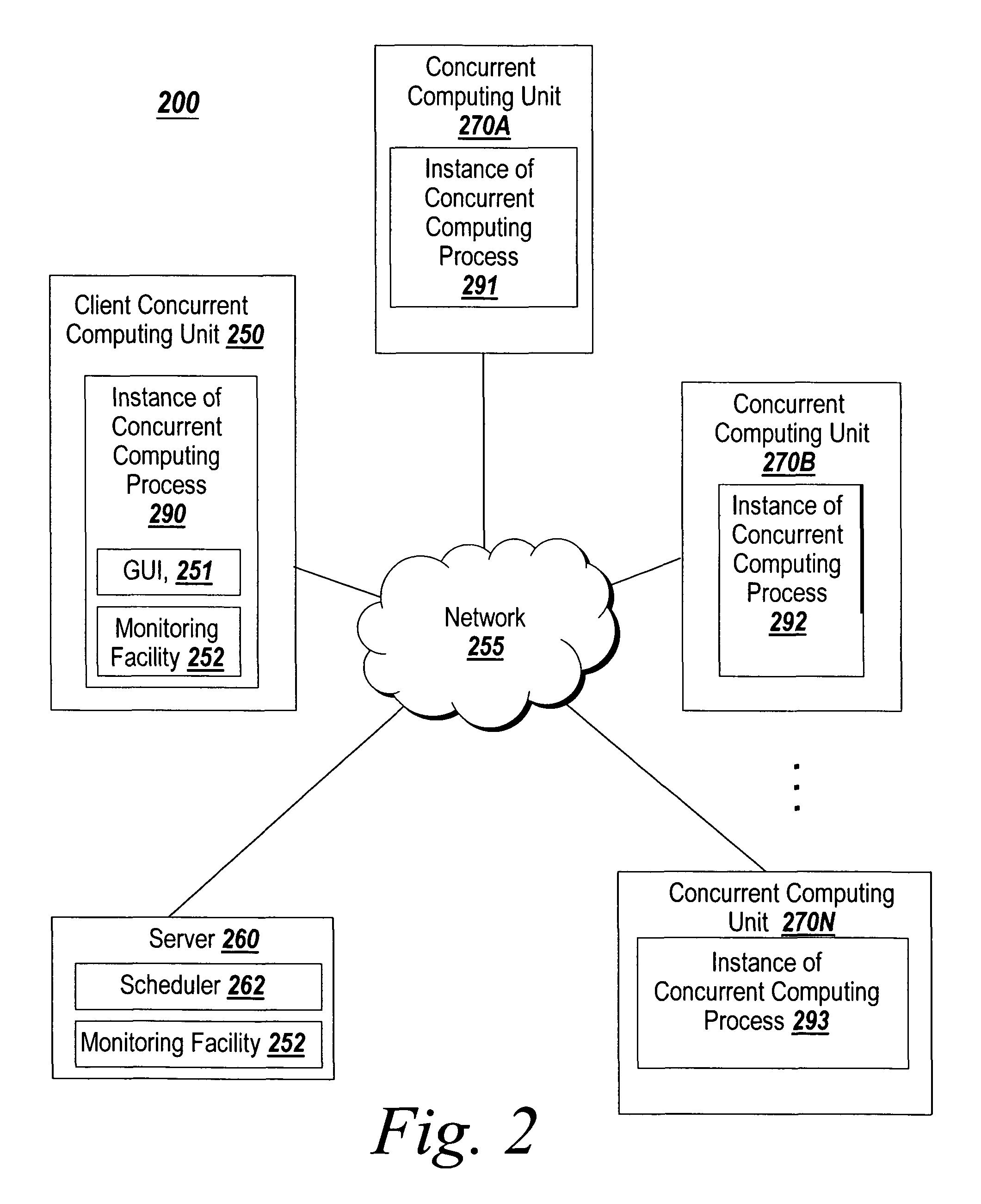

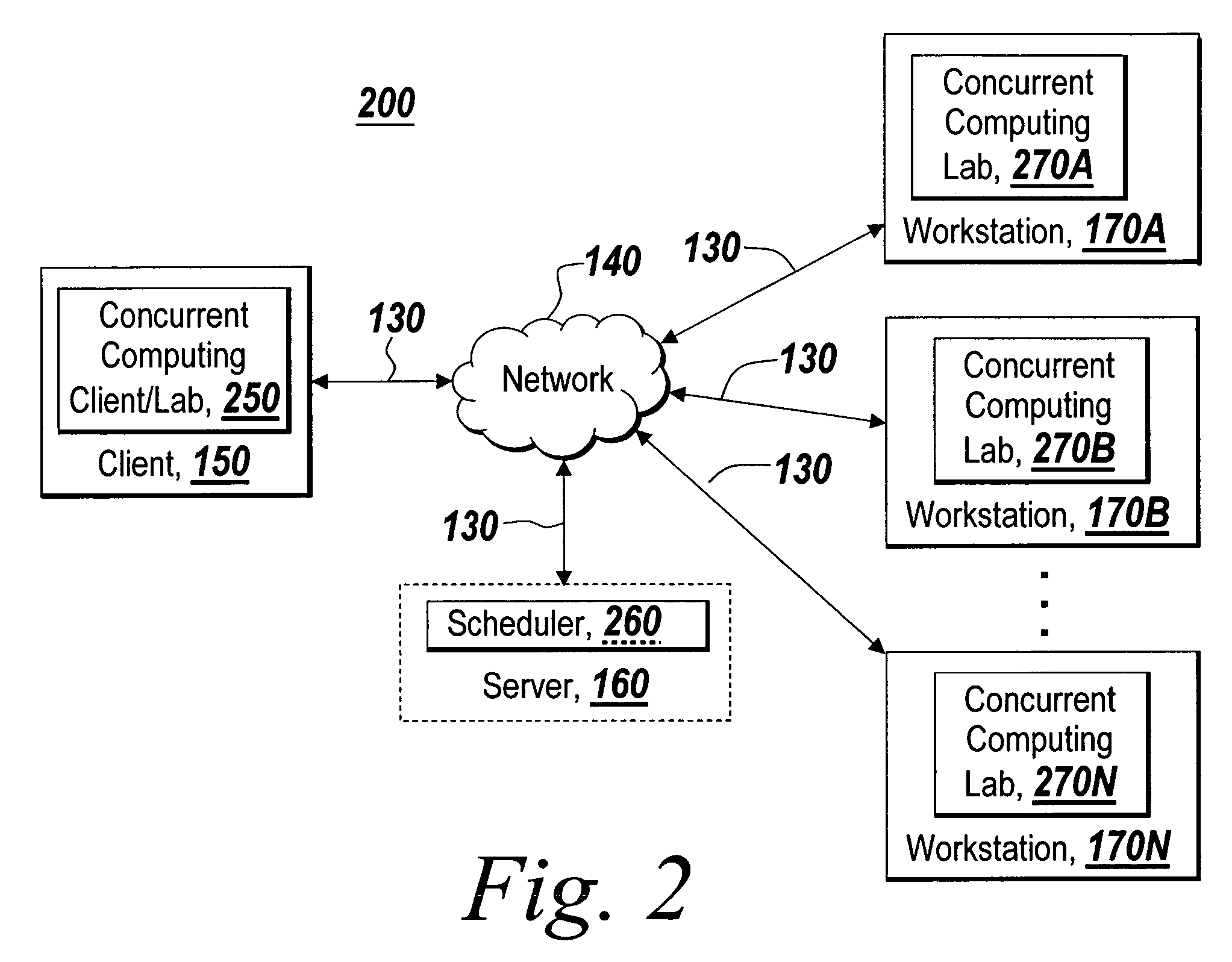

System and method for targeting commands to concurrent computing units executing a concurrent computing process

A graphical user interface for a concurrent computing environment that conveys the concurrent nature of a computing environment and allows a user to monitor the status of a concurrent process being executed on multiple concurrent computing units is discussed. The graphical user interface allows the user to target specific concurrent computer units to receive commands. The graphical user interface also alters the command prompt to reflect the currently targeted concurrent computing units.

Owner:THE MATHWORKS INC

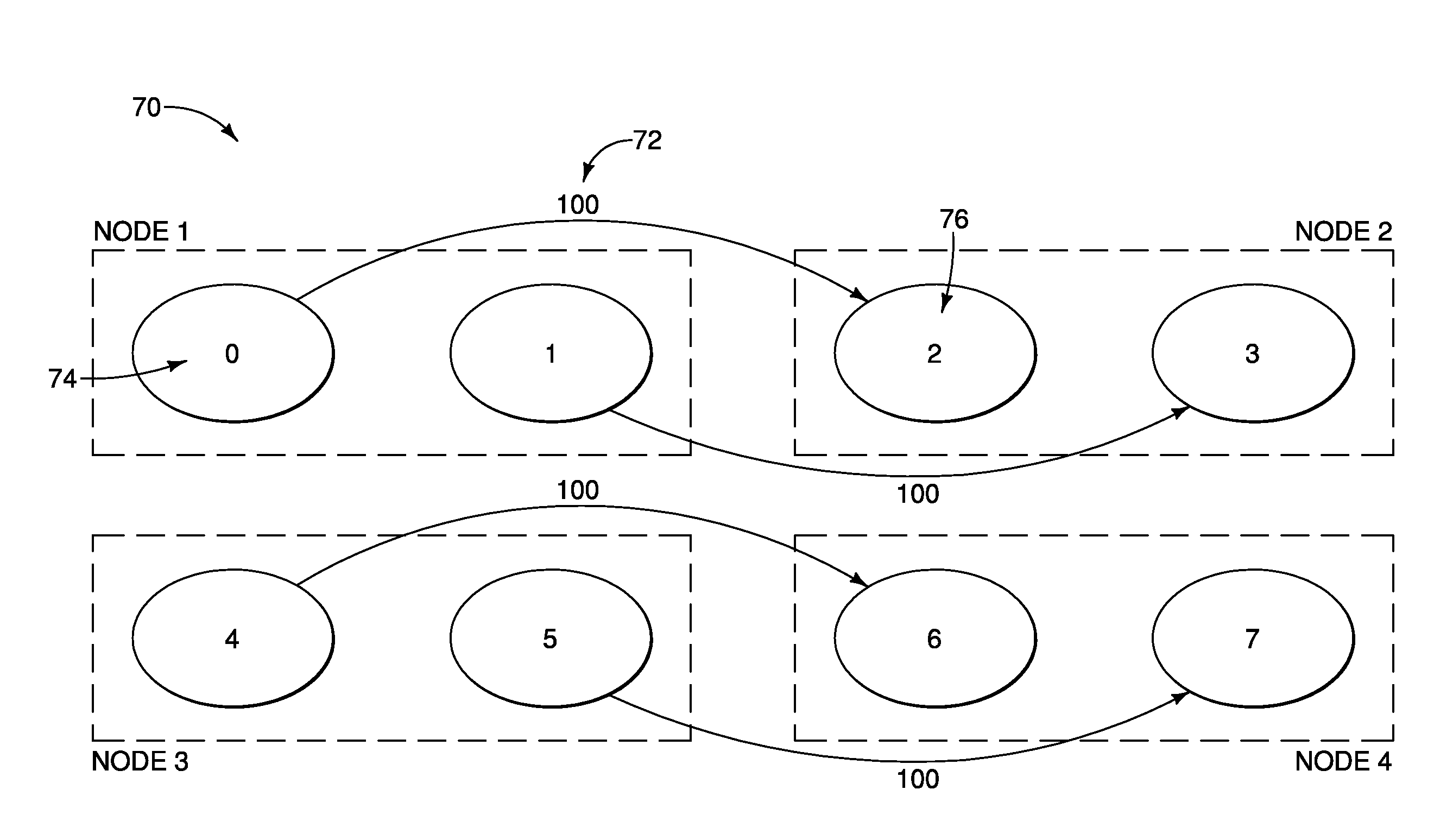

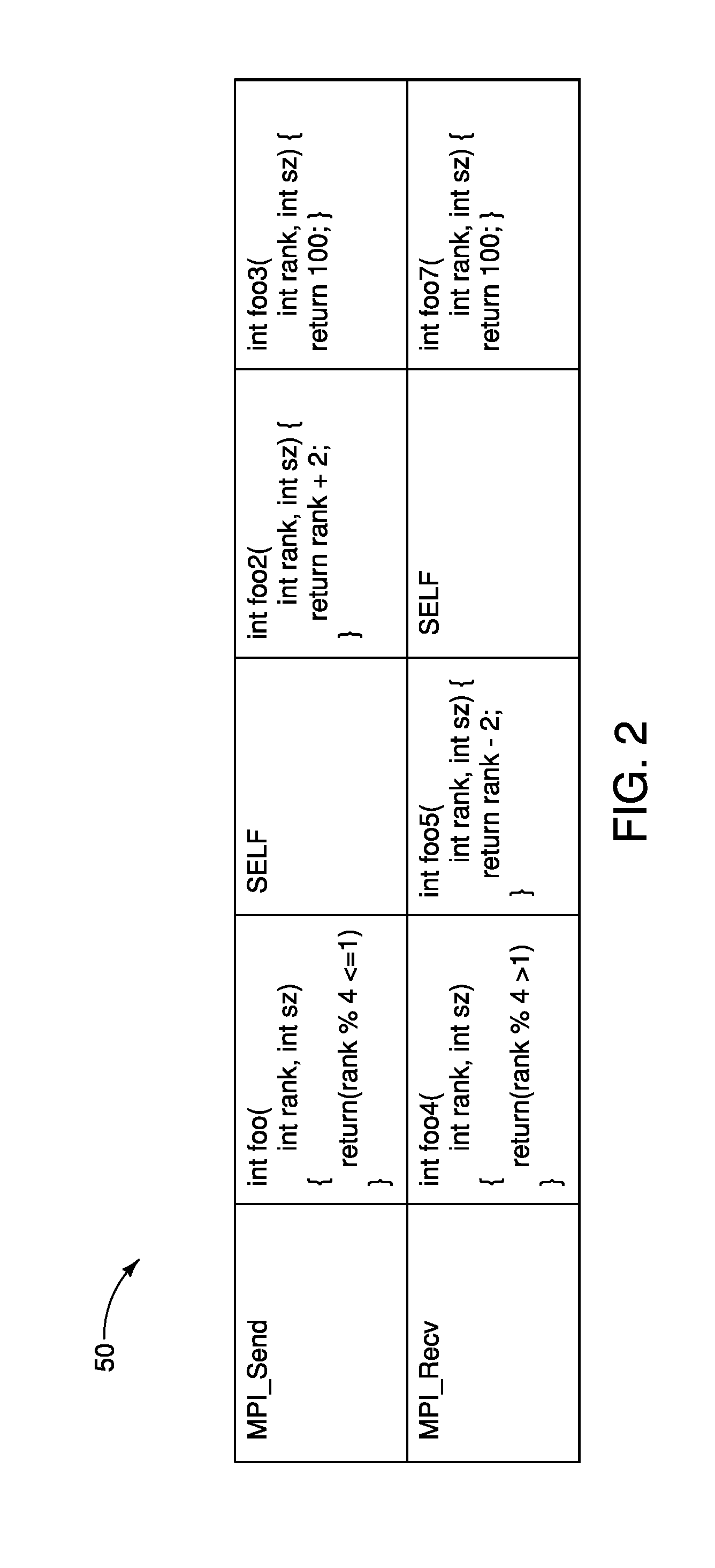

Process mapping in parallel computing

InactiveUS20100218190A1Easy to learnReduce communication overheadDigital data information retrievalInterprogram communicationMessage Passing InterfaceCommunication Analysis

A method of mapping processes to processors in a parallel computing environment where a parallel application is to be run on a cluster of nodes wherein at least one of the nodes has multiple processors sharing a common memory, the method comprising using compiler based communication analysis to map Message Passing Interface processes to processors on the nodes, whereby at least some more heavily communicating processes are mapped to processors within nodes. Other methods, apparatus, and computer readable media are also provided.

Owner:IBM CORP

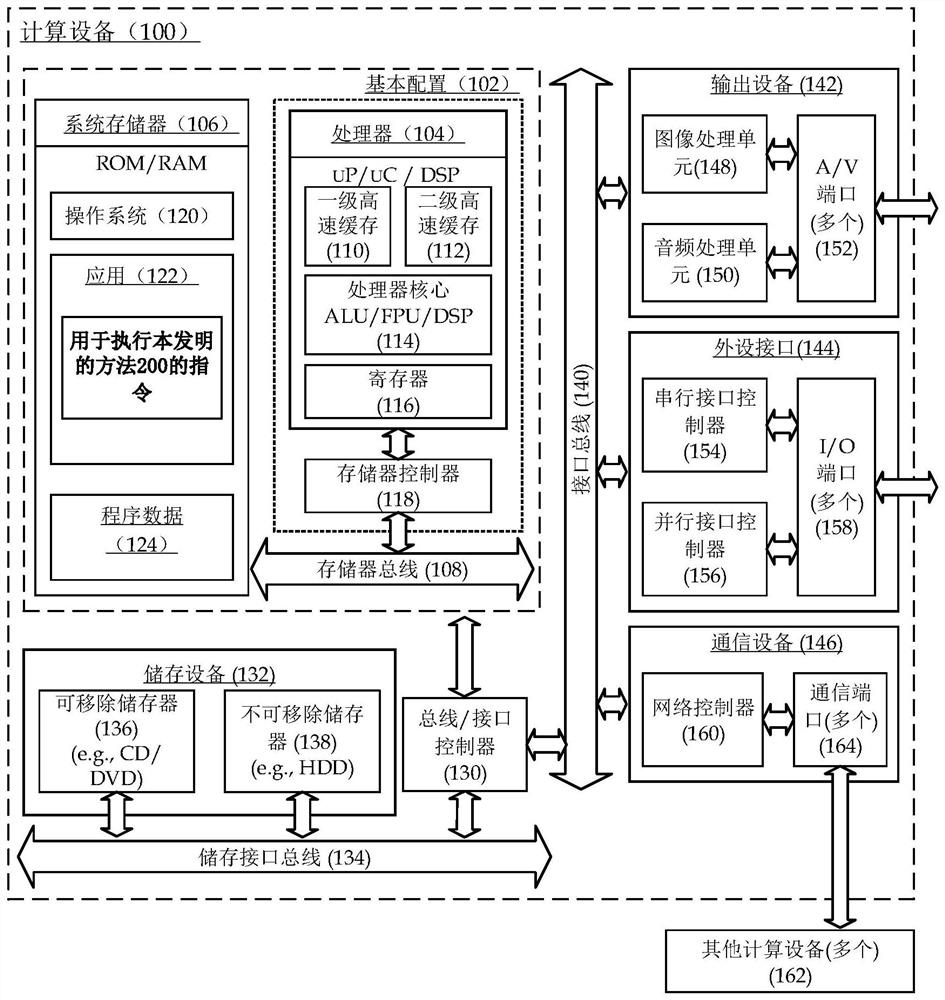

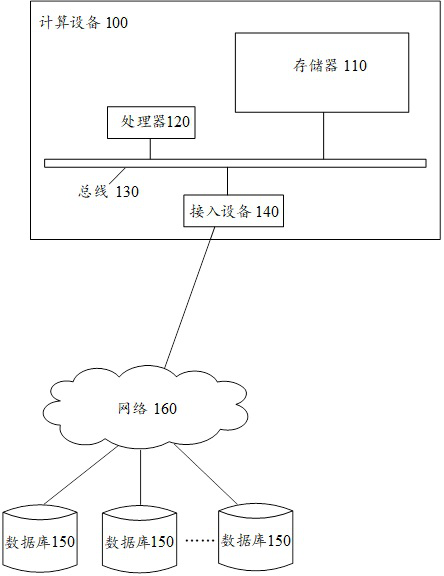

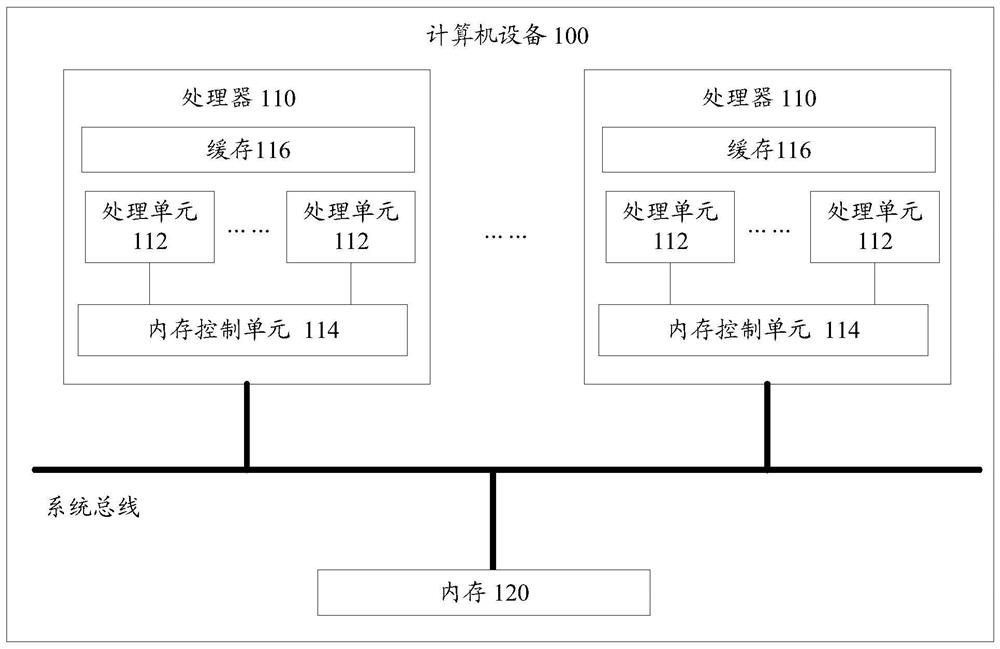

Heterogeneous program execution method and device, computing equipment and readable storage medium

ActiveCN113127100AImprove operational efficiencyGuaranteed uptimeProgram loading/initiatingEnergy efficient computingMemory addressProcess (computing)

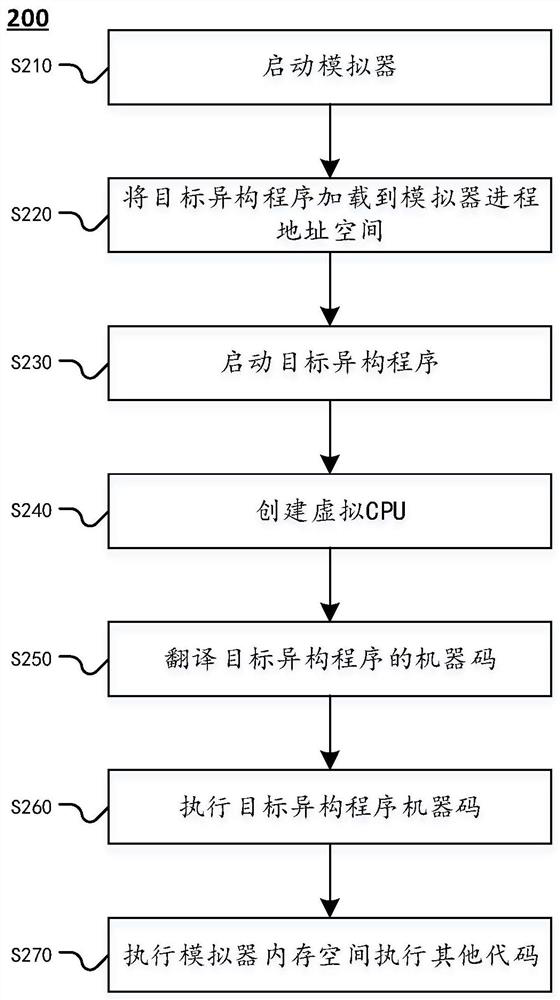

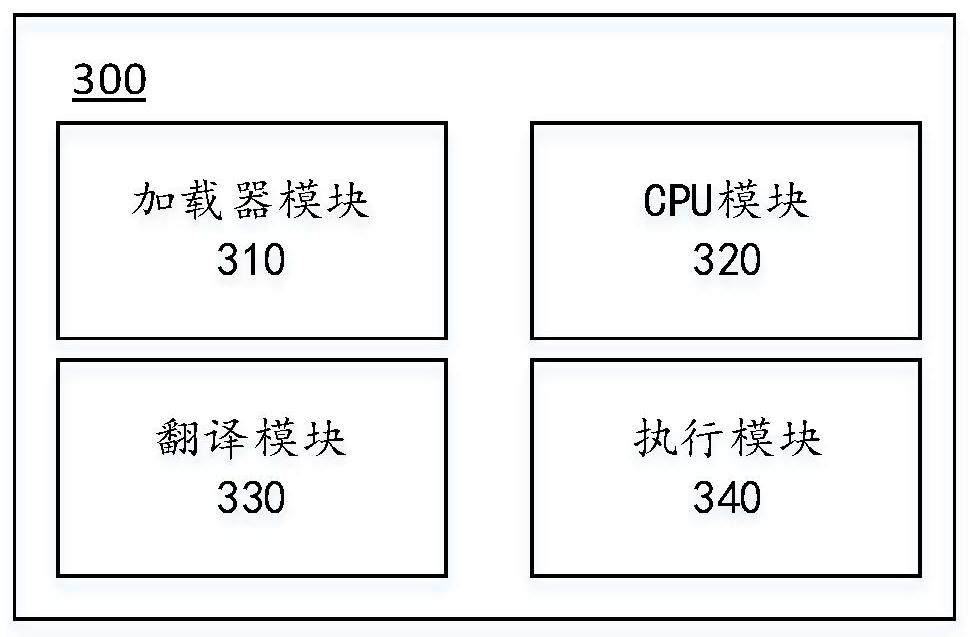

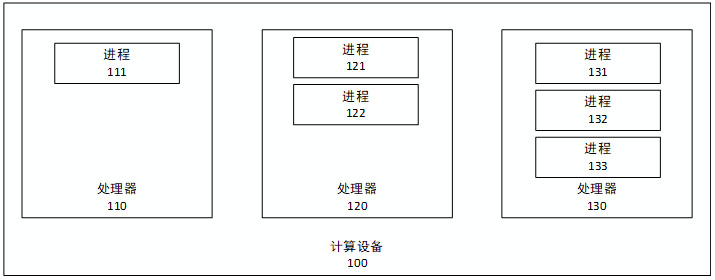

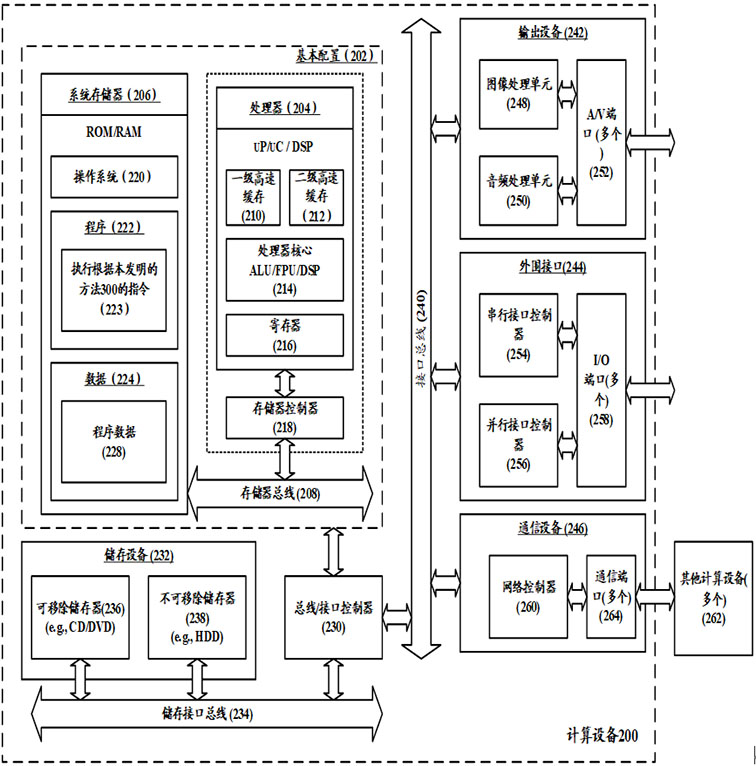

The invention discloses a heterogeneous program execution method which is suitable for being executed in a simulator, the simulator is suitable for residing in computing equipment, a target heterogeneous program is installed on the simulator, and the method comprises the following steps: when the simulator is started, obtaining the target heterogeneous program on the simulator; loading the target heterogeneous program to a memory address space of a simulator process; creating a virtual CPU according to the starting request of the target heterogeneous program; and executing the target heterogeneous program through the virtual CPU. The invention also discloses a corresponding device, computing equipment and a readable storage medium.

Owner:武汉深之度科技有限公司

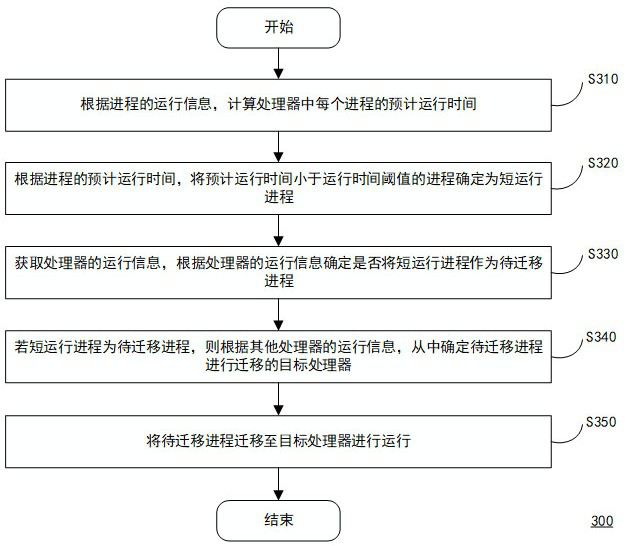

Process migration method, computing device and storage medium

ActiveCN113553164AImprove efficiencyInhibit migrationProgram initiation/switchingProcess (computing)Term memory

The invention discloses a process migration method, a computing device and a storage medium, and the process migration method comprises the following steps of calculating the predicted running time of each process in a processor according to the running information of the processes; according to the predicted running time of the processes, determining the process with the predicted running time smaller than a running time threshold value as a short running process; acquiring the running information of the processor, and determining whether the short running process is used as a to-be-migrated process according to the running information of the processor; if the short running process is the to-be-migrated process, determining a target processor for migrating the to-be-migrated process according to the running information of other processors; and migrating the to-be-migrated process to the target processor for operation. According to the present invention, the migration of all the short running processes can be avoided, the migration frequency of the short running processes is reduced, the problem of invalidation of cache and memory affinity caused by migration is reduced, and it is guaranteed that when the short running processes are migrated, the system performance can be improved, and the use efficiency of the processor is improved.

Owner:UNIONTECH SOFTWARE TECH CO LTD

Hot update method and device for Unity, computing equipment and computer readable storage medium

ActiveCN114115964AFix compatibility issuesReduce incompatibilitySoftware engineeringMetadata managementSoftware engineering

The invention provides a hot update method and device for Unity, computing equipment and a computer readable storage medium, and the method comprises the steps: adding an interpreter realized by C + + for an IL2CPP tool, and transforming a runtime only supporting AOT static compiling into a runtime supporting an AOT compiler and the interpreter; the metadata management module during operation is further transformed, and the function of dynamically loading the hot update program set dynamic library is achieved; in the calling process of the function, the function from the hot update program set points to the interpreter by intercepting the loading process of the program set, so that the interpretive execution of the function in the hot update program set is completed, the unification of function calling is realized, and the long-standing defects of the hot update scheme in the prior art are overcome.

Owner:在线途游(北京)科技有限公司 +1

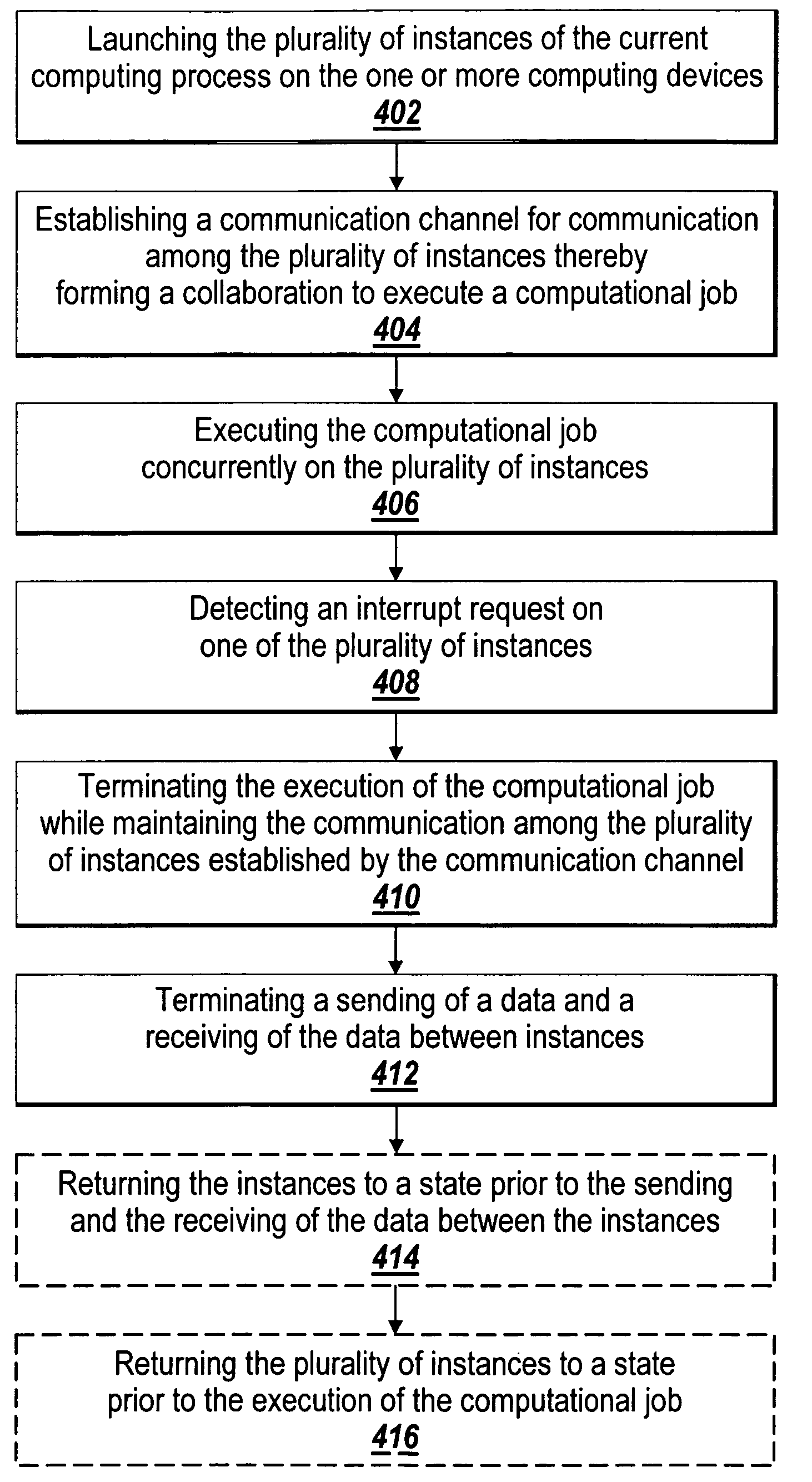

Exception handling in a concurrent computing process

ActiveUS8156493B2Error preventionFrequency-division multiplex detailsConcurrent computingProcess (computing)

The present invention provides a method and system for exception handling in an executable process executing in a concurrent computing environment. The present invention allows a user to interrupt the executable process at any stage of the computation without disconnecting the communication channel among the instances of the executable process. Additionally, the present invention also allows interrupting the concurrent computing process at any stage when an error occurs in the executable process or the communication channel without terminating the communication among the instances of the executable process. Upon notification of an interrupt request, each of the instances of the executable process in the concurrent computing environment flushes any pending incoming messages to return itself to a previous known state while maintaining the communication channel between the instances of the executable process.

Owner:THE MATHWORKS INC

Detecting malware via scanning for dynamically generated function pointers in memory

ActiveUS20200201998A1Hardware monitoringPlatform integrity maintainanceComputer hardwareFunction pointer

Owner:PALO ALTO NETWORKS INC

Method for managing physical bare computer based on Ironic

PendingCN112948008AMake full use of memory spaceImplement auto-discoveryBootstrappingRedundant operation error correctionSoftware engineeringProcess (computing)

The invention provides a method for managing a physical bare computer based on Ironic, and belongs to the field of cloud computing; after the bare computer is started through mini-ramdisk, the mini-ramdisk can access a complete memory space, then an ipa-boot-script process is started to complete downloading and starting work of an IPA, and finally management of the bare computer by the ironic conductor is achieved.

Owner:SHANDONG LANGCHAO YUNTOU INFORMATION TECH CO LTD

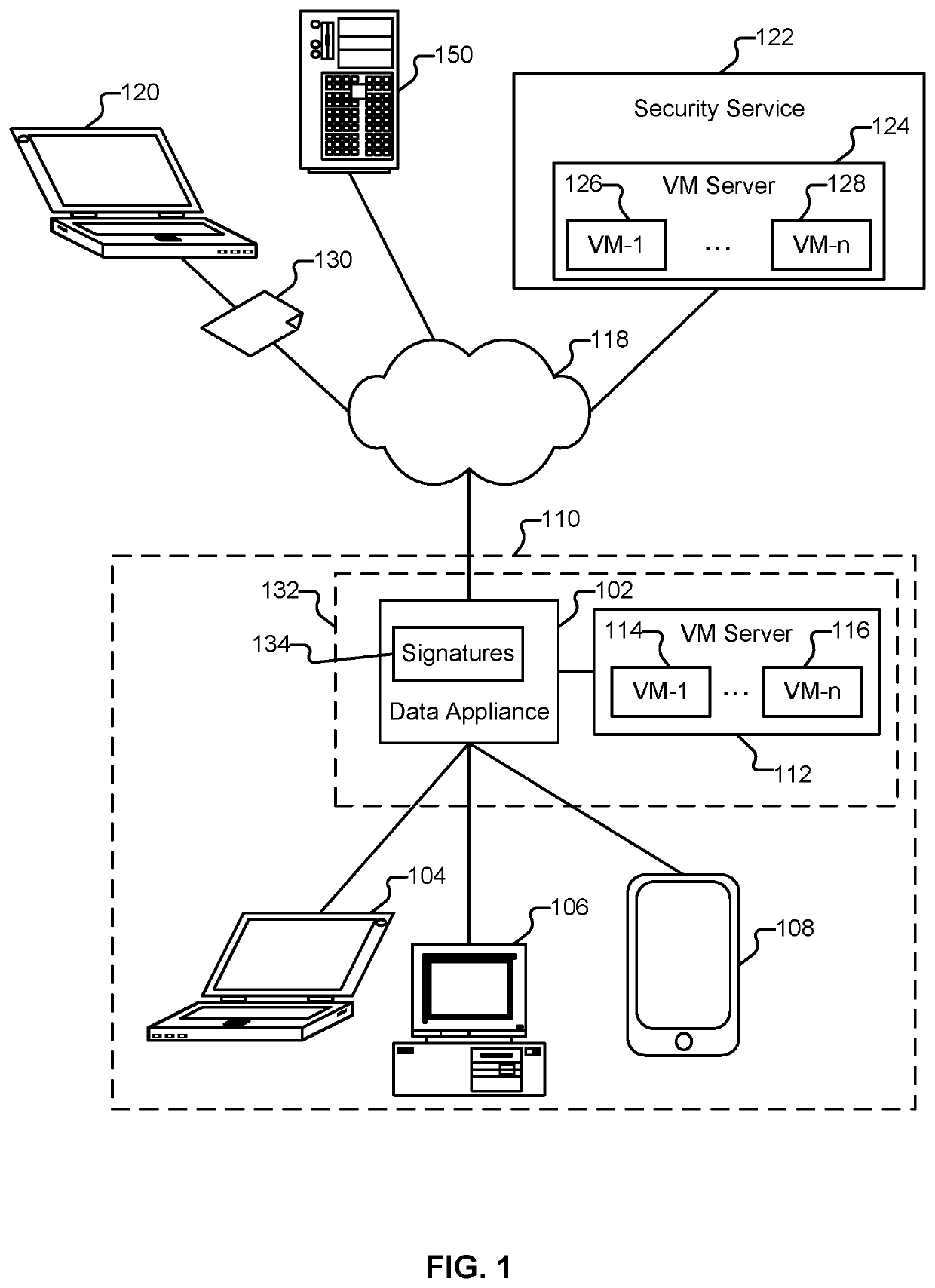

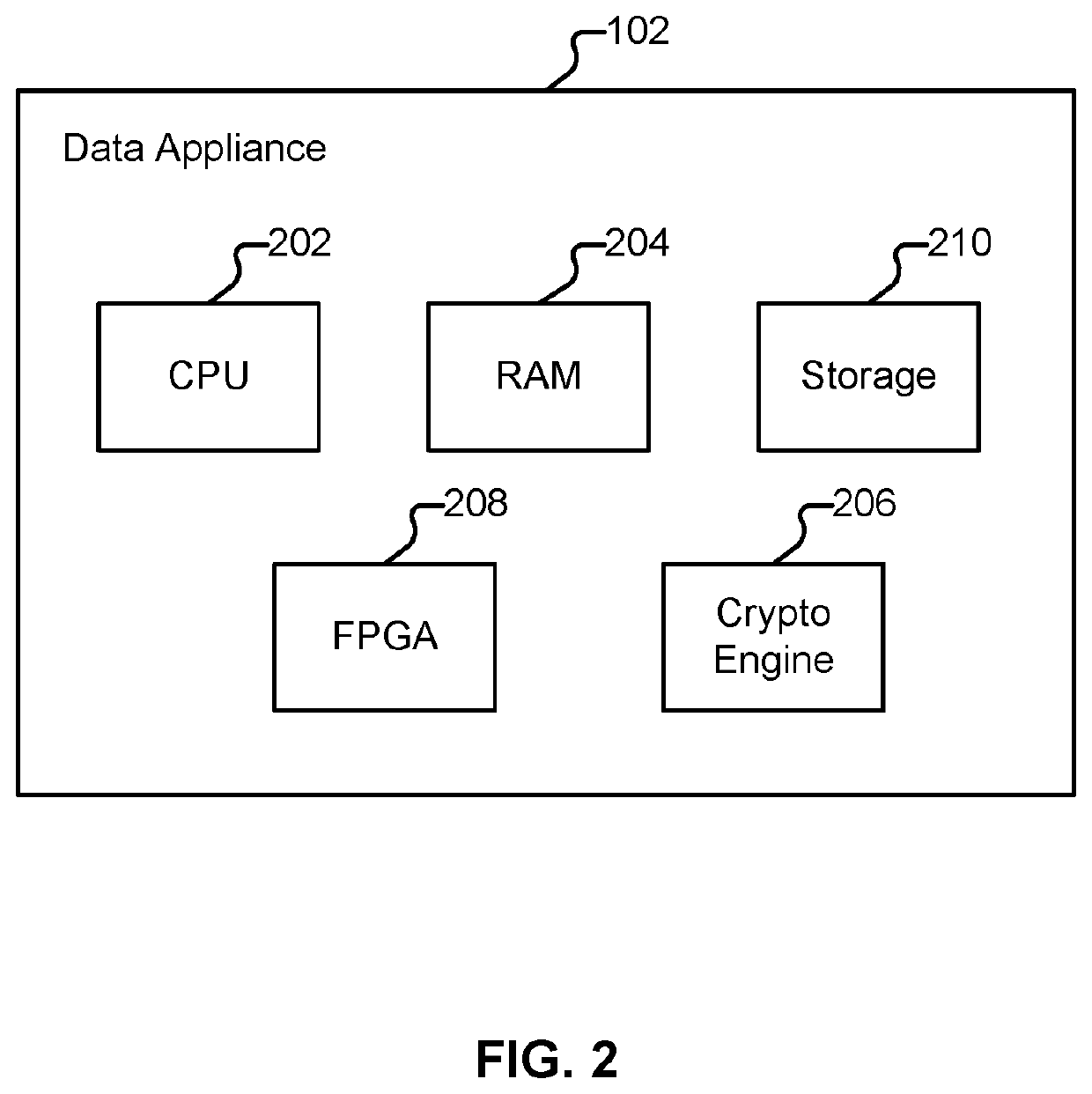

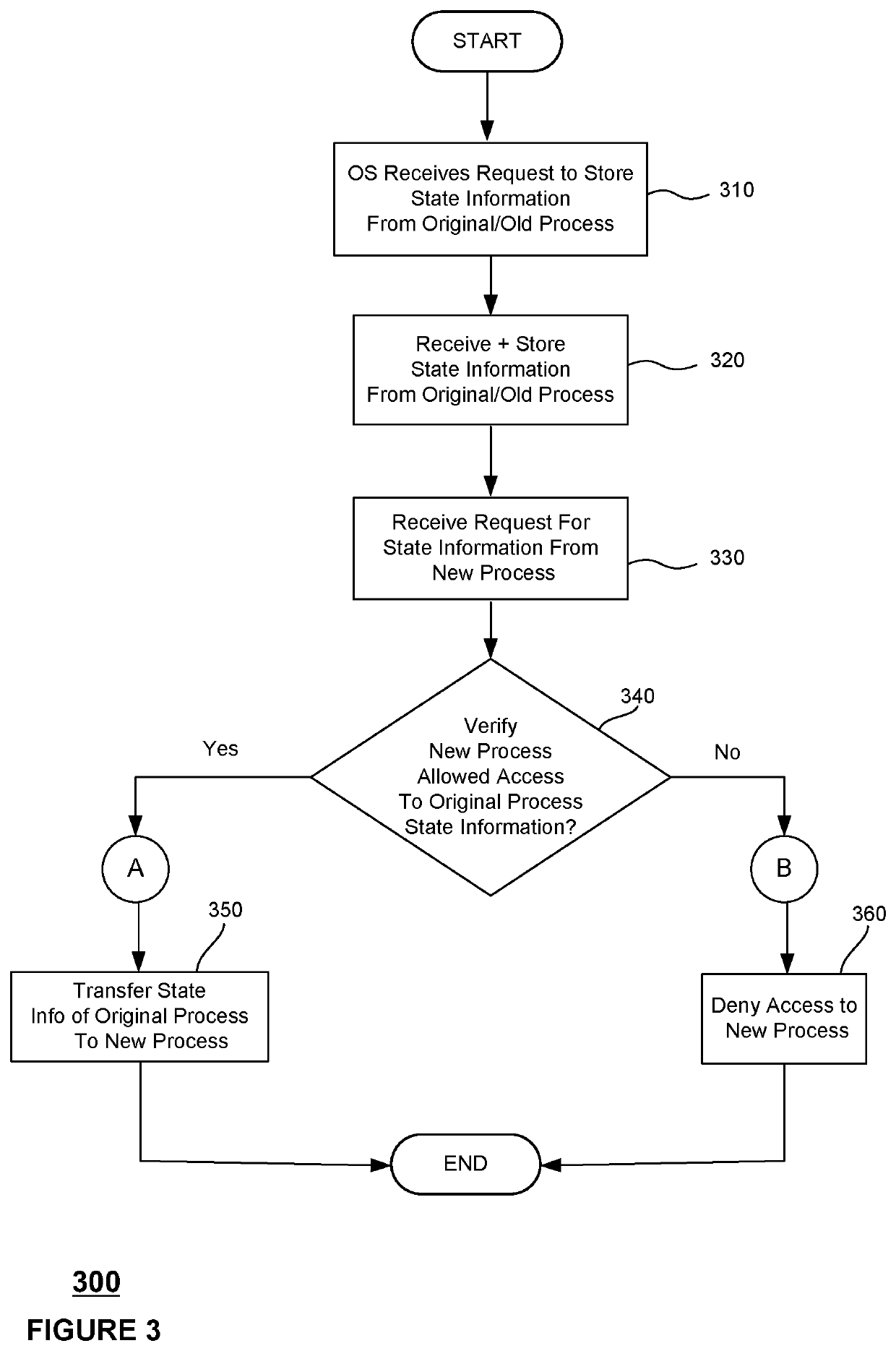

Transferral Of Process State And/Or Components In Computing Environments

ActiveUS20200319918A1Program initiation/switchingResource allocationSoftware engineeringProcess (computing)

This technology relates to transferring state information between processes or active software programs in a computing environment where the a new instance of a process or software program may receive such state information even after an original or old instance of the process or software program that owned the state information has terminated either naturally or unnaturally.

Owner:GOOGLE LLC

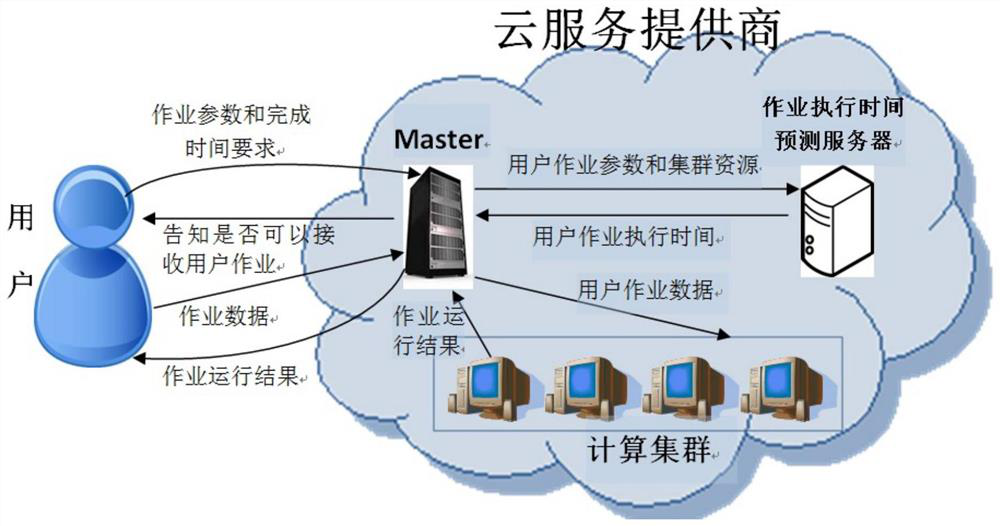

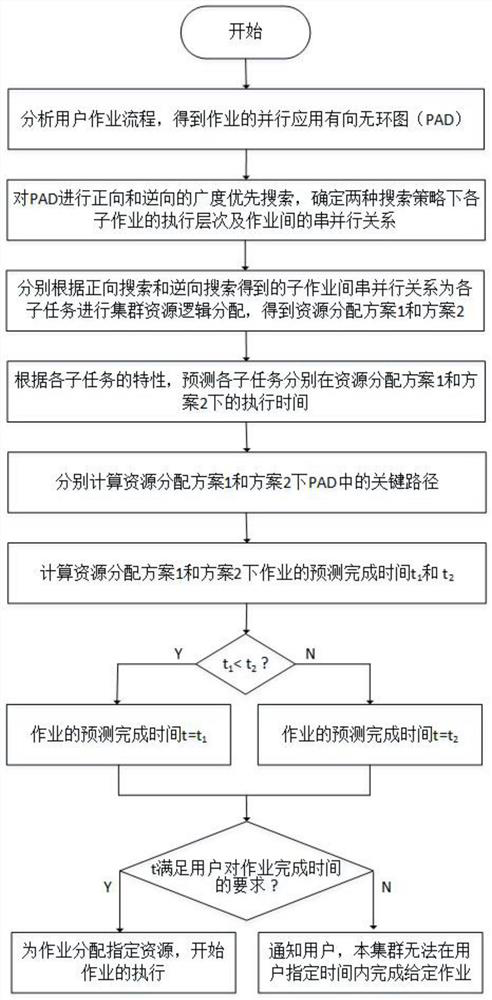

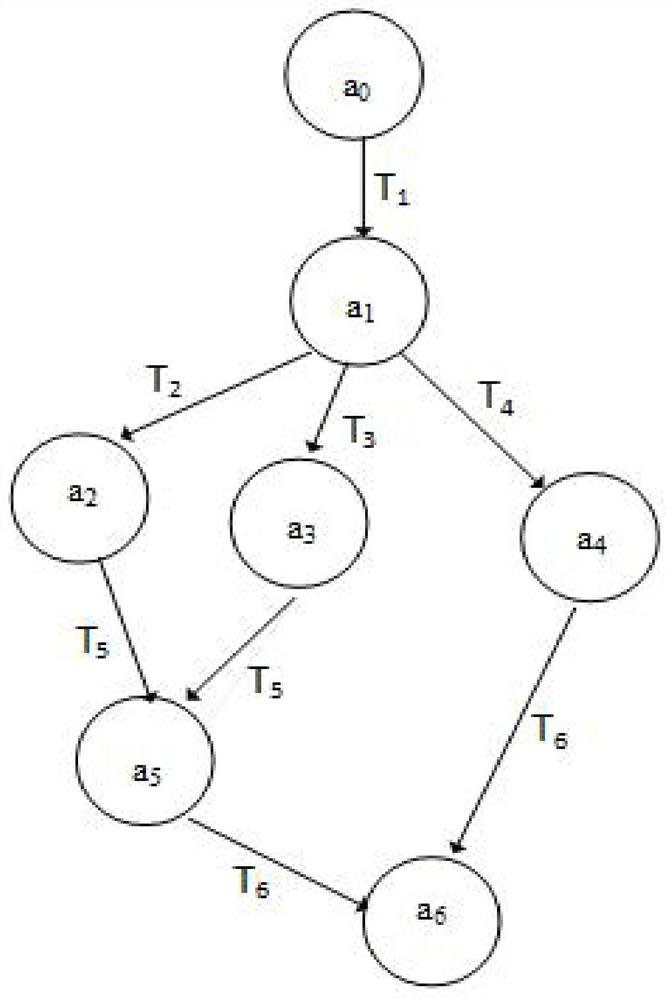

Distributed cluster resource scheduling method based on user operation process

PendingCN113157421AImprove parallelismImprove service qualityProgram initiation/switchingResource allocationResource schedulingProcess (computing)

The invention relates to a distributed cluster resource scheduling method based on a user operation process. The method comprises the following steps: firstly, analyzing an execution sequence constraint relationship among sub-jobs included in the job, and determining a serial-parallel execution sequence of the sub-jobs; and according to the execution sequence of the sub-jobs, performing logic allocation of resources for the sub-jobs and predicting the execution time of each sub-job under the resource allocation, and further predicting the execution time of the job by calculating a key path in the job process. Usually, the job submitted by the user has the constraint of the completion time, so that the predicted job completion time can be used as a basis whether the cluster can provide services for the user in time or not. Experiments prove that compared with a default resource allocation algorithm of Spark, the algorithm provided by the invention can shorten the job execution time by 16.81%. According to the algorithm provided by the invention, the degree of parallelism of sub-job operation can be improved, the job execution time is shortened, and the improvement of the service quality of mechanisms such as a cloud service platform, a supercomputing center and a data center is facilitated.

Owner:ZHEJIANG UNIV OF TECH

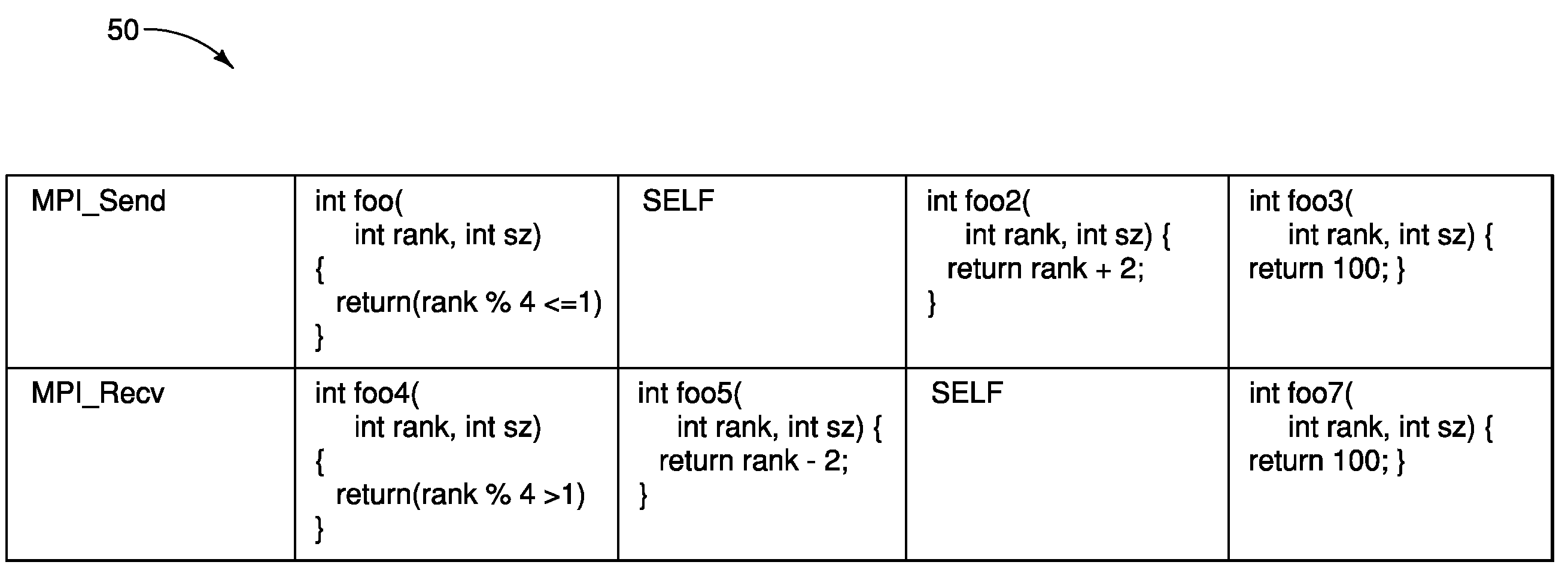

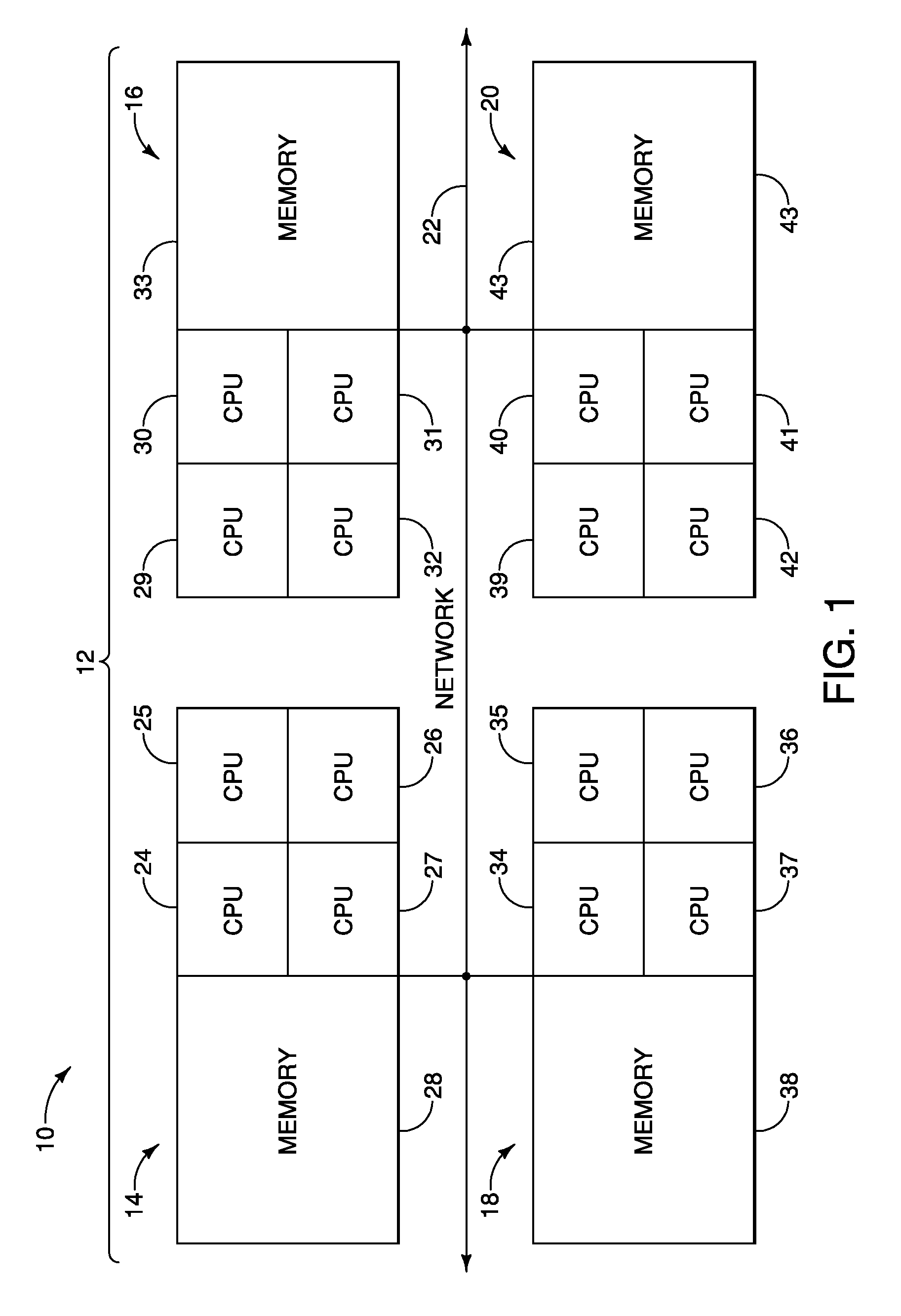

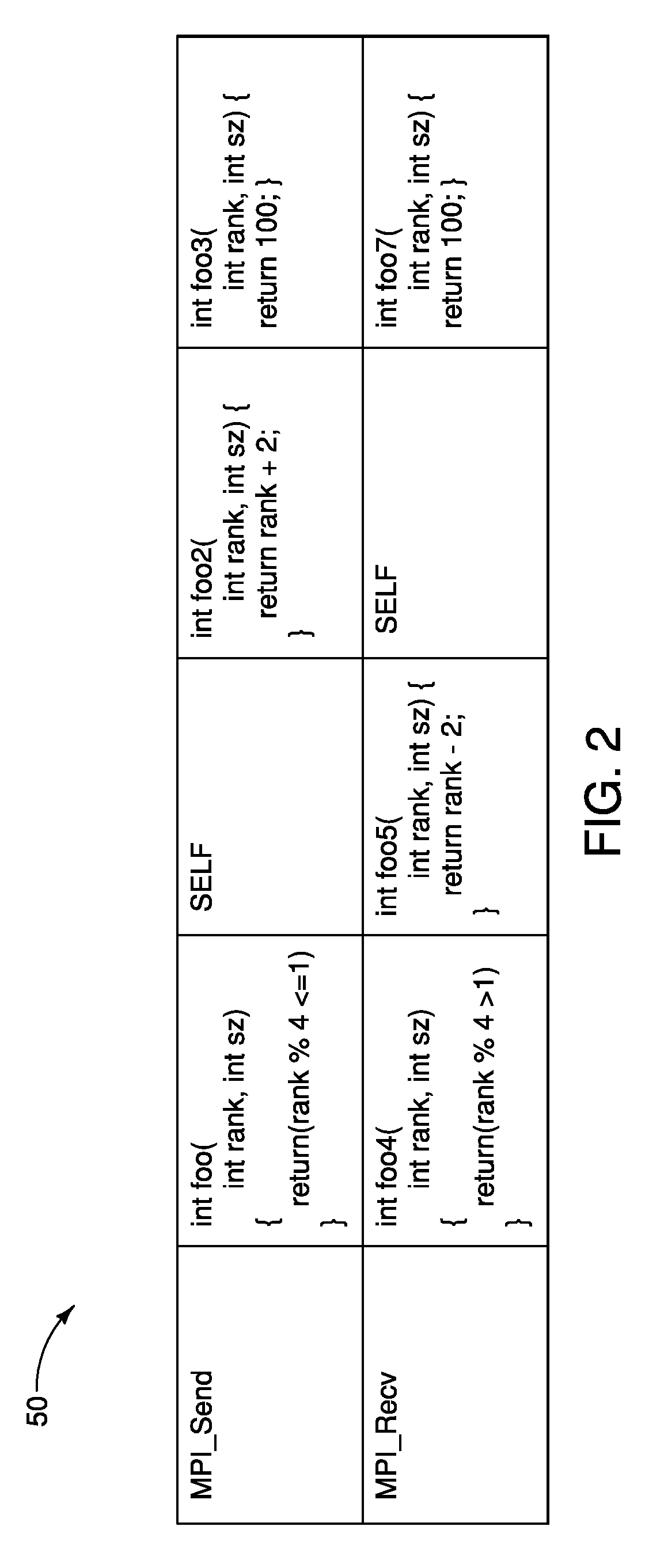

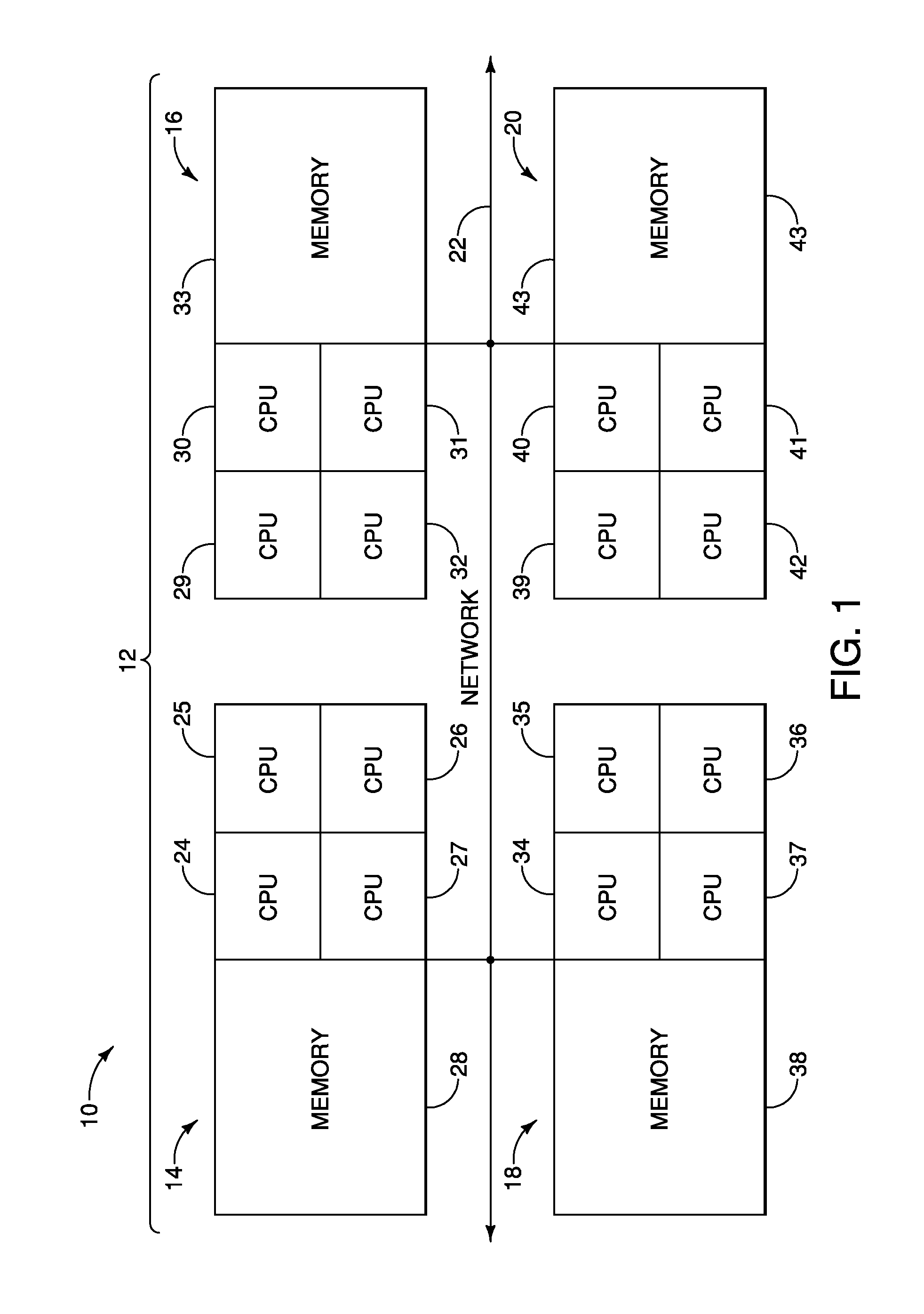

Process mapping parallel computing

InactiveUS20130139175A1Network can be highReduce communication overheadMultiprogramming arrangementsMemory systemsComputer architectureConcurrent computation

A method of mapping processes to processors in a parallel computing environment where a parallel application is to be run on a cluster of nodes wherein at least one of the nodes has multiple processors sharing a common memory, the method comprising using compiler based communication analysis to map Message Passing Interface processes to processors on the nodes, whereby at least some more heavily communicating processes are mapped to processors within nodes. Other methods, apparatus, and computer readable media are also provided.

Owner:IBM CORP

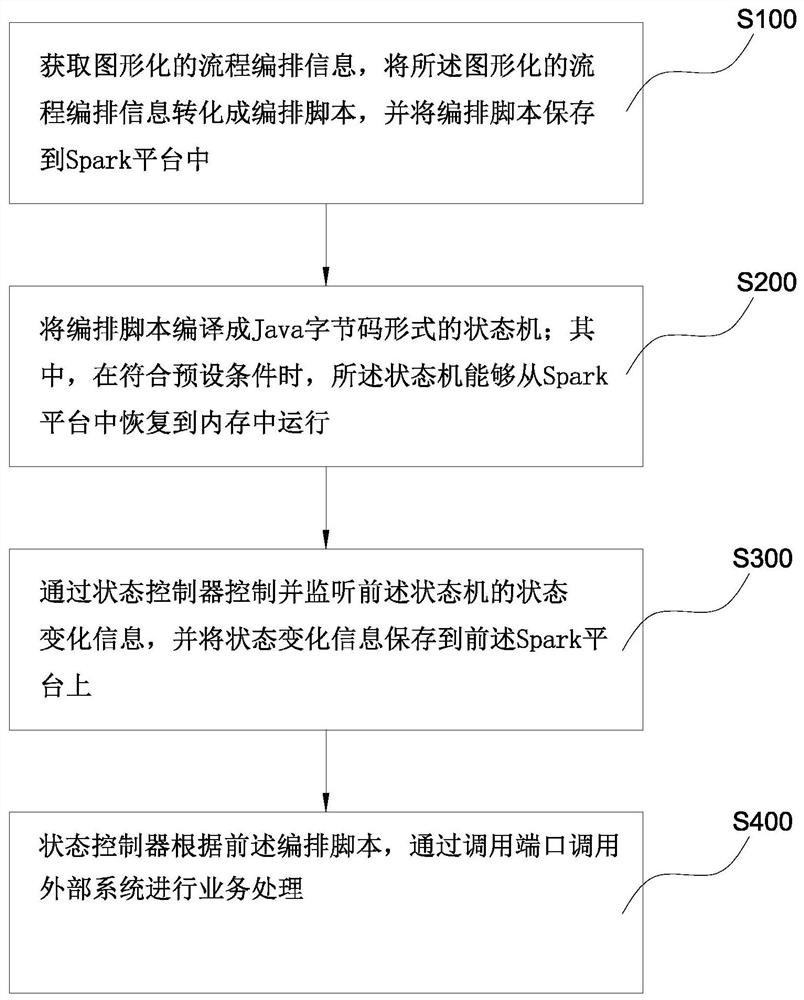

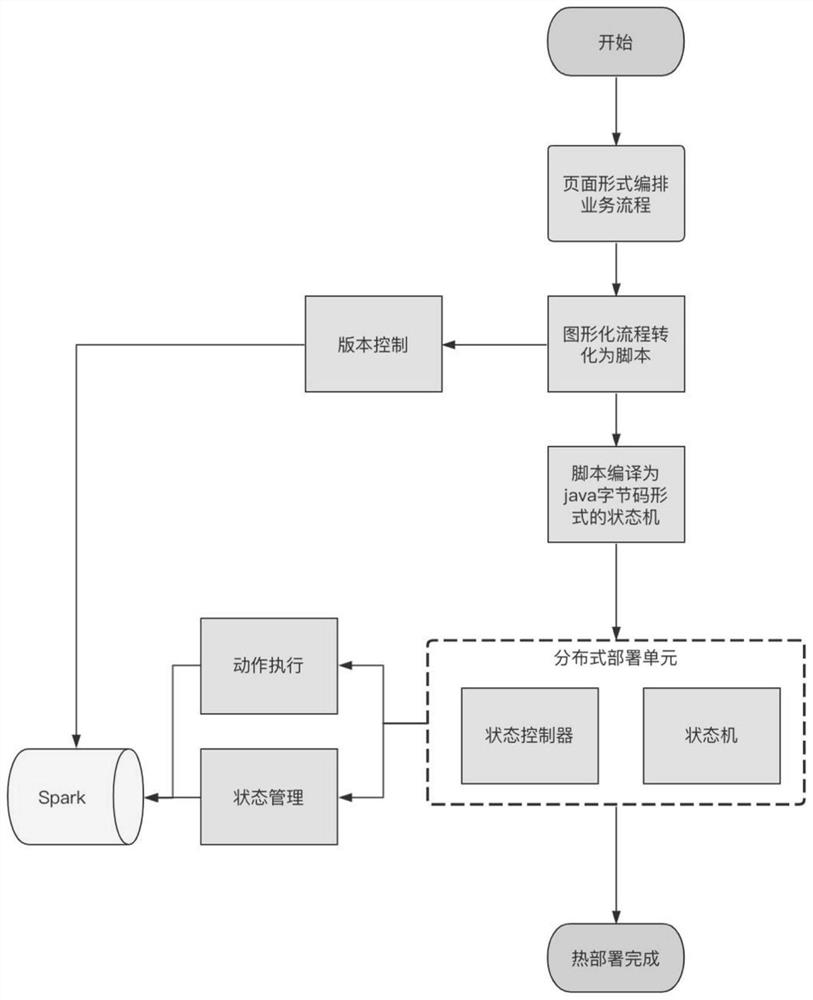

Process engine implementation method and system based on Spark and parallel memory computing

PendingCN112379884AIncrease elasticityProgram initiation/switchingDatabase management systemsData streamProcess (computing)

The invention discloses a process engine implementation method and system based on Spark and parallel memory computing, and relates to the technical field of process automation. The method comprises the following steps: acquiring graphical process arrangement information, converting the graphical process arrangement information into an arrangement script, and storing the arrangement script in a Spark platform; compiling the arrangement script into a state machine in a Java byte code form, wherein the state machine can be recovered from a Spark platform to a memory for operation; controlling and monitoring state change information of the state machine through a state controller, and storing the state change information to the Spark platform; and enabling the state controller to call an external system to perform service processing through the calling port according to the arrangement script. According to the invention, distributed data flow automation and business flow automation can beachieved at the same time, large throughput and instant responsiveness are both considered, and the method and system are especially suitable for scene requirements of large throughput.

Owner:李斌

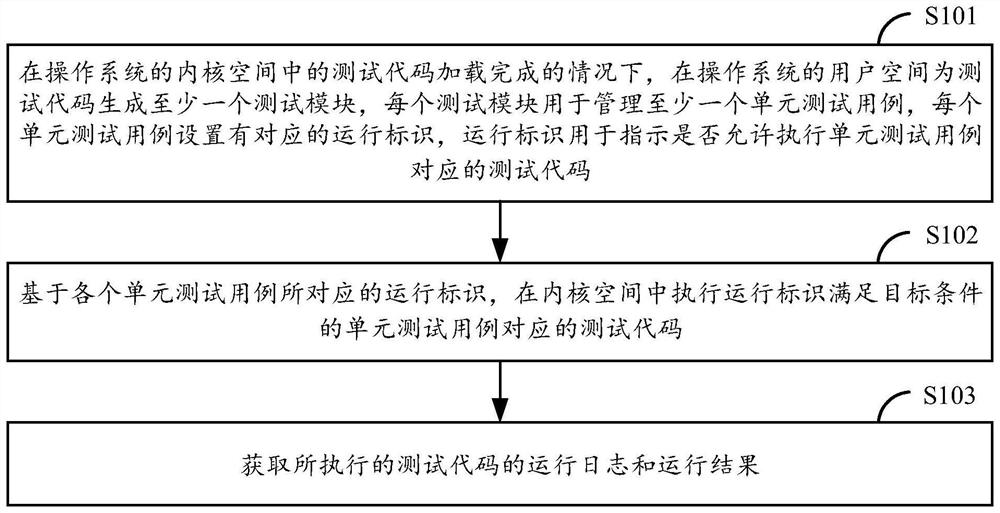

Unit testing method and device, computing equipment and medium

PendingCN114691496ATo achieve stand-alone operationImprove efficiencySoftware testing/debuggingOperational systemProcess (computing)

One or more embodiments of the invention provide a unit test method and apparatus, a computing device and a medium, so as to provide a finer-grained unit test case management framework, and under the condition that test codes in a kernel space of an operating system are loaded, the test codes in the kernel space of the operating system are loaded. At least one test module is generated for a test code in a user space of an operating system, each test module is used for managing at least one unit test case, and each unit test case is provided with a corresponding operation identifier, so that the test code can be tested based on the operation identifier corresponding to each unit test case. And executing the test code corresponding to the unit test case of which the operation identifier meets the target condition in the kernel space to obtain an operation log and an operation result of the executed test code, so that independent operation of one or several unit test cases is realized, a kernel module only containing the unit test case does not need to be rewritten, and the operation efficiency is improved. Therefore, the efficiency of the unit test process can be improved.

Owner:ALIBABA (CHINA) CO LTD

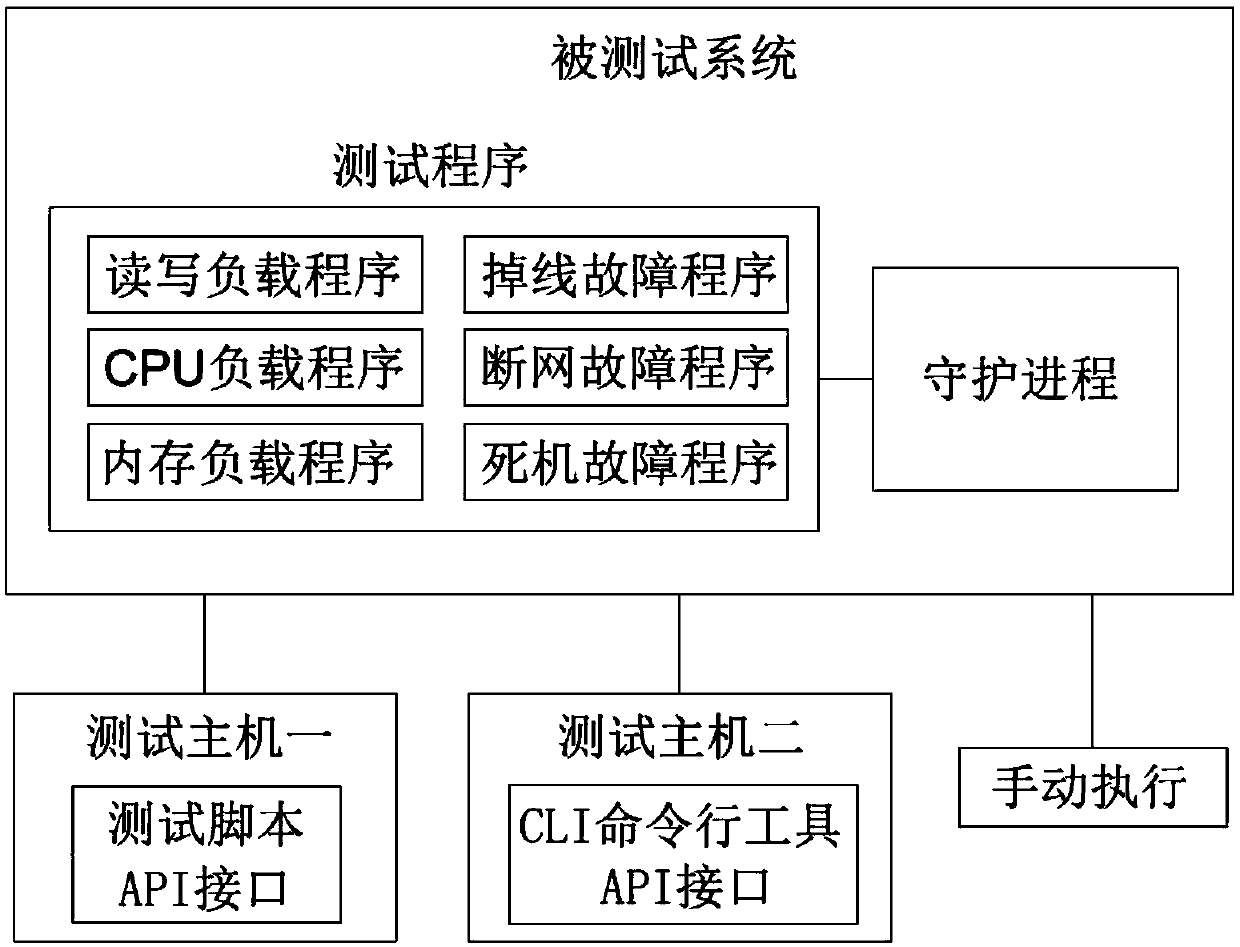

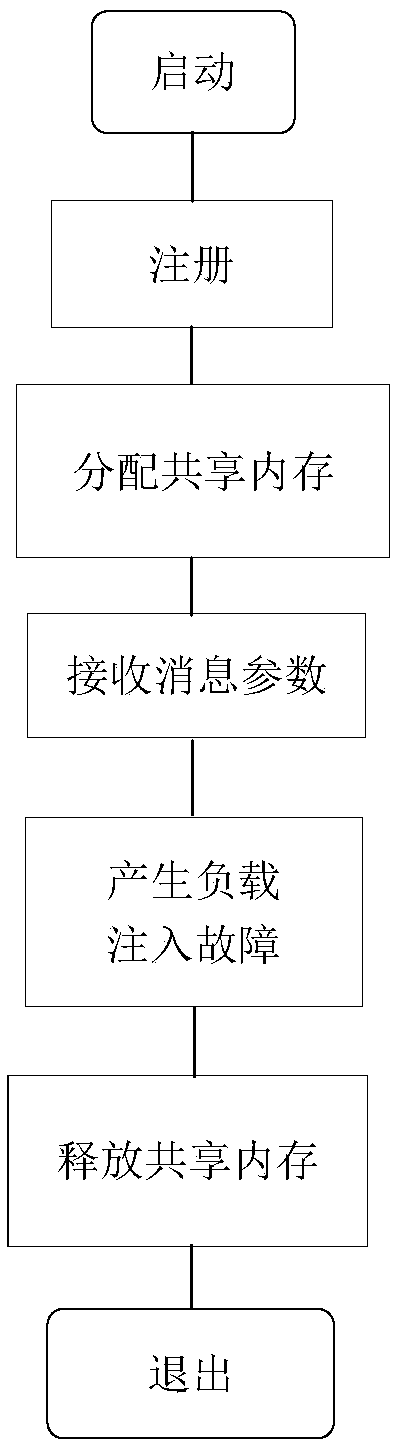

Test method of computer system

PendingCN110188028AEffective isolationFlexible assemblySoftware testing/debuggingPresent methodLoad generation

The invention belongs to the field of computers, and particularly discloses a computer test method. The method is applied to a test host and a tested system. A plurality of test programs and a daemonprocess for managing the test programs are installed in the tested system. The plurality of test programs are compiled under a unified test program framework, in the test process, the test host is incommunication connection with the daemon process, the test host sends a request to the daemon process, and the daemon process receives the request sent by the test host and starts, stops or finely adjusts the test programs on the tested system. Compared with the prior art, the technical scheme disclosed by the invention has the advantages that different types of load generation and fault injectionprograms are compiled by using a unified test program framework, faults and loads can be flexibly assembled, various computing loads can be generated as required, and software and hardware faults canbe flexibly injected.

Owner:西安奥卡云数据科技有限公司

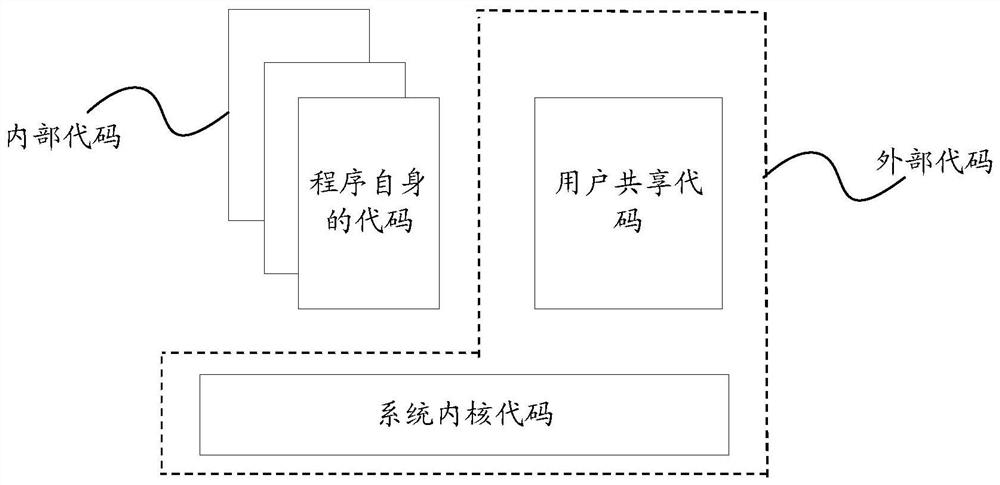

Program code execution behavior monitoring method and computer equipment

PendingCN113268726AInternal/peripheral component protectionPlatform integrity maintainanceProgramming languageOperational system

The invention provides a program code monitoring method which comprises the steps that computer equipment executes a first code corresponding to a first program code in a virtual execution environment, the first code belongs to an external code, and the external code is a code called in the first program code except an internal code; the external code comprises a system code provided by an operating system of the computing equipment, and the internal code is a code of a process generated by the first program code; in the process of executing the first code, if the second code belongs to the internal code, before the second code is executed, the computer equipment switches the execution environment of the first program code into a simulation execution environment, and the second code is a code to be executed; the second code is executed in the simulated execution environment. According to the method, under the condition that the aim of monitoring the instruction level of the program code is achieved, the performance overhead is reduced, and the operation efficiency of the system is improved.

Owner:HUAWEI TECH CO LTD

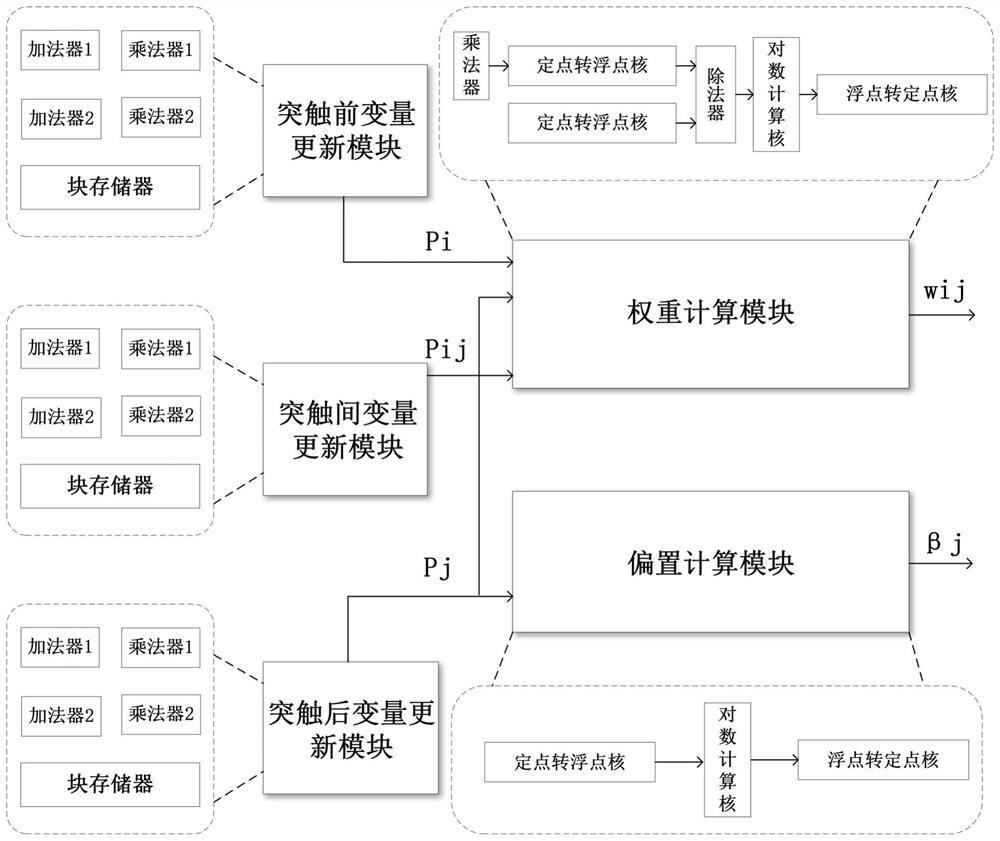

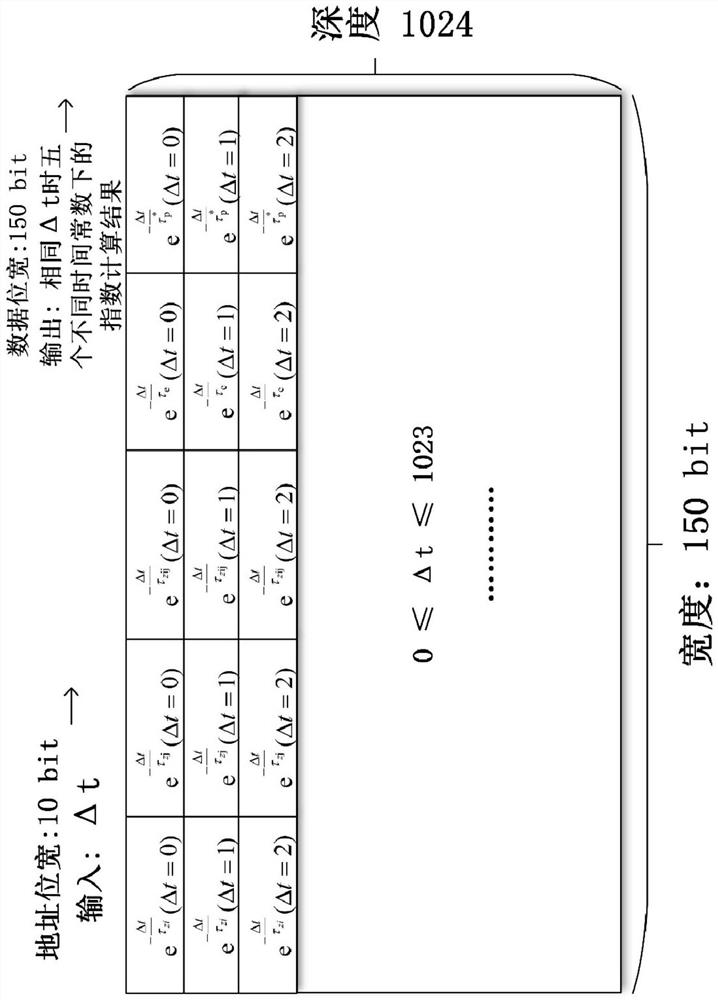

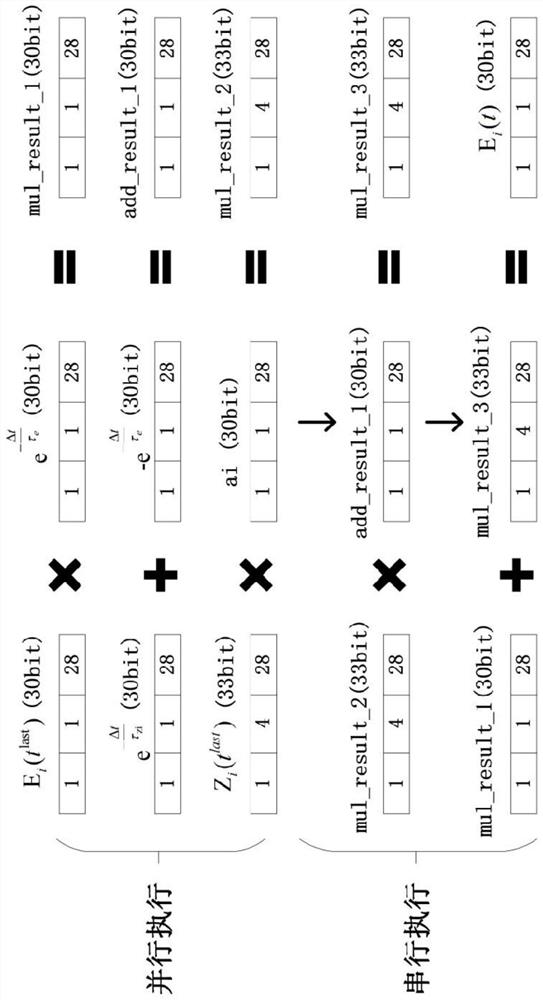

FPGA-based design method for improving the speed of BCPNN

PendingCN111882050AReduce overheadIncrease weightNeural architecturesPhysical realisationMultiplexingBinary multiplier

The invention discloses an FPGA-based design method for improving the speed of a BCPNN, and relates to the technical field of artificial intelligence, and the method comprises the steps: updating variables, weights and offsets of synaptic states in the BCPNN on hardware through modular design; and achieving exponential operation on the FPGA through the lookup table. through a parallel algorithm, the speed of the weight and bias updating process of the synaptic state in the BCPNN is increased; through module multiplexing of the adder and the multiplier, the resource overhead is reduced under the condition of keeping the same computing performance. The method provided by the invention not only has higher calculation performance, but also has higher calculation accuracy, and can effectively improve the weight and offset updating speed of the BCPNN.

Owner:FUDAN UNIV +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com