Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

32 results about "General matrix" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

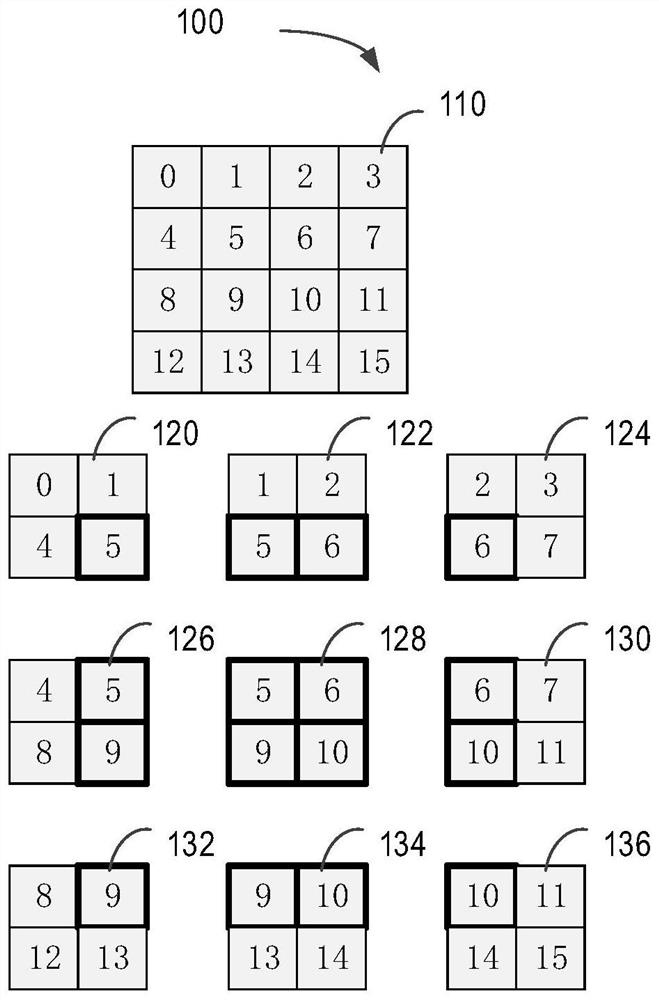

Optimizing performance of a graphics processing unit for efficient execution of general matrix operations

ActiveUS20050197977A1Improve performanceImprove the display effectDigital computer detailsProcessor architectures/configurationComputational scienceGraphics

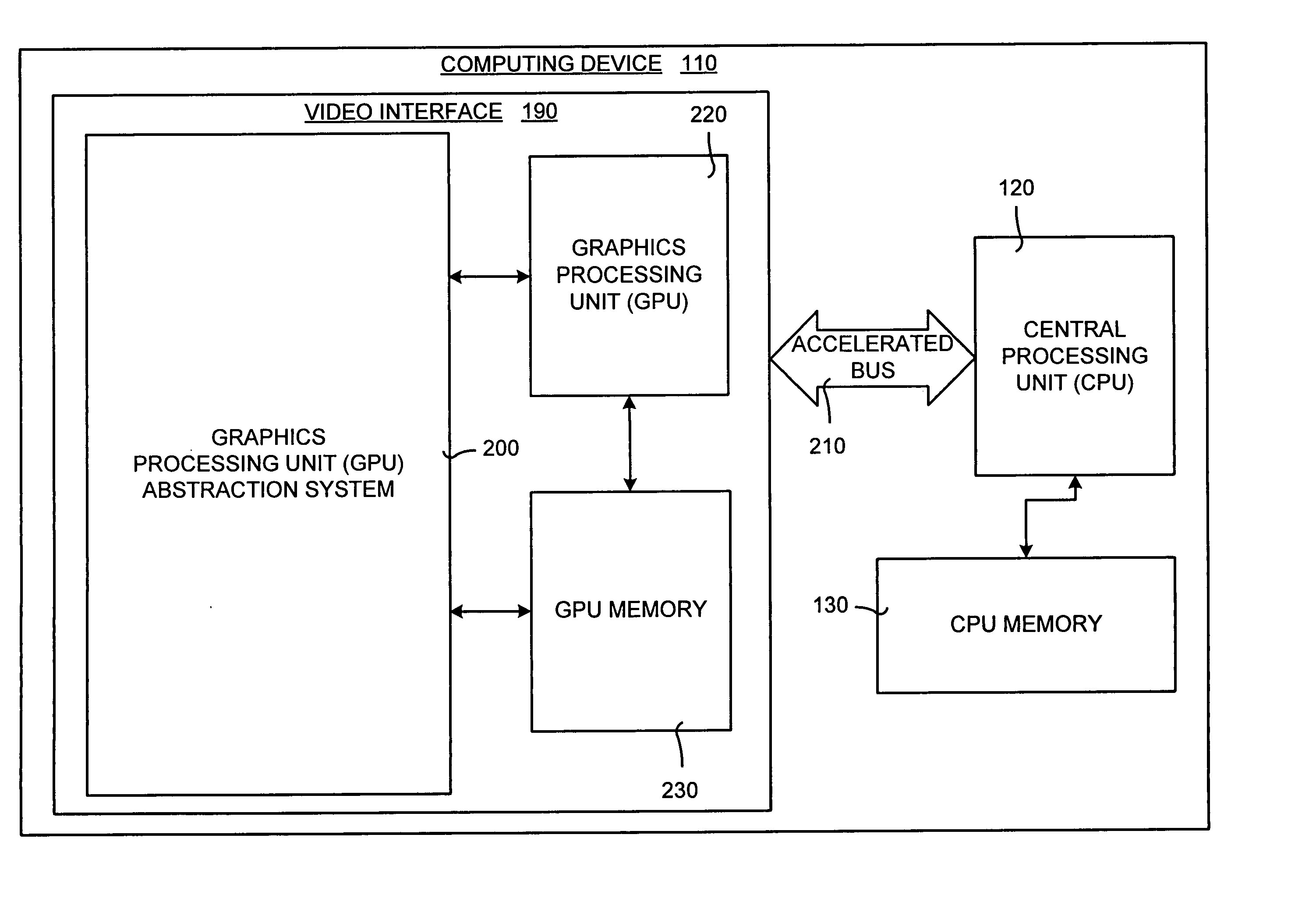

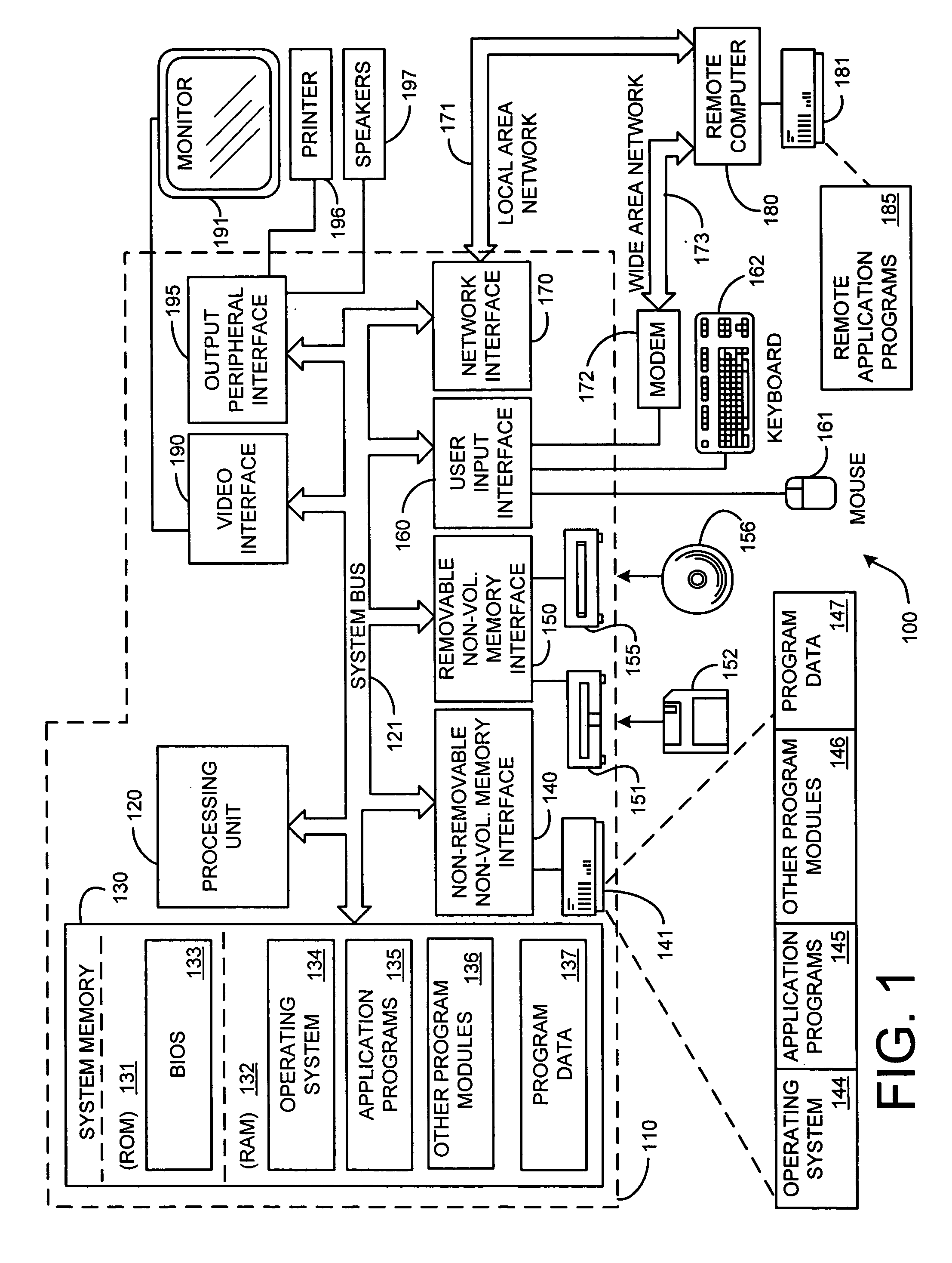

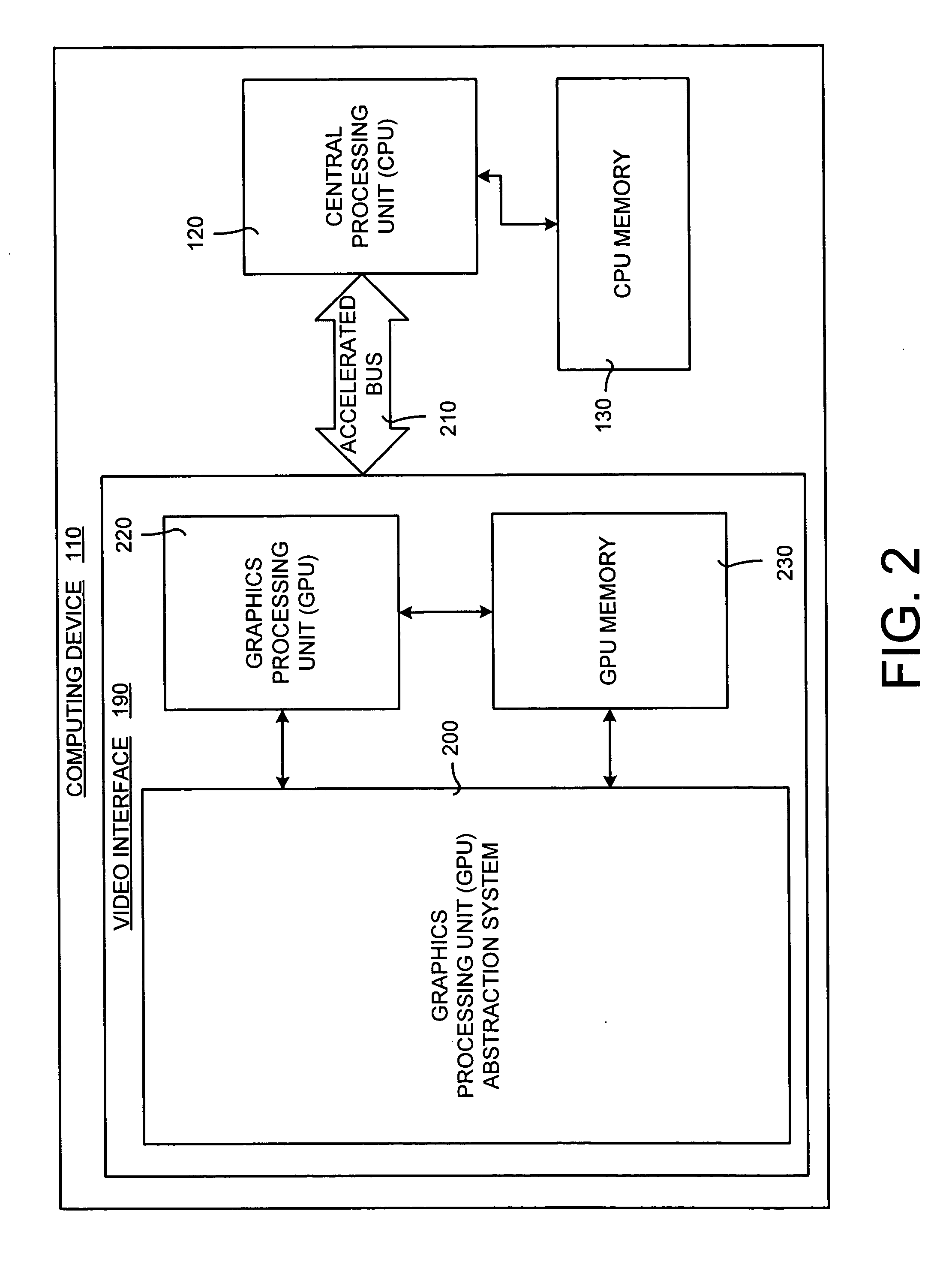

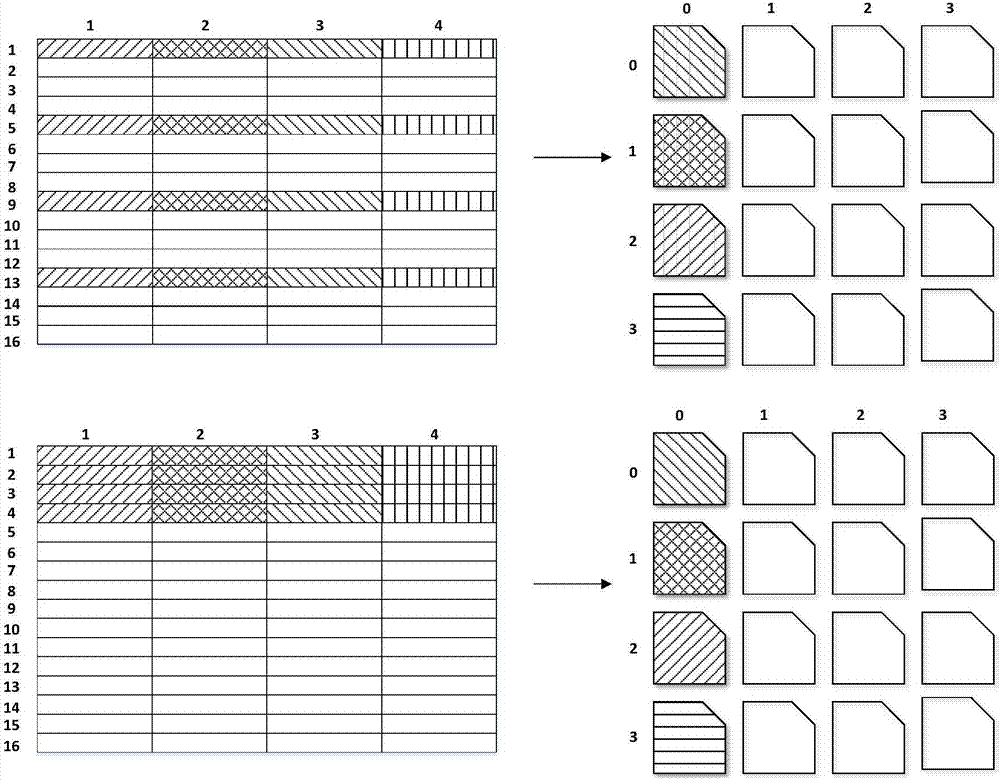

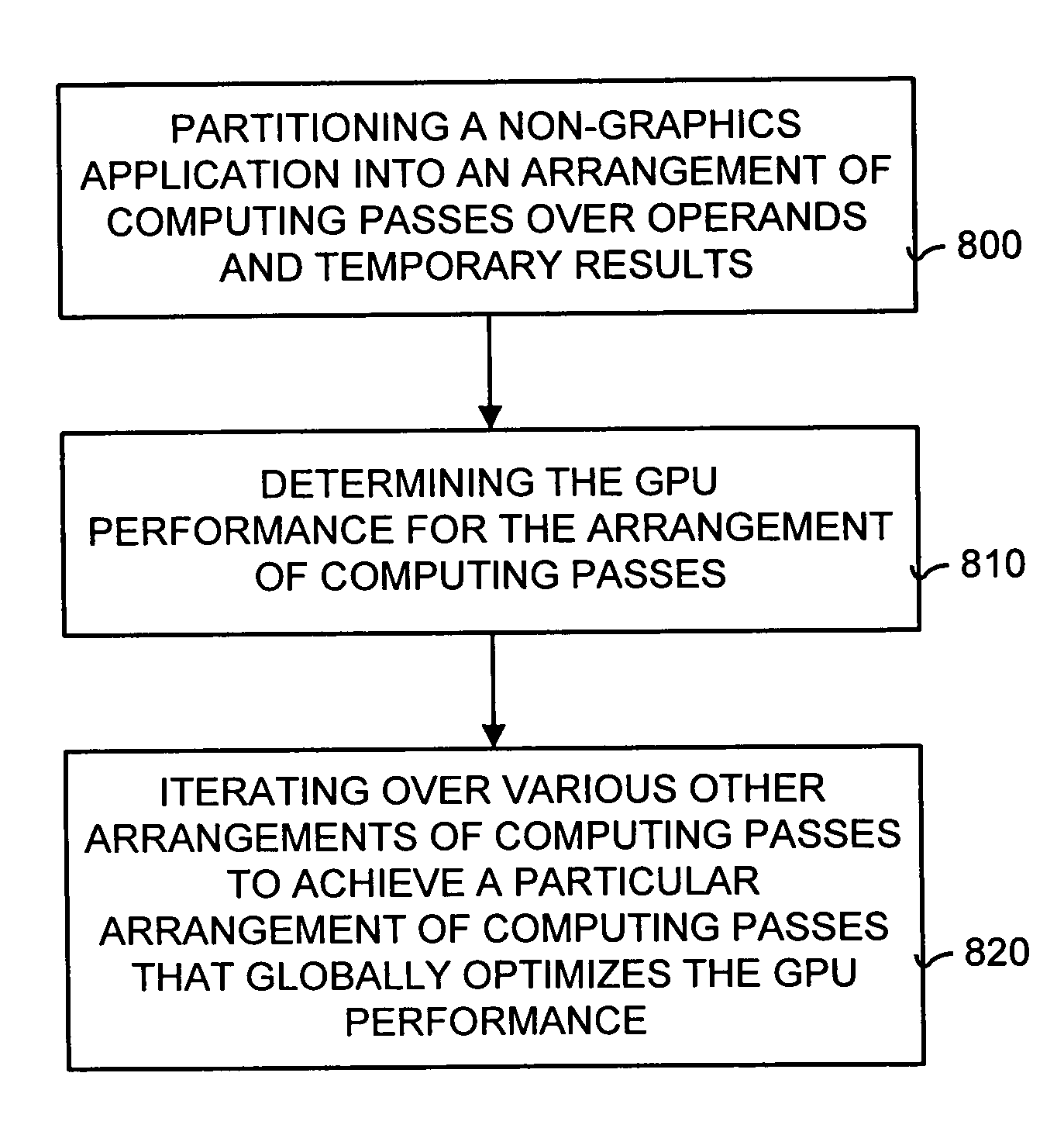

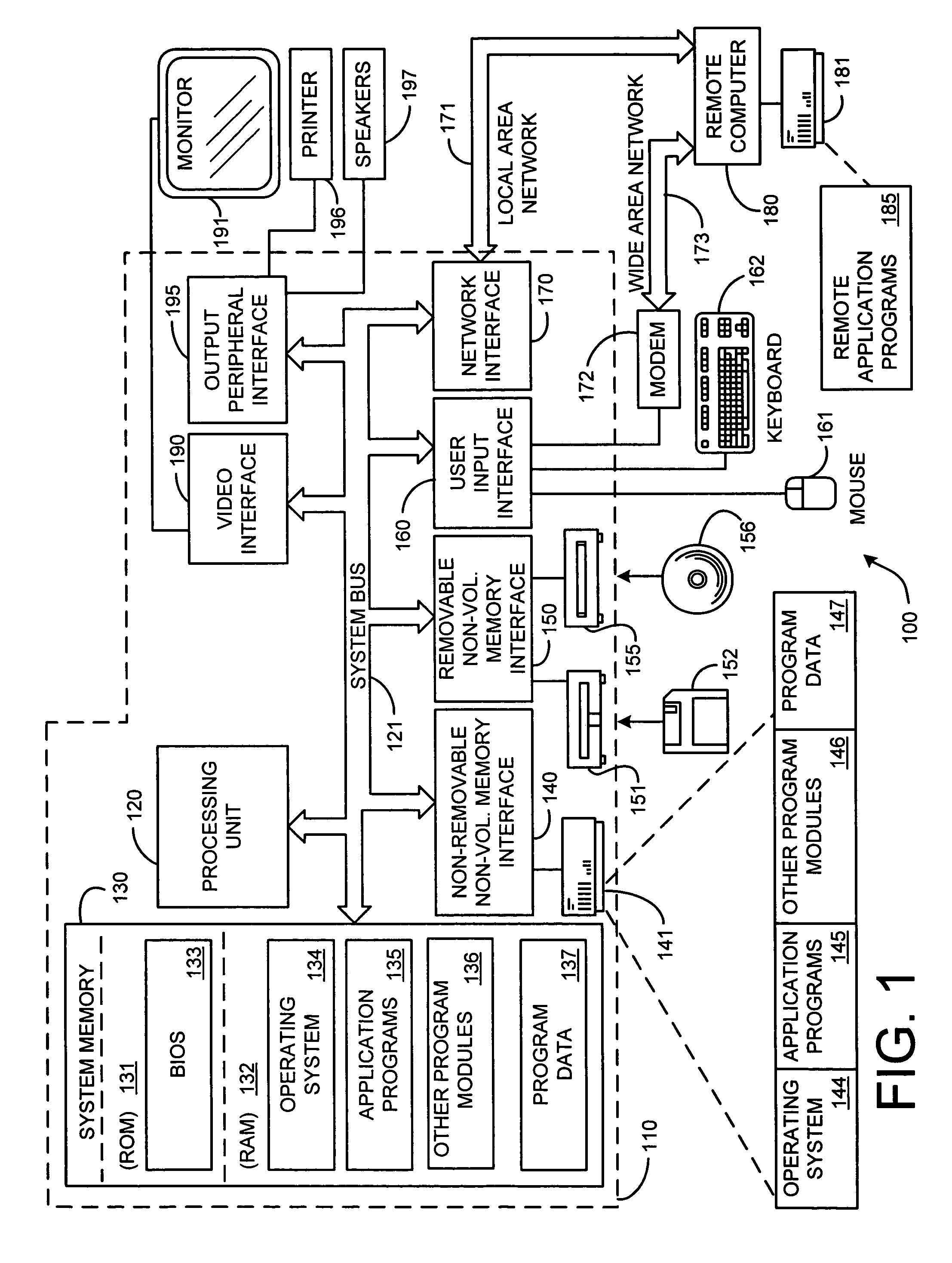

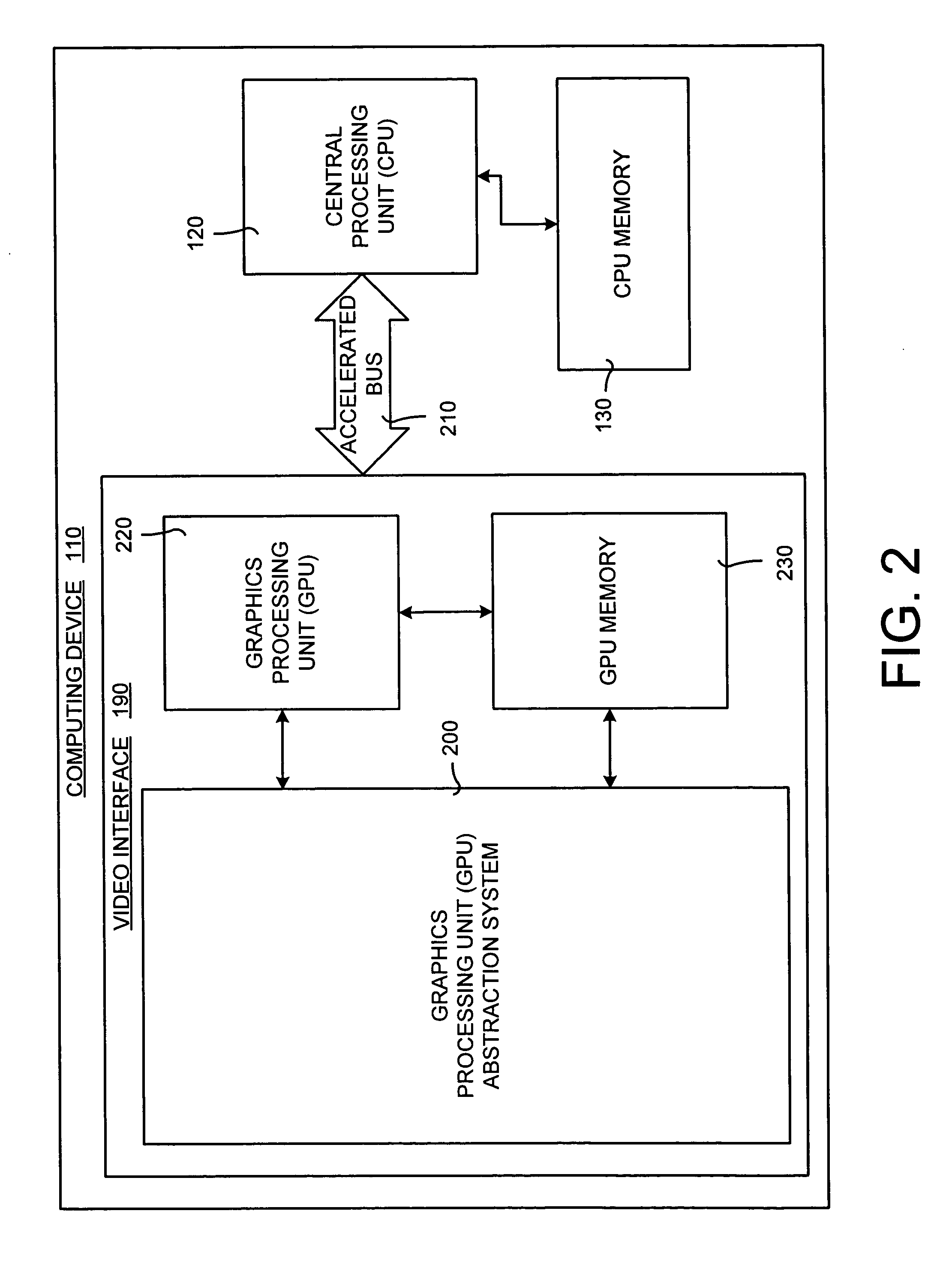

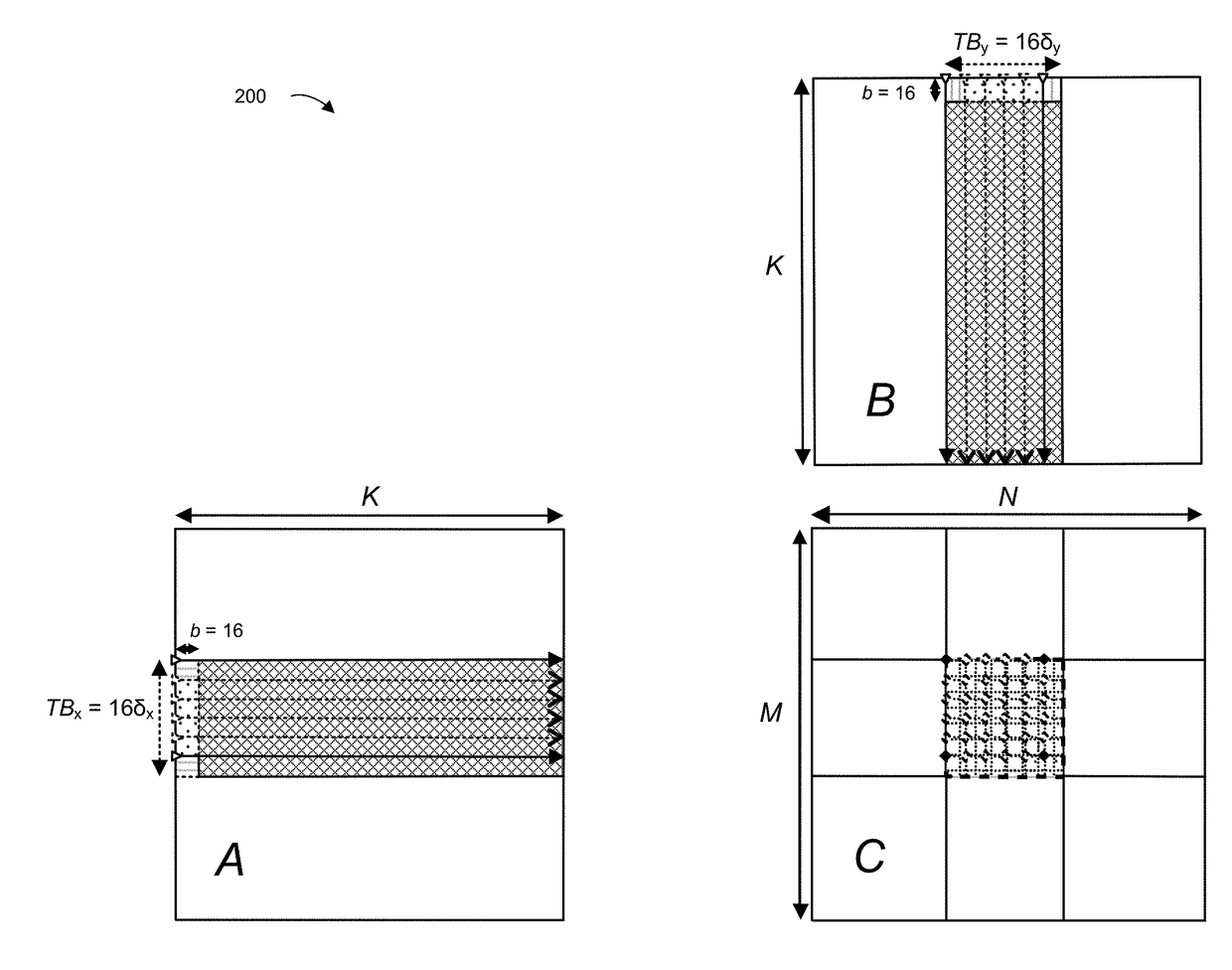

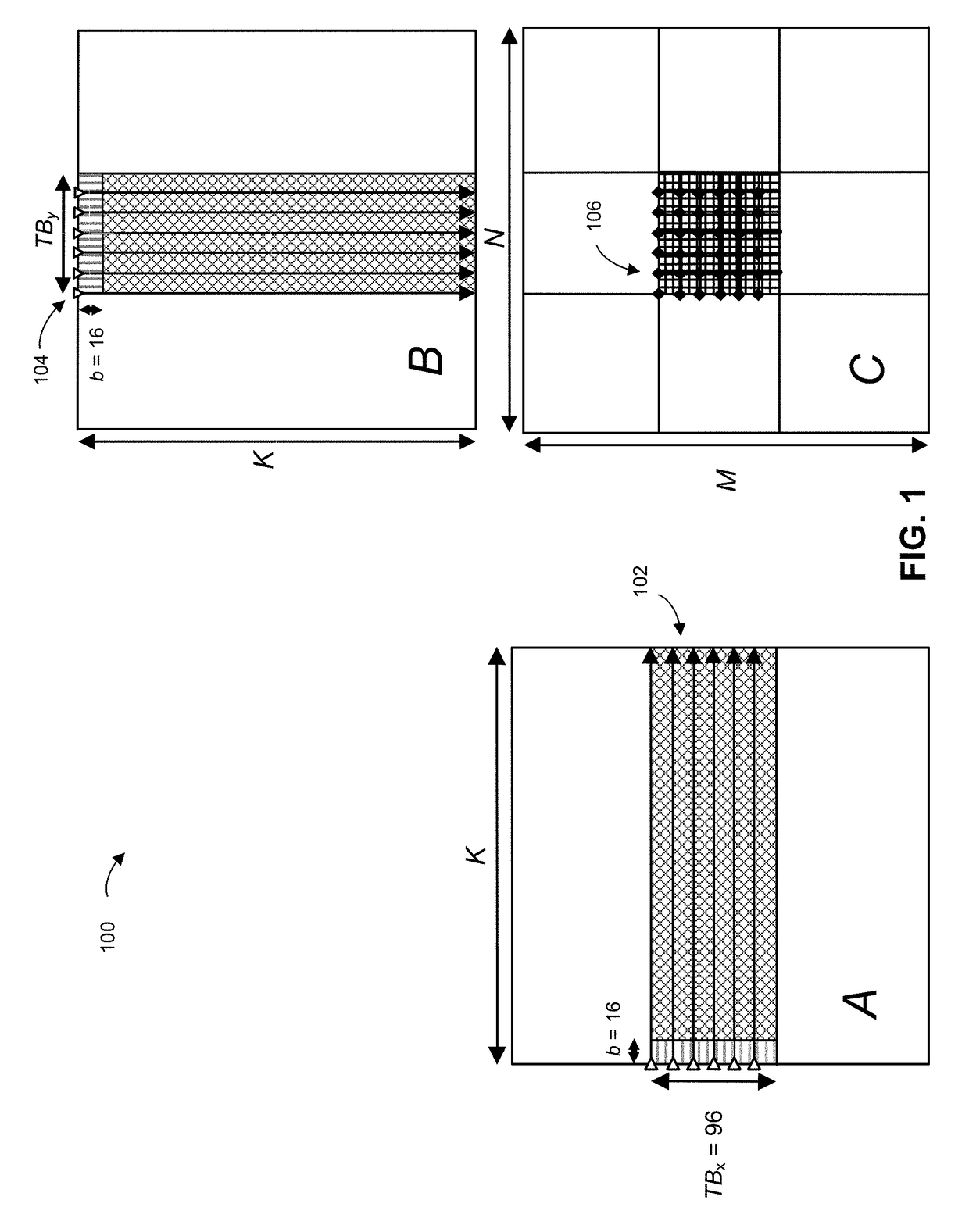

A system and method for optimizing the performance of a graphics processing unit (GPU) for processing and execution of general matrix operations such that the operations are accelerated and optimized. The system and method describes the layouts of operands and results in graphics memory, as well as partitioning the processes into a sequence of passes through a macro step. Specifically, operands are placed in memory in a pattern, results are written into memory in a pattern appropriate for use as operands in a later pass, data sets are partitioned to insure that each pass fits into fixed sized memory, and the execution model incorporates generally reusable macro steps for use in multiple passes. These features enable greater efficiency and speed in processing and executing general matrix operations.

Owner:MICROSOFT TECH LICENSING LLC

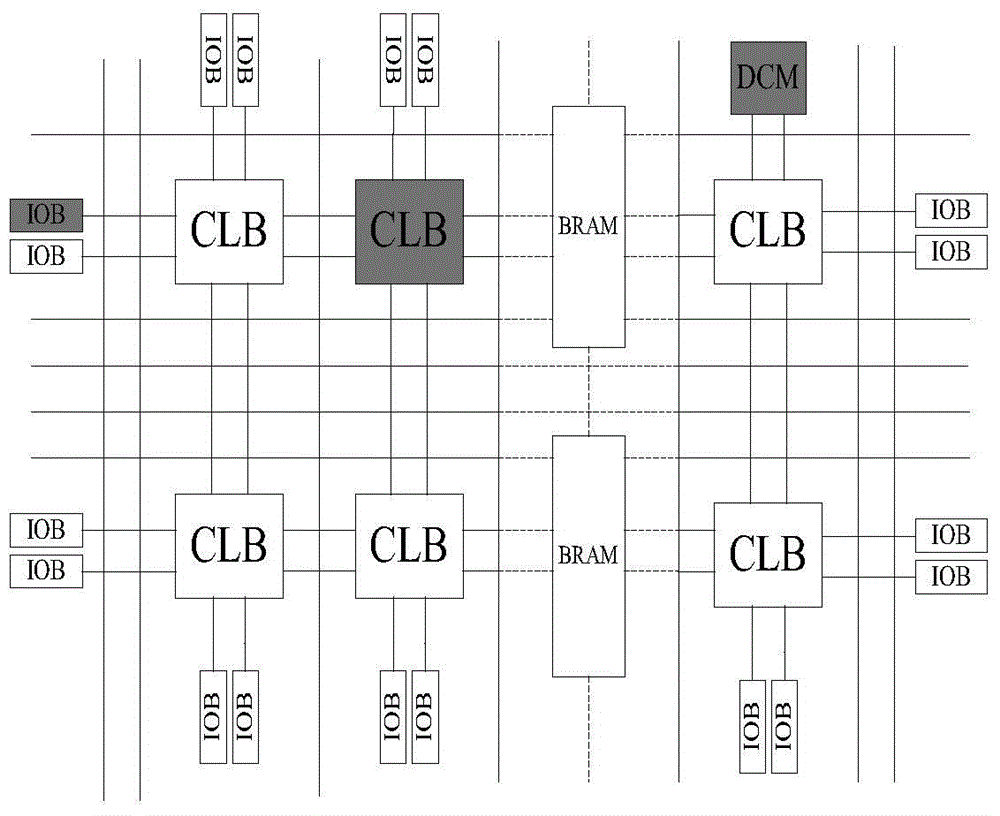

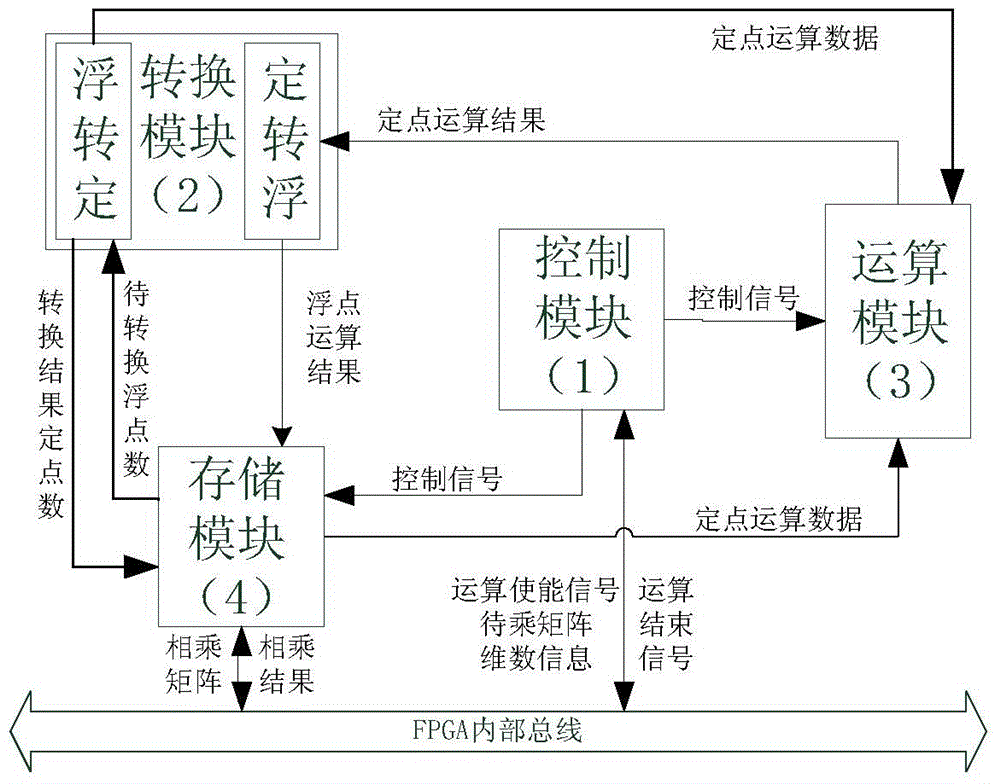

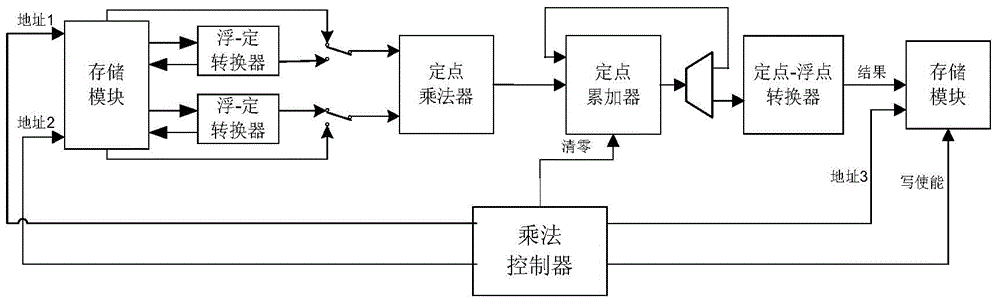

FPGA (Field Programmable Gate Array)-based general matrix fixed-point multiplier and calculation method thereof

ActiveCN104572011AImprove computing efficiencyImprove performanceComputation using non-contact making devicesGeneral matrixControl signal

The invention discloses an FPGA (Field Programmable Gate Array)-based general matrix fixed-point multiplier. An internal structure of the multiplier consists of a control module, a conversion module, an operation module and a storage module. The control module is used for generating a control signal according to dimension of a to-be-operated matrix. The conversion module is responsible for performing conversion between a fixed-point number and a floating-point number during operation. The operation module is used for reading operation data from the storage module and the conversion module, performing fixed-point multiplication and fixed-point accumulating operation and storing a result in the storage module. The storage module is used for caching to-be-operated matrix data and result matrix data, providing an interface compatible with a bus signal and allowing access of other components on a bus. The characteristic of high fixed-point calculation efficiency in hardware is fully utilized; by using a unique operation structure, simultaneous conversion and operation of the data are realized to improve the overall operation speed, and a plurality of matrix fixed-point multipliers can be simultaneously used to perform parallel calculation; thus the fixed-point multiplication of an arbitrary dimension matrix can be supported, and meanwhile extremely high calculation efficiency is guaranteed. Compared with matrix multiplication performed by using the floating-point number, the multiplier has the advantage that the calculation efficiency is greatly improved.

Owner:上海碧帝数据科技有限公司

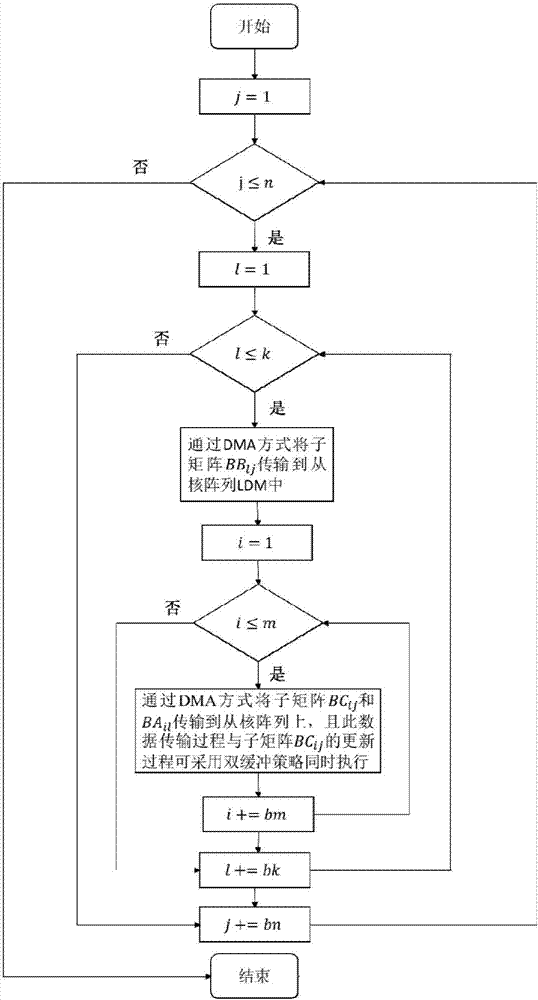

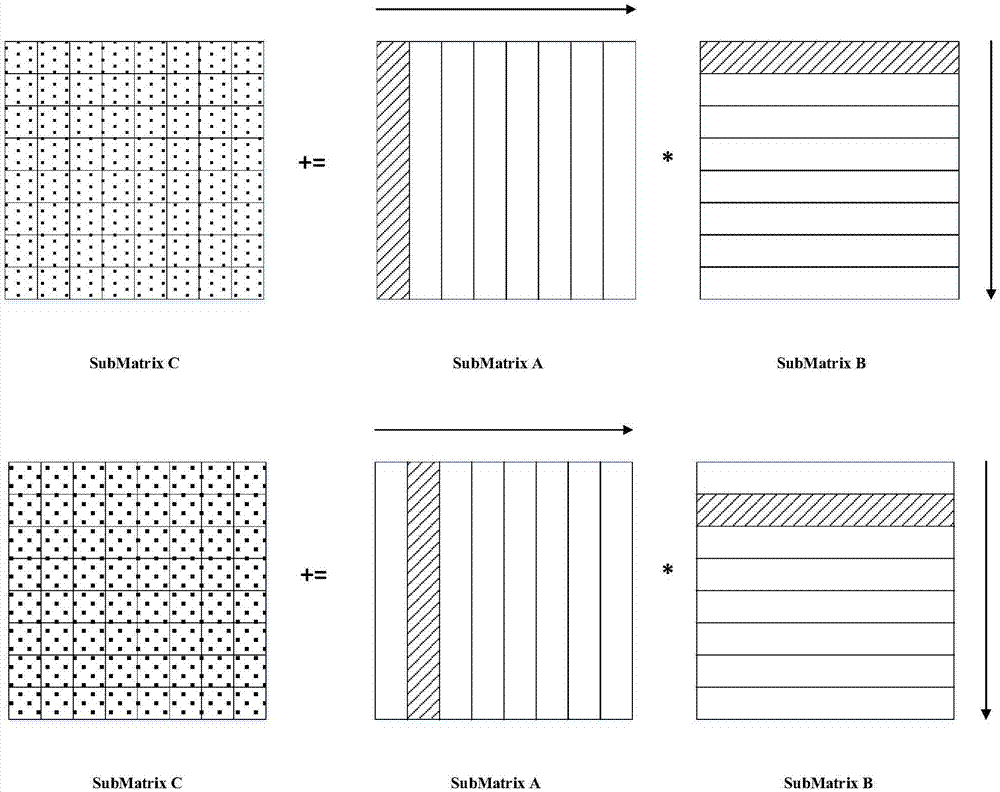

A GEMM (general matrix-matrix multiplication) high-performance realization method based on a domestic SW 26010 many-core CPU

ActiveCN107168683ASolve the problem that the computing power of slave cores cannot be fully utilizedImprove performanceRegister arrangementsConcurrent instruction executionFunction optimizationAssembly line

The invention provides a GEMM (general matrix-matrix multiplication) high-performance realization method based on a domestic SW 26010 many-core CPU. For a domestic SW many-core processor 26010, based on the platform characteristics of storage structures, memory access, hardware assembly lines and register level communication mechanisms, a matrix partitioning and inter-core data mapping method is optimized and a top-down there-level partitioning parallel block matrix multiplication algorithm is designed; a slave core computing resource data sharing method is designed based on the register level communication mechanisms, and a computing and memory access overlap double buffering strategy is designed by using a master-slave core asynchronous DMA data transmission mechanism; for a single slave core, a loop unrolling strategy and a software assembly line arrangement method are designed; function optimization is achieved by using a highly-efficient register partitioning mode and an SIMD vectoring and multiplication and addition instruction. Compared with a single-core open-source BLAS math library GotoBLAS, the function performance of the high-performance GEMM has an average speed-up ratio of 227. 94 and a highest speed-up ratio of 296.93.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI +1

Optimizing performance of a graphics processing unit for efficient execution of general matrix operations

ActiveUS7567252B2Improve performanceImprove the display effectDigital computer detailsProcessor architectures/configurationGraphicsData set

A system and method for optimizing the performance of a graphics processing unit (GPU) for processing and execution of general matrix operations such that the operations are accelerated and optimized. The system and method describes the layouts of operands and results in graphics memory, as well as partitioning the processes into a sequence of passes through a macro step. Specifically, operands are placed in memory in a pattern, results are written into memory in a pattern appropriate for use as operands in a later pass, data sets are partitioned to insure that each pass fits into fixed sized memory, and the execution model incorporates generally reusable macro steps for use in multiple passes. These features enable greater efficiency and speed in processing and executing general matrix operations.

Owner:MICROSOFT TECH LICENSING LLC

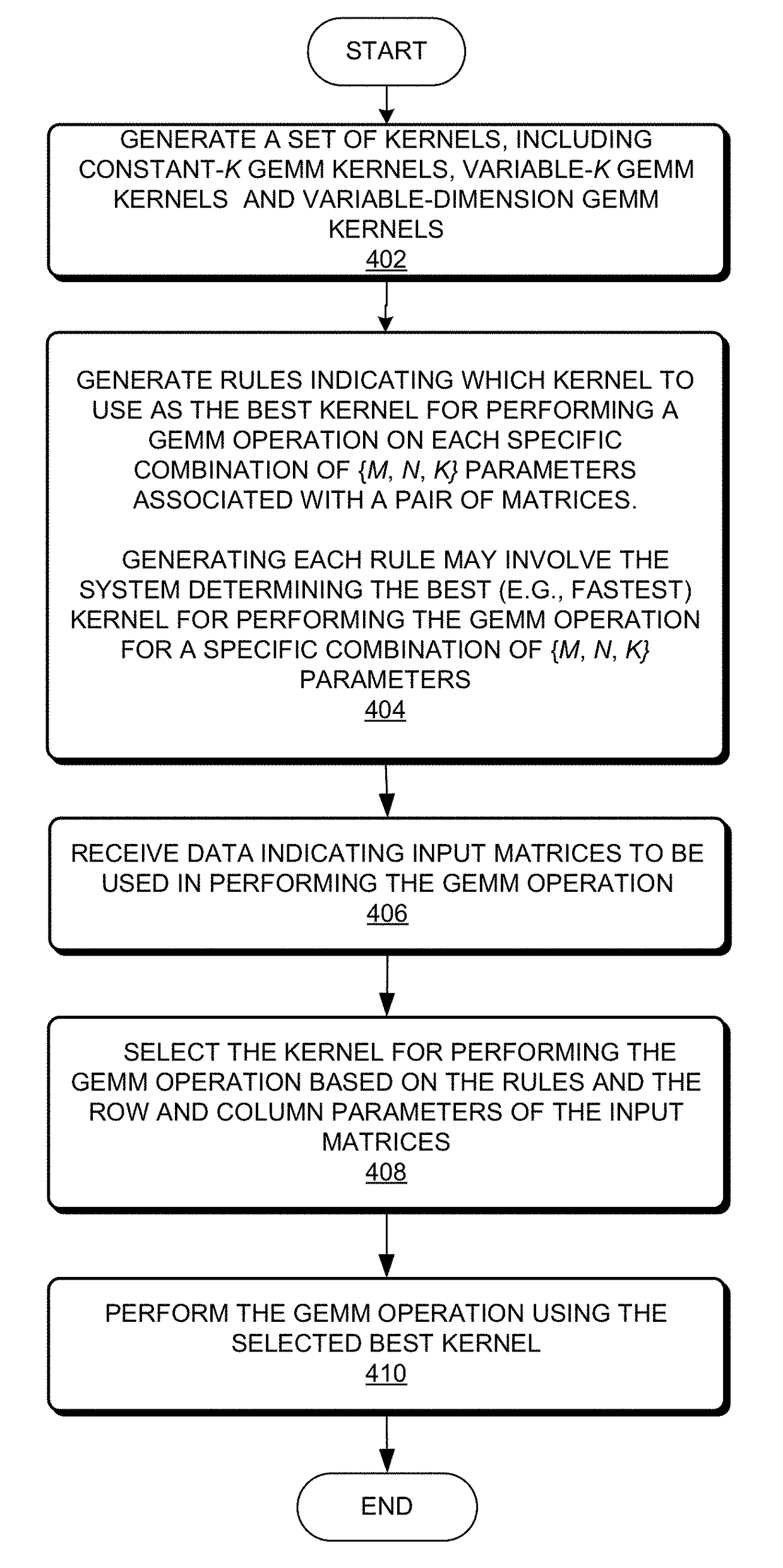

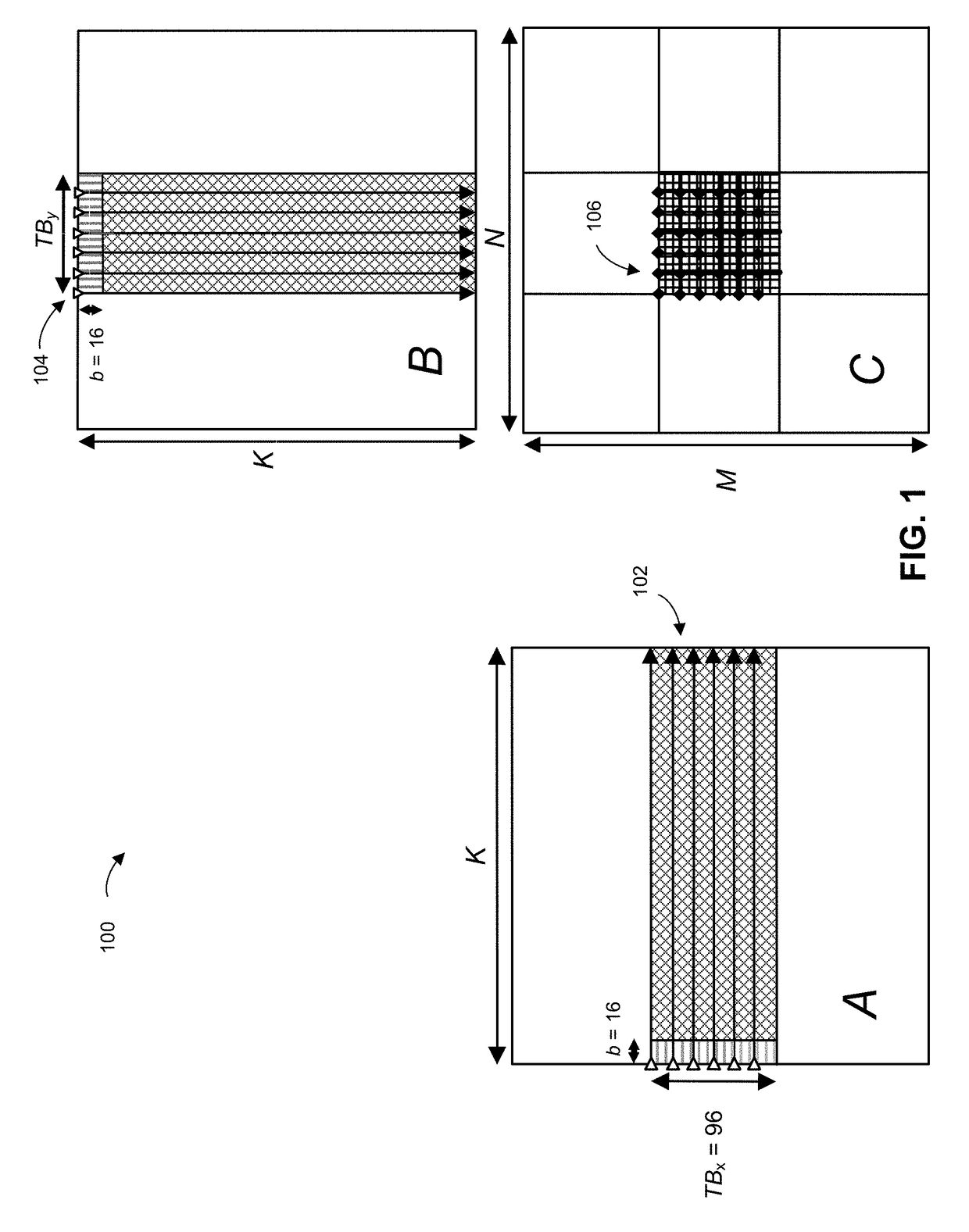

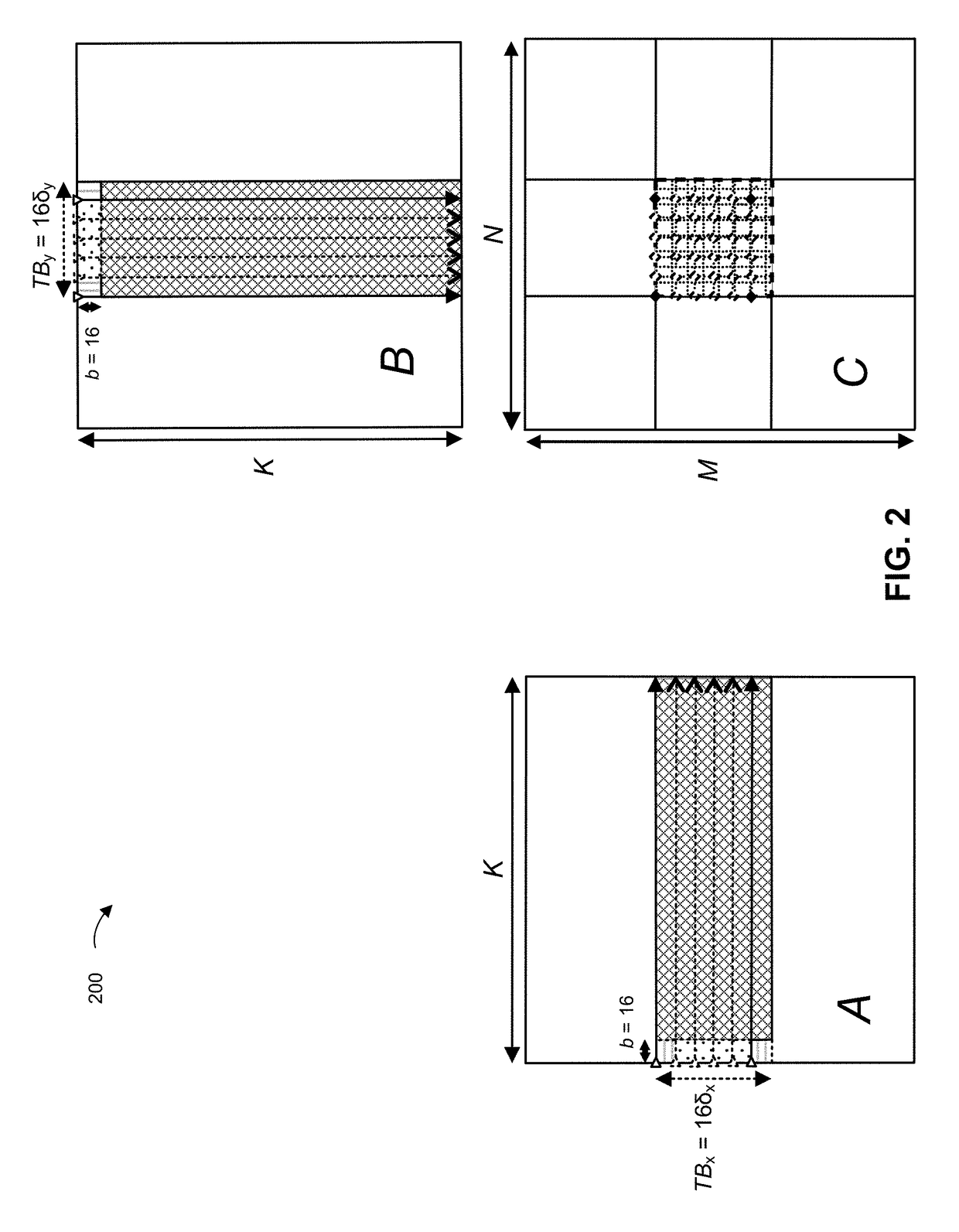

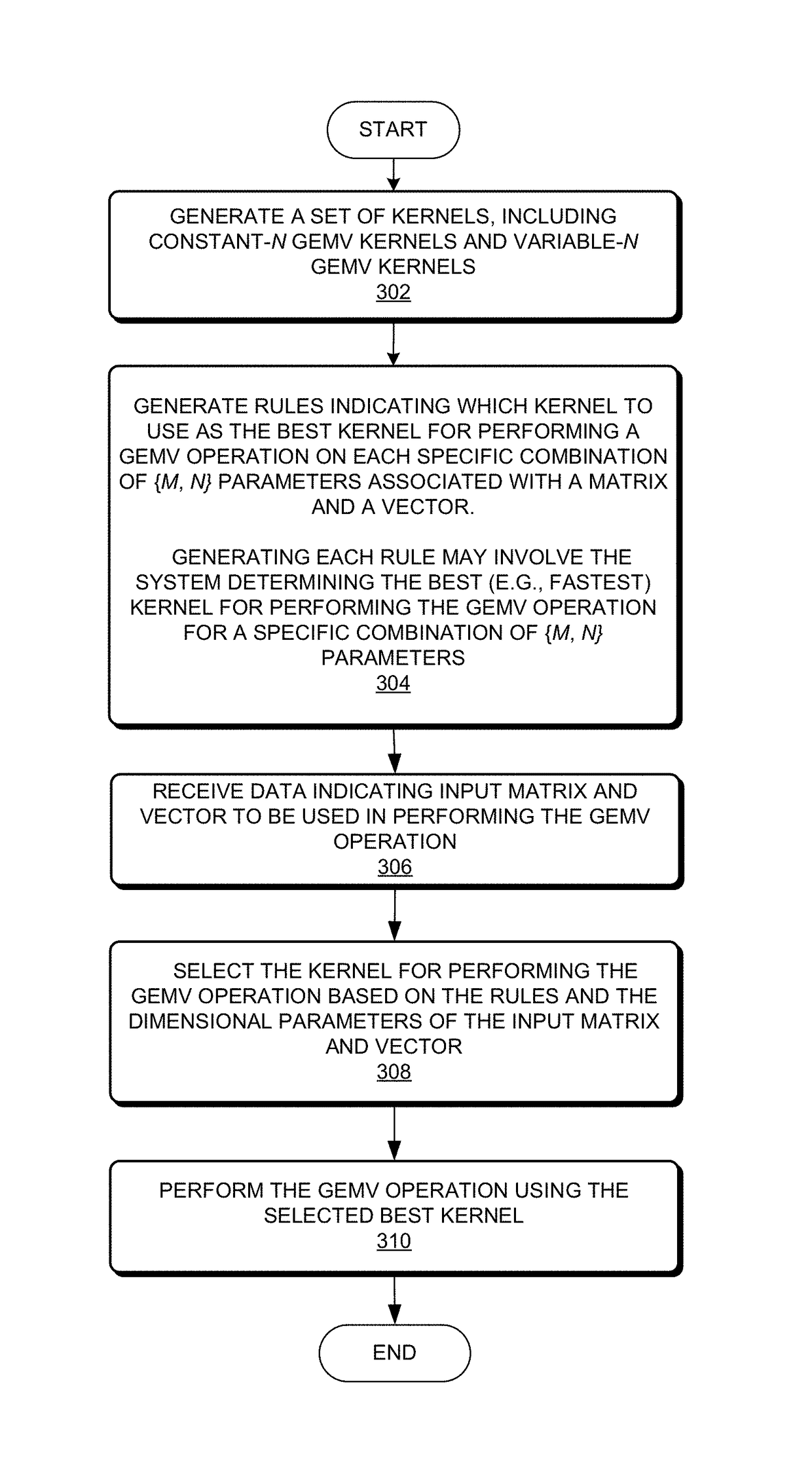

System and method for speeding up general matrix-matrix multiplication on the GPU

ActiveUS20170344514A1Increase the number ofTest can be redundantComplex mathematical operationsGraphicsComputational science

A method and system for performing general matrix-matrix multiplication (GEMM) operations on a graphics processor unit (GPU) using Smart kernels. During operation, the system may generate a set of kernels that includes at least one of a variable-dimension variable-K GEMM kernel, a variable-dimension constant-K GEMM kernel, or a combination thereof. A constant-K GEMM kernel performs computations for matrices with a specific value of K (e.g., the number of columns in a first matrix and the number of rows in a second matrix). Variable-dimension GEMM kernels allow for flexibility in the number of rows and columns used by a thread block to perform matrix multiplication for a sub-matrix. The system may generate rules to select the best (e.g., fastest) kernel for performing computations according to the particular parameter combination of the matrices being multiplied.

Owner:XEROX CORP

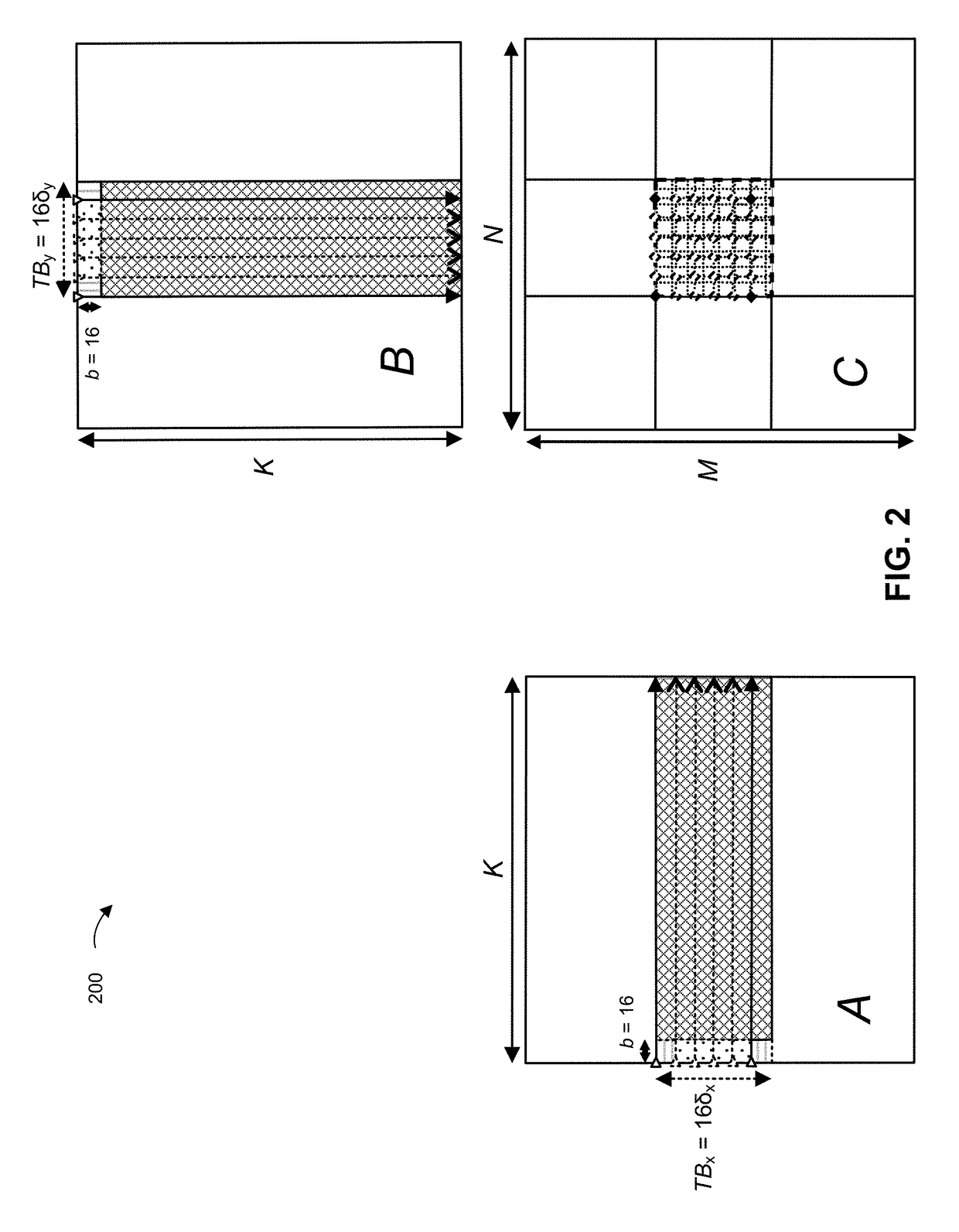

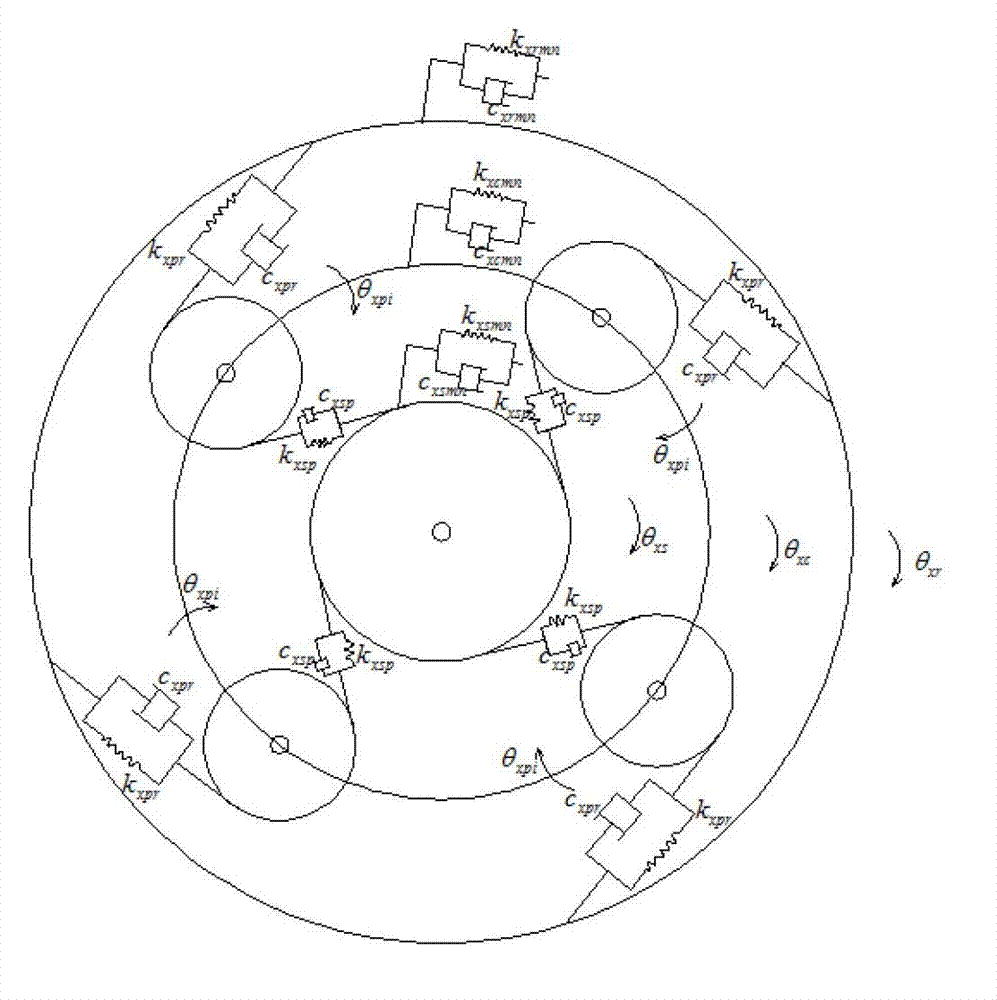

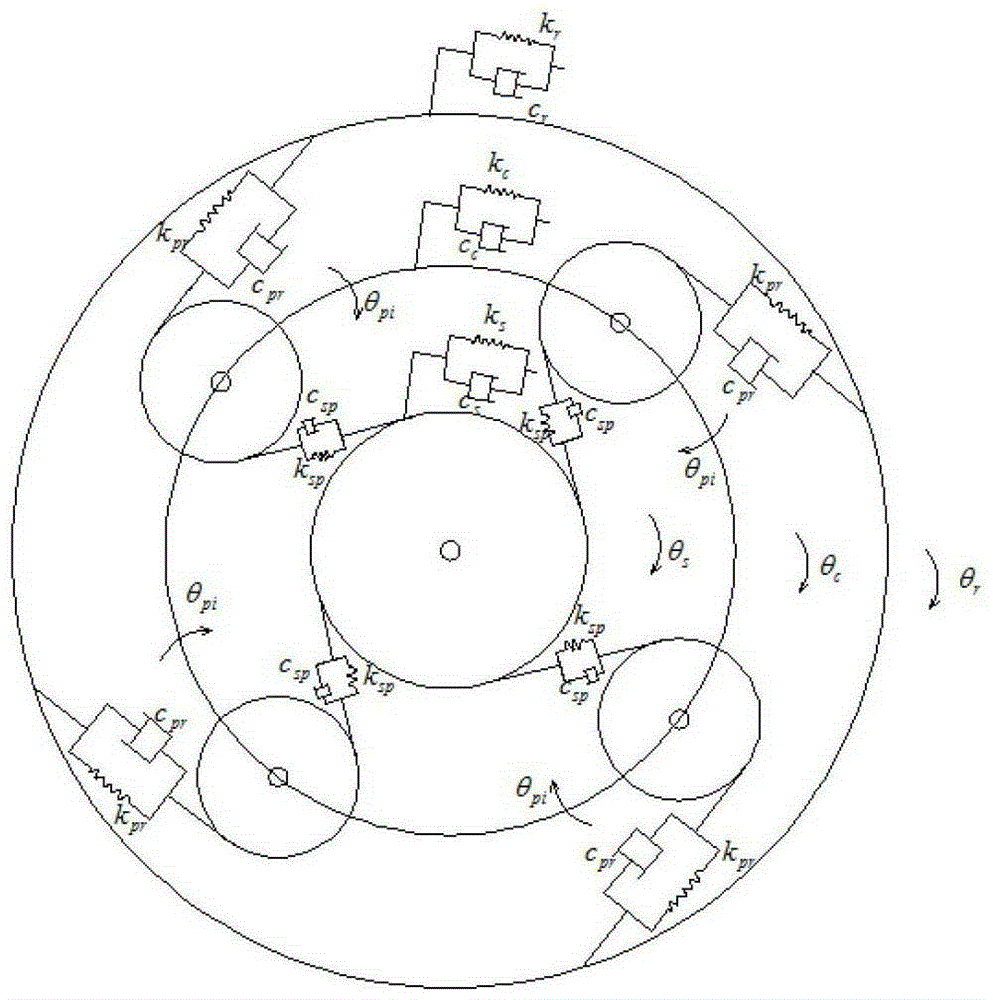

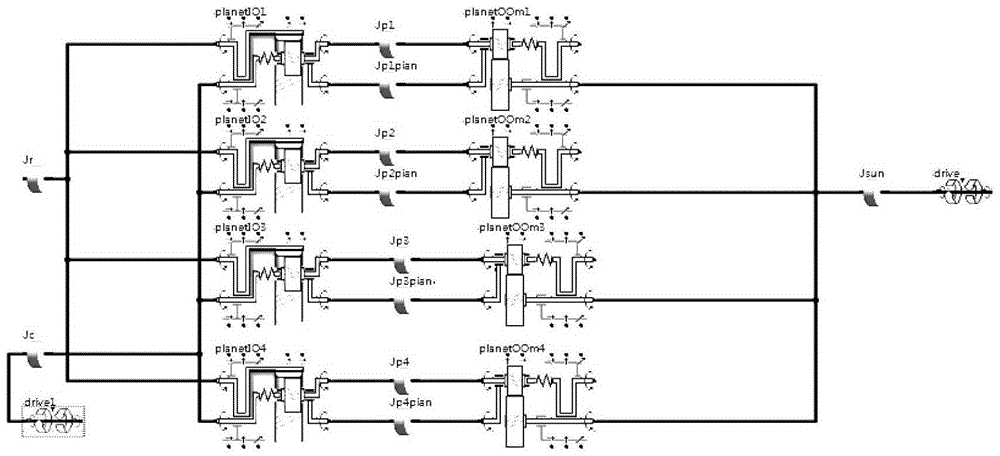

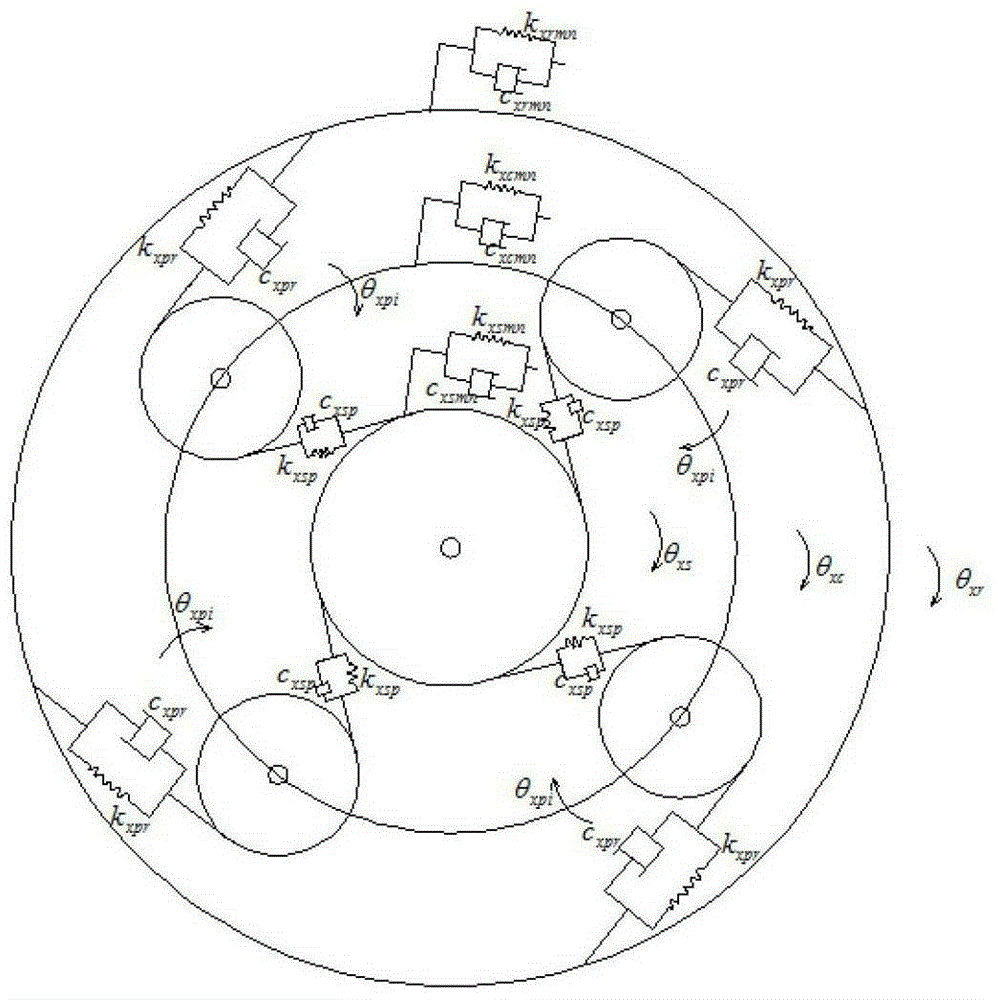

Method for analyzing torsional vibration inherent characteristic of planet gear transmission system

ActiveCN102968537AAccurate calculationVersatilitySpecial data processing applicationsSystem matrixEngineering

A method for analyzing a torsional vibration inherent characteristic of a planet gear transmission system comprises the following four steps: (1) carrying out mathematical modeling on pure torsional vibration of a single planet row with damping by using an Lagrangian equation; (2) analyzing the inherent characteristic of the transmission system; (3) verifying the influence of the damping on a fixed frequency through multigroup calculation; and (4) establishing a general matrix for the planet gear transmission system and carrying out modeling simulation verification through instance value calculation and simulation X. Through the adoption of the invention, an obtained damped natural vibration differential equation in the form of a parameterized matrix (a rigidity matrix, a damping matrix and a connecting matrix) in the planet transmission system is general; corresponding matrix forms are directly selected for corresponding parts according to different connecting structures and planet gear system parameters to construct an integral system matrix; and after parameters are brought in, the inherent characteristic of the transmission system is rapidly and accurately analyzed.

Owner:BEIHANG UNIV

System and method for speeding up general matrix-matrix multiplication on the GPU

ActiveUS10073815B2Reduce in quantityIncrease the number ofComplex mathematical operationsGraphicsComputational science

Owner:XEROX CORP

Method and system for conducting compression and query on sparse matrix

ActiveCN104809161AEfficient compressionAchieve direct accessSpecial data processing applicationsArray data structureAlgorithm

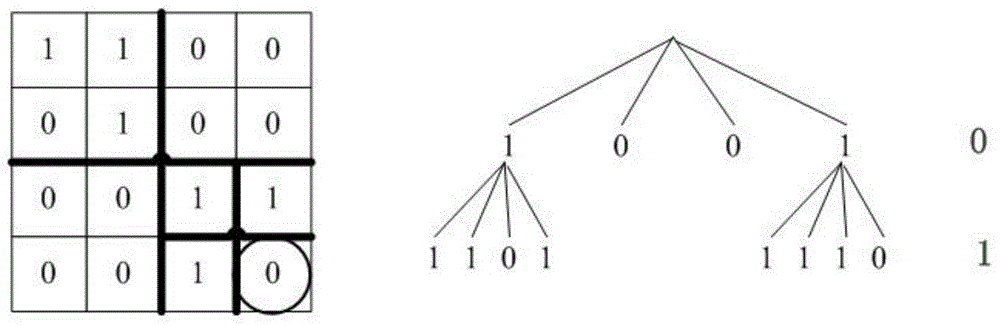

The invention relates to a method and system for conducting compression and query on a sparse matrix. According to the method, a k2-tree method is improved on the aspects of rank operation change and common matrix and non-zero matrix processing. Firstly, a sparse matrix to be processed is preprocessed to obtain a sparse matrix A of a square matrix with a unit value 0 or1; then, a k2-tree algorithm is adopted to obtain arrays T (tree) and L (leaves), the Rank array interval fixing digits are stored according to information in the T (tree) to obtain Rank (tree), V (leaves) and rank (leaves) values are obtained according to the L (leaves) and an original corresponding sparse matrix, and stored values in the sparse matrix A can be queried after coordinates of query units are input. The sparse matrix can be effectively compressed, query speed is higher, and more storage space is saved.

Owner:INST OF INFORMATION ENG CAS

Dataflow accelerator architecture for general matrix-matrix multiplication and tensor computation in deep learning

ActiveUS20200184001A1Lower latencyMemory architecture accessing/allocationSystolic arraysGeneral matrixData stream

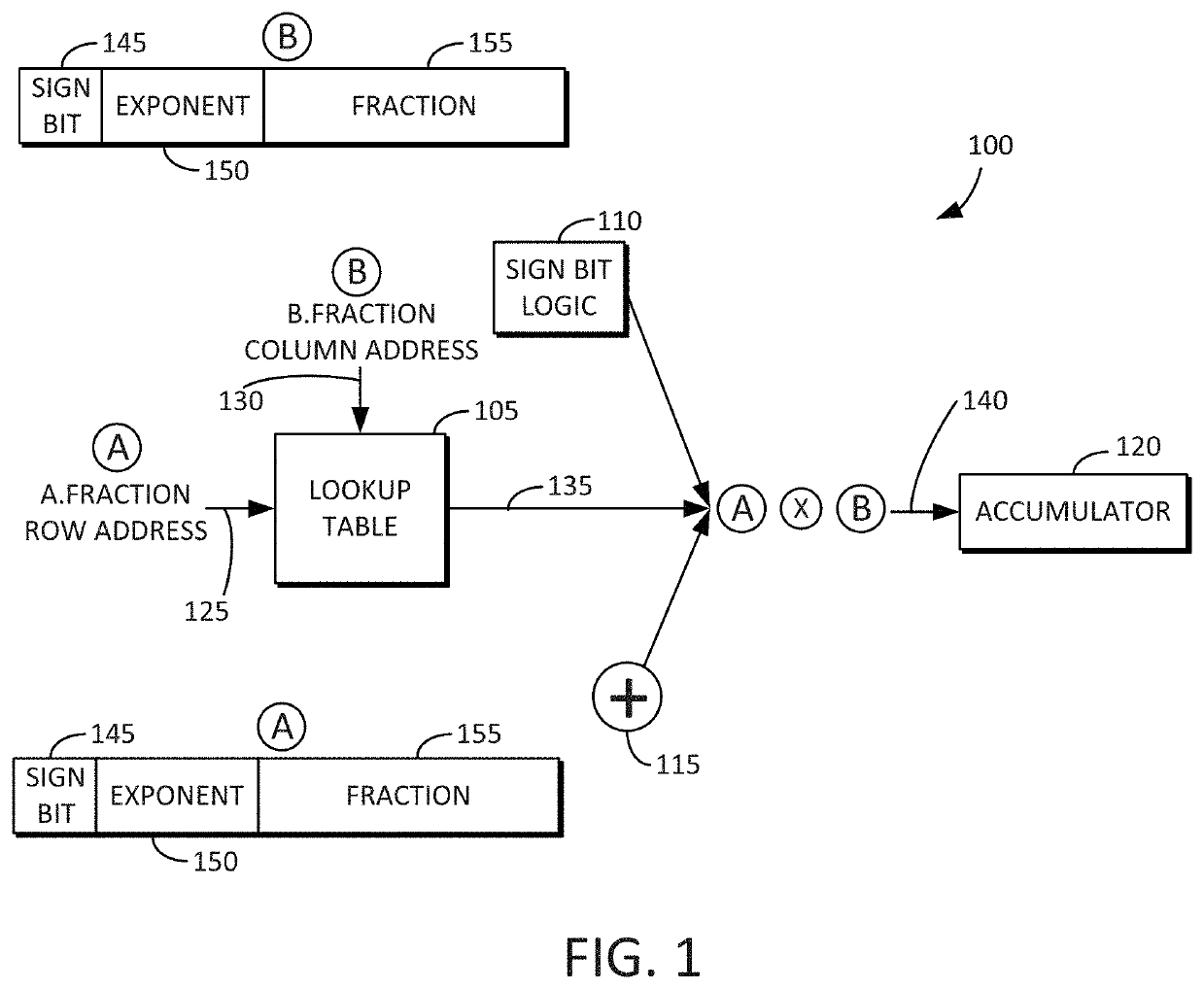

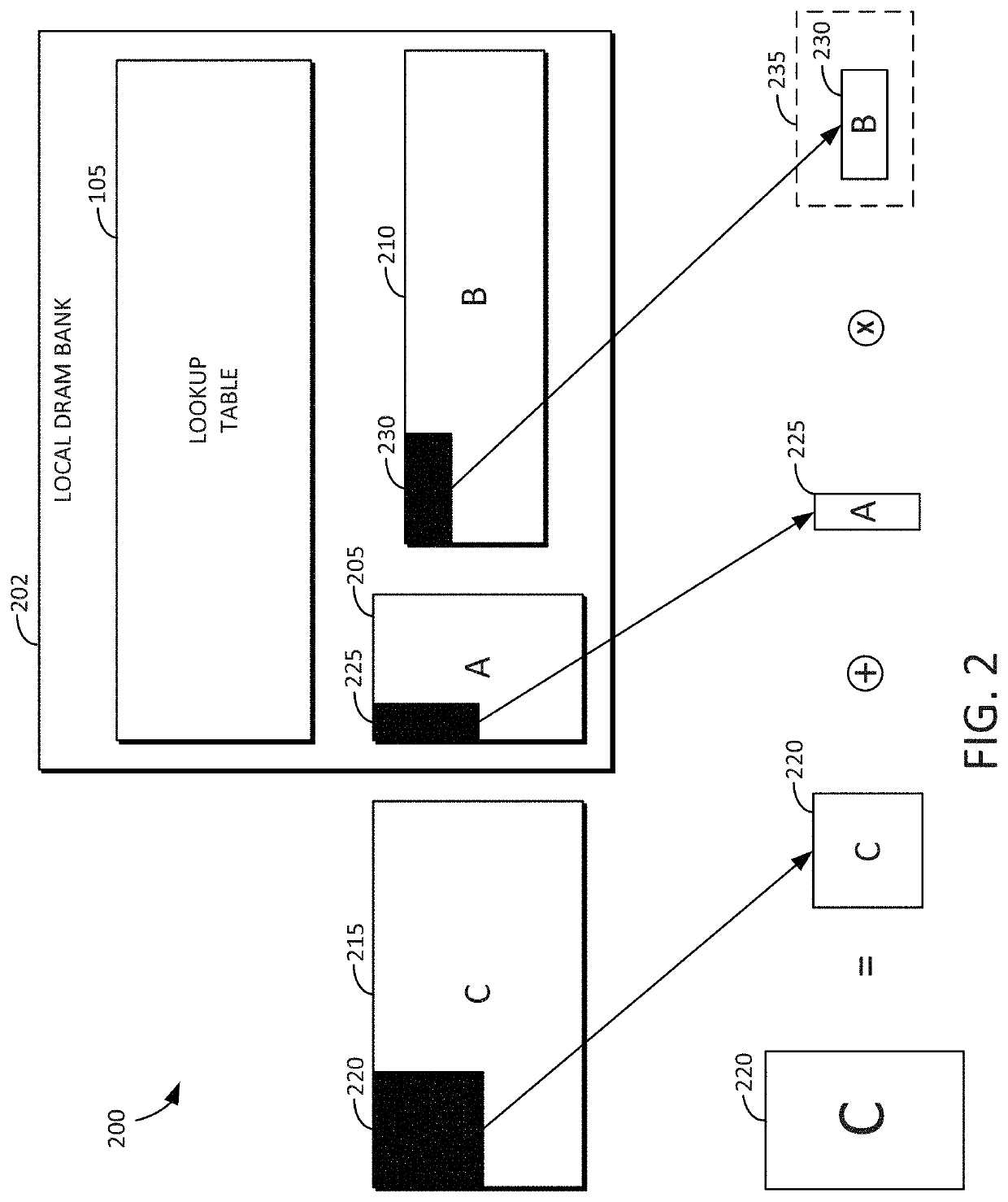

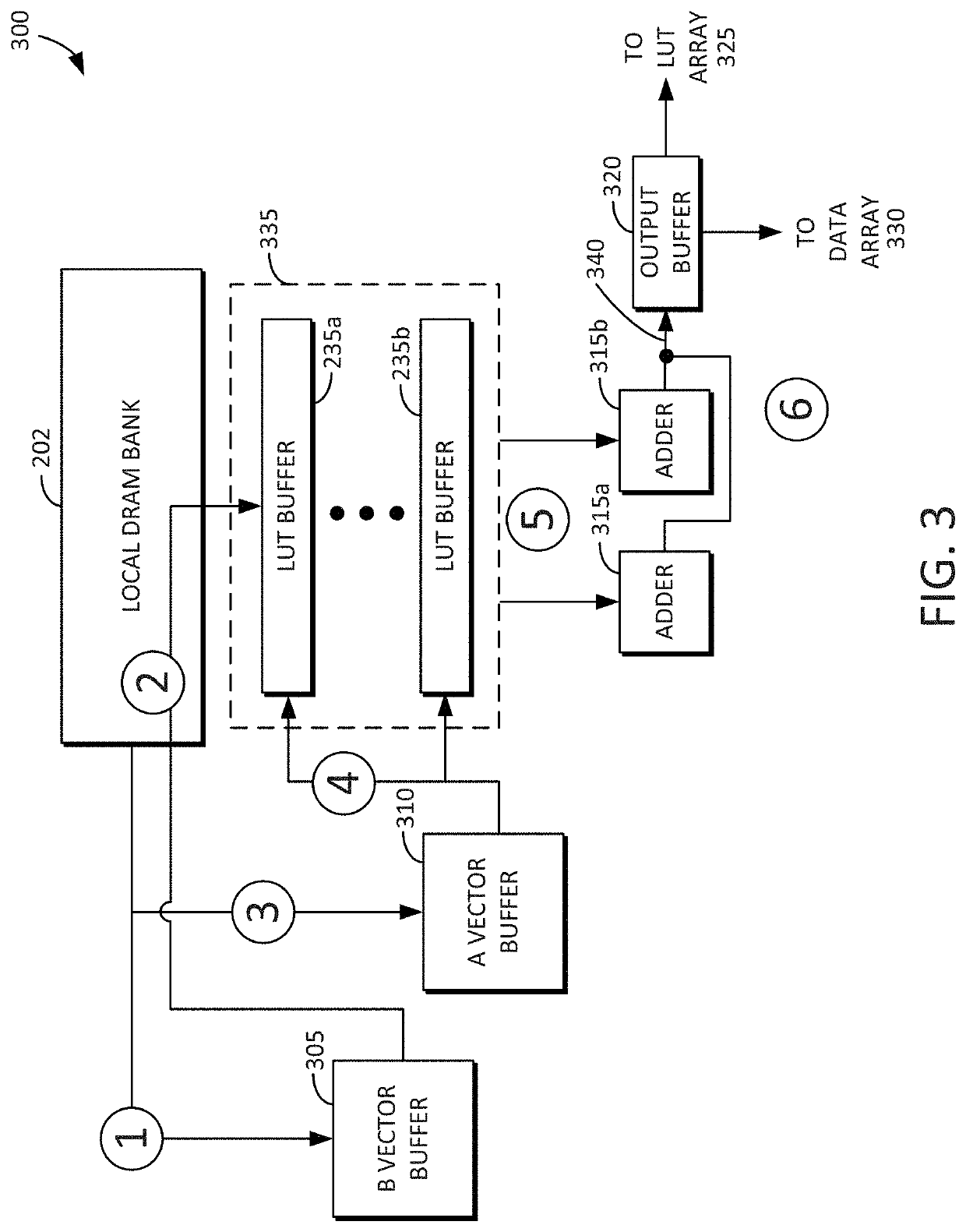

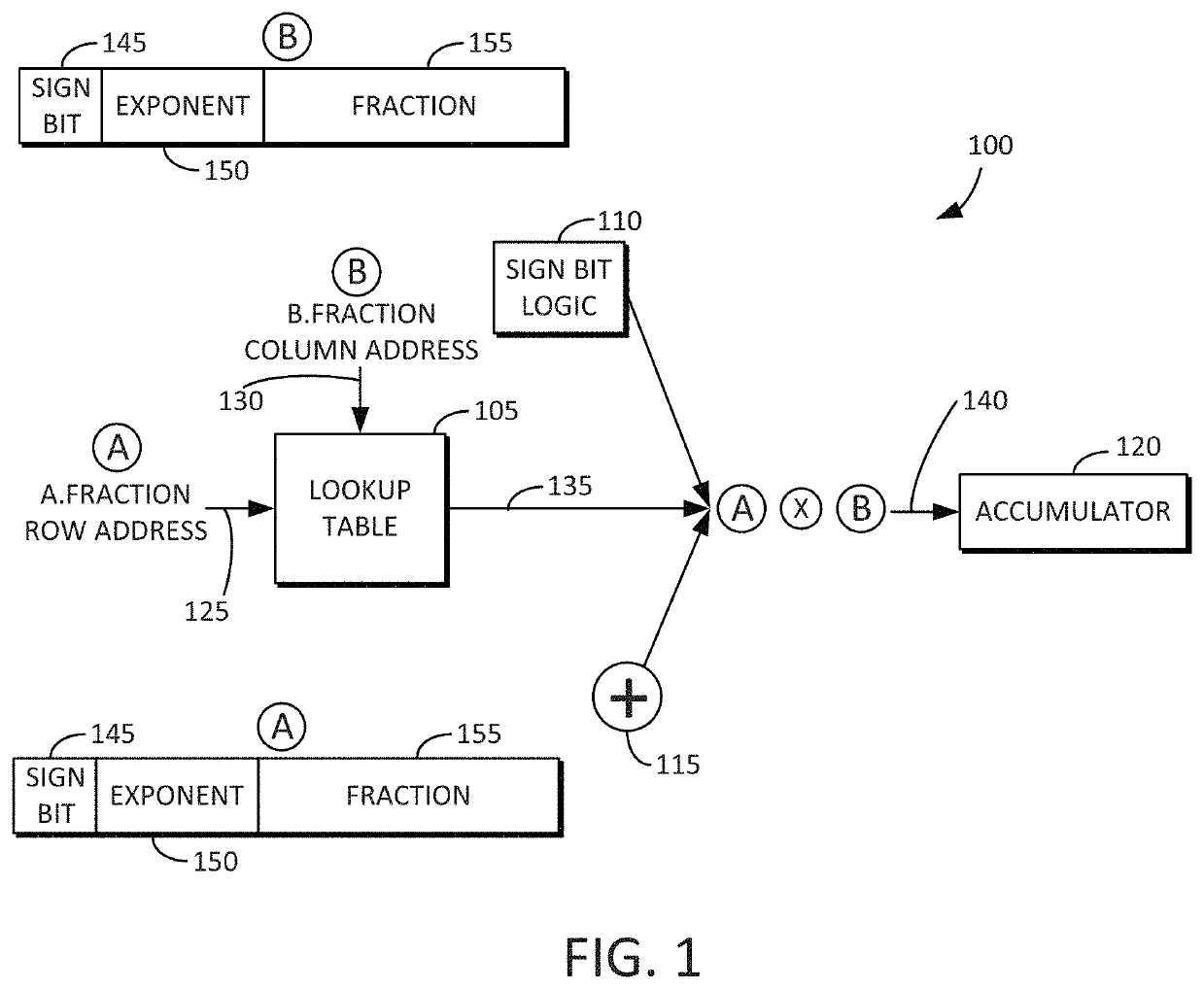

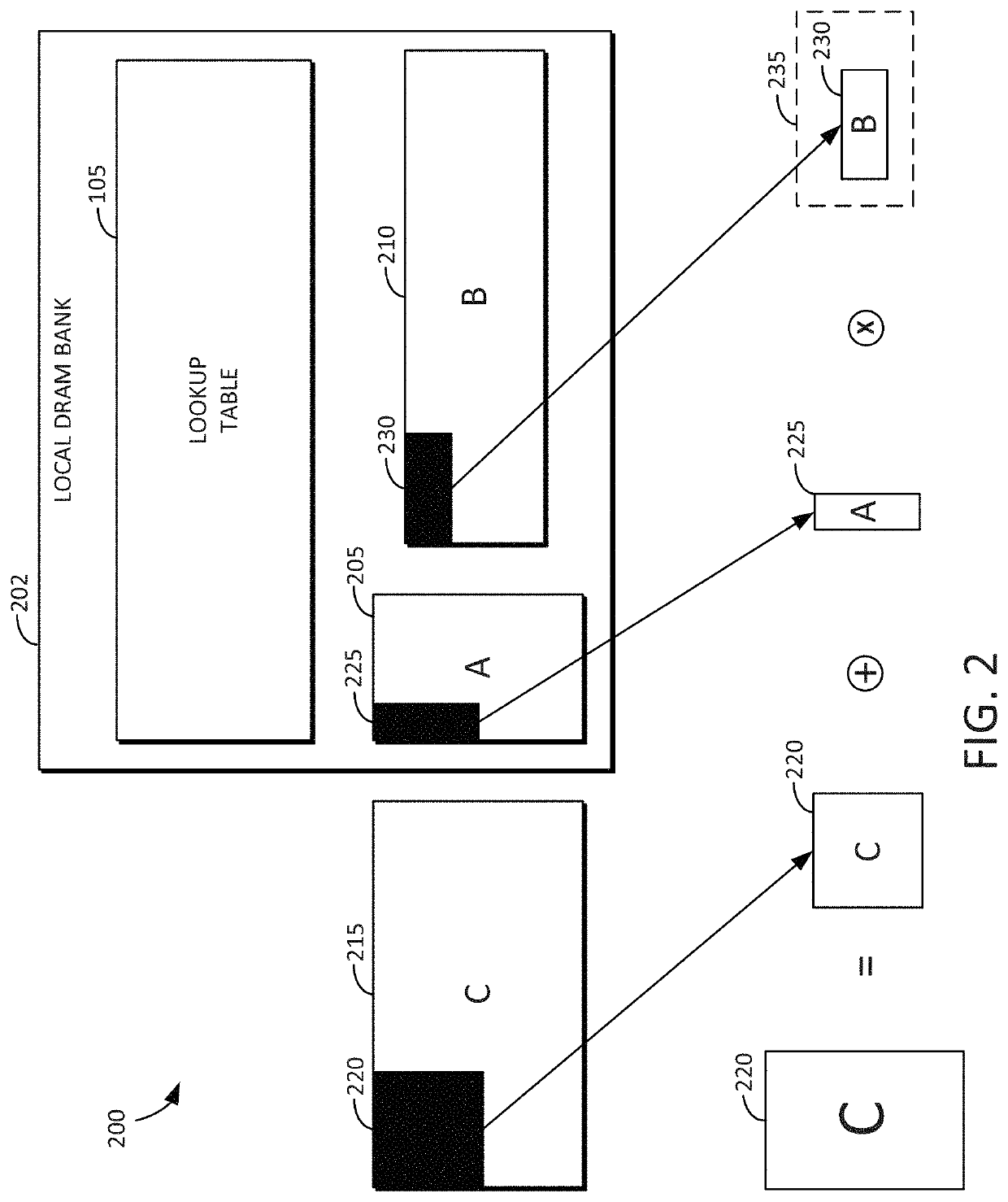

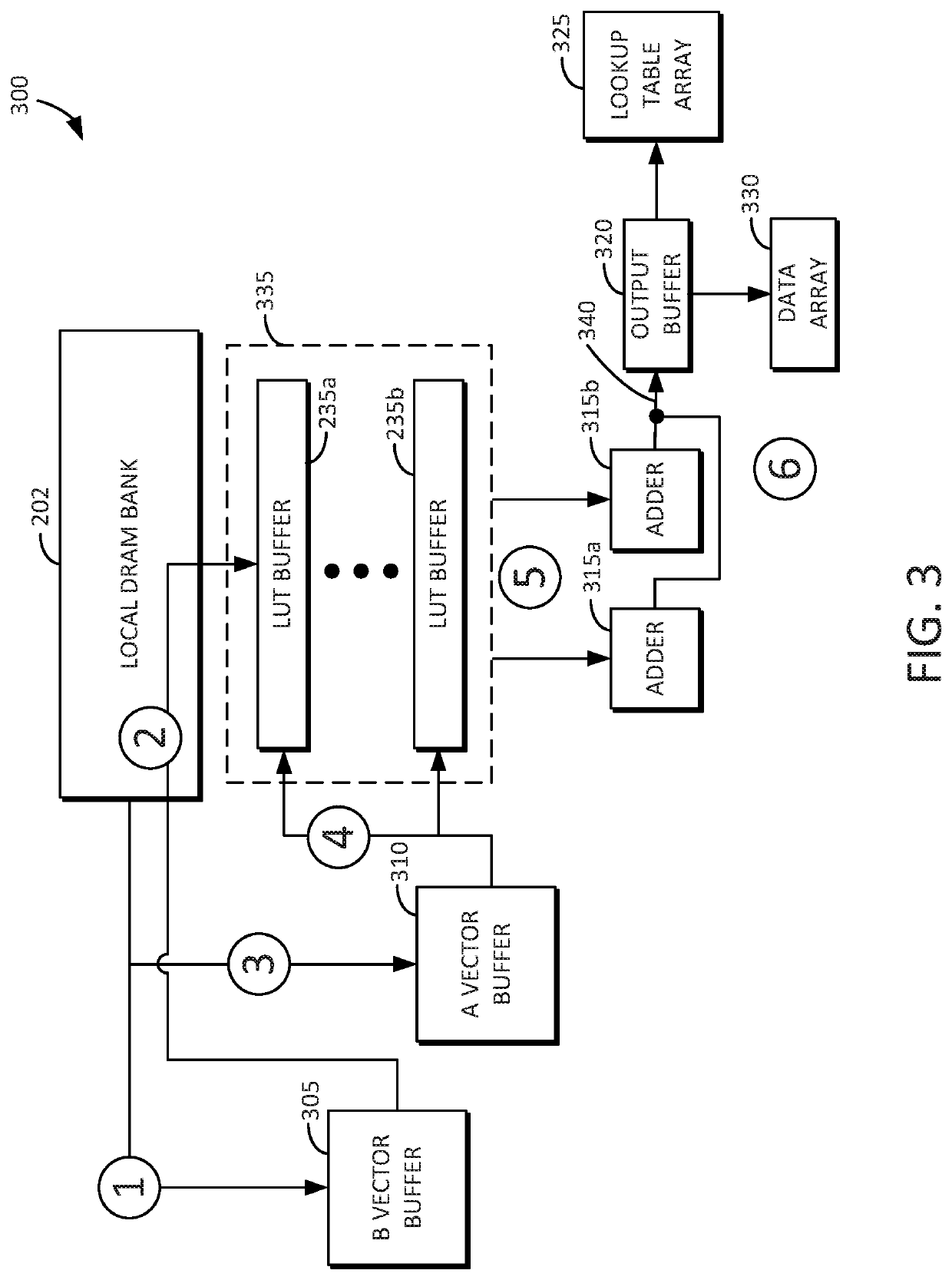

A general matrix-matrix multiplication (GEMM) dataflow accelerator circuit is disclosed that includes a smart 3D stacking DRAM architecture. The accelerator circuit includes a memory bank, a peripheral lookup table stored in the memory bank, and a first vector buffer to store a first vector that is used as a row address into the lookup table. The circuit includes a second vector buffer to store a second vector that is used as a column address into the lookup table, and lookup table buffers to receive and store lookup table entries from the lookup table. The circuit further includes adders to sum the first product and a second product, and an output buffer to store the sum. The lookup table buffers determine a product of the first vector and the second vector without performing a multiply operation. The embodiments include a hierarchical lookup architecture to reduce latency. Accumulation results are propagated in a systolic manner.

Owner:SAMSUNG ELECTRONICS CO LTD

Dataflow accelerator architecture for general matrix-matrix multiplication and tensor computation in deep learning

ActiveUS11100193B2Lower latencyMemory architecture accessing/allocationSystolic arraysGeneral matrixData stream

A general matrix-matrix multiplication (GEMM) dataflow accelerator circuit is disclosed that includes a smart 3D stacking DRAM architecture. The accelerator circuit includes a memory bank, a peripheral lookup table stored in the memory bank, and a first vector buffer to store a first vector that is used as a row address into the lookup table. The circuit includes a second vector buffer to store a second vector that is used as a column address into the lookup table, and lookup table buffers to receive and store lookup table entries from the lookup table. The circuit further includes adders to sum the first product and a second product, and an output buffer to store the sum. The lookup table buffers determine a product of the first vector and the second vector without performing a multiply operation. The embodiments include a hierarchical lookup architecture to reduce latency. Accumulation results are propagated in a systolic manner.

Owner:SAMSUNG ELECTRONICS CO LTD

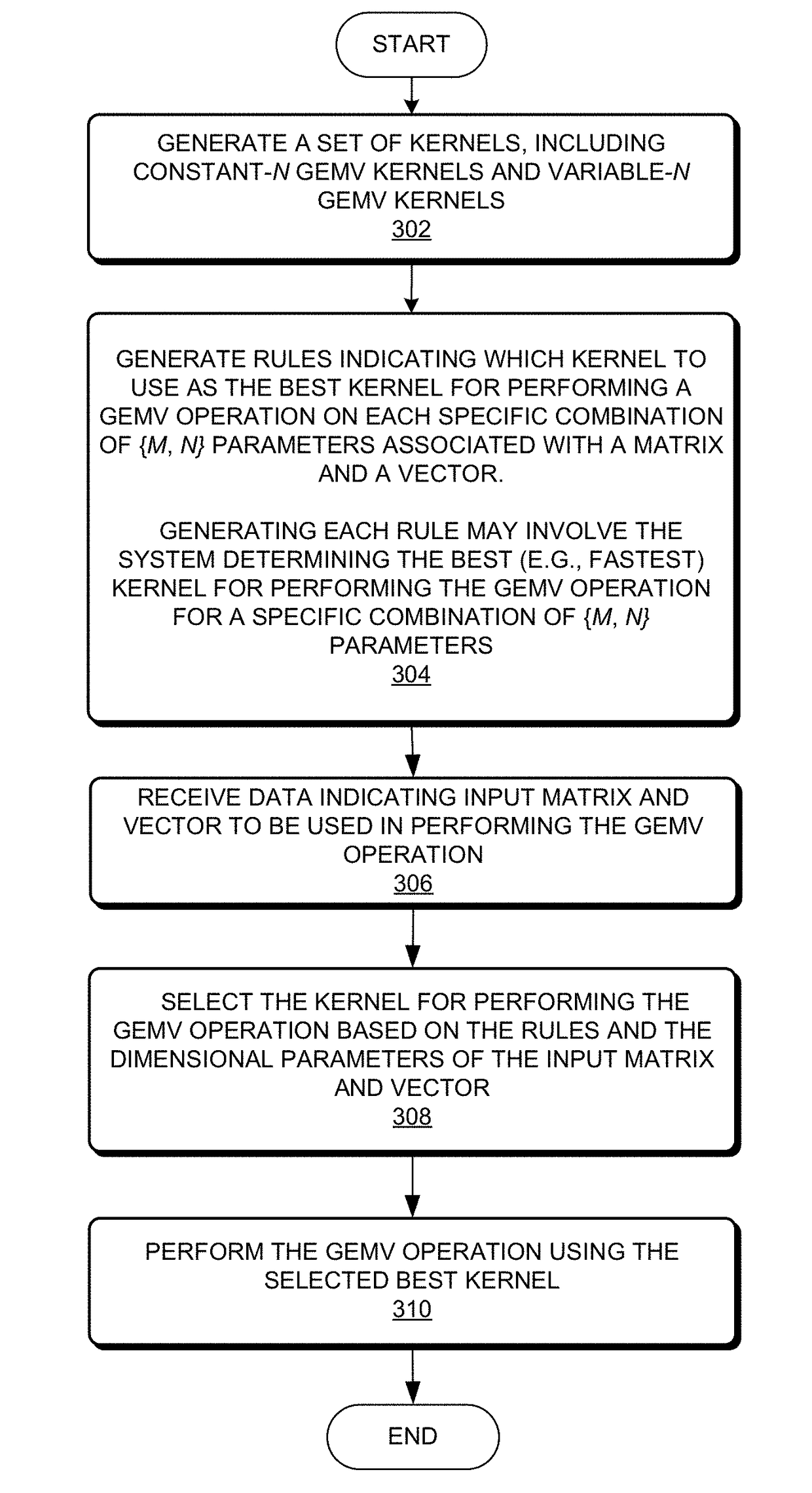

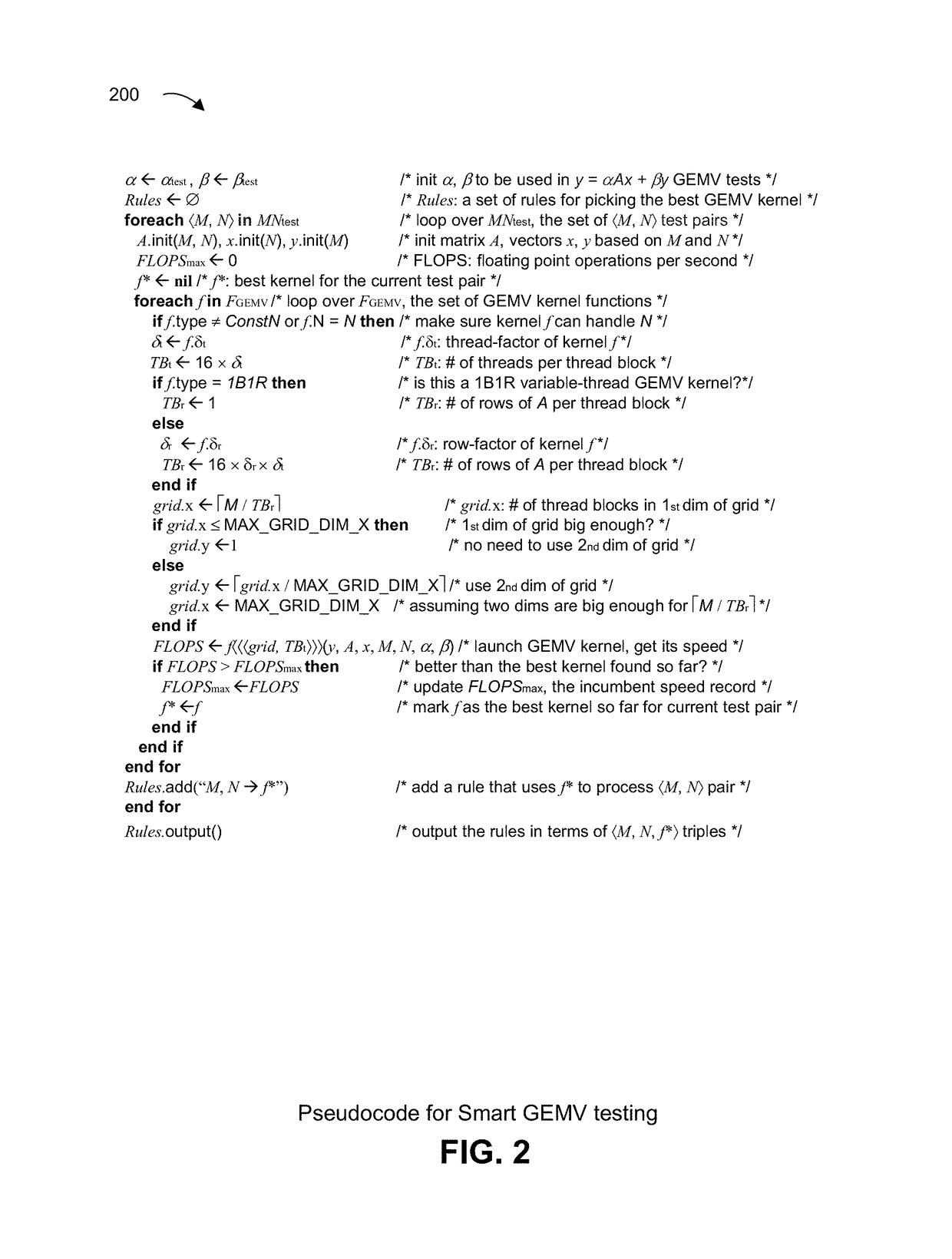

System and method for speeding up general matrix-vector multiplication on GPU

InactiveUS20170372447A1Increase the number of rowsReduce in quantityImage memory managementComputational scienceGraphics

A method and system for performing general matrix-vector multiplication (GEMV) operations on a graphics processor unit (GPU) using Smart kernels. During operation, the system may generate a set of kernels that includes at least one of a variable-N GEMV kernel and a constant-N GEMV kernel. A constant-N GEMV kernel performs computations for matrix and vector combinations with a specific value of N (e.g., the number of columns in a matrix and the number of rows in a vector). Variable-N GEMV kernels may perform computations for all values of N. The system may also generate 1B1R kernels, constant-N variable-rows GEMV kernels, and variable-N variable-rows GEMV kernels. The system may generate constant-N variable-threads GEMV kernels, and variable-N variable-threads GEMV kernels. The system may also generate variable-threads-rows GEMV kernels for the set. This may include ConstN kernels (e.g., constant-N variable-threads-rows GEMV kernels), and VarN kernels (e.g., variable-N variable-threads-rows GEMV kernels).

Owner:PALO ALTO RES CENT INC

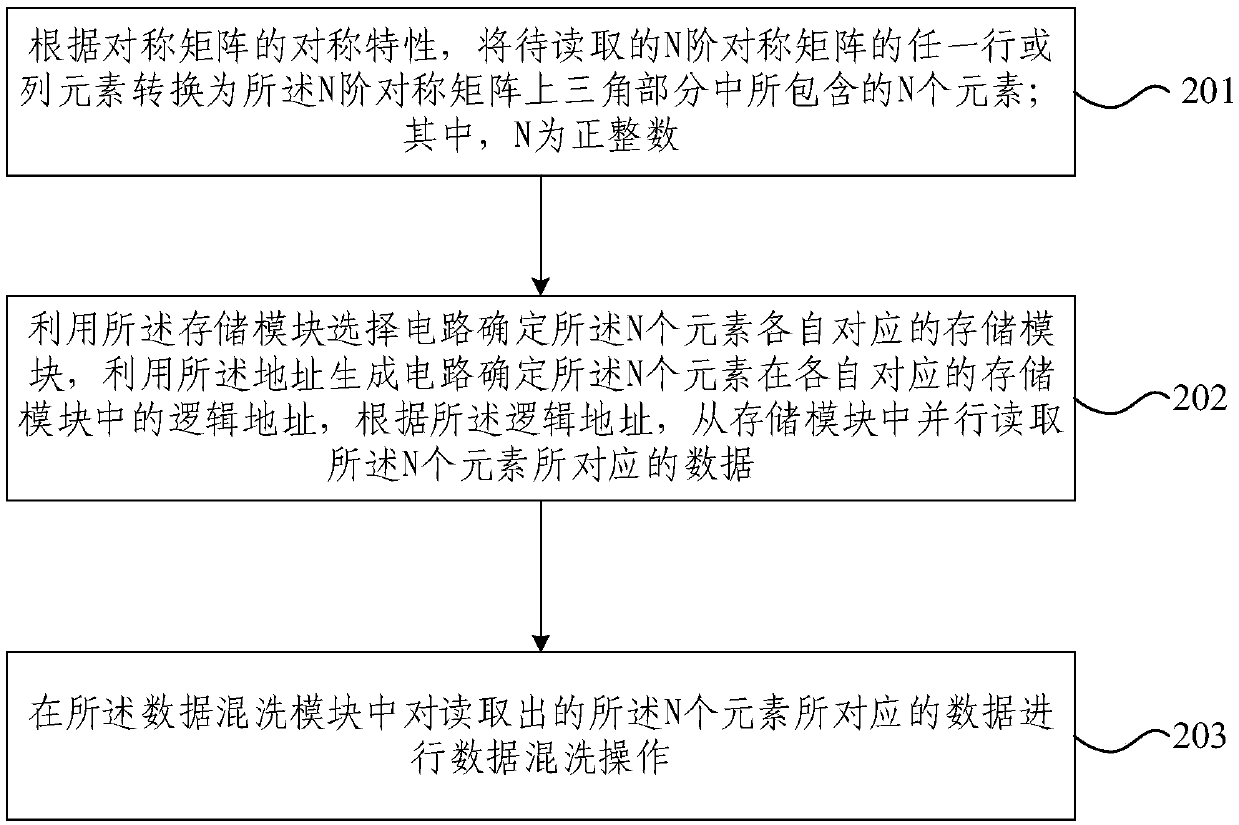

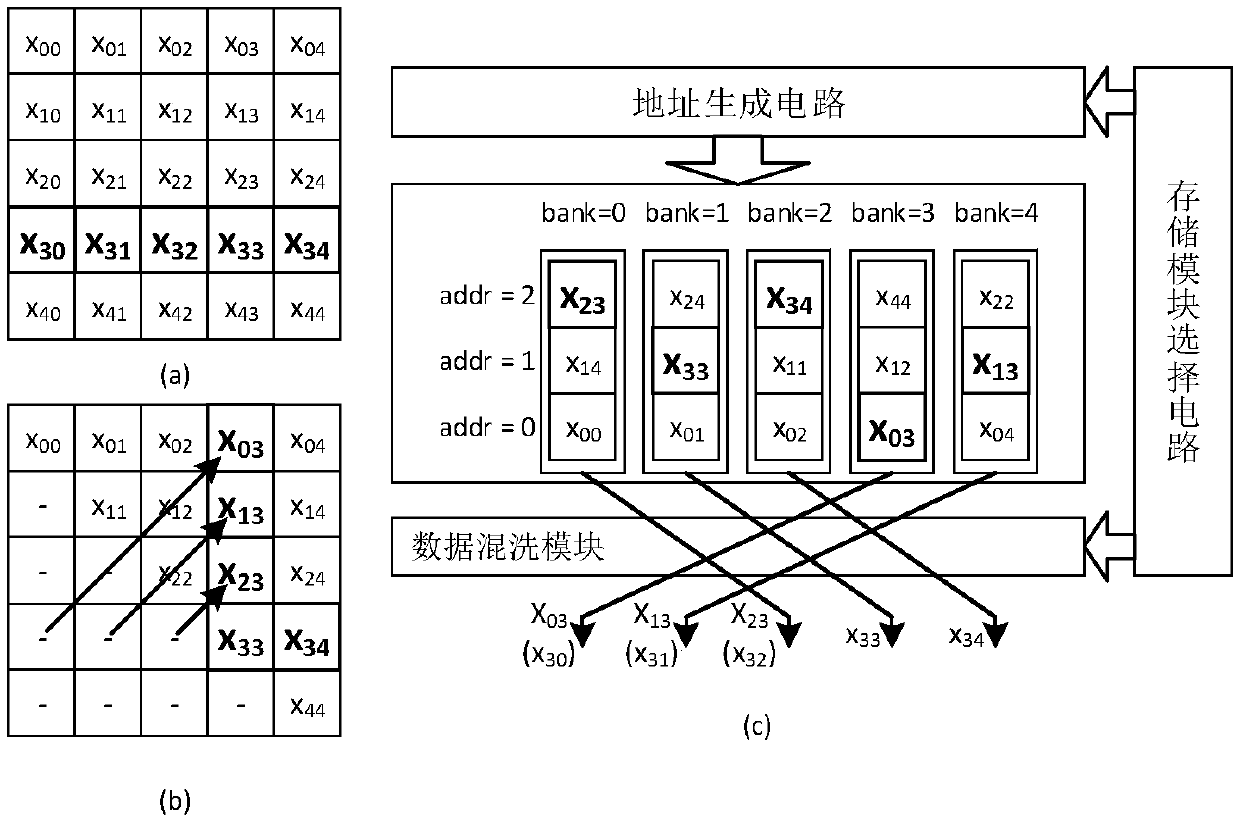

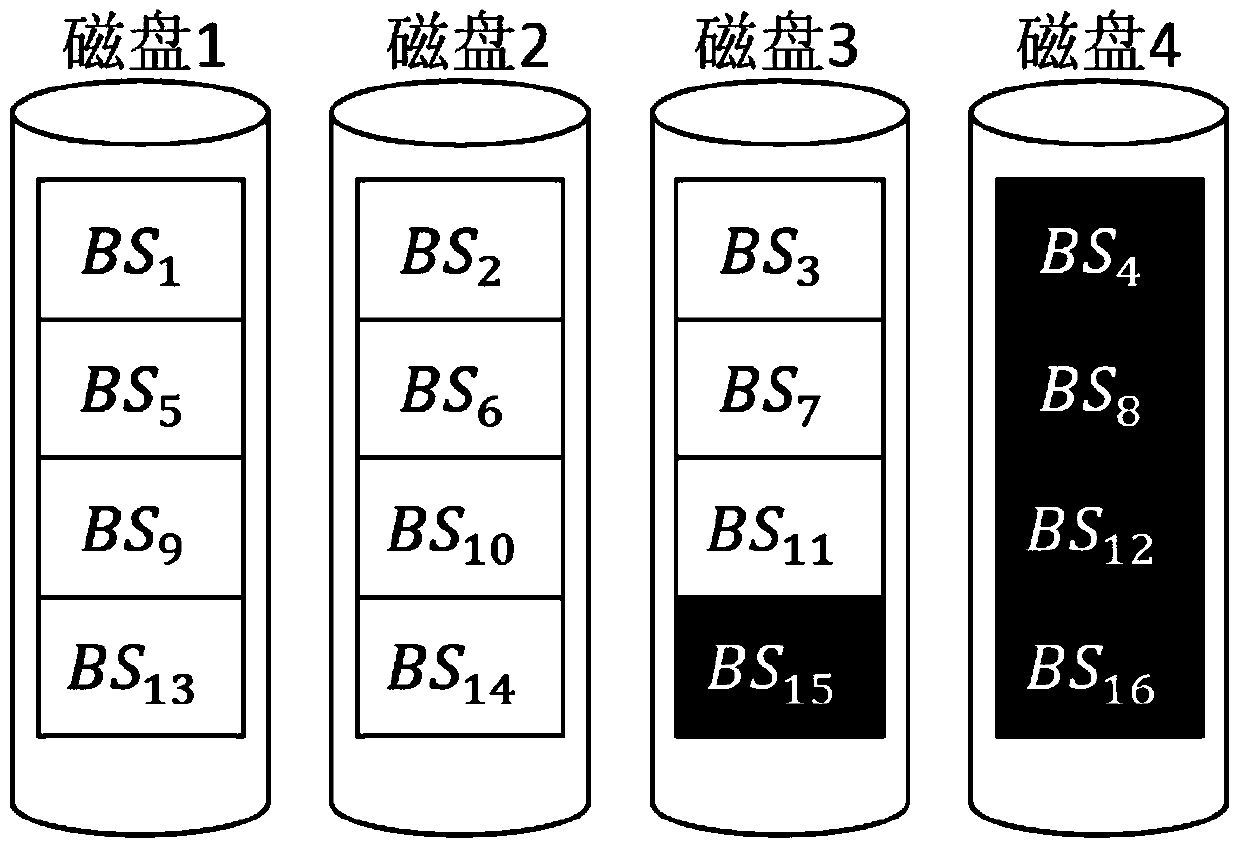

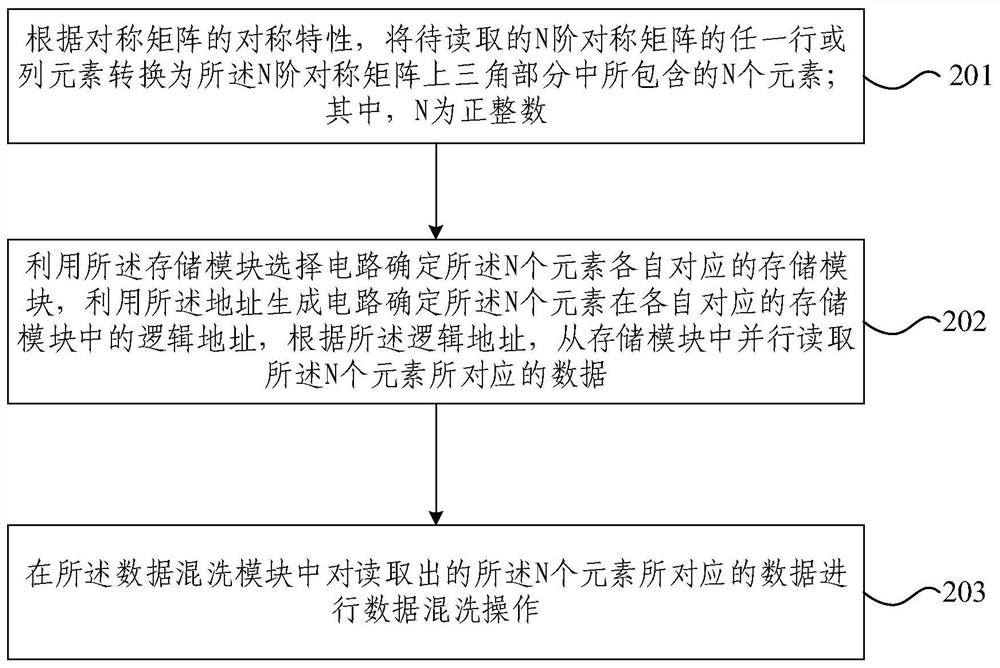

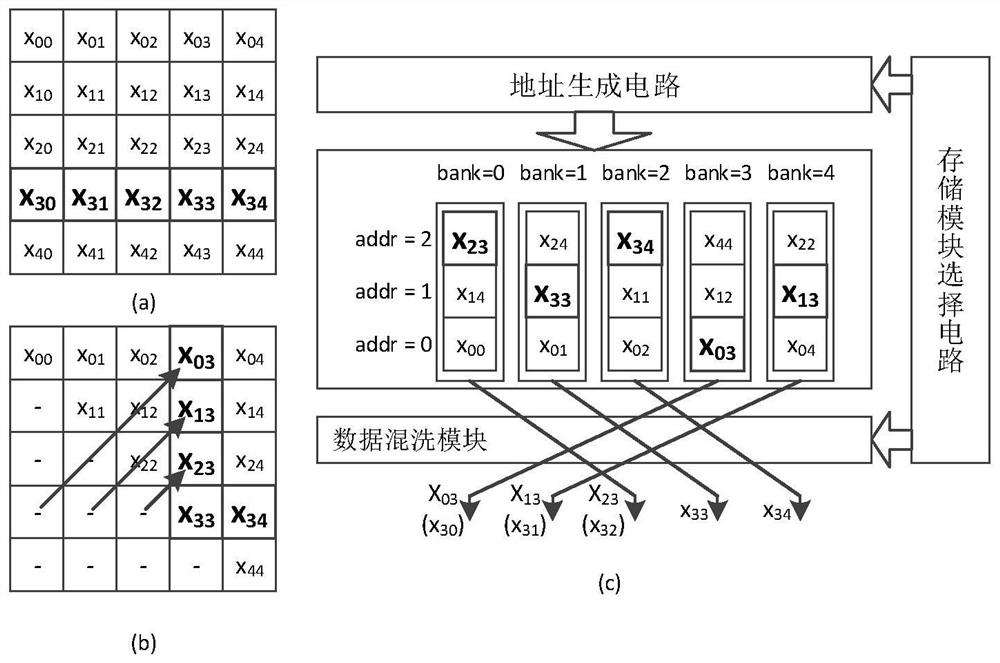

Upper triangular part storage device of a symmetric matrix and a parallel reading method

ActiveCN109614149ATake advantage ofImprove algorithm efficiencyConcurrent instruction executionMemory systemsGeneral matrixParallel computing

The embodiment of the invention provides an upper triangular part storage device of a symmetric matrix and a parallel reading method, and the device comprises a storage module selection circuit whichis used for selecting a storage module corresponding to each element of the upper triangular part of the symmetric matrix to be accessed; the address generation circuit is used for calculating a logicaddress of each element of the triangular part on the symmetric matrix to be accessed in the corresponding storage module; the m parallel storage modules are used for storing data corresponding to elements of the triangular part on the symmetric matrix to be accessed; and the data shuffling module is used for performing shuffling operation on the data read from the storage module. According to the embodiment of the invention, only the upper triangular part of the symmetric matrix needs to be stored, any row vector and any column vector of the symmetric matrix are read and recovered in parallel, and a parallel computing unit of hardware can be fully utilized, so that the algorithm efficiency of symmetric matrix operation can be improved to an algorithm efficiency level of general matrix operation.

Owner:极芯通讯技术(南京)有限公司

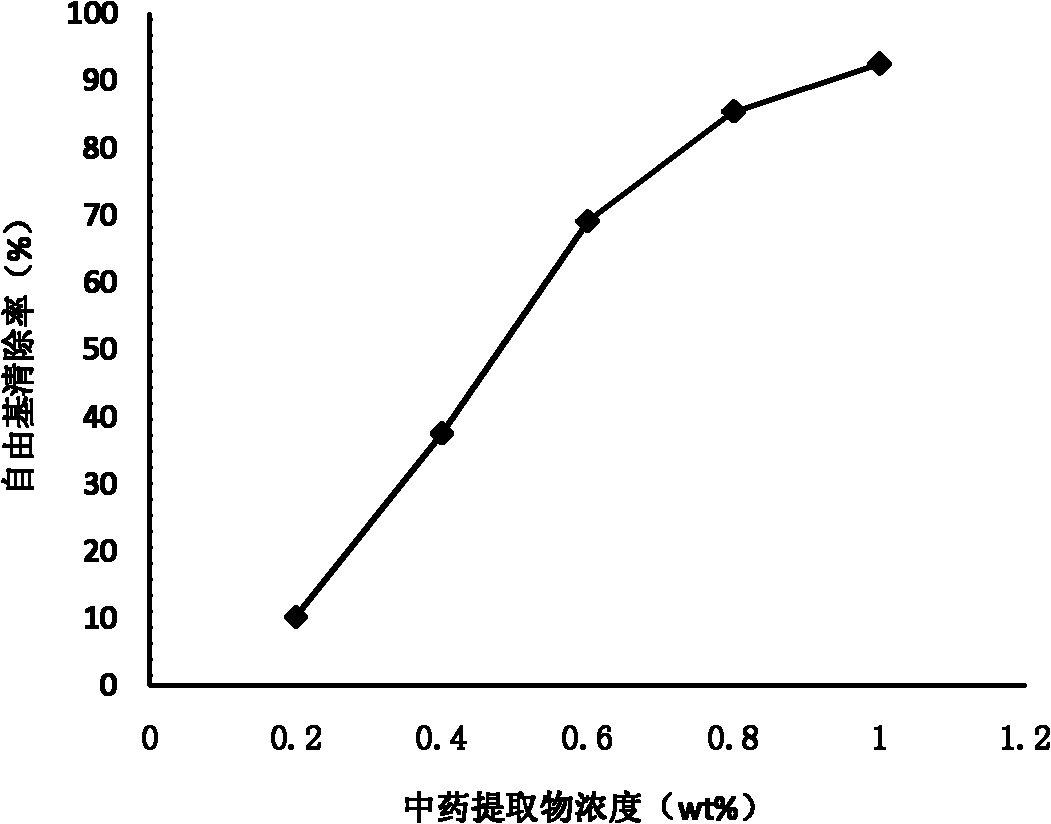

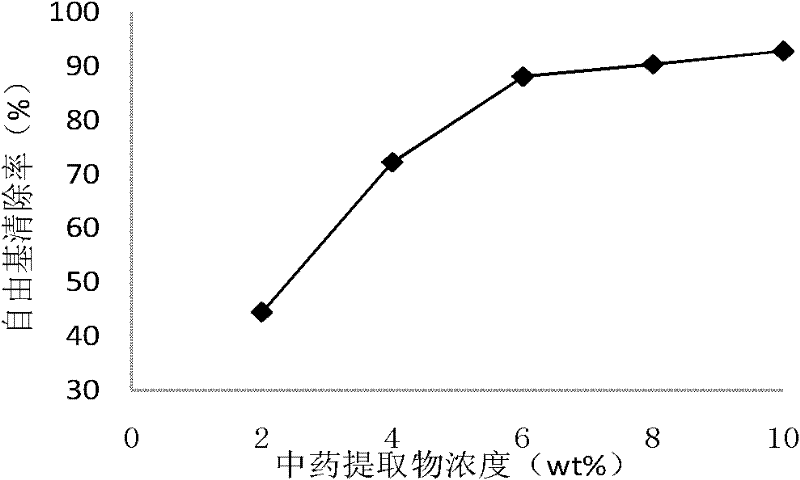

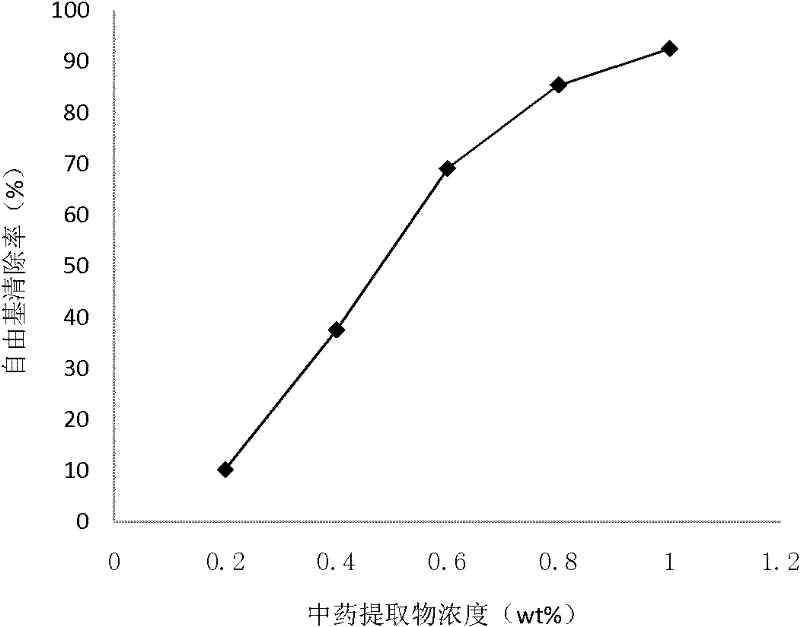

Skin care combination with effect of dispelling wrinkles, preparation and preparation method

ActiveCN102178631AImprove anti-wrinkle effectEasy to prepareCosmetic preparationsToilet preparationsGeneral matrixAdditive ingredient

The invention provides a traditional Chinese medicine mask with anti-wrinkle effect and a preparation method of the mask. Based on the traditional Chinese medicine theory of our nation, the mask aims for skin health and is formulated with the prescription traditional Chinese medicine extract as the main effect additive and the general matrix ingredients in cosmetics as the auxiliary material. The traditional Chinese medicine mask is not only simple in technology, natural and mild, safe and free of stimulation and easy to absorb, but also capable of invigorating the circulation of blood and eliminating stasis, promoting the circulation of qi and dredging collaterals, clearing radical and improving facial metabolism, thus moistening skin and deferring skin aging.

Owner:董银卯

System and method for speeding up general matrix-vector multiplication on GPU

InactiveUS10032247B2Reduce in quantityImage memory managementCathode-ray tube indicatorsGraphicsComputational science

A method and system for performing general matrix-vector multiplication (GEMV) operations on a graphics processor unit (GPU) using Smart kernels. During operation, the system may generate a set of kernels that includes at least one of a variable-N GEMV kernel and a constant-N GEMV kernel. A constant-N GEMV kernel performs computations for matrix and vector combinations with a specific value of N (e.g., the number of columns in a matrix and the number of rows in a vector). Variable-N GEMV kernels may perform computations for all values of N. The system may also generate 1B1R kernels, constant-N variable-rows GEMV kernels, and variable-N variable-rows GEMV kernels. The system may generate constant-N variable-threads GEMV kernels, and variable-N variable-threads GEMV kernels. The system may also generate variable-threads-rows GEMV kernels for the set. This may include ConstN kernels (e.g., constant-N variable-threads-rows GEMV kernels), and VarN kernels (e.g., variable-N variable-threads-rows GEMV kernels).

Owner:PALO ALTO RES CENT INC

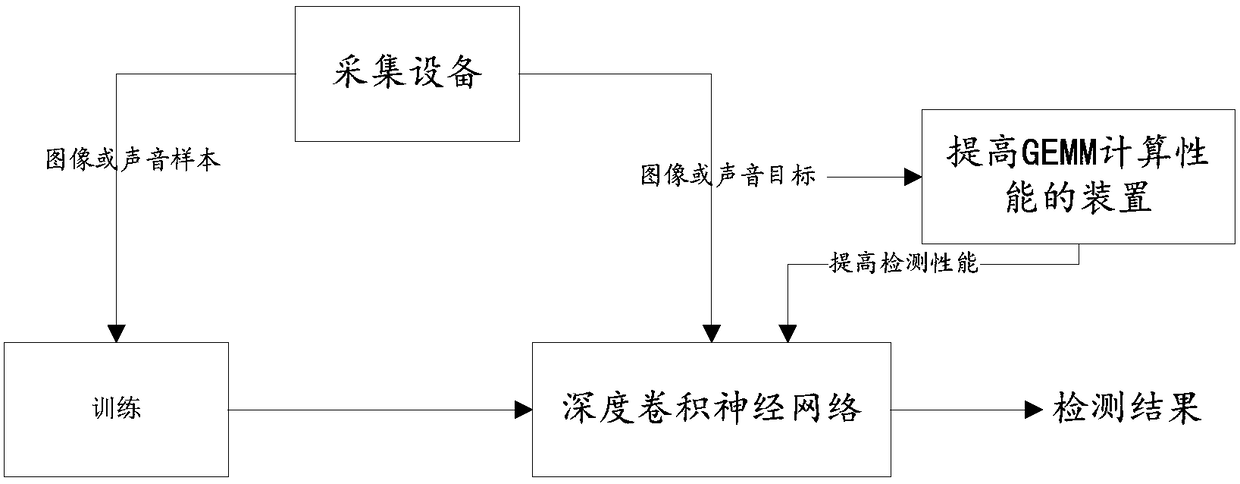

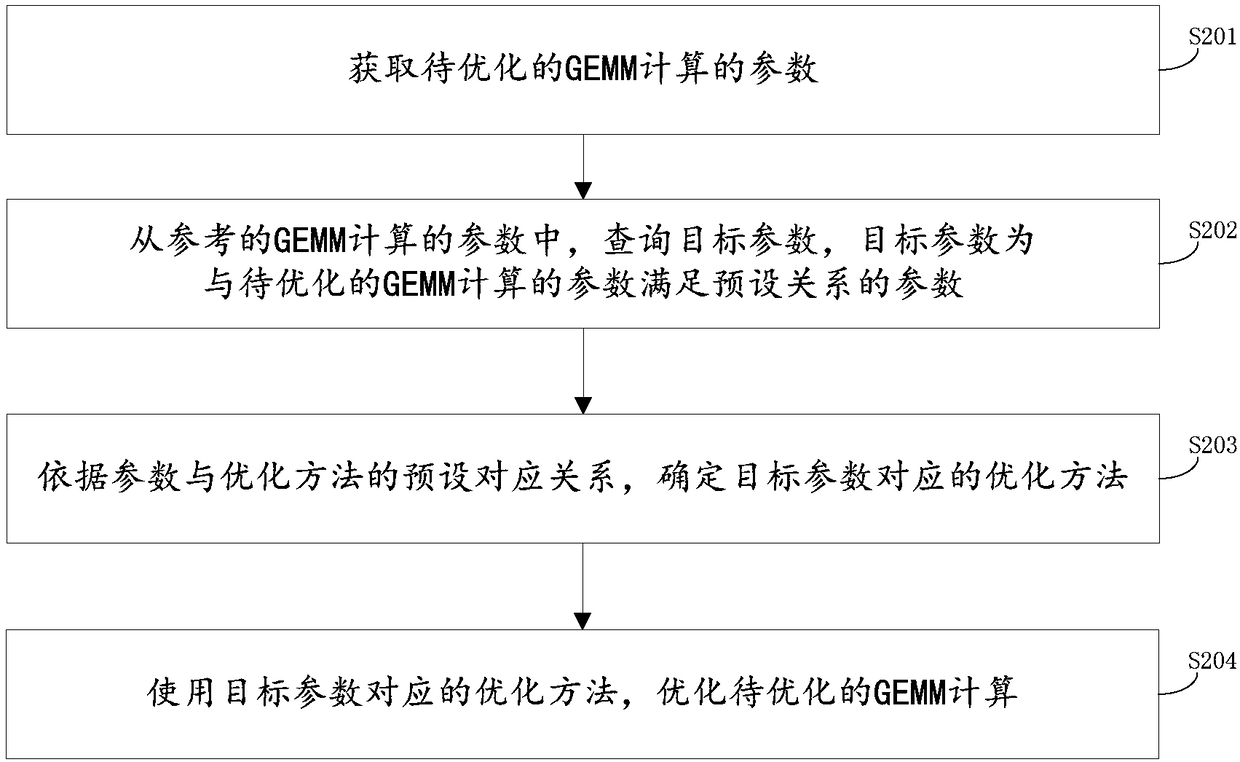

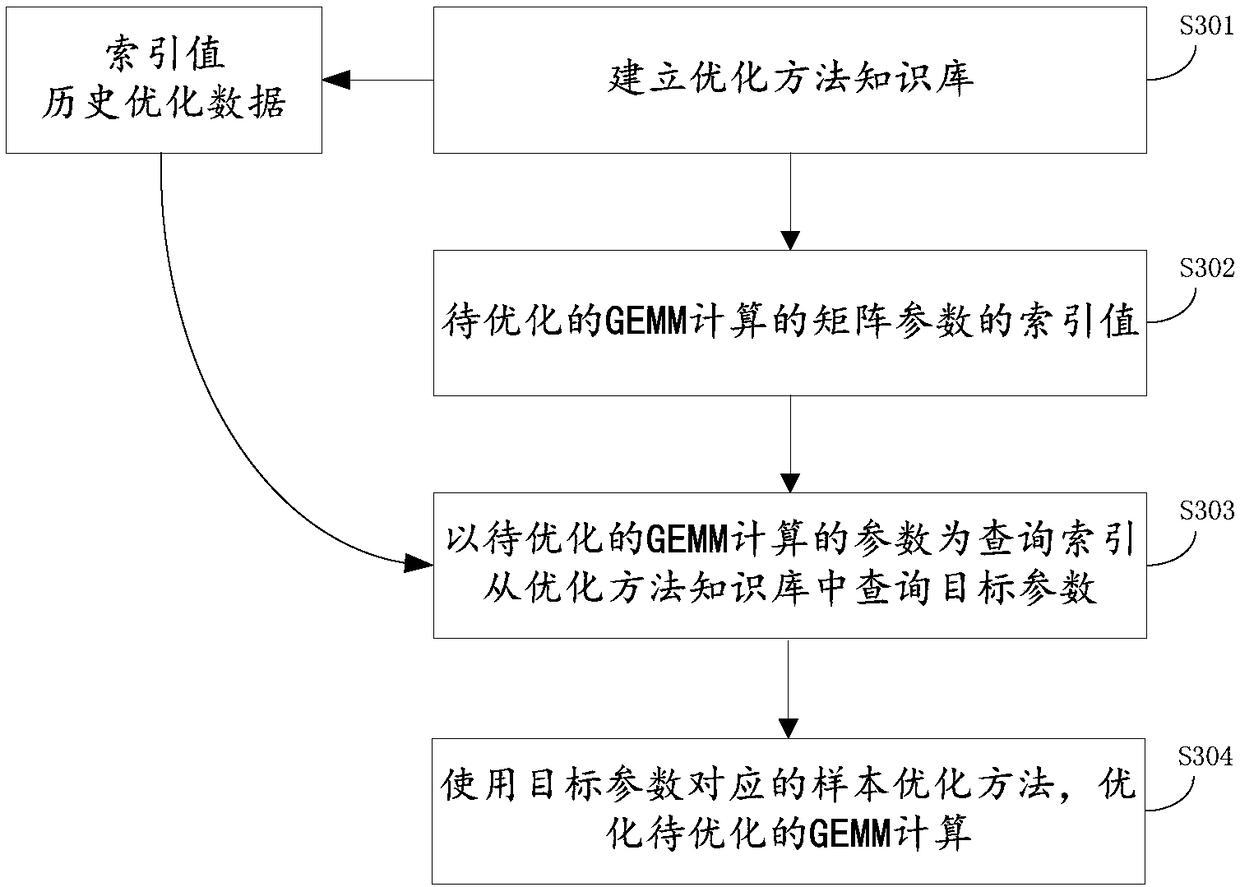

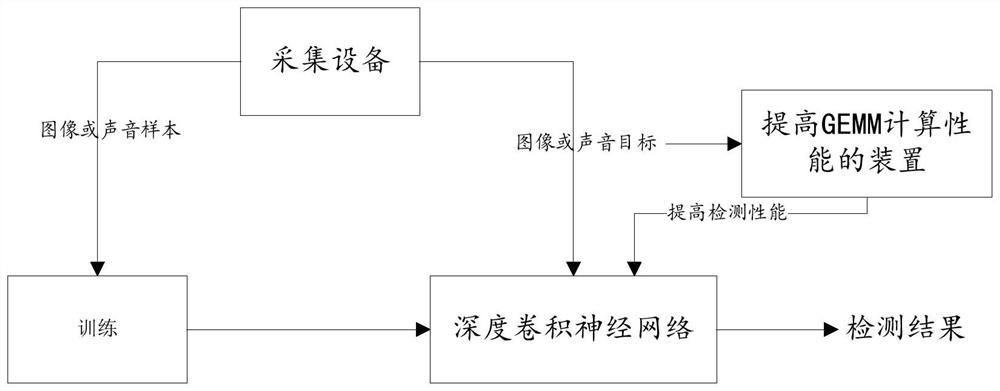

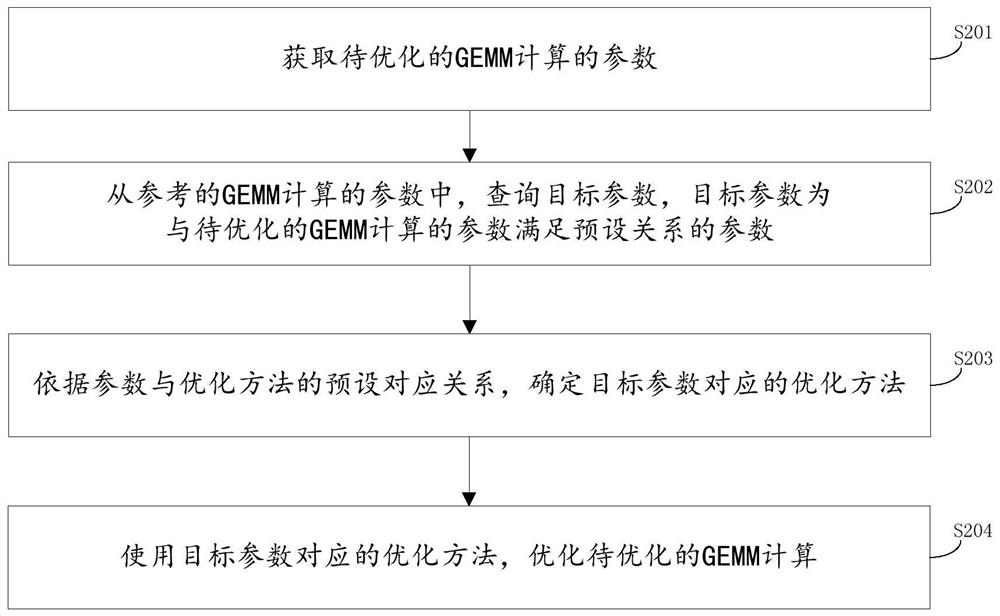

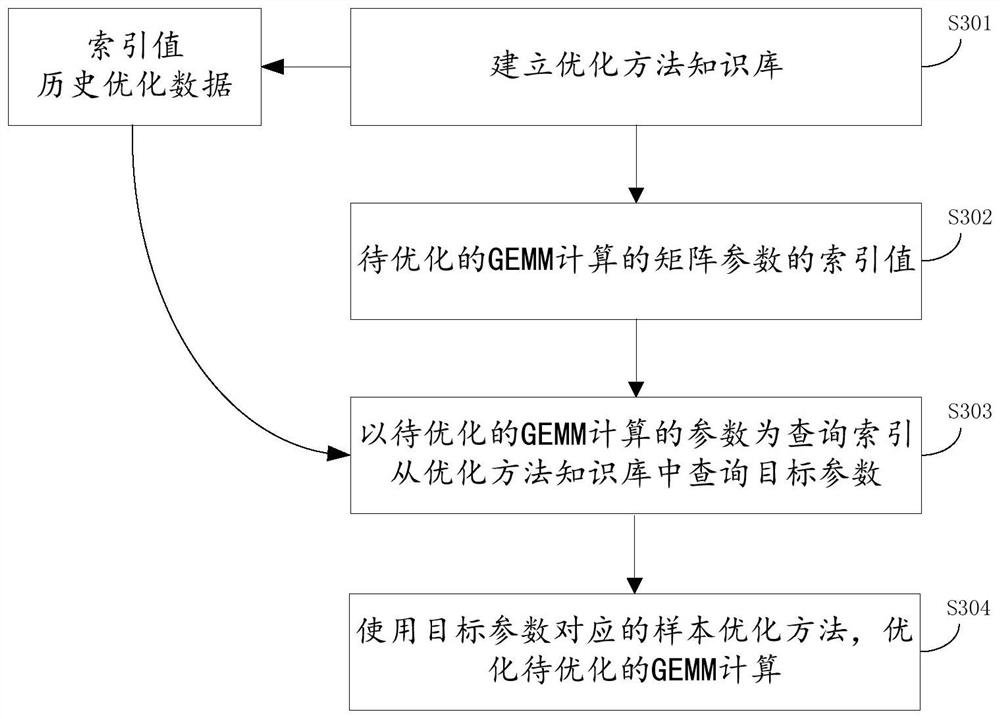

A method and a device for improving the computing performance of a GEMM

The present application provides a method and apparatus for improving the computational performance of a GEMM, to the method comprises steps of obtaining the parameters of multiplication of the general matrix-matrix and GEMM calculation to be optimized and querying the target parameters from the parameters calculated by the at least one historical GEMM, and the target parameters are the parameterssatisfying a preset relationship with the parameters calculated by the GEMM calculation to be optimized. According to the preset correspondence between the parameters and the optimization method, anoptimization method corresponding to the objective parameters is determined. And optimizing the GEMM calculation to be optimized by using the optimization method corresponding to the objective parameters. Wherein the parameters calculated by the GEMM are determined based on the size of the matrix participating in the GEMM calculation. Because the characteristics of the matrices involved in the GEMM computation are used as the basis for the optimization of the GEMM computation, the performance of the GEMM computation can be improved even if the matrices are small in size or irregular in shape in the process of target detection using depth convolution neural network.

Owner:XFUSION DIGITAL TECH CO LTD

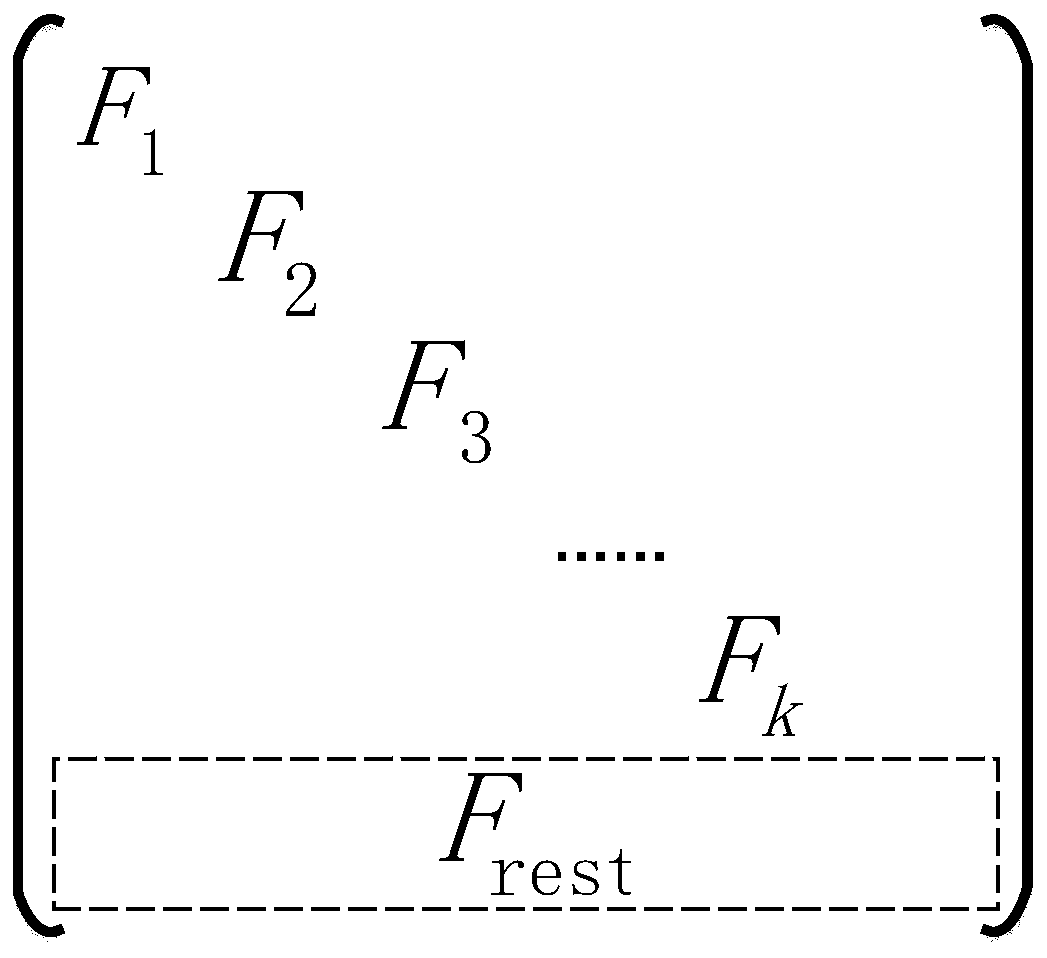

A General Matrix Optimization Method for Accelerating Erasure Code Encoding and Decoding Process

ActiveCN104991740BTake full advantage of parallel processing capabilitiesReduce the number of timesInput/output to record carriersRedundant data error correctionMatrix decompositionGeneral matrix

The invention discloses a universal matrix optimization method for accelerating erasure correcting code encoding and decoding processes. The method comprises the steps of: during encoding and decoding, decomposing a check matrix into a plurality of mutually independent sub-matrixes and a residual sub-matrix to enable encoding and decoding operation to be partially executed in parallel; and in addition, adjusting a matrix calculation sequence to reduce data block calculation frequency involved in the encoding and decoding processes so as to reduce the time cost for calculation. The method improves the performance of the erasure correcting code encoding and decoding processes, especially the performance of operation in a multi-core processor. The encoding and decoding processes realized by using the method can utilize potential parallel encoding capability, make full use of parallel processing capability of the multi-core processor and shorten the calculation time.

Owner:HUAZHONG UNIV OF SCI & TECH

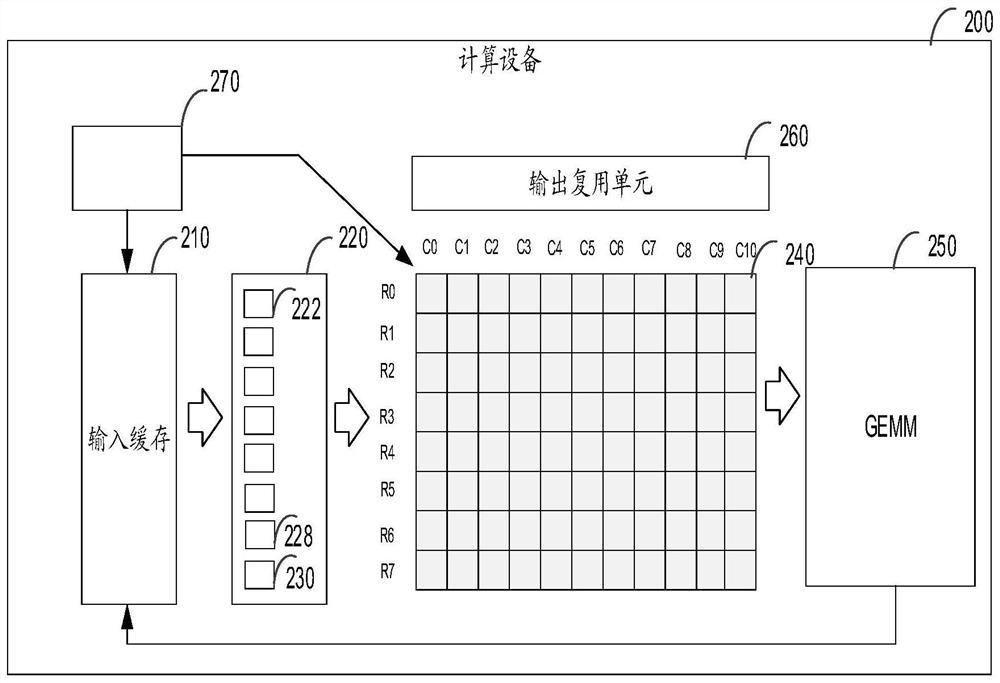

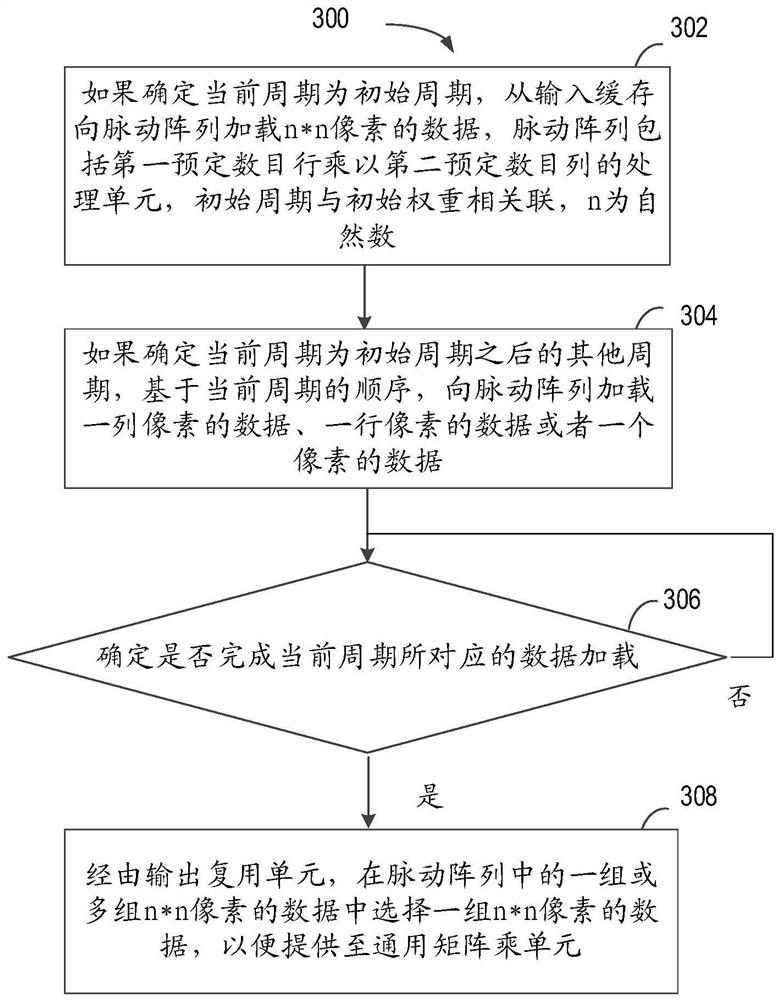

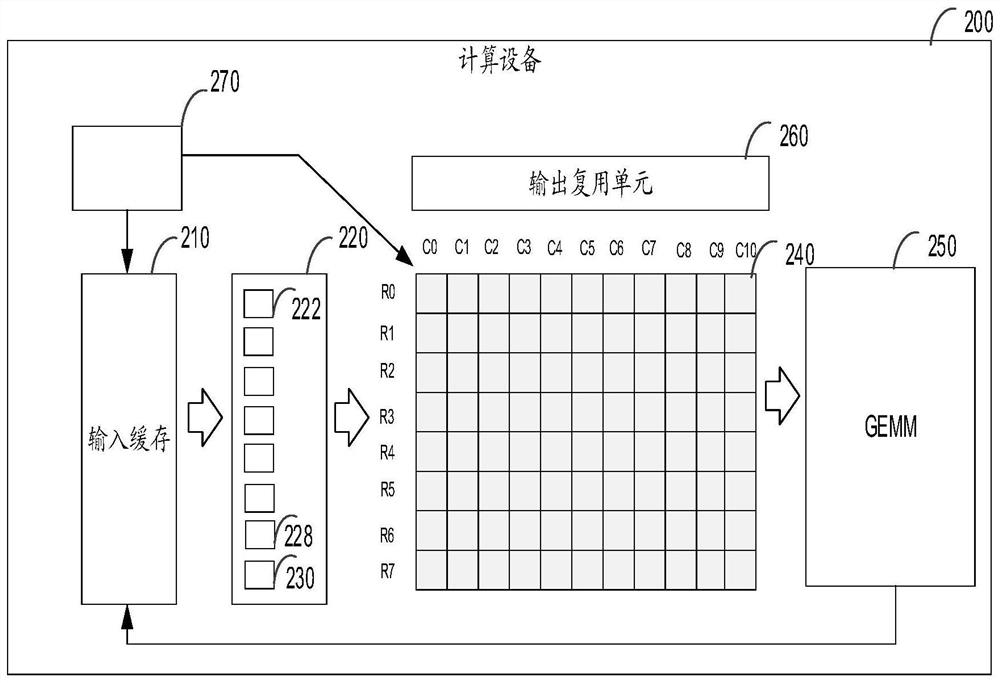

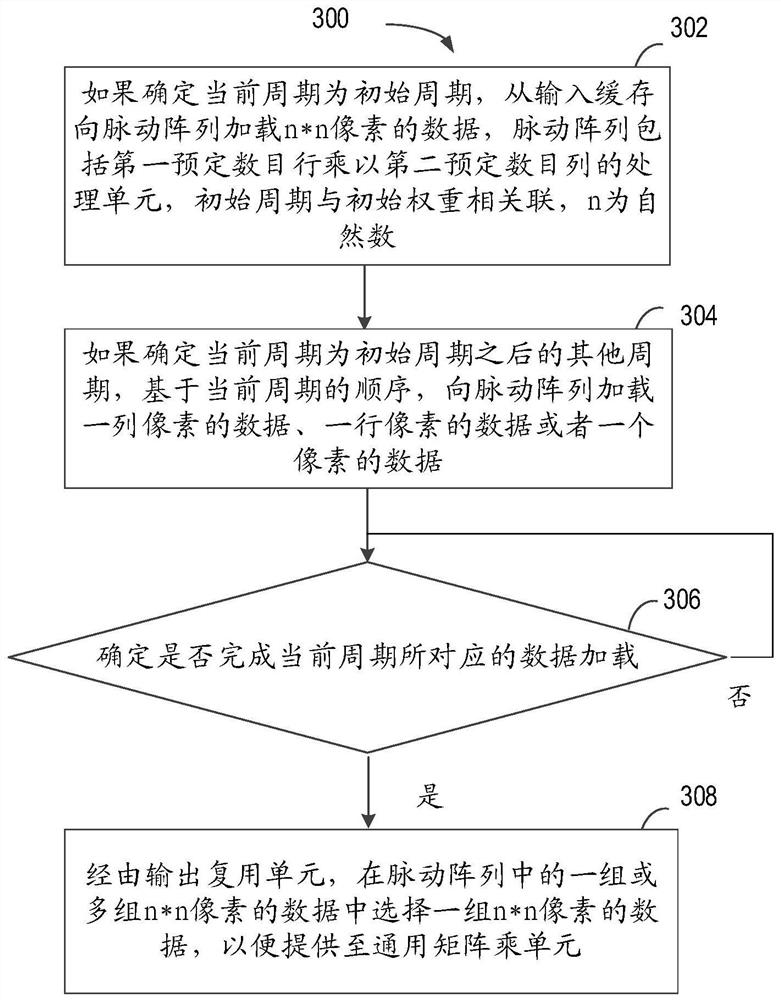

Method, computing device, and computer-readable storage medium for convolution computation

The present disclosure relates to a method for convolution calculation, a computing device and a computer-readable storage medium. The method includes: if it is determined that the current period is the initial period, loading data of n*n pixels from the input buffer to the systolic array, the systolic array includes a processing unit with a first predetermined number of rows multiplied by a second predetermined number of columns, the initial period and the initial weight Associated, n is a natural number; if it is determined that the current cycle is another cycle after the initial cycle, based on the sequence of the current cycle, load the data of a column of pixels, the data of a row of pixels or the data of one pixel to the systolic array; and if it is determined that the current cycle is completed For the corresponding data loading, a group of n*n pixel data is selected from one or more groups of n*n pixel data in the systolic array via the output multiplexing unit, so as to be provided to the general matrix multiplication unit. According to the embodiments of the present disclosure, bandwidth occupation and power consumption can be effectively reduced.

Owner:SHANGHAI BIREN TECH CO LTD

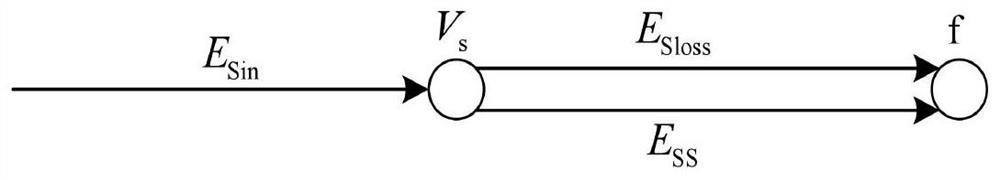

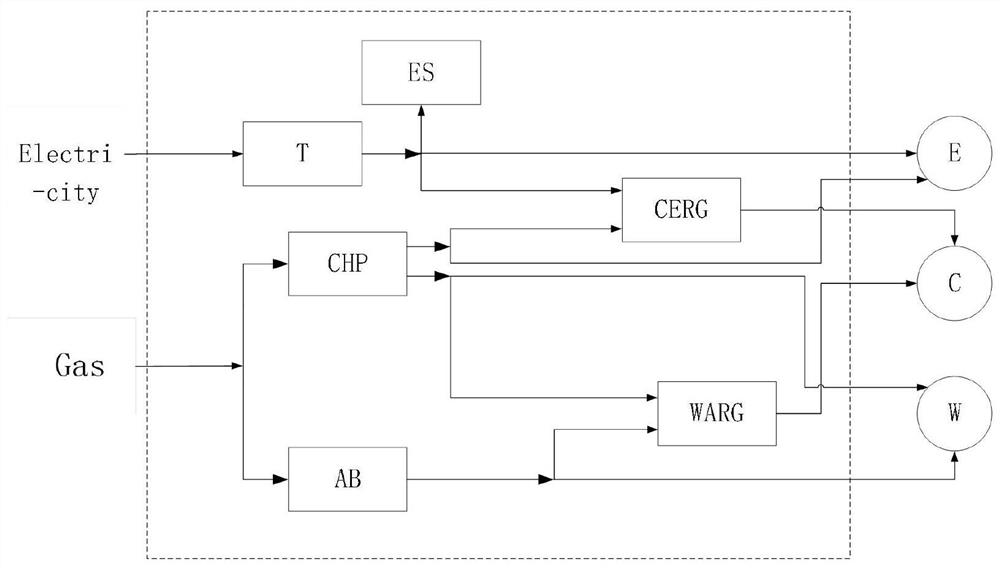

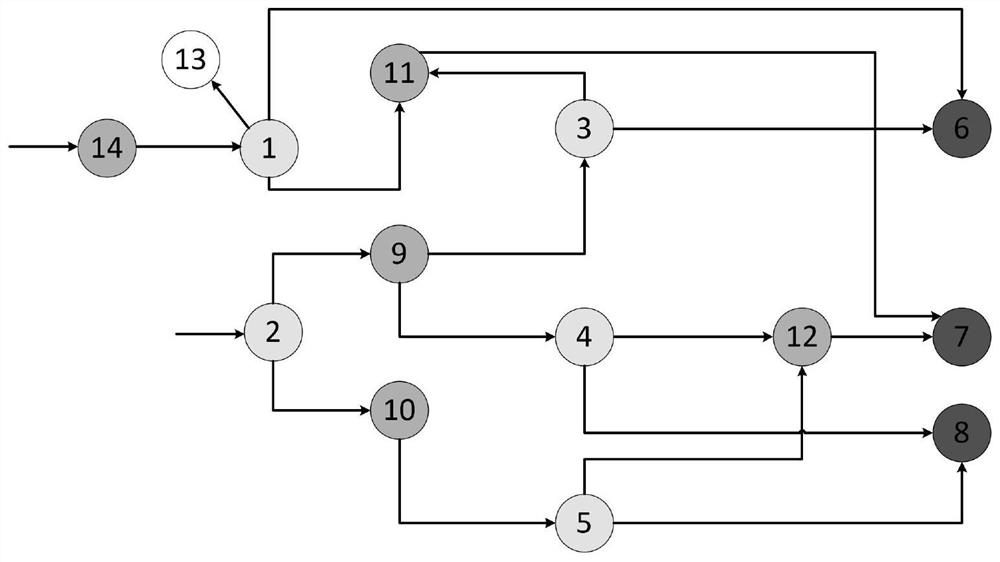

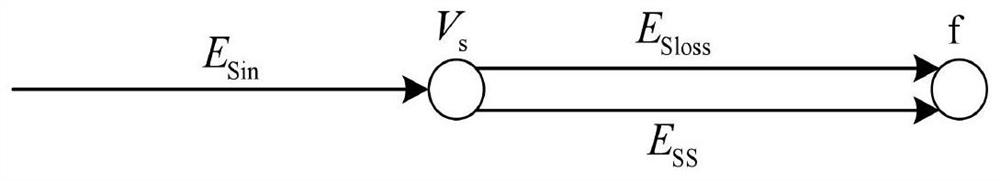

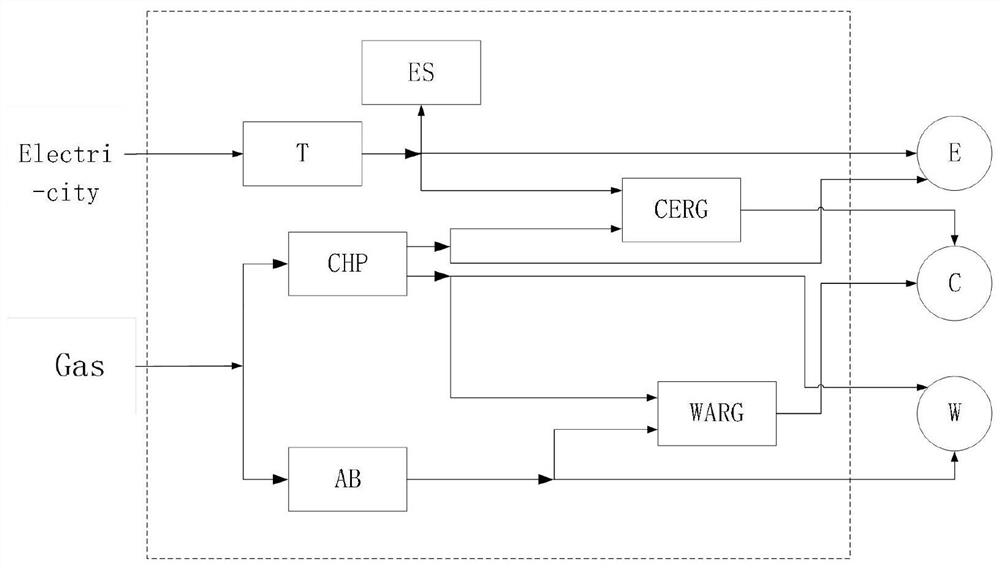

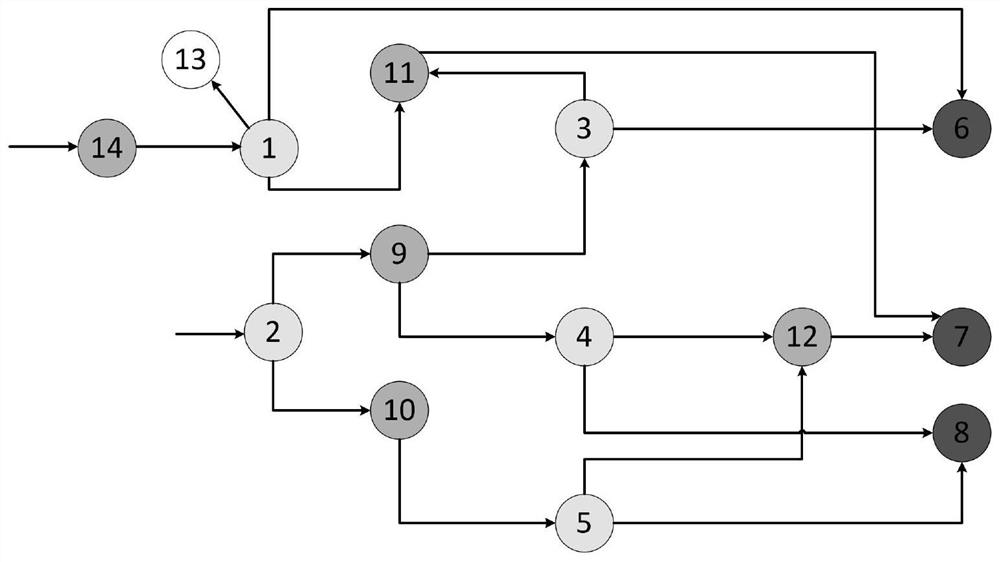

Multi-energy system general matrix modeling method based on graph theory and network flow

ActiveCN113515856ASmall amount of calculationGeometric CADDesign optimisation/simulationAlgorithmGraph theoretic

The invention relates to a multi-energy system general matrix modeling method based on a graph theory and network flow, which is characterized by comprising the following steps of: firstly, providing a group of new definitions to construct a unified energy flow network of the multi-energy system including energy storage; secondly, giving a matrix for describing a topological structure and energy conversion characteristics of the multi-energy system; on the basis, elaborating an energy flow equation matrix modeling process; and then, applying matrix analysis to obtain a coupling matrix. The method is suitable for an MES with a relatively simple structure and is also suitable for processing a system with a plurality of energy distribution devices or conversion devices in a topological structure; a sparse matrix is adopted for both a simple system and a complex system, so that the calculation amount is small. The method has the advantages of scientificity, reasonability, high practicability and good effect.

Owner:NORTHEAST DIANLI UNIVERSITY

Method for convolution calculation, computing device and computer readable storage medium

ActiveCN112614040AProcessor architectures/configurationPhysical realisationGeneral matrixParallel computing

The invention relates to a method for convolution calculation, a computing device and a computer readable storage medium. The method comprises the steps: if it is determined that the current period is the initial period, loading n * n pixel data from an input cache to a pulsation array, wherein the pulsation array comprises a first preset number of rows multiplied by a second preset number of columns of processing units, the initial period is associated with an initial weight, and n is a natural number; if it is determined that the current period is another period after the initial period, loading data of a column of pixels, data of a row of pixels or data of a pixel to the pulsation array based on the sequence of the current period; if it is determined that the data loading corresponding to the current period is completed, selecting one group of n * n pixel data from one or more groups of n * n pixel data in the pulse array through the output multiplexing unit so as to provide the n * n pixel data to the general matrix multiplication unit. According to the embodiment of the invention, bandwidth occupation and power consumption can be effectively reduced.

Owner:SHANGHAI BIREN TECH CO LTD

A method and device for improving gemm computing performance

The application provides a method and device for improving GEMM computing performance, obtain parameters calculated by general matrix-matrix multiplied by GEMM to be optimized, and query target parameters from at least one historical GEMM calculated parameter, the target parameter is the same as the parameter to be optimized The parameters calculated by GEMM satisfy the parameters of the preset relationship. The optimization method corresponding to the target parameter is determined according to the preset corresponding relationship between the parameter and the optimization method. And use the optimization method corresponding to the target parameter to optimize the GEMM calculation to be optimized. Wherein, the parameters of the GEMM calculation are determined based on the size of the matrices involved in the GEMM calculation. Because the characteristics of the matrix participating in the GEMM calculation to be optimized are used as the basis for optimizing the GEMM calculation to be optimized, in the process of using the deep convolutional neural network to detect the target, even if the size of the matrix is small or the shape is irregular, It can improve the performance of GEMM calculation.

Owner:XFUSION DIGITAL TECH CO LTD

A Method for Analyzing the Intrinsic Characteristics of Torsional Vibration in Planetary Gear Transmission System

ActiveCN102968537BAccurate calculationVersatilitySpecial data processing applicationsSystem matrixEngineering

A method for analyzing a torsional vibration inherent characteristic of a planet gear transmission system comprises the following four steps: (1) carrying out mathematical modeling on pure torsional vibration of a single planet row with damping by using an Lagrangian equation; (2) analyzing the inherent characteristic of the transmission system; (3) verifying the influence of the damping on a fixed frequency through multigroup calculation; and (4) establishing a general matrix for the planet gear transmission system and carrying out modeling simulation verification through instance value calculation and simulation X. Through the adoption of the invention, an obtained damped natural vibration differential equation in the form of a parameterized matrix (a rigidity matrix, a damping matrix and a connecting matrix) in the planet transmission system is general; corresponding matrix forms are directly selected for corresponding parts according to different connecting structures and planet gear system parameters to construct an integral system matrix; and after parameters are brought in, the inherent characteristic of the transmission system is rapidly and accurately analyzed.

Owner:BEIHANG UNIV

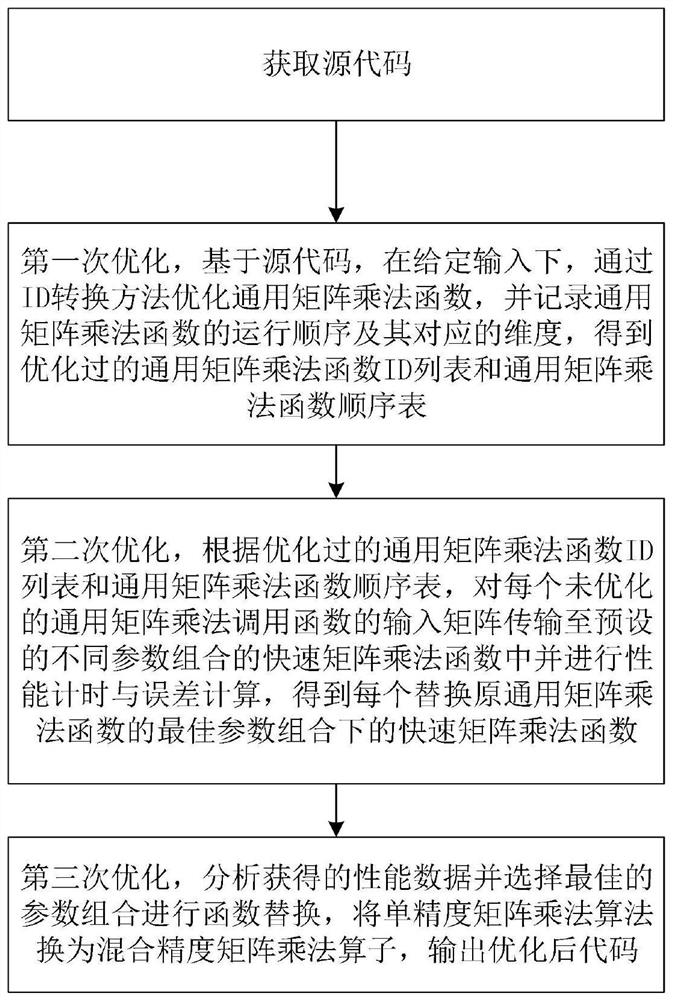

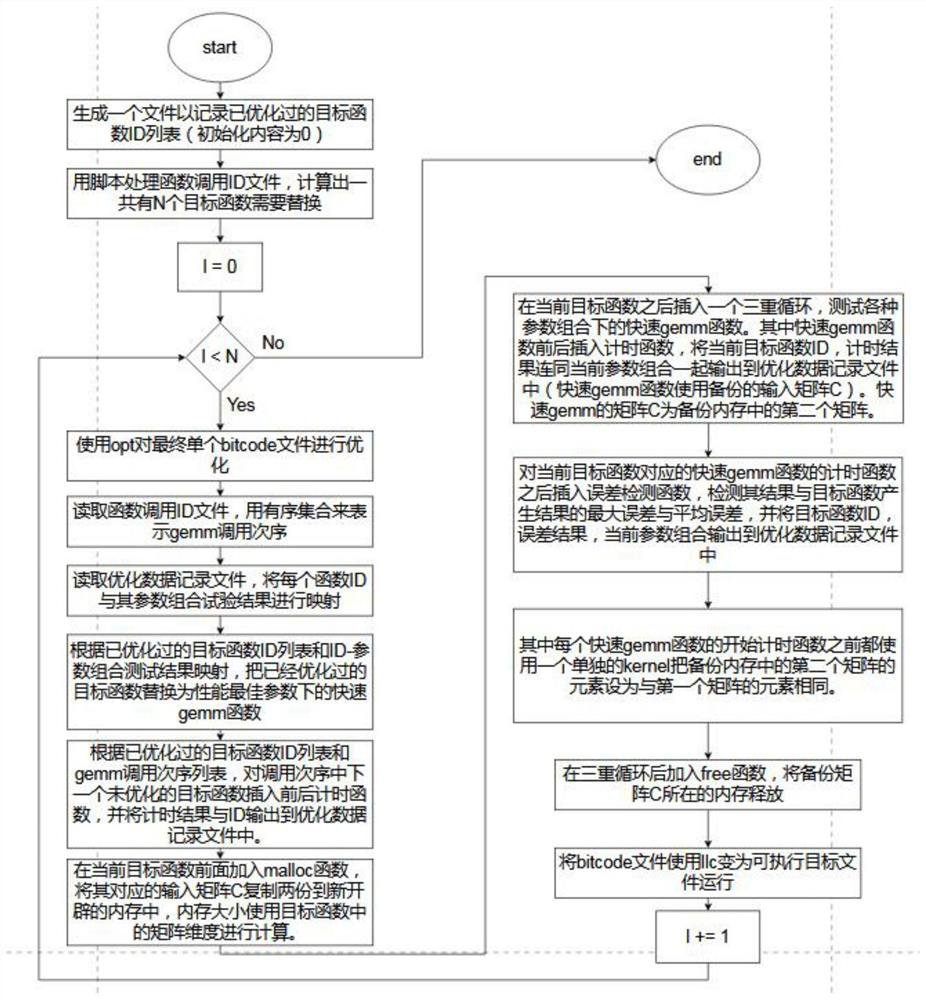

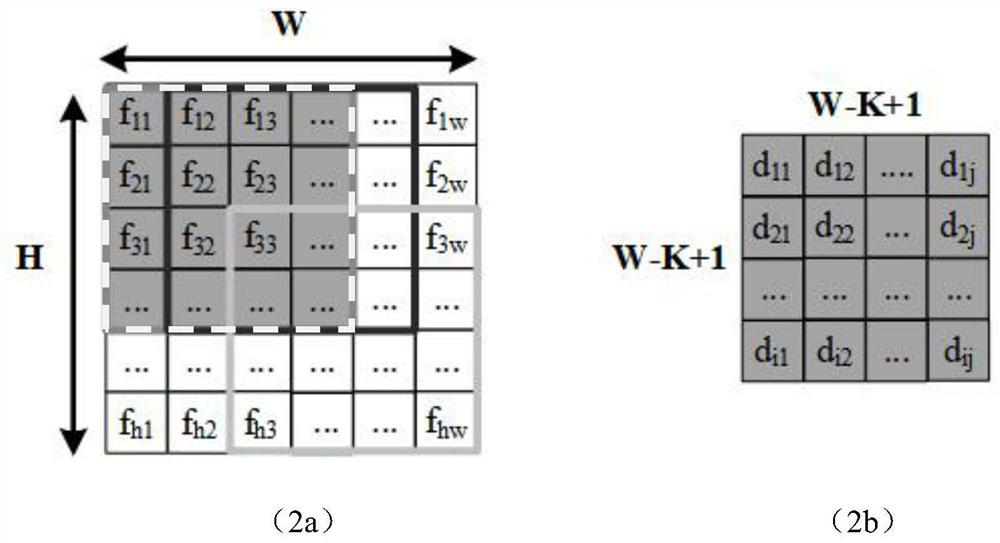

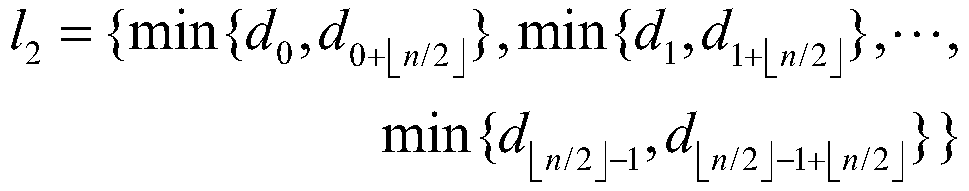

Error-controllable hybrid precision operator automatic optimization method

PendingCN114217817AFull use of mixed-precision computing capabilitiesImprove the efficiency of multiplication operationsCode refactoringComplex mathematical operationsGeneral matrixAlgorithm

The invention discloses an error-controllable hybrid precision operator automatic optimization method, which comprises the following steps of: first optimization: under given input, optimizing a general matrix multiplication function through an ID (Identity) conversion method, and recording an operation sequence and a corresponding dimension of the general matrix multiplication function; second optimization: according to an optimized general matrix multiplication GEMM function ID list and a general matrix multiplication function sequence list, transmitting the input of each non-optimized general matrix multiplication calling function to a preset fast matrix multiplication function of different parameter combinations, and carrying out performance timing and error calculation; and third optimization: analyzing the obtained data, converting a single-precision matrix multiplication algorithm into a mixed-precision matrix multiplication operator, and outputting an optimized code. By using the method, the hybrid precision GEMM operator can help to improve the performance in a more complex and wider high-performance computing program. The method can be widely applied to the field of compilers.

Owner:SUN YAT SEN UNIV

Convolutional neural network weight gradient optimization method based on data stream

ActiveCN112633498AAccelerated trainingReduce transfer timeNeural architecturesEnergy efficient computingData streamGeneral matrix

The invention discloses a convolutional neural network weight gradient optimization method based on a data stream, and provides a configurable data stream architecture design for convolutional neural network weight gradient optimization, so that convolution operations of different sizes in weight gradient calculation can be supported, and the degree of parallelism is K * K (convolution kernel size) times that of serial input. The training performance of the whole convolutional neural network is improved, and the problem that convolution operations of different sizes are difficult to realize in weight gradient calculation is solved. Compared with the prior art, the method has the advantages that (1) the acceleration effect is obvious: for weight gradient calculation, the degree of parallelism is improved by K * K compared with that of an original serial scheme, and the transmission time of data input is remarkably reduced, so that the purpose of accelerating the whole network training is achieved, and 1-1 / (K * K)% of input storage can be reduced compared with a general matrix multiplication scheme; and 2) the applicability and the universality are simultaneously met.

Owner:TIANJIN UNIV

A Parallel Acceleration Method of Network Intrusion Detection Based on KNN Algorithm

ActiveCN108600246BImprove parallelismImprove execution efficiencyCharacter and pattern recognitionTransmissionData setConcurrent computation

A parallel acceleration method based on the KNN algorithm -based network invasion detection.Methods using CUDA parallel computing models. Firstly, parallel analysis was performed for network intrusion detection based on the KNN algorithm. When the distance from the calculation network invasion detection data point to the training data set, the general matrix multiplication function provided by CUDA was used to accelerate.Improving the operation speed; then, during the distance sorting stage, two types of parallelized sorting strategies have been provided. According to the sorting results of a small amount, the sorting algorithm with less sorting time can be selected for distance sorting;The classification stage of the point is based on the CUDA -based atom and plus operation.The experimental results show that the acceleration method proposed by the present invention is effective. Under the condition of ensuring the detection rate, it effectively improves the parallelization acceleration performance of network invasion detection.

Owner:ZHEJIANG UNIV OF TECH

Skin care combination with effect of dispelling wrinkles, preparation and preparation method

ActiveCN102178631BImprove anti-wrinkle effectEasy to prepareCosmetic preparationsToilet preparationsGeneral matrixAdditive ingredient

The invention provides a traditional Chinese medicine mask with anti-wrinkle effect and a preparation method of the mask. Based on the traditional Chinese medicine theory of our nation, the mask aims for skin health and is formulated with the prescription traditional Chinese medicine extract as the main effect additive and the general matrix ingredients in cosmetics as the auxiliary material. Thetraditional Chinese medicine mask is not only simple in technology, natural and mild, safe and free of stimulation and easy to absorb, but also capable of invigorating the circulation of blood and eliminating stasis, promoting the circulation of qi and dredging collaterals, clearing radical and improving facial metabolism, thus moistening skin and deferring skin aging.

Owner:董银卯

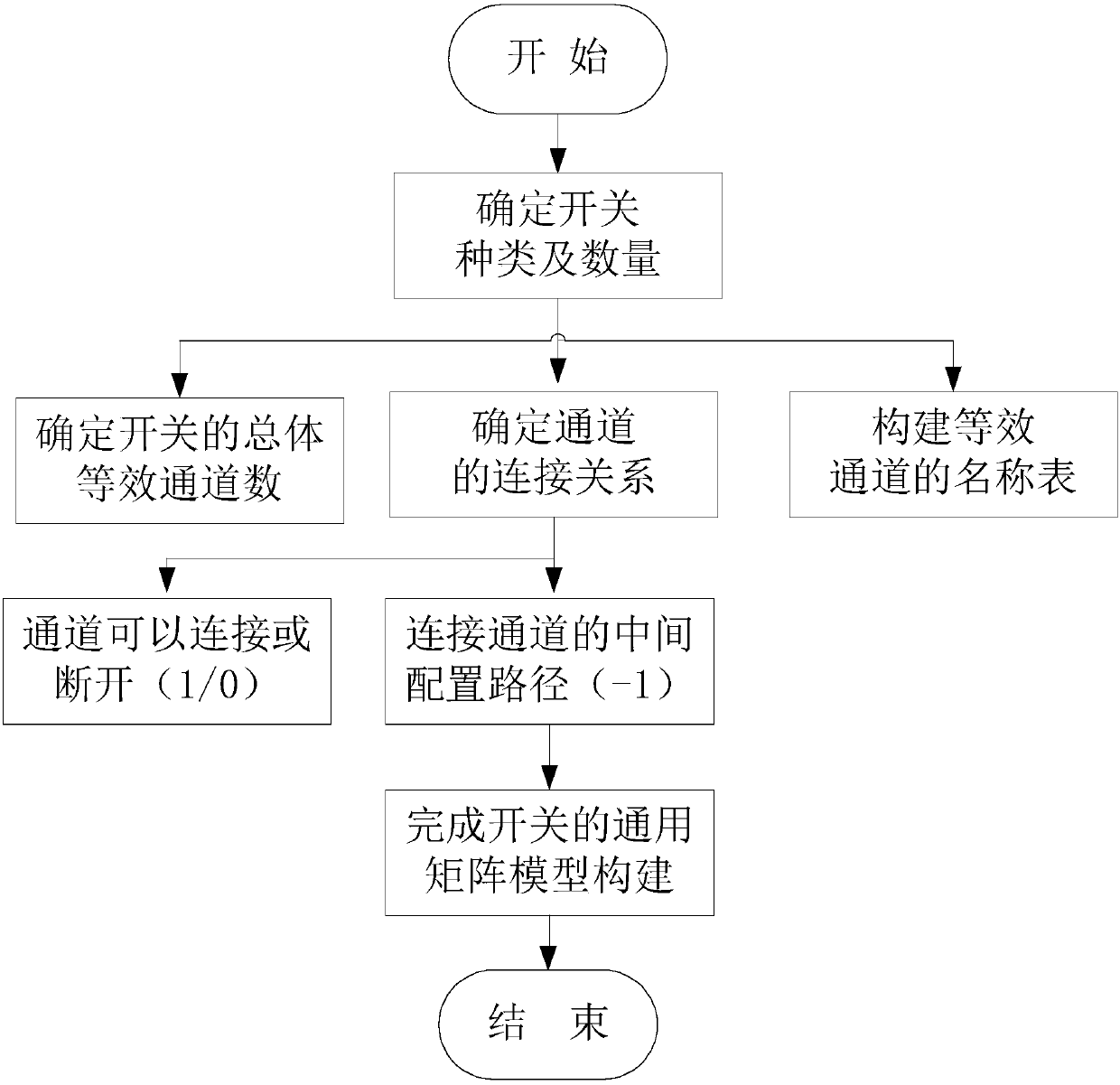

A general model-based switch driving method

ActiveCN104899041BConducive to design and developmentGood programming versatilityCreation/generation of source codeGeneral matrixComputer module

The Switch driving method based on the general model belongs to the construction field of the general model of Switch. In order to solve the problem that the existing switch driving module cannot drive various switch structures. Step 11, determine the type and quantity of switches, step 12, make each end of each switch a channel, obtain the channel numbers of all switches according to the type and quantity of switches, determine the connection relationship of each channel, and The channels are named, so as to construct the equivalent channel name table, and all the named channels are respectively used as the row items and column items of the equivalent channel name table, which are surrounded by the row items and column items of the equivalent channel name table The space constitutes the model matrix, and fills in the corresponding values in the model matrix according to the connection relationship of each channel, so as to complete the establishment of the general matrix model. Step 2 is to realize the driving of the Switch by using the established general matrix model. It is used to drive the switch.

Owner:HARBIN INST OF TECH

Upper Triangular Partial Storage Device and Parallel Reading Method of Symmetric Matrix

ActiveCN109614149BTake advantage ofImprove algorithm efficiencyConcurrent instruction executionMemory systemsComputer architectureConcurrent computation

The embodiment of the invention provides an upper triangular part storage device of a symmetric matrix and a parallel reading method, and the device comprises a storage module selection circuit whichis used for selecting a storage module corresponding to each element of the upper triangular part of the symmetric matrix to be accessed; the address generation circuit is used for calculating a logicaddress of each element of the triangular part on the symmetric matrix to be accessed in the corresponding storage module; the m parallel storage modules are used for storing data corresponding to elements of the triangular part on the symmetric matrix to be accessed; and the data shuffling module is used for performing shuffling operation on the data read from the storage module. According to the embodiment of the invention, only the upper triangular part of the symmetric matrix needs to be stored, any row vector and any column vector of the symmetric matrix are read and recovered in parallel, and a parallel computing unit of hardware can be fully utilized, so that the algorithm efficiency of symmetric matrix operation can be improved to an algorithm efficiency level of general matrix operation.

Owner:极芯通讯技术(安吉)有限公司

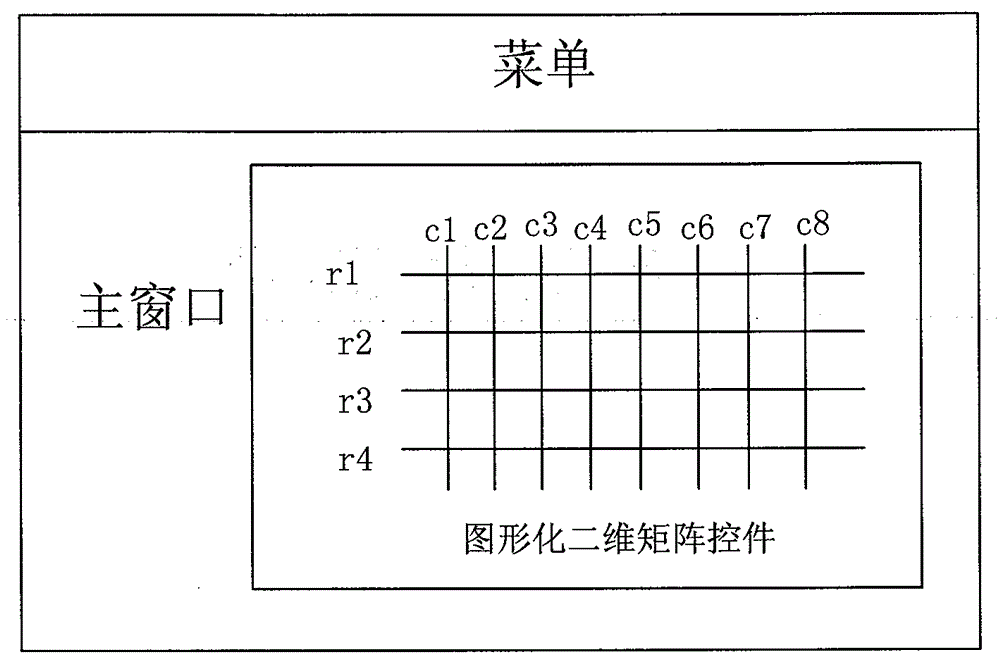

The Method of Quickly Realizing the Graphical Control System of Matrix Switch

ActiveCN103294532BAchieving a modular designReduce development difficultyMultiprogramming arrangementsGeneral matrixTopology information

The invention discloses a method for rapid implementation of a matrix switch graphical control system. The method adopts a switch control middleware, a graphical matrix control and a switch driving program, and comprises the following steps: performing operation environment configuration, and using the graphical matrix control and the switch control middleware to build a general system frame; defining a general matrix switch functional interface, wherein switch driving programs of matrix switches realize the function of definition of the general matrix switch functional interface, and using the general system frame to load the switch driving program to generate the matrix switch graphical control system; utilizing the switch control middleware to realize the processes of obtaining switch topology information from the switch driving program and building a two-dimensional graphical matrix; and utilizing the switch control middleware to realize the process of performing switch module operation via mouse operation on the two-dimensional graphical matrix. According to the invention, the development and maintenance difficulties of the matrix switch graphical control system are reduced, and the development cycle of a new product is shortened.

Owner:CHINA ELECTRONIS TECH INSTR CO LTD

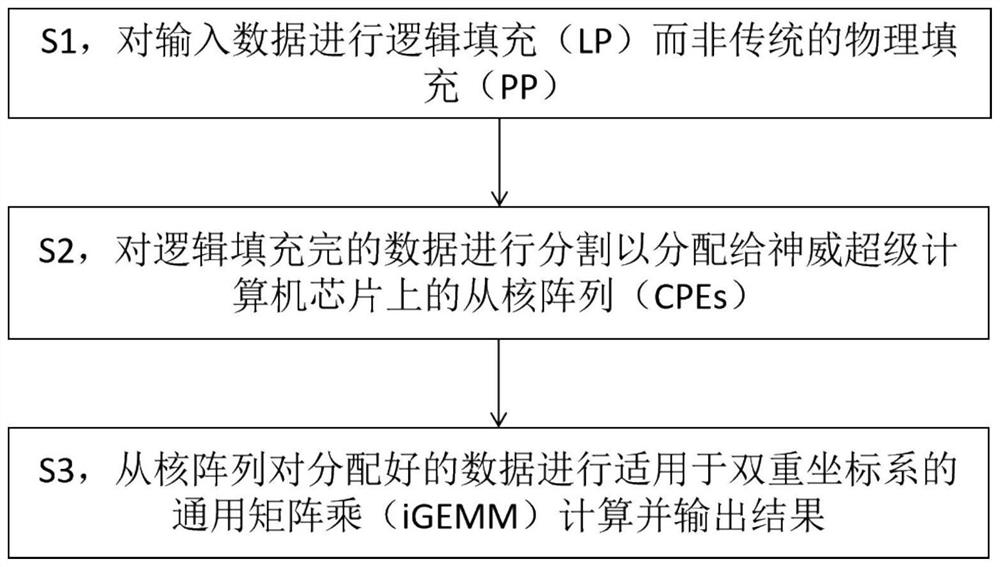

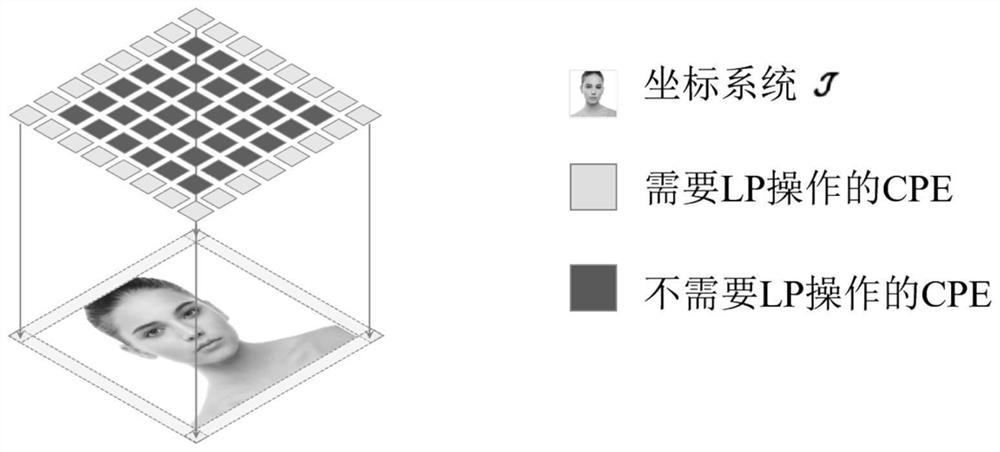

Convolutional neural network calculation method for many-core processor based on double coordinate systems

PendingCN114742692AReduce space complexityReduce redundancyImage analysisImage memory managementSupercomputerEngineering

The invention discloses a multi-core processor convolutional neural network calculation method based on a dual coordinate system, relates to the field of SW heterogeneous multi-core processors and deep learning, solves the problem that the communication pressure between calculation and communication is relatively large under the existing communication constraint, and provides the following scheme. The method comprises the following steps: S1, carrying out logic filling (LP) instead of traditional physical filling (PP) on input data; s2, segmenting the logically filled data so as to distribute the data to a slave core array (CPEs) on a super computer chip; and S3, performing general matrix multiplication (iGEMM) calculation suitable for a dual coordinate system on the distributed data by the slave core array, and outputting a result. The device has the characteristics that the communication pressure between calculation and communication under the communication constraint is reduced, and the space and time complexity is reduced, so that faster matrix multiplication becomes possible.

Owner:UNIVERSITY OF CHINESE ACADEMY OF SCIENCES

A general matrix modeling method for multi-energy systems based on graph theory and network flow

ActiveCN113515856BSmall amount of calculationGeometric CADDesign optimisation/simulationGraph theoreticNetFlow

Owner:NORTHEAST DIANLI UNIVERSITY

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com