Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

33 results about "Asynchronous I/O" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

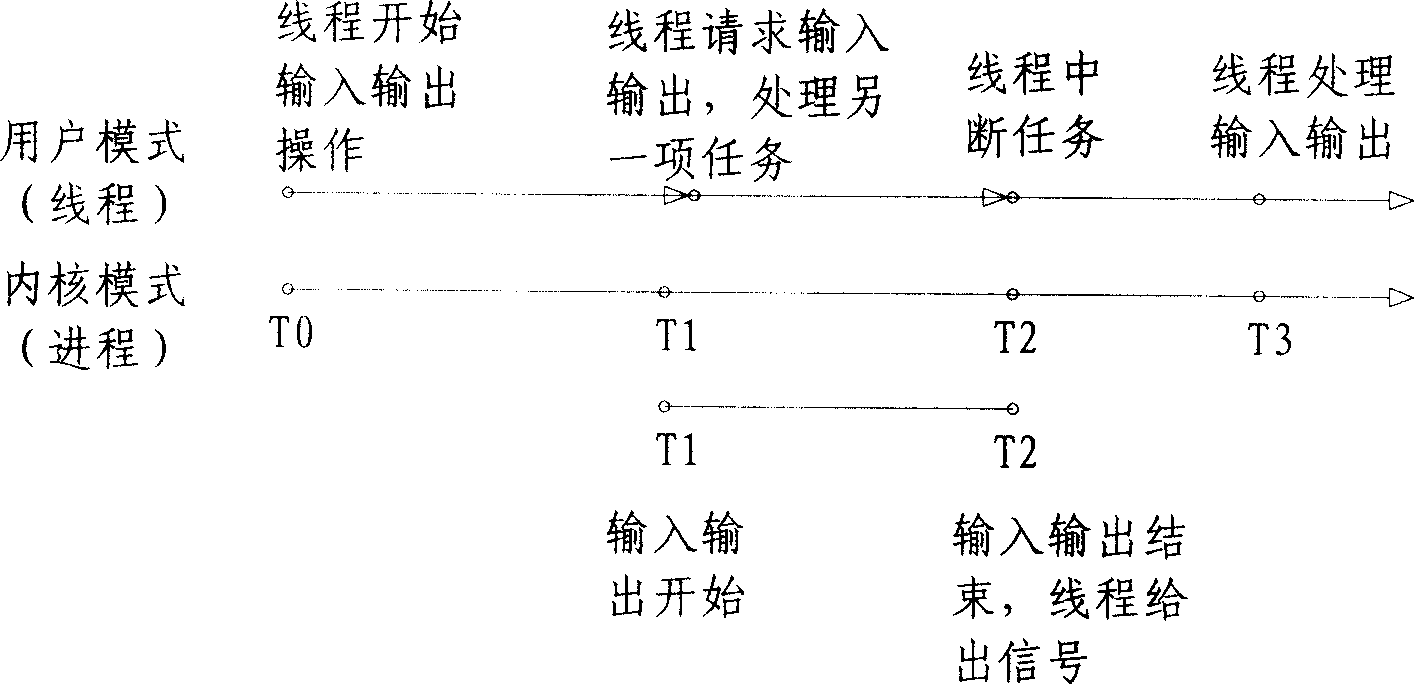

In computer science, asynchronous I/O (also non-sequential I/O) is a form of input/output processing that permits other processing to continue before the transmission has finished. Input and output (I/O) operations on a computer can be extremely slow compared to the processing of data. An I/O device can incorporate mechanical devices that must physically move, such as a hard drive seeking a track to read or write; this is often orders of magnitude slower than the switching of electric current. For example, during a disk operation that takes ten milliseconds to perform, a processor that is clocked at one gigahertz could have performed ten million instruction-processing cycles.

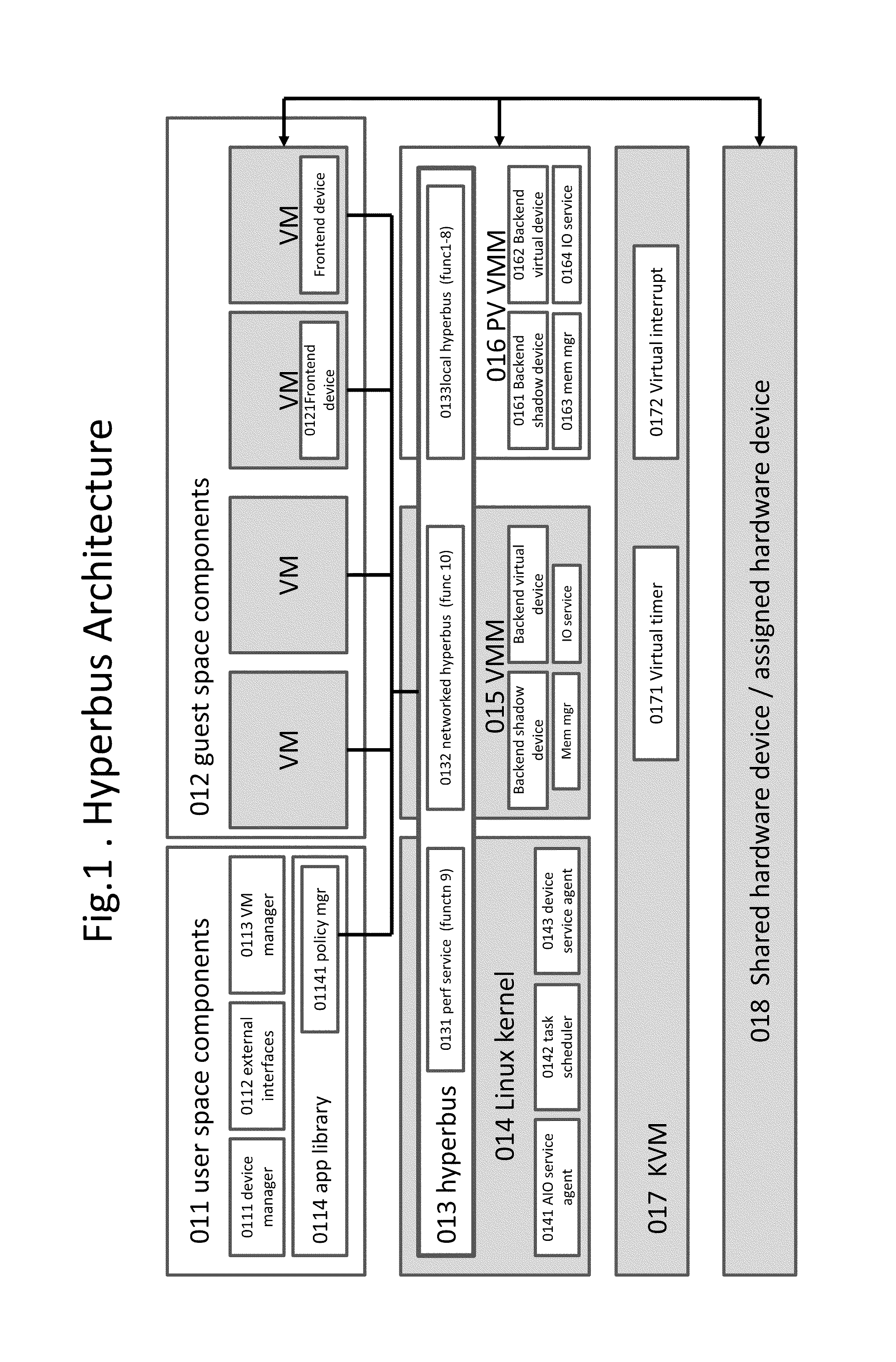

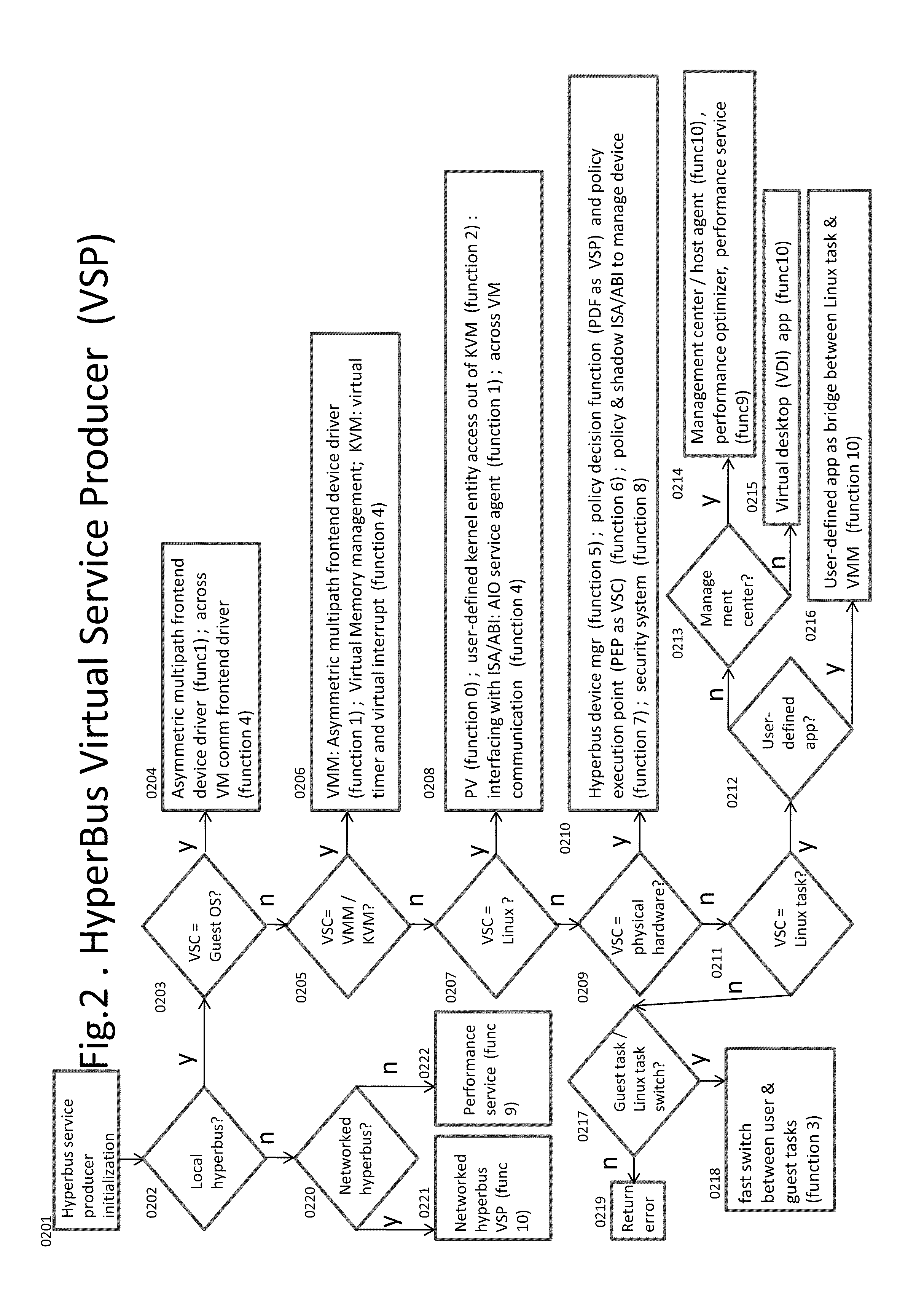

Kernel Bus System to Build Virtual Machine Monitor and the Performance Service Framework and Method Therefor

InactiveUS20110296411A1Fast deliveryImprove performanceSoftware simulation/interpretation/emulationMemory systemsVirtualizationSystem configuration

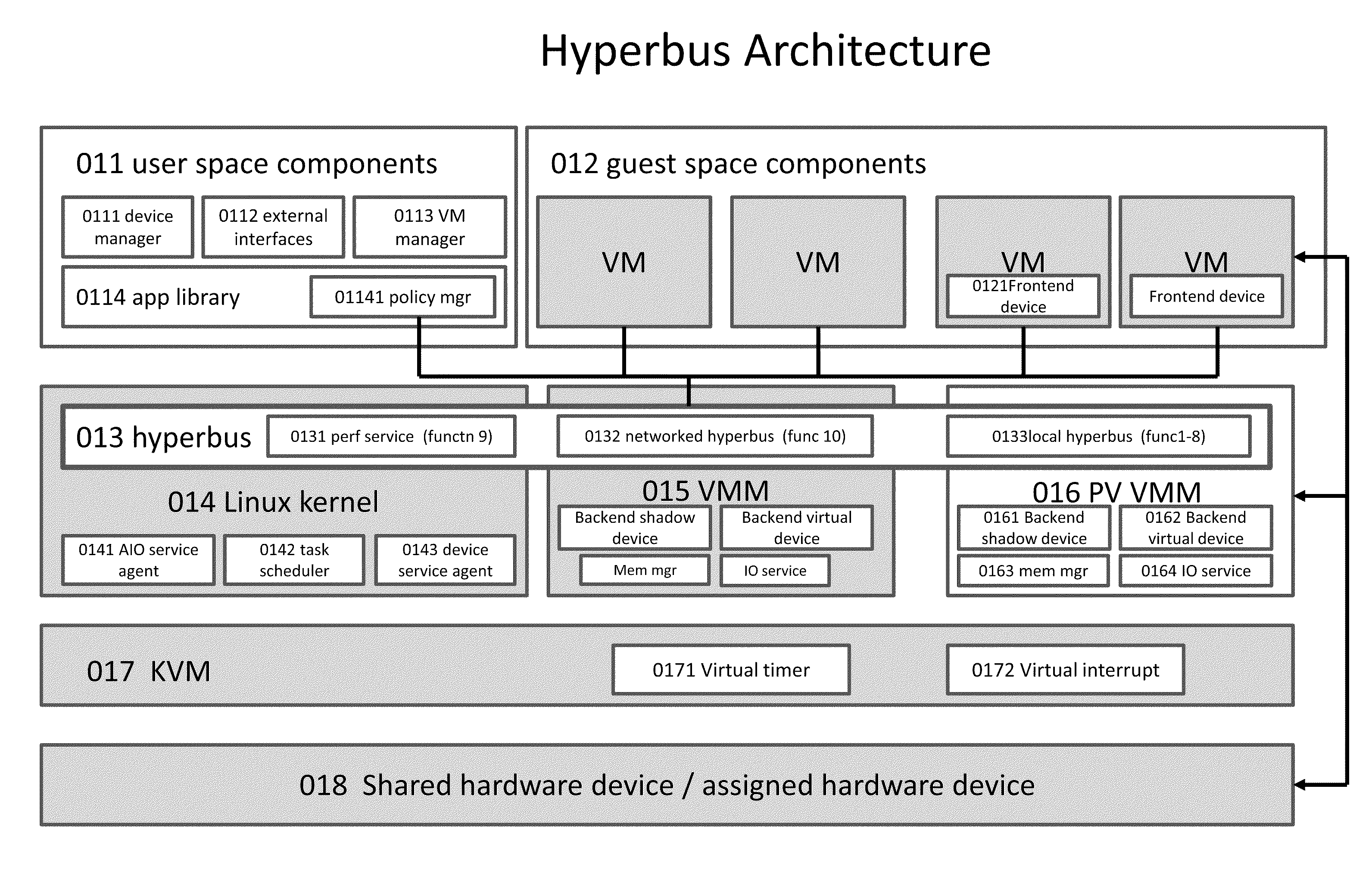

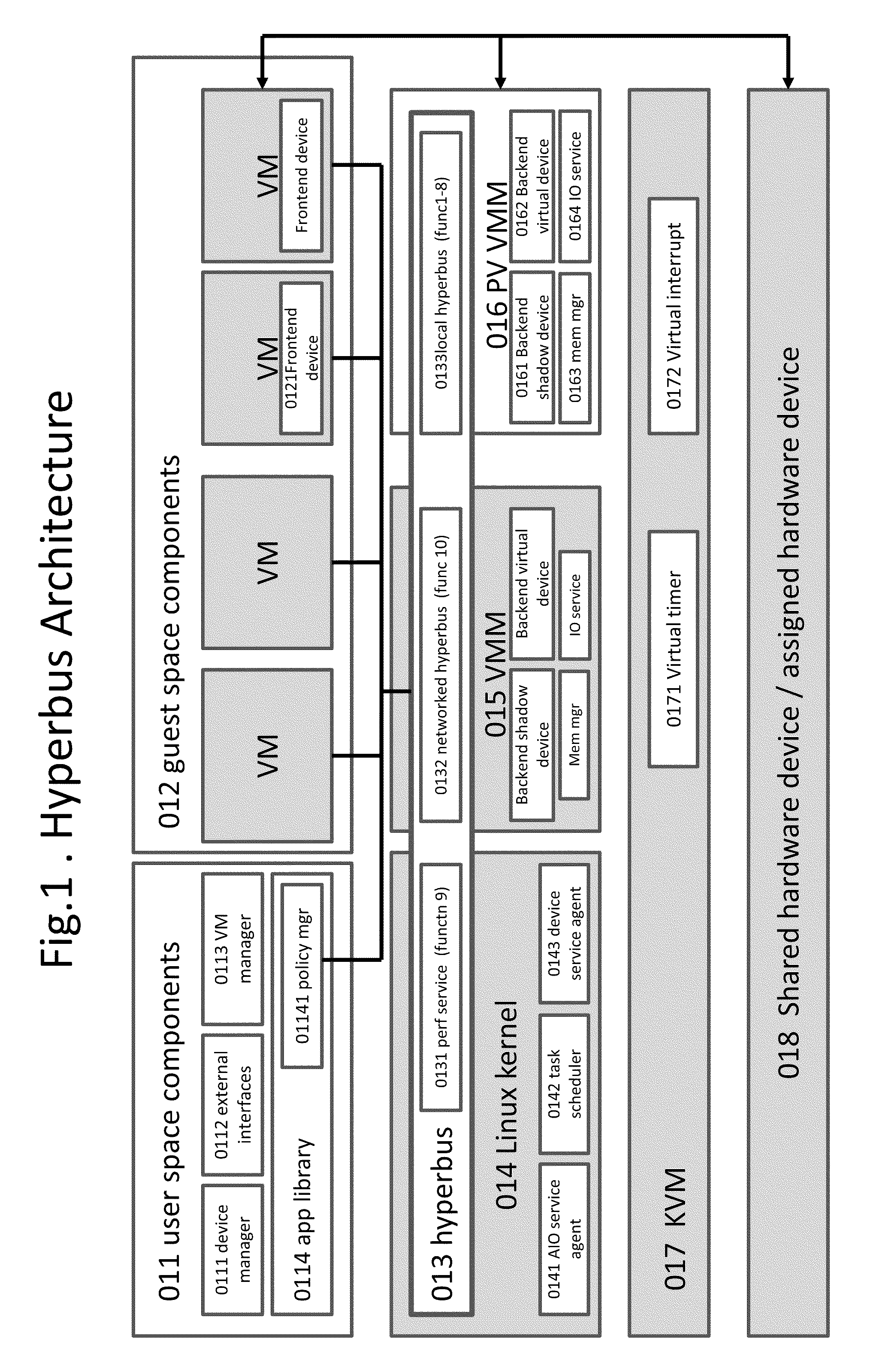

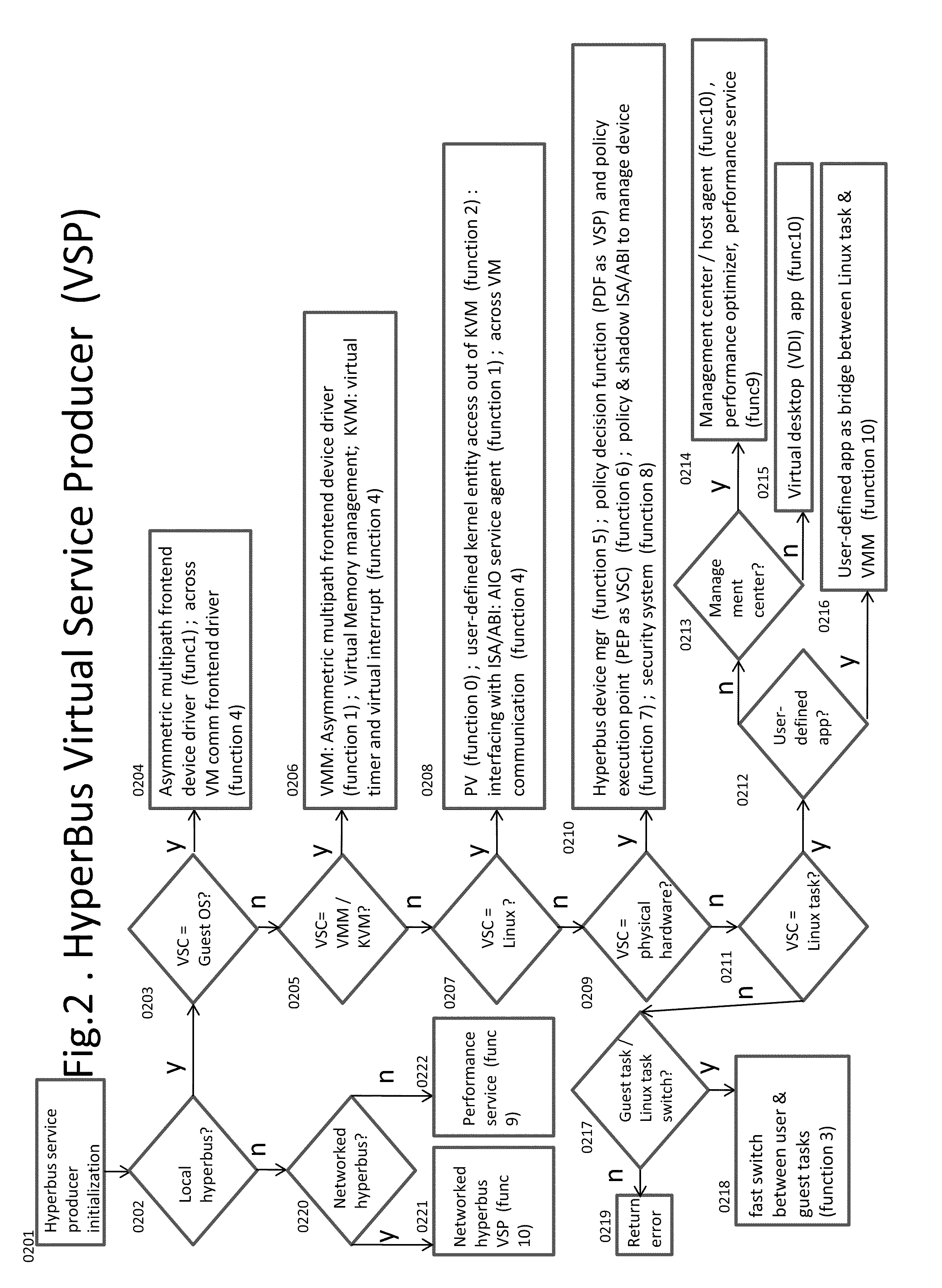

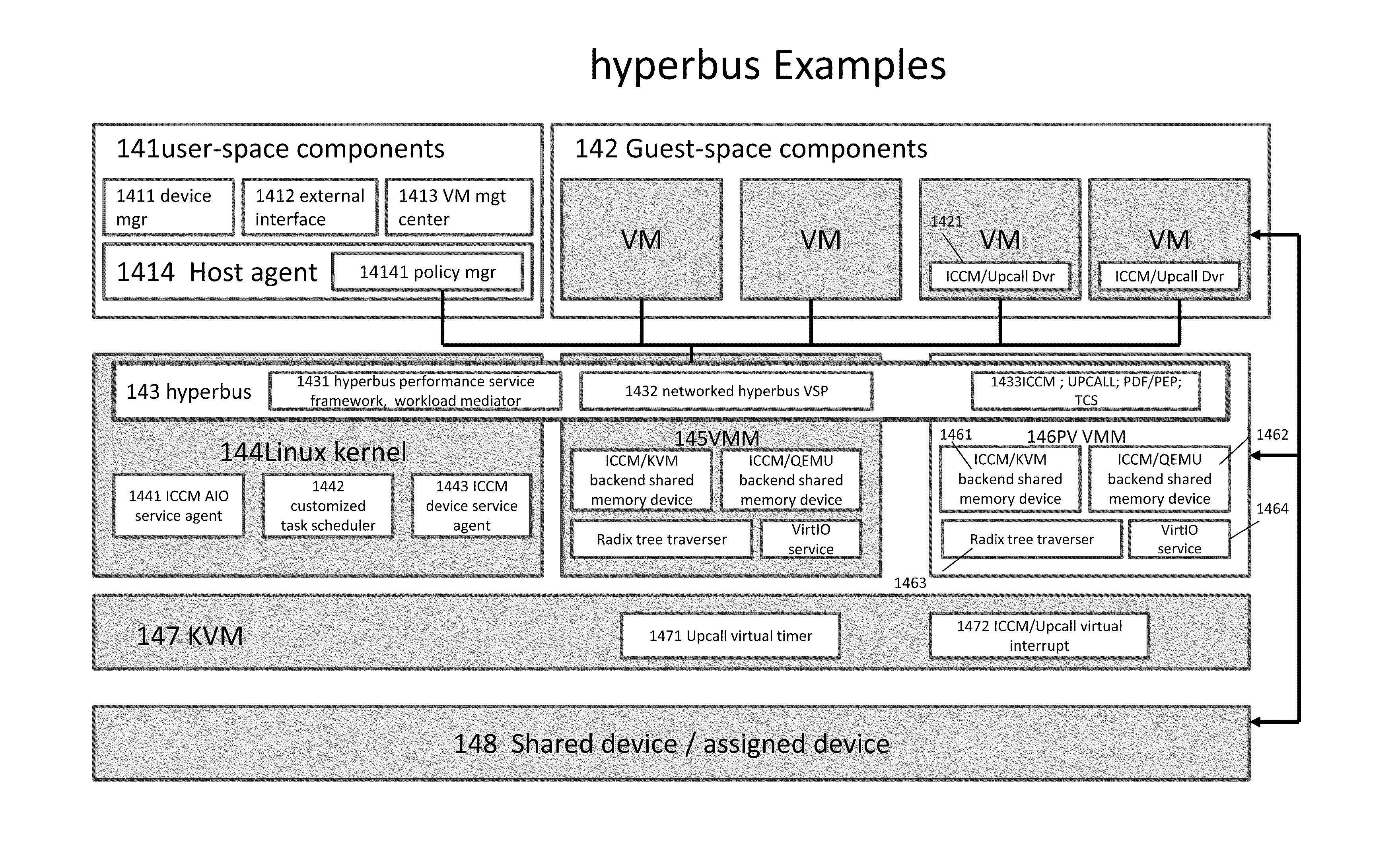

Some embodiments concern a kernel bus system for building at least one virtual machine monitor. The kernel bus system is based on kernel-based virtual machine. The kernel bus system is configured to run on a host computer. The host computer comprising one or more processors, one or more hardware devices, and memory. The kernel bus system can include: (a) a hyperbus; (b) one or more user space components; (c) one or more guest space components configured to interact with the one or more user space components via the hyperbus; (d) one or more VMM components having one or more frontend devices configure to perform I / O operations with the one or more hardware devices of the host computer using a zero-copy method or non-pass-thru method; (e) one or more para-virtualization components having (1) a virtual interrupt configured to use one or more processor instructions to swap the one or more processors of the host computer between a kernel space and a guest space; and (2) a virtual I / O driver configured to enable synchronous I / O signaling, asynchronous I / O signaling and payload delivery, and pass-through delivery independent an QEMU emulation; and (f) one or more KVM components. The hyperbus, the one or more user space components, the one or more guest space components, the one or more VMM components, the one or more para-virtualization components, and the one or more KVM components are configured to run on the one or more processors of the host computer. Other embodiments are disclosed.

Owner:TRANSOFT

Kernel bus system with a hyberbus and method therefor

InactiveUS8832688B2Fast deliveryImprove performanceMultiprogramming arrangementsSoftware simulation/interpretation/emulationVirtualizationZero-copy

Some embodiments concern a kernel bus system for building at least one virtual machine monitor. The kernel bus system can include: (a) a hyperbus; (b) user space components; (c) guest space components configured to interact with the user space components via the hyperbus; (d) VMM components having frontend devices configure to perform I / O operations with the hardware devices of the host computer using a zero-copy method or non-pass-thru method; (e) para-virtualization components having (1) a virtual interrupt module configured to use processor instructions to swap the processors of the host computer between a kernel space and a guest space; and (2) a virtual I / O driver configured to enable synchronous I / O signaling, asynchronous I / O signaling and payload delivery, and pass-through delivery independent an QEMU emulation; and (f) KVM components. Other embodiments are disclosed.

Owner:TRANSOFT

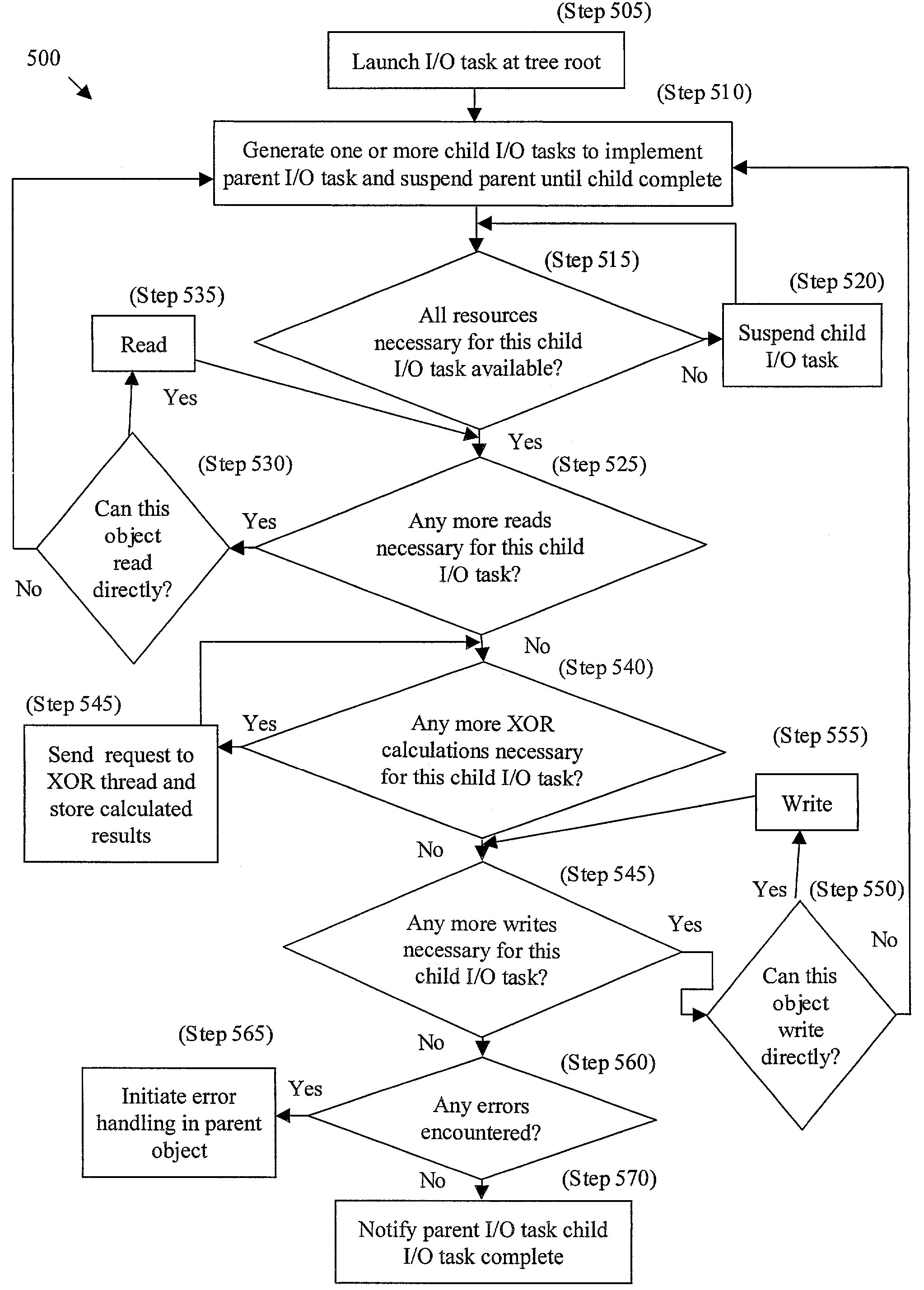

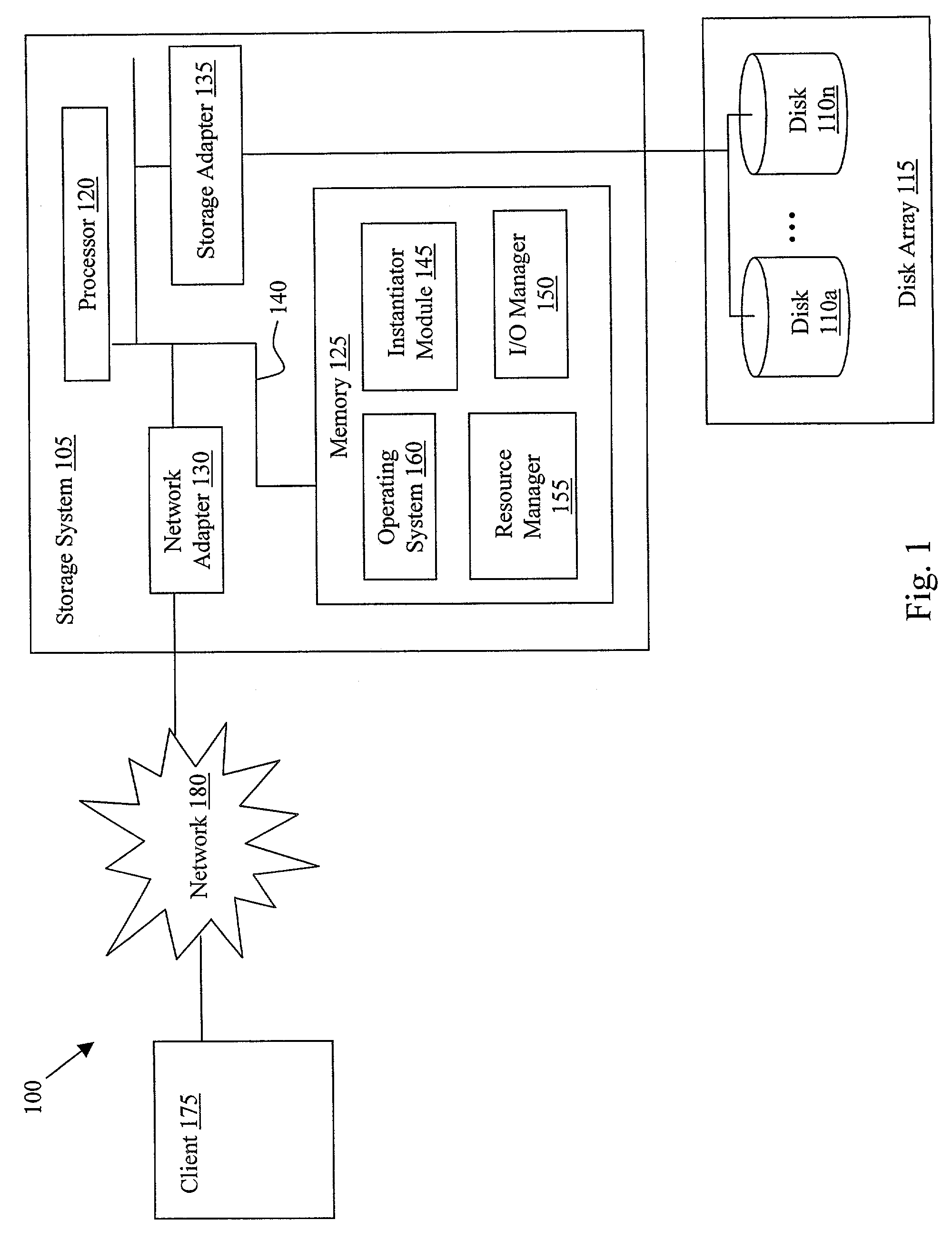

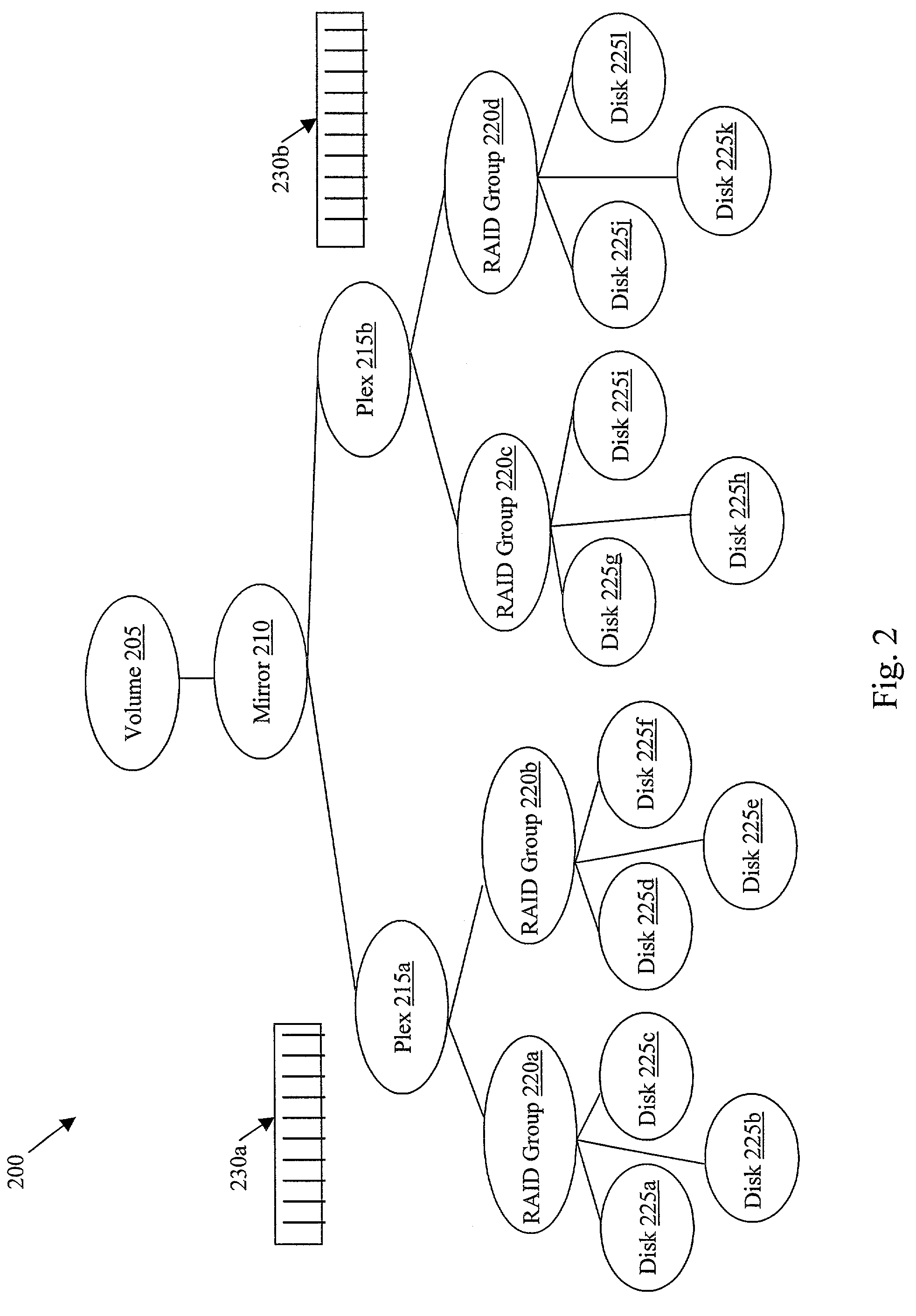

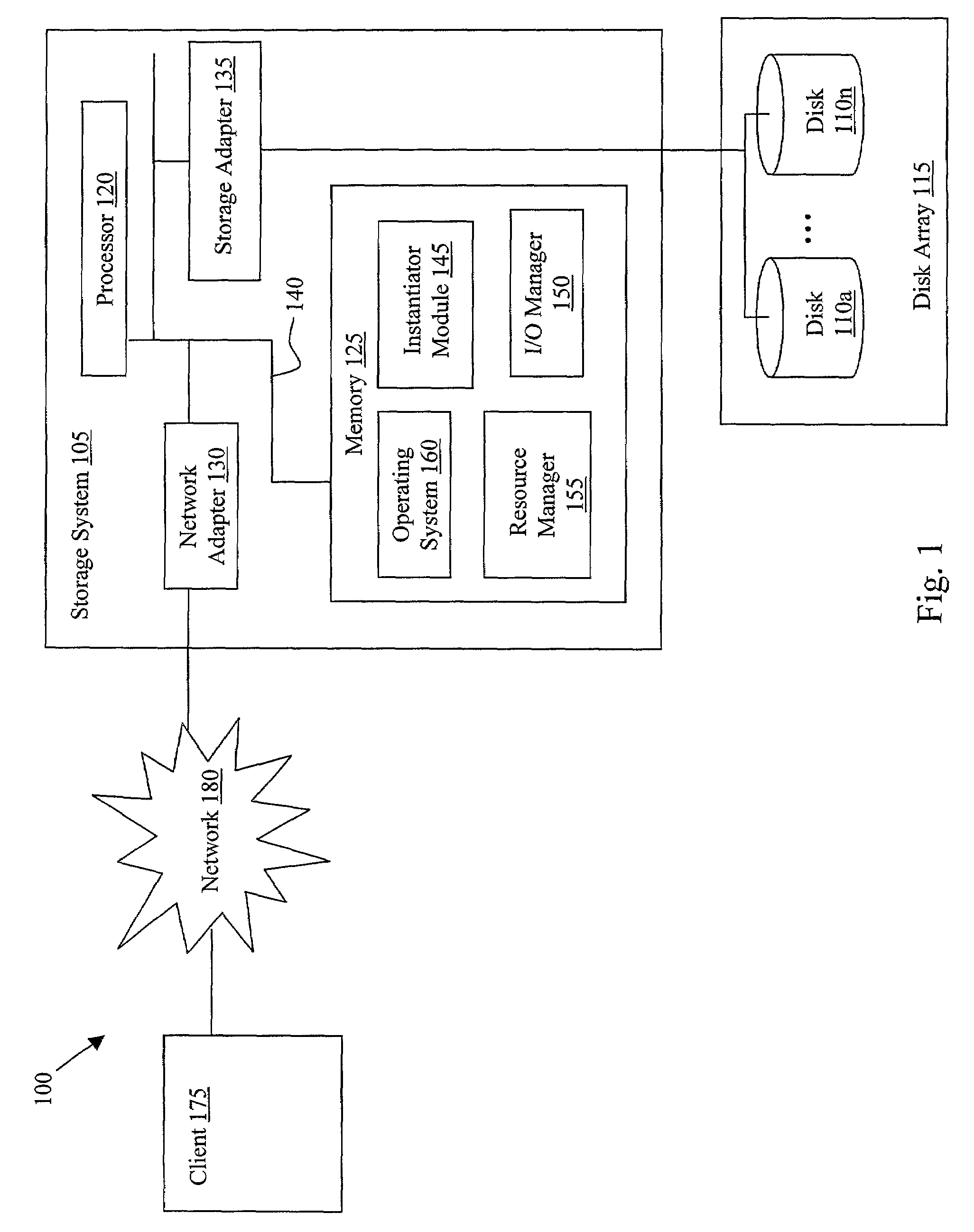

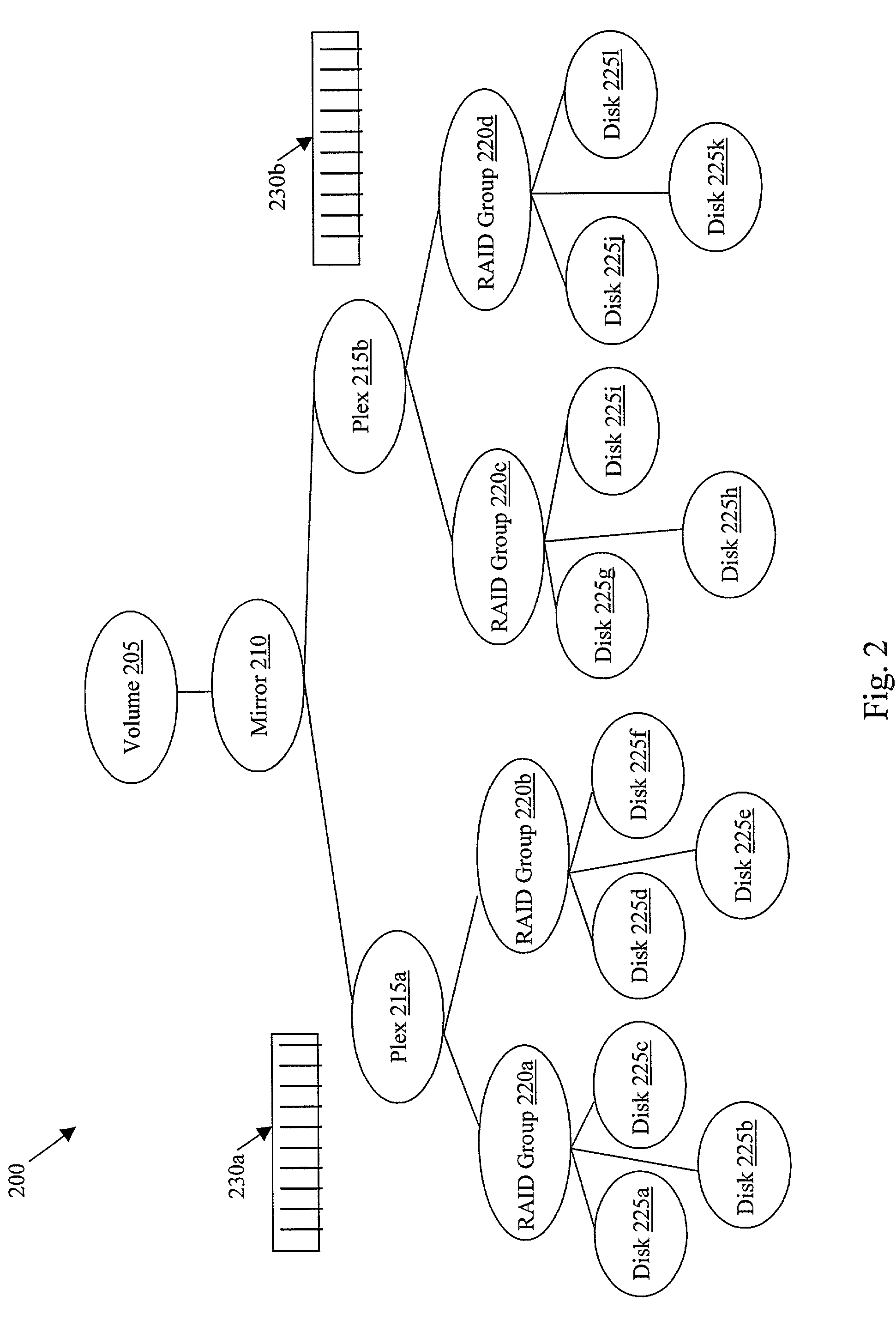

Method and apparatus for resource allocation in a raid system

ActiveUS7254813B2Load balancingEfficient use of resourcesInput/output to record carriersError detection/correctionRAIDComputer architecture

The present invention implements an I / O task architecture in which an I / O task requested by the storage manager, for example a stripe write, is decomposed into a number of lower-level asynchronous I / O tasks that can be scheduled independently. Resources needed by these lower-level I / O tasks are dynamically assigned, on an as-needed basis, to balance the load and use resources efficiently, achieving higher scalability. A hierarchical order is assigned to the I / O tasks to ensure that there is a forward progression of the higher-level I / O task and to ensure that resources do not become deadlocked.

Owner:NETWORK APPLIANCE INC

Intelligence education E-card system platform based on internet of things and cloud computation

InactiveCN102800038ASolve the problem of fragmentationShare in real timeData processing applicationsTransmissionEmbedded systemMetadata

The invention discloses an intelligence education E-card system platform based on internet of things and cloud computation. The platform comprises an IaaS (Infrastructure as a Service) unit, a PaaS (Platform as a Service) unit, an SaaS (Software as a Service) unit, a data collector and a sensing terminal, wherein the IaaS unit is responsible for transferring and processing information obtained by a sensing layer by using infrastructure as service; the PaaS unit makes up a comprehensive service platform with RFID (Radio Frequency Identification) and the data communication technology by using cloud computation as a fundamental platform; the SaaS unit supports a plurality of front-end browsers and is connected to the infrastructure through connectivity access points by adopting industry-advanced technical standards and technical specifications and adopting an SOA (Service-Oriented Architecture) system structure; the data collector supports a plurality of communication protocols, applies to a plurality of communication modes, adopts an I / O (Input / Output) model-IOCP (I / O Completion Port) mode, and uses threads for pool-processing asynchronous I / O requests; and the sensing terminal comprises a POS (Point-of-Sale) machine, a building machine, a recognizing machine, multimedia, a channel machine, a vehicle-mounted machine, a water controller and other specific-purpose terminal equipment.

Owner:王向东

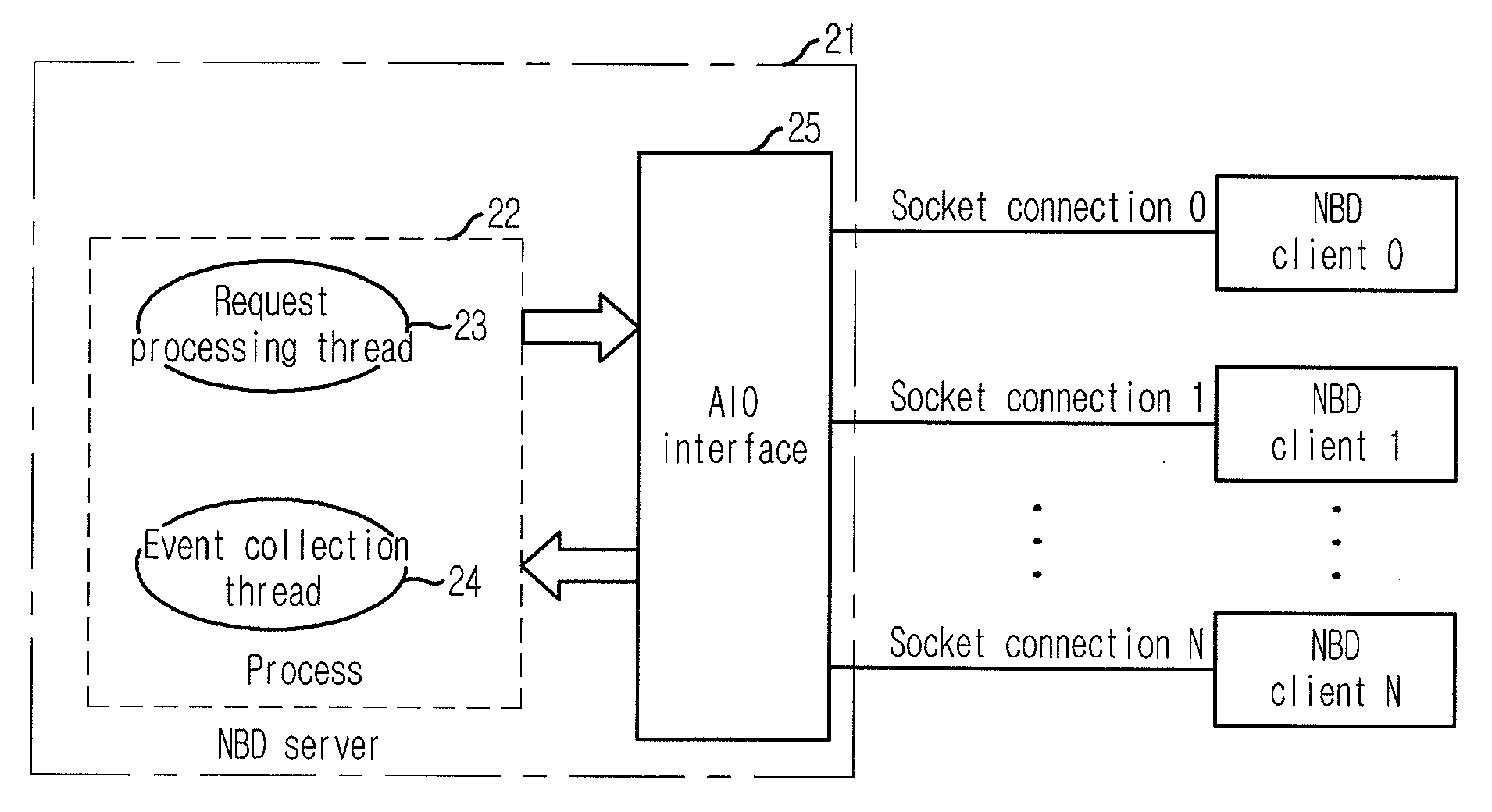

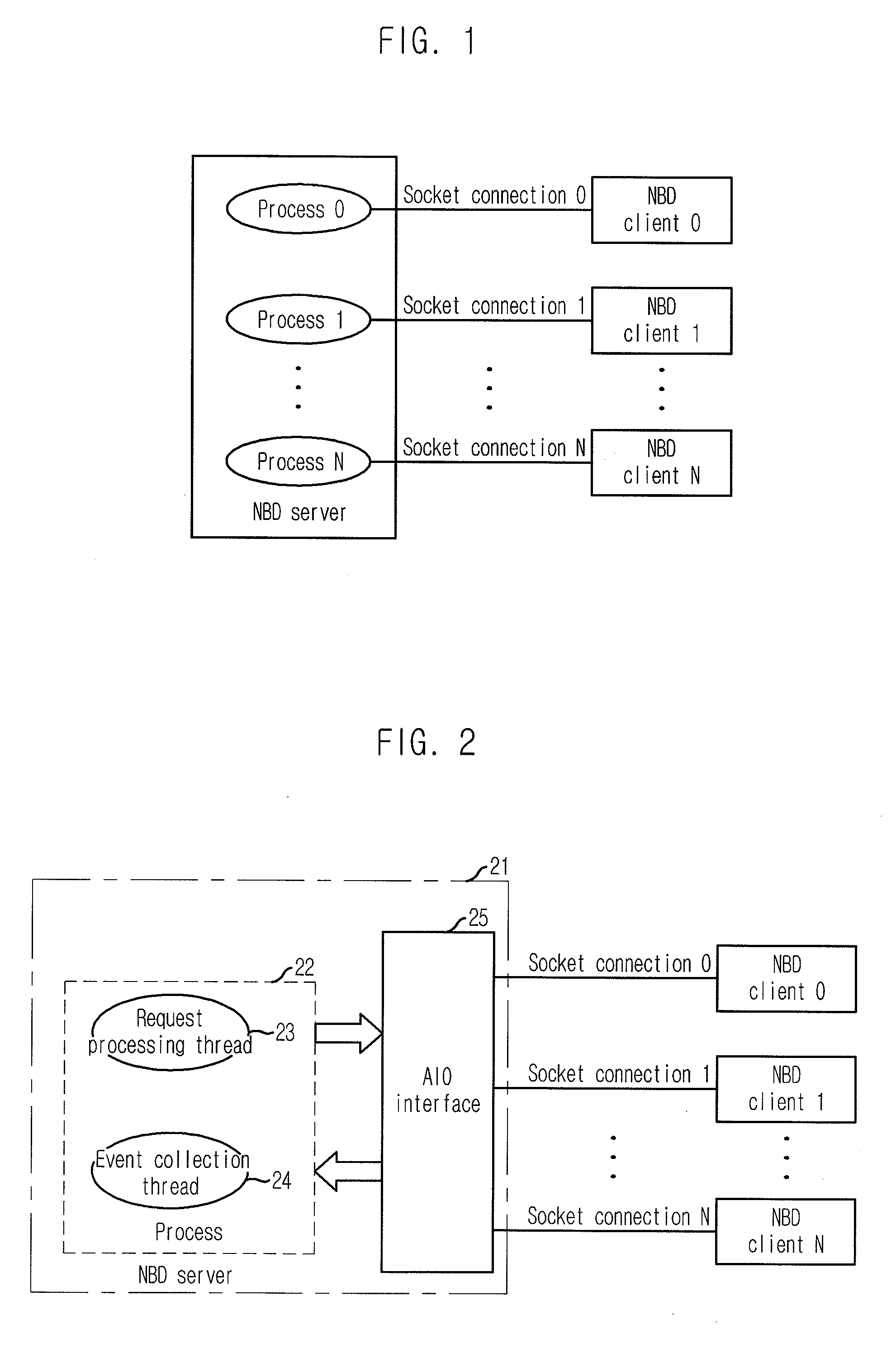

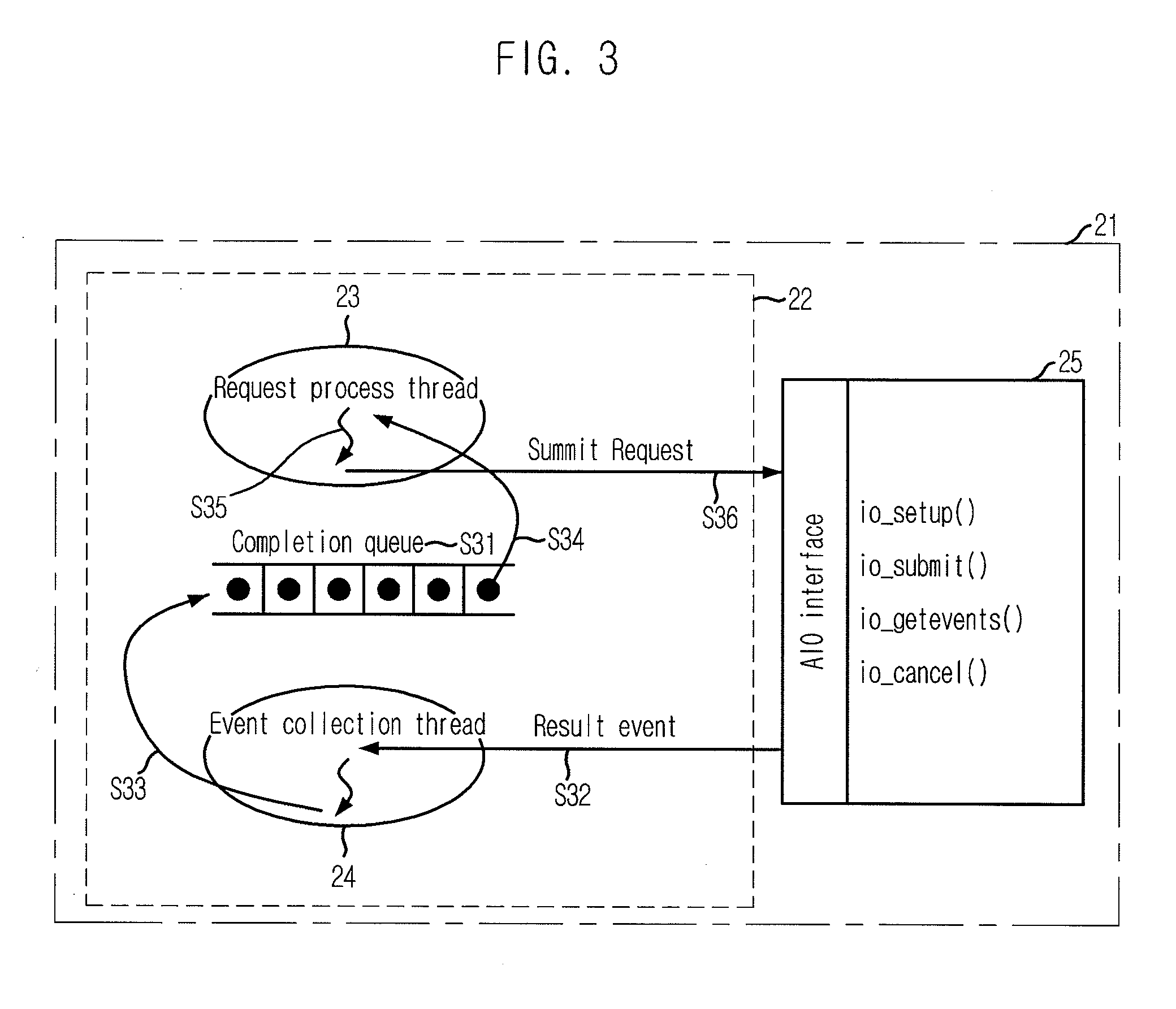

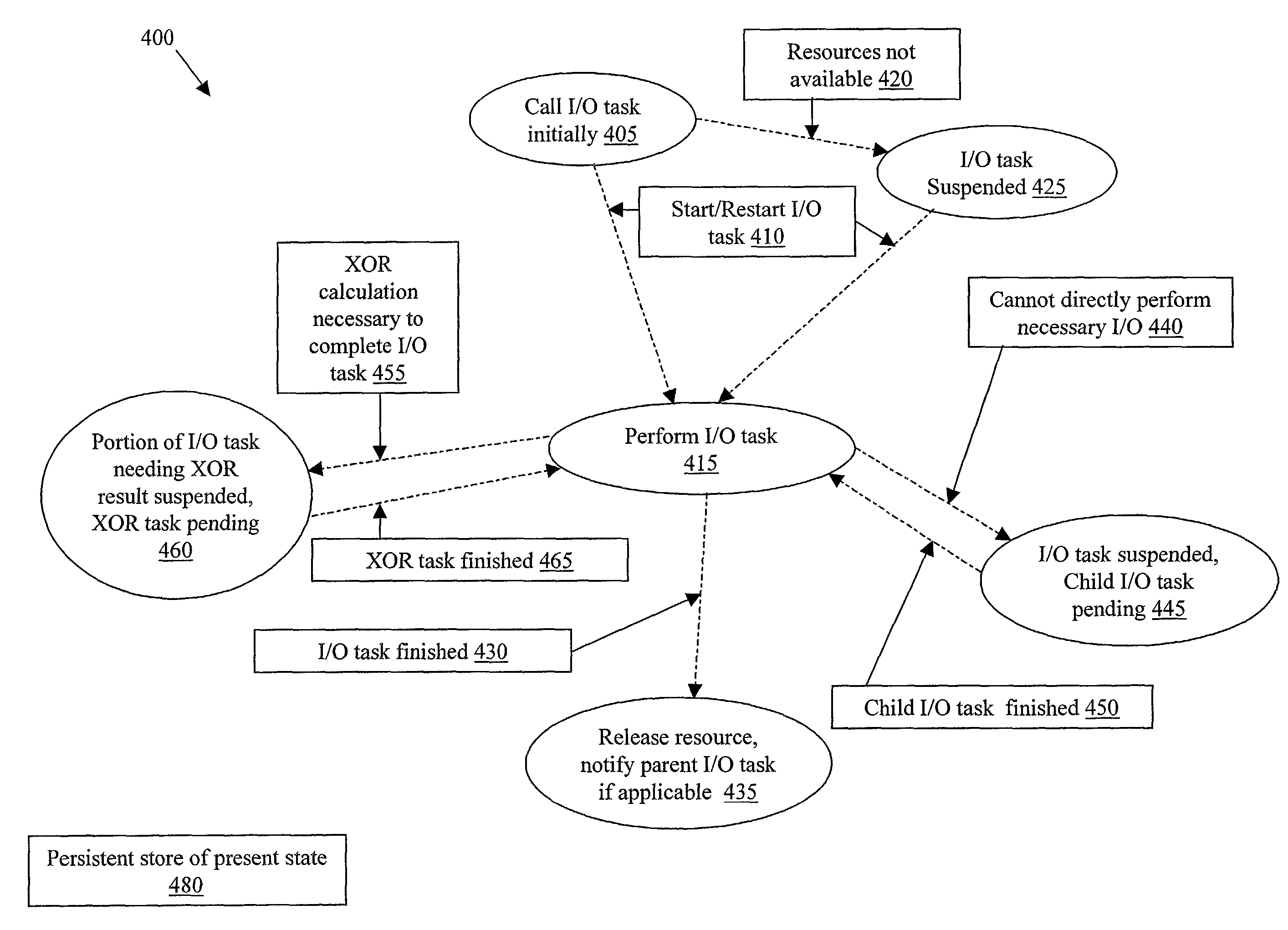

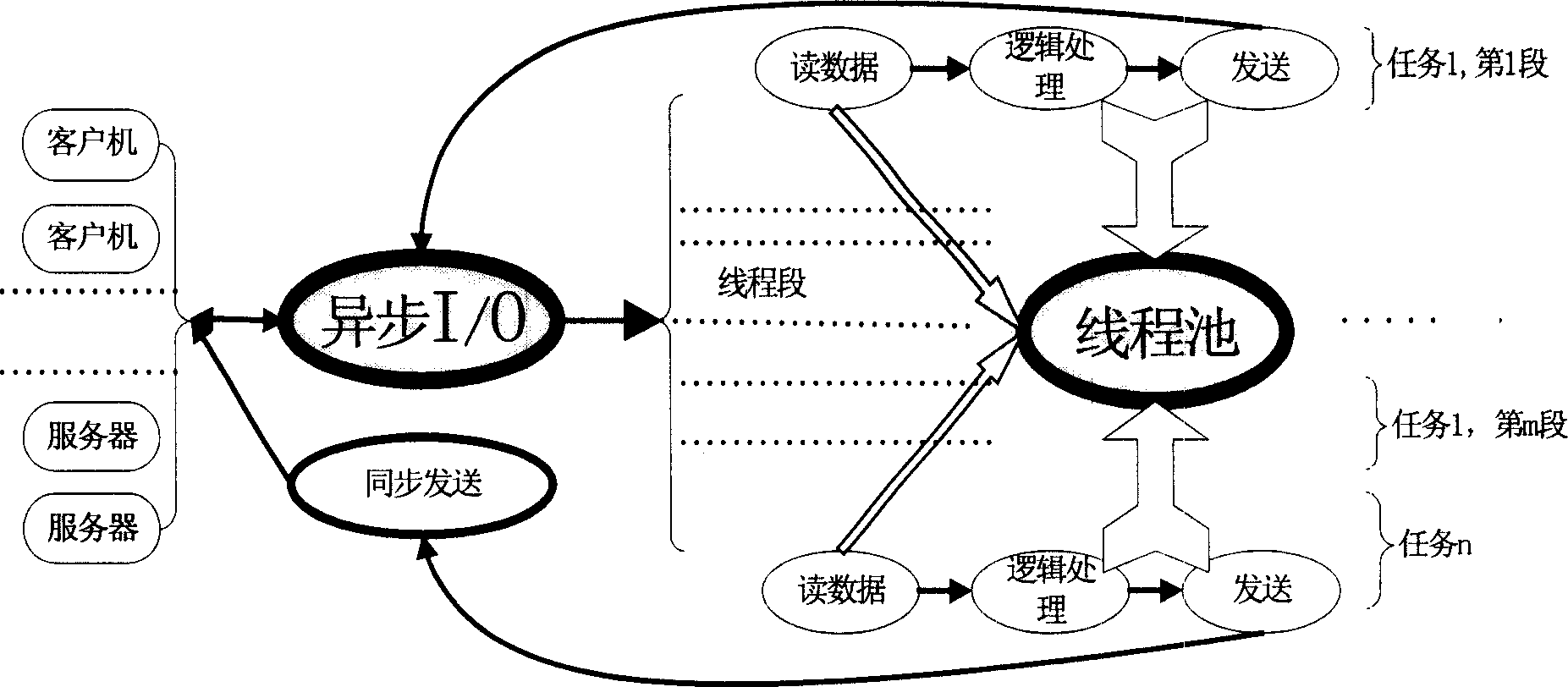

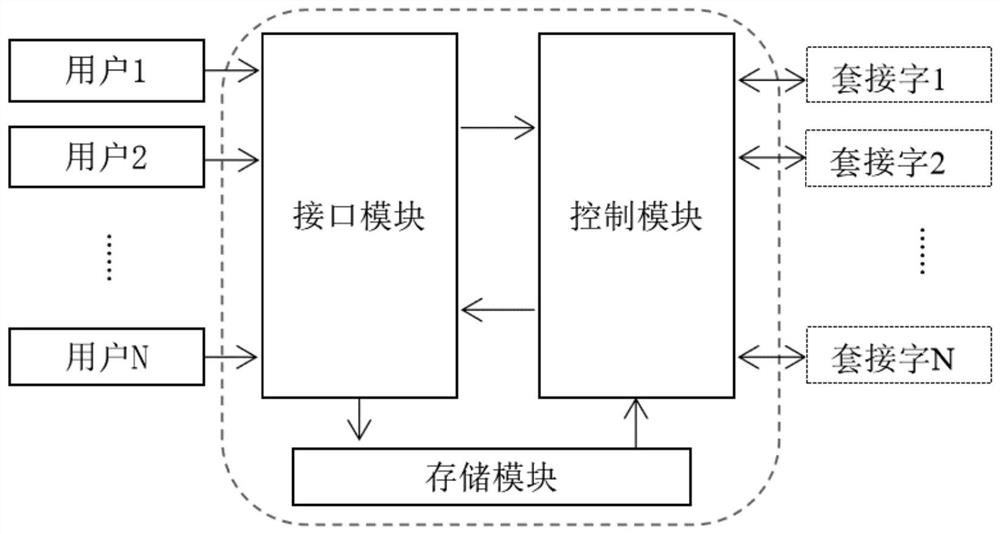

Network block device using network asynchronous I/O

InactiveUS20080133654A1Effectively providing serviceEffective serviceMultiple digital computer combinationsTransmissionClient-sideAsynchronous I/O

A network block device using a network asynchronous I / O method is provided. The network block device includes an asynchronous I / O interface for managing a plurality of socket connections; an request process unit for analyzing a request from a client through a socket, reading data from a disk and transmitting the read data to the client through the socket, and writing the data transmitted through the socket to the disk, through the asynchronous I / O interface; and a request processing unit for collecting a result event of processing an operation asynchronously requested by the request processing unit and informing the request processing unit of the collected result event, through the asynchronous I / O interface.

Owner:ELECTRONICS & TELECOMM RES INST

Method and apparatus for runtime resource deadlock avoidance in a raid system

ActiveUS7437727B2Load balancingEfficient use of resourcesInput/output to record carriersProgram initiation/switchingRAIDAsynchronous I/O

The present invention implements an I / O task architecture in which an I / O task requested by the storage manager, for example a stripe write, is decomposed into a number of lower-level asynchronous I / O tasks that can be scheduled independently. Resources needed by these lower-level I / O tasks are dynamically assigned, on an as-needed basis, to balance the load and use resources efficiently, achieving higher scalability. A hierarchical order is assigned to the I / O tasks to ensure that there is a forward progression of the higher-level I / O task and to ensure that resources do not become deadlocked.

Owner:NETWORK APPLIANCE INC

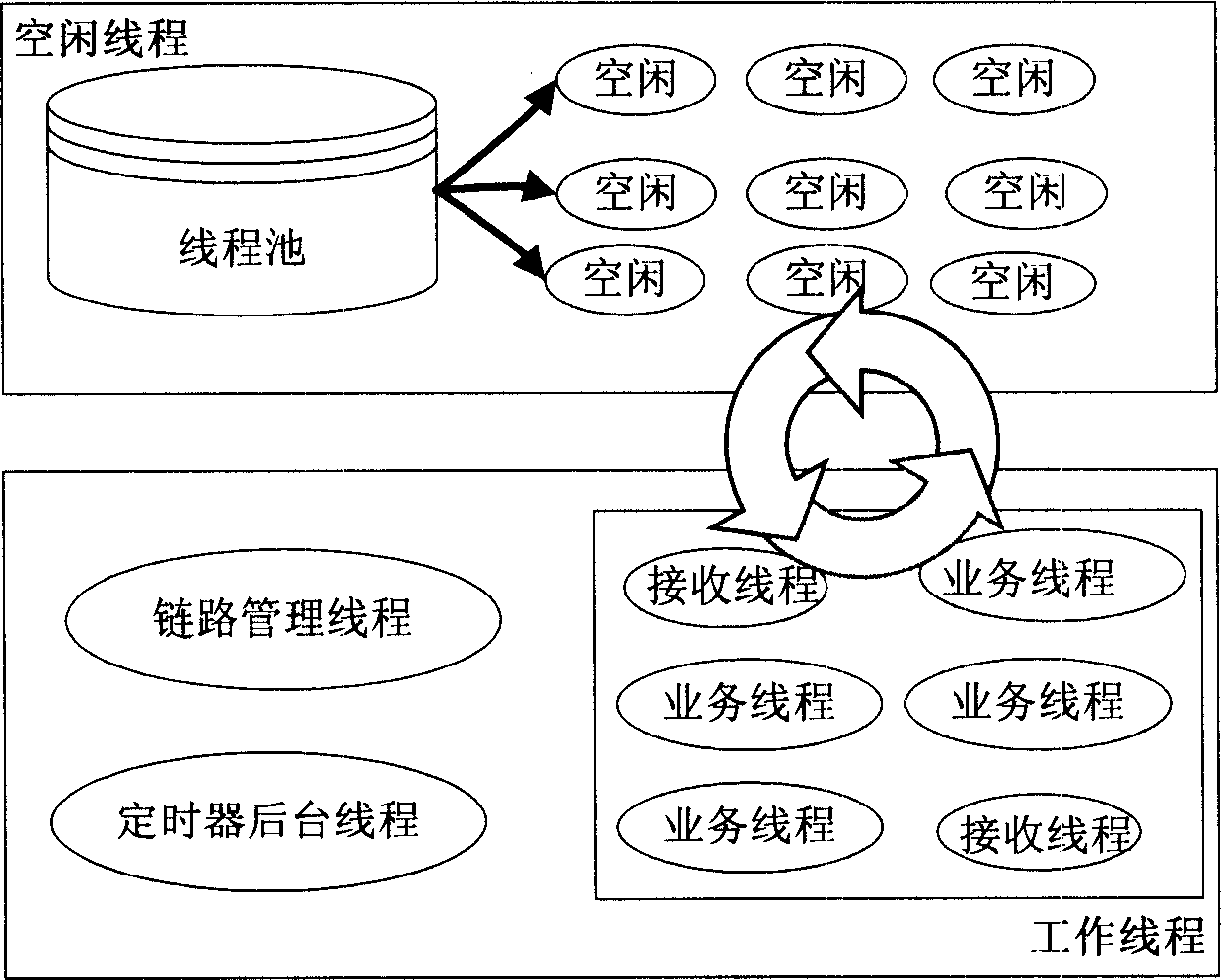

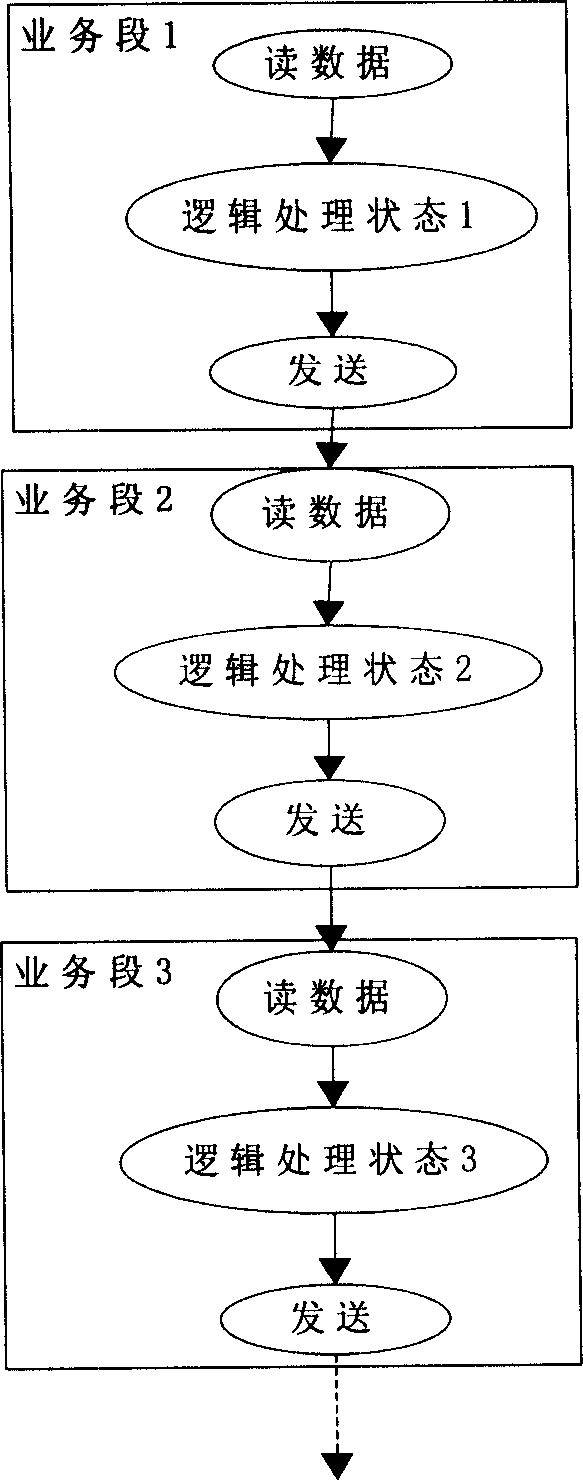

Method for applied server of computer system

InactiveCN1584842ATo achieve the effect of automatic adjustmentIncrease spaceMultiprogramming arrangementsTransmissionInternal memoryApplication server

A method for realizing application server of computer system includes forming linear program cell in computer system; spliting server linear program into linear program of linking, receiving and service, applying in computer linear prograum cell by most of linear program which is returned back to program cell after each data or service having been processed; storing process flow in internal memory.

Owner:ZTE CORP

Communication optimization method and system

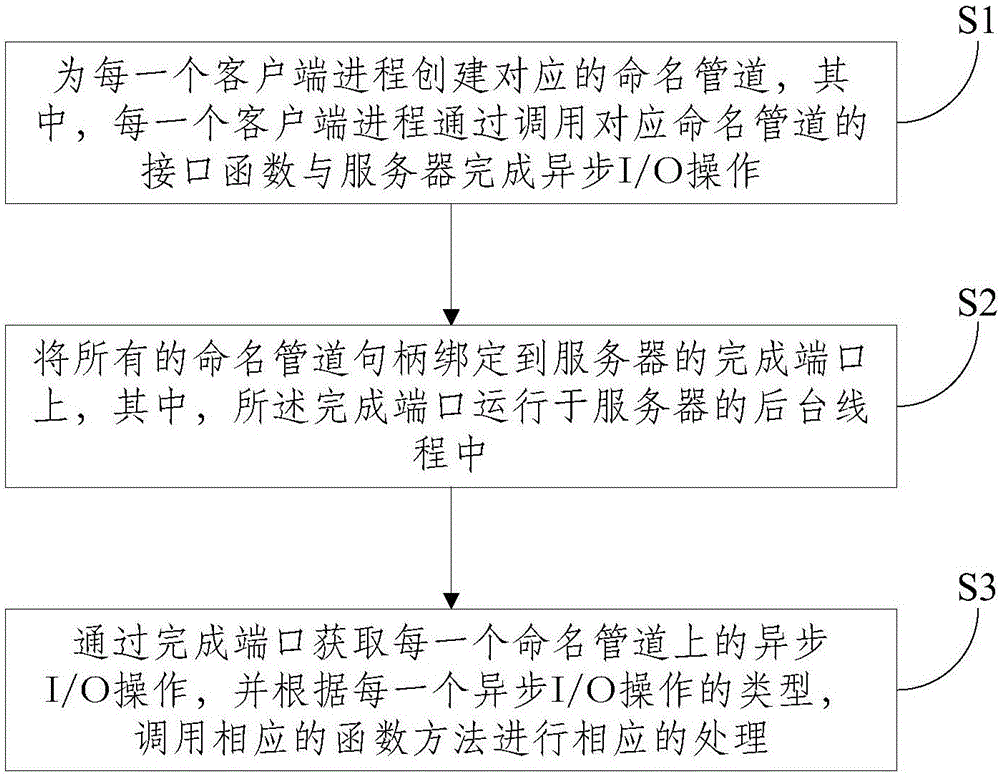

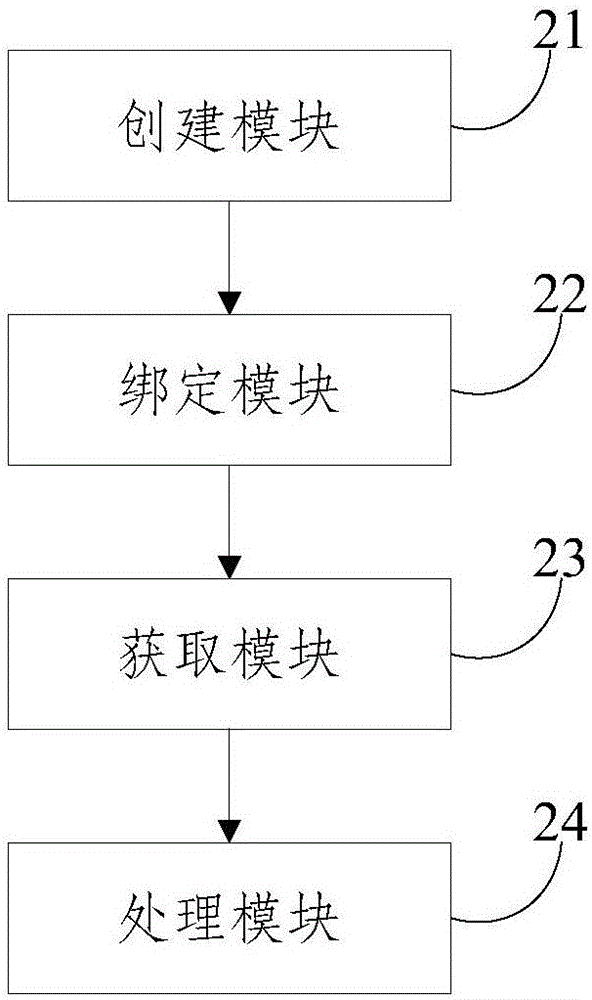

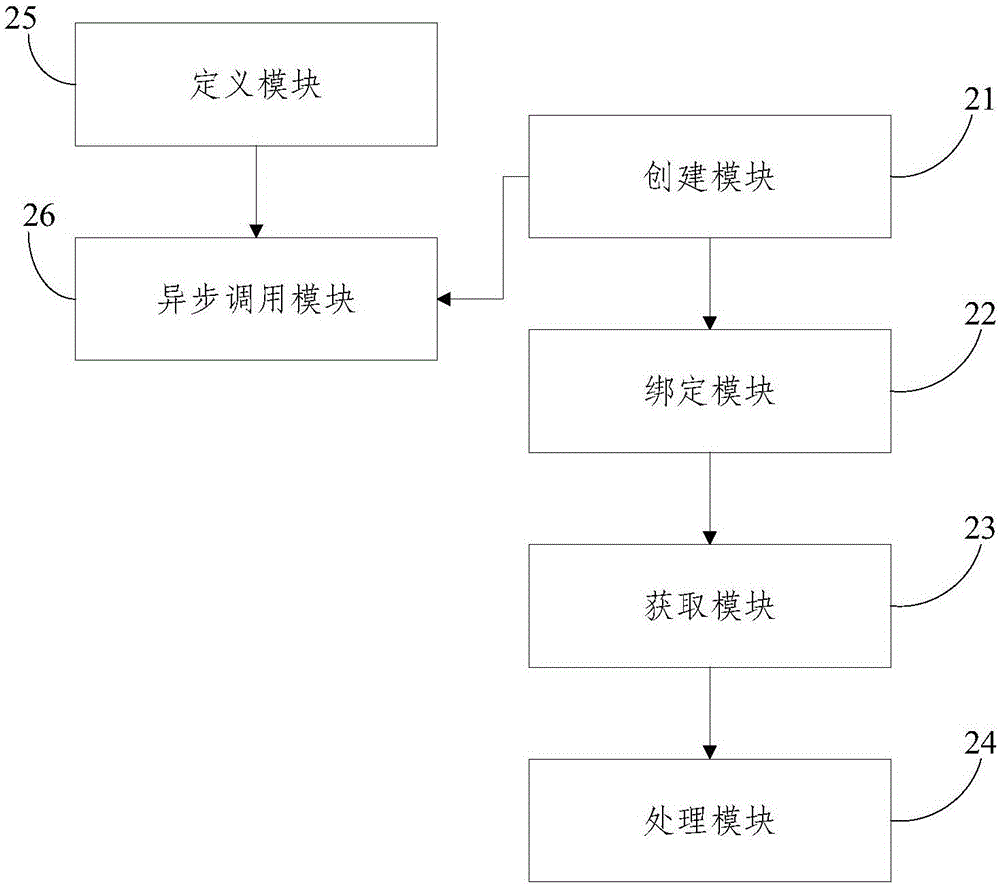

ActiveCN107526645AImprove processing efficiencyAvoid blockingInterprogram communicationAsynchronous I/OFunction method

The invention provides a communication optimization method and system. The method comprises the steps of S1, creating a corresponding named pipeline for each client process; S2, binding all named pipeline handles to a completion port of a server, wherein the completion port runs in a background thread; and S3, obtaining asynchronous I / O operation on each named pipeline through the completion port, and according to the type of each asynchronous I / O operation, calling a corresponding function method to perform processing. The completion port is created; all the named pipeline handles corresponding to the client processes are bound to the completion port; the asynchronous I / O operations of all clients are managed by utilizing the completion port; the completion port only needs to run in one background thread, so that request events of the clients can be processed through one background thread; and compared with a conventional mode in need of a plurality of threads, the problem of thread blockage is avoided and the CPU processing efficiency is improved.

Owner:WUHAN DOUYU NETWORK TECH CO LTD

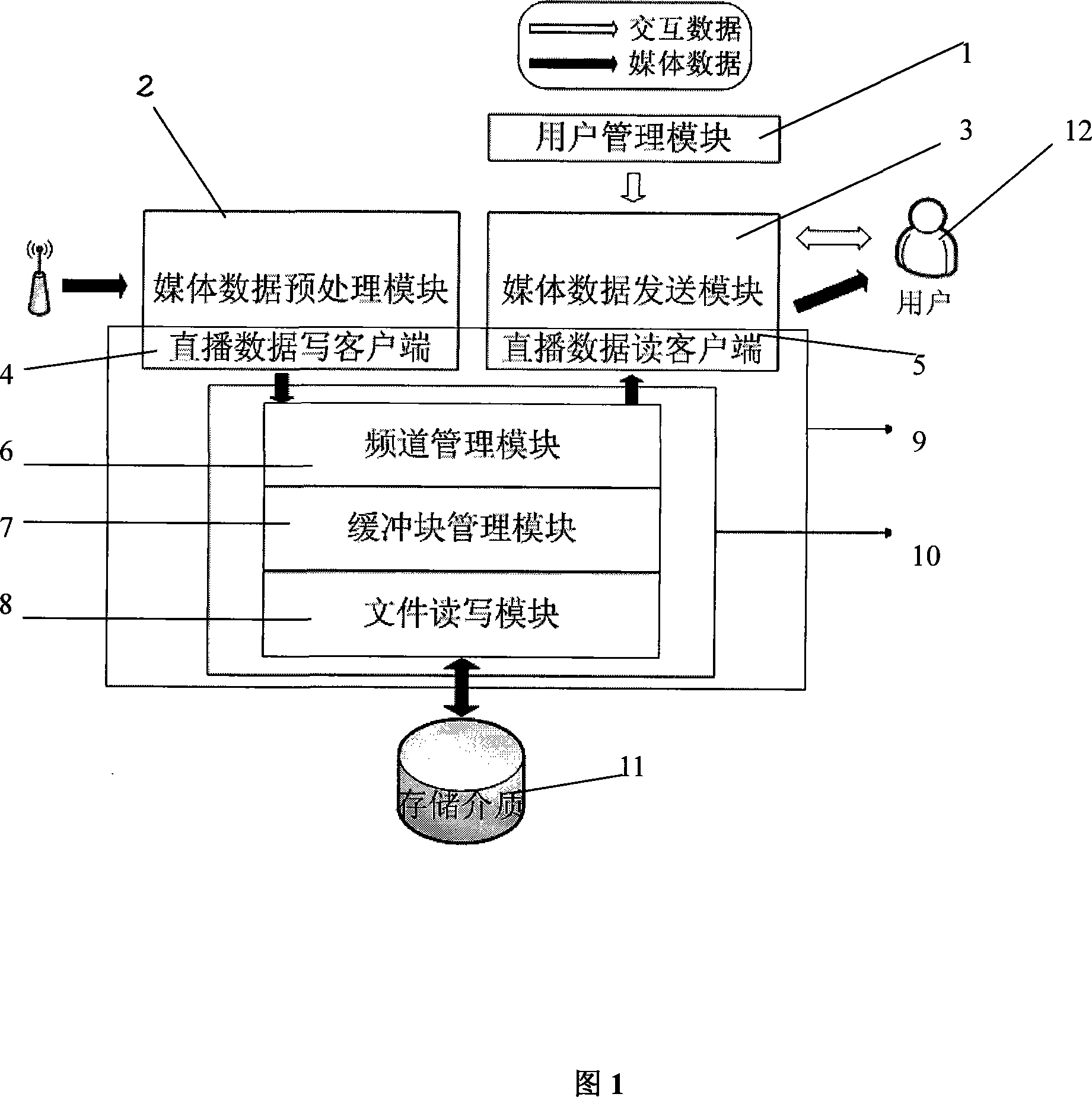

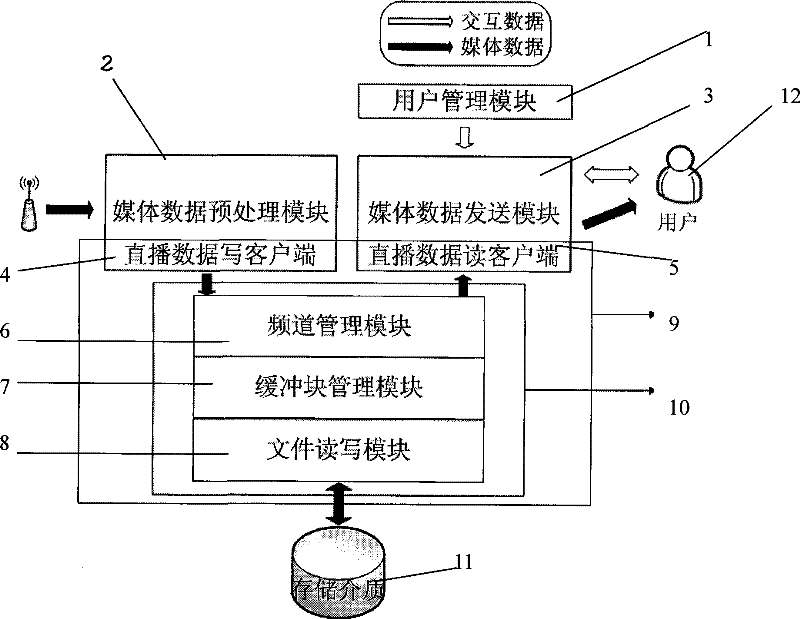

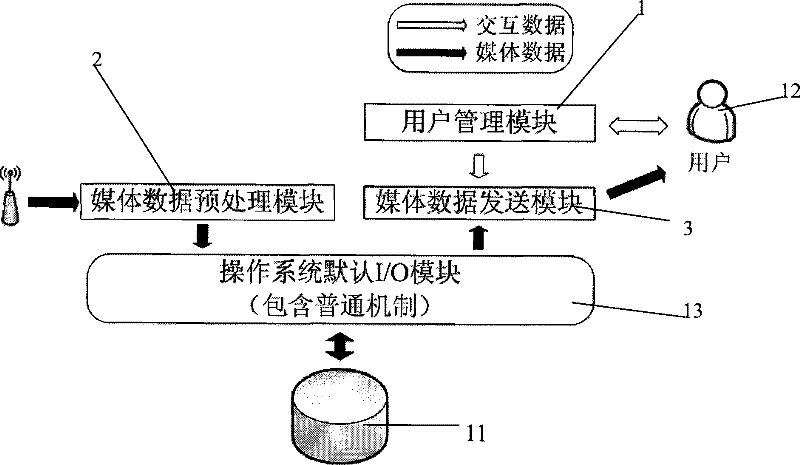

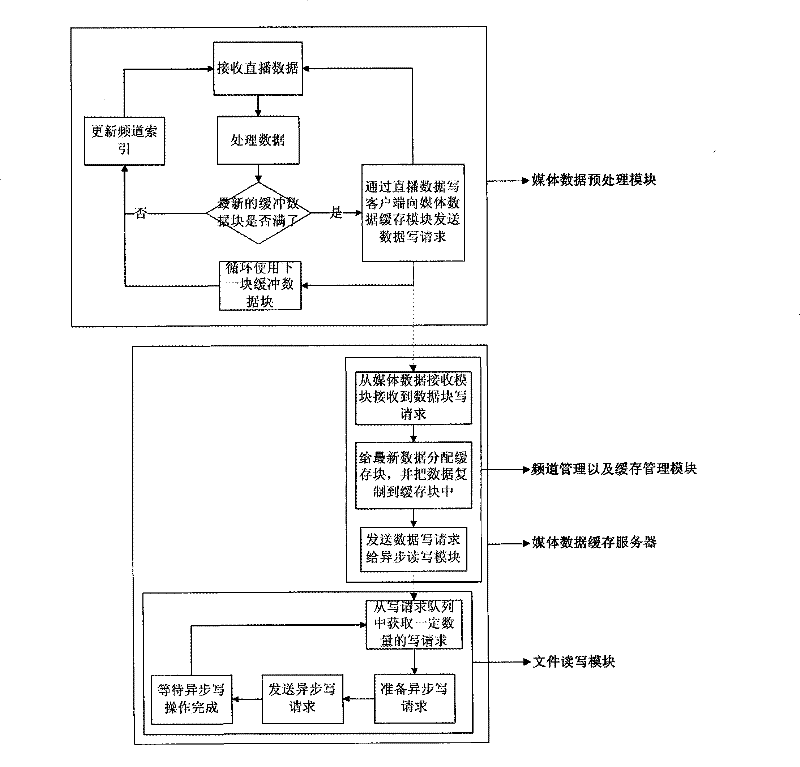

Special disk reading and writing system suitable for IPTV direct broadcast server with time shift

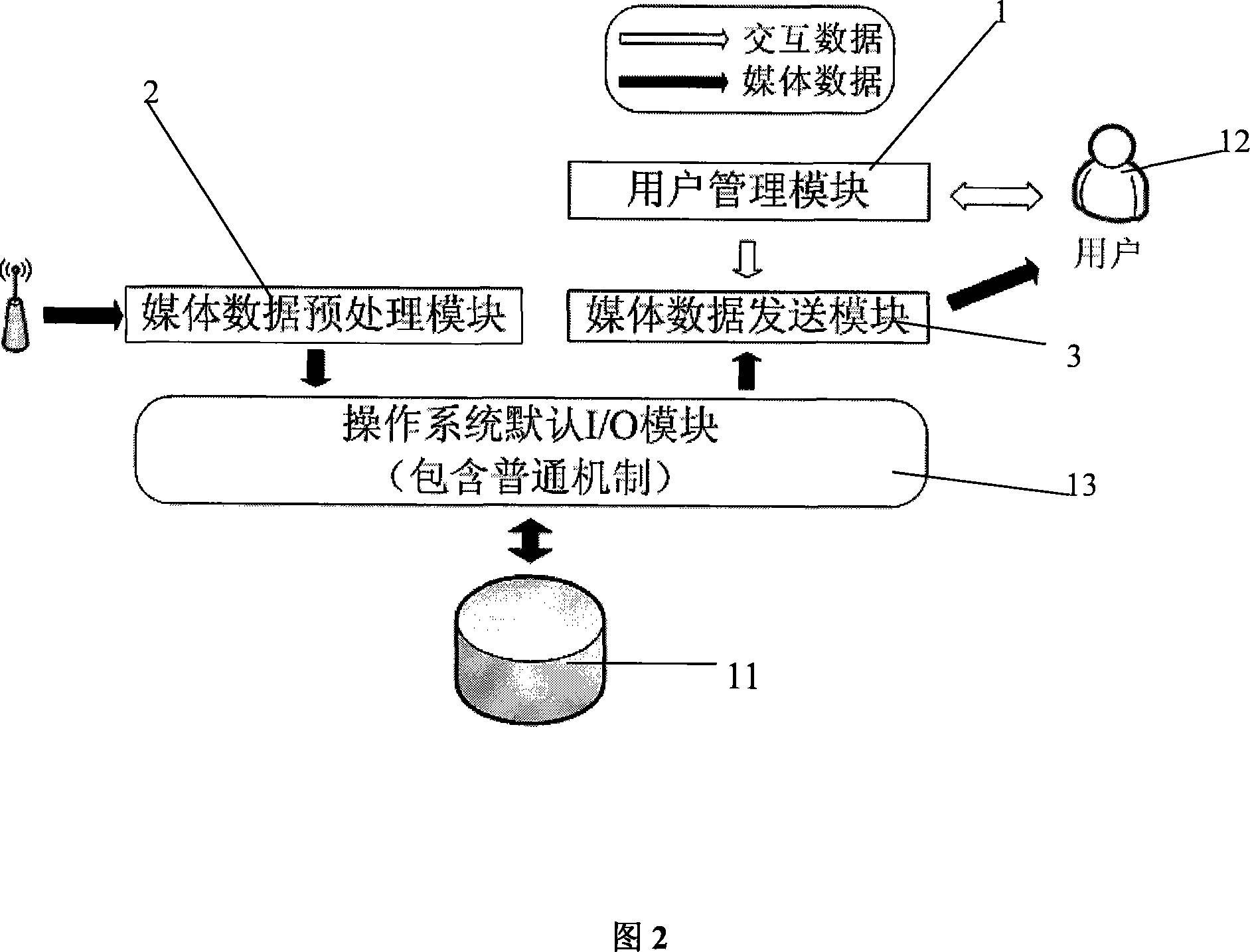

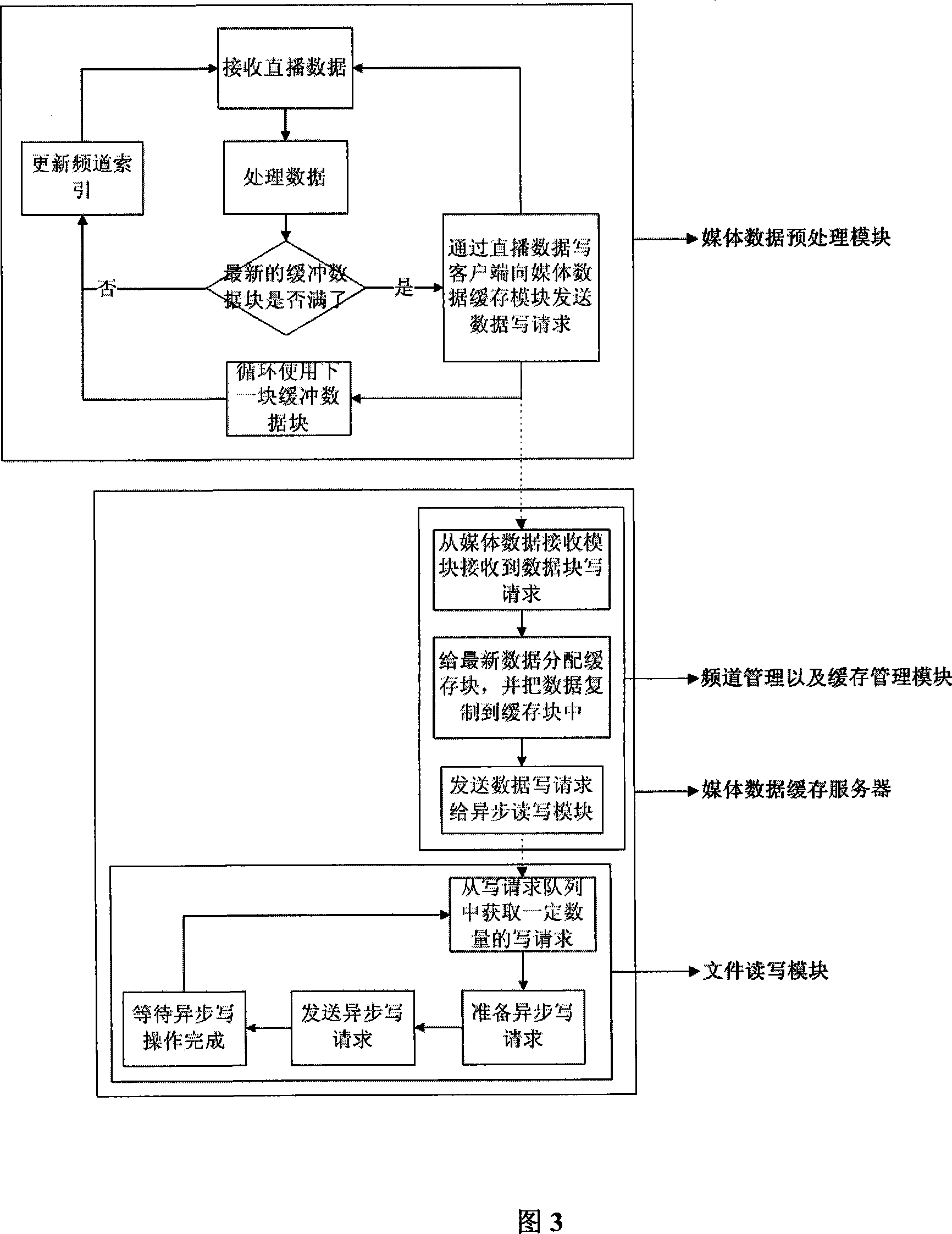

The disclosed special disc read-write system with time-variable IPTV live server comprises: a media data buffer server including a channel management module, a buffer management module with memory based on tmpfs and memory file mapping, and a file read / write module with DIRECT / asynchronous I / O; and some buffer clients including the direct data write / read client, wherein the live server also comprises a data pre-process module, a transmission module and user management module. This invention improves entire system performance.

Owner:SHANGHAI CLEARTV CORP LTD

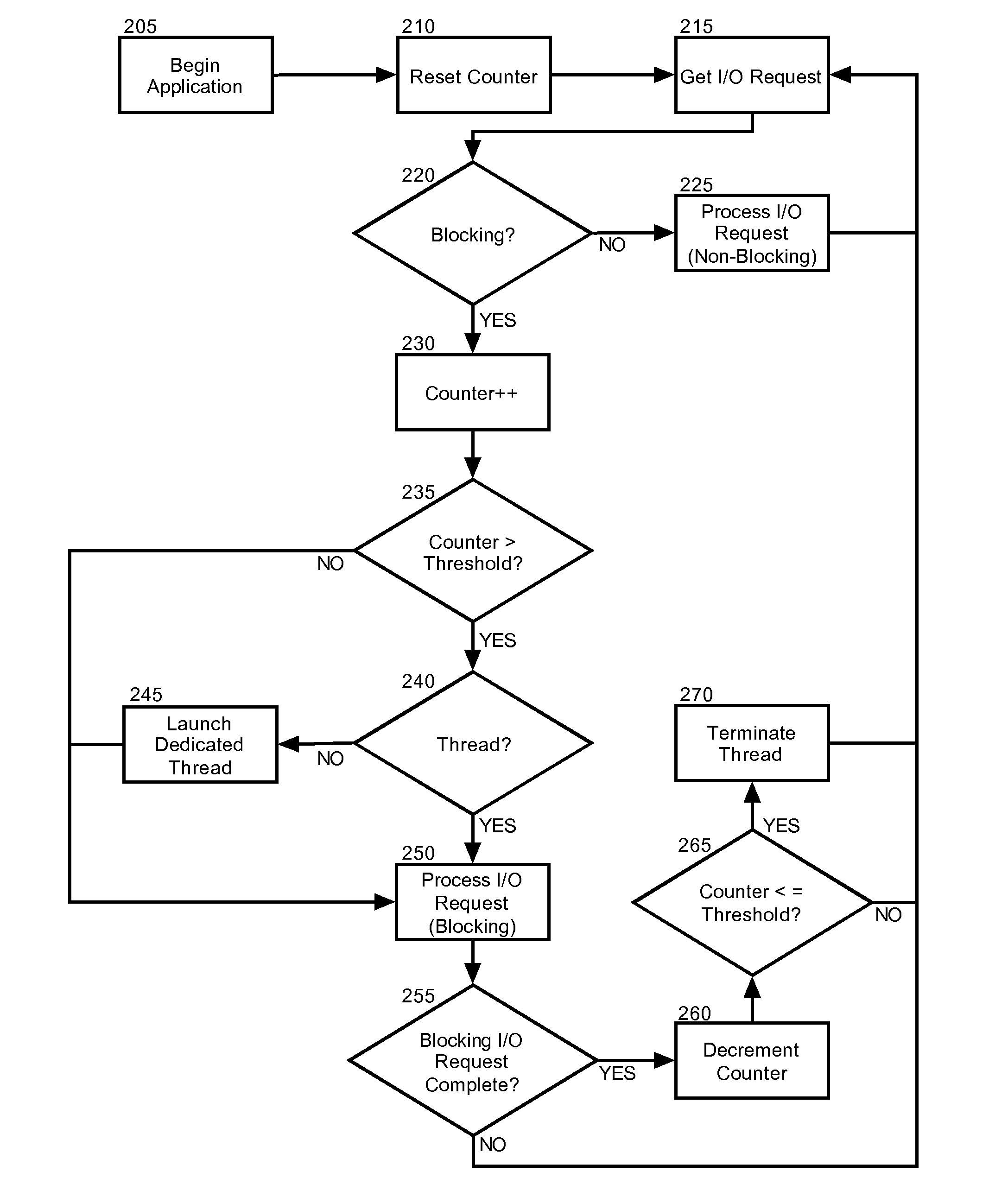

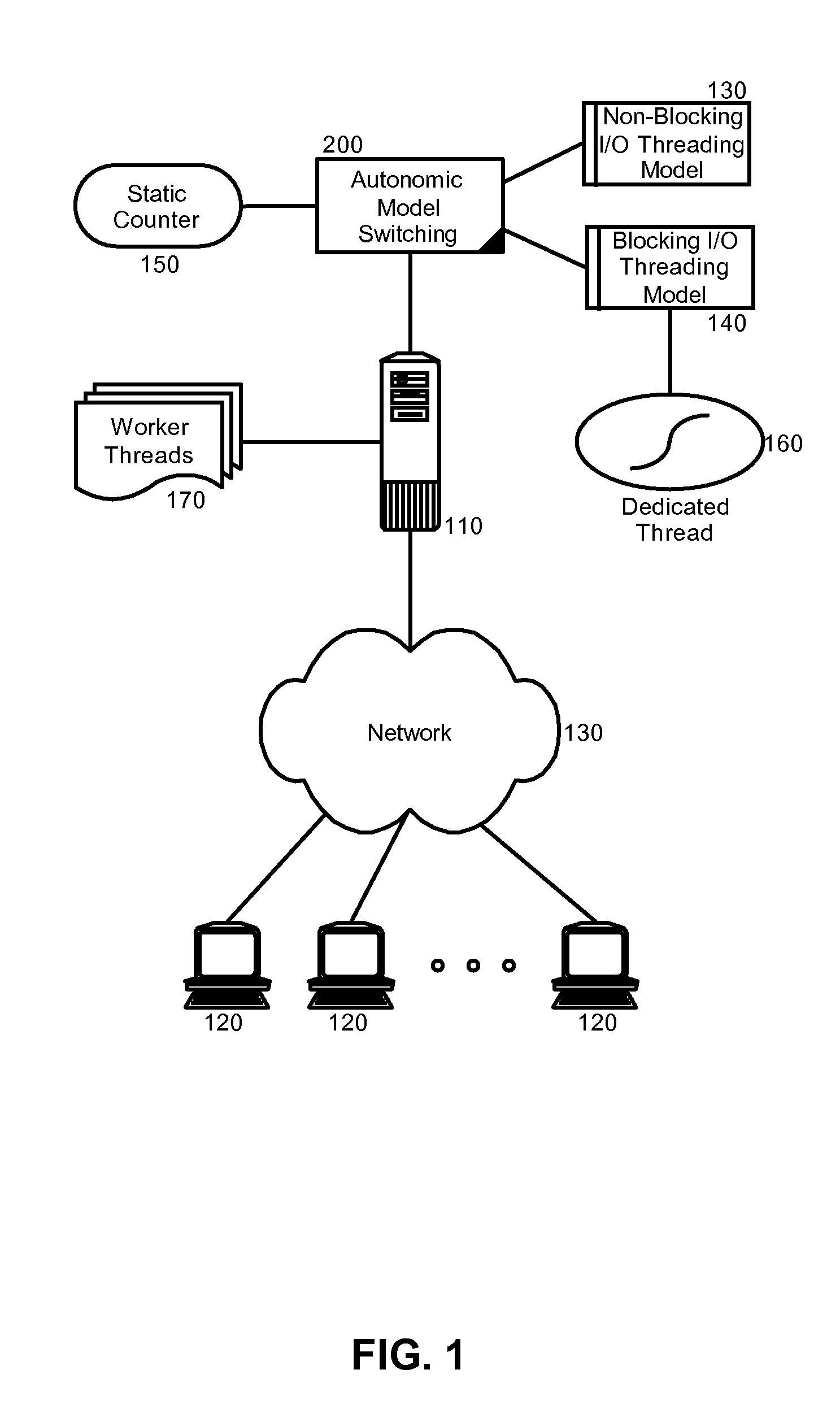

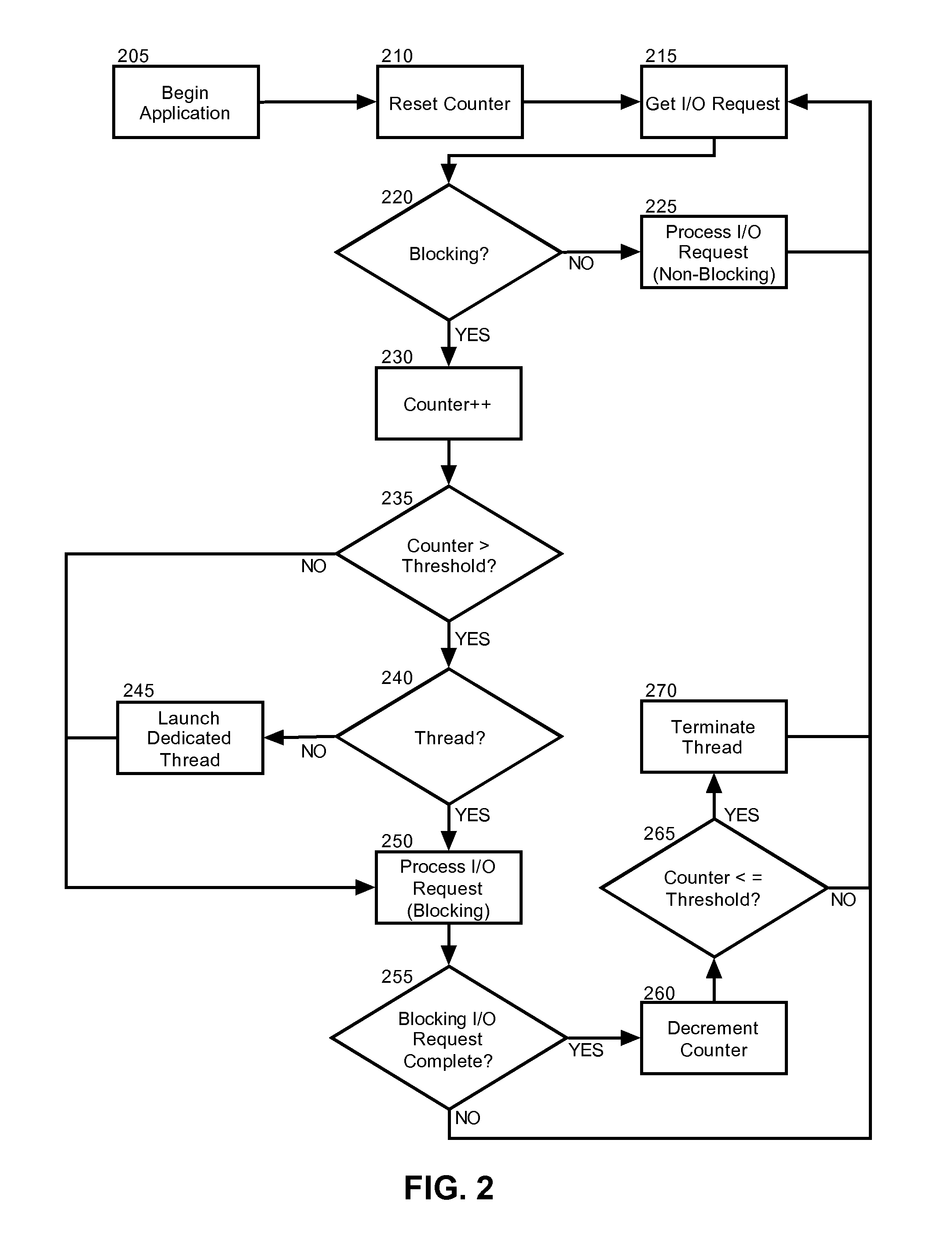

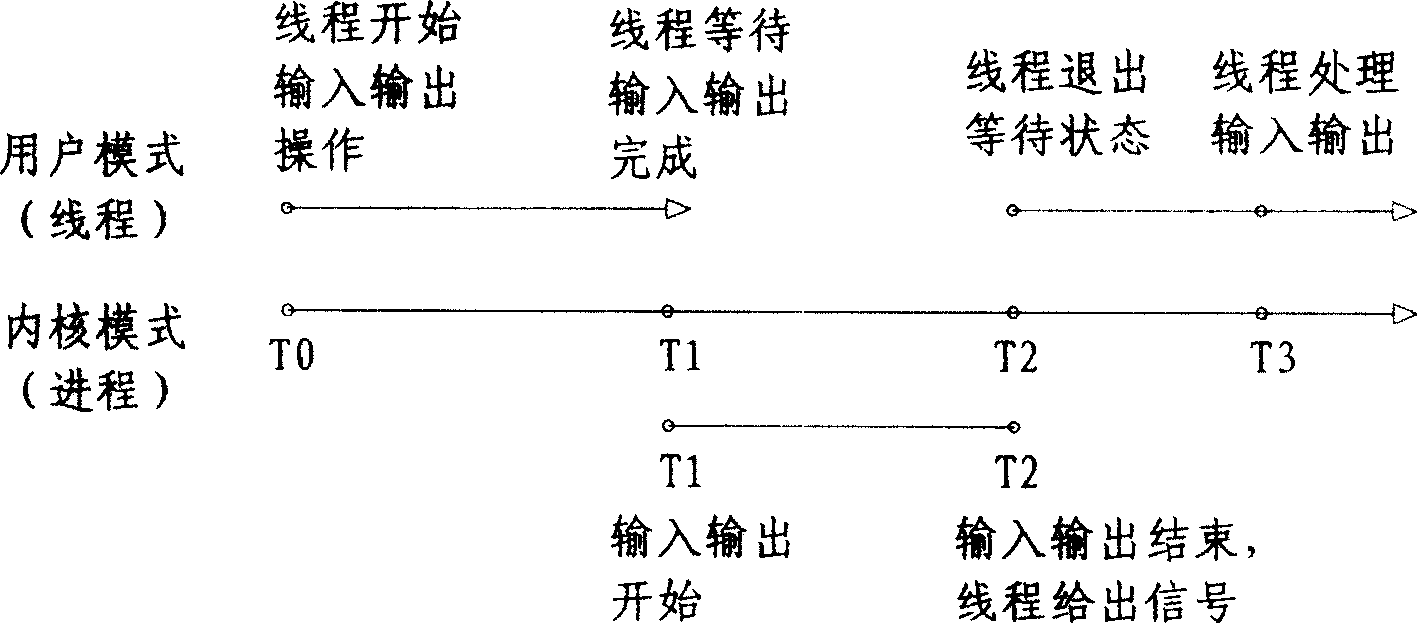

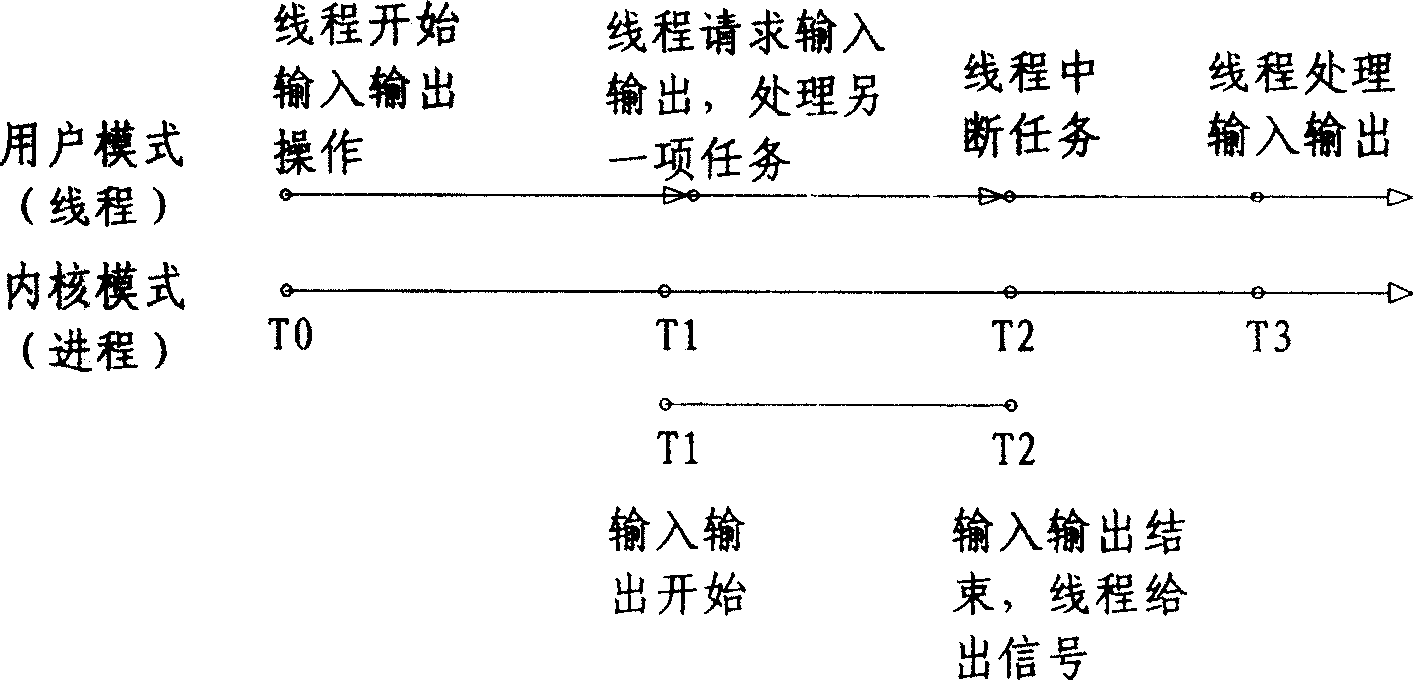

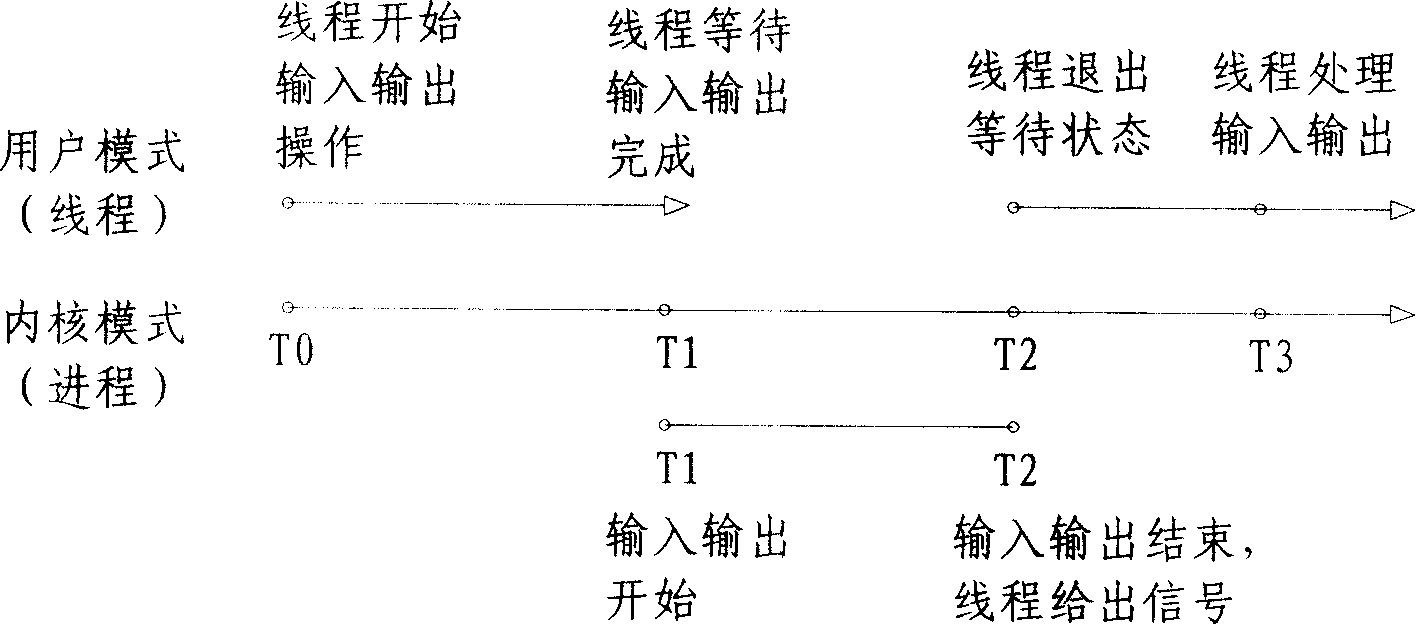

Autonomic threading model switch based on input/output request type

InactiveUS20080126613A1Input/output processes for data processingData conversionState of artComputer architecture

Embodiments of the present invention address deficiencies of the art in respect to threading model switching between asynchronous I / O and synchronous I / O models and provide a novel and non-obvious method, system and computer program product for autonomic threading model switching based upon I / O request types. In one embodiment, a method for autonomic threading model switching based upon I / O request types can be provided. The method can include selectably activating and de-activating a blocking I / O threading model according to a volume of received and completed blocking I / O requests.

Owner:IBM CORP

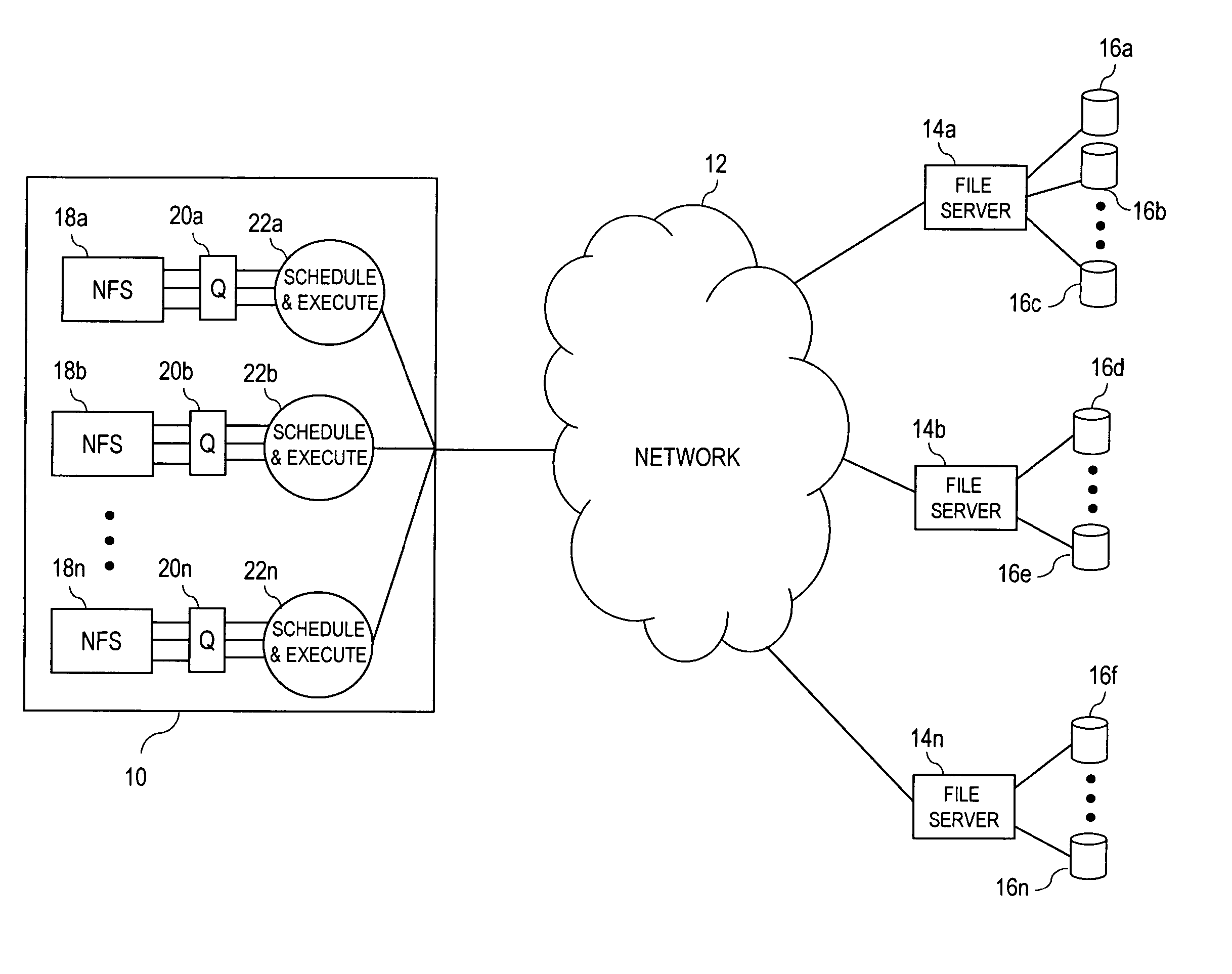

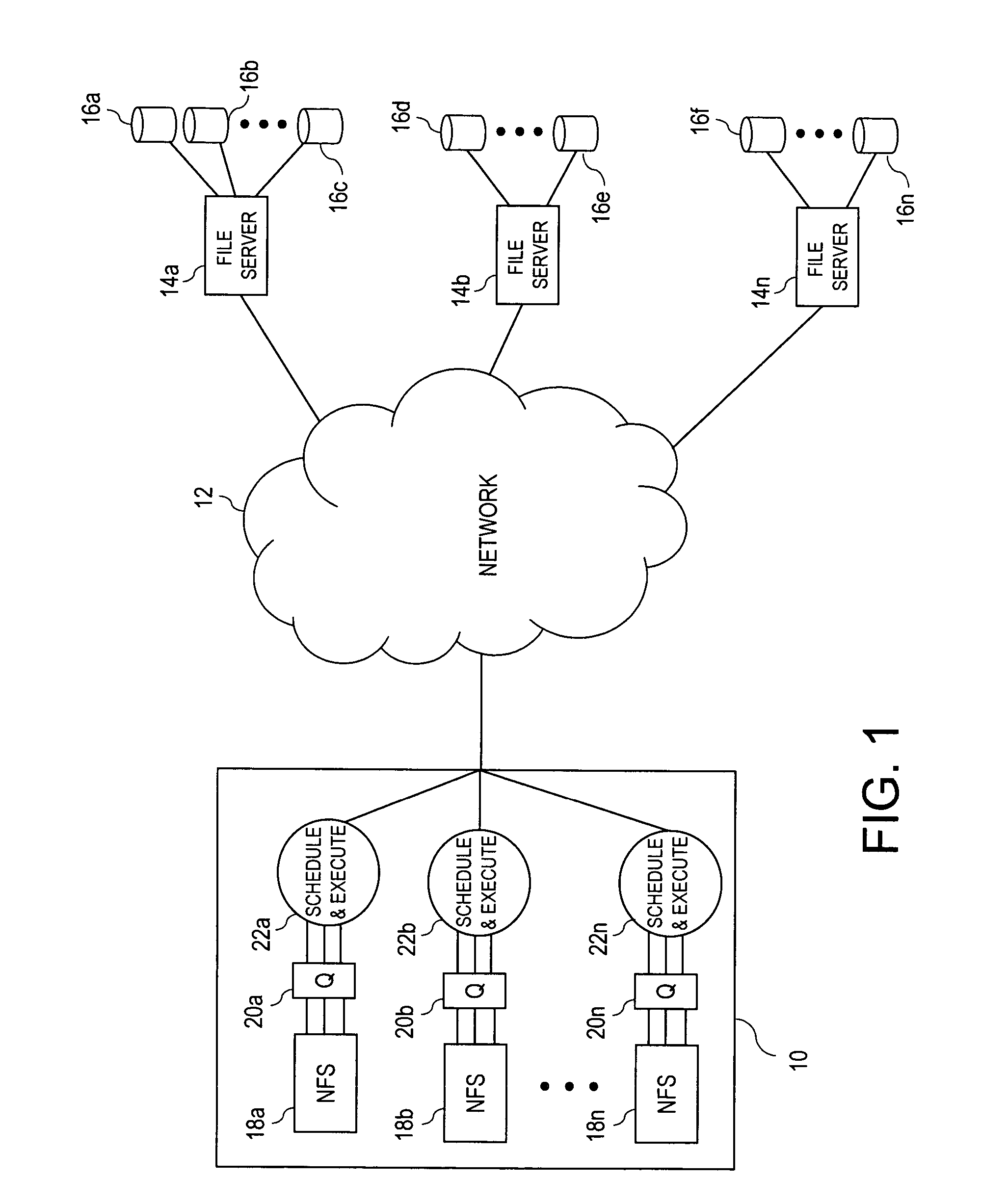

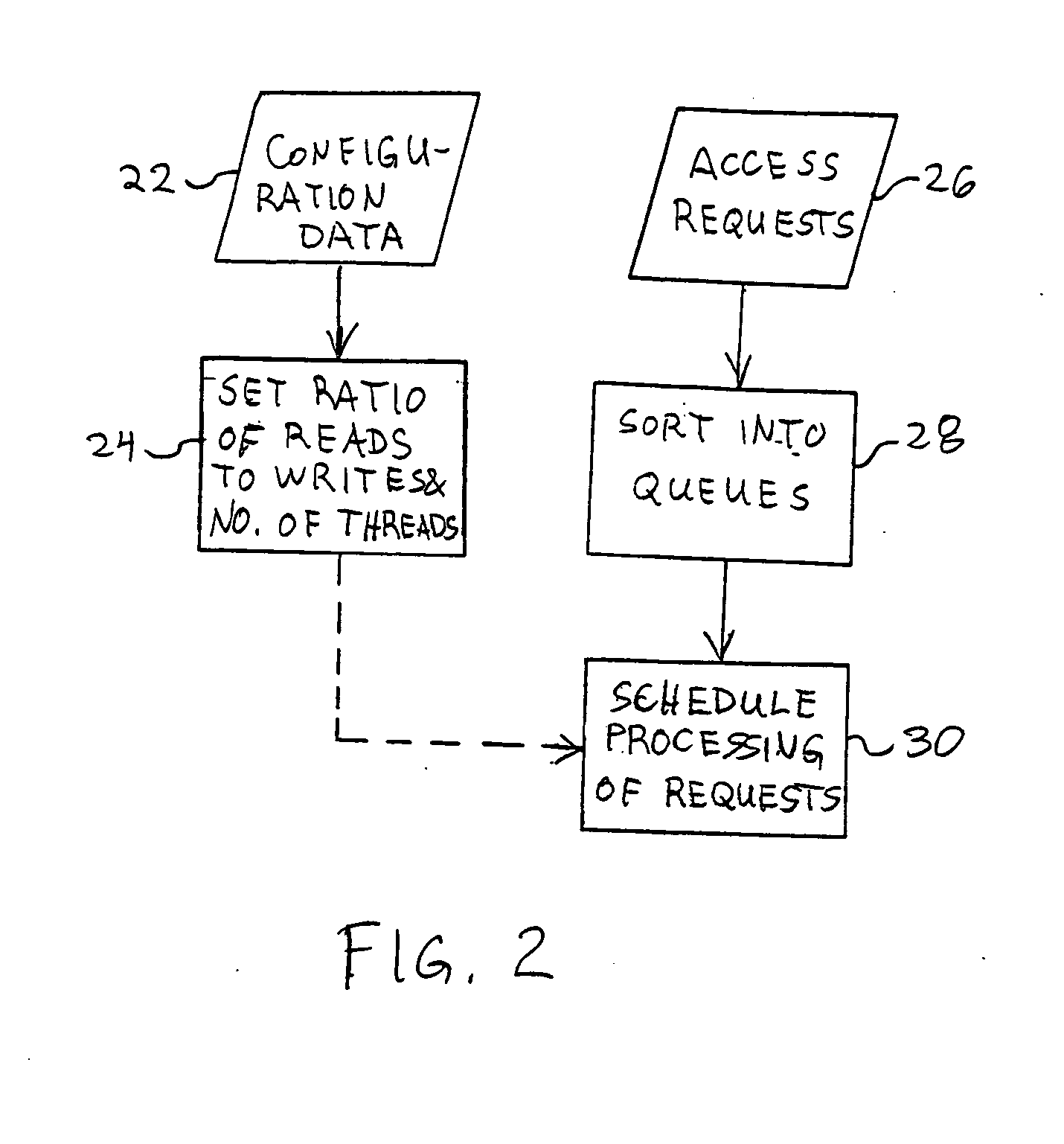

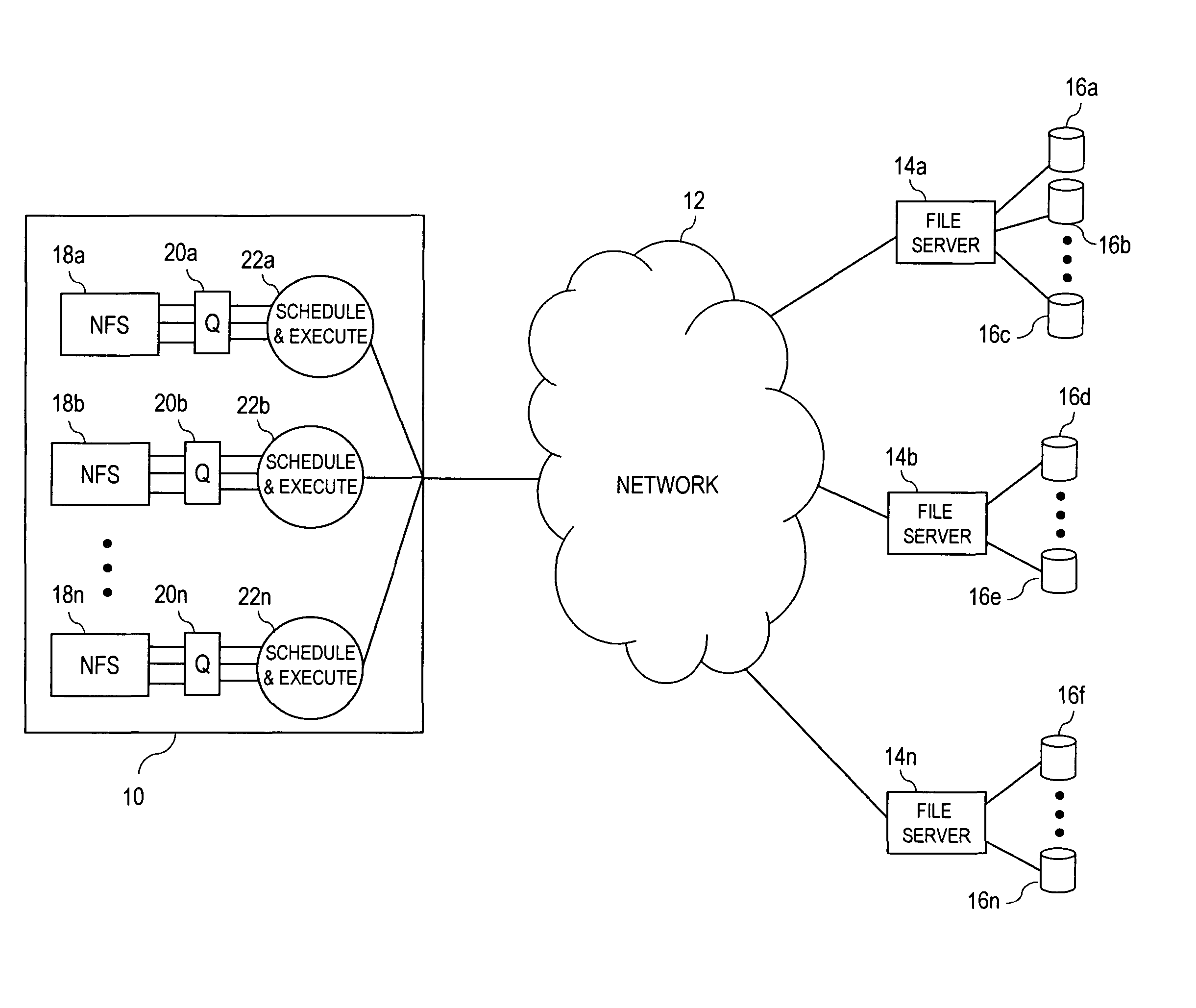

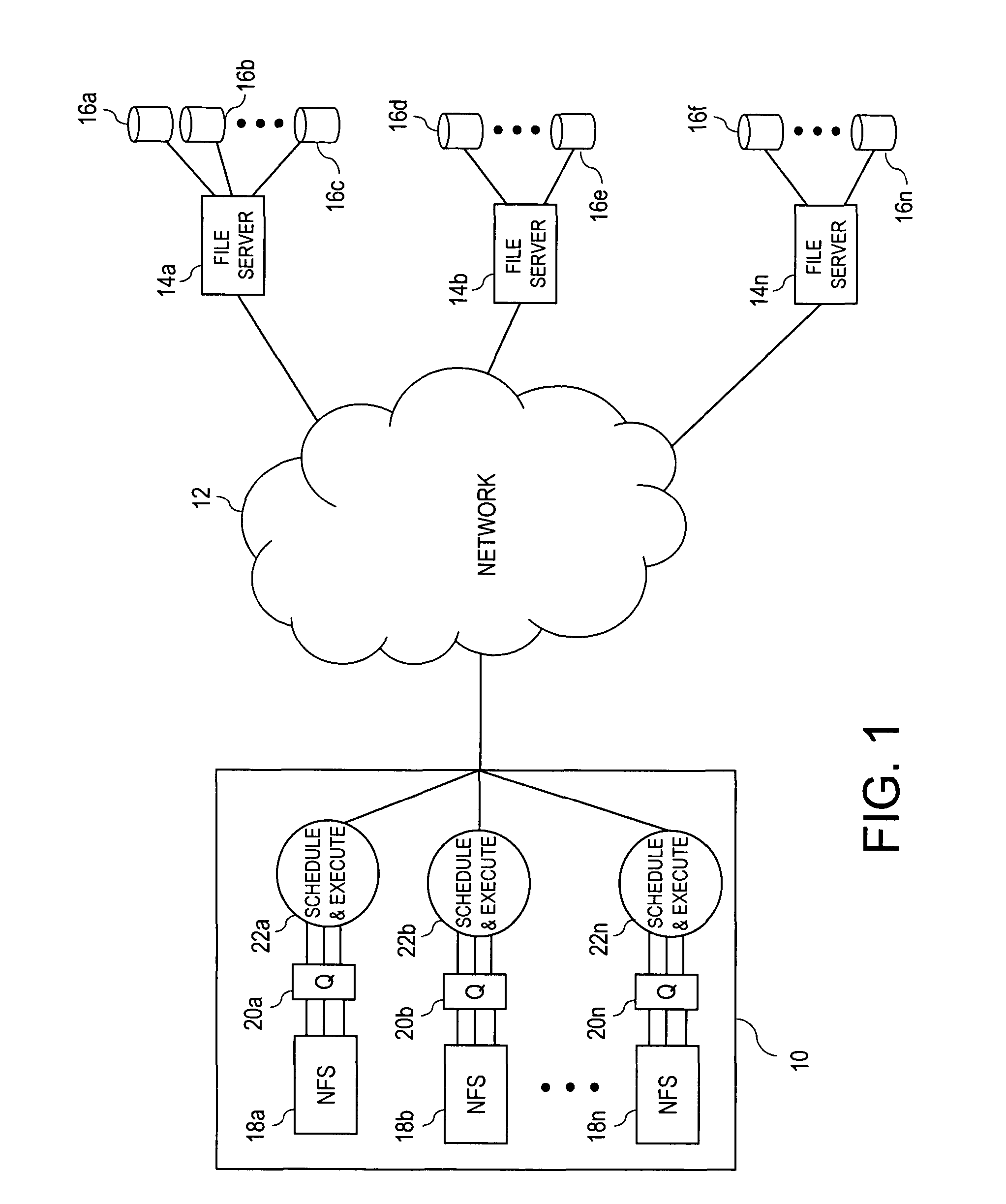

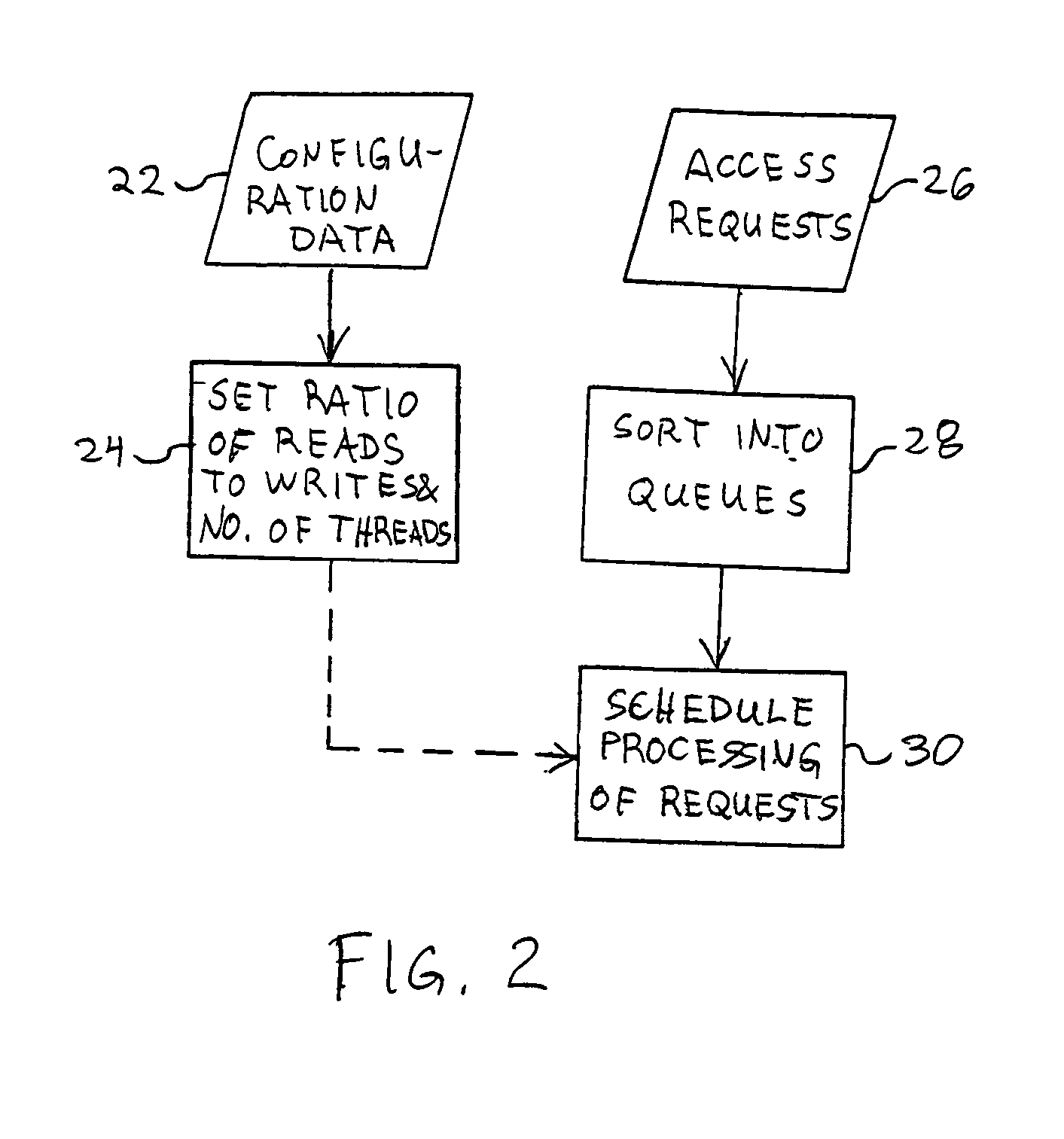

Network filesystem asynchronous I/O scheduling

ActiveUS20050015385A1Minimum delayDigital data processing detailsComputer security arrangementsFile systemNetwork File System

Resource acquisition requests for a filesystem are executed under user configurable metering. Initially, a system administrator sets a ratio of N:M for executing N read requests for M write requests. As resource acquisition requests are received by a filesystem server, the resource acquisition requests are sorted into queues, e.g., where read and write requests have at least one queue for each type, plus a separate queue for metadata requests as they are executed ahead of any waiting read or write request. The filesystem server controls execution of the filesystem resource acquisition requests to maintain the ratio set by the system administrator.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP +1

Network filesystem asynchronous I/O scheduling

ActiveUS8635256B2Minimum delayDigital data processing detailsComputer security arrangementsFile systemNetwork File System

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP +1

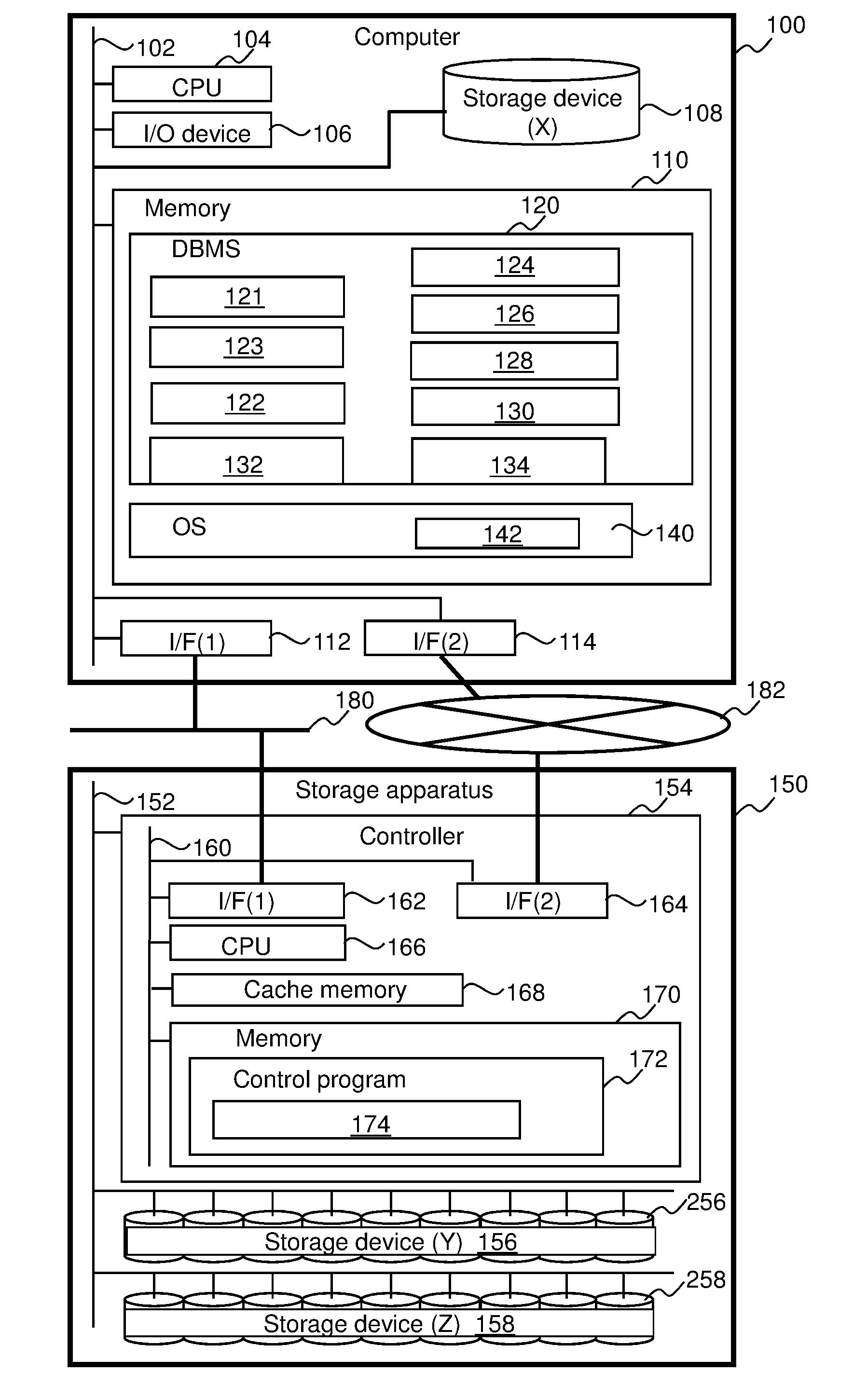

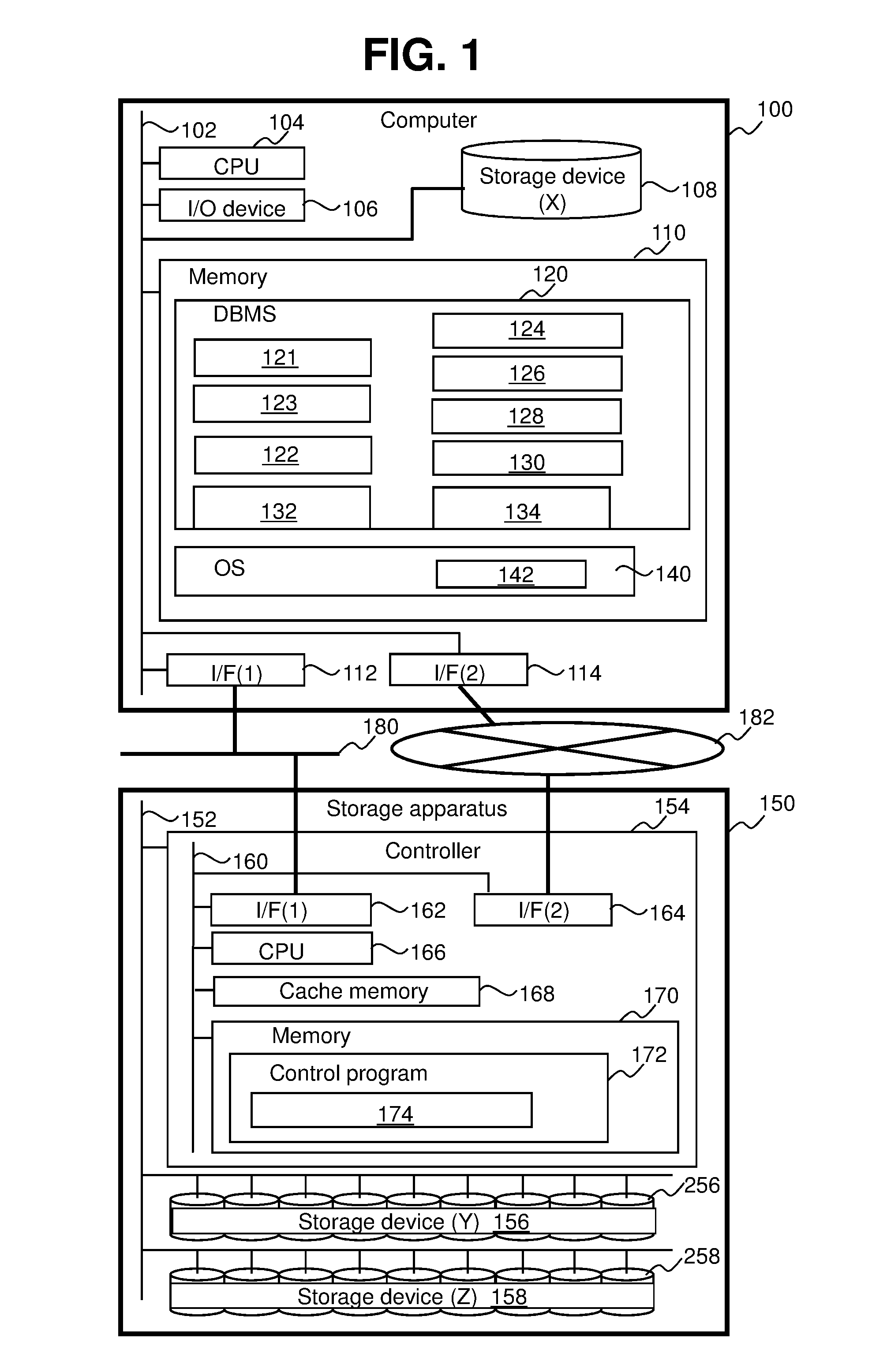

Database management system, computer, and database management method

ActiveUS20170004172A1Improve performanceSpecial data processing applicationsWait stateAsynchronous I/O

Owner:HITACHI LTD +1

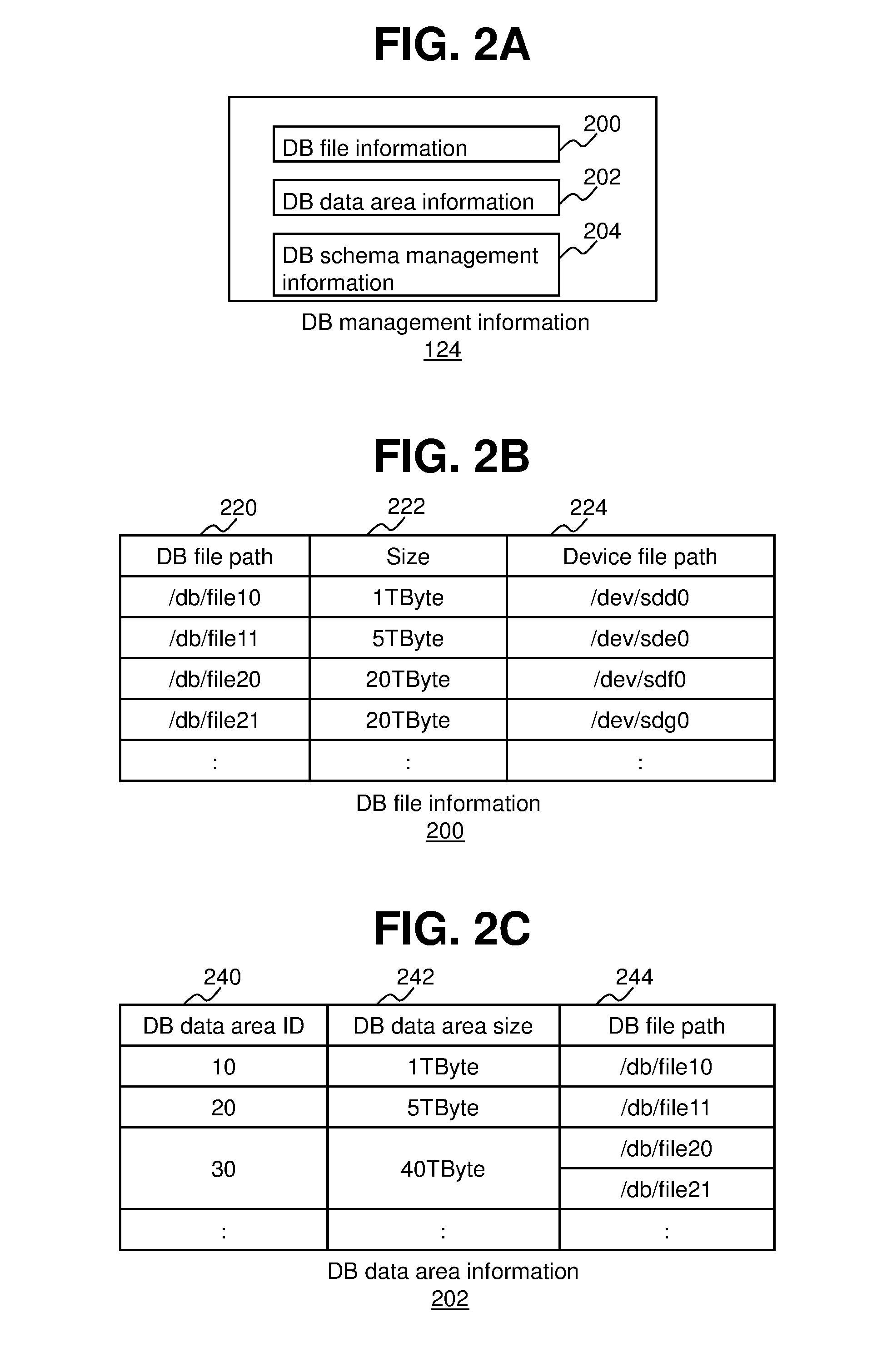

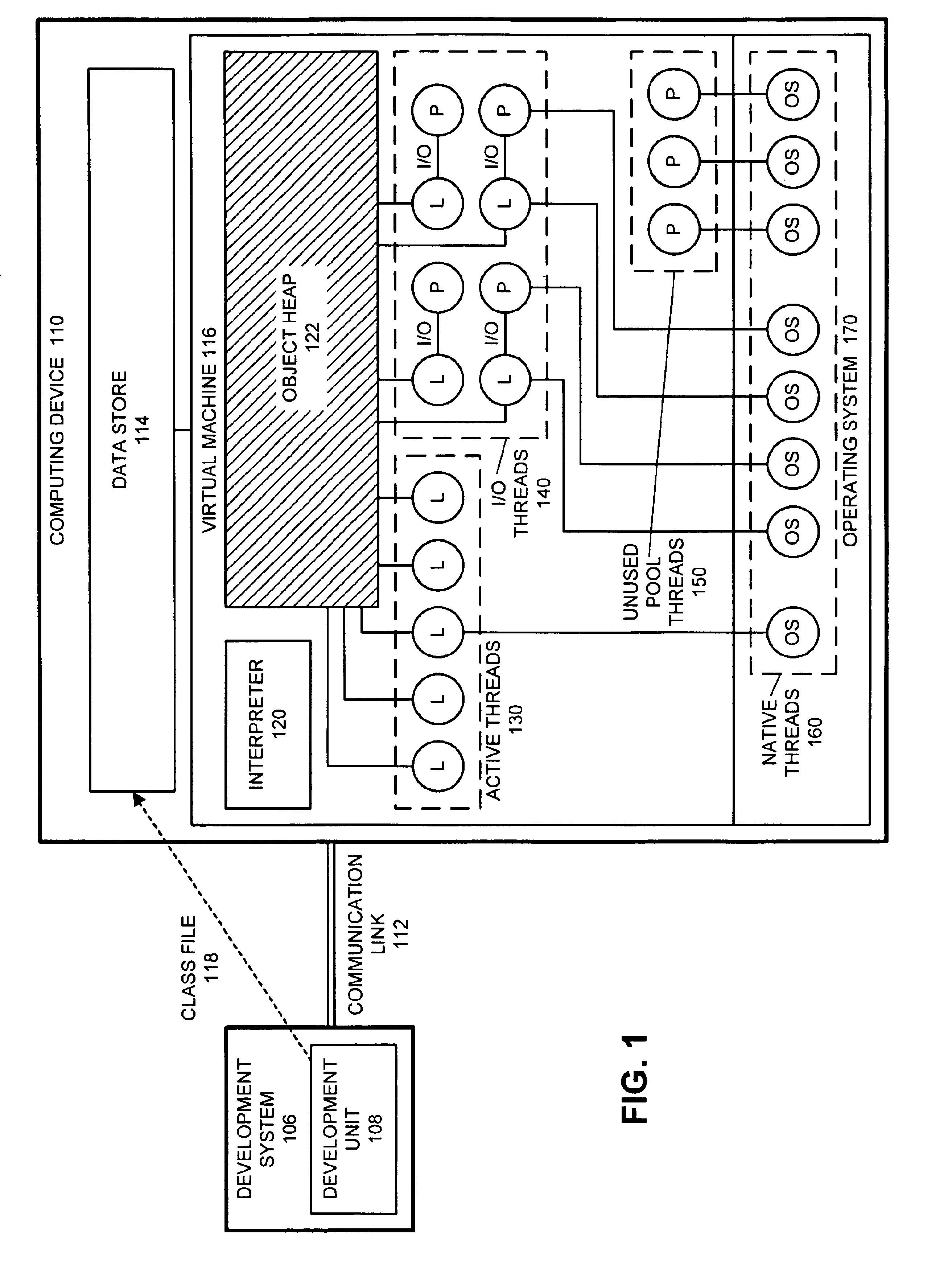

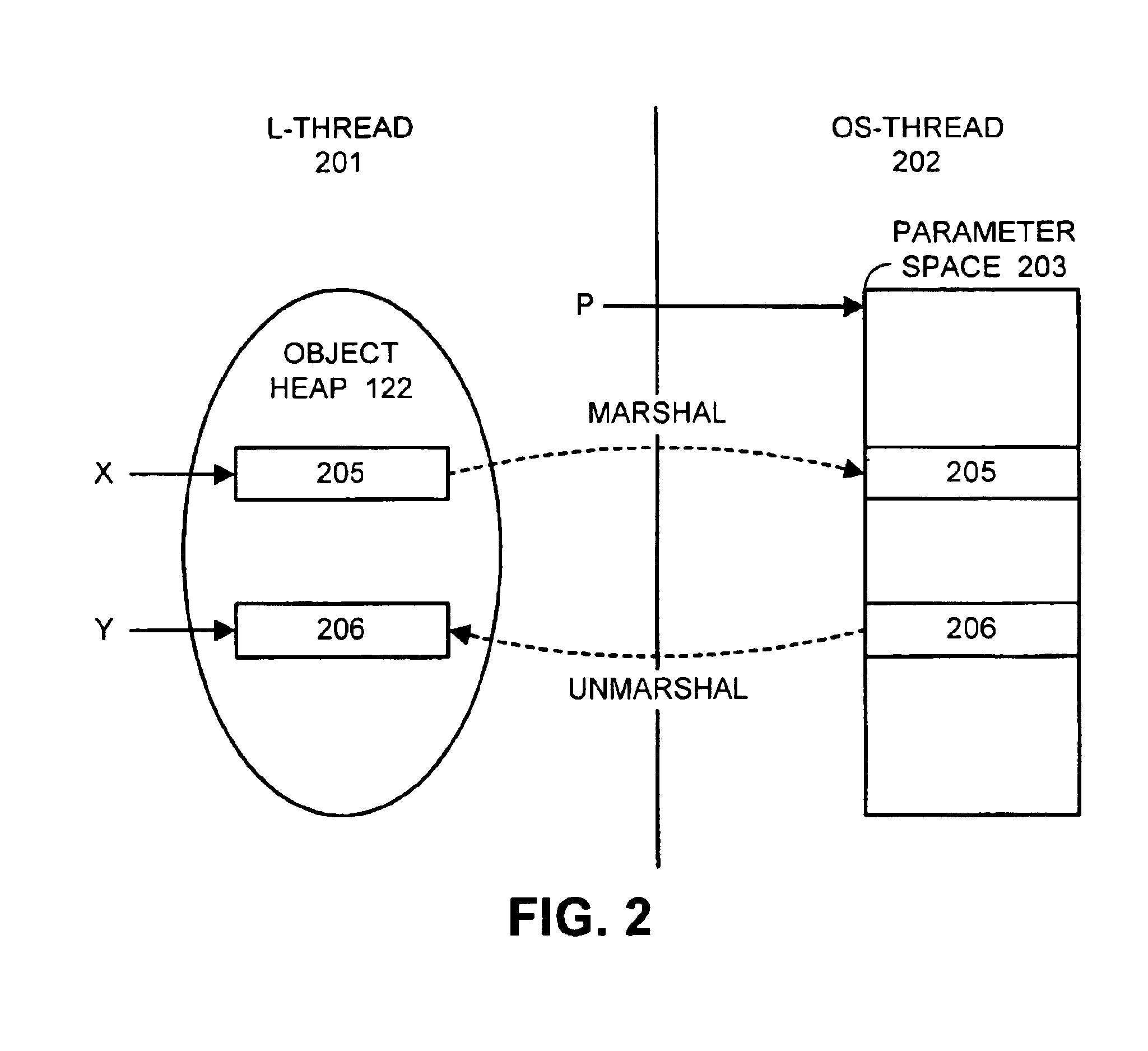

Method and apparatus for managing independent asynchronous I/O operations within a virtual machine

InactiveUS6865738B2Facilitates performing independent asynchronous I/O operationResource allocationSoftware simulation/interpretation/emulationOperational systemAsynchronous I/O

One embodiment of the present invention provides a system that facilitates performing independent asynchronous I / O operations within a platform-independent virtual machine. Upon encountering an I / O operation, a language thread within the system marshals parameters for the I / O operation into a parameter space located outside of an object heap of the platform-independent virtual machine. Next, the language thread causes an associated operating system thread (OS-thread) to perform the I / O operation, wherein the OS-thread accesses the parameters from the parameter space. In this way, the OS-thread does not access the parameters in the object heap directly while performing the I / O operation.

Owner:ORACLE INT CORP

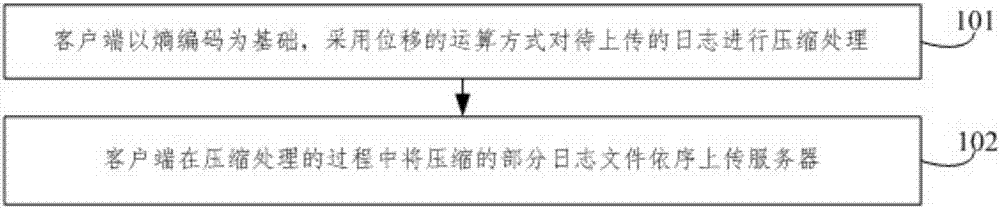

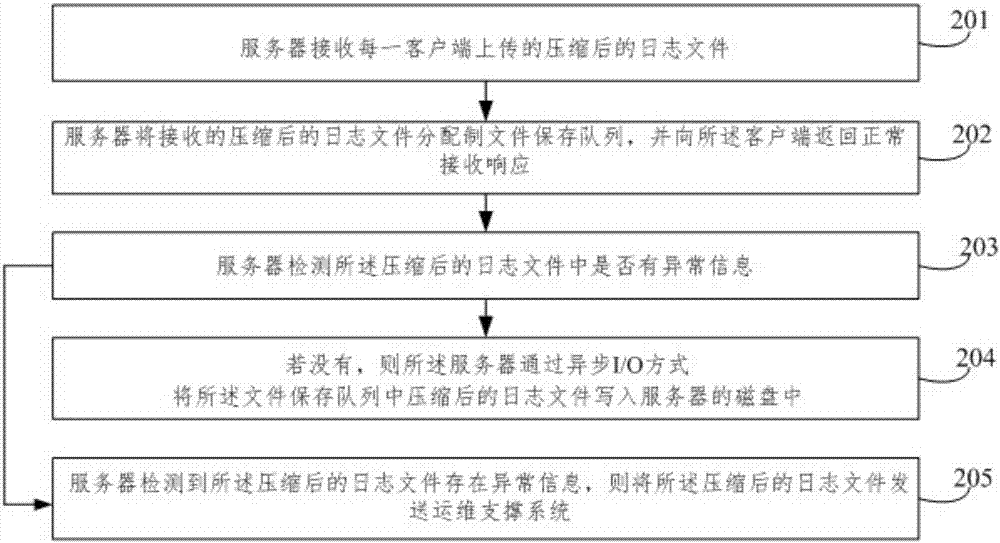

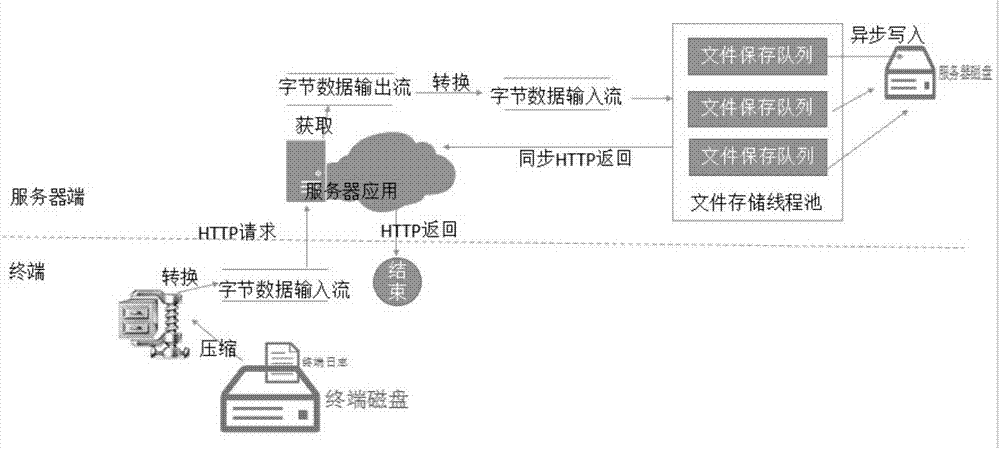

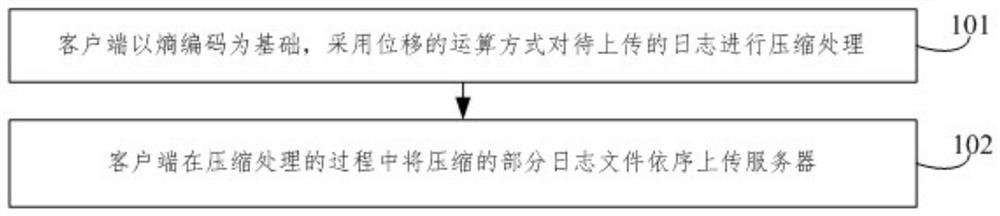

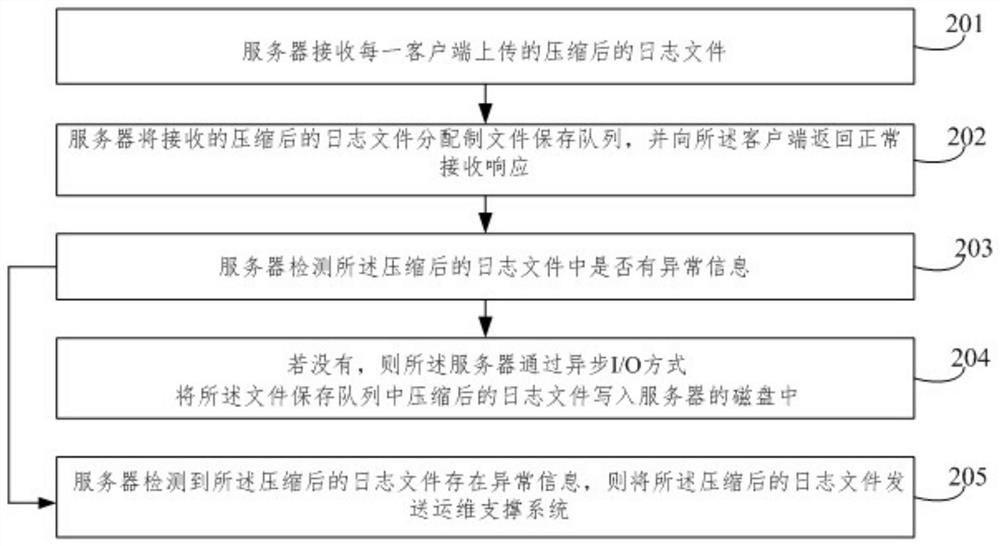

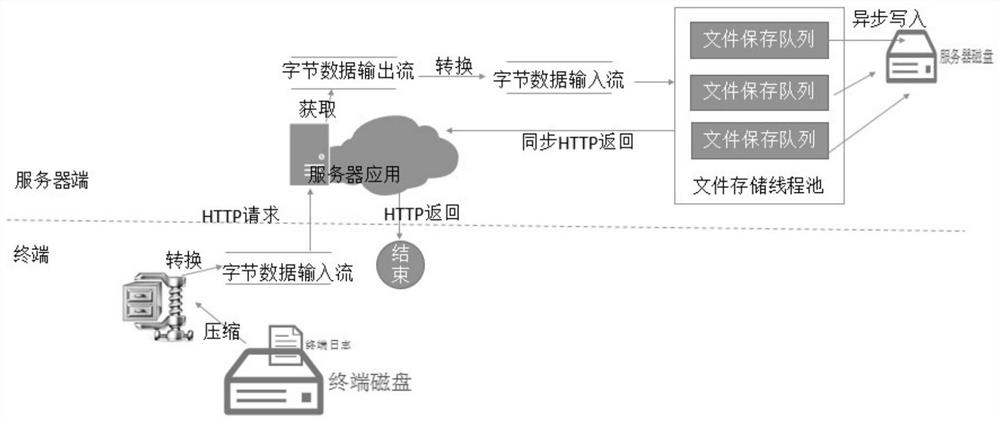

Optimization method for compressed uploading of self-service terminal log

ActiveCN108011966AGood functional experienceReduce waiting time for server responseHardware monitoringTransmissionComputer terminalClient-side

The invention provides an optimization method for compressed uploading of a self-service terminal log. The optimization method for the compressed uploading of the self-service terminal log comprises the following steps: a client uses a displacement operating mode to carry out compression processing on the logs to be uploaded on the basis of entropy coding; the client uploads the compressed log files to the server in order in the process of compression; the server receives the compressed log files uploaded by each client; the server distributes the received compressed log files to a file saving queue and returns a normal receiving response to the client; the server detects whether abnormal information is existed in the compressed log files or not; and if no abnormal information is existedin the compressed log files, the server writes the compressed log files in the file saving queue to the disk of the server through the asynchronous I / O mode. The optimization method for the compresseduploading of the self-service terminal log effectively reduces the response time of the client waiting for the server, and improves the function experience of the self-service terminal.

Owner:GUANGDONG KAMFU TECH CO LTD

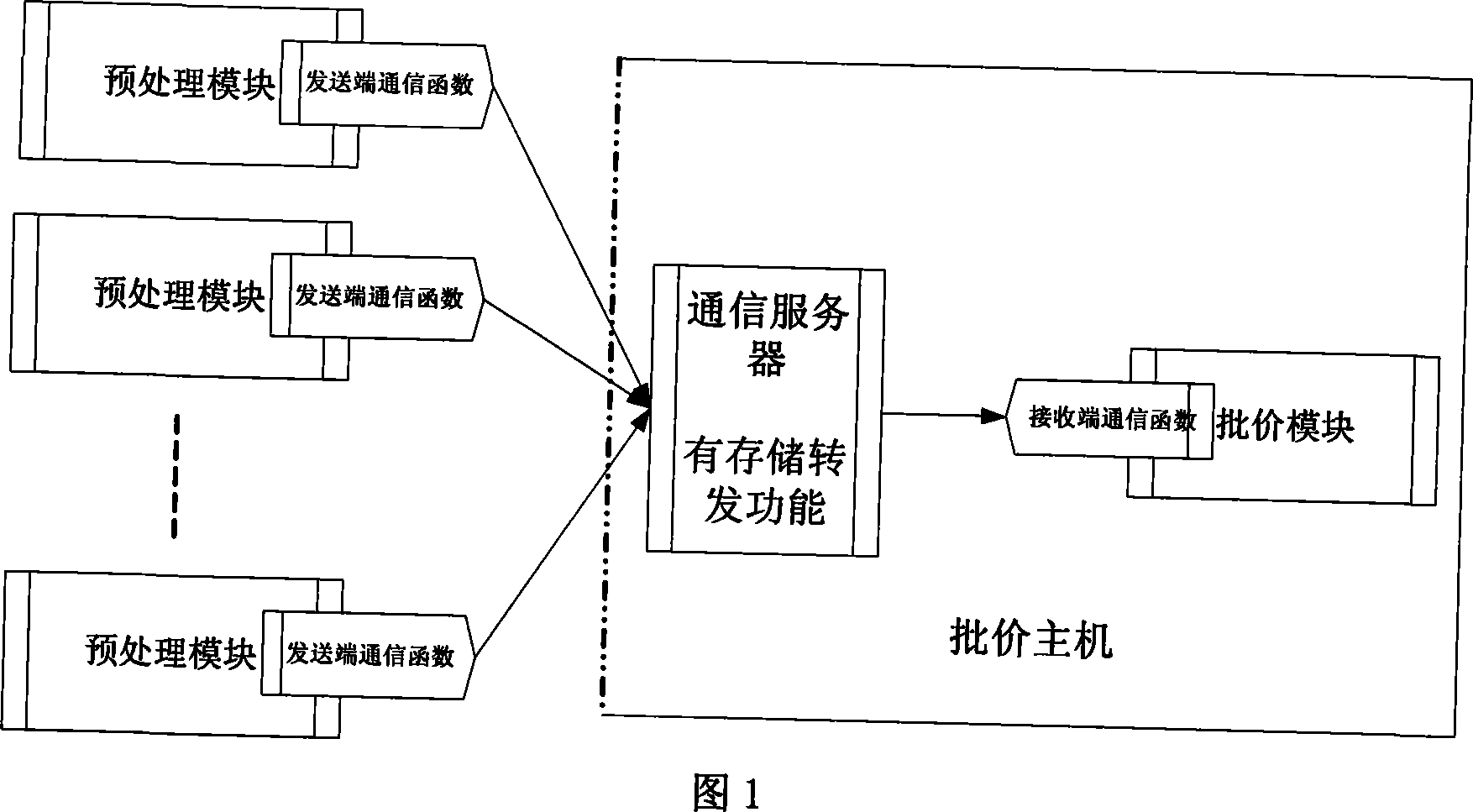

A parallel charging treatment method based on communication transmission

InactiveCN101217608AFast transmissionImprove satisfactionTelephonic communicationTransmissionFile transmissionData file

The invention discloses a method for paralleling charging processing based on communication transmission, which adopts a preprocessing module and a charge processing module to communicate. The preprocessing module means a module to convert file formats for original bills and the charge module means a module to calculate the bill towards bill files. The invention is characterized by the flow that: a communication server is arranged between the preprocessing module and the charge module; the preprocessing module sends data to the communication server, the communication server stores and transmits the data sent by the preprocessing data and the charge module receives the data stored and transmitted by the communication sever; the communication server ensures asynchronous data communication between the preprocessing module and the charge module. The invention adopts an asynchronous I / O mode and transmits data file through SOCKET and carries out paralleling billing processing, thereby improving file transmission speed and billing performance, forcefully preventing a subscriber from defaulting and improving satisfaction degree of subscriber inquiring telephone fee.

Owner:CHINA MOBILE GROUP SICHUAN

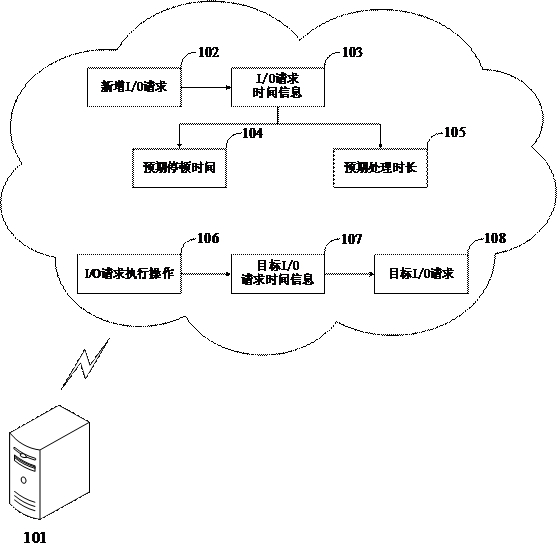

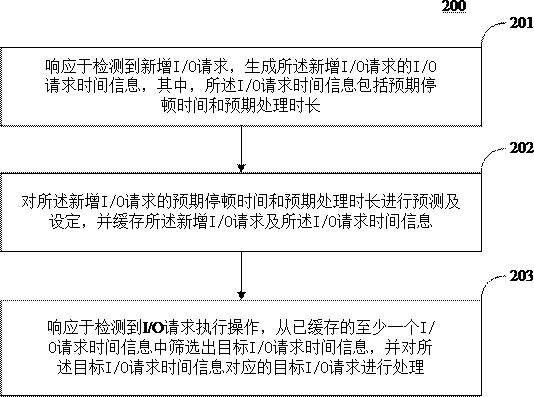

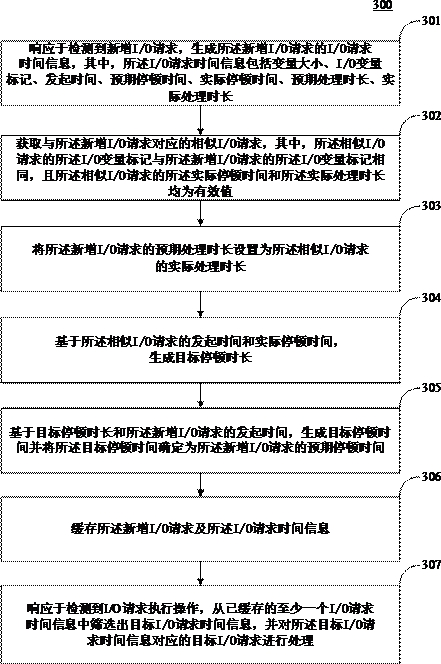

Asynchronous I/O request scheduling method and system, electronic equipment and medium

ActiveCN114706820AAvoid efficiencyAvoiding Wrong Technical IssuesFile access structuresFile/folder operationsTime informationComputer network

The invention relates to the technical field of I / O request processing, and provides an asynchronous I / O request scheduling method and device, electronic equipment and a computer readable storage medium. The method comprises the steps that in response to a detected newly-added I / O request, I / O request time information of the newly-added I / O request is generated, and the I / O request time information comprises expected pause time and expected processing duration; predicting and setting the expected pause time and the expected processing duration of the newly-added I / O request, and caching the newly-added I / O request and I / O request time information; and in response to the detected I / O request execution operation, screening out target I / O request time information from the cached at least one piece of I / O request time information, and processing a target I / O request corresponding to the target I / O request time information. According to the embodiment of the invention, through the steps, the technical problems that the processing efficiency is reduced and errors occur when the I / O requests are operated in a large quantity in a short time can be avoided.

Owner:BEIJING KAPULA SCI&TECH CO LTD

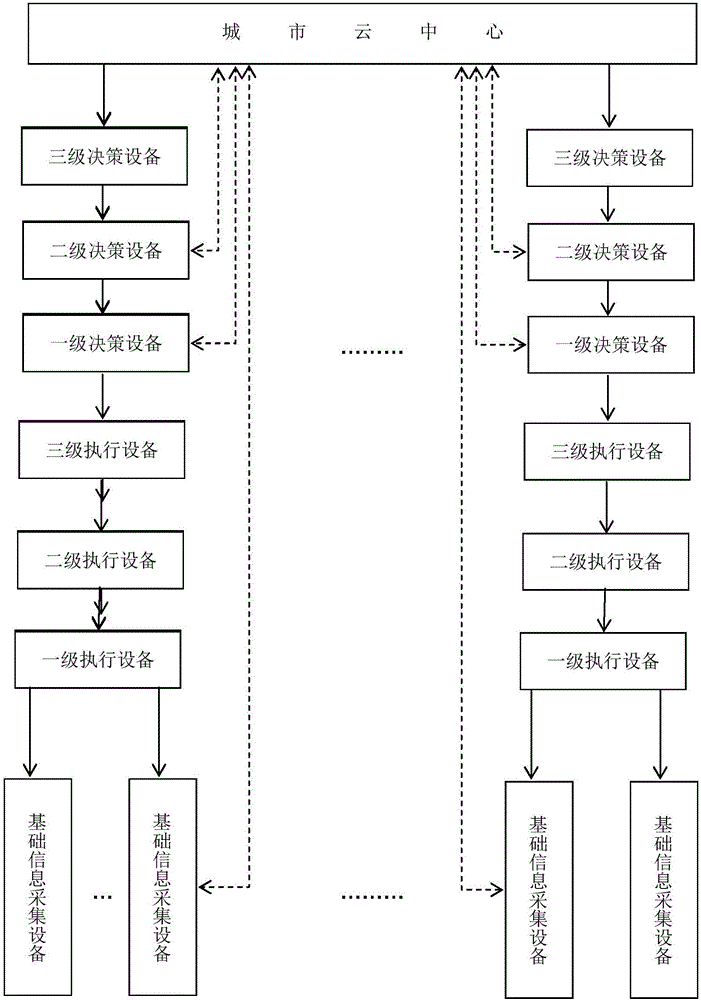

Intelligent city management system based on cloud platform

InactiveCN106327405ASolve problems such as disorderly waste of investmentImprove accuracyData processing applicationsIn-memory databaseRelational database

The invention provides an intelligent city management system based on a cloud platform. The system comprises a basic information acquisition device, a primary execution device, a secondary execution device, a ternary execution device, a primary decision device, a secondary decision device, a ternary decision device and a city cloud center, wherein the city cloud center is connected with the basic information acquisition device, the primary decision device and the secondary decision device directly or indirectly, and adopts a memory database technology, a non-relation database technology, a cache technology and an asynchronous I / O processing technology for different types of data uploaded by the devices. By unified allocation management of the multiple stages of devices, resources are further reasonably utilized, and the problems of waste and the like caused by unordered resource input are solved.

Owner:TERMINUSBEIJING TECH CO LTD

Autonomic threading model switch based on input/output request type

InactiveUS8055806B2Input/output processes for data processingData conversionState of artComputer architecture

Embodiments of the present invention address deficiencies of the art in respect to threading model switching between asynchronous I / O and synchronous I / O models and provide a novel and non-obvious method, system and computer program product for autonomic threading model switching based upon I / O request types. In one embodiment, a method for autonomic threading model switching based upon I / O request types can be provided. The method can include selectably activating and de-activating a blocking I / O threading model according to a volume of received and completed blocking I / O requests.

Owner:IBM CORP

Asynchronous I/O operation method and device based on socket

ActiveCN113162932AGet rid of dependenceImprove adaptabilityTransmissionElectric digital data processingComputer hardwareMultiplexing

The invention relates to the technical field of network communication, and particularly discloses a socket-based asynchronous I / O operation method and device. The method comprises the following steps: a management socket creation step: creating a management socket, and binding the management socket on a local address; a proxy thread creating step: creating a proxy thread, adding the management socket into read operation control information of the I / O multiplexing function, and calling the I / O multiplexing function; and a proxy thread running step: receiving an asynchronous operation request of a user by the management socket, executing a corresponding action according to the asynchronous operation request, and then sending a message to the user thread. By adopting the technical scheme of the invention, the adaptability to the operating system can be improved.

Owner:航天新通科技有限公司

Special disk reading and writing system suitable for IPTV direct broadcast server with time shift

InactiveCN101060418BSpecial service provision for substationMemory systemsCache serverProcess module

The invention belongs to network multimedia field, specifically relates to a special disc read-write system with time-variable IPTV live server and a time-variable IPTV live server using the same, wherein the disc read-write system comprises: a media data buffer server including a channel management module, a buffer management module with memory based on tmpfs and memory file mapping, and a file read / write module with DIRECT I / O and asynchronous I / O technology; and some buffer clients including the direct data write / read client. The time-variable IPTV live server also comprises a data pre-process module, a transmission module and user management module, wherein the special disc read-write system transmits the media data from the data pre-process module to the transmission module and finally sends to every client player, so as to improve entire system performance of the time-variable IPTV live server.

Owner:SHANGHAI CLEARTV CORP LTD

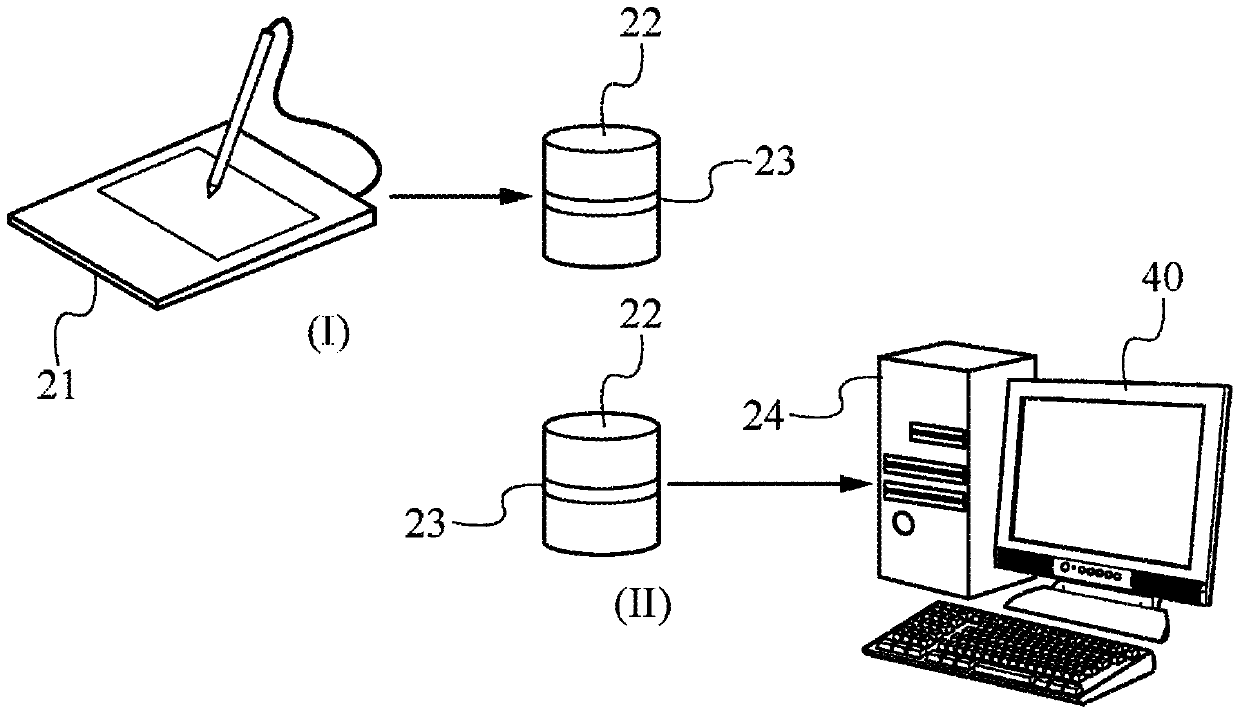

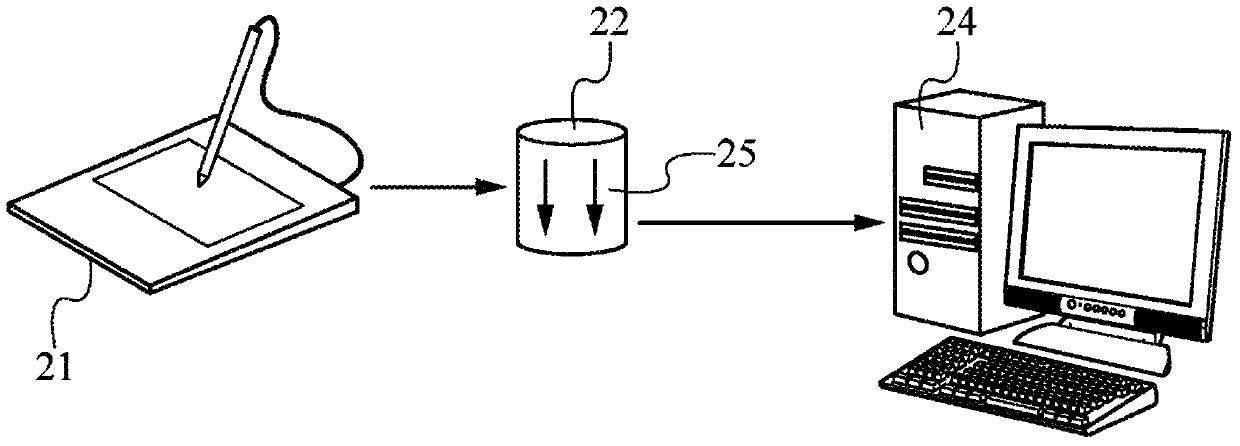

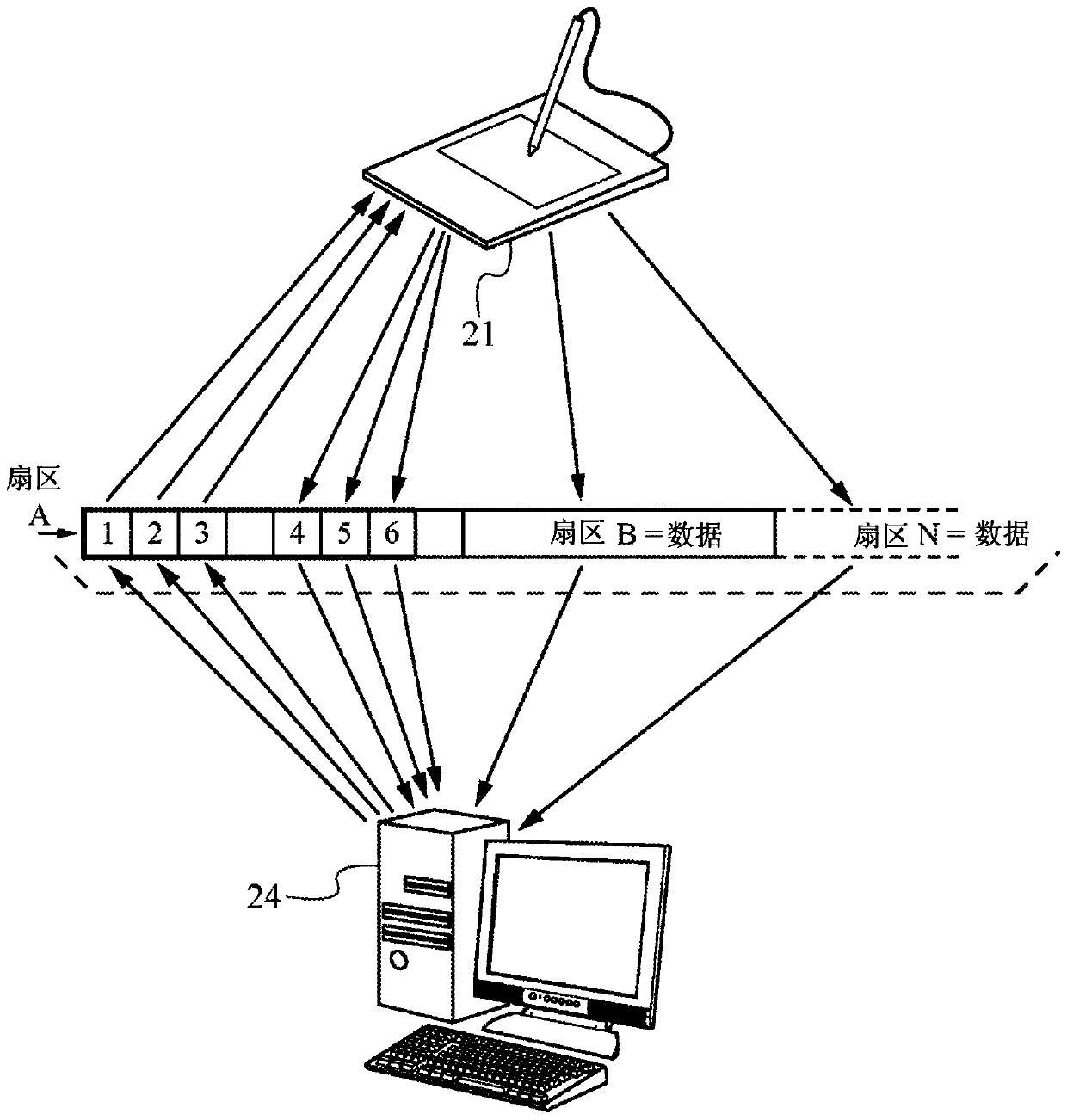

Method for exchanging control via a usb stick and associated devices allowing implementation thereof

ActiveCN105917322BEliminate the possibility of lossCharacter and pattern recognitionSecuring communicationData packEngineering

A real-time system for bi-directional storage and transfer of control and data between a processor and an external serial peripheral device such as a tablet and / or digitizer for handwritten signatures during I / O using an external medium Used to store static data to form a transfer buffer capable of operating with said Serial Peripheral Unit which is considered a "USB drive" removable virtual disk. Only files constituted as a linear sequence of data in sectors of constant size, which can be shared between the serial peripheral unit (21) and the processor (24), are used, the serial Both the peripheral unit (21) and the processor (24) are able to read and write to the file at virtually simultaneous times. Asynchronous I / O transfers are defined with data block contents on the order of kilobytes and storage areas that are actually as large as expected, definitely larger than data packets, used as temporary buffers for data waiting to be transferred.

Owner:HERMES COMM S R L S

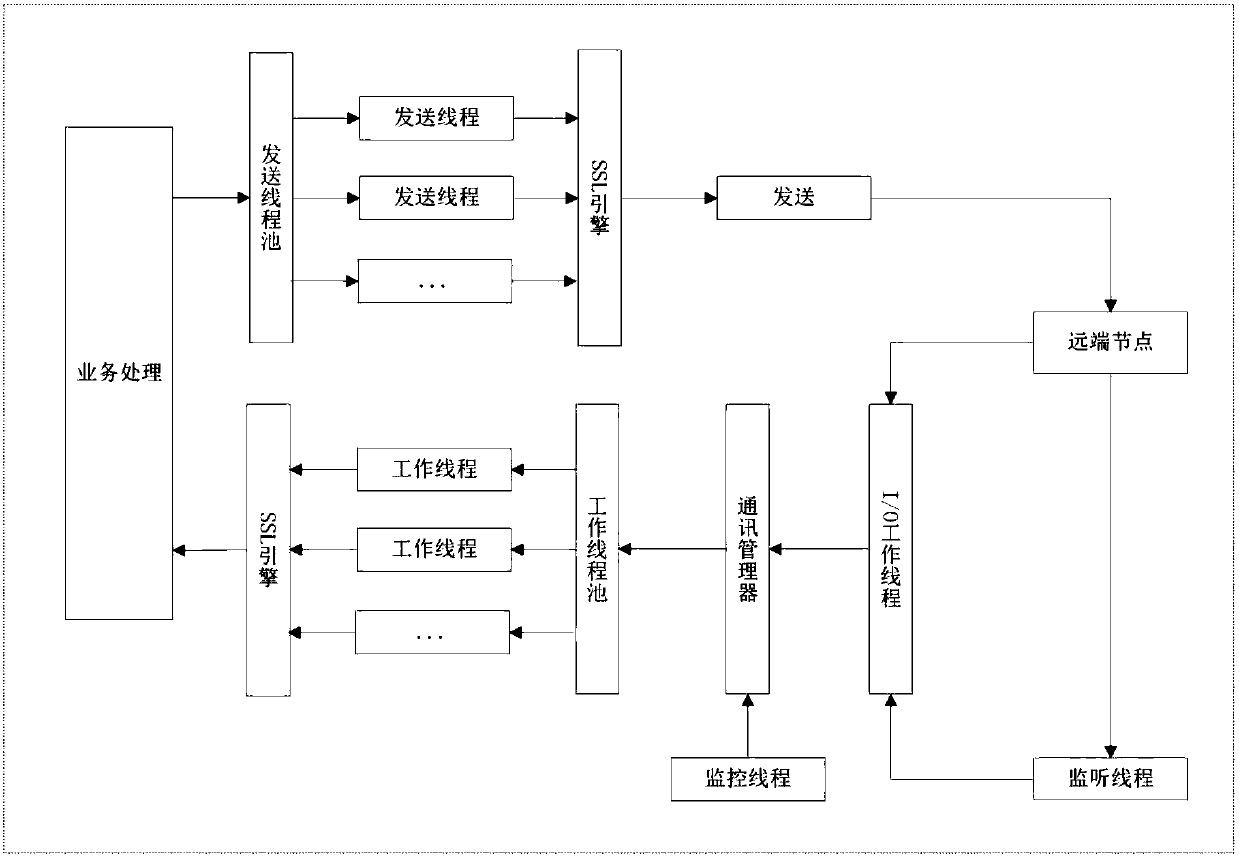

Large concurrent encrypted communication algorithm for secure authentication gateway

InactiveCN109639619AIncrease the number of concurrent connectionsCoping with shockTransmissionSecure transmissionNetwork communication

The invention discloses a large concurrent encrypted communication algorithm for a secure authentication gateway, related to a data security transmission method. The traditional communication encryption library adopts a synchronous processing method tightly coupling communication and encryption, which can only increase the concurrent processing by increasing the number of threads or processes; andlimited by the number of the threads and processes, the communication bandwidth of the network and the processing power of the CPU cannot be fully utilized. The large concurrent encrypted communication algorithm for the secure authentication gateway is independently implemented through network communication and SSL processing, and supports independent optimization; an asynchronous I / O mechanism of the operating system is used fully to improve the throughput processing capacity; the thread pool technology is used to make full use of the computing power of the CPU; and the queue technology is used to perform buffer processing on peak data. The main advantage of the large concurrent encrypted communication algorithm for the secure authentication gateway is that the SSL encrypted communication with high concurrency (greater than 50,000), high number of connections per second (greater than 500), high throughput (greater than 800Mb / s), and high peak impact can be realized.

Owner:北京安软天地科技有限公司

Response times in asynchronous I/O-based software using thread pairing and co-execution

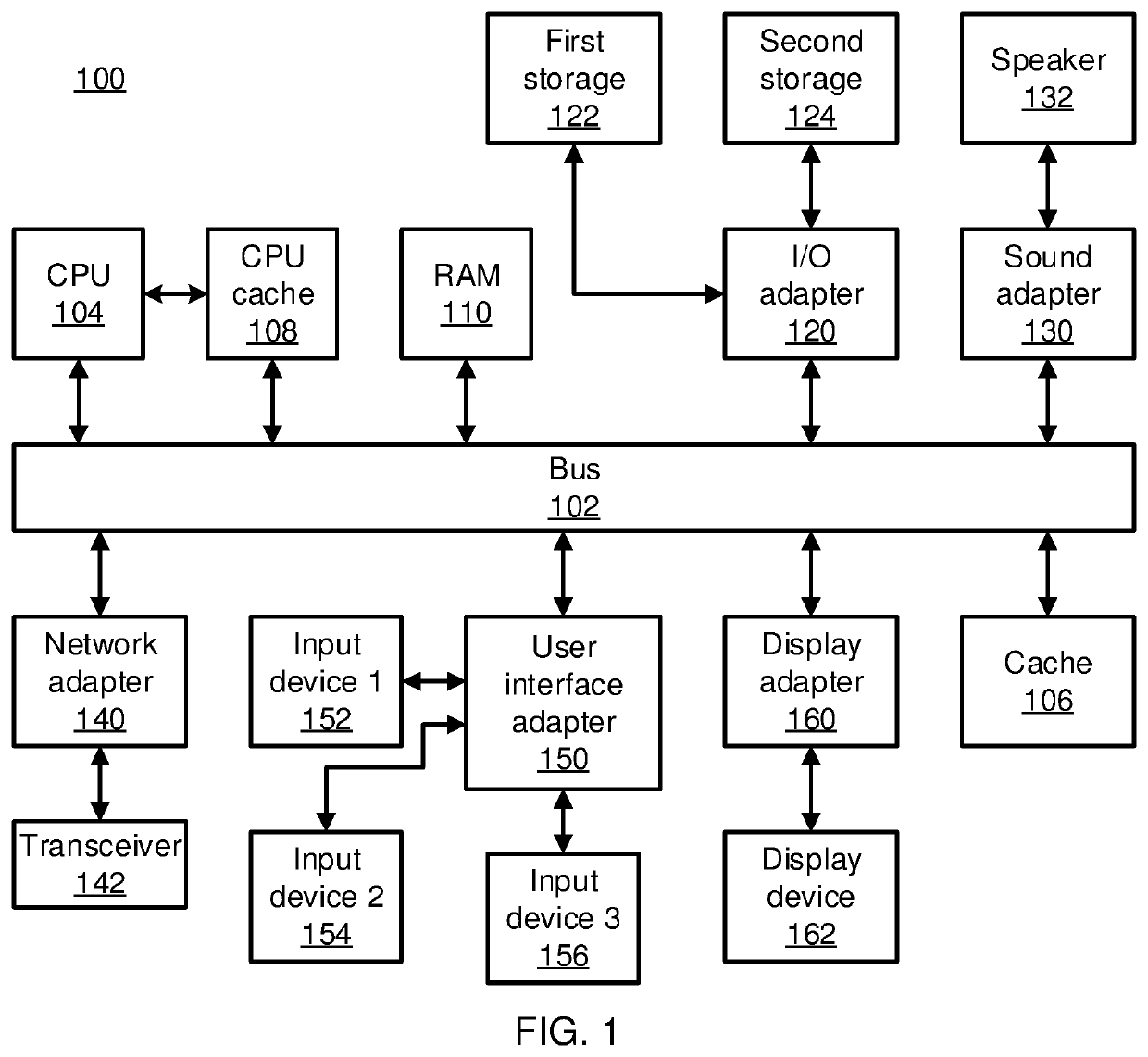

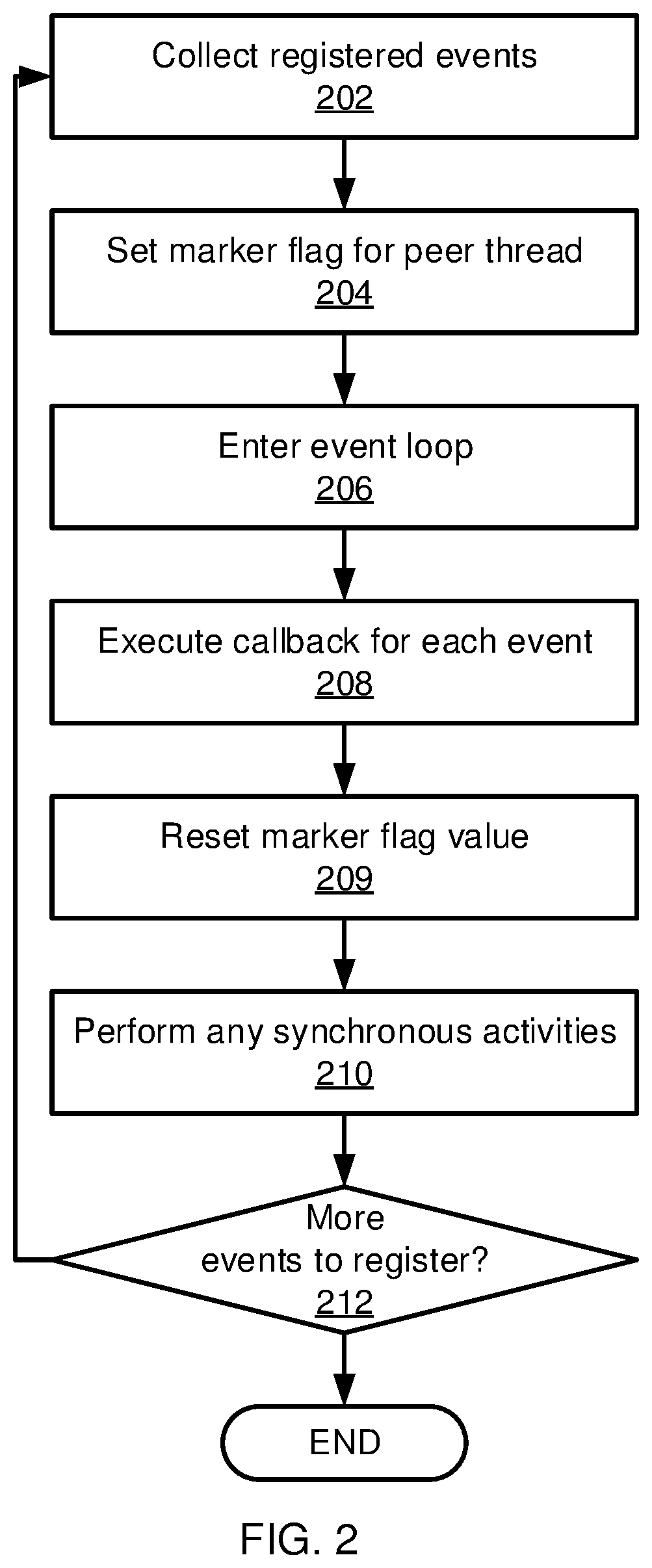

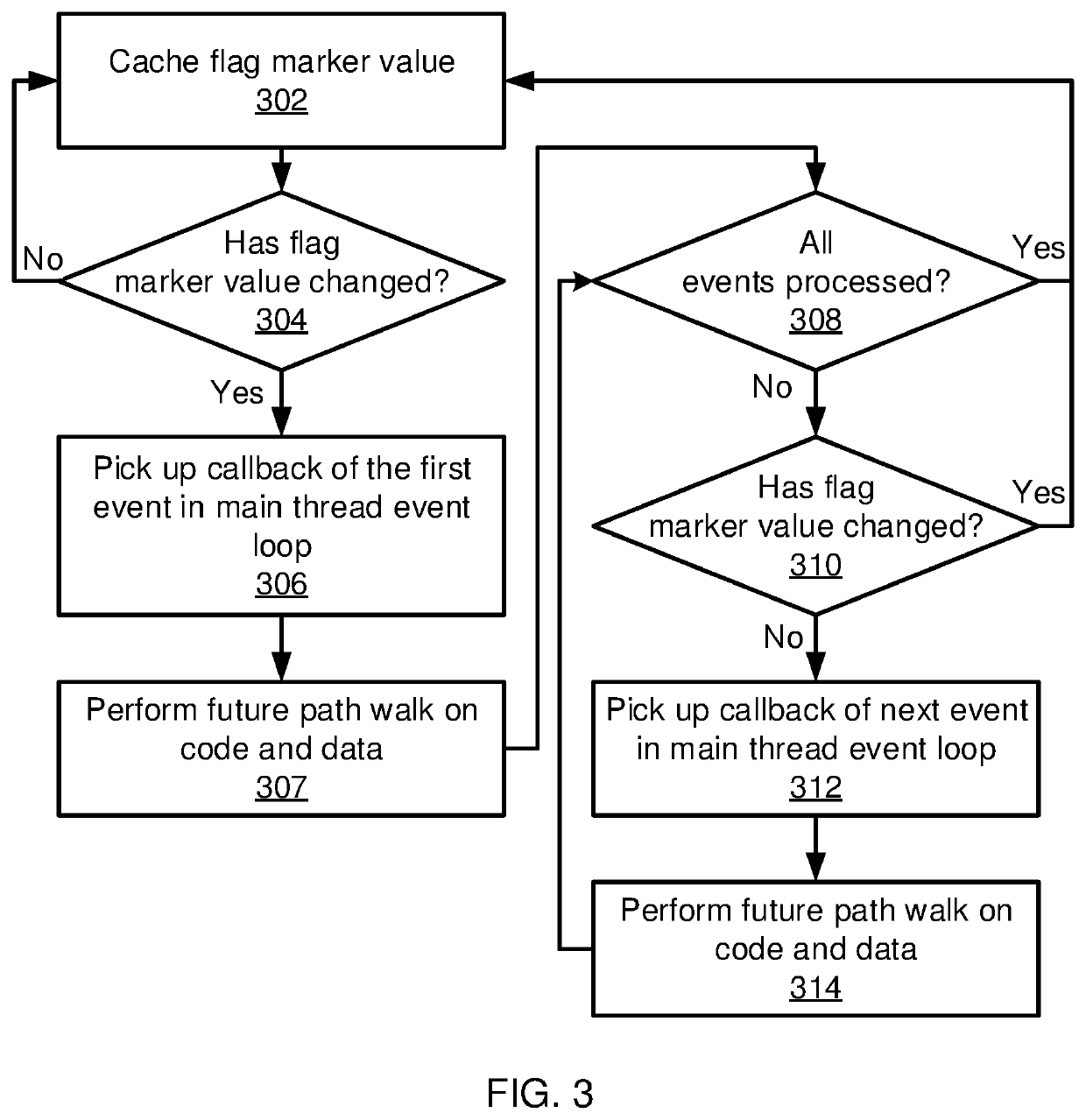

Methods and systems for pre-fetching operations include executing event callbacks in an event loop using a processor until execution stops on a polling request. A path walk is performed on future events in the event loop until the polling request returns to pre-fetch information for the future events into a processor cache associated with the processor. Execution of the event callbacks in the event loop is resumed after the polling request returns.

Owner:INT BUSINESS MASCH CORP

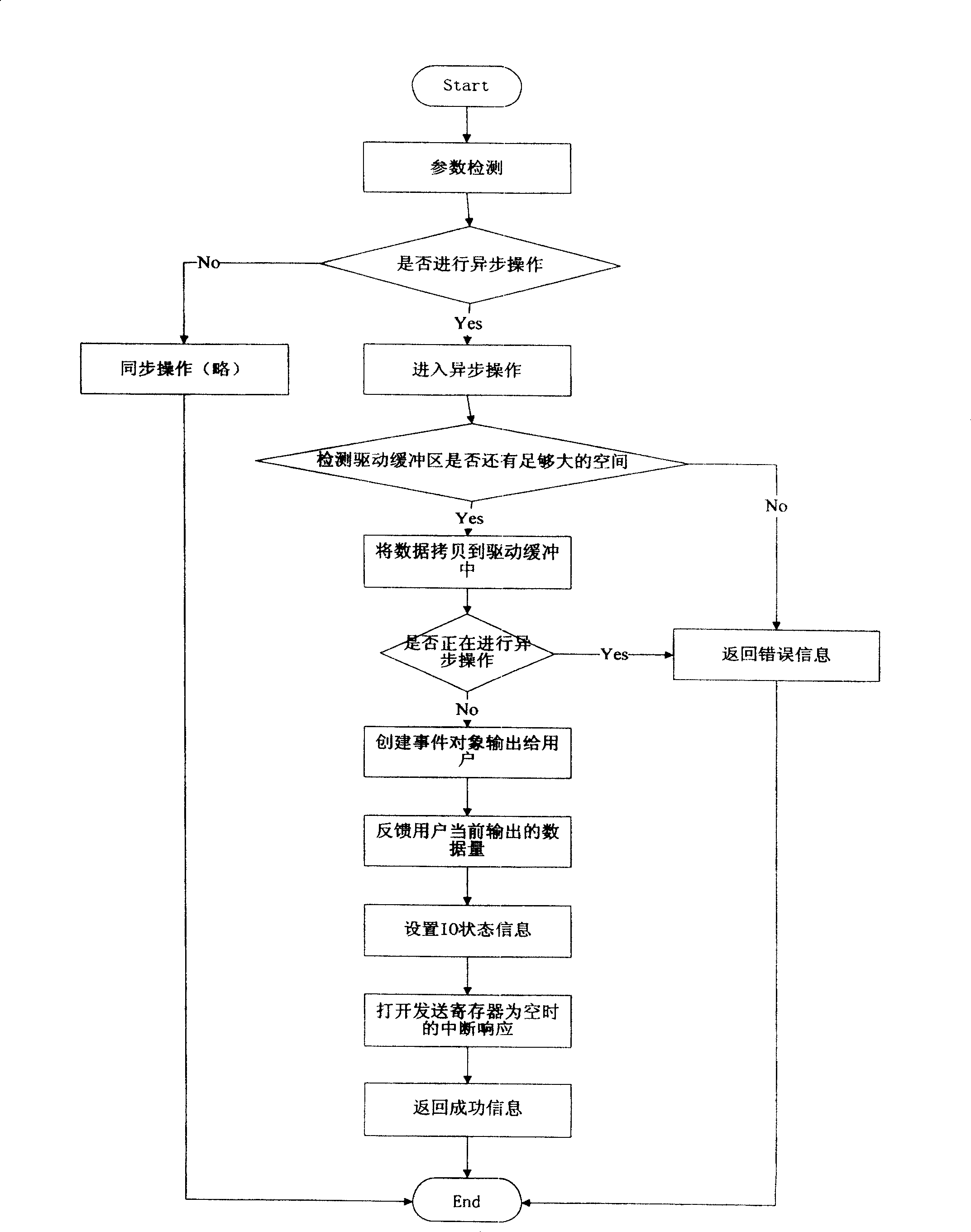

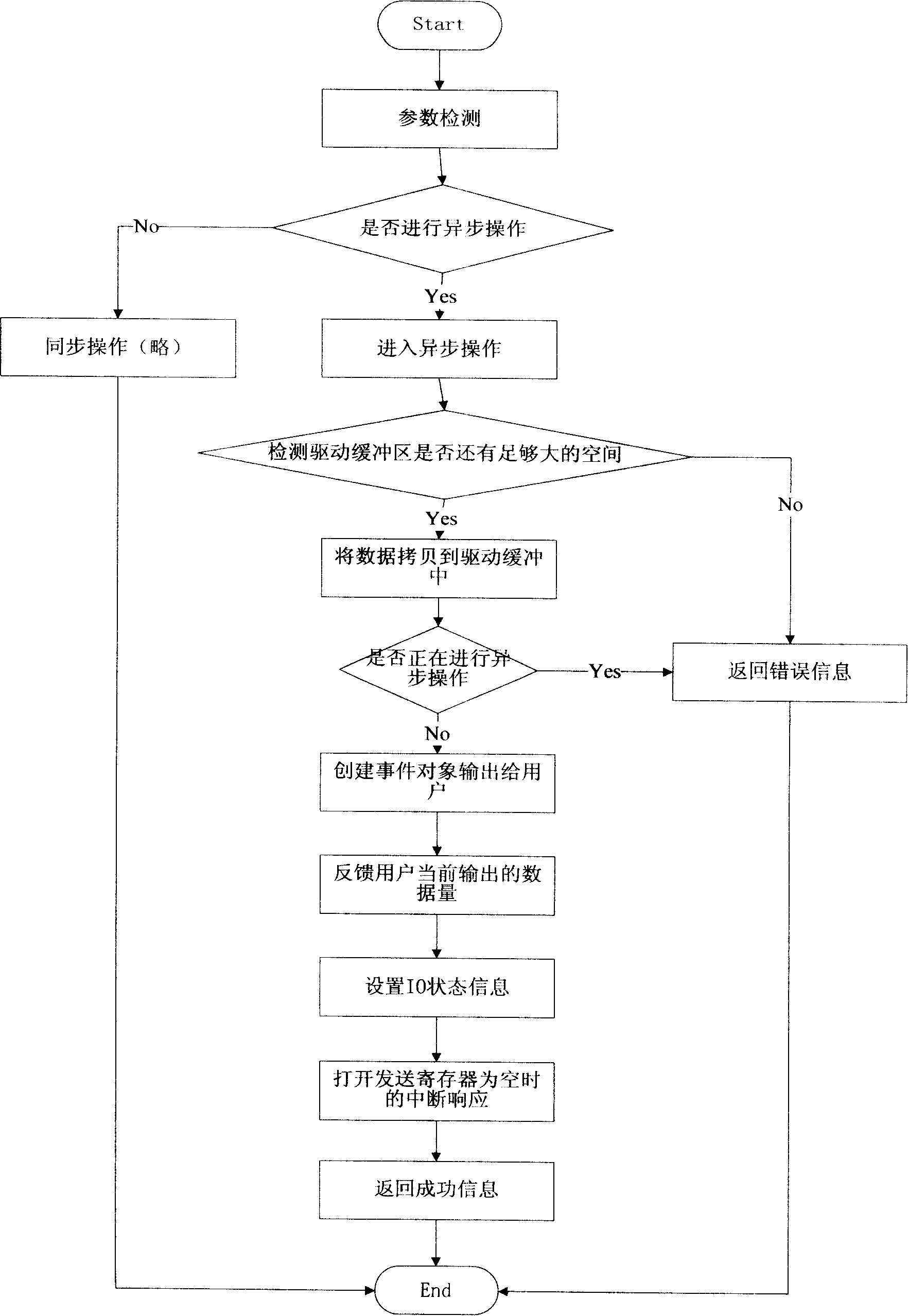

Method for processing asynchronous input/output signal

InactiveCN100511153CSolve the problem of asynchronous input and outputVersatileMultiprogramming arrangementsAsynchronous circuitComputer programming

The invention discloses an asynchronous input and output signal processing method, provides a set of event-based signal processing mechanism, and makes a special design on the interface of the driver program to cooperate with this set of signal processing, including the following steps: Step 1, as a driver program The user thread of the user puts forward the need for input and output signal processing through the driver interface; step 2, the driver creates an event object and exports it to the user thread; step 3, the user thread obtains the input and output processing signal through the event object, And complete the corresponding operation according to the definition of the signal by the driver; the driver completes the input and output operations of the device, and notifies the user thread to process the signal through the event object. Simplify some advanced synchronization mechanisms and provide them to driver designers; a user thread can perform asynchronous I / O operations with multiple devices at the same time; drivers can customize the signal semantics of asynchronous I / O to provide richer functions .

Owner:KORTIDE LTD

Asynchronous output incoming signal processing method

InactiveCN101158912ASolve the problem of asynchronous input and outputVersatileMultiprogramming arrangementsAsynchronous I/OComputer programming

The invention discloses an asynchronous input and output signal processing method, which provides a set of event-based signal processing mechanism, and a particular design is made on an interface of the driving program to be matched with the set of signal processing. The invention comprises the following steps: step 1, a subscriber thread as a driving program user proposes the needs for conducting the input and output signal processing through the driving program interface; step 2, the driving program establishes an event example, which shall be exported to the subscriber thread; step 3, the subscriber thread obtains an input and output processing signals through the event example, and complete the relevant operation in accordance with the signal definition by the drive; the driving program completes the equipment input and output operation, and give public notice on subscriber thread processing signals through the event example. Some high-level synchronous mechanism are simplified, so as to be provided to the driving program designer for use; a subscriber thread can simultaneously conduct the asynchronous I / O operation with a plurality of equipment; and the driving program can automatically define the signal semantic meaning of the asynchronous I / O, thereby providing richer functions.

Owner:KORTIDE LTD

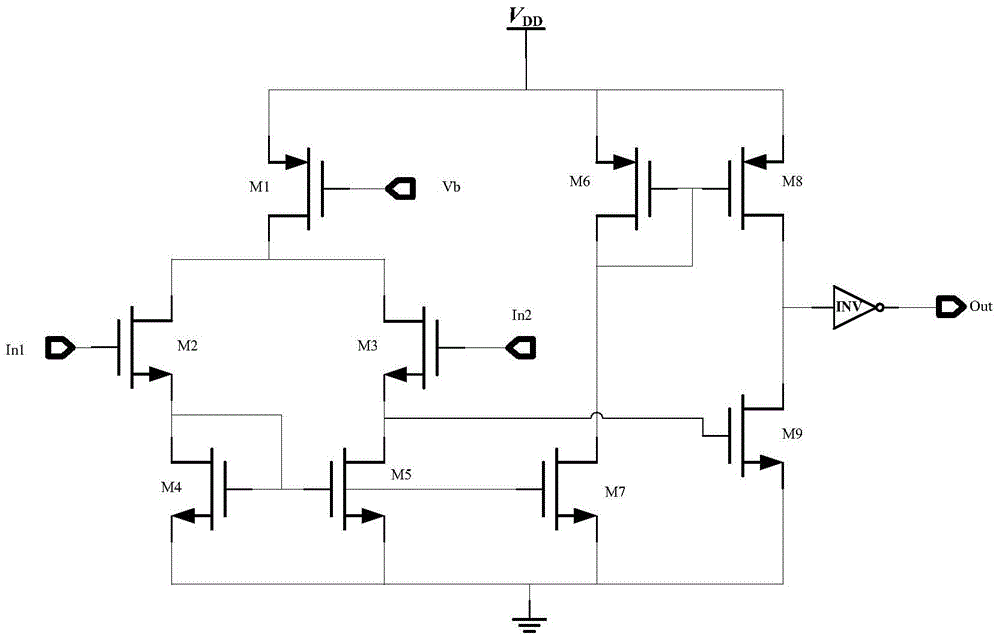

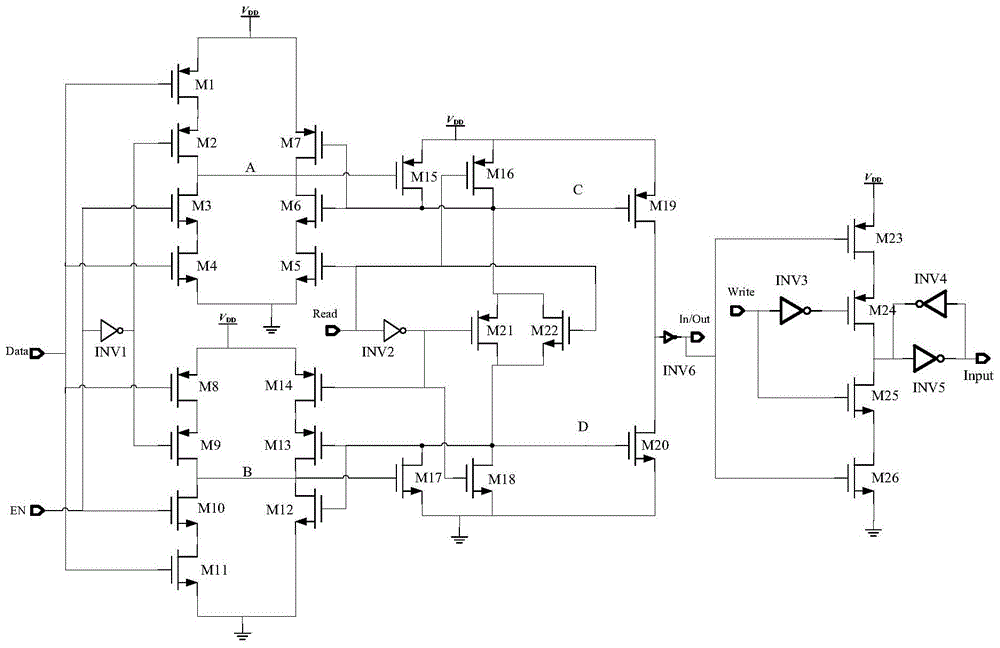

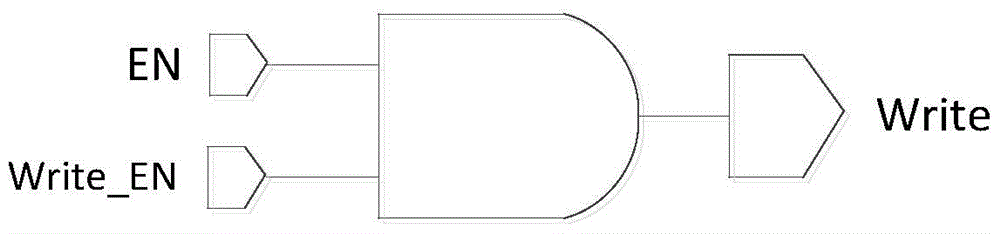

A kind of i/o interface circuit of asynchronous sram

The invention discloses an I / O interface circuit of an asynchronous SRAM, belonging to the technical field of SRAM data reading and writing. The interface circuit includes a read circuit and a write circuit; the output end of the read circuit is connected to the input end of the write circuit; the read circuit is connected to the enable signal, the data signal and the read signal; the write circuit is connected to the write signal . The I / O interface circuit of the asynchronous SRAM of the present invention controls the operation of the output data by using the enable signal and the read signal, and uses the write signal to control the write operation of the data, so that the read or write of the data is realized conveniently and quickly Operation; by introducing positive feedback in the read circuit, the accuracy of the level signal is enhanced; there is no path from the power supply VDD to the ground GND, which greatly reduces the power consumption of the read circuit; by using the read signal to control the transistor Turn on to speed up the response speed of the circuit.

Owner:BEIJING ZHONGKE XINWEITE SCI & TECH DEV

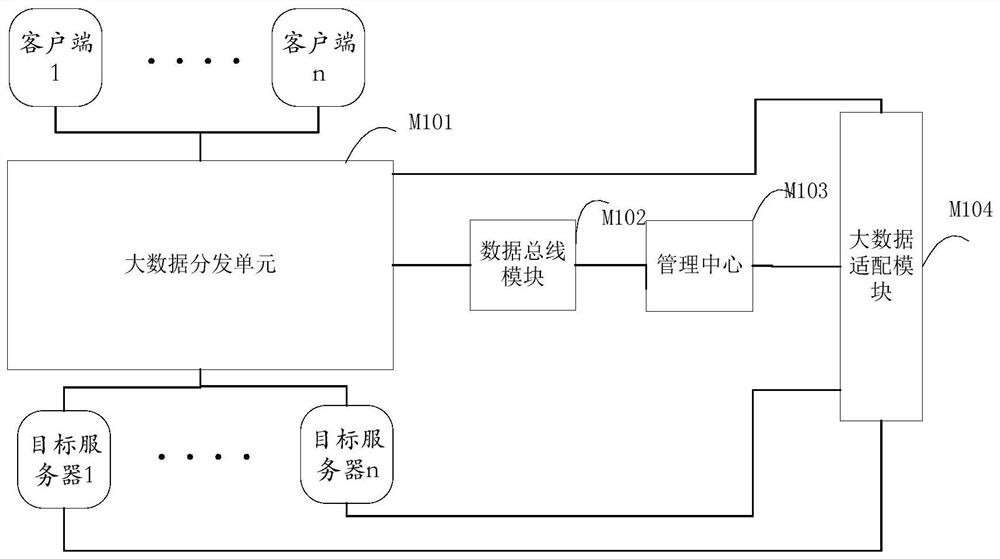

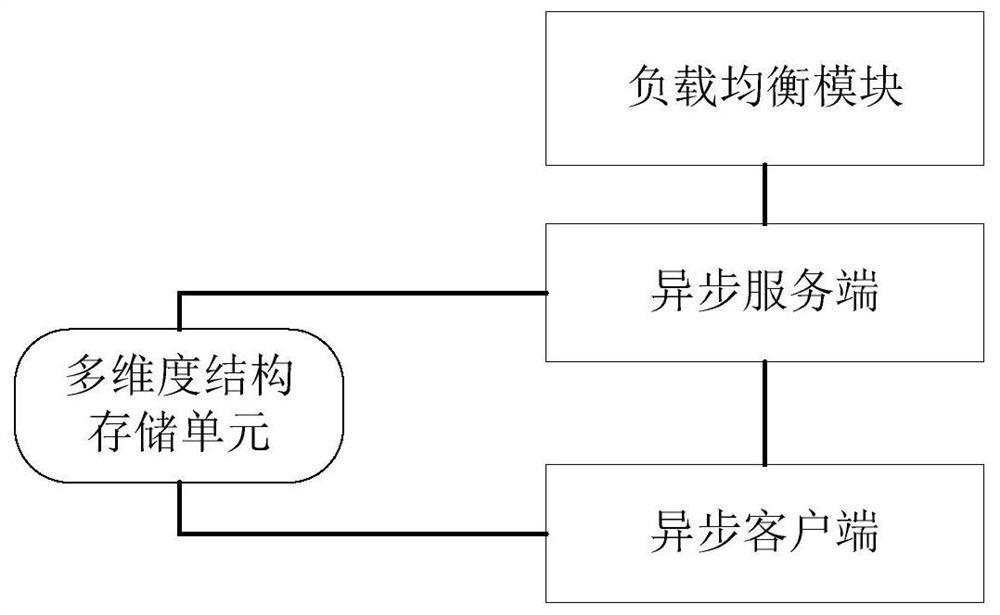

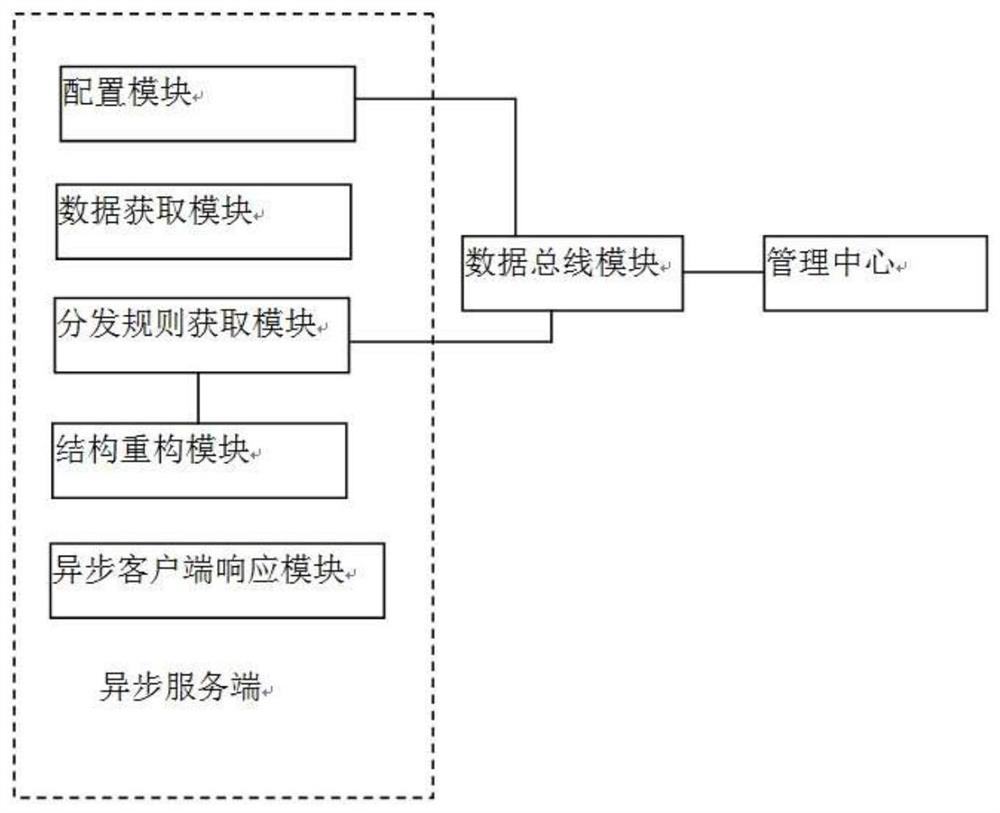

A system and method for distributing data in a big data platform

The system and method for distributing data in the big data platform of the present invention adopts asynchronous I / O as the technical basis to build a big data distribution unit for high-speed distribution of big data, adopts the separation of server and client threads to improve the throughput of data distribution, The complete structure of the data is guaranteed by the multi-dimensional structure storage unit, and the accuracy and correctness of the data distribution are guaranteed by the big data management center and the data bus module, so that all parts can cooperate at high speed without waiting for each other for resources, and can fully utilize resource. At the same time, the whole system shows good scalability.

Owner:亿阳安全技术有限公司

An optimization method for self-service terminal log compression upload

ActiveCN108011966BGood functional experienceReduce waiting time for server responseHardware monitoringTransmissionAsynchronous I/OEntropy encoding

The present invention provides an optimization method for compressing and uploading self-service terminal logs. The method includes: the client uses entropy coding as the basis to compress the logs to be uploaded by means of displacement operations; Some of the log files are uploaded to the server in sequence. The server receives the compressed log file uploaded by each client; the server allocates the compressed log file received to the file storage queue, and returns a normal reception response to the client; the server detects the compressed Whether there is any abnormal information in the log file; if not, then the server writes the compressed log file in the file saving queue into the disk of the server by means of asynchronous I / O. The above method effectively reduces the time for the client to wait for the response from the server, and improves the functional experience of the self-service terminal.

Owner:GUANGDONG KAMFU TECH CO LTD

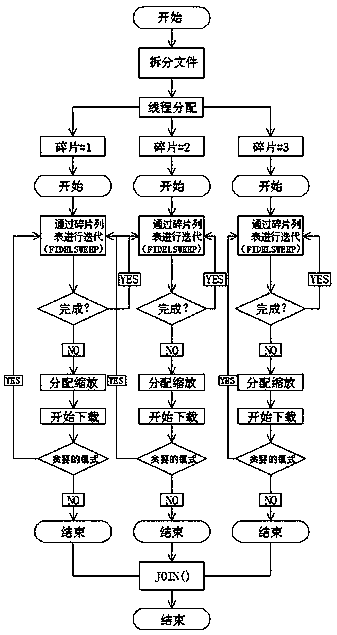

Full-bandwidth use ensuring method under situation that concurrent asynchronous connection without being interrupted

InactiveCN108900638AStable mass data transmissionFirmly connectedTransmissionAsynchronous I/OOperating system

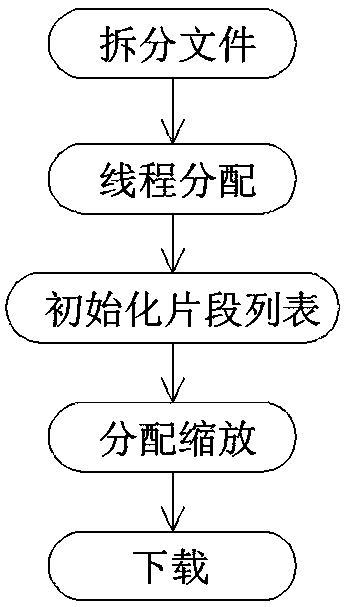

The invention provides a full-bandwidth use ensuring method under the situation that concurrent asynchronous connection without being interrupted. The method comprises the steps: splitting files; distributing threads; initializing a fragment list; distributing and zooming; downloading. The full-bandwidth use ensuring method disclosed by the invention can utilize multi-thread and asynchronous I / O advantages to the maximum extent to achieve network and hardware resource maximization; based on a multi-thread connection task precedent, multi-server connection is established, and all dialogues aredivided into a plurality of fragments; meanwhile, the quickest resource holder is optimized, and a novel method is provided for the field of multi-thread downloading.

Owner:陈若天

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com