Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

70results about How to "Reduce queuing delay" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

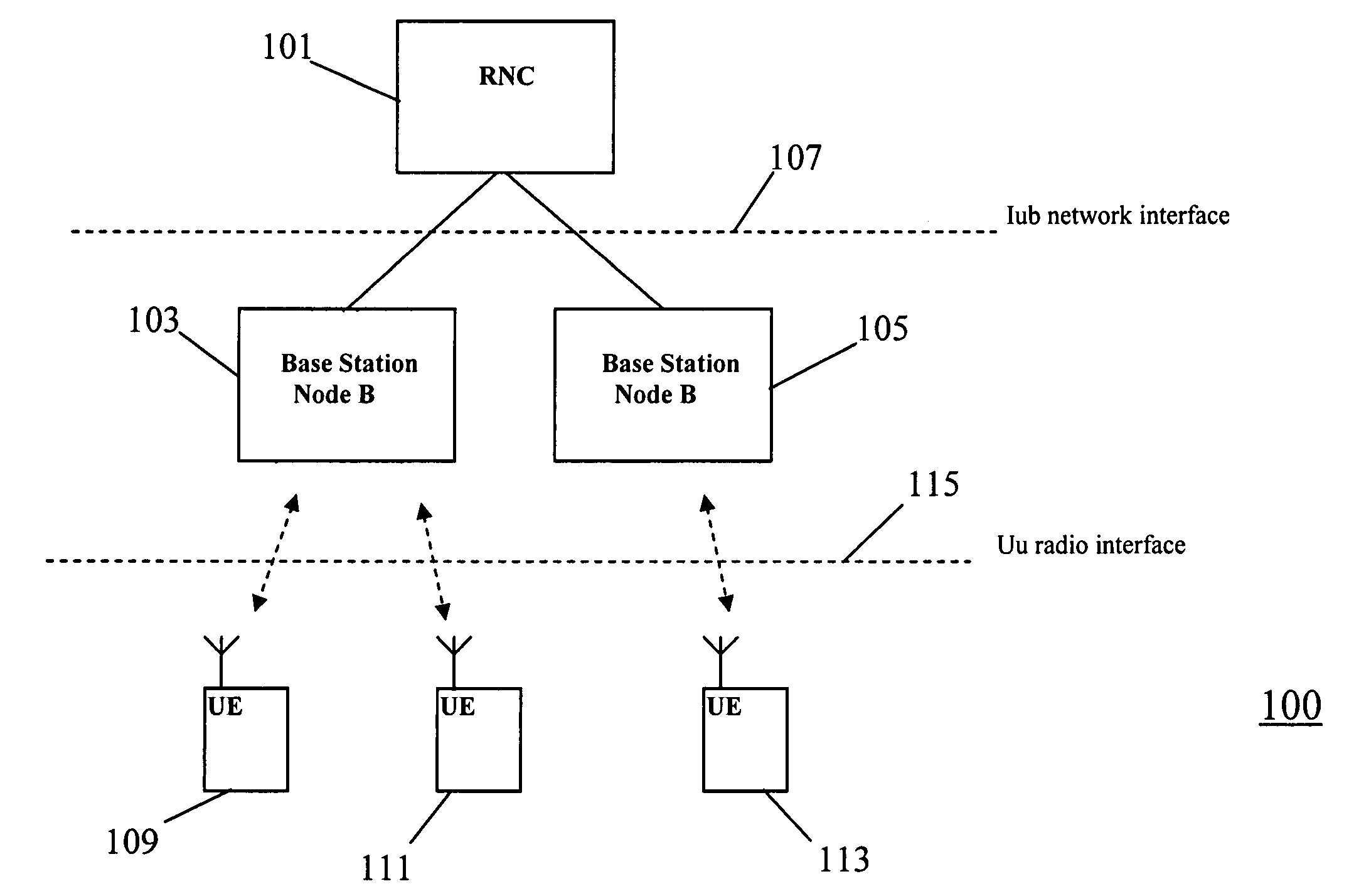

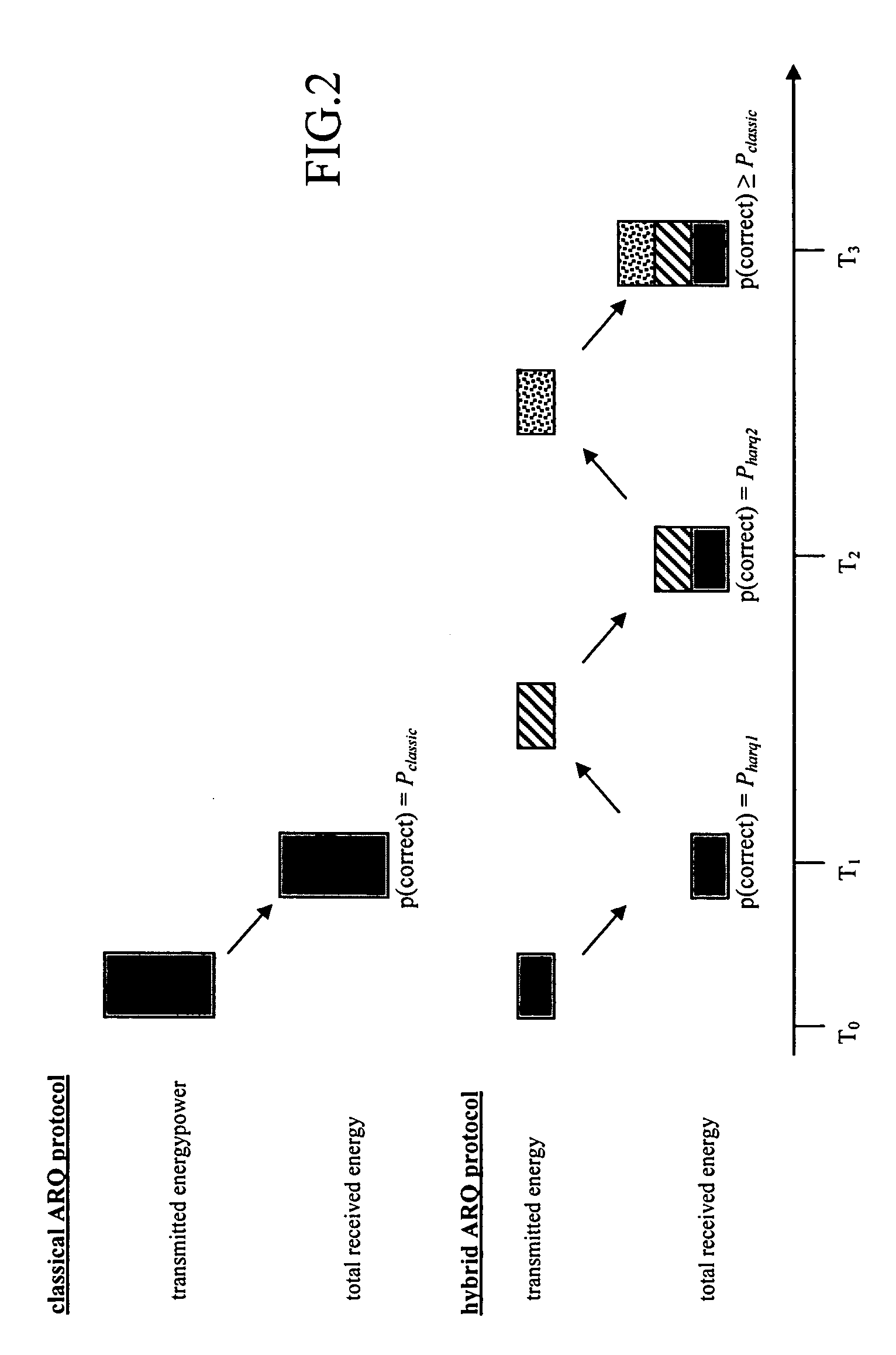

Retransmission scheme in a cellular communication system

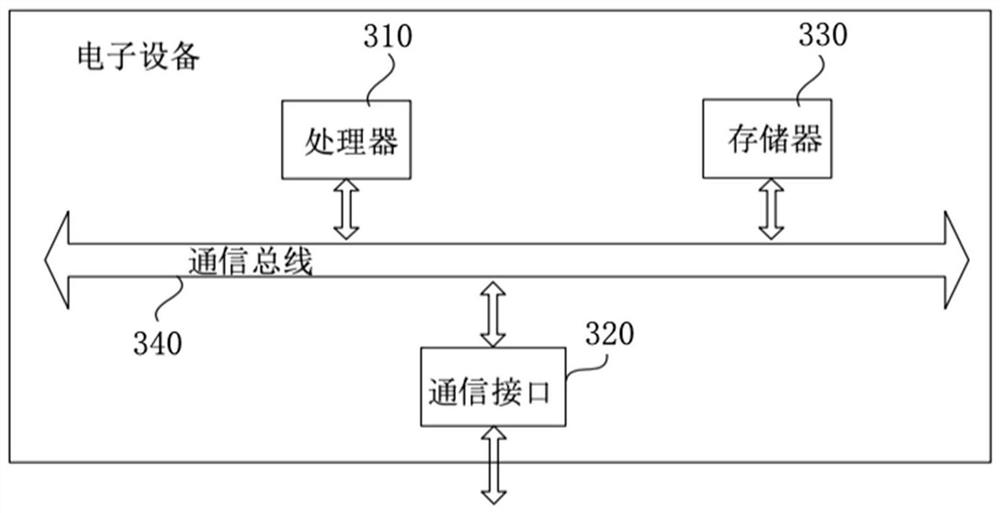

ActiveUS20060084389A1Improve performanceReduce delaysPower managementNetwork traffic/resource managementOperating pointTarget control

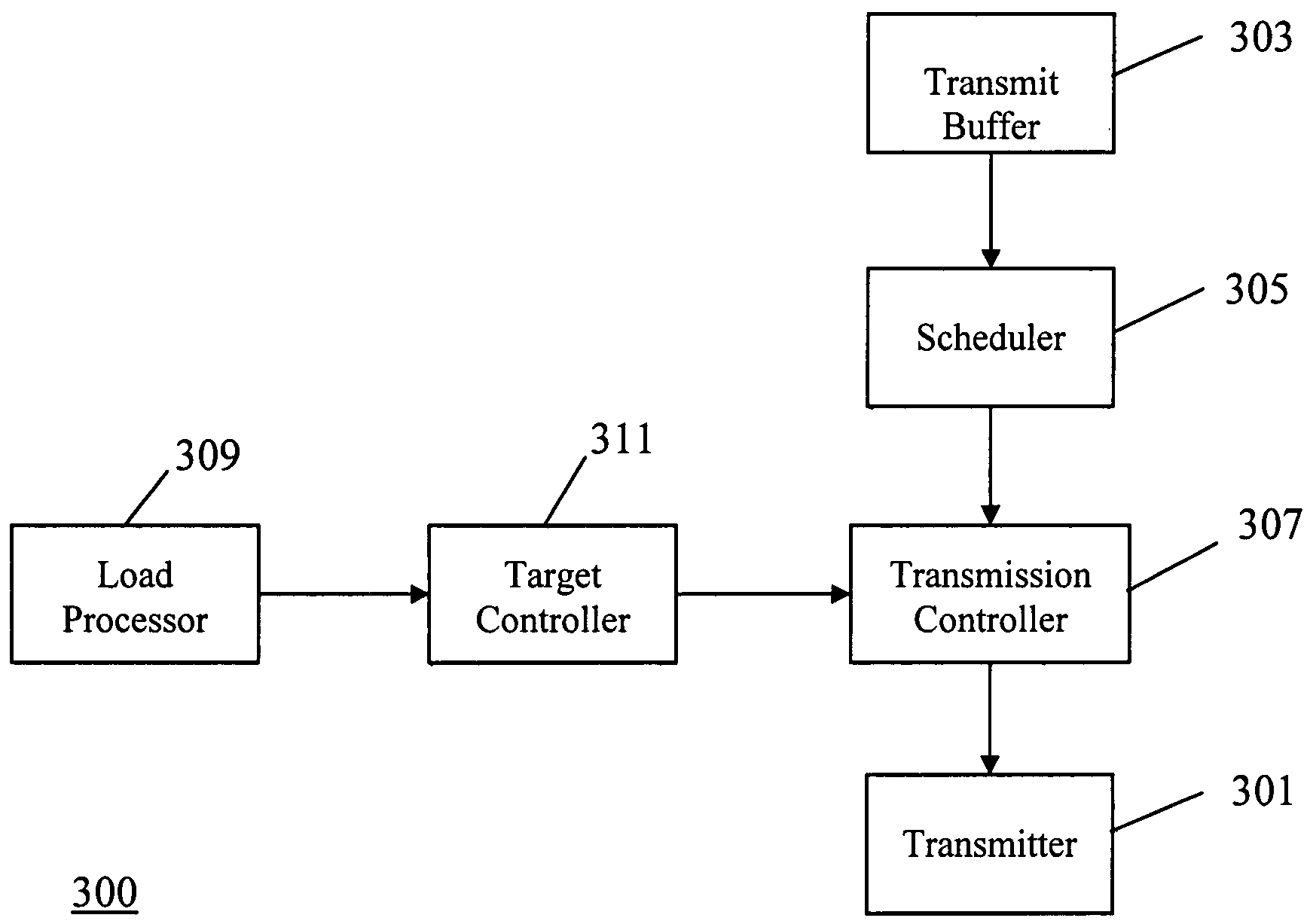

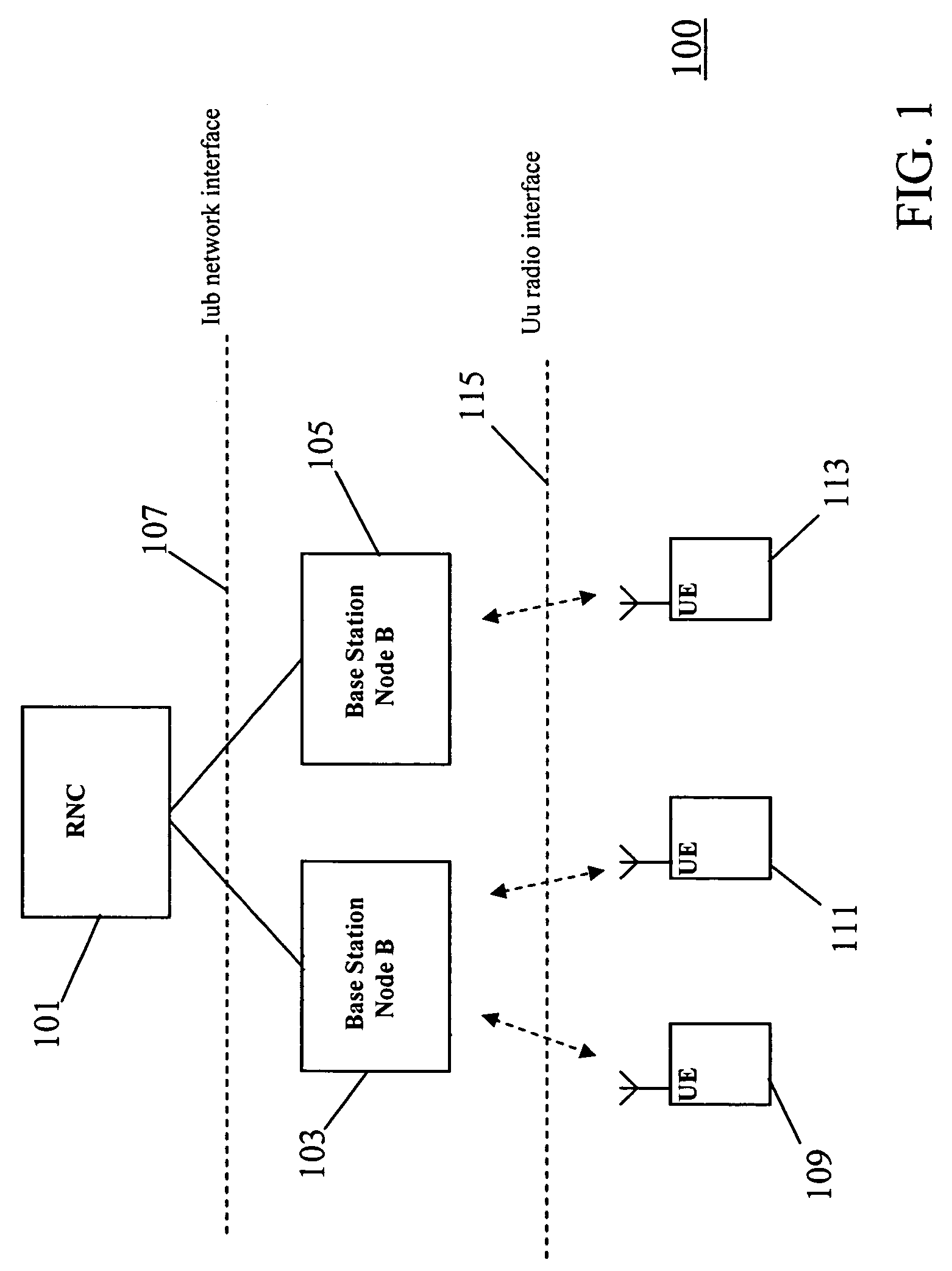

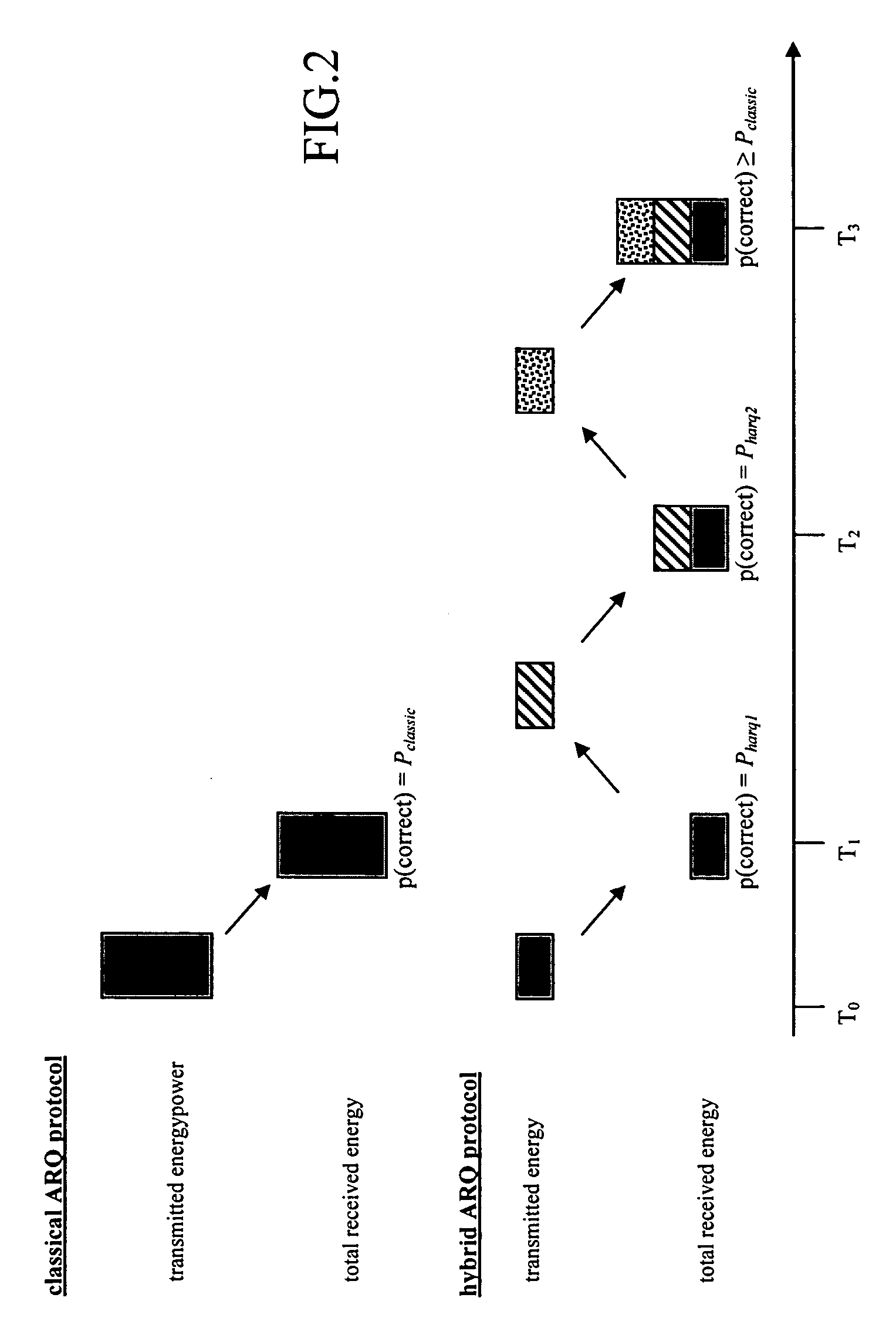

An apparatus 300 for a cellular communication system (100) comprises a buffer (303) which receives data for transmission over an air interface (115). The buffer (303) is coupled to a scheduler (305) which schedules the data and allocates the physical resource of the air interface (115). The transmissions are performed using a retransmission scheme such as a Hybrid-Automatic Repeat reQuest scheme. A load processor (309) determines a load characteristic associated with the scheduler (305) and a target controller (311) sets a target parameter for the retransmission scheme in response to the load characteristic. Specifically, a block error rate target may be set in response to a load level of a cell or plurality of cells. A transmission controller (307) sets a transmission parameter for a transmission in response to the target parameter and a transmitter (301) transmits the data using the transmission parameter. Accordingly, an operating point of the retransmission scheme may be dynamically adjusted thereby reducing overall latency.

Owner:SONY CORP

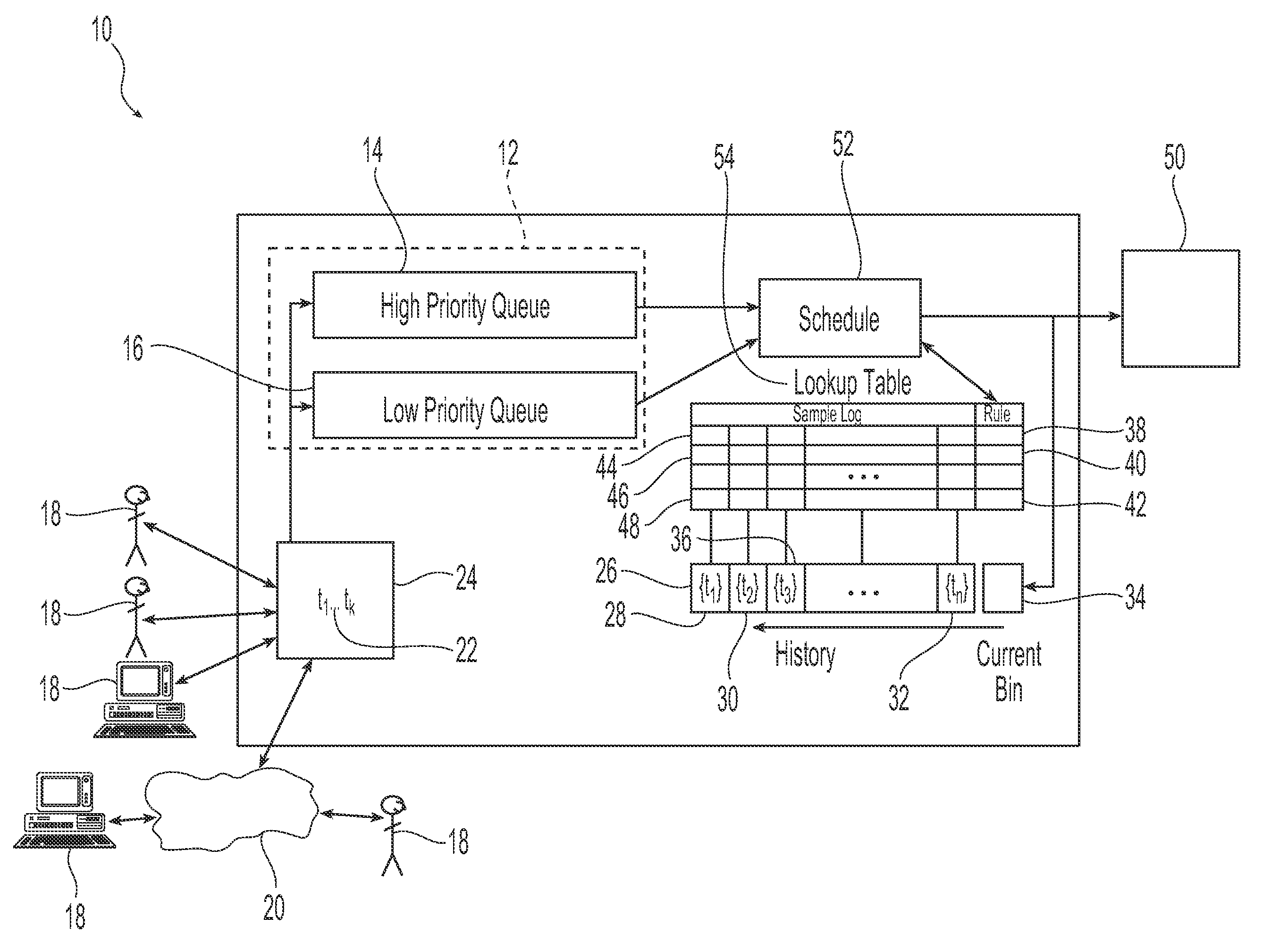

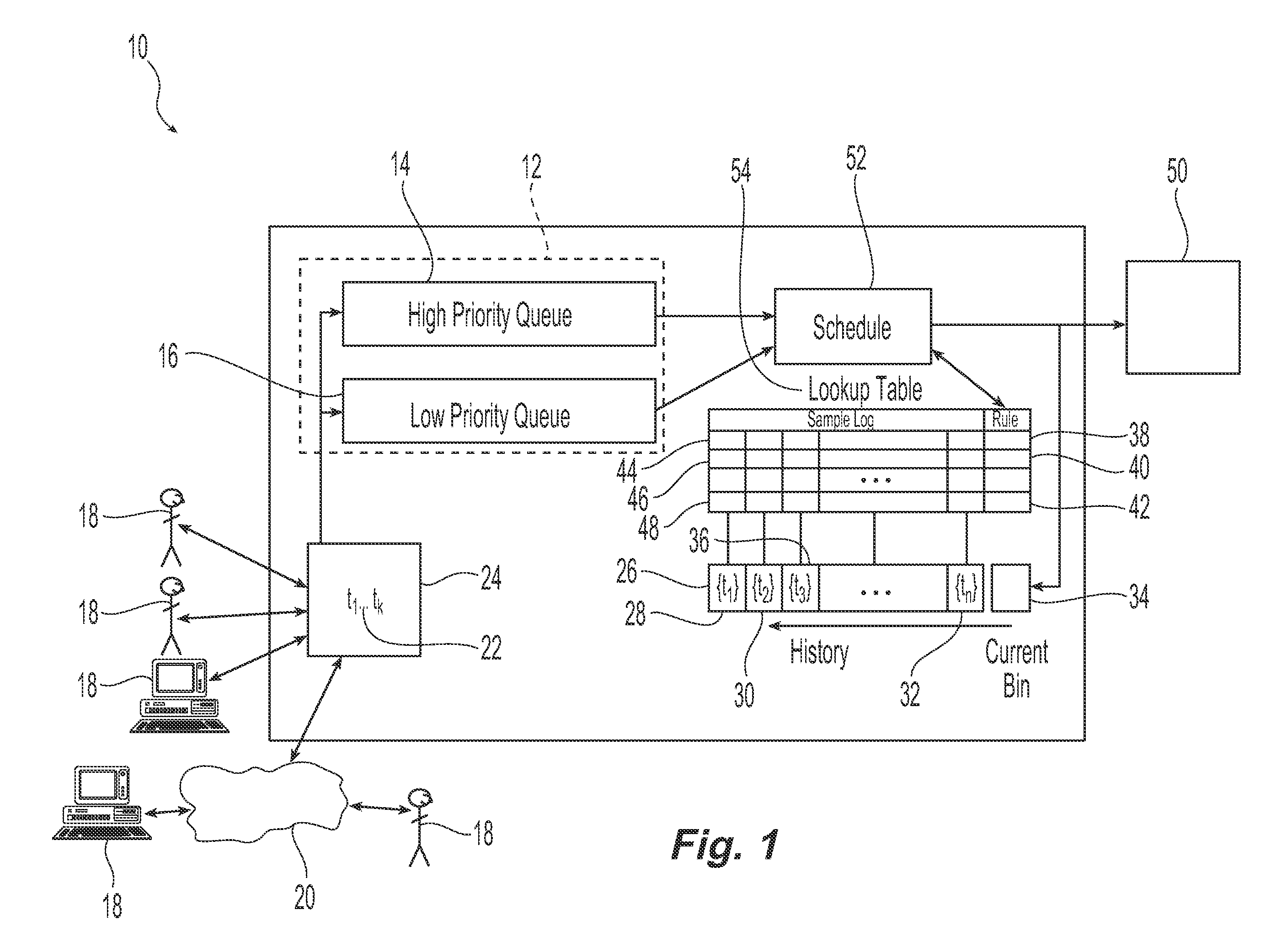

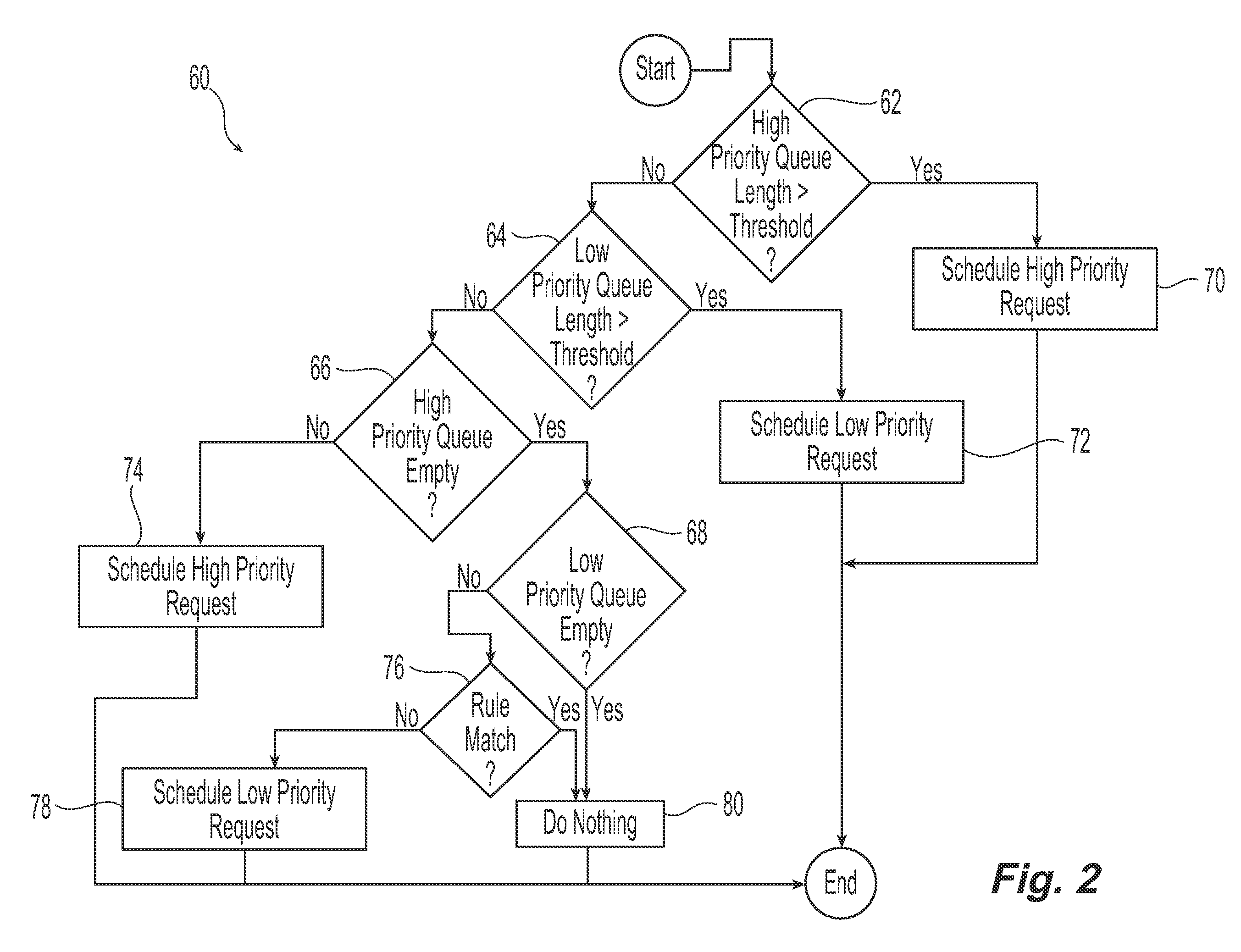

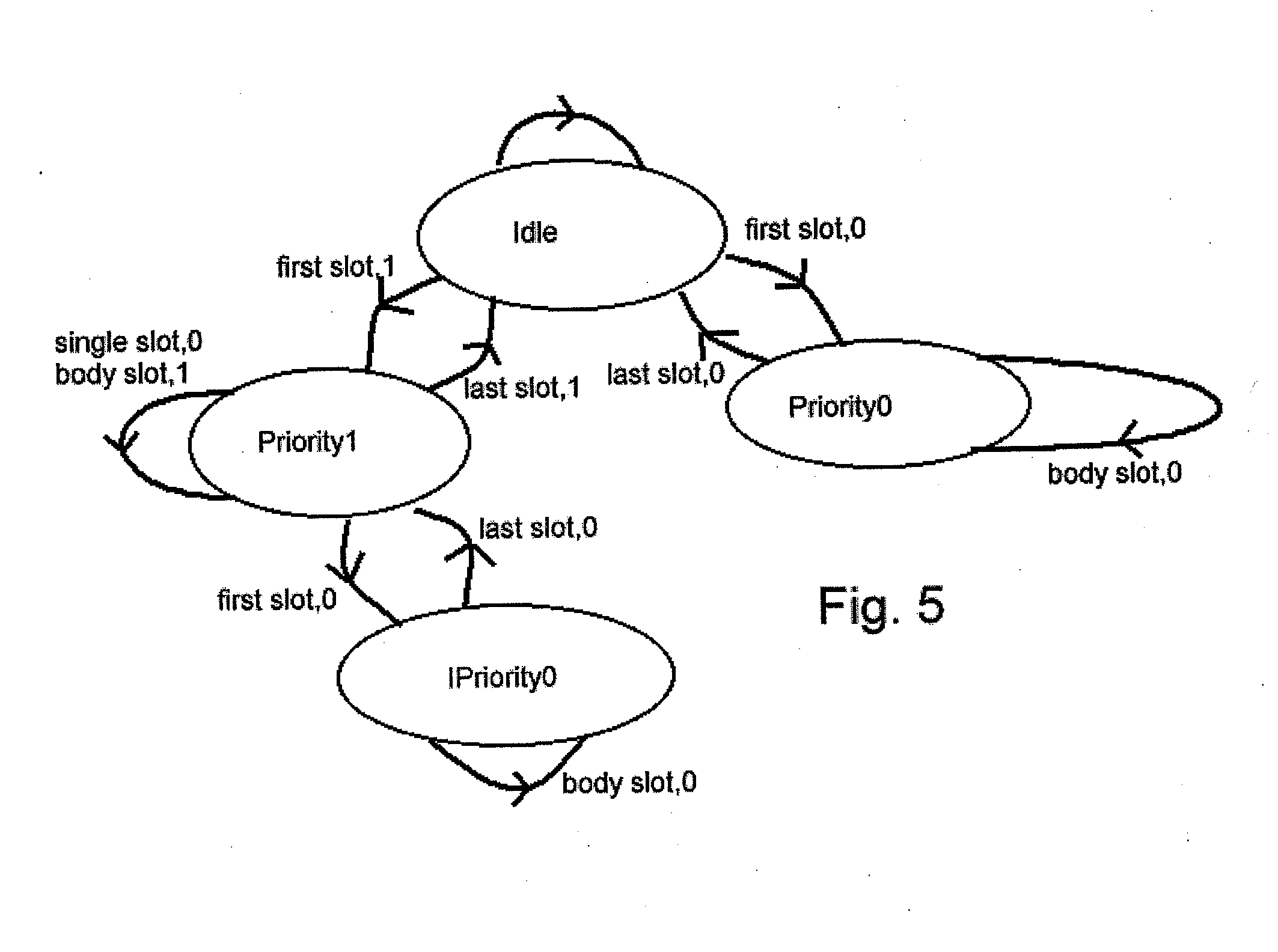

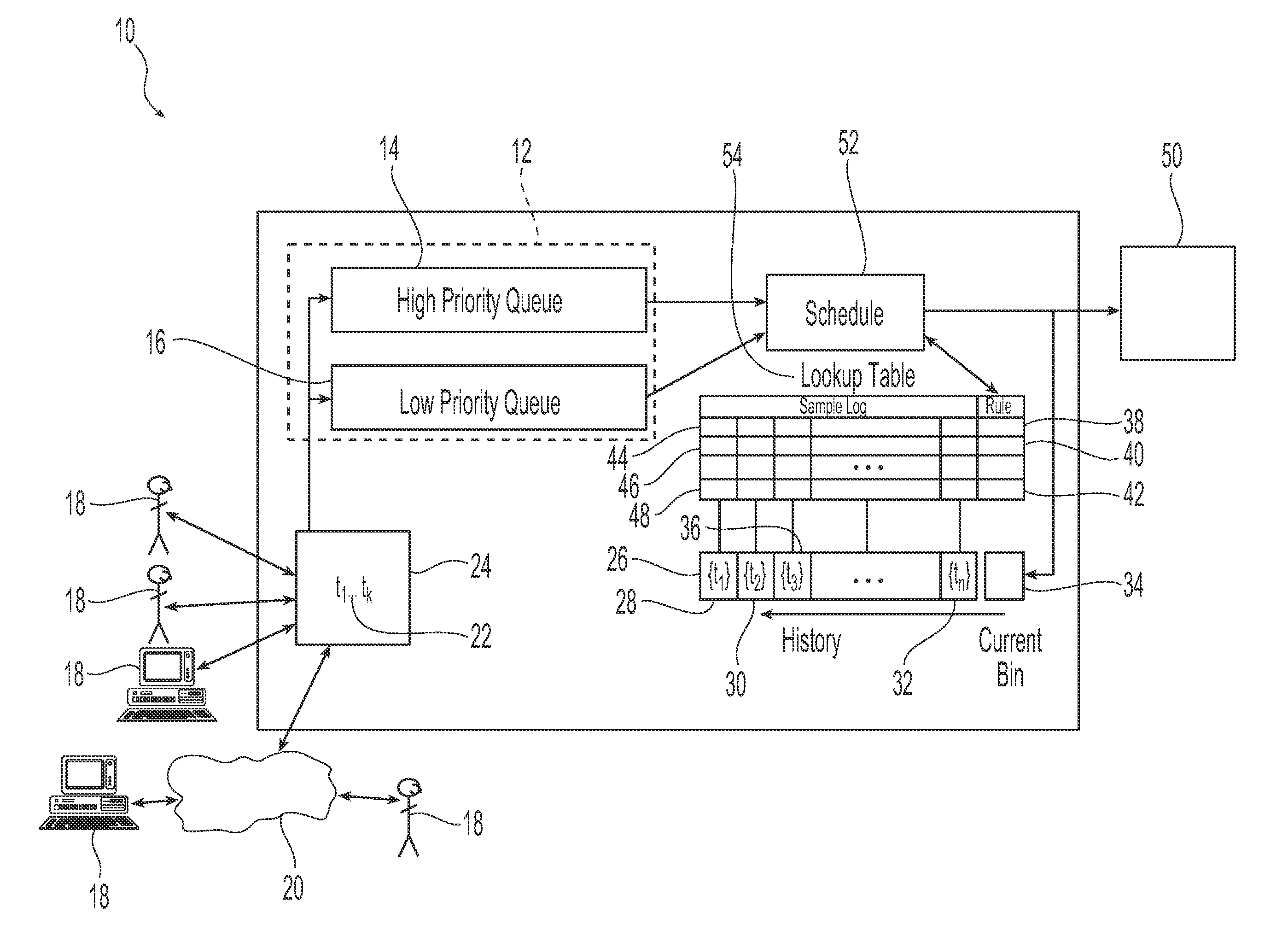

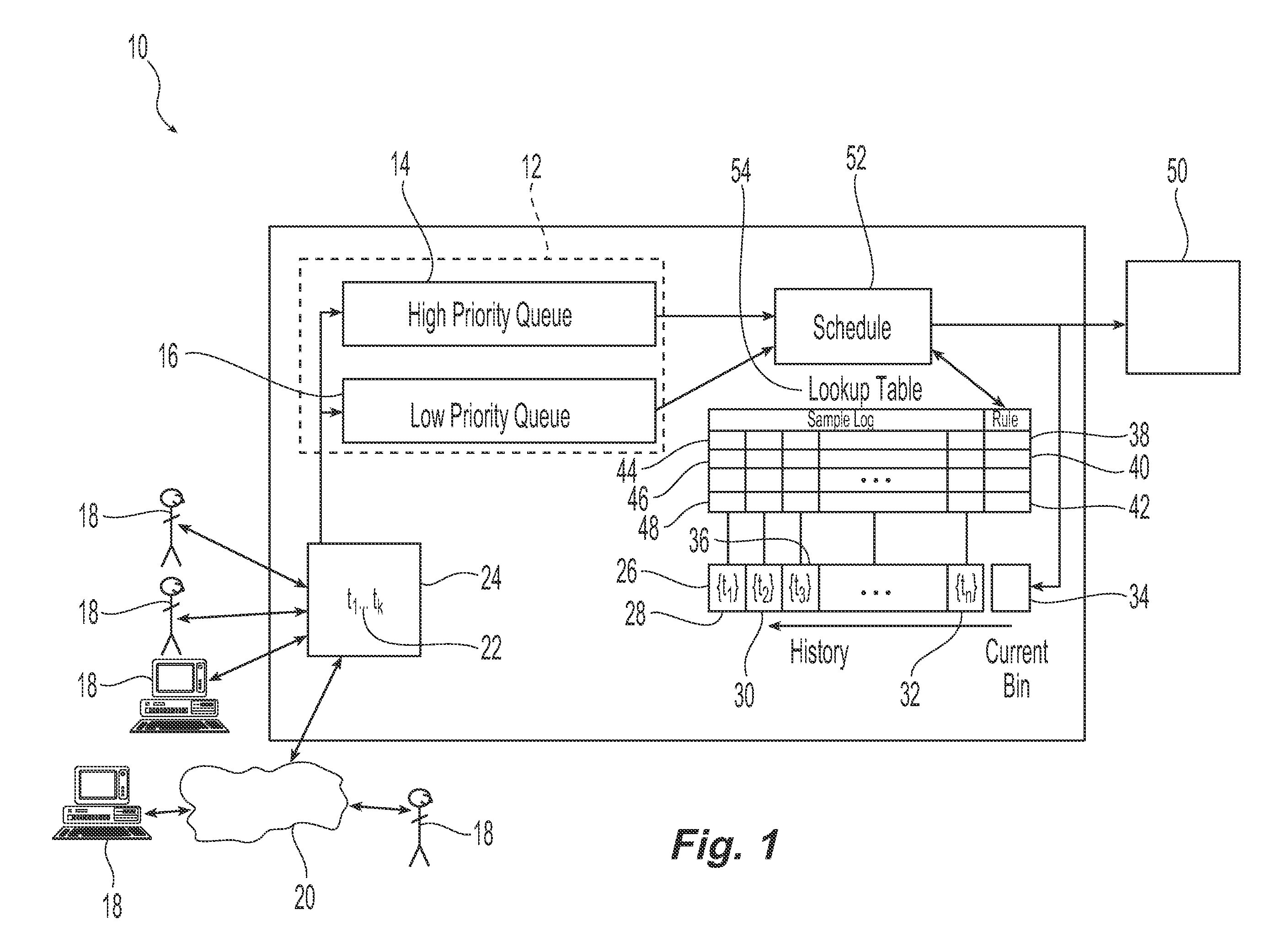

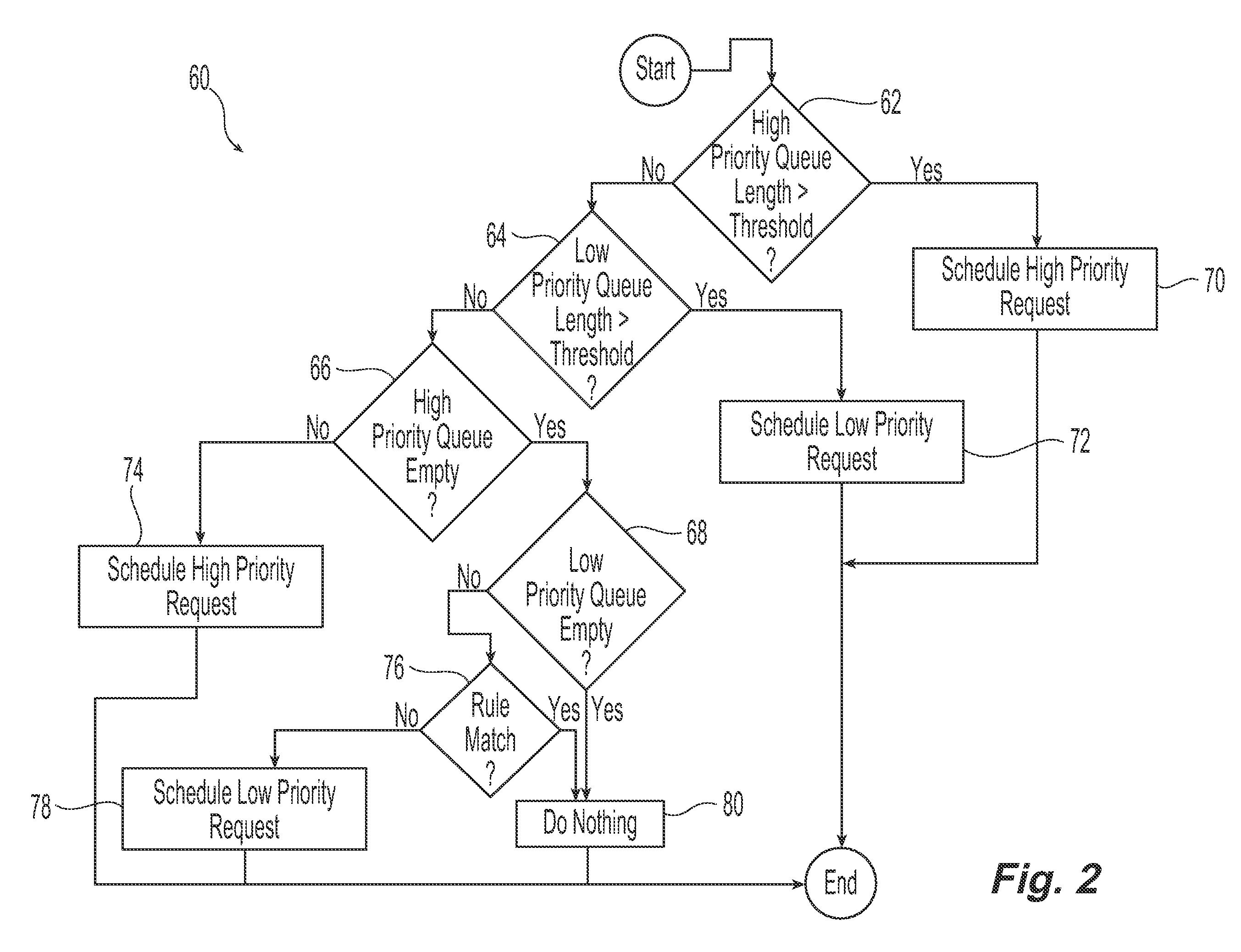

Prediction Based Priority Scheduling

ActiveUS20080222640A1Interference minimizationReduce queuing delayMultiprogramming arrangementsMemory systemsComputerized systemComputing systems

Systems and methods are provided that schedule task requests within a computing system based upon the history of task requests. The history of task requests can be represented by a historical log that monitors the receipt of high priority task request submissions over time. This historical log in combination with other user defined scheduling rules is used to schedule the task requests. Task requests in the computer system are maintained in a list that can be divided into a hierarchy of queues differentiated by the level of priority associated with the task requests contained within that queue. The user-defined scheduling rules give scheduling priority to the higher priority task requests, and the historical log is used to predict subsequent submissions of high priority task requests so that lower priority task requests that would interfere with the higher priority task requests will be delayed or will not be scheduled for processing.

Owner:IBM CORP

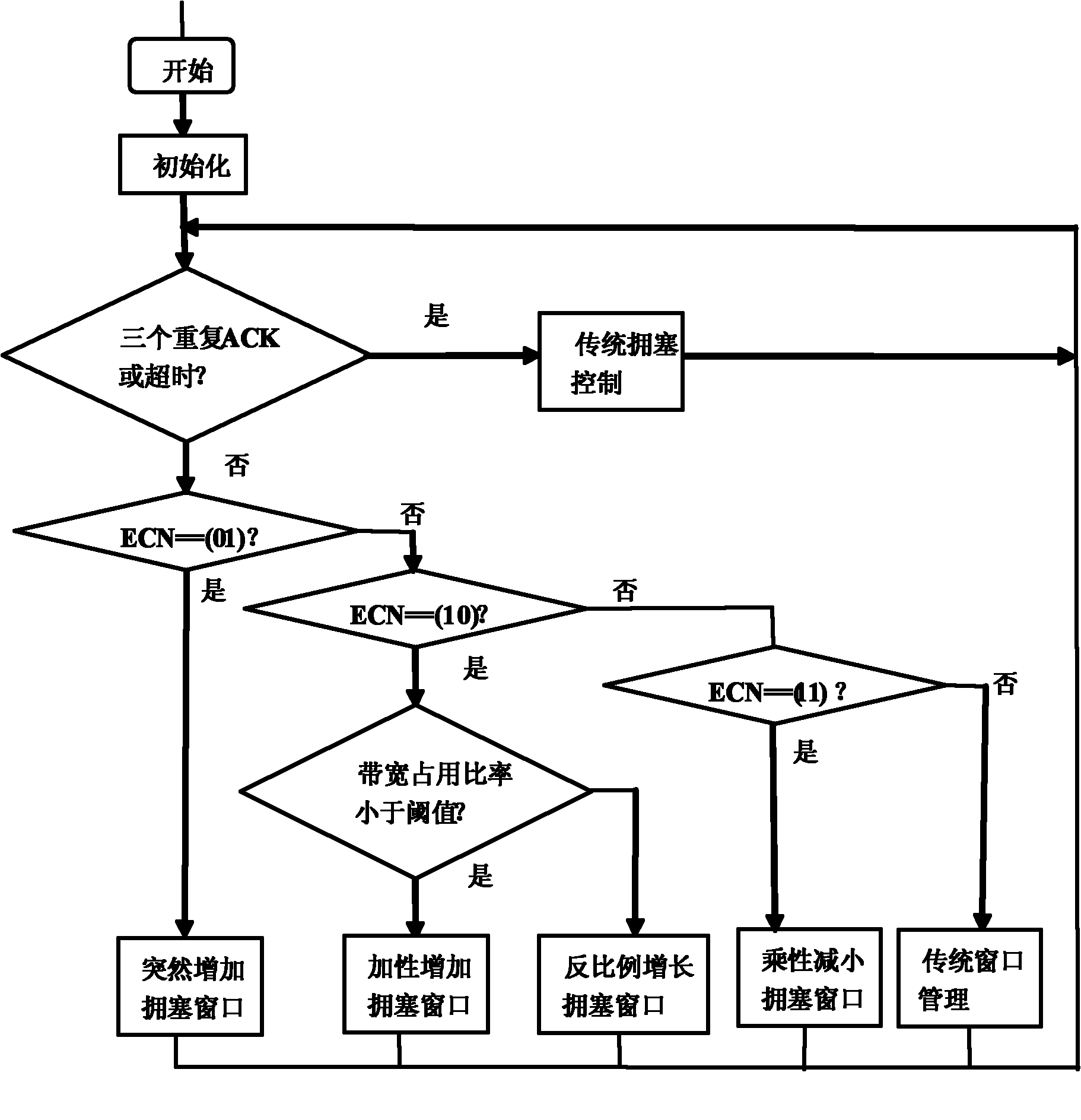

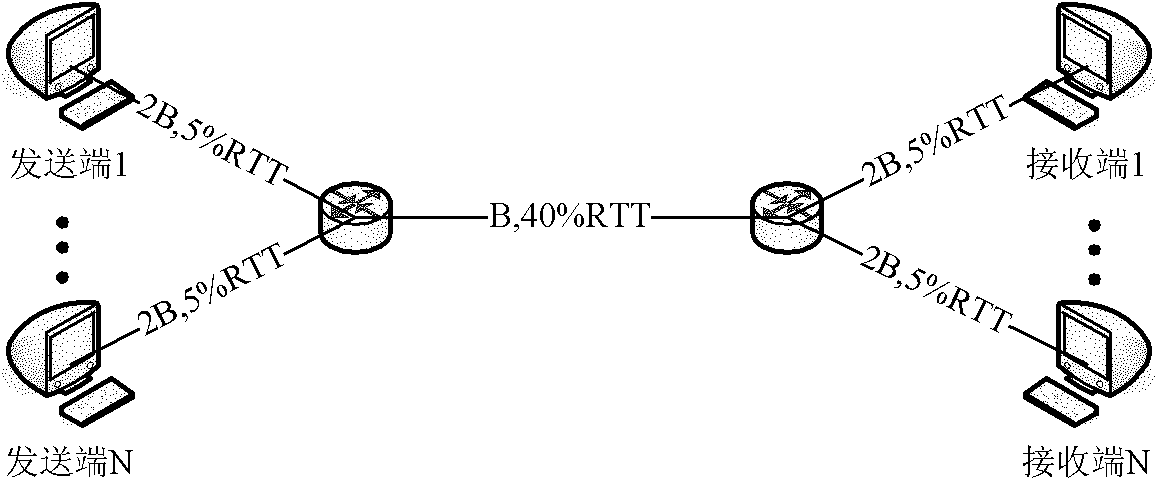

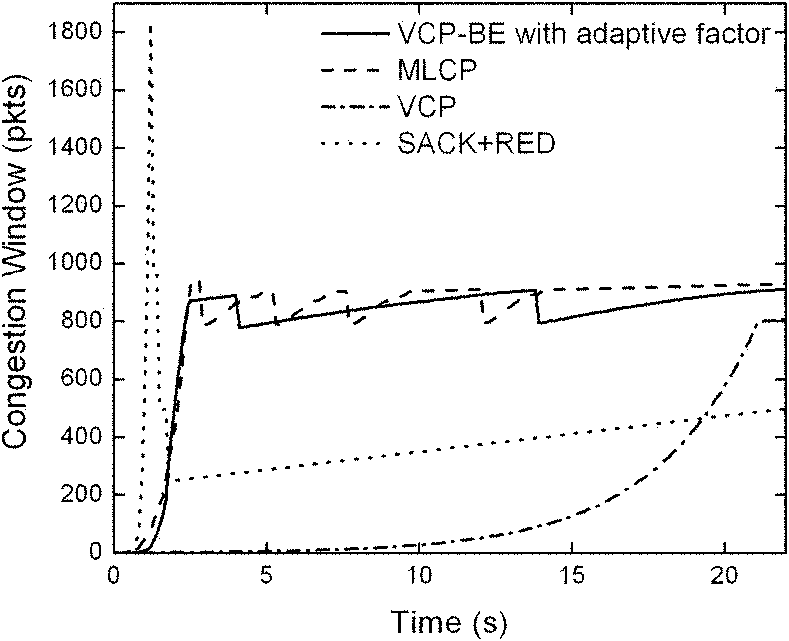

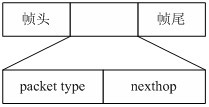

Explicit congestion control method based on bandwidth estimation in high-bandwidth delay network

InactiveCN101964755AHigh popularityEfficiencyData switching networksHigh bandwidthExplicit Congestion Notification

The invention discloses an explicit congestion control method based on bandwidth estimation in a high-bandwidth delay network, which comprises the following steps of: calculating the current available bandwidth of a network by a TCP (Transmission Control Protocol) sender through observing an ACK (Acknowledgement Character) receiving interval, loading a router by a packet header ECN (Explicit Congestion Notification) 2bits information feedback network, carrying out network state judgment by the sender through explicit feedback information and estimated available bandwidth values, and adjusting a congestion mechanism according to a judgment result. The congestion is judged by combining end-to-end feedback and router explicit feedback, i.e. finer congestion information is given by using the bandwidth estimation values based on the explicit feedback of coarseness, thus an algorithm ensures high congestion feedback precision while reducing the explicit feedback overhead and improving the protocol popularization, and improves the efficiency and the convergence rate of the protocol.

Owner:中科博华信息科技有限公司

Retransmission scheme in a cellular communication system

ActiveUS7292825B2Improve performanceReduce delaysError prevention/detection by using return channelFrequency-division multiplex detailsOperating pointTarget control

An apparatus 300 for a cellular communication system (100) comprises a buffer (303) which receives data for transmission over an air interface (115). The buffer (303) is coupled to a scheduler (305) which schedules the data and allocates the physical resource of the air interface (115). The transmissions are performed using a retransmission scheme such as a Hybrid-Automatic Repeat reQuest scheme. A load processor (309) determines a load characteristic associated with the scheduler (305) and a target controller (311) sets a target parameter for the retransmission scheme in response to the load characteristic. Specifically, a block error rate target may be set in response to a load level of a cell or plurality of cells. A transmission controller (307) sets a transmission parameter for a transmission in response to the target parameter and a transmitter (301) transmits the data using the transmission parameter. Accordingly, an operating point of the retransmission scheme may be dynamically adjusted thereby reducing overall latency.

Owner:SONY CORP

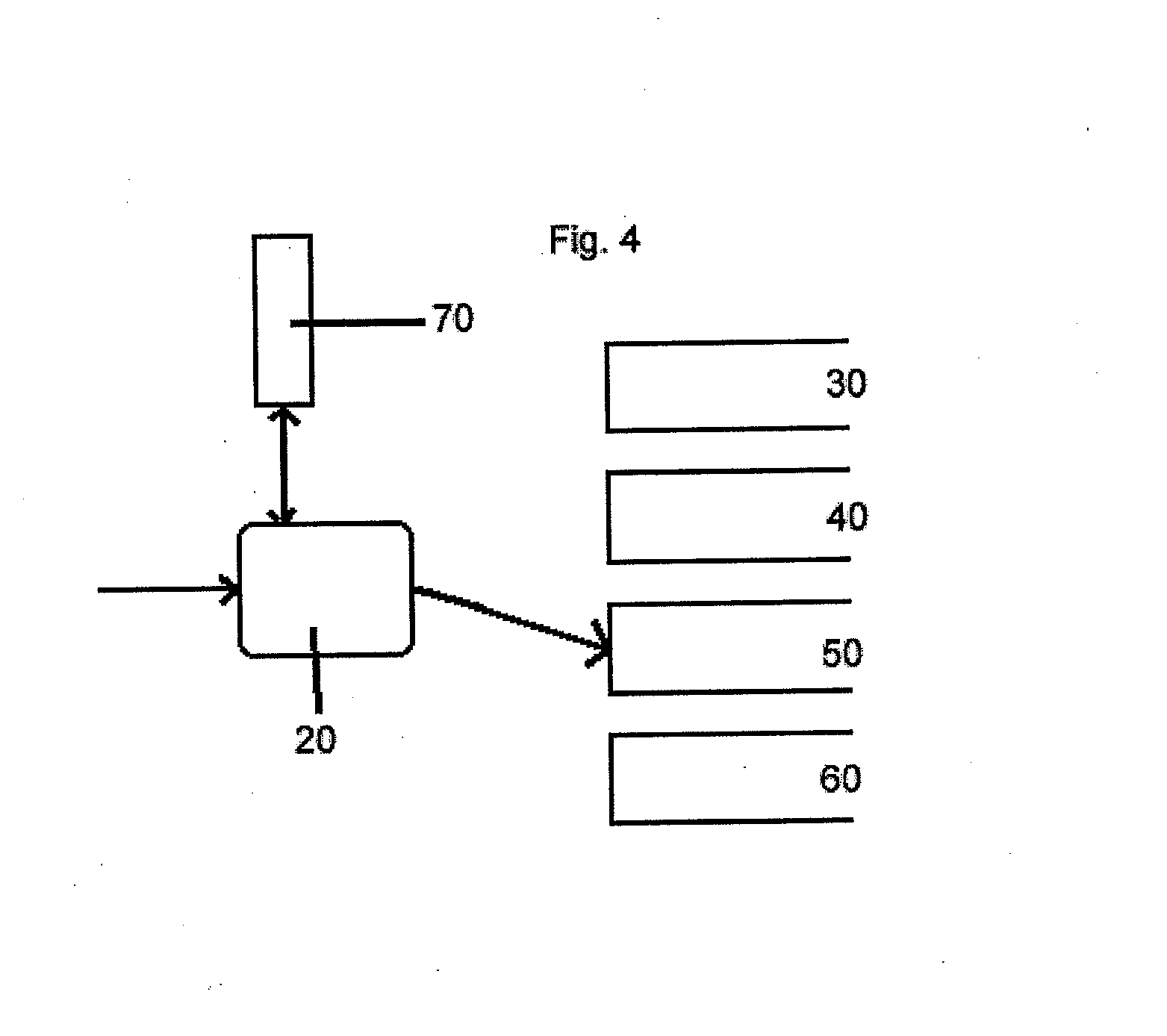

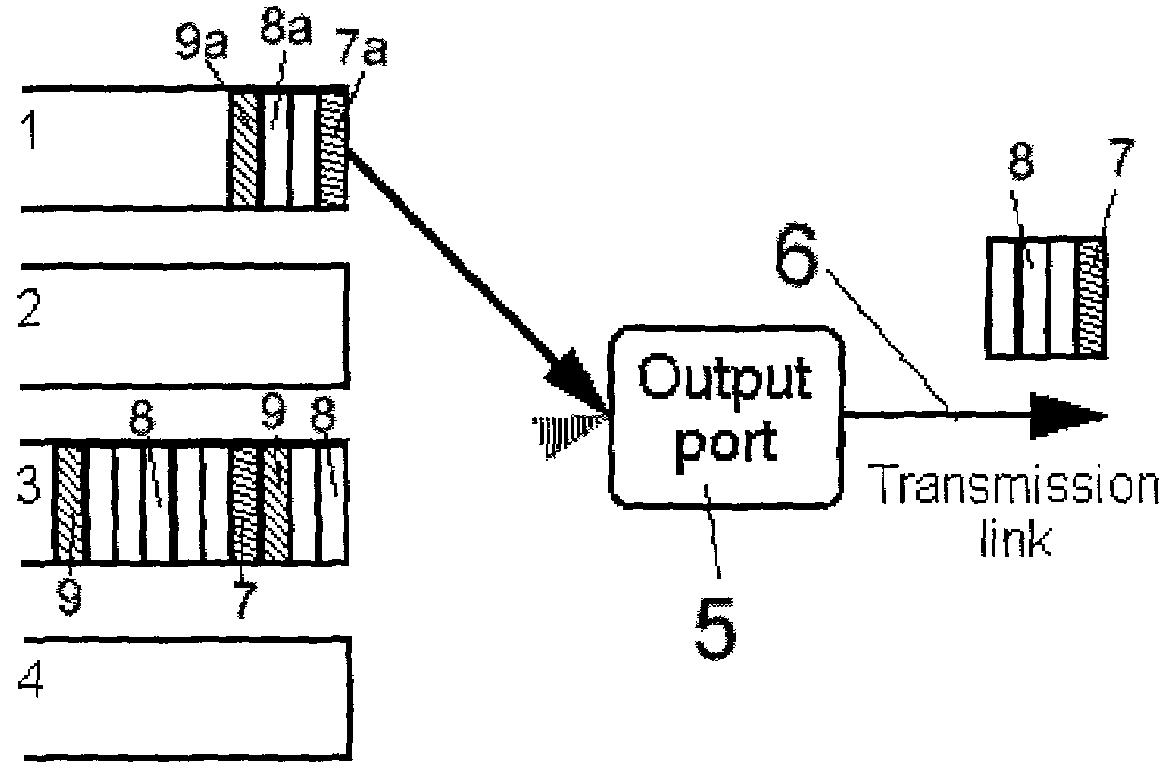

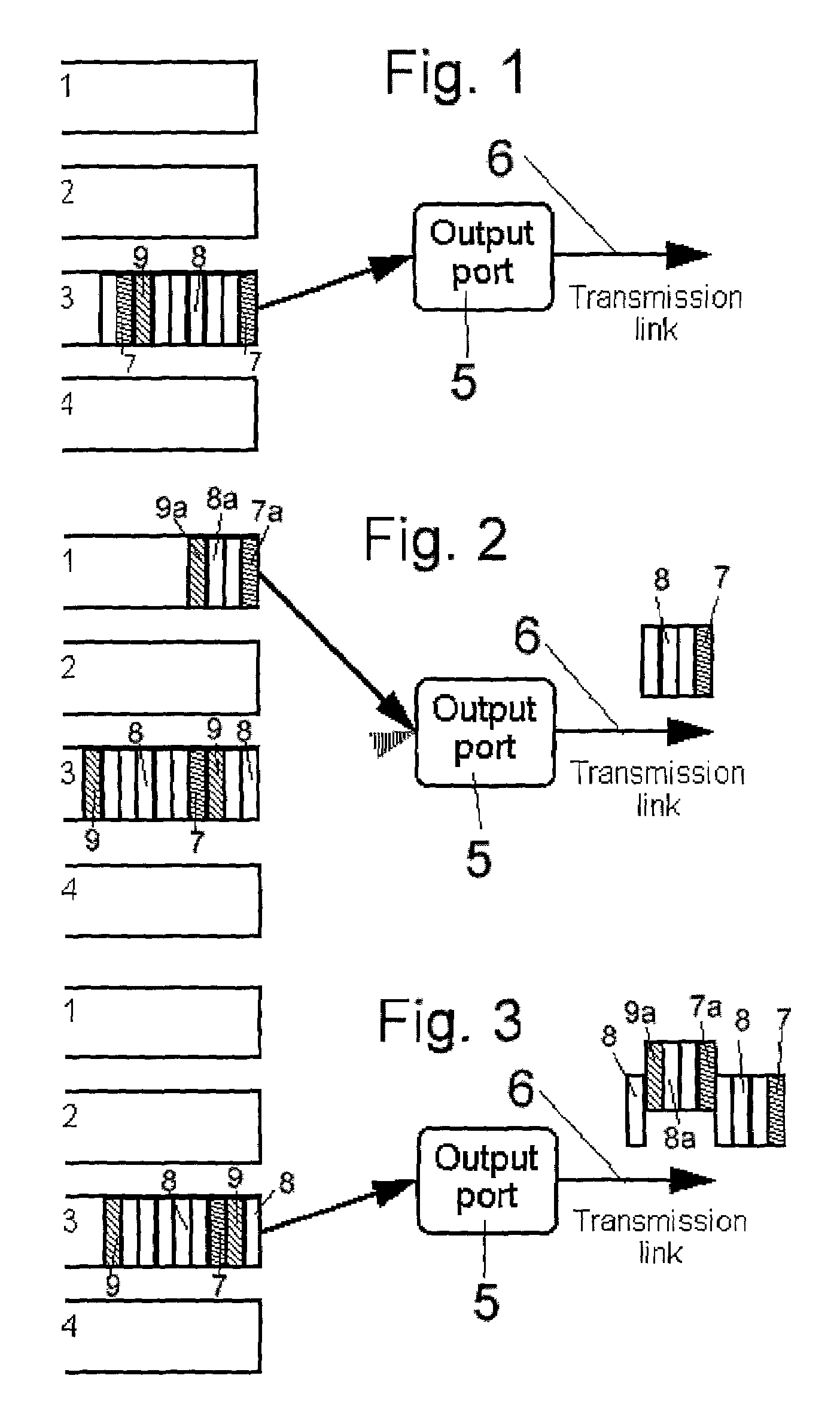

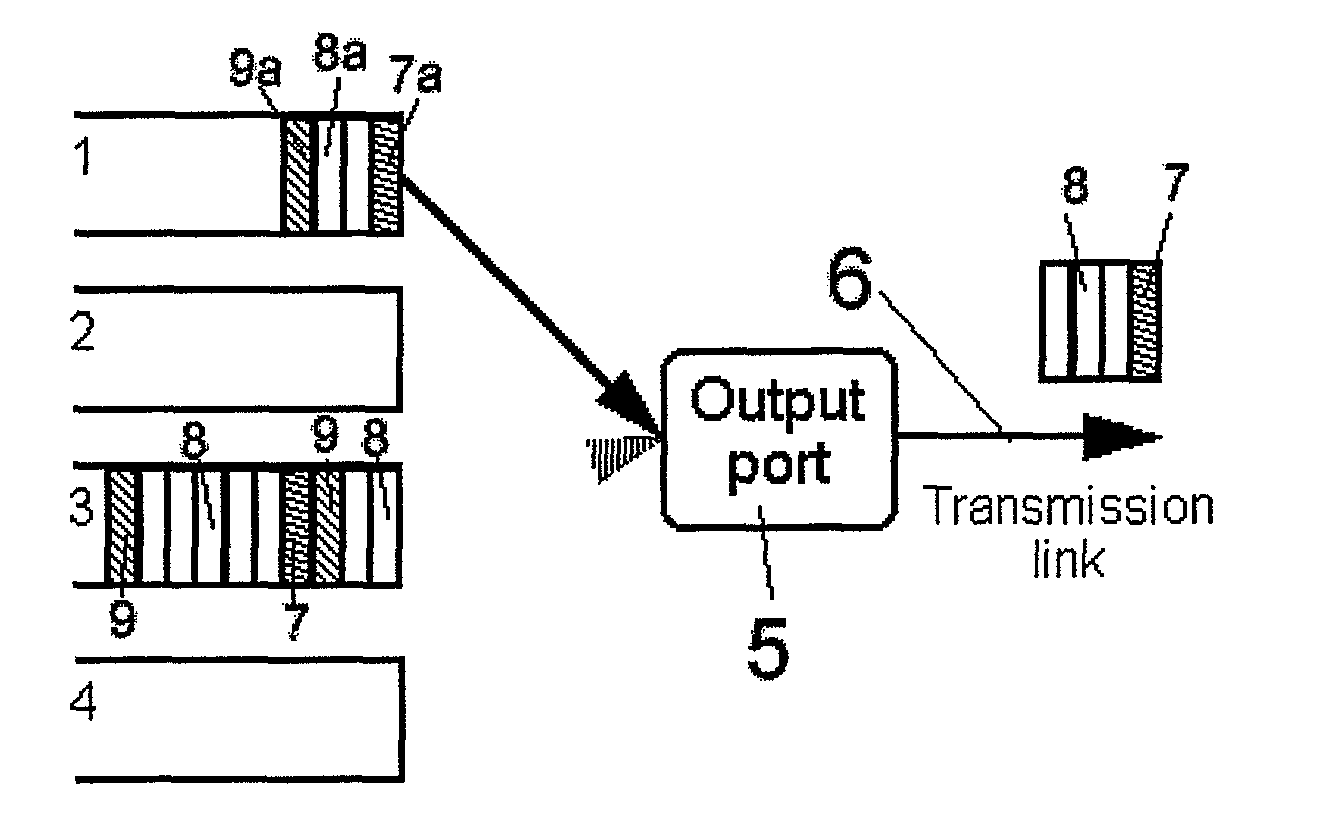

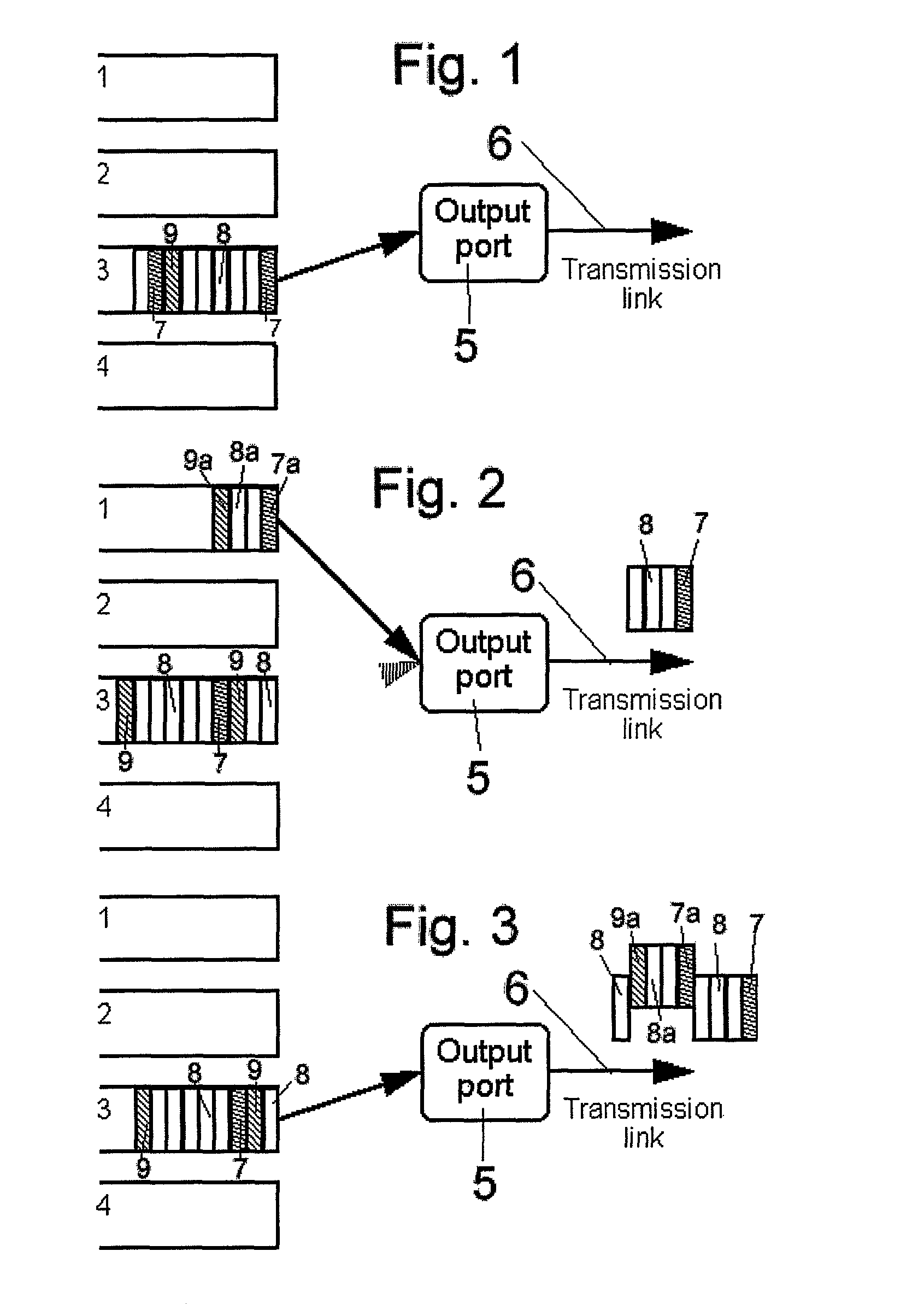

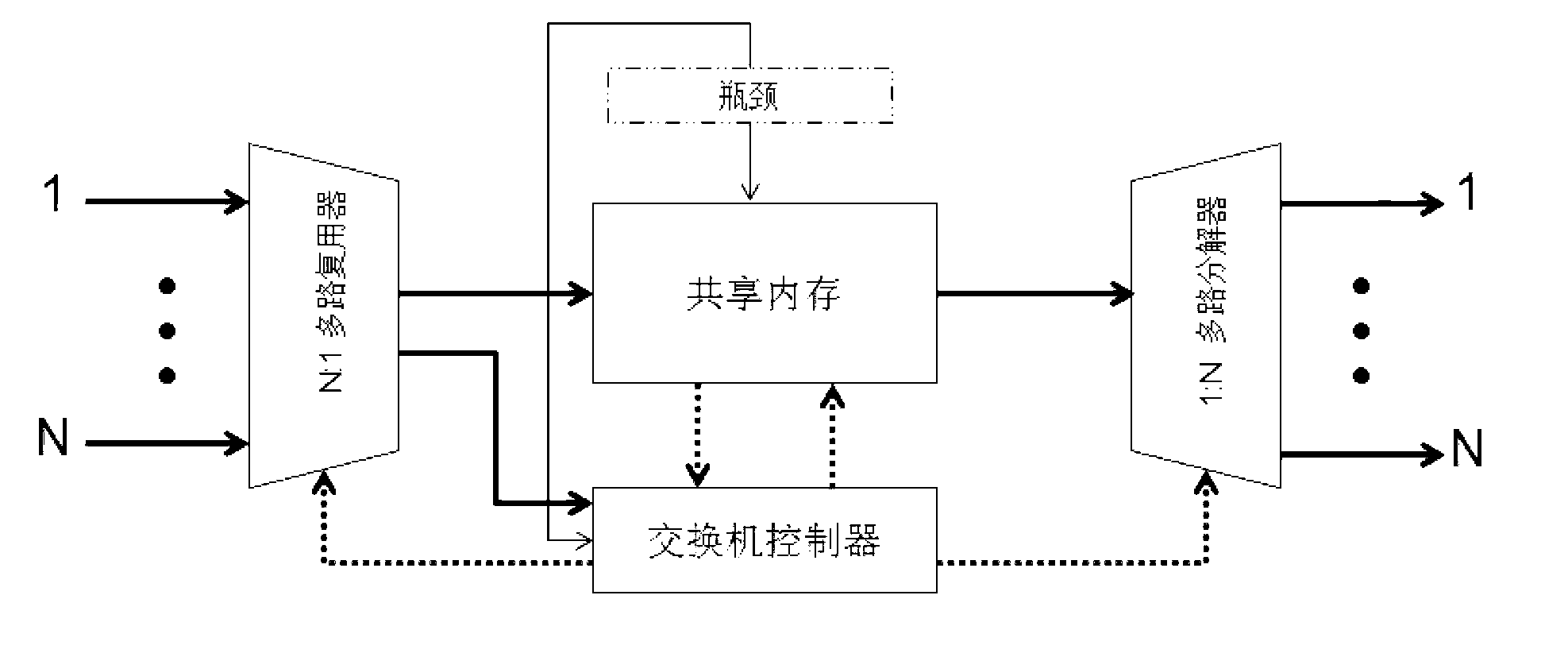

Switch and a switching method

InactiveUS20080137675A1Quickly and efficiently switchingReduce impactStar/tree networksStore-and-forward switching systemsTraffic capacityData stream

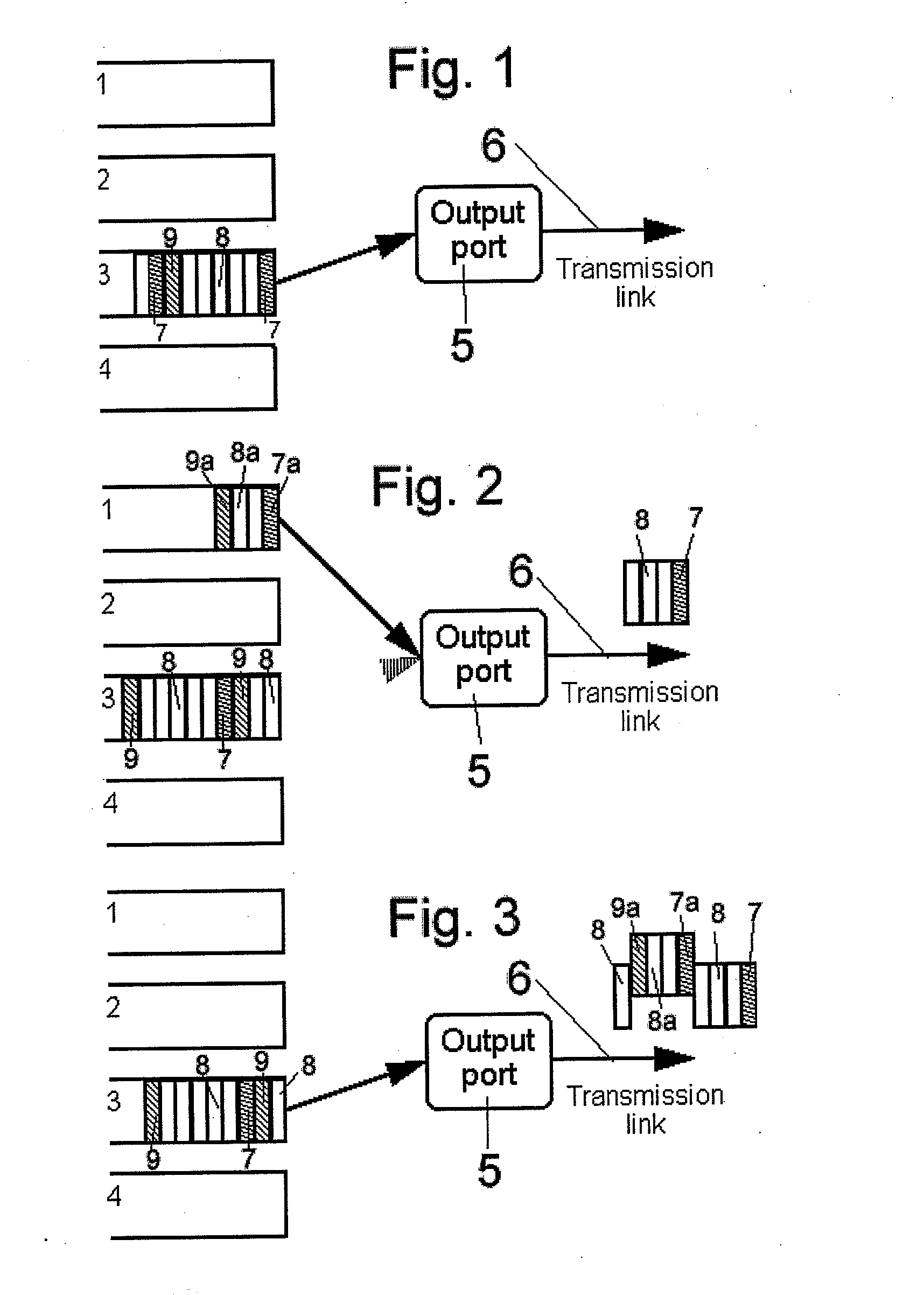

A switch at a transmission end of a system including a number of memory devices defining queues for receiving traffic to be switched, each queue having an associated predetermined priority classification, and a processor for controlling the transmission of traffic from the queues. The processor transmits traffic from the higher priority queues before traffic from lower priority queues. The processor monitors the queues to determine whether traffic has arrived at a queue having a higher priority classification than the queue from which traffic is currently being transmitted. The processor suspends the current transmission after transmission of the current minimum transmittable element if traffic has arrived at a higher priority queue, transmits traffic from the higher priority queue, and then resumes the suspended transmission. At a receiving end, a switch that includes a processor separates the interleaved traffic into output queues for reassembly of individual traffic streams from the data stream.

Owner:WSOU INVESTMENTS LLC

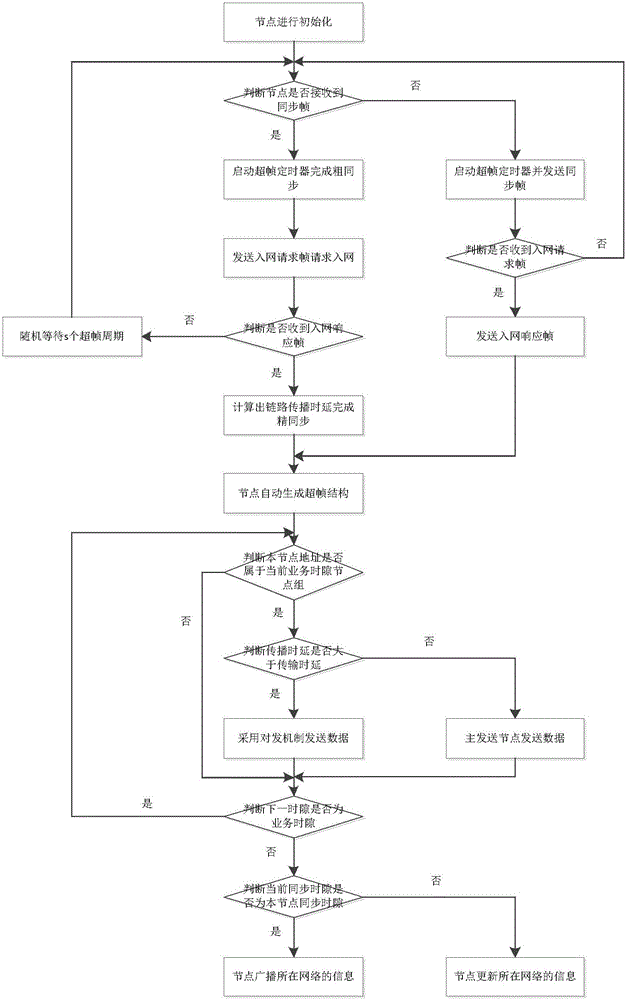

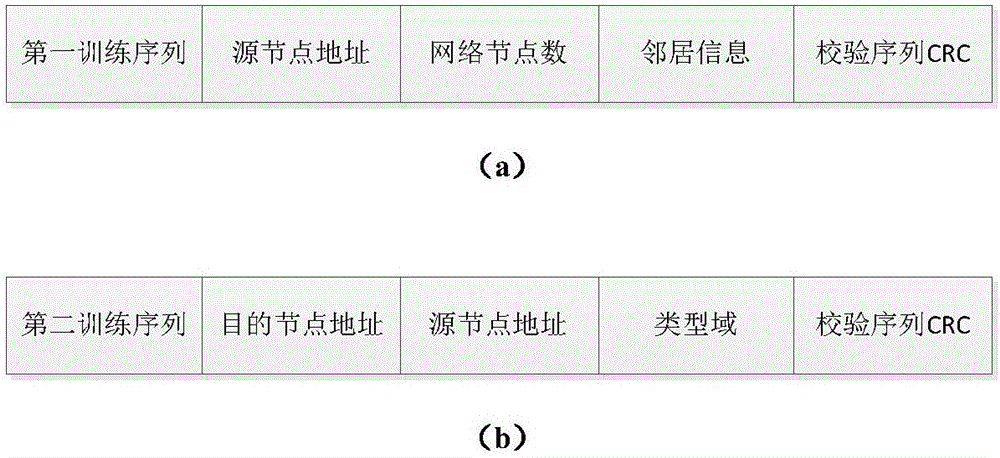

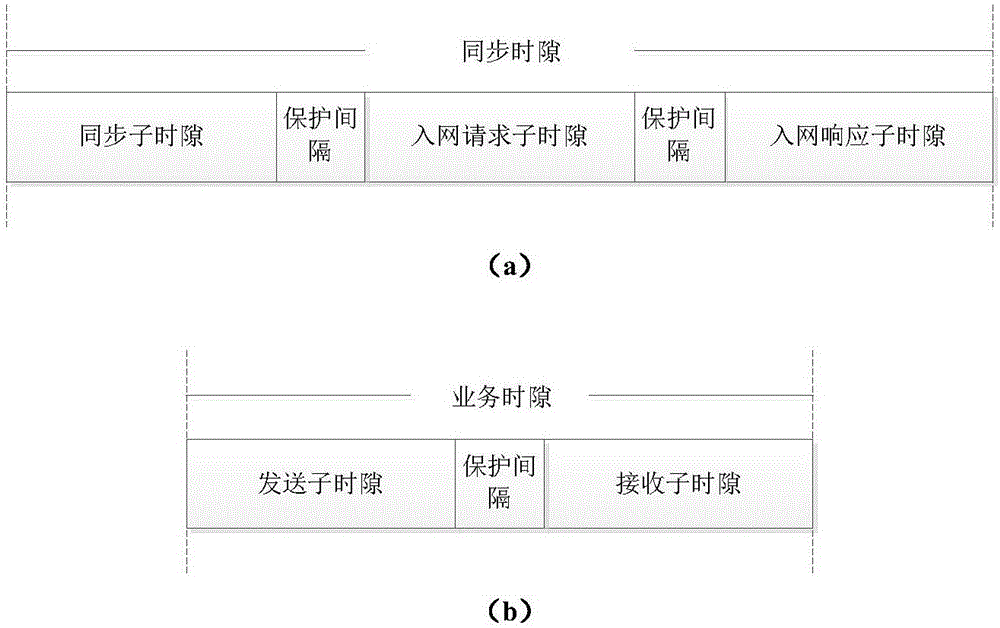

TDMA based long propagation delay wireless link time slot distribution method

ActiveCN106658735AHigh speedImprove efficiencyTime-division multiplexWireless communicationWireless ad hoc networkSynchronous frame

The invention discloses a TDMA based long propagation delay wireless link time slot distribution method which is mainly used for solving the problem of low throughput rate and low channel utilization rate of an existing long propagation delay wireless ad hoc network. The implementation scheme comprises the steps of 1, initializing a node; 2, judging whether the node receives a synchronous frame, if so, carrying out network access synchronization by the node, and otherwise, building a network by regarding the local clock as a reference by the node; 3, after synchronization is finished, generating a super-frame structure automatically by the node, and dividing the local time into multiple time slots with different functions; and 4, judging whether the current time slot is a service time slot by the node, if so, sending and receiving data, and otherwise, updating network information. as the TDMA is adopted as a channel access mode, the node can access the channel without any conflict; and through an interactive sending mechanism and a data frame queue scheduling mechanism, the network throughput rate and the channel utilization rate are increased, the queuing delay of the data frames is reduced; and the TDMA based long propagation delay wireless link time slot distribution method can be used for a time division multiple access ad hoc network.

Owner:XIDIAN UNIV

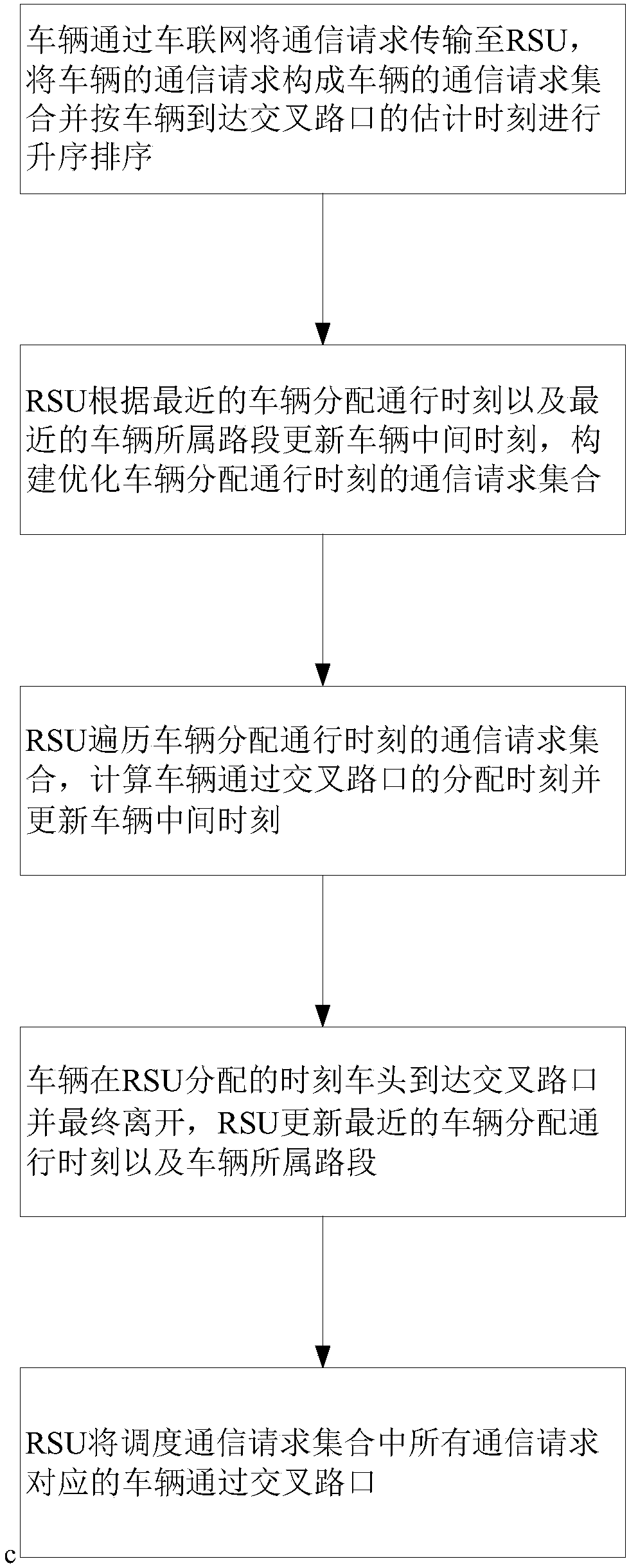

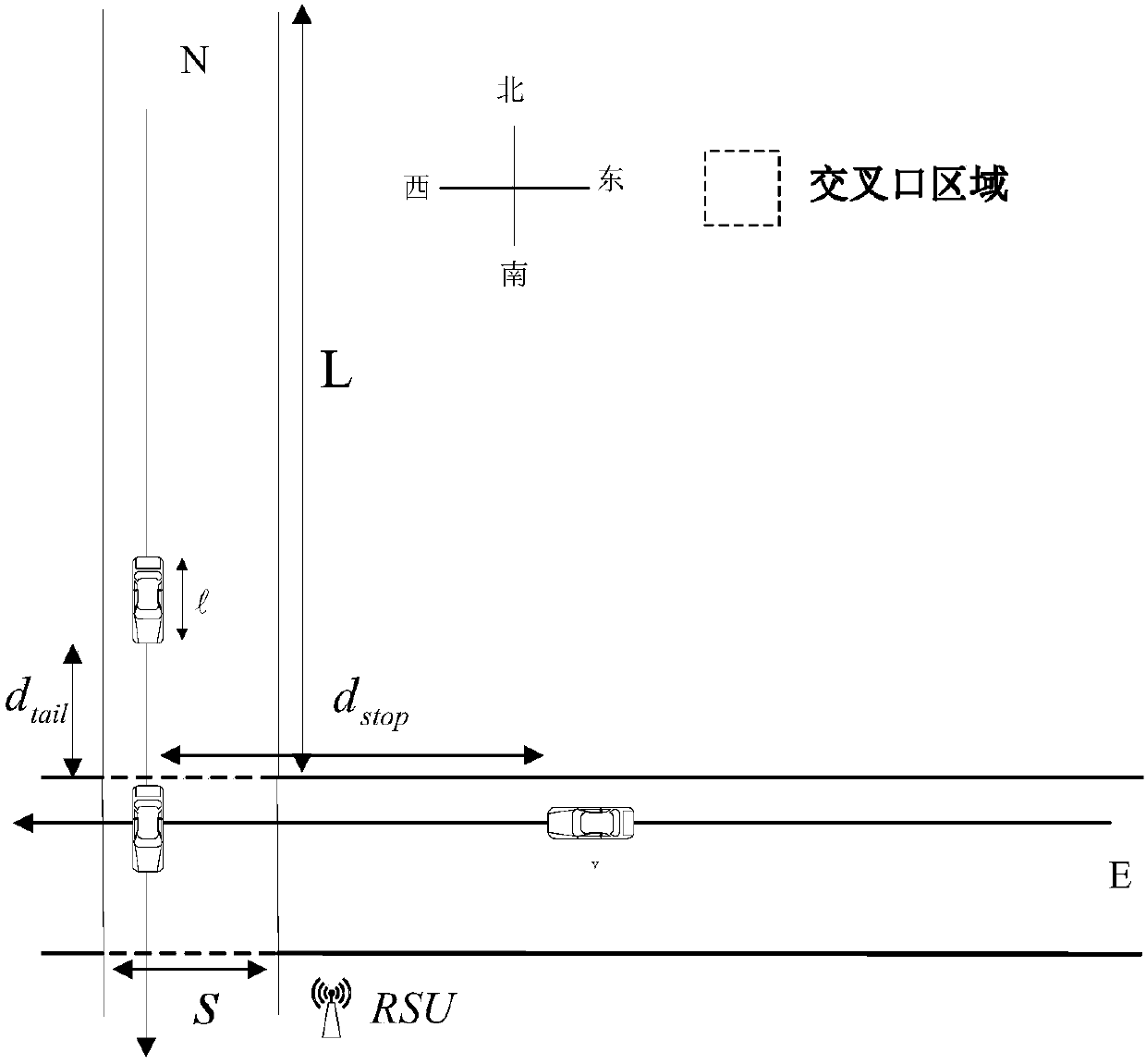

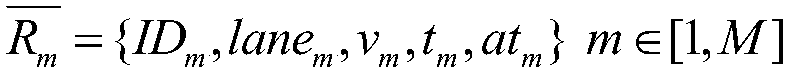

Vehicle scheduling method for junction without signal lights in autonomous driving environment

ActiveCN108281026AImprove traffic capacityImprove traffic efficiencyRoad vehicles traffic controlParticular environment based servicesSignal lightReal-time computing

The invention provides a vehicle scheduling method for a junction without signal lights in an autonomous driving environment. The method includes that communication requests of a vehicle are transmitted to an RSU (remote subscriber unit) and form communication request sets, and the communication request sets are sequenced in an ascending manner according to scheduled time when the vehicle reachesthe junction; the RSU updates intermediate times of the vehicle according to the nearest vehicle allocation time and nearest vehicle belonging road segment, and the communication request sets are constructed to optimize the vehicle allocation time; the RSU traverses the communication request sets of the vehicle allocation time, the allocation time of the vehicle passing through the junction is calculated, and the intermediate time of the vehicle is updated; the head end of the vehicle reaches the junction at the time allocated by the RSU, the vehicle leaves finally, and the RSU updates the vehicle allocation time and the vehicle belonging road section; the RSU schedules all the vehicles corresponding to the communication requests in the communication request sets to pass through the junction. Compared with the prior art, the vehicle scheduling method has the advantages that time for the vehicles passing through the junction is integrally reduced.

Owner:WUHAN UNIV

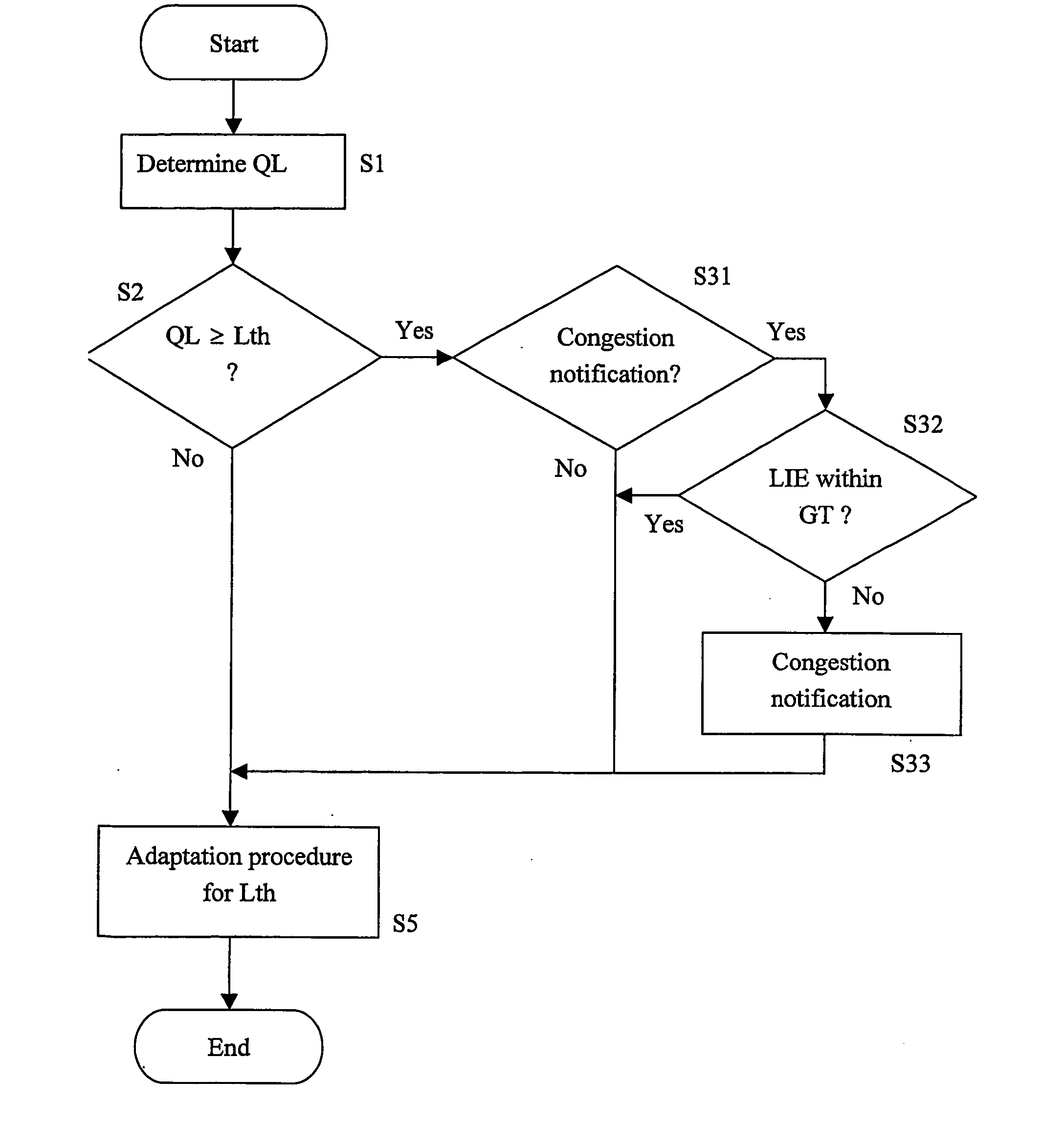

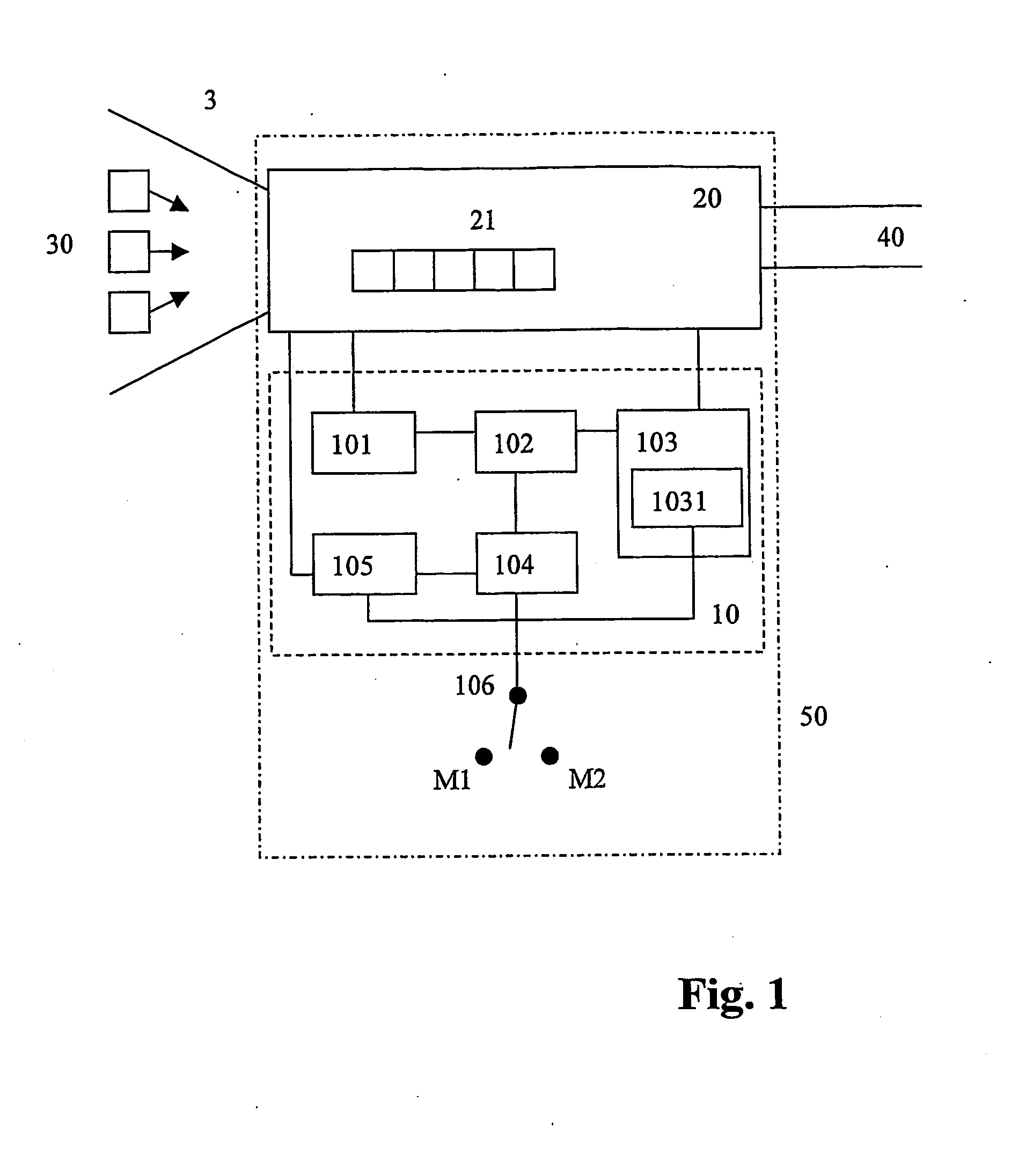

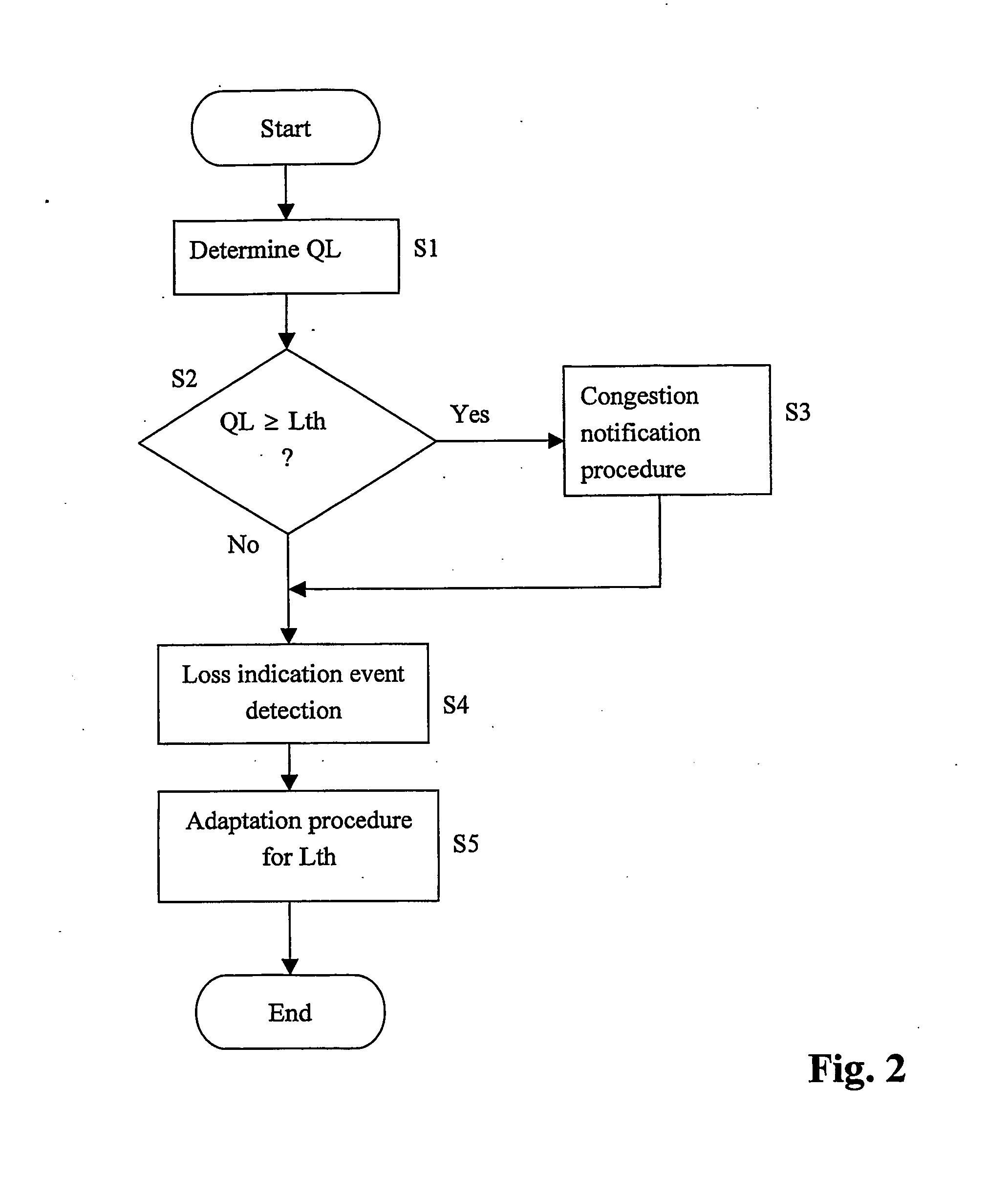

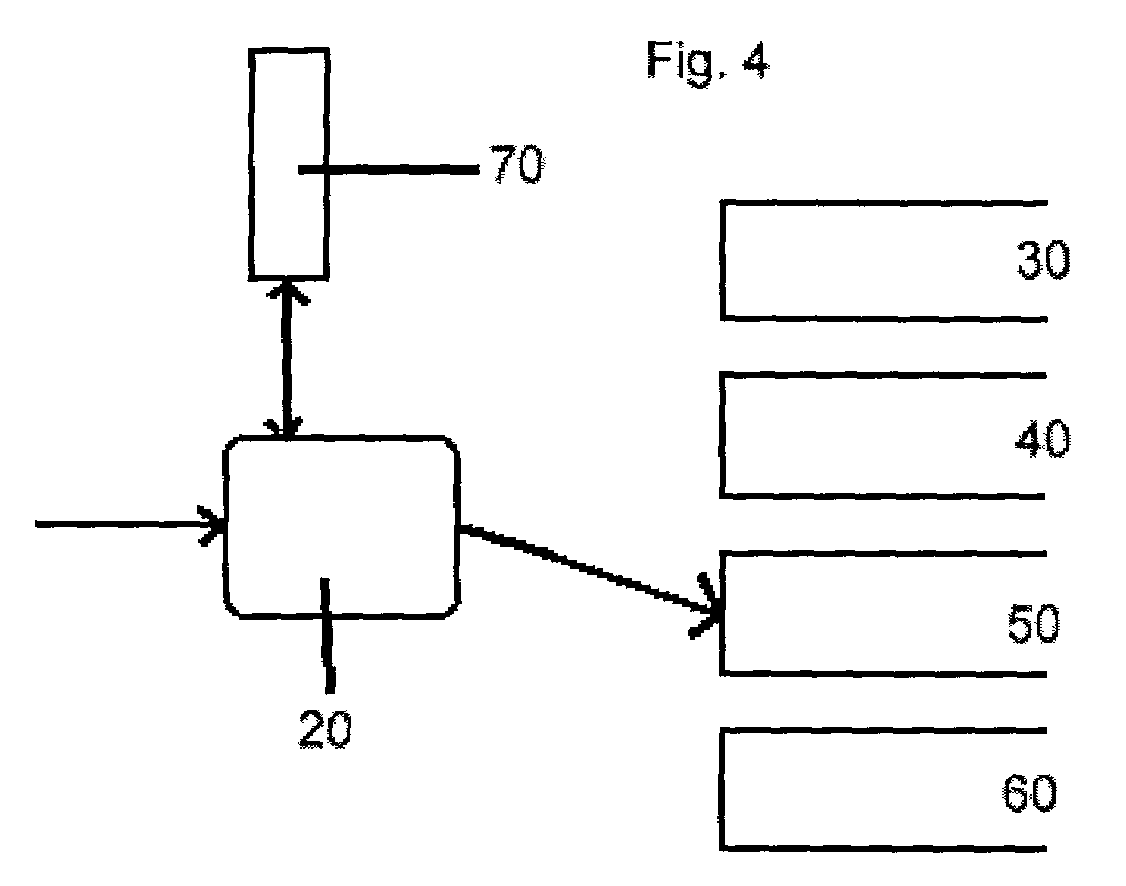

Method and device for controlling a queue buffer

InactiveUS20070091799A1Reduce delaysMaximize utilizationError preventionFrequency-division multiplex detailsCapacity valueControl system

A queue buffer control system is described, in which a queue length parameter QL is compared with a length threshold value Lth for triggering a congestion notification procedure that comprises a decision procedure for deciding whether to perform congestion notification or not. Also, an automatic threshold adaptation procedure S5 for adapting the threshold Lth on the basis of an estimated link capacity value LC is provided. The automatic threshold adaptation procedure S5 is operable notification in one of at least a first and a second adaptation mode, the first adaptation mode being associated with minimizing queuing delay and adapting the threshold value Lth on the basis of n·LC, where n≧1, and the second adaptation mode being associated with maximizing utilization and adapting the threshold value Lth on the basis of m·LC, where m>1 and m>n.

Owner:TELEFON AB LM ERICSSON (PUBL)

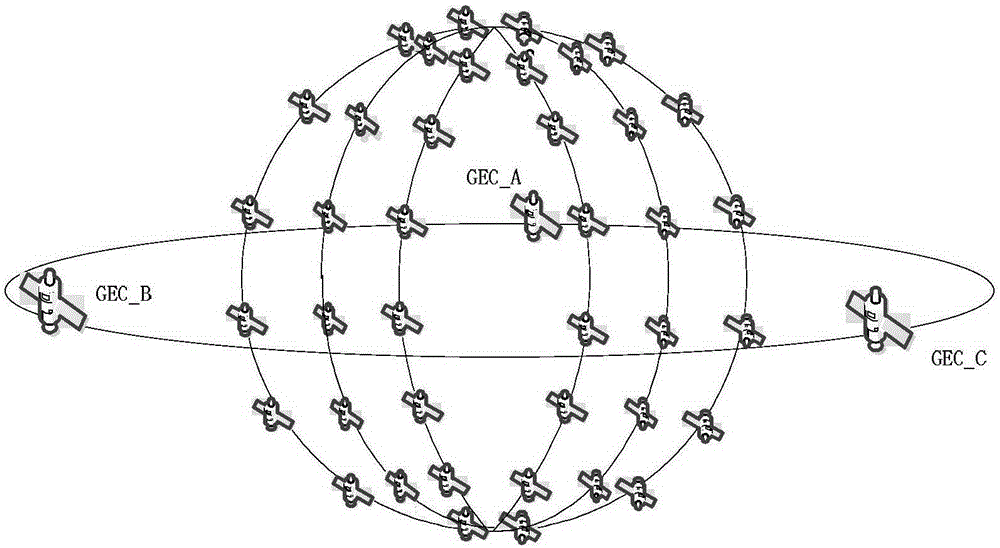

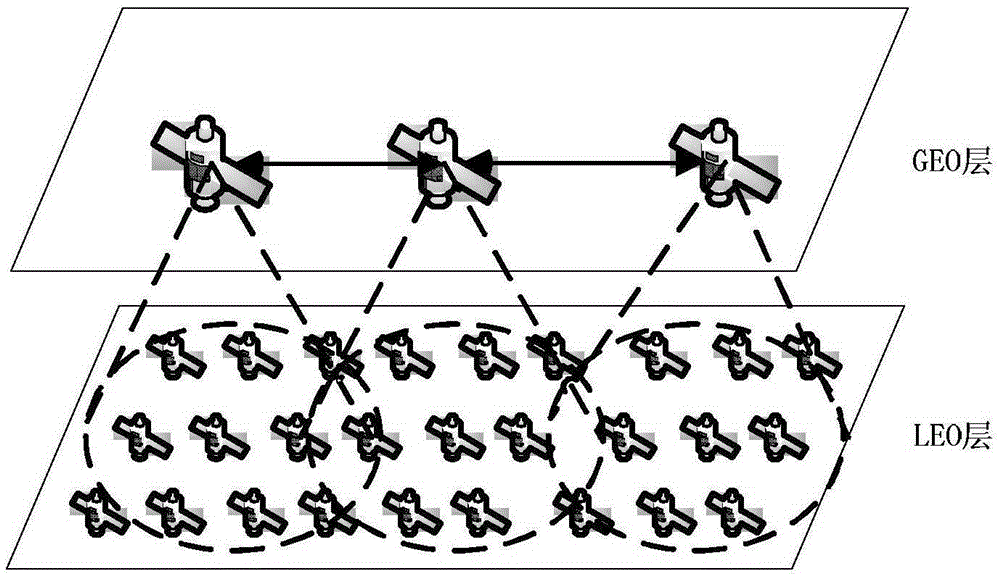

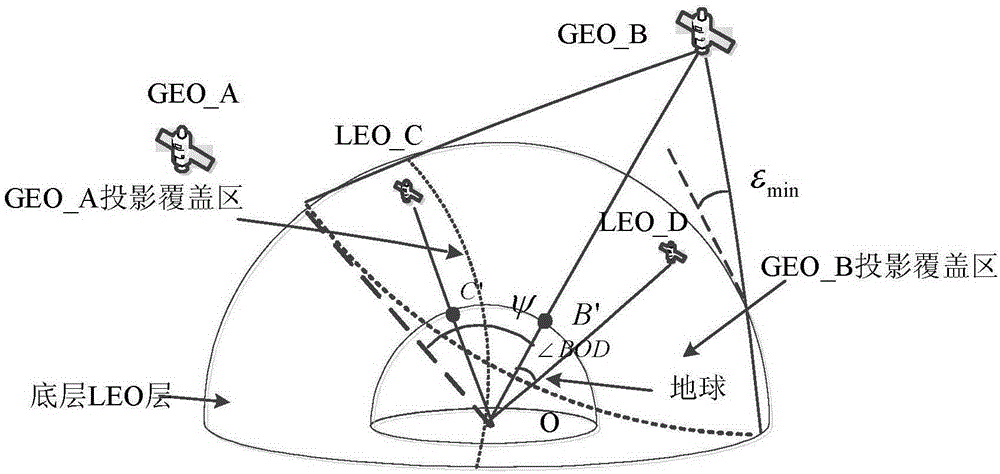

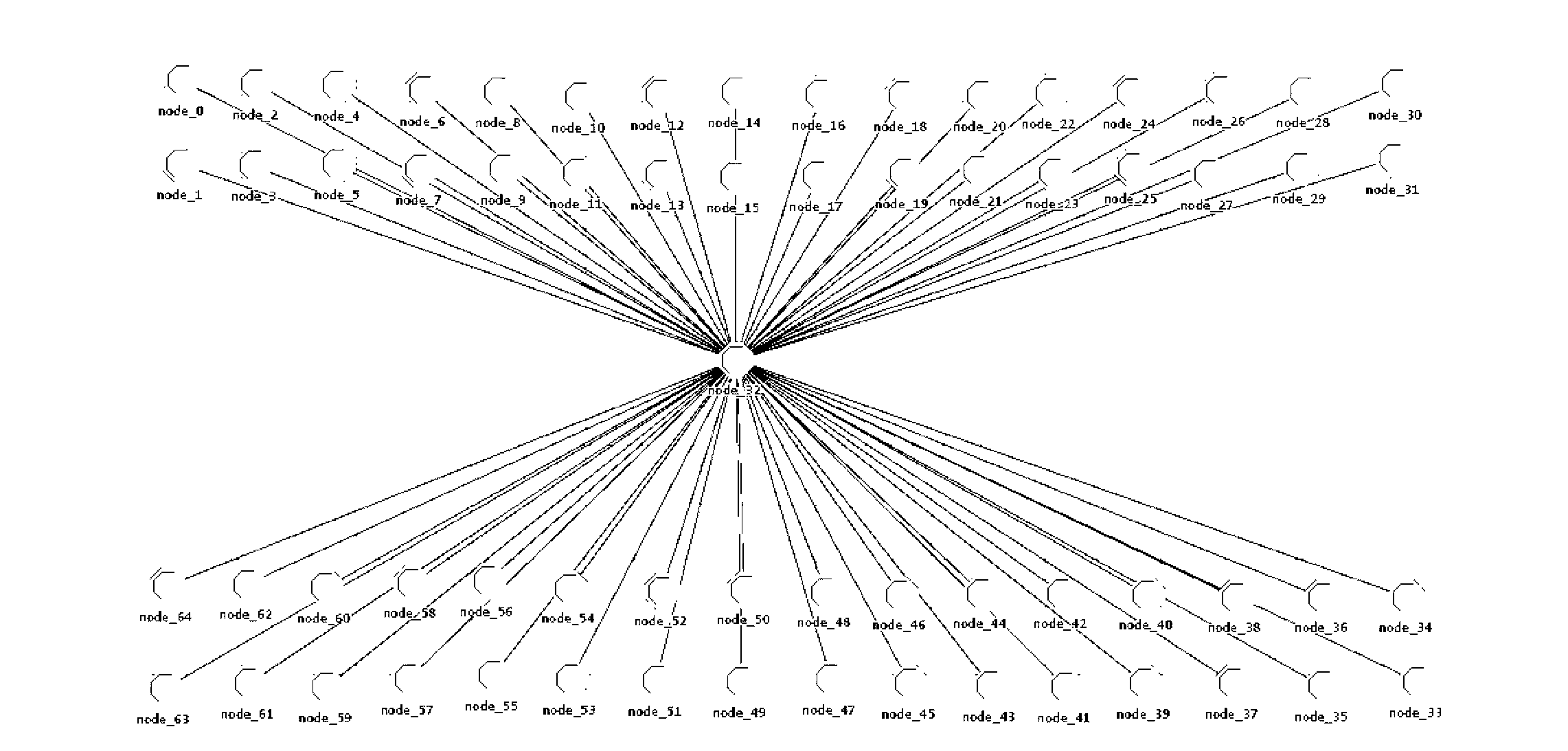

Virtual node based distributed GEO/LEO mixed network routing method

ActiveCN105228209AAvoid Congested SituationsLarge coverage areaNetwork topologiesDelayed timeEarth surface

The invention relates to a virtual node based distributed GEO / LEO mixed network routing method, and aims at solving the problem that a present satellite network routing algorithm cannot calculate routes in real time and is too long in delayed time when considering queuing time delay. The earth surface is averagely divided into 72 logical areas according to latitude and longitude, and LEO satellites are associated with the logical areas; the bottom LEO satellites are divided into three groups by a GEO, and each group serves as an LEO family; data is driven and routing is updated; and a routing calculation method is provided. According to different requirements of real-time service and non-real-time service, advantages of GEO and LEO networks are comprehensively utilized to construct a double-layer satellite network, and different layers are used for transmitting different type of packets in the mixed network to prevent the satellites from congestion in the present hop. The virtual node based method can be used to solve the problem that mobility of satellites causes continuous change of topology, and influence of queuing time delay is taken into consideration.

Owner:HARBIN INST OF TECH

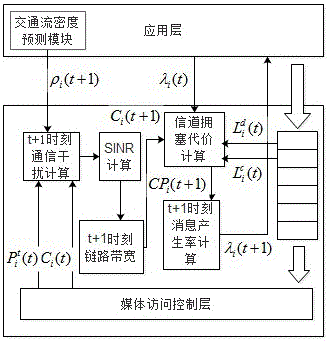

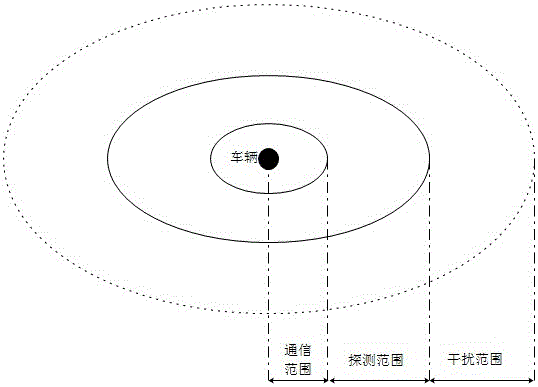

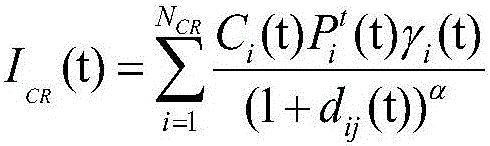

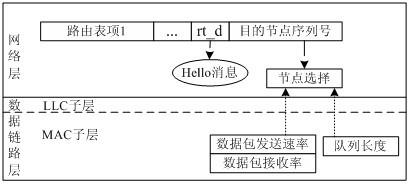

Adaptive rate control method based on mobility and DSRC/WAVE network relevance feedback

InactiveCN105791143AImprove utilizationAccurately control the targetData switching networksGeneration rateSignal-to-noise ratio (imaging)

The invention, which belongs to the technical field of car networking communinication, relates to an adaptive rate control method based on mobility and DSRC / WAVE network relevance feedback. The method comprises establishment of a traffic flow density prediction module, a t+1 time communication interference calculation module, an SINR calculation module, a t+1 time available link bandwidth calculation module, a channel congestion cost calculating module and an adaptive message generation rate calculation module. A traffic flow density value at a next time is predicted; according to the density value of the next time, a transmitting power, and a rate, an interference module of a communication process is established, a signal to noise ratio is calculated, and an available link bandwidth of a node at the next time is predicted; on the basis of mismatching of a transmission rate and mismatching of a transmission queue length, a channel congestion cost module is established, so that a message generation rate at the next time is adjusted adaptively. According to the method, adaptive rate adjustment is carried out in advance by using the prediction technology, so that the channel congestion is avoided; and the low communication delay and the high data packet transmission rate are guaranteed with the low calculation time and cost.

Owner:DALIAN UNIV OF TECH

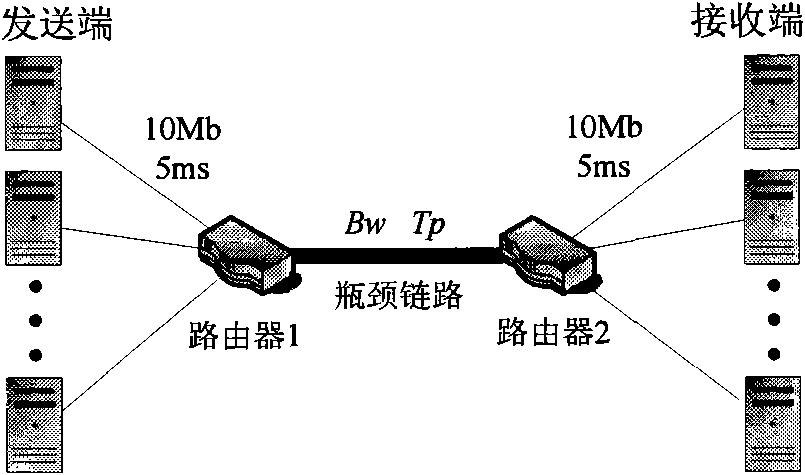

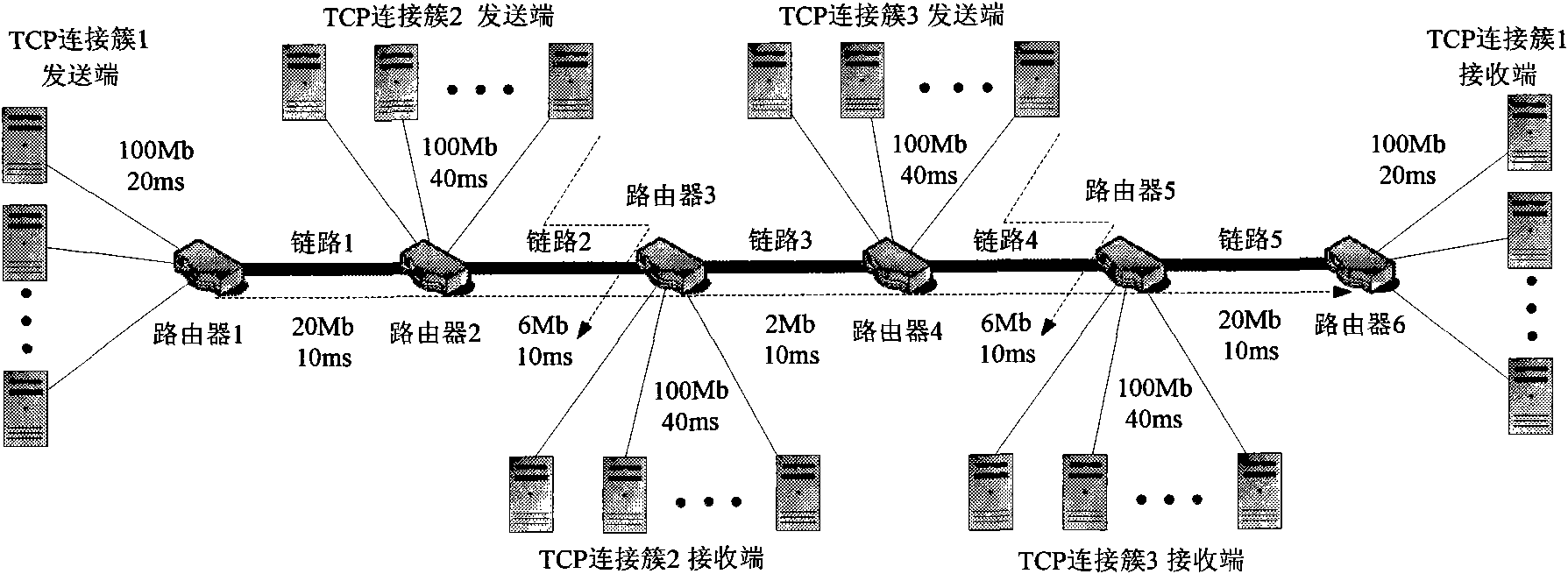

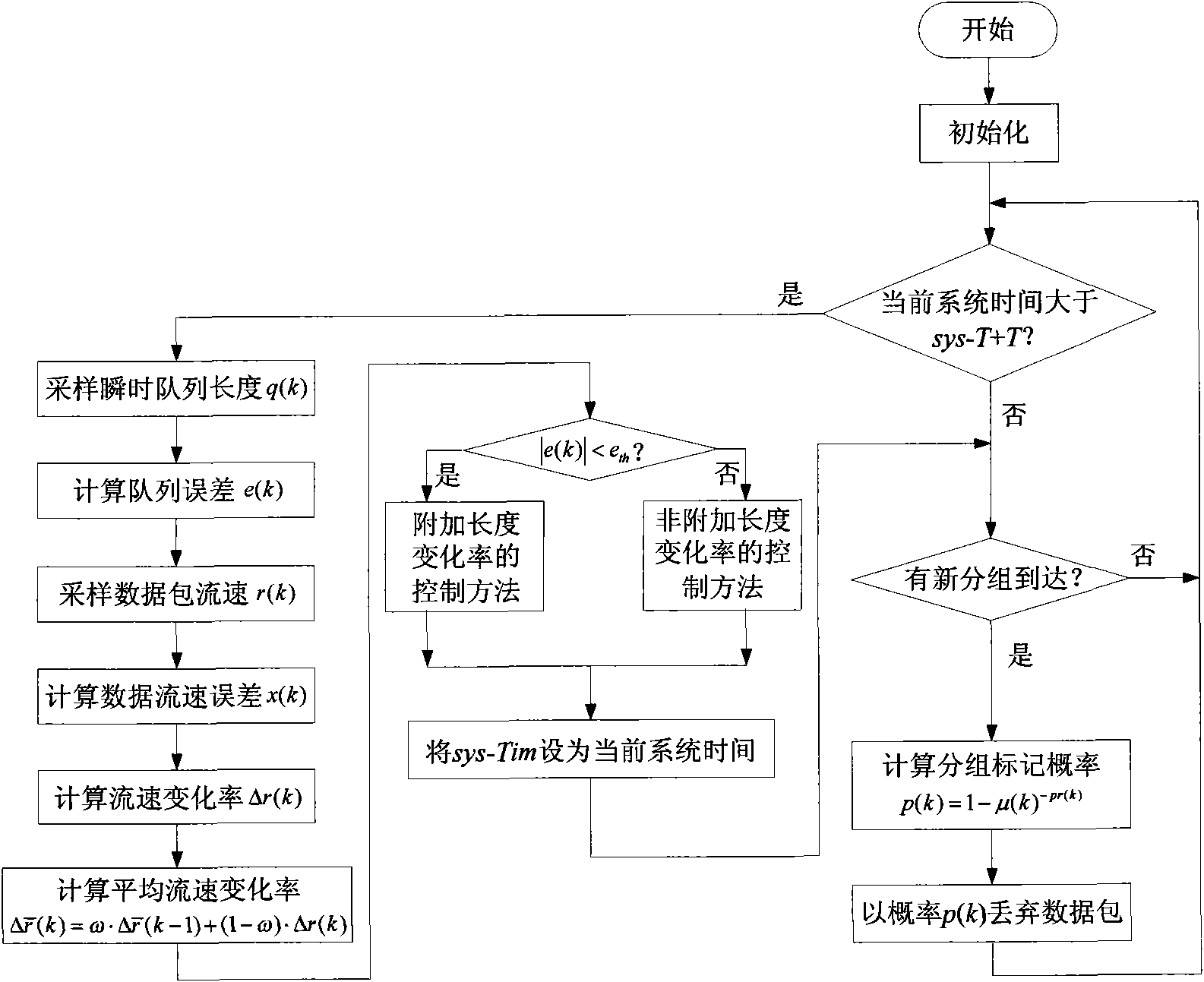

Adaptive congestion control method for communication network

InactiveCN101635674ADetect congestion goodSimple structureData switching networksUtilization factorConversion coefficients

The invention relates to an adaptive congestion control method for a communication network and belongs to the technical field of network engineering. The method comprises the following steps: calculating a queue difference e(k) in each sampling period; measuring input flow rate r(k) of a data package; calculating a data flow rate difference x(k); calculating a variance ratio deltar(k) of the dataflow rate; obtaining an average value delta*(k) of the variance ratio of the data flow rate; comparing a transient queue error e(k) with a preset error threshold e[th]; calculating a modified price pr(k) and probability conversion coefficient mu(k) according to a selected control method; calculating a drop probability p(k) by the following formula according to the price pr(k) and the conversion coefficient mu(k), and performing data package drop operation. The method requires simple structure and has strong expandability, and can effectively solve technical problems in REM, reduces route queuing time delay and jitter, ensures high link availability, and has good adaptability in a complicated dynamic environment.

Owner:SHANGHAI JIAO TONG UNIV

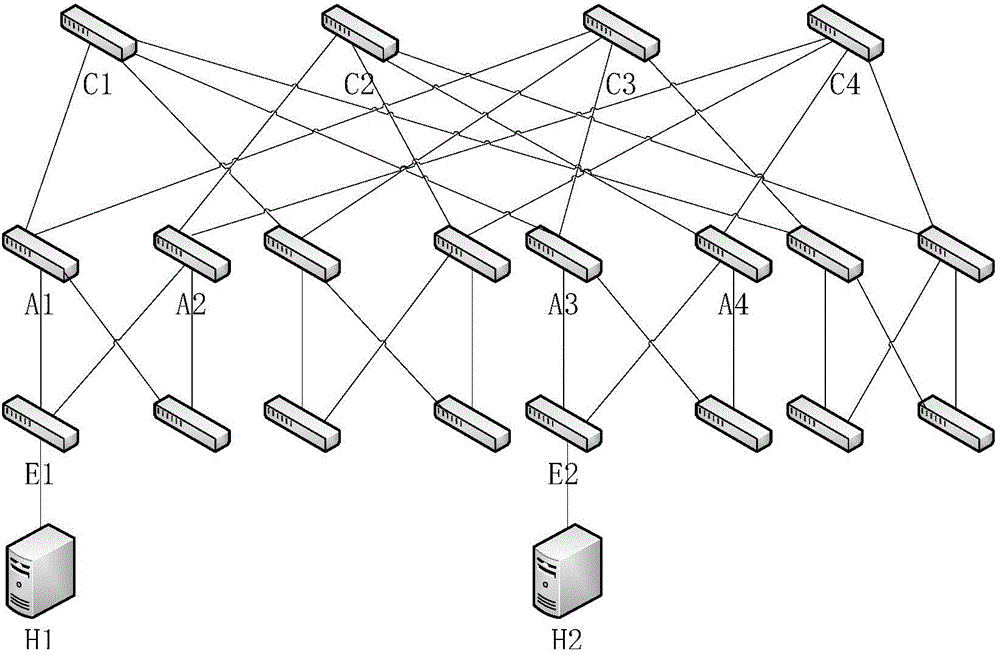

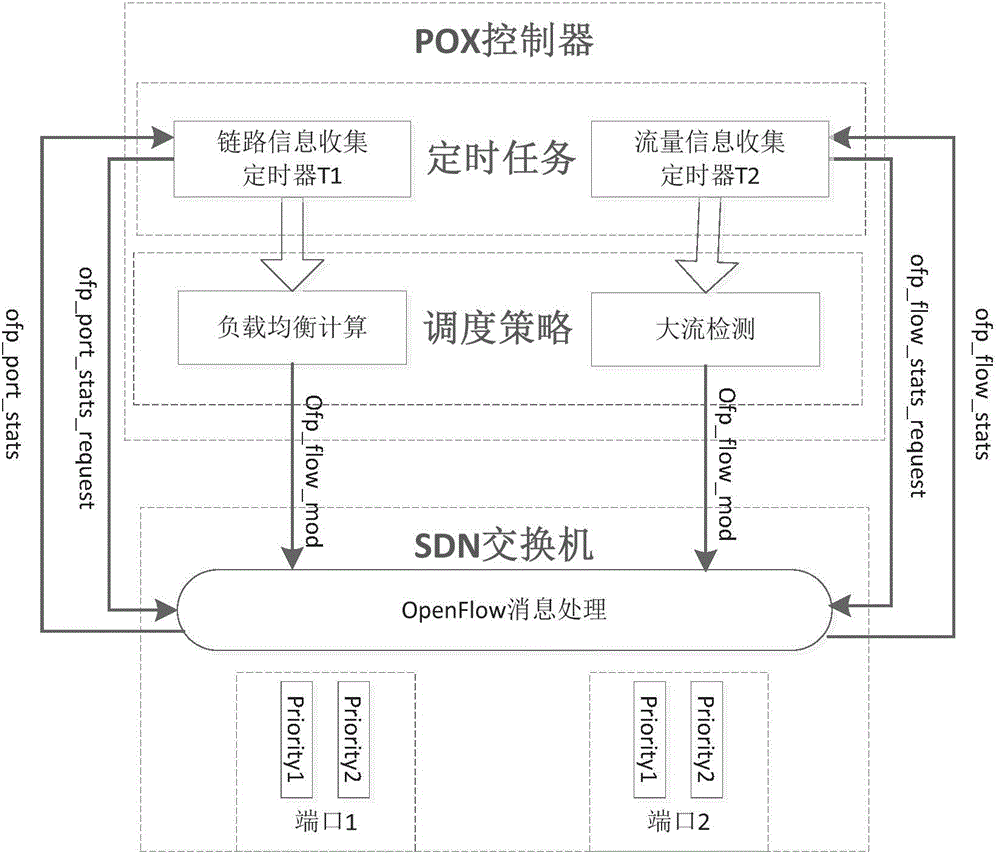

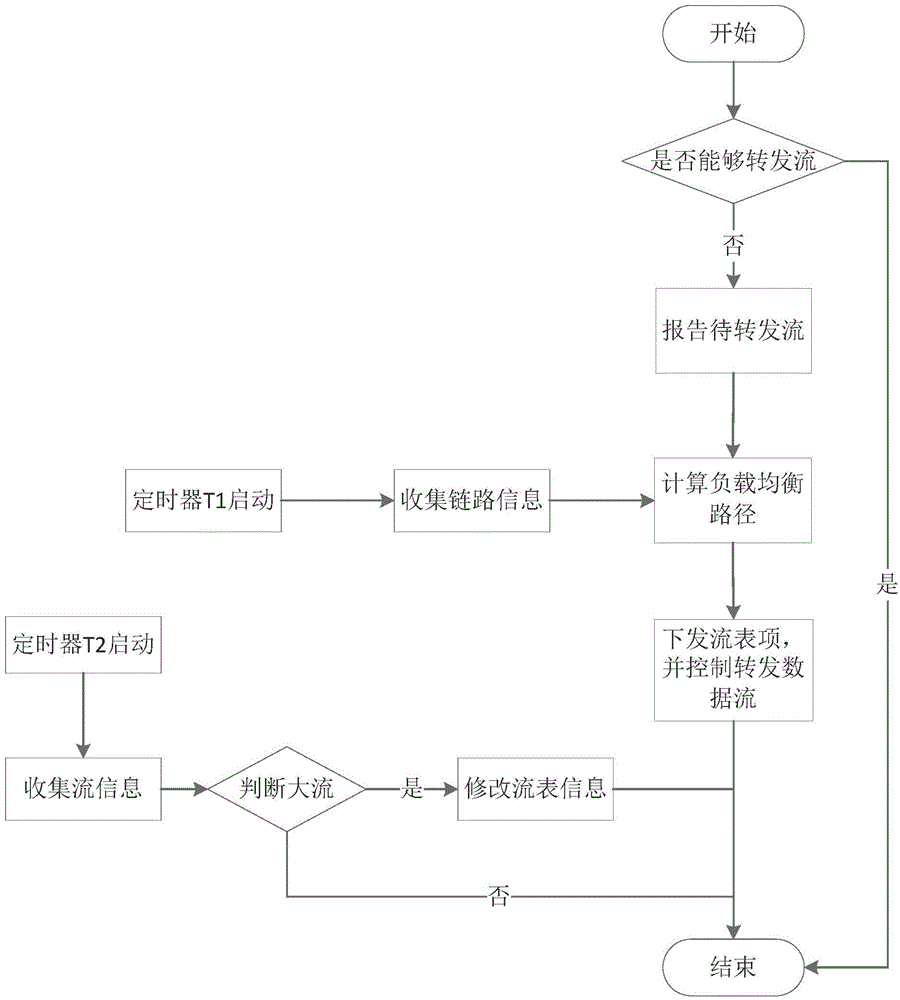

Data center network flow scheduling method based on round-robin

ActiveCN104836750AReduce queuing delayBalance workloadData switching networksData centerThe Internet

The invention discloses a data center network flow scheduling method based on round-robin, and belongs to the field of data center networks. In the data center networks having redundancy links, the method utilizes the SDN technology, comprehensively takes long flow and short flow distribution situations into account, dynamically controls the forwarding of flows in the networks, and dynamically adjusts queuing states of the flows in an SDN switch according to the size of the flows in the networks. By employing the method, load balancing of the data flows in the data center networks can be achieved, and the delay of the short flow in the internet can be reduced.

Owner:DALIAN UNIV OF TECH

Prediction based priority scheduling

ActiveUS8185899B2Reduce queuing delayInterference minimizationMultiprogramming arrangementsMemory systemsComputerized systemComputing systems

Systems and methods are provided that schedule task requests within a computing system based upon the history of task requests. The history of task requests can be represented by a historical log that monitors the receipt of high priority task request submissions over time. This historical log in combination with other user defined scheduling rules is used to schedule the task requests. Task requests in the computer system are maintained in a list that can be divided into a hierarchy of queues differentiated by the level of priority associated with the task requests contained within that queue. The user-defined scheduling rules give scheduling priority to the higher priority task requests, and the historical log is used to predict subsequent submissions of high priority task requests so that lower priority task requests that would interfere with the higher priority task requests will be delayed or will not be scheduled for processing.

Owner:INT BUSINESS MASCH CORP

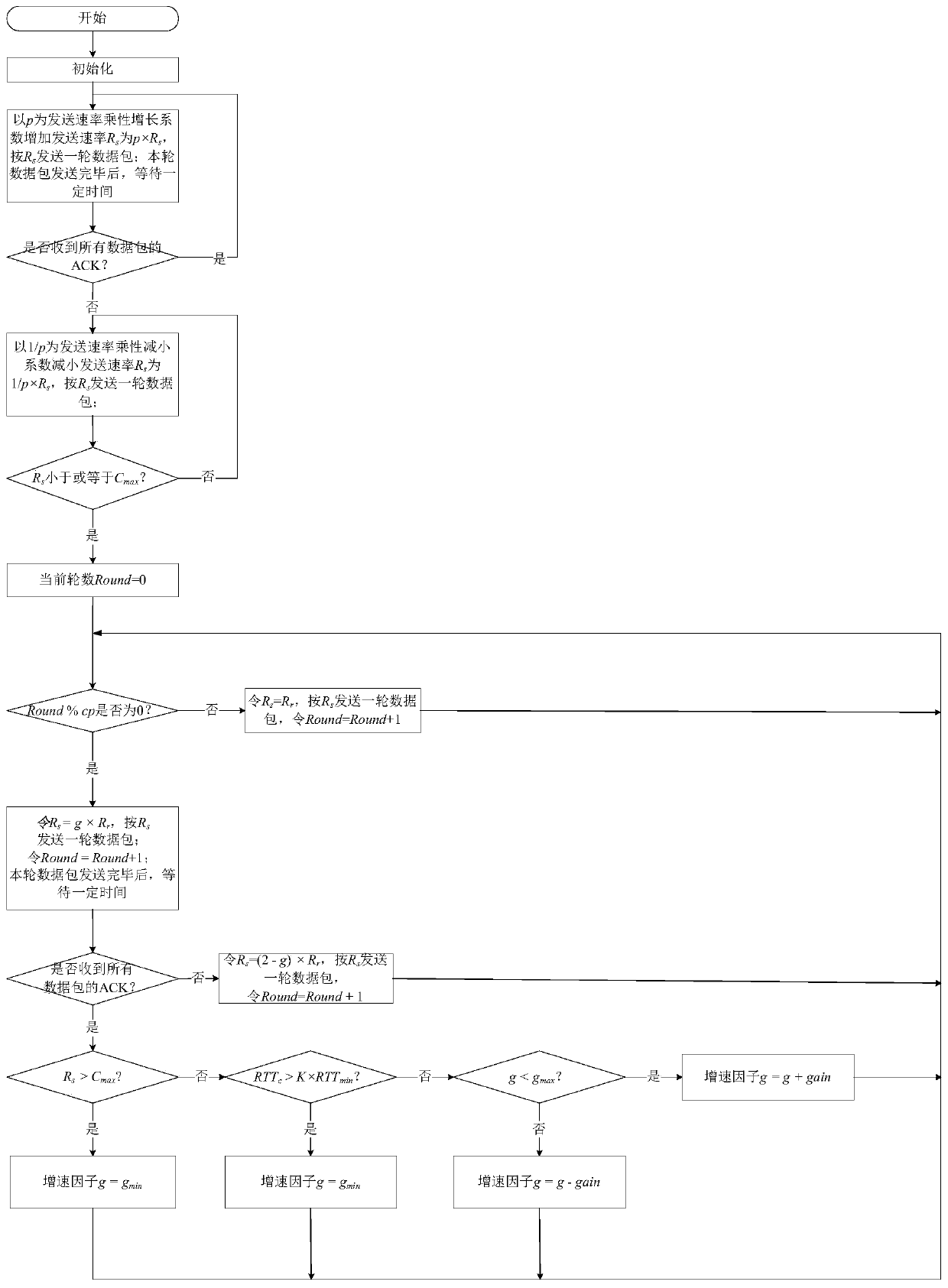

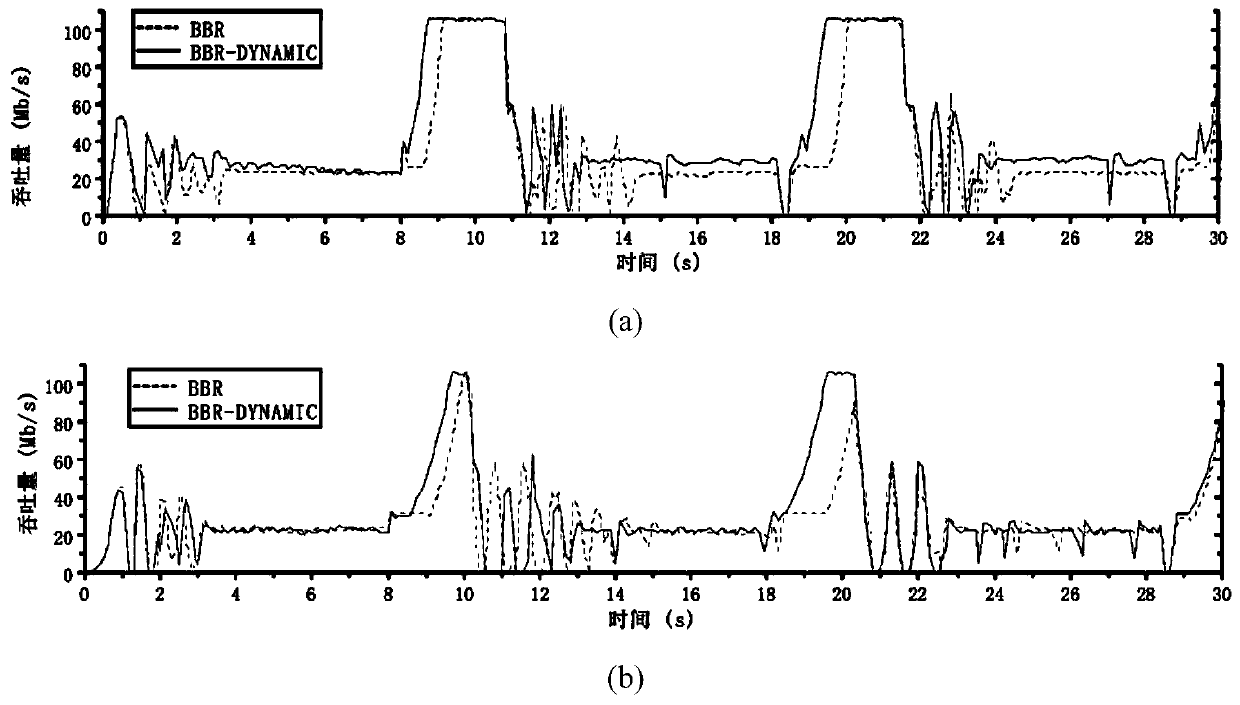

Sending rate adjusting method in bandwidth detection stage and congestion control algorithm

ActiveCN110572333ASmall increaseCongestion doesn't get worseData switching networksAcceleration factorNetwork packet

The invention discloses a method for adjusting a sending rate in a bandwidth detection stage and a congestion control algorithm, and the method comprises the steps: firstly increasing the sending ratethrough a speed increasing factor g in a first RTT in the bandwidth detection stage, and then sending a round of data package, so as to carry out the bandwidth detection; judging the congestion condition of the current network according to whether ACK packets of all data packets sent in the round are received within a certain period of time; when the condition of the current network is good, adjusting the acceleration factor of the sending rate according to the current round-trip delay and the historical maximum transmission rate; when the condition of the current network is poor, reducing the sending rate to send a round of data packets, i.e., reducing the sending rate to empty the queue in the second RTT of the bandwidth detection stage; and in the remaining period of the bandwidth detection stage, determining the sending rate according to the real-time transmission rate. The method can give consideration to the transmission efficiency, convergence and fairness.

Owner:CENT SOUTH UNIV

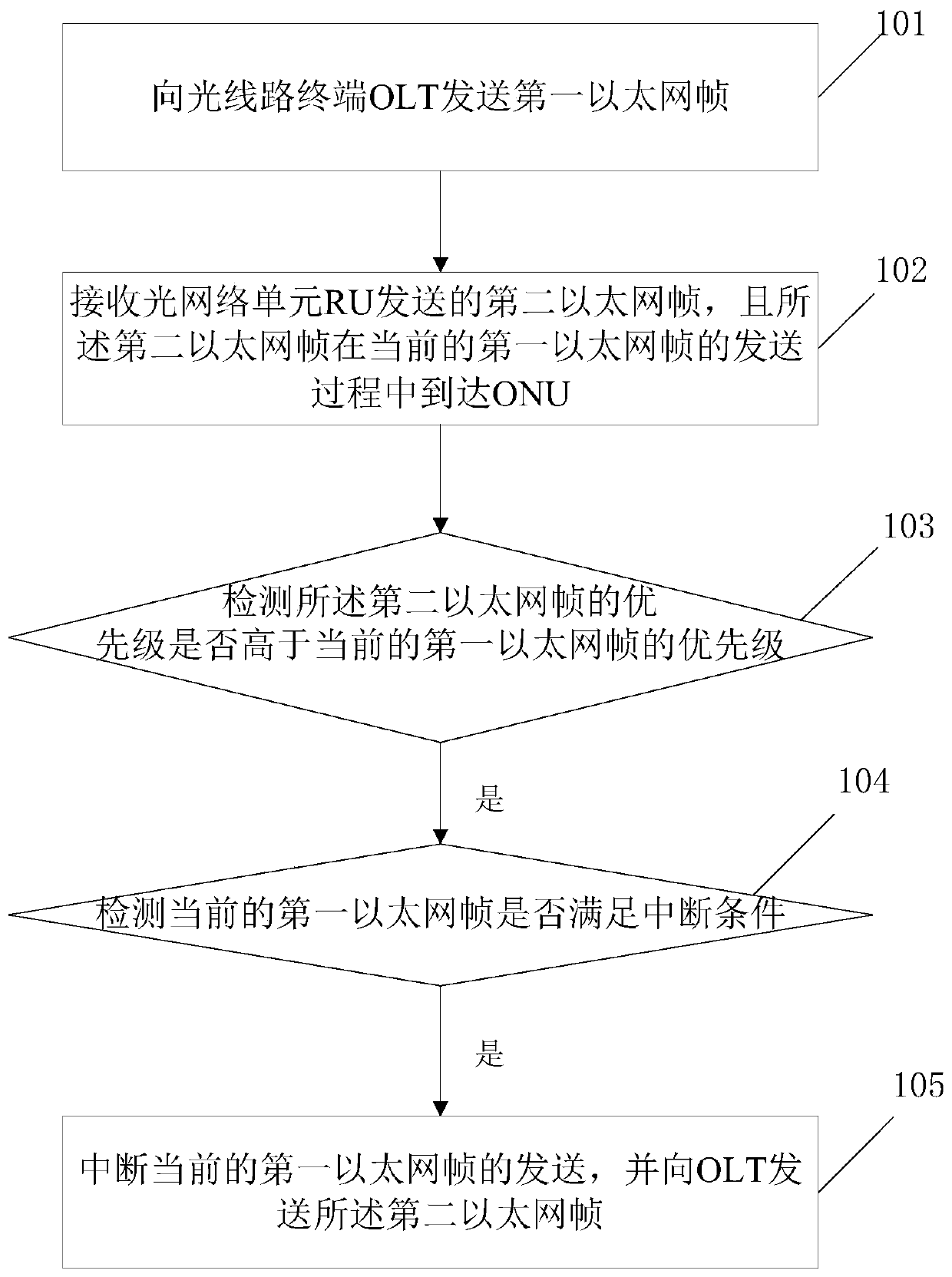

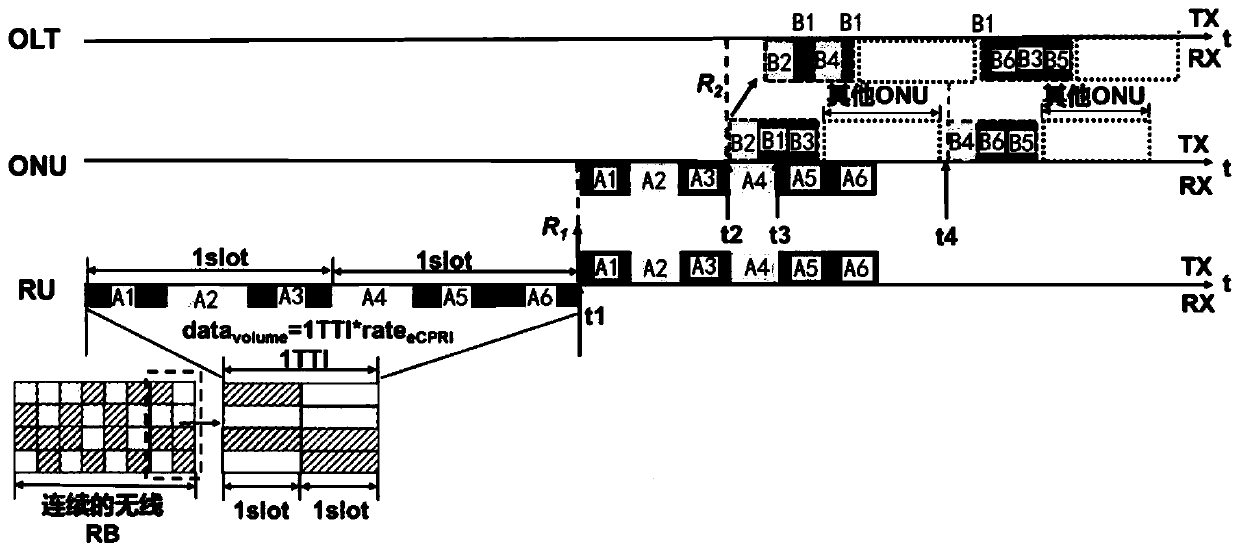

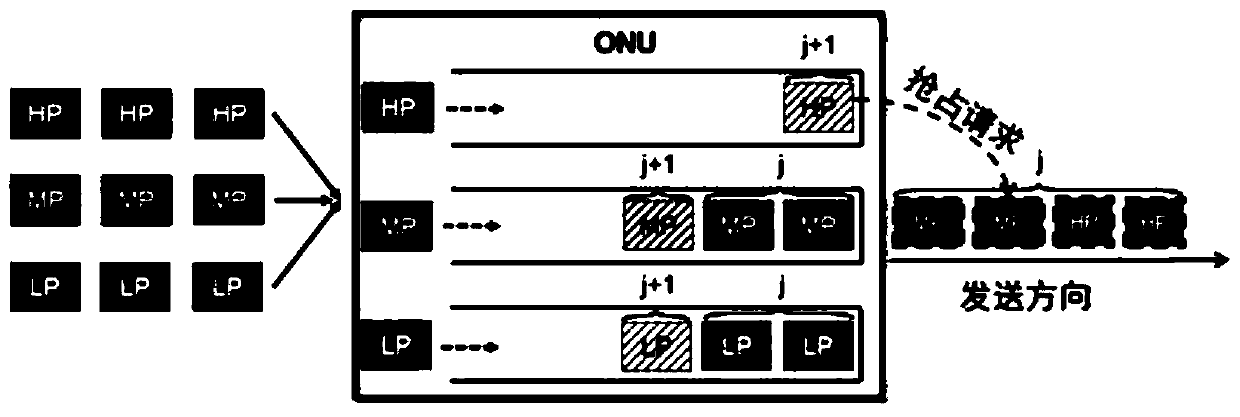

TDM-PON mobile forward transmission optical network data transmission method and device based on frame preemption

InactiveCN110933532AReduce queuing delayMultiplex system selection arrangementsEngineeringOptical line termination

The invention discloses a TDM-PON (Time Division Multiplexing-Passive Optical Network) mobile fronthaul optical network data transmission method and a device based on frame preemption. The method is applied to an optical network unit (ONU), and comprises the following steps: sending a first Ethernet frame to an optical line terminal (OLT); receiving a second Ethernet frame sent by an optical network unit RU, wherein the second Ethernet frame reaches the ONU in the sending process of the current first Ethernet frame; detecting whether the priority of the second Ethernet frame is higher than that of the current first Ethernet frame; if yes, detecting whether the current first Ethernet frame meets an interrupt condition; and if yes, interrupting the sending of the current first Ethernet frame, and sending the second Ethernet frame to the OLT, thereby reducing the queuing delay of the delay-sensitive service.

Owner:BEIJING UNIV OF POSTS & TELECOMM

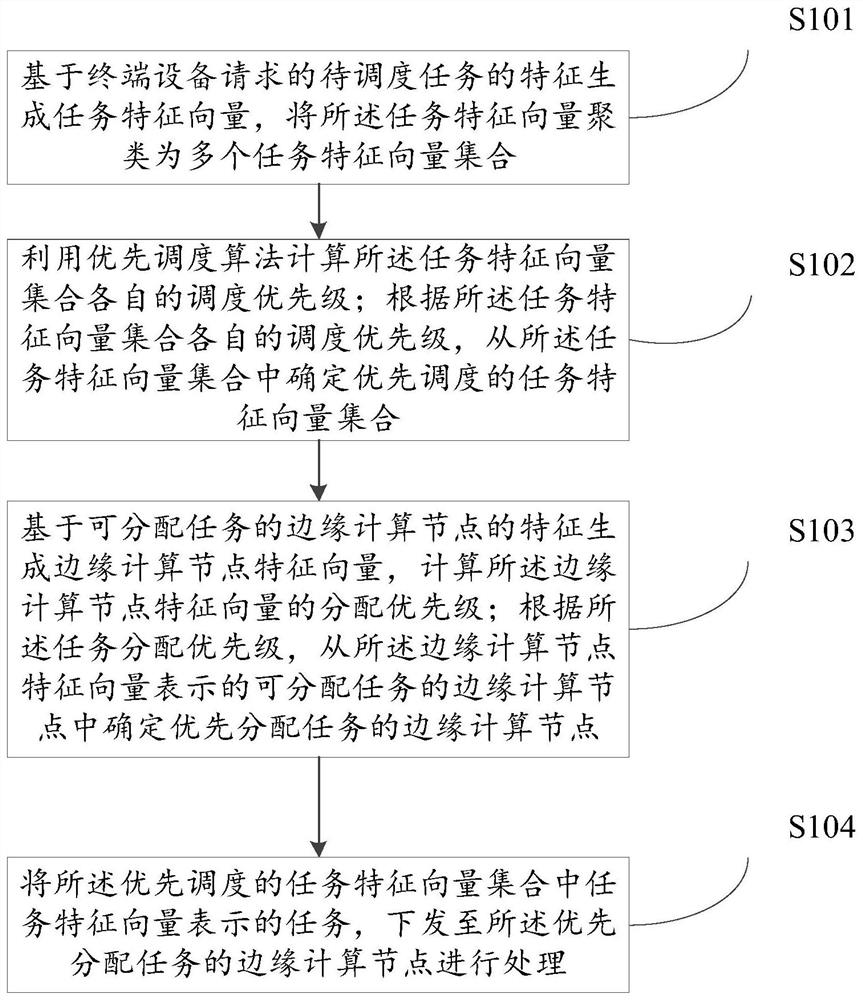

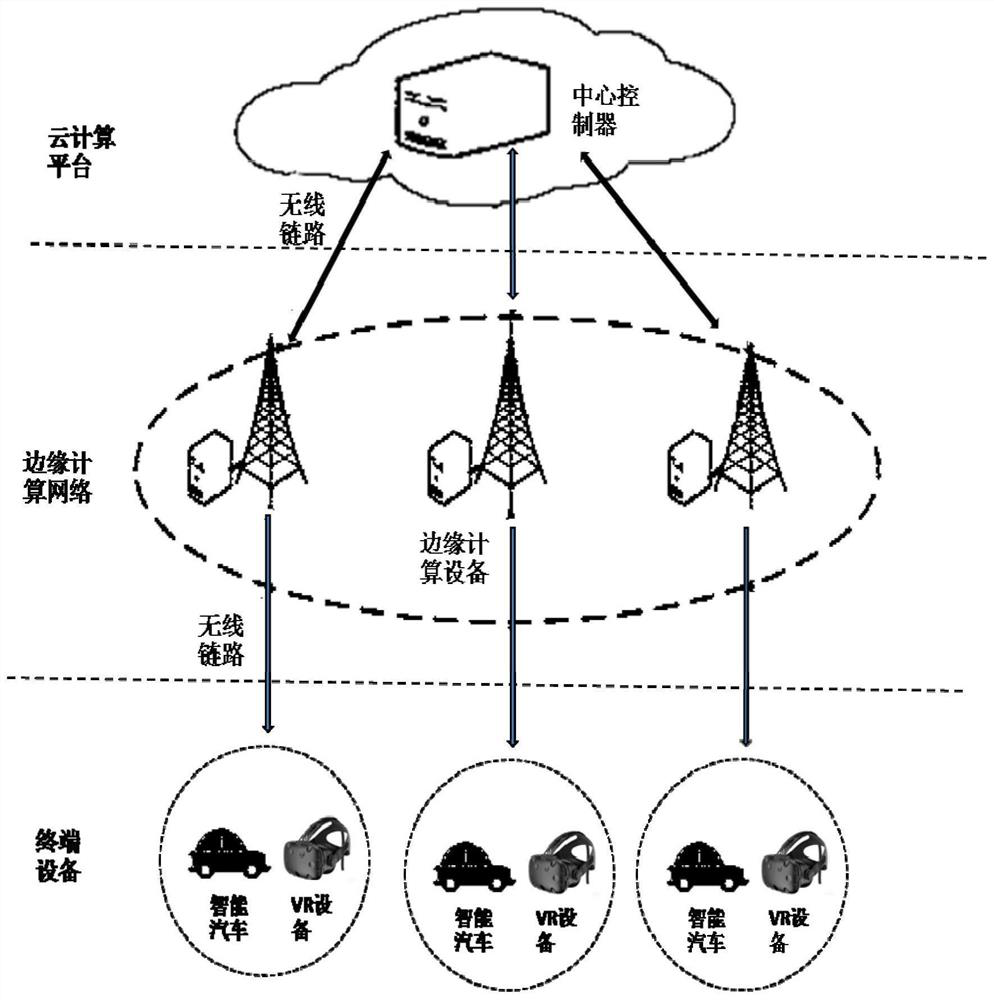

Task scheduling method and device based on edge computing network

ActiveCN111679904AReduce latencyReduce power consumptionResource allocationCharacter and pattern recognitionEdge computingTransmission time delay

The embodiment of the invention provides a task scheduling method based on an edge computing network. The cloud computing platform performs dual computing through task scheduling priorities and allocation priorities of edge computing nodes; according to the task scheduling method and device, the tasks with high time delay requirements are scheduled preferentially and allocated to the edge computing nodes close to the terminal equipment to be processed, so that the task processing time delay, the network transmission time delay and the system overhead of the whole network system during processing of the interactive tasks related to the user are reduced, and the user experience is improved.

Owner:BEIJING 21VIANET DATA CENT

Switch and a switching method

InactiveUS7352695B2Quickly and efficiently switchingReduce impactError preventionFrequency-division multiplex detailsTraffic capacityData stream

A switch at a transmission end of a system including a number of memory devices defining queues for receiving traffic to be switched, each queue having an associated predetermined priority classification, and a processor for controlling the transmission of traffic from the queues. The processor transmits traffic from the higher priority queues before traffic from lower priority queues. The processor monitors the queues to determine whether traffic has arrived at a queue having a higher priority classification than the queue from which traffic is currently being transmitted. The processor suspends the current transmission after transmission of the current minimum transmittable element if traffic has arrived at a higher priority queue, transmits traffic from the higher priority queue, and then resumes the suspended transmission. At a receiving end, a switch that includes a processor separates the interleaved traffic into output queues for reassembly of individual traffic streams from the data stream.

Owner:WSOU INVESTMENTS LLC

Switch and a switching method

InactiveUS7768914B2Quickly and efficiently switchingReduce impactError preventionTransmission systemsTraffic capacityData stream

Owner:WSOU INVESTMENTS LLC

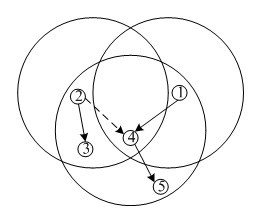

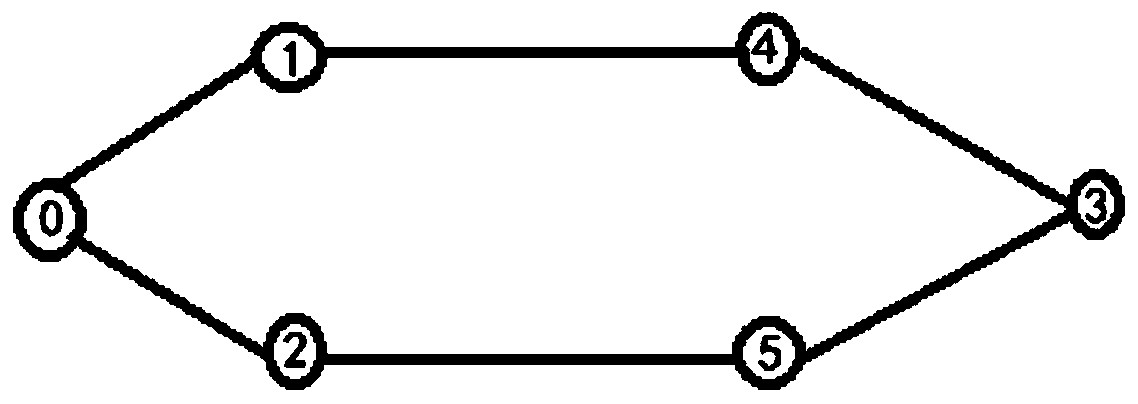

Cross-layer QOS (Quality of Service) routing method based on node occupancy rate in wireless sensor network

ActiveCN102523616AReduce latencyLoad balancingWireless communicationQuality of serviceWireless mesh network

The invention provides a cross-layer QOS (Quality of Service) routing method based on a node occupancy rate in a wireless sensor network. The method comprises the following routing steps of: 1) sending RREQ (Routing Request) grouping information to adjacent idle nodes by a source node; if no adjacent idle nodes exist, sending the RREQ grouping information to the adjacent nodes with the low occupancy rate; 2) comparing a target node address with a self routing table; if the target node address is on the routing table, carrying out step 3); otherwise, carrying out step 4); 3) sending the RREQ grouping information to the target node; 4) sending the RREQ grouping information to the adjacent idle nodes; if no adjacent idle nodes exist, sending the RREQ grouping information to the adjacent nodes with the low occupancy rate and returning back to the step 2); and 5), after receiving the RREQ grouping information by the target node, establishing a communication link. According to the invention, the selection of the nodes is controlled by the node occupancy rate; in a route inquiring process, the nodes at a transmission state are bypassed, so that the queuing time delay is reduced.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

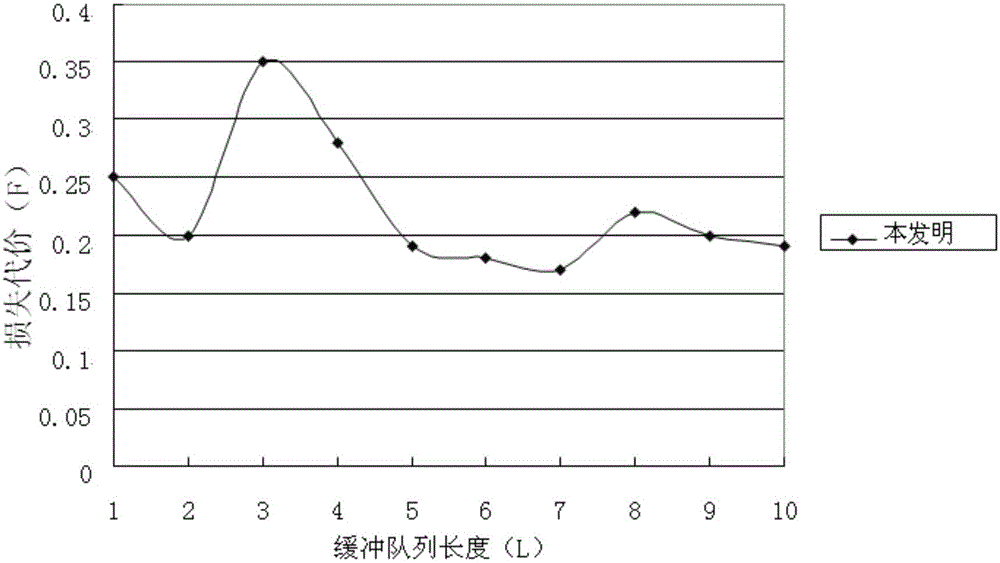

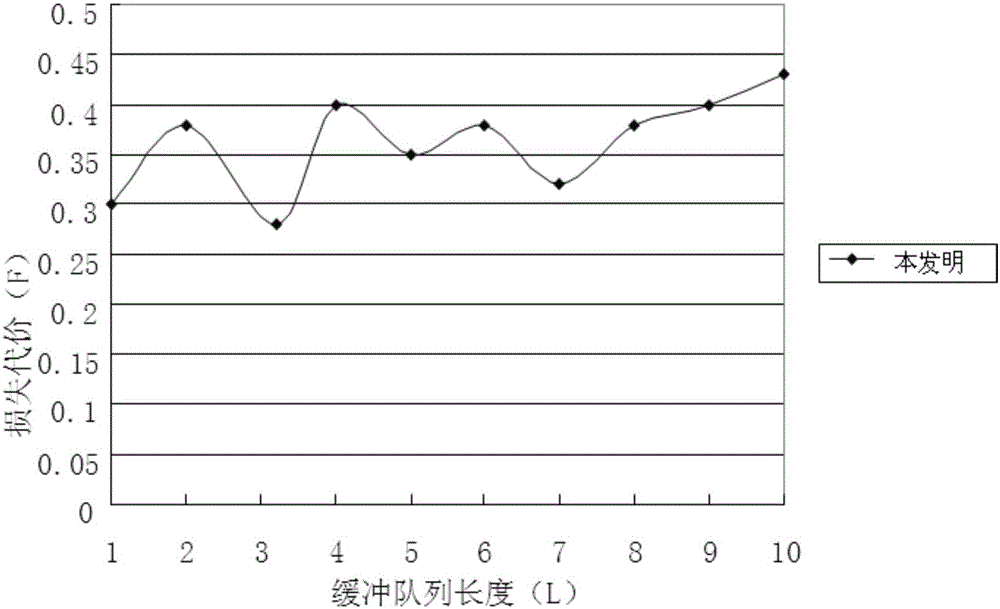

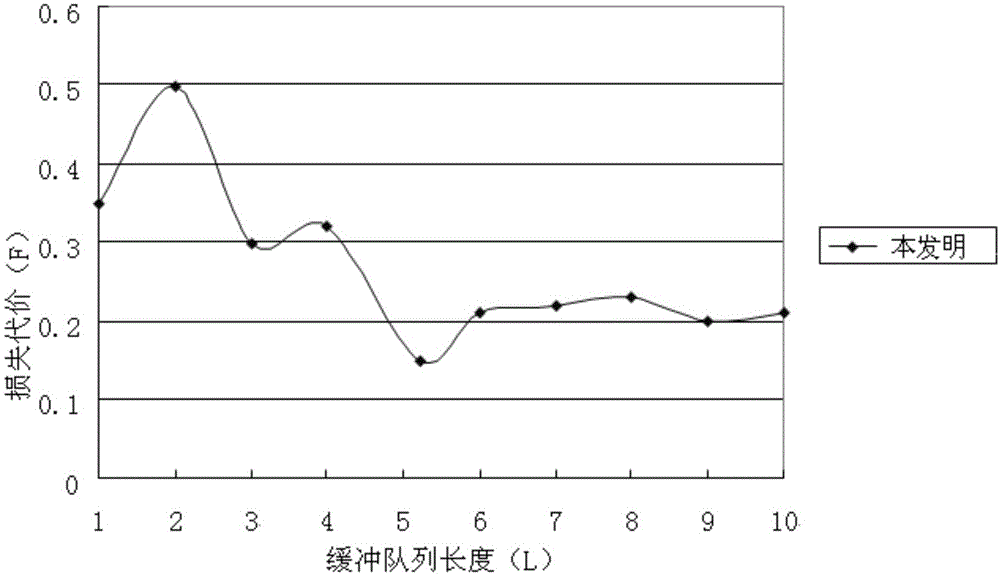

A method for setting a message buffer queue length

InactiveCN105119843AExcellent bandwidth utilizationReduce queuing delayData switching networksComputer network technologyMathematical model

The invention relates to a method for setting a message buffer queue length, and belongs to the technical field of computer networks. According to the invention, an Ethernet buffer queue mathematic model is established according to a queuing theory, and then based on an M / G / 1 / infinitely great queuing model, loss cost of Ethernet communication is calculated; and finally, an Ethernet optimal buffer queue length existing when the minimum loss cost is obtained is calculated, so that the optimal network buffer queue length at present can be dynamically calculated and set according to changes of the Ethernet bandwidth utilization rate, thereby reducing network data queuing time-delay, guaranteeing real time transmission of the network data and reaching an optimal Ethernet bandwidth utilization rate.

Owner:NO 8357 RES INST OF THE THIRD ACADEMY OF CHINA AEROSPACE SCI & IND

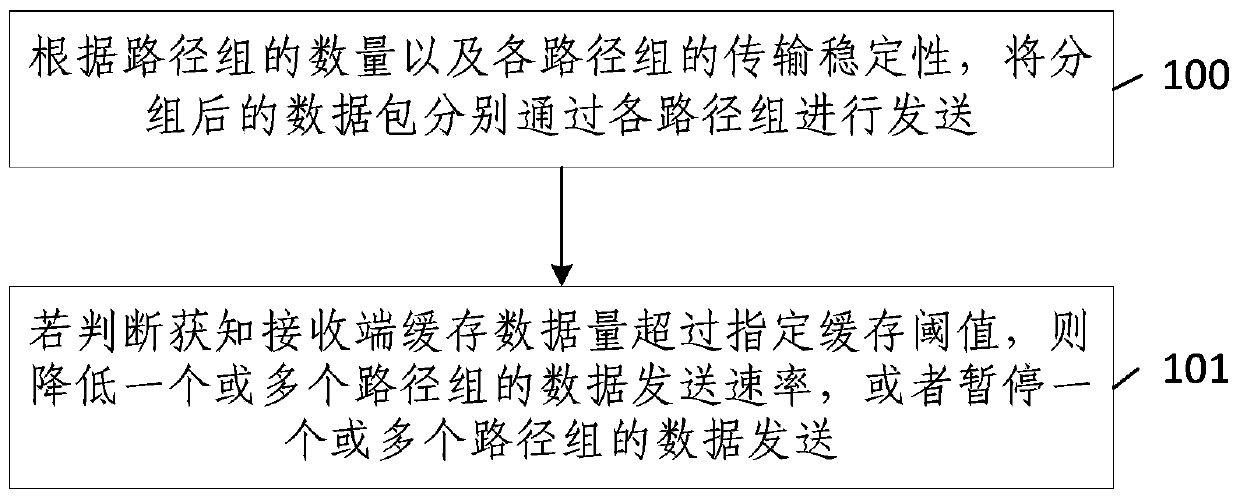

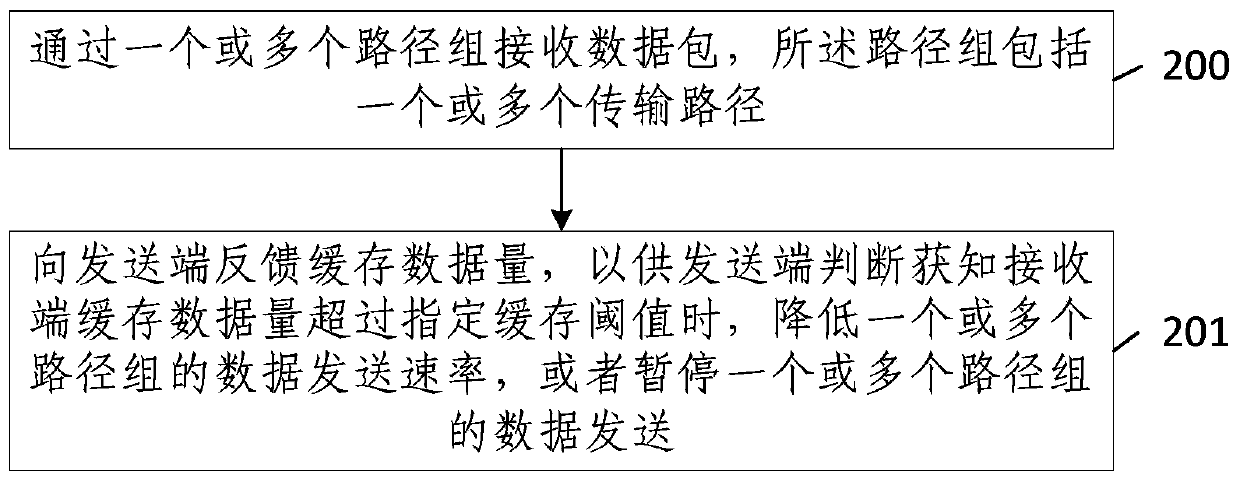

Multi-path transmission method and device based on SCTP-CMT transmission protocol

InactiveCN110022261AReduce delivery pressureImprove sending efficiencyData switching networksTransmission protocolTelecommunications link

The embodiment of the invention provides a multi-path transmission method and device based on an SCTP-CMT transmission protocol. The method comprises the following steps: according to the number of path groups and the transmission stability of each path group, sending grouped data packets through each path group respectively; wherein the path group comprises one or more transmission paths; and ifit is judged that the cache data volume of the receiving end exceeds the specified cache threshold value, reducing the data transmission rate of one or more path groups, or pausing the data transmission of one or more path groups. Due to the fact that more transmission links are used, the data transmission amount is increased, the transmission pressure of each link is reduced, and the data sendingefficiency can be improved. Meanwhile, when the cache of the receiving end is too large, rate control can be carried out according to the cache data size of the receiving end, and the problems that acache region of the receiving end is blocked due to the too large data size, the data receiving efficiency is reduced, and the efficiency of a whole communication link is influenced are solved.

Owner:BEIJING UNIV OF POSTS & TELECOMM

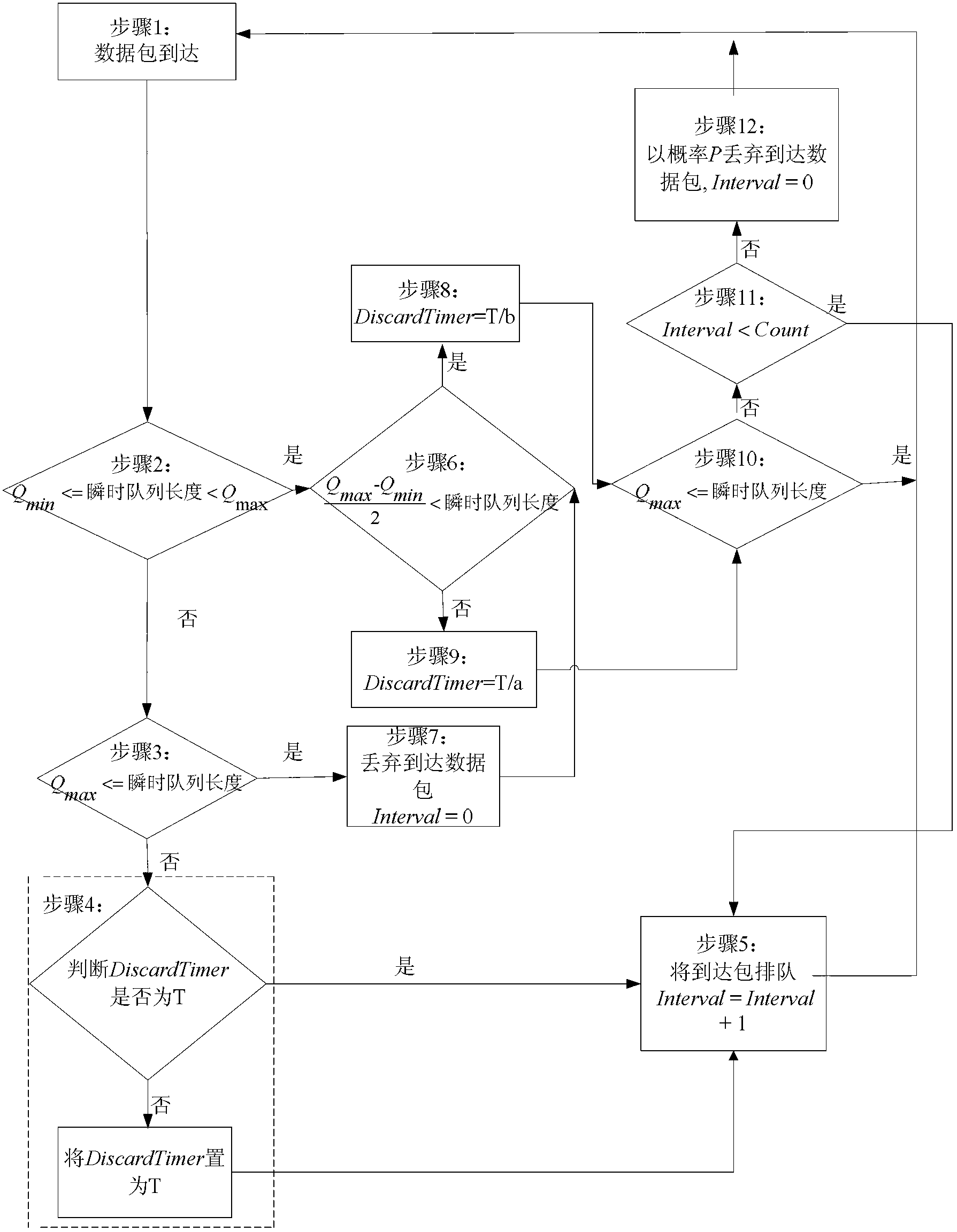

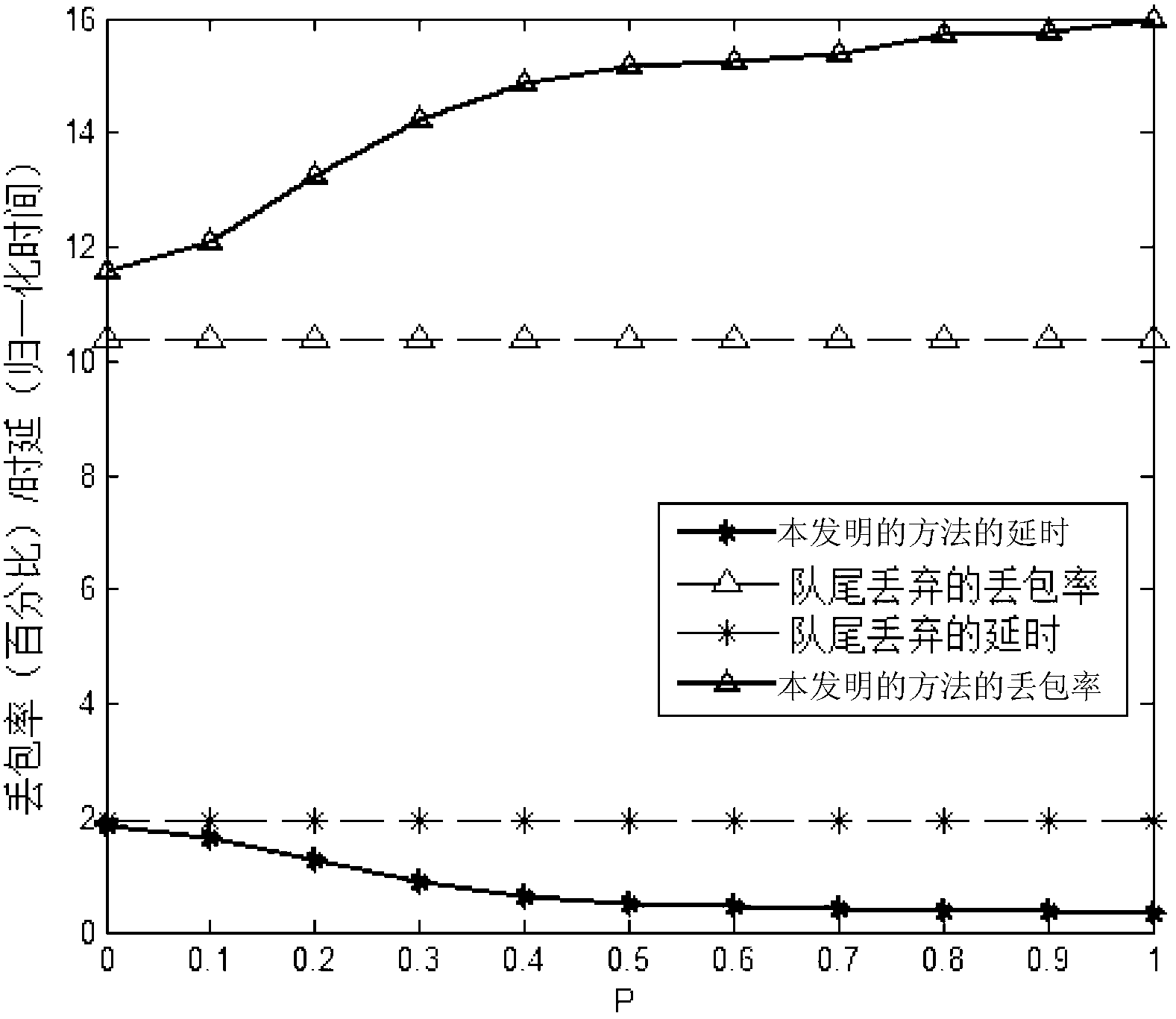

Packet loss method in LTE and LTE-A system based on RED algorithm

InactiveCN102801502AReduce queuing delayImprove adaptabilityError preventionRandom early detectionPacket loss

The invention discloses a packet loss method in a long term evolution (LTE) system and a long term evolution-advanced (LTE-A) system based on an RED (Random Early Detection) algorithm, relates to a packet loss method, and aims to solve the delay problem of long waiting of delay sensitive data flow in a radio link control (RLC) layer buffer of the LTE system and the LTE-A system caused by congestion and even buffer overflow. The packet loss is controlled and implemented by changing the set value of a discard timer in the LTE system and the LTE-A system and comparing an instantaneous queue length in the buffer with a minimal threshold Qmin and a maximal threshold Qmax. The randomness of a probability P ensures the randomness and fairness of the packet loss, and is favorable for the algorithm to avoid continuous packet loss. The method provided by the invention is used for implementing the packet loss in the LTE system and the LTE-A system.

Owner:HARBIN INST OF TECH

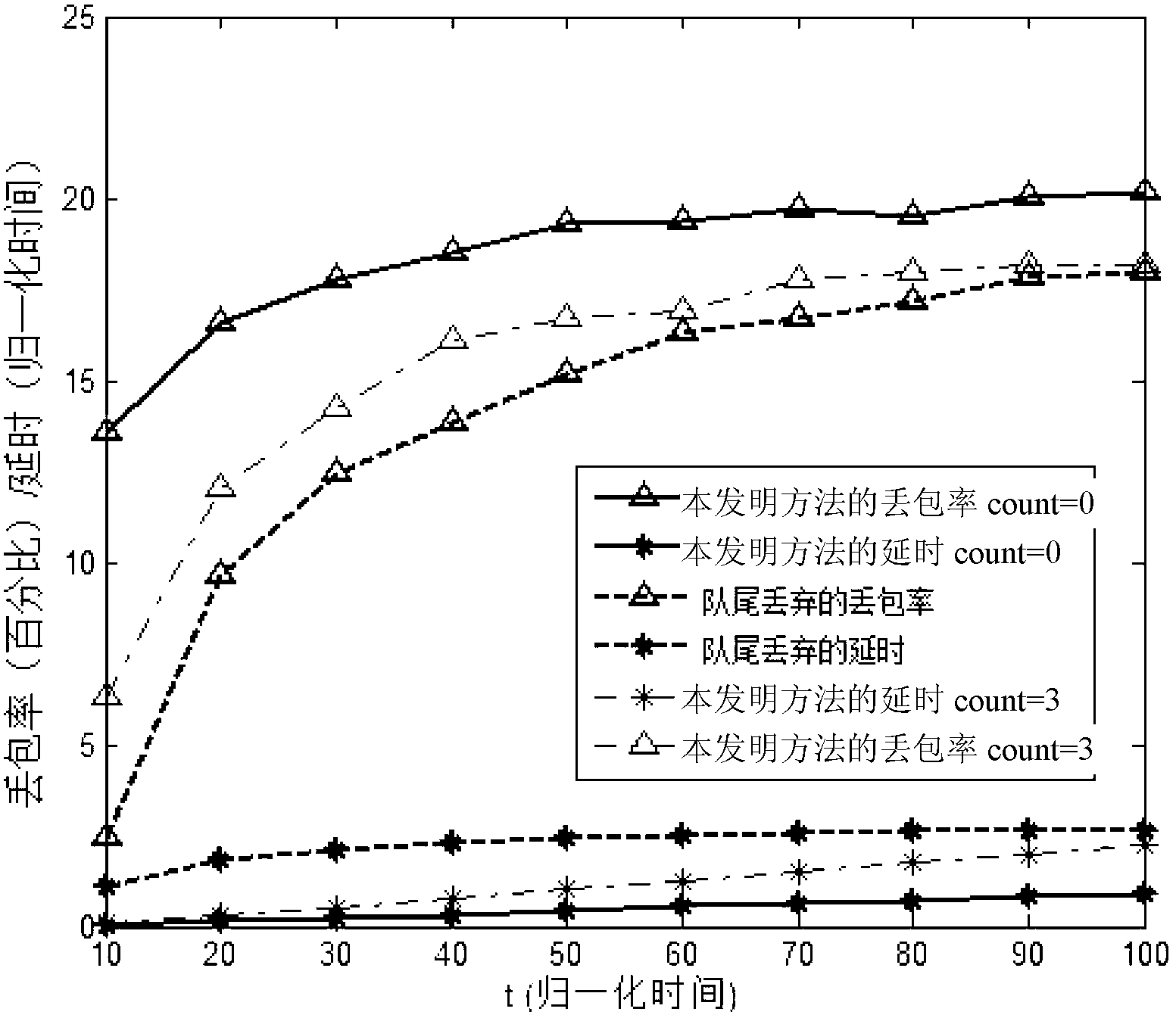

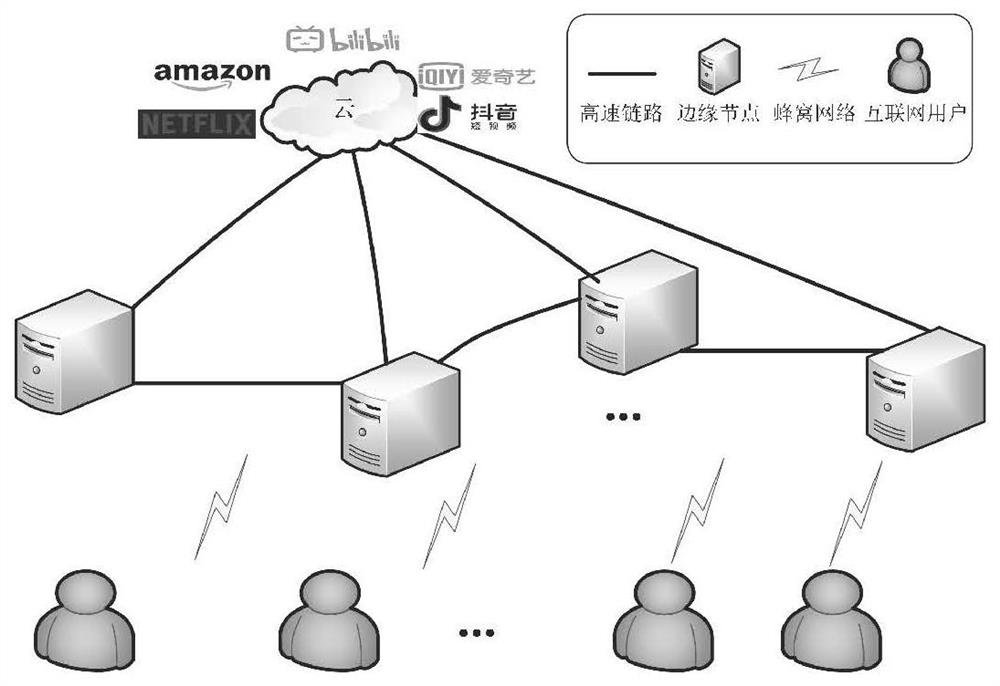

Load balancing edge cooperative caching method for Internet scene differentiated service

ActiveCN112039943AMeet the needs of different service levelsReduce queuing delayTransmissionDifferentiated serviceQueuing delay

The invention discloses a load balancing edge cooperative caching method for an Internet scene differentiated service. The method comprises the following steps: S1, defining response actions and caching parameters made by edge nodes in an edge cooperative caching system after a user sends an application service request; and S2, initializing parameters, executing an edge cooperative caching process, and calling a load balancing strategy and a differentiated service strategy. The differentiated service strategy is adopted in the edge cooperative caching system to meet the requirements of different service levels of different users in an Internet scene, queuing delay of user requests is reduced through the load balancing strategy, stability of node response request delay is improved, and userexperience is improved.

Owner:SUN YAT SEN UNIV

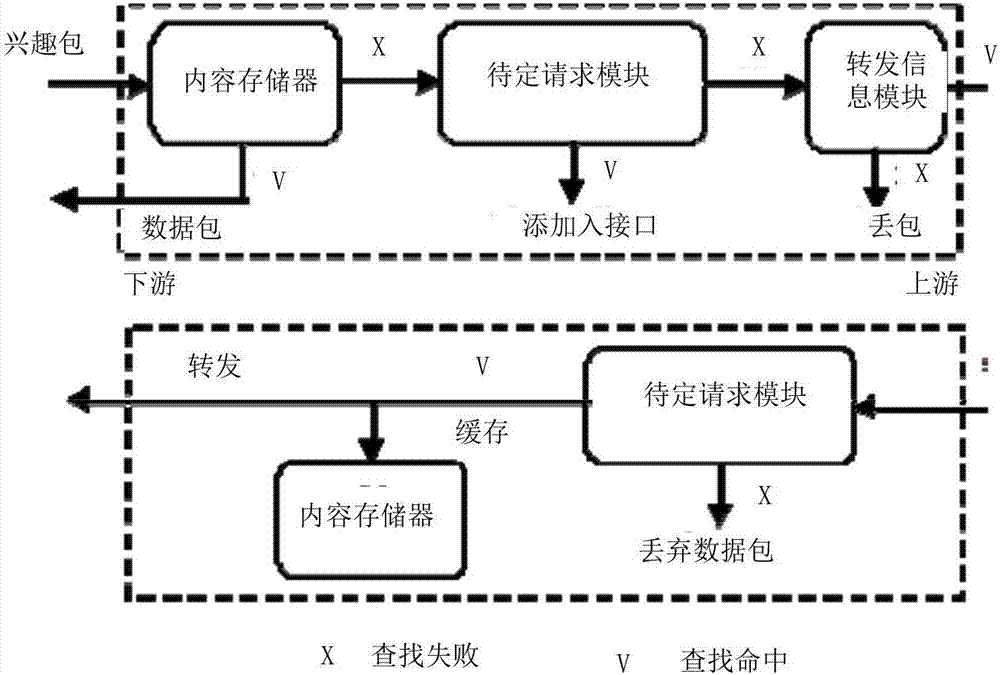

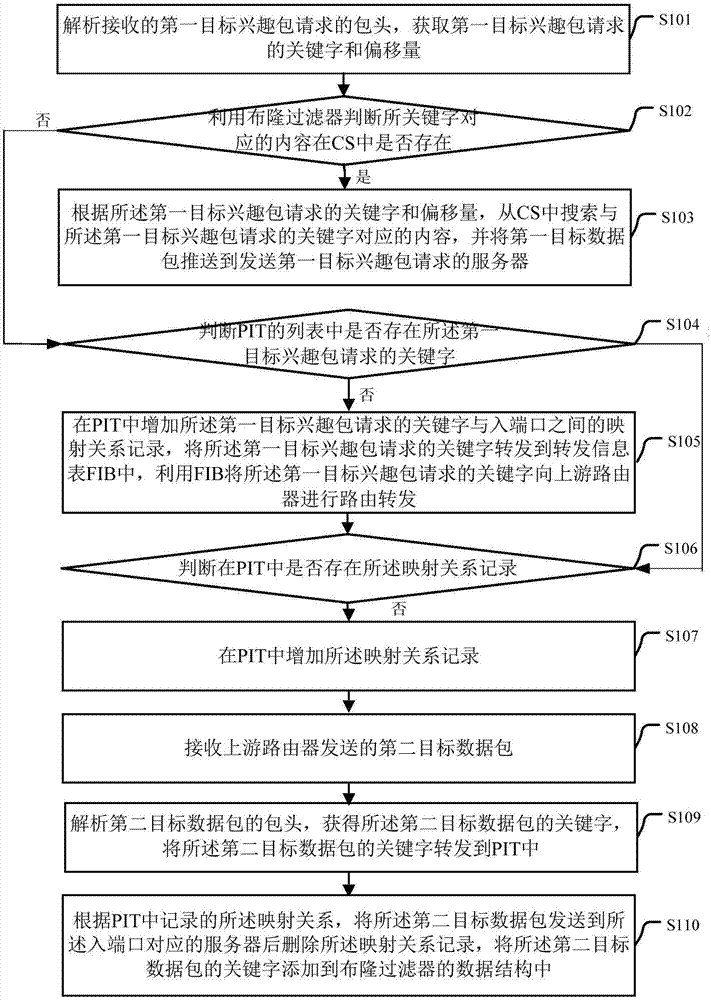

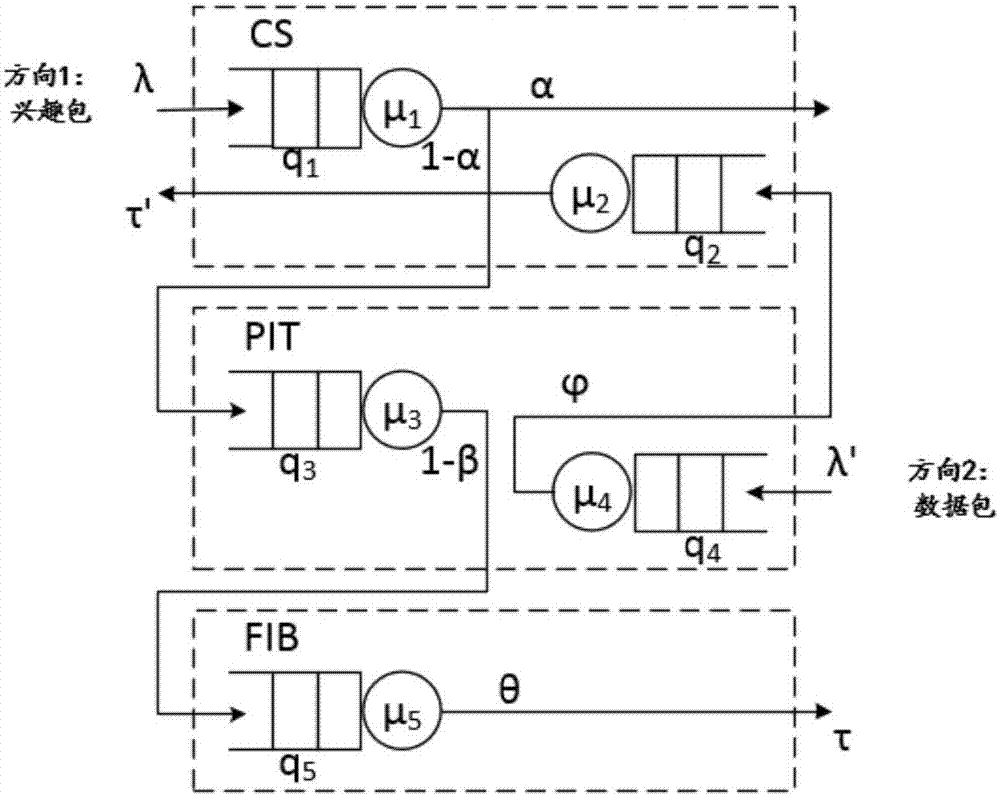

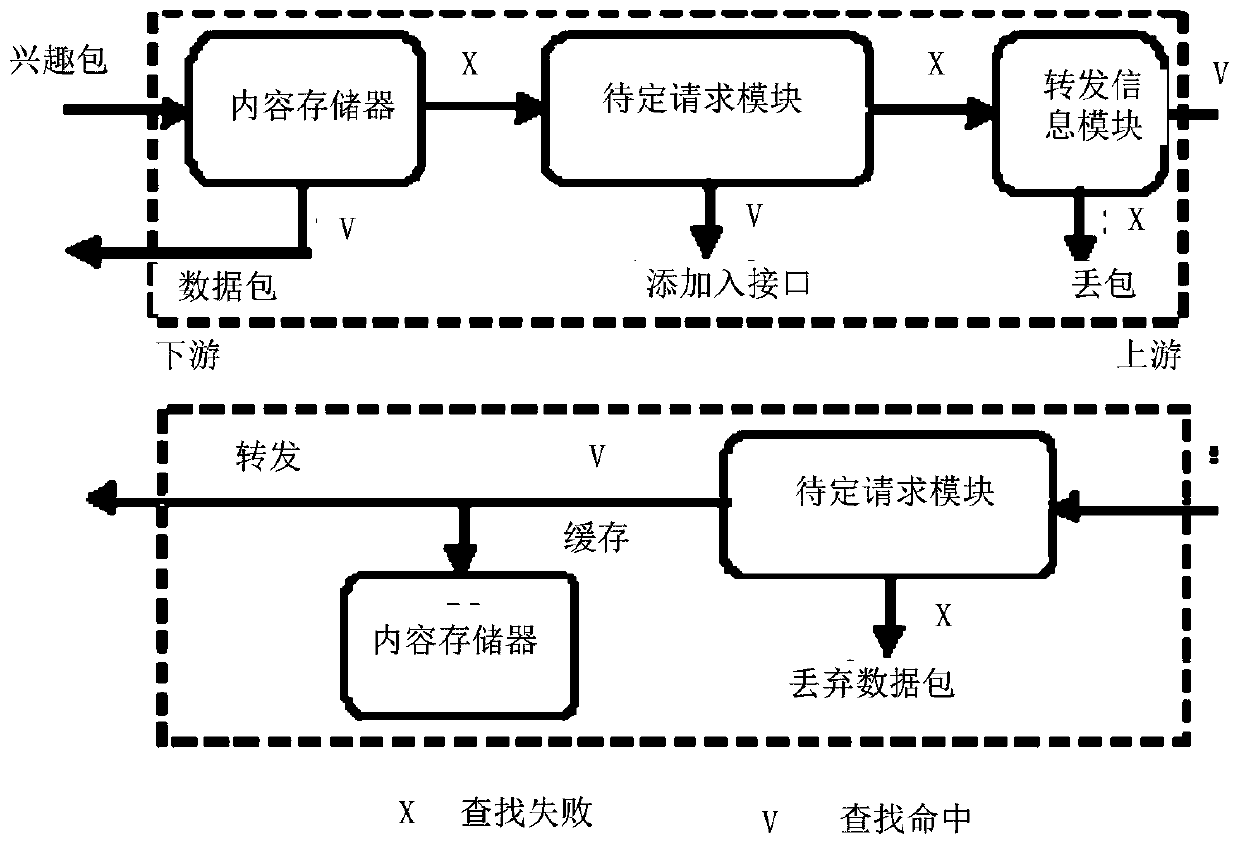

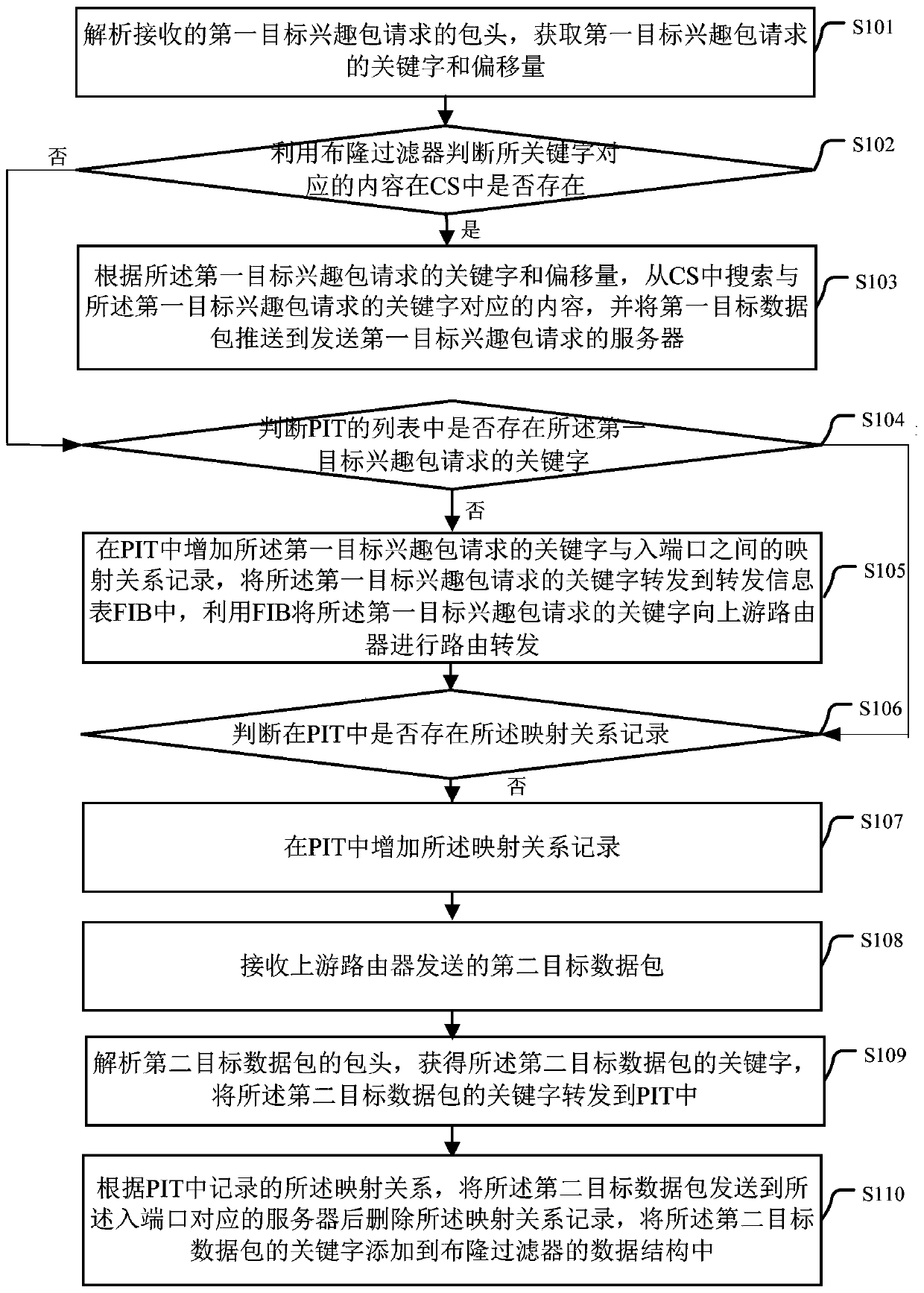

Non-blocking content caching method and device for content router

ActiveCN107454142AImprove performanceTake advantage ofData switching networksNetwork packetBloom filter

The embodiment of the invention provides a non-blocking content caching method and device for a content router. The method comprises the steps of analyzing a packet head of a received first target interest packet request; obtaining keywords and offset; judging whether content corresponding to the keywords exists in a CS (Content Store) or not through utilization of a bloom filter; if the content exists, judging whether an I / O waiting queue in the CS is smaller than a preset threshold or not; if the I / O waiting queue is smaller than the preset threshold, pushing a first target data packet to a server which sends the first target interest packet request; if the I / O waiting queue is not smaller than the preset threshold, judging whether the keywords exists in a pending interest table PIT or not; for the fact that the keywords do not exist in the PIT, sending the keywords to an FIB (forwarding information base); carrying out routing forwarding on the keywords by an upstream router through utilization of the FIB; receiving a sent second target data packet; sending the second target data packet to the server corresponding to a port and then deleting a mapping relationship record; and adding the keywords to a data structure of the bloom filter. According to the method, the problem that the CS is congested frequently is solved.

Owner:BEIJING UNIV OF POSTS & TELECOMM

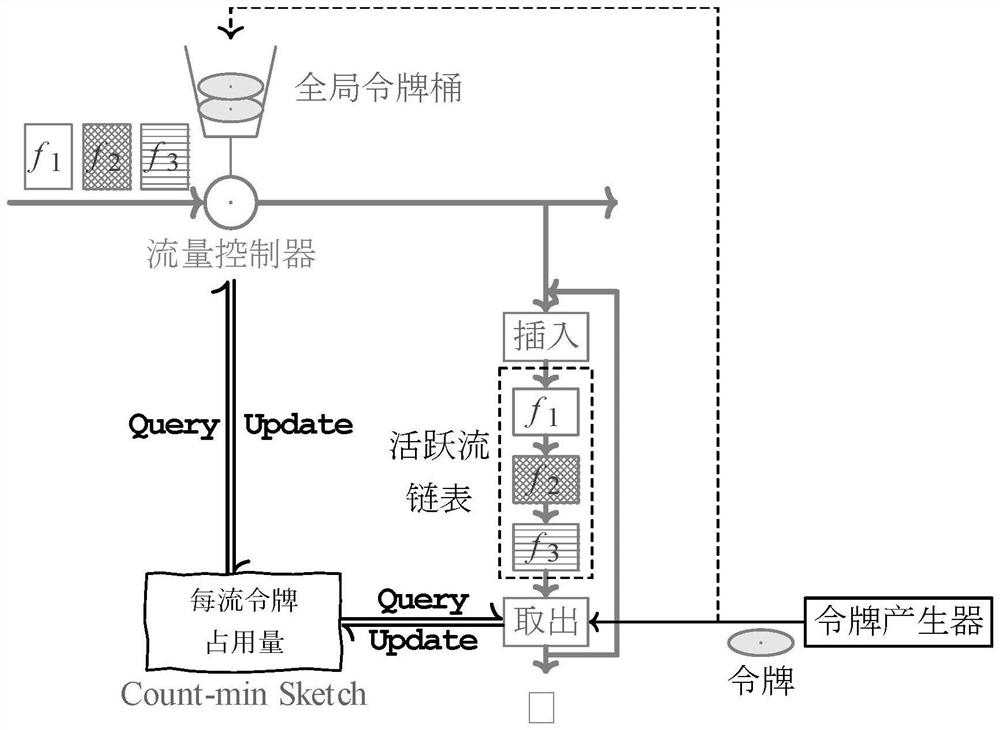

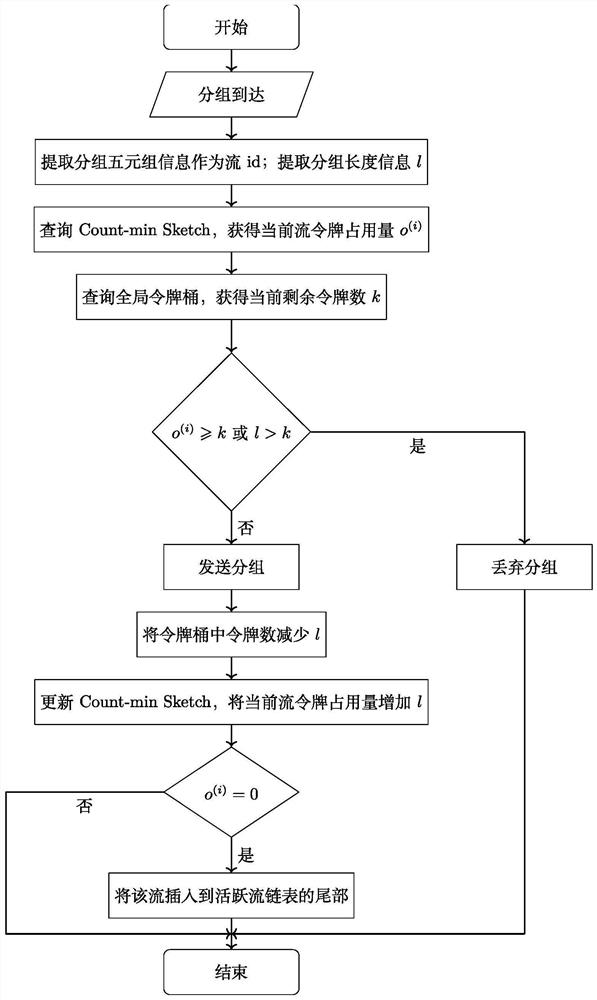

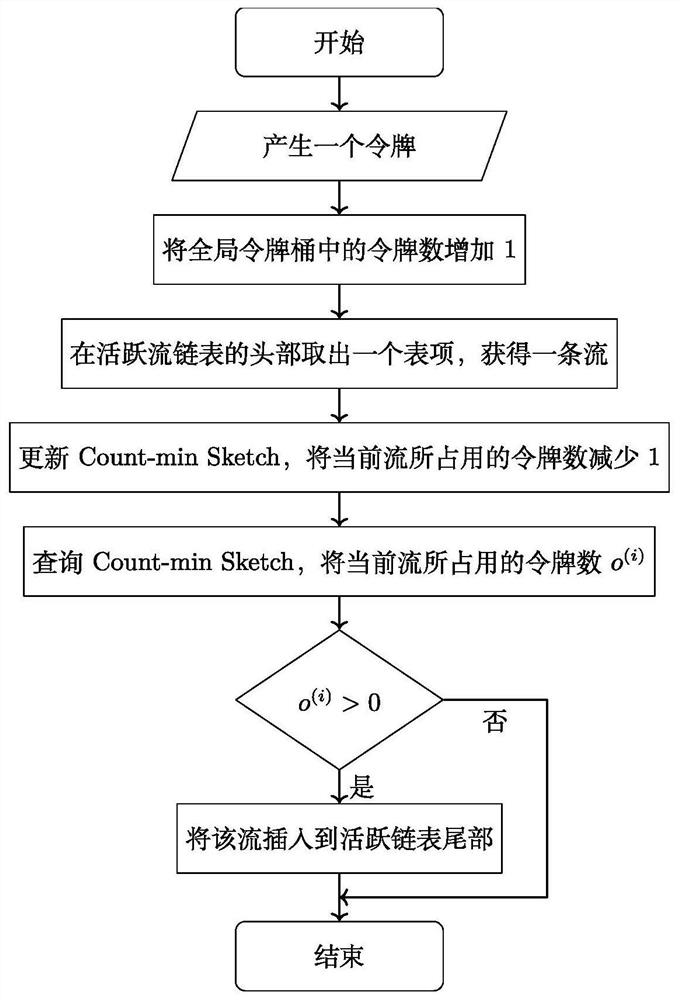

Fair network flow control method and device

ActiveCN112787950APrevent fullReduce transfer timeData switching networksComputer networkInternet traffic

The invention discloses a fair network flow control method and device, and the method comprises the steps of: (1) when a packet with the length of 1 arrives, determining whether to allow the packet to pass by a flow controller according to the token occupation amount of a flow to which the packet belongs and the number of tokens in a current global token bucket; if the packet is allowed to pass, increasing the token occupation amount of the stream by 1 through a Count-min Sketch; if the packet is allowed to pass and the token occupation amount of the stream to which the packet belongs is 0 before the packet arrives, inserting the stream into the tail part of the active stream linked list; and (2) generating the tokens at a preset speed, when the tokens are generated, adding 1 to the number of the tokens in the global token bucket, then taking out a stream from the head of the active stream linked list, reducing 1 to the number of the tokens occupied by the stream through a Count-min Sketch structure, and at the moment, if the token occupation amount of the stream is greater than 0, re-inserting the stream into the tail of the active stream linked list. According to the method, the tokens can be fairly allocated to each active flow, so that each flow passing through the flow controller fairly shares bandwidth resources.

Owner:XI AN JIAOTONG UNIV

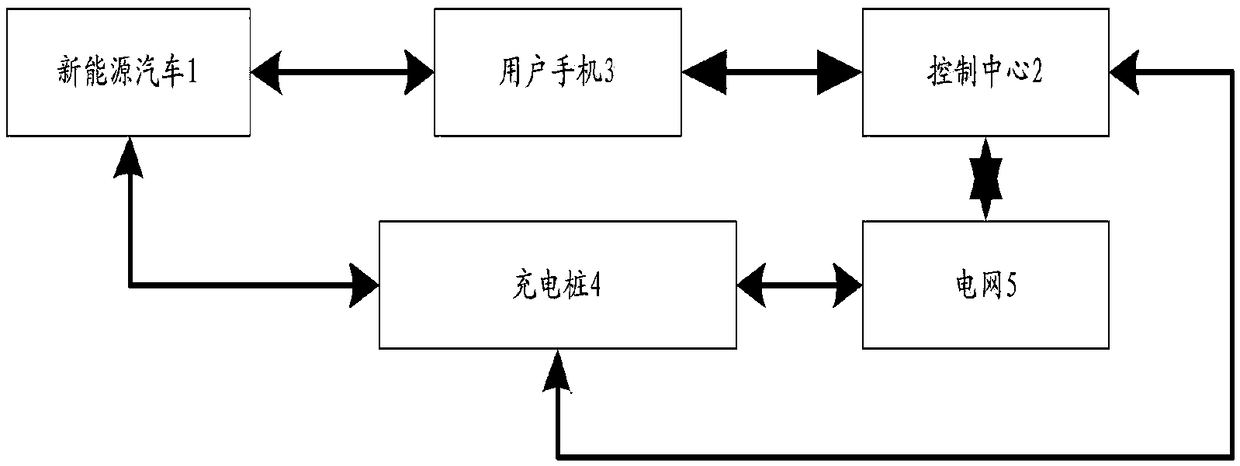

New energy automobile charging control system

InactiveCN108790879AReduce queuing delayShort time spentSubstation equipmentVehicular energy storageTime delaysCharge and discharge

The invention discloses a new energy automobile charging control system which comprises the following parts of new energy automobiles 1, a control center 2, user phones 3, charging piles 4 and a powergrid 5. The charging automobiles are accurately distributed to the charging piles according to the time sequence, charging is standard and controllable, and charging and discharging control efficiency is brought into full play. In the whole automobile dispatching process, the automobiles do not need to queue up to wait, queuing time delay is reduced, the time required for charging and dischargingis shortened, due to the fact that voice or video call data packages serve as channel carriers, channels can be established between automobile users and the control center at any time, hidden addresses are embedded in effective information of the data packages, the capacity of the channels is greatly increased, and the channels are unlikely to interfere in each other when the automobiles are multiple.

Owner:宁波市鄞州智伴信息科技有限公司

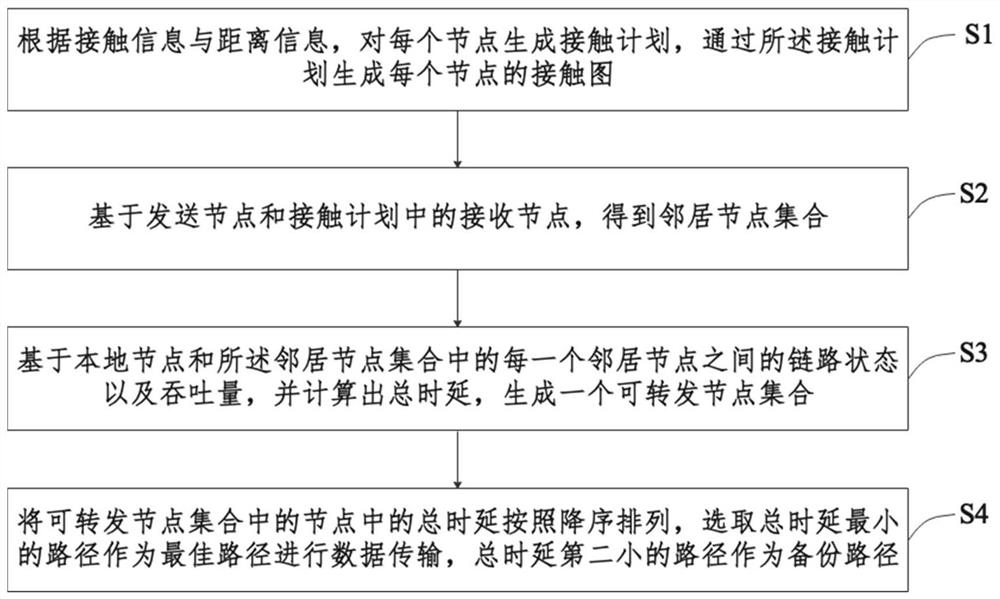

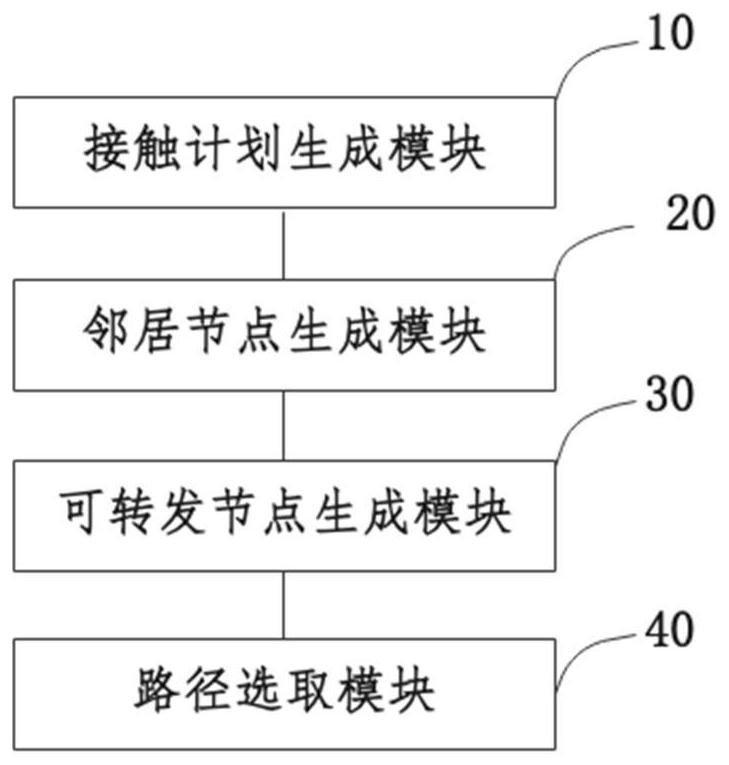

Method and system for selecting DTN network routing path of low-orbit satellite

ActiveCN112261681AReduce queuing delayQueuing delay will not causeRadio transmissionWireless communicationPathPingBackup path

The embodiment of the invention provides a low earth orbit satellite DTN network routing path selection method and system, and the method comprises the steps: generating a contact plan for each node according to contact information and distance information, and generating a contact graph of each node through the contact plan; obtaining a neighbor node set based on the sending node and a receivingnode in the contact plan; calculating the total time delay based on the link state and throughput between the local node and each neighbor node in the neighbor node set, and generating a forwards nodeset; and arranging the total time delay in the nodes in the forwardable node set in a descending order, selecting the path with the minimum total time delay as the optimal path to perform data transmission, and selecting the path with the second minimum total time delay as the backup path. According to the scheme, the state of the link and the queuing delay are fully considered, node congestion or network congestion cannot be caused, and the influence of the queuing delay on the whole network performance can be effectively reduced on the basis of ensuring the service volume.

Owner:BEIHANG UNIV

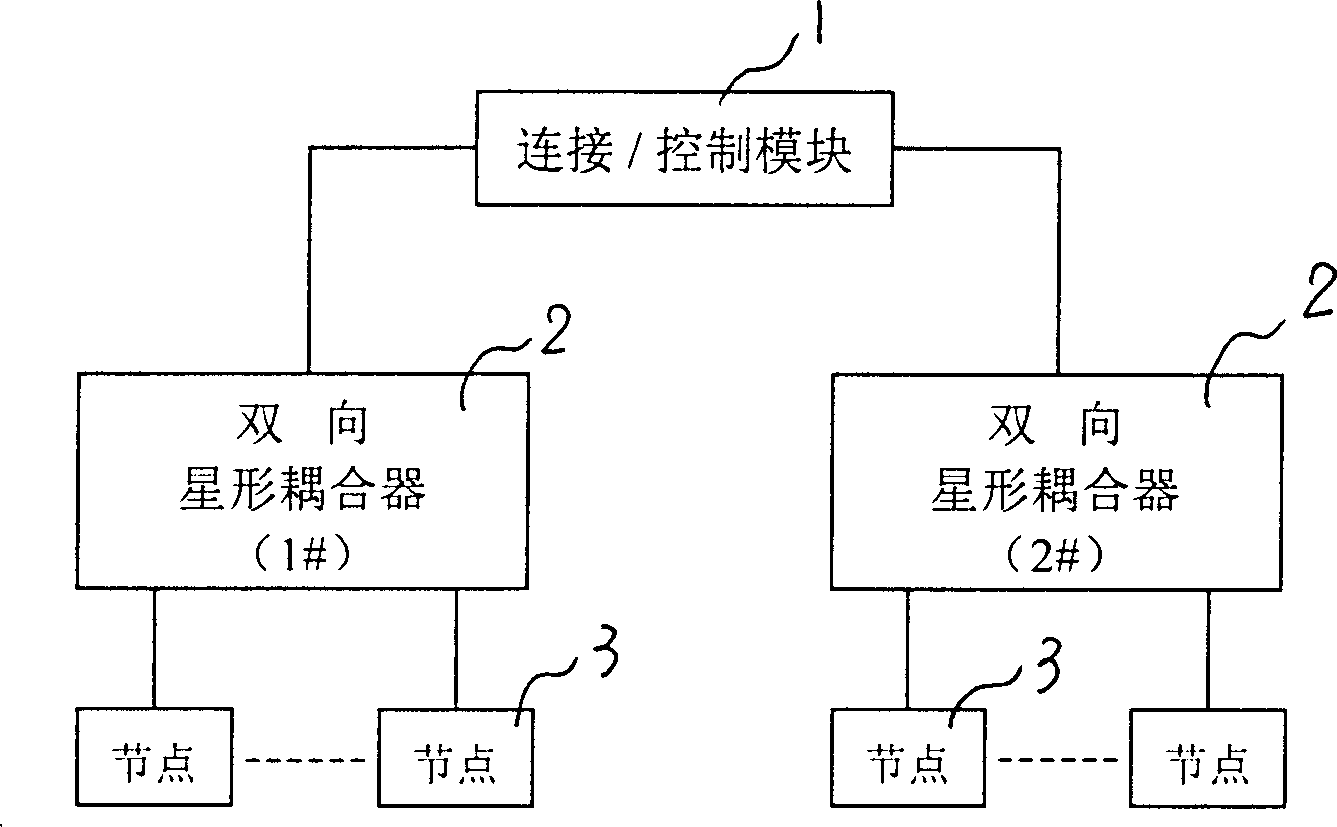

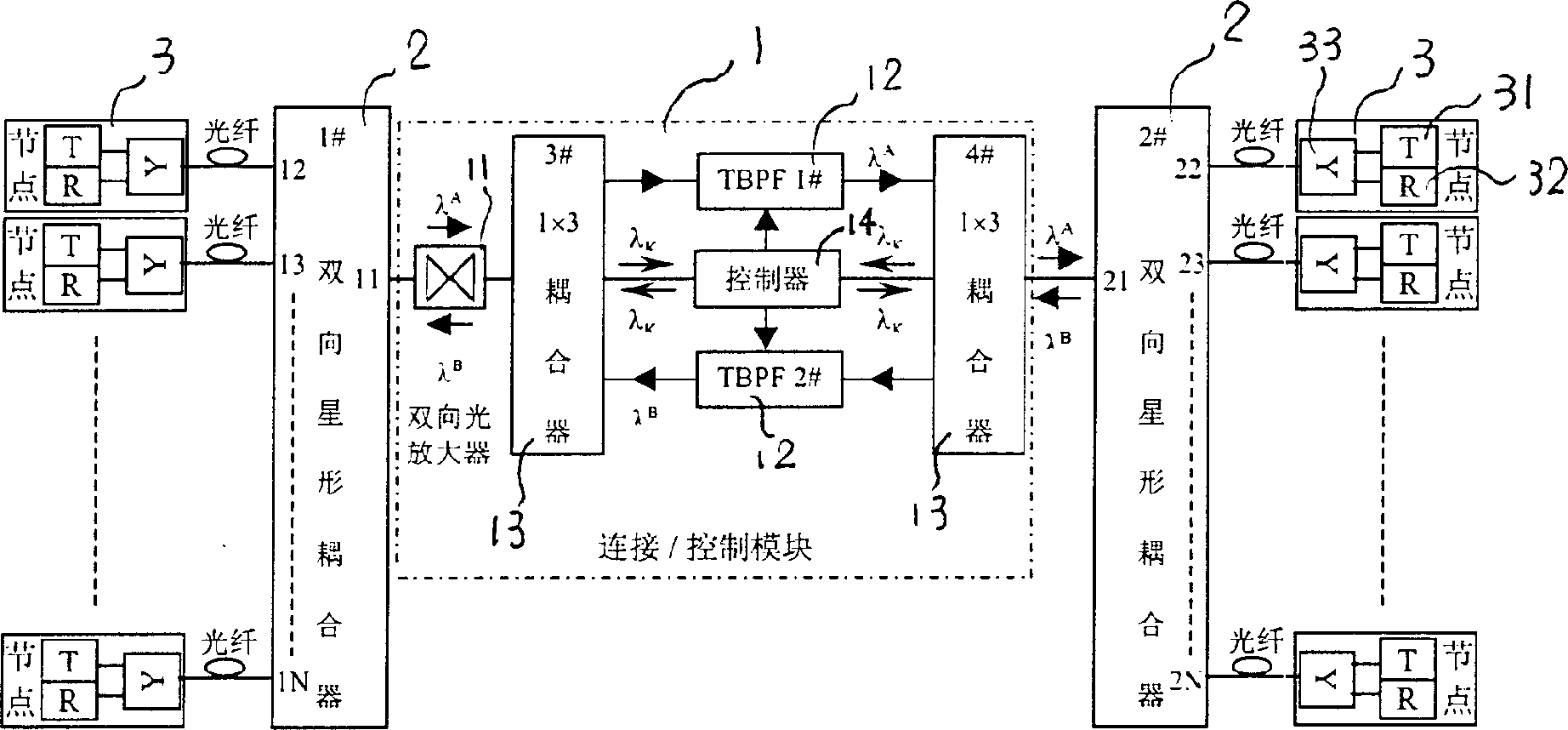

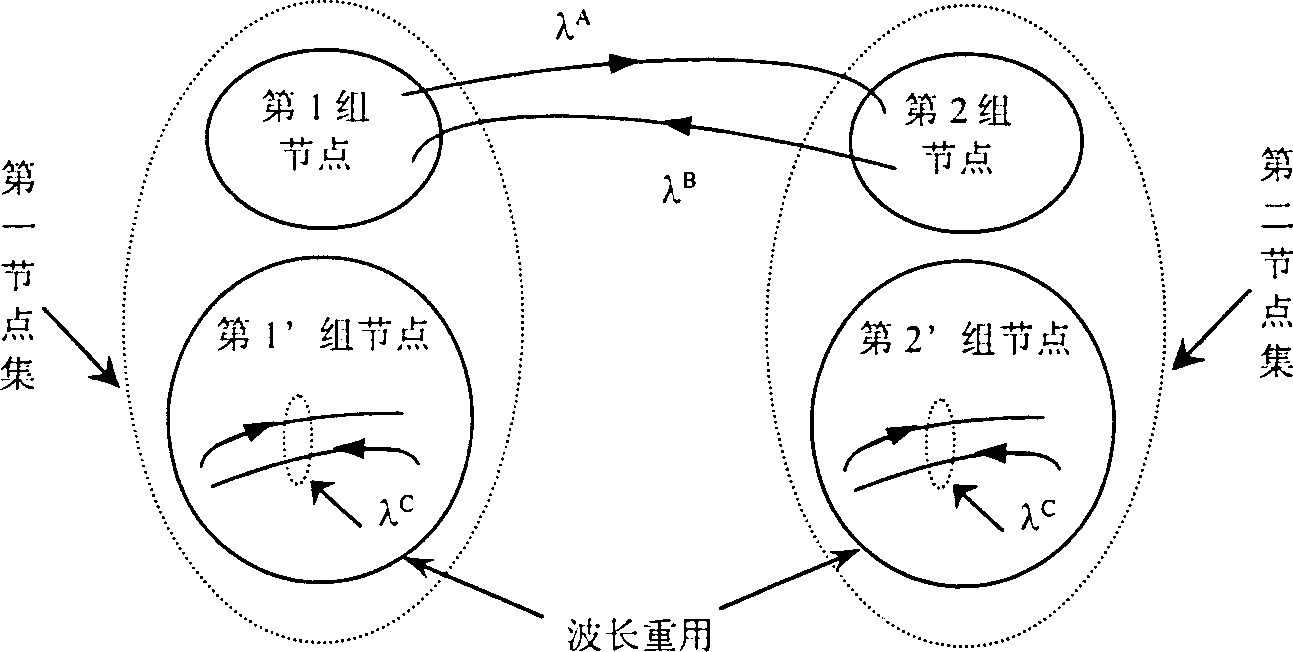

Single fiber bidirectional transmission multi wave length optical network system capable of realizing partial wave length reusing

InactiveCN1427570AImprove throughputReduce queuing delayWavelength-division multiplex systemsFibre transmissionMulti wavelengthNetwork performance

A multi-wavelength optical network using single-fibre bidirectional transmission to realize the multiplexing of partial wavelengthes is composed of a link / control module, two bidirectional star couplers and 2(N-1) nodes. Each bidirectional star coupler is connected to the link / control module via a port and an optical fiber, and can be connected to (N-1) nodes via an optical fibre for each node. Its advantages are more number of nodes (increased by one time), high network through put, short time delay, and optical fibres saved by one time.

Owner:SHANGHAI BELL

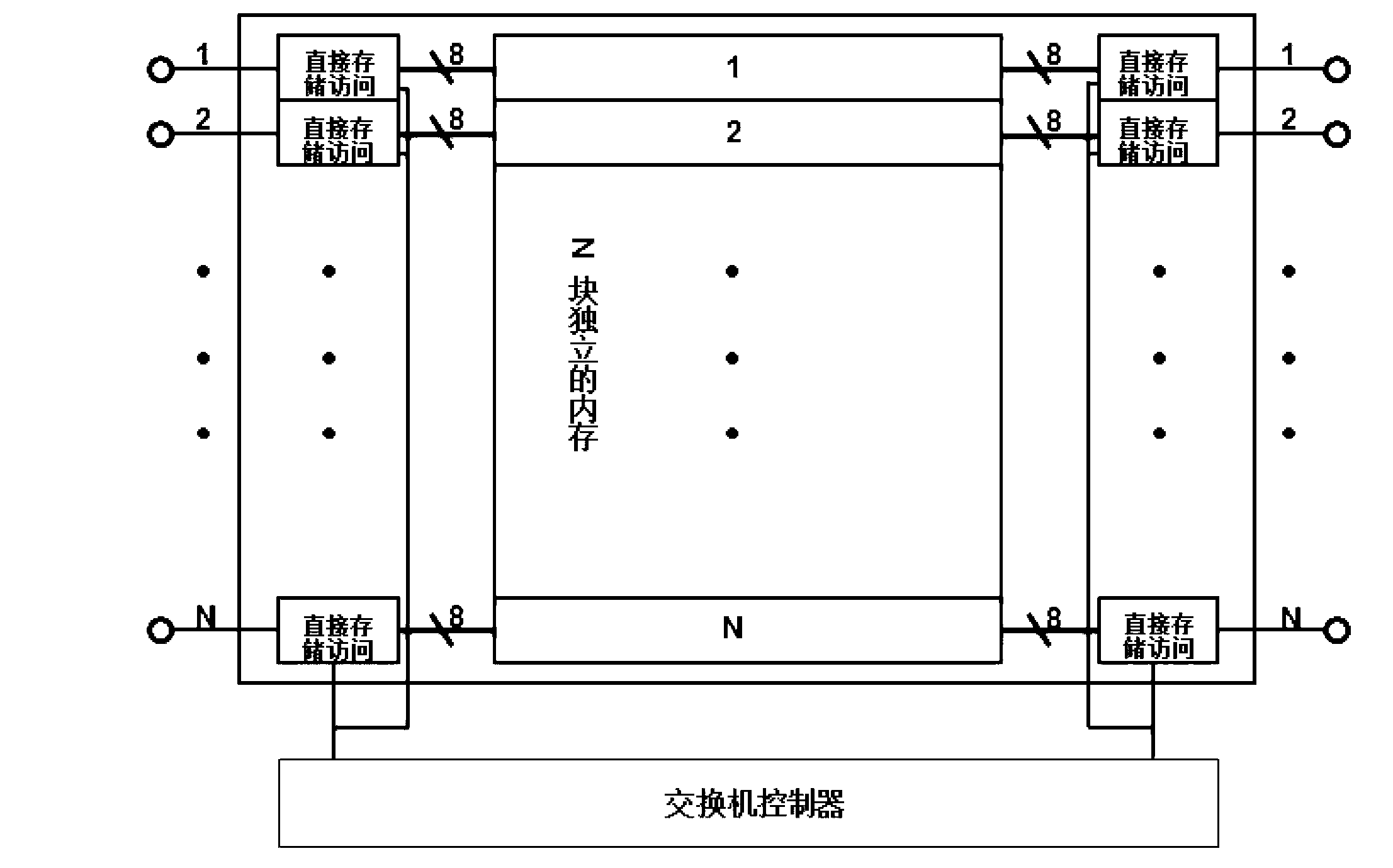

AFDX (avionics full-duplex switch Ethernet) network switch with time-space separation characteristic

ActiveCN103067309AQueue size reductionReduce queuing delayData switching networksAvionics Full-Duplex Switched EthernetTime delays

The invention discloses an AFDX (avionics full-duplex switch Ethernet) network switch with a time-space separation characteristic. The AFDX network switch comprises N input ports, N output ports, 2N DMAs (direct memory accesses), a switch controller and N memories, wherein each input port is connected with one memory by one DMA, and the memory is further connected with an output port by one DMA; and the 2N DMAs are connected with the switch controller. The switch with a time-space separation characteristic in an AFDX system provided by the invention is easy to realize by software and hardware; and in case of keeping 100% of throughput, the time delay of a data package after passing through the switch, the queuing time delay of the data package in a buffer area of the switch, and the queue size of the buffer area are respectively and greatly reduced.

Owner:SHANGHAI JIAO TONG UNIV

Caching method and device for content router

ActiveCN110062045AImprove performanceSolve the problem of frequent congestionData switching networksBloom filterCore router

The embodiment of the invention provides a caching method and device of a content router. The method comprises the following steps: analyzing a packet header of the received first target interest packet request, obtaining keywords and offsets, judging whether the I / O waiting queue in the CS is smaller than a set threshold value or not; if yes, pushing the first target data packet to a server sending a first target interest packet request, if not, judging whether the keyword exists in an undetermined request table PIT, sending the keyword to an FIB for the absence of the keyword in the PIT, andcarrying out routing forwarding on the keyword through an upstream router by utilizing the FIB; receiving a sent second target data packet, sending the second target data packet to a server corresponding to the port according to the PIT, deleting the mapping relation record, and adding the keyword to the data structure of the Bloom filter. By applying the method and the device, the problem of frequent CS congestion can be solved.

Owner:BEIJING UNIV OF POSTS & TELECOMM

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com