Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

34results about How to "Reduce context switching" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

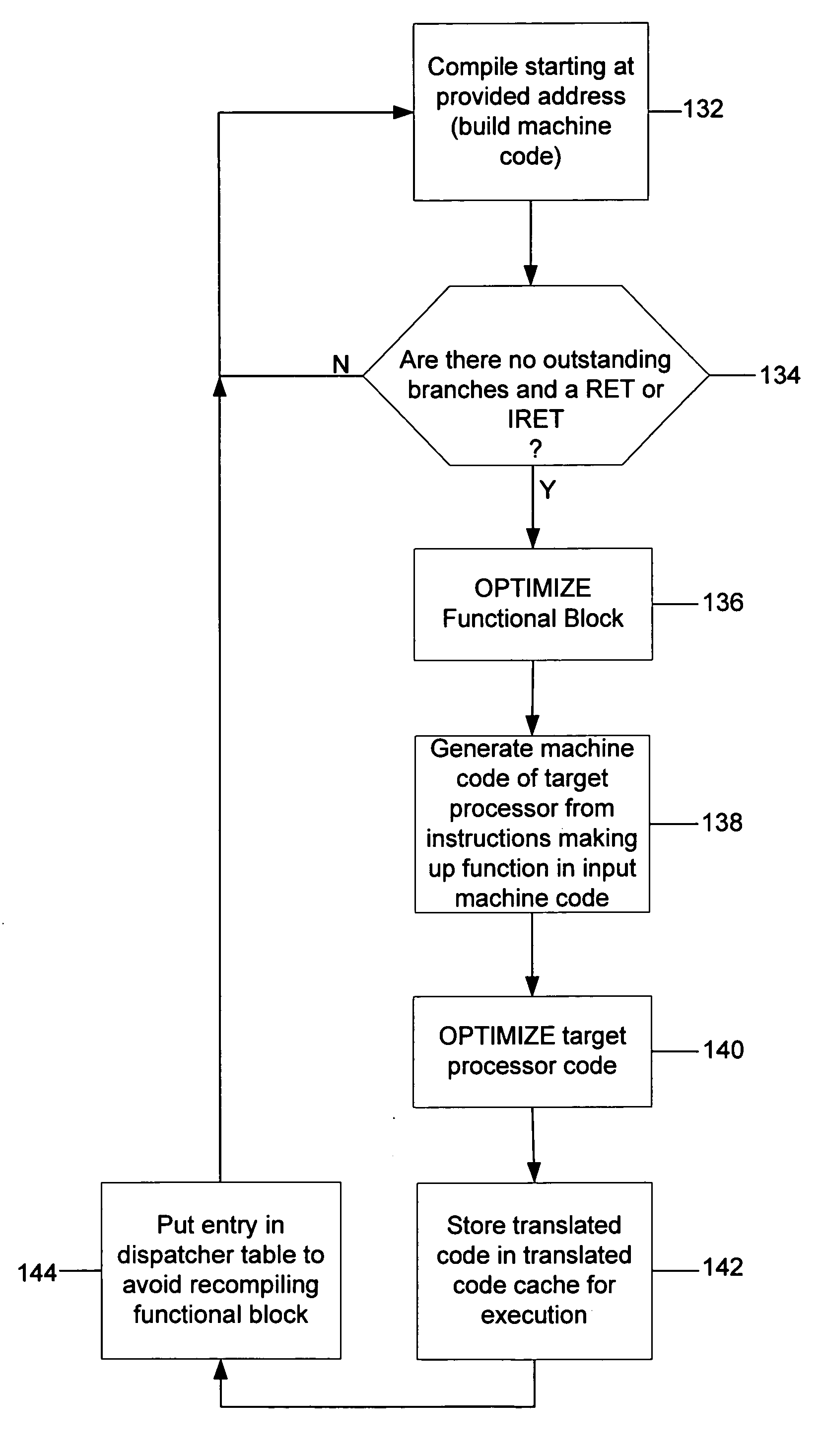

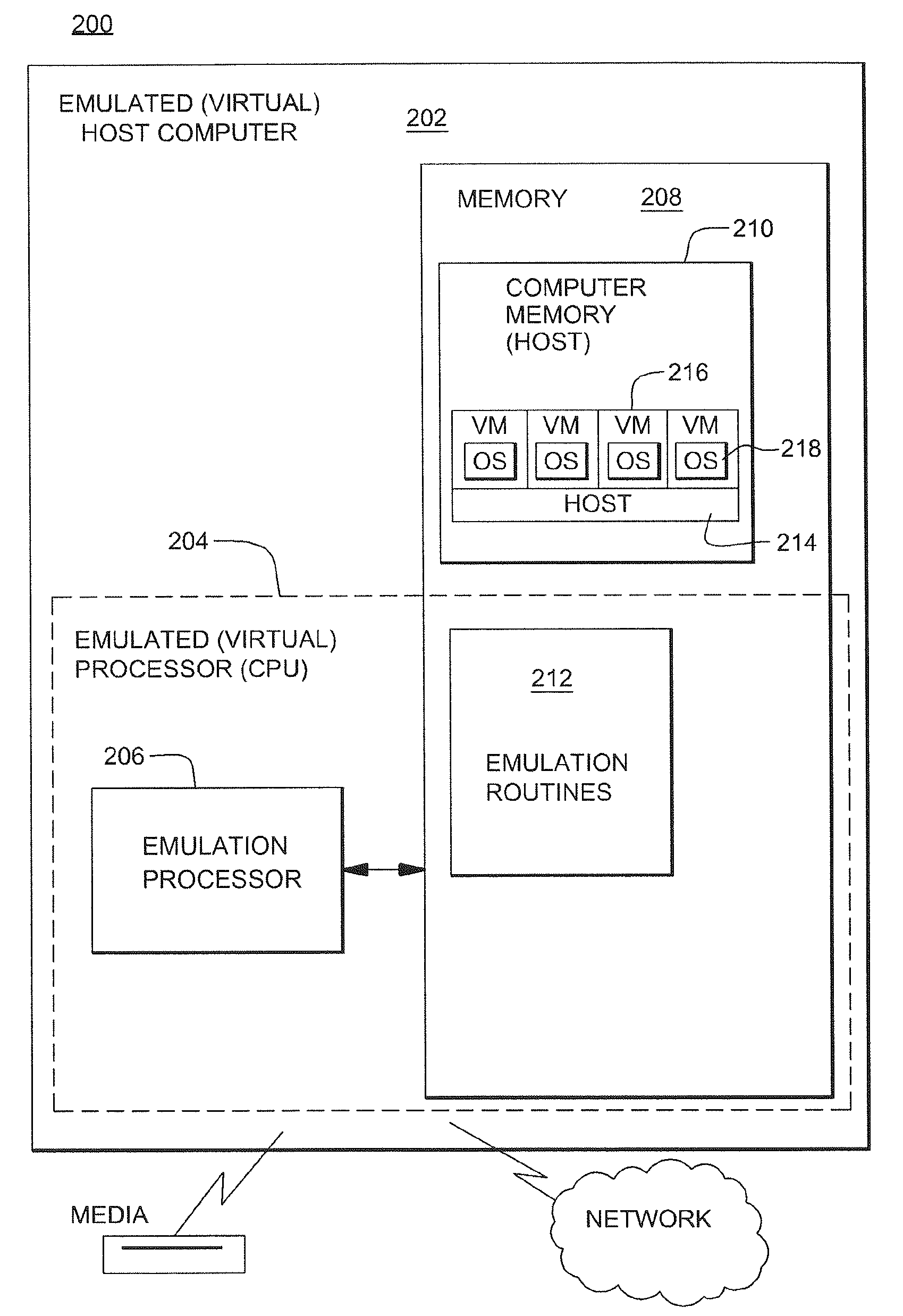

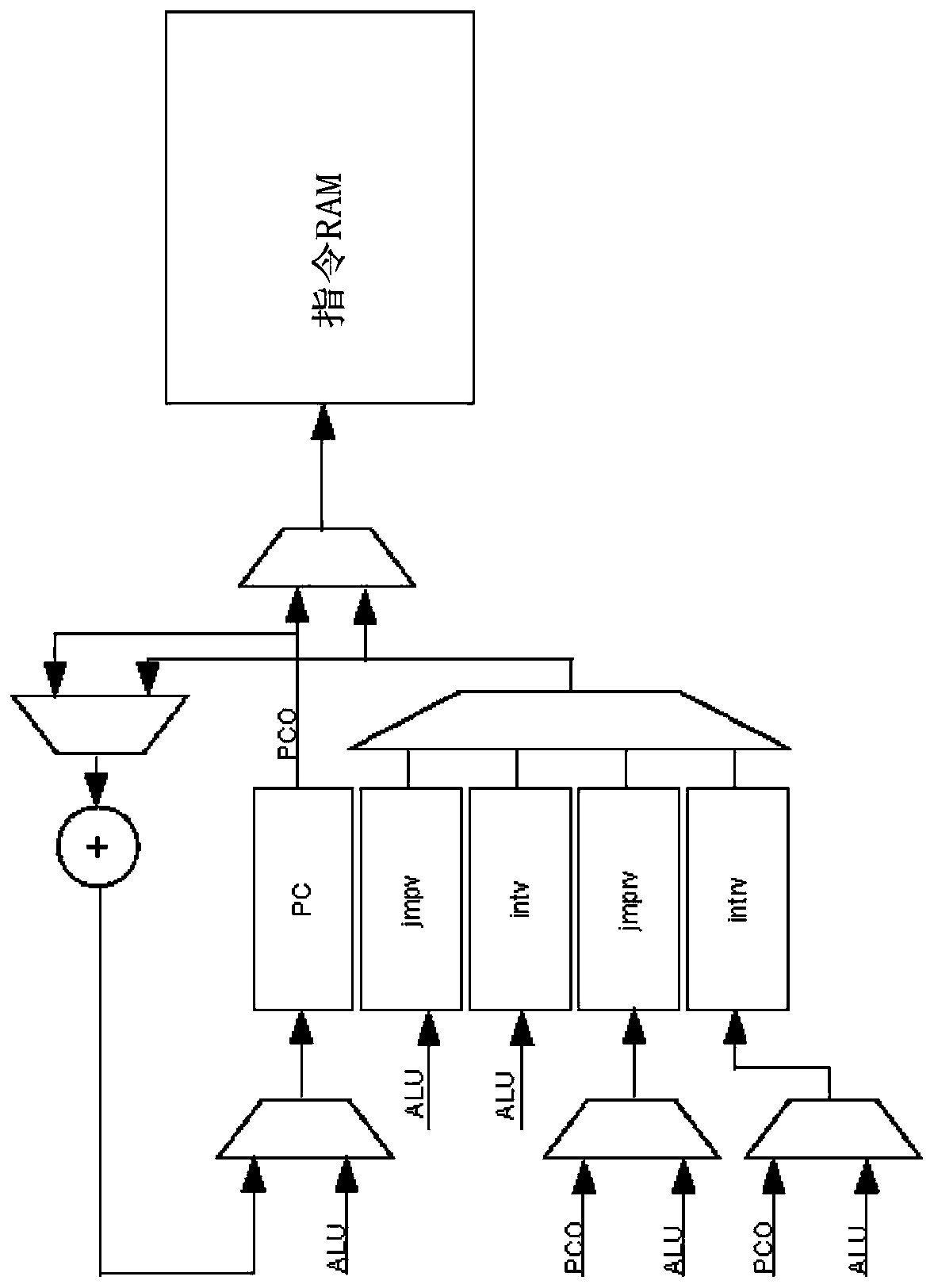

Function-level just-in-time translation engine with multiple pass optimization

InactiveUS20070006178A1Improve translationReduce context switchingBinary to binaryProgram controlCode TranslationSoftware emulation

A JIT binary translator translates code at a function level of the source code rather than at an opcode level. The JIT binary translator of the invention grabs an entire x86 function out of the source stream, rather than an instruction, translates the whole function into an equivalent function of the target processor, and executes that function all at once before returning to the source stream, thereby reducing context switching. Also, since the JIT binary translator sees the entire source code function context at once the software emulator may optimize the code translation. For example, the JIT binary translator might decide to translate a sequence of x86 instructions into an efficient PPC equivalent sequence. Many such optimizations result in a tighter emulated binary.

Owner:MICROSOFT TECH LICENSING LLC

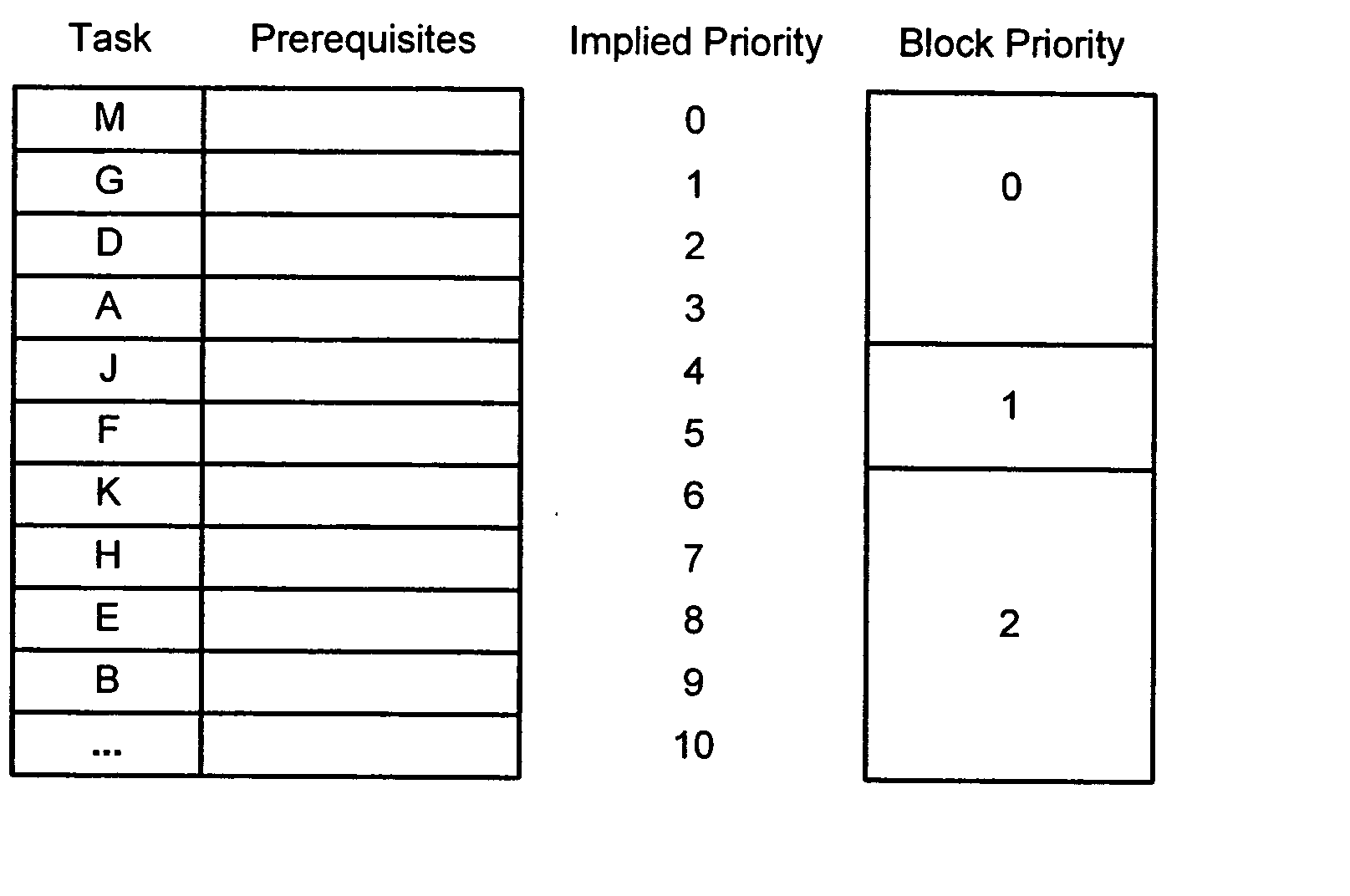

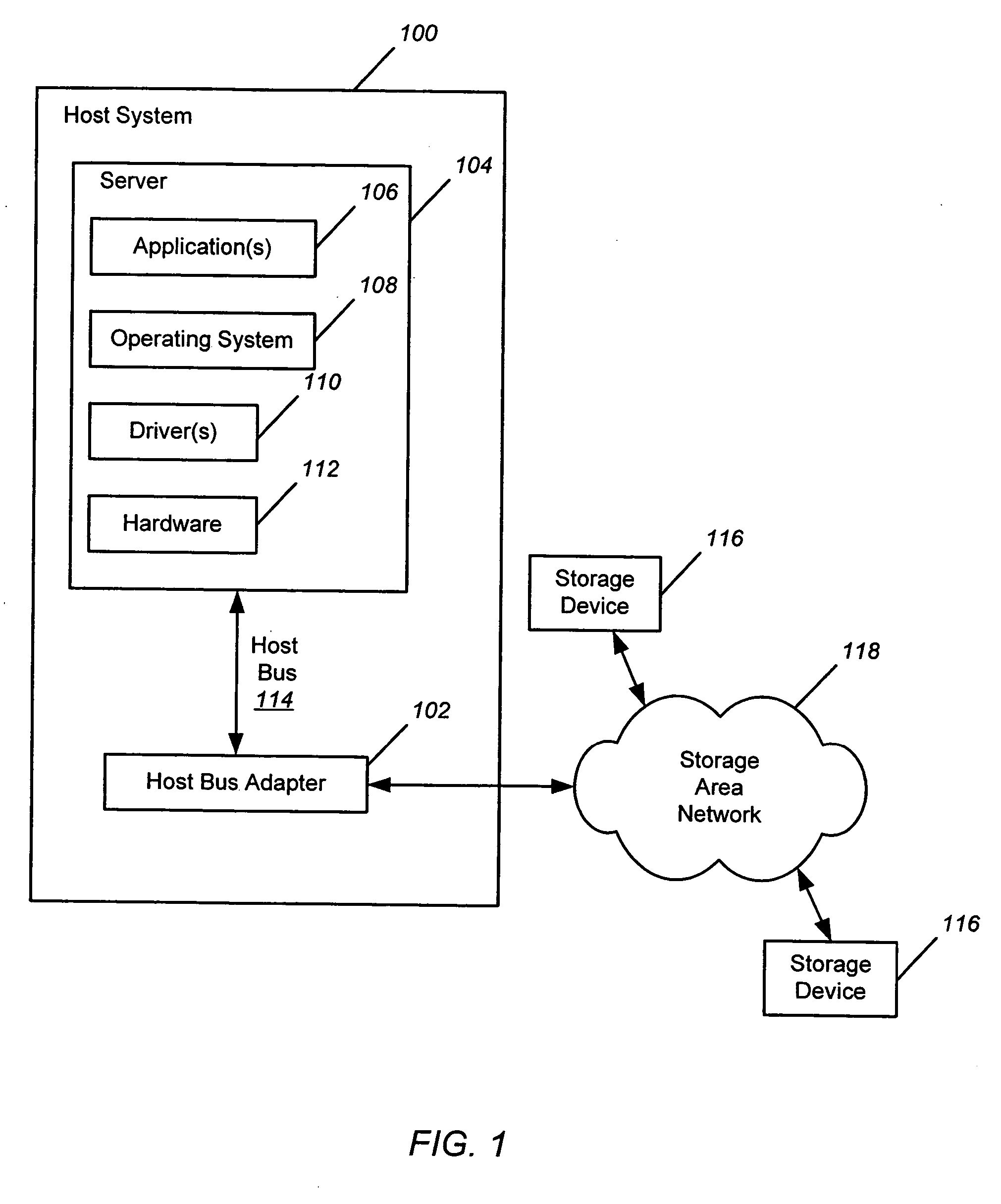

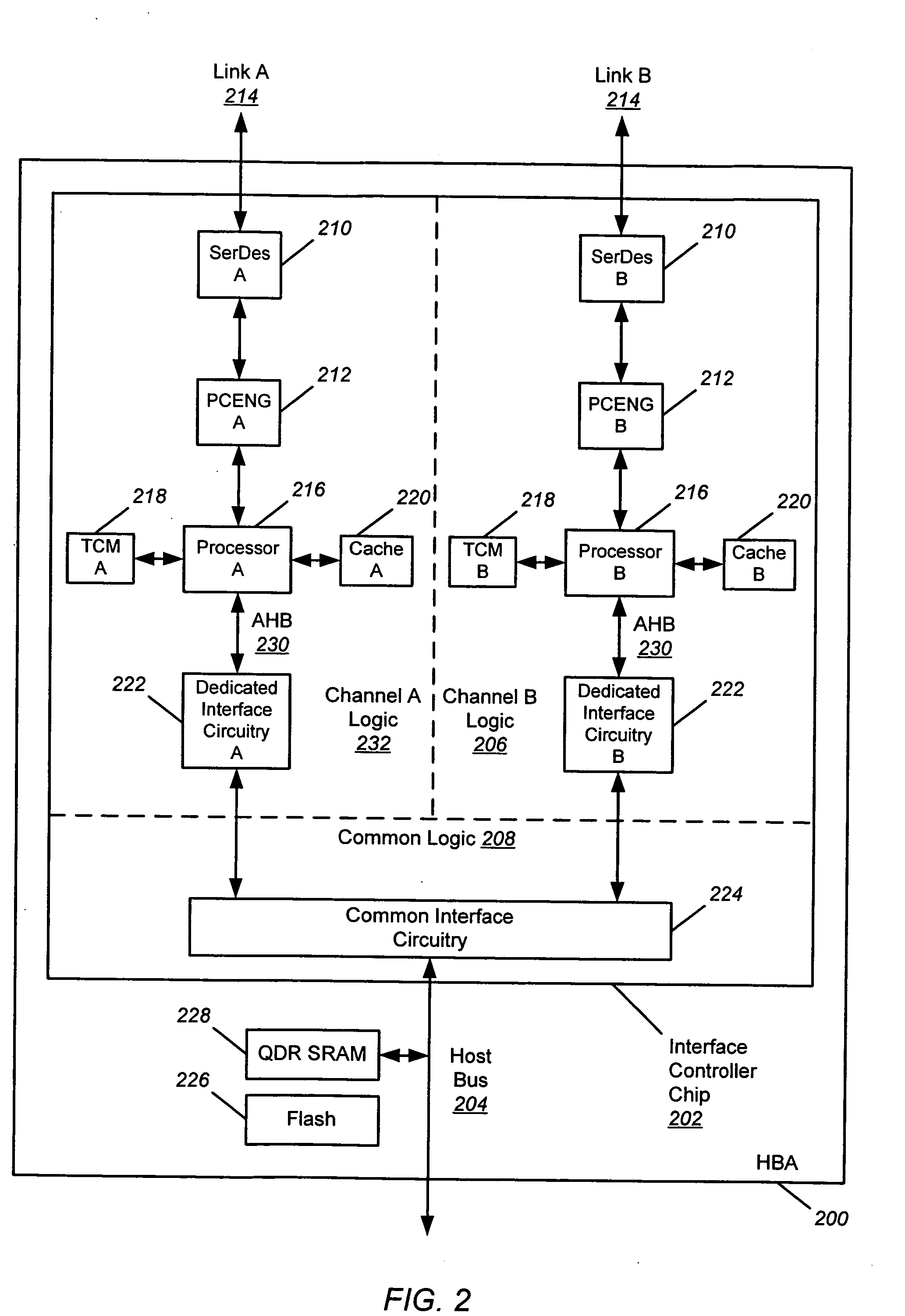

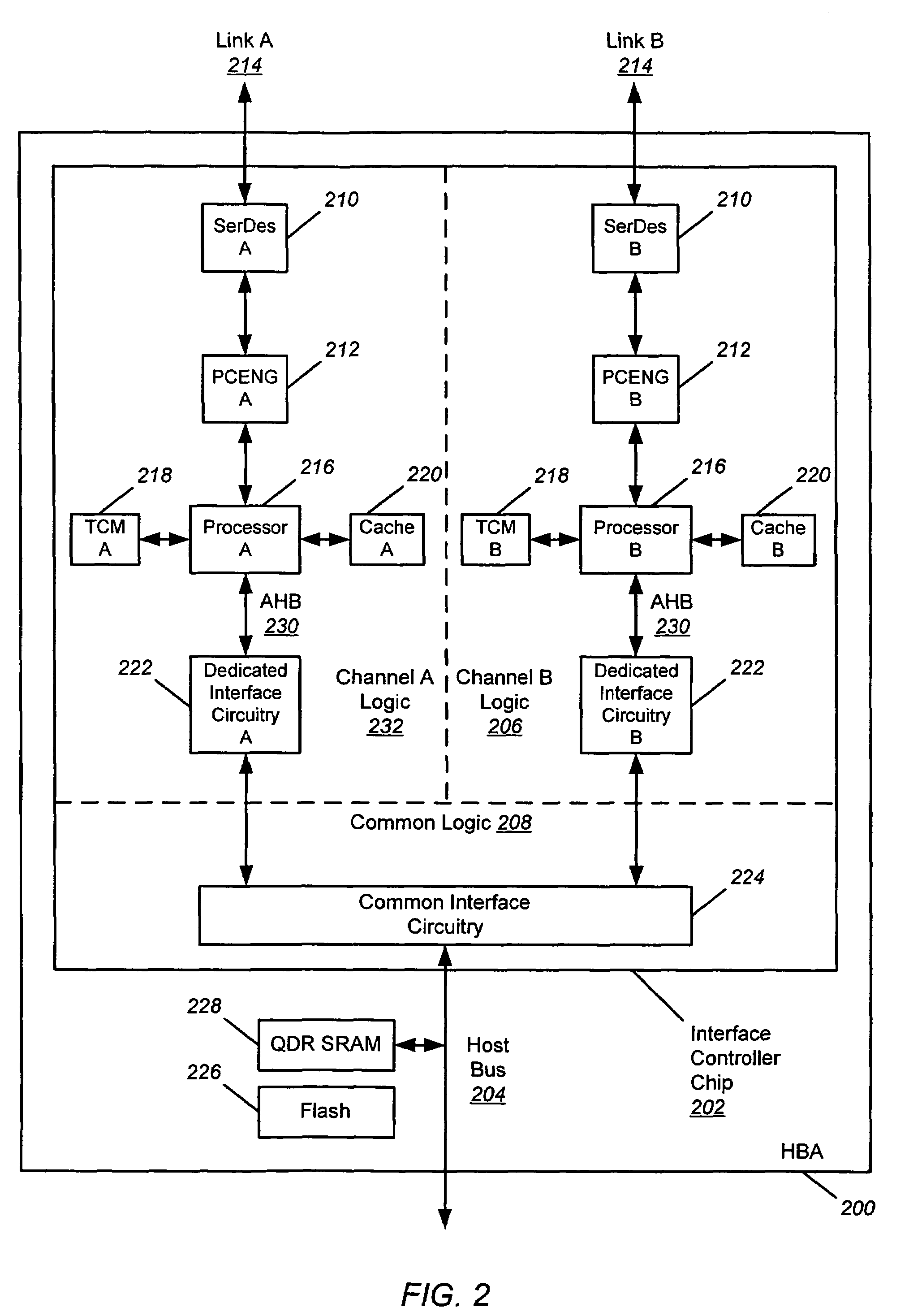

Prerequisite-based scheduler

InactiveUS20050240924A1Easy to operateReduce context switchingProgram initiation/switchingDigital computer detailsOperating systemPrecondition

A prerequisite-based scheduler is disclosed which takes into account system resource prerequisites for execution. Tasks are only scheduled when they can successfully run to completion and therefore a task, once dispatched, is guaranteed not to become blocked. In a prerequisite table, tasks are identified horizontally, and resources needed for the tasks are identified vertically. At the bottom of the table is the system state, which represents the current state of all resources in the system. If a Boolean AND operation is applied to the task prerequisite row and the system state, and if the result is the same as the prerequisite row, then the task is dispatchable. In one embodiment of the present invention, the prerequisite based scheduler (dispatcher) walks through the prerequisite table from top to bottom until a task is found whose prerequisites are satisfied by the system state. Once found, this task is dispatched.

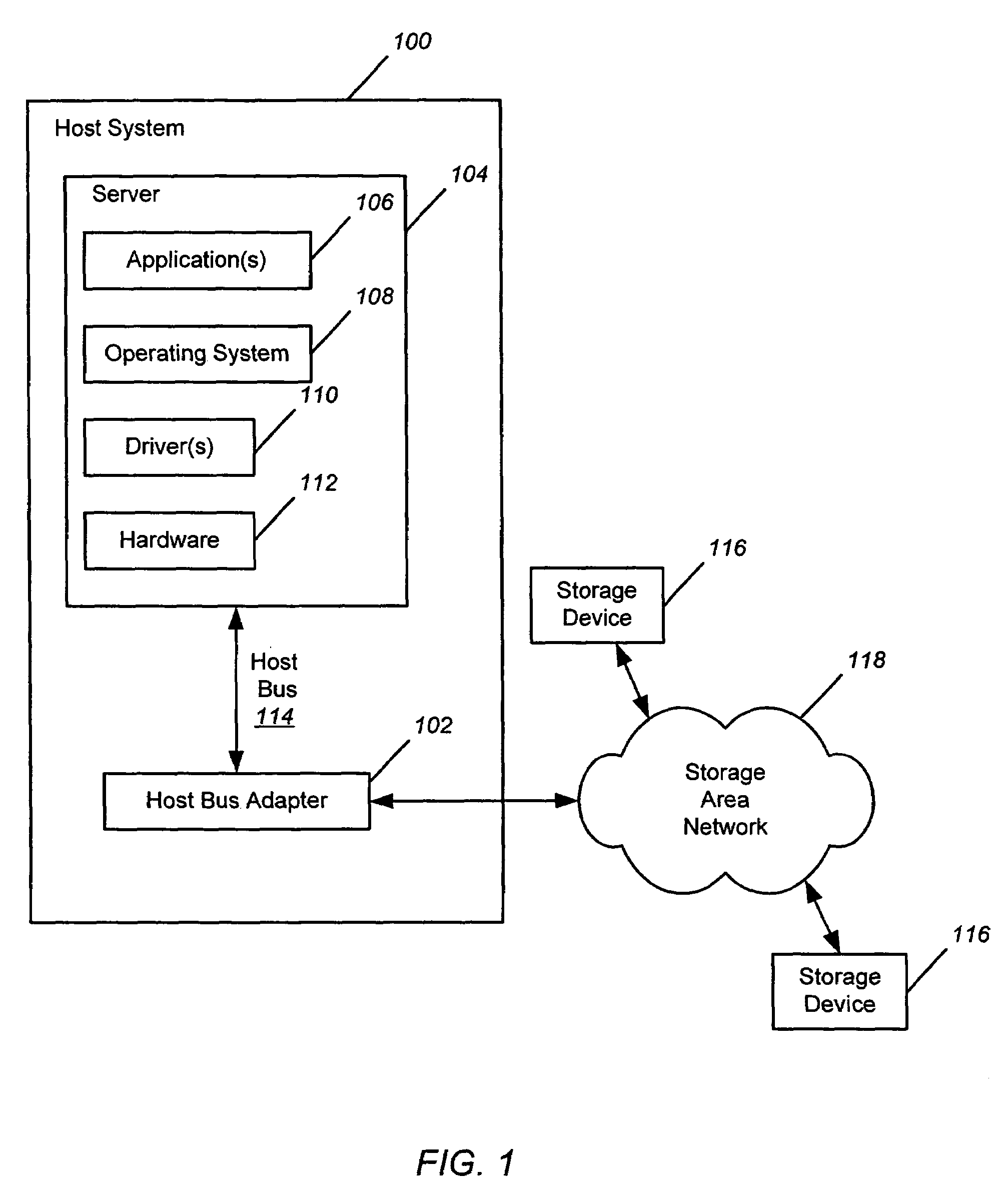

Owner:AVAGO TECH INT SALES PTE LTD

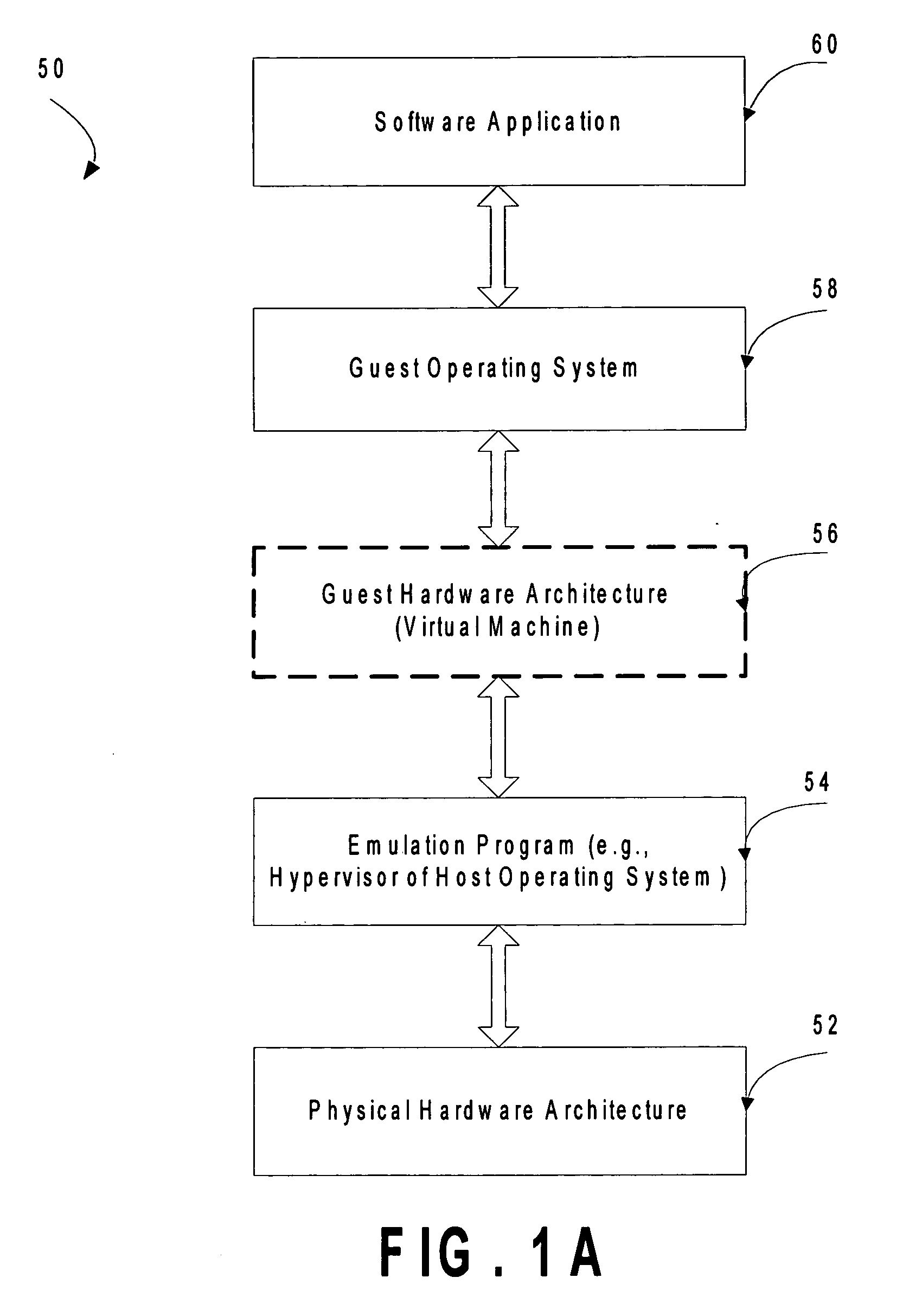

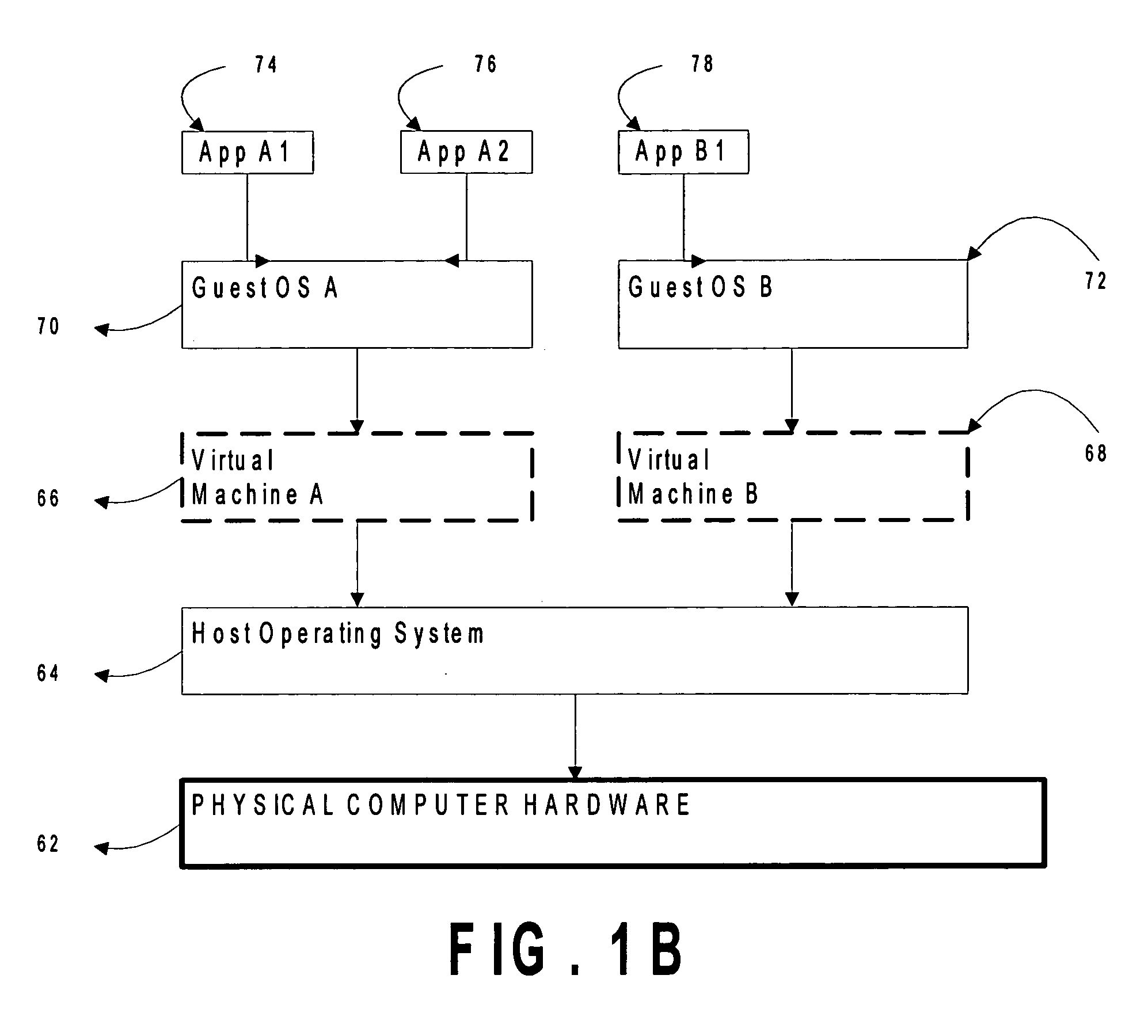

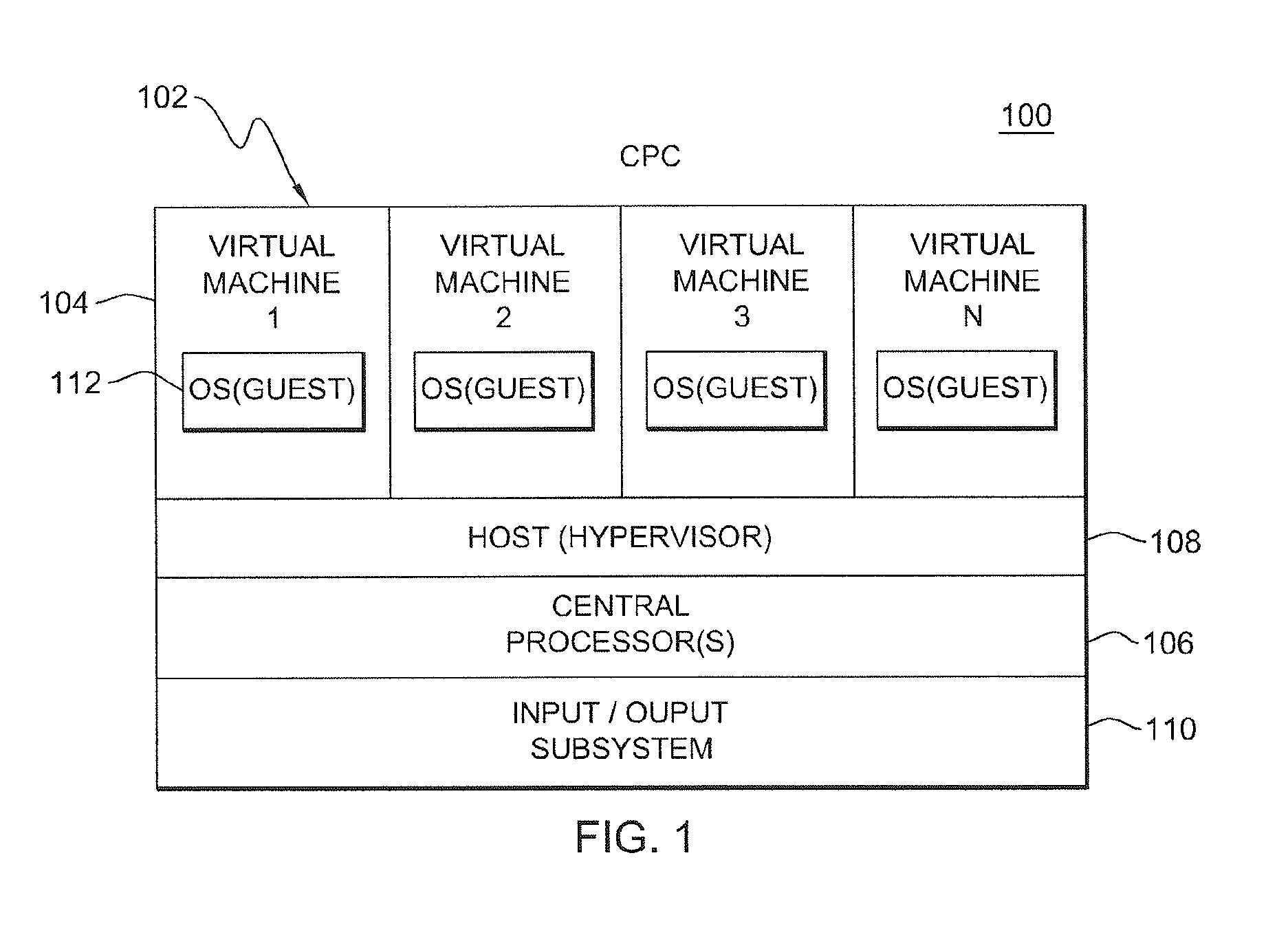

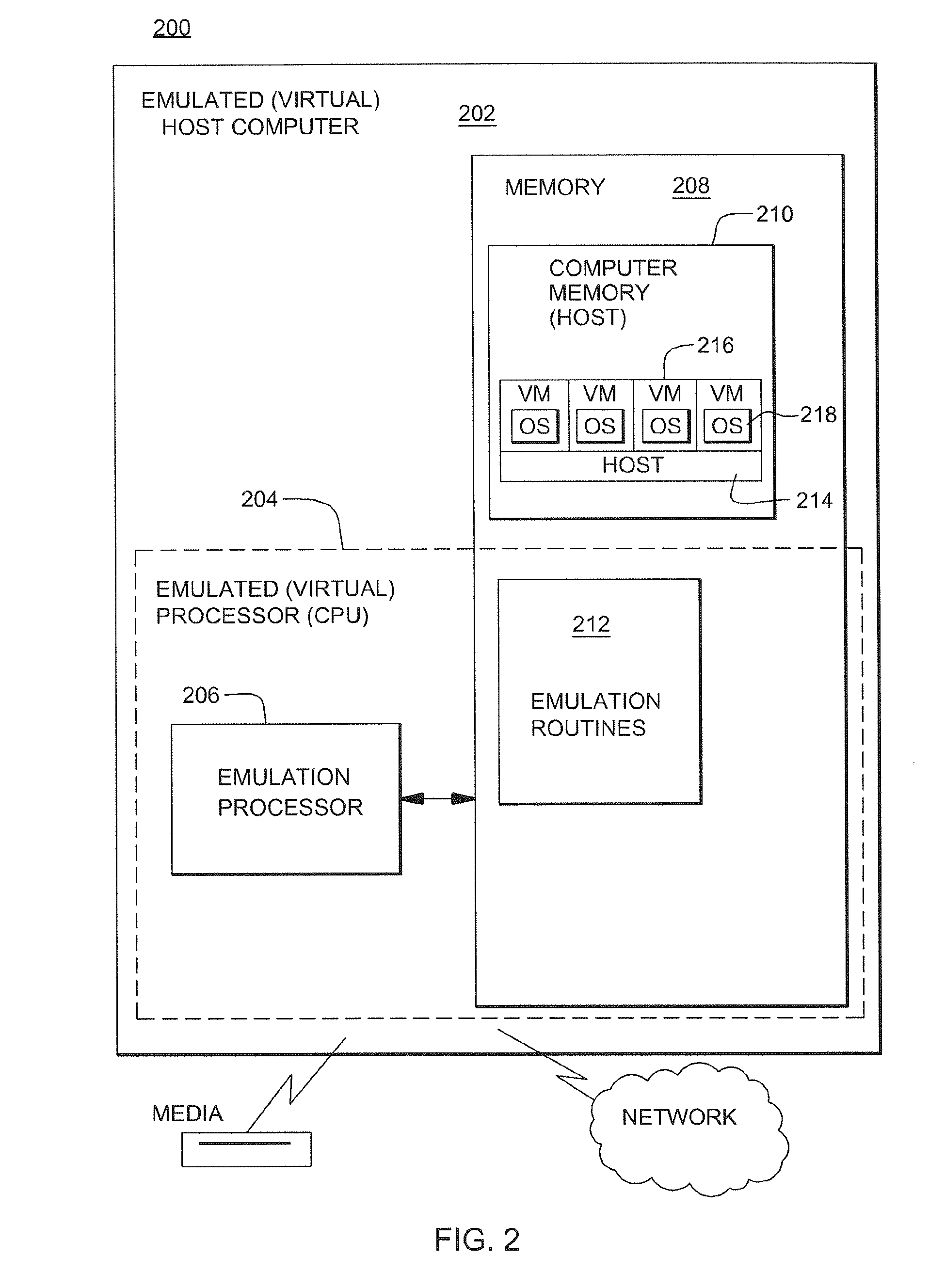

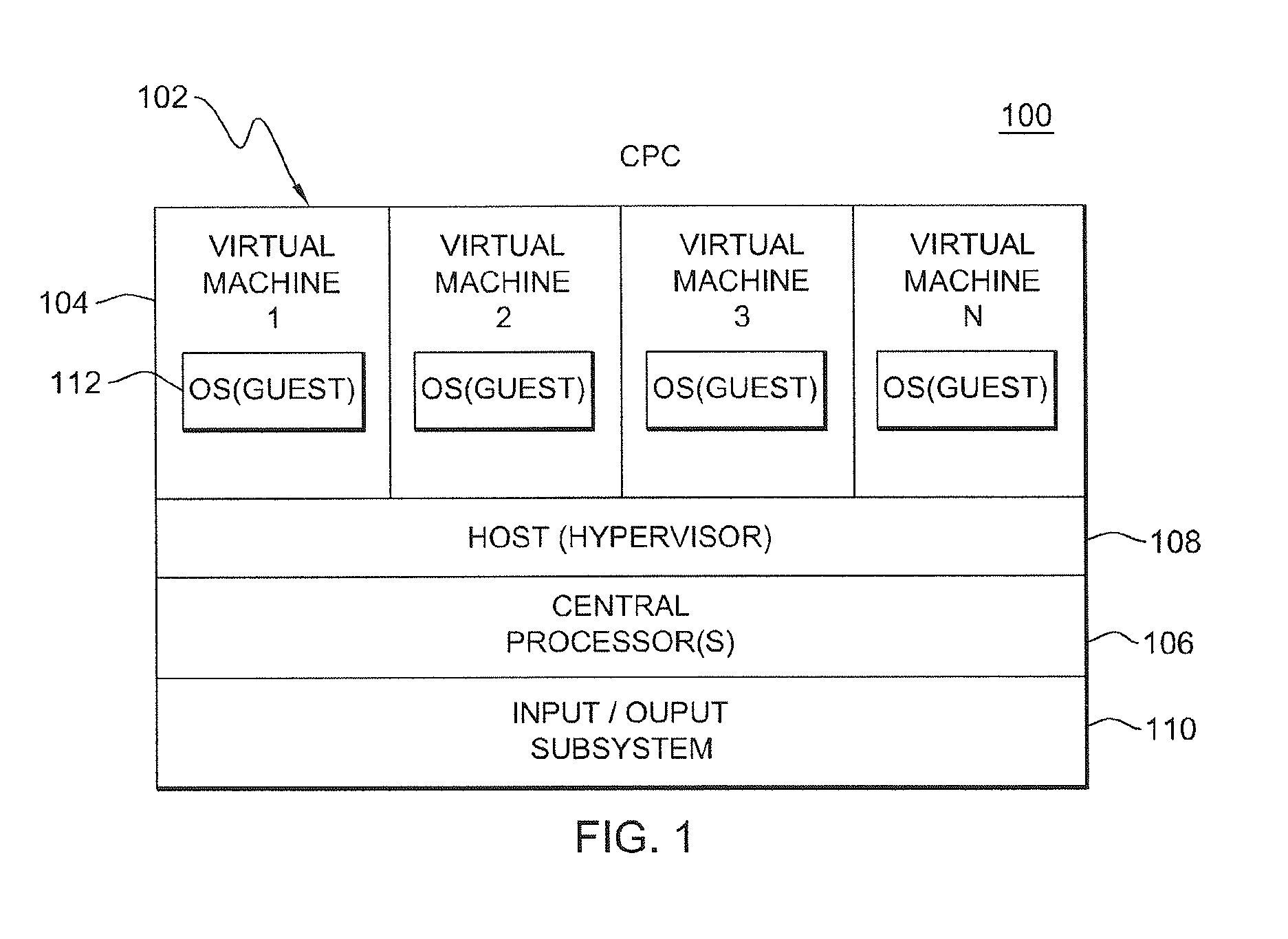

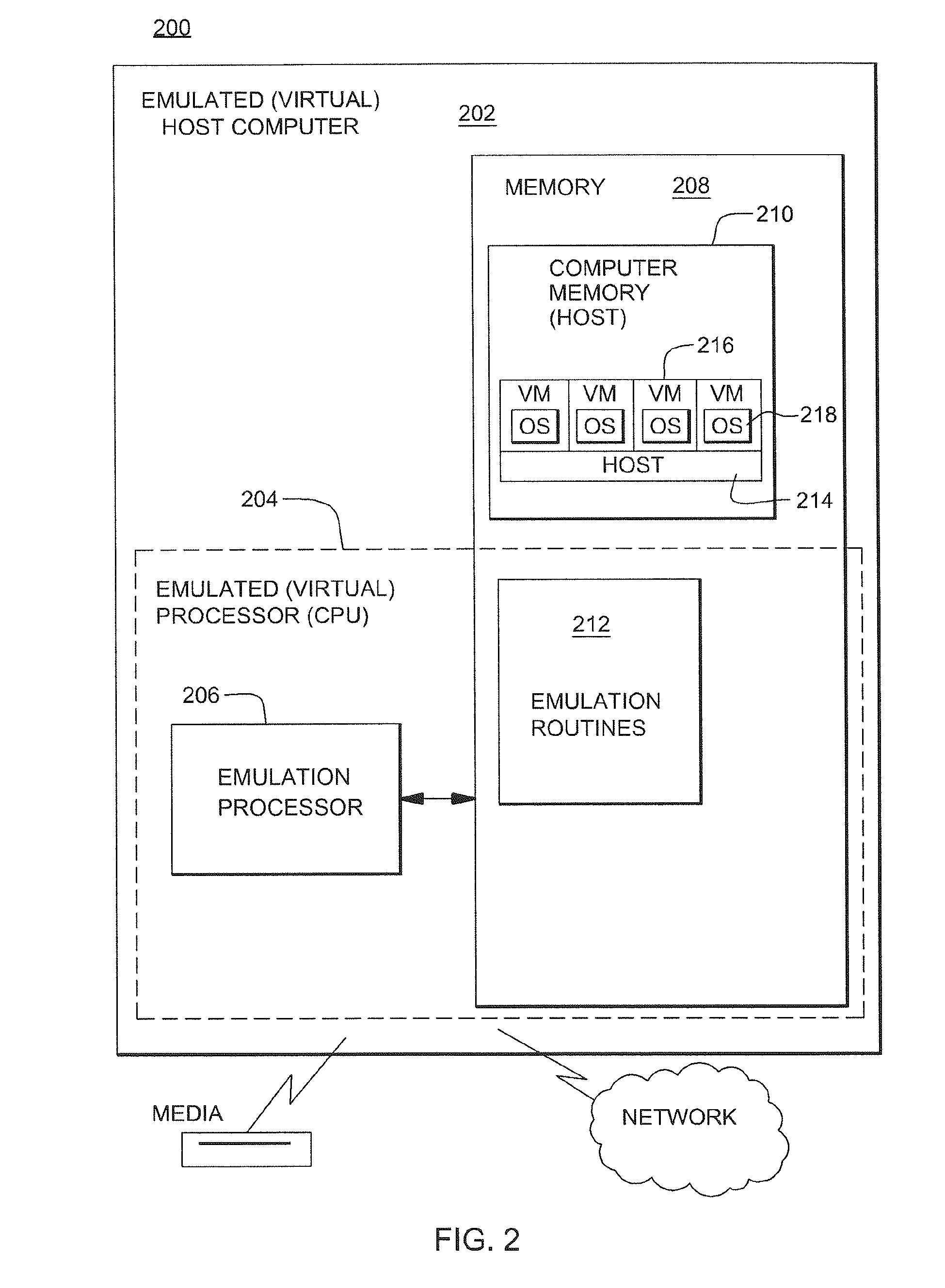

Optimizations of a perform frame management function issued by pageable guests

ActiveUS20090216984A1Reduce context switchingEasy to processMemory architecture accessing/allocationRegister arrangementsComputer hardwarePaging

Optimizations are provided for frame management operations, including a clear operation and / or a set storage key operation, requested by pageable guests. The operations are performed, absent host intervention, on frames not resident in host memory. The operations may be specified in an instruction issued by the pageable guests.

Owner:IBM CORP

Optimizations of a perform frame management function issued by pageable guests

ActiveUS8086811B2Reduce context switchingEasy to processMemory architecture accessing/allocationRegister arrangementsComputer hardwarePaging

Optimizations are provided for frame management operations, including a clear operation and / or a set storage key operation, requested by pageable guests. The operations are performed, absent host intervention, on frames not resident in host memory. The operations may be specified in an instruction issued by the pageable guests.

Owner:IBM CORP

High Performance, High Bandwidth Network Operating System

InactiveUS20120039336A1Reduce memory usageReduce context switchingData switching by path configurationProgram controlNetwork operating systemOperational system

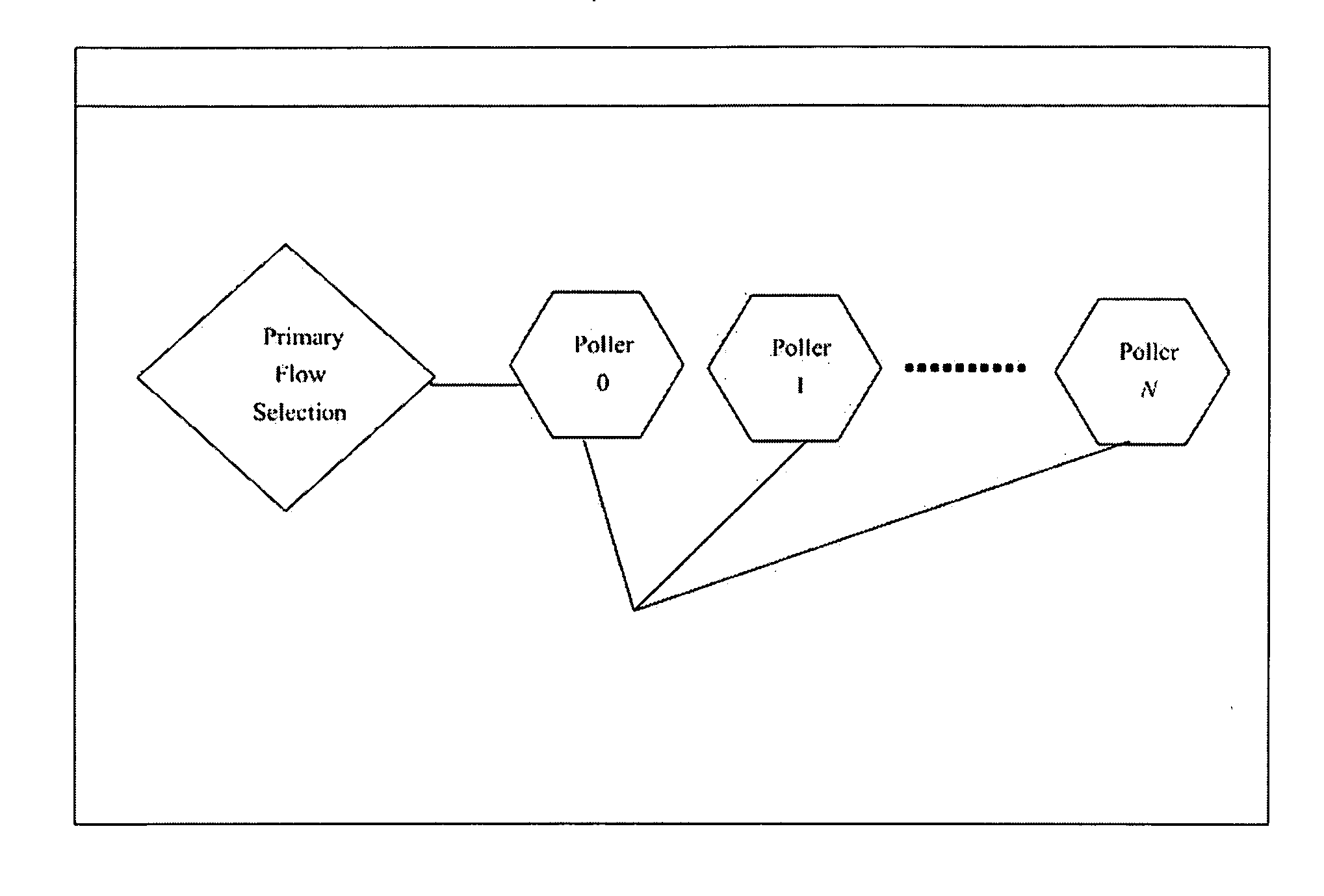

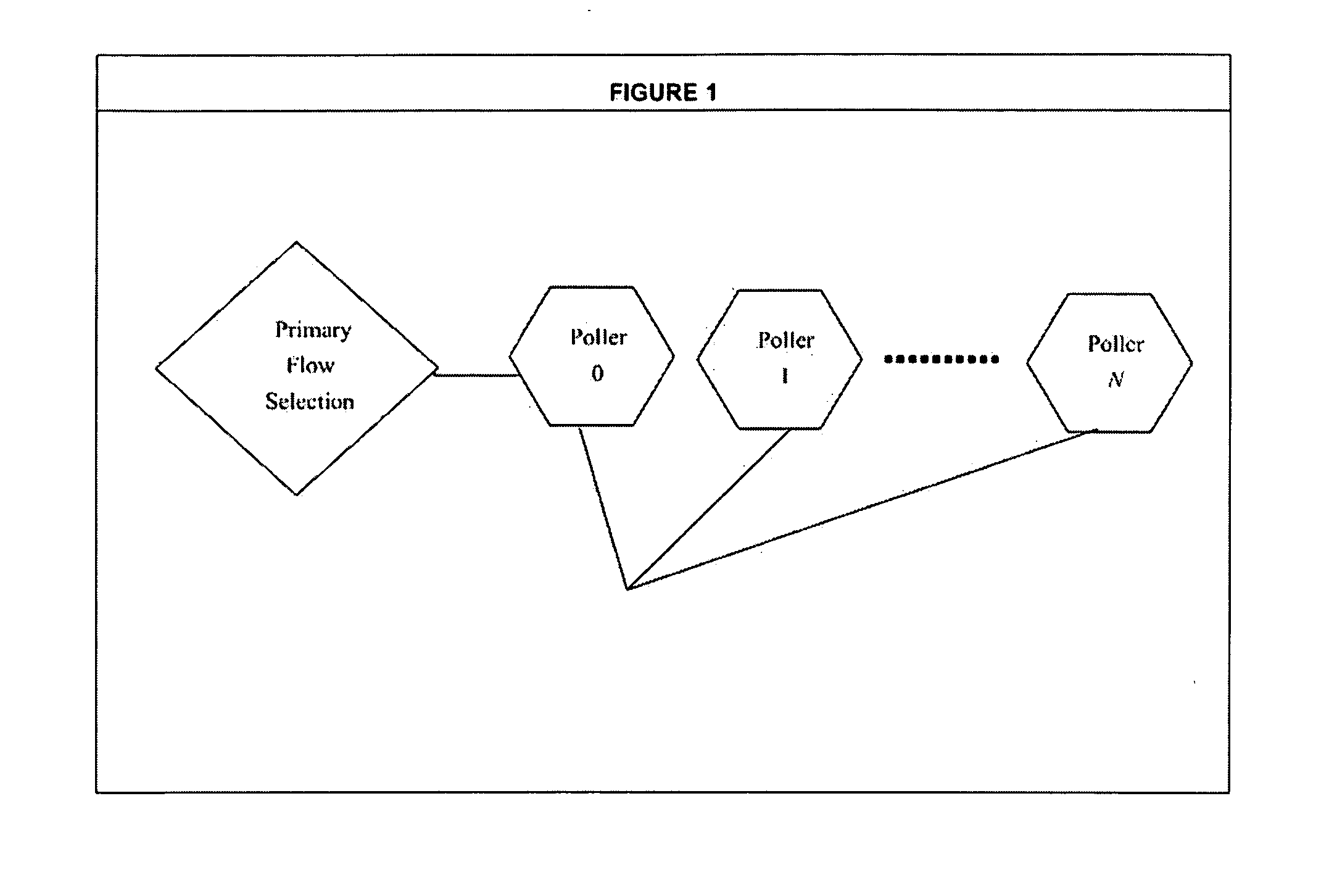

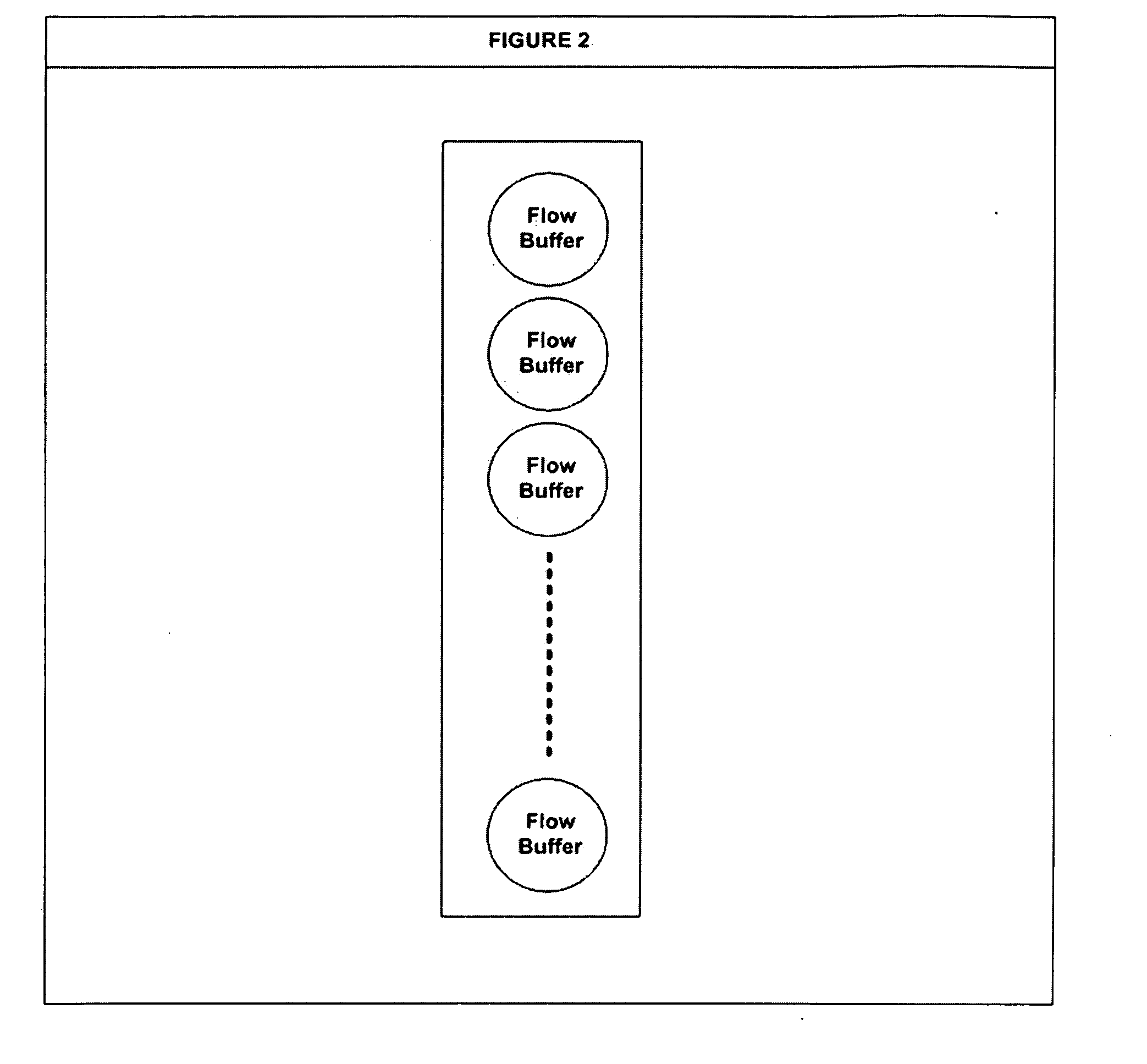

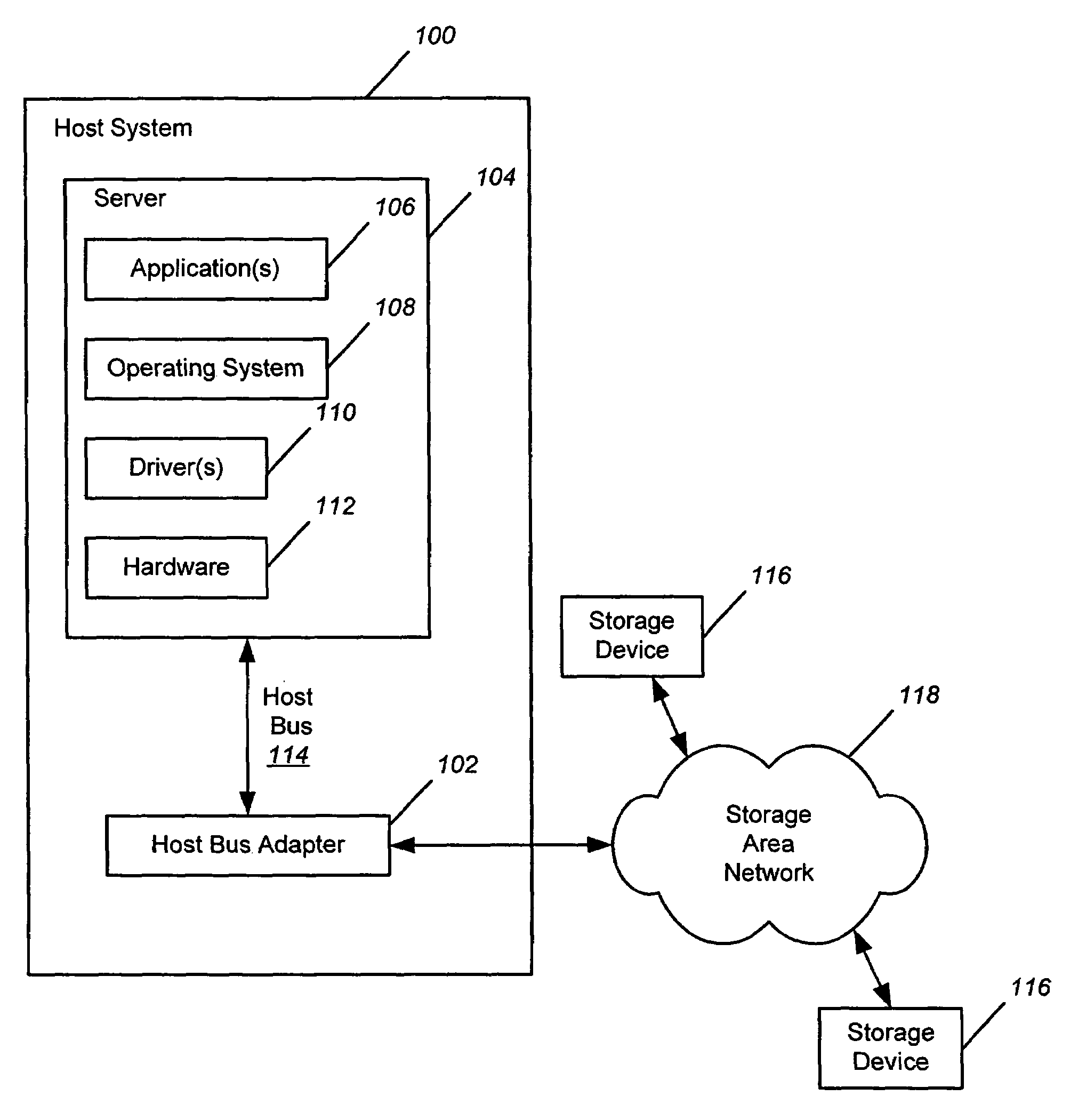

The present subject matter relates to computer operating systems, network interface cards and drivers, CPUs, random access memory and high bandwidth speeds. More specifically, a Linux operating system has specially-designed stream buffers, polling systems interfacing with network interface cards and multiple threads to deliver high performance, high bandwidth packets through the kernel to applications. A system and method are provided for capturing, aggregating, pre- analyzing and delivering packets to user space within a kernel to be primarily used by intrusion detection systems at multi-gigabit line rate speeds.

Owner:RICHMOND ALFRED +2

Prerequisite-based scheduler

InactiveUS7370326B2Reduce context switchingEasy to operateProgram initiation/switchingDigital computer detailsOperating systemPrecondition

A prerequisite-based scheduler is disclosed which takes into account system resource prerequisites for execution. Tasks are only scheduled when they can successfully run to completion and therefore a task, once dispatched, is guaranteed not to become blocked. In a prerequisite table, tasks are identified horizontally, and resources needed for the tasks are identified vertically. At the bottom of the table is the system state, which represents the current state of all resources in the system. If a Boolean AND operation is applied to the task prerequisite row and the system state, and if the result is the same as the prerequisite row, then the task is dispatchable. In one embodiment of the present invention, the prerequisite based scheduler (dispatcher) walks through the prerequisite table from top to bottom until a task is found whose prerequisites are satisfied by the system state. Once found, this task is dispatched.

Owner:AVAGO TECH INT SALES PTE LTD

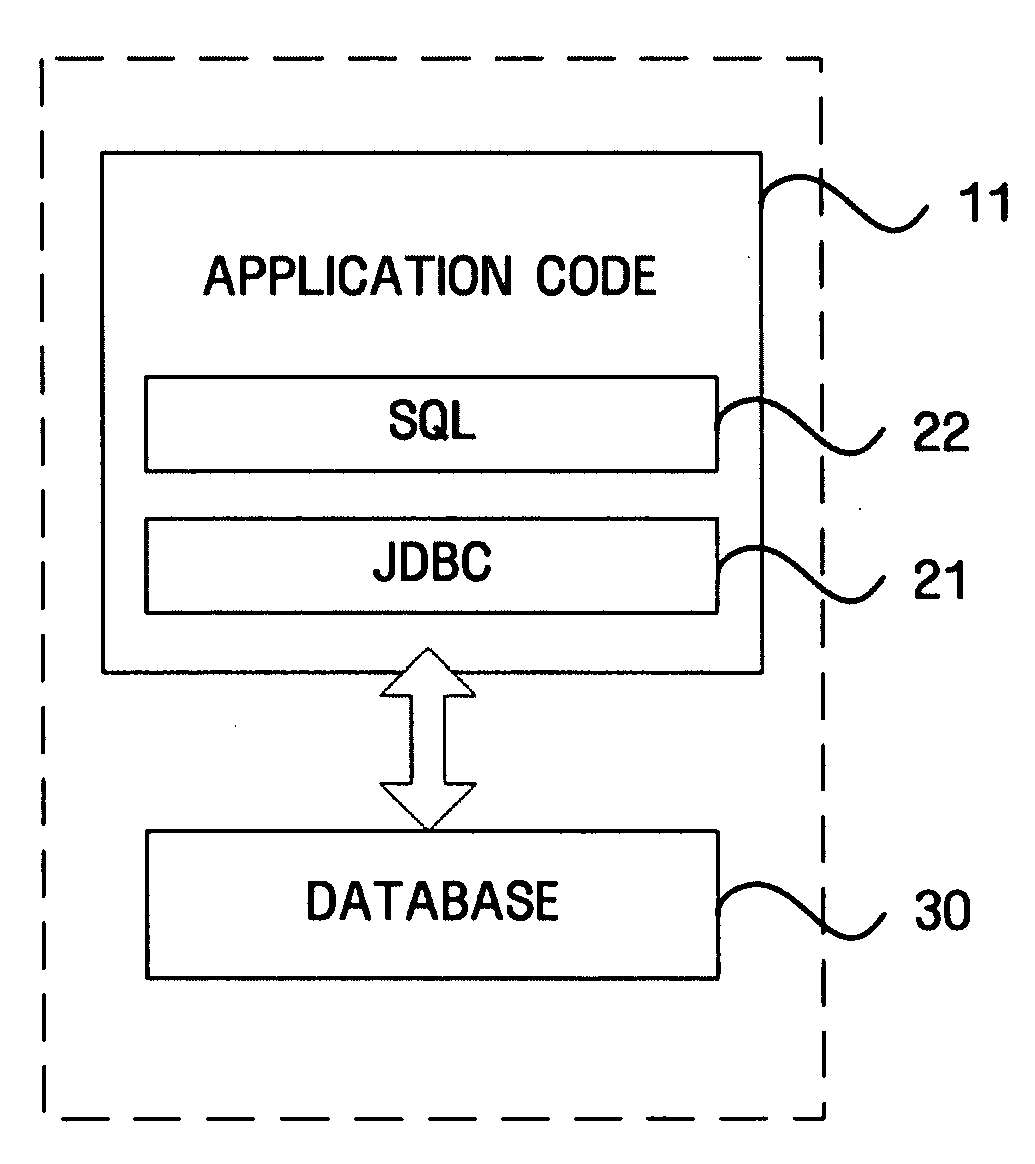

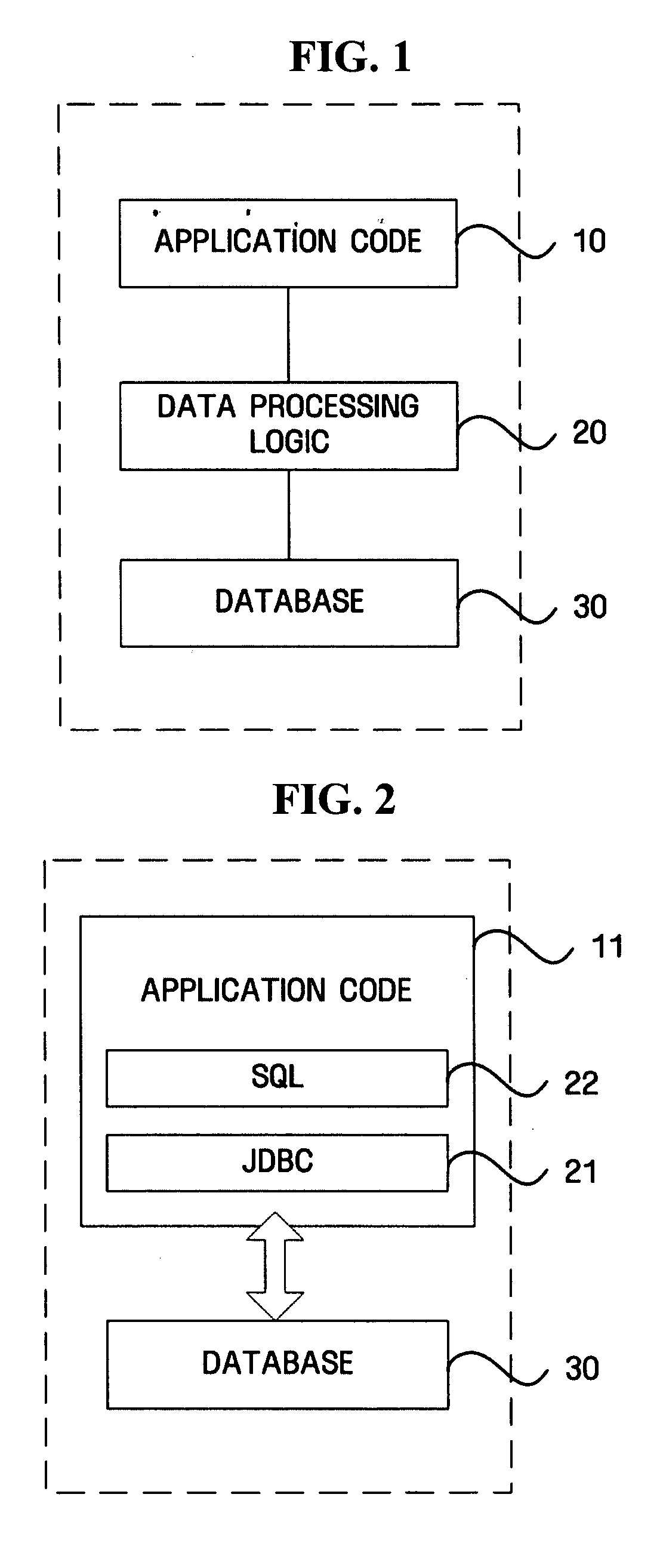

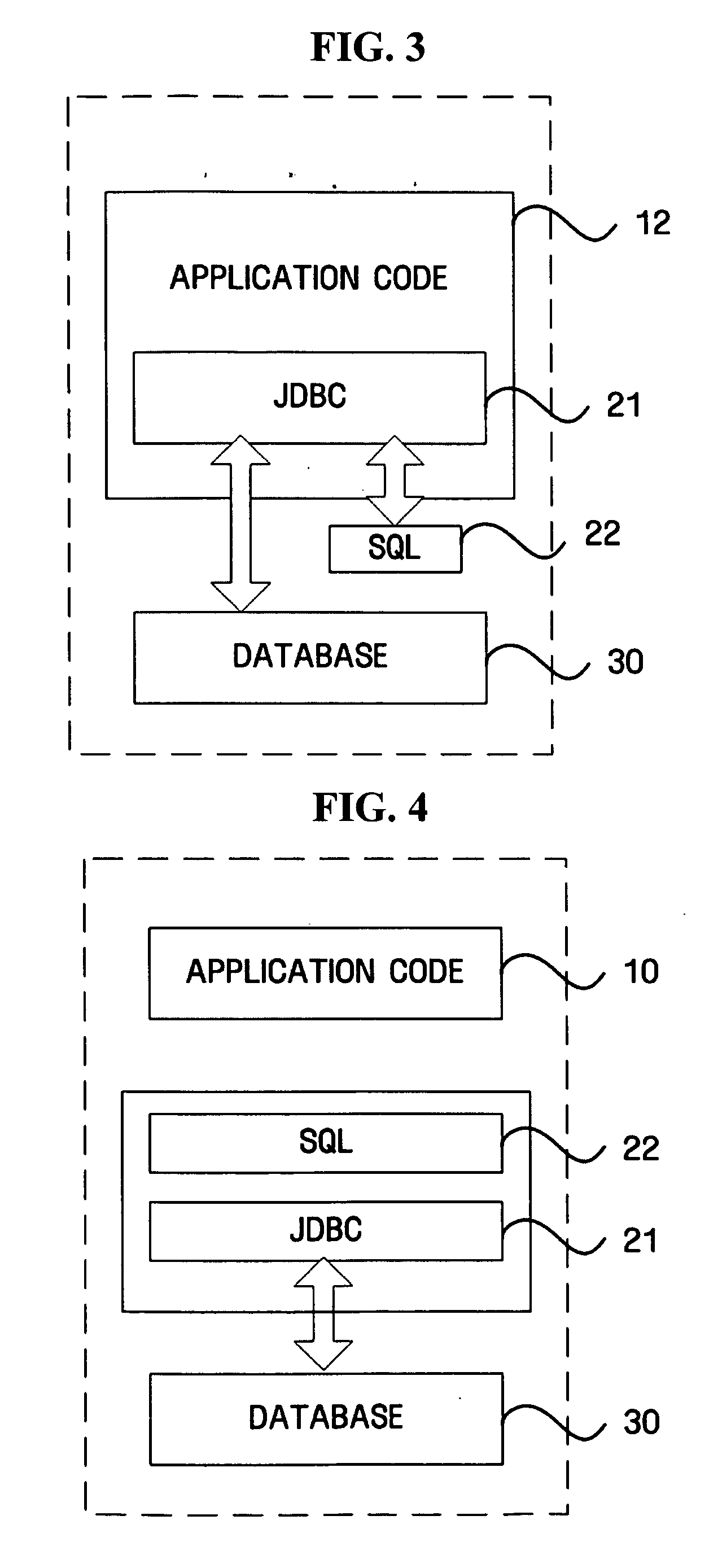

System and method for implementing database application while guaranteeing independence of software modules

InactiveUS20060277158A1Reduce context switchingGuaranteed independenceDigital data protectionProgramming languages/paradigmsSoftware engineeringProcessing element

Provided are a system and method for implementing a database application while guaranteeing the independence of software modules. The system includes an XML processing unit, an SQL information unit, an object pool, and a scheduler. The system can ensure the independence of software modules and the flexibility of developing a database application, reduce the development and maintenance cost of software programs, and guarantee the independence of software programs.

Owner:SAMSUNG ELECTRONICS CO LTD

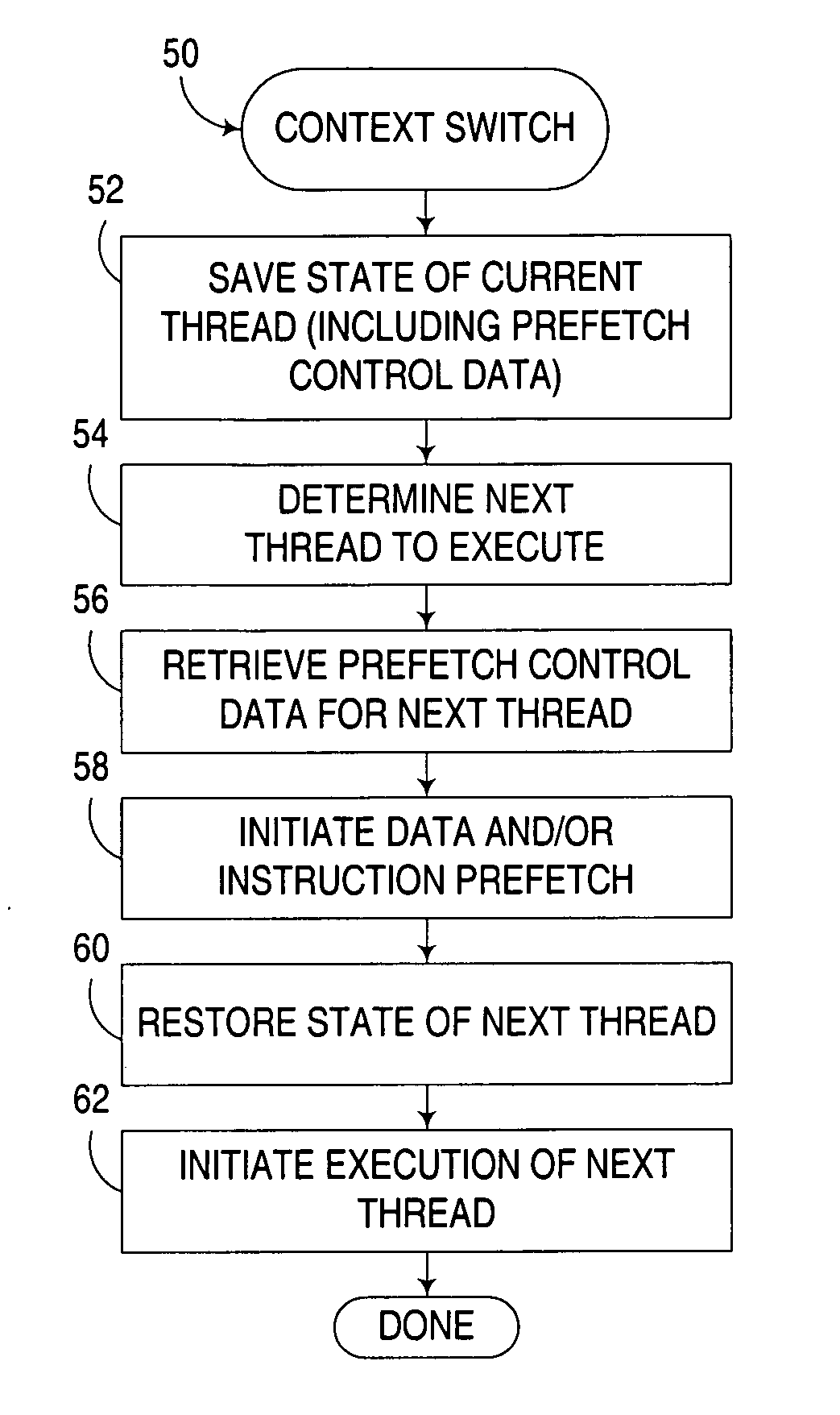

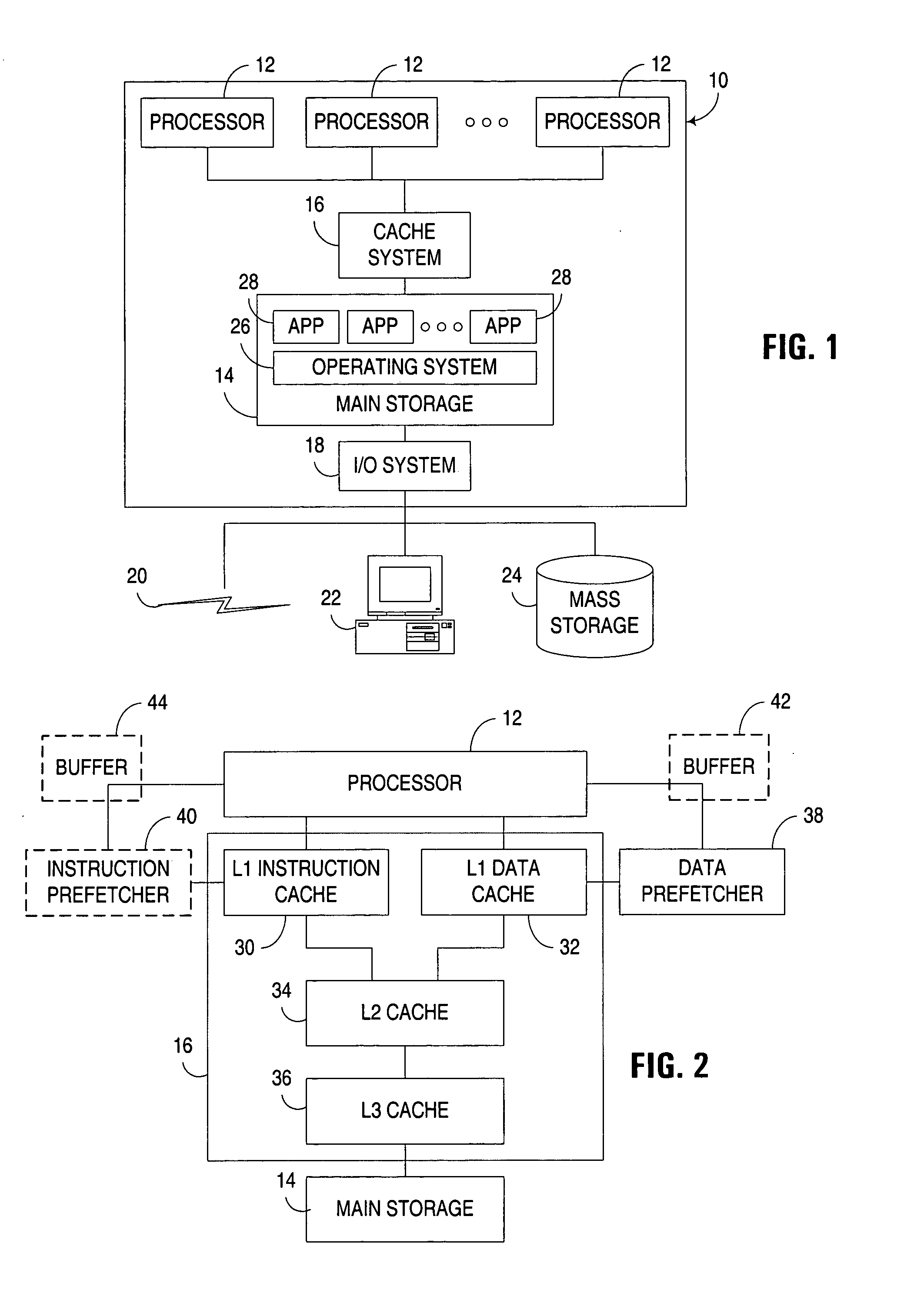

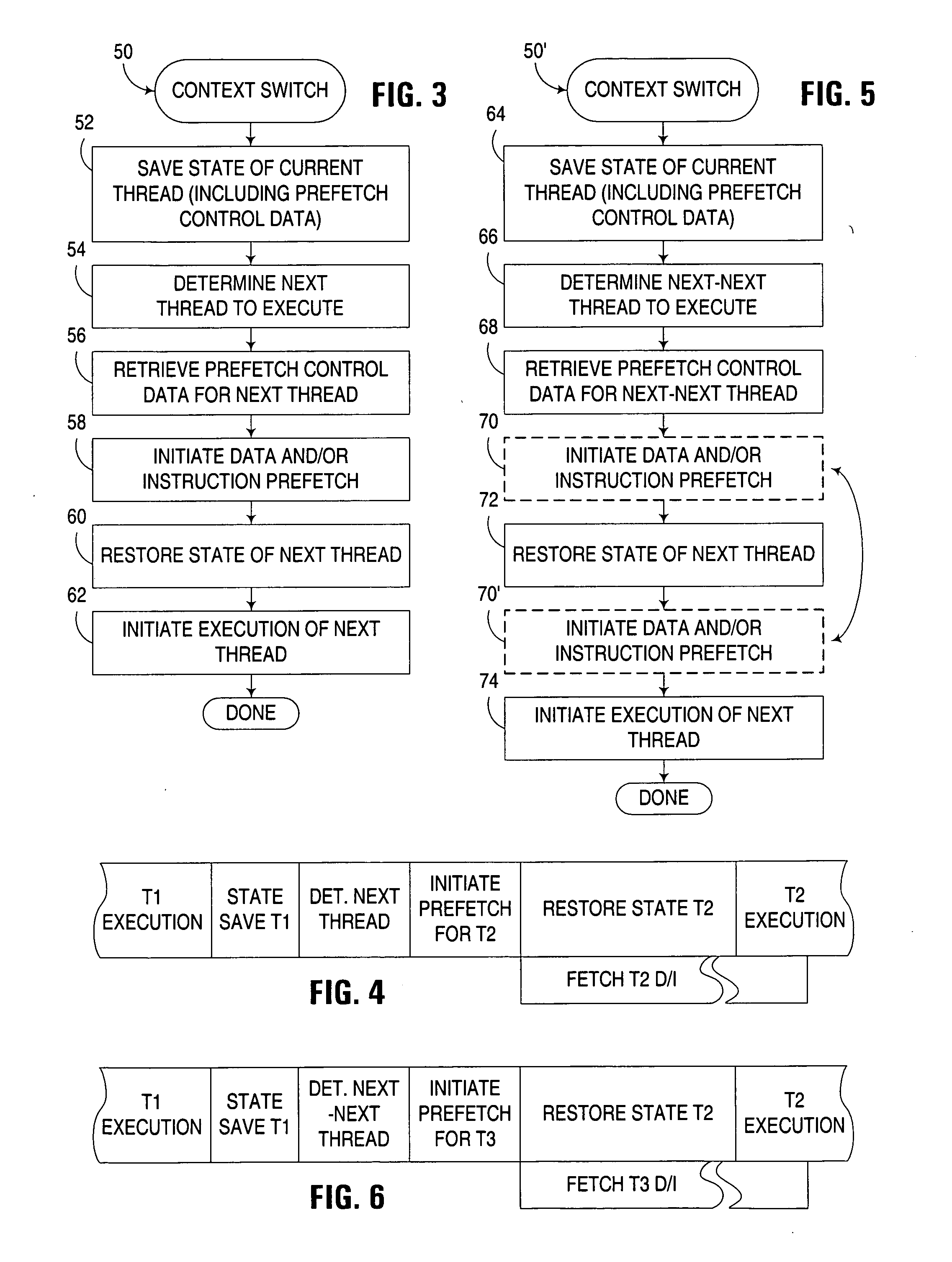

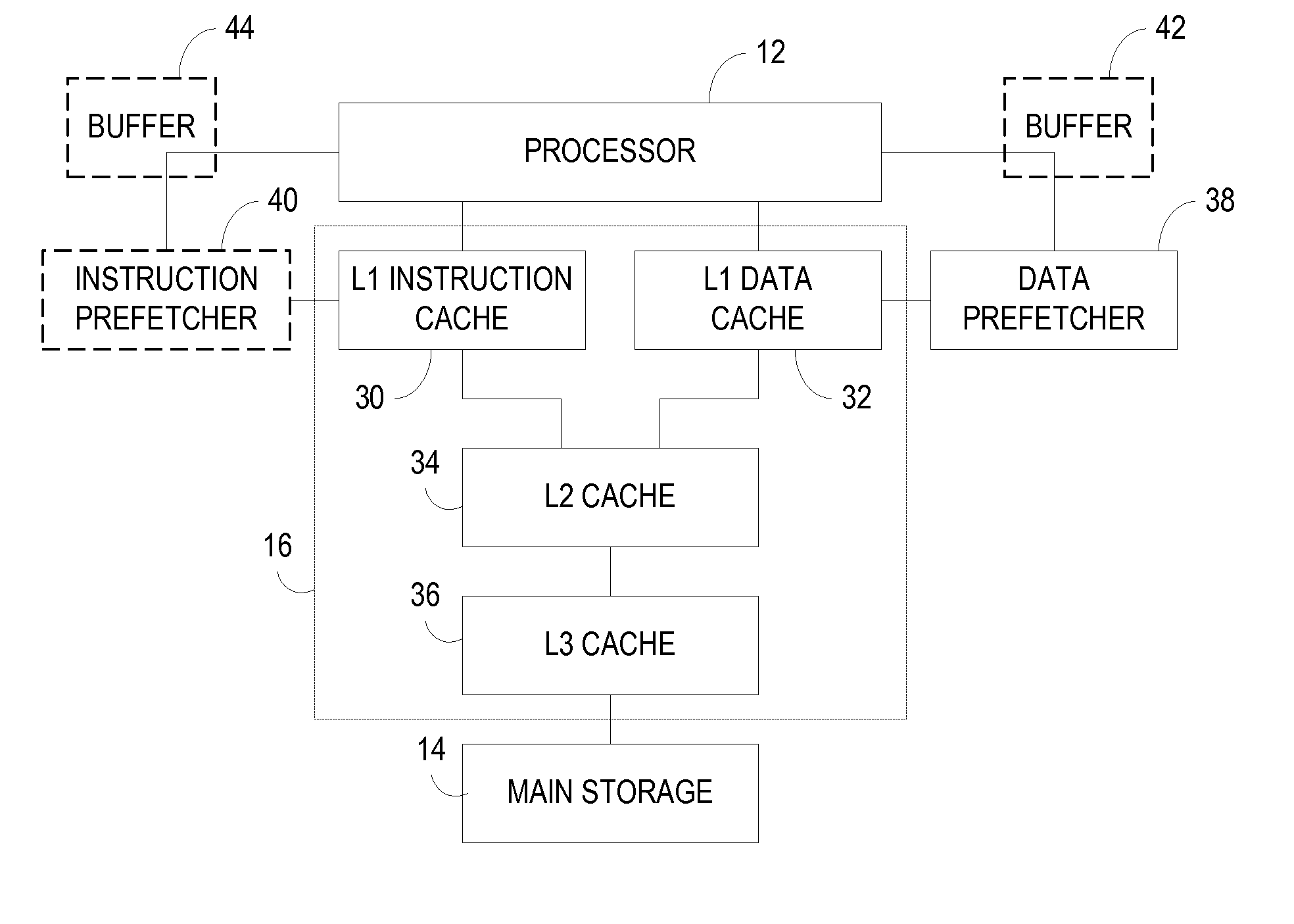

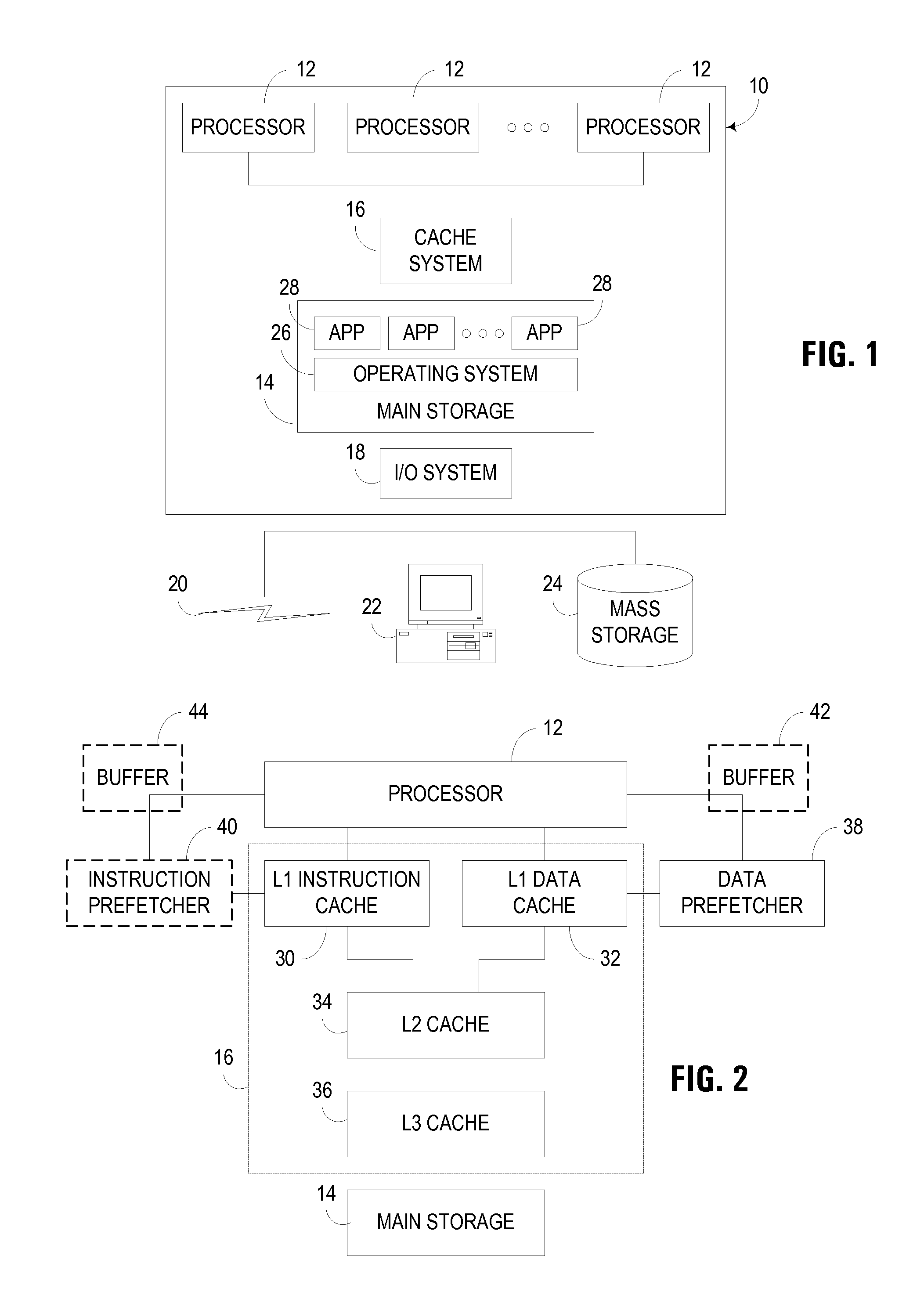

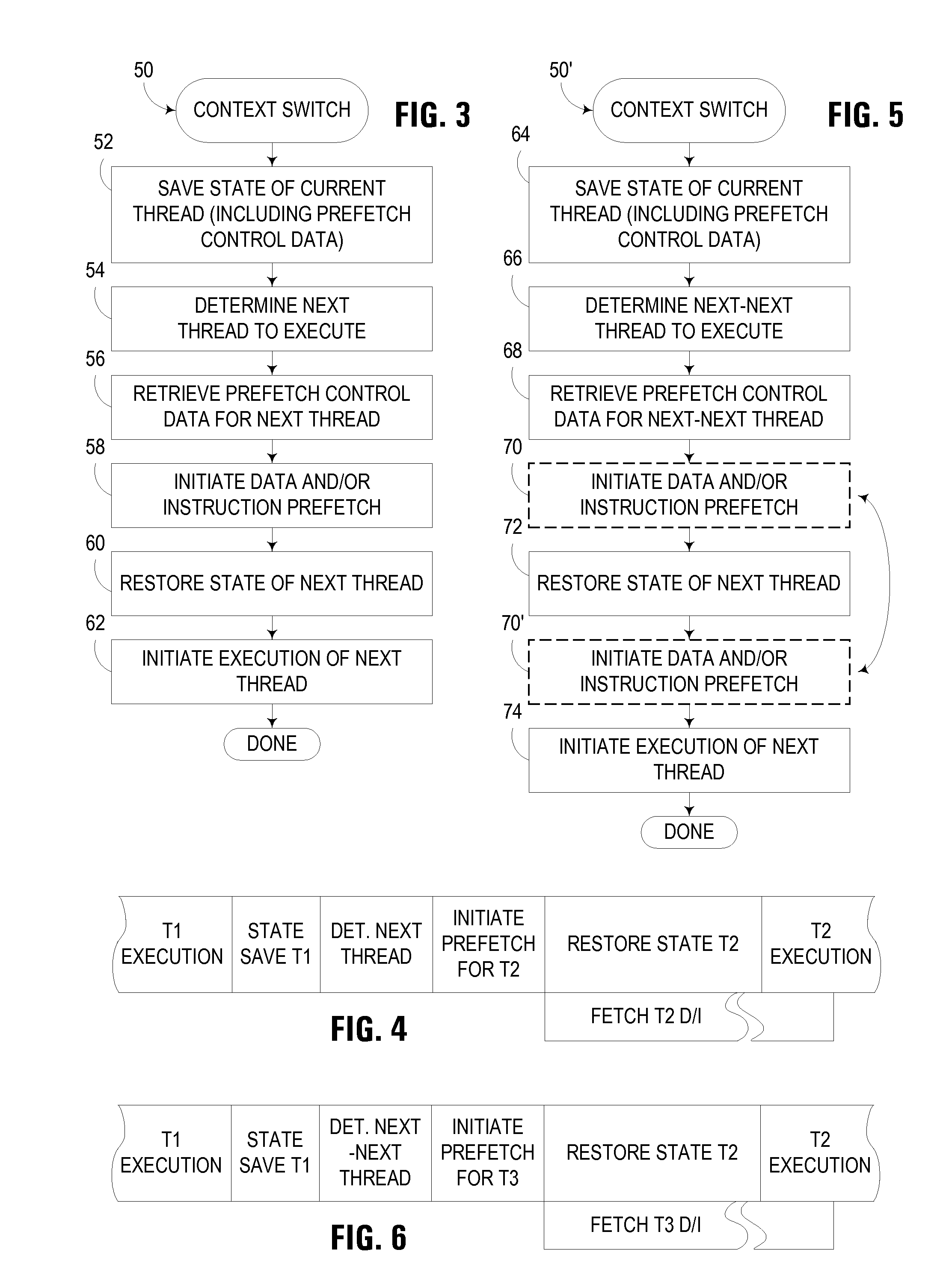

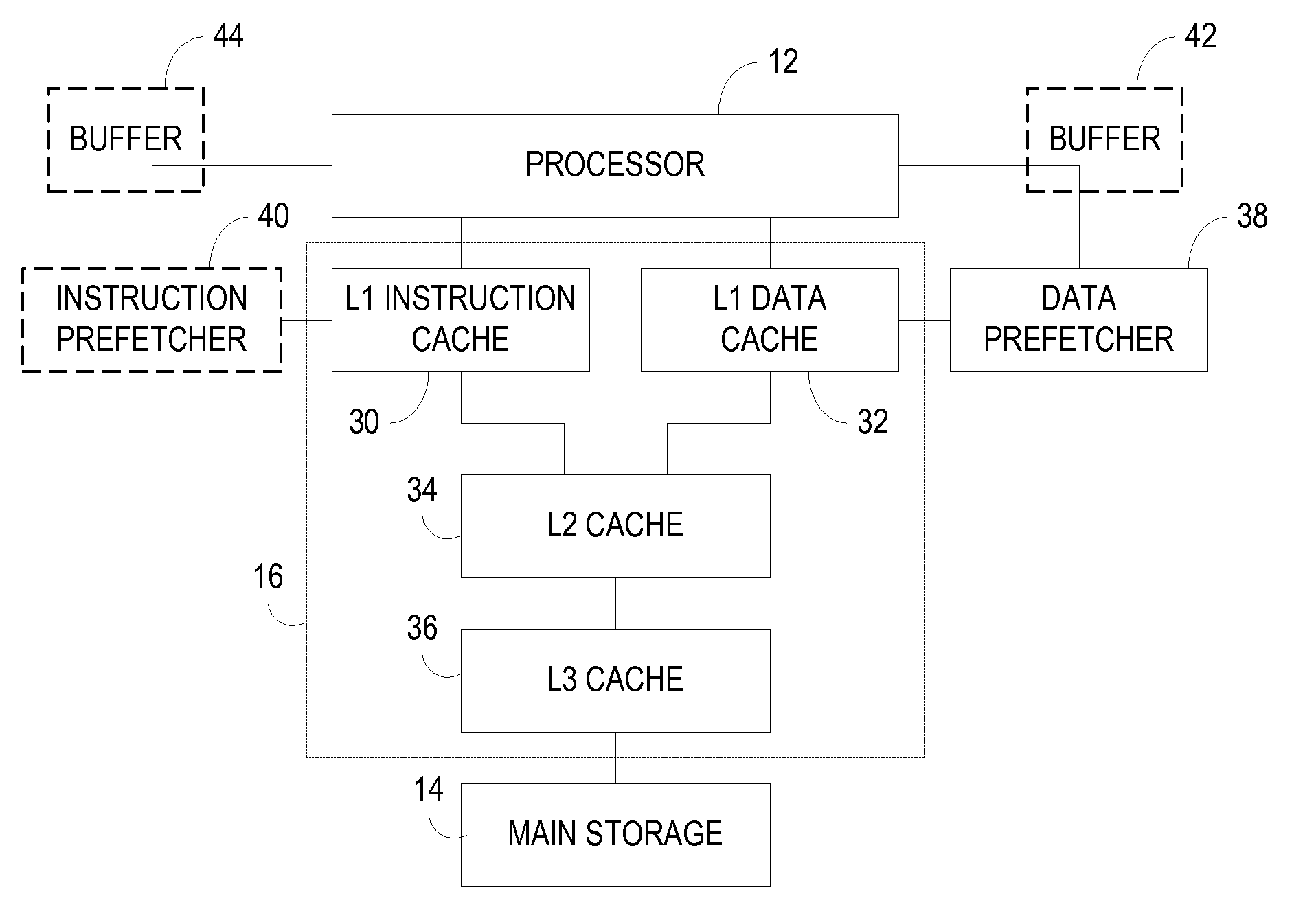

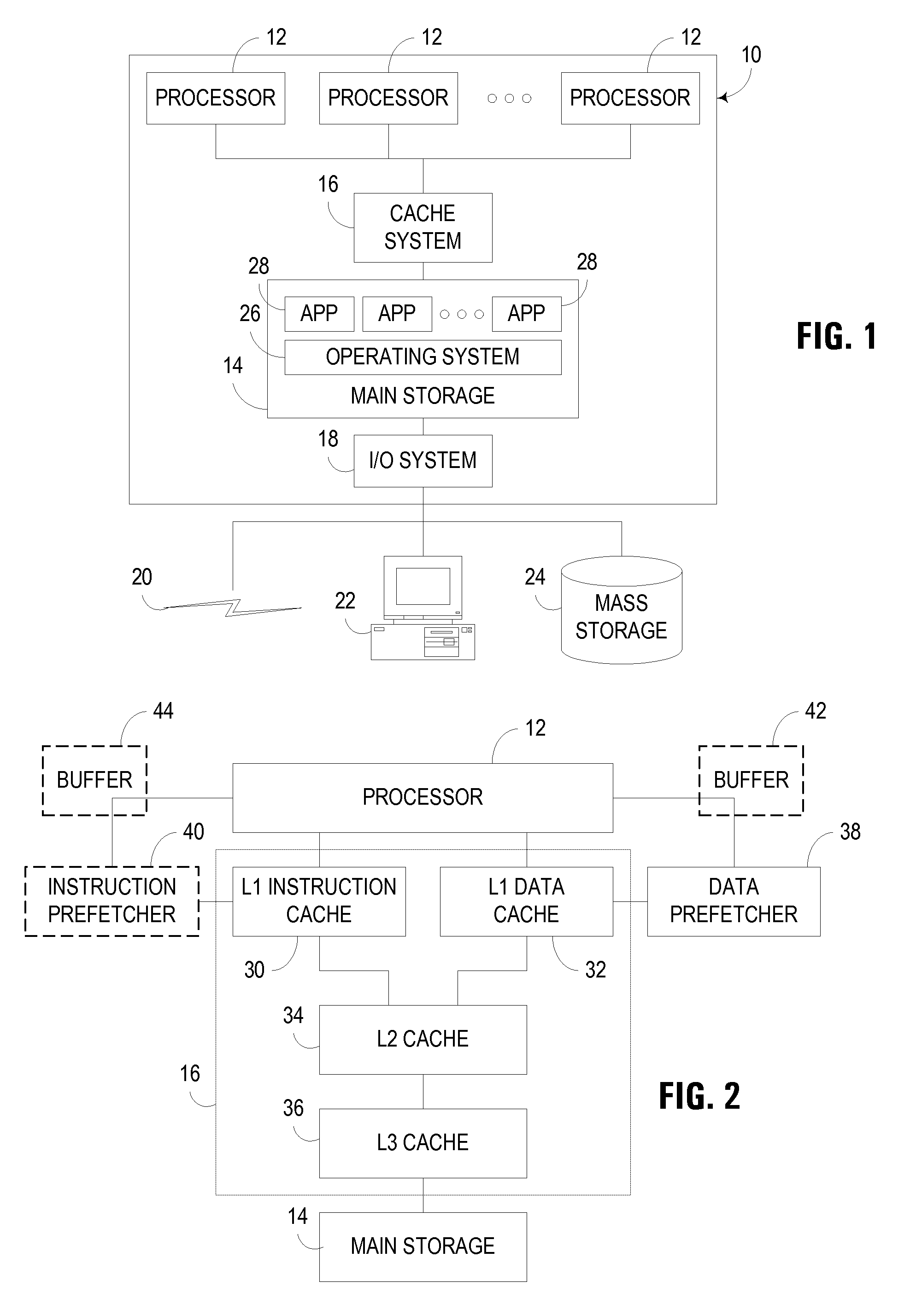

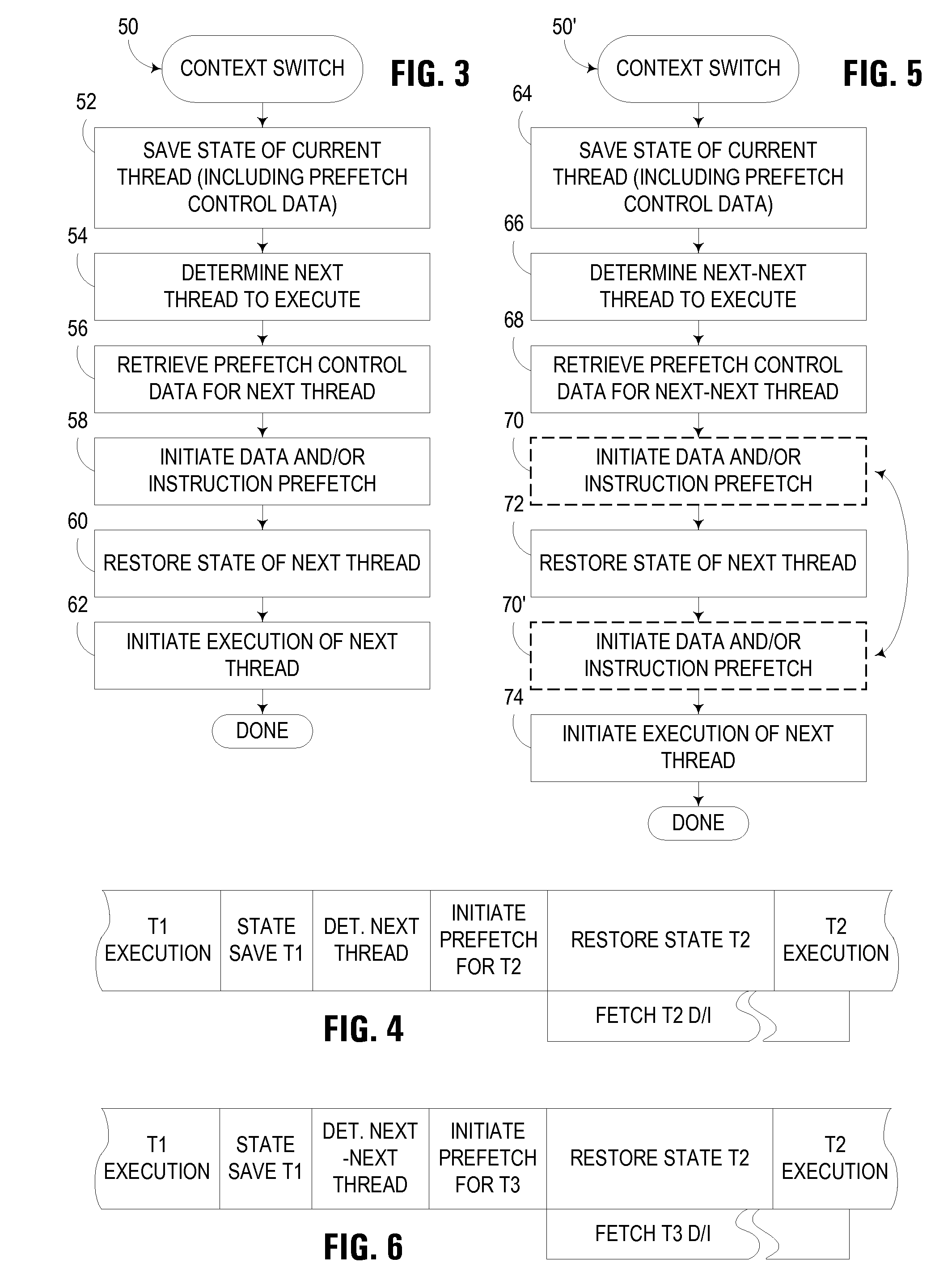

Context switch data prefetching in multithreaded computer

InactiveUS20050138627A1Reduce context switchingImprove system performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationOperating systemContext switch

An apparatus, program product and method initiate, in connection with a context switch operation, a prefetch of data likely to be used by a thread prior to resuming execution of that thread. As a result, once it is known that a context switch will be performed to a particular thread, data may be prefetched on behalf of that thread so that when execution of the thread is resumed, more of the working state for the thread is likely to be cached, or at least in the process of being retrieved into cache memory, thus reducing cache-related performance penalties associated with context switching.

Owner:IBM CORP

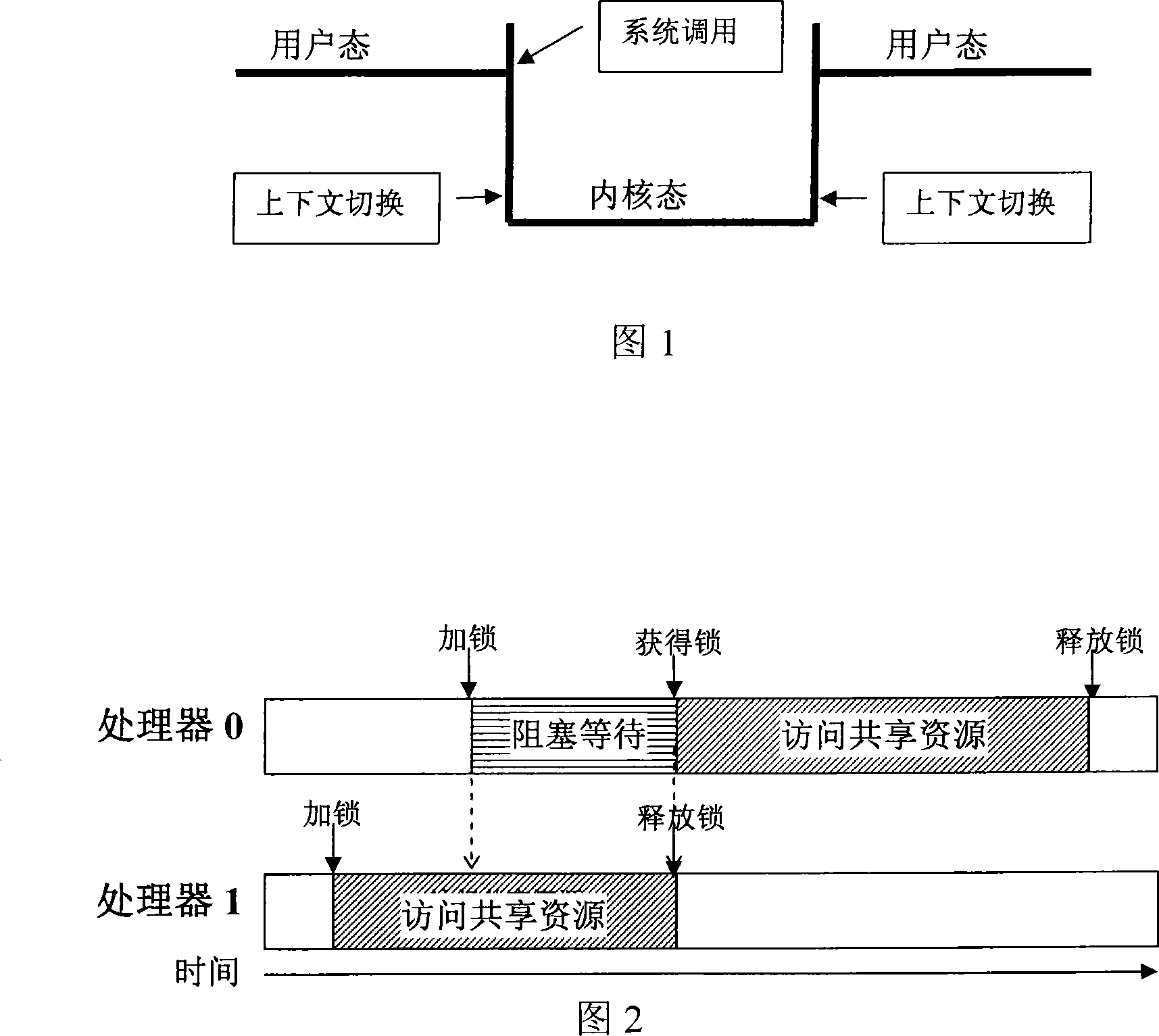

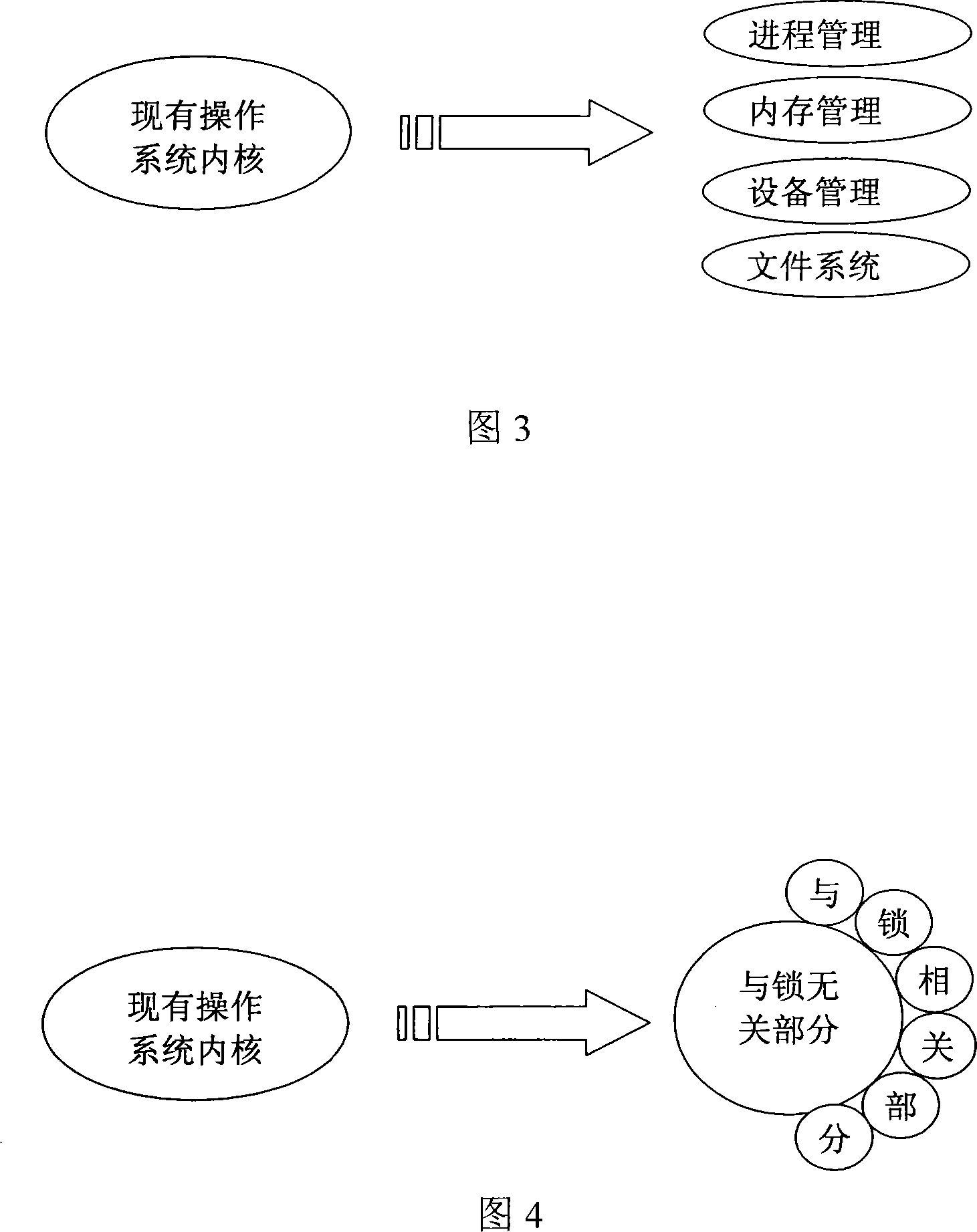

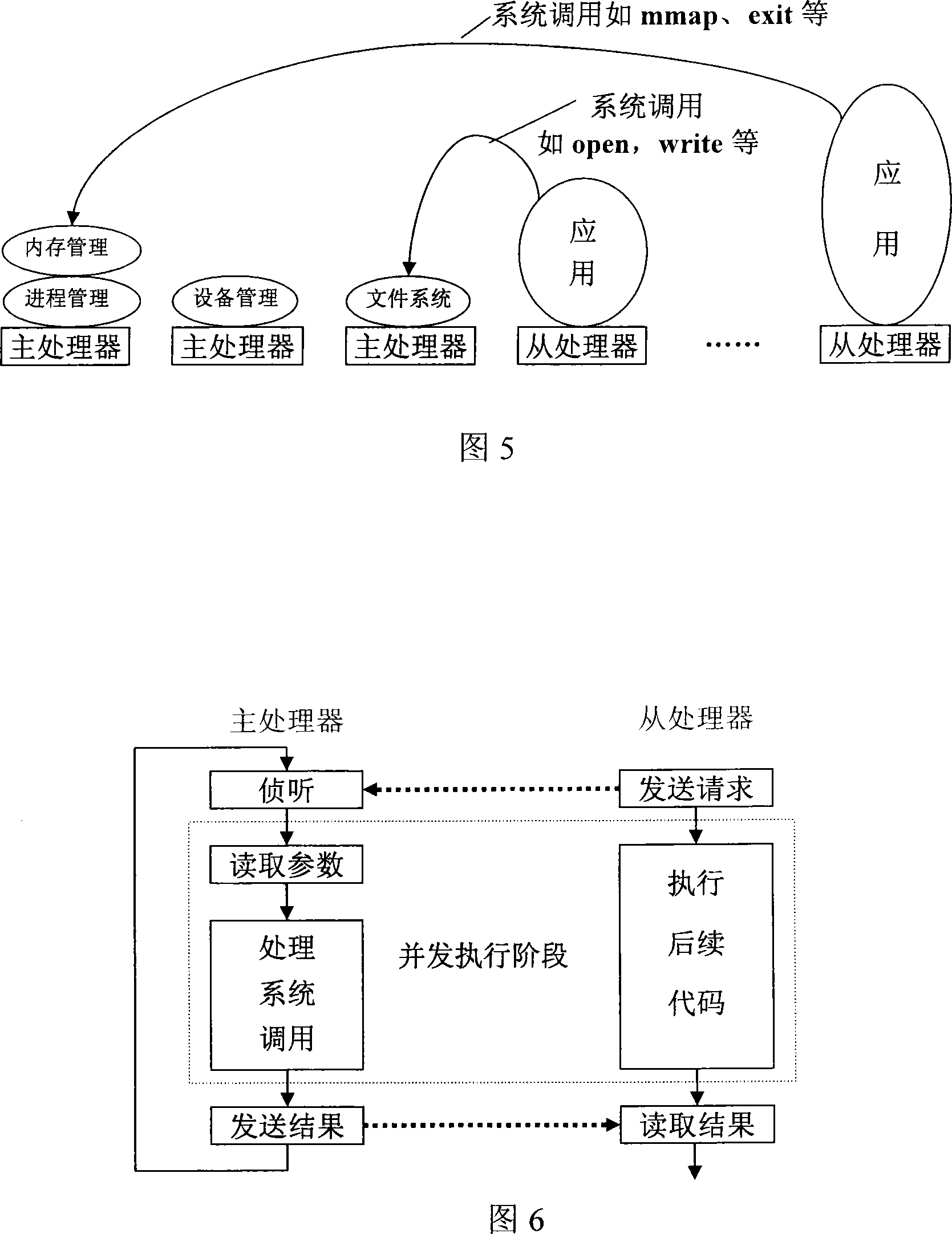

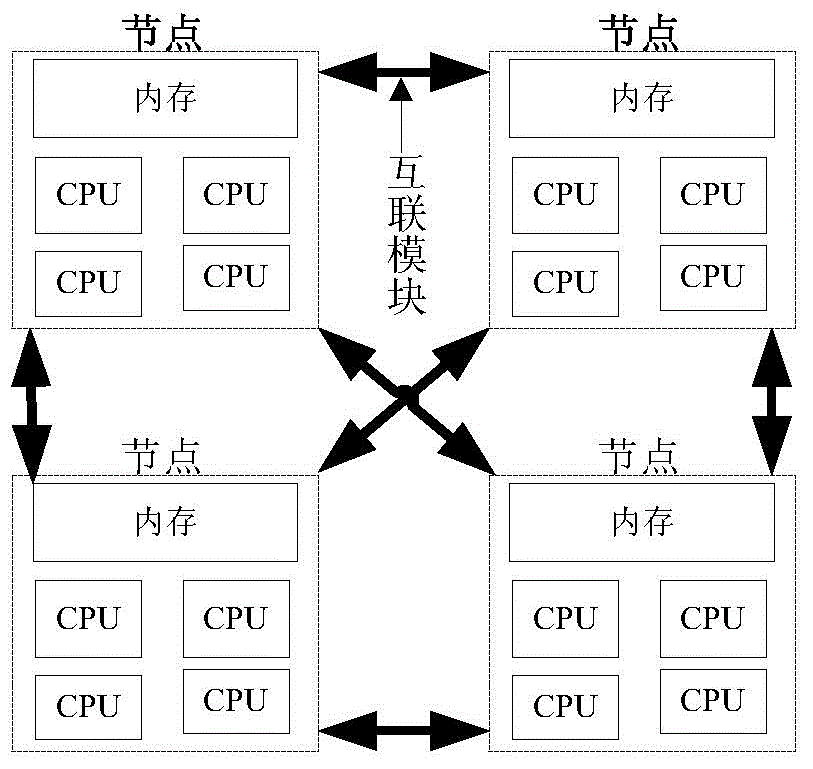

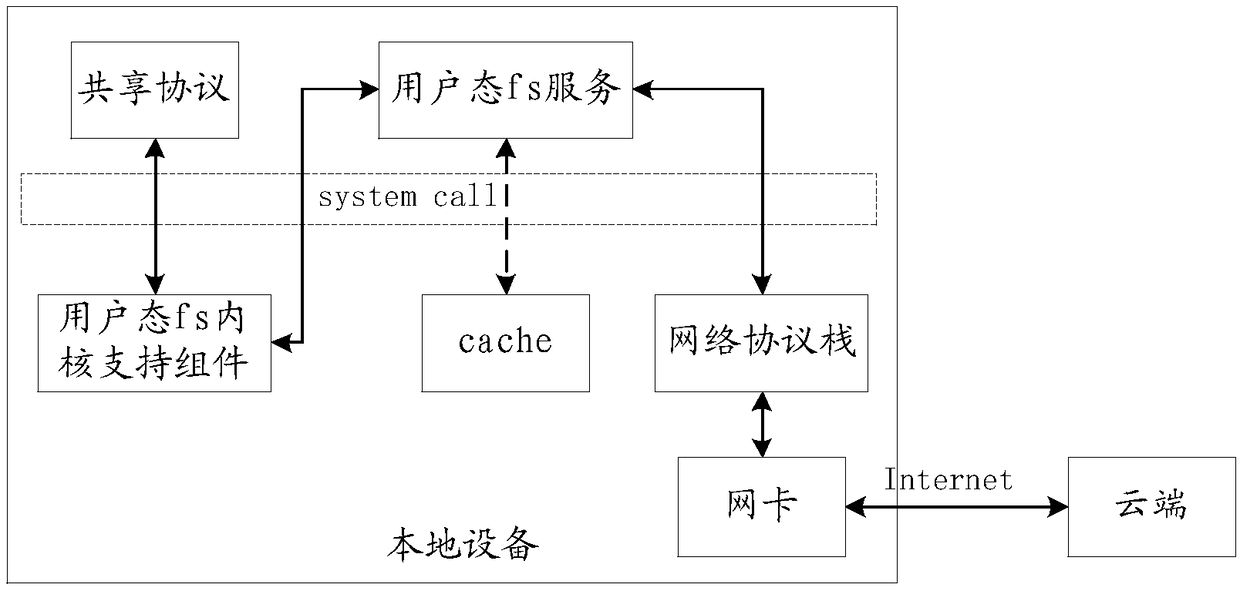

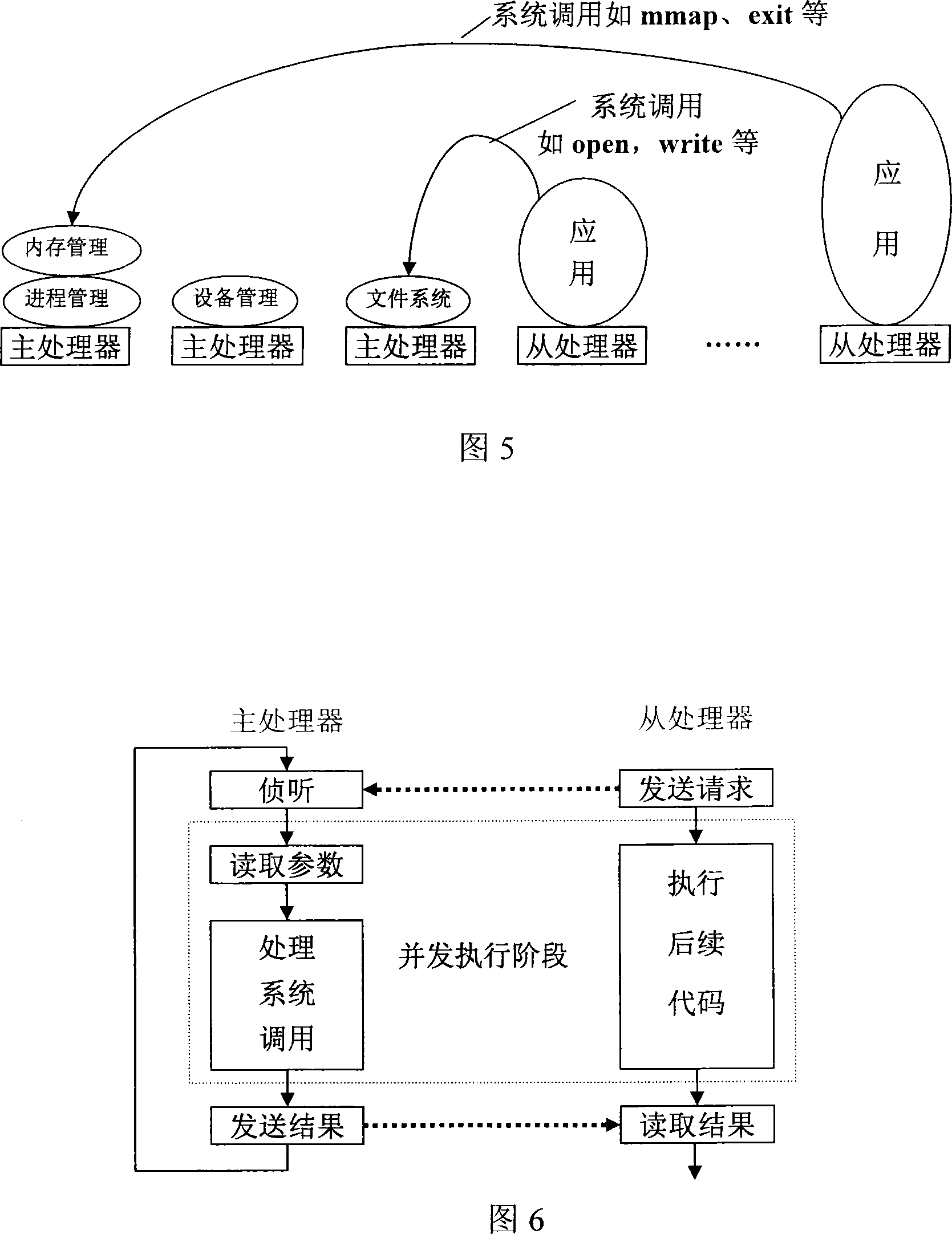

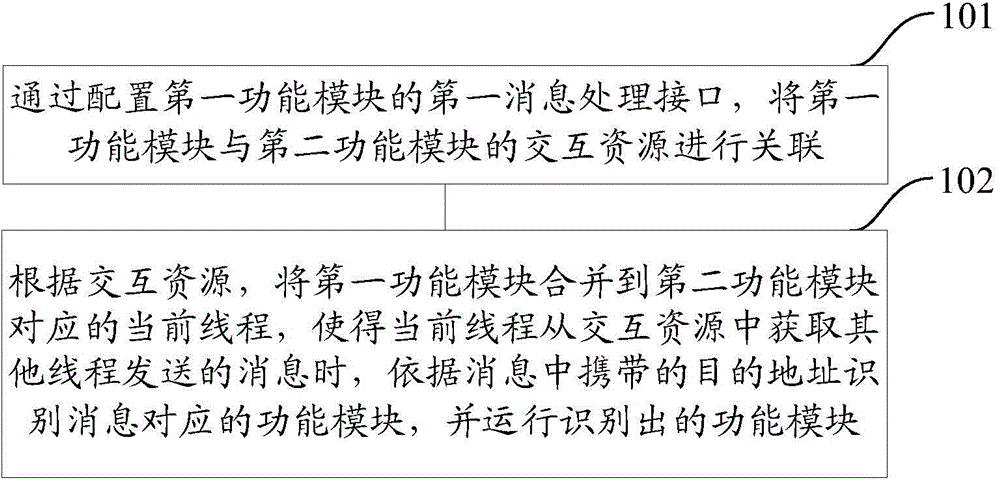

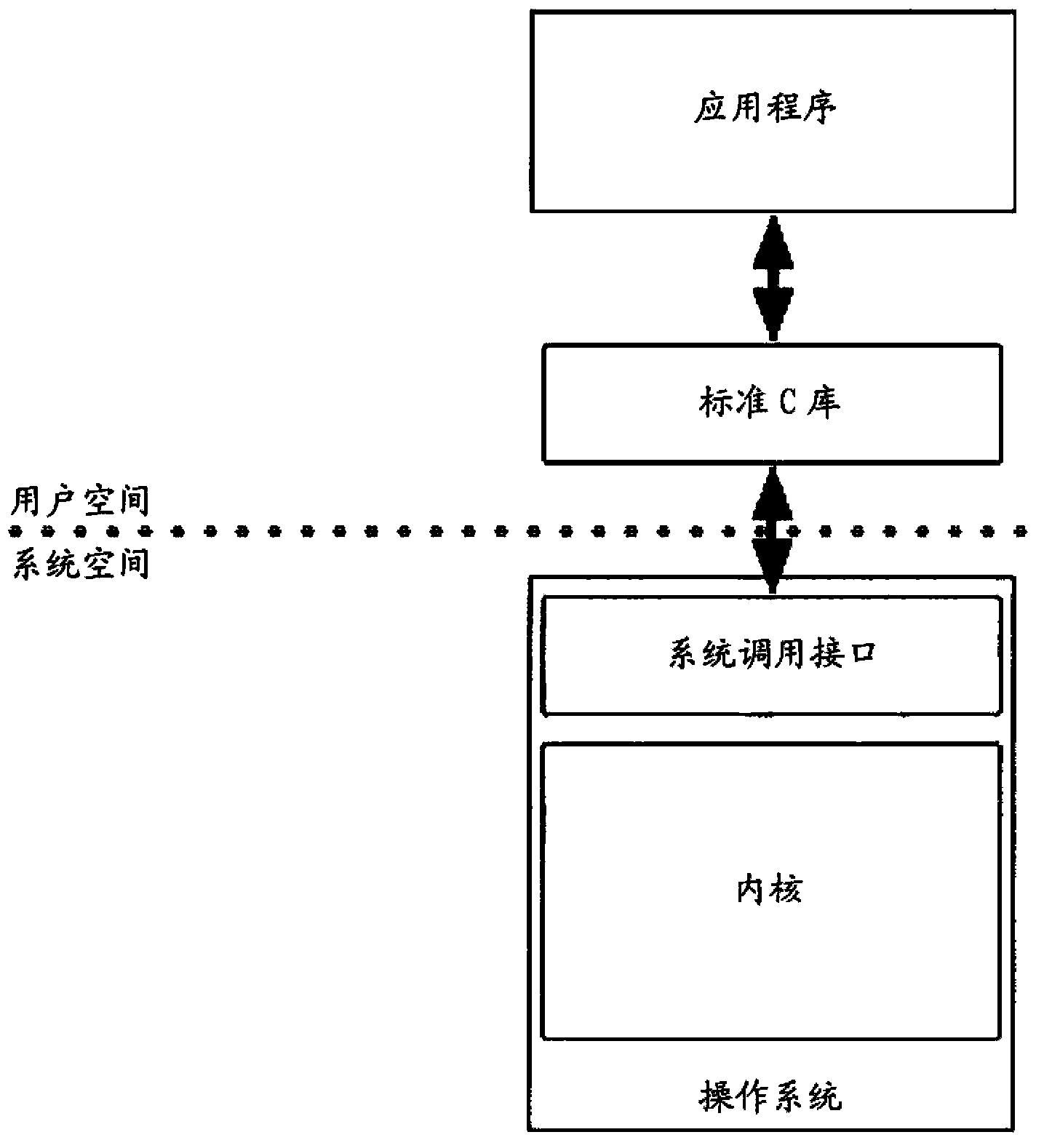

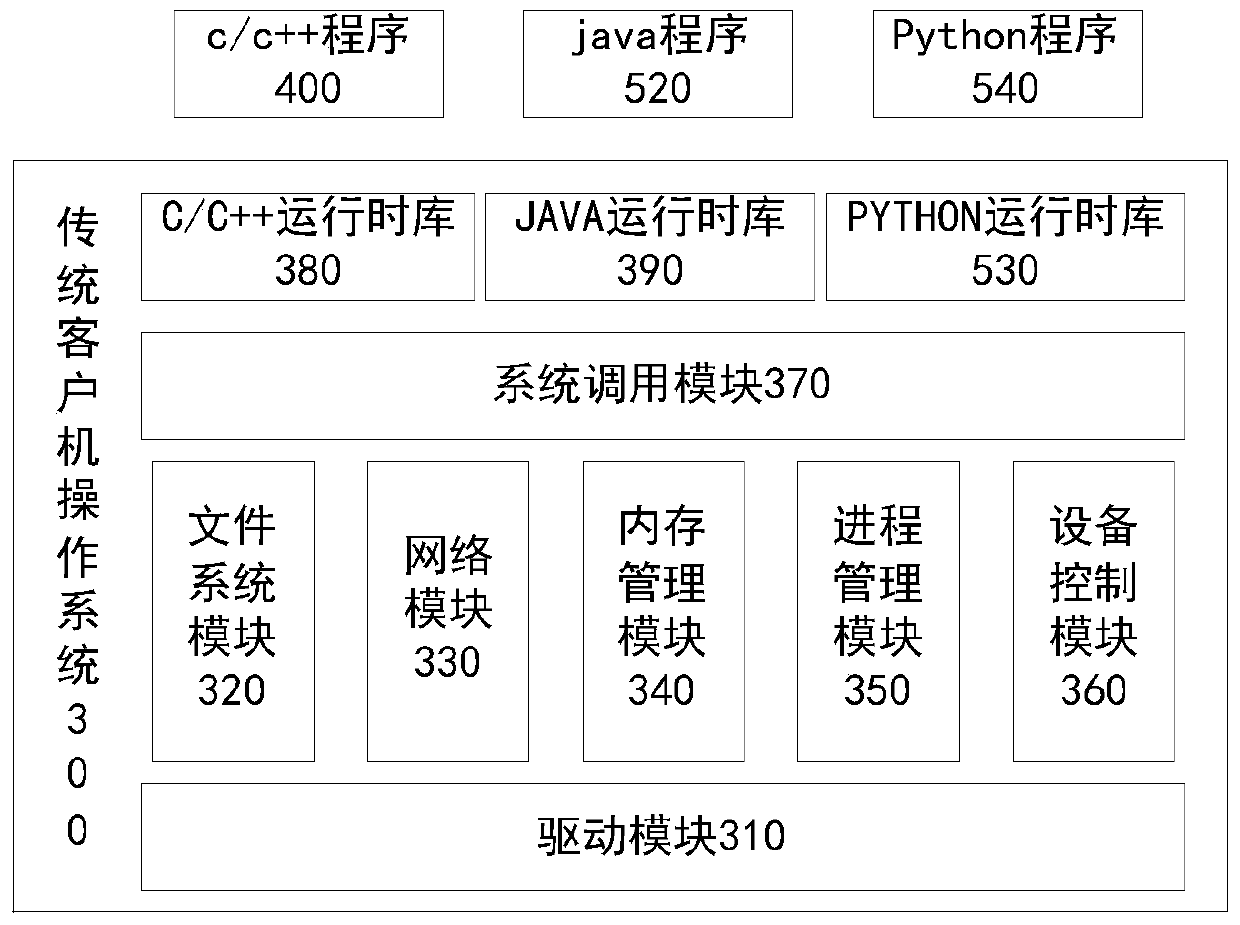

Operating system and operating system management method

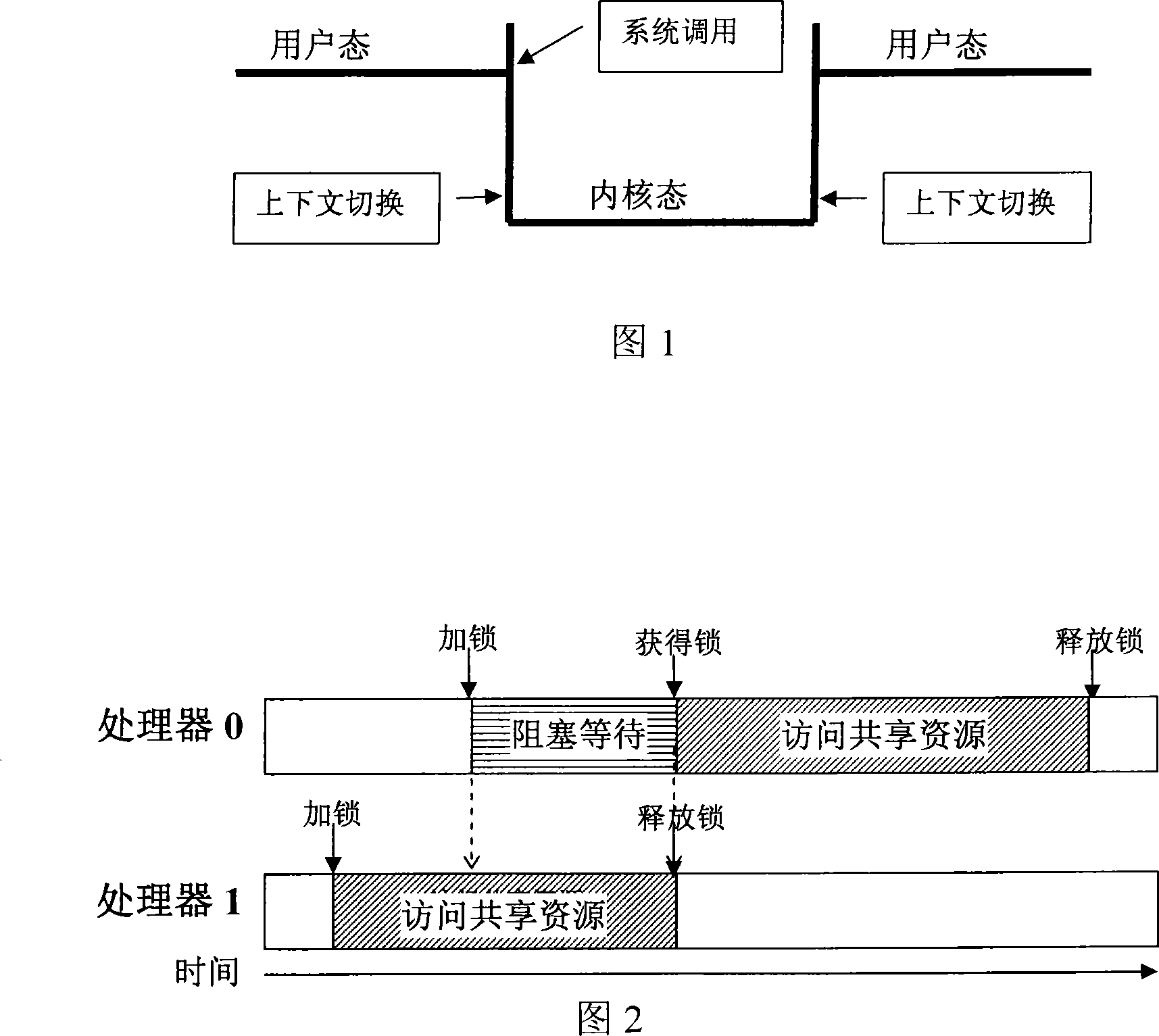

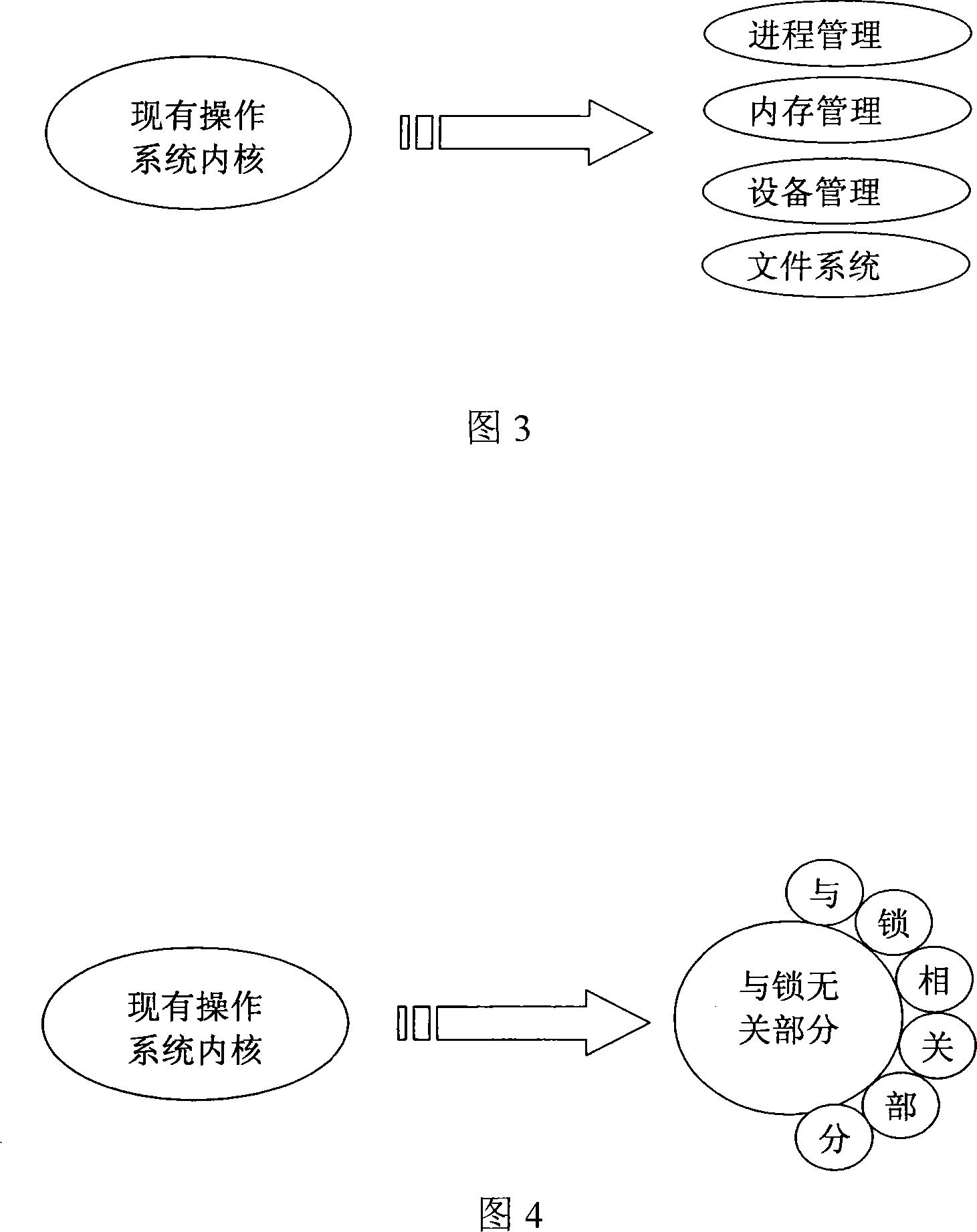

InactiveCN101196816AReduce context switchingImprove performanceInterprogram communicationSpecific program execution arrangementsApplication softwareSystem call

The invention discloses an operating system and a management method. The operating system comprises: a plurality of kernel service modules operated in kernel state corresponding to the system calling type, which are distributed on at least one processor and / or processor kernel; a plurality of application managing modules used for managing application procedure and application progress are distributed on different processor and / or processor kernel from the kernel service modules, which are used for managing application procedure and application progress; the kernel service modules are communicated with the application procedure through a system calling information. The management method comprises: step S1, the application system sends the system calling information, and then executes codes without dependent relation with the system calling result after sending the information; step S2, the kernel service module receives the system calling information and sends the system calling result back to the application procedure; step S3, the application procedure receives the system calling result and executes codes with dependent relation with the system calling result.

Owner:INST OF COMPUTING TECHNOLOGY - CHINESE ACAD OF SCI

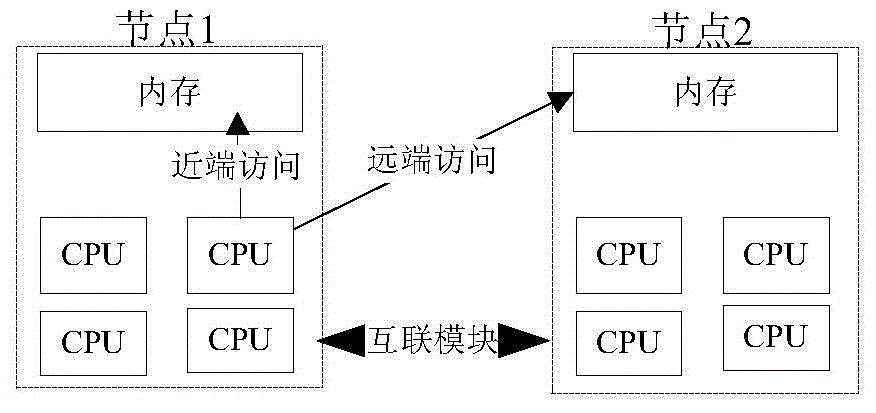

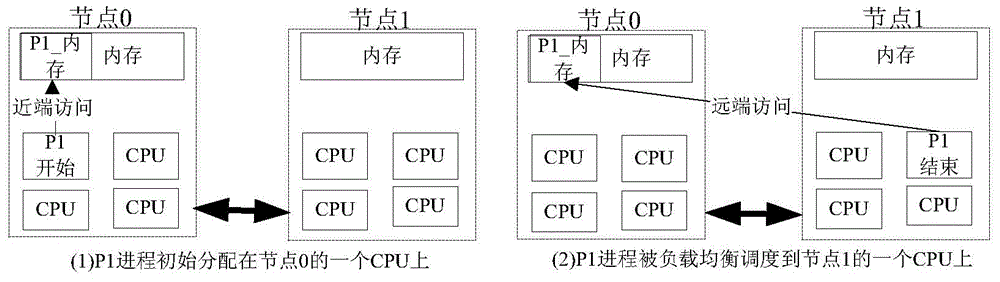

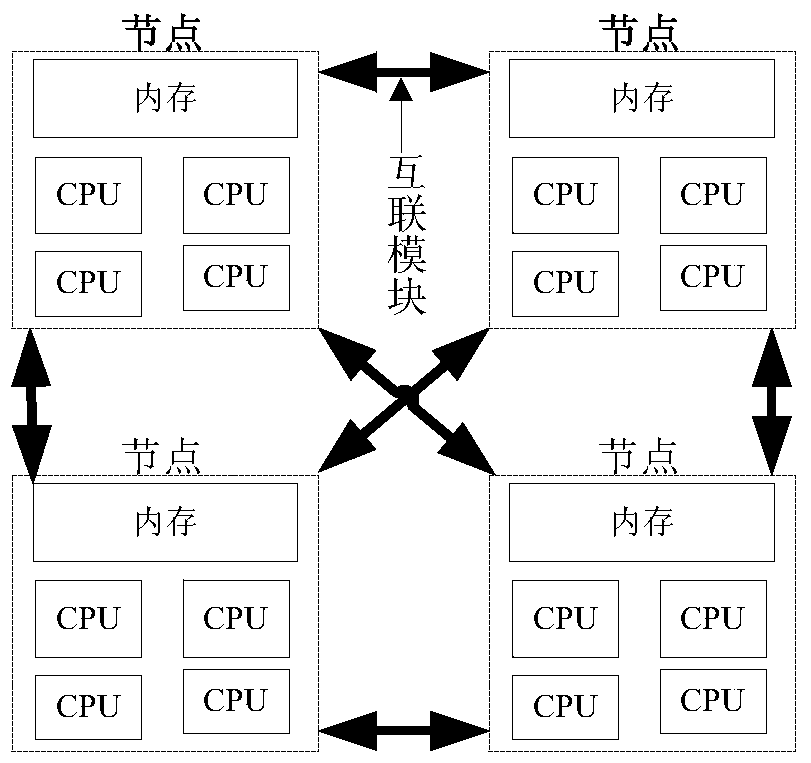

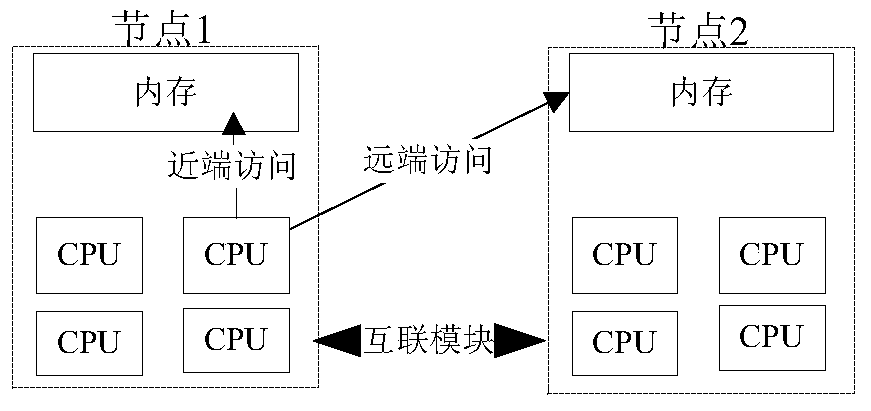

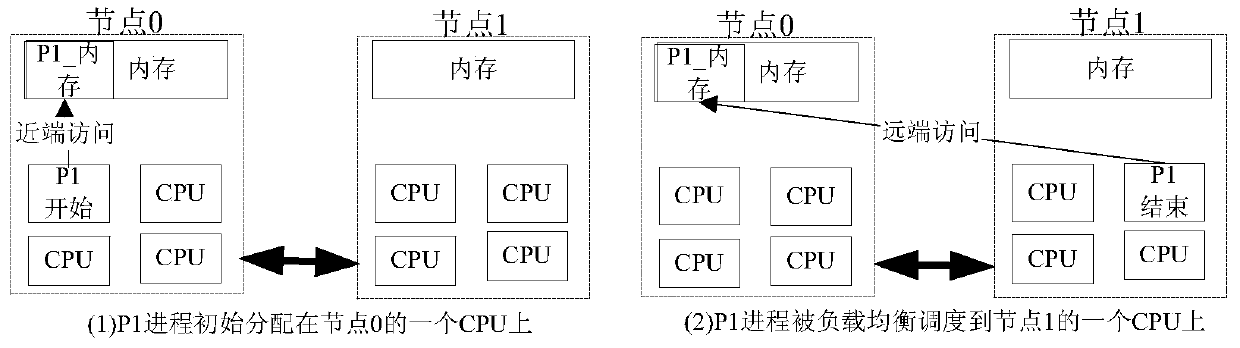

Memory migration method and memory migration device

ActiveCN105159841AReduce context switchingImprove the efficiency of memory migrationMemory adressing/allocation/relocationPhysical addressContext switch

The invention discloses a memory migration method and a memory migration device. The memory migration method comprises the steps of: when a memory data migration request of a random source node in a storage system is received, dividing to-be-migrated data according to memory blocks on the basis of continuity of physical addresses of the to-be-migrated data, and determining the number of occupied memory blocks of the source node after dividing of the to-be-migrated data; acquiring memory blocks of which the number is same with the number of the occupied memory blocks of the source node after dividing of the to-be-migrated data in a target node in the storage system; and migrating the to-be-migrated data to the memory blocks in the target node according to the divided memory blocks. The invention further discloses a corresponding memory migration device. According to the memory migration method provided by the embodiment of the invention, through combining and dividing the to-be-migrated data according to the memory blocks, the memory blocks with continuous physical addresses are acquired from the target node; the to-be-migrated data are migrated from the source node to the memory blocks of the target node according to the memory blocks; and the number of context switching in migration can be reduced and a memory migration efficiency can be improved in the memory migration process.

Owner:HUAWEI TECH CO LTD

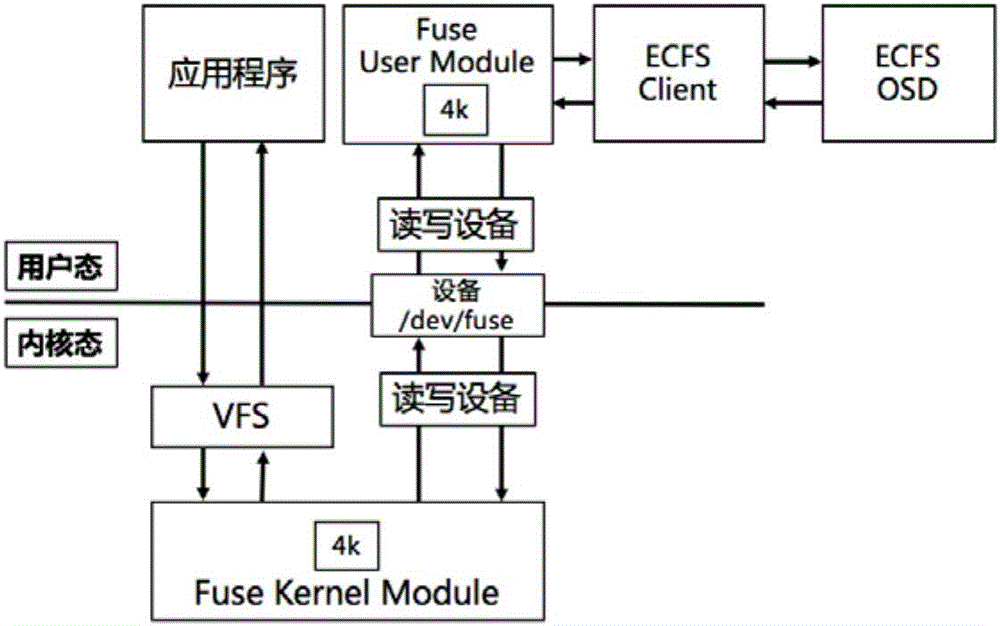

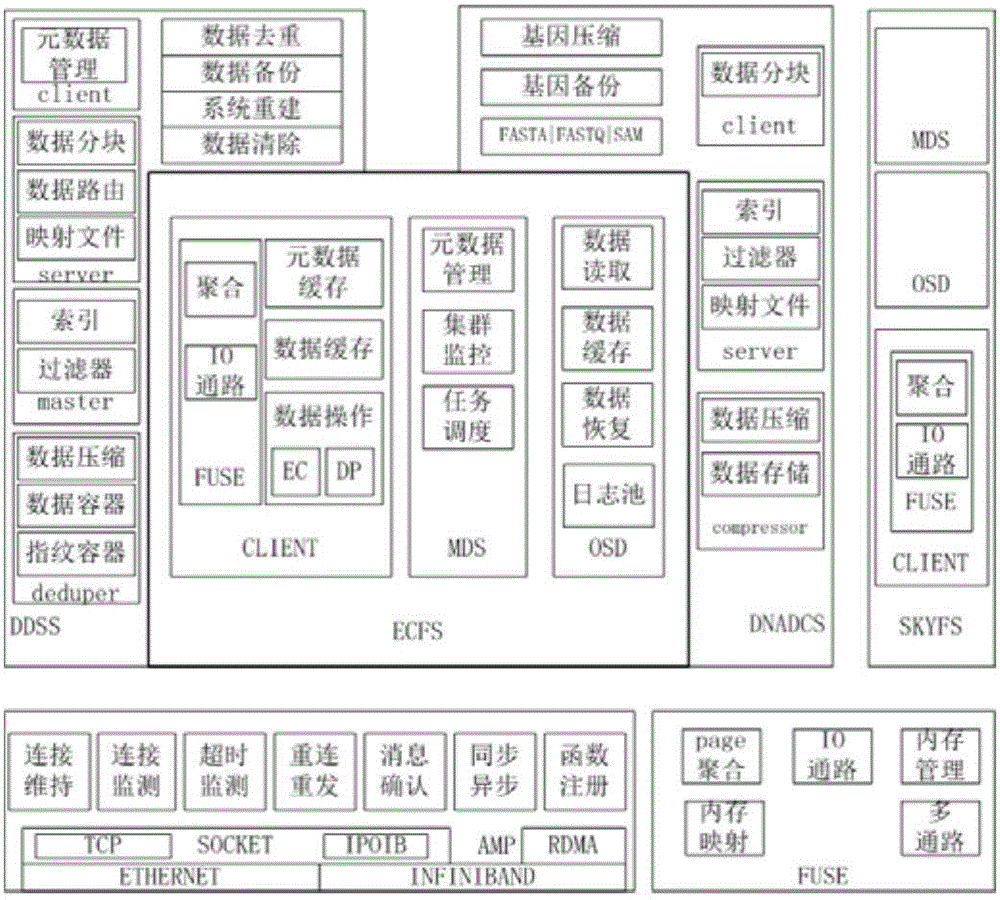

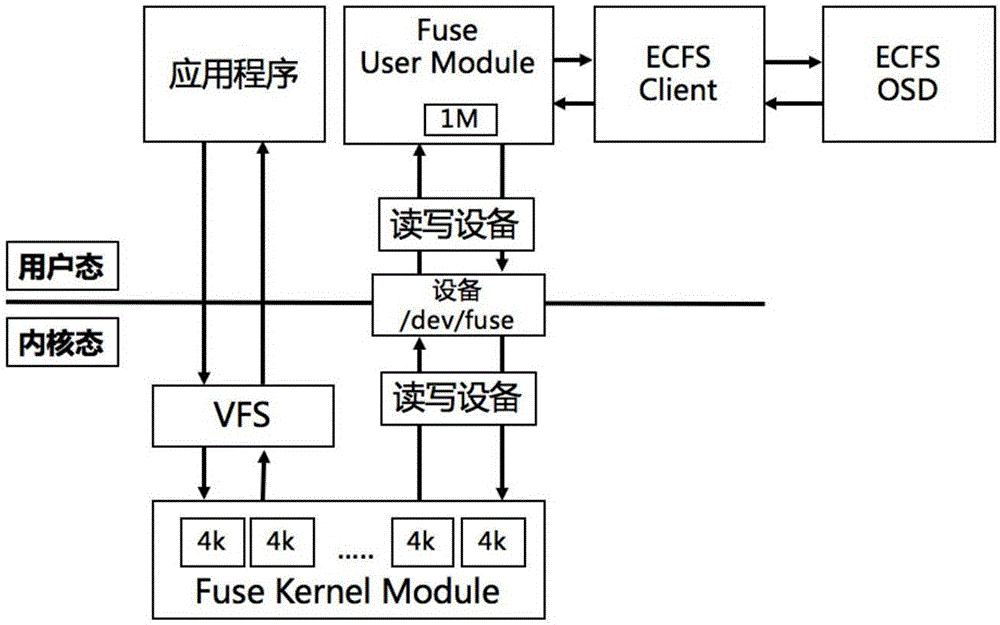

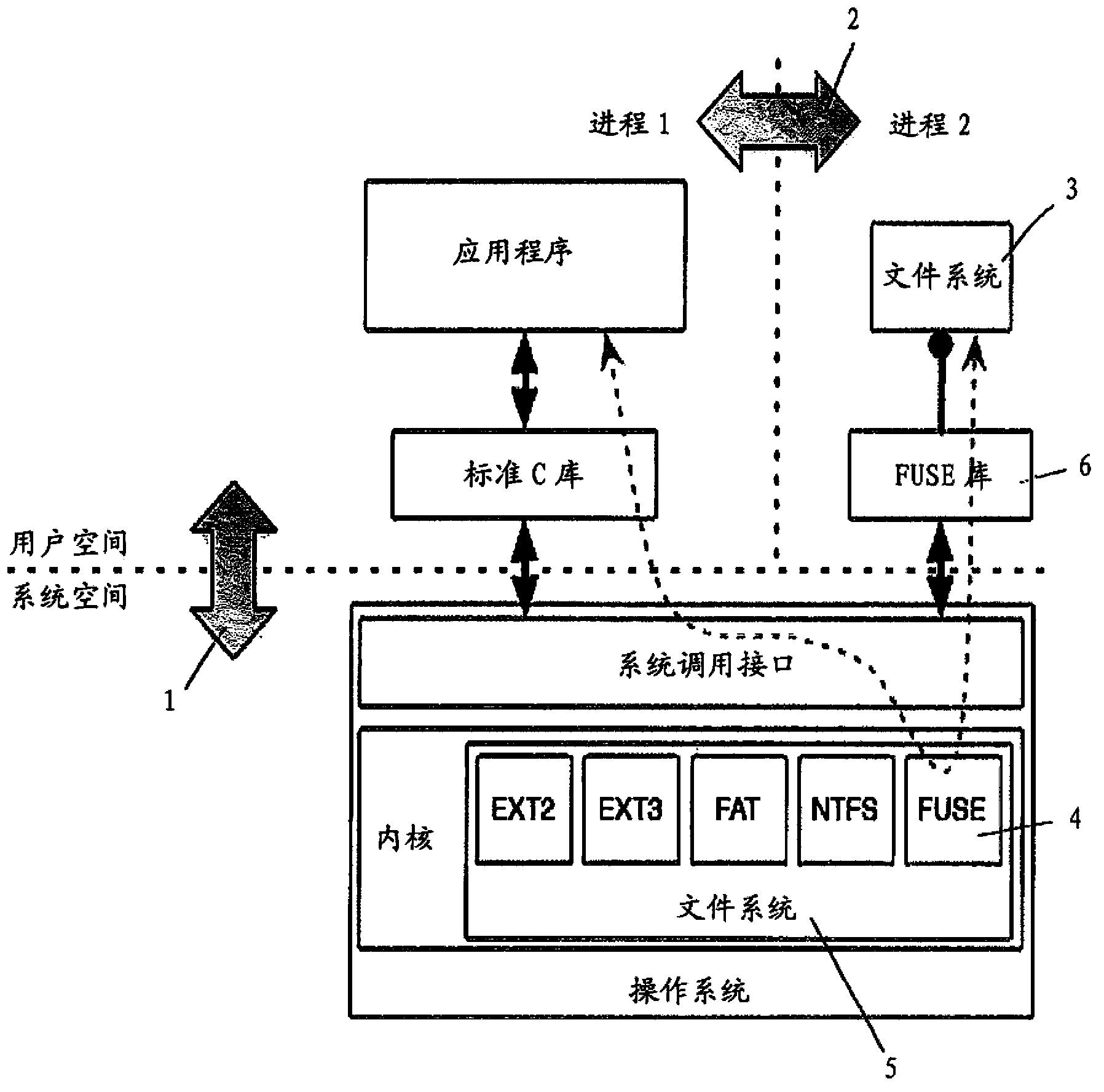

Multi-virtual-machine mapping and multipath fuse acceleration method and system based on kvm

ActiveCN106708627ARelieve pressureAbundant resourcesResource allocationSoftware simulation/interpretation/emulationSystem callClient-side

The invention provides a multi-virtual-machine mapping and multipath fuse acceleration method and system based on a kvm. The method comprises the steps that 1, a delayed writing function is added for a data path of an existing FUSE kernel module, wherein the delayed writing function is that data is aggregated in the FUSE kernel module through a VFS layer and is directly returned to the system for calling when passing through a kernel, and the data is transmitted to a user-mode client-side for data trading operation only when the aggregated data meets a certain size or is added no longer in a certain timer period; 2, multi-mounting-point mounting is conducted on the FUSE kernel module. Multi-virtual-machine data is mapped to a host machine by stripping the FUSE module function and a multi-virtual-machine mapping mechanism, the handling operation on a stripping client-side is issued to a host machine side to reduce the pressure of virtual machines, and more resources are provided for the virtual machines to perform calculating and processing tasks.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI +1

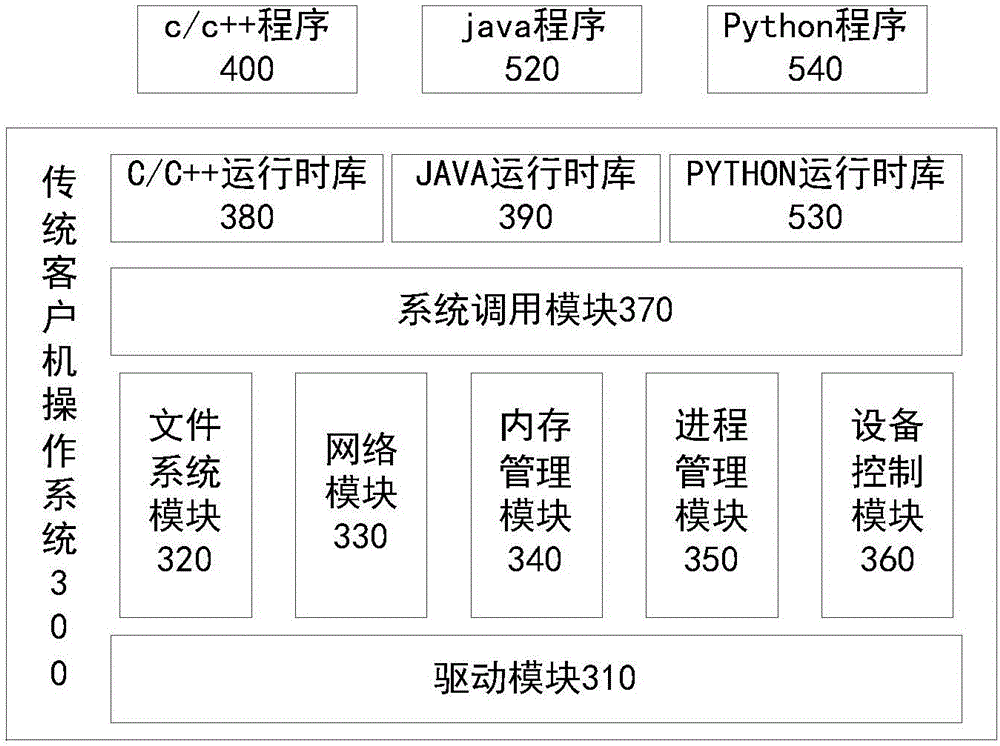

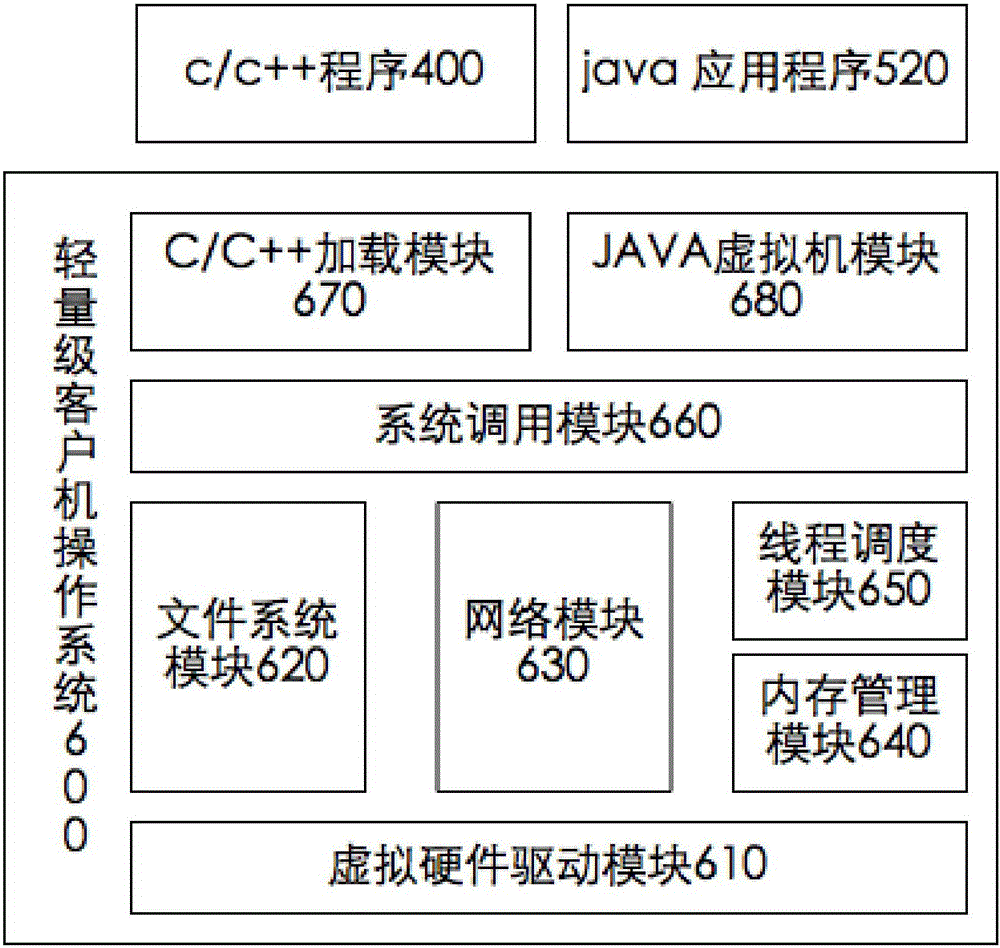

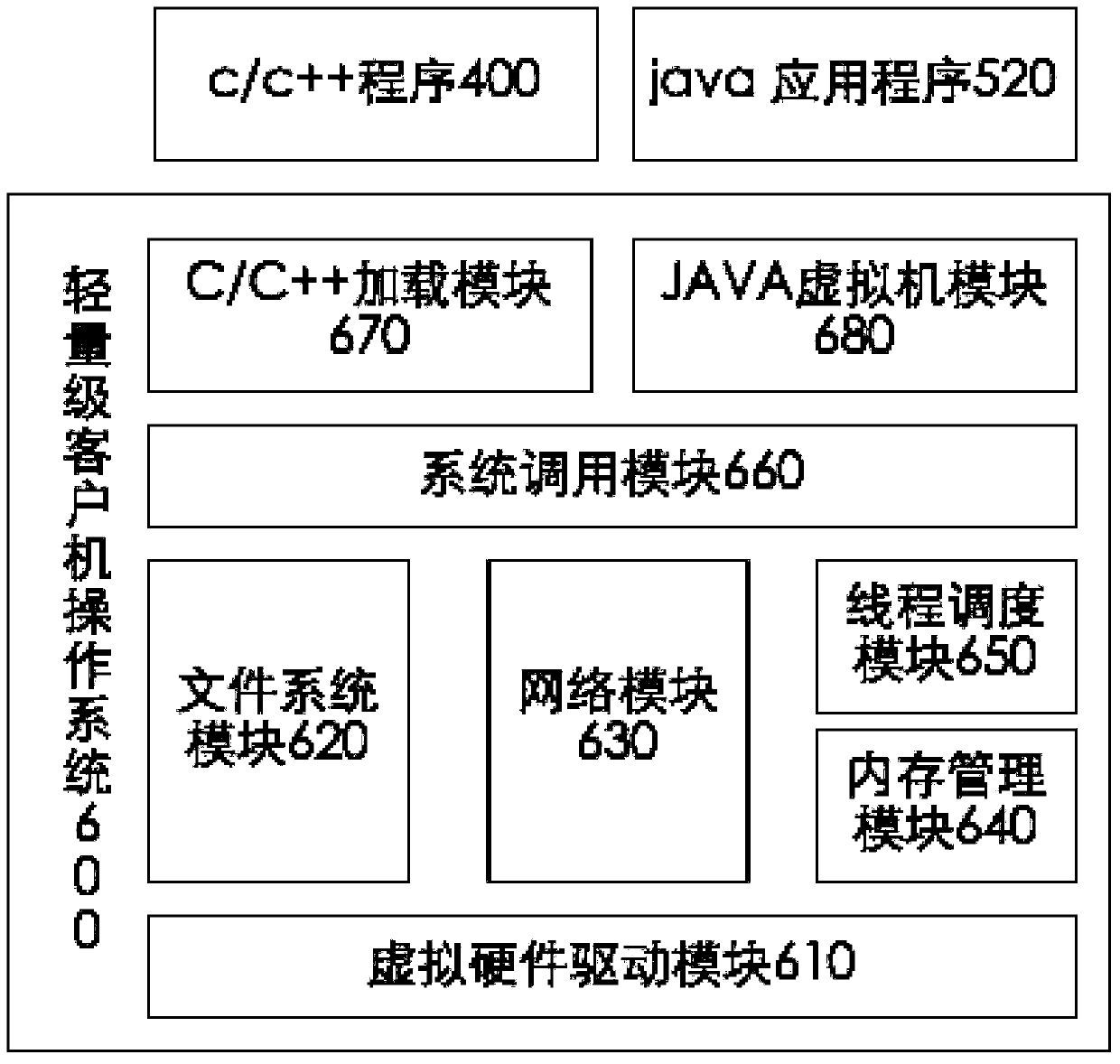

Method and system for lightweighting client computer operating system and virtualized operating system

ActiveCN106339257AFix performance issuesSolve functionResource allocationSystem callApplication software

The invention discloses a method for lightweighting a client computer operating system, a lightweight client computer operating system in cloud environment and a virtualized operating system based on the lightweight client computer operating system. The purpose of the invention is to solve the problems of performance and function limitation of application programs in cloud environment, and to simplify the design of client computer operating systems and improve the performance thereof, and to reduce the abstract and protective layers. The lightweight operating system mainly comprises a virtual hardware driver module, a file system module, a network module, a memory management module, a thread scheduling module and a system call module. One lightweight client computer operating system only runs one application program which reduces the excess, costly isolation of the client computer operating system; single address space is used and all threads and cores use one page table to reduce context switch. C / C++ application programs and java programs can be operated and the application program performance is effectively increased; the system is an efficient application program operation container.

Owner:CHINA STANDARD SOFTWARE

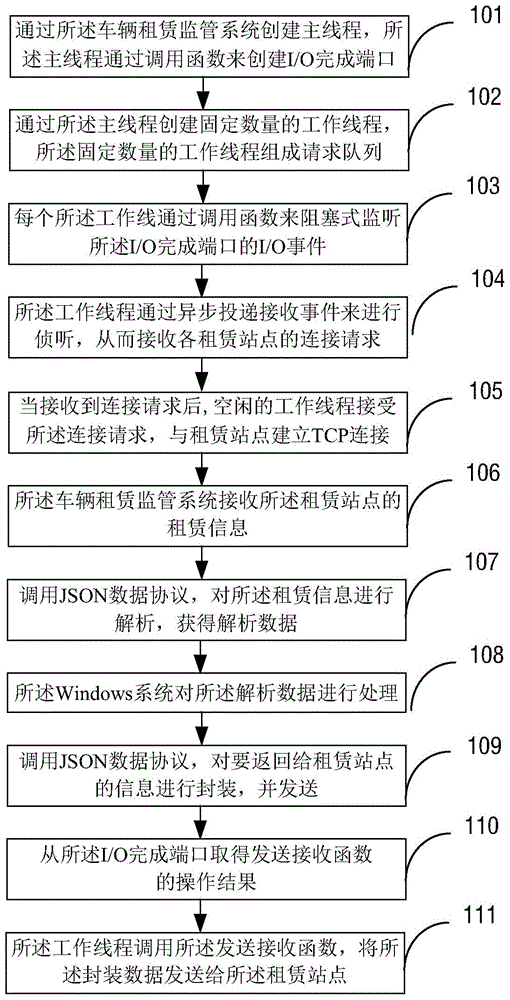

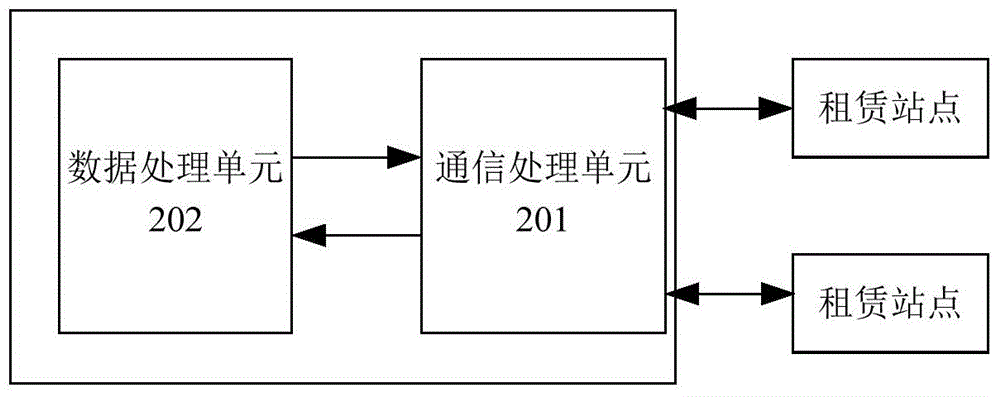

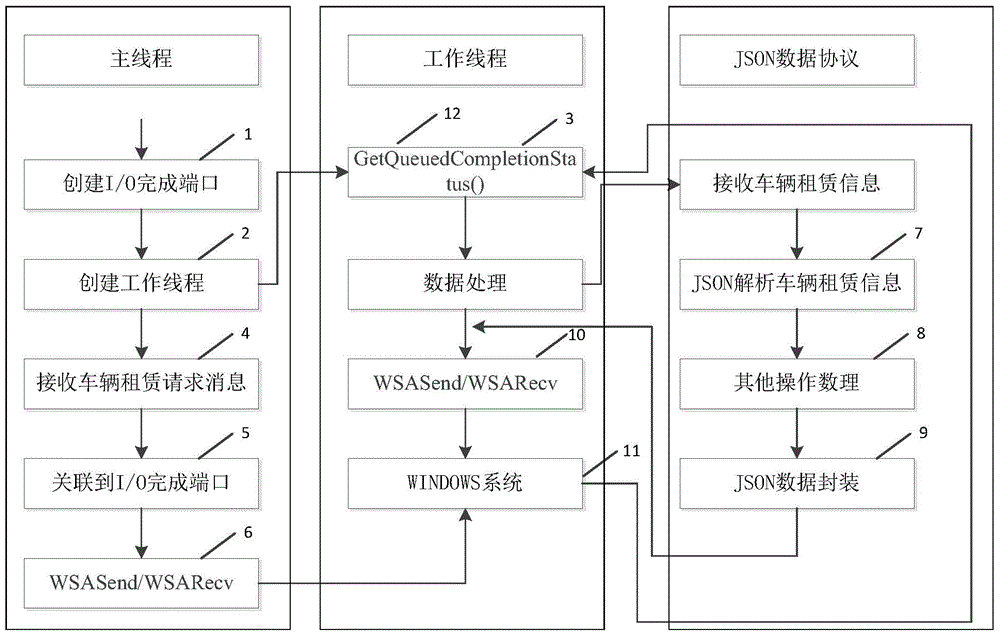

Communication method of vehicle rental information of public transportation system, and communication system

InactiveCN104917811AIncrease profitReasonable coordinationBuying/selling/leasing transactionsTransmissionCommunication interfaceAsynchronous communication

The invention provides a communication method of vehicle rental information of a public transportation system and a communication system, solves the problem of data communication between a vehicle rental supervision system and a lot of rental stations in a B / S mode, improves the maximum amount of concurrent users capably being carried, and improves data transmission efficiency. The communication method comprises operating the vehicle rental supervision system in a Windows system application service mode, establishing a communication service terminal according to an IOCP finished port model, and creating a fixed number of communication interface working thread to be used to perform asynchronous communication with the various rental stations; and, after TCP connection between the rental stations and the communication service terminal is established, all interaction data call a JSON data protocol so as to perform packaging and parsing.

Owner:上海行践公共自行车有限公司

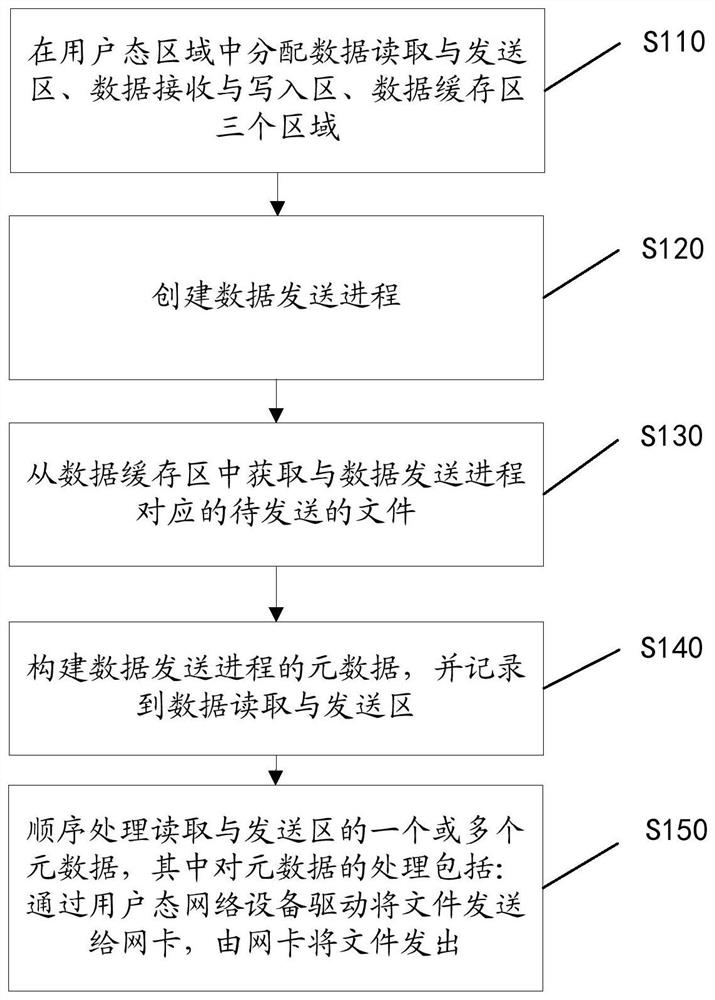

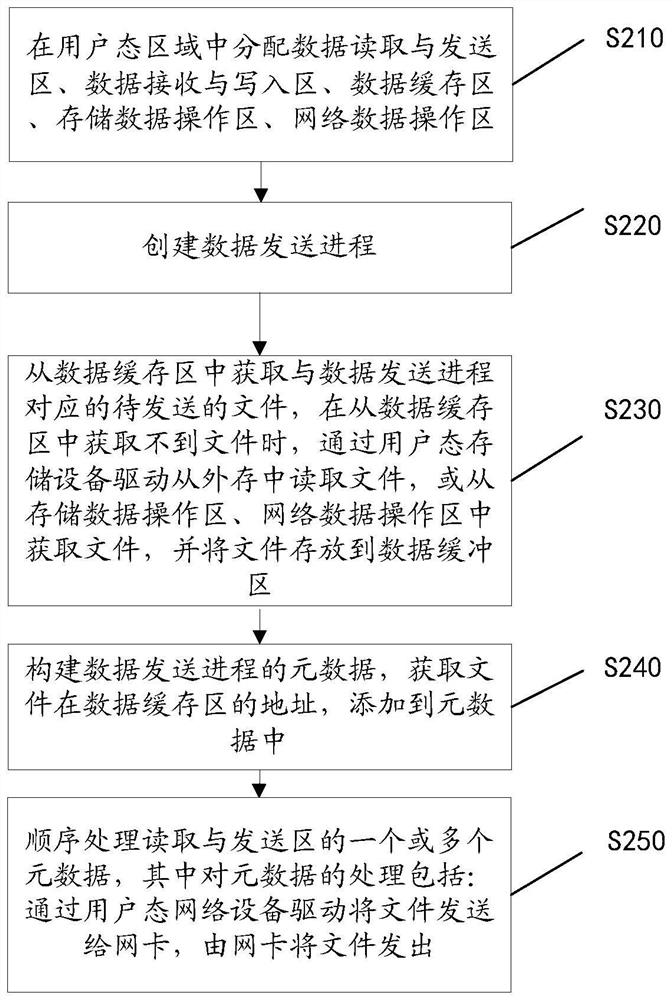

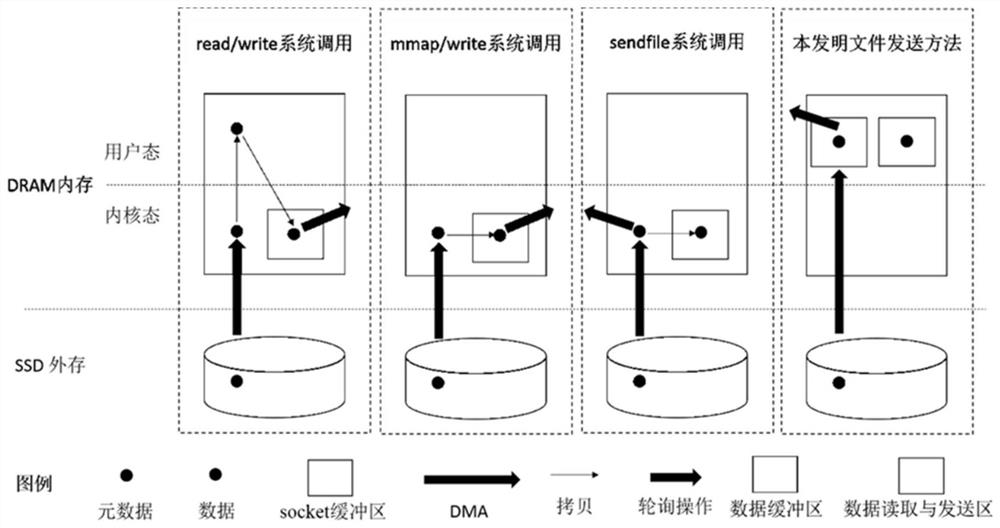

User-mode file sending method, file receiving method, and file transceiving device

ActiveCN108989432AImprove transceiver performanceReduce copyTransmissionSpecial data processing applicationsState spaceNetwork interface controller

The invention discloses a user-mode file sending method, a file receiving method and a file sending and receiving device. The file sending method comprises the following steps: allocating a data reading and sending area, a data receiving and writing area and a data buffer area in the user-mode area; creating a data sending process; obtaining a file to be sent corresponding to the data sending process from the data buffer area; constructing metadata of the data sending process and recording the metadata to the data reading and sending area; sequentially processing one or more metadata of the reading and sending area, wherein the processing of the metadata comprises sending the file to a network adapter via a user-mode network device driver, and sending the file by the network adapter. According to the technical scheme of the invention, the data receiving and sending are completed by using the user-mode space of the data receiving and sending process, and the kernel state space of the process is not needed to completely shield the kernel, that is, the copy, the context switching, and the interruption can be remarkably reduced, and the performance of the data sending and sending is improved.

Owner:NANJING ZHONGXING XIN SOFTWARE CO LTD

Context switch data prefetching in multithreaded computer

InactiveUS20080201529A1Reduce context switchingImprove system performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationOperating systemContext switch

An apparatus, program product and method initiate, in connection with a context switch operation, a prefetch of data likely to be used by a thread prior to resuming execution of that thread. As a result, once it is known that a context switch will be performed to a particular thread, data may be prefetched on behalf of that thread so that when execution of the thread is resumed, more of the working state for the thread is likely to be cached, or at least in the process of being retrieved into cache memory, thus reducing cache-related performance penalties associated with context switching.

Owner:IBM CORP

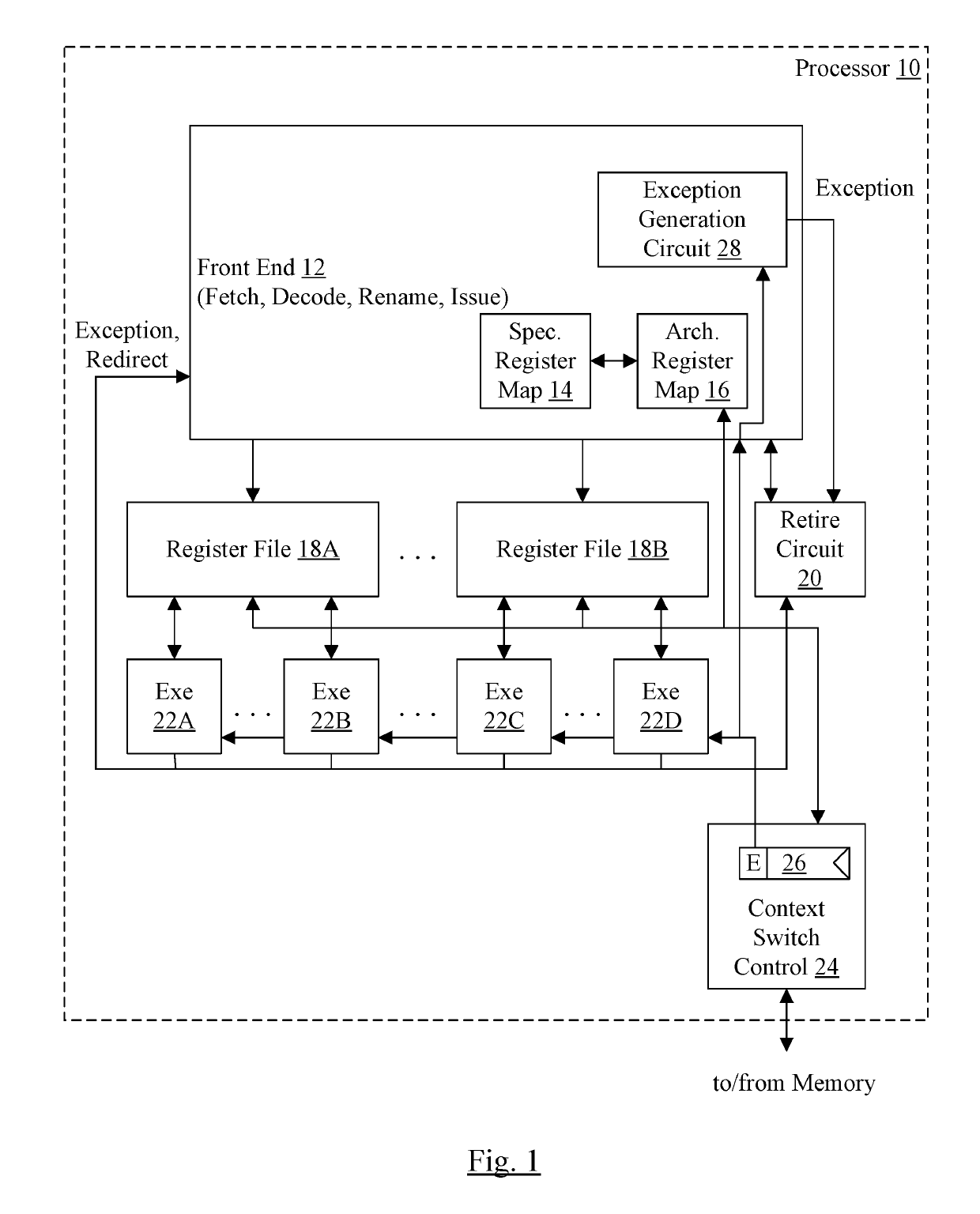

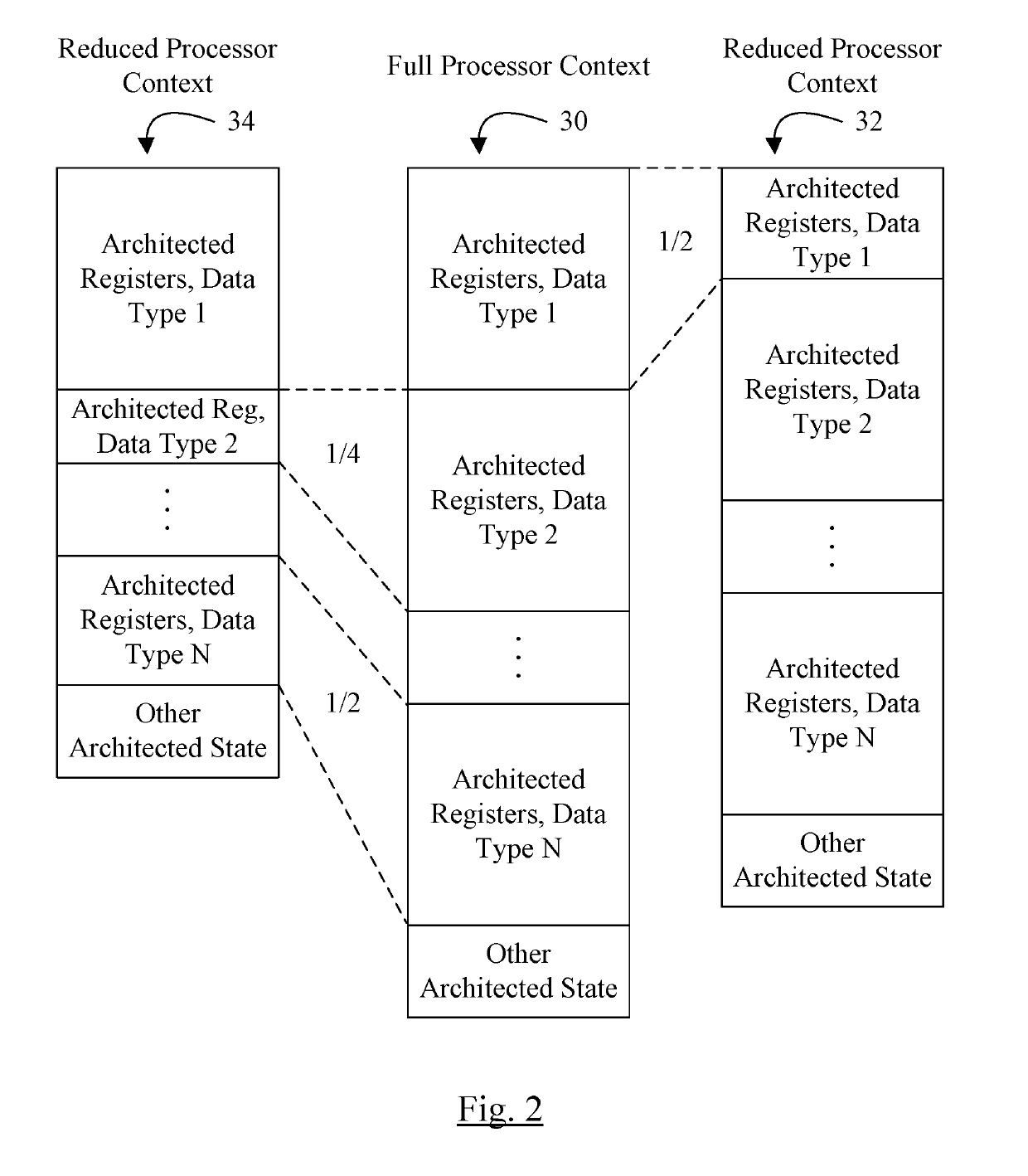

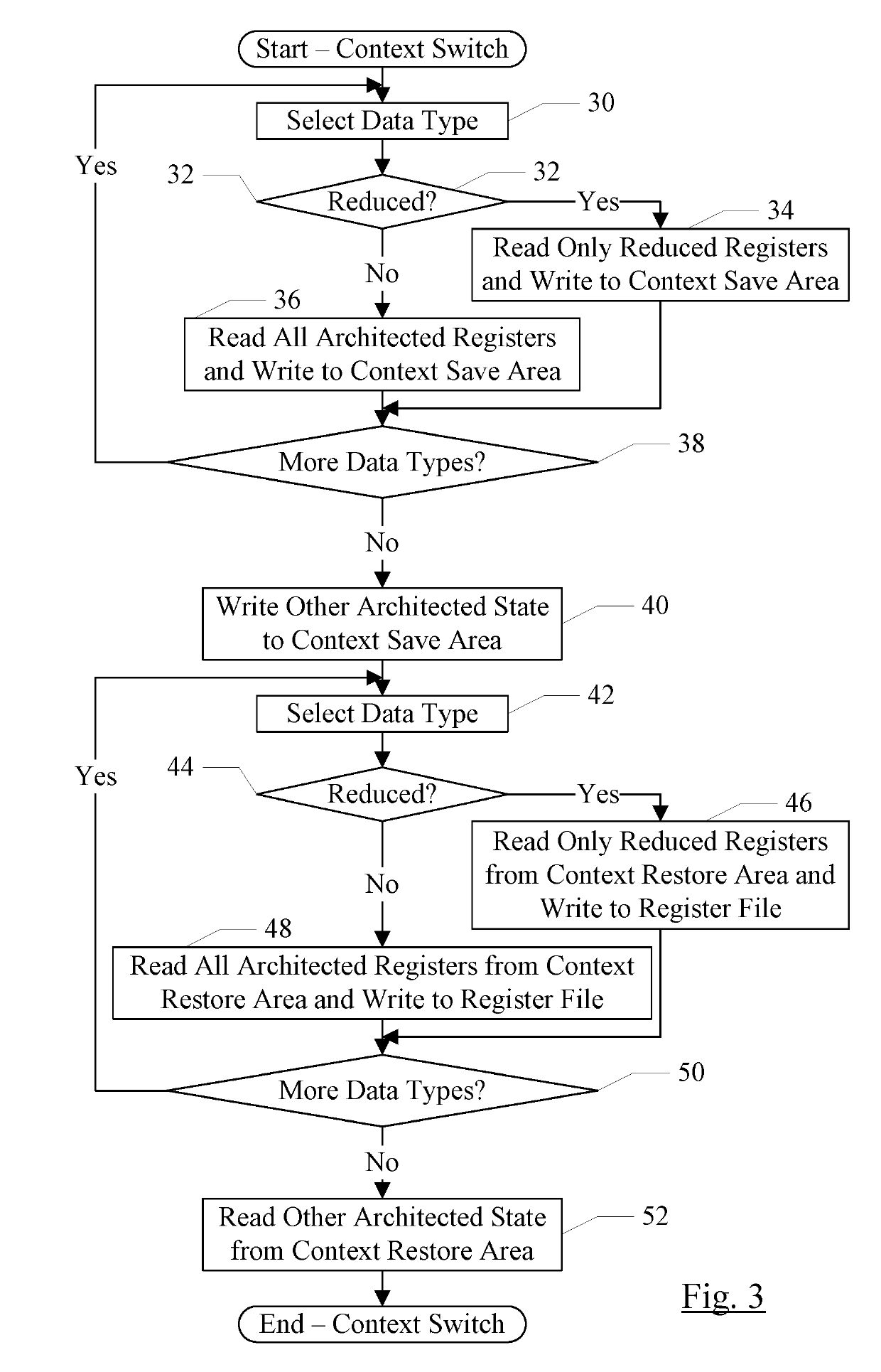

Context Switch Optimization

InactiveUS20190220417A1Reduced memory footprintIncrease performanceProgram initiation/switchingRegister arrangementsData typeRegister file

In an embodiment, a processor may include a register file including one or more sets of registers for one or more data types specified by the ISA implemented by the processor. The processor may have a processor mode in which the context is reduced, as compared to the full context. For example, for at least one of the data types, the registers included in the reduced context exclude one or more of the registers defined in the ISA for that data type. In an embodiment, one half or more of the registers for the data type may be excluded. When the processor is operating in a reduced context mode, the processor may detect instructions that use excluded registers, and may signal an exception for such instructions to prevent use of the excluded registers.

Owner:APPLE INC

Context switch data prefetching in multithreaded computer

InactiveUS20080201565A1Reduce context switchingImprove system performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationOperating systemContext switch

An apparatus, program product and method initiate, in connection with a context switch operation, a prefetch of data likely to be used by a thread prior to resuming execution of that thread. As a result, once it is known that a context switch will be performed to a particular thread, data may be prefetched on behalf of that thread so that when execution of the thread is resumed, more of the working state for the thread is likely to be cached, or at least in the process of being retrieved into cache memory, thus reducing cache-related performance penalties associated with context switching.

Owner:INT BUSINESS MASCH CORP

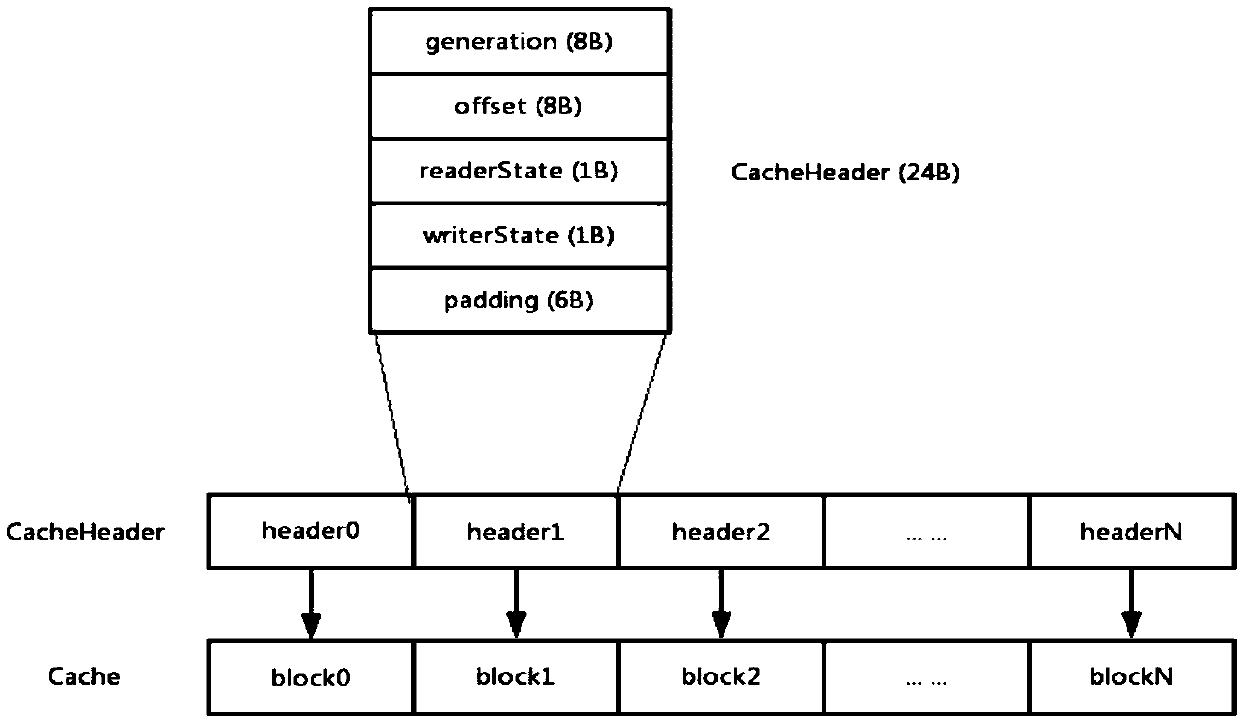

Data storage method, device, and computer-readable storage medium

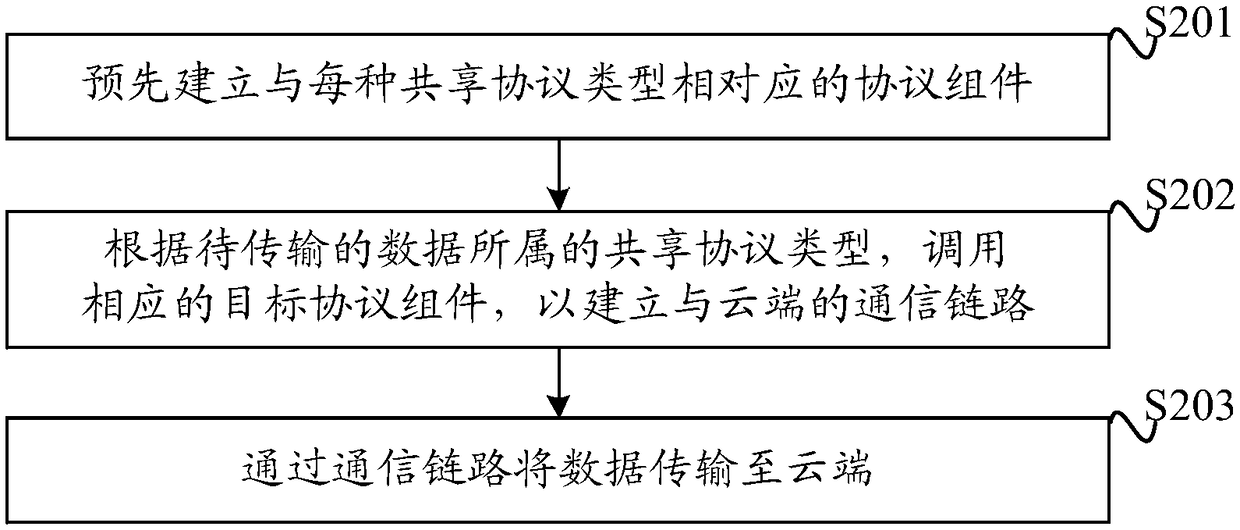

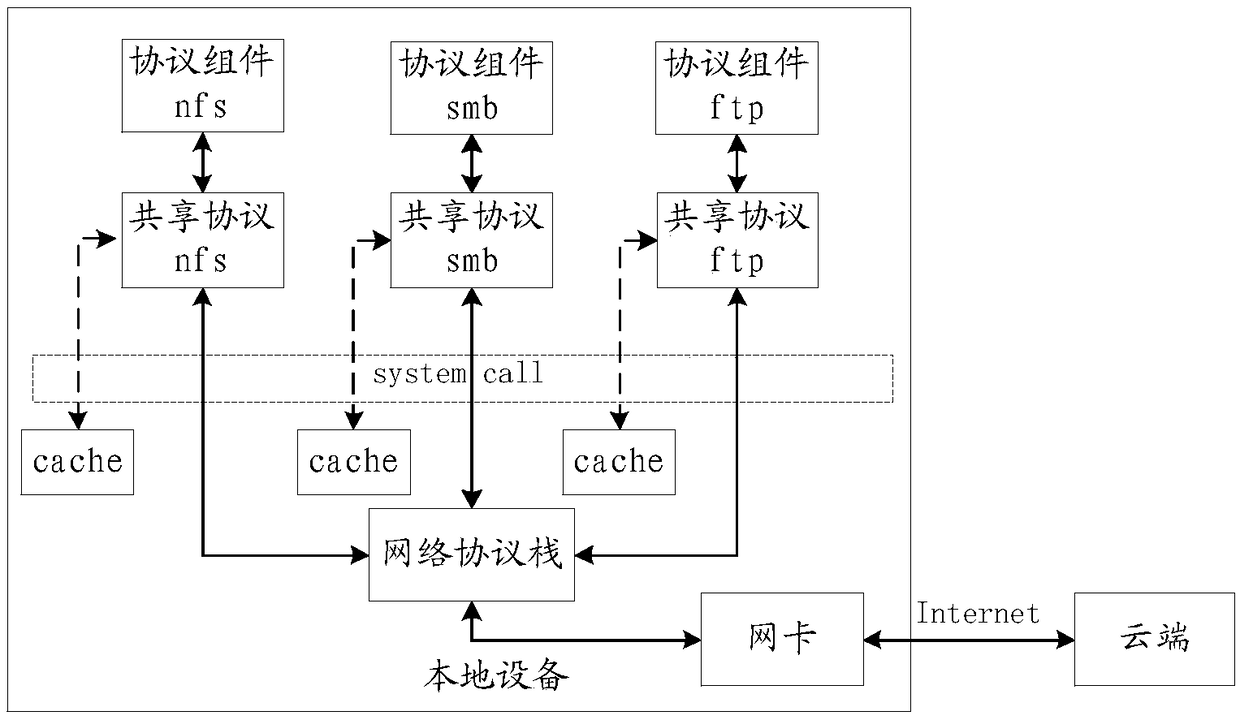

The embodiment of the invention discloses a data storage method, a device and a computer-readable storage medium, wherein protocol components corresponding to each shared protocol type are pre-established; the program code for communicating with the cloud is integrated into the protocol components. When the local device receives the data, according to the shared protocol type of the data, the corresponding target protocol component is called, and the communication link with the cloud can be established. Data is transferred to the cloud over this communication link. In the technical scheme, theprotocol component is establish beforehand, direct communication with the cloud can be realized, the context switch between the kernel state and the user state is reduced, the flow of data transmission is simplified, the time spent in data transmission is save, and the data processing performance of the local equipment is further improved.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

Operating system and operating system management method

InactiveCN101196816BImprove performanceImprove operational efficiencyInterprogram communicationSpecific program execution arrangementsOperational systemSystems management

The invention discloses an operating system and a management method. The operating system comprises: a plurality of kernel service modules operated in kernel state corresponding to the system calling type, which are distributed on at least one processor and / or processor kernel; a plurality of application managing modules used for managing application procedure and application progress are distributed on different processor and / or processor kernel from the kernel service modules, which are used for managing application procedure and application progress; the kernel service modules are communicated with the application procedure through a system calling information. The management method comprises: step S1, the application system sends the system calling information, and then executes codeswithout dependent relation with the system calling result after sending the information; step S2, the kernel service module receives the system calling information and sends the system calling resultback to the application procedure; step S3, the application procedure receives the system calling result and executes codes with dependent relation with the system calling result.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

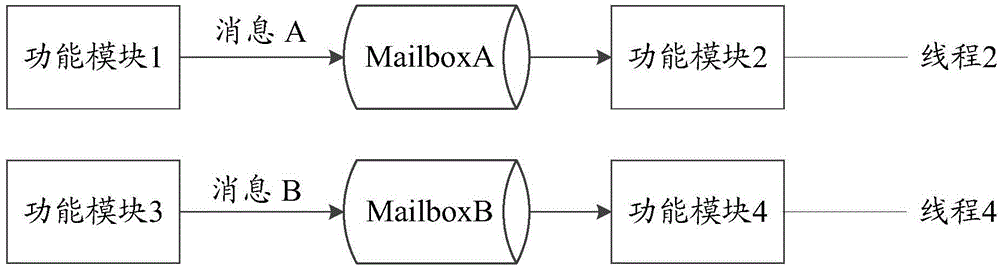

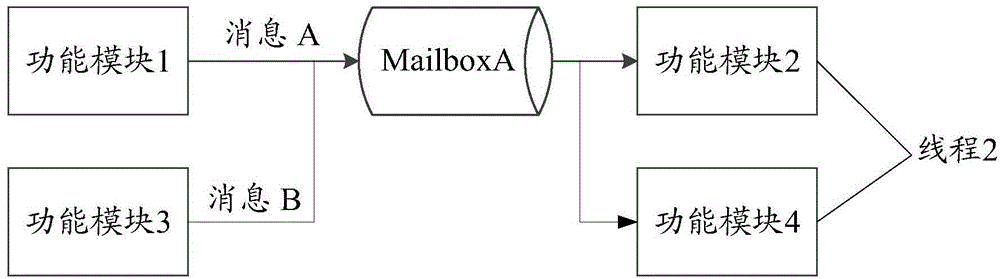

Optimization method and device for system performance

ActiveCN104636206AConvenience to mergeReduce consumptionProgram initiation/switchingResource allocationComputer moduleMessage processing

The embodiment of the invention provides an optimization method and device for system performance. The method comprises the steps that interactive resources of a first function module and a second function module are associated by the configuration of a first message processing interface of the first function module; the first function module is merged with a current thread corresponding to the second function module according to the interactive resources, so that when the current thread receives messages sent from other threads from the interactive resources, function modules corresponding to the messages can be recognized according to destination addresses carried by the messages, and the recognized function modules are operated. According to the optimization method and device for the system performance, the processes of context switches and message interaction between the threads are reduced, and the interactive resource waste caused by frequent multi-thread switches is reduced.

Owner:新奇点智能科技集团有限公司

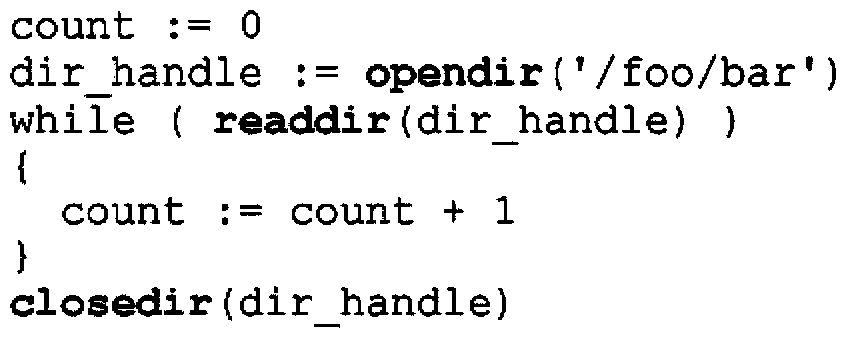

Method for backward-compatible aggregate file system operation performance improvement, and respective apparatus

ActiveCN103443789AWithout breaking interoperabilityNot required to callFile system administrationSpecial data processing applicationsFile systemSystem call

The method for operating a file system comprises the steps of designing a virtual file to provide a result from the file directory for which a multitude of system calls is required, distinguishing the virtual file by a unique name from the real files of the file directory, and retrieving the result from the file directory by opening the virtual file and reading the content of the virtual file. The virtual file is designed in particular for a file system operation.

Owner:INTERDIGITAL CE PATENT HLDG

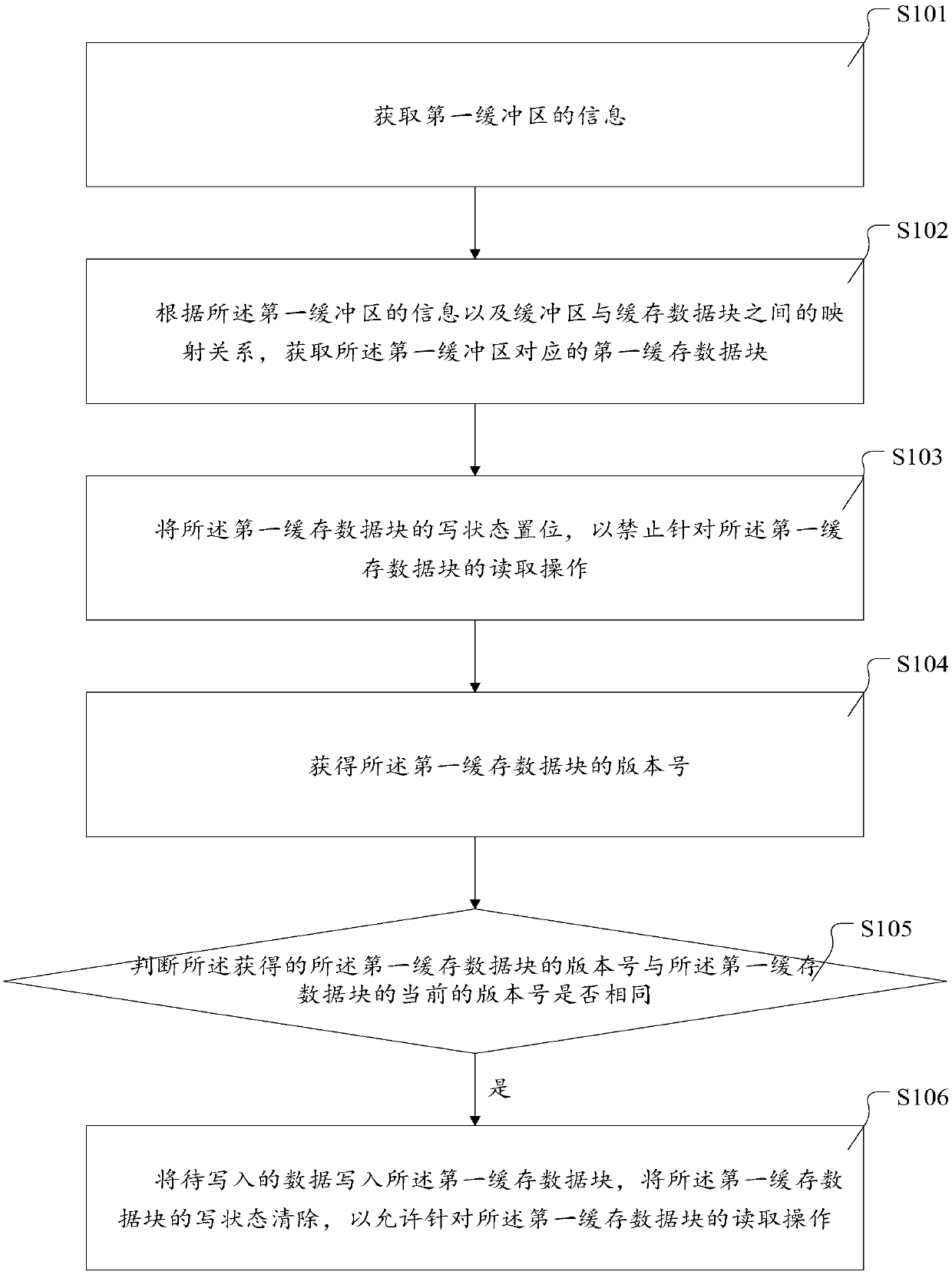

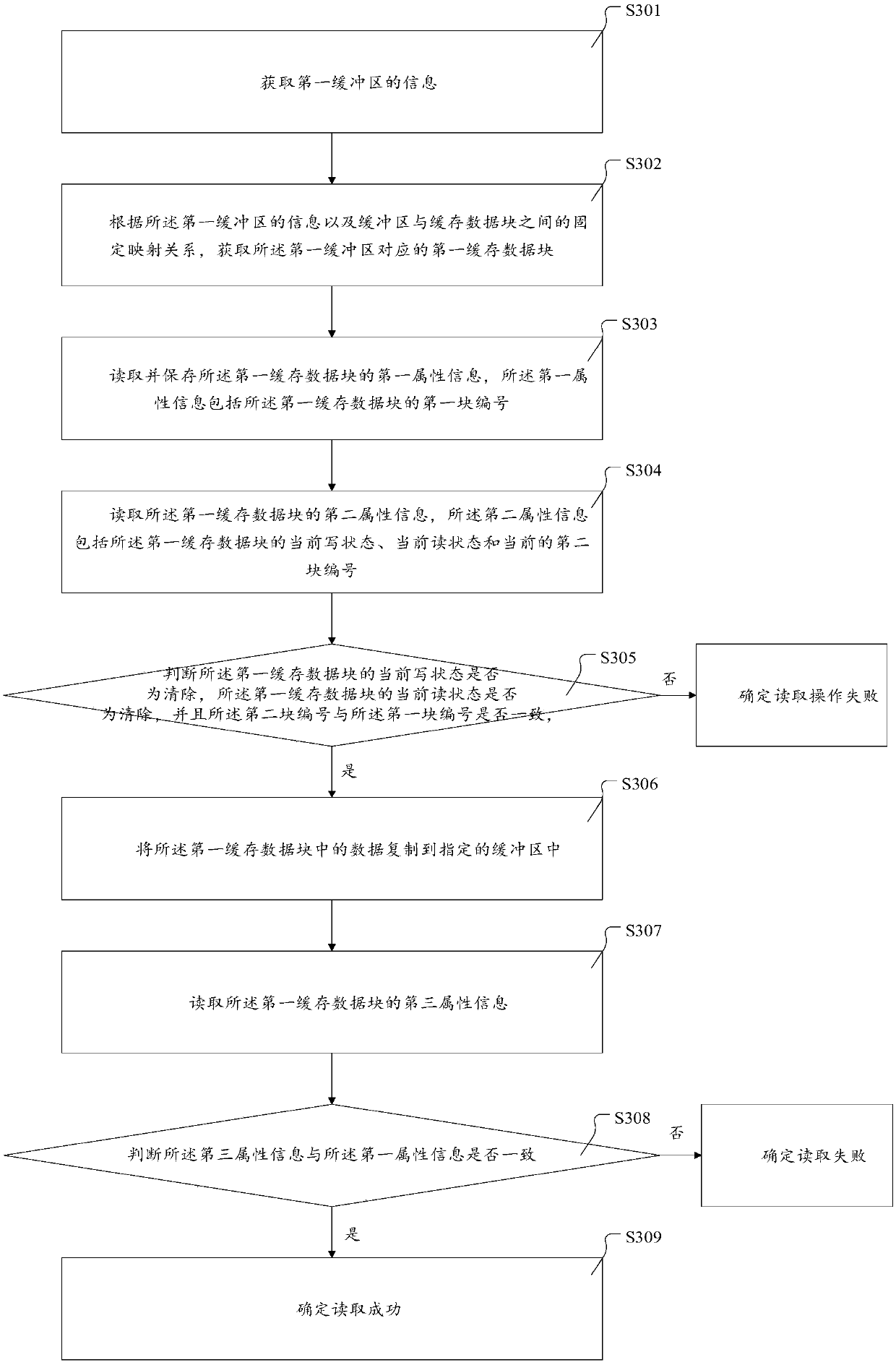

Data processing method and device

ActiveCN110874273AReduce congestionReduce context switchingProgram synchronisationInterprogram communicationParallel computingComputer engineering

The invention discloses a data processing method and device. The data processing method comprises the steps of obtaining information of a first buffer area; acquiring a first cache data block corresponding to the first buffer area according to the information of the first buffer area and a mapping relationship between the buffer area and the cache data block; setting a writing state of the first cache data block to forbid a reading operation for the first cache data block; obtaining a version number of the first cache data block; judging whether the obtained version number of the first cache data block is the same as the current version number of the first cache data block or not; If yes, writing data to be written into the first cache data block, and clearing a writing state of the firstcache data block to allow a reading operation for the first cache data block. By adopting the data processing method provided by the invention, the problem of concurrence of cache data reading and writing of a single producer and multiple consumers is solved.

Owner:ALIBABA GRP HLDG LTD

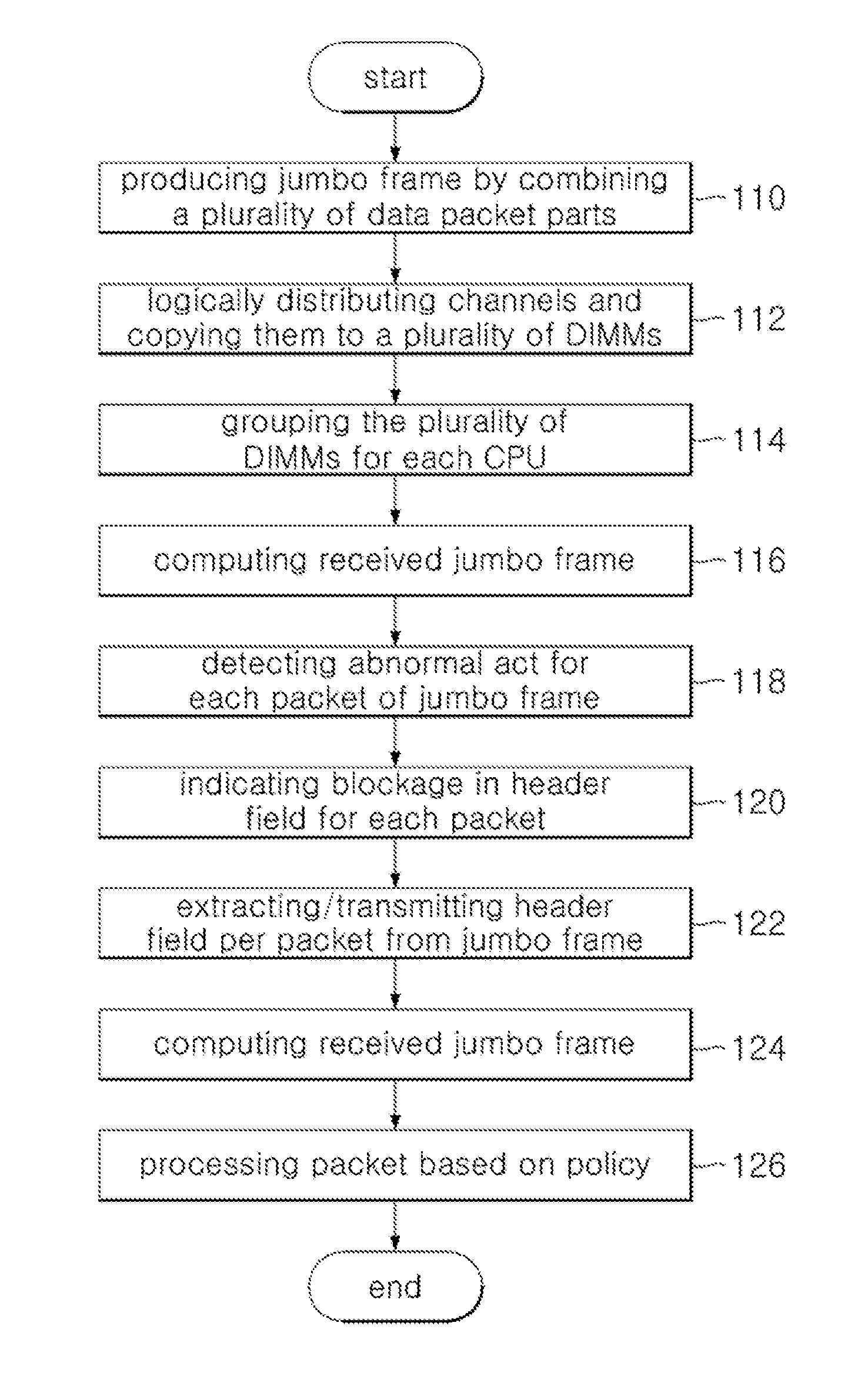

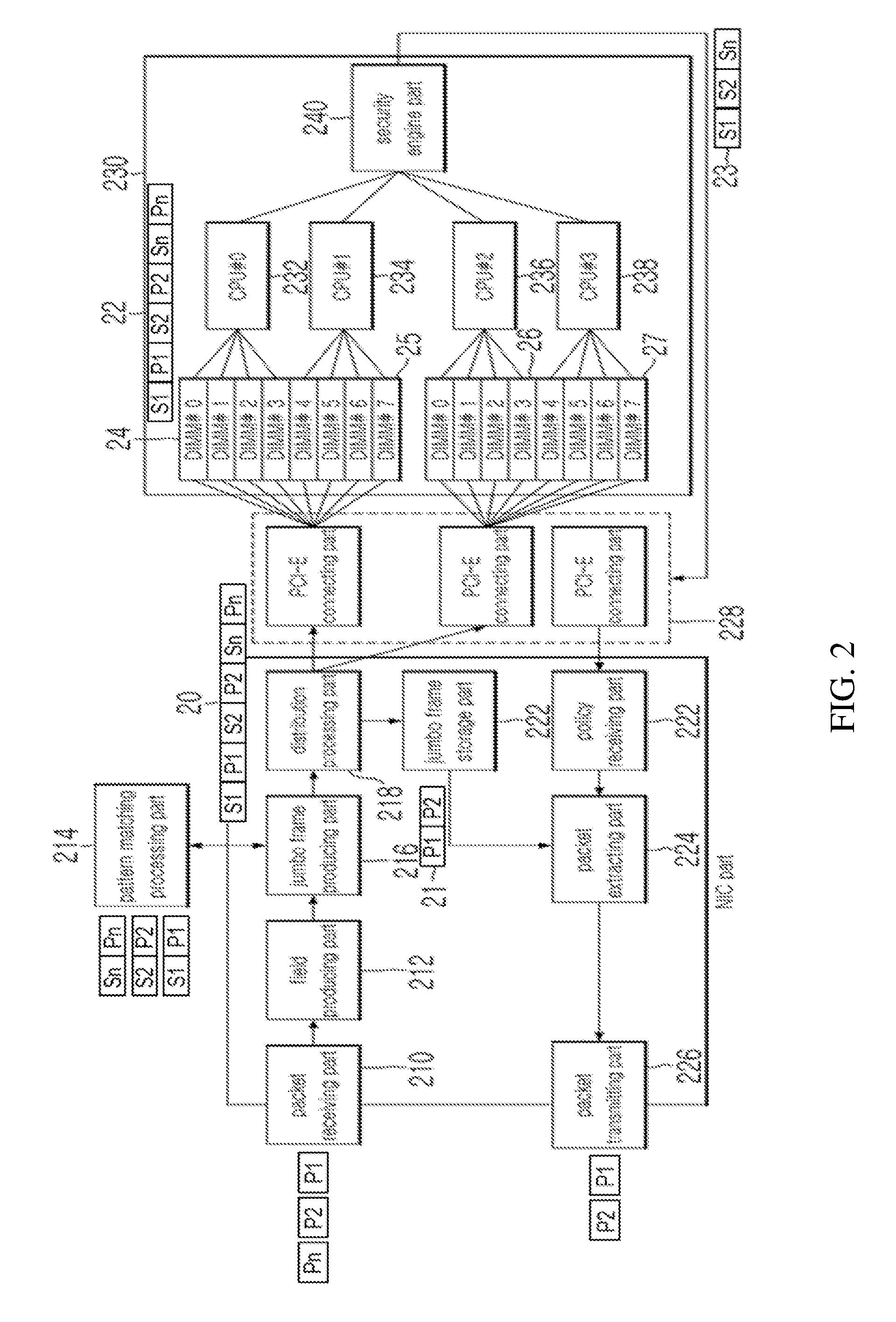

Method and apparatus for service traffic security using DIMM channel distribution in multicore processing system

ActiveUS20160373475A1Reduce context switchingEasy to processInput/output to record carriersTransmissionTraffic capacityProcess systems

The present invention relates to a multicore communication processing service. More specifically, aspects of the present invention provide a technology for converting a plurality of data packet units into one jumbo frame unit, copying the converted jumbo frame to a plurality of dual in-line memories (DIMMs) by logical distribution, and computing the jumbo frame through each CPU including multicore processors corresponding to the plurality of DIMM channels, thereby reducing the number of packets per second and securing efficiency in memories and CPU resources, and also adding / removing a header field for each data packet included in the jumbo frame according to a path transmitted or received from a network interface card (NIC) of the jumbo frame or processing the data packet using the header field only, thereby minimizing packet receive event and reducing context switching generated upon the packet receive event, which results in improvement of jumbo frame processing performance.

Owner:WINS CO LTD

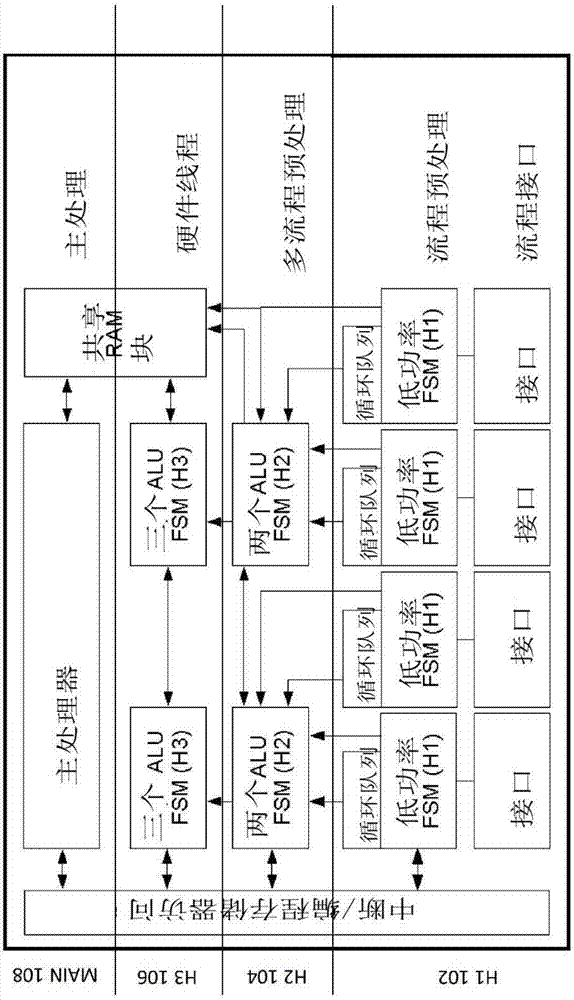

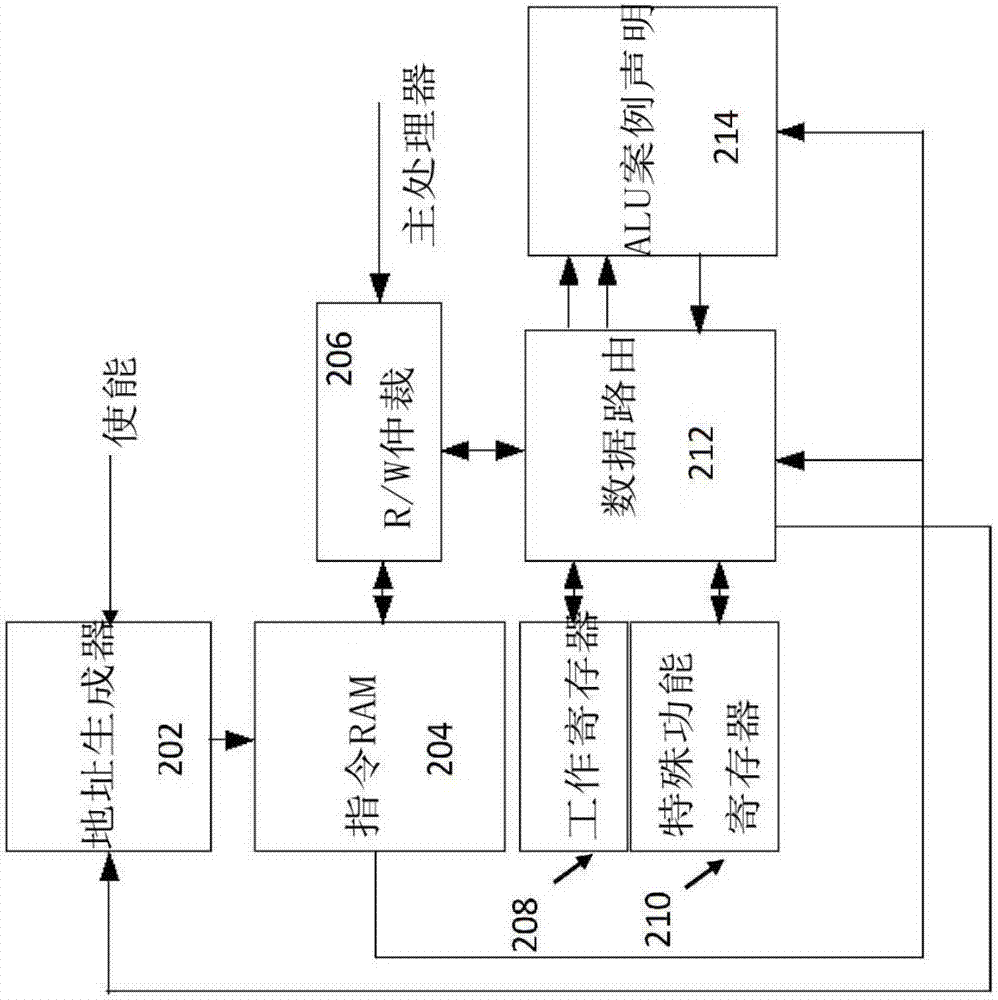

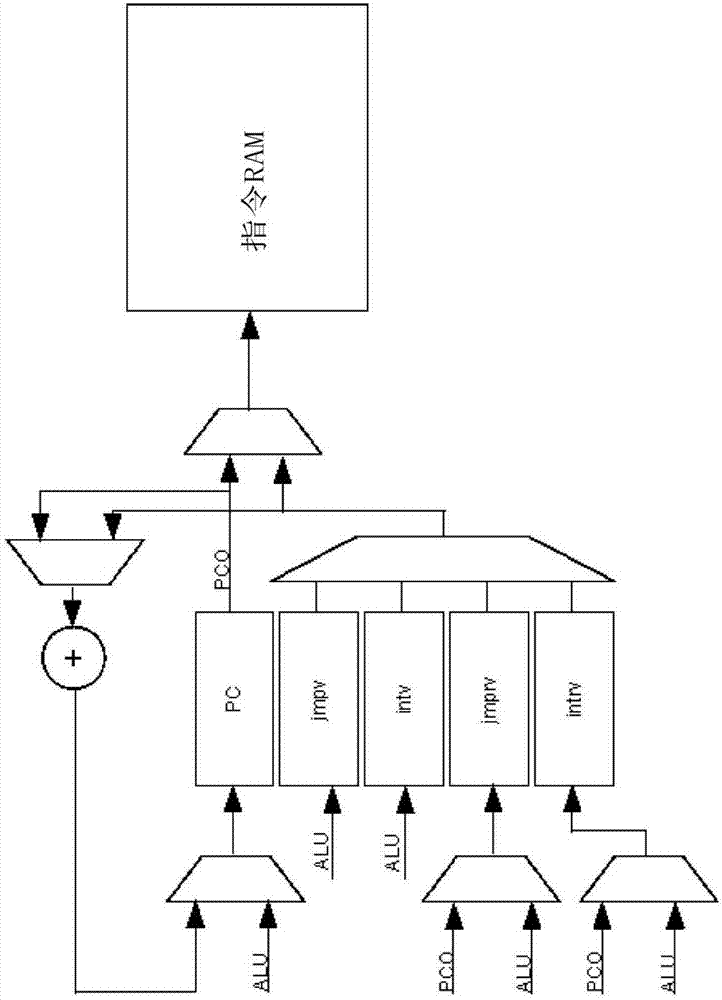

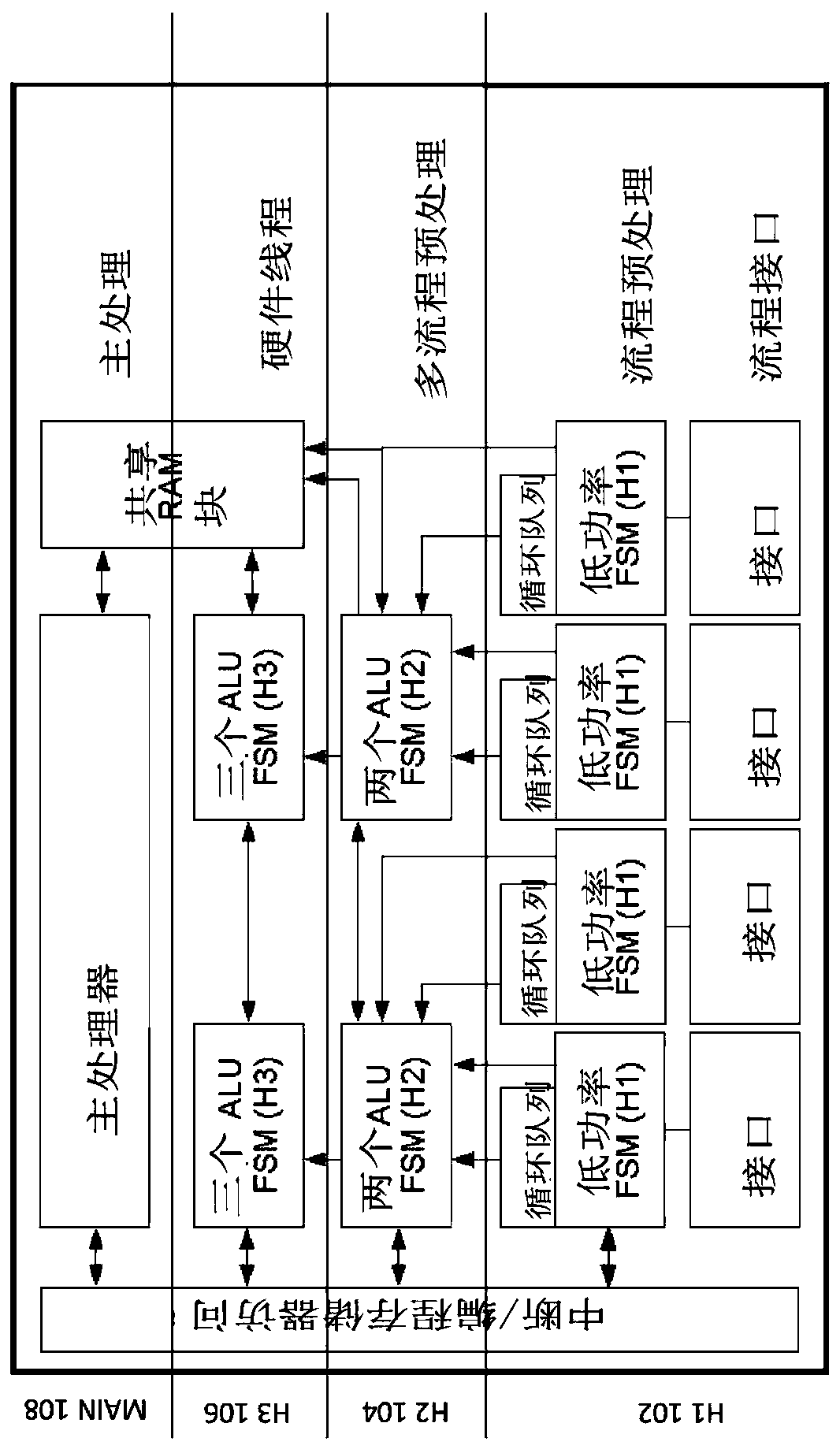

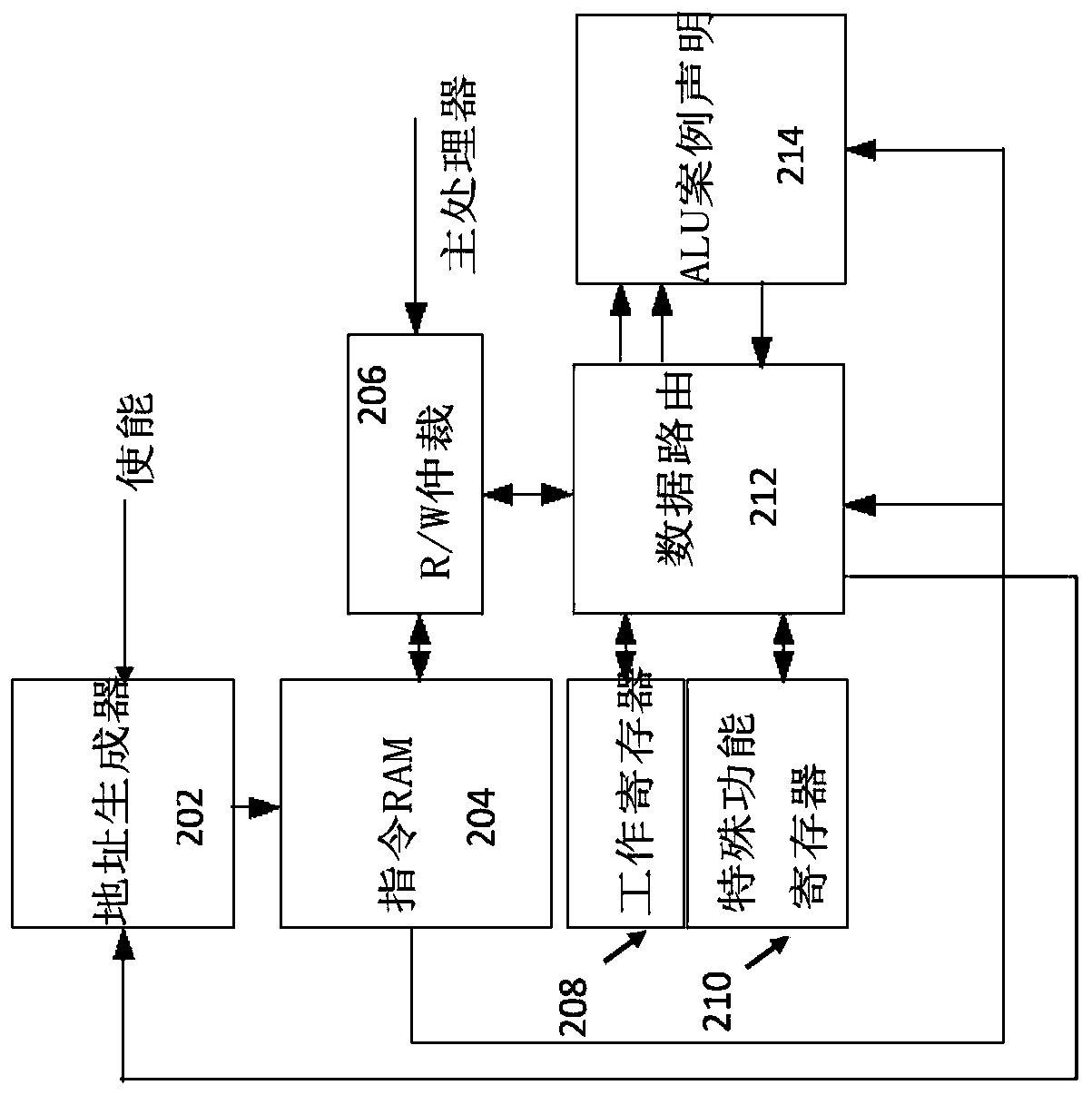

Configurable pre-processing array

ActiveCN107113719AReduce I/O accessMaintain hard real-time performancePower managementPower supply for data processingPre processorEvent management

A scaled and configurable pre-processor array can allow minimal digital activity while maintaining hard real time performance. The pre-processor array is specially designed to process real-time sensor data. The interconnected processing units of the array can drastically reduce context swaps, memory accesses, main processor input / output accesses, and real time event management overhead.

Owner:ANALOG DEVICES INC

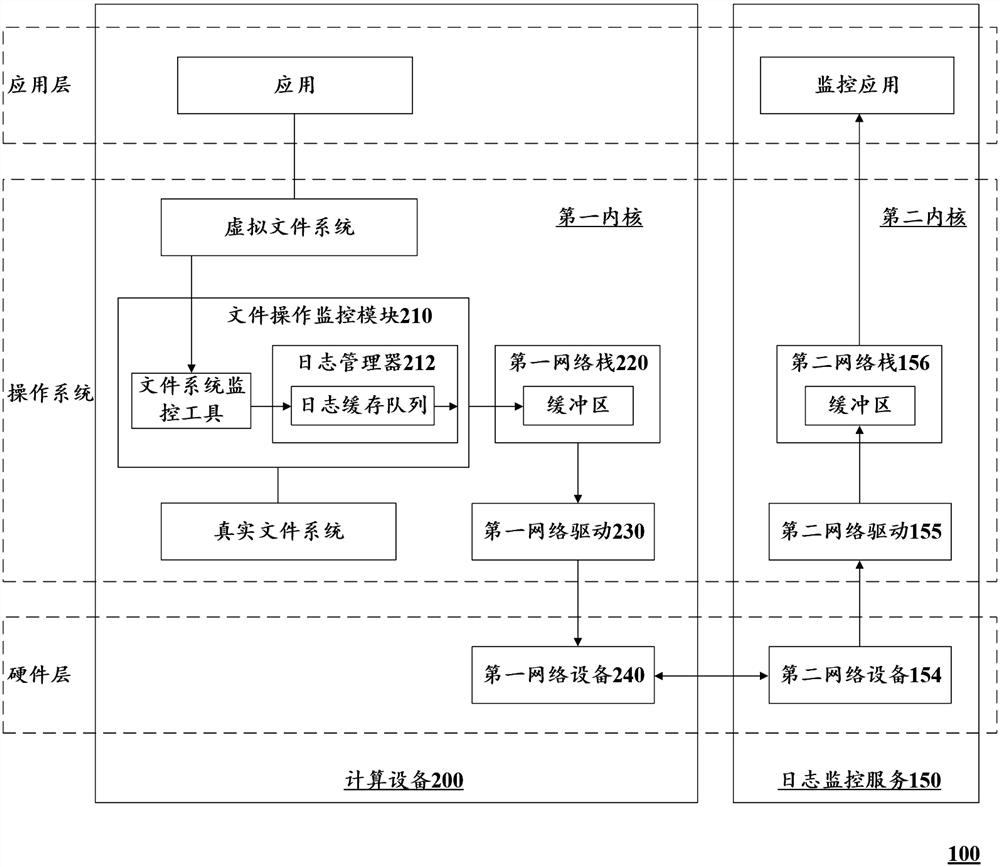

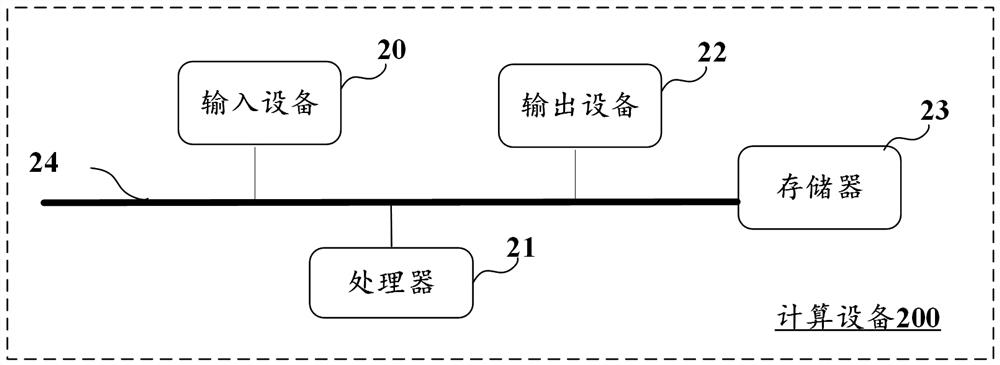

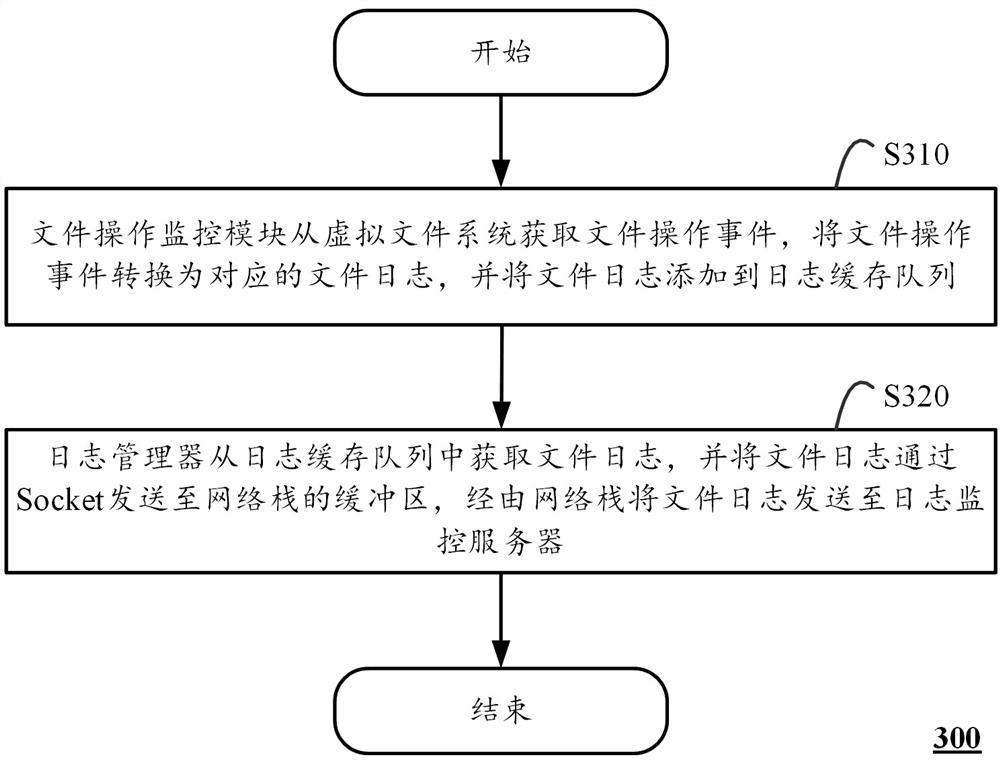

File log monitoring method and system and computing equipment

ActiveCN114780353AAvoid omissionsReduce consumptionHardware monitoringTransmissionVirtual file systemOperational system

The invention discloses a file log monitoring method and system and computing equipment, and relates to the technical field of file systems. The method is executed in an operating system of the computing device, a file operation monitoring module is established between a virtual file system and a real file system in a kernel of the operating system, the file operation monitoring module comprises a log manager, the log manager comprises a log cache queue, the kernel further comprises a network stack, and the network stack is connected with the virtual file system and the real file system. The method comprises the steps that a file operation monitoring module obtains a file operation event from a virtual file system, converts the file operation event into a corresponding file log and adds the file log to a log cache queue; and the log manager obtains the file log from the log cache queue, sends the file log to a buffer area of the network stack through the Socket, and sends the file log to the log monitoring server through the network stack. According to the technical scheme, the file log can be efficiently sent to the log monitoring server, and few system resources are occupied.

Owner:UNIONTECH SOFTWARE TECH CO LTD

Method and device for memory migration

ActiveCN105159841BReduce context switchingImprove the efficiency of memory migrationMemory adressing/allocation/relocationPhysical addressComputer science

The invention discloses a memory migration method and a memory migration device. The memory migration method comprises the steps of: when a memory data migration request of a random source node in a storage system is received, dividing to-be-migrated data according to memory blocks on the basis of continuity of physical addresses of the to-be-migrated data, and determining the number of occupied memory blocks of the source node after dividing of the to-be-migrated data; acquiring memory blocks of which the number is same with the number of the occupied memory blocks of the source node after dividing of the to-be-migrated data in a target node in the storage system; and migrating the to-be-migrated data to the memory blocks in the target node according to the divided memory blocks. The invention further discloses a corresponding memory migration device. According to the memory migration method provided by the embodiment of the invention, through combining and dividing the to-be-migrated data according to the memory blocks, the memory blocks with continuous physical addresses are acquired from the target node; the to-be-migrated data are migrated from the source node to the memory blocks of the target node according to the memory blocks; and the number of context switching in migration can be reduced and a memory migration efficiency can be improved in the memory migration process.

Owner:HUAWEI TECH CO LTD

Configurable preprocessing arrayer

ActiveCN107113719BReduce I/O accessMaintain hard real-time performancePower managementPower supply for data processingTerm memoryProcessing element

A scalable and configurable array of preprocessors enables minimal numerical activity while maintaining hard real-time performance. The preprocessor array is dedicated to processing real-time sensor data. The array's interconnected processing units can greatly reduce context switching, memory access, host processor I / O access, and real-time event management overhead.

Owner:ANALOG DEVICES INC

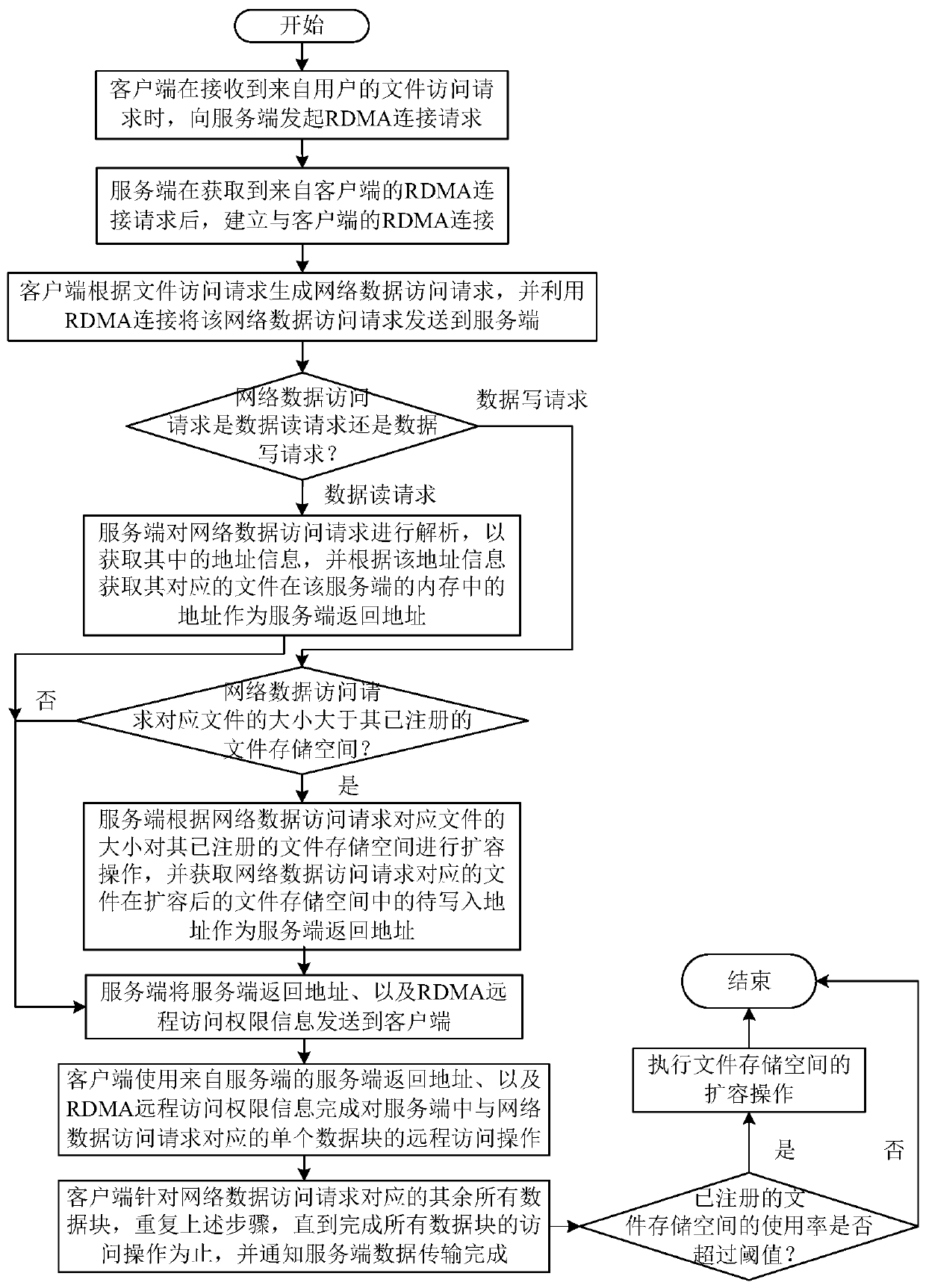

A distributed file system data transmission method and system based on rdma network

ActiveCN110191194BReduce overheadImprove data transfer efficiencyDigital data information retrievalTransmissionData accessTerm memory

The invention discloses a distributed file system data transmission method based on an RDMA network. The method comprises the following steps: a client receives a file access request from a user; an RDMA connection request is initiated to the server; a server acquires an RDMA connection request from a client and establishes RDMA connection with a client; the client generates a network data accessrequest according to the file access request; the network data access request is sent to a server side by using RDMA connection; and the server analyzes the network data access request to obtain address information in the network data access request, obtains an address of a file corresponding to the address information in the memory of the server as a server return address according to the addressinformation, and sends the server return address and the RDMA remote access permission information to the client. According to the invention, the technical problem of high memory operation overhead in the data transmission process of the existing distributed file system can be solved, and the technical problems of high load and long transmission delay of the server side can be solved.

Owner:HUAZHONG UNIV OF SCI & TECH

Method and system for making guest operating system lightweight, and virtualized operating system

ActiveCN106339257BFix performance issuesSolve functionResource allocationOperational systemSystem call

The invention discloses a method for making a client operating system lightweight, a lightweight client operating system in a cloud environment, and a virtualized operating system based on the lightweight client operating system. The performance and functional limitations of the program in the cloud environment, simplify the design of the client operating system and improve its performance, and reduce the number of layers of abstraction and protection. The lightweight operating system mainly includes a virtual hardware driver module, a file system module, a network module, a memory management module, a thread scheduling module and a system call module. Among them, only one application program runs in a lightweight guest operating system, which reduces redundant and costly "isolation" in the guest operating system, uses a single address space, and uses one page table for all threads and kernels, reducing context switch. It can run c / c++ application programs and java programs, and can effectively improve the performance of application programs. It is an efficient application program running container.

Owner:CHINA STANDARD SOFTWARE

User state file sending method, file receiving method and file sending and receiving device

ActiveCN108989432BImprove transceiver performanceReduce copyDigital data information retrievalInterprogram communicationRecipient sideDatabase

The invention discloses a file sending method, a file receiving method, and a file sending and receiving device in a user state. The file sending method includes: allocating a data reading and sending area, a data receiving and writing area, and a data buffer area in the user state area. Create a data sending process; Obtain the file to be sent corresponding to the data sending process from the data buffer area; Build the metadata of the data sending process, and record it in the data reading and sending area ; Sequentially process one or more metadata in the reading and sending area, wherein the processing of the metadata includes: sending the file to the network card through the user mode network device driver, and the file is sent by the network card issue. According to the technical solution of the present invention, the user state space of the data sending and receiving process is used to complete the receiving and sending of data, without using the kernel state space of the process to completely shield the kernel, which can significantly reduce copying, context switching, and interruption. Improved data sending and receiving performance.

Owner:NANJING ZHONGXING XIN SOFTWARE CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com