Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

53 results about "Strategic learning" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

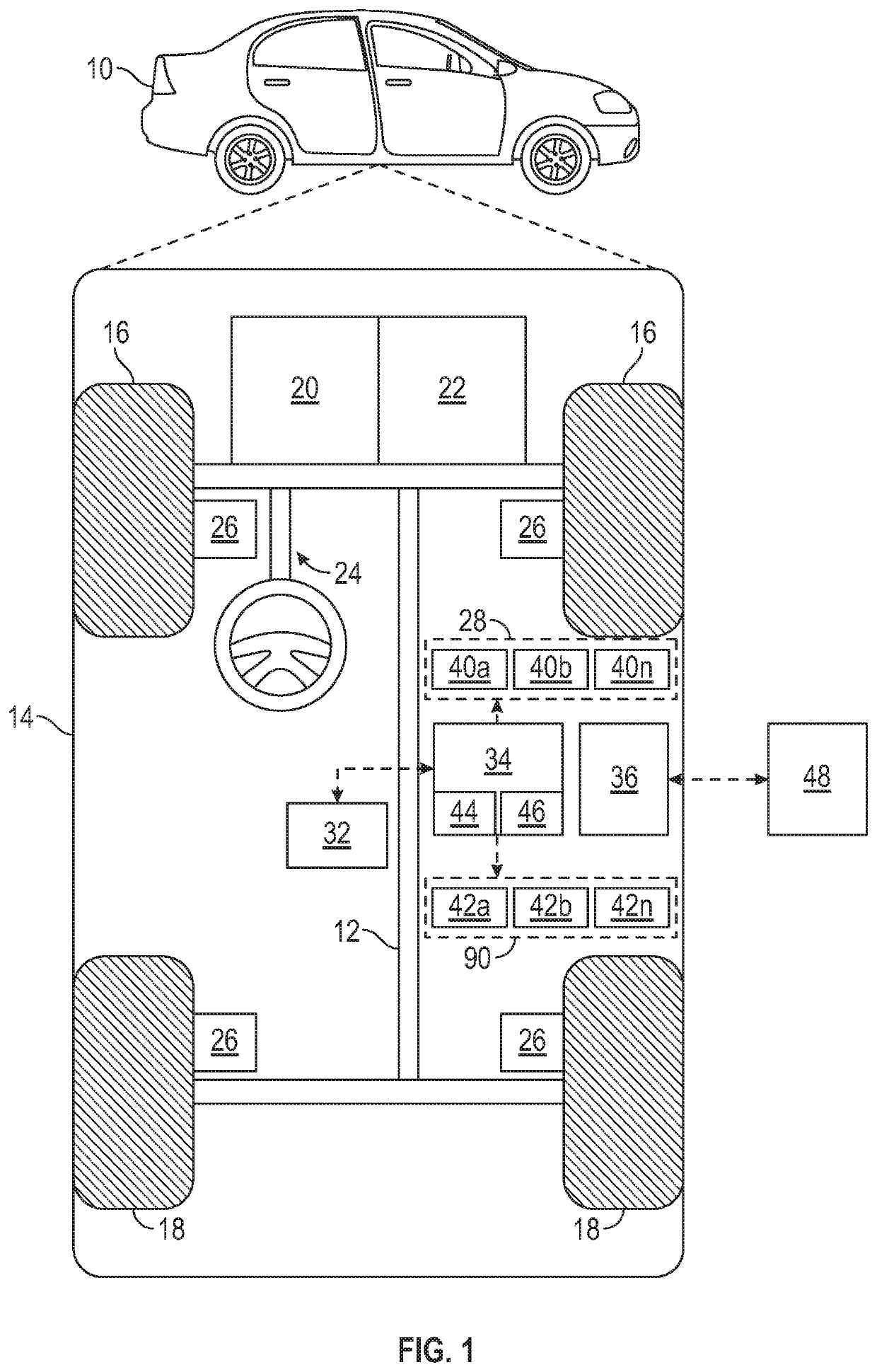

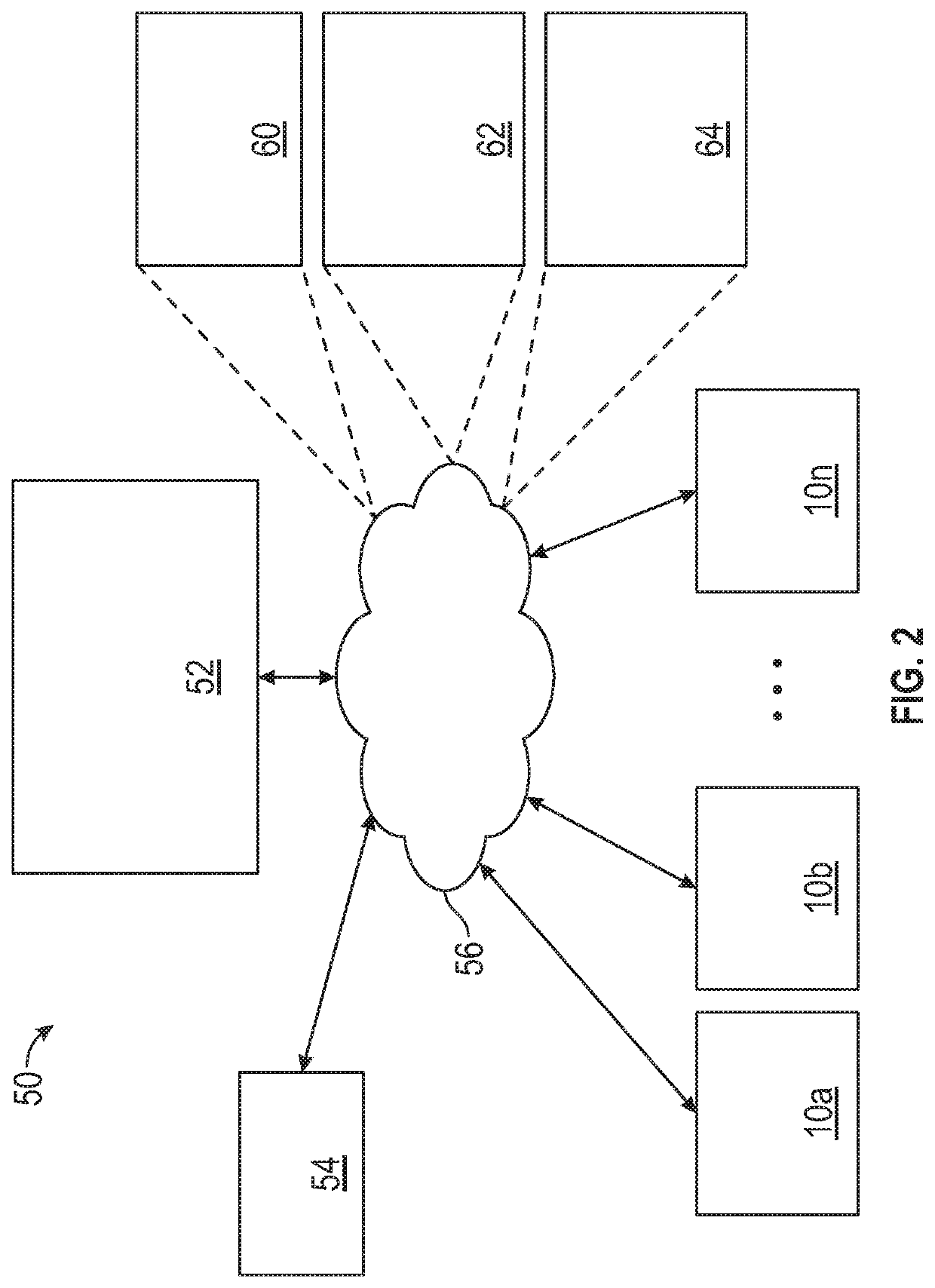

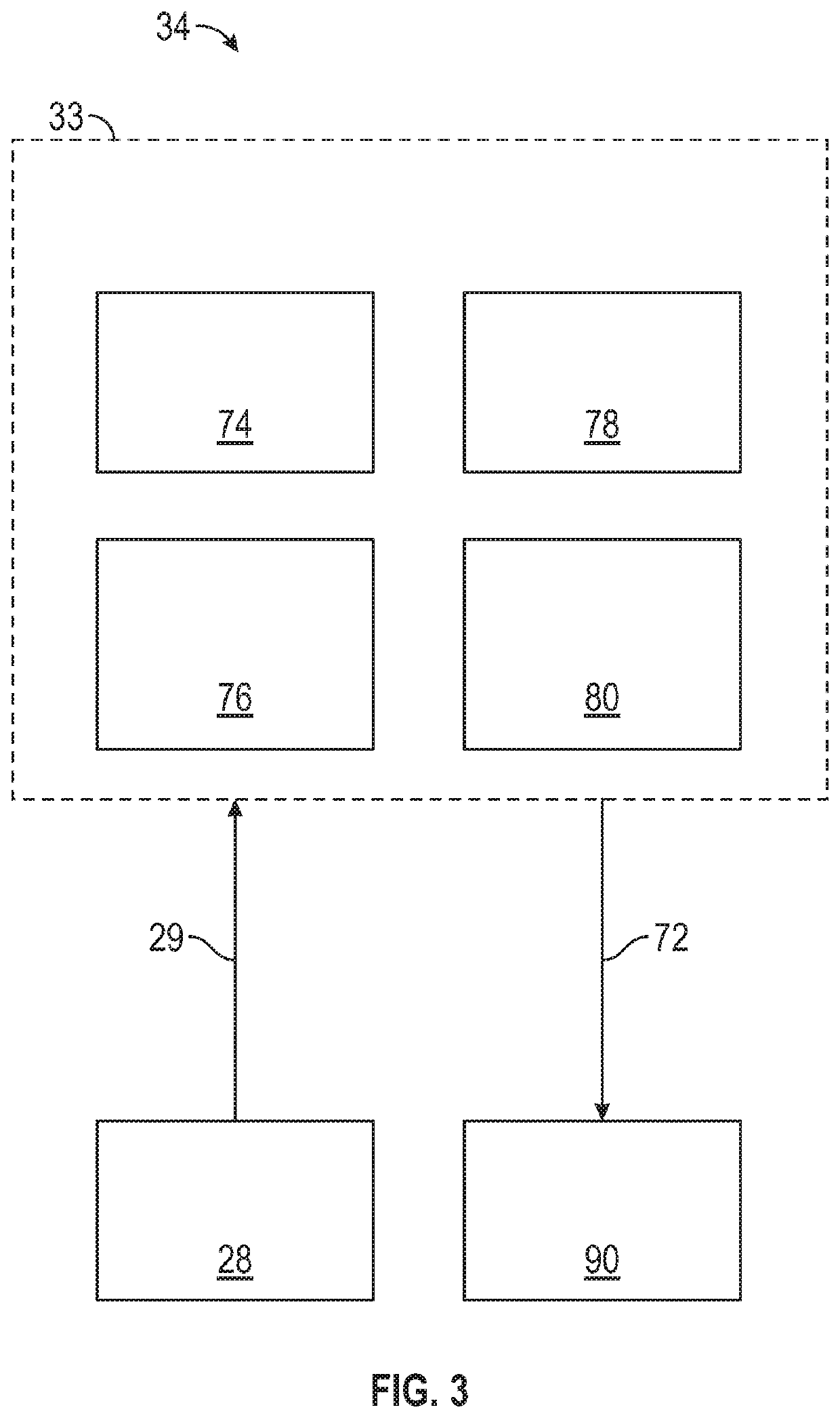

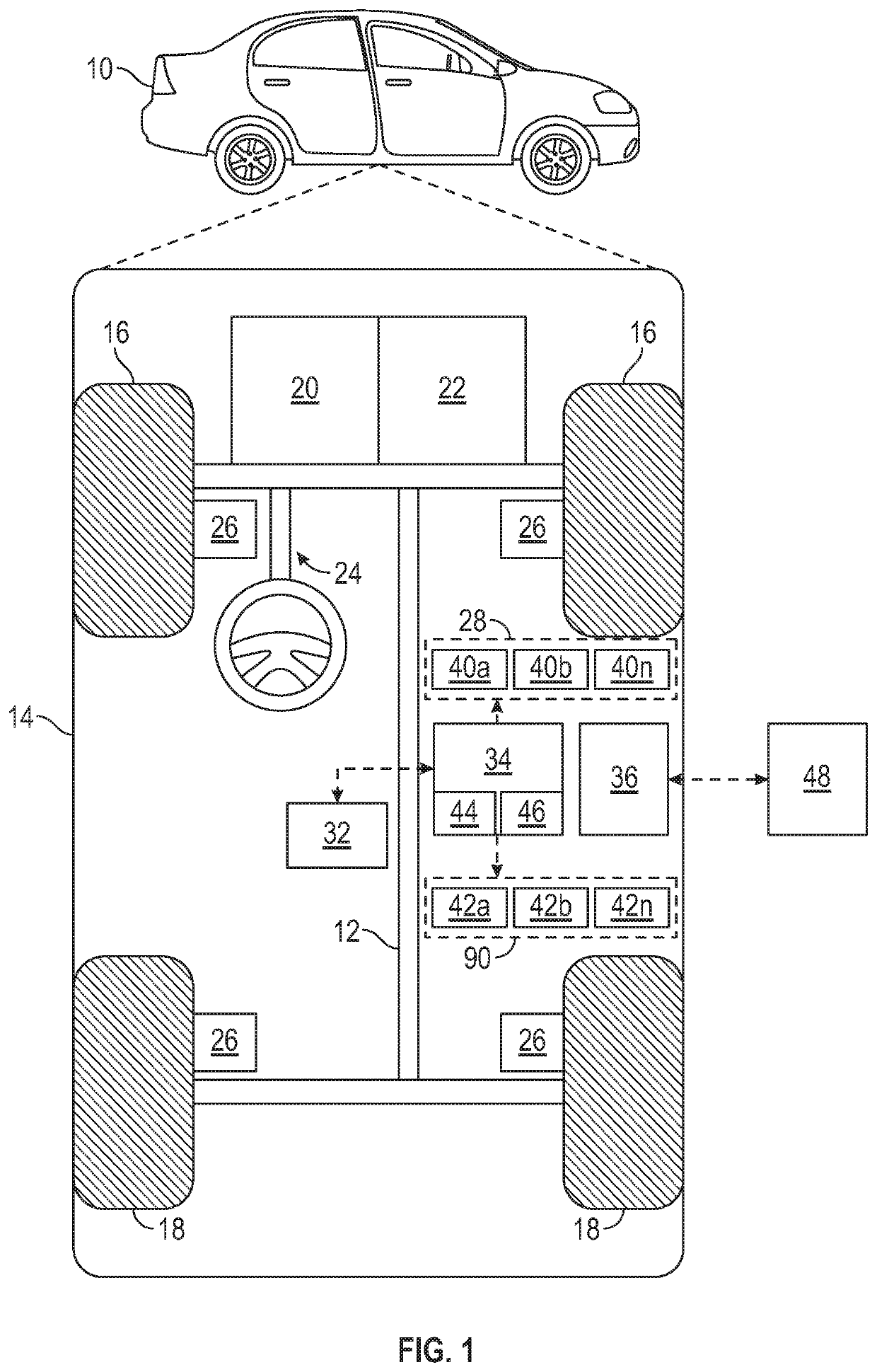

Systems, methods and controllers for an autonomous vehicle that implement autonomous driver agents and driving policy learners for generating and improving policies based on collective driving experiences of the autonomous driver agents

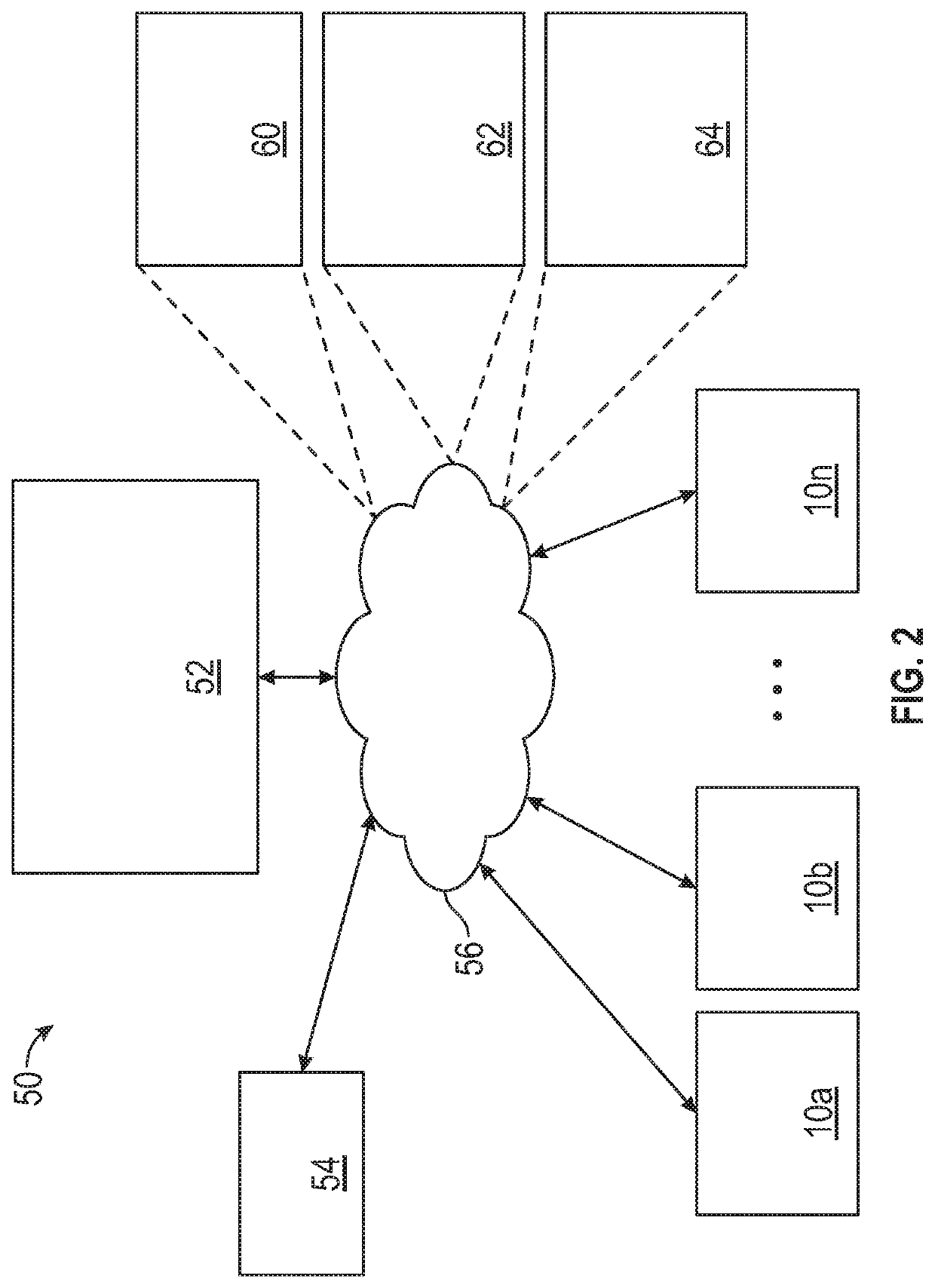

Systems and methods are provided autonomous driving policy generation. The system can include a set of autonomous driver agents, and a driving policy generation module that includes a set of driving policy learner modules for generating and improving policies based on the collective experiences collected by the driver agents. The driver agents can collect driving experiences to create a knowledge base. The driving policy learner modules can process the collective driving experiences to extract driving policies. The driver agents can be trained via the driving policy learner modules in a parallel and distributed manner to find novel and efficient driving policies and behaviors faster and more efficiently. Parallel and distributed learning can enable accelerated training of multiple autonomous intelligent driver agents.

Owner:GM GLOBAL TECH OPERATIONS LLC

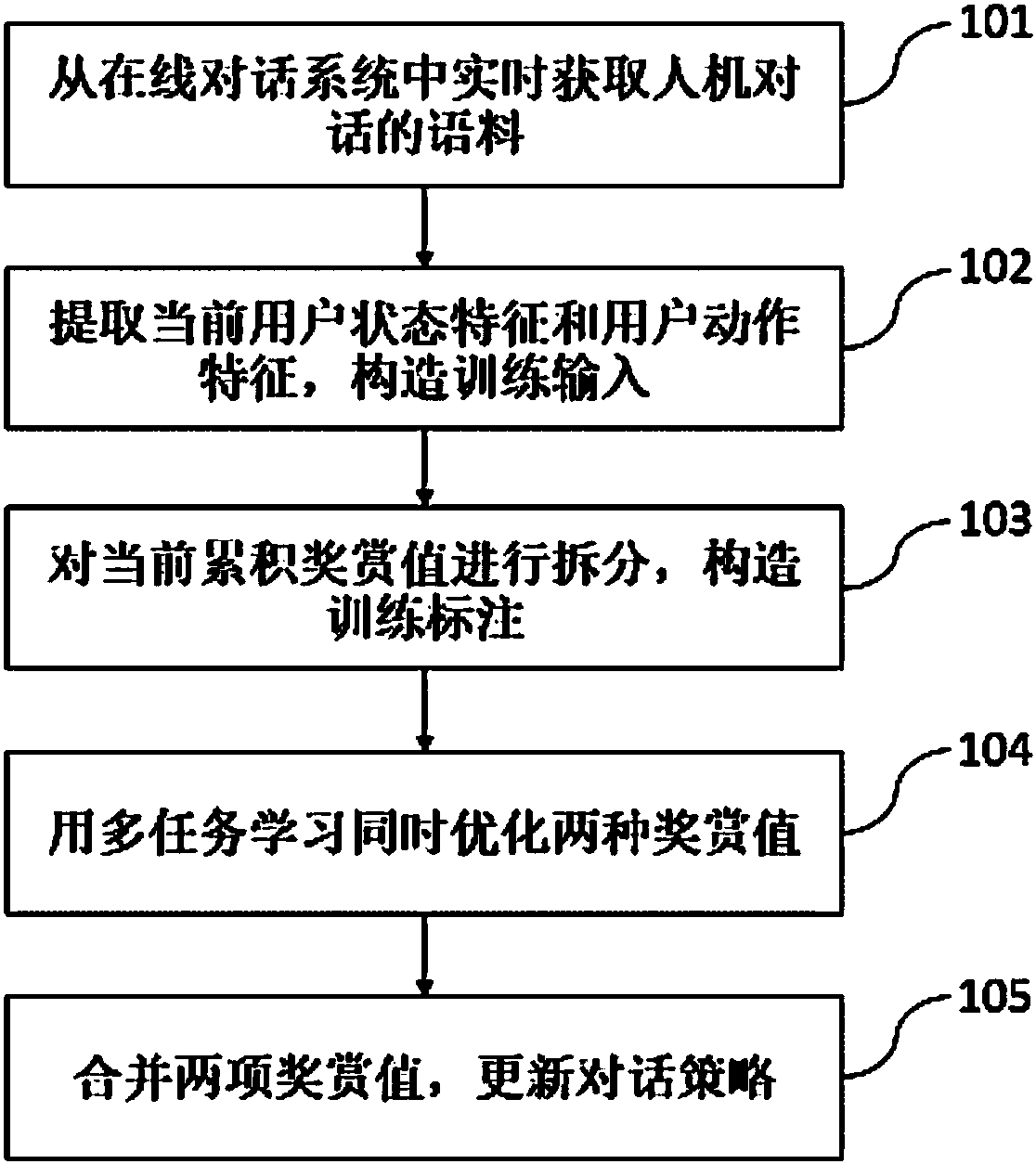

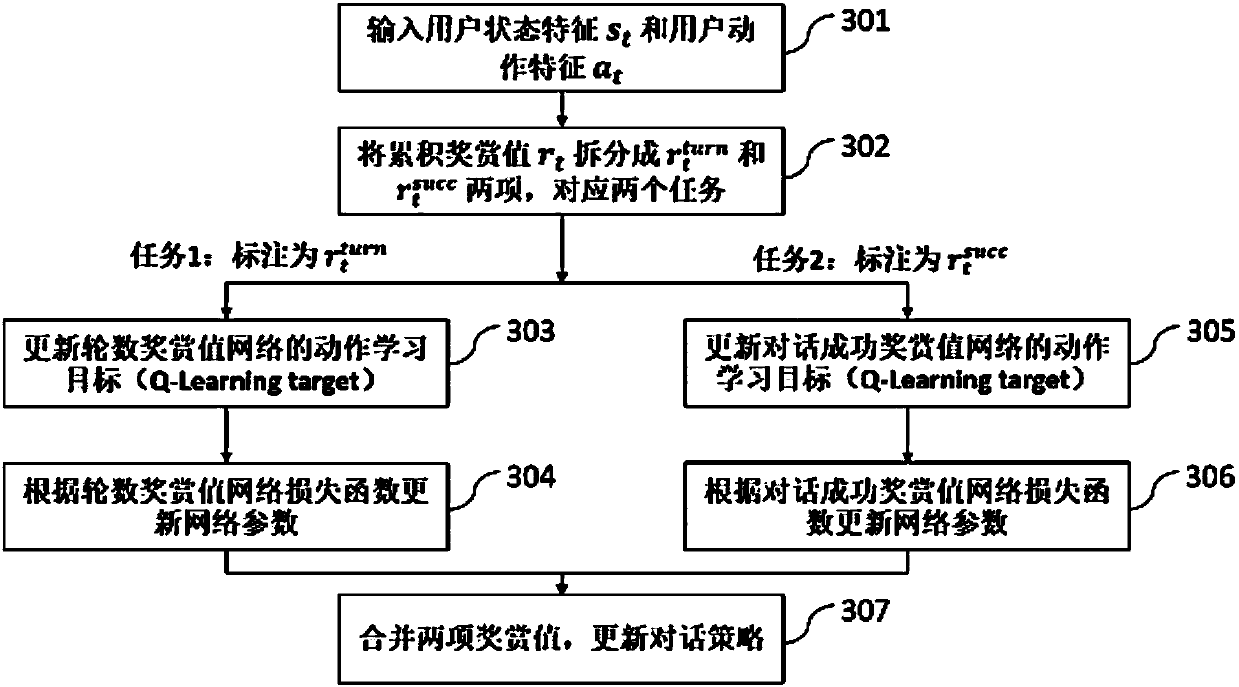

Dialog strategy online realization method based on multi-task learning

ActiveCN107357838AAvoid Manual Design RulesSave human effortSpeech recognitionSpecial data processing applicationsMan machineNetwork structure

The invention discloses a dialog strategy online realization method based on multi-task learning. According to the method, corpus information of a man-machine dialog is acquired in real time, current user state features and user action features are extracted, and construction is performed to obtain training input; then a single accumulated reward value in a dialog strategy learning process is split into a dialog round number reward value and a dialog success reward value to serve as training annotations, and two different value models are optimized at the same time through the multi-task learning technology in an online training process; and finally the two reward values are merged, and a dialog strategy is updated. Through the method, a learning reinforcement framework is adopted, dialog strategy optimization is performed through online learning, it is not needed to manually design rules and strategies according to domains, and the method can adapt to domain information structures with different degrees of complexity and data of different scales; and an original optimal single accumulated reward value task is split, simultaneous optimization is performed by use of multi-task learning, therefore, a better network structure is learned, and the variance in the training process is lowered.

Owner:AISPEECH CO LTD

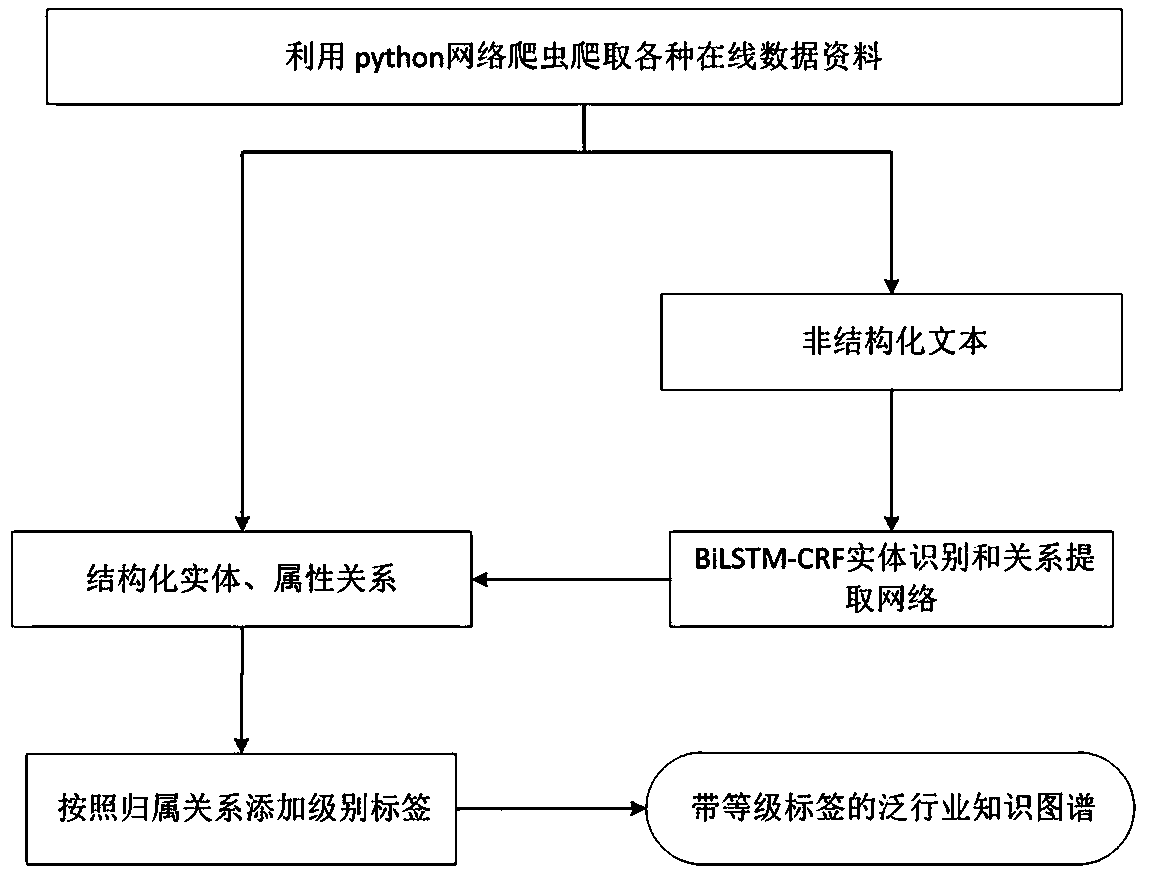

Dialogue auxiliary system based on team learning and hierarchical reasoning

ActiveCN110175227AMeet the needs of general knowledge queryImprove experienceInference methodsSpecial data processing applicationsEmpirical learningTeam learning

The invention discloses a dialogue auxiliary system for team learning and hierarchical reasoning. The method is characterized in that firstly a generic industry knowledge map which comprises the universal entities and attribute entries outside the industry is crawled and generated, thereby conveniently meeting the requirement of a user for universal knowledge query, and facilitating the realization of the rapid migration of knowledge bases among the industries; secondly, a multi-level reasoning network provided by the invention can realize the complex semantic reasoning capability, can realizethe man-machine conversation based on a reasoning process, and can carry out the accurate service recommendation and product marketing through the reasoning network at the same time; and finally, thestrategy learning network based on reinforcement learning can learn the sorting strategy by utilizing the accumulated historical interaction experience, so that the user experience is continuously improved. The functional extension and effect upgrading of an original dialogue system can be realized by deploying the system, and the user experience is improved.

Owner:SHANDONG SYNTHESIS ELECTRONICS TECH

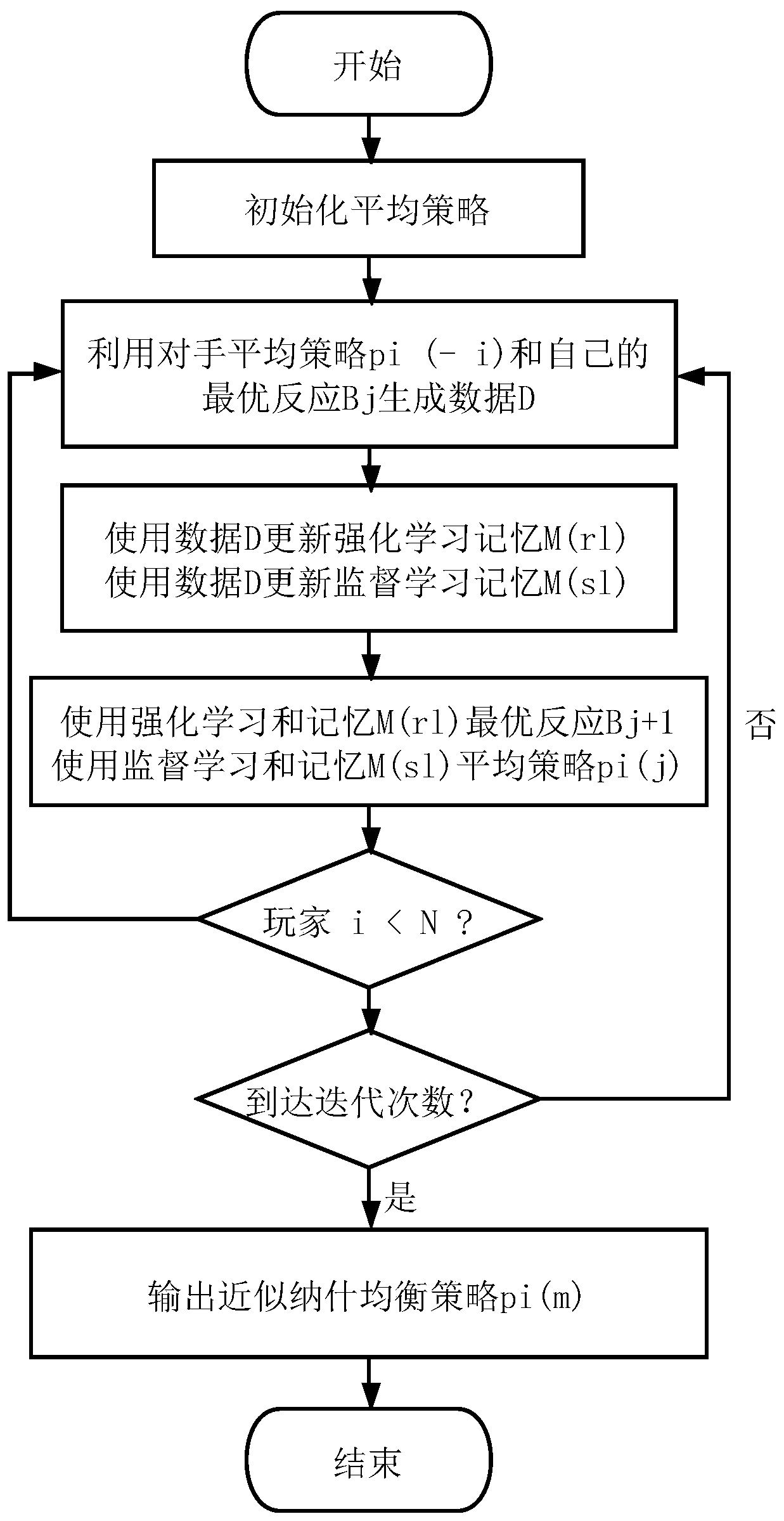

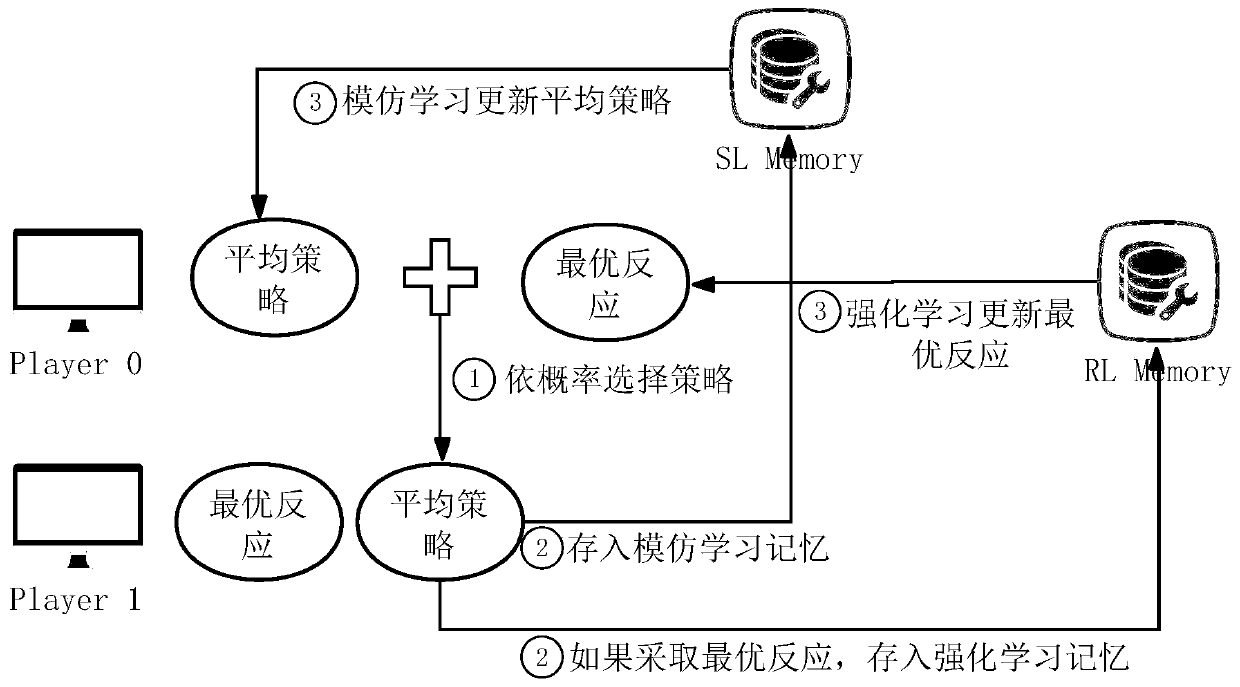

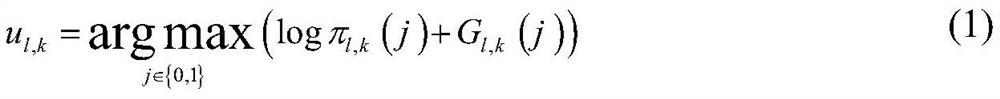

Fictitious self-play-based multi-person incomplete information game policy resolving method, device and system as well as storage medium

The invention provides a fictitious self-play-based multi-person incomplete information game policy resolving method, device and system as well as a storage medium. The method comprises the followingsteps: specific to a two-person gaming condition, implementing the generation of an average policy by using multi-type logistic regression and reservoir sampling, and implementing the generation of anoptimal response policy by using a DQN (Deep Q-Network) and annular buffering memory; and specific to a multi-person gaming condition, implementing the optimal response policy by using a multi-agentproximal policy optimization (MAPPO) algorithm, and meanwhile adjusting agent training by using multi-agent NFSP (Neural Fictitious Self-Play). The method has the beneficial effects that a fictitiousself-play algorithm framework is introduced; the Texas Poker policy optimizing process is partitioned into optimal response policy learning and average policy learning which are implemented by simulation learning and deep enhancement learning respectively; and a more universal multi-agent optimal policy learning method is designed.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

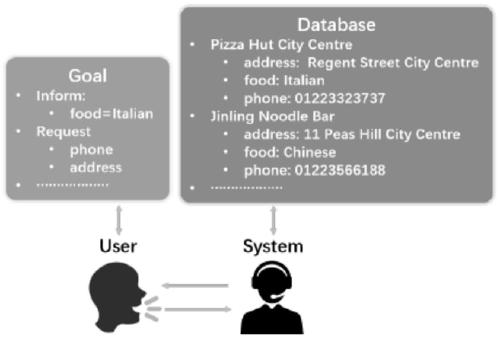

Efficient dialogue policy learning

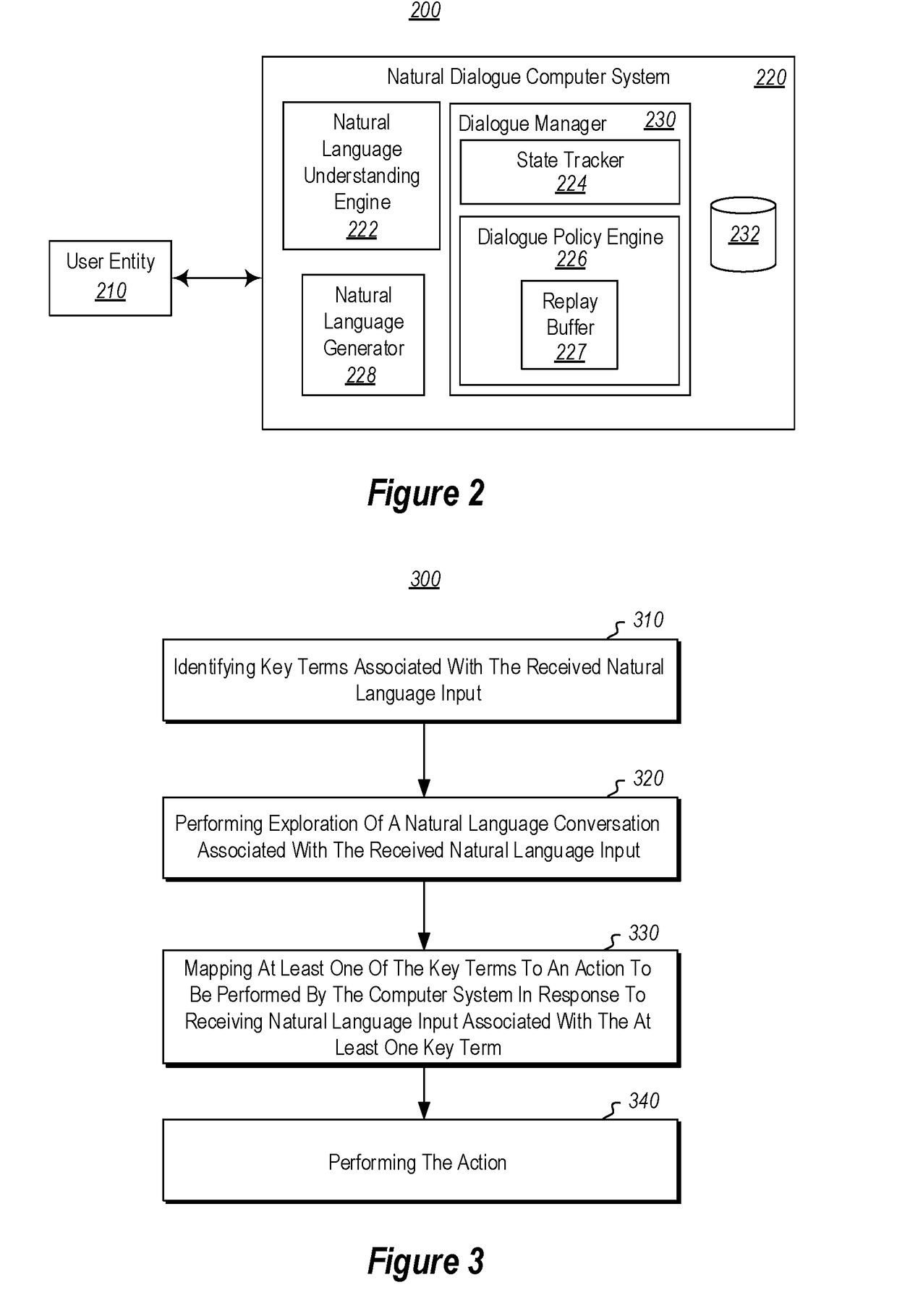

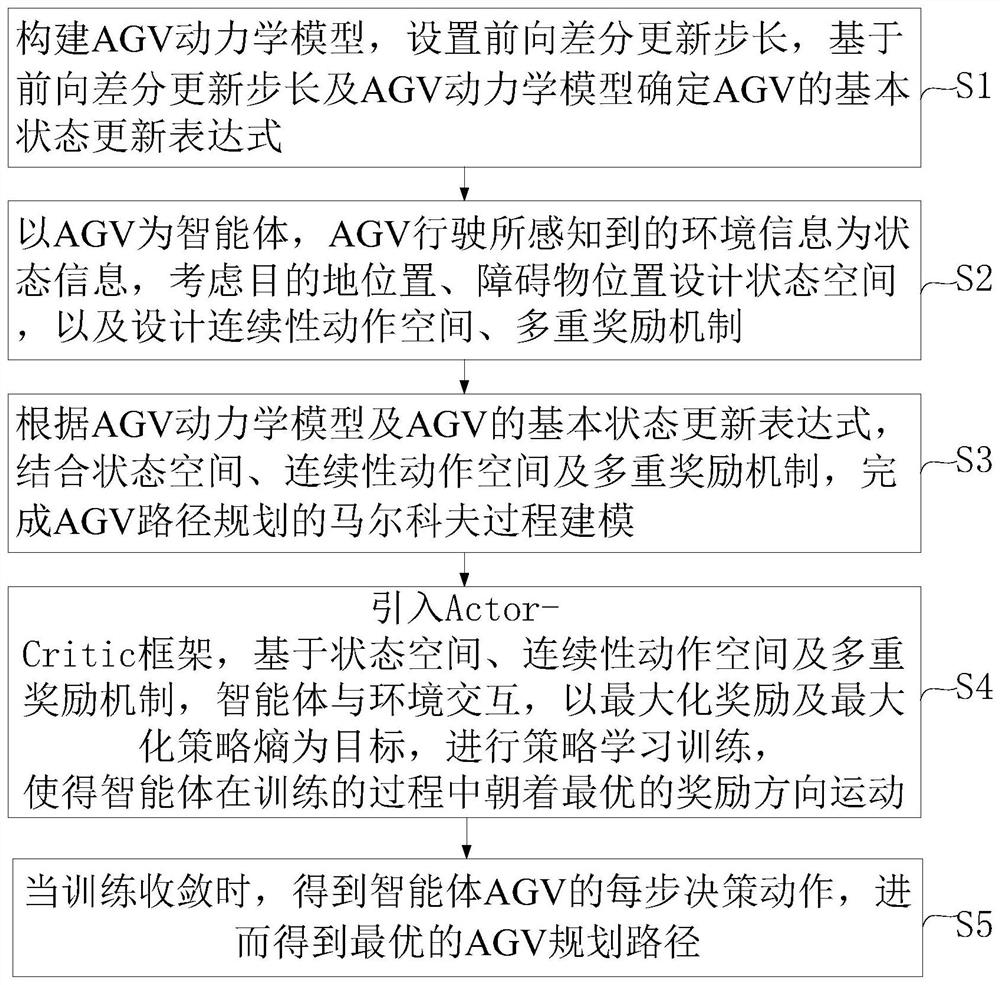

ActiveUS20180052825A1Narrow distributionMore certaintyMathematical modelsSemantic analysisKey termsComputerized system

Efficient exploration of natural language conversations associated with dialogue policy learning may be performed using probabilistic distributions. Exploration may comprise identifying key terms associated with the received natural language input utilizing the structured representation. Identifying key terms may include converting raw text of the received natural language input into a structured representation. Exploration may also comprise mapping at least one of the key terms to an action to be performed by the computer system in response to receiving natural language input associated with the at least one key term. Mapping may then be performed using a probabilistic distribution. The action may then be performed by the computer system. A replay buffer may also be utilized by the computer system to track what has occurred in previous conversations. The replay buffer may then be pre-filled with one or more successful dialogues to jumpstart exploration.

Owner:MICROSOFT TECH LICENSING LLC

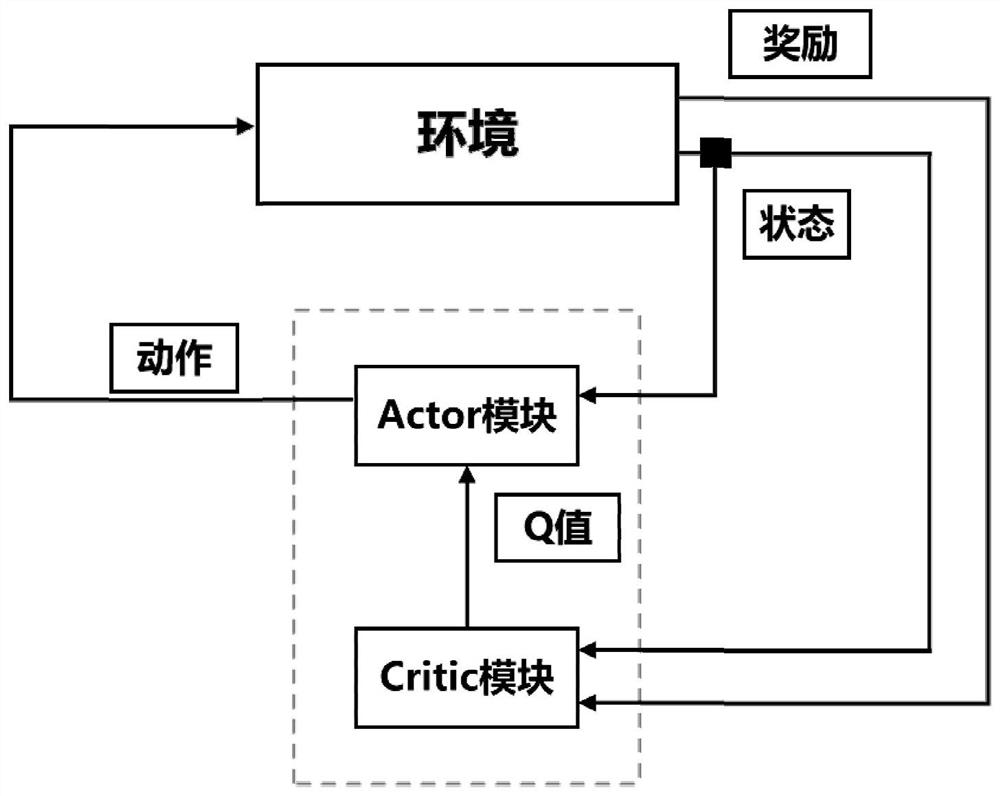

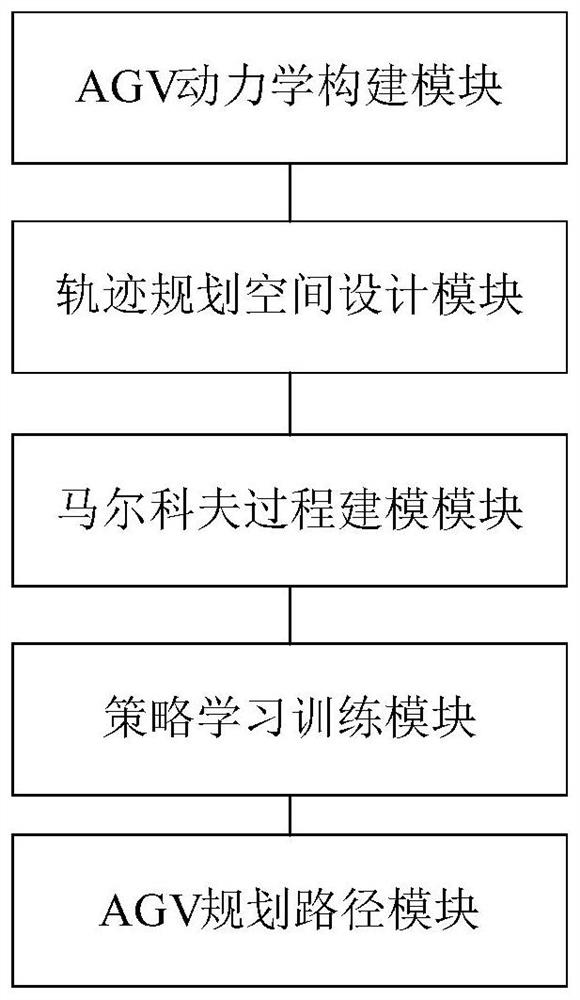

AGV path planning method and system based on reinforcement learning

ActiveCN113485380ARealize real-time decision controlHigh generalizabilityPosition/course control in two dimensionsVehiclesDecision controlControl engineering

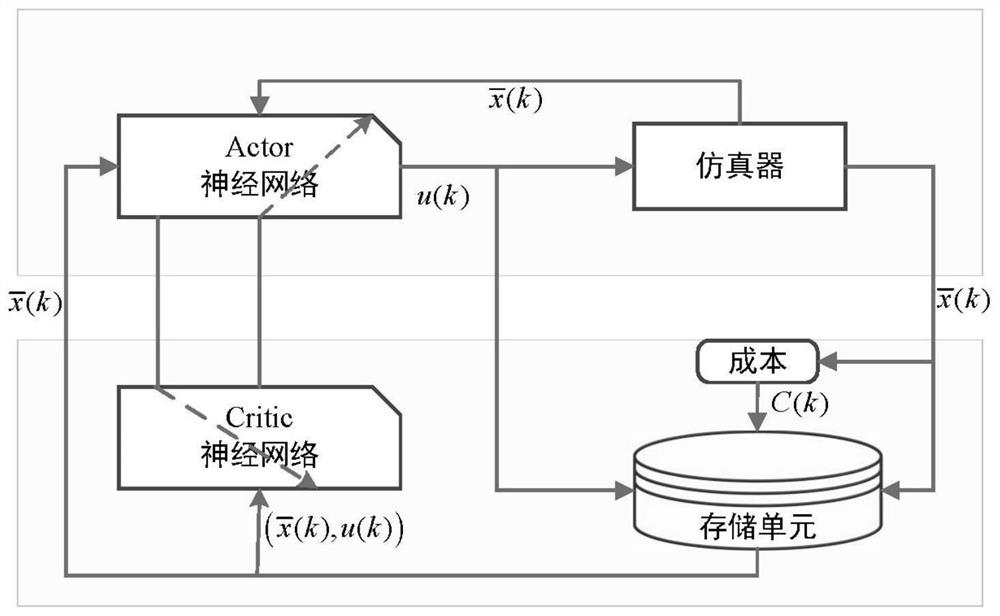

The invention provides an AGV path planning method and system based on reinforcement learning, and aims to solve the problem that an existing AGV path planning method based on reinforcement learning needs to consume a large amount of time and computing power cost. According to the method, an AGV dynamical model is constructed; an AGV is used as an intelligent agent, environment information sensed by the driving of the AGV is used as state information, a state space is designed with a destination position and an obstacle position considered, and a continuous action space and a multiple reward mechanism are designed; markov process modeling of path planning is completed on the basis of the state space, the continuous action space and the multiple reward mechanism, any different starting points, target points and obstacles at any positions can be given in the state space, generalization is high; an Actor-Critic framework is introduced, strategy learning training is carried out, online operation avoids the problem of large calculation amount; and calculation requirements are low, and the real-time decision control of the AGV for any target and obstacle can be realized.

Owner:GUANGDONG UNIV OF TECH

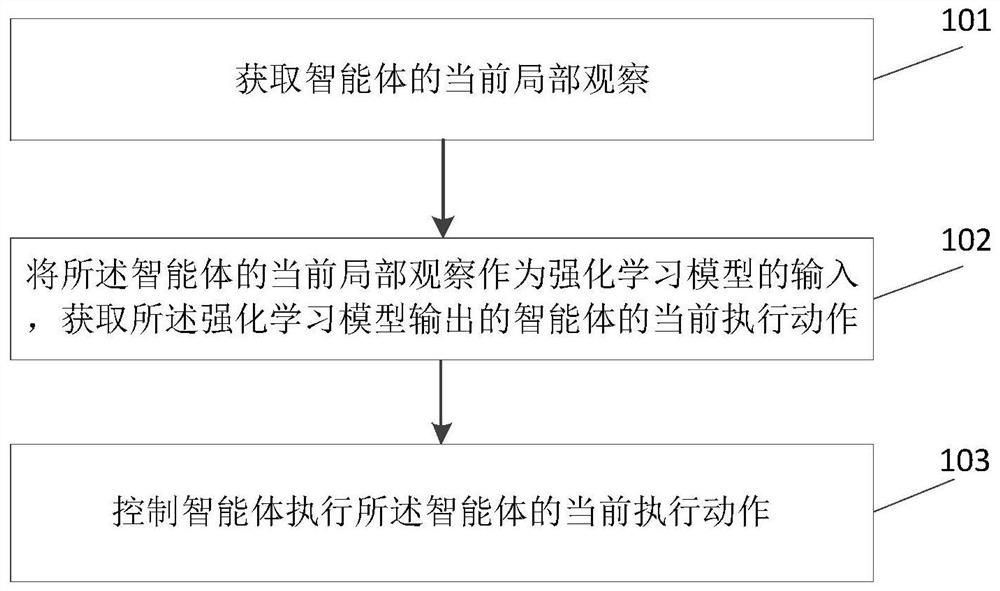

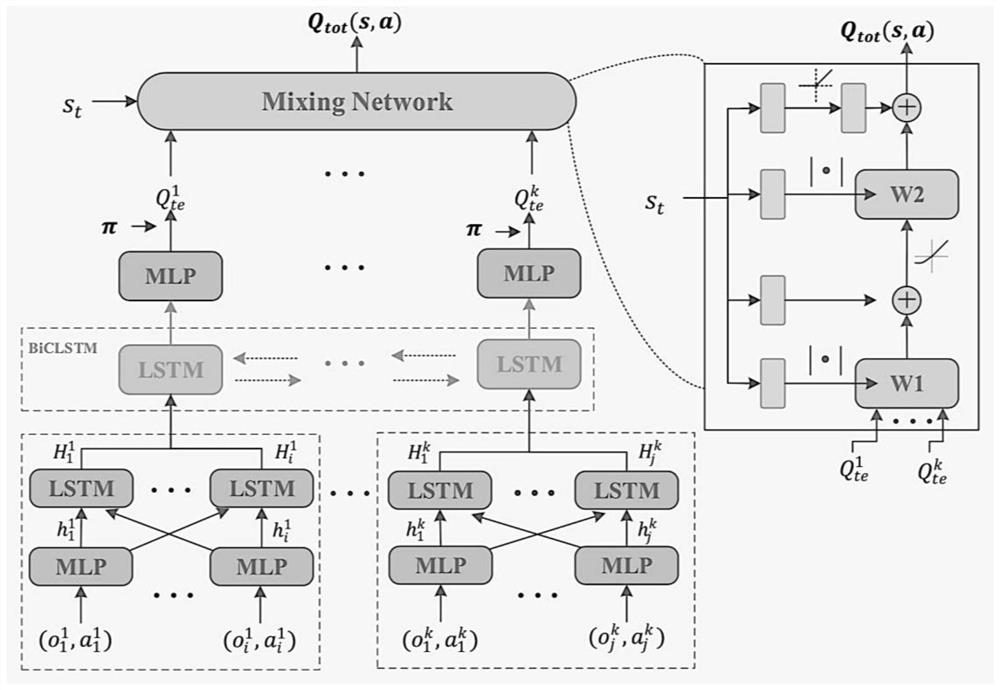

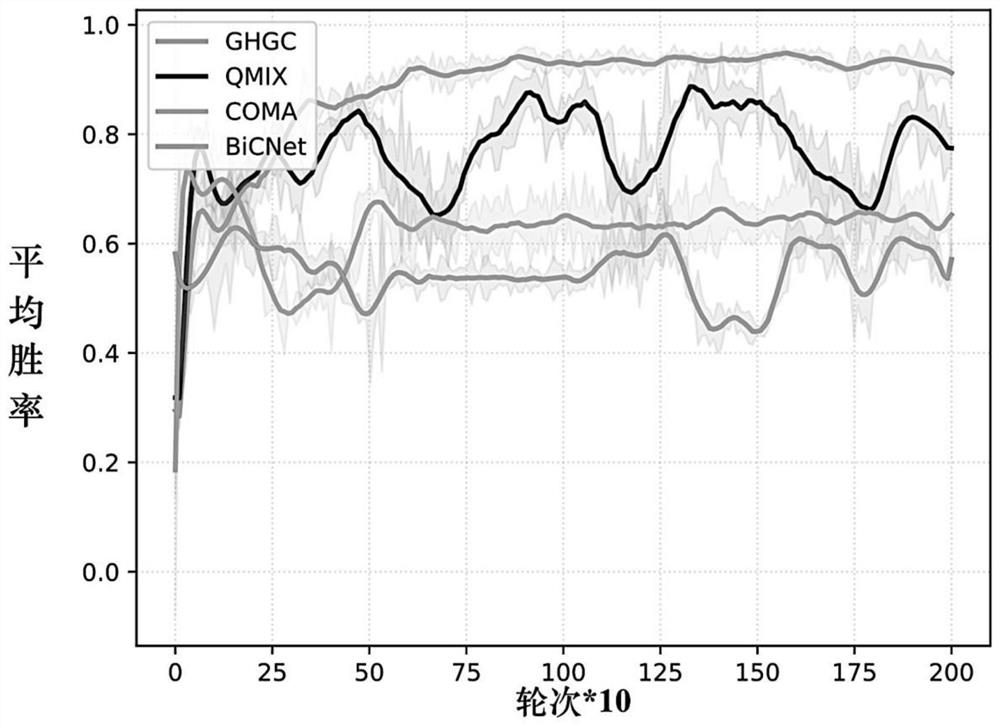

Intelligent agent control method and device based on reinforcement learning

PendingCN112215350AOptimize quantityEase of Expansion of TypesNeural architecturesMachine learningEngineeringHuman–computer interaction

The invention relates to an intelligent agent control method and device based on reinforcement learning. The method comprises the steps of obtaining current local observation of an intelligent agent;taking the current local observation of the intelligent agent as the input of a reinforcement learning model, and obtaining the current execution action of the intelligent agent output by the reinforcement learning model; controlling an intelligent agent to execute the current execution action of the intelligent agent; according to the technical scheme provided by the invention, the strategy learning process in a large-scale multi-intelligent system can be effectively simplified, the number and types of intelligent agents are easy to expand, and the method has potential value in large-scale real world application.

Owner:天津(滨海)人工智能军民融合创新中心

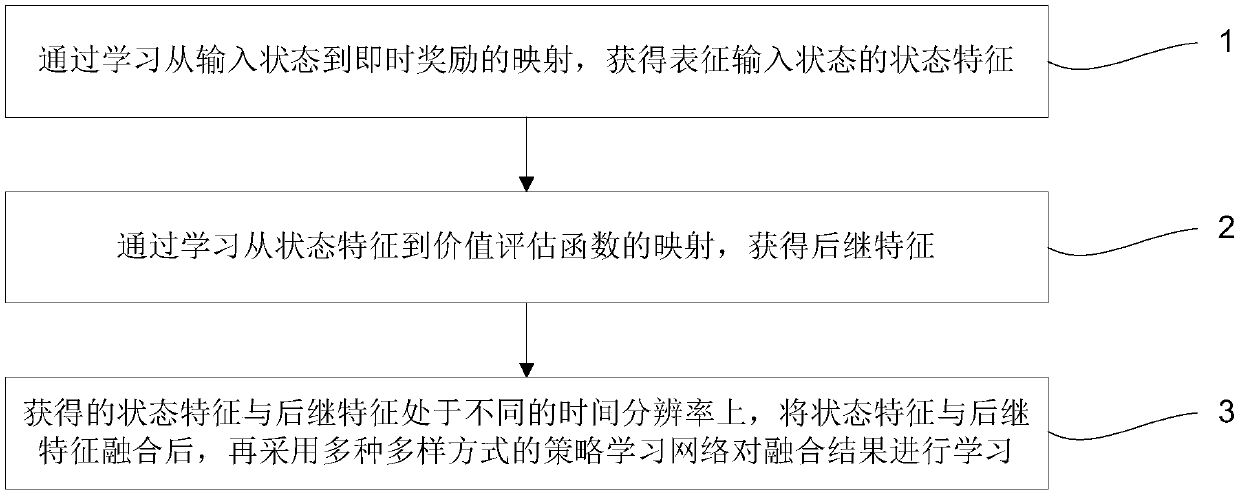

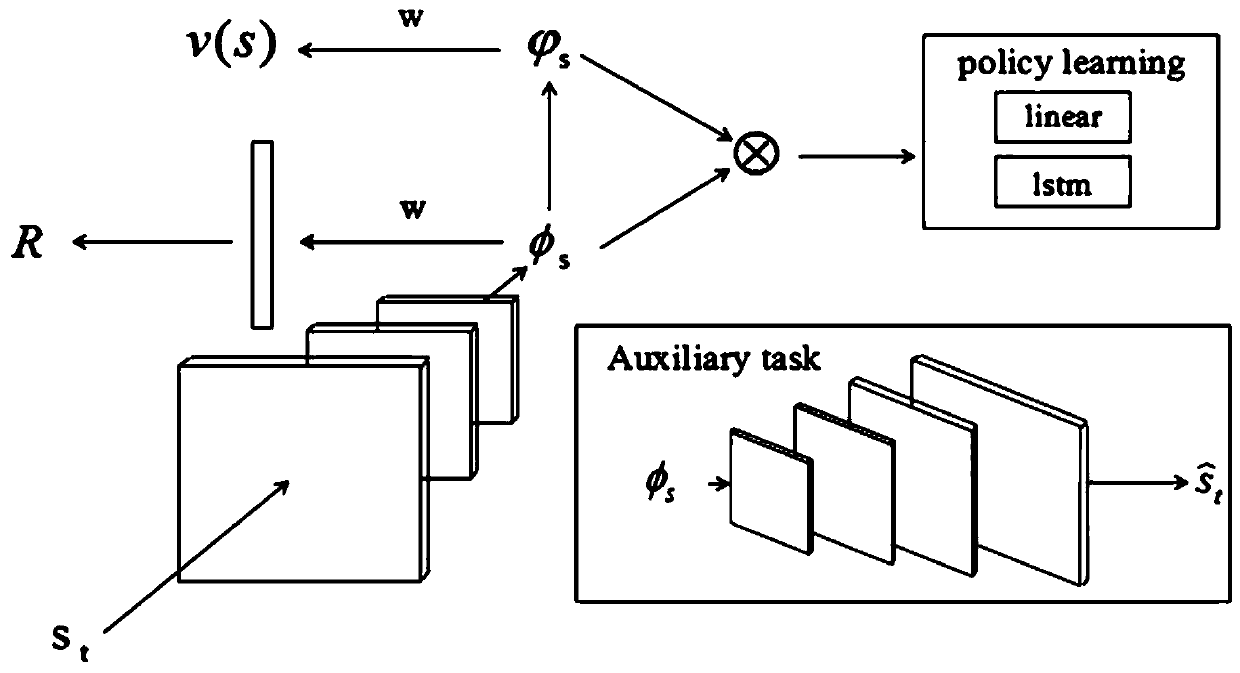

Joint learning method for features and strategies based on state features and subsequent features

ActiveCN108898221AImprove utilization efficiencyEfficient use ofPhysical realisationNeural learning methodsPattern recognitionImage resolution

The invention discloses a joint learning method for features and strategies based on state features and subsequent features. The method comprises: obtaining state features representing an input stateby learning mapping from the input state to the instant award; by learning mapping from state features to a value evaluation function, obtaining subsequent features; the obtained state features and the subsequent features being on different time resolution ratios, after the state features and subsequent features are fused, learning fusion results by using a variety of strategic learning networks.Compared with a traditional Agent network, the method uses sample information more efficiently. Compared with other algorithms, learning speed is obviously accelerated, and a network can converge faster and obtain better learning effect.

Owner:UNIV OF SCI & TECH OF CHINA

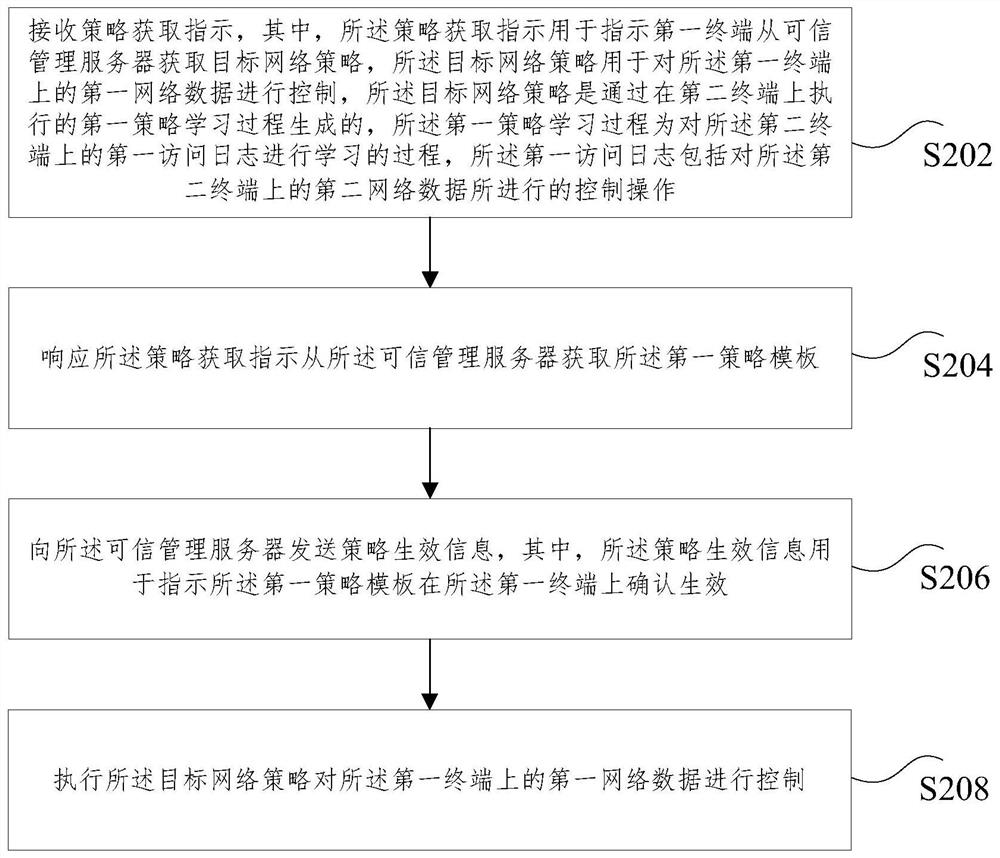

Network access control method and device

ActiveCN111901147AImprove configuration efficiencySolve technical problems with low configuration efficiencyData switching networksNetwork Access ControlNetwork data

The invention relates to a network access control method and device. The method comprises the following steps: receiving a strategy obtaining instruction, wherein the strategy obtaining instruction isused for indicating a first terminal to obtain a target network strategy from the trusted management server; wherein the target network strategy is used for controlling first network data on the first terminal, the target network strategy is generated through a first strategy learning process executed on a second terminal, and the first strategy learning process is a process of learning a first access log on the second terminal; obtaining a target network strategy from the trusted management server in response to the strategy obtaining instruction; sending strategy effective information to atrusted management server, wherein the strategy effective information is used for indicating that the target network strategy is confirmed to be effective on the first terminal; and executing the target network strategy to control the first network data on the first terminal. According to the application, the technical problem that the configuration efficiency of the network access strategy is relatively low in the related art is solved.

Owner:BEIJING KEXIN HUATAI INFORMATION TECH

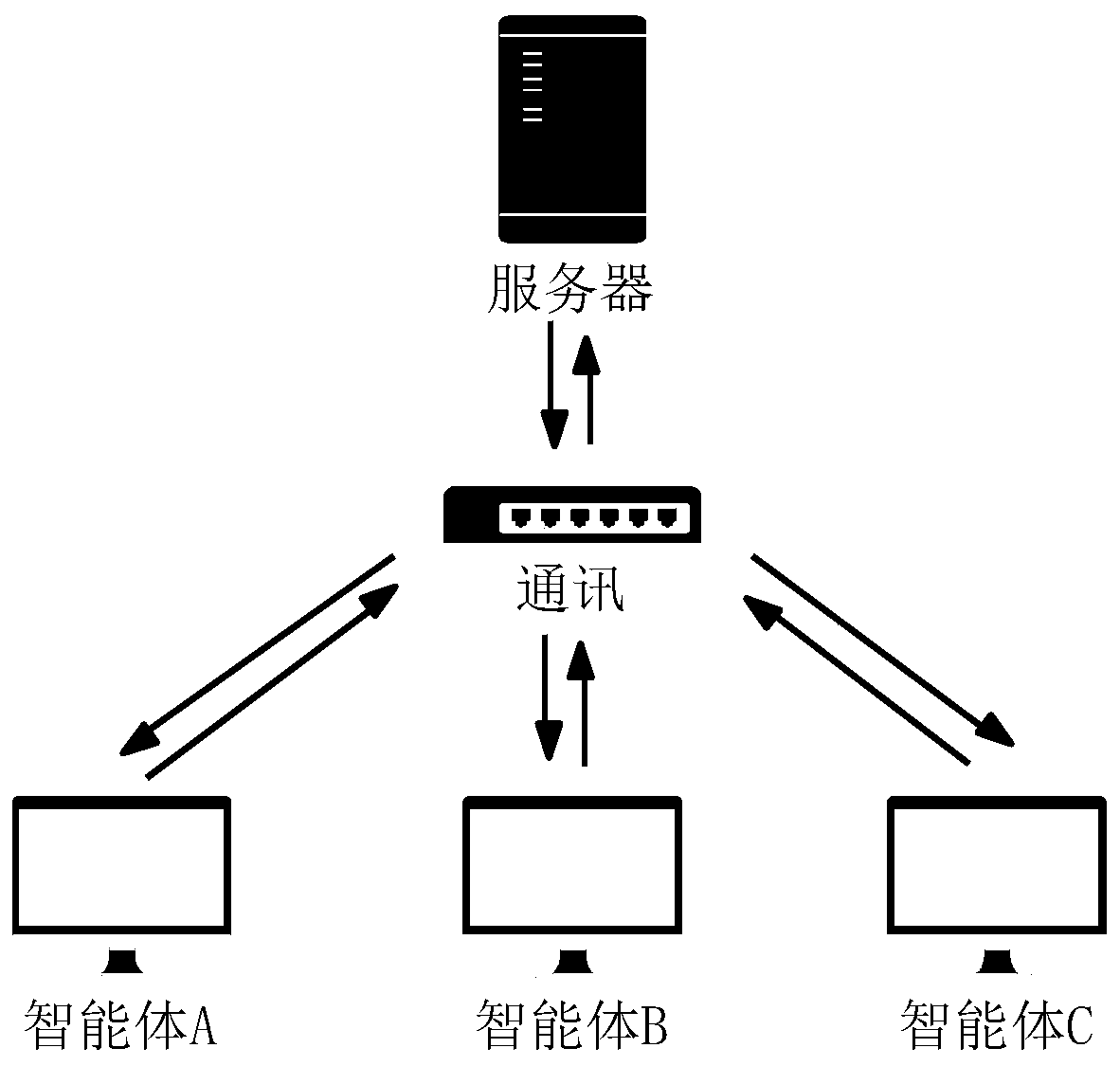

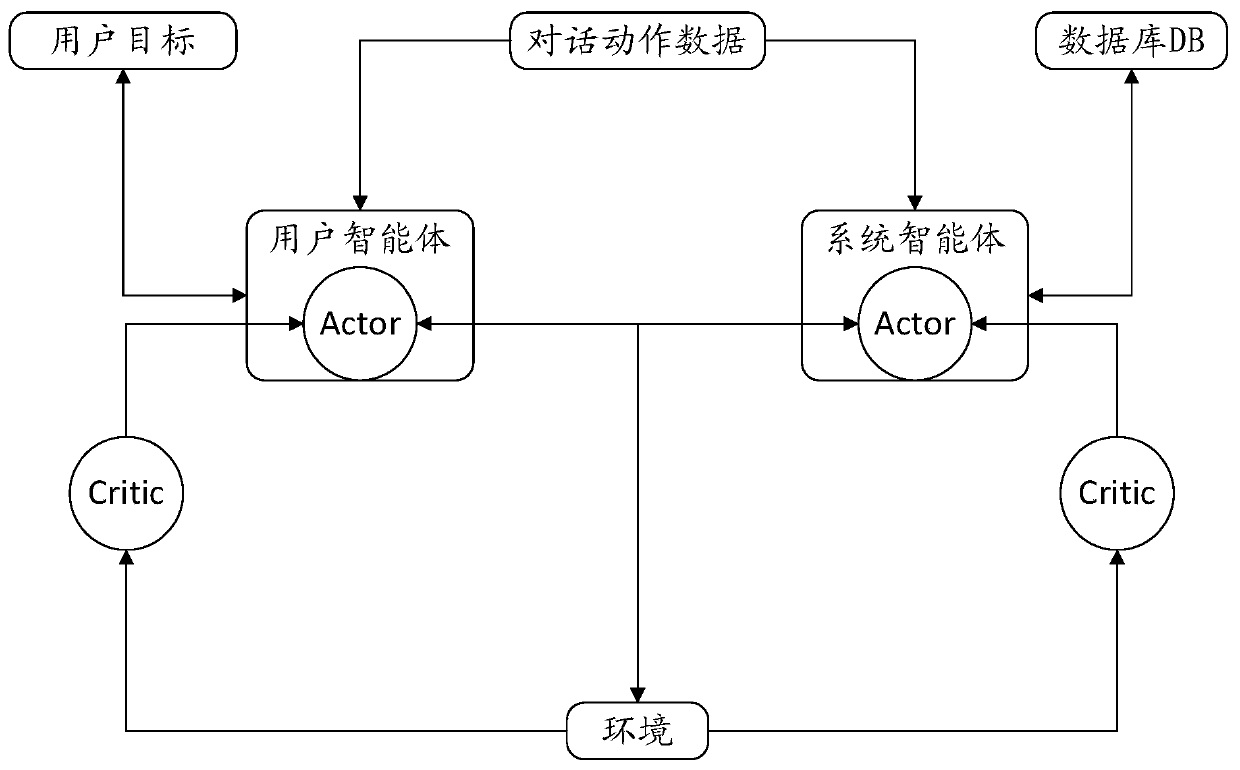

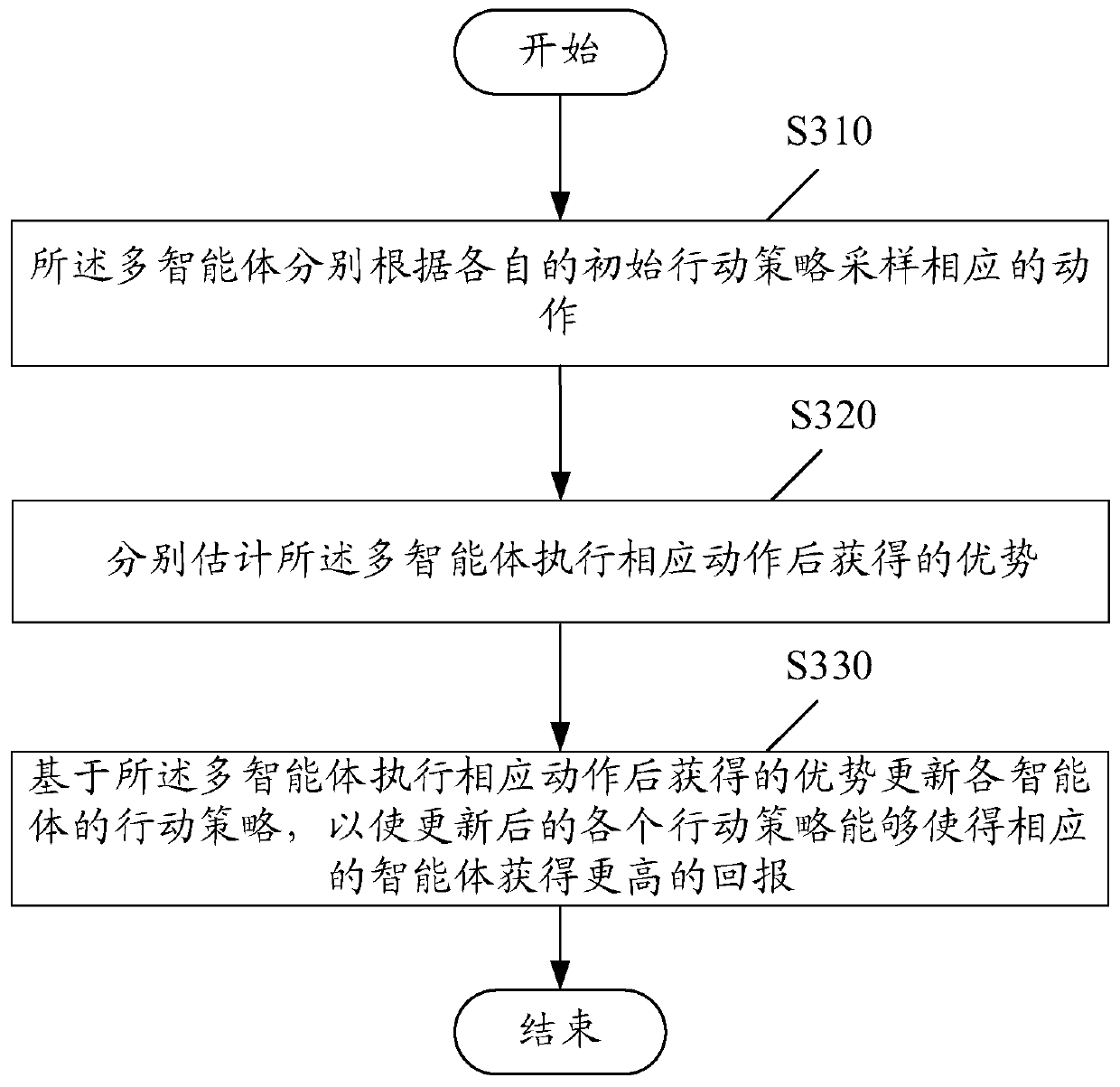

Multi-agent action strategy learning method and device, medium and computing equipment

PendingCN111309880ANo need for human supervisionSave time and costDigital data information retrievalNeural architecturesEngineeringHuman–computer interaction

The embodiment of the invention provides a multi-agent action strategy learning method. The multi-agent action strategy learning method comprises the steps that multiple agents sample corresponding actions according to respective initial action strategies; respectively estimating the advantages obtained after the multiple agents execute the corresponding actions; and updating the action strategy of each intelligent agent based on the advantages obtained after the multiple intelligent agents execute the corresponding actions, so that each updated action strategy can enable the corresponding intelligent agent to obtain higher return. The method provided by the invention is applied to a task processing-oriented machine learning scene; meanwhile, a plurality of cooperative intelligent agents are trained (namely a plurality of action strategies are trained at the same time). A pre-built simulator and the intelligent agents are not adopted for interaction, manual supervision is not needed, time cost and resources are greatly saved, in addition, in order to enable all the intelligent agents to learn excellent action strategies, different awards are distributed to all the intelligent agents, and therefore the multiple intelligent agents can learn the more excellent action strategies.

Owner:TSINGHUA UNIV

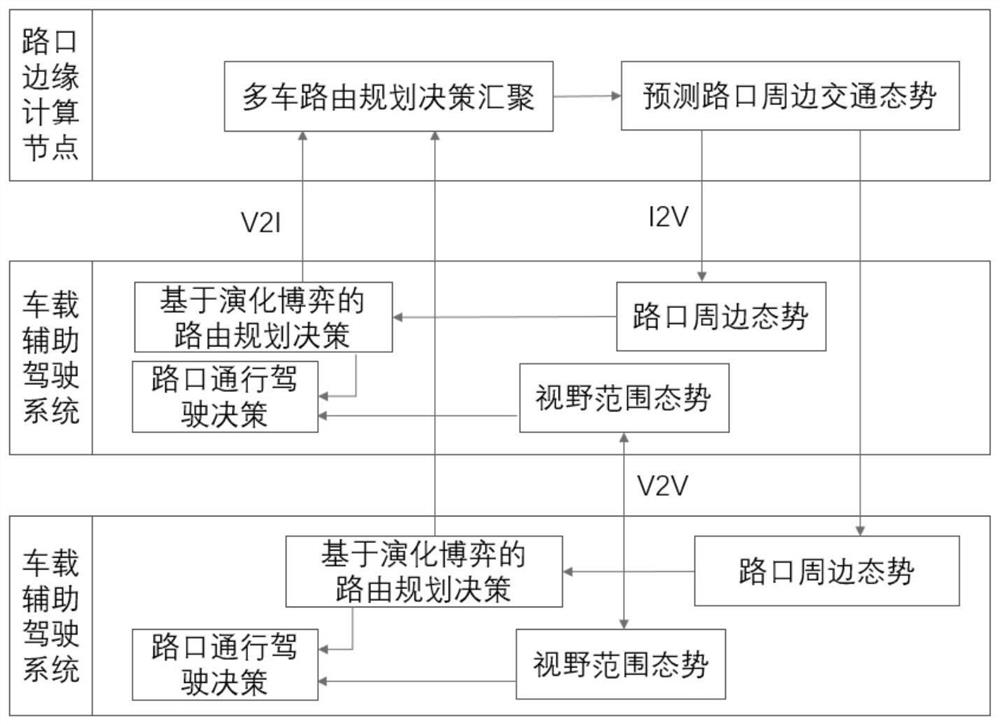

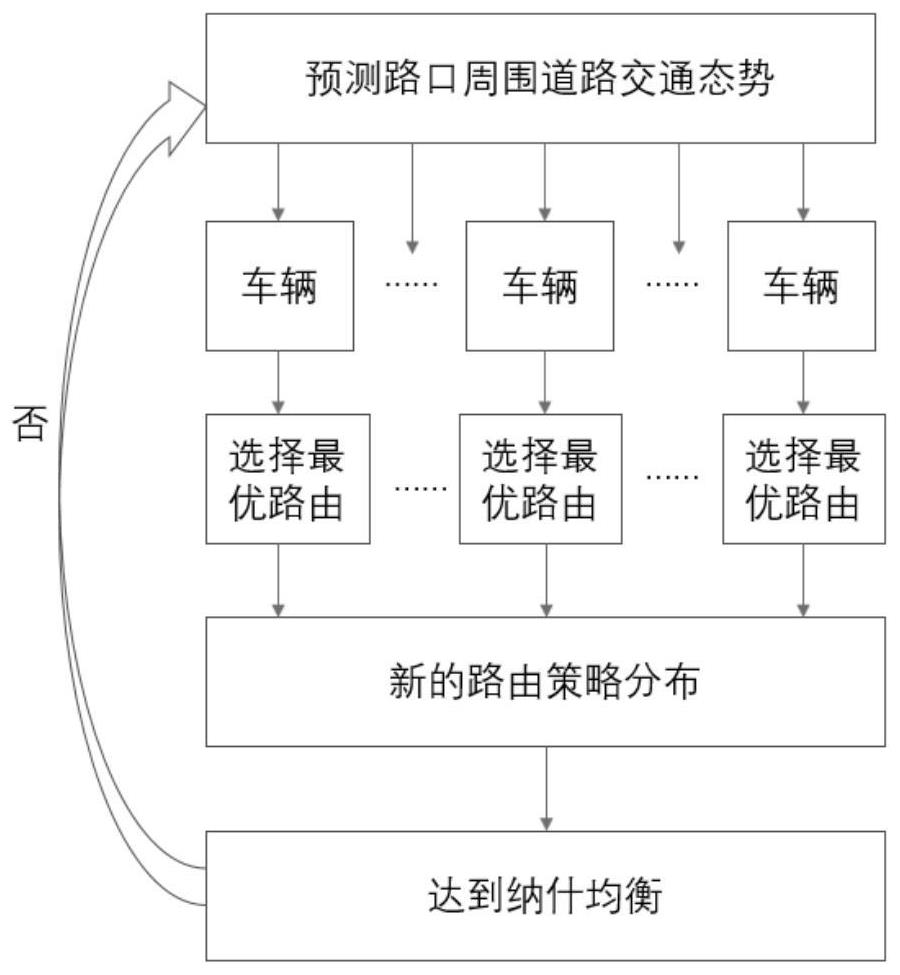

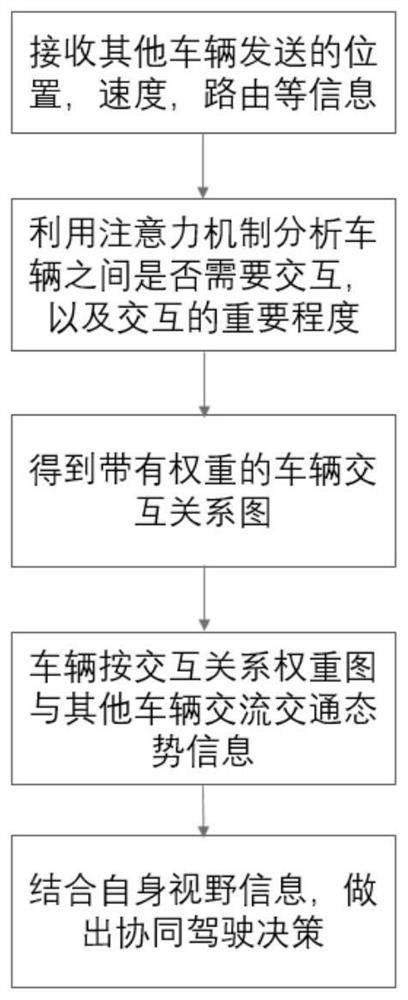

Multi-vehicle collaborative planning method based on distributed crowd-sourcing learning

ActiveCN114283607ALow computing performanceLower requirementParticular environment based servicesDetection of traffic movementRouting decisionEdge server

The invention discloses a multi-vehicle collaborative planning method based on crowd-sourcing learning, and belongs to the technical field of multi-vehicle-road collaborative decision making. According to the invention, the edge server is utilized to reduce the requirements of the computing capability and the communication capability of the vehicle; the evolutionary game is used for modeling the process of continuous game between vehicles in routing planning, and when the game state forms a stable situation, each vehicle obtains a routing decision with maximum own benefit; an intersection passing driving decision-making module is deployed on each vehicle, the vehicle is regarded as an independent decision-making individual, and a cooperative driving behavior of multiple vehicles at the intersection is modeled by using the powerful strategy learning capability of deep reinforcement learning; a traffic situation prediction module is calculated and deployed at the roadside edge, and the traffic situation perception under the limited visual field of vehicles is expanded by using the communication capability of multiple vehicles and roads. According to the invention, different aspects of road resources are optimized, space-time utilization of the intersection is optimized, space-time utilization of road resources around the intersection is optimized, and throughput of the intersection is increased.

Owner:BEIJING UNIV OF POSTS & TELECOMM

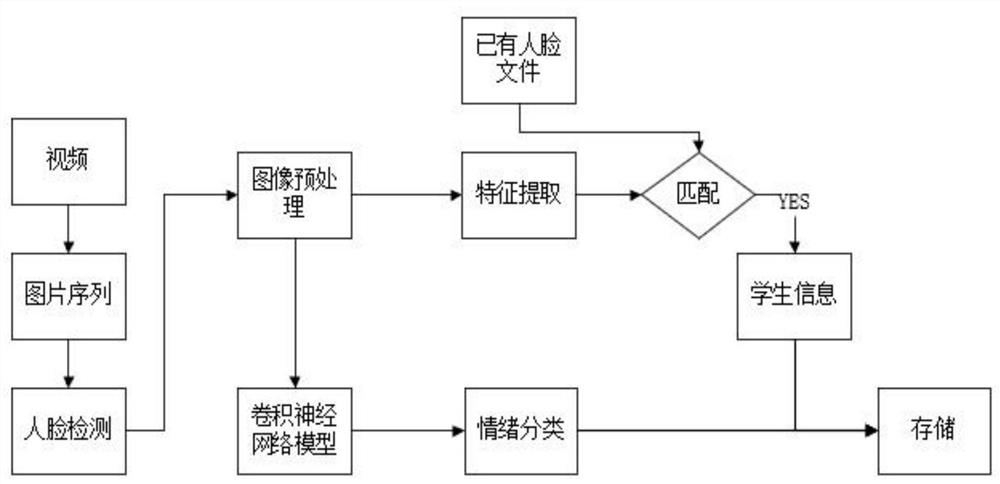

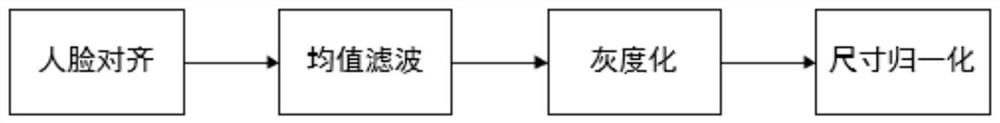

Student learning emotion recognition method based on convolutional neural network

PendingCN113657168ASolve the problem of difficult emotion perceptionSolve the problem that is not easy to perceiveCharacter and pattern recognitionNeural architecturesOnline learningBiology

The invention discloses a student learning emotion recognition method based on a convolutional neural network. The method comprises the following steps: classifying the expressions of a student through a convolutional neural network model, dividing the learning emotion of the student into positive emotion and negative emotion according to the expressions, storing the student information and emotion information, and feeding back the learning emotion of the student to a teacher. Parents and students can solve the problem that emotions of the students are not easy to perceive, support is provided for teachers to optimize classroom settings and pay attention to learning emotions of the students, a positive role is played in guaranteeing the classroom effect, support can be provided for online learning and detection of student input degree, and an educator can adjust teaching strategies and a learner can adjust learning states.

Owner:XIAN UNIV OF TECH

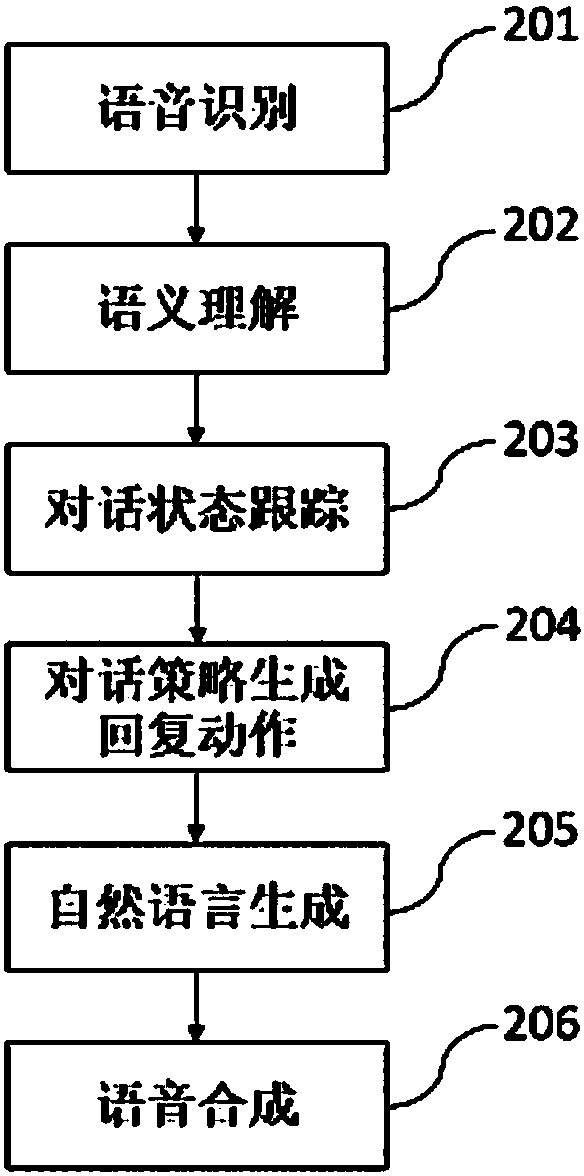

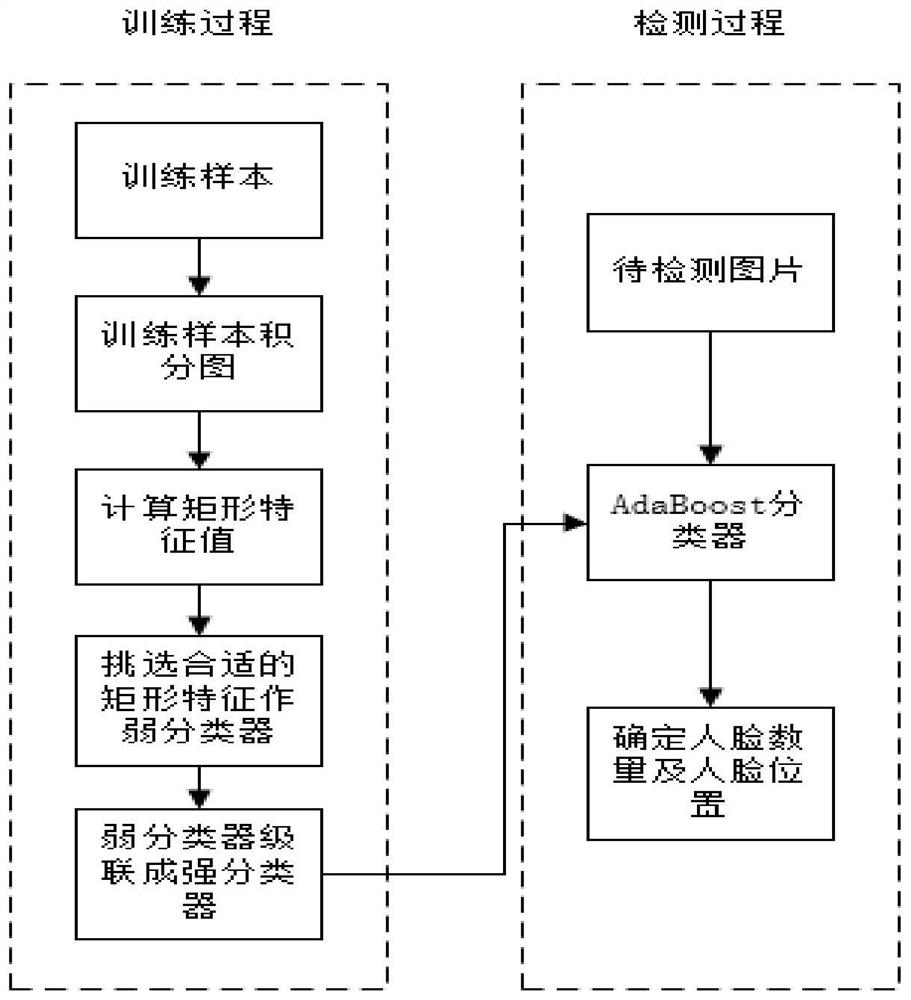

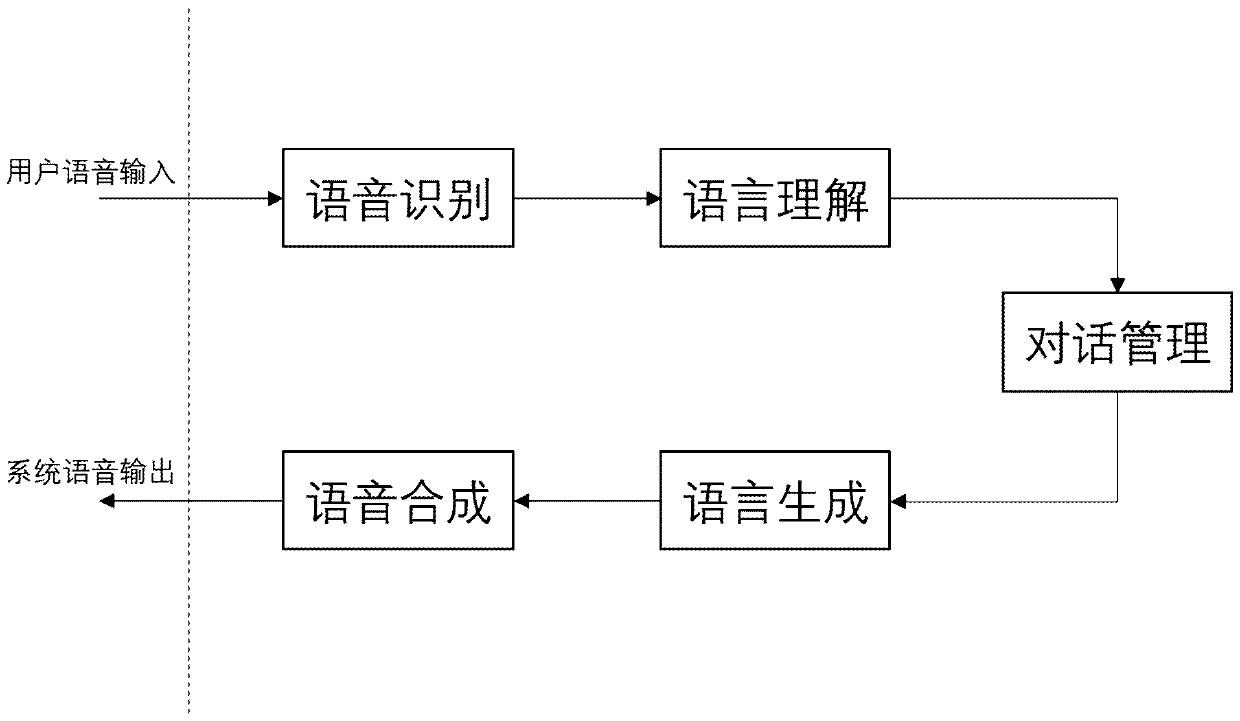

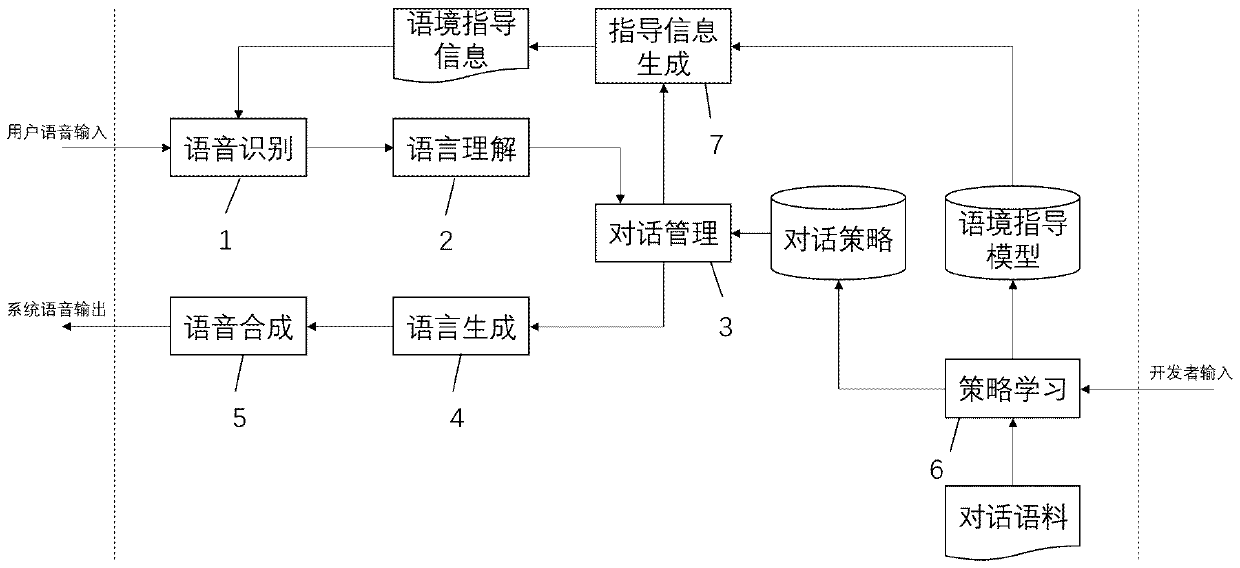

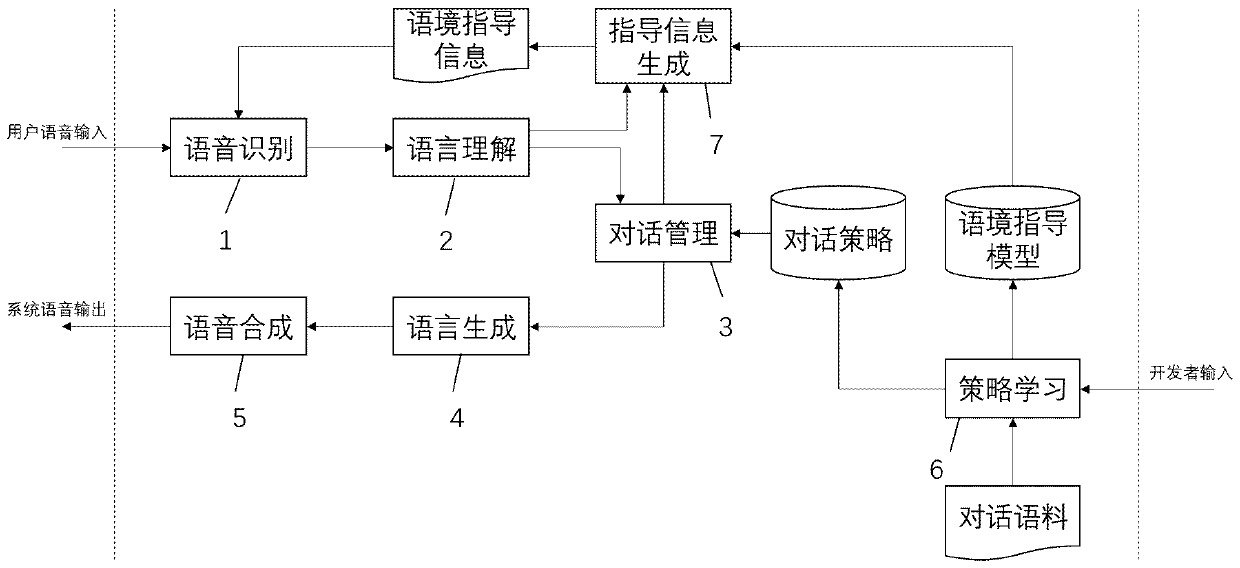

Spoken language dialogue management method and system

The invention discloses a spoken language dialogue management system which comprises a voice recognizer, a language understanding unit, a dialogue manager, a language generation unit, a voice synthesizer, a context guidance information generator and a strategy learning unit. In addition, the invention further discloses a spoken language dialogue management method. By the adoption of the technicalscheme, the dialogue manager maintains dialogue state information in the dialogue process, the system generates context guidance information according to the current dialogue state information and dynamically guides the voice recognizer to better recognize natural language possibly used by a user in the current context, and the voice recognition accuracy is greatly improved; and the speech recognizer does not need to prepare training corpora in a specific field in advance and train language models related to the field, but dynamically adjusts the language models according to different contexts, so that the workload of training the language models in advance is reduced, and the production efficiency of a man-machine conversation system is improved.

Owner:大连即时智能科技有限公司

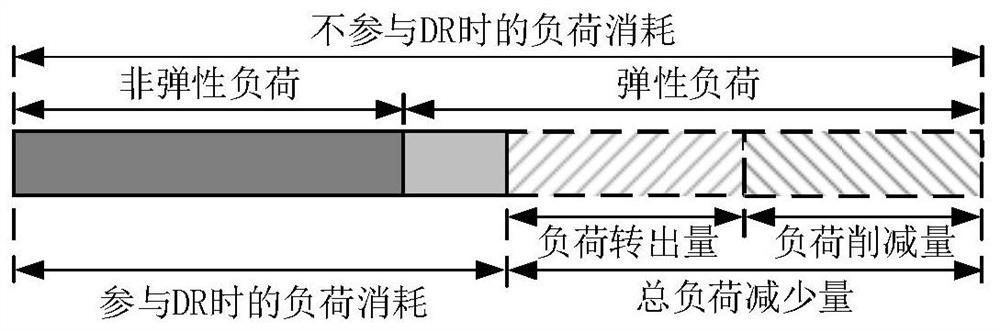

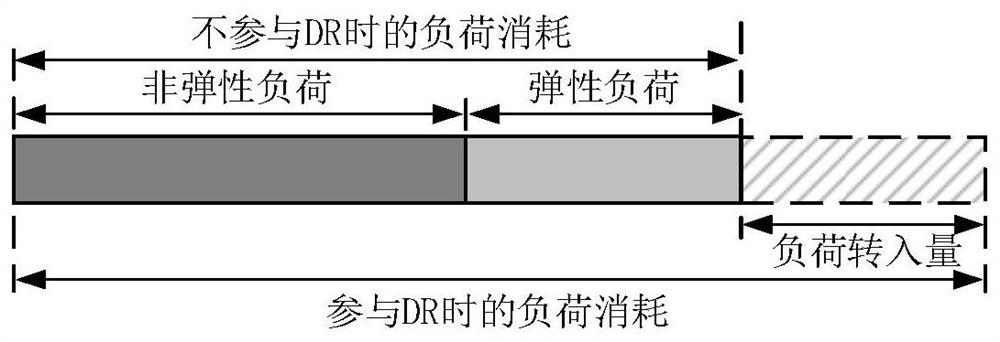

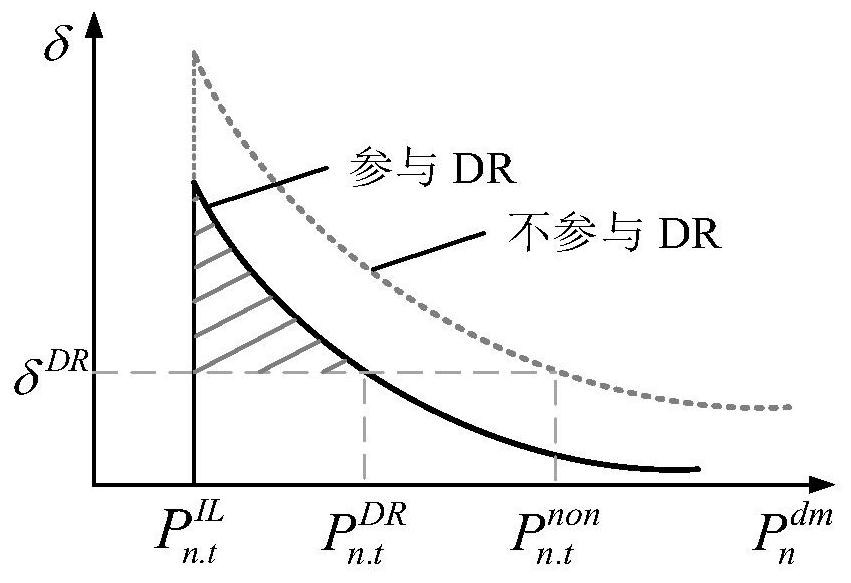

Optimal bidding strategy solving method

PendingCN113191804AFully consider incompletenessTake full account of uncertaintyMathematical modelsMarket predictionsFinancial transactionDecision taking

The invention belongs to the technical field of market transaction, and particularly relates to an optimal bidding strategy solving method. The traditional evolutionary game method is difficult to obtain a stable evolutionary equilibrium solution when facing the uncertainty of opponent decision. The invention provides an optimal bidding strategy solving method. The method aims at providing a DR resource optimal bidding strategy solving problem for users participating in demand side bidding, and comprises the steps of establishing a user participation DR income model based on a consumer demand curve. comprehensively considering the target orientation of the user participating in the market, and proposing an evolutionary game model based on the bounded rationality of a game main body; and 2) considering the incompleteness of market information and the uncertainty of subject decision, and providing an optimal bidding strategy learning algorithm based on Q learning and compound differential evolution for solving the established evolutionary game model based on the finite rationality of the game subject. An evolutionary game model based on user bounded rationality is established, and the user is helped to formulate an optimal DR bidding strategy.

Owner:XI AN JIAOTONG UNIV +1

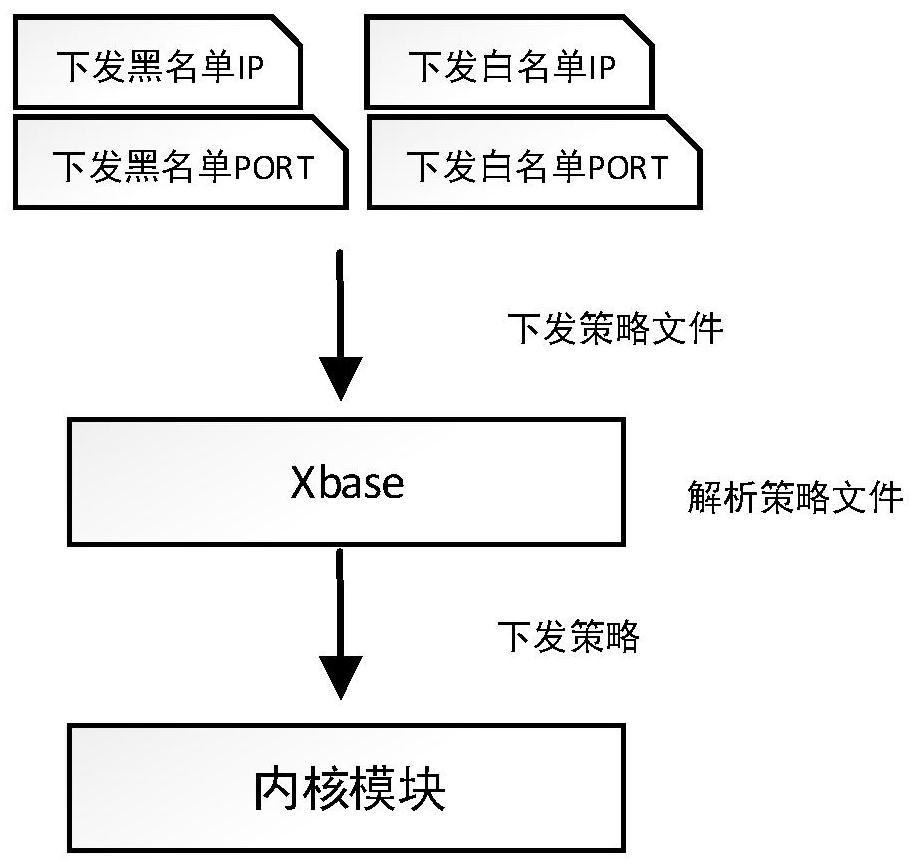

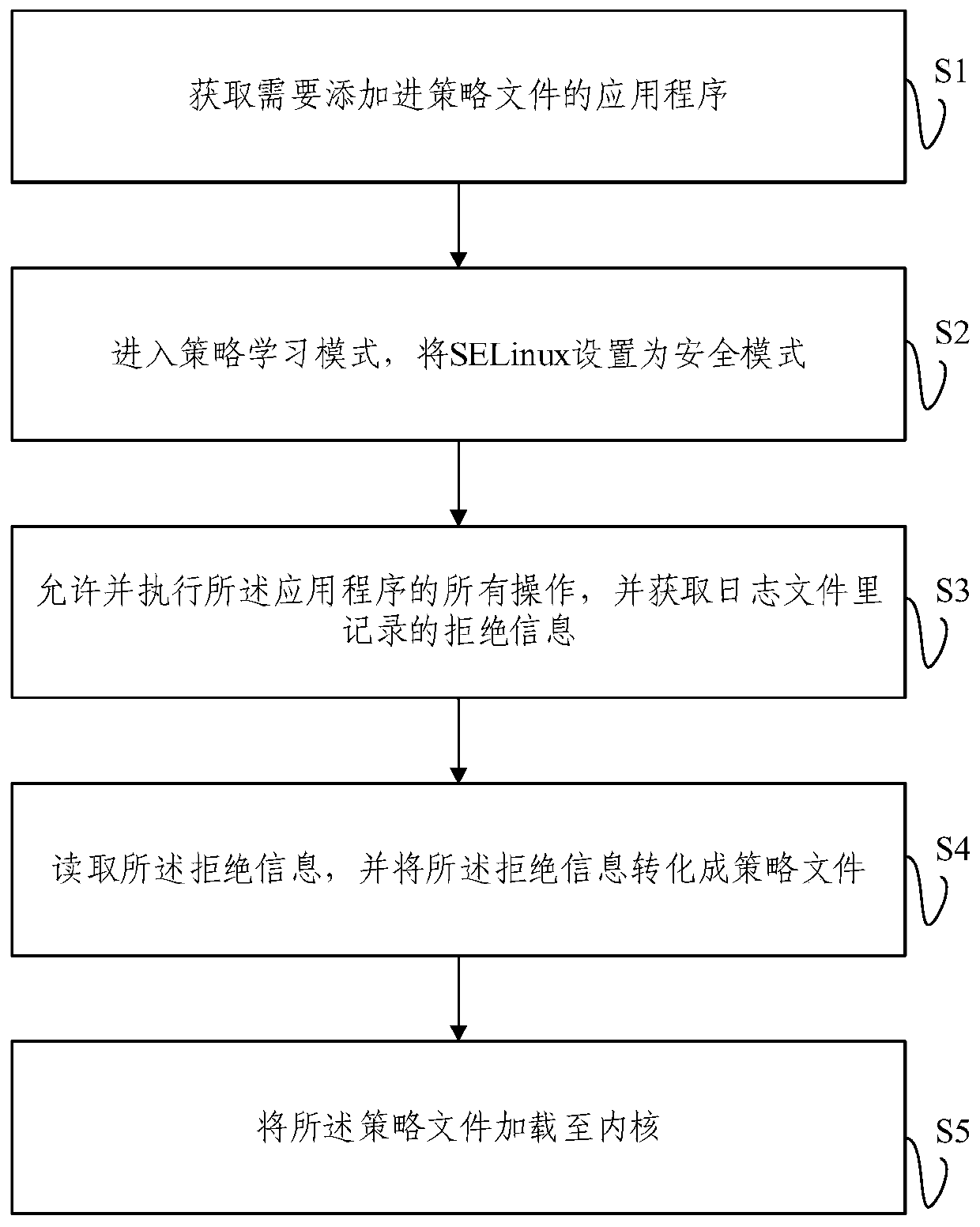

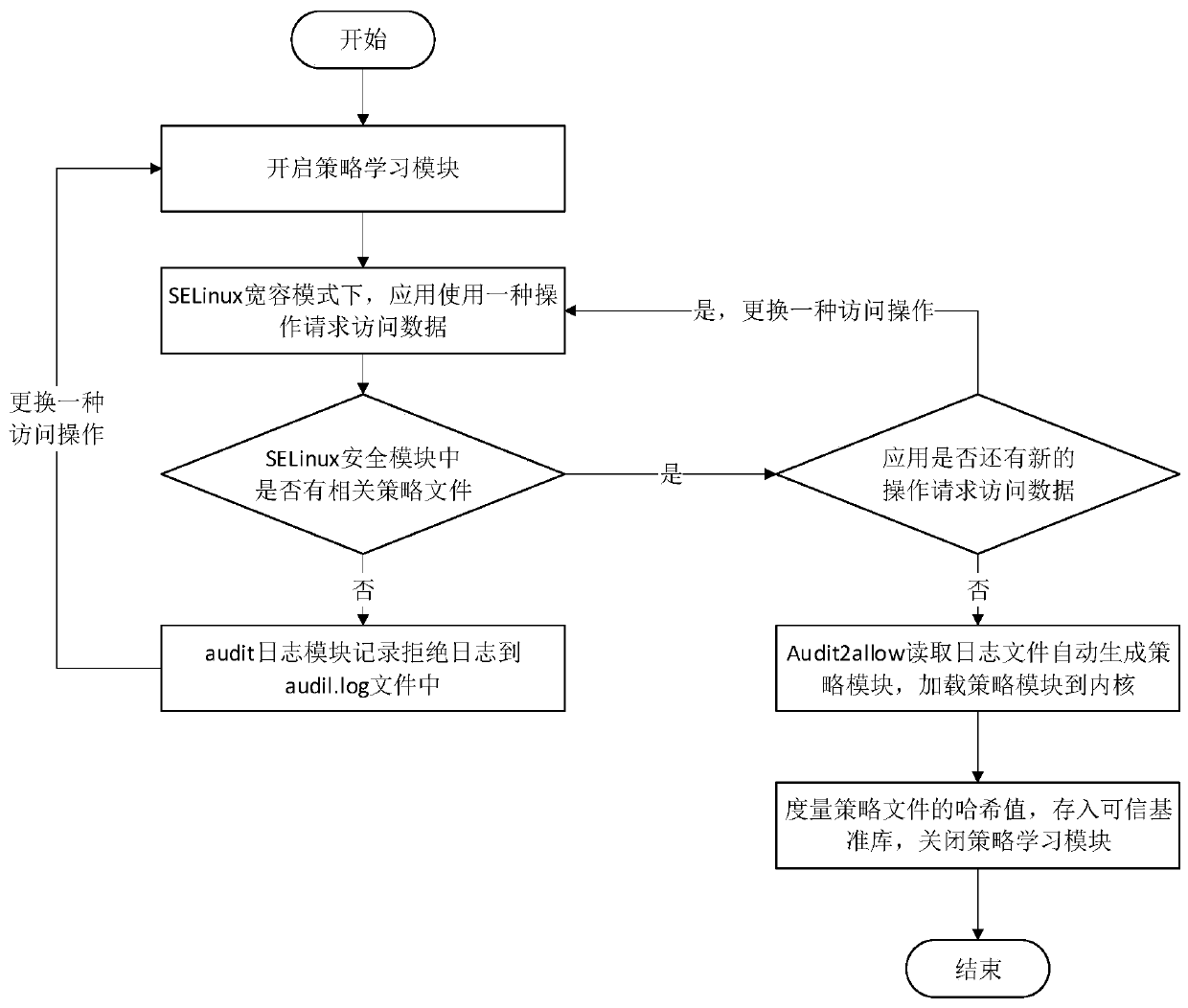

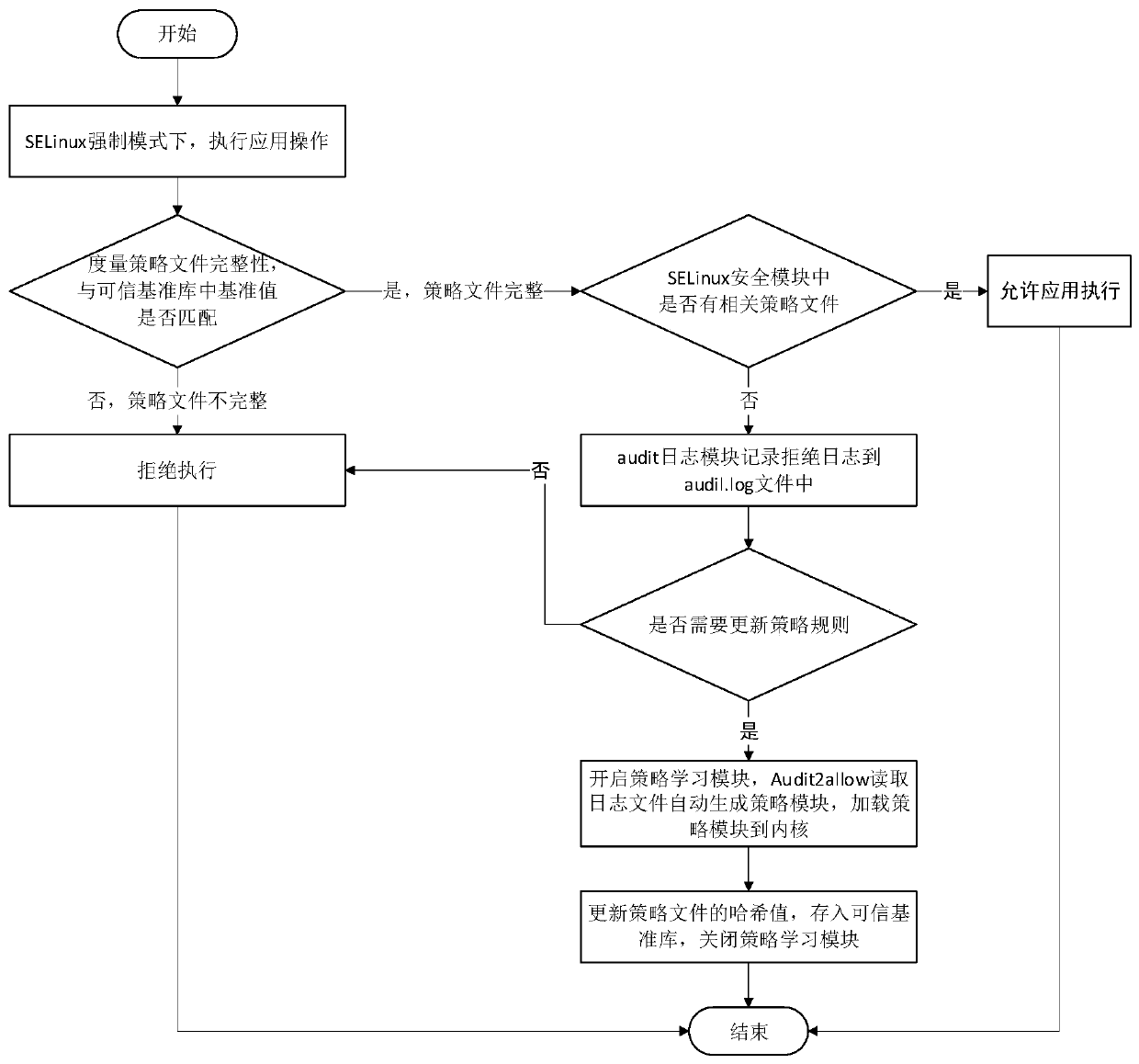

Self-learning credible strategy construction method and system based on SELinux

The embodiment of the invention provides a self-learning credible strategy construction method and system based on SELinux. The method comprises the steps: acquiring an application program needing tobe added into a strategy file; entering a strategy learning mode, and setting the SELinux as a tolerance mode; allowing and executing all operations of the application program, and obtaining rejectioninformation recorded in a log file; reading rejection information, and converting the rejection information into a strategy file; and loading the strategy file to the kernel. According to the self-learning credible strategy construction method and system, SELinux is to be in a tolerance mode for the newly installed application program, the strategy file is constructed through one training process, the application program operation needing to be added into the strategy file is executed, under the condition that the front operation strategy is not added, the rear operation is still allowed to be executed, the learning process cannot be stopped, and the credible strategy needed by the application program is constructed under the condition that program running is not affected.

Owner:BEIJING UNIV OF TECH

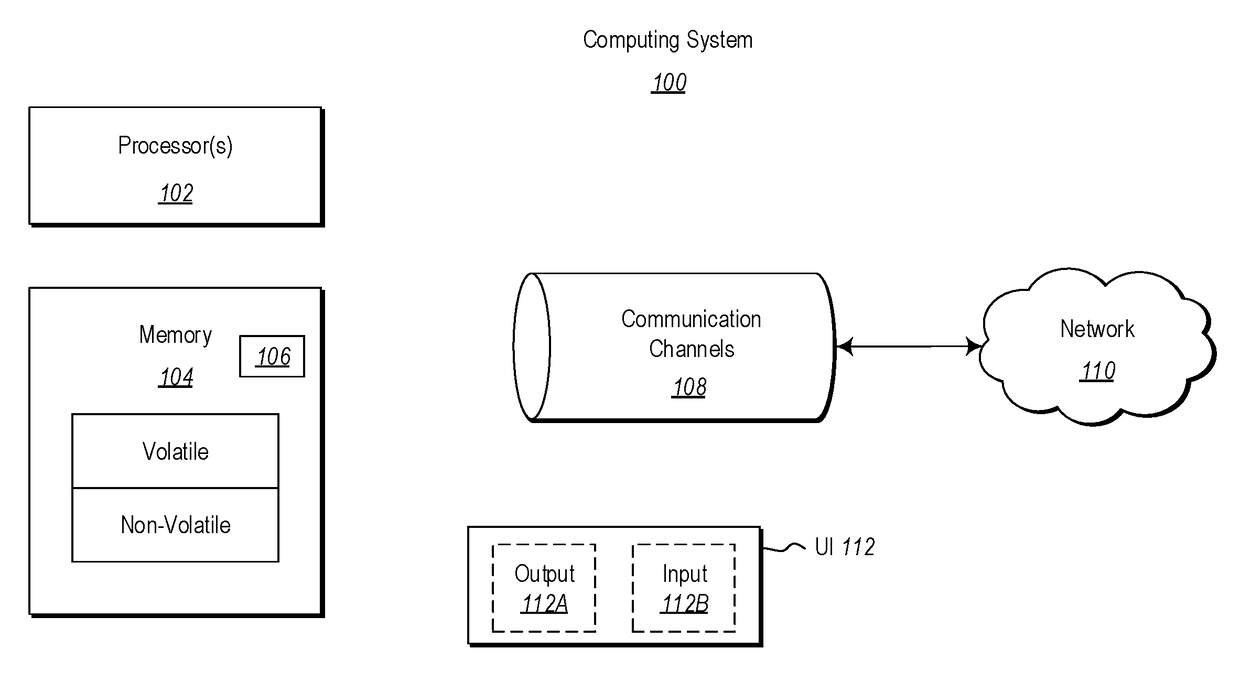

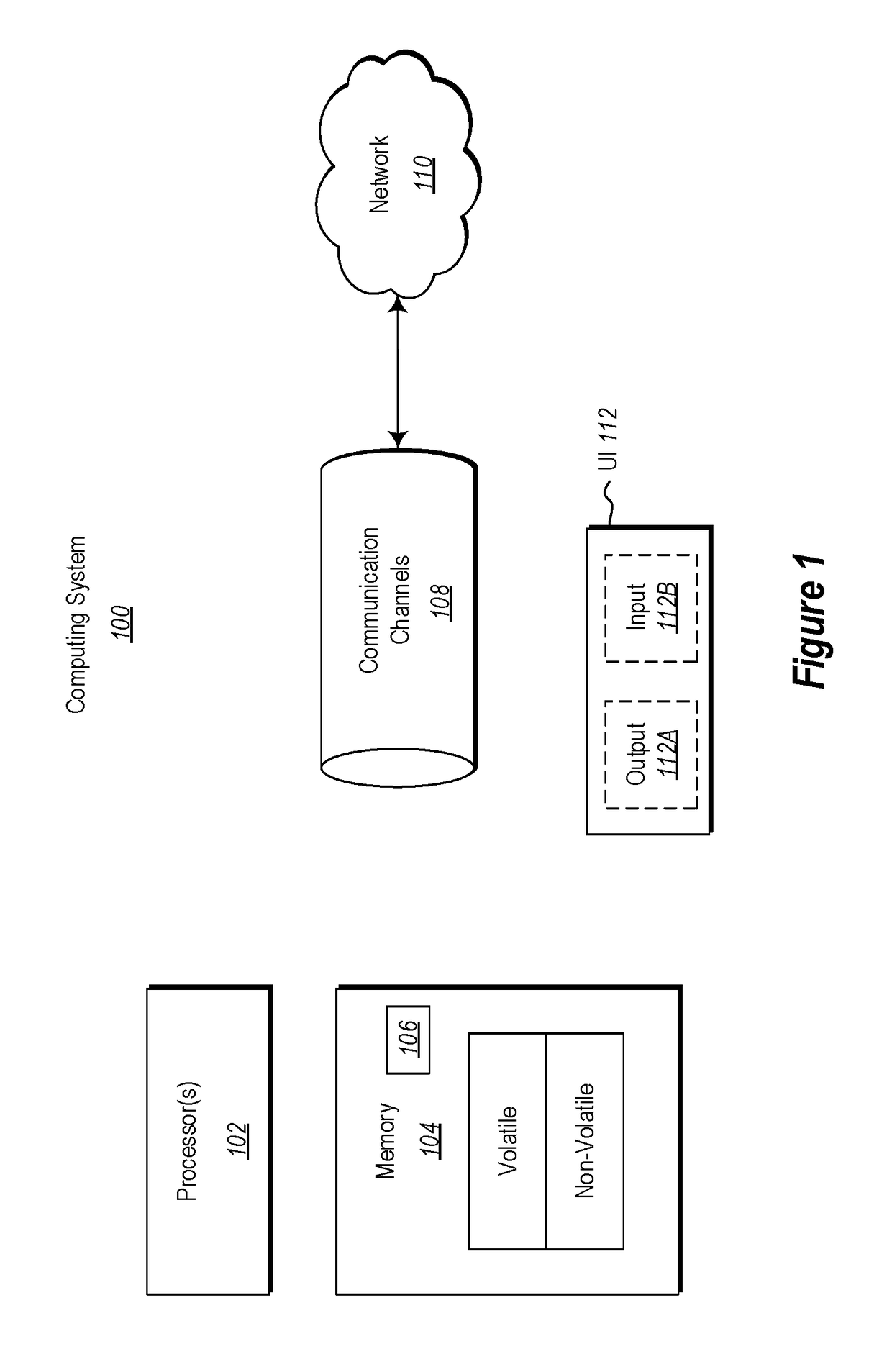

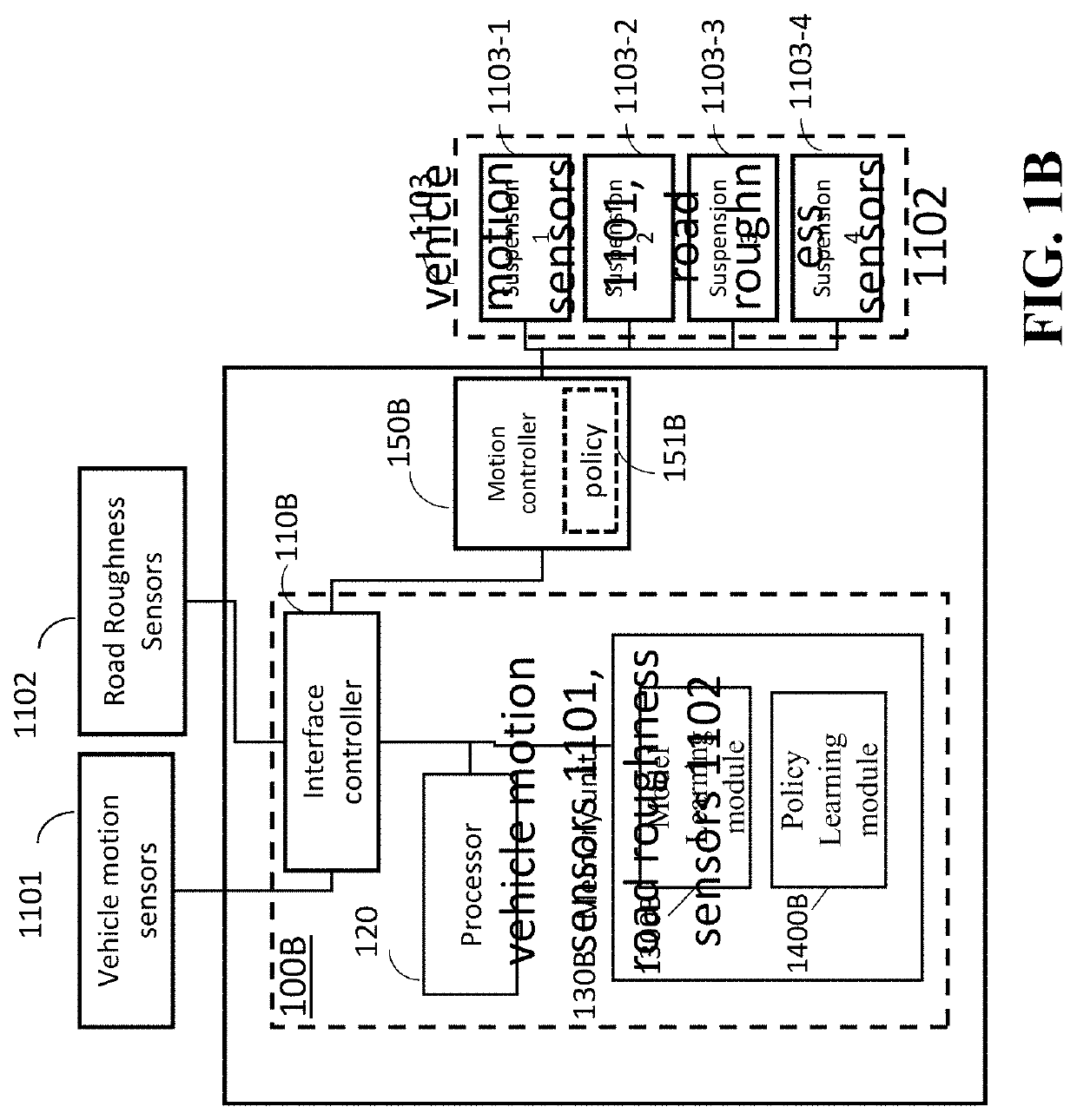

Systems, methods and controllers for an autonomous vehicle that implement autonomous driver agents and driving policy learners for generating and improving policies based on collective driving experiences of the autonomous driver agents

Systems and methods are provided autonomous driving policy generation. The system can include a set of autonomous driver agents, and a driving policy generation module that includes a set of driving policy learner modules for generating and improving policies based on the collective experiences collected by the driver agents. The driver agents can collect driving experiences to create a knowledge base. The driving policy learner modules can process the collective driving experiences to extract driving policies. The driver agents can be trained via the driving policy learner modules in a parallel and distributed manner to find novel and efficient driving policies and behaviors faster and more efficiently. Parallel and distributed learning can enable accelerated training of multiple autonomous intelligent driver agents.

Owner:GM GLOBAL TECH OPERATIONS LLC

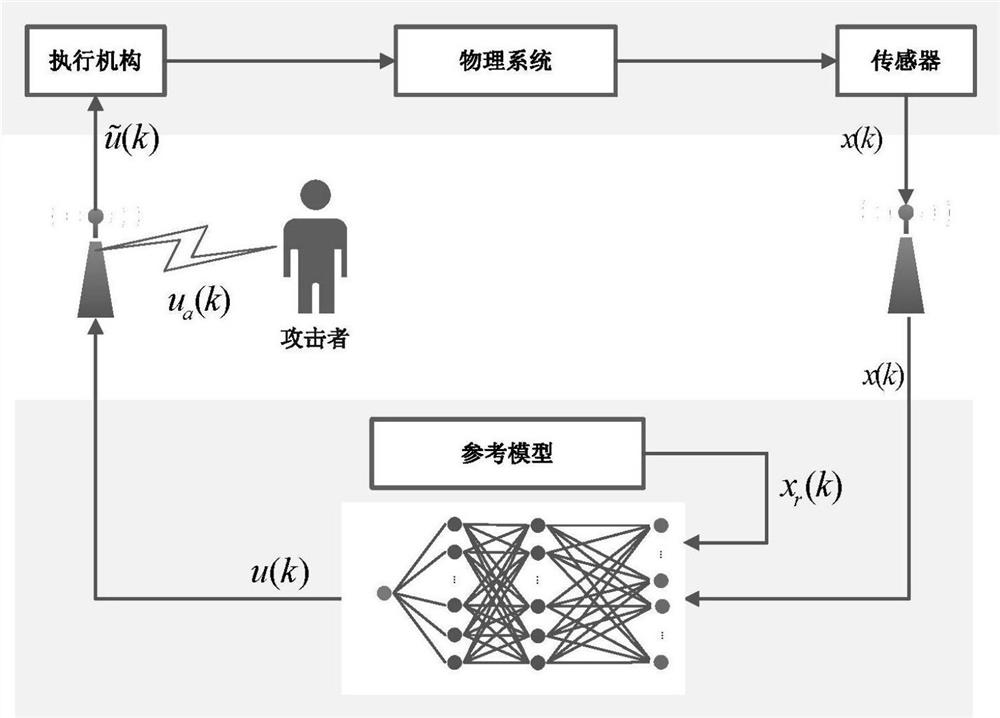

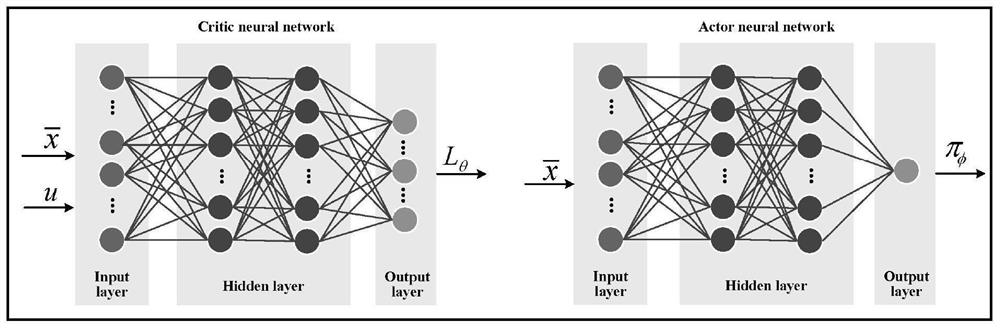

Information physical system safety control method based on deep reinforcement learning

ActiveCN113885330AGuaranteed stabilityImprove controlAdaptive controlLyapunov stabilityDynamic equation

The invention discloses an information physical system security control method based on deep reinforcement learning, and belongs to the technical field of information security. According to the invention, the problem of poor control performance of a security control strategy designed based on an existing method under the condition of network attack is solved. According to the method, the dynamic equation of the cyber-physical system under the attacked condition is described as a Markov decision process, and based on the established Markov process, the security control problem of the cyber-physical system under the false data injection attack condition is converted into a control strategy learning problem only using data; based on a flexible action-critic reinforcement learning algorithm framework, a flexible action-critic reinforcement learning algorithm based on a Lyapunov function is proposed, a novel deep neural network training framework is provided, a Lyapunov stability theory is fused in the design process, the stability of an information physical system is ensured, and the control performance is effectively improved. The method can be applied to safety control of the information physical system.

Owner:HARBIN INST OF TECH

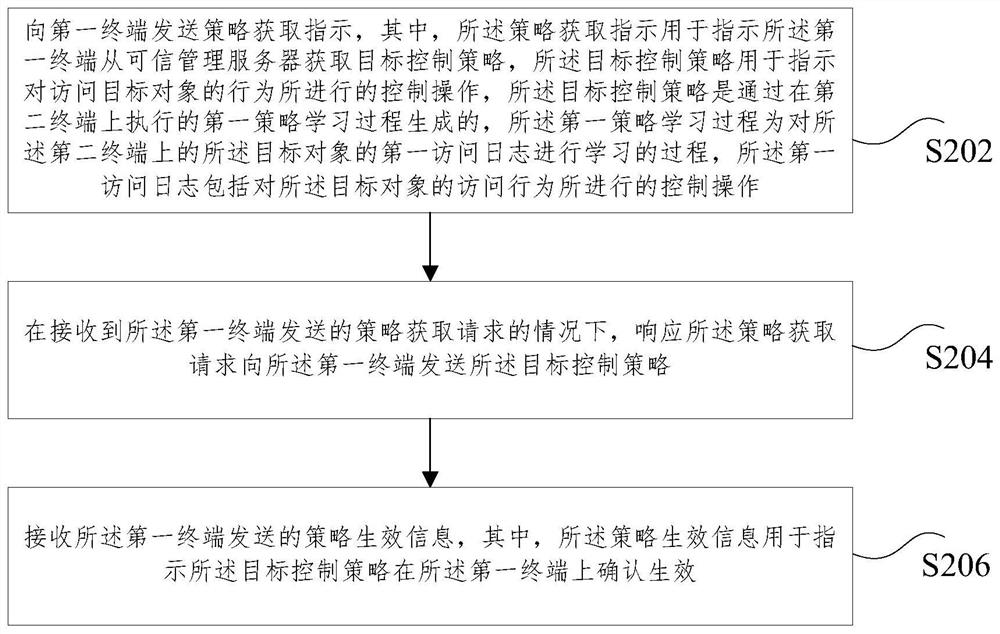

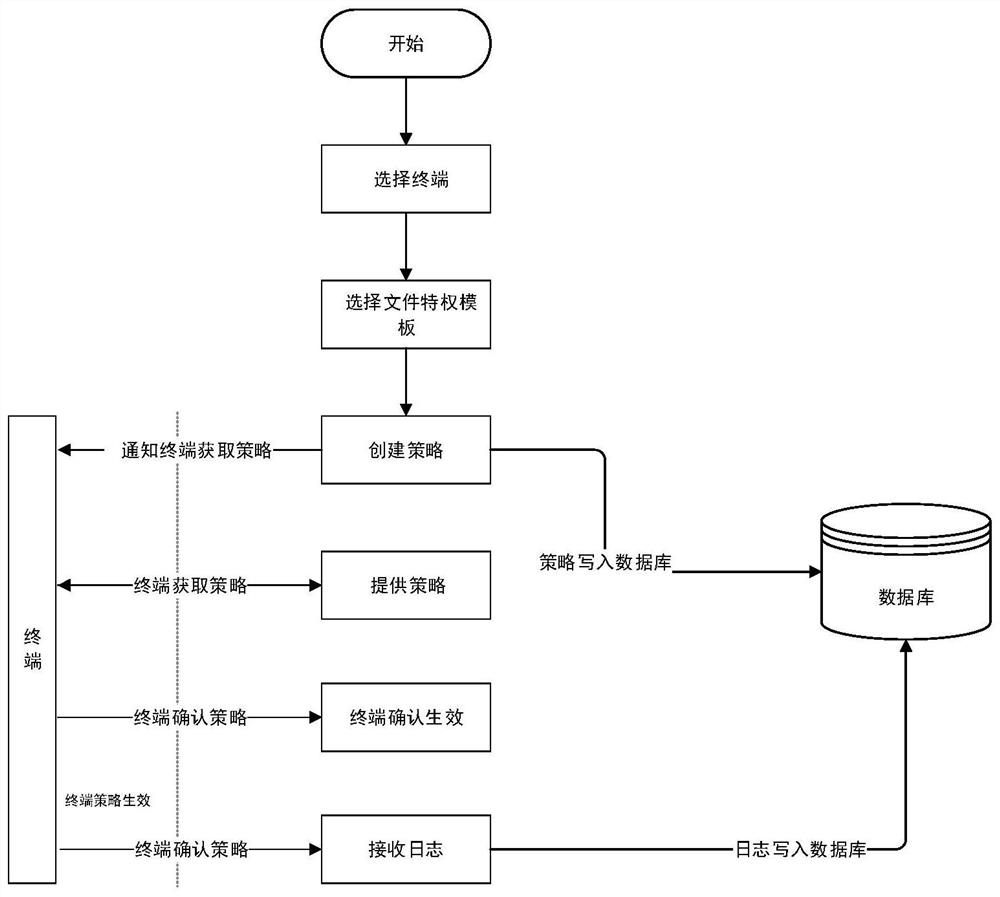

Object access strategy configuration method and device

PendingCN111897768AImprove configuration efficiencyAvoid duplicationDigital data protectionFile system administrationTarget controlServer

The invention relates to an object access strategy configuration method and device. The method comprises the following steps: sending a strategy acquisition instruction to the first terminal, whereinthe strategy obtaining instruction is used for indicating the first terminal to obtain a target control strategy from the trusted management server, wherein the target control strategy is used for indicating a control operation performed on a behavior of accessing the target object, the target control strategy is generated through a first strategy learning process executed on the second terminal,and the first strategy learning process is a process of learning a first access log of the target object on the second terminal; under the condition that a strategy obtaining request sent by the firstterminal is received, sending a target control strategy to the first terminal in response to the strategy obtaining request; and receiving strategy effective information sent by the first terminal, the strategy effective information being used for indicating that the target control strategy is confirmed to be effective on the first terminal. According to the application, the technical problem that the configuration efficiency of the object access strategy is relatively low in related technologies is solved.

Owner:BEIJING KEXIN HUATAI INFORMATION TECH

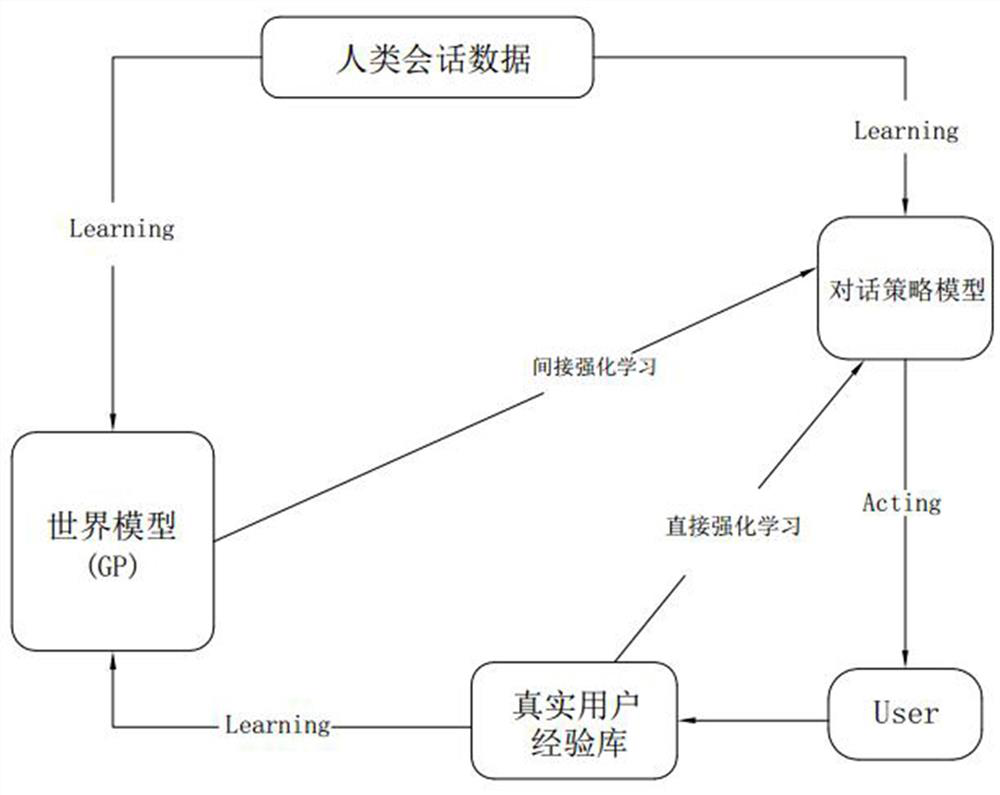

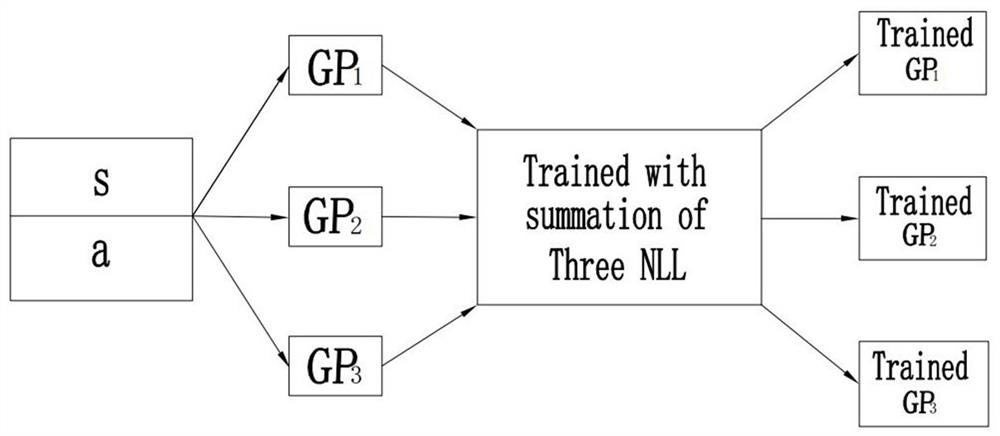

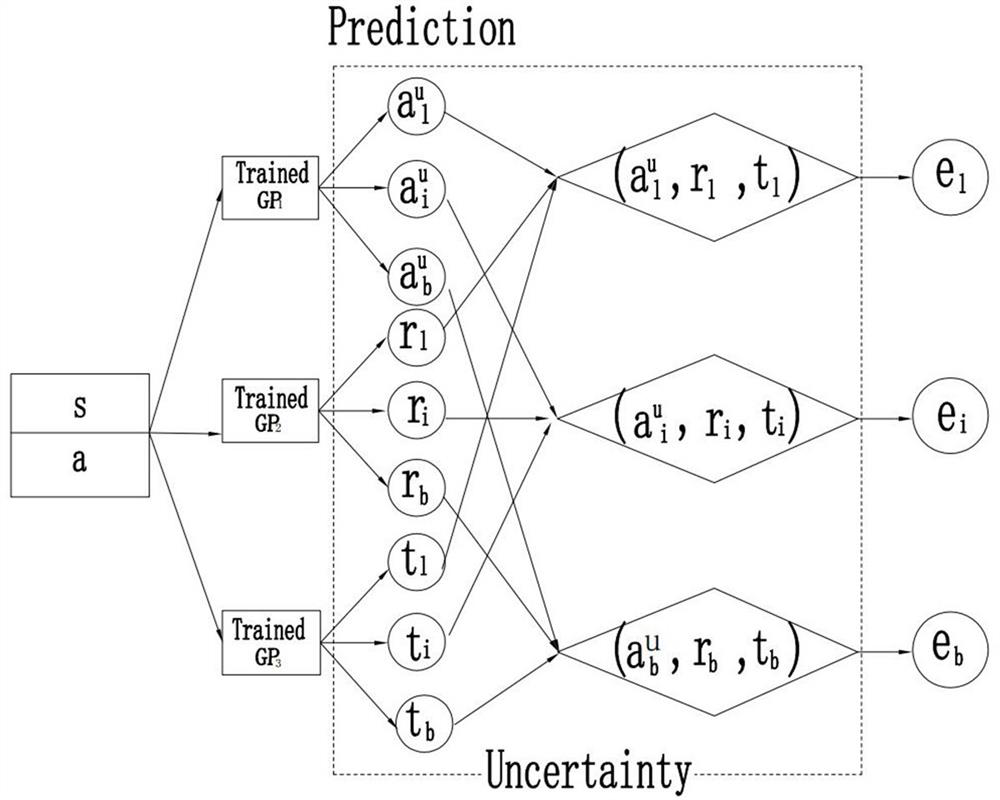

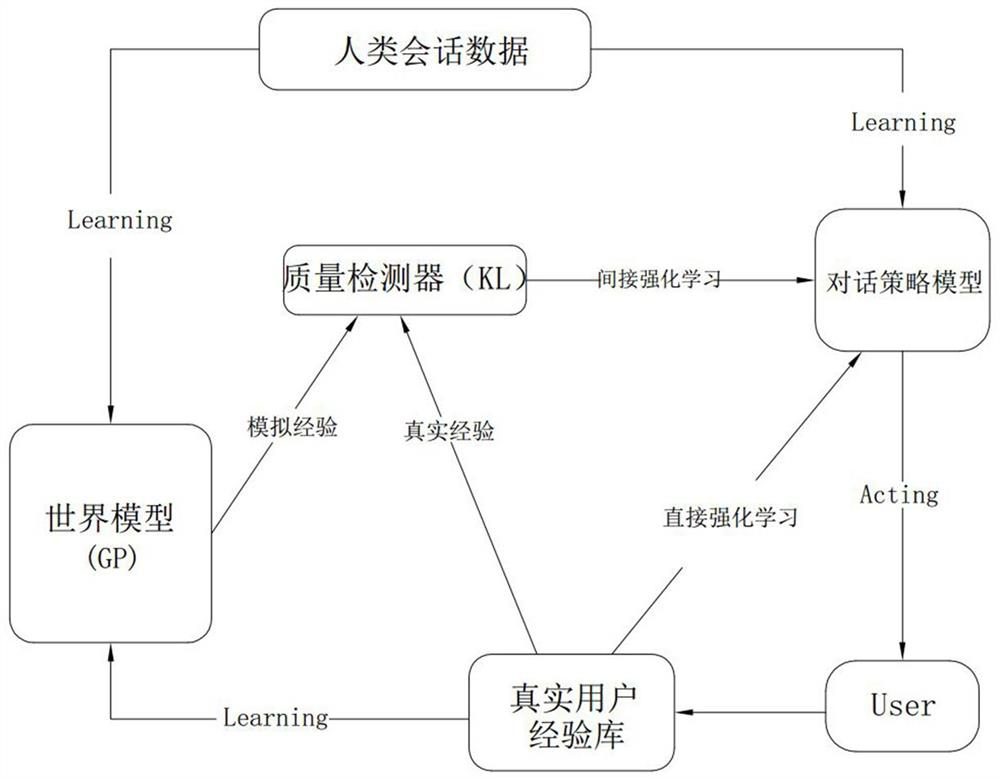

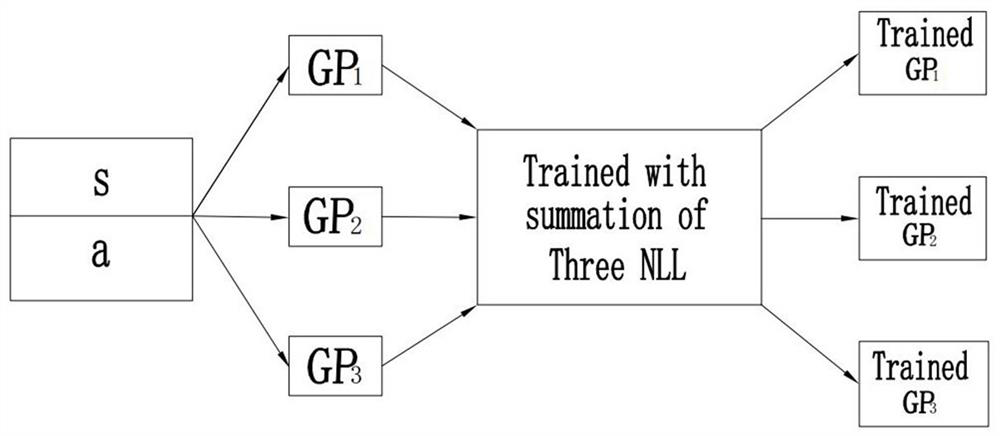

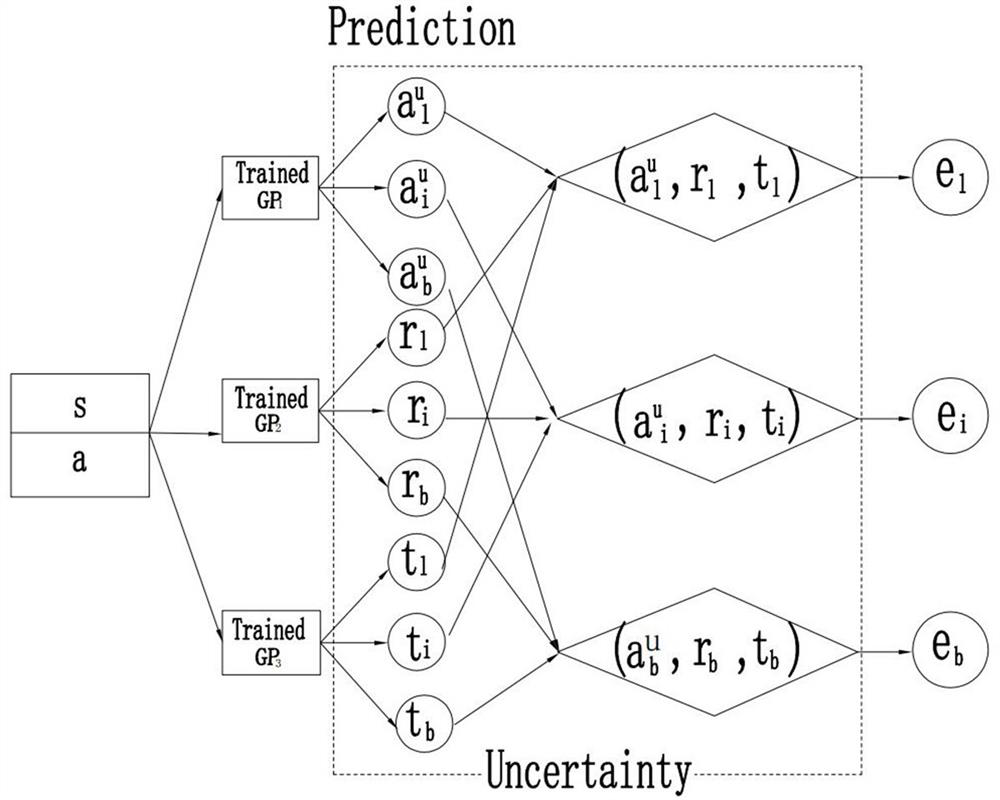

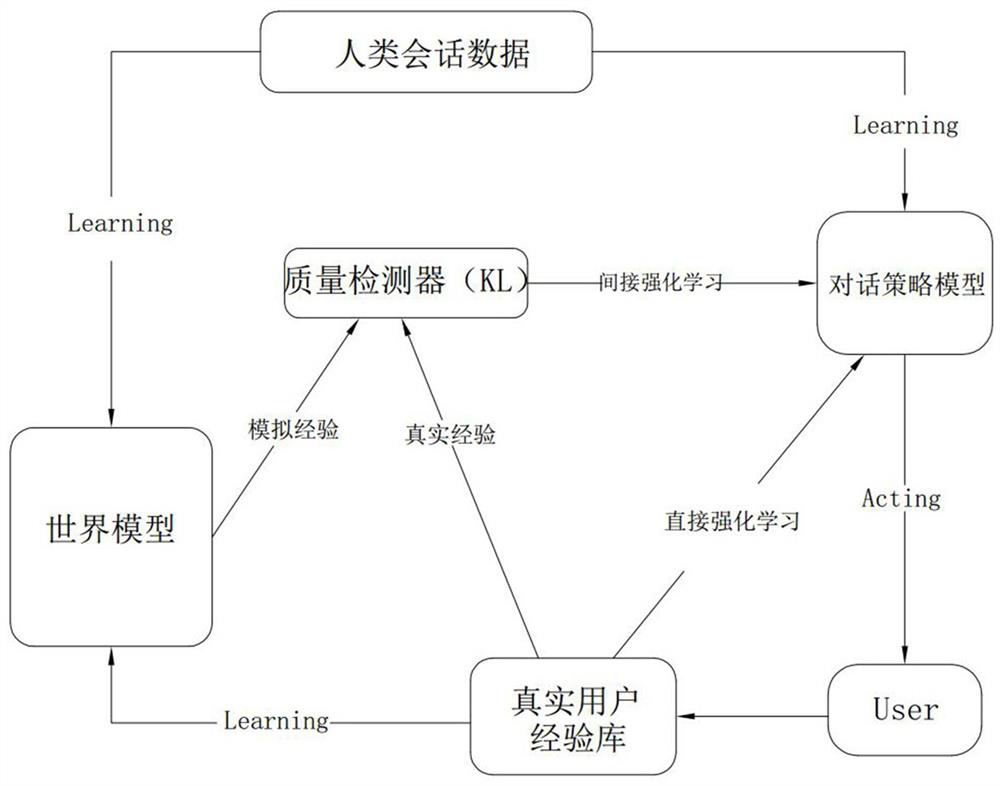

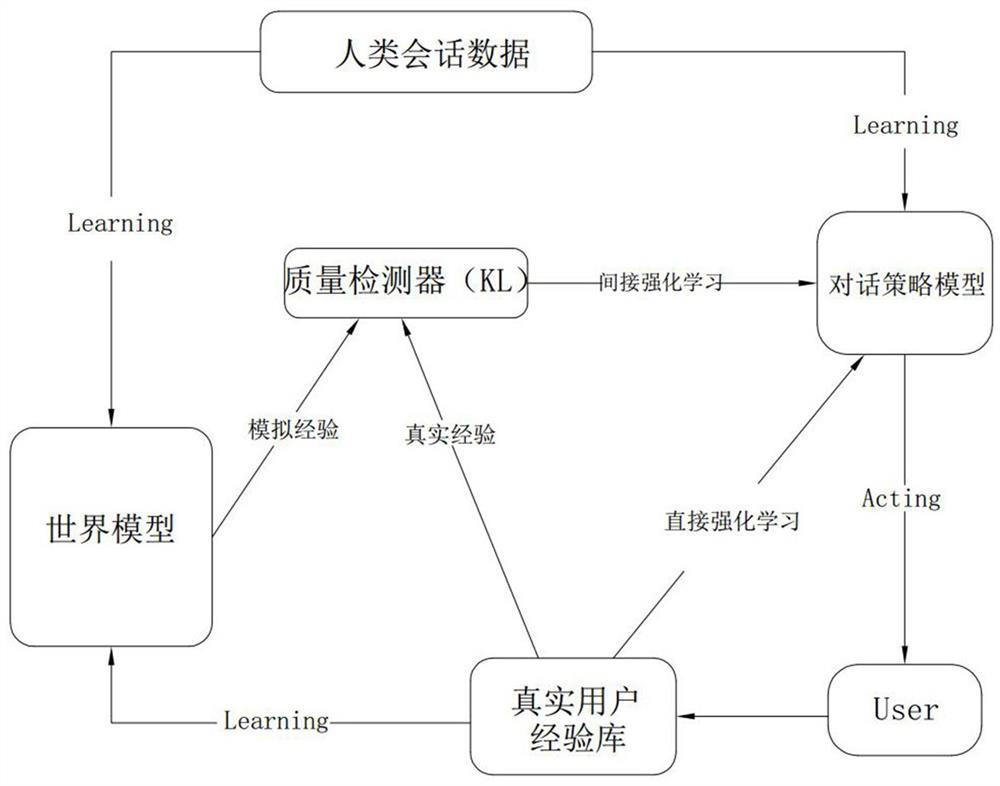

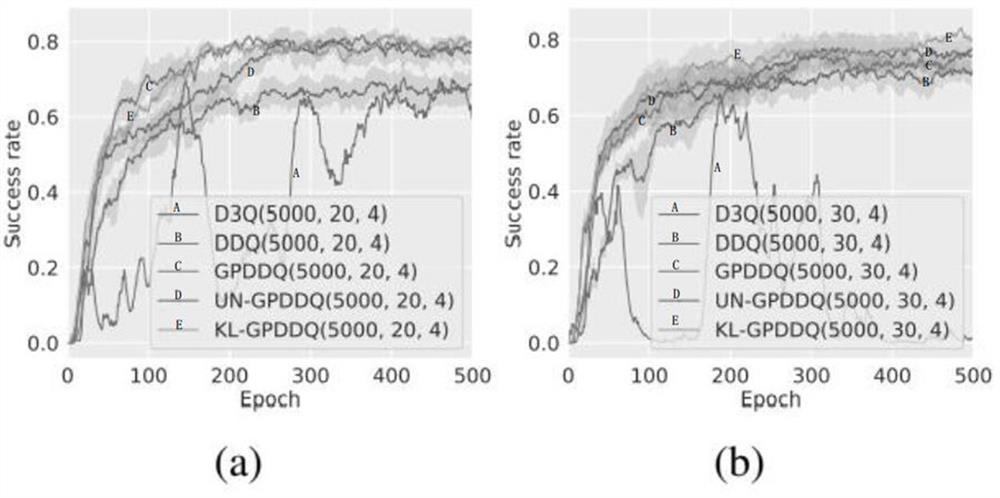

A method for generating high-quality simulated experiences for dialogue policy learning

ActiveCN112989017BDigital data information retrievalArtificial lifeEngineeringArtificial intelligence

The invention provides a method for generating high-quality simulation experience for dialogue strategy learning, which belongs to the field of machine learning technology, comprising the following steps: S1. generating simulation experience based on GP-based world model prediction; S2. storing the simulation experience in Buffers for dialog policy model training. The world model based on the Gaussian process of this solution can avoid the problem that the quality of simulation experience generated by the traditional DNN model depends on the amount of training data. Less will lead to poor learning effect, low learning efficiency and other problems.

Owner:NANHU LAB

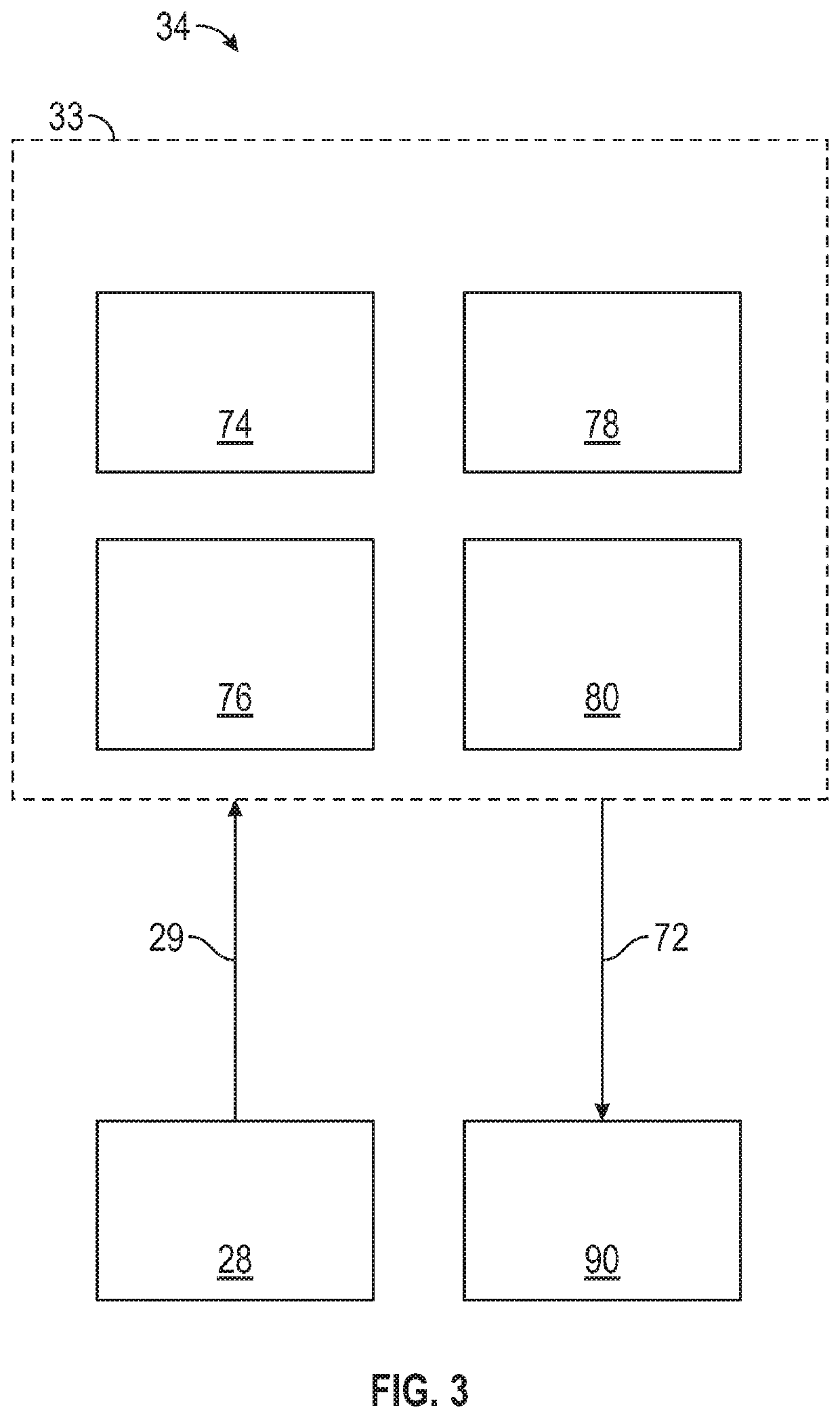

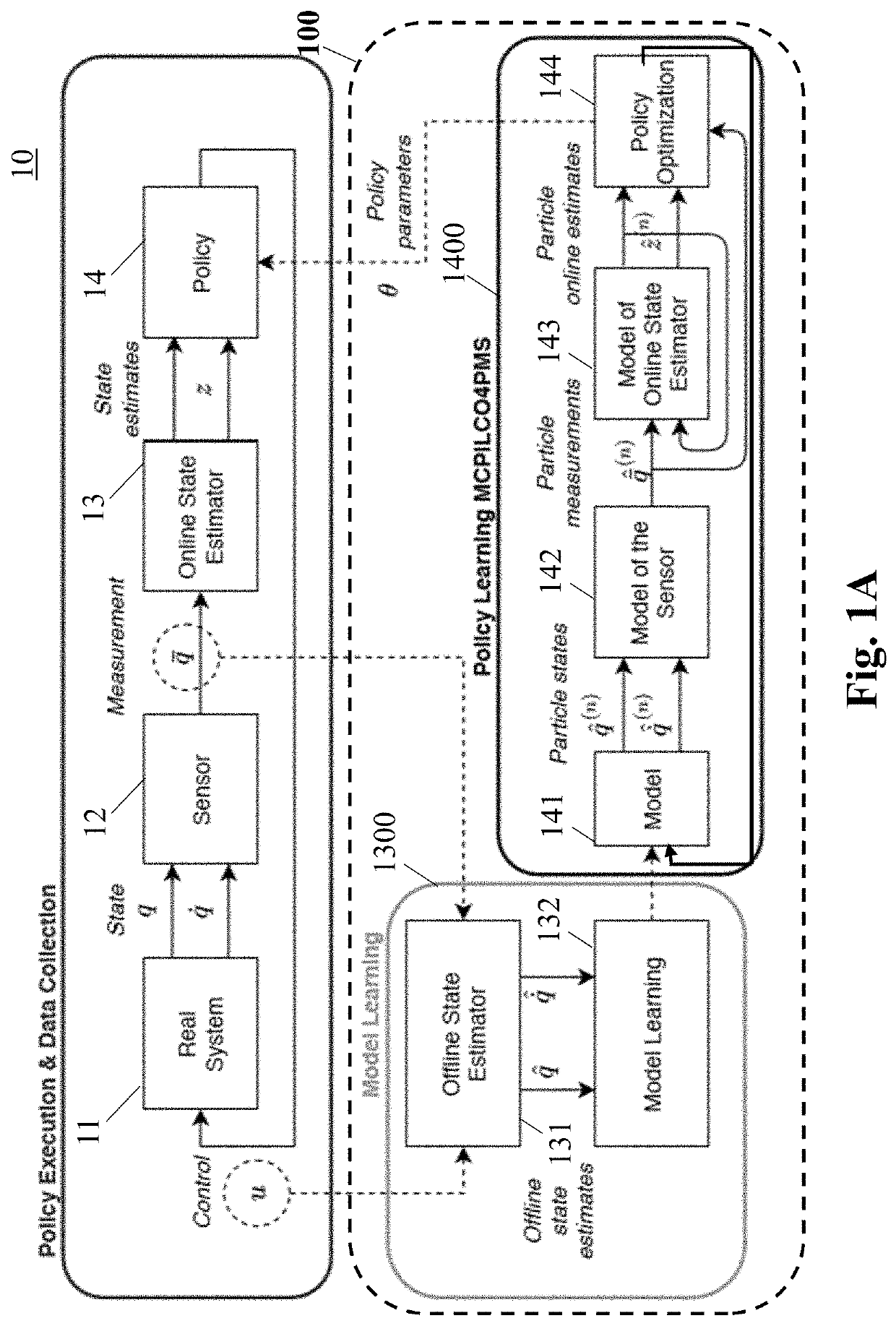

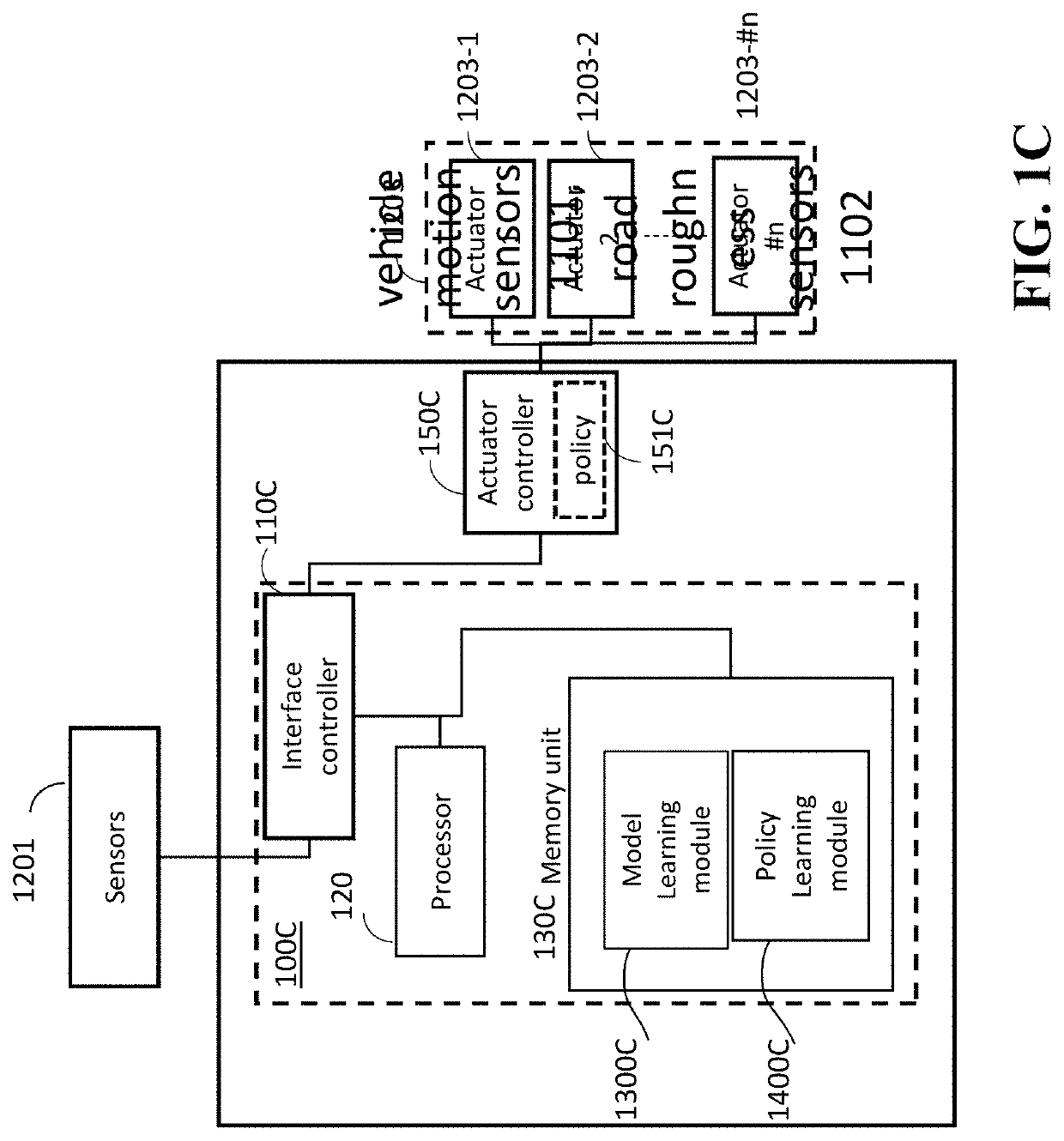

Method and System for Modelling and Control Partially Measurable Systems

PendingUS20220179419A1High data efficiencySubstantial data efficiency in solvingMathematical modelsKernel methodsControl systemModelSim

A controller for controlling a system that includes a policy configured to control the system is provided. The controller includes an interface connected to the system, the interface being configured to acquire an action state and a measurement state via sensors measuring the system, a memory to store computer-executable program modules including a model learning module and a policy learning module, a processor configured to perform steps of the program modules. The steps include offline-modeling to generate offline-learning states based on the action state and measurement state using the model learning program, providing the offline states to the policy learning program to generate policy parameters, and updating the policy of the system to operate the system based on the policy parameters. In the policy learning program to generate the policy parameters are considered a dropout method to improve the optimization of the policy parameters, a particle method to compute and evaluate the evolution of the particle states and a model of the sensor and a model of the online estimator to generate particle state online estimates to approximate the state estimates based on the particles states generated from the model learning program.

Owner:MITSUBISHI ELECTRIC RES LAB INC

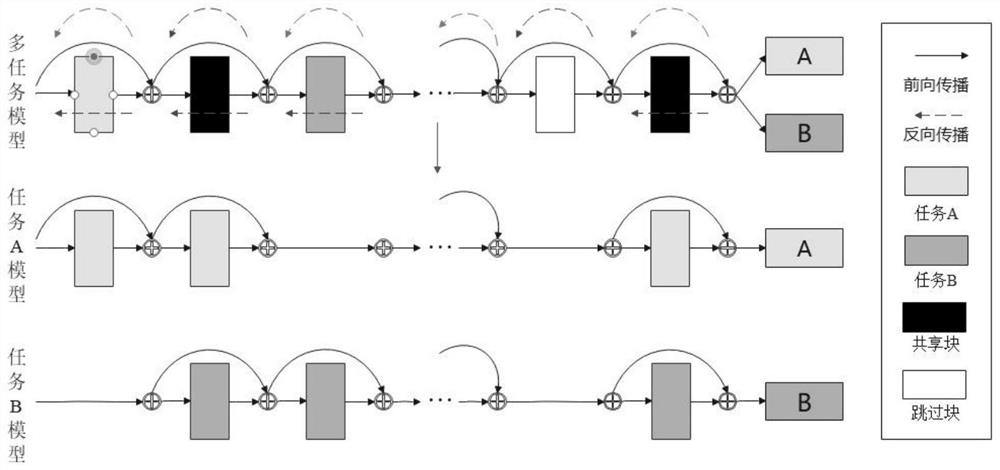

Multi-task network model training method and system based on adaptive task weight

PendingCN114819091AAvoid monotonyOptimizing Learning StrategiesImage analysisProgram initiation/switchingData setTask network

The invention relates to a multi-task network model training method and system based on adaptive task weights. According to the method, a sharing mode is learned through a strategy specific to tasks, the strategy autonomously selects which layers are executed in a multi-task network, and weights matched with the tasks can be searched at the same time, so that the model is better trained. According to the method, the multi-task network model is reconstructed based on ResNet, the learning strategy is effectively optimized according to the image in the data set in the training process, and the oneness of the multi-task model is overcome while the task index is improved. According to the method, a multi-task loss function suitable for regression and classification tasks is deduced based on probability theory maximum likelihood estimation, the task weight can be automatically adjusted in the training process so as to better improve the model performance, and the problem that the task weight is not flexible is solved.

Owner:HANGZHOU DIANZI UNIV

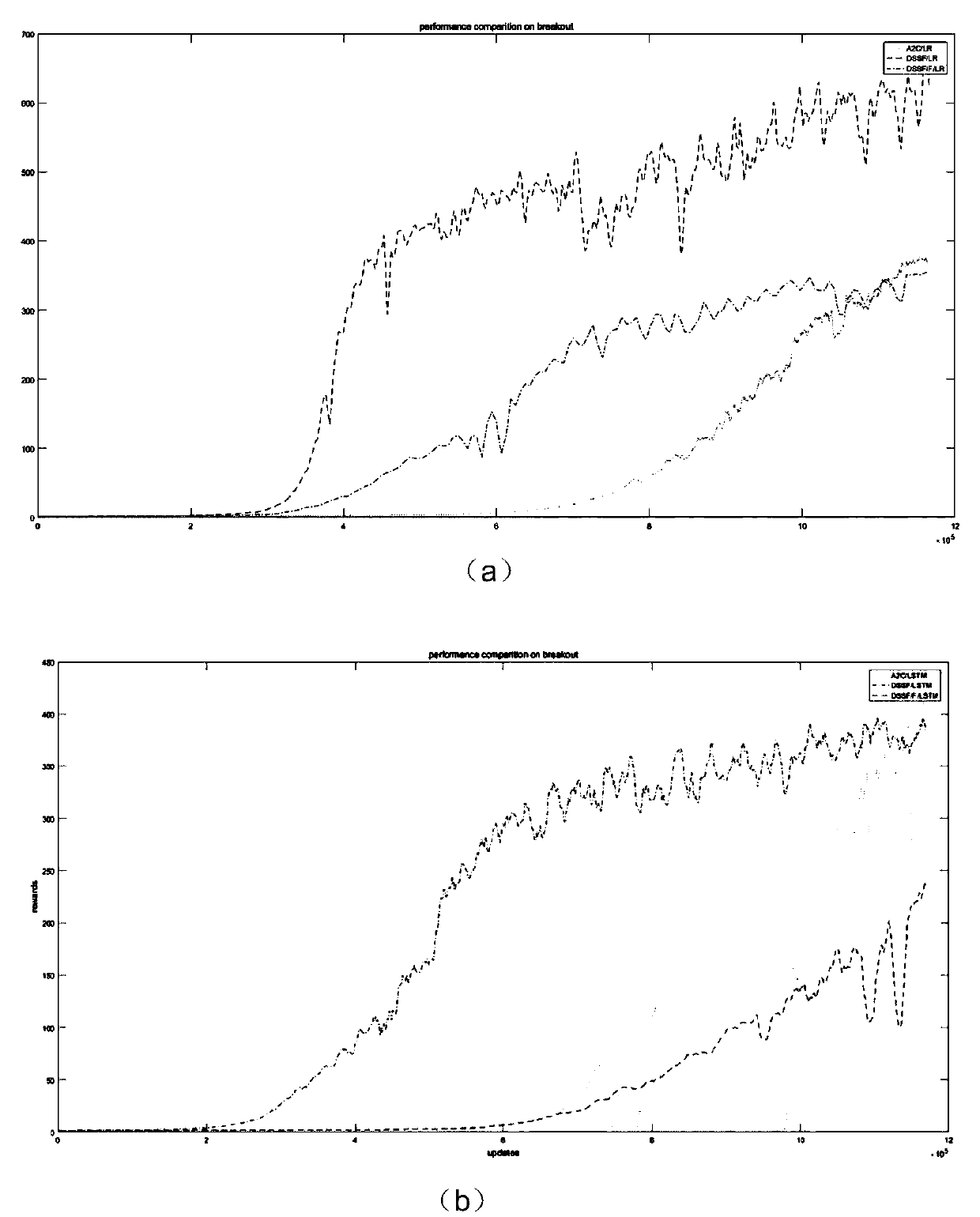

Ceph system performance optimization strategy and system based on deep reinforcement learning

InactiveCN111581178AImprove performanceHigh speedDigital data information retrievalCharacter and pattern recognitionFile systemData access

The invention discloses a Ceph system performance optimization system based on deep reinforcement learning. The Ceph system performance optimization system is composed of a data source module, a dataaccess mode learning module, an evaluation mechanism learning module and a system parameter adjustment learning module. The Ceph system performance optimization strategy based on deep reinforcement learning is realized through the following steps: S1, preprocessing a data source; s2, learning and classifying a Ceph file system running environment model; s3, carrying out evaluation mechanism learning; and S4, learning a Ceph file system parameter adjustment strategy. According to the data access method, deep reinforcement learning algorithm and interactive learning of an A2C model and a Ceph file system are combined to obtain the optimized parameters, and optimal system parameters adapted to data access mode may be selected; the method can adapt to different data access modes and hardware configurations, the optimal system parameters are obtained through intelligent learning, the system parameters can be obtained according to the optimal system parameters, and therefore the performanceof the Ceph file system is improved.

Owner:STATE GRID ANHUI ELECTRIC POWER +1

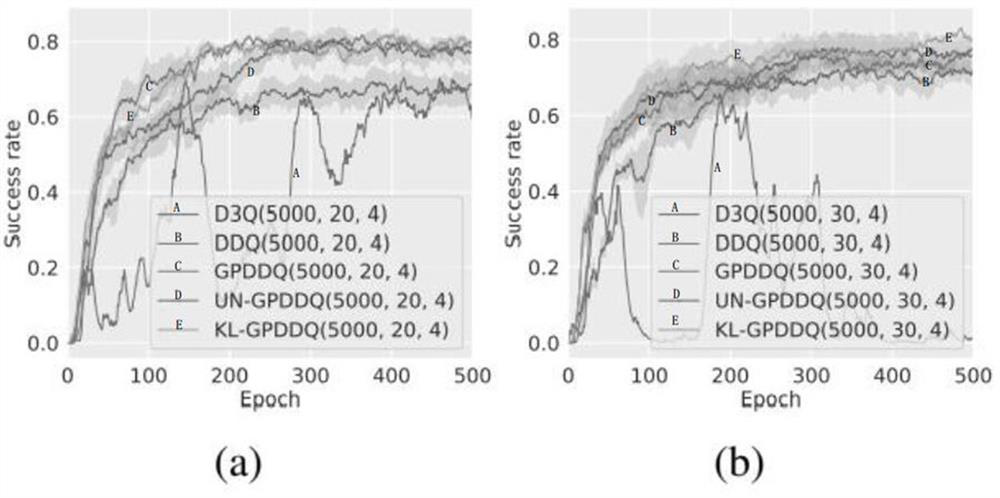

A deep GP-based Dyna-Q method for dialogue policy learning

ActiveCN113392956BEasy to analyzeAvoid the problem of needing to rely on the amount of training dataDigital data information retrievalNeural architecturesSimulationArtificial intelligence

Owner:NANHU LAB

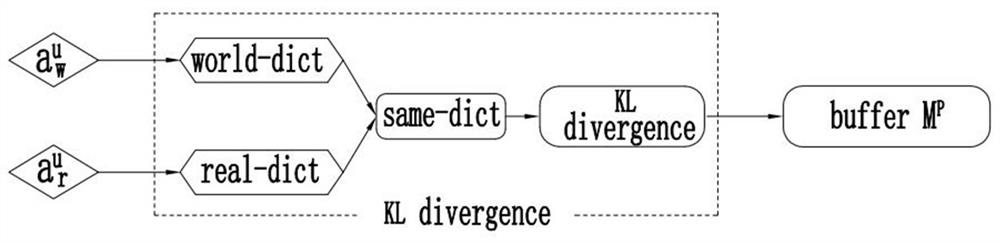

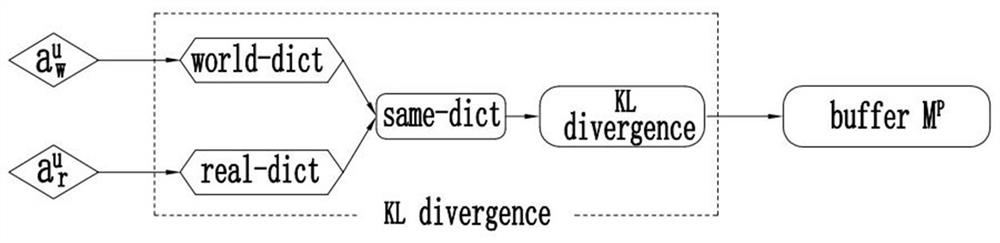

Method and system for detecting quality of simulated user experience in dialogue strategy learning

ActiveCN112989016BImprove assessmentImprove computing efficiencyArtificial lifeMachine learningRobustificationEngineering

Owner:NANHU LAB

Method for improving network information sharing level based on evolutionary game theory

PendingCN114257507AIncrease the level of sharingImprove cooperation efficiencyMachine learningTransmissionComputer networkInformation sharing

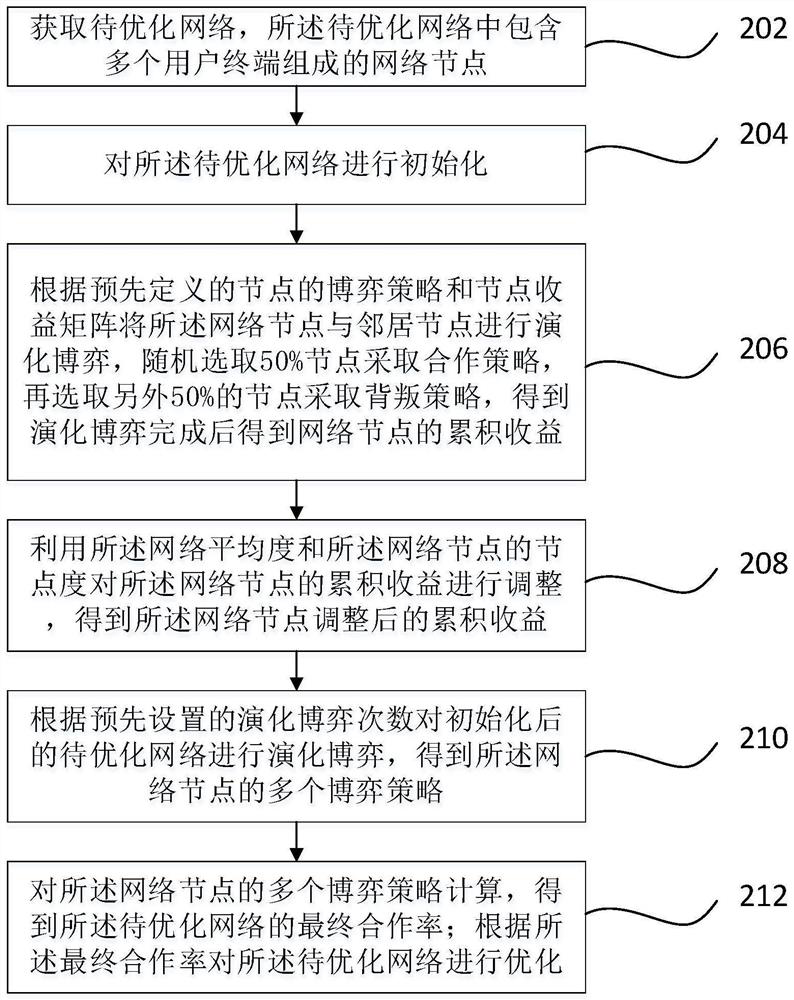

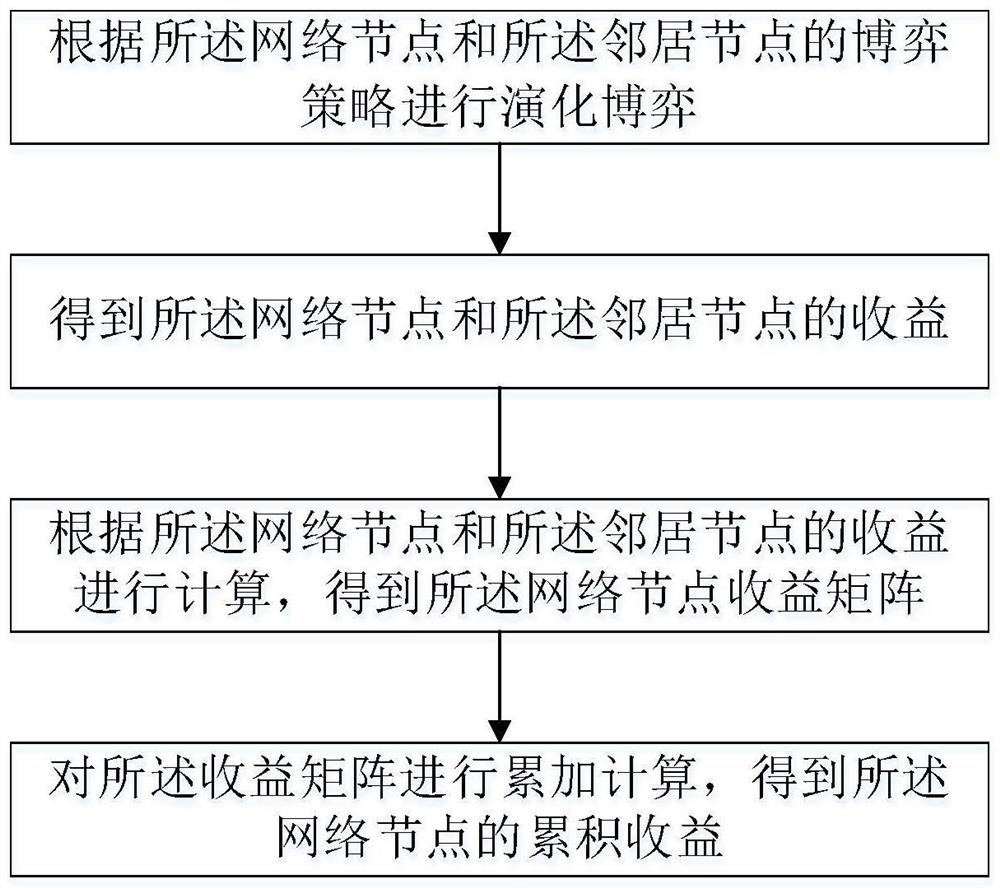

The invention relates to a method for improving the network information sharing level based on an evolutionary game theory, and the method comprises the steps: initializing a network structure, and determining the network structure, the number of nodes in a network, and the average degree dmean of the network; defining an income matrix and a game strategy of the nodes; initializing system parameters, and determining the number e of evolutionary gaming; each node games with a neighbor node to obtain accumulated income; the node degree is introduced into strategy updating of the node, and node revenue used for strategy updating is adjusted; each node randomly selects neighbor nodes for strategy learning; updating the strategy of each node in the network; and judging whether the node reaches the maximum evolution frequency, and finally calculating the final cooperation rate of the network. By adopting the method, the node degree of the individual in the network is added into the strategy updating process, the cooperative behavior in the network is effectively promoted, and the network information sharing level is improved.

Owner:NAT UNIV OF DEFENSE TECH

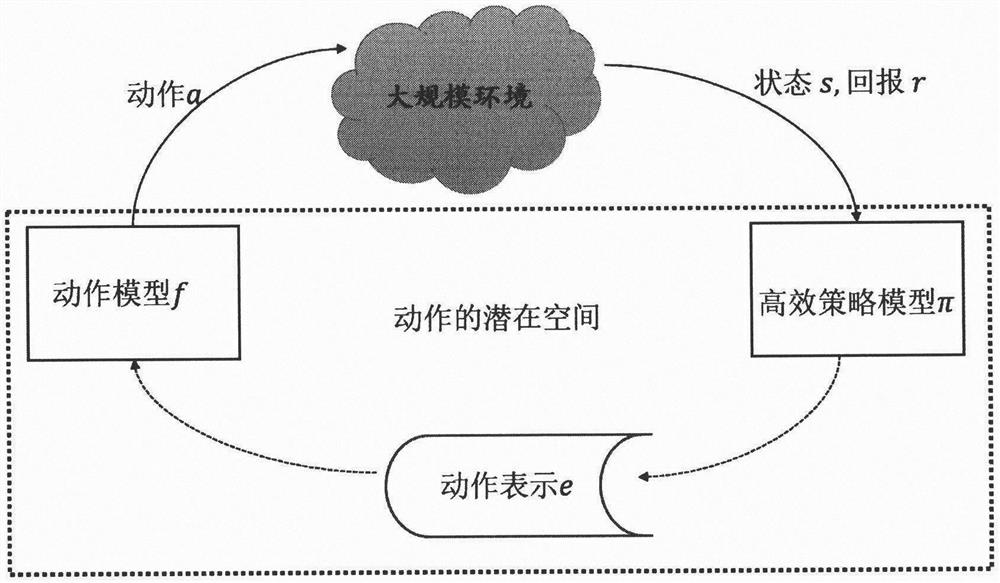

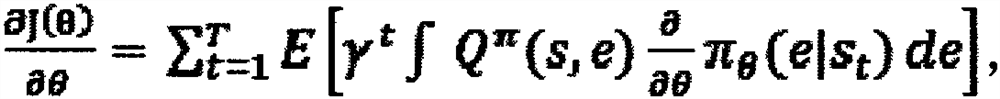

Reinforcement learning strategy learning method based on potential action representation space

PendingCN111950691AImprove generalization abilityFast learningNeural architecturesNeural learning methodsOffline learningSupervised learning

Sample utilization rate and learning efficiency are an important bottleneck problem of deep reinforcement learning in practical application. For the real world, in order to quickly and accurately obtain a universal strategy, the invention provides a reinforcement learning strategy learning method based on a potential action representation space, and the method learns the strategy in the potentialspace of the action and maps the action representation to the real action space; a strategy in the method is mapping from a state to action representation, the search space of strategy learning can bereduced, and the strategy learning efficiency is improved; according to the method, mature supervised learning can be selected for offline learning for representation of actions, the learning speed can be further increased, and the stability is improved. Besides, as long as the characteristics of the adopted actions are similar, even for tasks different from the training strategy, the learned strategy can be generalized into the action space of the current execution task under the fine tuning of a small number of learning samples, and the generalization ability of strategy expression is greatly improved.

Owner:TIANJIN UNIV OF SCI & TECH

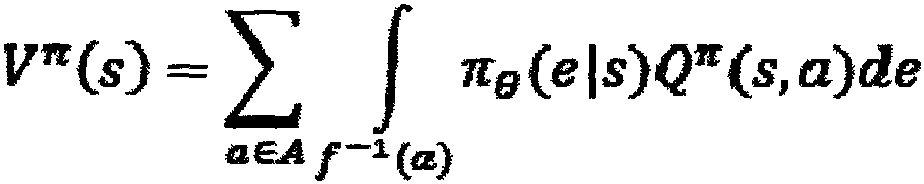

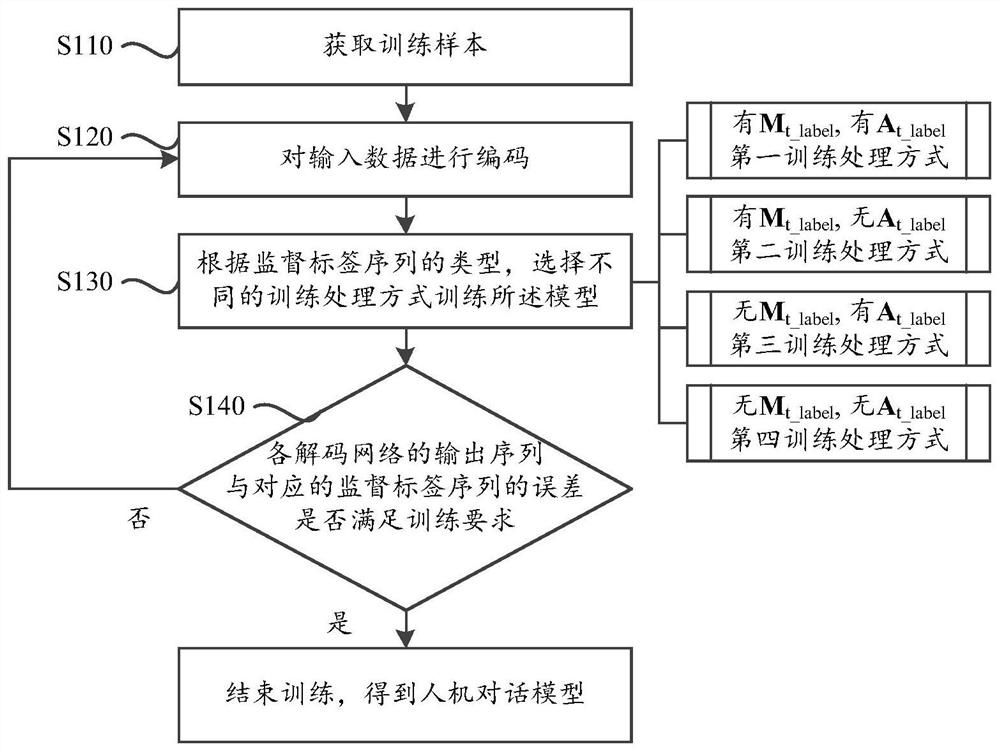

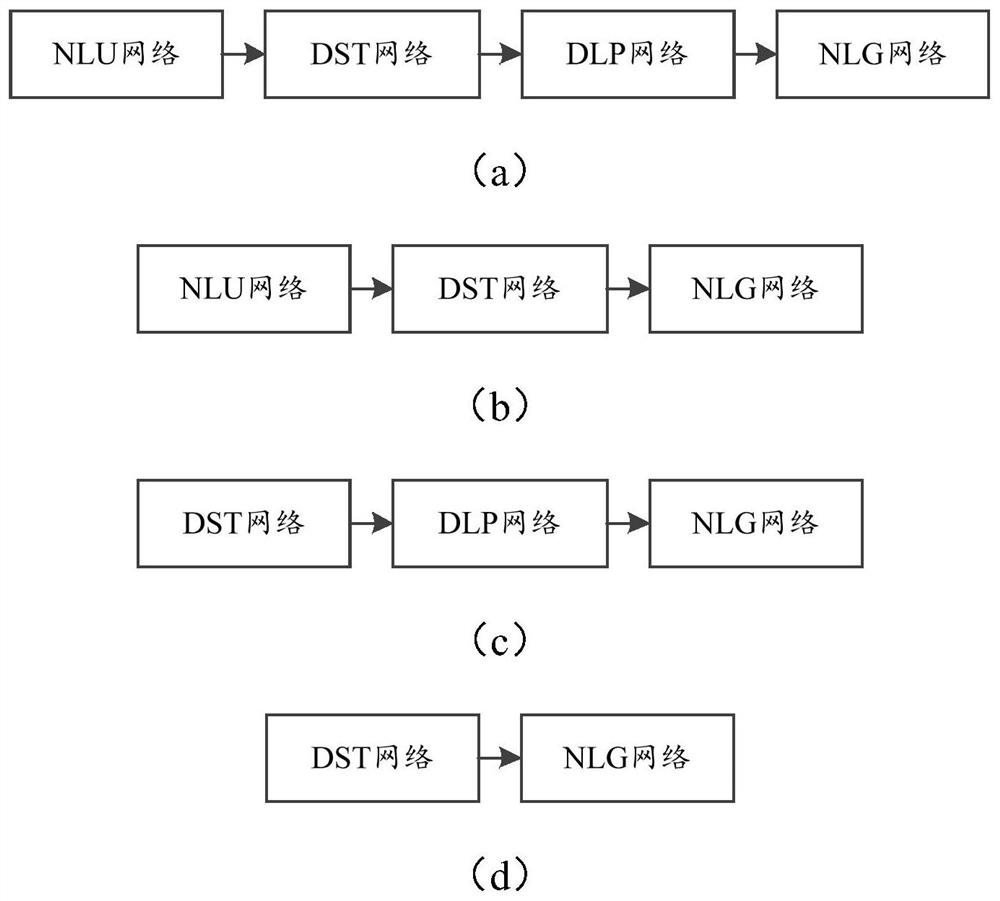

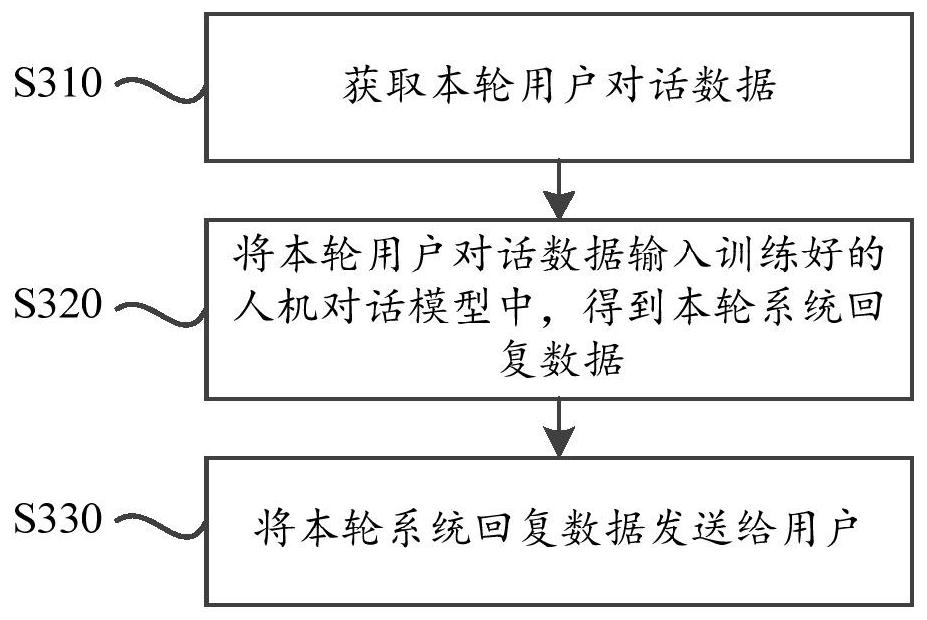

Man-machine dialogue model training method and man-machine dialogue method

ActiveCN113050787AImprove performanceAvoid problems prone to error accumulationInput/output for user-computer interactionMachine learningNatural language understandingDialog system

The invention provides a man-machine dialogue model training method and a man-machine dialogue method. The man-machine dialogue model integrates sub-modules of a pipelined dialogue system into an overall end-to-end structural framework; the current round of dialogue data and the previous round of historical data of a user are obtained and coded into a vector sequence; and finally, through four sub-modules of natural language understanding, dialogue state tracking, dialogue strategy learning and natural language generation in sequence, a current round of reply of the system is obtained. The invention further provides a man-machine dialogue method which is suitable for performing man-machine interaction by utilizing the model. According to the invention, the structures of the sub-modules can be flexibly selected according to the types of the supervision labels contained in the training data when the man-machine dialogue model is trained, all the sub-modules can be optimized at the same time, the problem that errors are continuously accumulated and spread is avoided, the system reply accuracy can be improved, and the number of samples used during training is greatly reduced.

Owner:SHANGHAI XIAOI ROBOT TECH CO LTD

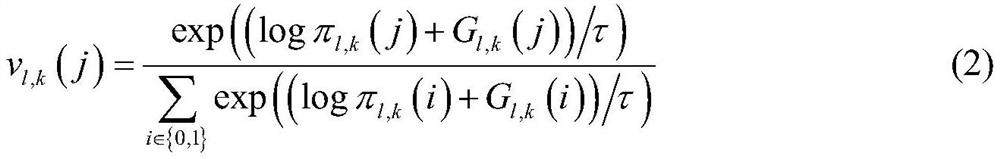

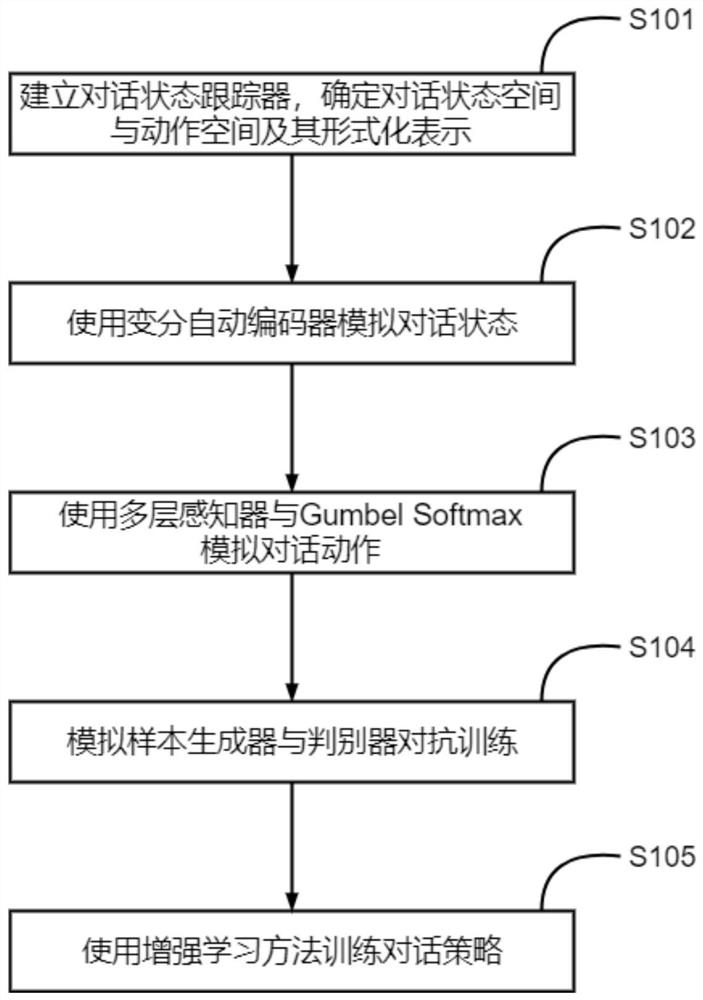

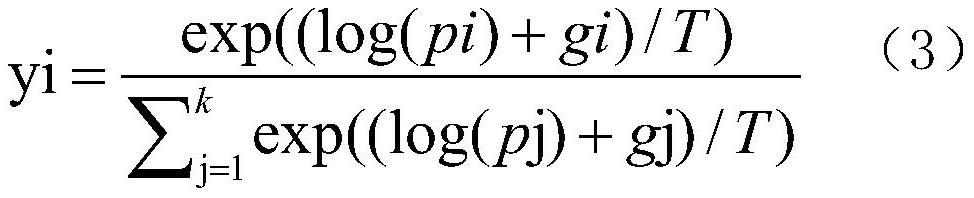

Task-oriented dialogue strategy generation method

PendingCN112949858AQuality improvementCharacter and pattern recognitionNeural learning methodsDiscriminatorDirect feedback

The invention relates to a task-oriented dialogue strategy generation method, and the method comprises the following steps: establishing a dialogue state tracker, and determining a dialogue state space, an action space and formalized representation thereof; simulating a dialogue state by using a variational automatic encoder; simulating a dialogue action by using a multi-layer perceptron and Gumbel Softmax; performing adversarial training on a simulation sample generator and a discriminator; and finally training a dialogue strategy by using a reinforcement learning method. Firstly, a simulation sample generator is used for learning a reward function, and loss from a discriminator can be directly fed back to the generator for optimization; secondly, the trained discriminator is taken as a dialogue reward to be brought into a reinforcement learning process for guiding dialogue strategy learning; the dialogue strategy can be updated by utilizing any reinforcement learning algorithm; according to the method, common information contained in high-quality dialogues generated by human beings can be deduced by distinguishing the dialogues generated by the human beings and the machine respectively, and then the learned information is fully utilized to guide dialogue strategy learning in a new field in a transfer learning mode.

Owner:网经科技(苏州)有限公司

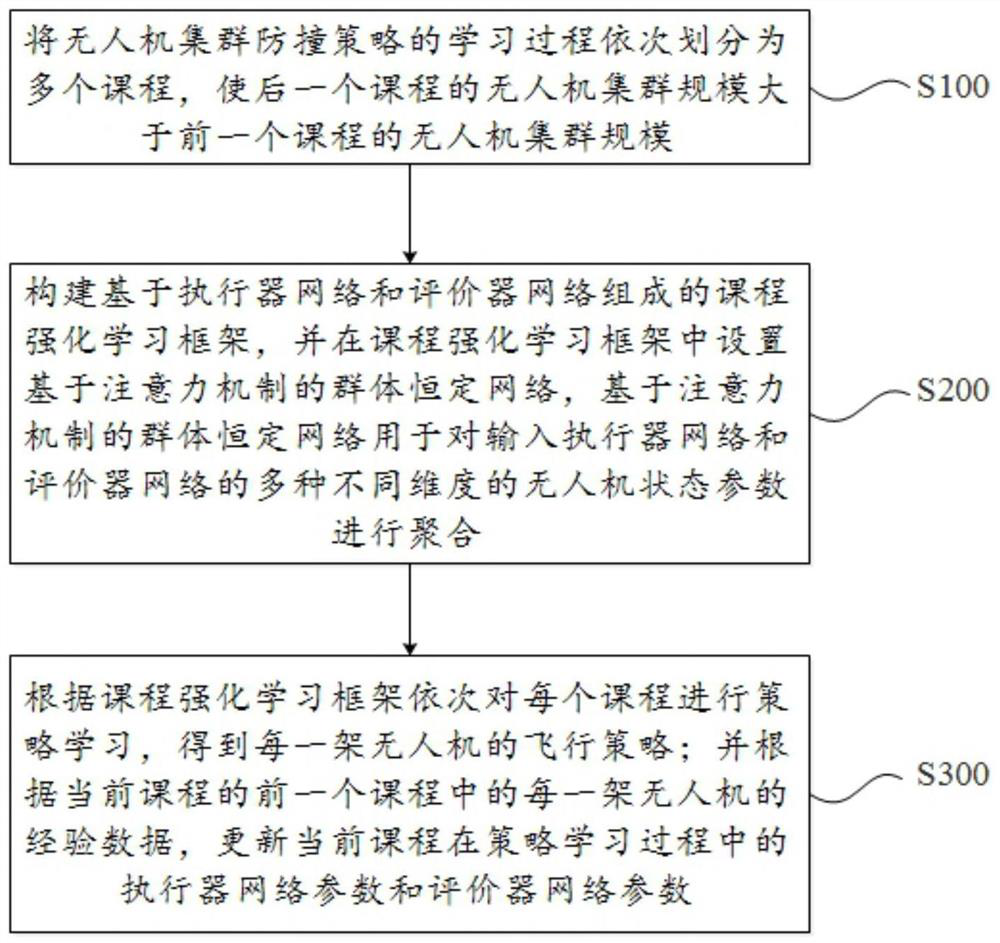

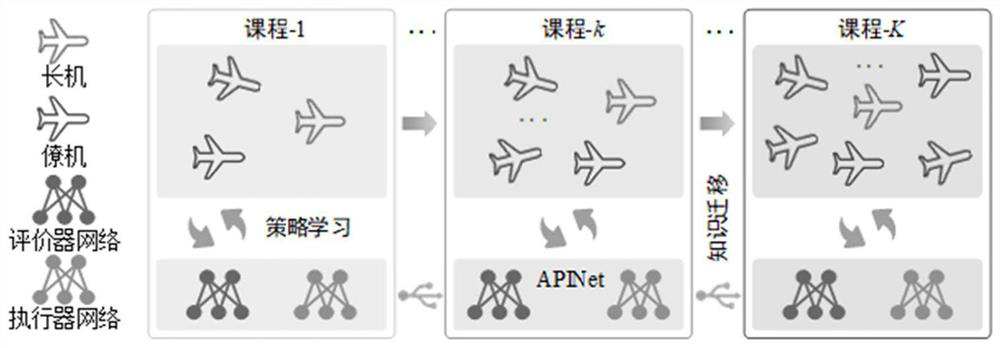

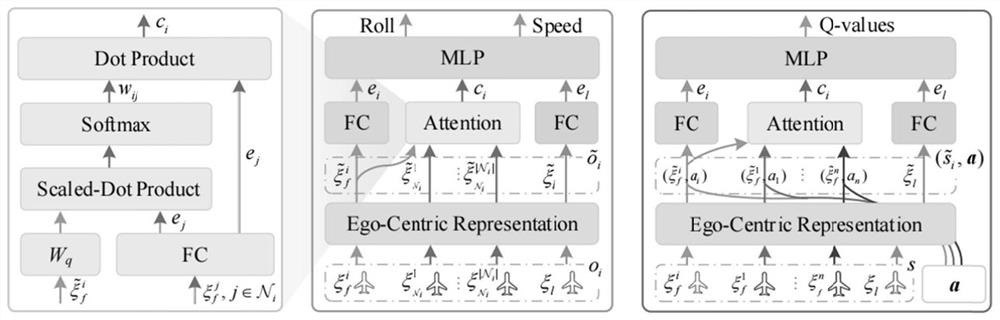

Large-scale unmanned aerial vehicle cluster flight method based on deep reinforcement learning

PendingCN114578860AImprove learning efficiencyImprove training efficiencyPosition/course control in three dimensionsSimulationUncrewed vehicle

The invention discloses a large-scale unmanned aerial vehicle cluster flight method based on deep reinforcement learning, and the method comprises the steps: dividing a learning process of an unmanned aerial vehicle cluster anti-collision strategy into a plurality of courses in sequence, and enabling the unmanned aerial vehicle cluster scale of the next course to be larger than the unmanned aerial vehicle cluster scale of the previous course; constructing a curriculum reinforcement learning framework based on an actuator network and an evaluator network, and setting a group constant network based on an attention mechanism in the curriculum reinforcement learning framework; sequentially carrying out strategy learning on each course according to the course reinforcement learning framework to obtain a flight strategy of each unmanned aerial vehicle; and according to the empirical data of each unmanned aerial vehicle in the previous course of the current course, the executor network parameters and the evaluator network parameters of the current course in the strategy learning process are updated. According to the invention, the learning and training efficiency of the large-scale unmanned aerial vehicle can be effectively improved, the collision of the large-scale unmanned aerial vehicle cluster during flight is effectively avoided, and the generalization ability is strong.

Owner:NAT UNIV OF DEFENSE TECH

Method and system for detecting simulated user experience quality in dialog policy learning

ActiveCN112989016AImprove assessmentImprove computing efficiencyArtificial lifeMachine learningRobustificationEngineering

The invention provides a method and a system for detecting simulated user experience quality in dialogue strategy learning. The method comprises the following steps: S1, generating simulated experience by a world model; S2, performing quality detection on the simulation experience through a quality detector based on KL divergence; and S3, storing the simulation experience which is qualified in quality detection for dialogue strategy model training. According to the scheme, the quality detector based on the KL divergence is introduced, the quality of simulation experience can be evaluated more easily and effectively, the calculation efficiency is greatly improved while the robustness and effectiveness of dialogue strategies are ensured, and the purpose of effectively controlling the quality of the simulation experience is achieved.

Owner:NANHU LAB

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com