Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

263 results about "Result vector" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The resultant is the vector sum of two or more vectors. If displacement vectors A and B are added together, the result will be vector R, which is the resultant vector. But any two vectors can be added as long as they are the same vector quantity.

Running-min and running-max instructions for processing vectors using a base value from a key element of an input vector

ActiveUS8417921B2Software engineeringGeneral purpose stored program computerControl vectorTheoretical computer science

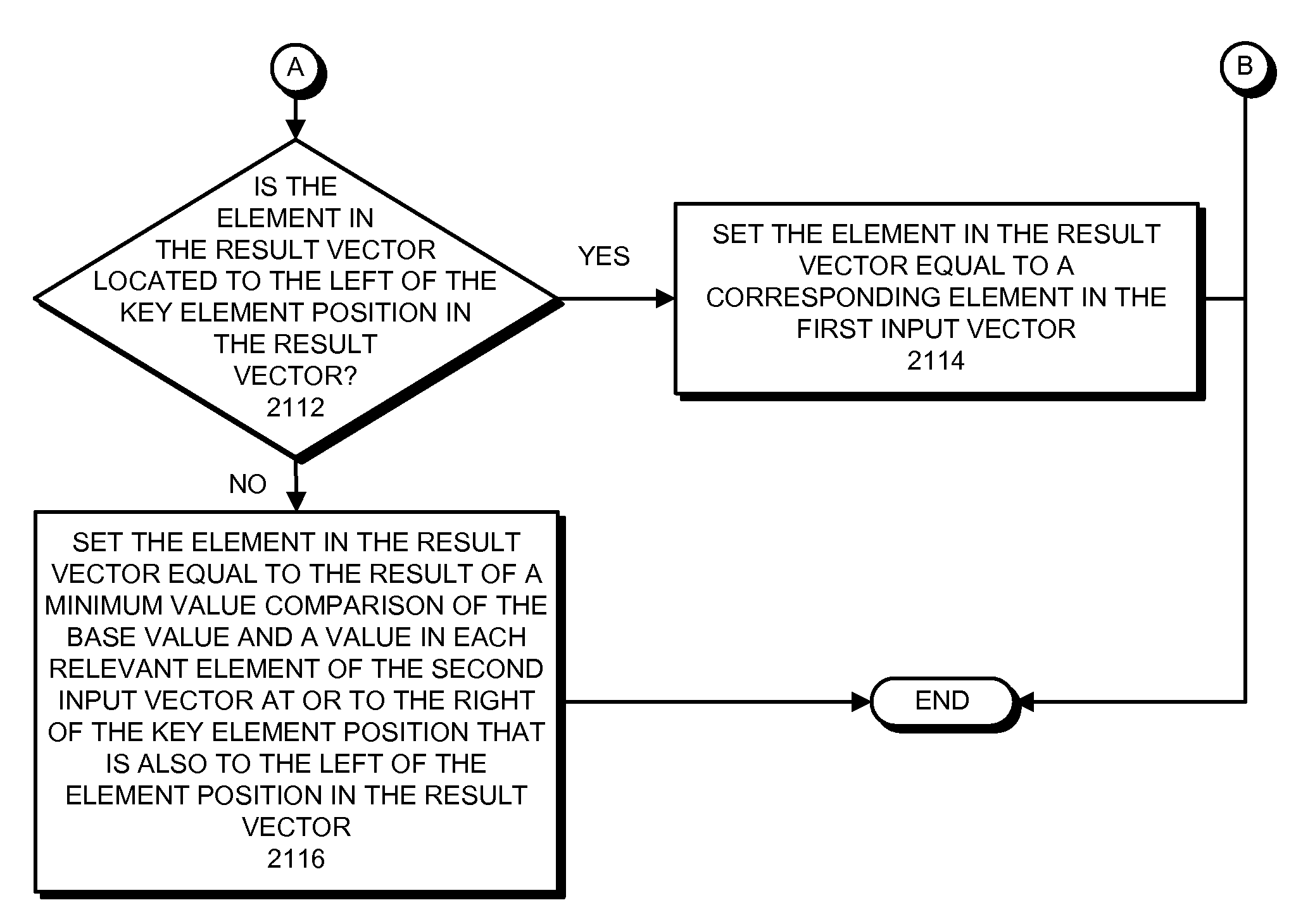

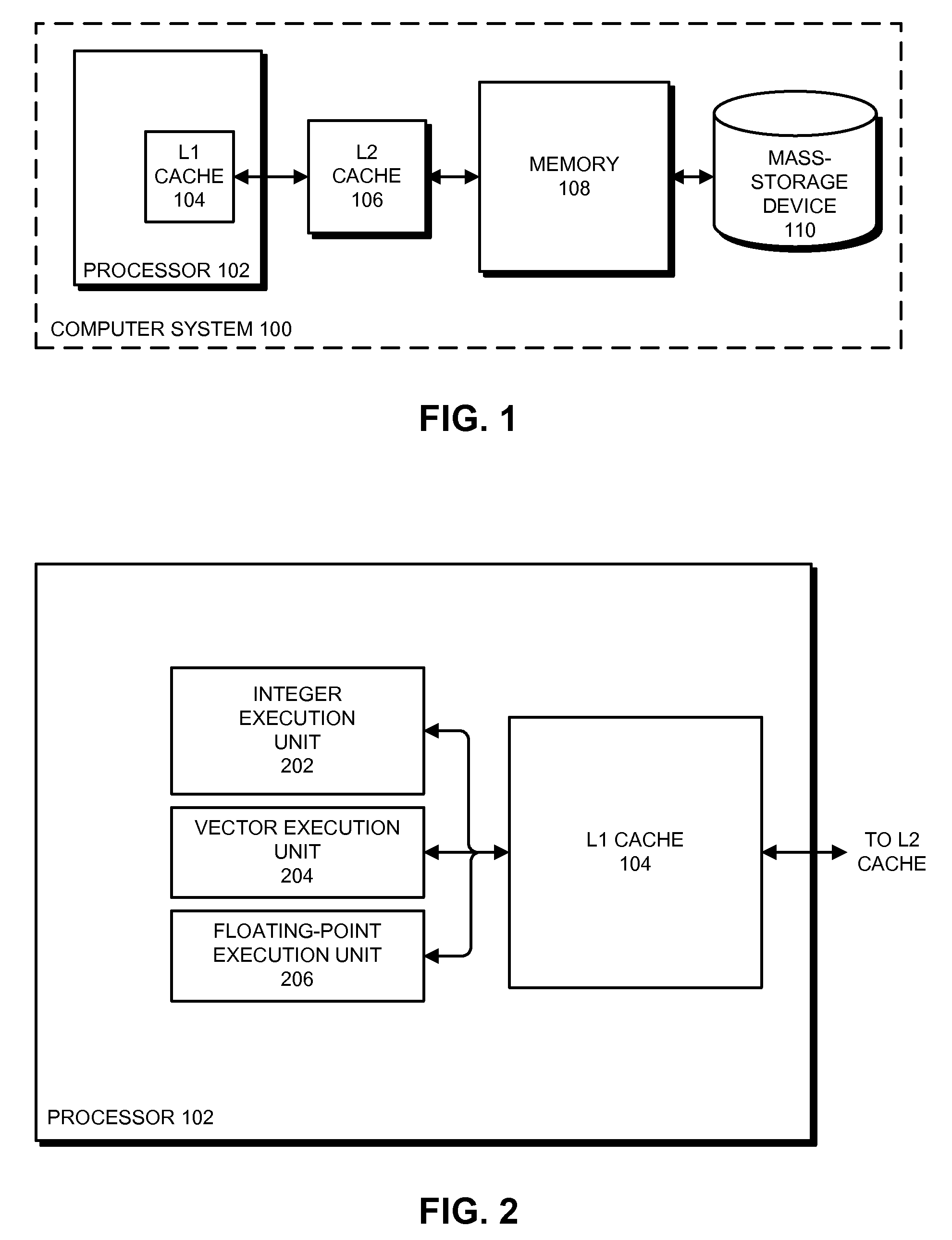

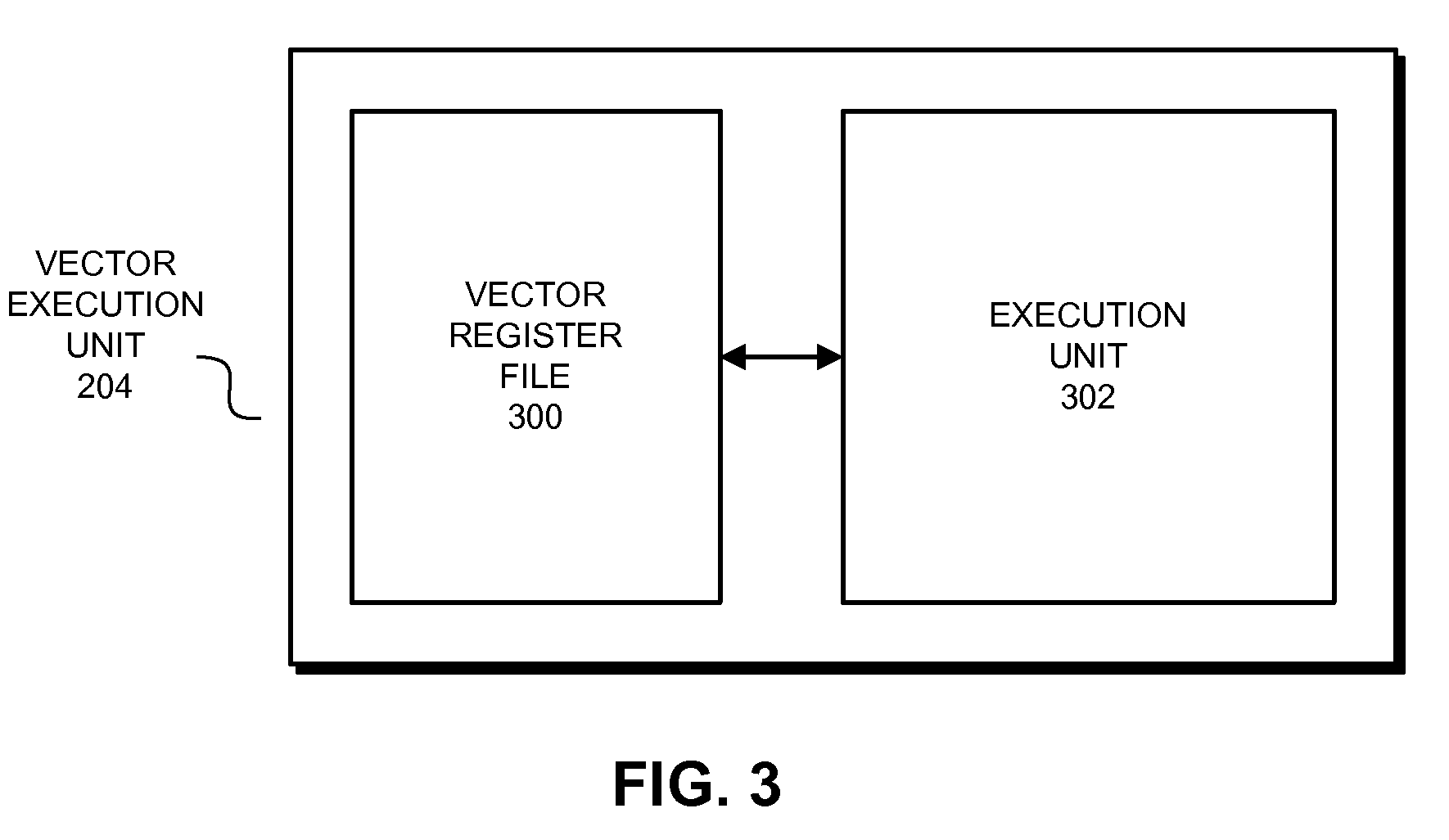

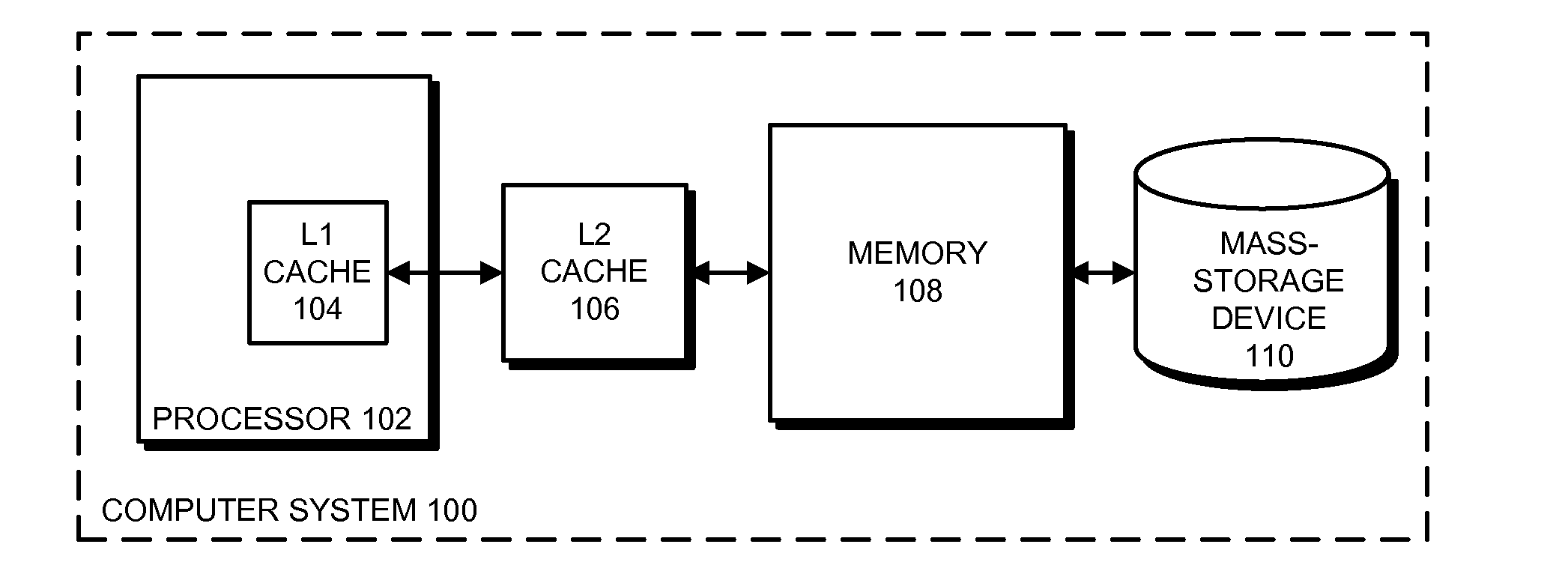

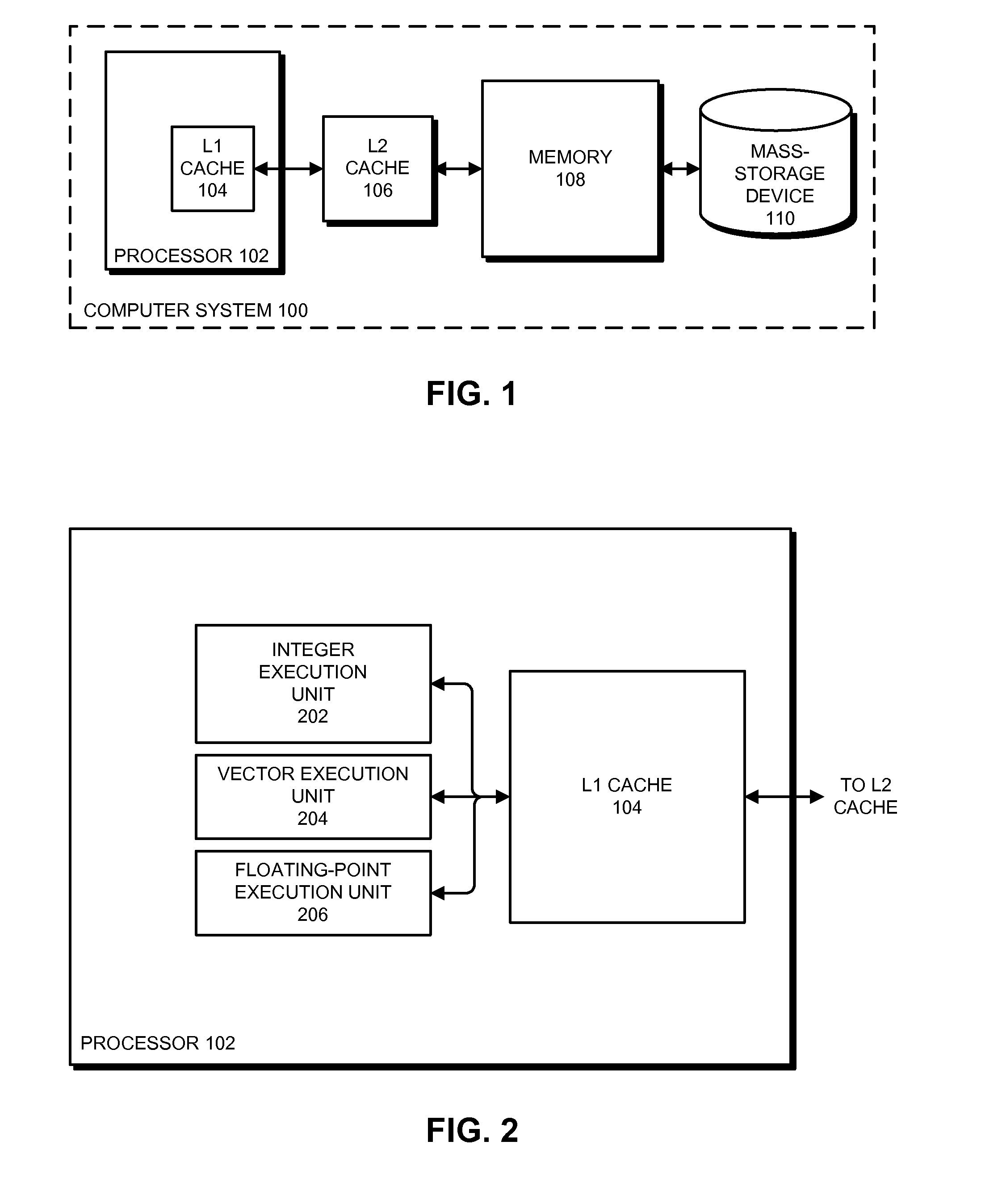

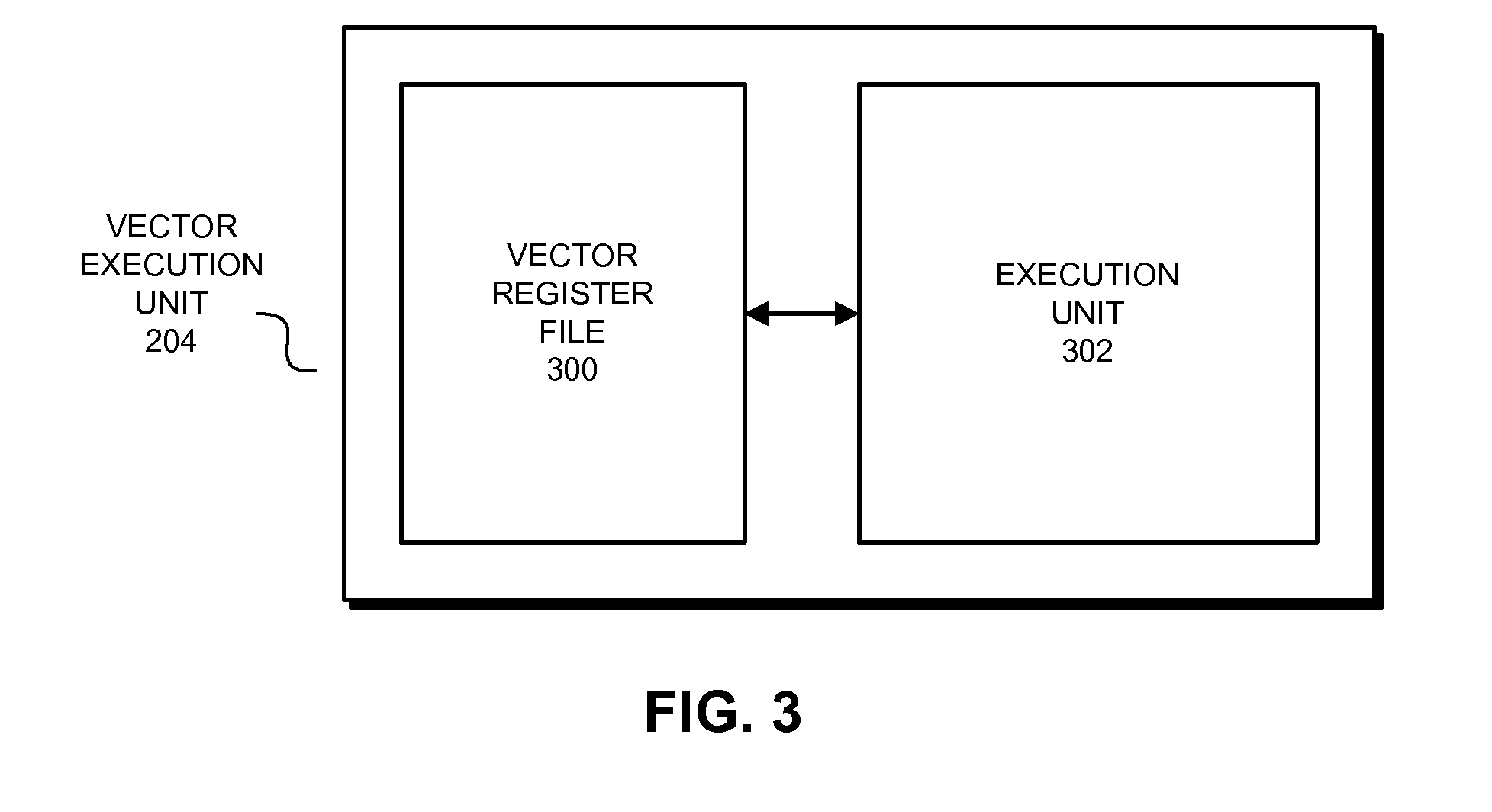

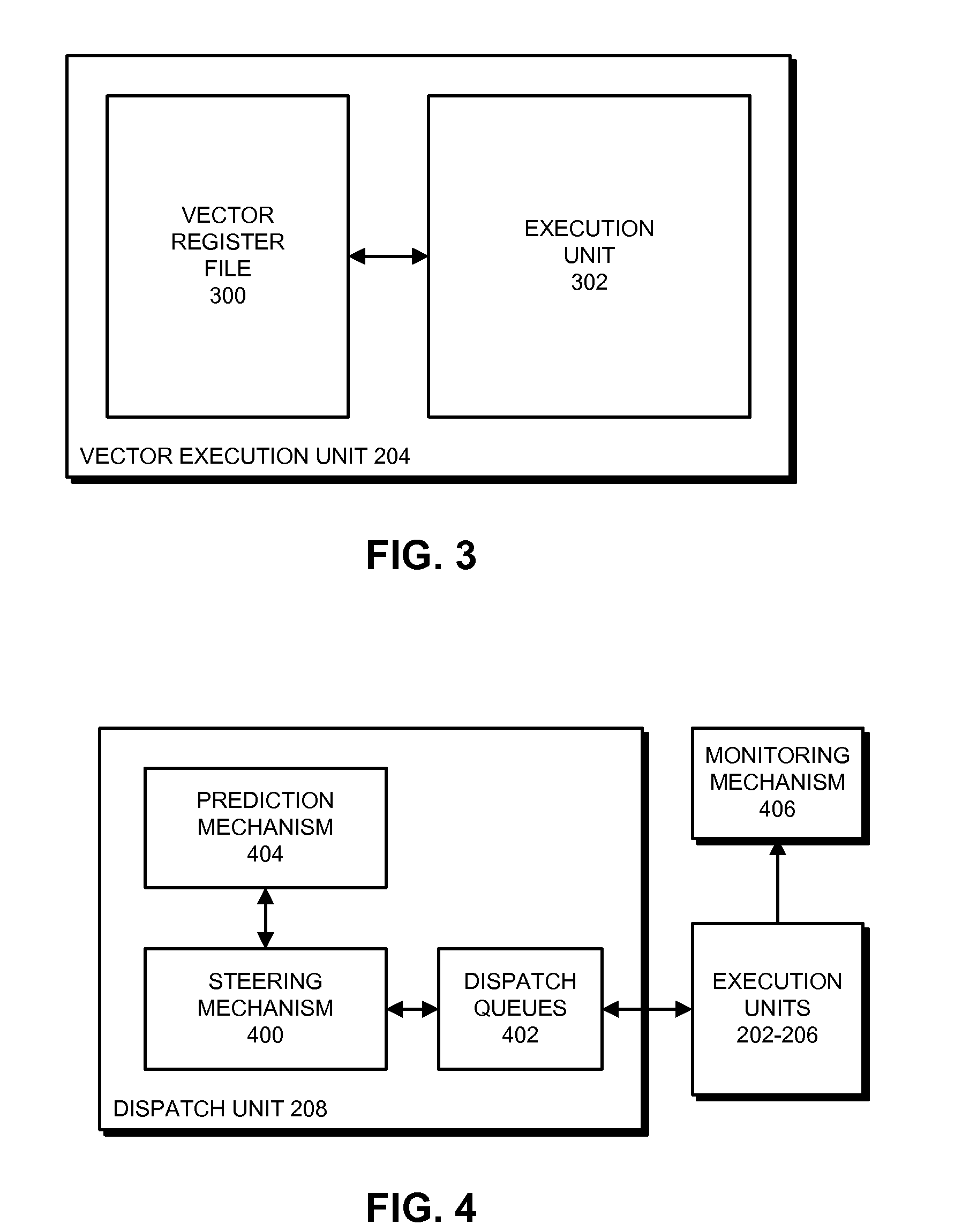

The described embodiments provide a processor for generating a result vector that contains results from a comparison operation. During operation, the processor receives a first input vector, a second input vector, and a control vector. When subsequently generating a result vector, the processor first captures a base value from a key element position in the first input vector. For selected elements in the result vector, processor compares the base value and values from relevant elements to the left of a corresponding element in the second input vector, and writes the result into the element in the result vector. In the described embodiments, the key element position and the relevant elements can be defined by the control vector and an optional predicate vector.

Owner:APPLE INC

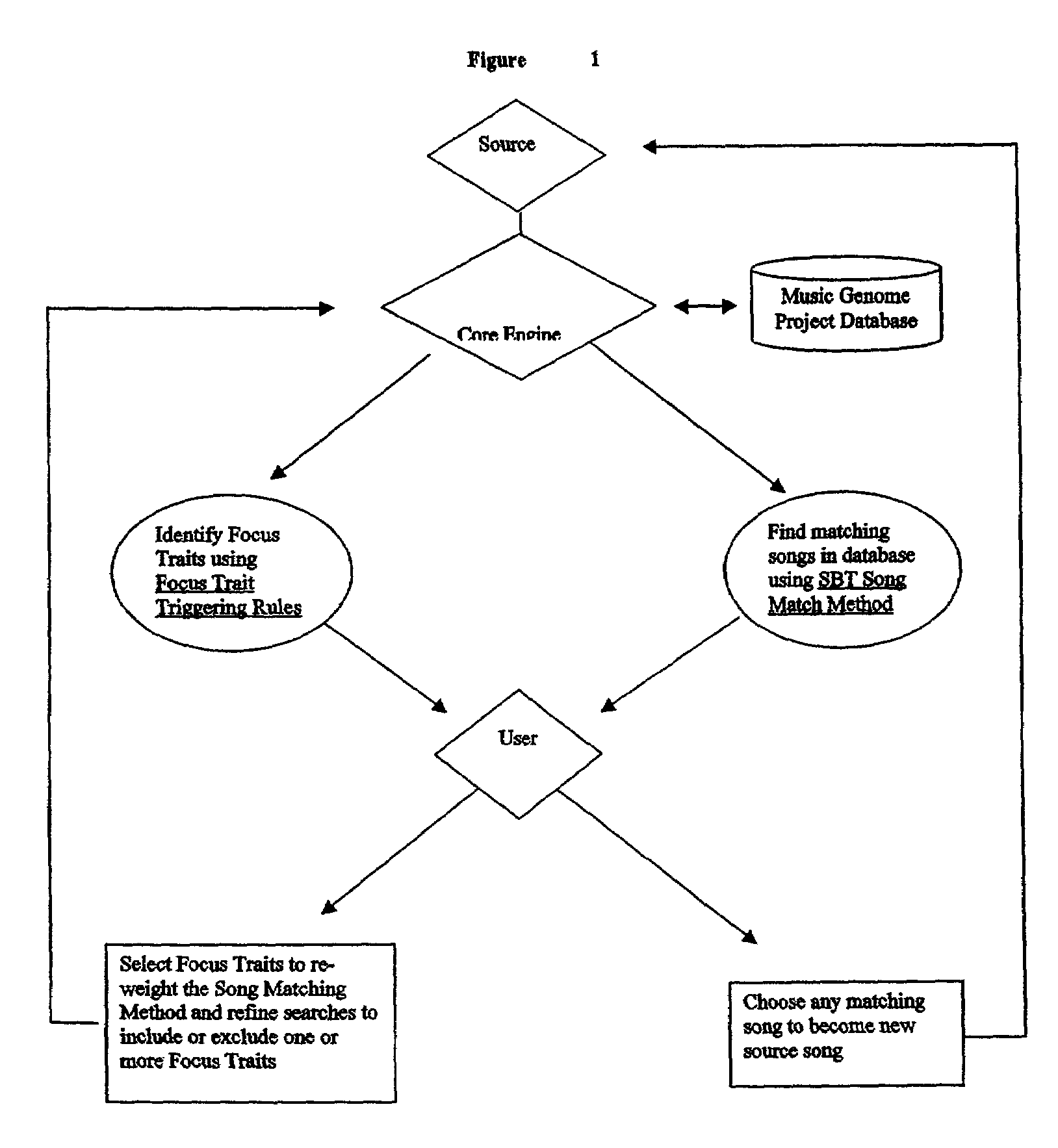

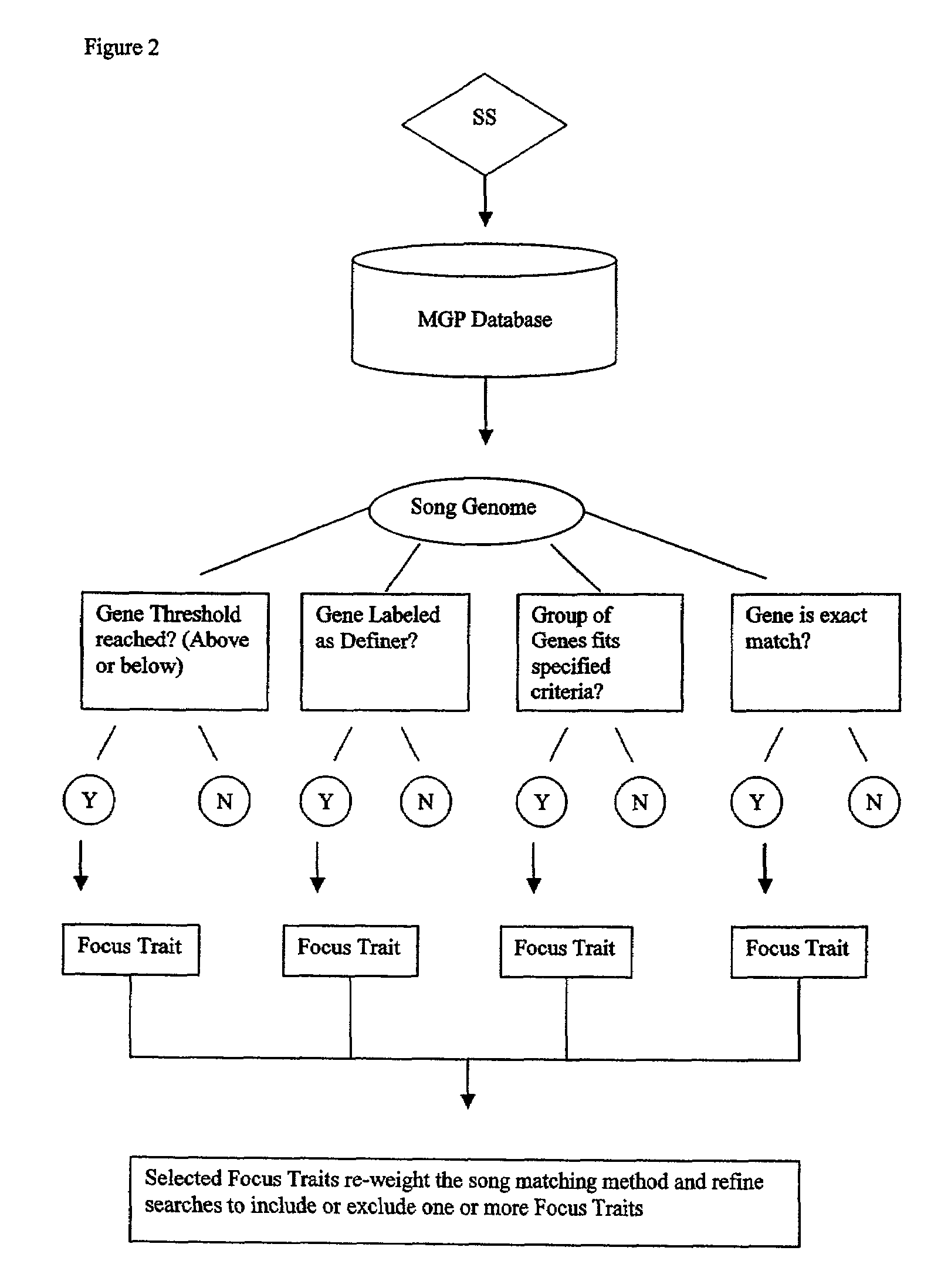

Consumer item matching method and system

InactiveUS7003515B1Digital data information retrievalData processing applicationsPattern recognitionMatching methods

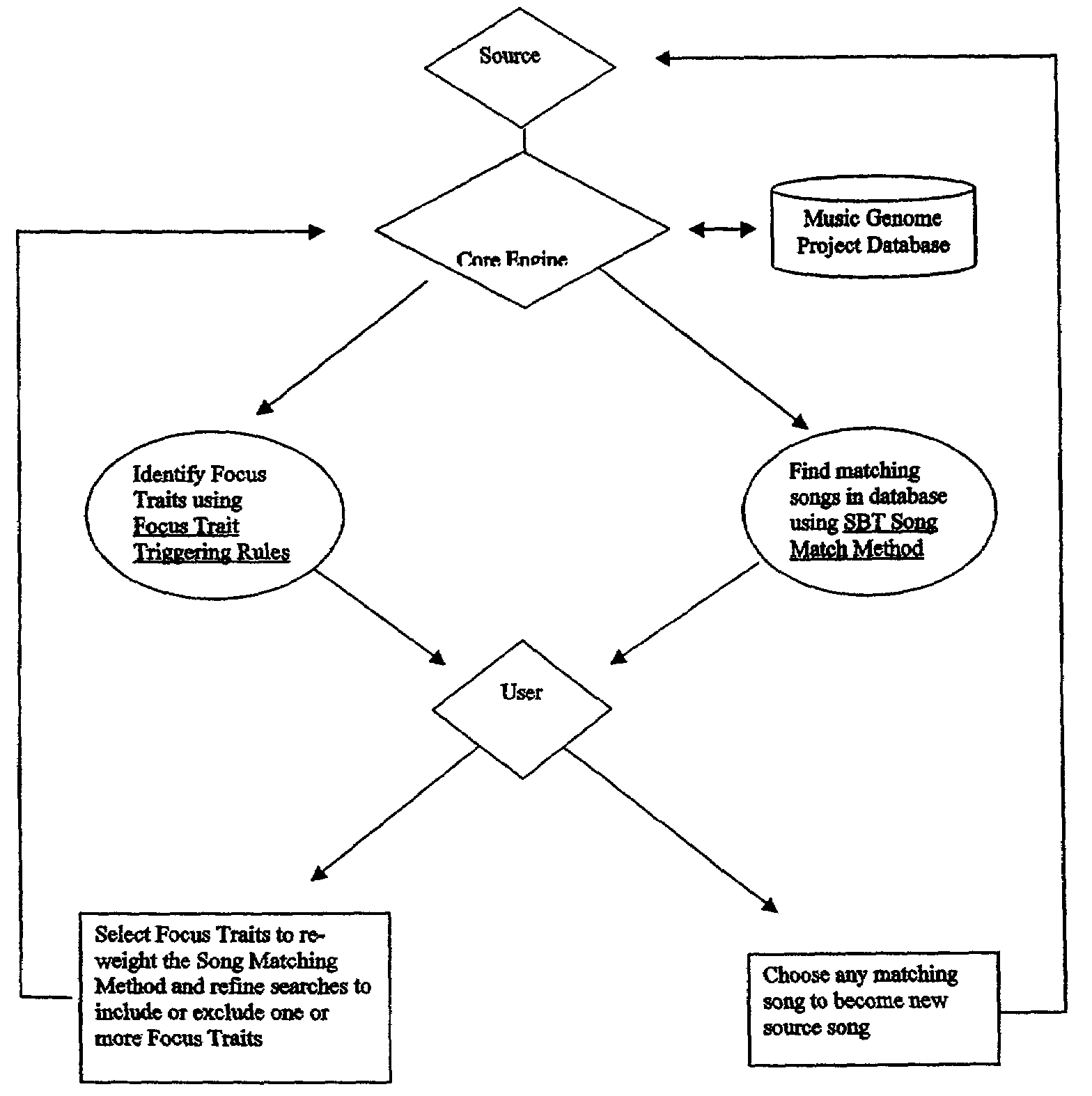

A method of determining at least one match item corresponding to a source item. A database of multiple items such as songs is created. Each song is also represented by an n-dimensional database vector in which each element corresponding to one of n musical characteristics of the song. An n-dimensional source song vector that corresponds to the musical characteristics of a source song is determined. A Distance between the source song vector and each of database song vector is calculated, each distance being a function of the differences between the n musical characteristics of the source song vector and one of source database song vector. The calculation of the distances may include the application of a weighted factor to the musical characteristics of resulting vector. A match song is selected based on the magnitude of the distance between the source song and each database songs after applying any weighted factors.

Owner:PANDORA MEDIA

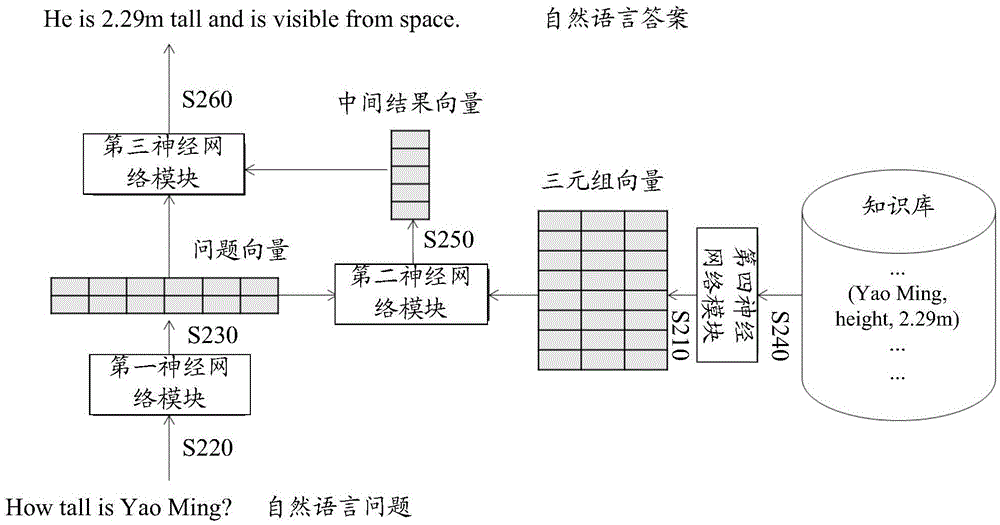

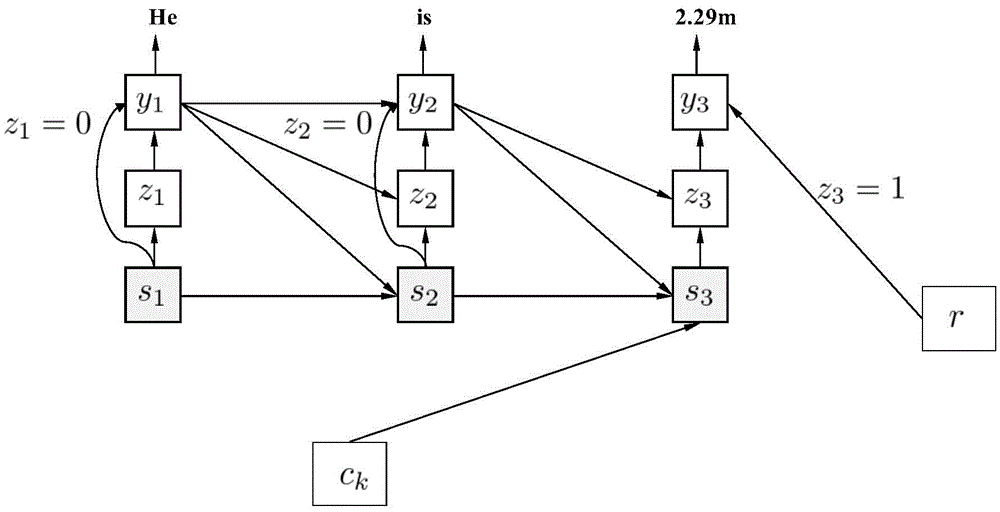

Method and neural network system used for man-machine conversation, and user equipment

The invention discloses a method and a neural network system used for a man-machine conversation, and user equipment. The method used for the man-machine conversation comprises the following steps that: carrying out vectorization on a natural language problem and a knowledge base; through vector computation, obtaining an intermediate result vector which is based on the knowledge base and expresses similarity between the natural language problem and a knowledge base answer through vector computation; and according to a problem vector and the intermediate result vector, carrying out computation to obtain a correct natural language answer based on facts. By use of the method provided by the invention, the conversation and the questions and answers based on the knowledge base are combined, natural language interaction can be carried out with users, and on the basis of the knowledge base, the natural language answer based on the facts can be given.

Owner:HUAWEI TECH CO LTD

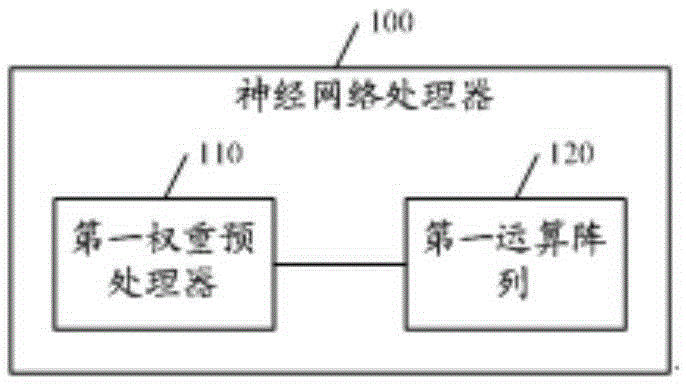

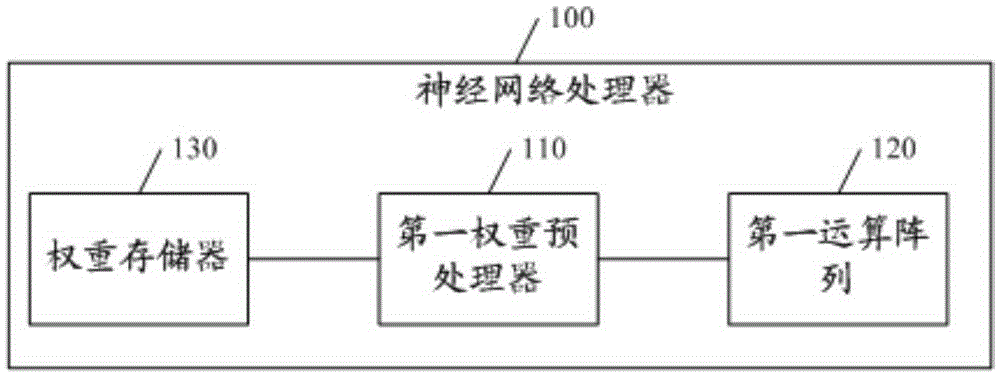

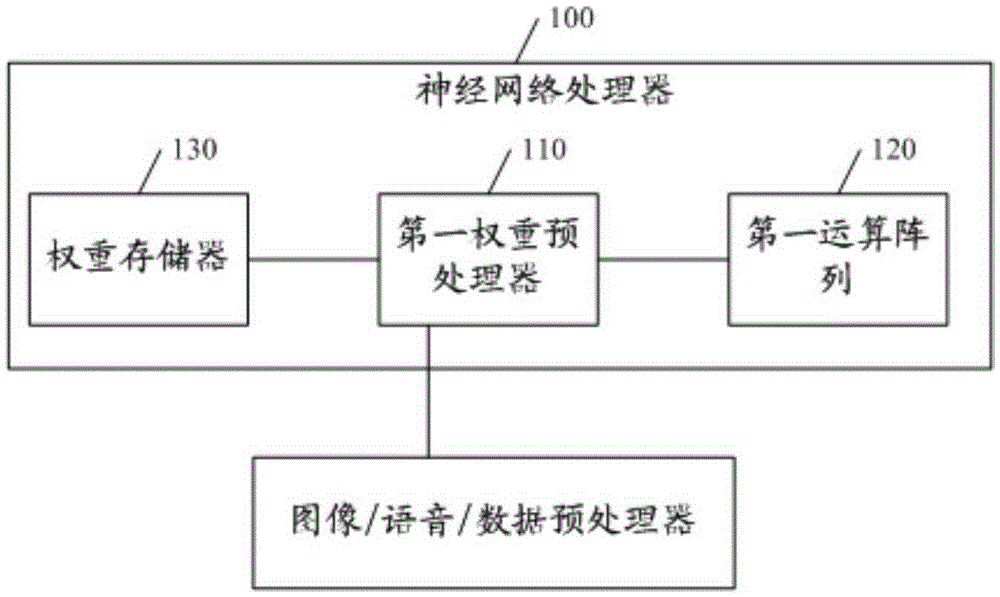

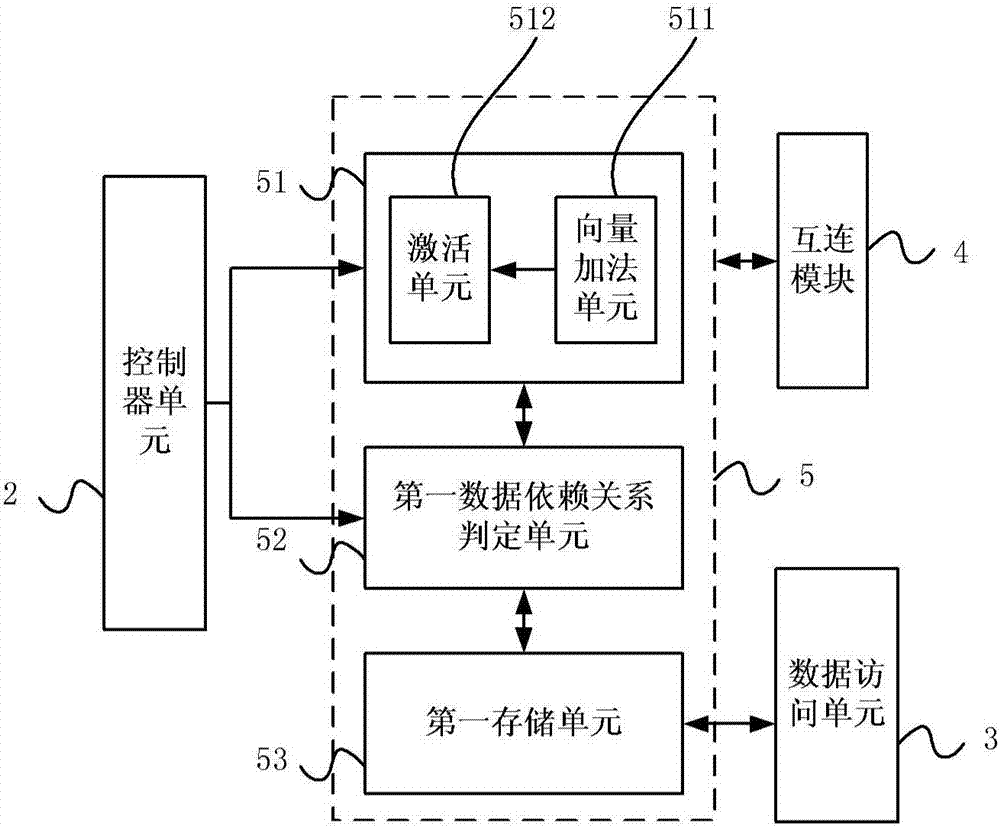

Neural network processor and convolutional neural network processor

ActiveCN105260776ARange space expansionMeet precision requirementsPhysical realisationReal arithmeticDomain space

The embodiment of the invention discloses a neural network processor and a convolutional neural network processor. The neural network processor can comprise a first weight preprocessor and a first operation array, wherein the first weight preprocessor is used for receiving a vector V<x> including M elements; the normalized value domain space of the element V(x-i) of the vector V<x> is a real number which is greater than or equal to 0 and is smaller than or equal to 1; an M*P weight vector matrix Q<x> is used for performing weighting operation on the M elements of the vector V<x> to obtain M weighting operation result vectors; the first operation array is used for accumulating the elements with the same positions in the M weighting operation result vectors to obtain P accumulated values; a vector V<y> including P elements is obtained according to the P accumulated values; and the vector V<y> is output. The technical scheme provided by the embodiment has the advantage that the expansion of the application range of neural network operation is facilitated.

Owner:HUAWEI TECH CO LTD

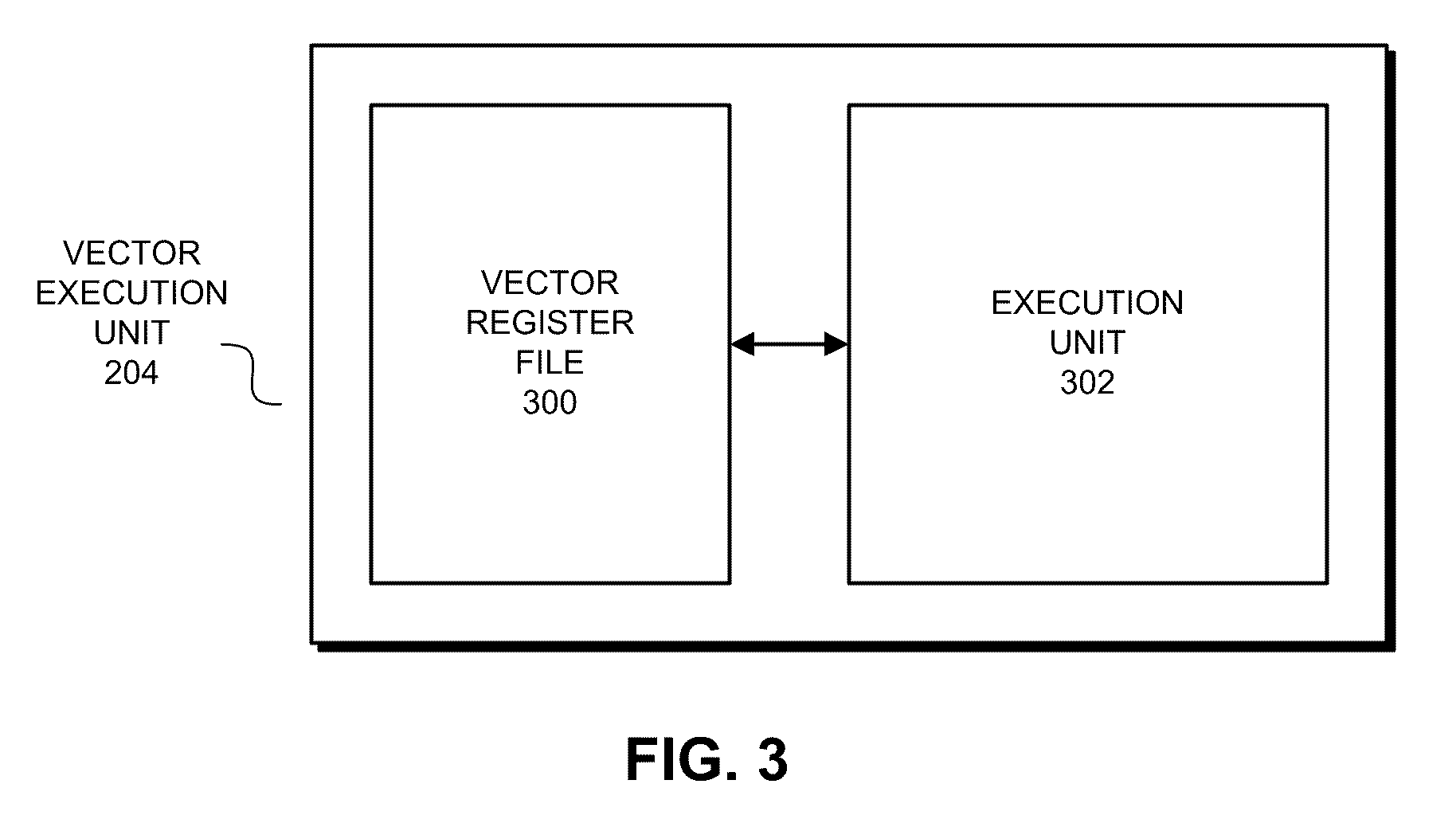

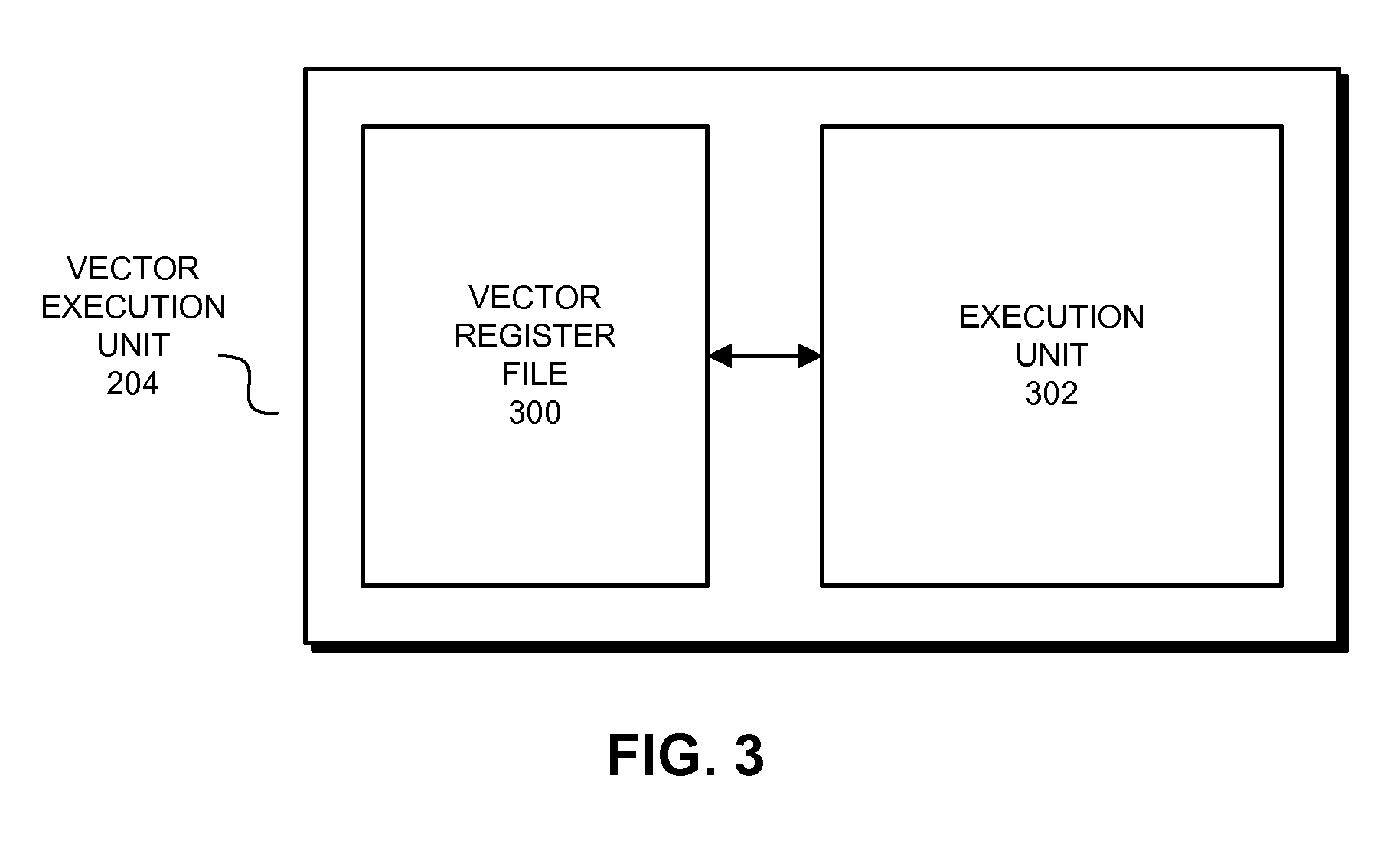

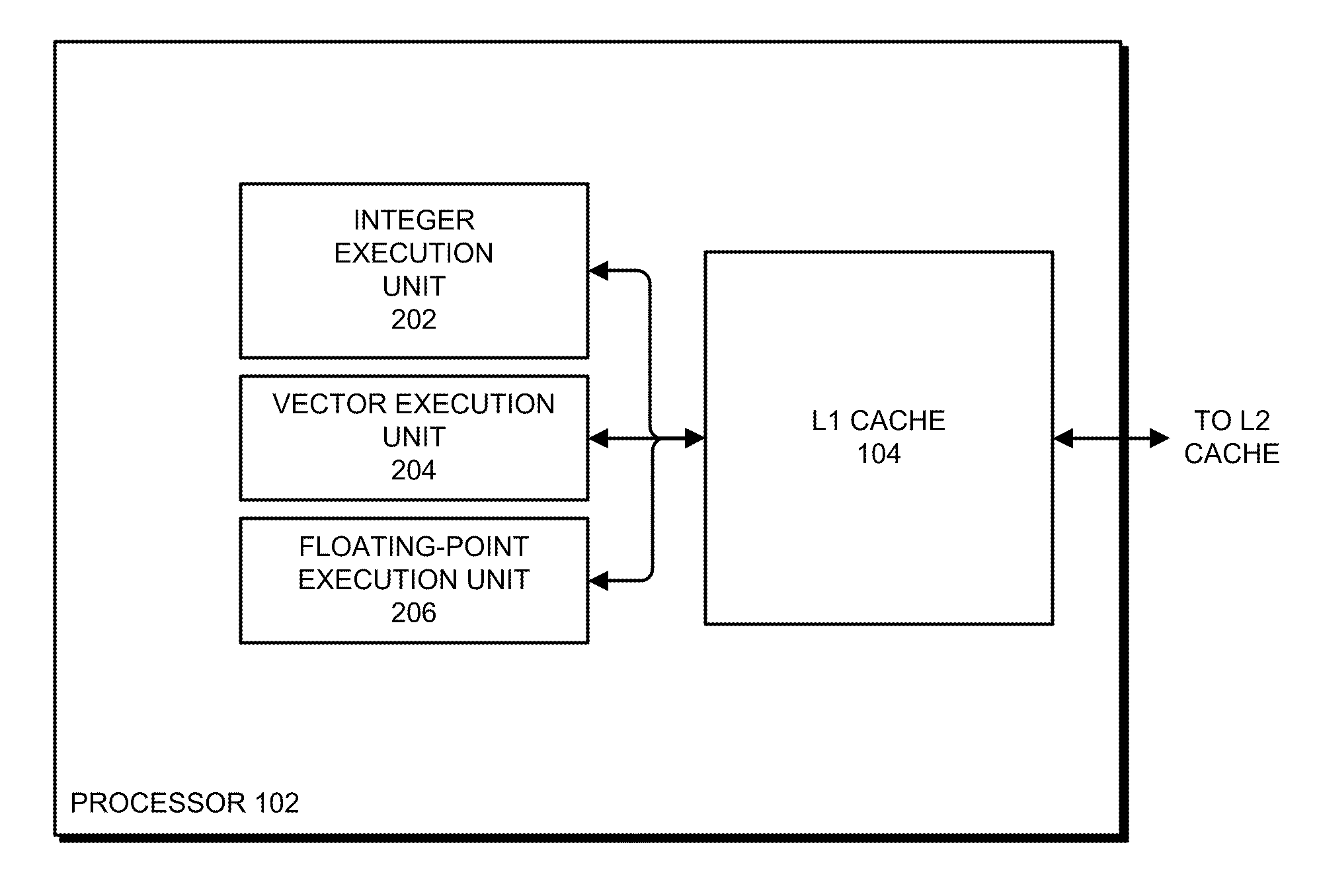

Dynamic Data Driven Alignment and Data Formatting in a Floating-Point SIMD Architecture

InactiveUS20100095087A1Minimizing amount of dataQuantity minimizationProgram control using stored programsHandling data according to predetermined rulesControl vectorProcessor register

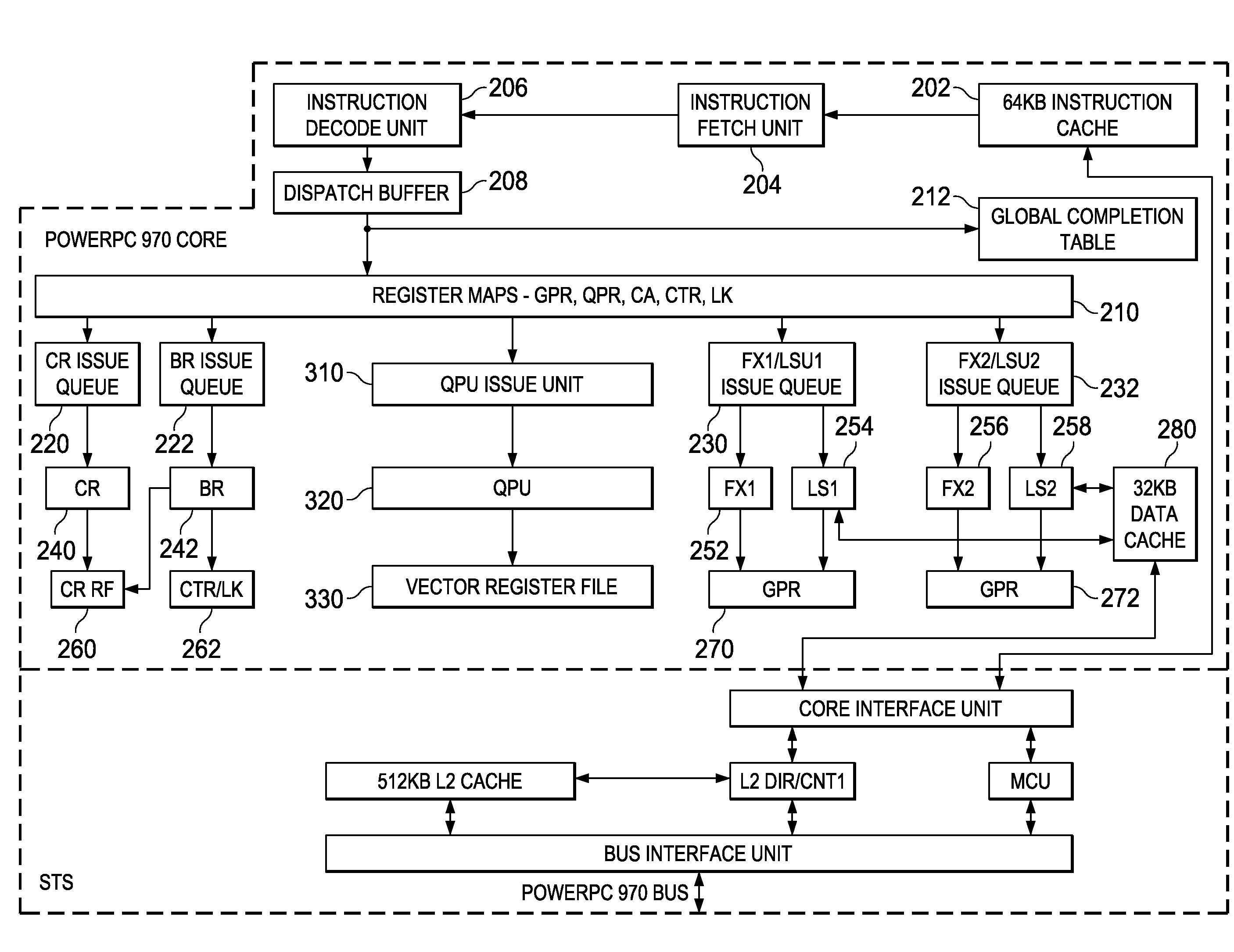

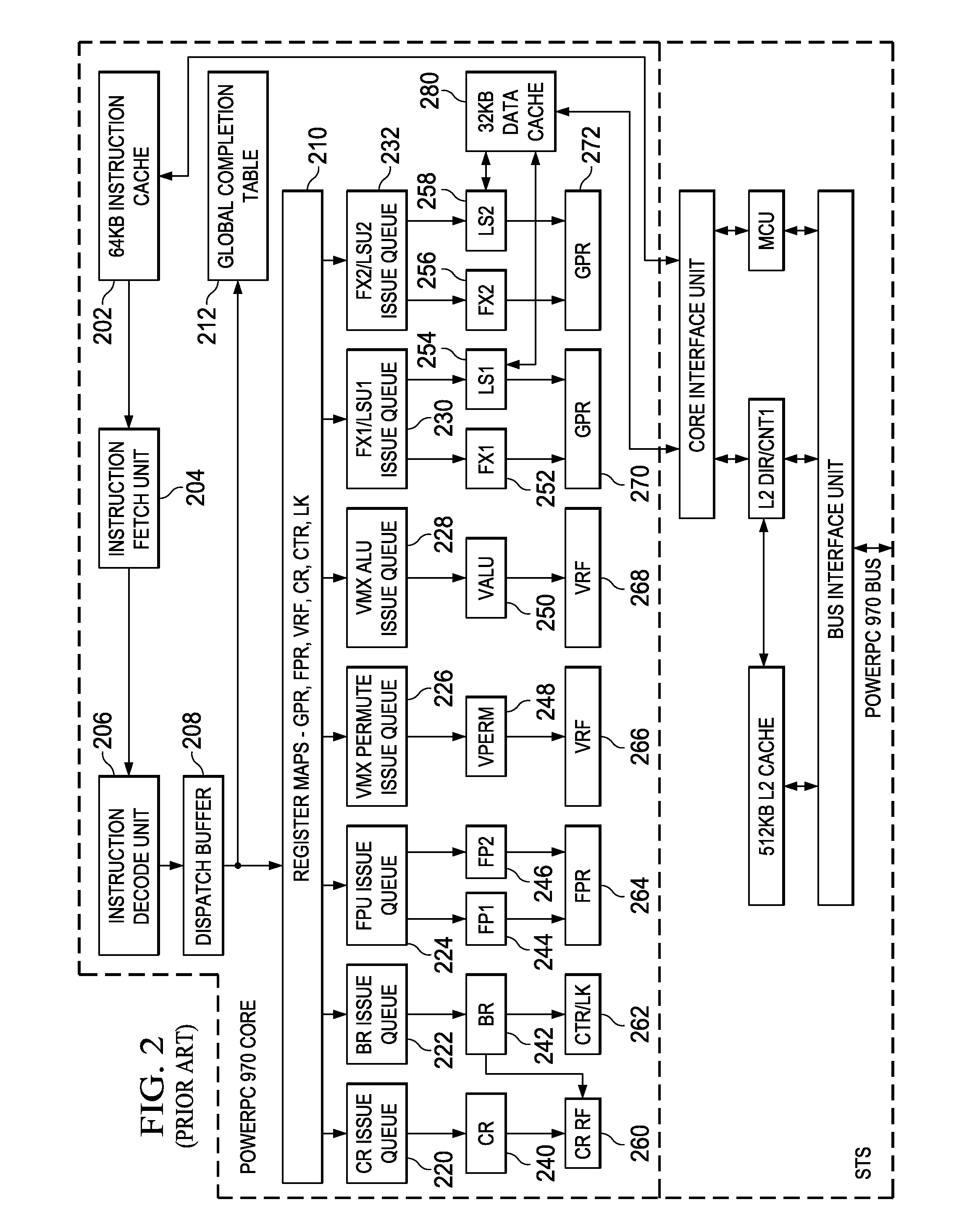

Mechanisms are provided for dynamic data driven alignment and data formatting in a floating point SIMD architecture. At least two operand inputs are input to a permute unit of a processor. Each operand input contains at least one floating point value upon which a permute operation is to be performed by the permute unit. A control vector input, having a plurality of floating point values that together constitute the control vector input, is input to the permute unit of the processor for controlling the permute operation of the permute unit. The permute unit performs a permute operation on the at least two operand inputs according to a permutation pattern specified by the plurality of floating point values that constitute the control vector input. Moreover, a result output of the permute operation is output from the permute unit to a result vector register of the processor.

Owner:IBM CORP

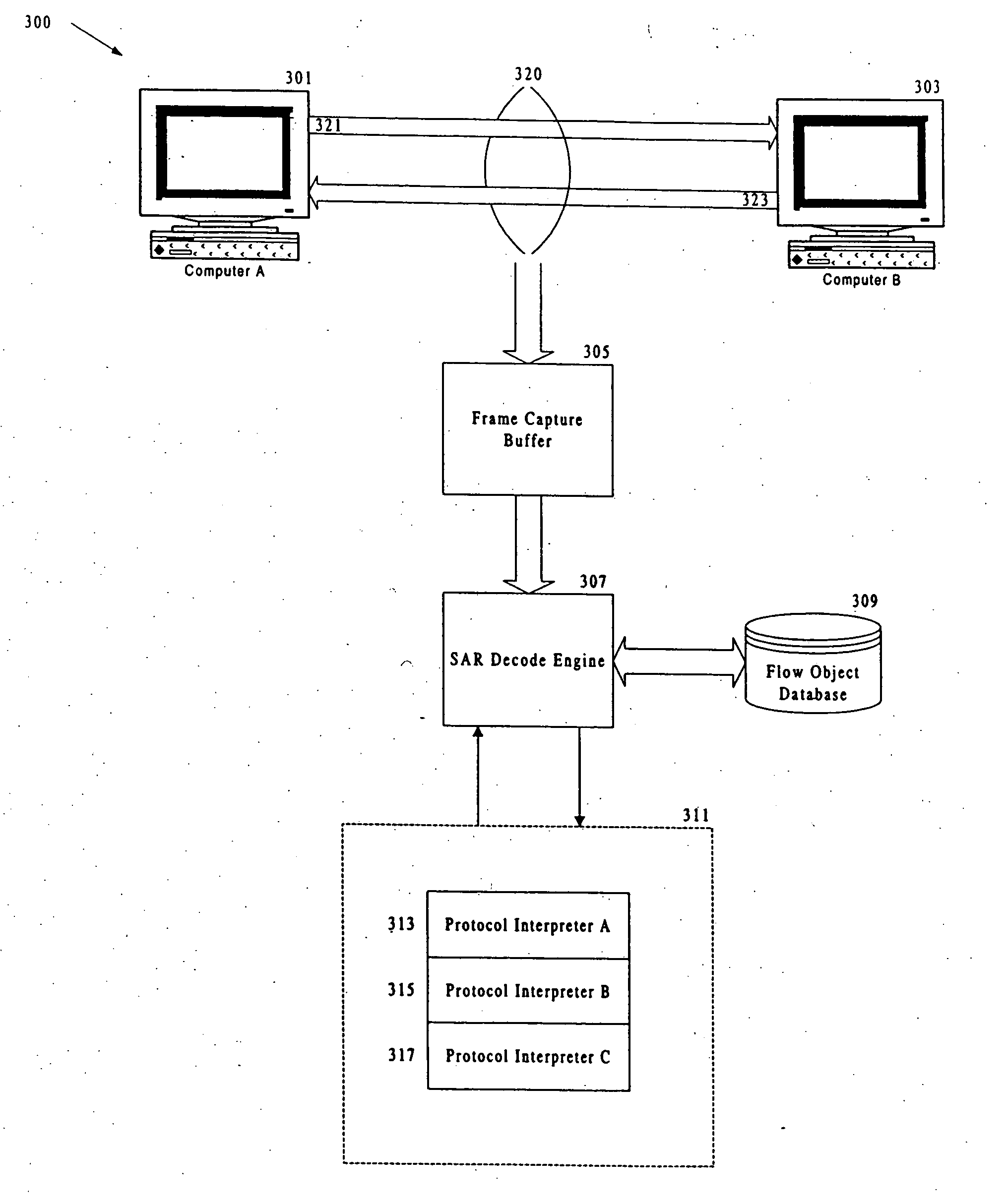

Multi-layer protocol reassembly that operates independently of underlying protocols, and resulting vector list corresponding thereto

InactiveUS6968554B1Reduce complexityImprove performanceMultiple digital computer combinationsTransmissionData streamLogical representation

A segmentation and re-assembly (SAR) decode engine receives protocol data units of data from a communication channel between two computers, sequences the protocol data units, and re-assembles the data in the protocol data units into the messages exchanged by the computers. The SAR decode engine is responsible for unpacking the payloads from the protocol data units as instructed by a protocol interpreter associated with the protocol data unit, and for creating and maintaining a flow object database containing flow objects representing the data flows at each protocol layer. The SAR decode engine creates a protocol flow object for each protocol layer and logically links the protocol flow object to circuit flow objects that define two one-way circuits within the channel. The circuit flow objects linked to a protocol flow object are logical representations of the protocol data units for the next higher protocol layer. For protocols that fragment data, each circuit flow object is a vector list containing one or more vectors that define the length, starting location and position of the data fragments in the immediately lower layer circuit flow objects.

Owner:NETWORK GENERAL TECH

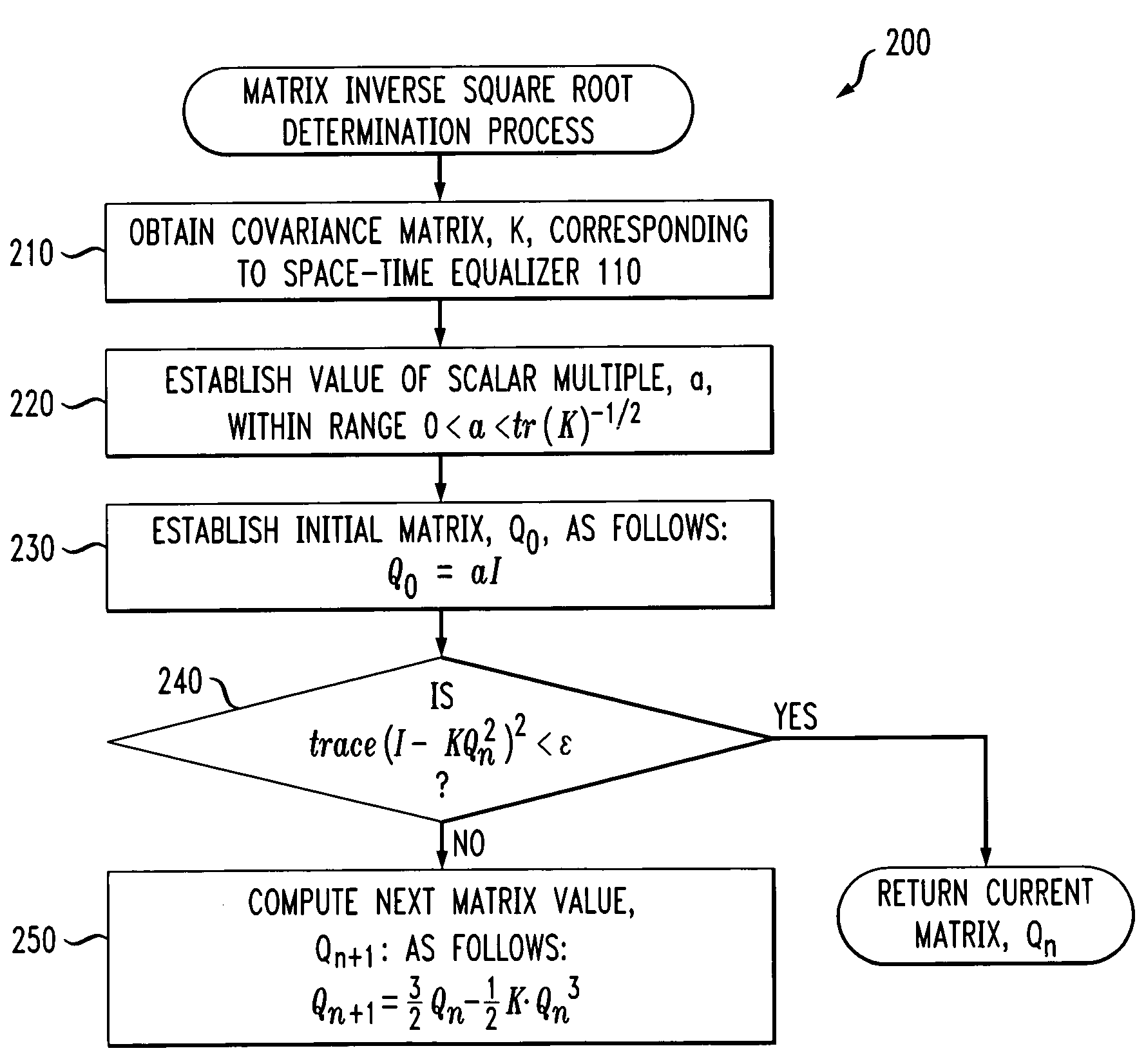

Method and apparatus for determining an inverse square root of a given positive-definite hermitian matrix

Generally, a method and apparatus are provided for computing a matrix inverse square root of a given positive-definite Hermitian matrix, K. The disclosed technique for computing an inverse square root of a matrix may be implemented, for example, by the noise whitener of a MIMO receiver. Conventional noise whitening algorithms whiten a non-white vector, X, by applying a matrix, Q, to X, such that the resulting vector, Y, equal to Q·X, is a white vector. Thus, the noise whitening algorithms attempt to identify a matrix, Q, that when multiplied by the non-white vector, will convert the vector to a white vector. The disclosed iterative algorithm determines the matrix, Q, given the covariance matrix, K. The disclosed matrix inverse square root determination process initially establishes an initial matrix, Q0, by multiplying an identity matrix by a scalar value and then continues to iterate and compute another value of the matrix, Qn+1, until a convergence threshold is satisfied. The disclosed iterative algorithm only requires multiplication and addition operations and allows incremental updates when the covariance matrix, K, changes.

Owner:LGS INNOVATIONS +1

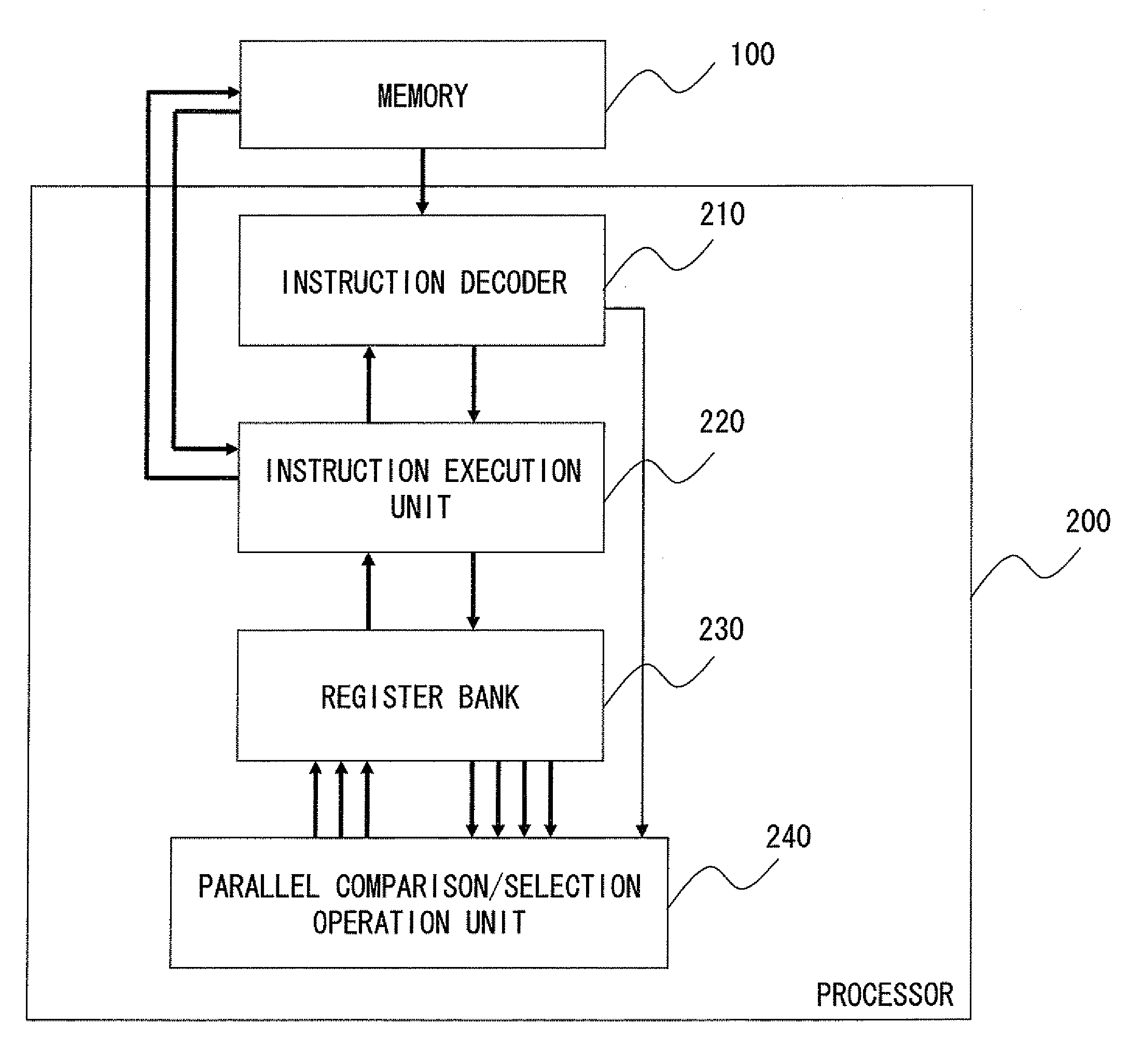

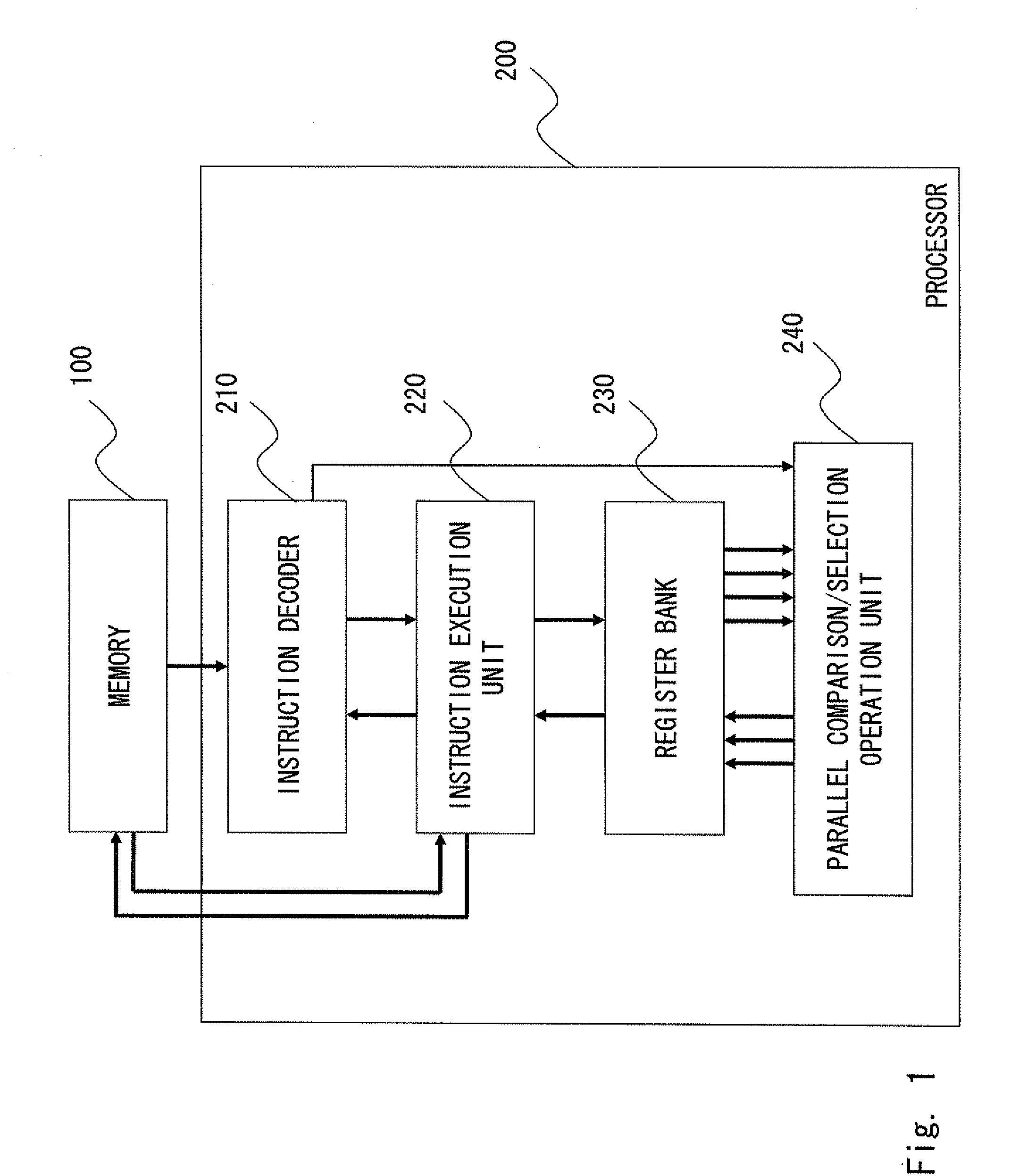

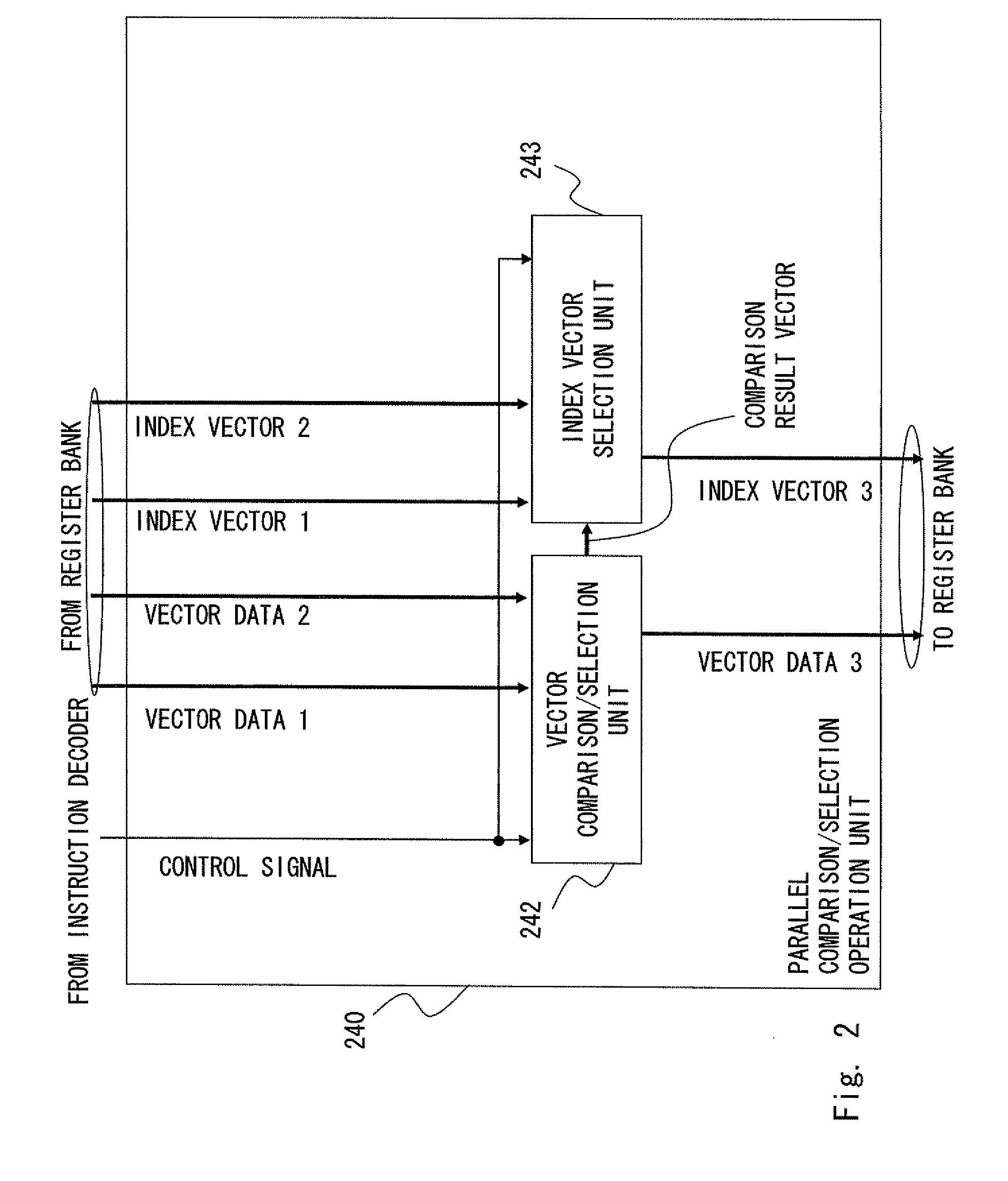

Parallel comparison/selection operation apparatus, processor, and parallel comparison/selection operation method

InactiveUS20120023308A1Efficient executionDigital data processing detailsGeneral purpose stored program computerParallel computingResult vector

Provided is a parallel comparison / selection operation apparatus which efficiently executes a search for a maximum value or a search for a minimum value with an index. The parallel comparison / selection operation apparatus includes a vector comparison / selection unit 242 that compares each element included in vector data 1 and vector data 2 for each corresponding element using the vector data 1 and the vector data 2, selects one element of the vector data 1 and the vector data 2 based on the comparison result, and generates vector data 3 including the selected element, and an index vector selection unit 243 that selects one element of an index vector 1 and an index vector 2 based on the comparison result vector using the index vector 1 of the vector data 1, the index vector 2 of the vector data 2, and the comparison result vector to generate and output an index vector 3 including the selected element.

Owner:NEC CORP +1

Computer product and method for sparse matrices

InactiveUS6243734B1Computation using non-contact making devicesComplex mathematical operationsArray data structureTheoretical computer science

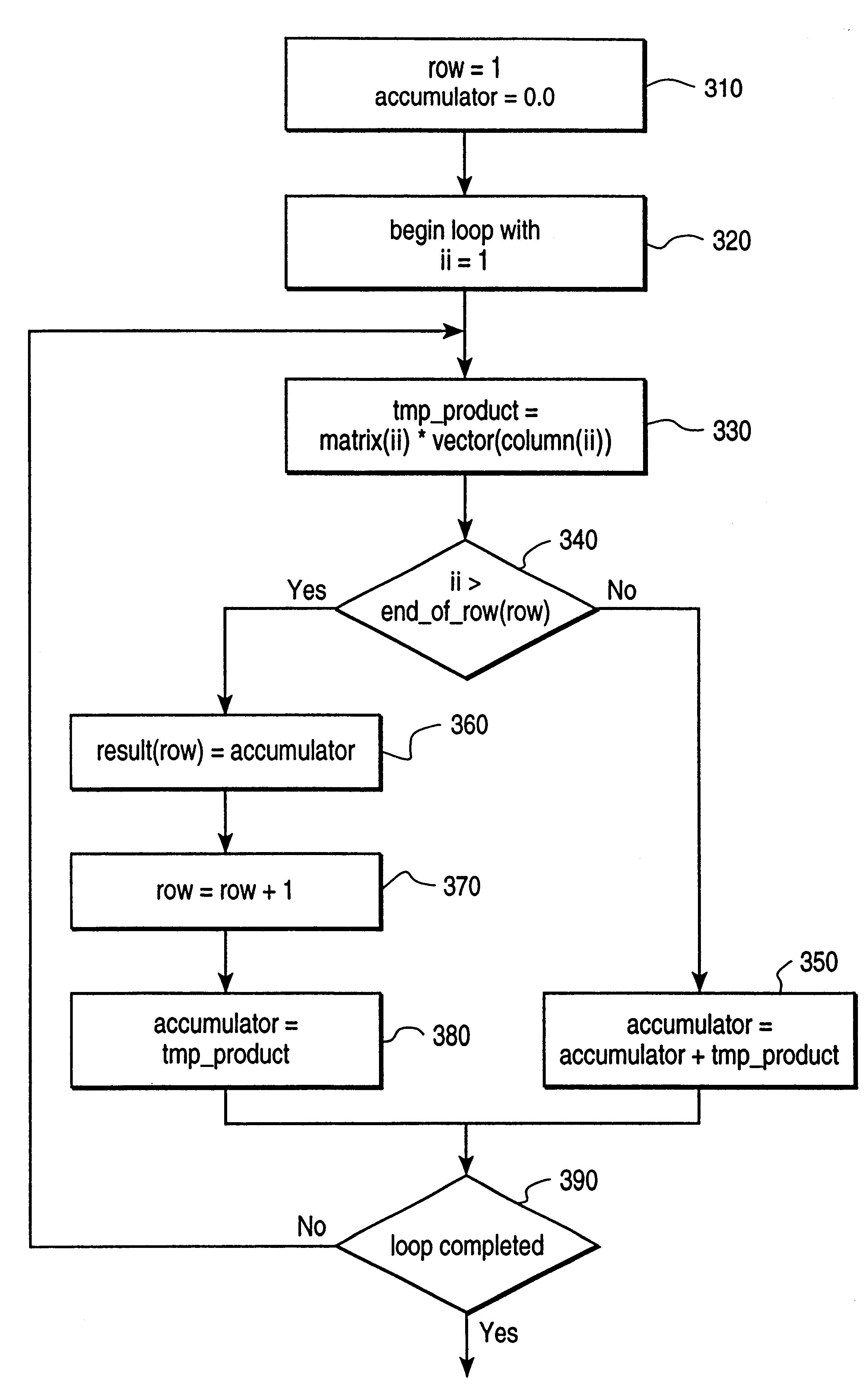

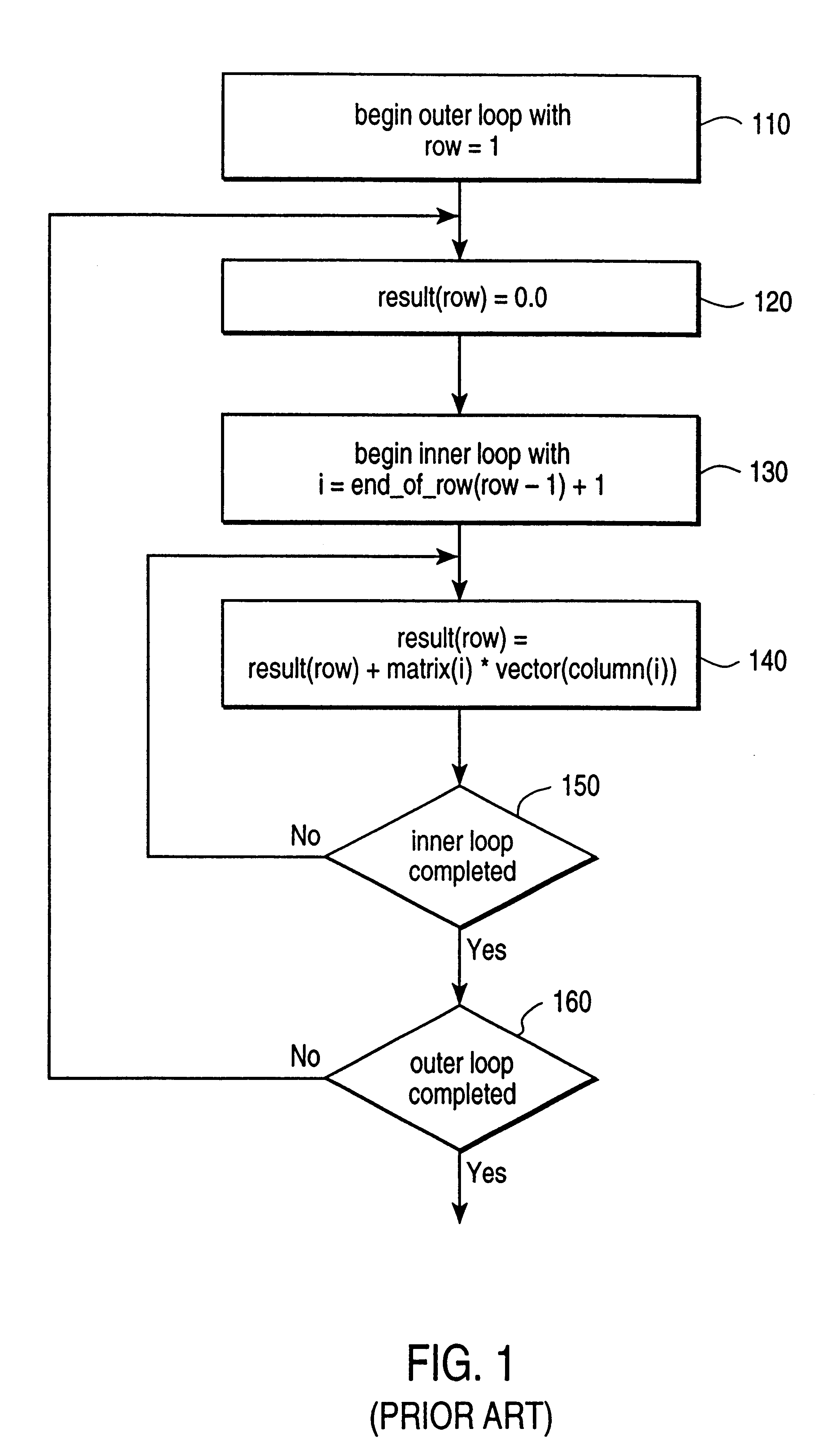

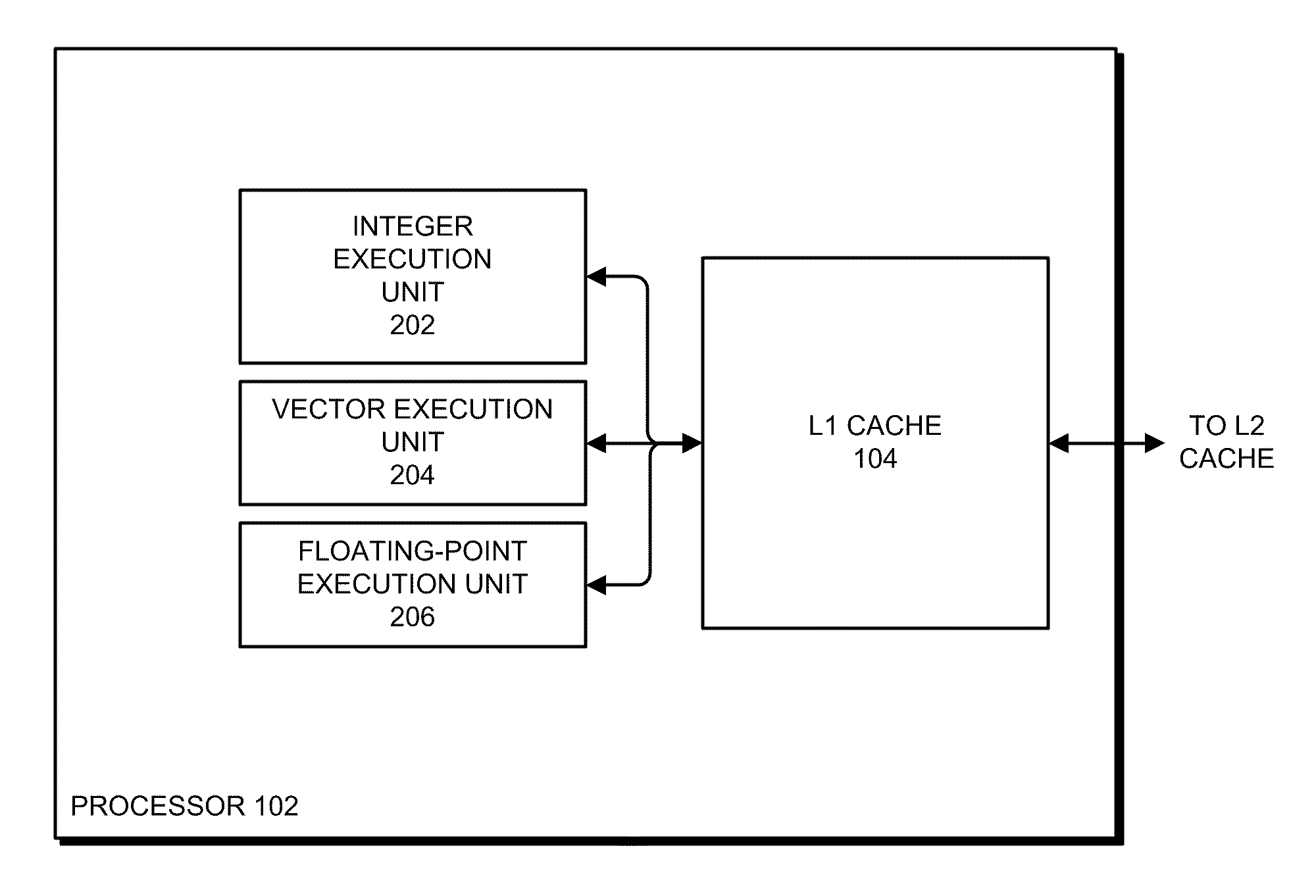

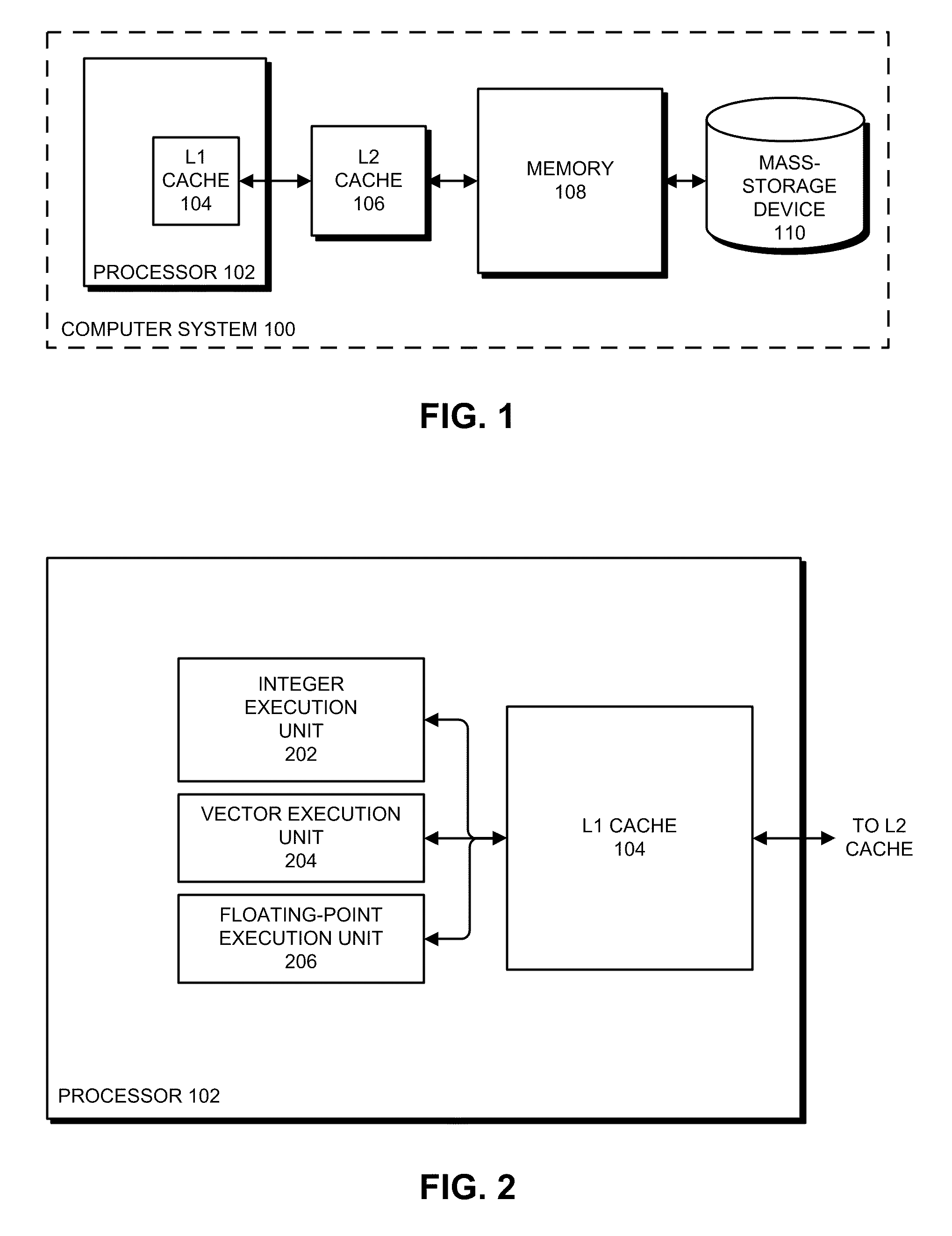

A computer program product and method for multiplying a sparse matrix by a vector are disclosed. The computer program product includes a computer readable medium for storing instructions, which, when executed by a computer, cause the computer to efficiently multiply a sparse matrix by a vector, and produce a resulting vector. The computer is made to create a first array containing the non-zero elements of the sparse matrix, and a second array containing the end_of_row position of the last non-zero element in each row of the sparse matrix. A variable is initialized, and then, for each row of the second array, the computer is made to do one of two things. Either, it equates the variable to the sum of the variable and the product of a particular element of the first array and a particular element of the vector. Or, it equates a particular element of the resulting vector to the variable, and then equates the variable to a particular value.

Owner:INTEL CORP

Running-and, running-or, running-xor, and running-multiply instructions for processing vectors

The described embodiments provide a processor for generating a result vector with shifted values. During operation, the processor receives a first input vector, a second input vector, and a control vector. When generating the result vector, the processor first captures a base value from a key element position in the second input vector. The processor then writes the product of the base value and values from relevant elements in the first input vector into selected elements in the result vector. In addition, a predicate vector can be used to control the values that are written to the result vector.

Owner:APPLE INC

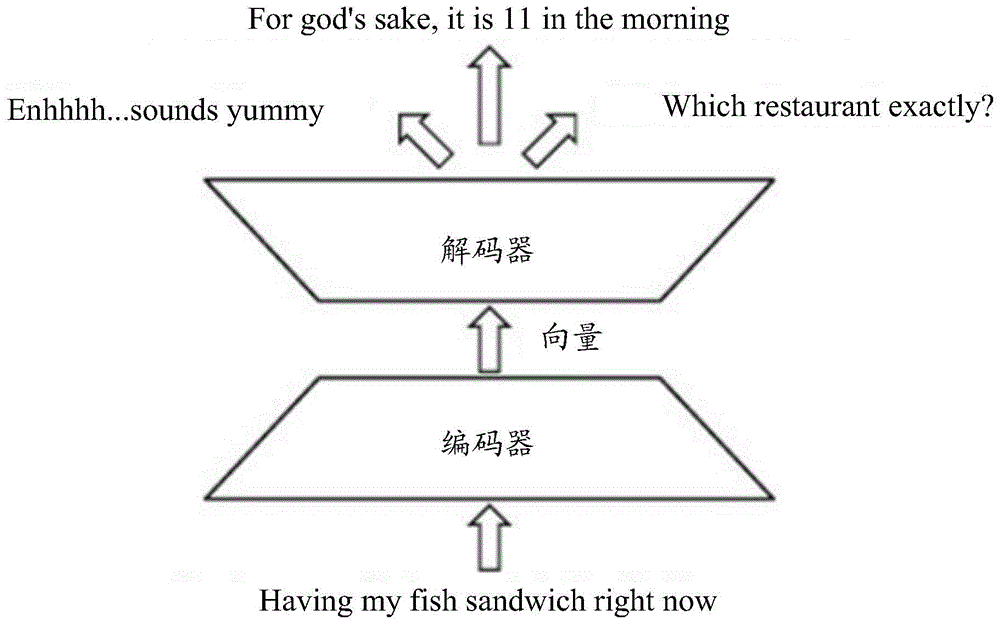

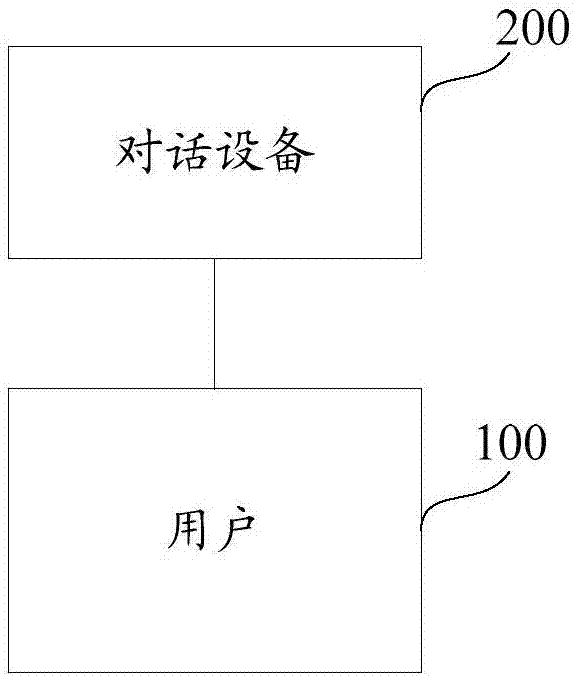

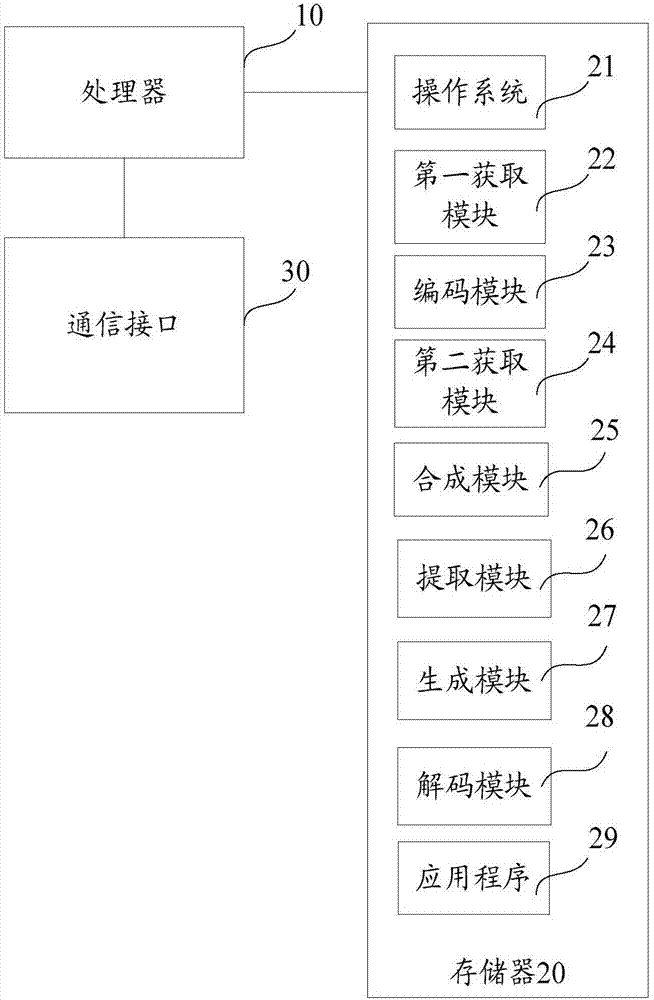

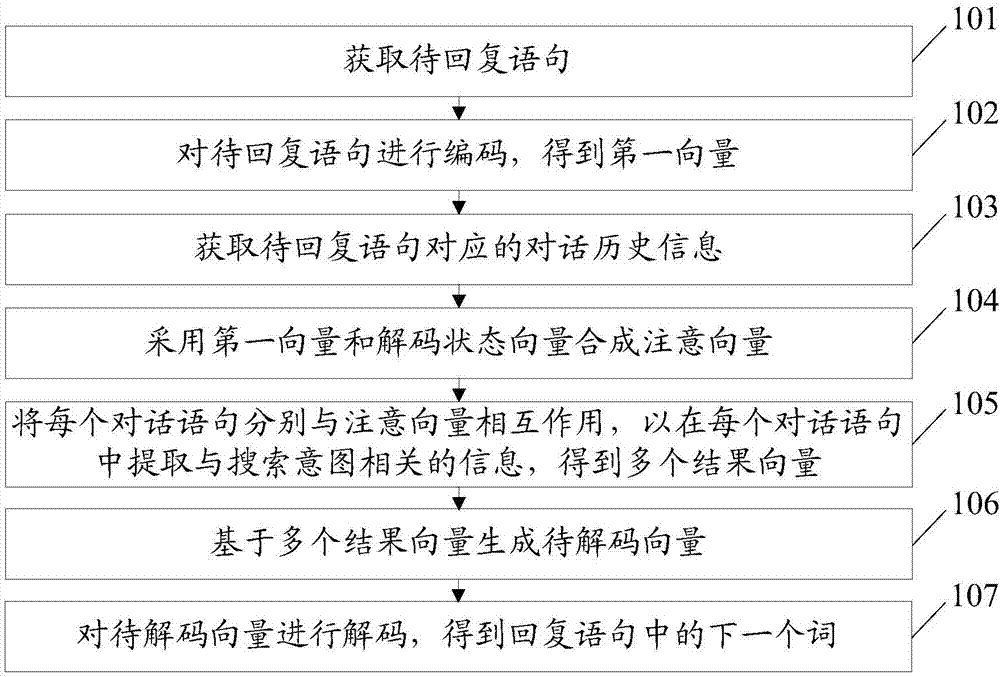

Deep learning-based dialogue method, device and equipment

ActiveCN107885756AMeet needsEffective handlingNatural language translationSemantic analysisLearning basedTheoretical computer science

The invention discloses a deep learning-based dialogue method, device and equipment, and belongs to the field of artificial intelligence. The method includes the steps of obtaining a to-be-answered statement; encoding the to-be-answered statement to obtain a first vector, wherein the first vector is the representation of the to-be-answered statement; obtaining the dialogue history information corresponding to the to-be-answered statement, wherein the dialogue history information includes at least one round of dialogue statements, and each round of dialogue statements includes two dialogue statements; synthesizing an attention vector by using the first vector and a decoding state vector, wherein the decoding state vector is used for representing the state of a decoder when the most recent word in an answered sentence is output, and the attention vector is used for representing a search intention; enabling each dialogue statement to interact with the attention vector respectively to extract the information related to the search intention in each dialogue statement to obtain multiple result vectors; generating a to-be-decoded vector based on the multiple result vectors; decoding the to-be-decoded vector to obtain a next word in the answered sentence. According to the method, device and equipment, the answered sentence takes a dialogue history as reference.

Owner:HUAWEI TECH CO LTD

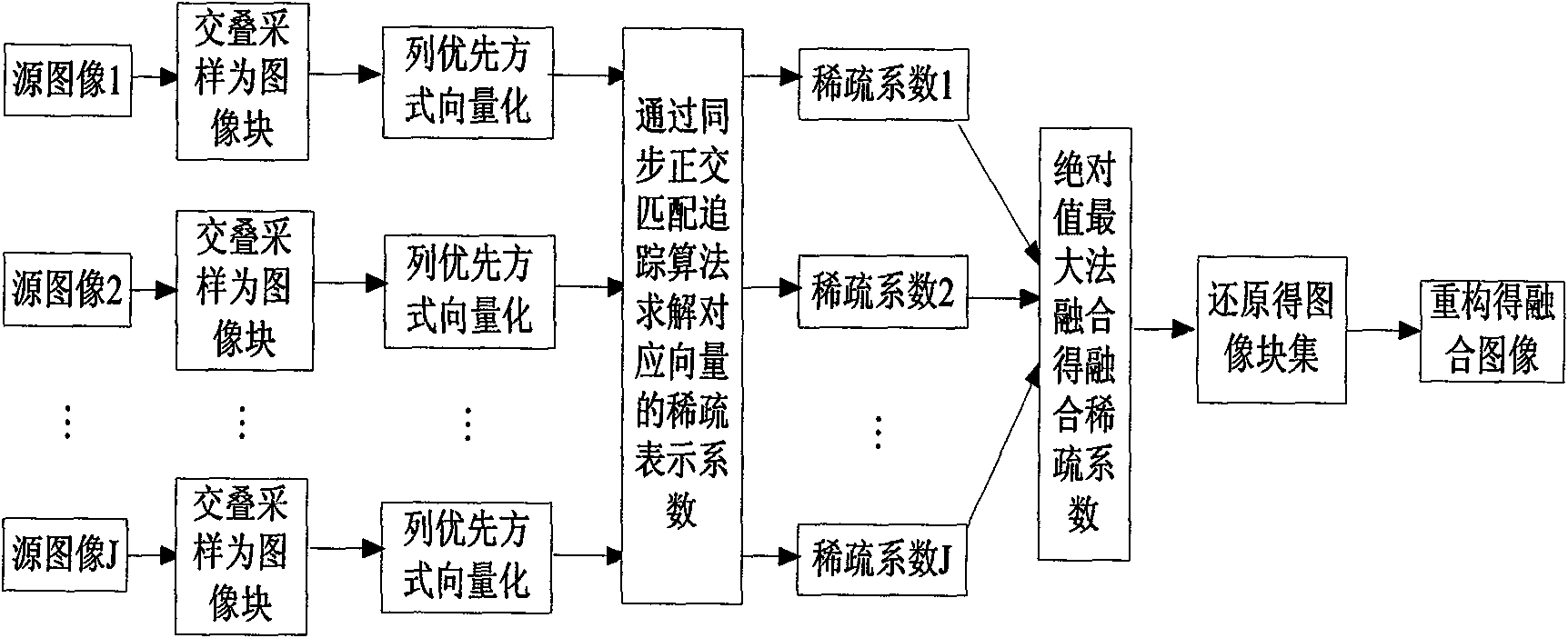

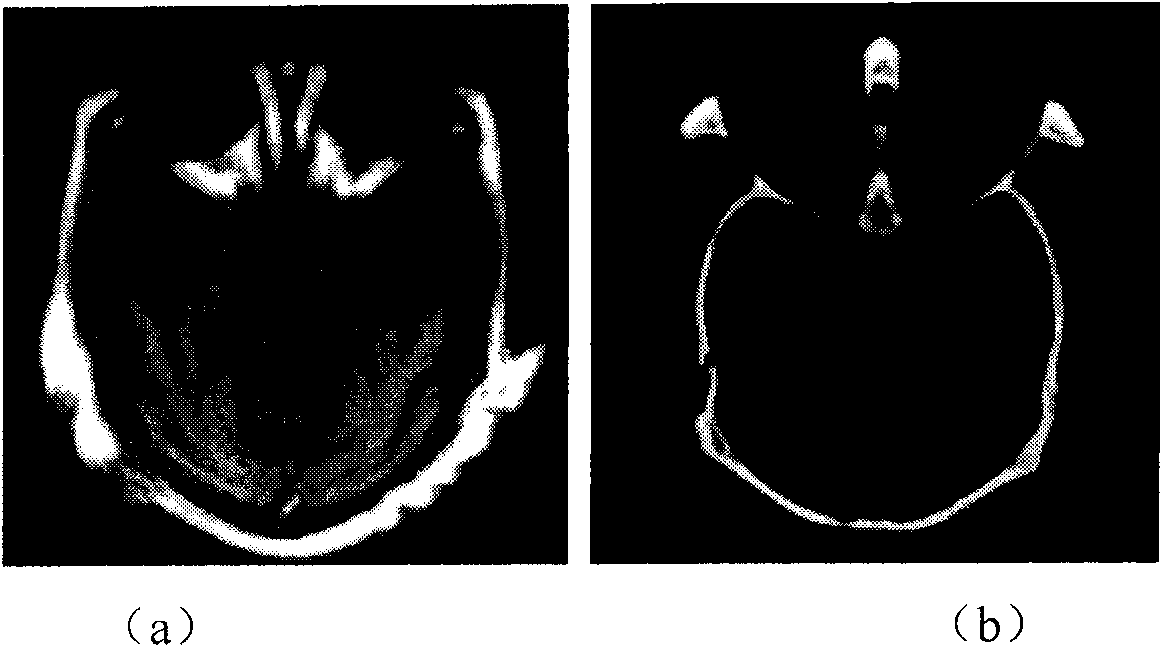

Multi-source image fusion method based on synchronous orthogonal matching pursuit algorithm

InactiveCN101540045AConform to visual characteristicsSparse Signal RepresentationImage enhancementPattern recognitionSlide window

The invention discloses a multi-source image fusion method based on the synchronous orthogonal matching pursuit algorithm. The multi-source image fusion method comprises the following steps: sampling a source image pixel by pixel in an overlapping manner into image blocks of the same size by a sliding window of the fixed size and expanding each image block by columns into column vectors; obtaining the sparse representation coefficient corresponding to each vector on the over-complete dictionary by the synchronous orthogonal matching pursuit algorithm; fusing the corresponding coefficient by the maximum absolute value method; inverse-transforming the fused sparse representation coefficient into the fusion result vector of corresponding to the vectors according to the over-complete dictionary; and restoring all the fusion result vectors to image blocks and re-constructing to obtain the fused image. The invention fully considers the intrinsic characteristics of the image sparsity and the method using sparse representation can more effectively present the useful information of each source image and achieve better fusion effect, therefore, the invention is of great significance and practical value to the post-processing and image display of various application systems.

Owner:HUNAN UNIV

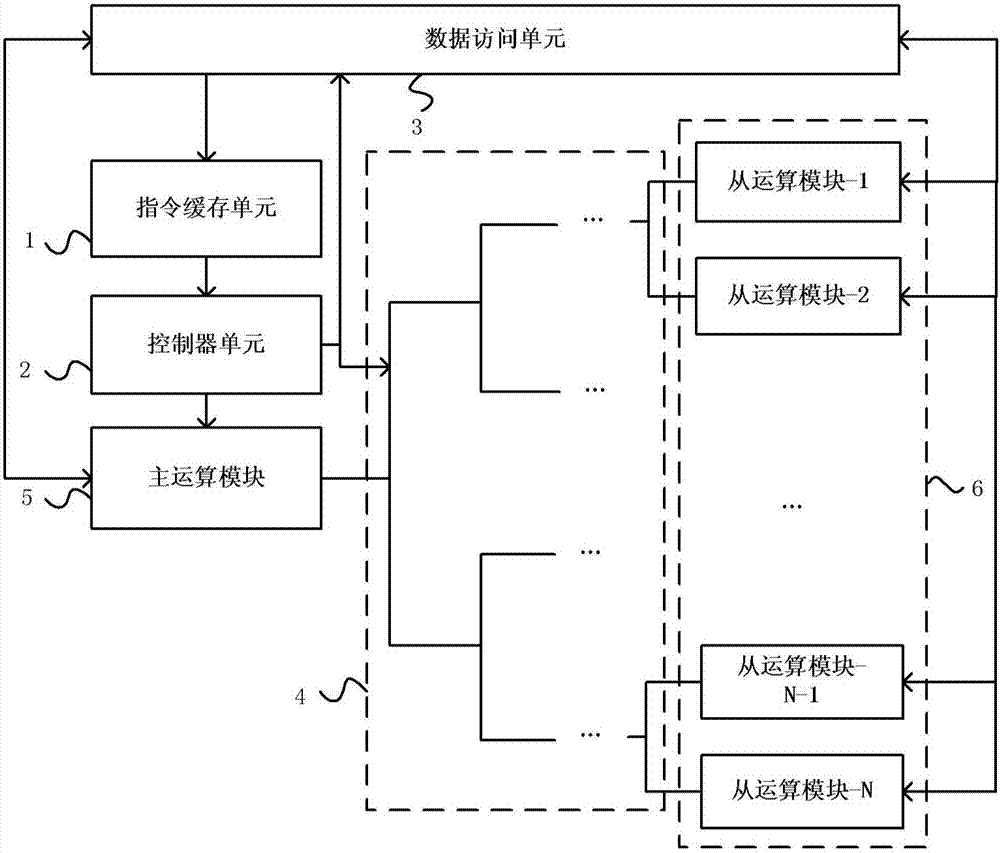

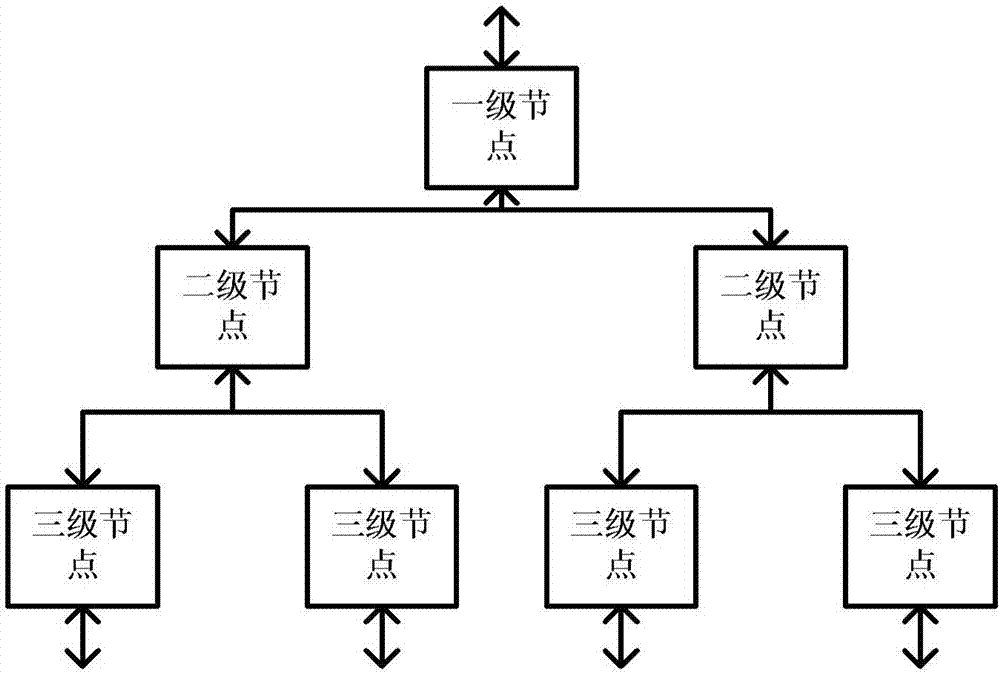

Device used for executing forward operations of neural network of fully-connected layers and methods

ActiveCN107315571AMemory architecture accessing/allocationConcurrent instruction executionData accessComputer module

The invention provides a device used for executing forward operations of fully-connected layers of an artificial neural network. The device includes an instruction storage unit, a controller unit, a data access unit, an interconnection module, a main operation module and a plurality of slave operation modules. One or more layers of forward operations of the fully-connected layers of the artificial neural network can be realized by using the device. For each layer, weighted summation is firstly carried out on an input neuron vector to calculate an intermediate result vector of the current layer, then bias is added to the intermediate result vector and activation are carried out to obtain an output neuron vector, and the output neuron vector is used as an input neuron vector of a next layer.

Owner:CAMBRICON TECH CO LTD

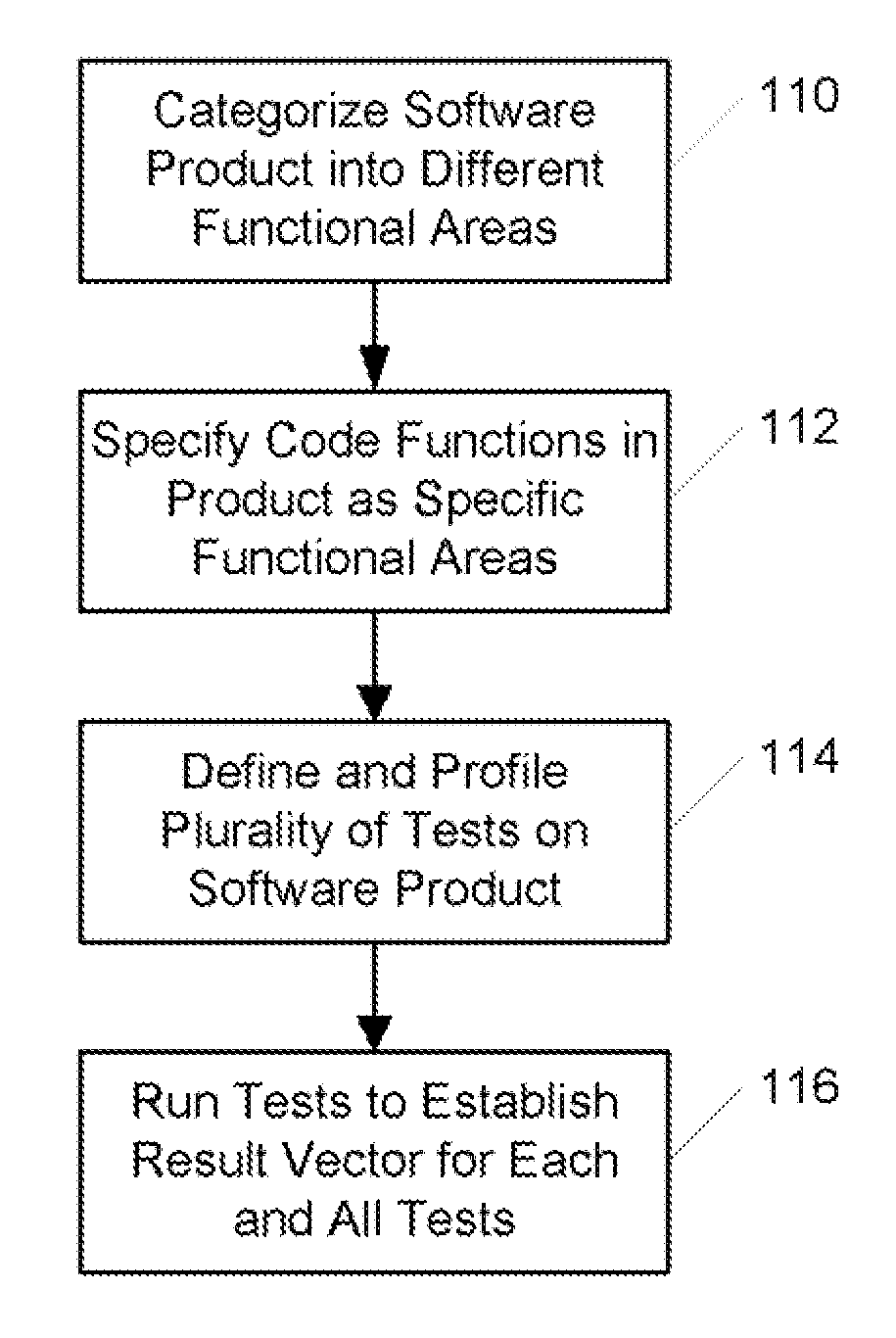

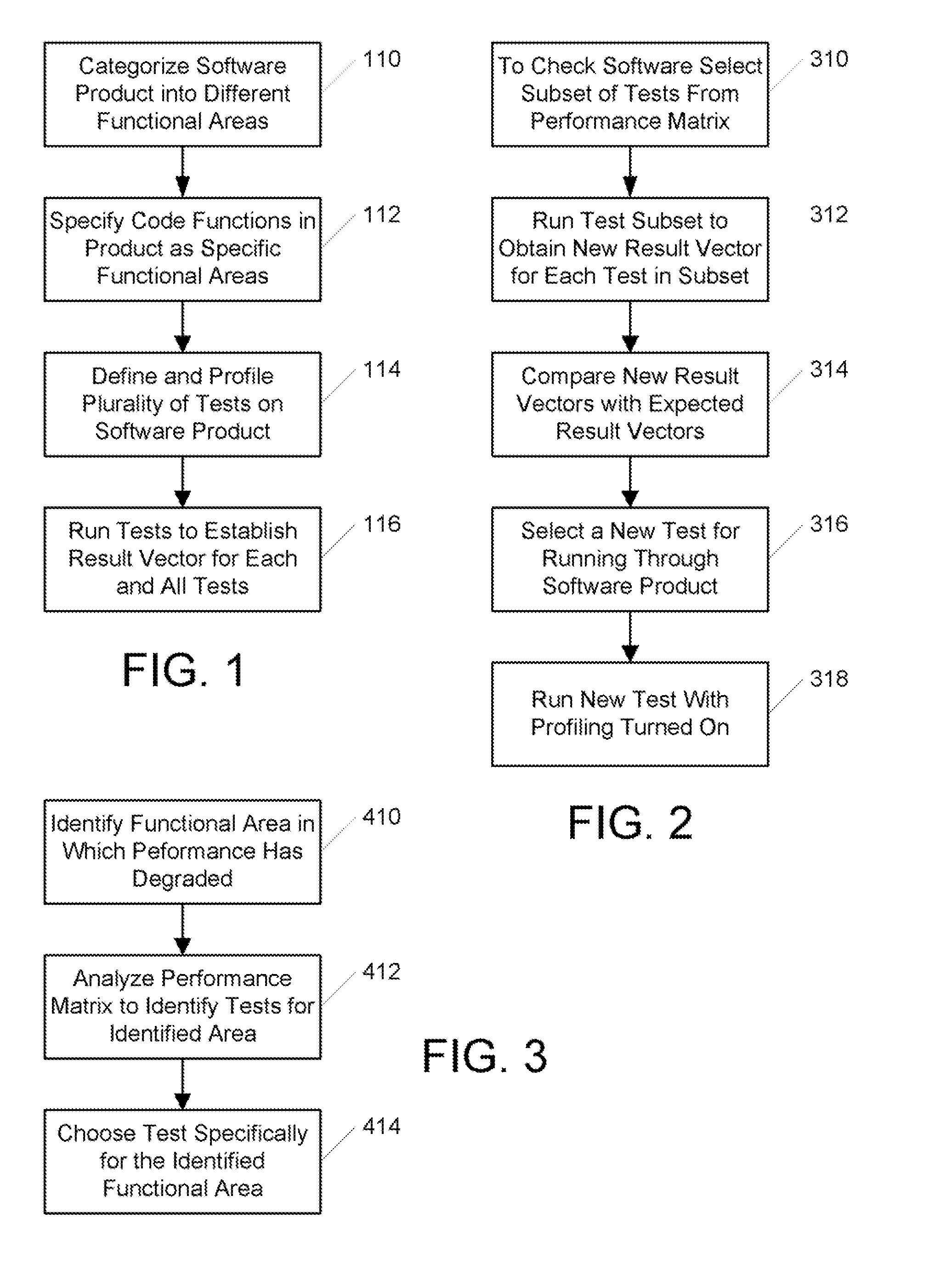

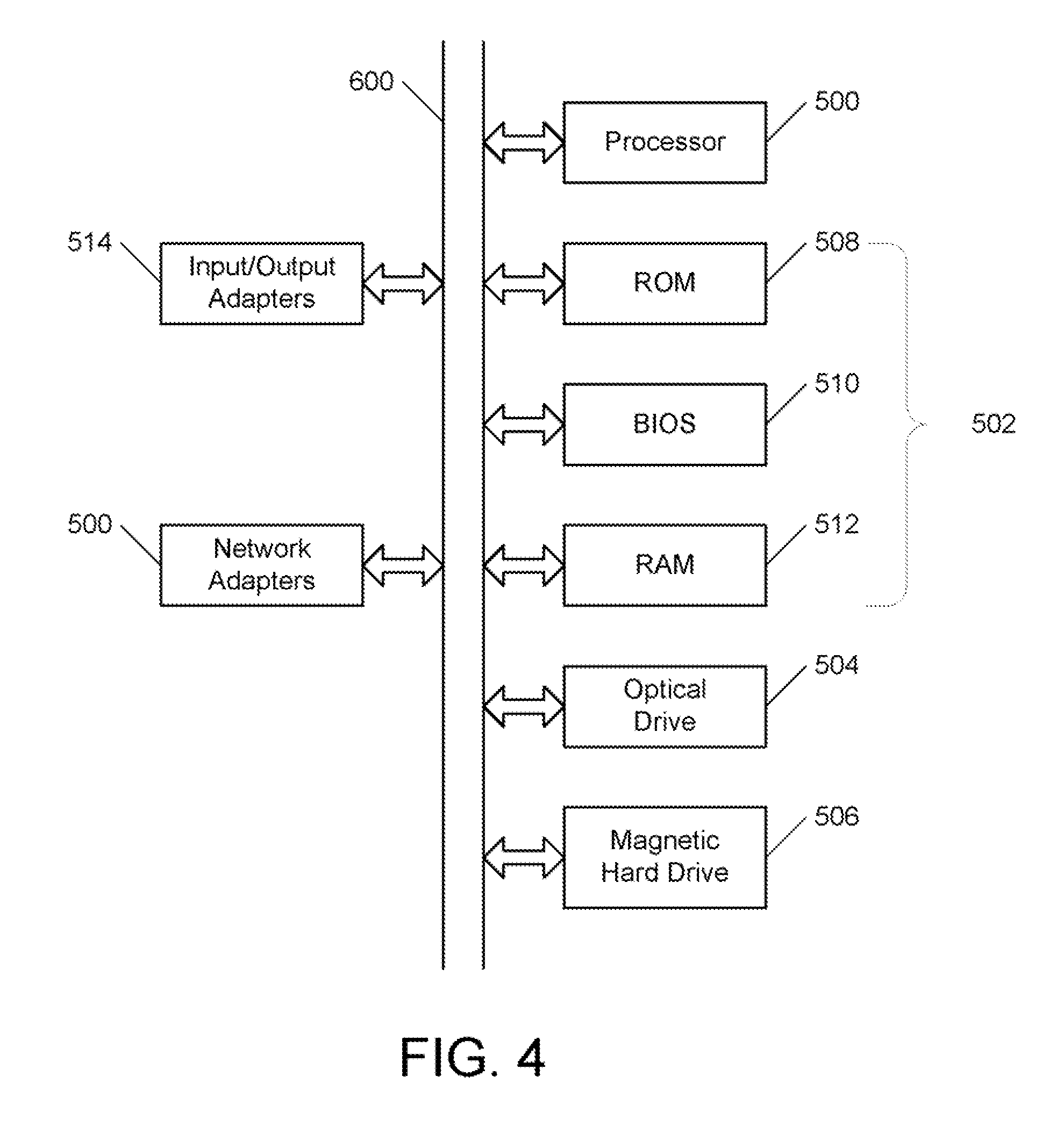

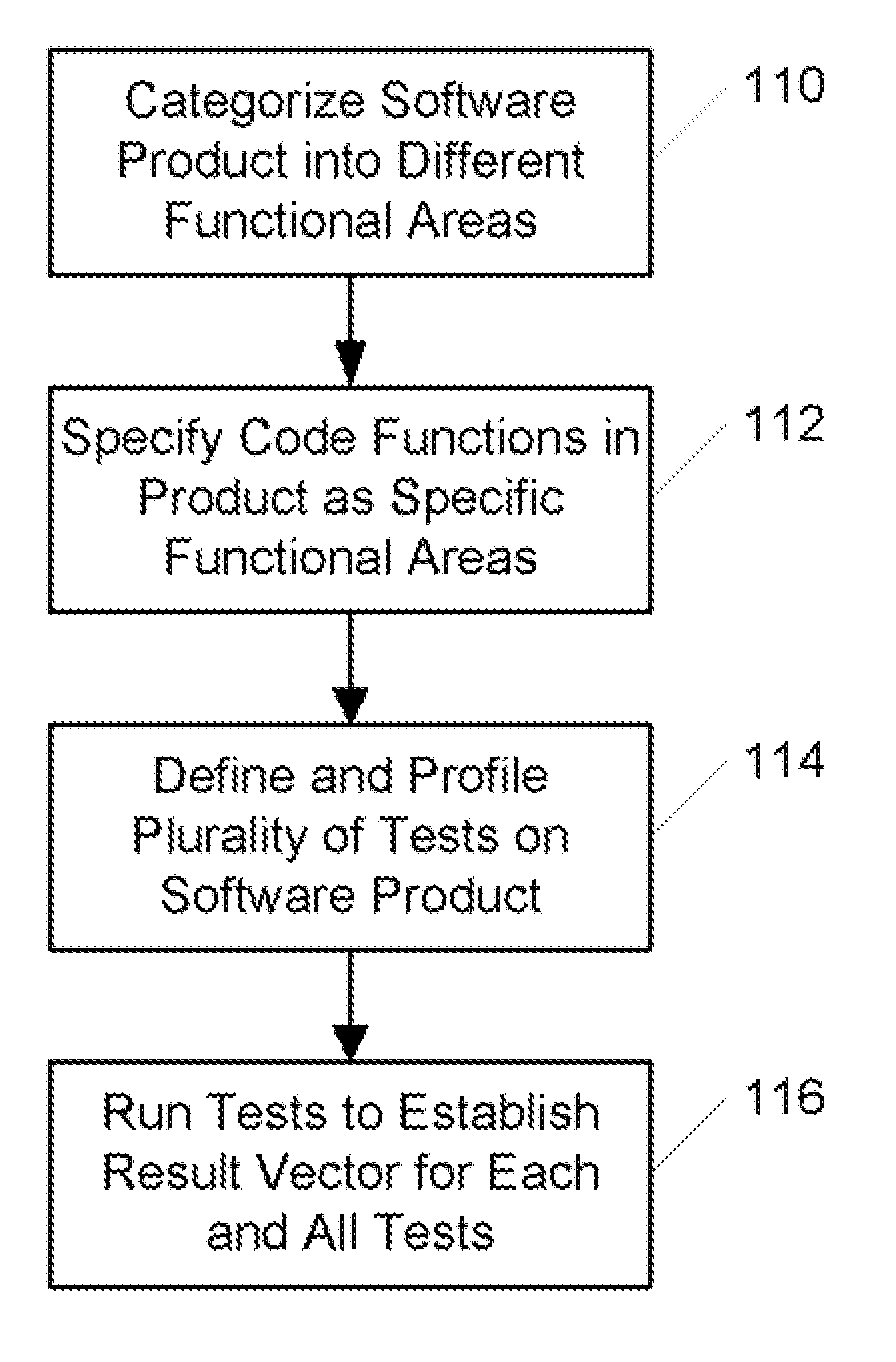

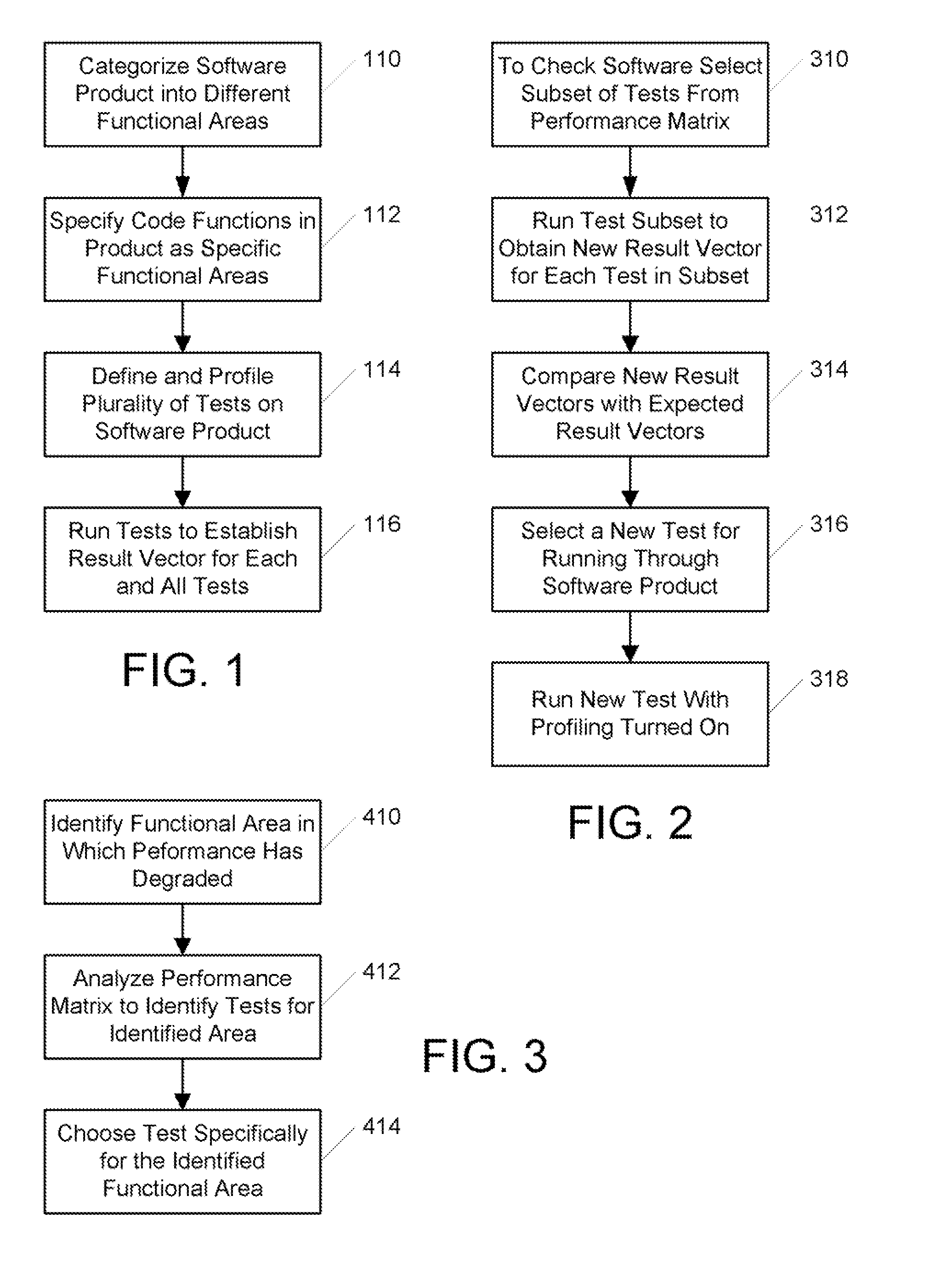

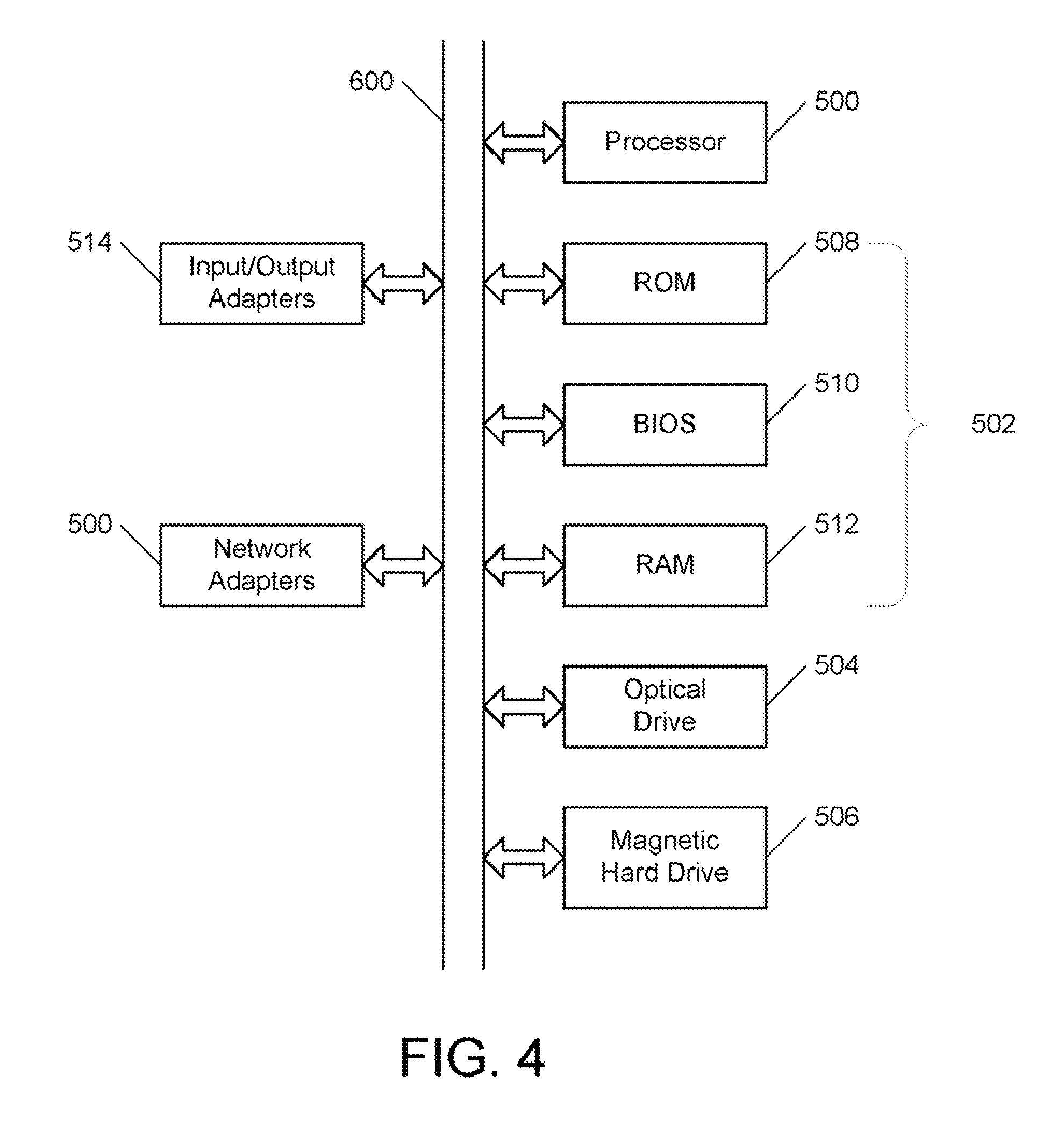

Software testing method and system

InactiveUS8056060B2Electronic circuit testingError detection/correctionParallel computingTest sequence

A software product is tested by first obtaining a performance matrix for the software product, the performance matrix containing the profile results of a plurality of tests on the software product, and an expected result vector for the plurality of tests. A test sequence is then executed for the software product, the sequence comprising selecting a subset of the plurality of tests, running the test subset to obtain a new result vector for the test subset, comparing the new result vector entry with the expected result vector entry for the same test, selecting a test (which may be one of the subset or may be a new test) according to the outcome of the result vector comparison and the performance matrix, and running the selected test under profile.

Owner:IBM CORP

Actual instruction and actual-fault instructions for processing vectors

ActiveUS20110035567A1Software engineeringGeneral purpose stored program computerParallel computingResult vector

The described embodiments include a processor that executes a vector instruction. The processor starts by receiving a vector instruction that optionally receives a predicate vector (which has N elements) as an input. The processor then executes the vector instruction. In the described embodiments, executing the vector instruction causes the processor to generate a result vector. When generating the result vector, if the predicate vector is received, for each element in the result vector for which a corresponding element of the predicate vector is active, otherwise, for each element of the result vector, the processor determines element positions for which a fault was masked during a prior operation. The processor then updates elements in the result vector to identify a leftmost element for which a fault was masked.

Owner:APPLE INC

Software Testing Method and System

InactiveUS20080046791A1Electronic circuit testingError detection/correctionParallel computingTest sequence

A software product is tested by first obtaining a performance matrix for the software product, the performance matrix containing the profile results of a plurality of tests on the software product, and an expected result vector for the plurality of tests. A test sequence is then executed for the software product, the sequence comprising selecting a subset of the plurality of tests, running the test subset to obtain a new result vector for the test subset, comparing the new result vector entry with the expected result vector entry for the same test, selecting a test (which may be one of the subset or may be a new test) according to the outcome of the result vector comparison and the performance matrix, and running the selected test under profile.

Owner:IBM CORP

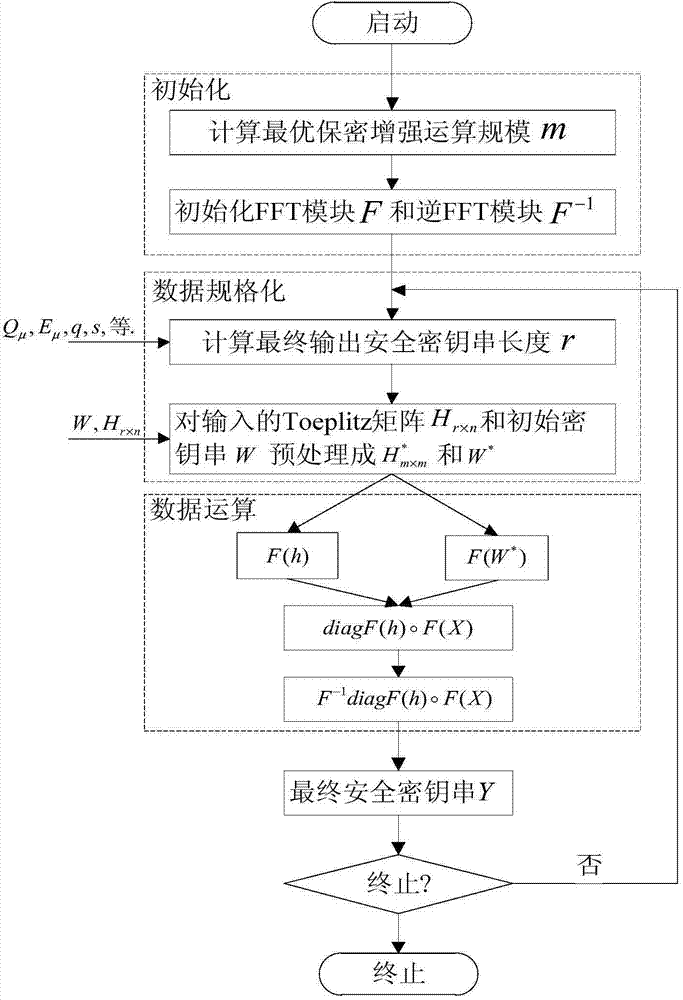

Quantum secret key distribution privacy amplification method supporting large-scale dynamic changes

ActiveCN104506313AIncrease flexibilityEasy to handleKey distribution for secure communicationCounting rateData operations

The invention discloses a quantum secret key distribution privacy amplification method supporting large-scale dynamic changes. The method includes the steps of firstly, conducting initialization, wherein the optimal operation scale m of an FFT module is calculated according to the actual running parameters of a quantum secret key distribution system when the privacy amplification method is started, and the initialization scale is an FFT operation and inverse FFT operation module of m; secondly, normalizing data, wherein the final security secret key length r is calculated according to the detector counting rate Q mu of the quantum secret key distribution system, the quantum bit error rate E mu, the corrected weak security secret key length n and the security parameter s of the quantum secret key distribution system, and normalizing an initial secret key string and a Toeplitz matrix according to the parameter m, the parameter n and the parameter r; thirdly, conducting data operation, wherein the operation process of the Toeplitz matrix and the initial secret key string is operated through the FFT technology, the first r items of the calculation result are taken to form a result vector, namely, the final security secrete key. The method has the advantages of being high in flexibility, better in processing performance, and the like.

Owner:NAT UNIV OF DEFENSE TECH

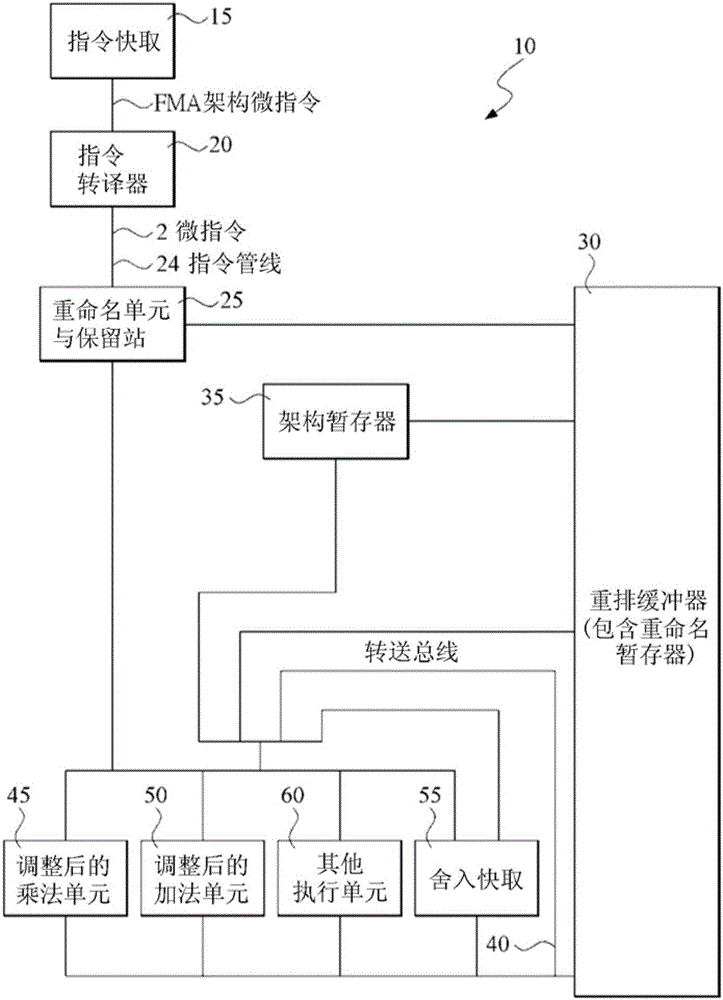

Split-path fused multiply-accumulate operation using first and second sub-operations

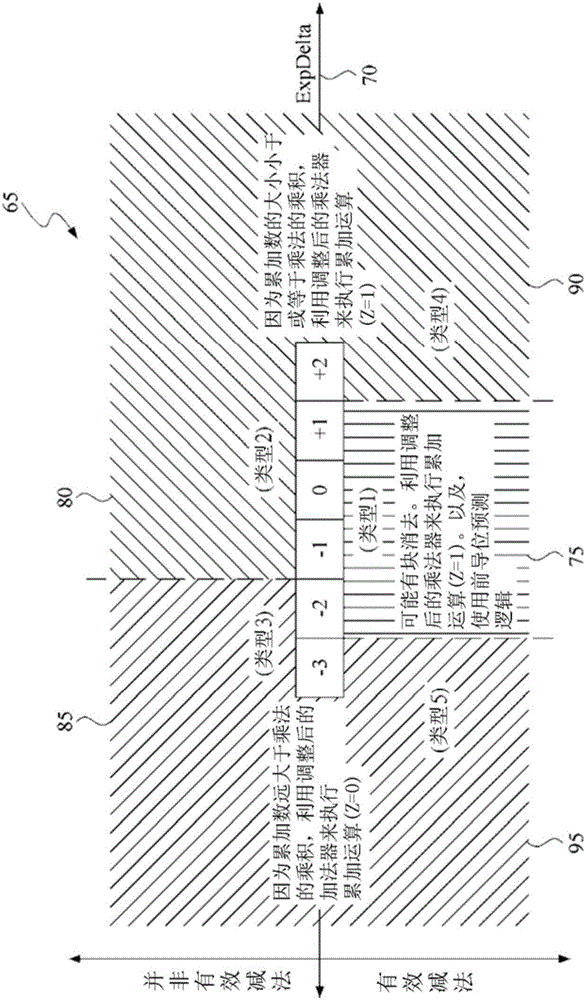

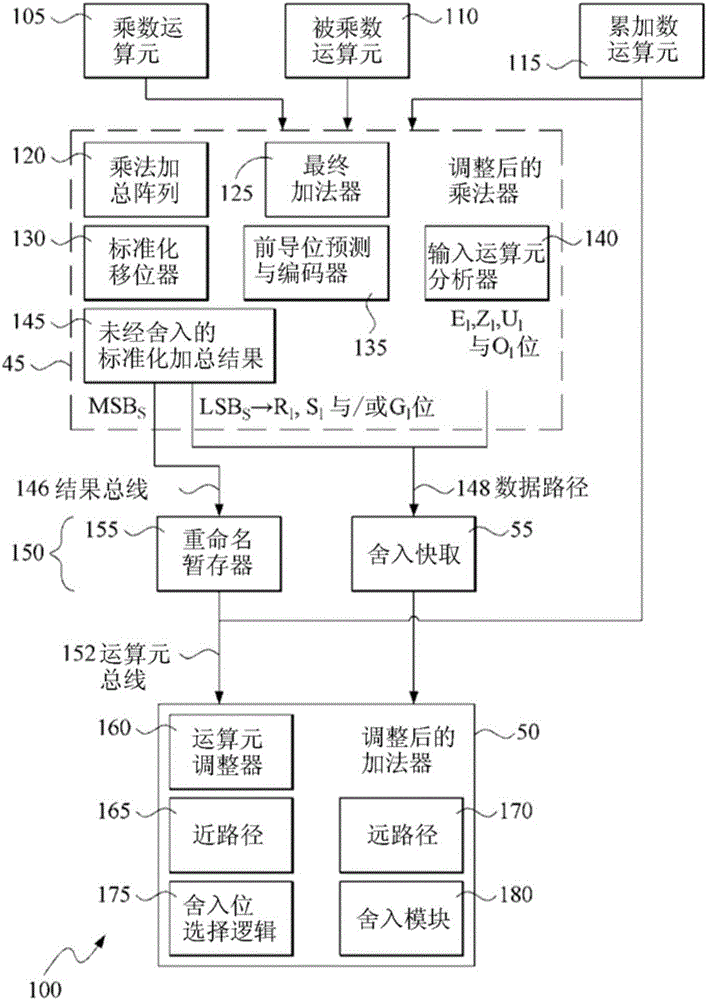

ActiveCN105849690AReduce cumulative power consumptionRound cache avoidanceDigital data processing detailsInstruction analysisMultiply–accumulate operationParallel computing

A microprocessor executes a fused multiply-accumulate operation of a form +-A * B +- C by dividing the operation in first and second suboperations. The first suboperation selectively accumulates the partial products of A and B with or without C and generates an intermediate result vector and a plurality of calculation control indicators. The calculation control indicators indicate how subsequent calculations to generate a final result from the intermediate result vector should proceed. The intermediate result vector, in combination with the plurality of calculation control indicators, provides sufficient information to generate a result indistinguishable from an infinitely precise calculation of the compound arithmetic operation whose result is reduced in significance to a target data size.

Owner:上海兆芯集成电路股份有限公司

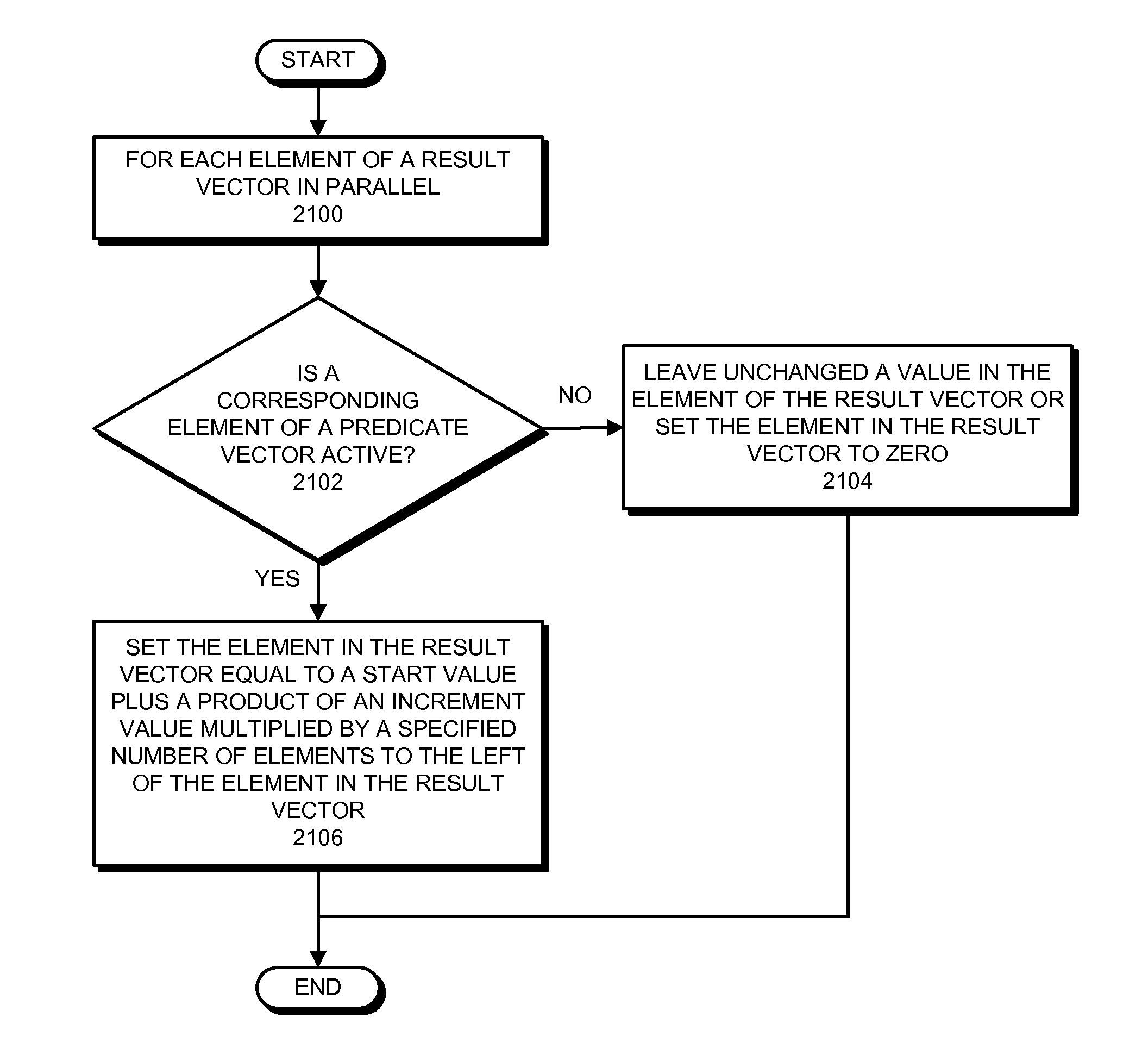

Vector index instruction for generating a result vector with incremental values based on a start value and an increment value

The described embodiments include a processor that executes a vector instruction. The processor starts by receiving a start value and an increment value, and optionally receiving a predicate vector with N elements as inputs. The processor then executes the vector instruction. Executing the vector instruction causes the processor to generate a result vector. When generating the result vector, if the predicate vector is received, for each element in the result vector for which a corresponding element of the predicate vector is active, otherwise, for each element in the result vector, the processor sets the element in the result vector equal to the start value plus a product of the increment value multiplied by a specified number of elements to the left of the element in the result vector.

Owner:APPLE INC

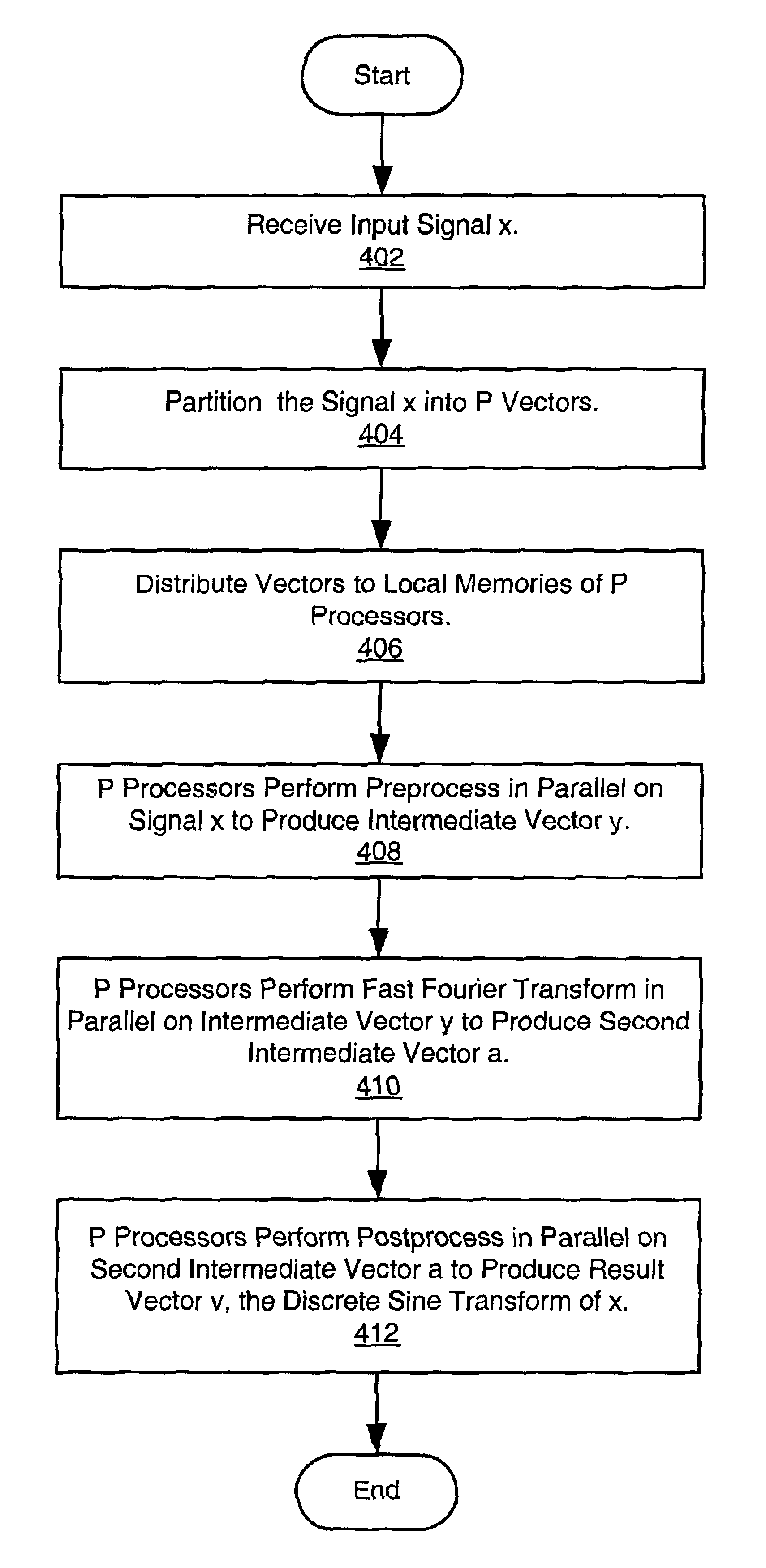

System and method for computing a discrete transform

InactiveUS6839727B2Digital computer detailsComplex mathematical operationsFast Fourier transformPost processor

A system and method for parallel computation of Discrete Sine and Cosine Transforms. The computing system includes a plurality of interconnected processors and corresponding local memories. An input signal x is received, partitioned into P local vectors xi, and distributed to the local memories. The preprocessors may calculate a set of coefficients for use in computing the transform. The processors perform a preprocess in parallel on the input signal x to generate an intermediate vector y. The processors then perform a Fast Fourier Transform in parallel on the intermediate vector y, generating a second intermediate vector a. Finally, the processors perform a post-process on the second intermediate vector a, generating a result vector v, the Discrete Transform of signal x. In one embodiment, the method generates the Discrete Sine Transform of the input signal x. In another embodiment, the method generates the Discrete Cosine Transform of the input signal x.

Owner:ORACLE INT CORP

Face recognition and movement track judging methods under high noise

InactiveCN107153820AMake sure the target is reliableReduce the possibility of misjudgmentCharacter and pattern recognitionFeature extractionK matrix

The invention discloses face recognition and movement track judging methods under high noise. The face recognition method comprises an image acquisition step, a feature extraction step, a feature comparison step, and a judgment step. In the image acquisition step, images of a video stream are acquired at intervals. In the feature comparison step, extracted features are compared with features stored in a face library, corresponding result vectors <id1, id2,...,idk > and <score1, score2,...,scorek> (respectively representing the IDs of most similar k persons in the face library and the similarity thereof) of faces are obtained, and two m*k matrixes are obtained for each image, wherein m is the number of persons in the frame of image. In the judgment step, a candidate person set is obtained according to images decoded from the same video stream and the number of times each ID appears in the comparison results, and a suspect ID is determined according to the number of times each ID in the candidate person set appears in images decoded from different video streams and the similarity. The method is of high detection reliability and low misjudgment rate under the condition of high noise.

Owner:UNIV OF ELECTRONIC SCI & TECH OF CHINA

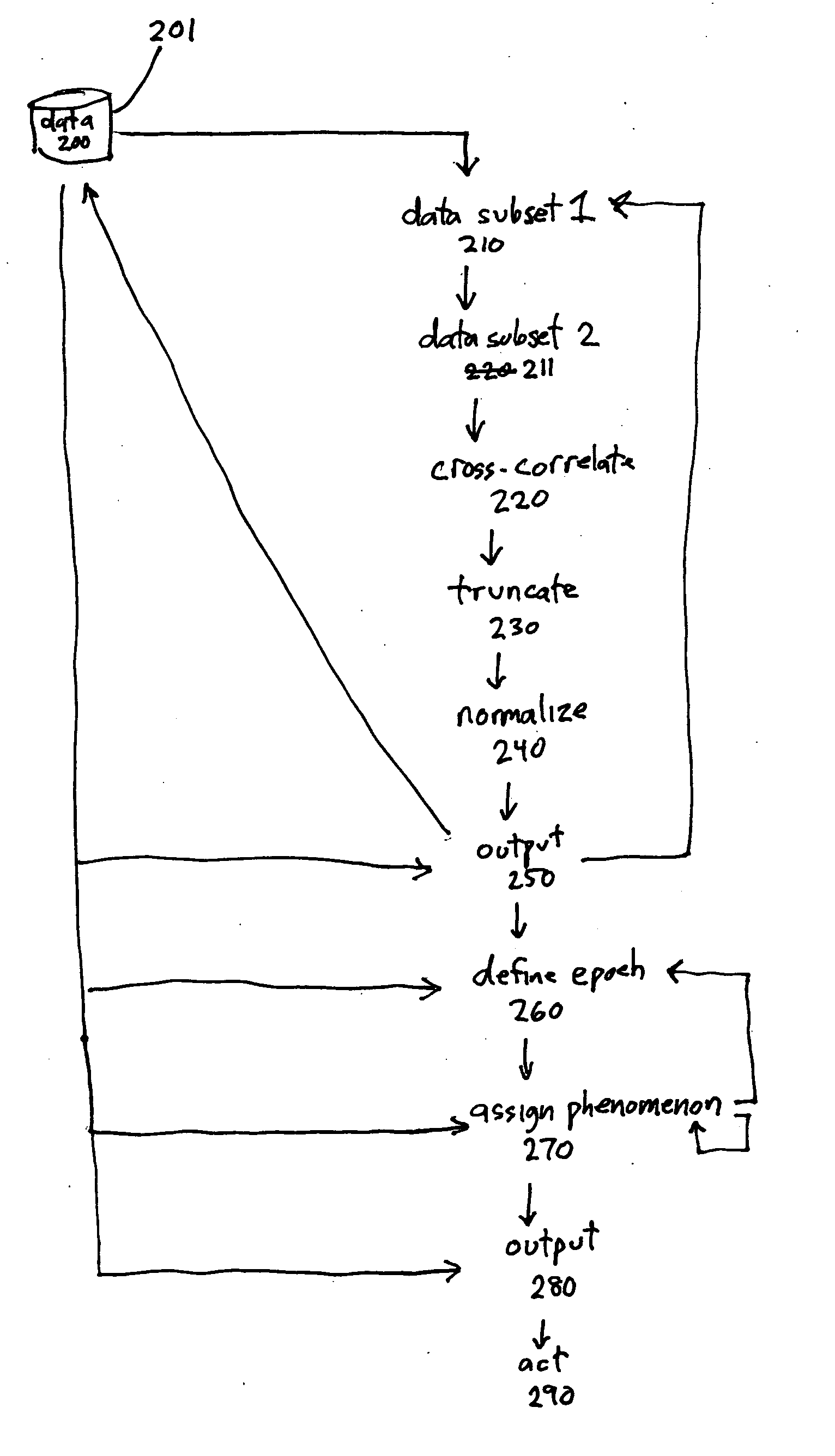

System and method for assessment of sleep

InactiveUS20070179395A1Eliminate lag timeAuscultation instrumentsRespiratory organ evaluationBiological bodyRapid Eye Movements

A method and system for characterizing breathing in an organism are disclosed. The method acquires data values indicative of periodic respiratory function, for example, tracheal sound envelope data acquired during a period of time associated with a sleep period of the organism. The method defines a first subset of data values among the data values and a second subset of data values among the data values. Cross-correlating the first and second subsets of data values yields a first result vector which is truncated, normalized, and output. These steps are optionally repeated for other subsets of data. From the resulting output, the organism's average respiratory rate may be determined. In addition, periods of time corresponding to sleep, wakefulness, rapid-eye movement (REM) sleep, and non-REM sleep may be identified. The time it takes the organism to fall asleep may also be determined.

Owner:SOTOS JOHN G +1

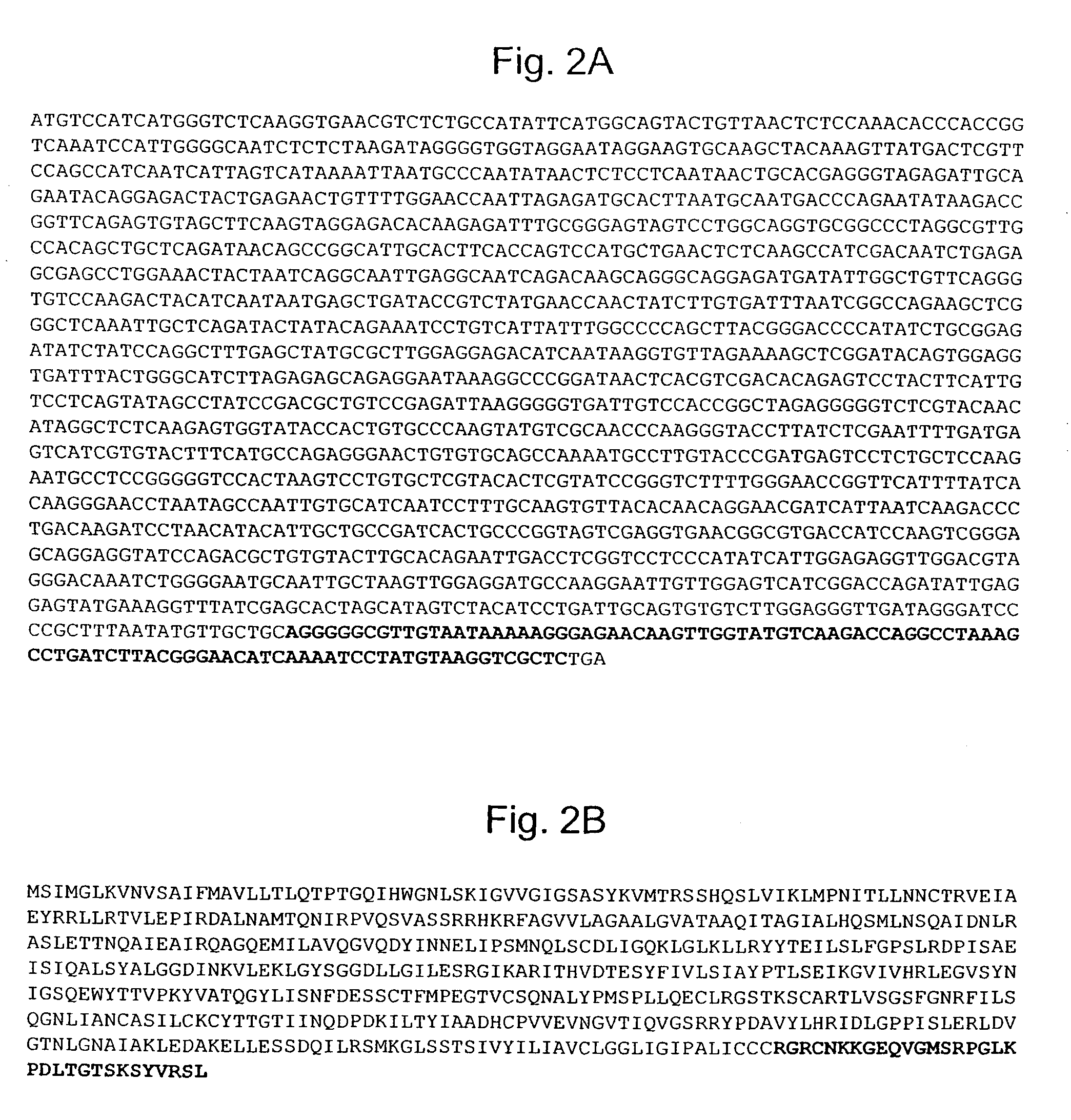

Pseudotyping of retroviral vectors, methods for production and use thereof for targeted gene transfer and high throughput screening

ActiveUS20100189690A1Increase in titer and transduction efficiencyHigh transduction efficiencySsRNA viruses negative-senseBiocideSurface markerMorbillivirus

The invention relates to the pseudotyping of retroviral vectors with heterologous envelope proteins derived from the Paramyxoviridae family, genus Morbillivirus, and various uses of the resulting vector particles. The present invention is based on the unexpected and surprising finding that the incorporation of morbillivirus F and H proteins having truncated cytoplasmic tails into lentiviral vector particles, and the complex interaction of these two proteins during cellular fusion, allows for a superior and more effective transduction of cells. Moreover, these pseudotyped vector particles allow the targeted gene transfer into a given cell type of interest by modifying a mutated and truncated H protein with a single-chain antibody or ligand directed against a cell surface marker of the target cell.

Owner:BUNDESREPUBLIK DEUTLAND LETZTVERTRETEN DURCH DEN PRASIDENTEN DES PAUL EHRLICH INSTITUTS

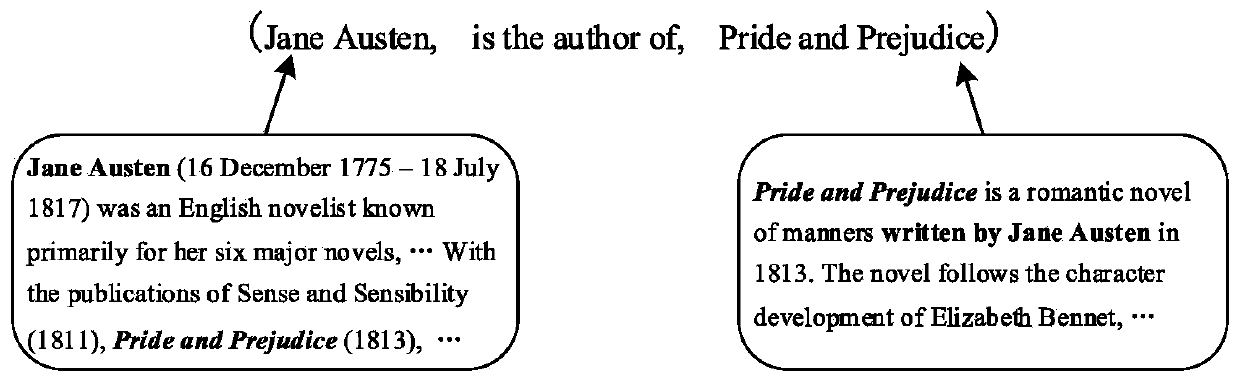

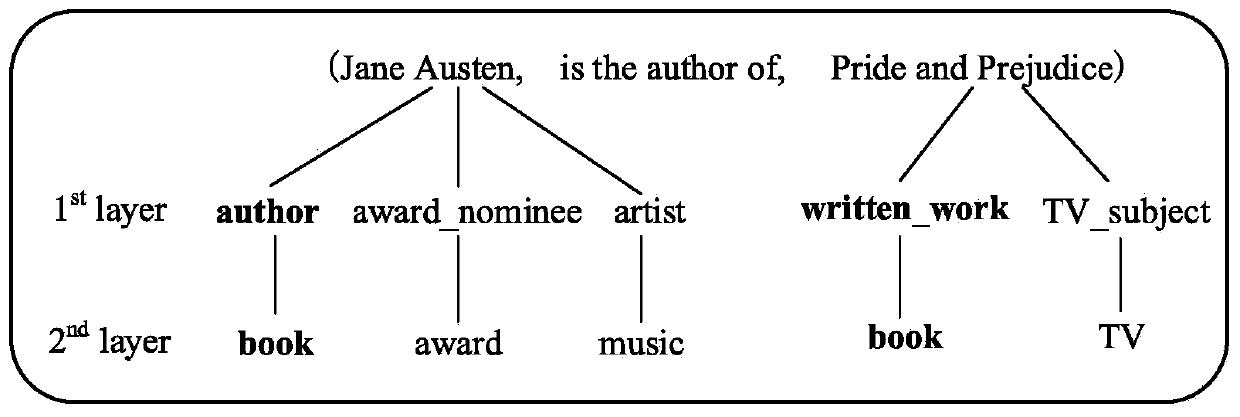

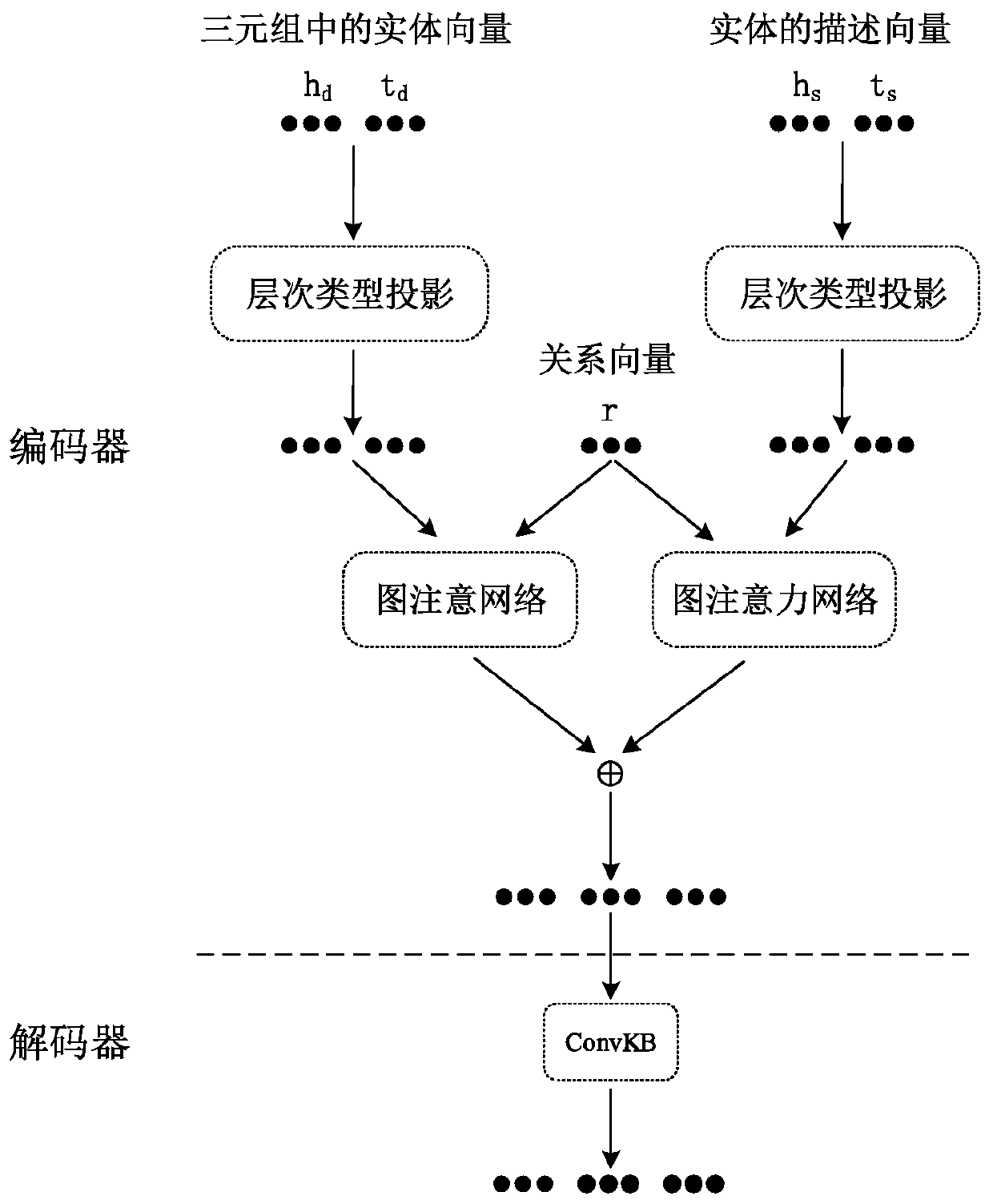

Knowledge representation learning method fusing multi-source information

ActiveCN111538848AMeet the characteristicsImprove sparse problemsNeural architecturesNeural learning methodsEncoder decoderSemantic matching

The invention discloses a knowledge representation learning method fusing multi-source information, and belongs to the technical field of natural language processing. The method comprises the following steps: combining hierarchical type information of an entity, text description information of the entity, graph topological structure information and a triple through an encoder model to obtain a preliminary fusion result of multi-source information; and inputting the preliminary fusion vector of the multi-source information into a decoder model for further training to obtain a final entity vector and a relationship vector. Entity hierarchy type information, entity text description information, graph structure information and an original triad are combined through a self-defined encoder, so that the characteristics of entities and relationships in a knowledge graph can be more fully expressed; anda ConvKB model is used as a decoder, a result vector generated by the encoder is input into the convolutional neural network for semantic matching, and global information among different dimensions of the triad is captured.

Owner:HUAZHONG UNIV OF SCI & TECH

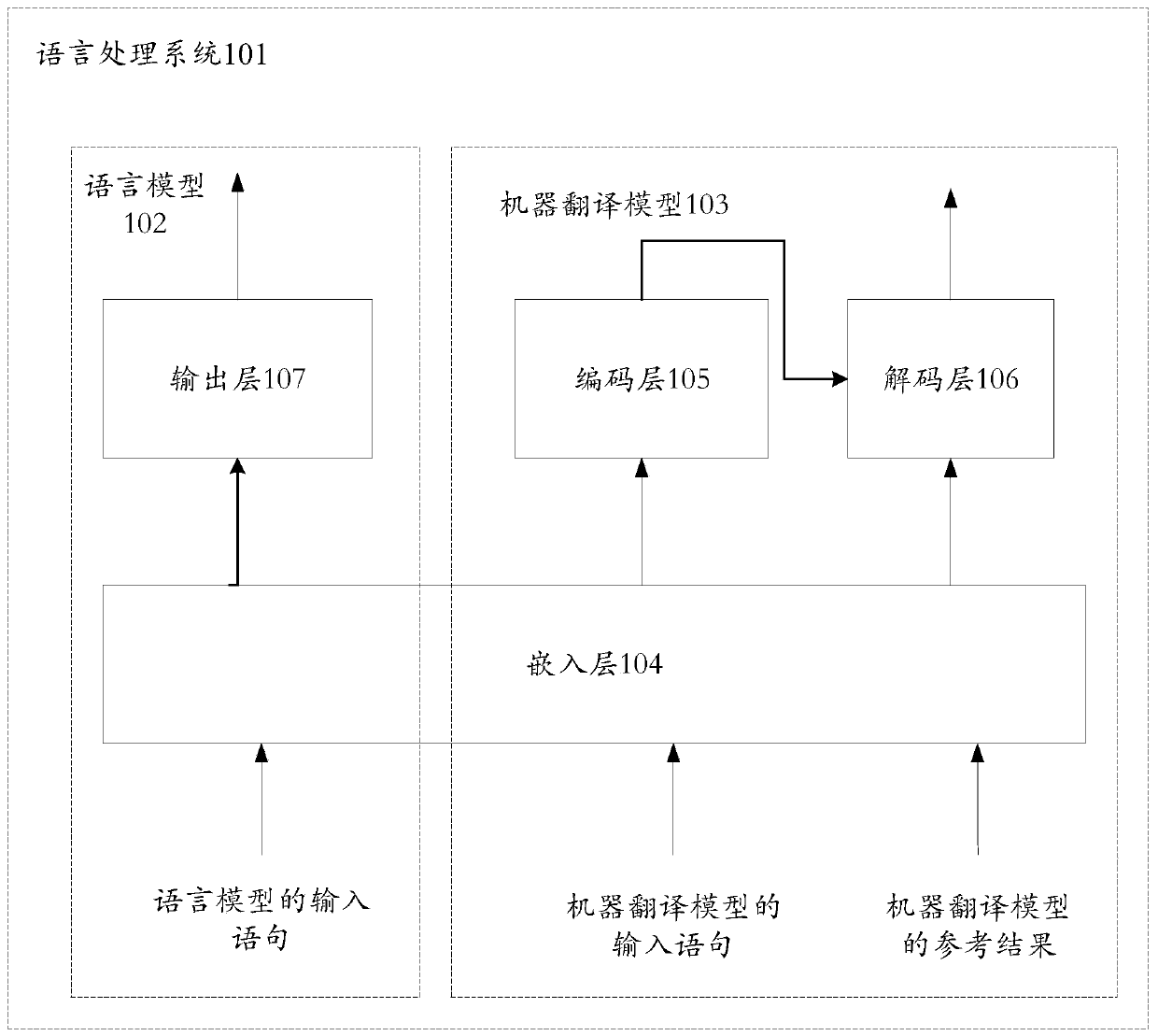

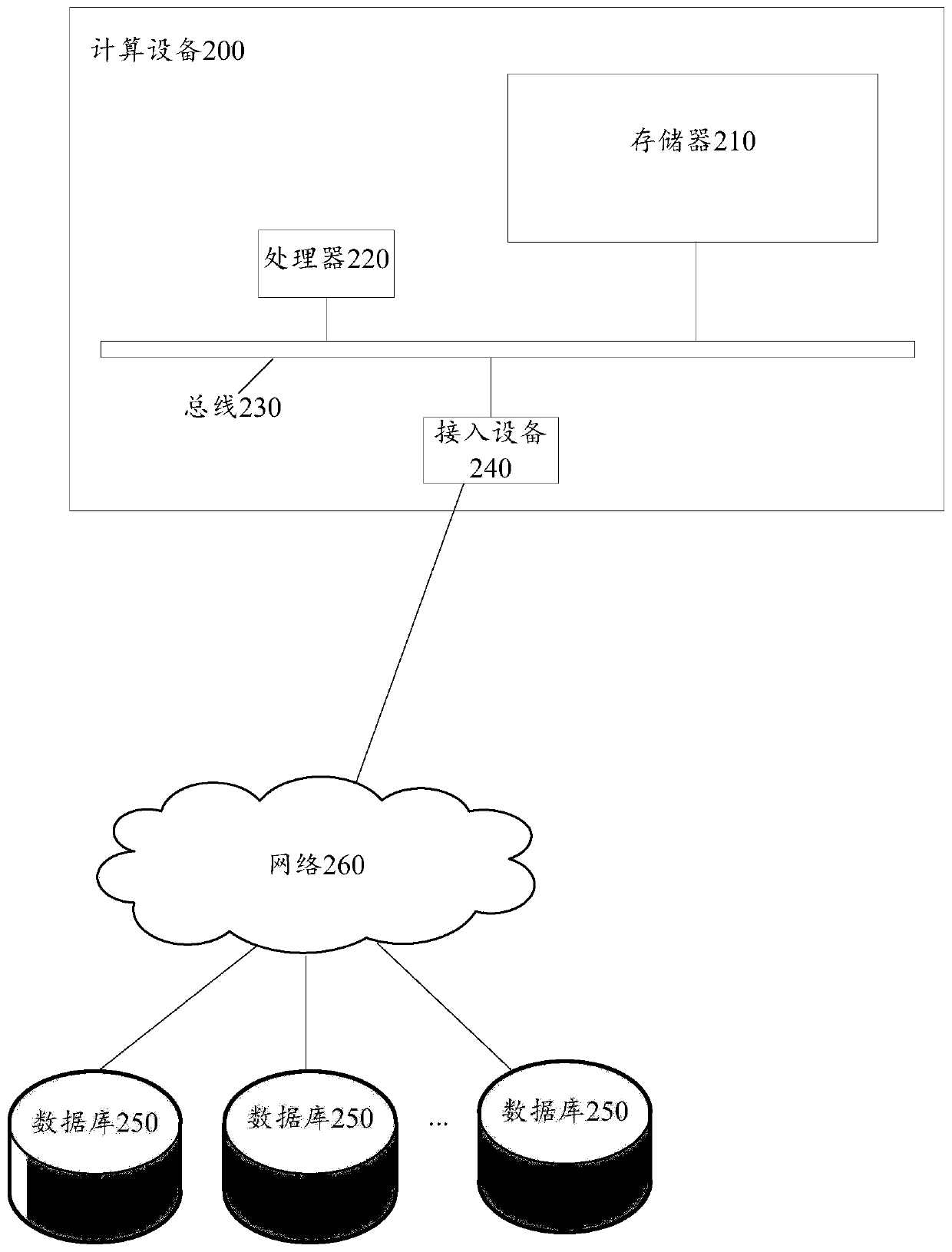

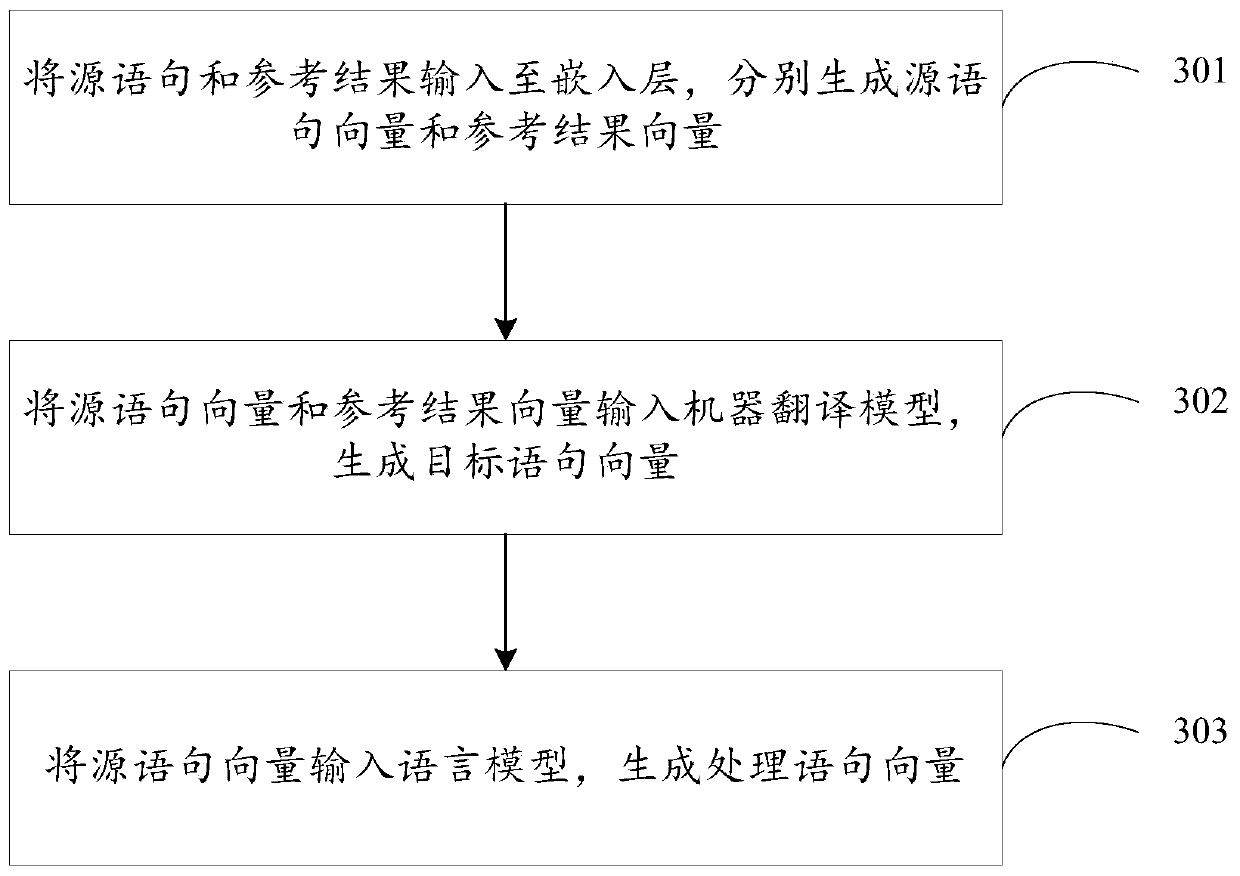

Language processing method and device and training method and device of language processing system

ActiveCN109858044AImplement joint trainingImprove generalization abilityNeural architecturesSpecial data processing applicationsMachine translationHuman language

The invention provides a language processing method and device and a training method and device of a language processing system. The language processing system comprises a language model and a machinetranslation model, the language model and the machine translation model comprise the same embedded layer, and the language processing method comprises the steps that a source statement and a reference result are input into the embedded layer, and a source statement vector and a reference result vector are generated respectively; the source statement vector and the reference result vector are input into the machine translation model to generate a target statement vector; and the source statement vector are input into the language model to generate a processing statement vector. As the machinetranslation model and the language model share the training result, compared with the prior art, the generalization effect of the language model and the machine translation model can be improved, anda better output result is obtained.

Owner:成都金山互动娱乐科技有限公司 +1

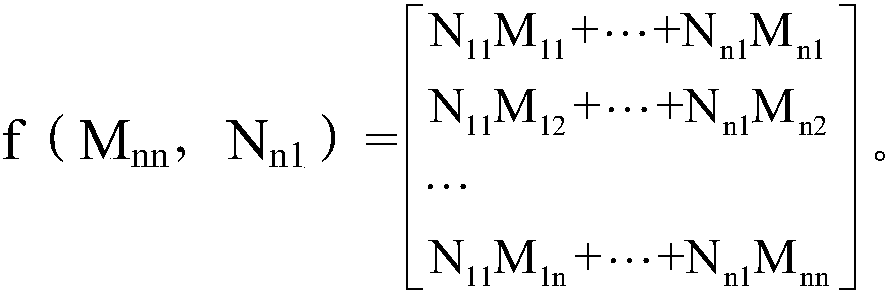

Computing method applied to symmetric matrix and vector multiplication

ActiveCN107590106AImprove computing efficiencyReduce wasteComplex mathematical operationsDiagonalParallel processing

The invention discloses a computing method applied to a symmetric matrix and vector multiplication. The method is used for computing the product of the (n1xn1) symmetric matrix and n1-dimensional column vectors, wherein first, the (n1xn1) symmetric matrix and the n1-dimensional column vectors are partitioned, and microscale data extension is performed on matrix blocks on diagonal lines of the (n1xn1) symmetric matrix after partitioning to turn the matrix blocks into symmetric matrix blocks; then the n1-dimensional column vectors are partitioned, an intermediate data block is computed accordingto the matrix after partitioning, and a final result vector is computed according to the intermediate data block. Through the computing method applied to the symmetric matrix and the vector multiplication, on the premise of performing parallel processing on the symmetric matrix, waste of storage space by the symmetric matrix can be reduced, and the computing efficiency of the symmetric matrix andthe vector multiplication can be improved.

Owner:SUZHOU RICORE IC TECH LTD

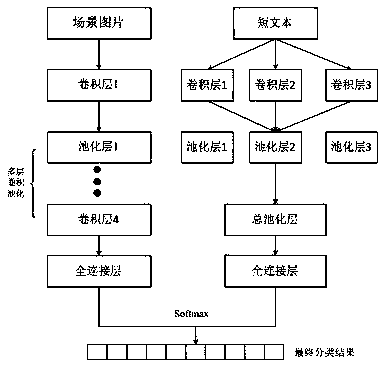

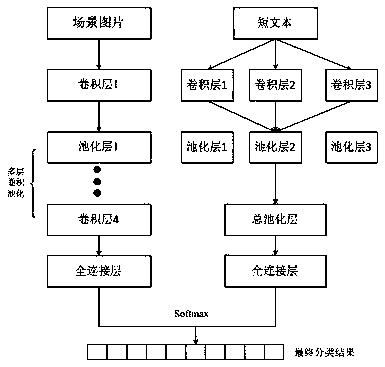

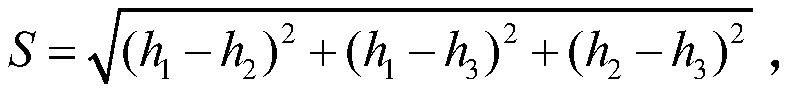

Multi-modal scene recognition method based on deep learning

ActiveCN110046656AImprove accuracyAccurate scene recognition meansCharacter and pattern recognitionNeural architecturesGroup of picturesImage prediction

The invention discloses a multi-modal scene recognition method based on deep learning. The multi-modal scene recognition method comprises the following steps: S1, carrying out word segmentation processing on a short text; S2, inputting a group of pictures, short text segmented words and corresponding tags into respective convolutional neural networks for training; S3, training a short text classification model; S4, training a picture classification model; S5, respectively calculating cross entropies of the full connection layer outputs in S3 and S4 and a standard classification result, calculating an average Euclidean distance which serves as a loss value, then feeding back the loss value to the respective convolutional neural network, and finally obtaining a complete multi-modal scene recognition model; S6, adding the text and the image prediction result vector to obtain a final classification result; and S7, respectively inputting the short text and the image to be identified into the trained multi-modal scene identification model, and performing scene identification. The invention provides a multi-modal scene searching mode, and more accurate and convenient scene recognition isprovided for users.

Owner:NANJING UNIV OF POSTS & TELECOMM

Shift-in-right instructions for processing vectors

The described embodiments provide a processor for generating a result vector with shifted values from an input vector. During operation, the processor receives an input vector and a control vector. Using these vectors, the processor generates the result vector, which can contain shifted values or propagated values from the input vector, depending on the value of the control vector. In addition, a predicate vector can be used to control the values that are written to the result vector.

Owner:APPLE INC

Predicting a result of a dependency-checking instruction when processing vector instructions

The described embodiments include a processor that executes a vector instruction. In the described embodiments, while dispatching instructions at runtime, the processor encounters a dependency-checking instruction. Upon determining that a result of the dependency-checking instruction is predictable, the processor dispatches a prediction micro-operation associated with the dependency-checking instruction, wherein the prediction micro-operation generates a predicted result vector for the dependency-checking instruction. The processor then executes the prediction micro-operation to generate the predicted result vector. In the described embodiments, when executing the prediction micro-operation to generate the predicted result vector, if a predicate vector is received, for each element of the predicted result vector for which the predicate vector is active, otherwise, for each element of the predicted result vector, the processor sets the element to zero.

Owner:APPLE INC

Multi-layer protocol reassembly that operates independently of underlying protocols, and resulting vector list corresponding thereto

InactiveUS20060031847A1Reduce complexityImprove performanceMultiprogramming arrangementsMultiple digital computer combinationsData streamLogical representation

Owner:NETWORK GENERAL TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com