Multi-modal scene recognition method based on deep learning

A scene recognition and deep learning technology, applied in the field of pattern recognition and artificial intelligence, can solve problems such as complex implementation methods, achieve the effect of improving accuracy and facilitating scene recognition methods

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

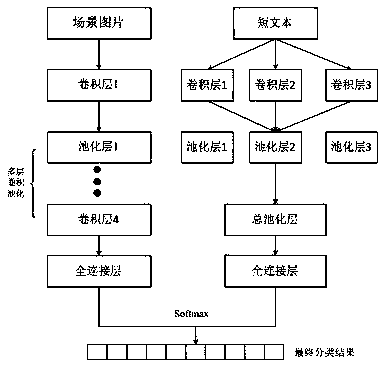

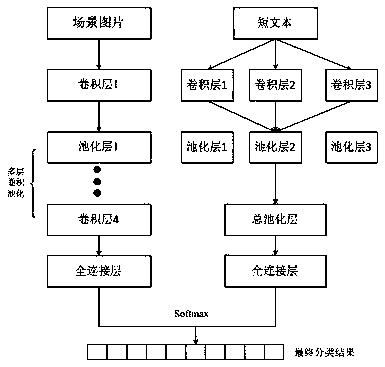

[0035] The present invention provides a new multi-modal scene recognition method based on deep learning for the problems of inaccurate results and high complexity of the existing scene recognition methods. Feature information of text modalities, and fusion of multi-modal feature information to improve the accuracy of scene recognition.

[0036] Further, the deep learning-based multi-modal scene recognition method of the present invention includes the following steps.

[0037] S1. Use the stammer word segmentation tool to perform word segmentation processing on short texts.

[0038] S2. Input a group of pictures and short text word segmentation and corresponding labels into respective convolutional neural networks for training.

[0039] S3. Training a short text classification model. Specifically include the following steps:

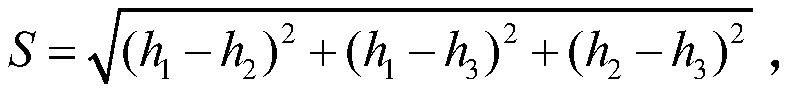

[0040] S31. During the training process of the short text classification model, quantify the word segmentation results of the input short text and inp...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com