Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

87 results about "Processor scheduling" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

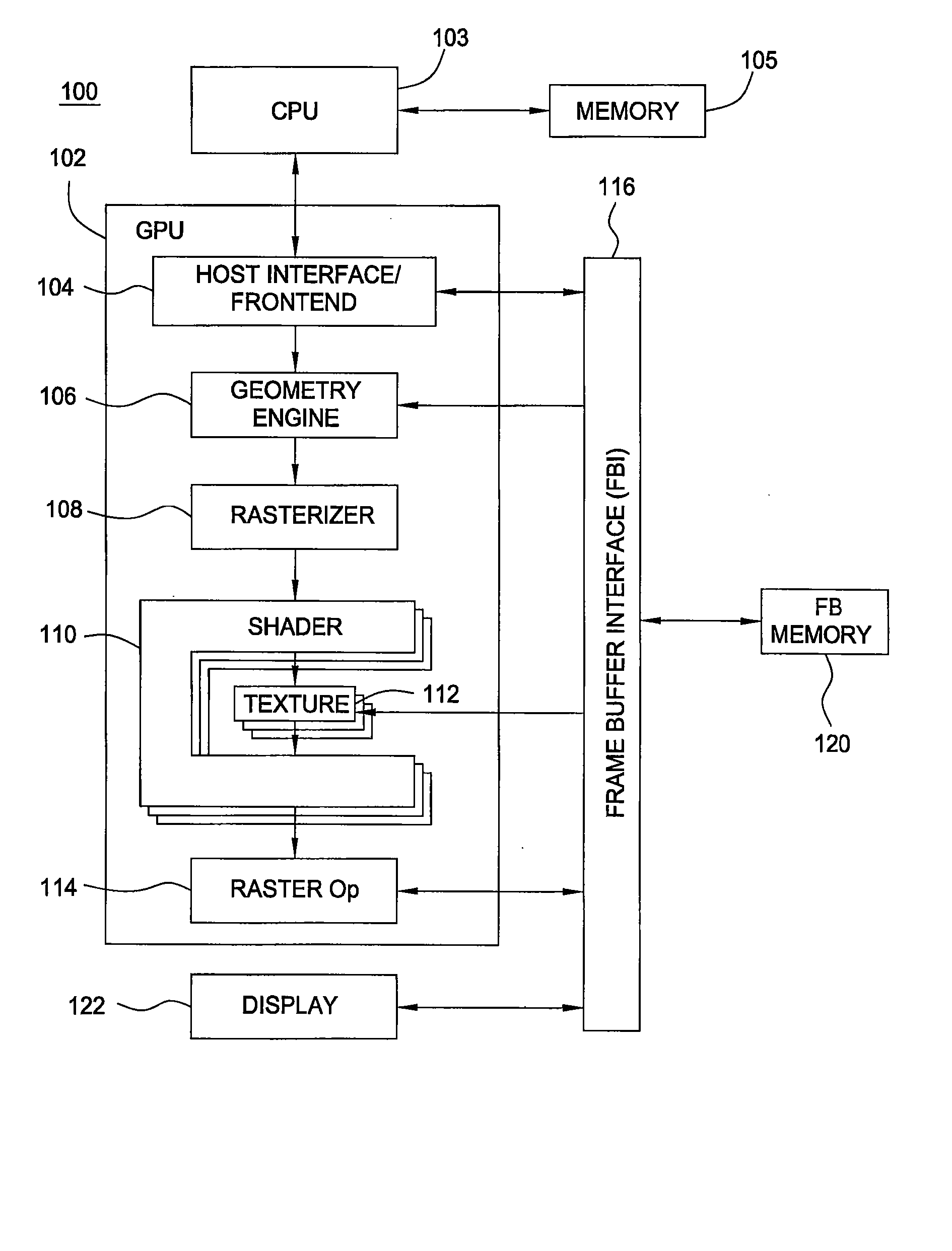

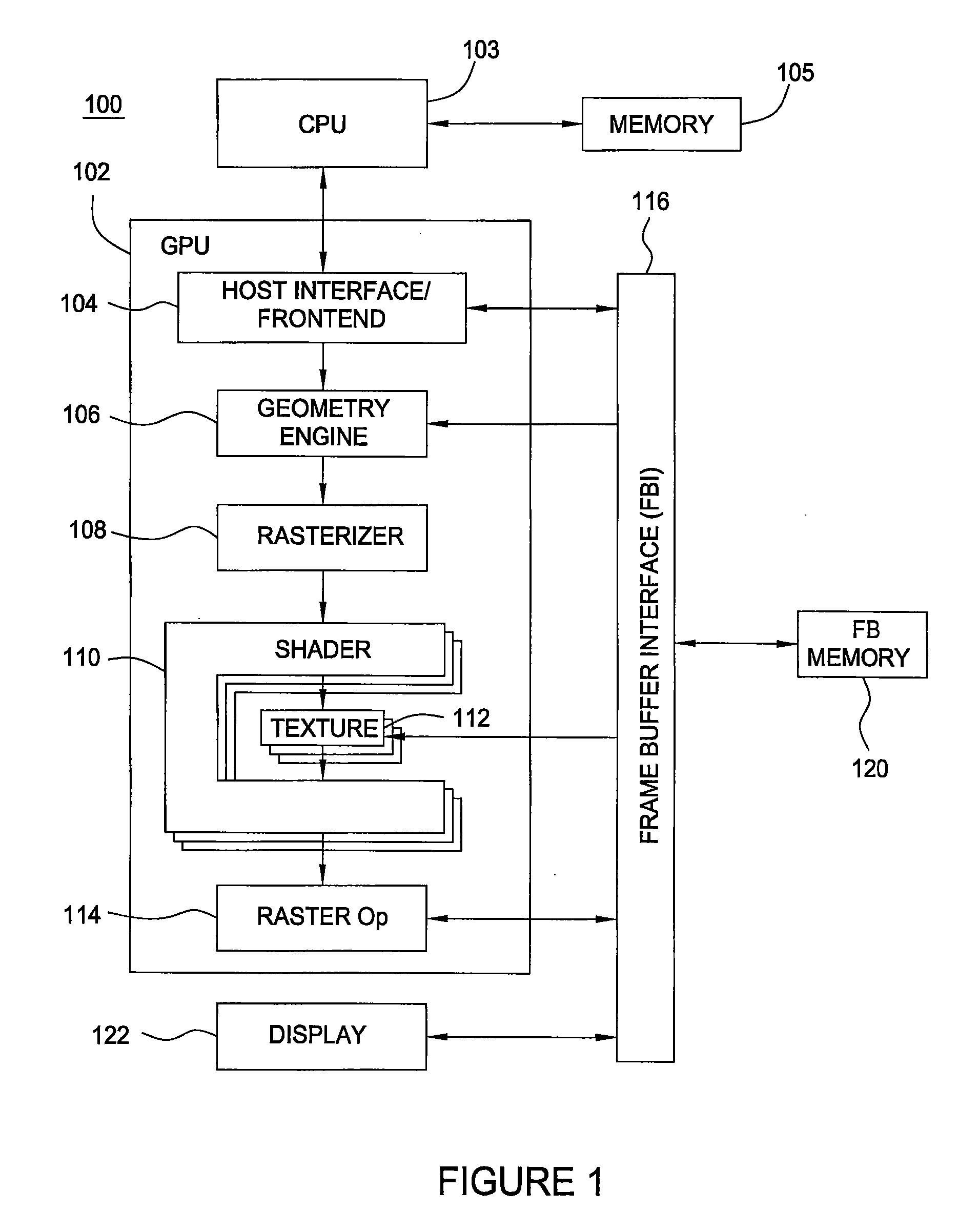

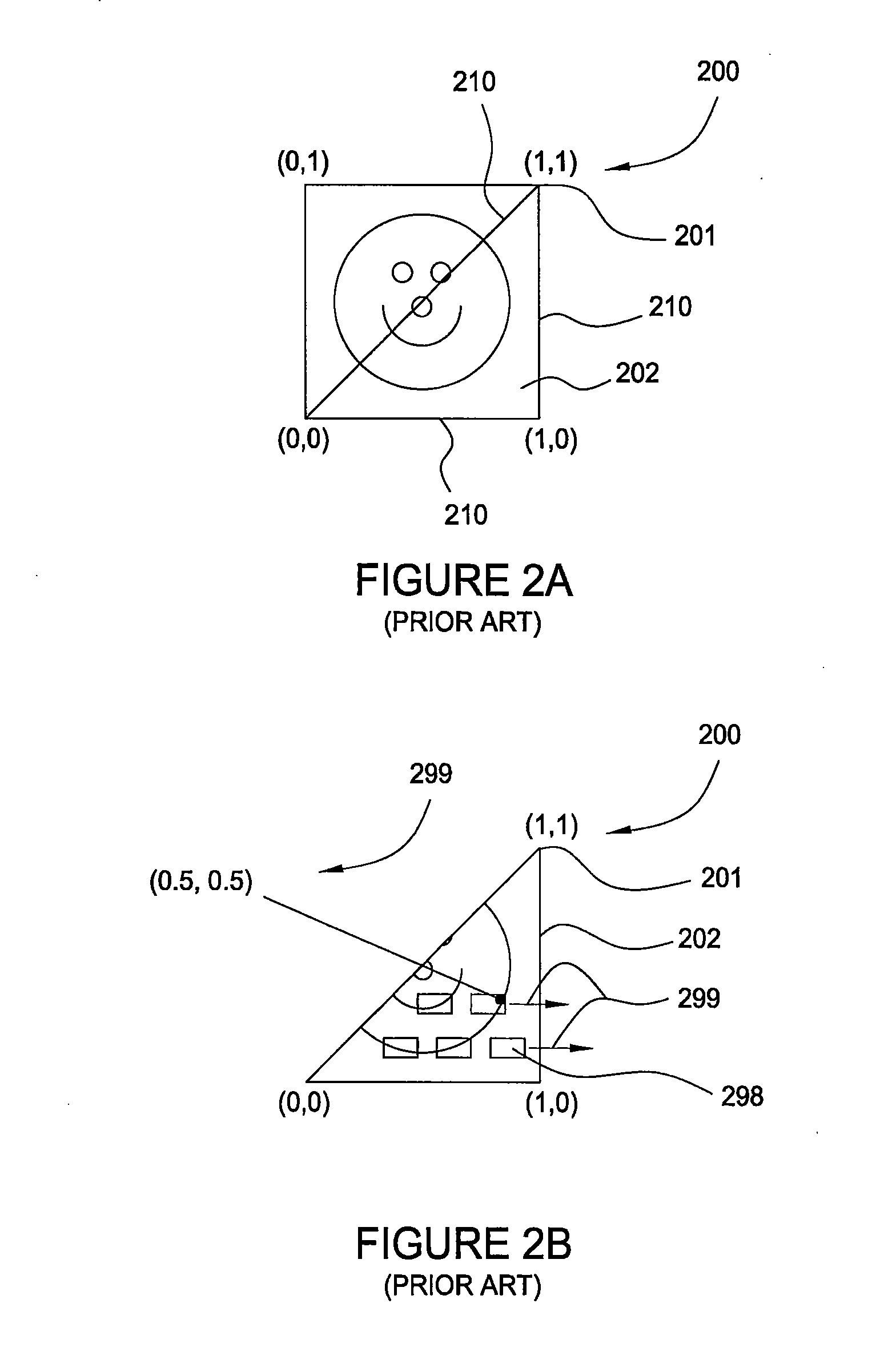

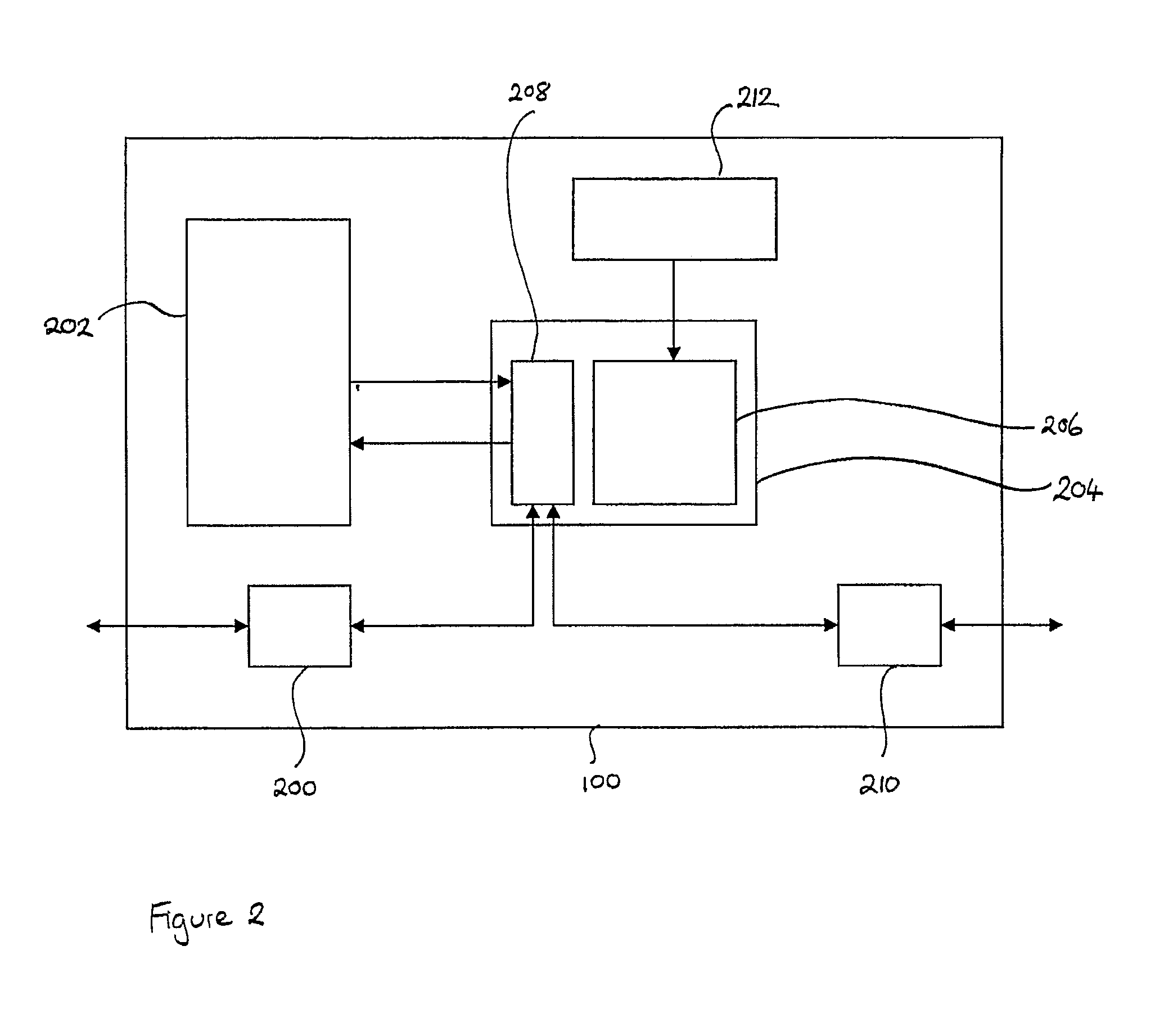

Scalable shader architecture

ActiveUS20050225554A1Perform operationMultiple digital computer combinationsProcessor architectures/configurationComputational scienceProcessor scheduling

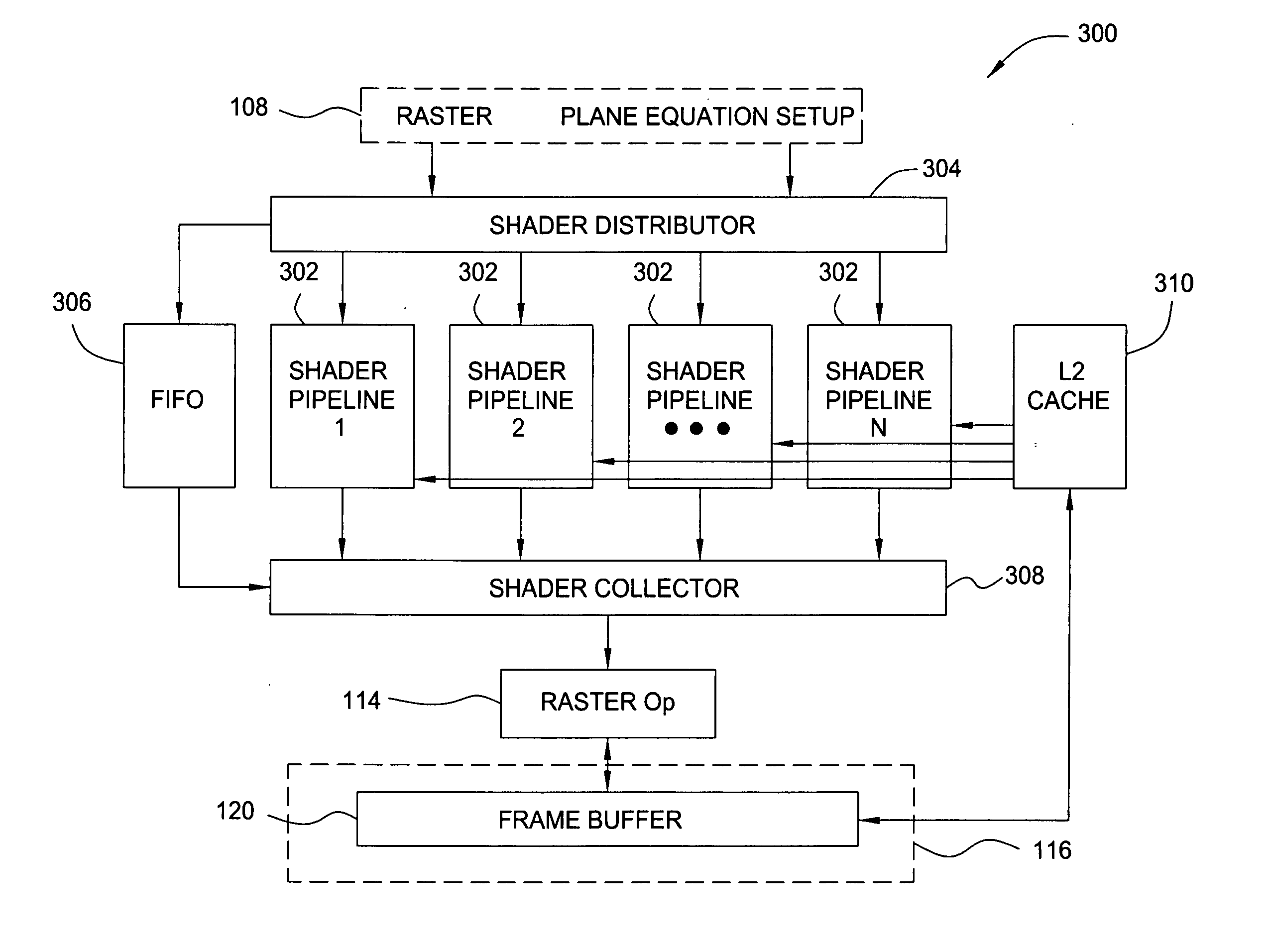

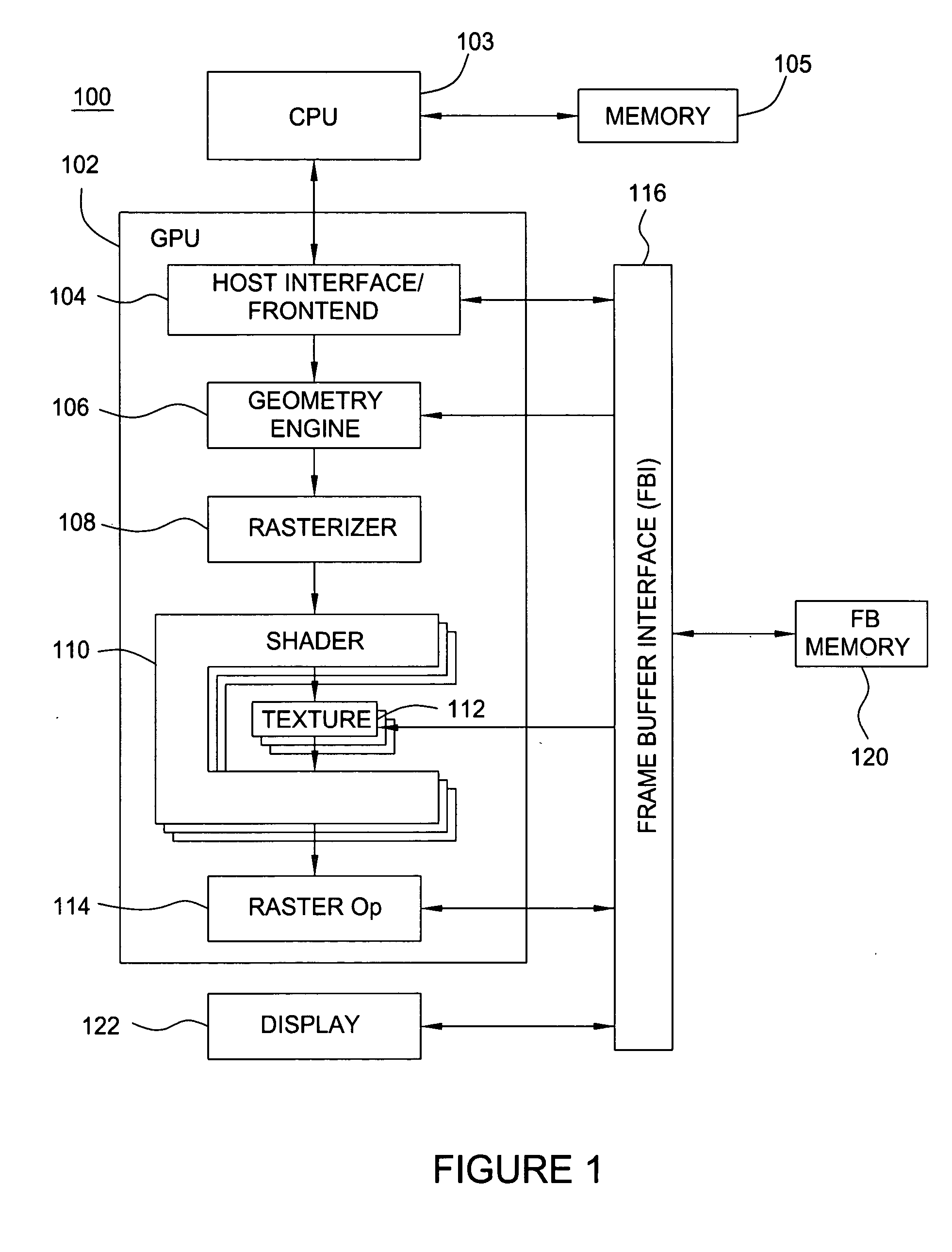

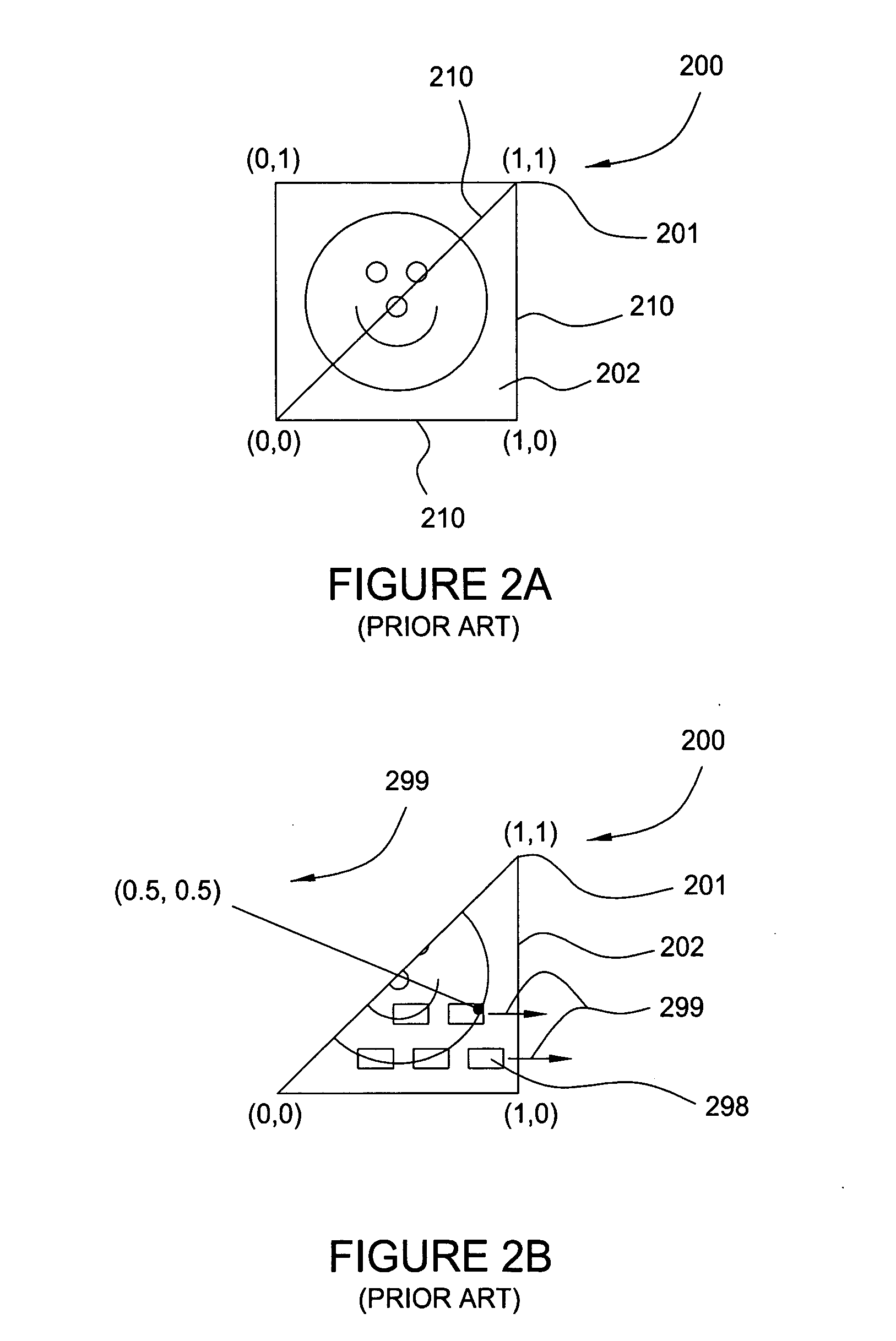

A scalable shader architecture is disclosed. In accord with that architecture, a shader includes multiple shader pipelines, each of which can perform processing operations on rasterized pixel data. Shader pipelines can be functionally removed as required, thus preventing a defective shader pipeline from causing a chip rejection. The shader includes a shader distributor that processes rasterized pixel data and then selectively distributes the processed rasterized pixel data to the various shader pipelines, beneficially in a manner that balances workloads. A shader collector formats the outputs of the various shader pipelines into proper order to form shaded pixel data. A shader instruction processor (scheduler) programs the individual shader pipelines to perform their intended tasks. Each shader pipeline has a shader gatekeeper that interacts with the shader distributor and with the shader instruction processor such that pixel data that passes through the shader pipelines is controlled and processed as required.

Owner:NVIDIA CORP

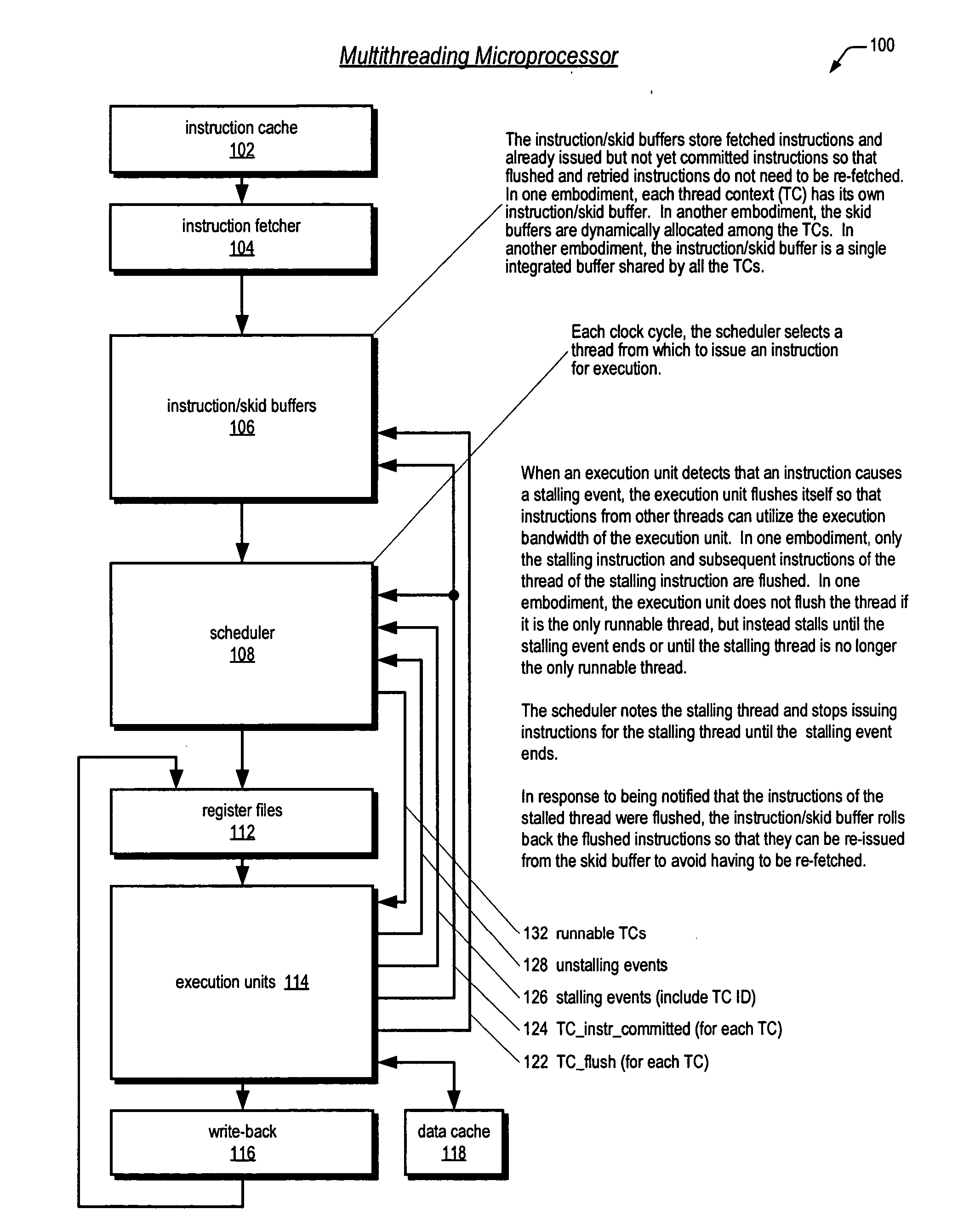

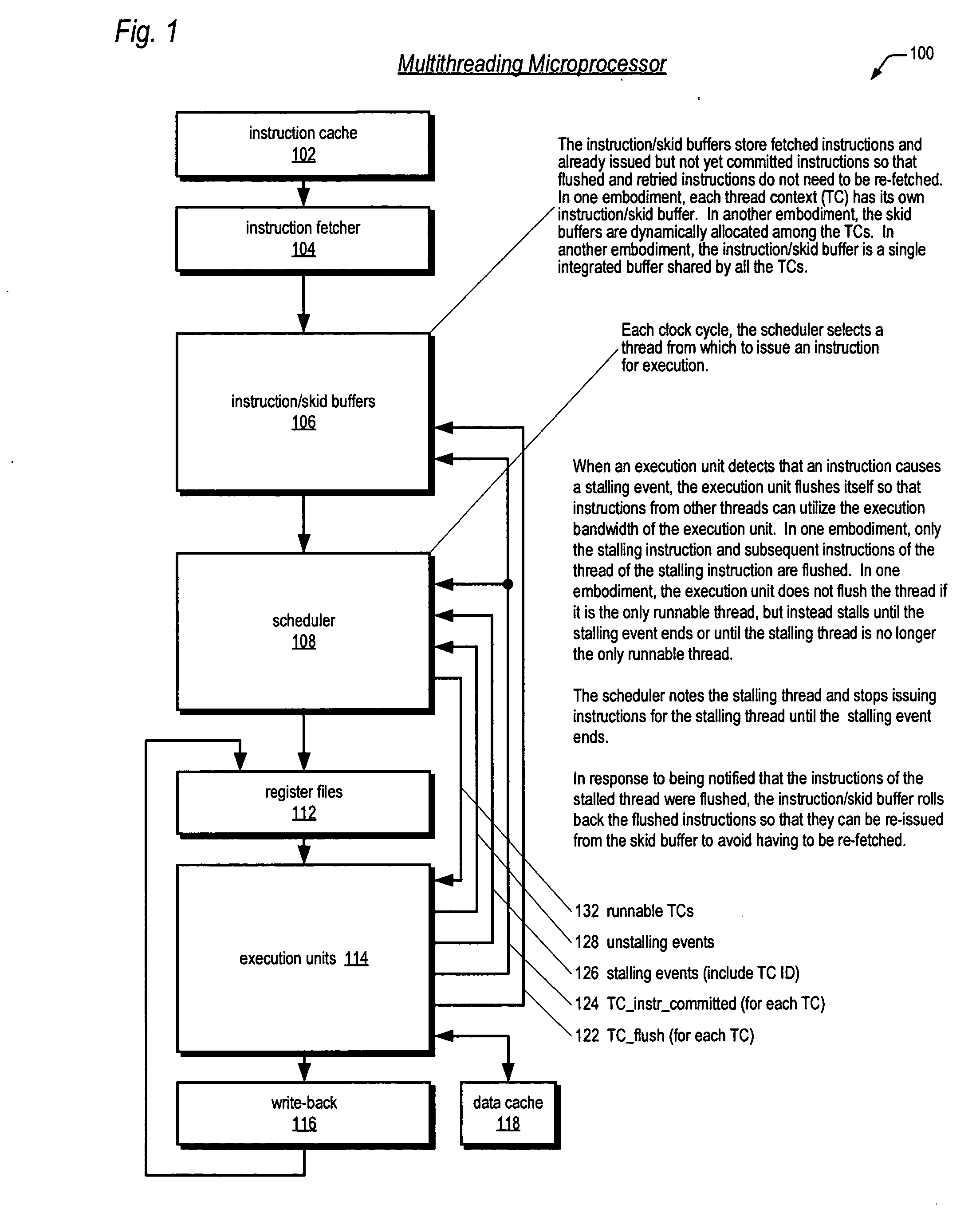

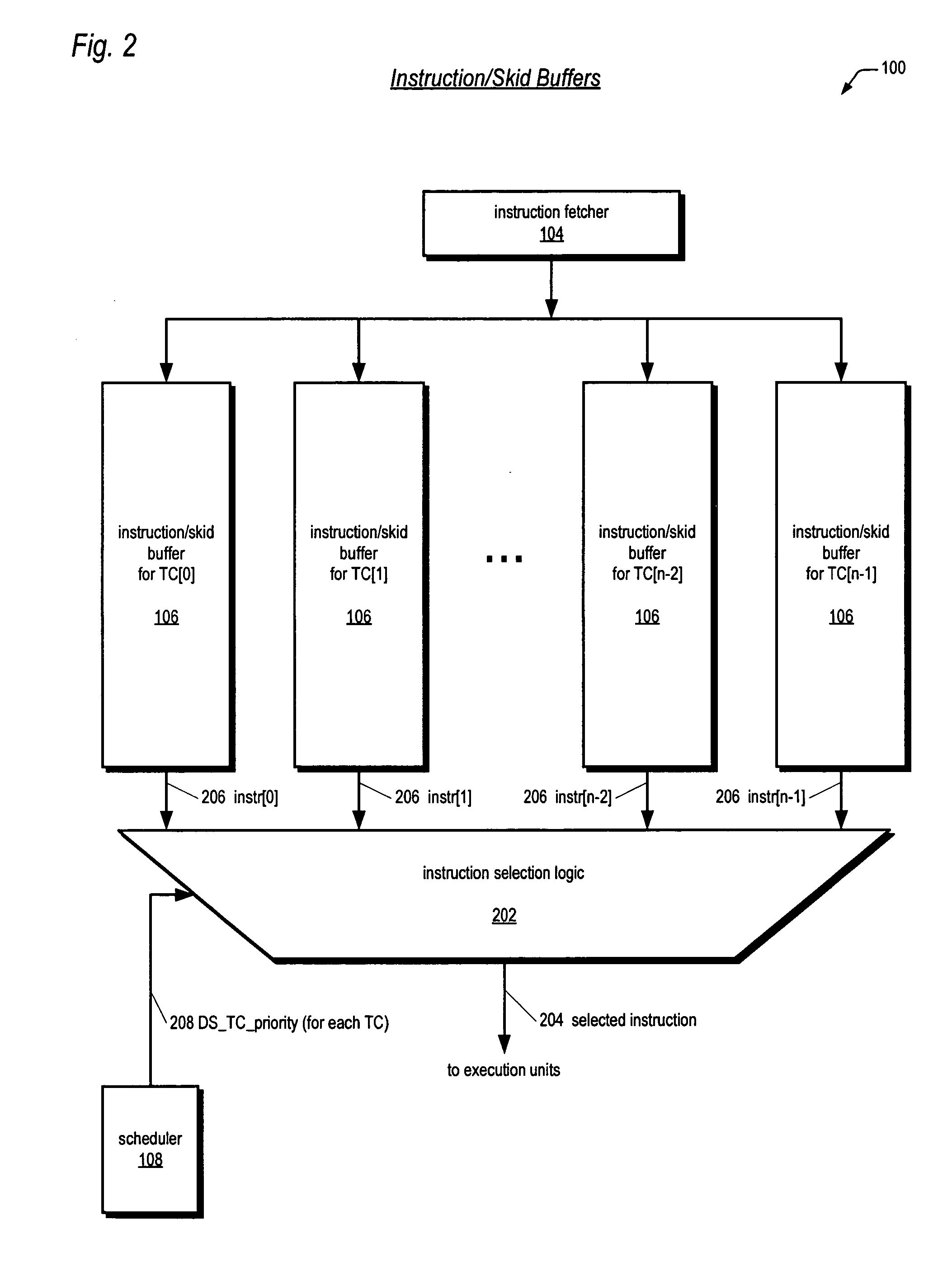

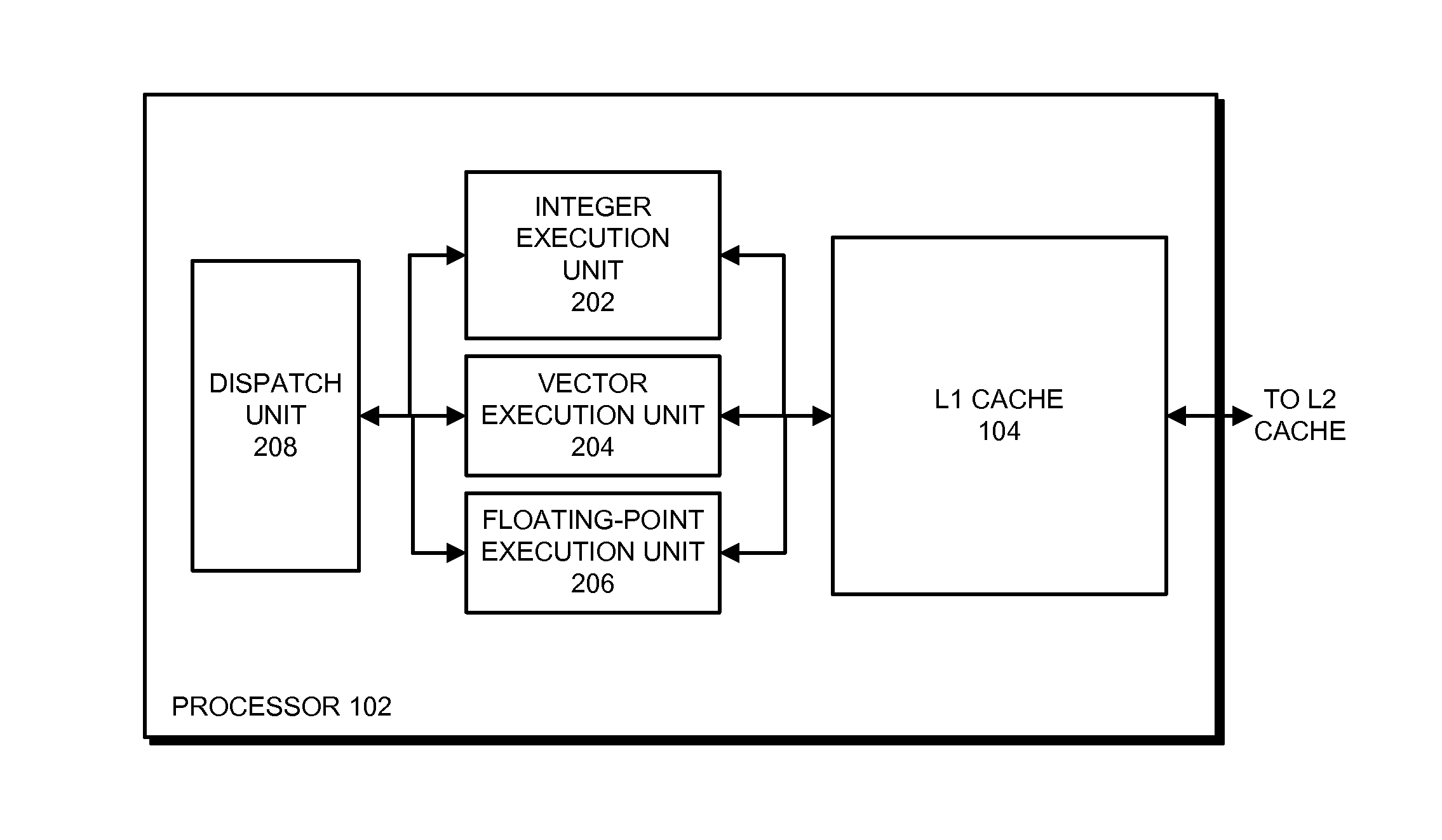

Barrel-incrementer-based round-robin apparatus and instruction dispatch scheduler employing same for use in multithreading microprocessor

InactiveUS20060179194A1Selectively disableDigital data processing detailsProgram controlProcessor schedulingShared resource

A circuit for selecting one of N requestors in a round-robin fashion is disclosed. The circuit 1-bit left rotatively increments a first addend by a second addend to generate a sum that is ANDed with the inverse of the first addend to generate a 1-hot vector indicating which of the requestors is selected next. The first addend is an N-bit vector where each bit is false if the corresponding requester is requesting access to a shared resource. The second addend is a 1-hot vector indicating the last selected requester. A multithreading microprocessor dispatch scheduler employs the circuit for N concurrent threads each thread having one of P priorities. The dispatch scheduler generates P N-bit 1-hot round-robin bit vectors, and each thread's priority is used to select the appropriate round-robin bit from P vectors for combination with the thread's priority and an issuable bit to create a dispatch level used to select a thread for instruction dispatching.

Owner:ARM FINANCE OVERSEAS LTD

Method, apparatus and computer program product for scheduling multiple threads for a processor

InactiveUS20040015684A1Program initiation/switchingDigital computer detailsComputer architectureProcessor scheduling

In one form of the invention, a method for scheduling multiple instruction threads for a processor in an information handling system includes communicating, to processor circuitry by an operating system, a selected schedule of instruction threads for a set of instructions. The processor circuitry switches from executing one of the threads with one of the contexts to executing another of the threads with another of the contexts, responsive to the schedule received from the operating system.

Owner:IBM CORP

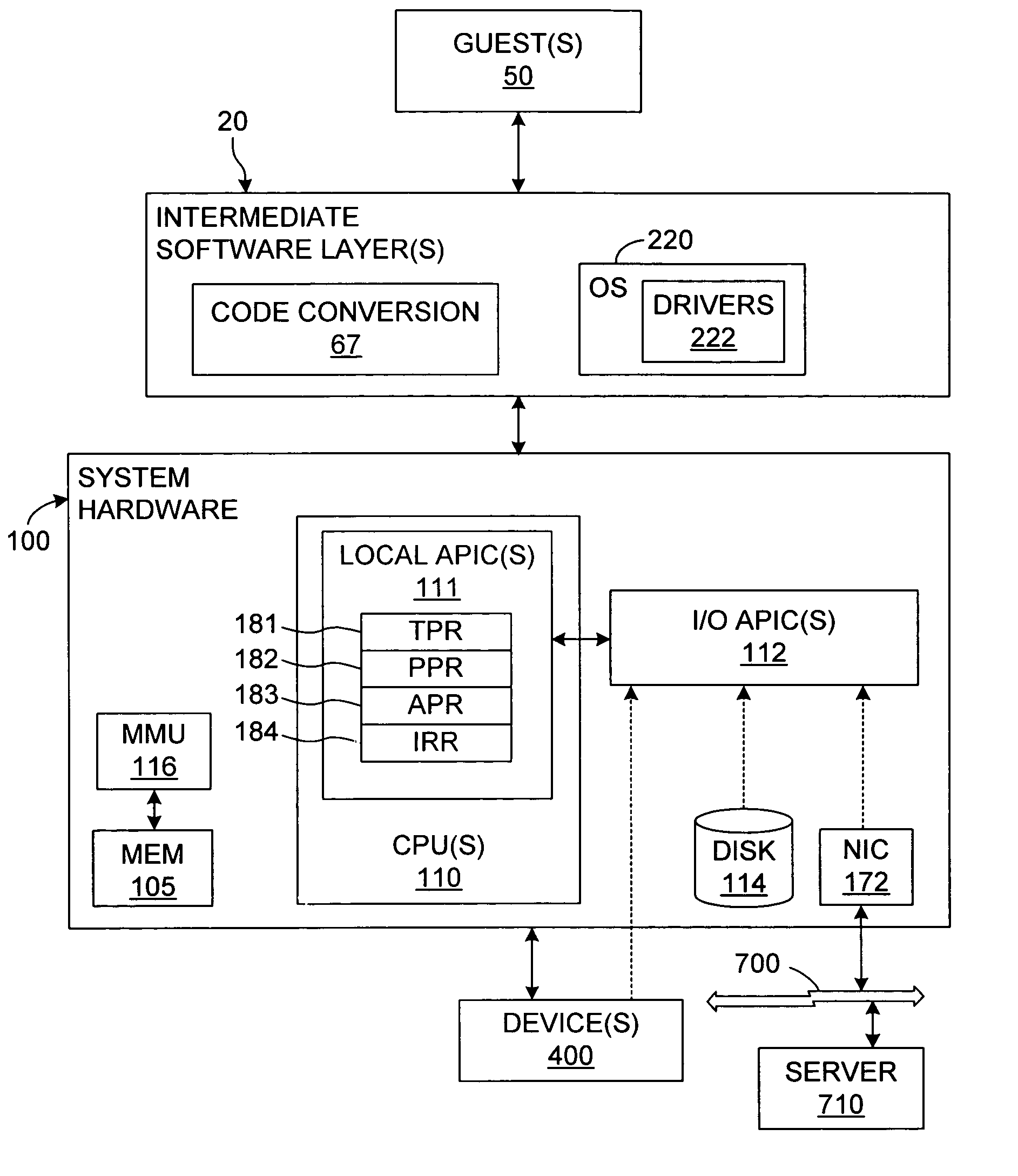

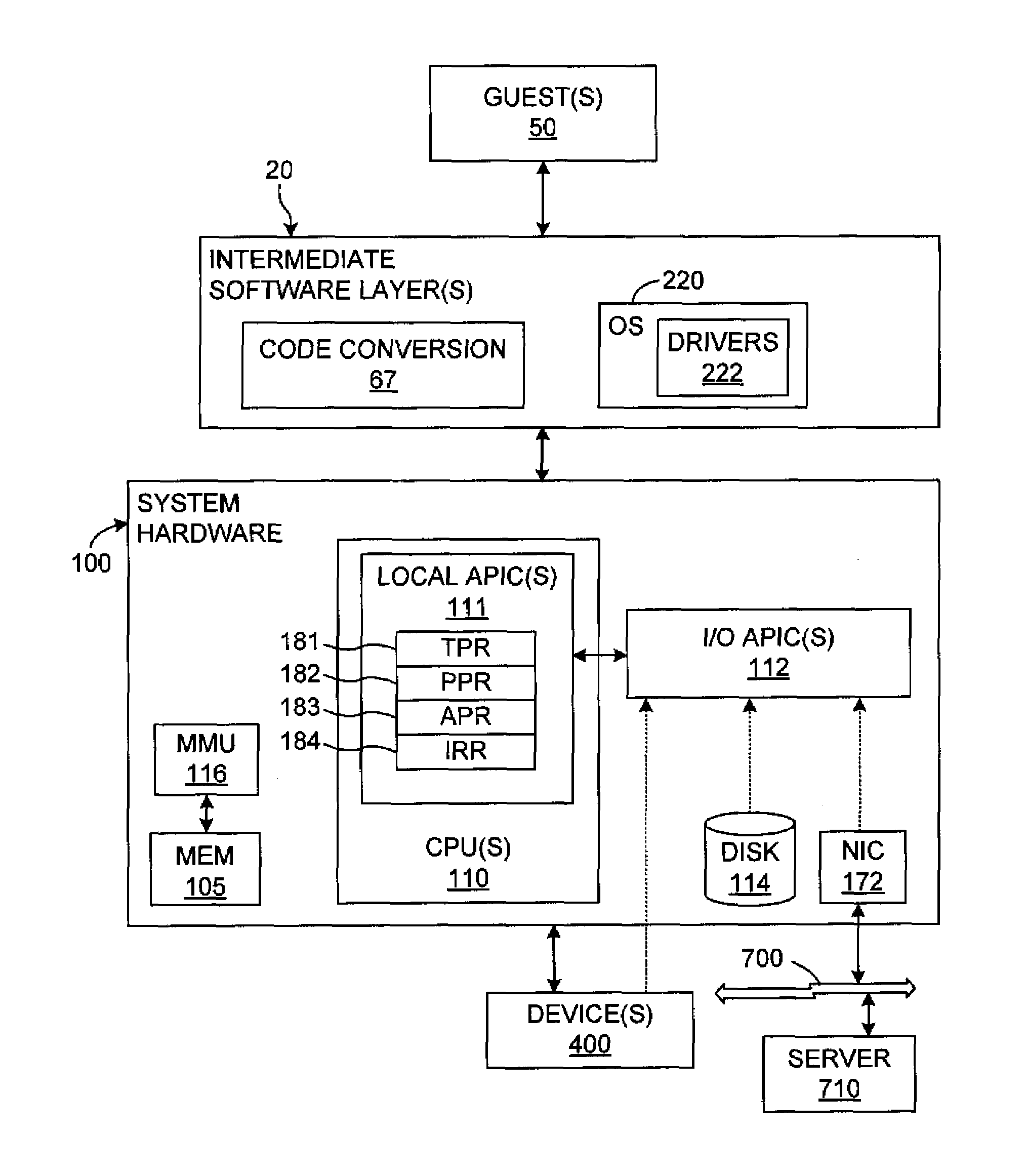

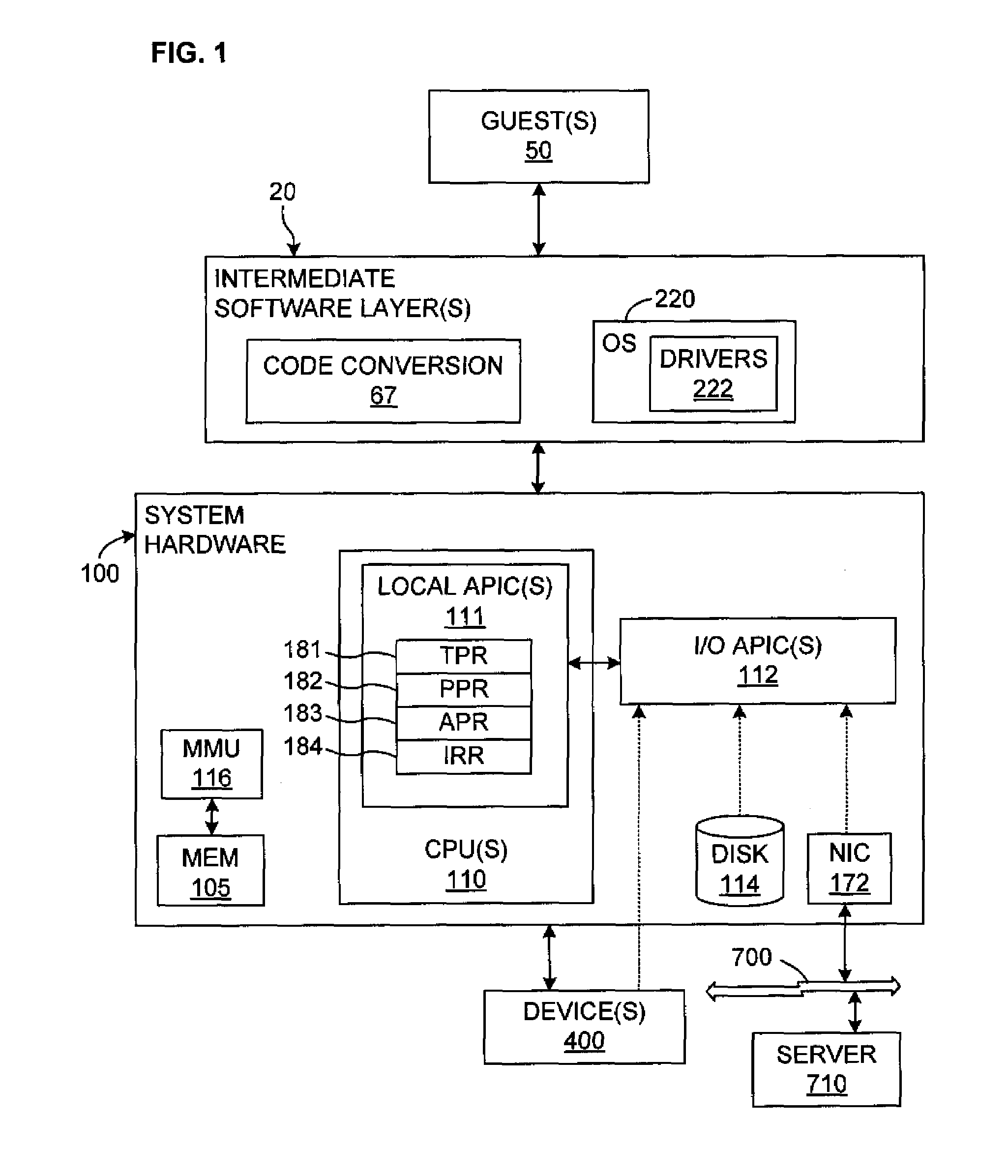

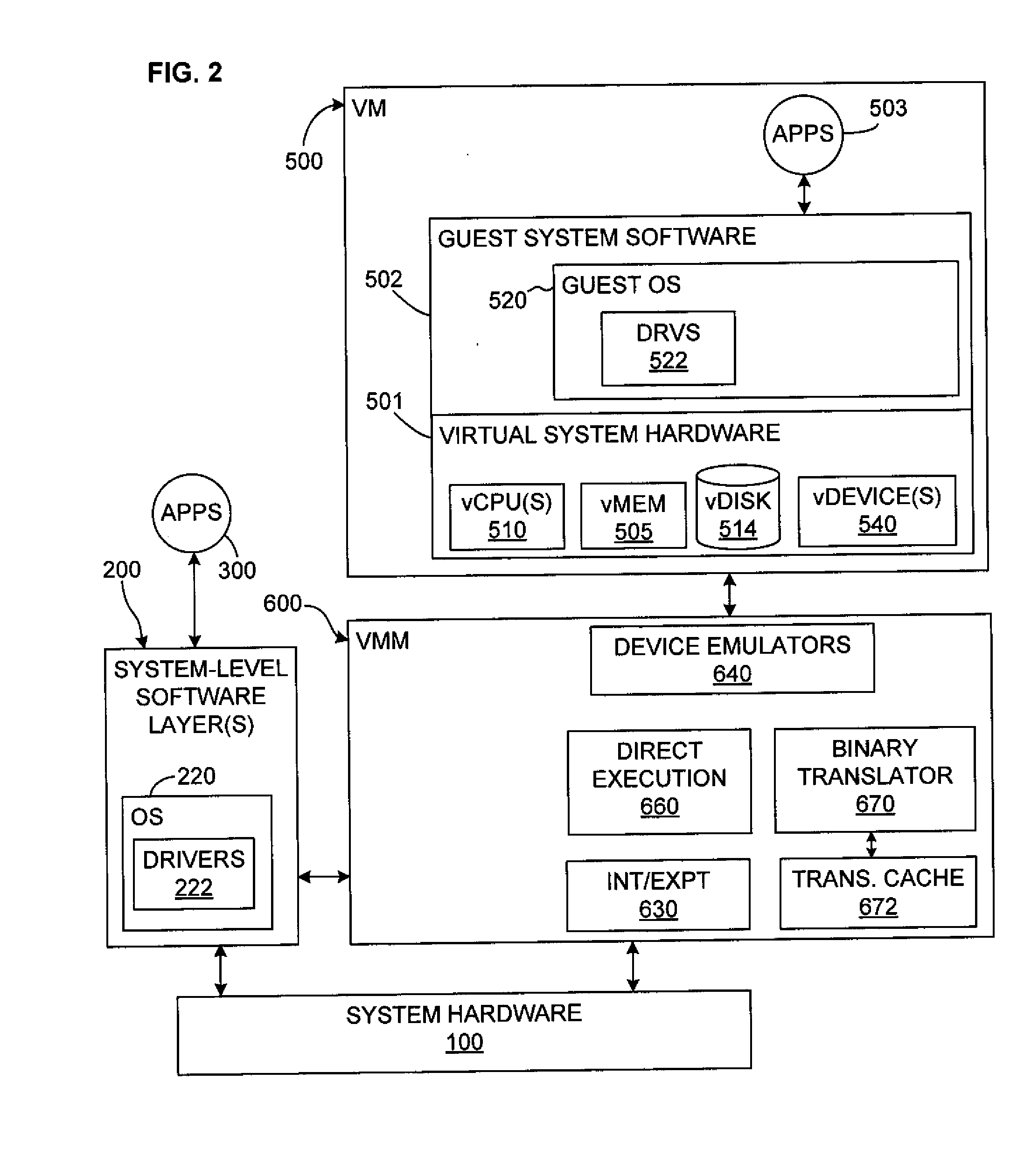

System and method for virtualizing processor and interrupt priorities

ActiveUS7590982B1Multiprogramming arrangementsSoftware simulation/interpretation/emulationVirtualizationProcessor scheduling

Dispatching of interrupts to a processor is conditionally suppressed, that is, only if an old priority value and a new priority value are either both less than or both greater than a maximum pending priority value. This conditional avoidance of dispatching is preferably implemented by a virtual priority module within a binary translator in a virtualized computer system and relates to interrupts directed to a virtualized processor by a virtualized local APIC.

Owner:VMWARE INC

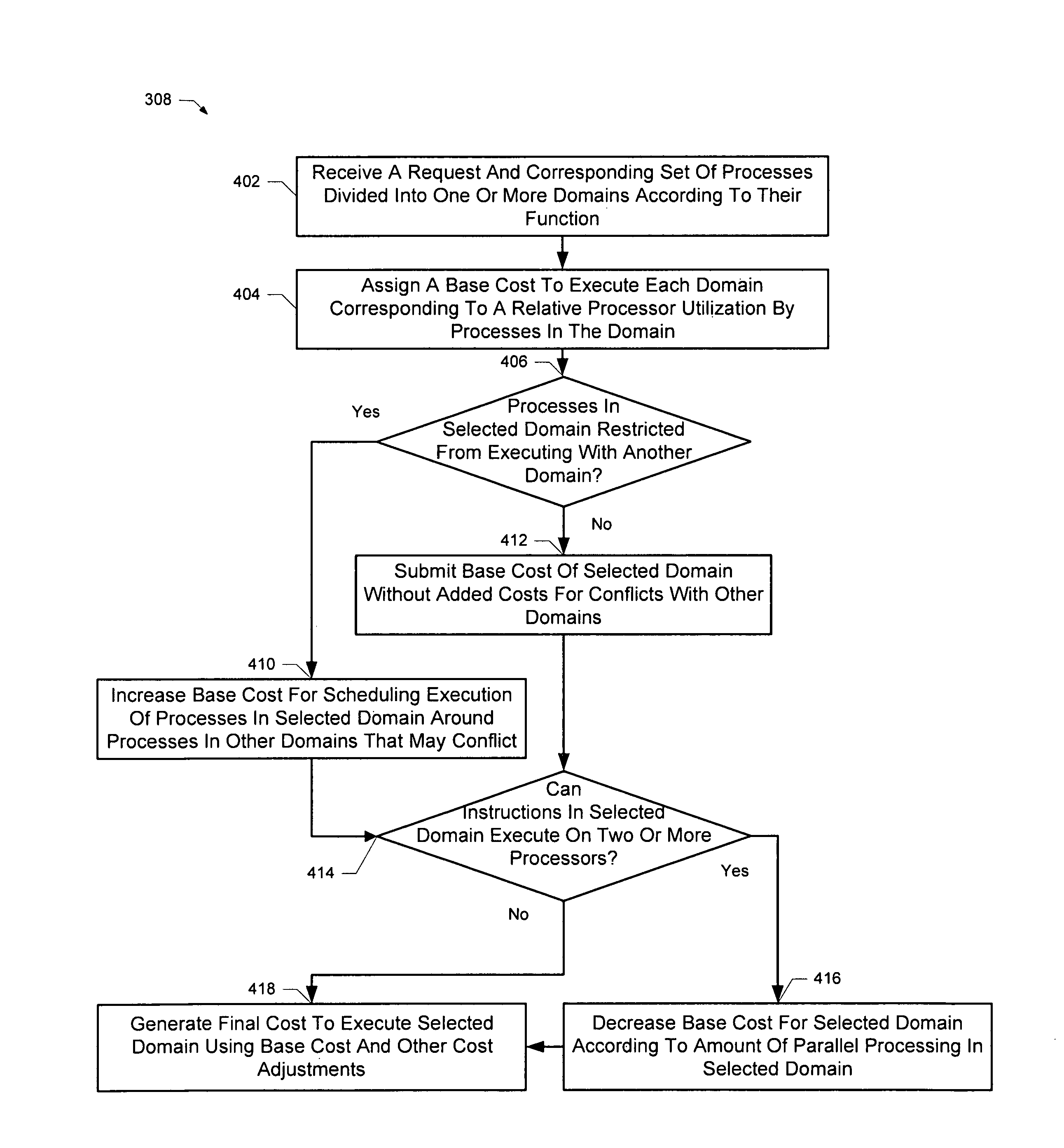

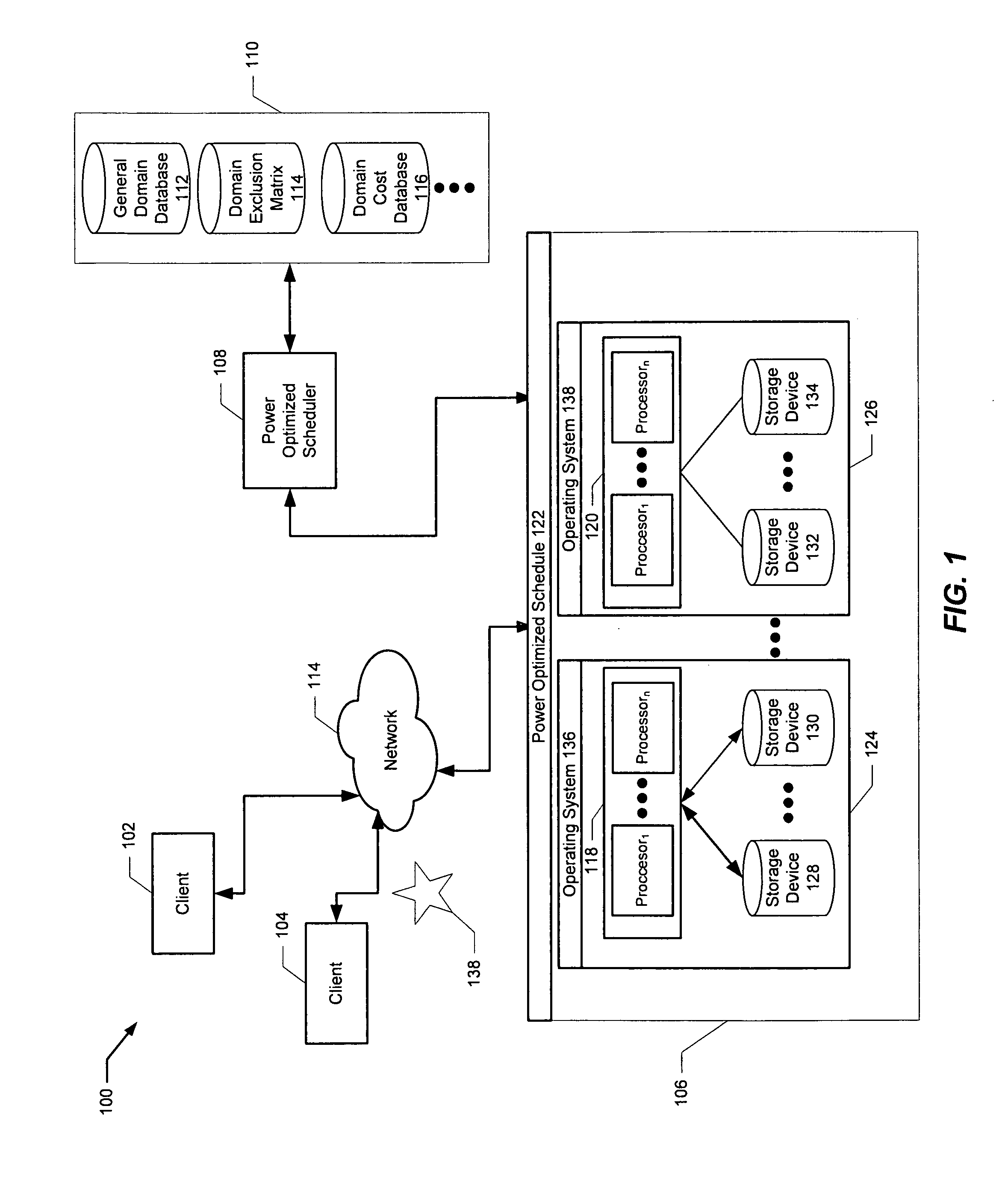

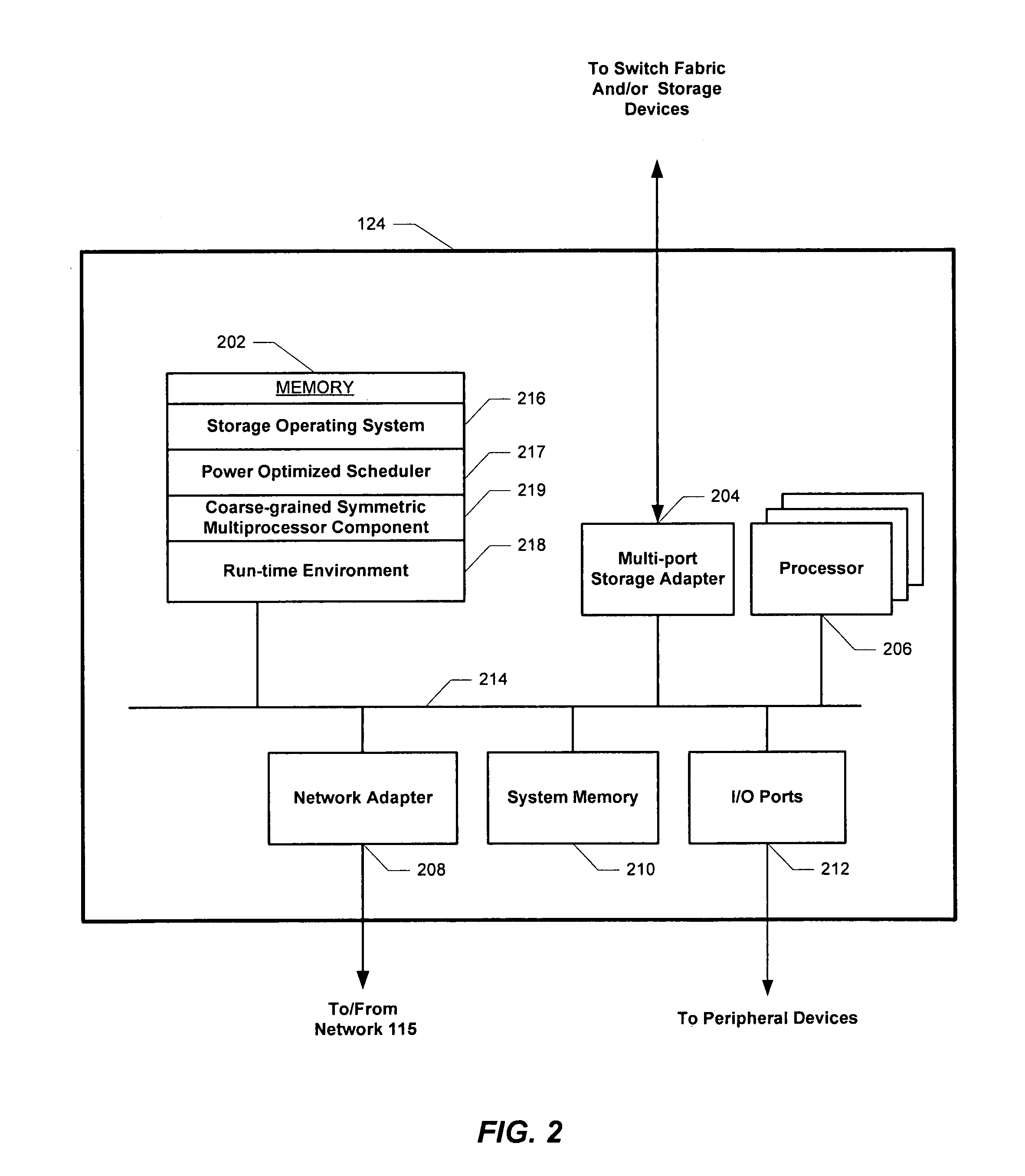

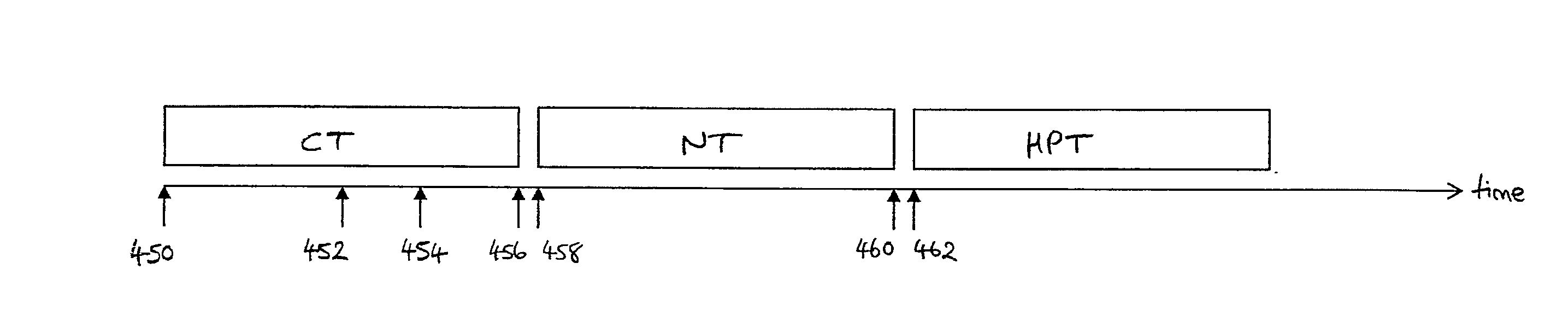

Processor scheduling method and system using domains

ActiveUS8578386B1Increase the number ofAvoid confictEnergy efficient ICTDigital data processing detailsProcessor schedulingWorkload

Aspects of the present invention concern a method and system for scheduling a request for execution on multiple processors. This scheduler divides processes from the request into a set of domains. Instructions in the same domain are capable of executing the instructions associated with the request in a serial manner on a processor without conflicts. A relative processor utilization for each domain in the set of the domains is based upon a workload corresponding to an execution of the request. If there are processors available then the present invention provisions a subset of available processors to fulfill an aggregate processor utilization. The aggregate processor utilization is created from a combination of the relative processor utilization associated with each domain in the set of domains. If processors are not needed then some processors may be shut down. Shutting down processors in accordance with the schedule saves energy without sacrificing performing.

Owner:NETWORK APPLIANCE INC

Task scheduling method and apparatus

ActiveUS20090031319A1Multiprogramming arrangementsMemory systemsProcessor schedulingComputer science

Owner:NXP USA INC

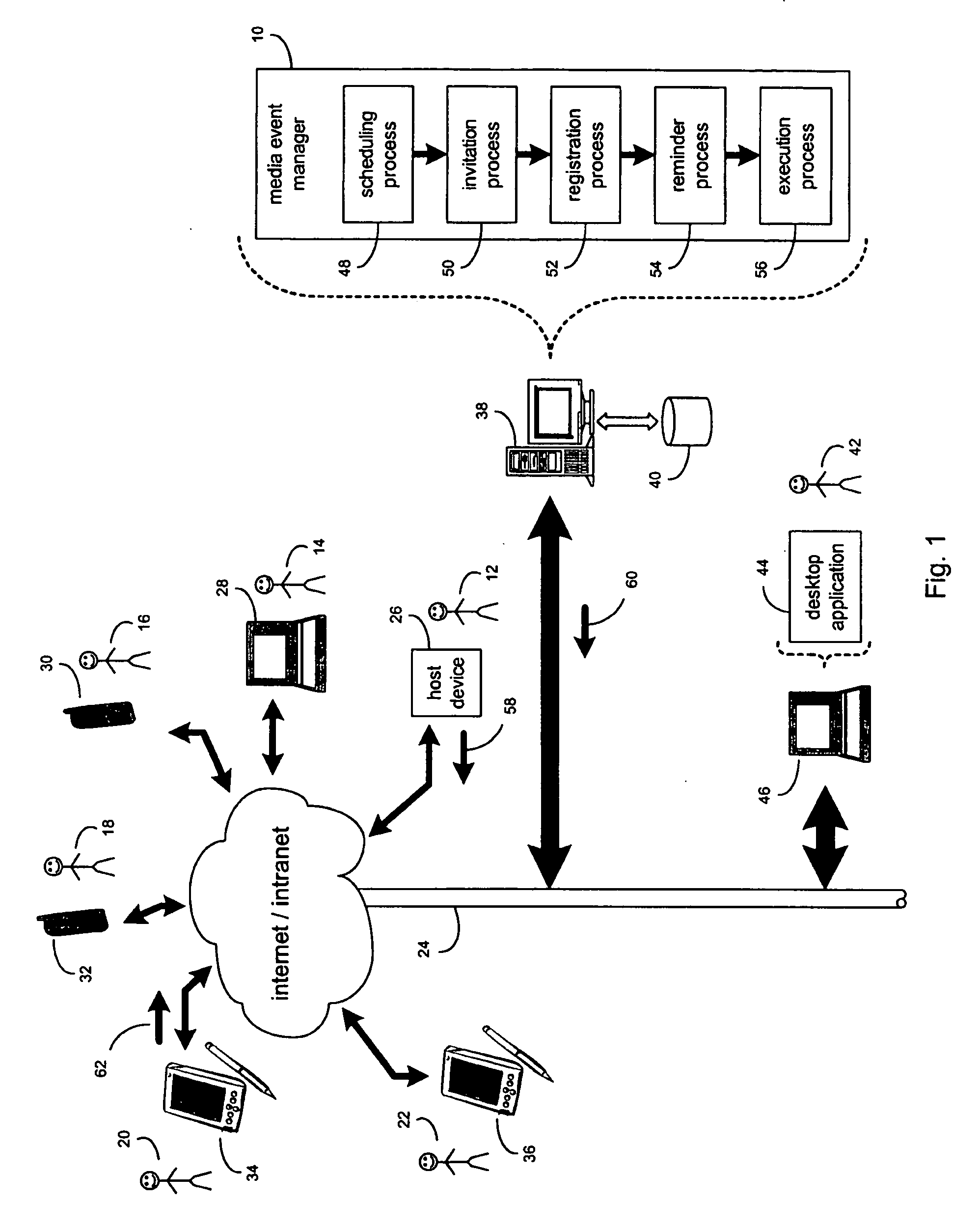

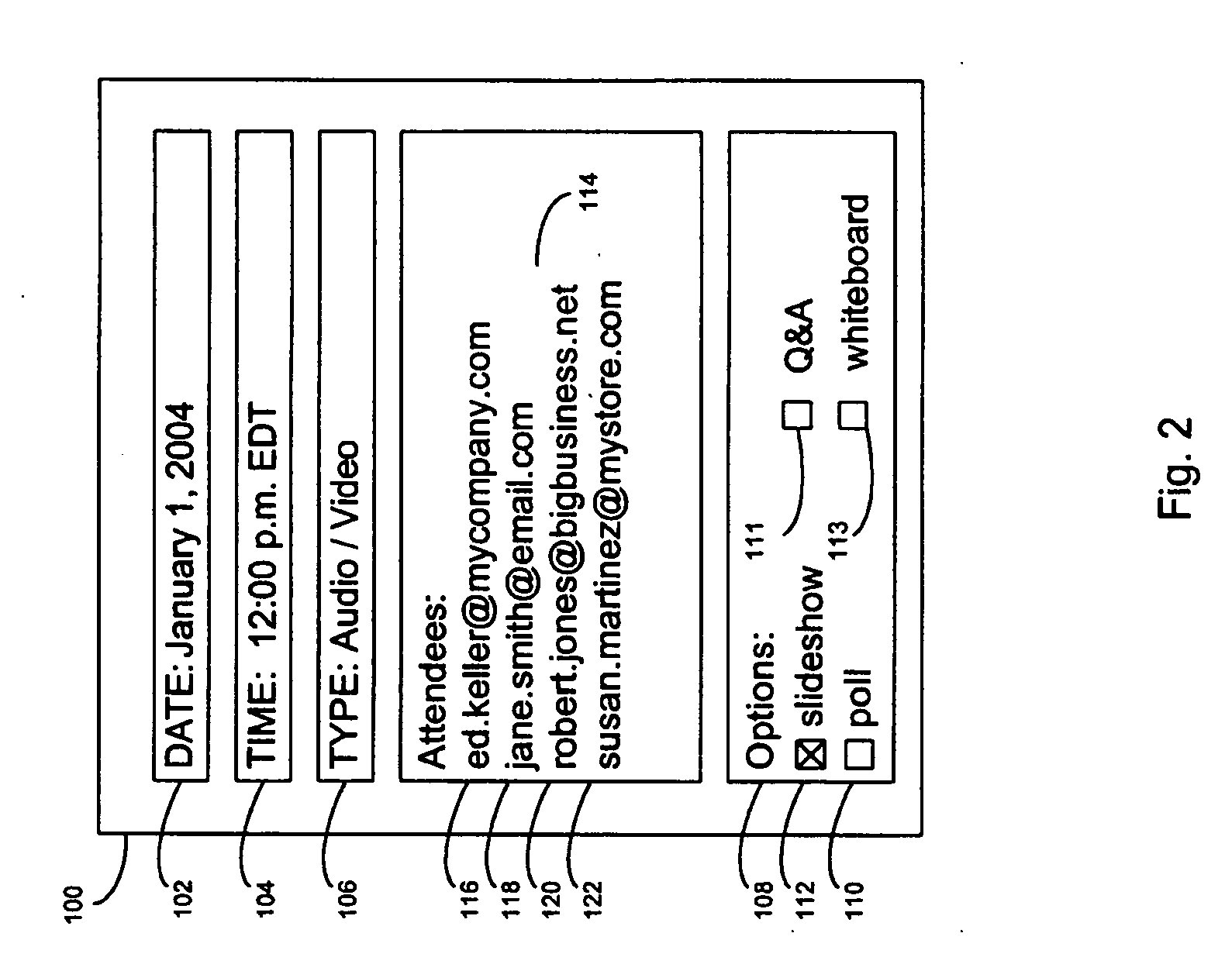

Event scheduling

ActiveUS20050160367A1Special service provision for substationVisual indicationProcessor schedulingEvent scheduling

In one aspect, the invention is a computer program product residing on a computer readable medium having a plurality of instructions stored thereon. The instructions when executed by the processor, cause that processor to schedule a network-based media event; and to invite an attendee to attend the network-based media event. Other aspects of the invention includes a process and a method.

Owner:INTEL CORP

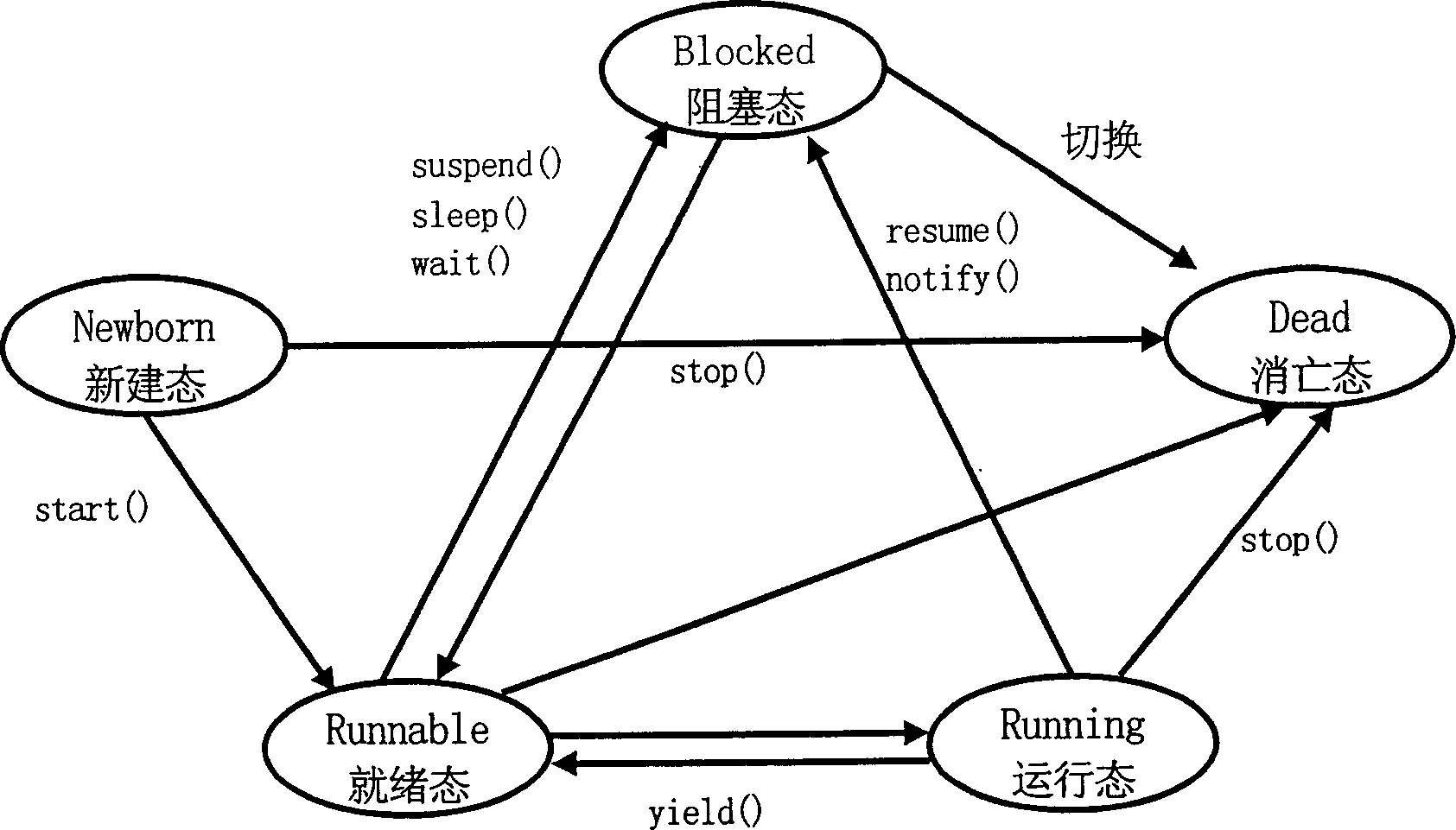

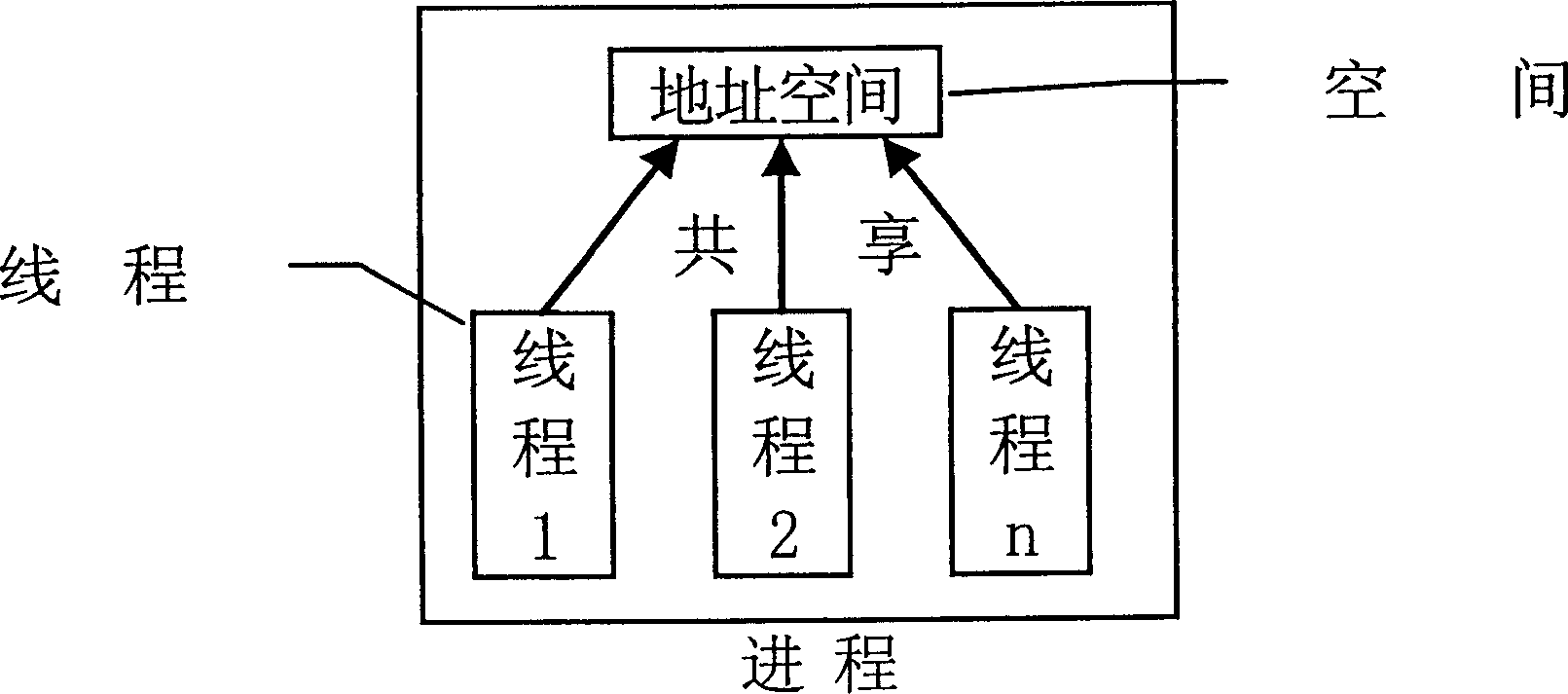

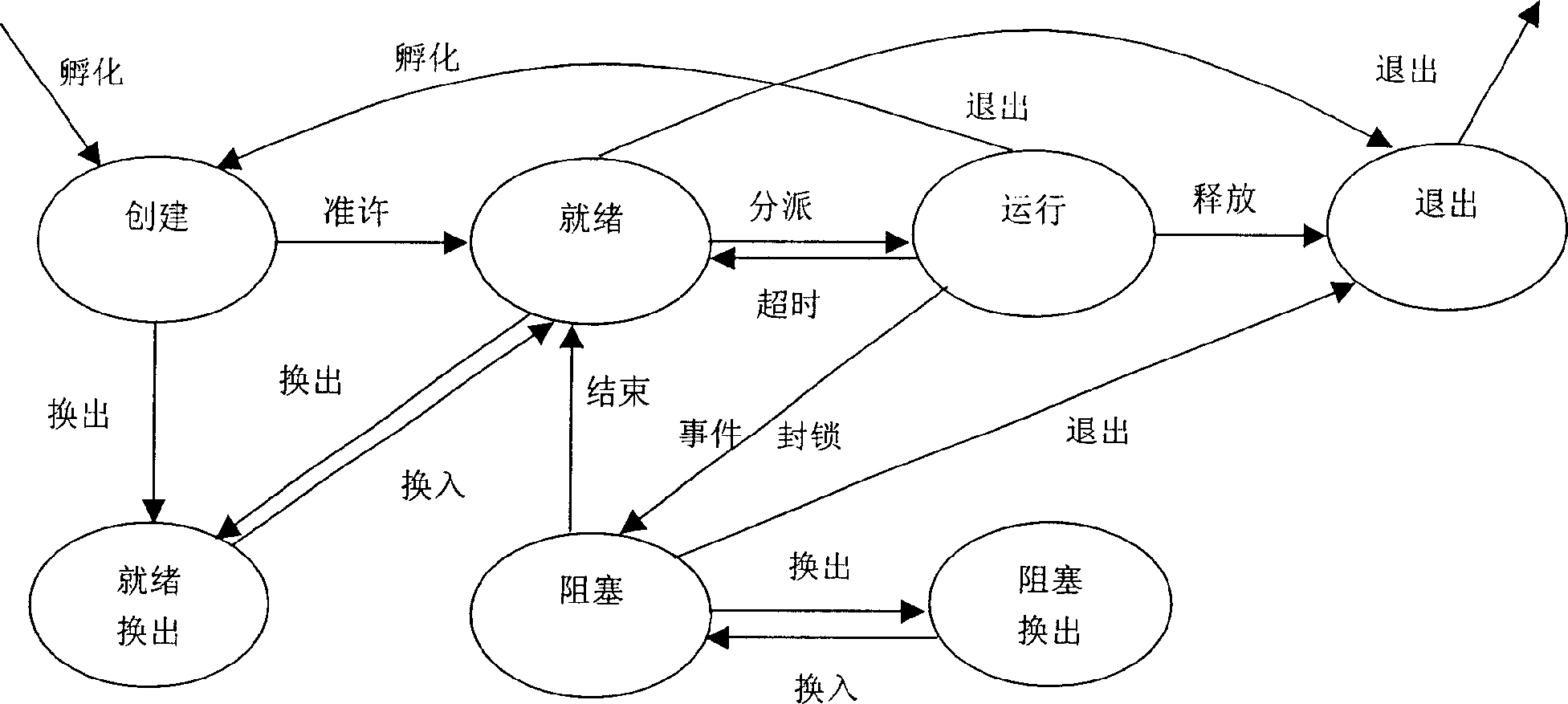

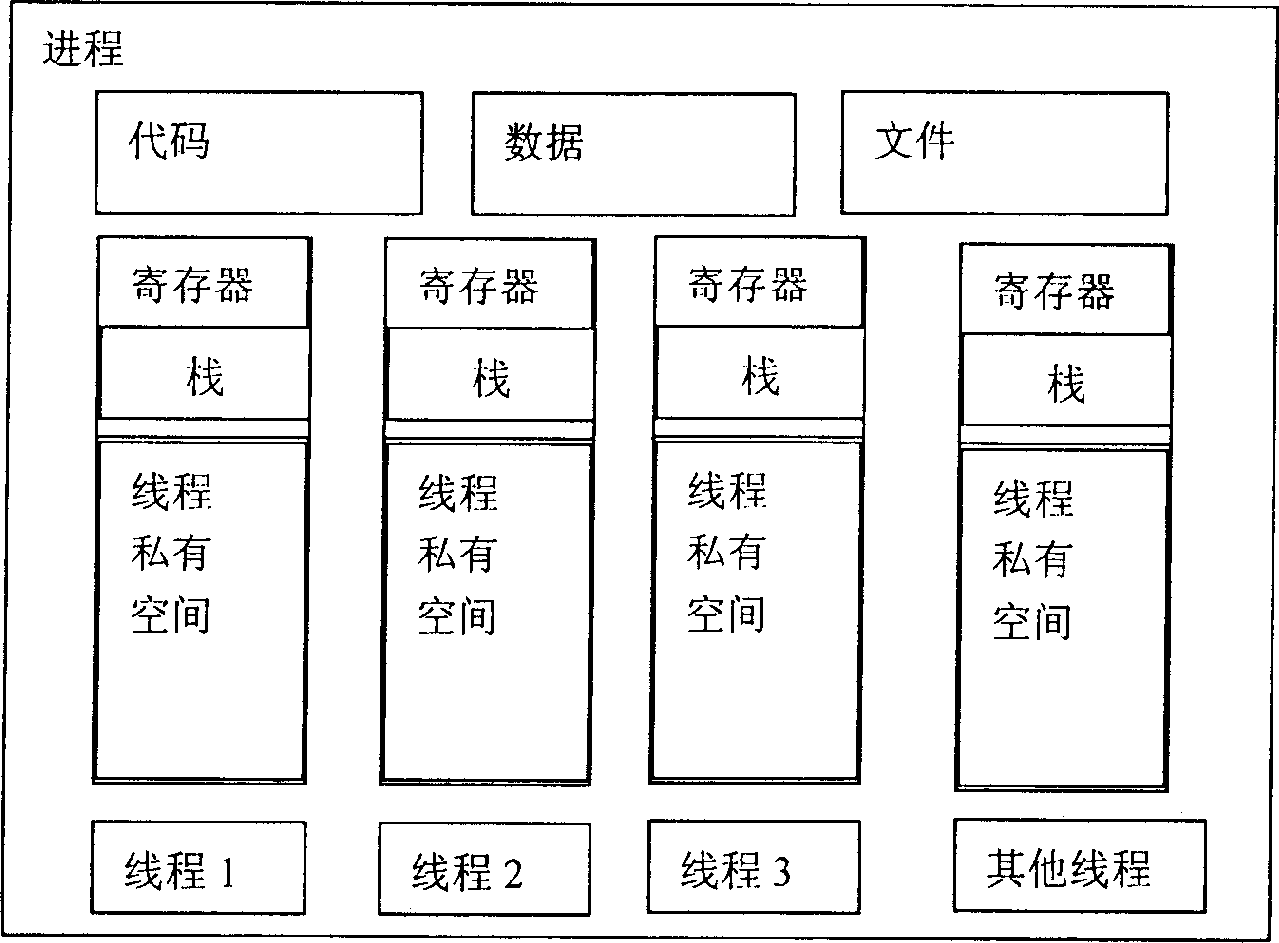

Thread implementation and thread state switching method in Java operation system

InactiveCN1801101AQuick switchReduce administrative overheadProgram initiation/switchingOperational systemProcessor scheduling

The invention discloses a conversion method of line-course realization and state in the Java operation system, which is characterized by the following: the line course provides an execution path in the Java operation system, which is a basic unit of disposer dispatching; all line-course sharing processes acquire address space and resource in the same process; the line course contains five different states, which can do high effective conversion among states to improve the system property. The invention displays important meaning to the embedded system environment, for Java operation system of embedded system especially.

Owner:ZHEJIANG UNIV

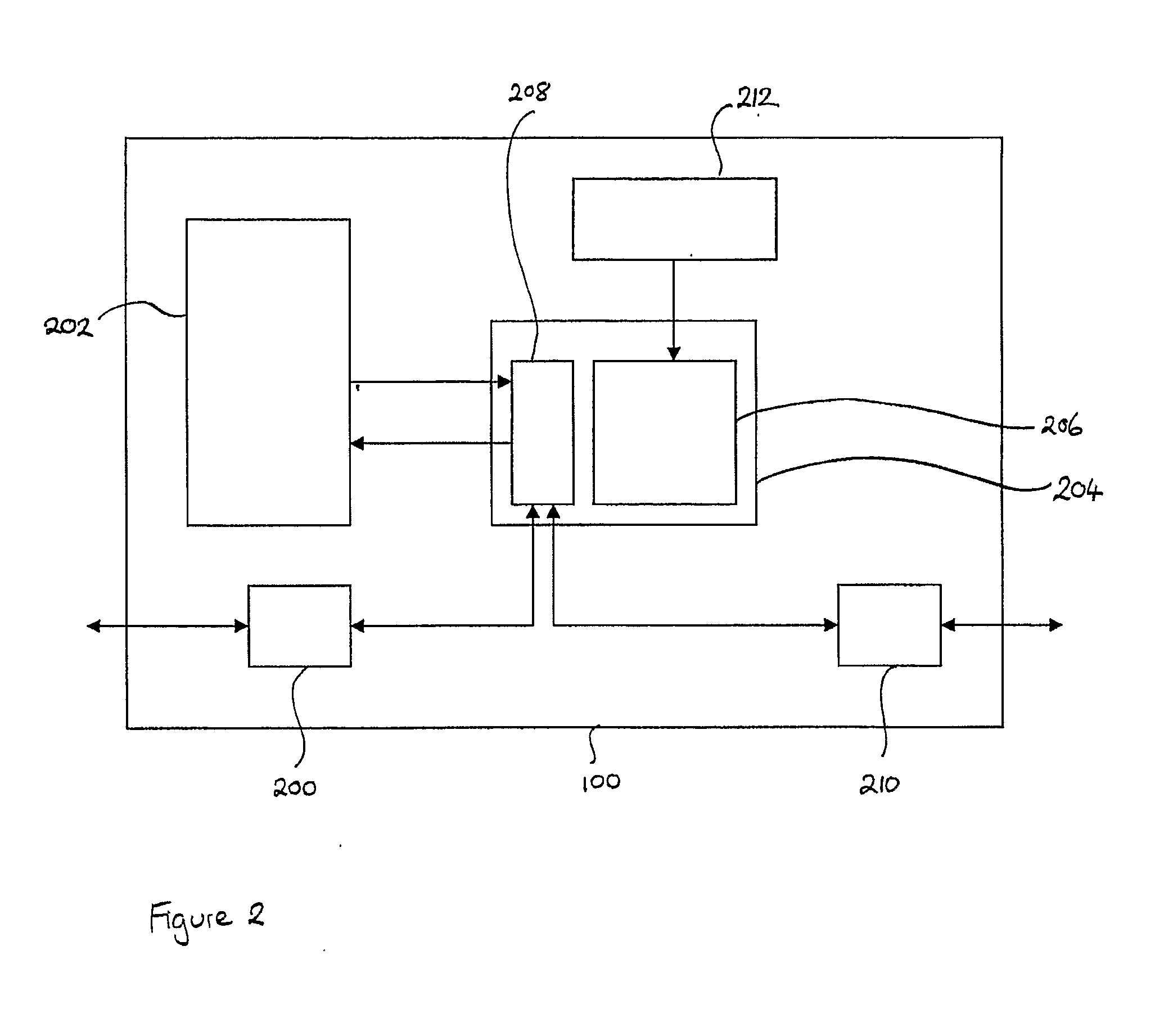

Scalable shader architecture

ActiveUS20080094405A1Processor architectures/configurationElectric digital data processingComputational scienceProcessor scheduling

A scalable shader architecture is disclosed. In accord with that architecture, a shader includes multiple shader pipelines, each of which can perform processing operations on rasterized pixel data. Shader pipelines can be functionally removed as required, thus preventing a defective shader pipeline from causing a chip rejection. The shader includes a shader distributor that processes rasterized pixel data and then selectively distributes the processed rasterized pixel data to the various shader pipelines, beneficially in a manner that balances workloads. A shader collector formats the outputs of the various shader pipelines into proper order to form shaded pixel data. A shader instruction processor (scheduler) programs the individual shader pipelines to perform their intended tasks. Each shader pipeline has a shader gatekeeper that interacts with the shader distributor and with the shader instruction processor such that pixel data that passes through the shader pipelines is controlled and processed as required.

Owner:NVIDIA CORP

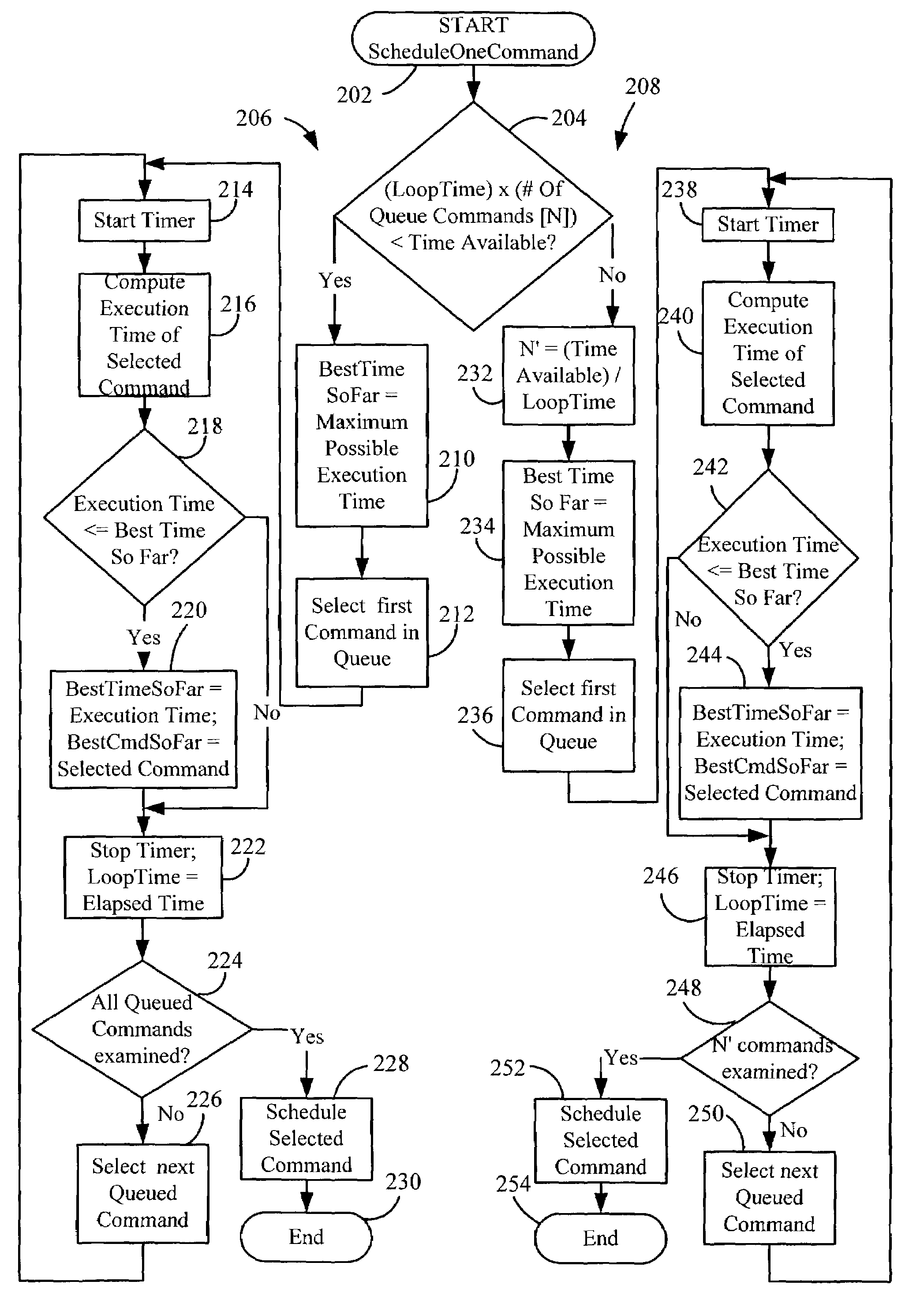

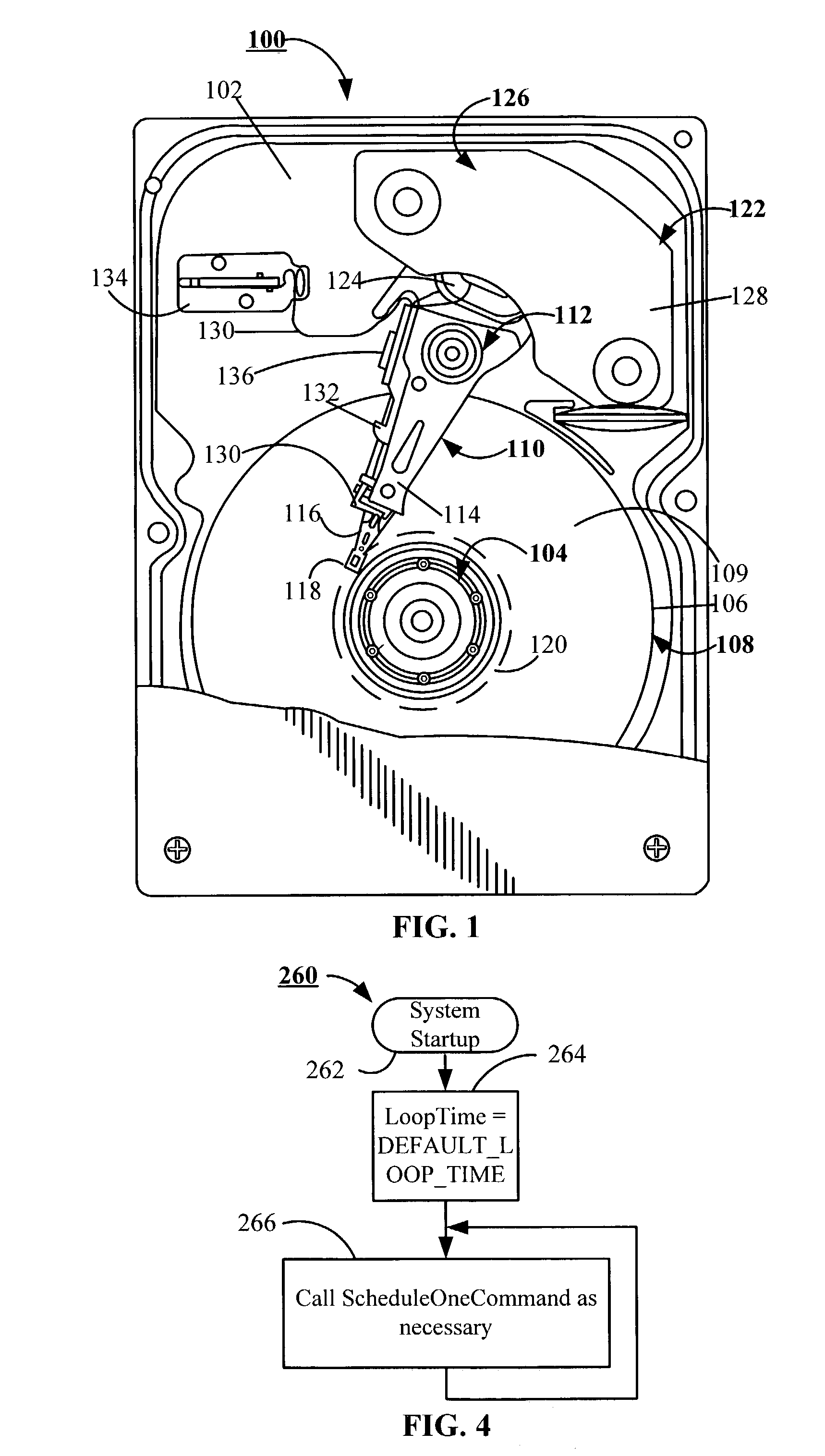

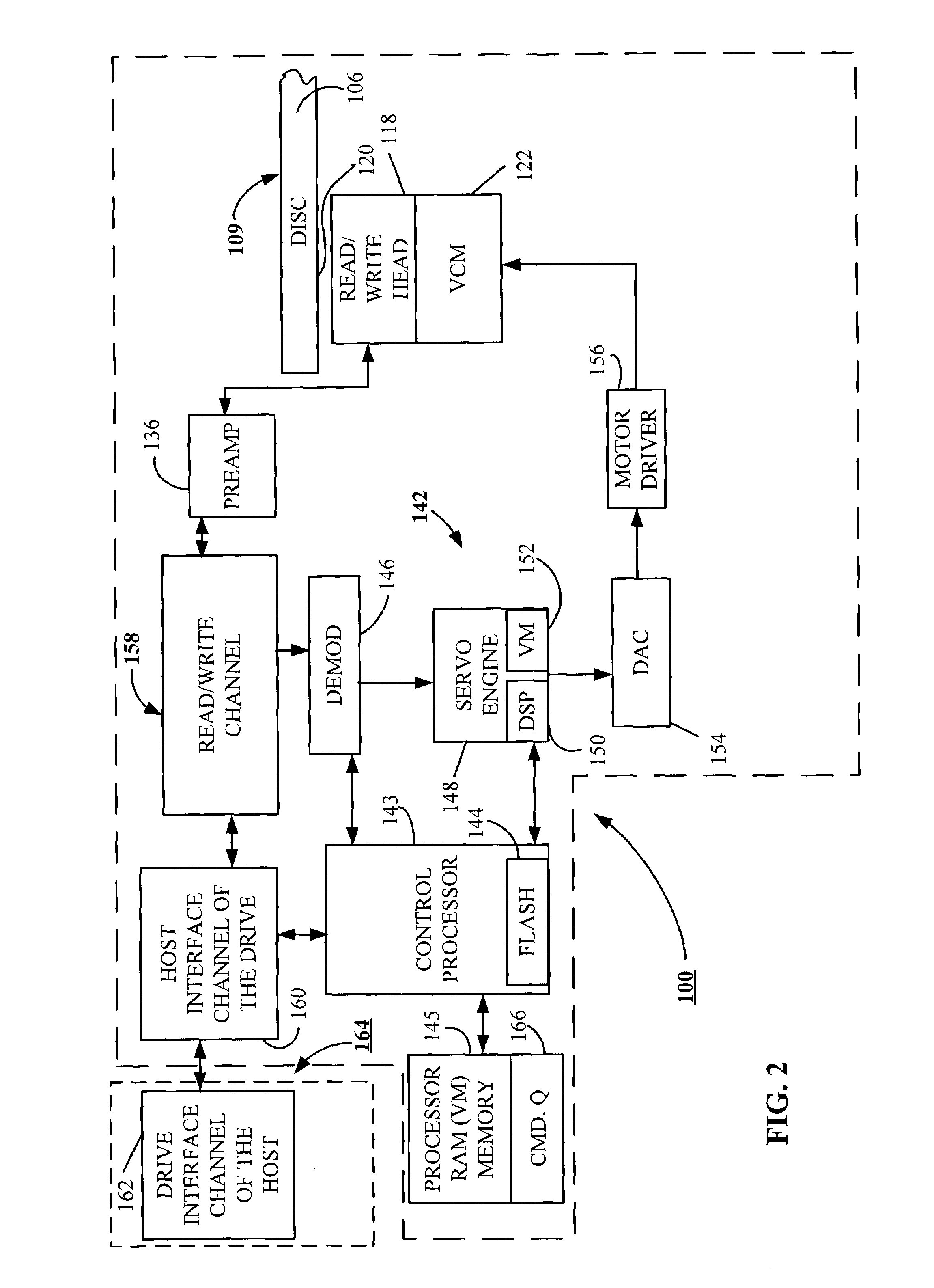

Execution time dependent command schedule optimization

InactiveUS7003644B2Improve data throughputInput/output to record carriersMemory systemsProcessor schedulingParallel computing

A disc drive with a control processor programmed with an execution time dependent command schedule optimization method to effect data throughput with a host device. The disc drive includes a head disc assembly executing commands scheduled by the control processor. The control processor selects and schedules a next optimum command from among commands analyzed by the control processor during a time the head disc assembly is executing a current command. The steps utilized by the control processor to select and schedule the next optimum command include executing a first command with the head disc assembly, determining a computation time for a second command, storing the computation time as a computation time estimate, and using the stored computation time estimate to determine the number of commands in a command queue for analysis to provide a level of command schedule optimization commensurate with the available time.

Owner:SEAGATE TECH LLC

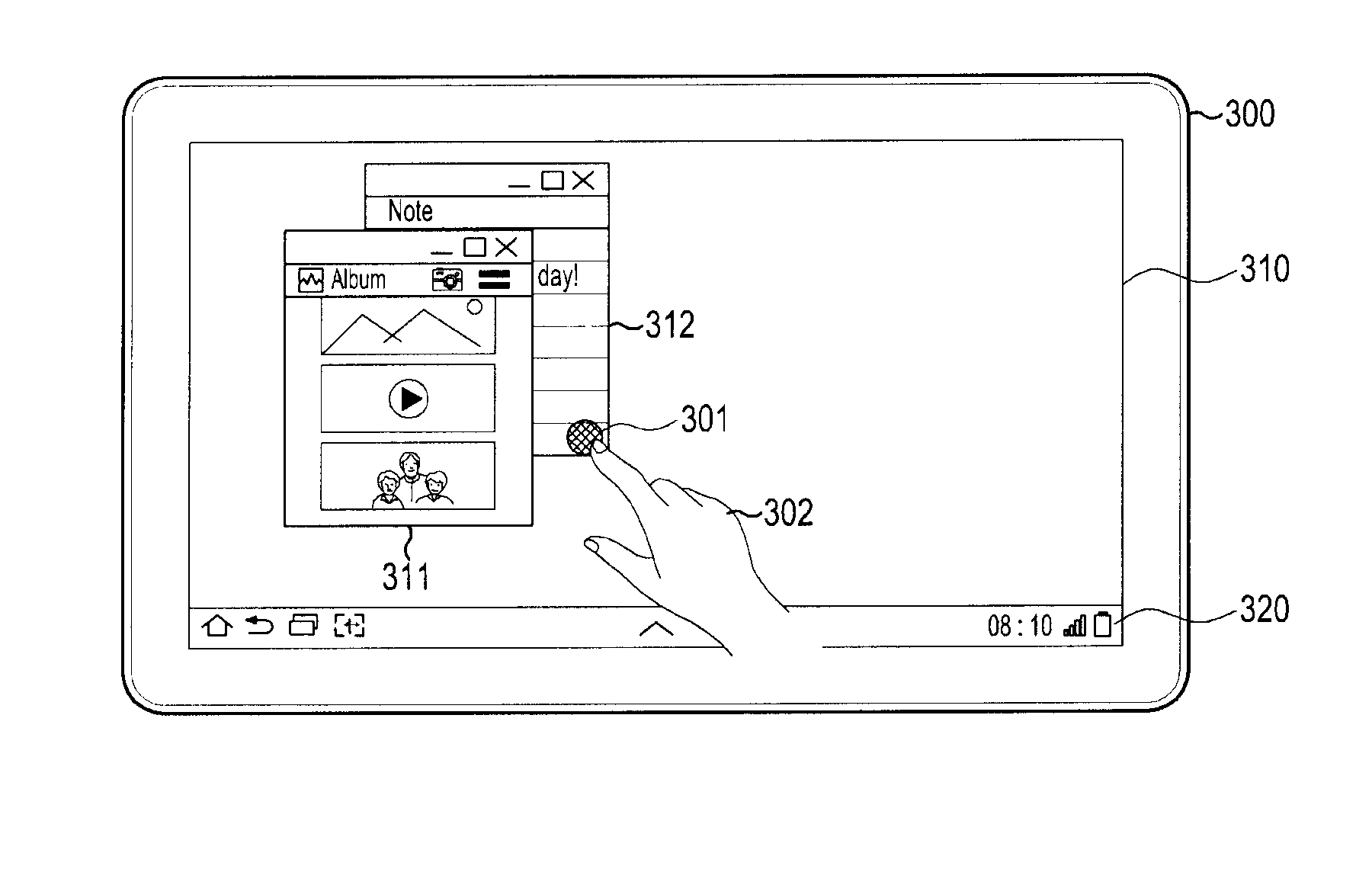

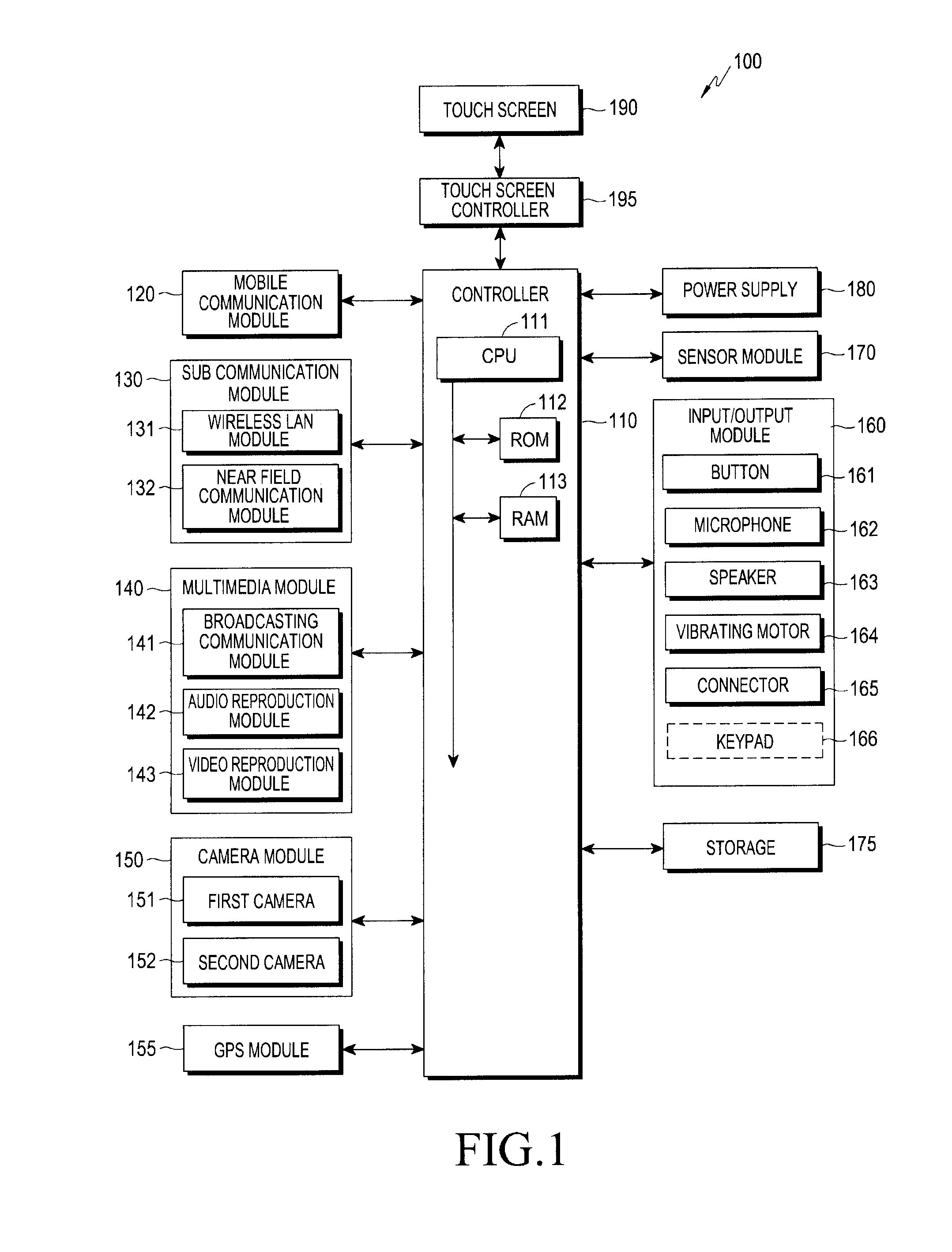

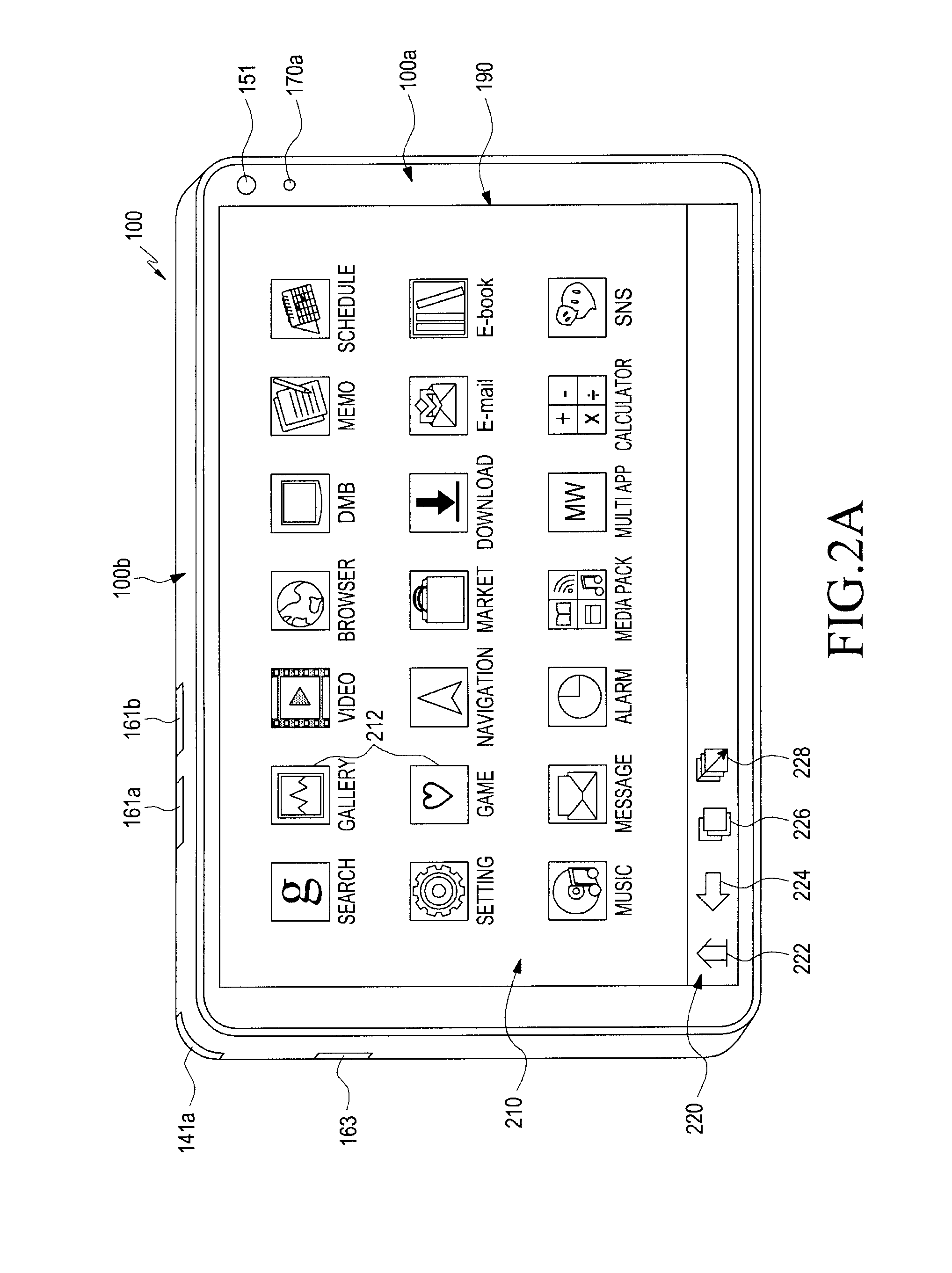

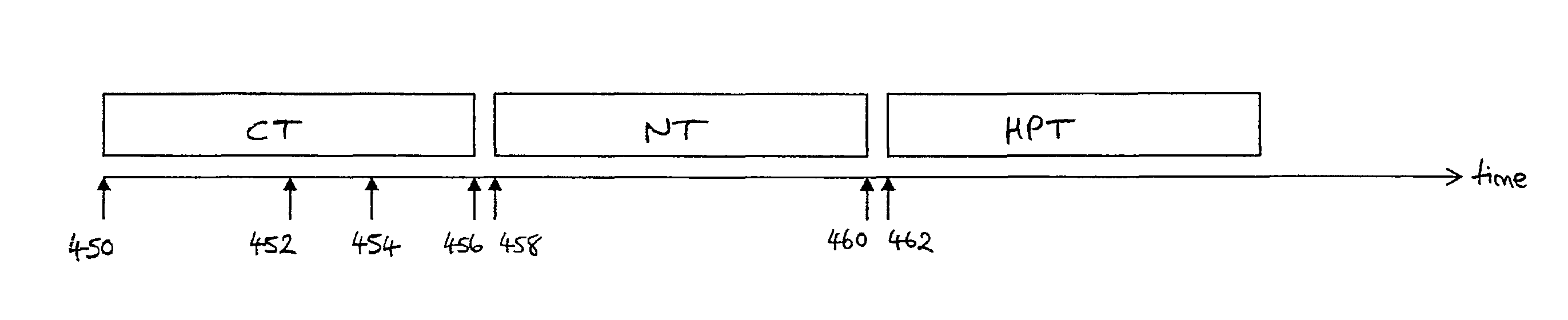

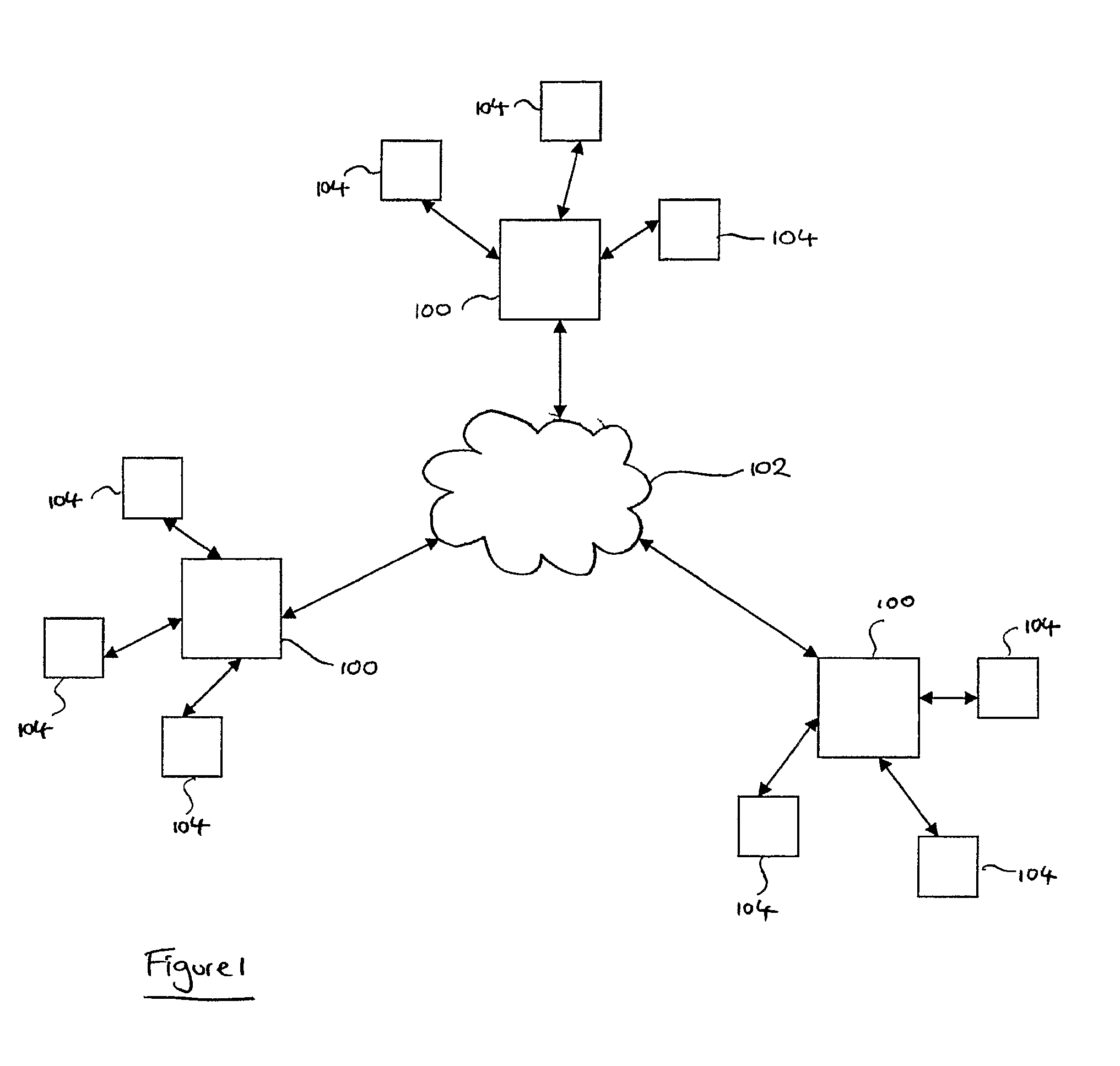

Display device for executing plurality of applications and method of controlling the same

In one aspect, method is provided for controlling a display device, comprising: displaying, on a touchscreen display, a first window executing a first application and a second window executing a second application; receiving, at the touchscreen display, a first command input to the first window and a second command input to the second window; determining whether the first command and the second command are received simultaneously; dispatching, by a processor, the first command; and dispatching, by the processor, the second command.

Owner:SAMSUNG ELECTRONICS CO LTD

Task scheduling method and apparatus

Owner:NXP USA INC

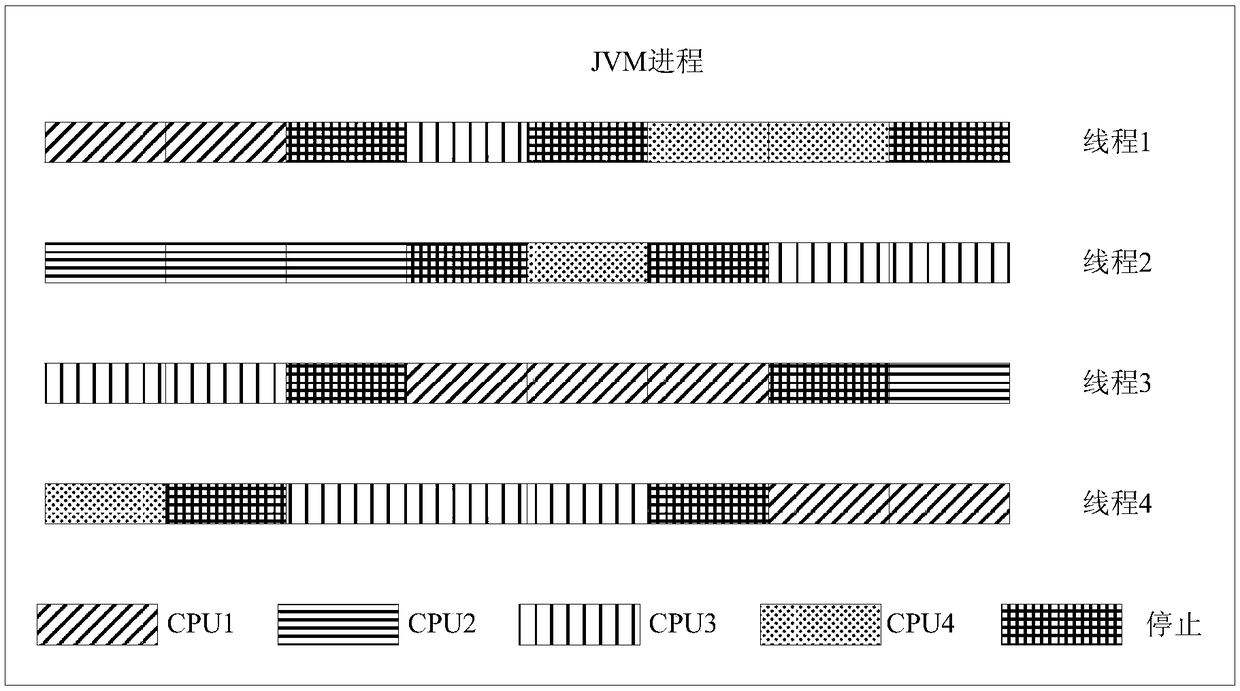

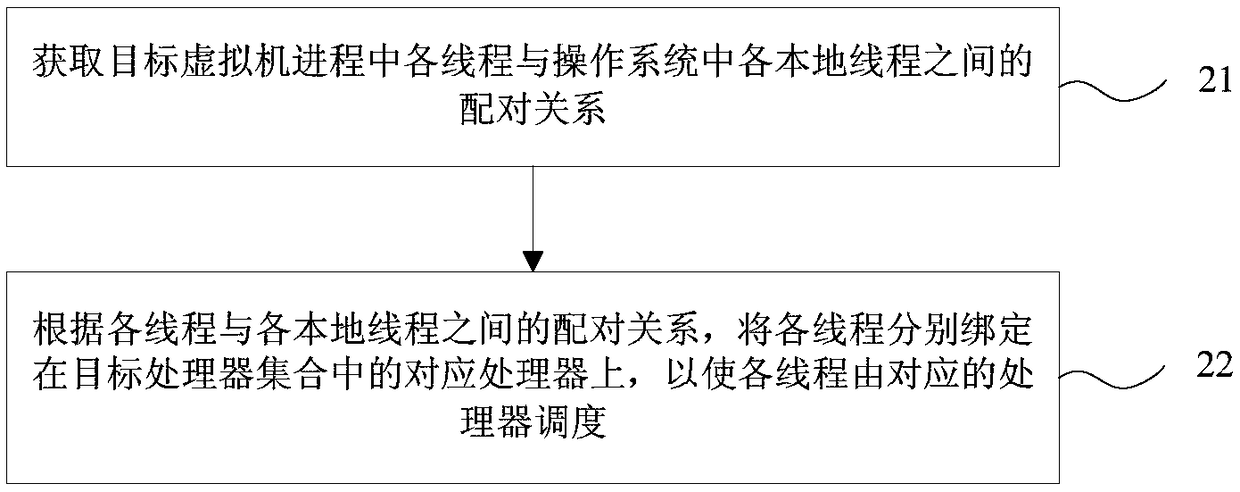

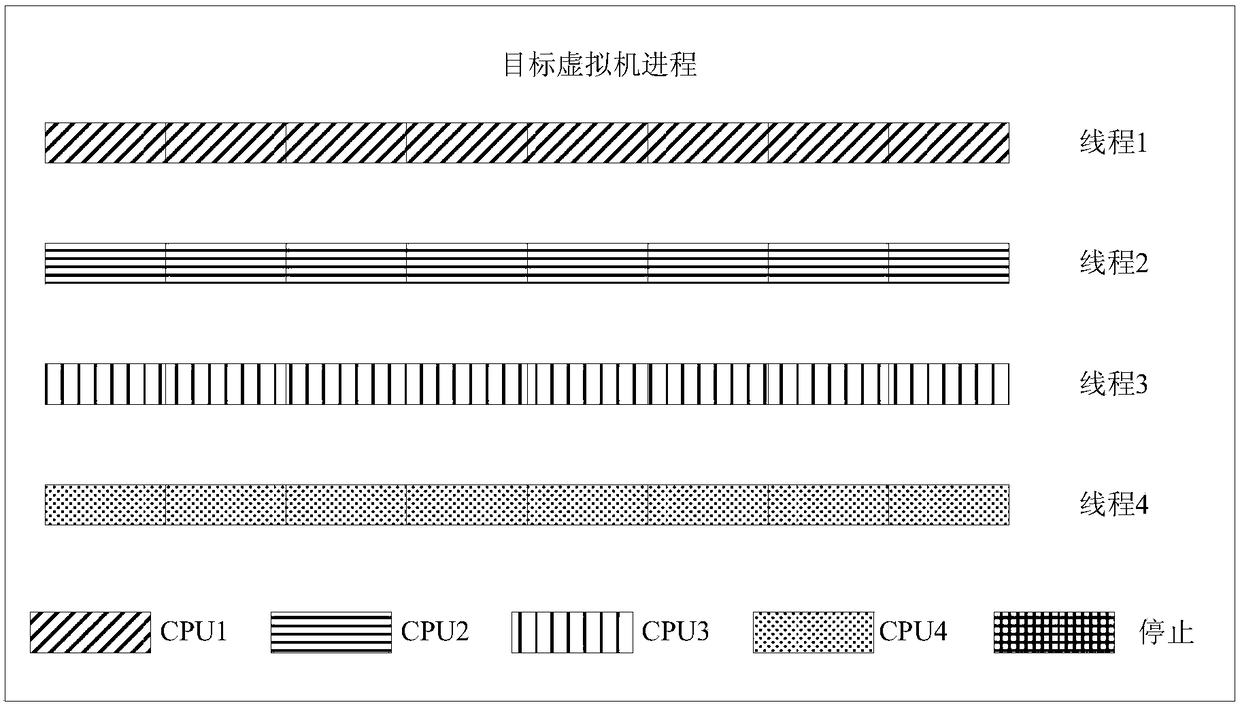

Thread scheduling method and device, electronic equipment and storage medium

InactiveCN108804211AImprove real-time performanceGuaranteed real-timeProgram initiation/switchingResource allocationOperational systemProcessor scheduling

Embodiments of the invention provide a thread scheduling method and device, electronic equipment and a storage medium. The method comprises the steps of acquiring a pairing relation between each thread in a target virtual machine process and each local thread in an operating system; and according to the pairing relation, binding each thread to a corresponding processor in a target processor set, so that each thread is dispatched by the corresponding processor. According to the technical scheme, the phenomenon that time slices of the same processor are scrambled for when multiple threads are executed is avoided; the problem that multi-thread task queuing is blocked due to excessive service threads of the same processor and overlong service time of real-time threads is solved; the executiontime delay of other threads is shortened; and the real-time performance of thread execution is improved.

Owner:HUAWEI CLOUD COMPUTING TECH CO LTD

Threading realizing and threading state transition method for embedded SRAM operating system

InactiveCN1825286AQuick switchWork around limited featuresProgram initiation/switchingProcess systemsOperational system

The invention provides a method for realizing threads and switching thread states for an embedded SRAM operating system, for the operating system is located in the SRAM, and the resources are limited, using the thread as the foundation of controlling the operating system, where a process is composed of threads, each of which is an executing path in the process, and each process is allowed to comprise plural parallel executing paths, namely multithreading. And the thread is a basic unit for the system to make processor control, and all threads in the same process share main memory space and resources obtained by the process, and the thread has seven different states and can make high-efficiency switching between states, and fully use the characters of SRAM and having special significance in processing system resources by embedded SRAM operating system.

Owner:ZHEJIANG UNIV

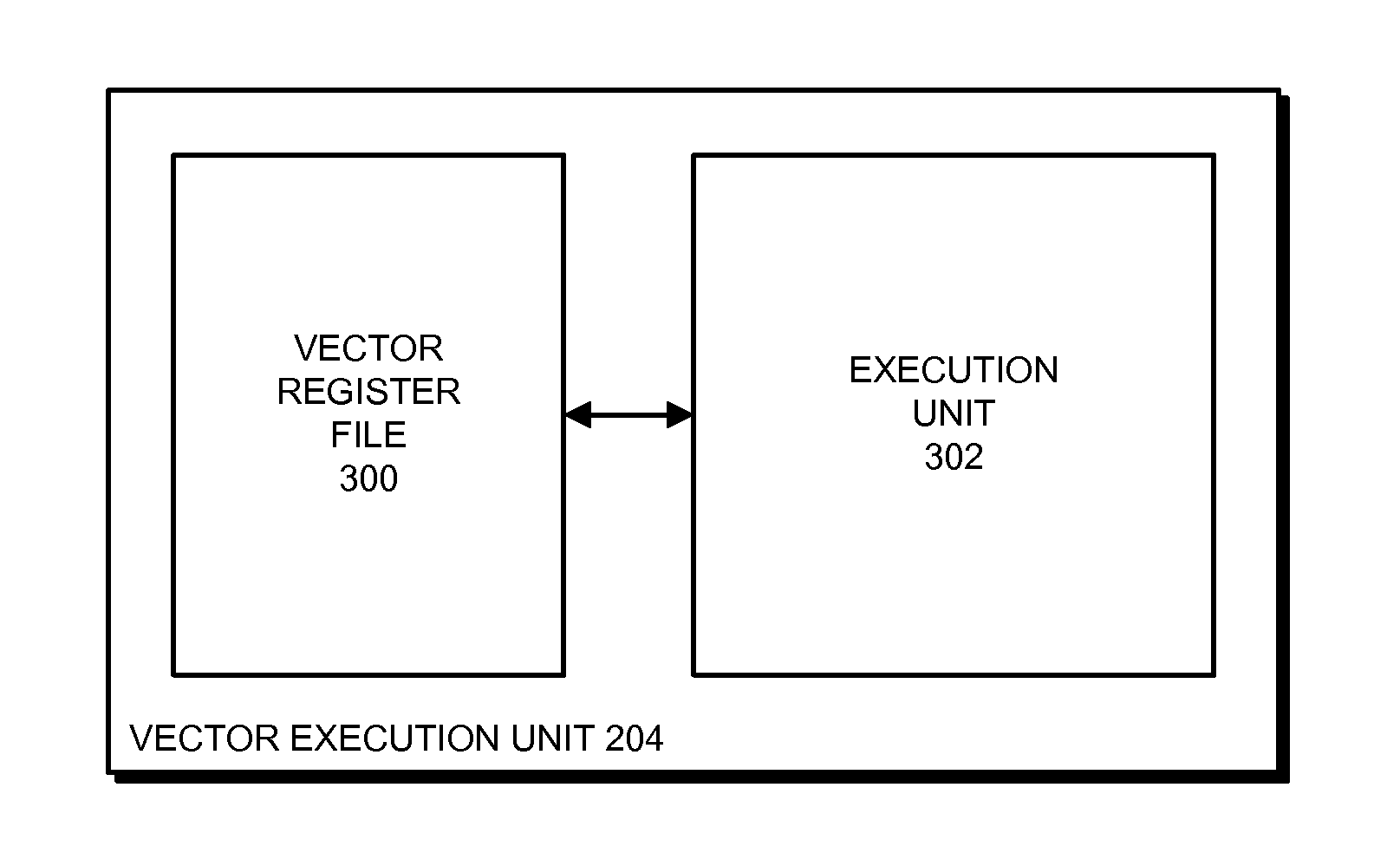

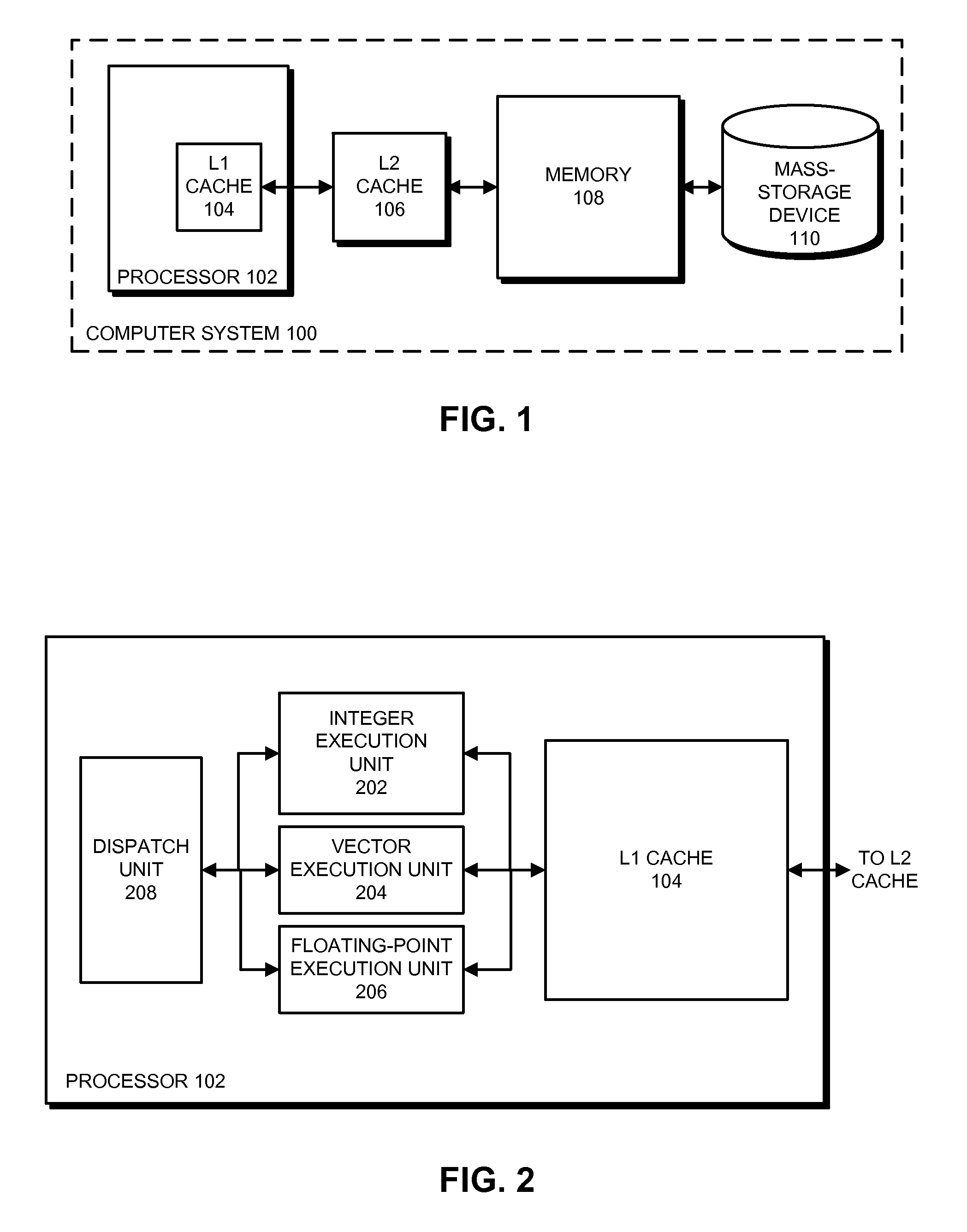

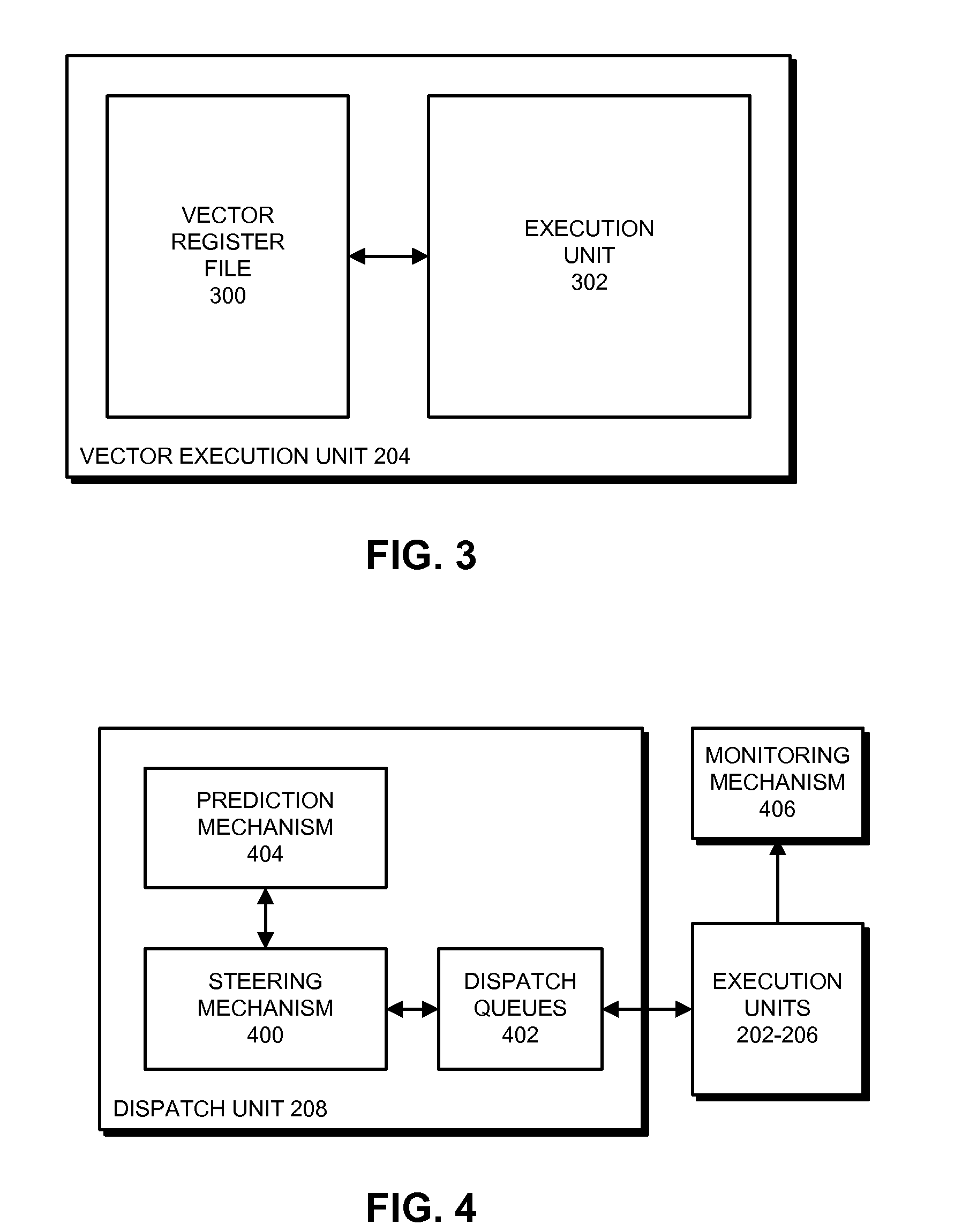

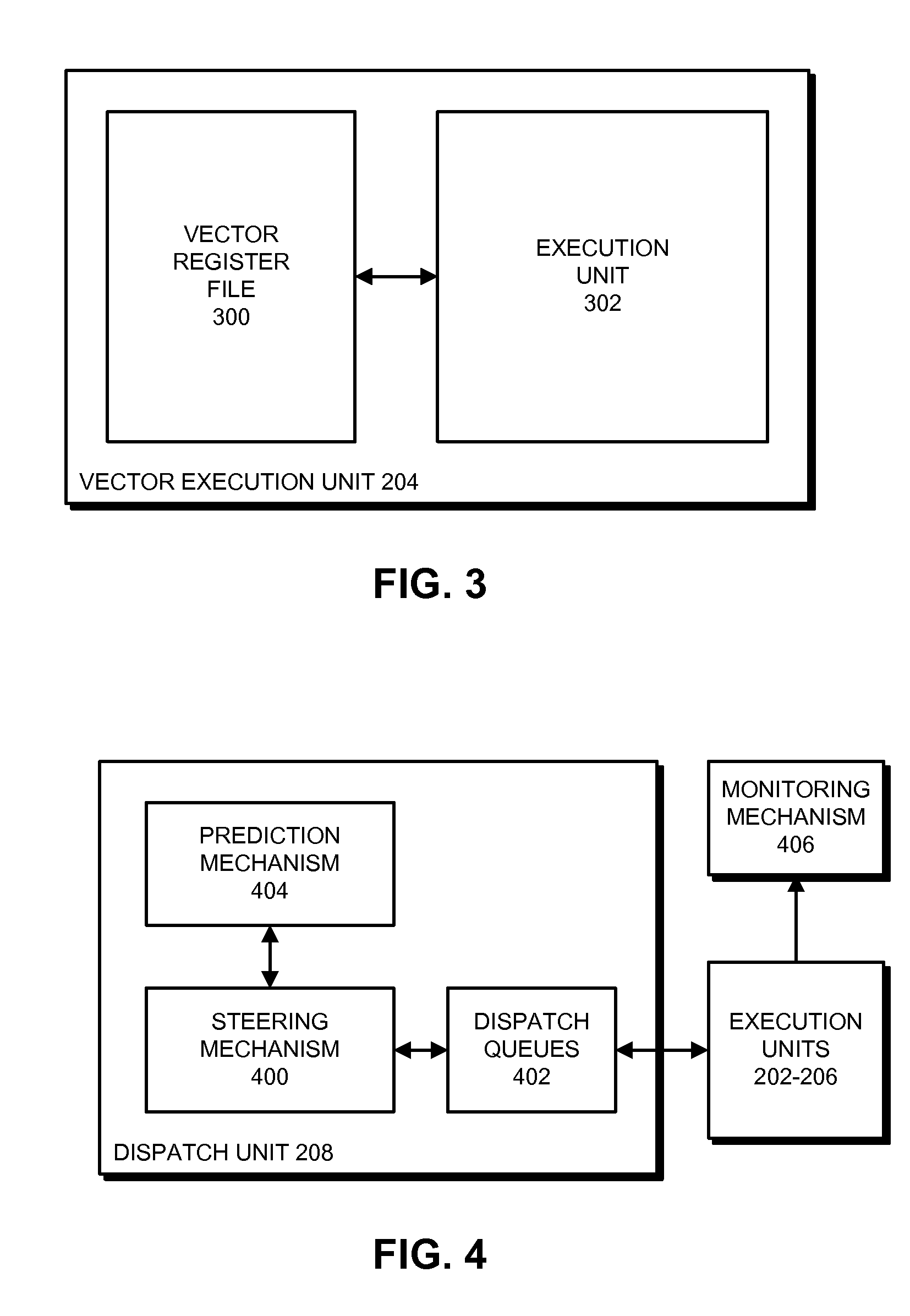

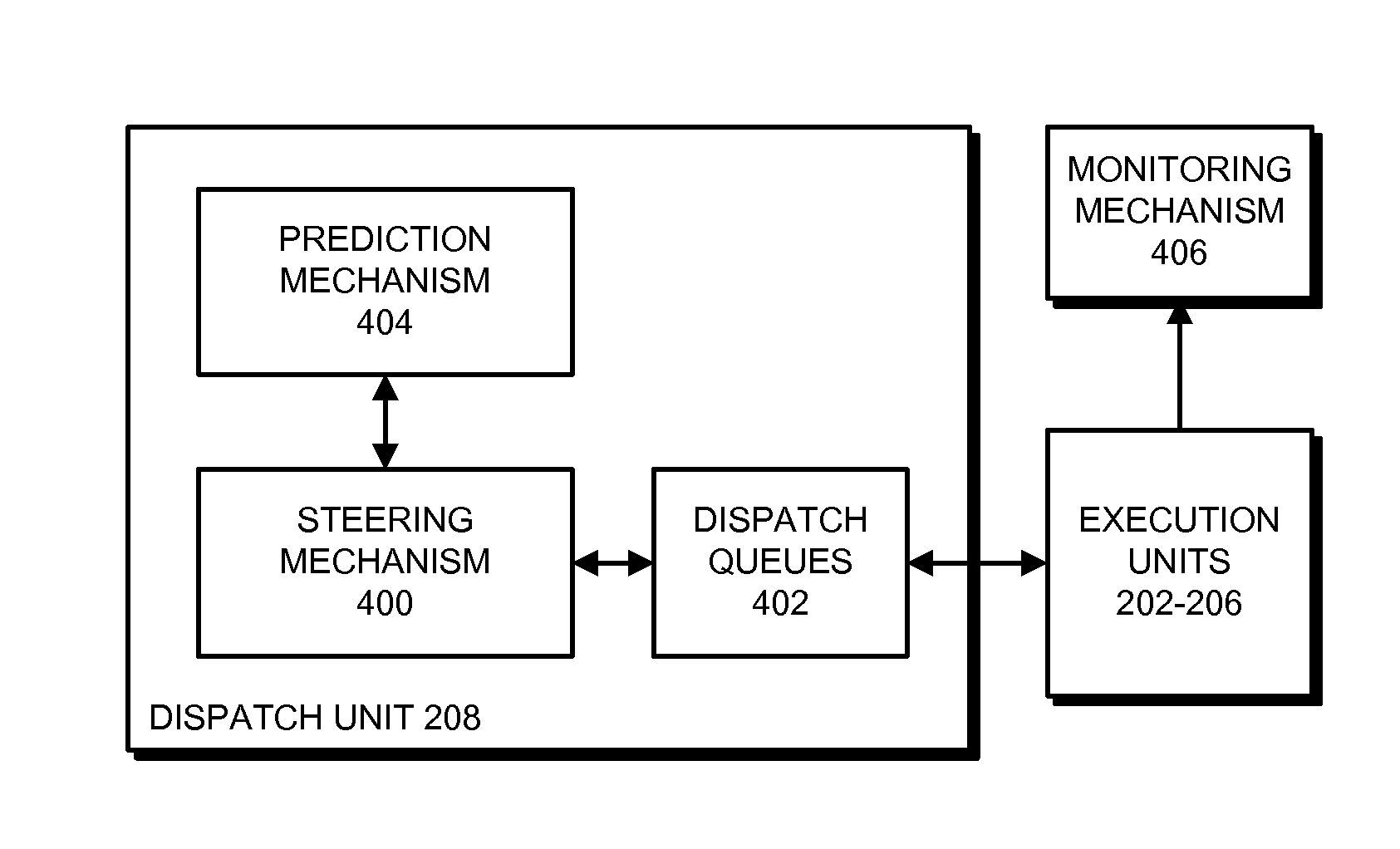

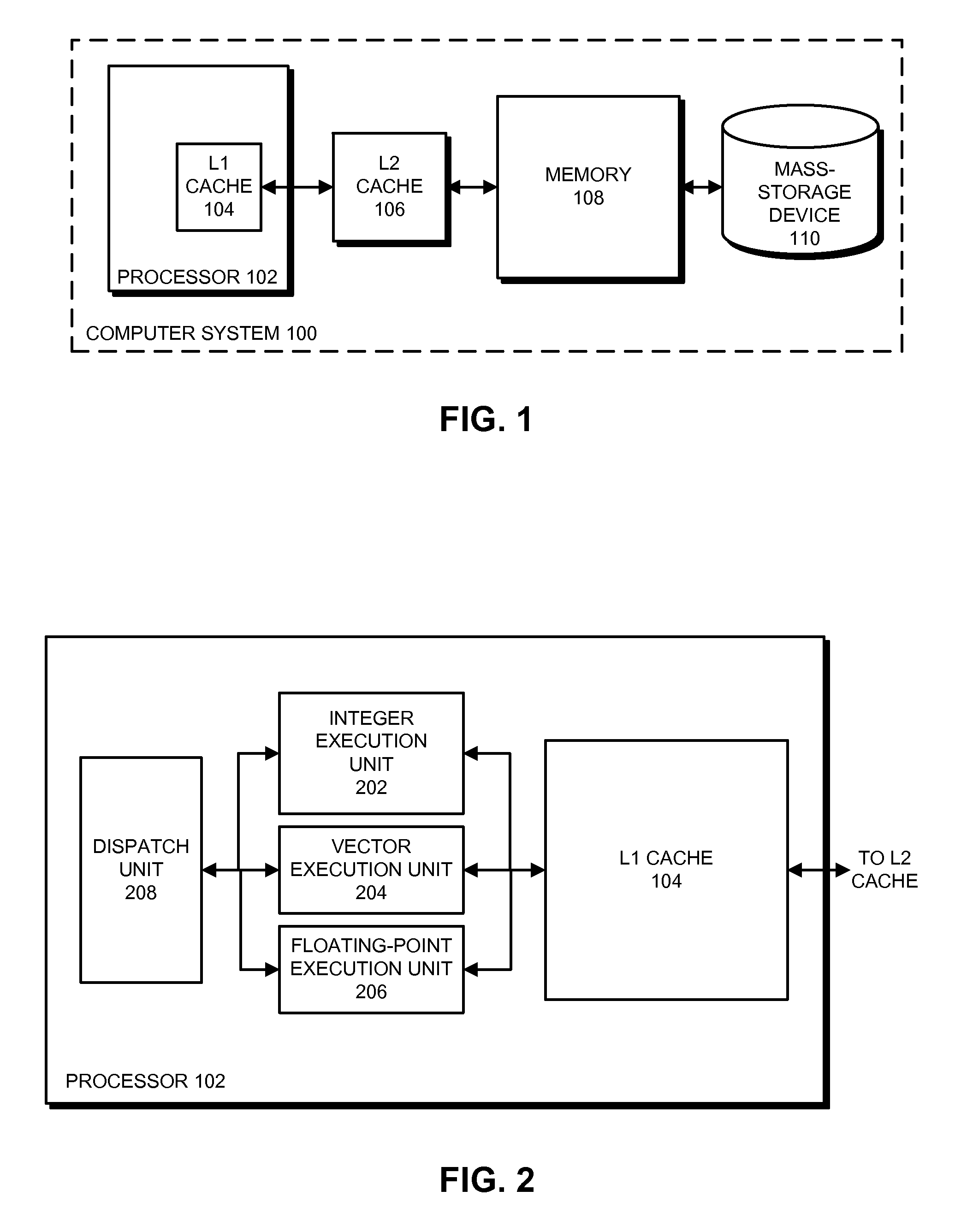

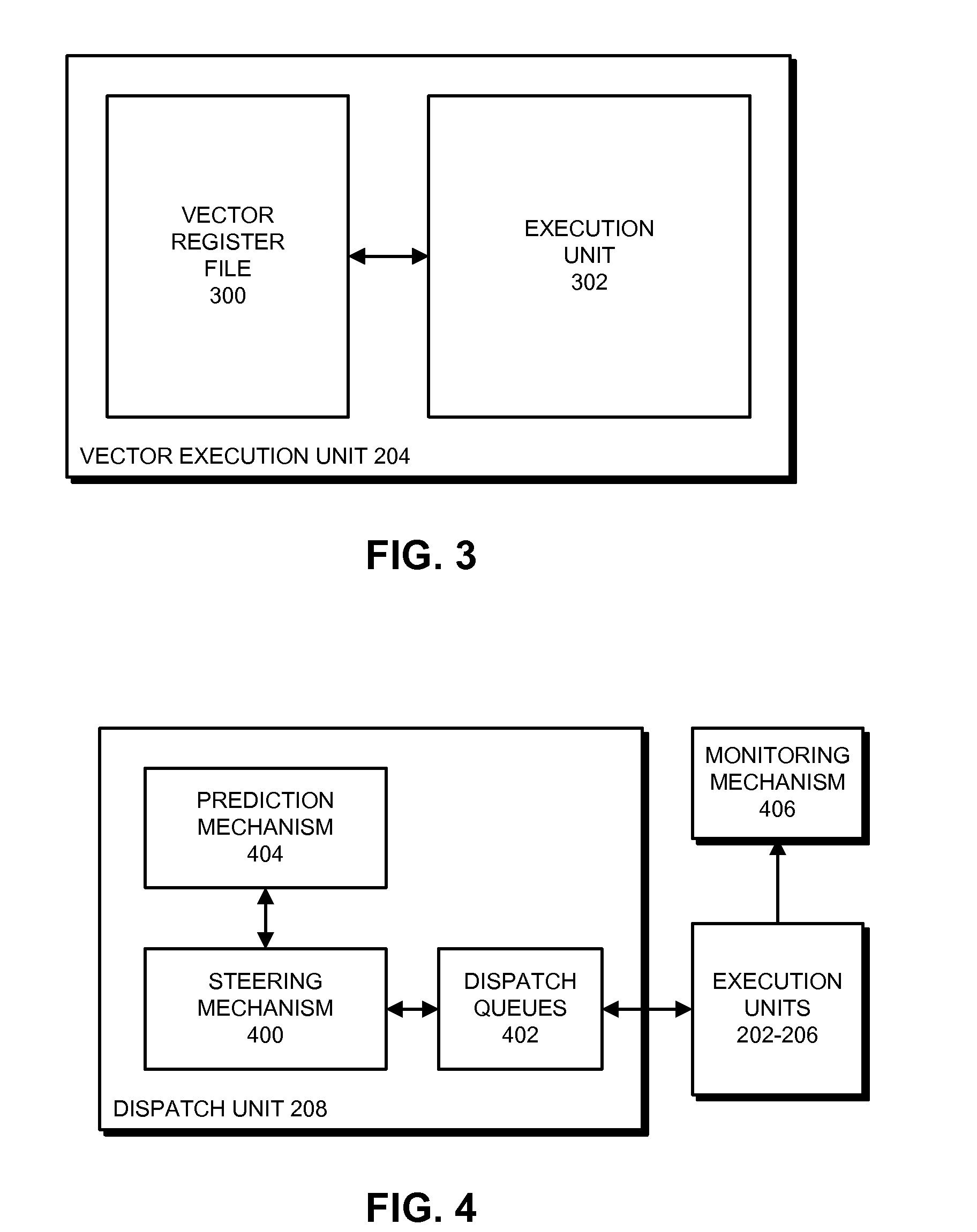

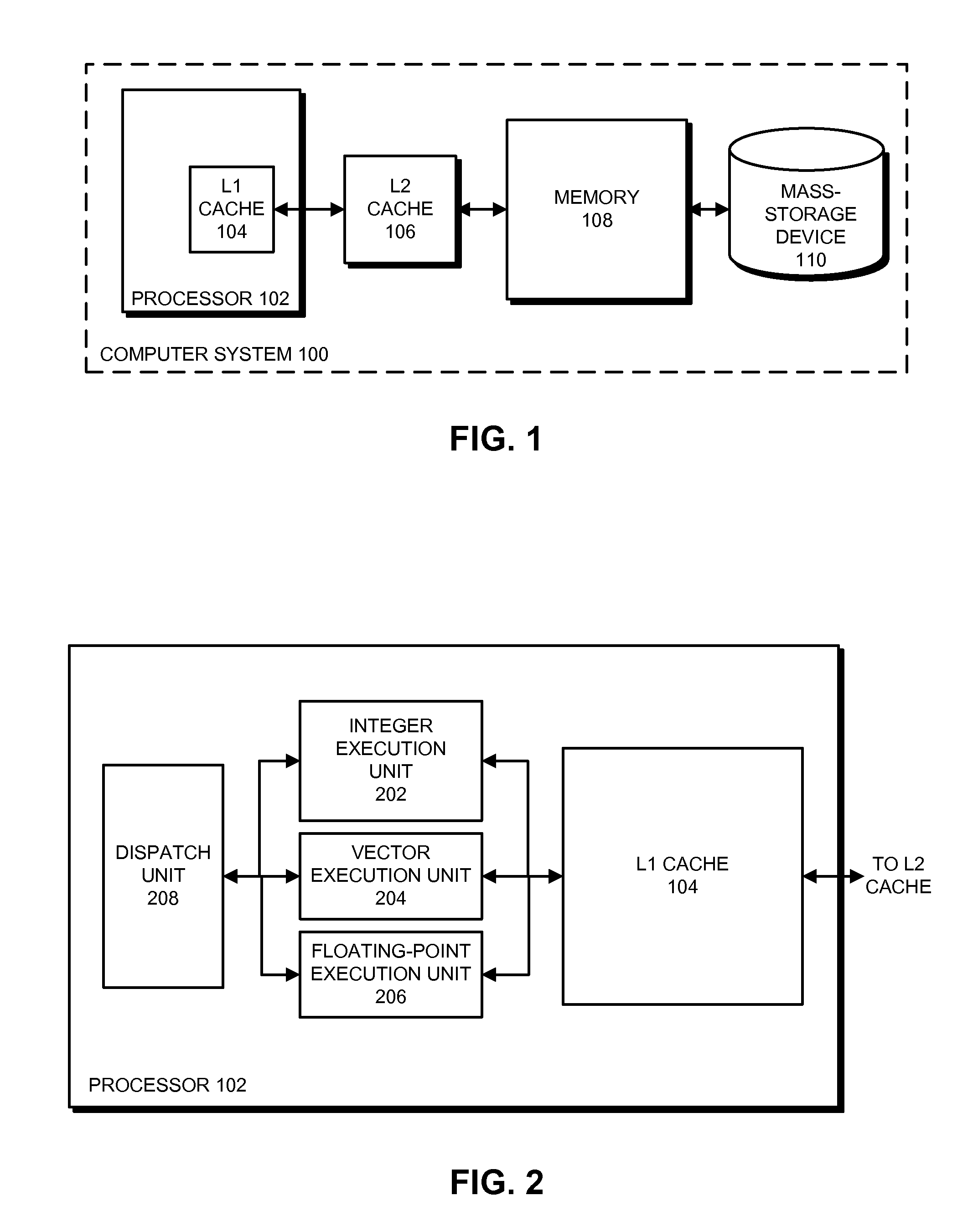

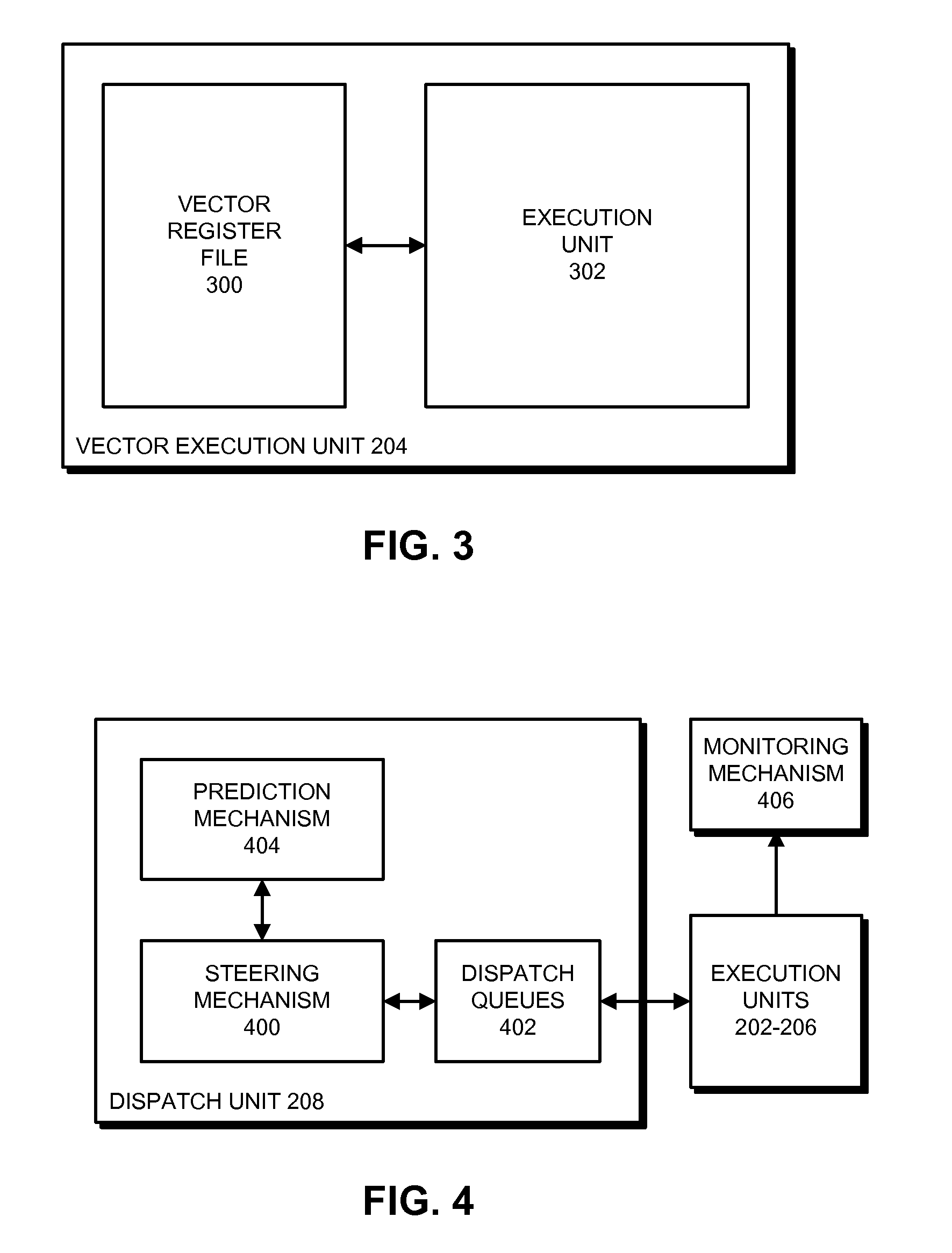

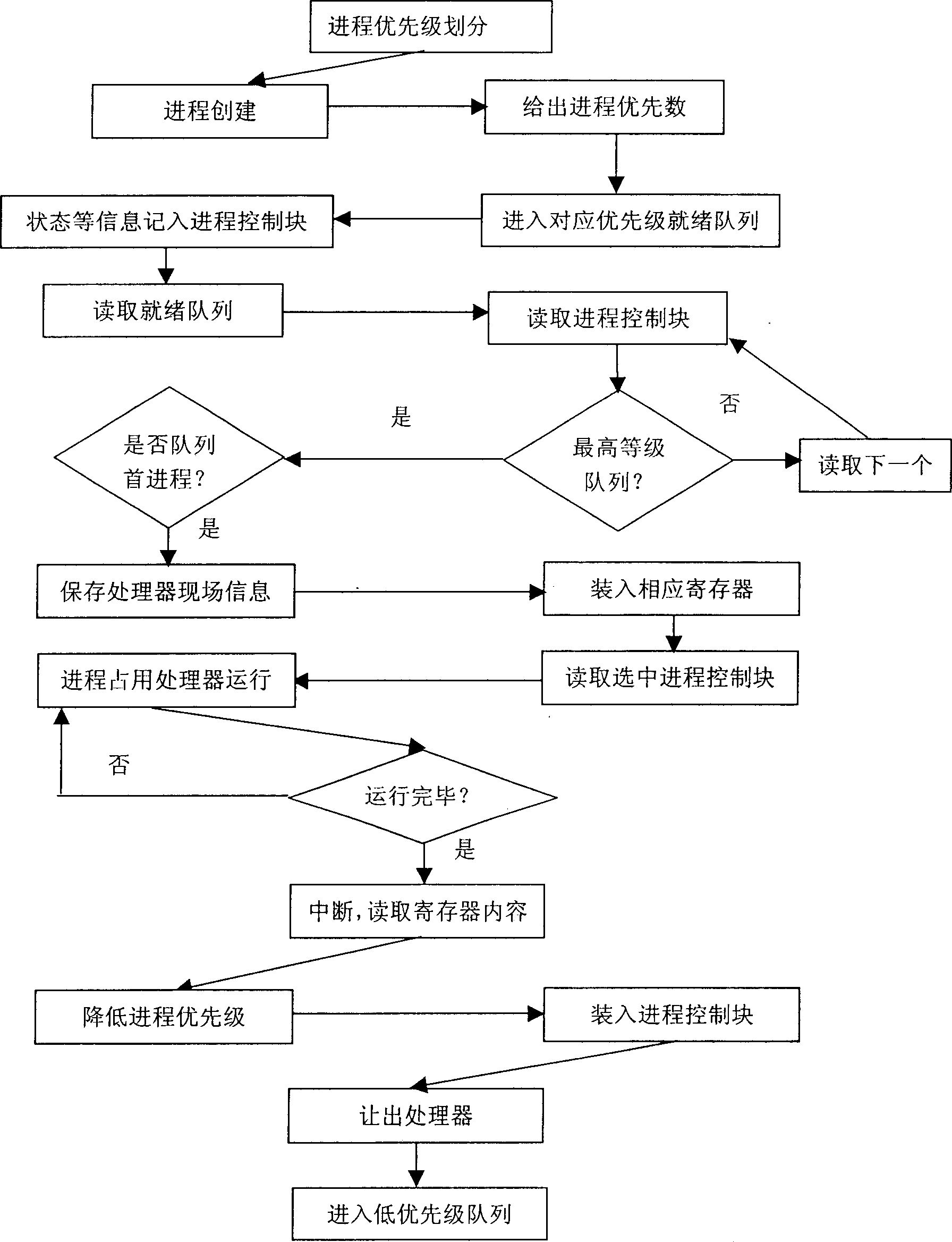

Predicting a result of a dependency-checking instruction when processing vector instructions

The described embodiments include a processor that executes a vector instruction. In the described embodiments, while dispatching instructions at runtime, the processor encounters a dependency-checking instruction. Upon determining that a result of the dependency-checking instruction is predictable, the processor dispatches a prediction micro-operation associated with the dependency-checking instruction, wherein the prediction micro-operation generates a predicted result vector for the dependency-checking instruction. The processor then executes the prediction micro-operation to generate the predicted result vector. In the described embodiments, when executing the prediction micro-operation to generate the predicted result vector, if a predicate vector is received, for each element of the predicted result vector for which the predicate vector is active, otherwise, for each element of the predicted result vector, the processor sets the element to zero.

Owner:APPLE INC

System and Method for Virtualizing Processor and Interrupt Priorities

ActiveUS20090300250A1Multiprogramming arrangementsSoftware simulation/interpretation/emulationVirtualizationProcessor scheduling

Dispatching of interrupts to a processor is conditionally suppressed, that is, only if an old priority value and a new priority value are either both less than or both greater than a maximum pending priority value. This conditional avoidance of dispatching is preferably implemented by a virtual priority module within a binary translator in a virtualized computer system and relates to interrupts directed to a virtualized processor by a virtualized local APIC.

Owner:VMWARE INC

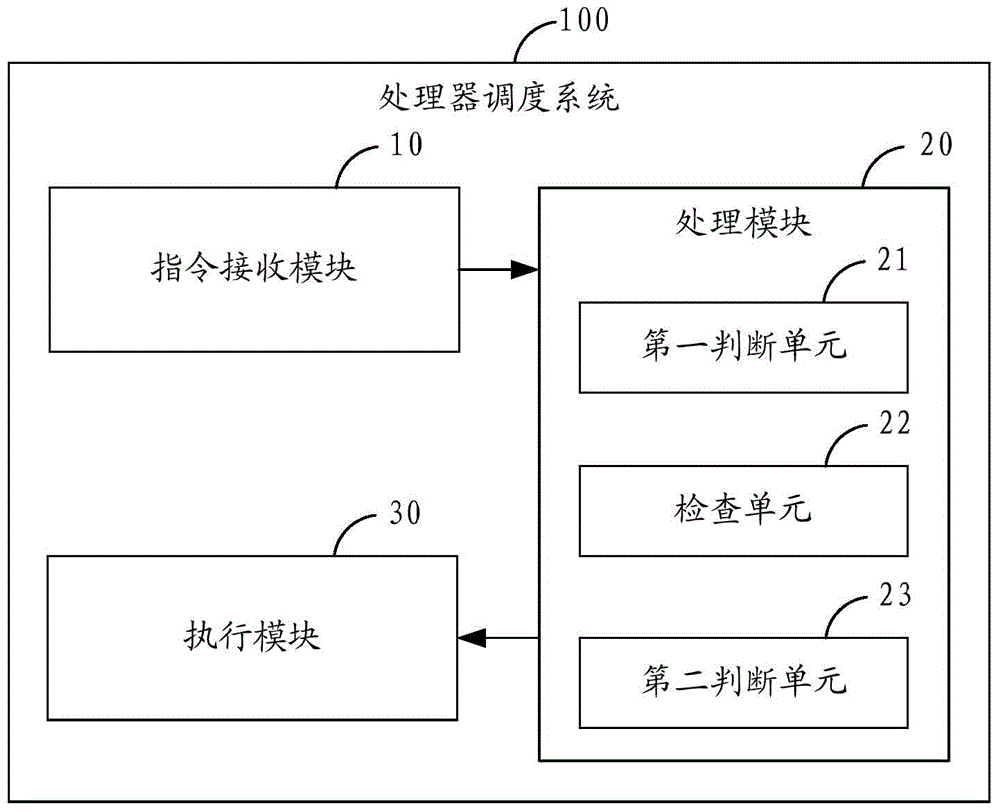

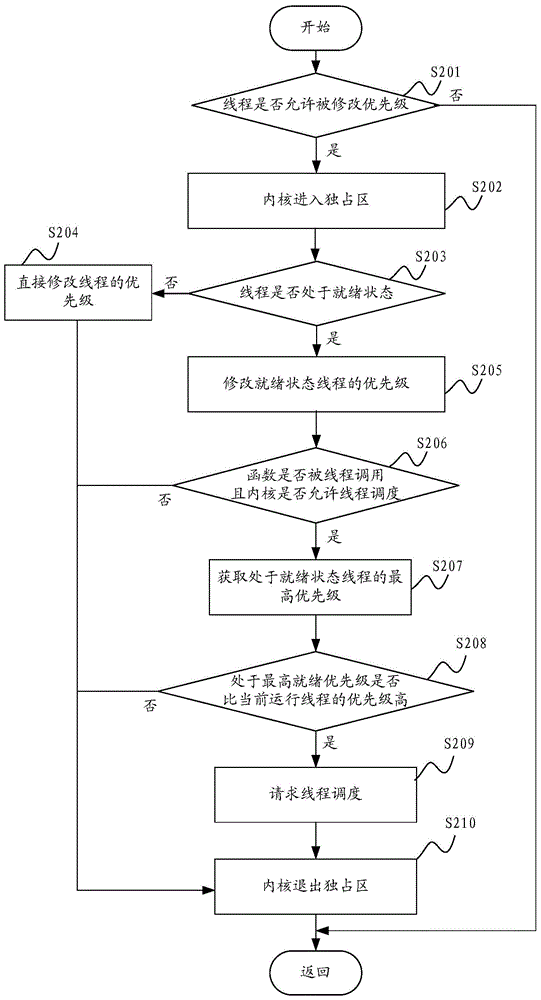

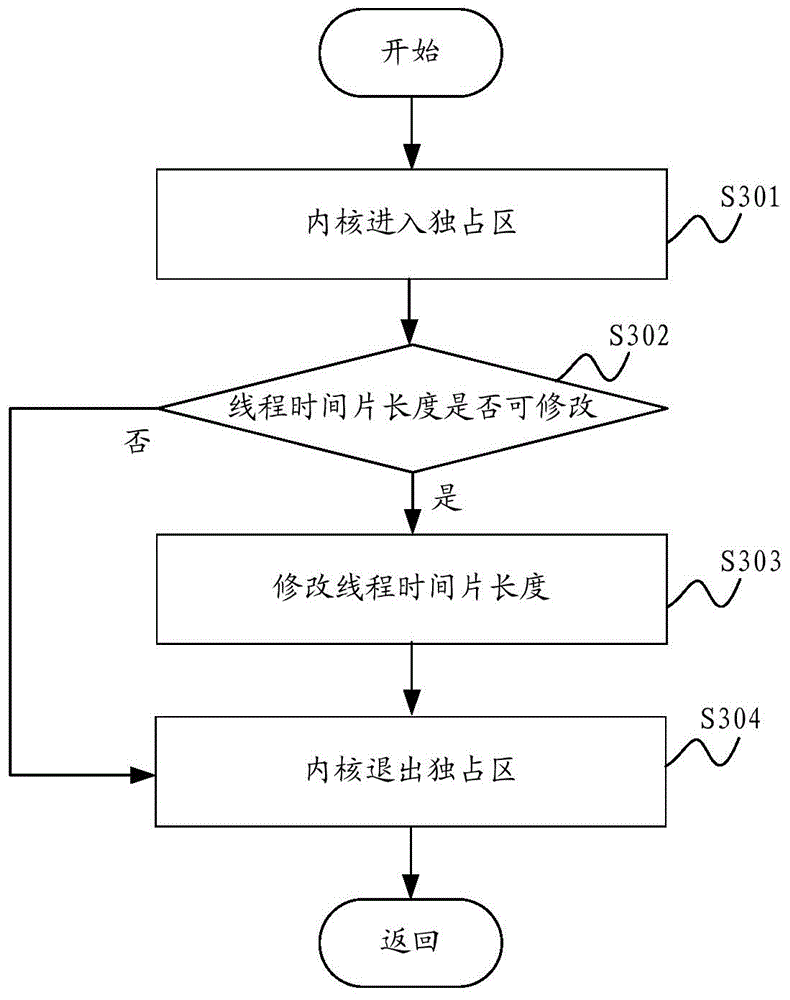

Processor scheduling method and system

InactiveCN106293902AImprove switching efficiencyImprove real-time performanceProgram initiation/switchingProcessor schedulingReal-time computing

The invention is applicable to the technical field of communications, and provides a processor scheduling method and system. The method comprises the steps of receiving a thread priority or time slice modification instruction; modifying the priority or time slice of a thread and acquiring a thread with the highest priority in the ready state; and when a thread under a running state currently exits a kernel exclusive area, executing the thread with the highest priority in the ready state in a processor according to the length of the time slice. The processor scheduling method provided by the invention combines a priority enforcement mechanism and a round-robin mechanism; a priority preemption mechanism is adopted between threads of different priorities, and the round-robin mechanism is adopted between threads of the same priority; since a scheduling mechanism is based on threads, switching between threads consumes a very small amount of resources, therefore, the switching efficiency of occupying a CPU between threads of different priorities and the same priority is improved, the fairness of occupying processor resources between the threads is considered, and the system real-time performance is high.

Owner:YULONG COMPUTER TELECOMM SCI (SHENZHEN) CO LTD

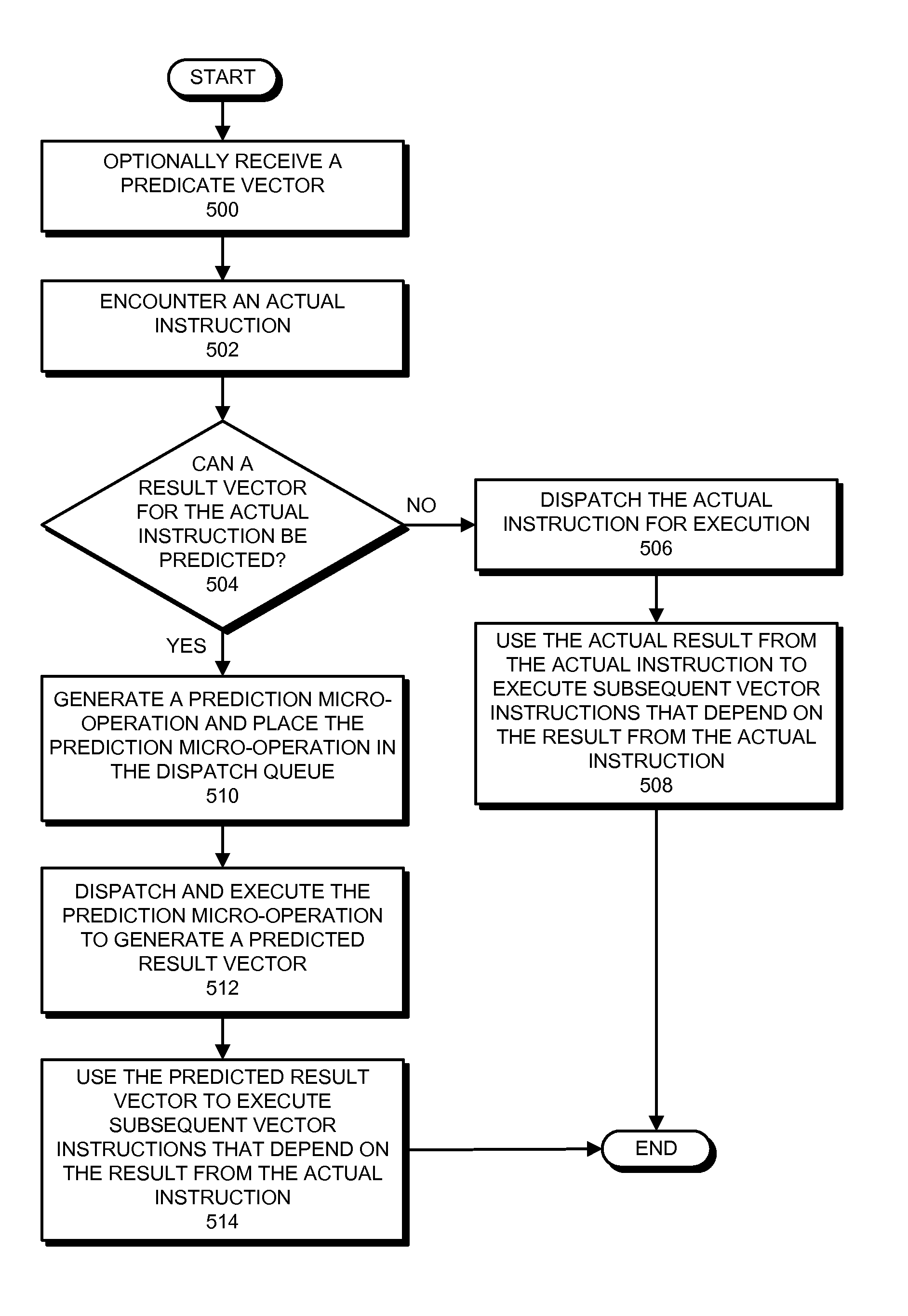

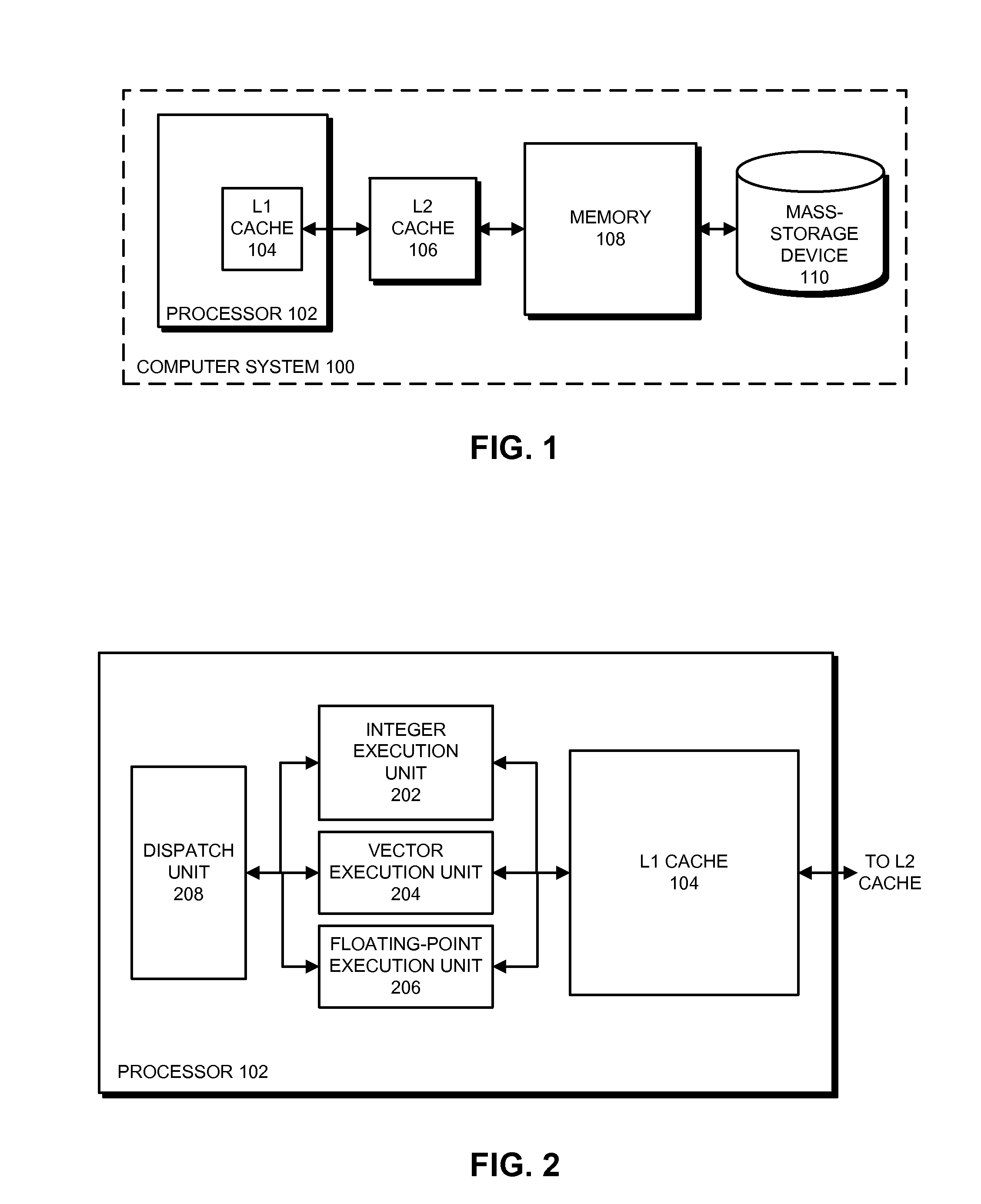

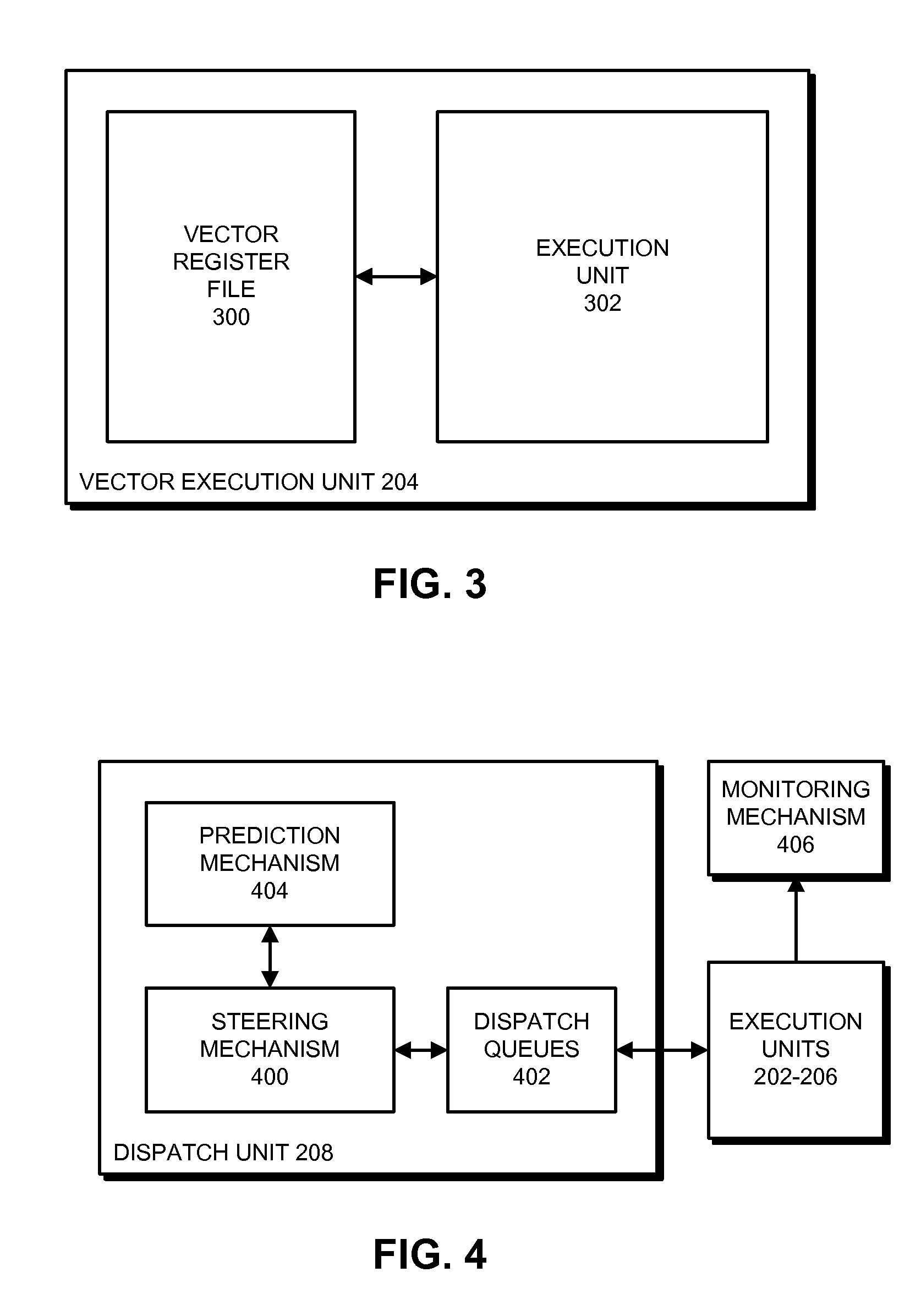

Predicting a result for an actual instruction when processing vector instructions

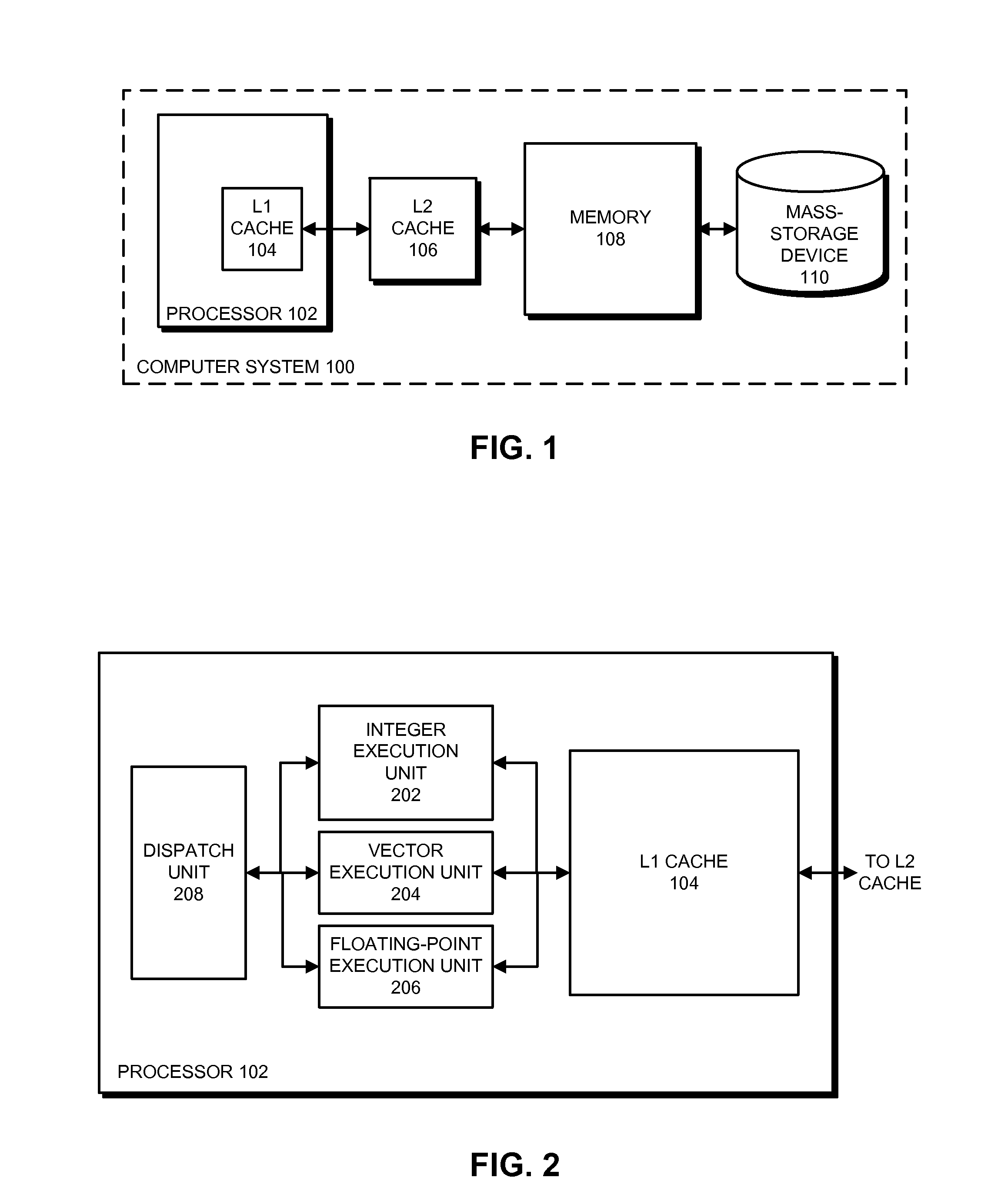

ActiveUS20120191957A1Digital computer detailsSpecific program execution arrangementsMicro-operationProcessor scheduling

The described embodiments provide a processor that executes vector instructions. In the described embodiments, while dispatching instructions at runtime, the processor encounters an Actual instruction. Upon determining that a result of the Actual instruction is predictable, the processor dispatches a prediction micro-operation associated with the Actual instruction, wherein the prediction micro-operation generates a predicted result vector for the Actual instruction. The processor then executes the prediction micro-operation to generate the predicted result vector. In the described embodiments, when executing the prediction micro-operation to generate the predicted result vector, if the predicate vector is received, for each element of the predicted result vector for which the predicate vector is active, otherwise, for each element of the predicted result vector, generating the predicted result vector comprises setting the element of the predicted result vector to true.

Owner:APPLE INC

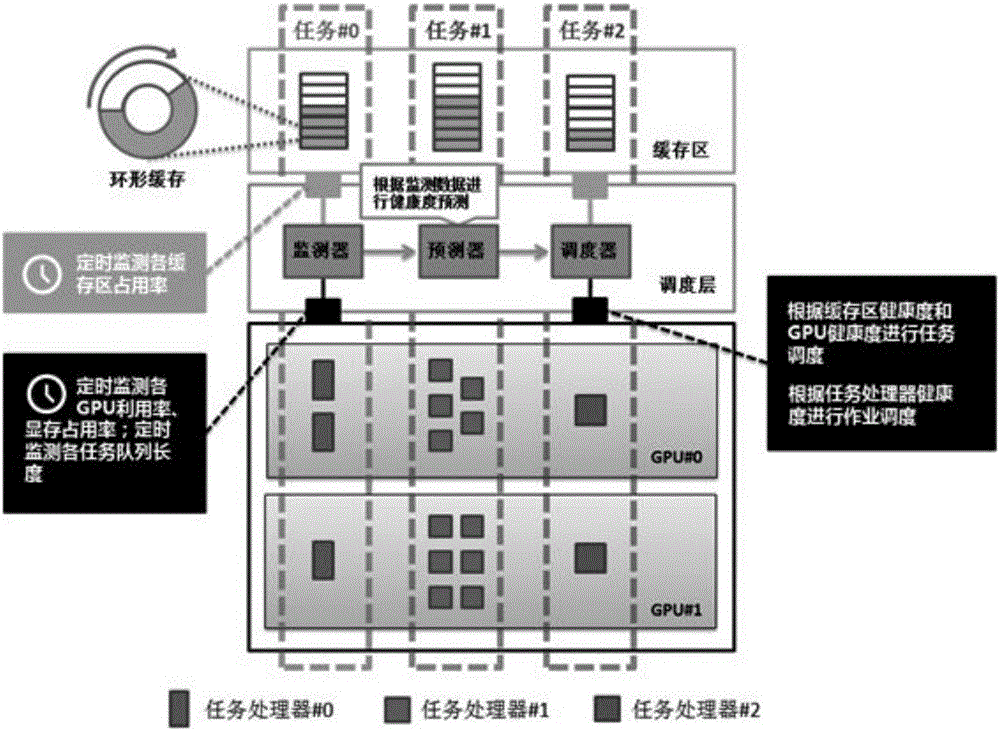

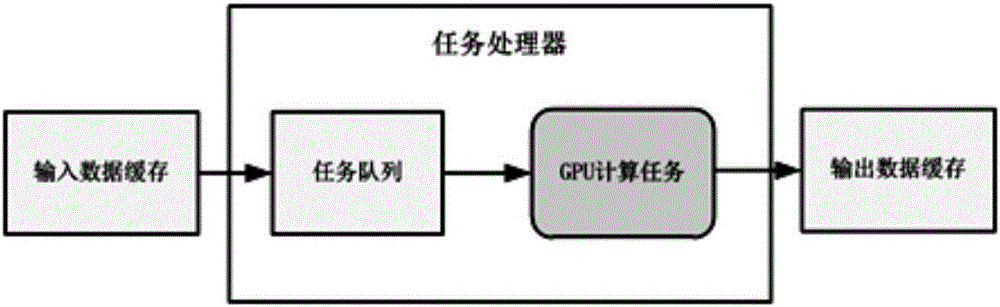

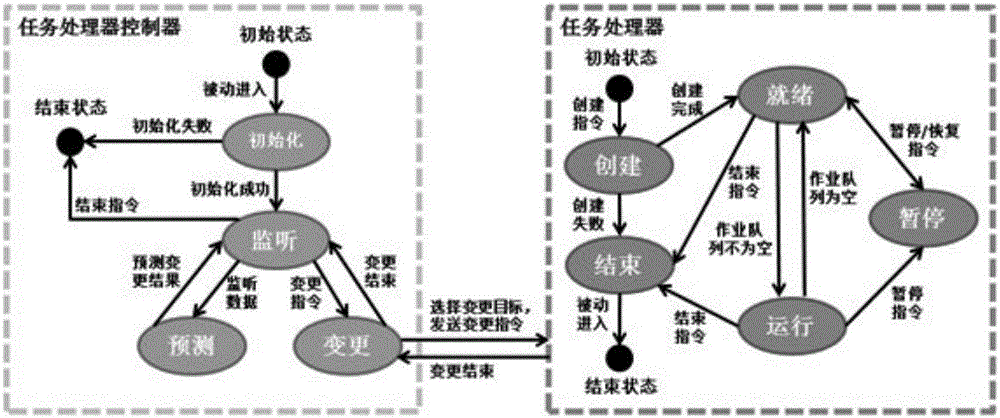

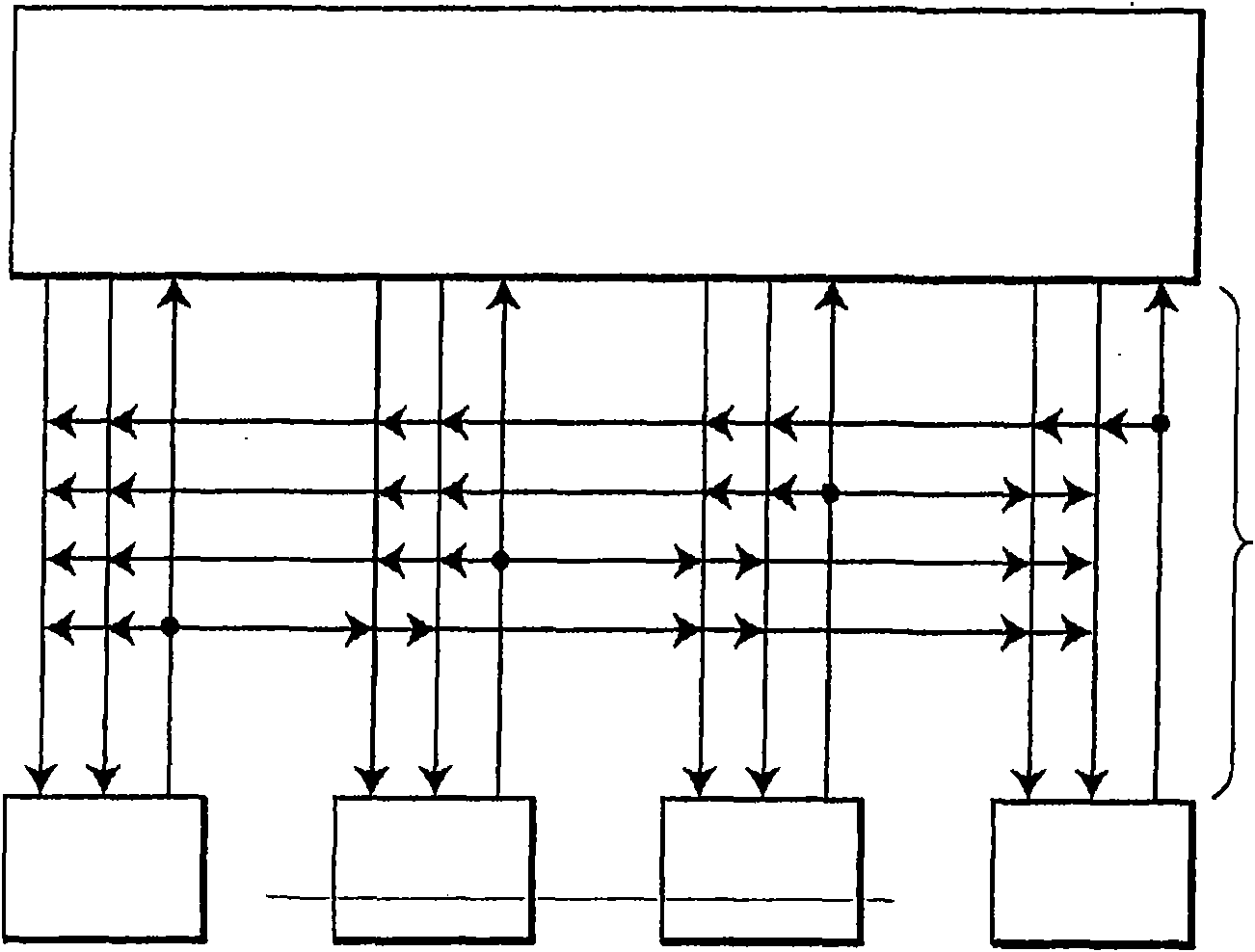

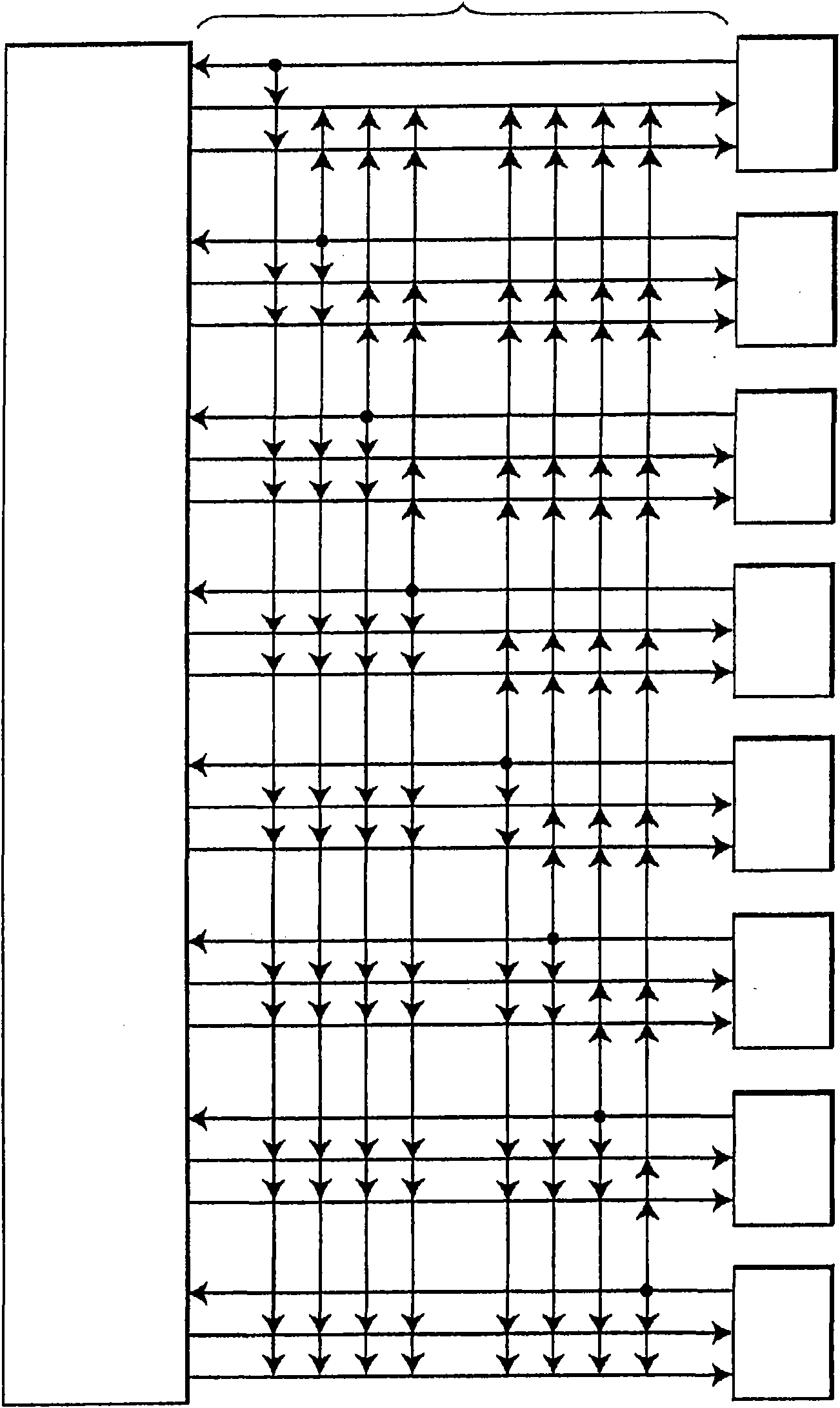

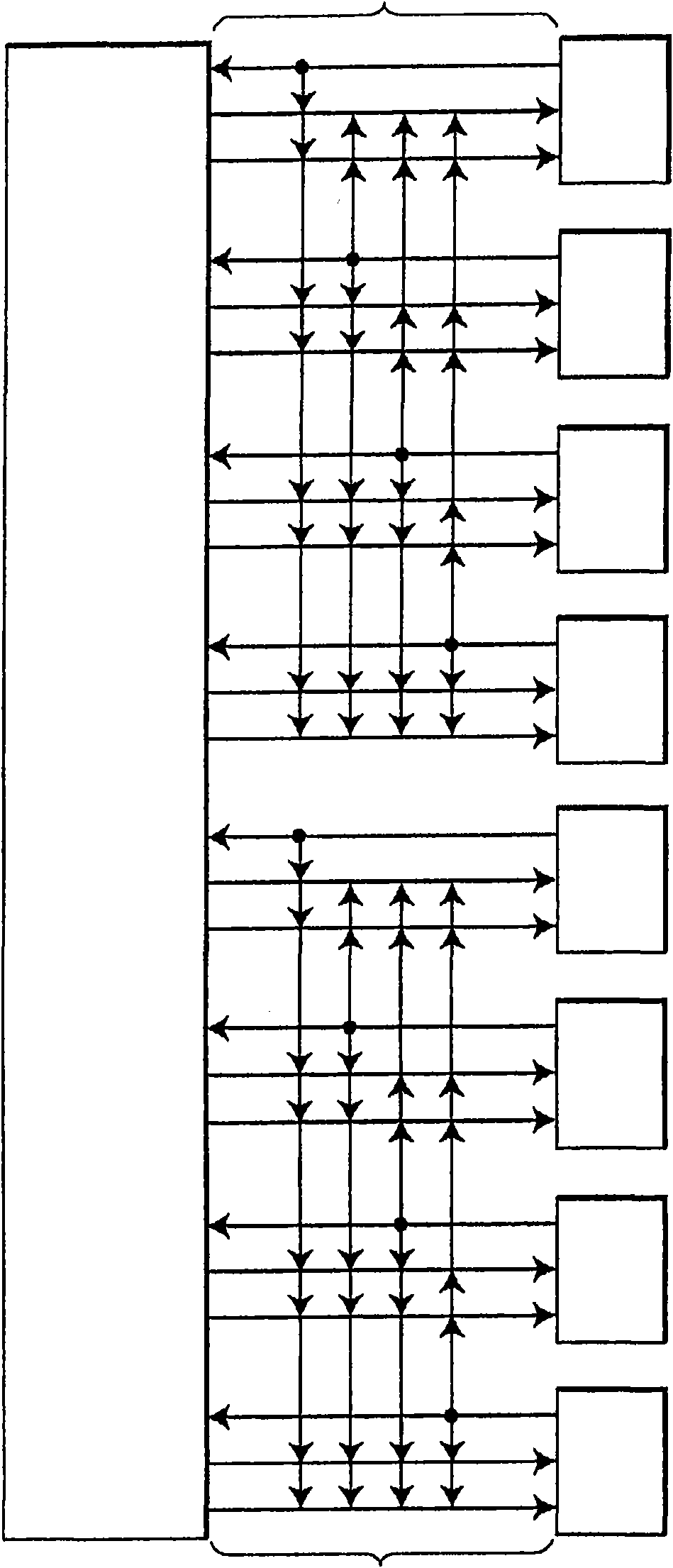

Real-time processing method of multiple video data on multi-GPU (multiple graphics processing unit) platform

ActiveCN106686352ATake advantage ofCalculation speedClosed circuit television systemsProcessor architectures/configurationProcessor schedulingMulti gpu

The invention provides a real-time processing method of multiple video data on multi-GPU (multiple graphics processing unit) platform; the method mainly comprises the steps of 1, establishing a layered parallel structure based on task processors; 2, initializing the task processors in the layered parallel structure, and receiving monitoring video data and processing the monitoring video data in real time by each task processor; 3, carrying out environment monitoring, calculating task queue health level, task cache region health level and health level of each GPU according to the results of environment monitoring; 4, scheduling the task processors and distributing tasks according to the task queue health level, the cache region health level and the health level of each GPU; 5, repeating the steps 3 and 4 regularly so that balanced load is maintained among the GPUs. A complete parallel scheduling and data management scheme is designed for the real-time processing of multiple video multiple tasks on the multi-GPU platform, the great calculating capacity of a multi-GPU processor can be utilized efficiently, and calculating speed is increased greatly.

Owner:PEKING UNIV

Virtual functional units for VLIW processors

InactiveCN101553780AImprove performanceConcurrent instruction executionScheduling functionProcessor scheduling

A virtual functional unit design is presented that is employed in a statically scheduled VLIW processor. ''Virtual'' views of the function unit appear to the processor scheduler that exceed the number of physical instantiations of the functional unit. As a result, significant processor performance improvements can be achieved for those types of functional units that are too difficult or too costly to physically duplicate. By providing different virtual views to the different clusters of a VLIW processor, the compiler / scheduler can generate more efficient code for the processor, than a processor without virtual views and the physical unit restricted to a subset of the processor's clusters. The compiler / scheduler guarantees that the restrictions with respect to scheduling of operations for functional units with multiple virtual views is met. NON-clustered processors also benefit from virtual views. By providing multiple virtual views in multiple issue slots of a physical function unit, the compiler / scheduler has more freedom to schedule operations for the functional unit.

Owner:NXP BV

Predicting a result of a dependency-checking instruction when processing vector instructions

ActiveUS9122485B2Digital computer detailsConcurrent instruction executionProcessor schedulingMicro-operation

The described embodiments include a processor that executes a vector instruction. In the described embodiments, while dispatching instructions at runtime, the processor encounters a dependency-checking instruction. Upon determining that a result of the dependency-checking instruction is predictable, the processor dispatches a prediction micro-operation associated with the dependency-checking instruction, wherein the prediction micro-operation generates a predicted result vector for the dependency-checking instruction. The processor then executes the prediction micro-operation to generate the predicted result vector. In the described embodiments, when executing the prediction micro-operation to generate the predicted result vector, if a predicate vector is received, for each element of the predicted result vector for which the predicate vector is active, otherwise, for each element of the predicted result vector, the processor sets the element to zero.

Owner:APPLE INC

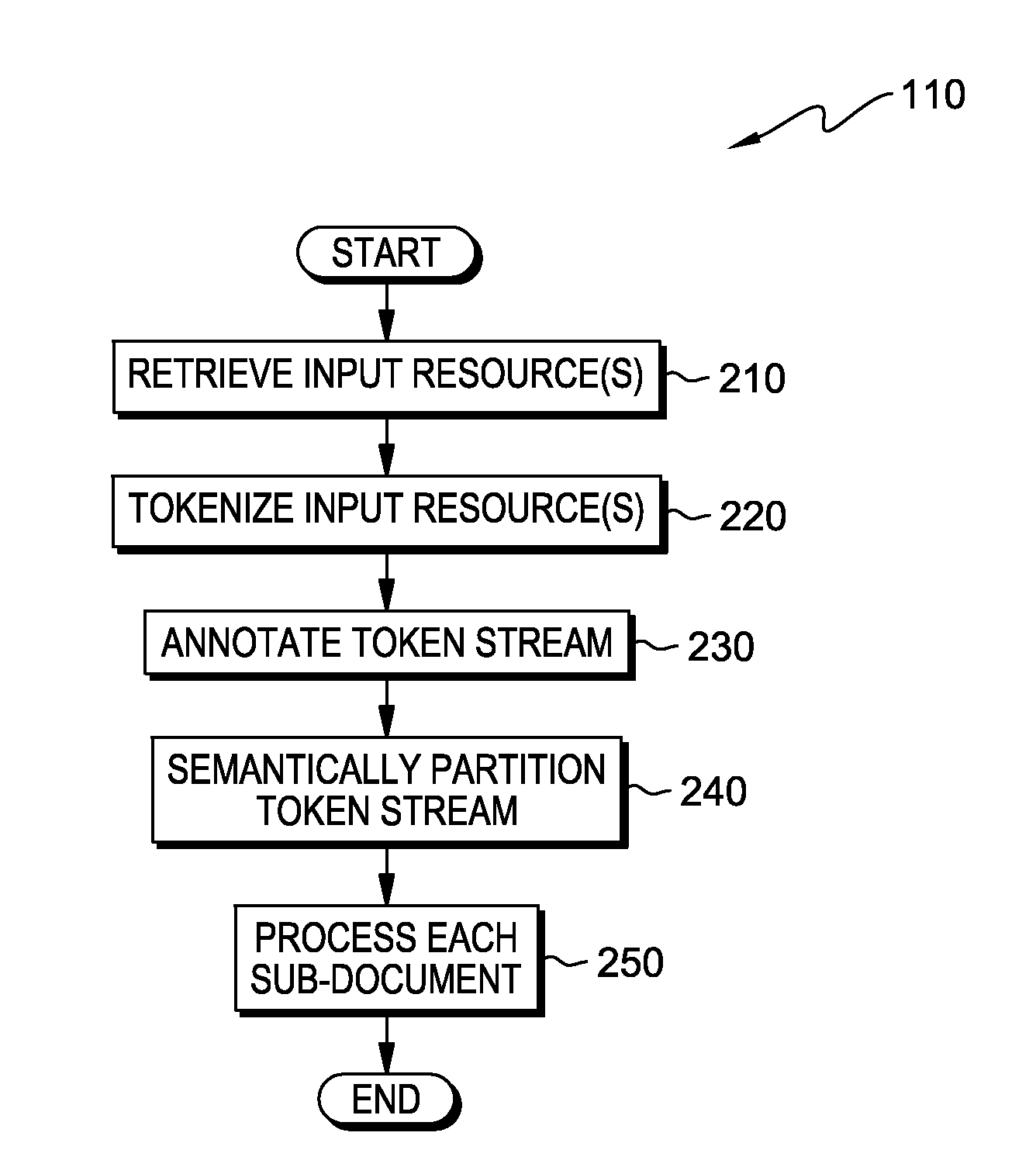

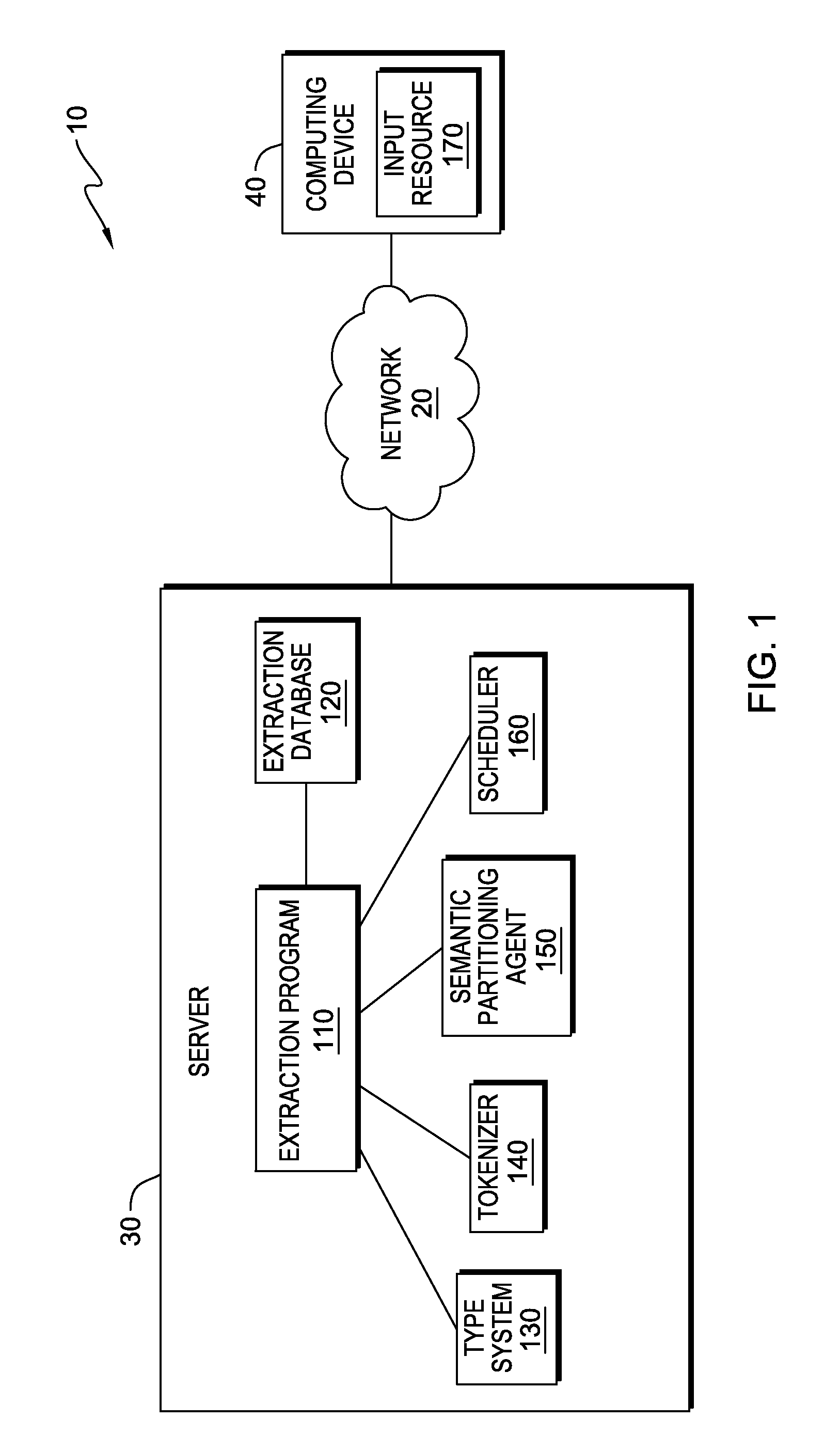

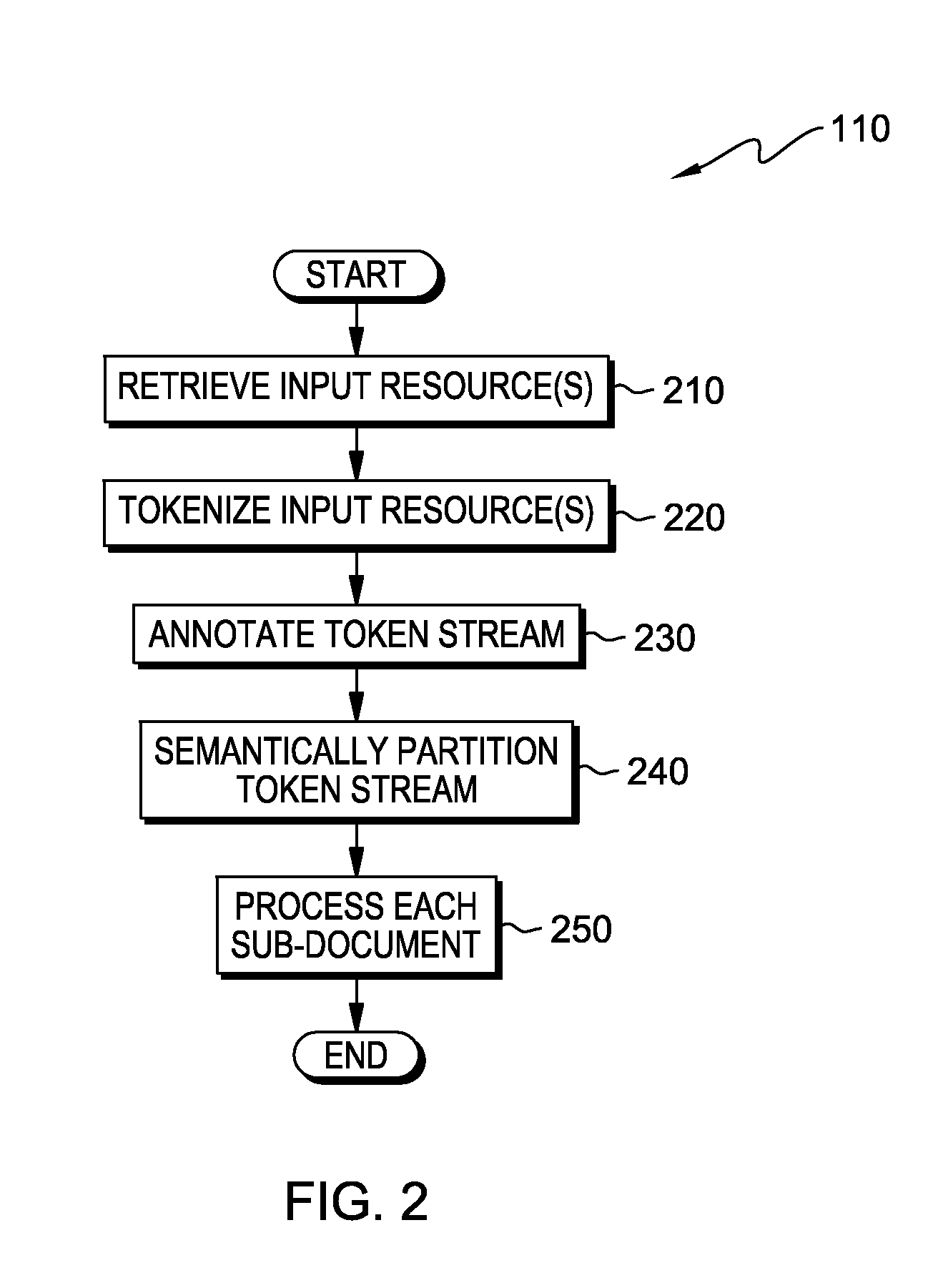

Parallelizing semantically split documents for processing

ActiveUS20160179775A1Semantic analysisDigital data processing detailsProcessor schedulingDocument preparation

In an approach for parallelizing document processing in an information handling system, a processor receives a document, wherein the document includes text content. A processor extracts information from the text content, utilizing natural language processing and semantic analysis, to form tokenized semantic partitions, comprising a plurality of sub-documents. A processor schedules a plurality of concurrently executing threads to process the plurality of sub-documents.

Owner:IBM CORP

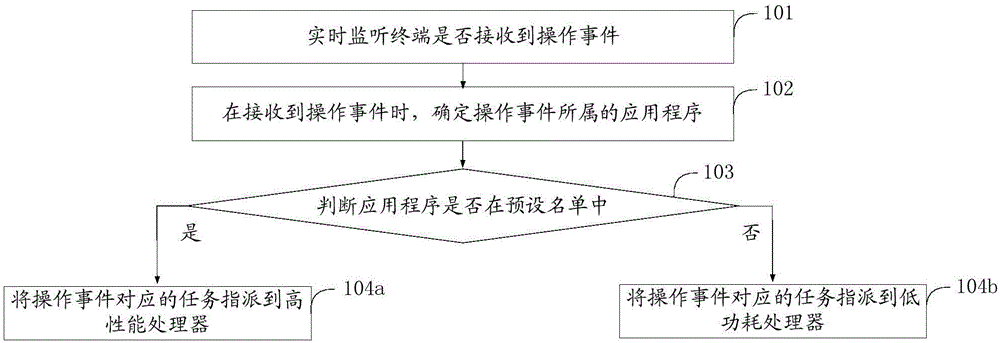

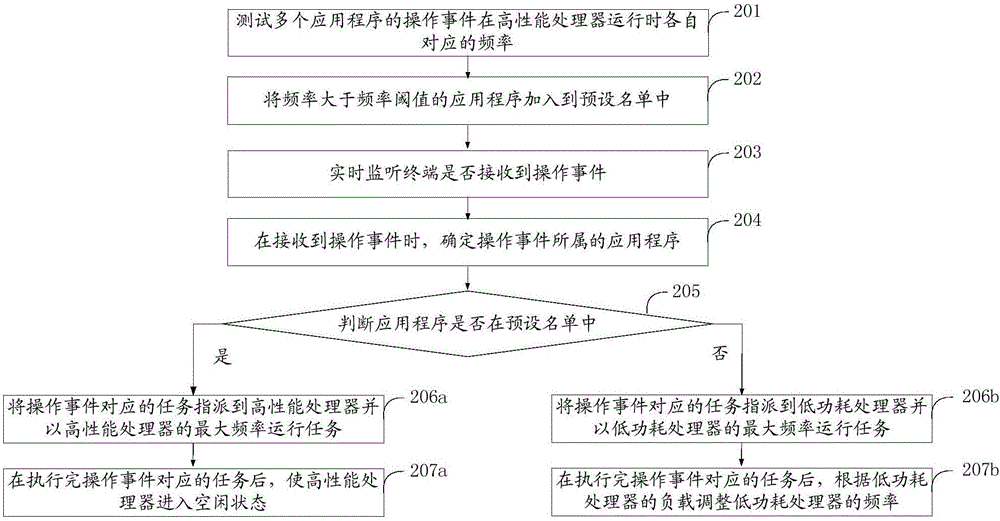

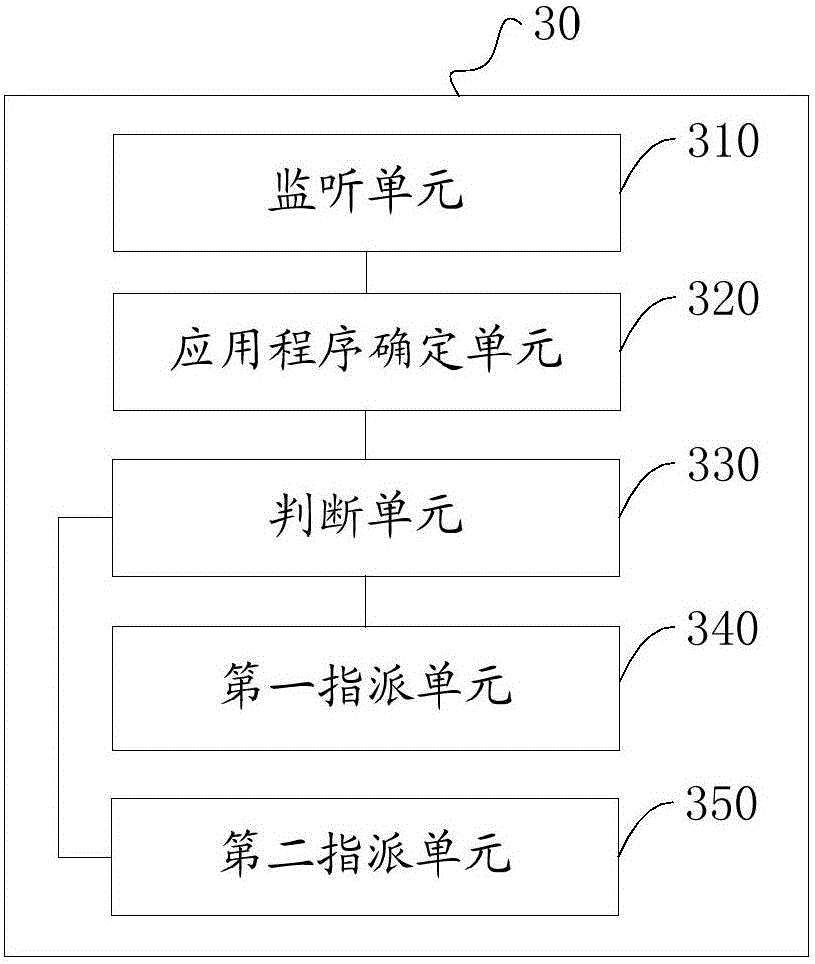

Method for scheduling processor and terminal

InactiveCN106406494AReduce power consumptionPower supply for data processingProcessor schedulingComputer terminal

The embodiment of the invention discloses a method for scheduling a processor and a terminal. The method comprises the steps of firstly monitoring whether the terminal receives an operational event or not in real time; determining an application program to which the operational event belongs when the operational event is received; judging whether the application program is in a default list or not; if so, assigning a task corresponding to the operational event to a high performance processor; and if not, assigning the task corresponding to the operational event to a low power consumption processor. According to the method for scheduling the processor and the terminal, the power consumption of a system can be reduced.

Owner:SHENZHEN GIONEE COMM EQUIP

Predicting a result for an actual instruction when processing vector instructions

ActiveUS9098295B2Digital computer detailsConcurrent instruction executionMicro-operationProcessor scheduling

The described embodiments provide a processor that executes vector instructions. In the described embodiments, while dispatching instructions at runtime, the processor encounters an Actual instruction. Upon determining that a result of the Actual instruction is predictable, the processor dispatches a prediction micro-operation associated with the Actual instruction, wherein the prediction micro-operation generates a predicted result vector for the Actual instruction. The processor then executes the prediction micro-operation to generate the predicted result vector. In the described embodiments, when executing the prediction micro-operation to generate the predicted result vector, if the predicate vector is received, for each element of the predicted result vector for which the predicate vector is active, otherwise, for each element of the predicted result vector, generating the predicted result vector comprises setting the element of the predicted result vector to true.

Owner:APPLE INC

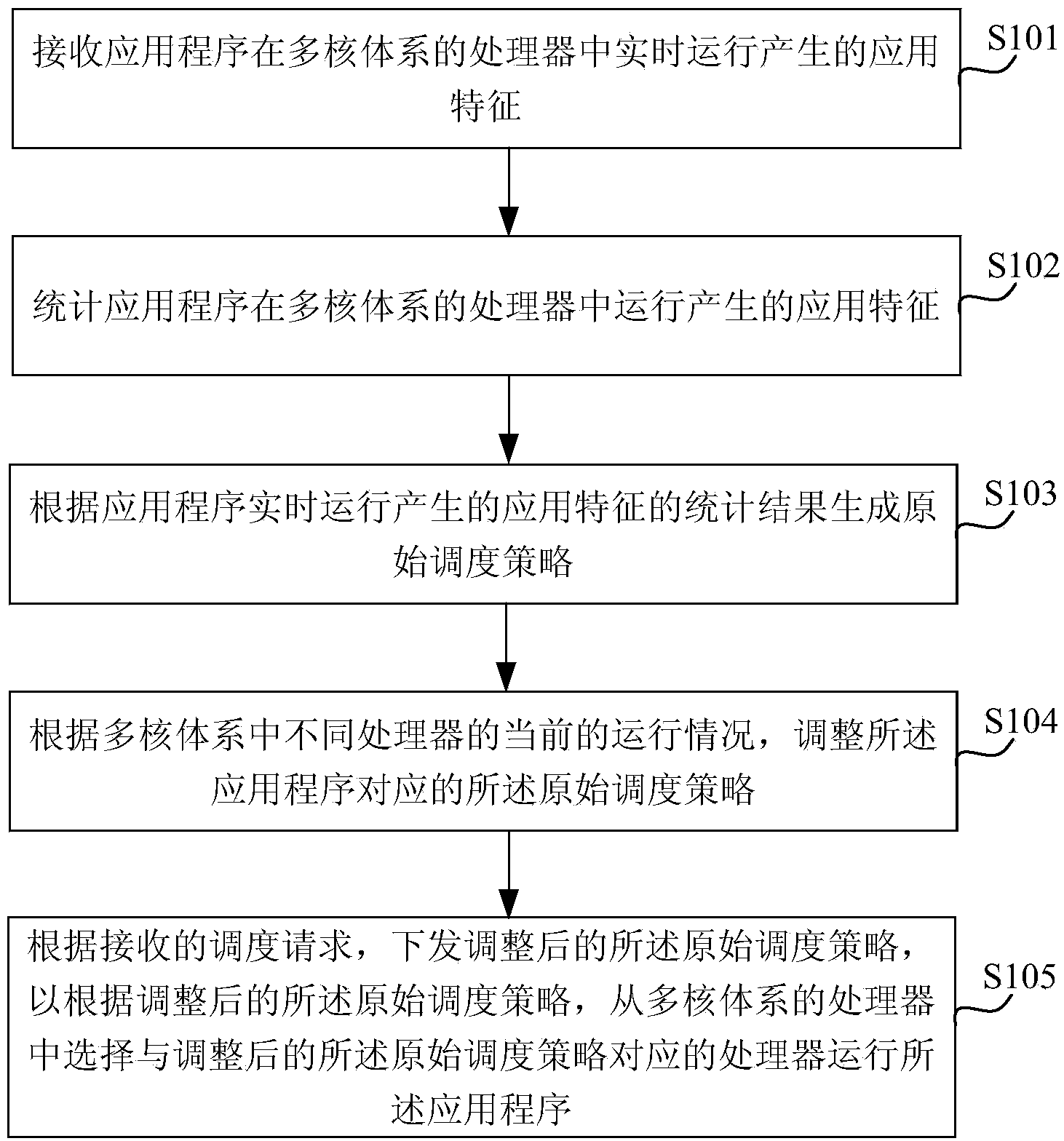

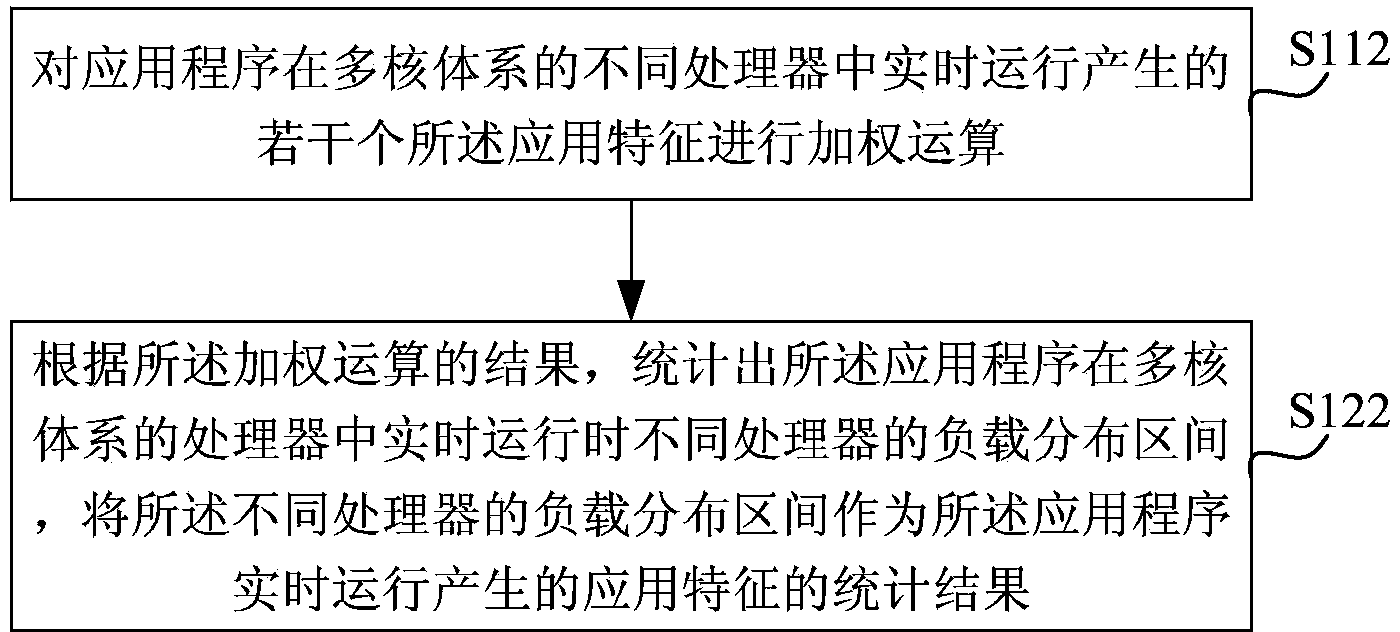

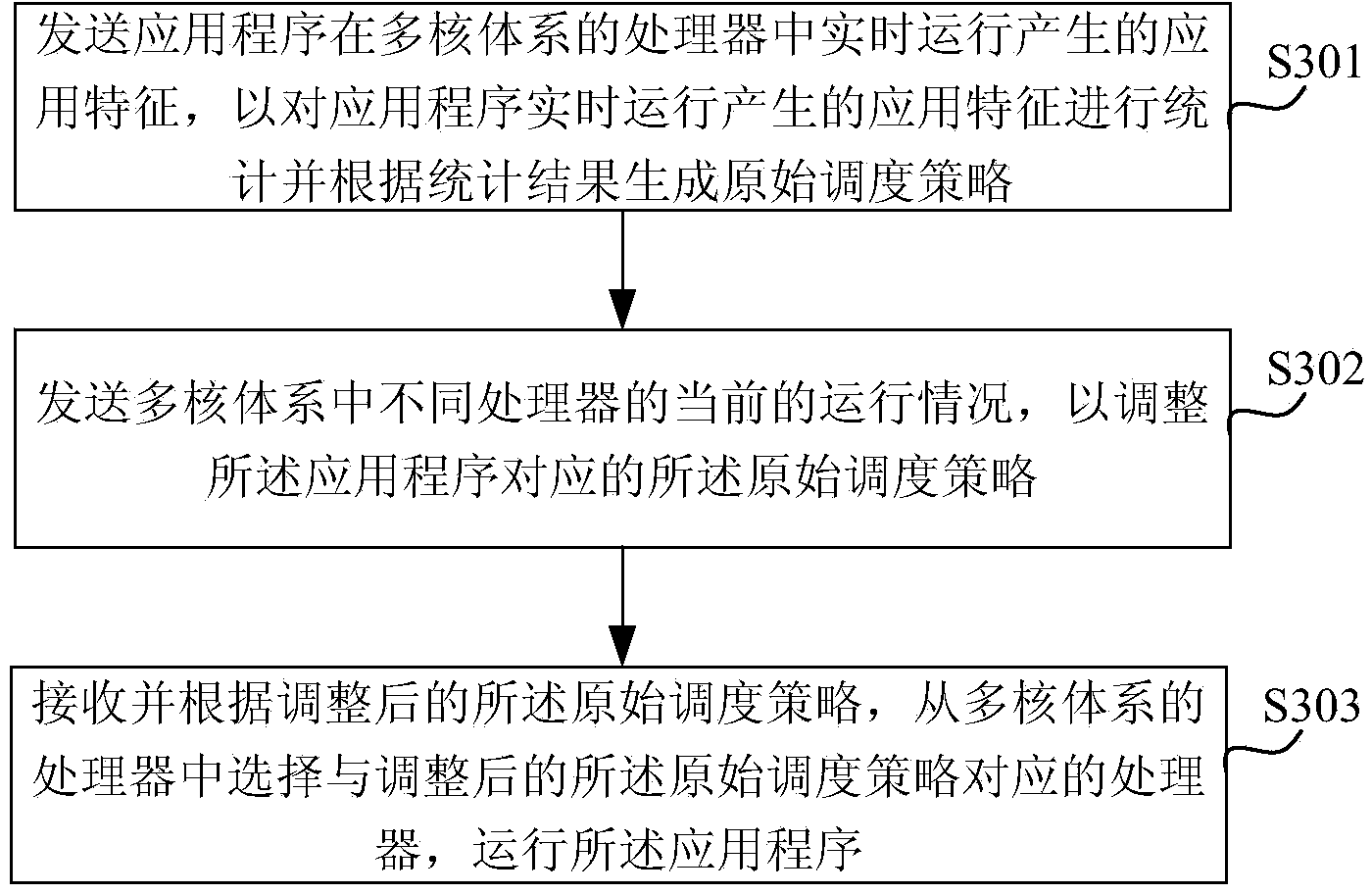

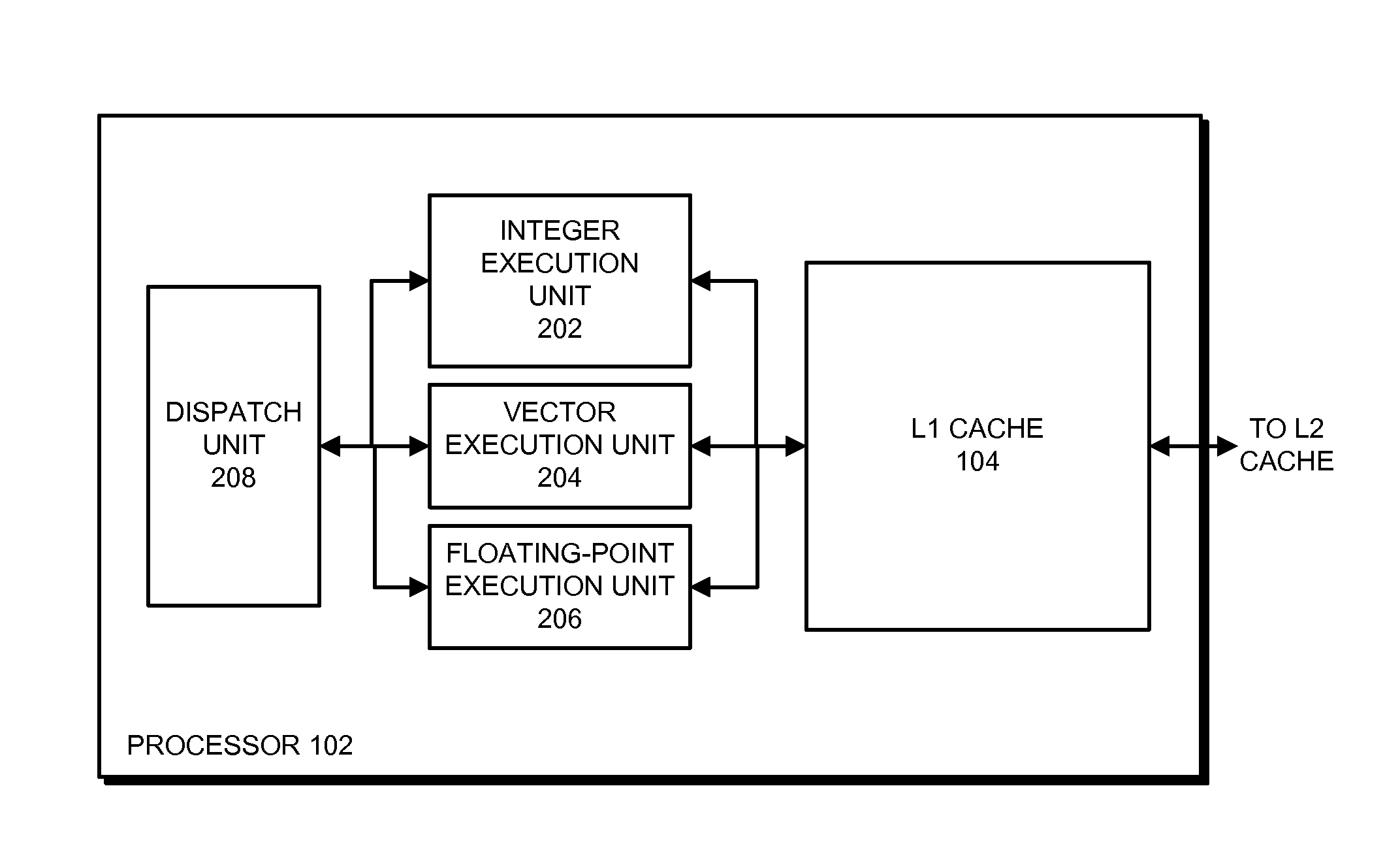

Method and device for generating processor scheduling policy in multi-core system and scheduling system

InactiveCN103942103AProgram initiation/switchingResource allocationProcessor schedulingOptimal scheduling

The invention discloses a method and device for generating a processor scheduling policy in a multi-core system and a scheduling system. The generating method comprises the steps that receiving and statistics are carried out on application characteristics generated by real-time operating of an application program in processors of the multi-core system, and an original scheduling policy is generated according to a statistical result of the application characteristics generated by real-time operating of the application program; the original scheduling policy corresponding the application program is adjusted according to the current operating conditions of different processors in the multi-core system; according to a received scheduling request, the adjusted original scheduling policy is issued, and according to the adjusted original scheduling policy, the processor corresponding to the adjusted original scheduling policy is selected from the processors of the multi-core system to operate the application program. The type of the application program does not need to be marked, the optimal scheduling mode of different application programs can be determined, and the situation that the optimal scheduling mode of the application programs is hard to determine due to the wide program representation types in the prior art is avoided.

Owner:LE SHI ZHI ZIN ELECTRONIC TECHNOLOGY (TIANJIN) LTD

Predicting a result for a predicate-generating instruction when processing vector instructions

ActiveUS20120191950A1Unpredictable resultDigital computer detailsMemory systemsMicro-operationProcessor scheduling

The described embodiments provide a processor that executes vector instructions. In the described embodiments, while dispatching instructions at runtime, the processor encounters a predicate-generating instruction. Upon determining that a result of the predicate-generating instruction is predictable, the processor dispatches a prediction micro-operation associated with the predicate-generating instruction, wherein the prediction micro-operation generates a predicted result vector for the predicate-generating instruction. The processor then executes the prediction micro-operation to generate the predicted result vector. In the described embodiments, when executing the prediction micro-operation to generate the predicted result vector, if the predicate vector is received, for each element of the predicted result vector for which the predicate vector is active, otherwise, for each element of the predicted result vector, generating the predicted result vector comprises setting the element of the predicted result vector to true.

Owner:ALLLE INC

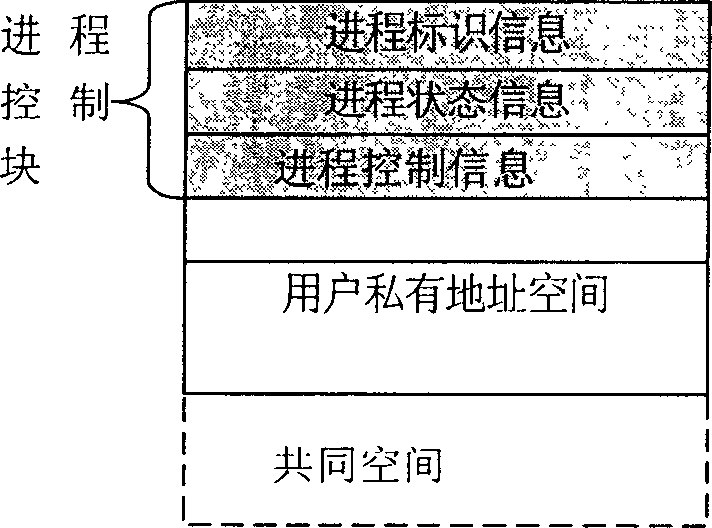

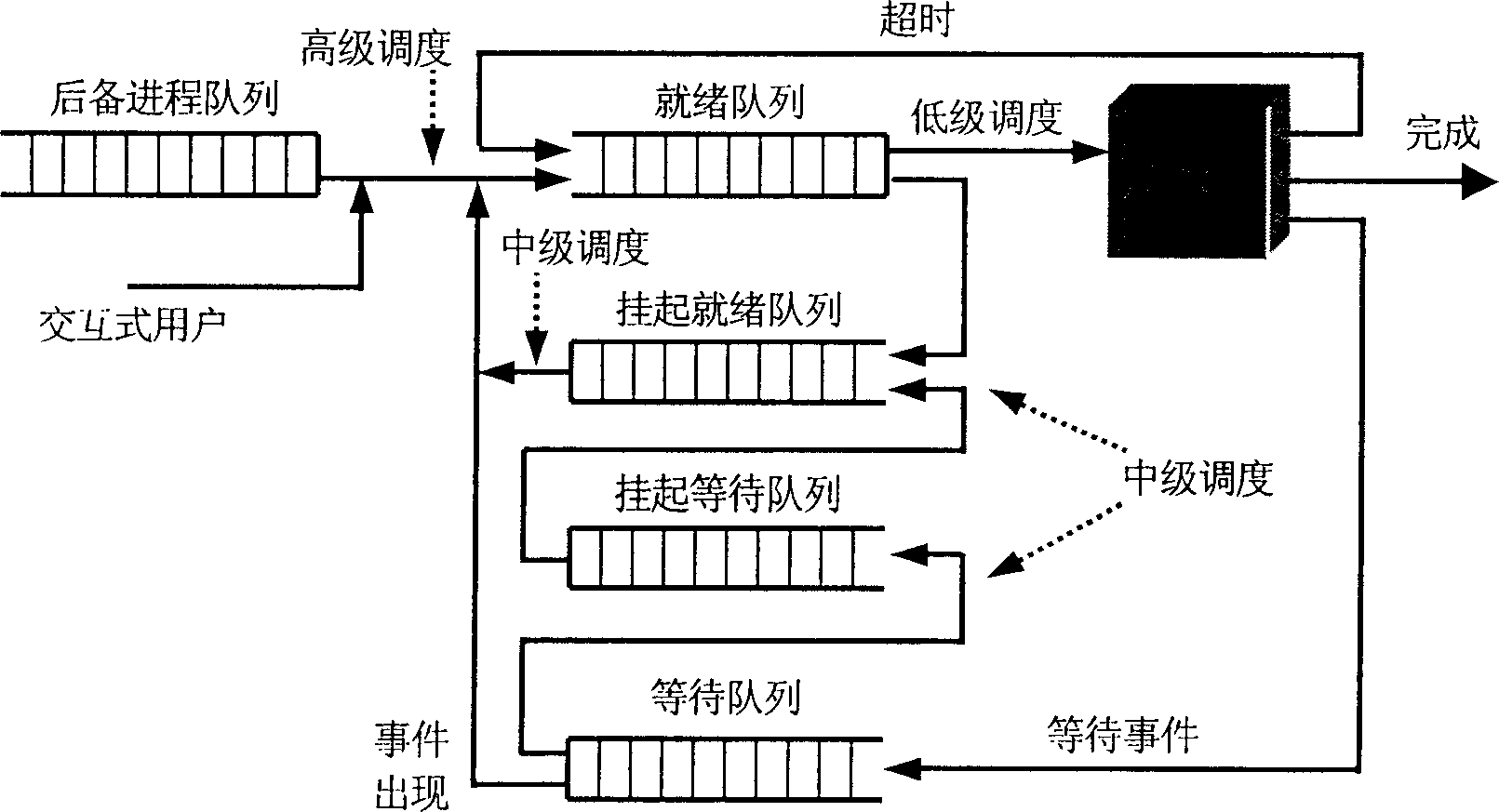

Method for implementing process multi-queue dispatching of embedded SRAM operating system

InactiveCN1825288AFast dispatch responseIncrease occupancyProgram initiation/switchingOperational systemProcessor scheduling

The invention discloses a method for realizing multi-queue process control for an embedded SRAM operating system, dividing ready process into two or multiple stages in the operating system, and correspondingly establishing two or multiple ready process queues for the system, and ordinarily allocating higher-priority queues to shorter time slices. And the processor control firstly selects available processor process from high-priority ready process queue each time and selects the process from lower-priority ready process queue only when no process is available. And the method can provide a good process control performance.

Owner:ZHEJIANG UNIV

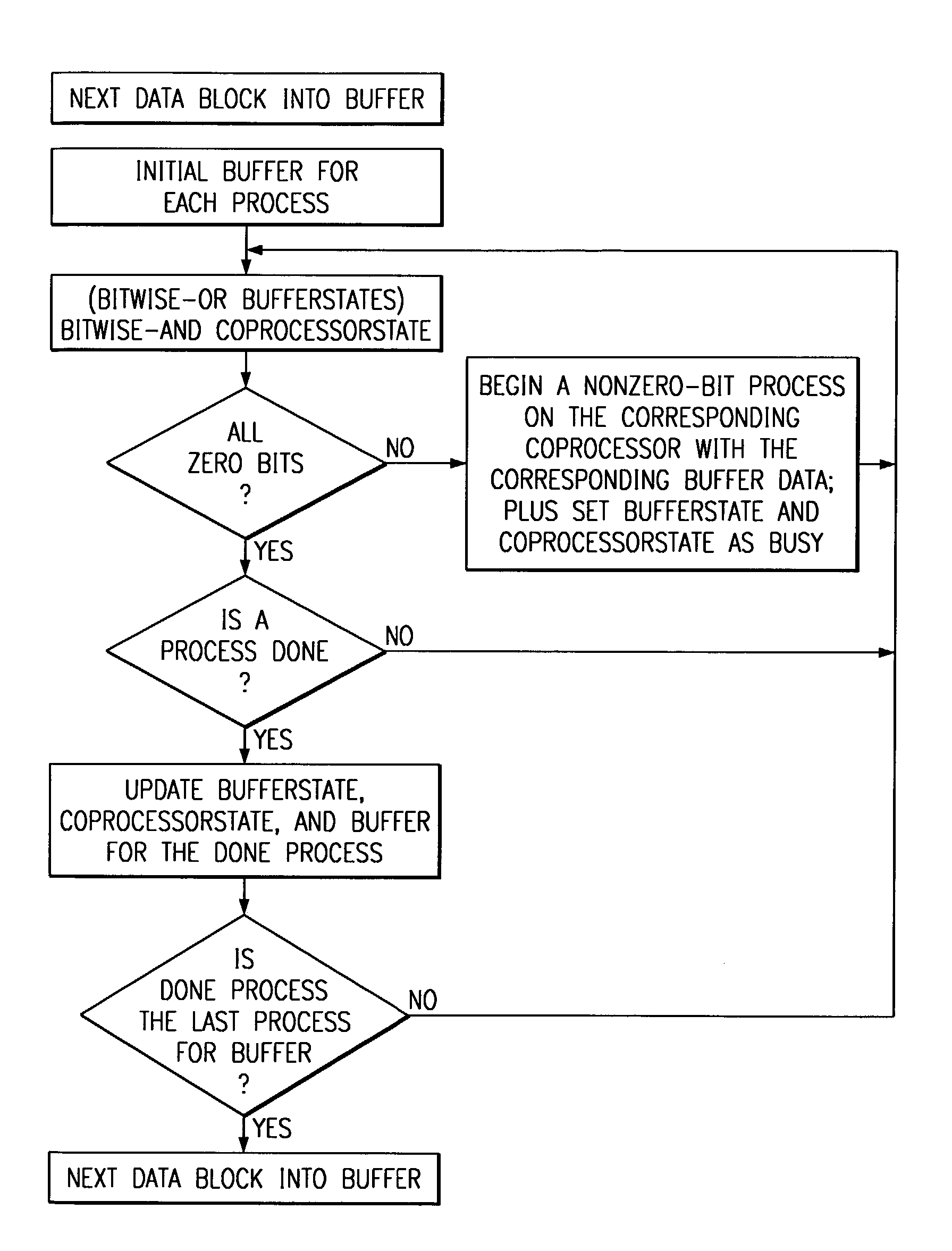

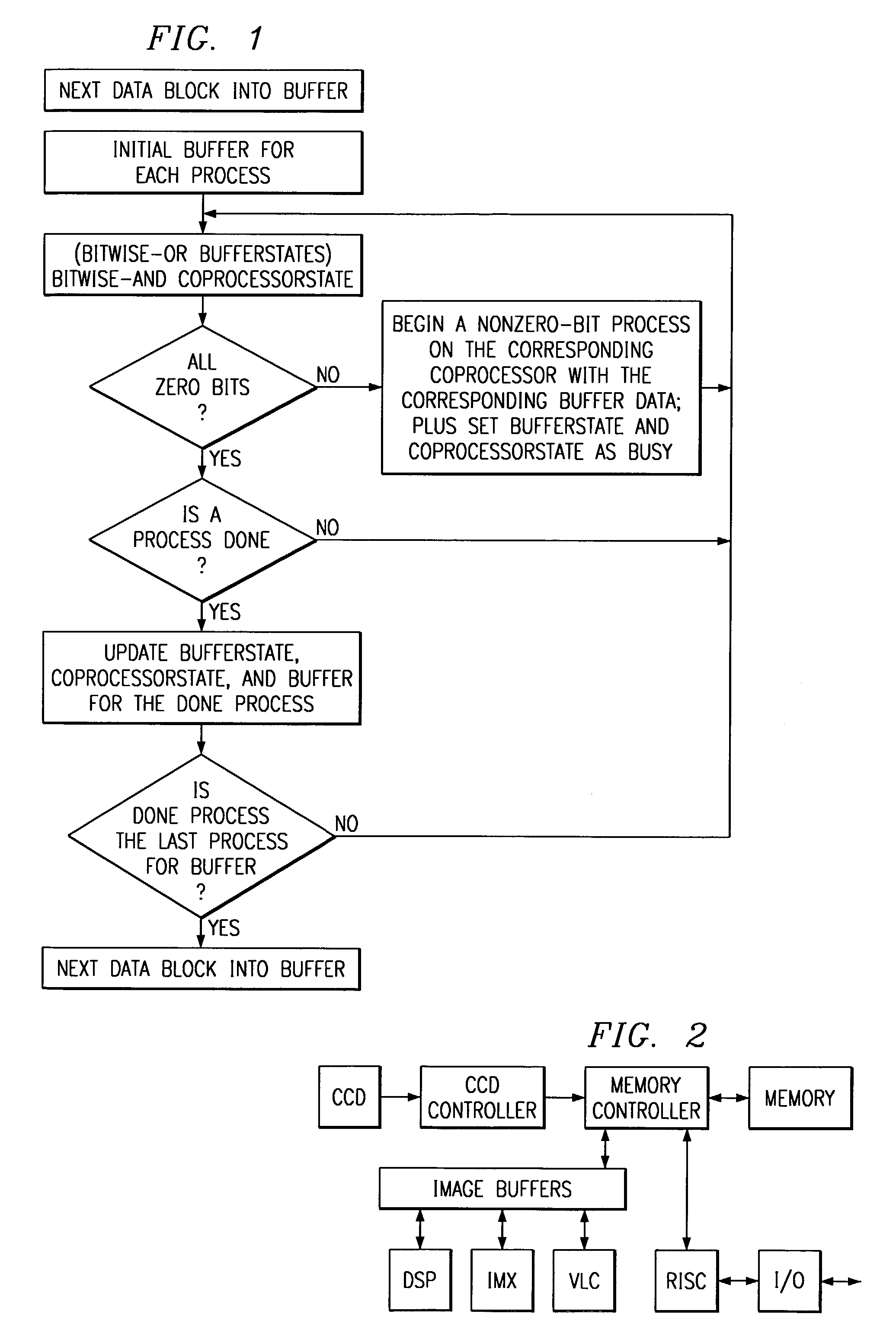

Method for scheduling processors and coprocessors with bit-masking

ActiveUS7346898B2Simple scheduling computationSimple calculationTelevision system detailsResource allocationCoprocessorProcessor scheduling

Multiple coprocessor scheduling of parallel processing steps control with bit arithmetic using a bitmask for each data block buffer indicating next processing step and a bitmask of processing steps with available coprocessors. ANDs of the bitmasks for the buffers with the bitmask of processing steps determines processing steps to run. This allows for parallel processing on the data blocks in the various buffers.

Owner:TEXAS INSTR INC

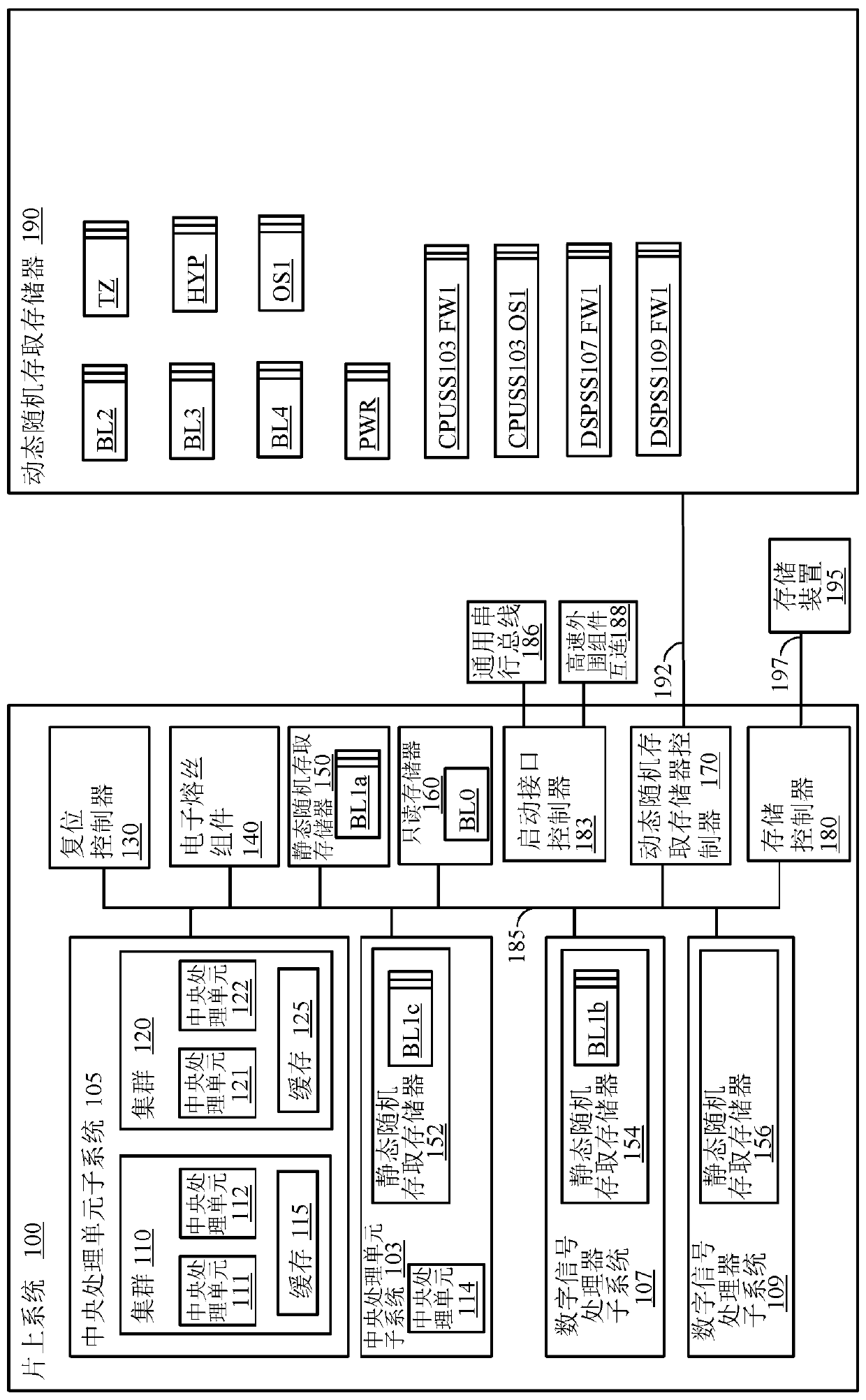

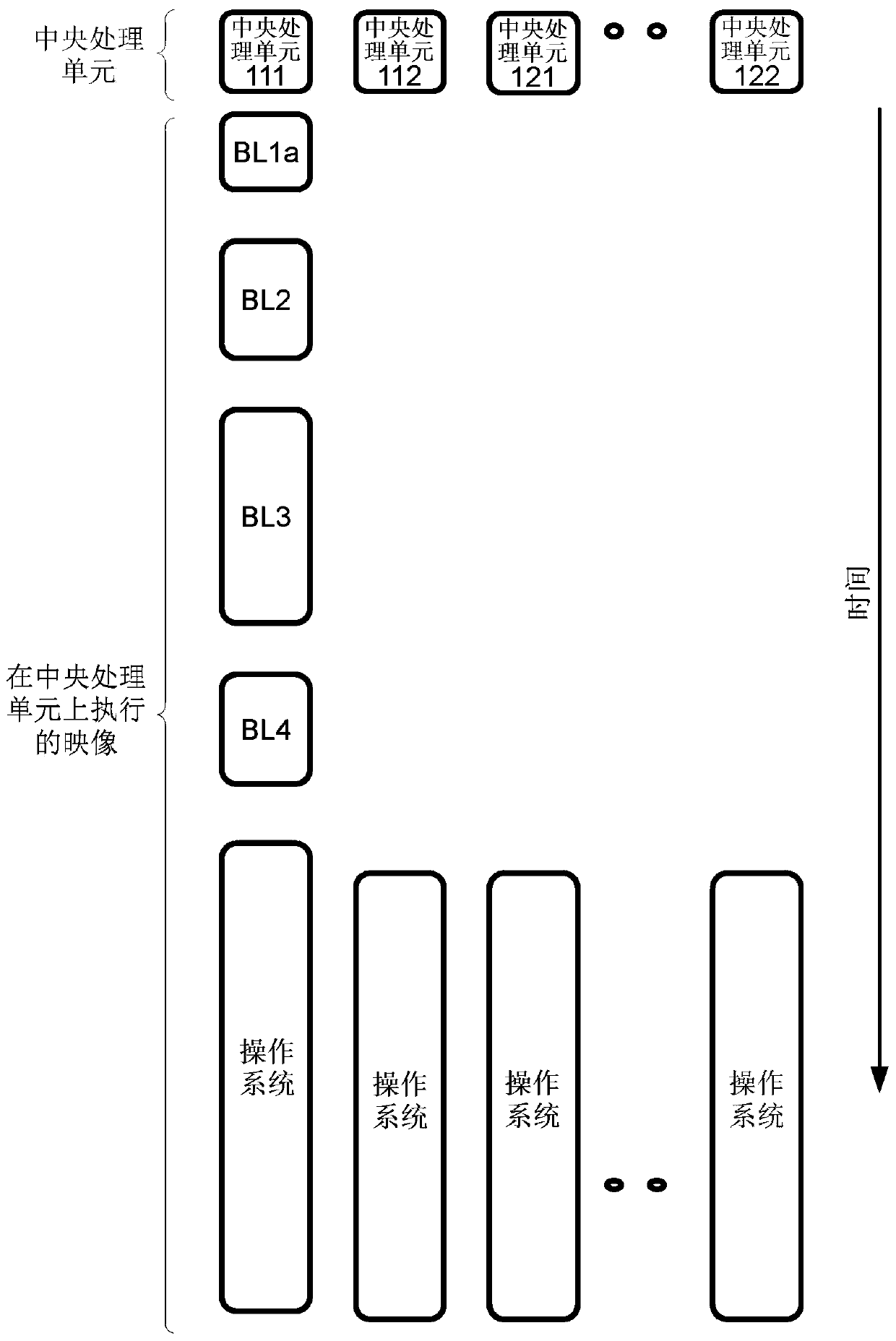

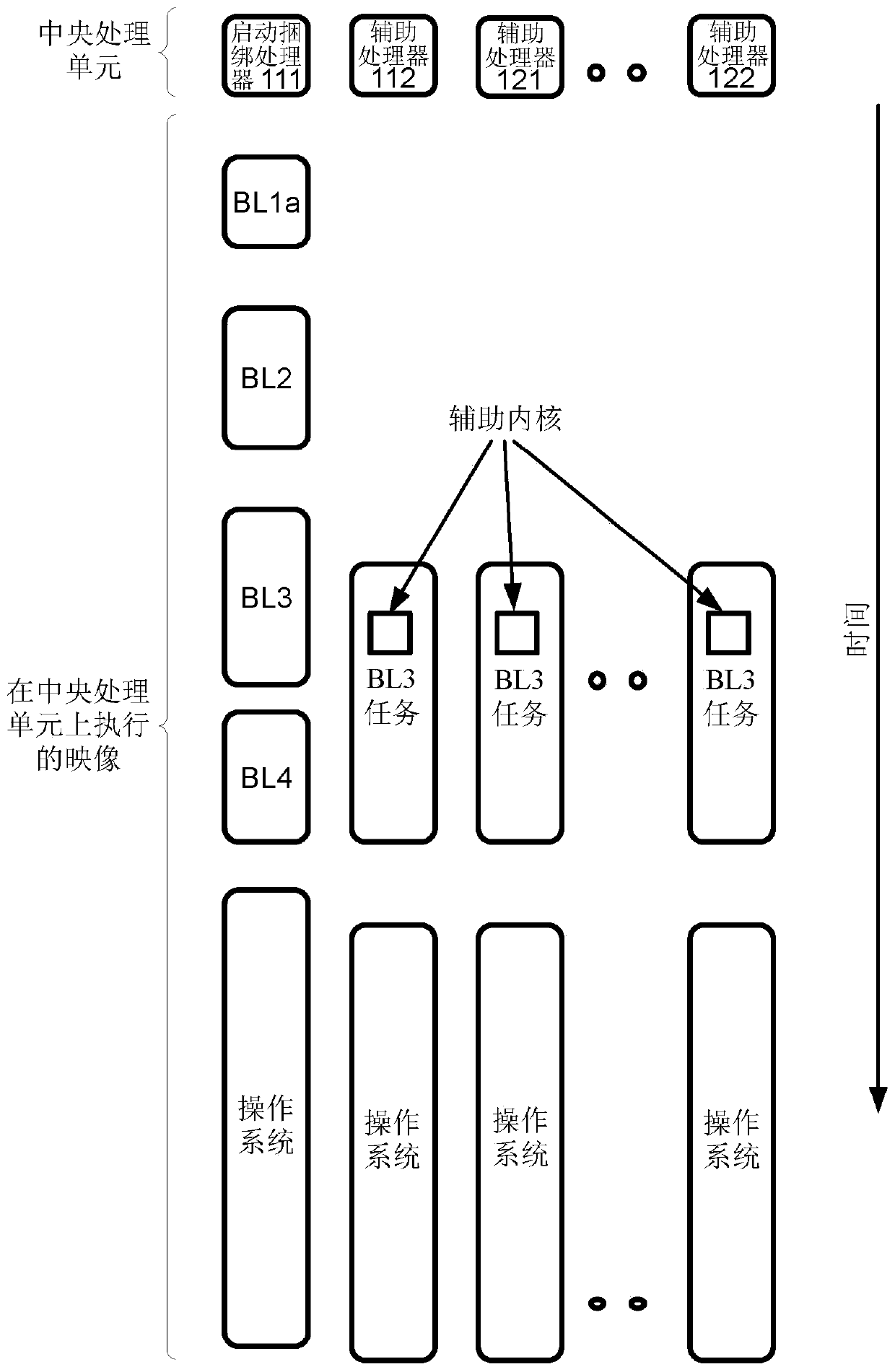

Multicore framework for use in pre-boot environment of system-on-chip

PendingCN111095205AInterprogram communicationDigital computer detailsComputer architectureProcessor scheduling

Various aspects are described herein. In some aspects, the disclosure provides a method of enabling a multicore framework in a pre-boot environment for a system-on-chip (SoC) comprising a plurality ofprocessors comprising a first processor and a second processor. The method includes initiating, by the first processor, bootup of the SoC into a pre-boot environment. The method further includes scheduling, by the first processor, execution of one or more boot-up tasks by a second processor. The method further includes executing, by the second processor, the one or more boot-up tasks in the pre-boot environment. The method further includes executing, by the first processor, one or more additional tasks in parallel with the second processor executing the one or more boot-up tasks.

Owner:QUALCOMM INC

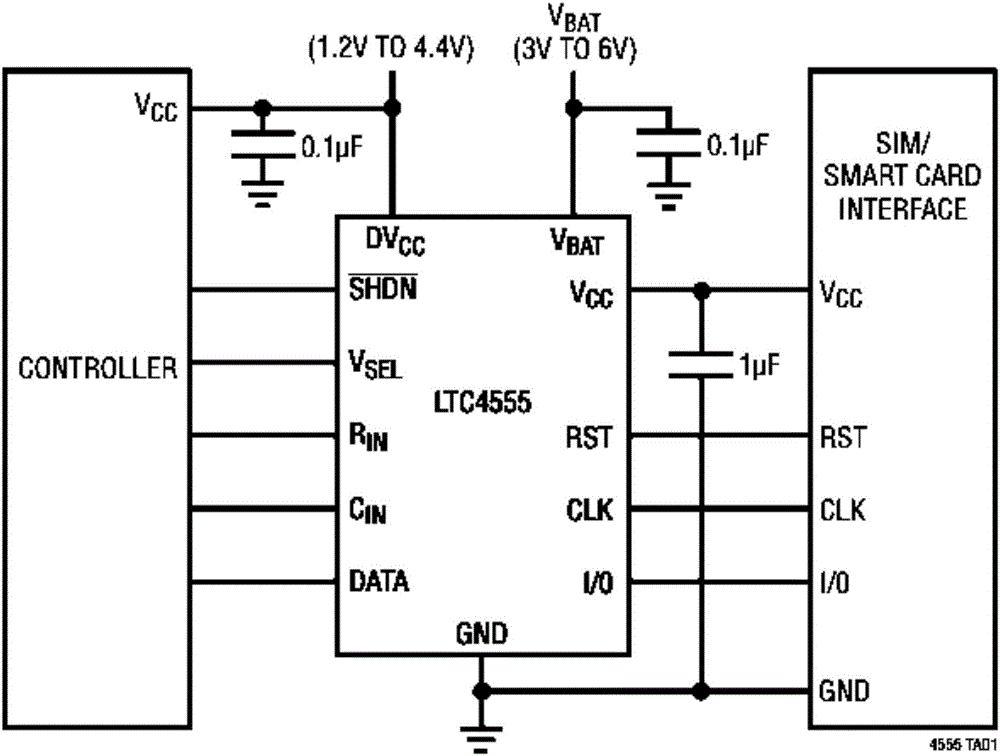

Beidou-based high frequency information communication system and method

ActiveCN106658466AAvoid damageLow load capacity requirementRadio transmissionElectric digital data processingCommunications systemProcessor scheduling

The invention discloses a Beidou-based high frequency information communication system. The system is characterized by comprising a Beidou communicator, a central control processor, a power module, an SIM card dispatcher and an SIM card array, wherein the central control processor, the Beidou communicator, the SIM card dispatcher and the SIM card array are directly connected with each other; the Beidou communicator and the central control processor are connected with the power module respectively. The central control processor dispatches a plurality of Beidou SIM cards to sequentially connect the Beidou communicator within short time, and the Beidou communicator acquires authorization information of the SIM cards and then sends the queued information out in sequence, thereby fulfilling the purpose of sending a large amount of information within short time.

Owner:江苏星宇芯联电子科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com