Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

89 results about "Memory-mapped file" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A memory-mapped file is a segment of virtual memory that has been assigned a direct byte-for-byte correlation with some portion of a file or file-like resource. This resource is typically a file that is physically present on disk, but can also be a device, shared memory object, or other resource that the operating system can reference through a file descriptor. Once present, this correlation between the file and the memory space permits applications to treat the mapped portion as if it were primary memory.

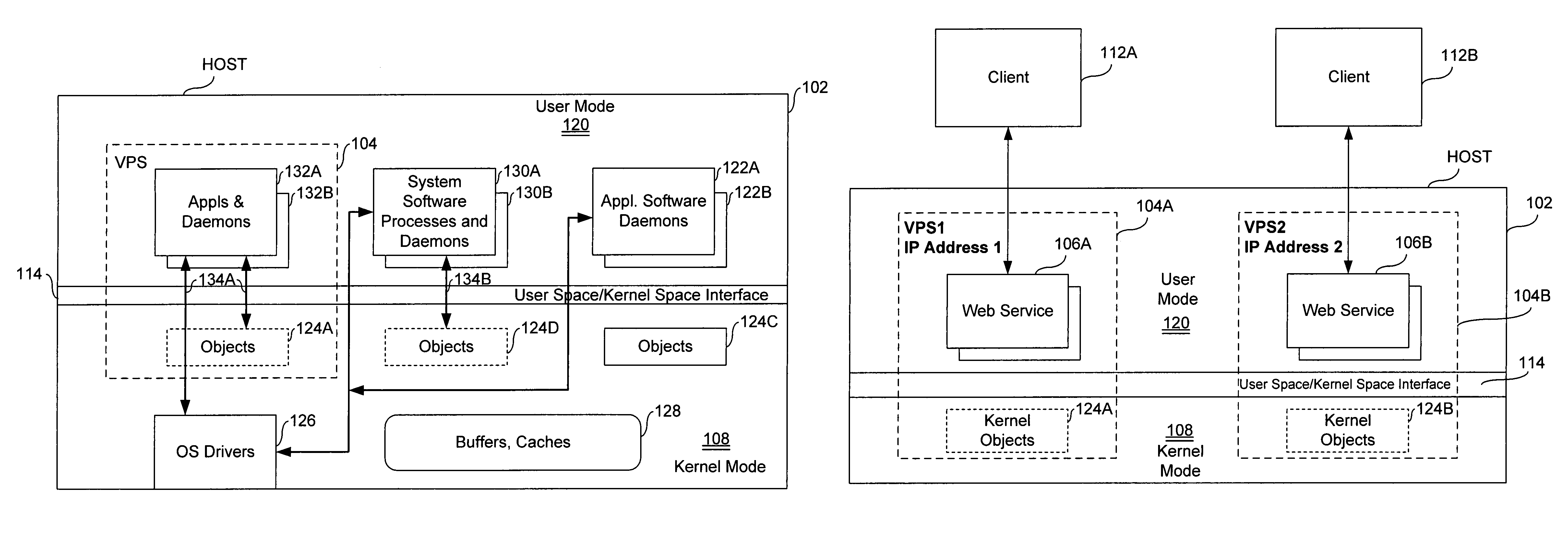

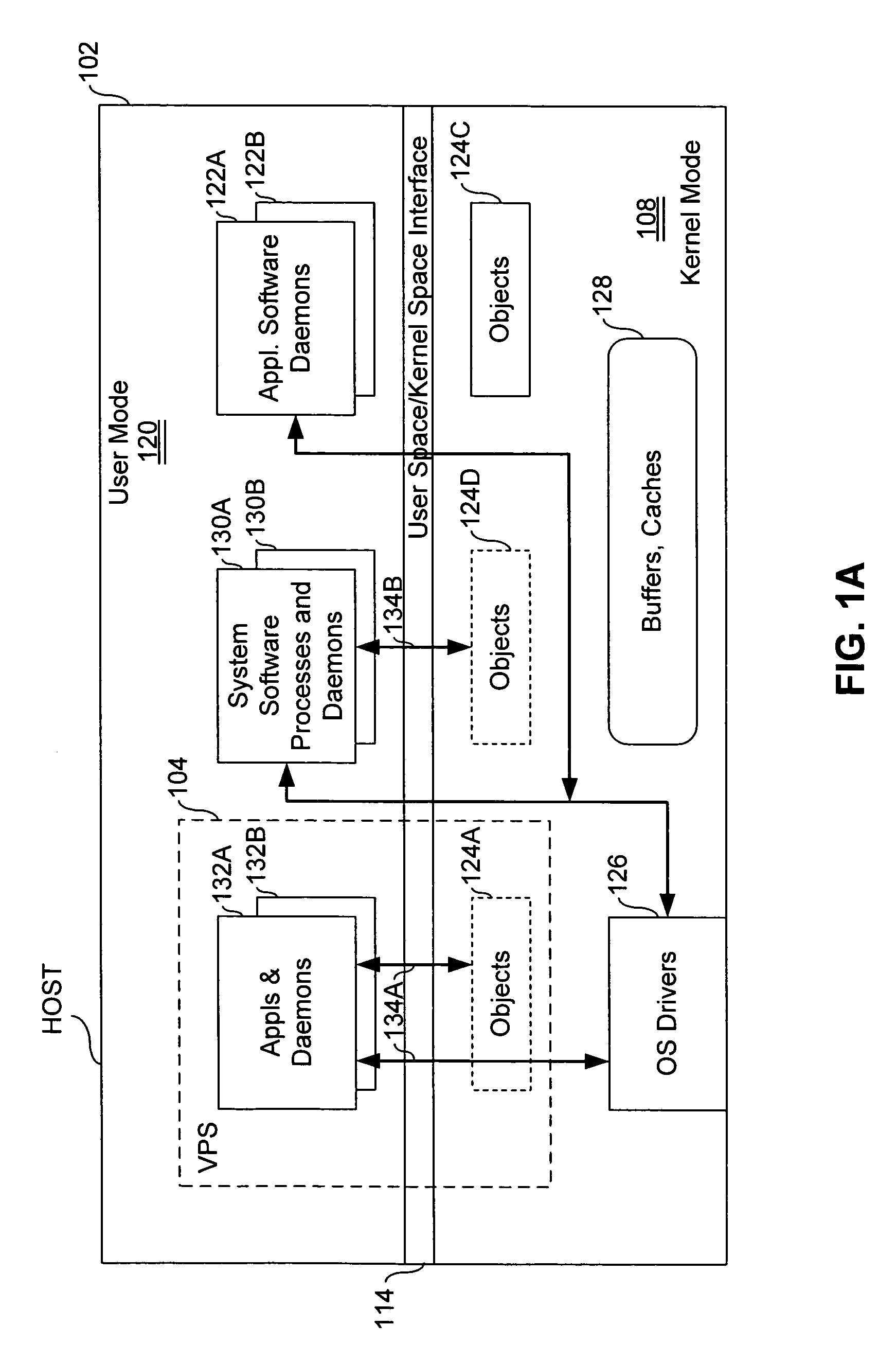

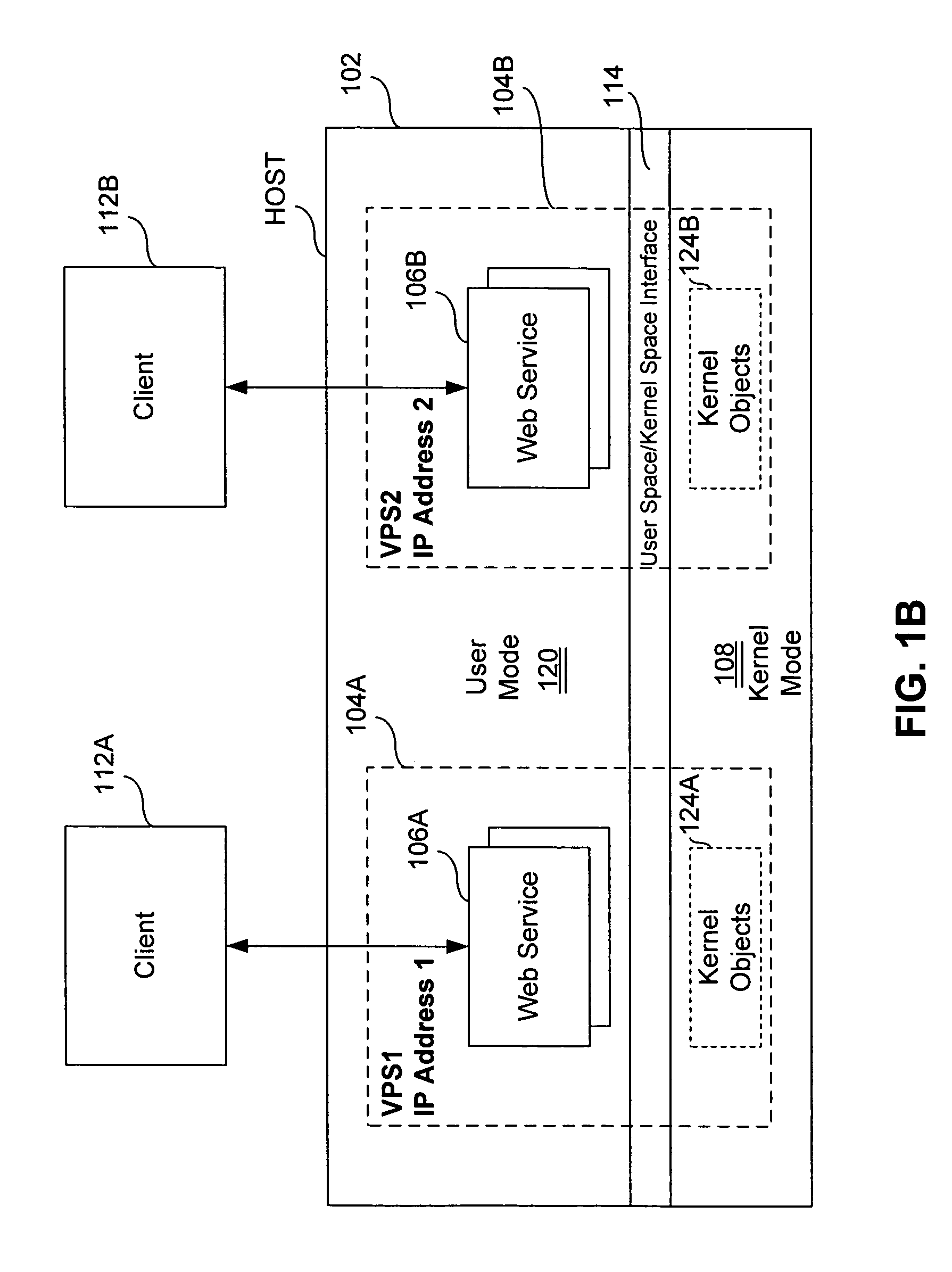

Virtual private server with enhanced security

ActiveUS7461144B1Improve securityData processing applicationsDigital computer detailsComputer resourcesOperational system

An end user computer includes a processor running an operating system. A plurality of virtual private servers (VPSs) are supported within the operating system. A plurality of applications are available to a user of the end user computer. The applications are launched within different VPSs. At least one of the VPSs has multiple applications launched within it. At least two of the applications are launched within different VPSs, and communicate with each other using secure communications means, such as firewalls, proxies, dedicated clipboards, named pipes, shared memory, dedicated inter-process communications, Local Procedure Calls / Remote Procedure Calls, API, network sockets, TCP / IP communications, network protocol communications and memory mapped files. The VPSs can be dynamically created and terminated. VPS control means are available to the user and include means for creation / termination of VPSs, a file system and registry backup, control information for backup / restore of data on a VPS level, placement of applications / processes rules for creation / support of corresponding VPSs, granulation of isolation for VPS / applications / processes, computer resource control, definition of permissible operations for inter-VPS communication, means for definition of permissible operations for inter-process communications.

Owner:VIRTUOZZO INT GMBH

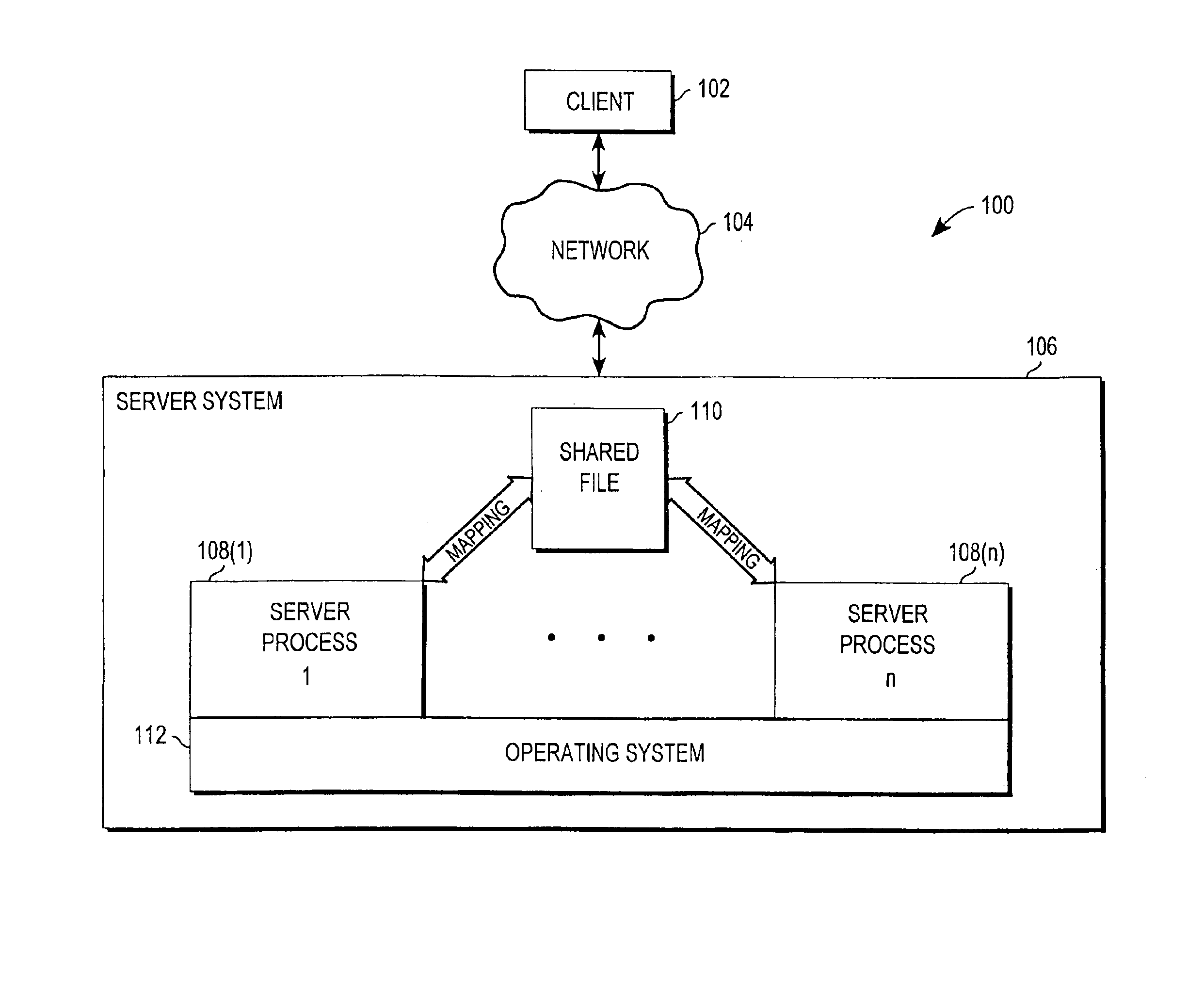

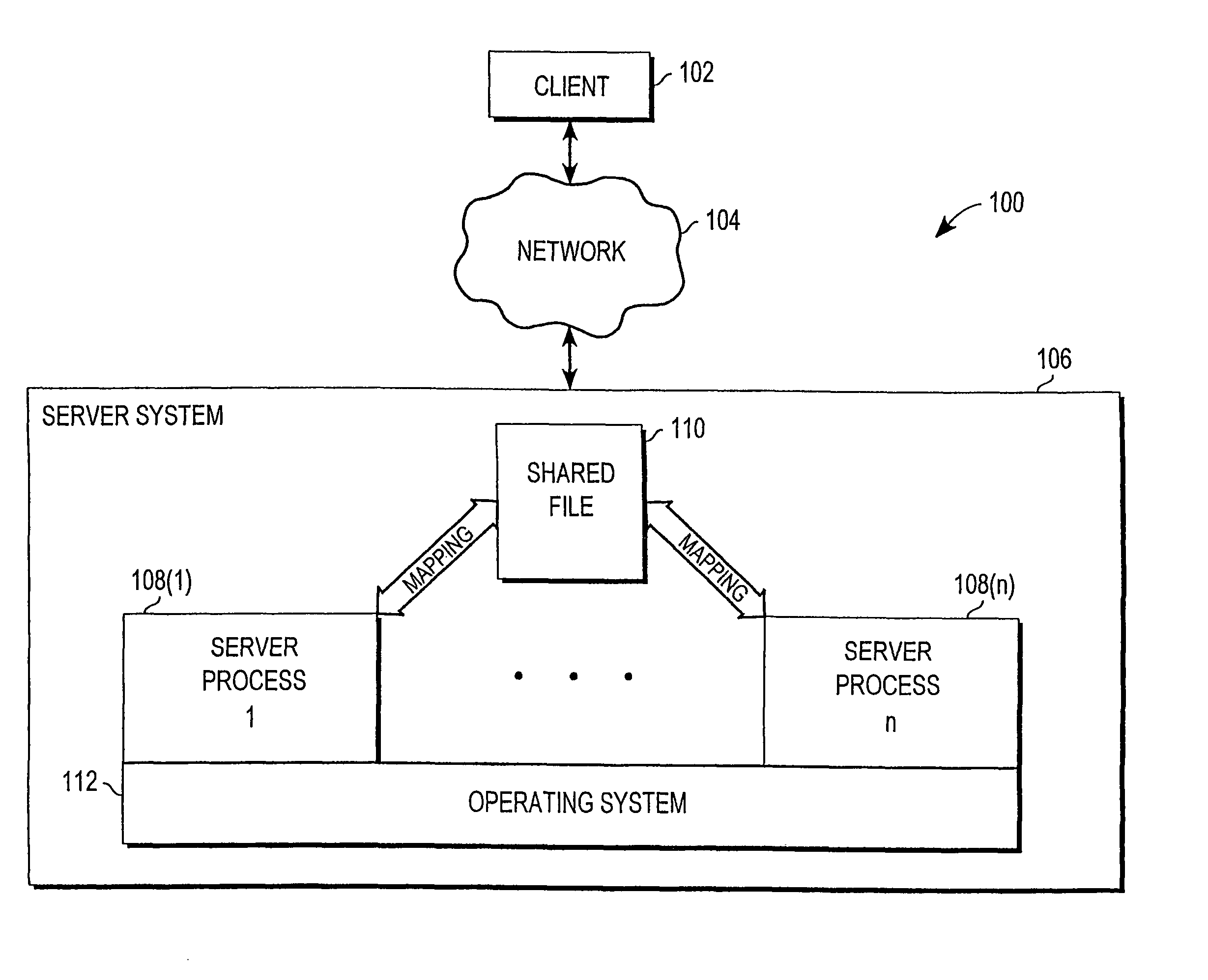

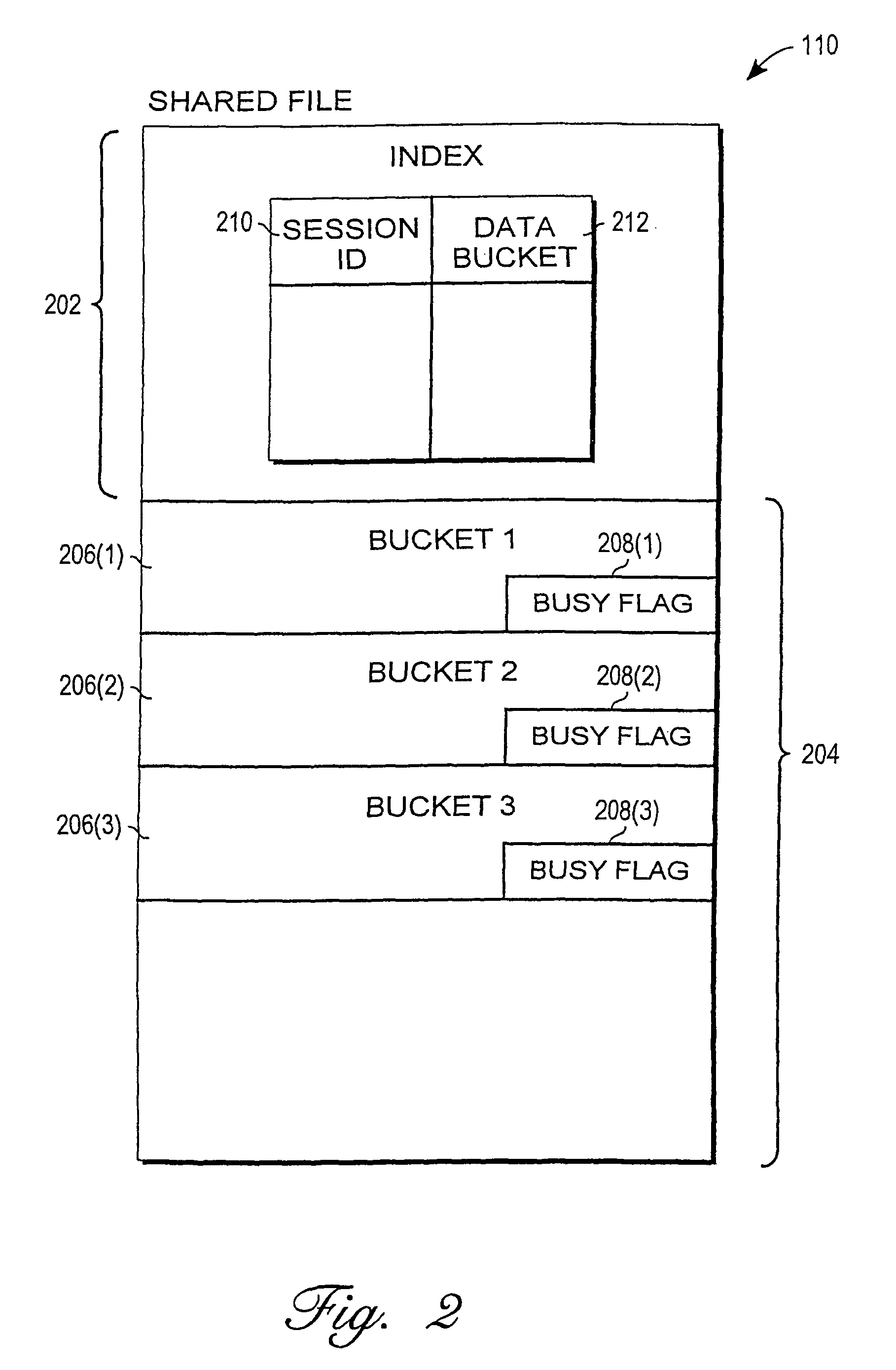

Mechanism for enabling session information to be shared across multiple processes

InactiveUS6938085B1Optimization mechanismProgram initiation/switchingResource allocationSession managementOperational system

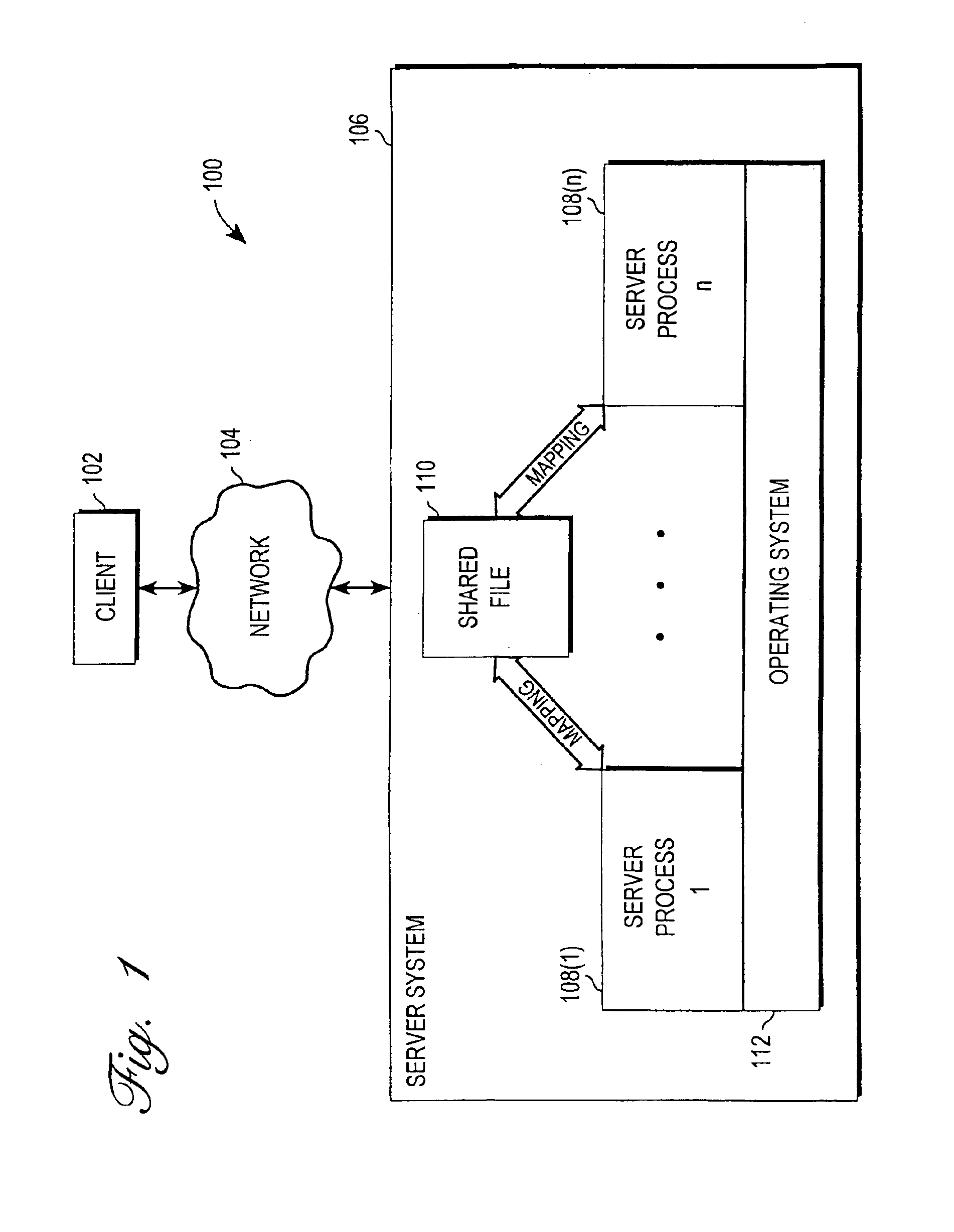

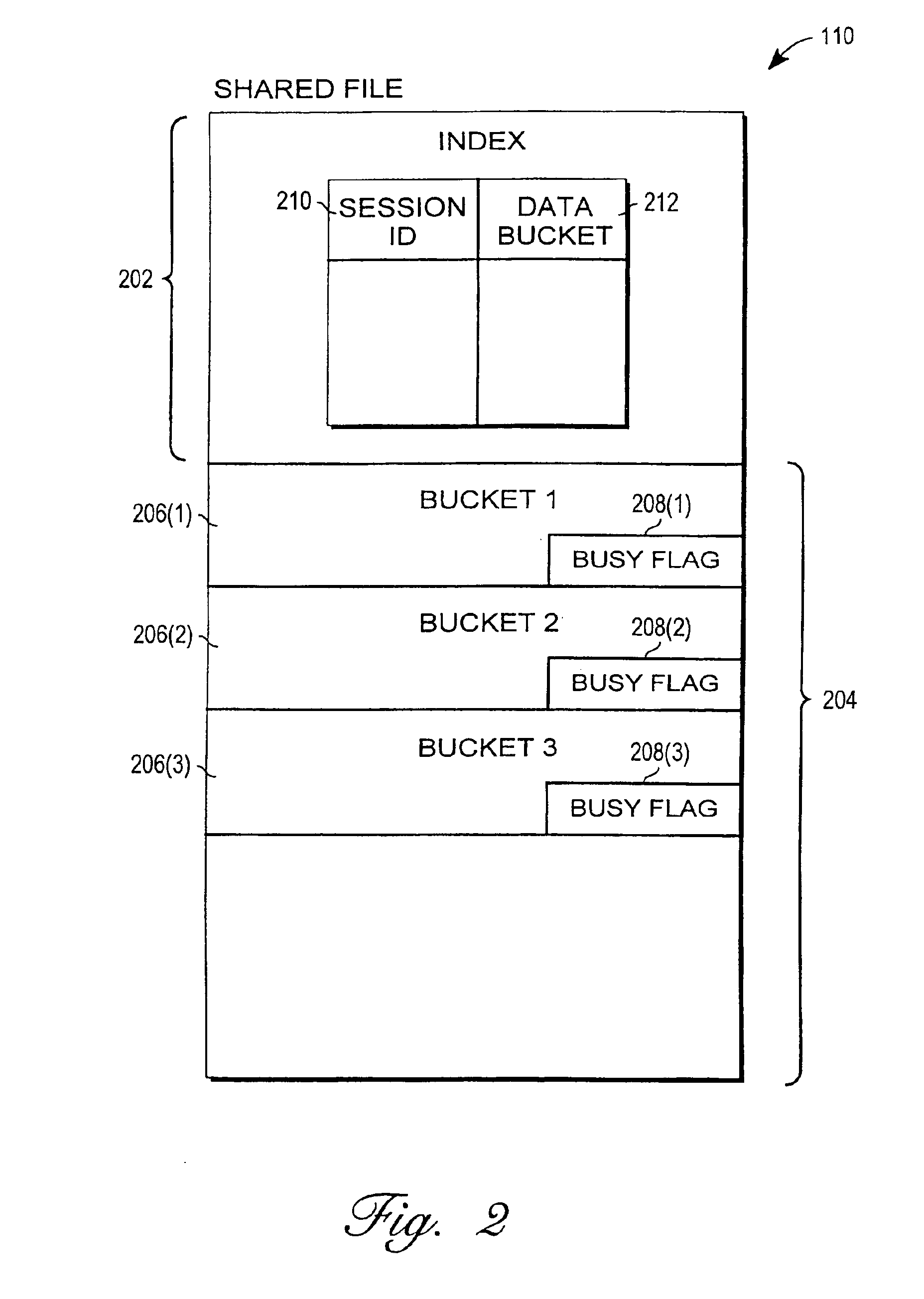

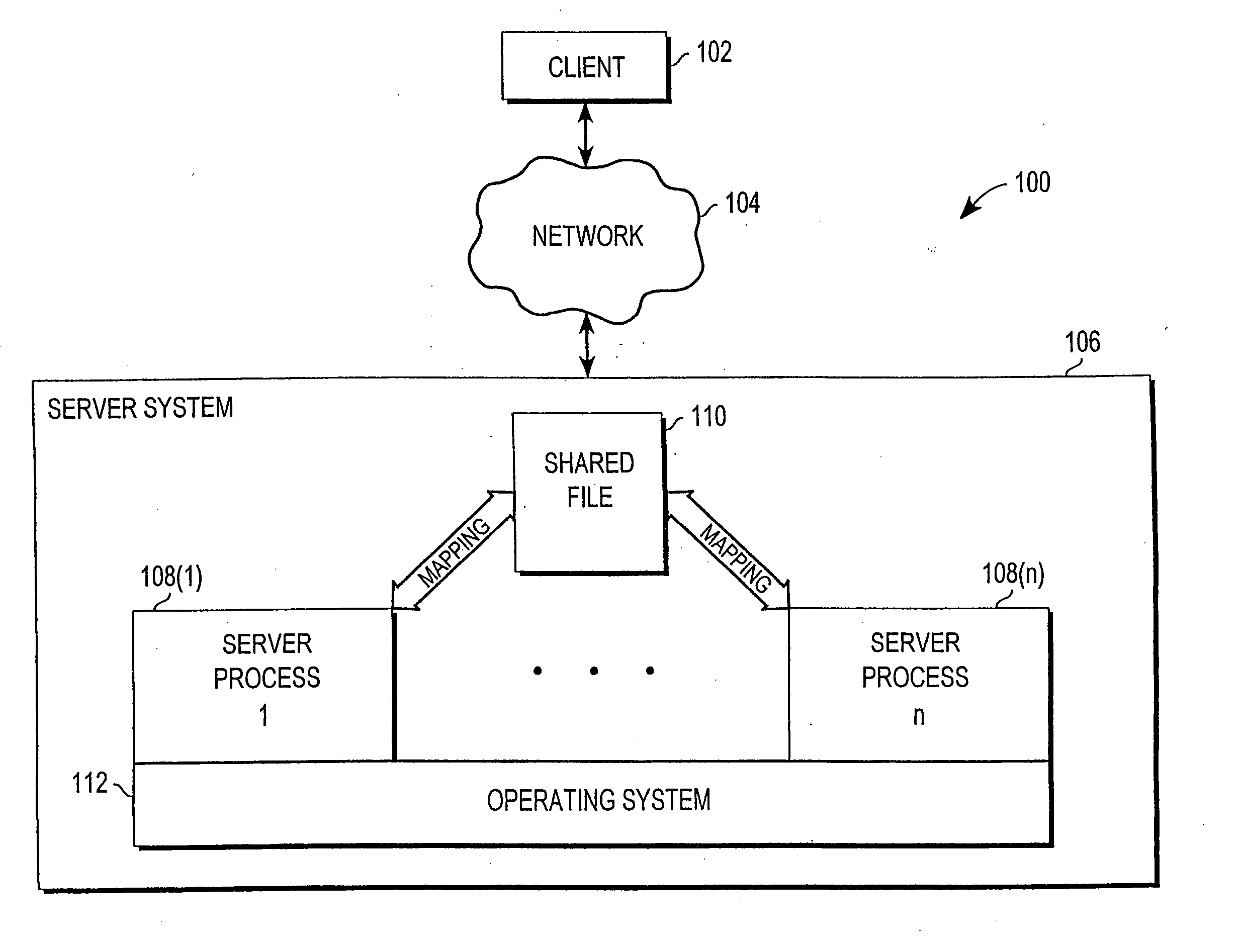

A mechanism for enabling session information to be shared across multiple processes in a multi-process environment is disclosed. There is provided a shared persistent memory-mapped file in a file system, which is mapped to the memory space of each of the processes. This file is used by all of the processes to store session information. Because the memory space of each process is mapped to the shared file, each process is able to access and manipulate all of the sessions in the system. Thus, sessions are no longer maintained on a process-specific basis. Rather, they are maintained on a centralized, shared basis. As a result, different requests pertaining to the same session may be serviced by different server processes without any adverse effects. Each process will be able to access and manipulate all of the state information pertaining to that session. By enabling session information to be shared, this mechanism eliminates the session management errors experienced by the prior art.

Owner:ORACLE INT CORP

Mechanism for enabling session information to be shared across multiple processes

InactiveUS20050204045A1Optimization mechanismProgram initiation/switchingResource allocationSession managementOperational system

A mechanism for enabling session information to be shared across multiple processes in a multi-process environment is disclosed. There is provided a shared persistent memory-mapped file in a file system, which is mapped to the memory space of each of the processes. This file is used by all of the processes to store session information. Because the memory space of each process is mapped to the shared file, each process is able to access and manipulate all of the sessions in the system. Thus, sessions are no longer maintained on a process-specific basis. Rather, they are maintained on a centralized, shared basis. As a result, different requests pertaining to the same session may be serviced by different server processes without any adverse effects. Each process will be able to access and manipulate all of the state information pertaining to that session. By enabling session information to be shared, this mechanism eliminates the session management errors experienced by the prior art.

Owner:ORACLE INT CORP

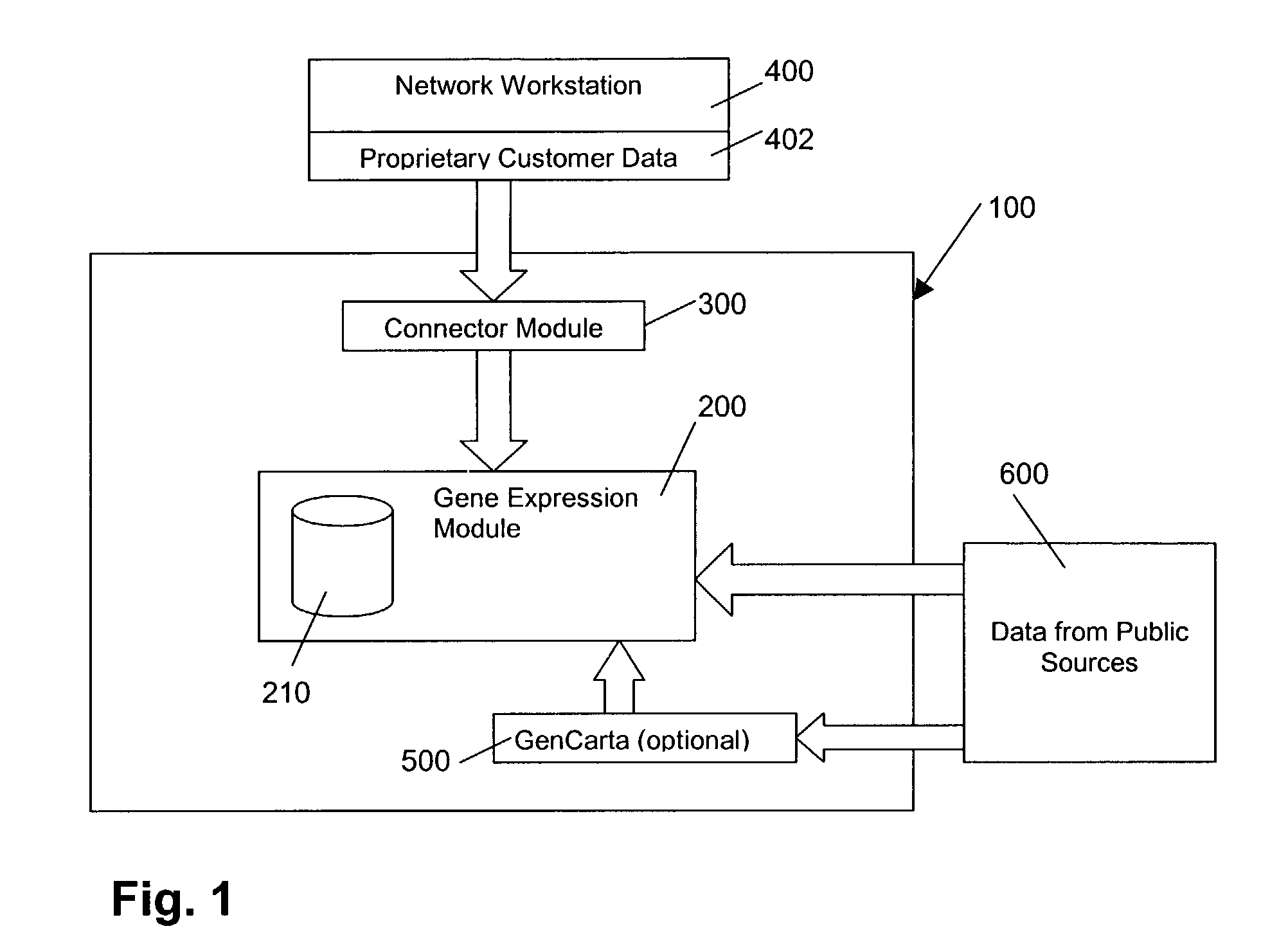

Analysis engine and work space manager for use with gene expression data

InactiveUS7251642B1Provide flexibilityProvides portabilityData processing applicationsDigital data processing detailsData warehouseApplication programming interface

A platform is for managing, integrating, and analyzing gene expression data. The platform includes a Run Time Engine(RTE) which provides more direct, quicker, and more efficient access to gene expression data through the use of memory mapped files. The platform also includes a workspace that is implemented in directories with data objects comprising XML descriptors coupled with binary data objects for storing gene and sample identifiers and input parameters for saved analysis sessions. The platform provides various Application Programming Interfaces (APIs) to a data warehouse, including a low-level C++ API, a high-level C++ API, a Perl API, R API, and CORBA API to access gene expression data from RTE memory mapped files. These APIs offer quicker and more direct access to the memory, thus improving the speed of overall operations.

Owner:OCIMUM BIO SOLUTIONS

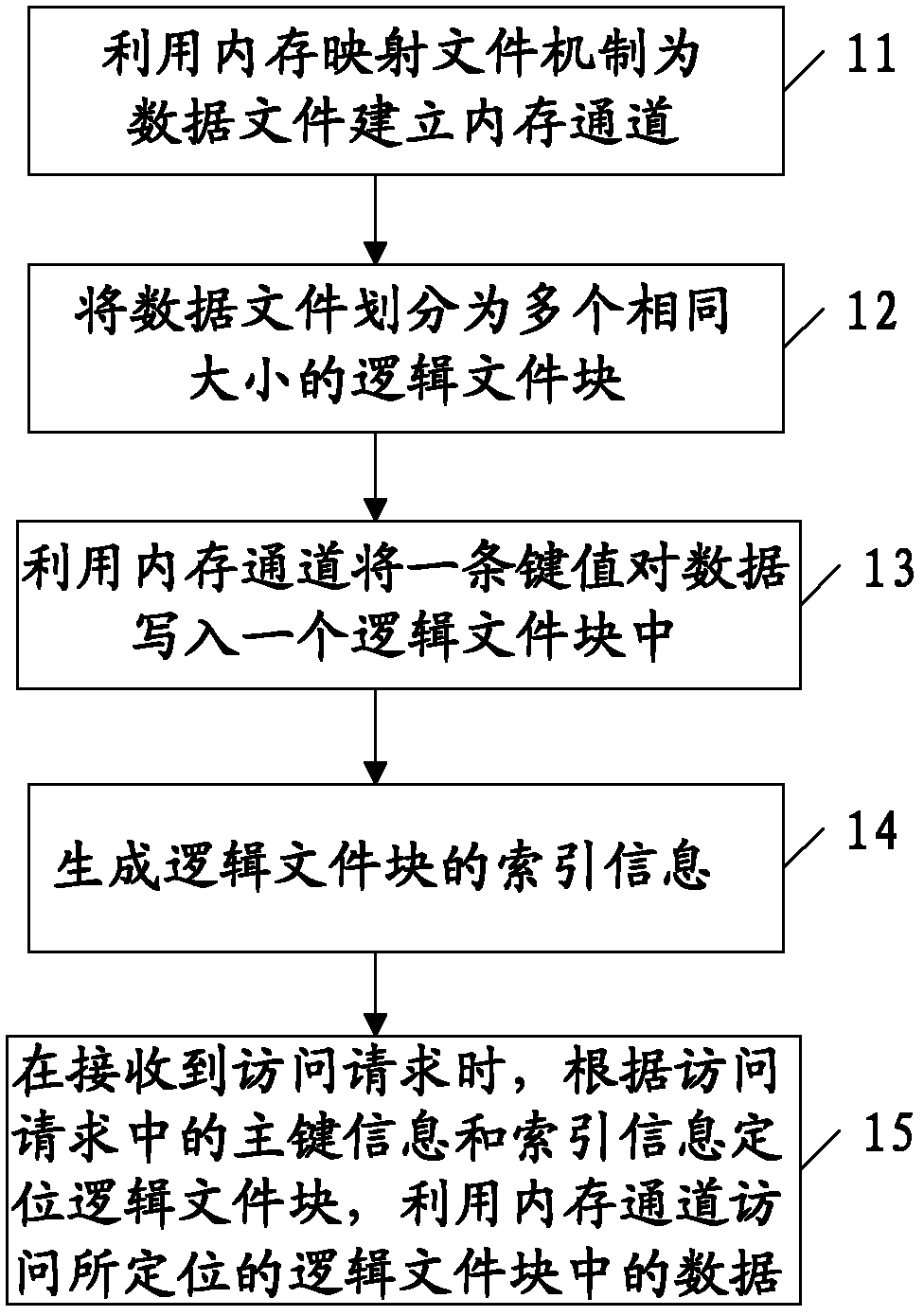

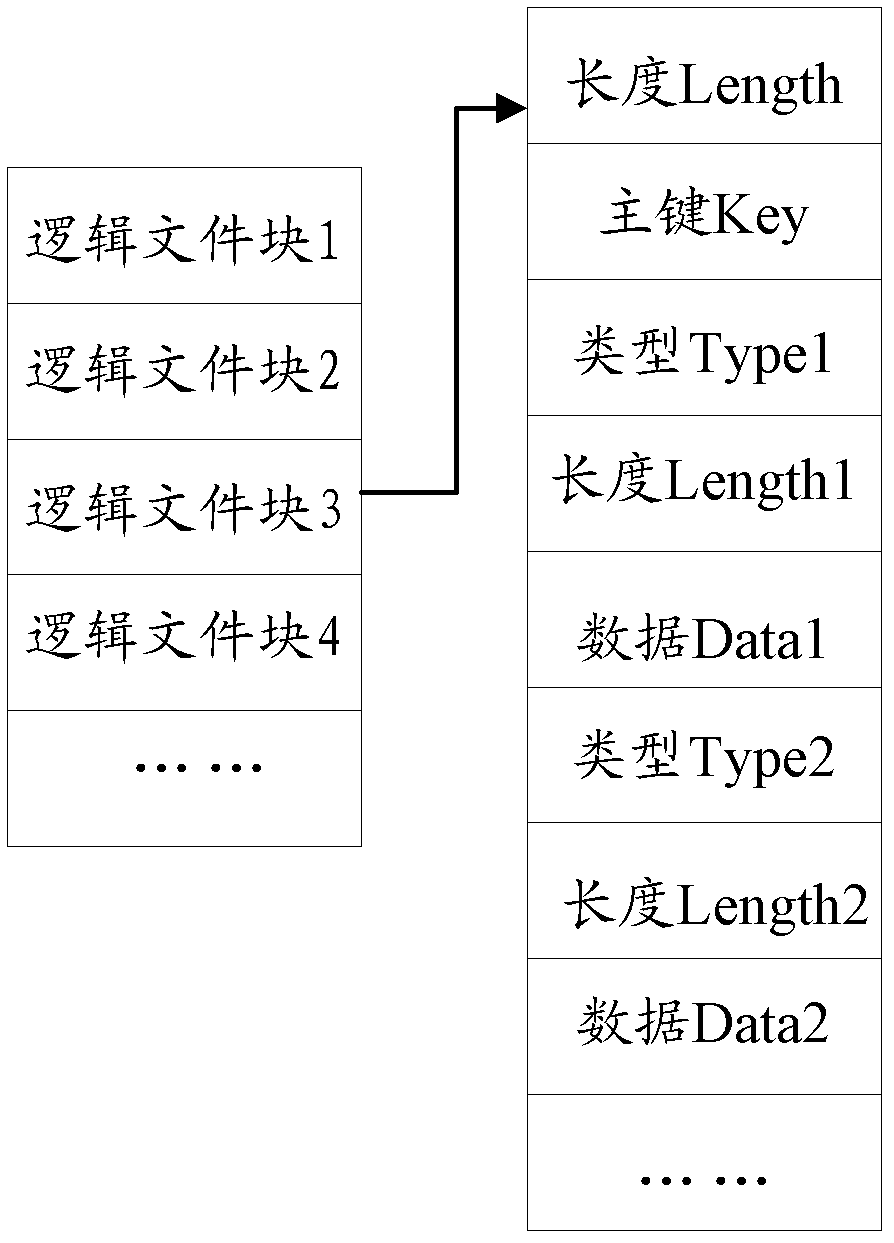

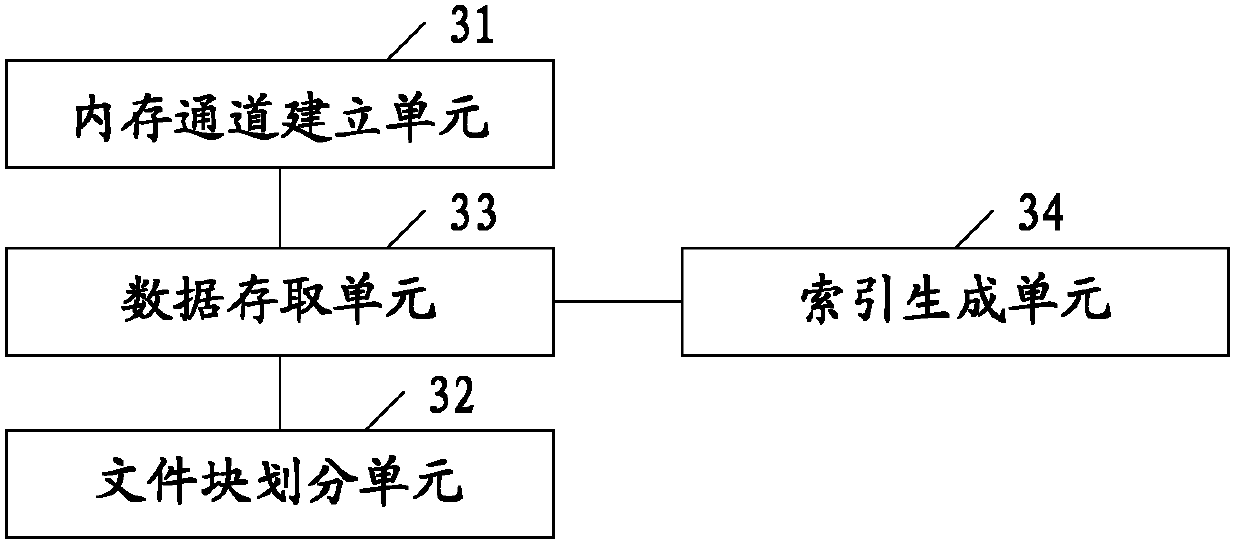

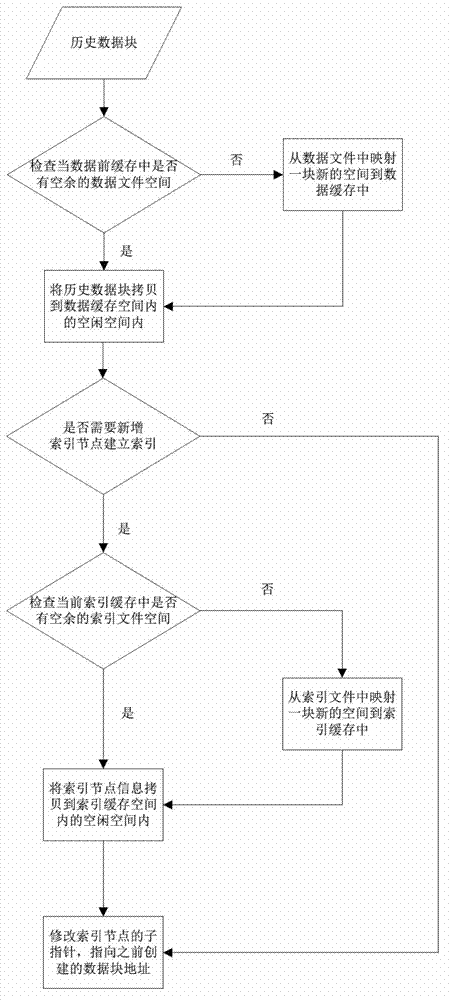

Data access method and device

ActiveCN103294710AImprove performanceIncrease flexibilitySpecial data processing applicationsAccess methodPaired Data

The invention discloses a data access method and device. The data access method provided by an embodiment of the invention comprises the steps that a memory channel is established for a data file stored on a disk by utilizing a memory-mapped file mechanism; the data file is divided into a plurality of logical file blocks with the same size; the memory channel is used for writing a piece of key-value pair data into a logical file block; and index information of the logical file block is generated, when an access request is received, the logical file block is positioned according to primary key information and index information in the access request, and data in the positioned logical file block is accessed through the memory channel. According to the scheme, the disk performance and the data access flexibility are improved, the data transmission in a network can be reduced, the access response is accelerated, and the data access efficiency is higher.

Owner:BEIJING FEINNO COMM TECH

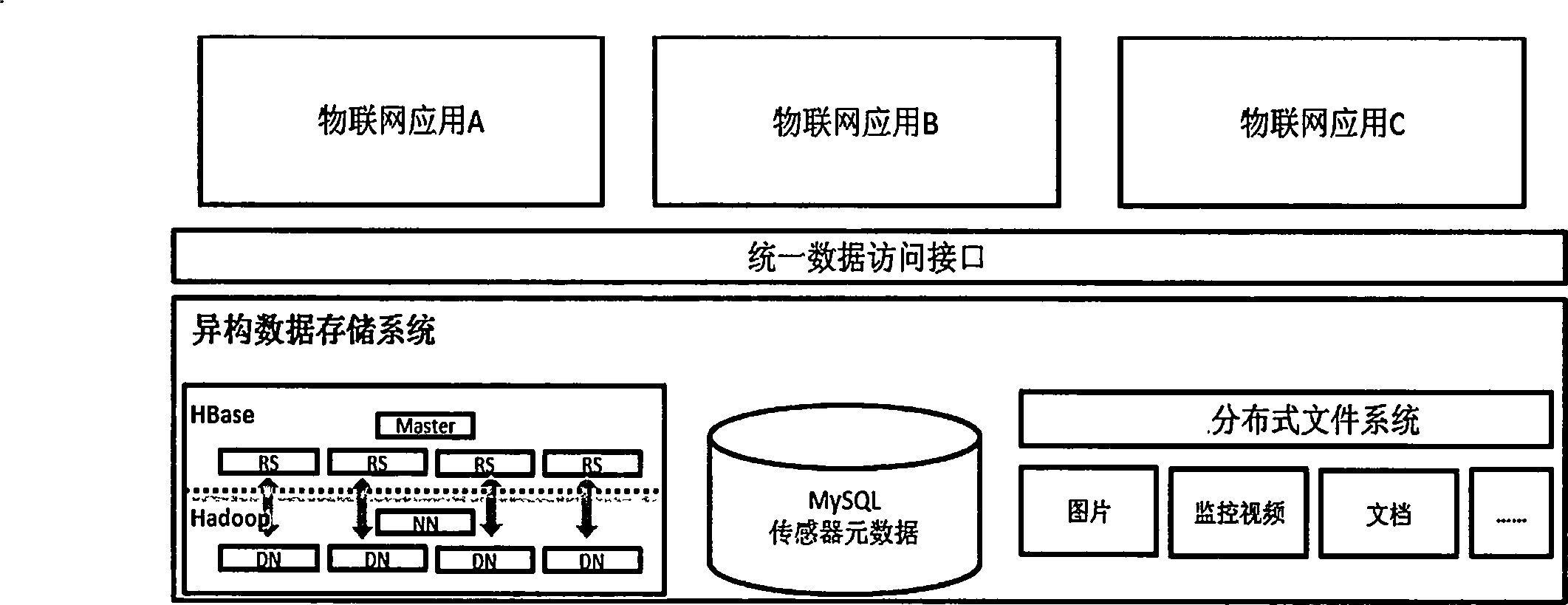

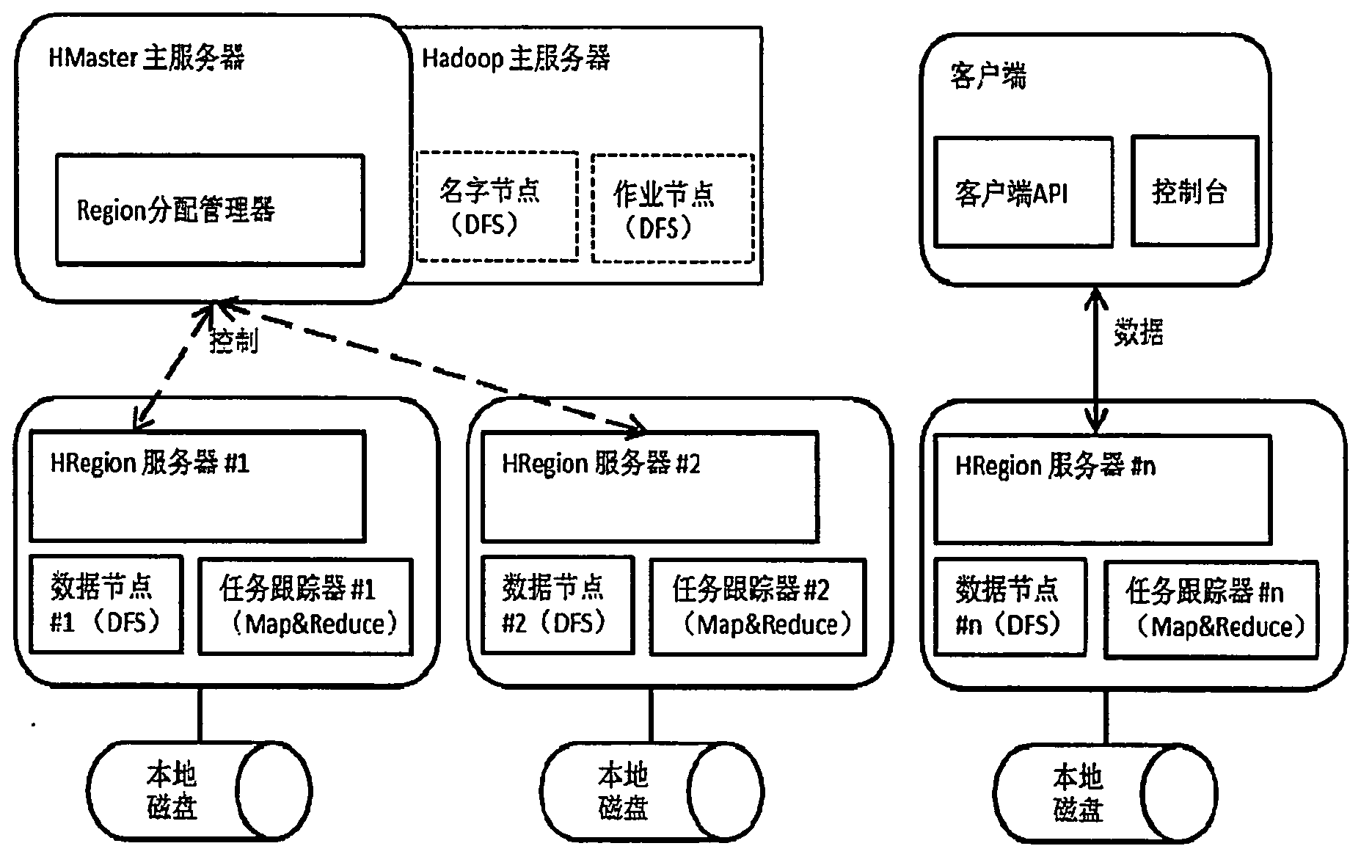

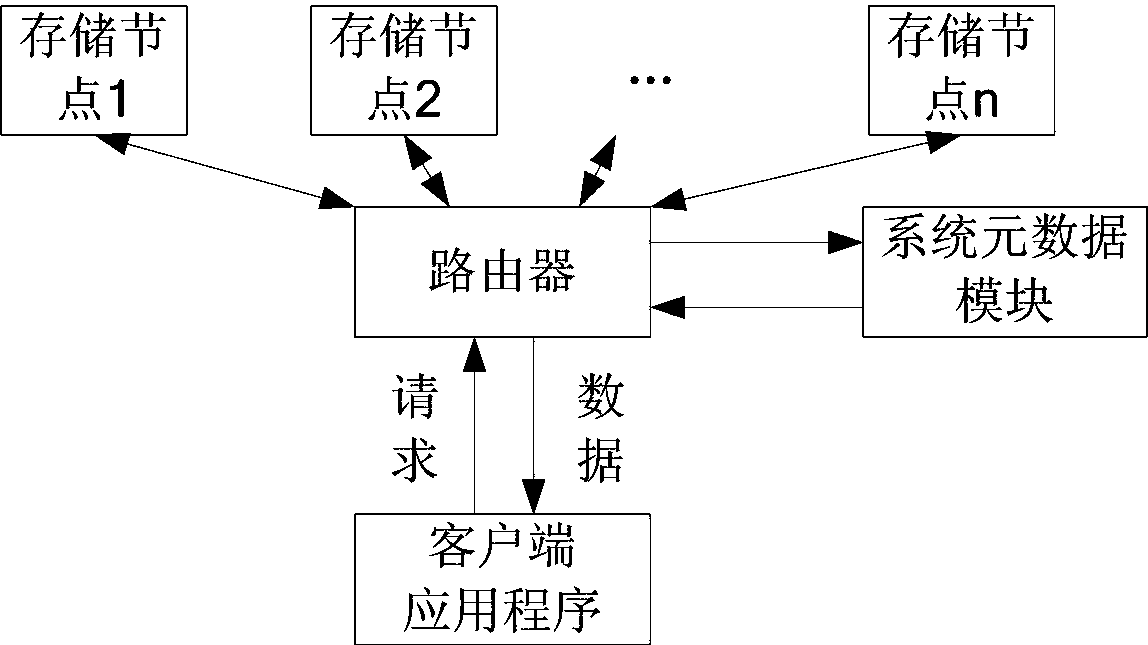

Internet of things heterogeneous data storage method and system

The invention discloses an Internet of things heterogeneous data storage method and a storage system. The internet of things heterogeneous data storage method comprises the steps that various types of data are stored in a column-based mode through an HBase distributed storage system, an MySQL metadatabase carries out registration management on the stored data, and finally the data access layer is configured in a centralized manner, so that applications based on the database can use the unified data interface. According to the Internet of things heterogeneous data storage method and the storage system, the unified storage mode is adopted through the unified data interface, the storage of different types of data and data from different sources is effectively supported; the column-oriented storage mechanism is extended by utilizing the HBase technology, so that the storage volume can be extended horizontally; the memory mapping file mechanism is adopted to effectively improve the storage and management efficiency of large files; and the data redundancy storage technology is adopted to greatly improve the system reliability and the accessibility. The Internet of things heterogeneous data storage method and the storage system can well solve the storage problem of various types of data, has efficient storage and utilization capability on large files, and is also low in cost and high in robustness.

Owner:上海和伍物联网系统有限公司

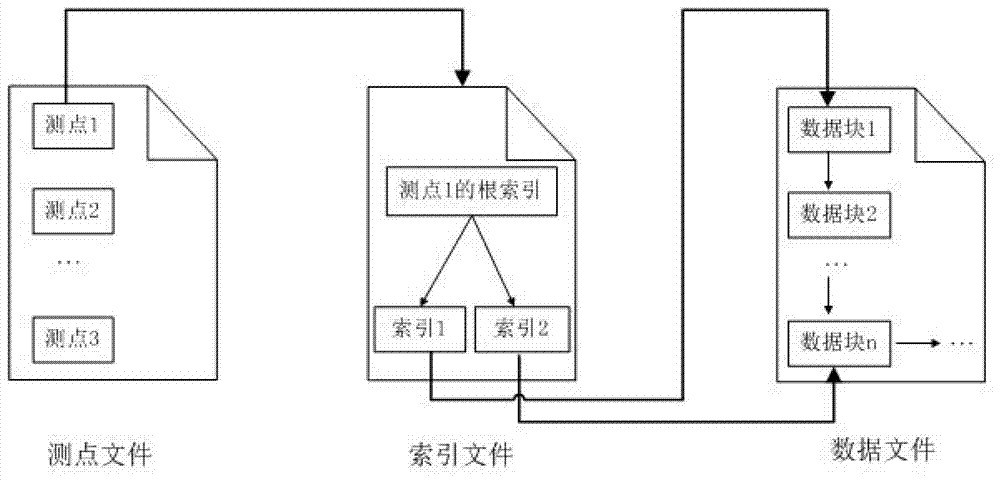

Indexing method applied to time sequence historical database

ActiveCN102890722AReduce accessReduce visit frequencySpecial data processing applicationsData accessAccess frequency

The invention discloses an indexing method applied to time sequence historical database, which is used for realizing the high-efficiency storage and retrieval of the time sequence historical database. According to the indexing method, the time point is taken as a keyword to establish multi-tree indexes, and the indexes can be orderly stored in an index file in a disc according to the time sequence, and the time sequence historical database can be orderly stored in the data file in a disc according to the time sequence. During data access, the access frequency of files can be reduced through batching memory mapping file mechanisms, so that the data storage and retrieval efficiency of the time sequence historical database can be ensured, and moreover, the consumption on memory resources is small.

Owner:STATE GRID CORP OF CHINA +2

Mechanism for enabling session information to be shared across multiple processes

InactiveUS8103779B2Optimization mechanismProgram initiation/switchingResource allocationSession managementOperational system

A mechanism for enabling session information to be shared across multiple processes in a multi-process environment is disclosed. There is provided a shared persistent memory-mapped file in a file system, which is mapped to the memory space of each of the processes. This file is used by all of the processes to store session information. Because the memory space of each process is mapped to the shared file, each process is able to access and manipulate all of the sessions in the system. Thus, sessions are no longer maintained on a process-specific basis. Rather, they are maintained on a centralized, shared basis. As a result, different requests pertaining to the same session may be serviced by different server processes without any adverse effects. Each process will be able to access and manipulate all of the state information pertaining to that session. By enabling session information to be shared, this mechanism eliminates the session management errors experienced by the prior art.

Owner:ORACLE INT CORP

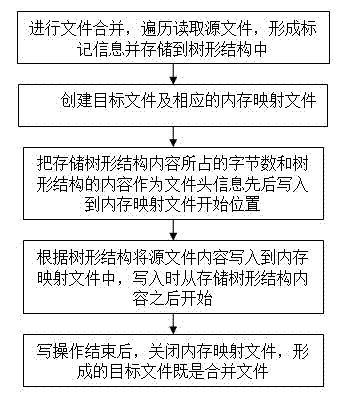

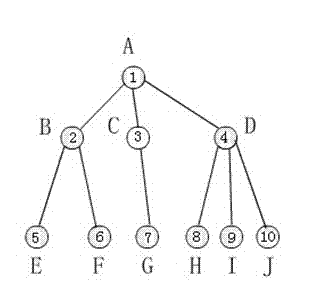

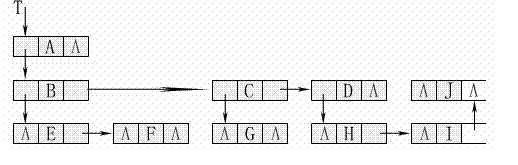

Method for joining files and method for splitting files

ActiveCN102508880AImprove merge speedEasy to operateSpecial data processing applicationsFile systemByte

The invention relates to a technology of a file system for a computer, in particular to a method for joining files and a method for splitting files. The method for joining the files comprises the following steps of: ergodically reading a source file to form source file mark information, and storing the source file mark information into a tree structure; creating a target file and a corresponding memory mapping file; taking byte numbers occupied by storing the content of the tree structure and the content of the tree structure as file header information, and sequentially writing the file header information into a start position of the memory mapping file; writing the information into the memory mapping file according to the content of the source file of the tree structure, wherein the written position starts after the content of the tree structure is stored; and closing the memory mapping file after the write operation is ended to form a target files, namely the joined files. The invention also provides a file splitting method for splitting the joined target files. According to the methods, the joining speed or the splitting speed of the files is improved, the certain source file can be quickly searched and split in the joined target files, and users can greatly conveniently operate the files.

Owner:HUAWEI TEHCHNOLOGIES CO LTD

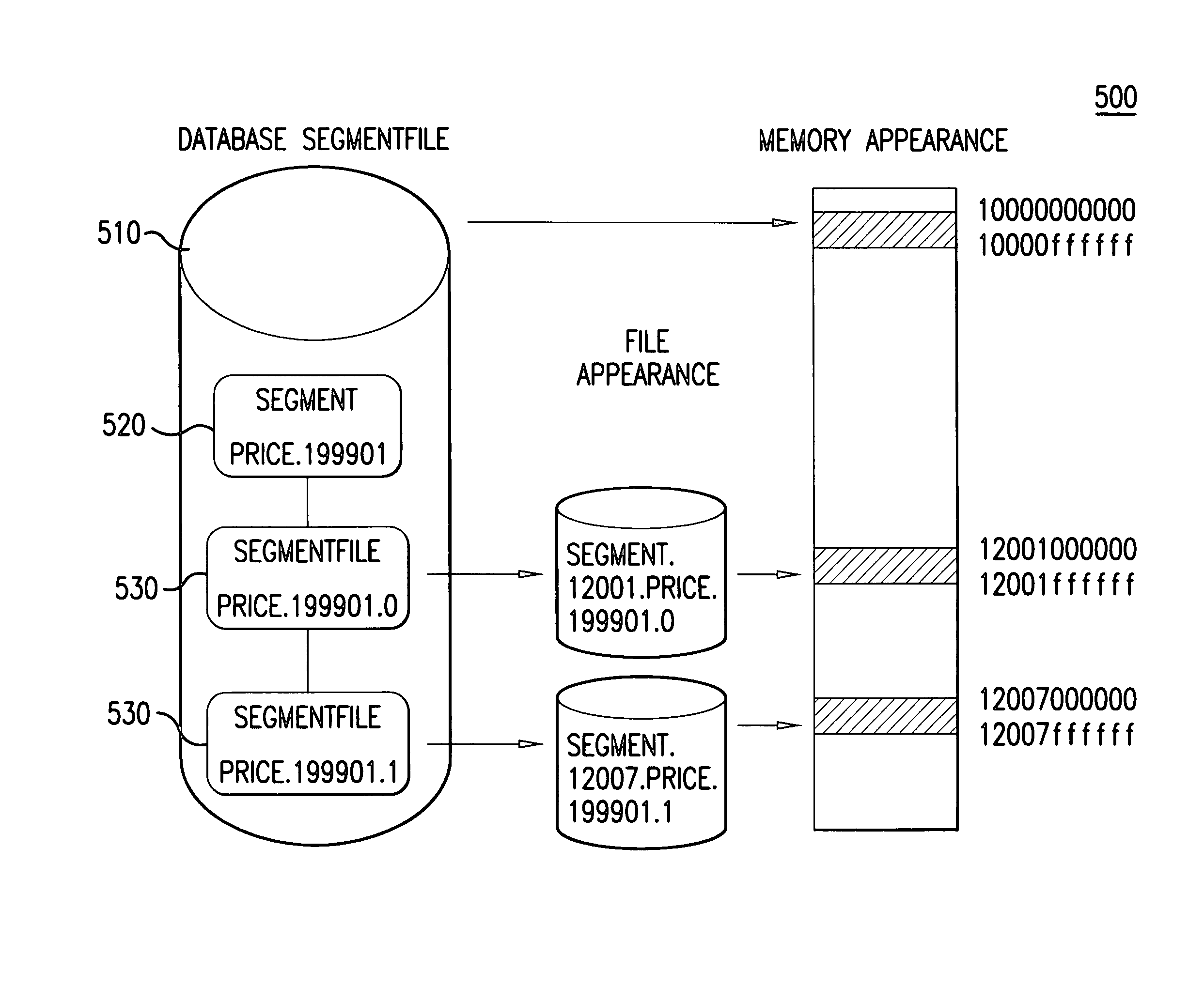

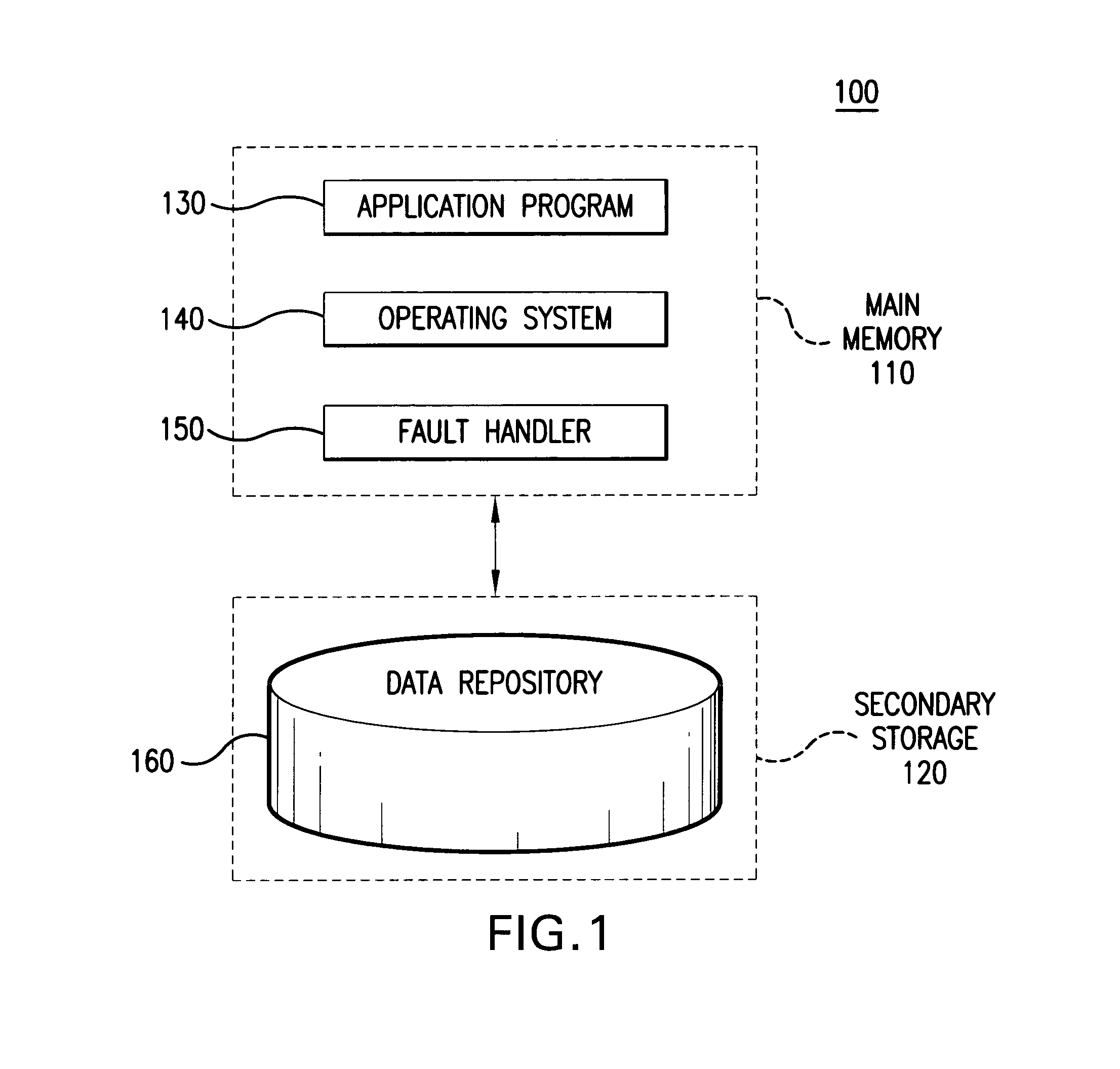

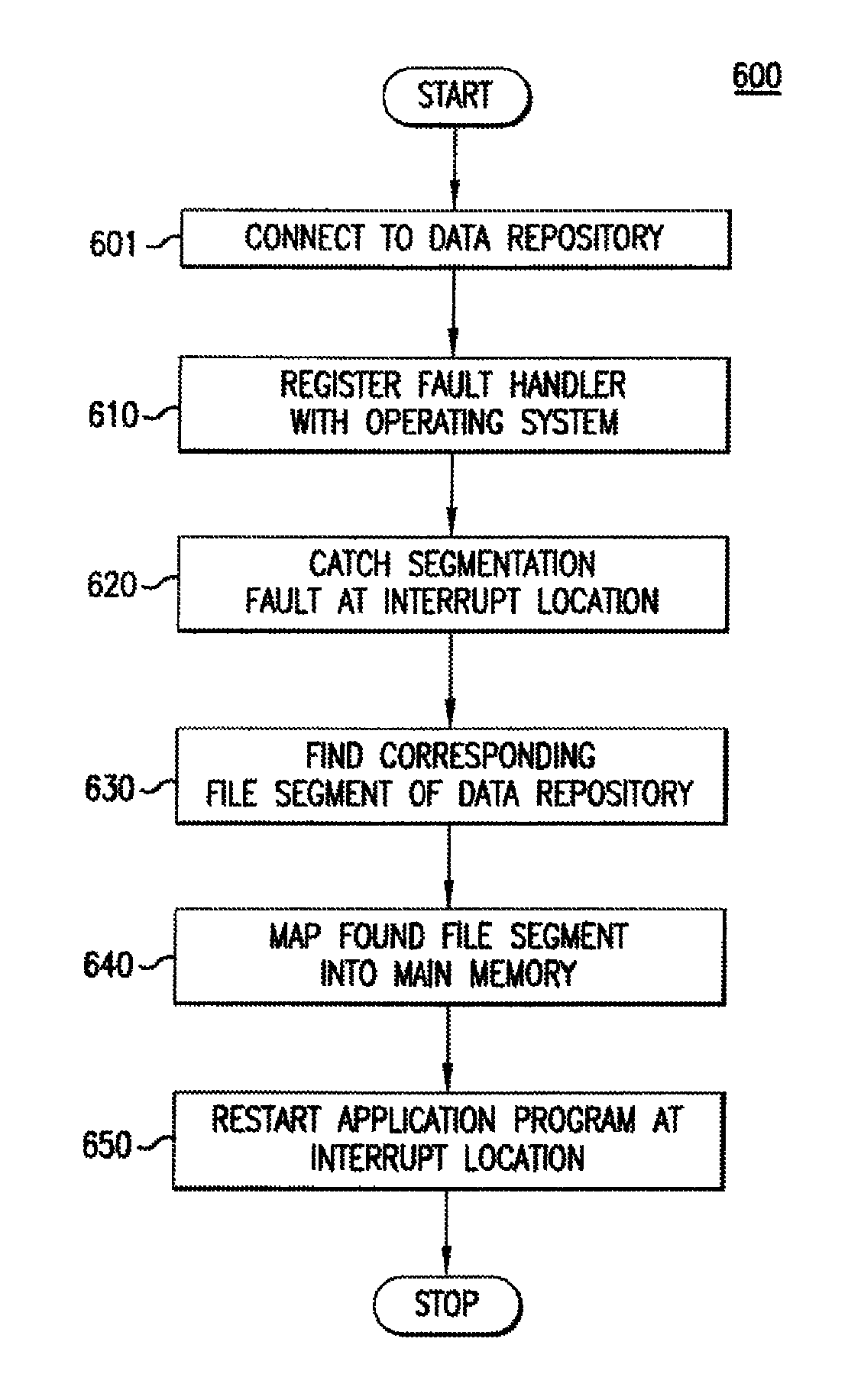

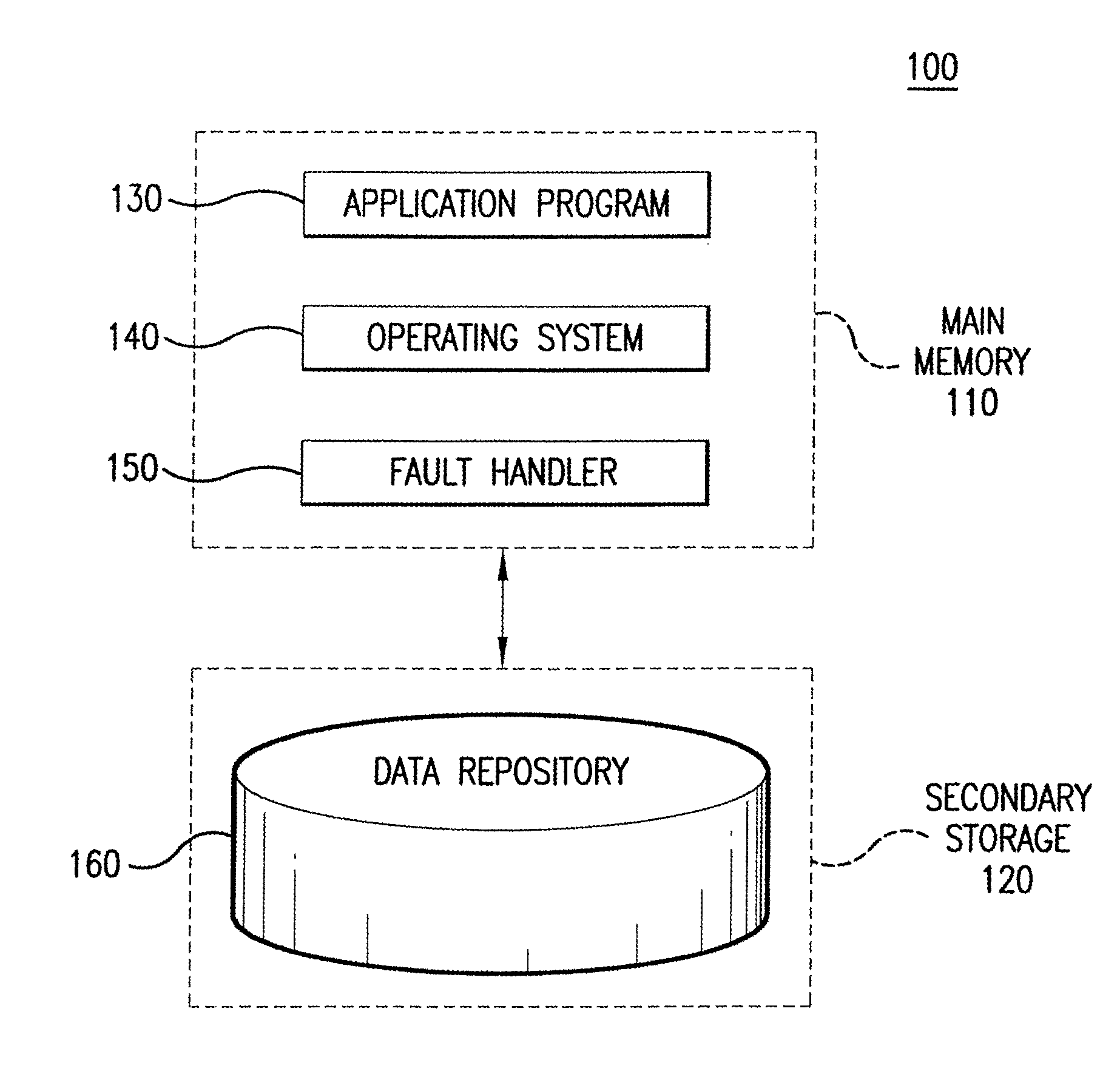

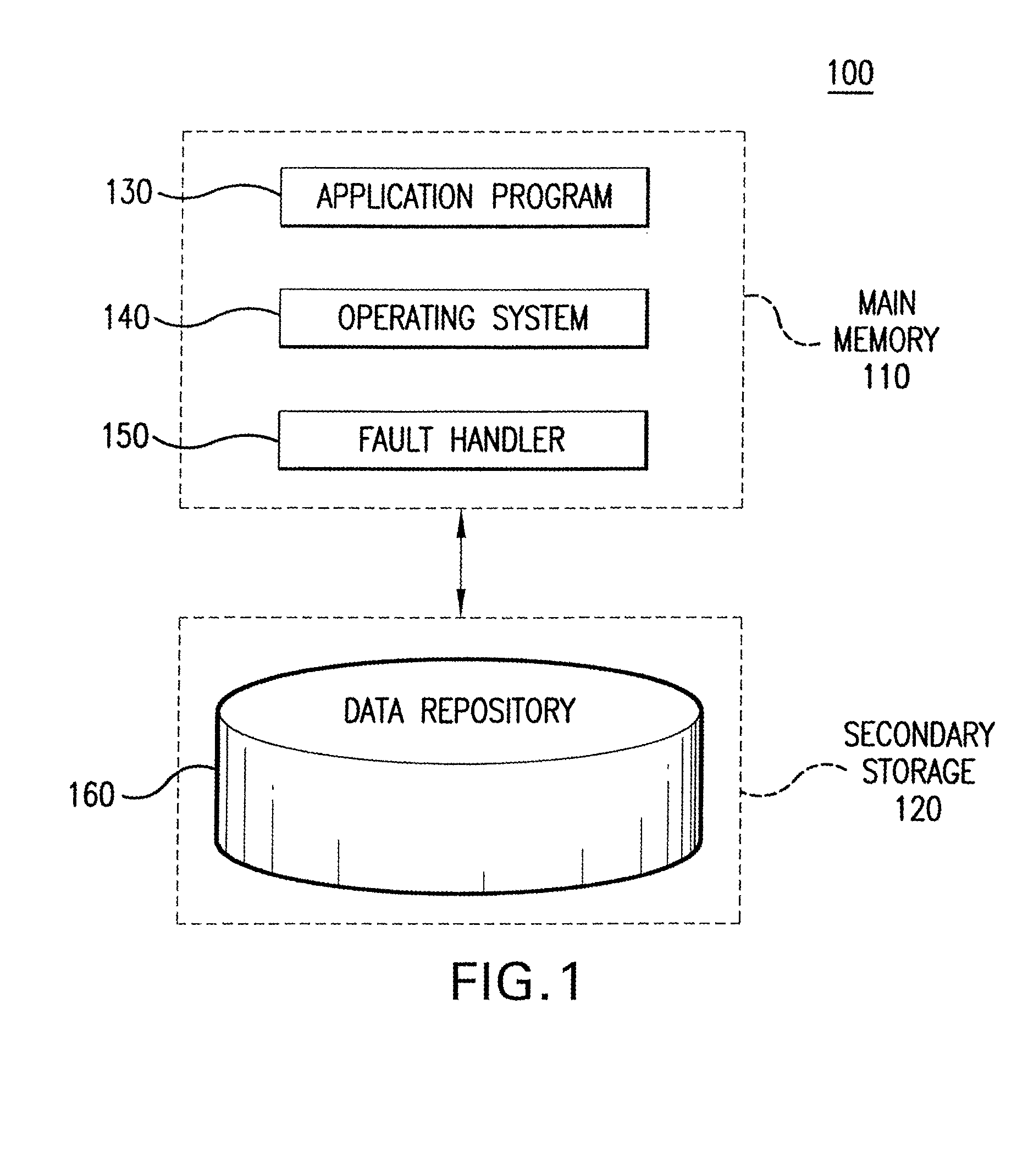

Segmented global area database

ActiveUS7222117B1Addressing slow performanceReduce the amount requiredData processing applicationsError detection/correctionOperational systemApplication software

Embodiments of the present invention relate to memory management methods and systems for object-oriented databases (OODB). In an embodiment, a database includes a plurality of memory-mapped file segments stored on at least one nonvolatile memory medium and not in main memory. An application program connects to the database. A fault handler associated with the database is registered with the operating system of the application program. The fault handler catches a segmentation fault that is issued for an object referenced by the application program and resident in the database. A file segment corresponding to the referenced object is found and mapped into main memory. The application program is restarted. Because data is transparently mapped into and out of the main memory without copying the data, objects may be read with near zero latency, and size restrictions on the database may be eliminated.

Owner:ADVENT SOFTWARE

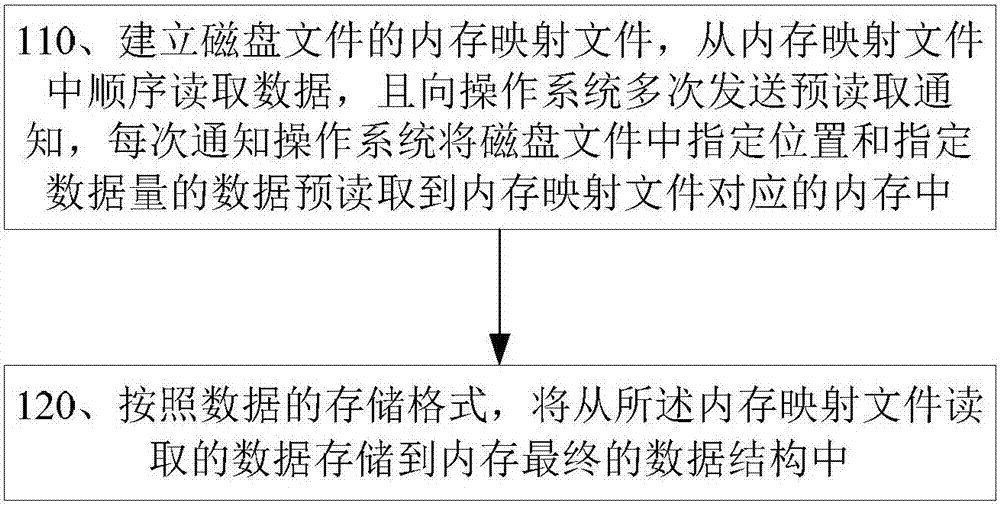

Method and device for document loading

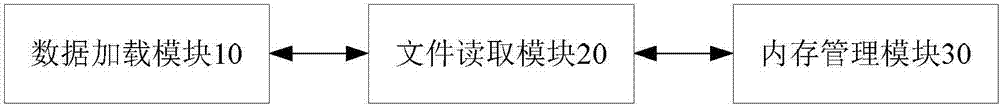

ActiveCN107480150AImprove loading speedStable and controllable memory usageSpecial data processing applicationsOperational systemMemory-mapped file

The invention provides a method and device for document loading. The method comprises the steps that a memory mapping document of a magnetic disk document is established by the document loading device; data is read successively from the memory mapping document, and pre-reading notices are sent to an operation system for multiple times; each time, the operation system is notified to pre-read the data at designated positions with a designated data size in the magnetic disk document to memory corresponding to the memory mapping document; and the document loading device stores the data read from the memory mapping document to a final data structure of the memory according to a storage format of the data. The document loading device comprises a data loading module, a document reading module and a memory management module. The method and device for the document loading provided by the application have the advantages that document loading speed can be increased; and a memory utilization amount during loading can be kept stable and controllable.

Owner:ALIBABA GRP HLDG LTD

Method of and apparatus for recovery of in-progress changes made in a software application

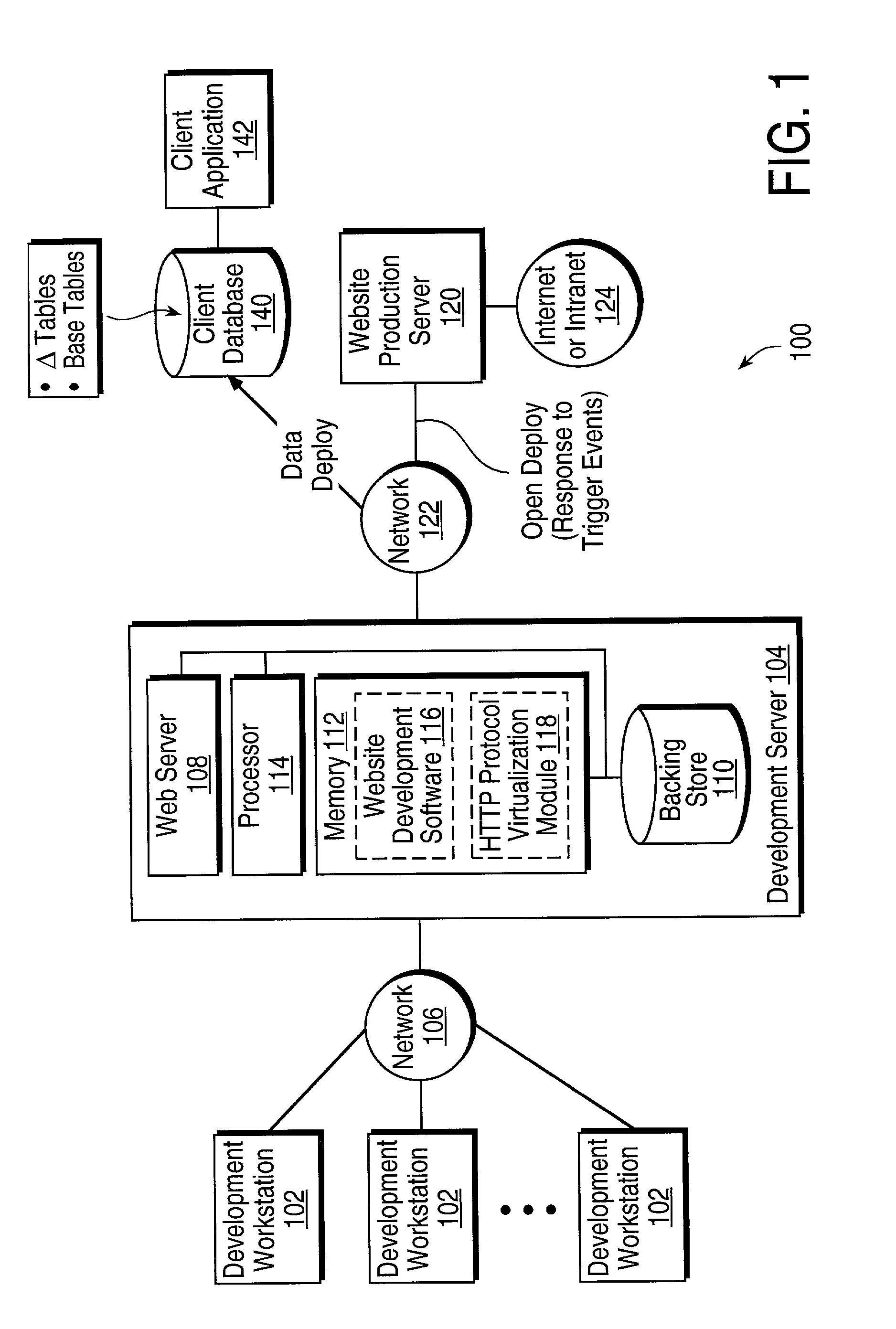

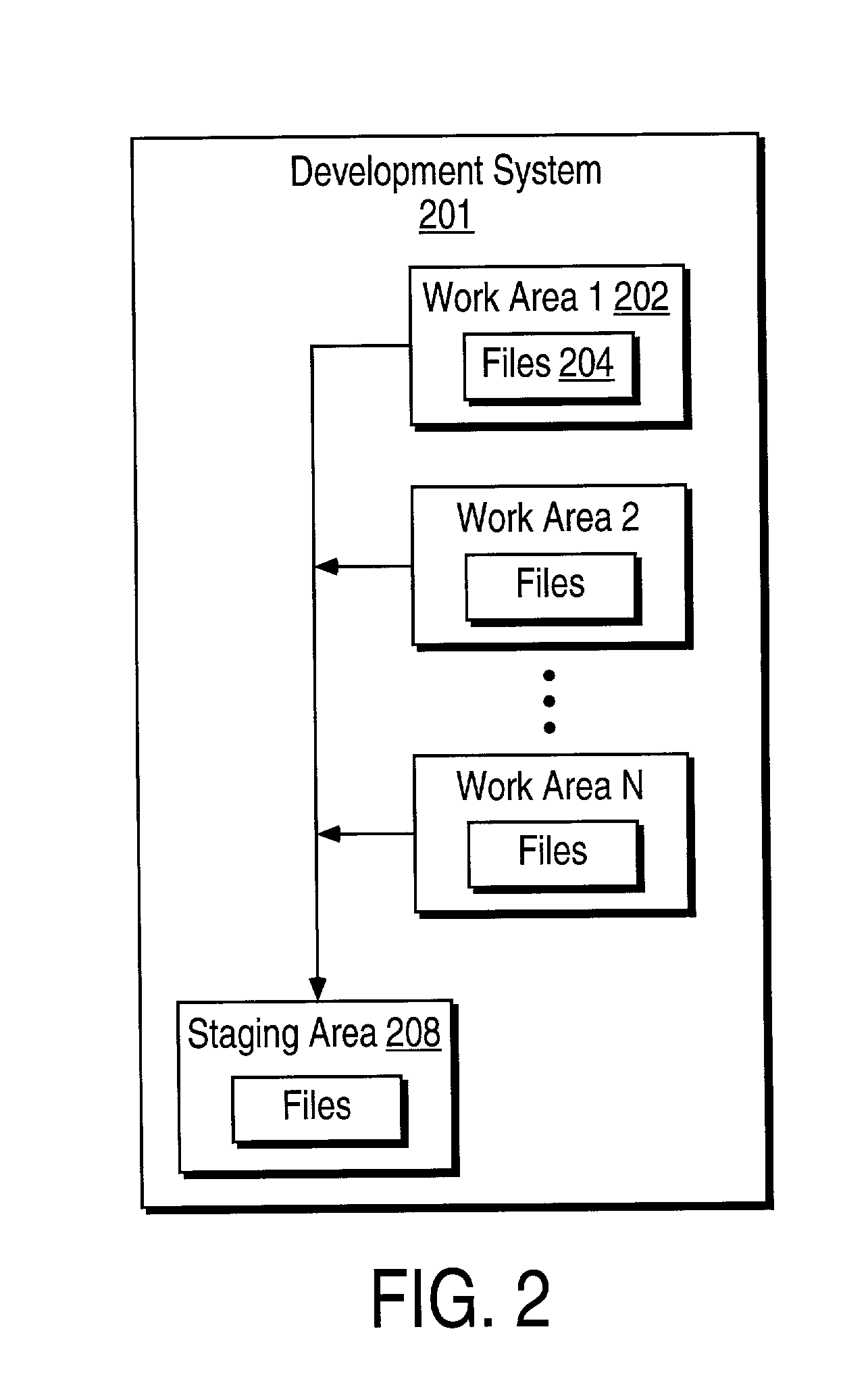

InactiveUS20020129042A1Reduce the amount of dataReduce of data timeMemory loss protectionVersion controlApplication softwareSystem failure

Provided are a method and apparatus configured to enable the preservation and recovery of in-progress developments and changes as they are made in a system for development of content in the event of a process or system failure. The in-progress data developments and changes may be preserved while they are created, and may then be retrieved and recovered after the system or process is recovered. The in-progress data developments and changes may be preserved while they are created, and may then be retrieved and recovered after the system or process is recovered. In one embodiment, the cache memory contents are mirrored into a memory-mapped file, providing a redundant location for cache data. In the event of a failure, the mirrored cache information is available for recovery from a file. This extra cache location can be filled and flushed as needed by the system as the cache data is stored to disk or other memory location, obviating its preservation. The invention further includes a method of and apparatus for recovering and restarting the actual process or system after such a failure. Utilizing one or both features, an application utilizing the invention may reduce the amount of data and process time lost as a result of a process or system failure.

Owner:HEWLETT PACKARD DEV CO LP

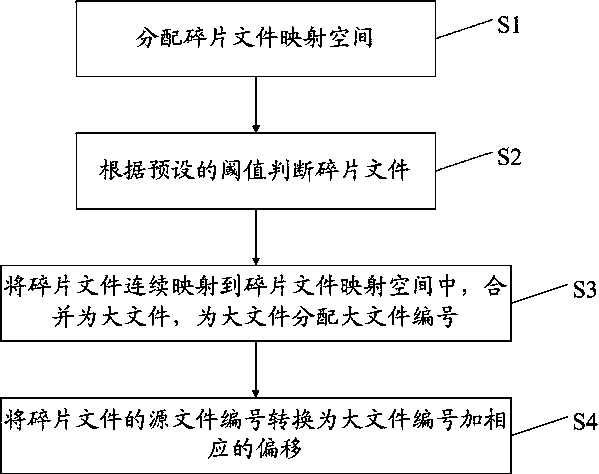

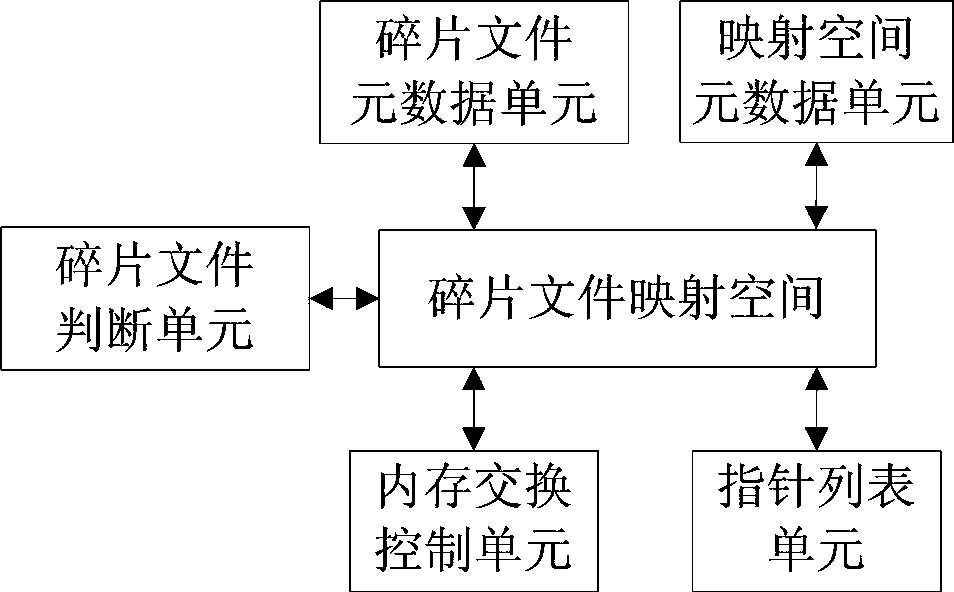

Fragmented-file storage method based on distributed storage system

ActiveCN103488685AImprove utilization efficiencyImprove processing efficiencyMemory adressing/allocation/relocationSpecial data processing applicationsMemory-mapped fileMetadata

The invention discloses a fragmented-file storage method based on a distributed storage system. A memory map file technology is adopted to continuously map fragmented files into a memory, the fragmented files are combined into a large file, and the large file is stored. Corresponding to the method, the invention further provides storage nodes comprising a fragmented-file judgment unit, a fragmented-file mapping space, a fragmented-file metadata unit, a memory mapping space metadata unit, a pointer list unit and a memory exchange control unit. The method and the storage nodes have the following beneficial effects that as the fragmented files are combined into the large file to be stored, the utilization efficiency of a disk is greatly improved, and the efficiency of processing continuous fragmented files is greatly improved; the memory mapping space adopts the non-exchange memory, and corresponding file priority and exchange strategy are matched, so that the efficiency of processing random fragmented files is greatly improved.

Owner:上海网达软件股份有限公司

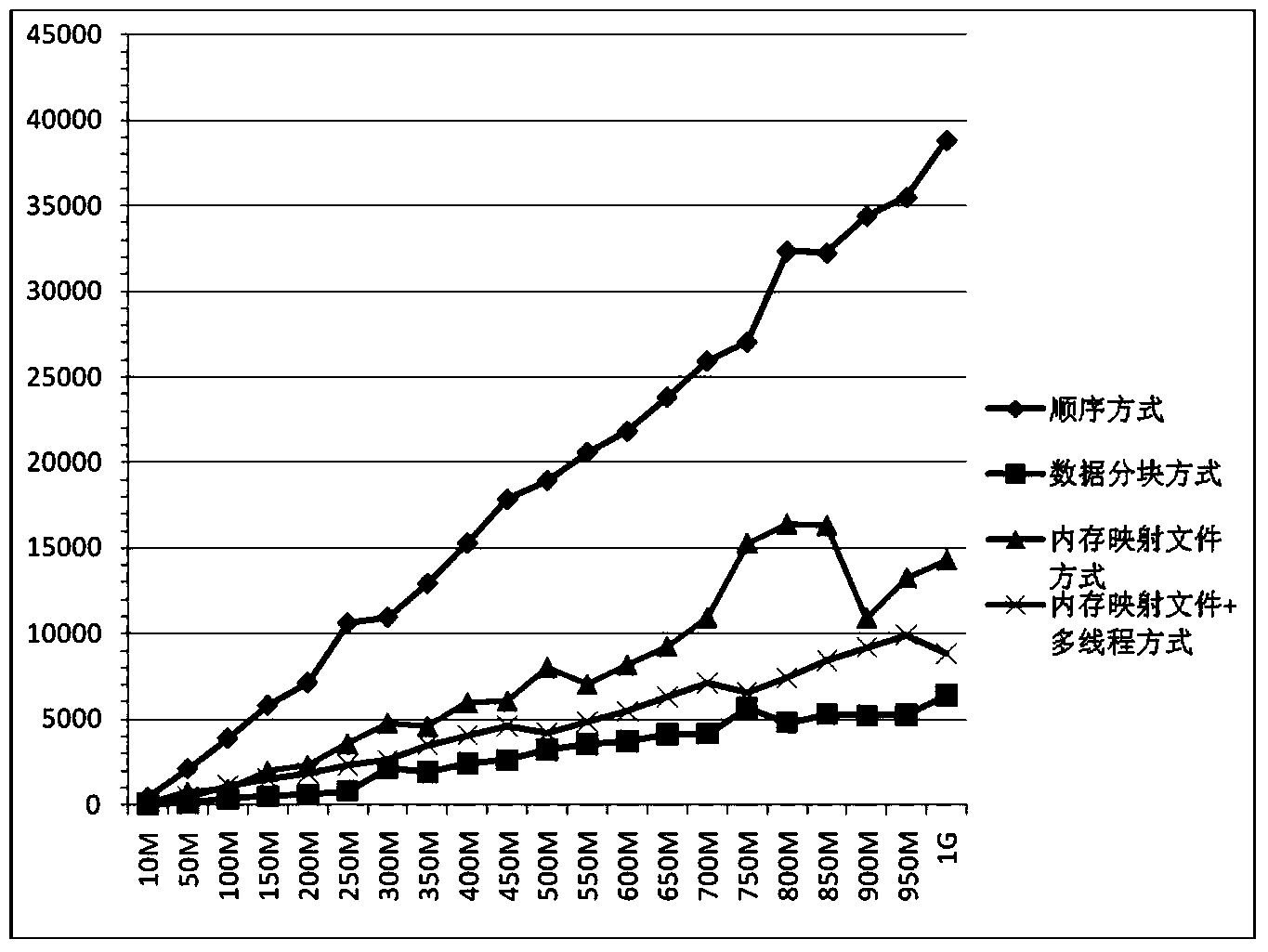

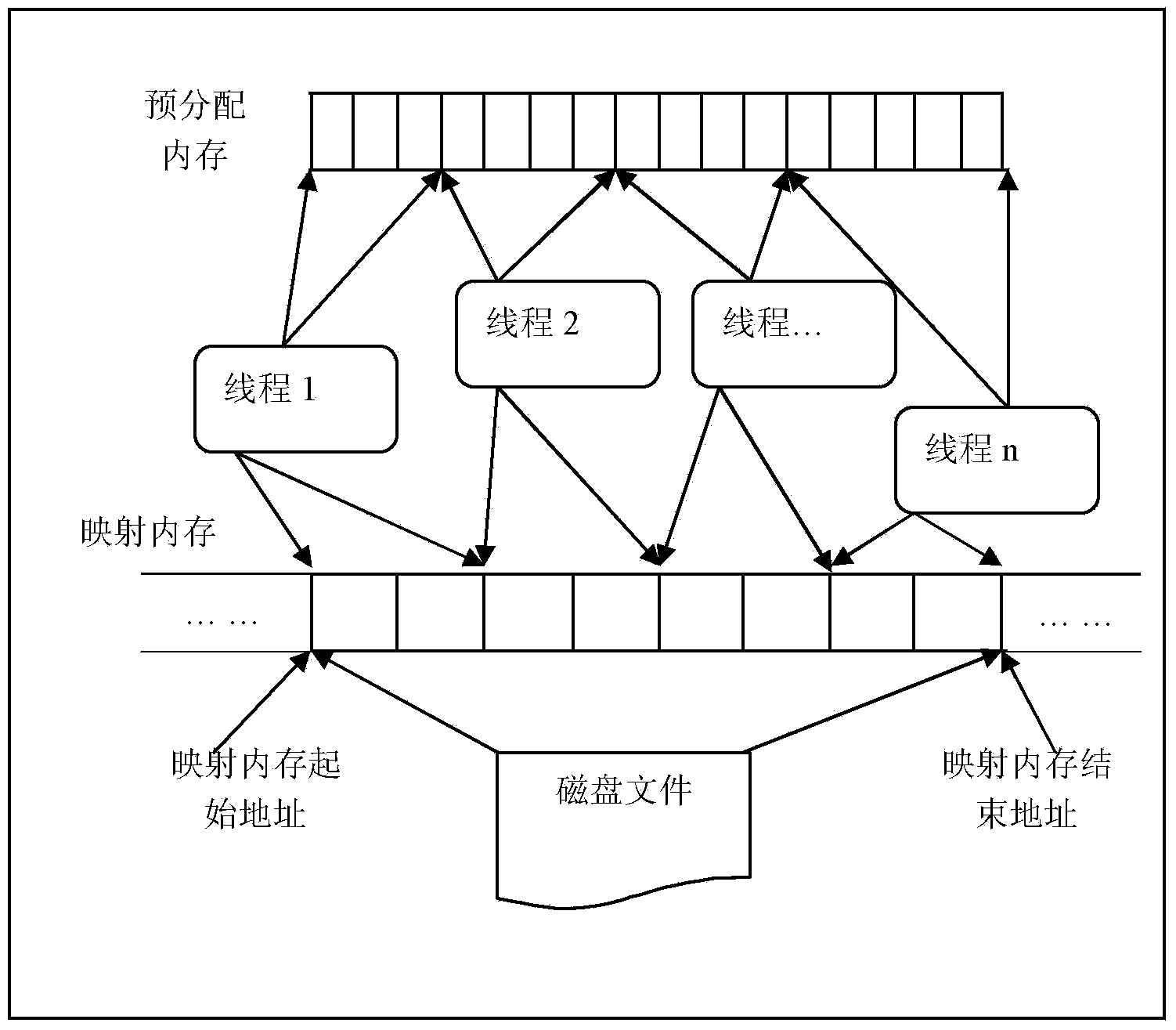

Rapid large-scale point-cloud data reading method based on memory pre-distribution and multi-point writing technology

InactiveCN104268096AImprove reading speedShorten read timeMemory adressing/allocation/relocationConcurrent instruction executionPoint cloudDistributed memory

The invention discloses a rapid large-scale point-cloud data reading method based on memory pre-distribution and multi-point writing technology, belongs to the technical field of point-cloud data file reading, and aims to solve the problems that reading time of an existing high and large-scale point-cloud data file is delayed and the existing high and large-scale point-cloud data file is slowly red. The method includes a memory pre-distribution process and a multi-point writing process and includes the steps: firstly, determining the number of points in a point-cloud data file, determining the memory size occupied by all points in the point-cloud data file and pre-distributing memories with the corresponding sizes for point-cloud data; secondly, mapping the point-cloud data file to a mapped memory through a memory mapping file mechanism, then building a thread pool containing a designated number of threads, enabling each thread to be responsible for analyzing parts of point-cloud data information in the mapped memory, and writing analyzed results into the pre-distributed memories to realize multi-point writing. Test results indicate that by the aid of the reading method based on the memory pre-distribution and multi-point writing technology, the reading speed of the point-cloud data file and particularly the large-scale point-cloud data file is increased by 220%-300%.

Owner:SOUTHWEAT UNIV OF SCI & TECH

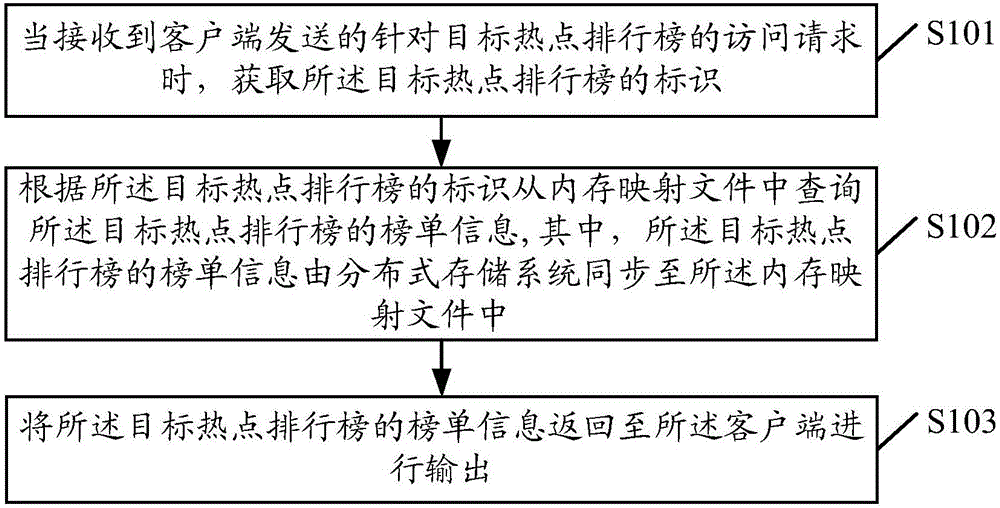

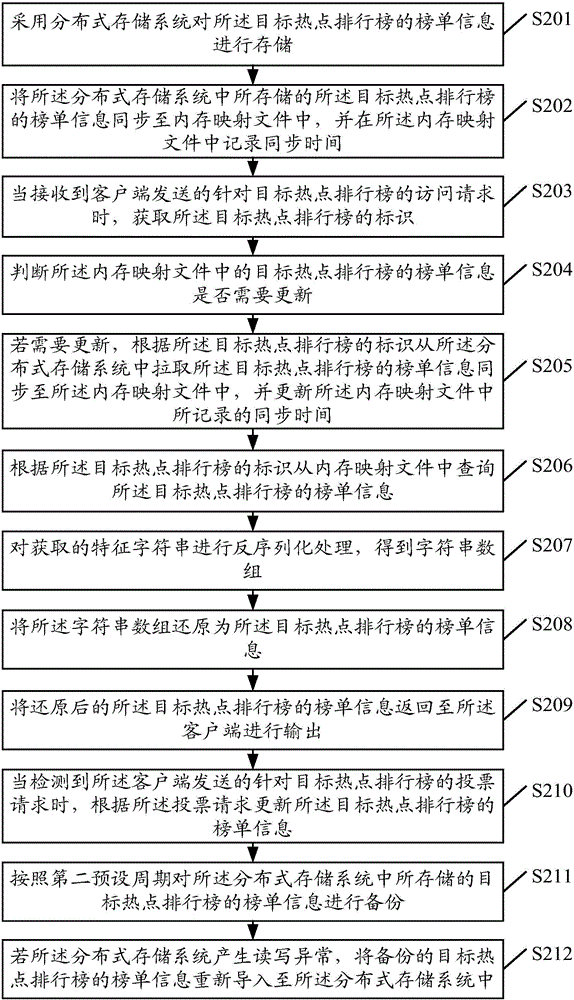

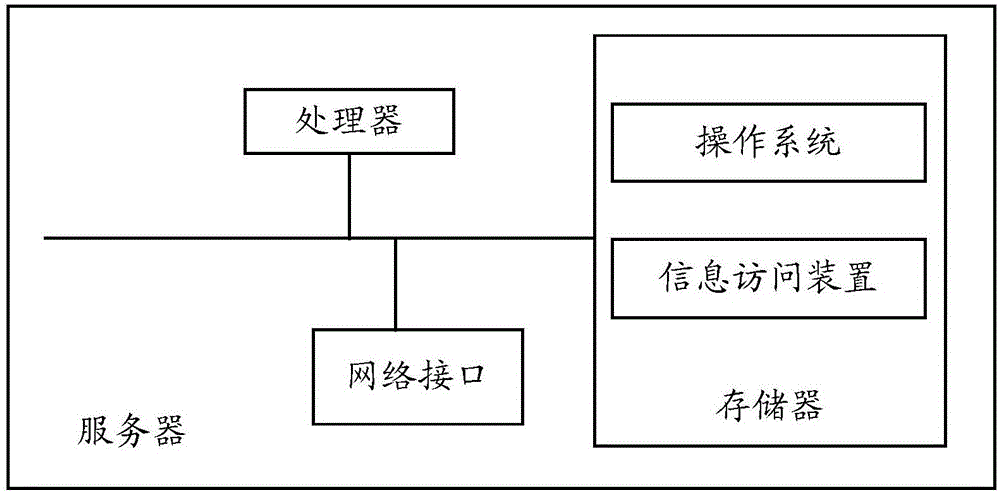

Information access method and apparatus, and server

InactiveCN106302829AImprove access efficiencyMeet the needs of timely acquisitionTransmissionInternal memoryInformation access

The embodiment of the invention provides an information access method and apparatus, and a server. The method can comprise the following steps: when an access request of a target hotspot ranking list sent by a client is received, obtaining an identifier of the target hotspot ranking list; inquiring list information of the target hotspot ranking list from an internal memory mapping file according to the identifier of the target hotspot ranking list, wherein the list information of the target hotspot ranking list is synchronized in the internal memory mapping file by a distributed storage system; and returning the list information of the target hotspot ranking list to the client to be output. According to the information access method and apparatus provided by the invention, massive access of the hotspot ranking list can be supported, the information access efficiency is improved, and the demands of users to obtain information in time are satisfied.

Owner:TENCENT MUSIC & ENTERTAINMENT SHENZHEN CO LTD

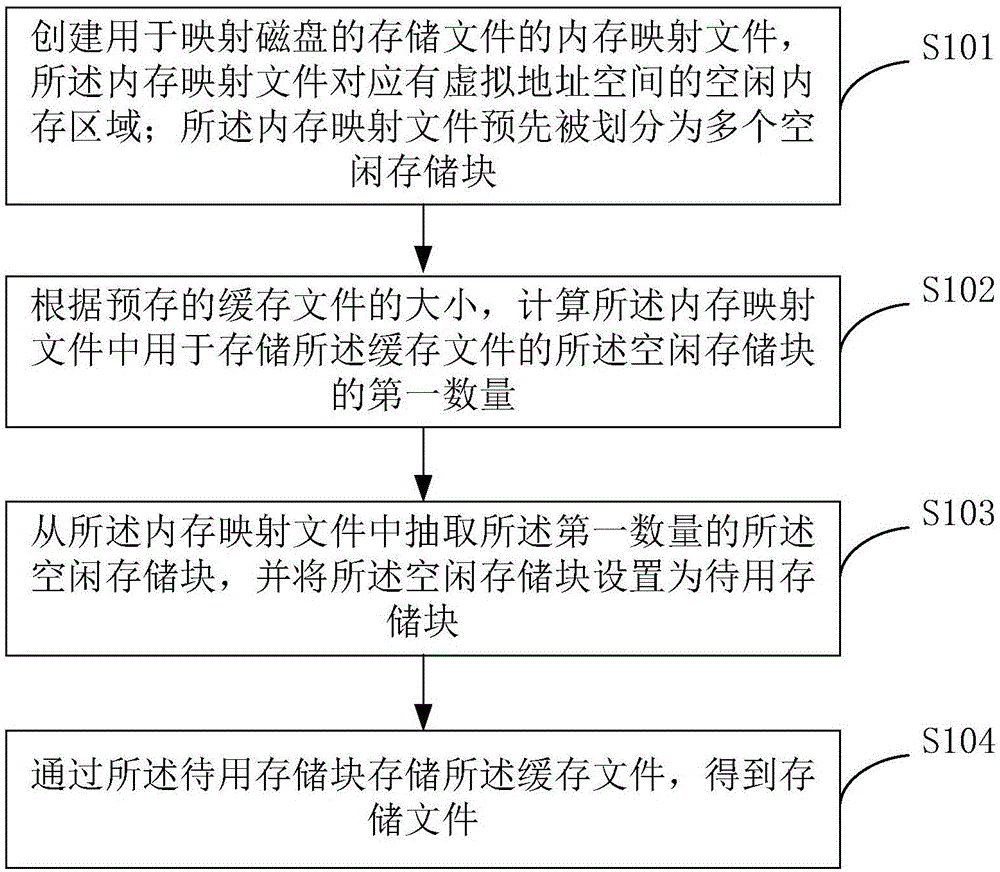

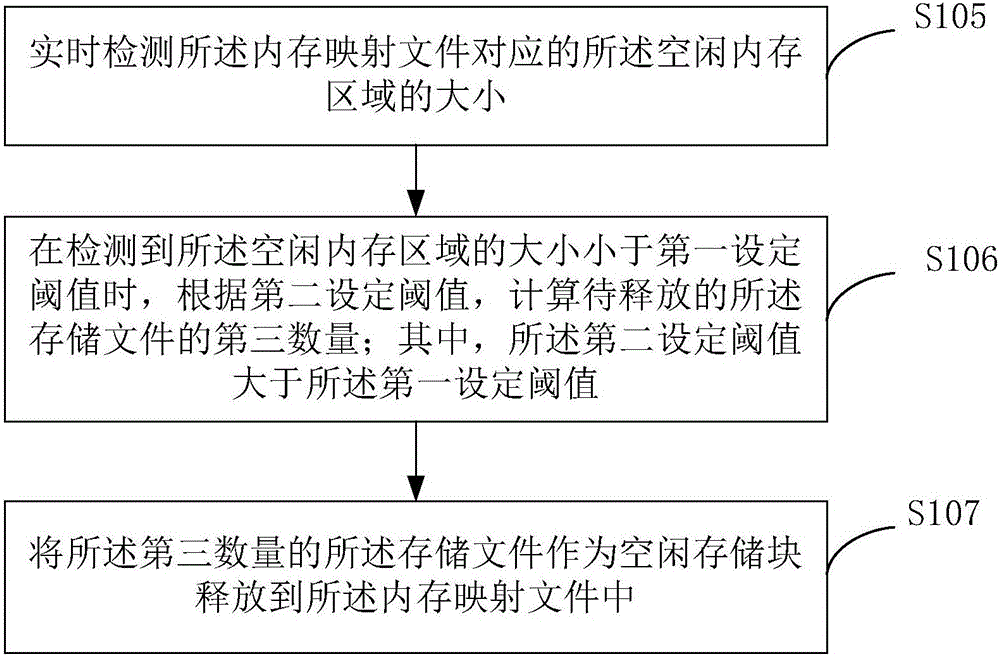

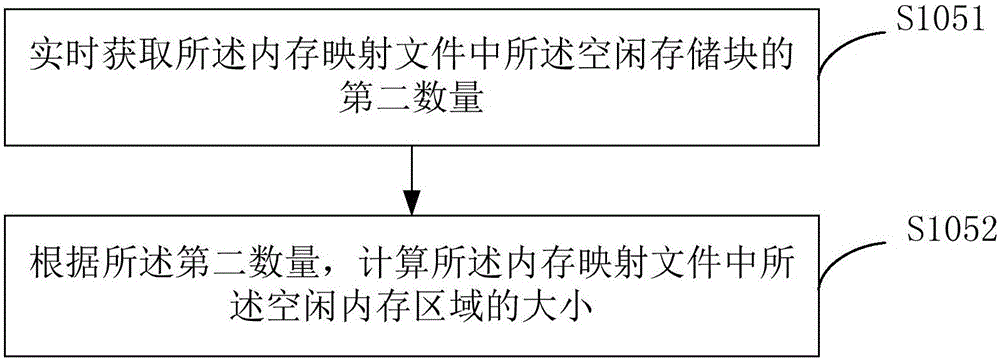

Method and device for caching data in disk

The invention provides a method and device for caching data in a disk, and relates to the technical field of data storage. The method includes the steps that a memory mapping file used for mapping a storage file of the disk is created, and the memory mapping file corresponds to a free memory area of a virtual address space; the memory mapping file is segmented into multiple free storage blocks in advance; according to the size of a pre-stored cache file, the first number of the free storage blocks, used for storing the cache file, in the memory mapping file is calculated; the first number of free storage blocks are extracted from the memory mapping file and set as to-be-used storage blocks; the cache file is stored through the to-be-used storage blocks to obtain a storage file. By means of the method and device, a cache space with multiple free storage blocks can be repeatedly written and recycled, the utilization rate of the cache space is increased, cache space utilization maximization is achieved, the hit rate of cache file access is increased, and meanwhile the writing and reading speed of the cache file is increased.

Owner:NETPOSA TECH

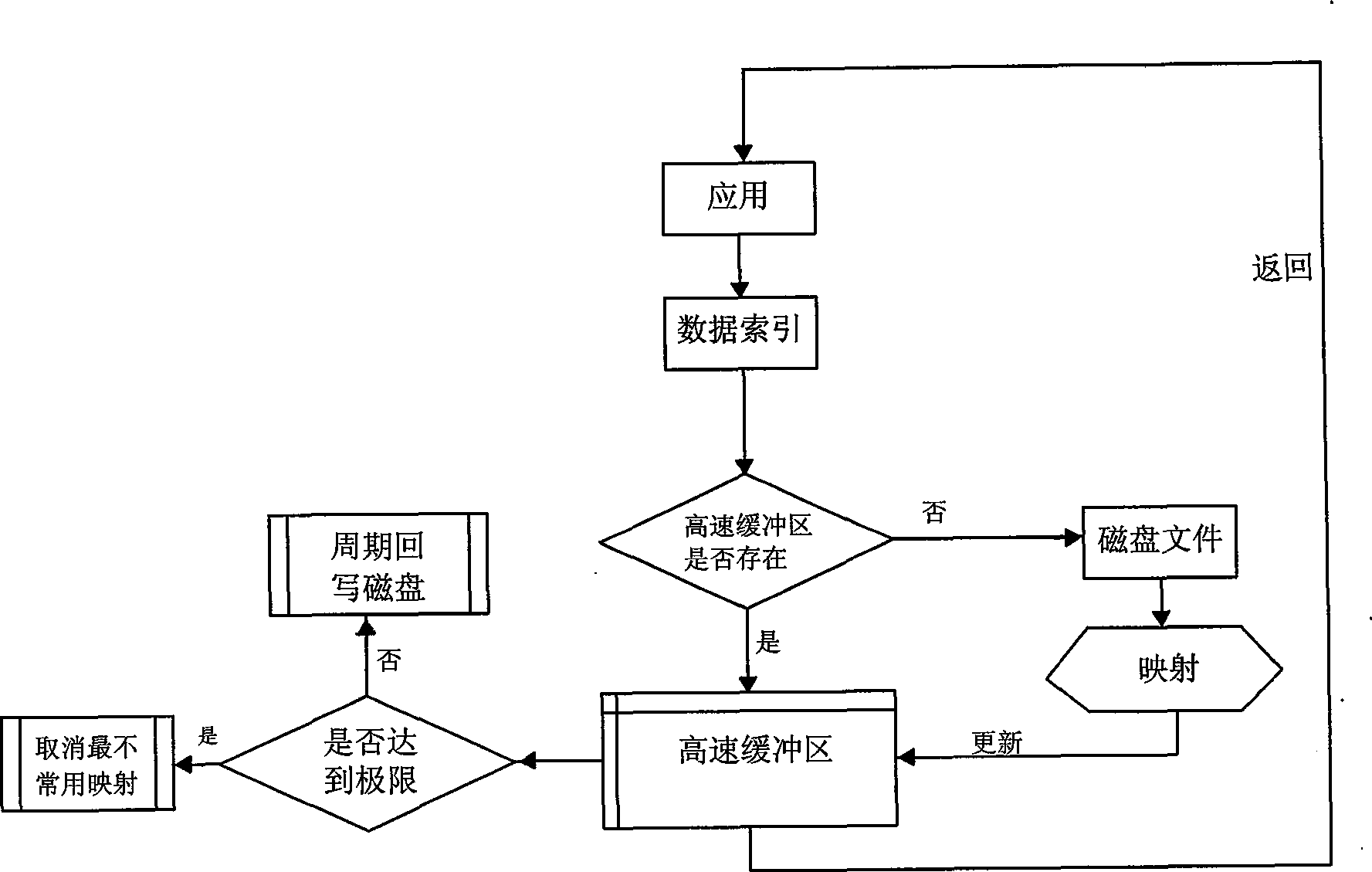

High-speed concurrent access method for power system large data files across platform

ActiveCN101520797AConvenienceImprove high-speed performanceMemory adressing/allocation/relocationSpecial data processing applicationsAccess methodElectric power system

The invention discloses a high-speed concurrent access method for power system large data files across a platform, which is characterized in that a cache management system capable of carrying out the unified management on all data files when a system runs and is responsible for interaction with actual disk files is set; the data files are processed in a memory-mapped file mode; the cache management system concurrently maps each portion of the data files to the minor address space in a data-processing service process one by one by using a method that the data files are divided into fixed block memory-mapped files; and the cache management system sets a high-speed buffer area which does not re-load loaded data accessing once more, thereby enhancing the efficiency and the speed of access. The high-speed concurrent access method of the power system large data files across the platform can meet the convenience, the rapidity and the reliability of an application program to the concurrent access of the large data files through the interaction of the common cache management system and the actual disk files.

Owner:STATE GRID ELECTRIC POWER RES INST

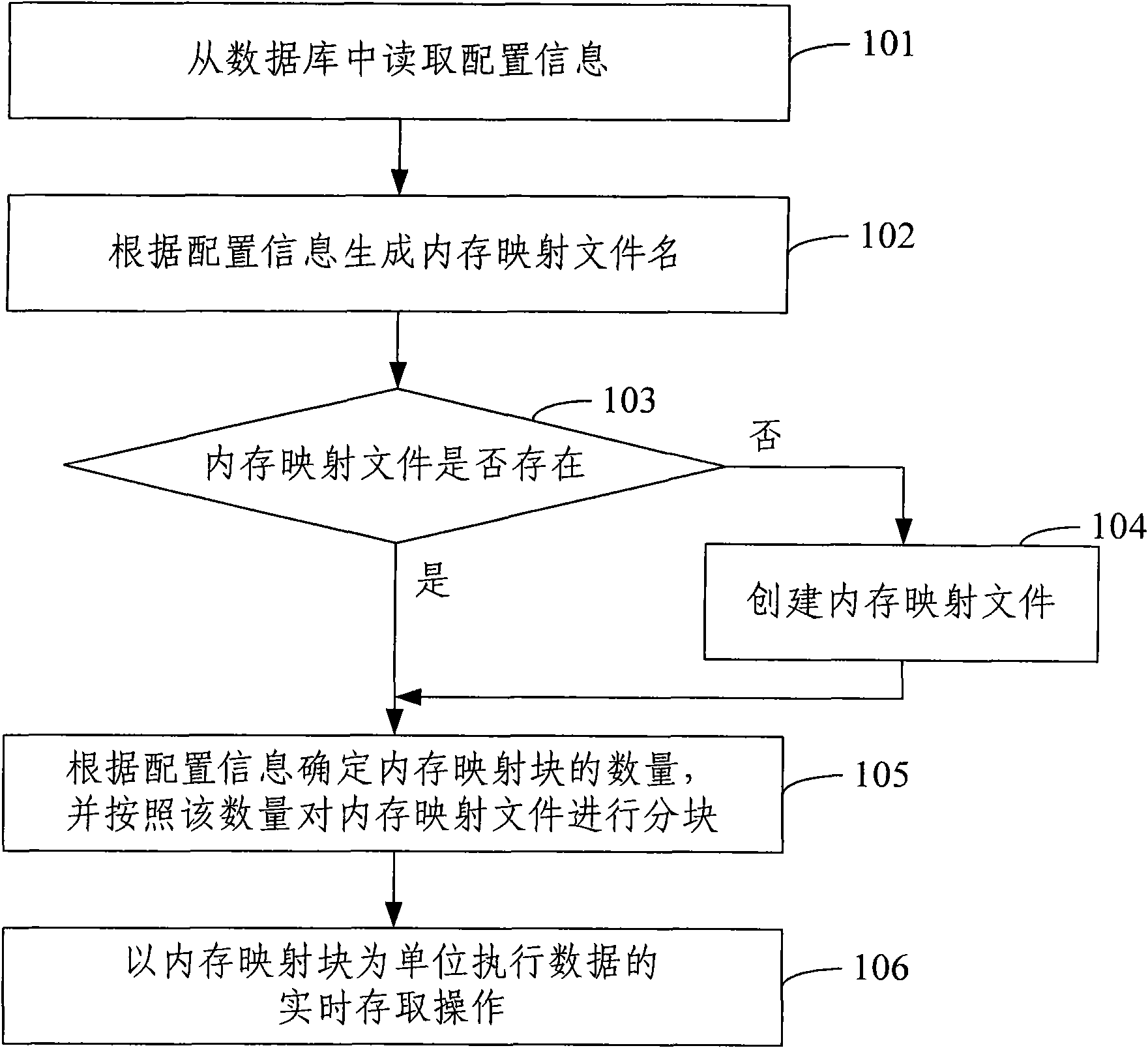

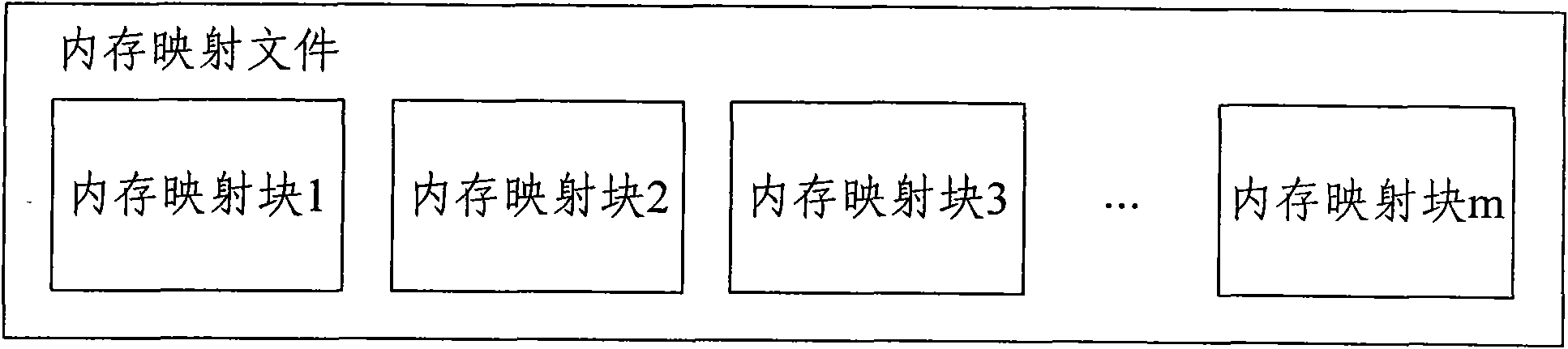

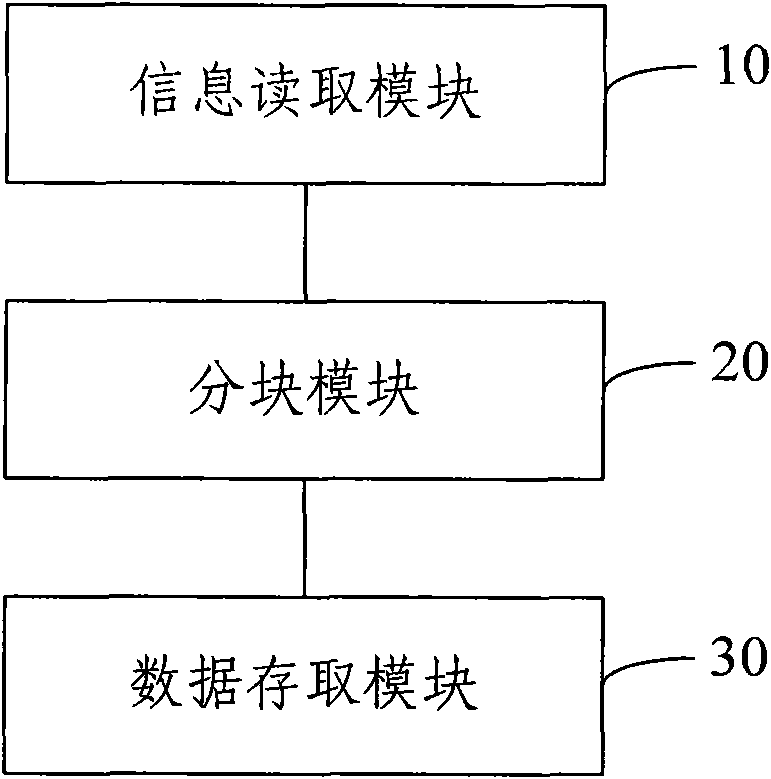

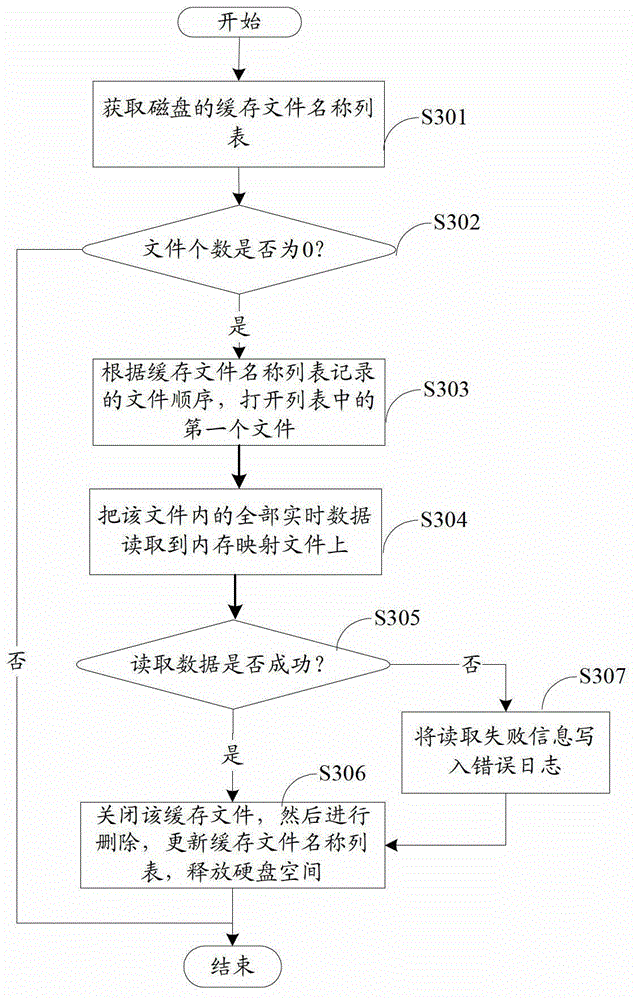

Method and system for real-time data memory

InactiveCN101567002AImprove efficiencyLower latencySpecial data processing applicationsReal-time dataMemory map

The invention discloses a method for real-time data memory, comprising the following steps of: reading prearranged configuration information, determining the quantity of the memory mapping blocks according to the configuration information of the reading, partitioning the memory mapping file according to the quantity of the memory mapping blocks, and executing the real-time access operation of the data by taking the memory mapping block as a unit. The invention also discloses a system for real-time data memory; the way of accessing of memory mapping file by partition is adopted so that the system can process a plurality of data requests in parallel, thus improving the system performance; as the quantity of the partitions can be configured according to practical requirement, the proposal has good expansibility.

Owner:北京中企开源信息技术有限公司

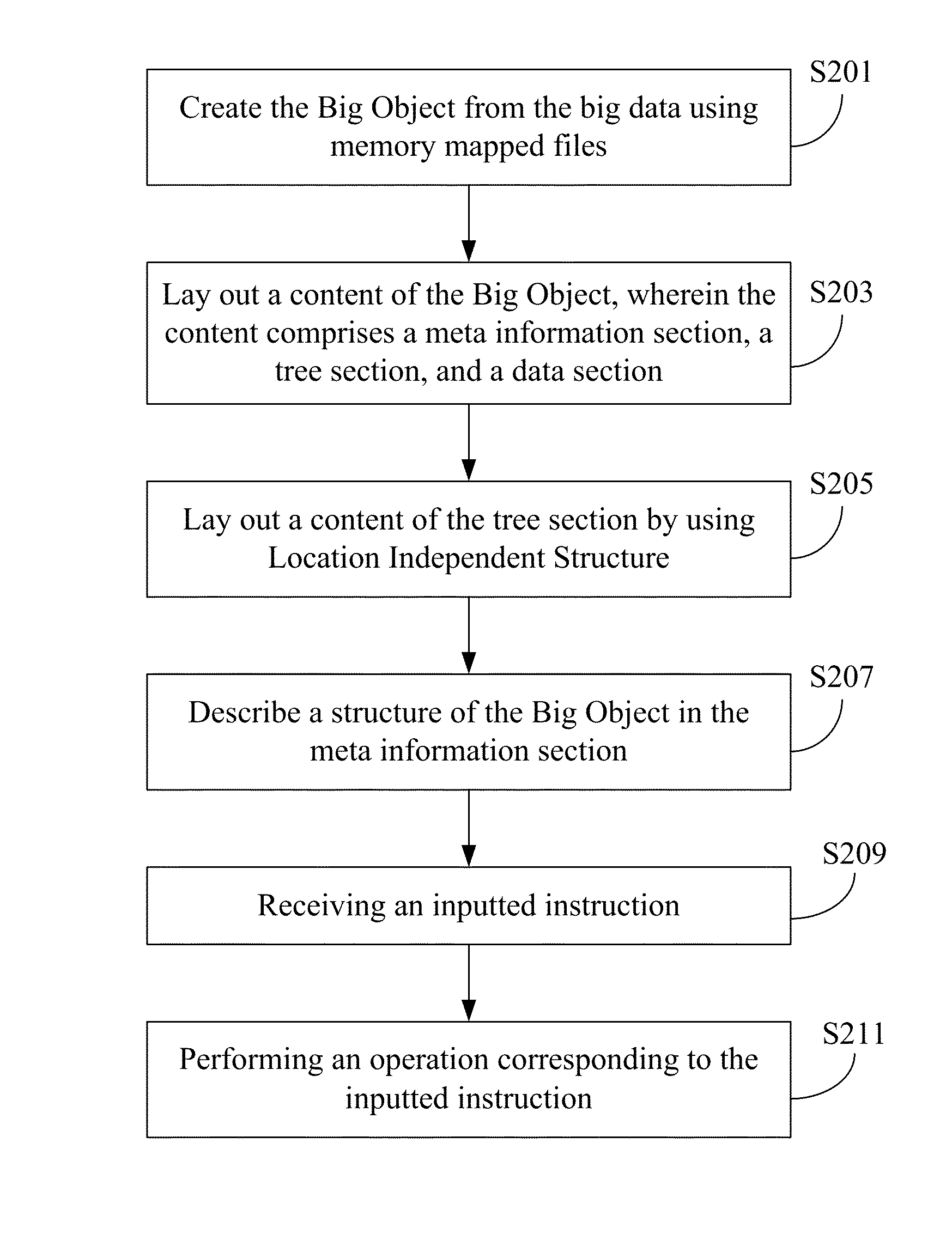

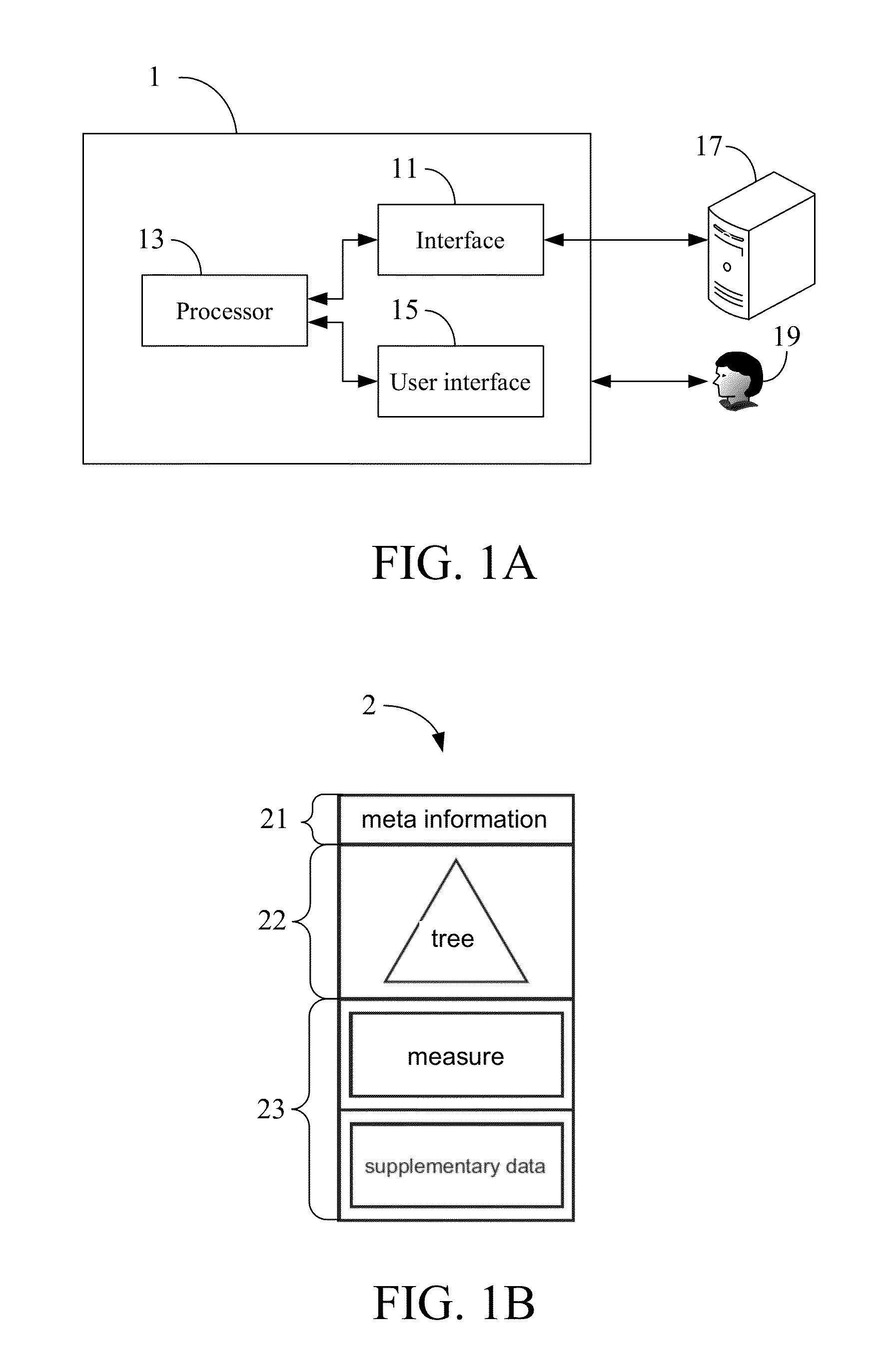

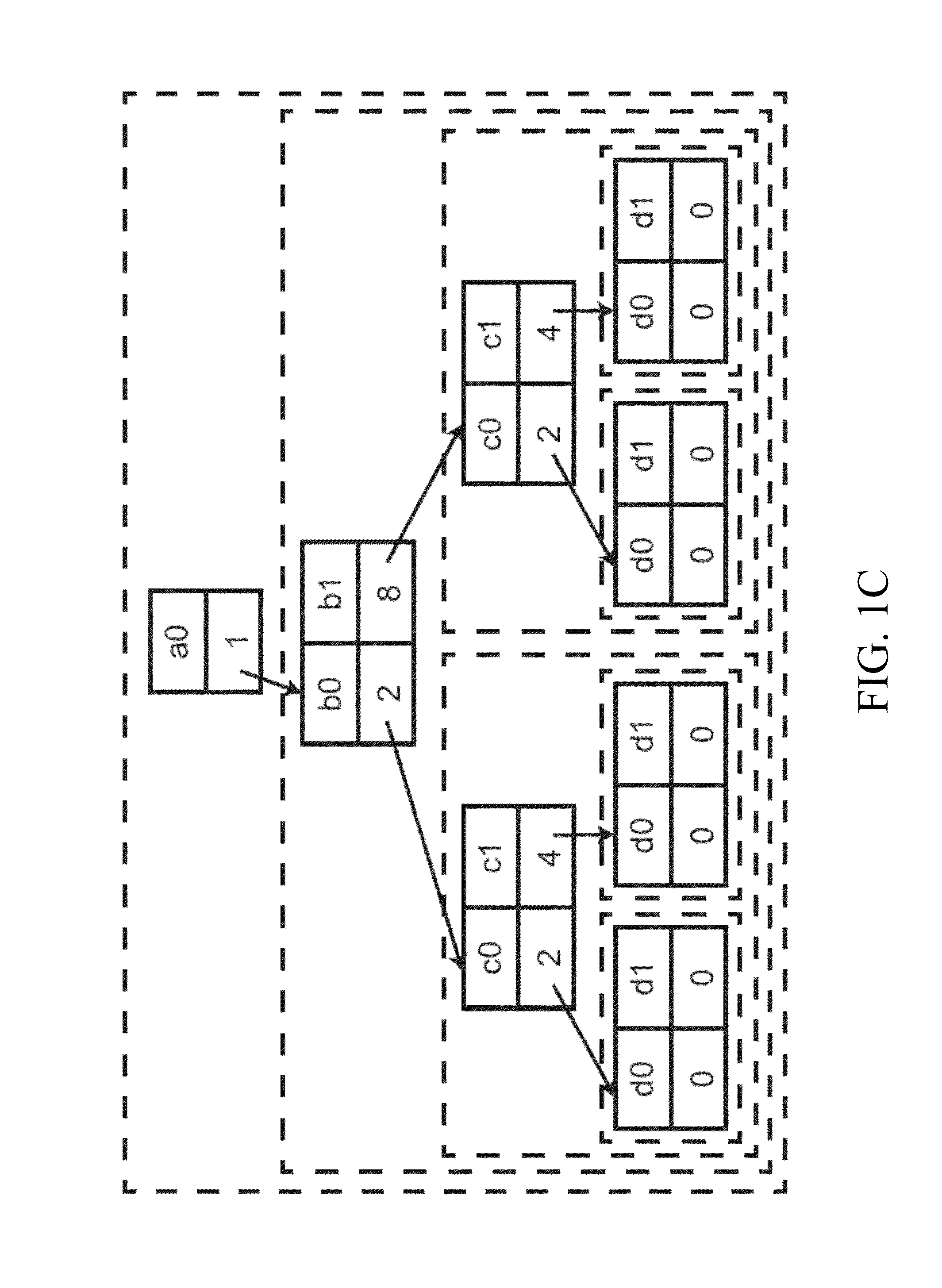

Apparatus and method for realizing big data into a big object and non-transitory tangible machine-readable medium thereof

ActiveUS8364723B1Efficient accessDigital data processing detailsMulti-dimensional databasesMemory-mapped fileData science

An apparatus and method for realizing big data into a Big Object and a non-transitory tangible machine-readable medium are provided. The apparatus comprises an interface and a processor. The interface is configured to access big data stored in a storage device. The processor is configured to create the Big Object from the big data using memory mapped files. The processor further lays out a content of the Big Object, wherein the content comprises a meta information section, a tree section, and a data section. The processor further lays out a content of the tree section by using LIS and describes a structure of the Big Object in the meta information section.

Owner:BIGOBJECT

Multi-writer in-memory non-copying database (MIND) system and method

ActiveUS8291269B1Error detection/correctionObject oriented databasesIn-memory databaseObject oriented databases

Embodiments of the invention relate to memory management methods and systems for object-oriented databases (OODB). In an embodiment, a database includes a plurality of memory-mapped file segments stored on at least one nonvolatile memory medium and not in main memory. An application program connects to the database with a plurality of writing processes to simultaneously write to an in-memory database, each writing process updating its own disk-based logfile, such that the effective disk writing speed is substantially increased and lock conflicts reduced.

Owner:ADVENT SOFTWARE

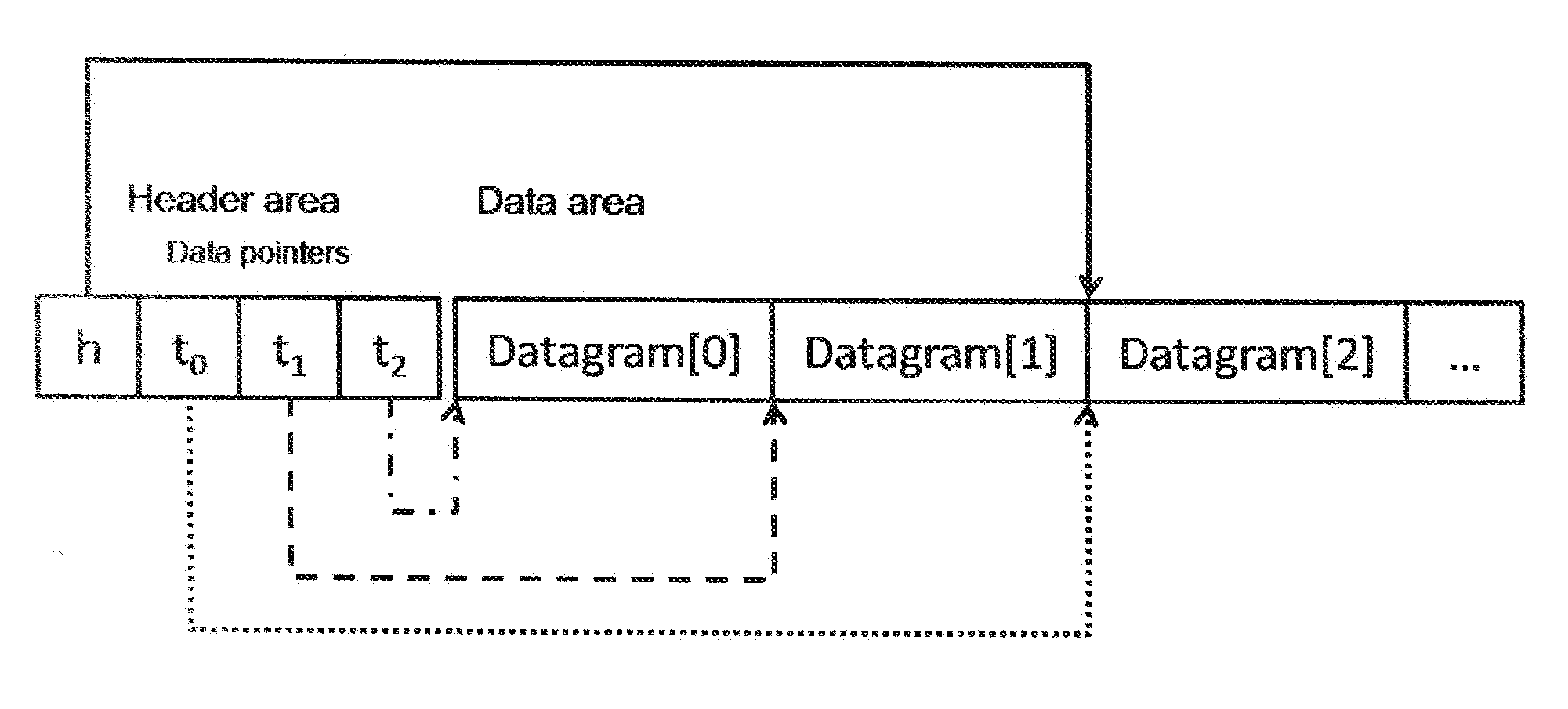

OS-independent Framework and Software Library for Real Time Inter-process Data Transfer (Data River)

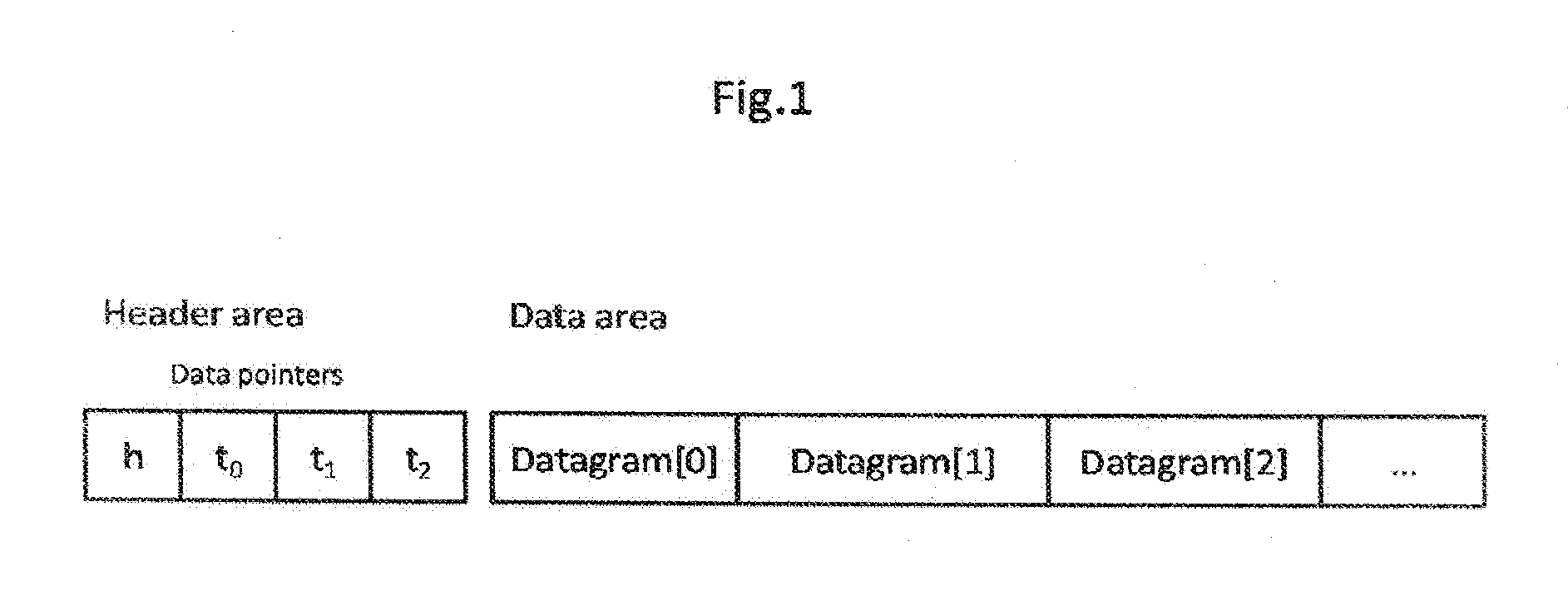

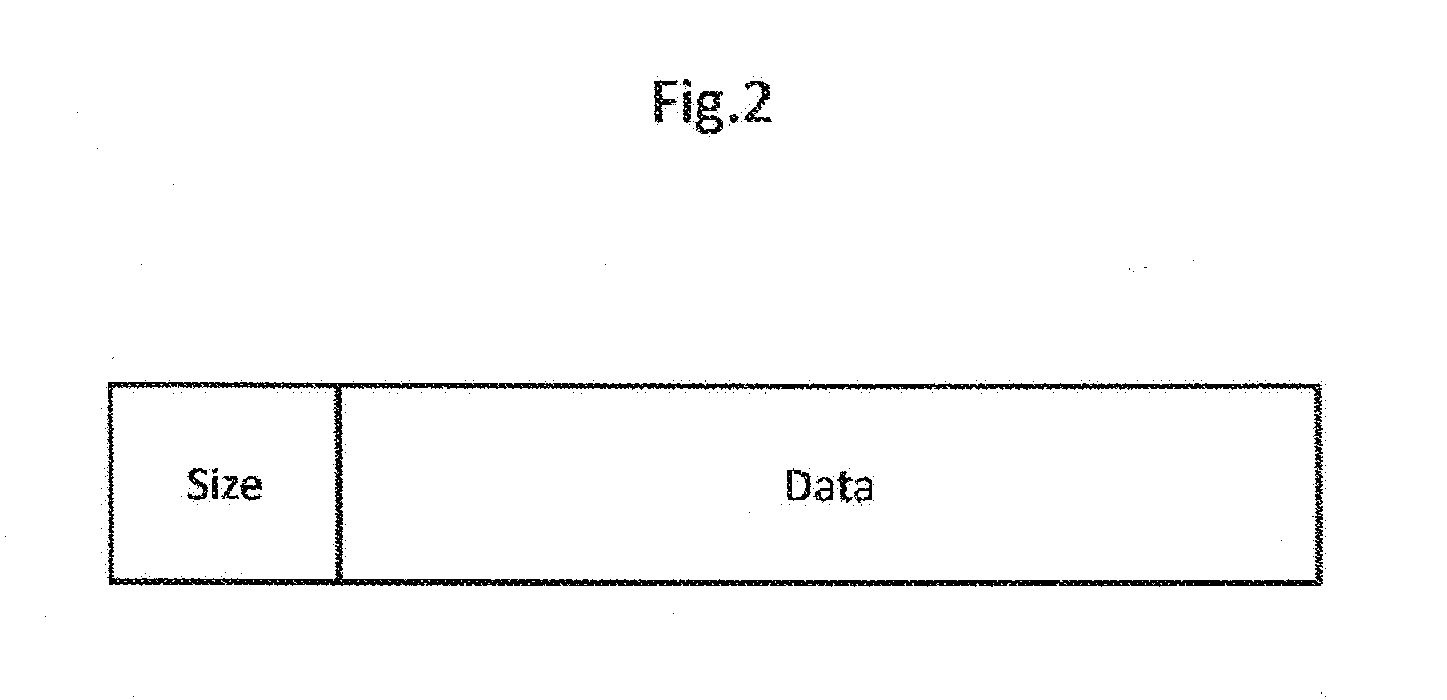

Proposed is a secure real-time inter-process data exchange mechanism based on memory mapped files (MMF). A modified FIFO access to the data (“one head, multiple tails”) is provided by pointers residing in a MMF buffer. A process writes to a data area of the buffer using “head” pointer, and data are read by processes using “tail” pointers. Only one shared data block is accessible at any given time, thus achieving secure high-performance real-time data sharing data between processes without using OS-dependent thread-synchronization techniques. The invention is implemented as OS-specific dynamic libraries that form a platform-independent software layer which hides the implementation details from the programmer. Access to the libraries is provided by a simple and easy to use API, via a limited number of high-level functions, with syntax consistent across languages (C, C++, Pascal, MATLAB) and OS platforms (Windows, Linux, MacOSX).

Owner:VANKOV ANDRE

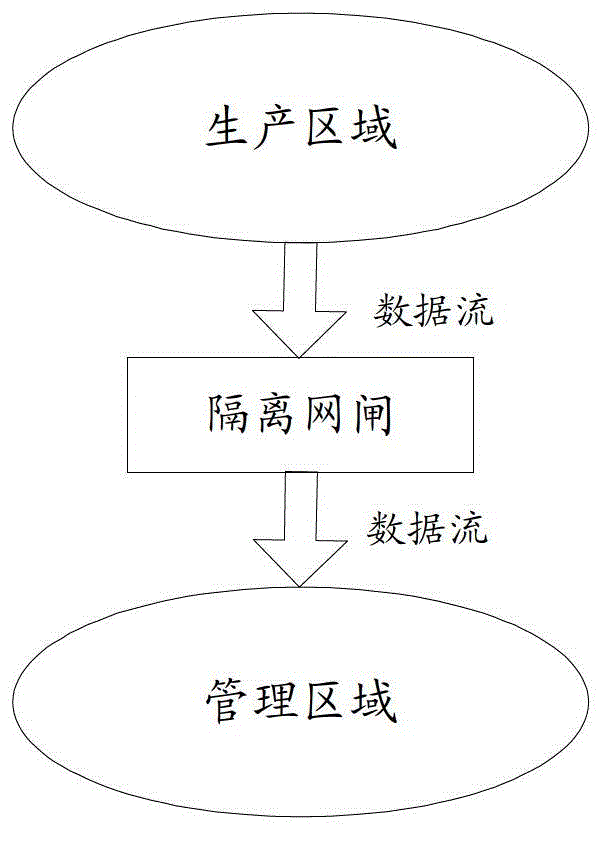

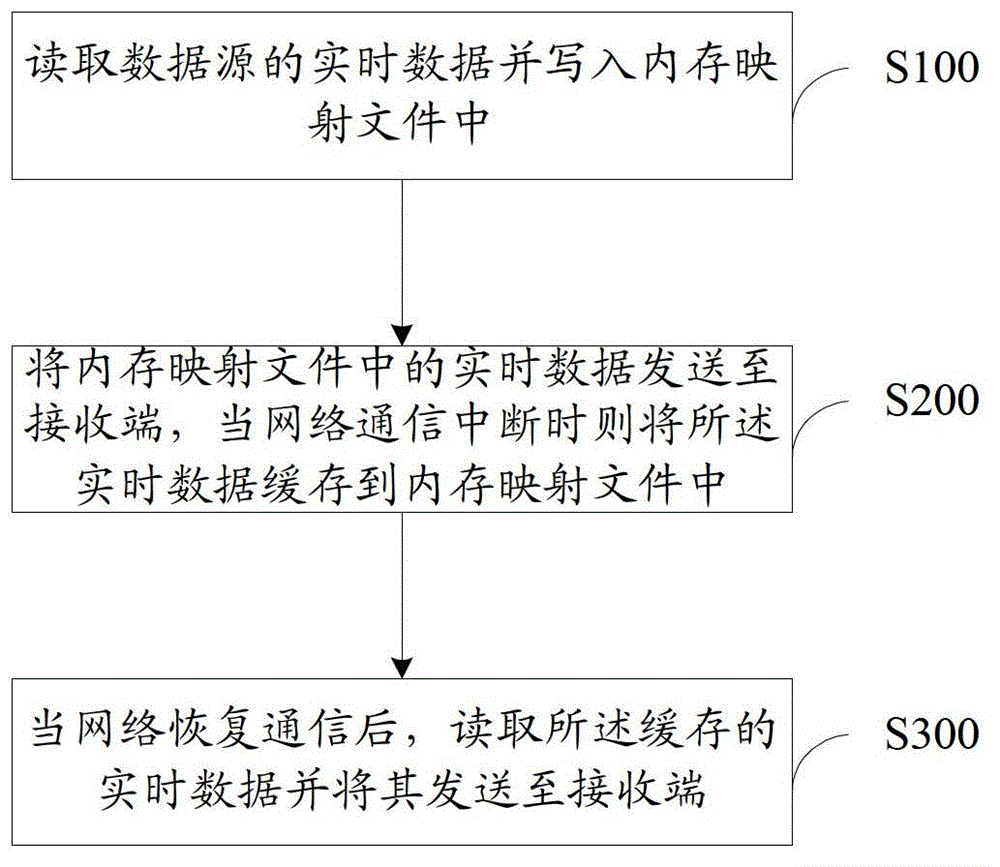

Data sending method

ActiveCN102868552AGuaranteed uptimeGuaranteed continuityData switching networksReal-time dataInstability

The invention provides a data sending method. The data sending method comprises the following steps of: reading real-time data of a data source and writing the real-time data into a memory mapping file; sending the real-time data in the memory mapping file to a receiving end, and when network communication is interrupted, caching the real-time data into the memory mapping file; and when the network communication is recovered, reading the cached real-time data and sending the real-time data to the receiving end. By adoption of the data sending method, the continuity of data transmission can be ensured under the condition of network instability, so an information system operates steadily, safely and efficiently.

Owner:ELECTRIC POWER RES INST OF GUANGDONG POWER GRID

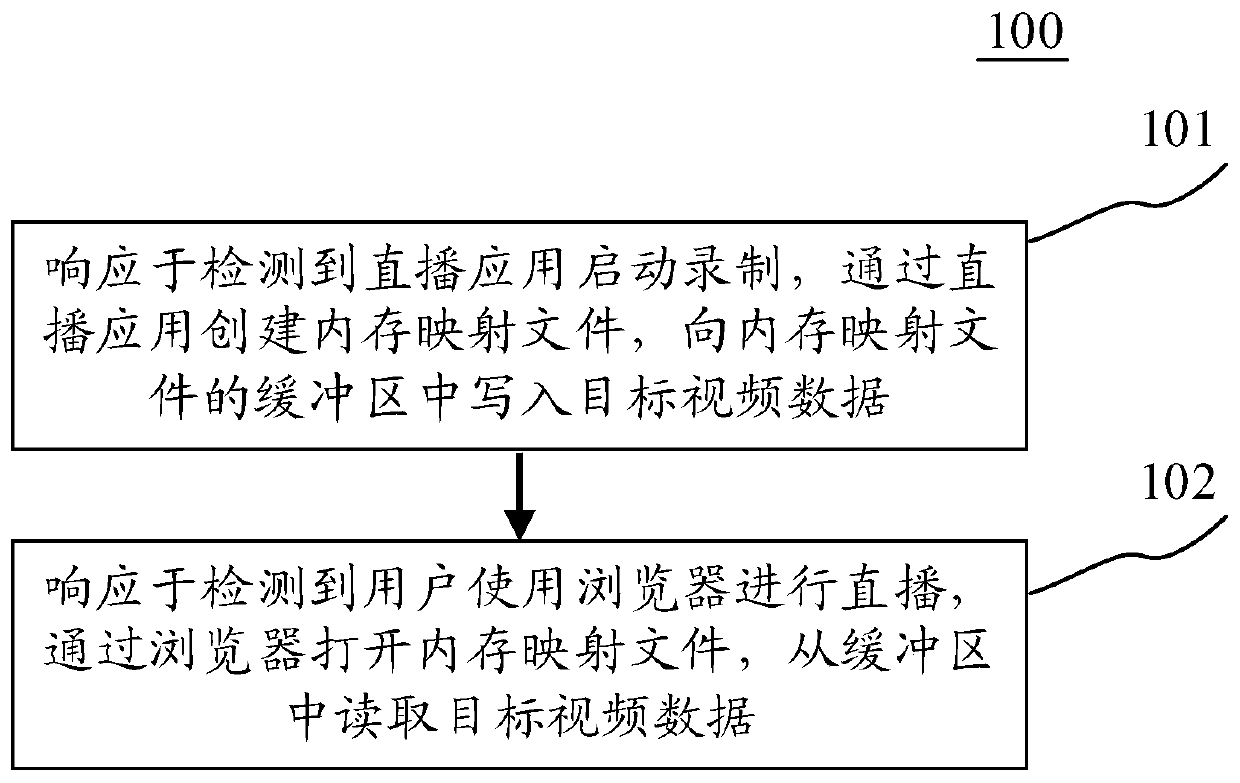

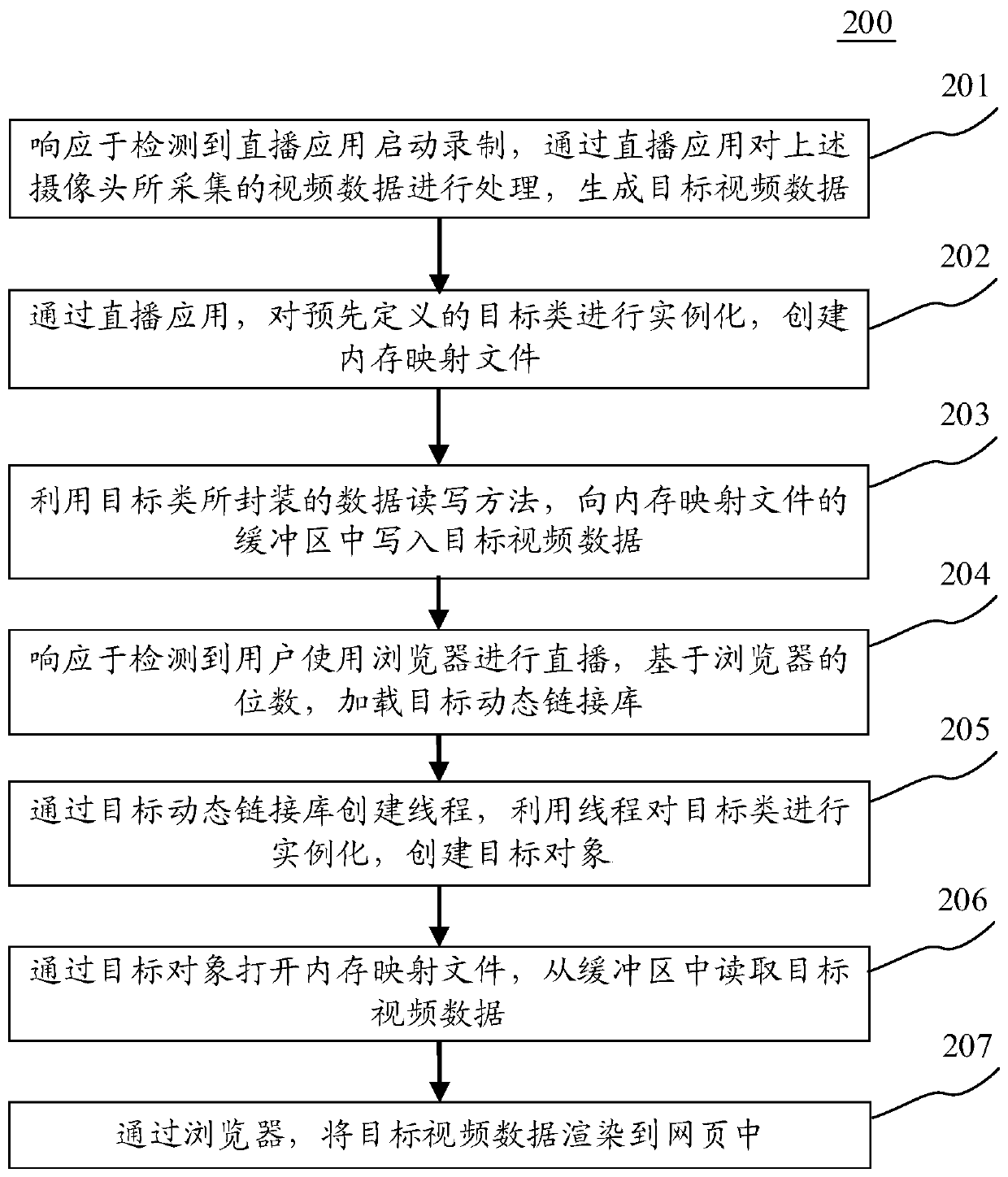

Communication method and device, terminal device and computer readable medium

The embodiment of the invention discloses a communication method and device, a terminal device and a computer readable medium. An embodiment of the method comprises the steps of responding to detection that a live broadcast application starts recording, creating a memory mapping file through the live broadcast application, and writing target video data into a buffer area of the memory mapping file; and in response to detecting that a user uses a browser to perform live broadcast, opening the memory mapping file through the browser, and reading the target video data from the buffer area, the browser accessing the memory mapping file by loading a target dynamic link library corresponding to the number of bits of the browser. According to the embodiment, communication between the 64-bit browser and the 32-bit live broadcast application is realized.

Owner:BEIJING QIYI CENTURY SCI & TECH CO LTD

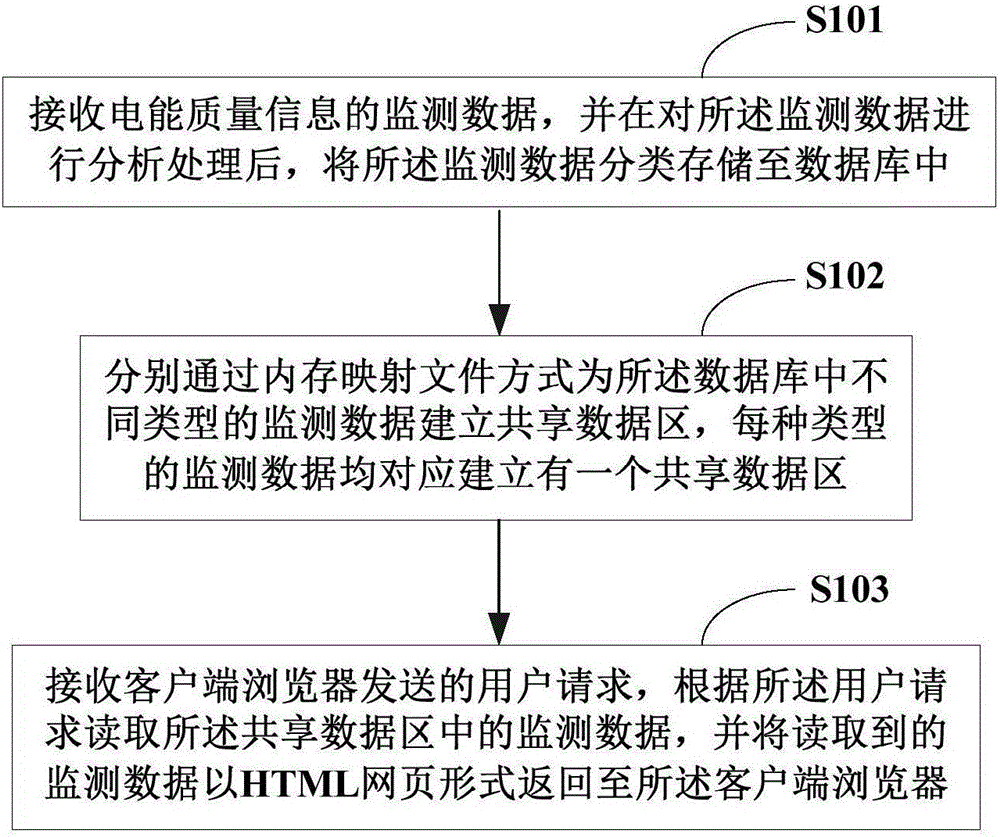

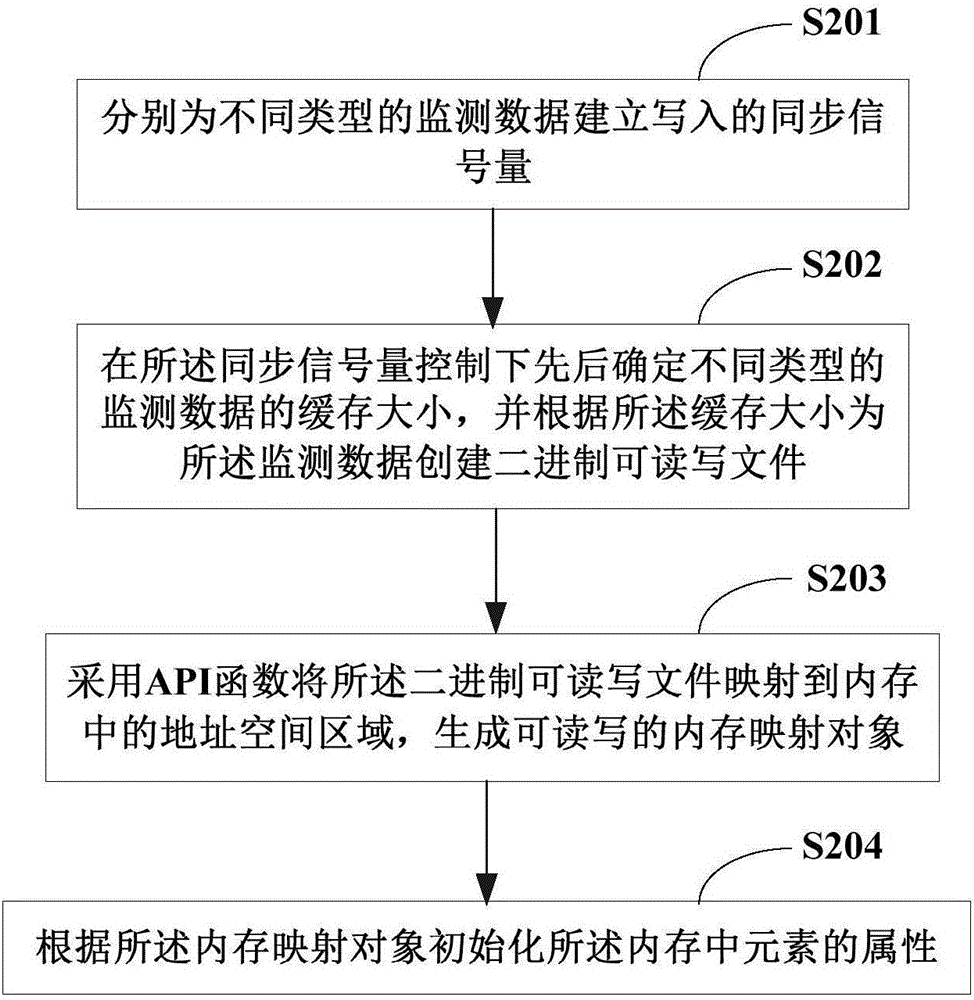

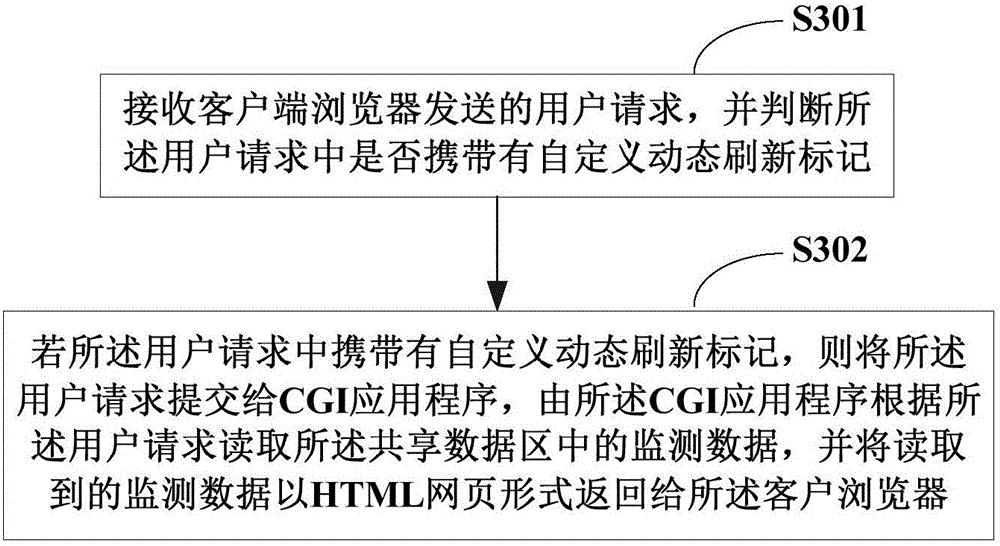

Method and system for monitoring electric energy quality

ActiveCN106372069AHigh speedShorten the timeElectrical testingWebsite content managementPower qualityPager

The invention provides a method and system for monitoring electric energy quality and relates to the technical field of electric power monitoring. The method comprises the steps that monitoring data of electric energy quality information is received, and the monitoring data is stored into a database in a classified manner after analysis and processing of the monitoring data; shared data areas are established for the monitoring data of different types in the database respectively through a memory mapping document manner, wherein the shared data area is established correspondingly for the monitoring data of each type; and a user request sent by a client side browser is received, the monitoring data in the shared data area is read according to the user request, and the read monitoring data is returned to the client side browser in the form of an HTML web page. According to the invention, the client side browser can acquire a Web full pager more quickly; accurate time of the Web page can be greatly reduced; an electric energy quality monitoring terminal task can be optimized; and in addition, dynamic refreshing of the HTML page can be achieved, and a user can carry out online testing and maintenance.

Owner:深圳金奇辉电气有限公司

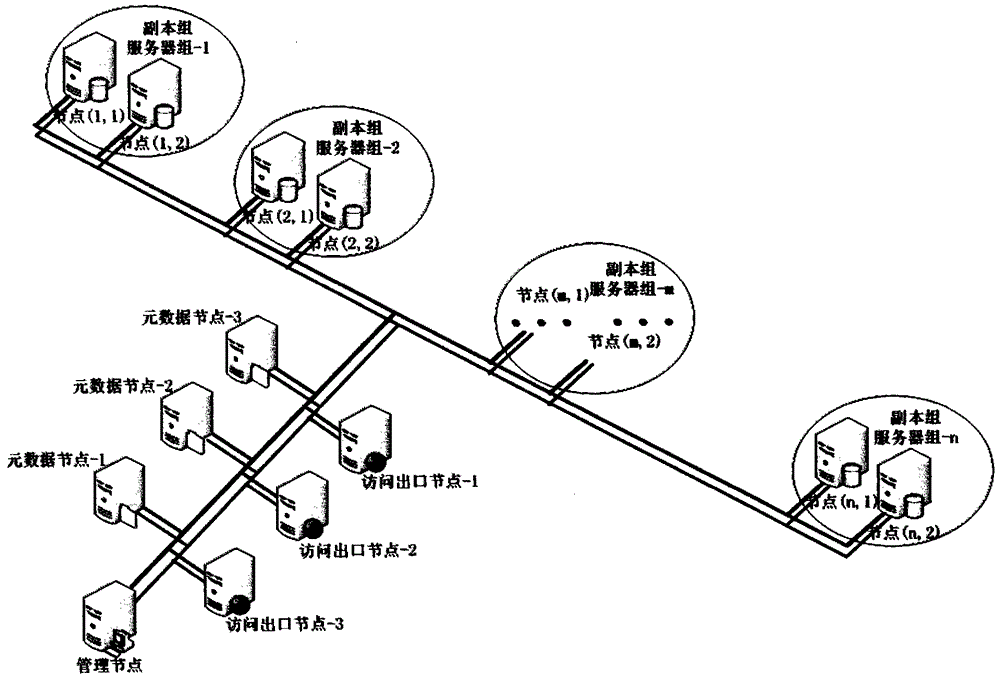

Mass class locator data storage method

InactiveCN104636332AEasy to controlRealize dynamic expansionSpecial data processing applicationsInput/output processes for data processingData informationData store

The invention provides a mass class locator data storage method. A mass class locator data storage system based on a MongoDB server cluster is built to serve as a supporting platform, a data fragment technology and a distributed type data storage technology are utilized, a sharing-nothing storage framework is adopted, data are divided according to the specific dimensionality needed by services and are averagely stored in all servers and disks, so that it is ensured that read and write loads of the system are balanced as much as possible, all IOs for data reading and writing are called, and the total IO supporting capacity of the cluster is obtained. In addition, a memory mapped file technology is utilized, accessed data information is stored in memories of the servers, and therefore performance delay possibly caused by the magnetic disk IOs is reduced to the maximum extent.

Owner:CHINA CHANGFENG SCI TECH IND GROUPCORP

Multi-writer in-memory non-copying database (MIND) system and method

ActiveUS8769350B1Error detection/correctionObject oriented databasesIn-memory databaseObject oriented databases

Embodiments of the invention relate to memory management methods and systems for object-oriented databases (OODB). In an embodiment, a database includes a plurality of memory-mapped file segments stored on at least one nonvolatile memory medium and not in main memory. An application program connects to the database with a plurality of writing processes to simultaneously write to an in-memory database, each writing process updating its own disk-based logfile, such that the effective disk writing speed is substantially increased and lock conflicts reduced.

Owner:ADVENT SOFTWARE

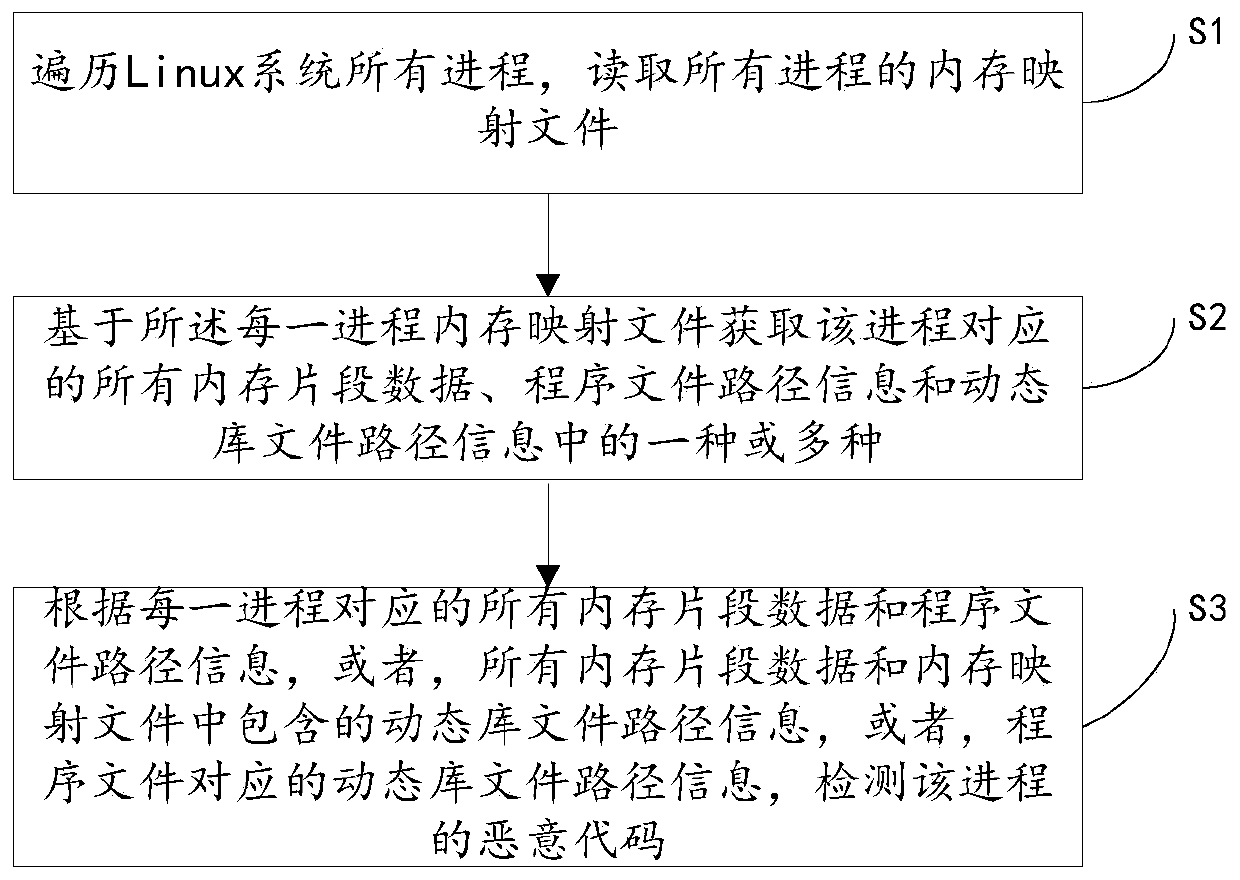

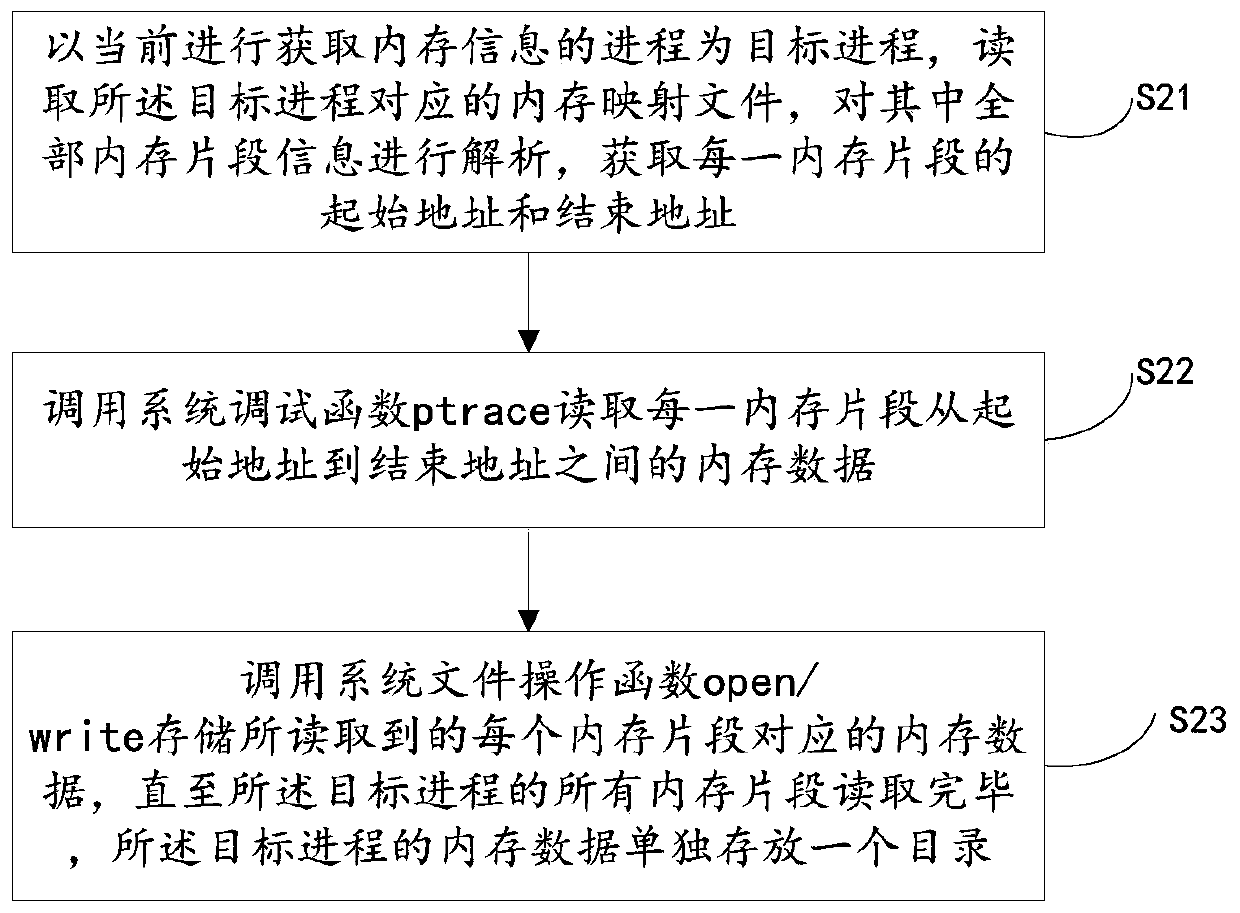

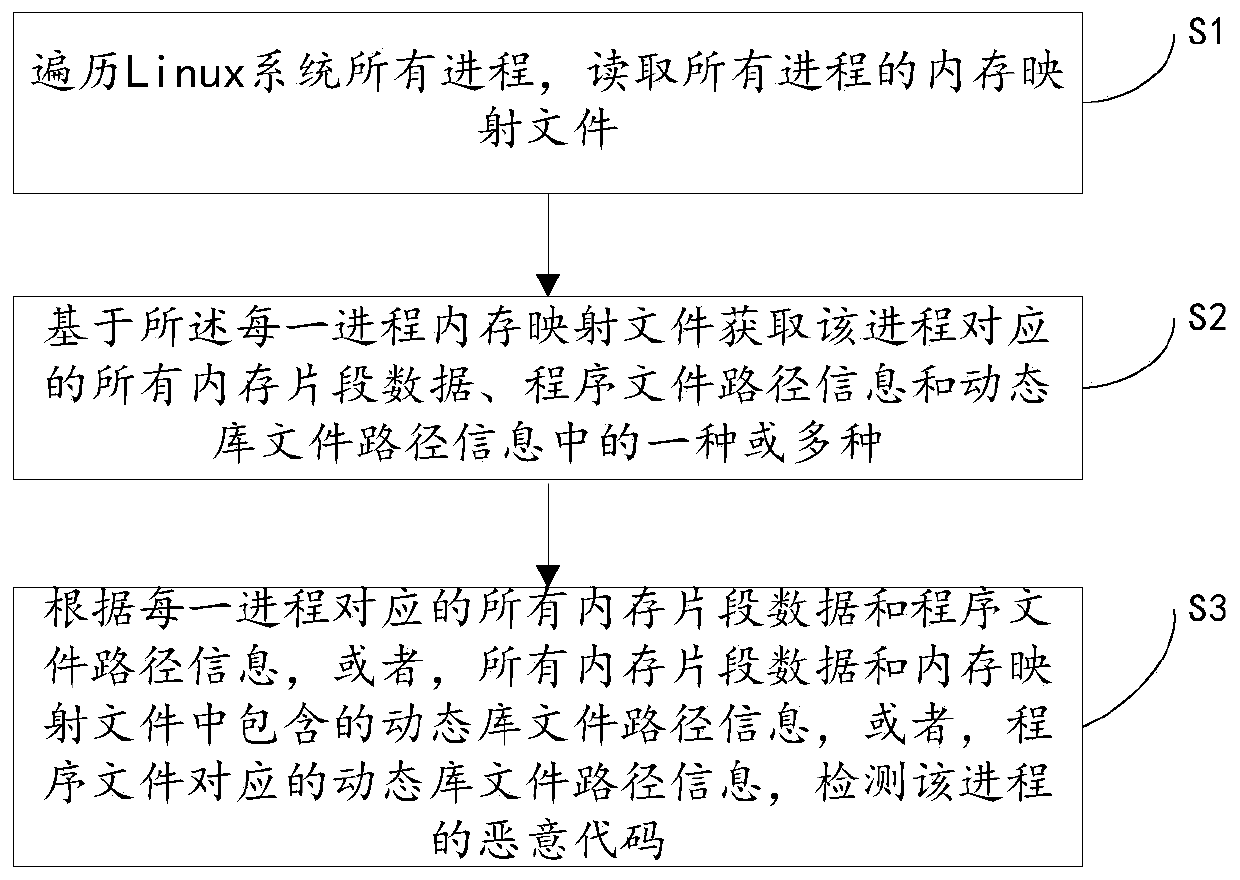

Linux platform process memory malicious code evidence obtaining method, controller and medium

ActiveCN109918907AImprove securityAvoid completenessPlatform integrity maintainanceMemory addressMemory forensics

The invention relates to a Linux platform process memory malicious code evidence obtaining method, a controller and a medium, and the method comprises the steps: traversing all processes of a Linux system, and reading memory mapping files of all the processes; Obtaining one or more of all memory segment data corresponding to the process based on each process memory mapping file, program file pathinformation and dynamic library file path information based on each process memory mapping file; and detecting the malicious code of the process according to all the memory segment data and the program file path information corresponding to each process, or all the memory segment data and the dynamic library file path information contained in the memory mapping file, or the dynamic library file path information corresponding to the program file. According to the invention, the process memory mapping file of the Linux operation system is utilized; The memory address layout of the process is determined, the complete memory of each process in the system is accurately obtained, malicious codes in the memory of the Linux system are effectively discovered, the security of the Linux system is improved, and the memory evidence obtaining method has universality and stability.

Owner:NAT COMP NETWORK & INFORMATION SECURITY MANAGEMENT CENT

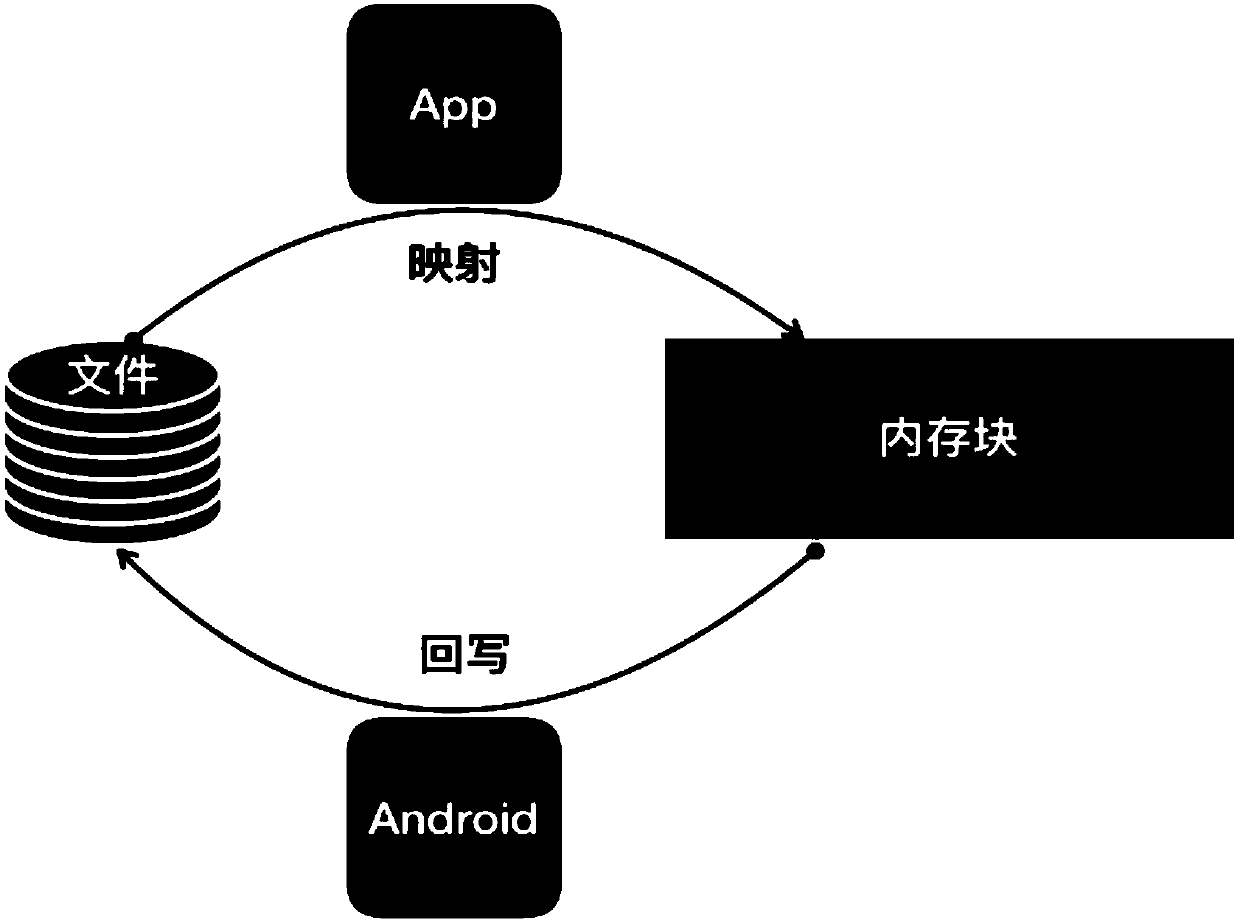

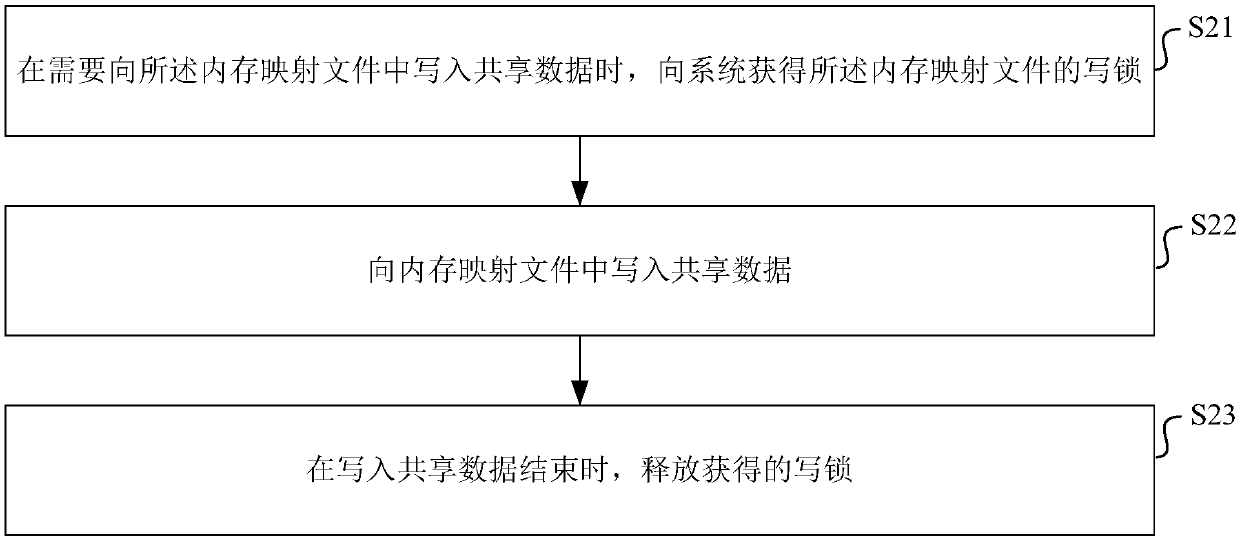

Data access method and device and storage medium

ActiveCN110399227AAchieve permanent storageAvoid lossProgram synchronisationOperational systemAccess method

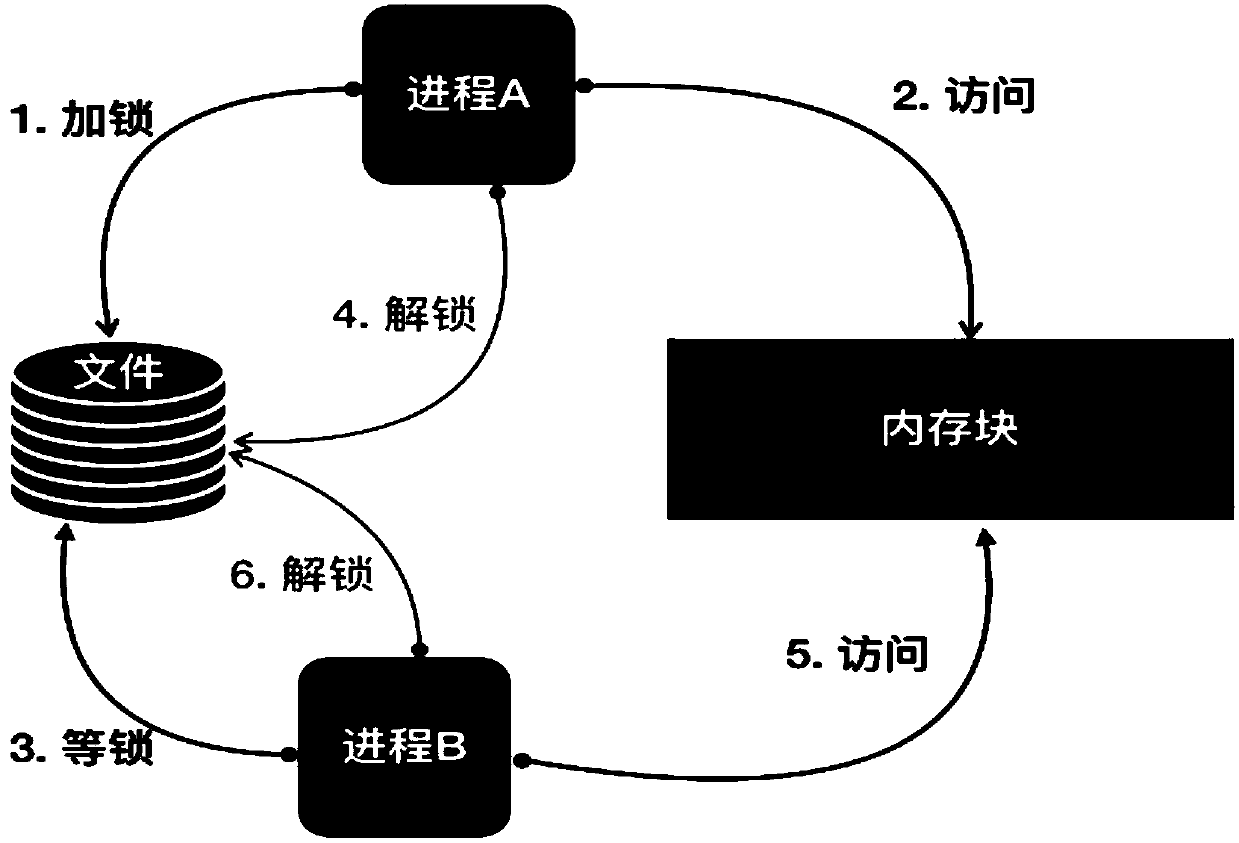

The invention discloses a data access method, a data access device and a storage medium, which are used for realizing multi-process data access while realizing permanent storage of data. The data access method includes: establishing a memory mapping file for storing shared data, wherein the memory mapping file is established by an Android application program by applying for a memory space from anAndroid operating system, and all processes in the Android application program share the memory space applied for the Android operating system; and when each process needs to write shared data into the memory mapping file, the method comprises the following steps: obtaining a write lock of the memory mapping file from a system, the write lock not allowing other processes in the application to readdata from a memory block in which the shared data is written or write data into the memory block; writing the shared data into the memory mapping file; and when writing of the shared data is finished, releasing the writing lock.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Method for reading dynamic memory mapping file in ground test device embedded software

InactiveCN108052460AImprove reading efficiencyImprove execution efficiencyMemory architecture accessing/allocationResource allocationData fileComputer module

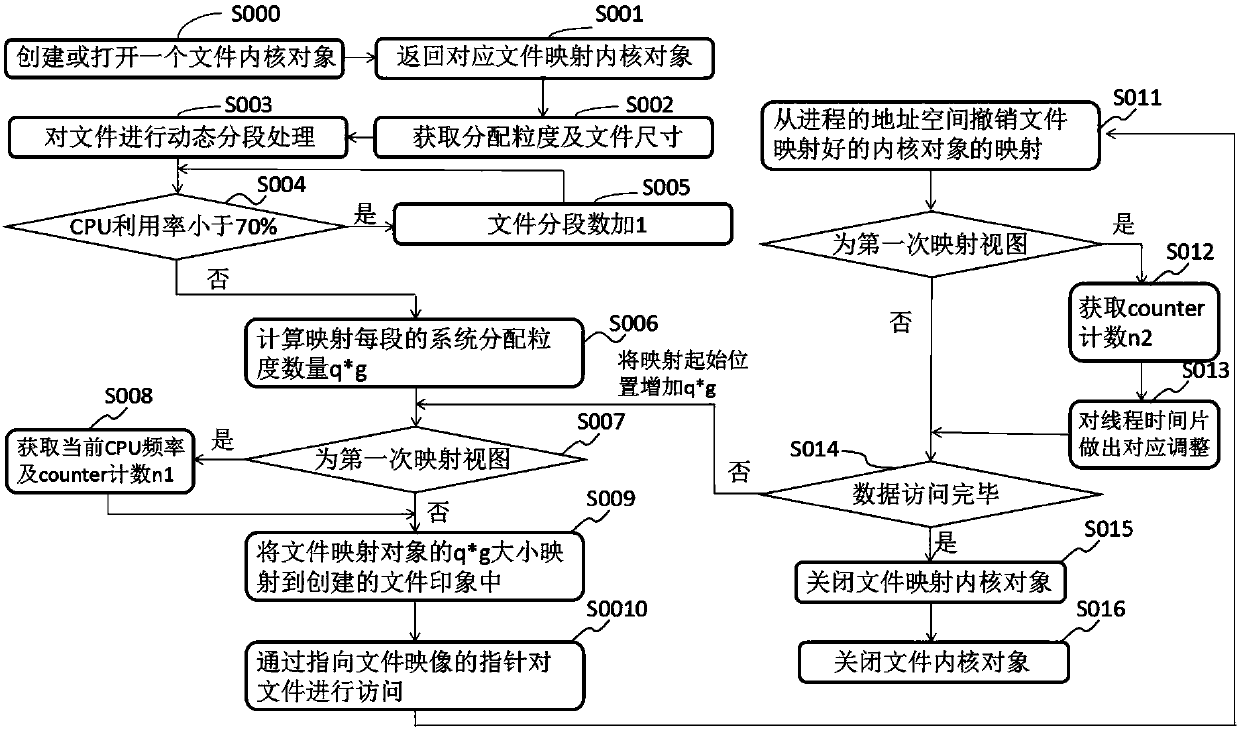

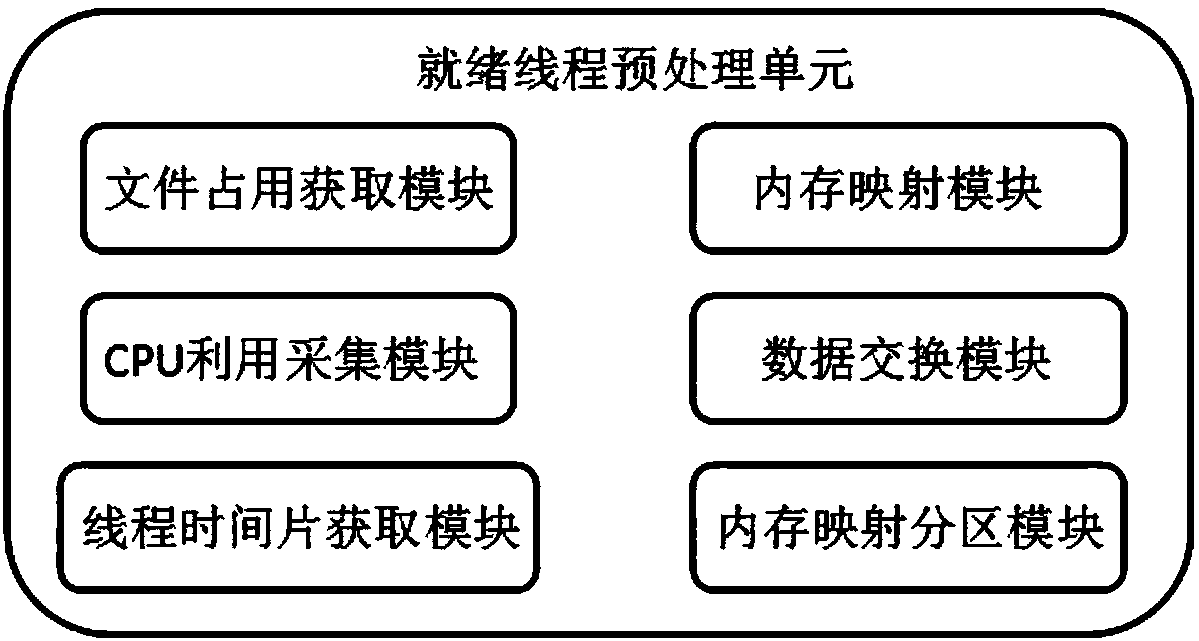

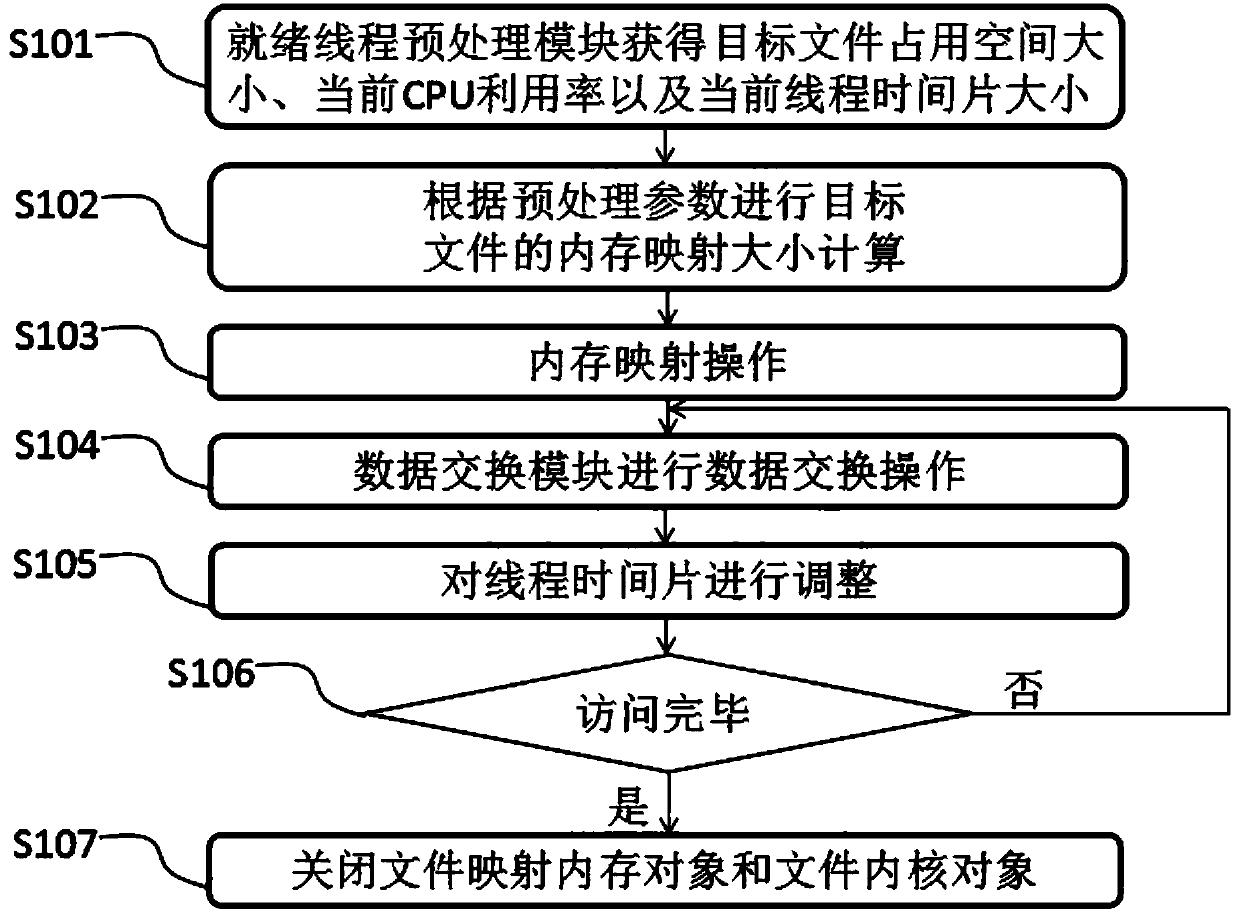

The invention discloses a method for reading a dynamic memory mapping file in ground test device embedded software, and relates to the technical field of data processing. According to the method, a ready thread preprocessing unit obtains information collected by a file occupation space obtaining module, a CPU collection module and a current thread time slice obtaining module, and performs memory mapping operation according to a memory mapping size of a target file; a data exchange module is used for data exchange operation; a thread time slice is correspondingly adjusted; and cyclic mapping reading of data is performed according to a calculated value. Through dynamic mapping of a big data file, a particle size value of mapping subsections is dynamically changed along with factors such as afile size, an embedded software state and the like, and the size of an execution time slice is dynamically adjusted according to reading time of each section of mapping, so that the file reading andthread execution efficiency is effectively improved and the resource overhead is reduced.

Owner:安徽雷威智能科技有限公司

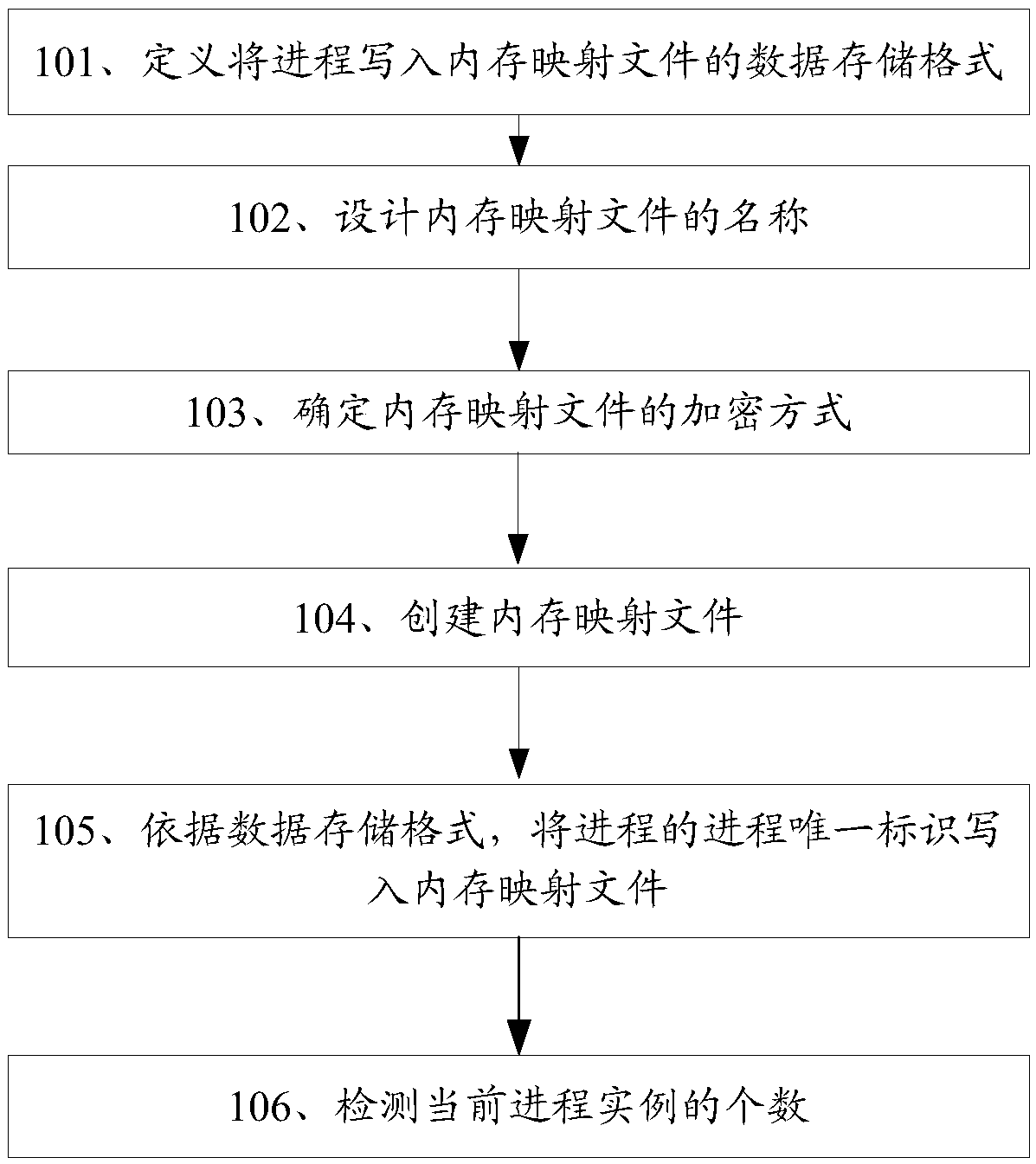

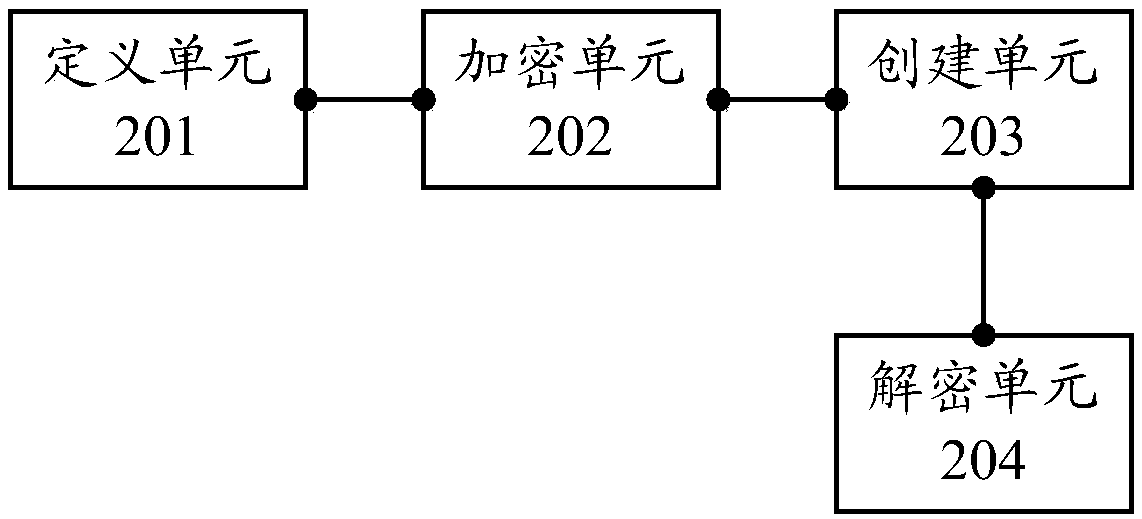

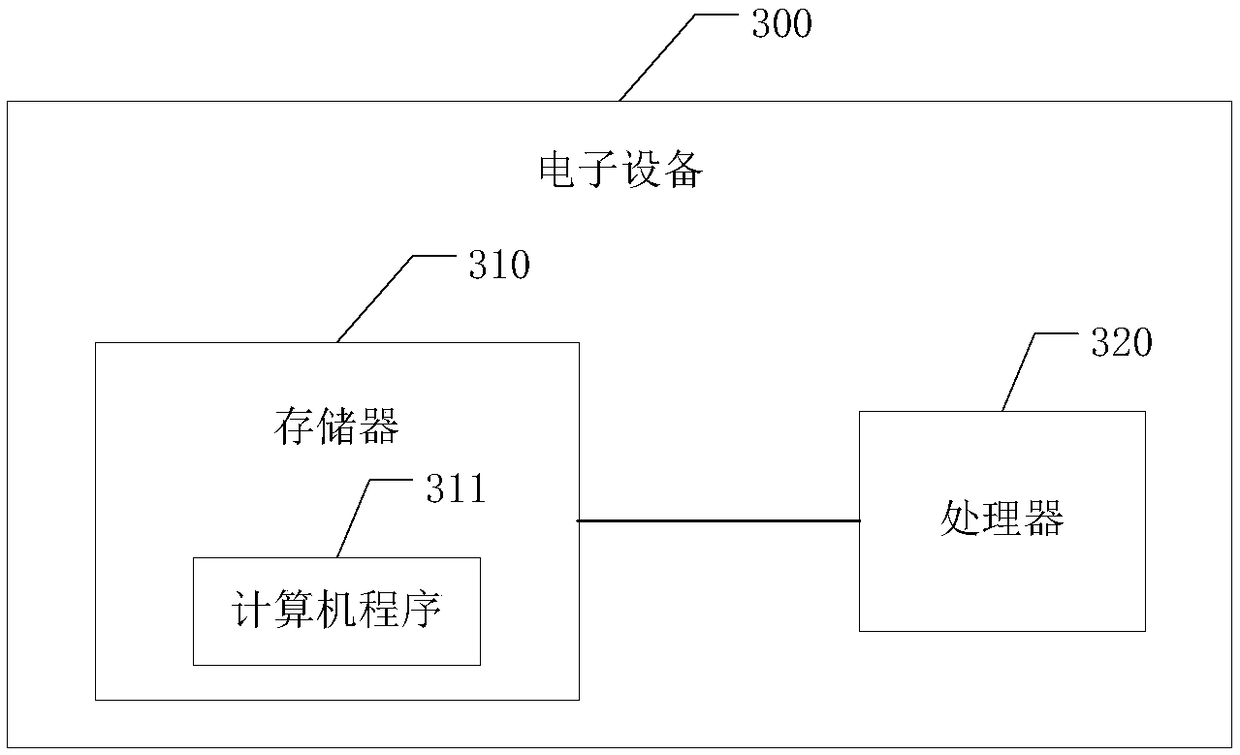

A method and device for detecting the number of process instances based on a memory mapping file

ActiveCN109324952ACovert detectionHardware monitoringDigital data protectionSoftware engineeringUnique identifier

The embodiment of the invention discloses a method and device for detecting the number of process instances based on a memory mapping file, which is used for detecting the number of process instancesexisting at the same time more concealed. The method of the embodiment of the invention comprises the following steps of defining a data storage format for writing a process into a memory mapping file; obtaining the unique process identification of the process, and encrypting the unique process identification of the process to obtain the encrypted KEY and the encrypted unique process identification, wherein the encrypted unique process identification changes with the change of the boot state; creating a memory map file, and writing the signature into the head end of the internal map file according to the data storage format, and writing the unique identification of the encrypted KEY and the process into the memory map file; when it is necessary to detect the number of actual inversions ofthe current process, reading the memory mapping file according to the data storage format, and decrypting the encrypted process unique identifier to obtain the decrypted process unique identifier, namely the number of the current process instances.

Owner:WUHAN DOUYU NETWORK TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com