High-speed concurrent access method for power system large data files across platform

A power system and access method technology, which is applied in electrical digital data processing, memory systems, special data processing applications, etc., can solve the problem that the writing speed query efficiency is difficult to meet the needs of applications, and achieves convenience and stability. running effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

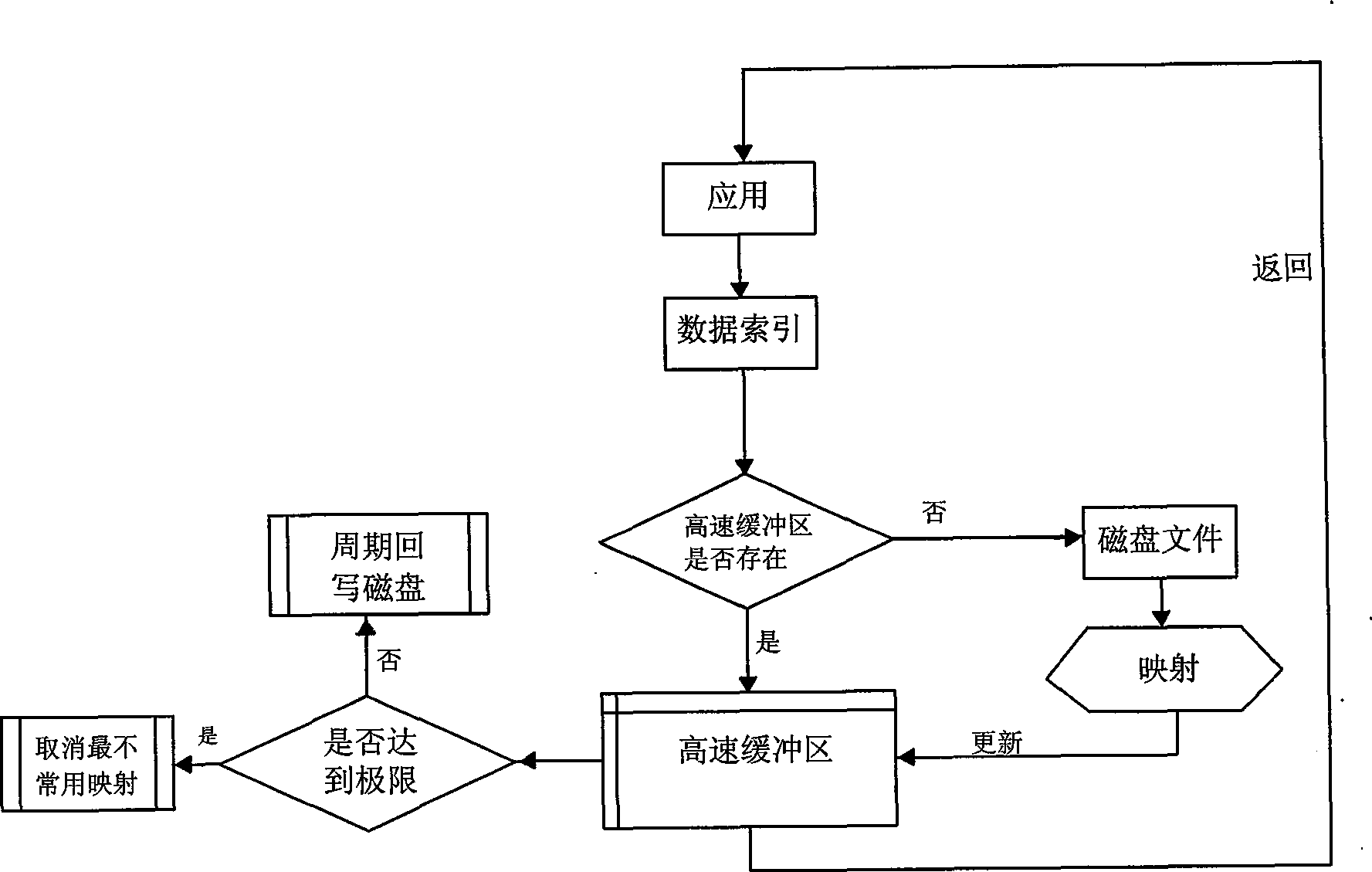

[0015] The present invention will be further described below in conjunction with accompanying drawing. figure 1 It is a flow chart of file processing by the cache management system in the implementation method of high-speed concurrent access to power system cross-platform big data files of the present invention.

[0016] The cache management system of the present invention has a high-speed buffer, and the purpose of the buffer is that if the data that has been loaded is accessed again, it does not need to be loaded again, thereby improving the efficiency and speed of access. The buffer has a certain size limit, When the buffer content exceeds this limit, the cache management system can automatically remove the least frequently used data from the high-speed buffer.

[0017] Processing flow of the present invention is:

[0018] When the application needs to process data, it obtains the relevant index information for specific data storage through a certain index mechanism. After...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com