Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

243results about How to "Reduce the amount of memory" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

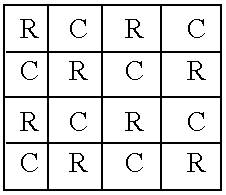

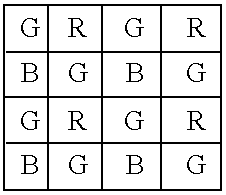

Image processing system to control vehicle headlamps or other vehicle equipment

InactiveUS6631316B2Reduce complexityLow costTelevision system detailsDigital data processing detailsImaging processingComputer graphics (images)

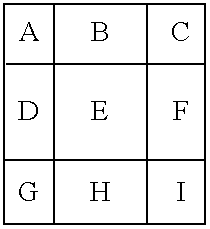

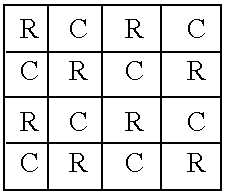

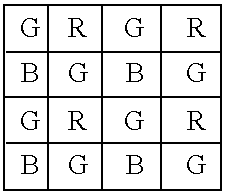

An imaging system of the invention includes an image array sensor including a plurality of pixels. Each of the pixels generate a signal indicative of the amount of light received on the pixel. The imaging system further includes an analog to digital converter for quantizing the signals from the pixels into a digital value. The system further includes a memory including a plurality of allocated storage locations for storing the digital values from the analog to digital converter. The number of allocated storage locations in the memory is less than the number of pixels in the image array sensor. According to another embodiment, an imaging device includes an image sensor having a plurality pixels arranged in an array; and a multi-layer interference filter disposed over said pixel array, said multi-layer interference filter being patterned so as to provide filters of different colors to neighboring pixels or groups of pixels.

Owner:GENTEX CORP

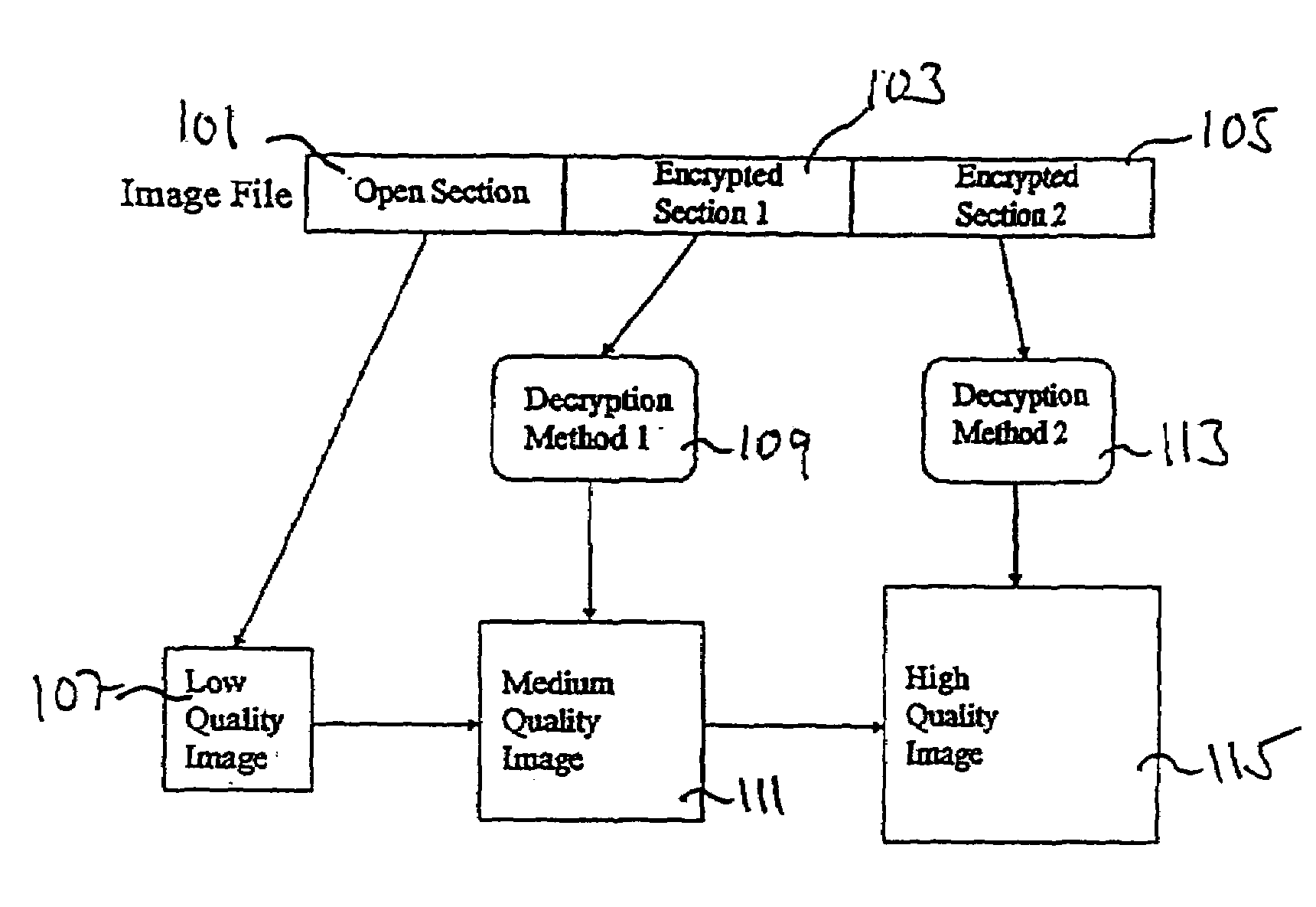

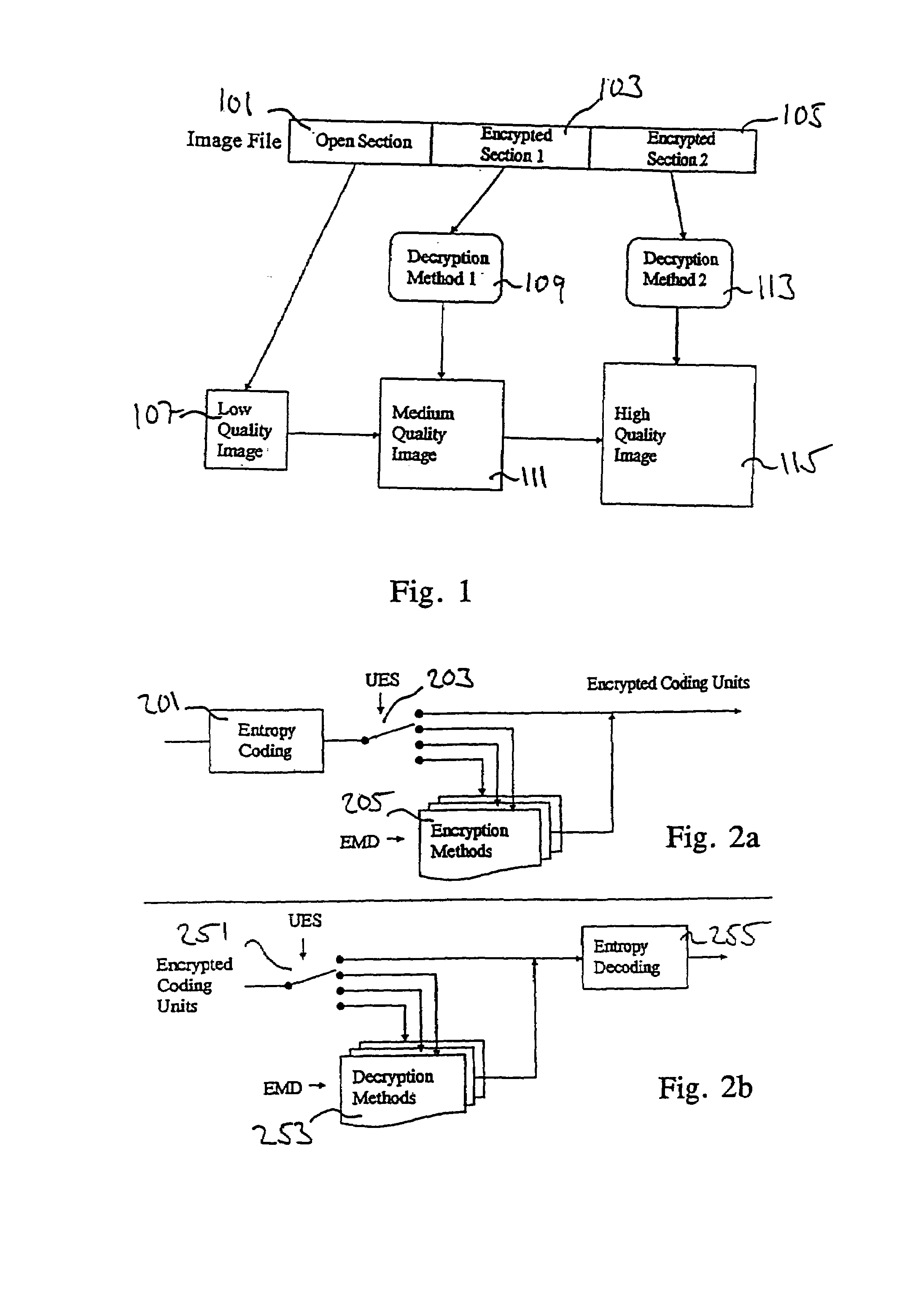

Method and a device for encryption of images

InactiveUS6931534B1Reduce transfer timeReduce the amount of memoryMultiple keys/algorithms usageUser identity/authority verificationComputer hardwareObject based

In a method and a device for partial encryption and progressive transmission of images, a first section of the image file is compressed at reduced quality without decryption, and a second section of the image file is encrypted. Users having access to appropriate decryption keywords can decrypt this second section. The first section together with the decrypted second section can then be viewed as a full quality image. The storage space required for storing the first and section together is essentially the same as the storage space required for storing the unencrypted full quality image. By using the method and device as described herein storage and bandwidth requirements for partially encrypted images is reduced. Furthermore, object based composition and processing of encrypted objects are facilitated, and ROIs can be encrypted. Also, the shape of a ROI can be encrypted and the original object can be decrypted and restored in the compressed domain.

Owner:TELEFON AB LM ERICSSON (PUBL)

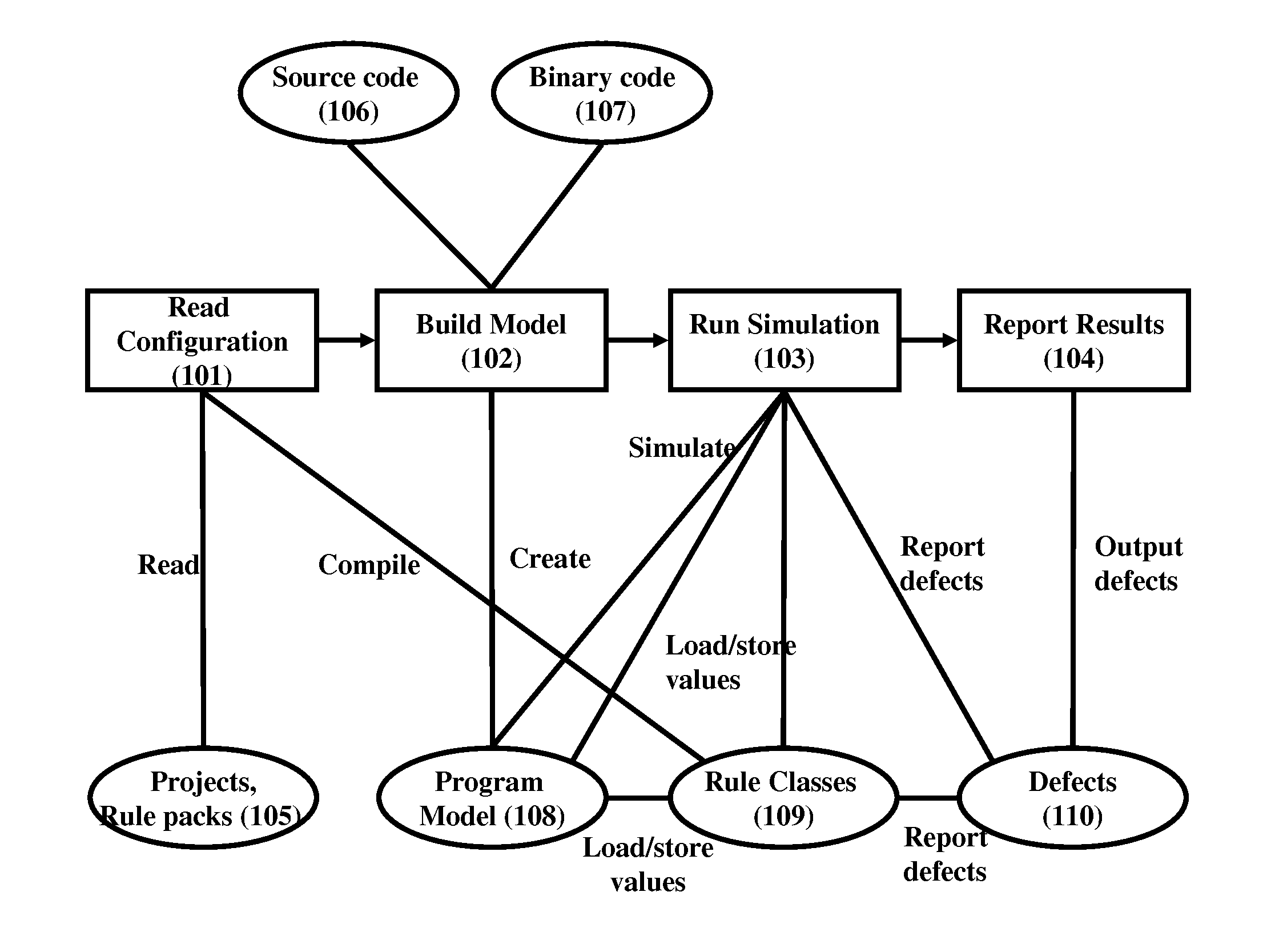

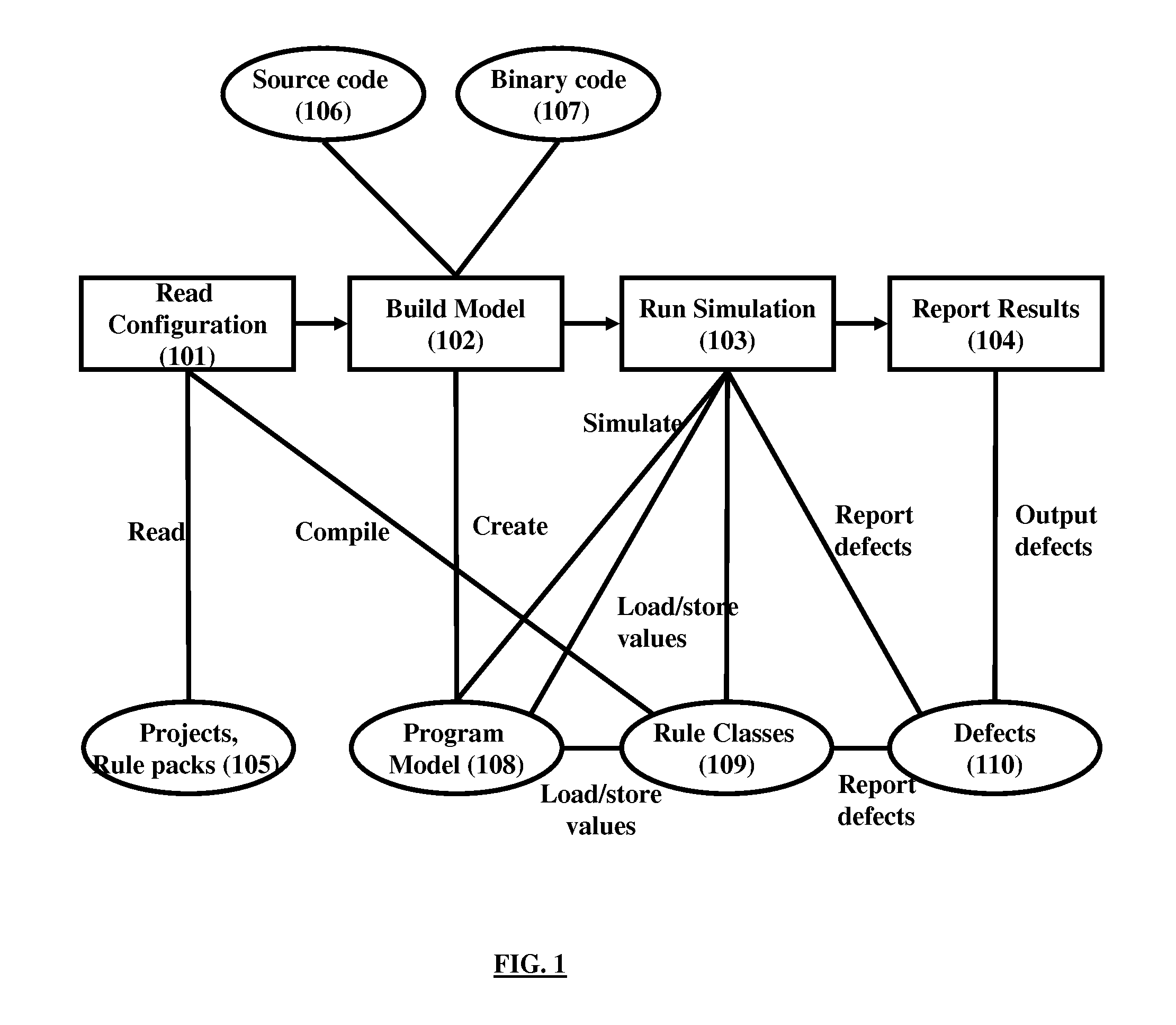

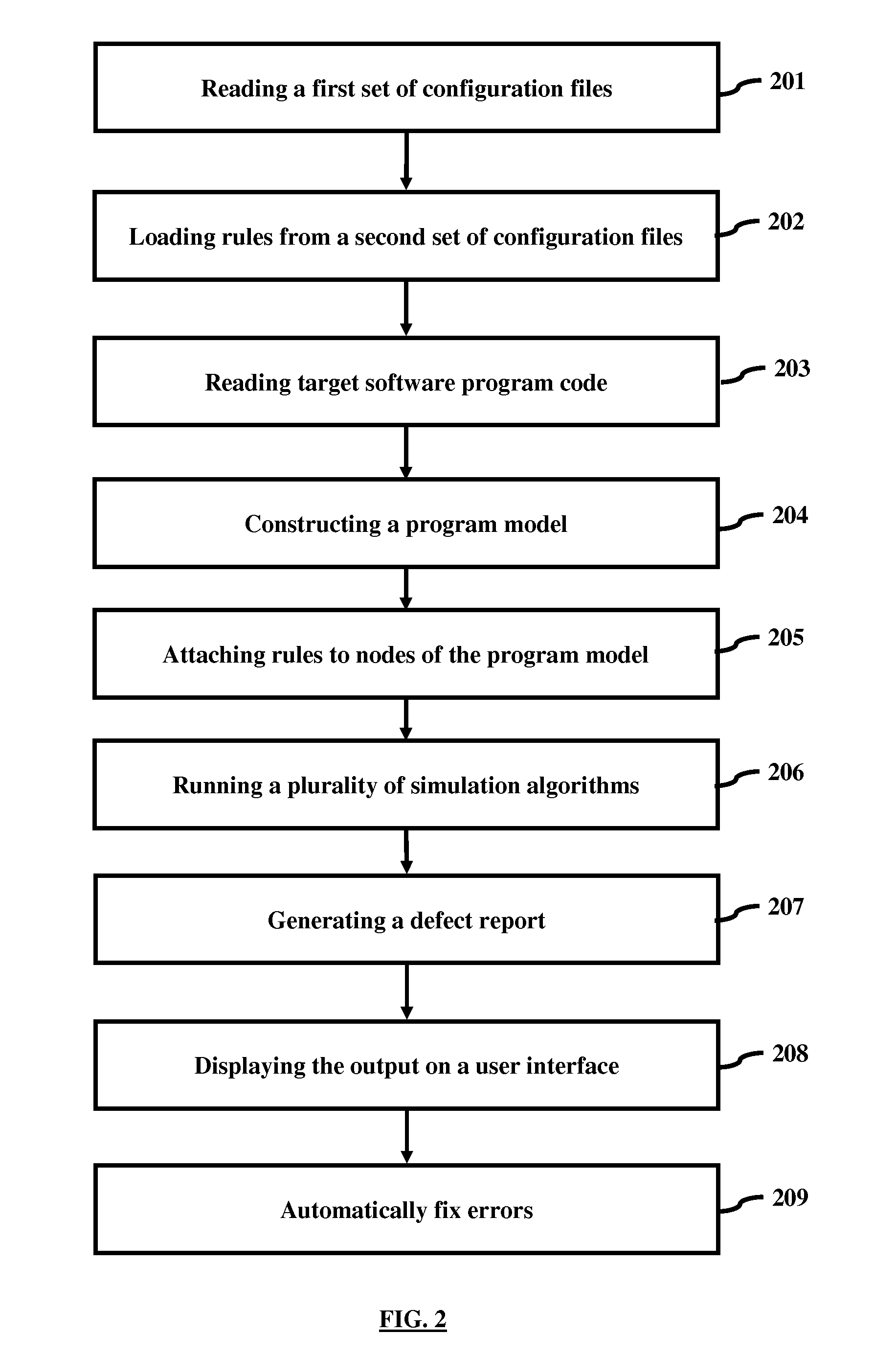

Method and Apparatus for Software Simulation

InactiveUS20100198799A1Reduce in quantityReduce the amount of memoryDigital data processing detailsSoftware testing/debuggingSoftware emulationParallel computing

A software simulation method and program storage device for software defect detection and obtaining insight into software code is disclosed, where simulation consists of executing target software program code for multiple input values and multiple code paths at the same time, thus achieving 100% coverage over inputs and paths without actually running the target software. This allows simulation to detect many defects that are missed by traditional testing tools. The simulation method runs a plurality of algorithms where a plurality of custom defined and pre-defined rules are verified in target software to find defects and obtain properties of the software code.

Owner:KRISHNAN SANJEEV +1

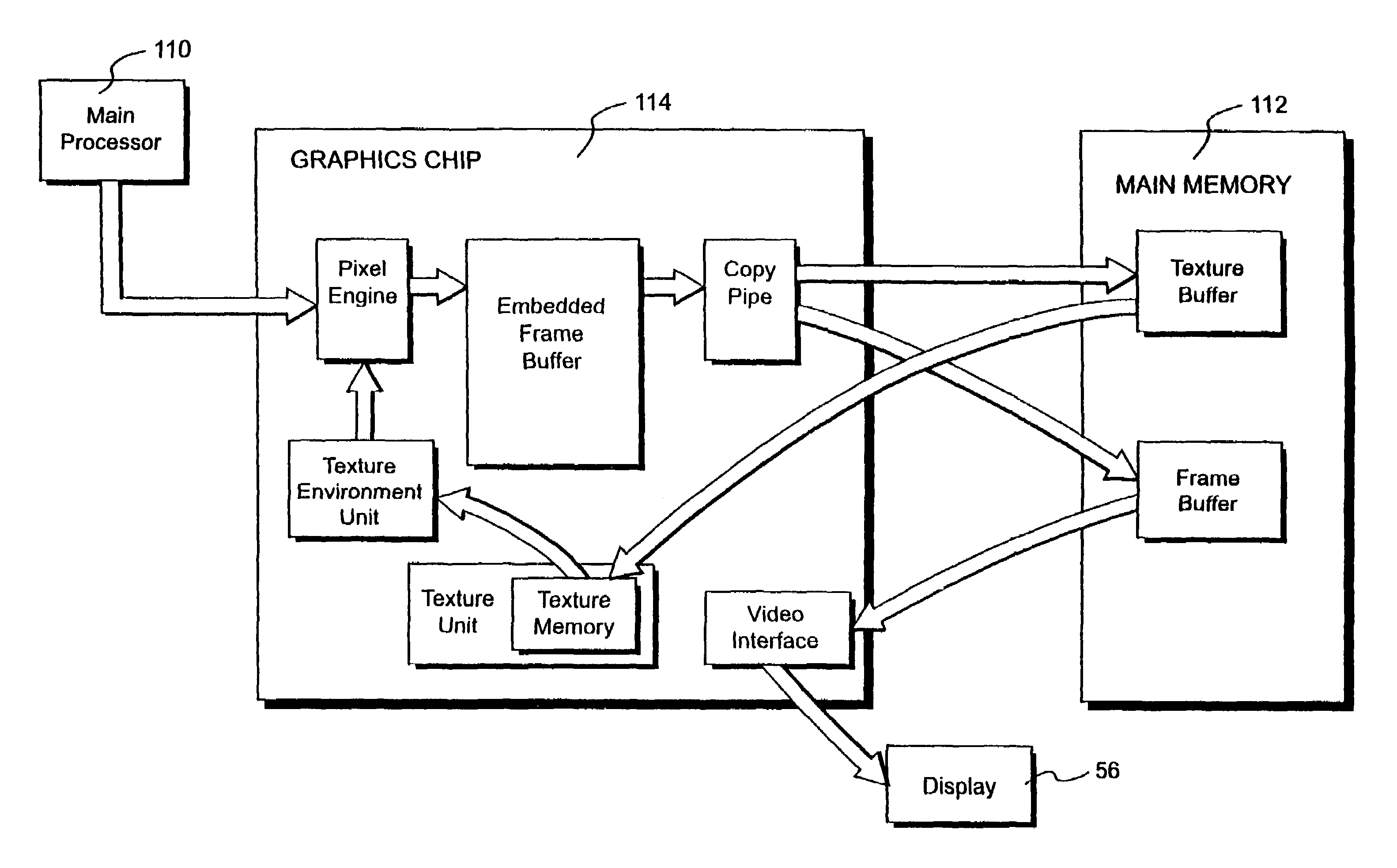

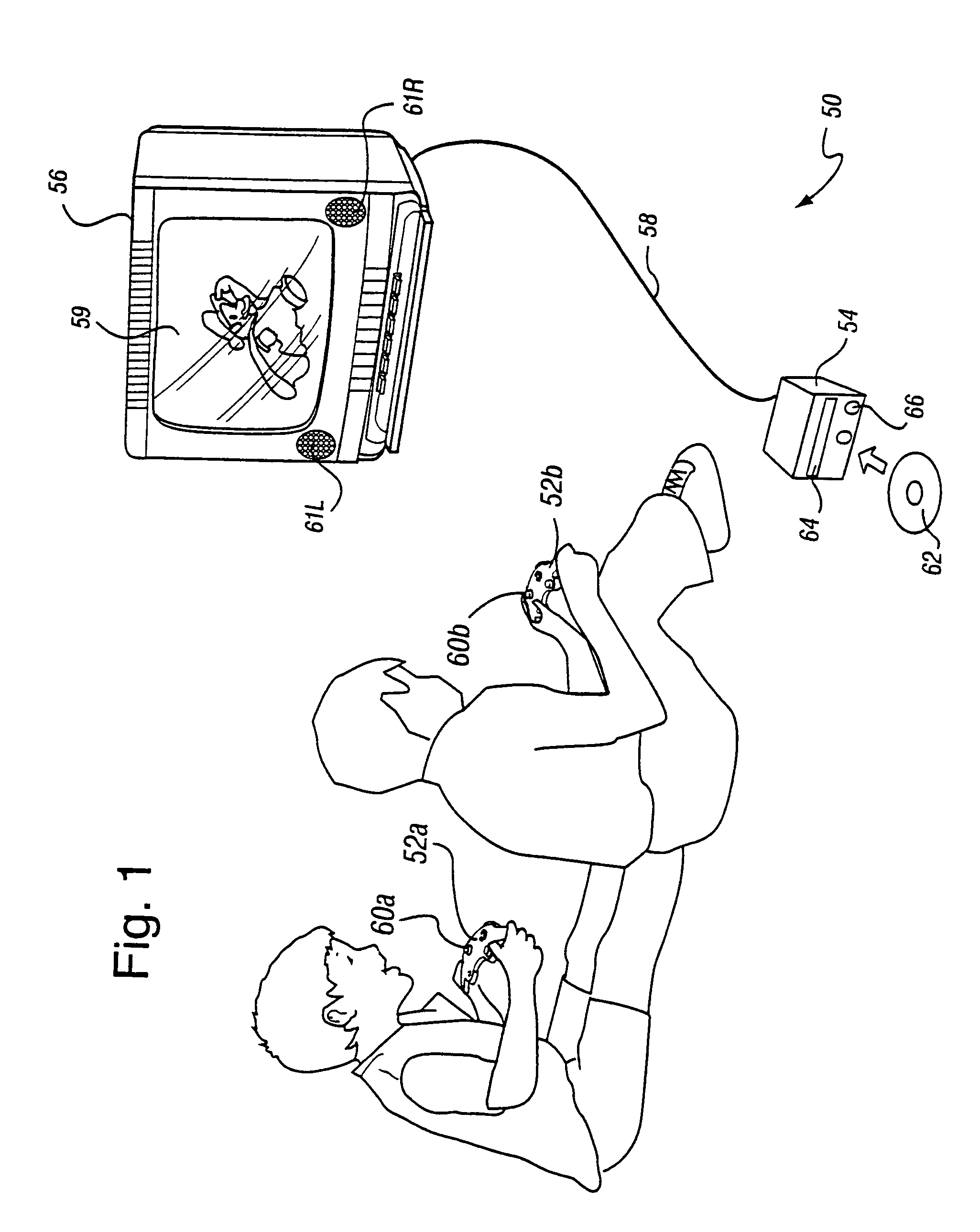

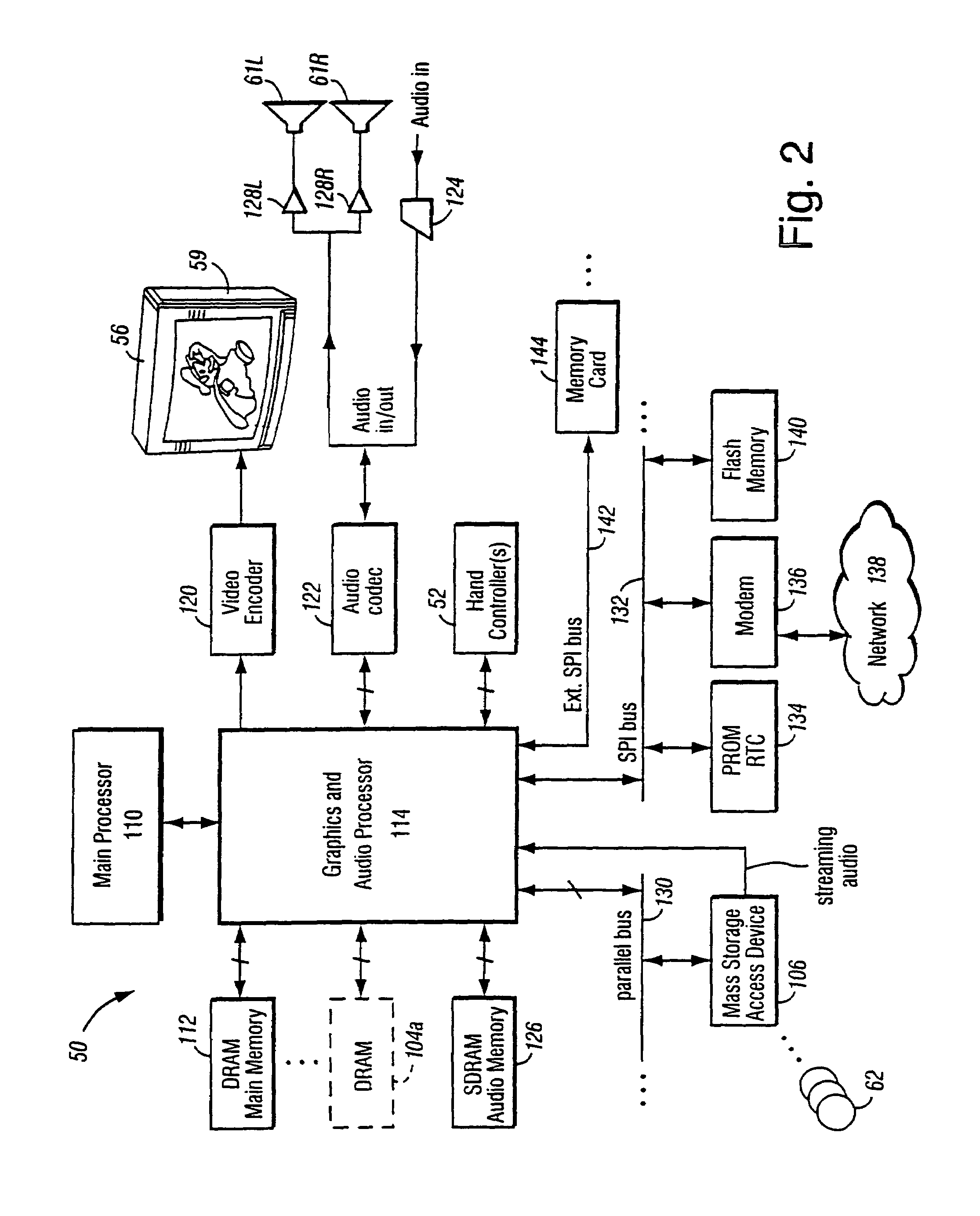

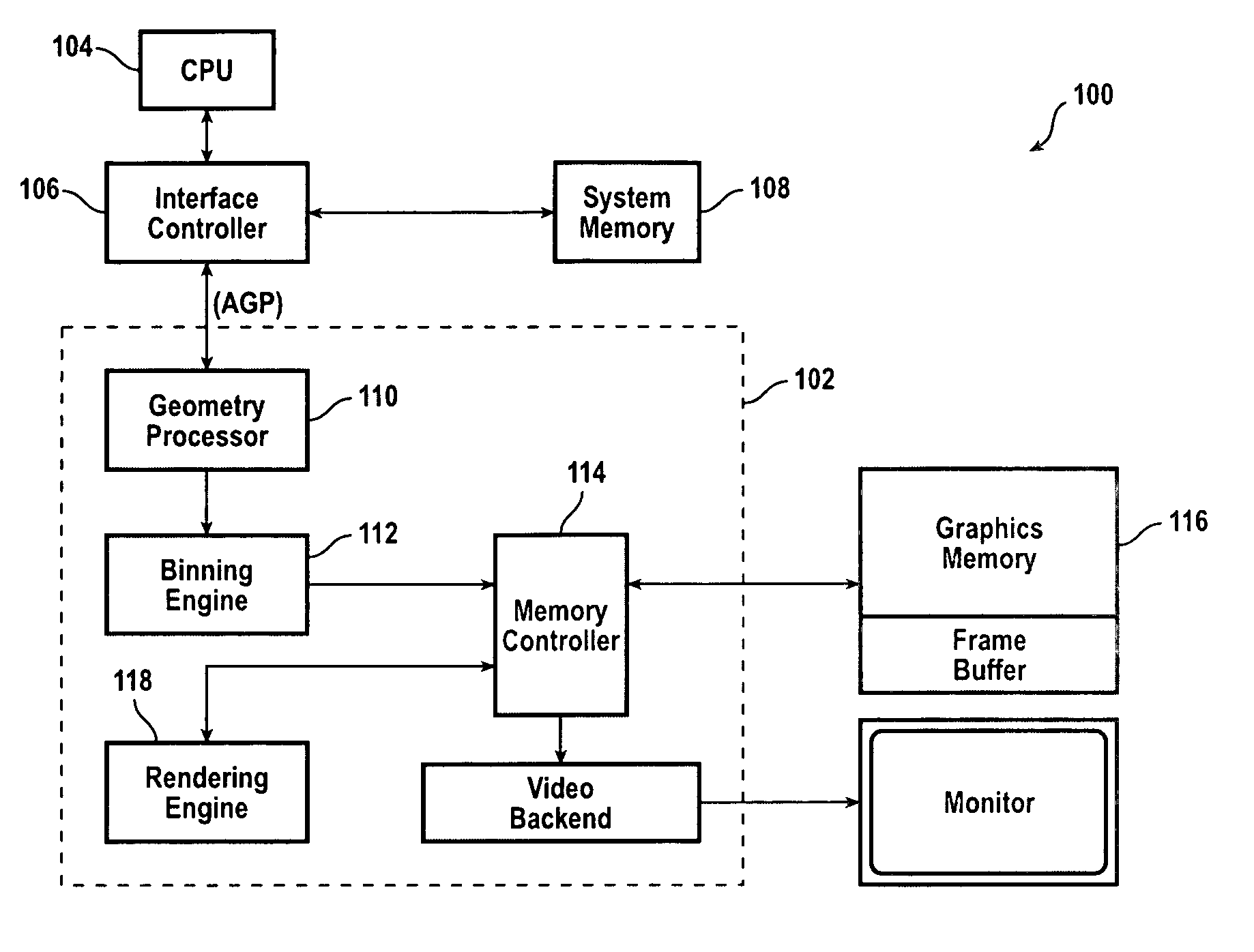

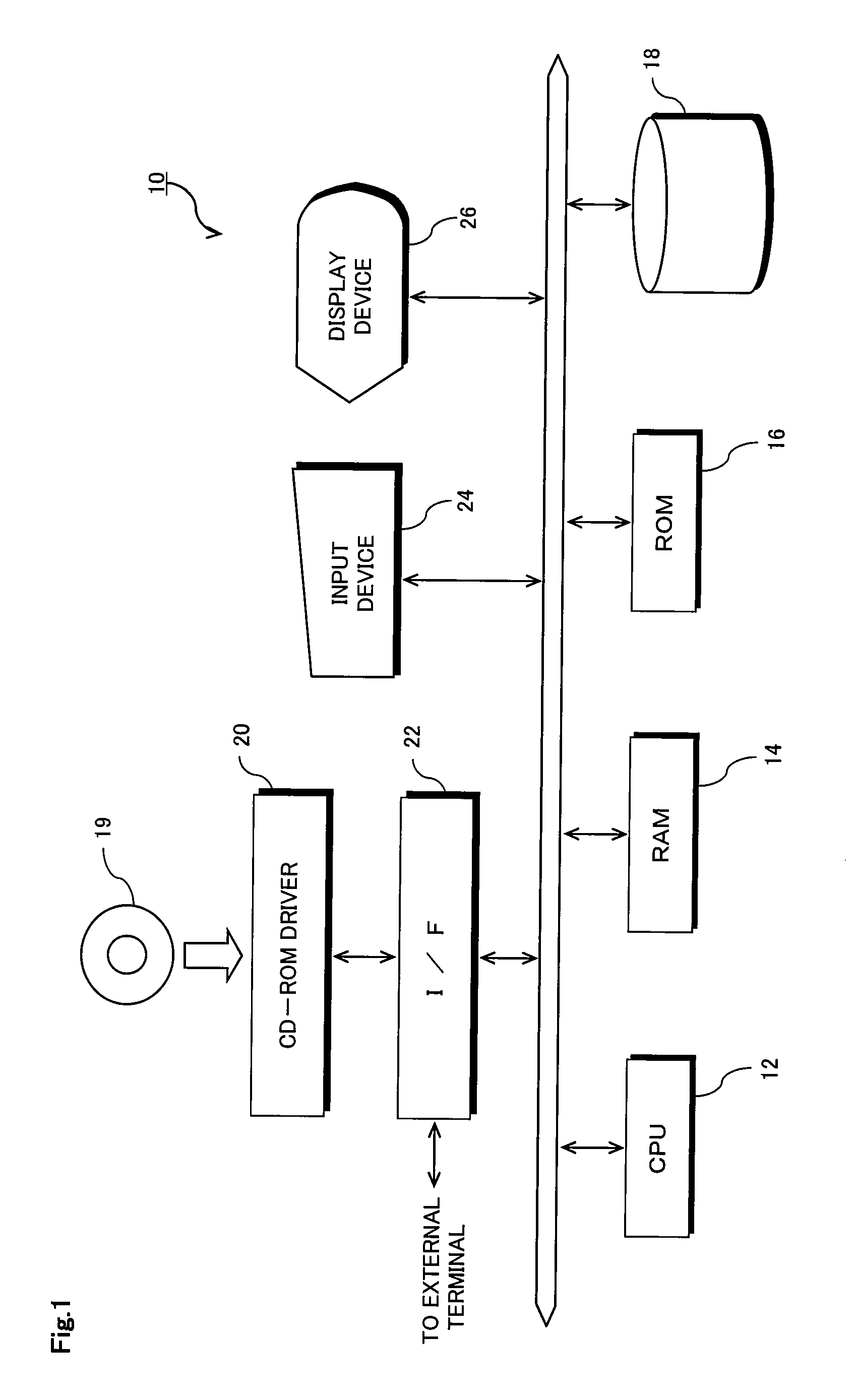

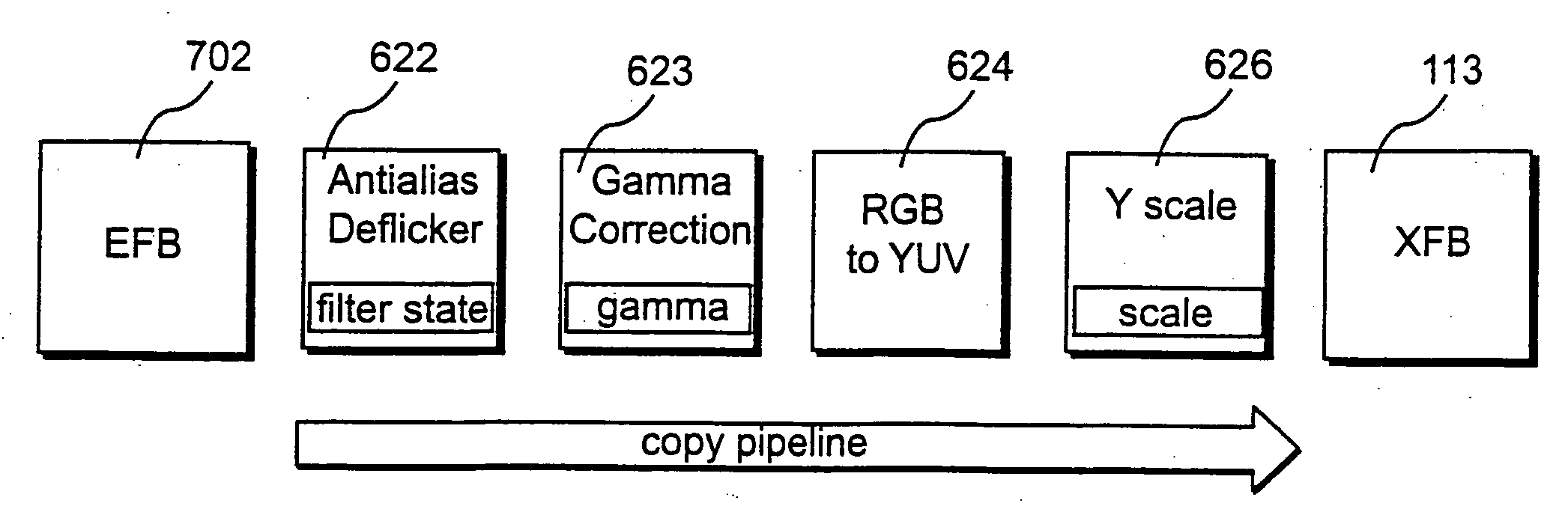

Graphics system with copy out conversions between embedded frame buffer and main memory

InactiveUS7184059B1Improve system flexibilityEasy to useImage memory managementCathode-ray tube indicatorsGraphic systemGraphics processing unit

A graphics system including a custom graphics and audio processor produces exciting 2D and 3D graphics and surround sound. The system includes a graphics and audio processor including a 3D graphics pipeline and an audio digital signal processor. The graphics processor includes an embedded frame buffer for storing frame data prior to sending the frame data to an external location, such as main memory. A copy pipeline is provided which converts the data from one format to another format prior to writing the data to the external location. The conversion may be from one RGB color format to another RGB color format, from one YUV format to another YUV format, from an RGB color format to a YUV color format, or from a YUV color format to an RGB color format. The formatted data is either transferred to a display buffer, for use by the video interface, or to a texture buffer, for use as a texture by the graphics pipeline in a subsequent rendering process.

Owner:NINTENDO CO LTD

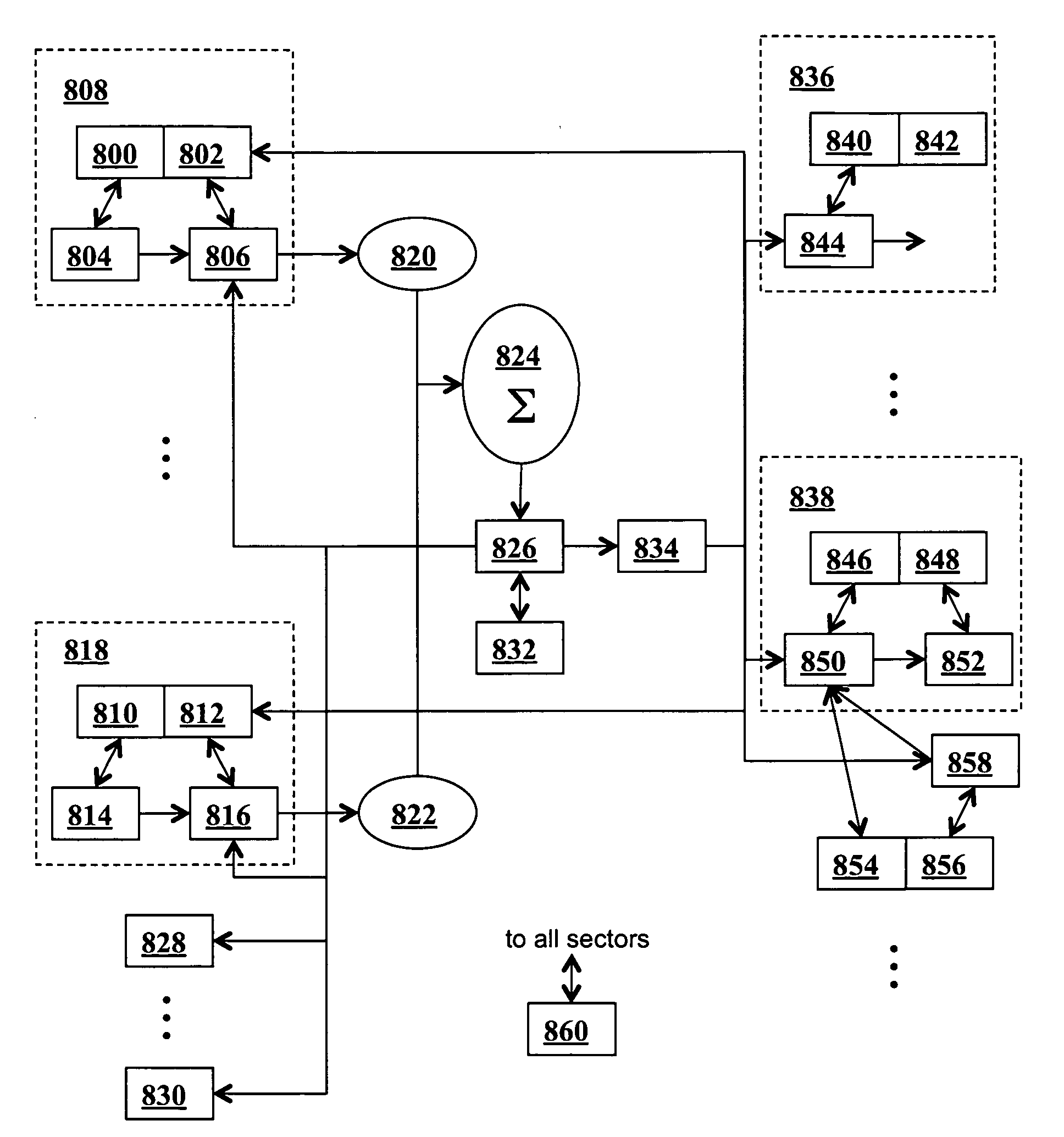

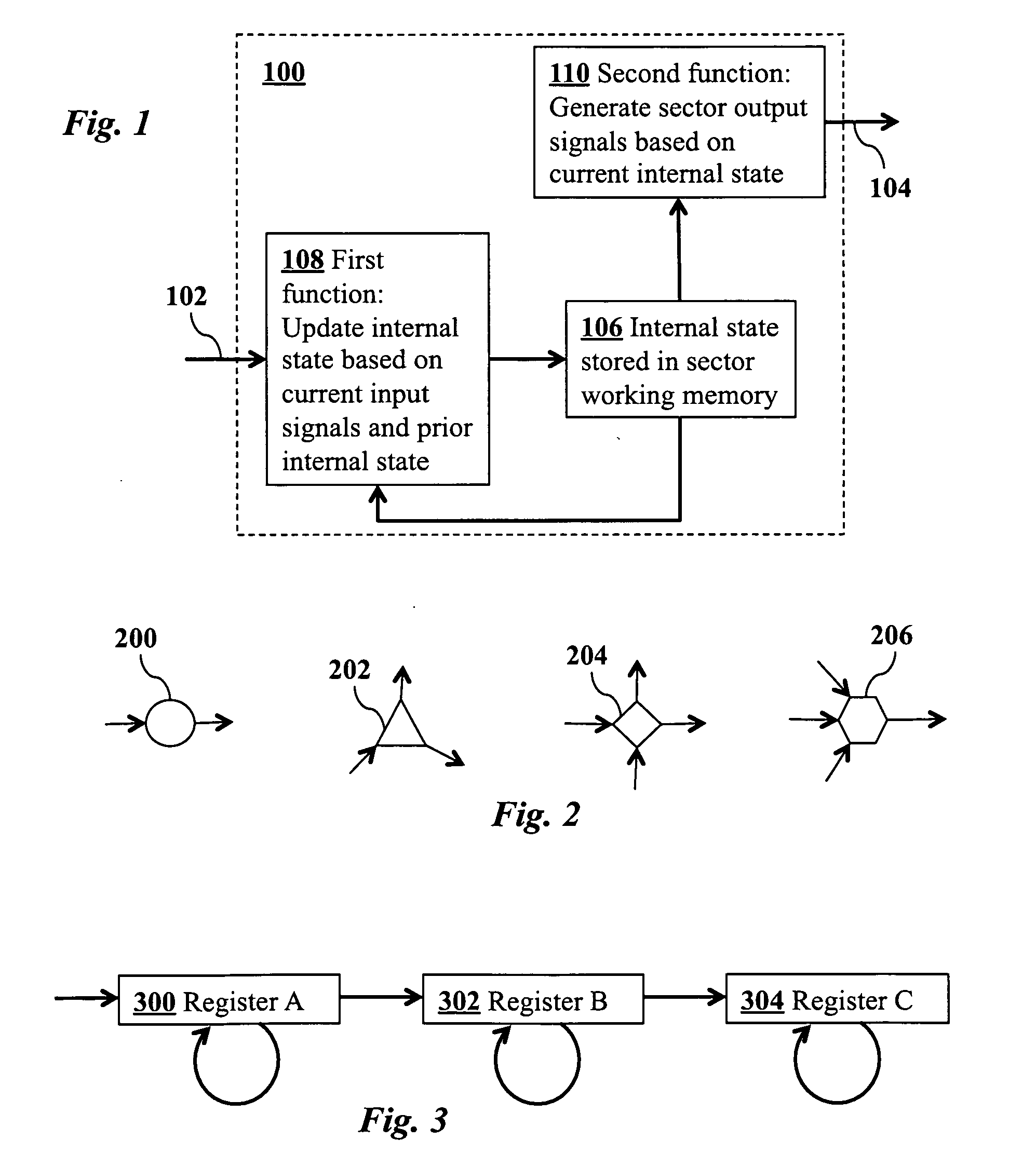

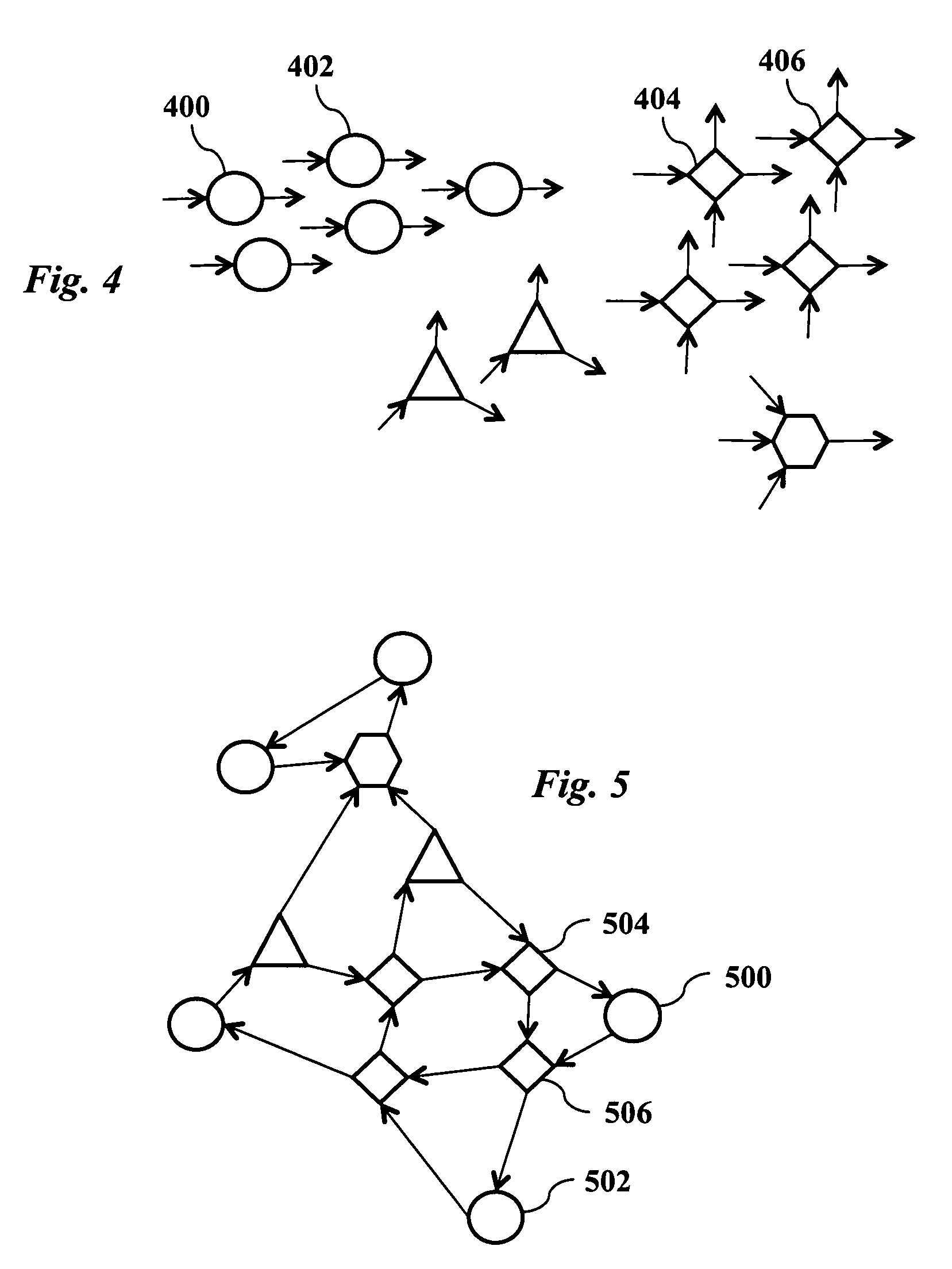

Method for efficiently simulating the information processing in cells and tissues of the nervous system with a temporal series compressed encoding neural network

ActiveUS20110016071A1Effective simulationExact reproductionDigital computer detailsDigital dataInformation processingNervous system

A neural network simulation represents components of neurons by finite state machines, called sectors, implemented using look-up tables. Each sector has an internal state represented by a compressed history of data input to the sector and is factorized into distinct historical time intervals of the data input. The compressed history of data input to the sector may be computed by compressing the data input to the sector during a time interval, storing the compressed history of data input to the sector in memory, and computing from the stored compressed history of data input to the sector the data output from the sector.

Owner:CORTICAL DATABASE

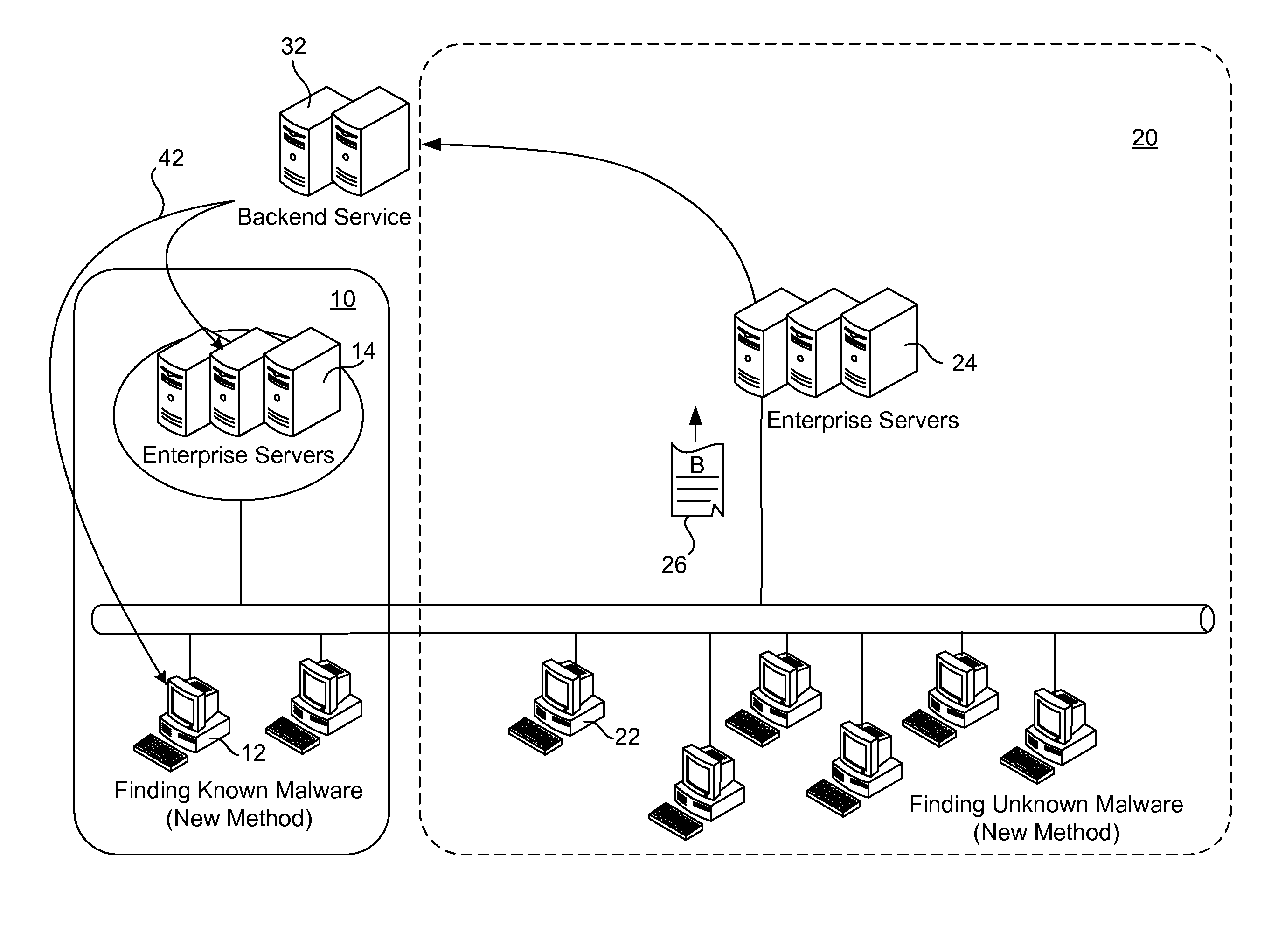

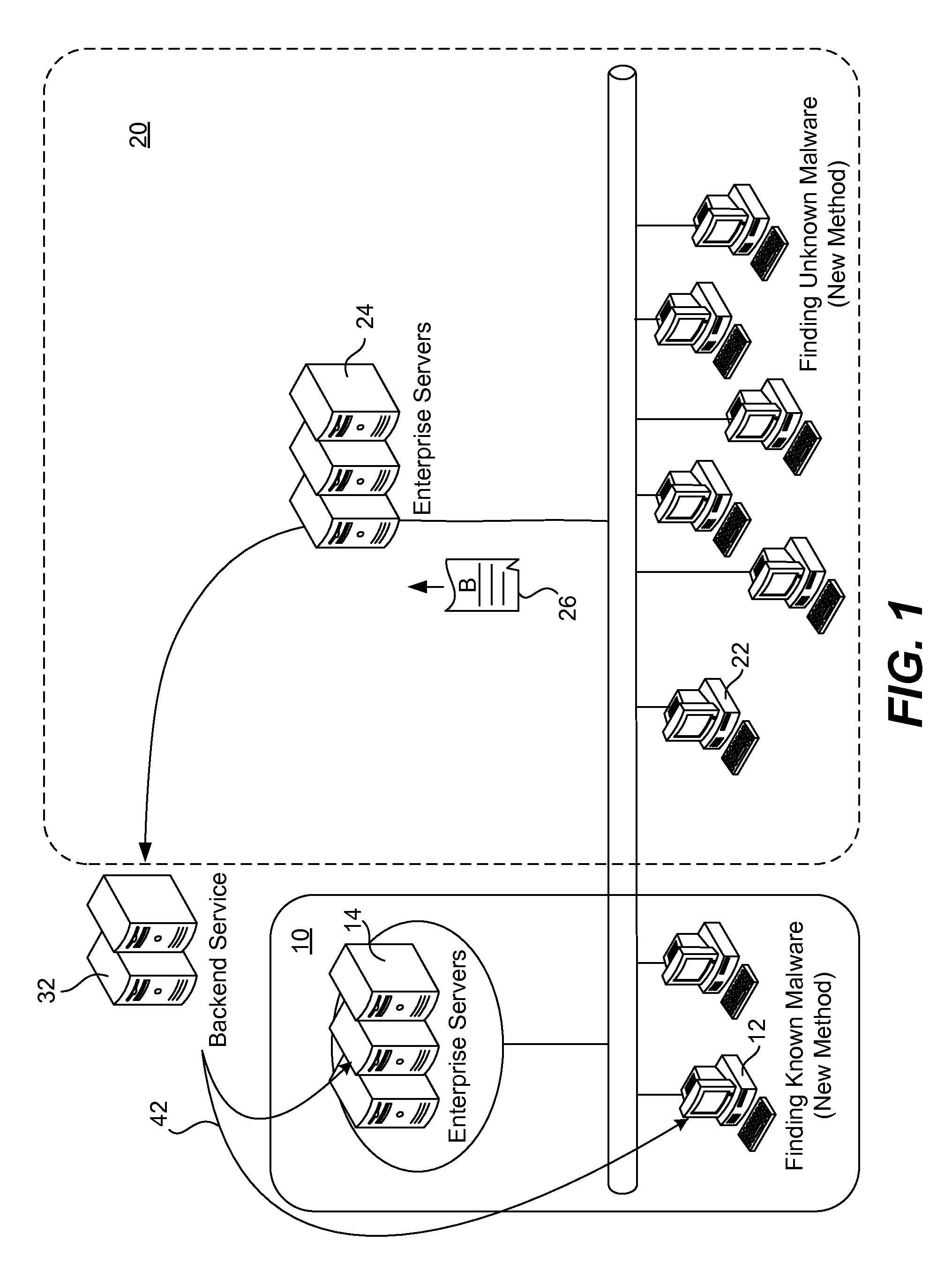

Zero day malware scanner

ActiveUS8375450B1Quick analysisReduce the amount of memoryMemory loss protectionUser identity/authority verificationClient-sideMalware

A training model for malware detection is developed using common substrings extracted from known malware samples. The probability of each substring occurring within a malware family is determined and a decision tree is constructed using the substrings. An enterprise server receives indications from client machines that a particular file is suspected of being malware. The suspect file is retrieved and the decision tree is walked using the suspect file. A leaf node is reached that identifies a particular common substring, a byte offset within the suspect file at which it is likely that the common substring begins, and a probability distribution that the common substring appears in a number of malware families. A hash value of the common substring is compared (exact or approximate) against the corresponding substring in the suspect file. If positive, a result is returned to the enterprise server indicating the probability that the suspect file is a member of a particular malware family.

Owner:TREND MICRO INC

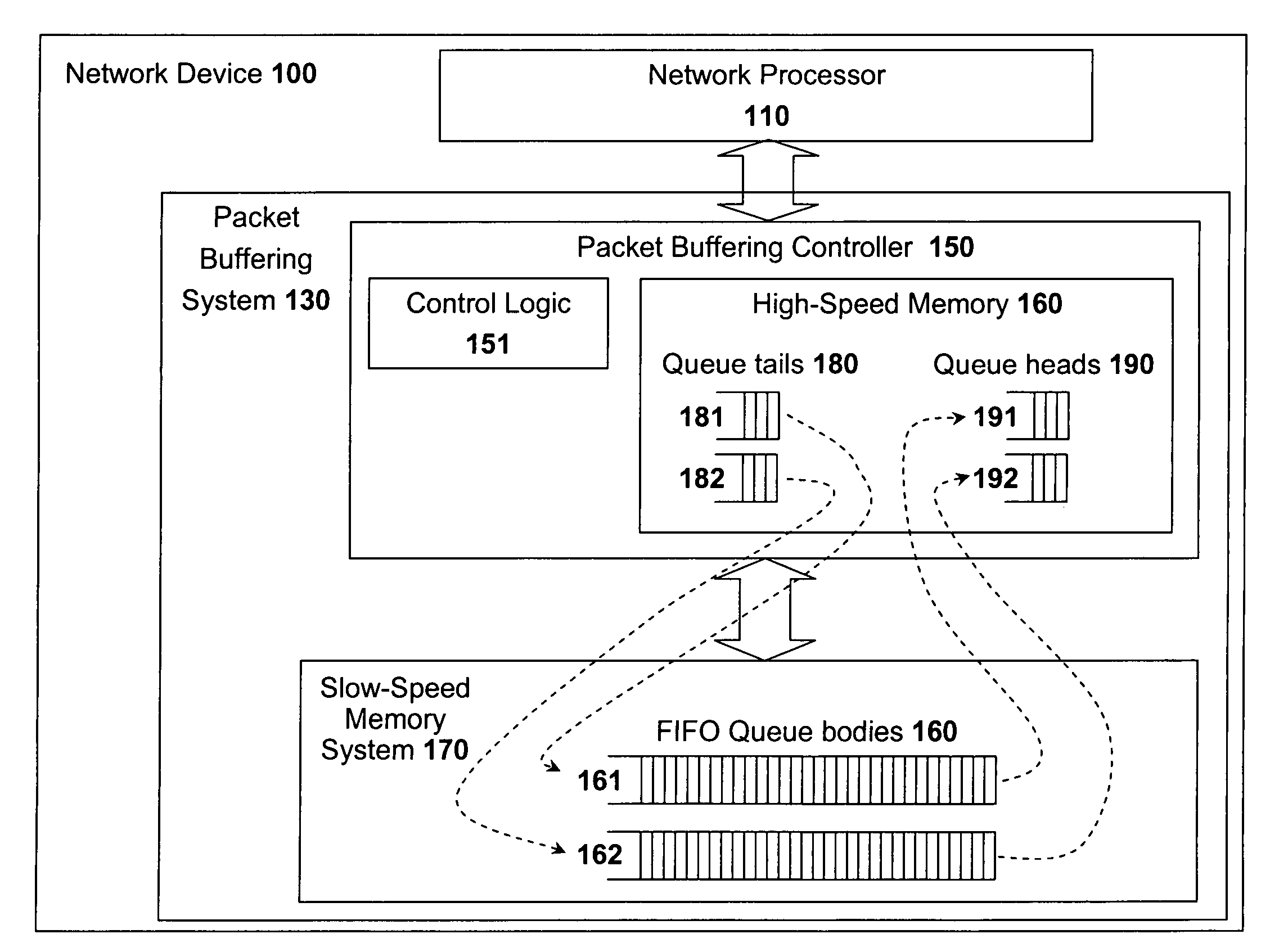

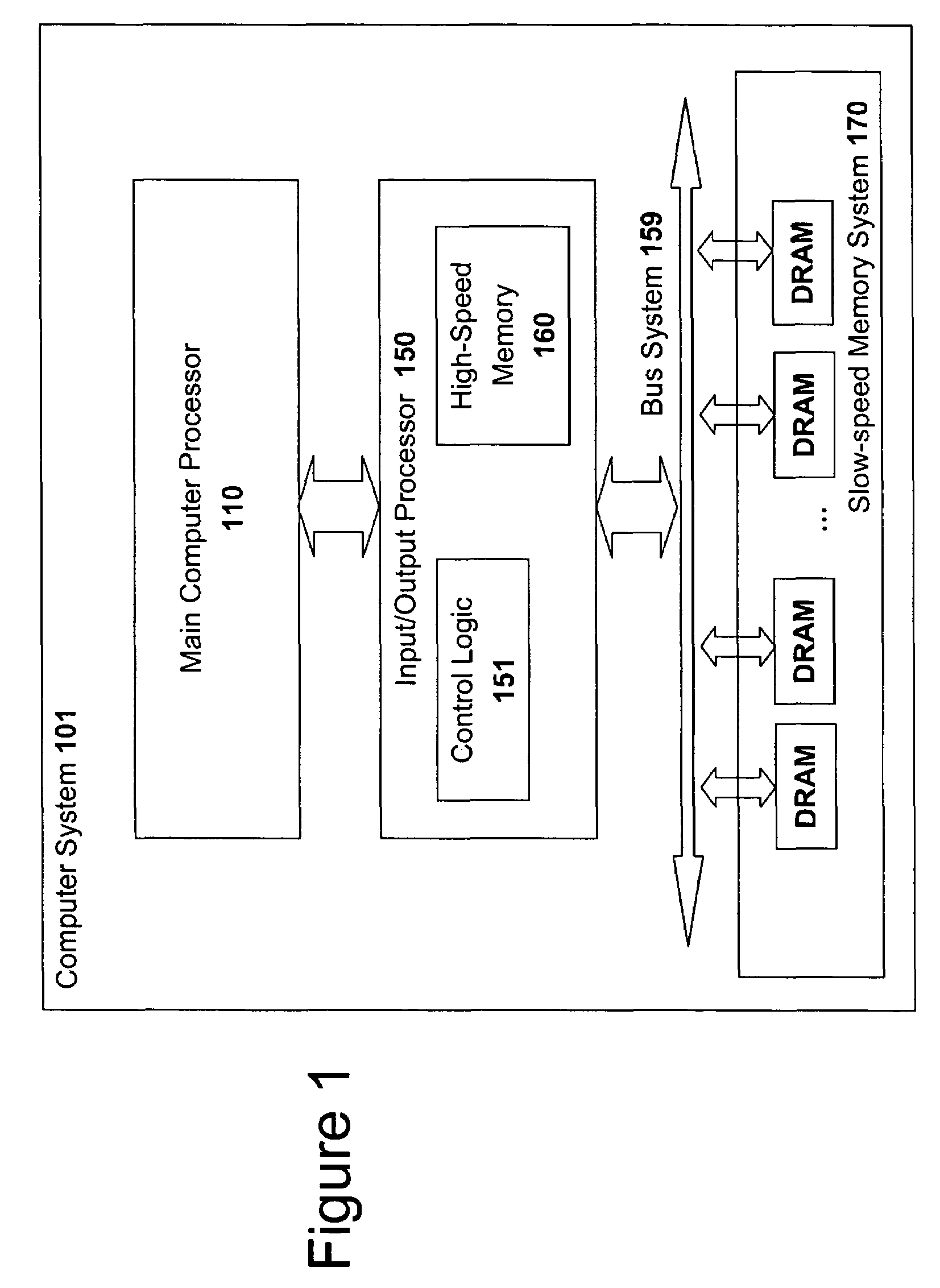

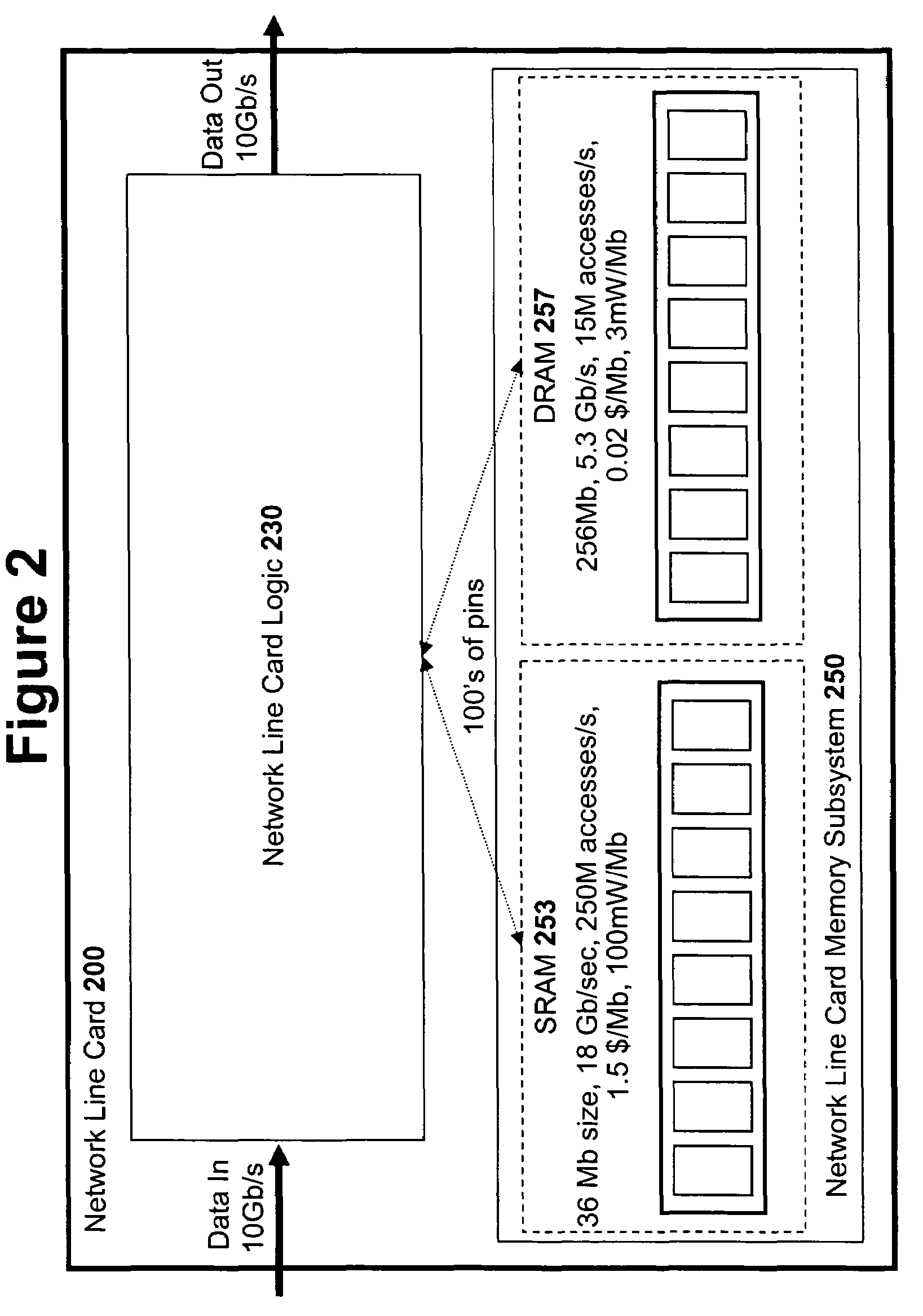

High speed memory and input/output processor subsystem for efficiently allocating and using high-speed memory and slower-speed memory

ActiveUS7657706B2Easy to handleSimple taskMemory architecture accessing/allocationMemory adressing/allocation/relocationHigh speed memoryMemory bank

An input / output processor for speeding the input / output and memory access operations for a processor is presented. The key idea of an input / output processor is to functionally divide input / output and memory access operations tasks into a compute intensive part that is handled by the processor and an I / O or memory intensive part that is then handled by the input / output processor. An input / output processor is designed by analyzing common input / output and memory access patterns and implementing methods tailored to efficiently handle those commonly occurring patterns. One technique that an input / output processor may use is to divide memory tasks into high frequency or high-availability components and low frequency or low-availability components. After dividing a memory task in such a manner, the input / output processor then uses high-speed memory (such as SRAM) to store the high frequency and high-availability components and a slower-speed memory (such as commodity DRAM) to store the low frequency and low-availability components. Another technique used by the input / output processor is to allocate memory in such a manner that all memory bank conflicts are eliminated. By eliminating any possible memory bank conflicts, the maximum random access performance of DRAM memory technology can be achieved.

Owner:CISCO TECH INC

Demand-based memory system for graphics applications

InactiveUS7102646B1High resolutionSmall size of pageMemory adressing/allocation/relocationImage memory managementApplication softwareGraphics

A memory system and methods of operating the same that drastically increase the efficiency in memory use and allocation in graphics systems. In a graphics system using a tiled architecture, instead of pre-allocating a fixed amount of memory for each tile, the invention dynamically allocates varying amounts of memory per tile depending on the demand. In one embodiment all or a portion of the available memory is divided into smaller pages that are preferably equal in size. Memory allocation is done by page based on the amount of memory required for a given tile.

Owner:NVIDIA CORP

Image processing system to control vehicle headlamps or other vehicle equipment

InactiveUS20020156559A1Reduce complexityLow costTelevision system detailsDigital data processing detailsImaging processingAnalog-to-digital converter

An imaging system of the invention includes an image array sensor including a plurality of pixels. Each of the pixels generate a signal indicative of the amount of light received on the pixel. The imaging system further includes an analog to digital converter for quantizing the signals from the pixels into a digital value. The system further includes a memory including a plurality of allocated storage locations for storing the digital values from the analog to digital converter. The number of allocated storage locations in the memory is less than the number of pixels in the image array sensor. According to another embodiment, an imaging device includes an image sensor having a plurality pixels arranged in an array; and a multi-layer interference filter disposed over said pixel array, said multi-layer interference filter being patterned so as to provide filters of different colors to neighboring pixels or groups of pixels.

Owner:GENTEX CORP

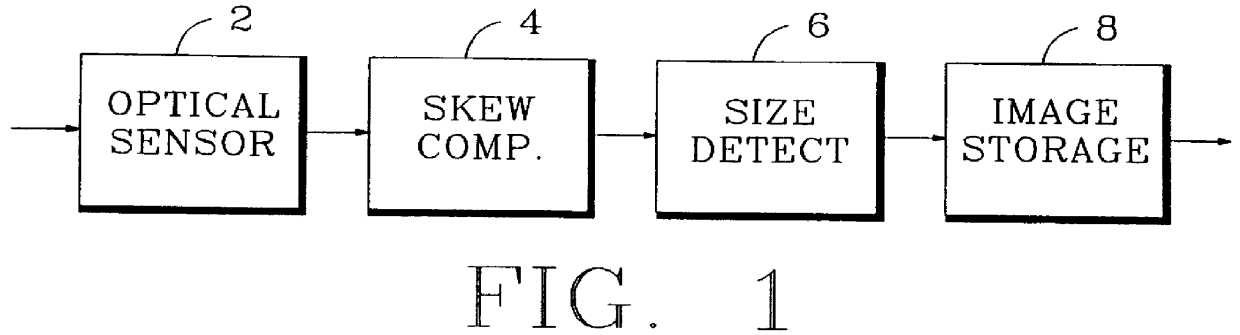

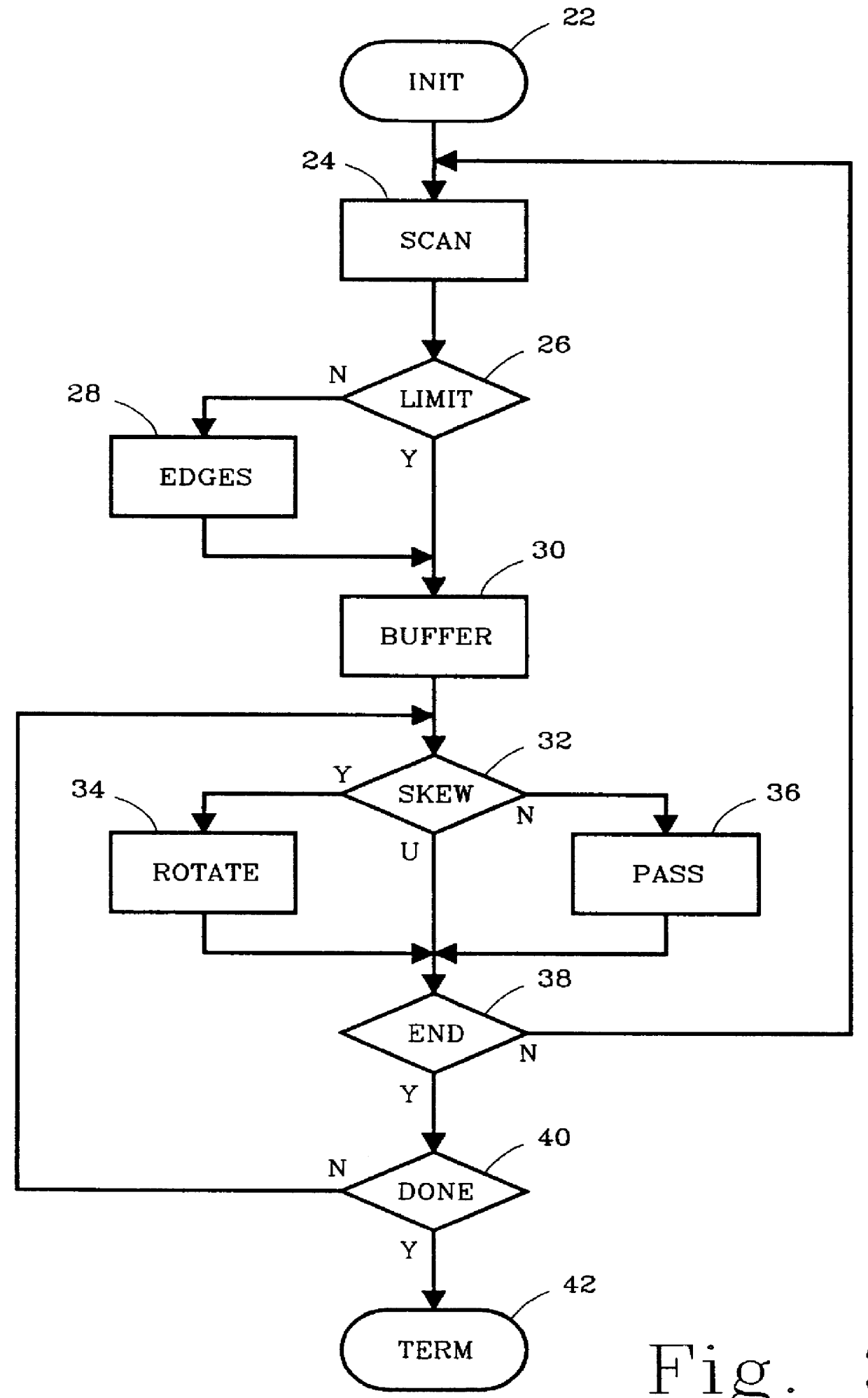

Method and apparatus for near real-time document skew compensation

InactiveUS6064778AReduce the amount of memoryReduce delaysCharacter recognitionPictoral communicationComputer graphics (images)Skew angle

A document imaging system detects skew and / or size of a document. In one embodiment, a document imaging system generates scanning signals representing the documents, analyxes the scanning signals to detect one more edges of the document before the entire lenght of the document is scanned, establishes a skew angle between the detected edges and a reference orientation, and modifies the scanning signals to compensate for skew while the document is being scanned. In another embodiment, a document imaging system detects one or more edges of a document, defines a polygon having sides substantially congruent with the detected edges, and establishes the size of the document in response to the polygon.

Owner:PRIMAX ELECTRONICS LTD

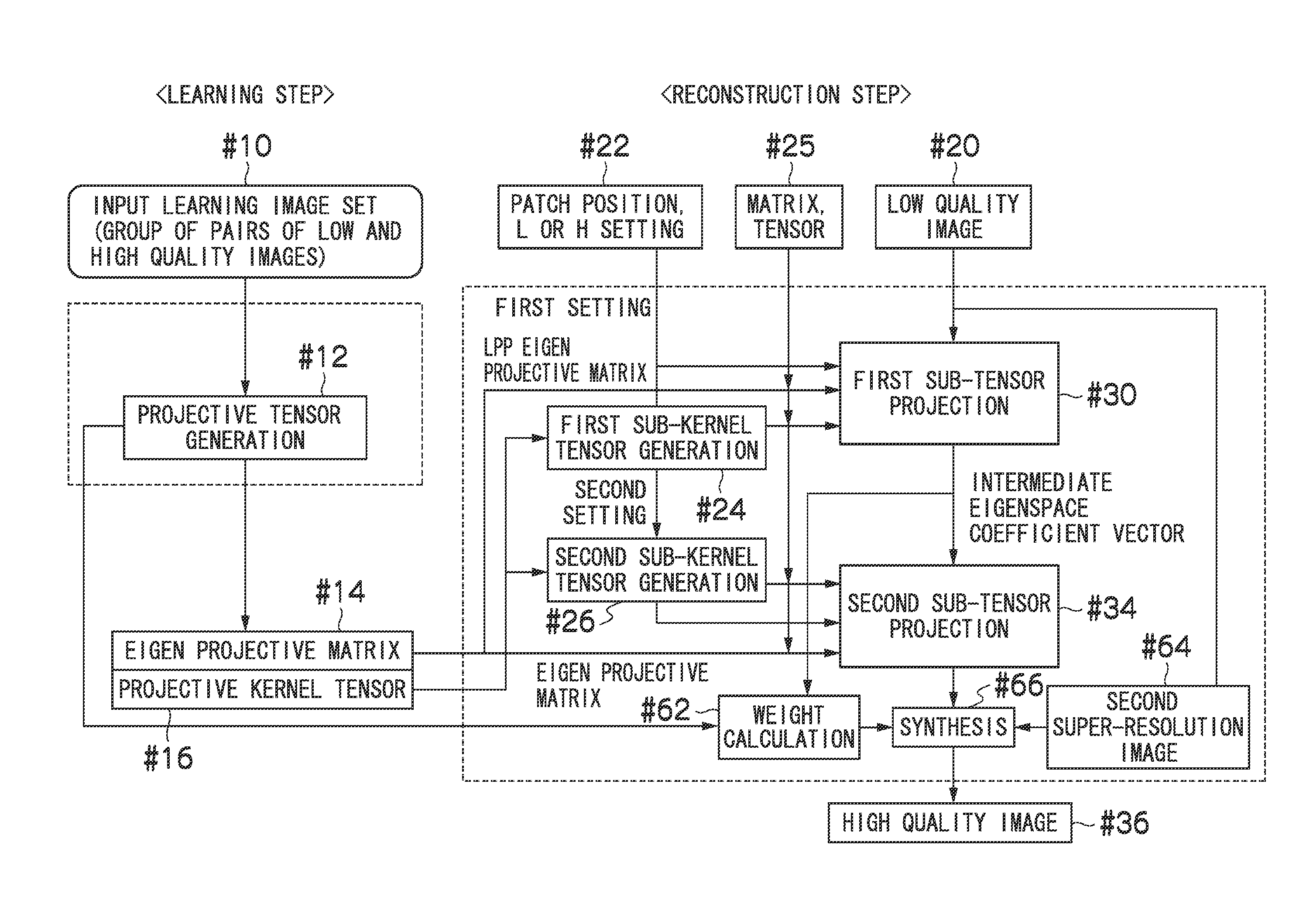

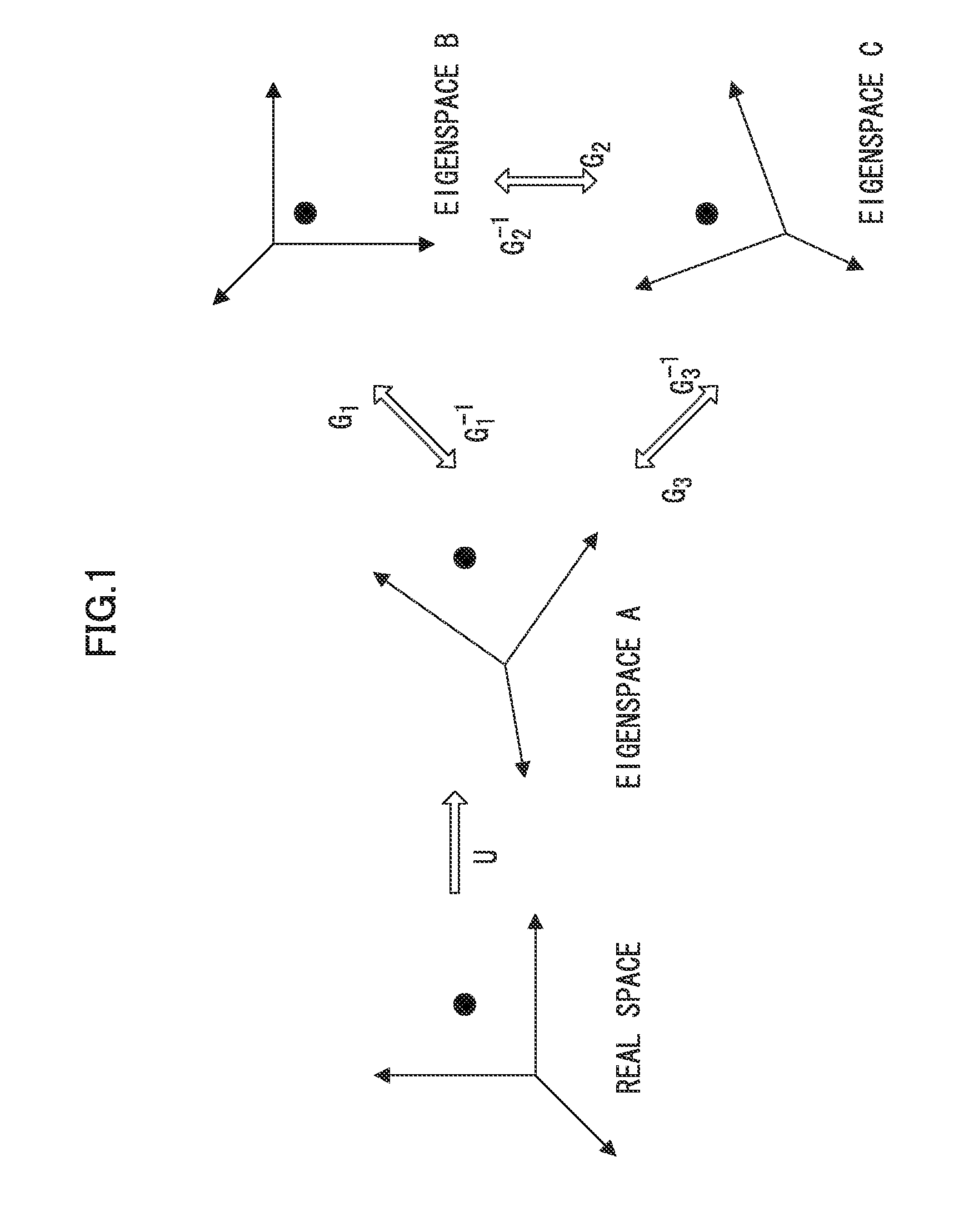

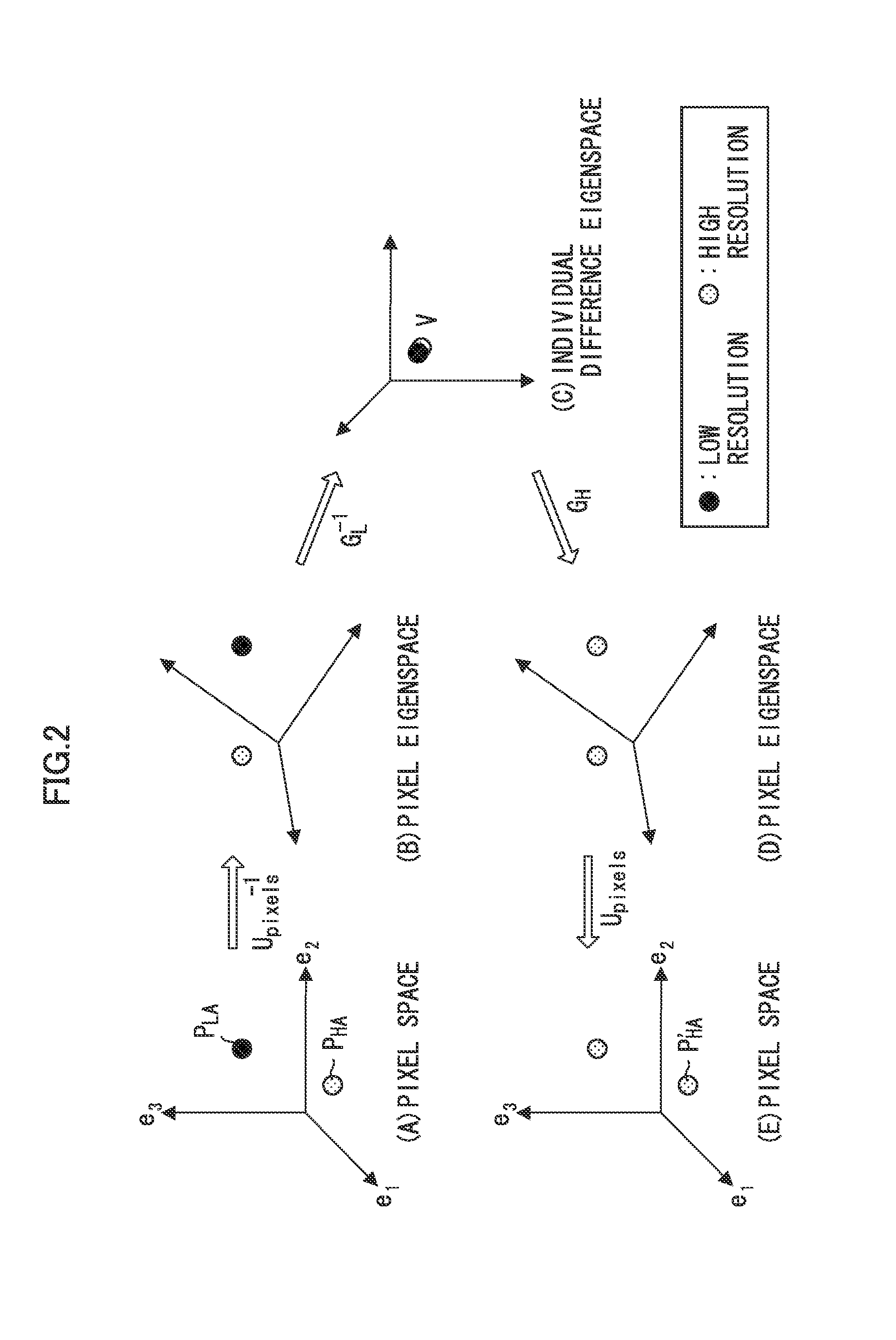

Image processing apparatus and method, data processing apparatus and method, and program and recording medium

InactiveUS20110026849A1Satisfactory reconstruction imageInhibit deteriorationGeometric image transformationAcquiring/recognising facial featuresImaging processingWeight coefficient

The present invention determines the adopting ratio (weight coefficient) between the high image quality processing using the tensor projection method and the high image quality processing using another method according to the degree of deviation of the input condition of the input image, and combines these processes as appropriate. This allows a satisfactory reconstruction image to be acquired even in a case of deviation from the input condition, and avoids deterioration of the high quality image due to deterioration of the reconstruction image by the projective operation.

Owner:FUJIFILM CORP

Method and apparatus for scalable interconnect solution

InactiveUS7036101B2Low costBulky designSemiconductor/solid-state device manufacturingProgram controlGraphicsComputer architecture

An innovative routing method for an integrated circuit design layout. The layout can include design netlists and library cells. A multiple-level global routing can generate topological wire for each net. An area oriented graph-based detail routing on the design can be performed. A post route optimization after the detail routing can be performed to further improve the routing quality. Some methods can be single threaded all or some of the time, and / or multi-threaded some or all of the time.

Owner:CADENCE DESIGN SYST INC

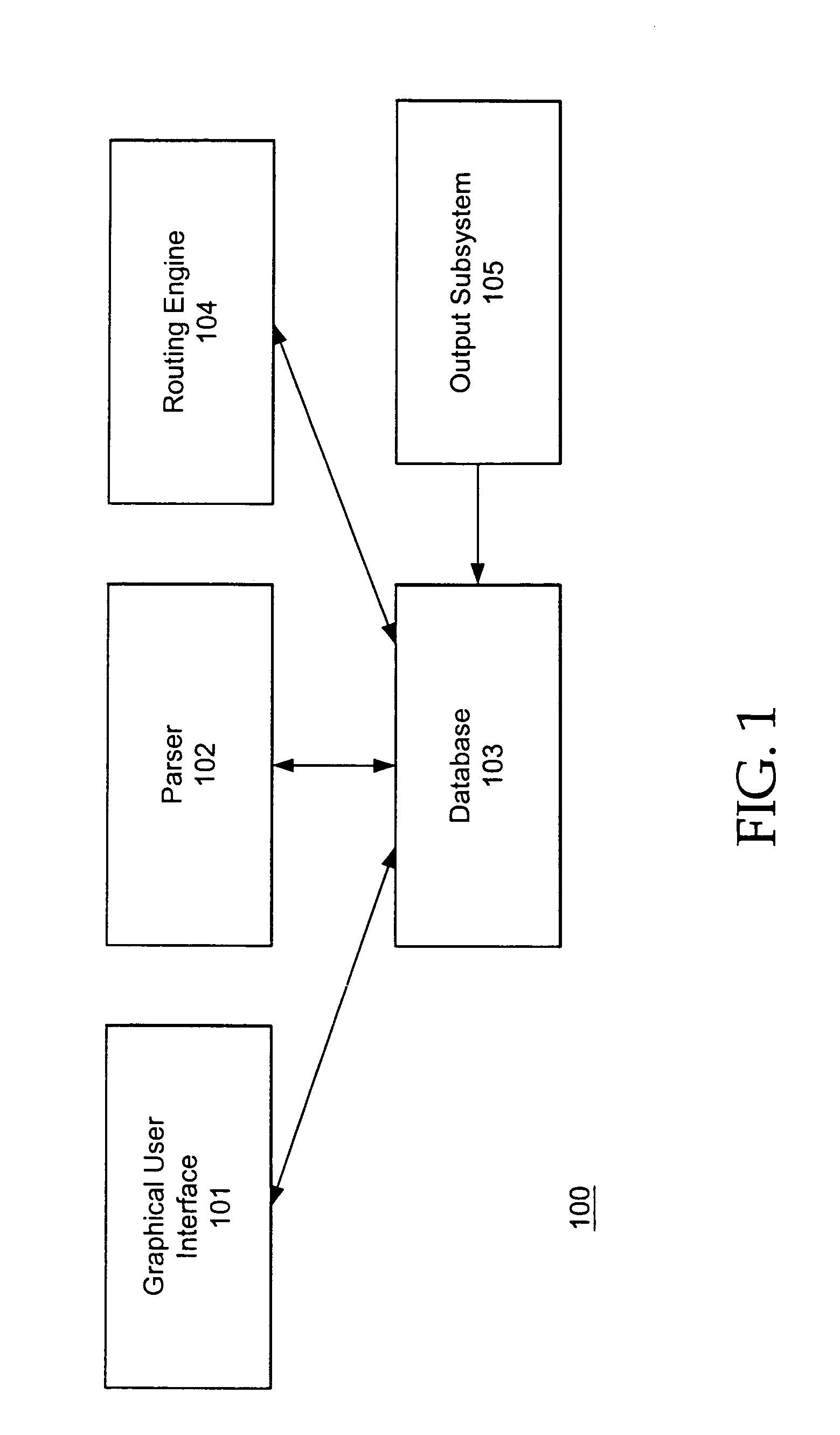

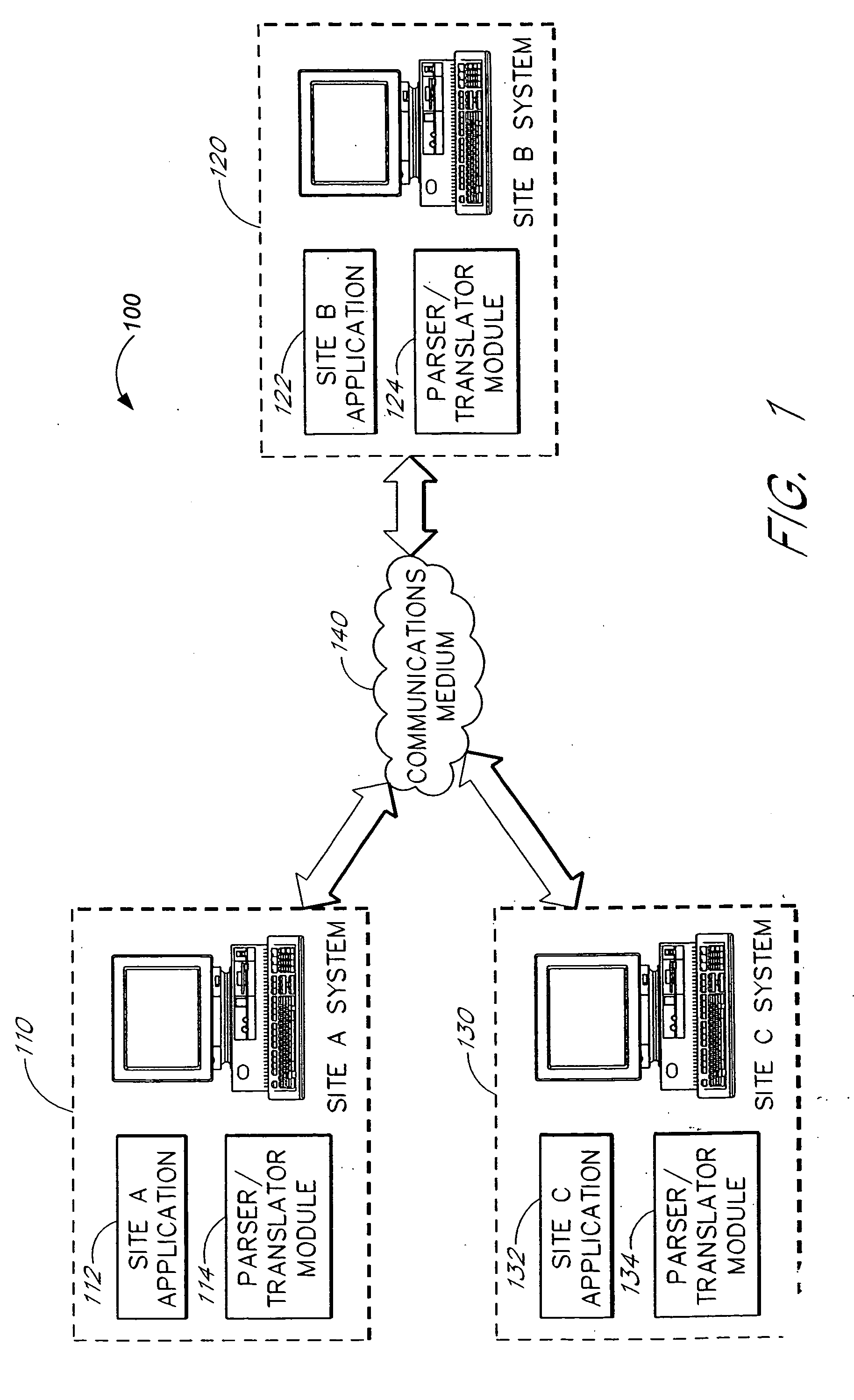

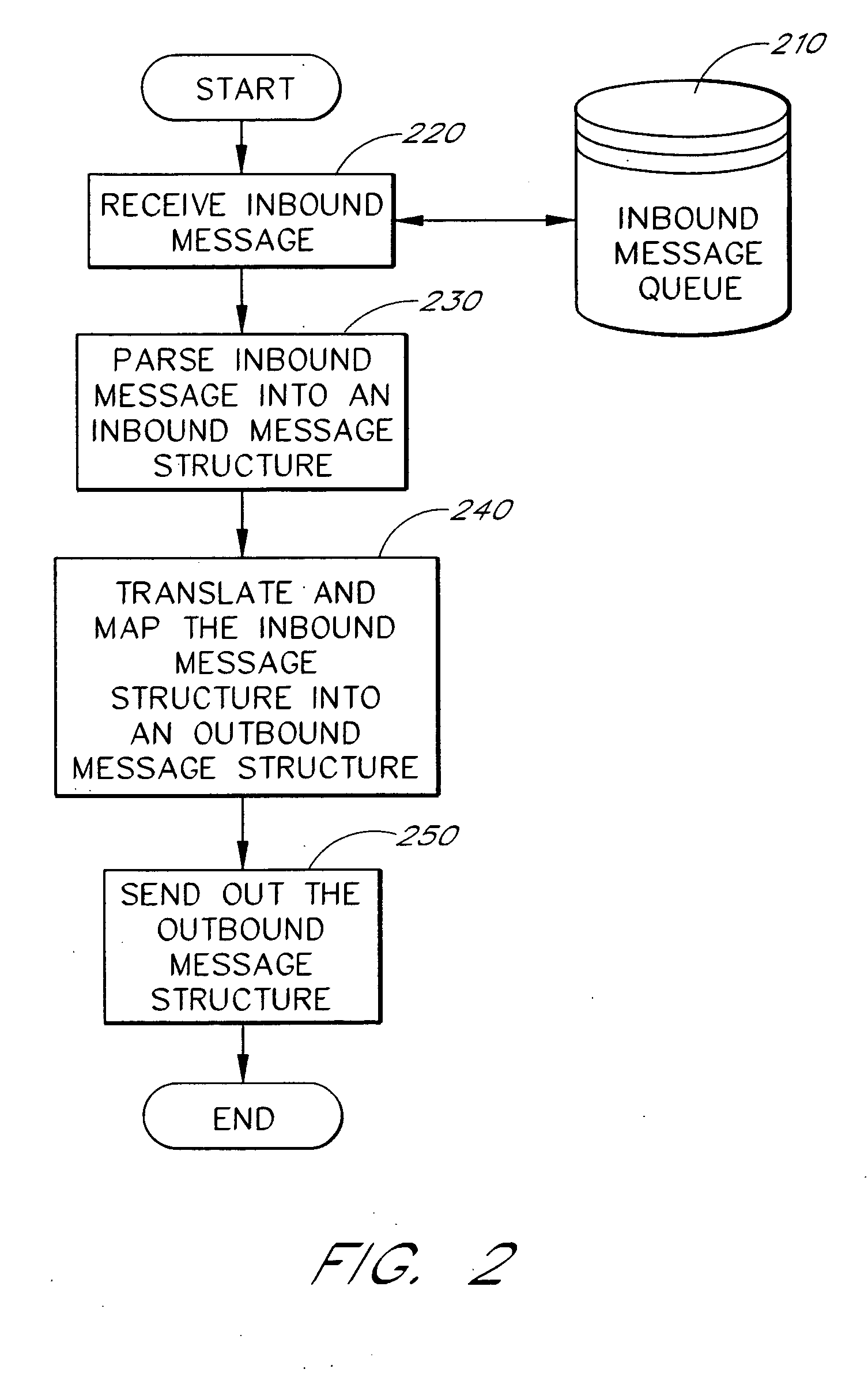

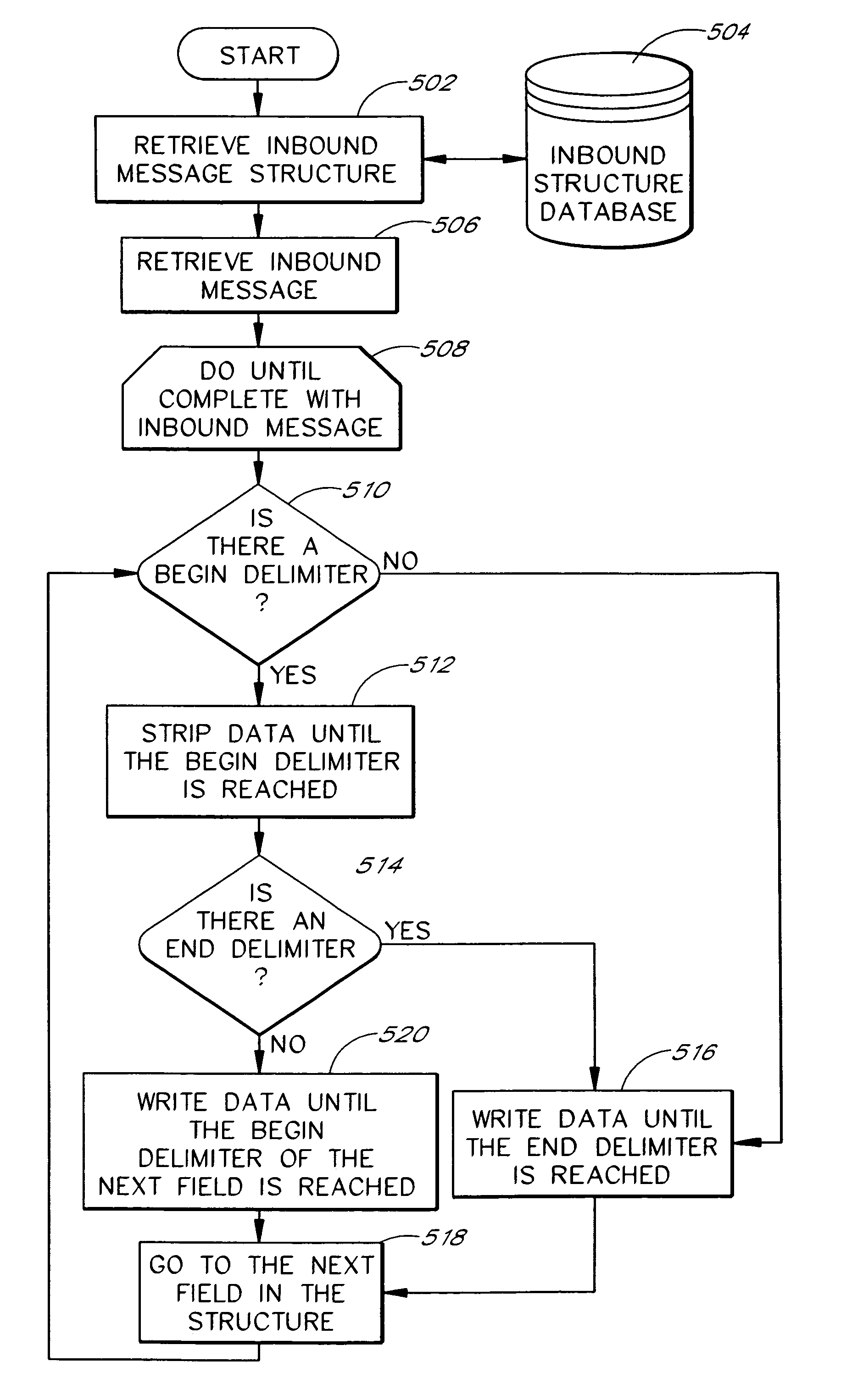

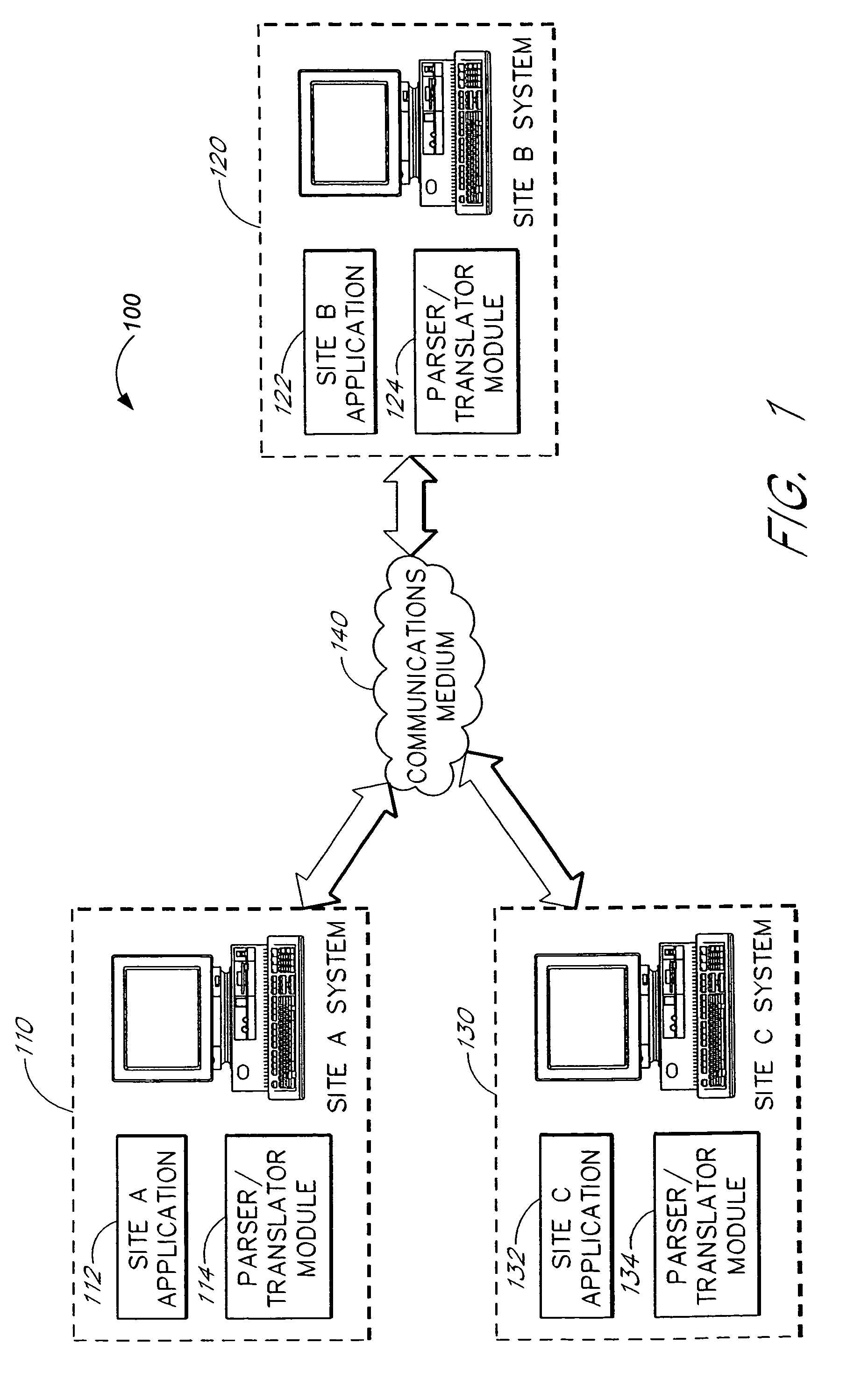

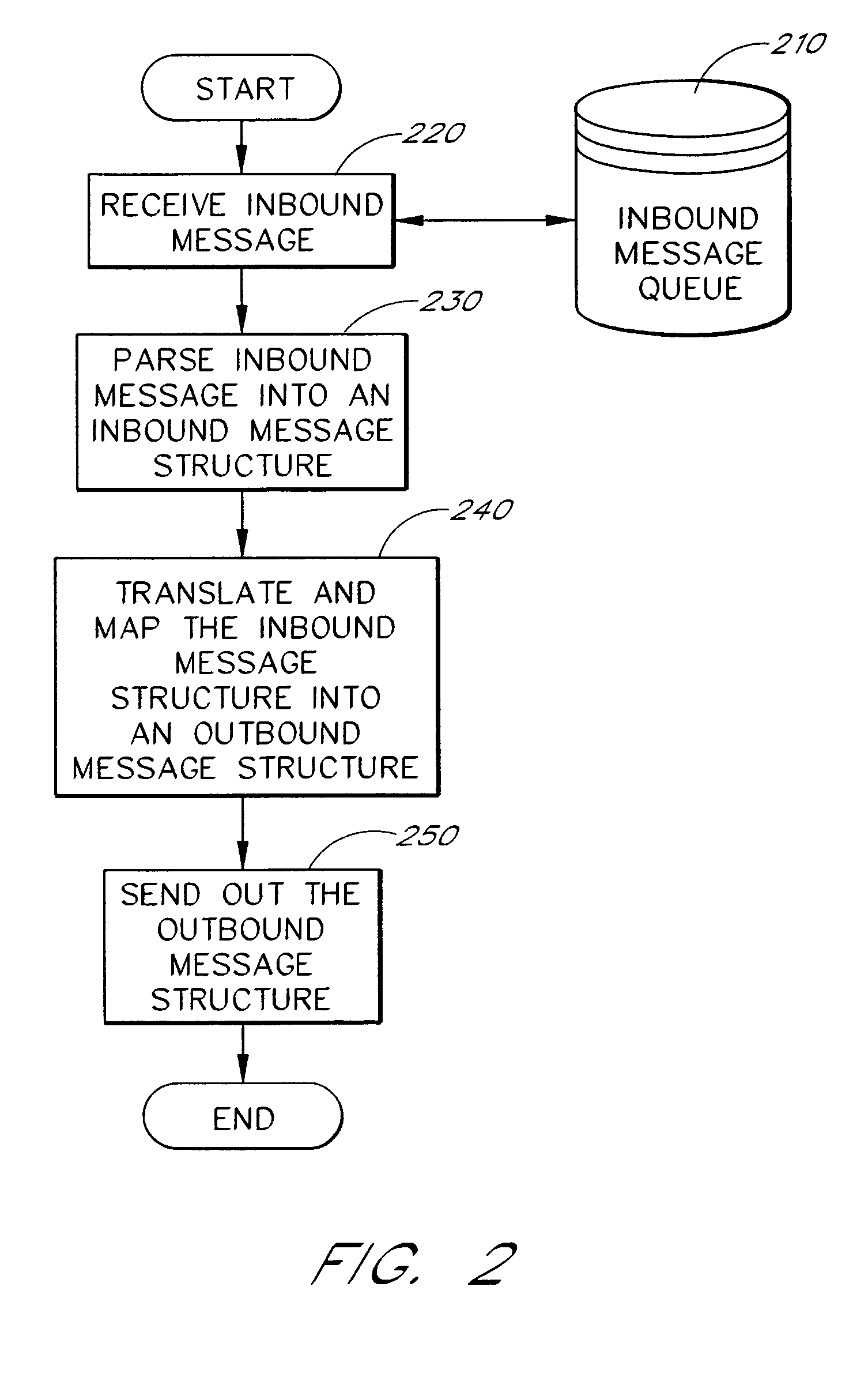

Message translation and parsing of data structures in a distributed component architecture

InactiveUS20050097566A1Efficiently parseEfficiently translateInterprogram communicationMultiple digital computer combinationsUser needsMessage structure

The present invention is related to systems and methods that parse and / or translate inbound messages into outbound messages such that disparate computer systems can communicate intelligibly. In one embodiment, a system recursively parses the inbound message such that relatively fewer outbound message structure definitions are required and advantageously decreases the usage of resources by the system. Further, one system in accordance with the present invention allows an operator to configure the identity of a delimiter in the inbound message. The delimiter can span multiple characters and includes the logical inverse of a set of delimiters. The outbound message can be accessed at nodes within a hierarchy, as well as at leaves. Thus, a user need not know the precise location of data within the outbound message. A set of updating rules further permits the updating of an outbound message without having to re-parse an entire inbound message.

Owner:ORACLE INT CORP

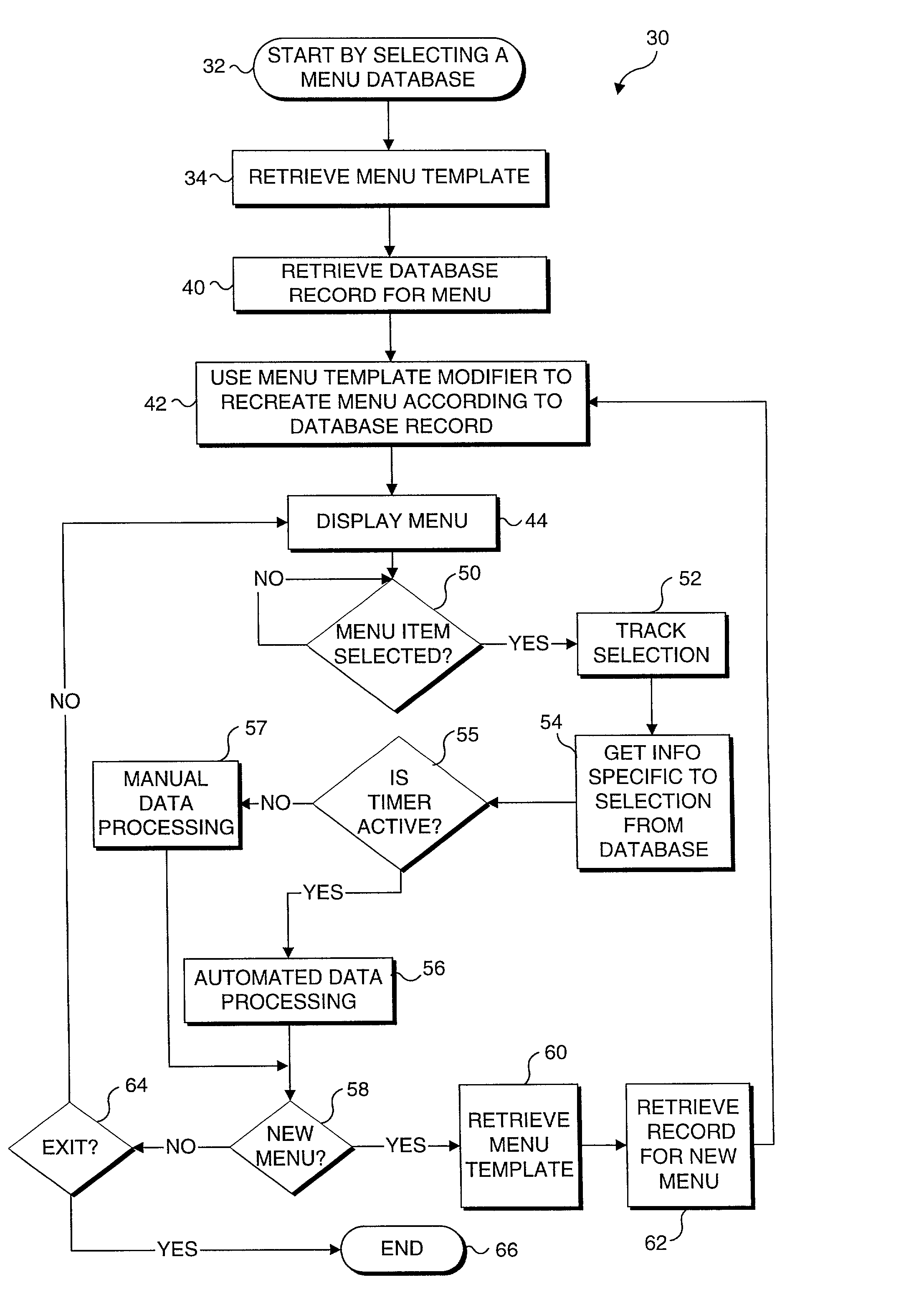

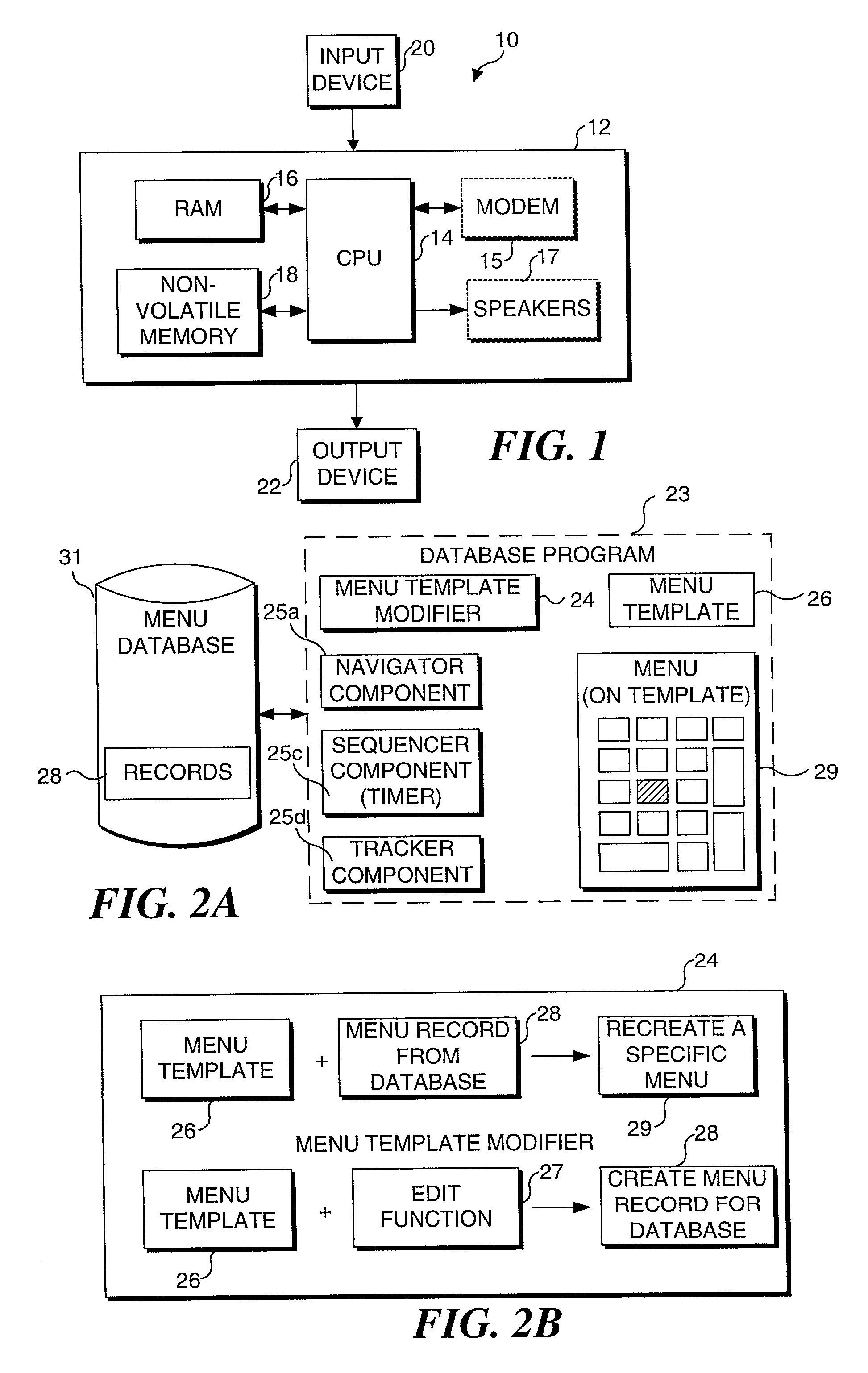

Method for generating and navigating a plurality of menus using a database and a menu template

InactiveUS7127679B2Reduce the amount of memoryReduce the amount requiredDigital data processing detailsExecution for user interfacesSpatial organizationNumeric keypad

Menus are created that facilitate access to data in a menu database. Preferably, the menus include a plurality of menus items laid out so as to duplicate the spatial organization of keys on a numeric keypad, such that a one-to-one relationship exists between the keys on the keypad and the menu items. The amount of memory required to store database records relating to individual menus is reduced, because a menu template provides general formatting information. Thus, database records need not include formatting details. When a menu is required, a menu template modifier uses the menu template and the corresponding database record to generate the desired menu. The menus are employed for accessing data in the menu database. An edit function is provided in the menu template modifier to enable custom menus to be developed and modified.

Owner:SOFTREK

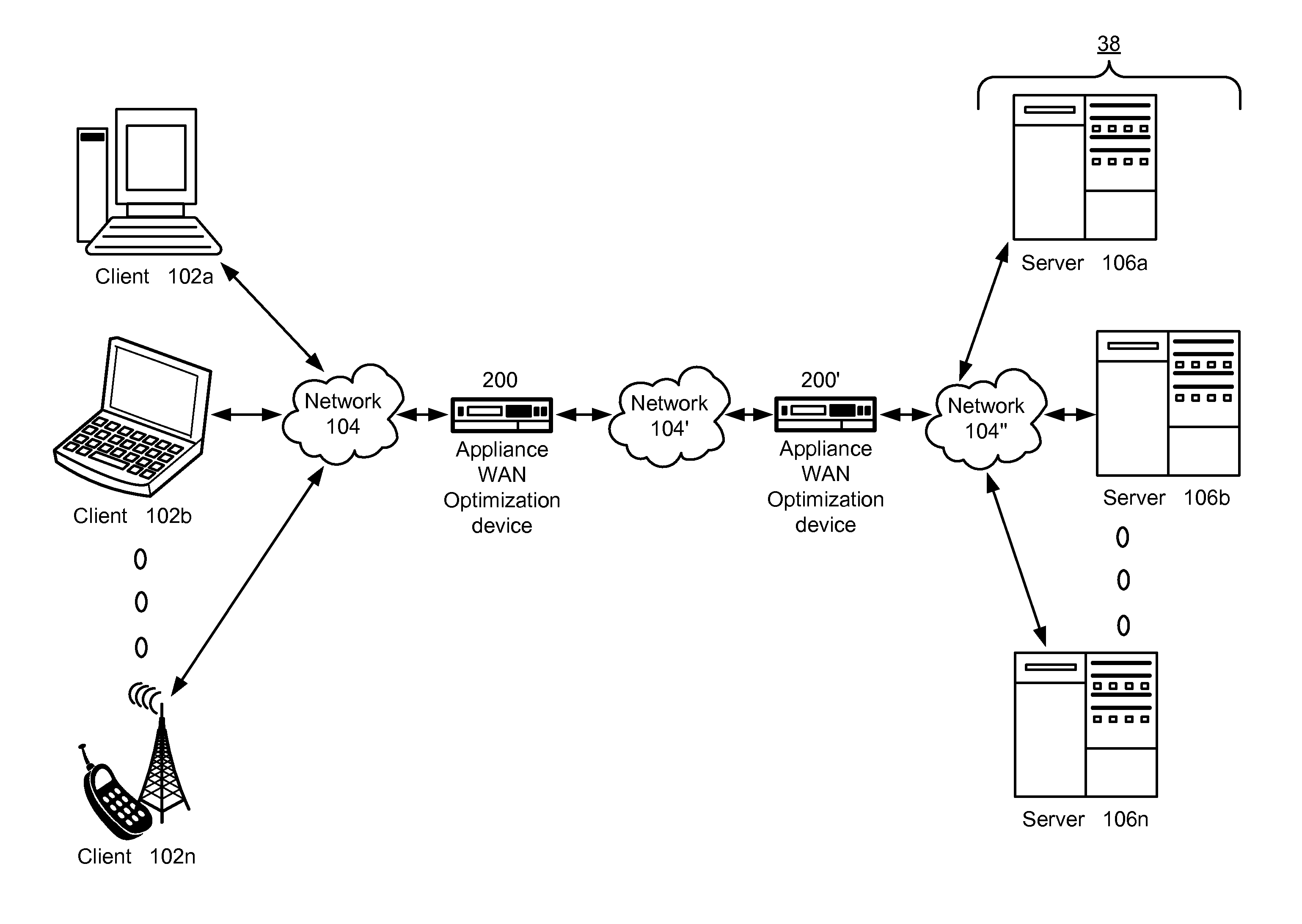

Prioritizing highly compressed traffic to provide a predetermined quality of service

ActiveUS20130094356A1Easy transferReduce the amount of memoryError preventionFrequency-division multiplex detailsHard codingQuality of service

A network optimization engine can be used to optimize the transmission of network traffic by employing means to prioritize highly compressed network traffic over other network traffic. The network optimization engine accomplishes network traffic optimization by calculating a compression ratio for received data packets and determining whether the calculated compression ratios exceed a compression ratio threshold. The predetermined compression ratio threshold can be a hard coded value or an empirically determined compression ratio threshold that is calculated using a sample of the received network packets. Network packets having a compression ratio that exceeds the compression ratio threshold are classified as highly compressed network traffic and transmitted according to a transmission scheme that is different than a transmission scheme used to transmit non-highly compressed network traffic.

Owner:CITRIX SYST INC

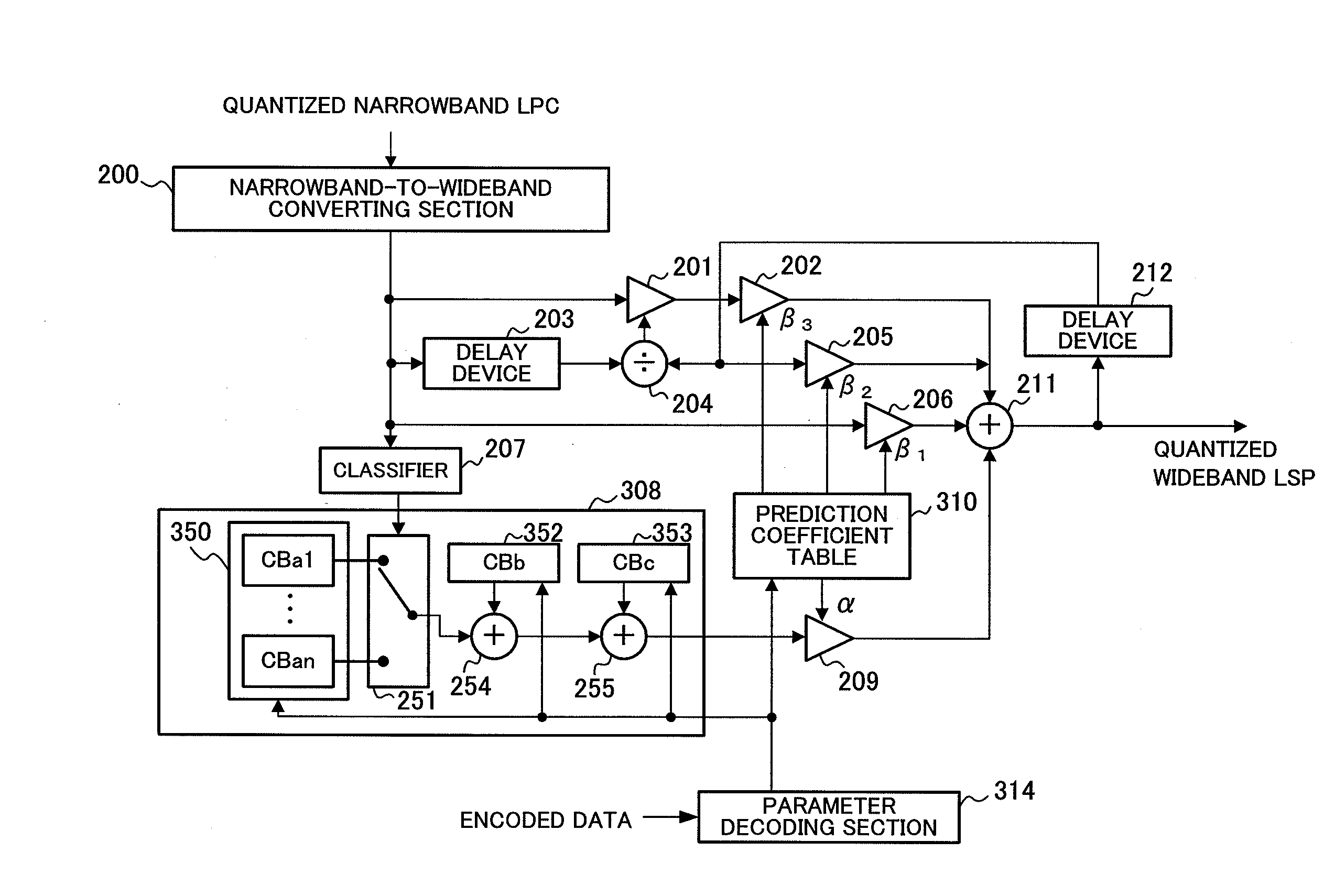

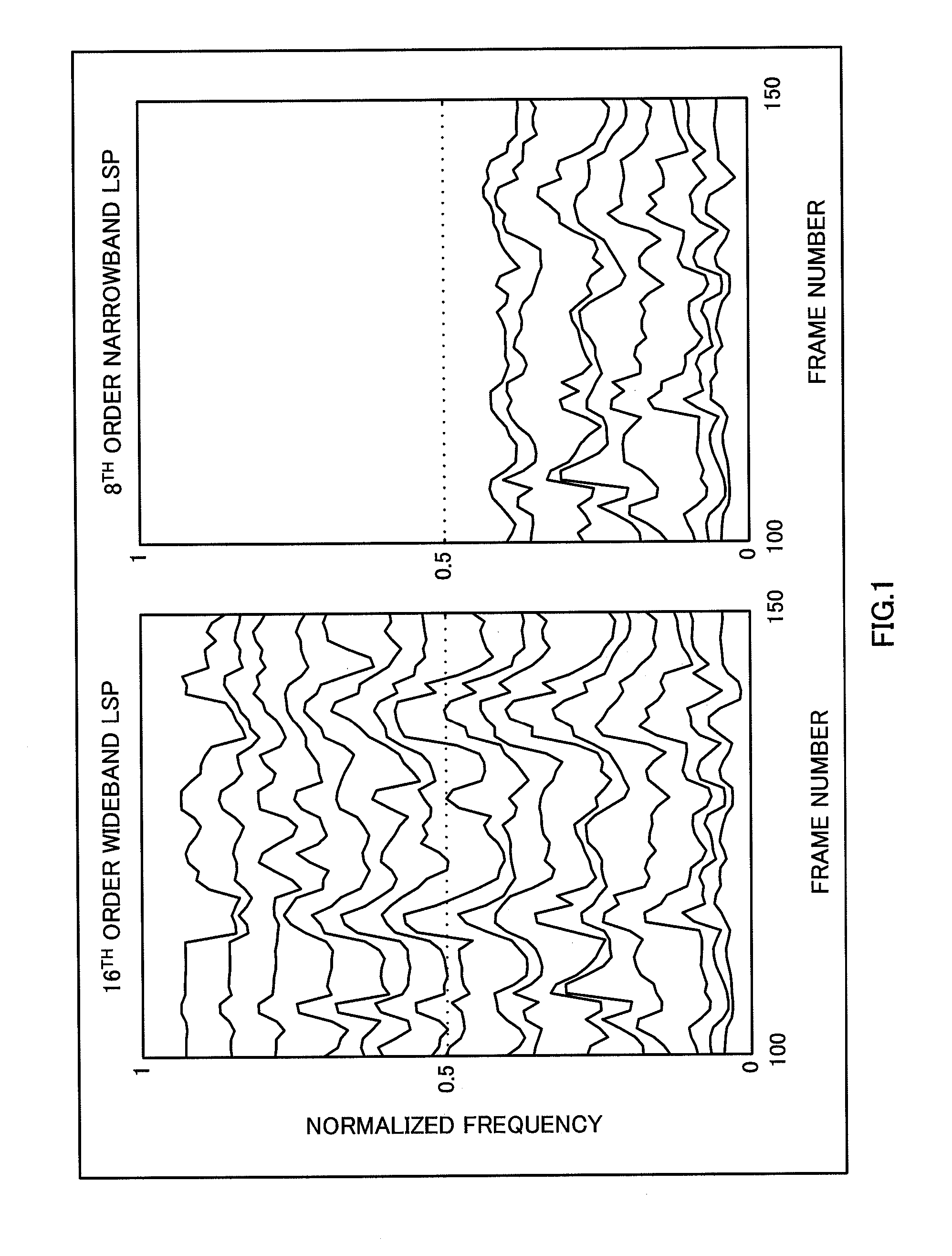

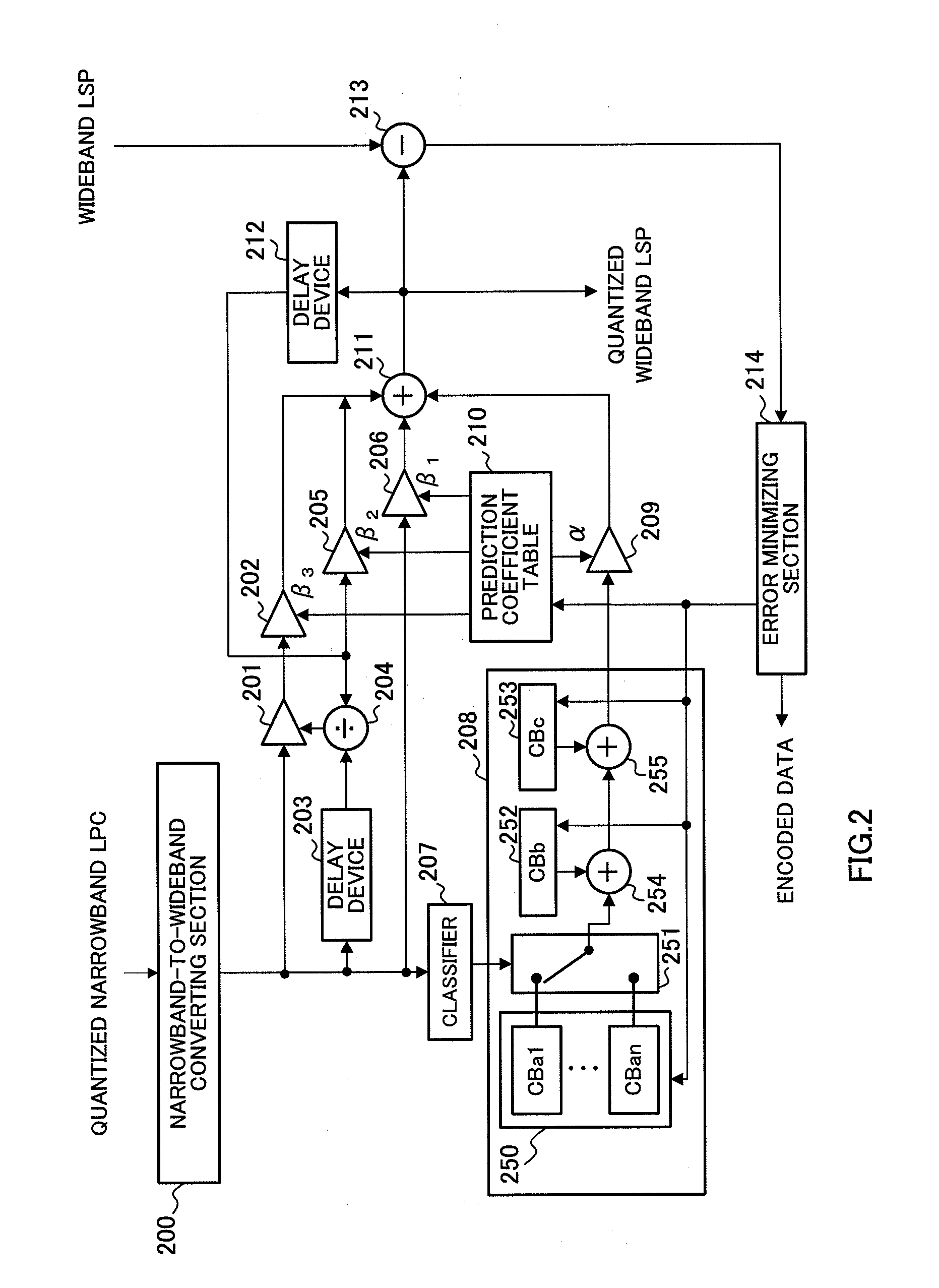

Scalable Encoding Apparatus, Scalable Decoding Apparatus, Scalable Encoding Method, Scalable Decoding Method, Communication Terminal Apparatus, and Base Station Apparatus

ActiveUS20080059166A1Improve Quantization EfficiencyImprove efficiencySpeech analysisComputer architectureTerminal equipment

A scalable encoding apparatus, a scalable decoding apparatus and the like are disclosed which can achieve a band scalable LSP encoding that exhibits both a high quantization efficiency and a high performance. In these apparatuses, a narrow band-to-wide band converting part (200) receives and converts a quantized narrow band LSP to a wide band, and then outputs the quantized narrow band LSP as converted (i.e., a converted wide band LSP parameter) to an LSP-to-LPC converting part (800). The LSP-to-LPC converting part (800) converts the quantized narrow band LSP as converted to a linear prediction coefficient and then outputs it to a pre-emphasizing part (801). The pre-emphasizing part (801) calculates and outputs the pre-emphasized linear prediction coefficient to an LPC-to-LSP converting part (802). The LPC-to-LSP converting part (802) converts the pre-emphasized linear prediction coefficient to a pre-emphasized quantized narrow band LSP as wide band converted, and then outputs it to a prediction quantizing part (803).

Owner:III HLDG 12 LLC

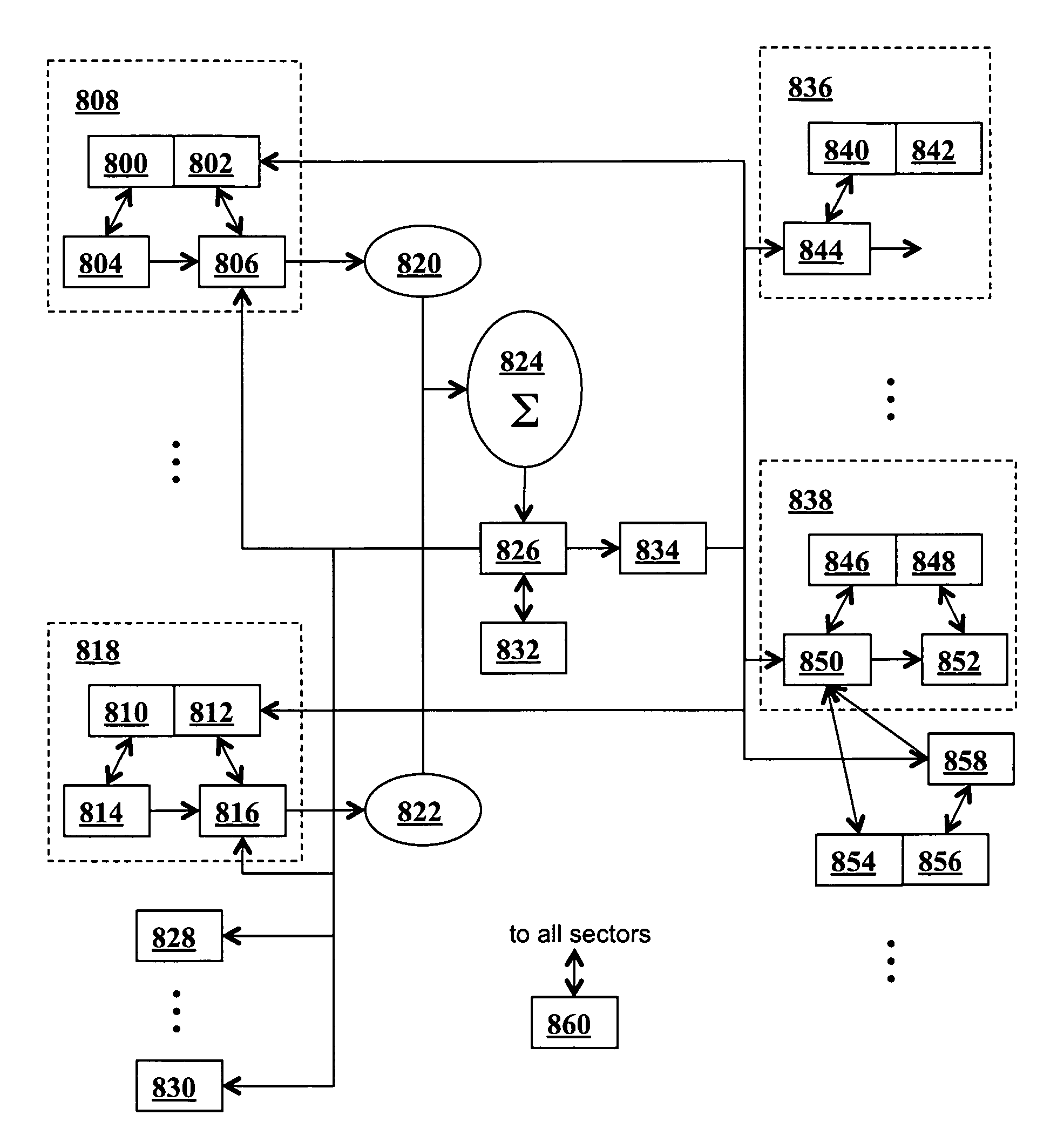

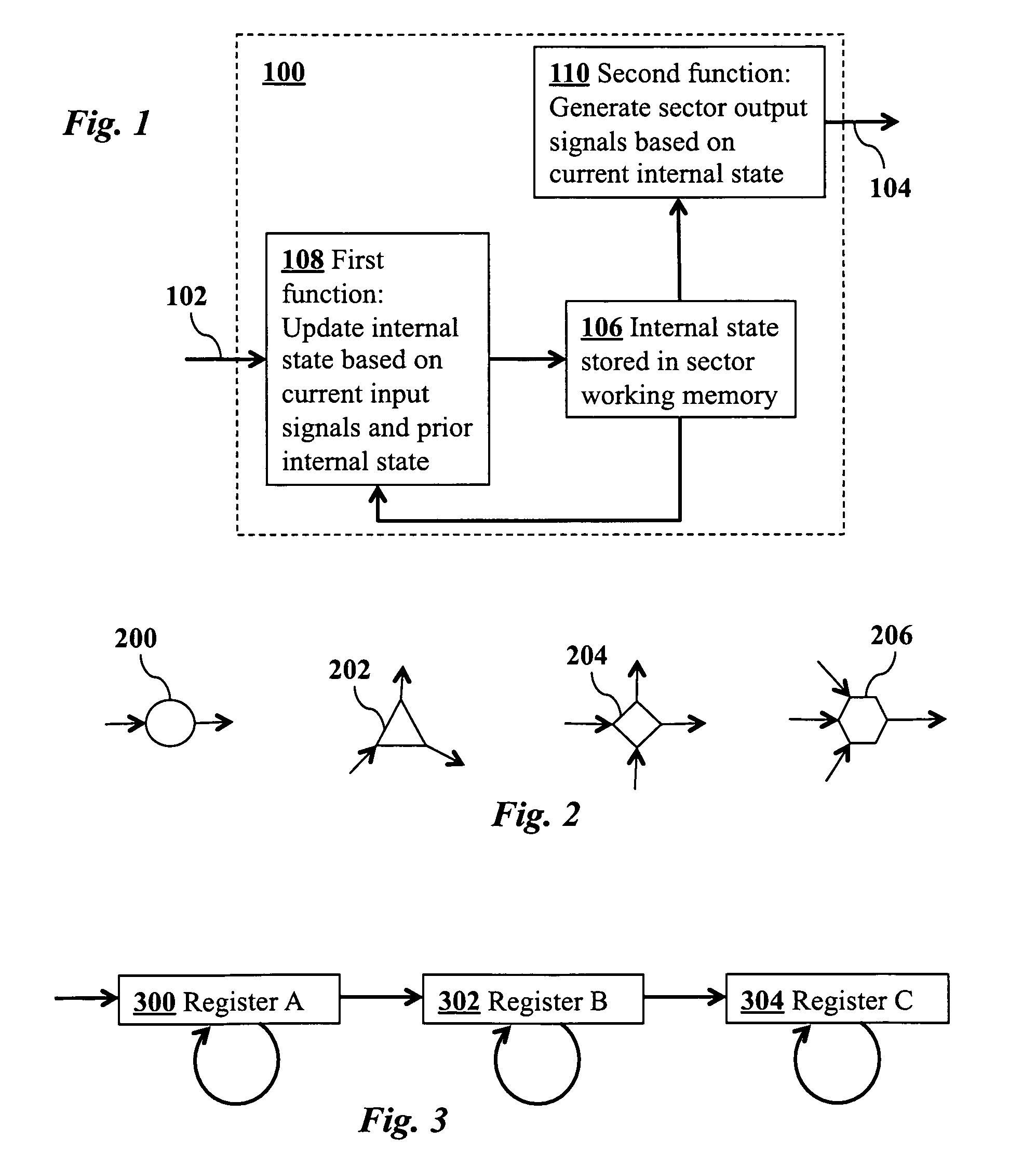

Method for efficiently simulating the information processing in cells and tissues of the nervous system with a temporal series compressed encoding neural network

ActiveUS8200593B2Effective simulationExact reproductionDigital computer detailsDigital dataInformation processingNervous system

A neural network simulation represents components of neurons by finite state machines, called sectors, implemented using look-up tables. Each sector has an internal state represented by a compressed history of data input to the sector and is factorized into distinct historical time intervals of the data input. The compressed history of data input to the sector may be computed by compressing the data input to the sector during a time interval, storing the compressed history of data input to the sector in memory, and computing from the stored compressed history of data input to the sector the data output from the sector.

Owner:CORTICAL DATABASE

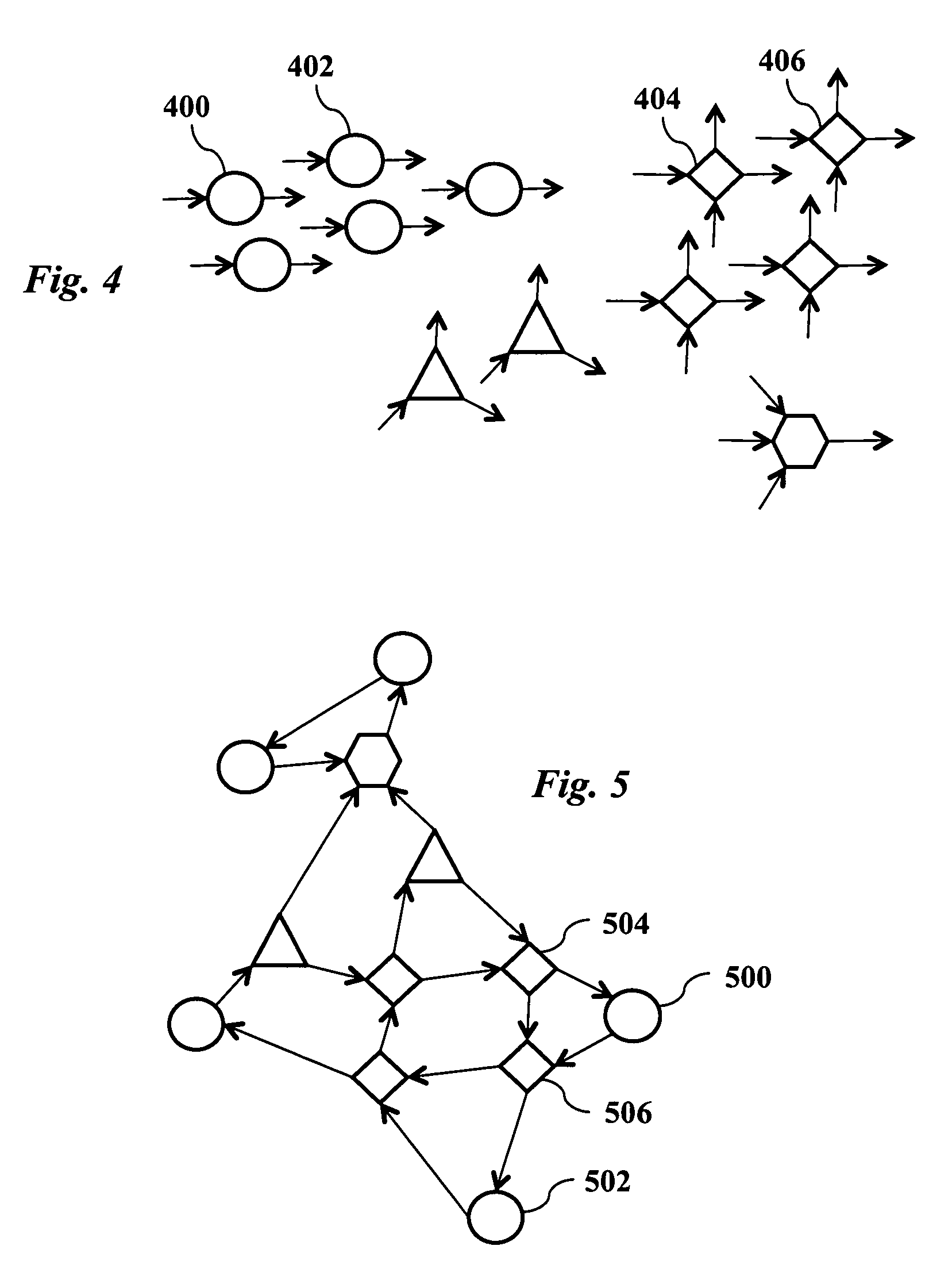

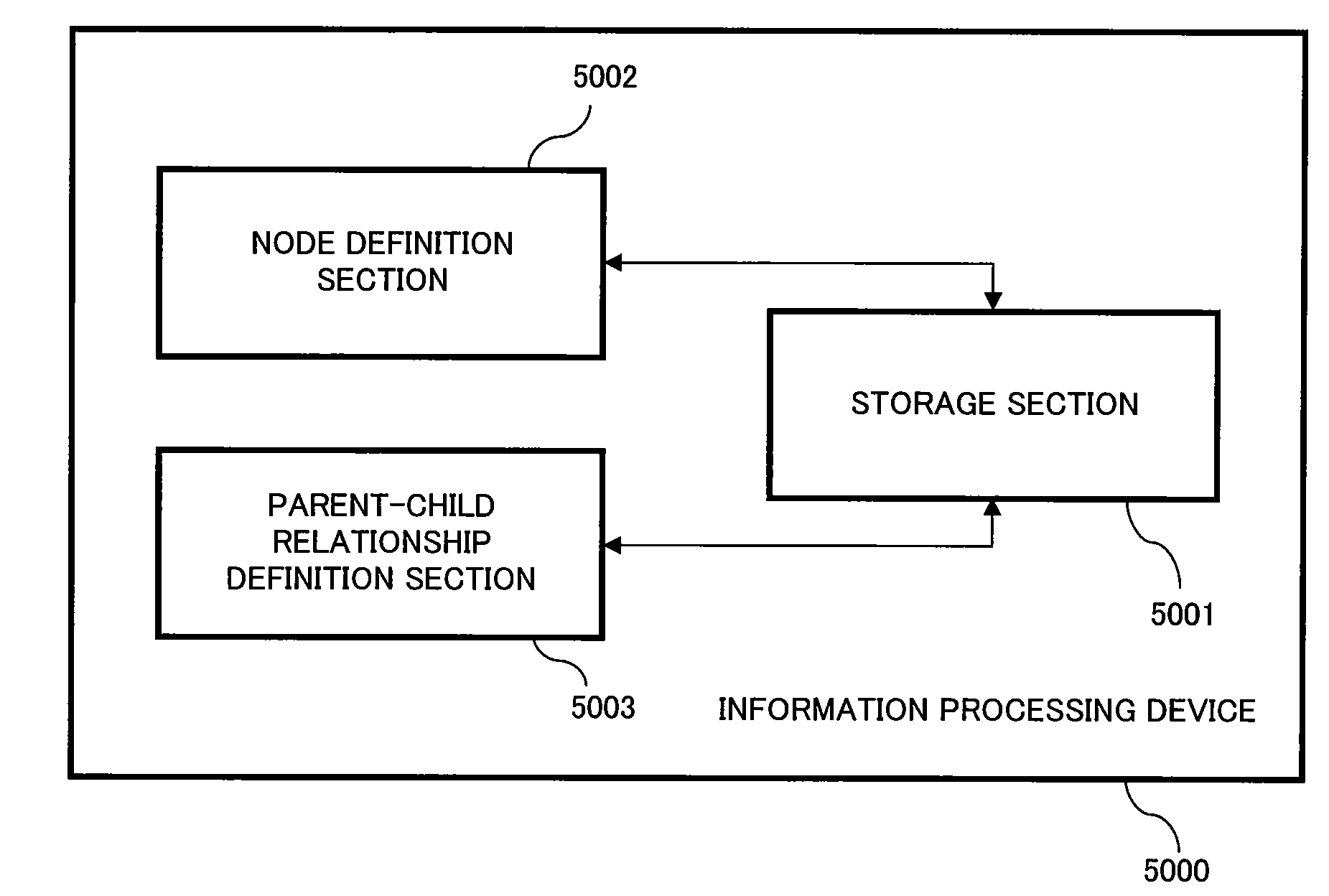

Method for Handling Tree-Type Data Structure, Information Processing Device, and Program

InactiveUS20080270435A1Reduce the amount of memoryIncrease speedDigital data information retrievalData processing applicationsInformation processingTheoretical computer science

It is possible to express a tree-type data structure so as to effectively trace the relationship between data in the tree-type data structure (for example, parent-child, ancestor, descendant, brothers, generations). In the tree-type data structure, for each of non root nodes which are the nodes excluding the root node, their parent nodes are correlated so that the parent-child relationship between the nodes is expressed by using “child->parent” relationship. Accordingly, by specifying a child node, it is possible to promptly specify the only one parent node corresponding to the child node.

Owner:ESPERANT SYST CO LTD

Graphics system with embedded frame buffer having reconfigurable pixel formats

InactiveUS20050162436A1Improve system flexibilityEasy to useIndoor gamesCathode-ray tube indicatorsGraphic systemGraphics processing unit

A graphics system including a custom graphics and audio processor produces exciting 2D and 3D graphics and surround sound. The system includes a graphics and audio processor including a 3D graphics pipeline and an audio digital signal processor. The graphics system has a graphics processor includes an embedded frame buffer for storing frame data prior to sending the frame data to an external location, such as main memory. The embedded frame buffer is selectively configurable to store the following pixel formats: point sampled RGB color and depth, super-sampled RGB color and depth, and YUV (luma / chroma). Graphics commands are provided which enable the programmer to configure the embedded frame buffer for any of the pixel formats on a frame-by-frame basis.

Owner:NINTENDO CO LTD

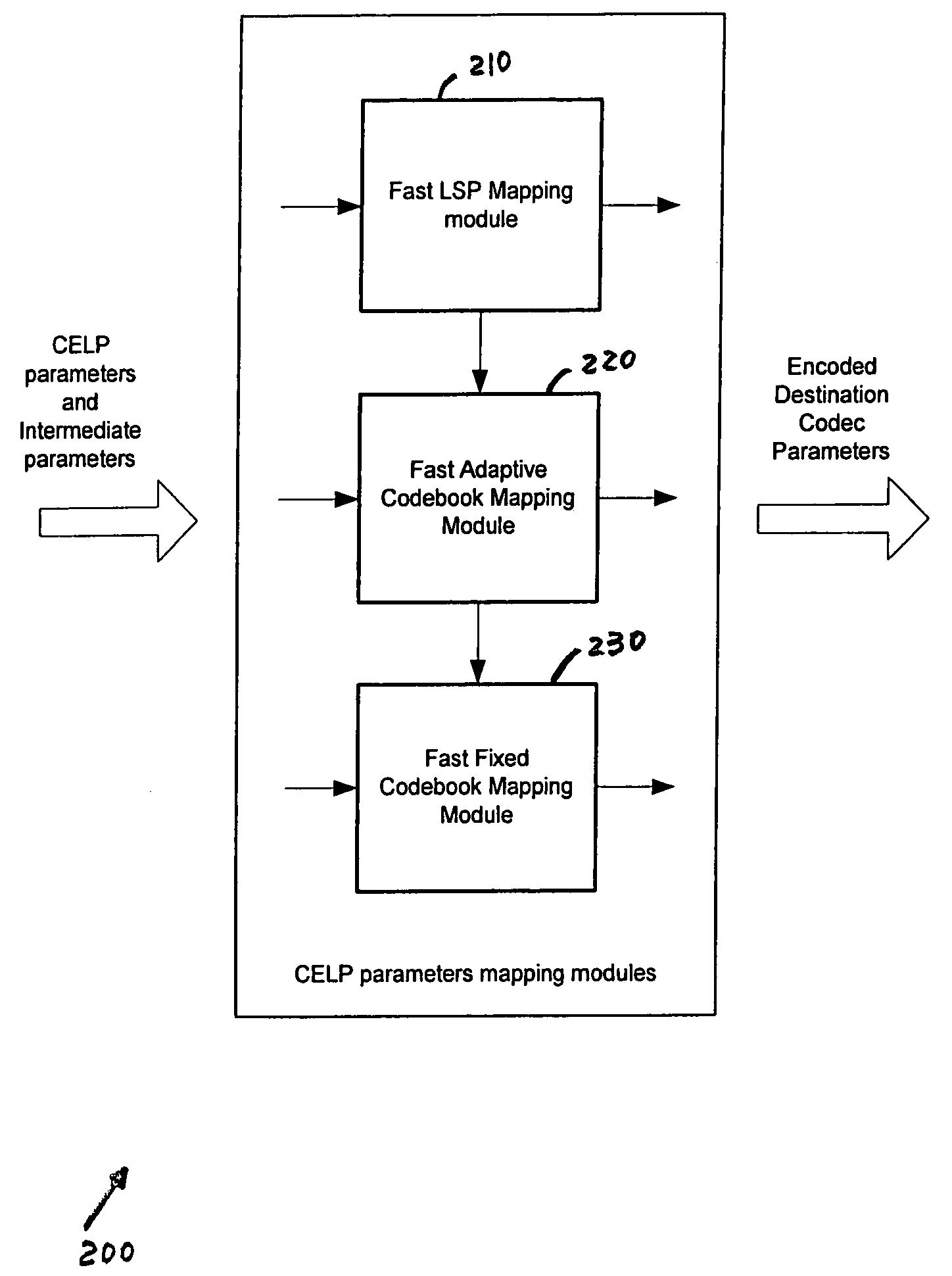

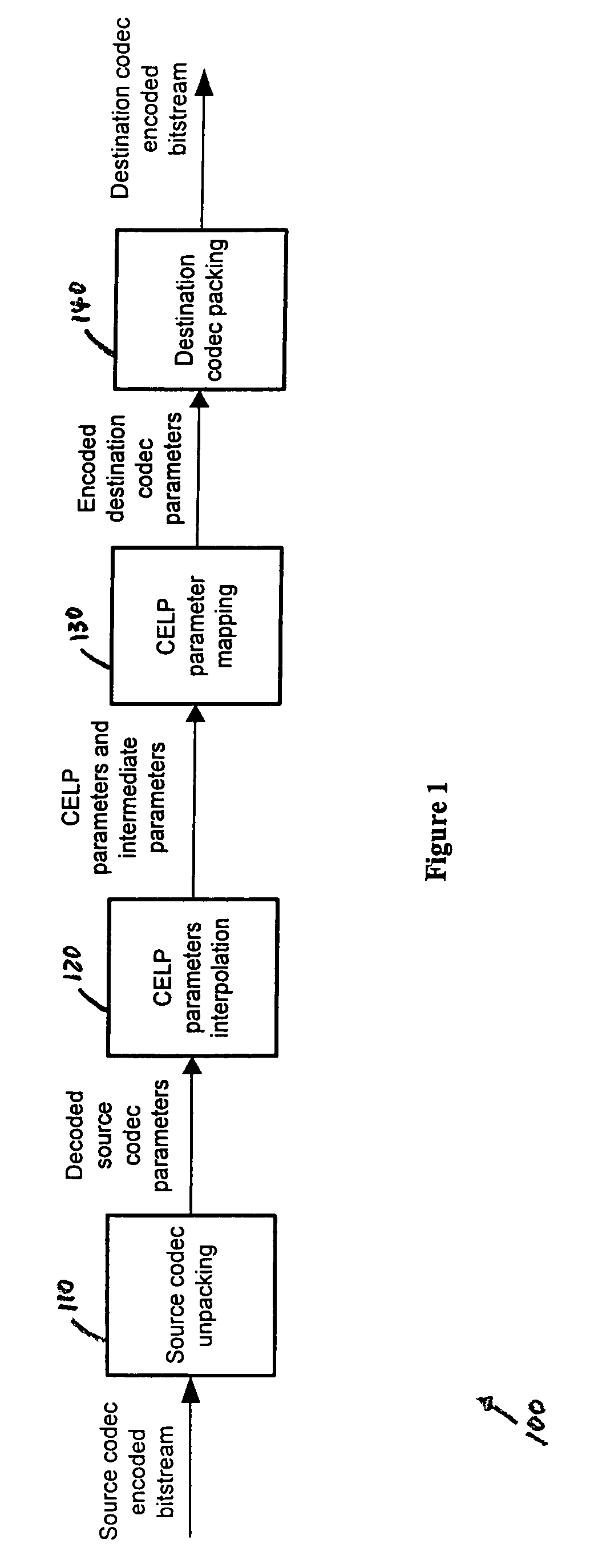

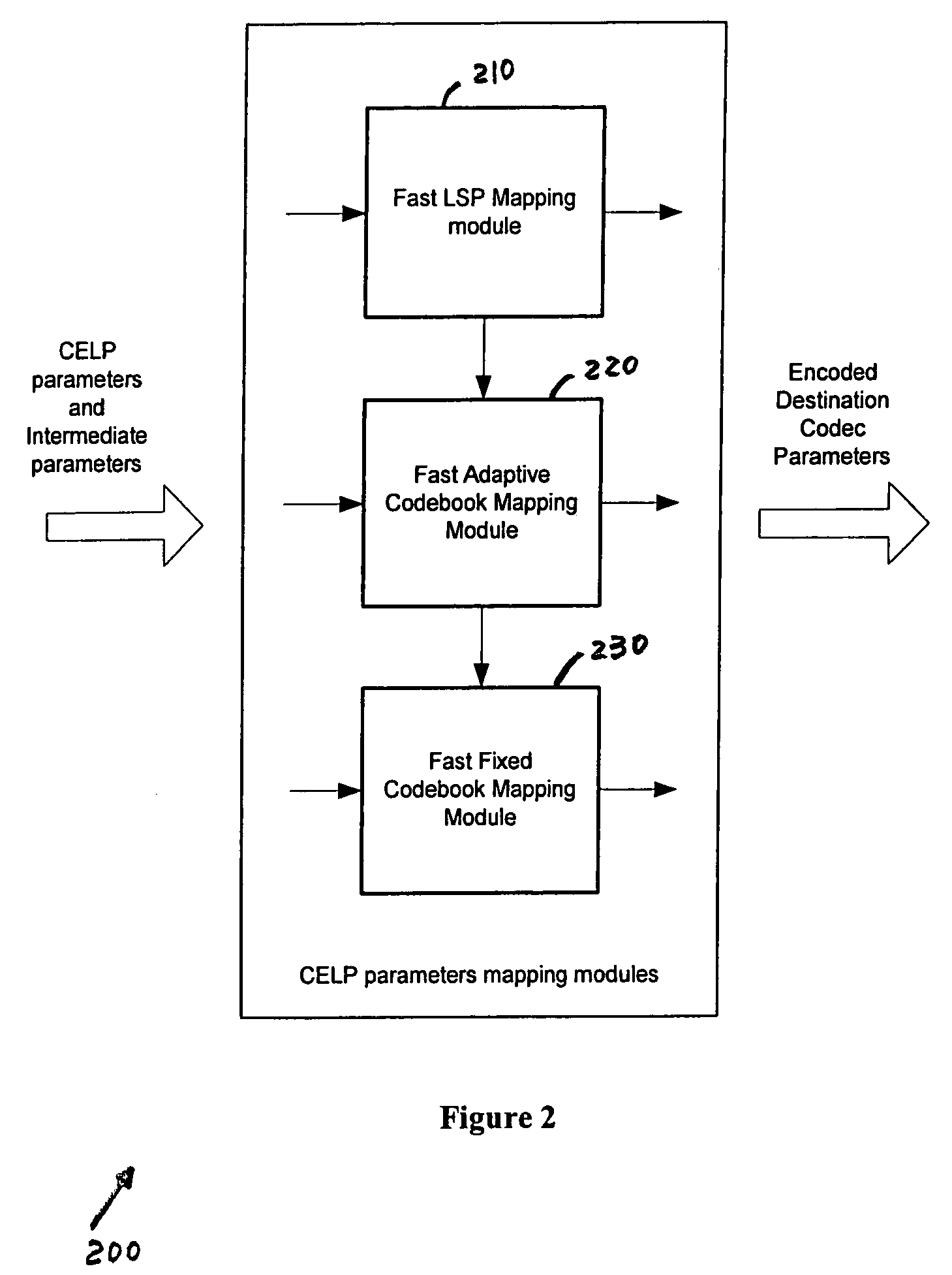

Method and apparatus for fast CELP parameter mapping

InactiveUS7363218B2Reduce the amount requiredReduce complexitySpeech analysisCode conversionComputer architectureComputer module

An apparatus and method for mapping CELP parameters between a source codec and a destination codec. The apparatus includes an LSP mapping module, an adaptive codebook mapping module coupled to the LSP mapping module, and a fixed codebook mapping module coupled to the LSP mapping module and the adaptive codebook mapping module. The LSP mapping module includes an LP overflow module and an LSP parameter modification module. The adaptive codebook mapping module includes a first pitch gain codebook. The fixed codebook mapping module includes a first target processing module, a pulse search module, a fixed codebook gain estimation module, a pulse position searching module.

Owner:ONMOBILE GLOBAL LTD

Message translation and parsing of data structures in a distributed component architecture

InactiveUS7526769B2Reduce the amount of memoryAdapt the behavior of the message structureInterprogram communicationMultiple digital computer combinationsUser needsComputerized system

Owner:ORACLE INT CORP

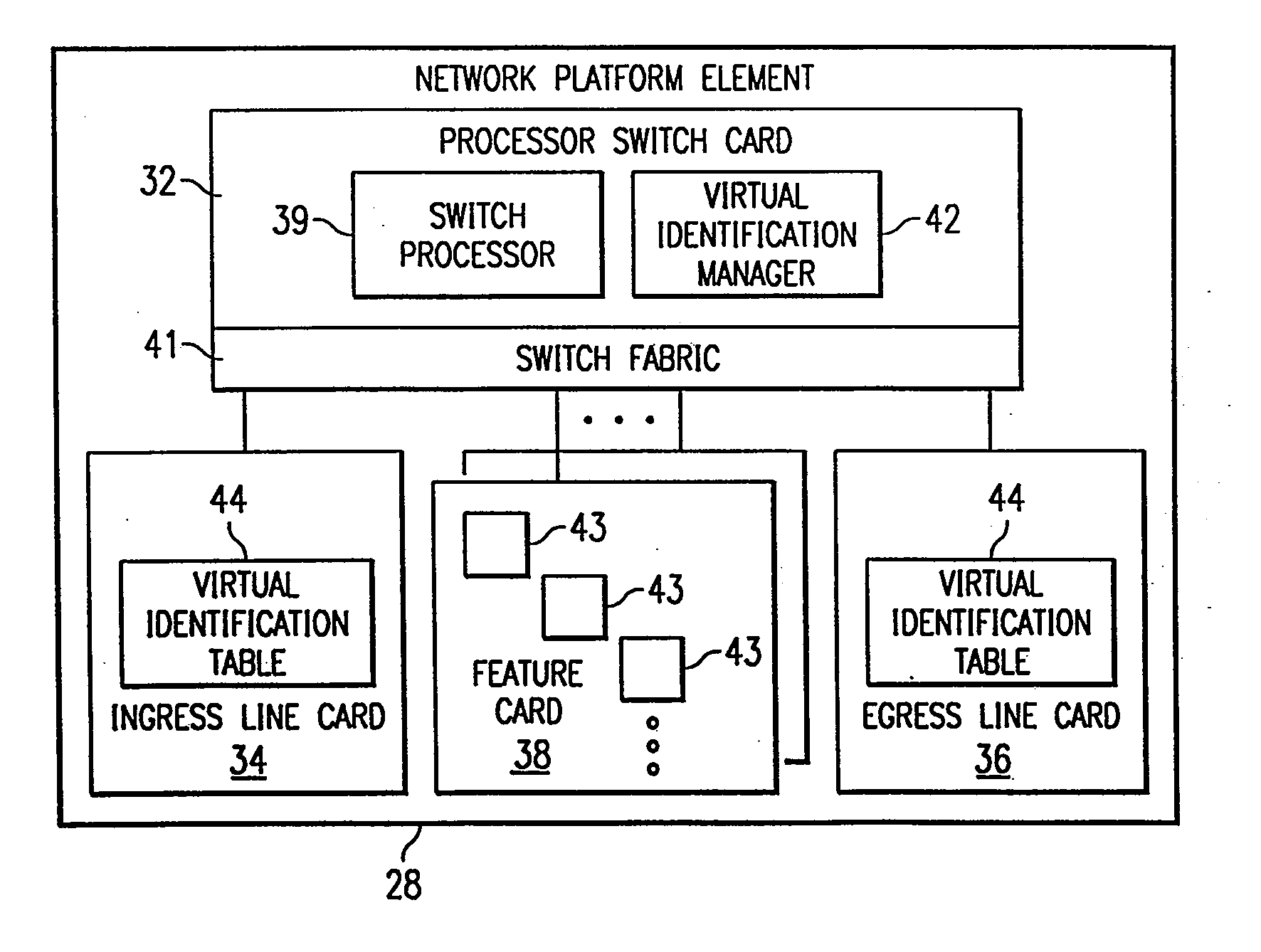

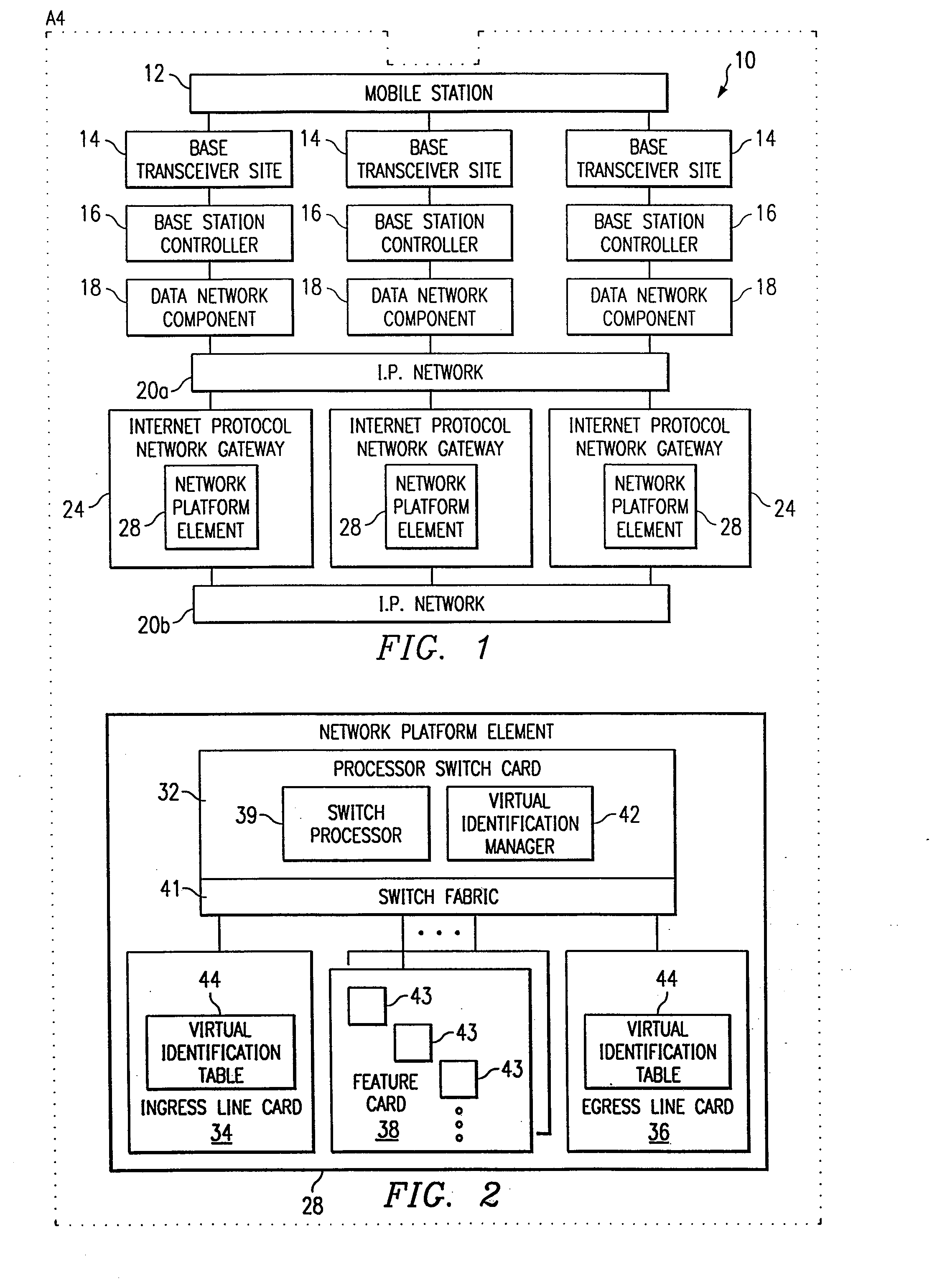

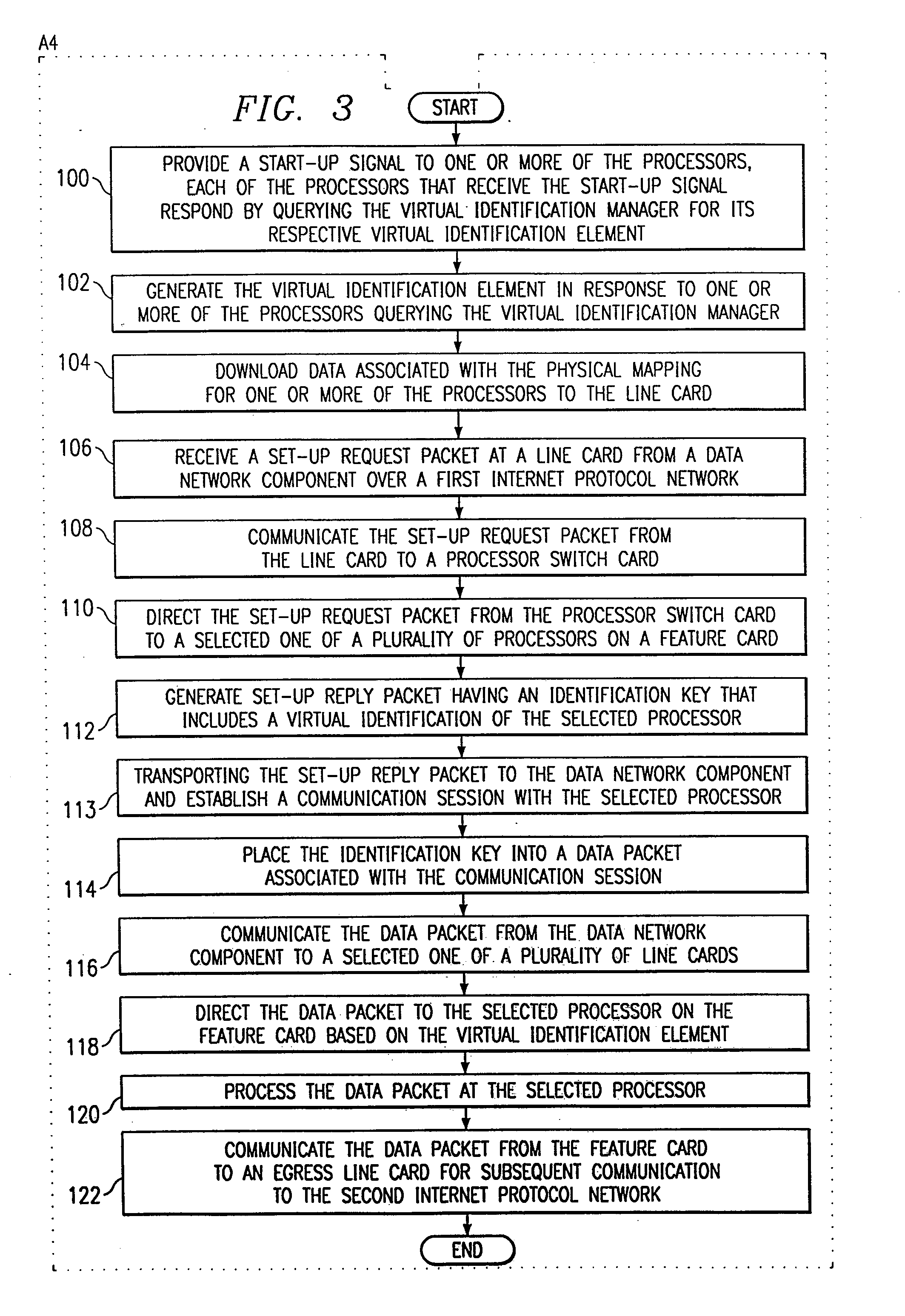

System and Method for Processing Packets in a Multi-Processor Environment

InactiveUS20070136489A1Extended routeEliminate and greatly reduce disadvantage and problemTime-division multiplexData switching by path configurationData packMulti processor

A method for processing packets in a multi-processor environment, that includes receiving a set-up request packet for a communication session and directing the set-up request packet to a selected one of a plurality of processors. A set-up reply packet is generated at the selected one of the plurality of processors, the set-up reply packet including a virtual identifier assigned to the selected one of the plurality of processors. The set-up reply packet is transported to establish the communication session.

Owner:CISCO TECH INC

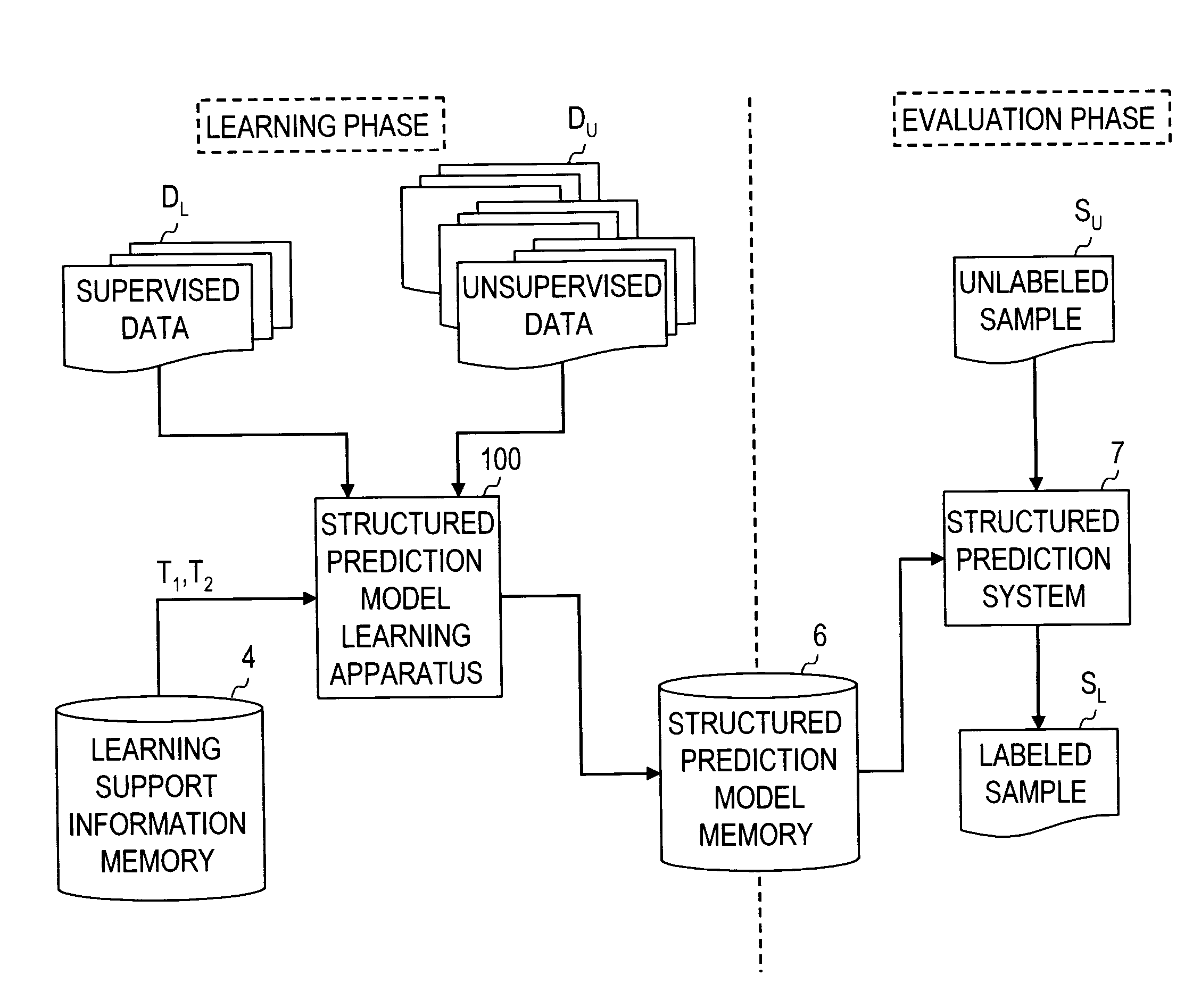

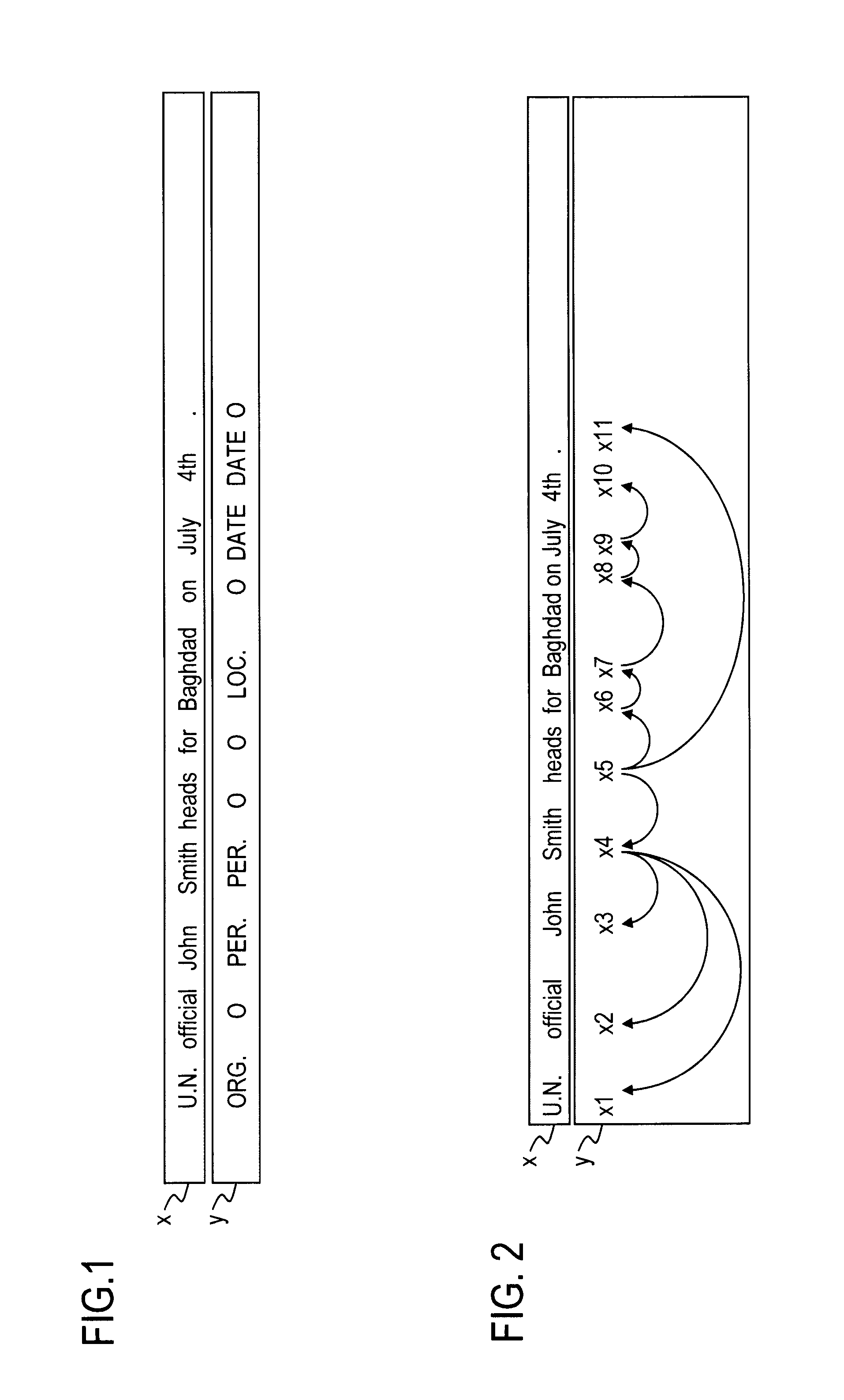

Structured prediction model learning apparatus, method, program, and recording medium

ActiveUS20120084235A1Reduce in quantityPredictive performanceDigital computer detailsMachine learningAlgorithmModel parameters

A structured prediction model learning apparatus, method, program, and recording medium maintain prediction performance with a smaller amount of memory. An auxiliary model is introduced by defining the auxiliary model parameter set θ(k) with a log-linear model. A set Θ of auxiliary model parameter sets which minimizes the Bregman divergence between the auxiliary model and a reference function indicating the degree of pseudo accuracy is estimated by using unsupervised data. A base-model parameter set λ which minimizes an empirical risk function defined beforehand is estimated by using supervised data and the set Θ of auxiliary model parameter sets.

Owner:NIPPON TELEGRAPH & TELEPHONE CORP +1

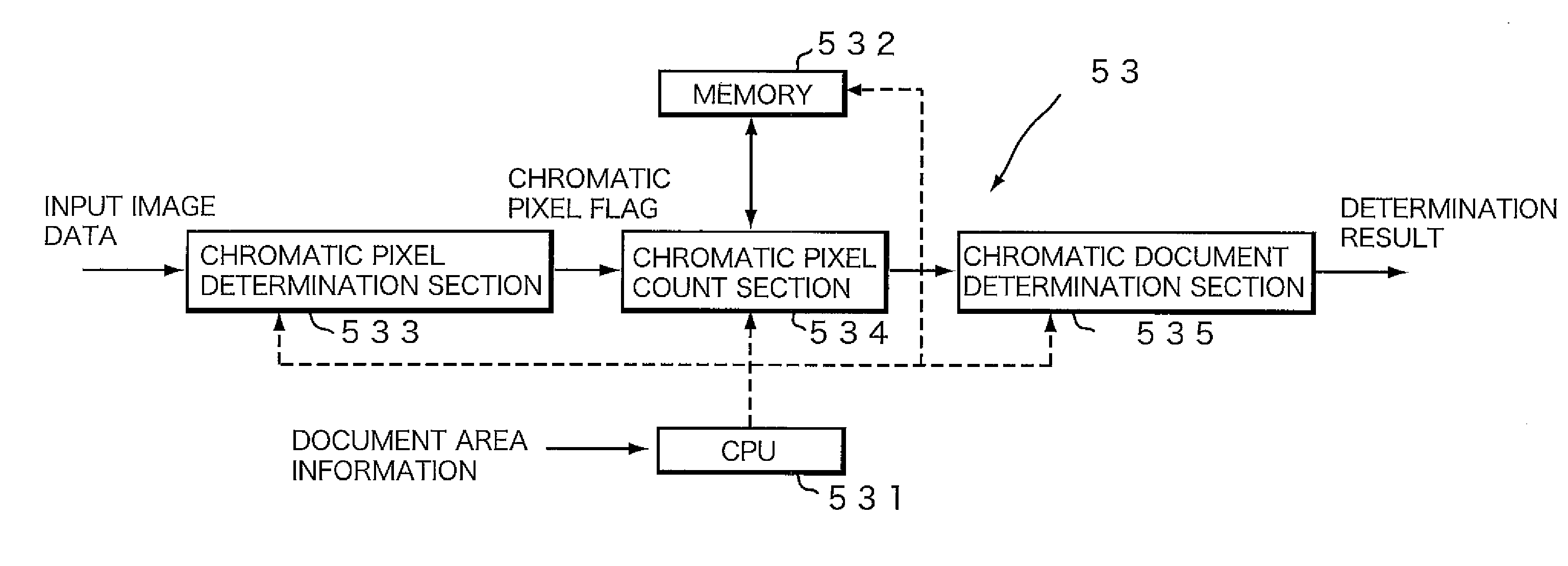

Image processing method, image processing apparatus, image forming apparatus, and recording medium

InactiveUS20080239354A1Reduce the amount of memoryImprove efficiencyDigitally marking record carriersDigital computer detailsImaging processingComputer graphics (images)

An available image area is recognized from information, including a read start position and a read end position of a document, a scaling for performing image processing, a processing object position, or the like. Subsequently, the number of chromatic pixels of one line is counted to output it to a memory, and when it is summed up to an end line of the available image area, a difference between a count number N1 in one previous line of the available image area and a count number N2 in the end line of the available image area is calculated to thereby compare the difference with a predetermined threshold value. When the difference is larger than the threshold, it is determined to be a chromatic image, whereas when the difference is smaller than the threshold, it is determined to be an achromatic image.

Owner:SHARP KK

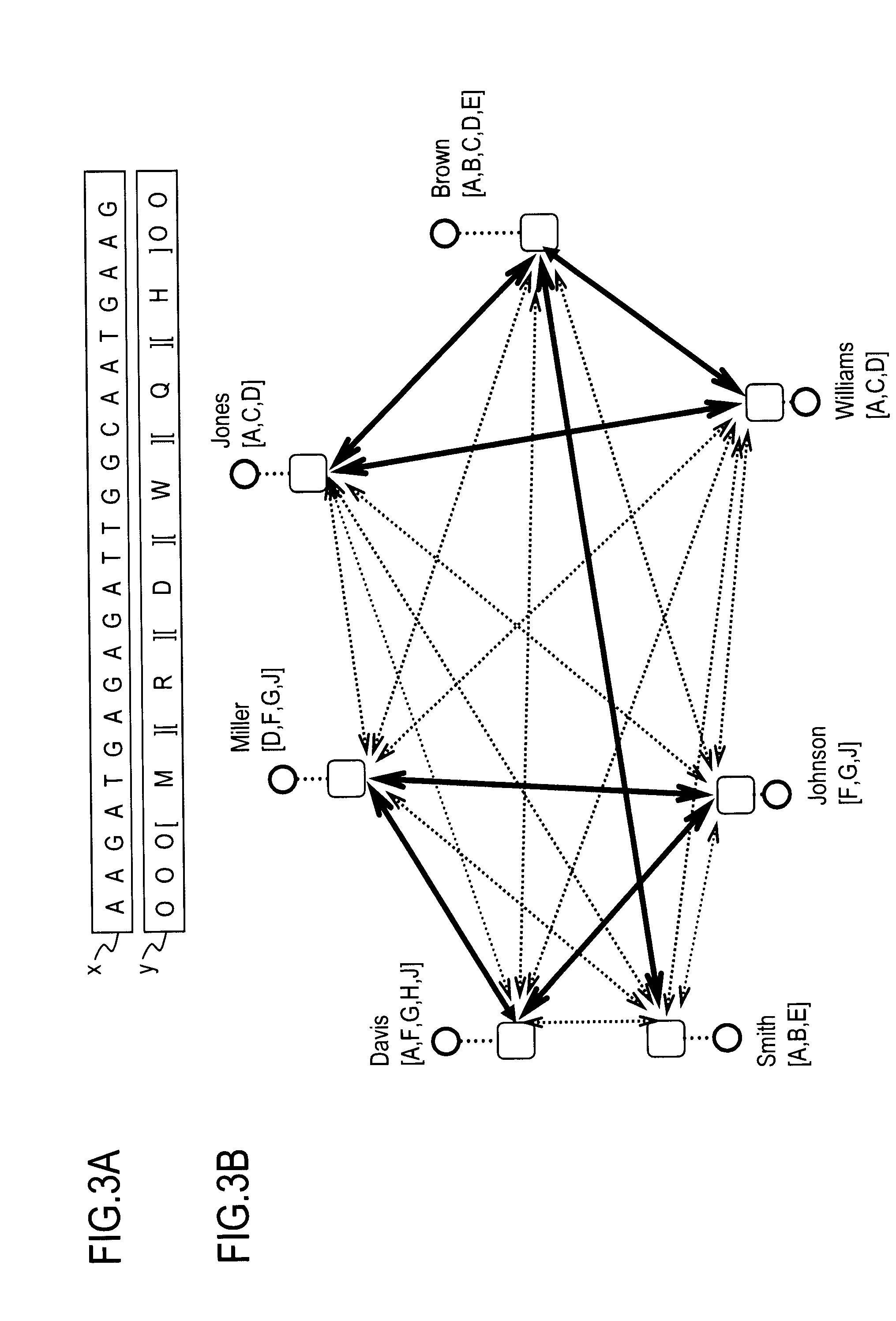

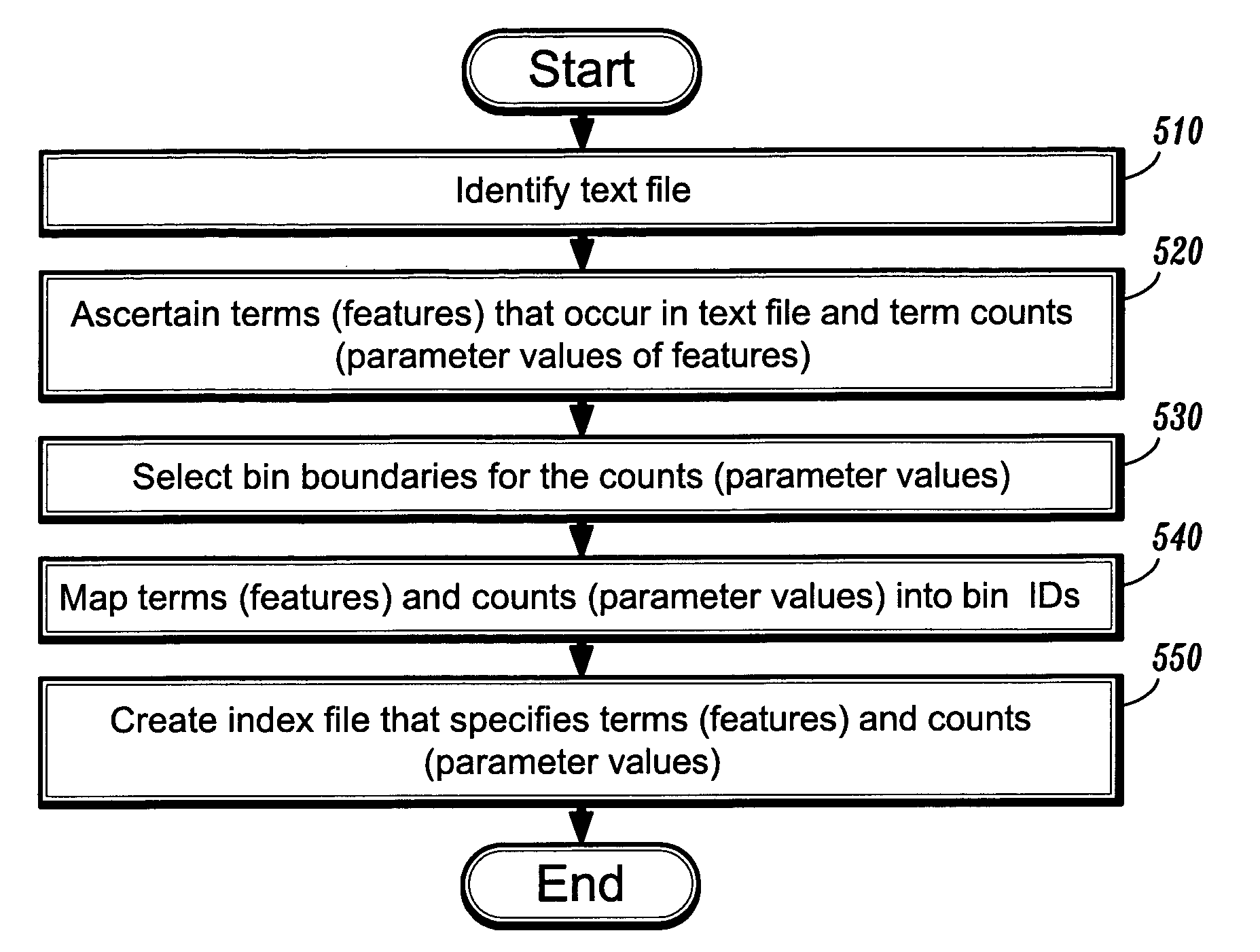

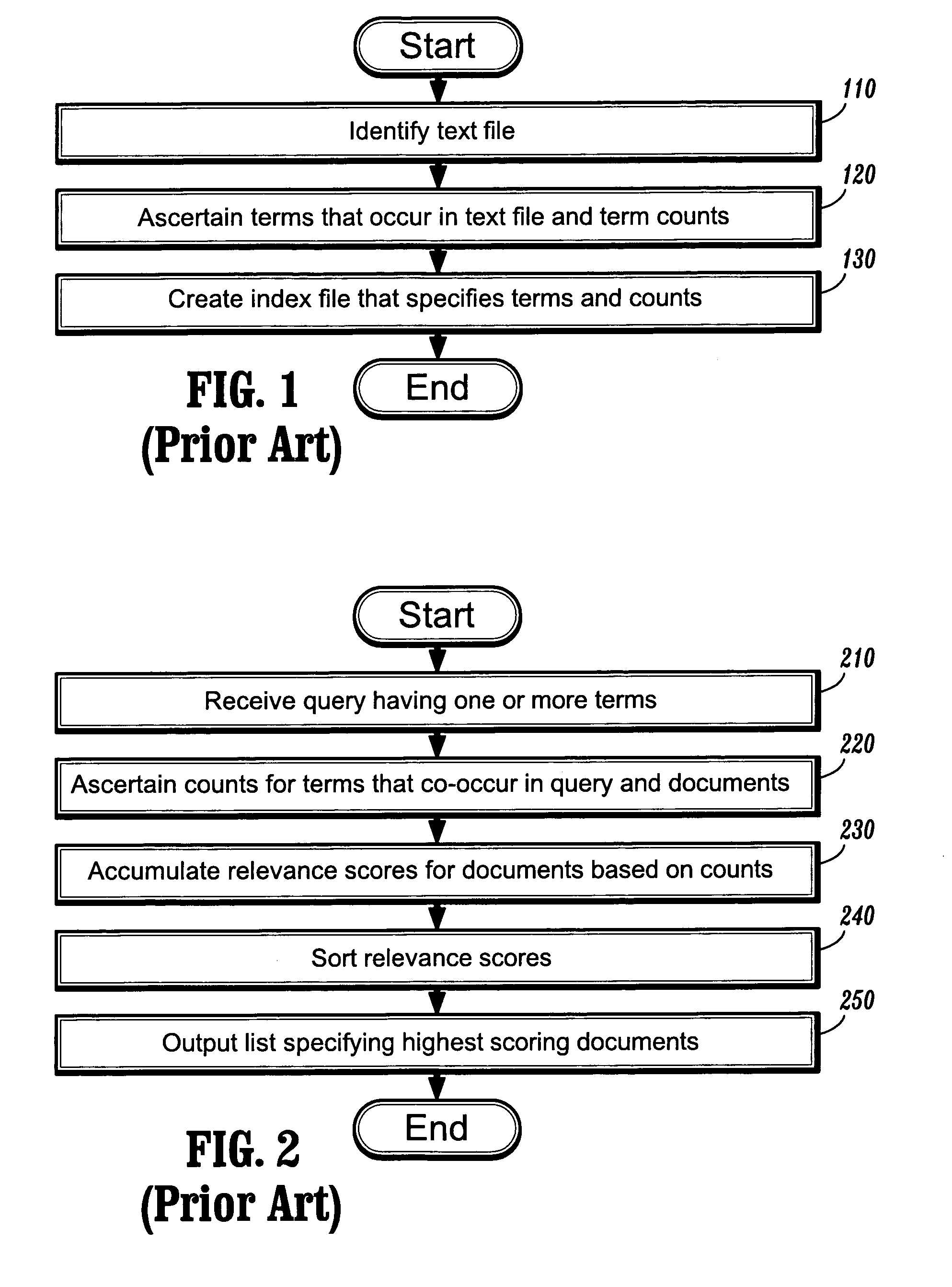

Compressing index files in information retrieval

ActiveUS7028045B2Reduced space requirementReduce spacingData processing applicationsDigital data information retrievalData miningIndexed file

There is provided a method for compressing an index file in an information retrieval system that retrieves information from a plurality of documents. Each of the plurality of documents has features occurring therein. Each of the features has parameters corresponding thereto. Parameter values corresponding to the parameters of the features are mapped into a plurality of bins. Bin identifiers are stored in the index file. Each of the bin identifiers identifies a bin to which is assigned at least one individual parameter value corresponding to at least one individual parameter.

Owner:TWITTER INC

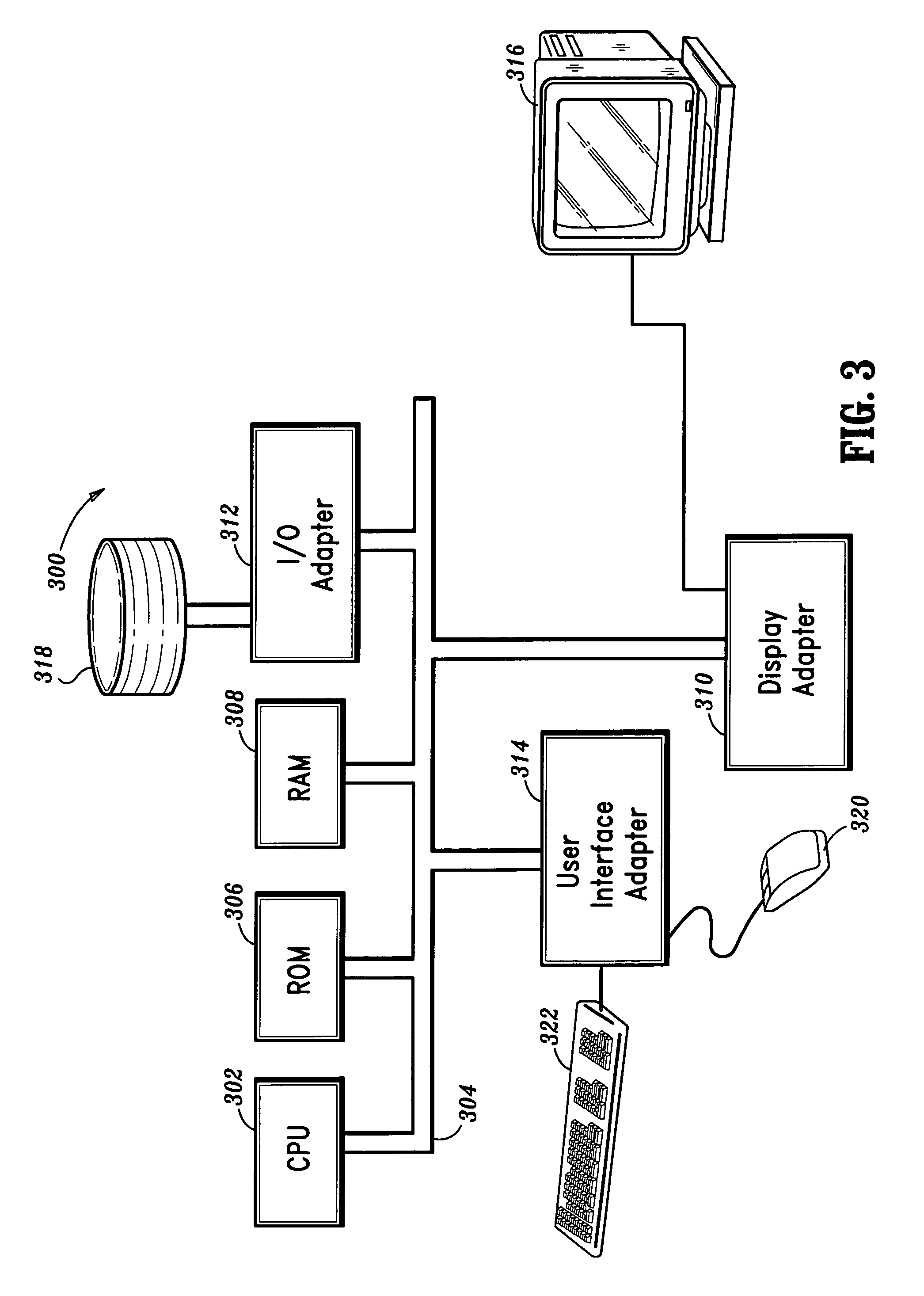

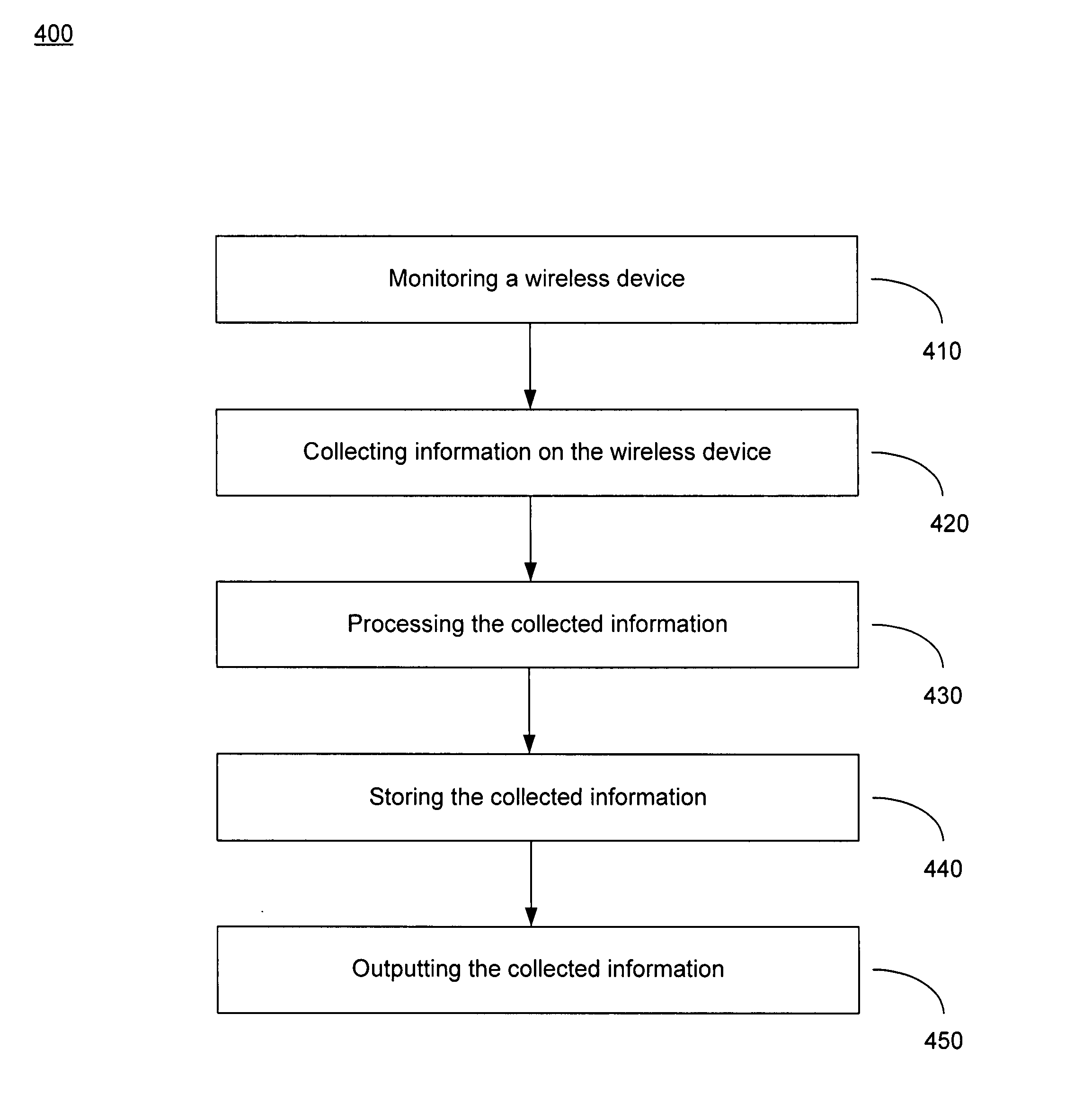

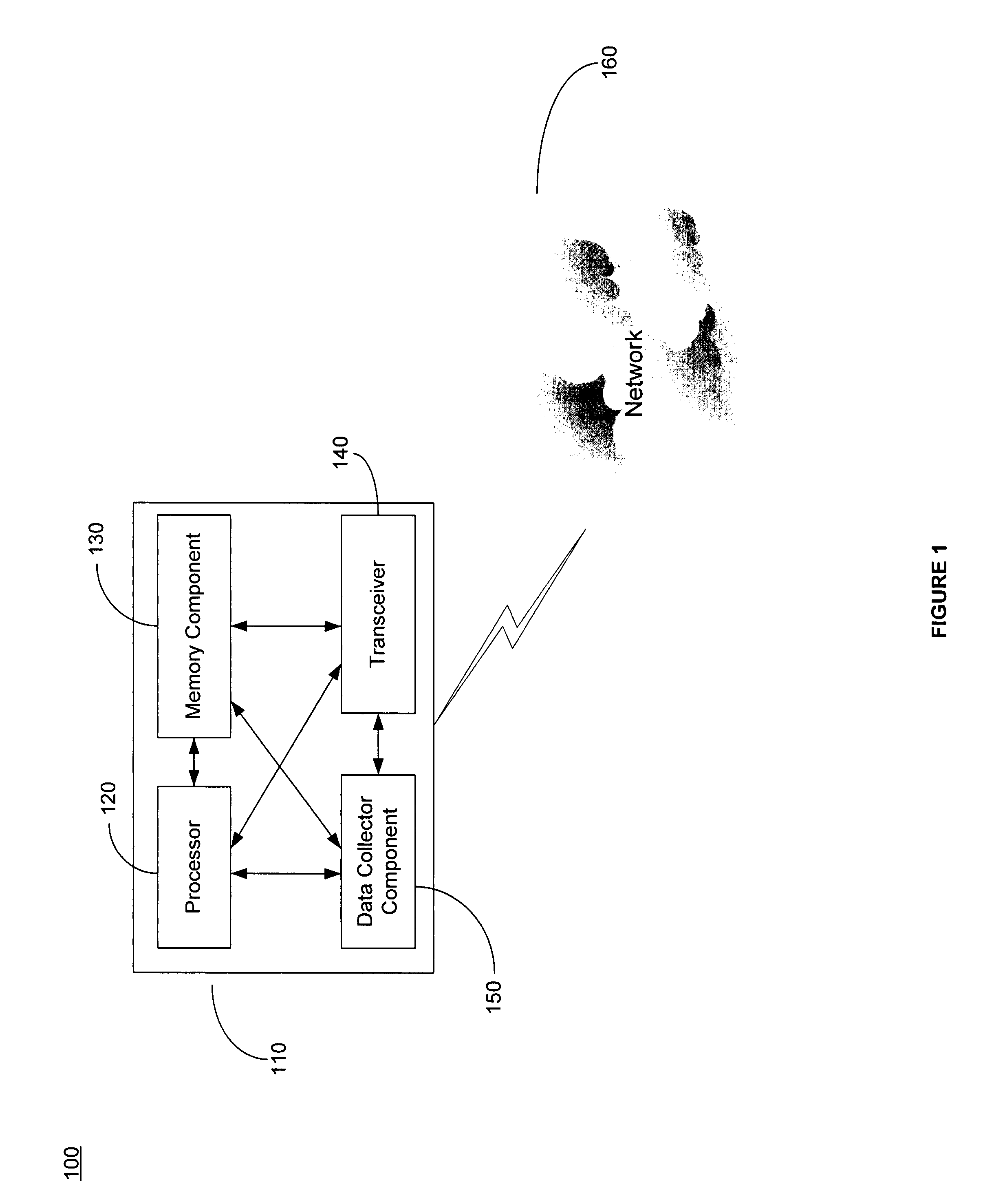

Method and system for collecting wireless information transparently and non-intrusively

ActiveUS8014726B1Minimizing slowdownRisk minimizationError preventionFrequency-division multiplex detailsTransceiverOperational system

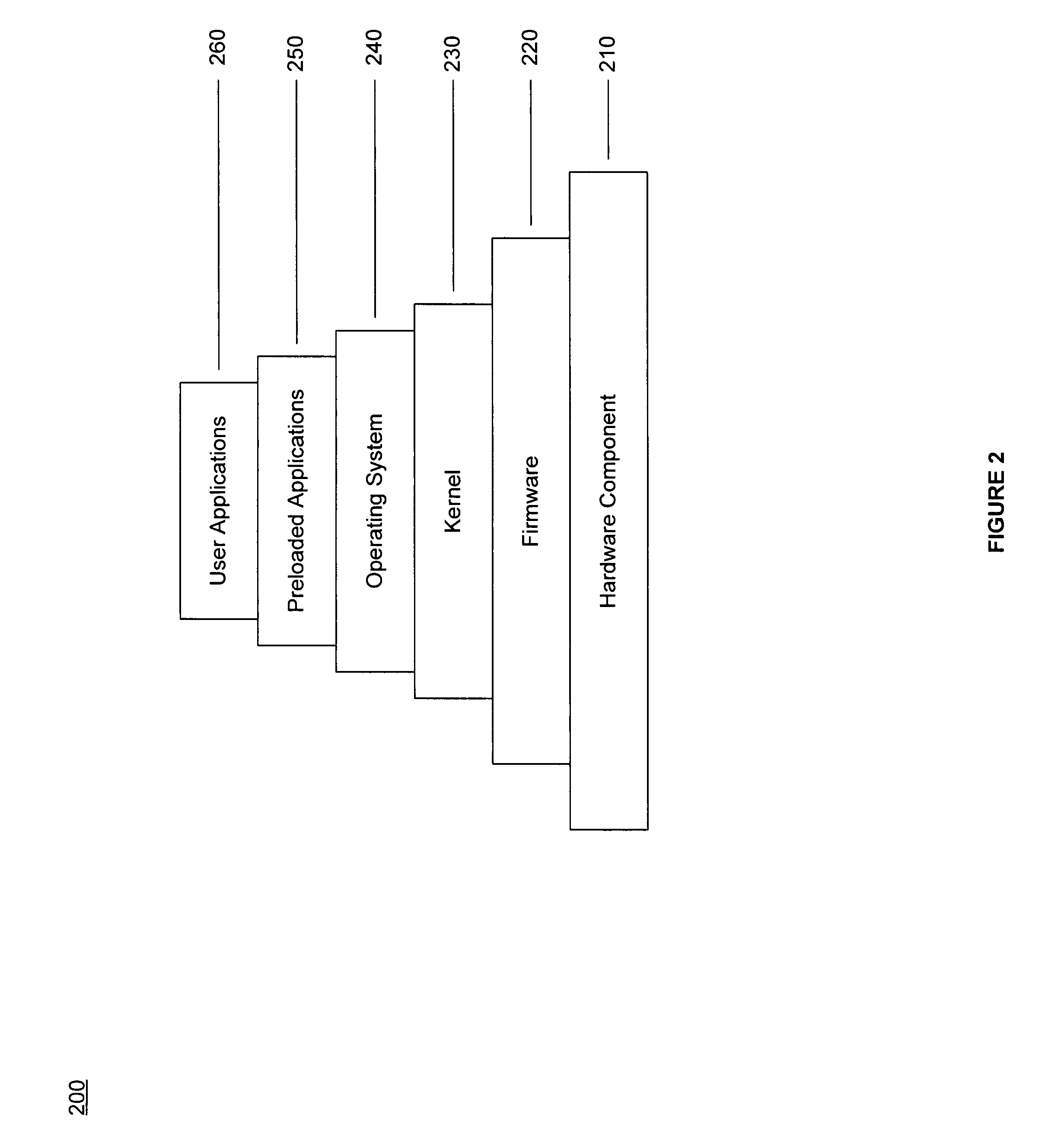

A method of collecting information on a wireless device. A wireless device may comprise a processor, a transceiver, a memory and a data collecting component. The data collecting component is operable to monitor the wireless device and collect information (e.g., wireless network information, wireless device information and wireless device usage information). In one embodiment, at least a portion of the data collecting component is installed within and / or below the operating system. For example, at least a portion of the data collecting component may be embedded within the operating system, the kernel, the firmware and / or on top of the firmware, the kernel or any combination thereof. In one embodiment, the collected information may be processed, stored and transmitted. Accordingly, the data collector component operates in a non-intrusive / transparent manner by utilizing less memory space and produces fewer errors without causing a system slowdown while maintaining high level of privacy and security.

Owner:THE NIELSEN CO (US) LLC

Inter-shader attribute buffer optimization

ActiveUS20110080415A1Reduce amount of memoryReduce the amount of memoryMemory adressing/allocation/relocationAnimationShaderComputational science

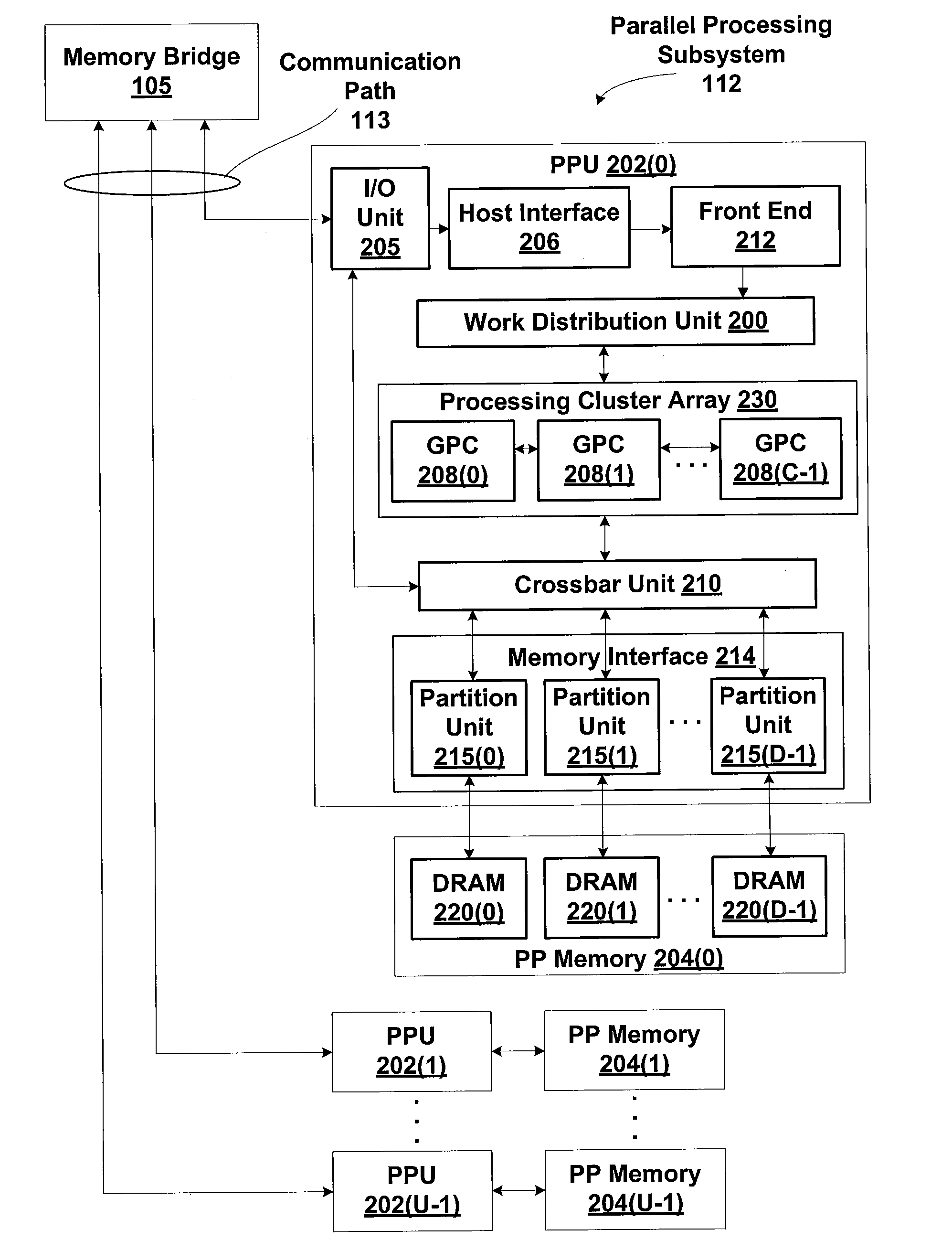

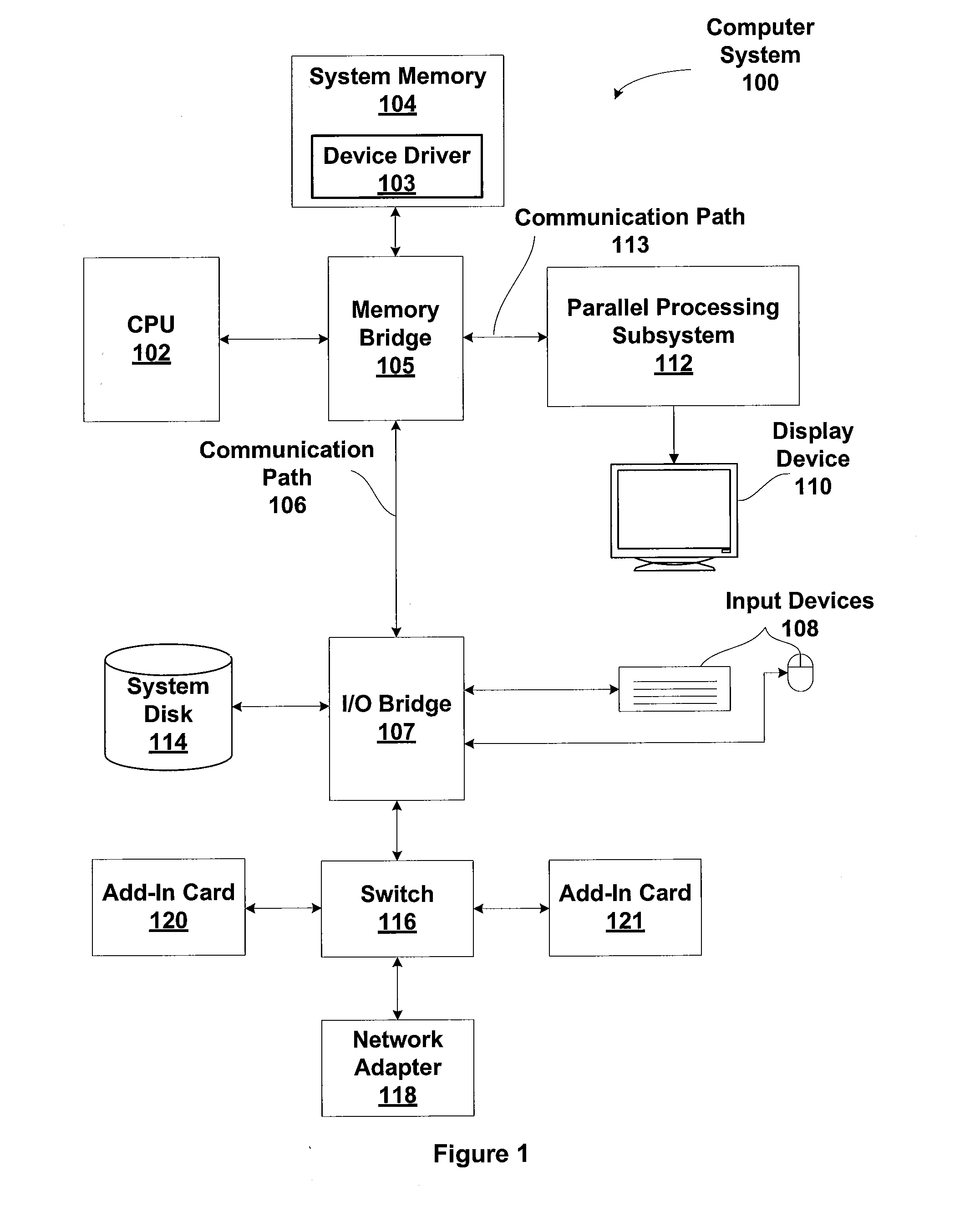

One embodiment of the present invention sets forth a technique for reducing the amount of memory required to store vertex data processed within a processing pipeline that includes a plurality of shading engines. The method includes determining a first active shading engine and a second active shading engine included within the processing pipeline, wherein the second active shading engine receives vertex data output by the first active shading engine. An output map is received and indicates one or more attributes that are included in the vertex data and output by the first active shading engine. An input map is received and indicates one or more attributes that are included in the vertex data and received by the second active shading engine from the first active shading engine. Then, a buffer map is generated based on the input map, the output map, and a pre-defined set of rules that includes rule data associated with both the first shading engine and the second shading engine, wherein the buffer map indicates one or more attributes that are included in the vertex data and stored in a memory that is accessible by both the first active shading engine and the second active shading engine.

Owner:NVIDIA CORP

Navigation apparatus and computer program

InactiveUS20090171570A1Easy to understandMinimizeInstruments for road network navigationRoad vehicles traffic controlLiquid-crystal displayComputer science

When a map image in the periphery of a vehicle position is displayed on a liquid crystal display in 3D, POIs existing in a first searching region within a first searching distance from a current position of the vehicle are searched in an area in the vicinity of the vehicle position by setting all types of points as search targets, POIs existing in a second searching region within a second searching distance from a second searching point are searched in an area distant from the vehicle position by setting only specific types of points as search targets, and icon symbols indicating types and positions of the points are displayed on the map image while being overlapped therewith based on the searched POIs.

Owner:AISIN AW CO LTD

Multi-camera system for implementing digital slow shutter video processing using shared video memory

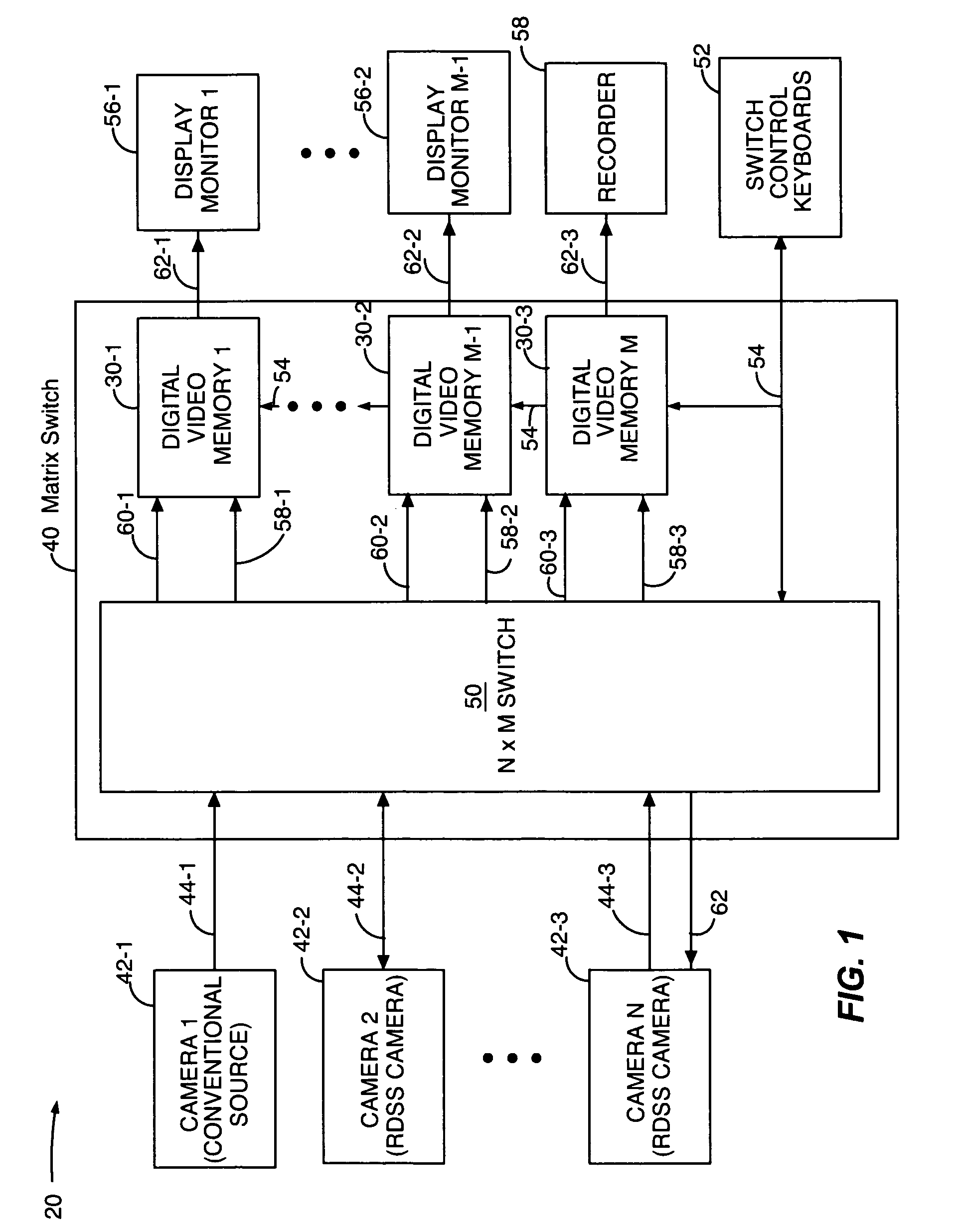

InactiveUS7064778B1Reduce the amount of memoryLow costTelevision system detailsColor television detailsVideo memoryVideo storage

A technique, specifically a method and apparatus that implements the method, that allows a memory to be located remotely from a video source. Specifically, the method provides a write control signal between a video source and a remote memory that allows the remote memory to provide a video image during slow-shutter operation of the camera.

Owner:PELCO INC

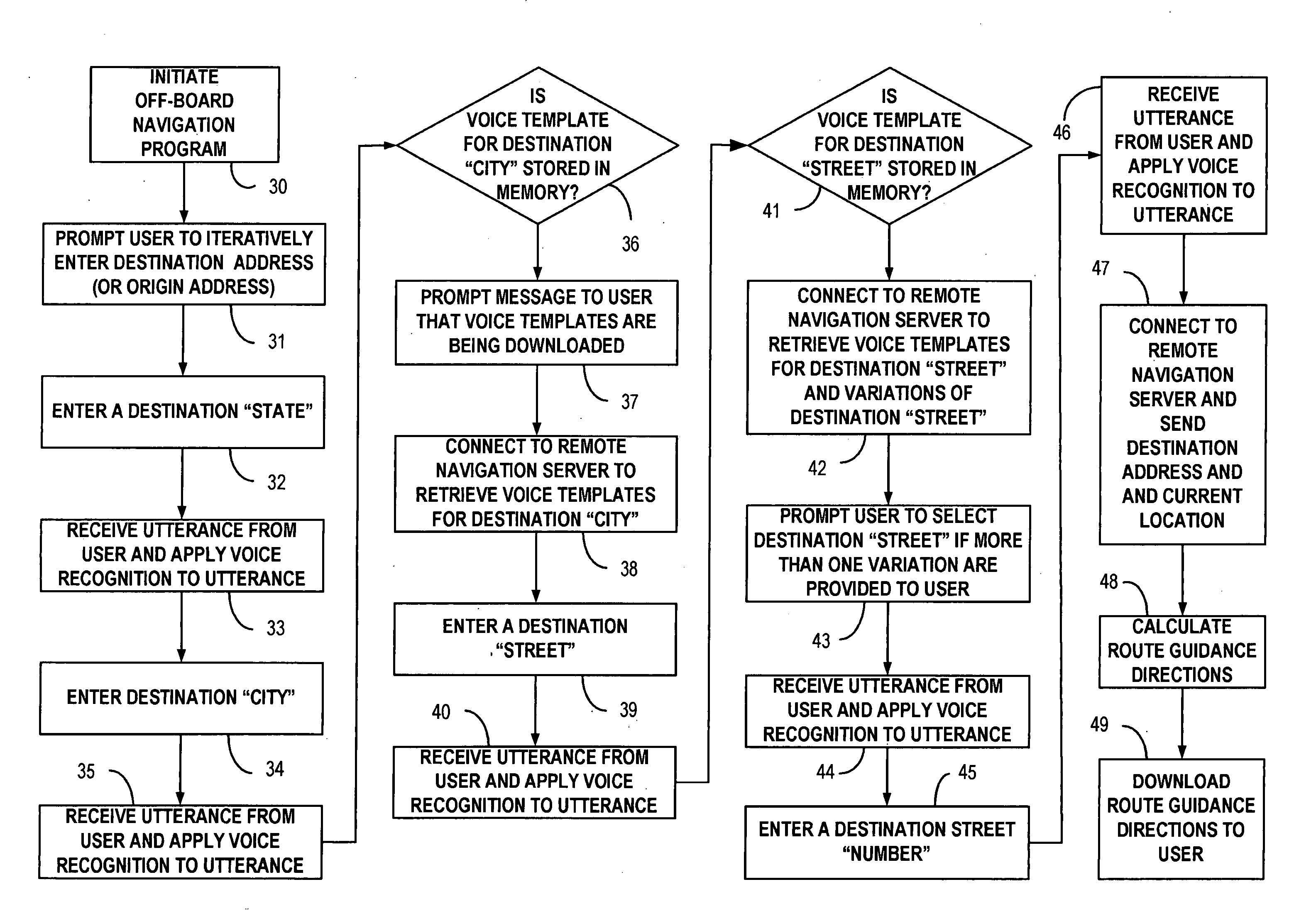

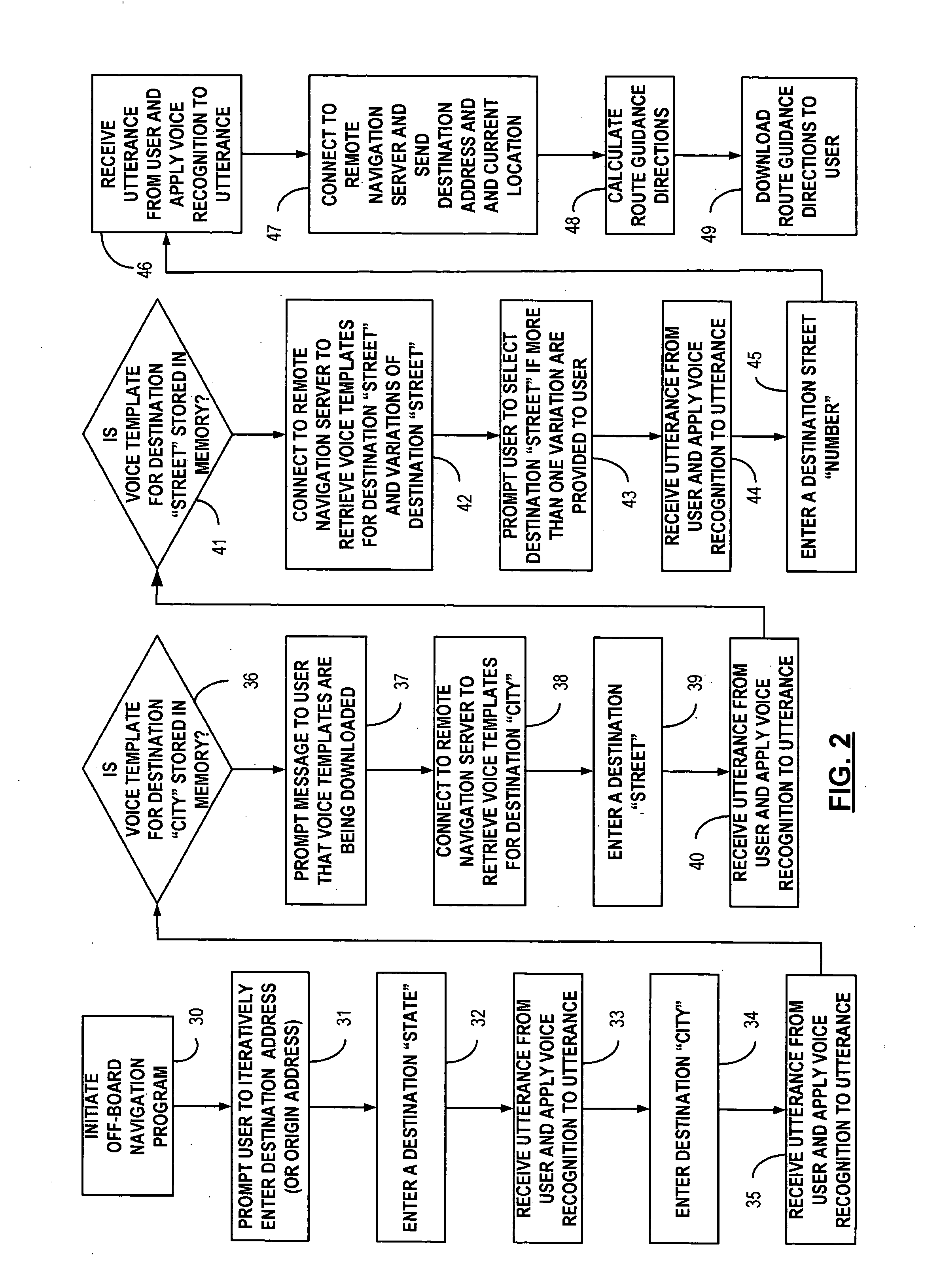

Remote navigation server interface

InactiveUS20060129311A1Reduce the amount of memoryInstruments for road network navigationRoad vehicles traffic controlIn vehicleSpeech sound

A method is provided for generating a destination address in response to a plurality of oral inputs by a user. The user is prompted to orally input a primary geographical descriptor of the destination address to the in-vehicle navigation module. Primary geographical utterance data is generated in response to an oral input by the user. The primary matching voice templates stored in the in-vehicle navigation module are compared with the primary geographical utterance data. The secondary voice templates associated with the secondary geographical descriptors within the primary geographical descriptors are retrieved from the remote navigation server. The secondary geographical utterance data is generated in response an oral input by the user. The stored secondary voice templates are compared with the secondary geographical utterance data. The destination address is generated in response to matching voice templates. The destination address is provided to a remote navigation server for calculating route guidance directions.

Owner:LEAR CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com